Gesture recognition method based on 3D-CNN and convolutional LSTM

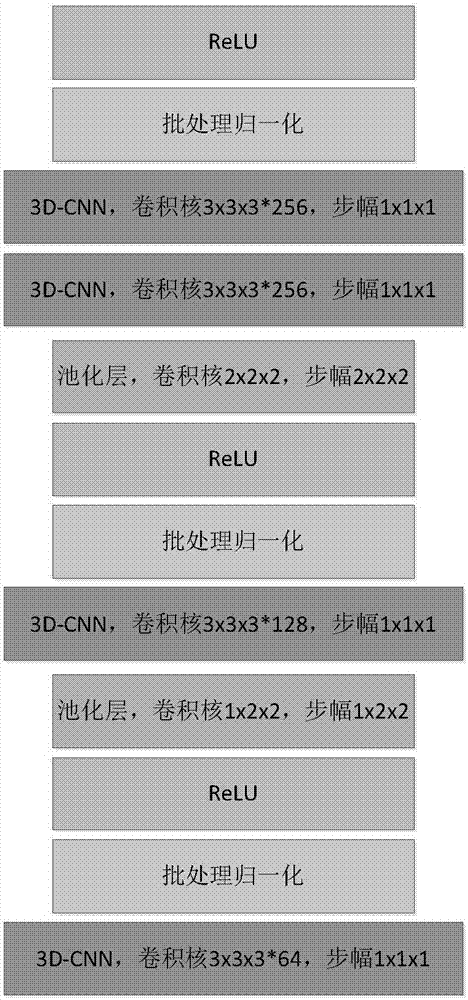

A 3D-CNN, gesture recognition technology, applied in character and pattern recognition, neural learning methods, instruments, etc., can solve the problems of only extracting short-term spatiotemporal features, background interference, low recognition rate, etc., to reduce overfitting, reduce time and improve the recognition accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach

[0080]1. Use the IsoGD public gesture data set for network training, and preprocess the RGB images and Depth images in the training set according to the requirements of step S1. The IsoGD dataset contains 249 gestures, 35878 RGB videos and Depth videos for training, 5784 RGB videos and Depth videos for verification, and 6271 RGB videos and Depth videos for testing. The downsampling method is used to normalize the size of the image frame to 112*112 pixels. The RGB video and Depth video in the training set are normalized by using the time dithering strategy, so that the length of each gesture video is 32 frames. For example, a gesture video has a total of 170 frames of images, according to The first frame of the preprocessed video is Idx 1 =170 / 32*(1+random(-1,1) / 2), if random(-1,1)=0.5, then Idx 1 =170 / 32*(1+0.5 / 2)=7, which is the seventh frame image in the original video, and so on. It should be noted that uniform sampling with a temporal jitter strategy is used during tr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com