Patents

Literature

405 results about "Multi modal fusion" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

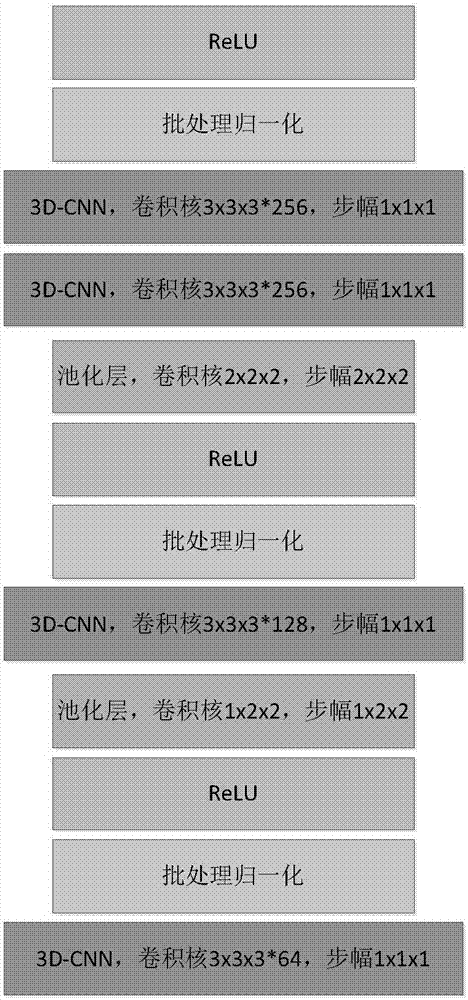

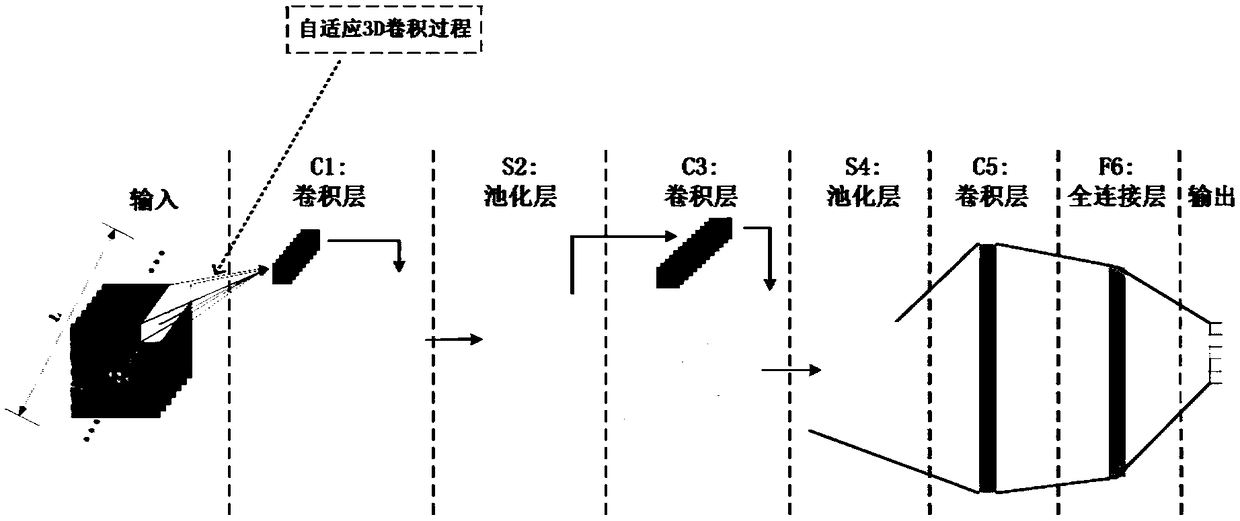

Gesture recognition method based on 3D-CNN and convolutional LSTM

InactiveCN107451552AShorten the timeReduce overfittingCharacter and pattern recognitionNeural architecturesPattern recognitionShort terms

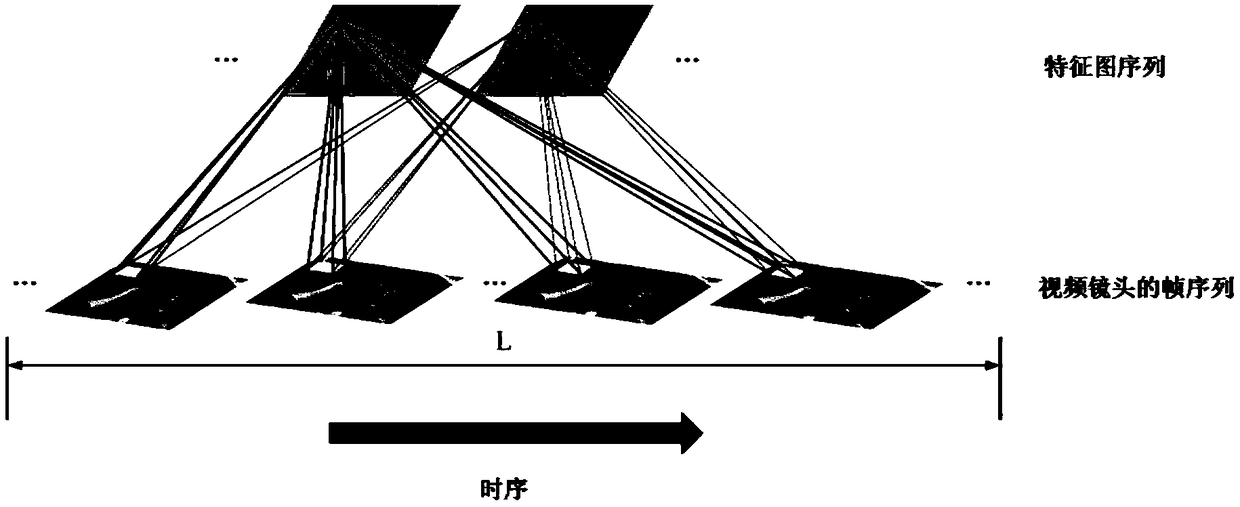

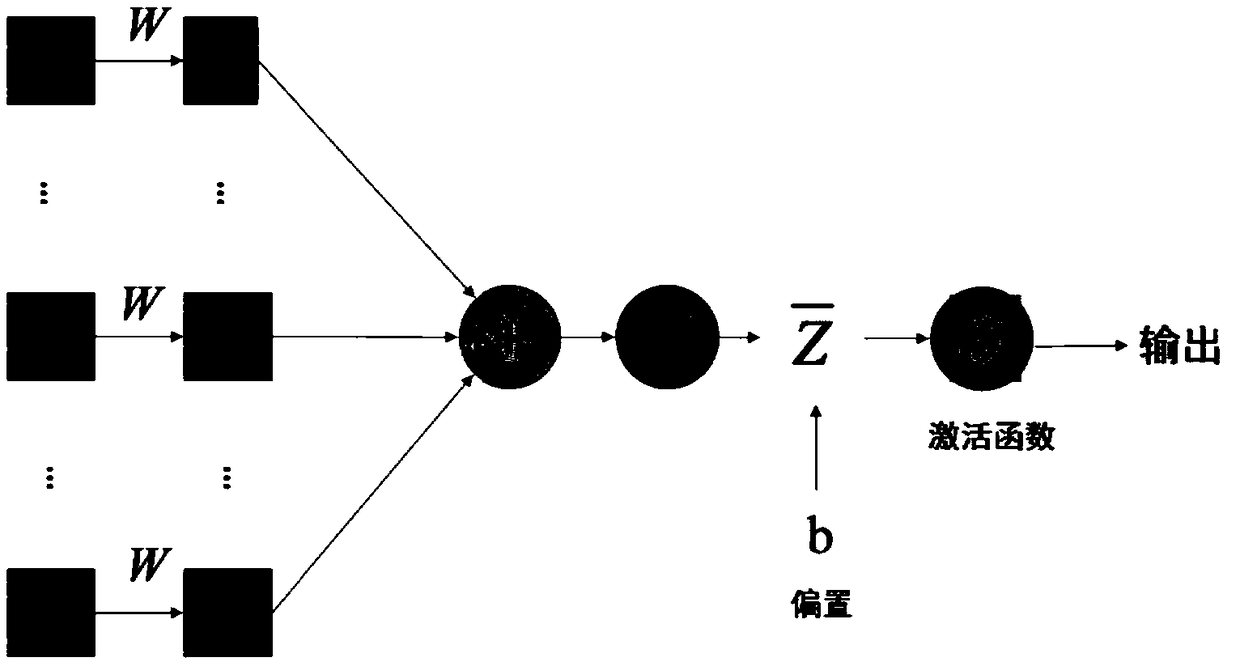

The invention discloses a gesture recognition method based on 3D-CNN and convolution LSTM. The method comprises the steps that the length of a video input into 3D-CNN is normalized through a time jitter policy; the normalized video is used as input to be fed to 3D-CNN to study the short-term temporal-spatial features of a gesture; based on the short-term temporal-spatial features extracted by 3D-CNN, the long-term temporal-spatial features of the gesture are studied through a two-layer convolutional LSTM network to eliminate the influence of complex backgrounds on gesture recognition; the dimension of the extracted long-term temporal-spatial features are reduced through a spatial pyramid pooling layer (SPP layer), and at the same time the extracted multi-scale features are fed into the full-connection layer of the network; and finally, after a latter multi-modal fusion method, forecast results without the network are averaged and fused to acquire a final forecast score. According to the invention, by learning the temporal-spatial features of the gesture simultaneously, the short-term temporal-spatial features and the long-term temporal-spatial features are combined through different networks; the network is trained through a batch normalization method; and the efficiency and accuracy of gesture recognition are improved.

Owner:BEIJING UNION UNIVERSITY

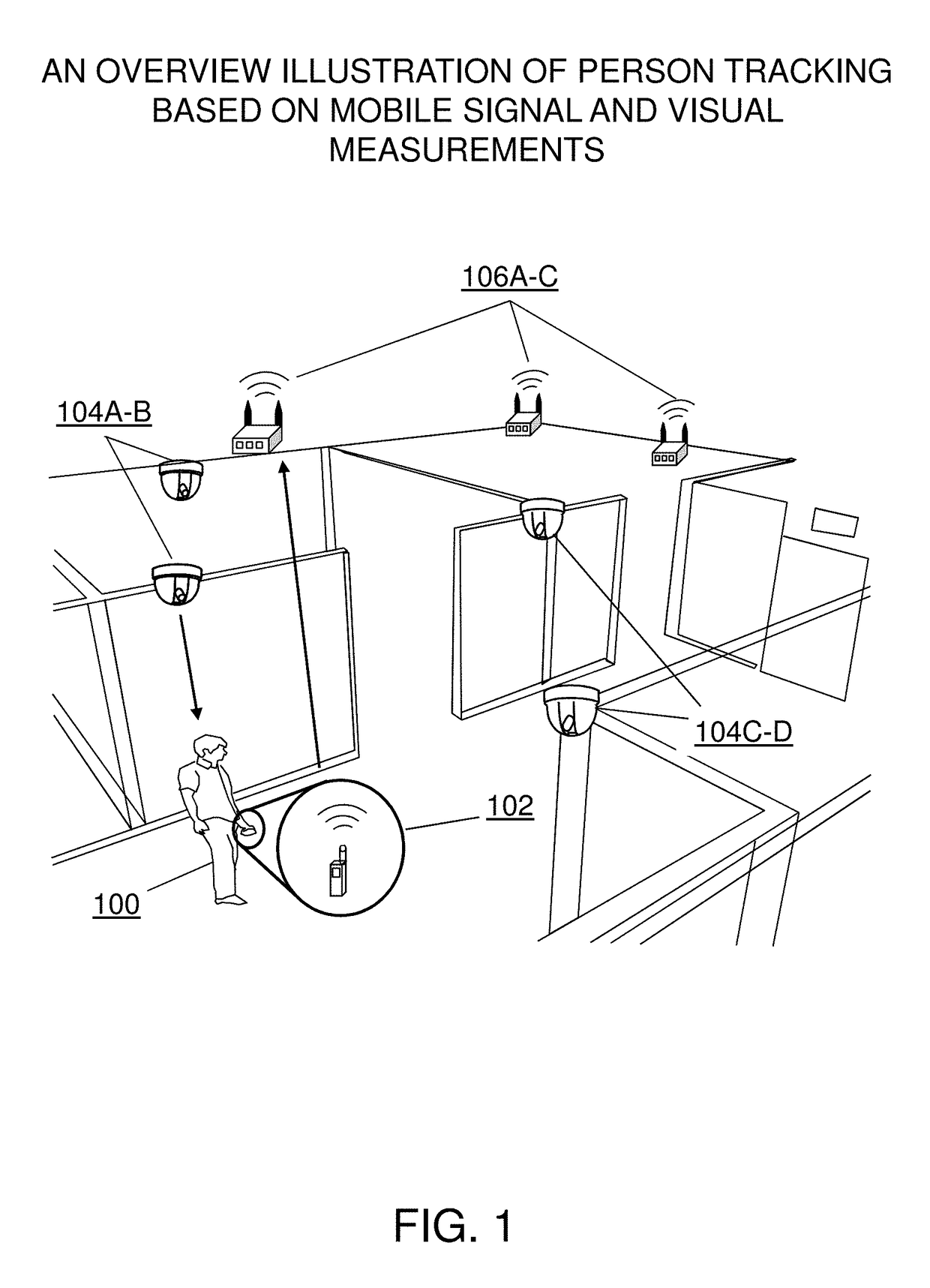

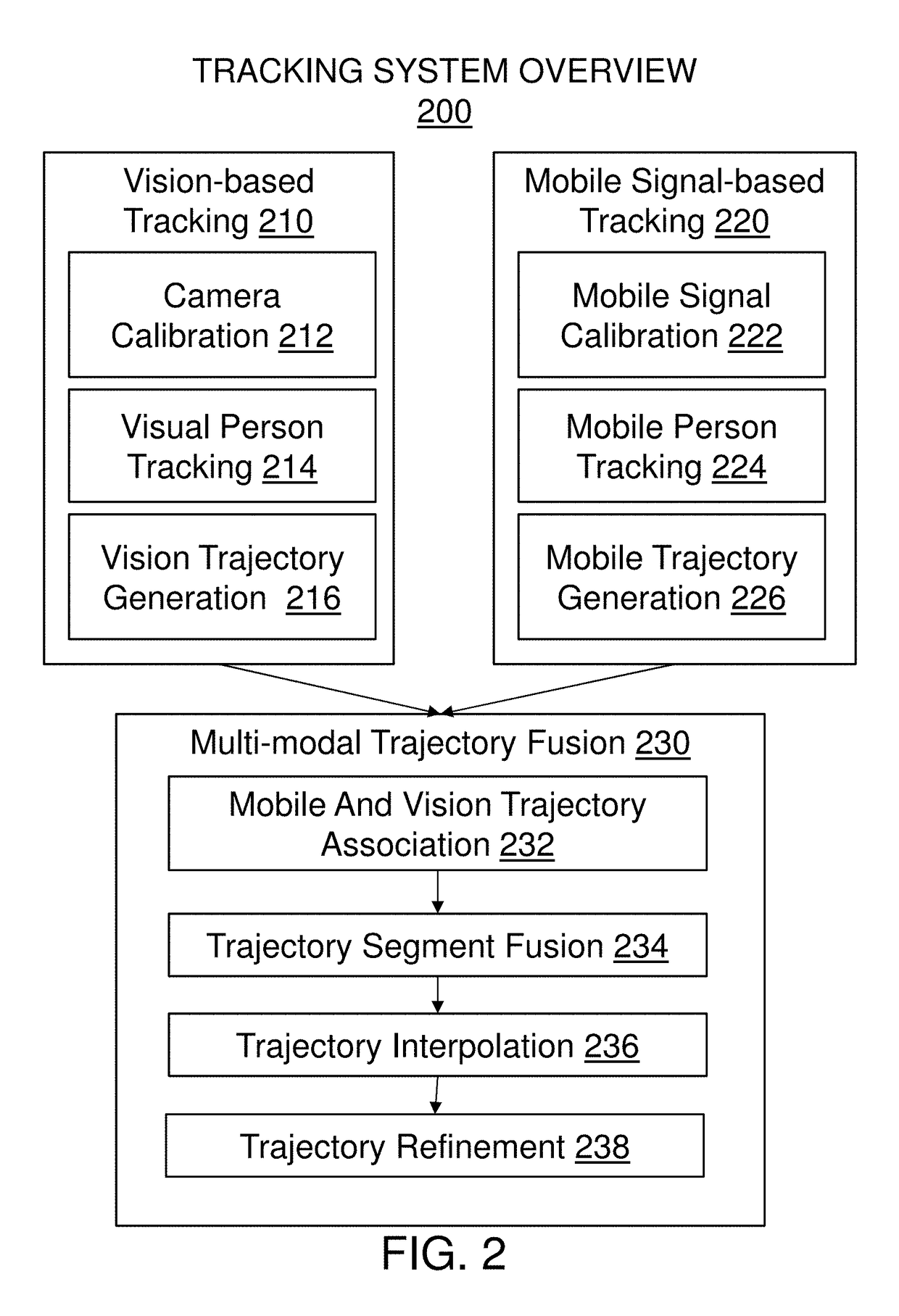

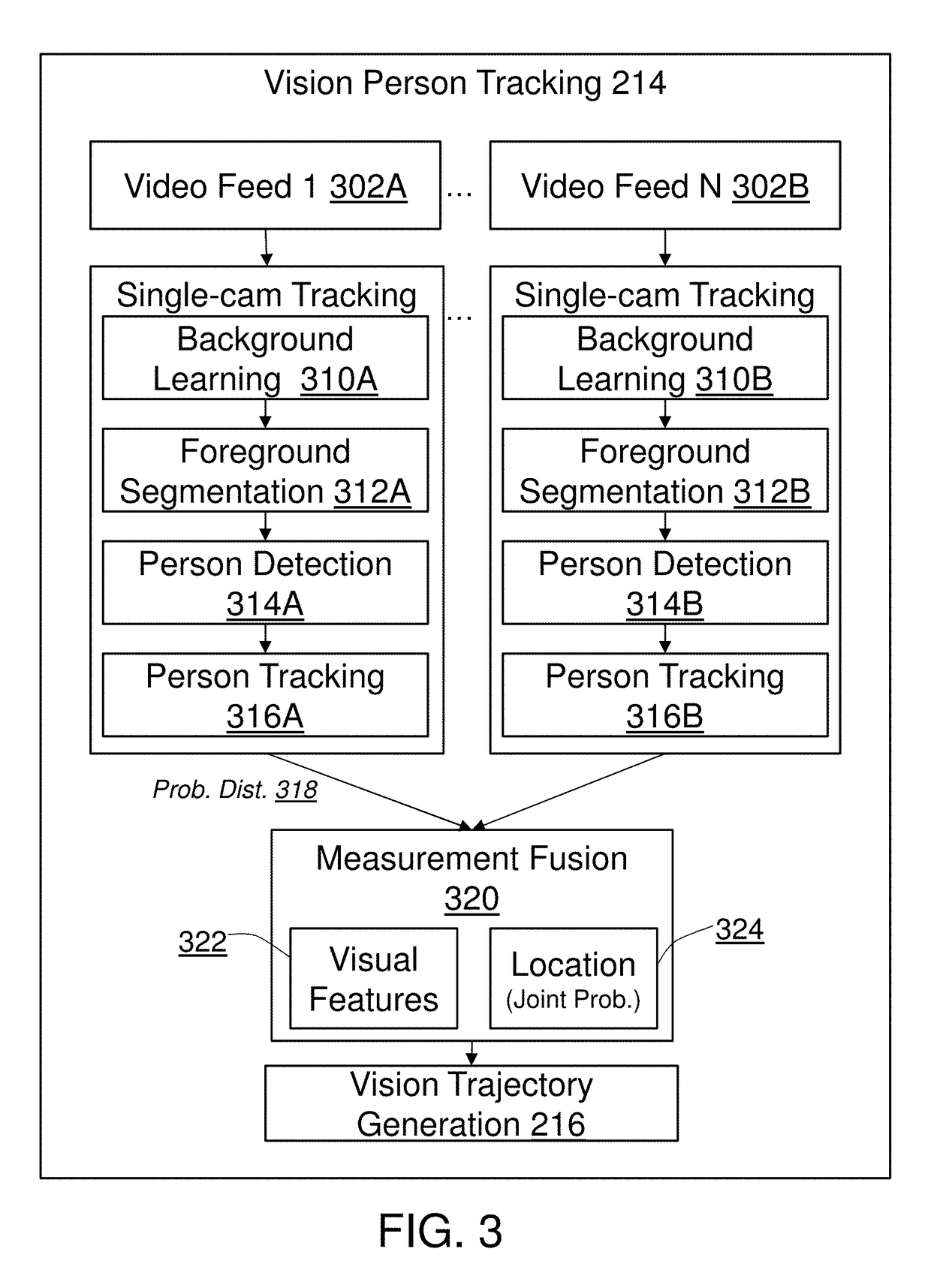

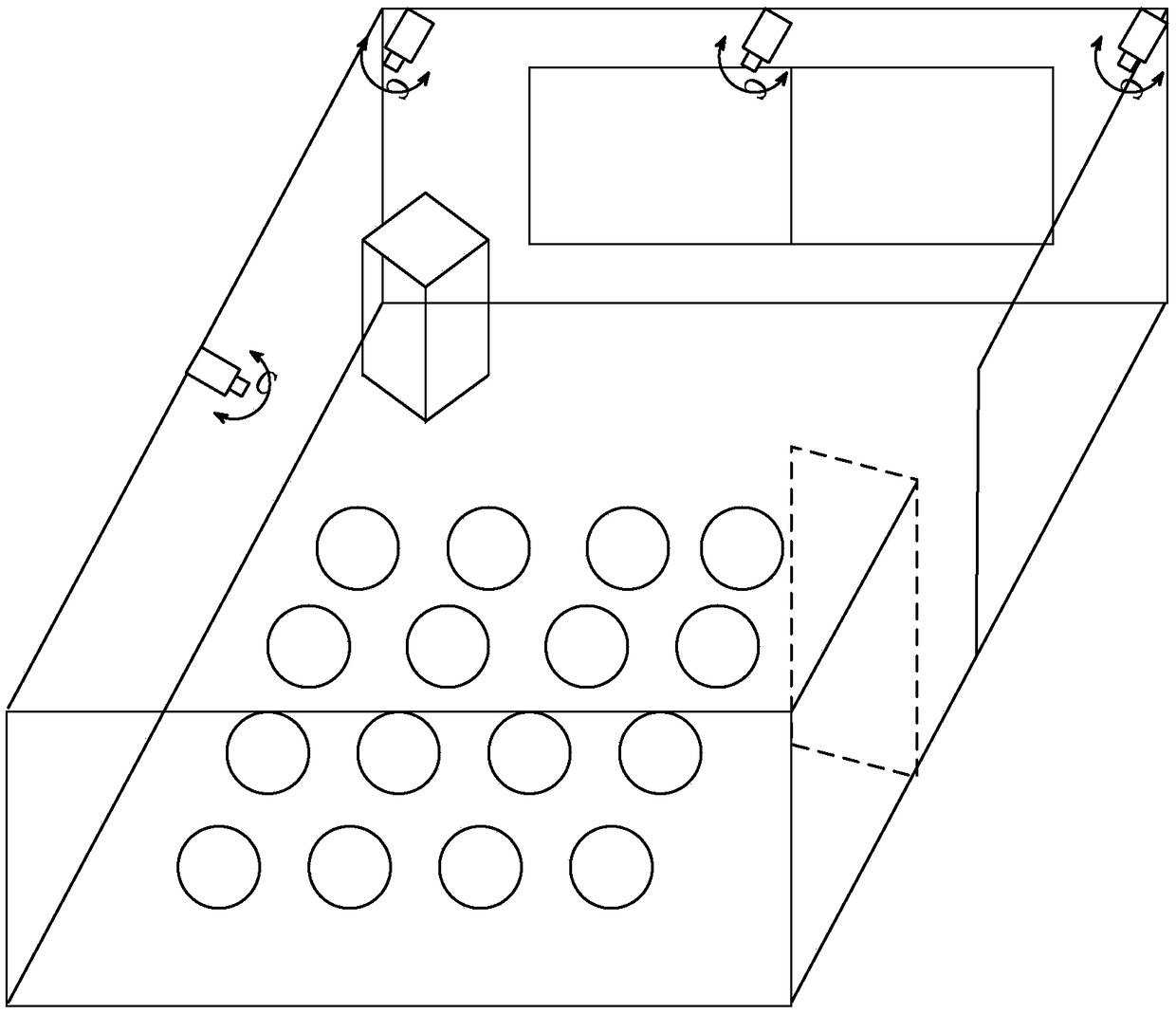

Method and system for in-store shopper behavior analysis with multi-modal sensor fusion

ActiveUS10217120B1Improve reliabilityImprove balanceTelevision system detailsPosition fixationWi-FiPhysical space

The present invention provides a comprehensive method for automatically and unobtrusively analyzing the in-store behavior of people visiting a physical space using a multi-modal fusion based on multiple types of sensors. The types of sensors employed may include cameras for capturing a plurality of images and mobile signal sensors for capturing a plurality of Wi-Fi signals. The present invention integrates the plurality of input sensor measurements to reliably and persistently track the people's physical attributes and detect the people's interactions with retail elements. The physical and contextual attributes collected from the processed shopper tracks includes the motion dynamics changes triggered by an implicit and explicit interaction to a retail element, comprising the behavior information for the trip of the people. The present invention integrates point-of-sale transaction data with the shopper behavior by finding and associating the transaction data that corresponds to a shopper trajectory and fusing them to generate a complete an intermediate representation of a shopper trip data, called a TripVector. The shopper behavior analyses are carried out based on the extracted TripVector. The analyzed behavior information for the shopper trips yields exemplary behavior analysis comprising map generation as visualization of the behavior, quantitative shopper metric derivation in multiple scales (e.g., store-wide and category-level) including path-to-purchase shopper metrics (e.g., traffic distribution, shopping action distribution, buying action distribution, conversion funnel), category dynamics (e.g., dominant path, category correlation, category sequence). The present invention includes a set of derived methods for different sensor configurations.

Owner:VIDEOMINING CORP

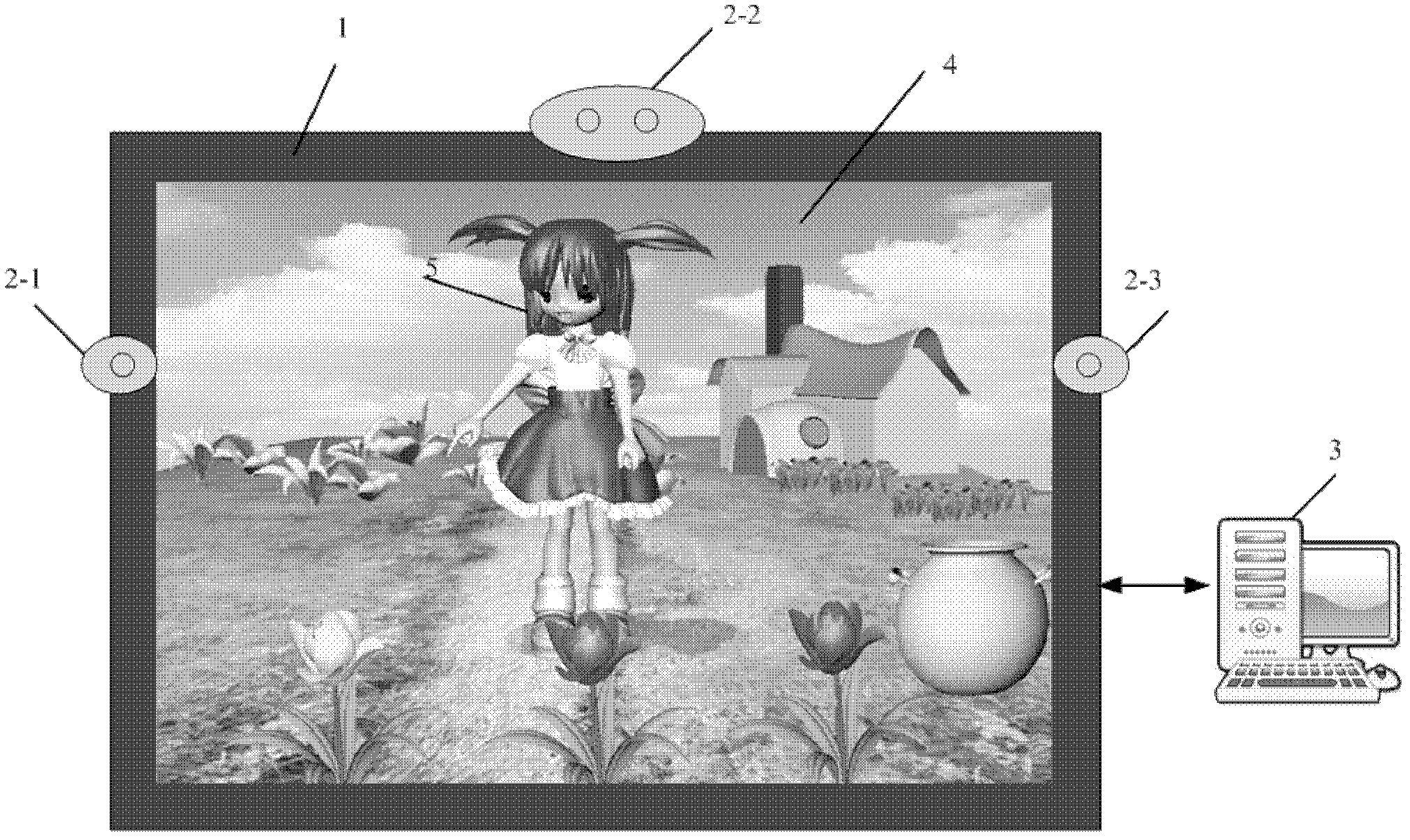

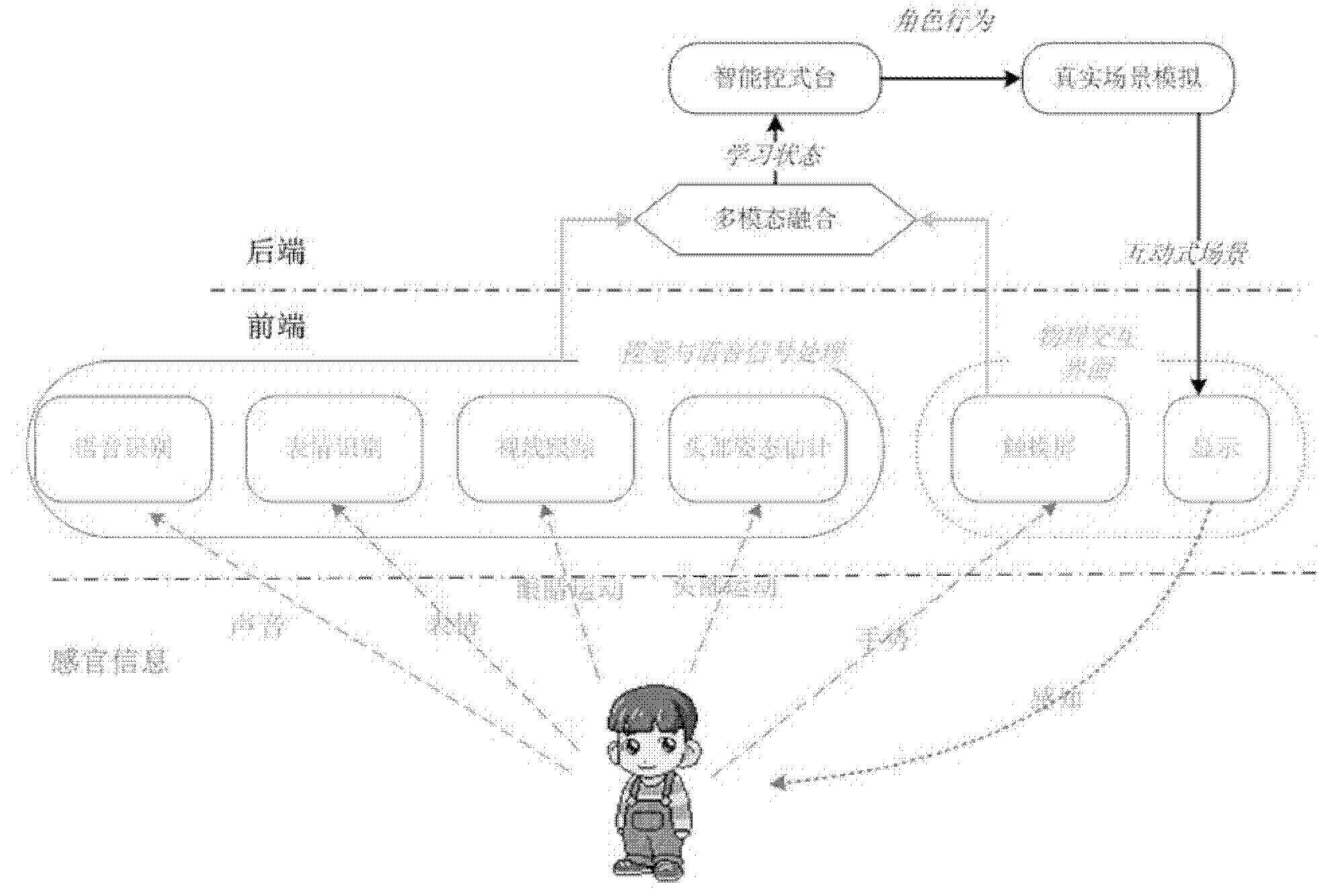

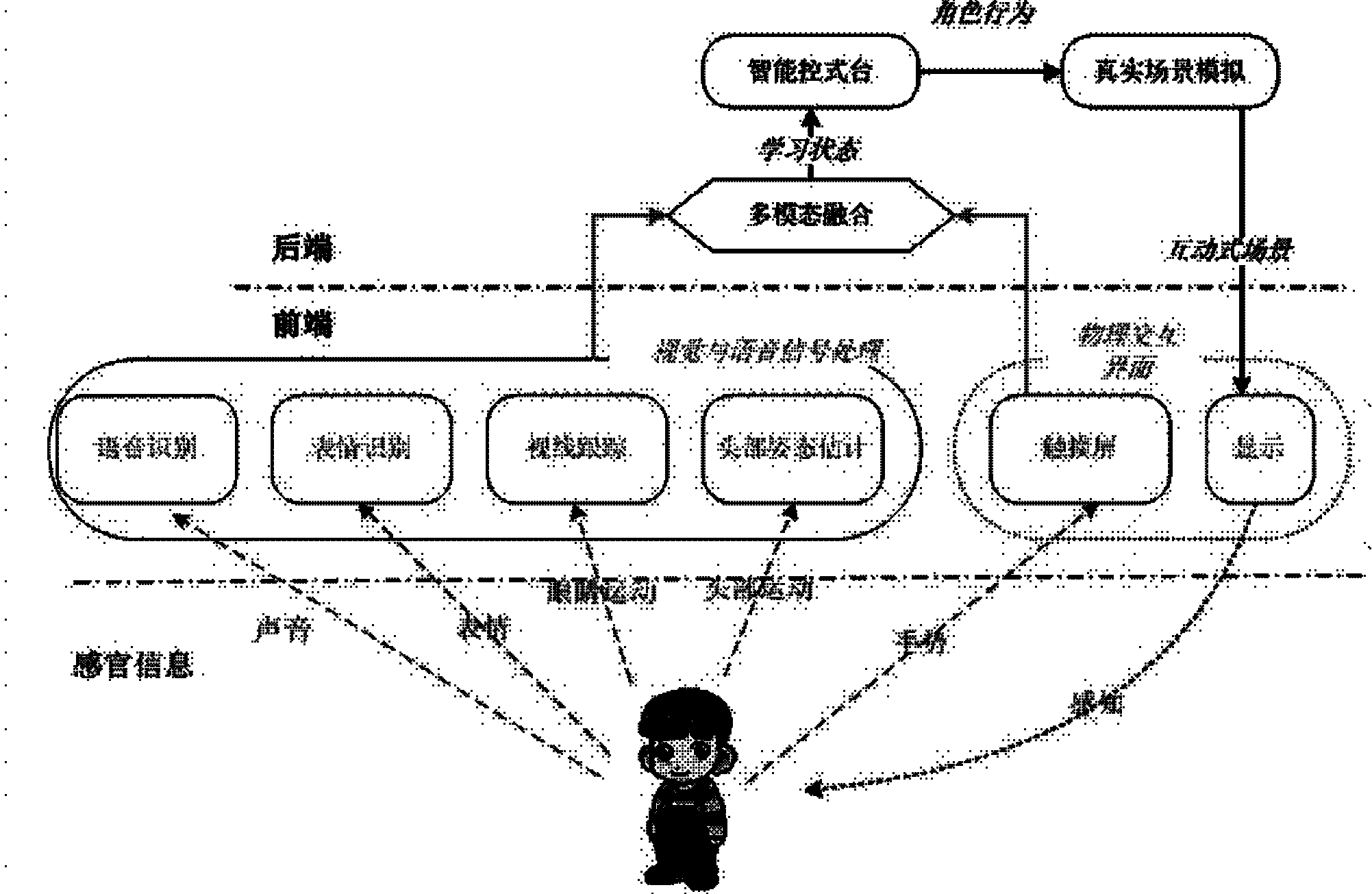

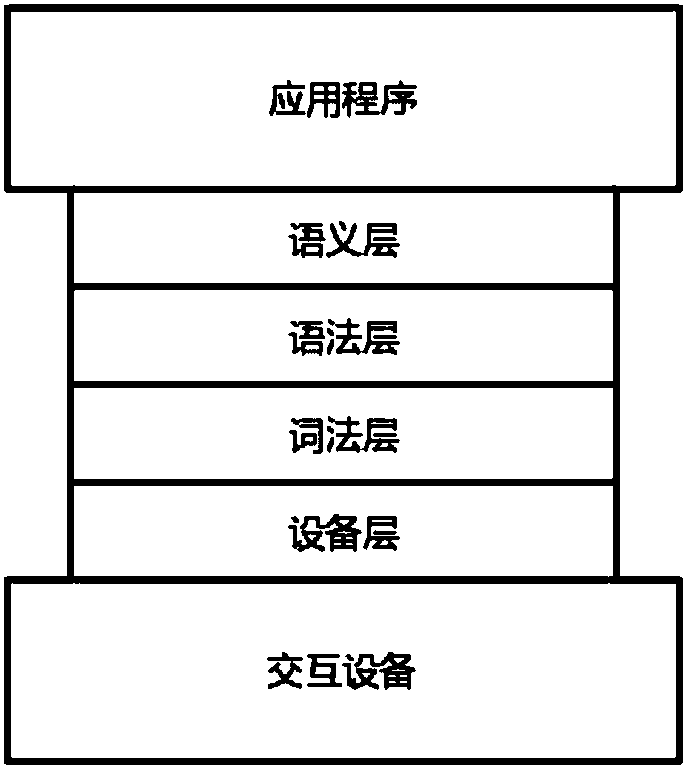

Human-machine interaction multi-mode early intervention system for improving social interaction capacity of autistic children

ActiveCN102354349AImprove social interaction skillsImprove social interactionInput/output for user-computer interactionGraph readingUSBVisual perception

The invention discloses a human-machine interaction multi-mode early intervention system for improving the social interaction capacity of autistic children. The system comprises a multi-point touch screen, a computer and three cameras respectively mounted at the left and right sides of the touch screen and above the middle part of the touch screen, wherein each camera is provided with a microphone and connected with the computer through a USB (Universal Serial Bus) interface; and the system is provided with six basic modules, namely a visual signal processing module, a voice signal processing module, a physical interactive interface module, a multi-mode fusion module, an intelligent control console module and a real scene simulation module, wherein the modules are combined with computer vision, voice recognition, behavior identification, intelligent agent and virtual reality technologies so as to support and improve the social interaction capacity of the autistic children. Development and change of several children in the learning environment are tracked for half year, wherein the social interaction capacity of most children is improved obviously, and other children also make some progress in the aspect of interaction capacity.

Owner:HUAZHONG NORMAL UNIV

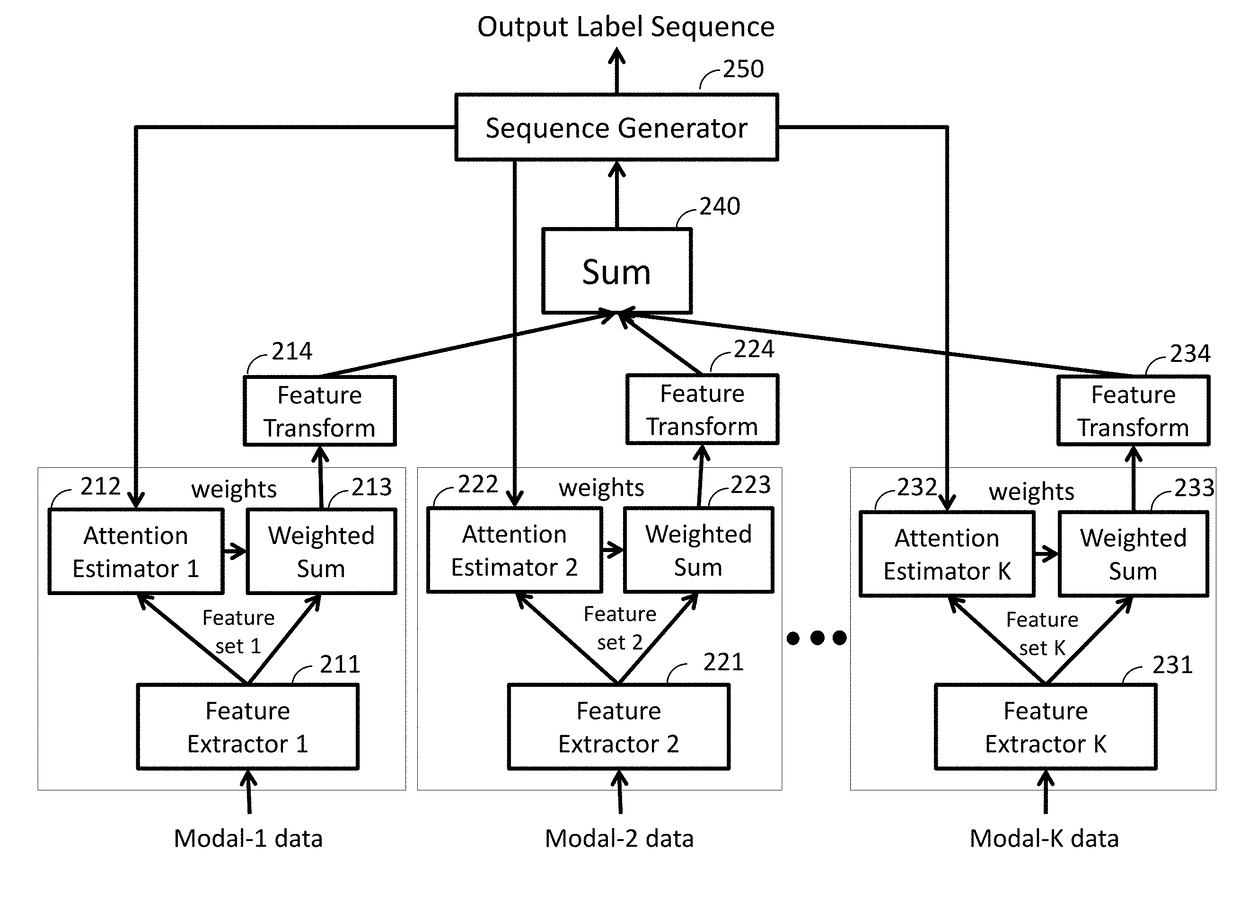

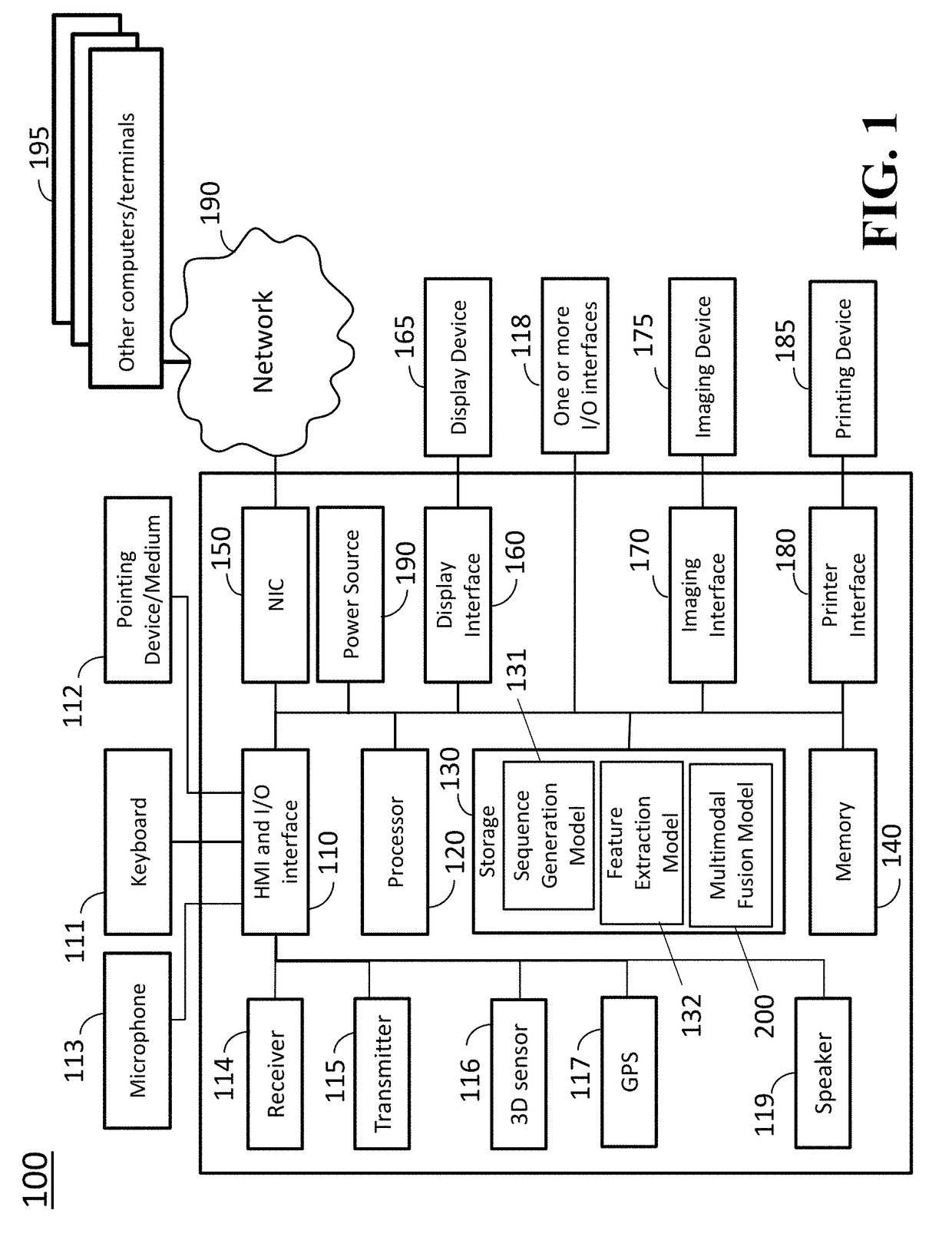

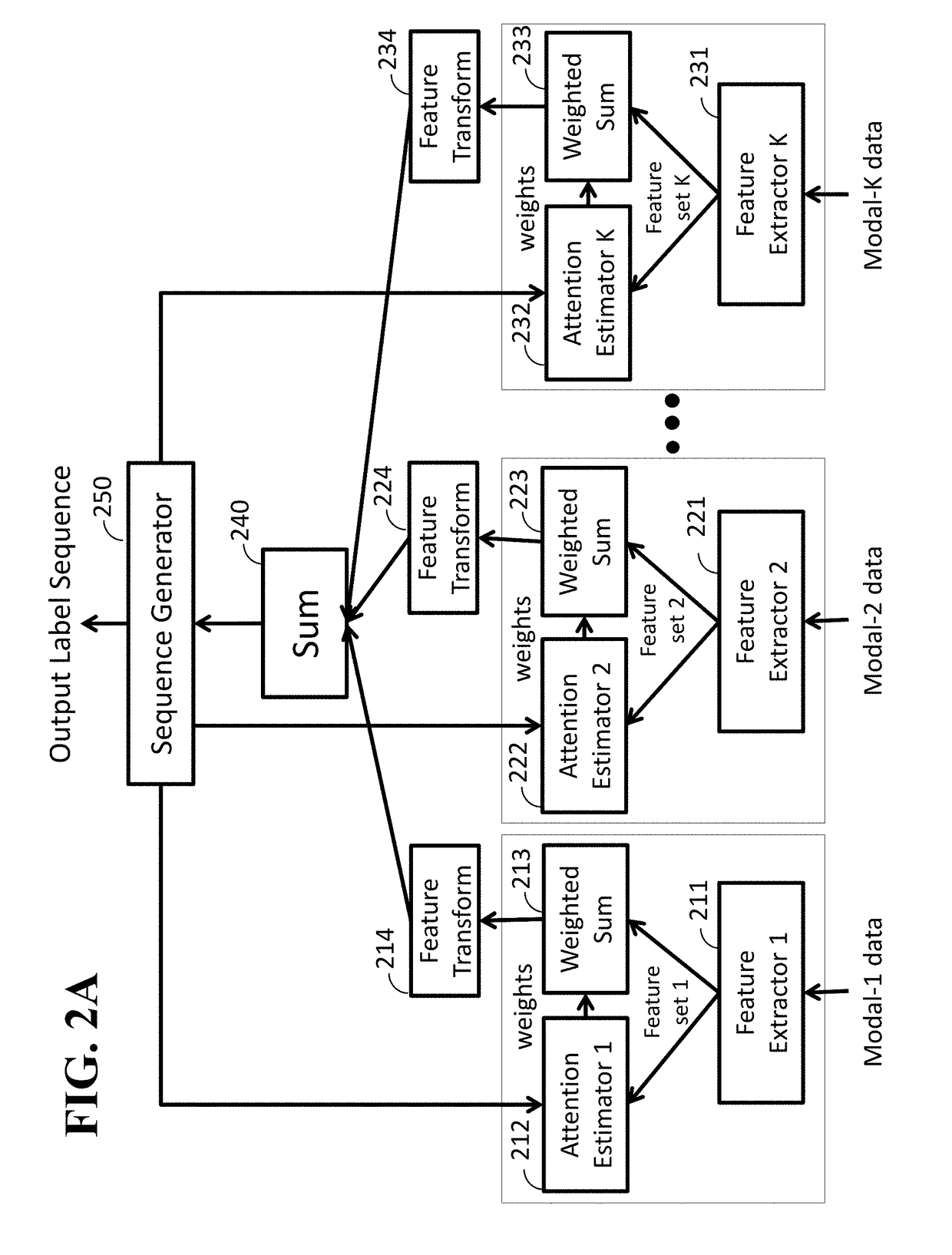

Method and System for Multi-Modal Fusion Model

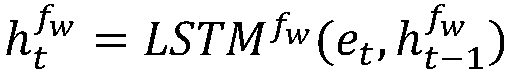

A system for generating a word sequence includes one or more processors in connection with a memory and one or more storage devices storing instructions causing operations that include receiving first and second input vectors, extracting first and second feature vectors, estimating a first set of weights and a second set of weights, calculating a first content vector from the first set of weights and the first feature vectors, and calculating a second content vector, transforming the first content vector into a first modal content vector having a predetermined dimension and transforming the second content vector into a second modal content vector having the predetermined dimension, estimating a set of modal attention weights, generating a weighted content vector having the predetermined dimension from the set of modal attention weights and the first and second modal content vectors, and generating a predicted word using the sequence generator.

Owner:MITSUBISHI ELECTRIC RES LAB INC

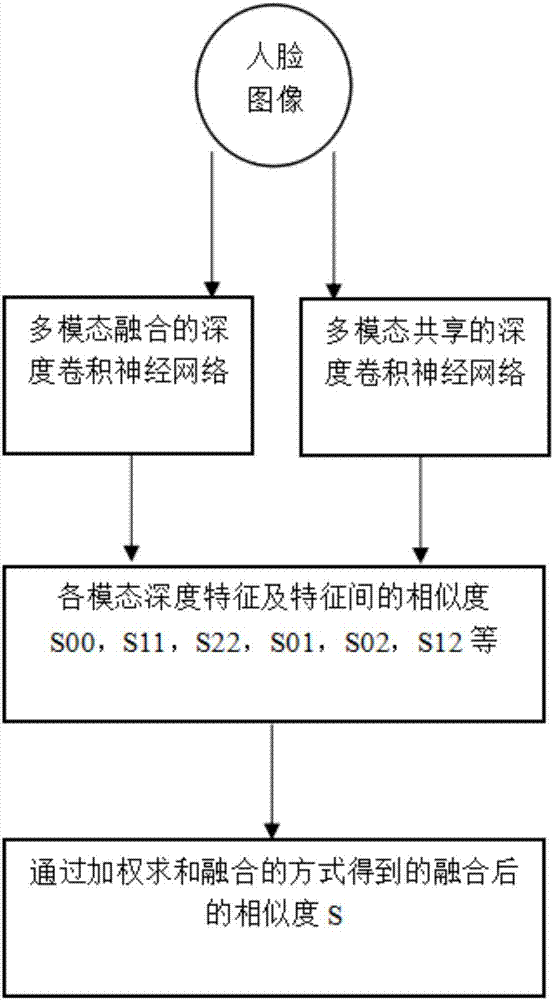

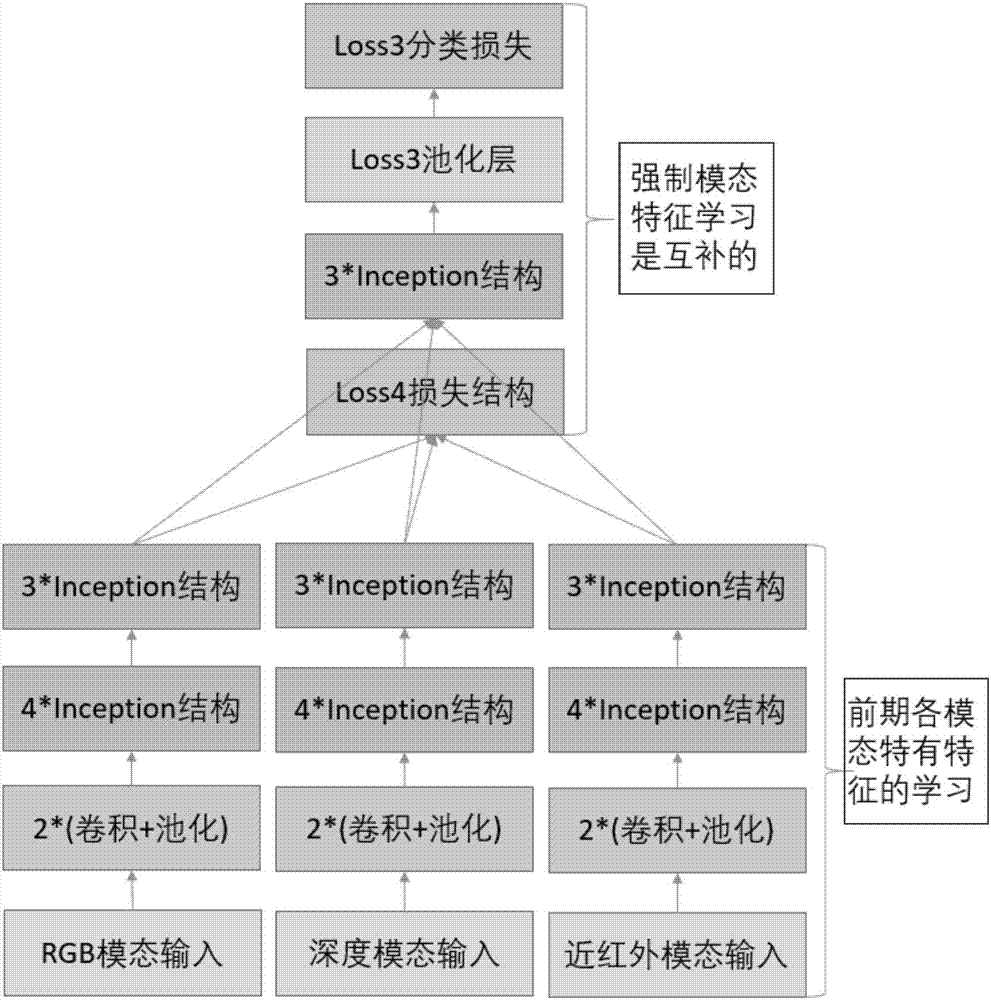

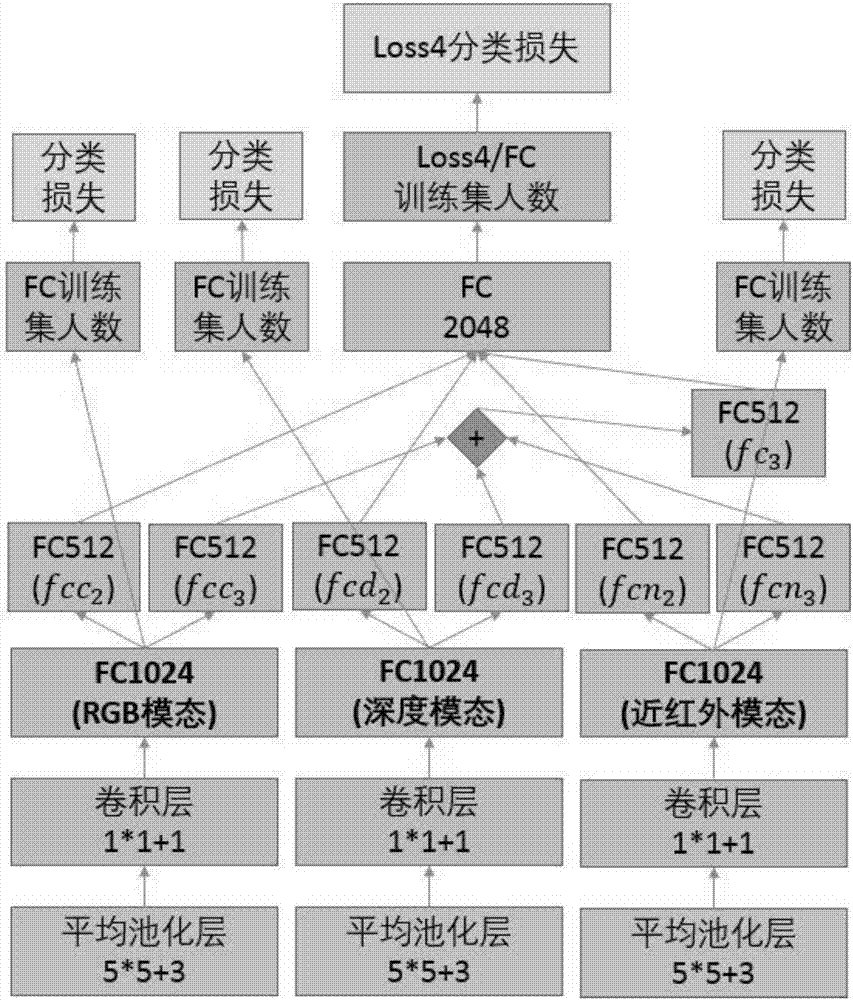

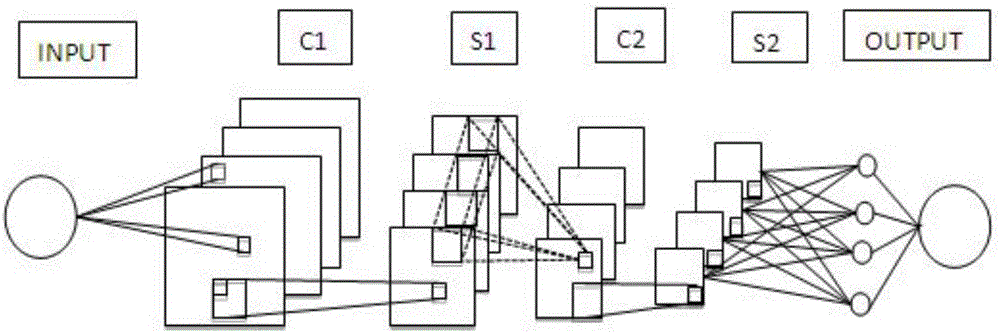

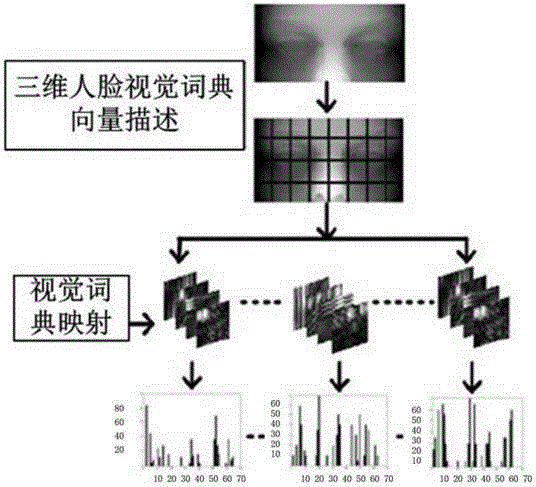

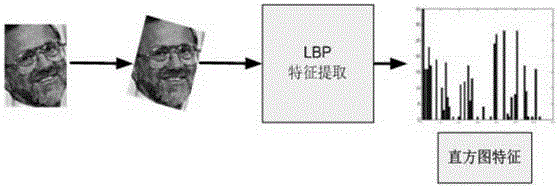

Multi-modal human face recognition method based on deep learning

ActiveCN106909905AImprove performanceTake advantage ofCharacter and pattern recognitionFace detectionModal data

The invention discloses a multi-modal human face recognition method based on deep learning, and the method comprises the steps: (1), carrying out the human face detection and alignment of an RGB human face image; (2), designing a multi-modal fusion depth convolution neural network structure N1, and training an N1 network; (2), designing a multi-modal shared depth convolution neural network structure N2, and a training an N2 network; (4), extracting features; (5), calculating similarity; and (6), carrying out the similarity fusion. The method employs a multi-modal system, overcomes some inherent shortcomings of a single-modal system through the collection of various types of human face modal data, the advantages of various types of modal information and a fusion strategy, effectively improves the performance of a human face recognition system through the efficient use of various types of modal information, and enables the human face recognition to be quicker and more accurate.

Owner:SEETATECH BEIJING TECH CO LTD

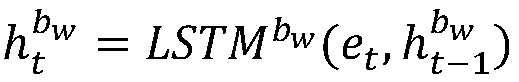

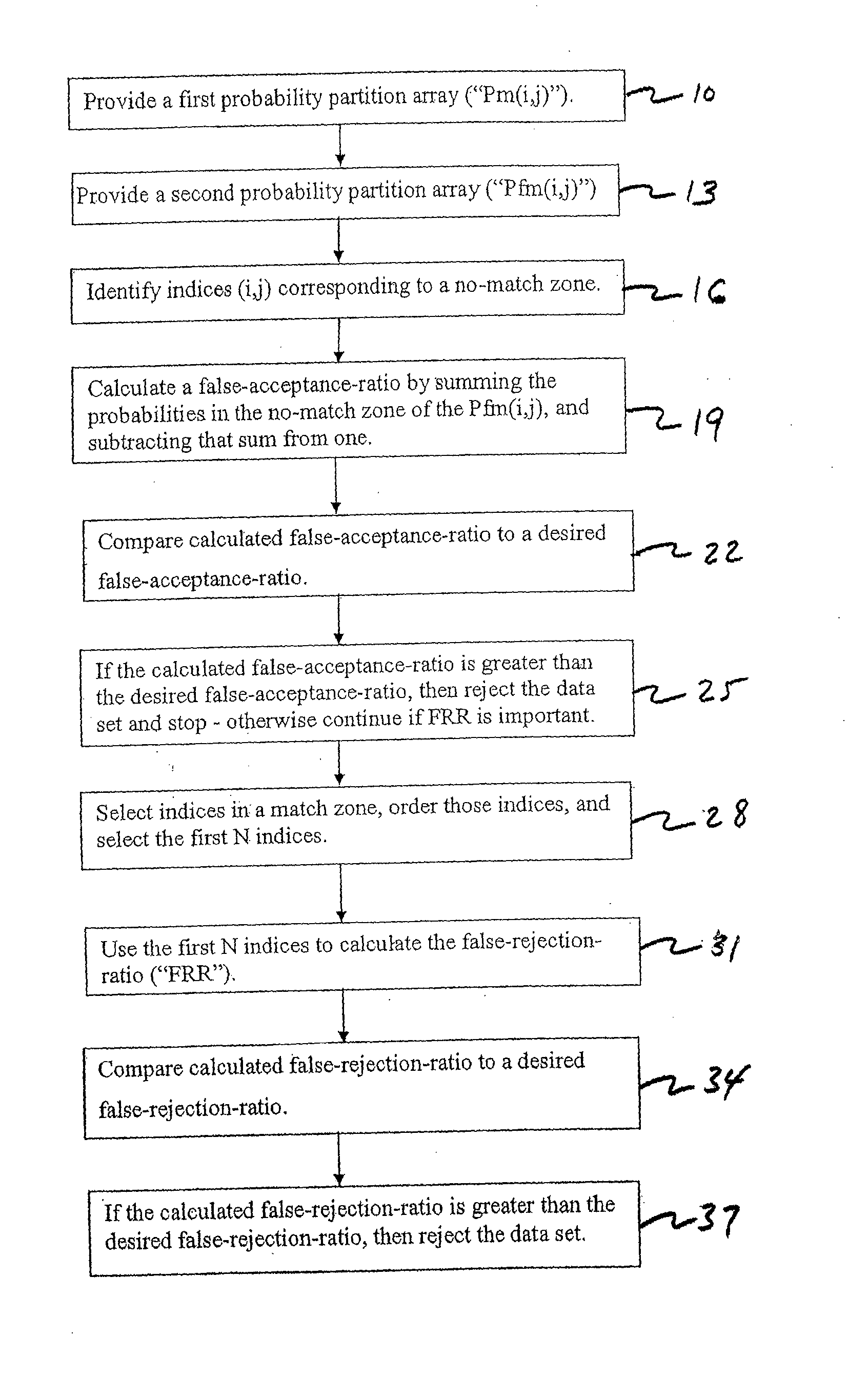

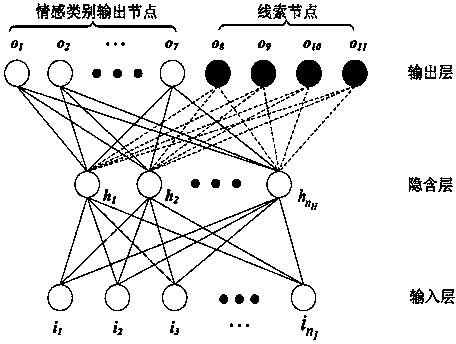

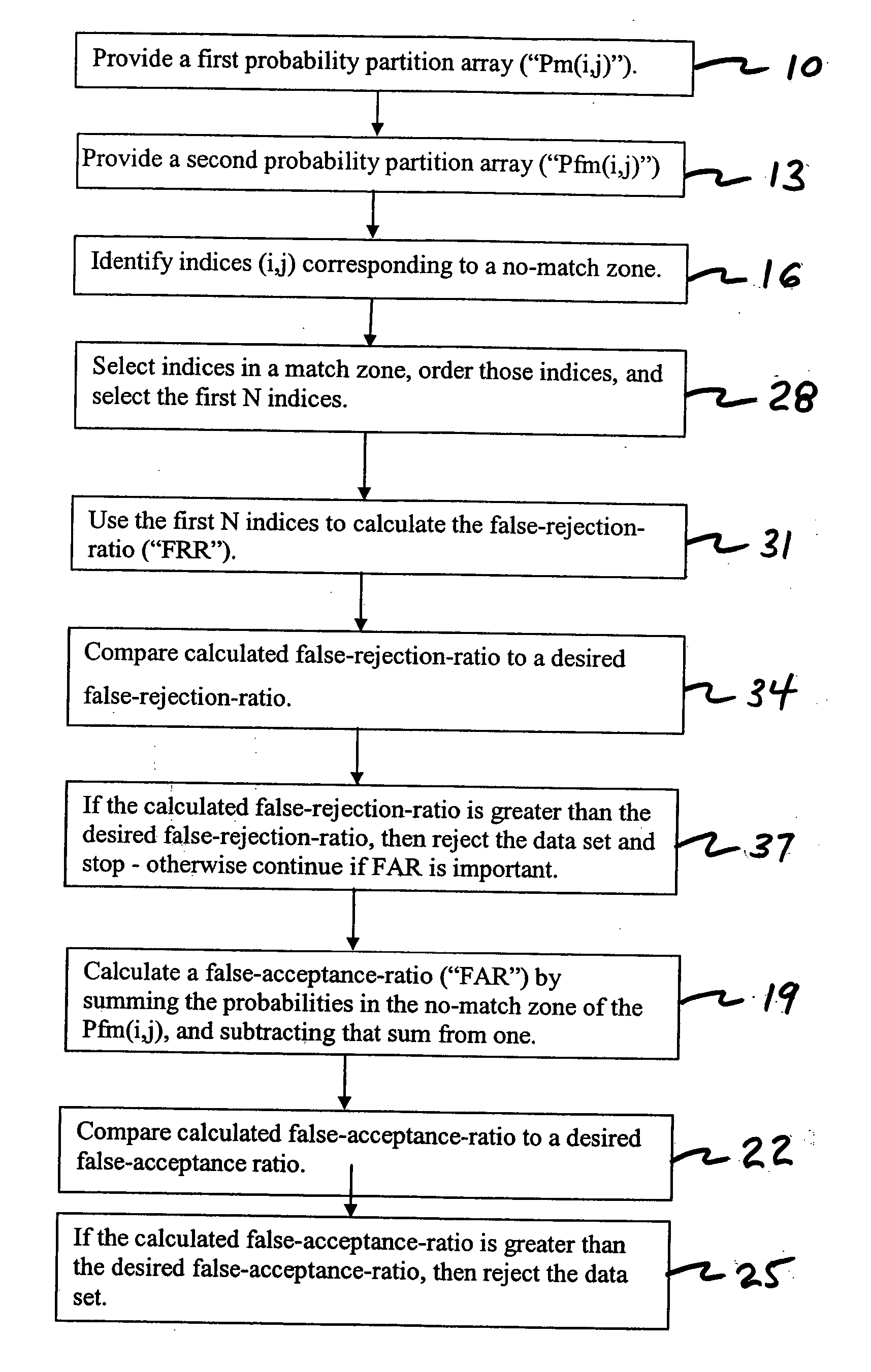

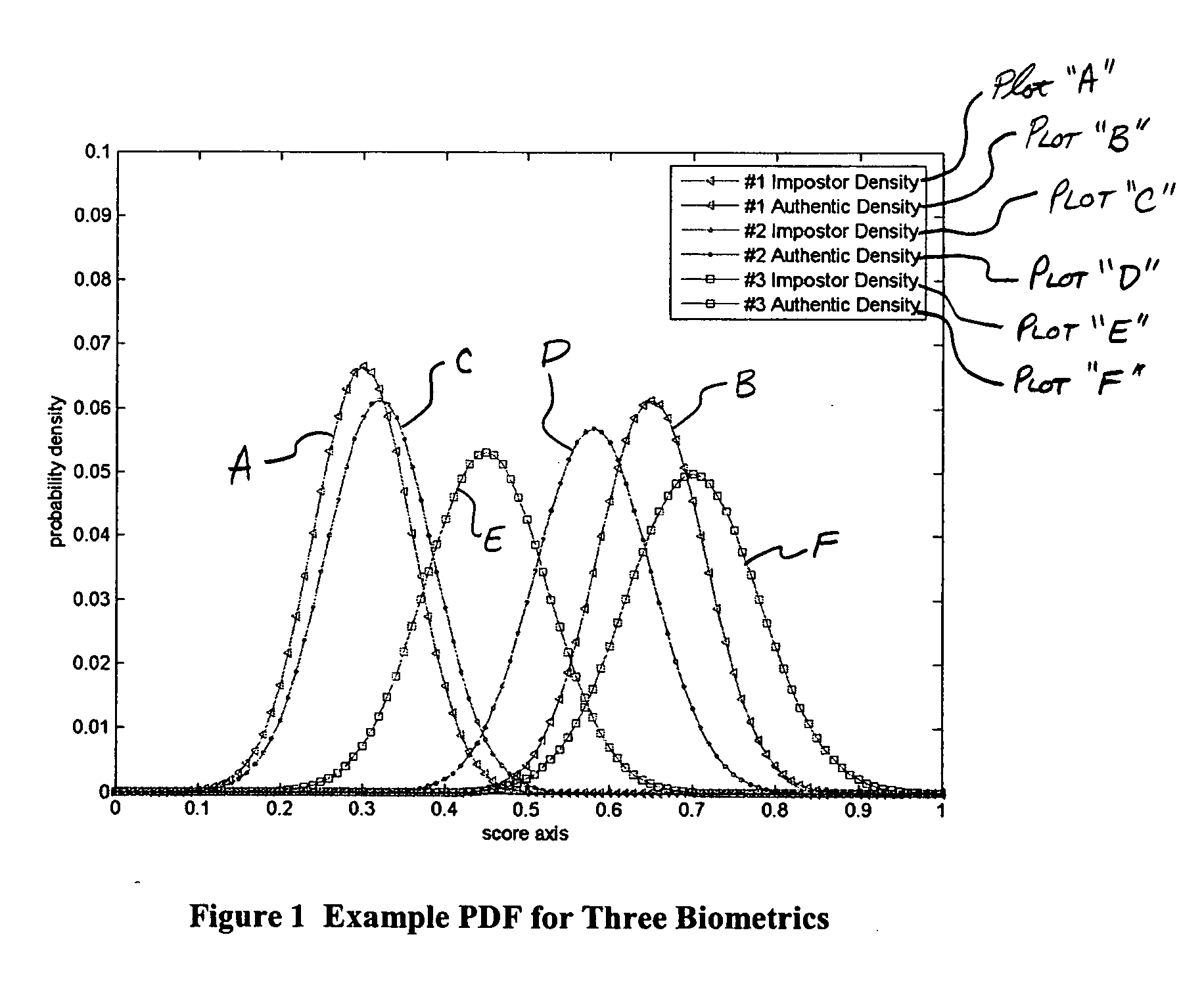

Multimodal Fusion Decision Logic System For Determining Whether To Accept A Specimen

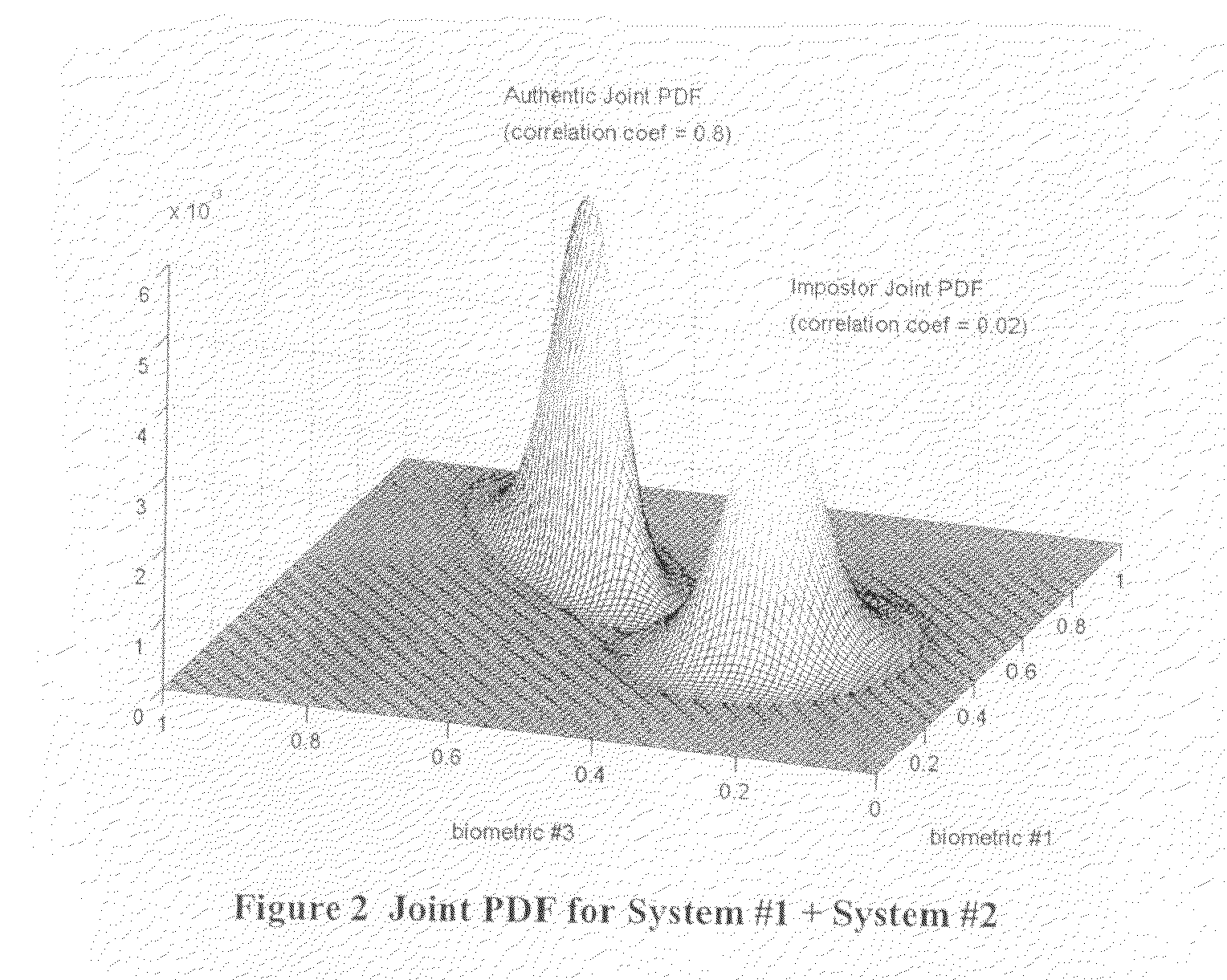

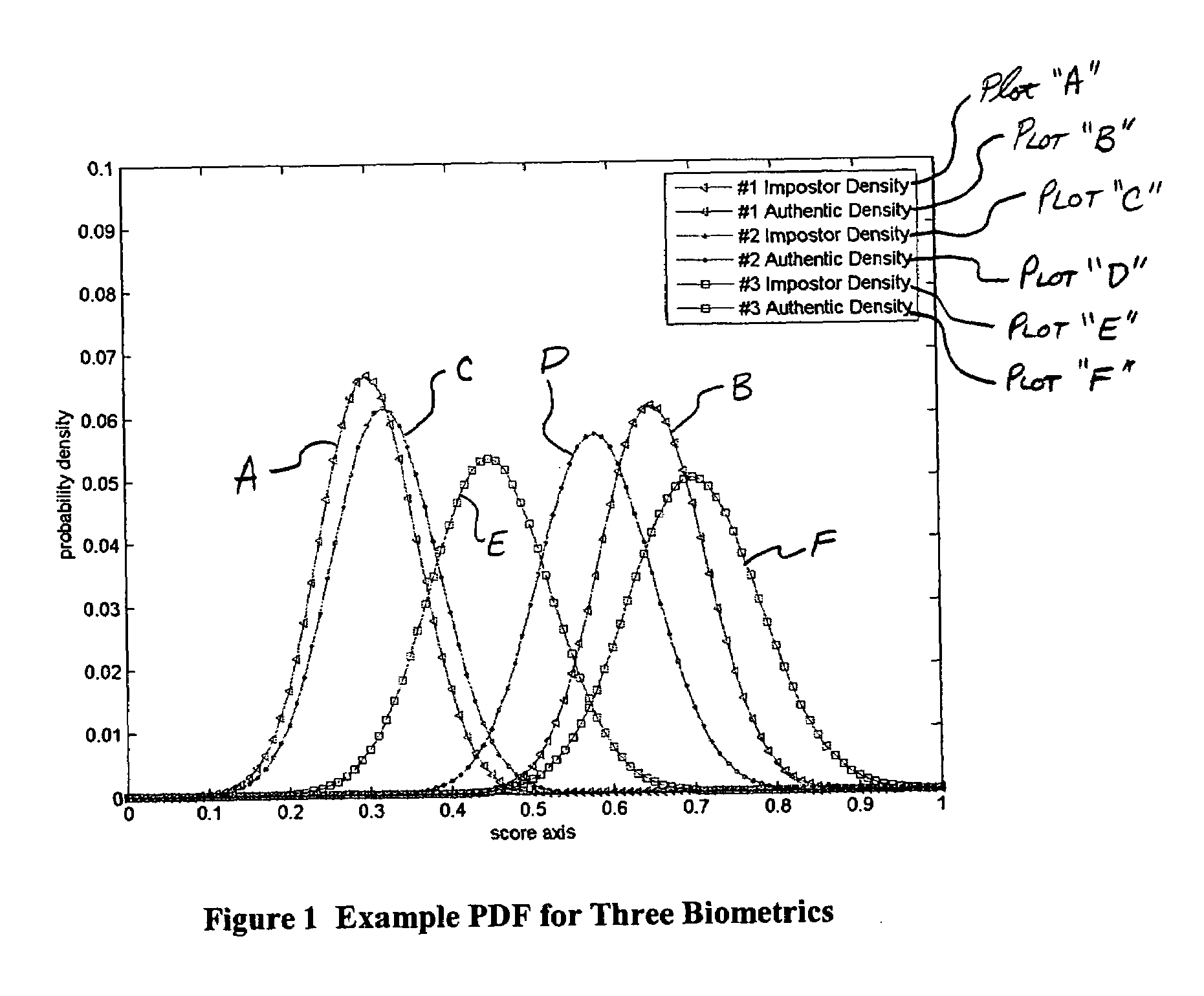

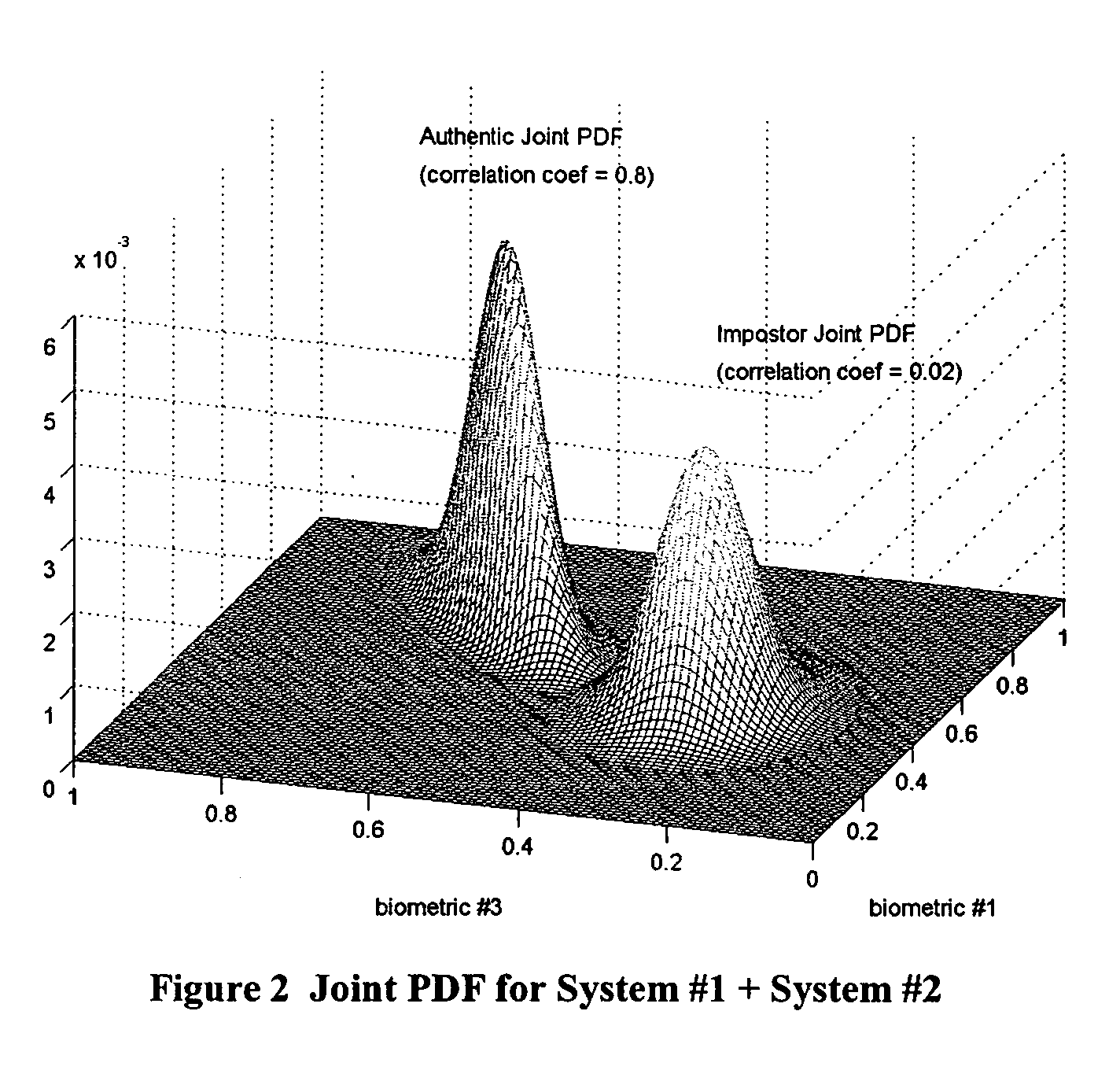

InactiveUS20090171623A1Mathematical modelsDigital data processing detailsData setFalse acceptance ratio

The present invention includes a method of deciding whether a data set is acceptable for making a decision. A first probability partition array and a second probability partition array may be provided. One or both of the probability partition arrays may be a Copula model. A no-match zone may be established and used to calculate a false-acceptance-rate (“FAR”) and / or a false-rejection-rate (“FRR”) for the data set. The FAR and / or the FAR may be compared to desired rates. Based on the comparison, the data set may be either accepted or rejected. The invention may also be embodied as a computer readable memory device for executing the methods.

Owner:QUALCOMM INC

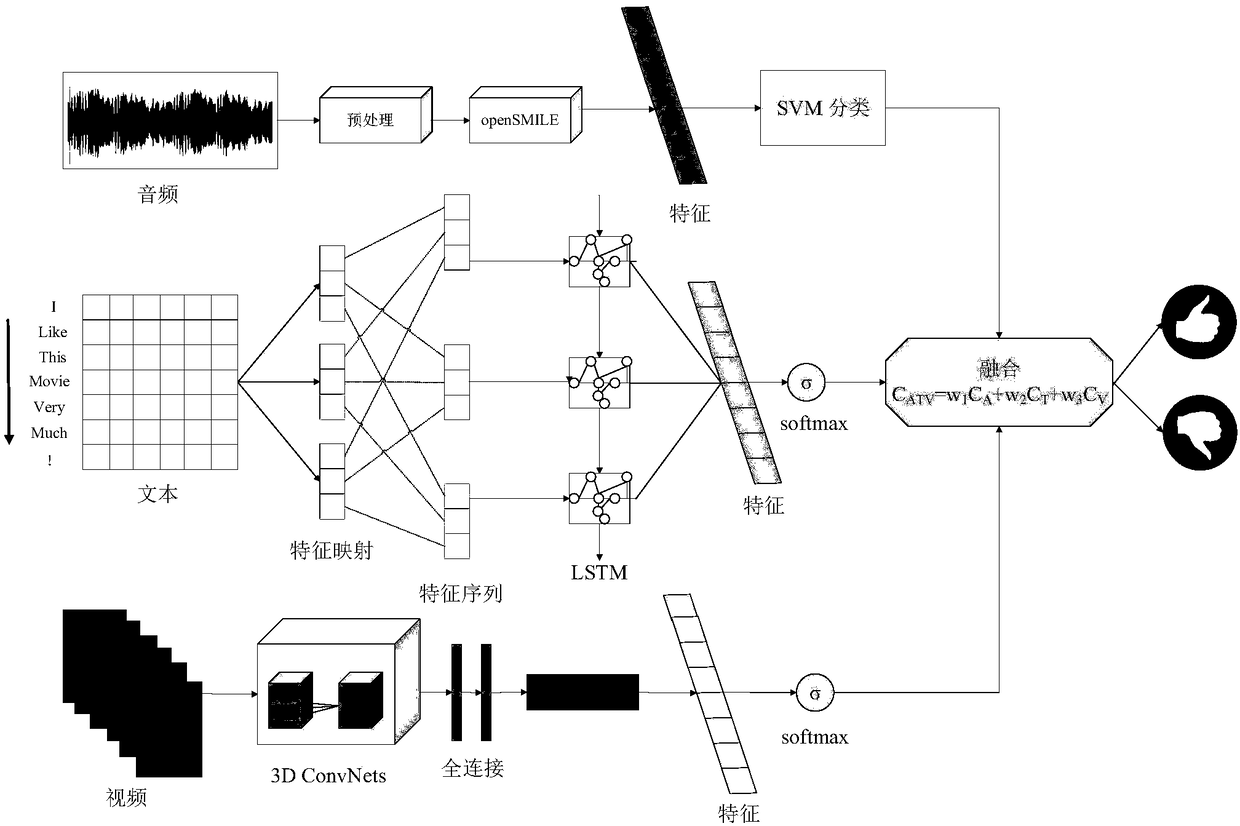

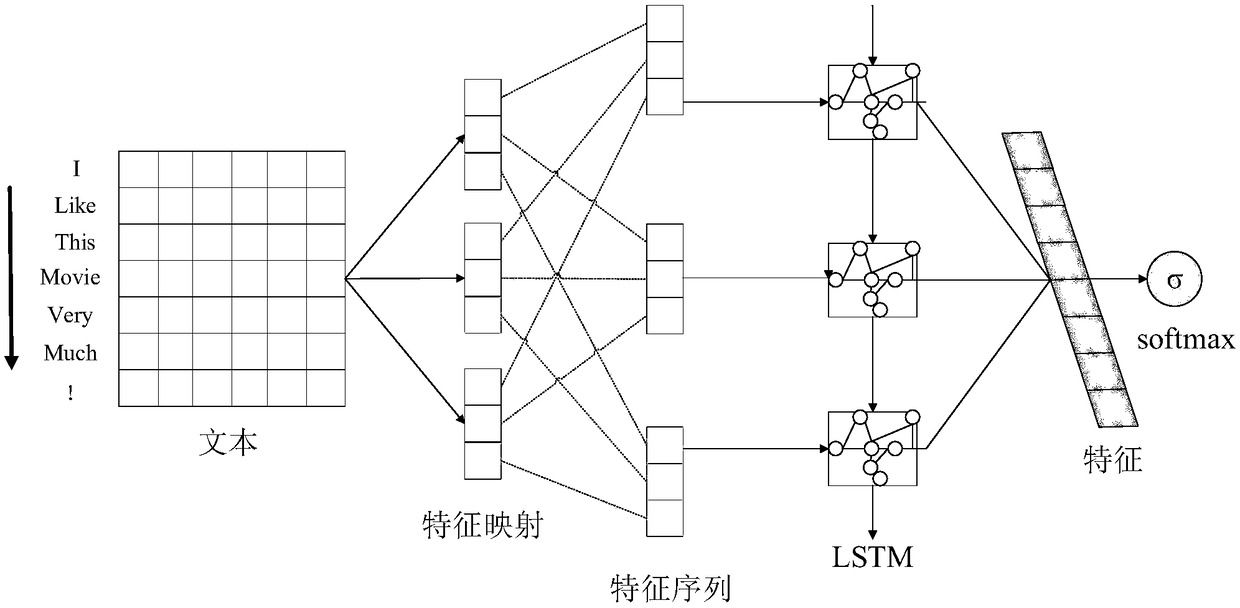

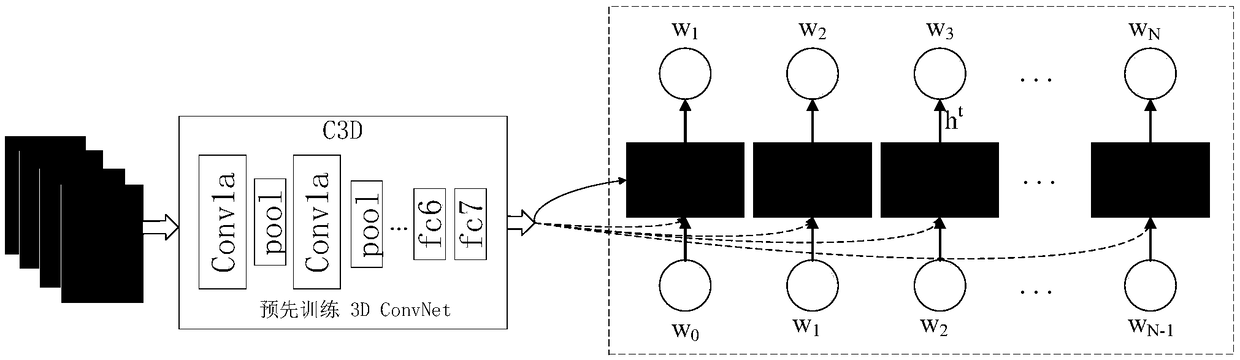

A socio-emotional classification method based on multimodal fusion

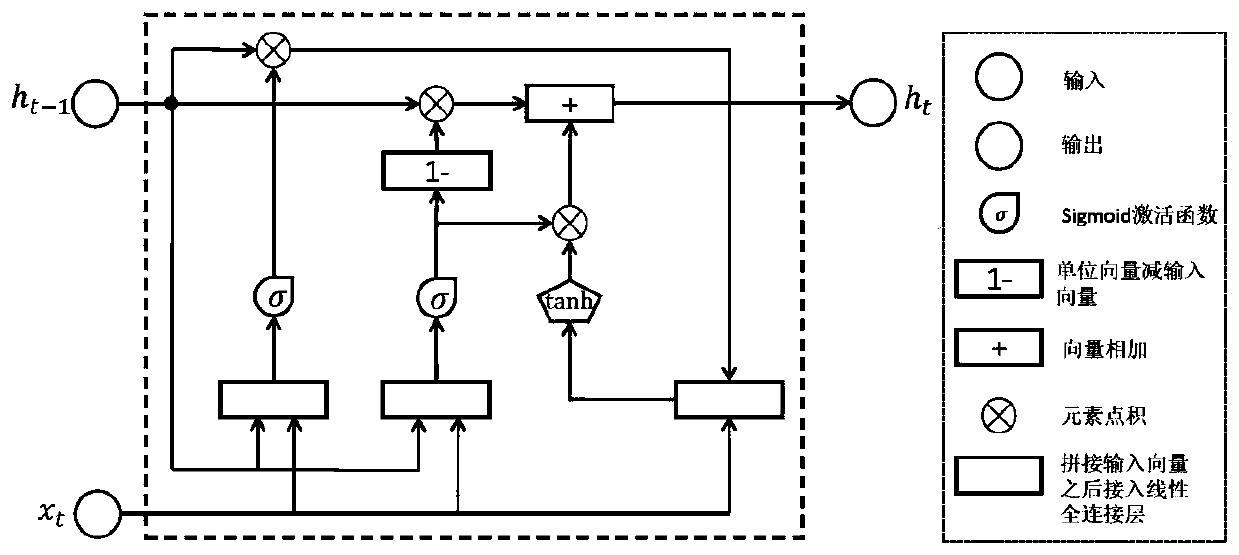

InactiveCN109508375AEfficient extractionImprove accuracySemantic analysisNeural architecturesShort-term memoryClassification methods

The invention provides a social emotion classification method based on multimodal fusion, which relates to information in the form of audio, visual and text. Most of affective computing research onlyextracts affective information by analyzing single-mode information, ignoring the relationship between information sources. The present invention proposes a 3D CNN ConvLSTM (3D CNN ConvLSTM) model forvideo information, and establishes spatio-temporal information for emotion recognition tasks through a cascade combination of a three-dimensional convolution neural network (C3D) and a convolution long-short-term memory recurrent neural network (ConvLSTM). For text messages, use CNN-RNN hybrid model is used to classify text emotion. Heterogeneous fusion of vision, audio and text is performed by decision-level fusion. The deep space-time feature learned by the invention effectively simulates the visual appearance and the motion information, and after fusing the text and the audio information,the accuracy of the emotion analysis is effectively improved.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

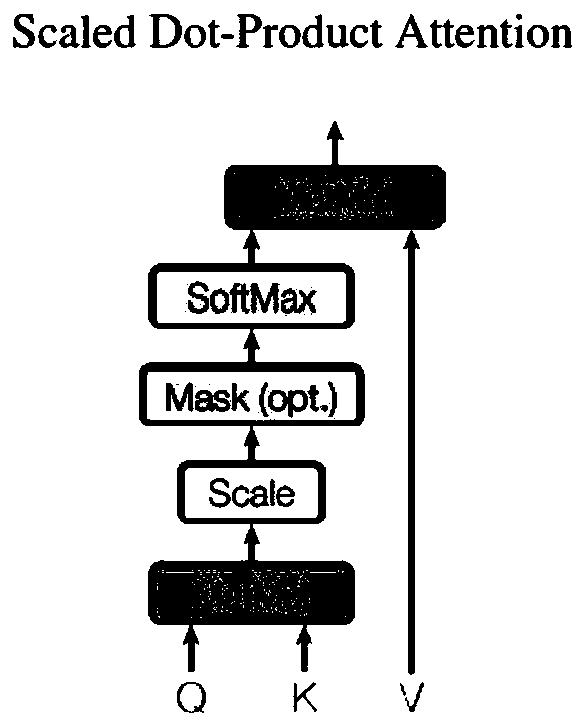

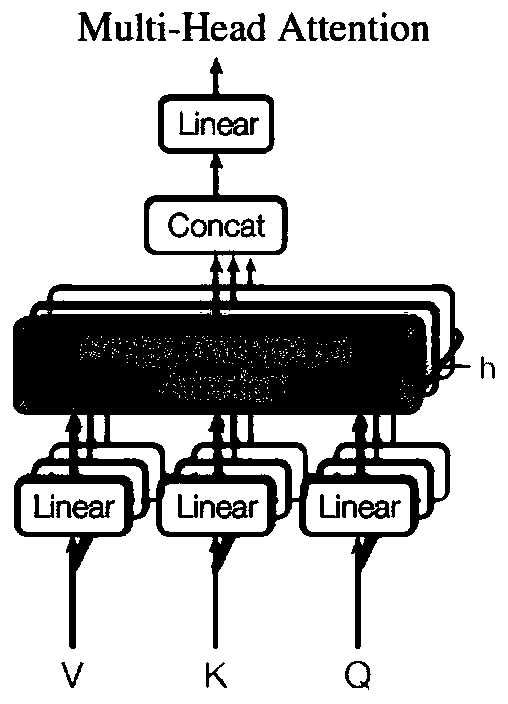

Visual question and answer fusion enhancement method based on multi-modal fusion

ActiveCN110377710AImprove accuracyHigh expressionDigital data information retrievalCharacter and pattern recognitionAttention modelVisually impaired

The invention discloses a visual question and answer fusion enhancement method based on multi-modal fusion. The method comprises the following steps of 1, constructing a time sequence model by utilizing a GRU structure, obtaining feature representation learning of a problem, and utilizing output which is extracted from Faster R-CNN and is based on an attention model from bottom to top as the feature representation; 2, performing multi-modal reasoning based on an attention model Transformer, and introducing the attention model for performing multi-modal fusion on a picture-problem-answer tripleset, and establishing an inference relation; and 3, providing different reasoning processes and result outputs for different implicit relationships, and performing label distribution regression learning according to the result outputs to determine answers. According to the method, answers are obtained based on specific pictures and questions and directly applied to applications serving the blind,the blind or visually impaired people can be helped to better perceive the surrounding environment, the method is also applied to a picture retrieval system, and the accuracy and diversity of pictureretrieval are improved.

Owner:HANGZHOU DIANZI UNIV

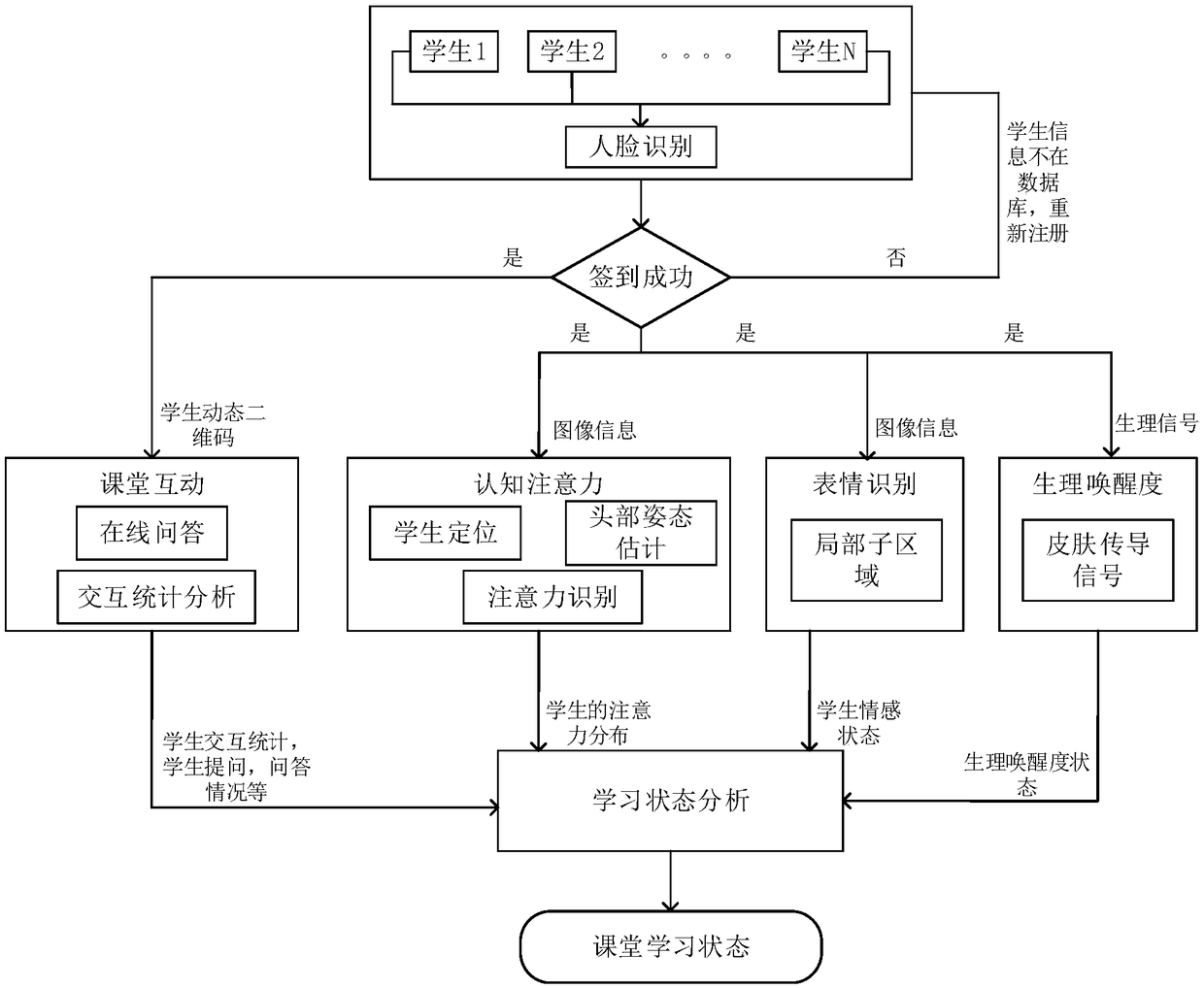

Multi-mode information fusion-based classroom learning state monitoring method and system

InactiveCN108805009AIncrease credibilityImprove legibilityData processing applicationsAcquiring/recognising facial featuresSkin conductionBiological activation

The invention discloses a multi-mode information fusion-based classroom learning state monitoring method and system. The method specifically comprises the following steps of: acquiring an indoor sceneimage and positioning faces in the scene image; estimating face orientation postures in a face region so as to estimate attentions of students according to the face orientation postures; estimating facial expressions in the face region; acquiring skin conduction signals of the students so as to estimating physiological activation degrees of the students according to the skin conduction signals; recording interactive answer frequencies and correctness of the students on the classroom so as to estimate participation degrees of the students; and fusing four-dimensional information such as attention, learning moods, the physiological activation degrees and the classroom participation degrees of the students so as to analyze classroom learning states of the students. The invention furthermoreprovides a system for realizing the method. By applying the method and system, the learning states of students on classrooms can be objectively and correctly monitored and analyzed in real time, so that the teaching process analysis is perfected and the teaching effect differentiation degree is enhanced.

Owner:HUAZHONG NORMAL UNIV

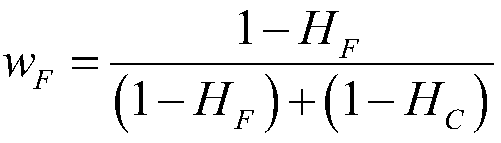

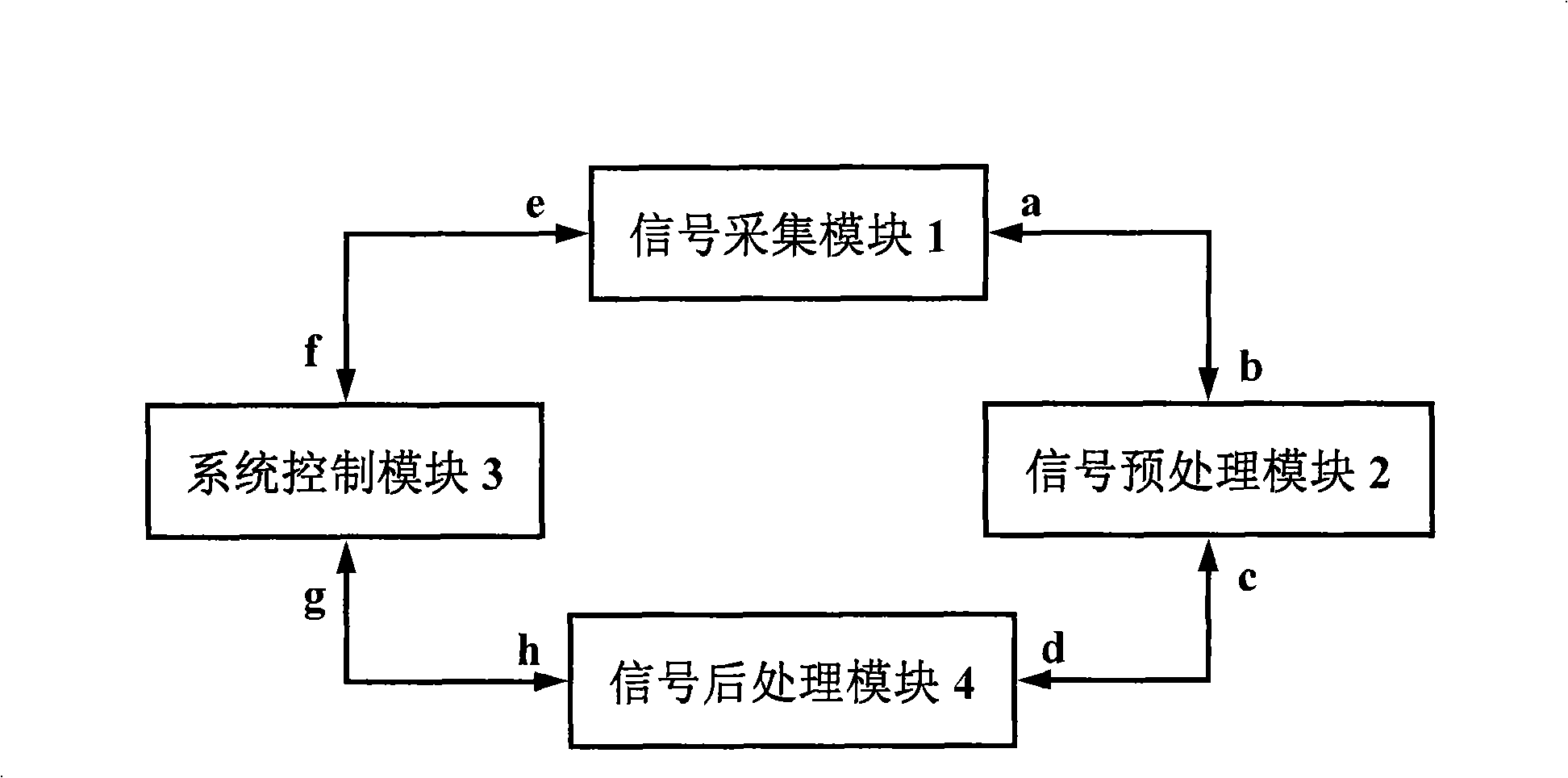

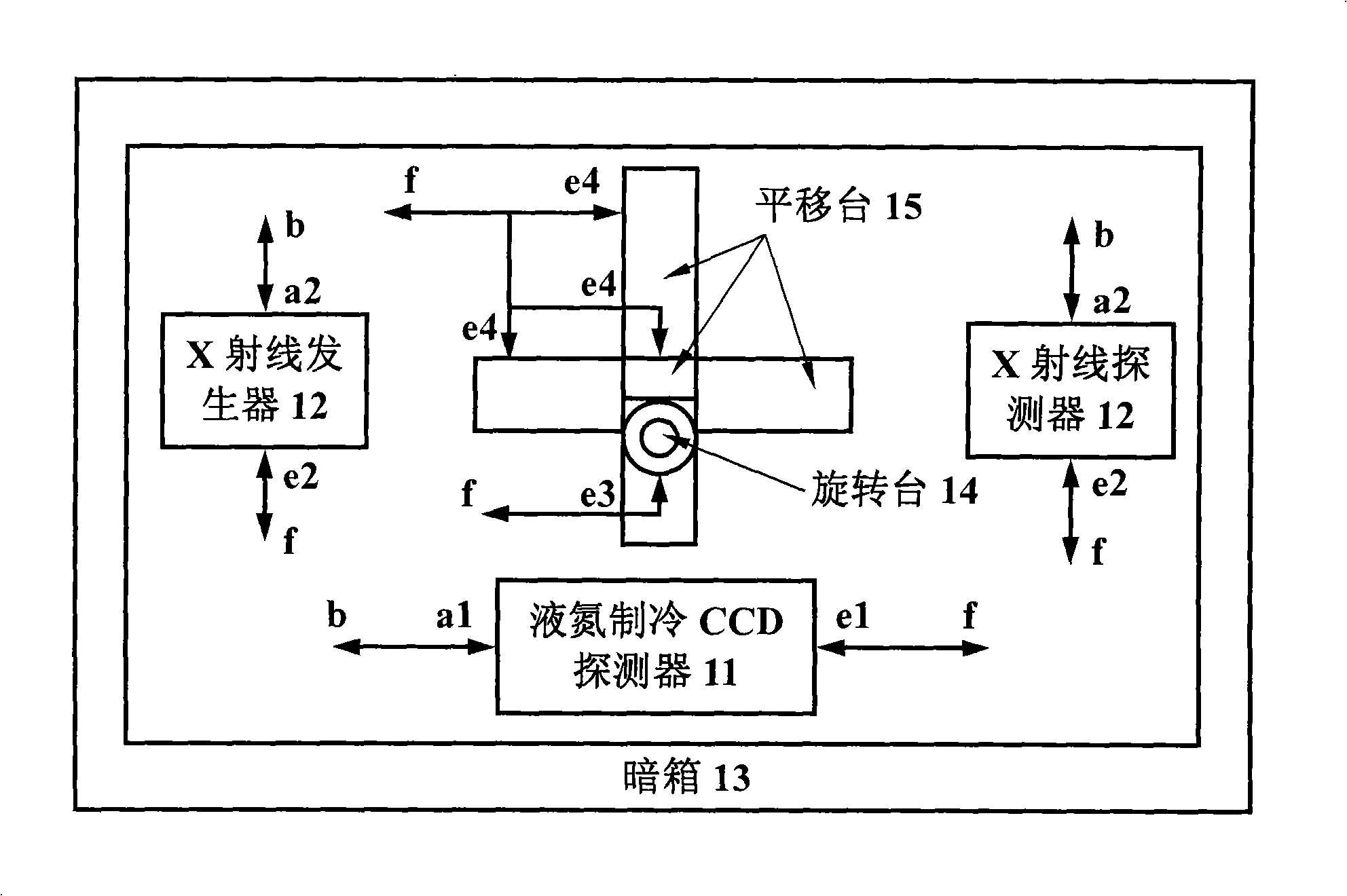

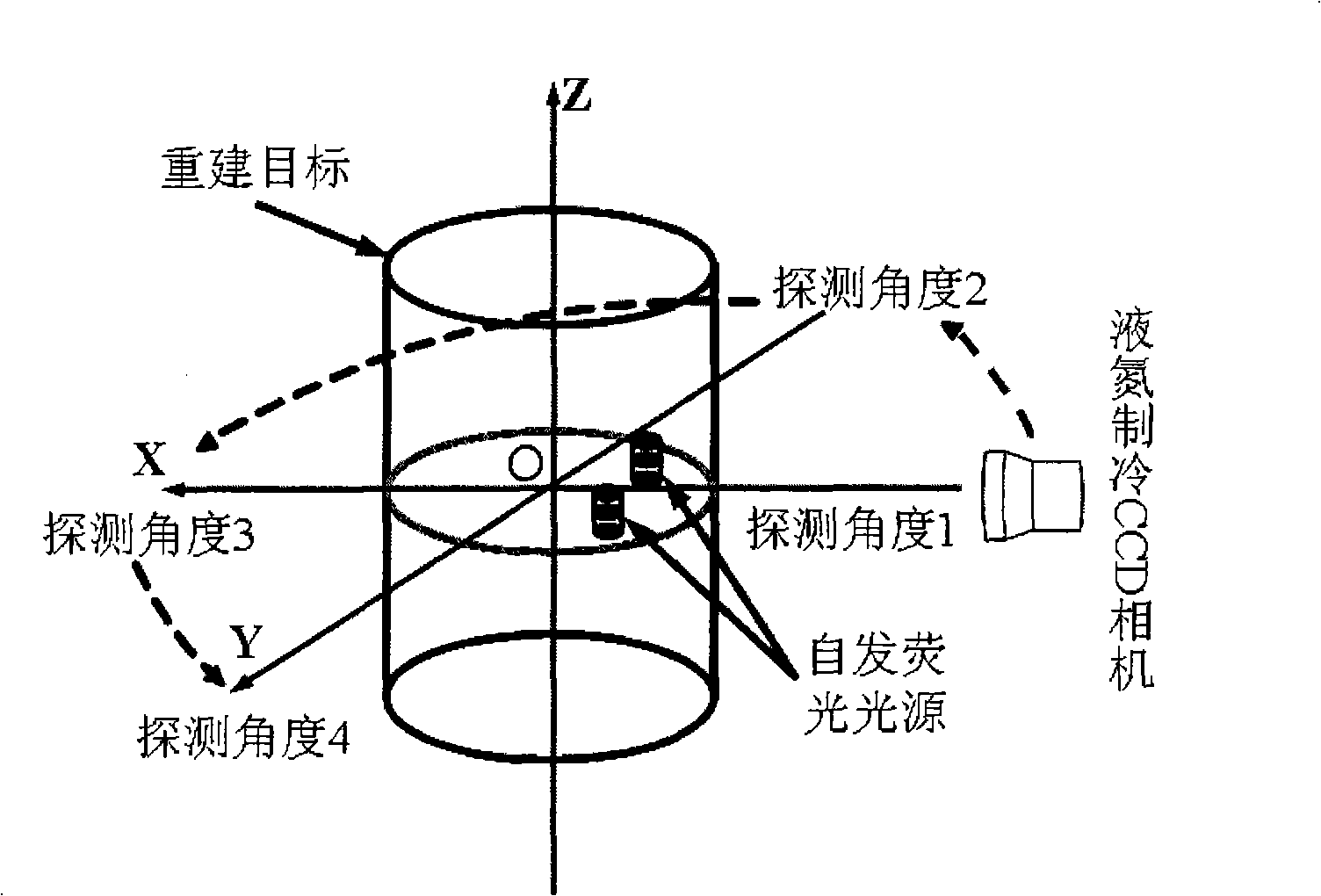

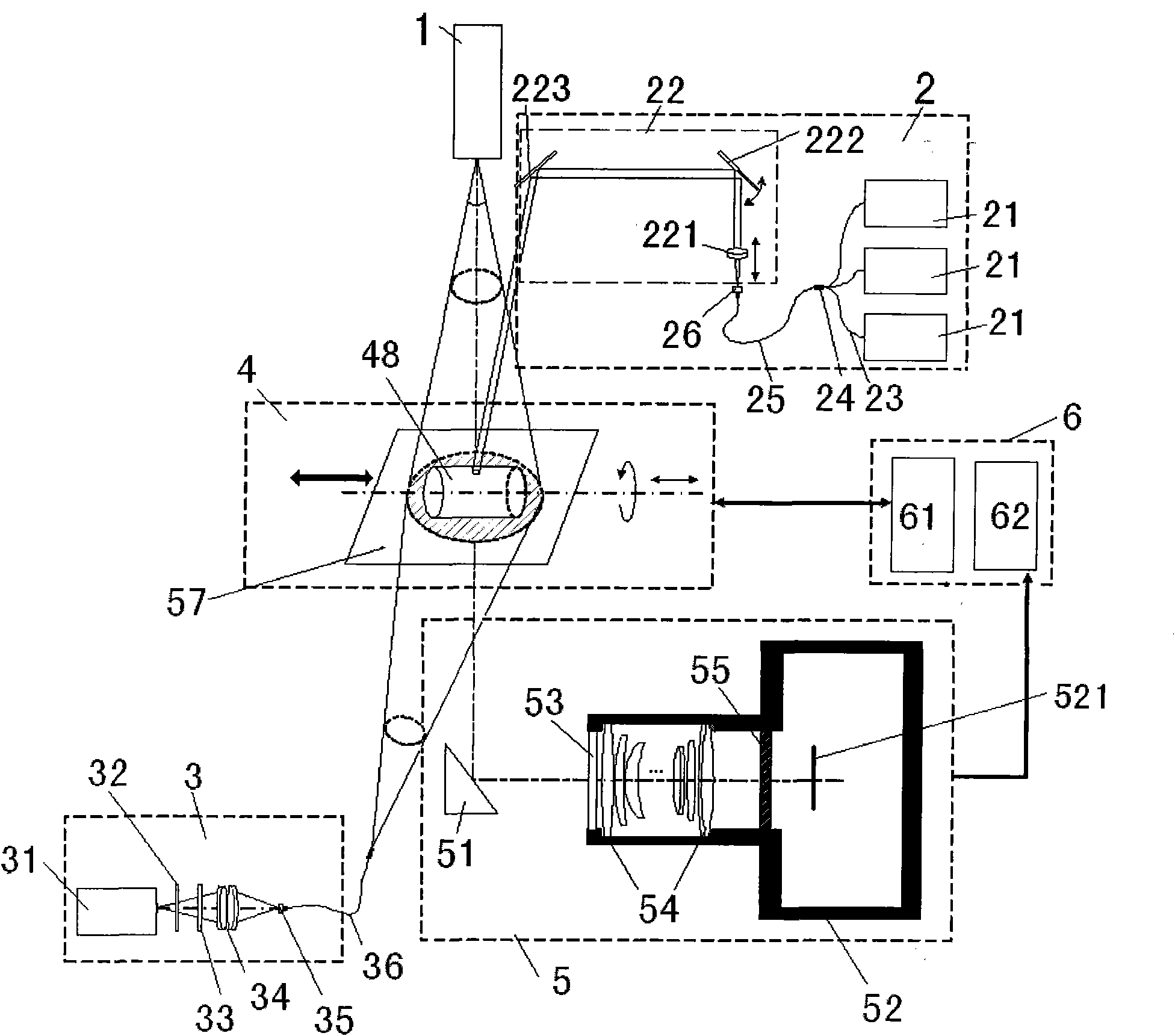

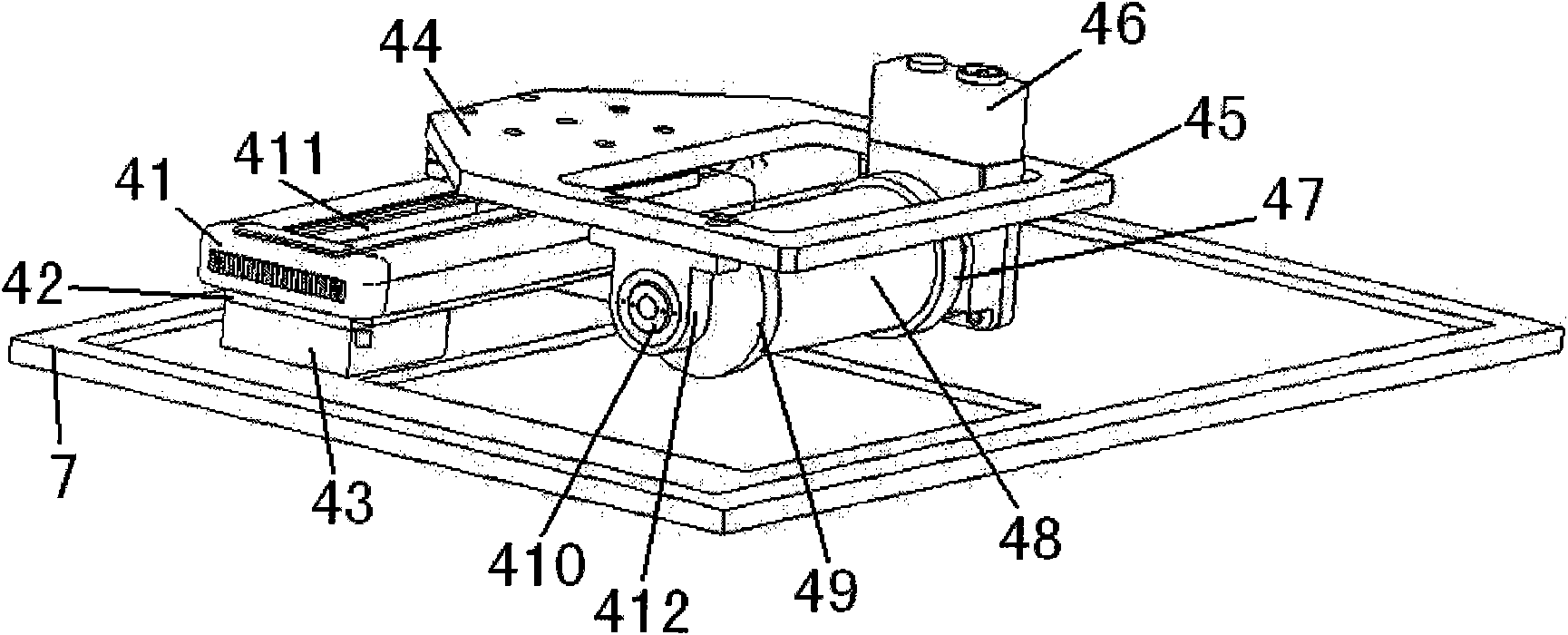

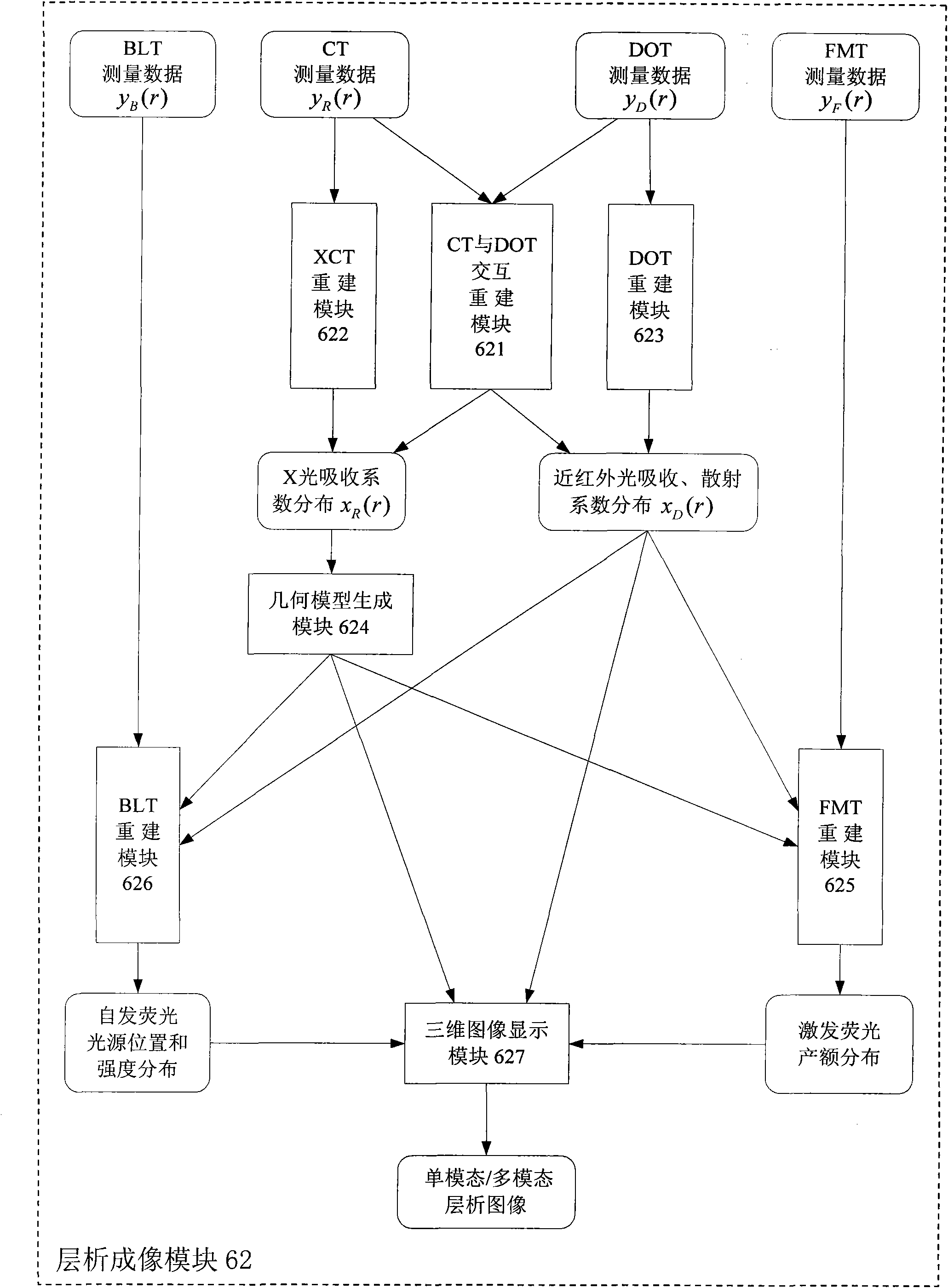

Multimode autofluorescence tomography molecule image instrument and rebuilding method

ActiveCN101301192AImprove signal-to-noise ratioIncrease the amount of information availableImage enhancementSurgeryDiagnostic Radiology ModalityLiquid nitrogen cooling

The invention discloses a multi-modality autofluorescence molecular tomographic imaging instrument, comprising a signal gathering module, a signal preprocessing module, a system control module, and a signal post-processing module. The method of the invention comprises determining the feasible region of light source through X-ray imaging and autofluorescence tomographic imaging based on multi-stage adaptive finite element combined with digital mouse, reconstruction target area optical characteristic parameter, and modality fusion, and adaptive optimized factorization for partial texture according to the posterior error estimation to obtain the fluorescence light source in reconstruction target area. The morbidity problem of autofluorescence molecular tomographic image can be efficiently solved, and the precise reconstruction of the autofluorescence light source can be carried out in the complicated reconstruction target area by the multi-modality fusion imaging mode of the autofluorescence molecular tomographic imaging. The precise reconstruction of the autofluorescence light source can be finished by the liquid nitrogen cooling CCD probe, multi-angle fluorescence probe technology, and multi-modality fusion technology, and the autofluorescence molecular tomographic imaging algorithm based on multi-stage adaptive finite element with the non-uniformity characteristics of the reconstruction target area.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

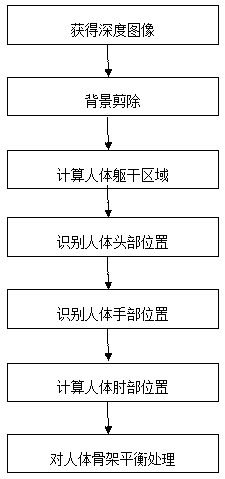

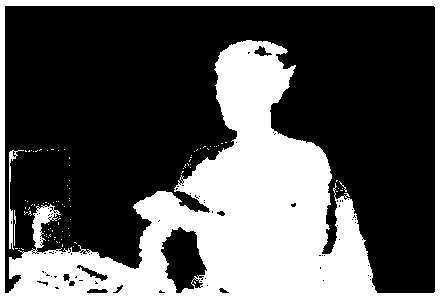

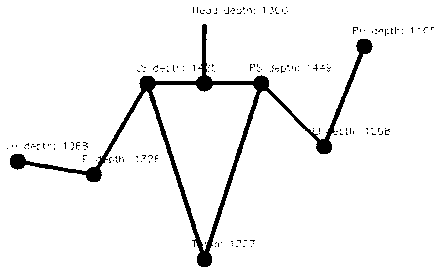

Method for recovering real-time three-dimensional body posture based on multimodal fusion

InactiveCN102800126AThe motion capture process is easyImprove stability3D-image rendering3D modellingColor imageTime domain

The invention relates to a method for recovering a real-time three-dimensional body posture based on multimodal fusion. The method can be used for recovering three-dimensional framework information of a human body by utilizing multiple technologies of depth map analysis, color identification, face detection and the like to obtain coordinates of main joint points of the human body in a real world. According to the method, on the basis of scene depth images and scene color images synchronously acquired at different moments, position information of the head of the human body can be acquired by a face detection method; position information of the four-limb end points with color marks of the human body are acquired by a color identification method; position information of the elbows and the knees of the human body is figured out by virtue of the position information of the four-limb end points and a mapping relation between the color maps and the depth maps; and an acquired framework is subjected to smooth processing by time domain information to reconstruct movement information of the human body in real time. Compared with the conventional technology for recovering the three-dimensional body posture by near-infrared equipment, the method provided by the invention can improve the recovery stability, and allows a human body movement capture process to be more convenient.

Owner:ZHEJIANG UNIV

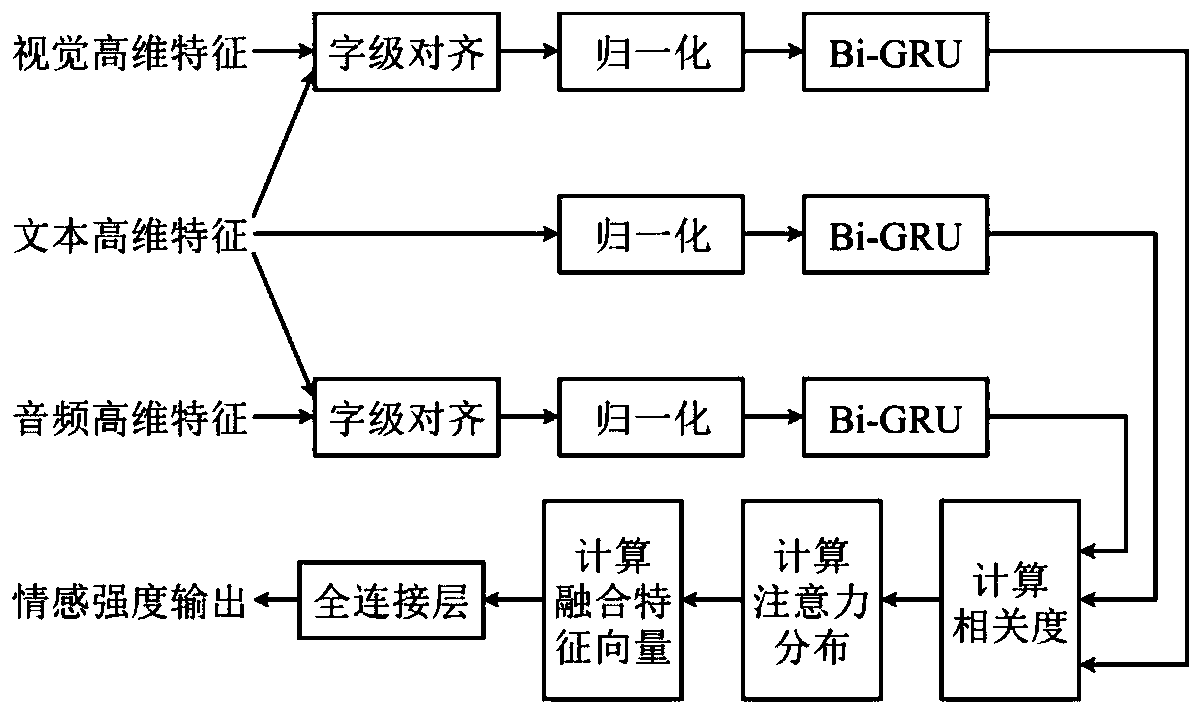

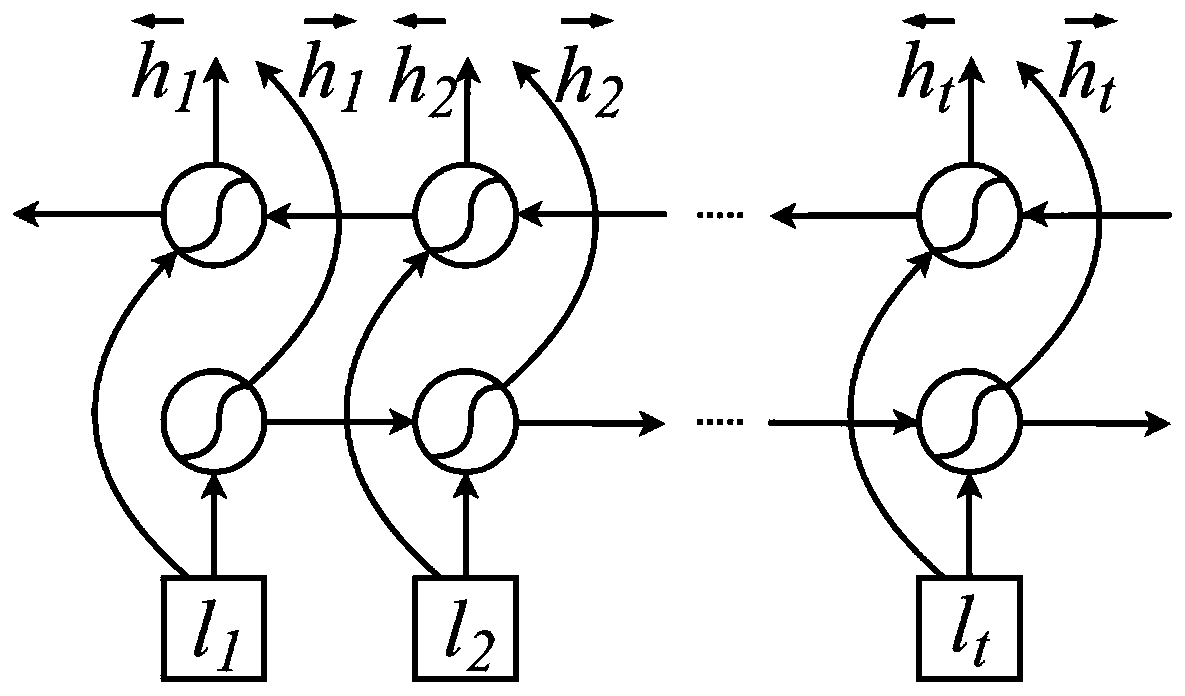

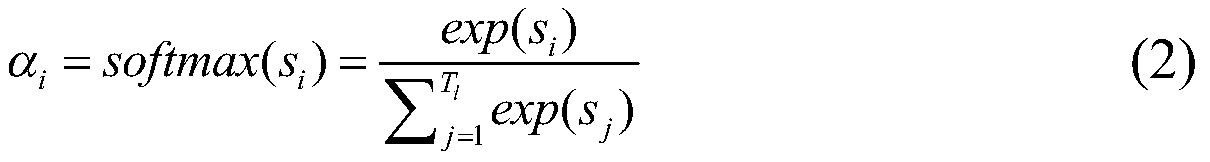

Multi-modal emotion recognition method based on fusion attention network

ActiveCN110188343AImprove accuracyOvercoming Weight Consistency IssuesCharacter and pattern recognitionNatural language data processingDiagnostic Radiology ModalityFeature vector

The invention discloses a multi-modal emotion recognition method based on a fusion attention network. The method comprises: extracting high-dimensional features of three modes of text, vision and audio, and aligning and normalizing according to the word level; then, inputting the signals into a bidirectional gating circulation unit network for training; extracting state information output by the bidirectional gating circulation unit network in the three single-mode sub-networks to calculate the correlation degree of the state information among the multiple modes; calculating the attention distribution of the plurality of modalities at each moment; wherein the state information is the weight parameter of the state information at each moment; and weighting and averaging state information ofthe three modal sub-networks and the corresponding weight parameters to obtain a fusion feature vector as input of the full connection network, a to-be-identified text, inputting vision and audio intothe trained bidirectional gating circulation unit network of each modal, and obtaining final emotion intensity output. According to the method, the problem of weight consistency of all modes during multi-mode fusion can be solved, and the emotion recognition accuracy under multi-mode fusion is improved.

Owner:ZHEJIANG UNIV OF TECH

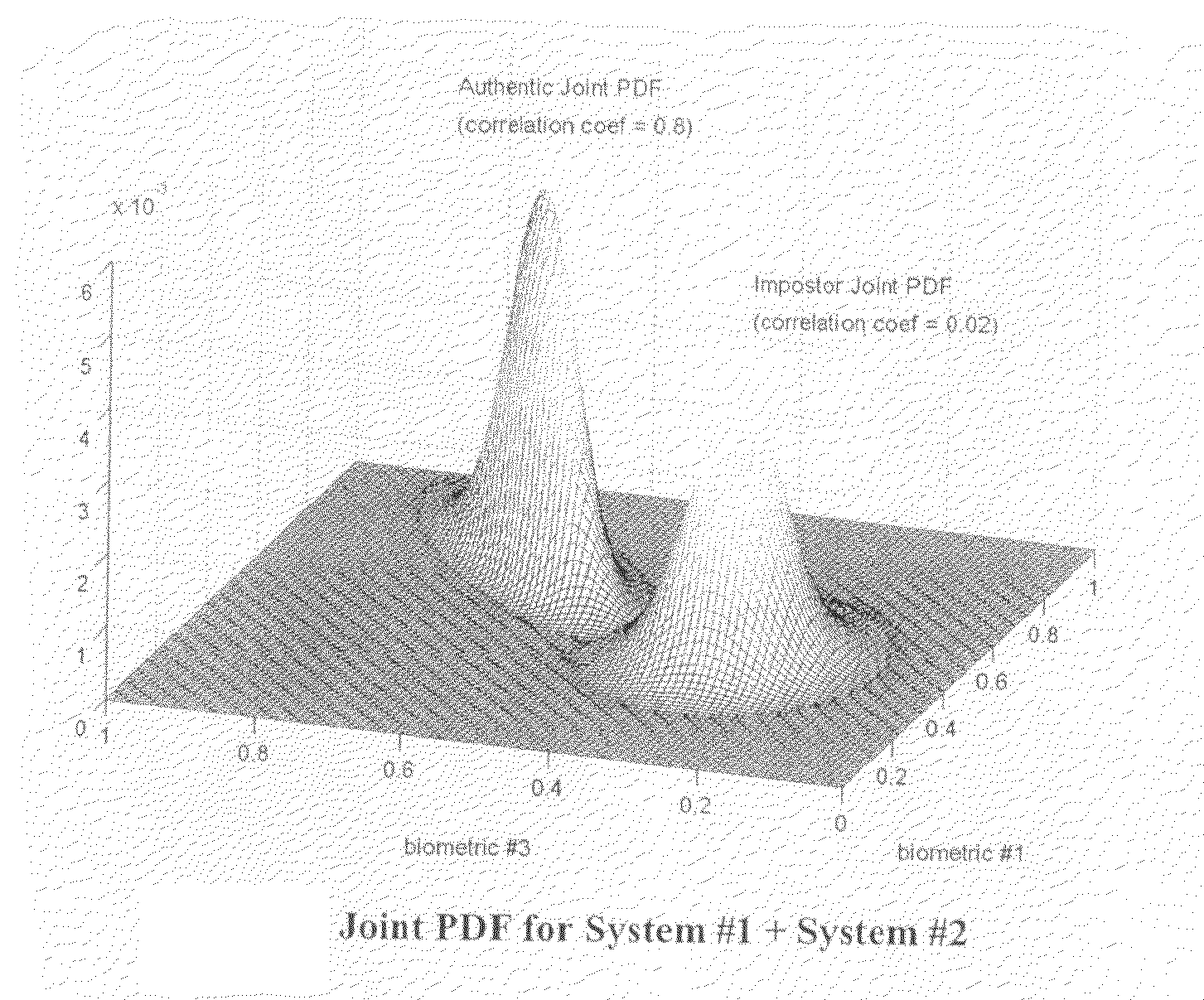

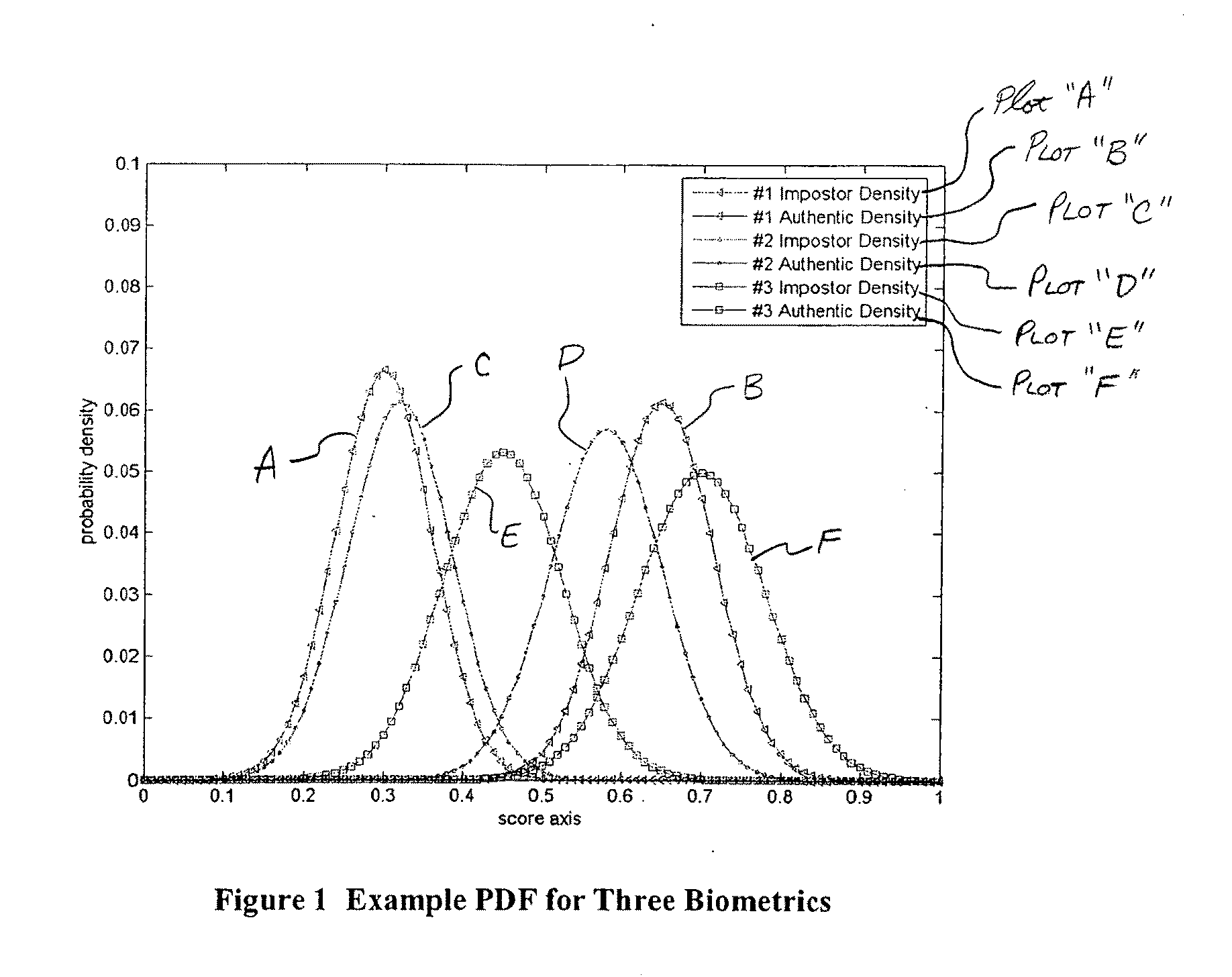

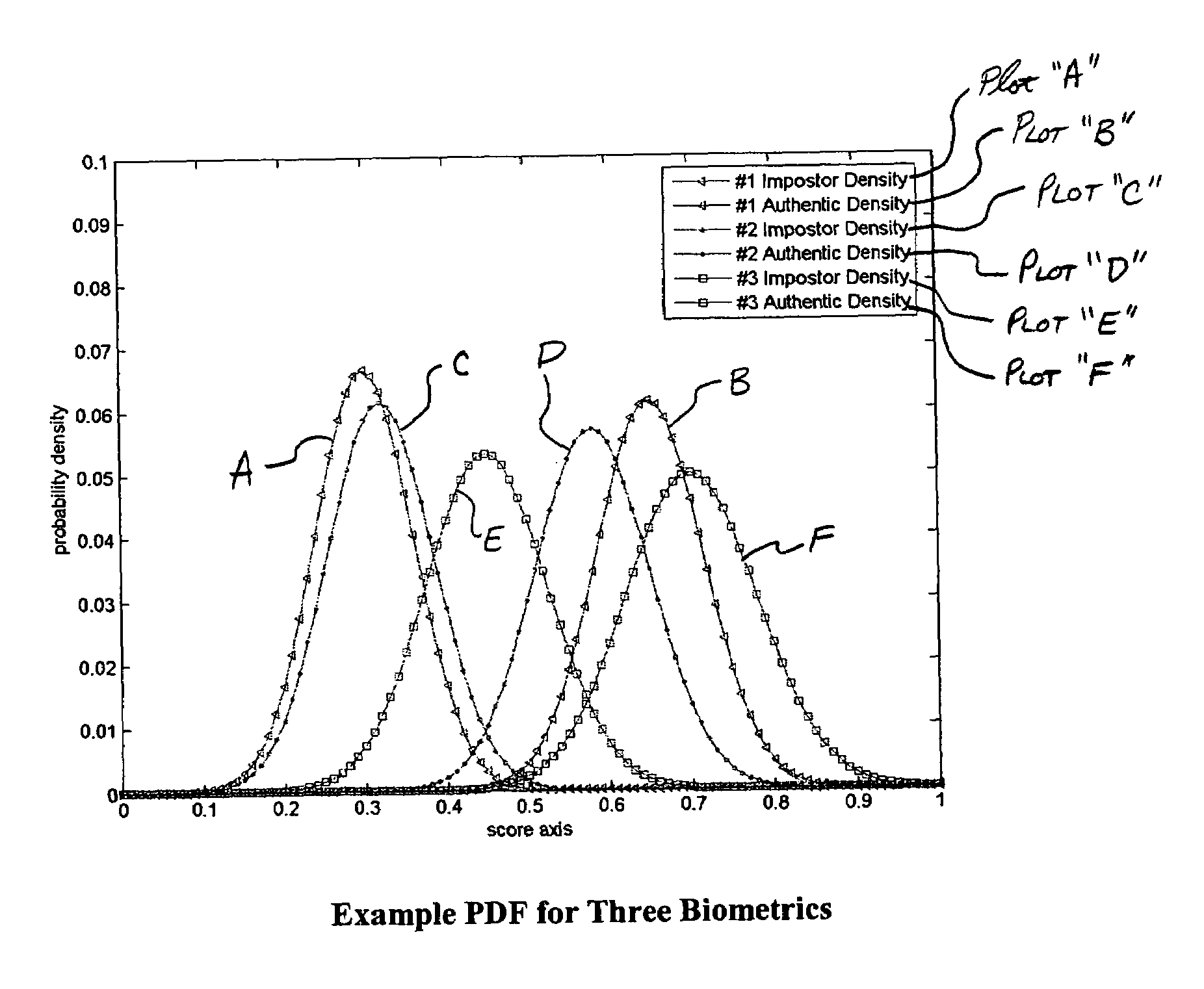

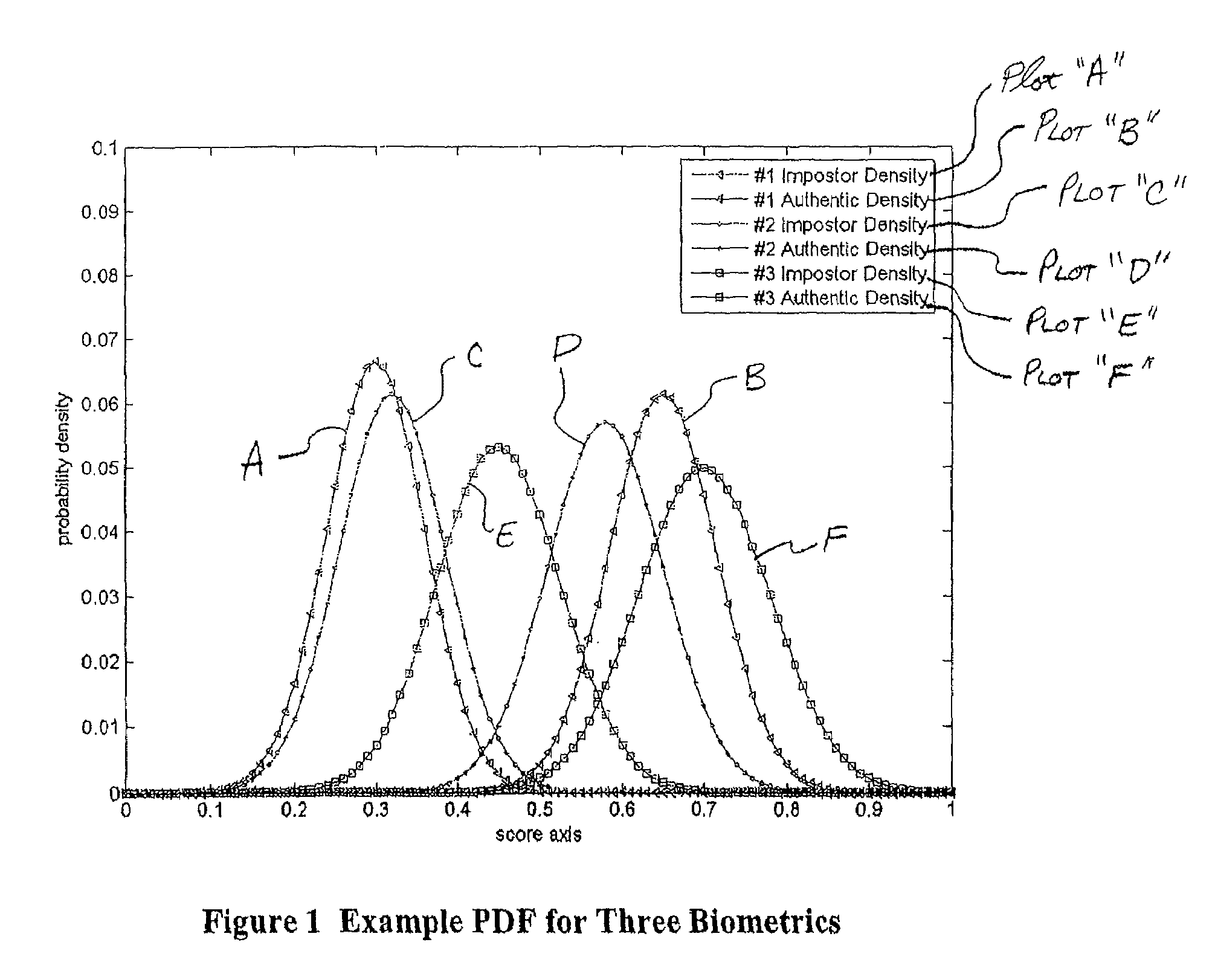

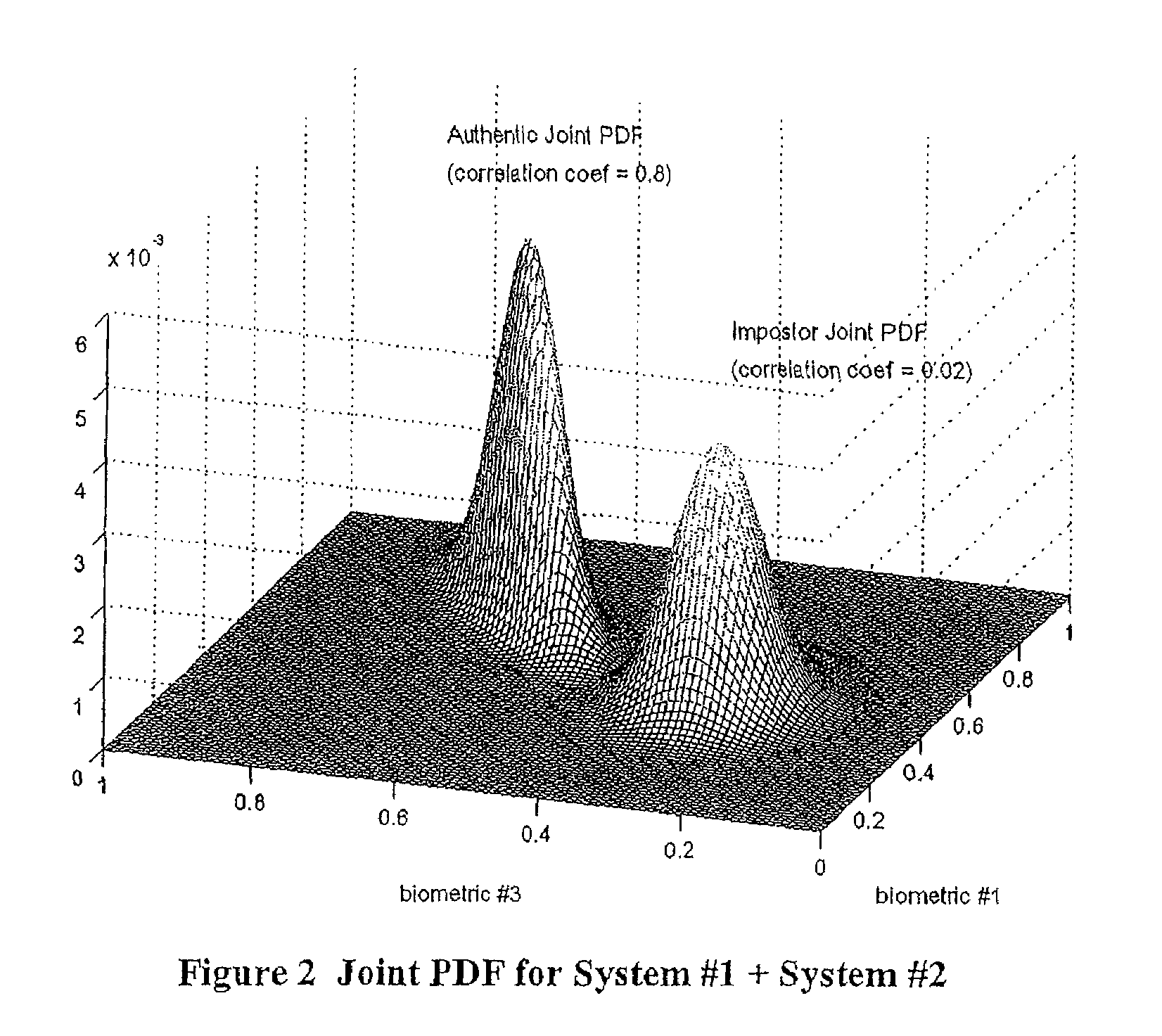

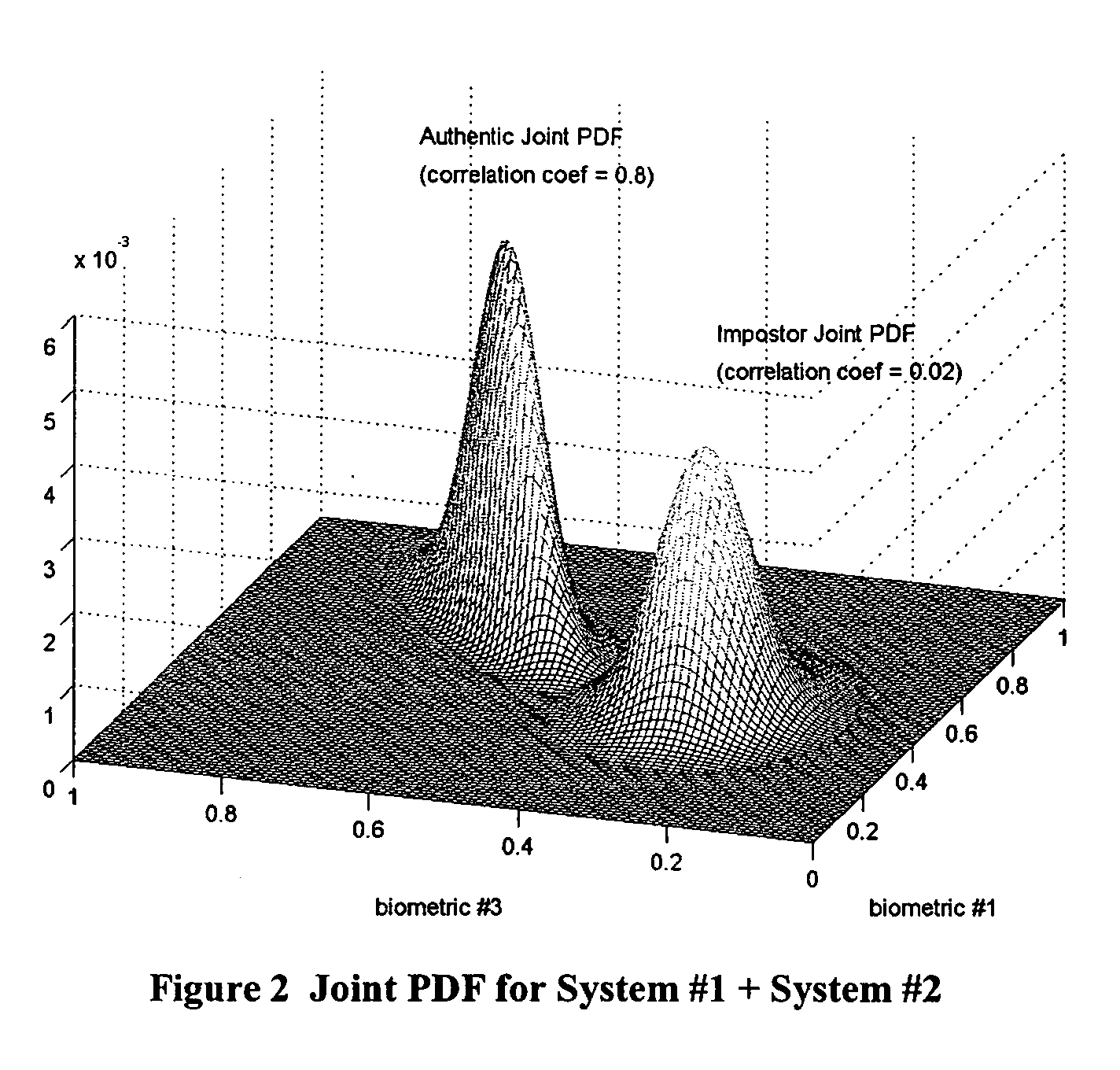

Multimodal fusion decision logic system

InactiveUS7287013B2Electric signal transmission systemsMultiple keys/algorithms usageData setComputer science

The present invention includes a method of deciding whether a data set is acceptable for making a decision. A first probability partition array and a second probability partition array may be provided. A no-match zone may be established and used to calculate a false-acceptance-rate (“FAR”) and / or a false-rejection-rate (“FRR”) for the data set. The FAR and / or the FAR may be compared to desired rates. Based on the comparison, the data set may be either accepted or rejected. The invention may also be embodied as a computer readable memory device for executing the methods.

Owner:QUALCOMM INC

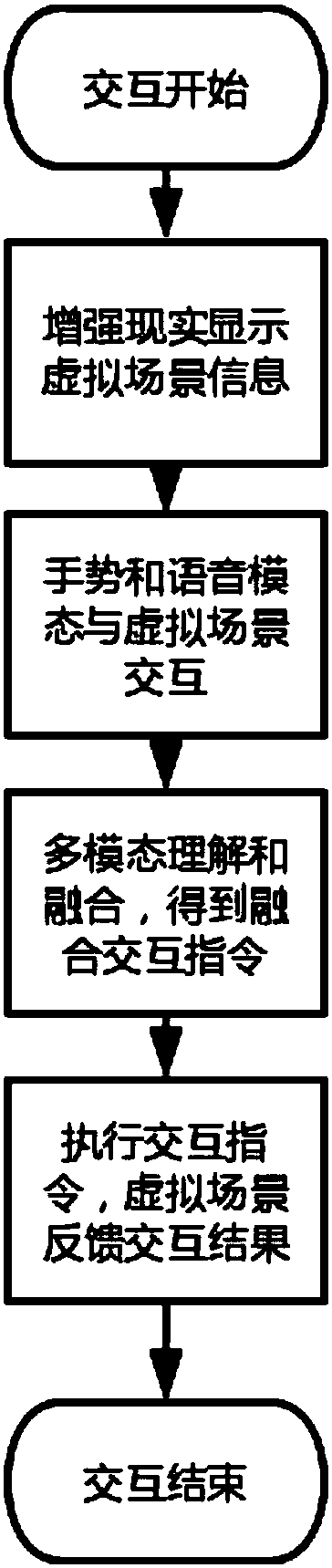

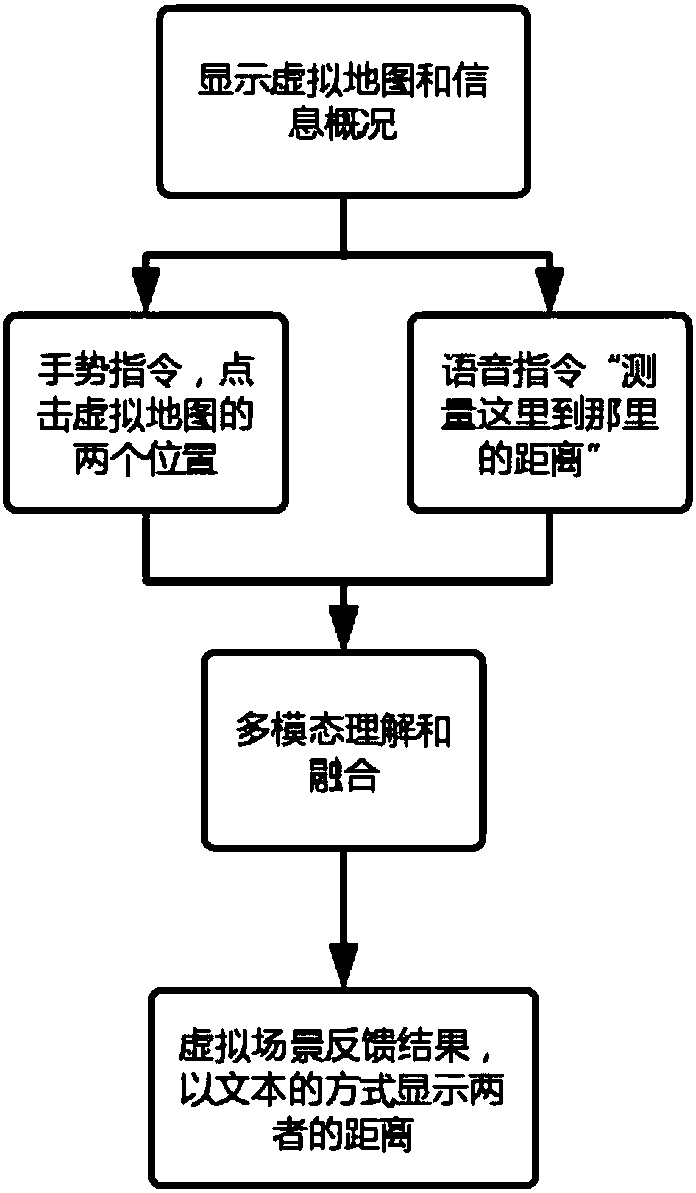

Mobile type multi-modal interaction method and device based on enhanced reality

InactiveCN108334199AEnhanced interactive informationEnhanced interactionInput/output for user-computer interactionSpeech recognitionModal dataInteraction interface

The invention discloses a mobile type multi-modal interaction method and device based on enhanced reality. The method comprises the following steps that: through an enhanced reality way, displaying ahuman-computer interaction interface, wherein an enhanced reality scene comprises interaction information, including a virtual object and the like; through the ways of gesture and voice, sending an interaction instruction by a user, comprehending different-modal semantic through a multi-modal fusion method, and carrying out fusion on the modal data of the gesture and the voice to generate a multi-modal fusion interaction instruction; and after a user interaction instruction acts, returning an acting result to an enhanced reality virtual scene, and carrying out information feedback through thechange of the scene. The device of the invention comprises a gesture sensor, a PC (Personal Computer), a microphone, optical transmission type enhanced reality display equipment and a WiFi (Wireless Fidelity) router. The invention provides the mobile type multi-modal interaction method and device based on the enhanced reality, a human-centered thought is embodied, the method and the device are natural and visual, learning load is lowered, and interaction efficiency is improved.

Owner:SOUTH CHINA UNIV OF TECH

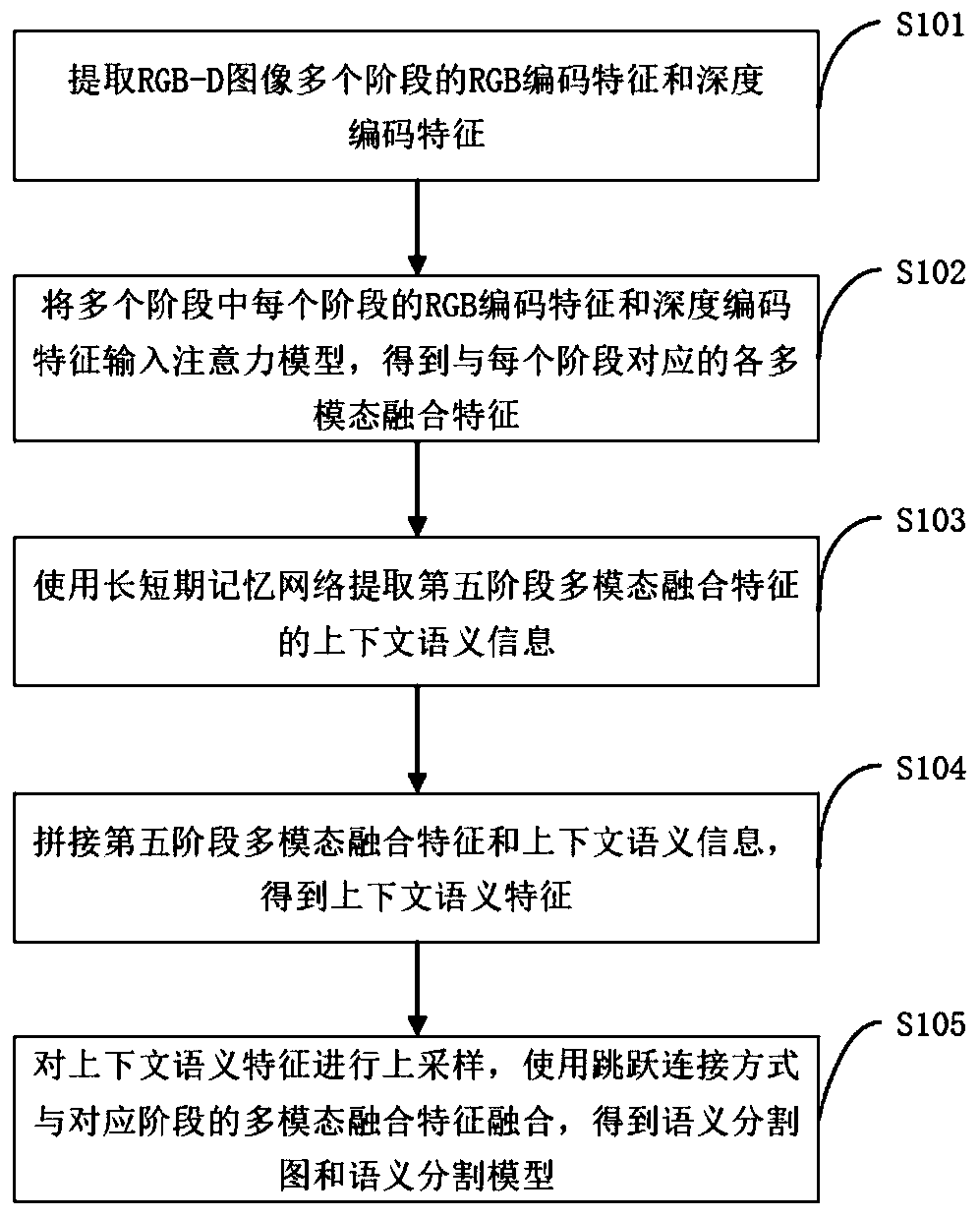

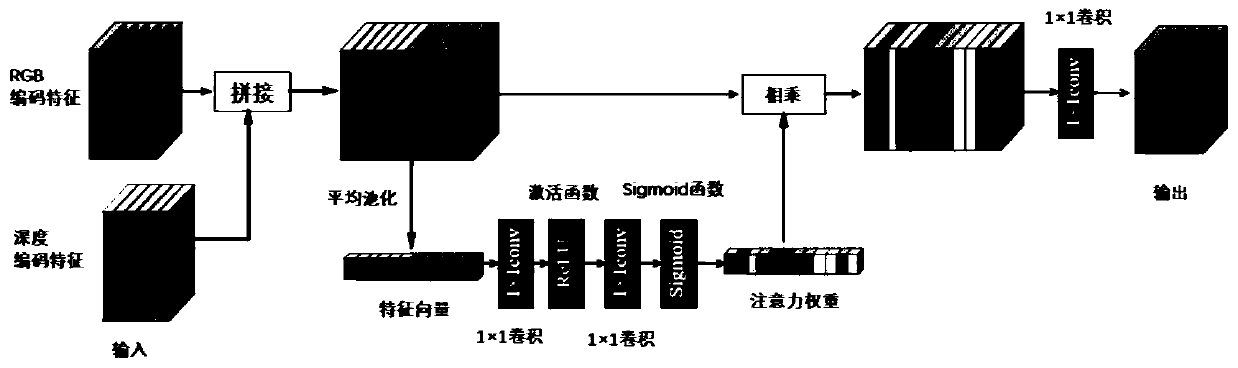

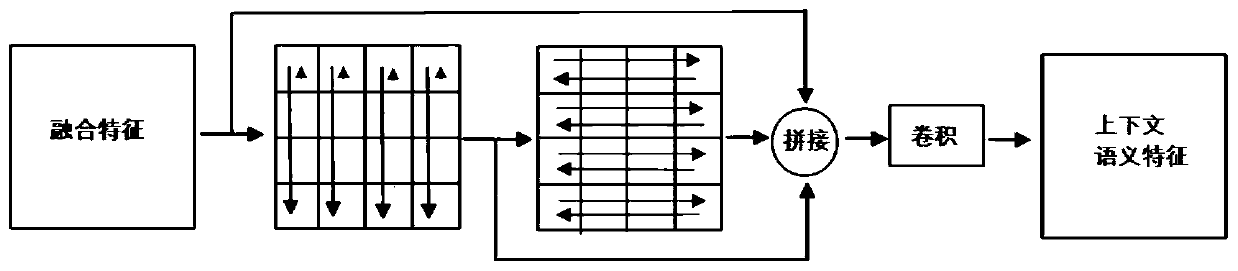

Semantic segmentation method and system for RGB-D image

ActiveCN110298361AImproving Semantic Segmentation AccuracyEfficient use ofCharacter and pattern recognitionNeural architecturesAttention modelComputer vision

The invention discloses a semantic segmentation method and system for an RGB-D image. The semantic segmentation method comprises the steps: extracting RGB coding features and depth coding features ofan RGB-D image in multiple stages; inputting the RGB coding features and the depth coding features of each stage in the plurality of stages into an attention model to obtain each multi-mode fusion feature corresponding to each stage; extracting context semantic information of the multi-modal fusion features in the fifth stage by using a long short-term memory network; splicing the multi-modal fusion features and the context semantic information in the fifth stage to obtain context semantic features; and performing up-sampling on the context semantic features, and fusing the context semantic features with the multi-modal fusion features of the corresponding stage by using a jump connection mode to obtain a semantic segmentation map and a semantic segmentation model. By extracting RGB codingfeatures and depth coding features of the RGB-D image in multiple stages, the semantic segmentation method effectively utilizes color information and depth information of the RGB-D image, and effectively mines context semantic information of the image by using a long short-term memory network, so that the semantic segmentation accuracy of the RGB-D image is improved.

Owner:HANGZHOU WEIMING XINKE TECH CO LTD +1

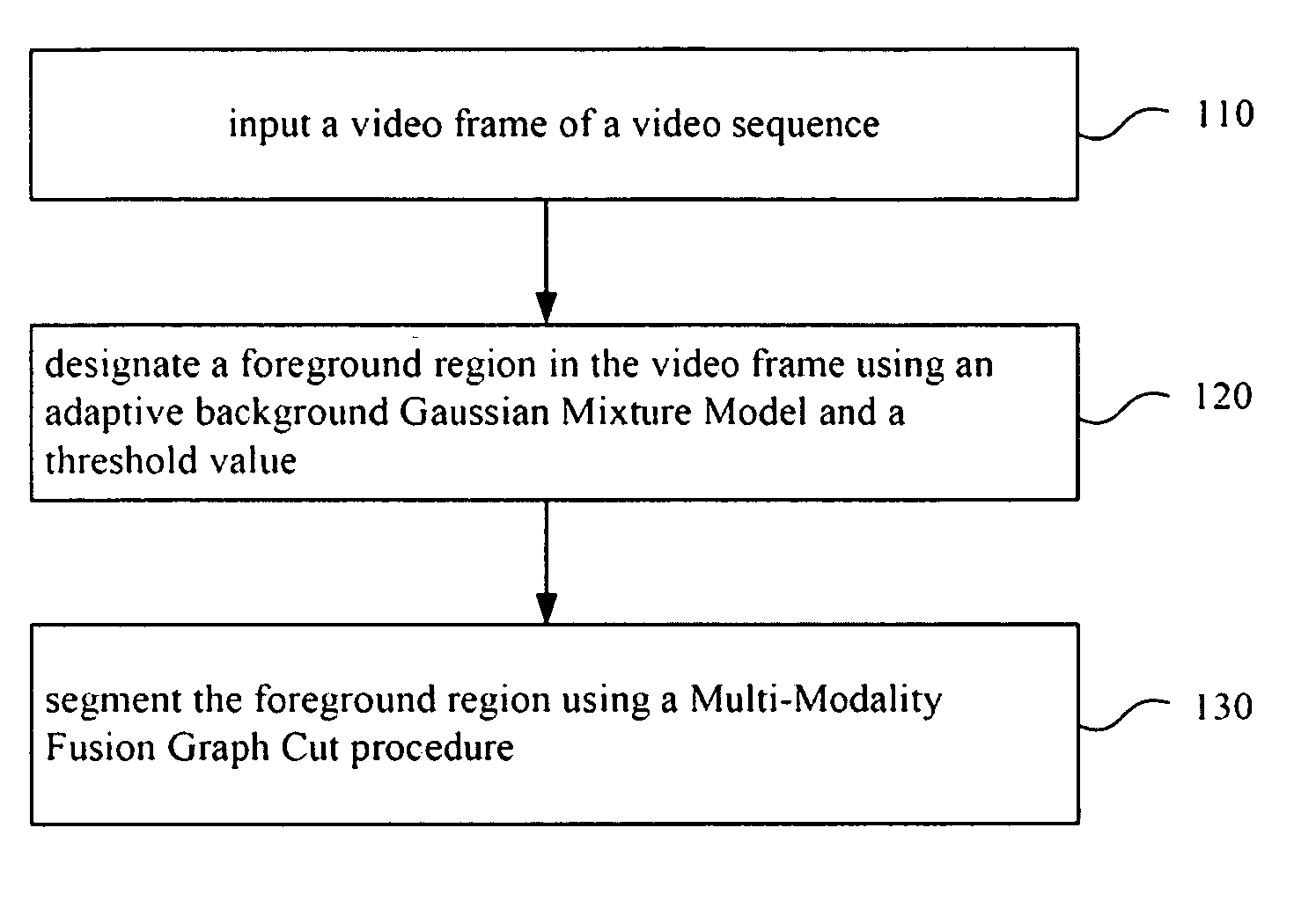

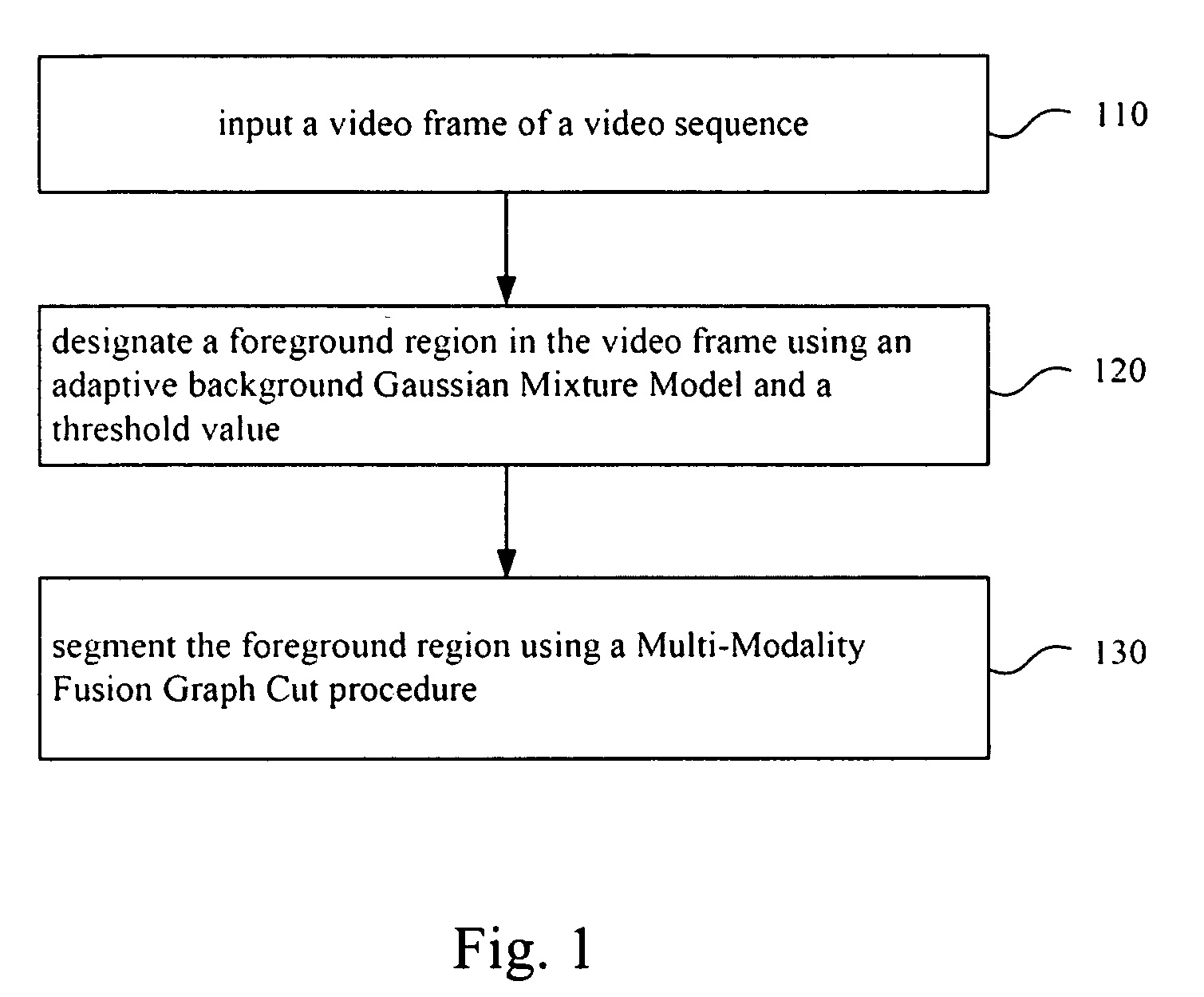

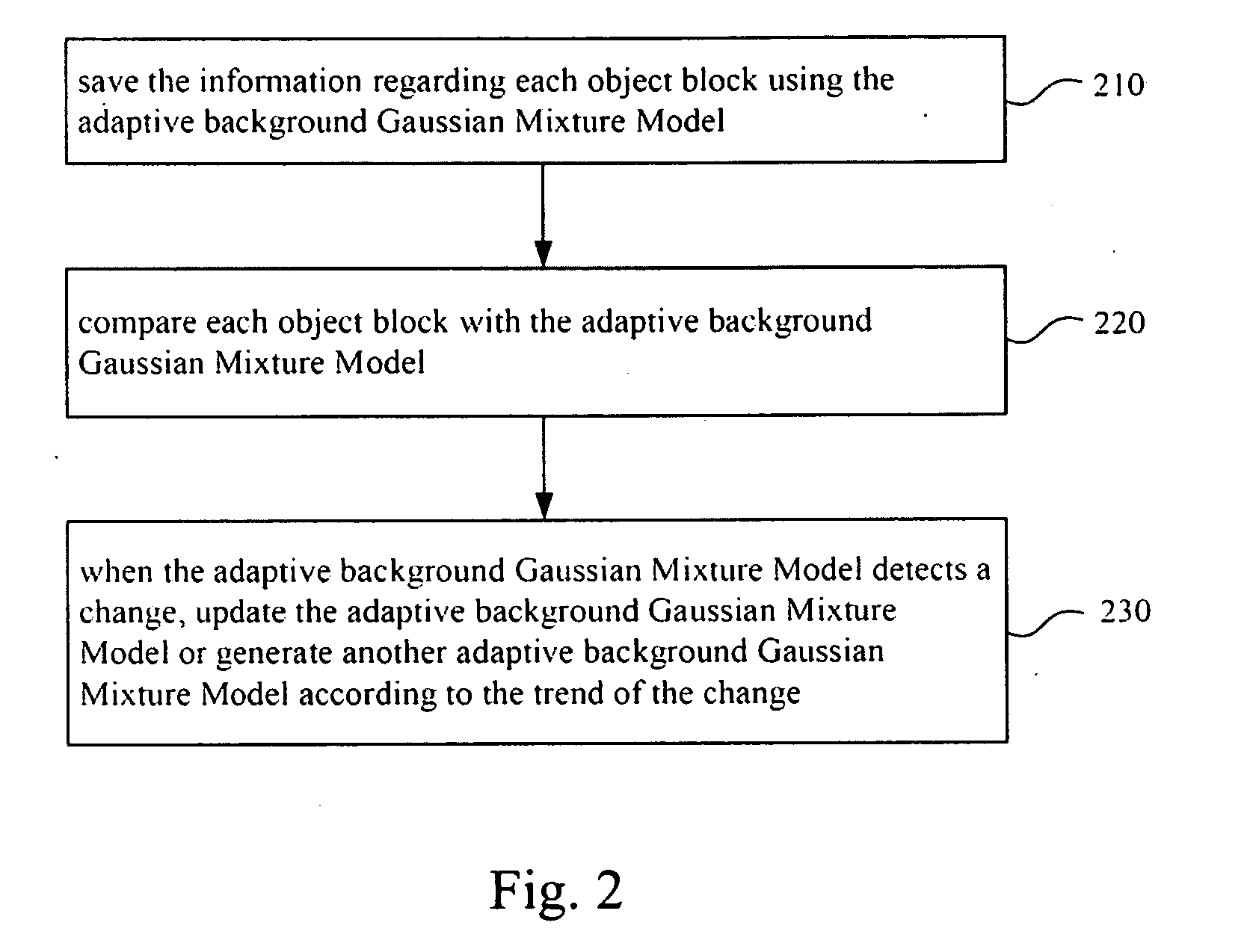

Method and system for foreground detection using multi-modality fusion graph cut

InactiveUS20100208987A1Improve integrityImage enhancementImage analysisVideo sequenceForeground detection

A method for foreground detection using multi-modality fusion graph cut includes the following steps. A video frame of a video sequence is inputted. A foreground region in the video frame is designated using adaptive background Gaussian Mixture Model and a threshold value. The foreground region is segmented using a multi-modality fusion graph cut procedure. A computer program product using the method and a system for foreground detection using multi-modality fusion graph cut are also disclosed herein.

Owner:INSTITUTE FOR INFORMATION INDUSTRY

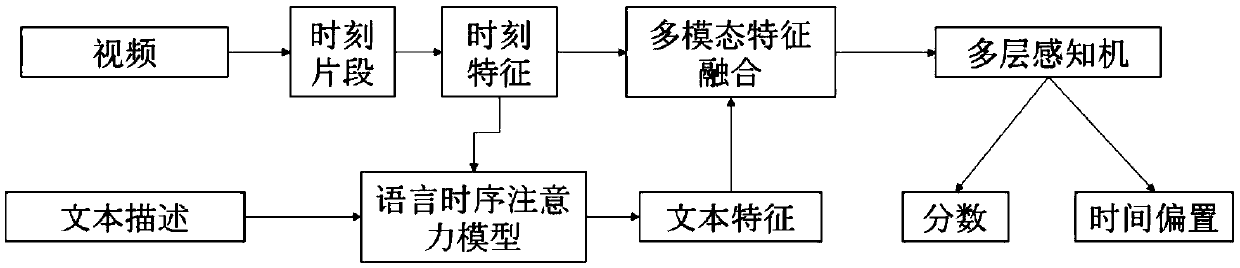

Method and system for cross-mode-based video time location, and storage medium

ActiveCN108932304AAdaptive codingImprove accuracyCharacter and pattern recognitionNatural language data processingFeature extractionStart time

The invention discloses a method and a system for cross-mode-based video time location, and a storage medium. The method and the system are applied in a location problem of a certain time segment in avideo. The method comprises the following steps: establishing a language timing model, to extract text information which is beneficial for time location and extract features; a multimodal fusion model fusing text-visual features, to generate enhanced time representation features; a multi-layer perception model being used to predict matching degree between time and text description, and starting time of the time segment; using a training model which trains data from end to end. The method and the system have higher accuracy than an existing model on a time location problem based on text query.

Owner:SHANDONG UNIV

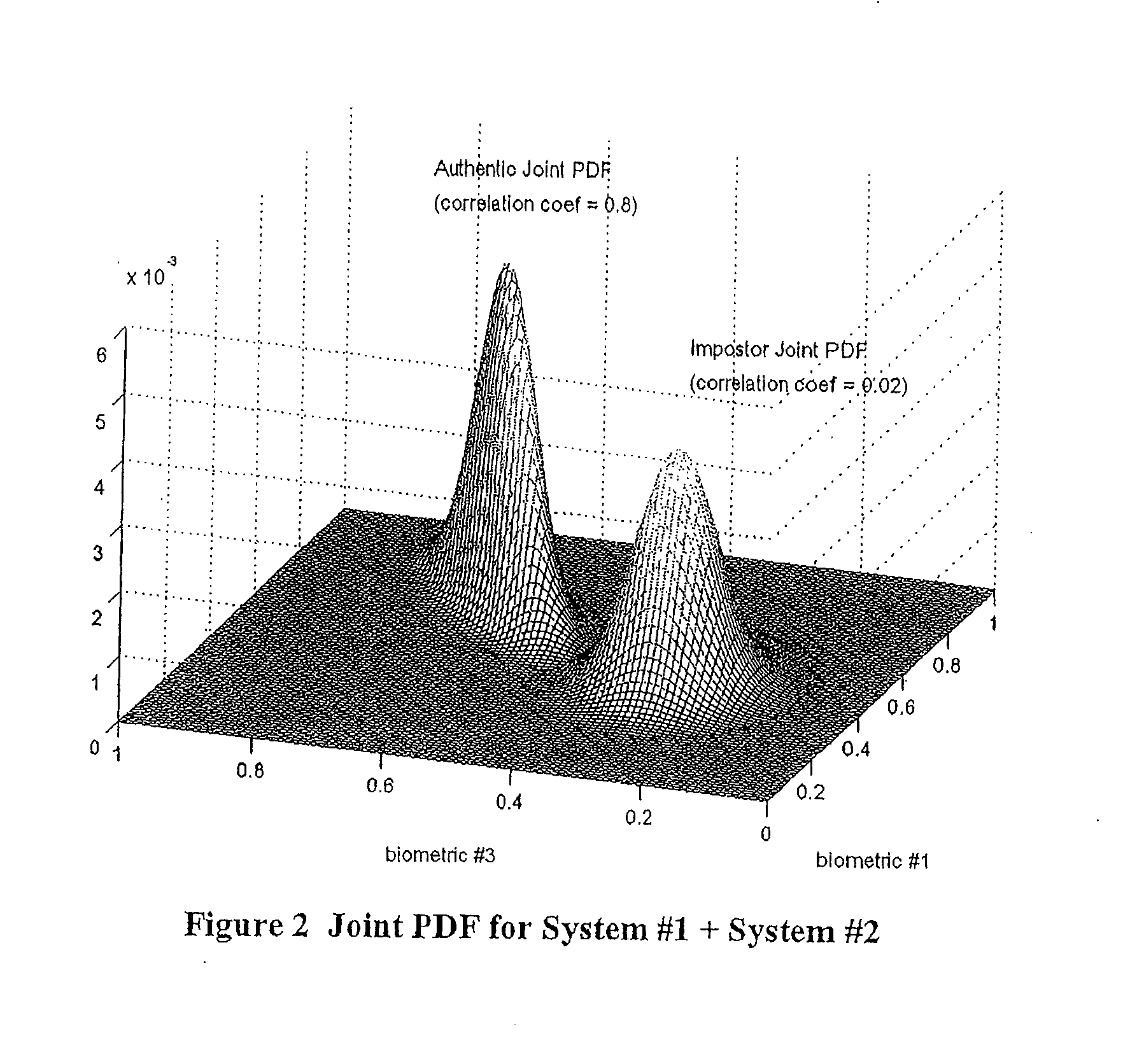

Multimodal Fusion Decision Logic System Using Copula Model

The present invention includes a method of deciding whether a data set is acceptable for making a decision. A first probability partition array and a second probability partition array may be provided. One or both of the probability partition arrays may be a Copula model. A no-match zone may be established and used to calculate a false-acceptance-rate (“FAR”) and / or a false-rejection-rate (“FRR”) for the data set. The FAR and / or the FAR may be compared to desired rates. Based on the comparison, the data set may be either accepted or rejected. The invention may also be embodied as a computer readable memory device for executing the methods.

Owner:QUALCOMM INC

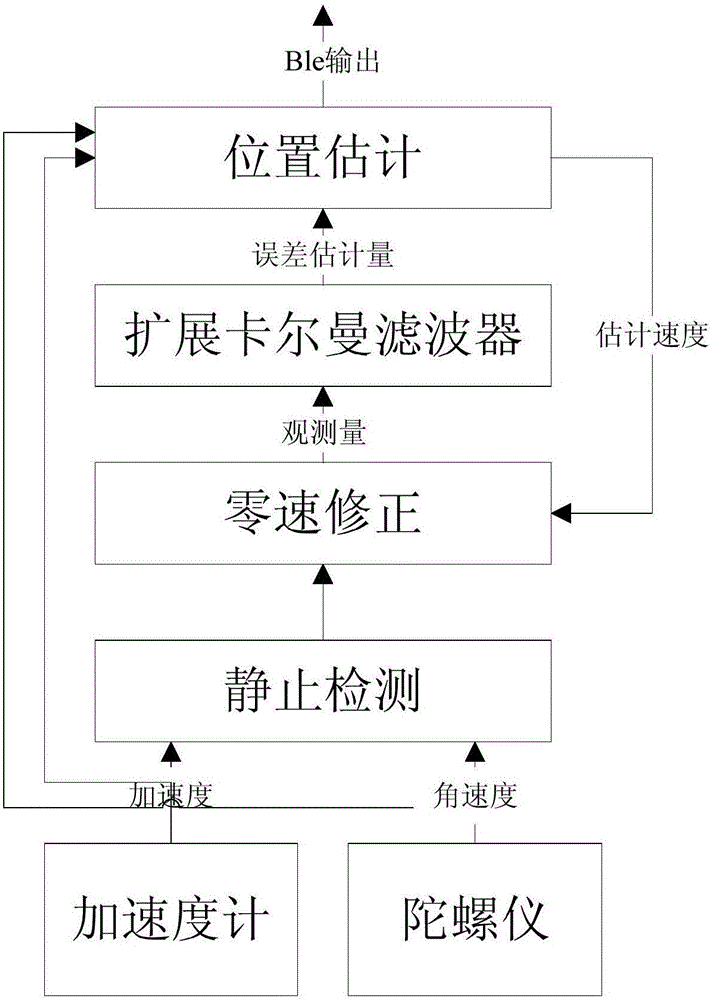

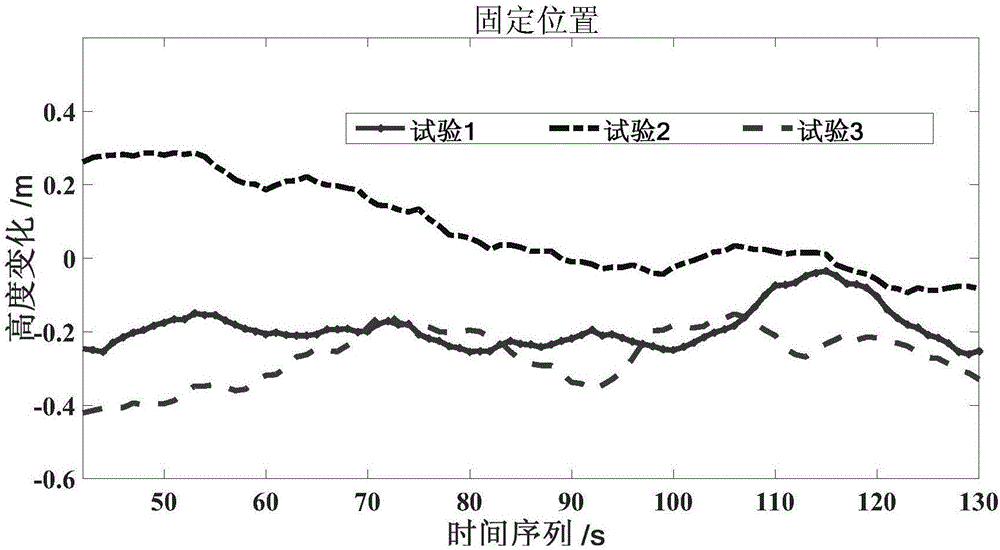

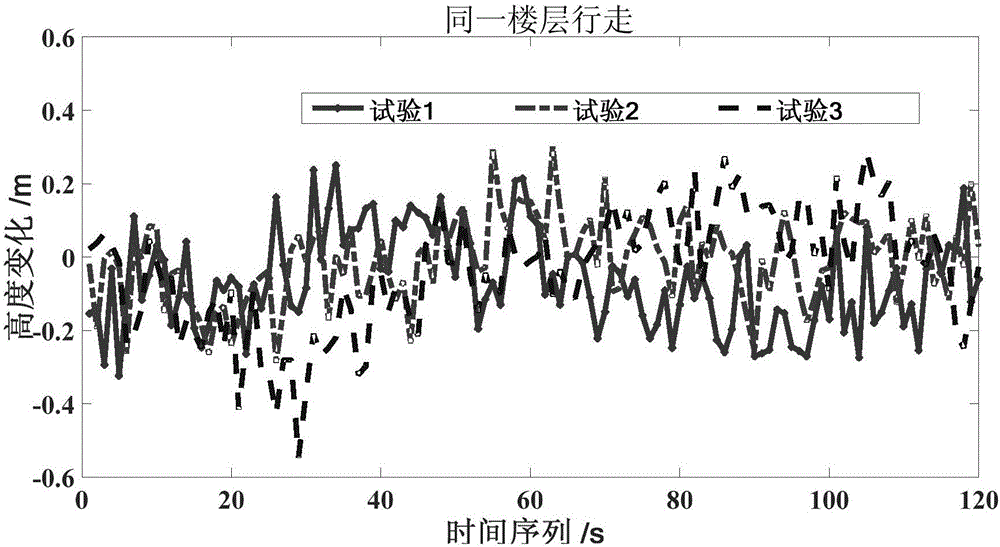

Multimodal fusion based indoor self-positioning method and device

ActiveCN106500690AAchieve positioningImplement navigationNavigational calculation instrumentsNavigation by speed/acceleration measurementsHuman–computer interactionSelf positioning

Owner:THE 22ND RES INST OF CHINA ELECTRONICS TECH GROUP CORP +1

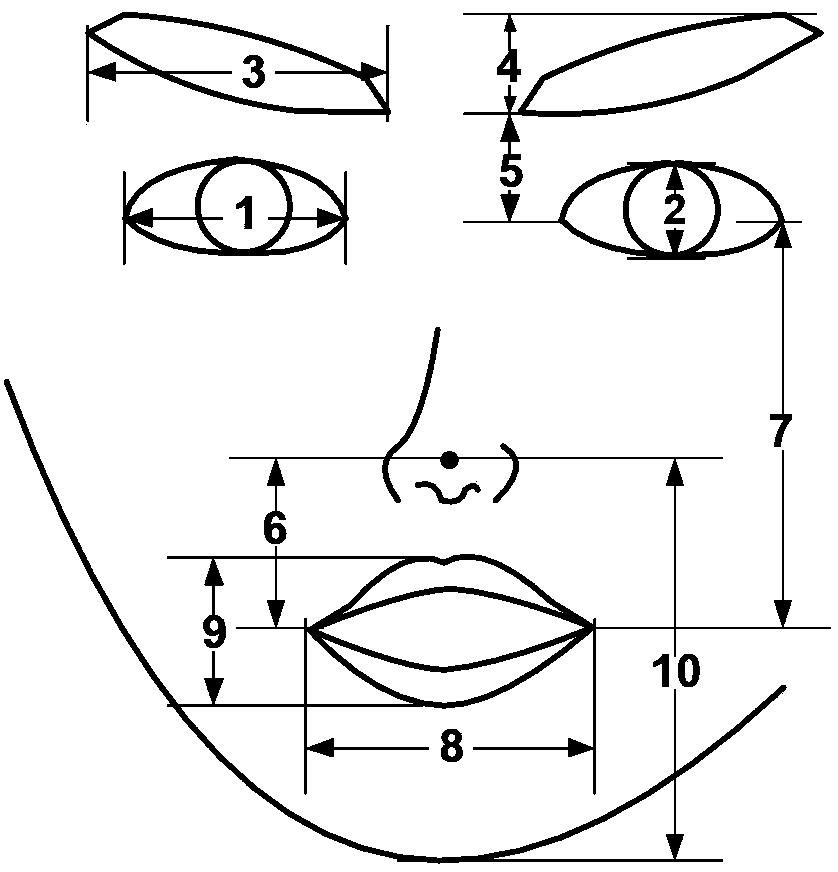

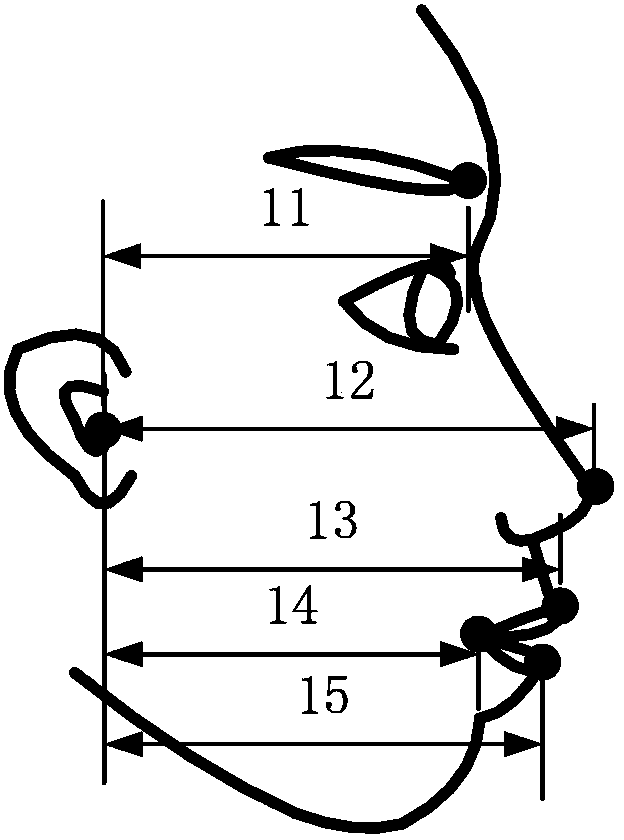

Voice-vision fusion emotion recognition method based on hint nerve networks

InactiveCN103400145AImproved feature selectionGood effectBiological neural network modelsCharacter and pattern recognitionNODALVision convergence

The invention provides a voice-vision fusion emotion recognition method based on hint nerve networks and belongs to the field of automatic emotion recognition. The method has the following basic thought: first, respectively using feature data in threes channels, i.e. the front facial expression, the side facial expression and the voice of a person to independently train one nerve network to execute the recognition of discrete emotion types, adding four hint nodes to the output layer of a nerve network model in the training process, and respectively carrying the hint information of four coarseness types in an activation-evaluation space; and then fusing the output results of the three nerve networks by a multi-modal fusion model, and adopting nerve networks based on hint information training in the multi-modal fusion model. Under the help of the hint information, better feature selection can be produced through the learning of nerve network weighing. The method has the advantages of lower calculated amount, high recognition rate and great robustness and has more remarkable effect on conditions with fewer training data.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

Multimodal fusion decision logic system using copula model

The present invention includes a method of deciding whether a data set is acceptable for making a decision. A first probability partition array and a second probability partition array may be provided. One or both of the probability partition arrays may be a Copula model. A no-match zone may be established and used to calculate a false-acceptance-rate (“FAR”) and / or a false-rejection-rate (“FRR”) for the data set. The FAR and / or the FAR may be compared to desired rates. Based on the comparison, the data set may be either accepted or rejected. The invention may also be embodied as a computer readable memory device for executing the methods.

Owner:QUALCOMM INC

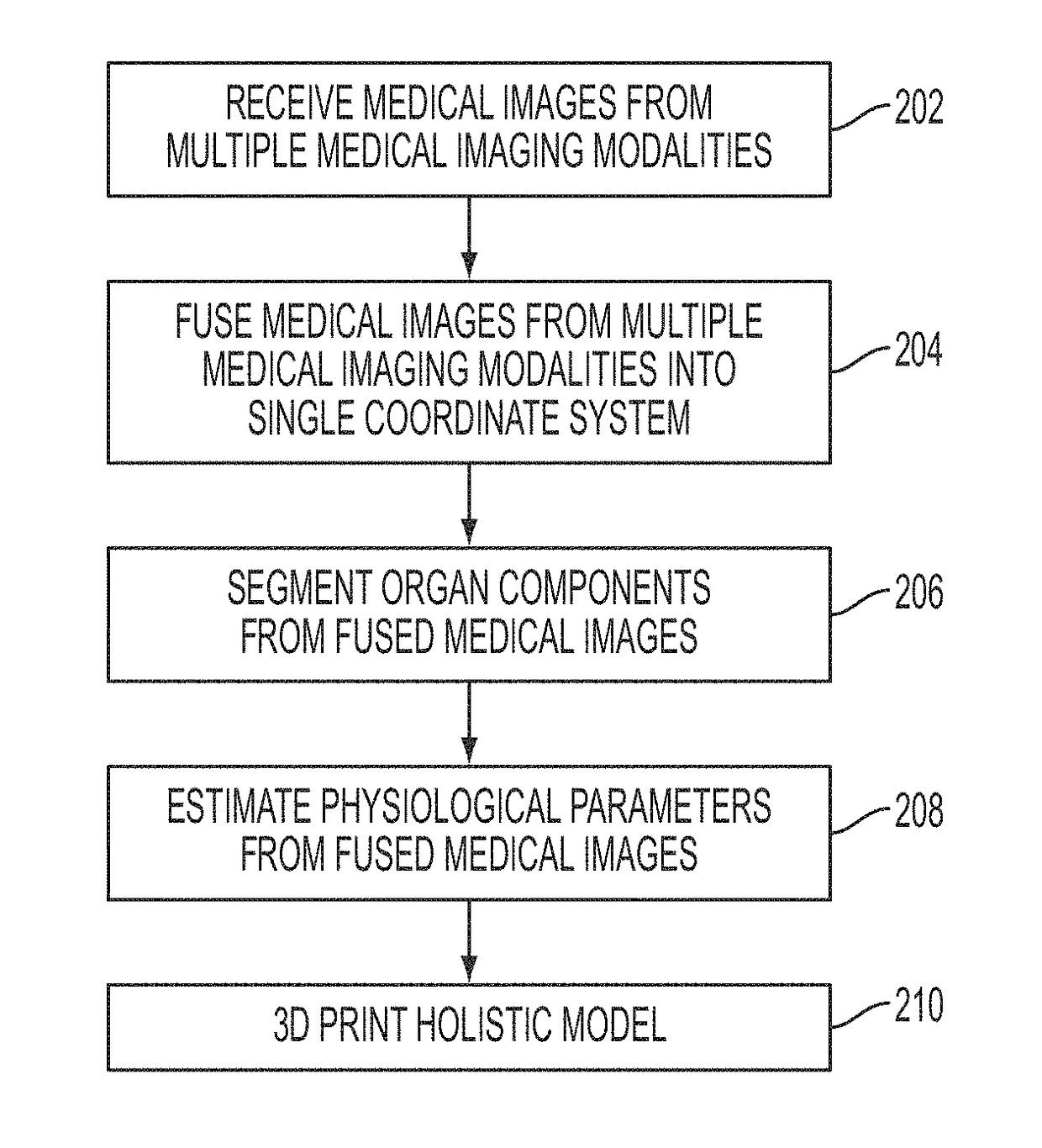

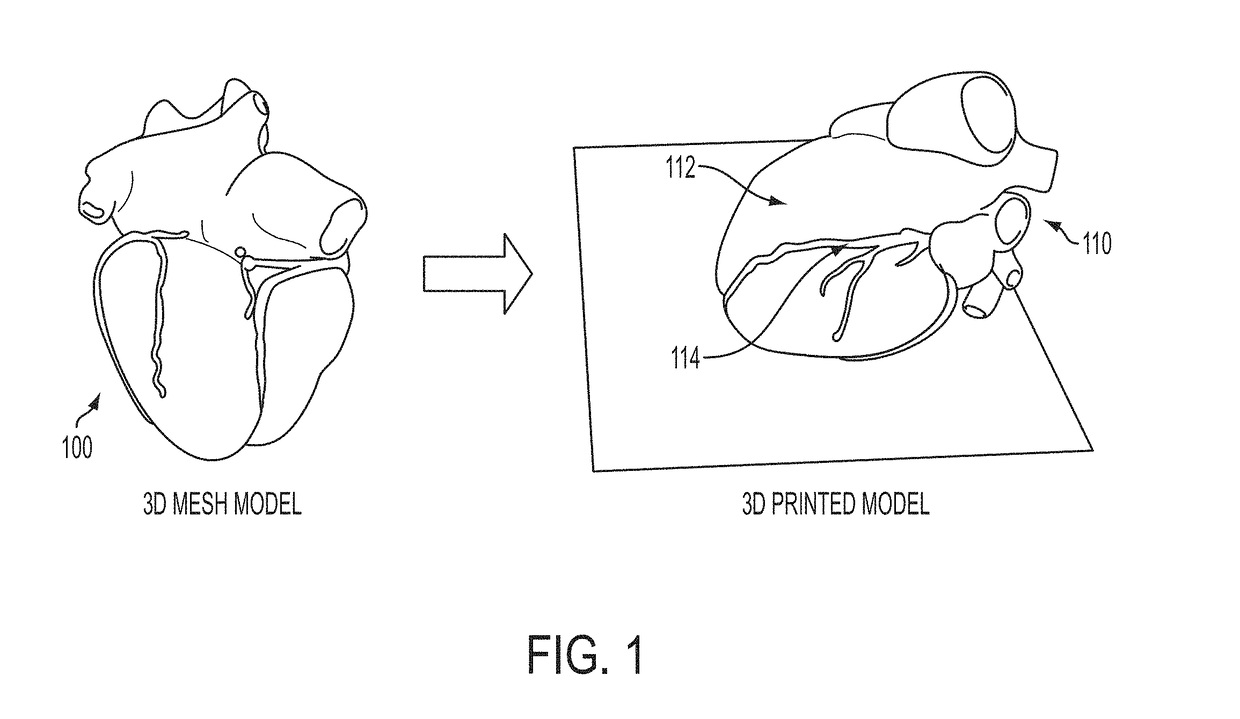

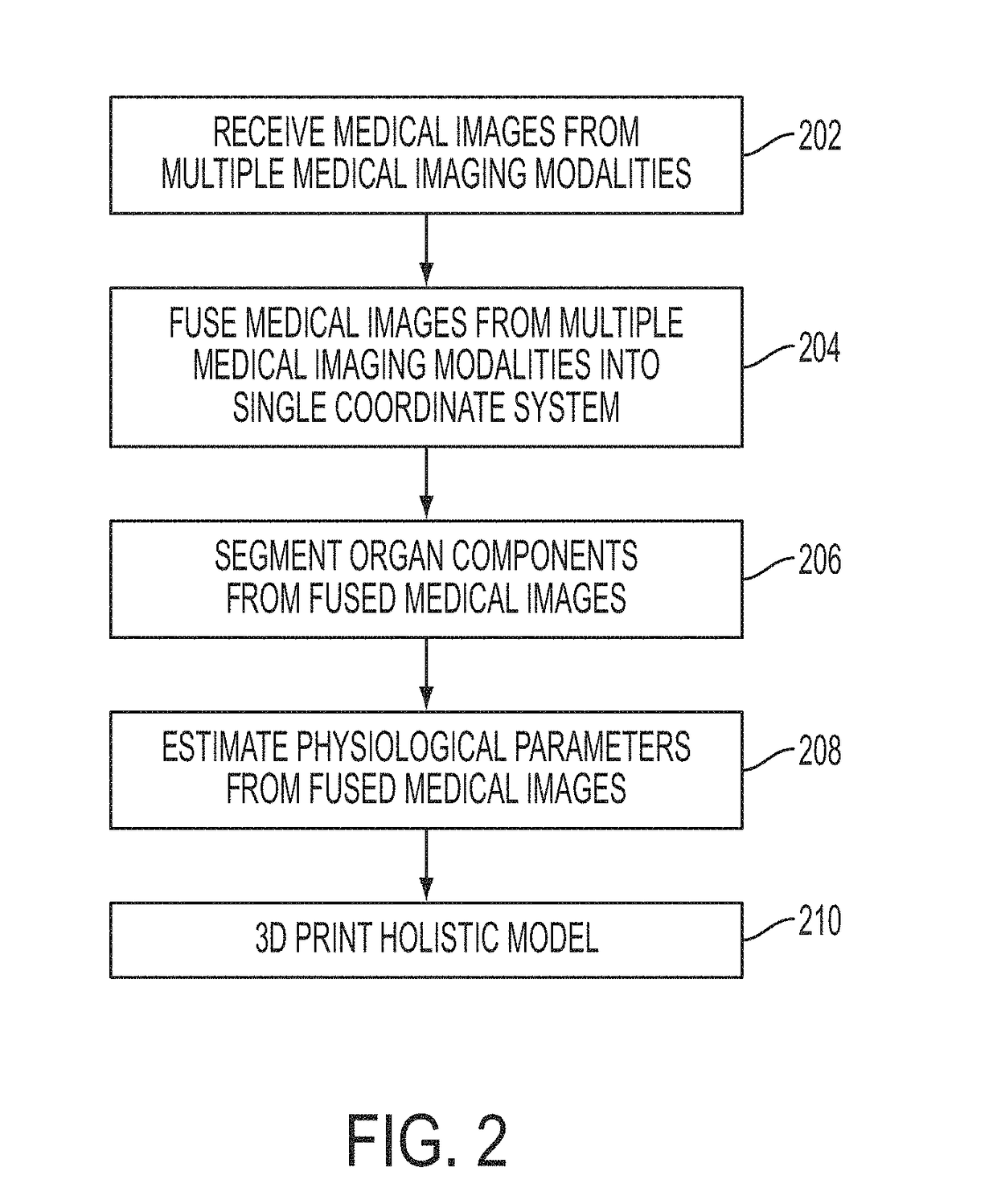

Multi-Modality Image Fusion for 3D Printing of Organ Morphology and Physiology

A system and method for multi-modality fusion for 3D printing of a patient-specific organ model is disclosed. A plurality of medical images of a target organ of a patient from different medical imaging modalities are fused. A holistic mesh model of the target organ is generated by segmenting the target organ in the fused medical images from the different medical imaging modalities. One or more spatially varying physiological parameter is estimated from the fused medical images and the estimated one or more spatially varying physiological parameter is mapped to the holistic mesh model of the target organ. The holistic mesh model of the target organ is 3D printed including a representation of the estimated one or more spatially varying physiological parameter mapped to the holistic mesh model. The estimated one or more spatially varying physiological parameter can be represented in the 3D printed model using a spatially material property (e.g., stiffness), spatially varying material colors, and / or spatially varying material texture.

Owner:SIEMENS HEALTHCARE GMBH

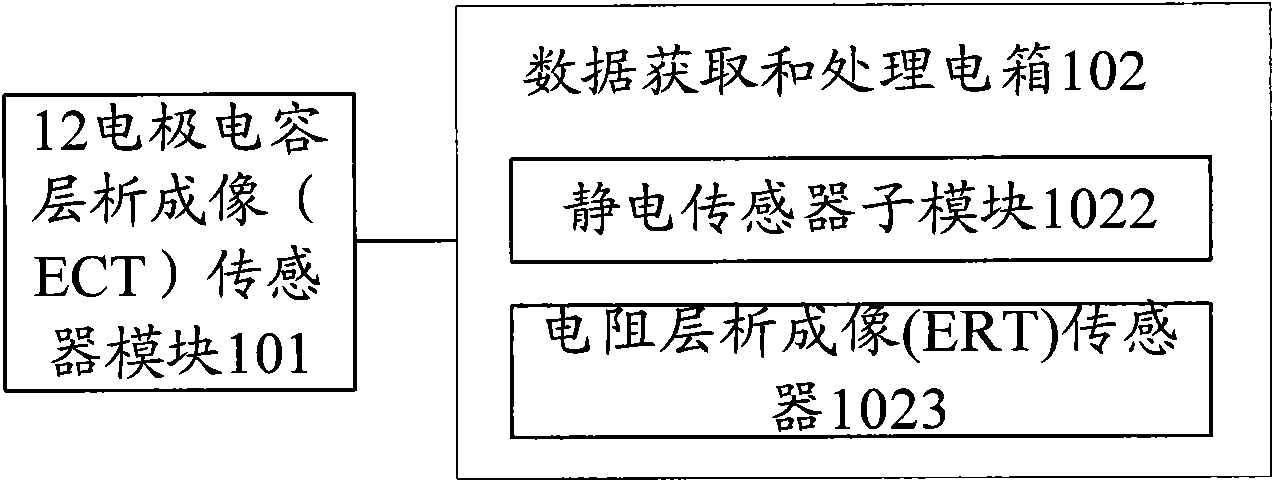

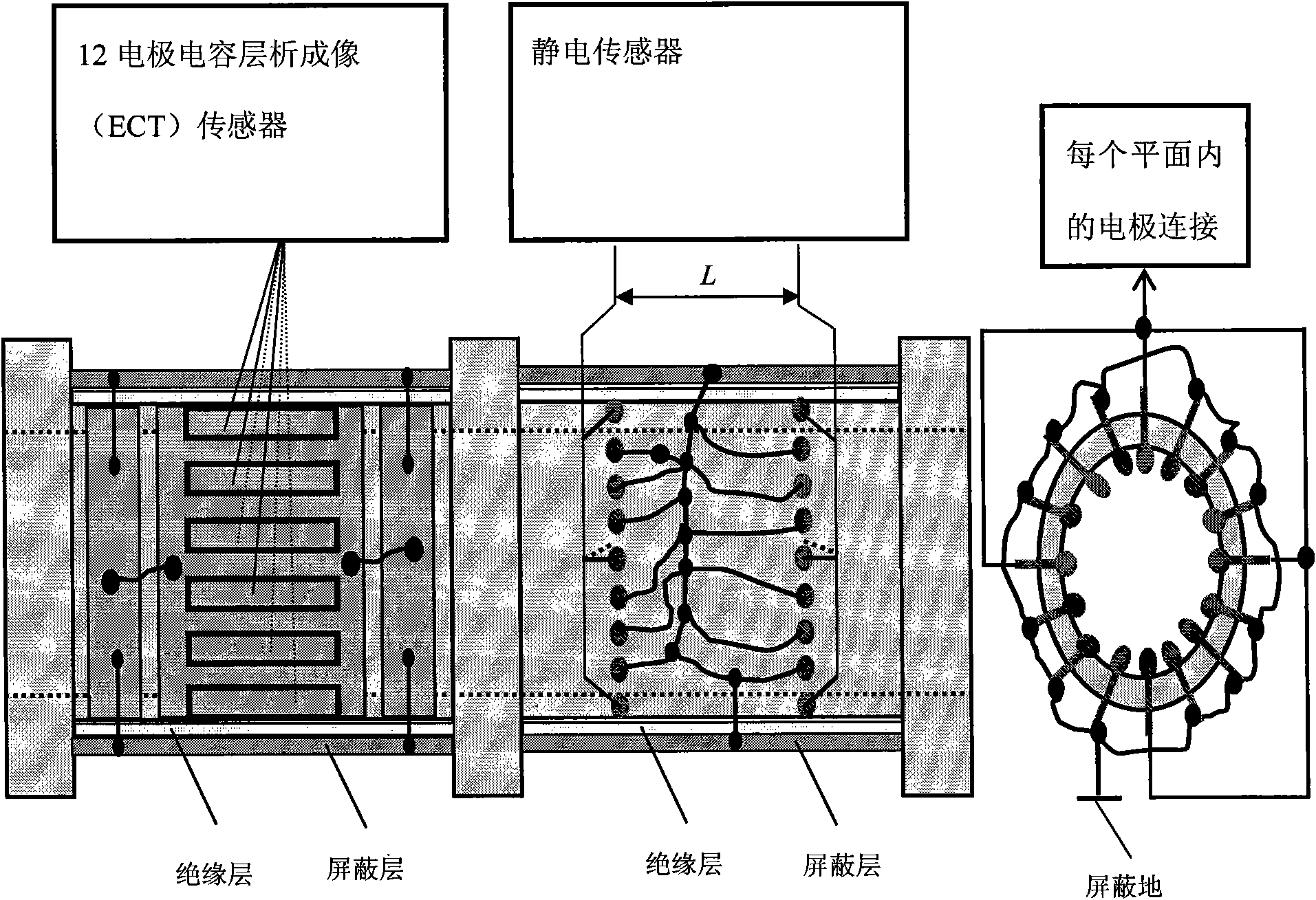

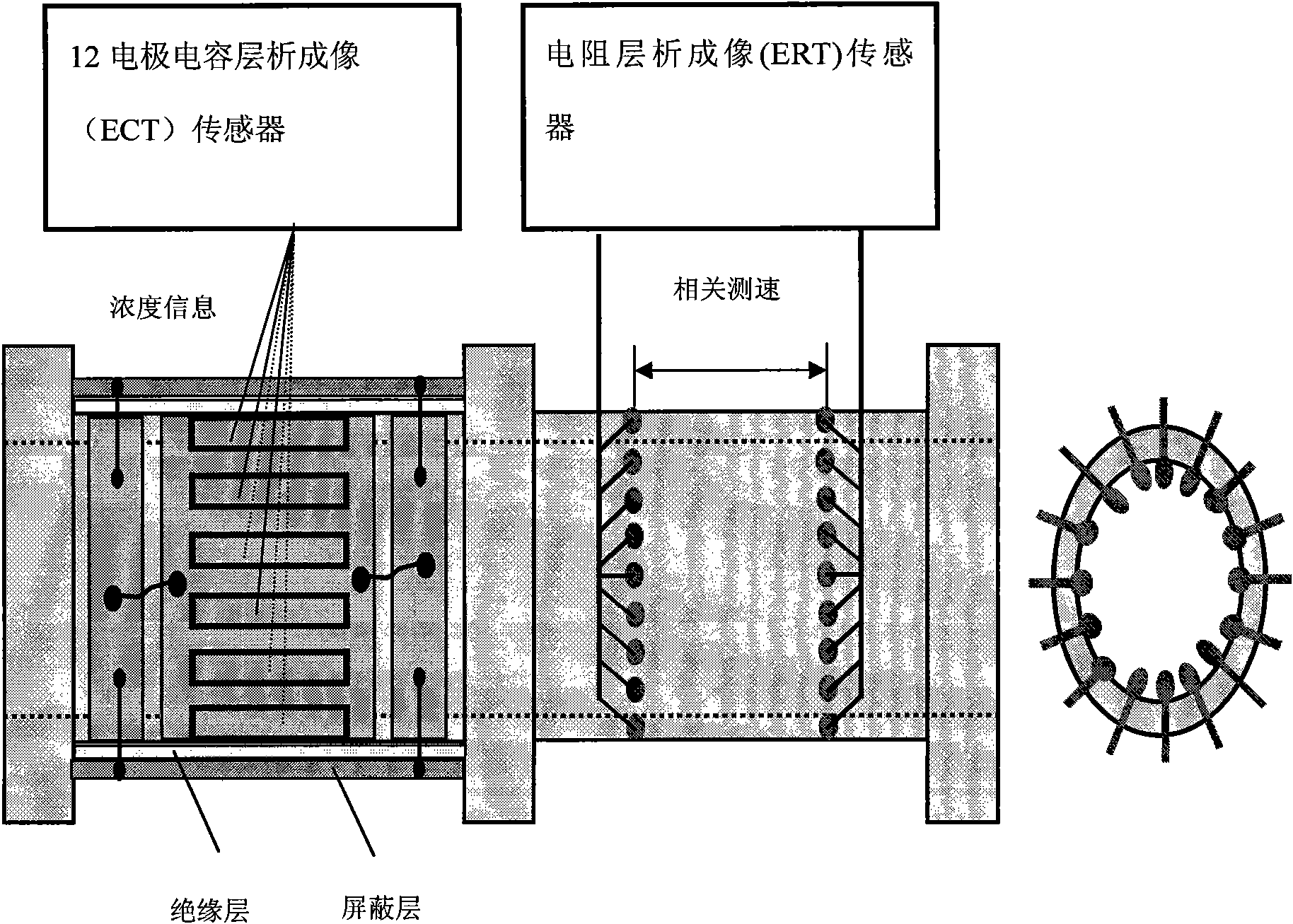

Novel multi-mode adaptive sensor system

InactiveCN101975801AEasy to measureResistance/reactance/impedenceFluid speed measurementEngineeringSelf adaptive

The invention provides a novel multi-mode adaptive sensor system, which can be used under gas / solid two-phase flow and gas / liquid two-phase flow environments. For different measurement environments, the measurement can be realized only by performing cable connection and mode selection in different modes on a multi-mode fusion sensor. Therefore, convenience is brought to the unified integration ofan imaging sensor system and the measurement under different working conditions.

Owner:BEIJING JIAOTONG UNIV

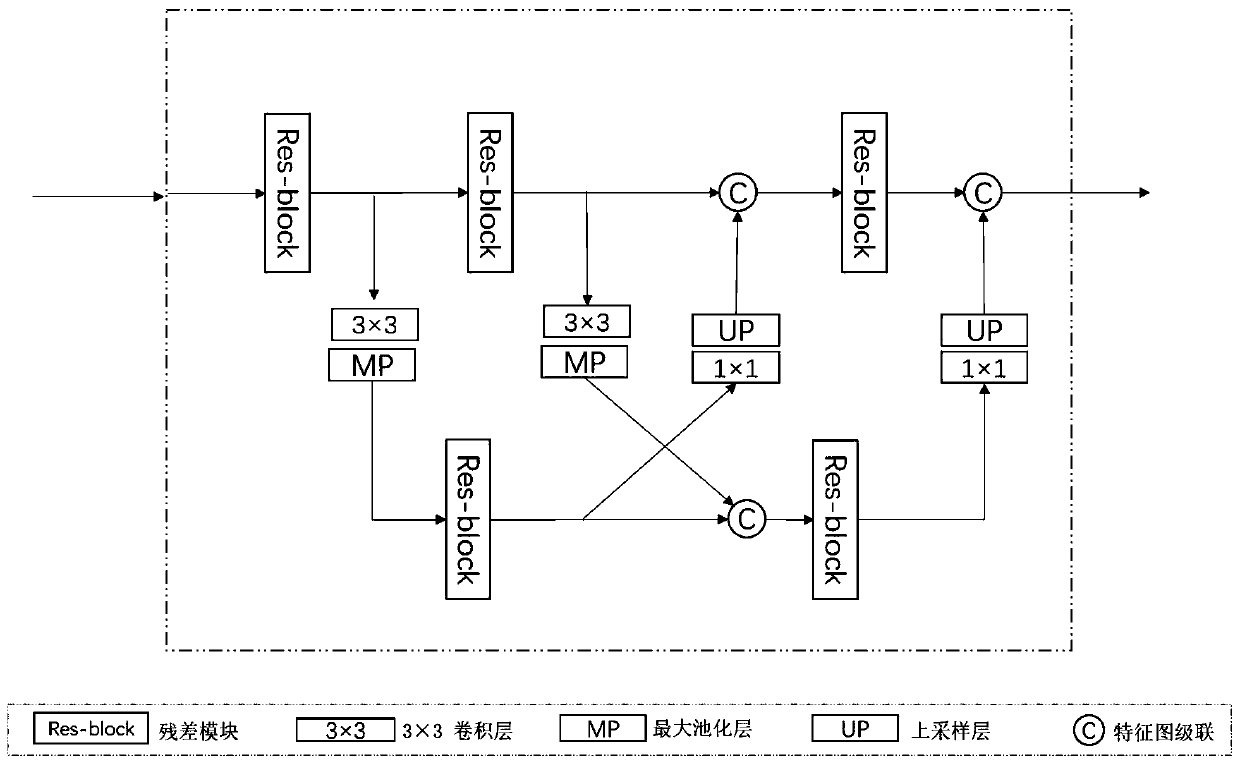

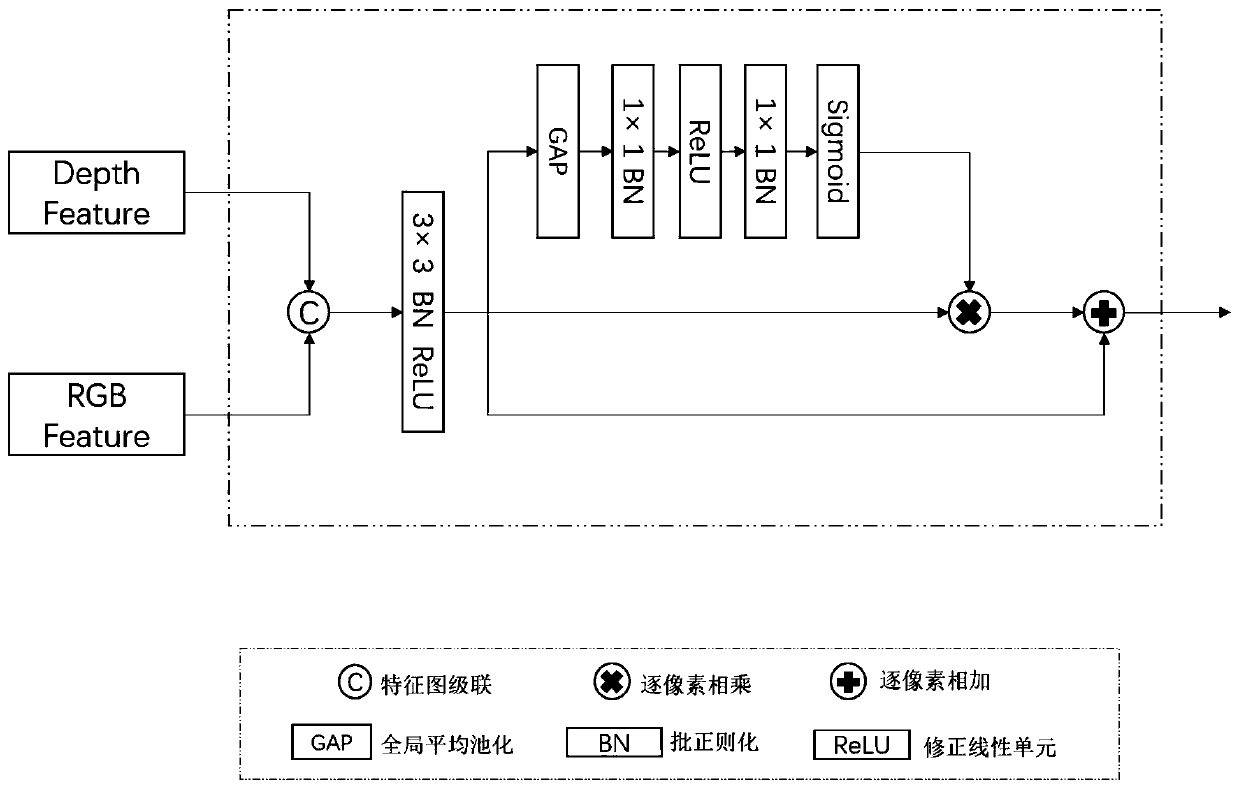

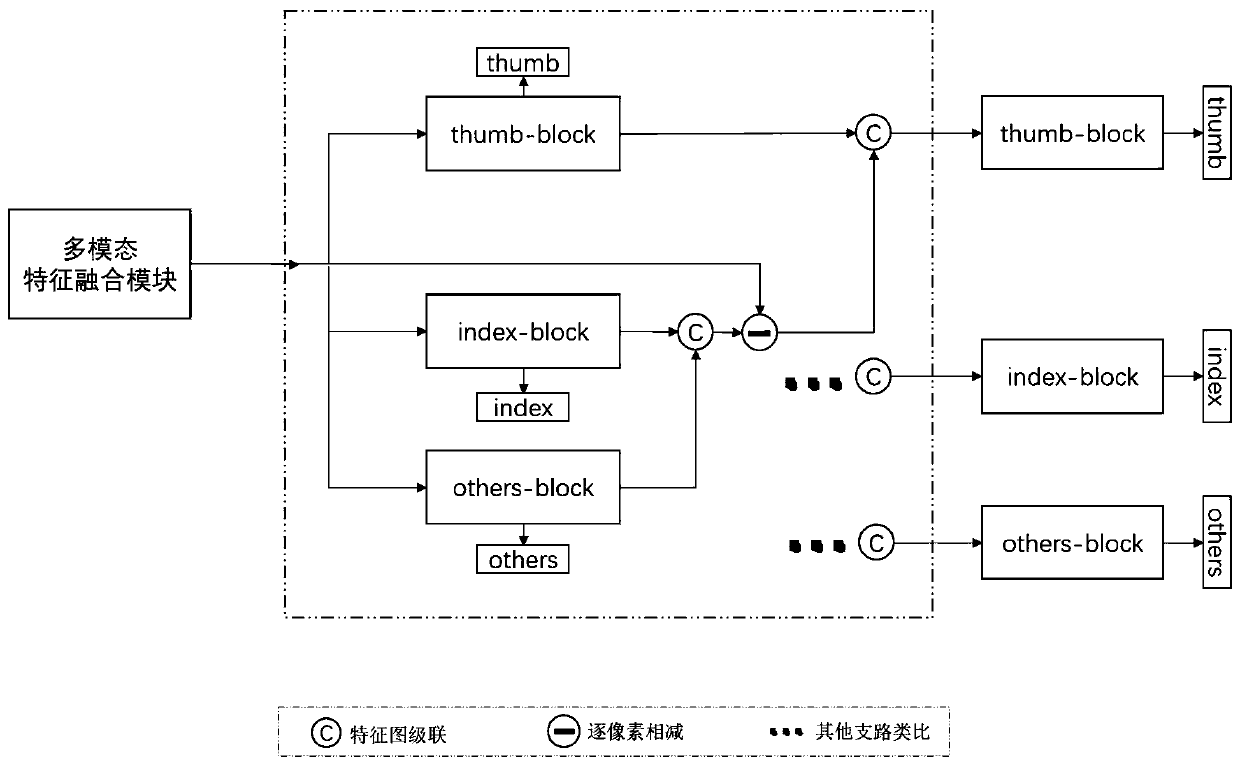

Hand posture estimation system and method based on RGBD fusion network

ActiveCN110175566AImprove accuracyImprove applicabilityCharacter and pattern recognitionNeural architecturesImaging processingInterference elimination

The invention provides a hand posture estimation system and method based on an RGBD fusion network. The system comprises a global depth feature extraction module, a residual module, a multi-mode feature fusion module and a branch parallel interference elimination module. The global depth feature extraction module adopts two parallel paths of cross-fused residual networks, wherein the upper path isa high-resolution feature map, the lower path is a low-resolution feature map, carries out the multi-scale feature fusion by the cross-fusing multi-resolution information, and finally predicts the network output in a high-resolution feature map. An input part of the system is divided into a depth image processing branch and an RGB color image processing branch, the features extracted by the two branches are subjected to multi-mode fusion to form the global features, the global features are sent to a branch parallel interference elimination module to perform feature extraction of hand branches, and the reinforced hand branch features are obtained and used for final joint position prediction. According to the method, the hand posture estimation with higher accuracy is achieved mainly through the information synthesis of the color images and the depth images.

Owner:DALIAN UNIV OF TECH

Video semantic representation method and system based on multi-mode fusion mechanism and medium

ActiveCN109472232AConsistent data representation dimensionsImprove performanceCharacter and pattern recognitionNeural architecturesFusion mechanismSemantic representation

The invention discloses a video semantic representation method and system based on a multi-mode fusion mechanism and a medium. Feature extraction: extracting visual features, voice features, motion features, text features and domain features of a video; Feature fusion: performing feature fusion on the extracted visual, voice, motion and text features and domain features through the constructed multi-level hidden Dirichlet distribution topic model; And feature mapping: mapping the fused features to a high-level semantic space to obtain a fused feature representation sequence. The model utilizesthe unique advantages of the theme model in the semantic analysis field, and the video representation mode obtained through model training on the basis of the model has ideal distinction in the semantic space.

Owner:苏州吴韵笔墨教育科技有限公司

Multimodal fusion decision logic system

InactiveUS20060204049A1Electric signal transmission systemsMultiple keys/algorithms usageData setComputer science

The present invention includes a method of deciding whether a data set is acceptable for making a decision. A first probability partition array and a second probability partition array may be provided. A no-match zone may be established and used to calculate a false-acceptance-rate (“FAR”) and / or a false-rejection-rate (“FRR”) for the data set. The FAR and / or the FAR may be compared to desired rates. Based on the comparison, the data set may be either accepted or rejected. The invention may also be embodied as a computer readable memory device for executing the methods.

Owner:QUALCOMM INC

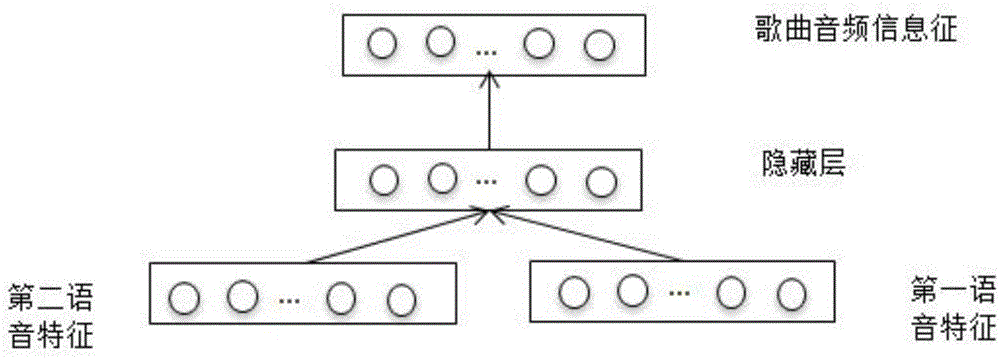

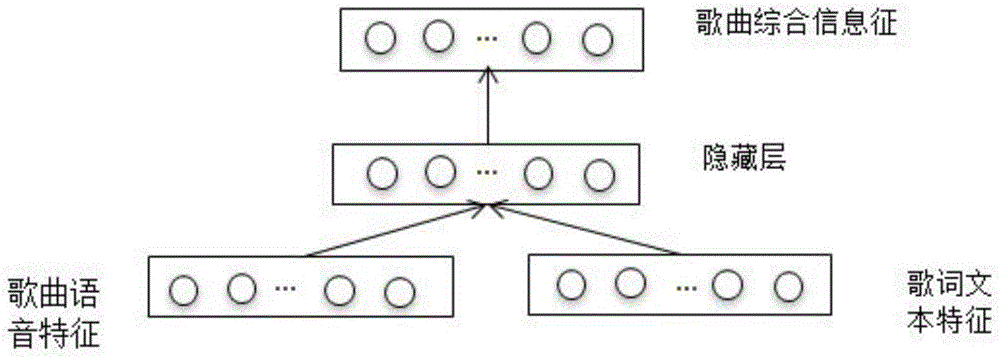

Recognition method for multi-mode fused song emotion based on deep study

ActiveCN106228977AGood emotional differentiationSolving order of magnitudeSpeech recognitionFeature extractionData information

The invention discloses a recognition method for multi-mode fused song emotion based on deep study. The method is characterized by comprising the following steps: 1) acquiring song lyric text data and audio voice data; 2) extracting text features from the lyric text content and acquiring the lyric text information features; 3) firstly fusing a first voice feature and a second voice feature of the song voice data, thereby acquiring song voice information features; 4) secondarily fusing the lyric text information features with the song voice information features, thereby acquiring a comprehensive information feature of the song; 5) utilizing a depth classifier to train the comprehensive information feature, thereby acquiring a song emotion recognition model and realizing the emotion recognition for the multi-mode fusion of the song through the song emotion recognition model. According to the method provided by the invention, the data information at the two aspects of lyric text information and song voice information of the song is comprehensively combined, so that the accuracy for the judgment on the song emotion state in the man-machine interaction can be increased.

Owner:青岛类认知人工智能有限公司

Multi-mode molecular tomography system

InactiveCN101984928ADoes not involve spatial registration issuesConsistent geometric coordinatesSurgeryComputerised tomographsSoft x rayOptical tomography

The invention relates to a multi-mode molecular tomography system which is characterized by comprising one or more light sources of an X-ray source, a near infrared laser light source and a finite spectral width light source for projecting scanning light to an object to be scanned, an electric loading device, an imaging device and a control and processing device, wherein the imaging device is used for obtaining intensity distribution data of x-rays, visible light or near infrared light emerging from the surface of the object to be scanned after scanning, and inputting the intensity distribution data into the control and processing device; the control and processing device is used for controlling the actions of the object to be scanned through the electric loading device; and the control and processing device comprises a tomography module, the tomography module is used for receiving the data of the imaging device, utilizing XCT (X-ray computed tomography) and DOT (diffuse optical tomography) modes to reconstruct an outer boundary and similar information of all internal organizations during marginalization, fusing and reconstructing XCT, DOT, FMT (fluorescence molecular tomography) and BLT (bioluminescence tomography) single-mode or multi-mode tomography image after fusion and outputting. The multi-mode molecular tomography system is applicable to the field of x-ray and optical biomedical imaging.

Owner:PEKING UNIV

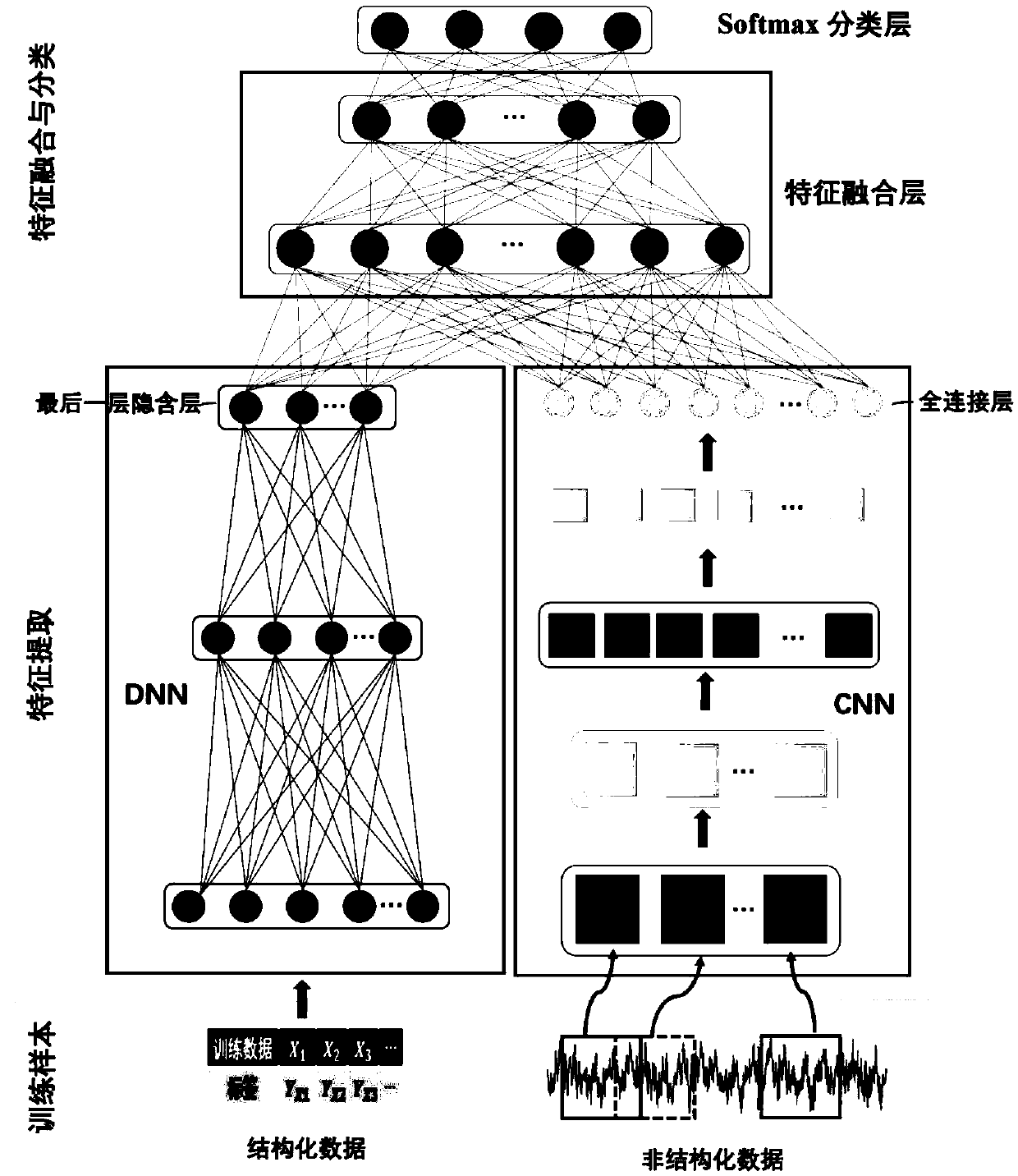

Intelligent fault diagnosis method based on multi-mode fusion deep learning

ActiveCN108614548AAchieve forecastImplement diagnosticsProgramme controlElectric testing/monitoringIndustrial equipmentUnstructured data

The invention, which belongs to the technical field of industrial equipment fault diagnosis, discloses an intelligent fault diagnosis method based on multi-mode fusion deep learning. Fault features implied in structured data and unstructured data are extracted respectively; the different extracted fault features are fused organically; and fault classification is carried out by using a softmax classifier to realize prediction and diagnosis of the health state of industrial equipment. With the method disclosed by the invention, fault feature extraction, feature fusion and fault classification ofmulti-mode heterogeneous data from different sensors can be well realized. Because realization of fault feature extraction, feature fusion and fault classification of multi-mode heterogeneous data from different sensors, the diagnosis cost is saved and high universality is realized. The intelligent fault diagnosis method can be extended to the fault diagnosis of various industrial devices.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

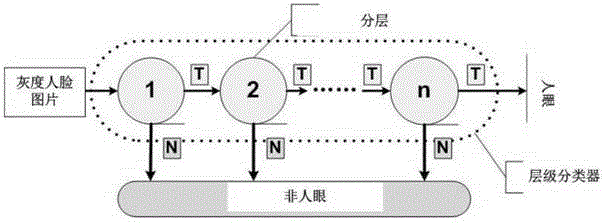

Multi-modal face recognition device and method based on multi-layer fusion of gray level and depth information

InactiveCN104598878AImprove performanceCharacter and pattern recognitionPattern recognitionGray level

The invention discloses a multi-modal face recognition device and method based on multi-layer fusion of gray level and depth information. The method mainly comprises the steps of recognizing the gray level information of a human face; recognizing the depth information of the human face; normalizing the gray level information and the depth information of the human face, based on normalized matching scores, obtaining multi-modal fused matching scores through fusion strategies to achieve multi-modal face recognition. According to the multi-modal face recognition device and method based on multi-layer fusion of gray level and depth information, a multi-modal system collects two-dimensional gray level information and three-dimensional depth information, makes use of the advantages of the two-dimensional gray level information and the three-dimensional depth information, and through the fusion strategies, overcomes certain inherent shortcomings of a single-modal system such as illumination of gray level images and expressions of depth images, thereby greatly enhancing the performance and achieve accurate and rapid face recognition.

Owner:SHENZHEN WEITESHI TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com