A socio-emotional classification method based on multimodal fusion

A sentiment classification and multi-modal technology, applied in text database clustering/classification, semantic analysis, biological neural network model, etc., to achieve performance improvement and increase accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] The specific implementation of the present invention will be further explained in detail below in conjunction with the accompanying drawings.

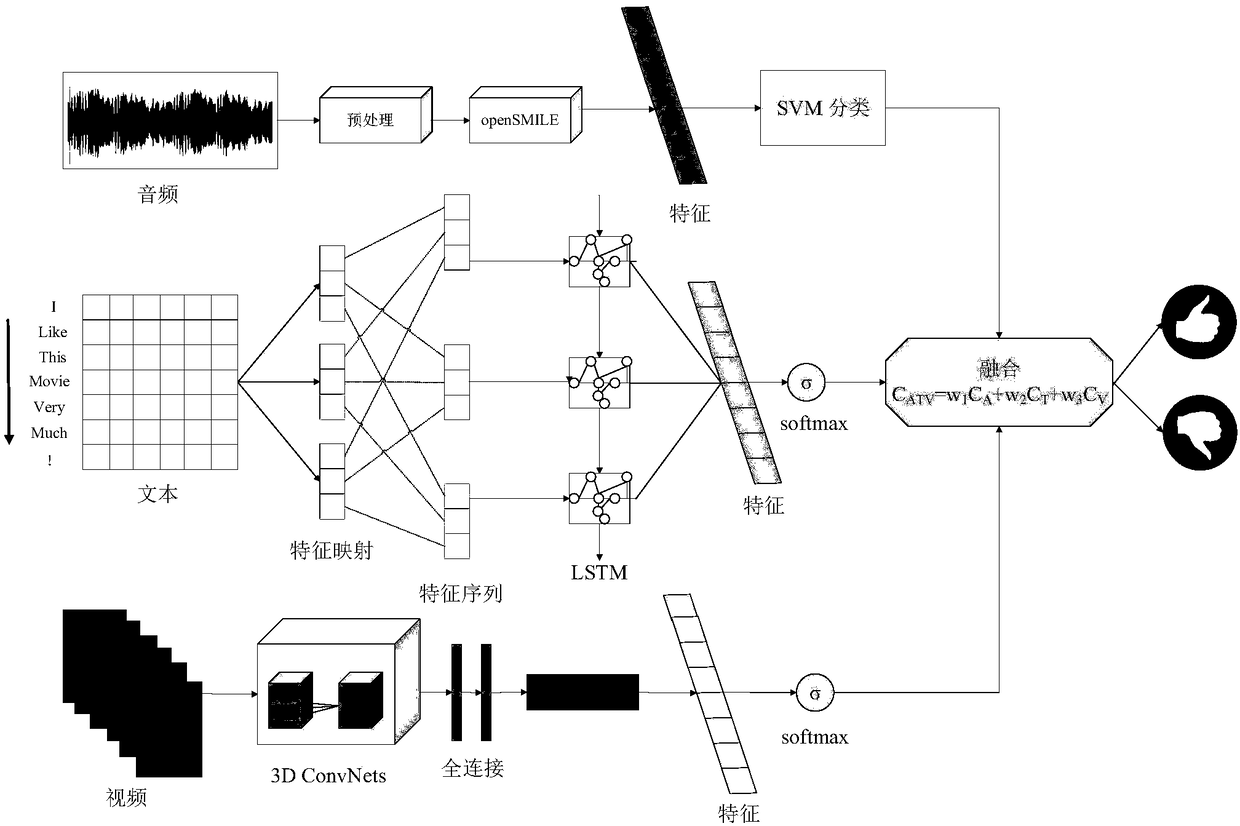

[0030] figure 1 It is the model frame diagram of the present invention. Involving audio, visual and text information feature extraction and decision fusion classification.

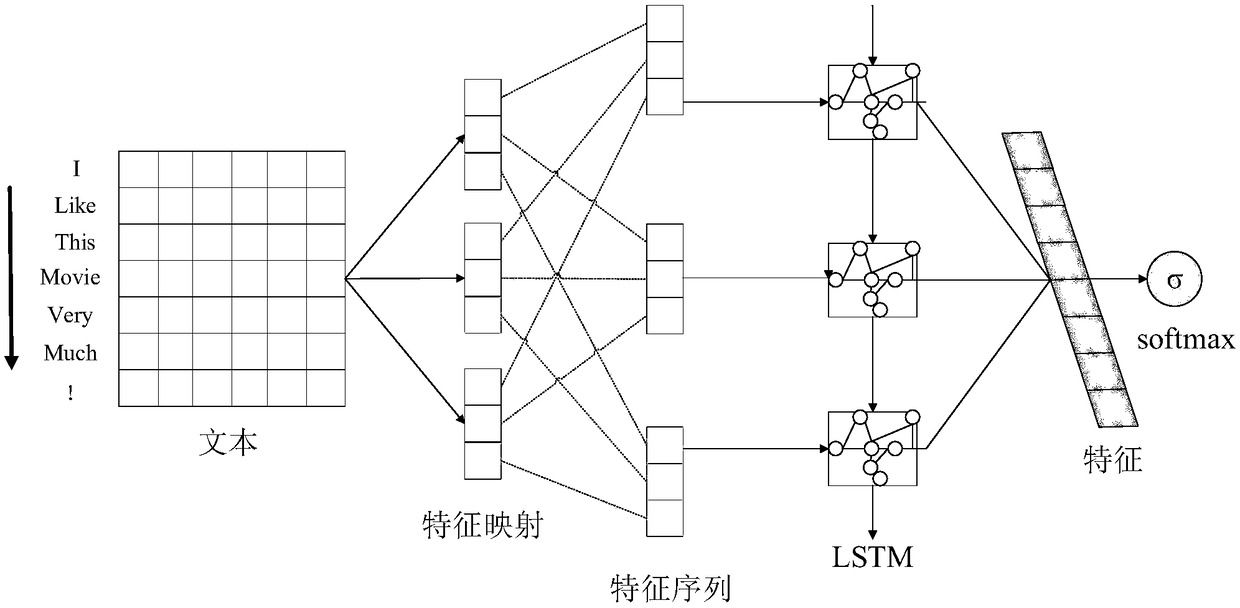

[0031] (1) Text sentiment classification based on CNN-RNN hybrid model: For text information, use CNN-RNN hybrid model to realize text sentiment analysis. CNN-RNN consists of two parts: Convolutional neural network extracts text features, and recurrent neural network is used for emotion prediction.

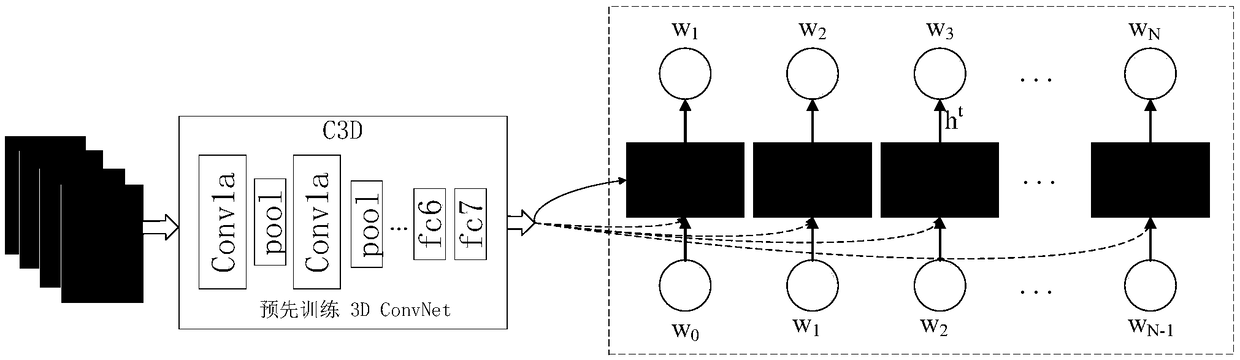

[0032] (2) Visual emotion classification based on the 3DCLS model: 3DCLS (3D CNN-ConvLSTM) consists of two parts: a three-dimensional convolutional neural network extracts spatiotemporal features from the input video, and a convolutional LSTM (LongShort-Term Memory) further learns long-term Spatio-temporal features, processing and emotional prediction of the extracte...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com