Patents

Literature

1743 results about "Emotionality" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Emotionality is the observable behavioral and physiological component of emotion. It is a measure of a person's emotional reactivity to a stimulus. Most of these responses can be observed by other people, while some emotional responses can only be observed by the person experiencing them. Observable responses to emotion (i.e., smiling) do not have a single meaning. A smile can be used to express happiness or anxiety, a frown can communicate sadness or anger, and so on. Emotionality is often used by psychology researchers to operationalize emotion in research studies.

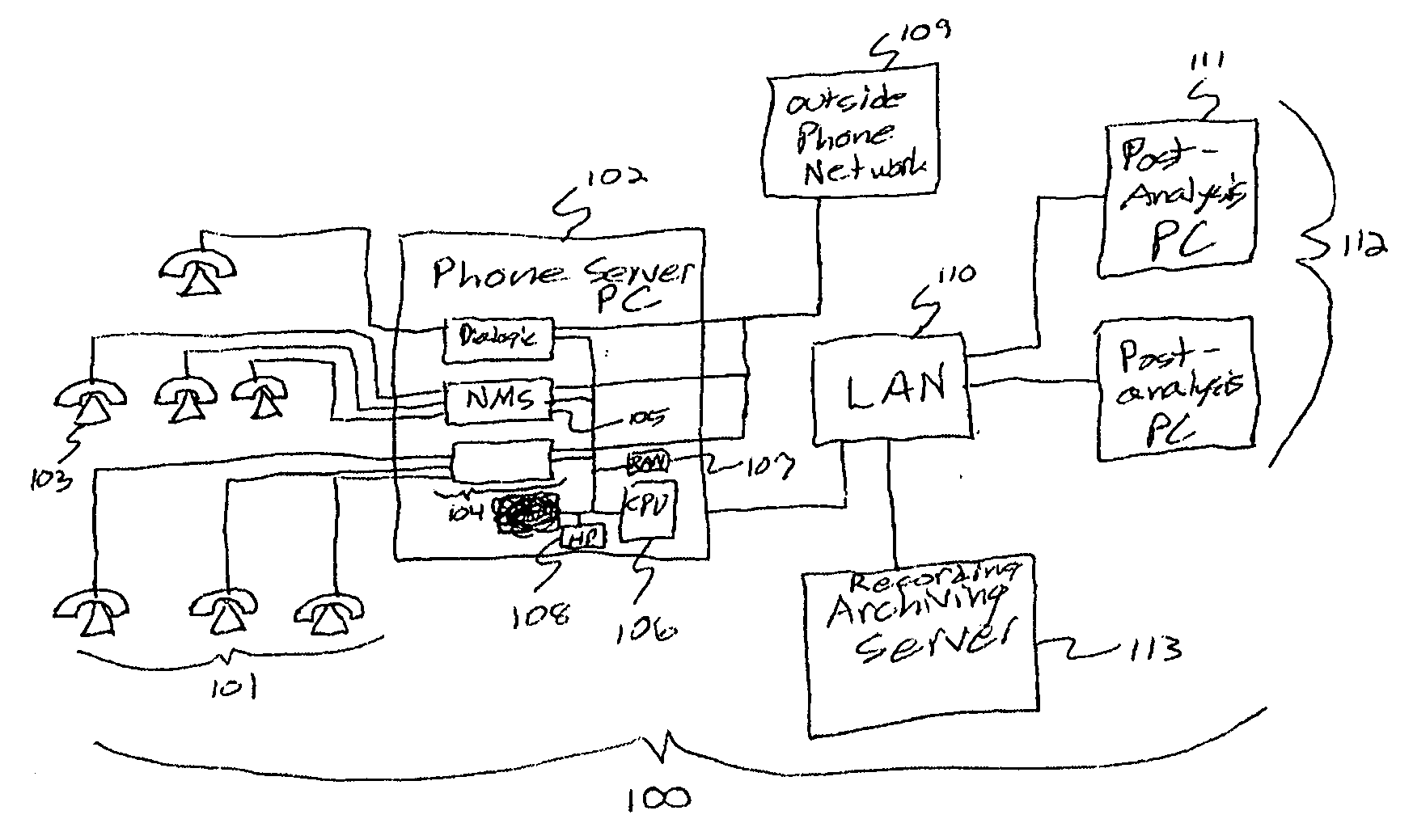

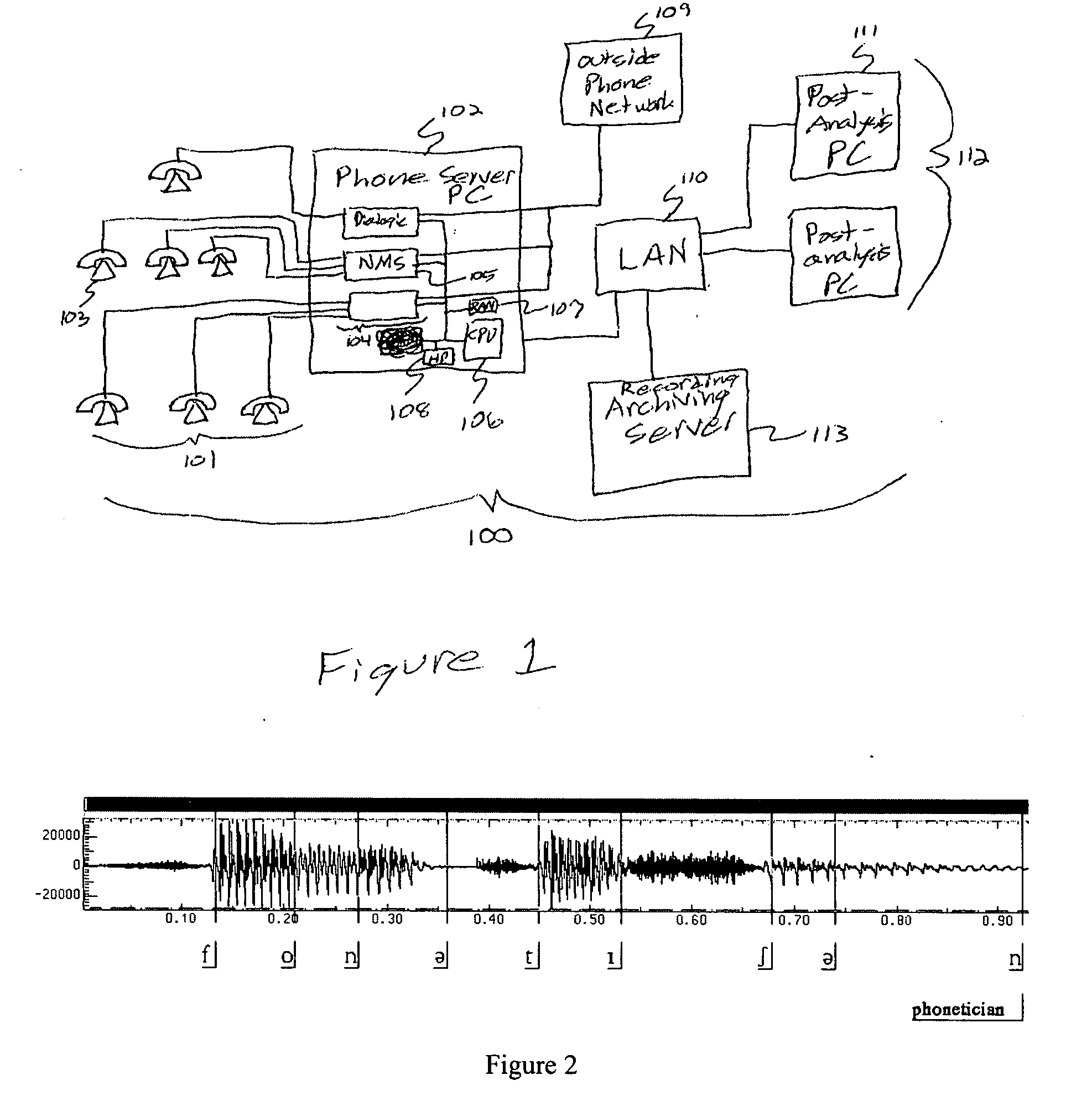

Multi-party conversation analyzer & logger

ActiveUS20070071206A1High precisionFacilitate interruptionInterconnection arrangementsSubstation speech amplifiersGraphicsSurvey instrument

A multi-party conversation analyzer and logger uses a variety of techniques including spectrographic voice analysis, absolute loudness measurements, directional microphones, and telephonic directional separation to determine the number of parties who take part in a conversation, and segment the conversation by speaking party. In one aspect, the invention monitors telephone conversations in real time to detect conditions of interest (for instance, calls to non-allowed parties or calls of a prohibited nature from prison inmates). In another aspect, automated prosody measurement algorithms are used in conjunction with speaker segmentation to extract emotional content of the speech of participants within a particular conversation, and speaker interactions and emotions are displayed in graphical form. A conversation database is generated which contains conversation recordings, and derived data such as transcription text, derived emotions, alert conditions, and correctness probabilities associated with derived data. Investigative tools allow flexible queries of the conversation database.

Owner:SECURUS TECH LLC

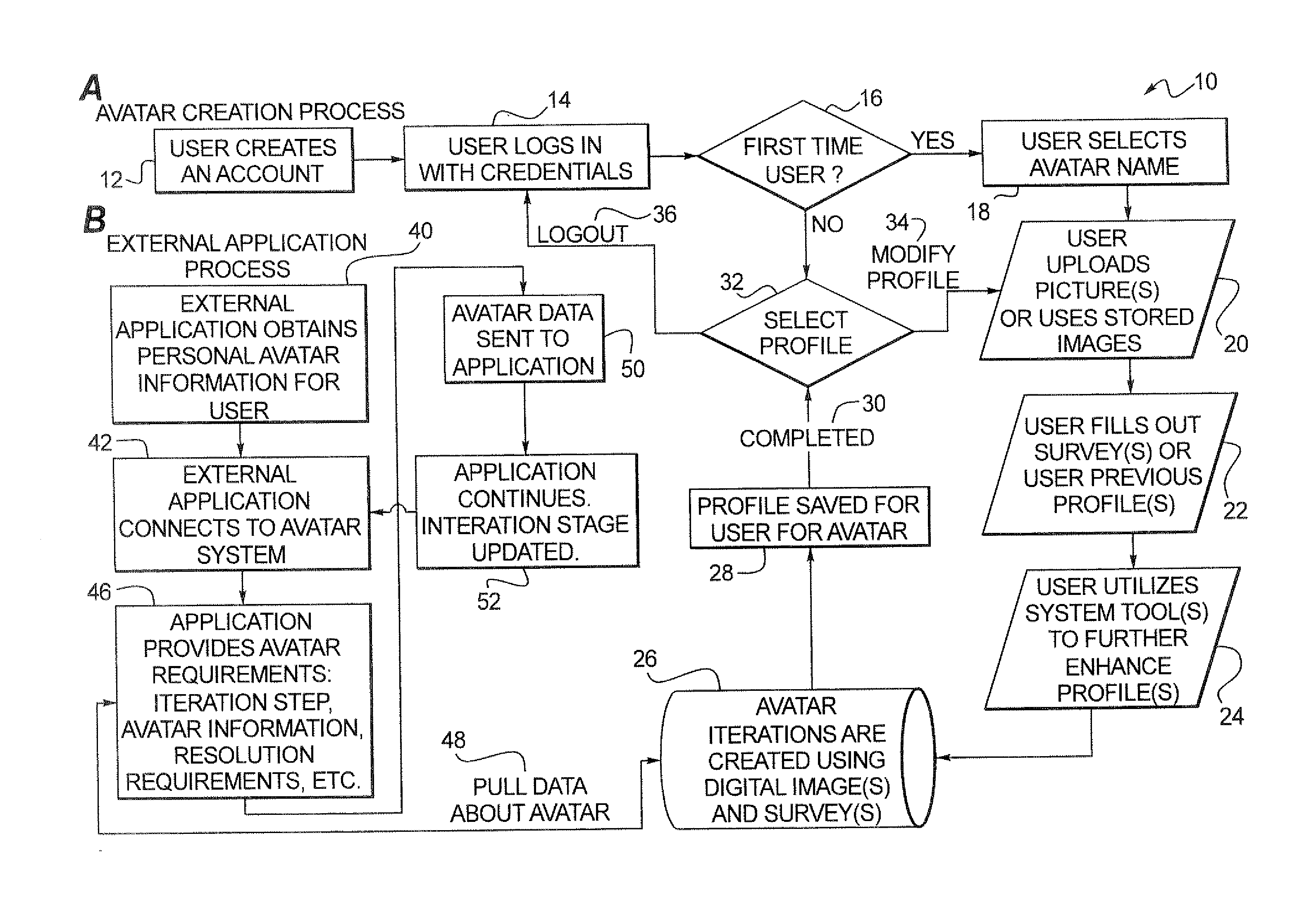

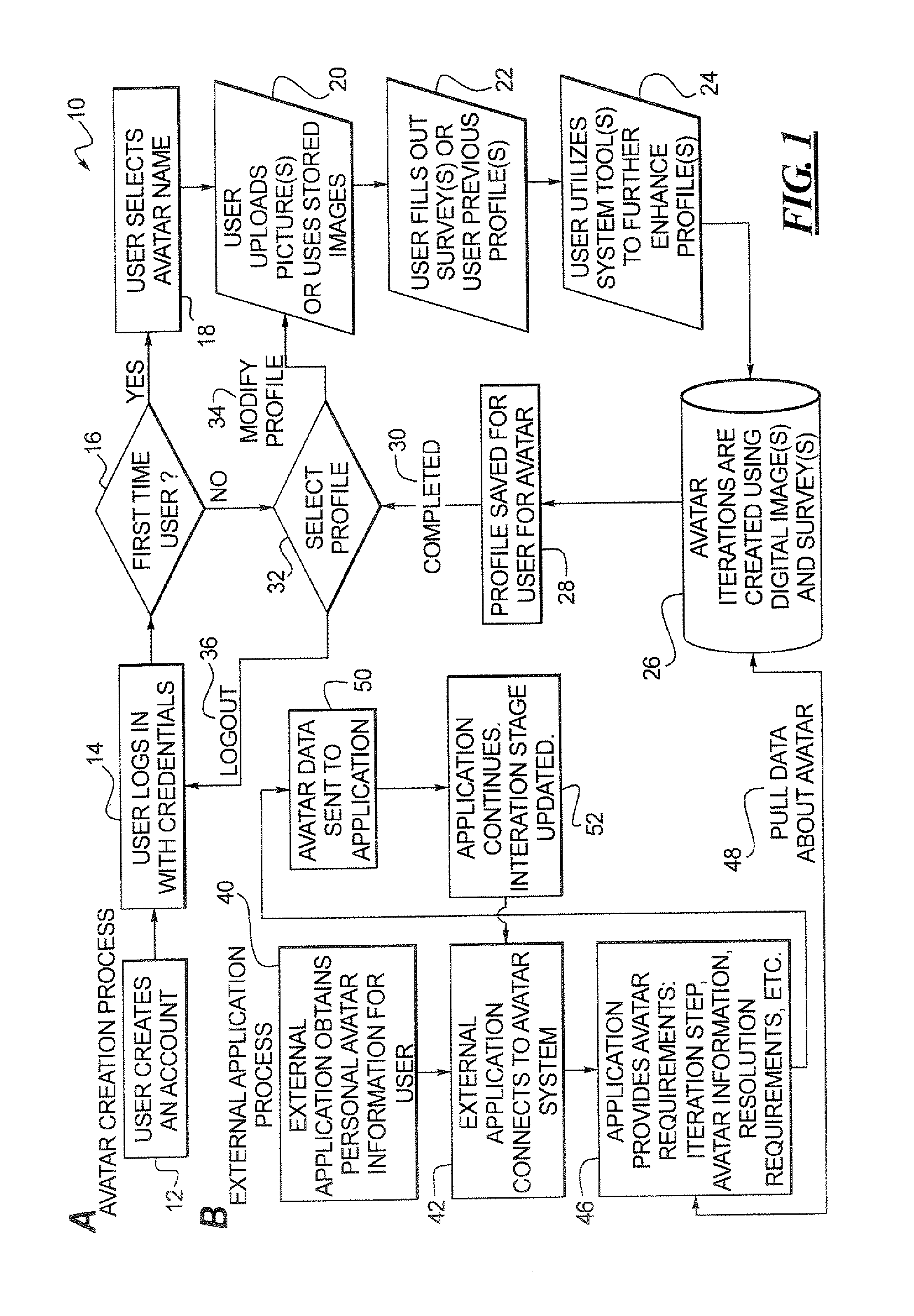

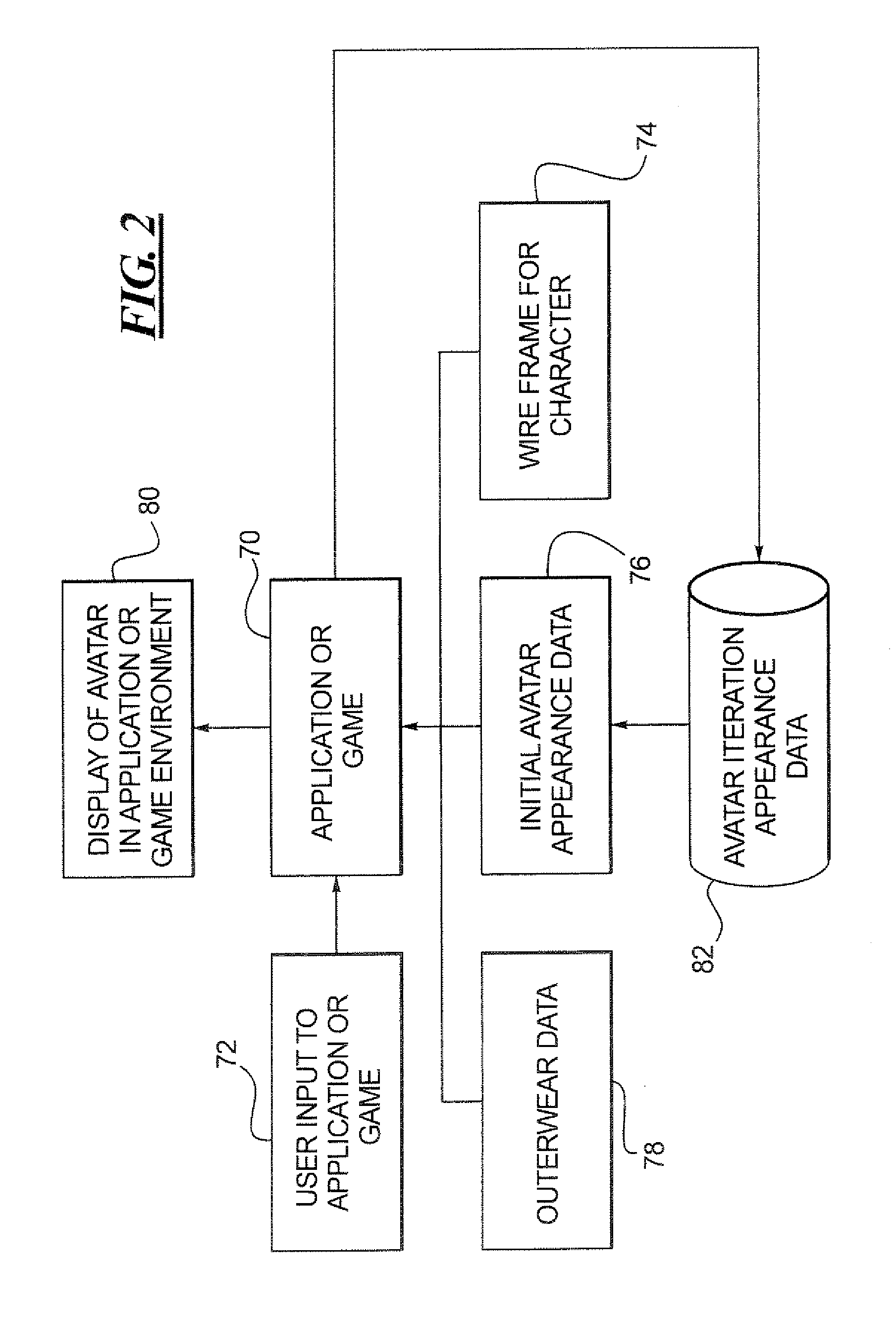

Character for computer game and method

ActiveUS8047915B2Enhance believability and realism and emotional connectionIncrease impactVideo gamesSpecial data processing applicationsHuman–computer interactionFinal version

A video game character or avatar is generated using images of the player so that the on-screen avatar being controlled by the player appears like the player. Answers to a questionnaire or a psychological profile are provided by the player to determine characteristics that the player views as desirable, which are used to generate a final version of the avatar. Iterations of the avatar appearance are generated from the initial appearance to the final, more desirable appearance. Using the avatar, as play of the game proceeds the player's avatar begins as a character appearing like themselves and gradually becomes a character that is more as they would like to appear. The avatar increases the emotional impact of games by providing strong visual and psychological connections with the player. The avatar may instead begin from an initial character that does not appear similar to the player. The iterations may make the avatar gradually less appealing or with other changes in appearance, in some embodiments.

Owner:LYLE DEV

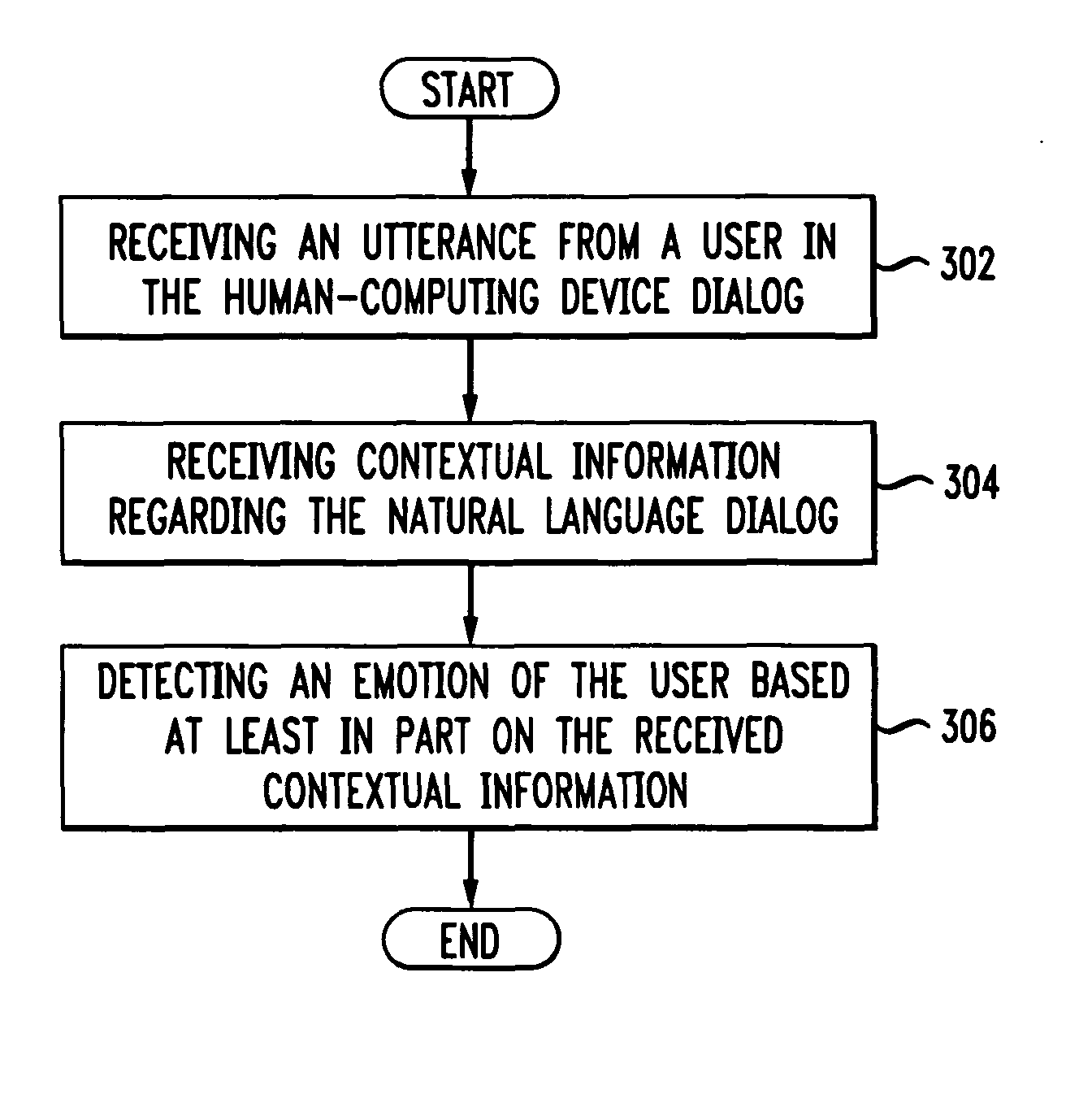

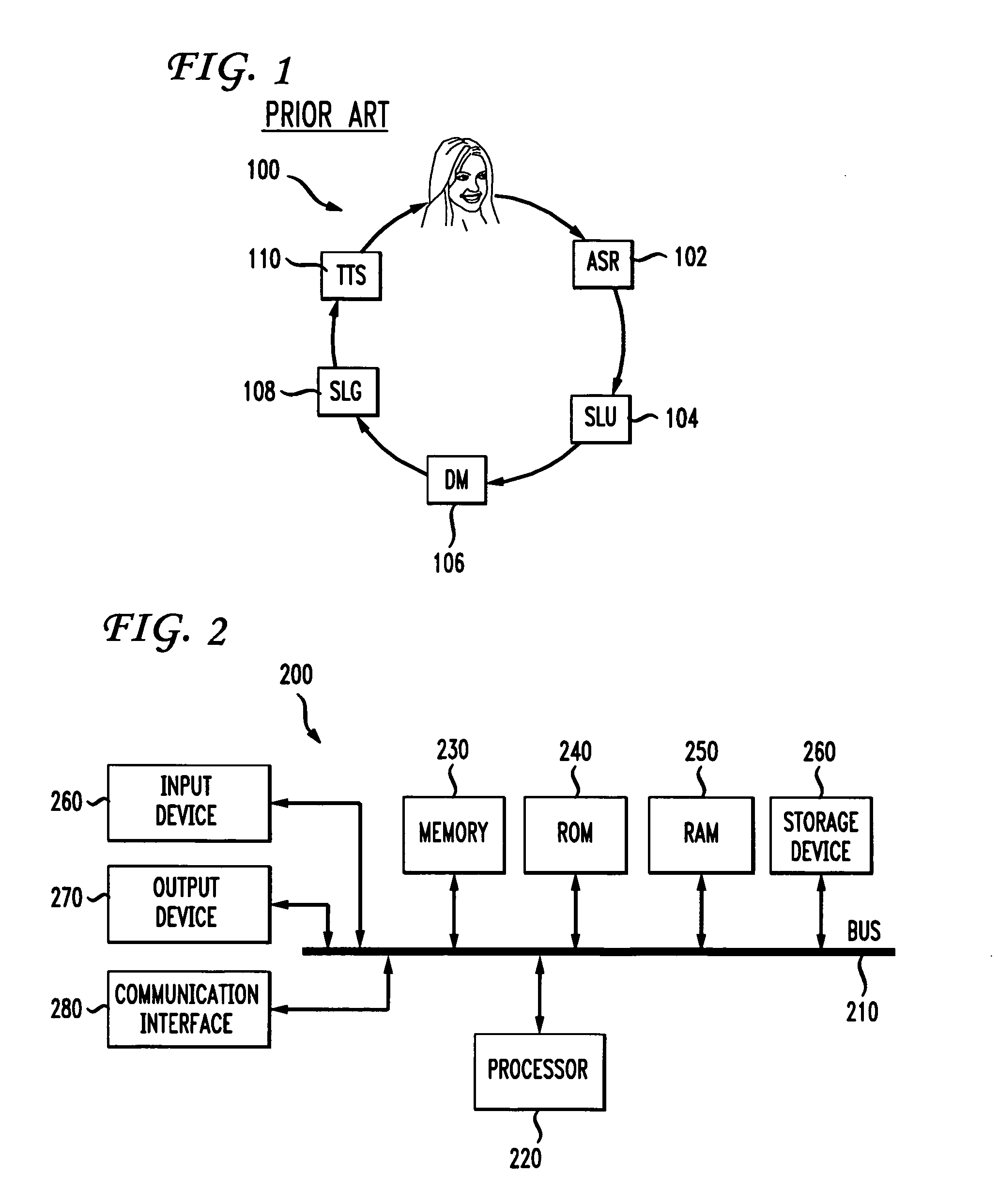

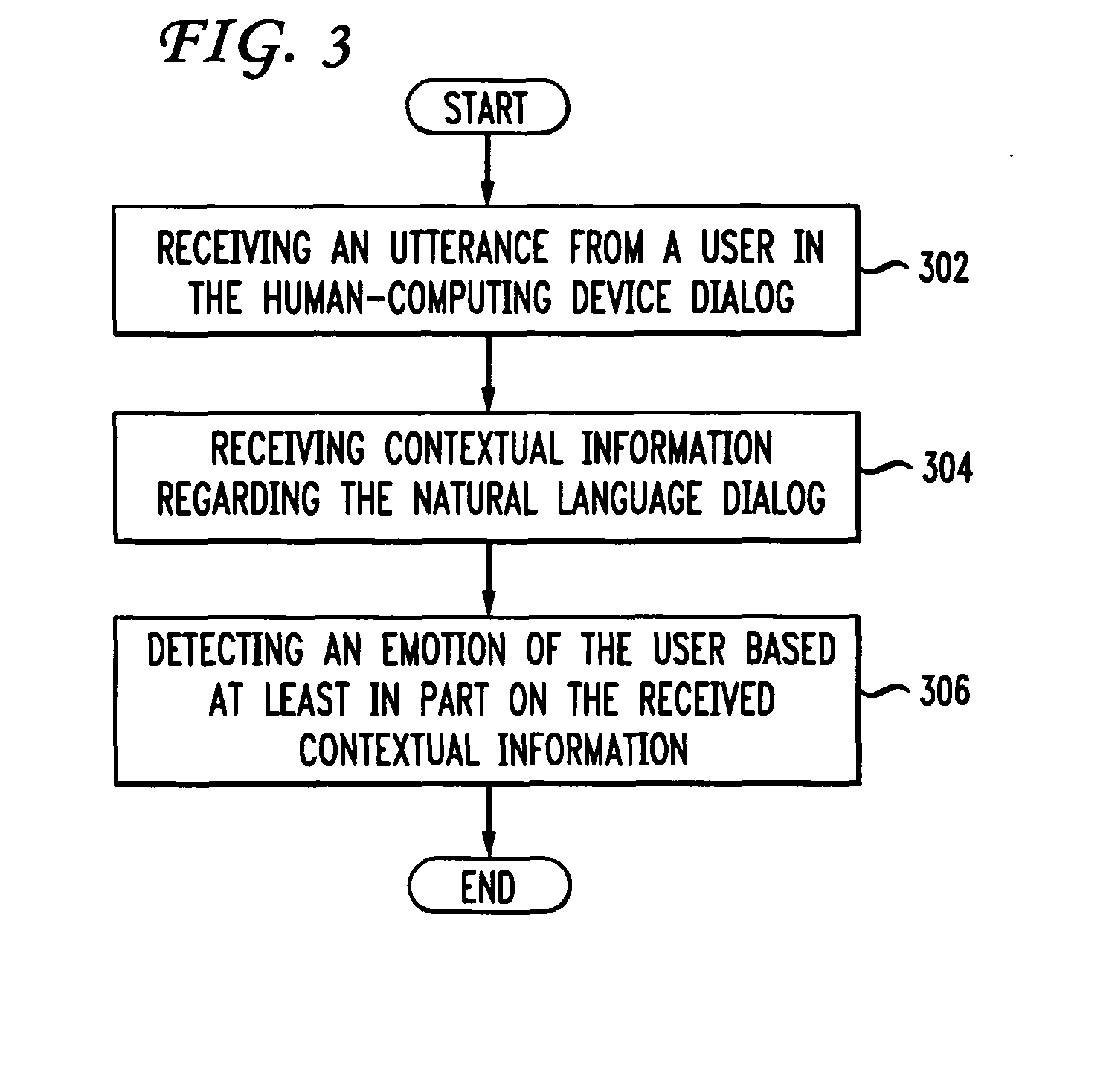

System and method for building emotional machines

InactiveUS7912720B1More natural flowAccurate informationNatural language data processingSpeech recognitionEmotion detectionNatural language

A system, method and computer-readable medium for practicing a method of emotion detection during a natural language dialog between a human and a computing device are disclosed. The method includes receiving an utterance from a user in a natural language dialog between a human and a computing device, receiving contextual information regarding the natural language dialog which is related to changes of emotion over time in the dialog, and detecting an emotion of the user based on the received contextual information. Examples of contextual information include, for example, differential statistics, joint statistics and distance statistics.

Owner:NUANCE COMM INC

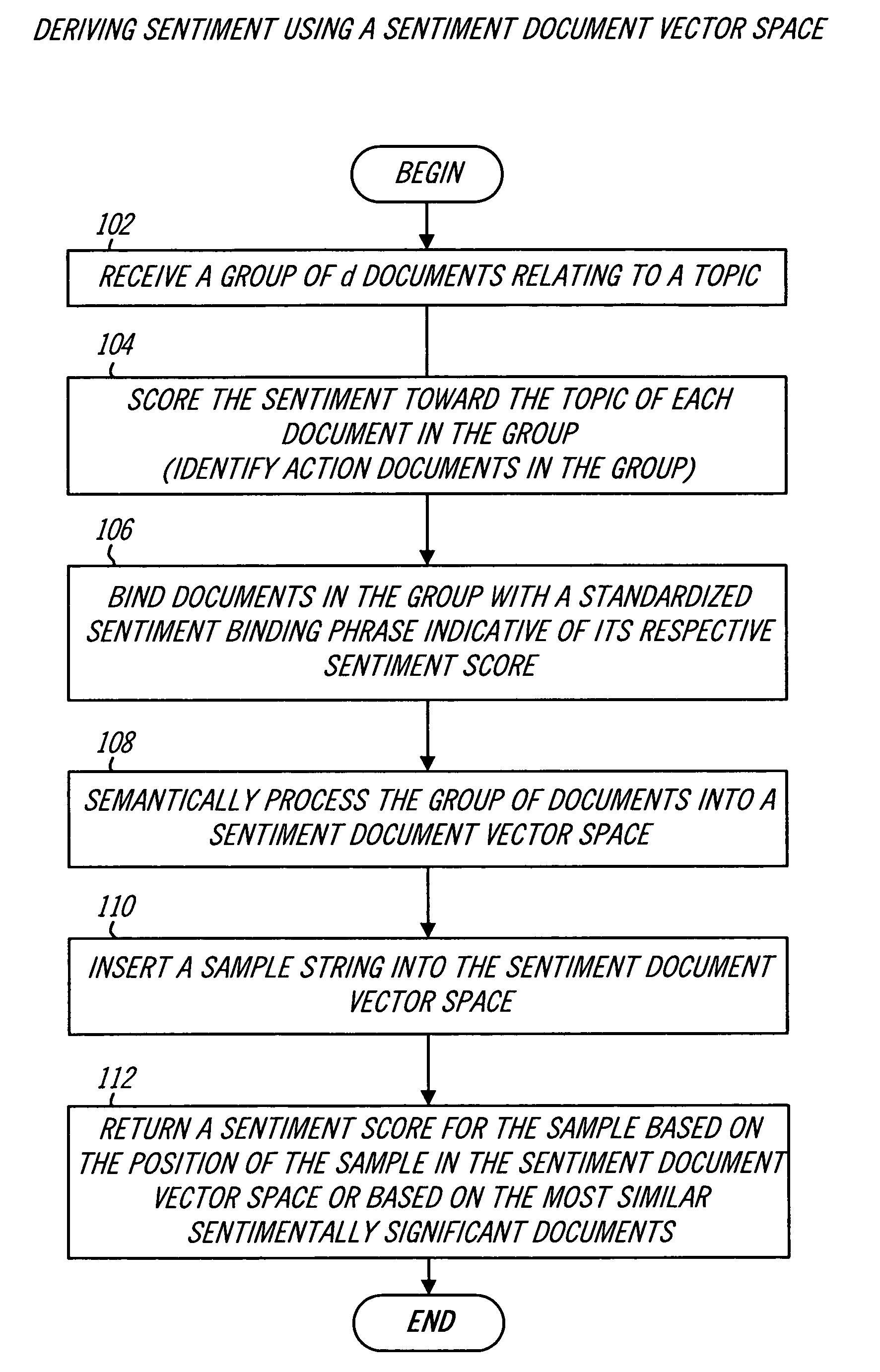

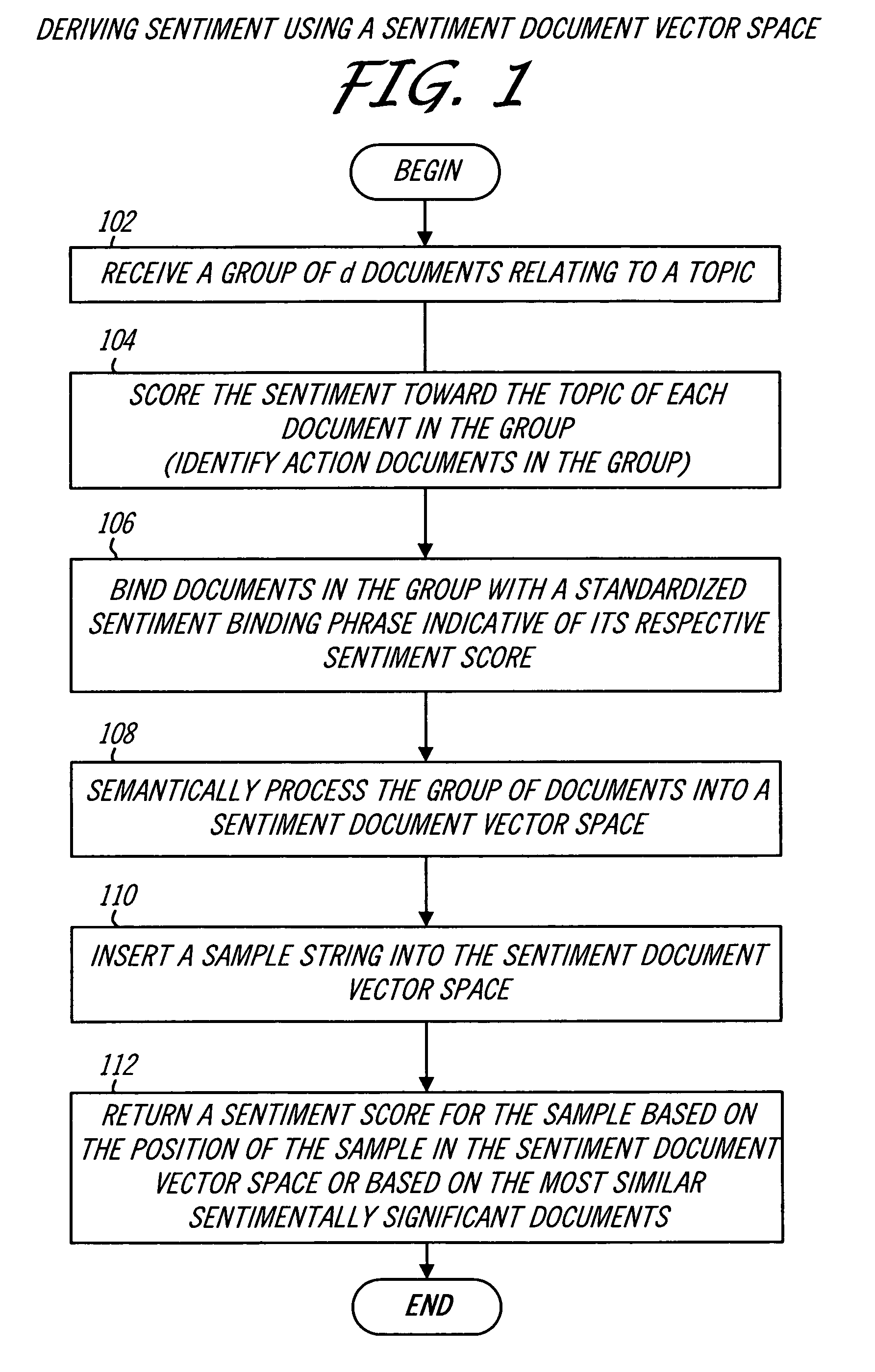

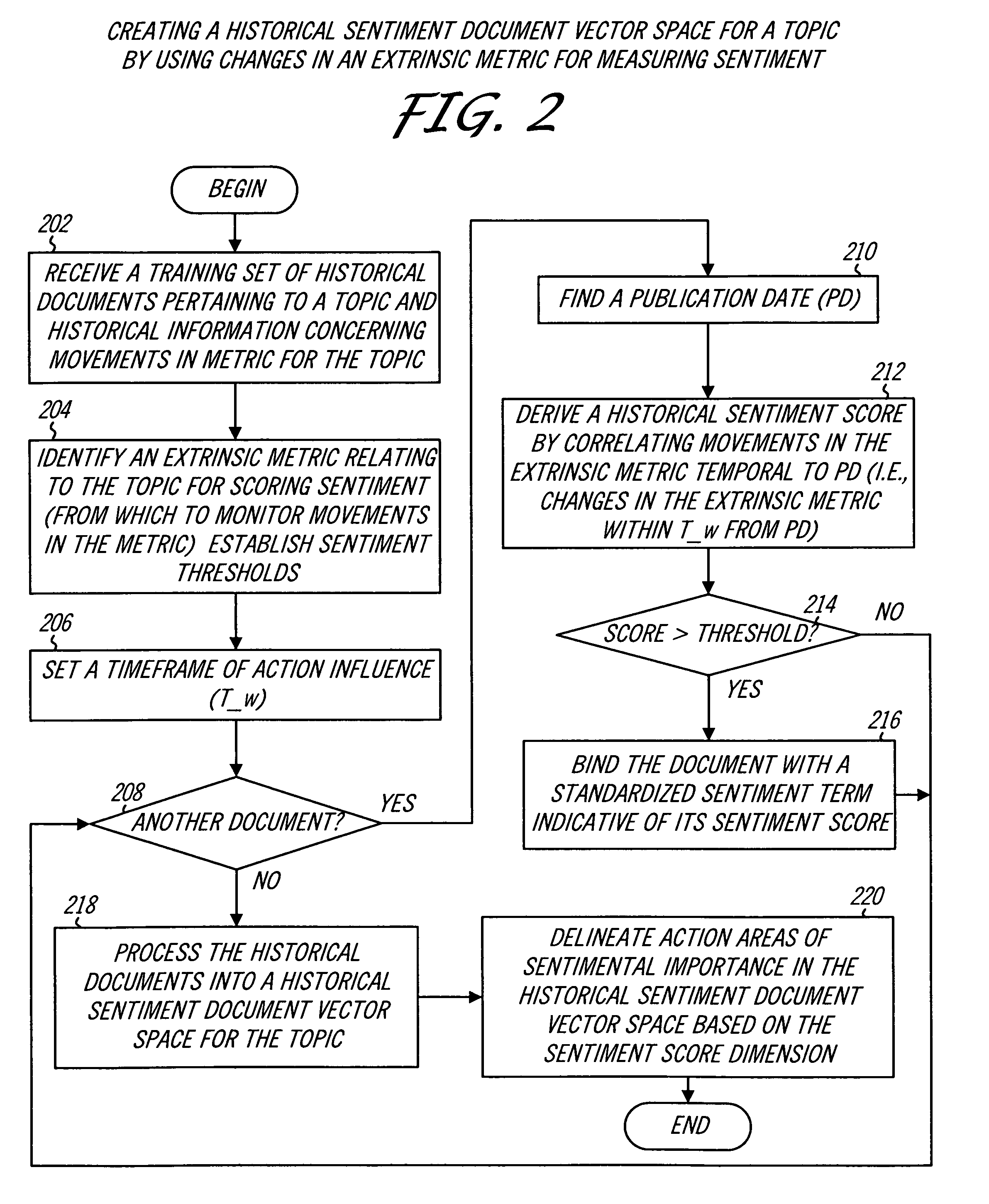

System and method for sentiment-based text classification and relevancy ranking

ActiveUS8166032B2Digital data information retrievalDigital data processing detailsSentiment scoreText categorization

The sentimental significance of a group of historical documents related to a topic is assessed with respect to change in an extrinsic metric for the topic. A unique sentiment binding label is included to the content of actions documents that are determined to have sentimental significance and the group of documents is inserted into a historical document sentiment vector space for the topic. Action areas in the vector space are defined from the locations of action documents and singular sentiment vector may be created that describes the cumulative action area. Newly published documents are sentiment-scored by semantically comparing them to documents in the space and / or to the singular sentiment vector. The sentiment scores for the newly published documents are supplemented by human sentiment assessment of the documents and a sentiment time decay factor is applied to the supplemented sentiment score of each newly published documents. User queries are received and a set of sentiment-ranked documents is returned with the highest age-adjusted sentiment scores.

Owner:MARKETCHORUS

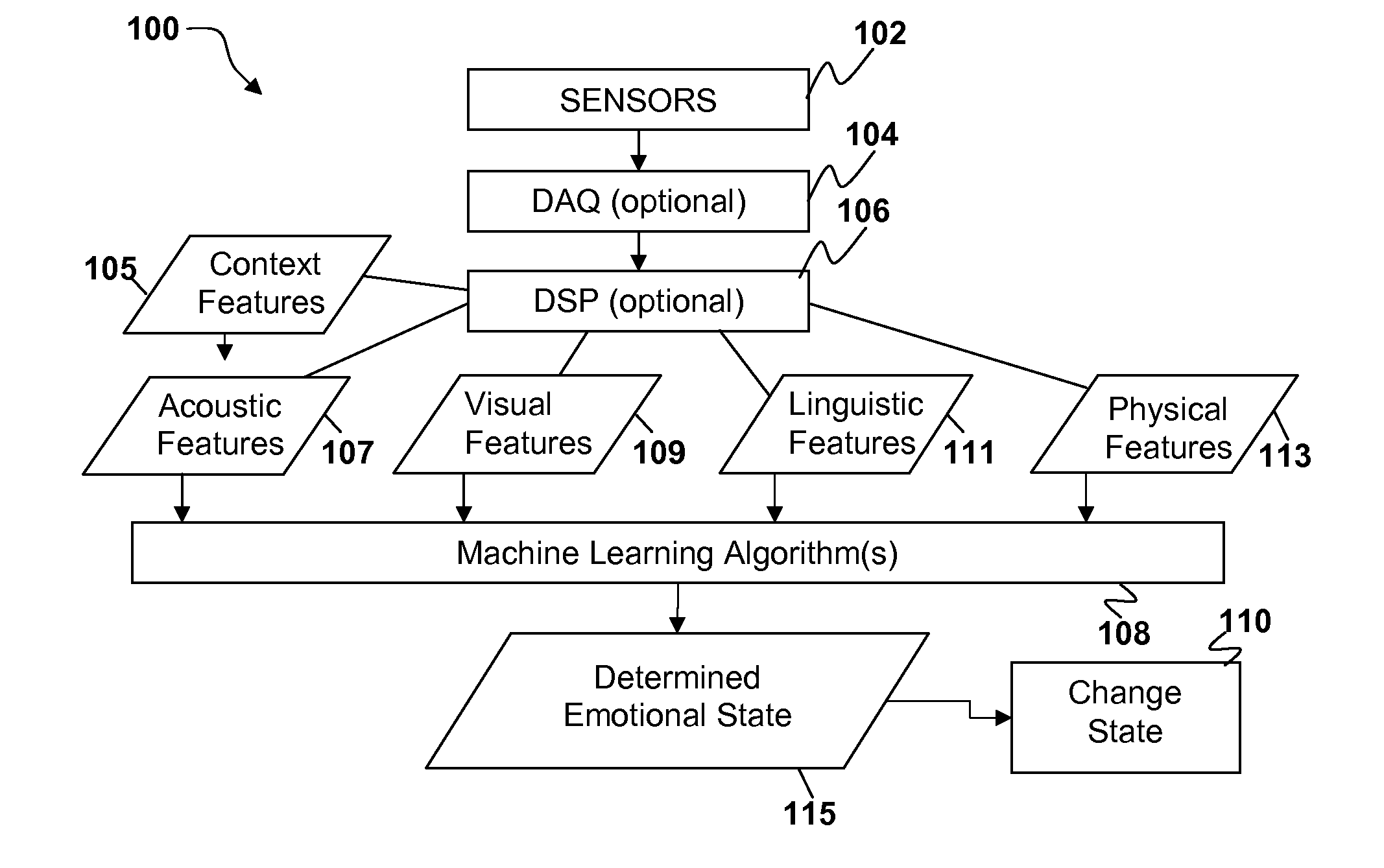

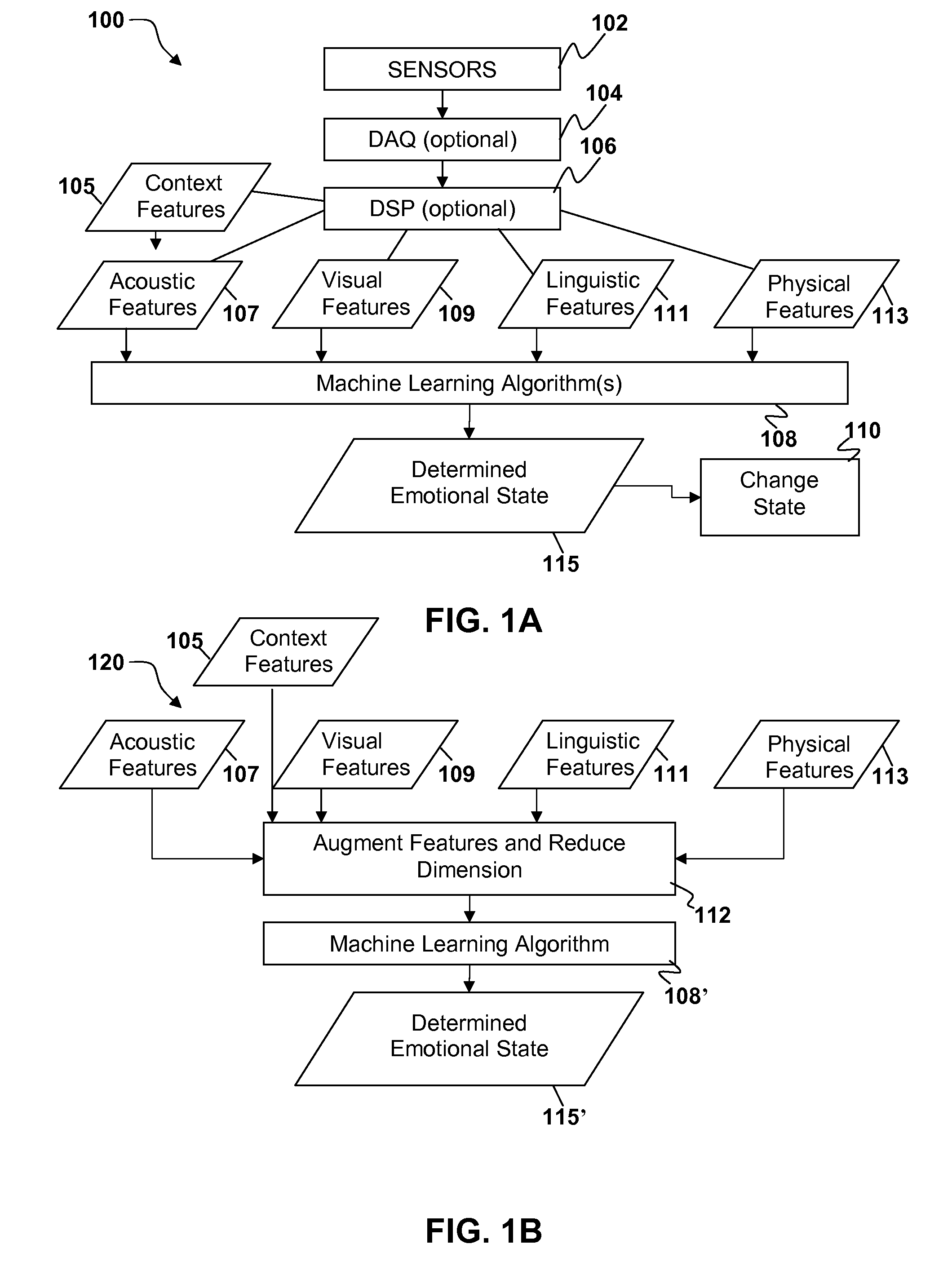

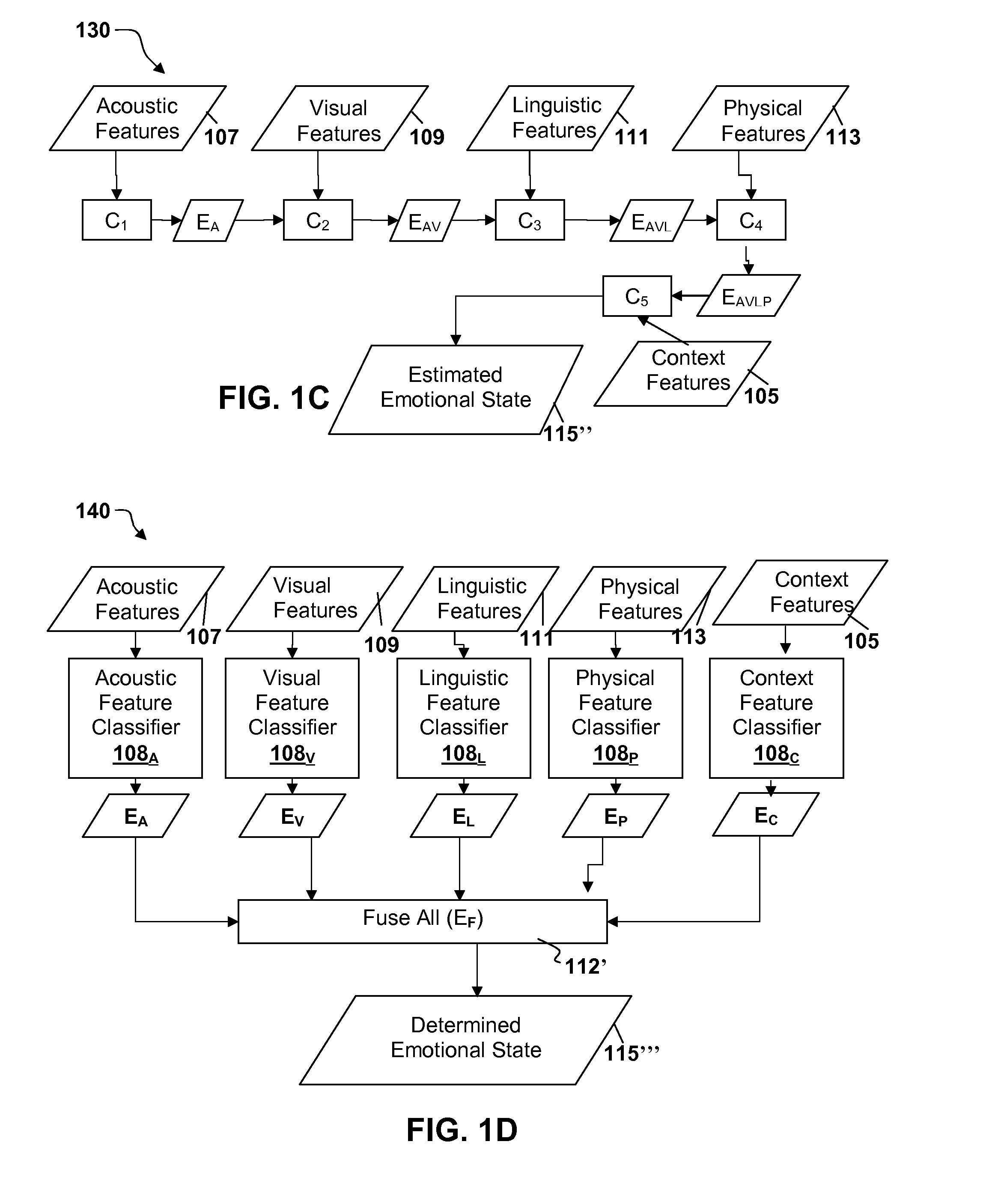

Multi-modal sensor based emotion recognition and emotional interface

ActiveUS20140112556A1Speech recognitionAcquiring/recognising facial featuresPattern recognitionSubject matter

Features, including one or more acoustic features, visual features, linguistic features, and physical features may be extracted from signals obtained by one or more sensors with a processor. The acoustic, visual, linguistic, and physical features may be analyzed with one or more machine learning algorithms and an emotional state of a user may be extracted from analysis of the features. It is emphasized that this abstract is provided to comply with the rules requiring an abstract that will allow a searcher or other reader to quickly ascertain the subject matter of the technical disclosure. It is submitted with the understanding that it will not be used to interpret or limit the scope or meaning of the claims.

Owner:SONY COMPUTER ENTERTAINMENT INC

Compassion, Variety and Cohesion For Methods Of Text Analytics, Writing, Search, User Interfaces

ActiveUS20120166180A1Increases precision and recallReduced hardware resourceNatural language data processingSpecial data processing applicationsSocial mediaResonance

The present invention increases precision and recall of search engines, while decreasing hardware resources needed, using musical rhythmic analysis to detect sentiment and emotion, using poetic and metaphoric resonances with dictionary meanings, to annotate, distinguish and summarize n-grams of word meanings, then intersecting n-grams to locate mutually salient sentences, using metaphor salience analysis to cluster sentences and paragraphs into automatically named concepts, automatically characterizing quality and depth to which documents describe concepts, using editorial metrics of compassion, variety of perspectives and logical cohesion, to automatically set pricing for written works and their copyrights, and to monitor blogs and social media for newly important concepts, and provide advanced user interfaces.

Owner:SYNAPSIFY

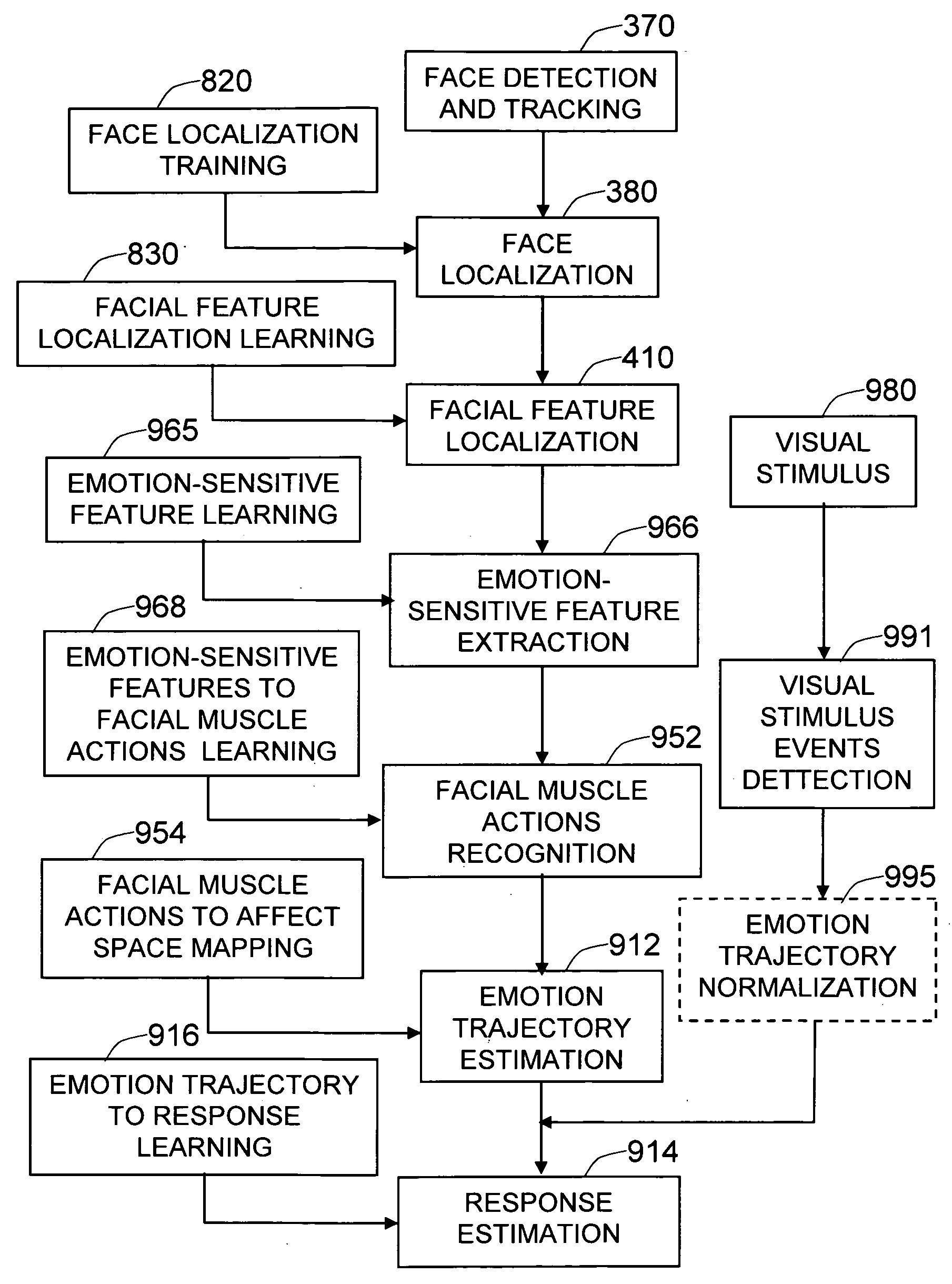

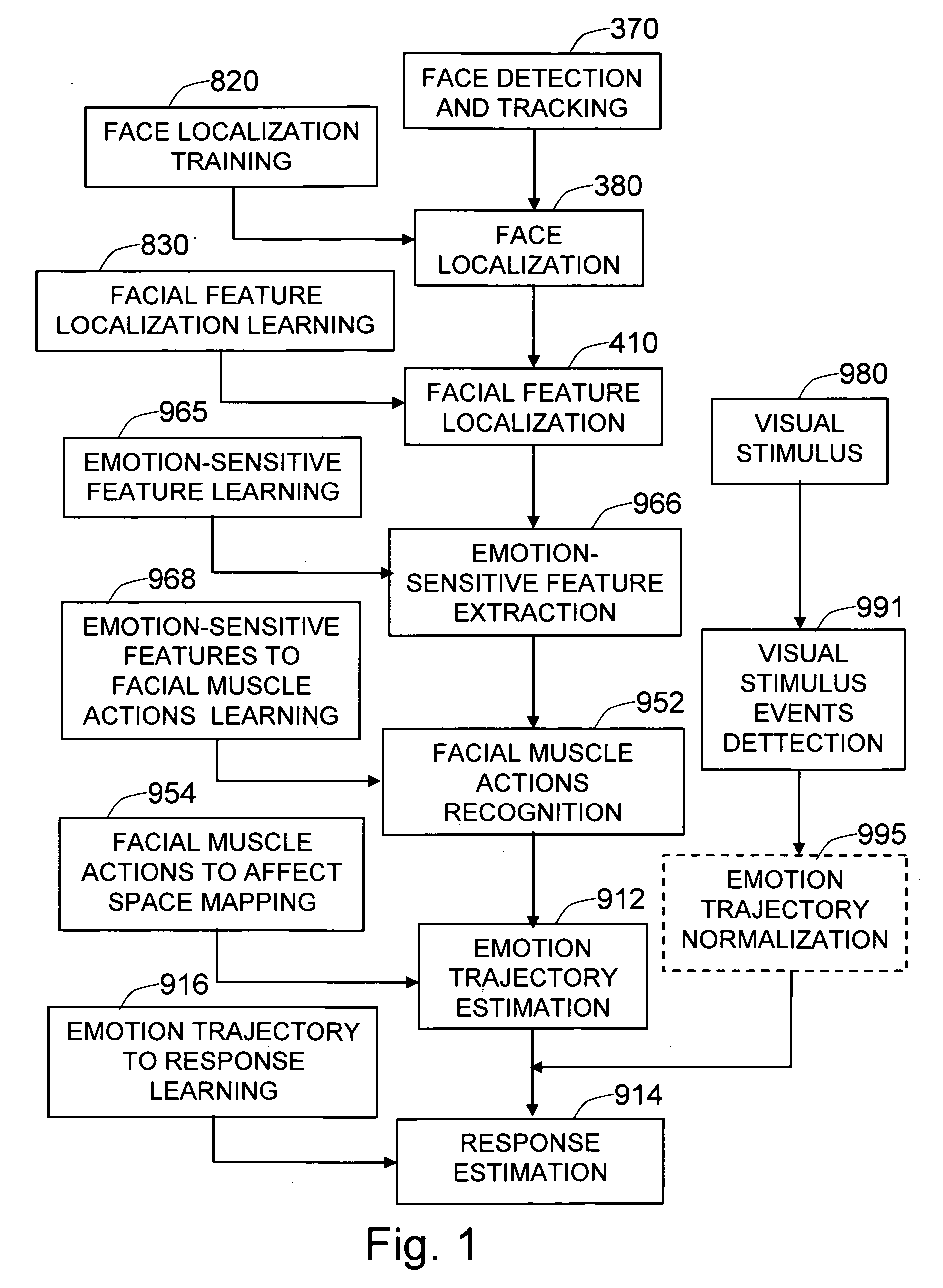

Method and system for measuring human response to visual stimulus based on changes in facial expression

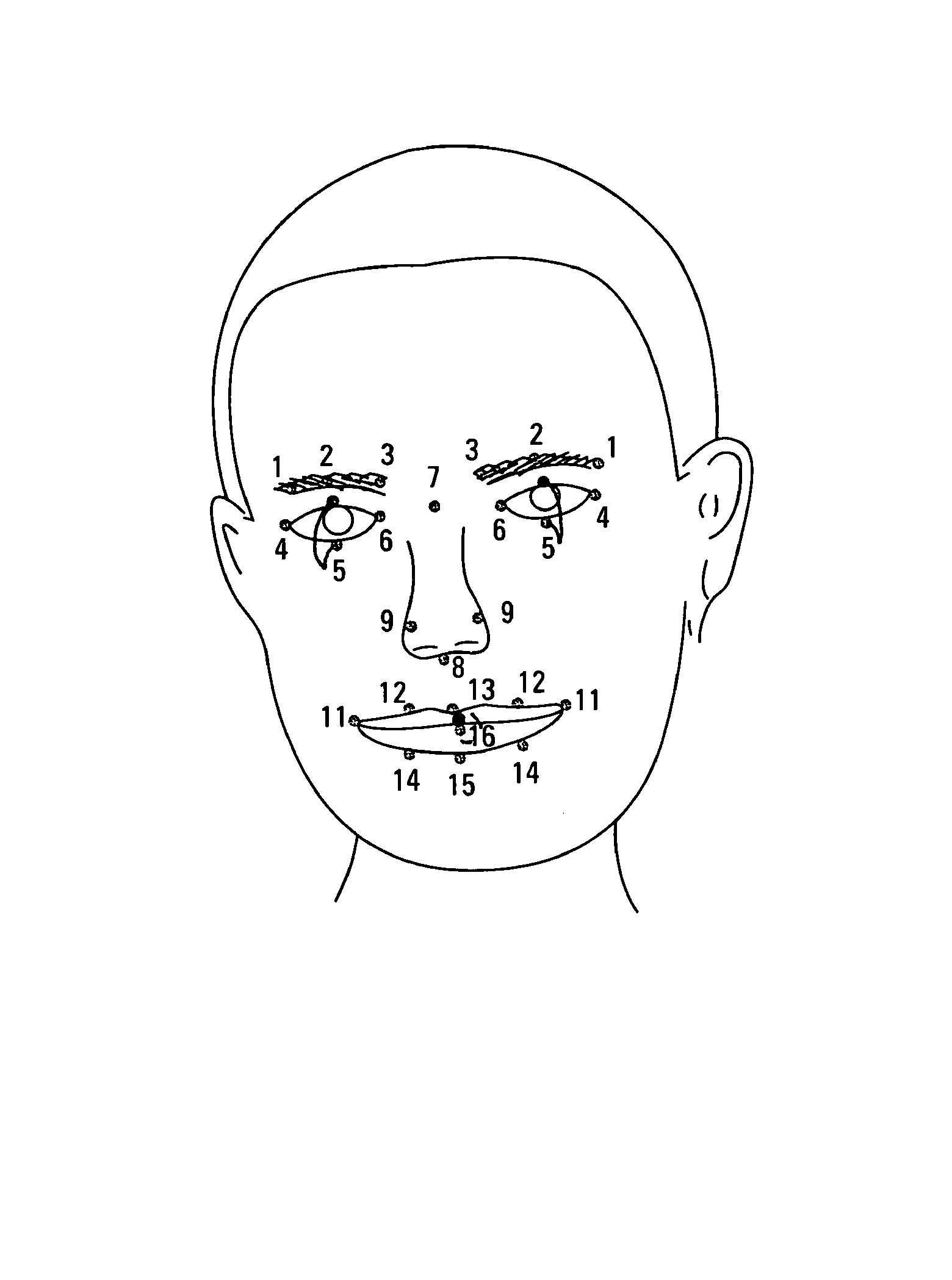

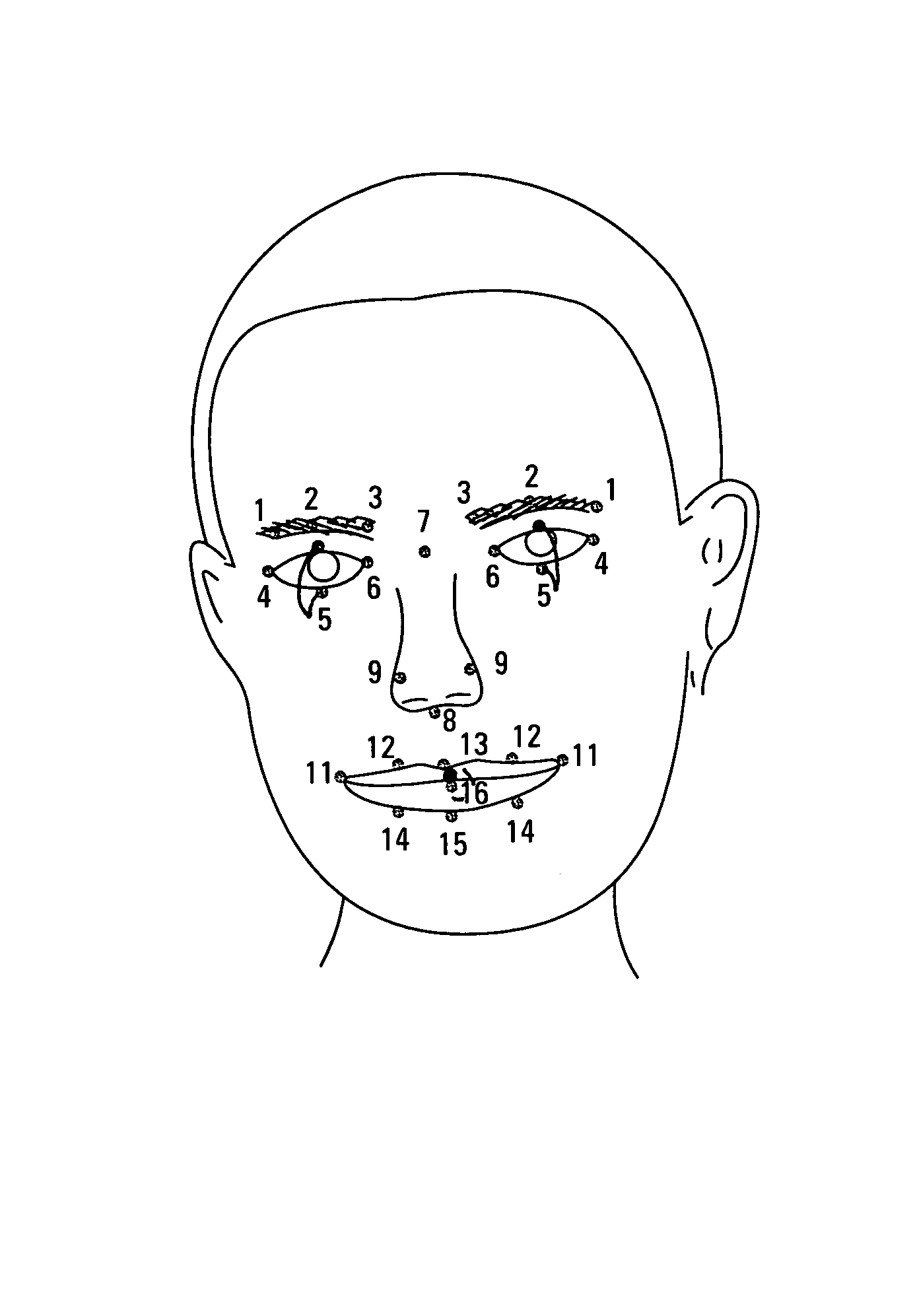

ActiveUS20090285456A1Improve responseCharacter and pattern recognitionEye diagnosticsWrinkle skinLearning machine

The present invention is a method and system for measuring human emotional response to visual stimulus, based on the person's facial expressions. Given a detected and tracked human face, it is accurately localized so that the facial features are correctly identified and localized. Face and facial features are localized using the geometrically specialized learning machines. Then the emotion-sensitive features, such as the shapes of the facial features or facial wrinkles, are extracted. The facial muscle actions are estimated using a learning machine trained on the emotion-sensitive features. The instantaneous facial muscle actions are projected to a point in affect space, using the relation between the facial muscle actions and the affective state (arousal, valence, and stance). The series of estimated emotional changes renders a trajectory in affect space, which is further analyzed in relation to the temporal changes in visual stimulus, to determine the response.

Owner:MOTOROLA SOLUTIONS INC

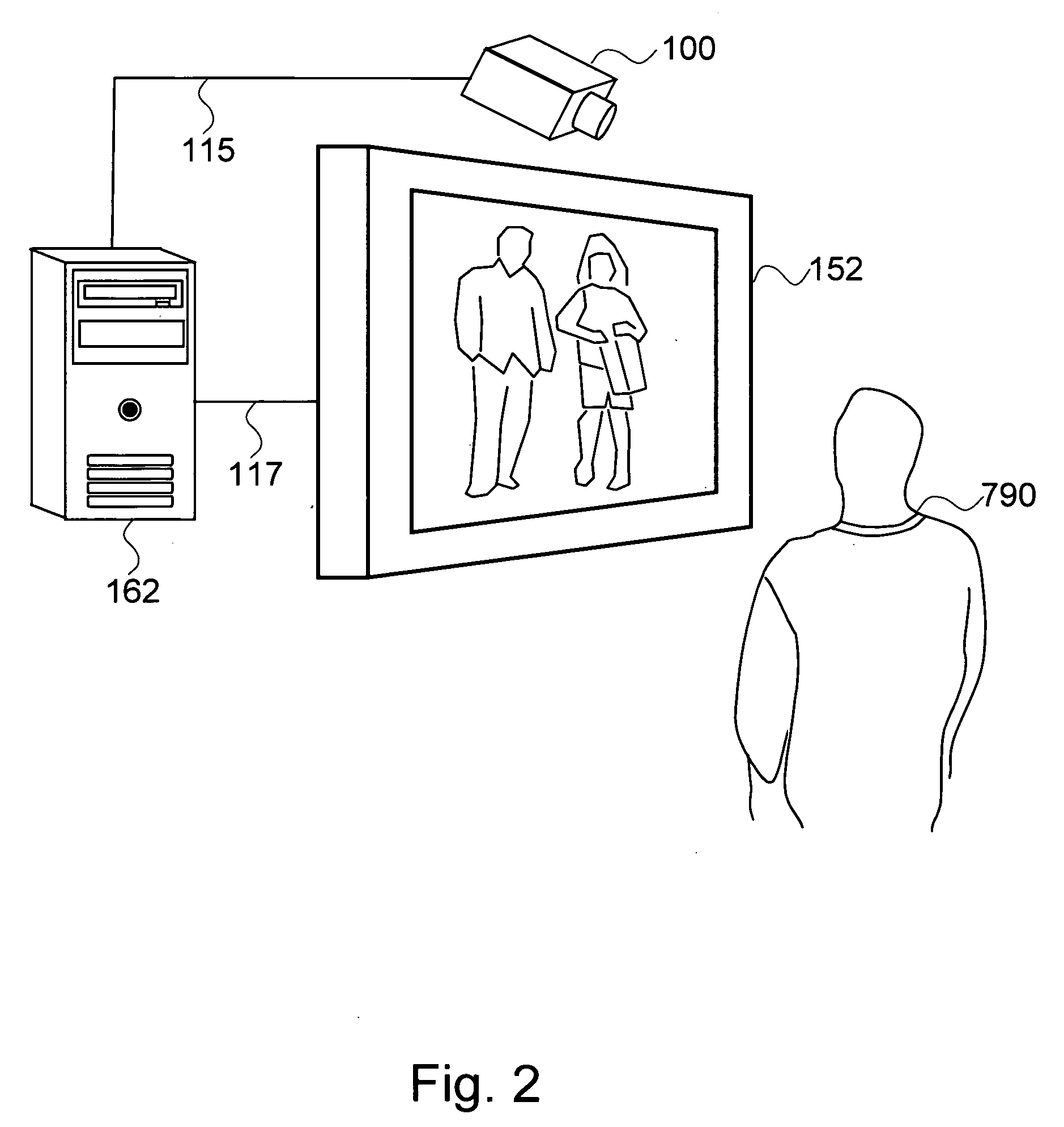

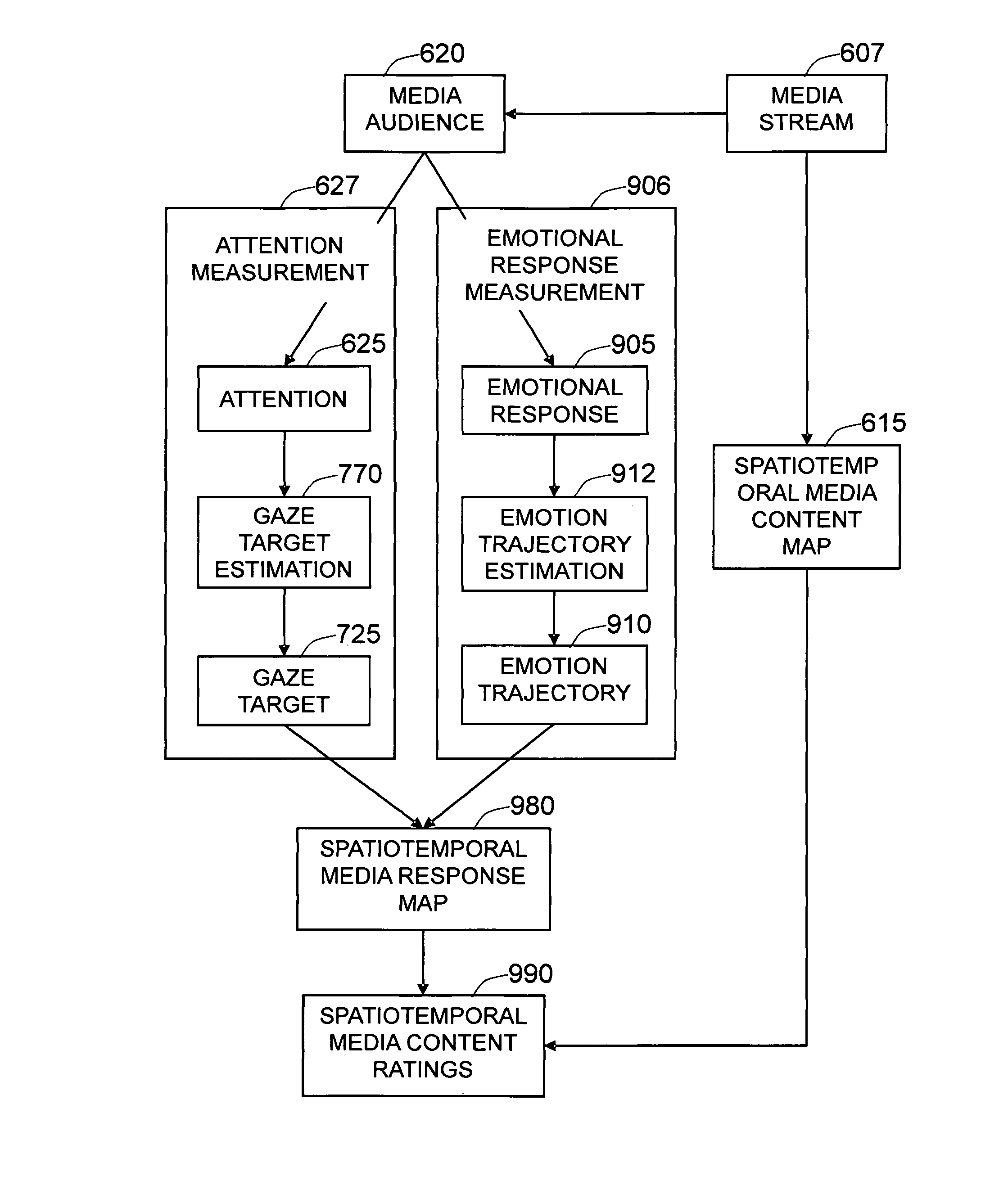

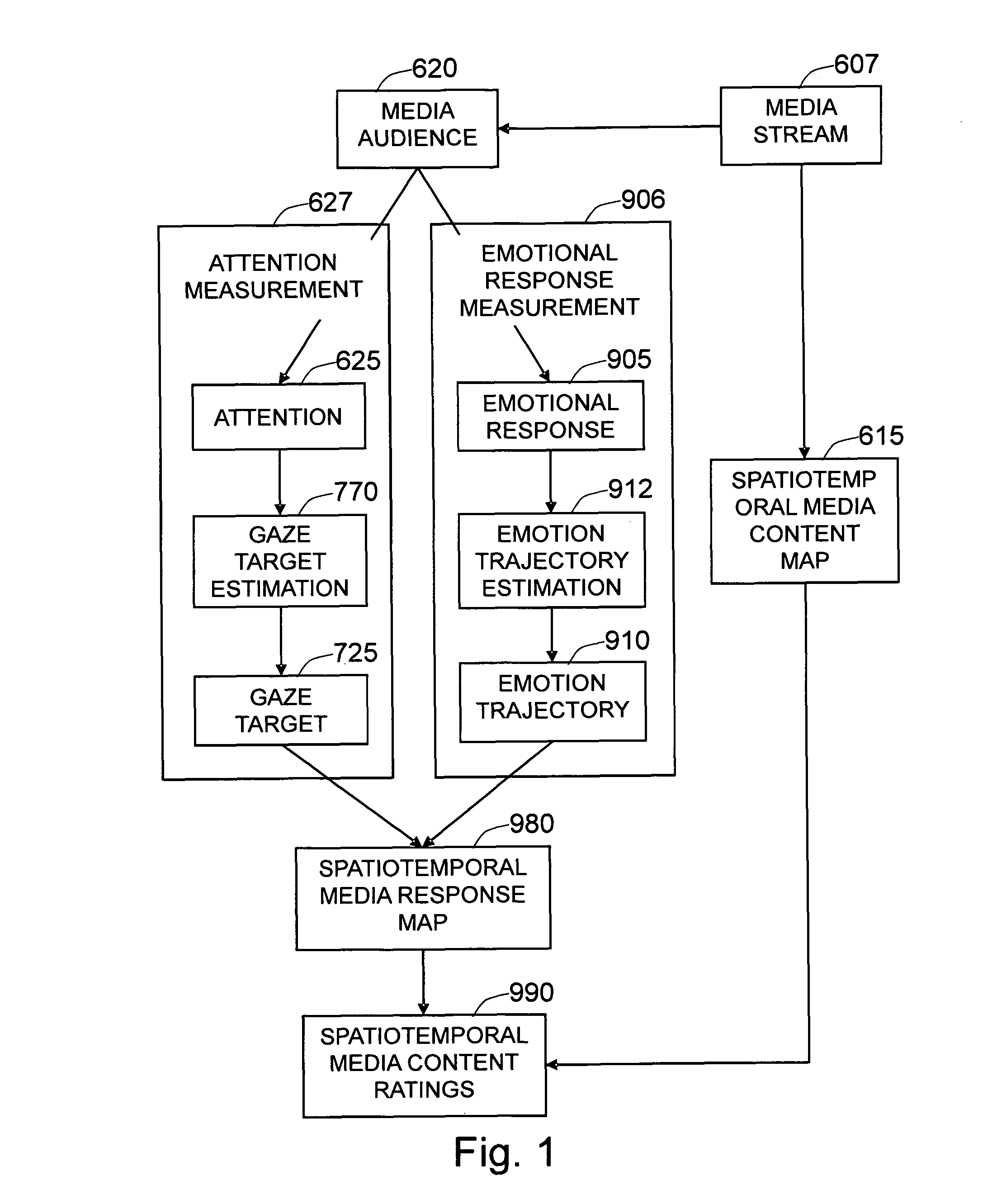

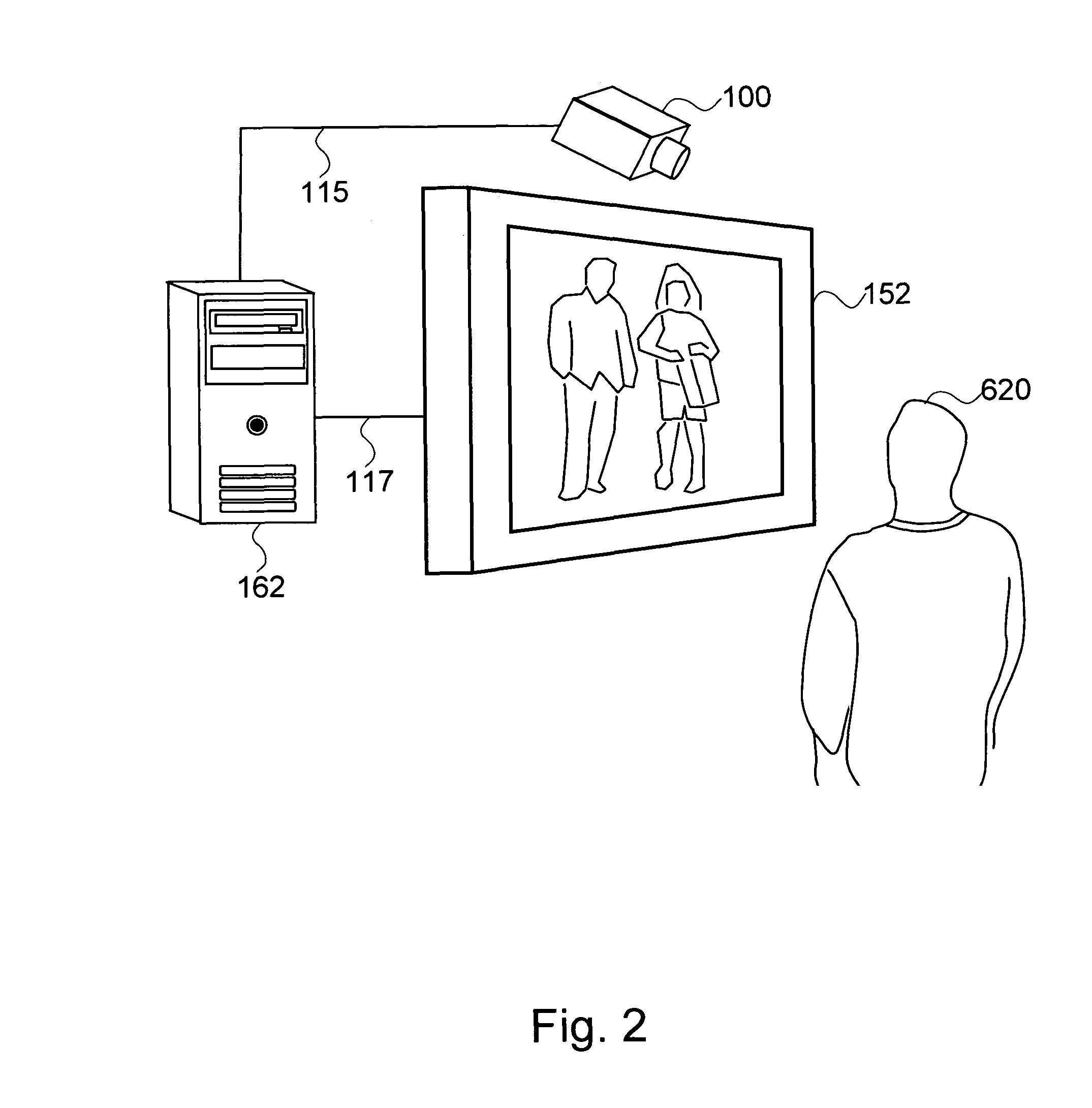

Method and system for measuring emotional and attentional response to dynamic digital media content

The present invention is a method and system to provide an automatic measurement of people's responses to dynamic digital media, based on changes in their facial expressions and attention to specific content. First, the method detects and tracks faces from the audience. It then localizes each of the faces and facial features to extract emotion-sensitive features of the face by applying emotion-sensitive feature filters, to determine the facial muscle actions of the face based on the extracted emotion-sensitive features. The changes in facial muscle actions are then converted to the changes in affective state, called an emotion trajectory. On the other hand, the method also estimates eye gaze based on extracted eye images and three-dimensional facial pose of the face based on localized facial images. The gaze direction of the person, is estimated based on the estimated eye gaze and the three-dimensional facial pose of the person. The gaze target on the media display is then estimated based on the estimated gaze direction and the position of the person. Finally, the response of the person to the dynamic digital media content is determined by analyzing the emotion trajectory in relation to the time and screen positions of the specific digital media sub-content that the person is watching.

Owner:MOTOROLA SOLUTIONS INC

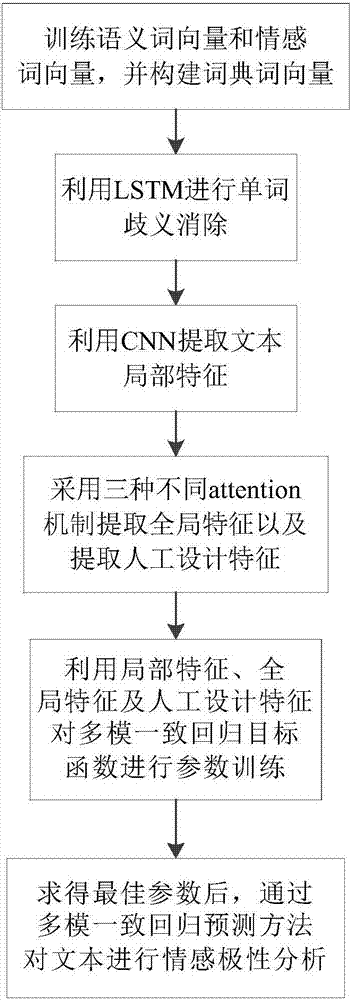

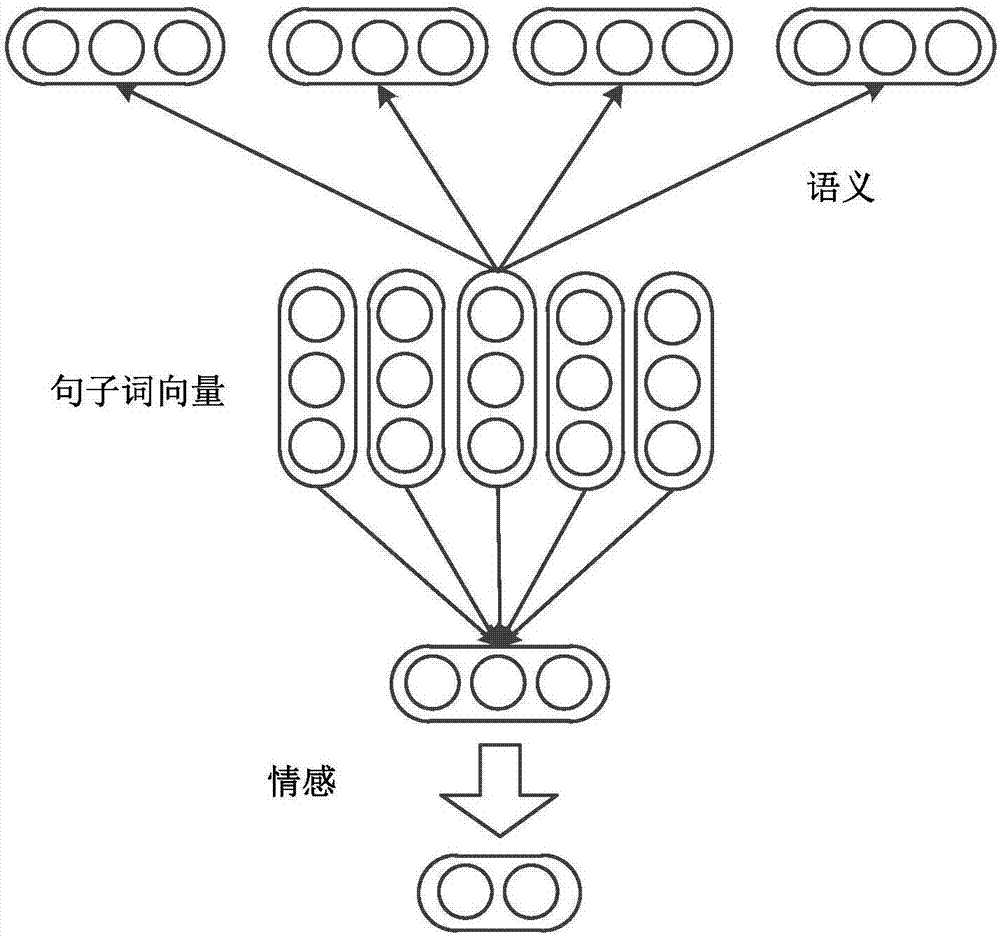

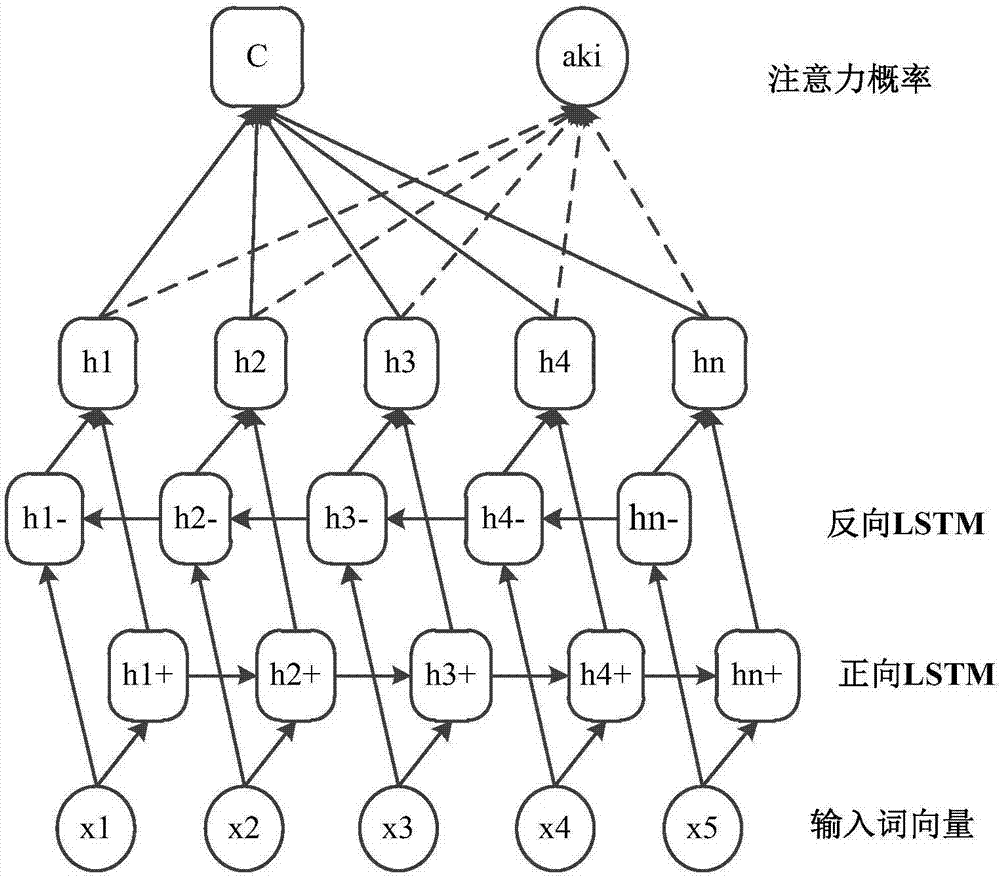

attention CNNs and CCR-based text sentiment analysis method

ActiveCN107092596AHigh precisionImprove classification accuracySemantic analysisNeural architecturesFeature extractionAmbiguity

The invention discloses an attention CNNs and CCR-based text sentiment analysis method and belongs to the field of natural language processing. The method comprises the following steps of 1, training a semantic word vector and a sentiment word vector by utilizing original text data and performing dictionary word vector establishment by utilizing a collected sentiment dictionary; 2, capturing context semantics of words by utilizing a long-short-term memory (LSTM) network to eliminate ambiguity; 3, extracting local features of a text in combination with convolution kernels with different filtering lengths by utilizing a convolutional neural network; 4, extracting global features by utilizing three different attention mechanisms; 5, performing artificial feature extraction on the original text data; 6, training a multimodal uniform regression target function by utilizing the local features, the global features and artificial features; and 7, performing sentiment polarity prediction by utilizing a multimodal uniform regression prediction method. Compared with a method adopting a single word vector, a method only extracting the local features of the text, or the like, the text sentiment analysis method can further improve the sentiment classification precision.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

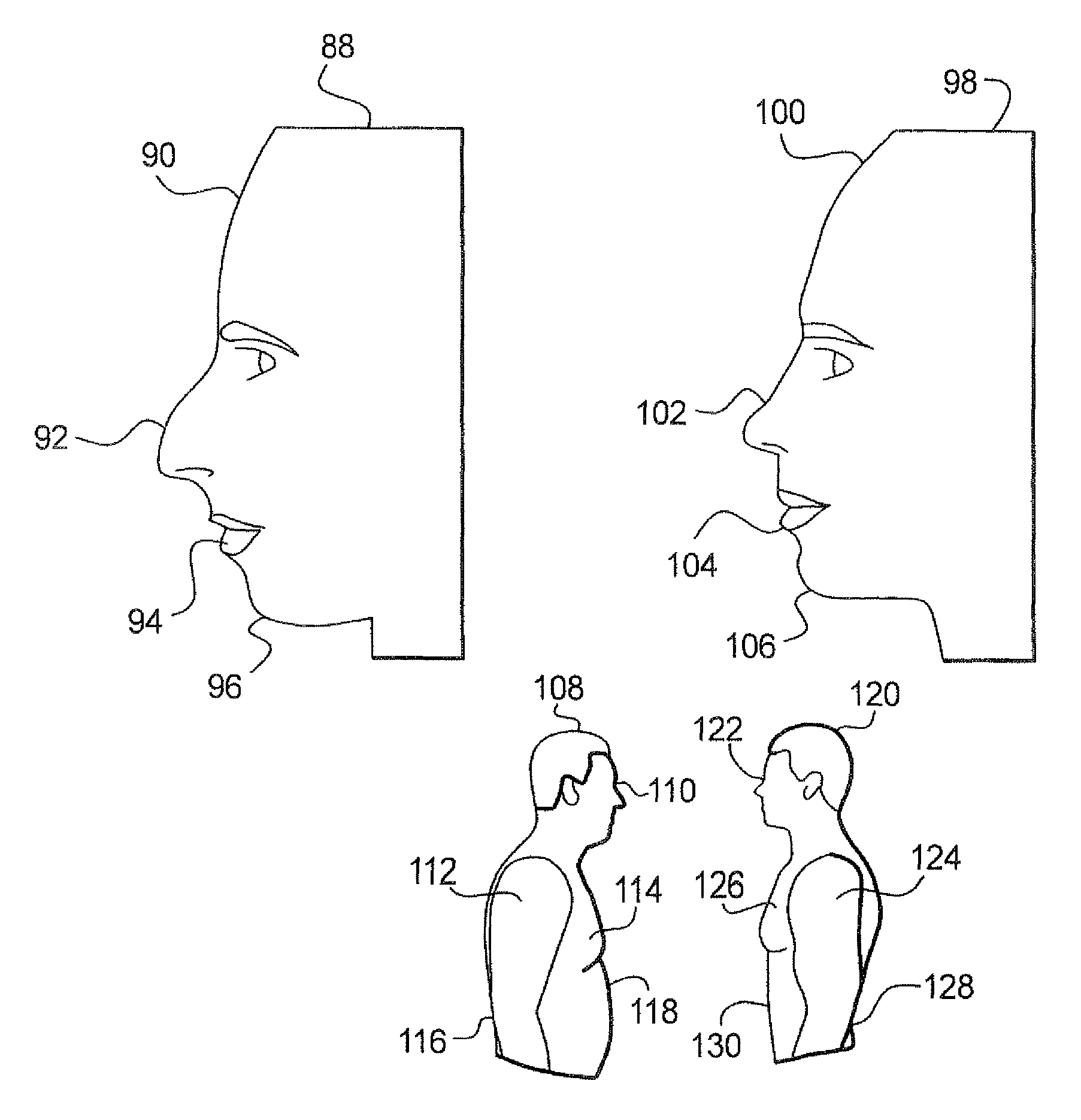

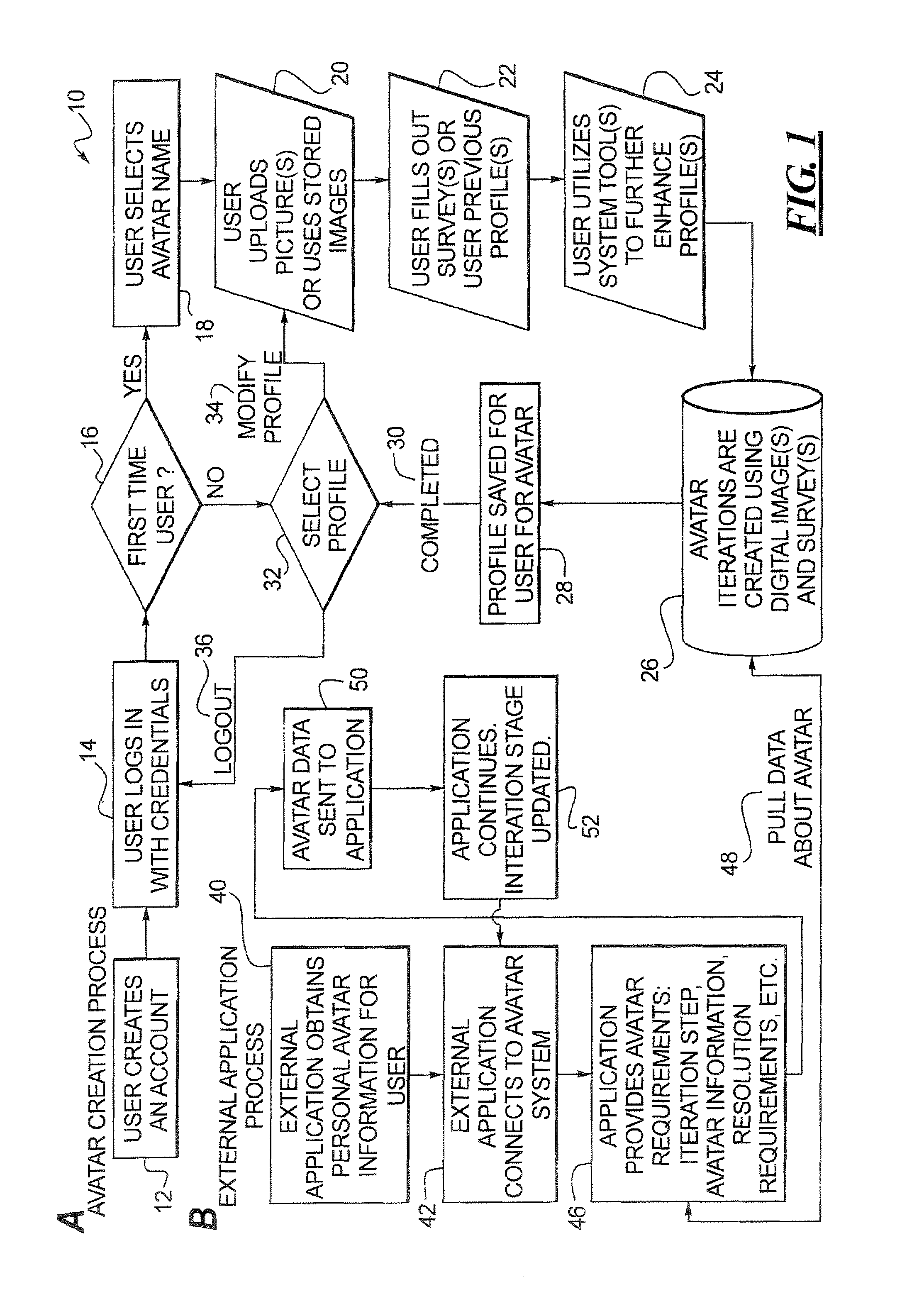

Character for computer game and method

ActiveUS20070167204A1Enhance believabilityImprove realismVideo gamesSpecial data processing applicationsFinal versionHuman–computer interaction

A video game character or avatar is generated using images of the player so that the on-screen avatar being controlled by the player appears like the player. Answers to a questionnaire or a psychological profile are provided by the player to determine characteristics that the player views as desirable, which are used to generate a final version of the avatar. Iterations of the avatar appearance are generated from the initial appearance to the final, more desirable appearance. Using the avatar, as play of the game proceeds the player's avatar begins as a character appearing like themselves and gradually becomes a character that is more as they would like to appear. The avatar increases the emotional impact of games by providing strong visual and psychological connections with the player. The avatar may instead begin from an initial character that does not appear similar to the player. The iterations may make the avatar gradually less appealing or with other changes in appearance, in some embodiments.

Owner:LYLE DEV

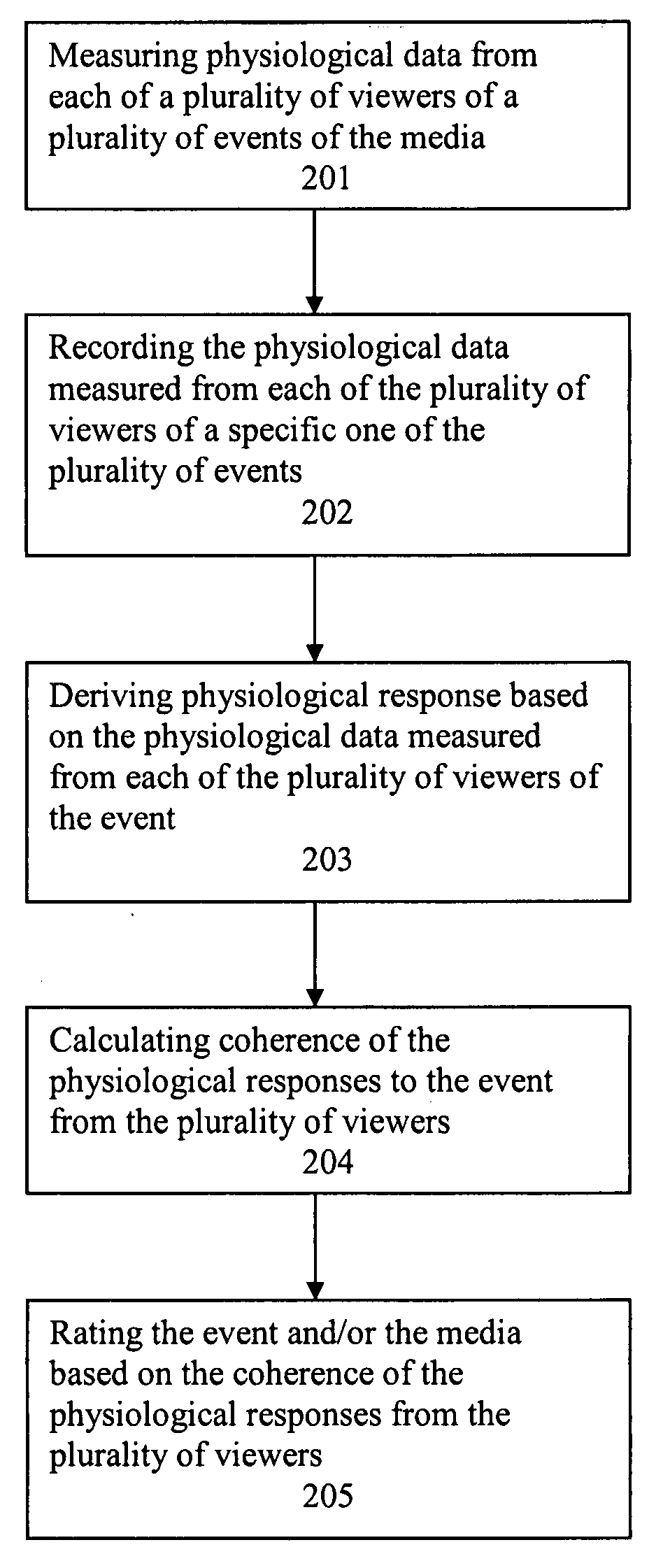

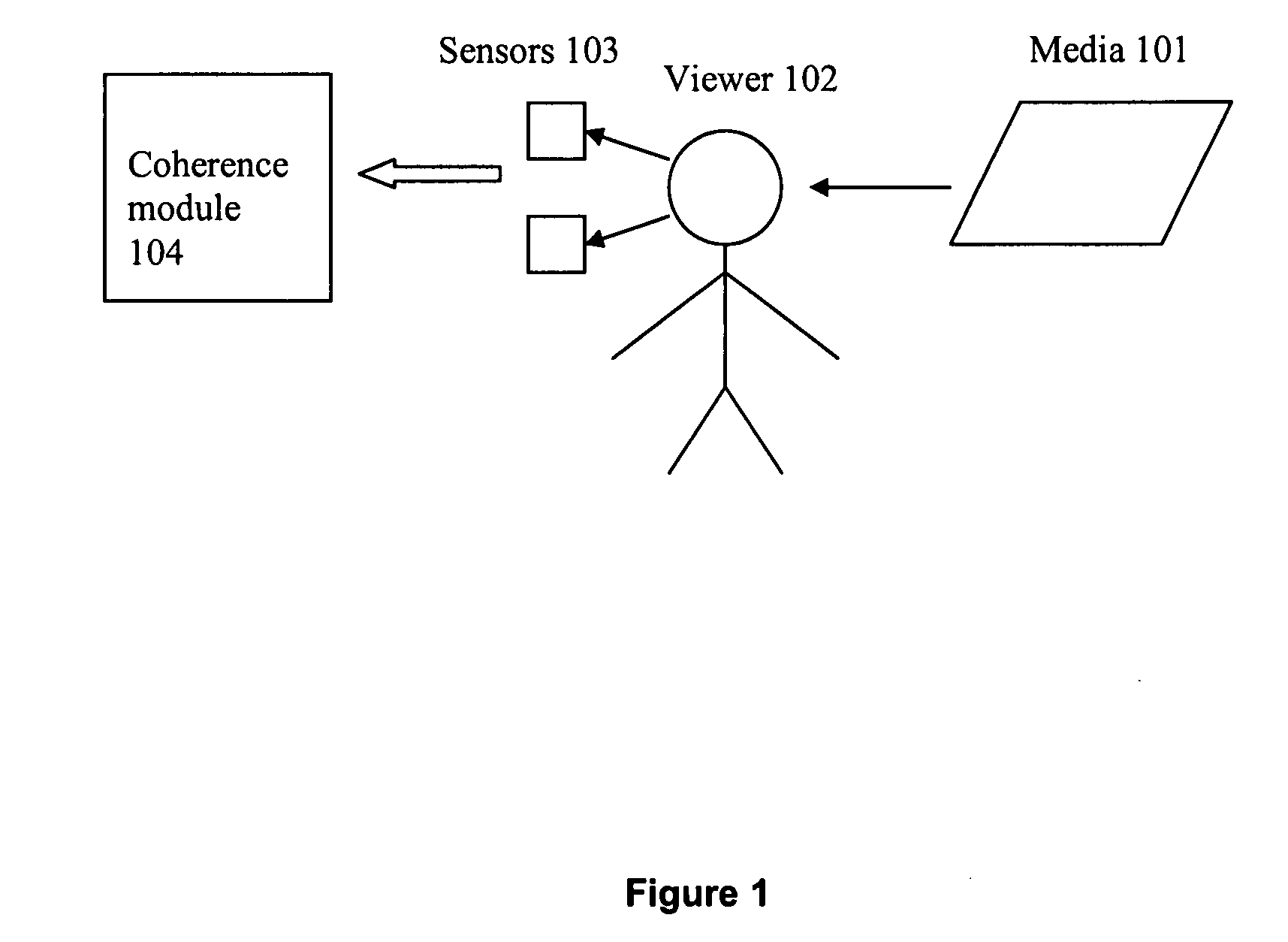

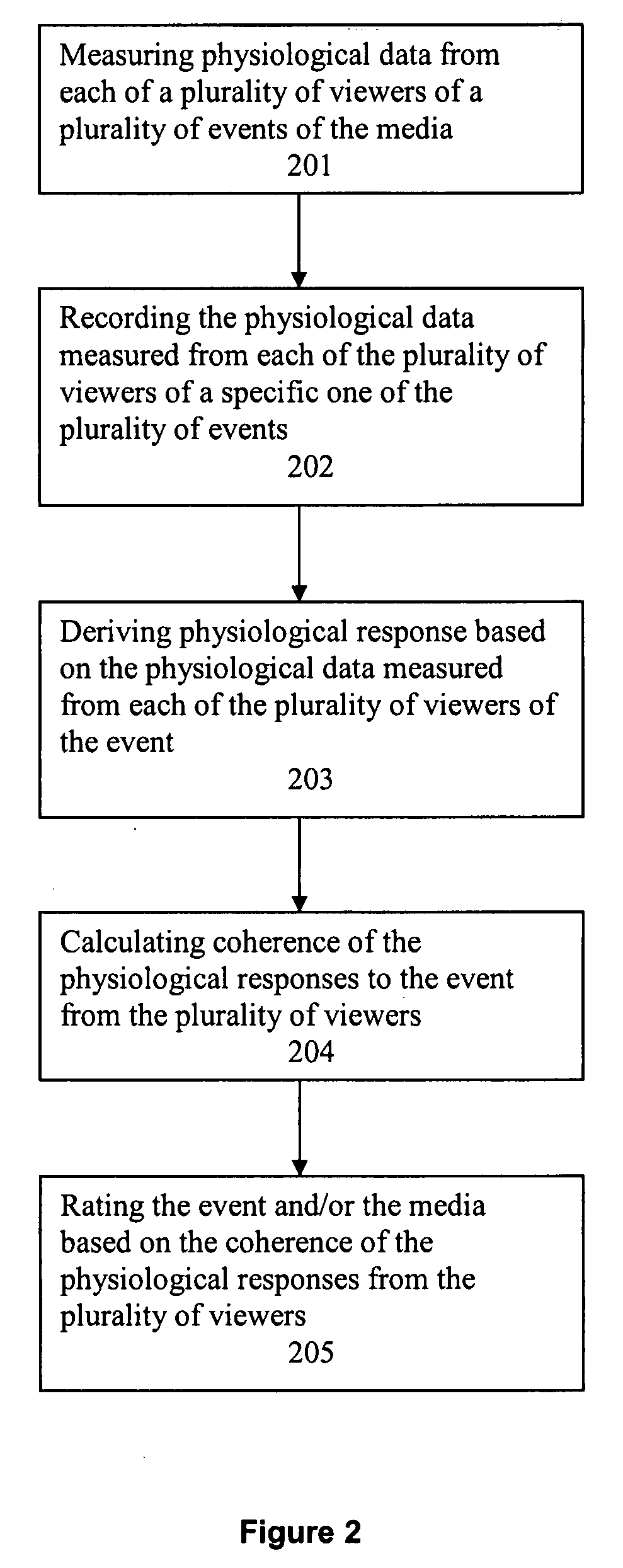

Method and system for using coherence of biological responses as a measure of performance of a media

ActiveUS20080222670A1Strong correlationImprove fluencyElectroencephalographyAnalogue secracy/subscription systemsComputer scienceEmotionality

Various embodiments of the present invention create a novel system for rating an event in a media based on the strength of the emotions viewers feel towards the event. The viewer's responses to the media can be measured and calculated via physiological sensors. The metric for rating the strength of the media is created based on the mathematical coherence of change (up or down) of all pertinent physiological responses across multiple viewers. Such rating offers an objective ability to compare the strengths of events of the media, as there is a strong correlation between high coherence of physiological responses (all viewers feel the same thing at the same time) and strong ratings of emotionality, engagement, likeability, success in the marketplace / on screen.

Owner:NIELSEN CONSUMER LLC

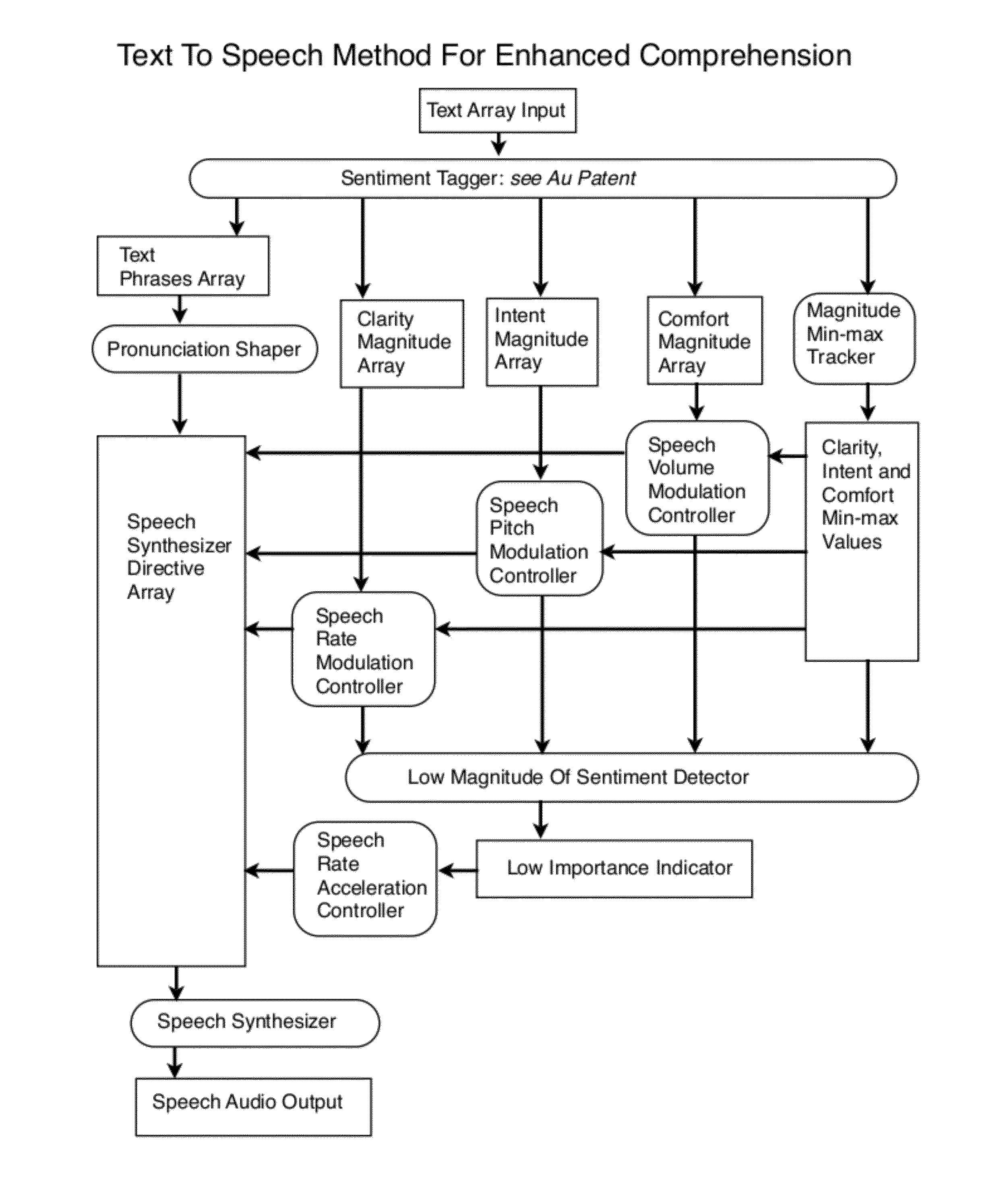

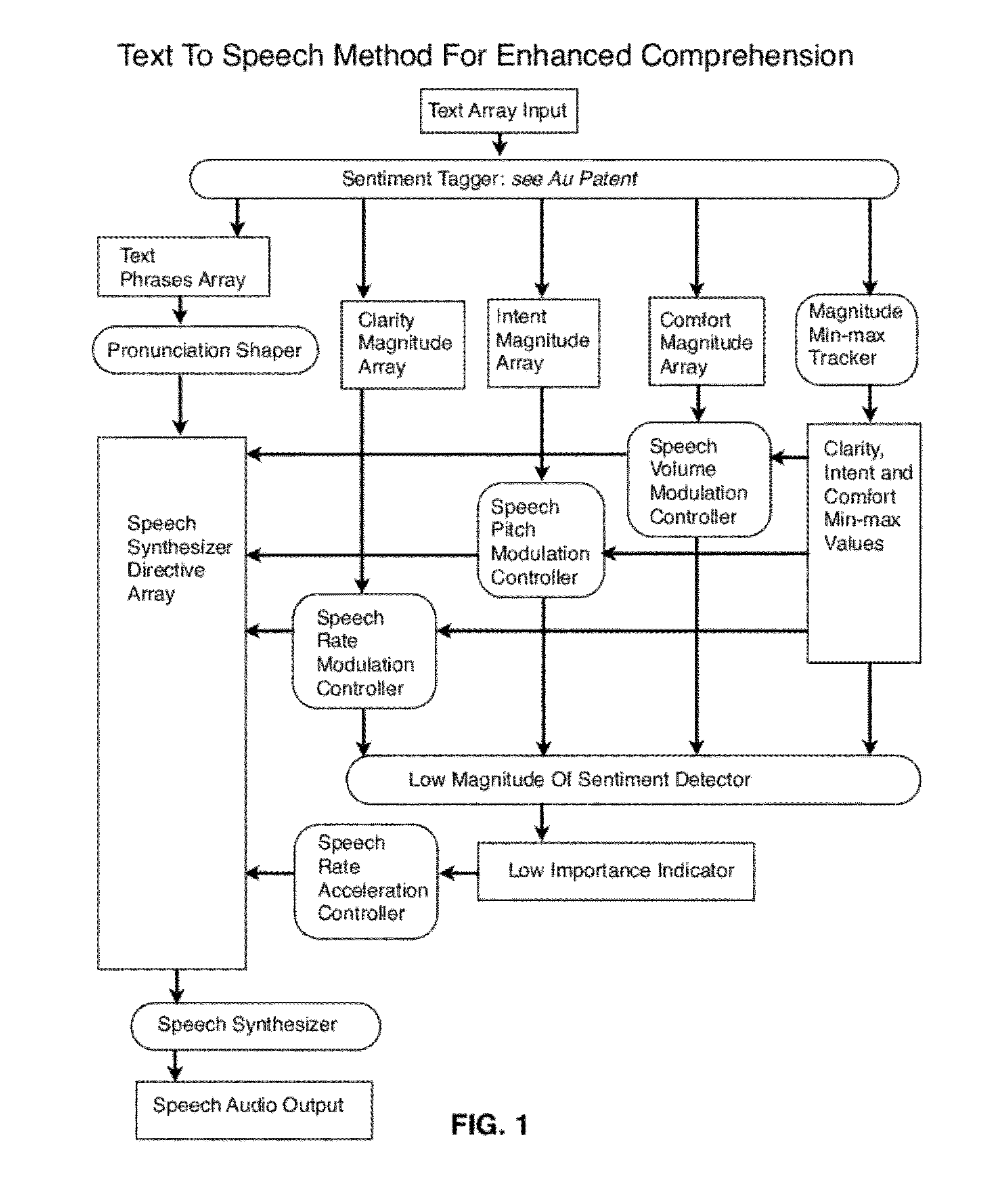

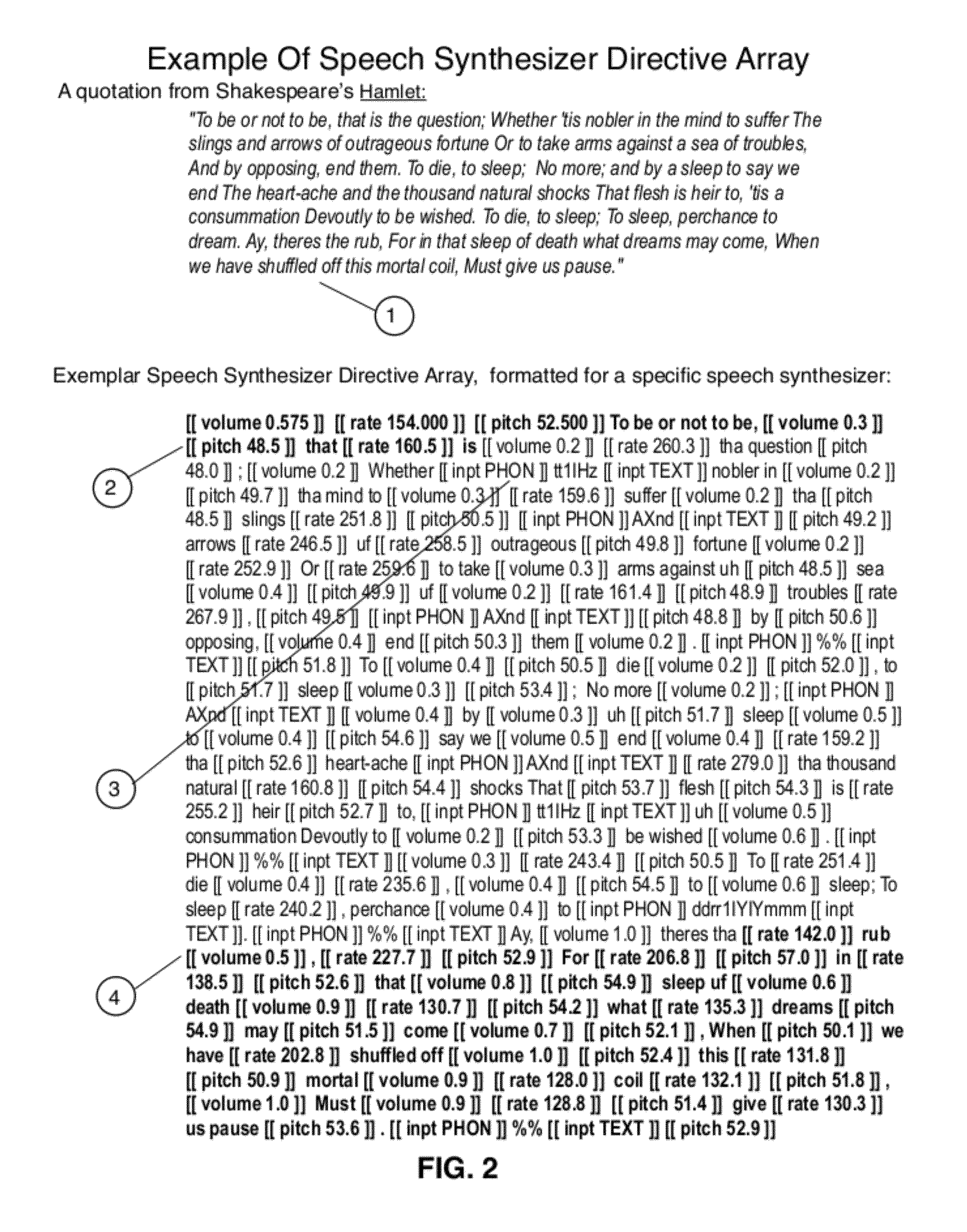

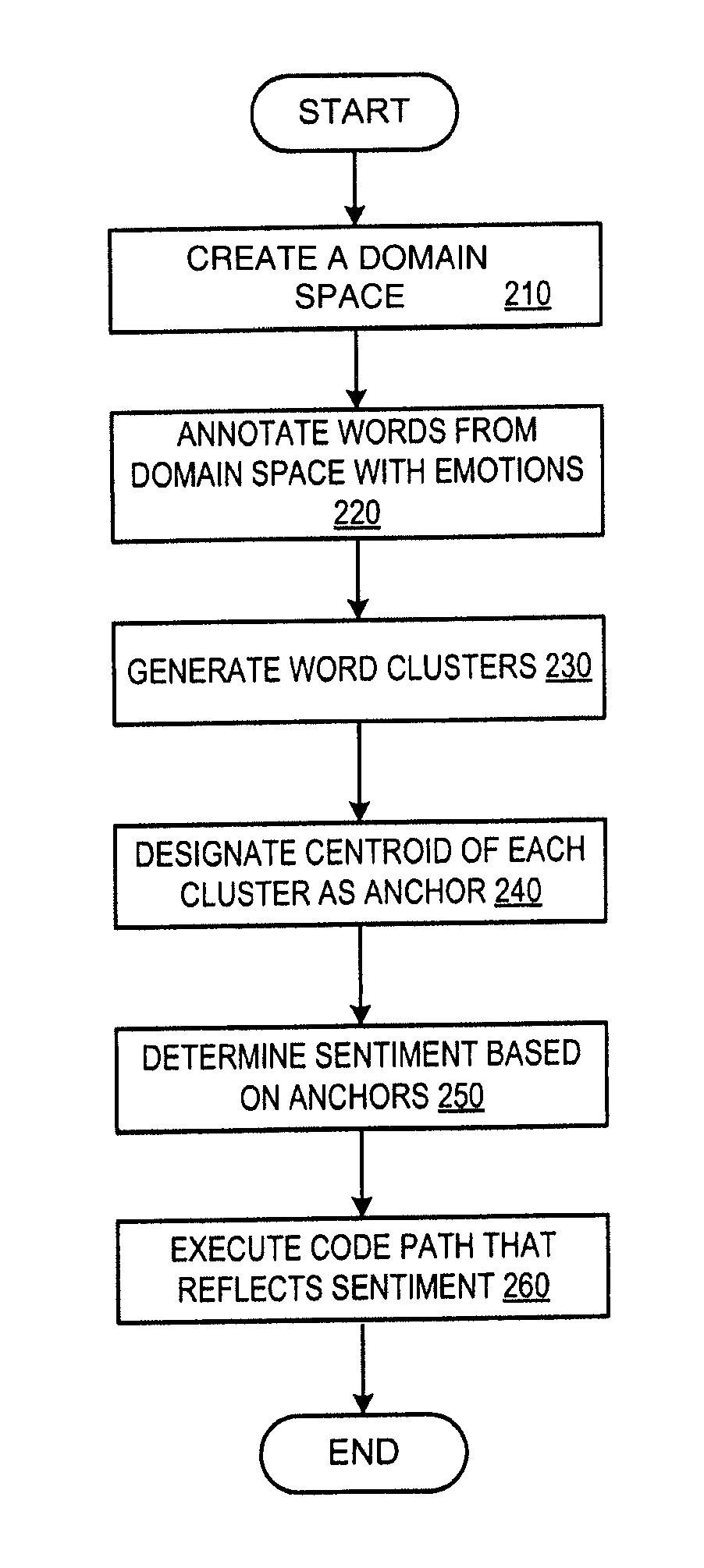

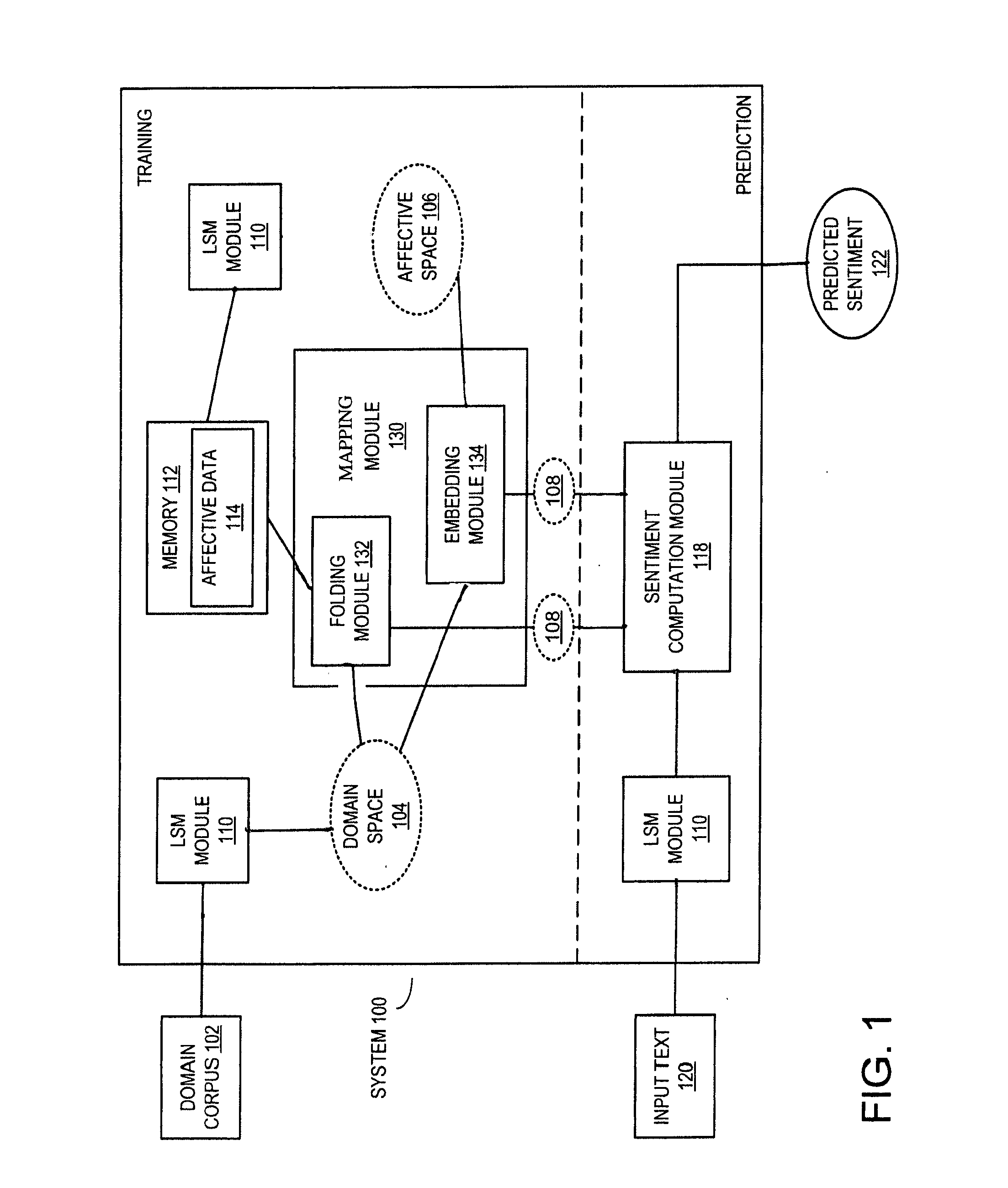

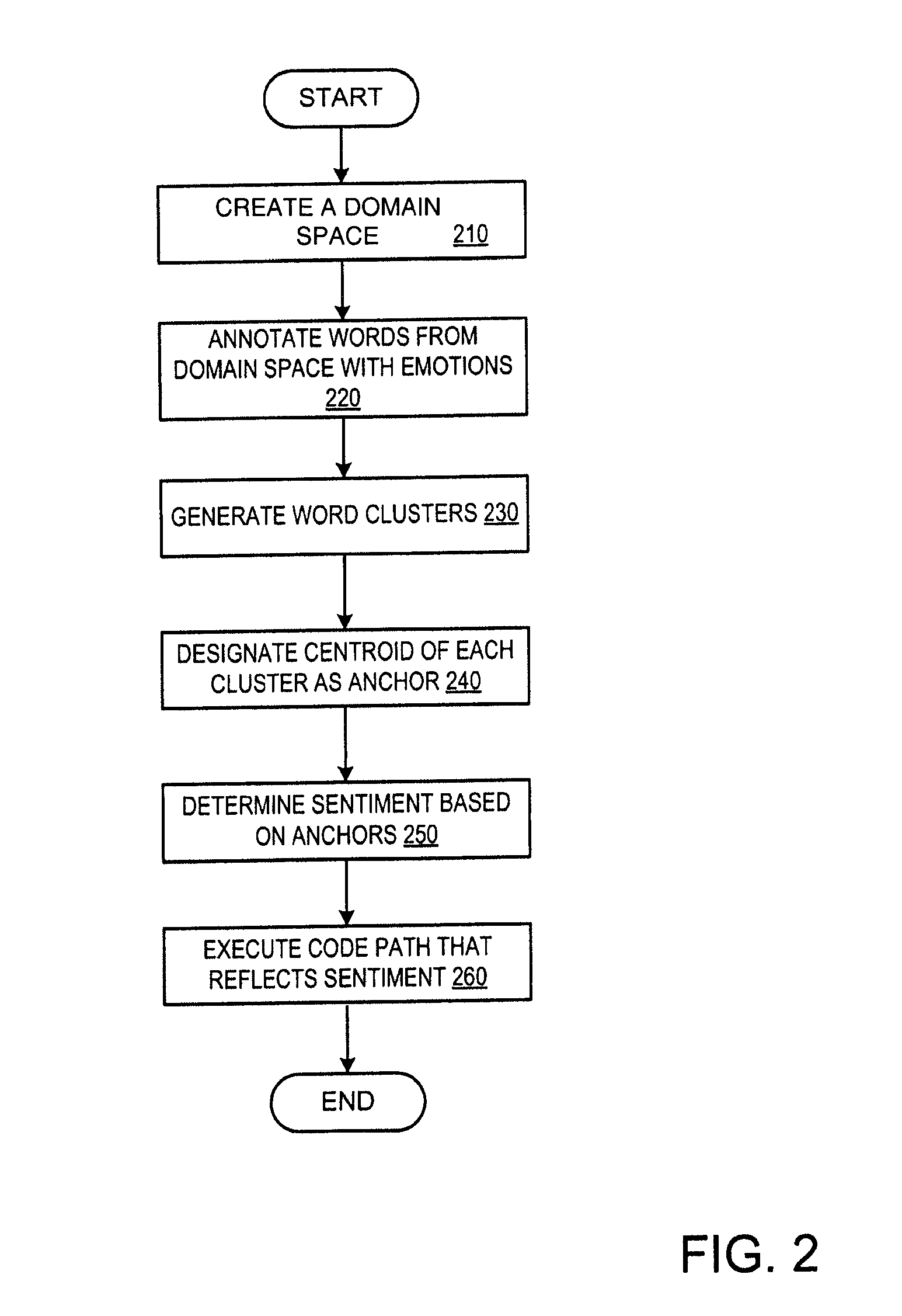

Sentiment prediction from textual data

ActiveUS20110112825A1Semantic analysisSpecial data processing applicationsDomain spaceData prediction

A semantically organized domain space is created from a training corpus. Affective data are mapped onto the domain space to generate affective anchors for the domain space. A sentiment associated with an input text is determined based the affective anchors. A speech output may be generated from the input text based on the determined sentiment.

Owner:APPLE INC

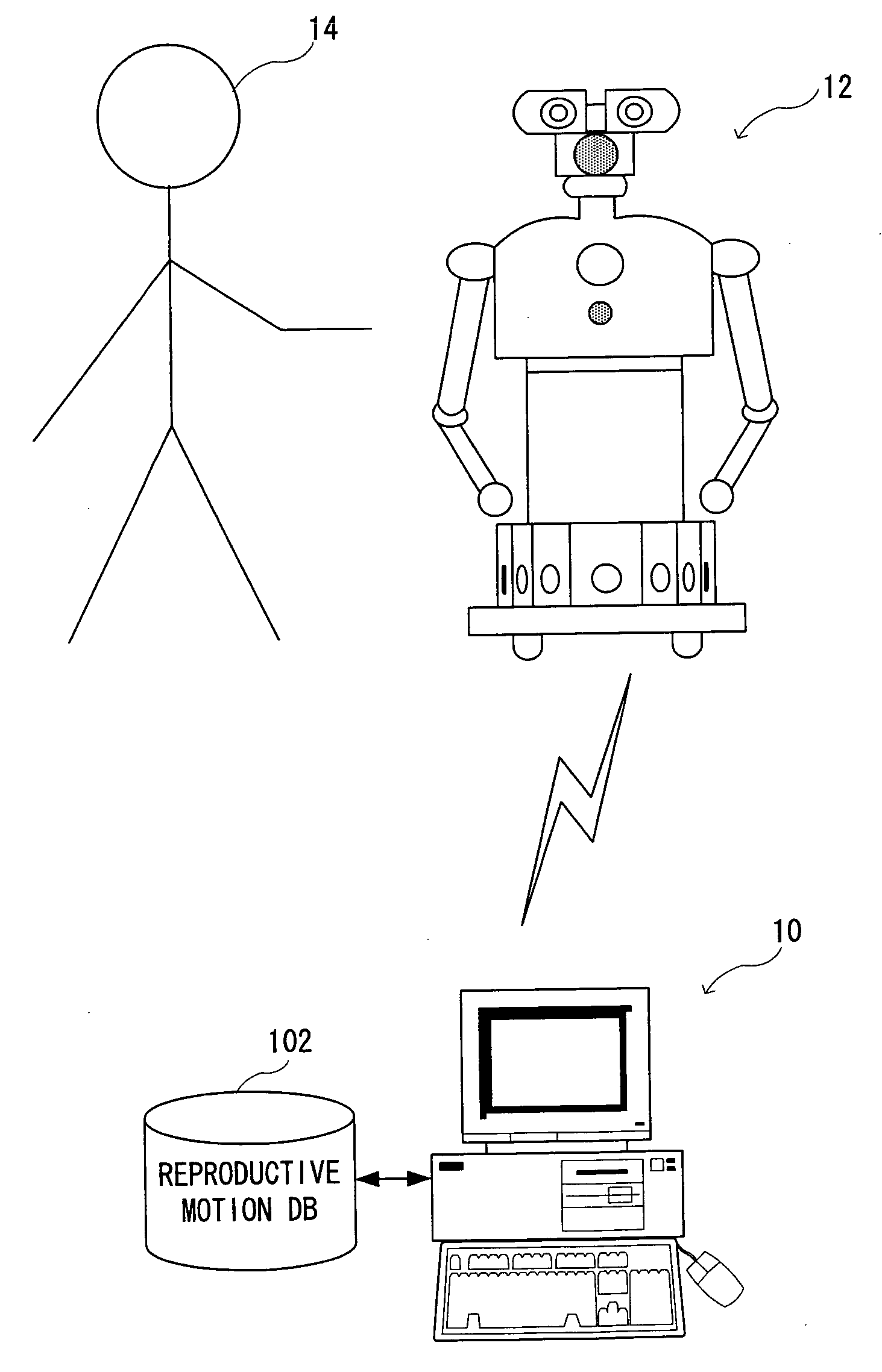

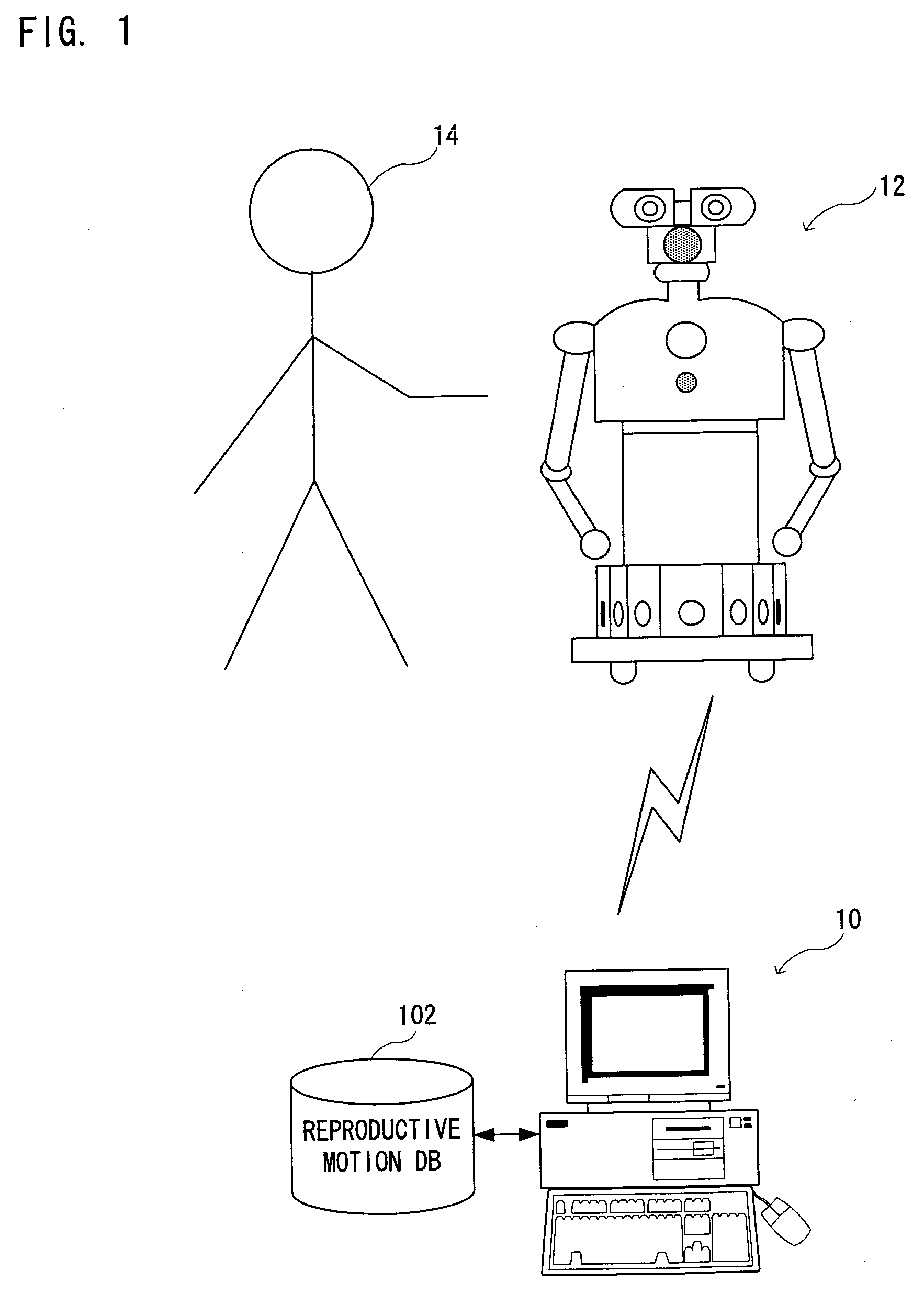

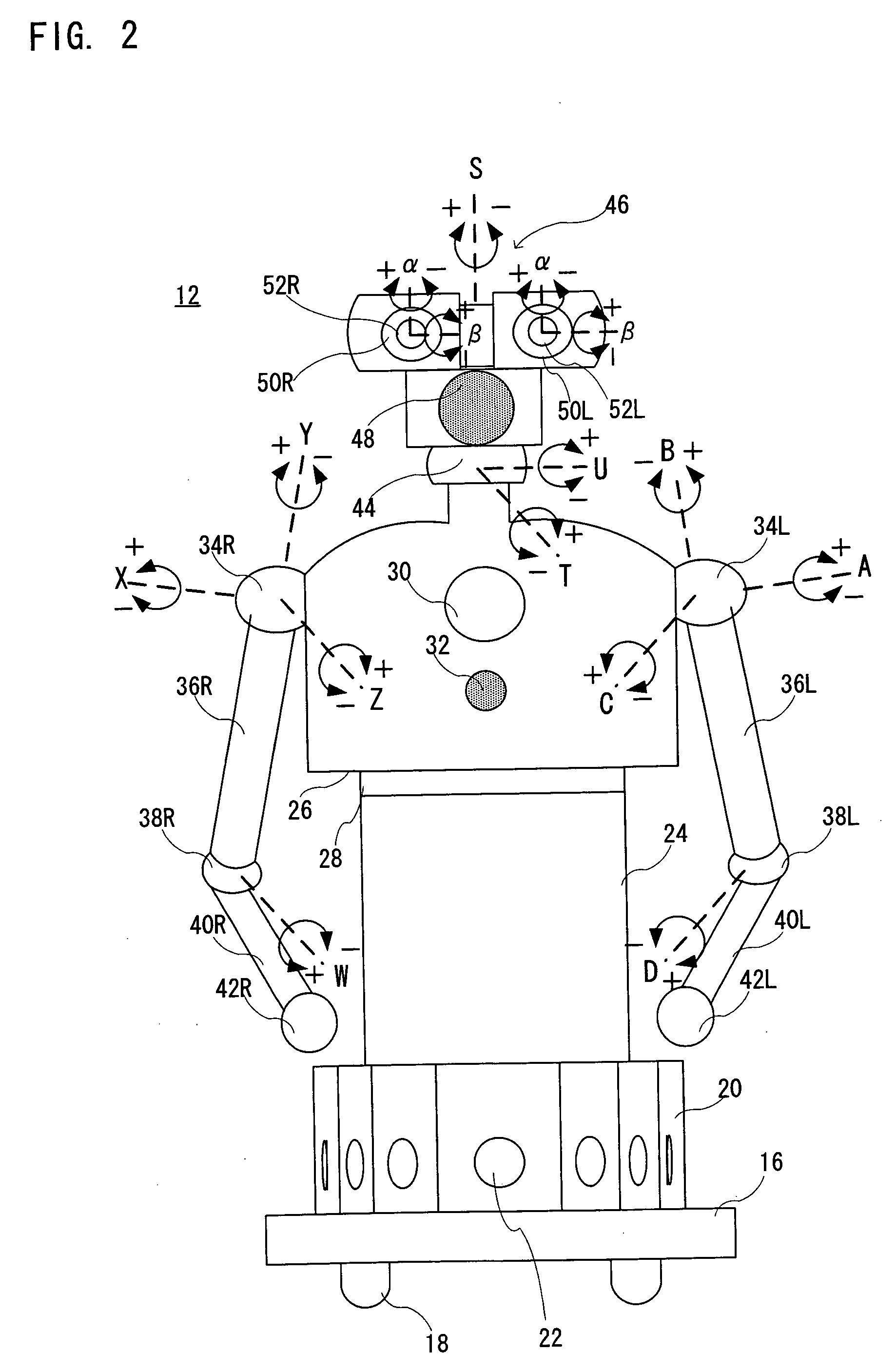

Communication robot control system

ActiveUS20060293787A1Promote generationEasy inputProgramme-controlled manipulatorComputer controlReflexHuman behavior

A communication robot control system displays a selection input screen for supporting input of actions of a communication robot. The selection input screen displays in a user-selectable manner a list of a plurality of behaviors including not only spontaneous actions but also reactive motions (reflex behaviors) in response to behavior of a person as a communication partner, and a list of emotional expressions to be added to the behaviors. According to a user's operation, the behavior and the emotional expression to be performed by the communication robot are selected and decided. Then, reproductive motion information for interactive actions including reactive motions and emotional interactive actions, is generated based on input history of the behavior and the emotional expression.

Owner:ATR ADVANCED TELECOMM RES INST INT

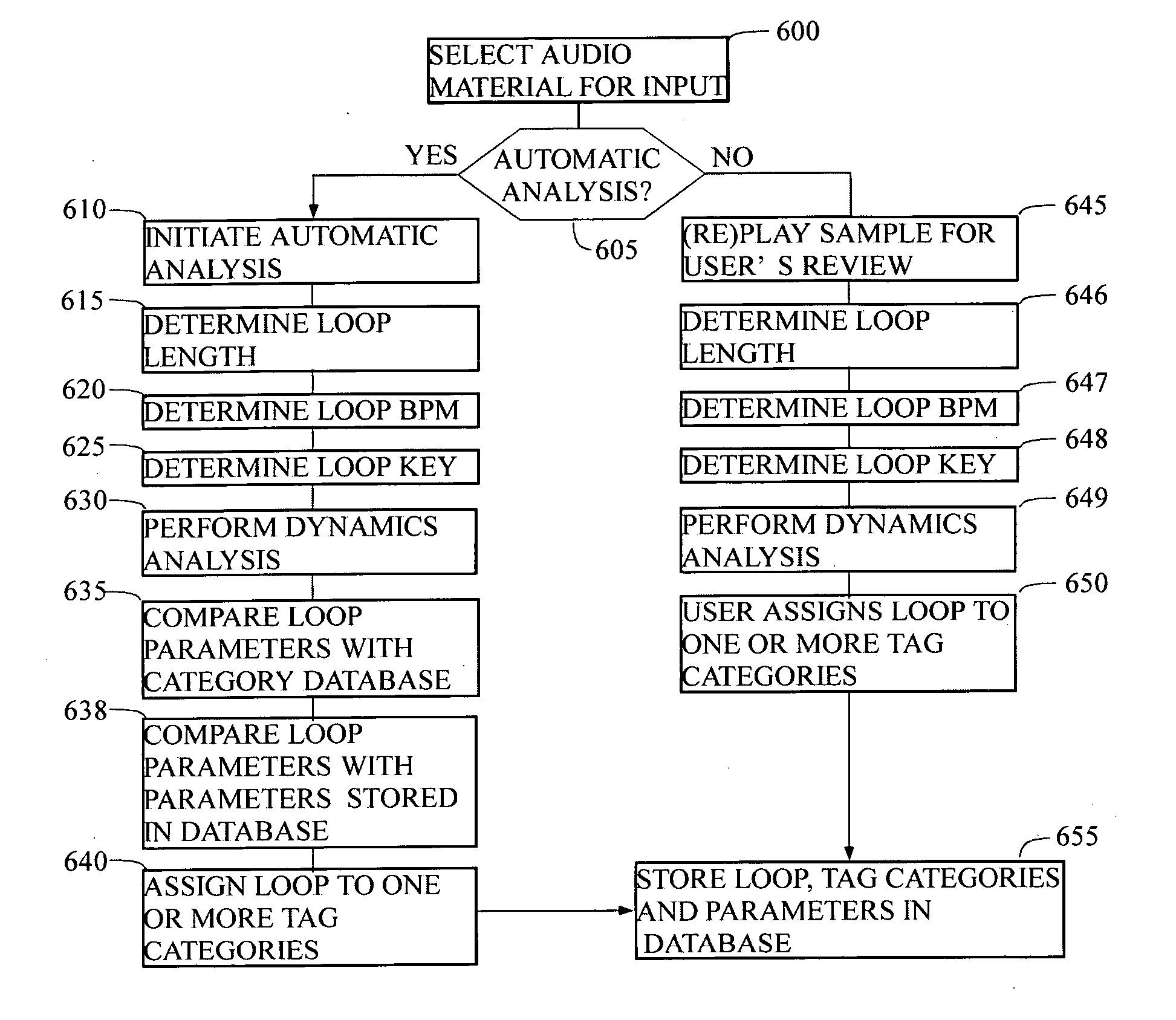

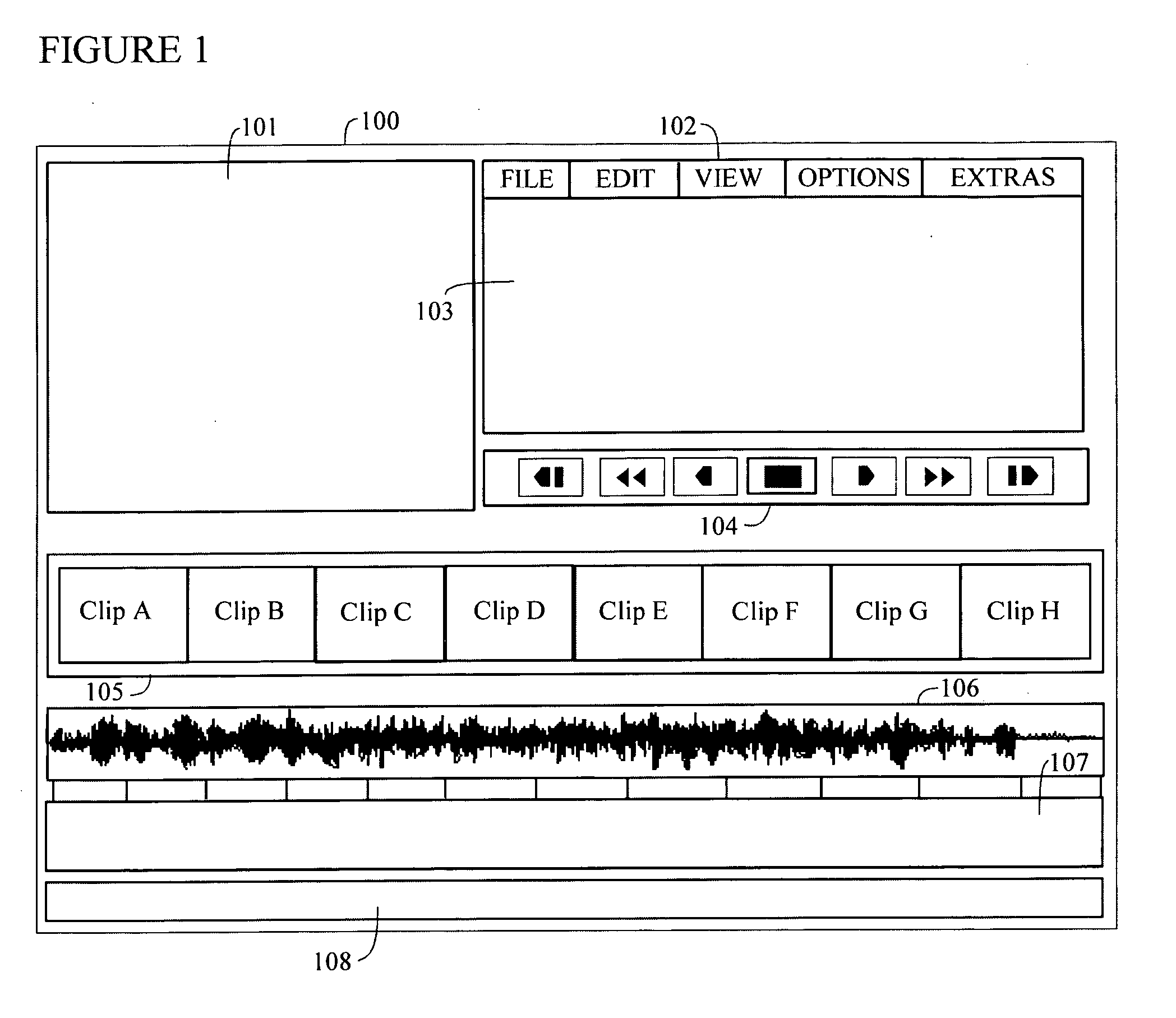

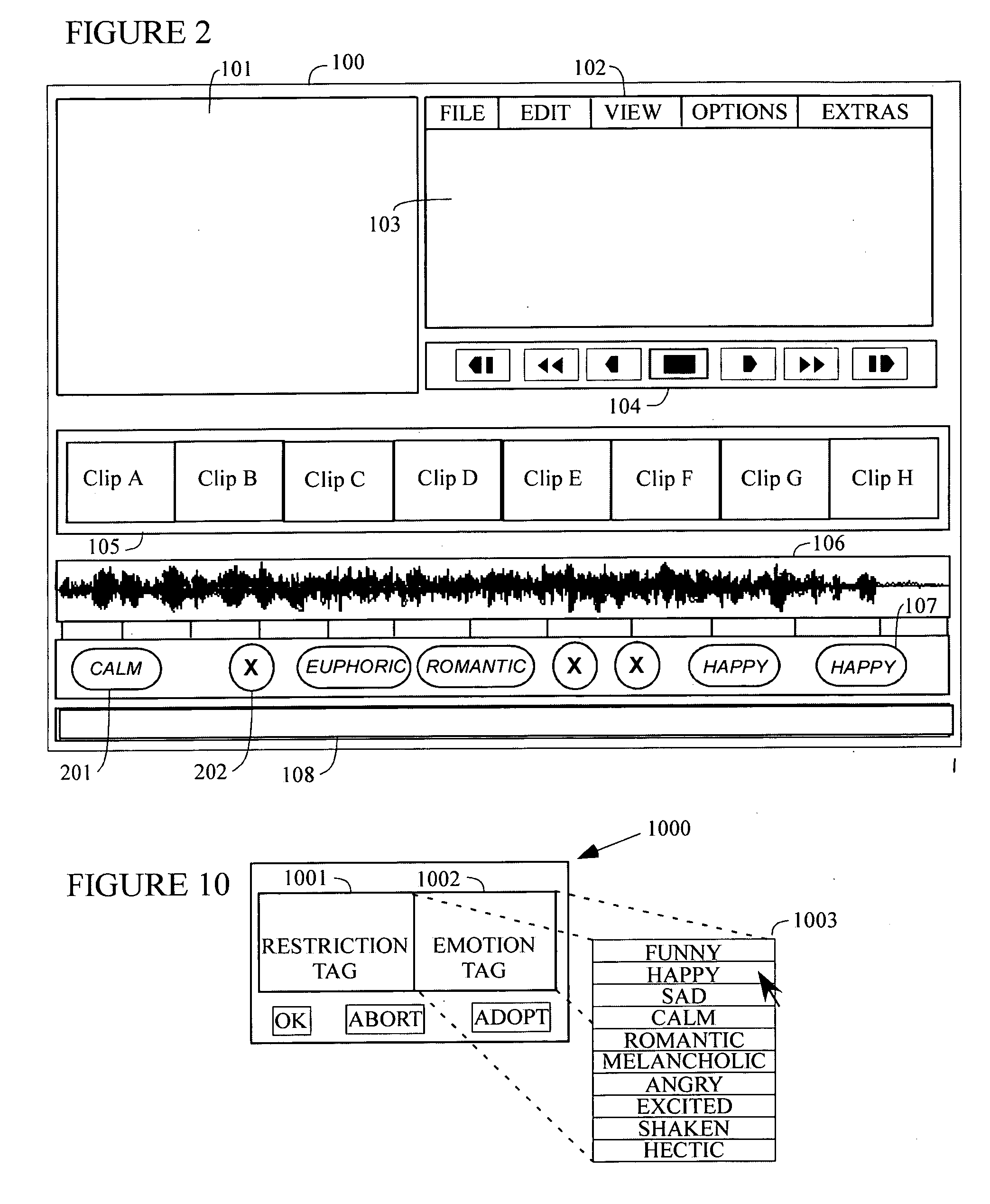

System and method of automatically creating an emotional controlled soundtrack

ActiveUS20060122842A1Pleasant video experienceClosely matchedGearworksTelevision system detailsDigital videoSoftware

There is provided herein a system and method for enabling a user of digital video editing software to automatically create an emotionally controlled soundtrack that is matched in overall emotion or mood to the scenes in the underlying video work. In the preferred arrangement, the user will be able to control the generation of the soundtrack by positioning emotion tags in the video work that correspond to the general mood of each scene. The subsequent soundtrack generation step will utilize these tags to prepare a musical accompaniment to the video work that generally matches its on-screen activities.

Owner:MAGIX

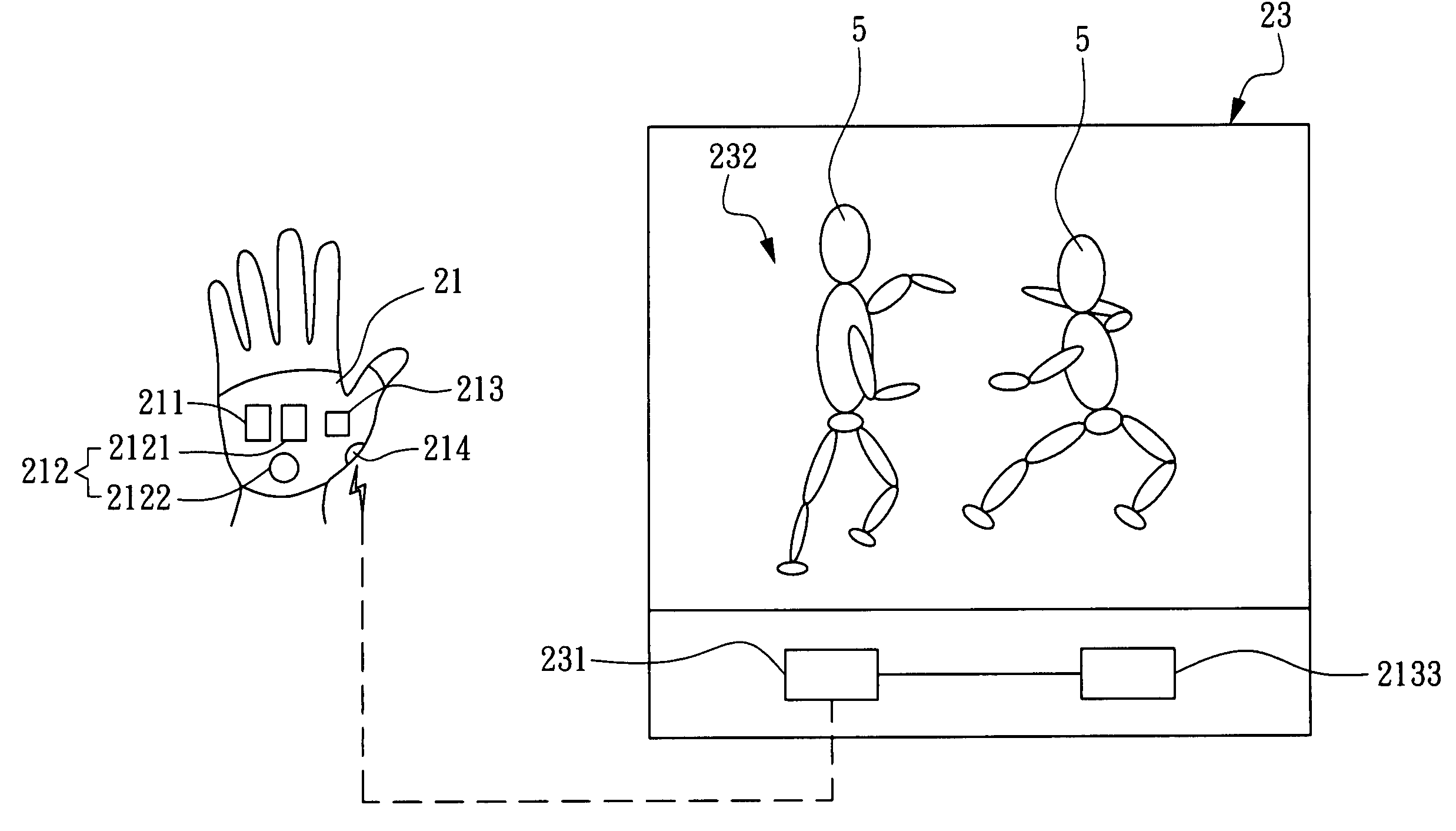

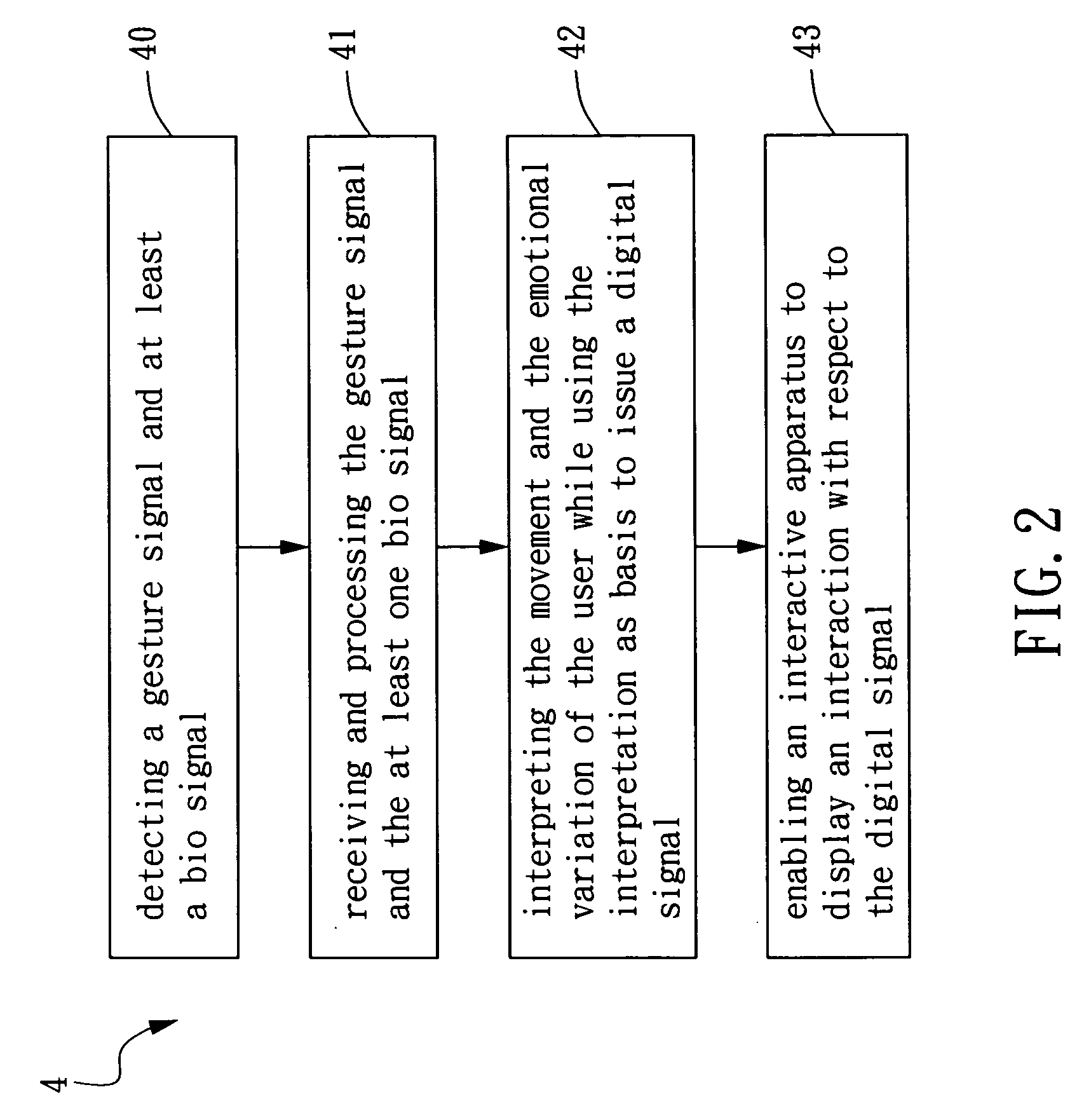

Interactive gaming method and apparatus with emotion perception ability

InactiveUS20070149282A1Compact and commercially feasibleRelease fatigueVideo gamesSpecial data processing applicationsEmotion perceptionPower flow

The present invention relates to an interactive gaming method and apparatus with emotion perception ability, by which not only gestures of a user can be detected and used as inputs for controlling a game, but also physiological attributes of the user such as heart beats, galvanic skin response (GSR), etc., can be sensed and used as emotional feedbacks of the game affecting the user. According to the disclosed method, the present invention further provides an interactive gaming apparatus that will interpret the signals detected by the inertial sensing module and the bio sensing module and use the interpretation as a basis for evaluating the movements and emotions of a user immediately, and then the evaluation obtained by the interactive gaming apparatus is sent to the gaming platform to be used as feedbacks for controlling the game to interact with the user accordingly. Therefore, by the method and apparatus according to the present invention, not only the harmonics of human motion can be trained to improve, but also the self-control of a user can be enhanced.

Owner:IND TECH RES INST

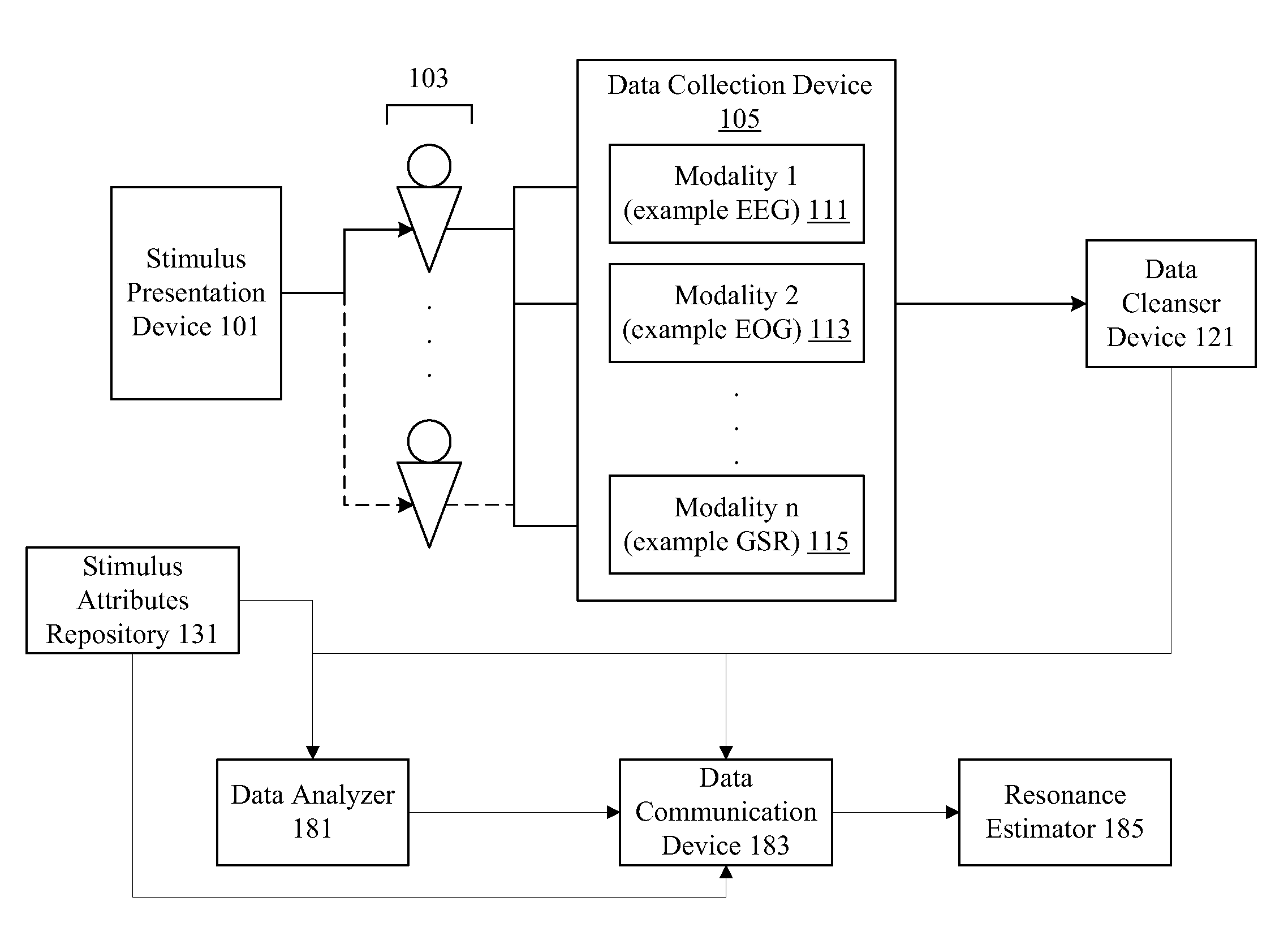

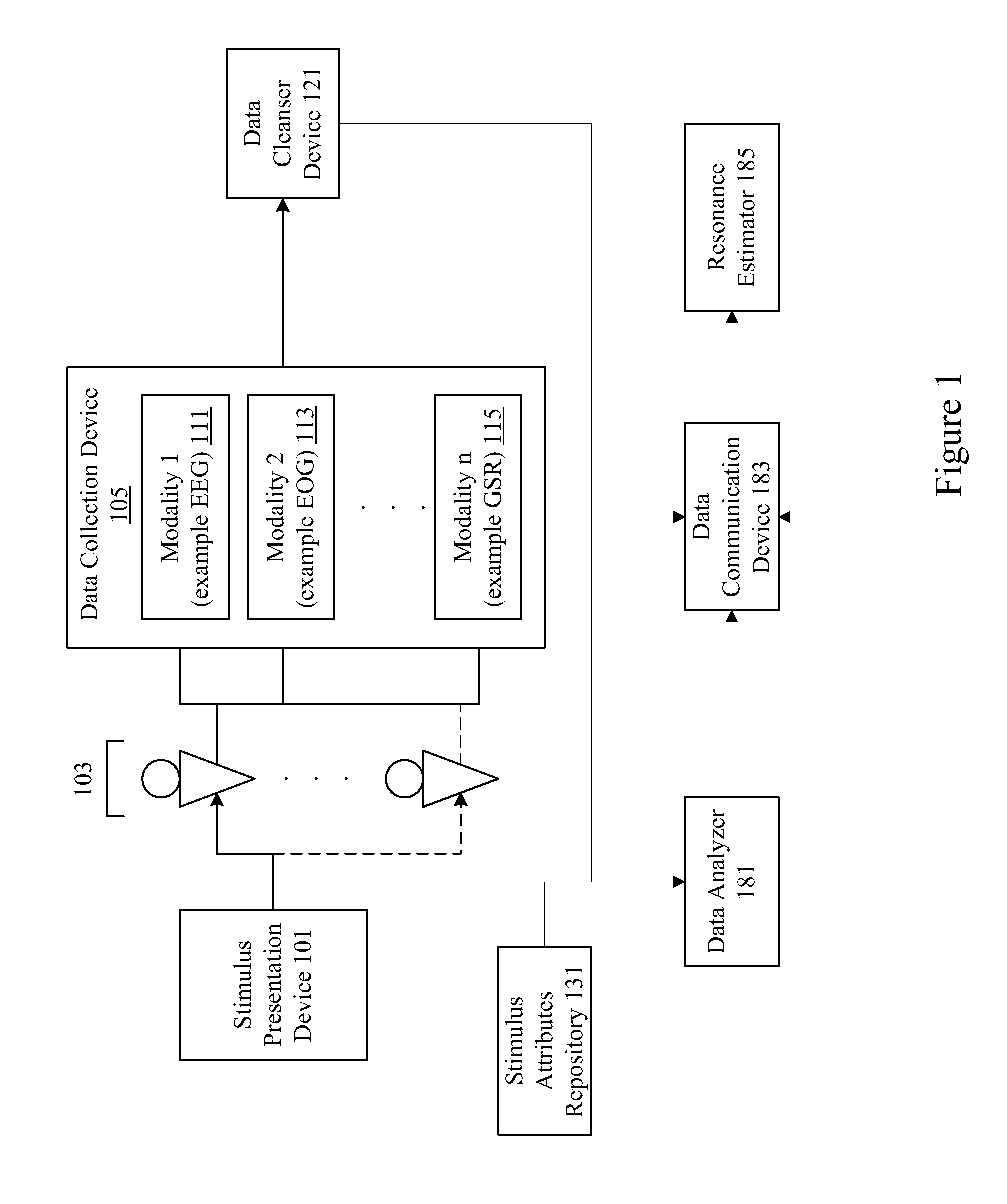

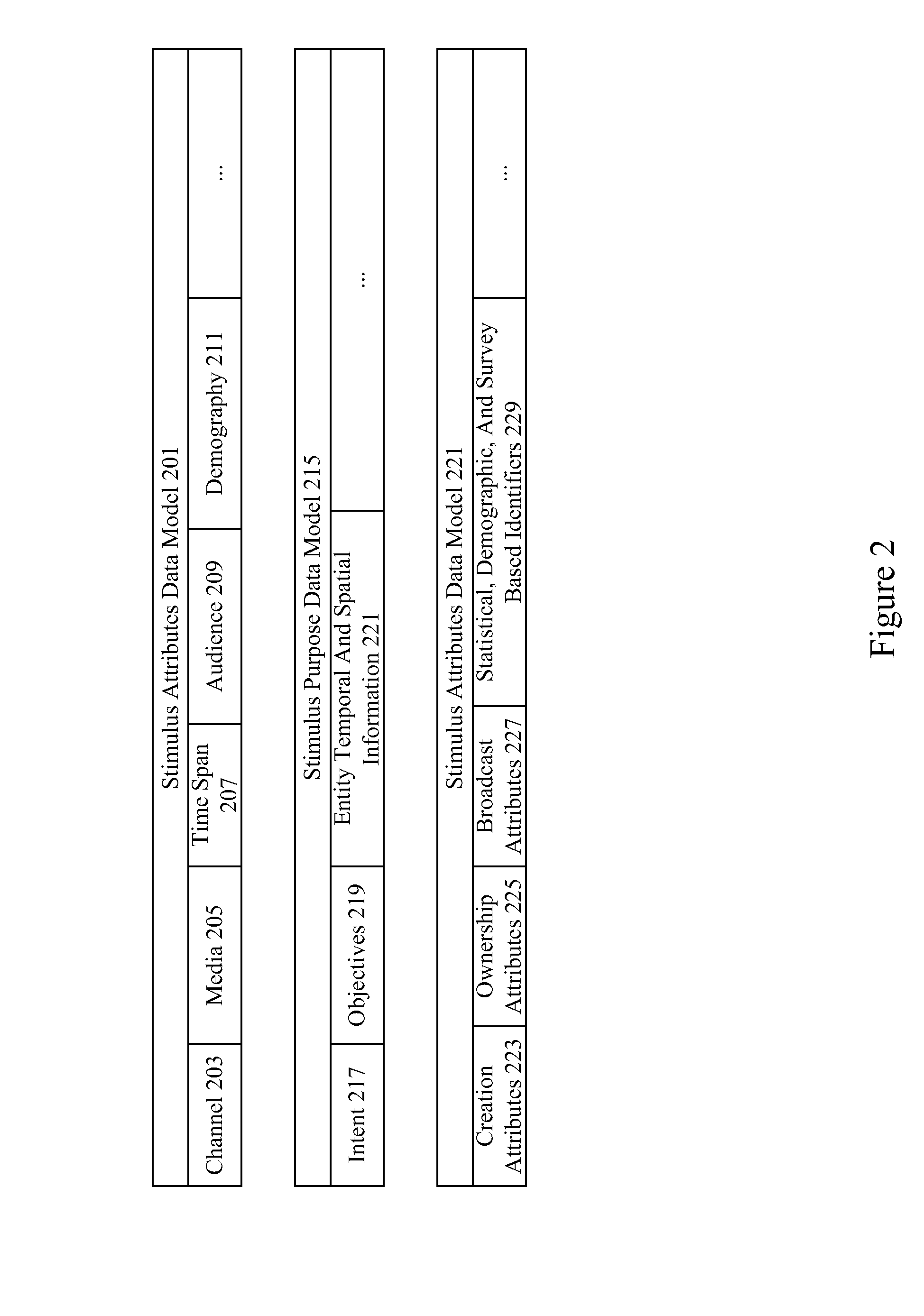

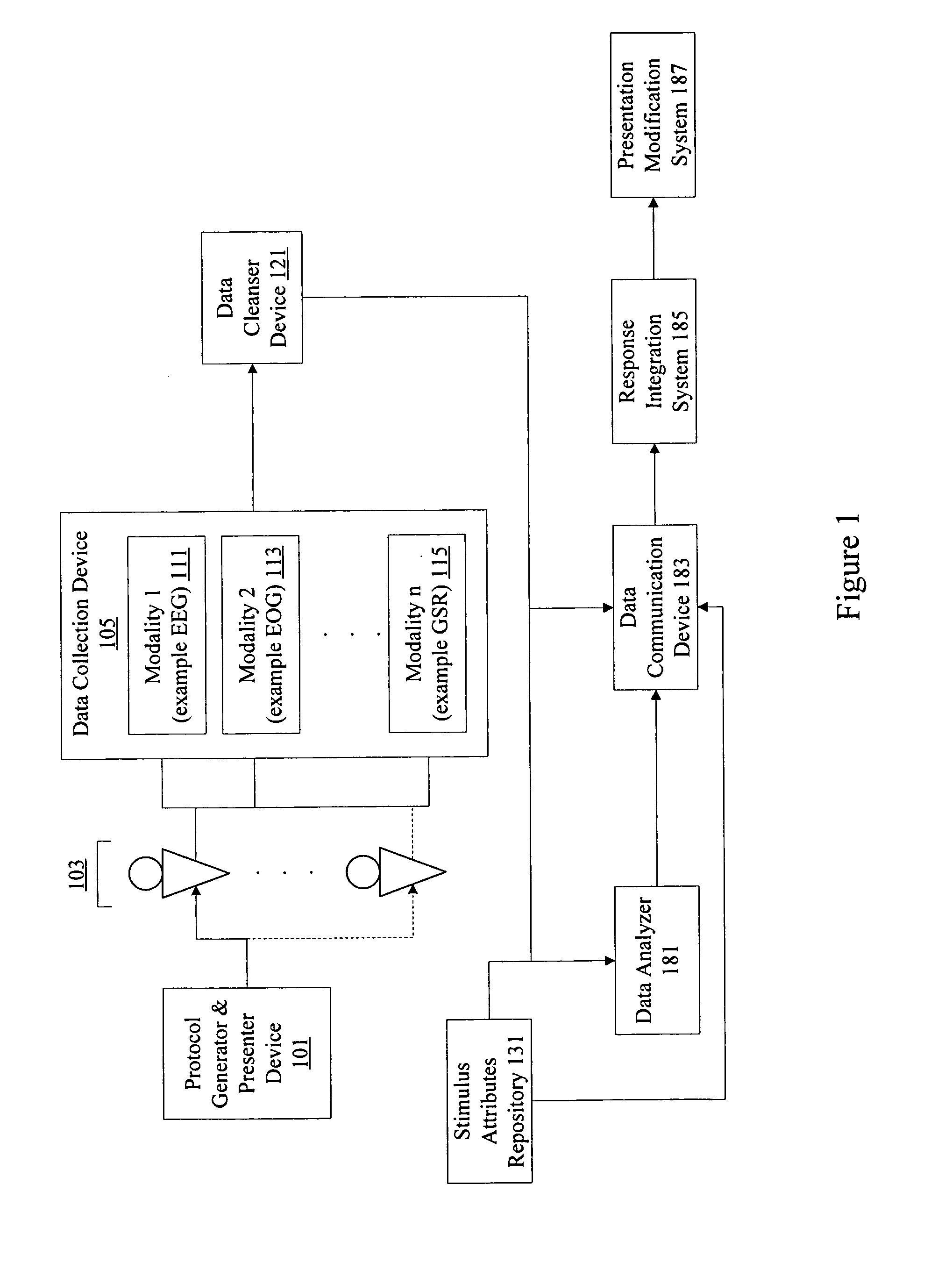

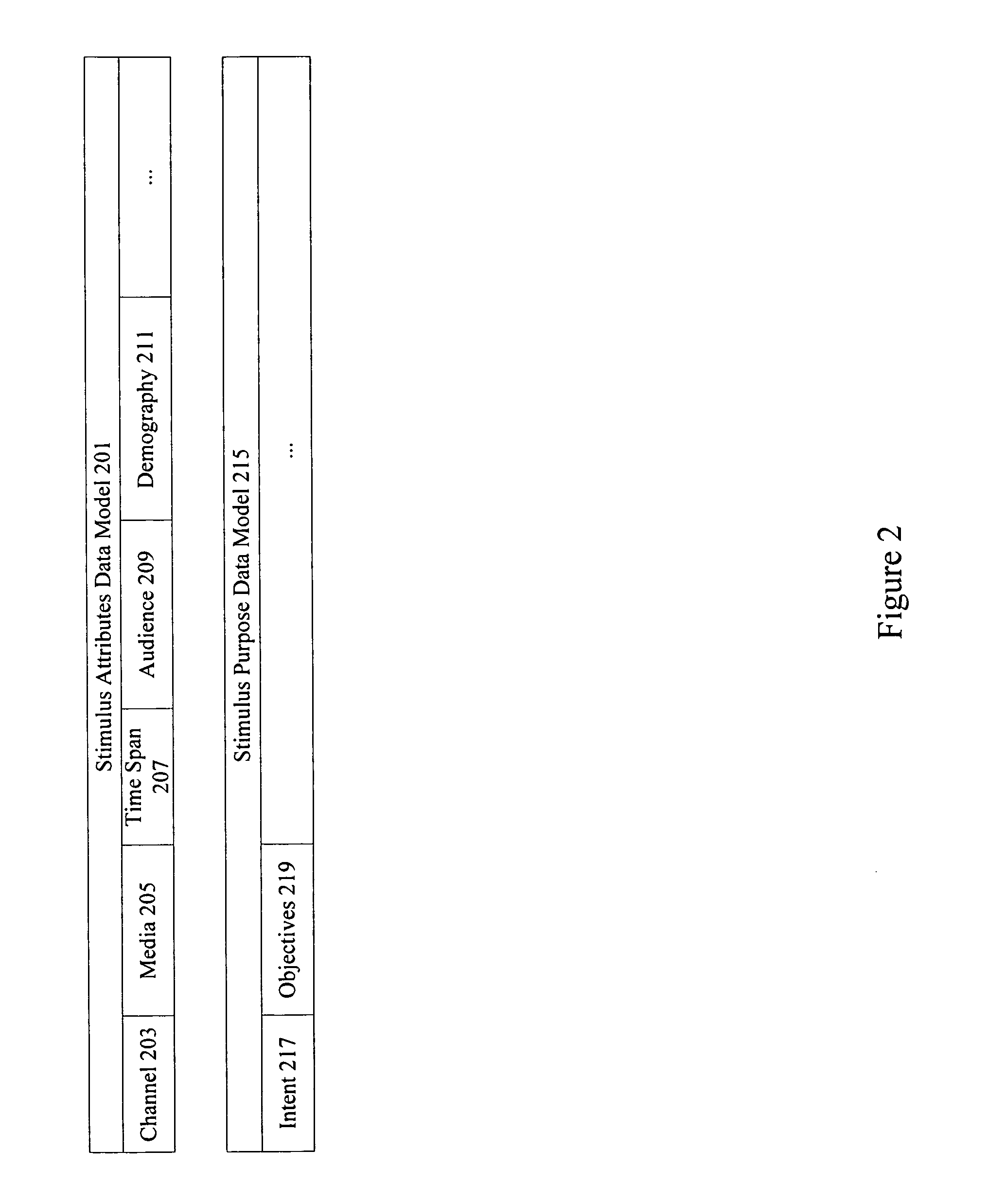

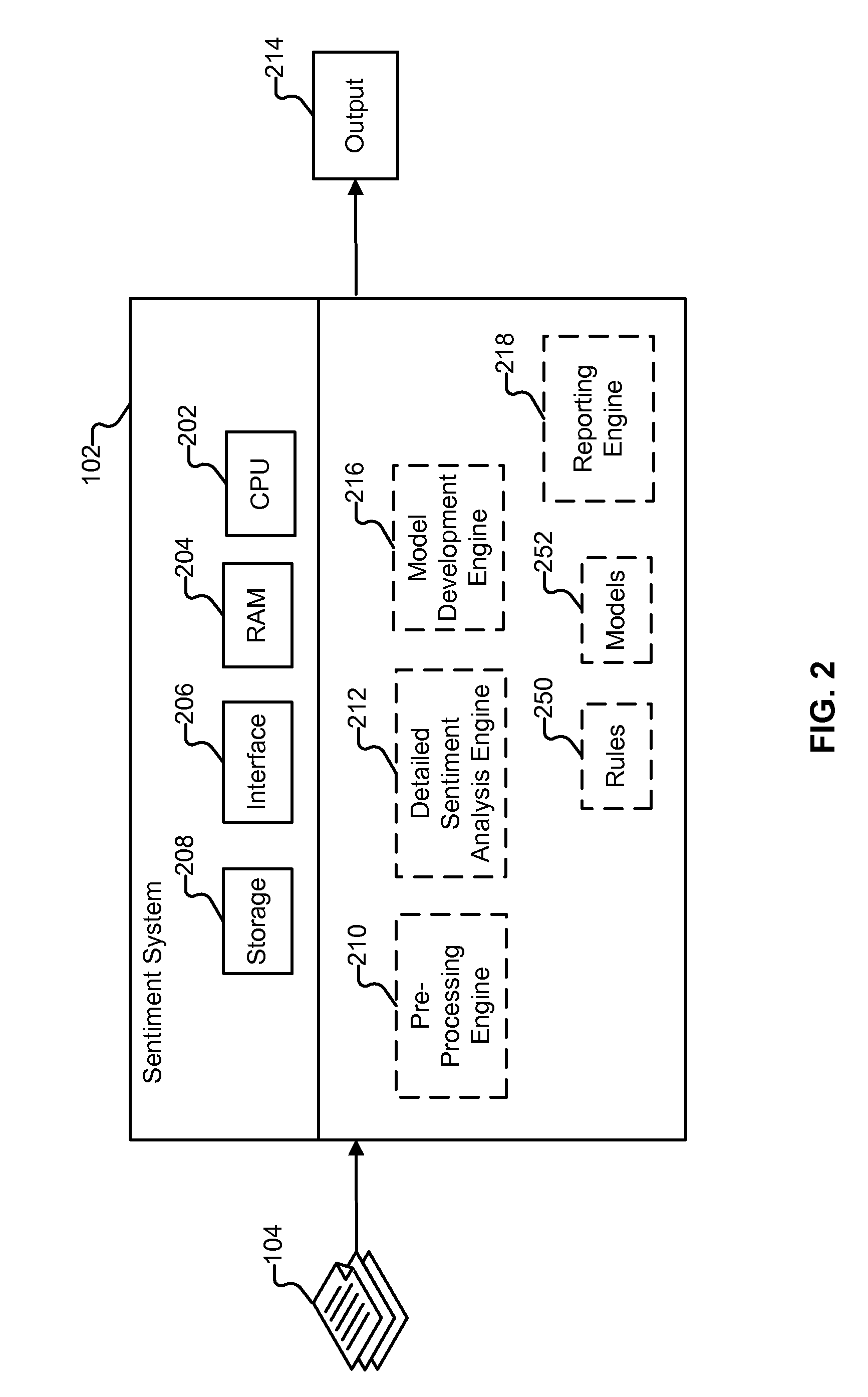

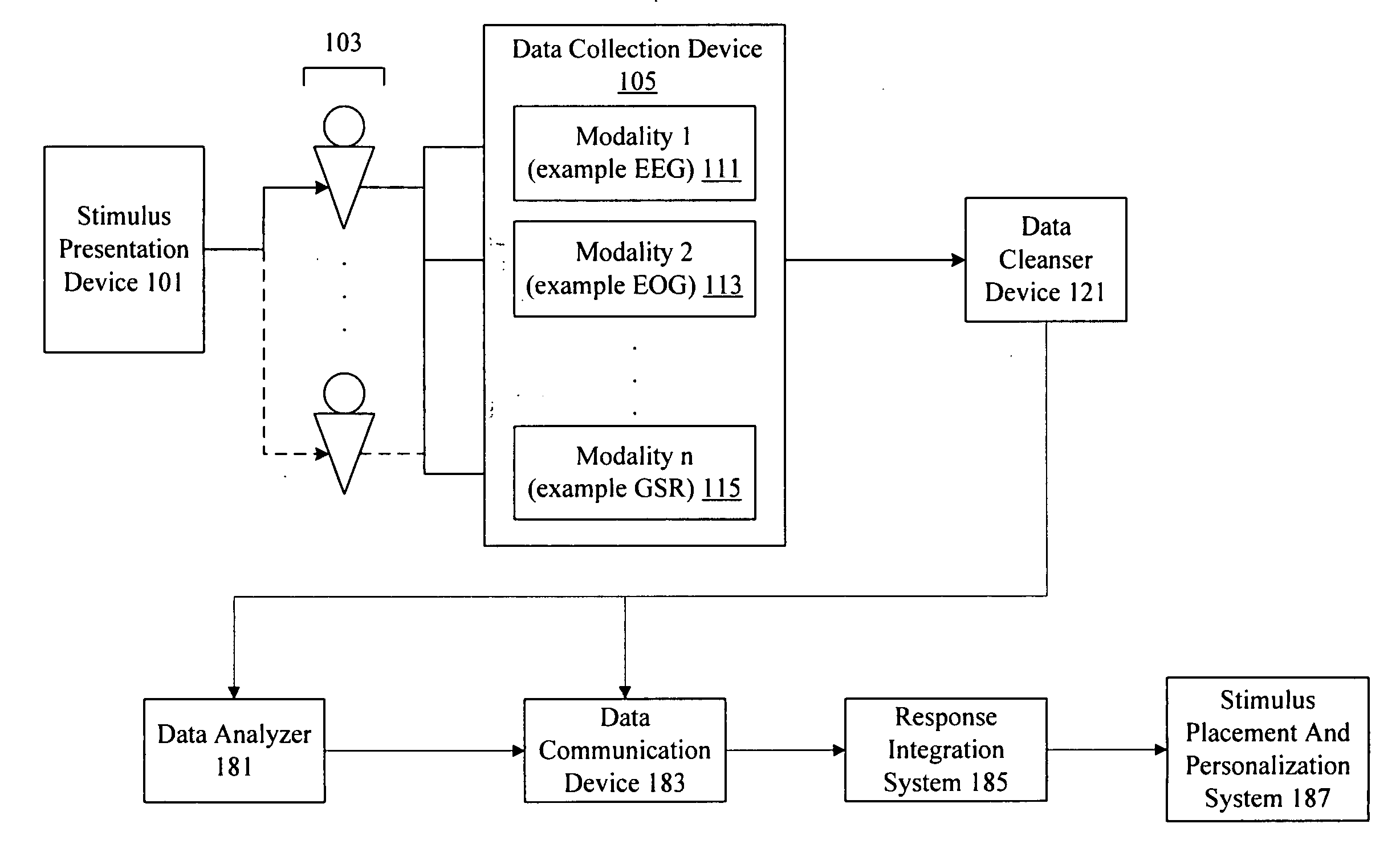

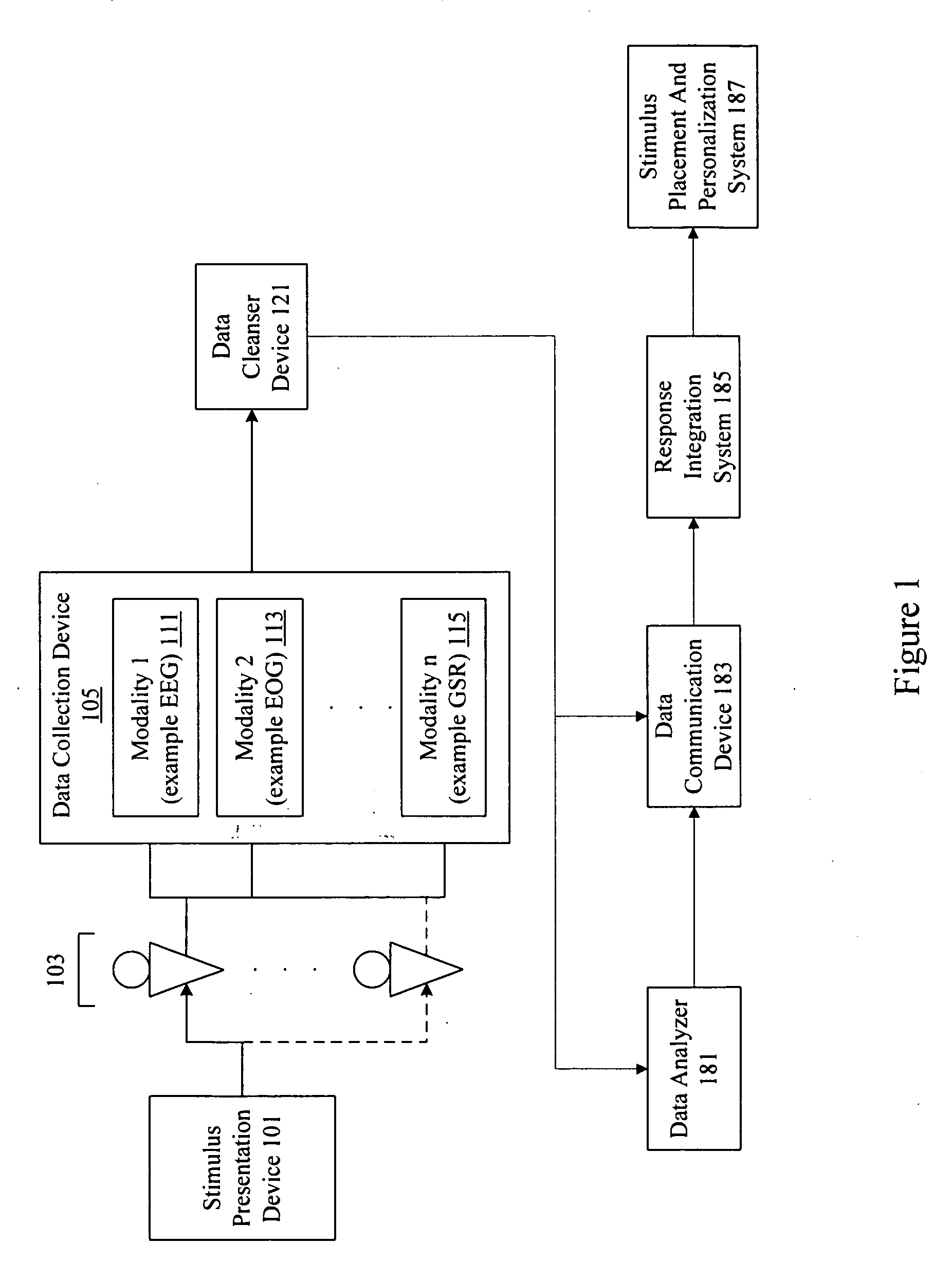

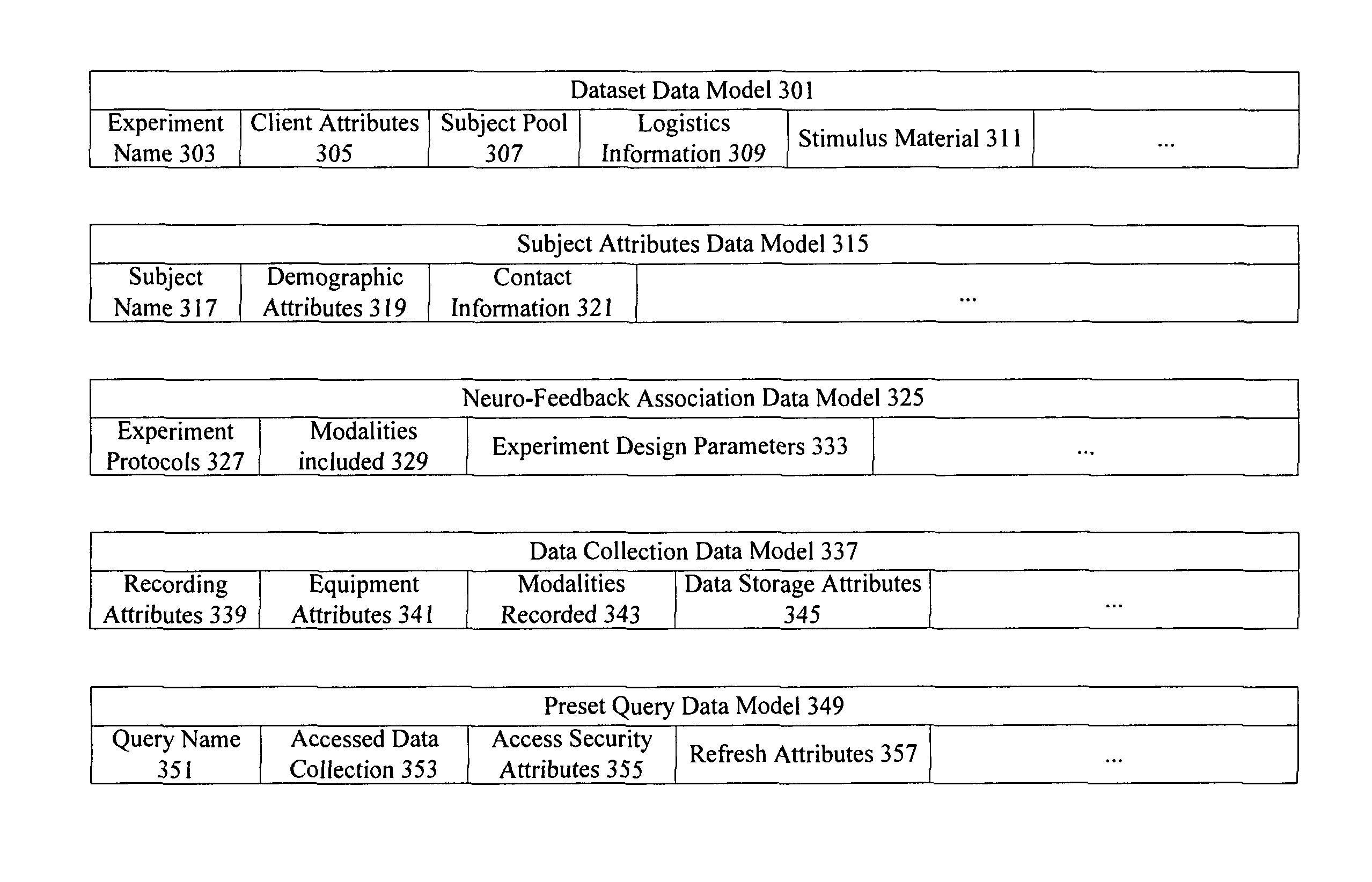

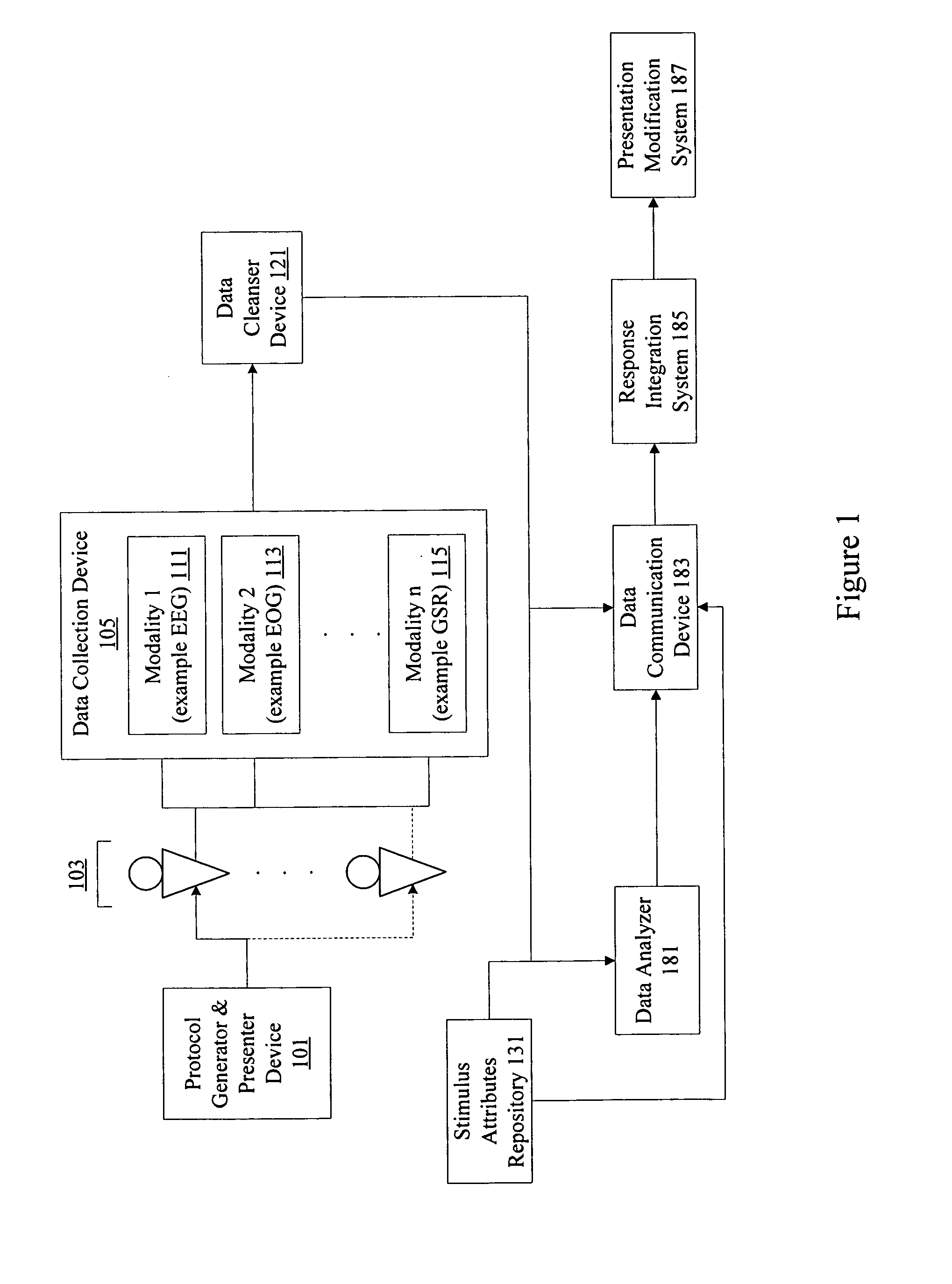

Neuro-response stimulus and stimulus attribute resonance estimator

A system determines neuro-response stimulus and stimulus attribute resonance. Stimulus material and stimulus material attributes such as communication, concept, experience, message, images, audio, pricing, and packaging are evaluated using neuro-response data collected with mechanisms such as Event Related Potential (ERP), Electroencephalography (EEG), Galvanic Skin Response (GSR), Electrocardiograms (EKG), Electrooculography (EOG), eye tracking, and facial emotion encoding. Neuro-response data is analyzed to determine stimulus and stimulus attribute resonance.

Owner:NIELSEN CONSUMER LLC

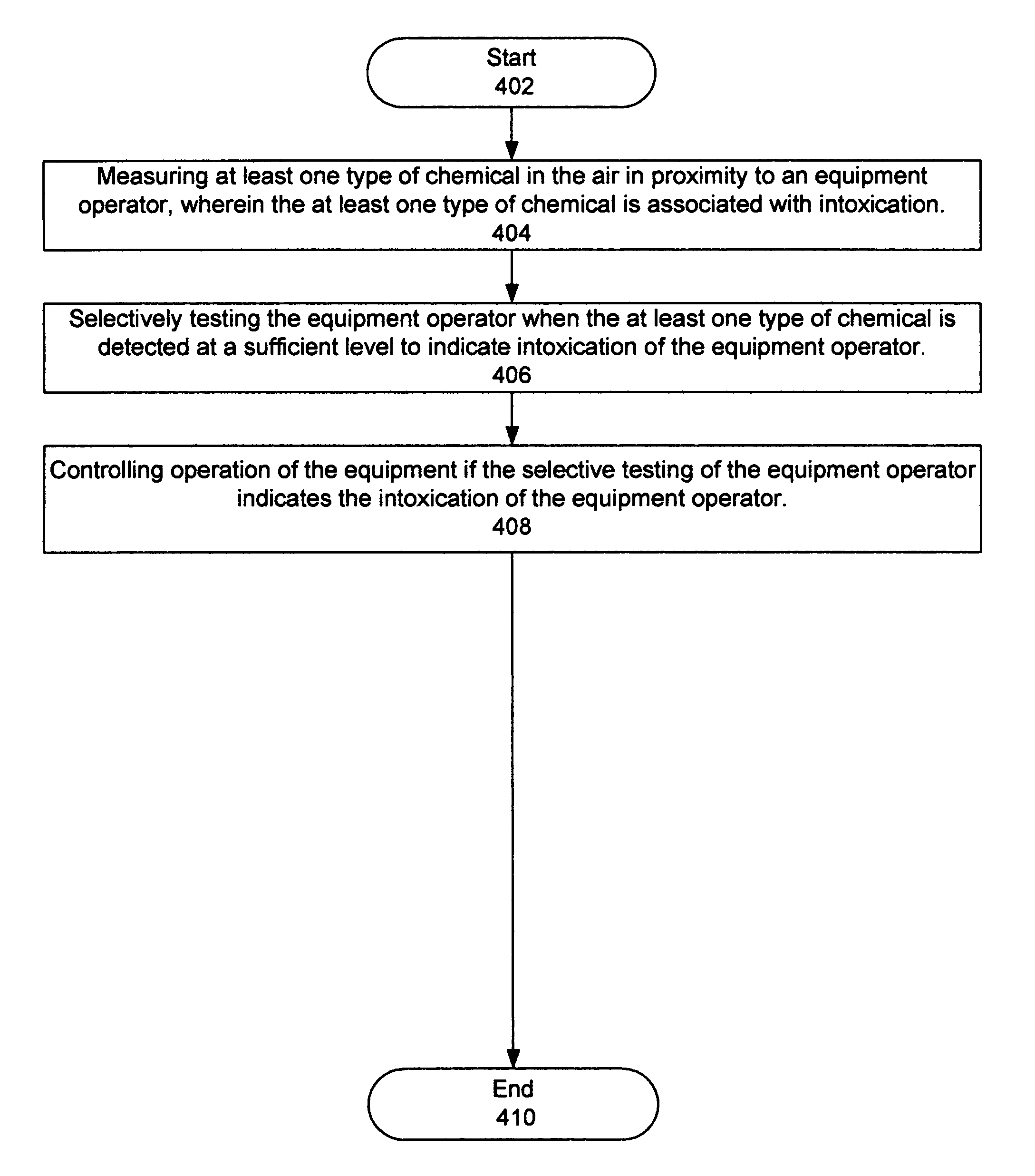

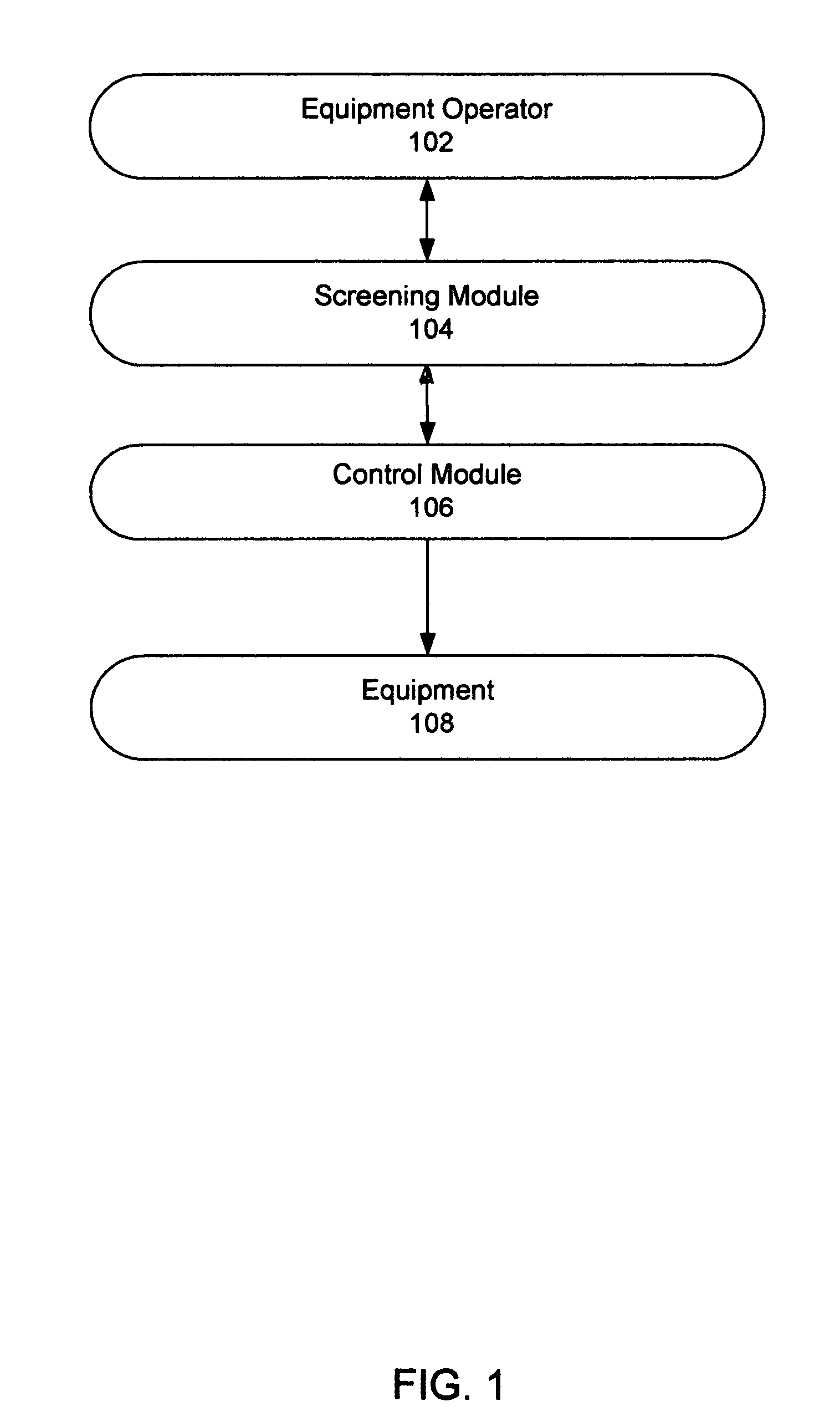

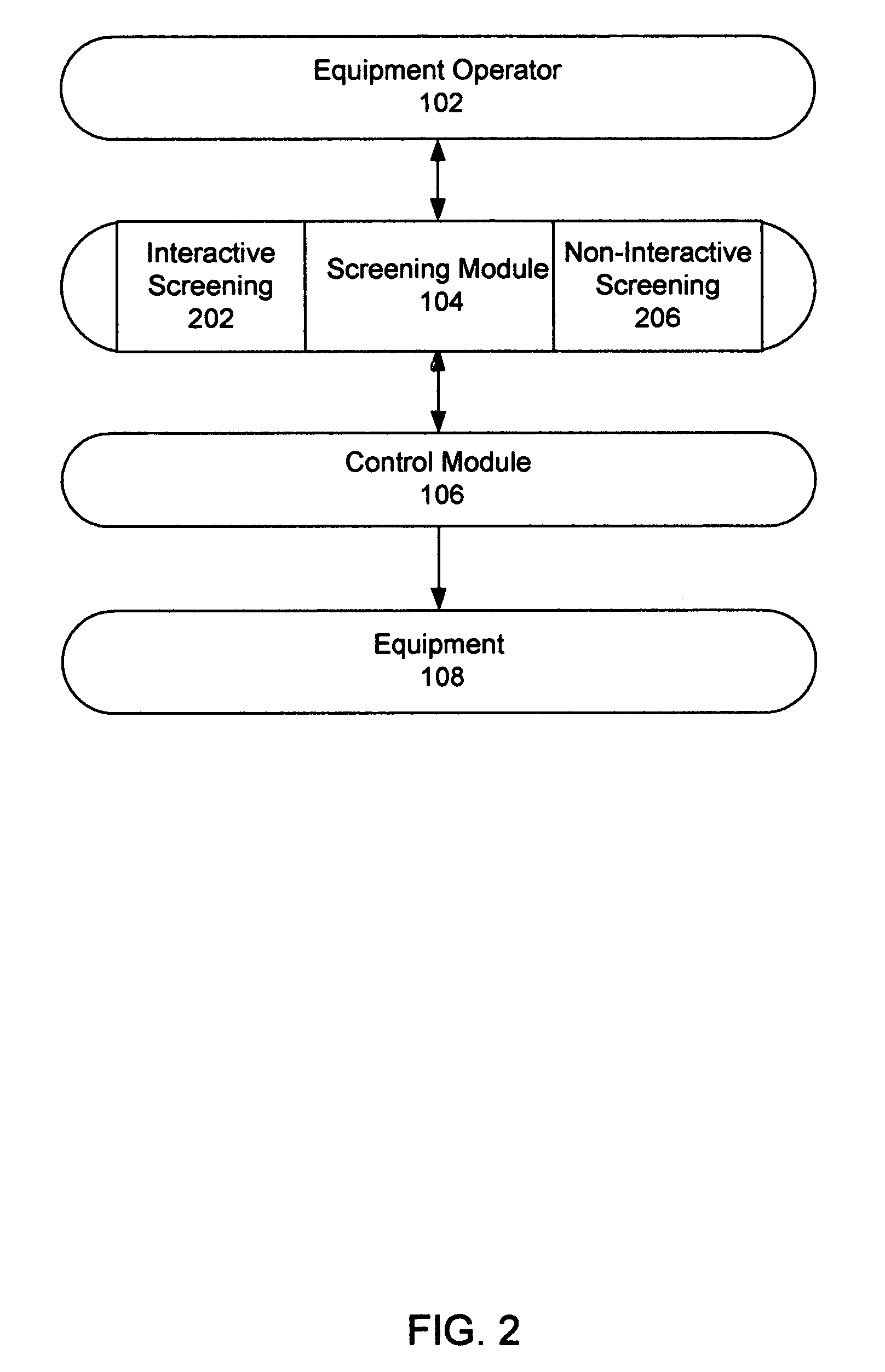

Multistage safety screening of equipment operators

InactiveUS7227472B1Increase probabilityMinimize the annoyance to an operatorElectric devicesLayered productsEquipment OperatorControl equipment

Methods and systems to screen equipment operators for impairments, such as intoxication, physical impairment, medical impairment, or emotional impairment, to selectively test the equipment operators and control the equipment if impairment of the equipment operator is determined. One embodiment is a method to screen an equipment operator for intoxication. A second embodiment is a method to screen an equipment operator for impairment, such as intoxication, physical impairment, medical impairment, or emotional impairment. A third embodiment is an equipment operator screening system to determine impairment, such as intoxication, physical impairment, medical impairment, or emotional impairment.

Owner:VEHICLE INTELLIGENCE & SAFETY

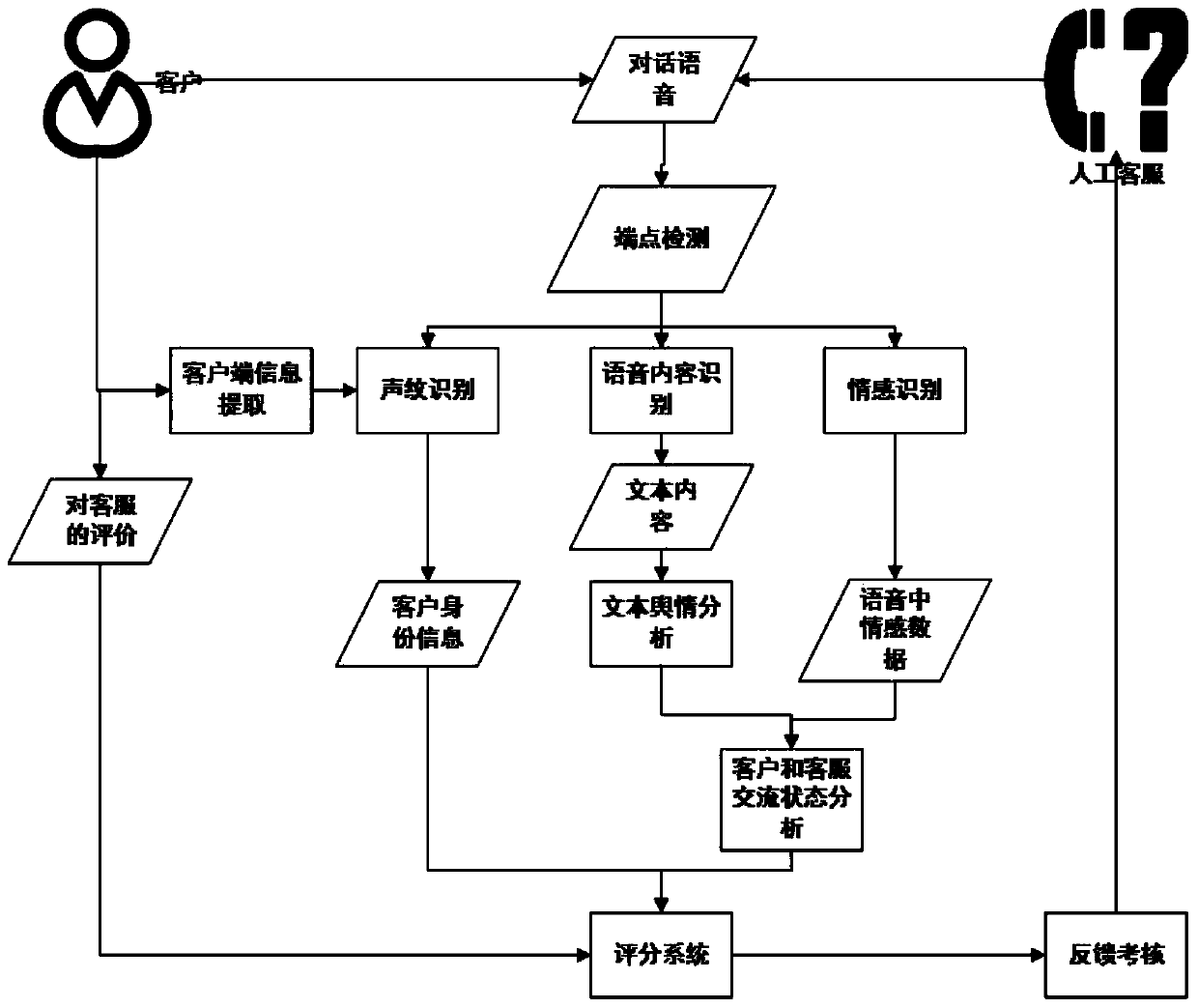

Smart phone customer service system based on speech analysis

InactiveCN103811009ARealize objective managementEasy to handleSpeech analysisSpecial service for subscribersOpinion analysisConversational speech

The invention provides a smart phone customer service system based on speech analysis. Firstly, real-time recording and effectiveness detection are performed on conversation speech caused when a client calls human customer service; then related individual information of the client is extracted, voiceprint recognition is performed on client speech in the conversation speech, and the verification is performed and the conversation speech is used as client identity at the time of consulting complaints to be recorded; meanwhile, speech content recognition is performed on the conversation speech to save as written record, and text public opinion analysis is performed on the written record; speech emotion analysis is performed on the conversation speech to record speech emotion data; the effect of the consulting complaints this time is analyzed by combination of the result of the text public opinion analysis and the emotion data; final grade of the customer service is obtained by combination of the analysis results and traditional grading evaluation to perform feed-back assessment. Compared with traditional customer service systems, the smart customer service system is capable of effectively increasing service qualities of the client and achieving objectified management of customer service evaluation.

Owner:EAST CHINA UNIV OF SCI & TECH

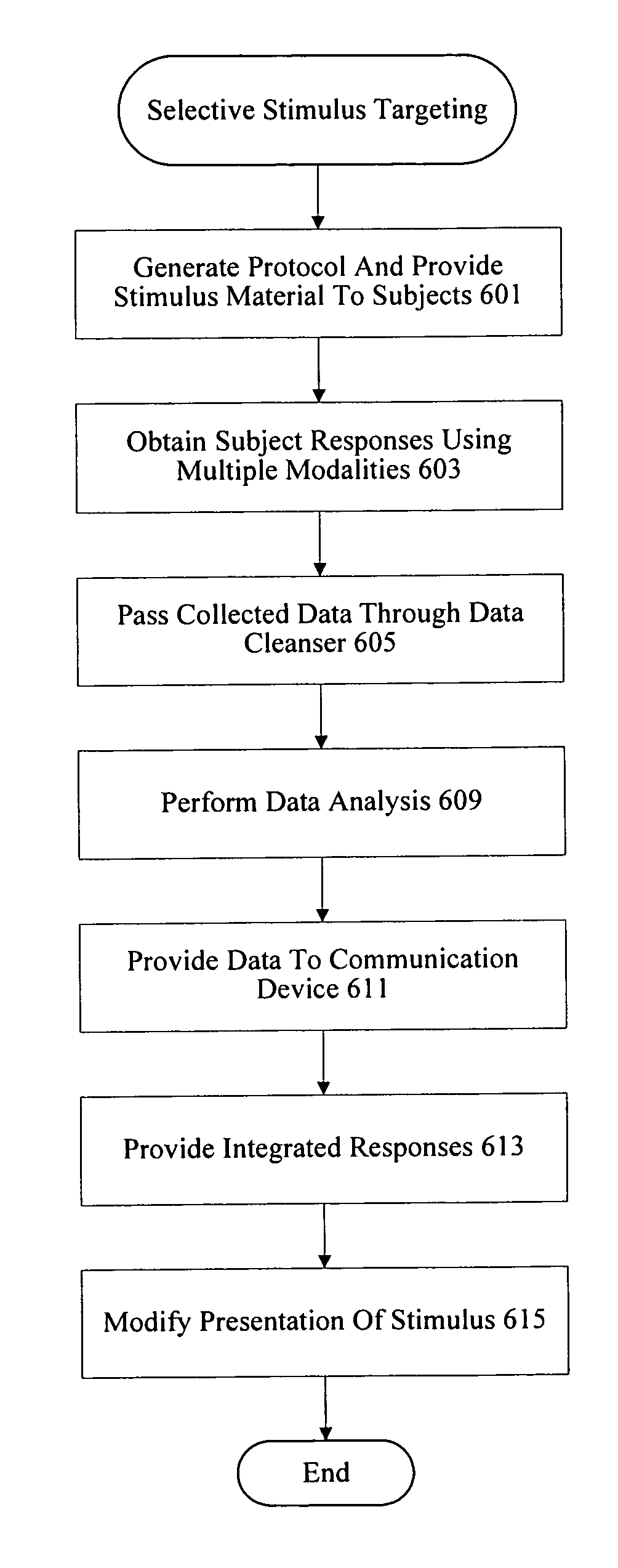

Neuro-physiology and neuro-behavioral based stimulus targeting system

A system performs stimulus targeting using neuro-physiological and neuro-behavioral data. Subjects are exposed to stimulus material such as marketing and entertainment materials and data is collected using mechanisms such as Electroencephalography (EEG), Galvanic Skin Response (GSR), Electrocardiograms (EKG), Electrooculography (EOG), eye tracking, and facial emotion encoding. Neuro-physiological and neuro-behavioral data collected is analyzed to select targeted stimulus materials. The targeted stimulus materials are provided to particular subjects for a variety of purposes.

Owner:NIELSEN CONSUMER LLC

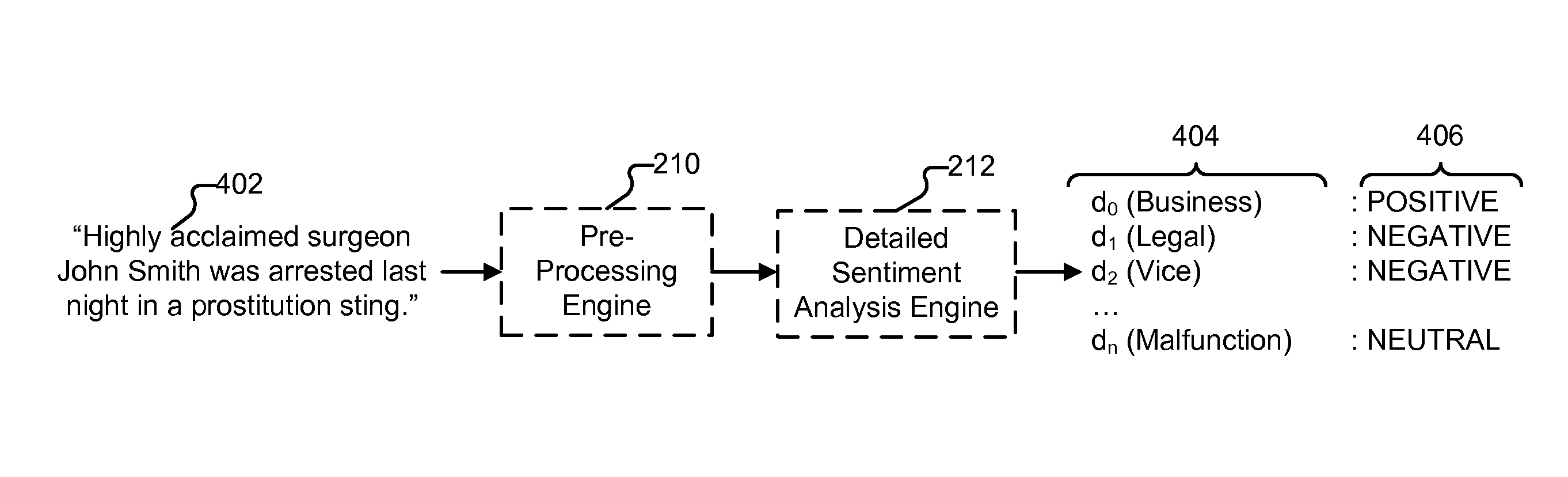

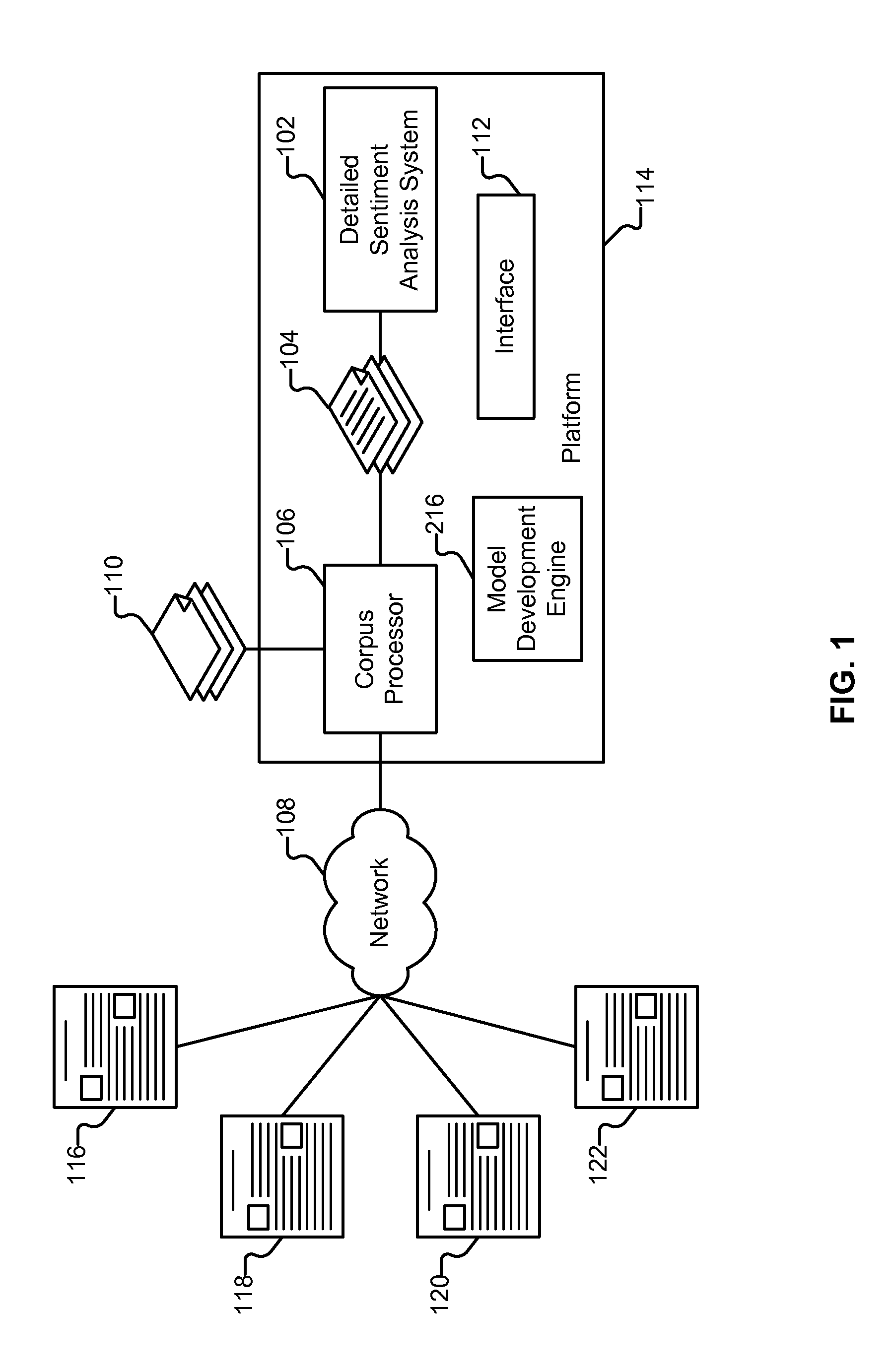

Detailed sentiment analysis

Performing detailed sentiment analysis includes generating a first sentiment score for a first entity based on a content source. The first sentiment score is generated with respect to a first dimension. A second sentiment score for the first entity is generated based on the content source. The second sentiment score is generated with respect to a second dimension.

Owner:REPUTATION COM

Translating emotion to braille, emoticons and other special symbols

InactiveUS20050069852A1Substation equipmentInput/output processes for data processingGraphicsPattern recognition

A system for incorporating emotional information in a communication stream from a first person to a second person, including an electronic image analyzer for determining an emotional component of a speaker or presenter using subsystems such as facial expression recognition, hand gesture recognition, body movement recognition, voice pitch analysis. A symbol generator generates one or more symbols such as emoticons, graphic symbols, or text modifications (bolding, underlining, etc.) corresponding to the emotional aspects of the presenter or speaker. The emotional symbols are merged with the audio or visual information from the speaker, and is presented to one or more recipients.

Owner:IBM CORP +1

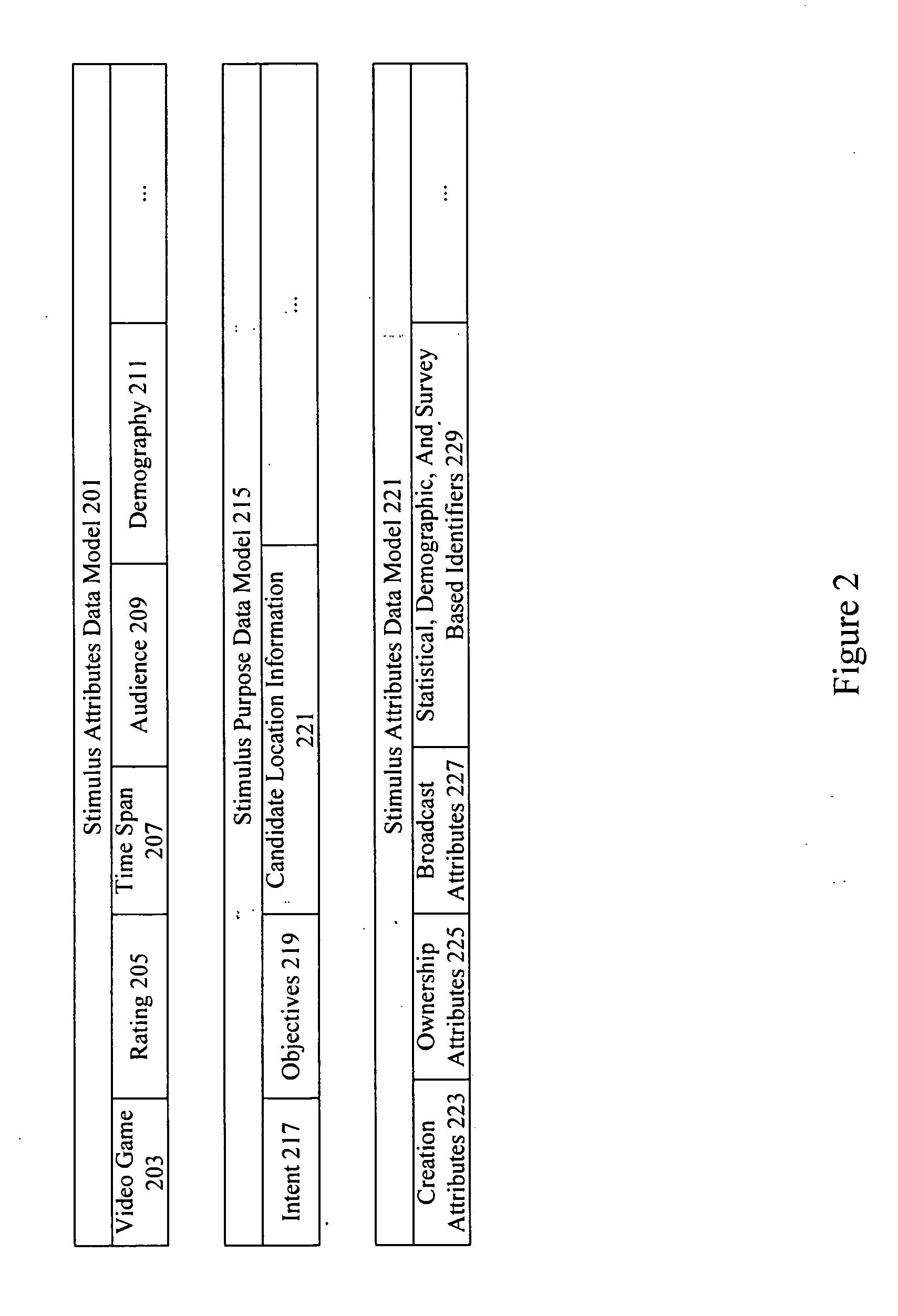

Personalized stimulus placement in video games

A system analyzes neuro-response measurements from subjects exposed to video games to identify neurologically salient locations for inclusion of stimulus material and personalized stimulus material such as video streams, advertisements, messages, product offers, purchase offers, etc. Examples of neuro-response measurements include Electroencephalography (EEG), optical imaging, and functional Magnetic Resonance Imaging (fMRI), eye tracking, and facial emotion encoding measurements.

Owner:THE NIELSEN CO (US) LLC

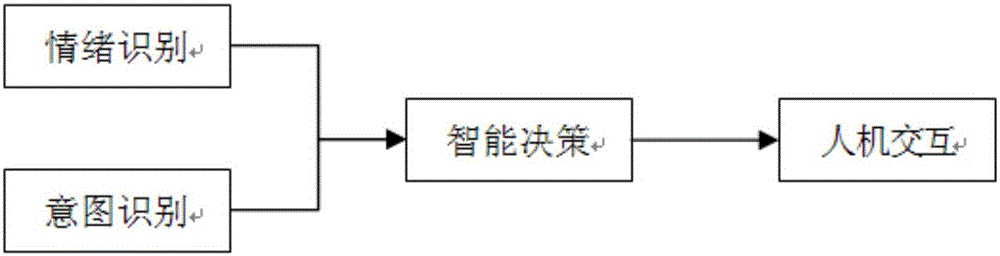

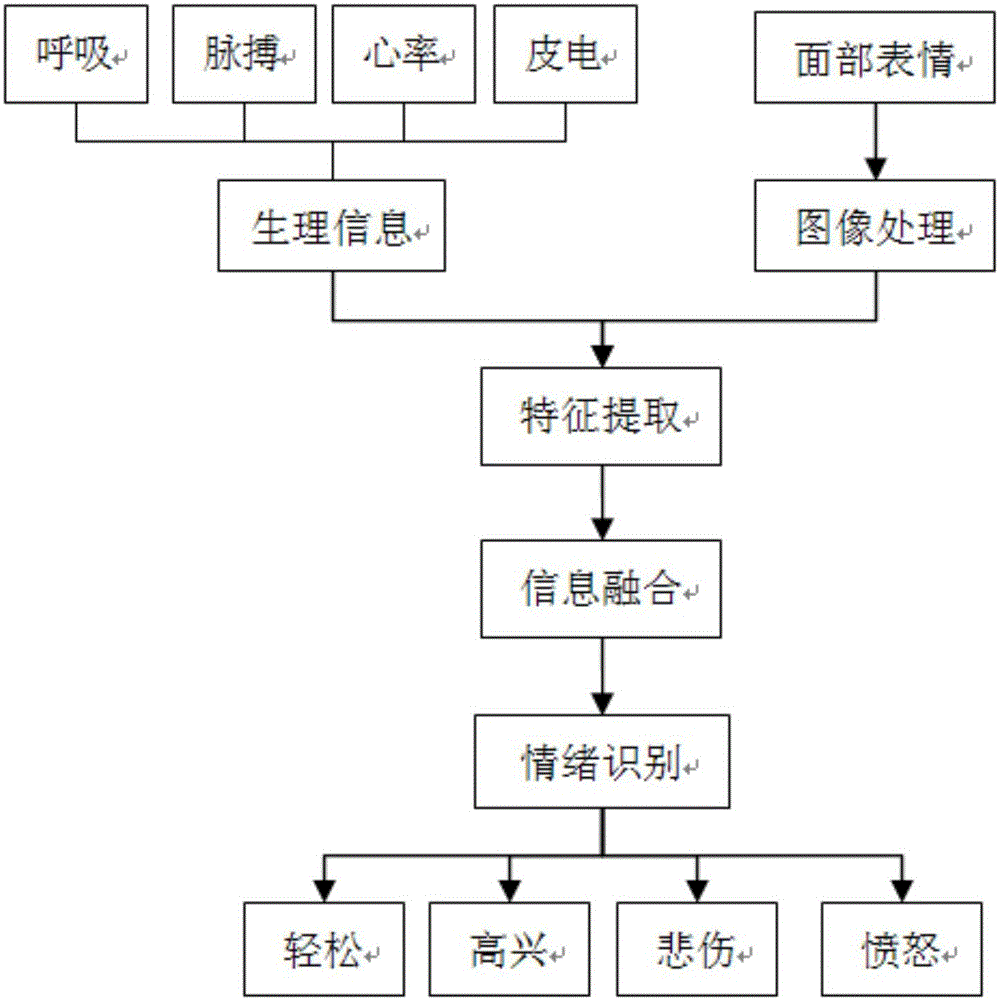

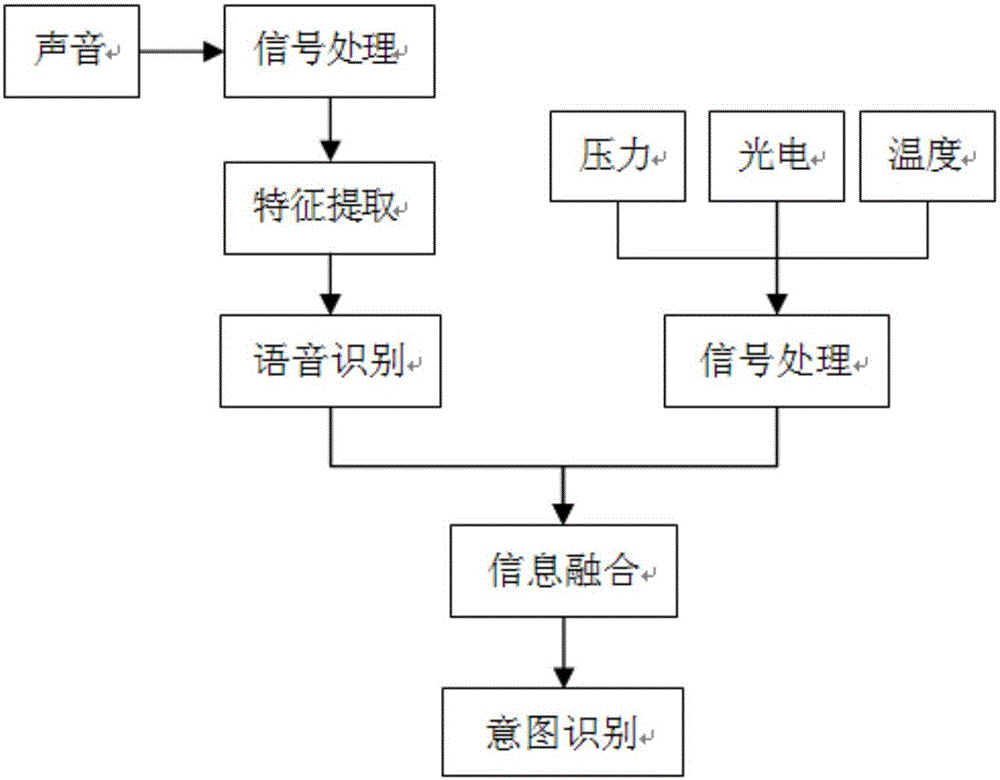

Robot man-machine interaction method based on user mood and intension recognition

The invention relates to a robot man-machine interaction method based on user mood and intension recognition. User mood recognition is carried out by combining human body biological information such as breath, the heart rate and the dermal electricity with facial expression recognition. The user intension is recognized through different kinds of physical sensor information such as pressure, photoelectricity and temperature. Intelligent control and decision making are carried out according to the user mood and intention recognition results. A corresponding execution mechanism of a robot is controlled to complete limb movements and voice interaction. By means of the robot man-machine interaction method based on user mood and intension recognition, the robot can understand the user intension more thoroughly, know the metal changes of a user, meet the functional requirement for emotion care for the user, and better participate in the life of users such as the old people and children.

Owner:国家康复辅具研究中心

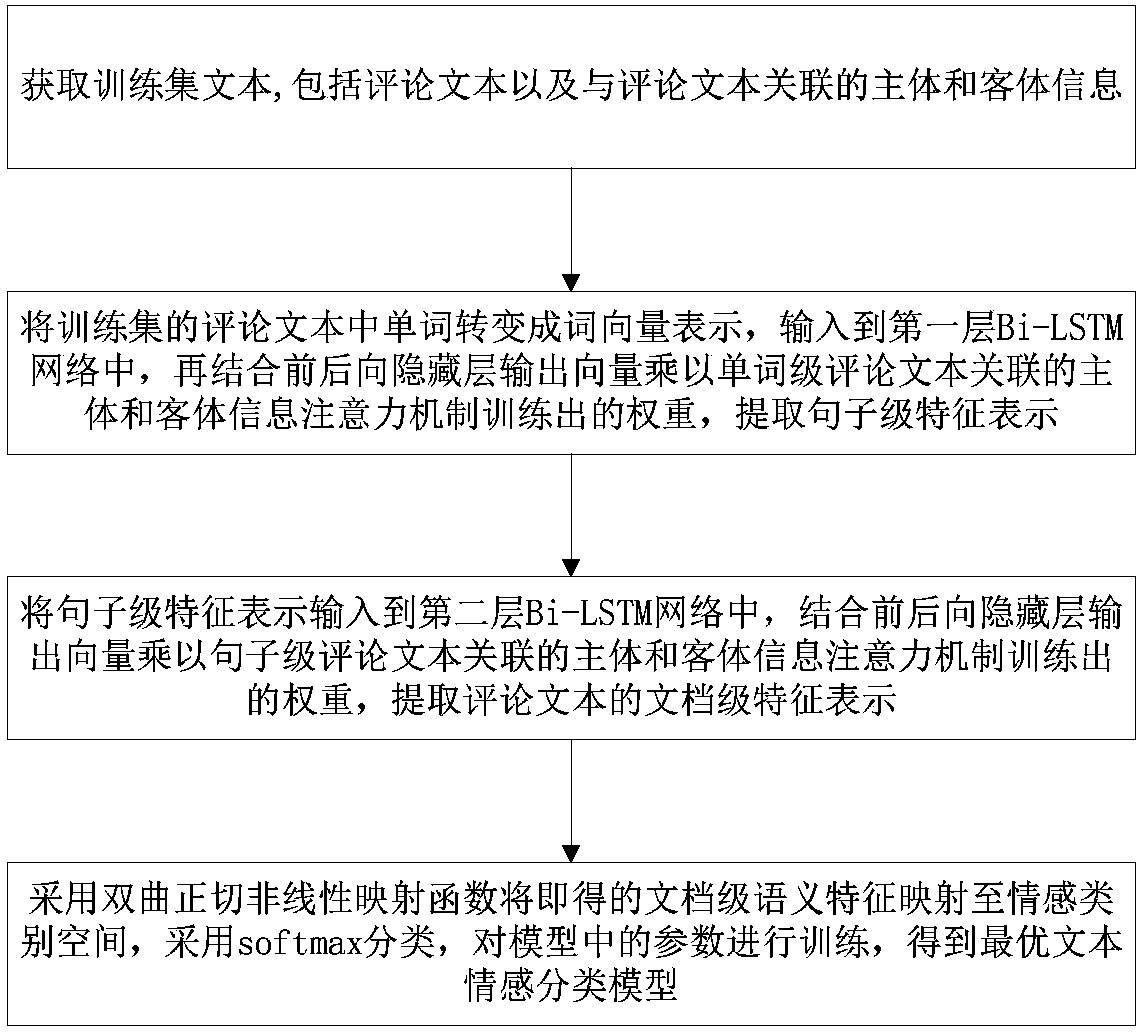

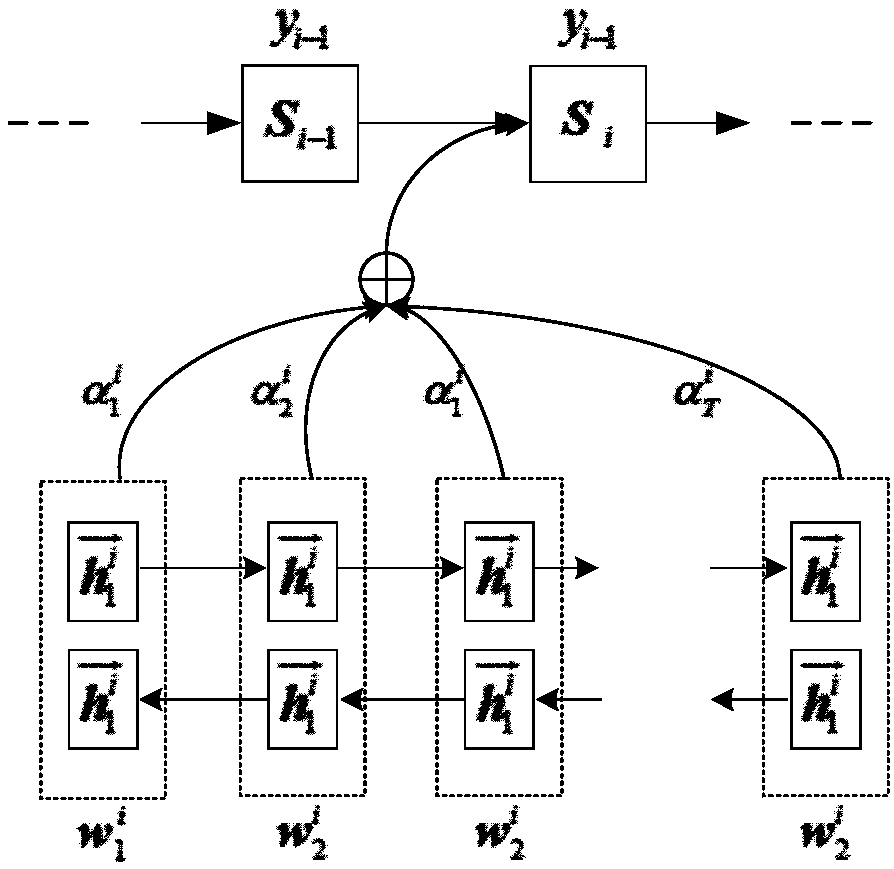

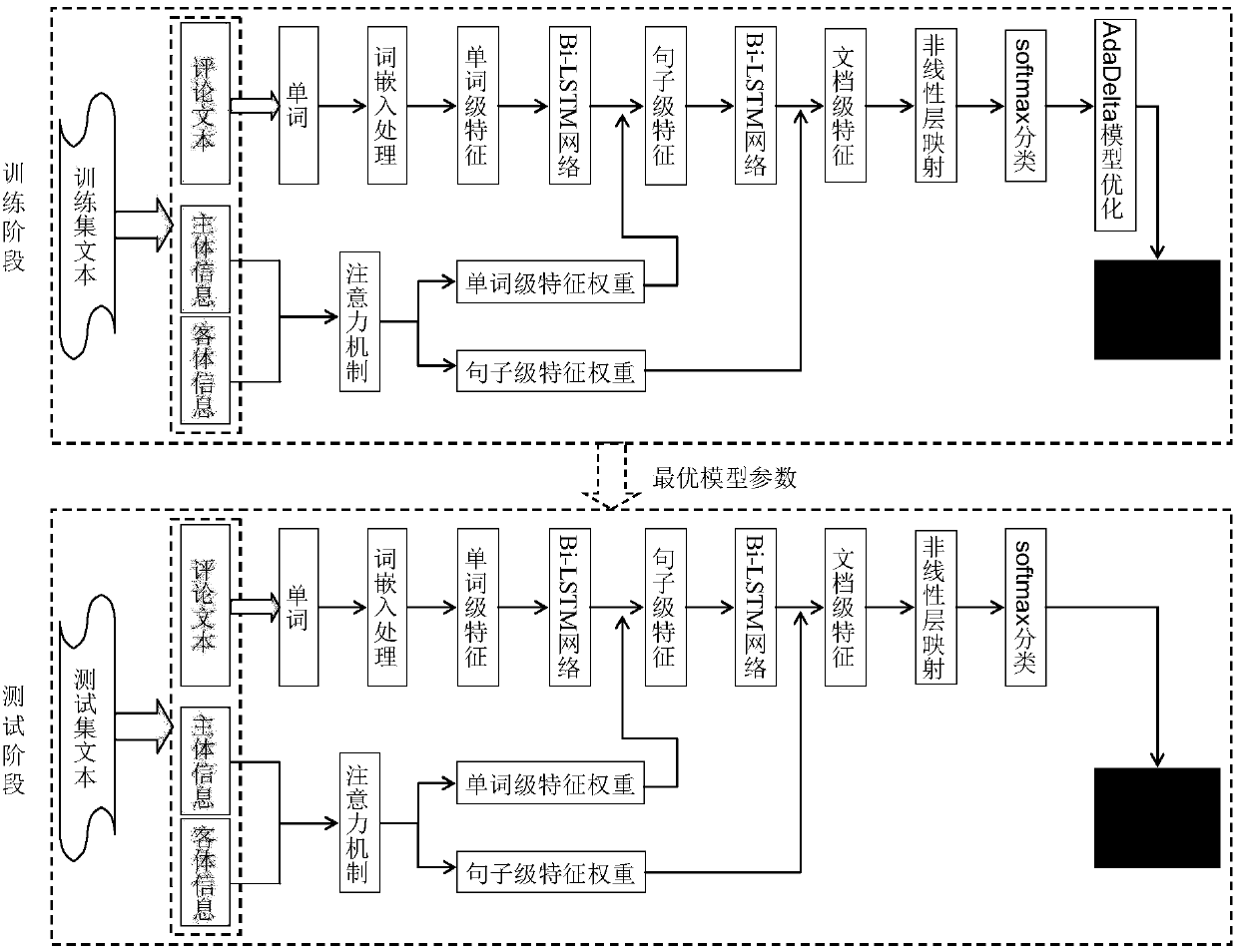

Comment text emotion classification model training and emotion classification method and device and equipment

ActiveCN108363753AAchieving Context Semantic Robust AwarenessRealize semantic expressionSemantic analysisSpecial data processing applicationsClassification methodsNetwork model

The invention discloses a comment text emotion classification model training and emotion classification method and device and equipment and belongs to the field of text emotion classification in natural language processing. Model training comprises the steps that a comment text and associated subject and object information are acquired; a comment subject and object attention mechanism is fused based on a first-layer Bi-LSTM network to extract sentence-level feature representation; the comment subject and object attention mechanism is fused based on a second-layer Bi-LSTM network to extract document-level feature representation; and a hyperbolic tangent non-linear mapping function is adopted to map document-level features to an emotion category space, softmax classification is adopted to train parameters in a model, and an optimal text emotion classification model is obtained. According to the method, the hierarchical bidirectional Bi-LSTM network model and the attention mechanism are adopted, context semantic robust perception and semantic expression of the text can be realized, the robustness of text emotion classification can be remarkably improved, and the correct rate of classification is increased.

Owner:NANJING UNIV OF POSTS & TELECOMM

Computerized method of assessing consumer reaction to a business stimulus employing facial coding

Owner:SENSORY LOGIC

Neuro-physiology and neuro-behavioral based stimulus targeting system

A system performs stimulus targeting using neuro-physiological and neuro-behavioral data. Subjects are exposed to stimulus material such as marketing and entertainment materials and data is collected using mechanisms such as Electroencephalography (EEG), Galvanic Skin Response (GSR), Electrocardiograms (EKG), Electrooculography (EOG), eye tracking, and facial emotion encoding. Neuro-physiological and neuro-behavioral data collected is analyzed to select targeted stimulus materials. The targeted stimulus materials are provided to particular subjects for a variety of purposes.

Owner:NIELSEN CONSUMER LLC

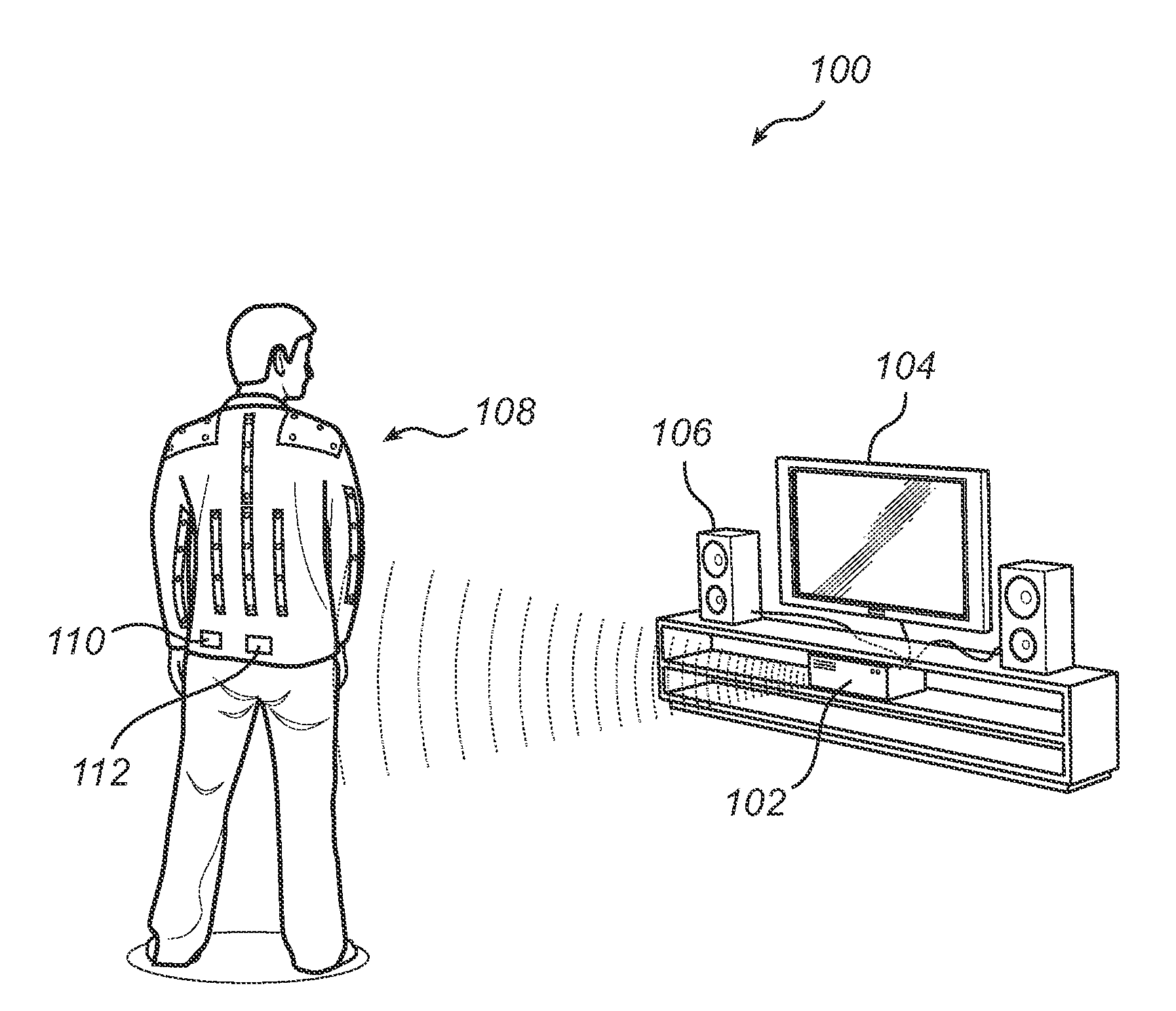

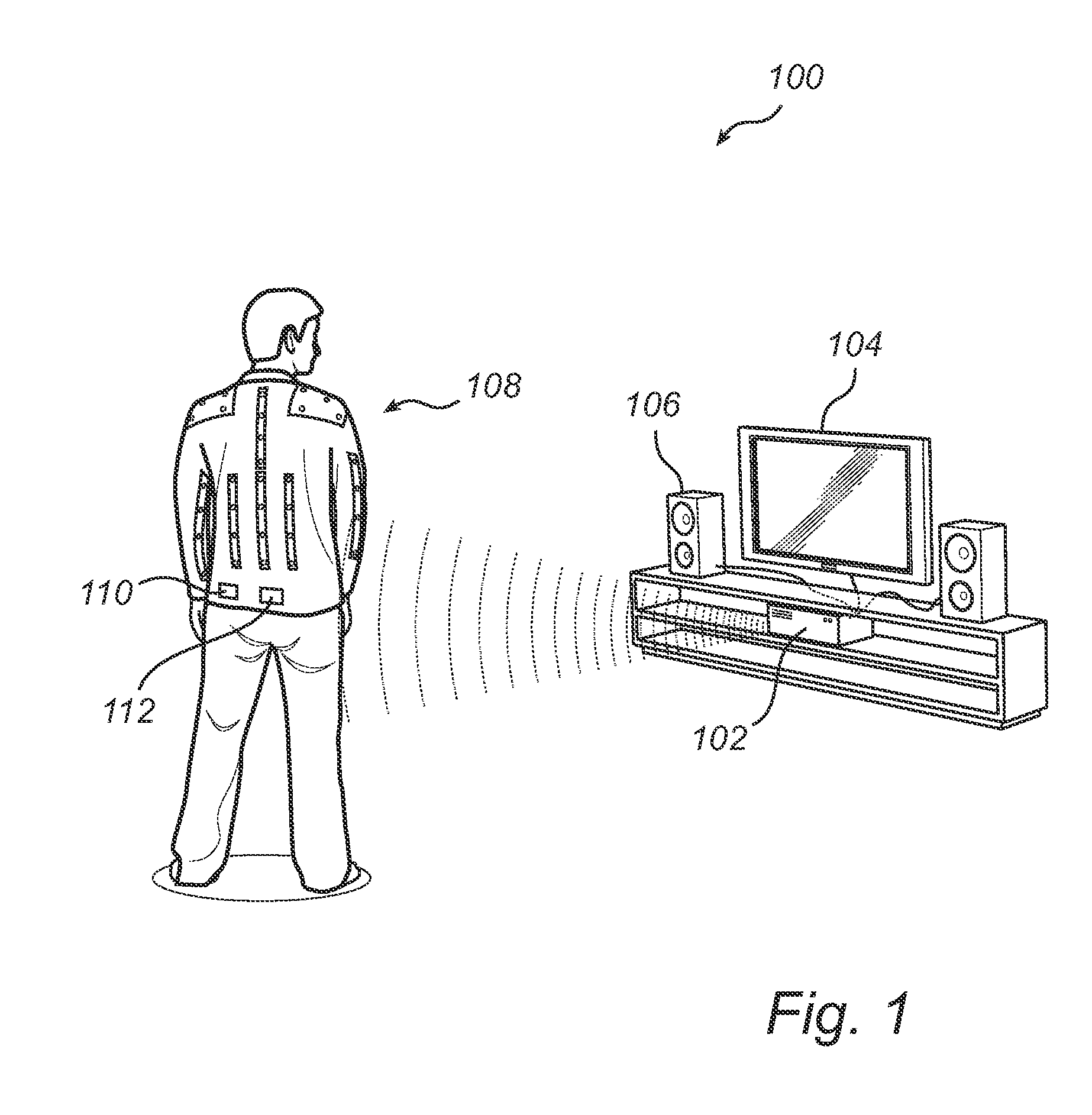

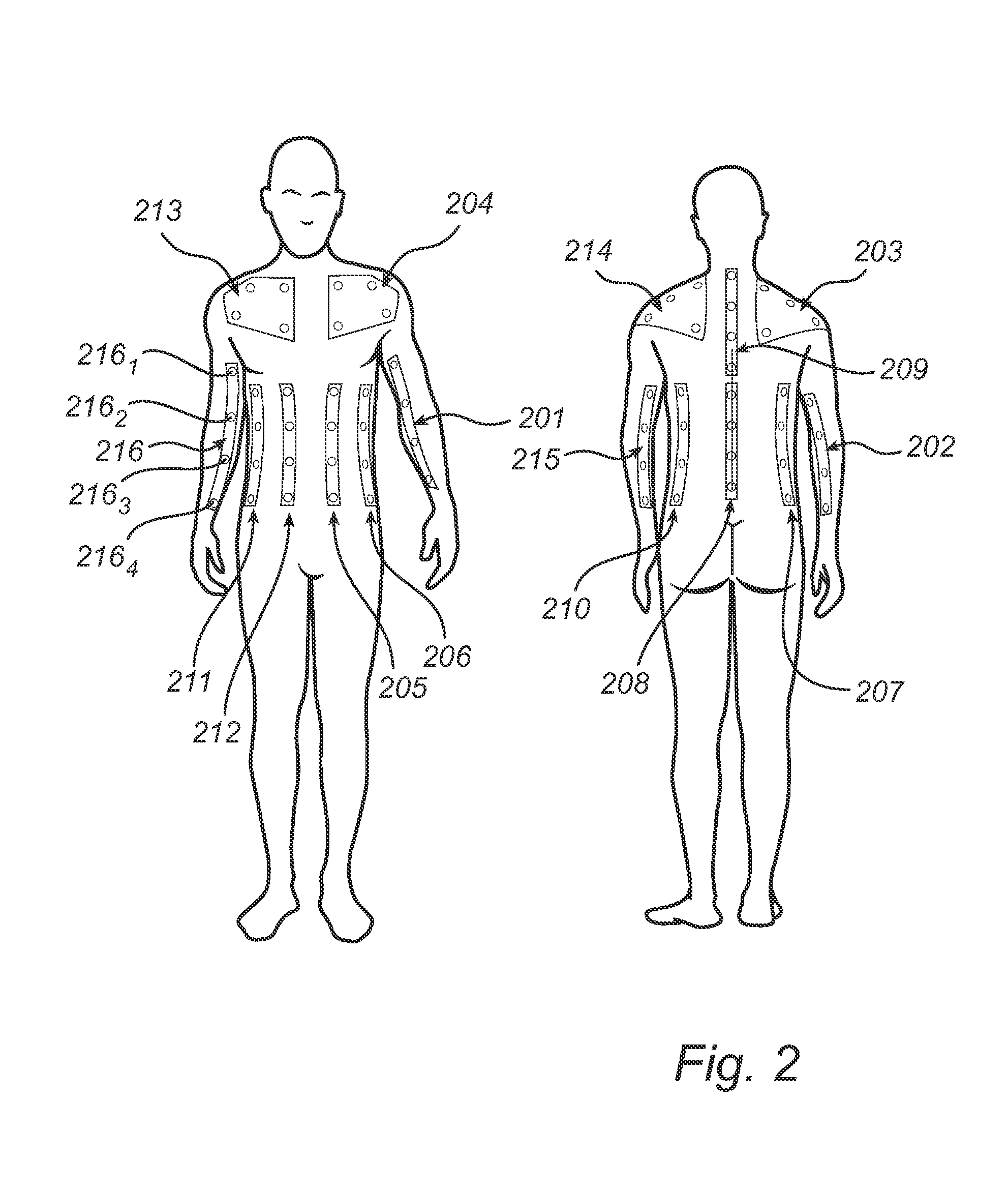

Method and system for conveying an emotion

InactiveUS20110063208A1Enhance versatilityStrengthen emotionRepeater circuitsCathode-ray tube indicatorsBody regionMultimedia information

The present invention relates to a method for conveying an emotion to a person being exposed to multimedia information, such as a media clip, by way of tactile stimulation using a plurality of actuators arranged in a close vicinity of the person's body, the method comprising the step of providing tactile stimulation information for controlling the plurality of actuators, wherein the plurality of actuators are adapted to stimulate multiple body sites in a body region, the tactile stimulation information comprises a sequence of tactile stimulation patterns, wherein each tactile stimulation pattern controls the plurality of actuators in time and space to enable the tactile stimulation of the body region, and the tactile stimulation information is synchronized with the media clip. An advantage with the present invention is thus that emotions can be induced, or strengthened, at the right time (e.g. synchronized with a specific situation in the media clip).

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV

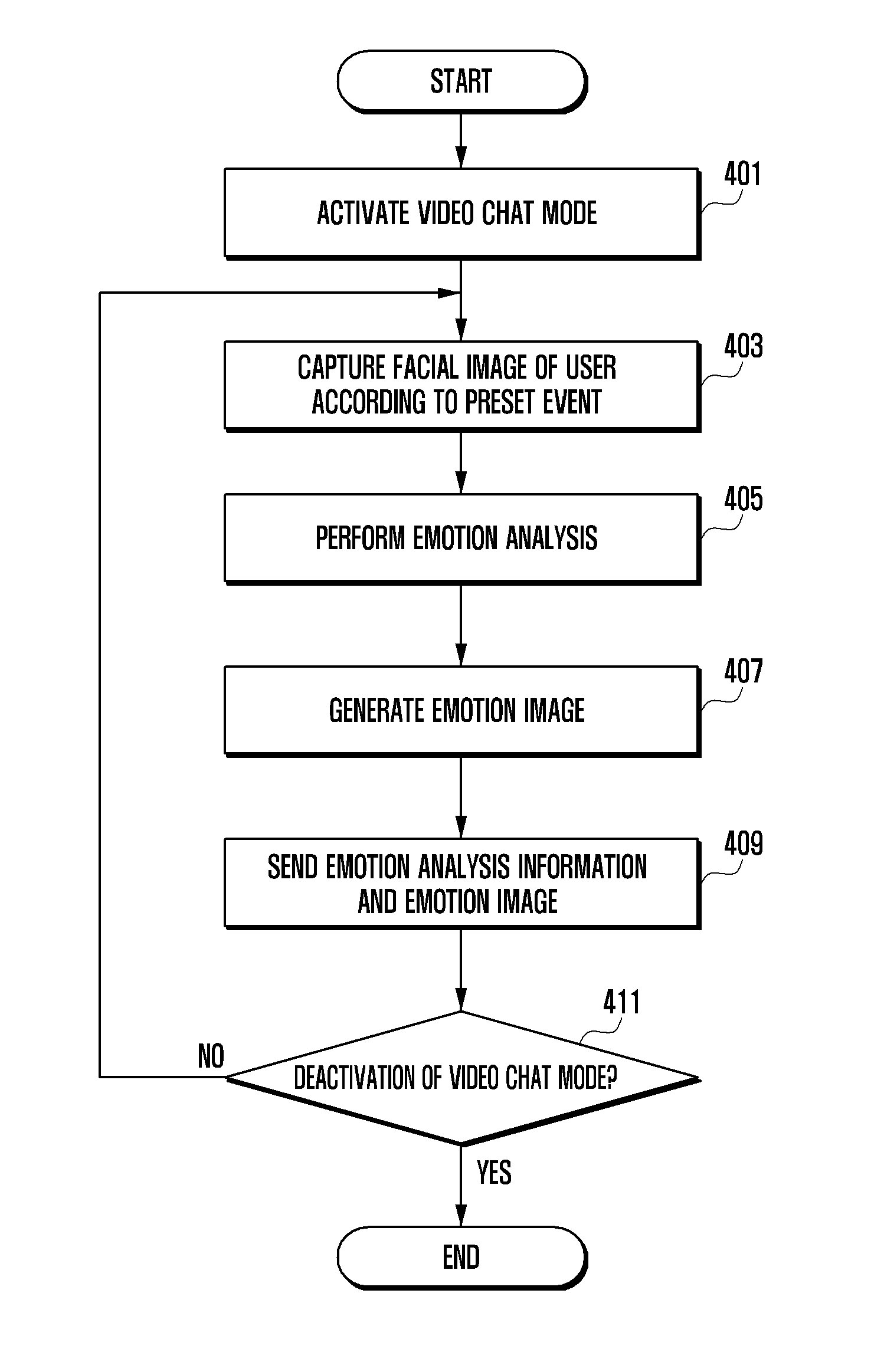

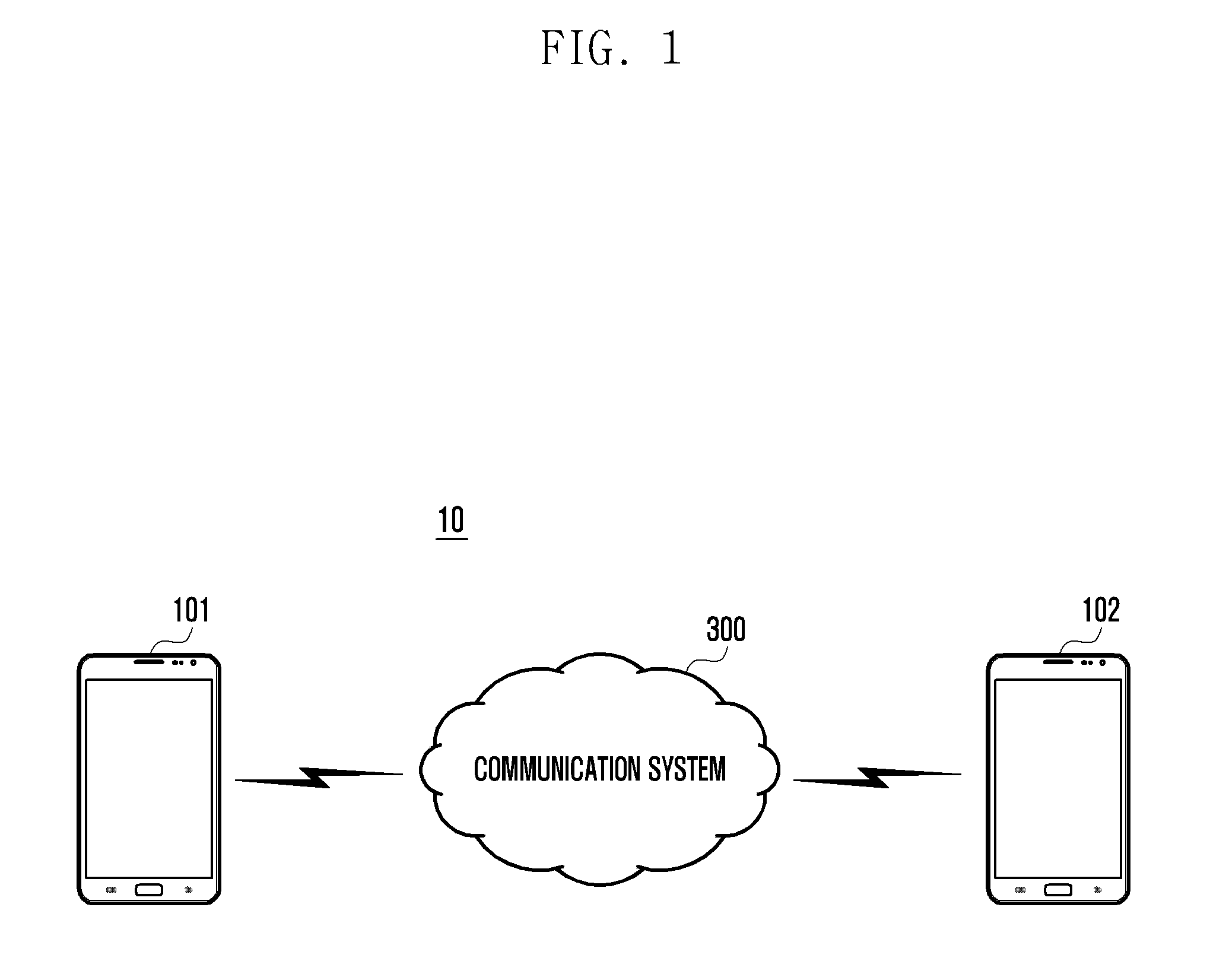

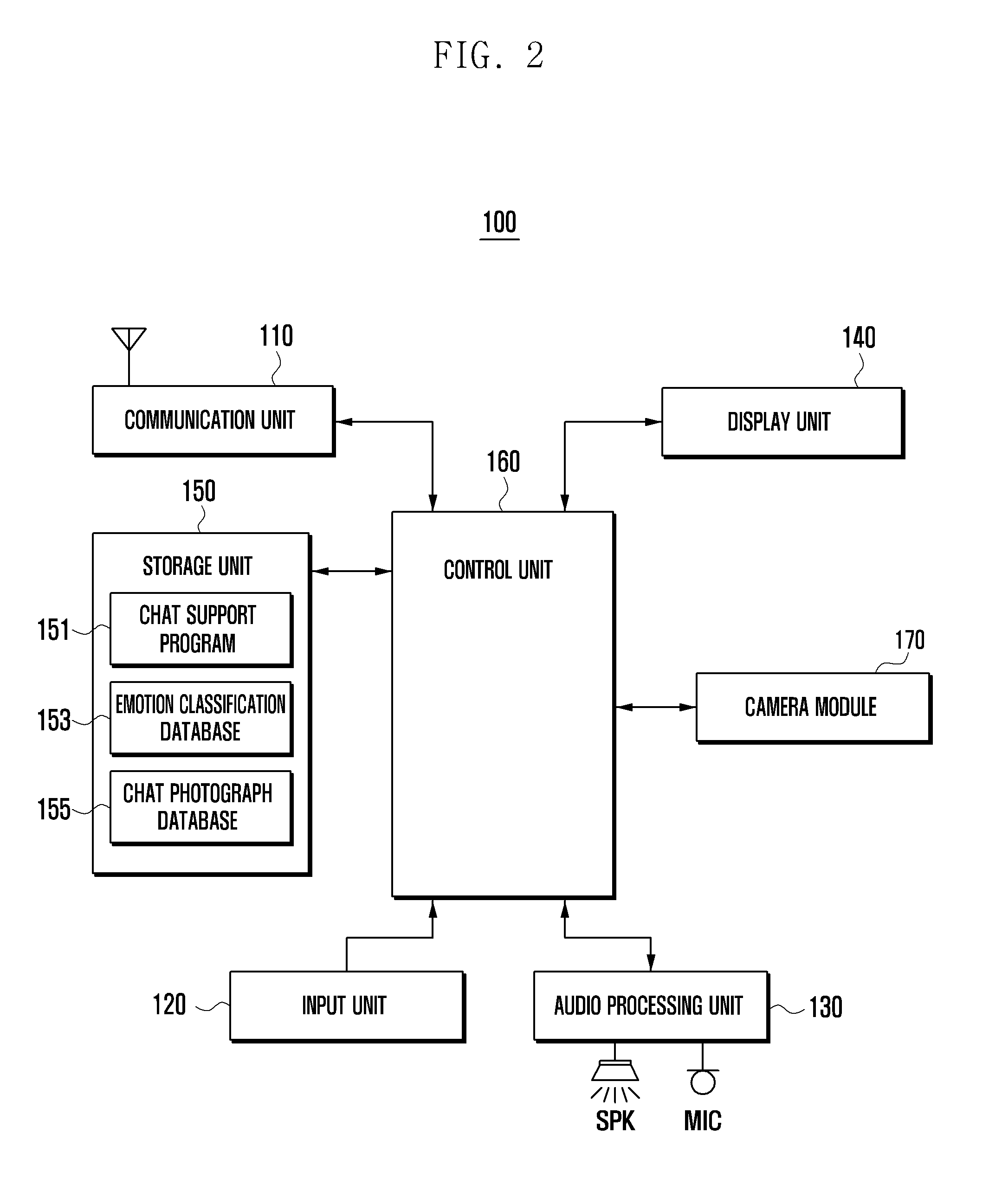

Method for user function operation based on face recognition and mobile terminal supporting the same

ActiveUS20140192134A1Facilitating video call functionEasy data managementTelevision system detailsColor television detailsPattern recognitionComputer module

A mobile device user interface method activates a camera module to support a video chat function and acquires an image of a target object using the camera module. In response to detecting a face in the captured image, the facial image data is analyzed to identify an emotional characteristic of the face by identifying a facial feature and comparing the identified feature with a predetermined feature associated with an emotion. The identified emotional characteristic is compared with a corresponding emotional characteristic of previously acquired facial image data of the target object. In response to the comparison, an emotion indicative image is generated and the generated emotion indicative image is transmitted to a destination terminal used in the video chat.

Owner:SAMSUNG ELECTRONICS CO LTD

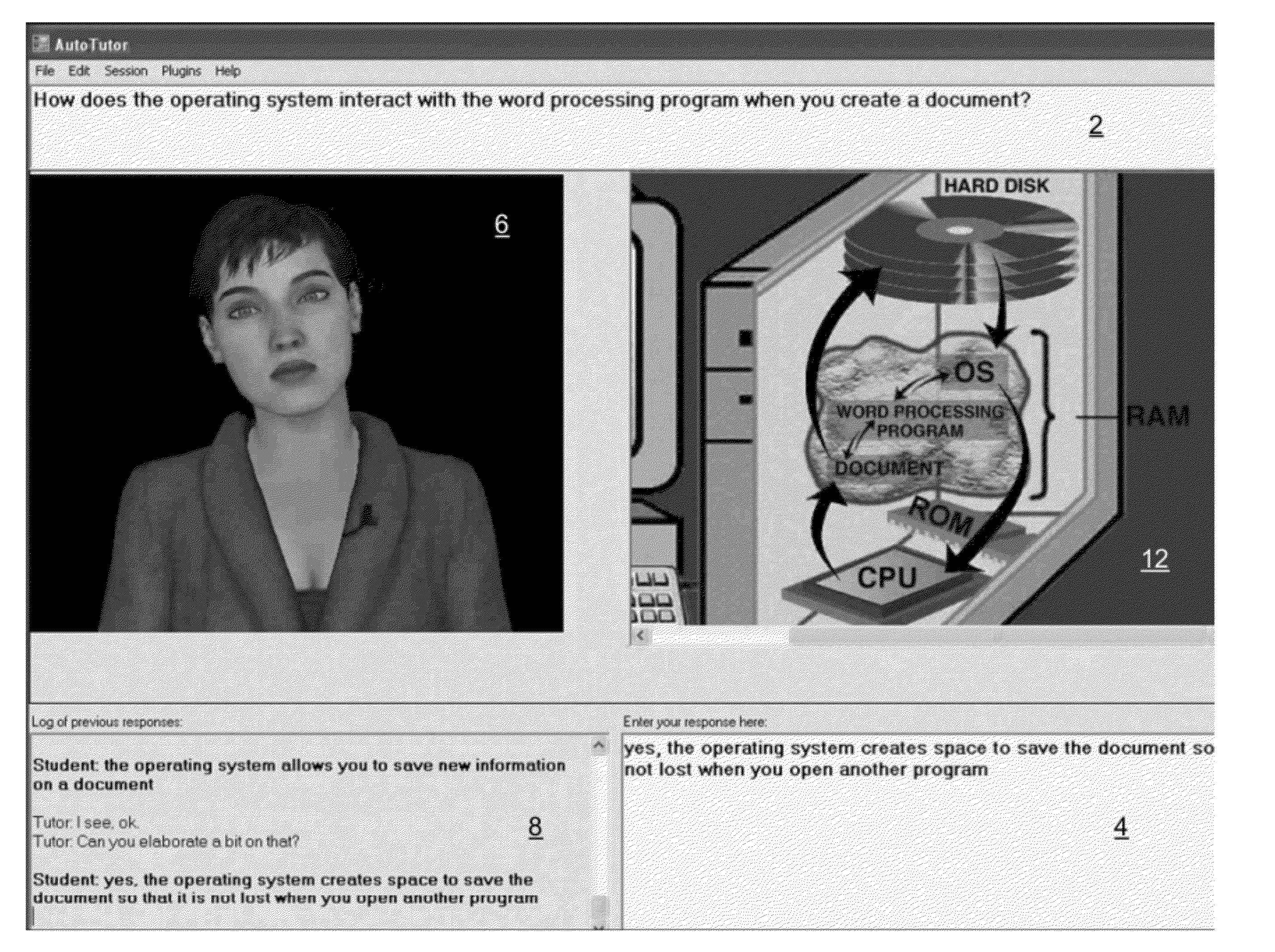

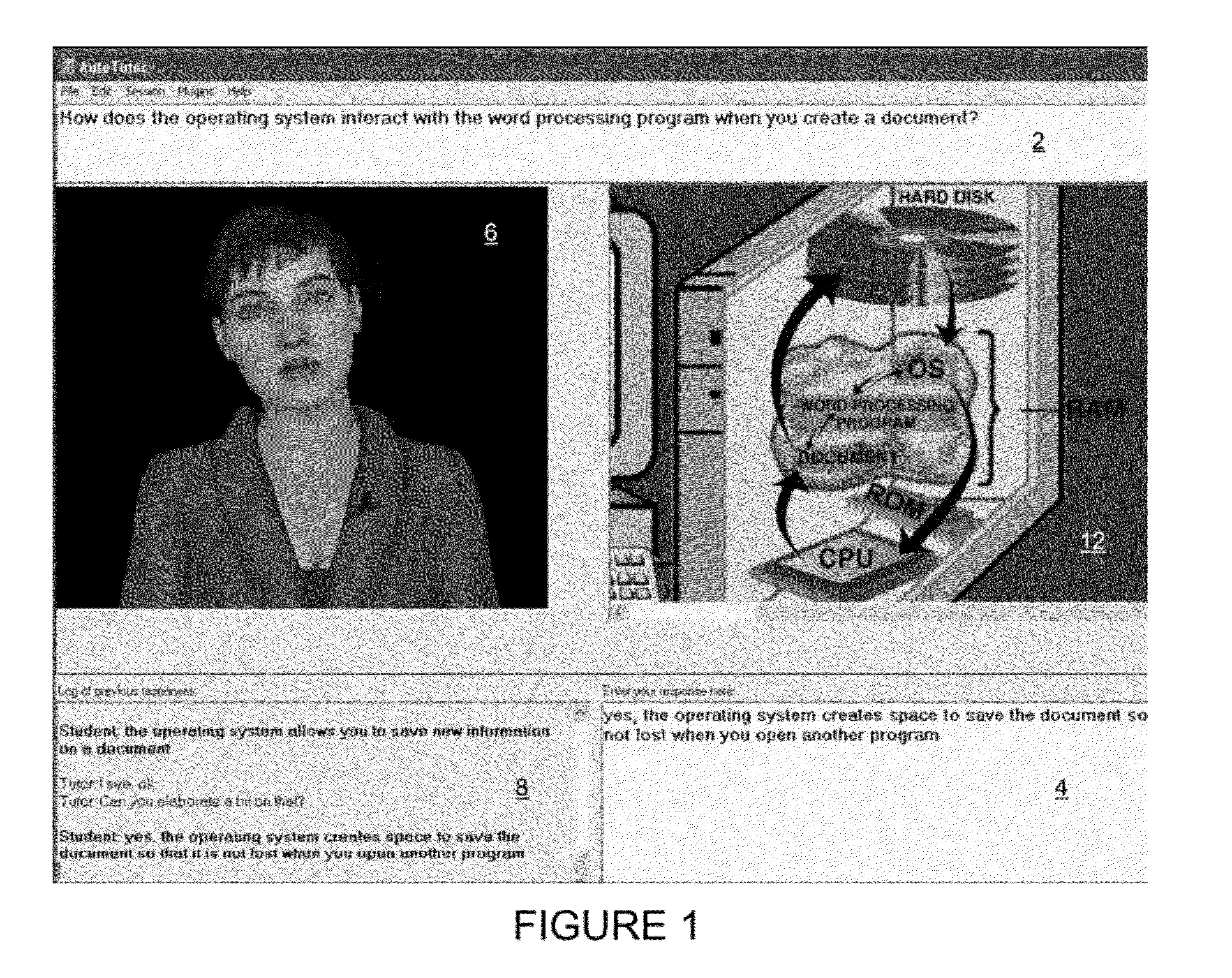

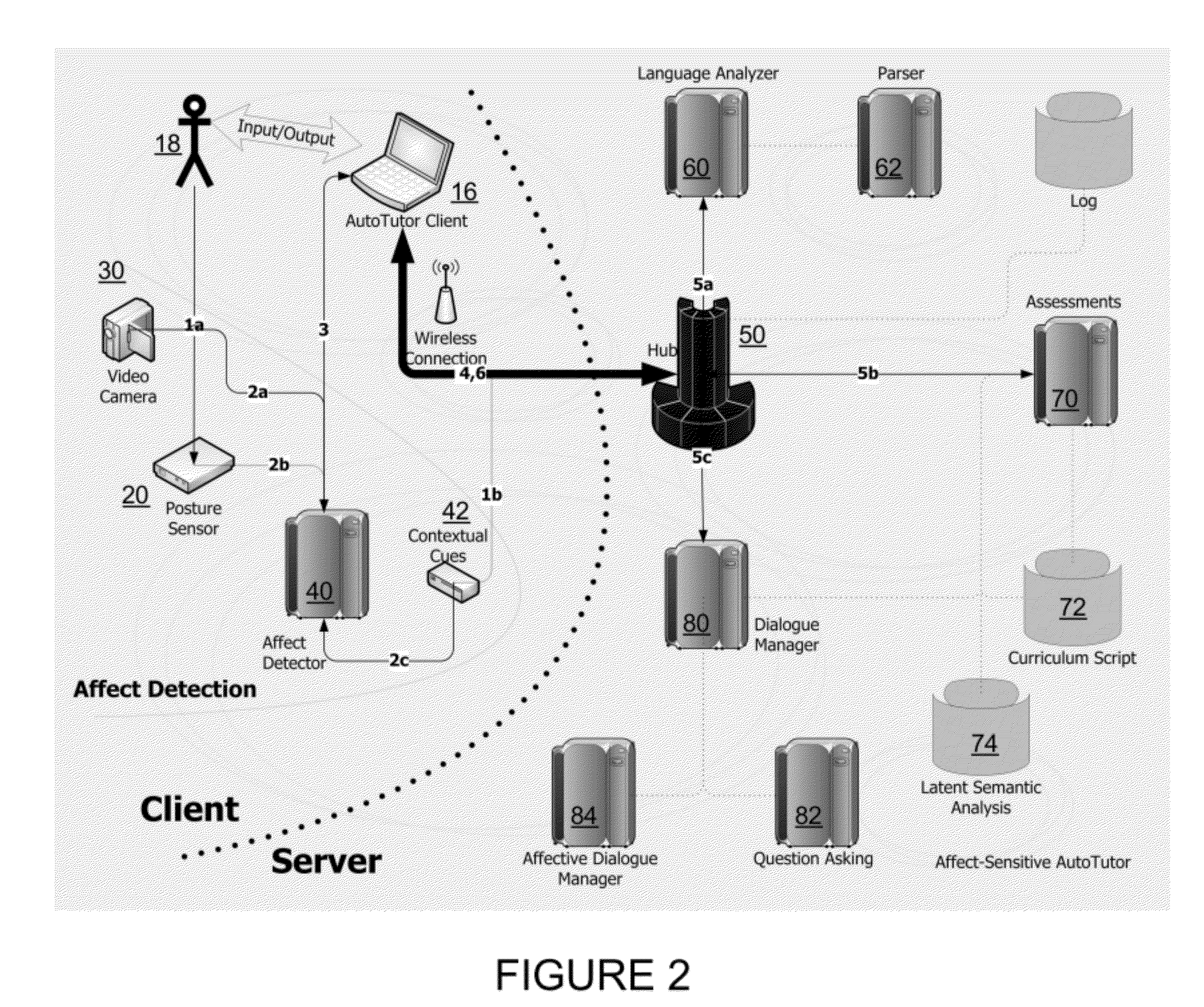

Affect-sensitive intelligent tutoring system

ActiveUS20120052476A1Good conditionMinimizing frustrationElectrical appliancesFrustrationCognitive status

An Intelligent Tutoring System (ITS) system is provided that is able to identify and respond adaptively to the learner's or student's affective states (i.e., emotional states such as confusion. frustration, boredom, and flow / engagement) during a typical learning experience, in addition to adapting to the learner's cognitive states. The system comprises a new signal processing model and algorithm, as well as several non-intrusive sensing devices, and identifies and assesses affective states through dialog assessment techniques, video capture and analysis of the student's face, determination of the body posture of the student, pressure on a pressure sensitive mouse, and pressure on a pressure sensitive keyboard. By synthesizing the output from these measures, the system responds with appropriate conversational and pedagogical dialog that helps the learner regulate negative emotions in order to promote learning and engagement.

Owner:GRAESSER ARTHUR CARL +1

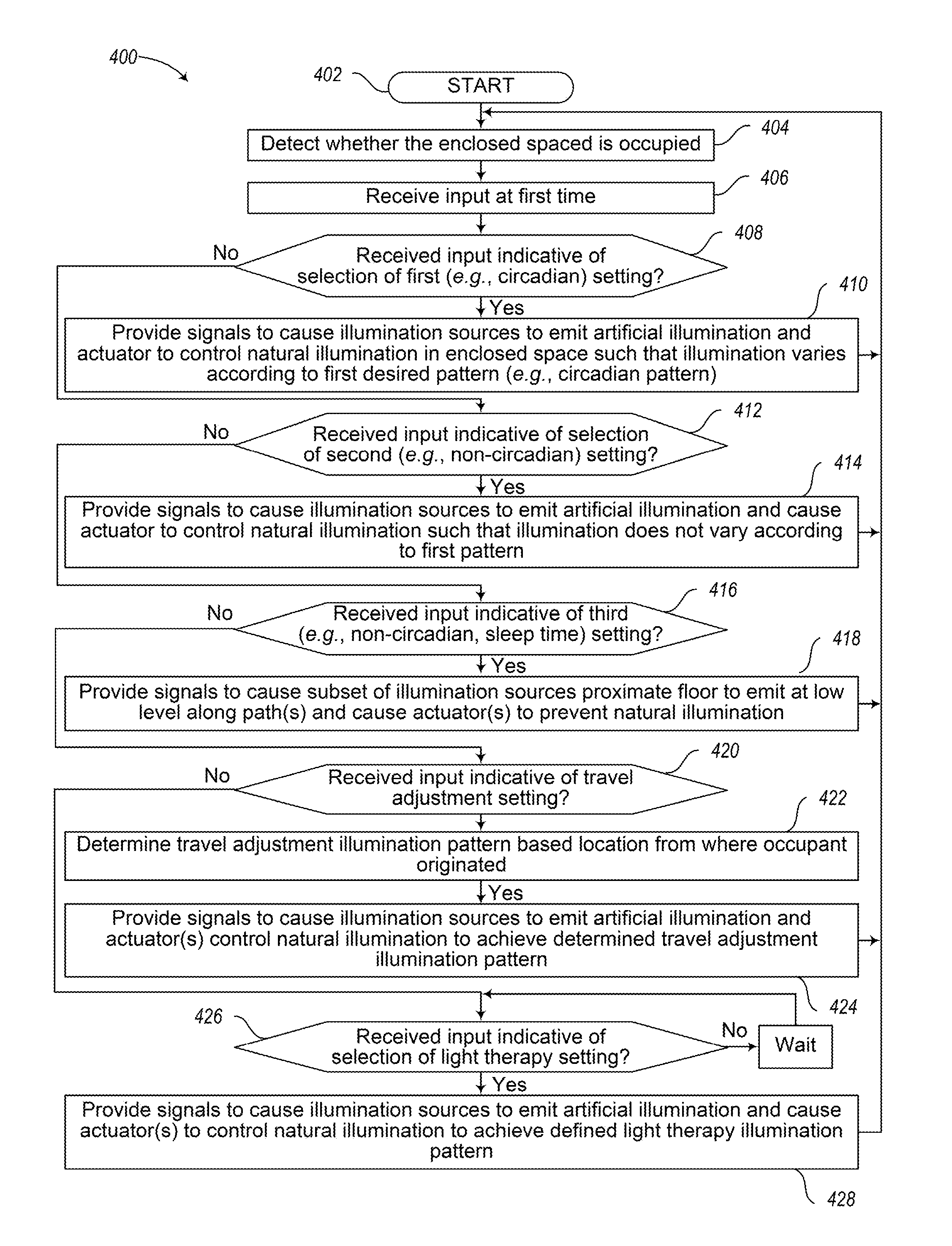

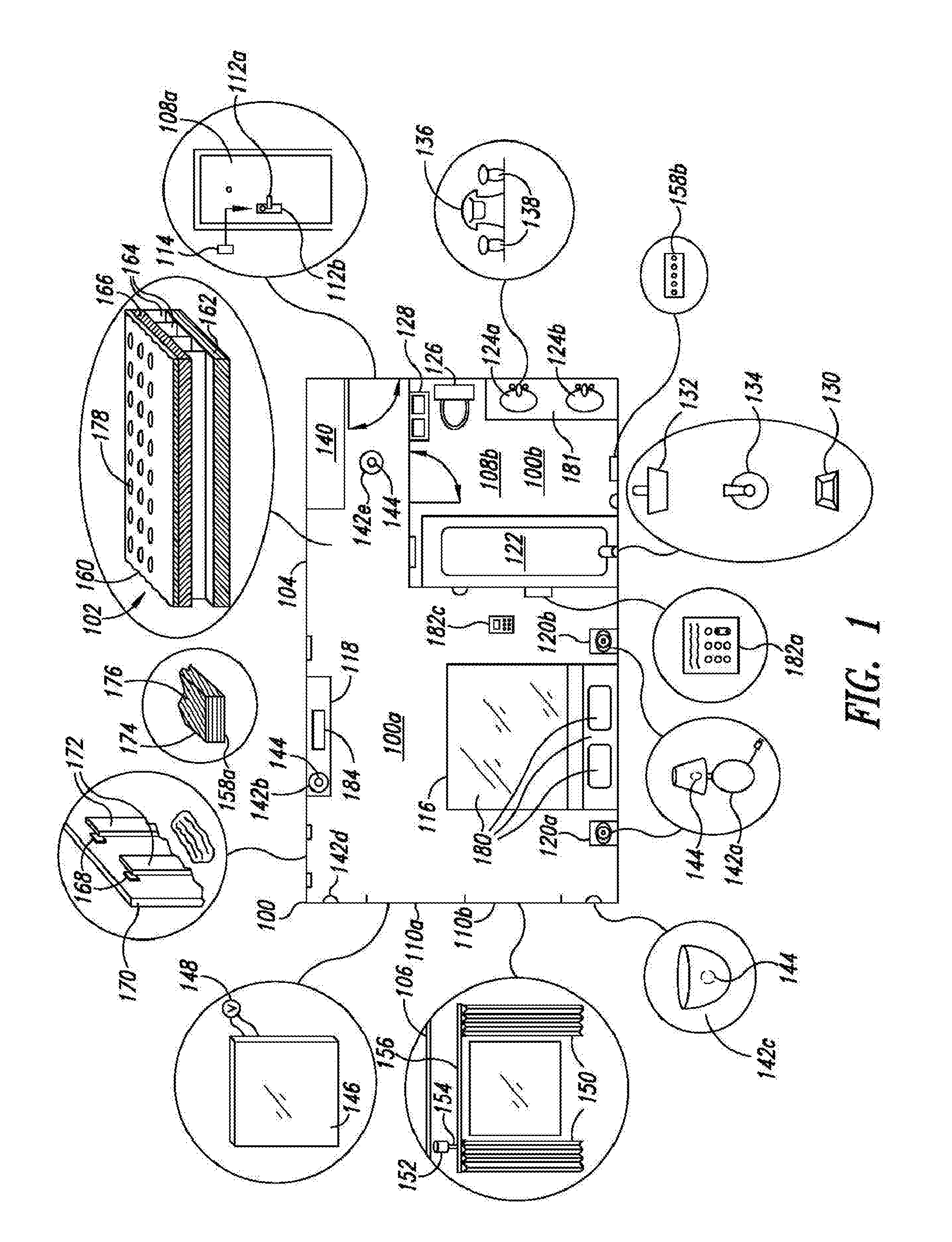

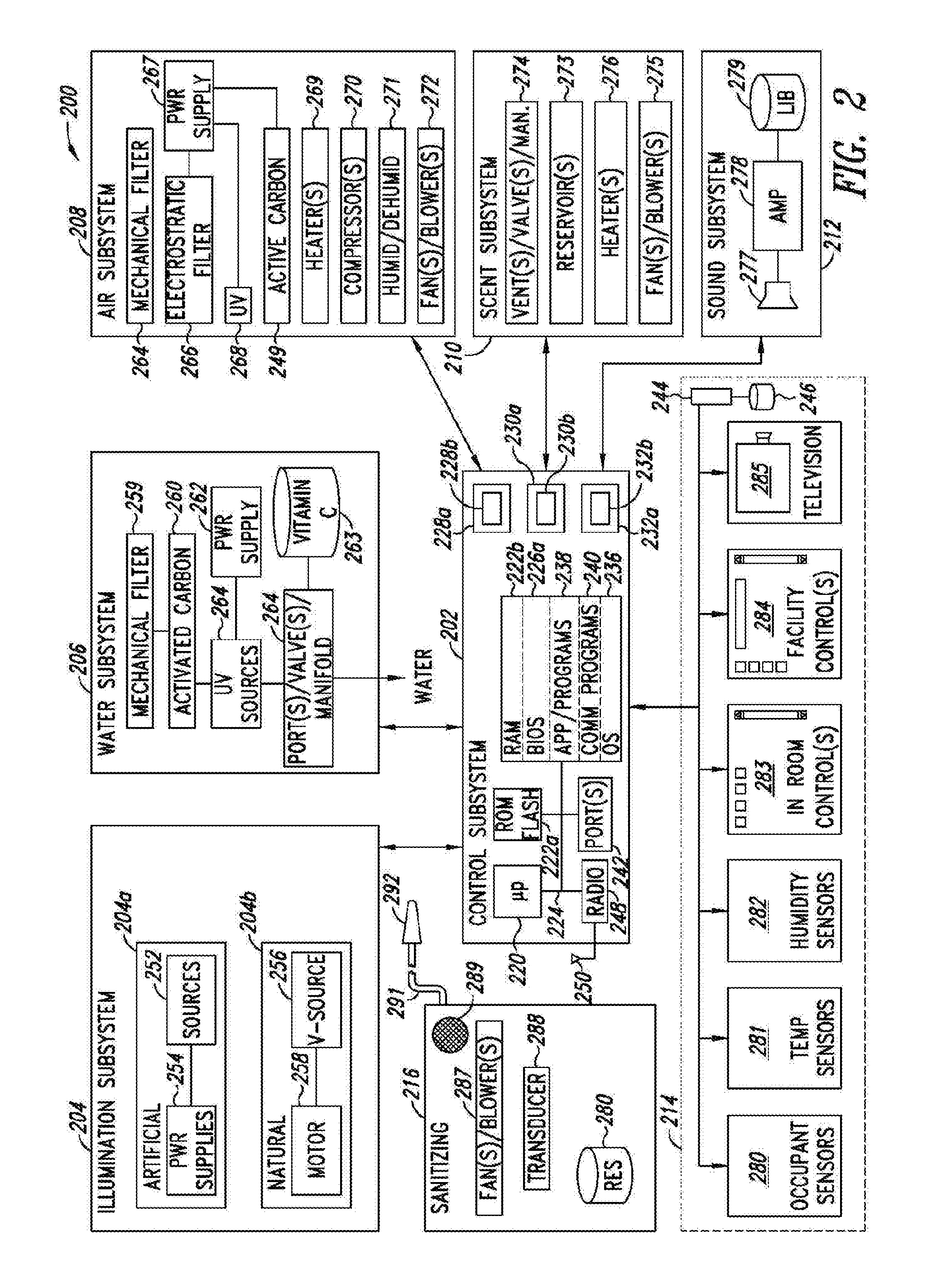

Methods for enhancing wellness associated with habitable environments

ActiveUS20170053068A1Improve the environmentGood environmental characteristicsPhysical therapies and activitiesMechanical apparatusSeasonal Affective DisordersResidence

Environmental characteristics of habitable environments (e.g., hotel or motel rooms, spas, resorts, cruise boat cabins, offices, hospitals and / or homes, apartments or residences) are controlled to eliminate, reduce or ameliorate adverse or harmful aspects and introduce, increase or enhance beneficial aspects in order to improve a “wellness” or sense of “wellbeing” provided via the environments. Control of intensity and wavelength distribution of passive and active Illumination addresses various issues, symptoms or syndromes, for instance to maintain a circadian rhythm or cycle, adjust for “jet lag” or season affective disorder, etc. Air quality and attributes are controlled. Scent(s) may be dispersed. Noise is reduced and sounds (e.g., masking, music, natural) may be provided. Environmental and biometric feedback is provided. Experimentation and machine learning are used to improve health outcomes and wellness standards.

Owner:DELOS LIVING LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com