Patents

Literature

46 results about "Emotion perception" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Emotion perception refers to the capacities and abilities of recognizing and identifying emotions in others, in addition to biological and physiological processes involved. Emotions are typically viewed as having three components: subjective experience, physical changes, and cognitive appraisal; emotion perception is the ability to make accurate decisions about another's subjective experience by interpreting their physical changes through sensory systems responsible for converting these observed changes into mental representations. The ability to perceive emotion is believed to be both innate and subject to environmental influence and is also a critical component in social interactions. How emotion is experienced and interpreted depends on how it is perceived. Likewise, how emotion is perceived is dependent on past experiences and interpretations. Emotion can be accurately perceived in humans. Emotions can be perceived visually, audibly, through smell and also through bodily sensations and this process is believed to be different from the perception of non-emotional material.

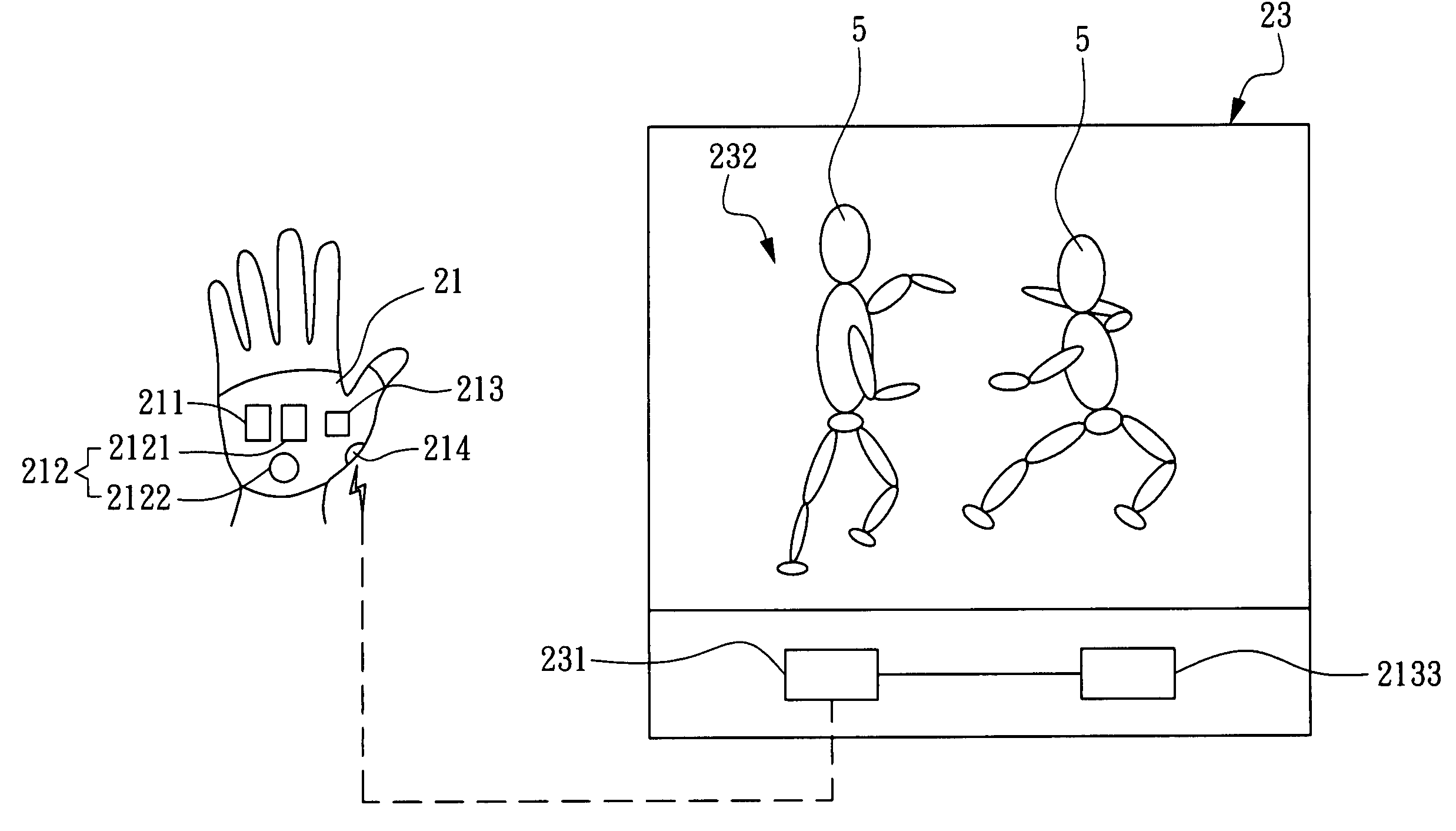

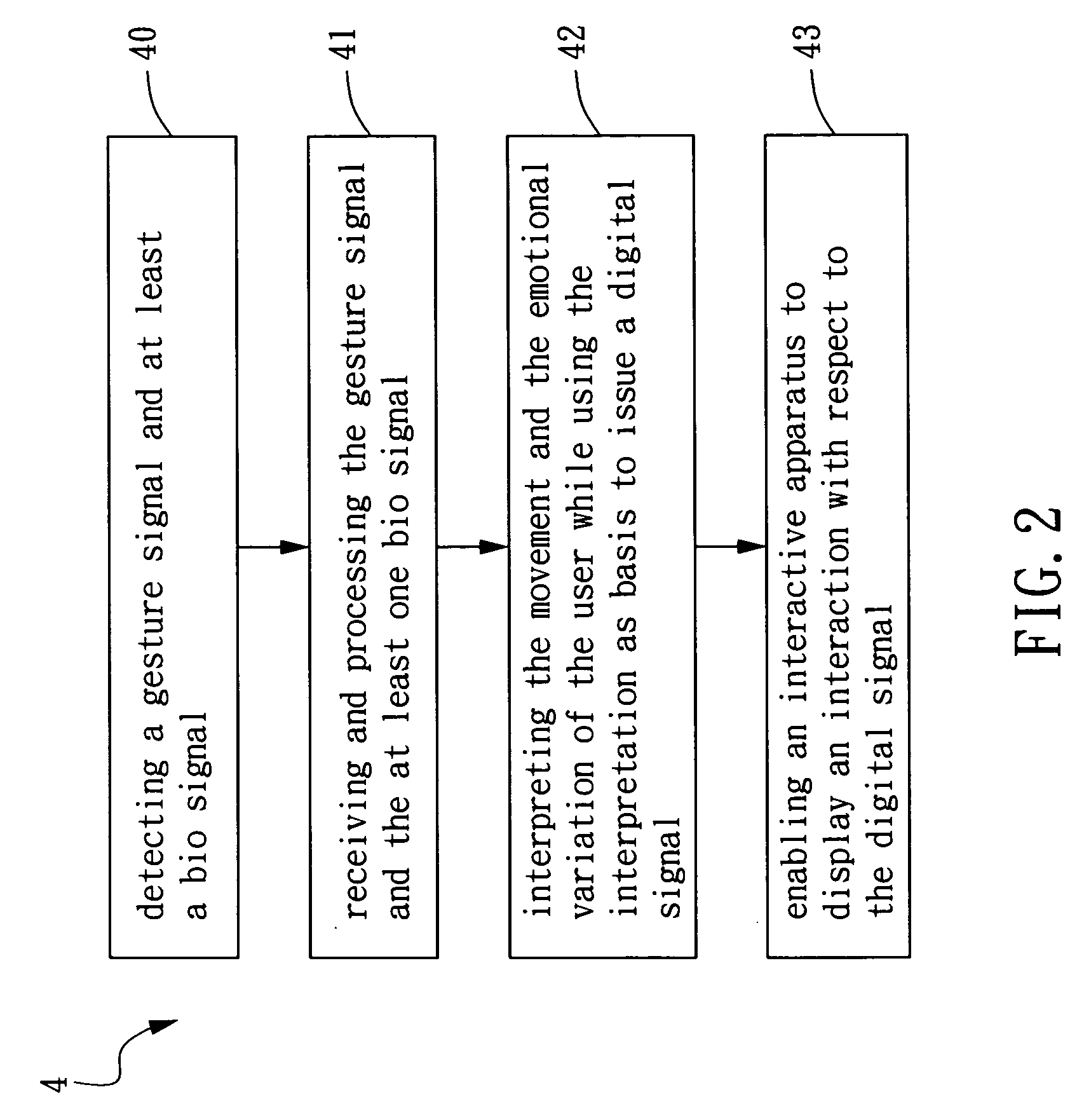

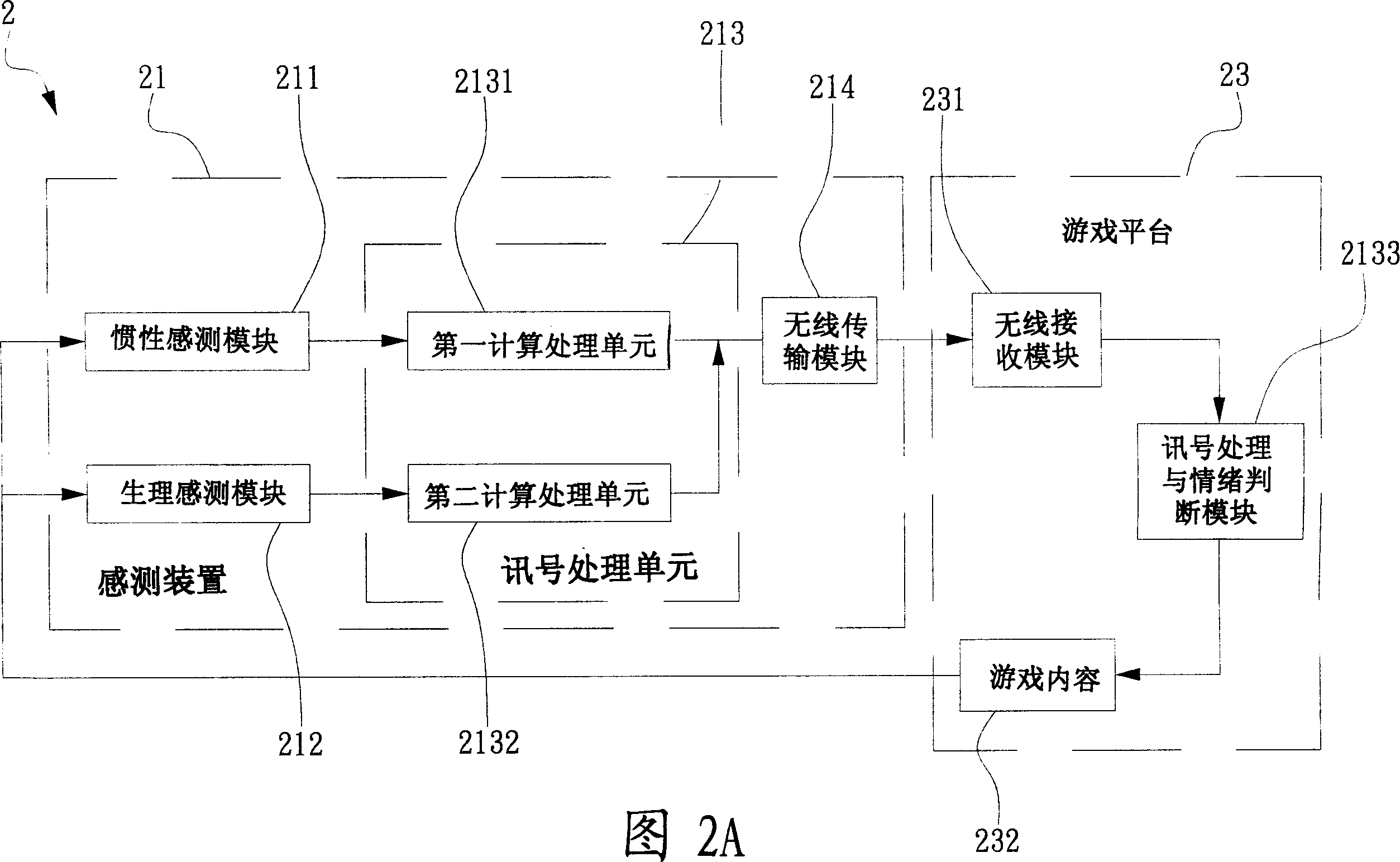

Interactive gaming method and apparatus with emotion perception ability

InactiveUS20070149282A1Compact and commercially feasibleRelease fatigueVideo gamesSpecial data processing applicationsEmotion perceptionPower flow

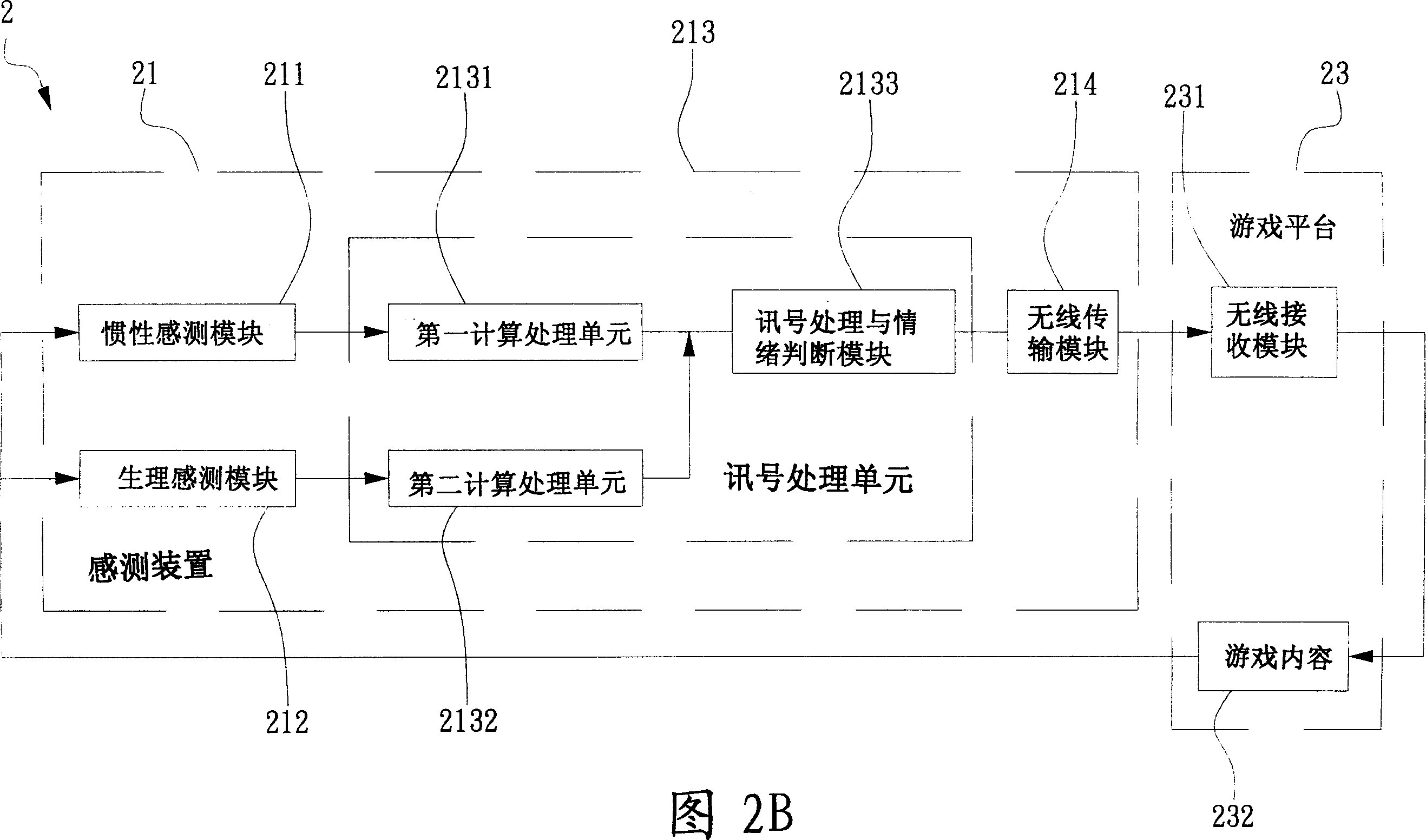

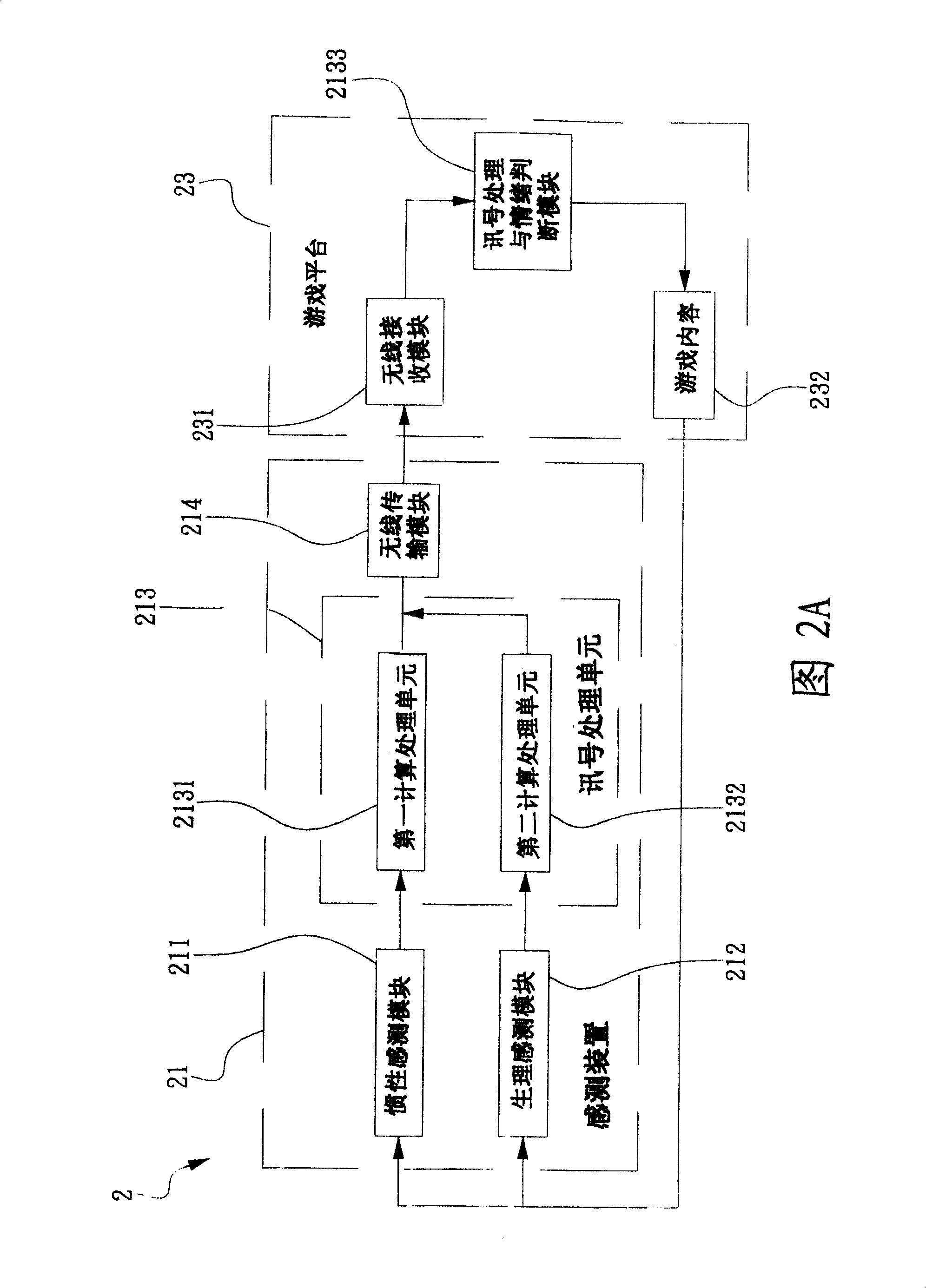

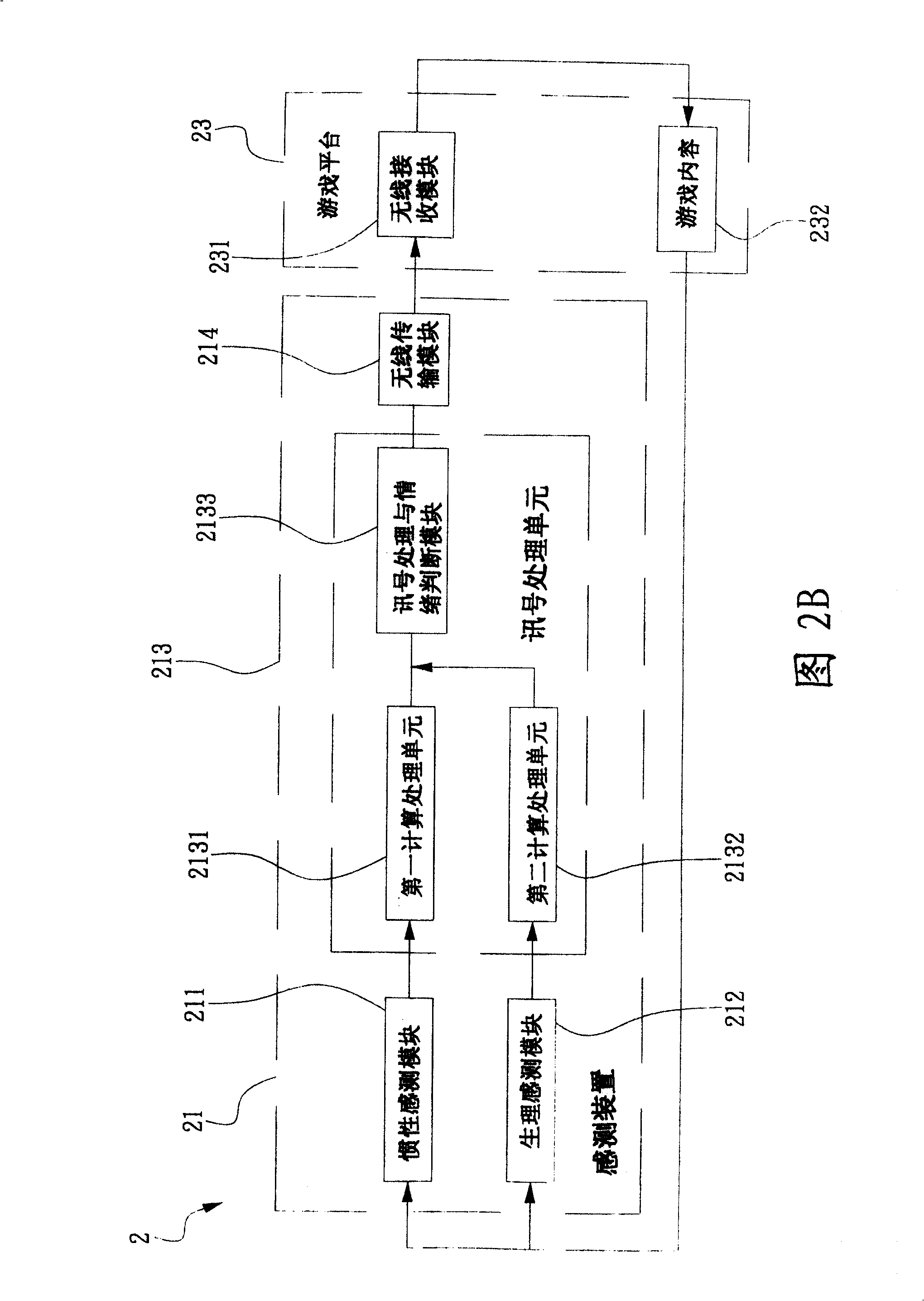

The present invention relates to an interactive gaming method and apparatus with emotion perception ability, by which not only gestures of a user can be detected and used as inputs for controlling a game, but also physiological attributes of the user such as heart beats, galvanic skin response (GSR), etc., can be sensed and used as emotional feedbacks of the game affecting the user. According to the disclosed method, the present invention further provides an interactive gaming apparatus that will interpret the signals detected by the inertial sensing module and the bio sensing module and use the interpretation as a basis for evaluating the movements and emotions of a user immediately, and then the evaluation obtained by the interactive gaming apparatus is sent to the gaming platform to be used as feedbacks for controlling the game to interact with the user accordingly. Therefore, by the method and apparatus according to the present invention, not only the harmonics of human motion can be trained to improve, but also the self-control of a user can be enhanced.

Owner:IND TECH RES INST

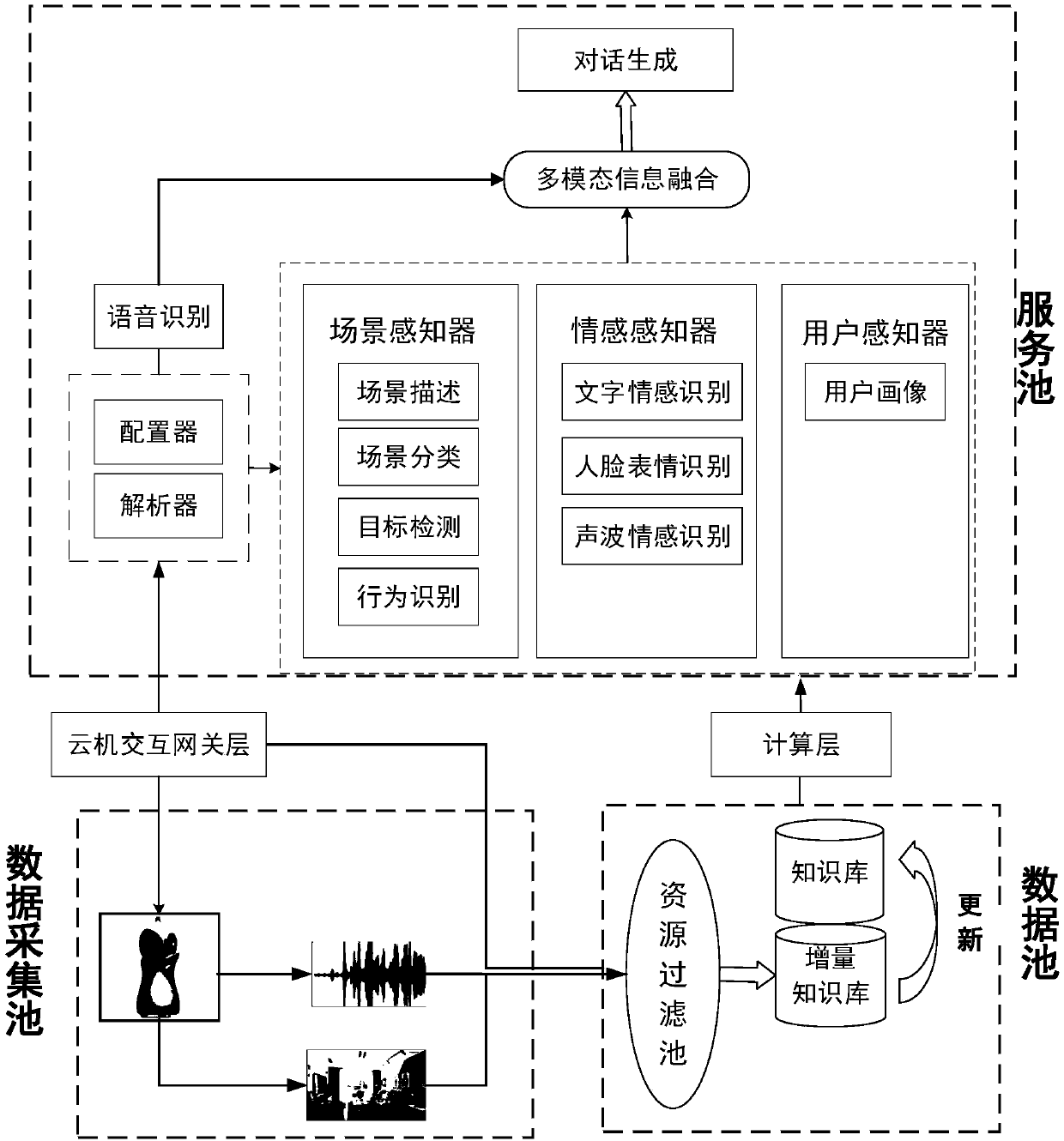

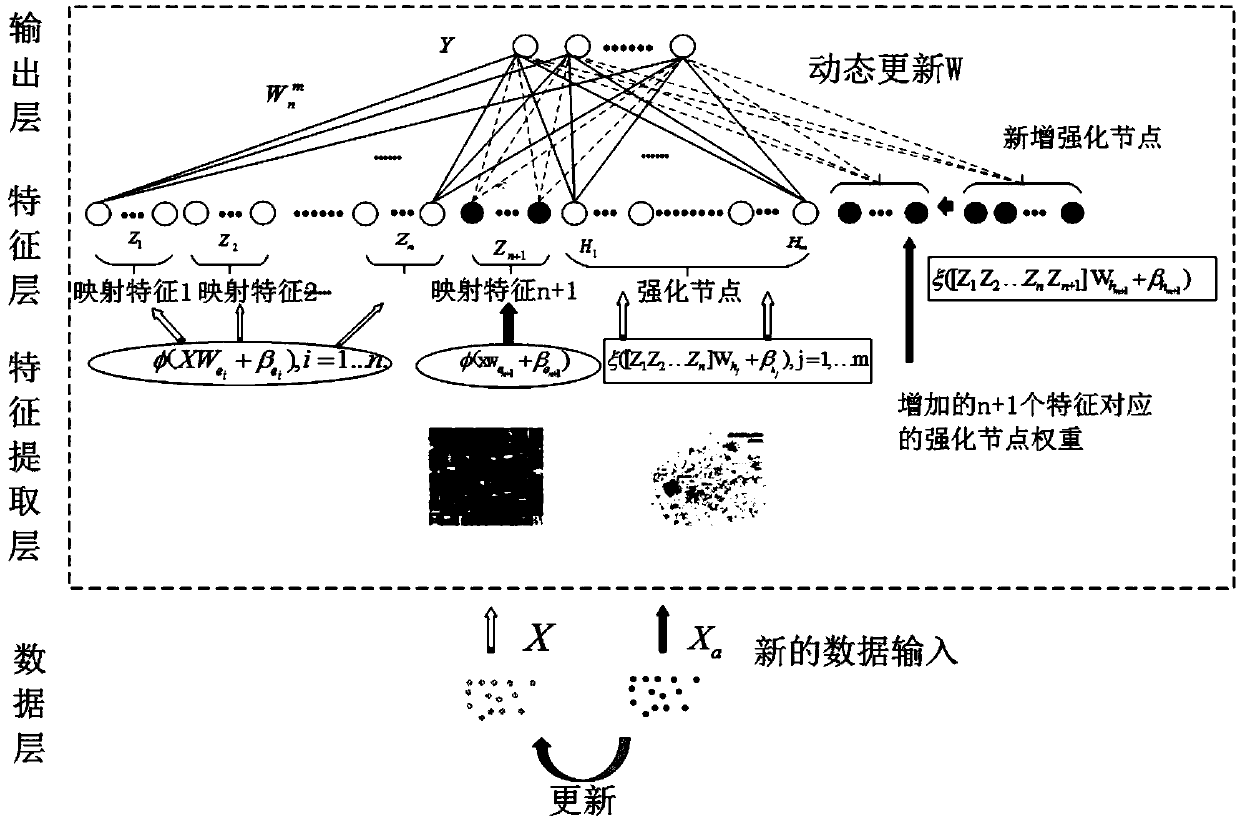

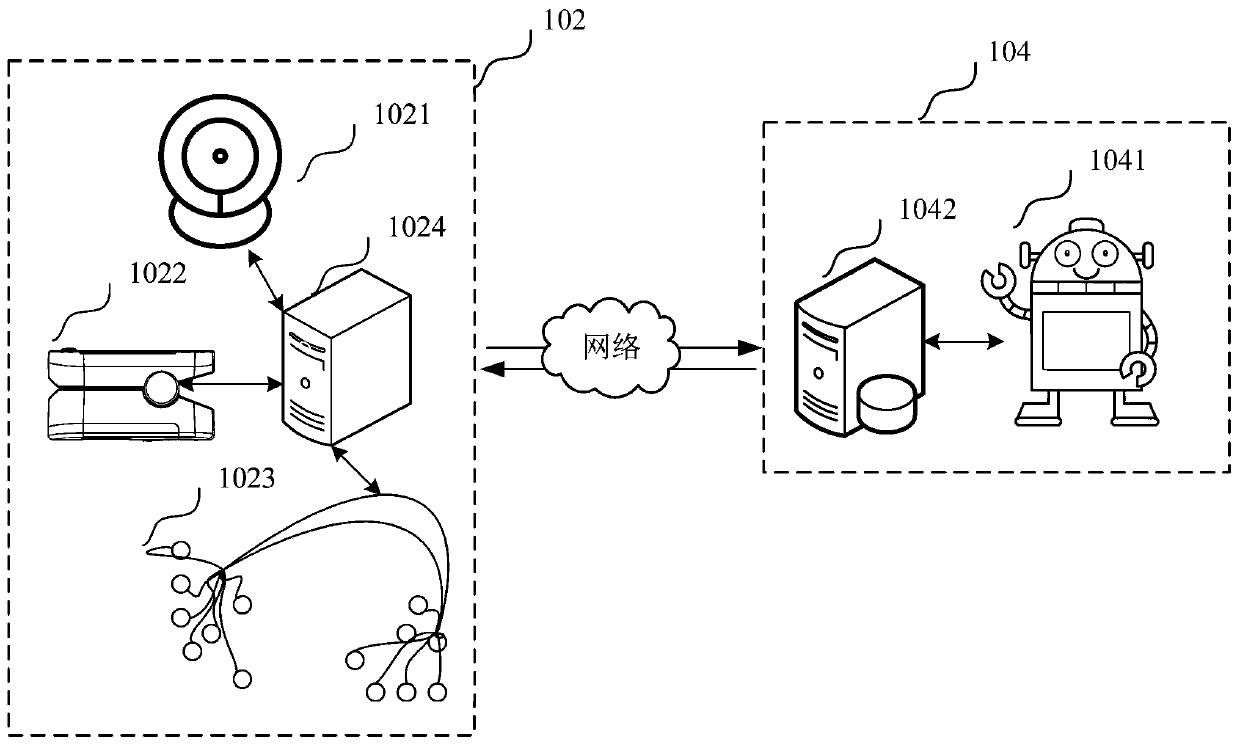

Home service robot cloud multimode dialogue method, device and system

ActiveCN109658928AImplement updateIncrease profitNatural language data processingSpeech recognitionEmotion perceptionService model

The invention discloses a home service robot cloud multimode dialogue method, device and system. The method includes the steps: receiving user voice information and scene image information in real time; converting the user voice information into text information, and performing word segmentation and named entity identification to determine dialogue types; preprocessing the scene image information;screening preprocessing image information and processed text information, adding the information into an incremental knowledge base, performing incremental model training when dialogue service load rate is smaller than a threshold value, and updating a dialogue service model; performing scene perception, user perception and emotion perception on the preprocessing image information and / or processed text information to obtain scene perception information, user perception information and emotion perception information; combining the processed text information with the scene perception information, the user perception information and / or the emotion perception information according to different dialogue types to generate interactive dialogue information of a user and a robot through the dialogue service model.

Owner:SHANDONG UNIV

Emotion perception interdynamic recreational apparatus

InactiveCN1991692AAdd funReduce volumePsychotechnic devicesInput/output processes for data processingEmotion perceptionPhysiologic States

The invention relates to an emotion perception interactive amusement apparatus that uses the inertia sensing module to sense the user's action as the input judgment of game control, and adds the physiological sensing module to sense the physiological morphological feature of heartbeat and skin as the emotion state. The emotion perception interactive amusement apparatus can unscramble the signal sensed by the inertia sensing module and the physiological sensing module to know the real-time emotion and action, and feedback to the game platform to generate the interactive entertainment effect, and it can train the coordination of user's body and control the self emotion to provide a body and mind amusement apparatus.

Owner:IND TECH RES INST

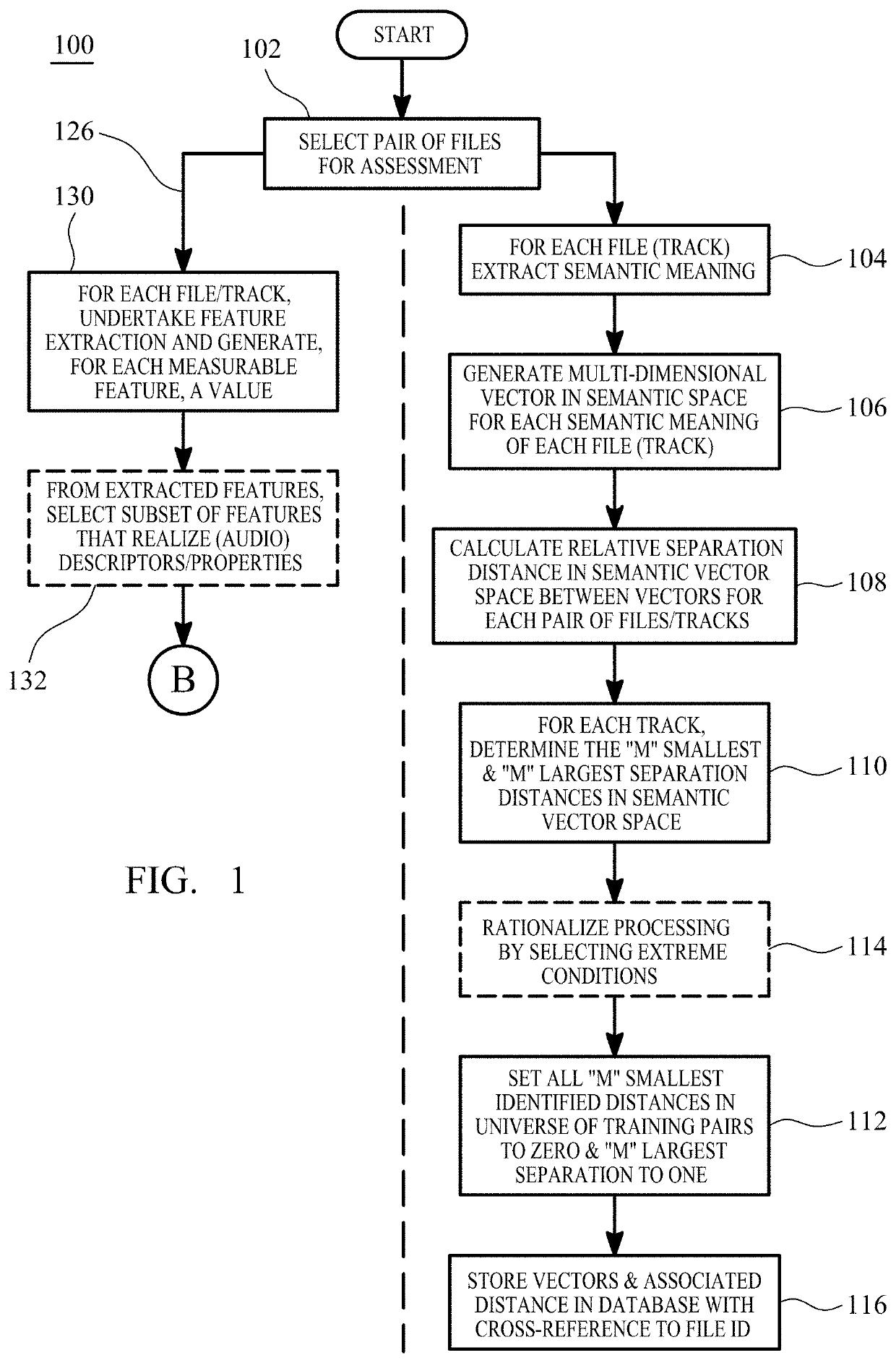

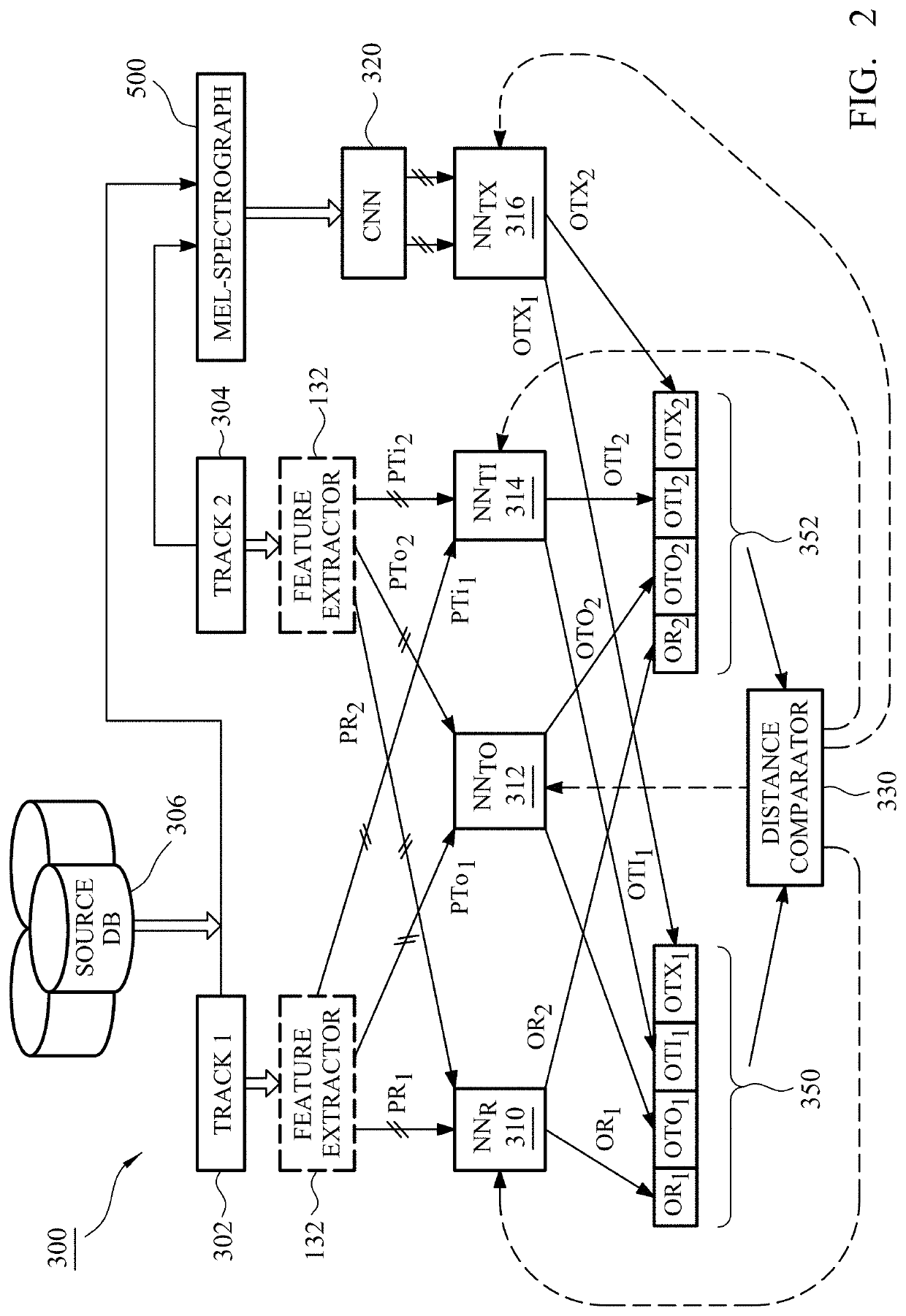

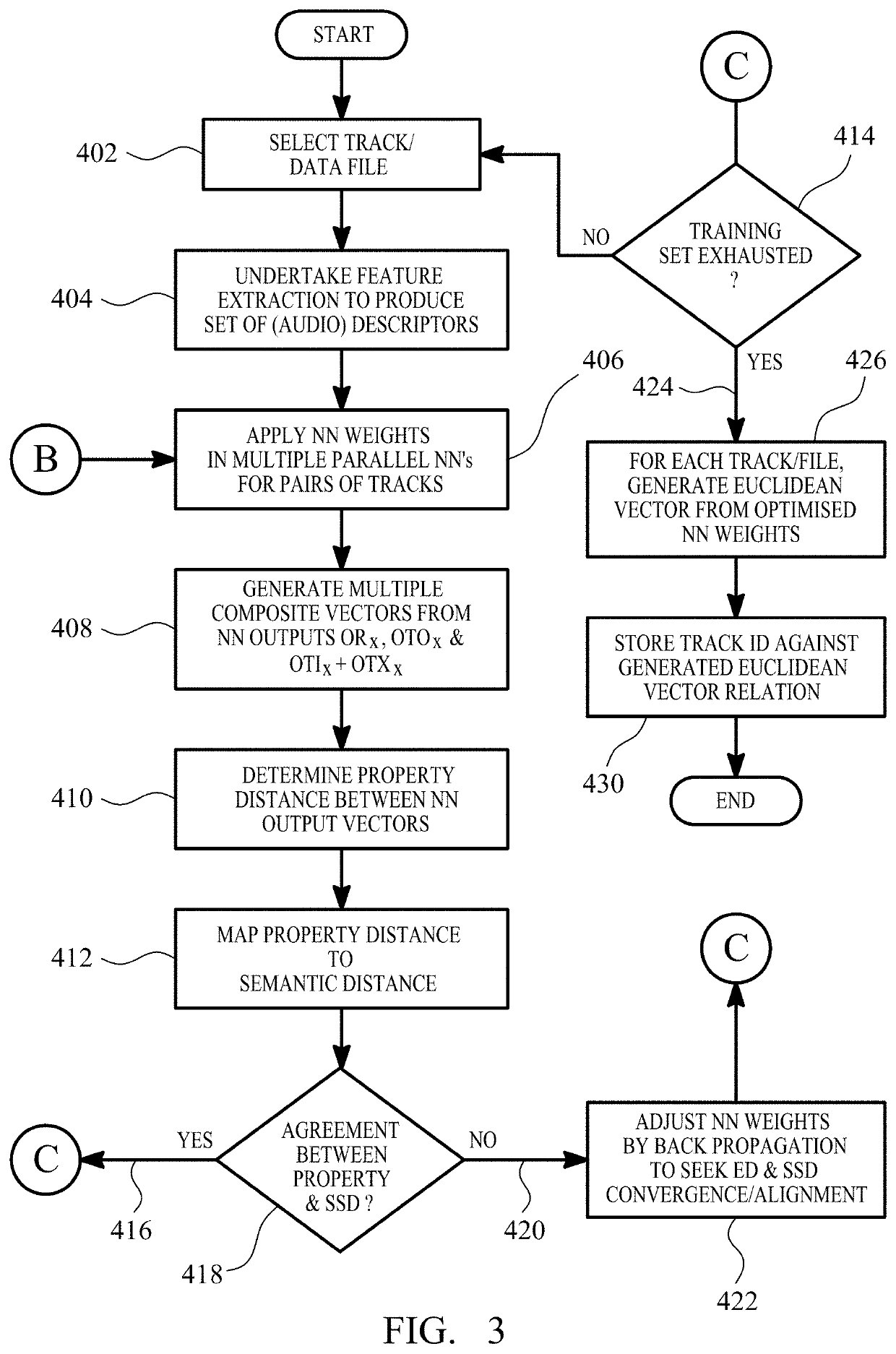

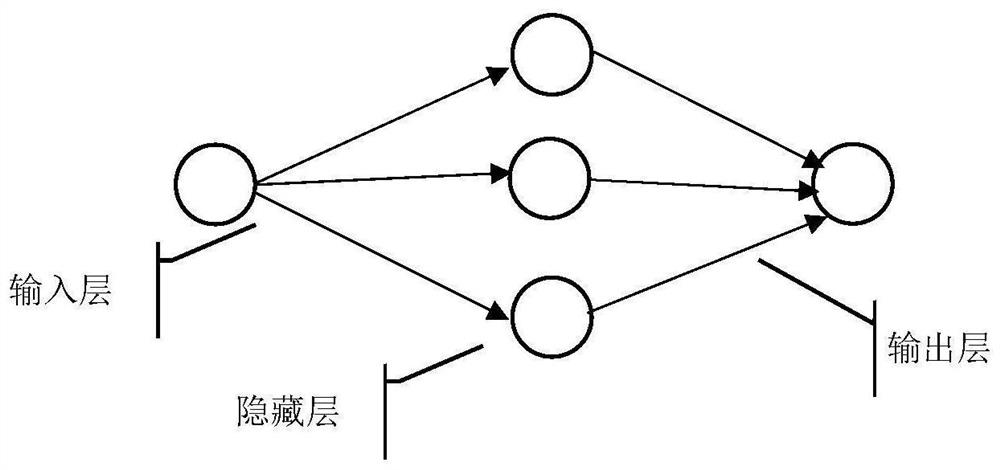

Method of training a neural network and related system and method for categorizing and recommending associated content

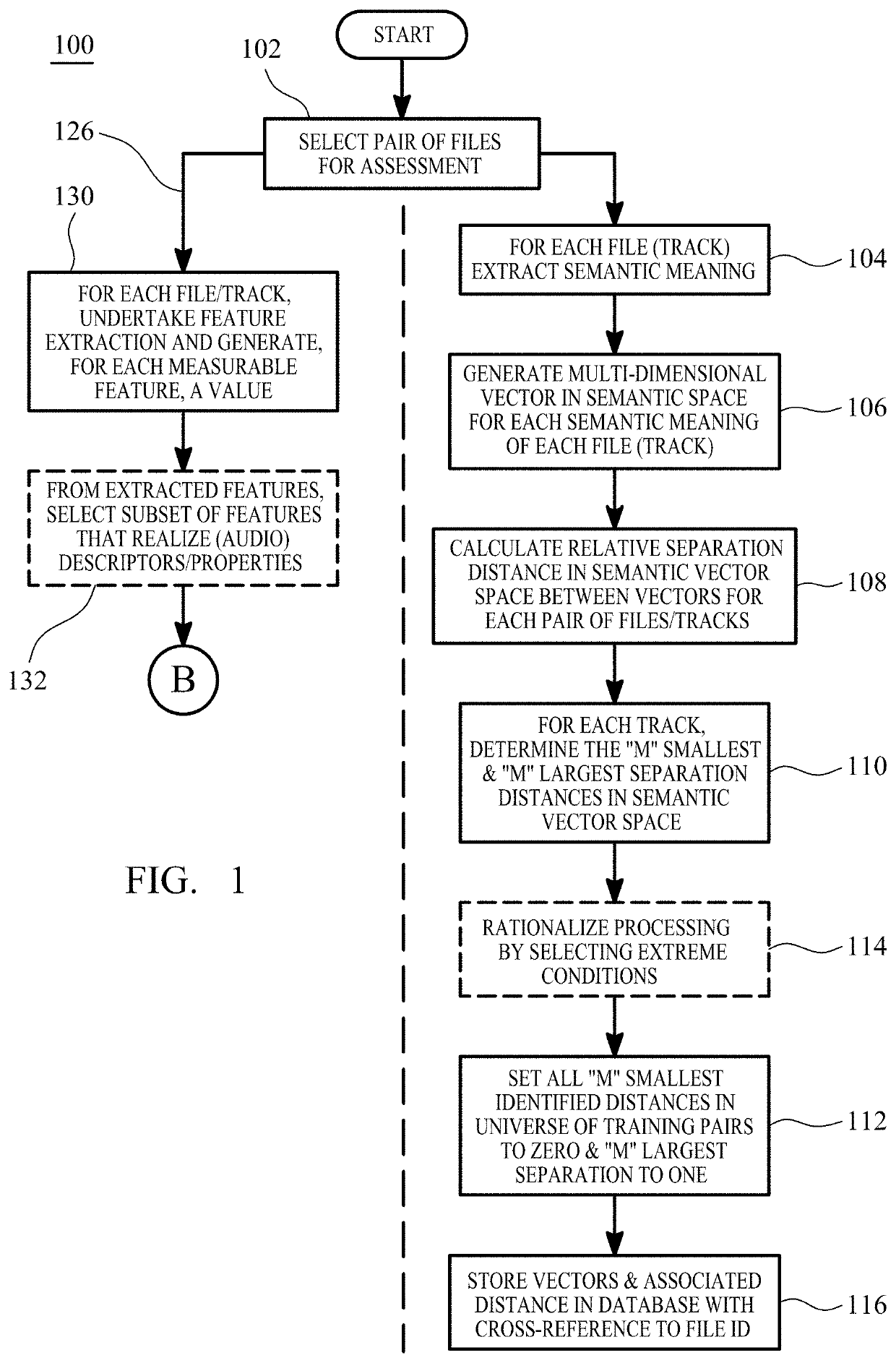

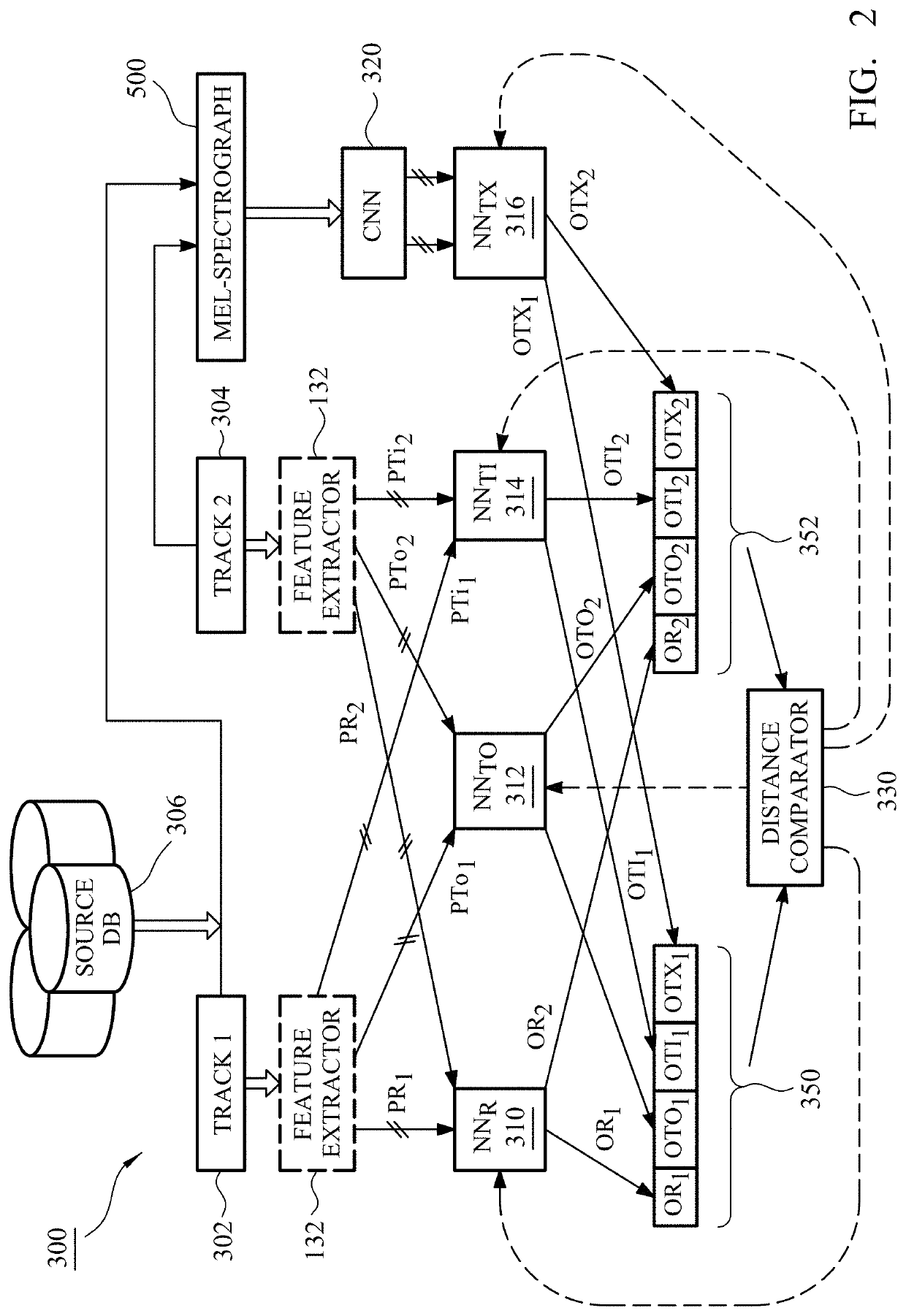

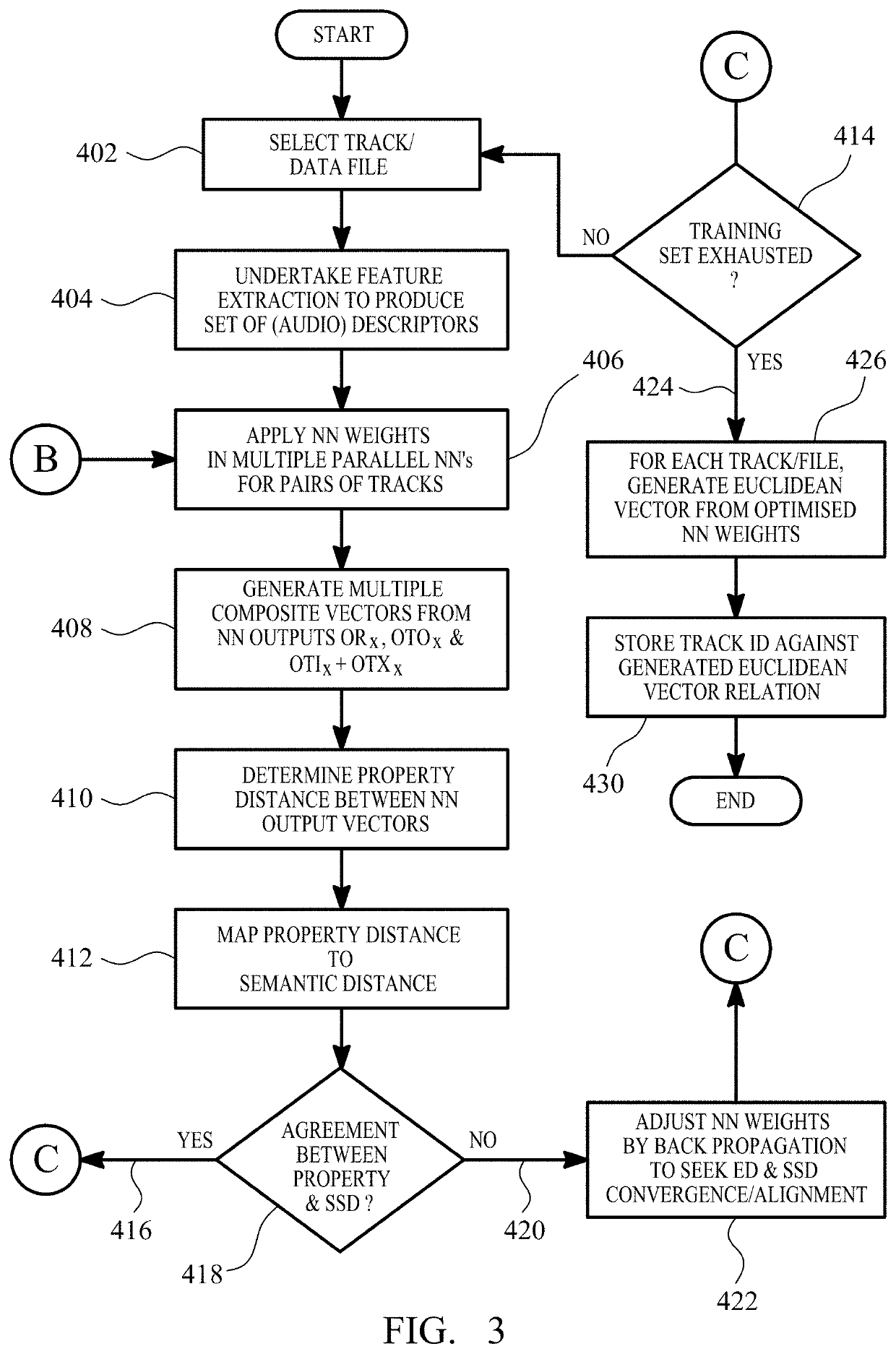

PendingUS20210012200A1Increased and rapid accessMitigate issueElectrophonic musical instrumentsSemantic analysisEmotion perceptionSemantic property

A property vector representing extractable measurable properties, such as musical properties, of a file is mapped to semantic properties for the file. This is achieved by using artificial neural networks “ANNs” in which weights and biases are trained to align a distance dissimilarity measure in property space for pairwise comparative files back towards a corresponding semantic distance dissimilarity measure in semantic space for those same files. The result is that, once optimised, the ANNs can process any file, parsed with those properties, to identify other files sharing common traits reflective of emotional perception, thereby rendering a more liable and true-to-life result of similarity / dissimilarity. This contrasts with simply training a neural network to consider extractable measurable properties that, in isolation, do not provide a reliable contextual relationship into the real-world.

Owner:EMOTIONAL PERCEPTION AI LTD

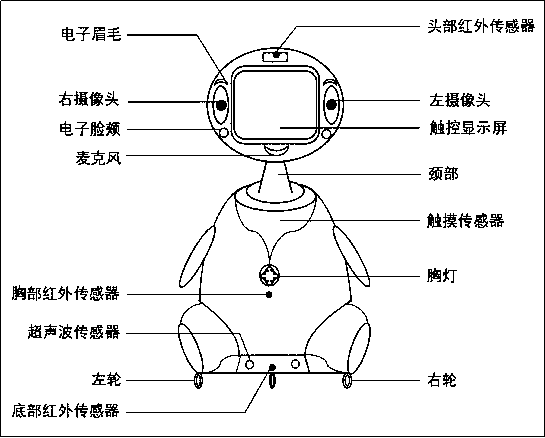

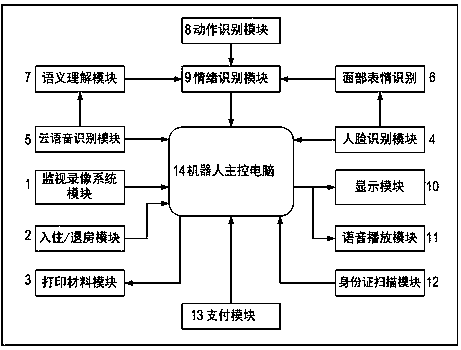

Hotel service robot system based on cloud voice communication and emotion perception

PendingCN108090474ARealize paymentImprove check-in experienceData processing applicationsSpeech analysisEmotion perceptionPayment

The invention relates to the technical field of a hotel service robot system based on cloud voice communication and emotion perception. The hotel service robot system includes a cloud voice recognition module, a semantic understanding module, a voice playing module, a facial expression recognition module, a face recognition module, a display module, an action recognition module, an emotion recognition module, a check-in / check-out module, a print material module, a payment module, a surveillance video system module, an identity card scanning module and the like. The above modules are integratedin a hotel service robot, the hotel service robot judges whether there is a customer check-in and check-out service by using a face recognition technology, and handles the service by voice communication with a customer, and meanwhile, the hotel service robot has functions of printing of related materials, payment, identity scanning and the like. The hotel service robot judges customer emotion through three aspects of information of human facial expression, voice and action to properly adjust emotional scenes for communication with the customer, and the hotel service robot can call a hotel monitoring system to perform face recognition in order to remind customers whose face images are not collected and recorded to collect and record the face images.

Owner:SOUTH CHINA UNIV OF TECH +1

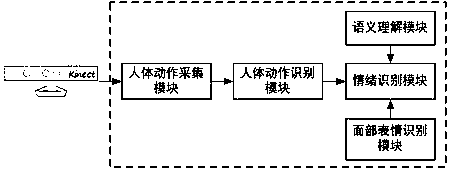

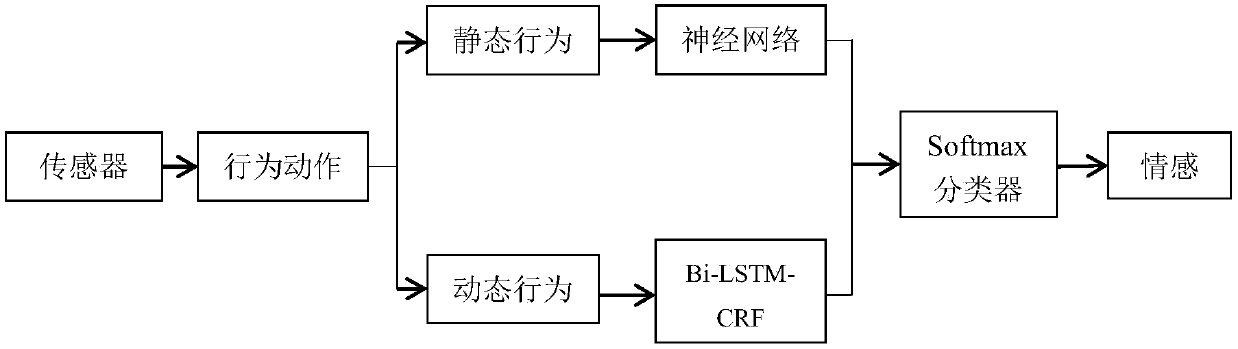

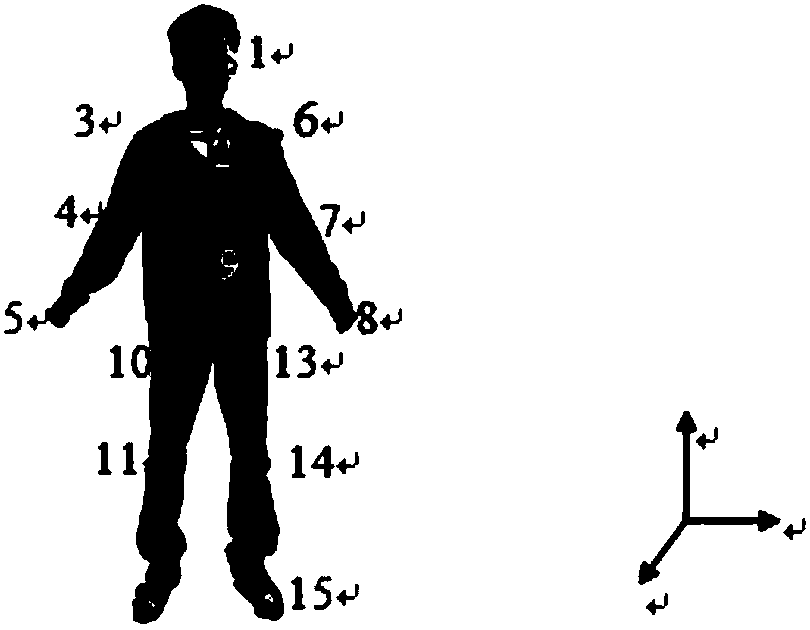

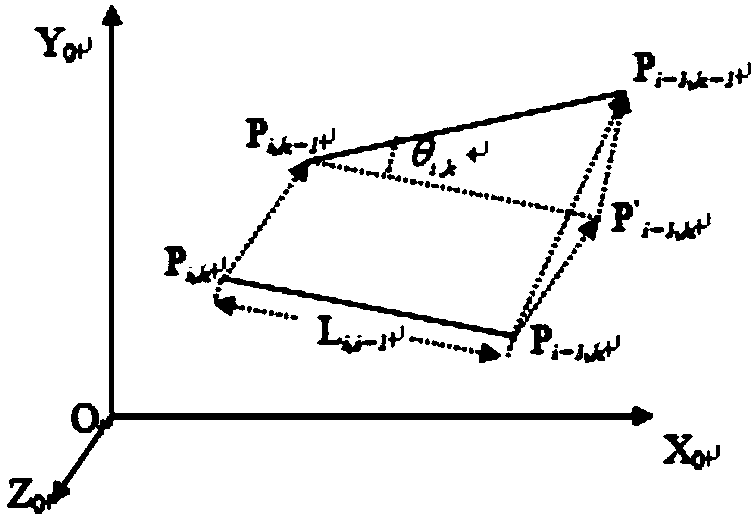

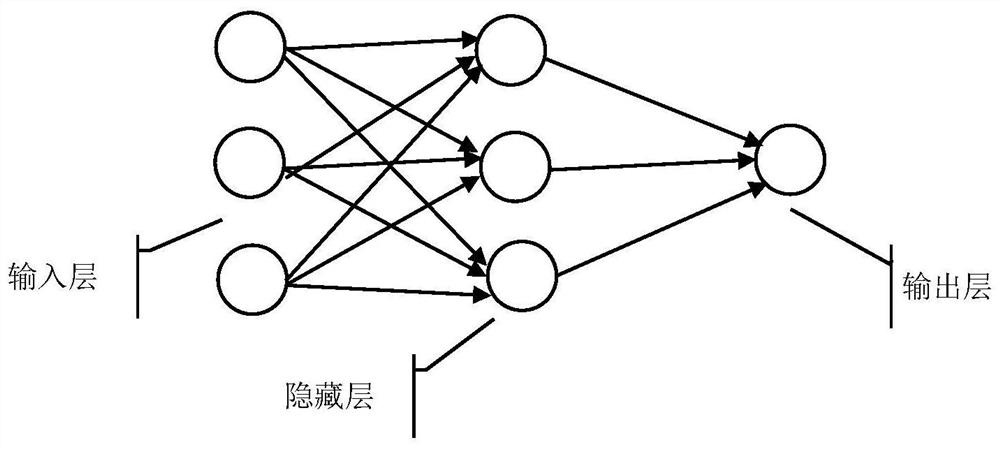

Body language-based emotion perception method adopting deep learning and UKF

ActiveCN108363978AEasy to catchLow body language noiseBiometric pattern recognitionConditional random fieldObservational error

The invention discloses a body language-based emotion perception method adopting deep learning and UKF. The method comprises the following steps of monitoring a person entering a Kinect work area by adopting Kinect, and then calculating skeleton points of the person in real time; estimating the positions of the skeleton points by using unscented Kalman filtering, and calculating a measurement error generated by a tracking error and noises of a device; adopting a convolutional neural network method for static body actions, and adopting bidirectional long-short-term memory conditional random field analysis for dynamic body actions; and directly putting output items of characteristics obtained after action processing into a softmax classifier for performing identification, thereby identifyingeight emotions. The body language-based emotion perception has the following advantages that firstly, body languages can be more easily captured by a sensor; secondly, emotion perception-based body language noises are relatively small; thirdly, the body languages have less fraudulence; and fourthly, the capture of the body actions does not influence or disturb the actions of participants.

Owner:SOUTH CHINA UNIV OF TECH

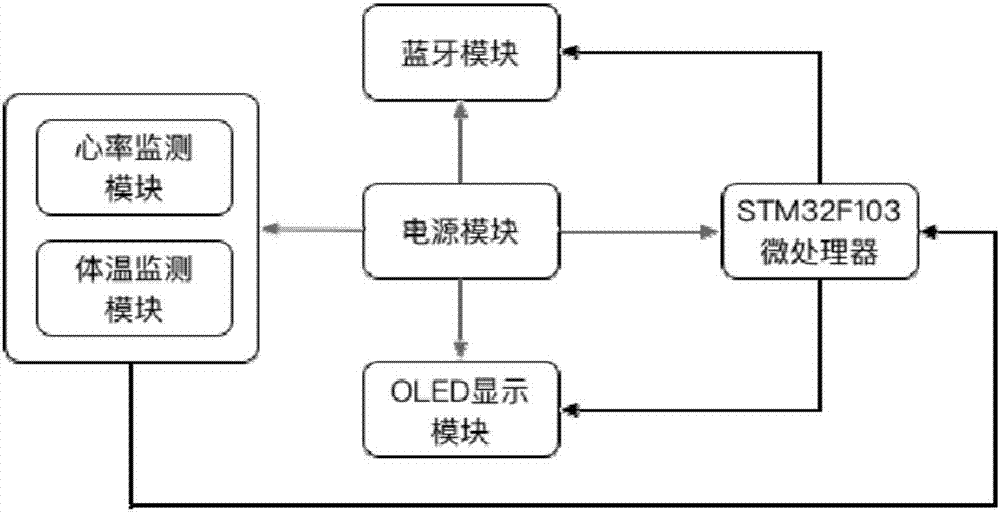

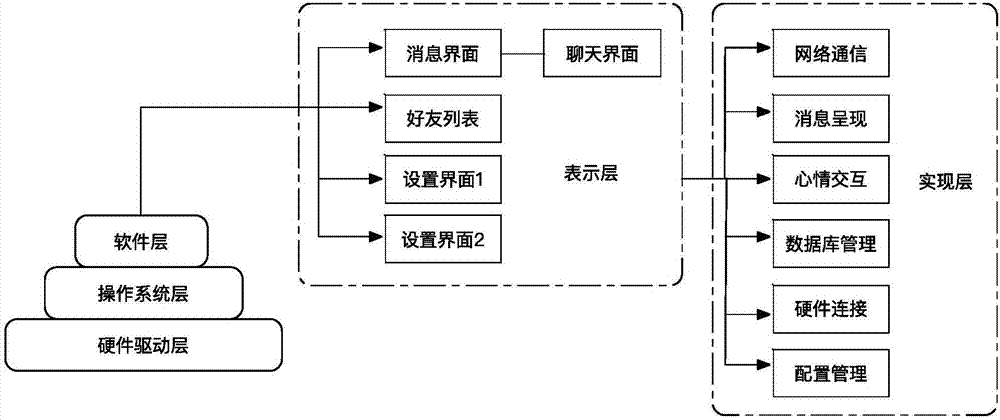

Internet-of-things emotion perception technology-based internet-of-things social application system

InactiveCN107464188ARich information interaction modeImprove social experienceData processing applicationsSoftware engineeringEmotion perceptionGraphics

The invention discloses an internet-of-things emotion perception technology-based internet-of-things social application system. The system comprises a hardware acquisition module and a social APP, wherein the hardware acquisition module is used for measuring and recording heart rate and shell temperature data of users in real time and sending the data to a mobile terminal; the social APP is installed on the mobile terminal; and the social APP has a basic chat function, is internally provided with an emotion perception algorithm, and is used for receiving the data uploaded by the hardware acquisition module in real time after being correctly matched with the hardware acquisition module, analyzing emotion of a user using the current hardware, and transferring emotion perception information through contour pattern change or vibration so as to realize emotion perception and interaction. Aiming at the current situation that the current internet-of-things social application software is lack of scientific measurement and real-time emotion change of interaction users, the method fuses an internet-of-things emotion perception technology into internet social applications, so that the dead zone that the current internet social applications ignore the user emotion interaction is filled, the interaction experience in internet social contact is increased and the social contact quality is enriched.

Owner:ZHEJIANG UNIV

Method of training a neural network to reflect emotional perception and related system and method for categorizing and finding associated content

ActiveUS20200320388A1Increased and rapid accessMitigate issueElectrophonic musical instrumentsSemantic analysisEmotion perceptionSemantic property

A property vector representing extractable measurable properties, such as musical properties, of a file is mapped to semantic properties for the file. This is achieved by using artificial neural networks “ANNs” in which weights and biases are trained to align a distance dissimilarity measure in property space for pairwise comparative files back towards a corresponding semantic distance dissimilarity measure in semantic space for those same files. The result is that, once optimised, the ANNs can process any file, parsed with those properties, to identify other files sharing common traits reflective of emotional-perception, thereby rendering a more liable and true-to-life result of similarity / dissimilarity. This contrasts with simply training a neural network to consider extractable measurable properties that, in isolation, do not provide a reliable contextual relationship into the real-world.

Owner:EMOTIONAL PERCEPTION AI LTD

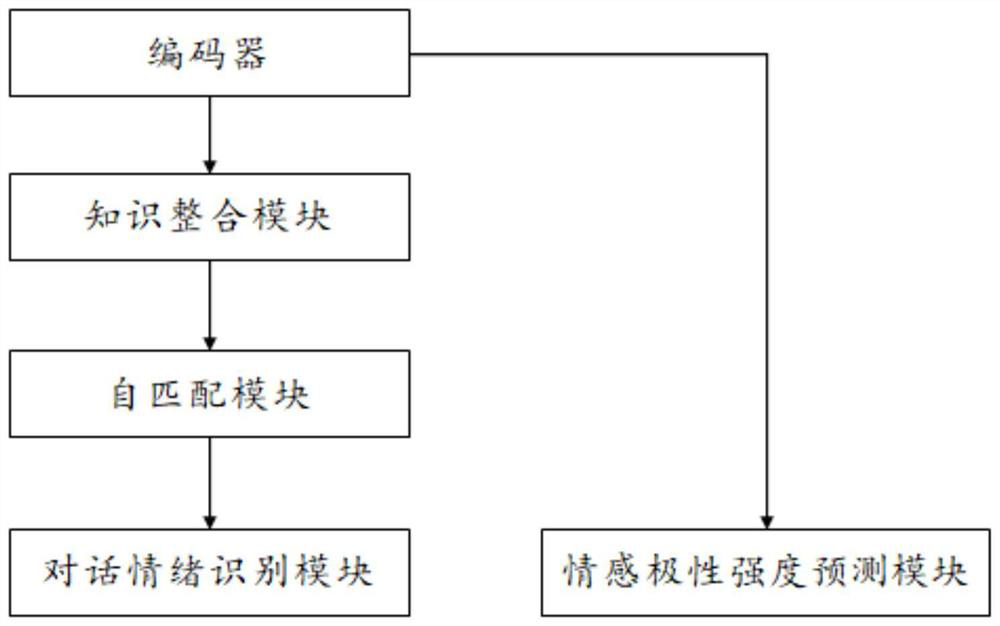

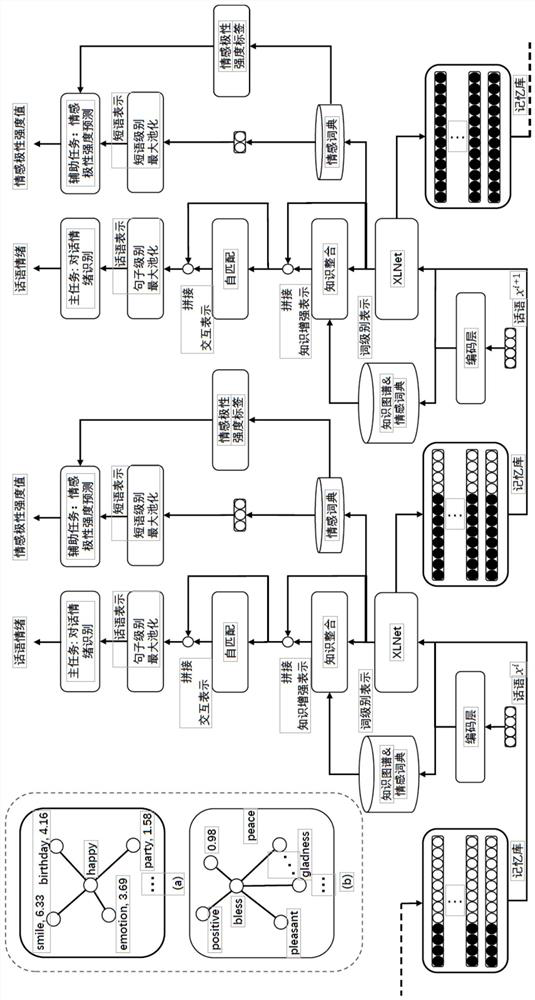

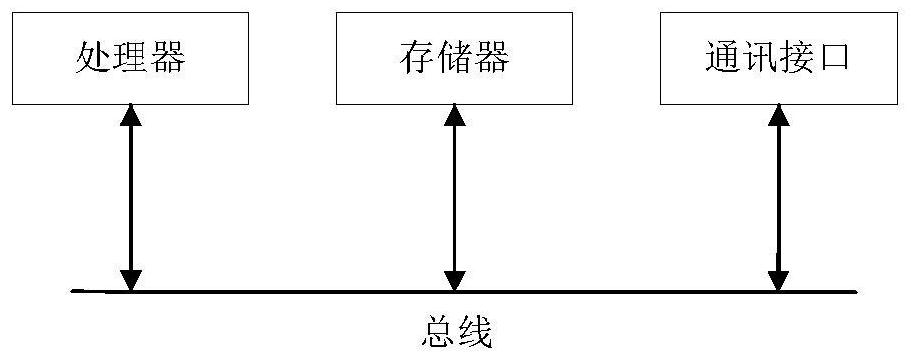

Dialogue emotion recognition network model based on double knowledge interaction and multi-task learning, construction method, electronic equipment and storage medium

ActiveCN113535957ANatural language data processingKnowledge representationEmotion perceptionEmotional perception

The invention discloses a dialogue emotion recognition network model based on double knowledge interaction and multi-task learning, a construction method, electronic equipment and a storage medium, and belongs to the technical field of natural language processing. The problem that an existing Emotion Recovery in Conversion (ERC) model neglects the direct interaction between the utterance and the knowledge is solved. And when an auxiliary task weakly related to the main task is used, limited emotion information can only be provided for the ERC task. According to the method, common knowledge in a large-scale knowledge graph is utilized to enhance word level representation. A self-matching module is used to integrate knowledge representations and utterance representations, allowing complex interaction between the two representations. And a phrase-level sentiment polarity intensity prediction task is taken as an auxiliary task. A label of the auxiliary task comes from an emotion polarity intensity value of an emotion dictionary and is obviously highly related to an ERC task, and direct guidance information is provided for emotion perception of a target utterance.

Owner:HARBIN INST OF TECH

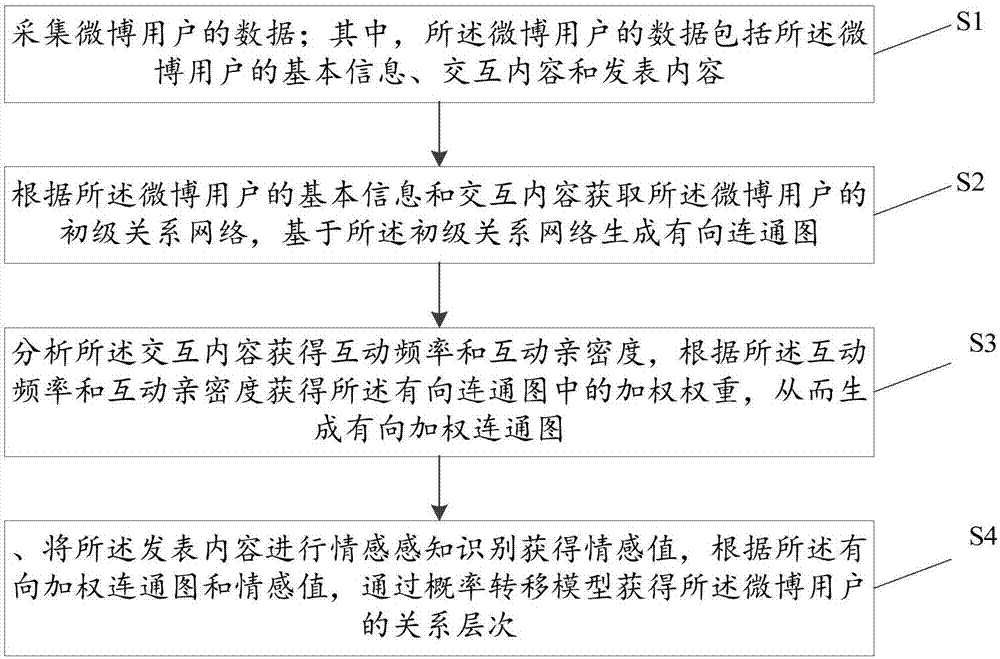

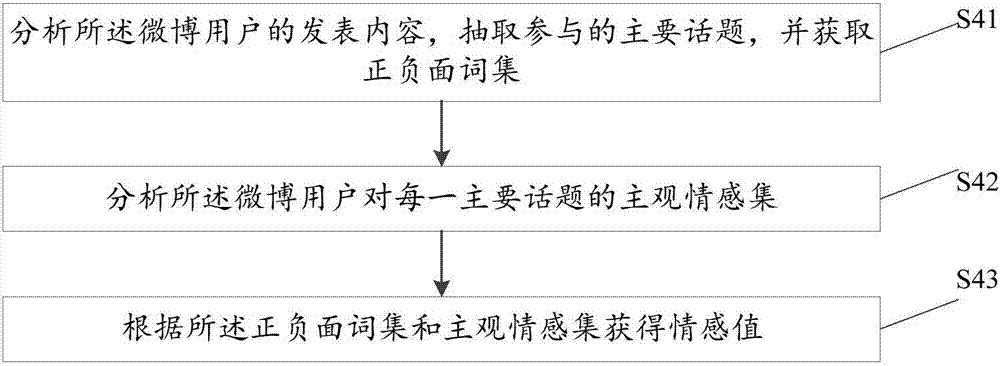

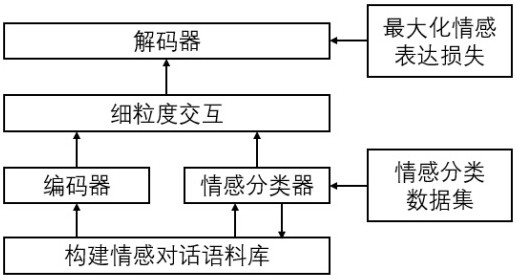

Social network interpersonal relationship analysis method and apparatus

InactiveCN107463551ASolve the problem of low relationship levelRich in relationshipsData processing applicationsSemantic analysisEmotion perceptionTransfer model

The invention discloses a social network interpersonal relationship analysis method. The method comprises the steps of firstly collecting data of microblog users; according to basic information and interactive contents of the microblog users, obtaining a primary relationship network of the microblog users; based on the primary relationship network, generating a directed connected graph; analyzing the interactive contents to obtain an interactive frequency and interactive intimacy; according to the interactive frequency and the interactive intimacy, obtaining a weight in the directed connected graph, thereby generating a directed weighted connected graph; performing emotion perception identification on published contents to obtain an emotion value; and according to the directed weighted connected graph and the emotion value, obtaining relationship levels of the microblog users through a probability transfer model. The problem of low relationship level obtained in the prior art is solved, so that richer interpersonal relationships can be obtained.

Owner:GUANGZHOU TEDAO INFORMATION TECH CO LTD

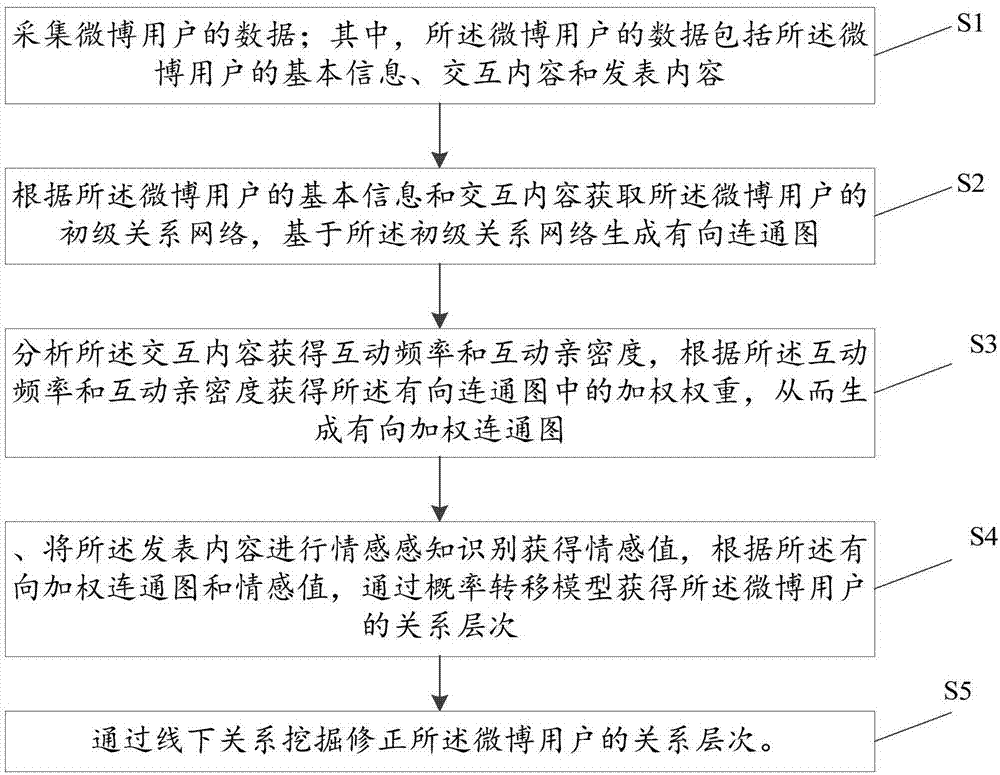

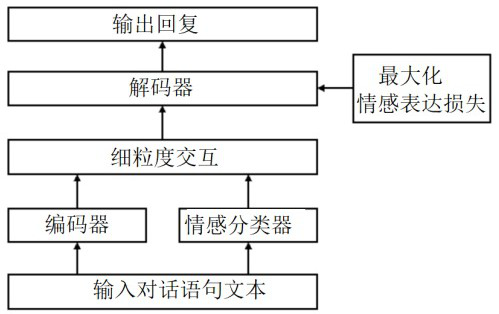

Emotional dialogue generation method and system based on interactive fusion

The invention discloses an emotional dialogue generation method and system based on interactive fusion. The method comprises the following steps: S100: receiving a dialogue statement text; S200, an emotion classifier identifying an emotion category contained in the dialogue statement text and representing the emotion category as a vector; S300, encoding the dialogue statement text into a context vector by an encoder; S400, a fine-grained interaction module fusing the emotion category representation vector and the context vector of the dialogue statement text to generate an interactive vector; S500, the decoder performing decoding by using the interactive vector to generate a reply; and S600, outputting a reply. The problems that an existing man-machine conversation system is weak in emotion perception ability and insufficient in emotion expression ability can be solved.

Owner:STATE GRID E COMMERCE CO LTD +1

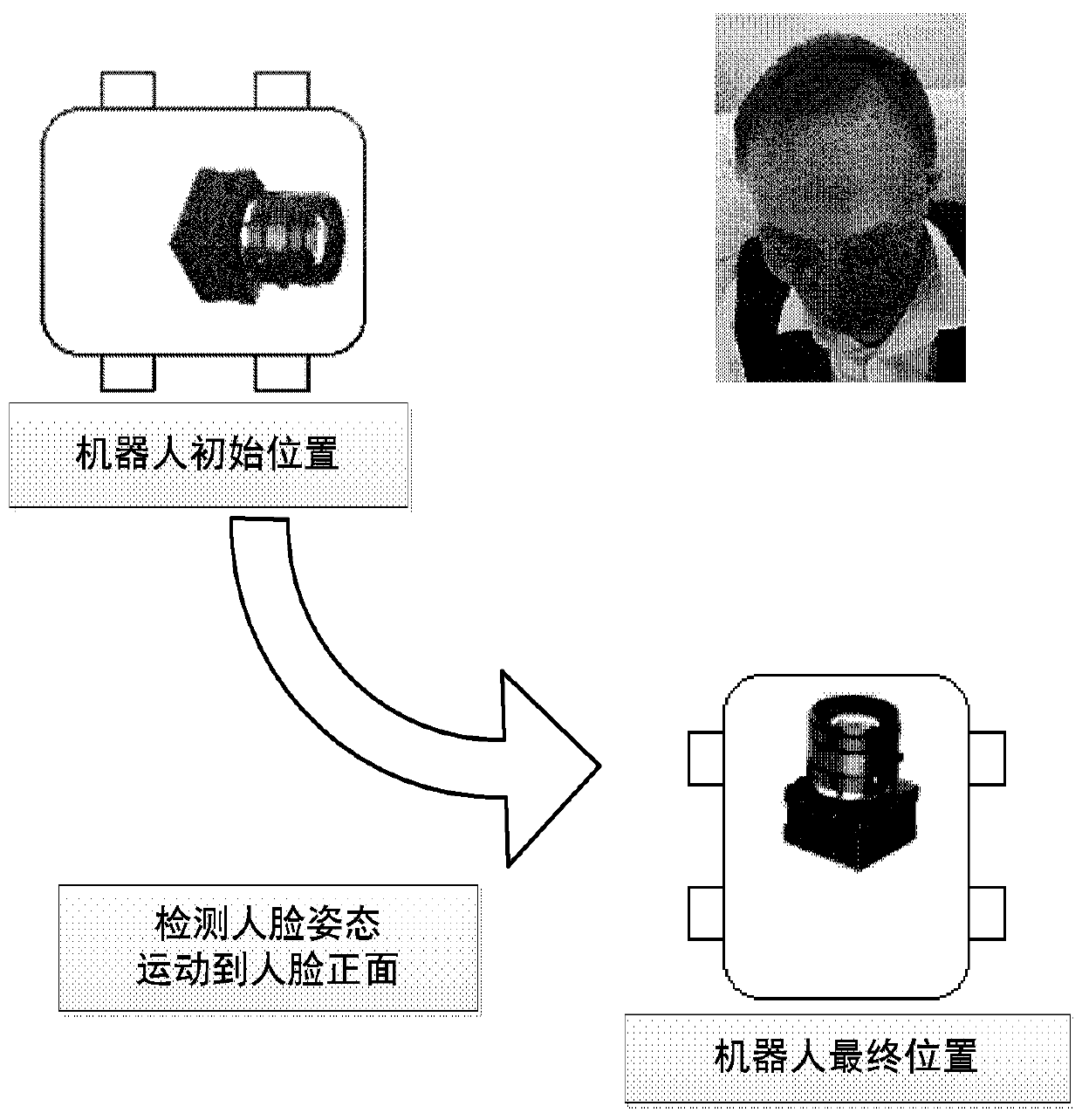

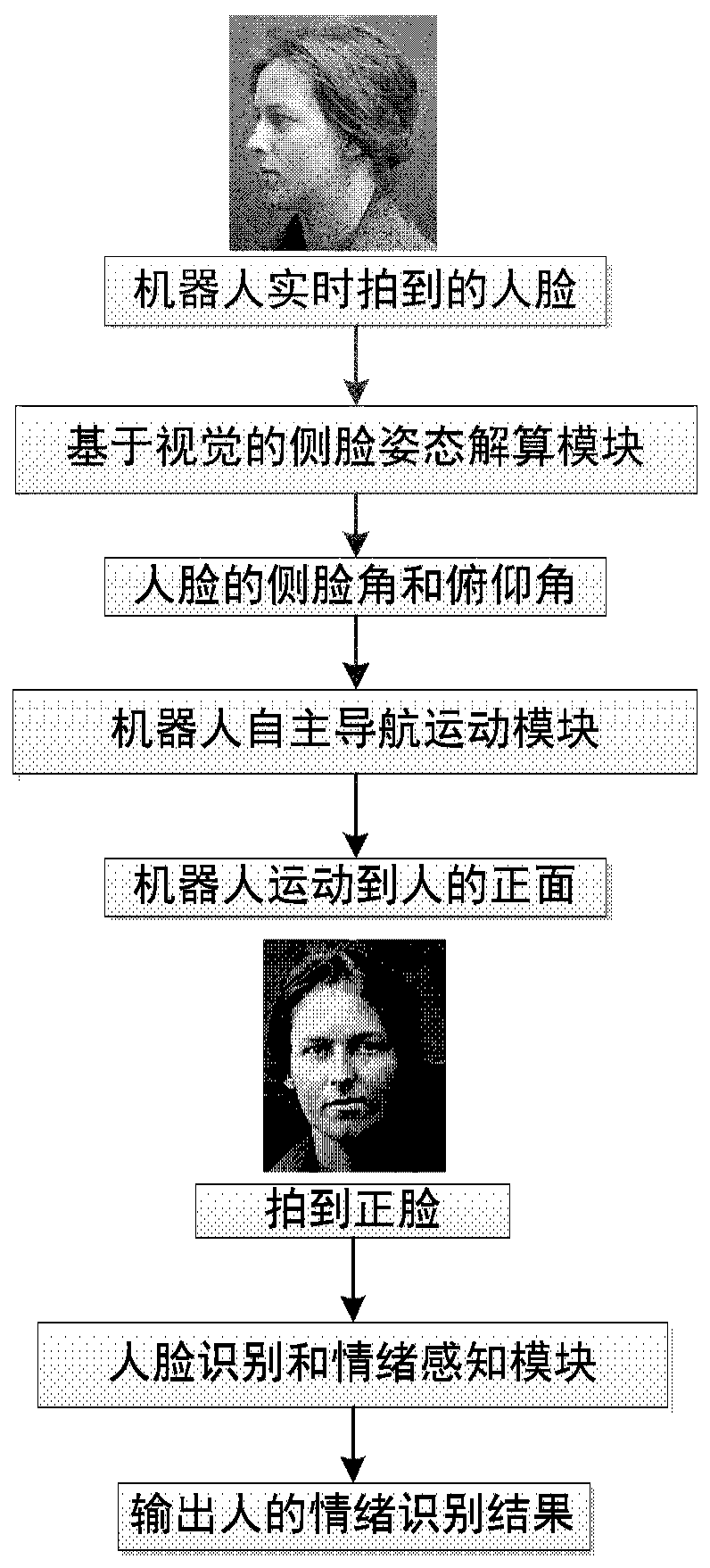

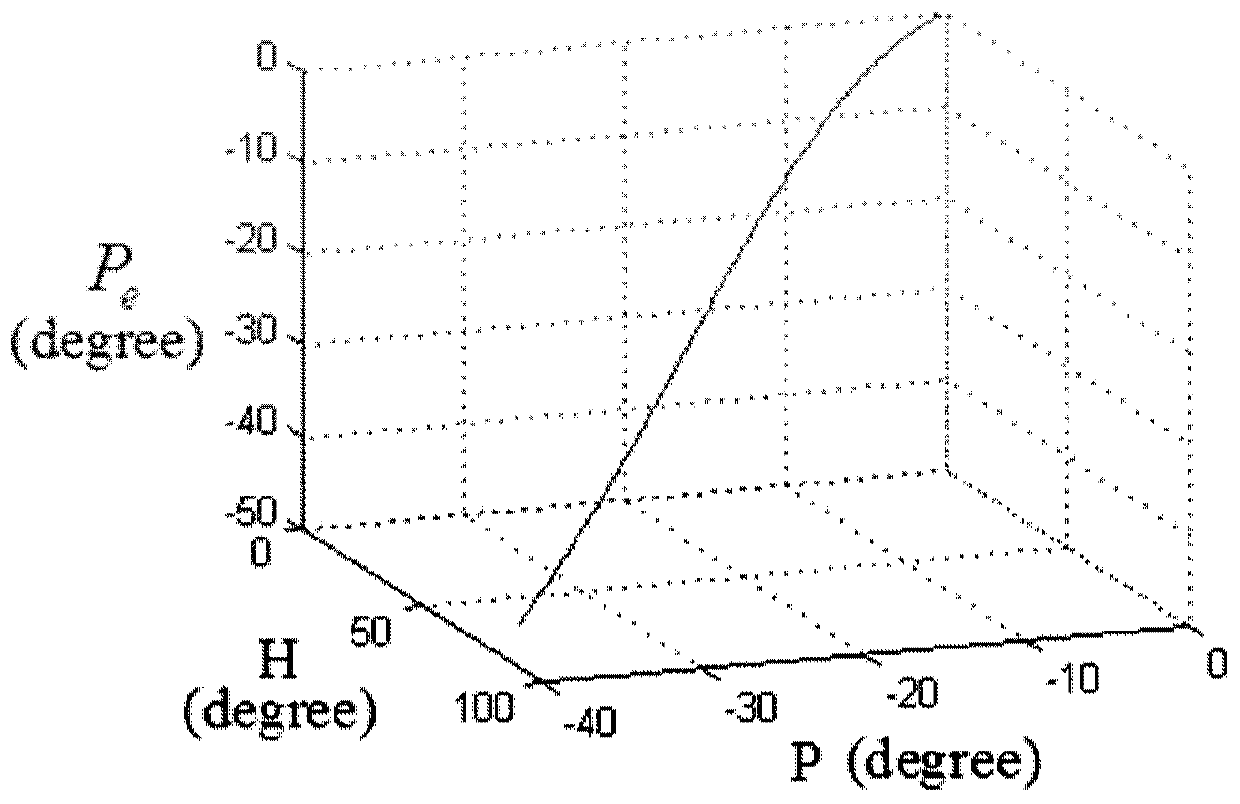

A vision-based side face posture resolving method and an emotion perception autonomous service robot

ActiveCN109886173ACharacter and pattern recognitionEmotion perceptionPhysical medicine and rehabilitation

The invention discloses a vision-based side face posture resolving method and an emotion perception autonomous service robot, wherein the side face posture resolving method is used for acquiring an attitude angle of a face image detected in a current state relative to a face in a front face state in real time, and comprises the following specific steps of constructing an attitude change model of aface area; constructing a shearing angle model of the face; and constructing an attitude angle resolving model based on shear angle elimination. The emotion perception autonomous service robot comprises an acquisition module which is provided with the side face posture resolving method in advance; a navigation module used for controlling the robot to move to a place over against the human face tocollect a front face image of the human face according to the human face attitude angle obtained by the collection module; and a face recognition and emotion sensing module used for finally realizingthe identity recognition and emotion detection. According to the vision-based side face posture resolving method and the emotion perception autonomous service robot provided by the invention, the problem that the identity and emotion of a person cannot be identified during side face is solved.

Owner:INST OF ELECTRONICS CHINESE ACAD OF SCI

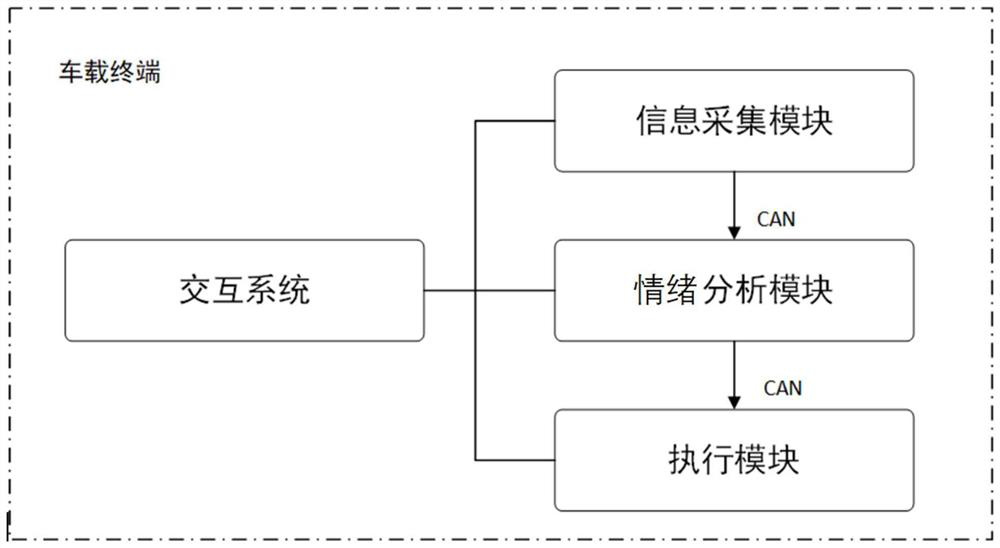

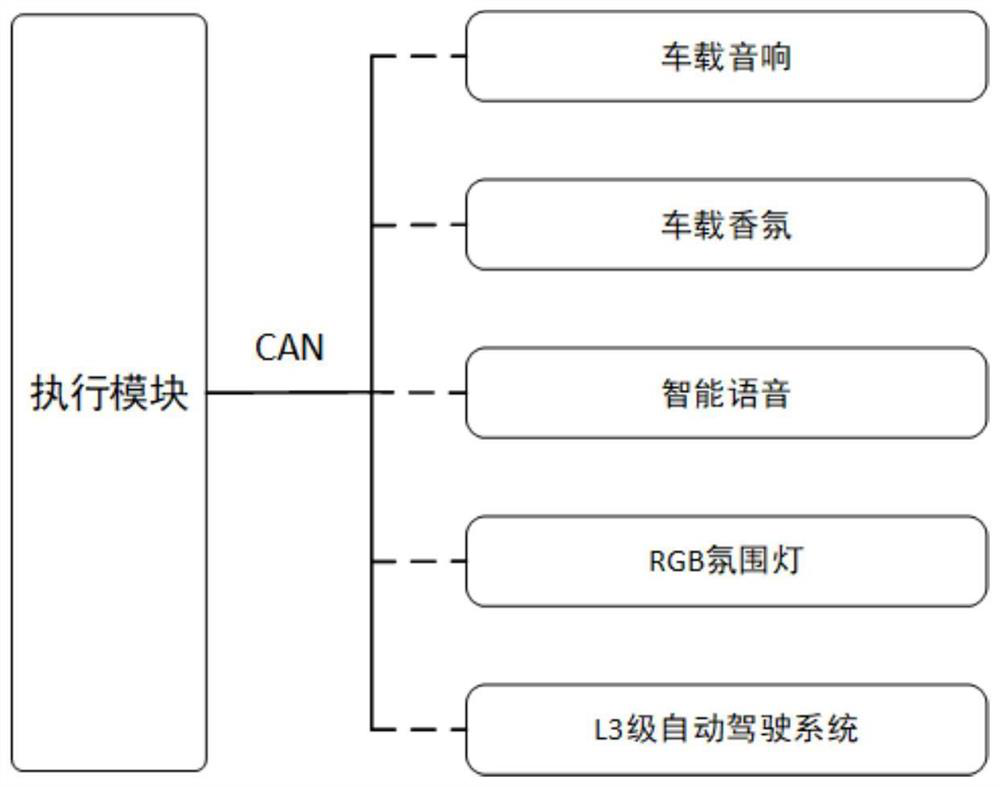

Vehicle-mounted interaction system based on emotion perception and voice interaction

PendingCN114445888AImprove experienceRealize natural interactionAir-treating devicesLighting circuitsEmotion perceptionEmotional perception

The invention provides a vehicle-mounted interaction system based on emotion perception and voice interaction. The system is applied to a vehicle-mounted terminal and comprises an information acquisition module, an emotion analysis module and an execution module. The camera collects passenger information and transmits the passenger information to the analysis module, the emotional state of a passenger is obtained through a facial emotion analysis method, and the execution module starts matched preset operation. The preset operation comprises modes of intelligent voice interaction, automatic driving system starting, RGB atmosphere lamp adjustment, music adjustment, in-vehicle fragrance adjustment and the like. The emotion and intention of the passenger can be captured and analyzed in real time, interaction is carried out in a voice mode, the expectation of the user for intelligent voice and emotional perception is met, for the passenger, the change of the emotion can influence the change of the environment in the vehicle, the change is natural and comfortable, the experience of the passenger in the vehicle can be improved, and the user experience is improved. And natural interaction between people and vehicles is realized.

Owner:CHANGZHOU UNIV

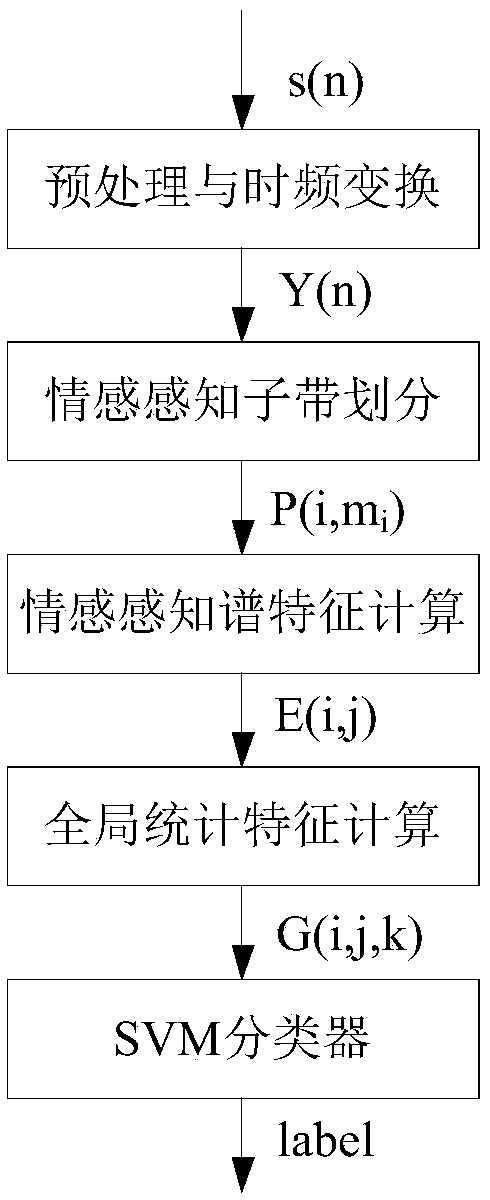

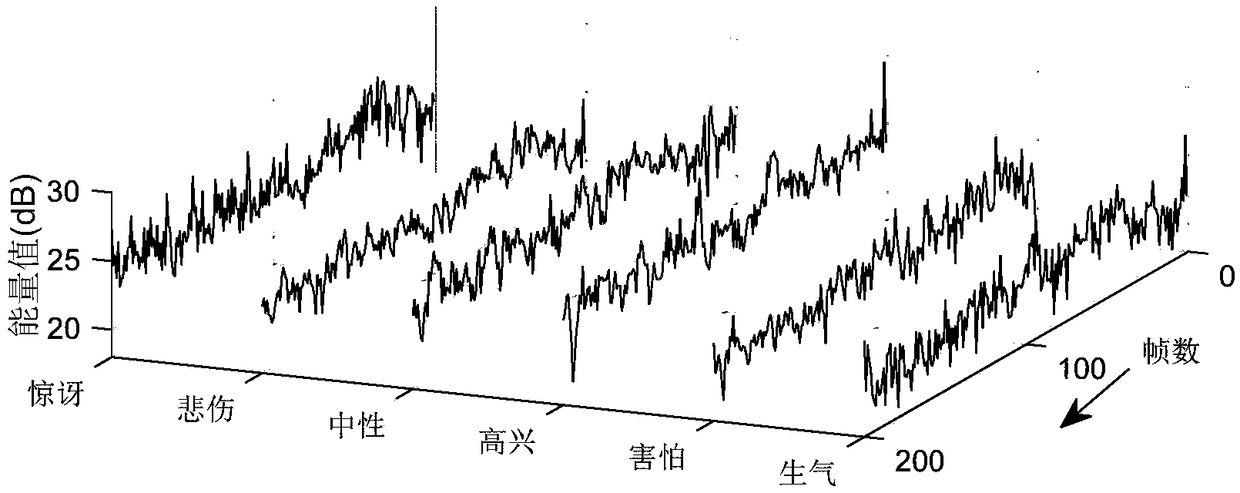

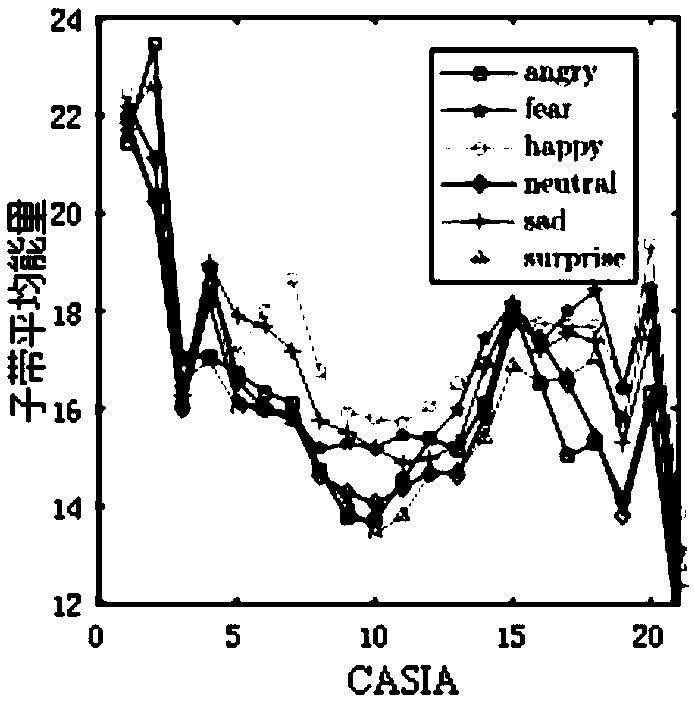

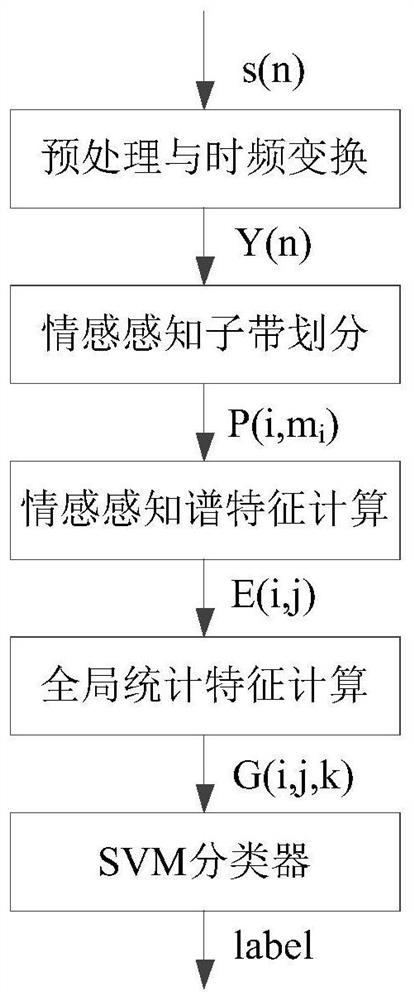

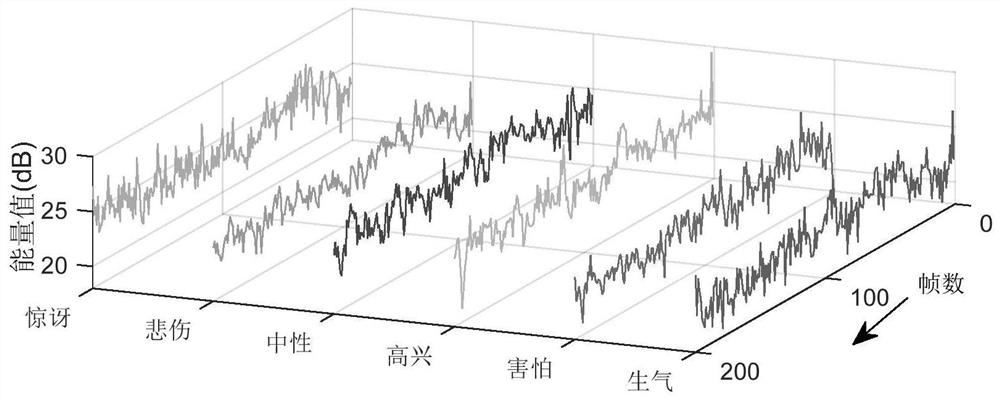

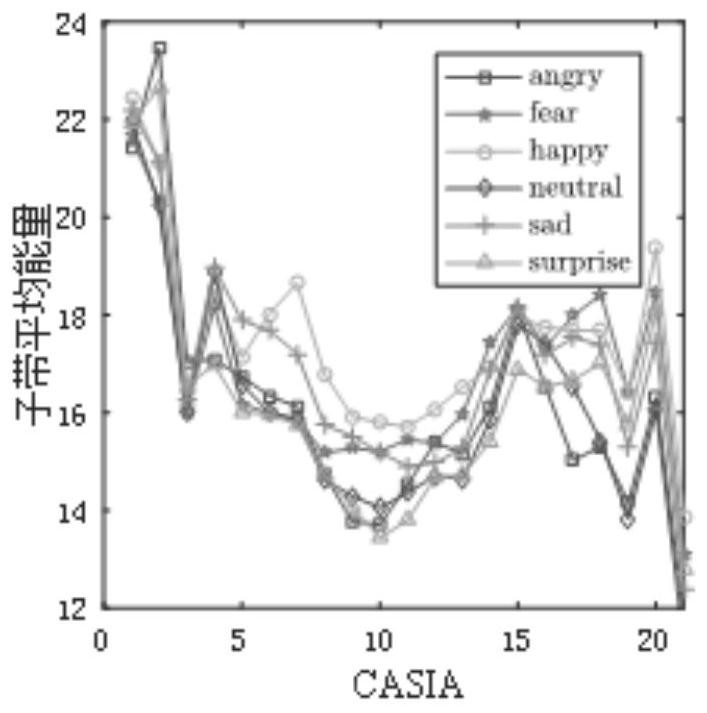

Method for speech emotion recognition by utilizing emotion perception spectrum characteristics

ActiveCN108847255AIncrease effective resolutionAccurate distinctionSpeech recognitionEmotion perceptionFourier transform on finite groups

The invention relates to a method for speech emotion recognition by utilizing emotion perception spectrum characteristics. Firstly, a pre-emphasis method is used to perform high-frequency enhancementon an input speech signal, and then, the signal is converted into frequency field by using fast Fourier transform to obtain a speech frequency signal; the speech frequency signal is divided into a plurality of sub-bands by using an emotion perception sub-band dividing method; emotion perception spectrum characteristic calculation is carried out on each sub-band, wherein the spectrum characteristics comprises an emotion entropy characteristic, an emotional spectrum harmonic inclination degree and an emotional spectrum harmonic flatness; global statistical characteristic calculation is carried out on the spectrum characteristics to obtain a global emotion perception spectrum characteristic vector; finally, the emotion perceptual spectrum characteristic vector is input to an SVM classifier toobtain the emotion category of the speech signal. According to the speech psychoacoustic model principle, the perceptual sub-band dividing method is used to accurately describe the emotion state information, and emotion recognition is carried out through the sub-band spectrum characteristics, and the recognition rate is improved by 10.4% compared with prior MFCC characteristics.

Owner:湖南商学院 +1

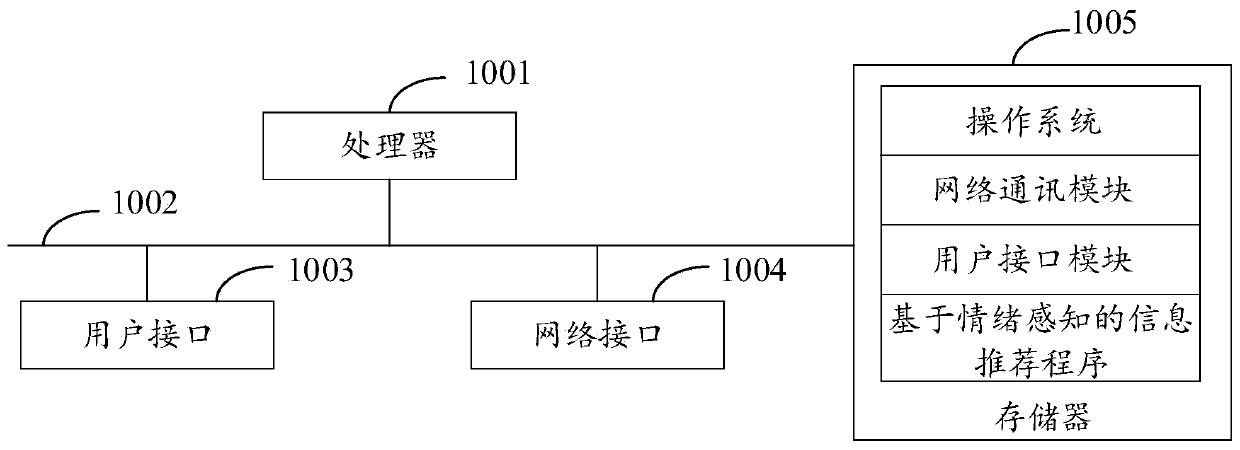

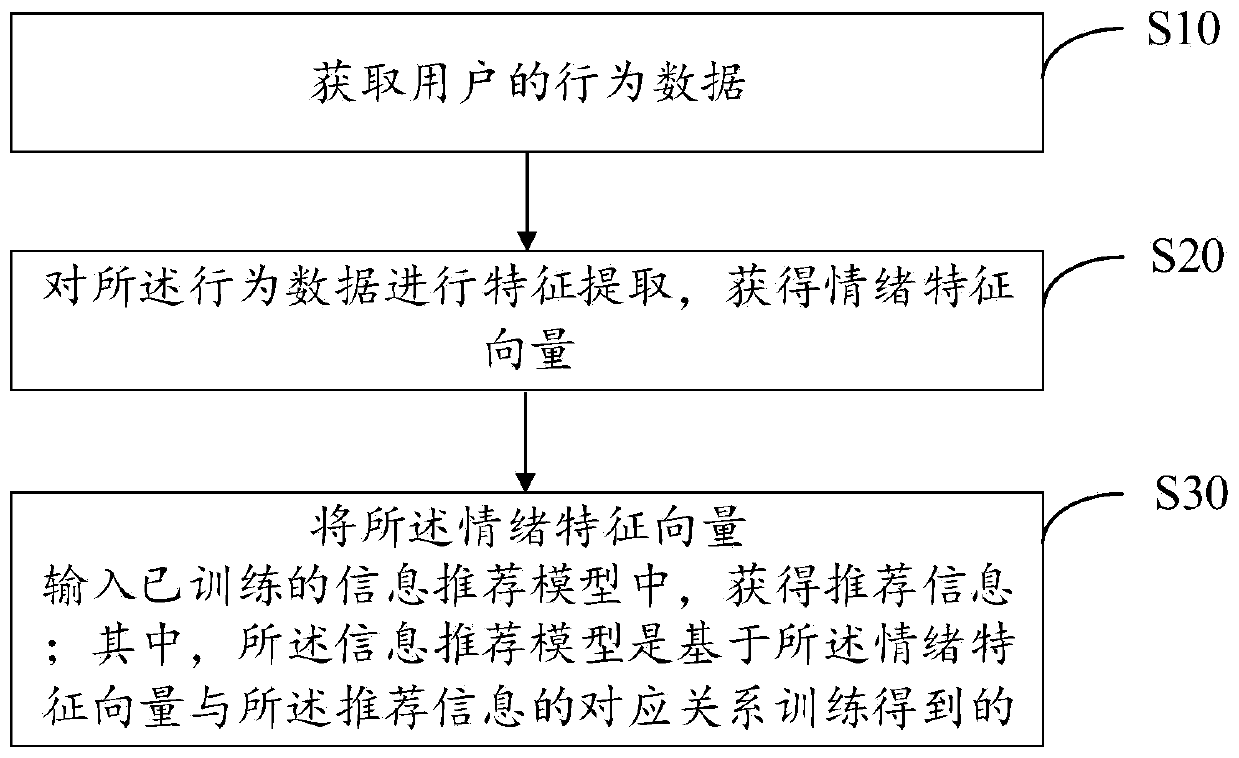

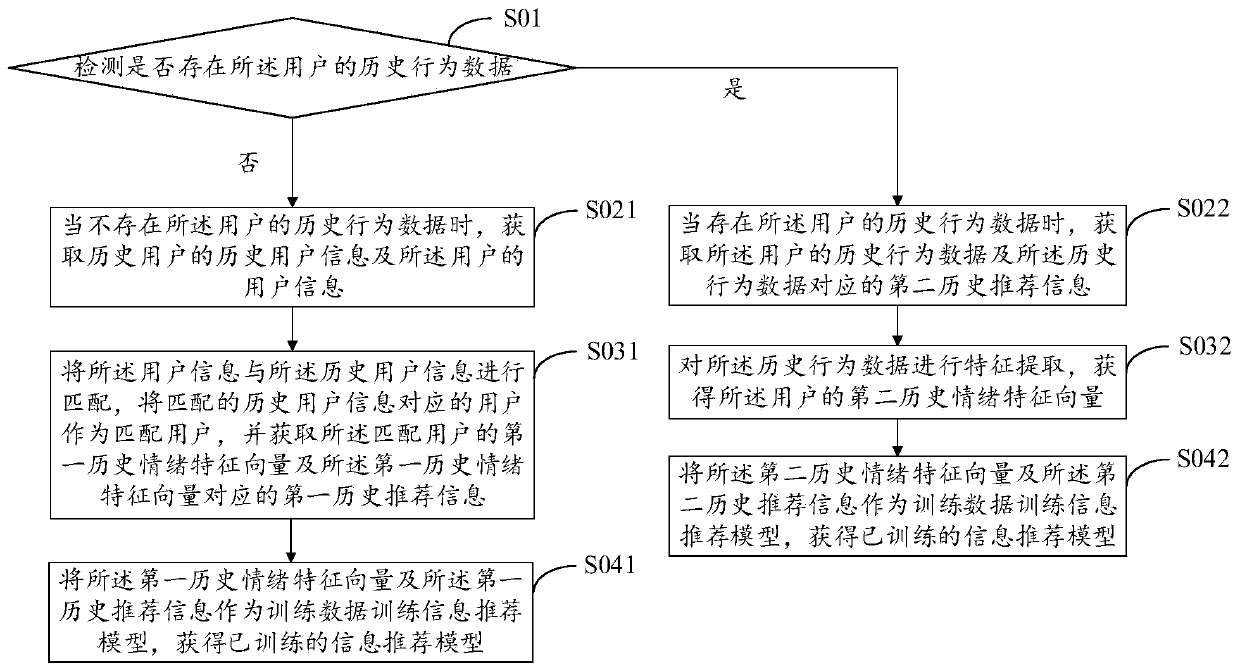

Information recommendation method and device based on emotion perception and storage medium

PendingCN111222044AProtect privacy and securityDigital data information retrievalCharacter and pattern recognitionEmotion perceptionEmotional perception

The invention discloses an information recommendation method and device based on emotion perception and a storage medium. The method comprises the steps of obtaining behavior data of a user; performing feature extraction on the behavior data to obtain an emotion feature vector; inputting the emotion feature vector into a trained information recommendation model to obtain recommendation information; wherein the information recommendation model is obtained by training based on the corresponding relationship between the emotion feature vector and the recommendation information. According to the method, the behavior data of the user is acquired, the equipment is controlled based on the behavior data, the content suitable for the emotion of the user is recommended to the user, the facial expression of the user does not need to be monitored, the conversation content of the user does not need to be analyzed, and the privacy safety of the user is protected.

Owner:SHENZHEN TCL DIGITAL TECH CO LTD

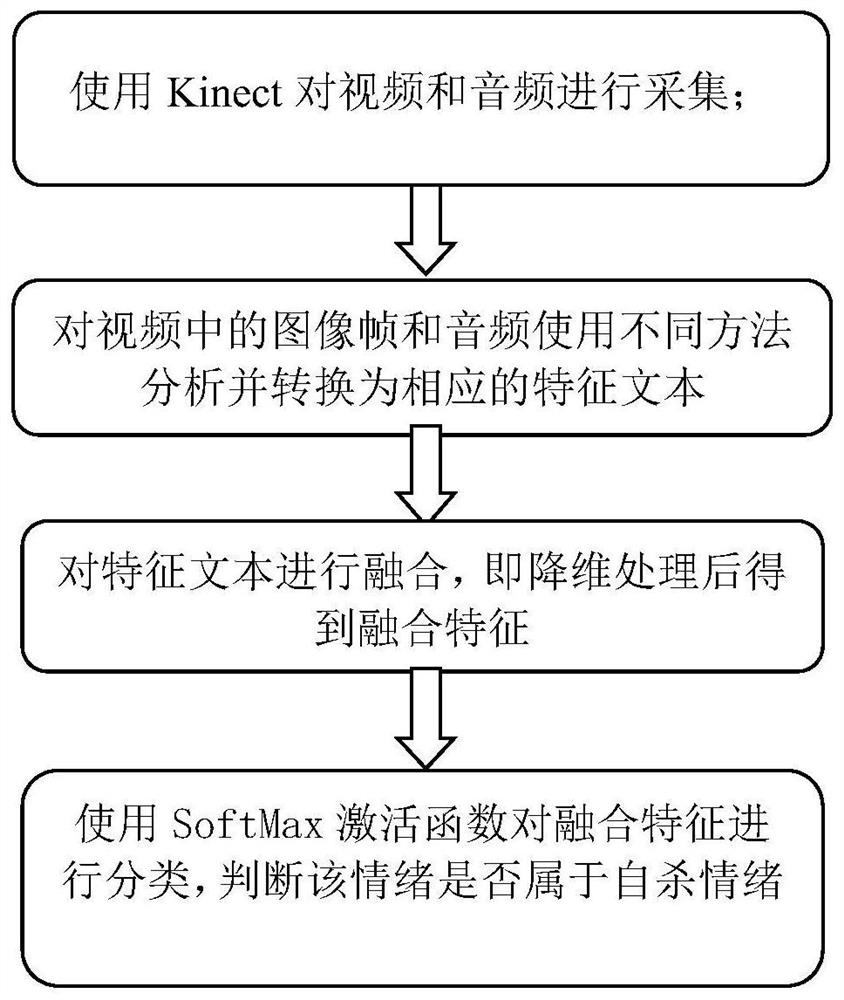

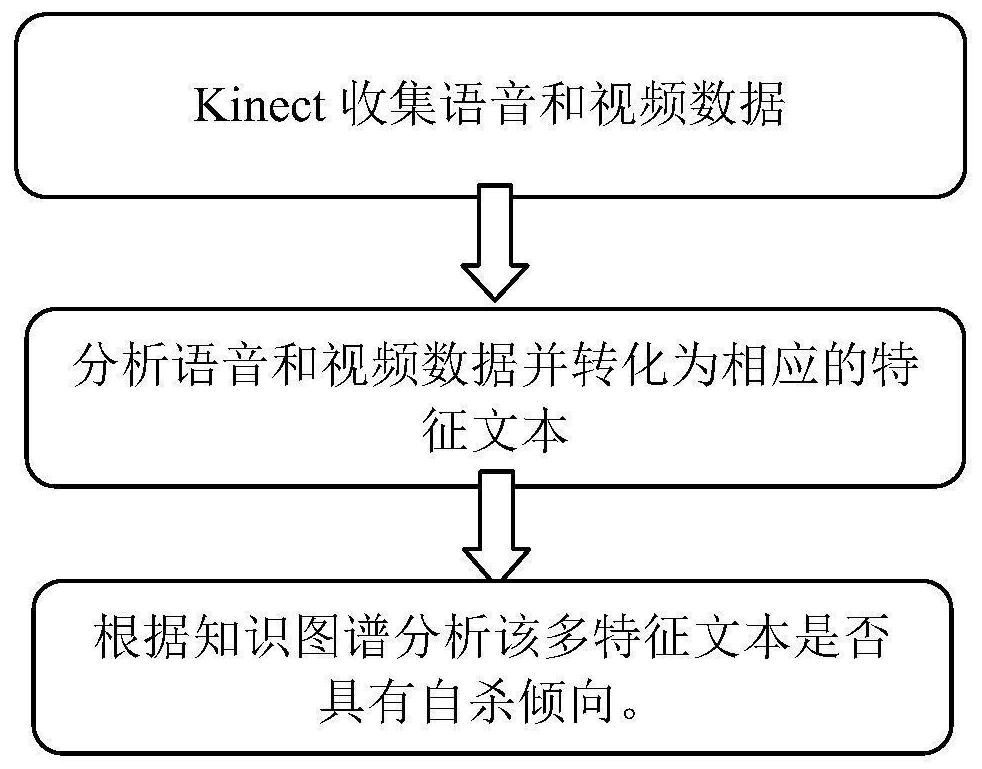

Suicide emotion perception method based on multi-modal fusion of voice and micro-expressions

PendingCN112101096AReduce dimensionalityImprove performanceNeural architecturesAcquiring/recognising facial featuresEmotion perceptionMicroexpression

The invention discloses a suicide emotion perception method based on multi-mode fusion of voice and micro-expression. The method comprises the following steps: collecting videos and audios by using Kinect with an infrared camera; analyzing image frames and audios in the video by using different methods and converting the image frames and audios into corresponding feature texts; fusing the featuretexts, namely performing dimension reduction processing to obtain fused features; and classifying the fusion features by using a SoftMax activation function, and judging whether the emotion belongs tosuicide emotions or not. According to the invention, the multi-modal data is aligned with the text layer. The text intermediate representation and the proposed fusion method form a frame fusing speech and facial expressions. According to the invention, the dimensionality of voice and facial expressions is reduced, and two pieces of information are unified into one component. The Kinect is used for data acquisition, and the invention has the advantages of being non-invasive, high in performance and convenient to operate.

Owner:SOUTH CHINA UNIV OF TECH

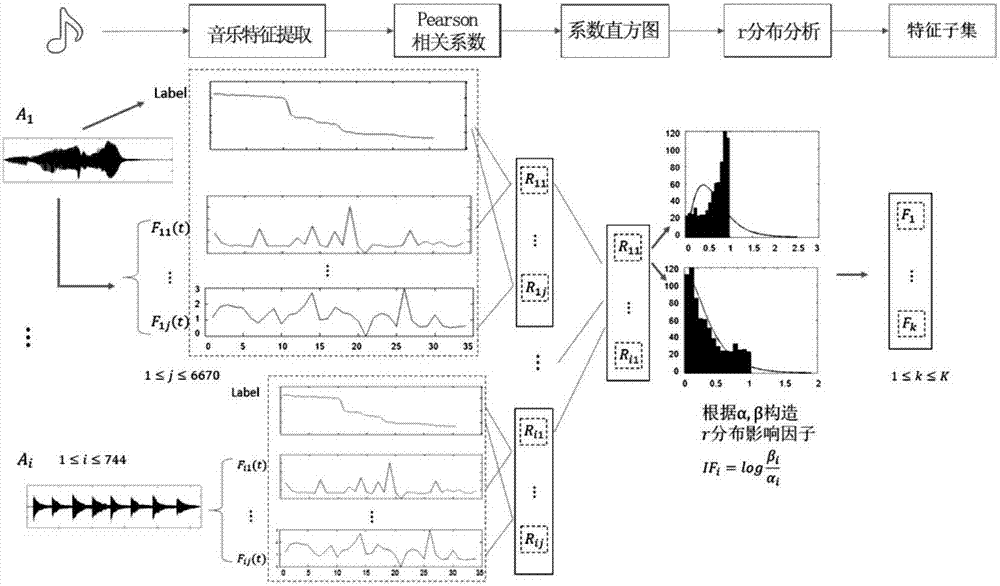

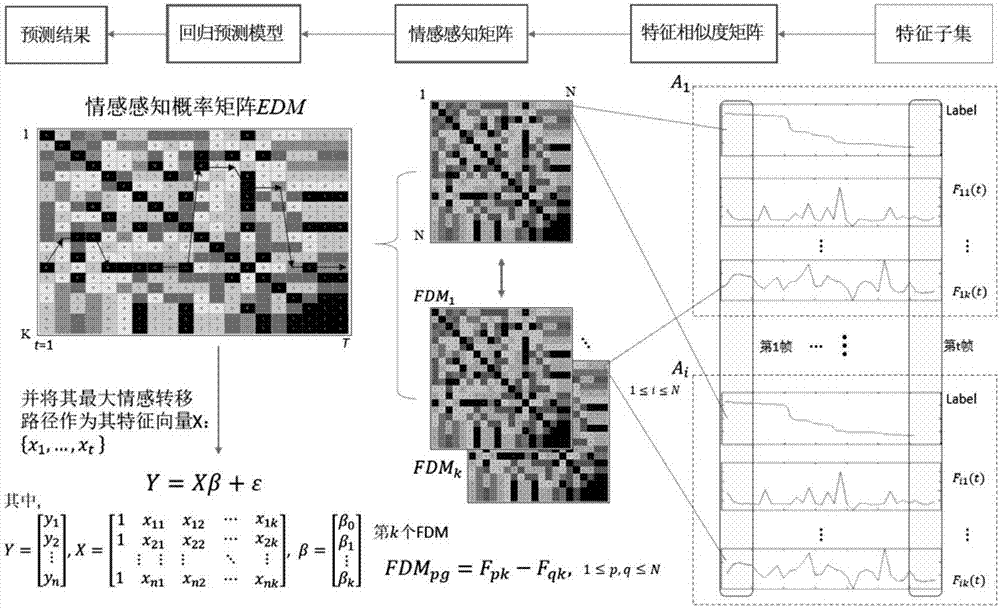

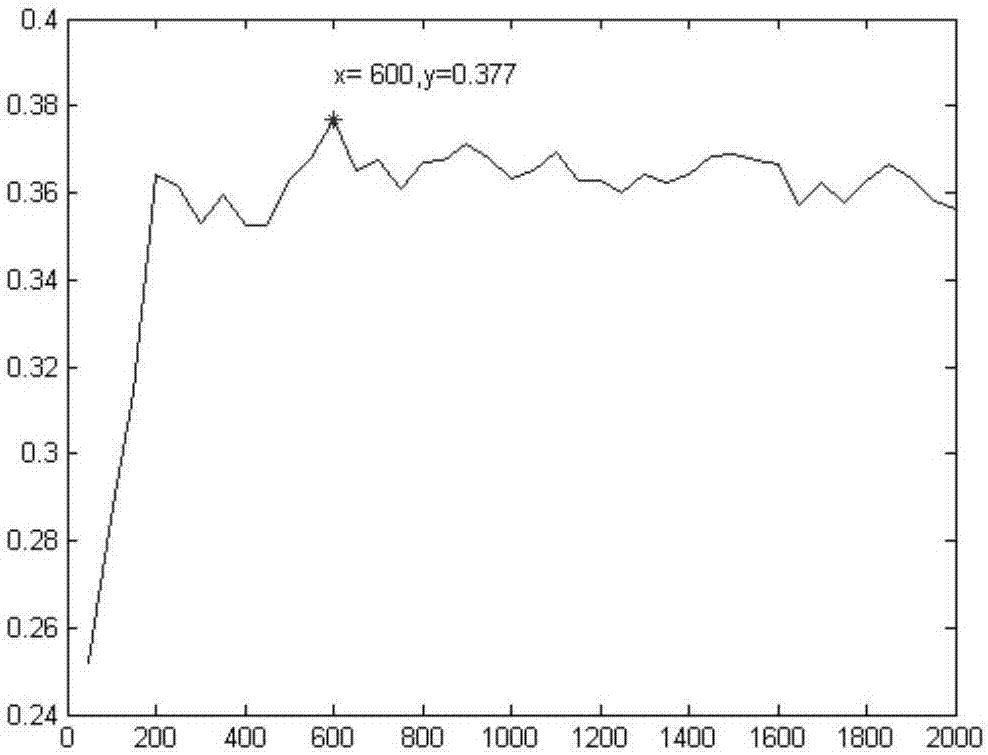

Music continuous emotion feature analysis evaluation method based on Gamma distribution analysis

ActiveCN107578785AHigh precisionReduce the number of featuresSpeech analysisEmotion perceptionPattern recognition

The invention provides a music continuous emotion feature analysis evaluation method based on Gamma distribution analysis. The method includes the steps: firstly, building a Gamma distribution analysis evaluation method of music continuous emotion features, and finding emotion features most similar to emotion responses on a time sequence by the method; secondly, building an emotion feature analysis method based on an emotion perception matrix, evaluating the features from emotion perception ability by the method, and finding emotion features with best perception ability; finally, automaticallyanalyzing music emotions in real time based on Gamma distribution emotion analysis forecasting method. According to the method, the music emotions can be automatically analyzed, emotion tags are automatically forecasted, bases are provided for evaluation and selection of the music emotions, and the method has promoting effects on aspects such as artificial intelligence and emotion perception.

Owner:HARBIN INST OF TECH +1

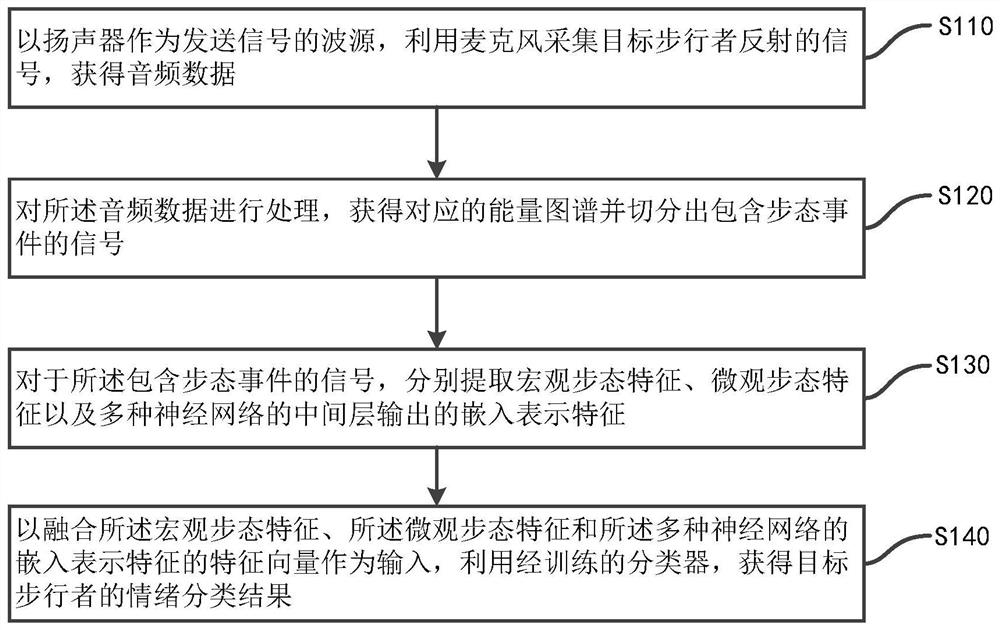

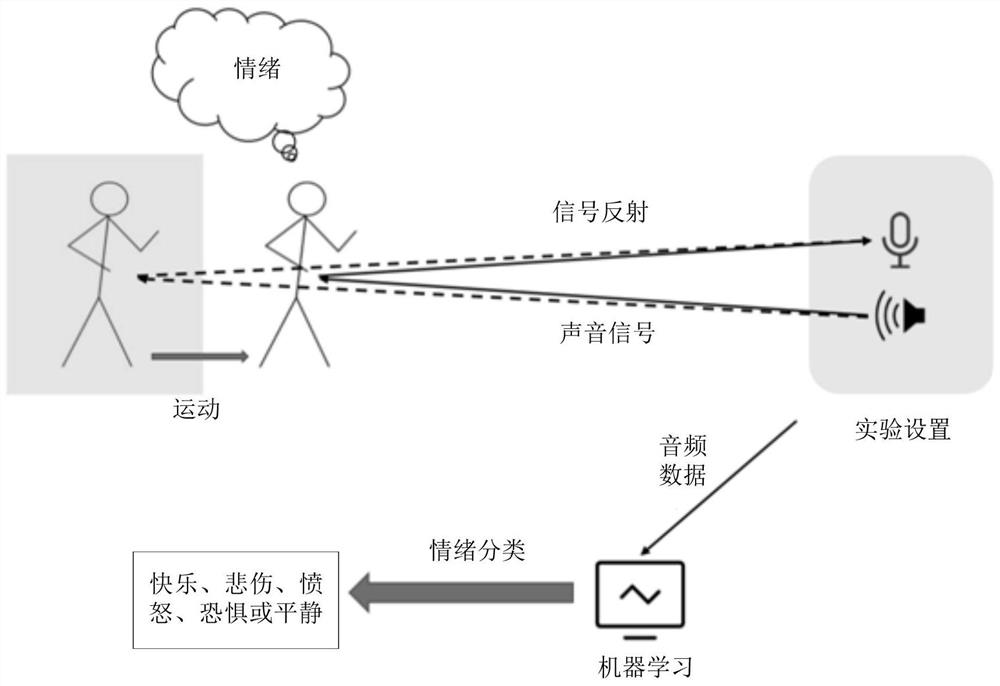

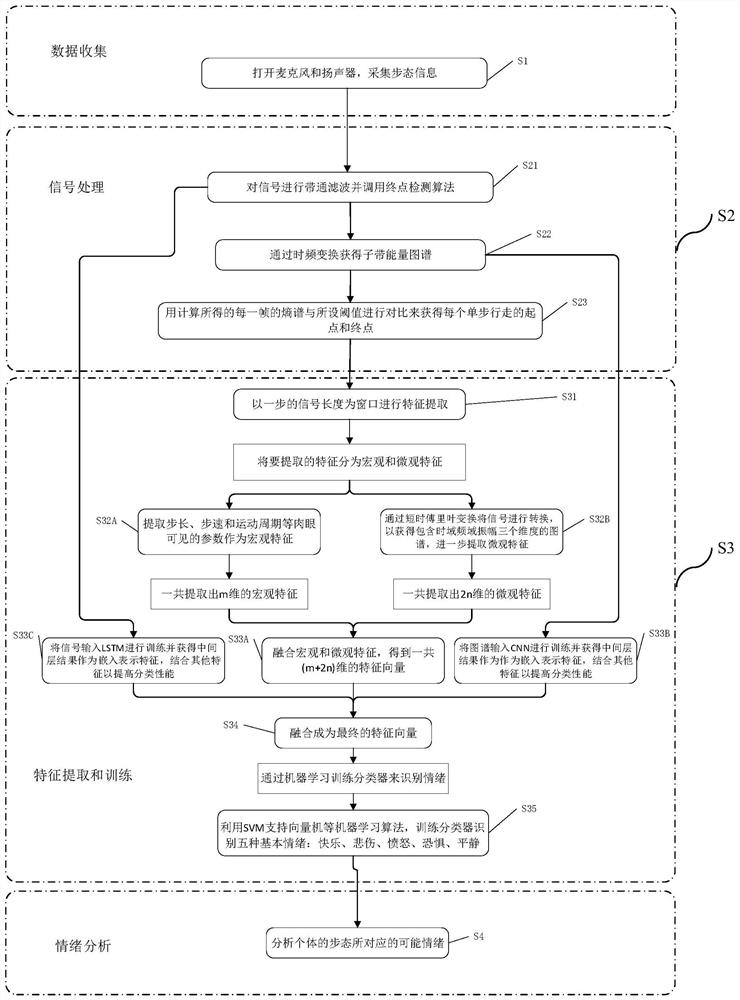

Gait recognition and emotion perception method and system based on intelligent acoustic equipment

The invention provides a gait recognition and emotion perception method and system based on intelligent acoustic equipment. The method comprises the following steps of: taking a loudspeaker as a wavesource for sending signals, and utilizing a microphone to collect a signal reflected by a target pedestrian to obtain voice frequency data; processing the voice frequency data to obtain a corresponding energy atlas, and segmenting a signal containing a gait event; for the signal containing the gait event, independently extracting macroscopic gait characteristics, microcosmic gait characteristics and the embedding representation characteristics of various neural networks; and taking the blending of the characteristic vectors of the macroscopic gait characteristics, microcosmic gait characteristics and the embedding representation characteristics of various neural networks as input, and utilizing a trained classifier to obtain thee emotion classification result of the target pedestrian. Themethod can be applied to the intelligent home environment, and can carry out emotion recognition under a situation that individual privacy is not invaded and a user is not required to carry additionalequipment.

Owner:SHENZHEN UNIV

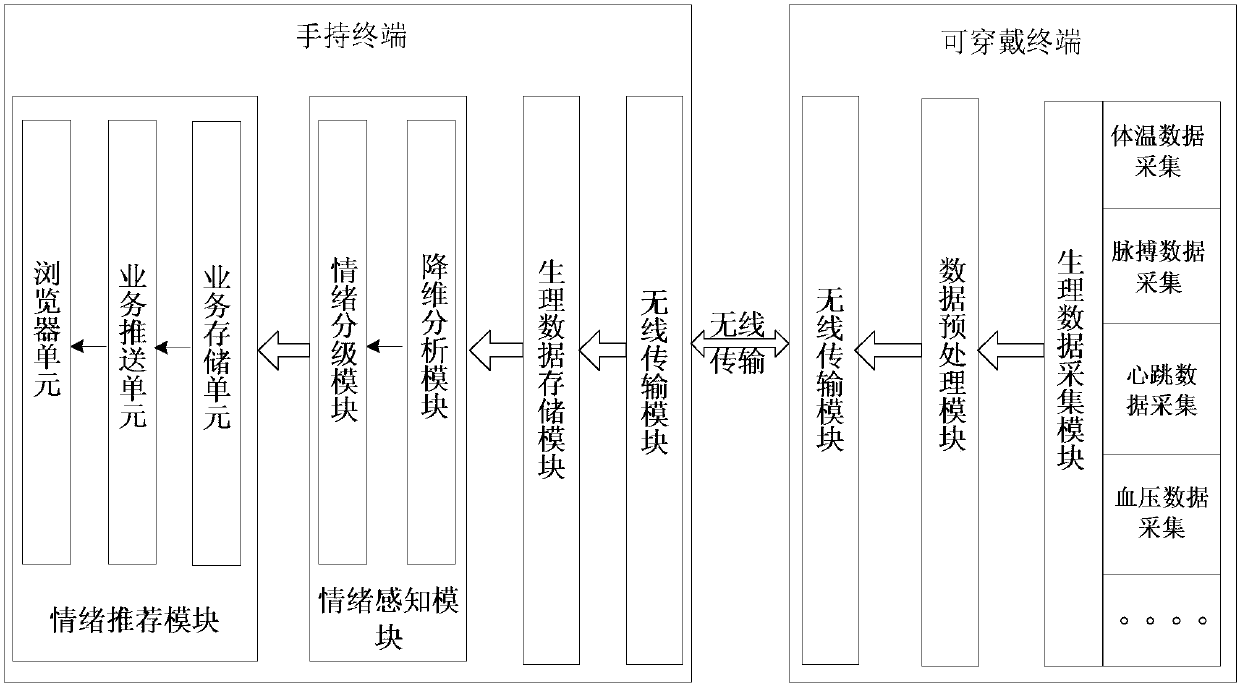

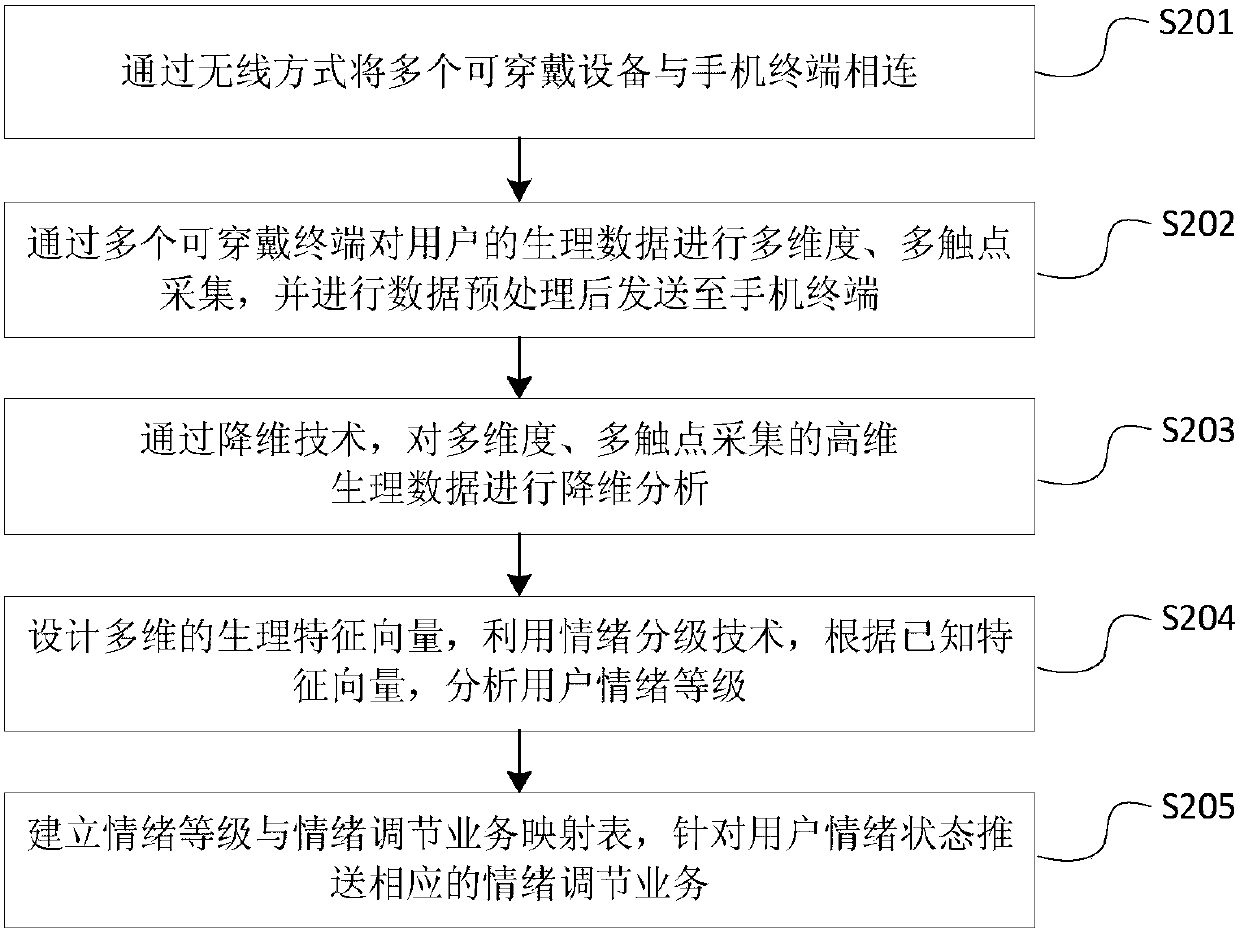

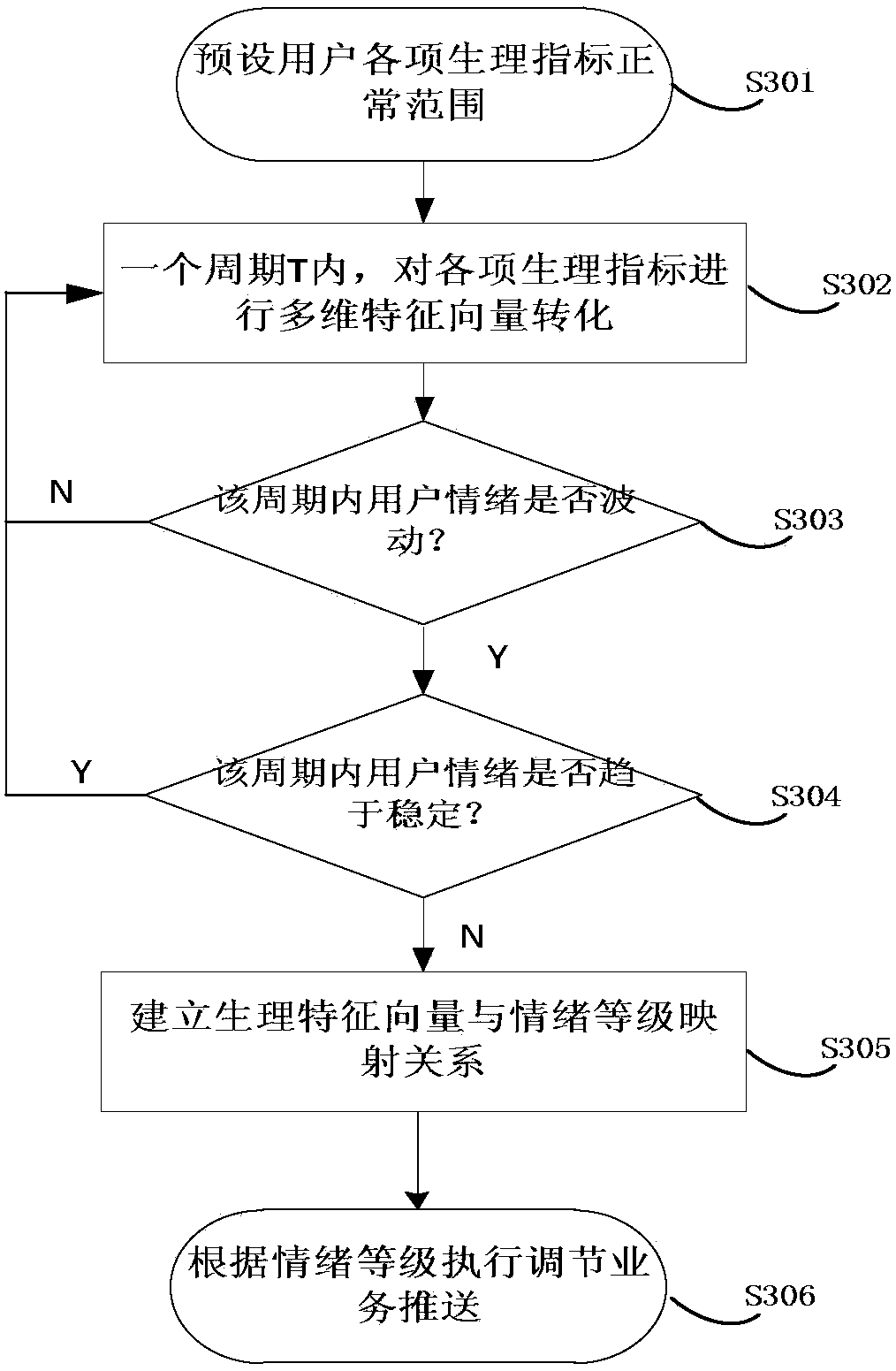

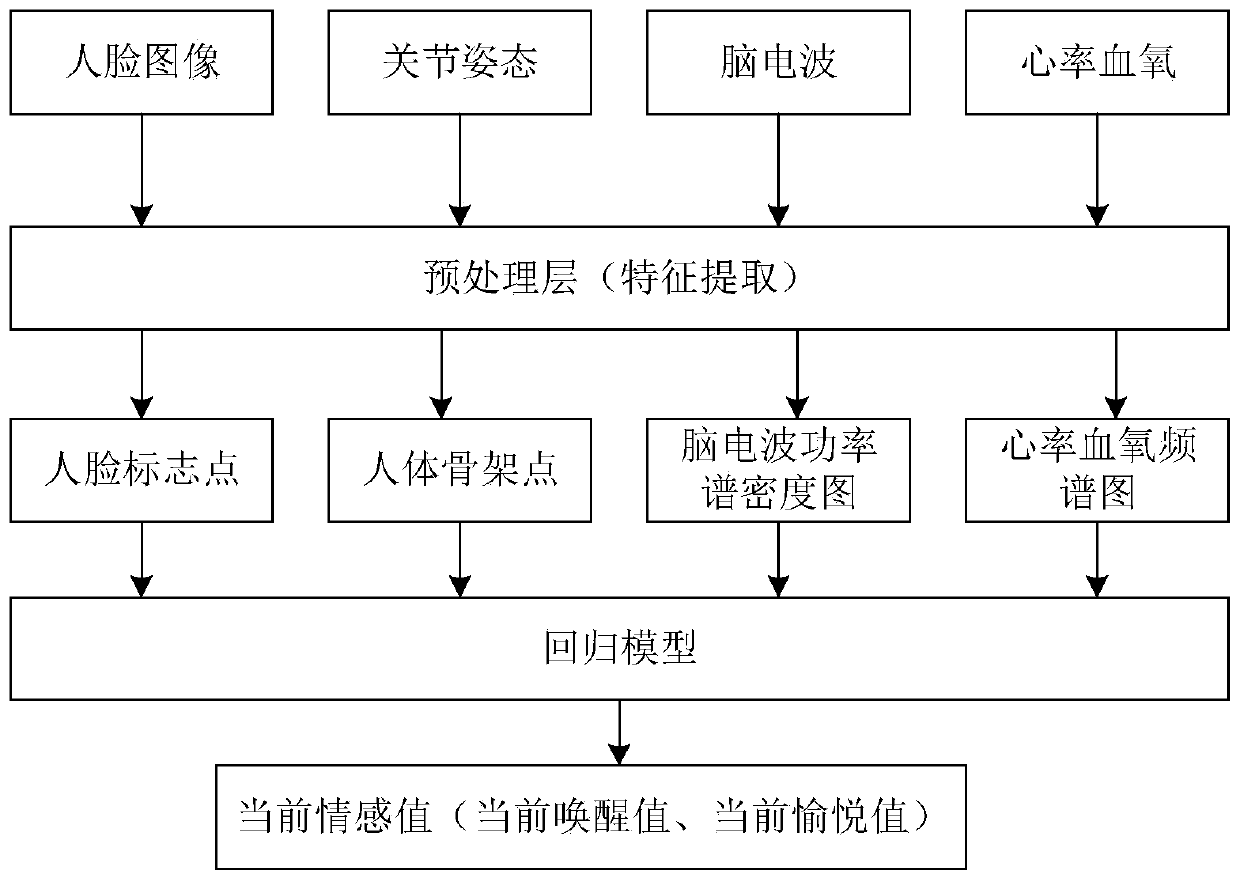

A method for pushing emotional regulation services

ActiveCN106202860BImprove user experienceRealize reasonable adjustmentHealth-index calculationSpecial data processing applicationsFeature vectorEmotion perception

Owner:西安慧脑智能科技有限公司

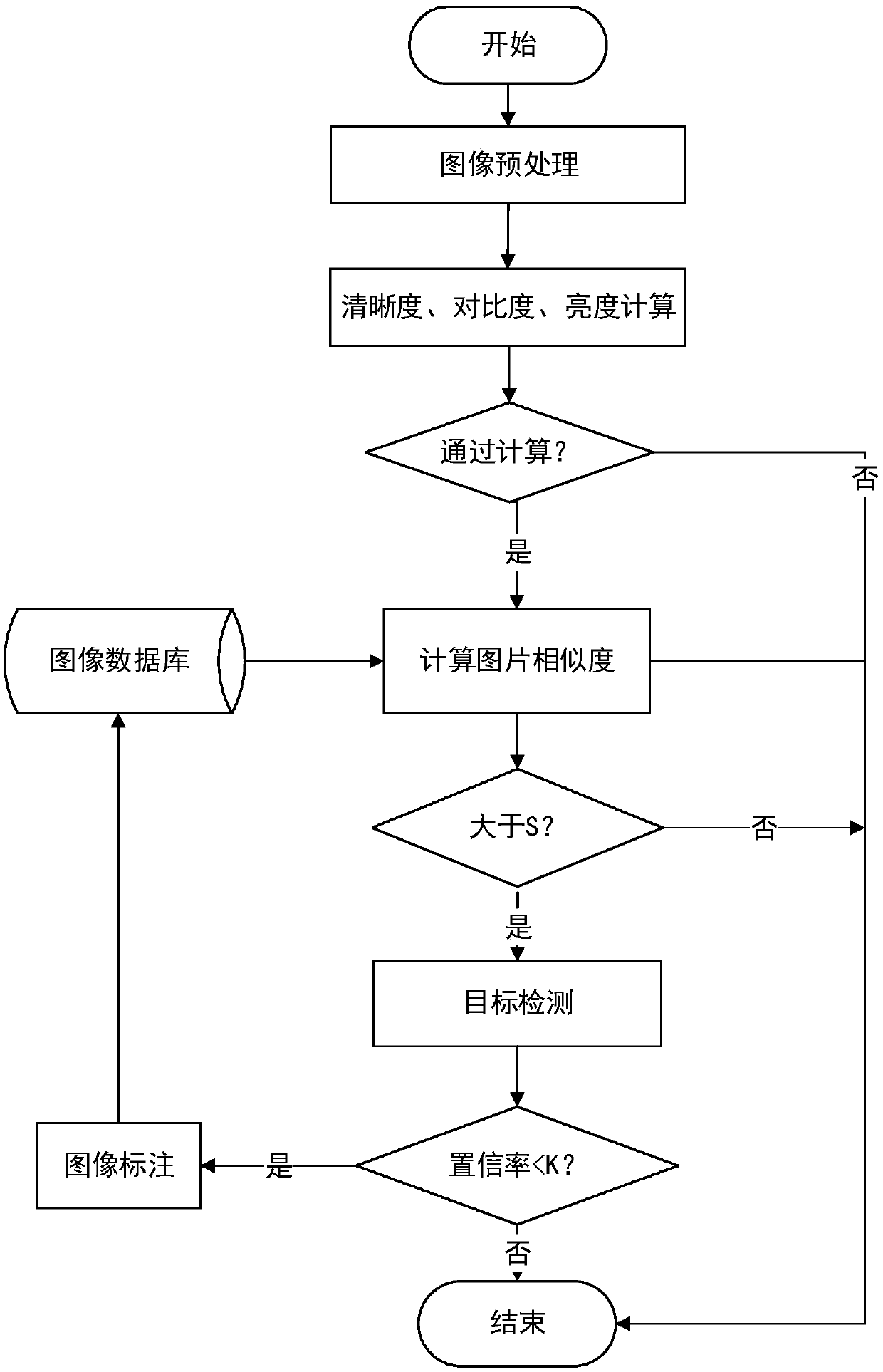

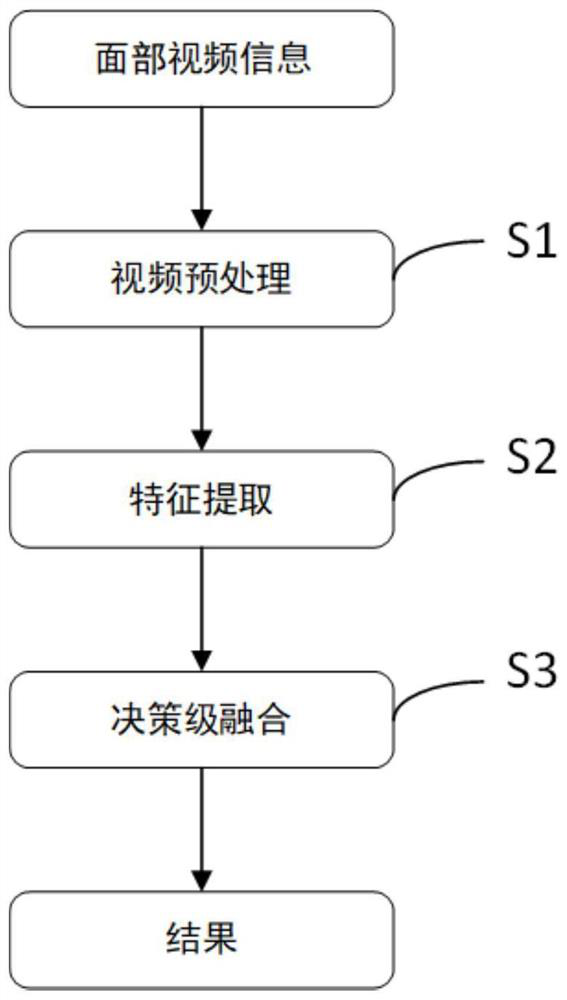

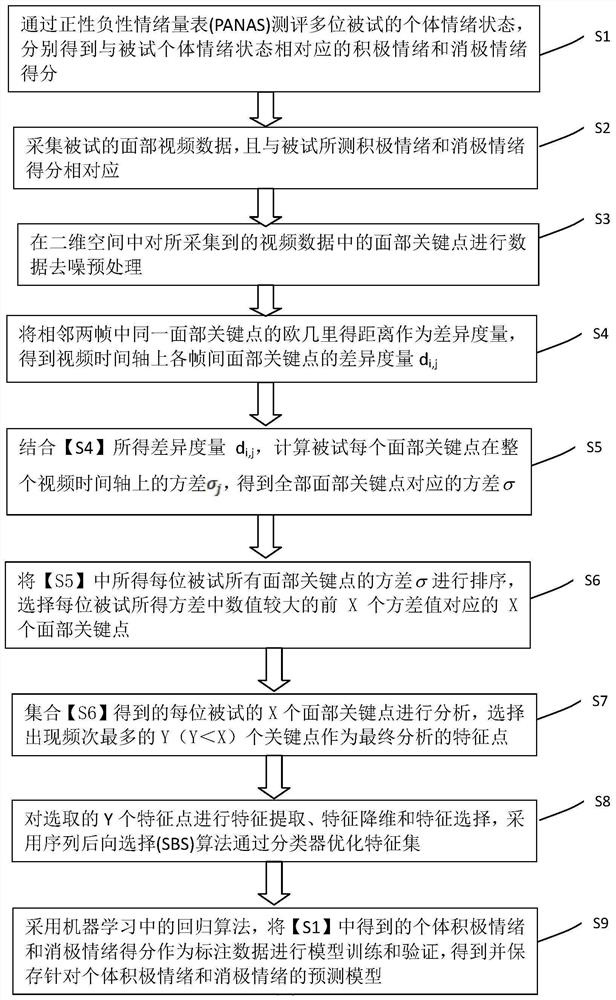

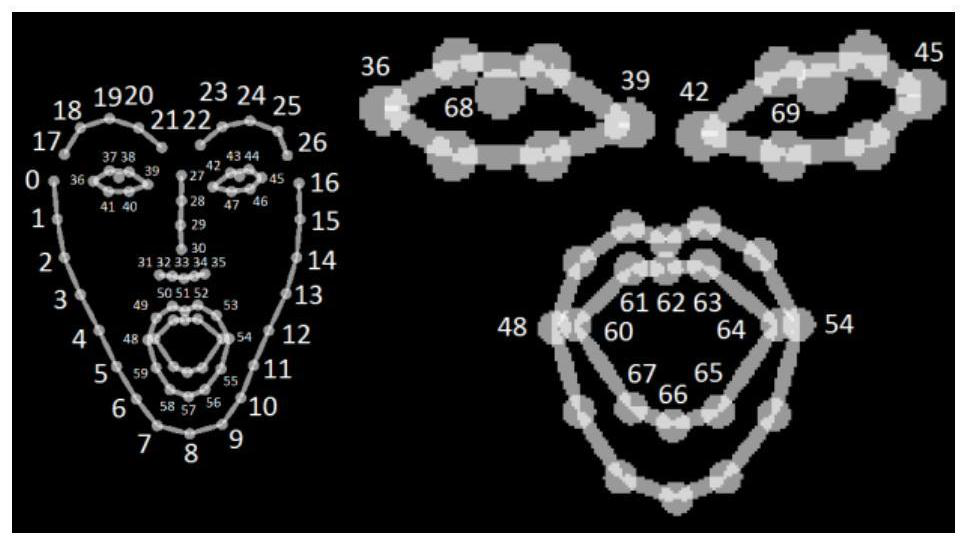

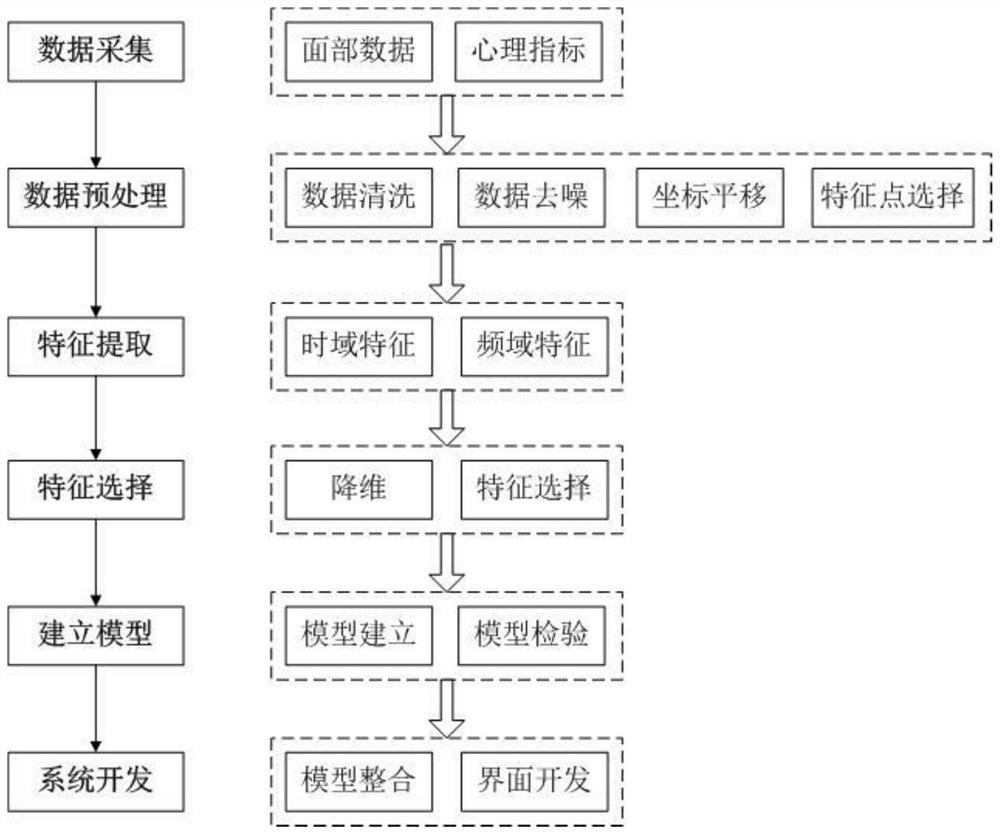

Method for establishing emotion perception model based on individual face analysis in video

PendingCN112507959ARealize automatic identificationImprove timelinessAcquiring/recognising facial featuresEmotion perceptionEmotional perception

The invention provides a method for establishing an emotion perception model based on individual face analysis in a video, comprising the following steps: evaluating the emotional states of a plurality of tested individuals through a positive and negative emotion scale, and respectively obtaining positive emotion and negative emotion scores corresponding to the emotional states of the tested individuals; collecting tested face video data, wherein the tested face video data corresponds to the tested emotional state score; performing data denoising preprocessing on the face key points in the acquired video data in a two-dimensional space; selecting facial feature points representing emotion perception by calculating a difference measurement variance between adjacent frames; carrying out feature extraction, feature dimension reduction and feature selection on the facial feature points, and optimizing a feature set through a classifier by adopting a sequence backward selection (SBS) algorithm; and adopting a regression algorithm in machine learning and taking the obtained scores of the individual positive emotion and negative emotion as annotation data to perform model training and verification, thereby obtaining and storing a prediction model for the individual positive emotion and negative emotion. According to the method, the user does not need to report by himself / herself, thetimeliness is high, and the correlation coefficient of the model prediction score and the scale evaluation score can reach the medium-to-strong correlation level.

Owner:INST OF PSYCHOLOGY CHINESE ACADEMY OF SCI

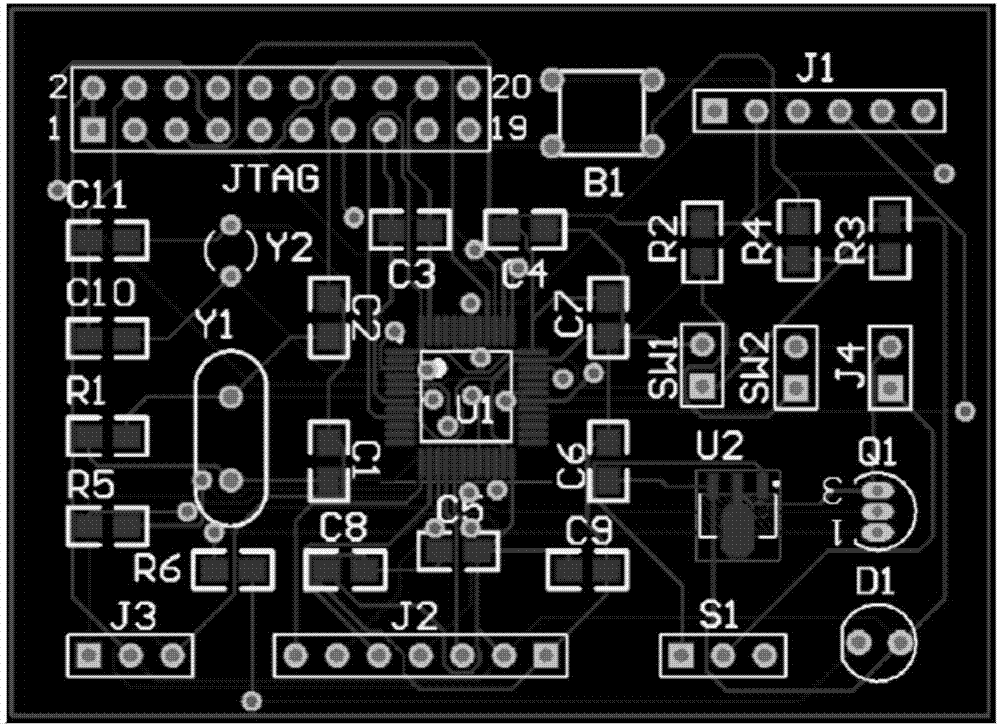

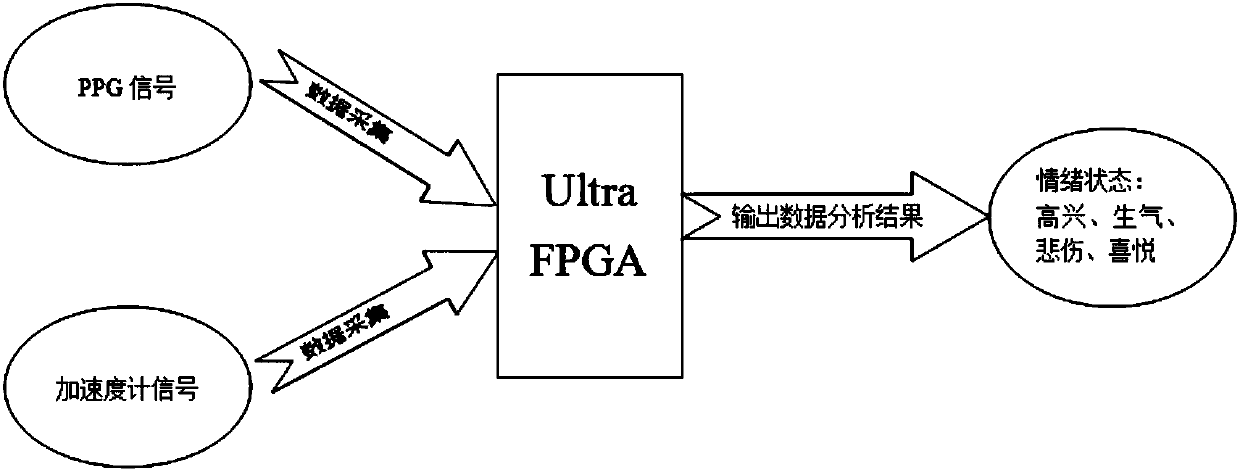

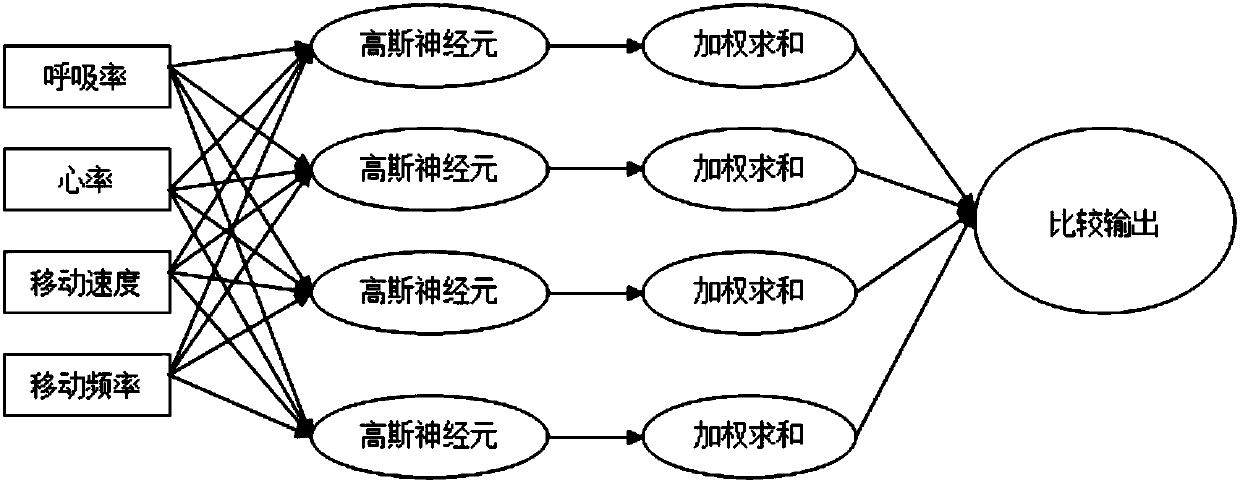

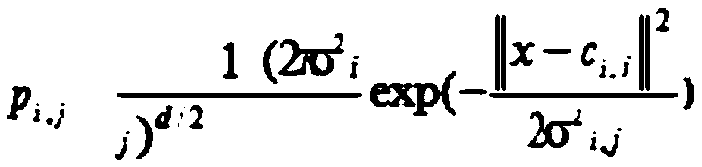

Hardware implementation method and combination of emotion perception function of intelligent wearable device

ActiveCN109846496ATimely reminder to pay attention to mental healthPay attention to mental health protectionPsychotechnic devicesEmotion perceptionAccelerometer

Disclosed is a hardware implementation method of an emotion perception function of an intelligent wearable device. The method includes the following steps: a PPG(photoplethysmography) sensor and an accelerometer transmit collected PPG signals and acceleration signals to a FPGA(Field-Programmable Gate Array) chip; the FPGA chip carries out feature extraction of the PPG signals and the accelerationsignals to obtain four physical quantities of heart rate, respiration rate, moving speed and moving frequency; and inputting the four physical quantities of heart rate, respiration rate, moving speedand moving frequency into an RHPNN(Robust Heteroscedastic Probabilistic Neural Network) based on probability to realize classification of emotion. The hardware implementation method and combination sense changes of emotion of a user in real time, remind the user to pay attention to metal health and adjust a working state in time, provide guarantee for mental health of the user, and can improve theworking efficiency of the user.

Owner:昆山光微电子有限公司 +1

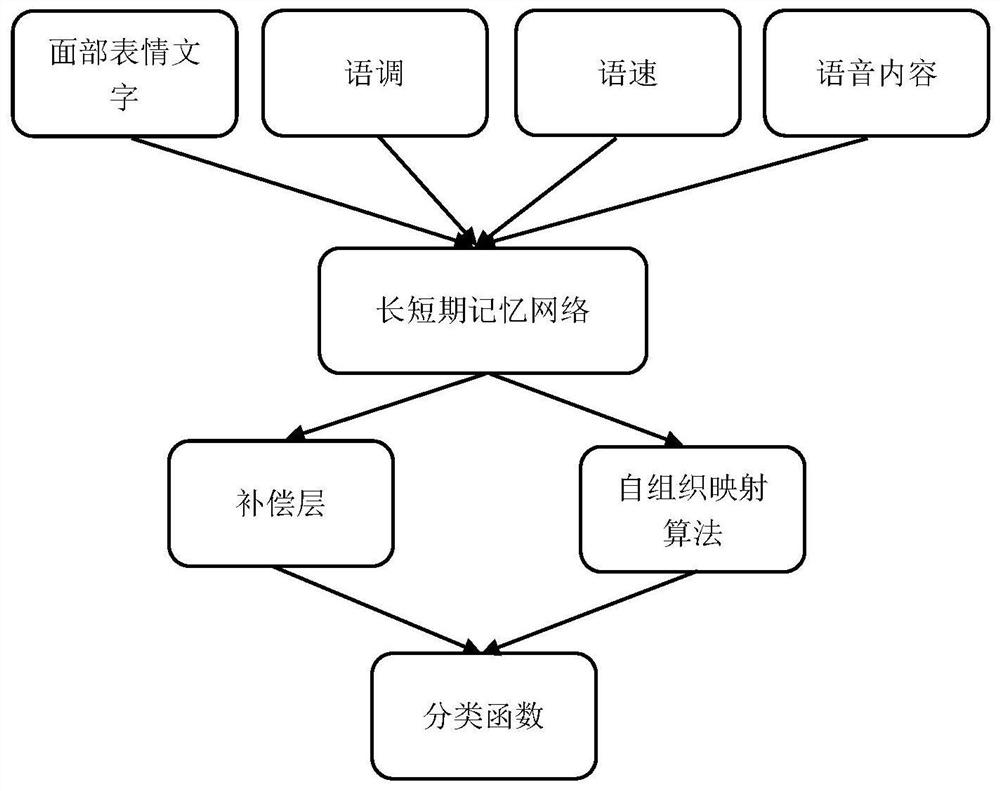

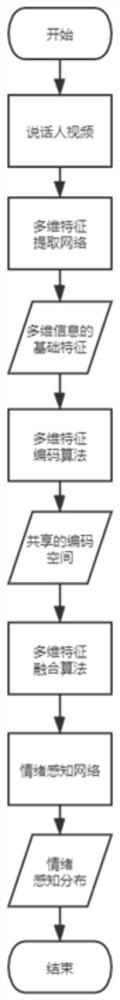

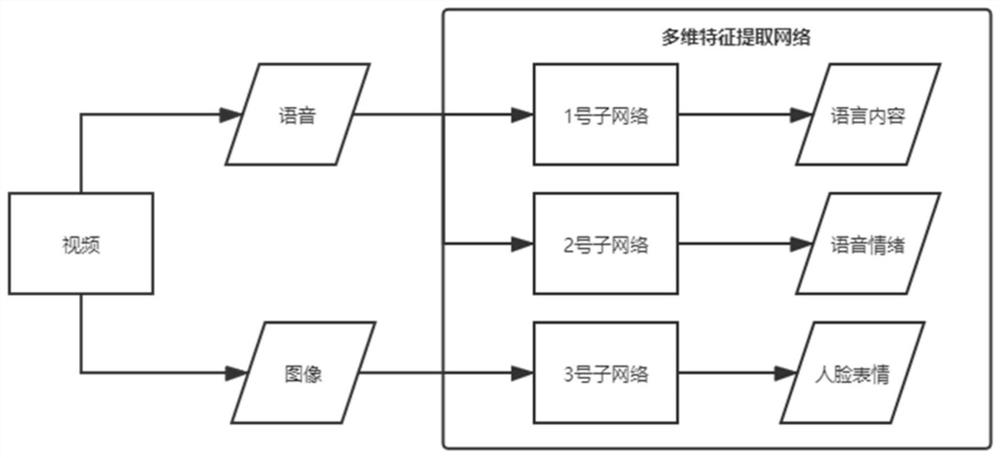

Speaker emotion perception method fusing multi-dimensional information

PendingCN113837072AImprove fusion effectQuick analysisVideo data clustering/classificationCharacter and pattern recognitionEmotion perceptionFeature vector

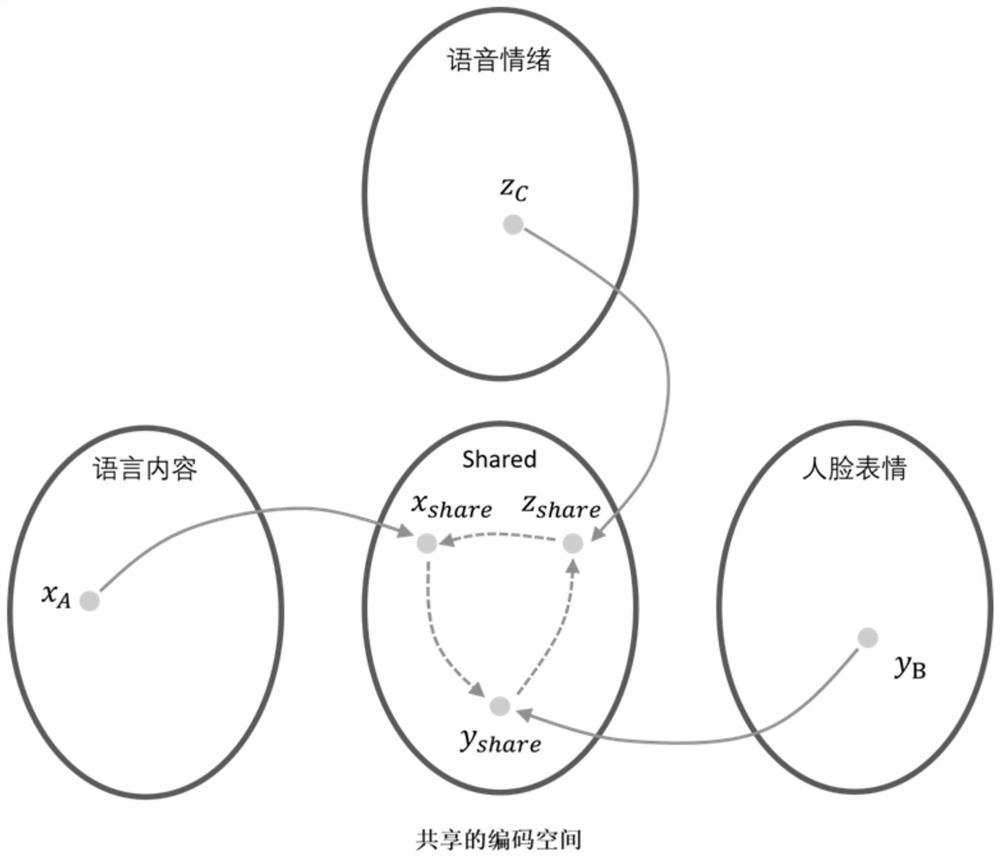

The invention discloses a speaker emotion perception method fusing multi-dimensional information, and relates to the technical field of deep learning and human emotion perception. The method includes: inputting a video of a speaker, and extracting an image and voice of the speaker from the video; inputting the image and the voice of the speaker into a multi-dimensional feature extraction network, extracting a language text and a language emotion in the voice, and extracting facial expression features of the speaker from image information; utilizing a multi-dimensional feature coding algorithm for coding various feature results of the multi-dimensional feature extraction network, and mapping the multi-dimensional information to a shared coding space; fusing the features in the coding space from low dimension to high dimension by using a multi-dimensional feature fusion algorithm, and obtaining feature vectors of multi-dimensional information highly related to the emotion of the speaker in a high-dimensional feature space; and inputting the fused multi-dimensional information into an emotion perception network for prediction, and outputting emotion perception distribution of the speaker. By means of the method, the ambiguity can be effectively eliminated according to the multi-dimensional information, and the emotion perception distribution of the speaker can be accurately predicted.

Owner:XIAMEN UNIV

Emotion perception interdynamic recreational apparatus

InactiveCN100451924CAdd funReduce volumePsychotechnic devicesInput/output processes for data processingEmotion perceptionPhysiologic States

Owner:IND TECH RES INST

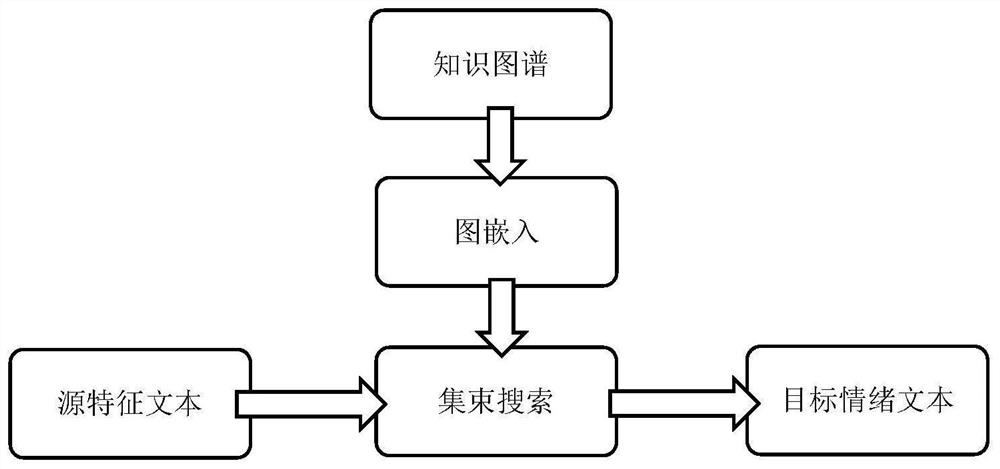

Voice and micro-expression recognition suicide emotion perception method based on knowledge graph

ActiveCN112069897AExpressiveObjective expressionSpeech analysisNeural architecturesNatural language processingEmotion perception

The invention discloses a voice and micro-expression recognition suicide emotion perception method based on a knowledge graph. The method comprises the following steps of: acquiring voice and video byusing Kinect with an infrared camera; analyzing image frames and voices in the video and converting the image frames and voices into corresponding feature texts; and analyzing the feature text basedon the knowledge graph, generating a final target emotion text, and judging whether the target emotion text belongs to suicide emotions or not. The Kinect is used for data acquisition, and the methodhas the characteristics of high performance and convenience in operation.

Owner:SOUTH CHINA UNIV OF TECH

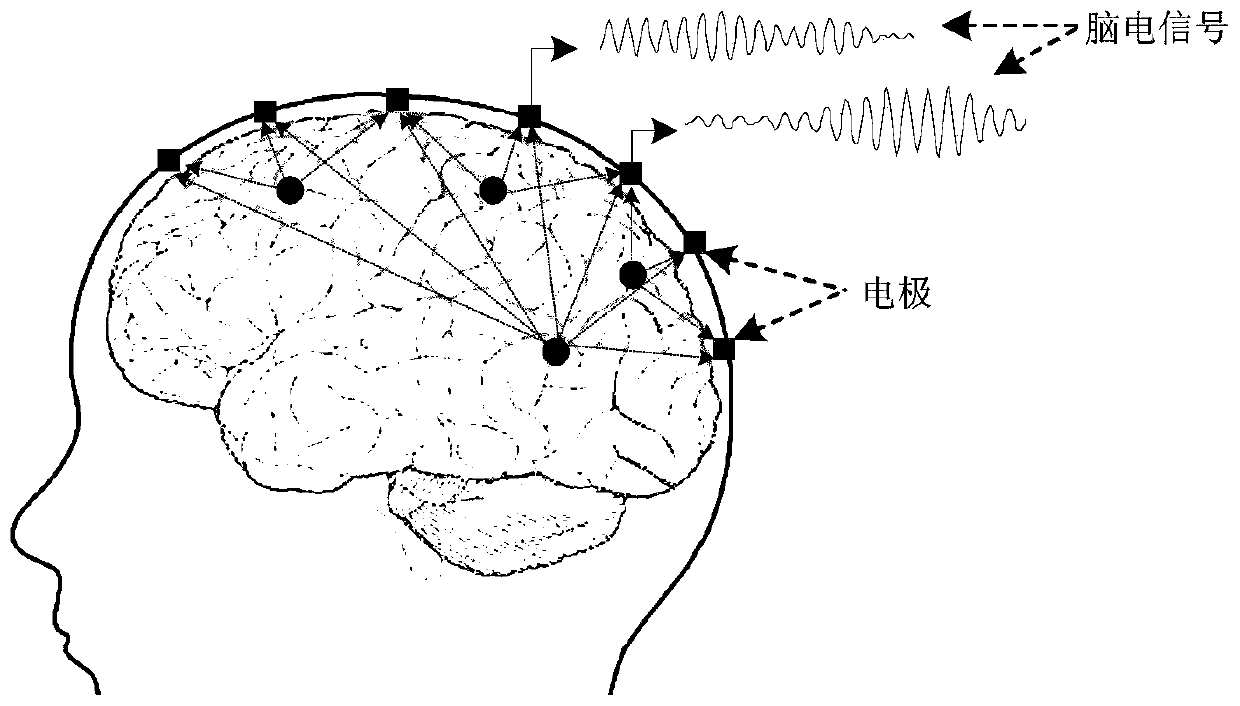

EEG-based emotional perception and stimulus sample selection method for adolescent environmental psychology

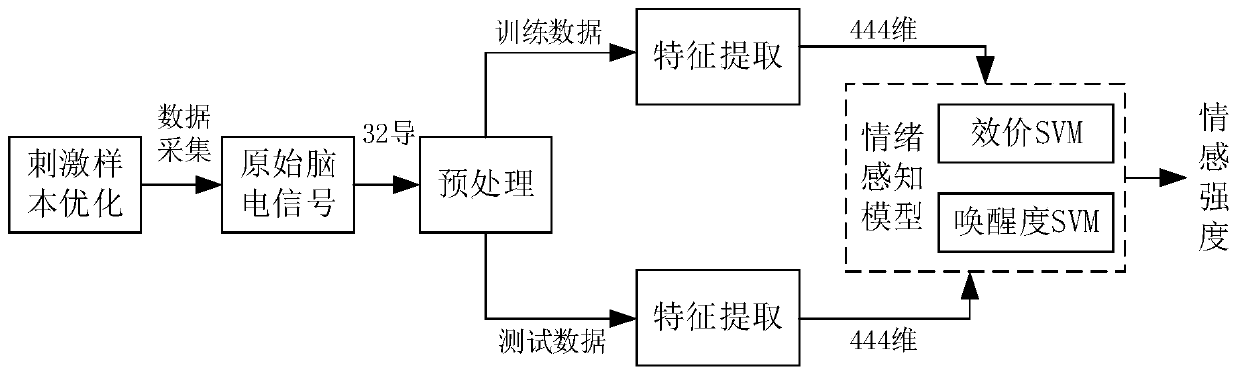

ActiveCN107080546BPerceived emotional intensityStrong emotional perceptionSensorsPsychotechnic devicesEmotion perceptionEmotional perception

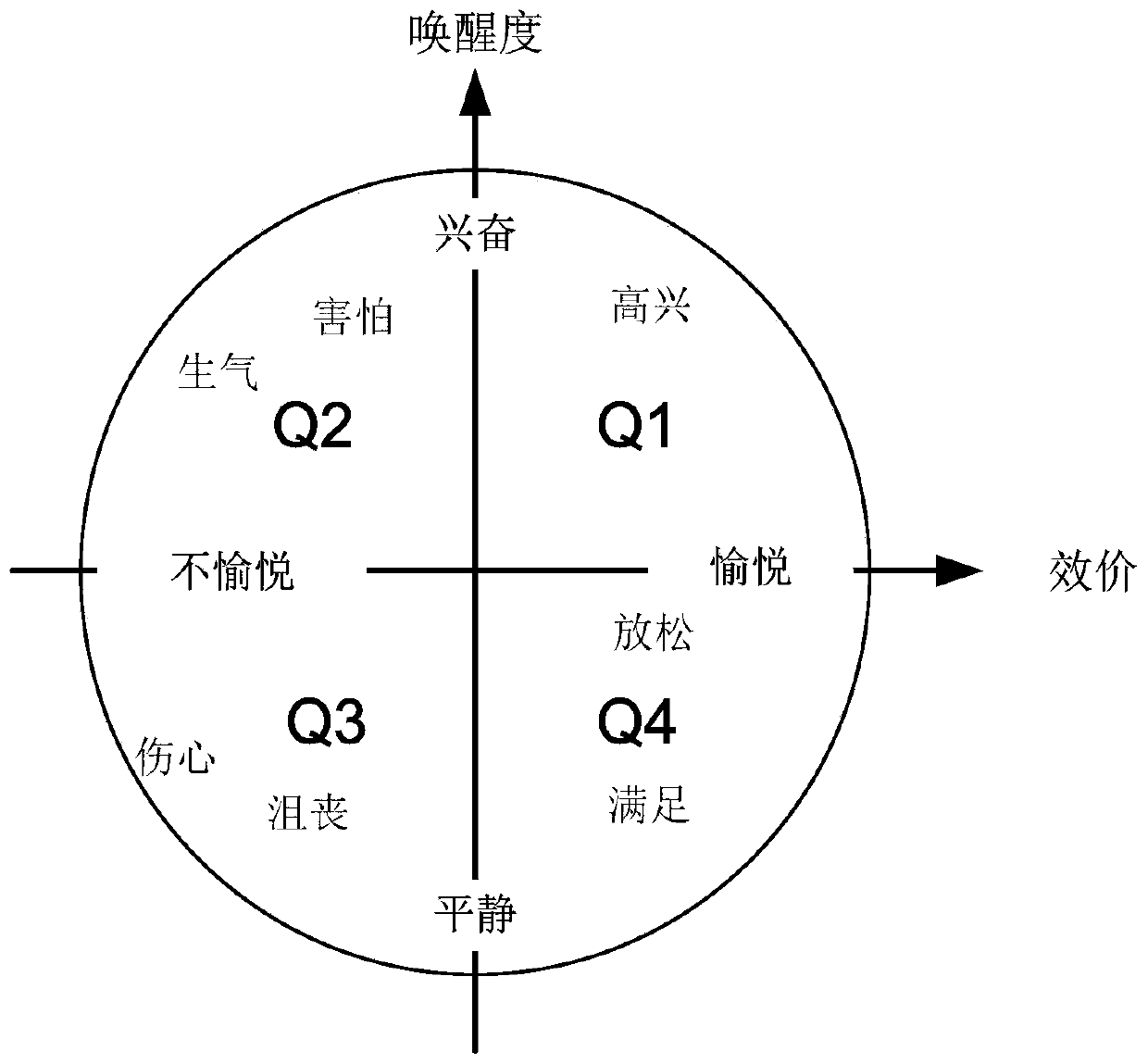

The invention discloses a system and a method for sensing emotion of juvenile environmental psychology based on electroencephalogram, and a method for selecting simulation samples. The system comprises an electroencephalogram signal acquisition module, an electroencephalogram signal preprocessing module, an electroencephalogram signal feature extraction module, an emotion sensing module and the like; and a built environment serves as a visual simulation source, wherein juveniles often contact with, participate in or are crazy about the built environment. The emotion sensing method comprises the steps of selecting the visual simulation source, acquiring the electroencephalogram signal, preprocessing the electroencephalogram signal, extracting electroencephalogram signal features, training a model, determining emotion intensity and the like. According to the method for selecting the simulation samples, arousal dimensionality and valence dimensionality are divided according to 5 emotion intensities, and rectangular select boxes are determined non-equidistantly according to the actual selected condition of the samples and the distribution state of the samples in a two-dimensional space. The emotion sensing system, the emotion sensing method and the method for selecting the simulation samples have the advantages of high emotion sensing ability, object extending ability and the like, and have high application values in environmental psychology research.

Owner:安徽智趣小天使信息科技有限公司

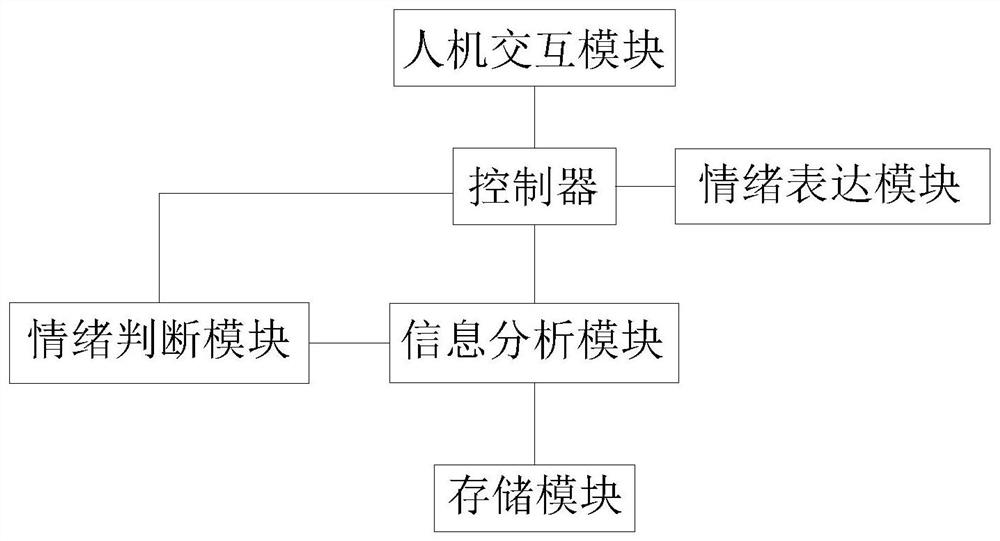

Language emotion perception response method for robot

InactiveCN111611384APersonality DiversityGood choiceText database queryingSpecial data processing applicationsEmotion perceptionInformation transmission

The invention provides a language emotion perception response method for a robot. The language emotion perception response method comprises the following steps that S1, a man-machine interaction module transmits collected information to a controller; S2, the controller transmits the collected information to an information analysis module; S3, the information acquired by the controller by the information analysis module is analyzed and disassembled, and disassembled data is transmitted to an emotion judgment module; S4, the emotion judgment module compares and judges the received information with the data of the storage module and then sends a judgment result to the controller; and S5, the controller controls the emotion expression module to express the corresponding emotion. According to the language emotion perception response method for the robot, facial expression and limb and sound expression can be carried out, so that emotion expression is more stereoscopic, emotion management ismore flexible, and people can relax in the chatting process.

Owner:TIANJIN WEIKA TECH CO LTD

A Method for Speech Emotion Recognition Using Emotion Perceptual Spectrum Features

ActiveCN108847255BIncrease effective resolutionAccurate distinctionSpeech recognitionEmotion perceptionSvm classifier

The invention relates to a method for speech emotion recognition using emotion perception spectrum features. Firstly, a pre-emphasis method is used to perform high-frequency enhancement on the input speech signal, and then the fast Fourier transform is used to convert it to a frequency to obtain a speech frequency signal; and then for the speech frequency The signal is divided into multiple sub-bands by using the emotion-aware sub-band division method; the emotional-aware spectral features are calculated for each sub-band, and the spectral features include emotional entropy features, emotional spectrum harmonic inclinations, and emotional spectrum harmonic flatness; The spectral features are calculated by global statistical features to obtain the global emotion perception spectrum feature vector; finally, the emotion perception spectrum feature vector is input to the SVM classifier to obtain the emotion category of the speech signal. According to the principle of the speech psychoacoustic model, the invention adopts the perceptual sub-band division method to accurately describe the emotional state information, and performs emotional recognition through the sub-band spectral features, and improves the recognition rate by 10.4% compared with the traditional MFCC features.

Owner:湖南商学院 +1

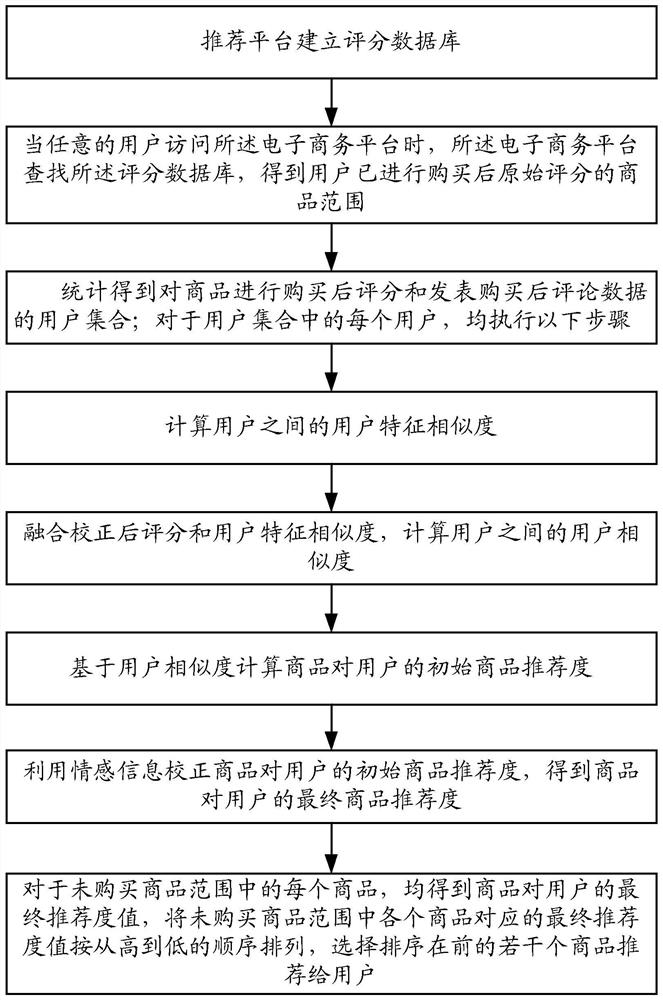

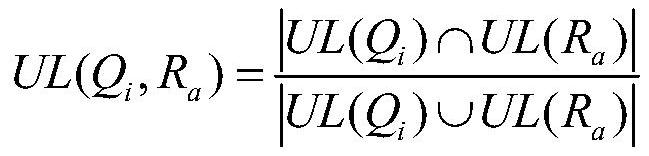

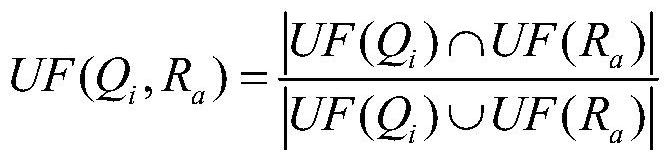

Emotion-aware product recommendation method based on mixed information fusion

ActiveCN111507804BImprove accuracyDigital data information retrievalCharacter and pattern recognitionEmotion perceptionEmotional perception

The present invention provides an emotion-aware product recommendation method based on mixed information fusion, which includes: calculating the user similarity between users; calculating the initial product recommendation degree of the product to the user based on the user similarity; using the emotional information to correct the initial product to the user Commodity recommendation degree, to obtain the final product recommendation degree of the product to the user; for each product in the range of unpurchased products, the final recommendation value of the product to the user is obtained, and the final recommendation value corresponding to each product in the range of unpurchased products Arranged in order from high to low, select a number of top-ranked products and recommend them to users. The present invention comprehensively considers the score data and comment data of all users who have purchased the product, the similarity between the user and each user who has purchased the product, and the pre-purchase consultation and comment data of each product, so as to obtain product recommendation Degree, because the considered information is comprehensive and sufficient, effectively improving the accuracy of product recommendation results.

Owner:莫毓昌

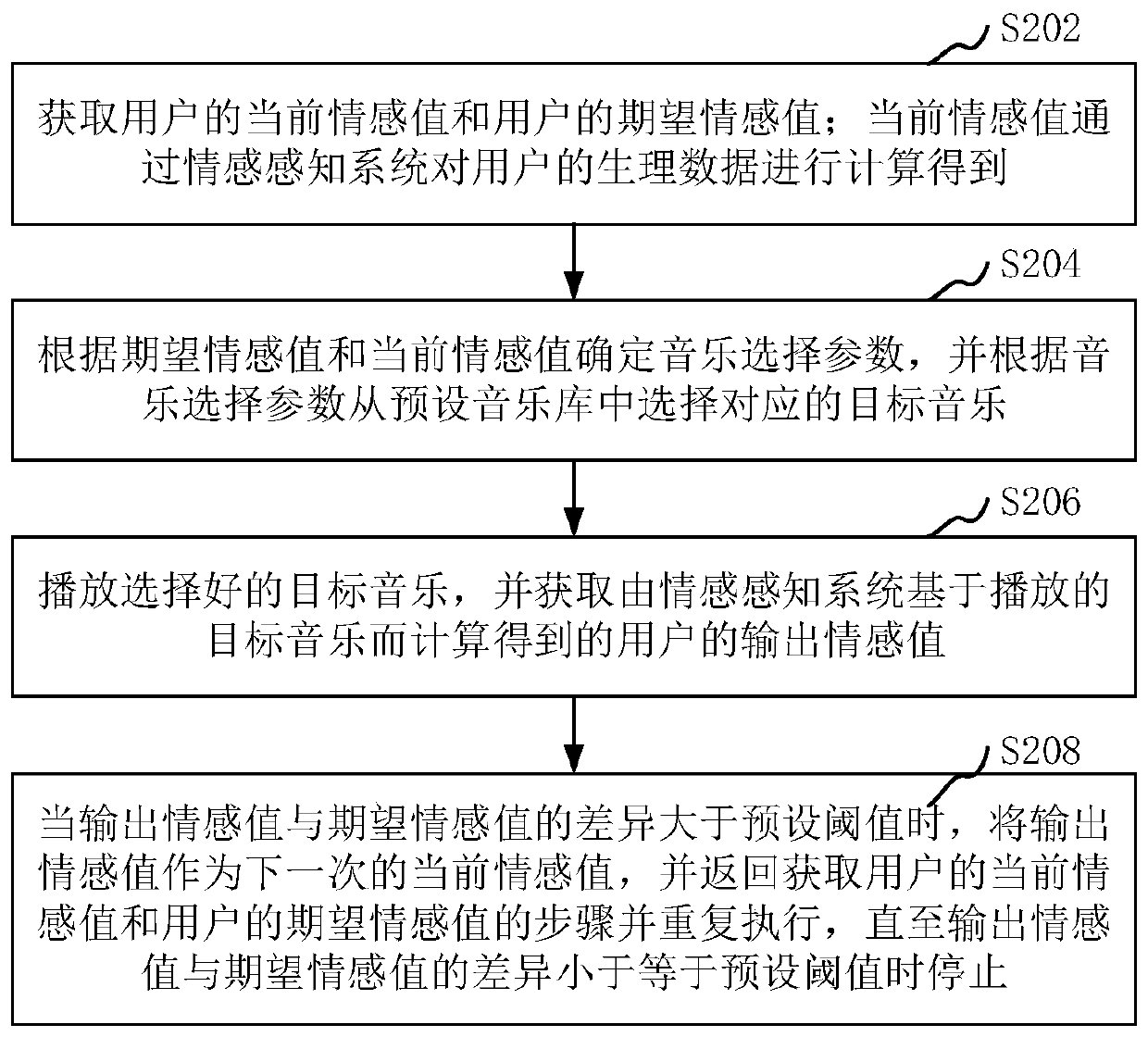

Emotion adjustment method, device, computer equipment and storage medium

ActiveCN111430006AImprove accuracyImprove the efficiency of emotion regulationRecord information storageMental therapiesEmotion perceptionEmotional perception

The application relates to emotion adjustment method, device, computer equipment and a storage medium. The method includes obtaining the current emotional value and the expected emotional value of a user, wherein the current emotional value is obtained by calculating the physiological data of the user by using an emotional perception system. The music selection parameter is determined according tothe expected emotion value and the current emotion value, and the corresponding target music is selected from the preset music library according to the music selection parameter. Then the selected target music is played and the output emotion value of the user is calculated by the emotion perception system based on the played target music. When the difference between the output emotion value andthe expected emotion value is greater than the preset threshold, the output emotion value is used as the current emotion value for the next time. Then the method returns to step of obtaining the current emotional value and the expected emotional value of the user and repeats the step until the difference between the output emotion value and the expected emotion value is less than or equal to the preset threshold. The method can increase the emotion adjustment efficiency.

Owner:SHENZHEN INST OF ARTIFICIAL INTELLIGENCE & ROBOTICS FOR SOC +1

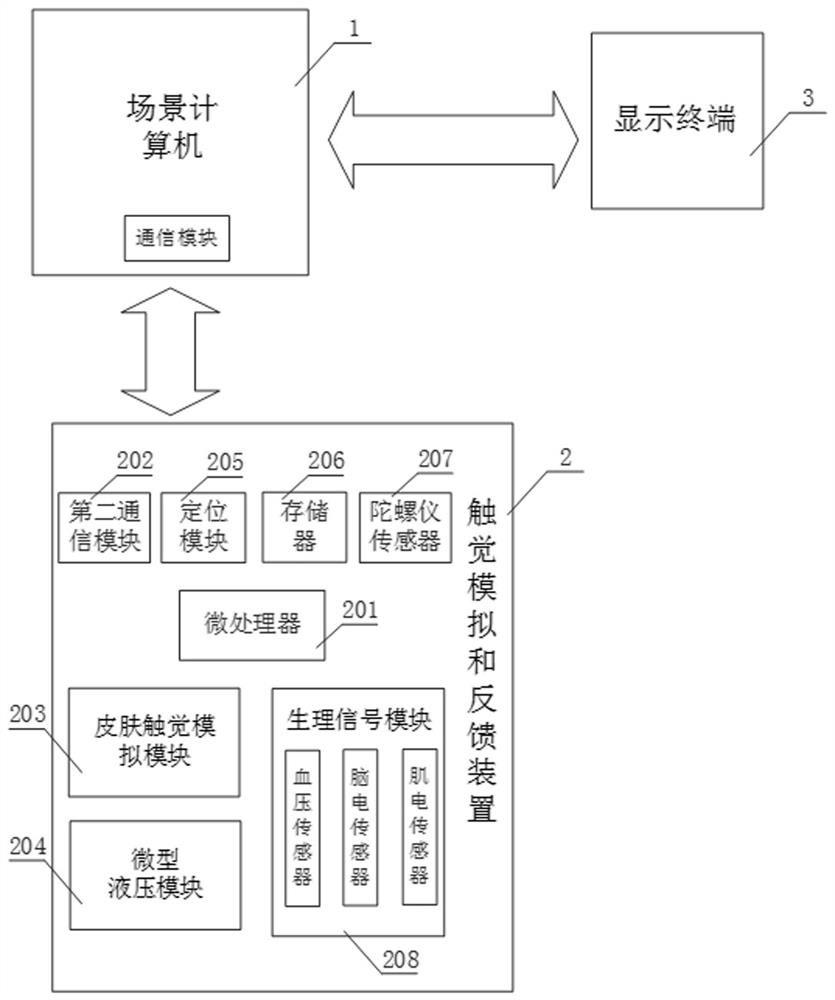

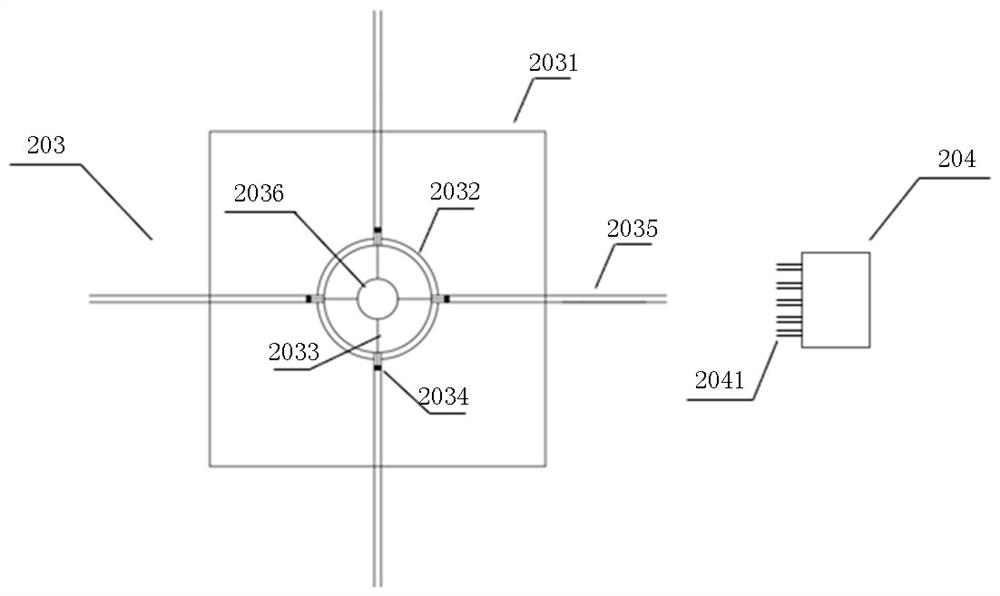

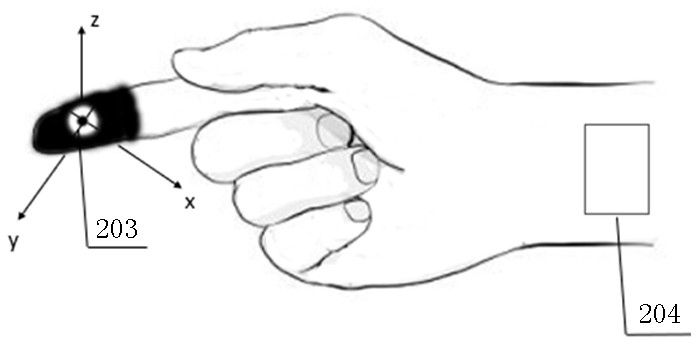

Virtual scene experience system and method with haptic simulation and emotion perception

ActiveCN113190114AImprove experienceRealistic tactile experienceInput/output for user-computer interactionKernel methodsEmotion perceptionPhysical medicine and rehabilitation

The invention relates to a virtual scene experience system with tactile simulation and emotion perception. The virtual scene experience system comprises a scene computer, and a display terminal and a touch simulation and feedback device which are respectively in communication connection with the scene computer; wherein the touch simulation and feedback device is worn on a limb of a user and comprises a microprocessor, and a second communication module, a positioning module, a skin tactile simulation module and a physiological signal module which are respectively connected with the microprocessor; the skin touch simulation module generates different pressures and shearing forces on the skin contacted with the skin touch simulation module according to the scene displayed by the display terminal and the limb position of the user, and simulates the touch of the user touching an object in the scene. The system provided by the invention provides a virtual scene for a user, accurately tracks the motion trail and posture of the hand of the user, simulates more vivid touch experience, can be widely applied to virtual simulation of various scenes, and greatly improves the user experience.

Owner:CHINA THREE GORGES UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com