Patents

Literature

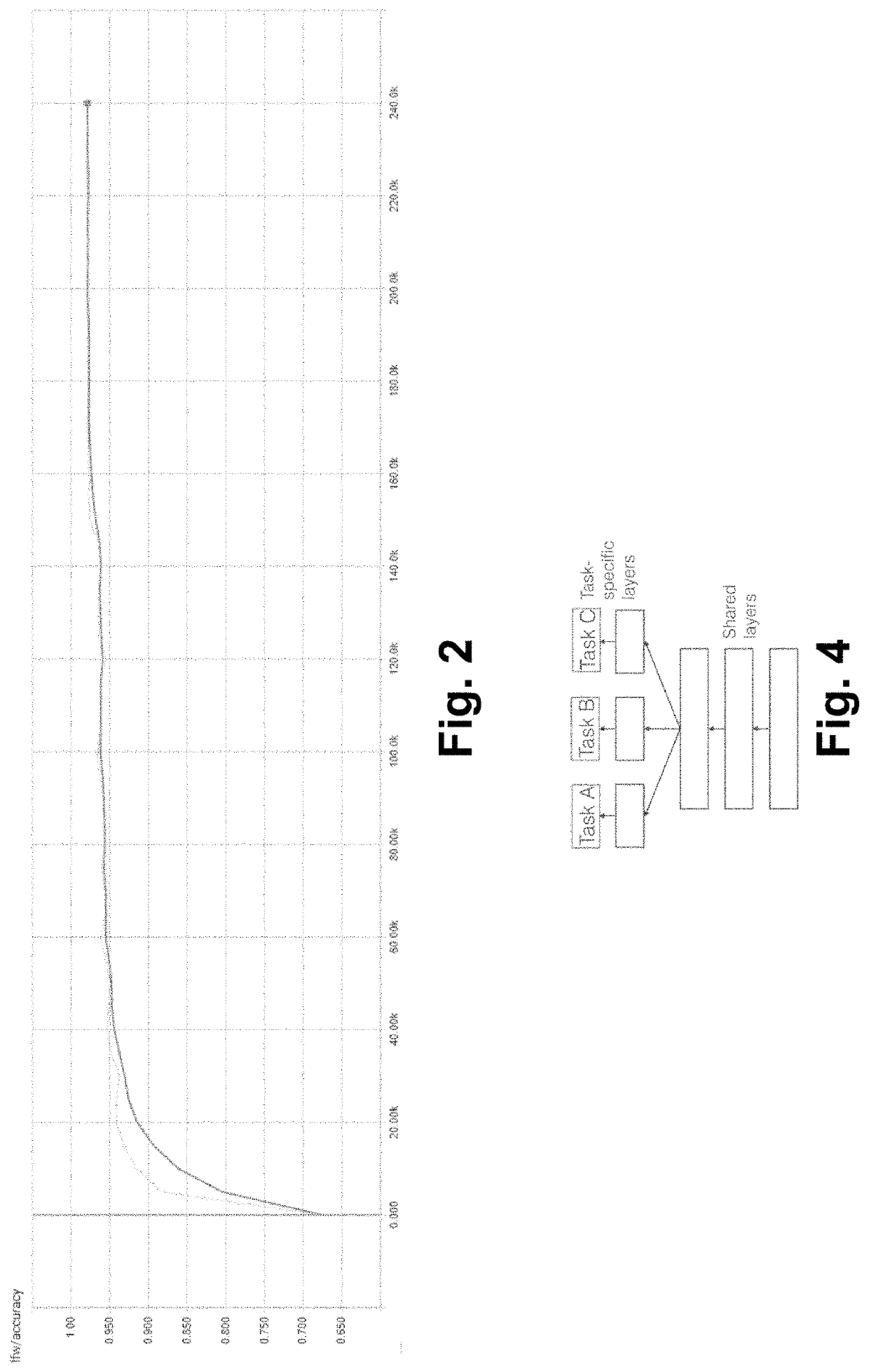

566 results about "Task learning" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

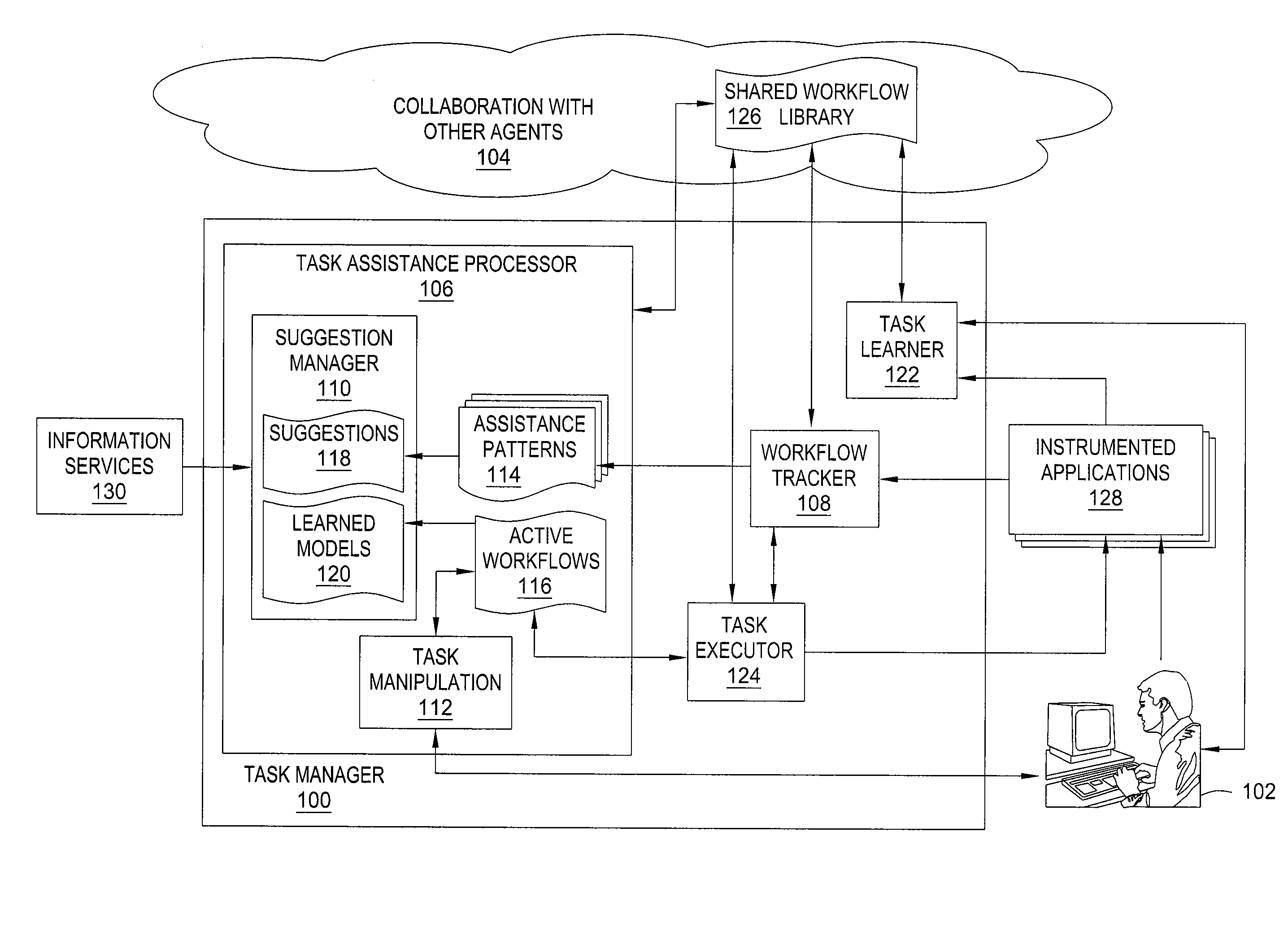

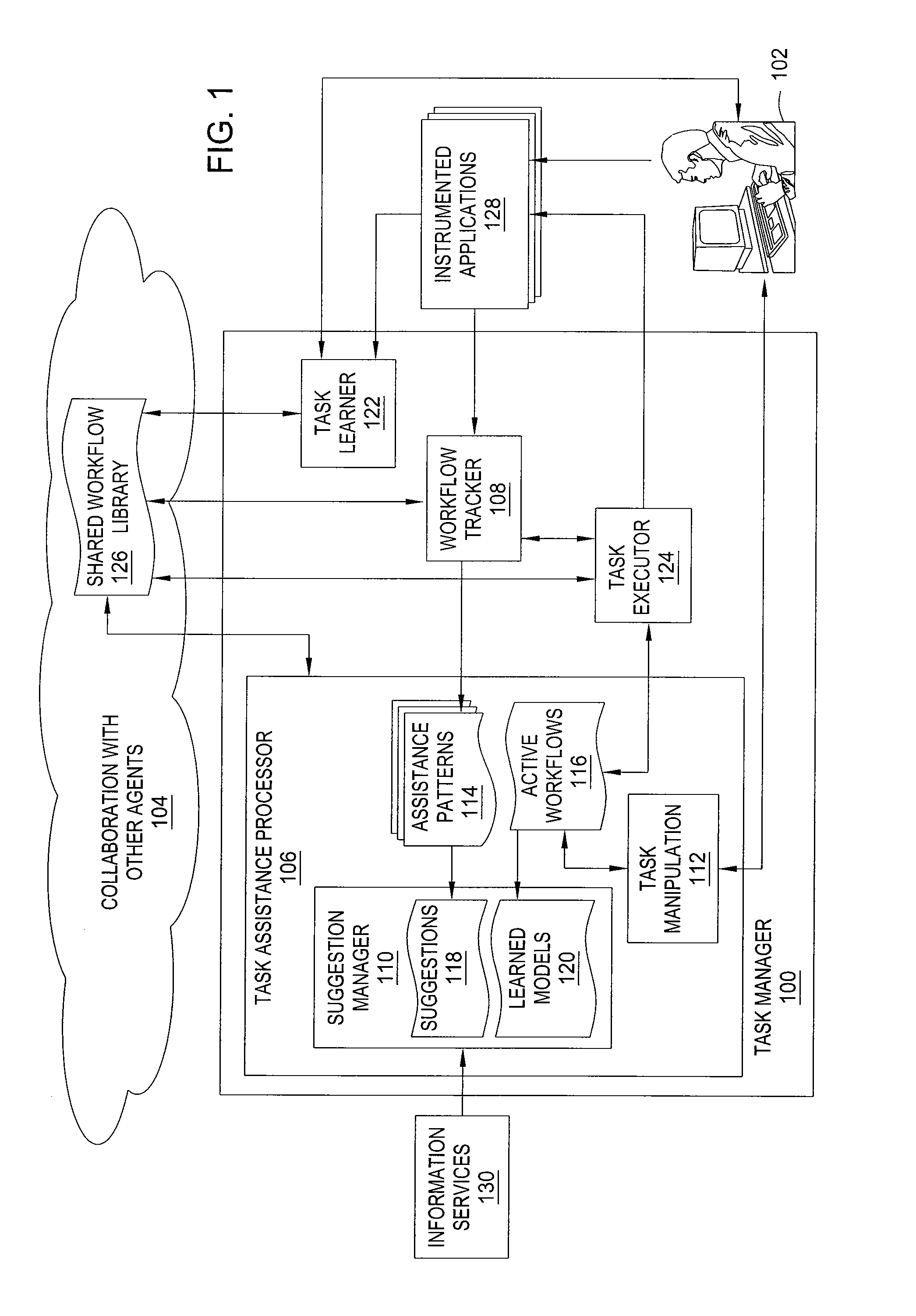

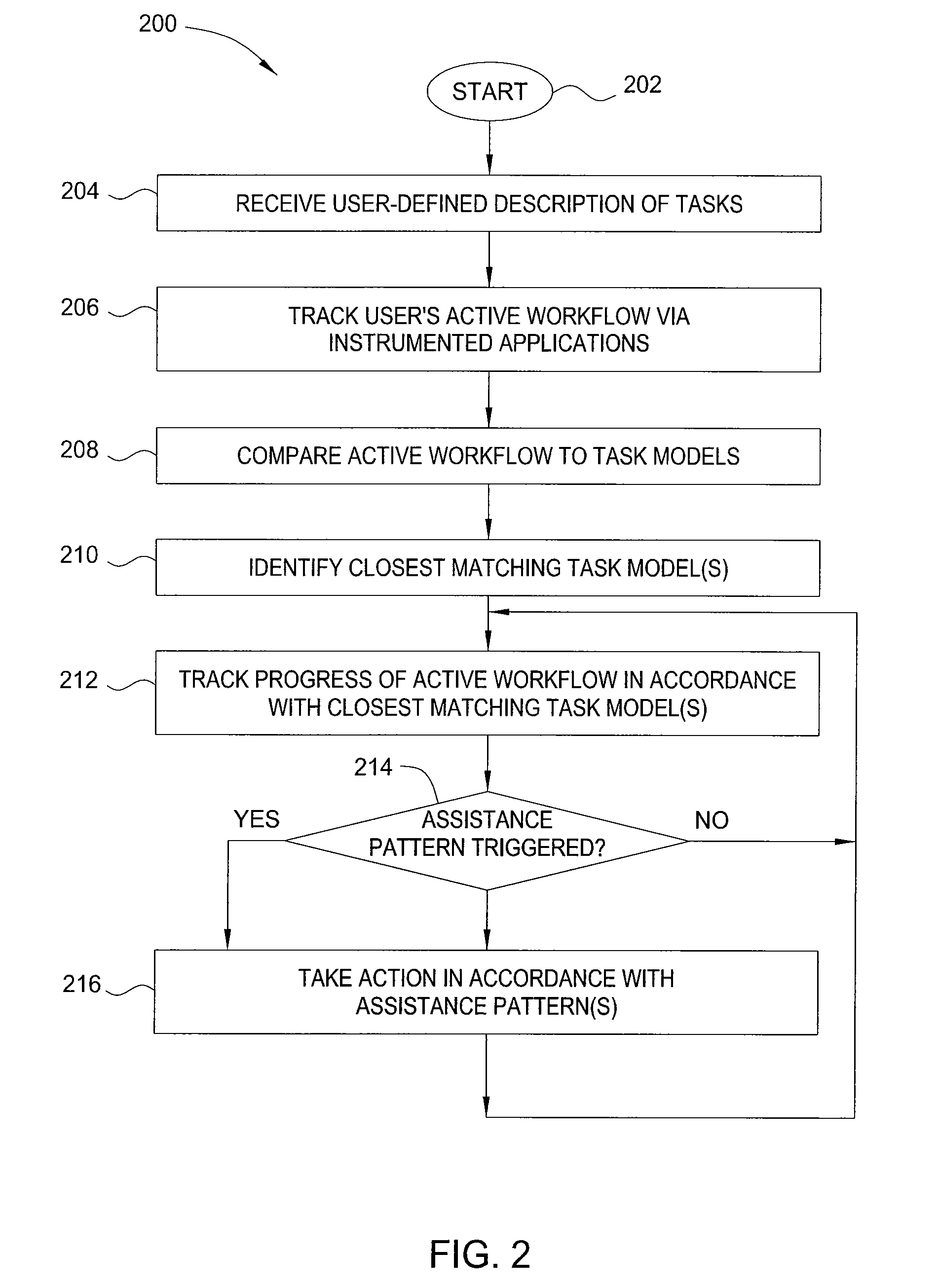

Method and apparatus for automated assistance with task management

ActiveUS20090307162A1Digital computer detailsMultiprogramming arrangementsCoprocessorApplication software

The present invention relates to a method and apparatus for assisting with automated task management. In one embodiment, an apparatus for assisting a user in the execution of a task, where the task includes one or more workflows required to accomplish a goal defined by the user, includes a task learner for creating new workflows from user demonstrations, a workflow tracker for identifying and tracking the progress of a current workflow executing on a machine used by the user, a task assistance processor coupled to the workflow tracker, for generating a suggestion based on the progress of the current workflow, and a task executor coupled to the task assistance processor, for manipulating an application on the machine used by the user to carry out the suggestion.

Owner:SRI INTERNATIONAL

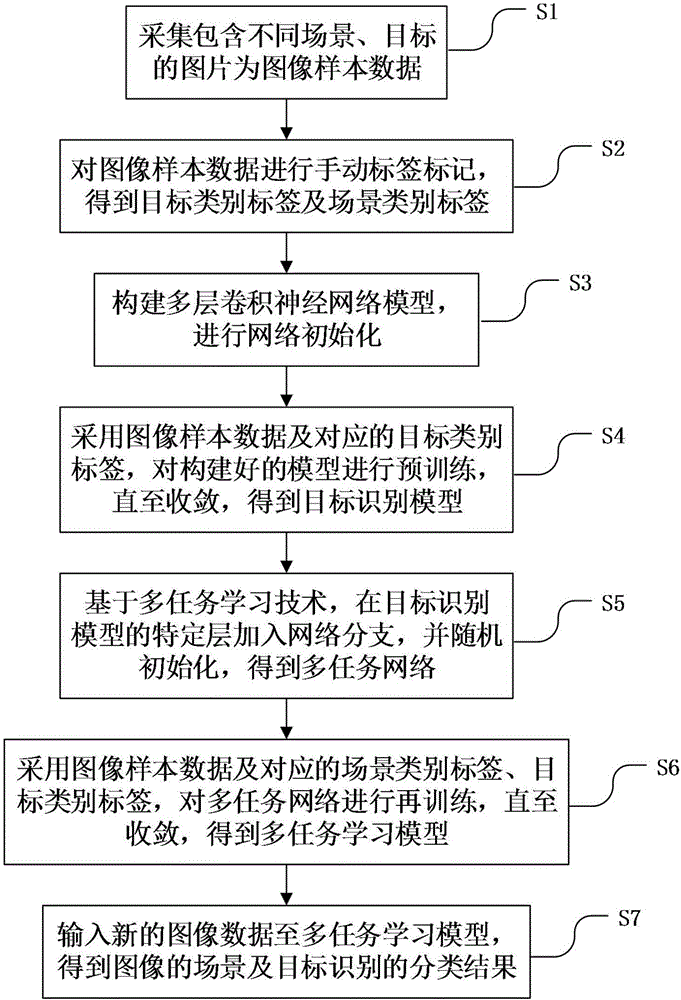

Scene and target identification method and device based on multi-task learning

InactiveCN106845549ARealize integrated identificationImprove single-task recognition accuracyCharacter and pattern recognitionNeural architecturesTask networkGoal recognition

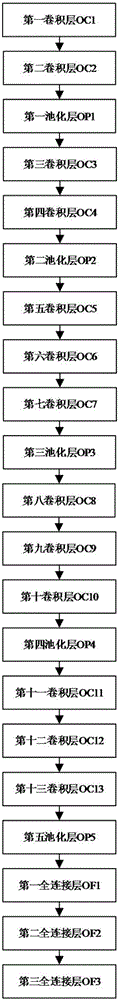

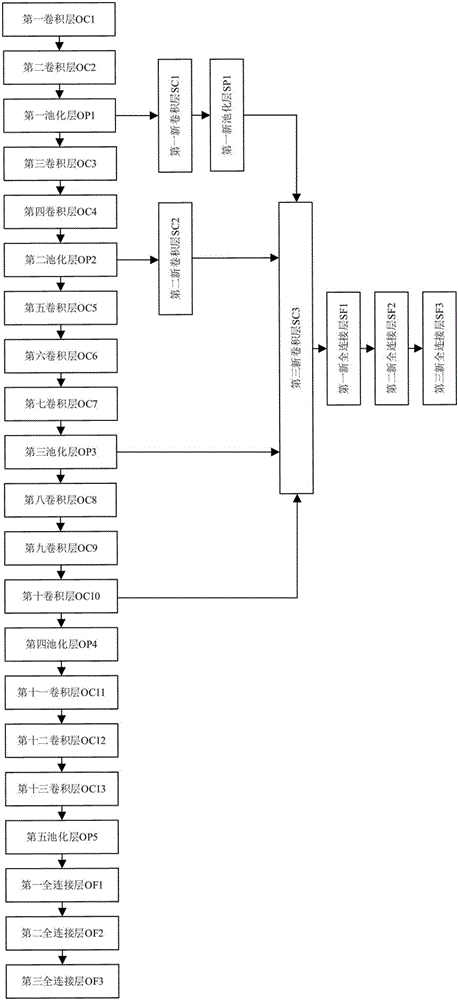

The invention relates to a scene and target identification method and device based on multi-task learning. The method comprises the steps that pictures containing different scenes and targets are collected as image sample data; the image sample data is subjected to manual label marking, and target class labels and scene class labels are obtained; a multi-layer convolutional neural network model is built, and network initialization is conducted; the image sample data and the corresponding target class labels are adopted for pre-training the built model till convergence, and a target identification model is obtained; based on a multi-task learning technology, network branches are added into a specific layer of the target identification model, random initialization is conducted, and a multi-task network is obtained; the image sample data and the corresponding scene class labels and target class labels are adopted for e-training the multi-task network till convergence, and a multi-task learning model is obtained; new image data is input to the multi-task learning model, and classification results of scene and target identification of images are obtained. Accordingly, the single task identification precision is improved.

Owner:珠海习悦信息技术有限公司

Multi-task named entity recognition and confrontation training method for medical field

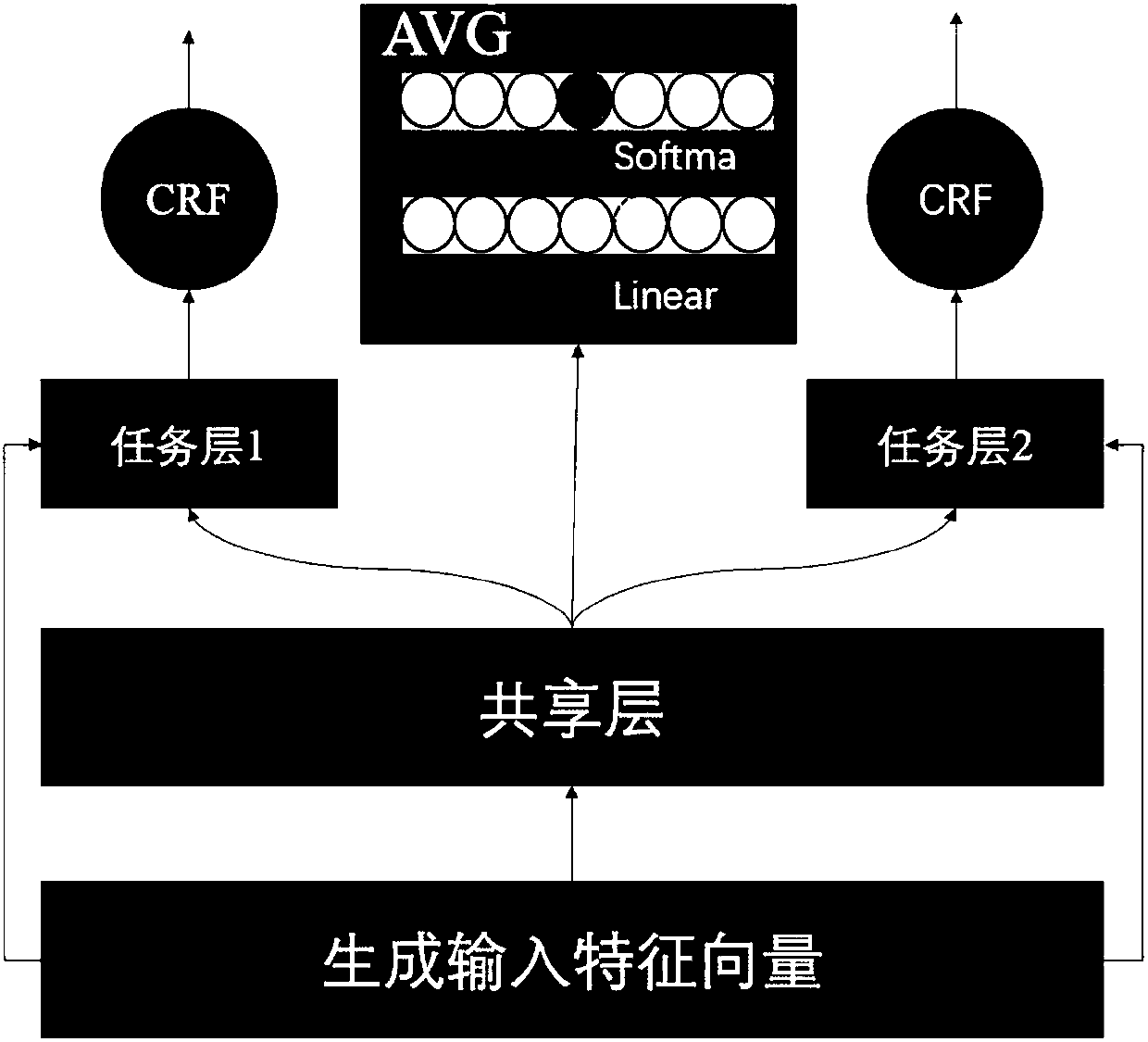

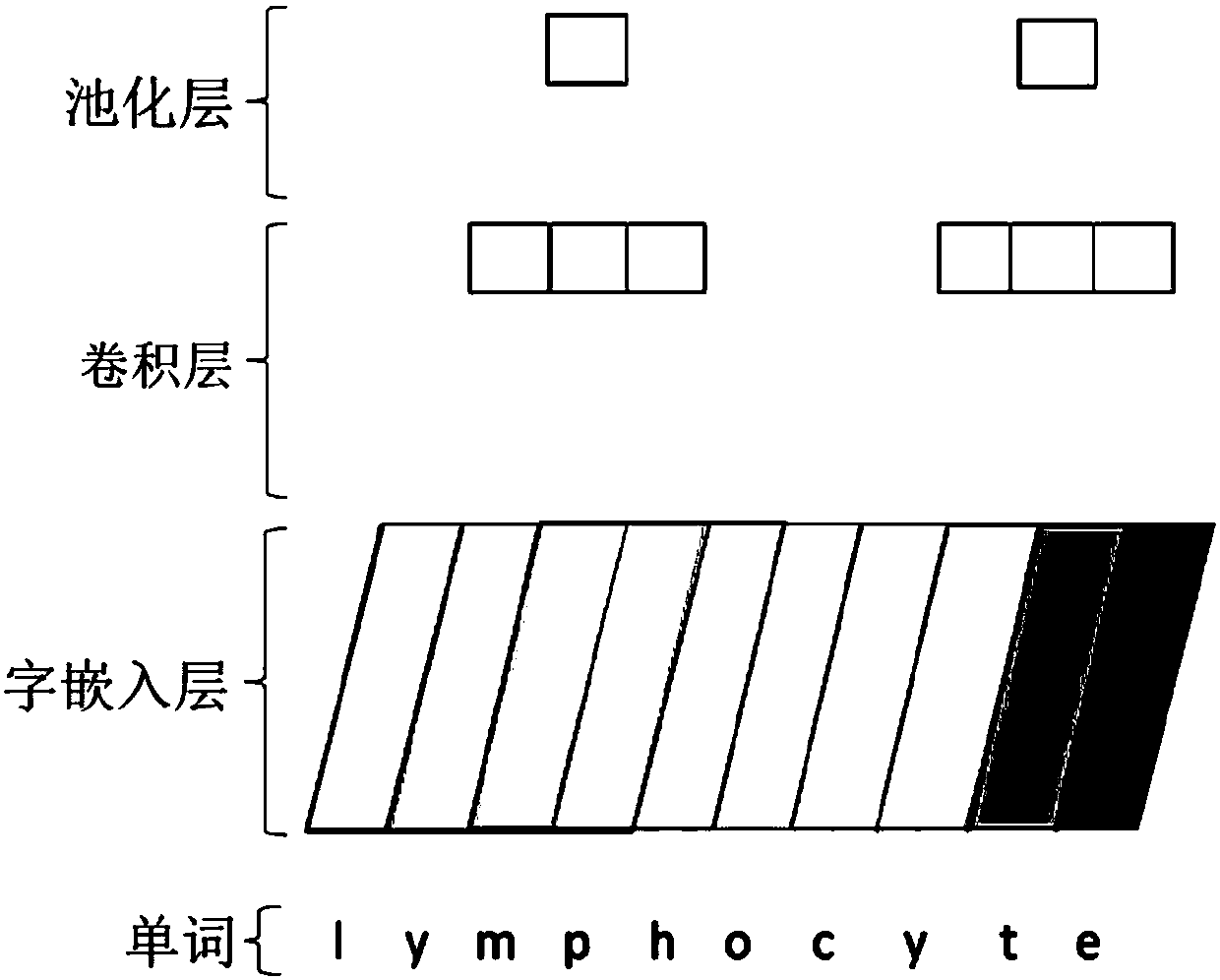

InactiveCN108229582AEntity Recognition FacilitationImprove accuracyCharacter and pattern recognitionNeural architecturesConditional random fieldData set

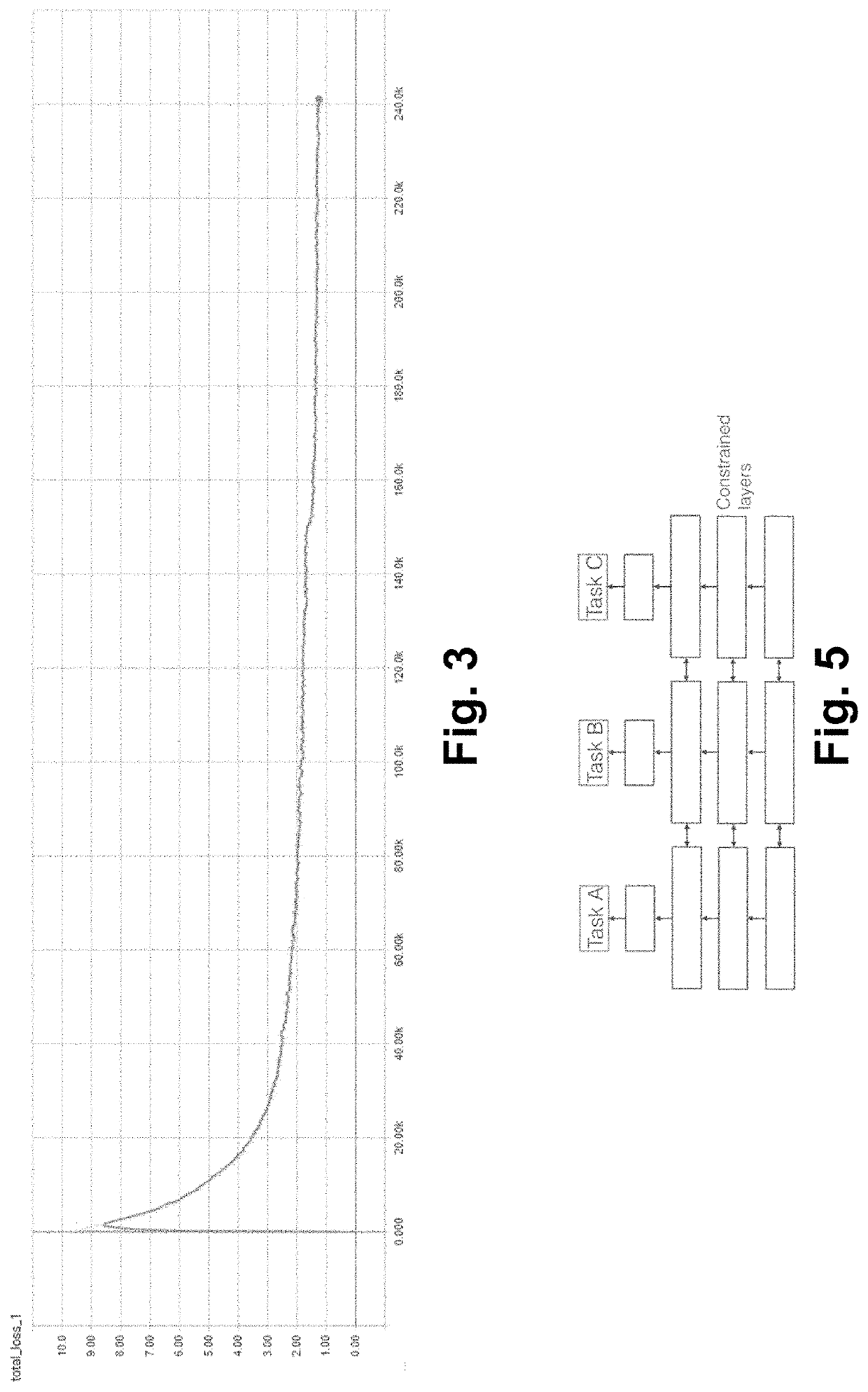

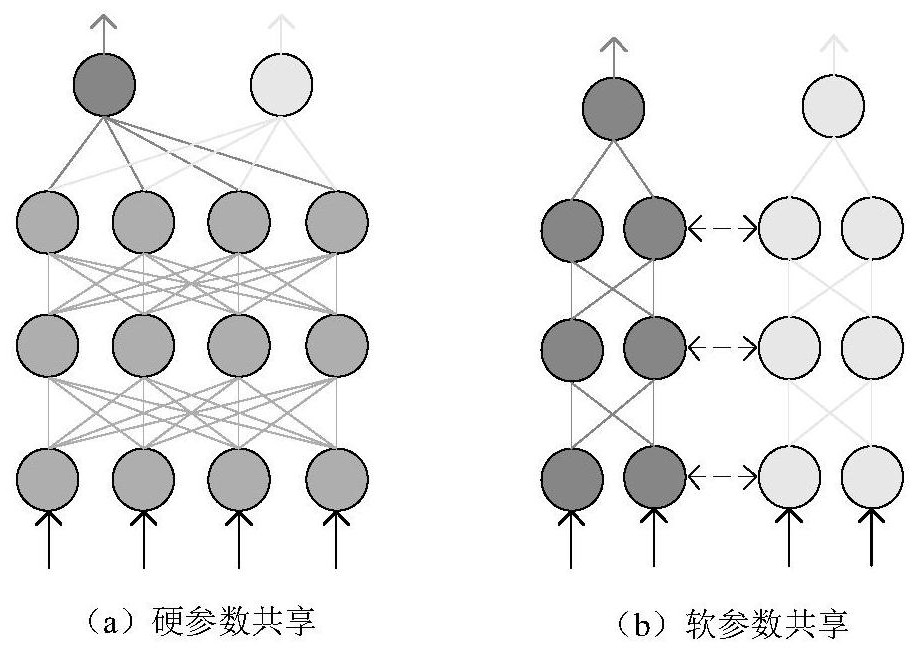

The invention discloses a multi-task named entity recognition and confrontation training method for medical field. The method includes the following steps of (1) collecting and processing data sets, so that each row is composed of a word and a label; (2) using a convolutional neural network to encode the information at the word character level, obtaining character vectors, and then stitching withword vectors to form input feature vectors; (3) constructing a sharing layer, and using a bidirection long-short-term memory nerve network to conduct modeling on input feature vectors of each word ina sentence to learn the common features of each task; (4) constructing a task layer, and conducting model on the input feature vectors and the output information in (3) through a bidirection long-short-term network to learn private features of each task; (5) using conditional random fields to decode labels of the outputs of (3) and (4); (6) using the information of the sharing layer to train a confrontation network to reduce the private features mixed into the sharing layer. According to the method, multi-task learning is performed on the data sets of multiple disease domains, confrontation training is introduced to make the features of the sharing layer and task layer more independent, and the task of training multiple named entity recognition simultaneously in a specific domain is accomplished quickly and efficiently.

Owner:ZHEJIANG UNIV

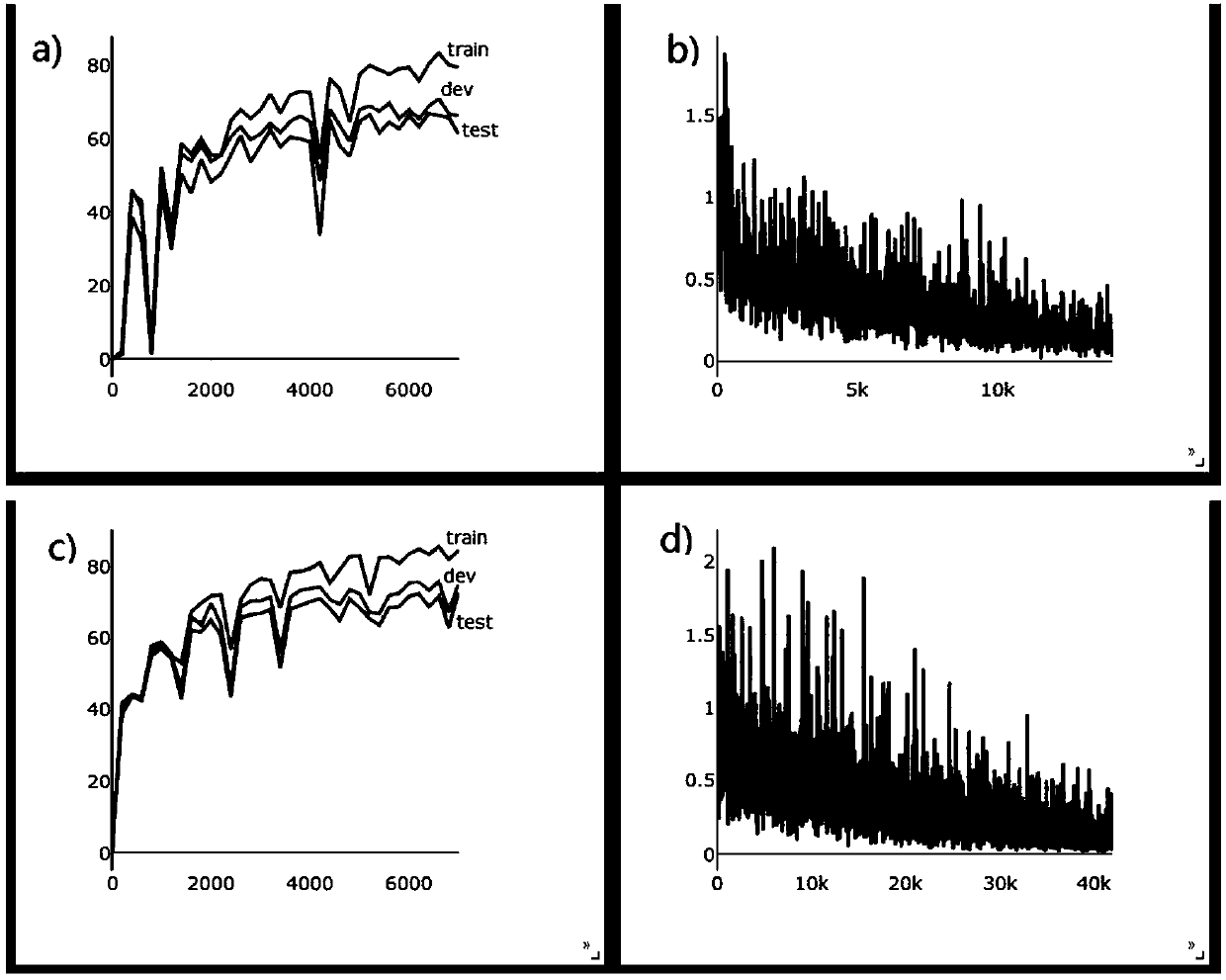

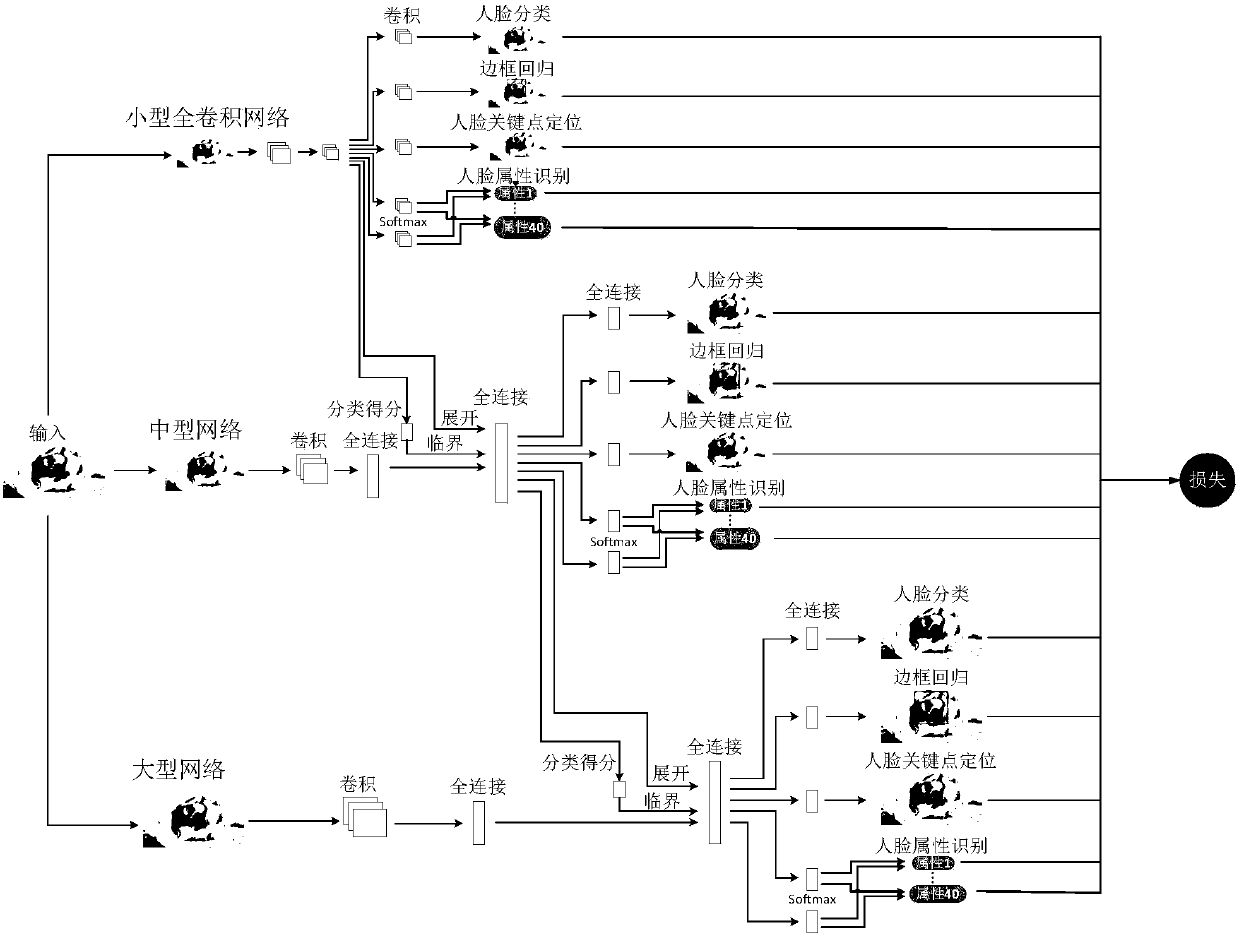

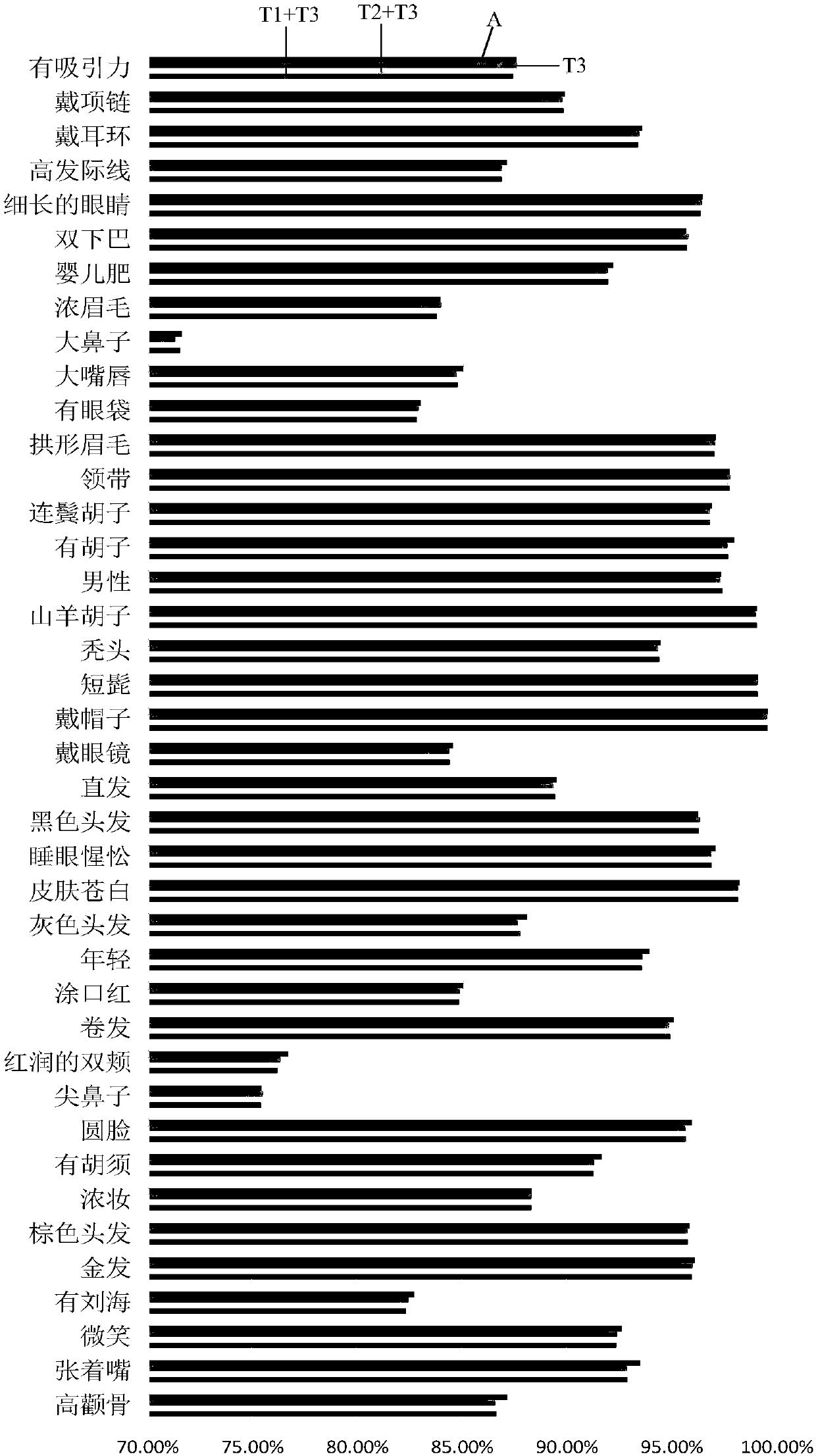

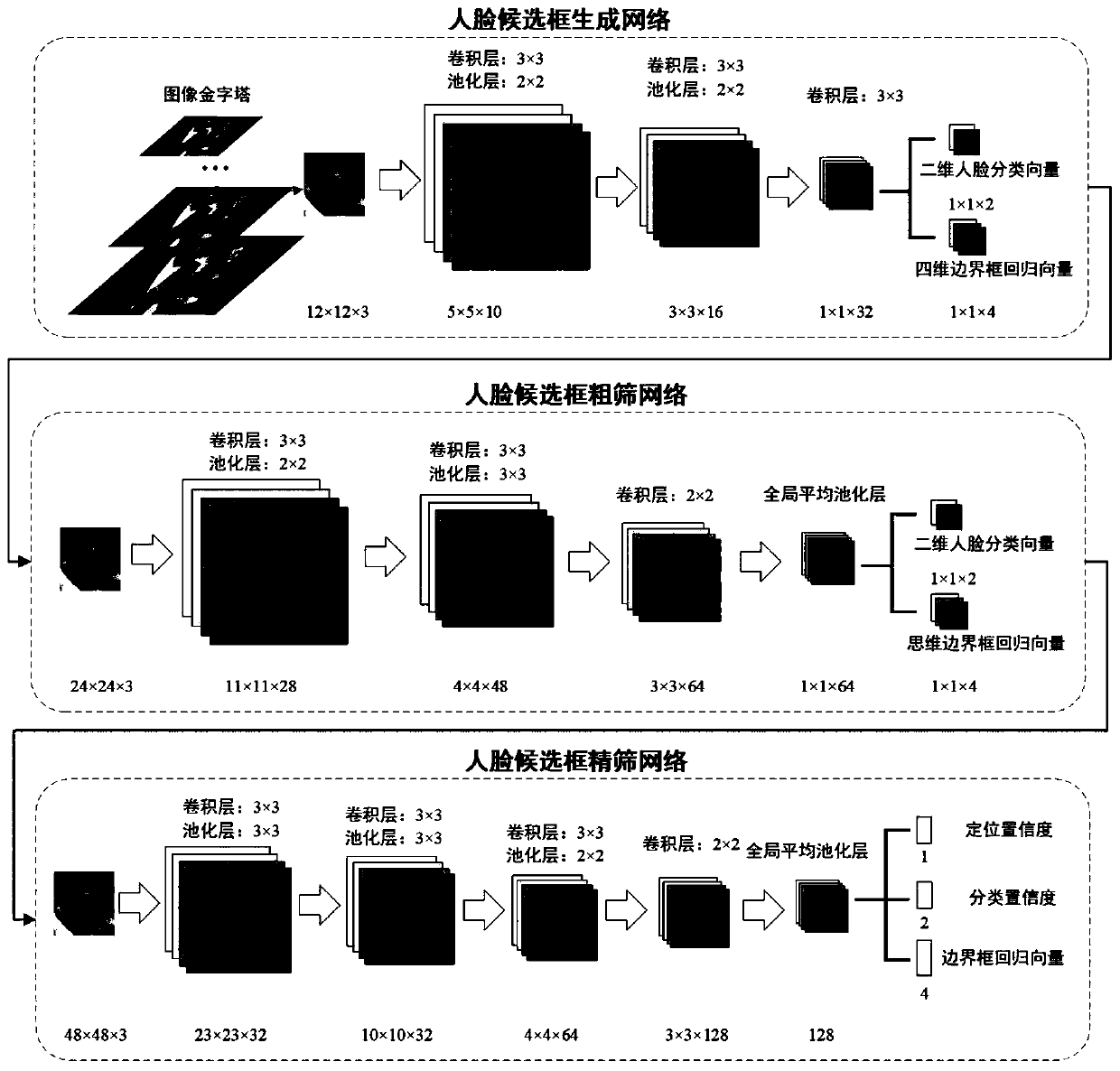

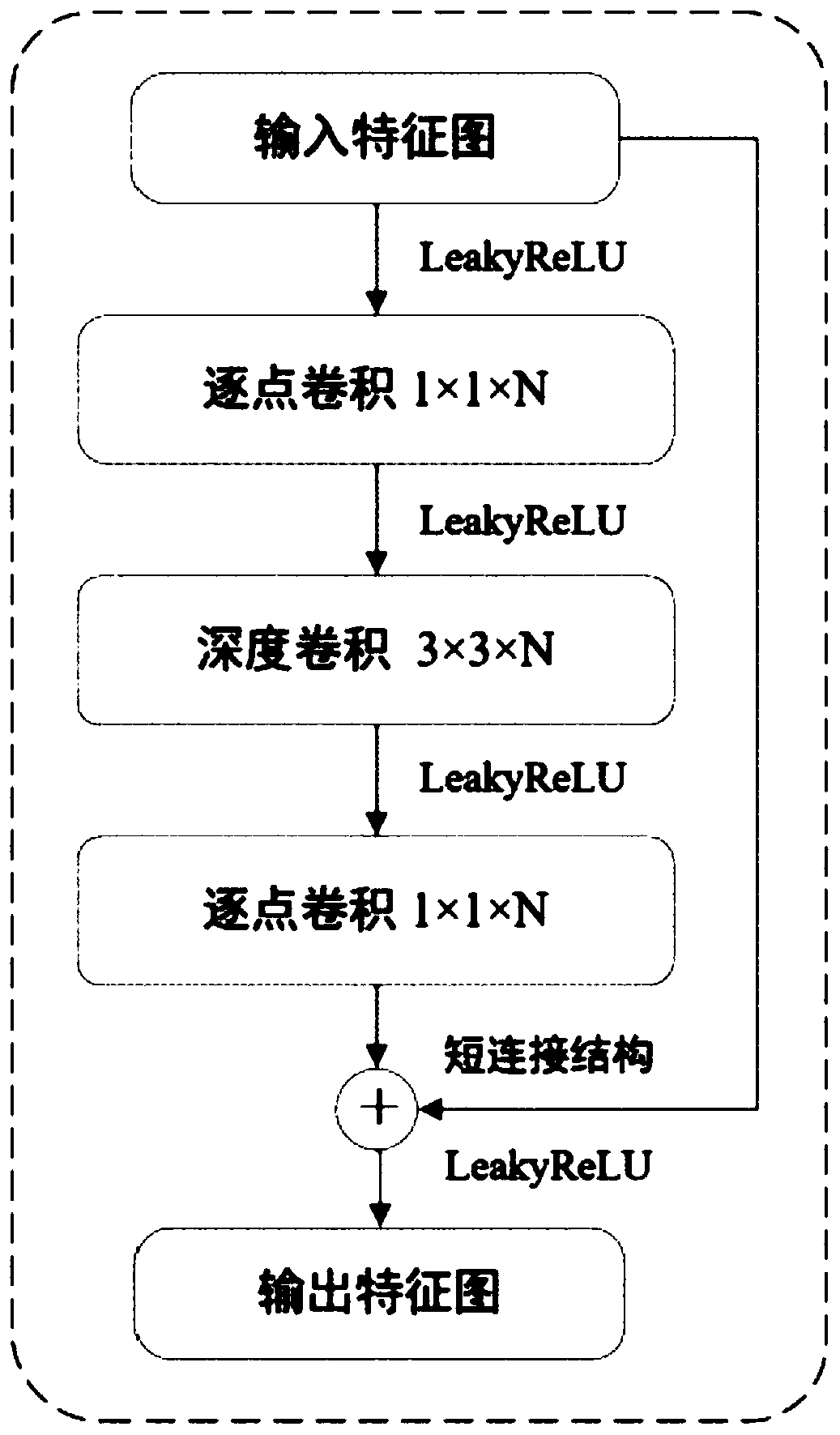

Face attribute recognition method of deep neural network based on cascaded multi-task learning

ActiveCN108564029APromote resultsThe result of face attribute recognition is improvedCharacter and pattern recognitionNeural architecturesVisual technologyCrucial point

The invention provides a face attribute recognition method of a deep neural network based on cascaded multi-task learning and relates to the computer vision technology. Firstly, a cascaded deep convolutional neural network is designed, then multi-task learning is used for each cascaded sub-network in the cascaded deep convolutional neural network, four tasks of face classification, border regression, face key point detection and face attribute analysis are learned simultaneously, then a dynamic loss weighting mechanism is used in the deep convolutional neural network based on the cascaded multi-task learning to calculate the loss weights of face attributes, finally a face attribute recognition result of a last cascaded sub-network is used as the final face attribute recognition result based on a trained network model. A cascading method is used to jointly train three different sub-networks, end-to-end training is achieved, the result of face attribute recognition is optimized, different from the use of fixed loss weights in a loss function, a difference between the face attributes present is considered in the invention.

Owner:XIAMEN UNIV

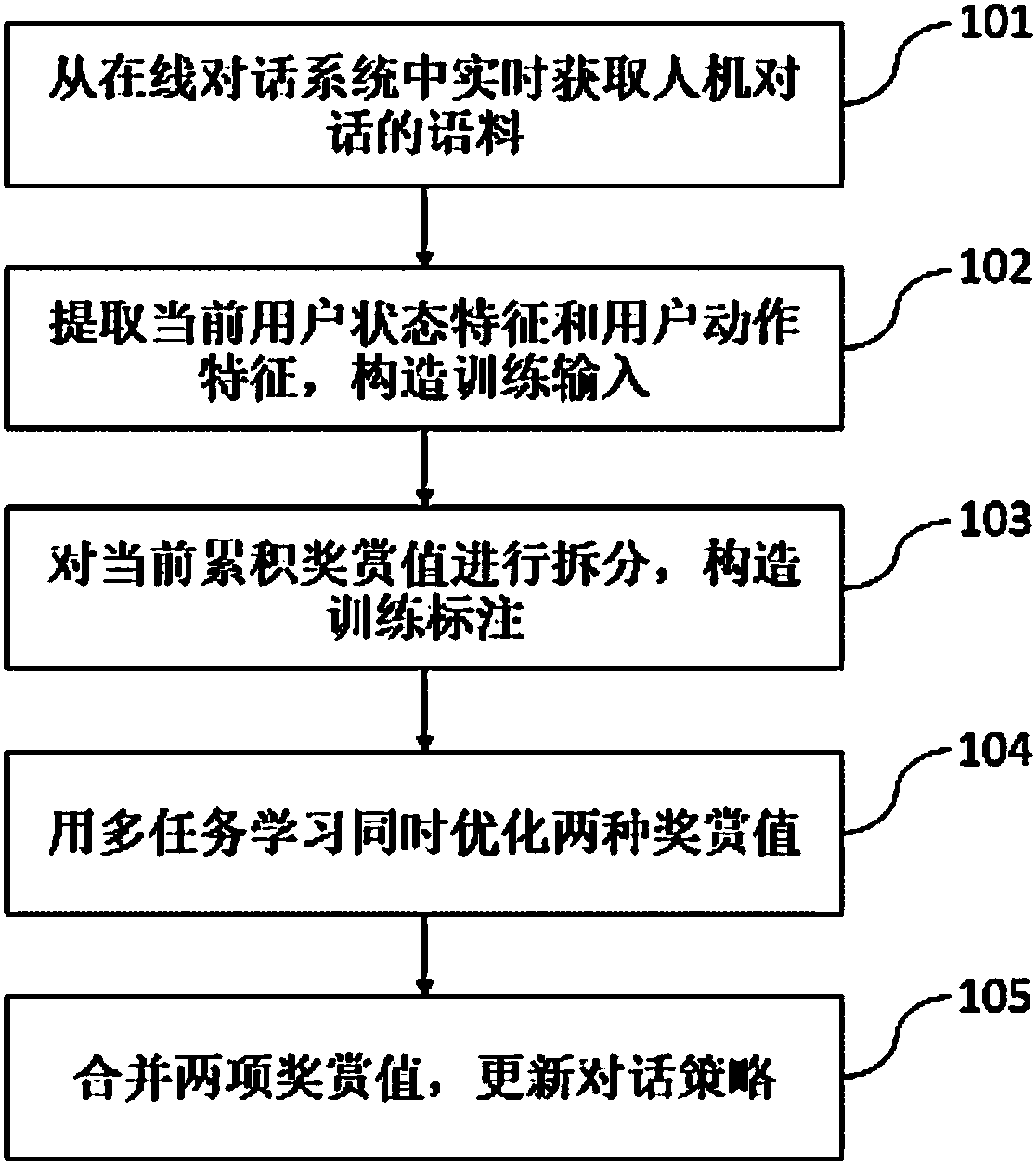

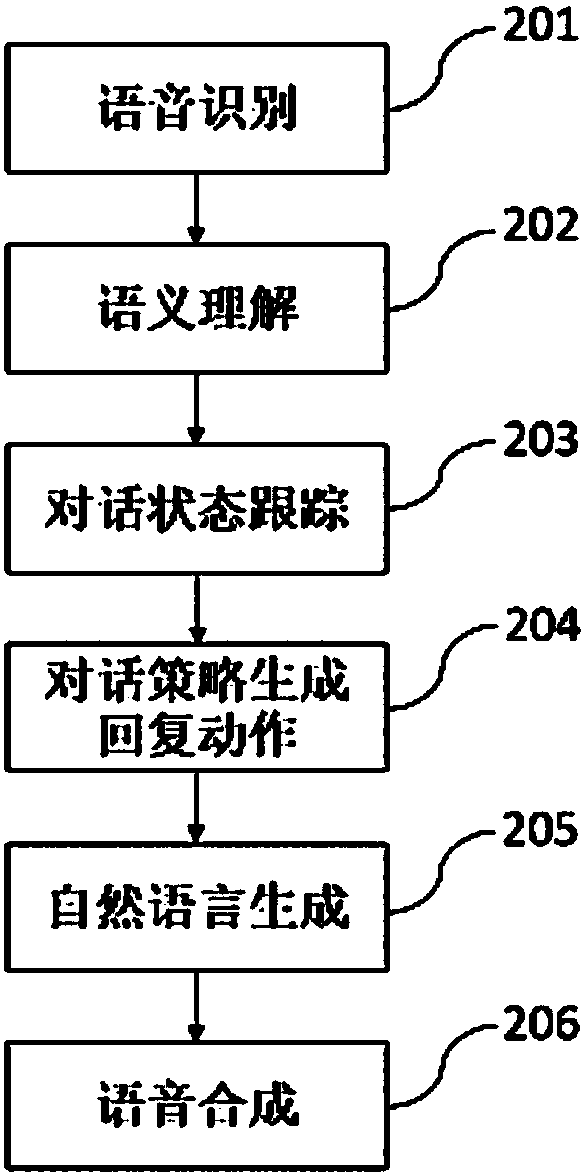

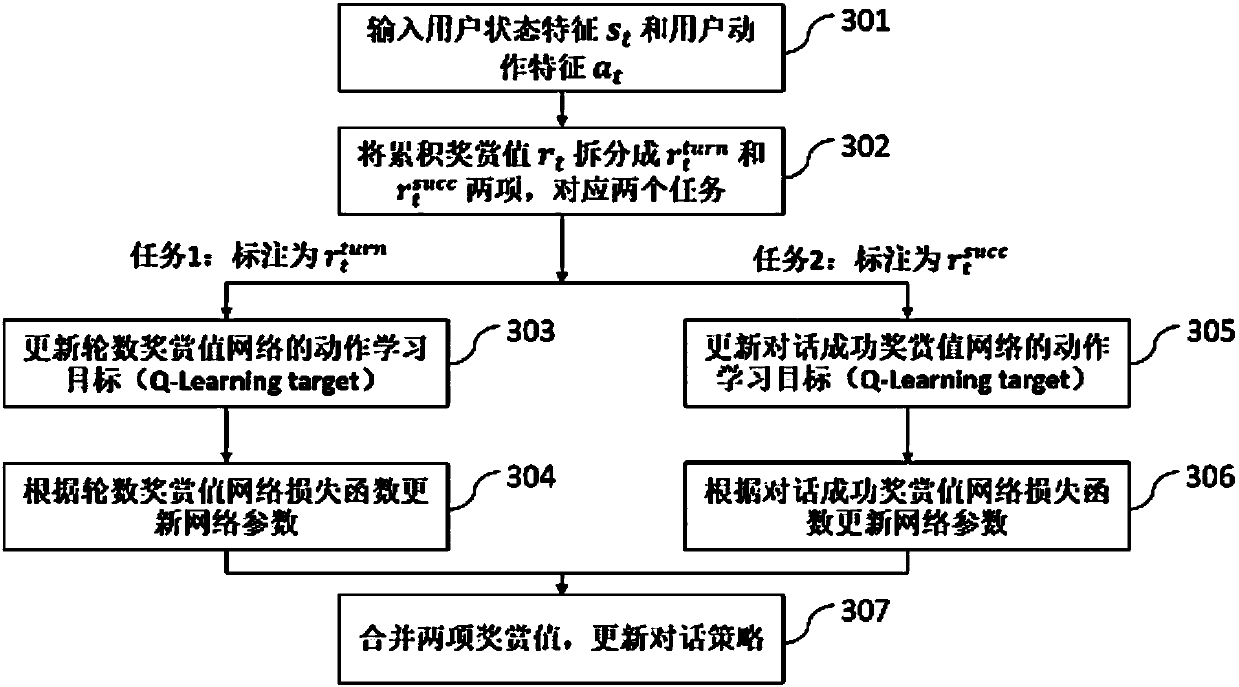

Dialog strategy online realization method based on multi-task learning

ActiveCN107357838AAvoid Manual Design RulesSave human effortSpeech recognitionSpecial data processing applicationsMan machineNetwork structure

The invention discloses a dialog strategy online realization method based on multi-task learning. According to the method, corpus information of a man-machine dialog is acquired in real time, current user state features and user action features are extracted, and construction is performed to obtain training input; then a single accumulated reward value in a dialog strategy learning process is split into a dialog round number reward value and a dialog success reward value to serve as training annotations, and two different value models are optimized at the same time through the multi-task learning technology in an online training process; and finally the two reward values are merged, and a dialog strategy is updated. Through the method, a learning reinforcement framework is adopted, dialog strategy optimization is performed through online learning, it is not needed to manually design rules and strategies according to domains, and the method can adapt to domain information structures with different degrees of complexity and data of different scales; and an original optimal single accumulated reward value task is split, simultaneous optimization is performed by use of multi-task learning, therefore, a better network structure is learned, and the variance in the training process is lowered.

Owner:AISPEECH CO LTD

Systems and/or methods for accelerating facial feature vector matching with supervised machine learning

ActiveUS20200065563A1Reduced dimensionEasy to compareCharacter and pattern recognitionKnowledge representationFeature vectorMachine learning

A face recognition neural network generates small dimensional facial feature vectors for faces in received images. A multi-task learning classifier network receives generated vectors and outputs classifications for the corresponding images. The classifications are built by respective sub-networks each comprising classification layers arranged in levels. The layers in at least some levels receive as input intermediate outputs from an immediately upstream classification layer in the same sub-network and in each other sub-network. After training, a resultant facial feature vector corresponding to an input image is received from the neural network. Resultant classifiers for the input image are received from the classifier network. A database is searched for a subset of reference facial feature vectors having associated classification features matching the resultant classifiers. Vector matching identifies which one(s) of the reference facial feature vectors in the subset of reference facial feature vectors most closely match(es) the resultant facial feature vector.

Owner:SOFTWARE AG

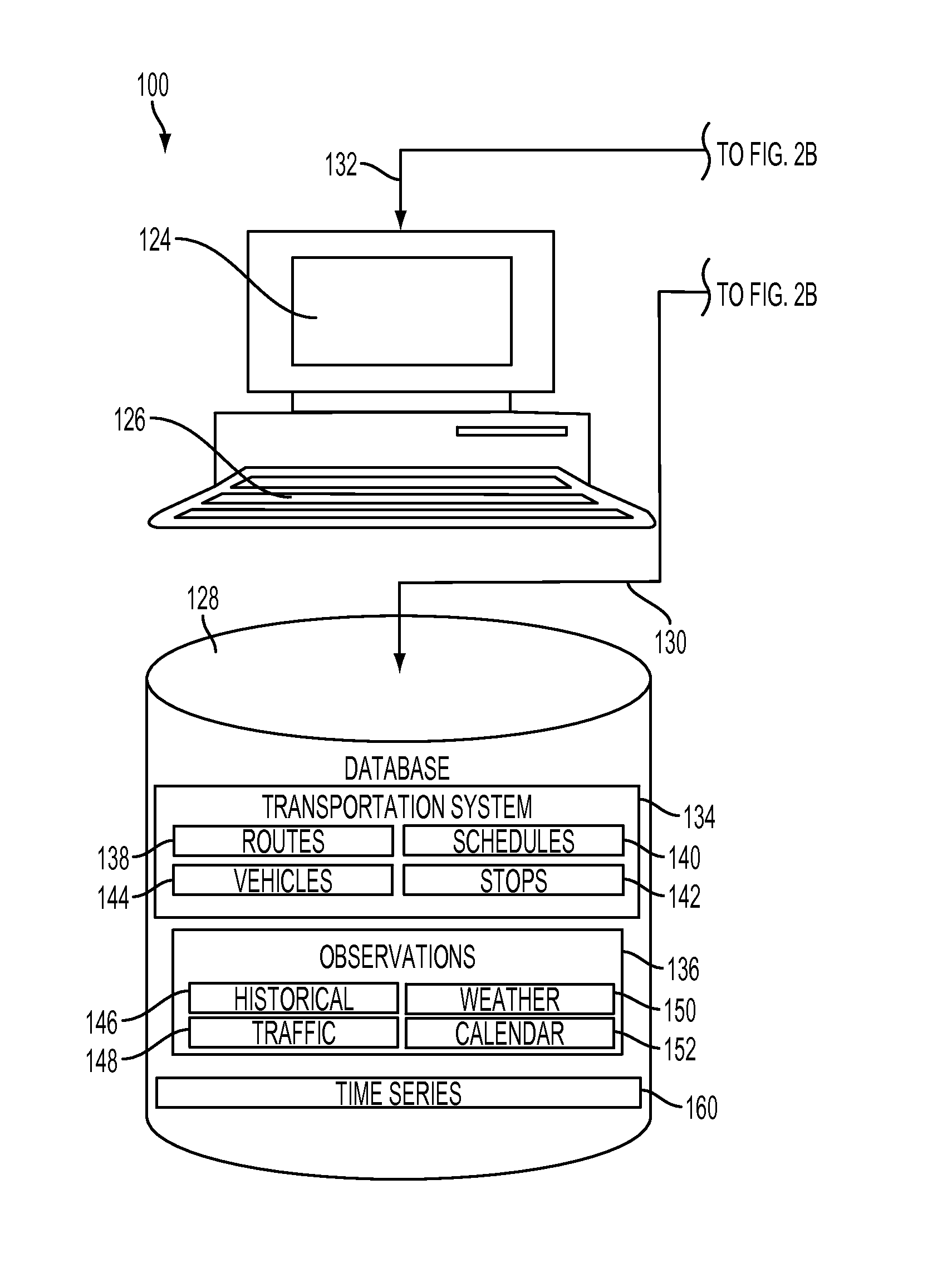

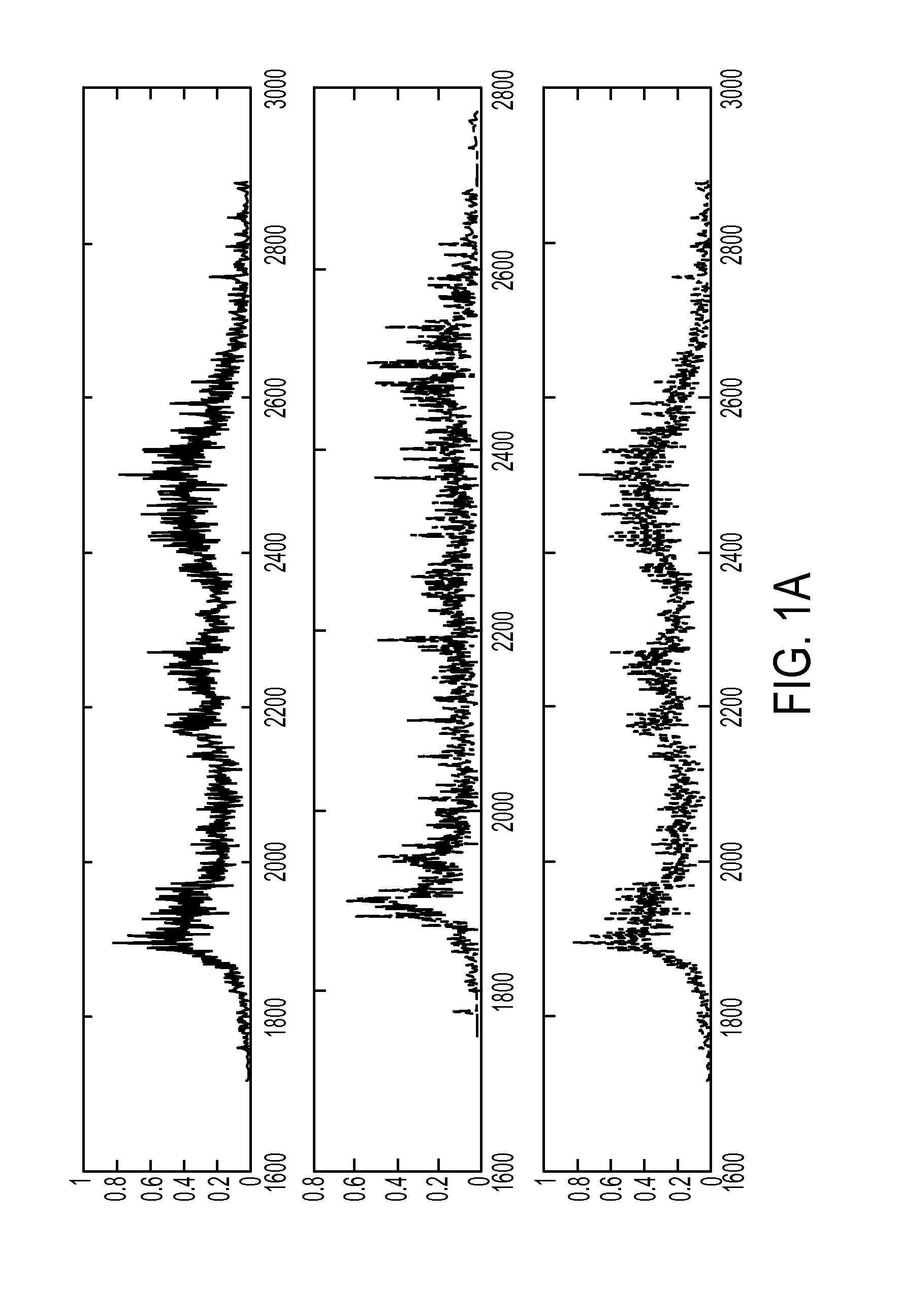

System and method for multi-task learning for prediction of demand on a system

InactiveUS20150186792A1Road vehicles traffic controlDigital computer detailsTraffic networkEngineering

A multi-task learning system and method for predicting travel demand on an associated transportation network are provided. Observations corresponding to the associated transportation network are collected and a set of time series corresponding to travel demand are generated. Clusters of time series are then formed and for each cluster, multi-task learning is applied to generate a prediction model. Travel demand on a selected segment of the associated transportation network corresponding to at least one of the set of time series is then predicted in accordance with the generated prediction model.

Owner:XEROX CORP

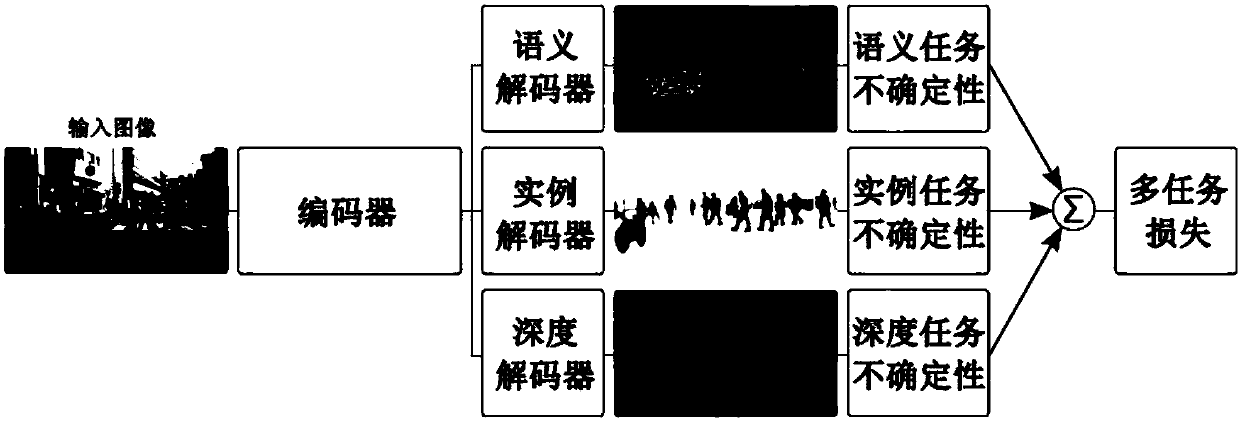

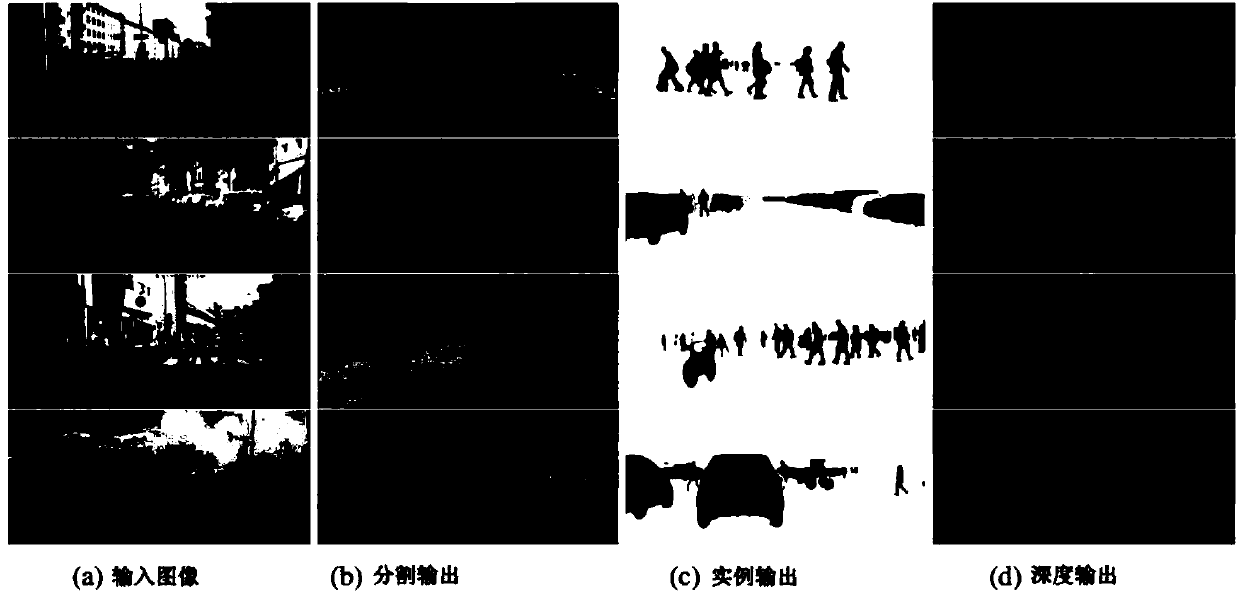

Scene understanding method based on multi-task learning

InactiveCN107451620ACharacter and pattern recognitionNeural learning methodsProbit modelGaussian function

The invention provides a scene understanding method based on multi-task learning. The method includes: multi-task learning having unstable homoscedasticity, multi-task likelihood function, and scene understanding model. The method includes the following steps: first executing weighted linear sum on the loss of each individual task, learning the optimal task weight, inducting a multi-task loss function, defining a probability model, defining the probability as the gaussian function of the average values that are output by the model, eventually modeling pixel-level learning regression and classified output, comprising semantic division, incidence division and depth regression. According to the invention, the scene understanding model can learn multi-task weight, is more advantageous than models that independently train each task in that the method herein reduces computing amount, increases learning efficiency and prediction precision and can be real-timely operated.

Owner:SHENZHEN WEITESHI TECH

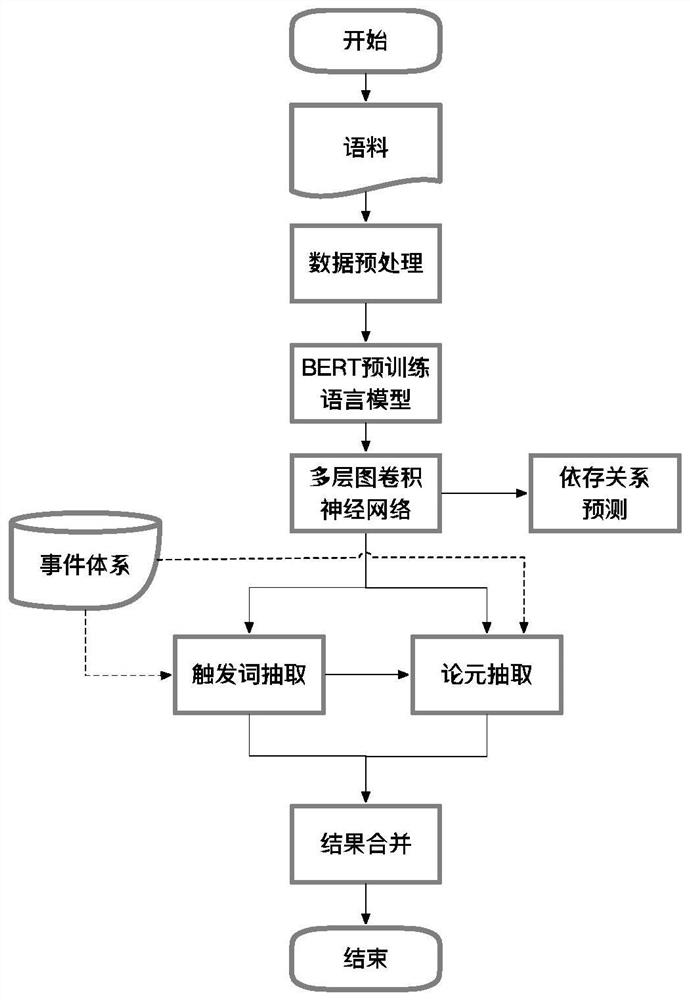

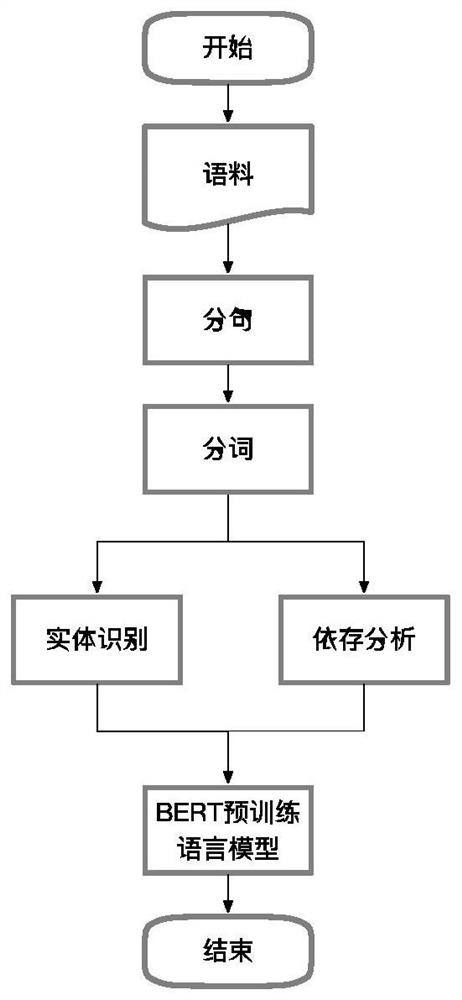

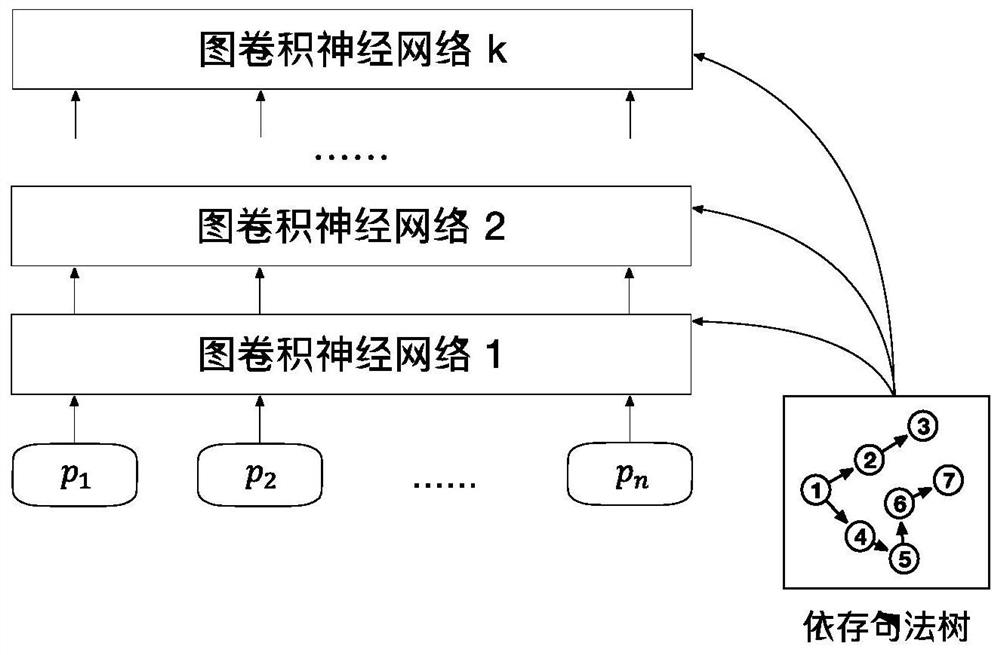

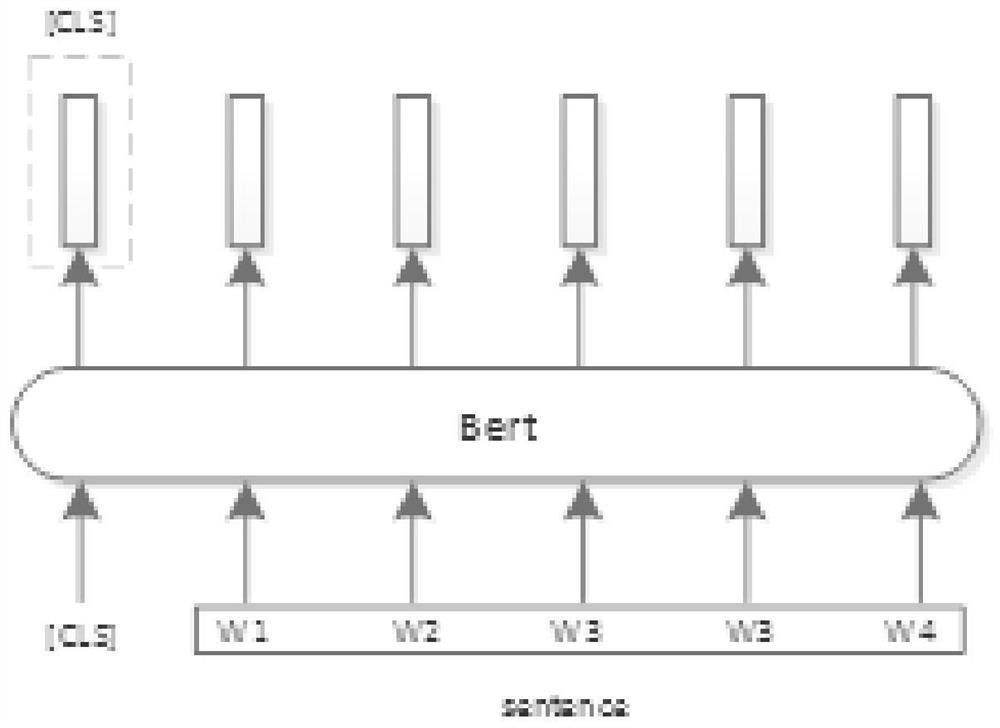

Event extraction method and system fusing dependency information and pre-trained language model

ActiveCN111897908AImprove performanceNatural language data processingNeural architecturesAlgorithmMulti-task learning

The invention provides an event extraction method and system fusing dependency information and a pre-trained language model. The method comprises the steps of taking a dependency syntax tree of a sentence as input, learning dependency syntax features by using a graph convolutional neural network, adding a dependency relationship prediction task, capturing a more important dependency relationship in a multi-task learning mode, and finally enhancing underlying syntax expression by using a BERT pre-training language model to complete event extraction of Chinese sentences. Therefore, the performance of trigger word extraction and argument extraction under the event extraction task is improved.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

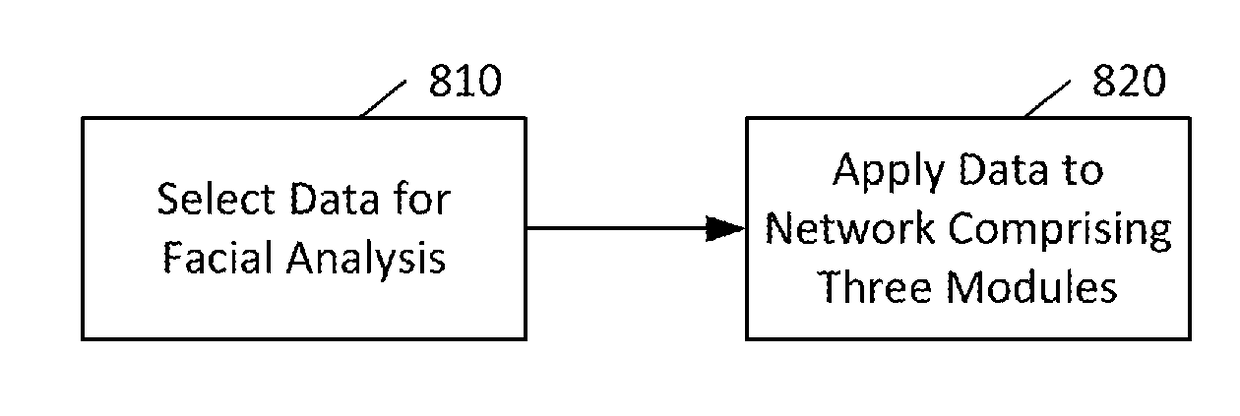

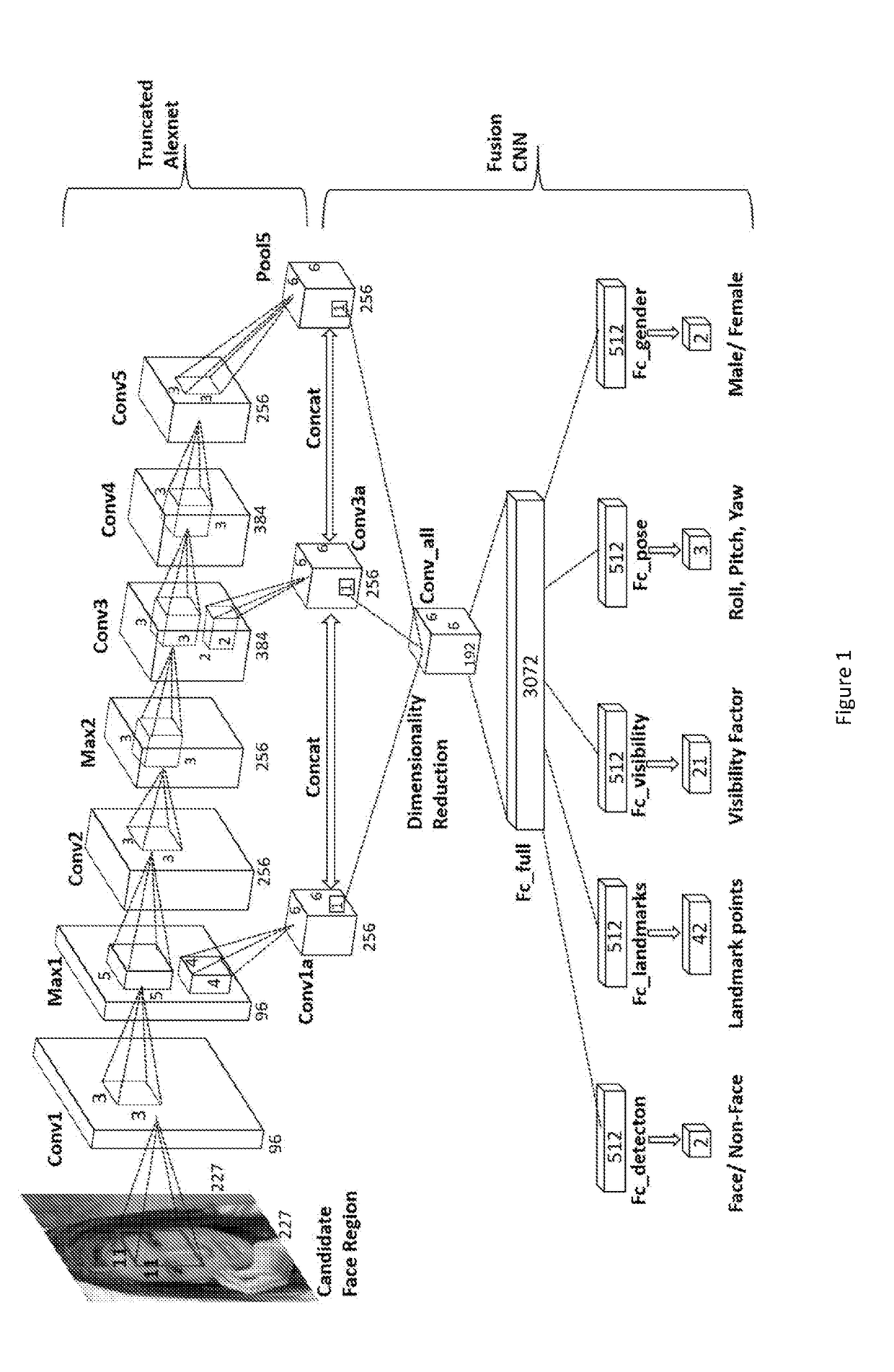

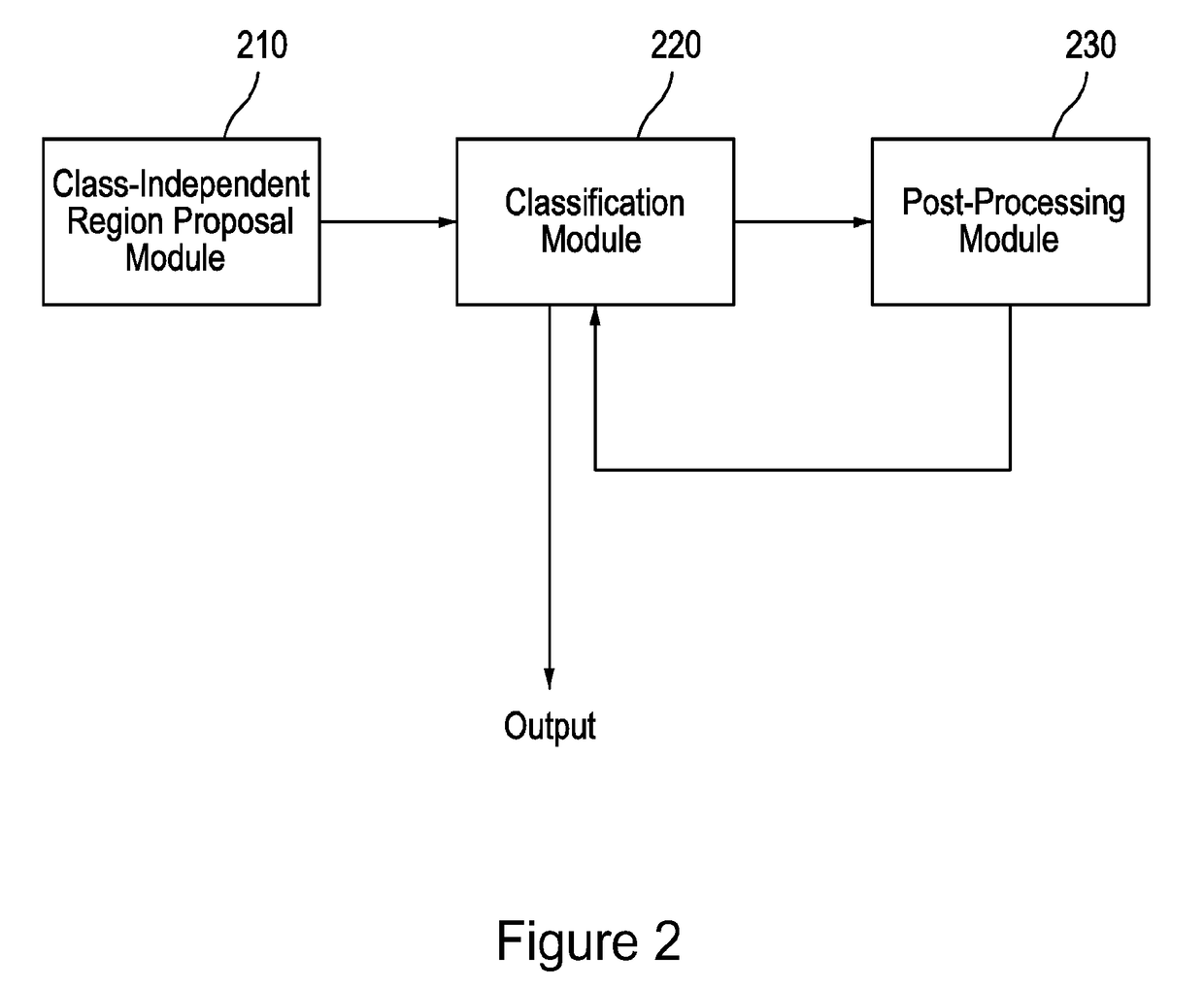

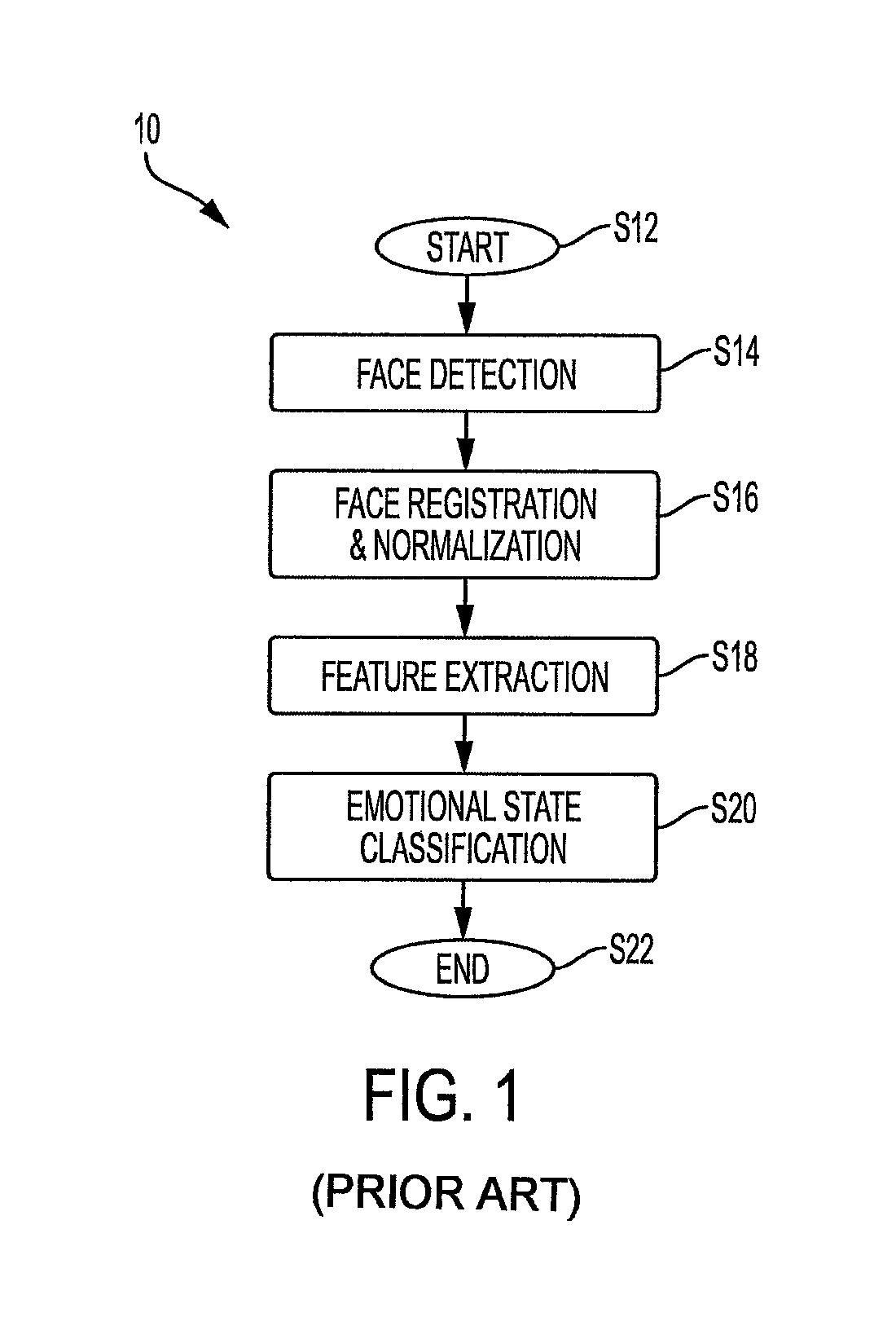

Deep multi-task learning framework for face detection, landmark localization, pose estimation, and gender recognition

Various image processing may benefit from the application deep convolutional neural networks. For example, a deep multi-task learning framework may assist face detection, for example when combined with landmark localization, pose estimation, and gender recognition. An apparatus can include a first module of at least three modules configured to generate class independent region proposals to provide a region. The apparatus can also include a second module of the at least three modules configured to classify the region as face or non-face using a multi-task analysis. The apparatus can further include a third module configured to perform post-processing on the classified region.

Owner:UNIV OF MARYLAND

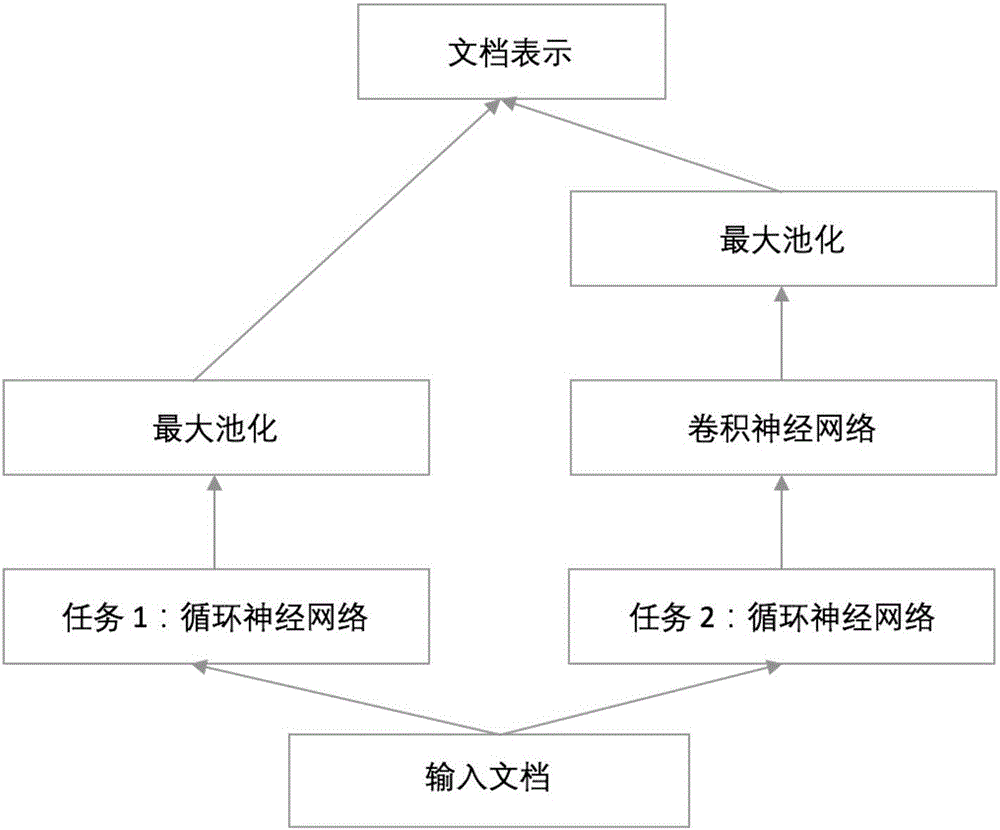

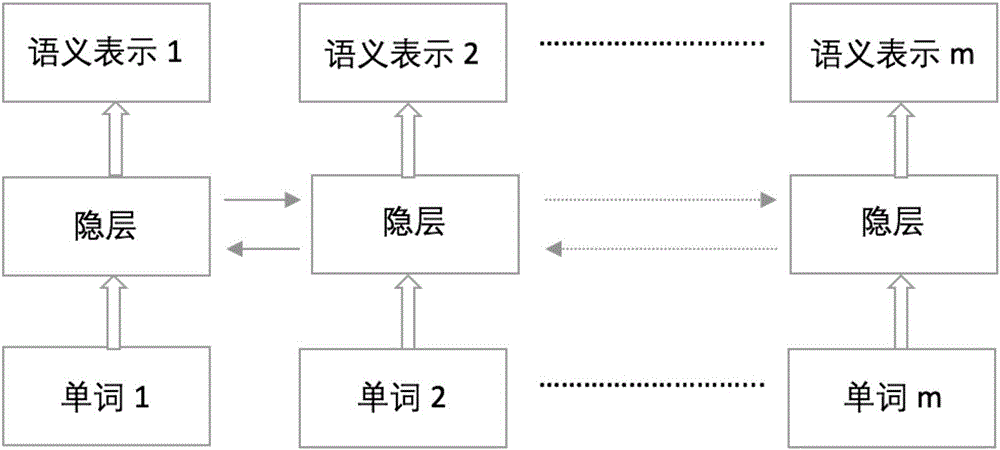

Text classification method based on deep multi-task learning

InactiveCN106777011AImprove classification effectSolve problemsSpecial data processing applicationsNeural learning methodsClassification methodsDocument preparation

The invention provides a text classification method based on deep multi-task leaning. The method comprises the steps that by means of a recurrent neural network obtained through other task training and by combining the learning ability of a convolutional neural network, additional document representation is obtained, that is to say, a large amount of external information is introduced, semantic representation of a document is extended, and the problem that training data is insufficient is effectively solved. Accordingly, compared with a traditional multi-task leaning method, the convolutional neural network is used for conducting feature extraction on bottom-layer features of an auxiliary task, the features of other tasks can be utilized for being effectively transferred to the current task, and the performance of text classification is improved.

Owner:SUN YAT SEN UNIV

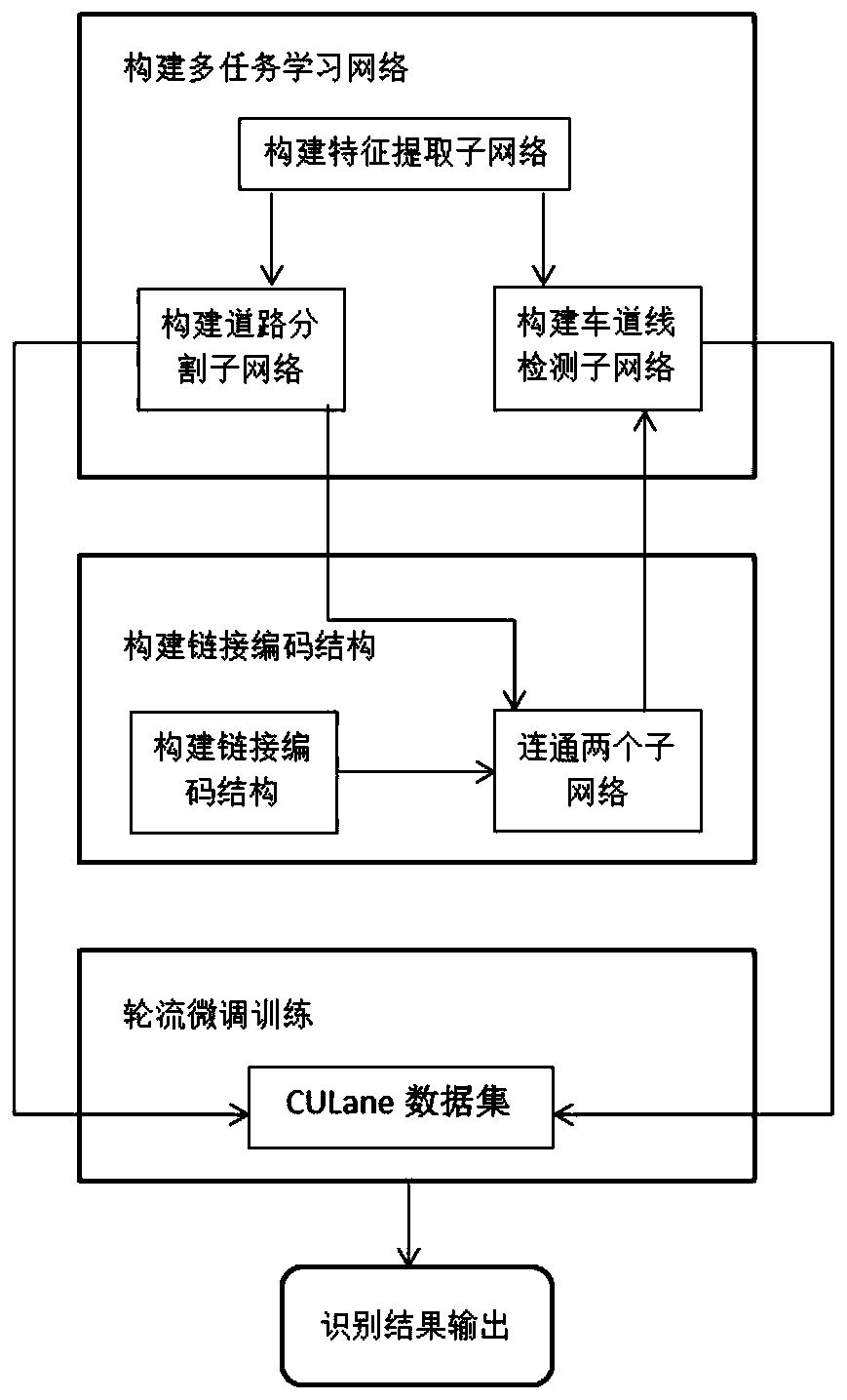

Lane line multi-task learning detection method based on road segmentation

ActiveCN110414387ADetection scale diversificationImprove robustnessCharacter and pattern recognitionNeural architecturesPattern recognitionMulti-task learning

The invention relates to the technical field of road traffic image recognition, and discloses a lane line multi-task learning detection method based on road segmentation, and the method comprises thesteps: constructing a multi-task learning network, carrying out the recognition and processing of an inputted road image, and outputting road segmentation data and lane line detection data; constructing a link coding structure, and connecting the two sub-networks; and performing alternate fine adjustment training on the two sub-networks, correcting and improving the lane line detection data precision by adopting a cross entropy loss function, and finally outputting corrected lane line detection data. The detection scale is diversified, the robustness is improved, the detection precision in a complex scene is improved, and the speed is high. The two sub-networks are connected through the link coding structure, and the information amount obtained by the feature map is increased through parameter hard sharing of the two sub-networks.

Owner:WUHAN UNIV OF TECH

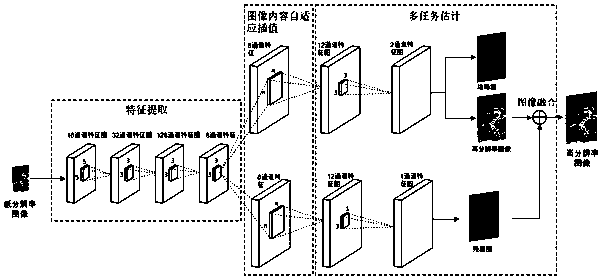

An image super-resolution algorithm based on context-dependent multi-task depth learning

ActiveCN109389552ASimple structureIdeal structure informationGeometric image transformationMulti-task learningHigh resolution image

The invention provides an image super-resolution algorithm based on context-related multi-task depth learning, Three depth neural networks are designed for capturing the basic information, the main edge information and the small detail information of the image, and then the neural networks are trained in a multi-task learning framework for context-related connection and unification. Given the input low-resolution image, the trained neural network will output the basic image, the main edge image and the micro-detail image respectively, and the final high-resolution image will be fused from thebasic image and the micro-detail image. The algorithm can recover high resolution (HR) images only from static low resolution (LR) images. Moreover, the structure of the recovered HR image is well preserved, and the structure information in the ideal HR image can be recovered as much as possible.

Owner:SUN YAT SEN UNIV

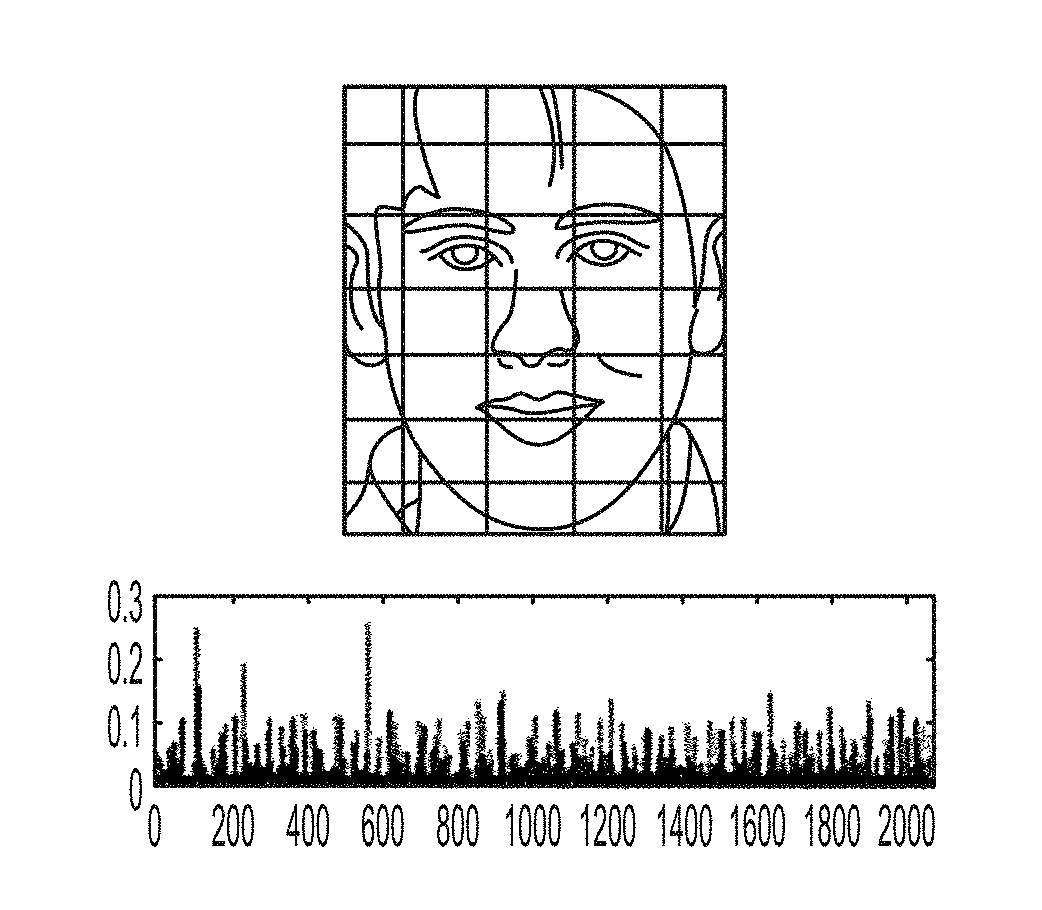

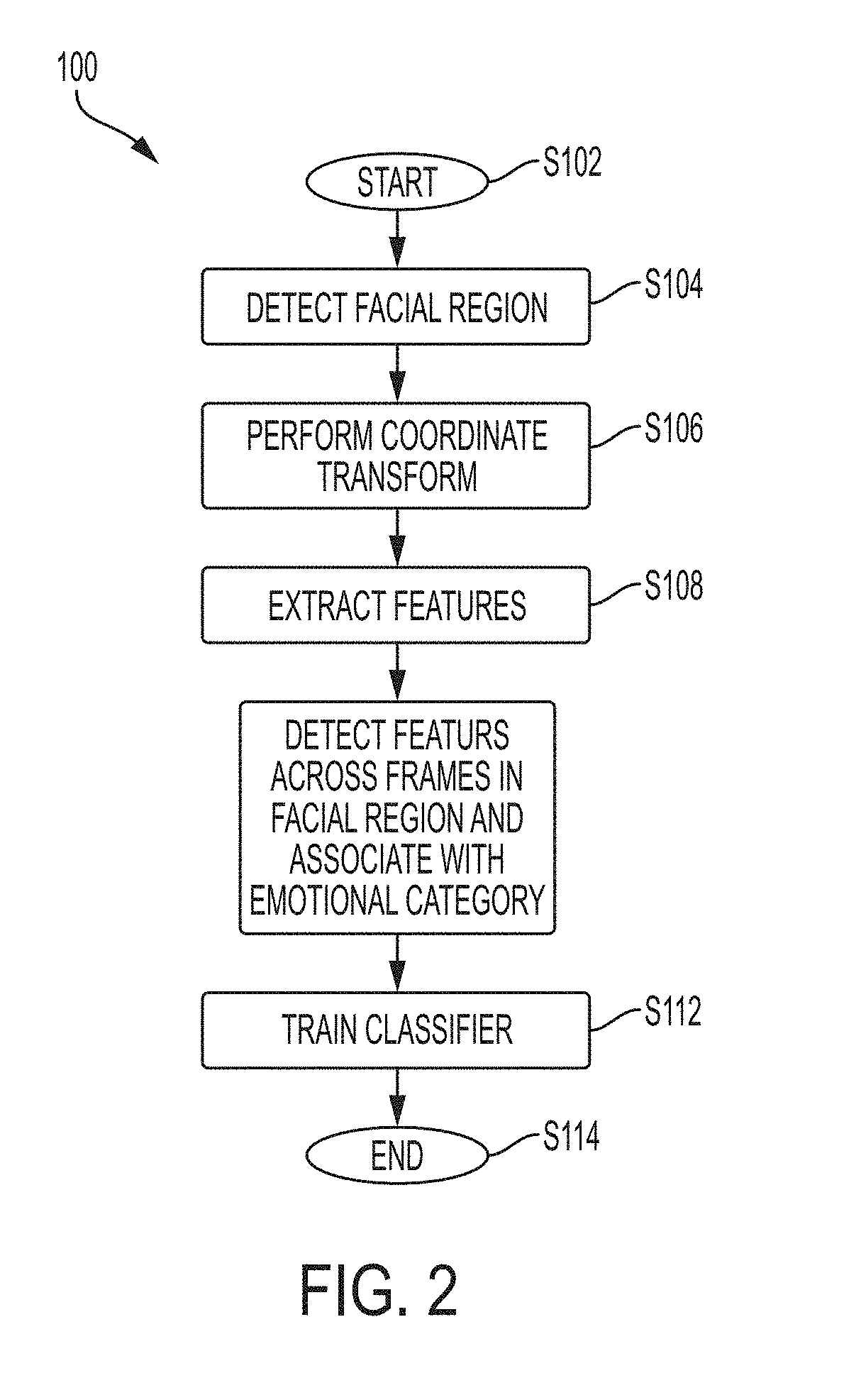

Learning emotional states using personalized calibration tasks

A method for determining an emotional state of a subject taking an assessment. The method includes eliciting predicted facial expressions from a subject administered questions each intended to elicit a certain facial expression that conveys a baseline characteristic of the subject; receiving a video sequence capturing the subject answering the questions; determining an observable physical behavior experienced by the subject across a series of frames corresponding to the sample question; associating the observed behavior with the emotional state that corresponds with the facial expression; and training a classifier using the associations. The method includes receiving a second video sequence capturing the subject during an assessment and applying features extracted from the second image data to the classifier for determining the emotional state of the subject in response to an assessment item administered during the assessment.

Owner:XEROX CORP

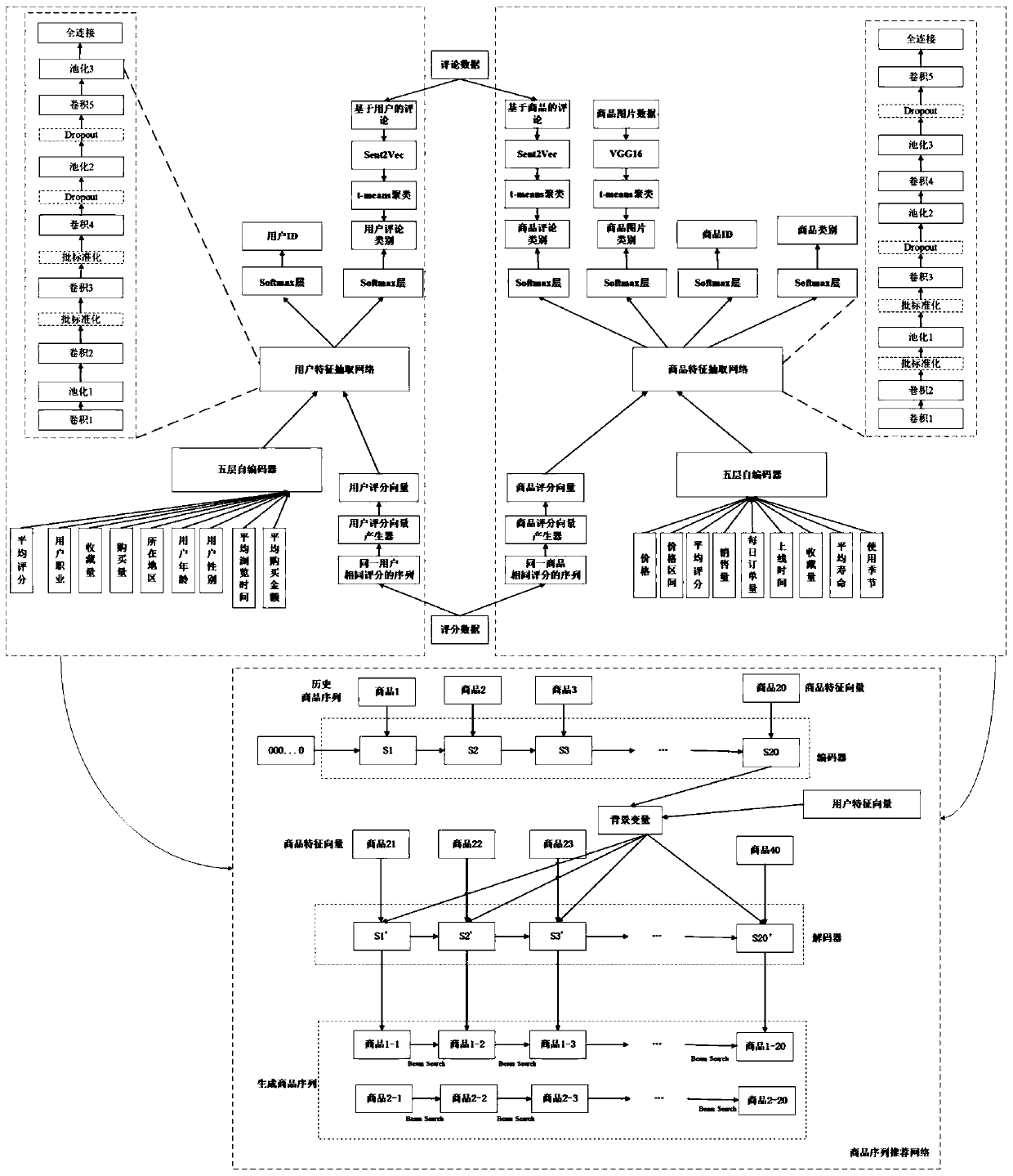

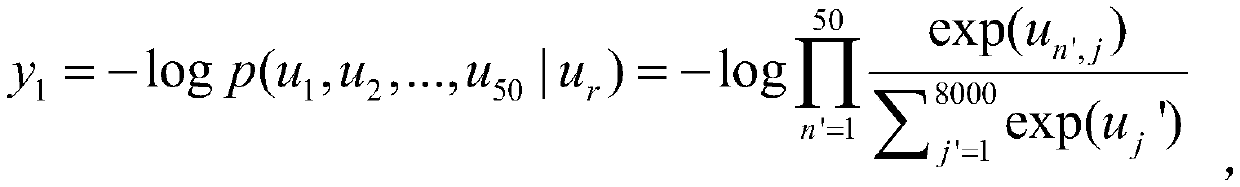

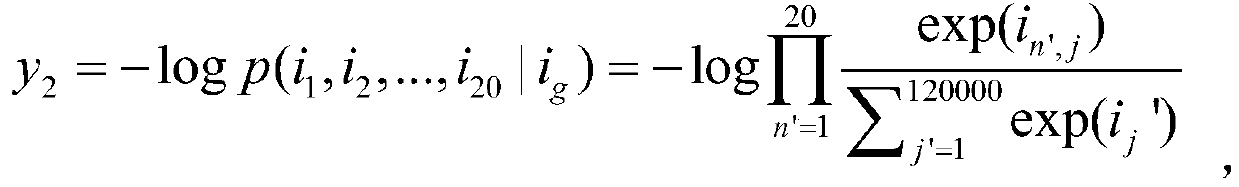

User dynamic preference oriented commodity sequence personalized recommendation method

ActiveCN110458627AEasy to useScore full useAdvertisementsCharacter and pattern recognitionFeature vectorPersonalization

The invention discloses a user dynamic preference oriented commodity sequence personalized recommendation method. The method comprises the steps of extracting a commodity sequence under the same usersimilarity score to construct a commodity score vector; extracting a user sequence of similar scores of the same commodity to obtain a user score vector; combining user personal information, commoditybasic attribute information, user and article comments and commodity pictures; achieving feature extraction of a user and a commodity based on multi-task learning, taking a user feature vector and afeature vector of a historical commodity sequence of the user feature vector as input, achieving generation of the commodity sequence by training a coder-decoder, and accurately learning recommendation of the optimal commodity sequence in combination with a search strategy. According to the invention, based on the multi-modal user-commodity data, the user features and the commodity features are highly extracted and fused, personalized recommendation of the commodity sequence for user preferences is realized, and the user experience is improved.

Owner:SOUTH CHINA NORMAL UNIVERSITY +1

Company announcement processing method for multi-task learning and server

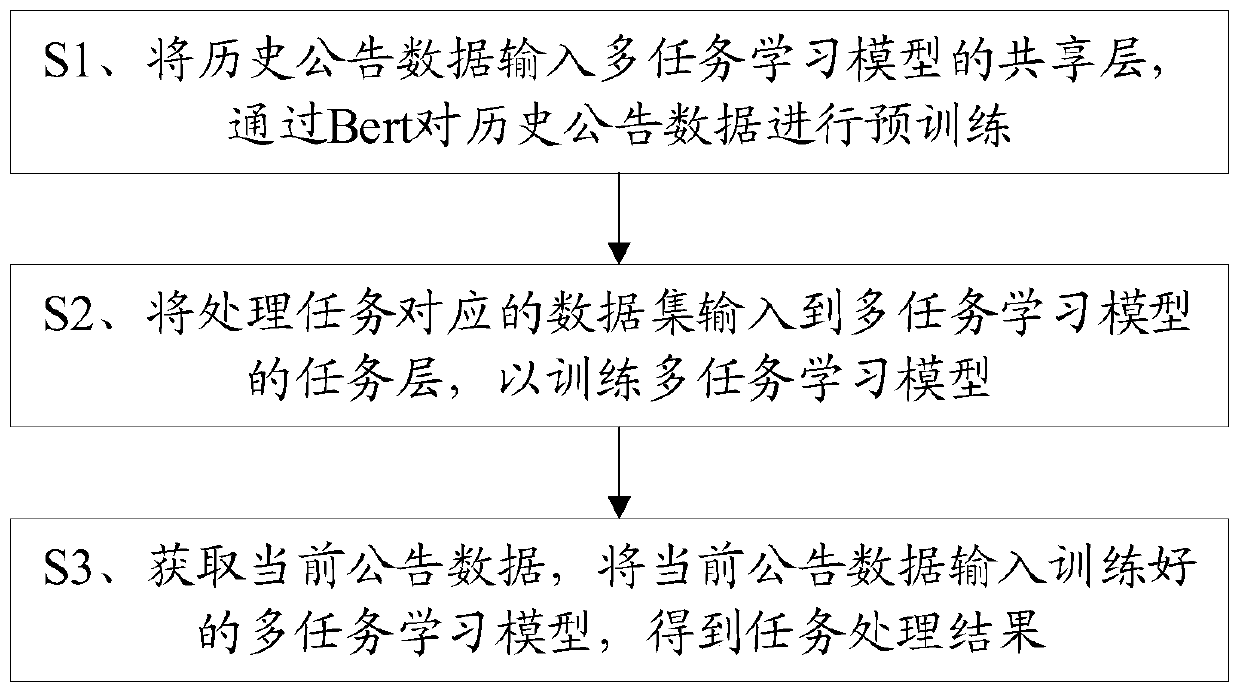

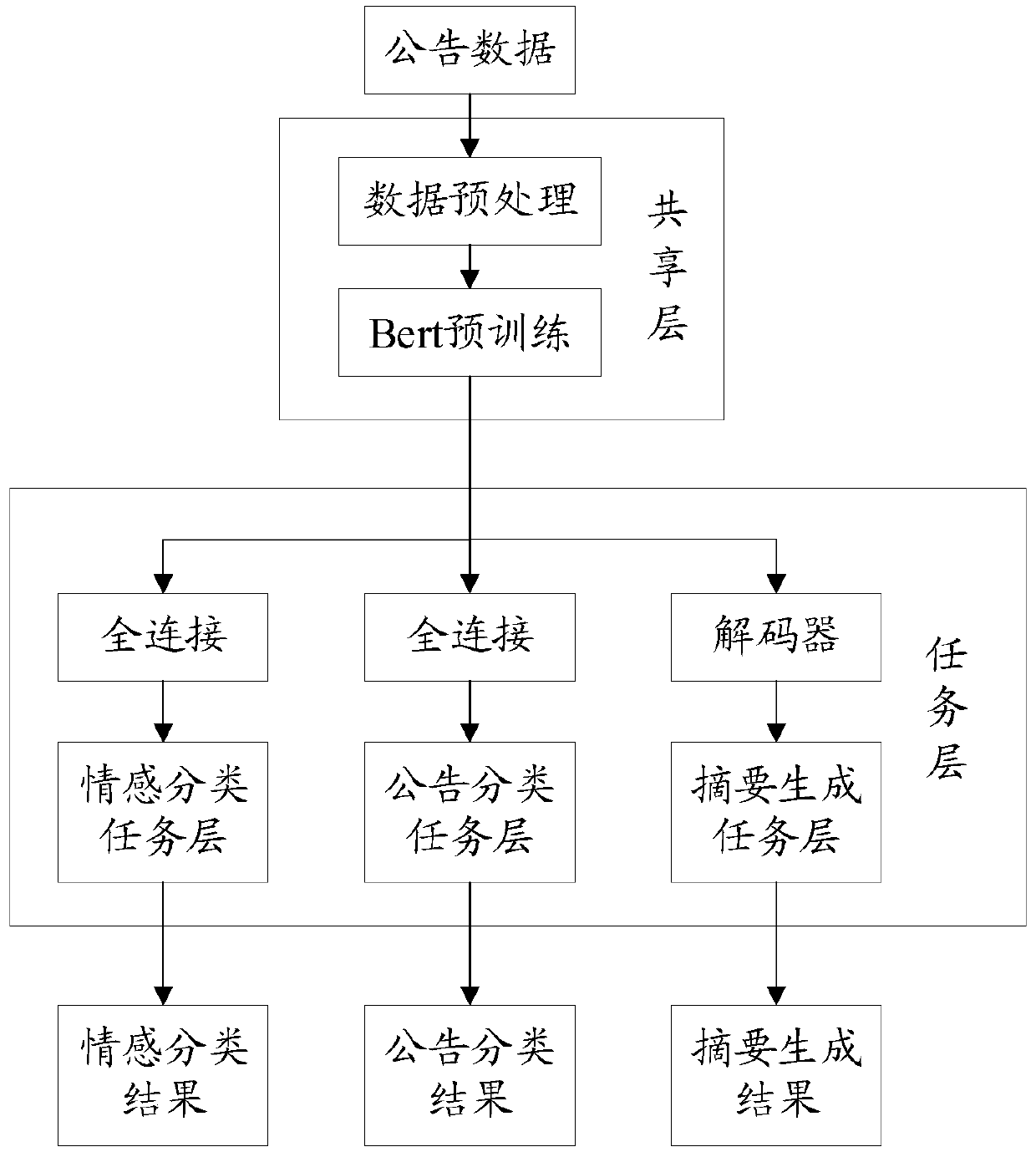

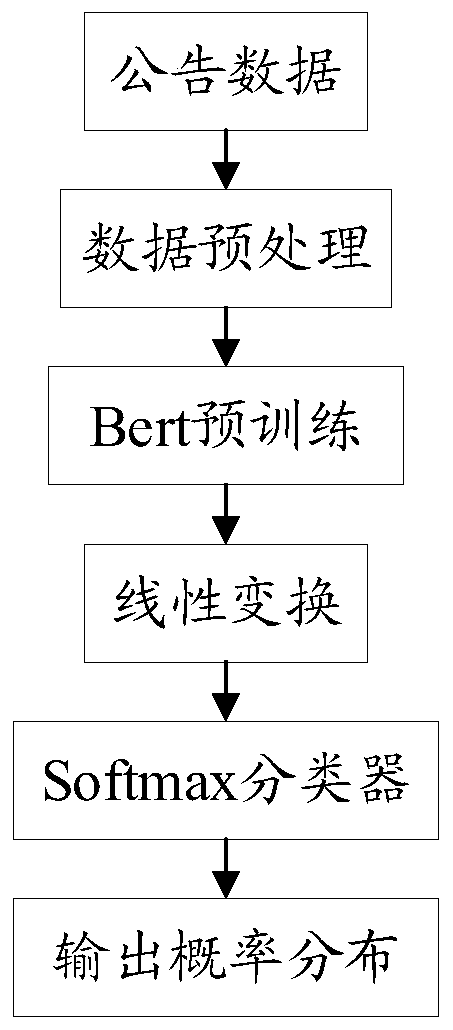

ActiveCN110222188AReduce learningImprove learning efficiencySemantic analysisEnergy efficient computingData setMulti-task learning

The invention discloses a company announcement processing method for multi-task learning and a server, and the method comprises the steps: inputting historical announcement data into a sharing layer of a multi-task learning model, and carrying out the pre-training of the historical announcement data through Bert; inputting the data set corresponding to the processing task into a task layer of themulti-task learning model to train the multi-task learning model; obtaining current announcement data, and inputting the current announcement data into the trained multi-task learning model to obtaina task processing result. The multi-task learning model is constructed through using in a transfer learning and multi-task learning mode, and the method has the advantages of being high in learning efficiency, high in generalization, low in manual maintenance cost, high in accuracy of multiple tasks, high in recall rate and convenient for engineering deployment and maintenance.

Owner:深圳司南数据服务有限公司

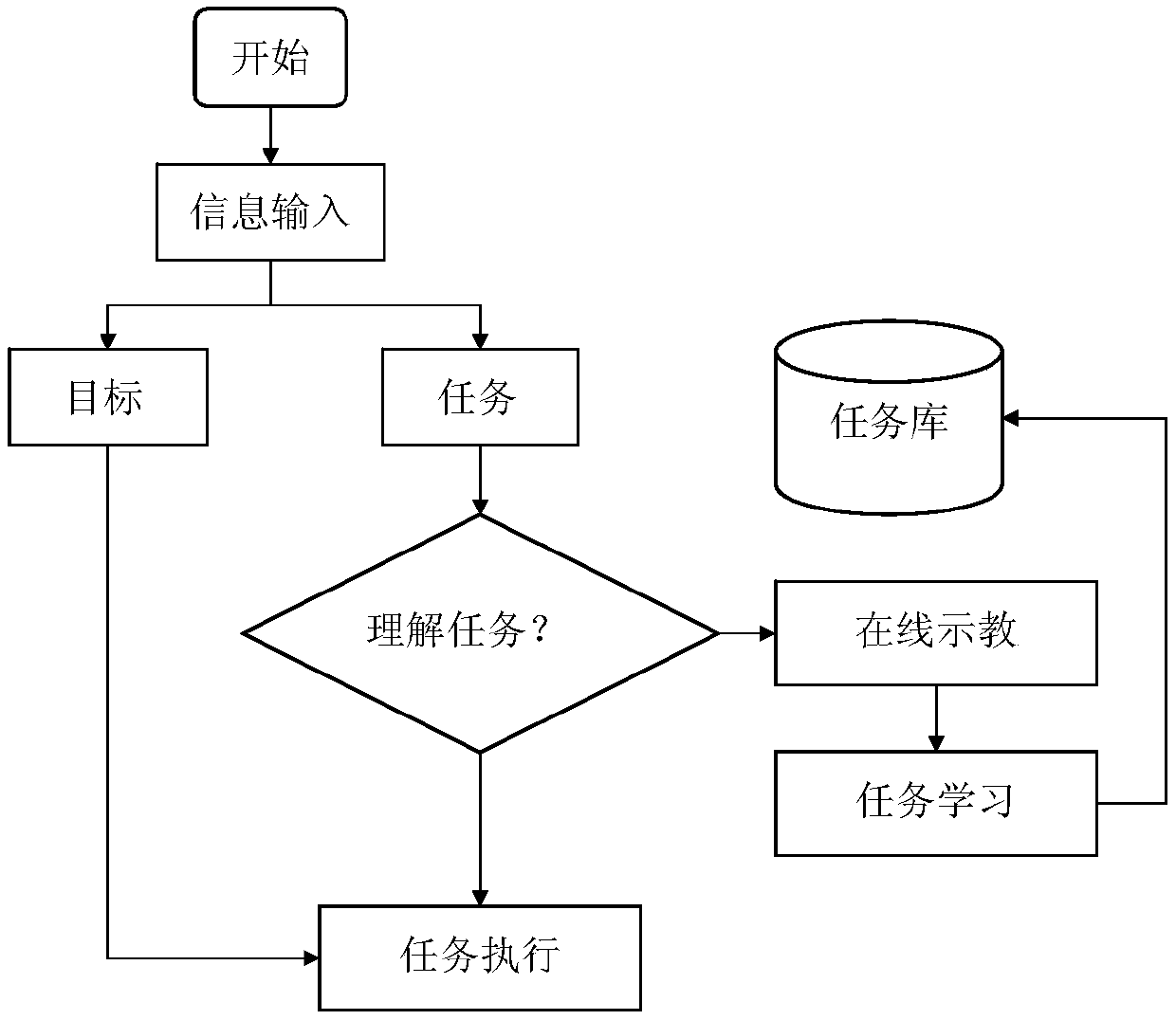

Robot self-directed learning method for human-machine cooperation

The invention provides a robot self-directed learning method for human-machine cooperation. The robot self-directed learning method for the human-machine cooperation comprises a target understanding method for the human-machine cooperation and a task learning algorithm; the self-directed learning method allows a robot to perceive a target rapidly with the help of humans and achieve the objective of mastering a new skill rapidly by simulating actions of humans. The self-directed learning method comprises the steps of (1) designing a deep learning method for the human-machine cooperation on thetarget understanding plane and introducing the experiential knowledge of humans; and (2) introducing evaluation and feedback of humans on the task learning plane to optimize and strengthen a learningalgorithm. According to the method, the robot can conduct self-learning and online learning effectively by combining real-time feedback and teaching of humans.

Owner:SOUTH CHINA UNIV OF TECH

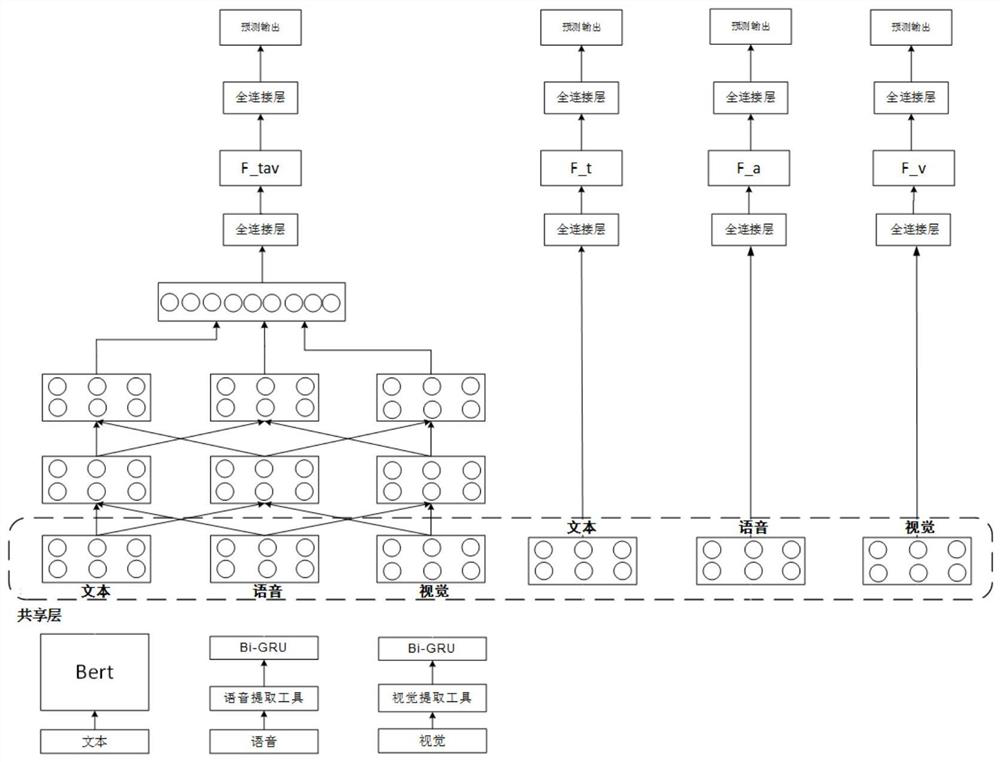

Multi-modal fusion emotion recognition system and method based on multi-task learning and attention mechanism and experimental evaluation method

InactiveCN113420807AAccurate identificationImprove computing efficiencyEnsemble learningCharacter and pattern recognitionMulti-task learningMulti modal data

The invention relates to a multi-modal fusion emotion recognition system and method based on multi-task learning and an attention mechanism and an experimental evaluation method, and aims to solve the problems that in the prior art, a multi-modal emotion recognition process without introducing a multi-modal fusion mechanism is low in efficiency and accuracy. The invention belongs to the field of human-computer interaction, and provides a multi-modal fusion emotion recognition method based on combination of multi-task learning and an attention mechanism, and compared with single-modal emotion recognition work, the multi-modal fusion emotion recognition method based on combination of multi-task learning and the attention mechanism is wider in application. Multi-task learning is utilized to introduce an auxiliary task, so that the emotion representation of each mode can be more efficiently learned, and an interactive attention mechanism can enable the emotion representations among the modes to mutually learn and complement each other, so that the recognition accuracy of the multi-mode emotion is improved; experiments are carried out on the multi-modal data sets CMU-MOSI and CMU-MOSEI, the accuracy and the F1 value are both improved, and meanwhile the accuracy and efficiency of emotion information recognition are improved.

Owner:HARBIN UNIV OF SCI & TECH

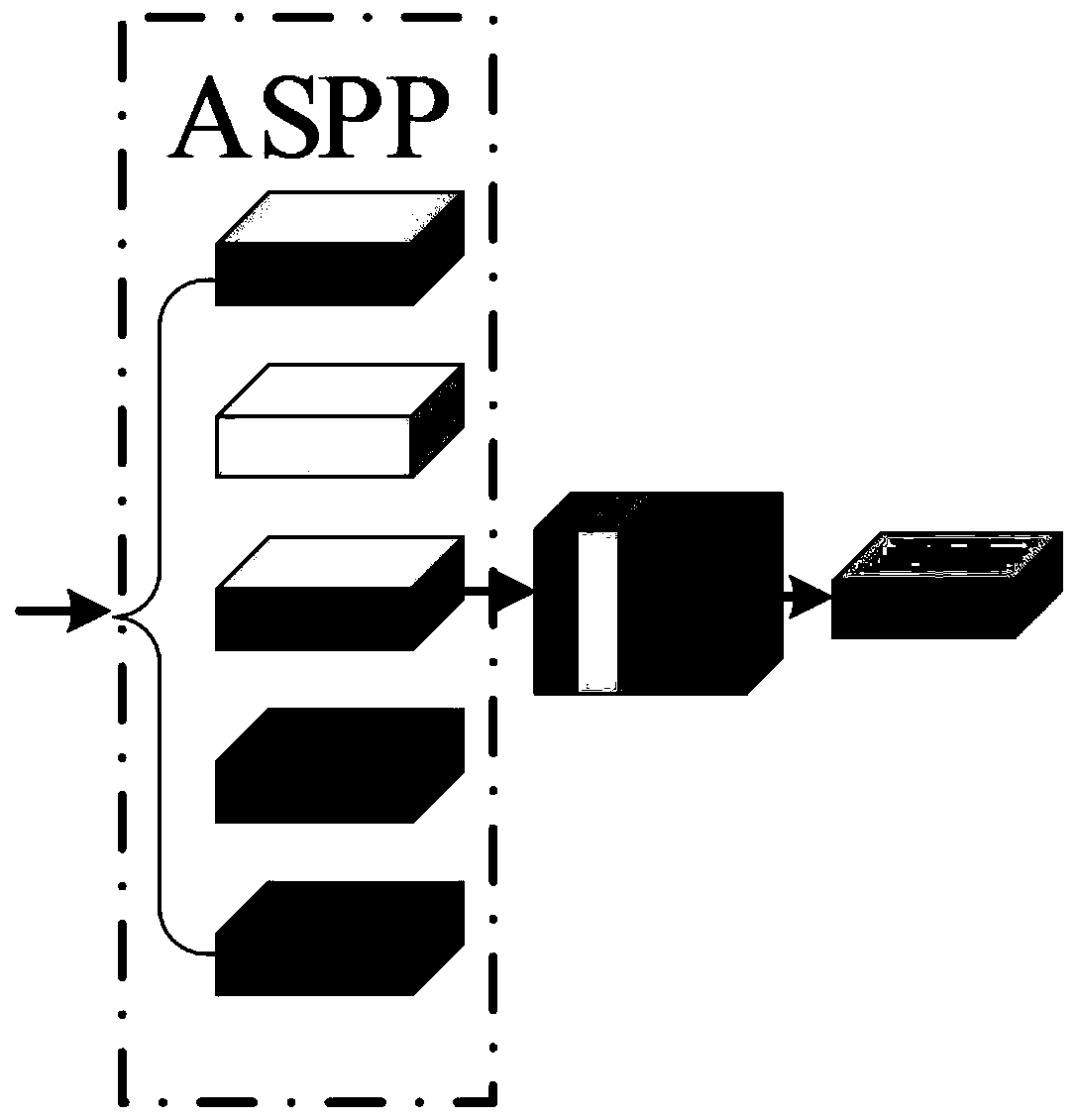

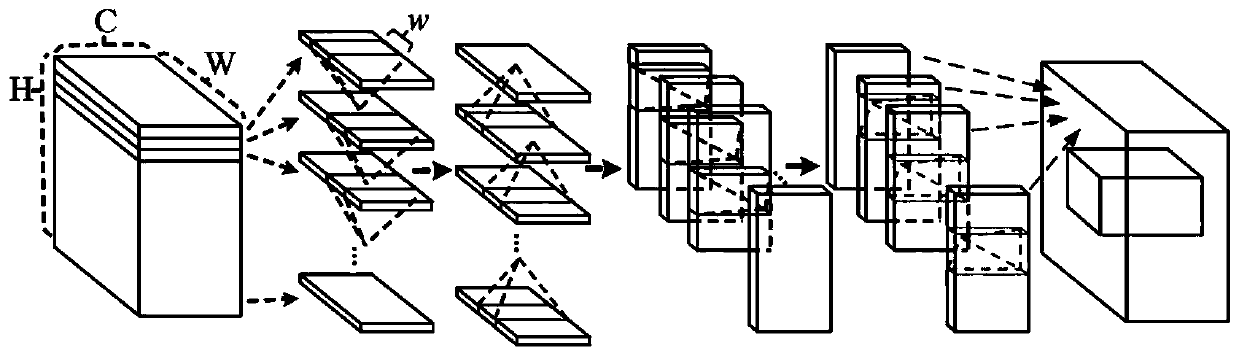

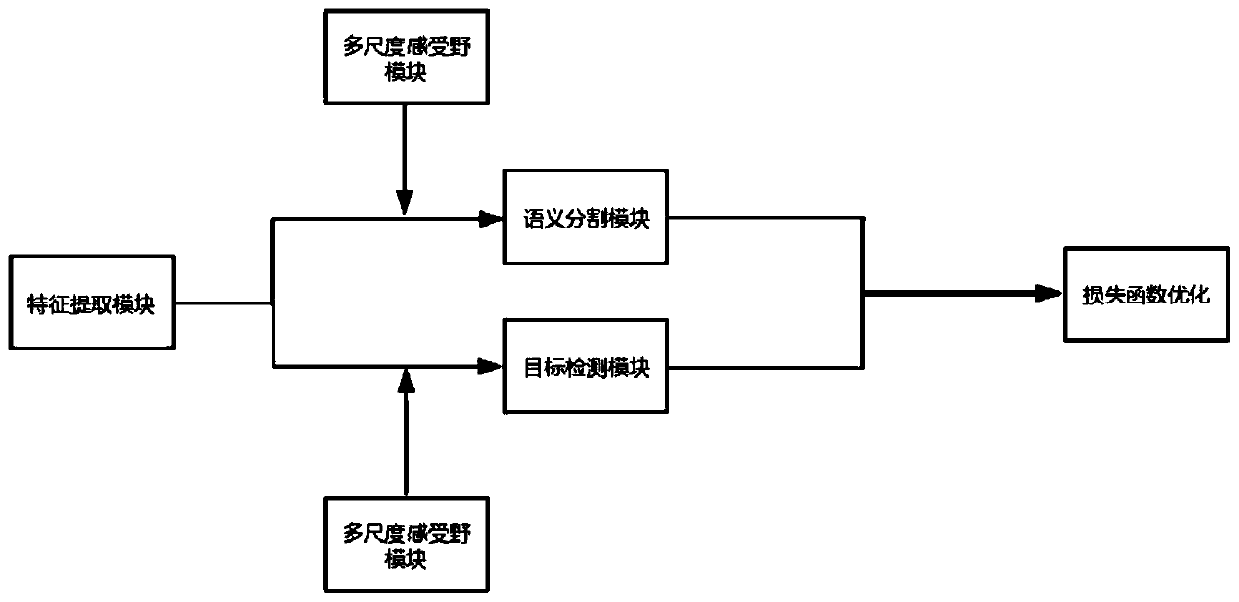

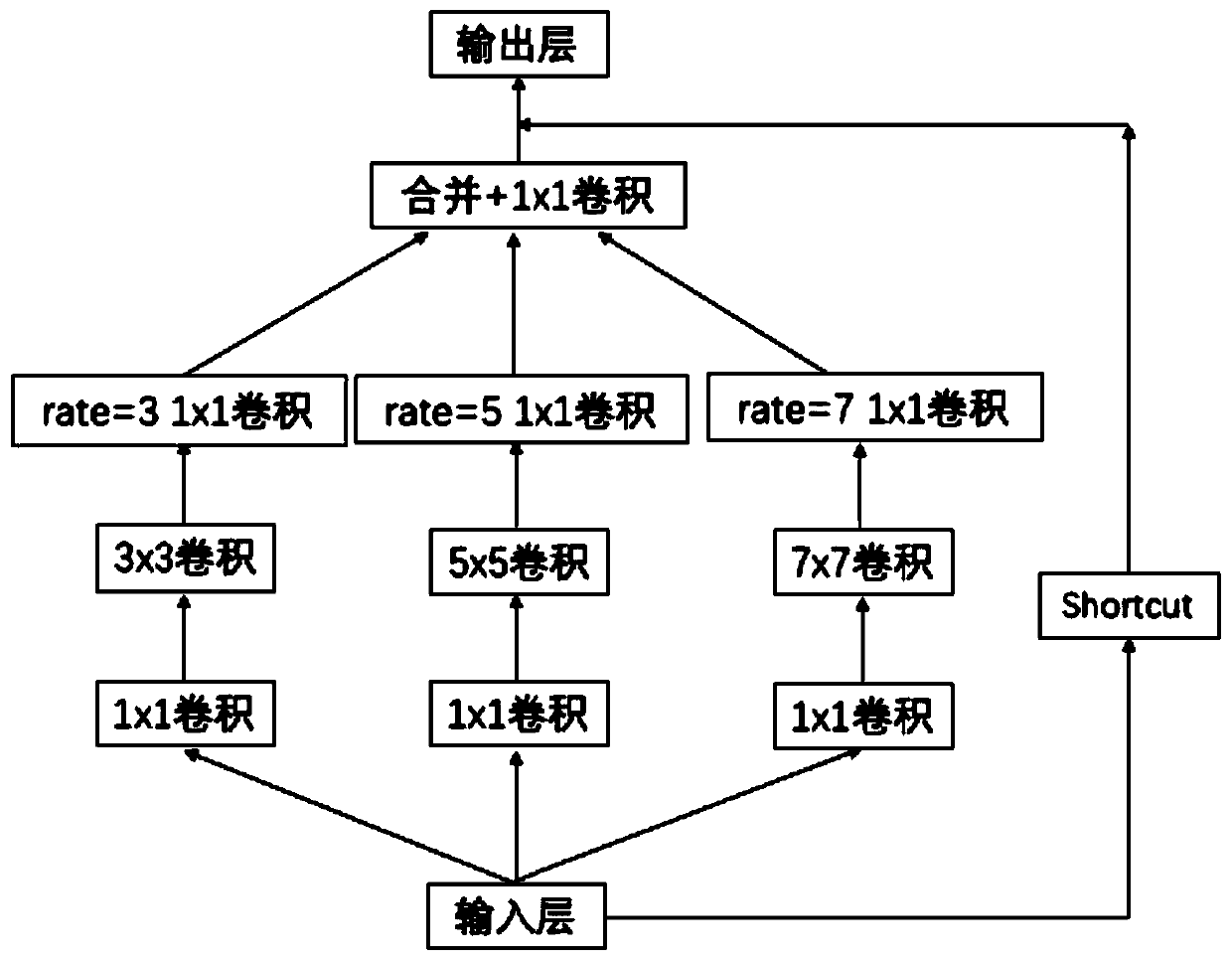

Multi-task learning method for real-time target detection and semantic segmentation based on lightweight network

The invention relates to a multi-task learning method for real-time target detection and semantic segmentation based on a lightweight network. The system comprises a feature extraction module, a semantic segmentation module, a target detection module and a multi-scale receptive field module. The feature extraction module selects a lightweight convolutional neural network MobileNet; features are extracted through a MobileNet network and sent to a semantic segmentation module to complete segmentation of a drivable area and a selectable driving area of a road, and meanwhile the features are sentto a target detection module to complete object detection appearing in a road scene. A multi-scale receptive field module is used for increasing the receptive domain of a feature map, convolution of different scales is used for solving the multi-scale problem, finally, weighted summation is carried out on a loss function of a semantic segmentation module and a loss function of a target detection module, and a total module is optimized. Compared with the prior art, the method provided by the invention has the advantage that two common unmanned driving perception tasks of road object detection and road driving area segmentation are completed more quickly and accurately.

Owner:SUN YAT SEN UNIV

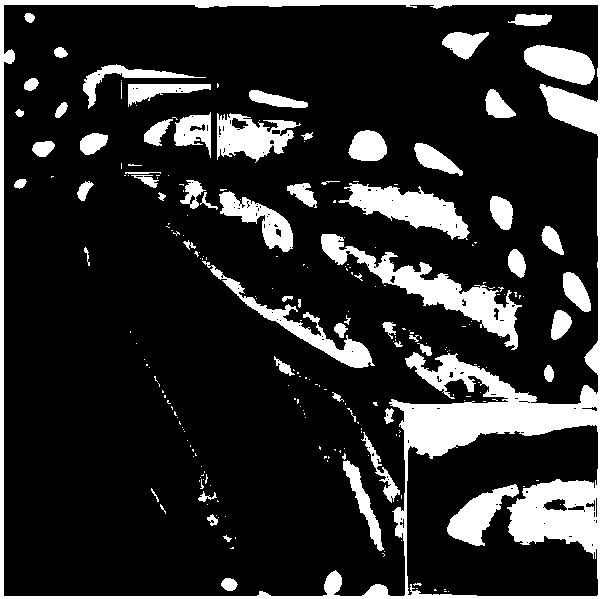

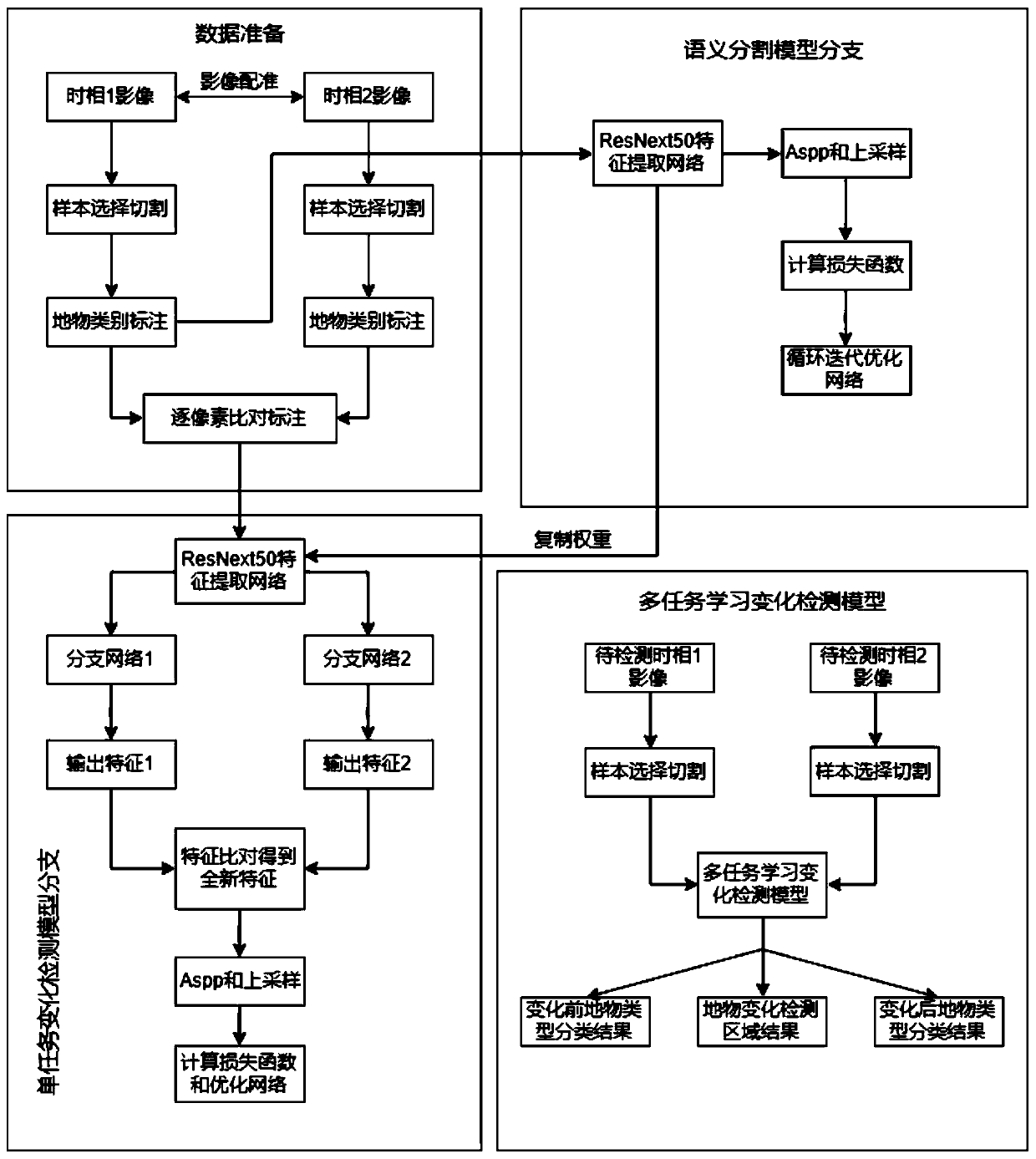

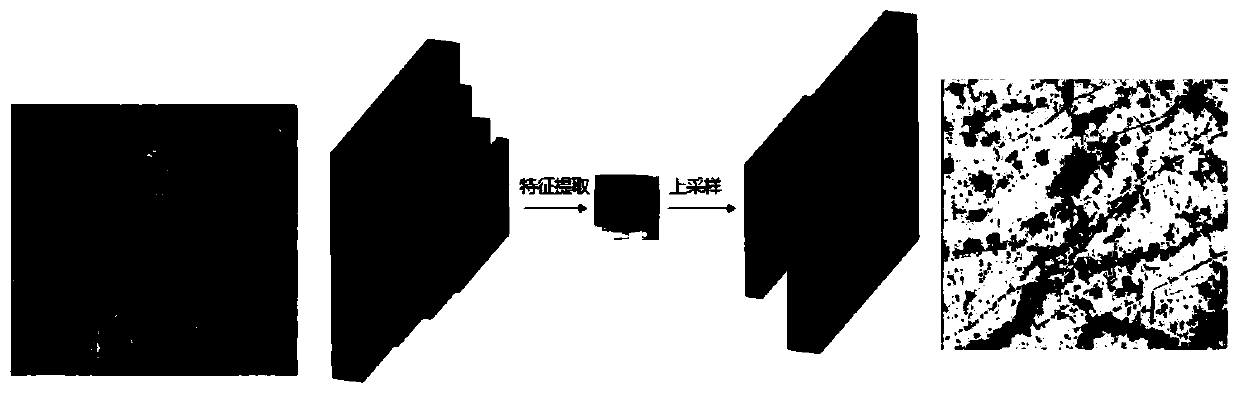

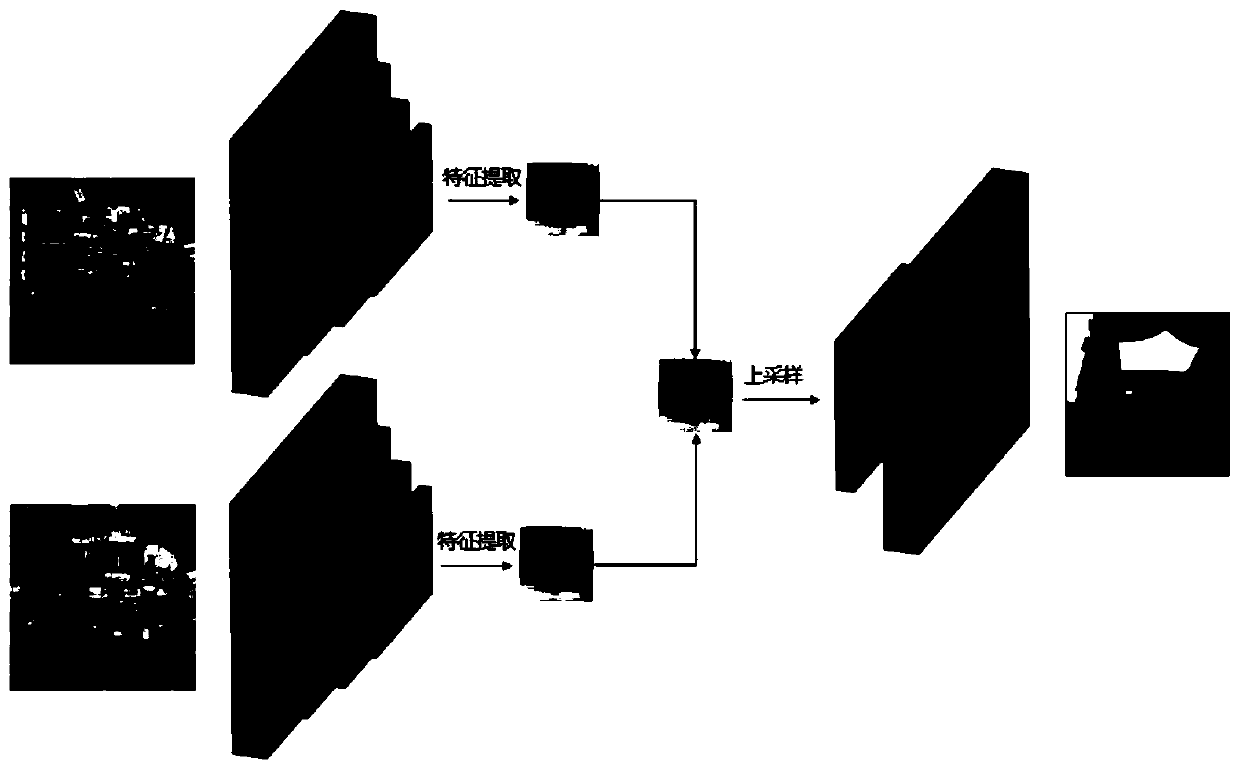

High-resolution remote sensing image ground object change detection method based on multi-task learning

ActiveCN111582043AEfficient extractionAvoid error accumulationScene recognitionNeural architecturesFeature extractionMulti-task learning

The invention belongs to the technical field of remote sensing image ground object change detection, and particularly relates to a remote sensing image ground object change detection method based on multi-task learning, which is used for solving the problem of error accumulation caused by the fact that the ground object change detection precision depends on the ground object classification precision in the prior art. A multi-task learning ground object change detection model is adopted and comprises two semantic segmentation model branches and a change detection model branch. A semantic segmentation model is constructed through a segmentation network, a feature extraction module of the model can effectively extract features of a remote sensing image, then a twin network is constructed to train a ground object change detection model, and a multi-task learning mechanism is constructed. In conclusion, the ground object change detection area can be determined, change detection results of different ground objects and ground object types before and after area change can be obtained, meanwhile, the problem of error accumulation is avoided, and the change detection precision is improved.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA +1

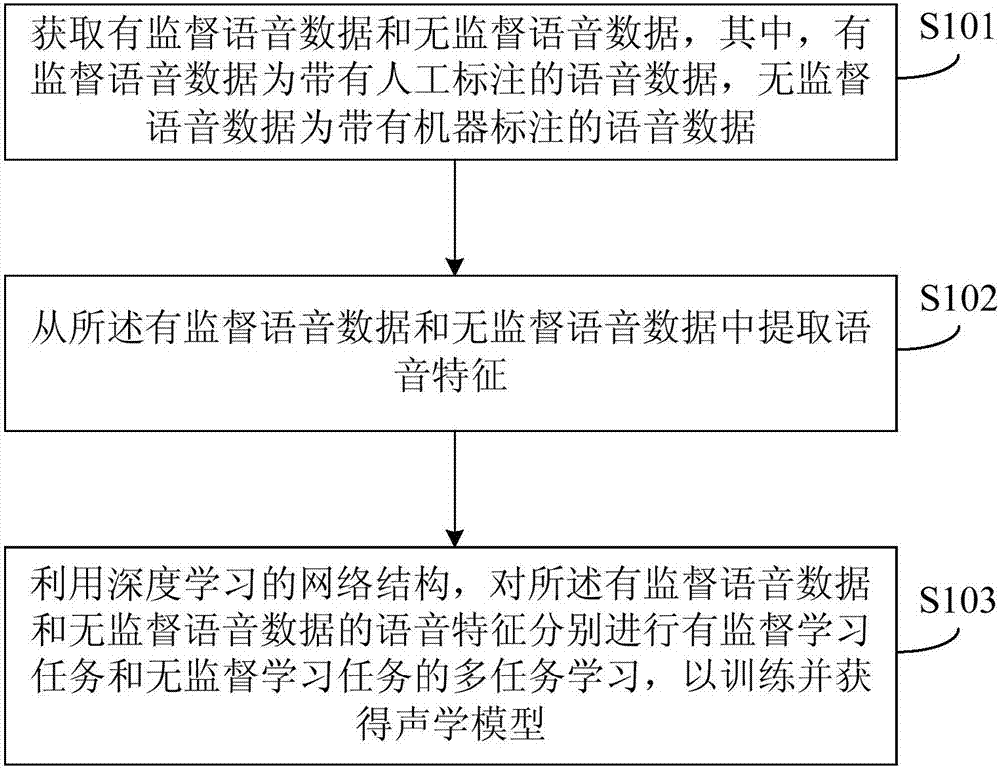

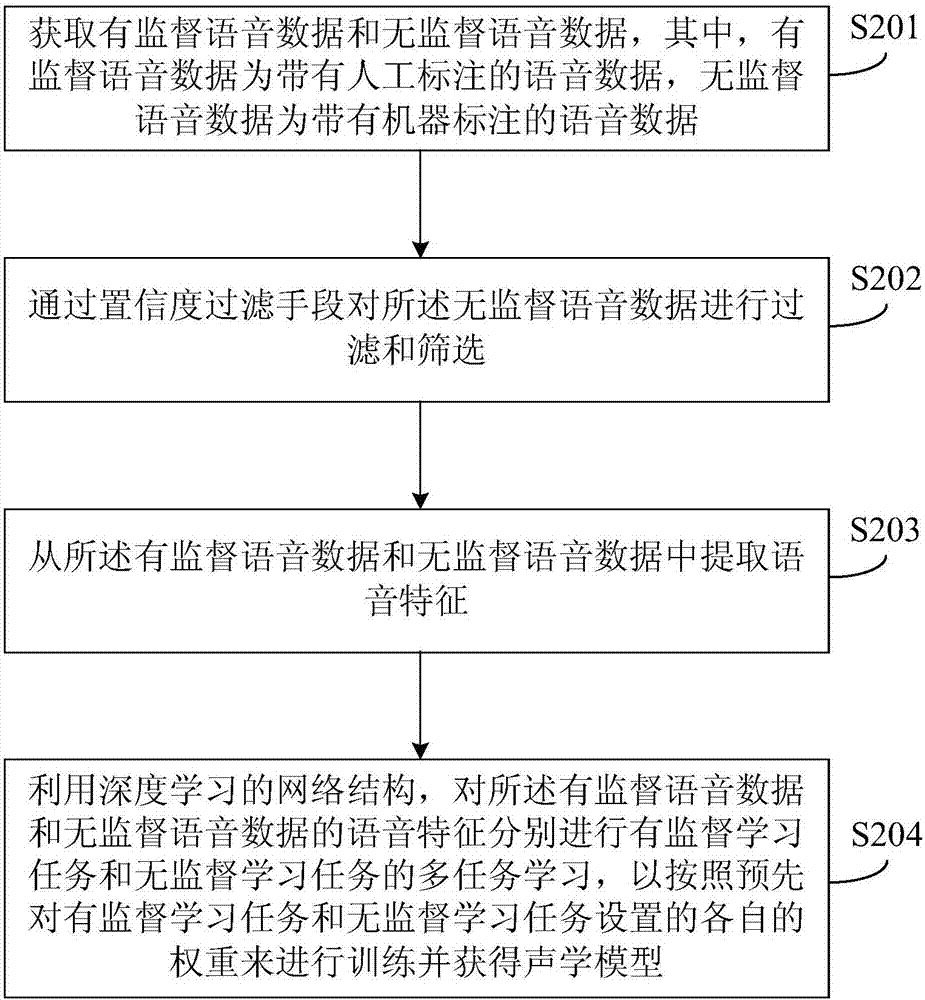

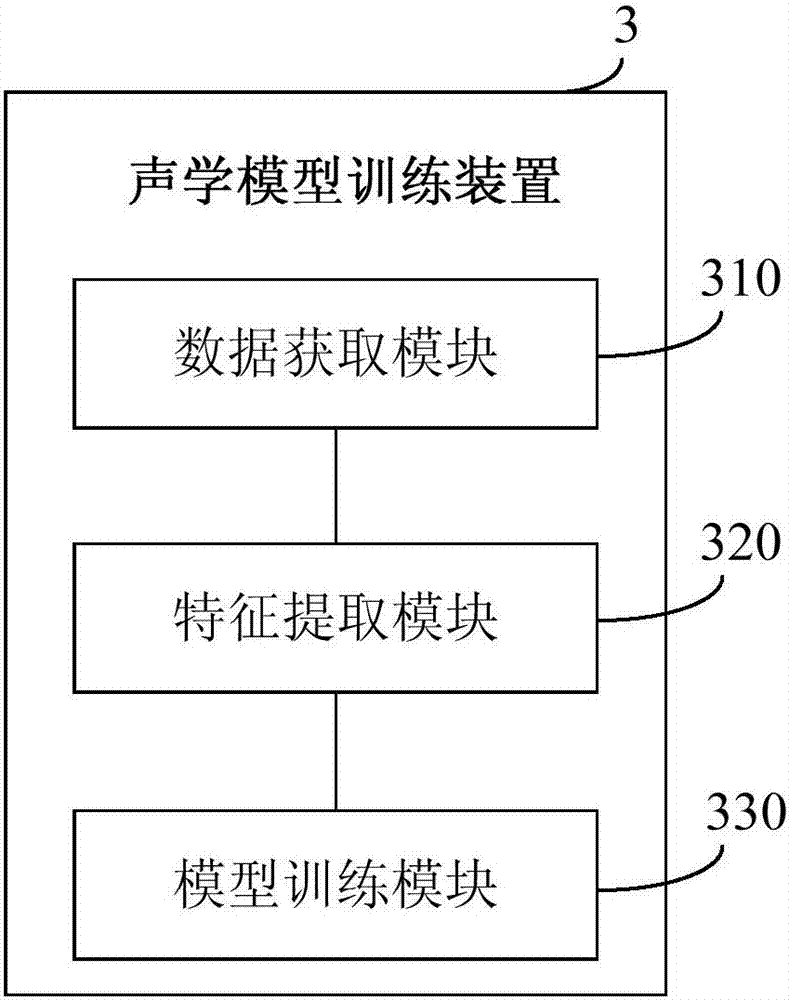

Acoustic model training method and device, computer equipment, and storage medium

The embodiment of the invention discloses an acoustic model training method and device, computer equipment, and a storage medium; the method comprises the following steps: obtaining supervised voice data and non-supervision voice data, wherein the supervised voice data refers to voice data with artificial marks, and the non-supervision data refers to the voice data with machinery marks; extracting voice features from the supervised voice data and non-supervision voice data; using a depth learning network structure to respectively carry out multiple-task learning including a supervised learning task and a non-supervision learning task for the voice features of the supervised voice data and non-supervision voice data, and training to obtain an acoustic model. The half-supervision acoustic model training based on multiple-task learning can save the artificial marking voice data cost needed for training the acoustic model, and no expensive artificial marked voice data is needed, thus continuously improving voice recognition performance.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

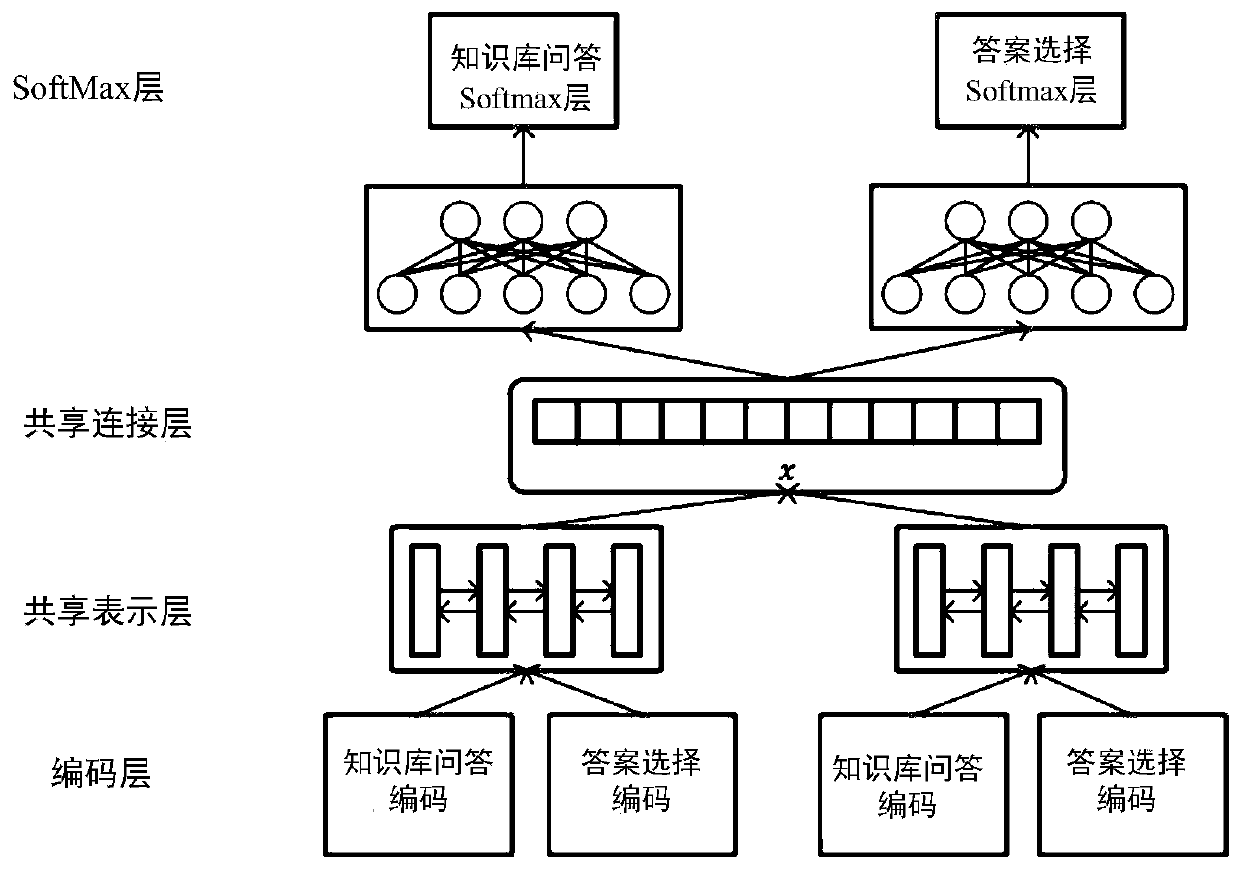

A question and answer method based on multi-task learning

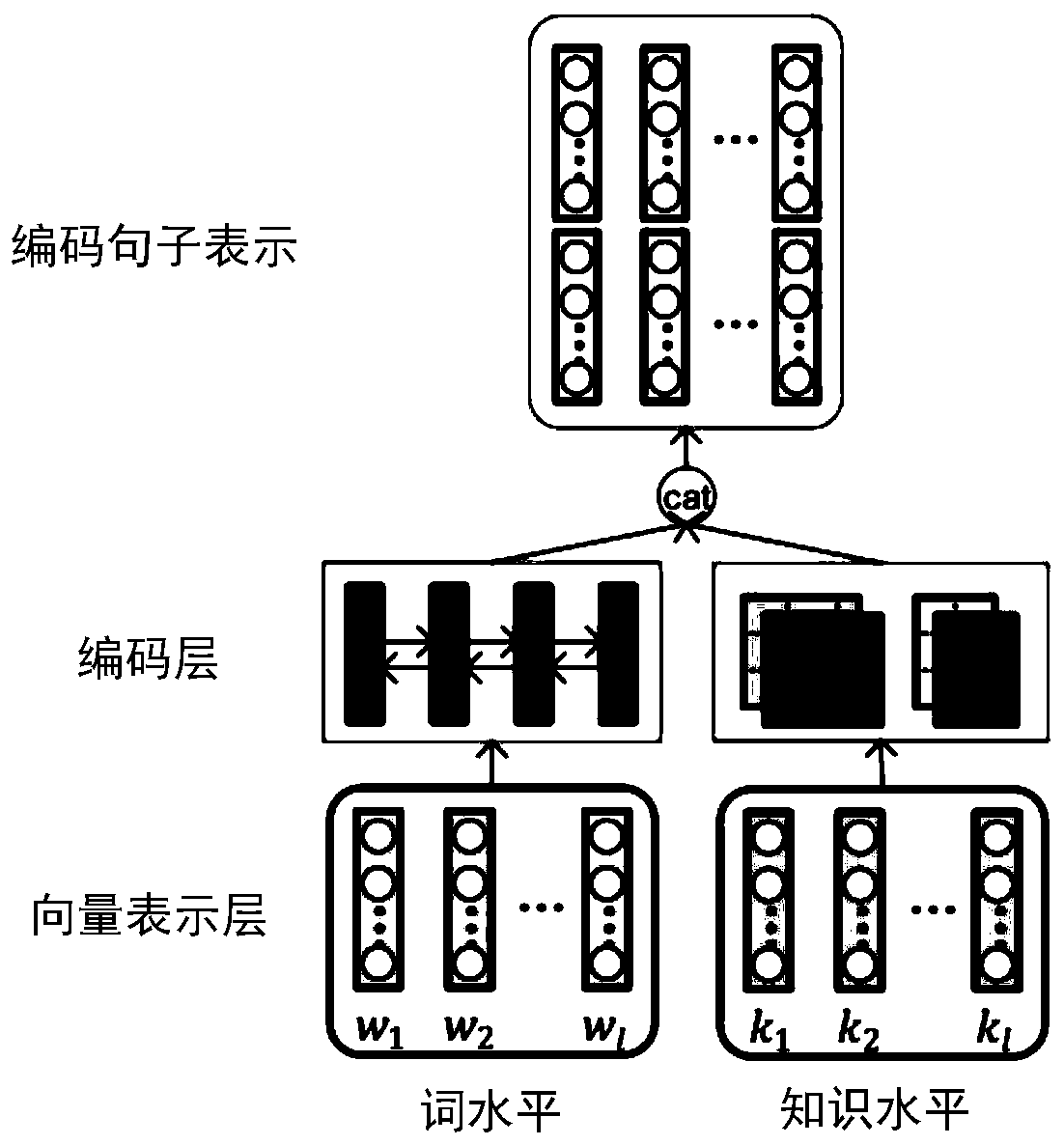

ActiveCN109885671AEfficient collectionImprove the level ofDigital data information retrievalNeural architecturesFeature learningMulti-task learning

The invention relates to a question and answer method based on multi-task learning, which belongs to the field of artificial intelligence and comprises the following steps: S1, configuring a task-specific siamese encoder for each task, and encoding a preprocessed sentence into a distributed vector representation; S2, using a shared representation learning layer to share advanced information amongdifferent tasks; S3, performing softmax layer classification specific to the task; as for question and answer pair in k task and tag of question and answer pair in k task, inputting thefinal feature representation form of the formula into a softmax layer which is specific to the task to carry out binary classification; and S4, multi-task learning: training a multi-task learning model to minimize a cross entropy loss function. According to the method, multi-view attention learnt from different angles is utilized, so that the tasks can interact to learn more comprehensive sentencerepresentation, the multi-view attention scheme can also effectively collect attention information from different representation view angles, and the overall level of representation learning is improved.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

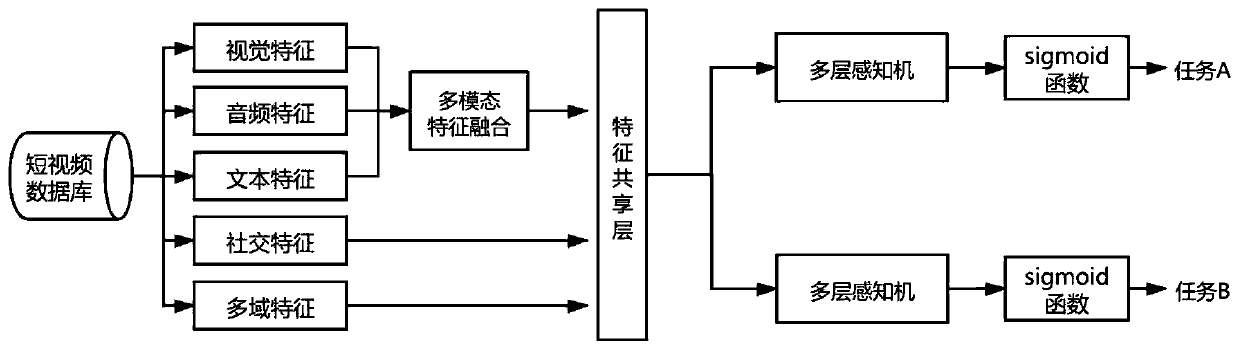

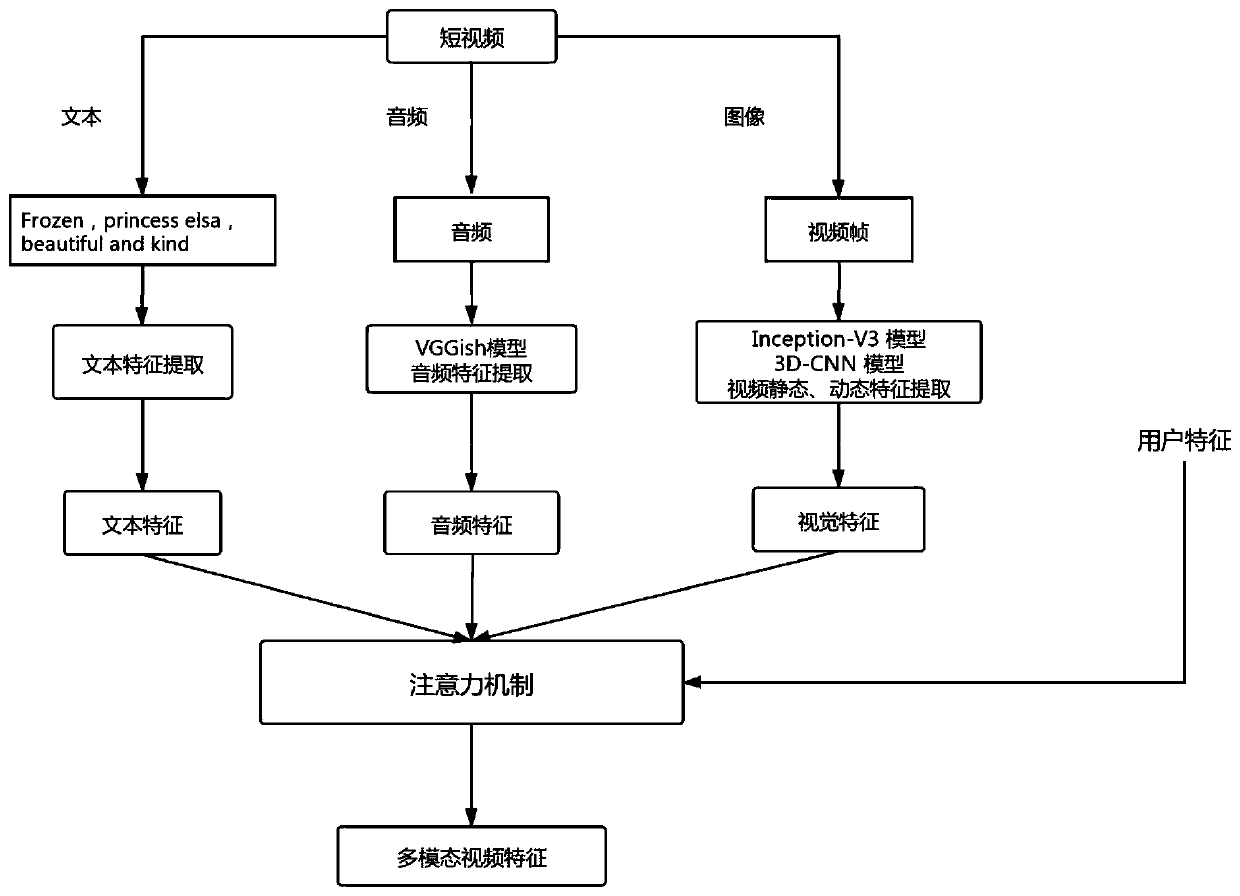

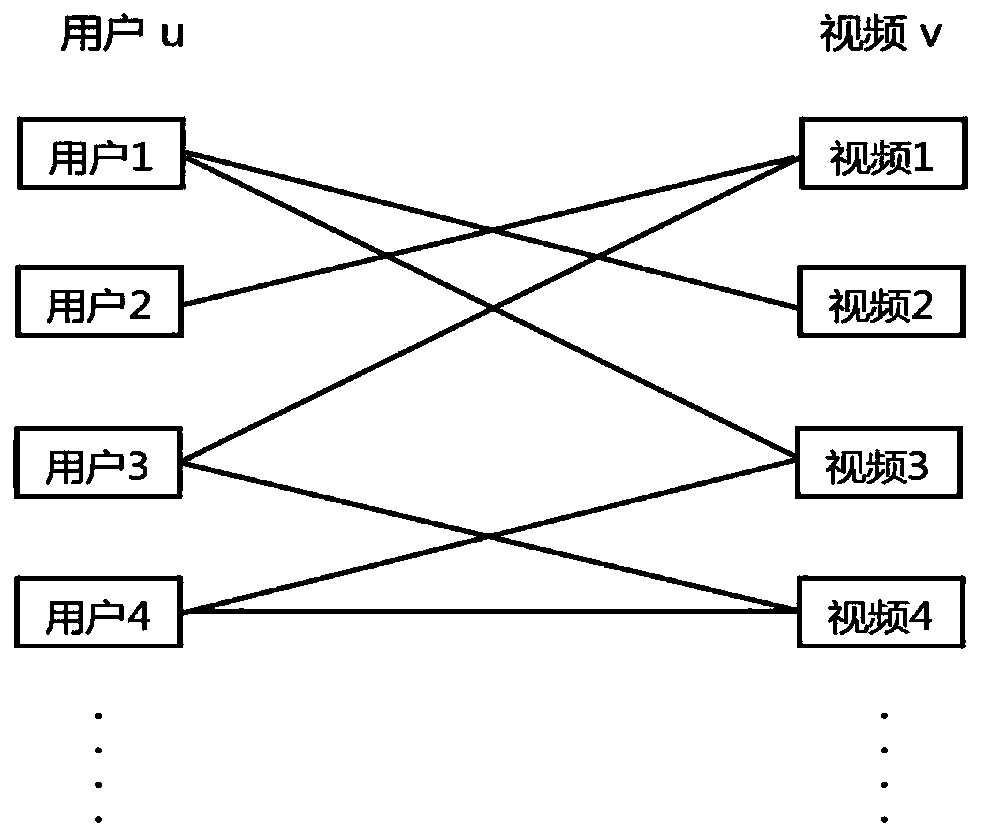

Video recommendation method based on multi-modal video content and multi-task learning

InactiveCN111246256ARich learningPersonalizedDigital data information retrievalNeural architecturesPattern recognitionPersonalization

The invention discloses a video recommendation method based on multi-modal video content and multi-task learning. The method comprises the following steps: extracting visual, audio and text features of a short video through a pre-trained model; fusing the multi-modal features of the video by adopting an attention mechanism method; learning feature representation of the social relationship of the user by adopting a deep walk method; proposing a deep neural network model based on an attention mechanism to learn multi-domain feature representation; embedding the features generated based on the above steps into a sharing layer as a multi-task model, and generating prediction results through a multi-layer perceptron. According to the method, the attention mechanism is combined with the user features to fuse the video multi-modal features, so that the whole recommendation is richer and more personalized; meanwhile, because of multi-domain features and with consideration of the importance ofinteraction features in recommendation learning, a deep neural network model based on an attention mechanism is provided, so that learning of high-order features is enriched, and more accurate personalized video recommendation is provided for users.

Owner:SOUTH CHINA UNIV OF TECH +1

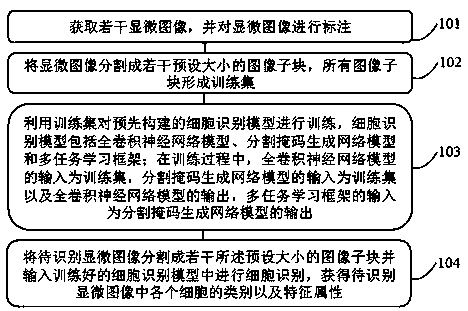

Microscopic image cell identification method and device based on multi-task learning

ActiveCN111524138AGuaranteed accuracyEnable multiple detectionImage enhancementImage analysisMicroscopic imageMulti-task learning

The invention discloses a microscopic image cell identification method based on multi-task learning. The method comprises the following steps: firstly, labeling an acquired microscopic image by adopting a multi-labeling mode; then segmenting the microscopic image containing the multiple labels to form a training set; training a pre-constructed cell identification model by utilizing the training set containing the multiple labels; and finally, carrying out cell identification on the microscopic image to be identified by utilizing the trained cell identification model to obtain the category andthe characteristic attribute of each cell in the microscopic image to be identified. Compared with the prior art, the method provided by the invention adopts a multi-labeling mode to label the microscopic image, so that the model obtained by training can realize multi-detection, and the multi-detection can ensure the accuracy of a final detection result.

Owner:湖南国科智瞳科技有限公司

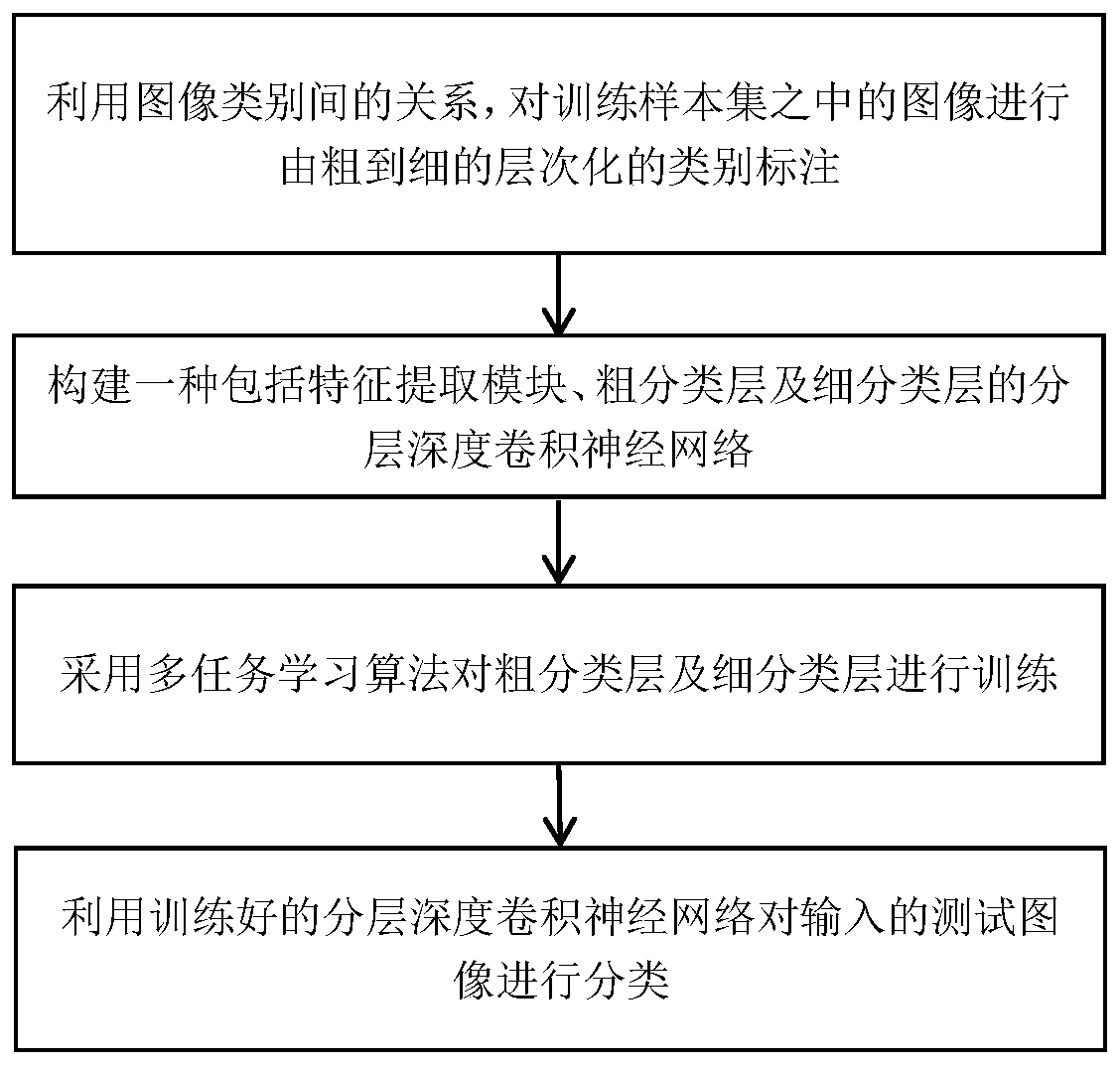

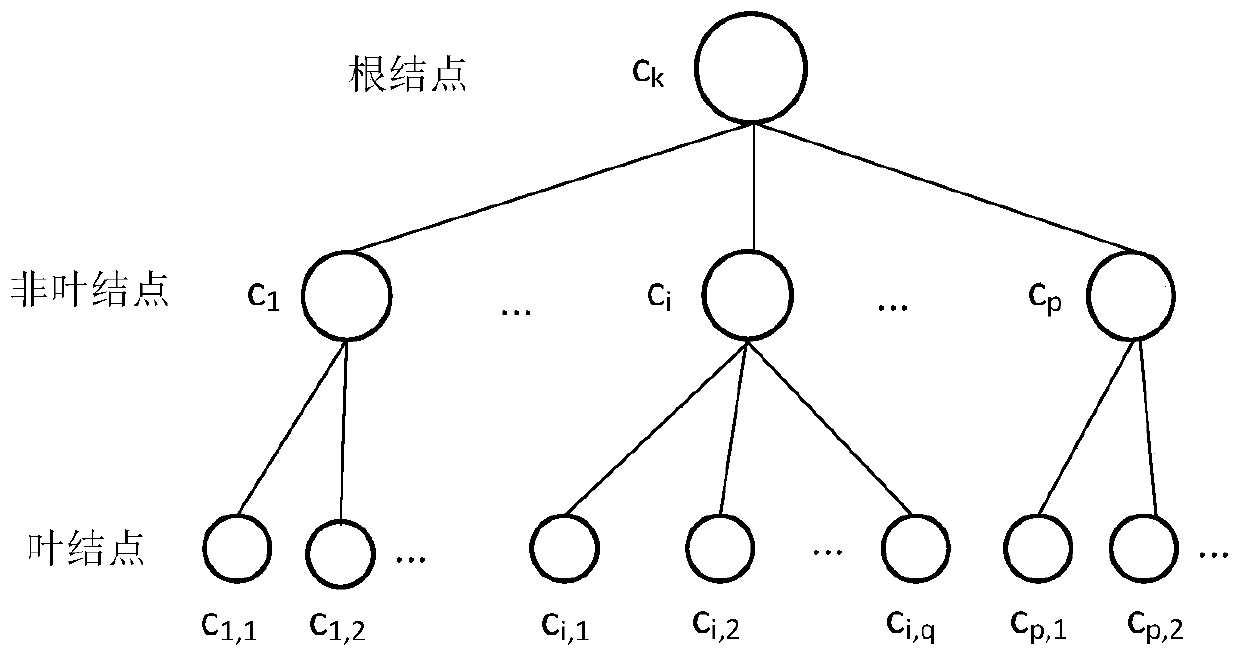

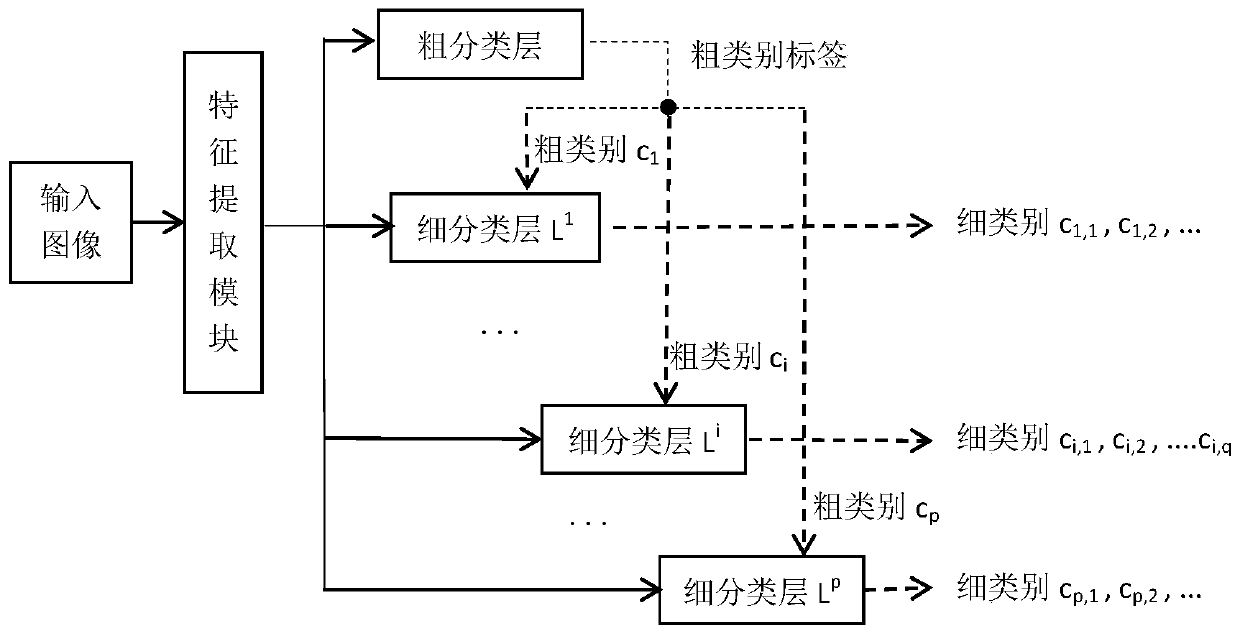

Image classification method and system based on hierarchical multi-task learning

InactiveCN110309888AImplement classificationImprove classification efficiencyCharacter and pattern recognitionFeature extractionClassification methods

The invention discloses an image classification method and system based on hierarchical multi-task learning. The method comprises the following steps of: firstly, carrying out hierarchical category labeling on images in a training sample set from coarse to fine by utilizing a relation between image categories through experience knowledge of experts in some professional fields; secondly, constructing a hierarchical deep convolutional neural network comprising a feature extraction module, a coarse classification layer and a fine classification layer; secondly, training the coarse classificationlayer and the fine classification layer by adopting a multi-task learning algorithm; and finally, classifying the input test images by using the trained hierarchical deep convolutional neural network.The hierarchical structure information between the image categories is combined with the convolutional neural network, the hierarchical deep convolutional neural network is designed, and a multi-tasklearning algorithm is utilized to realize coarse-to-fine classification of the images.

Owner:NANJING UNIV OF POSTS & TELECOMM

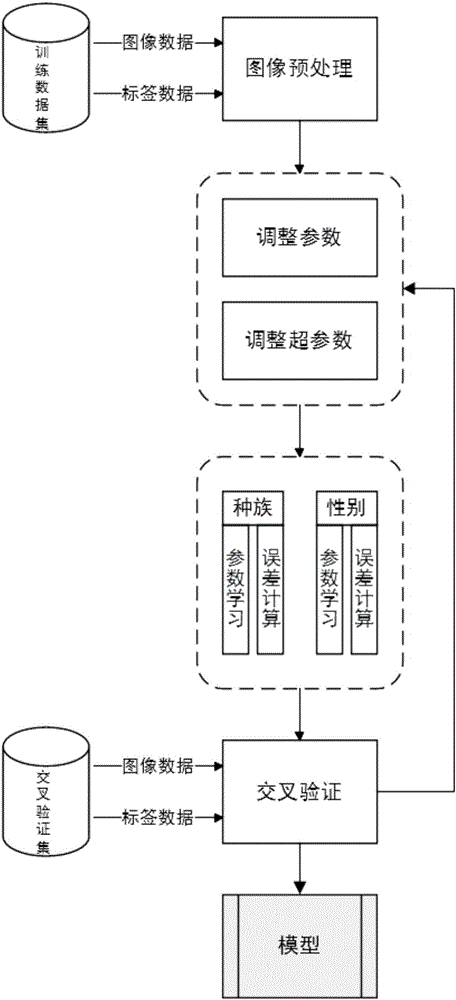

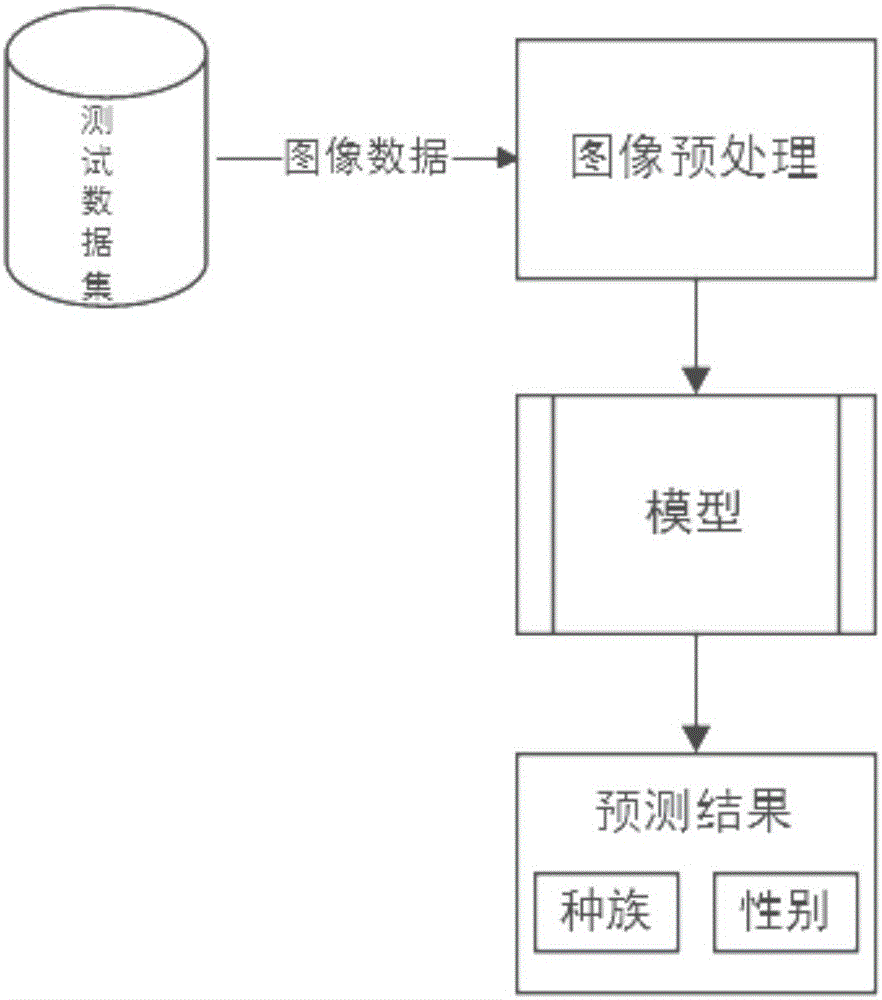

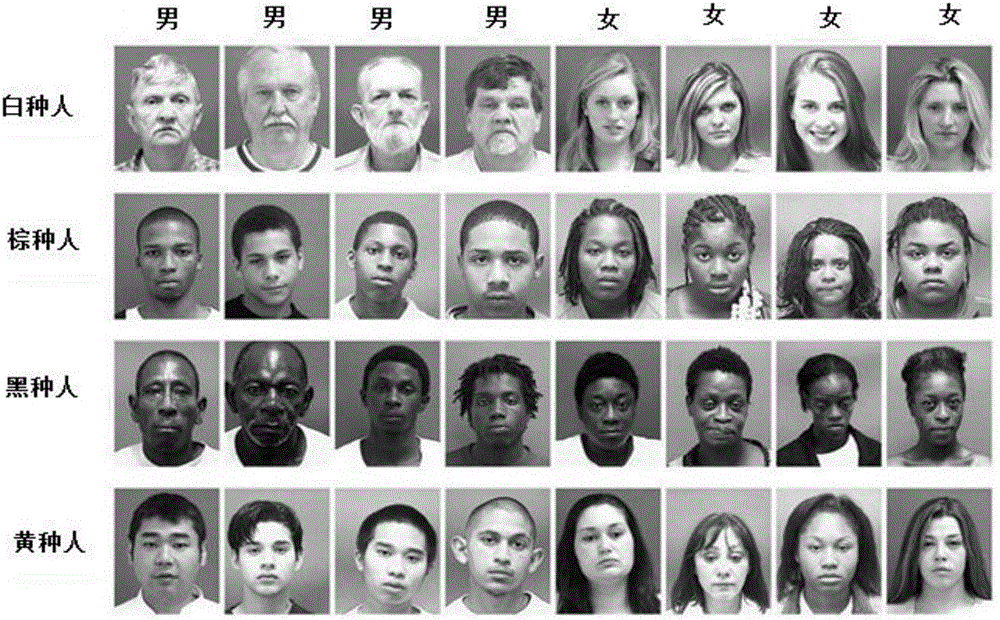

Multi-task learning based method for recognizing race and gender through human face image

InactiveCN105894050AImprove generalization abilityEasy to identifyCharacter and pattern recognitionSmall sampleSemantics

The invention provides a multi-task learning based method for recognizing race and gender through a human face image, relates to the technical fields such as the digital image processing field, the mode recognizing field, the computer vision field and the physiology field, and aims to solve the problems of recognizing of the race and the gender from a static human face or a video human face under a plurality of occasions. The multi-task learning method is a learning method for improving the learning performance through related task learning and has the advantages of recognizing the learning difference between the tasks, sharing related features of the tasks, improving the learning performance through the relevance, and reducing the high-dimension small sample over-learning problem. The multi-task learning method can be applied to the human face based race and gender recognizing; different semantics are treated as different tasks, and on that basis, the semantics-based multi-task feature selection is proposed. With the adoption of the method for recognizing the race and the gender, the generalization capacity of a learning system and the recognizing effect can be obviously improved.

Owner:BEIJING UNION UNIVERSITY

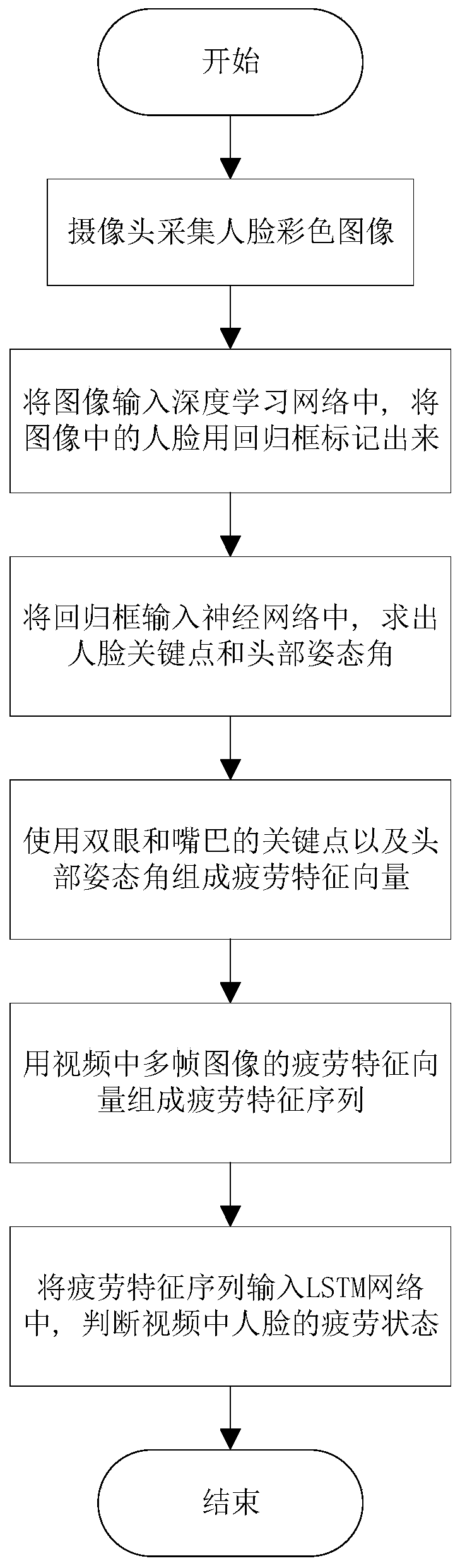

Driver fatigue state rapid detection method based on deep learning

InactiveCN110674701ASmall amount of calculationReduce the amount of huge parametersCharacter and pattern recognitionNeural architecturesPattern recognitionColor image

The invention discloses a driver fatigue state rapid detection method based on deep learning, and the method comprises the following steps: (1), collecting a color image of a driver during driving, detecting a face part of the driver in the image through a deep learning method, and marking the face part through a regression frame; (2) taking the face boundary regression frame as input, inputting the face boundary regression frame into a multi-task learning network, and finally detecting to obtain a face key point of a face and an attitude angle of a head; and (3) establishing a space-time fatigue feature sequence by using the face key points and the head attitude angle, inputting the feature sequence into a fatigue recognition deep learning network as input, and finally outputting a fatigue state recognition result. Real-time performance and accuracy requirements of driver fatigue state detection are fully considered, a corresponding optimization method is designed by optimizing compression and fatigue characteristics of a deep learning network model on the premise of ensuring accuracy, the volume of the network is compressed to the maximum extent, and the operation speed of the algorithm is increased.

Owner:SOUTHEAST UNIV

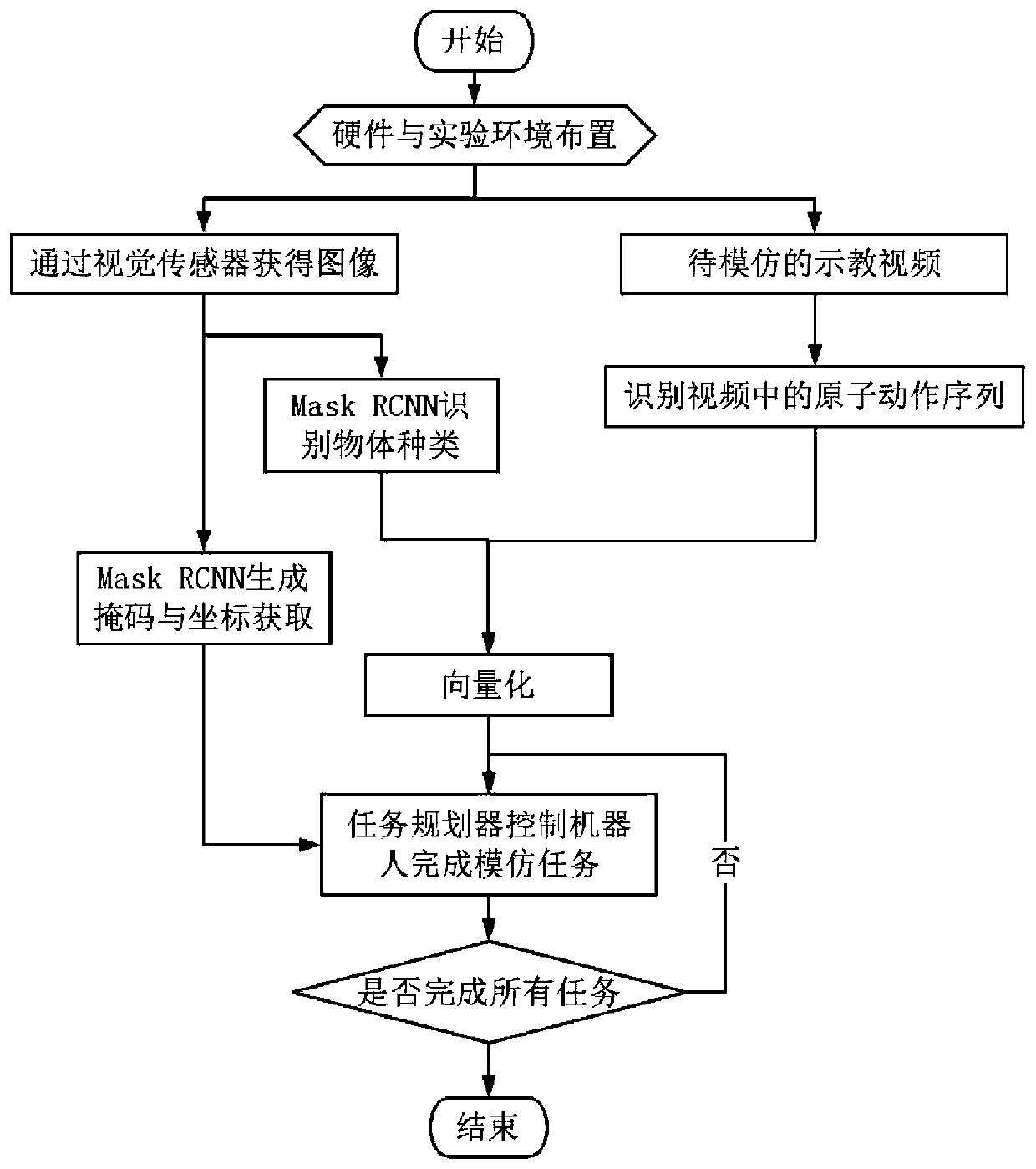

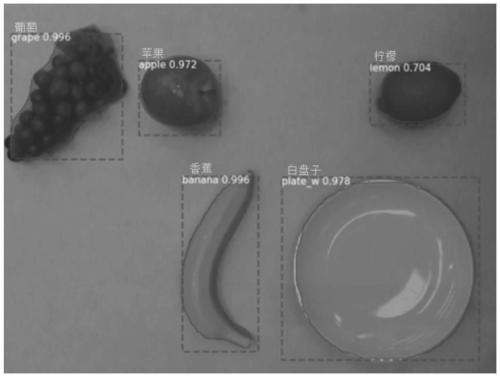

Robot sequence task learning method based on visual simulation

ActiveCN111203878AImprove generalization abilityImprove general performanceProgramme-controlled manipulatorComputer visionTask learning

The invention provides a robot sequence task learning method based on visual simulation. The robot sequence task learning method based on the visual simulation is used for guiding a robot to simulateand execute human actions from a video containing the human actions. The robot sequence task learning method comprises the following steps of (1) identifying object types and masks by using a region-based mask convolutional neural network according to an input image; (2) calculating actual plane physical coordinates (x, y) of objects according to the masks; (3) identifying atomic actions in a target video; (4) converting an atomic action sequence and the identified object types into a one-dimensional vector; (5) inputting the one-dimensional vector into a task planner, and outputting a task description vector capable of guiding the robot; and (6) controlling the robot to simulate a sequence task in the target video by combining the task description vector and the object coordinates. According to the robot sequence task learning method based on the visual simulation, the video and the image serve as input, the objects are recognized, a task sequence is deduced, the robot is guided to simulate the target video, and meanwhile, the generalization performance is high, so that the simulation of the task can still be completed under different environments or object types.

Owner:BEIHANG UNIV

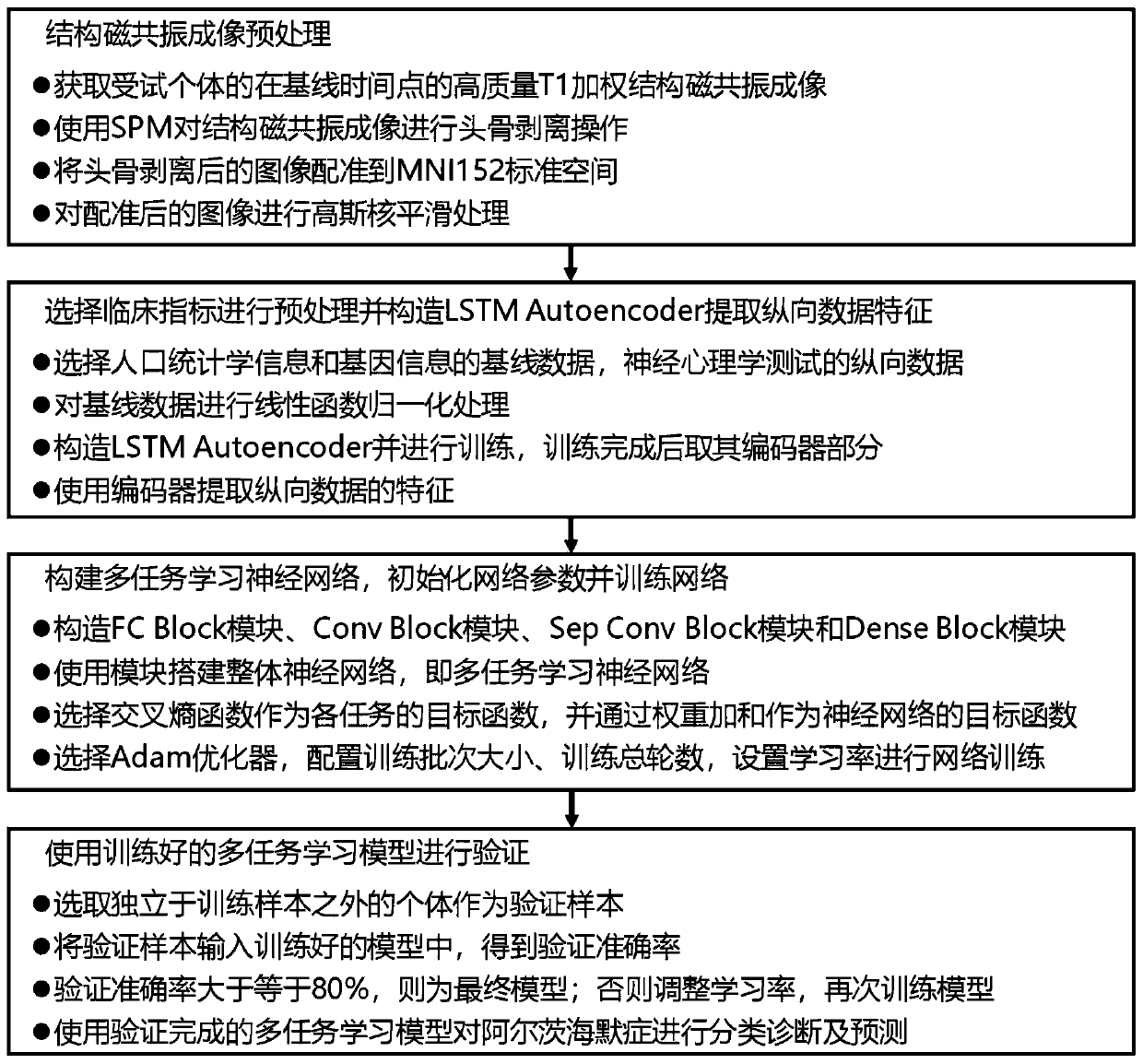

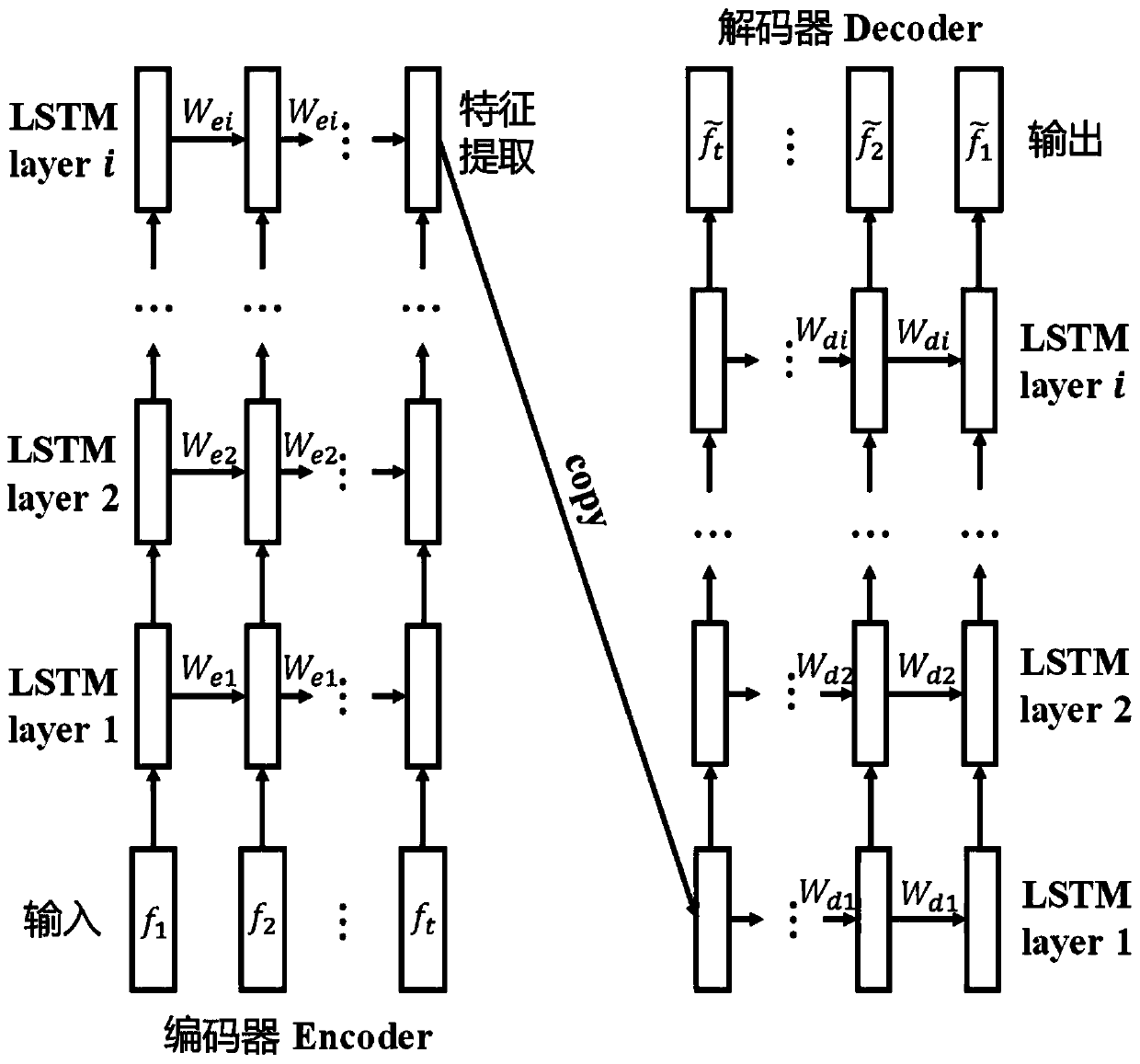

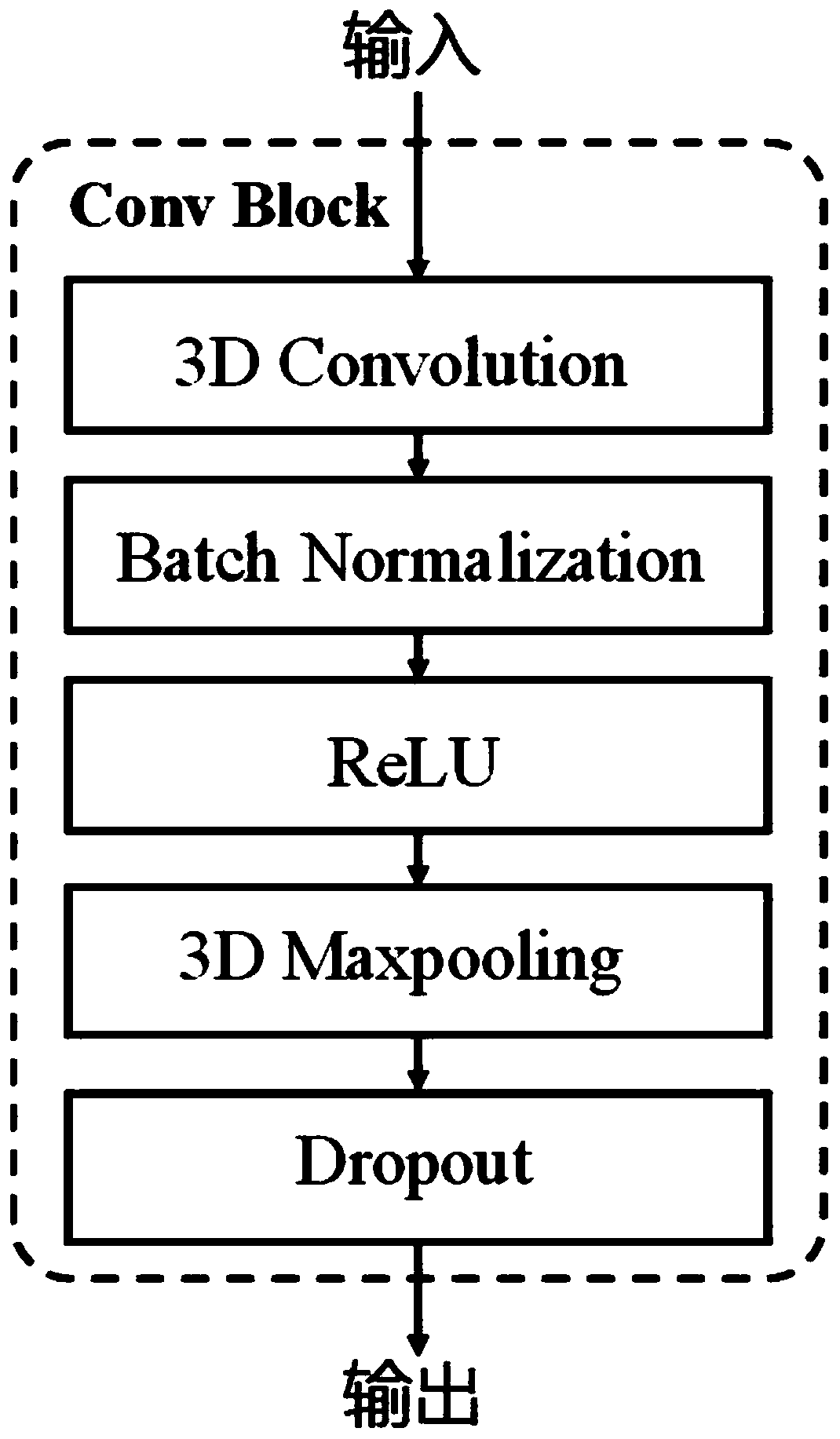

Alzheimer's disease classification and prediction system based on multi-task learning

ActiveCN111488914AAvoid complex processingImprove classification efficiencyMedical automated diagnosisCharacter and pattern recognitionFeature vectorImaging processing

The invention discloses an Alzheimer's disease classification and prediction system based on multi-task learning, and relates to an Alzheimer's disease classification and prediction system. The objective of the invention is to solve the problem that an existing Alzheimer's disease classification system cannot judge whether a mild cognitive impairment individual will be transformed into Alzheimer'sdisease or not. The system comprises a an image processing main module, a clinical index processing main module, a neural network main module, a training main module and a detection main module; wherein the image processing main module is used for acquiring a head image, preprocessing the acquired head image to obtain a preprocessed image, and inputting the preprocessed image into the training main module and the detection main module; wherein the clinical index processing main module is used for selecting clinical indexes, acquiring feature vectors of the clinical indexes and inputting the feature vectors of the clinical indexes into the training main module and the detection main module; and the neural network main module is used for building an Alzheimer's disease classification and prediction model. The system is applied to the technical field of intelligent medical detection.

Owner:HARBIN INST OF TECH

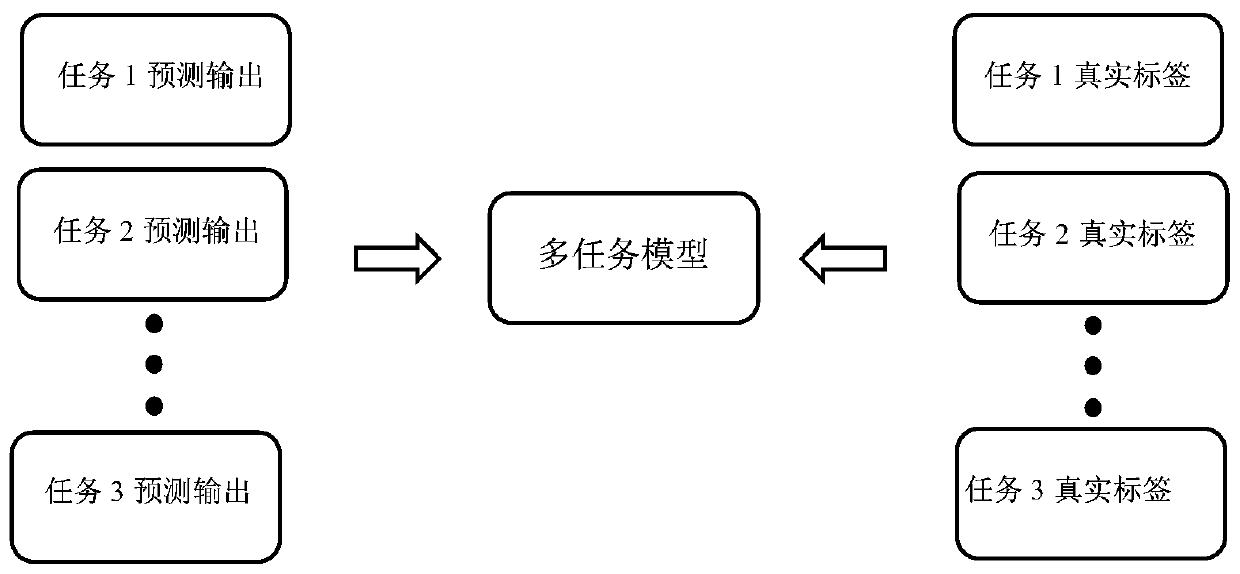

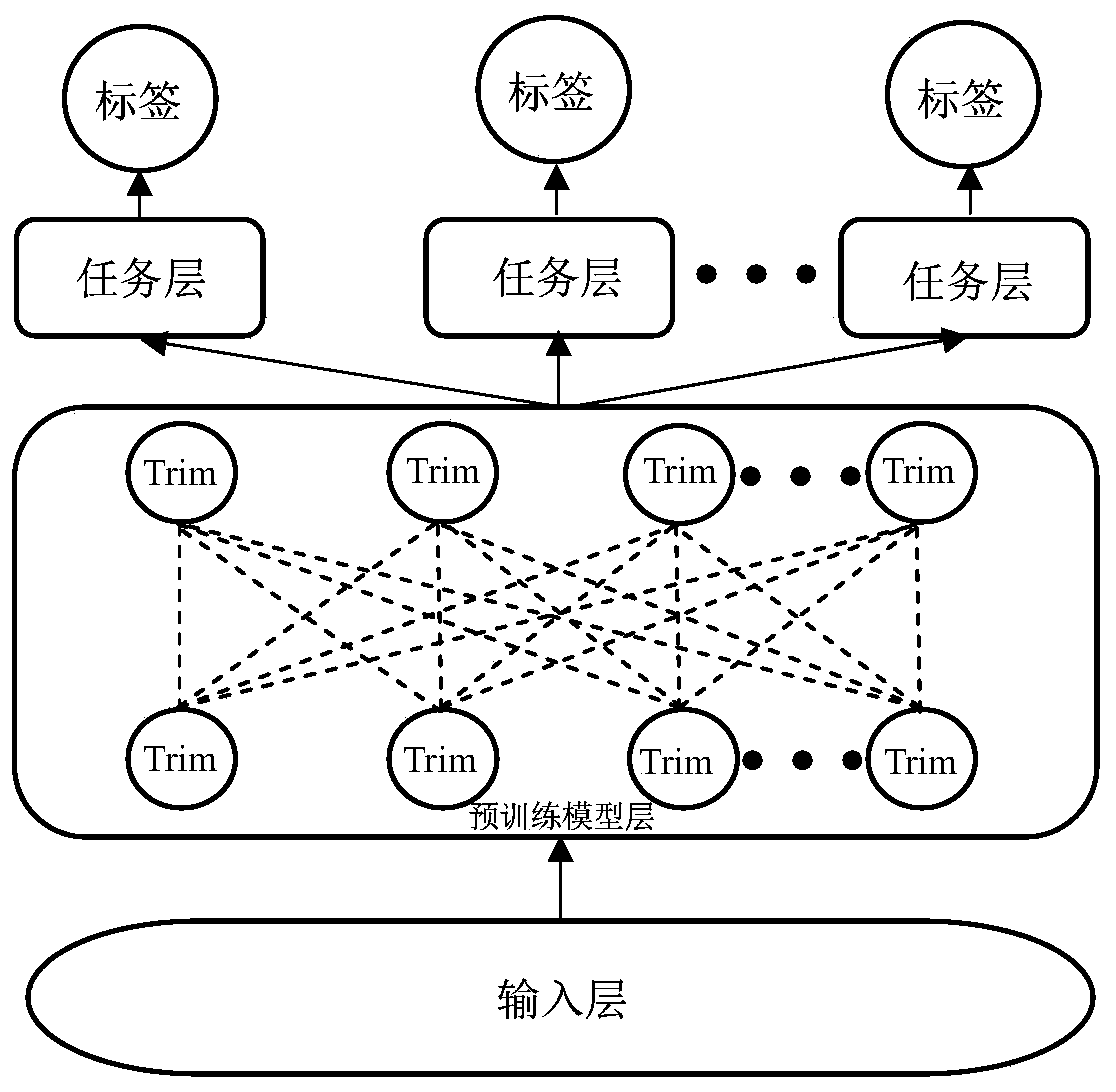

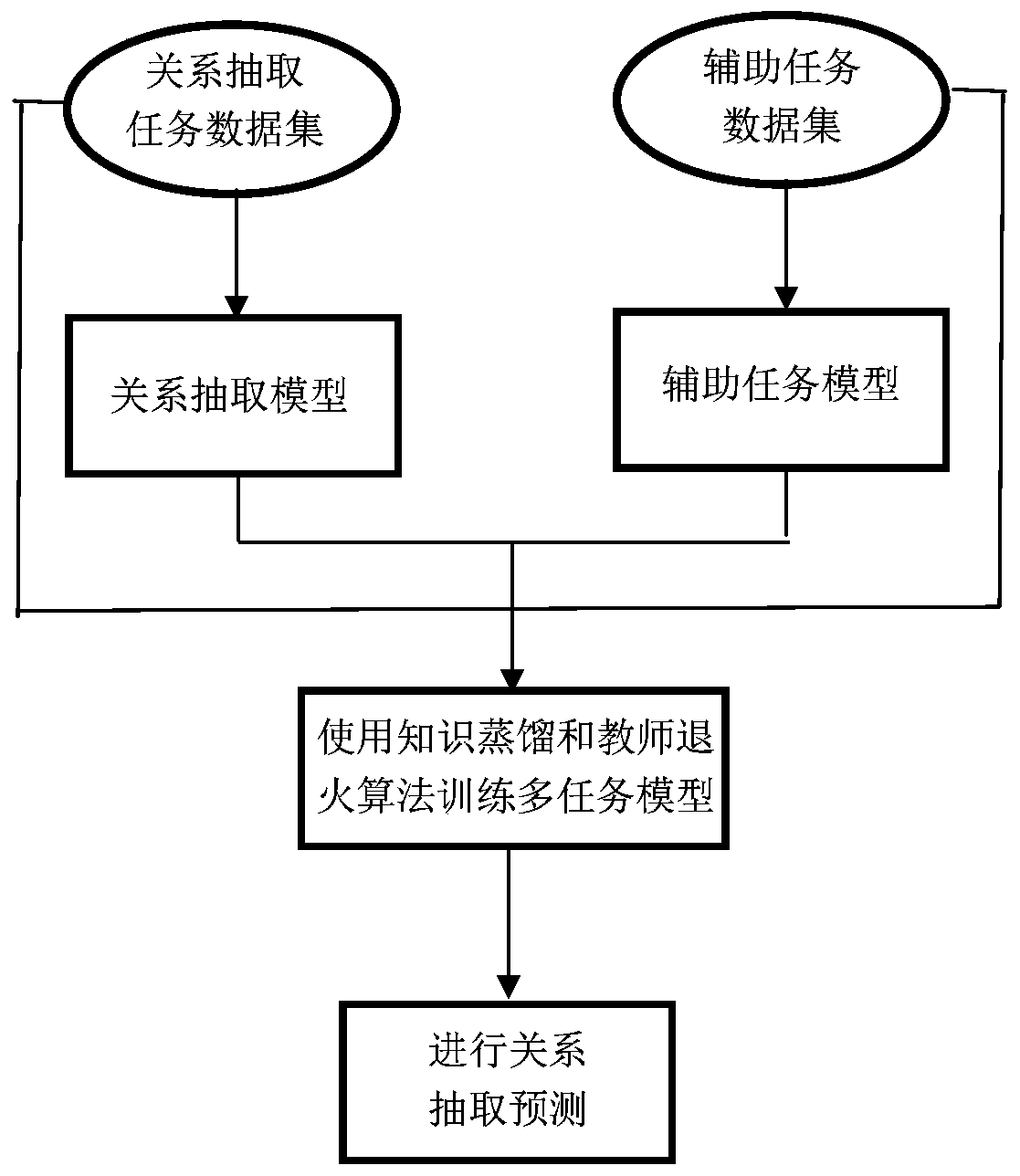

Natural language relation extraction method based on multi-task learning mechanism

ActiveCN111241279AImprove migration abilityEfficient methodNatural language data processingNeural architecturesData setEngineering

The invention discloses a natural language relation extraction method based on a multi-task learning mechanism. The method comprises the following steps: introducing information implied by different tasks by utilizing a plurality of auxiliary tasks to improve a relation extraction effect; introducing knowledge distillation to enhance the effect of assisting a task to guide and train a multi-task model, introducing a teacher annealing algorithm for relation and extraction based on multi-task learning to enable the effect of the multi-task model to serve as a single-task model of a guide task inan ultra-far mode, and finally improving the accuracy of relation extraction is improved. The method comprises the following steps: firstly, training on different auxiliary tasks to obtain a multi-task model for guiding training; then, a model learned by an auxiliary task and a real label are used as supervision information to guide the learning of a multi-task model at the same time; finally, evaluation is carried out on a SemEval2010 task-8 data set, and the performance of the model is superior to that of a model independently using improved BERT for relation extraction and is also superiorto that of a mainstream model based on deep learning relation extraction.

Owner:EAST CHINA NORMAL UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com