Patents

Literature

184 results about "Reward value" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Value of Rewards is a Total Reward, Remuneration, Compensation & Benefits activity by René Broekhuis.

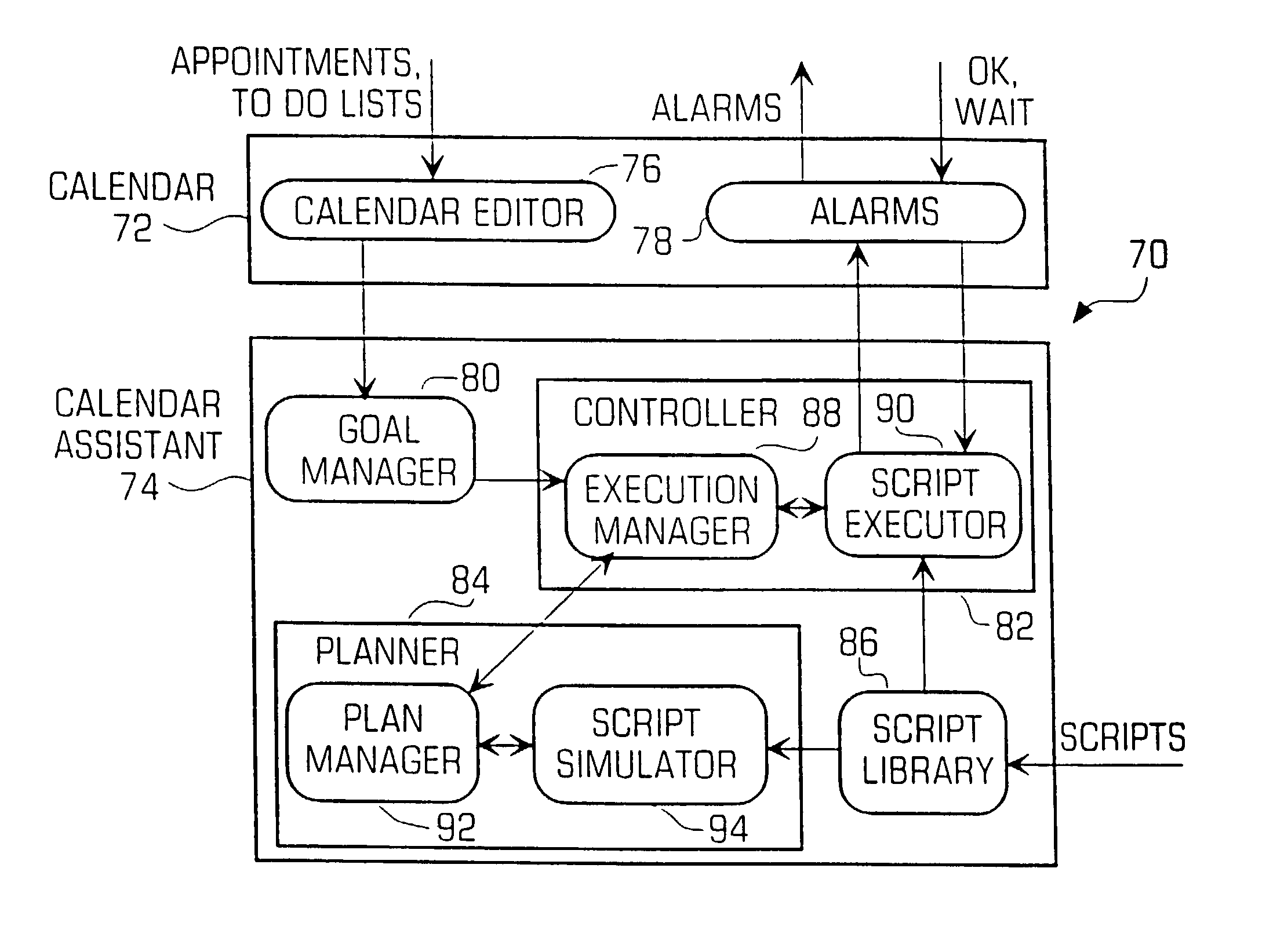

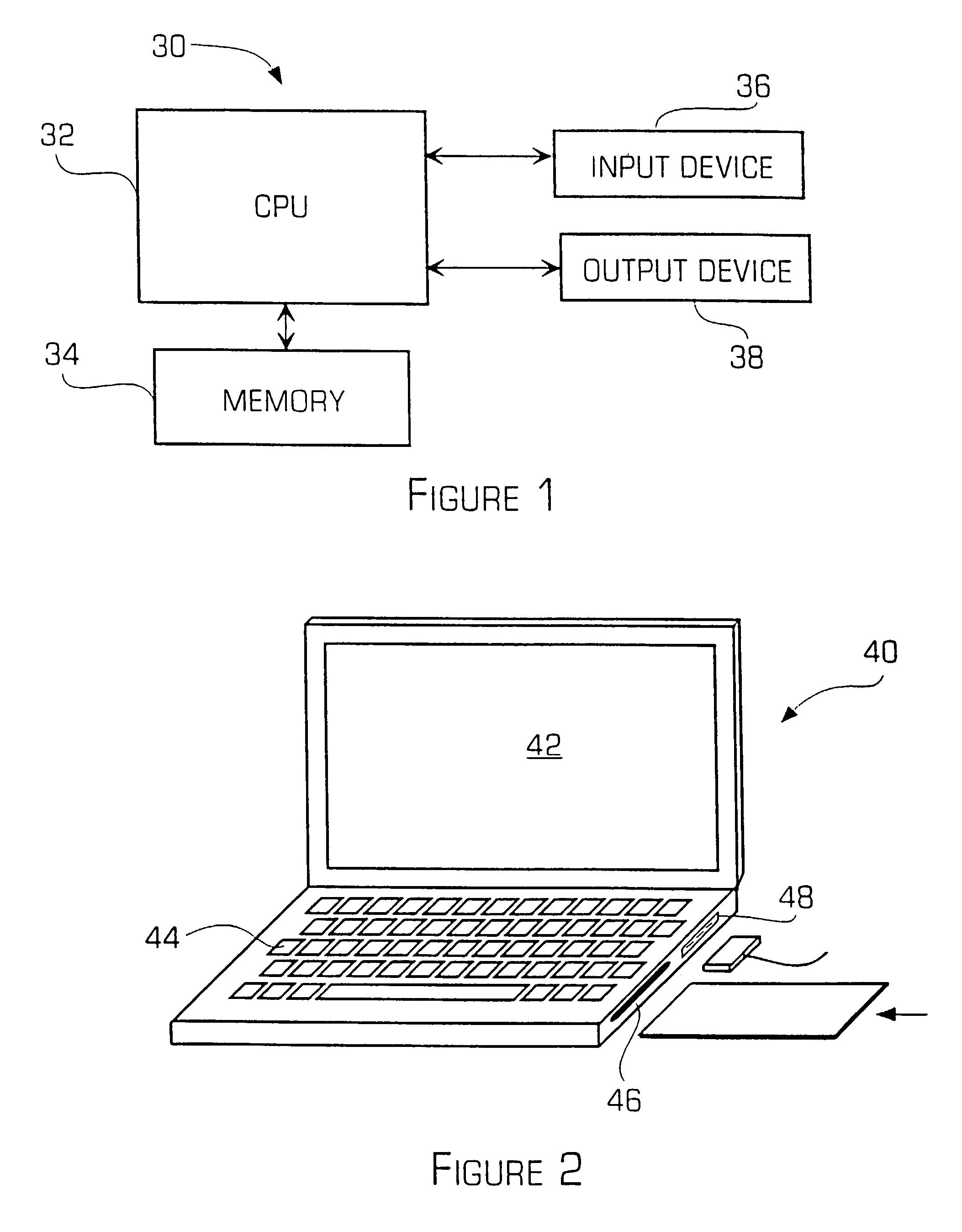

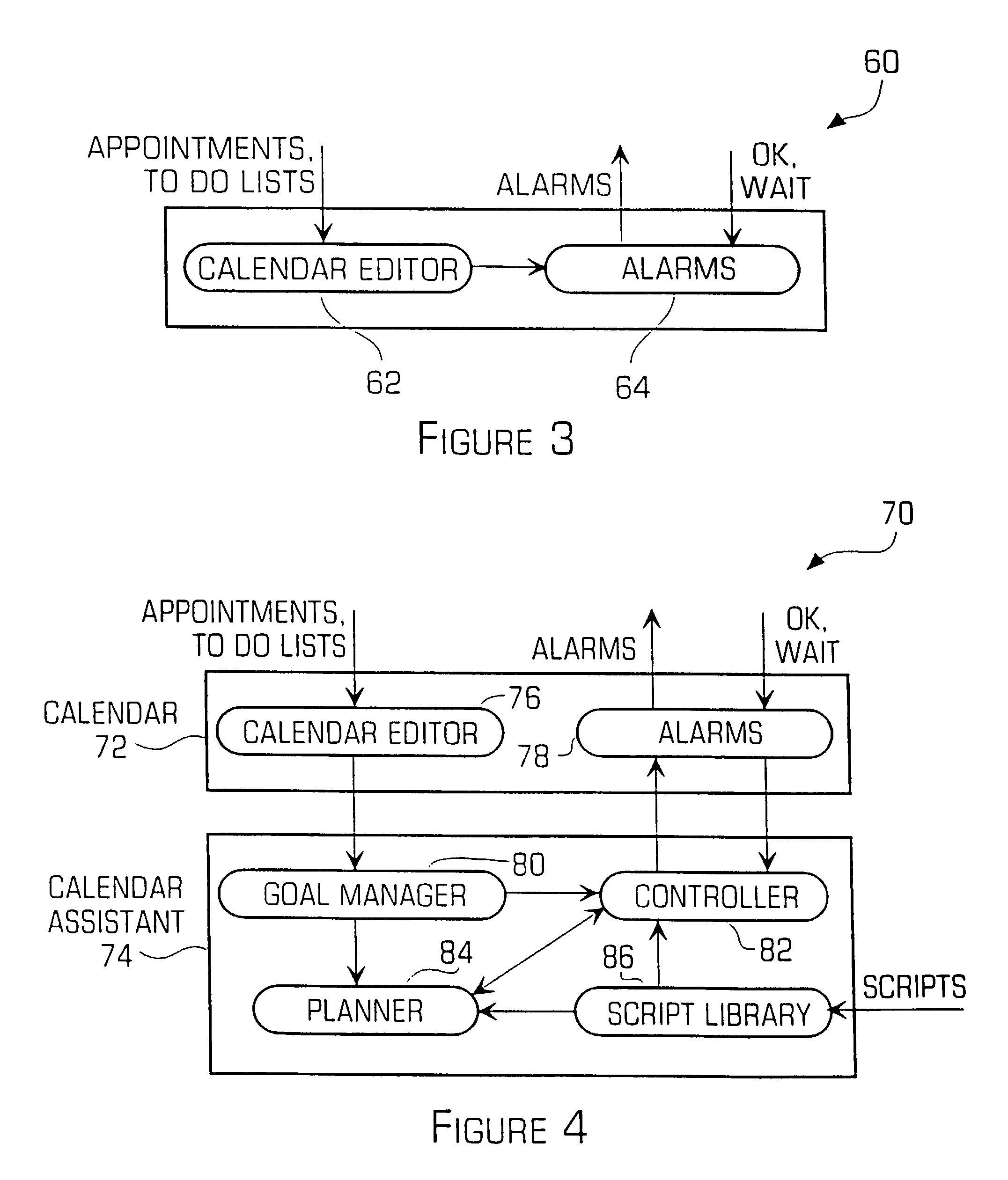

Automatic planning and cueing system and method

InactiveUS7027996B2MaximizationImprove variationComputer controlSimulator controlUser needsStart time

A method for automatically planning is provided, comprising the steps of receiving a plurality of tasks that a user needs to perform, each task having an earliest start time, a latest stop time, a duration for completing the event and a reward value for completing the event, the tasks including a fixed task having the duration being equal to the time period between the earliest start time and the latest stop time and a floating task having a duration that is less than the time period between the earliest start time and the latest stop time, arranging said fixed task into a plan for the user based on the earliest start time, duration and reward of the fixed task, determining an actual start time for the floating task within the time period between the earliest start time and the latest stop time based on the earliest start time and duration of the fixed task, and arranging said floating task into the plan for the user based on the selected actual start time and the reward of the floating task. A system for automatically planning a series of events into a plan is also provided.

Owner:ATTENTION CONTROL SYST

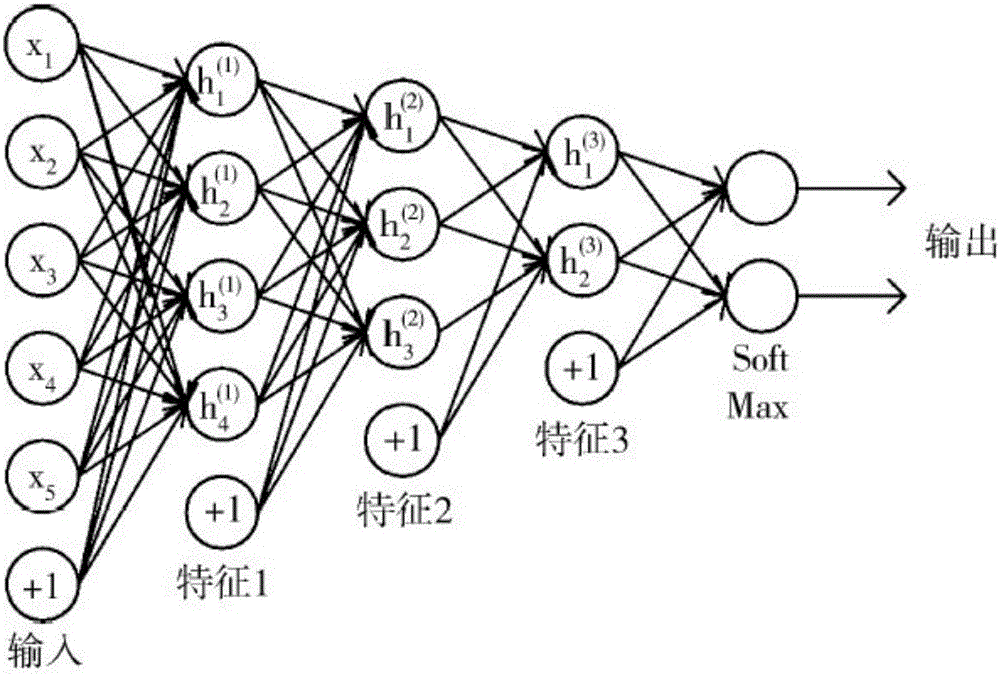

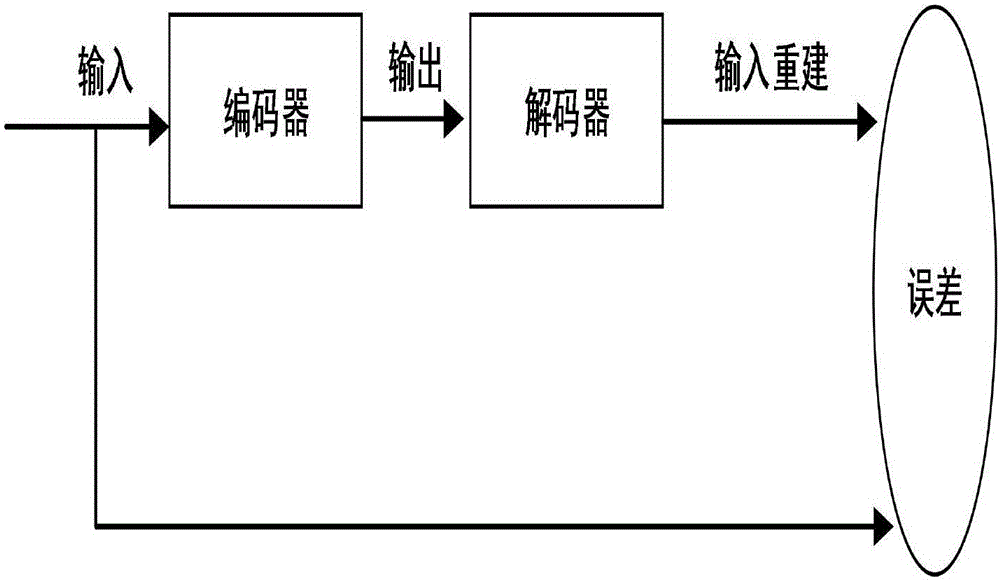

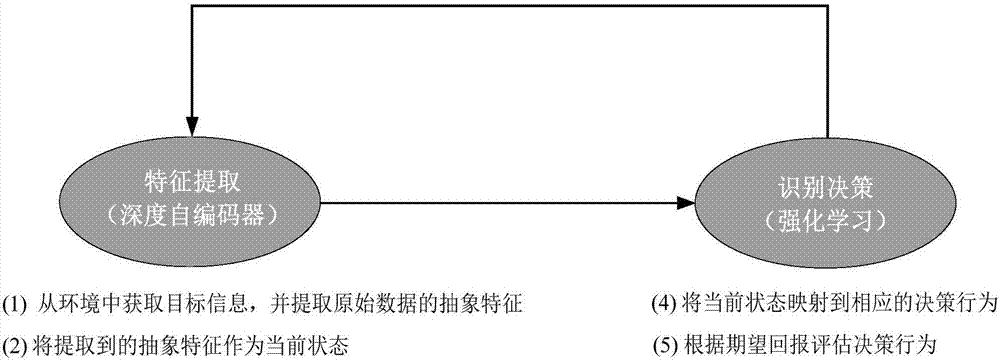

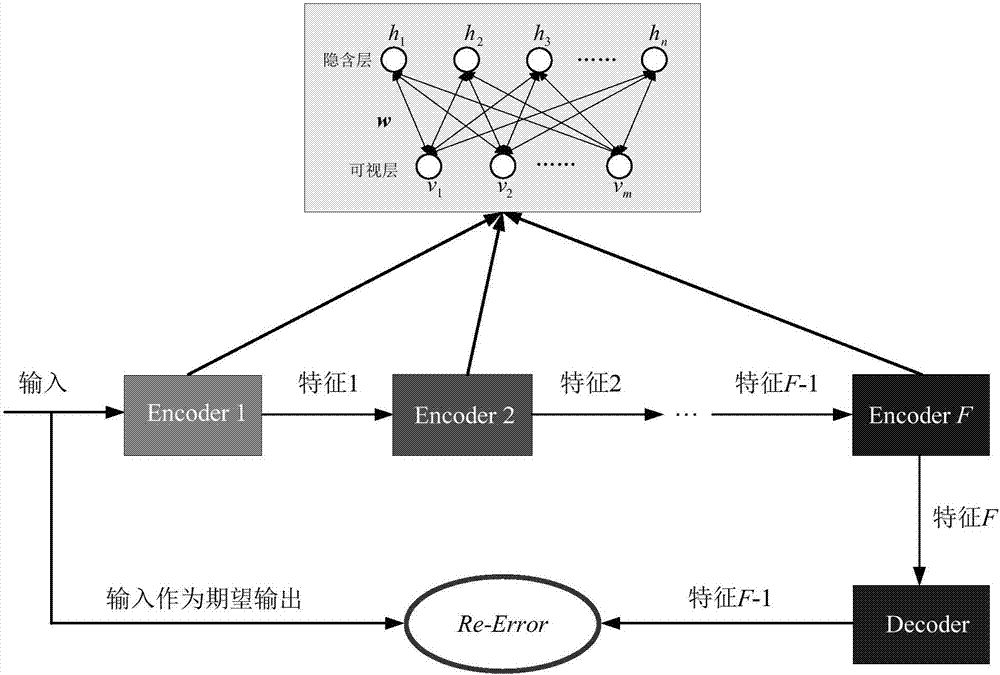

Mobile robot path planning method with combination of depth automatic encoder and Q-learning algorithm

ActiveCN105137967AAchieve cognitionImprove the ability to process imagesBiological neural network modelsPosition/course control in two dimensionsAlgorithmReward value

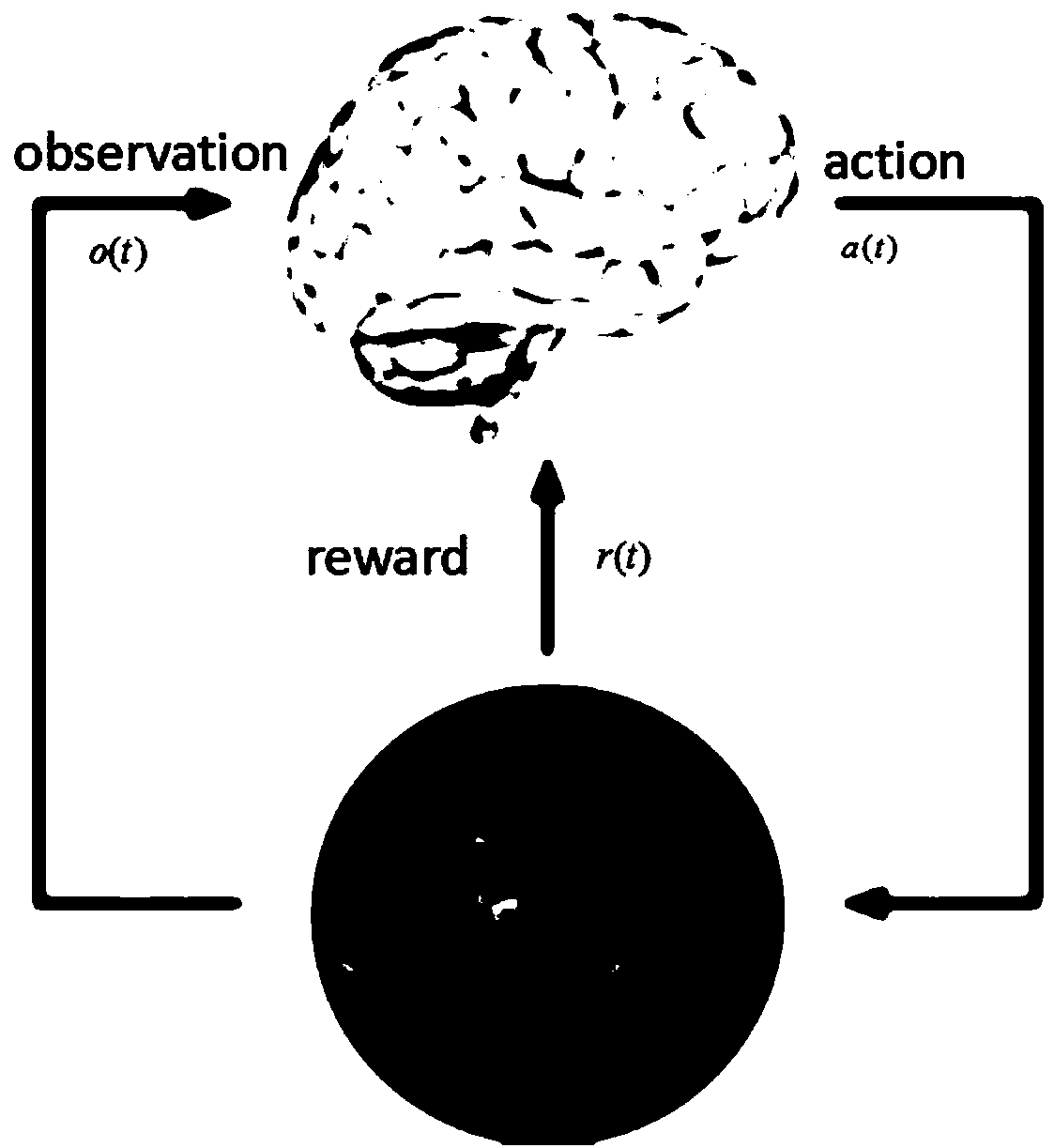

The invention provides a mobile robot path planning method with combination of a depth automatic encoder and a Q-learning algorithm. The method comprises a depth automatic encoder part, a BP neural network part and a reinforced learning part. The depth automatic encoder part mainly adopts the depth automatic encoder to process images of an environment in which a robot is positioned so that the characteristics of the image data are acquired, and a foundation is laid for subsequent environment cognition. The BP neural network part is mainly for realizing fitting of reward values and the image characteristic data so that combination of the depth automatic encoder and the reinforced learning can be realized. According to the Q-learning algorithm, knowledge is obtained in an action-evaluation environment via interactive learning with the environment, and an action scheme is improved to be suitable for the environment to achieve the desired purpose. The robot interacts with the environment to realize autonomous learning, and finally a feasible path from a start point to a terminal point can be found. System image processing capacity can be enhanced, and environment cognition can be realized via combination of the depth automatic encoder and the BP neural network.

Owner:BEIJING UNIV OF TECH

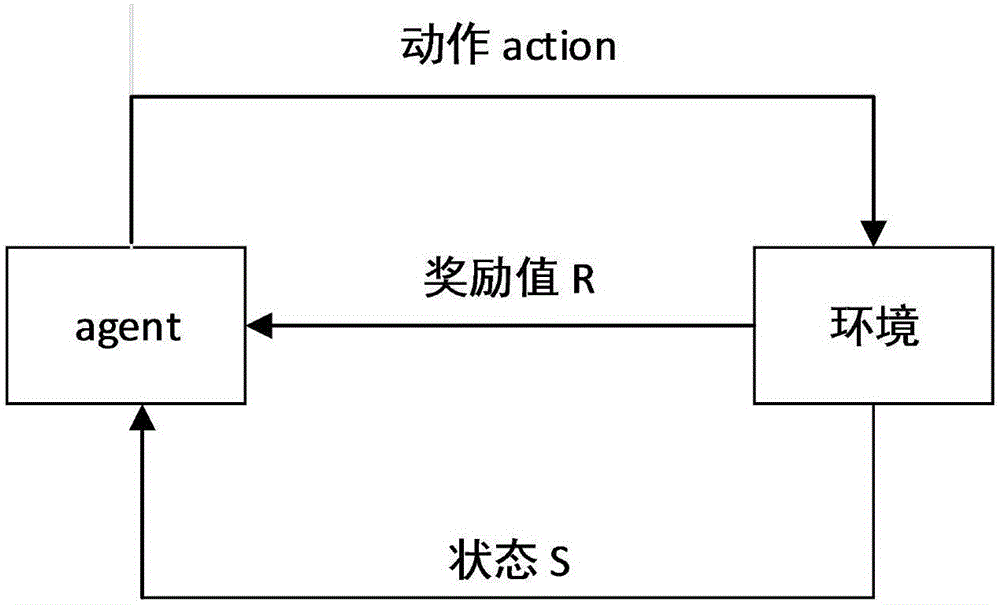

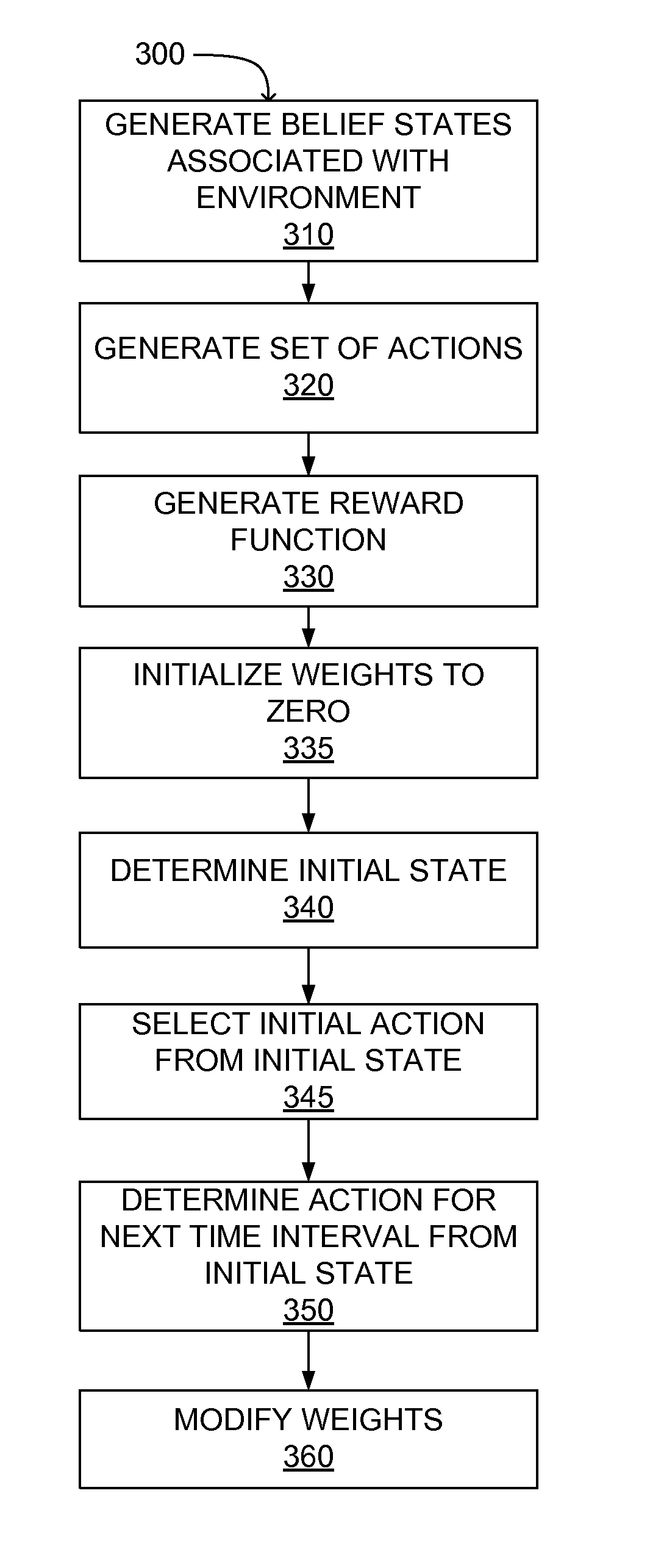

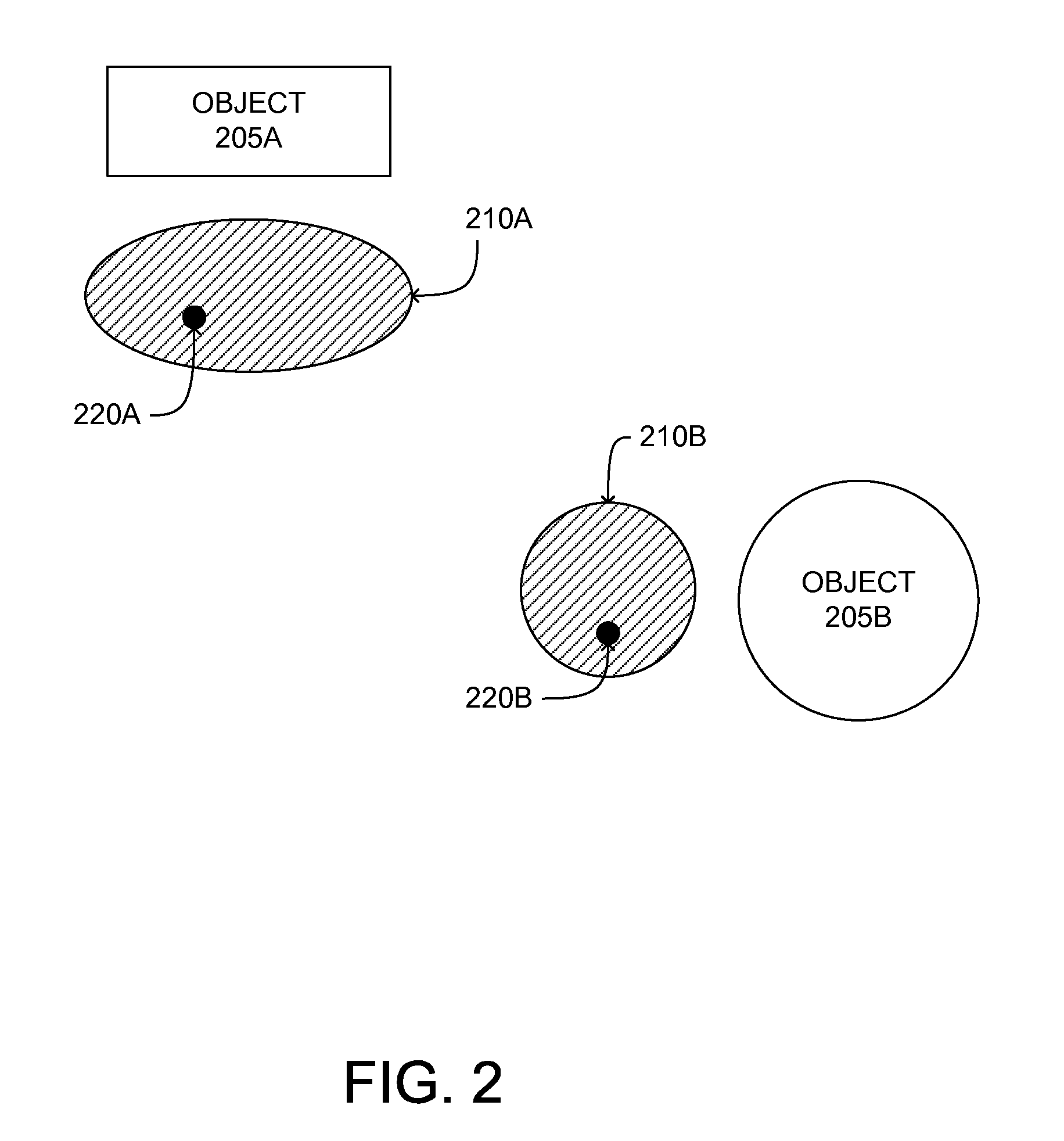

Smoothed Sarsa: Reinforcement Learning for Robot Delivery Tasks

The present invention provides a method for learning a policy used by a computing system to perform a task, such delivery of one or more objects by the computing system. During a first time interval, the computing system determines a first state, a first action and a first reward value. As the computing system determines different states, actions and reward values during subsequent time intervals, a state description identifying the current sate, the current action, the current reward and a predicted action is stored. Responsive to a variance of a stored state description falling below a threshold value, the stored state description is used to modify one or more weights in the policy associated with the first state.

Owner:HONDA MOTOR CO LTD

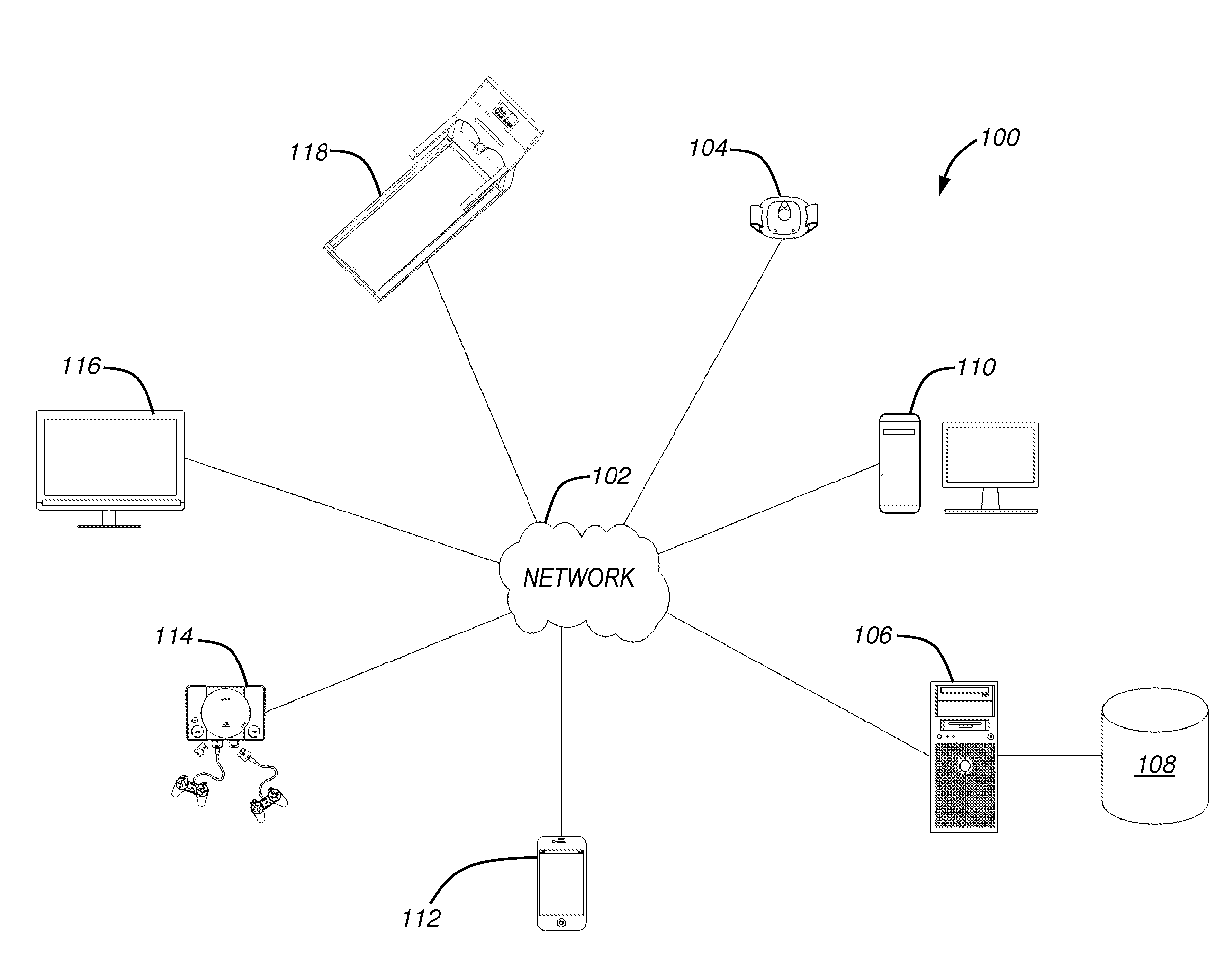

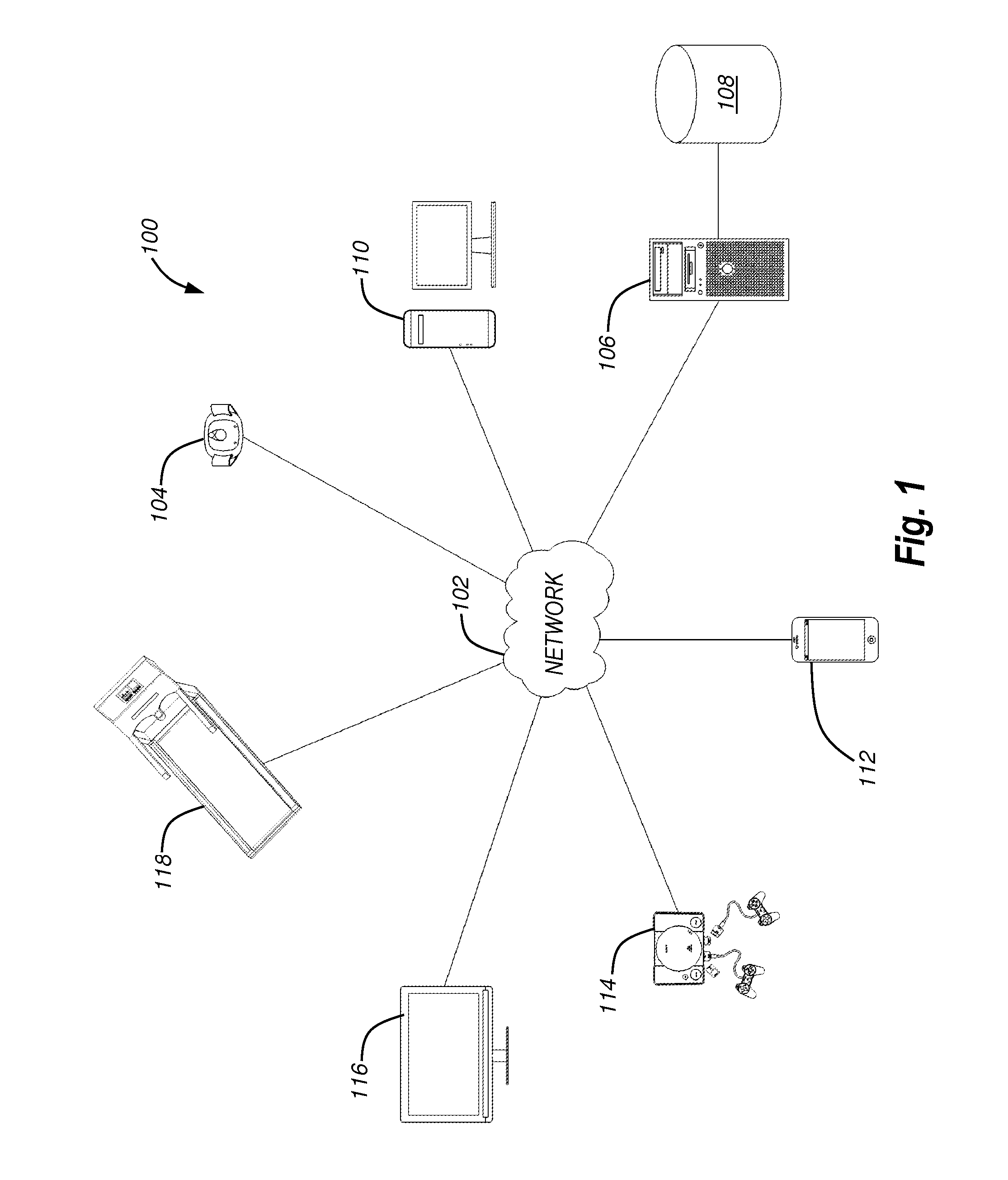

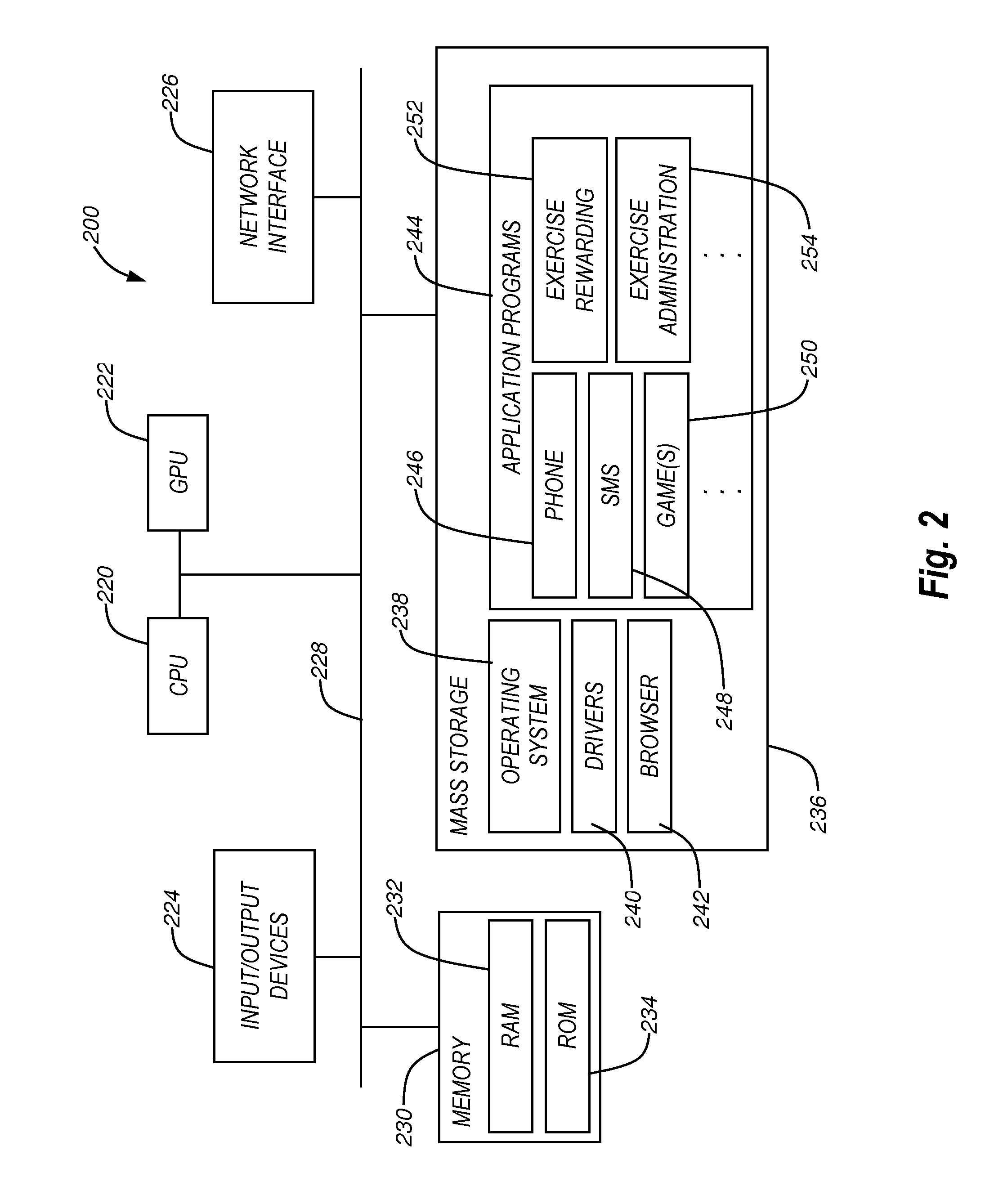

System and method for rewarding physical activity

ActiveUS20130330694A1Cosmonautic condition simulationsDiagnostic recording/measuringBattery chargeReward value

A system incentivizes people to engage in physical activity. A person's physical activity may be monitored over a period of time. Such monitoring may occur by using a sensing device carried or worn by the user. In the system, one or more thresholds for physical activity are established. Information tracked using the sensing device can be compared to the thresholds. A percentage of physical activity relative to the threshold can be translated to a reward established for the physical activity, and the percentage may be used to determine a reward value for an electronic device relative to a full available value. Rewards may include times or amounts. Time values may indicate durations during which particular activities or devices may be used. Amount values may indicate an amount of a battery charge, a number of communications, a currency value, or other values relative to use of an electronic device.

Owner:IFIT INC

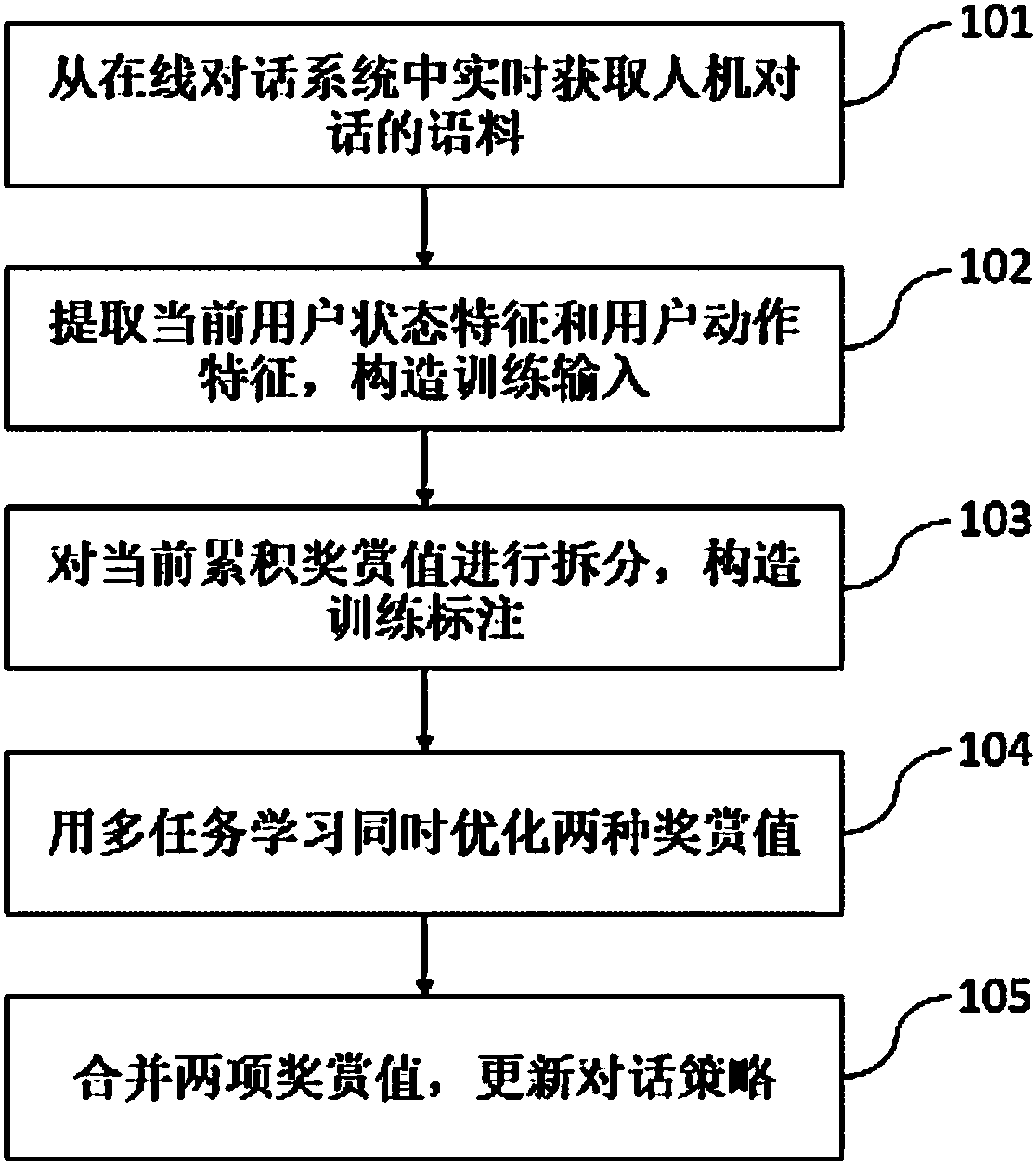

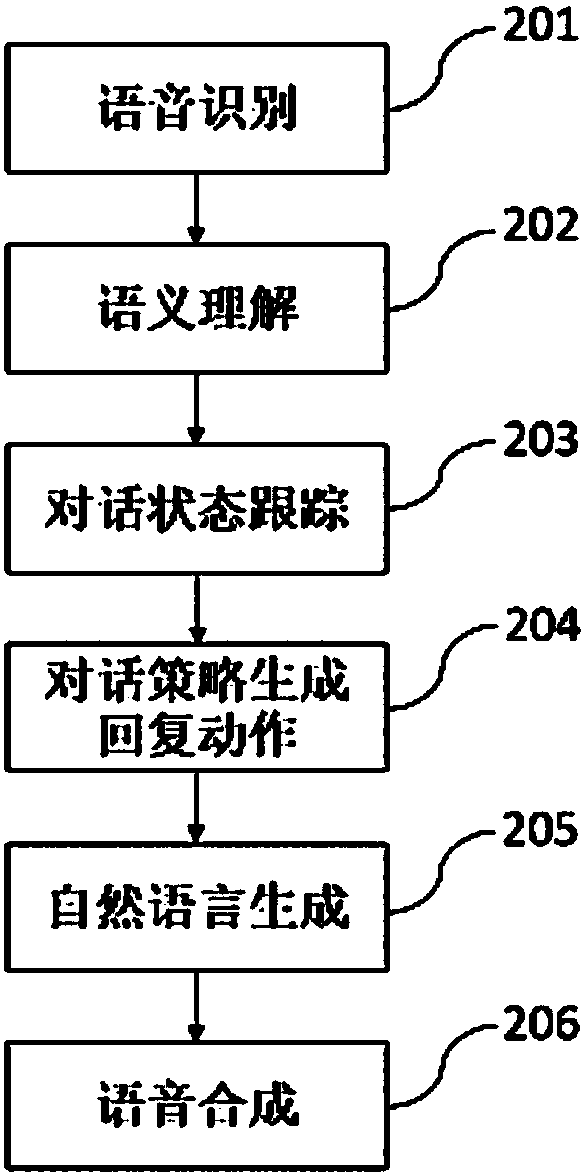

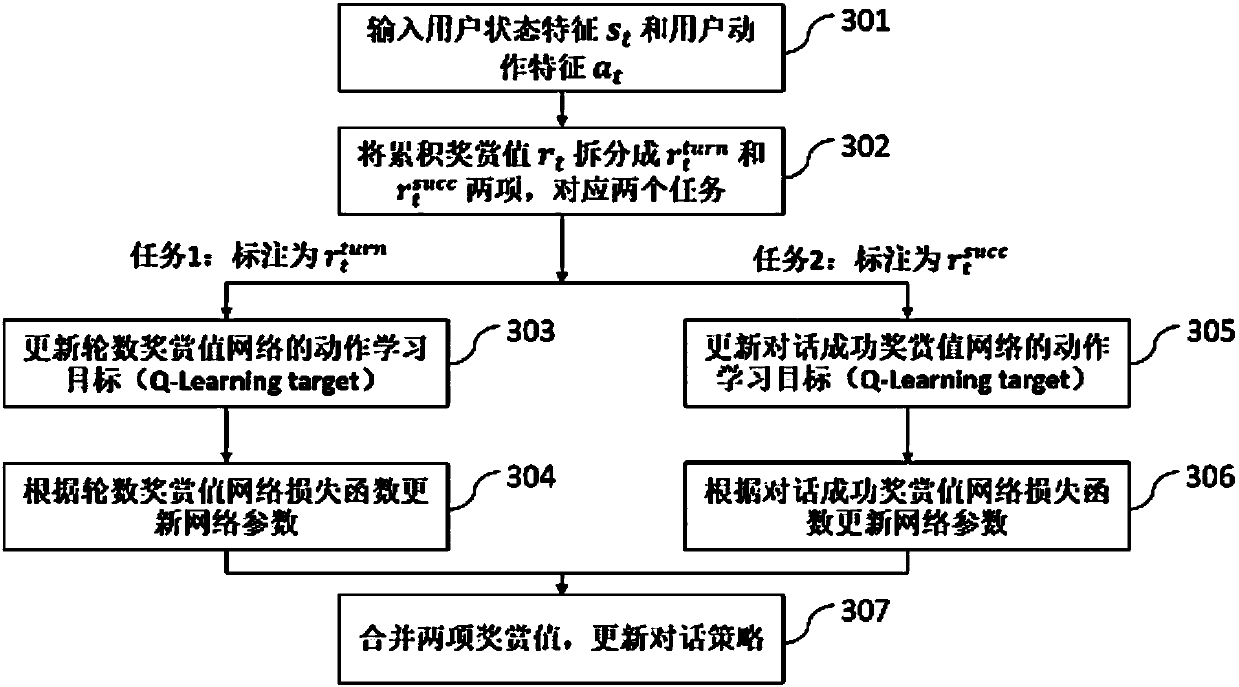

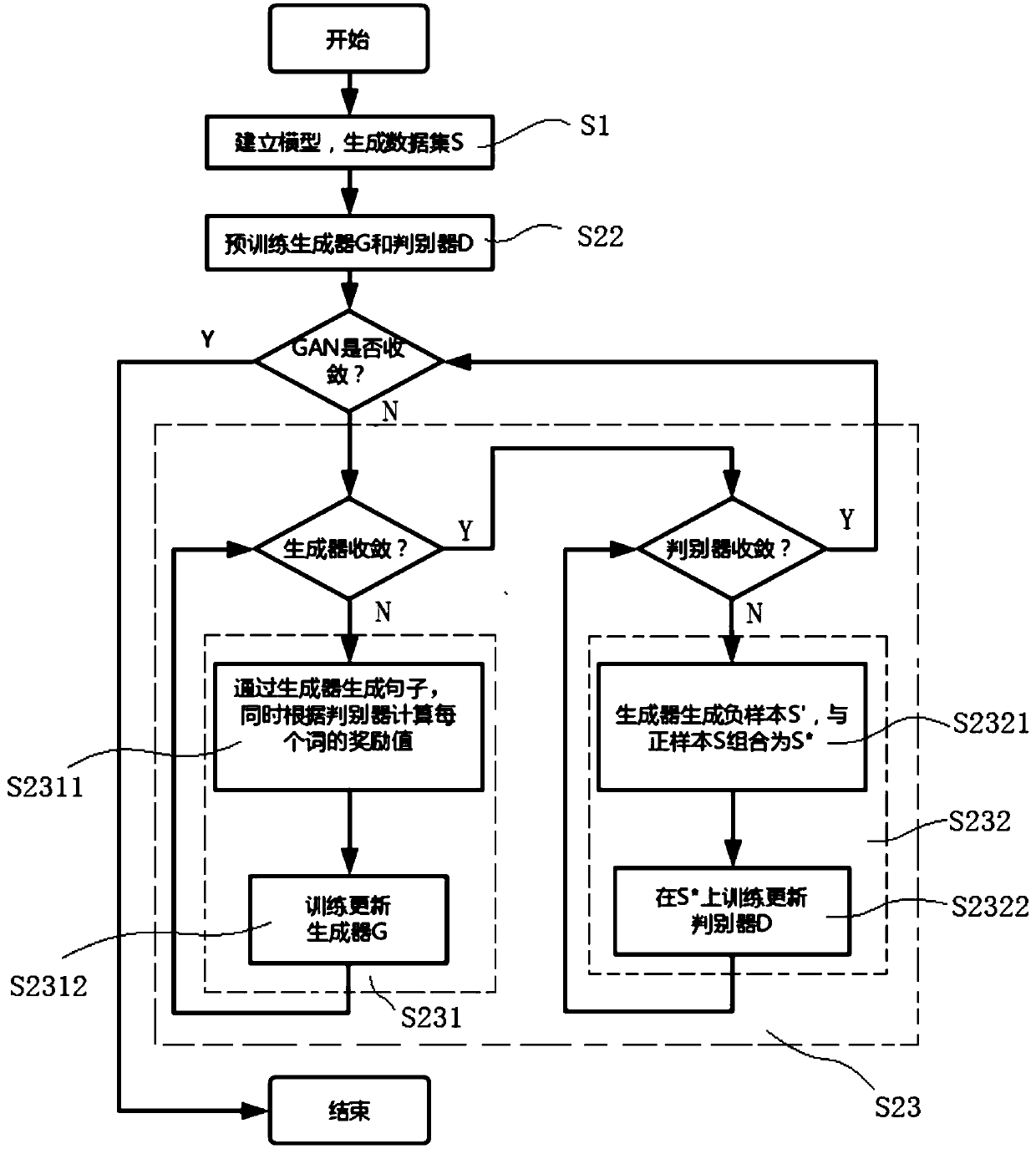

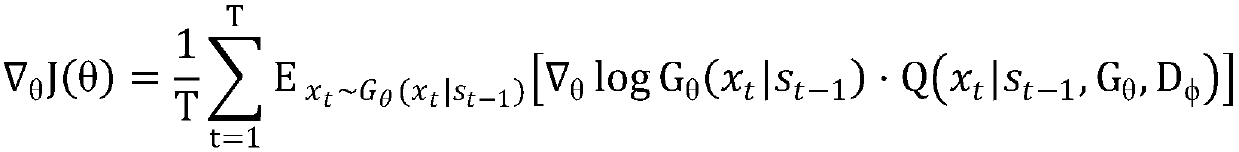

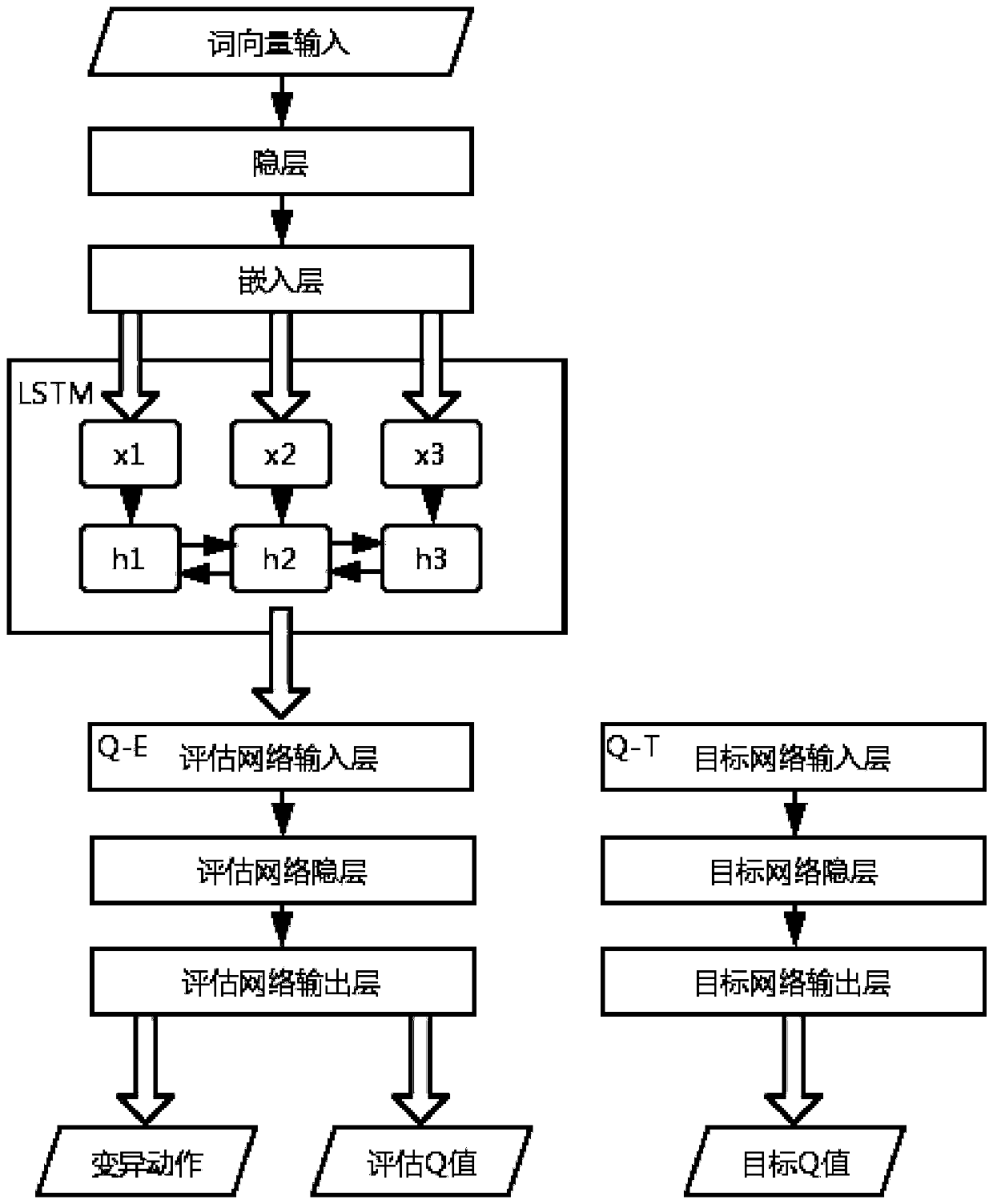

Dialog strategy online realization method based on multi-task learning

ActiveCN107357838AAvoid Manual Design RulesSave human effortSpeech recognitionSpecial data processing applicationsMan machineNetwork structure

The invention discloses a dialog strategy online realization method based on multi-task learning. According to the method, corpus information of a man-machine dialog is acquired in real time, current user state features and user action features are extracted, and construction is performed to obtain training input; then a single accumulated reward value in a dialog strategy learning process is split into a dialog round number reward value and a dialog success reward value to serve as training annotations, and two different value models are optimized at the same time through the multi-task learning technology in an online training process; and finally the two reward values are merged, and a dialog strategy is updated. Through the method, a learning reinforcement framework is adopted, dialog strategy optimization is performed through online learning, it is not needed to manually design rules and strategies according to domains, and the method can adapt to domain information structures with different degrees of complexity and data of different scales; and an original optimal single accumulated reward value task is split, simultaneous optimization is performed by use of multi-task learning, therefore, a better network structure is learned, and the variance in the training process is lowered.

Owner:AISPEECH CO LTD

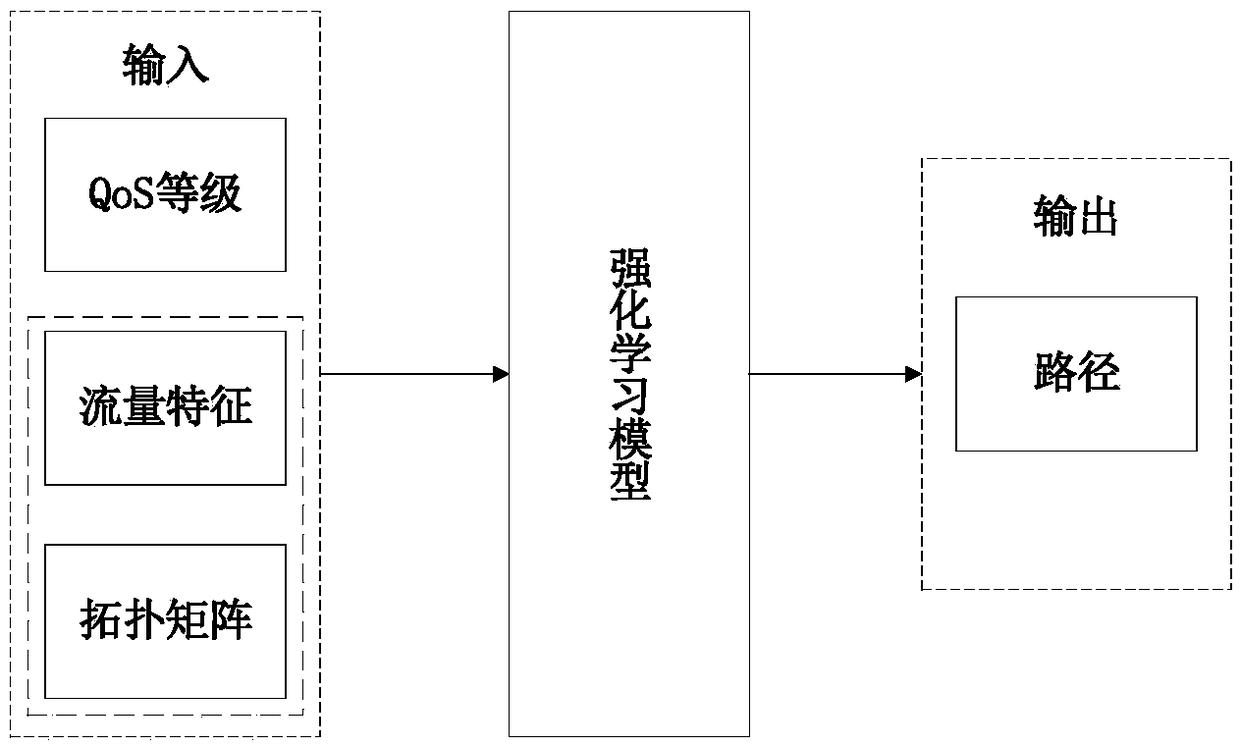

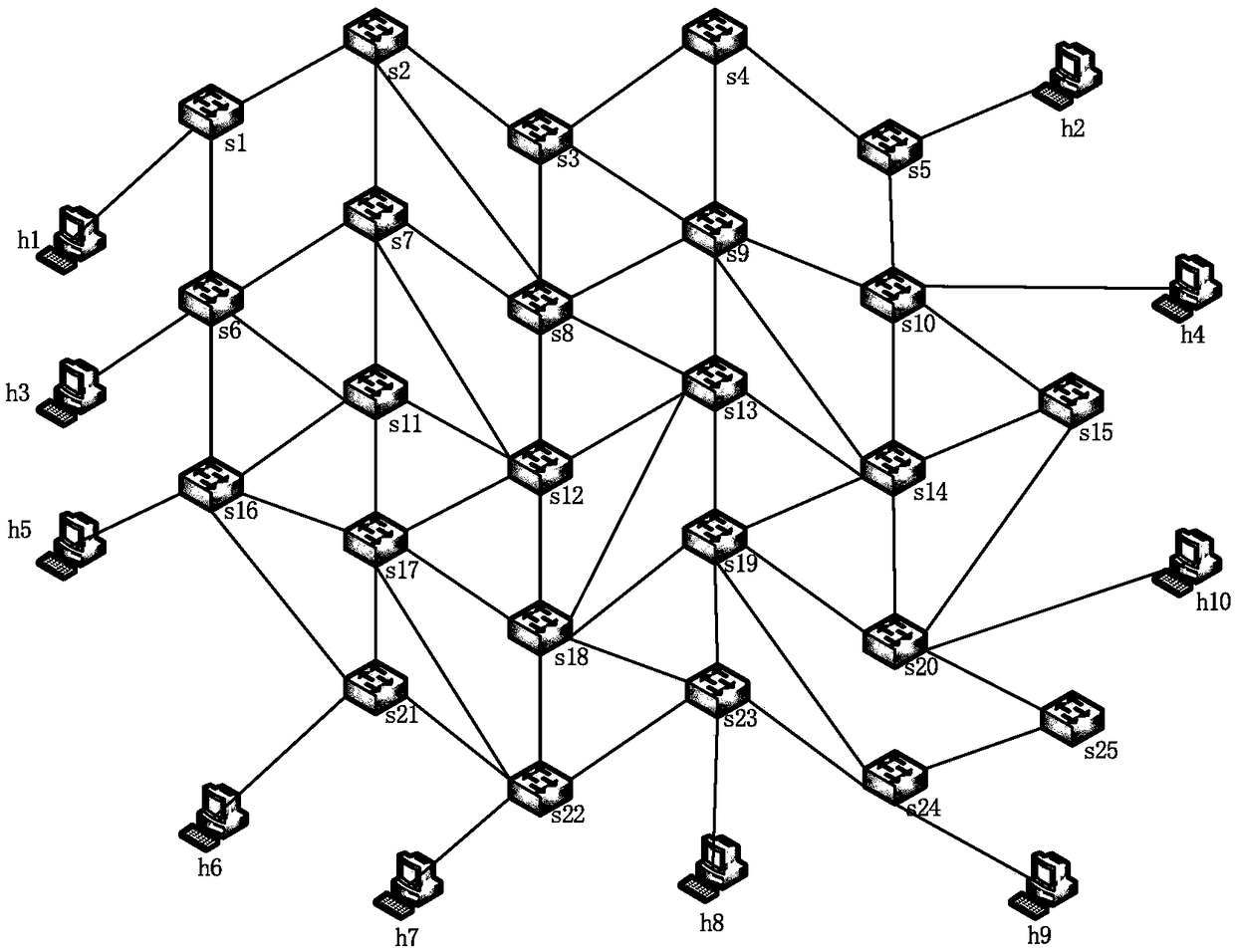

SDN route planning method based on reinforcement learning

ActiveCN109361601AIncrease profitReduce congestionData switching networksTraffic characteristicReward value

The invention discloses an SDN route planning method based on reinforcement learning. The method comprises the following steps: constructing a reinforcement learning model capable of generating a route by using Q learning in reinforcement learning on an SDN control plane, designing a reward function in the Q learning algorithm, generating different reward values according to different QoS levels of traffic; inputting the current network topology matrix, traffic characteristics, and QoS levels of traffic in the reinforcement learning model for training to implement traffic-differentiated SDN route planning, and finding the shortest forwarding path meeting the QoS requirements for each traffic. The SDN route planning method, by utilizing the characteristics of continuous learning and environment interaction and adjustment strategy, is high in link utilization rate and can effectively reduce the network congestion compared with the Dijkstra algorithm commonly used in traditional route planning.

Owner:ZHEJIANG GONGSHANG UNIVERSITY

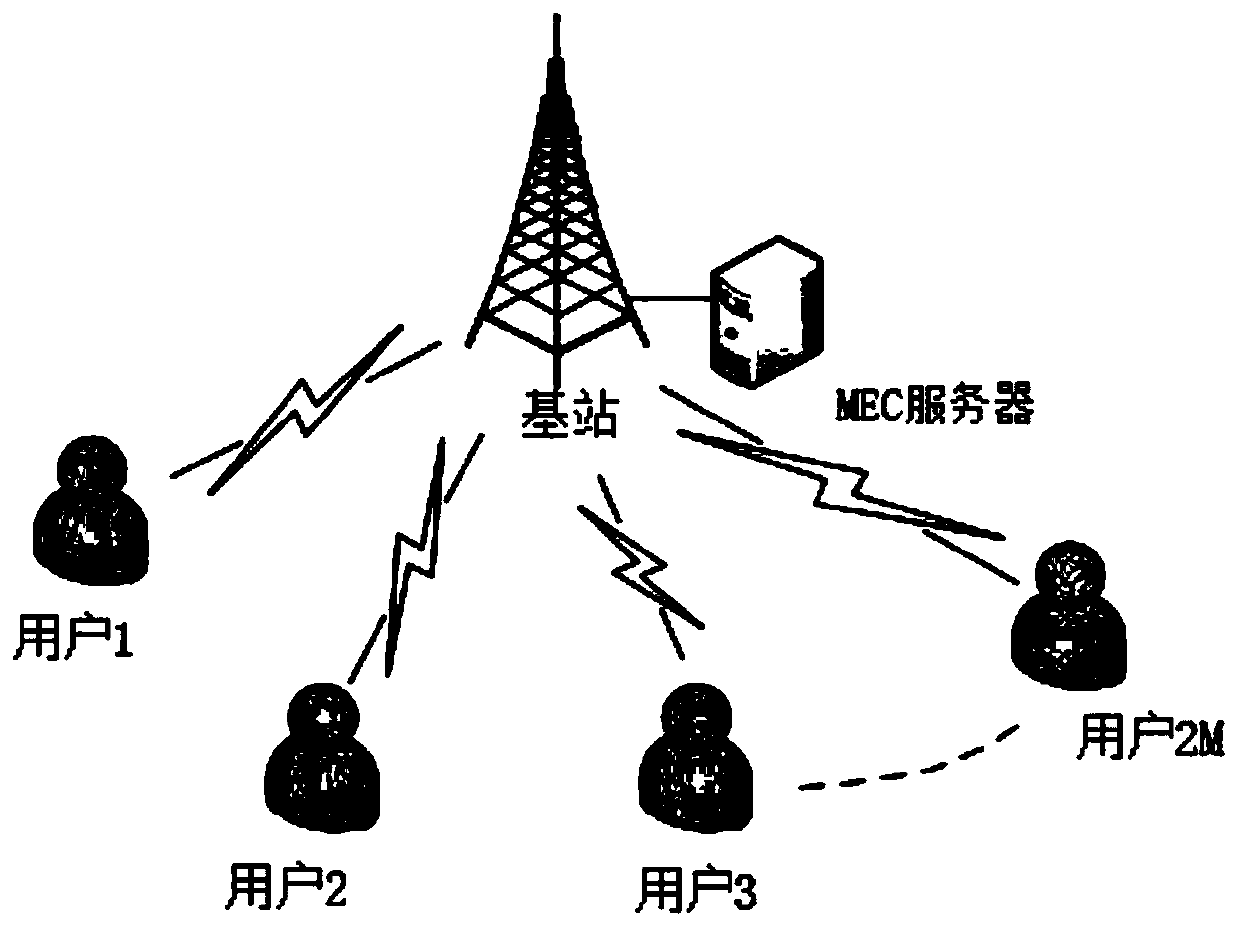

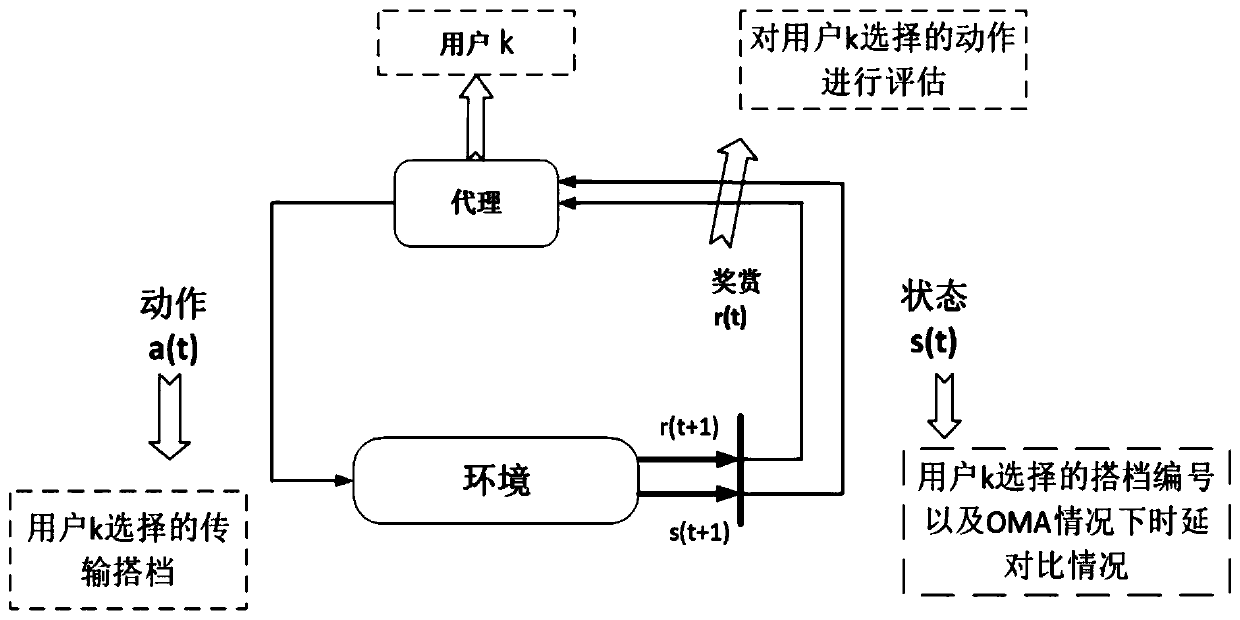

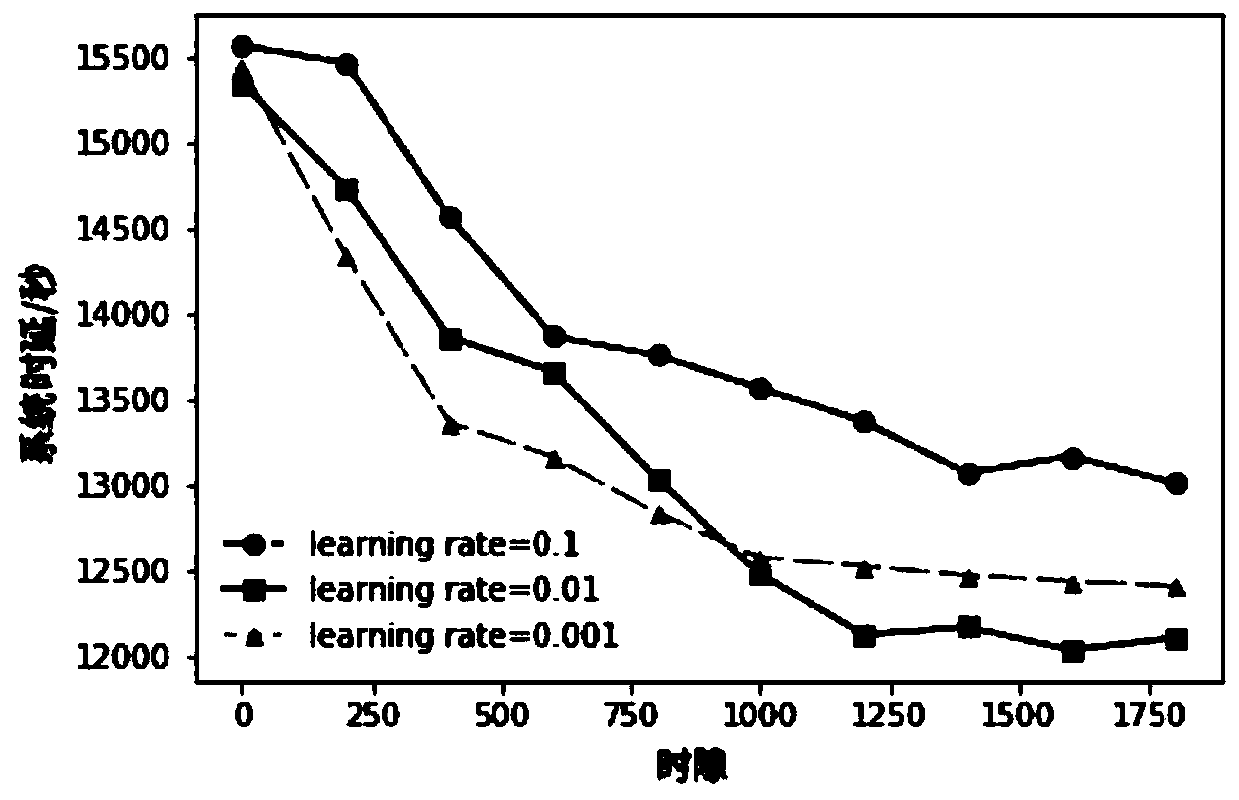

Unloading time delay optimization method in mobile edge computing scene

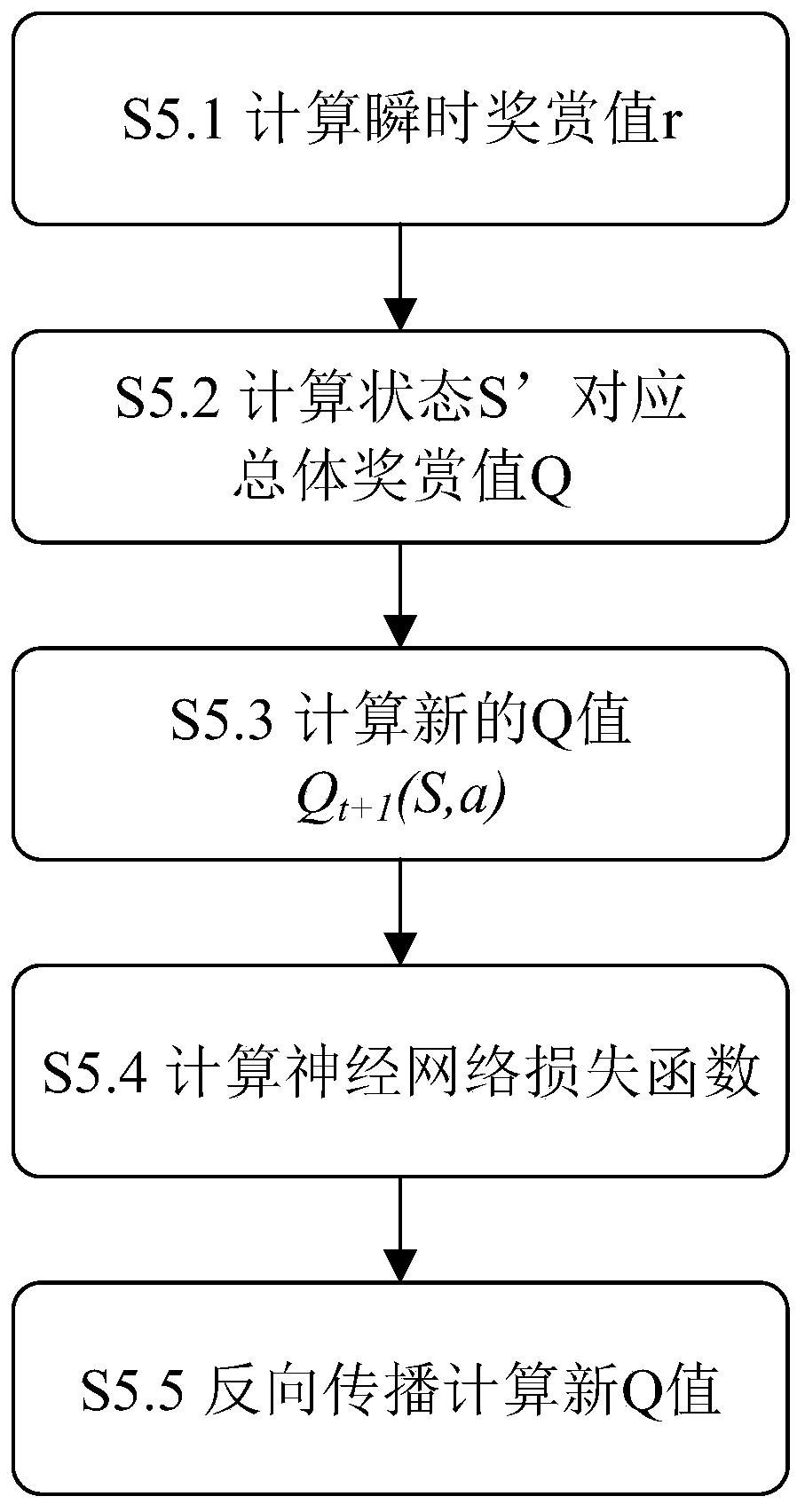

ActiveCN110113190AThe total offload delay is continuously minimizedNeural architecturesData switching networksAlgorithmTime delays

The invention aims to provide an unloading delay optimization method in a mobile edge computing scene. The method comprises the following steps: step 1, constructing a system model, wherein the systemmodel comprises 2M users and an MEC server, each user has L tasks and needs to be unloaded to the MEC server for calculation, and it is assumed that only two users are allowed to adopt a mixed NOMA strategy to unload at the same time; step 2, setting each user as an executor, and performing action selection by each executor according to a DQN algorithm, i.e., selecting one user from the rest 2M-1users as the own transmission partner and unloading the transmission partner at the same time; step 3, performing system optimization by using a DQN algorithm: calculating the total unloading time delay of the system, updating a reward value, then training a neural network, and updating a Q function by using the neural network as a function approximator after the selection of all users is completed; and continuously carrying out the iterative optimization on the system until the optimal time delay is found. The problem of high time delay consumption in the existing multi-user MEC scene is solved.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

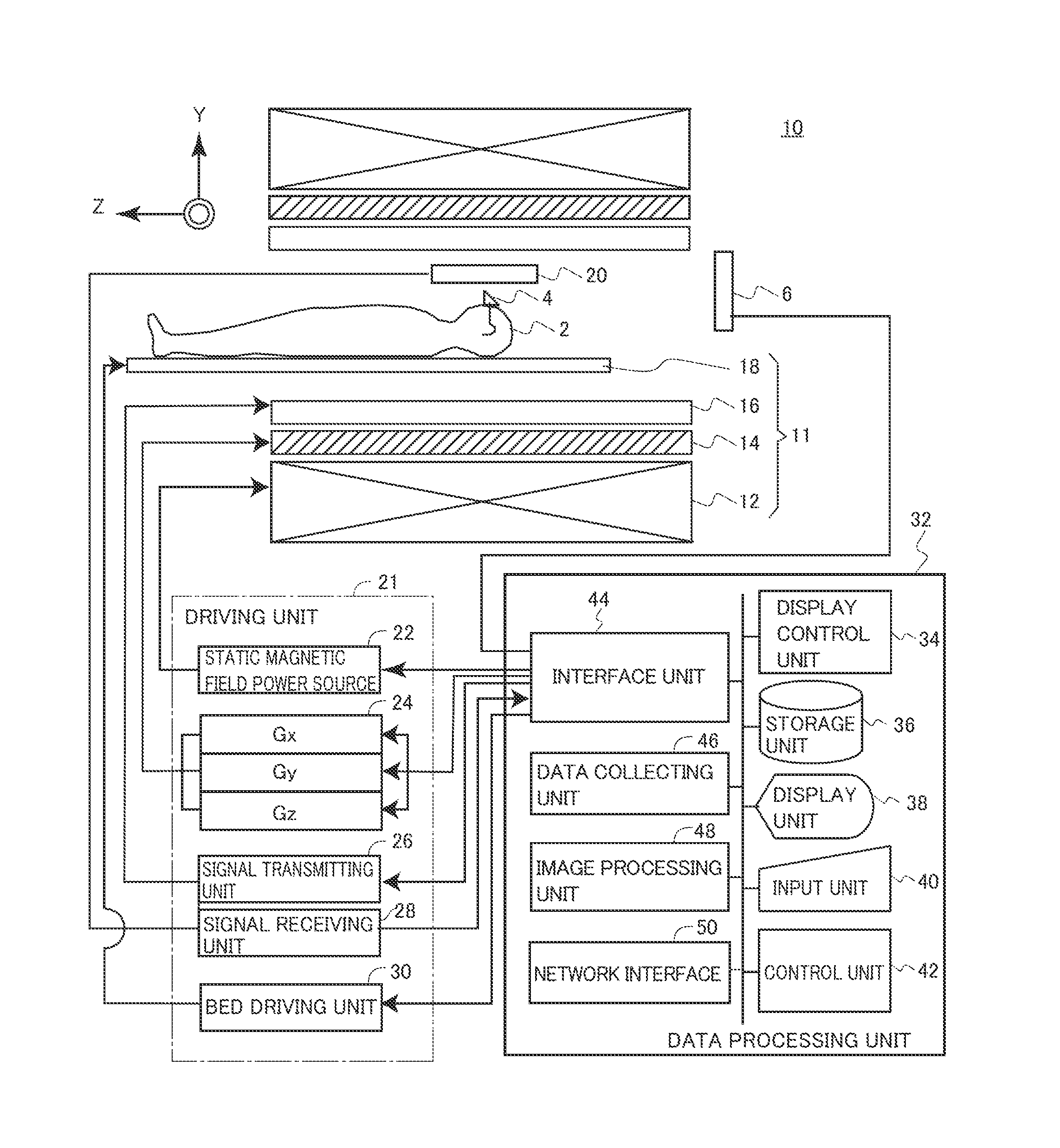

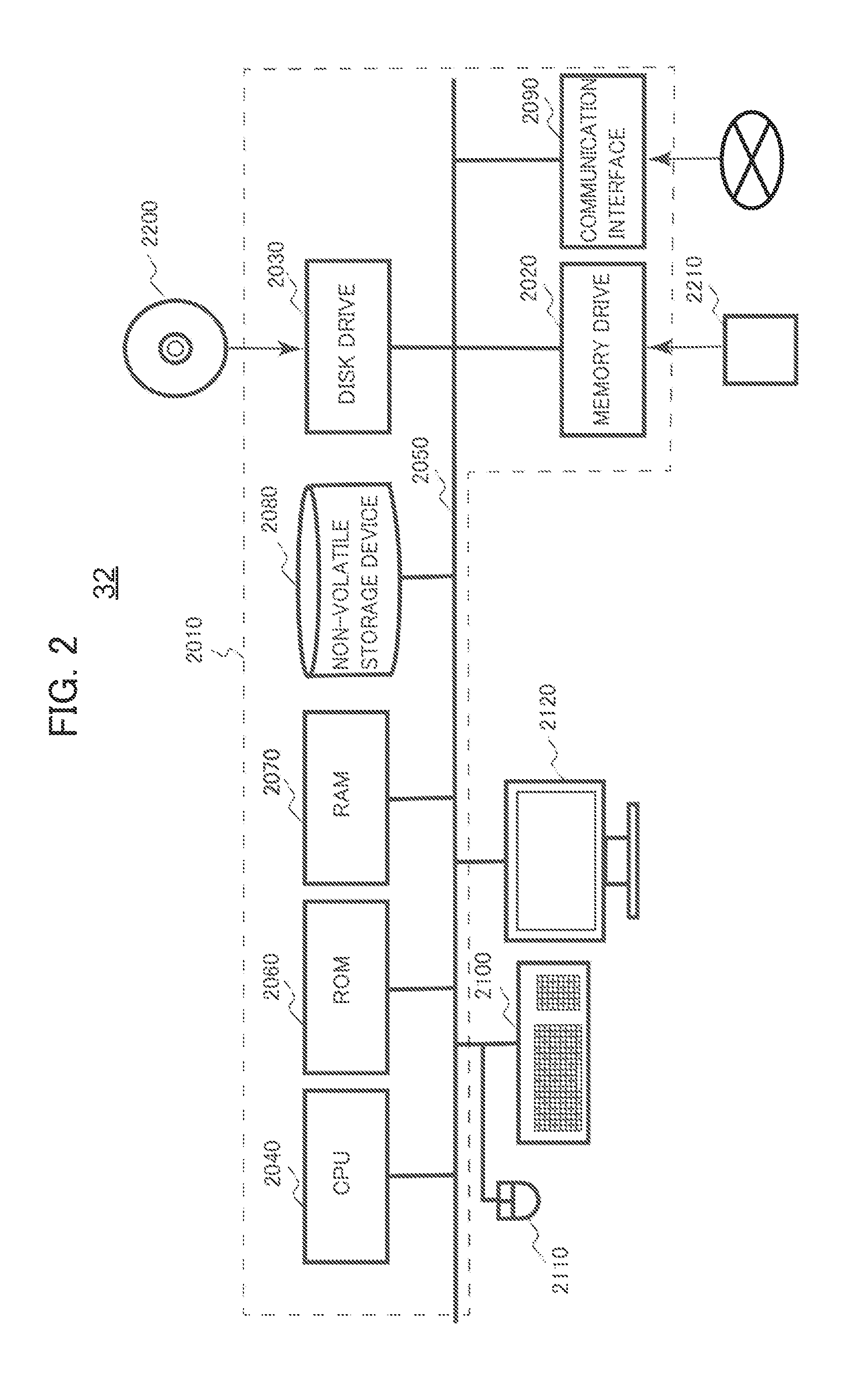

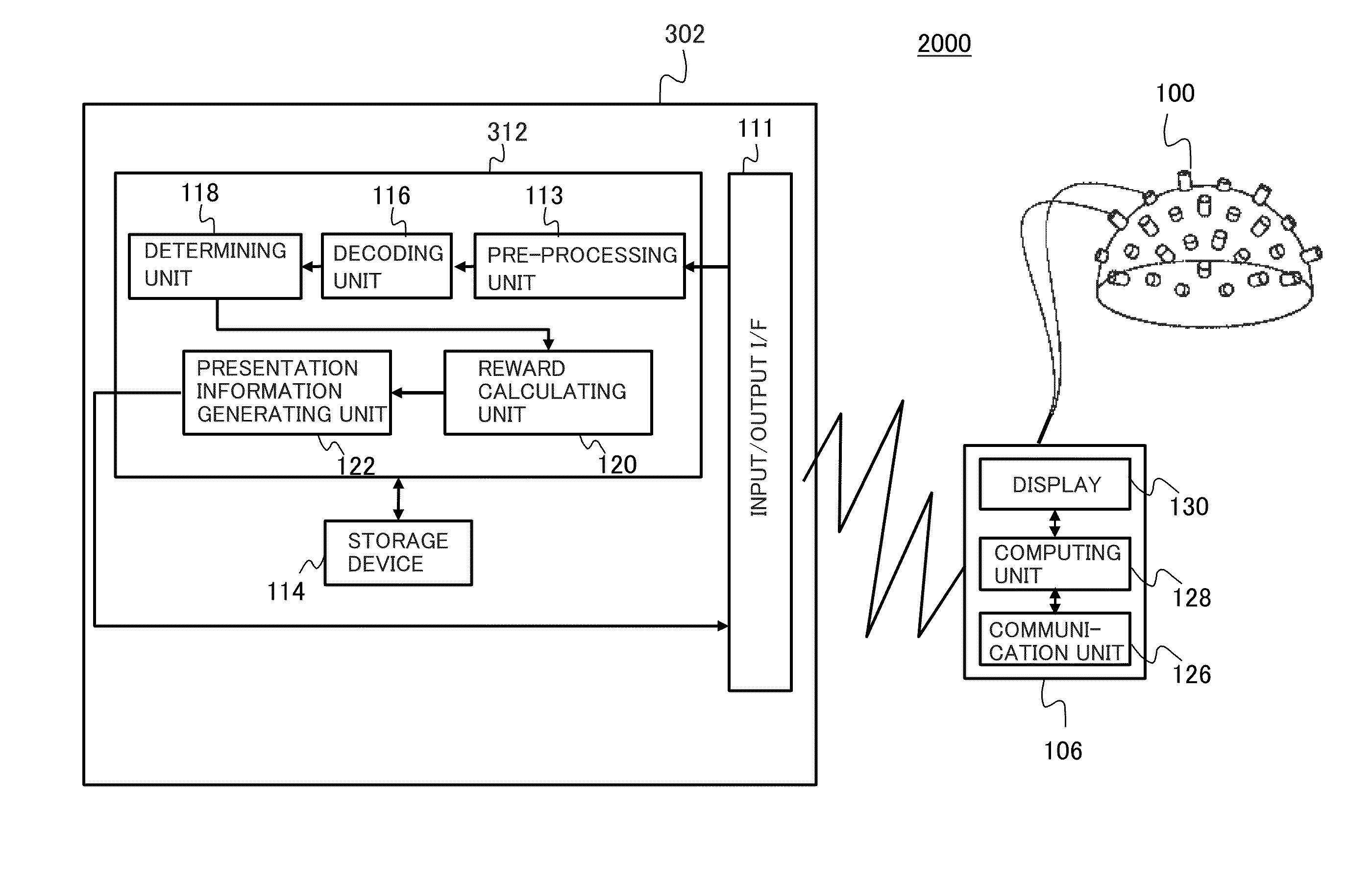

Brain activity training apparatus and brain activity training system

Provided is a brain activity training apparatus for training to cause a change in correlation of connectivity among brain regions, utilizing measured correlations of connections among brains regions as feedback information. From measured data of resting-state functional connectivity MRI of a healthy group and a patient group (S102), correlation matrix of degree of brain activities among prescribed brain regions is derived for each subject. Feature extraction is executed (S104) by regularized canonical correlation analysis on the correlation matrix and attributes of the subject including a disease / healthy label of the subject. Based on the result of regularized canonical correlation analysis, by discriminant analysis through sparse logistic regression, a discriminator is generated (S108). The brain activity training apparatus feeds back a reward value to the subject based on the result of discriminator on the data of functional connectivity MRI of the subject.

Owner:ATR ADVANCED TELECOMM RES INST INT

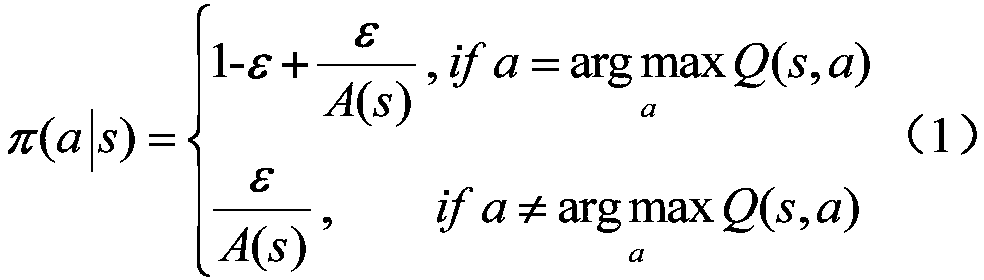

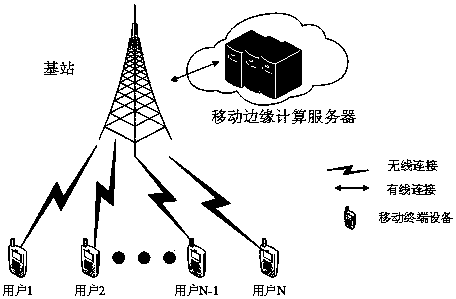

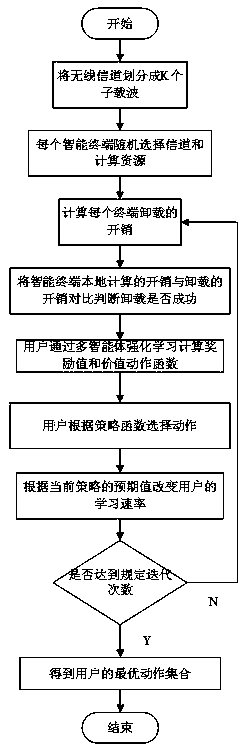

Resource allocation method based on multi-agent reinforcement learning in mobile edge computing system

ActiveCN110418416ALow costReduce complexityTransmissionHigh level techniquesCarrier signalReward value

The invention discloses a resource allocation method based on multi-agent reinforcement learning in a mobile edge computing system, which comprises the following steps: (1) dividing a wireless channelinto a plurality of subcarriers, wherein each user can only select one subcarrier; (2) enabling each user to randomly select a channel and computing resources, and then calculating time delay and energy consumption generated by user unloading; (3) comparing the time delay energy consumption generated by the local calculation of the user with the time delay energy consumption unloaded to the edgecloud, and judging whether the unloading is successful or not; (4) obtaining a reward value of the current unloading action through multi-agent reinforcement learning, and calculating a value function; (5) enabling the user to perform action selection according to the strategy function; and (6) changing the learning rate of the user to update the strategy to obtain an optimal action set. Based onvariable-rate multi-agent reinforcement learning, computing resources and wireless resources of the mobile edge server are fully utilized, and the maximum value of the utility function of each intelligent terminal is obtained while the necessity of user unloading is considered.

Owner:SOUTHEAST UNIV

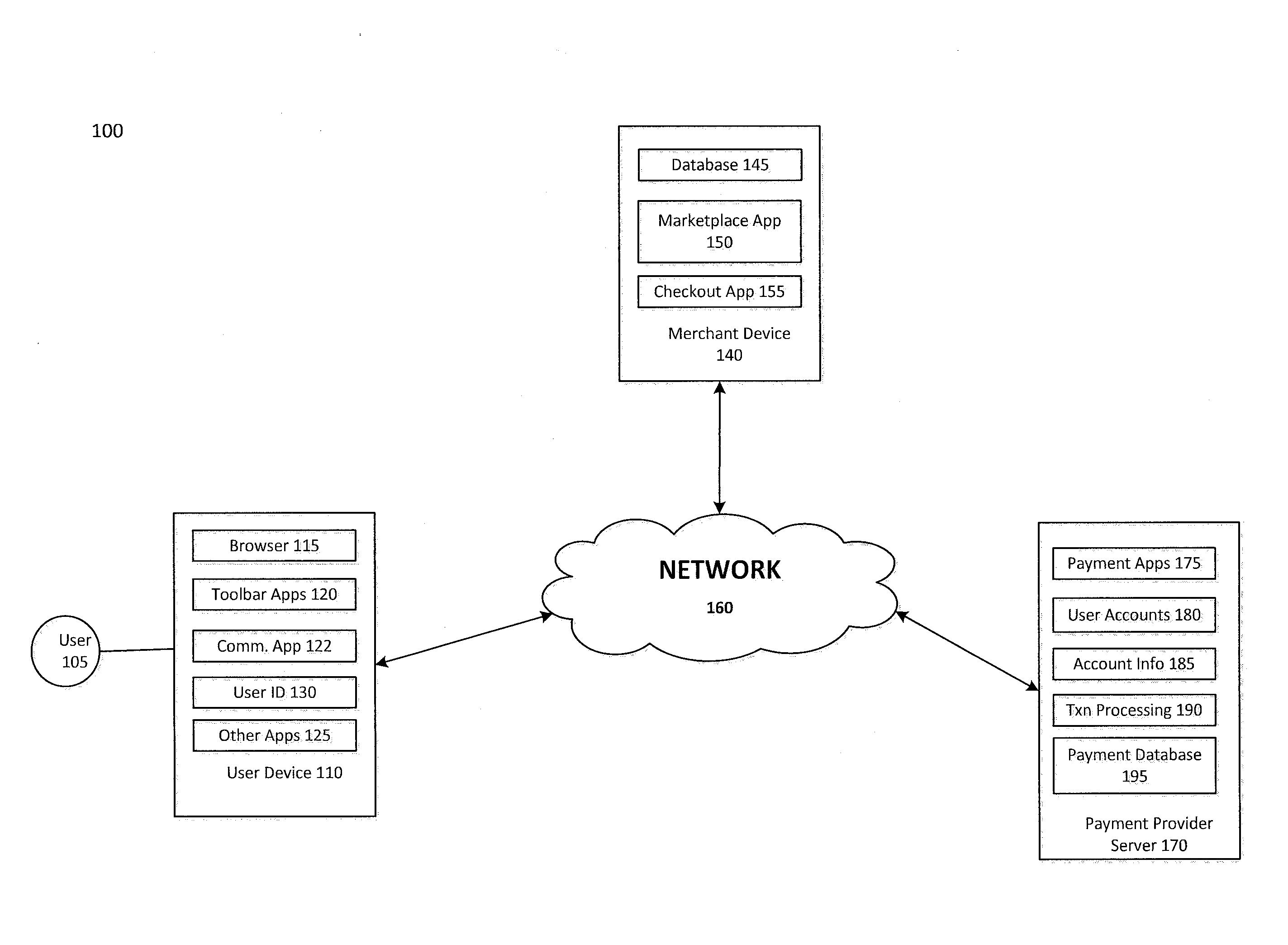

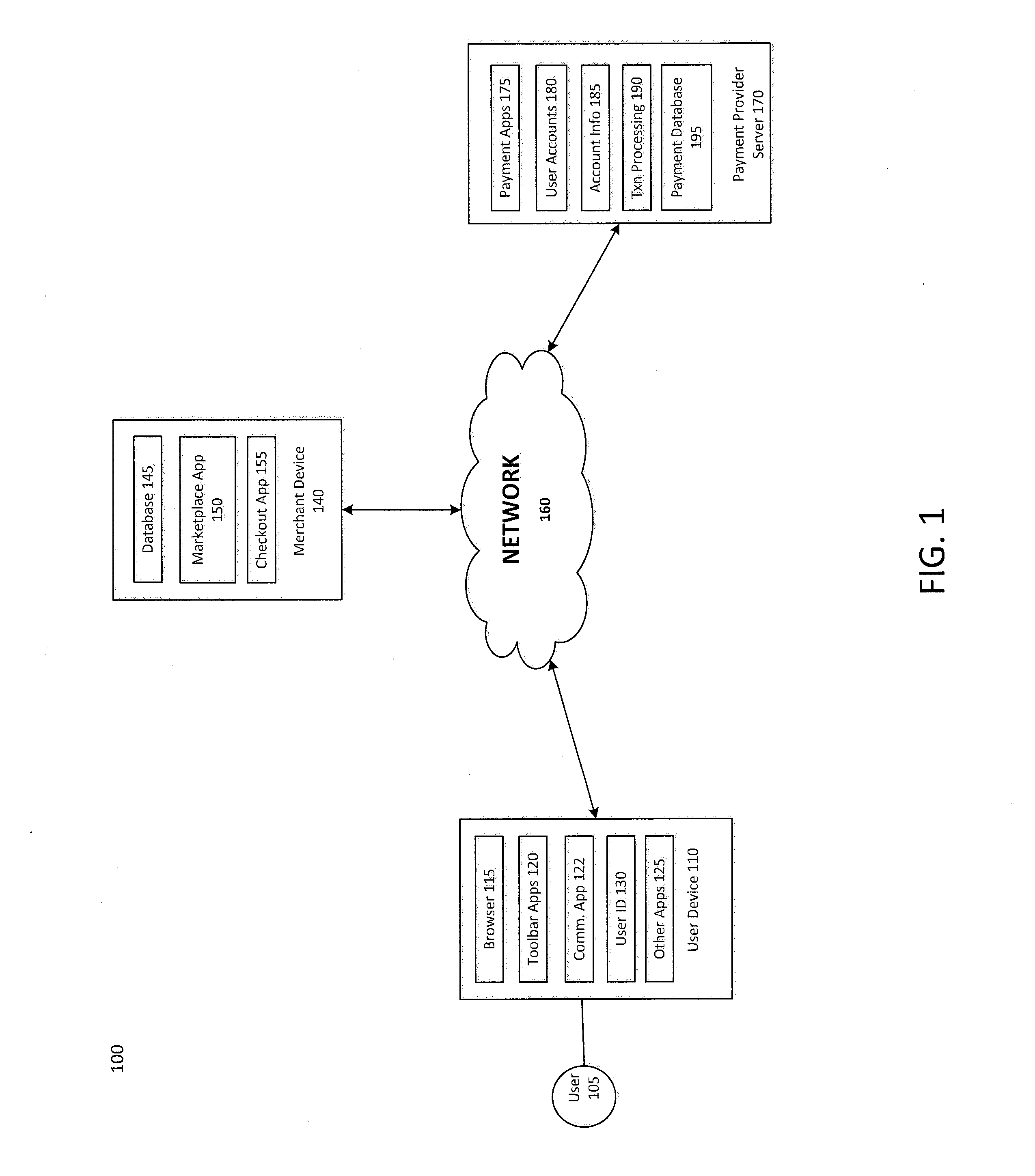

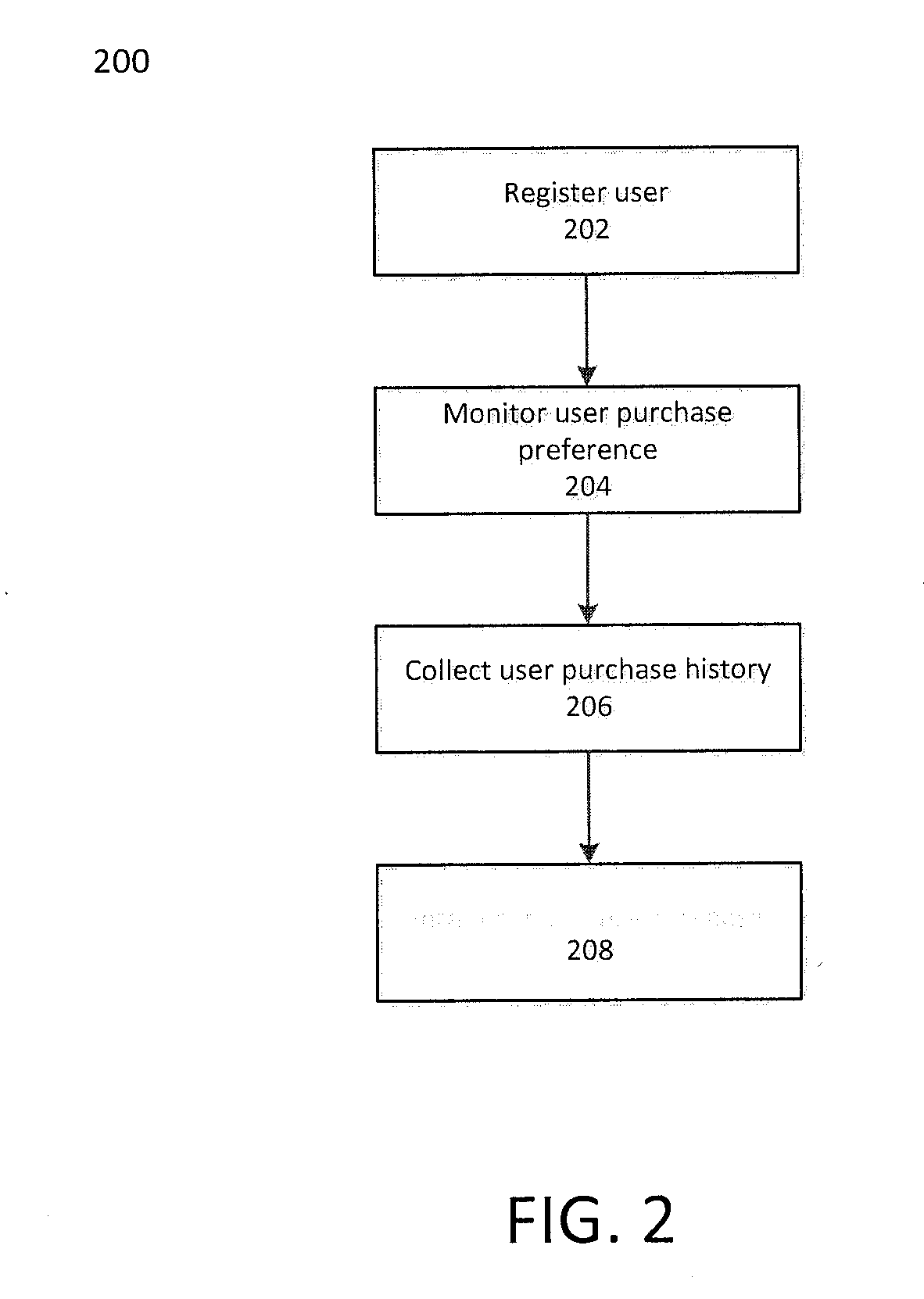

Systems and methods for managing loyalty reward programs

A system or a method is provided to manage a user's loyalty programs. In particular, the system may retrieve information from each of the user's loyalty programs to identify available loyalty programs for a given purchase. The system may infer or predict the user's future purchases. A comparison of different loyalty programs for a given purchase may be implemented in view of the user's future purchases. One or more loyalty programs that provide the user with good reward options based on the user's future purchases may be suggested to the user for certain purchases. In particular, loyalty programs that provide top reward values may be suggested to the user.

Owner:PAYPAL INC

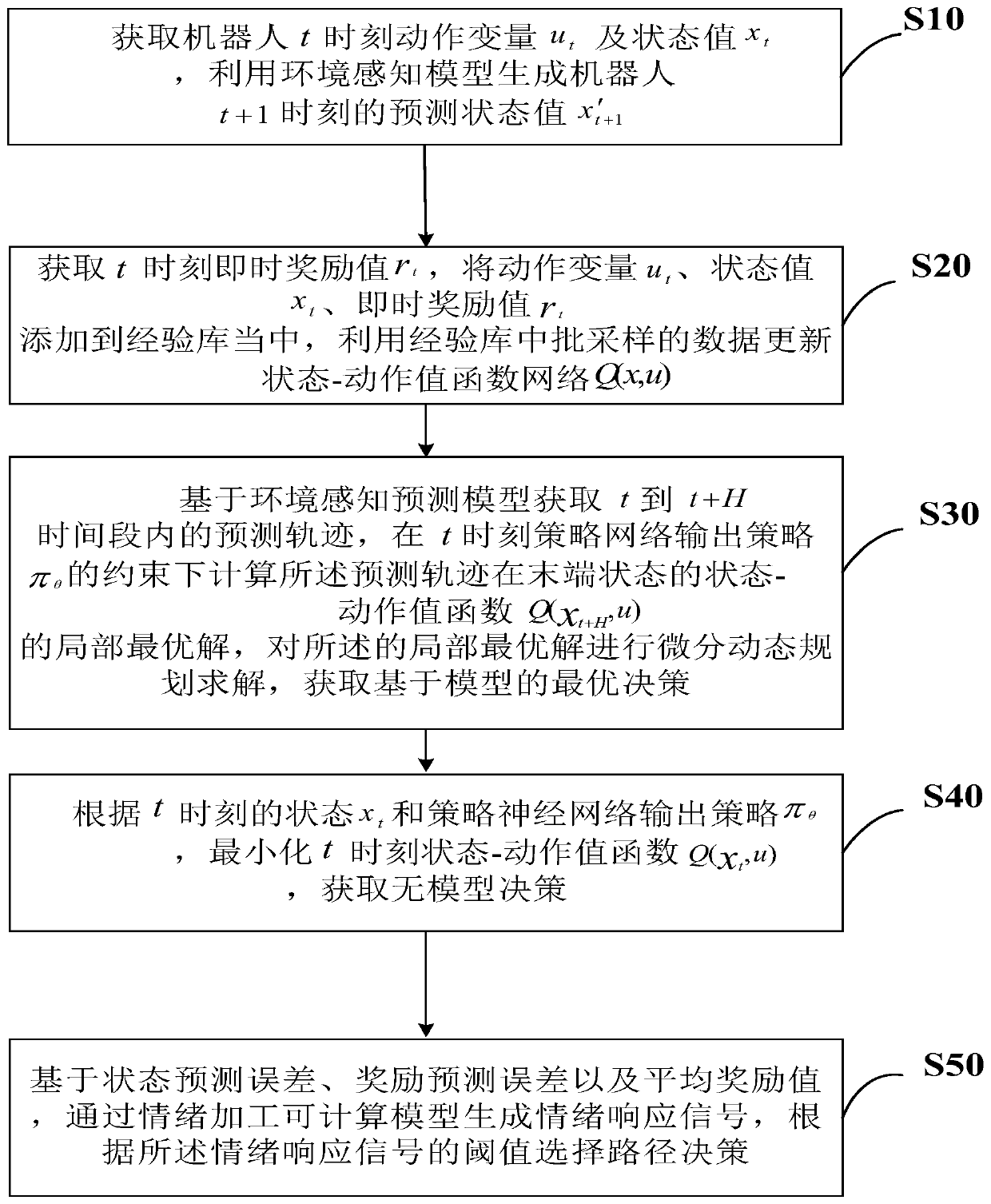

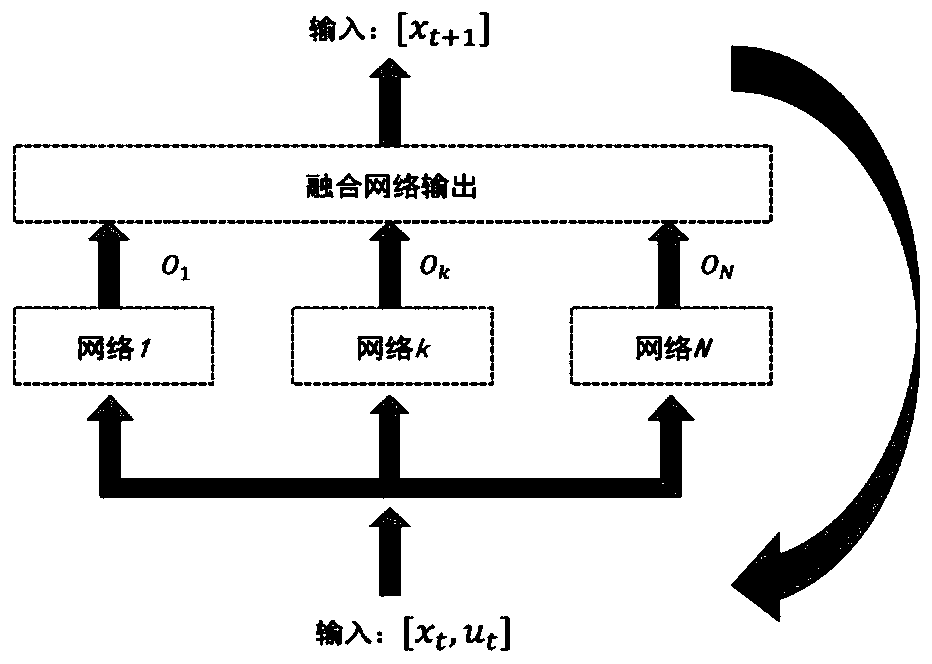

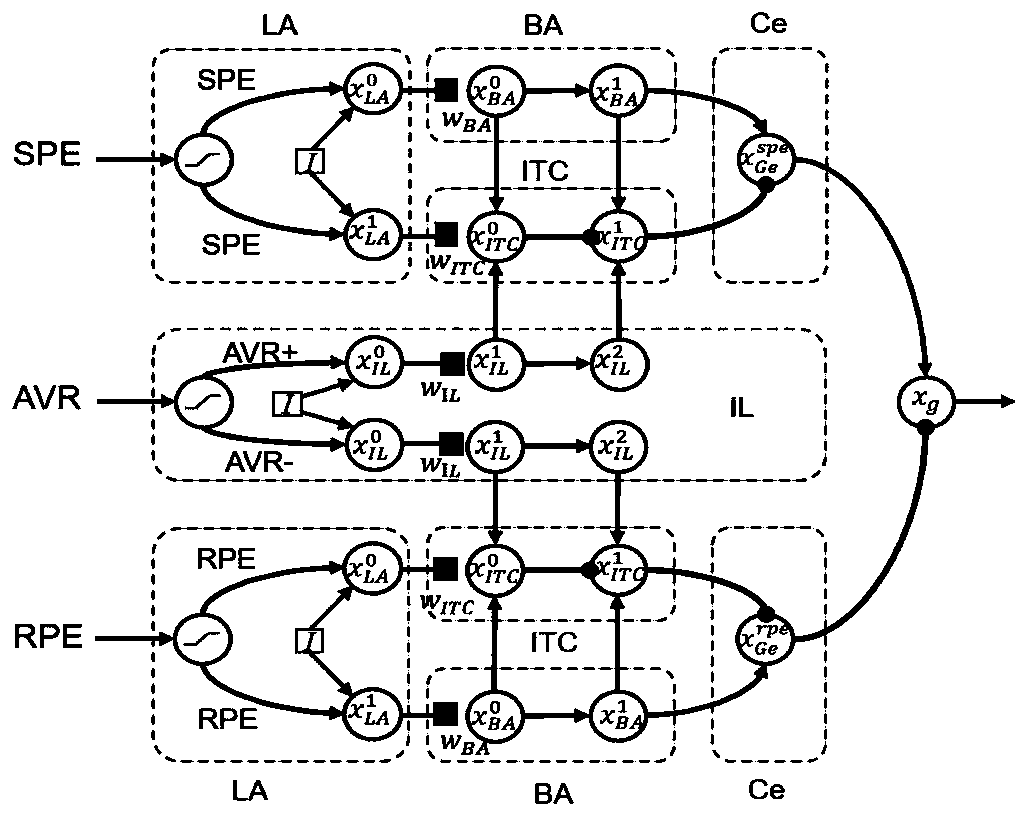

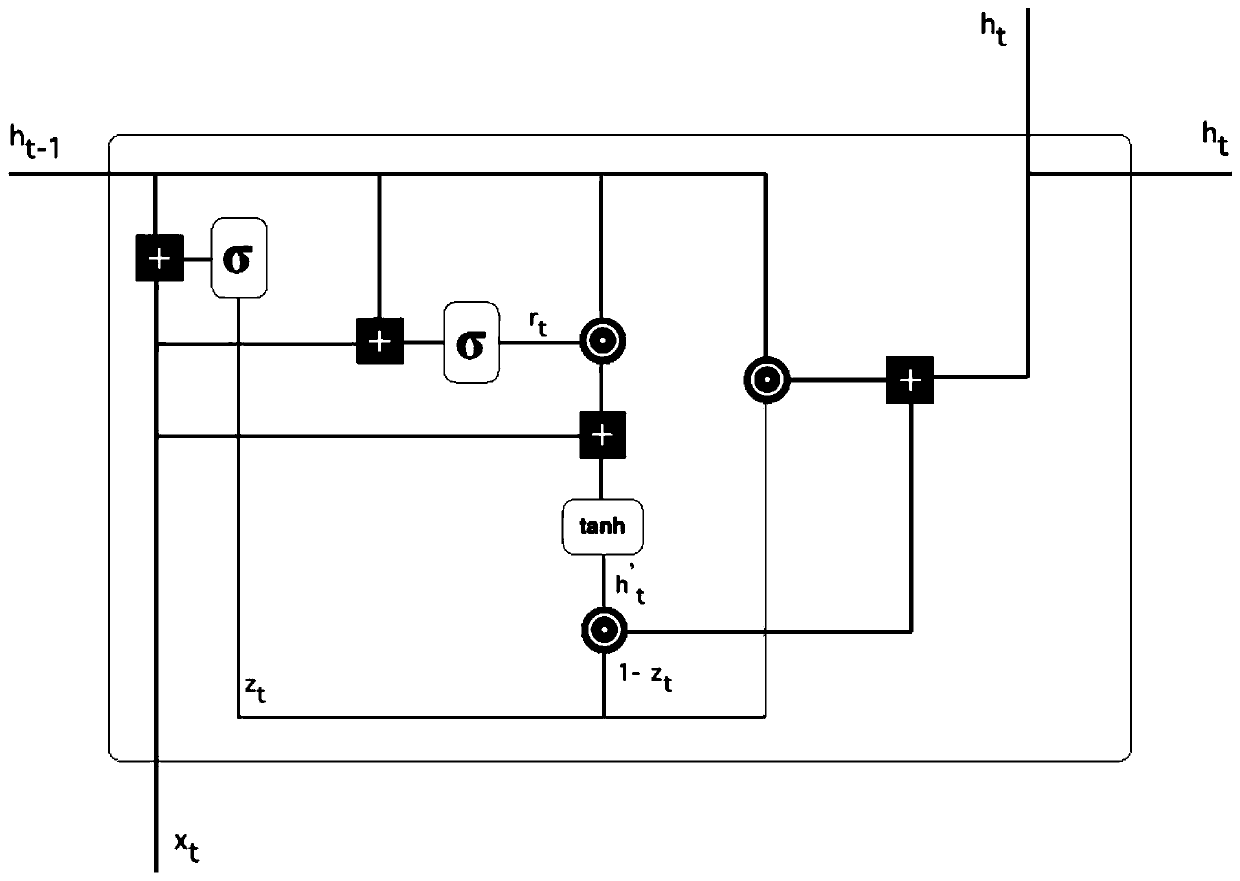

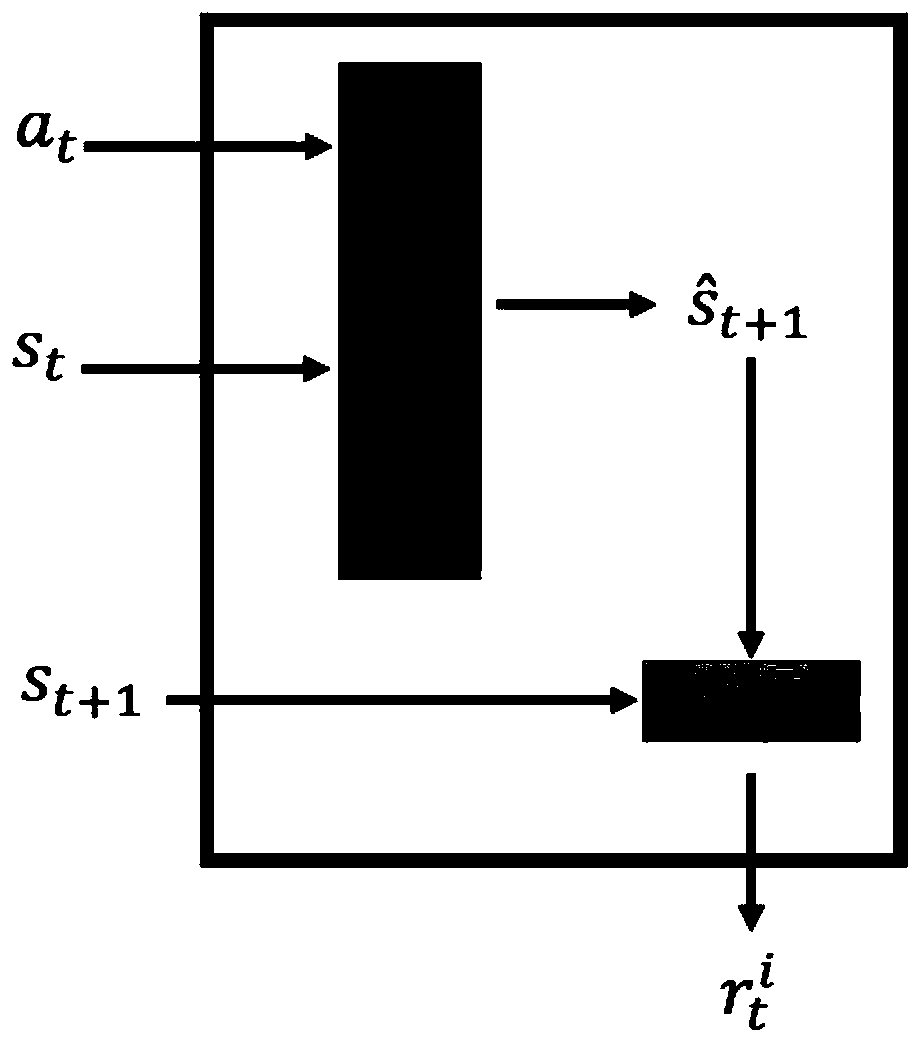

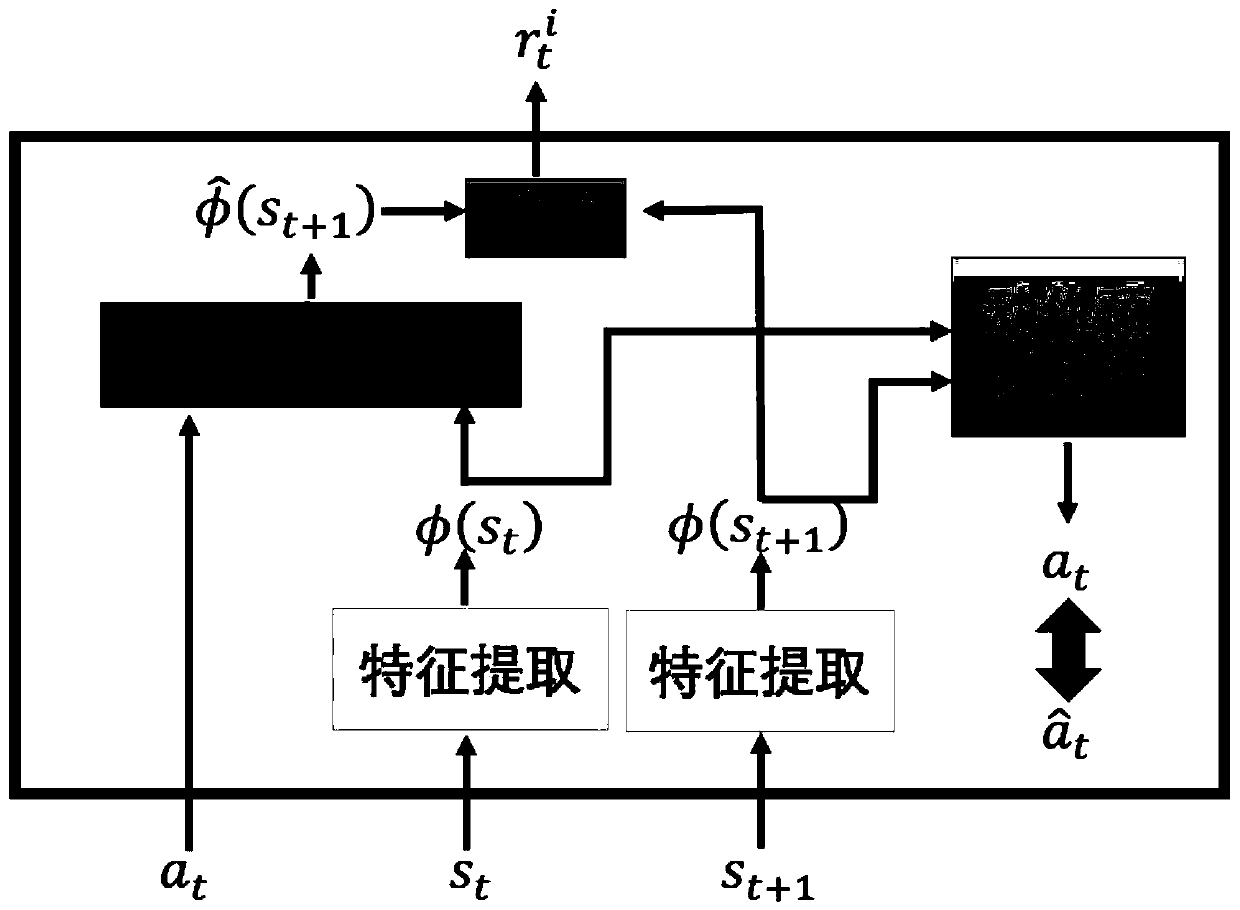

Robot motion decision-making method, system and device introducing emotion regulation and control mechanism

ActiveCN110119844AOptimize preset objective functionGuaranteed learning efficiencyForecastingResourcesOptimal decisionState prediction

The invention belongs to the field of intelligent robots, particularly relates to a robot motion decision-making method, system and device introducing an emotion regulation and control mechanism, andaims to solve the problems of robot decision-making speed and learning efficiency. The method comprises the following steps: generating a predicted state value of a next moment according to a currentaction variable and a state value by utilizing an environmental perception model; updating state-based on action variables, state values, immediate rewards An action value function network; obtaininga prediction track based on an environmental perception model, calculating a local optimal solution of the prediction track, carrying out differential dynamic programming, and obtaining an optimal decision based on the model; acquiring a model-free decision based on a current state and strategy as well as minimized state-motion functions and based on the state prediction error, the reward prediction error and the average reward value, generating an emotion response signal through an emotion processing computable model, and selecting a path decision according to a threshold value of the signal.The decision-making speed is gradually increased while learning efficiency is ensured.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

Deep reinforcement learning-based incomplete information game method, device, system and storage medium

ActiveCN110399920AImprove solution efficiencyEffective explorationCharacter and pattern recognitionNeural architecturesTime complexityReward value

The invention provides a deep reinforcement learning-based incomplete information game method, a device, a system and a storage medium. The method comprises the steps of exploring and utilizing a mechanism to improve a strategy gradient algorithm, adding a memory unit into a deep reinforcement learning network, and optimizing a reward value by a self-driven mechanism. The beneficial effects of theinvention are that: the method is suitable for large-scale production. The problem of high variance frequently occurring in a strategy gradient algorithm is solved through a baseline function. For the problem of high time complexity in the reinforcement learning sampling and optimization process, a parallel mechanism is adopted to improve the model solving efficiency. Through a self-driven mechanism, an intelligent agent is helped to explore the environment more effectively while making up for the sparse environment reward value.

Owner:HARBIN INST OF TECH SHENZHEN GRADUATE SCHOOL

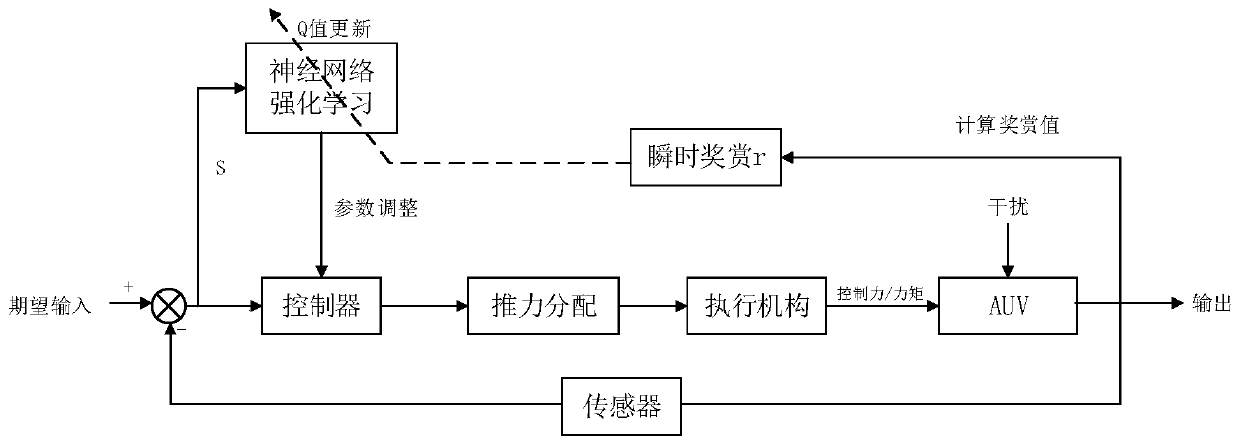

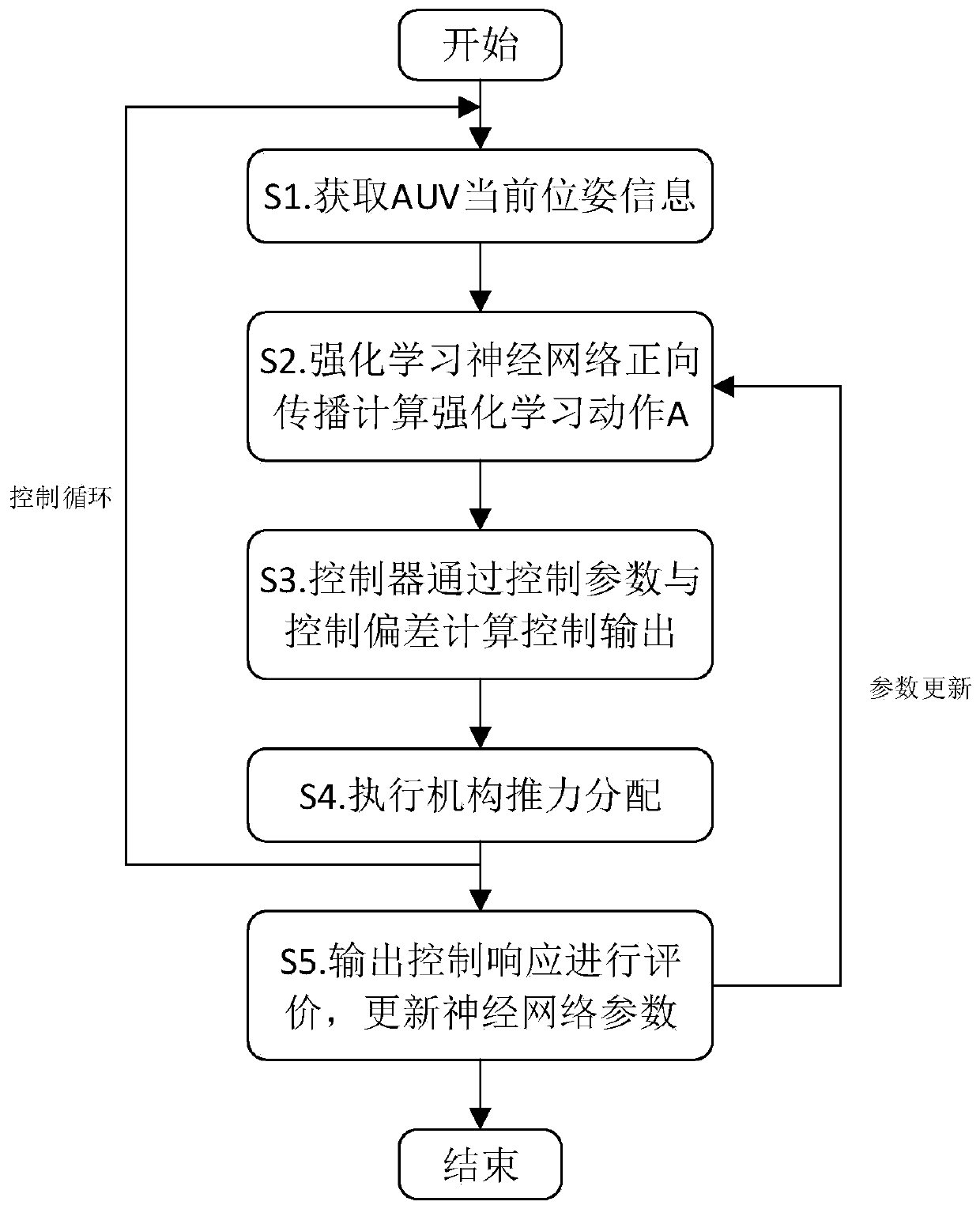

Neural network reinforcement learning control method of autonomous underwater robot

The invention provides a neural network reinforcement learning control method of an autonomous underwater robot. The neural network reinforcement learning control method of the autonomous underwater robot comprises the steps that current pose information of an autonomous underwater vehicle (AUV) is obtained; quantity of a state is calculated, the state is input into a reinforcement learning neuralnetwork to calculate a Q value in a forward propagation mode, and parameters of a controller are calculated by selecting an action A; the control parameters and control deviation are input into the controller, and control output is calculated; the autonomous robot performs thrust allocation according to executing mechanism arrangement; and a reward value is calculated through control response, reinforcement learning iteration is carried out, and reinforcement learning neural network parameters are updated. According to the neural network reinforcement learning control method of the autonomousunderwater robot, a reinforcement learning thought and a traditional control method are combined, so that the AUV judges the self motion performance in navigation, the self controller performance isadjusted online according to experiences generated in the motion, a complex environment is adapted faster through self-learning, and thus, better control precision and control stability are obtained.

Owner:HARBIN ENG UNIV

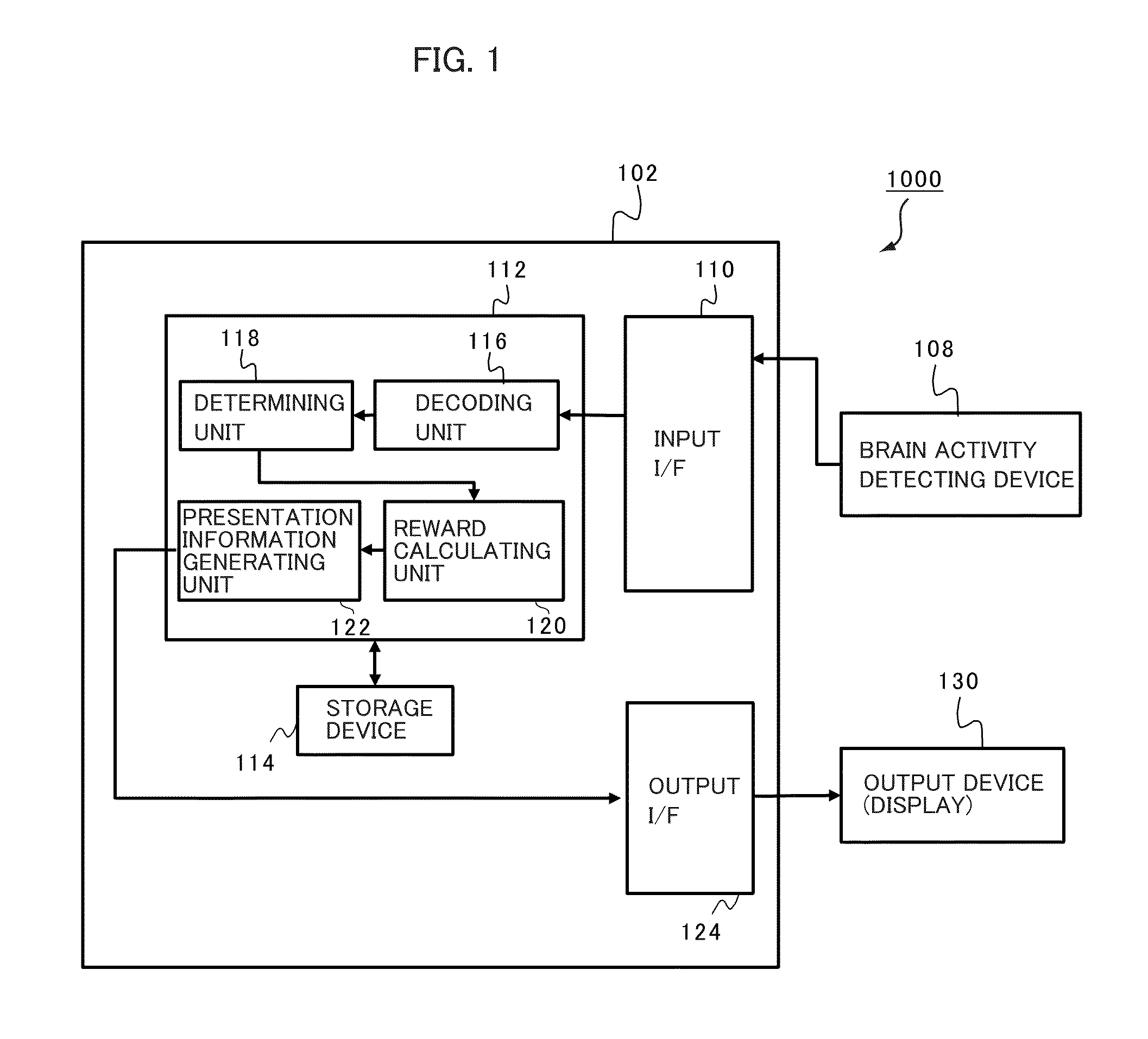

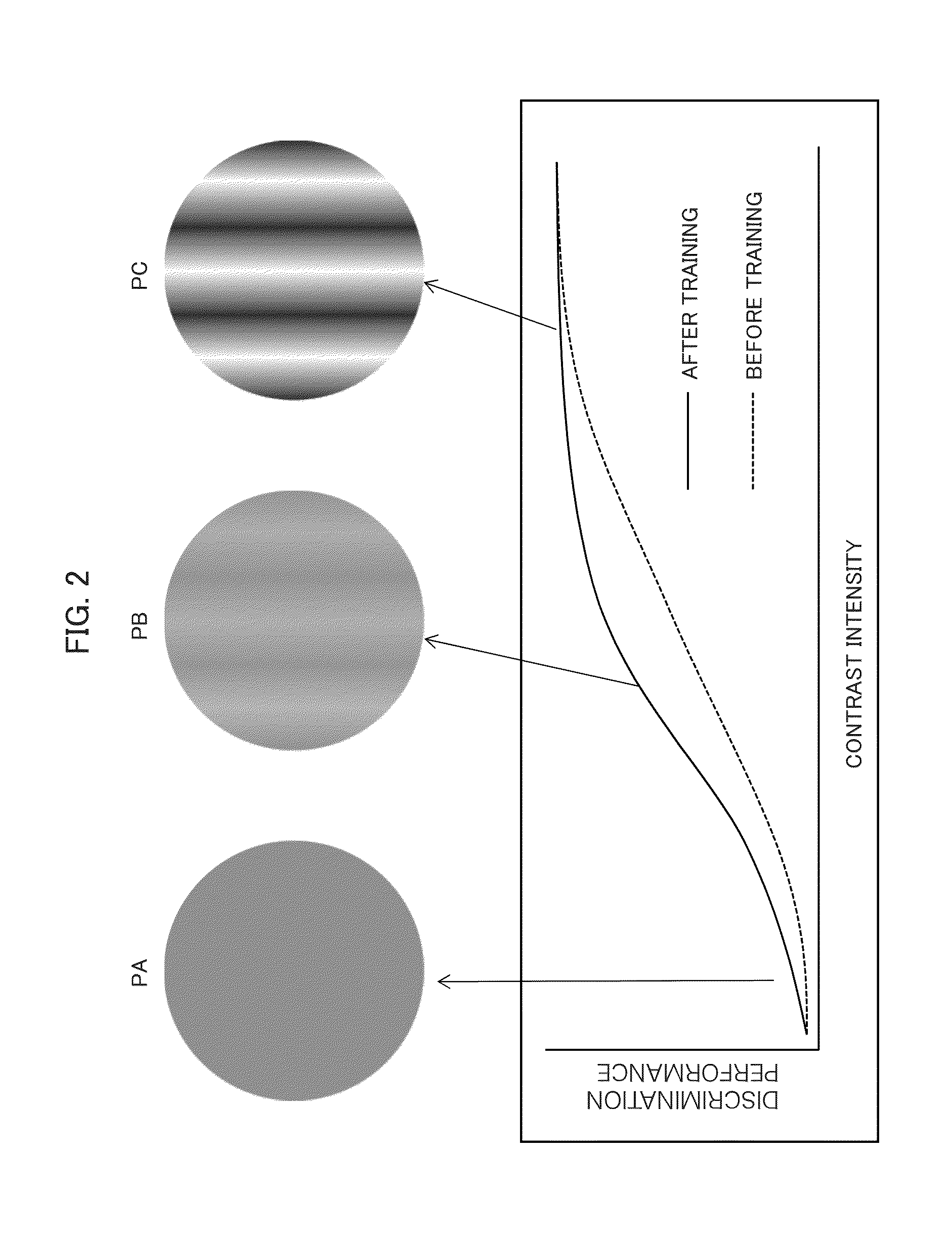

Apparatus and method for supporting brain function enhancement

ActiveUS20140171757A1Improve brain functionMedical imagingDiagnostics using spectroscopyCranial nervesOutput device

A training apparatus 1000 using a method of decoding nerve activity includes: a brain activity detecting device 108 for detecting brain activity at a prescribed area within a brain of a subject; and an output device 130 for presenting neurofeedback information (presentation information) to the subject. A processing device 102 decodes a pattern of cranial nerve activity, generates a reward value based on a degree of similarity of the decoded pattern with respect to a target activation pattern obtained in advance for the event as the object of training, and generates presentation information corresponding to the reward value.

Owner:ATR ADVANCED TELECOMM RES INST INT

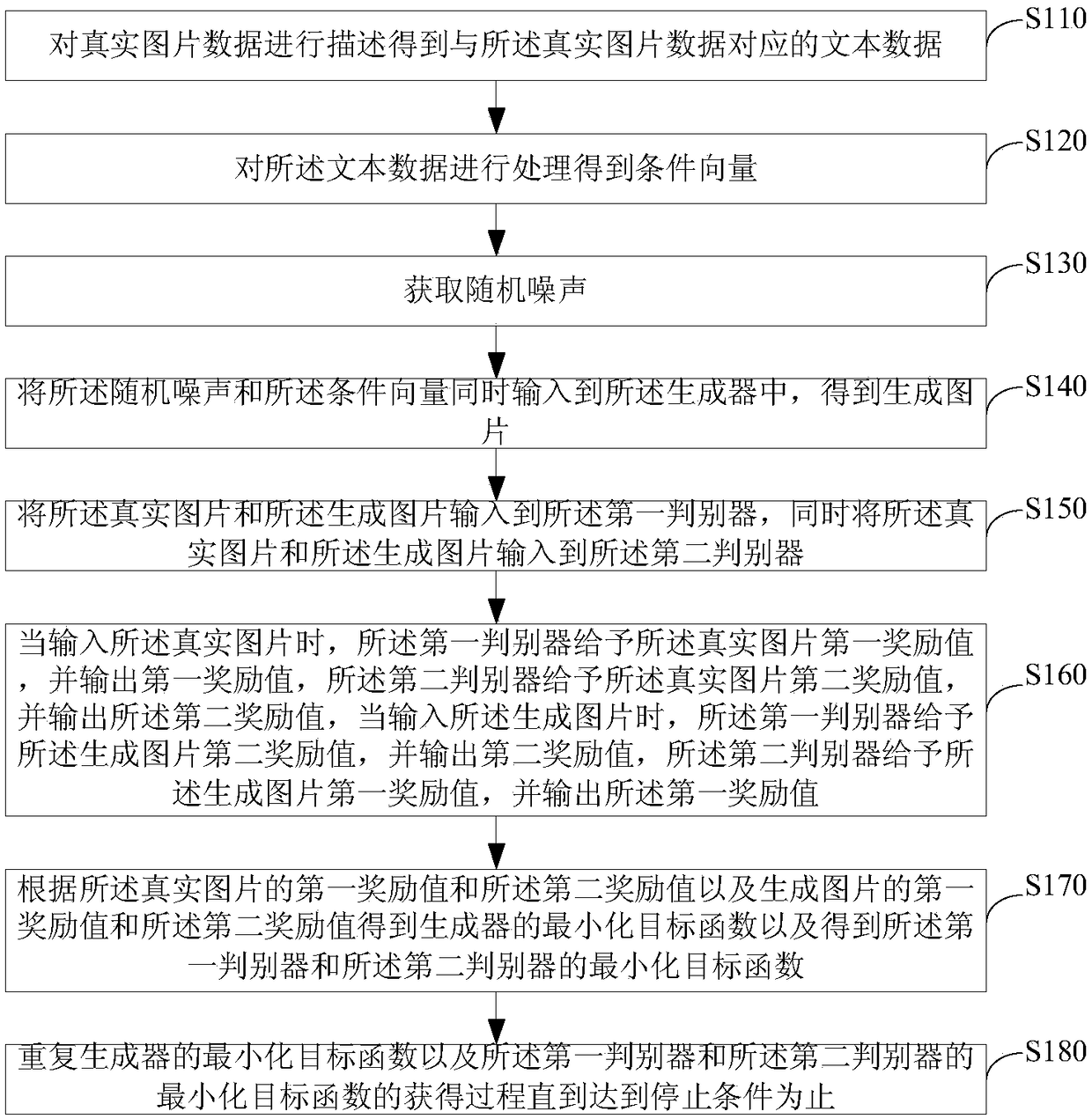

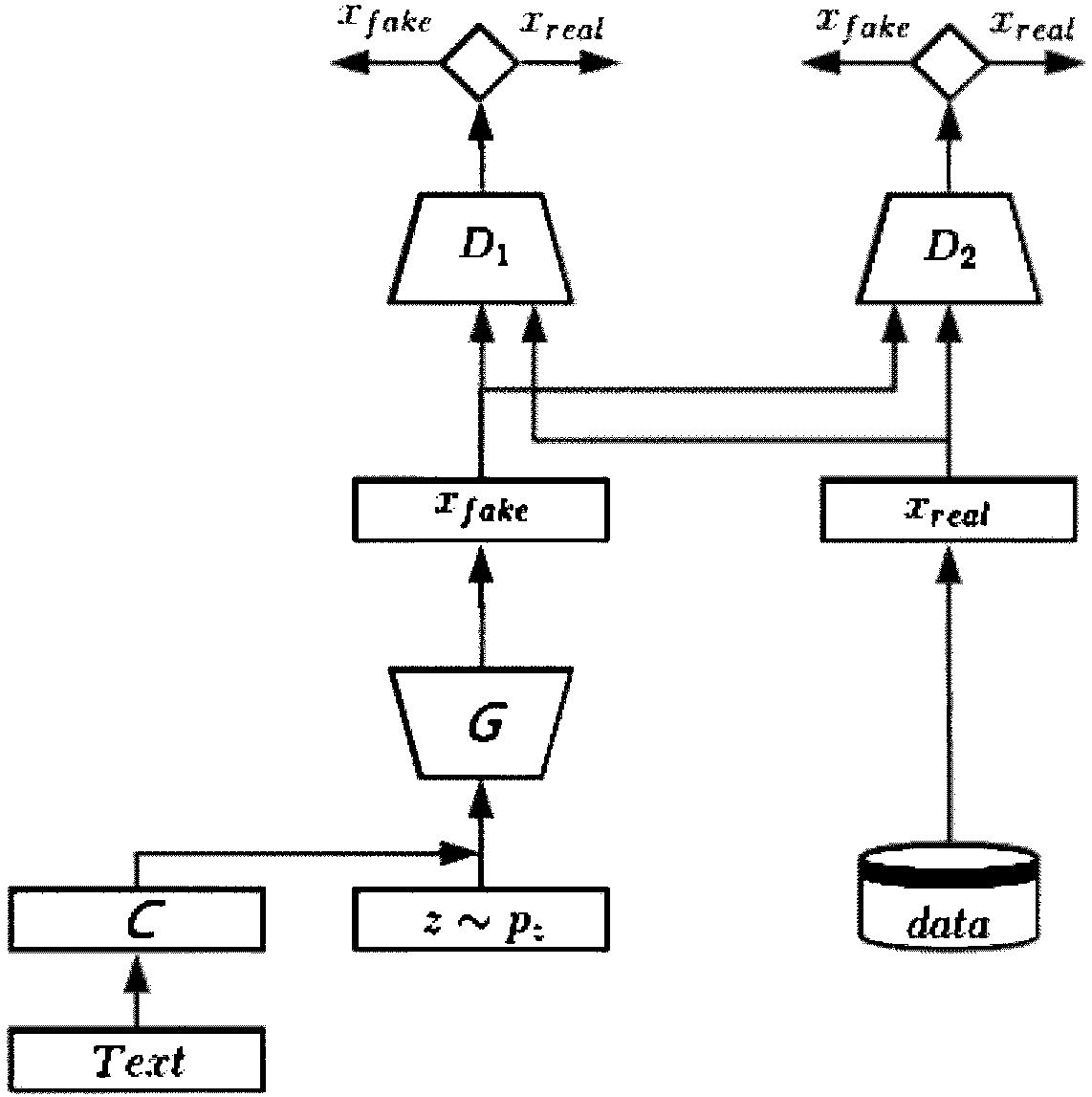

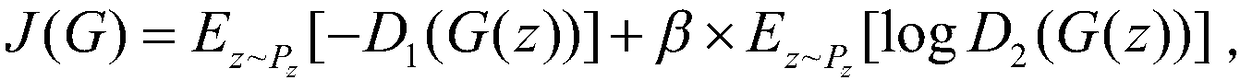

Image generation method of generative adversarial network based on dual discriminators

InactiveCN108460717AIncrease diversityDoes not consume computing resources2D-image generationImage acquisitionDiscriminatorAlgorithm

The present invention relates to the technical field of image generation of a generative adversarial network, especially to an image generation method of generative adversarial network based on dual discriminators. The method comprises the steps of: obtaining text data; performing processing of the text data to obtain condition vectors; obtaining random noise; inputting the random noise and the condition vectors into a generator at the same time to obtain a generation image; inputting a real image and the generation image into a first discriminator and a second discriminator; giving a first reward value to the real image by the first discriminator, giving a second reward value to the real image by the second discriminator, giving a second reward value to the generation image by the first discriminator, and giving the first reward value to the generation image by the second discriminator; and obtaining a minimum target function according to the first reward value and the second reward value. The image generation method of the generative adversarial network based on the dual discriminators improves the diversity of the generated images and cannot consume lots of calculation resources.

Owner:RUN TECH CO LTD

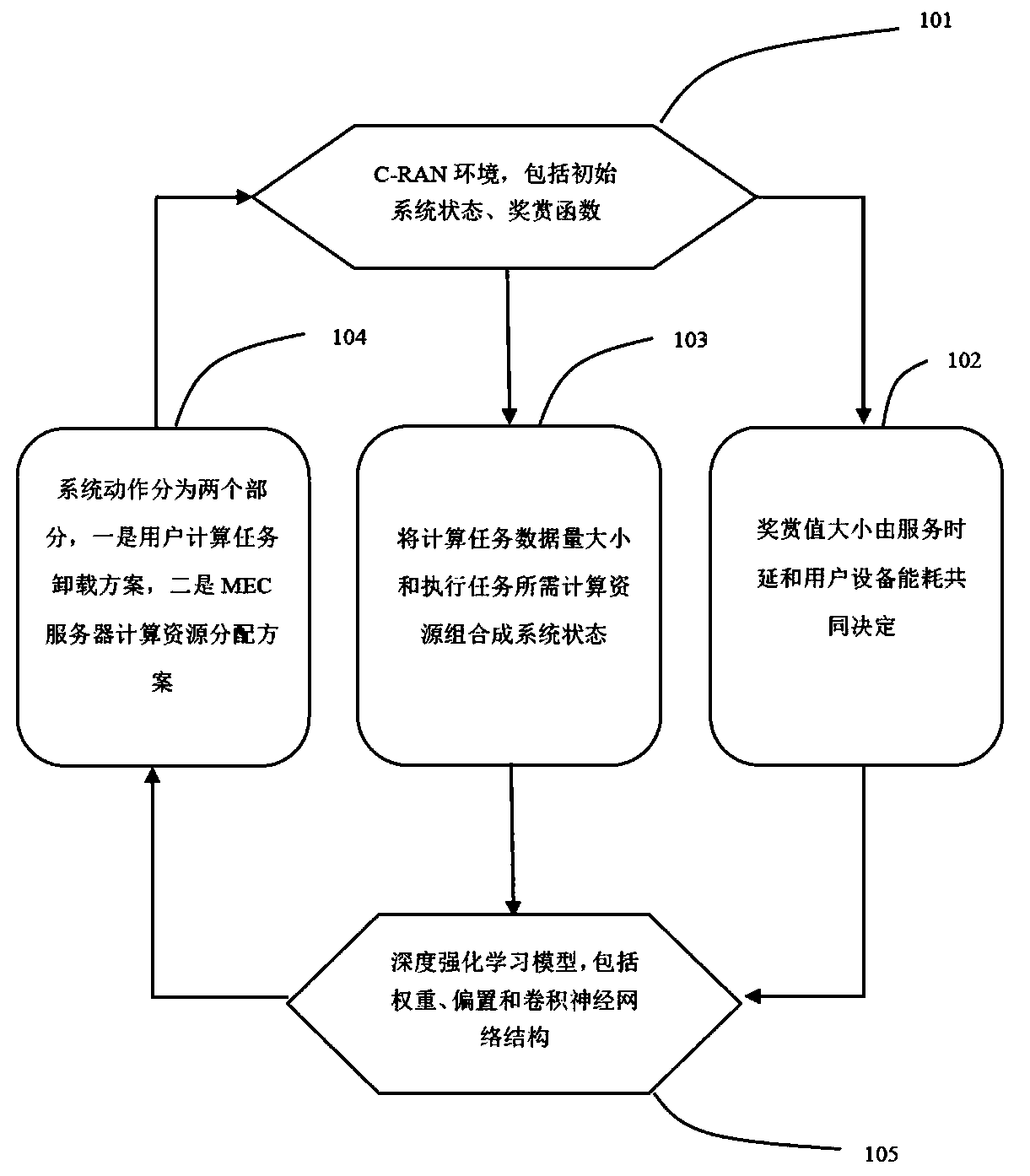

C-RAN calculation unloading and resource allocation method based on deep reinforcement learning

PendingCN110557769AImprove service qualityReduce energy consumptionMachine learningWireless communicationMobile edge computingReward value

The invention discloses a C-RAN calculation unloading and resource allocation method based on deep reinforcement learning in the technical field of mobile communication. The method comprises the following steps: 1) firstly constructing a deep reinforcement learning neural network; calculating a task data size and calculation resources required for executing a task; 2) inputting the system state into a deep reinforcement learning model; performing neural network training, and obtaining system actions, 3) enabling the user to unload the calculation task according to the unloading proportionalitycoefficient; enabling the mobile edge computing server to execute a computing task according to the computing resource allocation coefficient; obtaining a reward value of the system action accordingto the reward function; updating neural network parameters according to the reward value; and 4) repeating the above steps until the reward value tends to be stable, completing the training process, and unloading the user computing task and allocating the computing resources of the MEC server according to the final system action. The method can greatly reduce the user service time and energy consumption, so that the real-time low-energy-consumption service becomes possible.

Owner:NANJING UNIV OF POSTS & TELECOMM

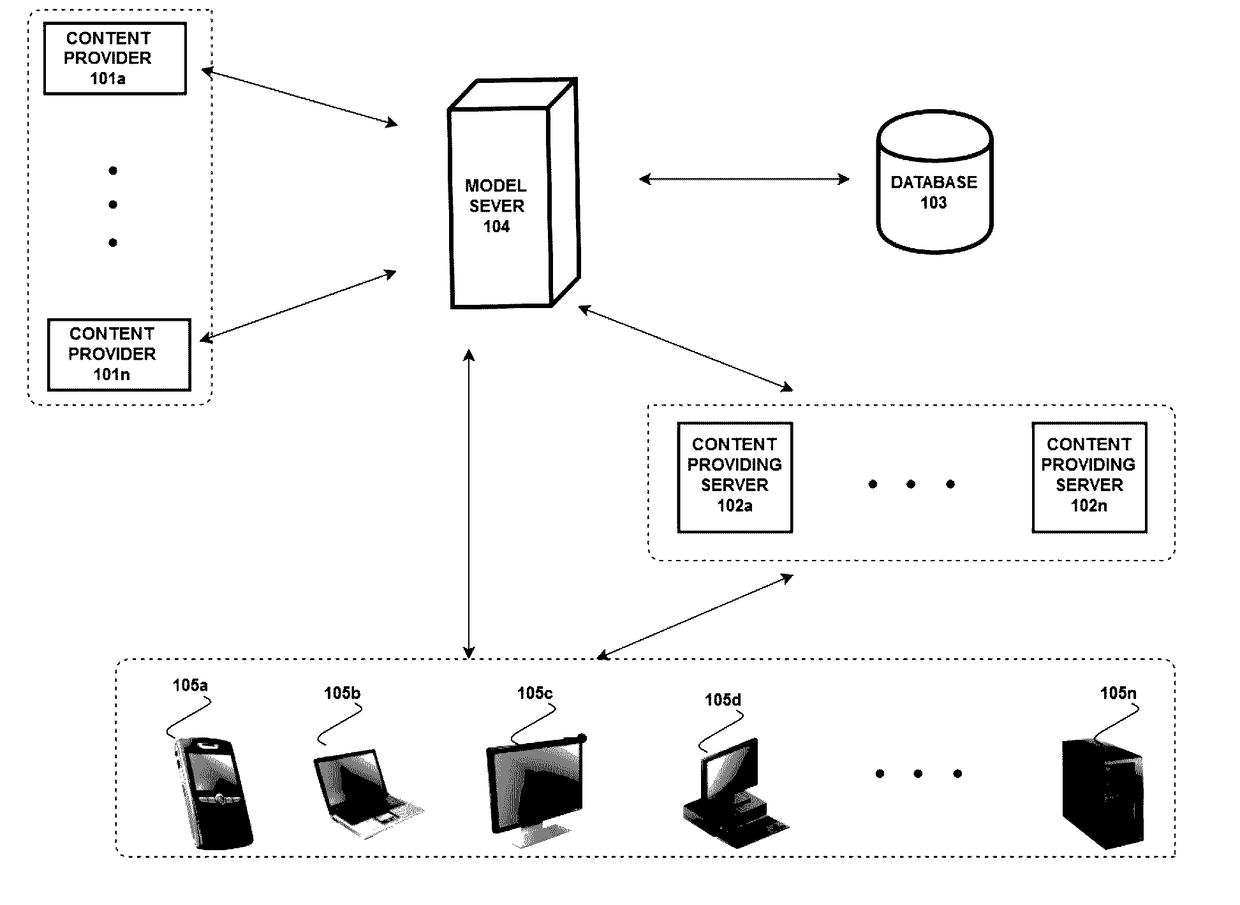

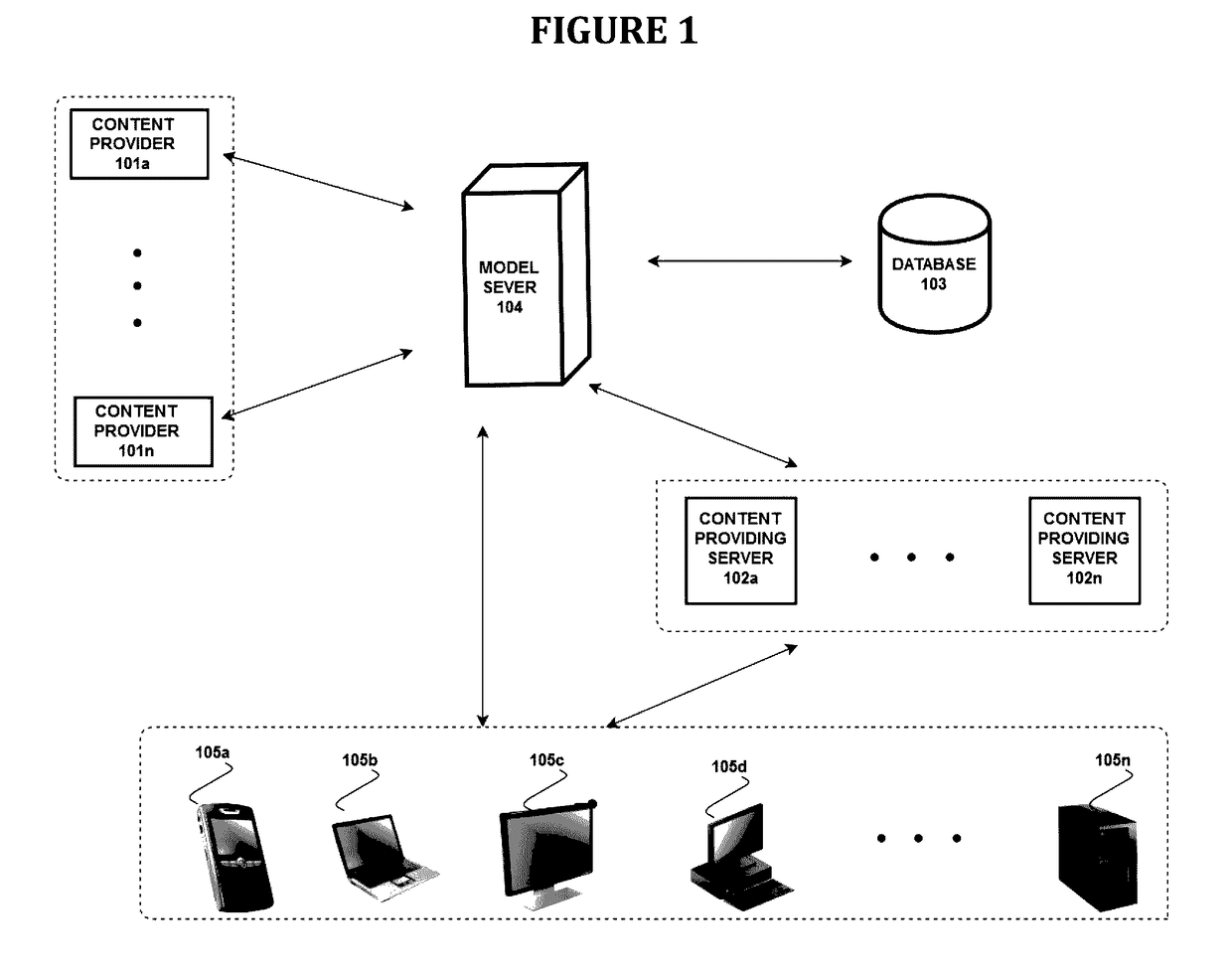

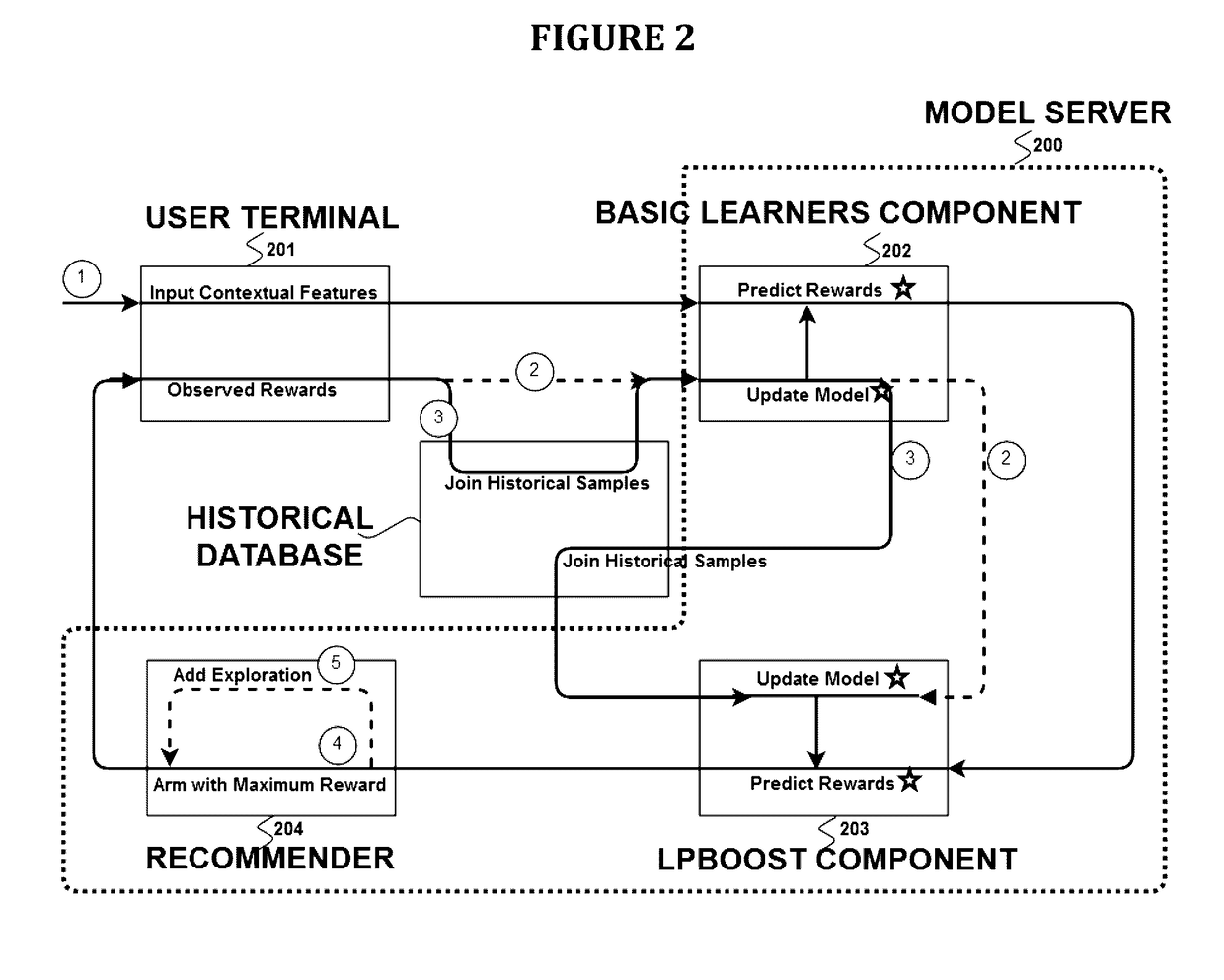

Device, method, and computer readable medium of generating recommendations via ensemble multi-arm bandit with an lpboost

A method, an apparatus, and a computer readable medium of recommending contents. The method includes receiving, by a computer, at least one of user input and contextual input, wherein the contextual input corresponds to a plurality of arms, calculating, by the computer, a plurality of reward values for each of the plurality of arms using a plurality of individual recommendation algorithms such that each of the plurality of reward values is generated by a respective individual recommendation algorithm from the plurality of individual recommendation algorithms, based on the received input, calculating, by the computer, an aggregated reward value for each of the plurality of arms by applying linear program boosting to the plurality reward values for the respective arm; and selecting one arm from the plurality of arms which has greatest calculated aggregated reward value; and outputting, by the computer, contents corresponding to the selected arm.

Owner:SAMSUNG SDS AMERICA INC

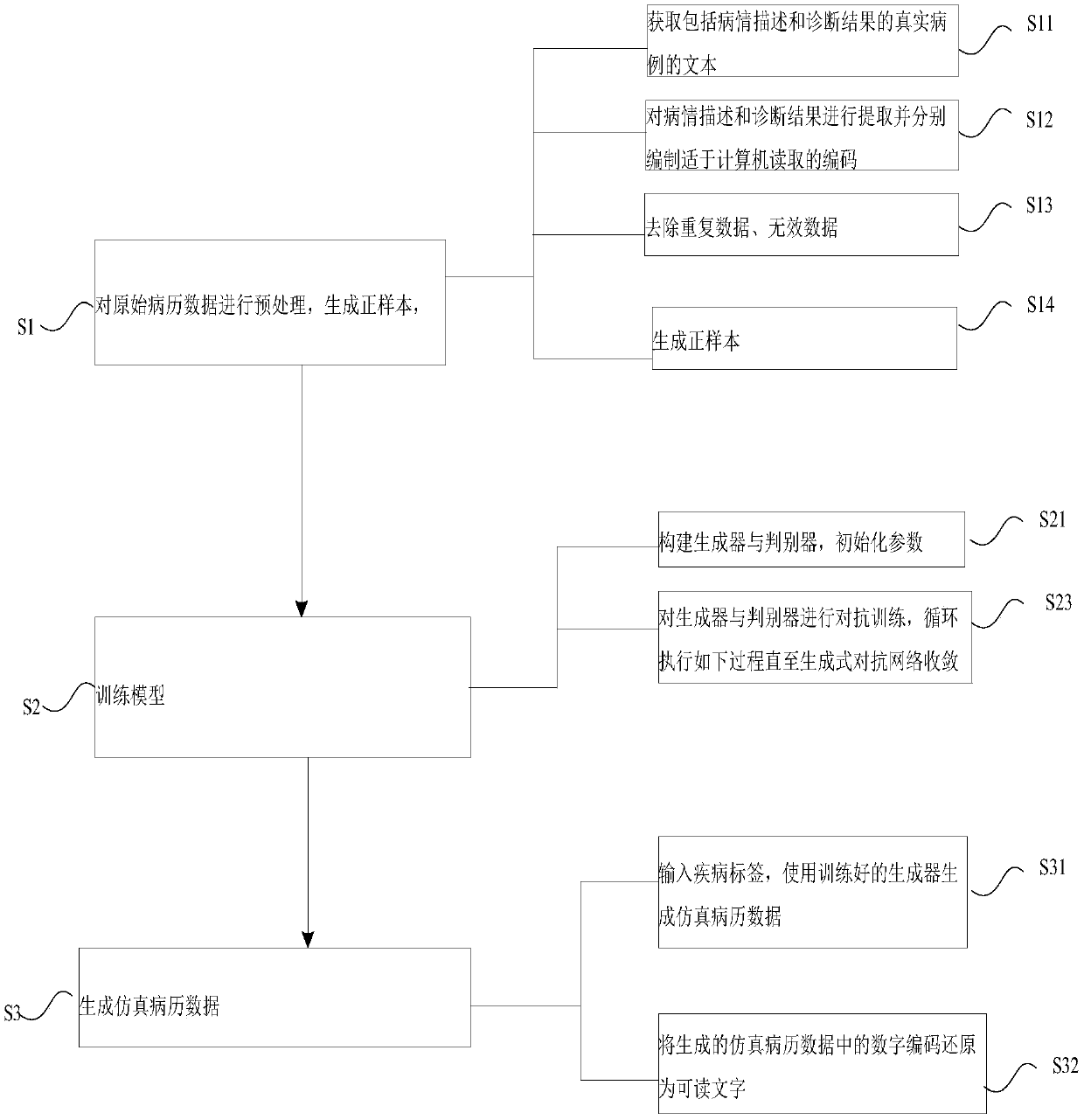

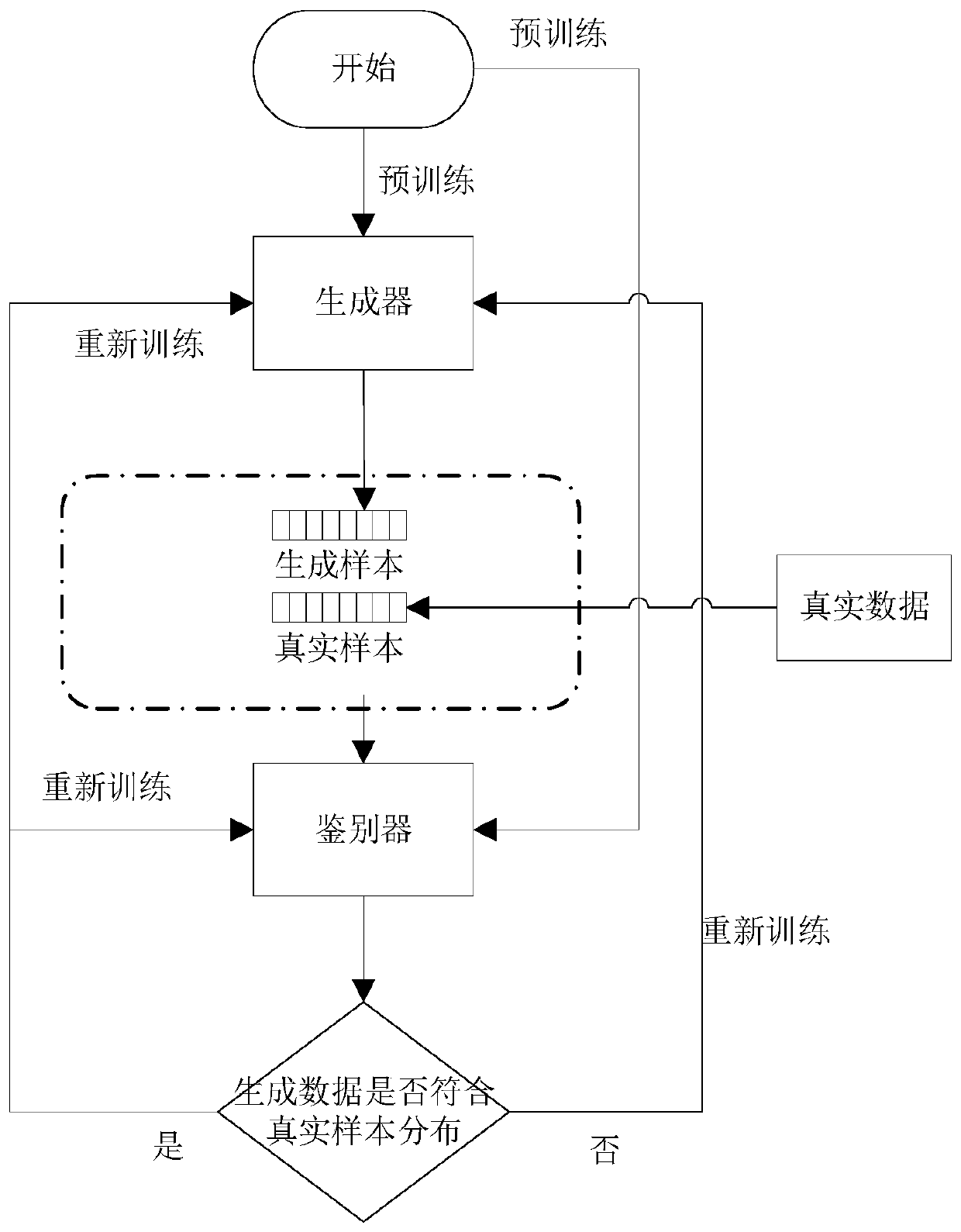

Simulation text medical record generation method and system

ActiveCN109003678AEasy to carry outConvenient researchMedical data miningMedical automated diagnosisMedical recordPositive sample

The invention provides a simulation text medical record generation method and system. The original medical record is applied to generate positive samples, the word vector and the disease tag vector outputted by the generator in each cycle act as inputs, a new word vector is outputted and a sentence composed of multiple word vectors is generated by repetition for many times. As each word vector isgenerated, the generated word vector sequence is taken as the initial state, generator sampling is repeatedly operated to generate multiple sentences, and the discriminator takes an average of the reward values of all the sentence as the reward value of the present word vector, the generator is updated according to the obtained reward values of the sentences and the word vector and the process isrepeated until convergence. The convergent generator generates negative samples and the negative samples and the positive samples form a mixed medical record data set. The disease tag vector and the word vector sequence act as the input, the probability of each medical record coming from the real medical record is obtained and the discriminator is updated and the process is repeated until convergence. The patient privacy is involved and the simulated text medical record can assist other machine learning tasks so as to facilitate the research on the disease.

Owner:TSINGHUA UNIV

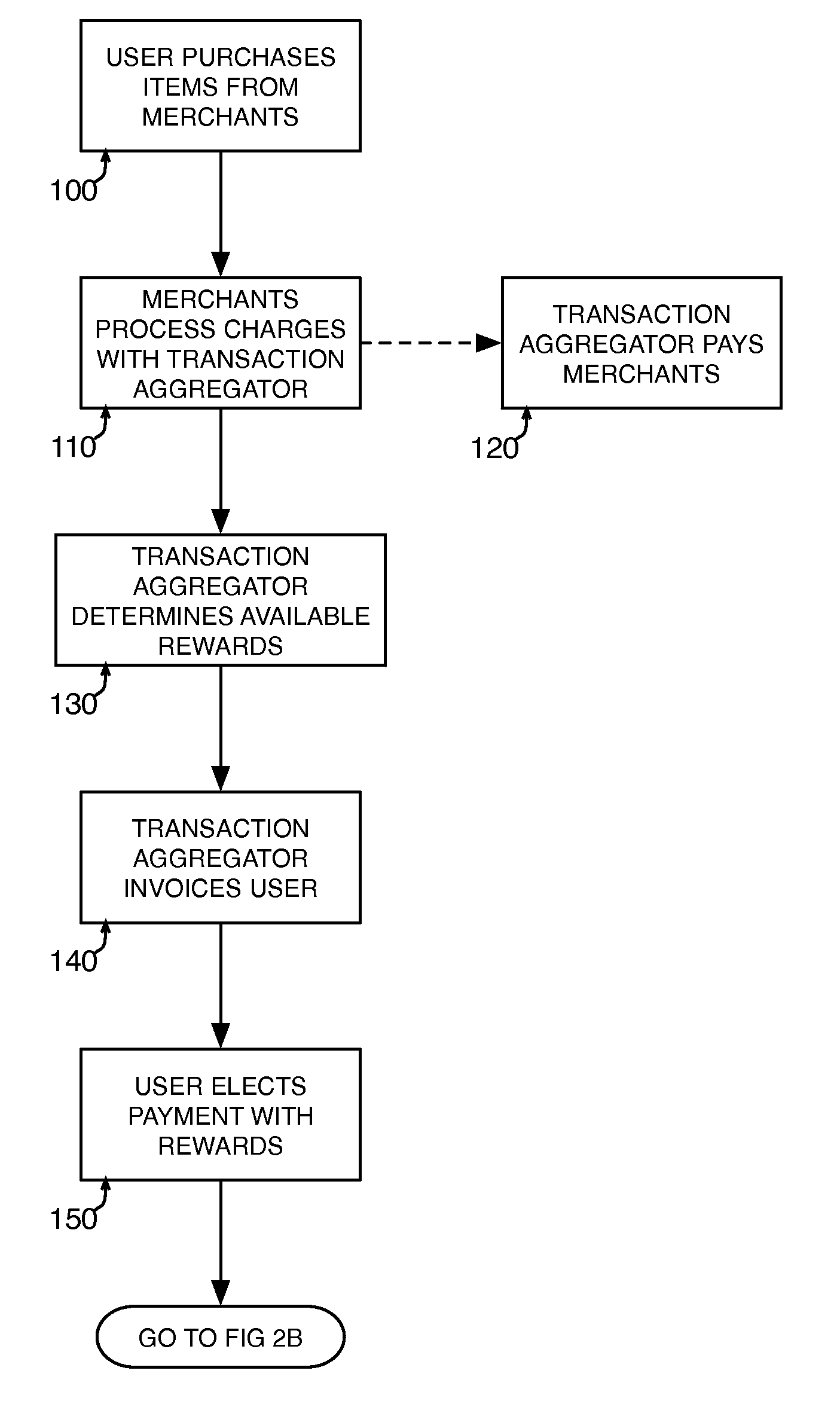

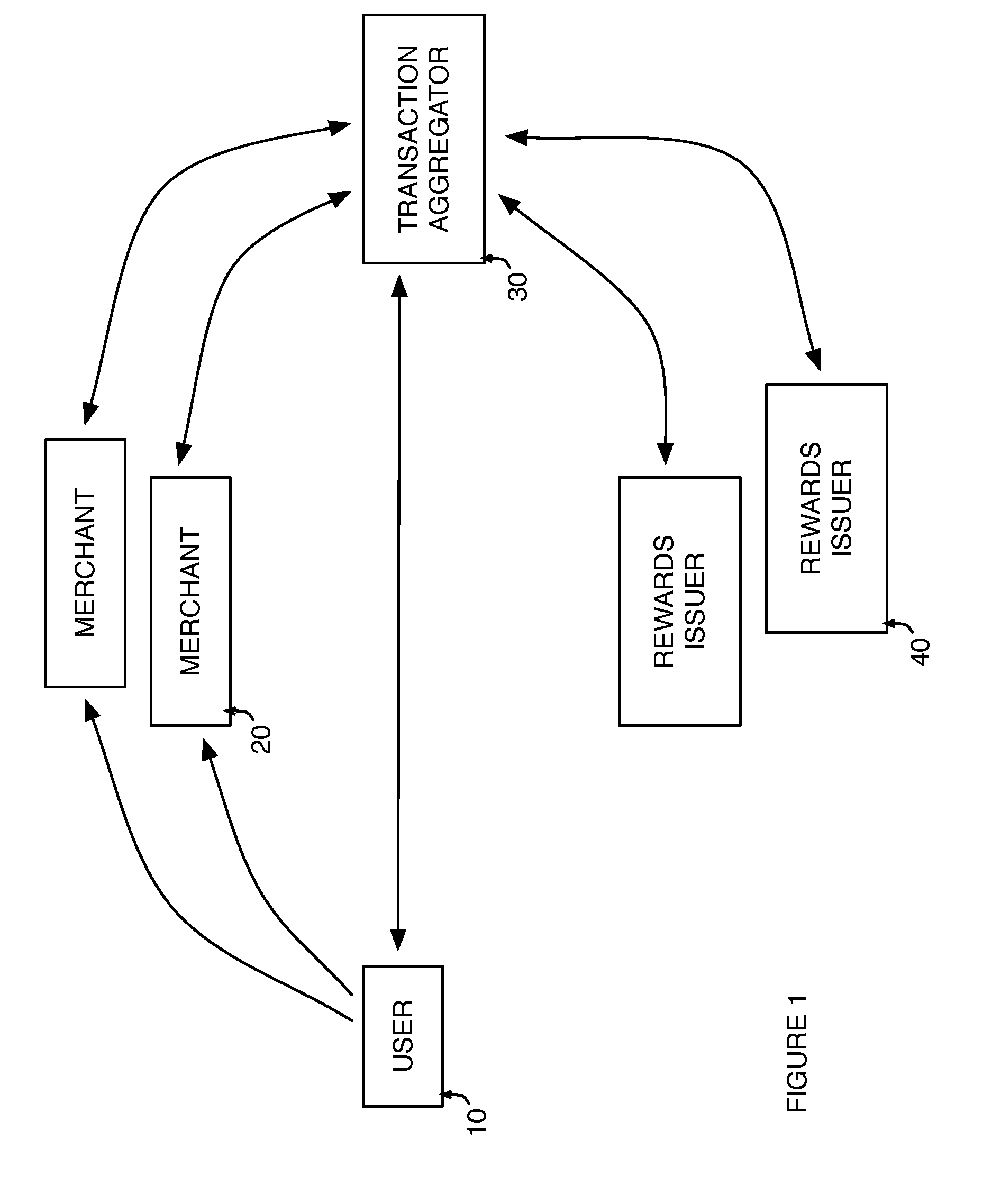

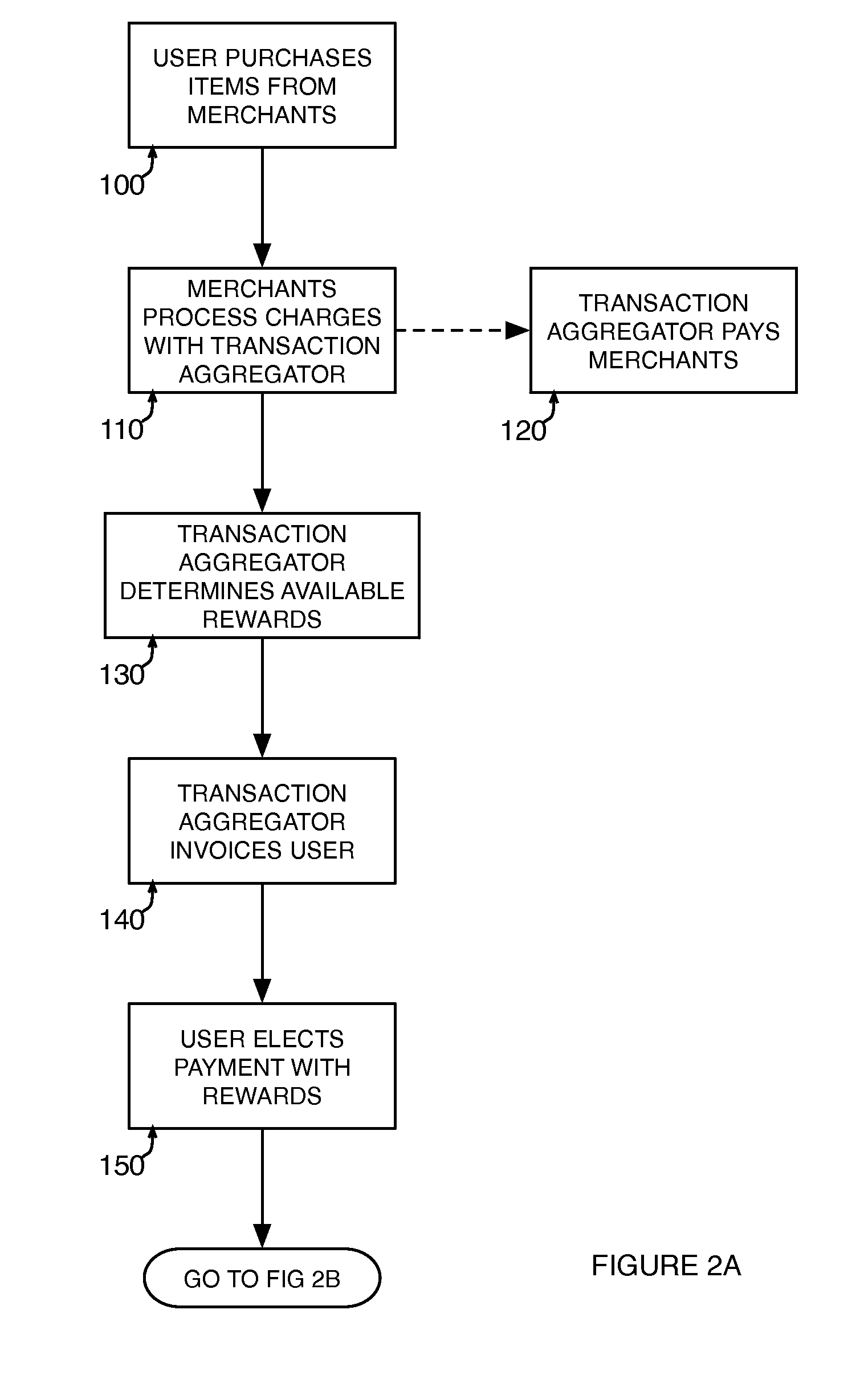

Method and system for redeeming rewards in payment of a transaction account

A transaction aggregator aggregates a plurality of transaction account charges for a user transaction account. The transaction charges are received from merchants for acquisitions made by a user. The user is offered an option to make at least a partial payment to the user transaction account by redeeming rewards from at least one user reward account associated with a rewards issuer. A reward redemption instruction is received from the user, the reward redemption instruction designating the redemption of rewards from at least one user reward account. The user reward account(s) designated by the user is caused to redeem the rewards by decreasing the rewards in the user reward account and conveying corresponding consideration to the transaction aggregator. A credit is provided on the user transaction account for an amount corresponding to the value of the rewards decreased in the user reward account(s).

Owner:SIGNATURE SYST

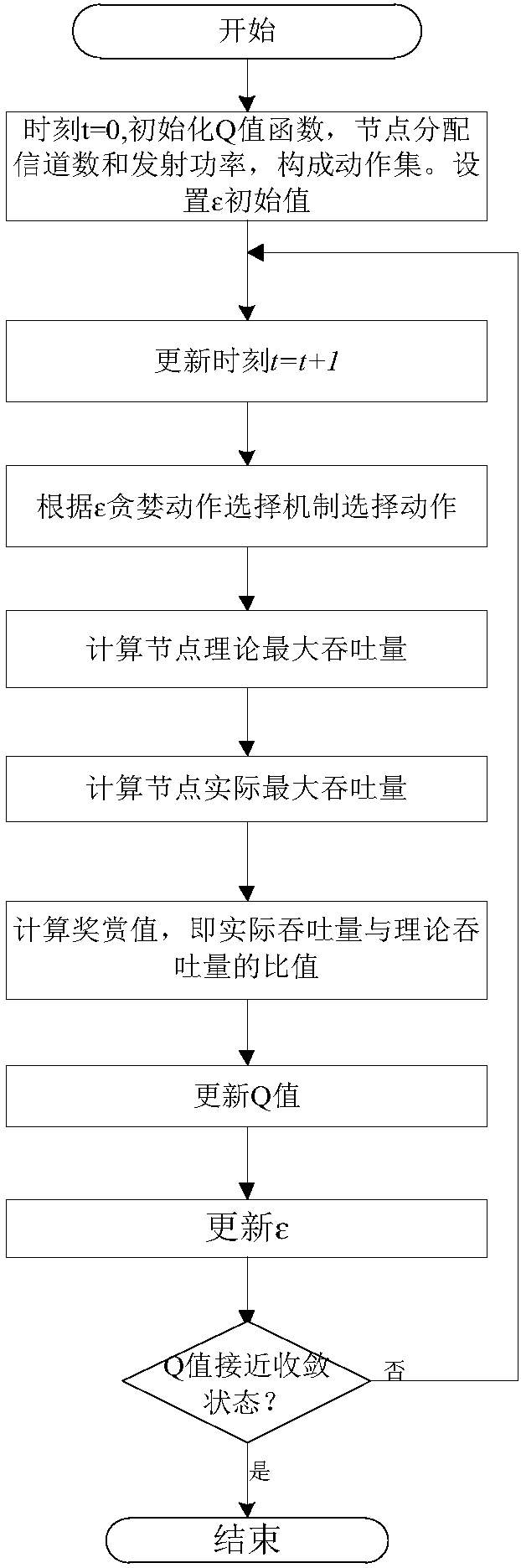

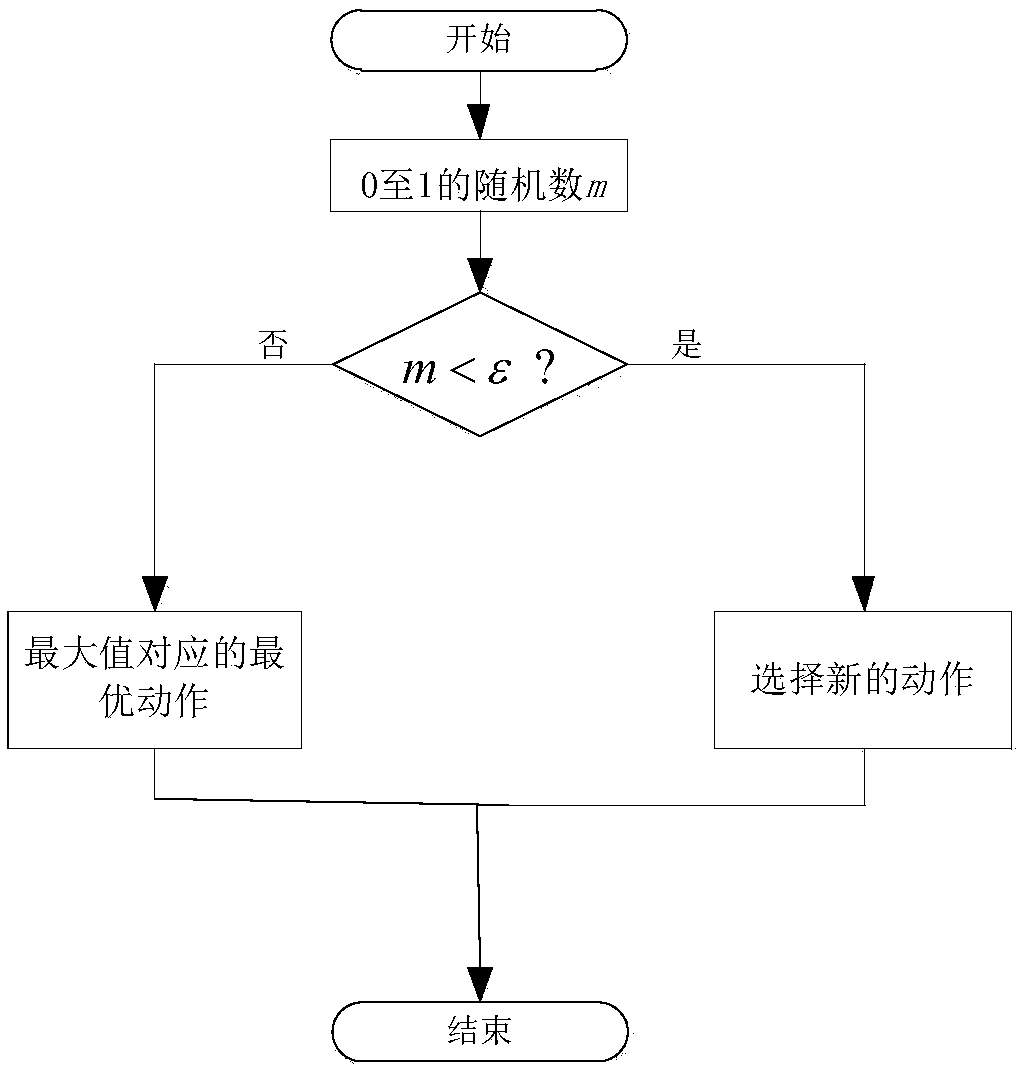

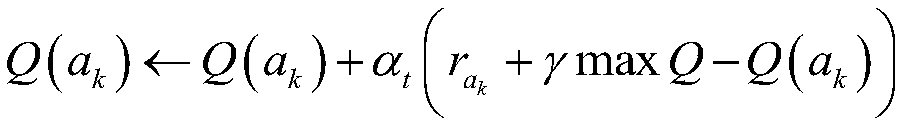

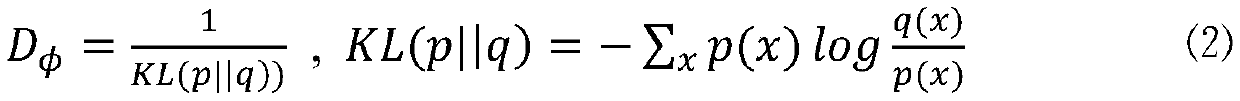

Wireless network distributed autonomous resource allocation method based on stateless Q learning

ActiveCN108112082AExcellent throughputHigh level techniquesWireless communicationWireless mesh networkPrior information

The invention discloses a wireless network distributed autonomous resource allocation method based on stateless Q learning. First, the number of channels and the transmitted power are taken as a set of actions, and a set of actions is randomly selected to calculate the actual network throughput; then the ratio of the actual network throughput and the theoretical throughput is taken as a reward after the action selection, and an action value function is updated according to the reward; and finally, the iterative adjustment of the actions can find the maximum solution of a cumulative reward value function, and the corresponding action thereof can reach the optimum performance of the wireless network. The method provided by the invention allows each node to autonomously perform channel allocation and transmitted power control to maximize the network throughput under the condition that prior information such as resource configuration of other nodes in the network is unknown.

Owner:BEIJING UNIV OF TECH

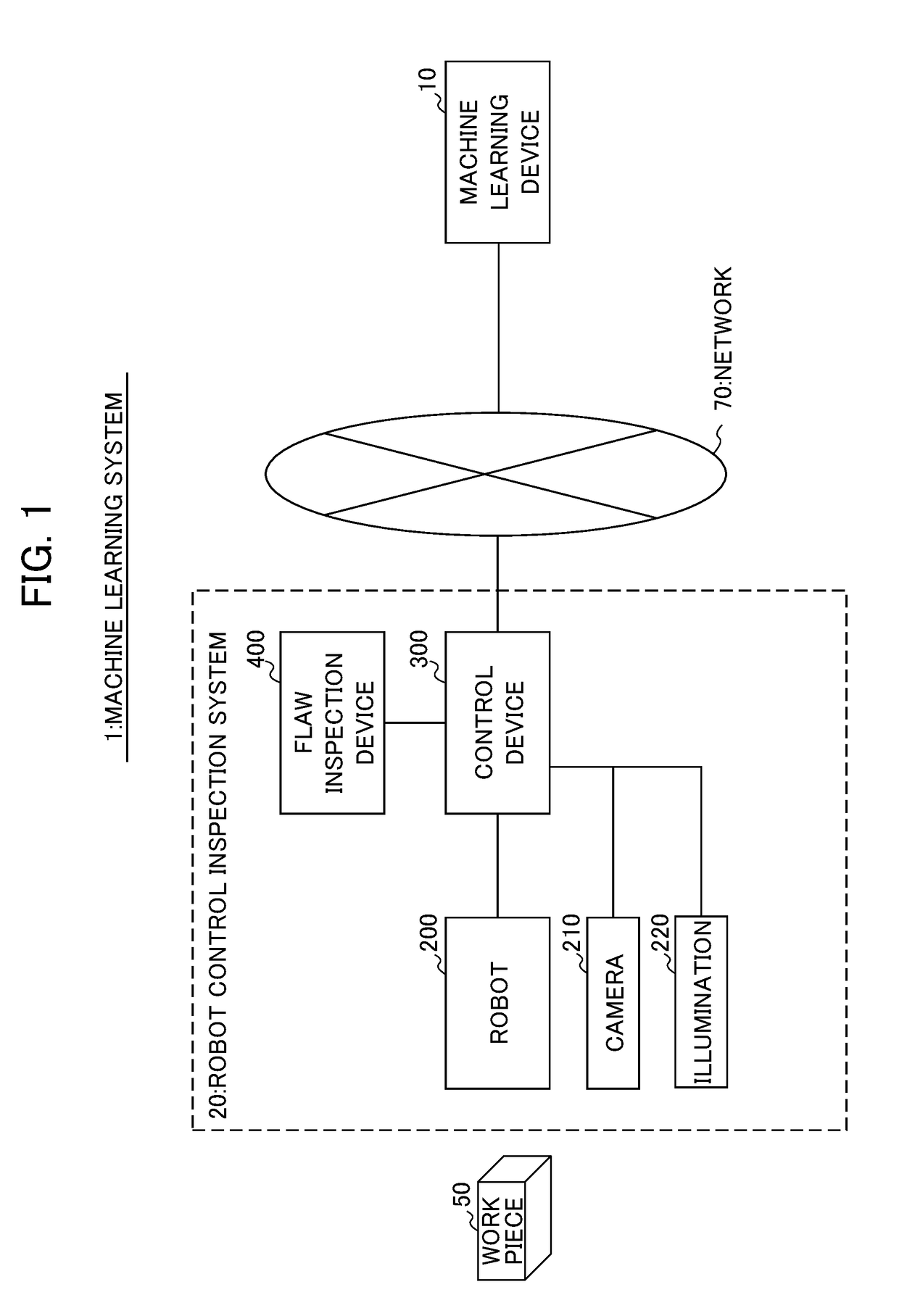

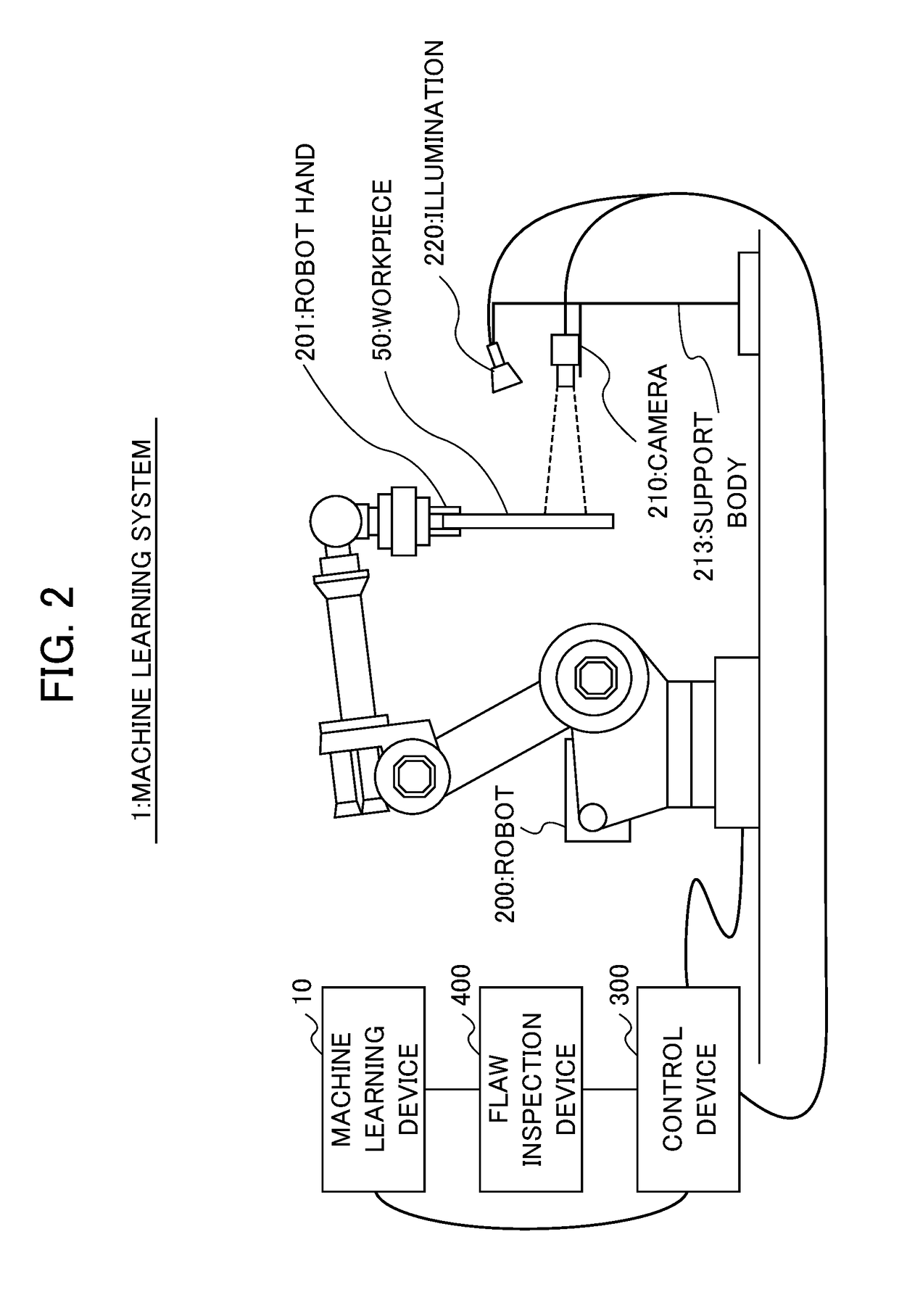

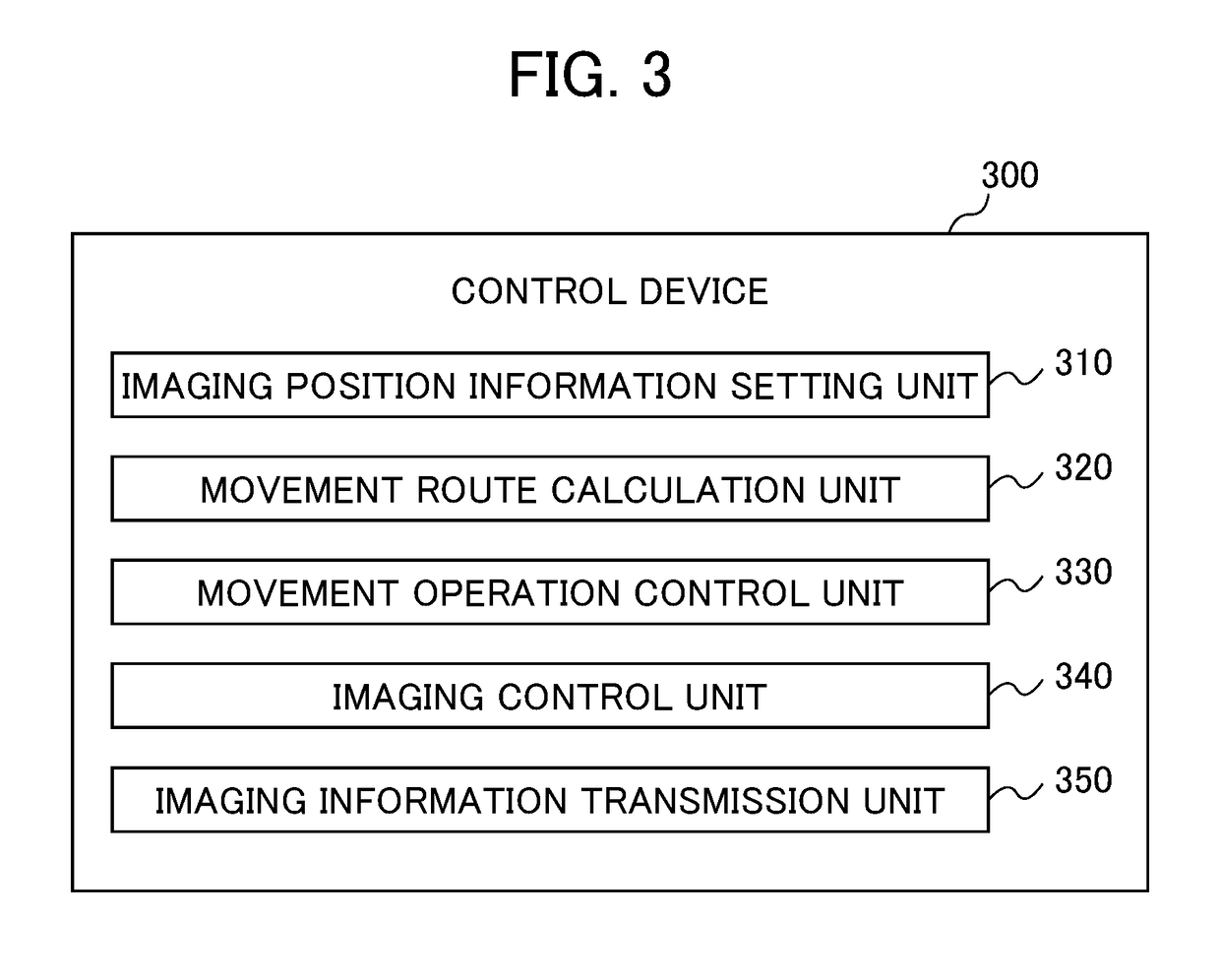

Machine learning device, robot control system, and machine learning method

ActiveUS20180370027A1Optimize the numberShorten cycle timeProgramme controlImage enhancementPattern recognitionRobot hand

A machine learning device that acquires state information from a robot control inspection system. The system has a robot hand to hold a workpiece or camera. The state information includes a flaw detection position of the workpiece, a movement route of the robot hand, an imaging point of the workpiece, and the number of imaging by the camera. A reward calculator calculates a reward value in reinforcement learning based on flaw detection information including the flaw detection position. A value function updater updates an action value function by performing the reinforcement learning based on the reward value, the state information, and the action.

Owner:FANUC LTD

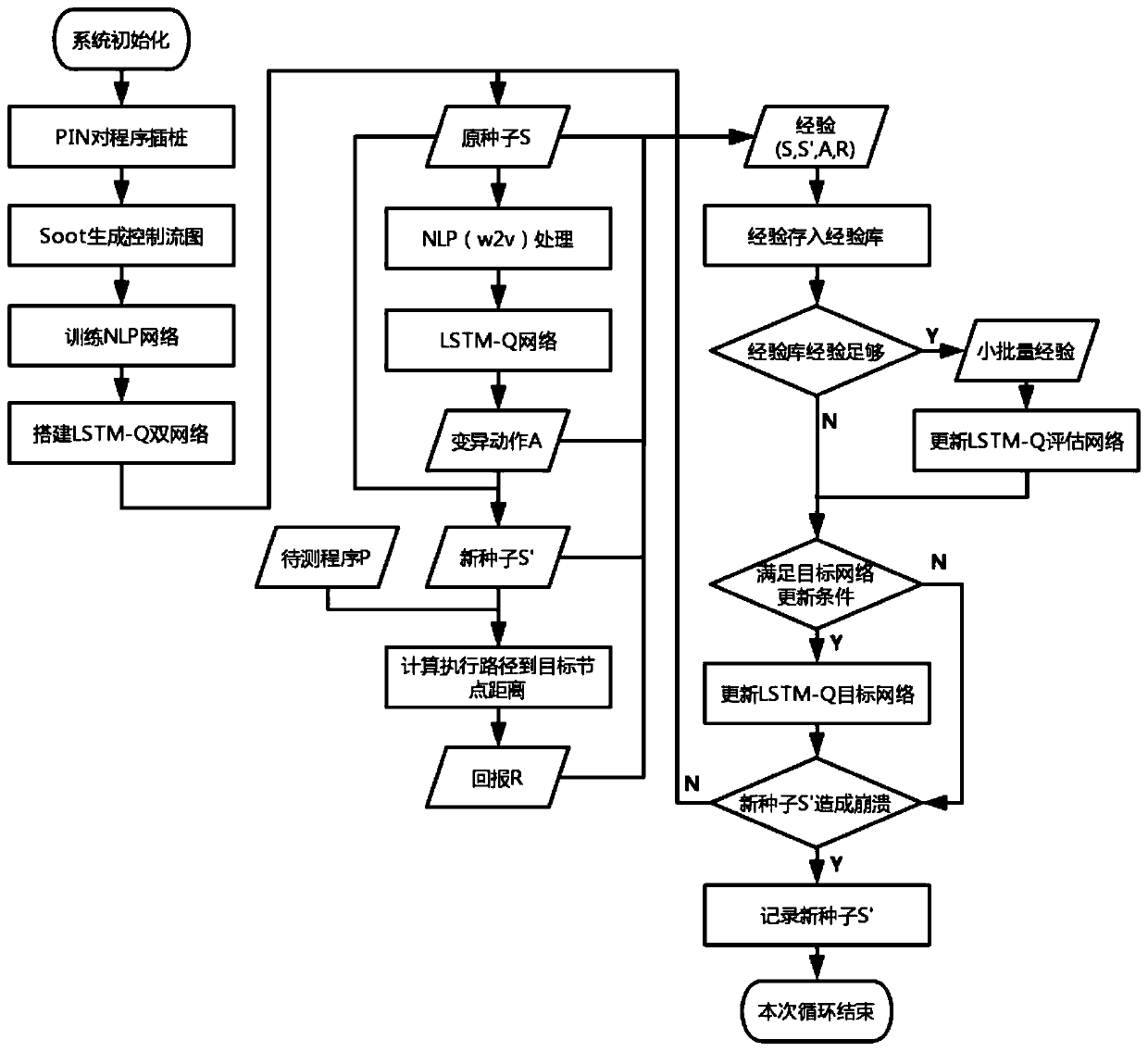

Vulnerability detection method based on deep reinforcement learning and program path instrumentation

ActiveCN110008710AGood effectGood orientationPlatform integrity maintainanceNeural architecturesNODALCode coverage

The invention discloses a vulnerability detection method combining deep reinforcement learning and a program path instrumentation technology. The method includes: obtaining a path corresponding to input from a control flow diagram of a to-be-tested program in an instrumentation mode; calculating and obtaining a reward value according to the path and a target node in the control flow graph, using the reward value to train a neural network of deep reinforcement learning so as to select a variation action; and performing variation on the input of the to-be-tested program according to the variation action to obtain an updated input and an updated path thereof, calculating an updated reward value, training the neural network again and performing input variation processing, and performing circulating until the to-be-tested program is collapsed to obtain a corresponding input vulnerability. According to the method, the accuracy is higher, the input corresponding to the path where the vulnerability is located can be obtained more efficiently, and compared with a traditional fuzzy test, the method is higher in detection speed and has a certain code coverage amount.

Owner:SHANGHAI JIAO TONG UNIV +1

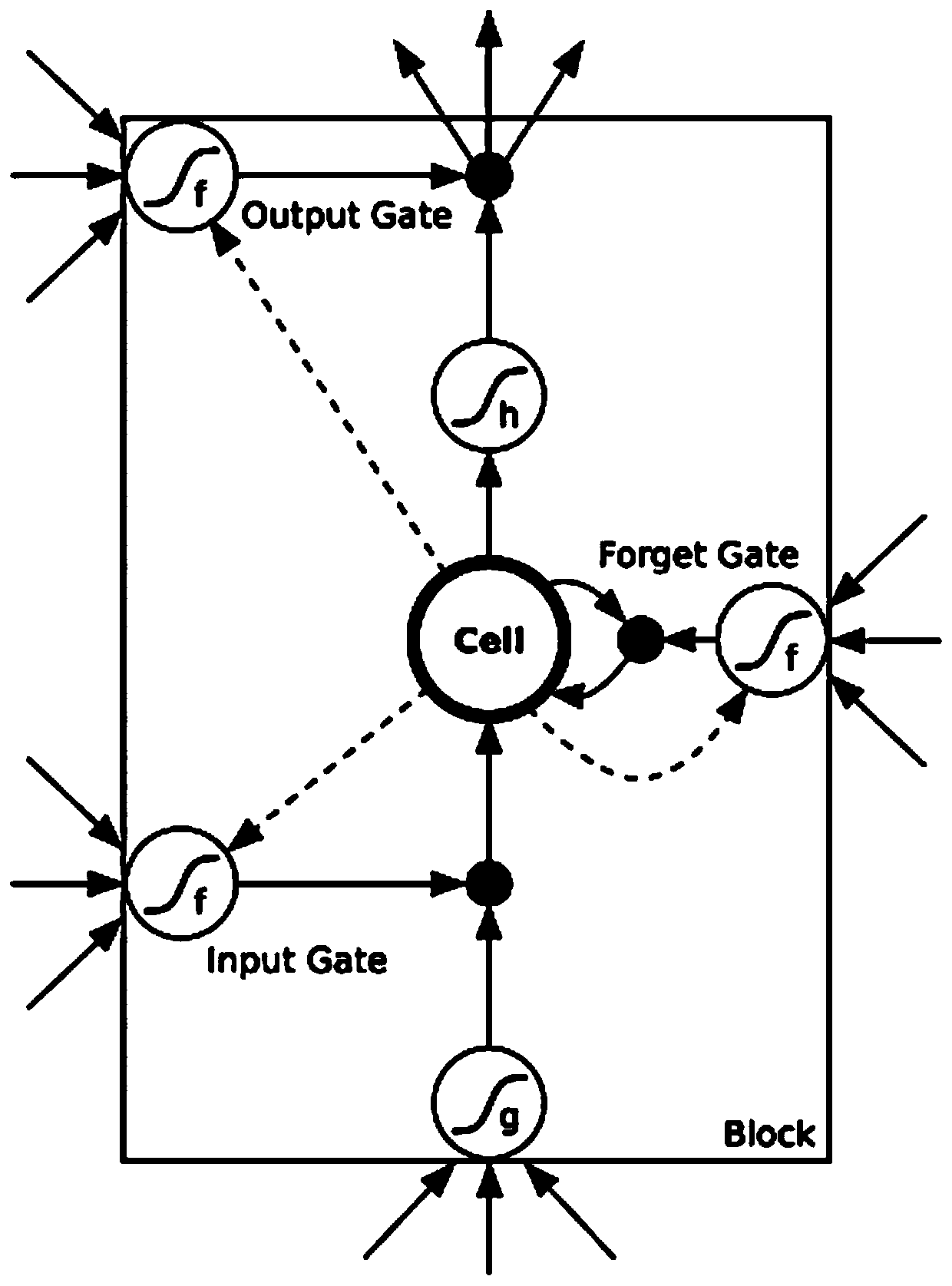

An industrial data generation method based on a neural network model

InactiveCN109886403AIncrease collection capacityReduce computational complexityNeural architecturesNeural learning methodsDiscriminatorExtensibility

The invention provides an industrial data generation method based on a neural network model, and the method comprises the following steps of generating a large-scale data set by taking a time sequencegeneration process as a continuous decision-making process through an identification feedback mechanism based on the idea of a generative adversarial network; wherein the discriminator extracts timesequence characteristics and evaluates the importance of each characteristic to the sequence, and the quality of the generated data is measured by training a real sample and a generated sample, and the discriminator feeds back a corresponding reward value of a data probability generated in each step in the generator through time difference learning, the generator based on the LSTM is trained by astrategy gradient of reinforcement learning, and the reward value is provided by a return value of the discriminator. According to the industrial data generation method based on the neural network model, a new mode of generating big data from small data is realized, so that the mining analysis effect of data mining in a big data environment is improved, and the whole framework has the reliable performance and the excellent expandability.

Owner:CHINA UNIV OF PETROLEUM (EAST CHINA)

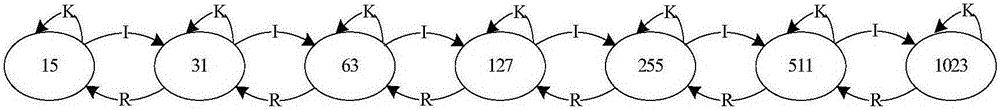

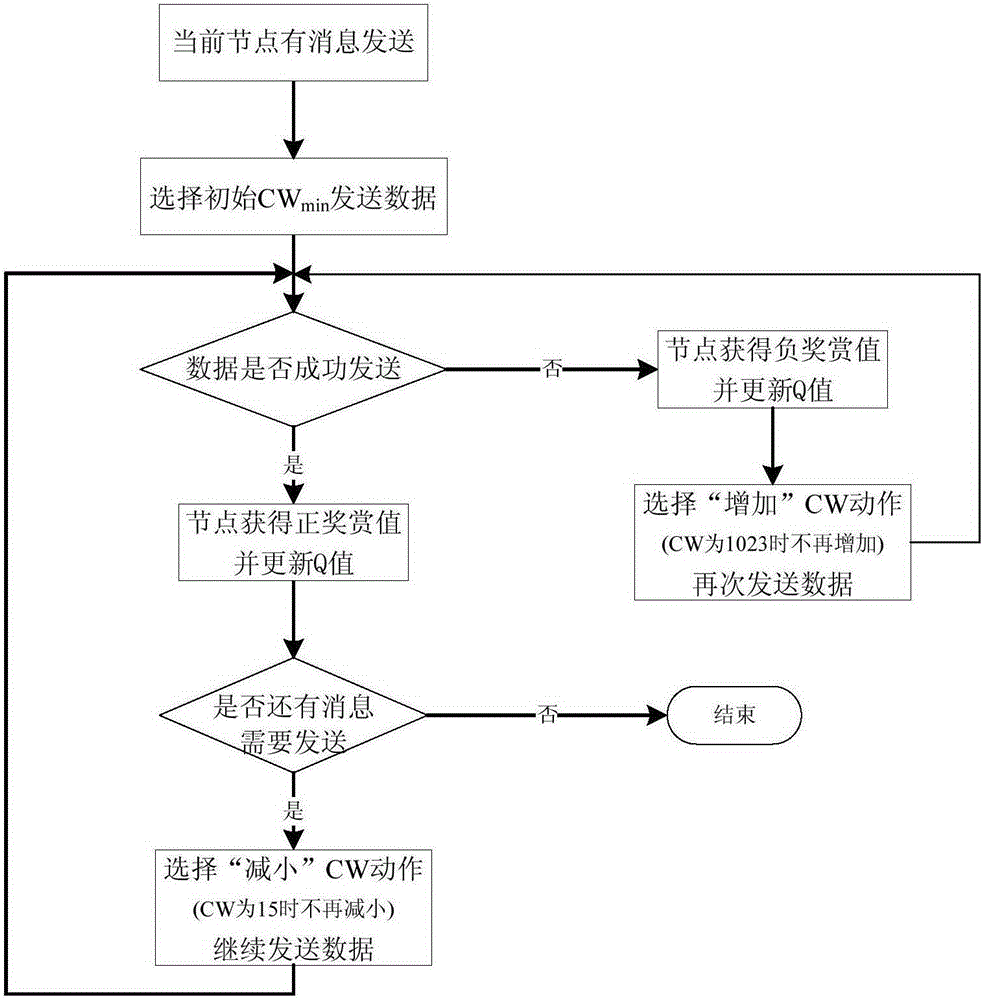

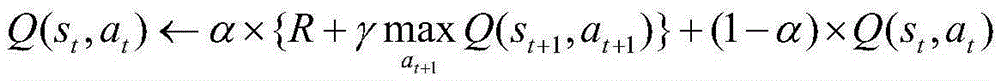

Realization method for Q learning based vehicle-mounted network media access control (MAC) protocol

ActiveCN105306176AIncrease the probability of successful deliveryReduce the number of dodgesError prevention/detection by using return channelNetwork traffic/resource managementLearning basedReward value

The invention discloses a realization method for a Q learning based vehicle-mounted network media access control (MAC) protocol. According to the method, a vehicle node uses a Q learning algorithm to constantly interact with the environment through repeated trial and error in a VANETs environment, and dynamically adjusts a competitive window (CW) according to a feedback signal (reward value) given by the VANETs environment, thereby always accessing the channel via the best CW (the best CW is selected when the reward value obtained from surrounding environment is maximum). Through adoption of the method, the data frame collision rate and transmission delay are lowered, and fairness of the node in channel accessing is improved.

Owner:NANJING NANYOU INST OF INFORMATION TECHNOVATION CO LTD

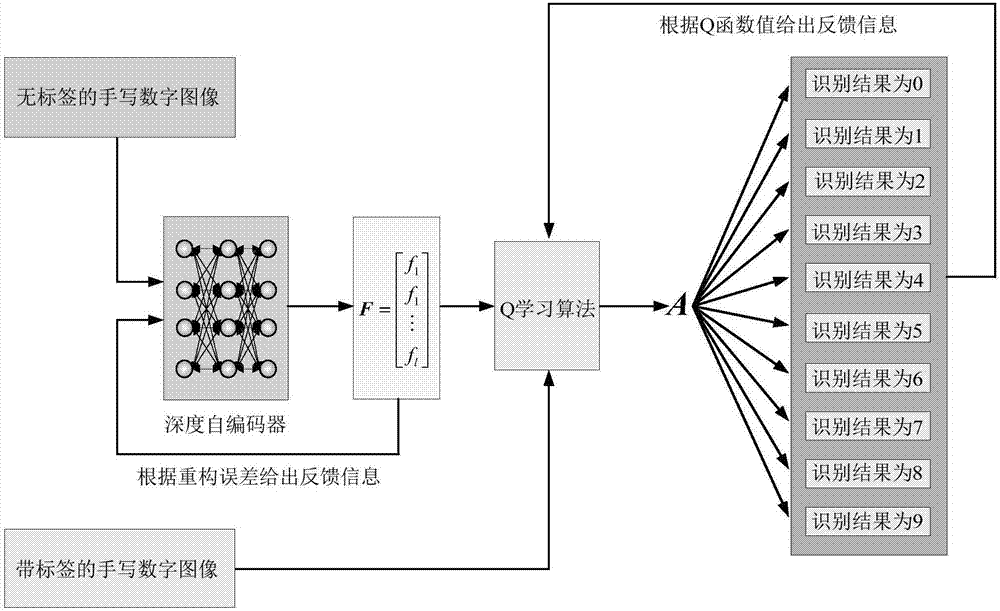

Handwritten numeral recognition method based on deep Q learning strategy

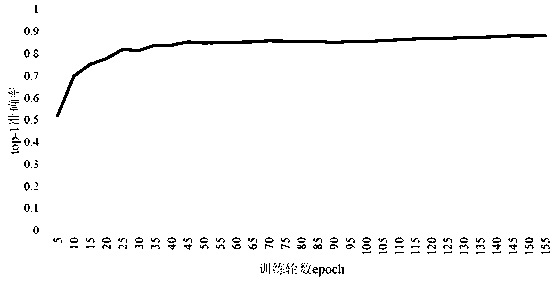

ActiveCN107229914AStrong decision-making abilityHigh precisionDigital ink recognitionDeep belief networkFeature extraction

The invention provides a handwritten numeral recognition method based on a deep Q learning strategy, belongs to the field of artificial intelligence and pattern recognition and solves the problem of low identification precision of a handwritten numeral standard object MNIST database. The method is characterized by, to begin with, carrying out abstract feature extraction on an original signal through a deep auto-encoder (DAE), Q learning algorithm using coding characteristics of the DAE for the original signal as a current state; then, carrying out classification and identification on the current state to obtain a reward value, and returning the reward value to the Q learning algorithm to carry out iterative update; and maximizing the reward value to finish high-precision identification of handwritten numerals. The method combines deep learning having perception capability and reinforcement learning having decision-making ability and forms a Q deep belief network (Q-DBN) through combination of the deep auto-encoder and the Q learning algorithm, thereby improving identification precision, and meanwhile, reducing identification time.

Owner:BEIJING UNIV OF TECH

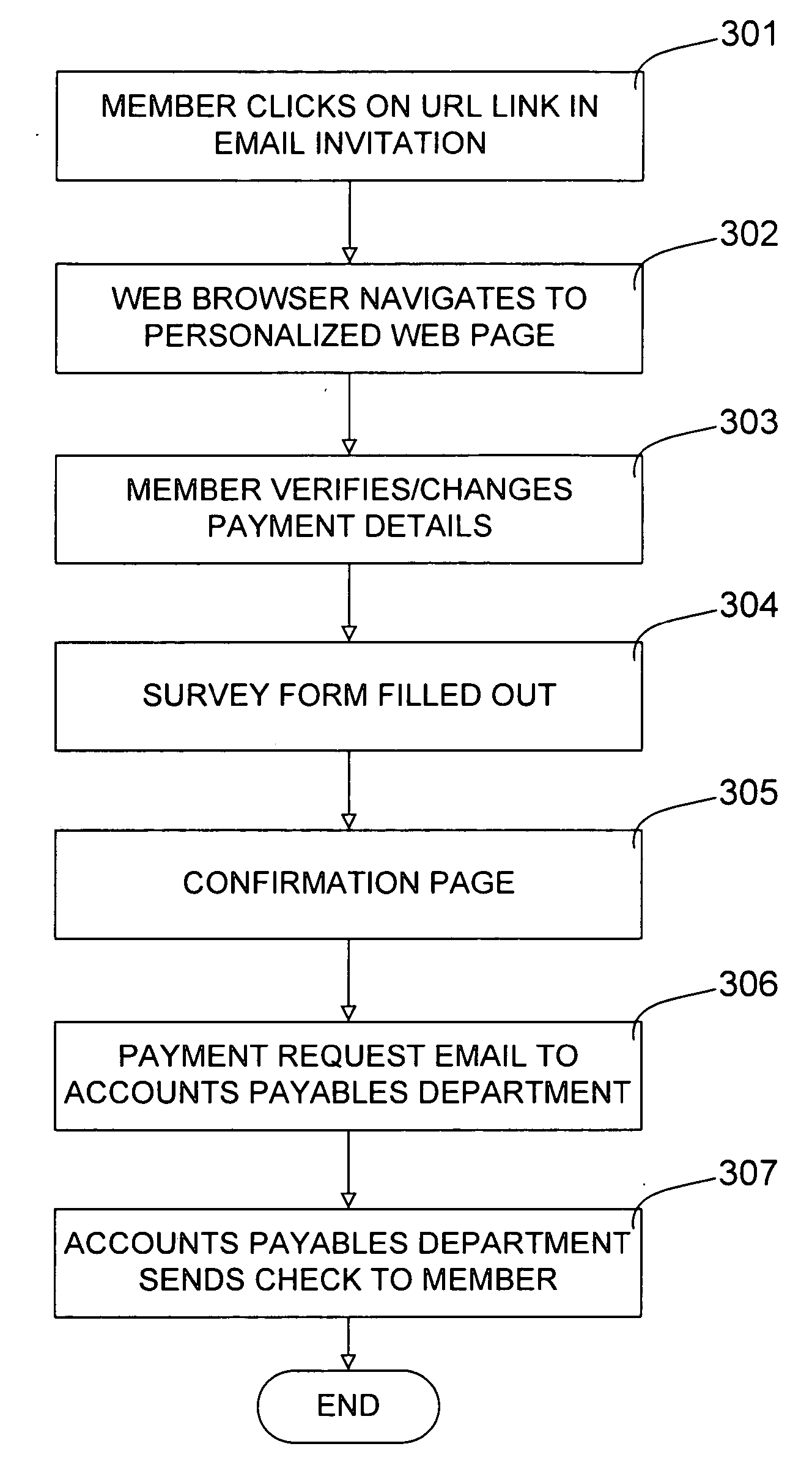

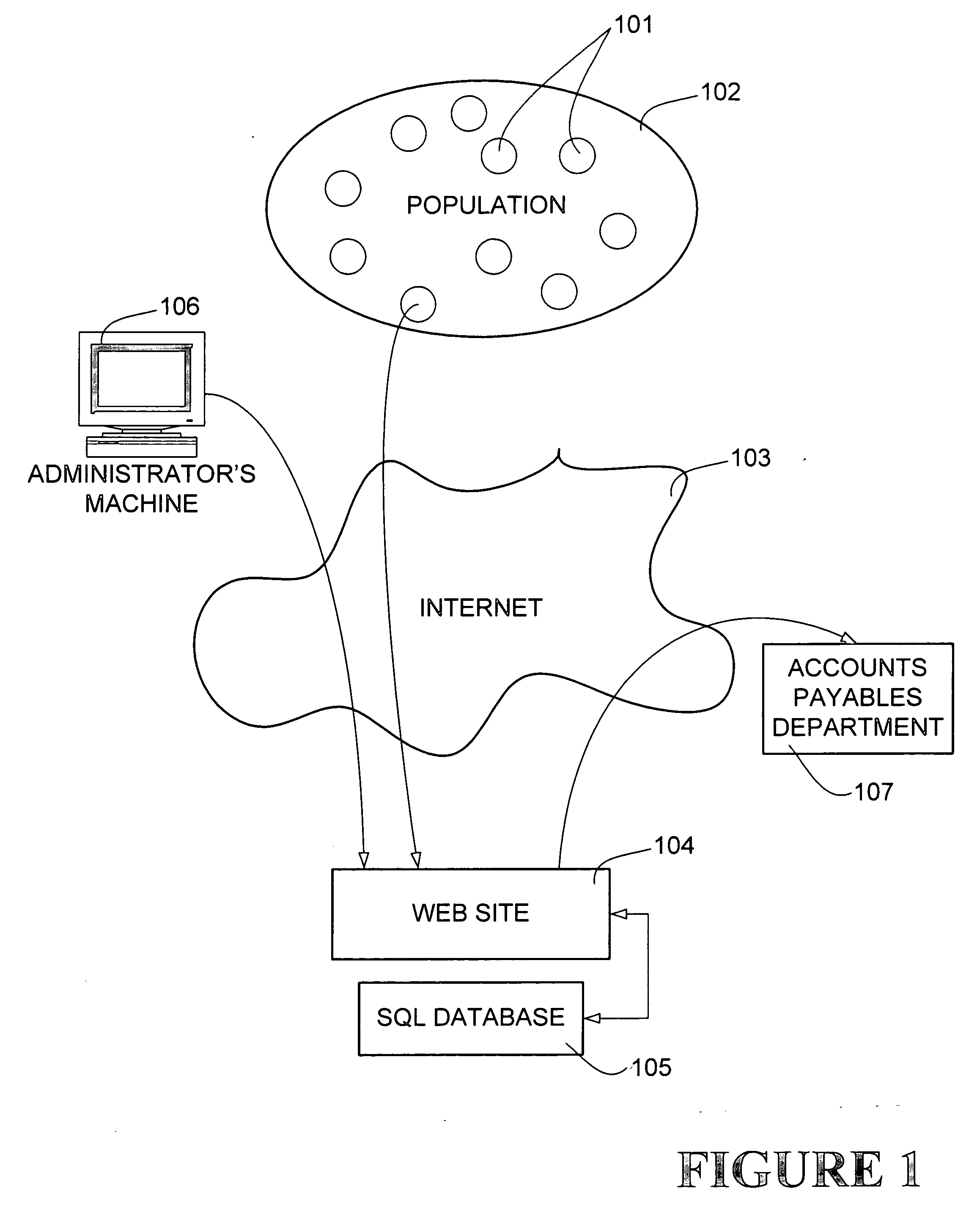

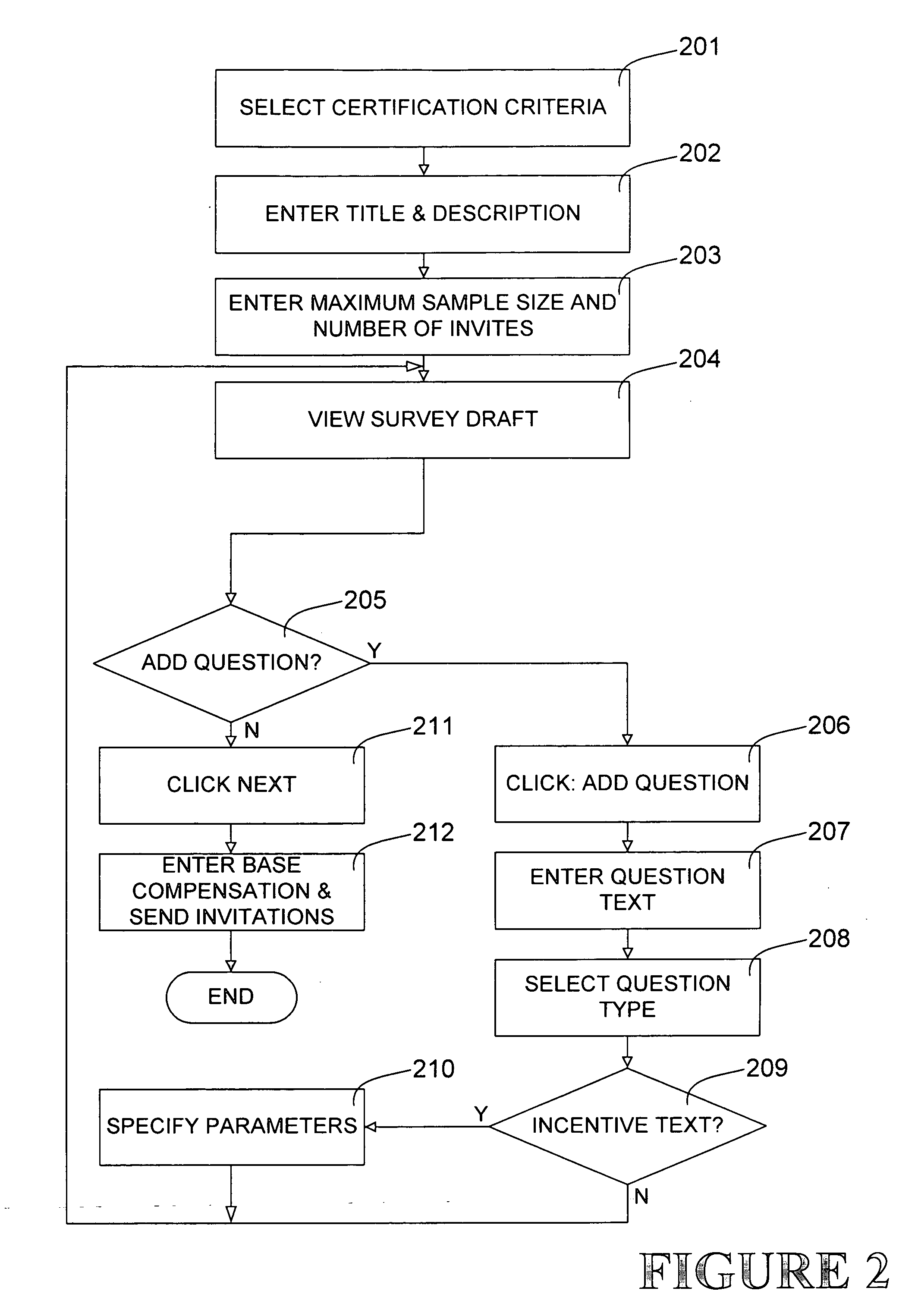

Methods and systems for providing real-time incentive for text submissions

In one aspect of a preferred embodiment, the invention comprises a system for providing an incentive for text entry, comprising: a server computer in communication with an electronic computer network; a database in communication with the server computer; an administrator computer in communication with the server computer; and an accounts payable computer in communication with the server computer; wherein the server computer is operable to transmit a form over the electronic computer network to a user computer; and wherein the form comprises at least one text input area; and at least one reward value display wherein a displayed reward value is related to an amount of text entered into the at least one text input area.

Owner:SILICON VALLEY BANK

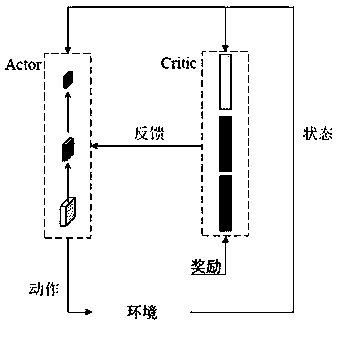

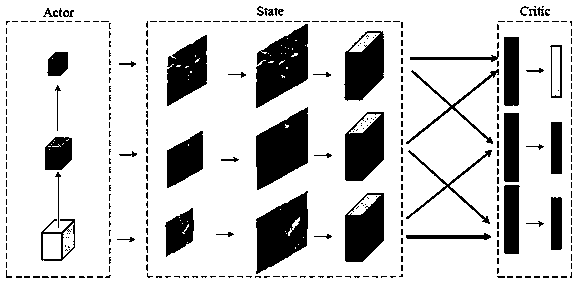

Image fine-grained recognition method based on reinforcement learning strategy

ActiveCN110135502AEasy to digGood attentionCharacter and pattern recognitionEnergy efficient computingExperimental validationData set

The invention provides a fine-grained recognition method based on reinforcement learning and cross bilinear features, aiming at solving the problem that an area with the best discrimination capabilityof a fine-grained image is difficult to mine. An actor-Critic strategy is used to mine the most attention-grabbing areas of an image. An Actor module is responsible for generating top M candidate areas with the best discrimination capability. A Critic module evaluates the state value of the action by utilizing the cross bilinear characteristic; and then calculates a reward value of the action under the current state by utilizing a sorting one-type reward, further obtains a value advantage, feeds the value advantage back to the Actor module, updates the output of the region with the most attention, and finally predicts the fine-grained category by using the region with the most discrimination capability in combination with the original image characteristics. According to the method, the region with the most attention of the fine-grained image can be better mined. It is verified by experiments that the recognition accuracy of the present invention on the CUB-200-2011 public data set isimproved compared with the existing methods, and the high fine-grain recognition accuracy rate is achieved respectively.

Owner:SOUTHEAST UNIV

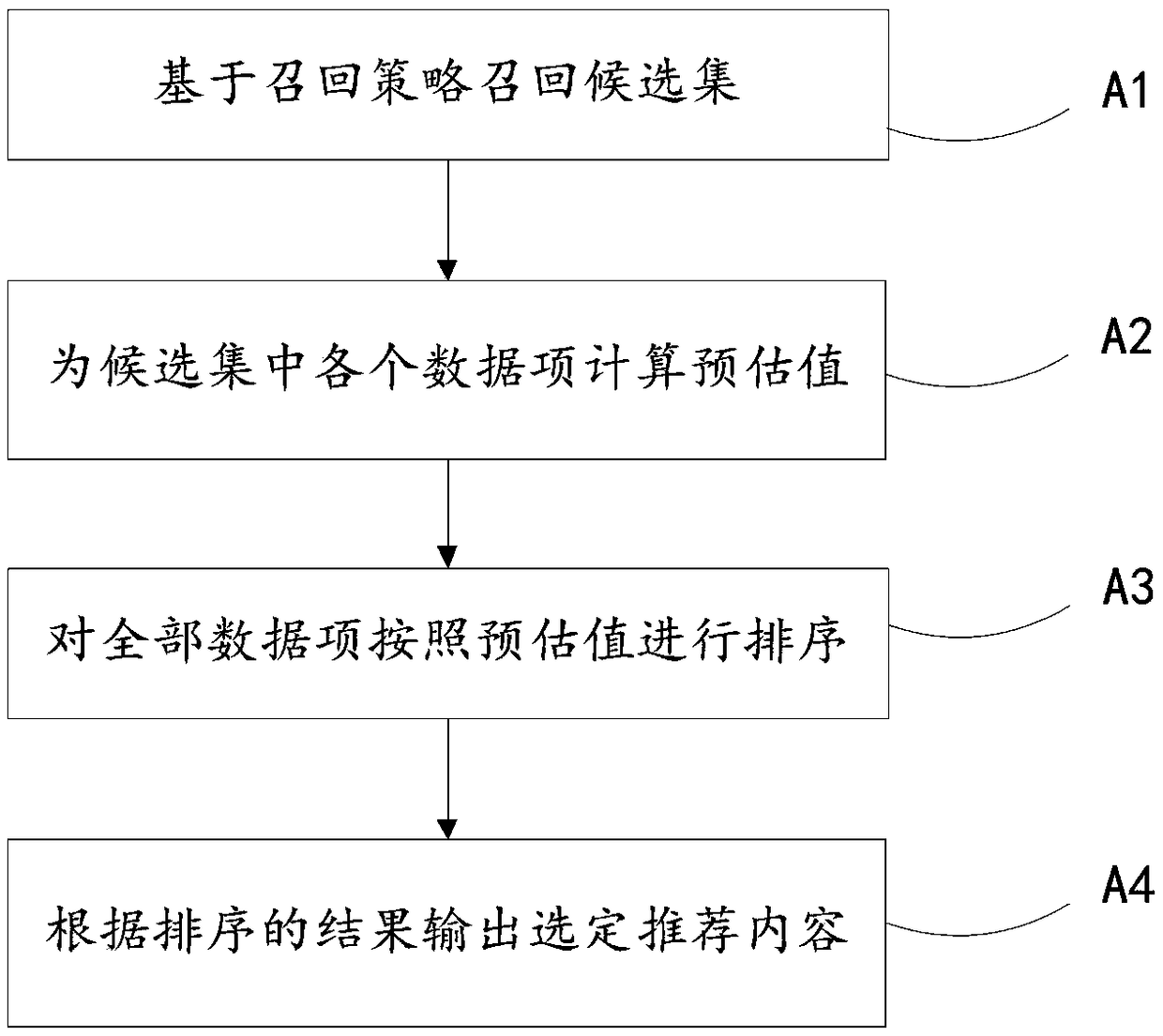

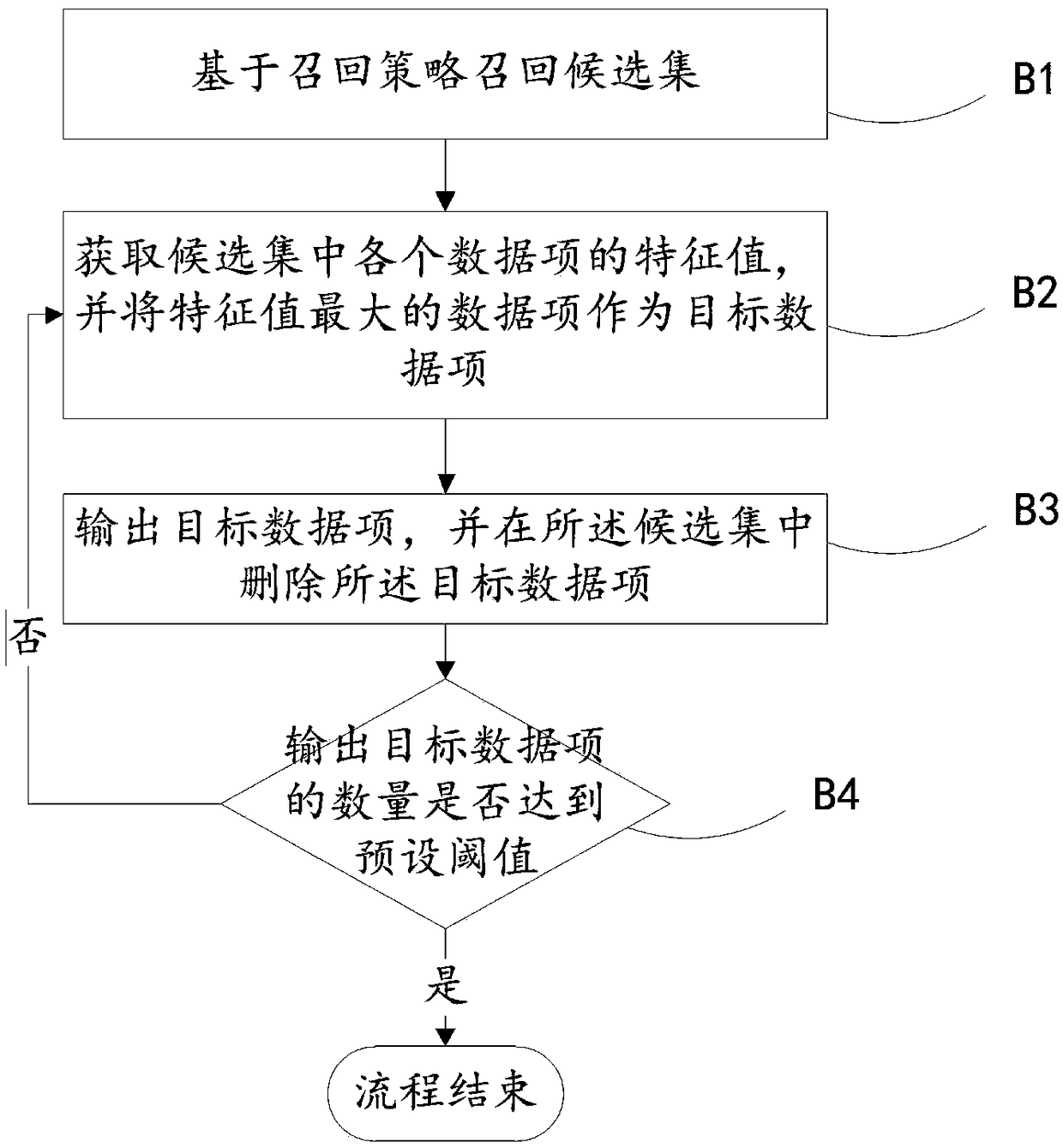

Content recommendation method and apparatus based on depth reinforcement learning

ActiveCN109062919AExcellent recommendation resultInspire browsing intentComputing modelsSpecial data processing applicationsDepth enhancementRanking

The invention provides a content recommendation method and a device based on depth reinforcement learning. The method comprises the following steps: training a depth reinforcement function to obtain atraining result for a parameter set in the depth reinforcement function; obtaining an ordered candidate set of recommended contents and a number of pieces of selected recommended contents; based on the training results of the parameter set, the comprehensive reward value of each recommended content in the candidate set being calculated by using the depth enhancement function; a comprehensive award value for each recommendation content being associated with the recommendation content and other recommendations ranked after the recommendation content; according to the result of calculation, selecting the recommended contents as the selected contents and outputting them in order. The invention synthetically considers the recommended content and the ranking of the recommended content by usingthe method of depth reinforcement learning, thereby obtaining a better recommended result.

Owner:云南腾云信息产业有限公司

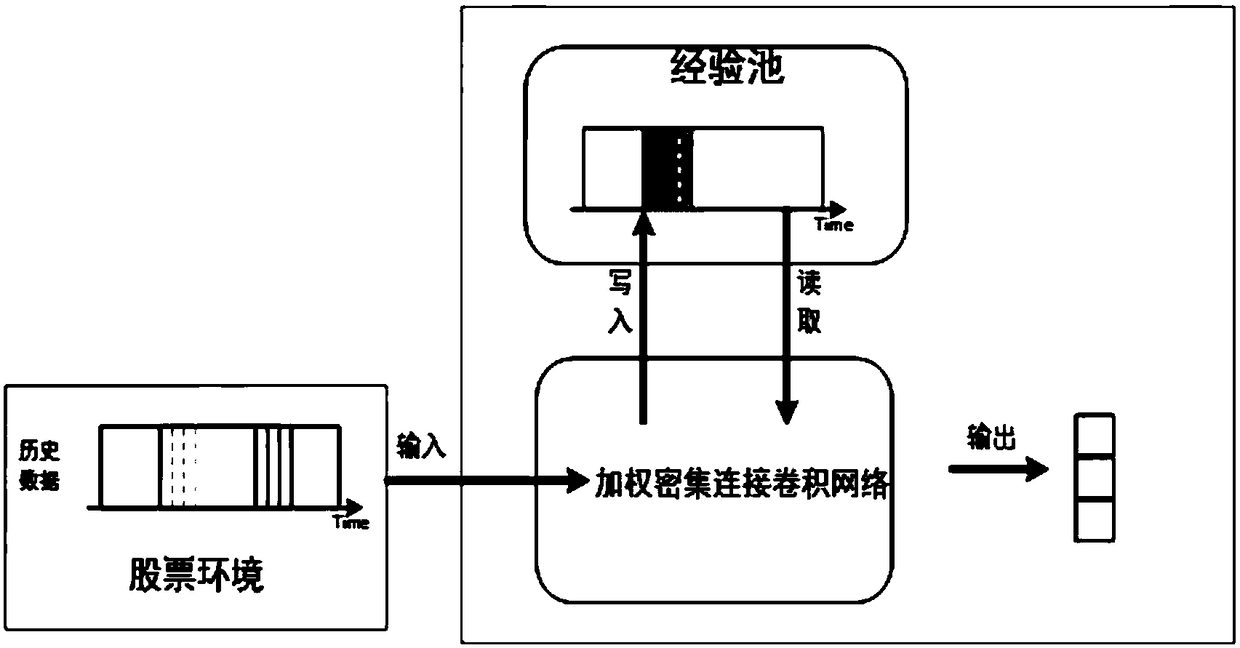

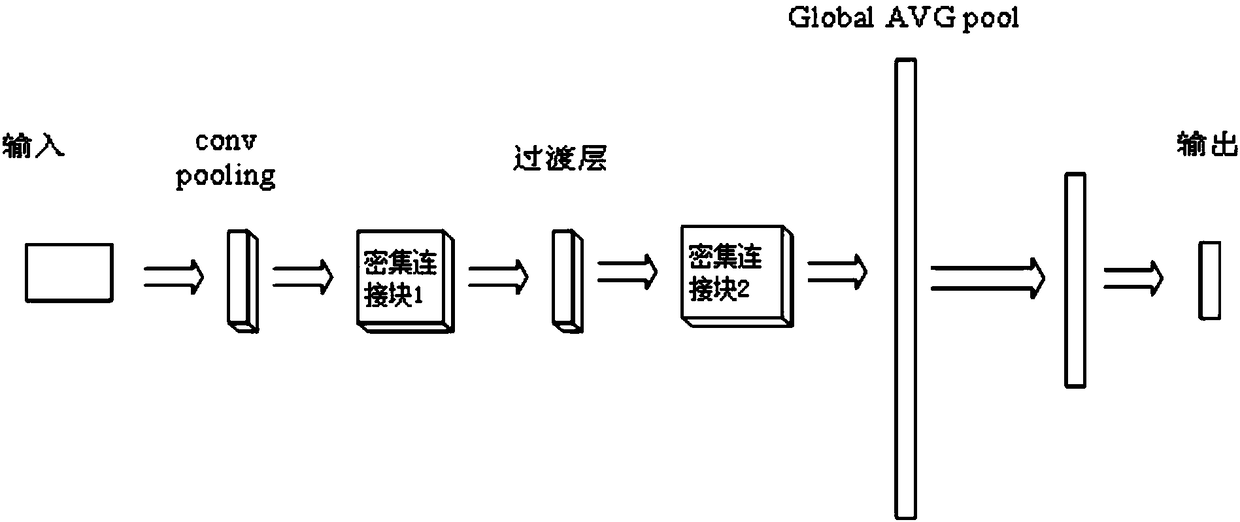

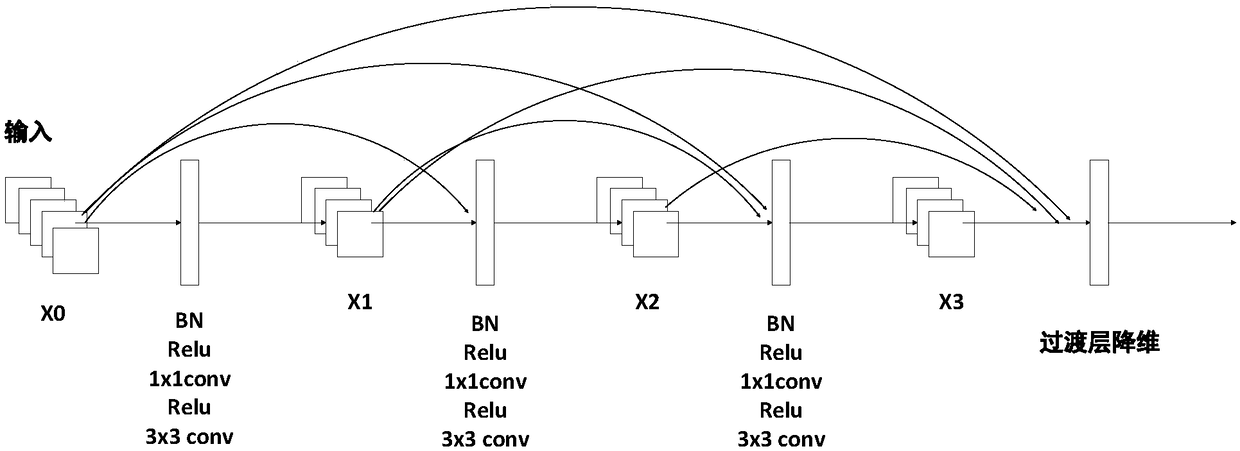

Stock investment method based on weighted dense connection convolution neural network deep learning

InactiveCN108090836ASmall sizeMaximize featureFinanceNeural architecturesFeature extractionDecision taking

The invention relates to a stock investment method based on weighted dense connection convolution neural network deep learning. According to the method, feature extraction is conducted on input stockdata through weighted dense connection convolution, different initial weight values are endowed with dynamic adjustment weight values in the training process through cross-layer connection and featurepatterns of different layers, the feature patterns are more effectively used, information flow between all layers in the network is increased, and the problem that the layer is too deep and thus convergence of gradient disappearance results in the training process is difficult is solved to some extent. Through the Q value output by the weighted dense connection convolution network, appropriate stock trading action is selected, a corresponding reward value is obtained, the reward value and states are stored in a experience pool, at the time of training, batch sampling is randomly conducted inthe experience pool, and the weighted dense connection convolution neural network is used for approaching a Q value function of the Q-learning algorithm. By directly learning the environmental factorsof a stock market, a trading decision is directly given.

Owner:NANJING UNIV OF INFORMATION SCI & TECH

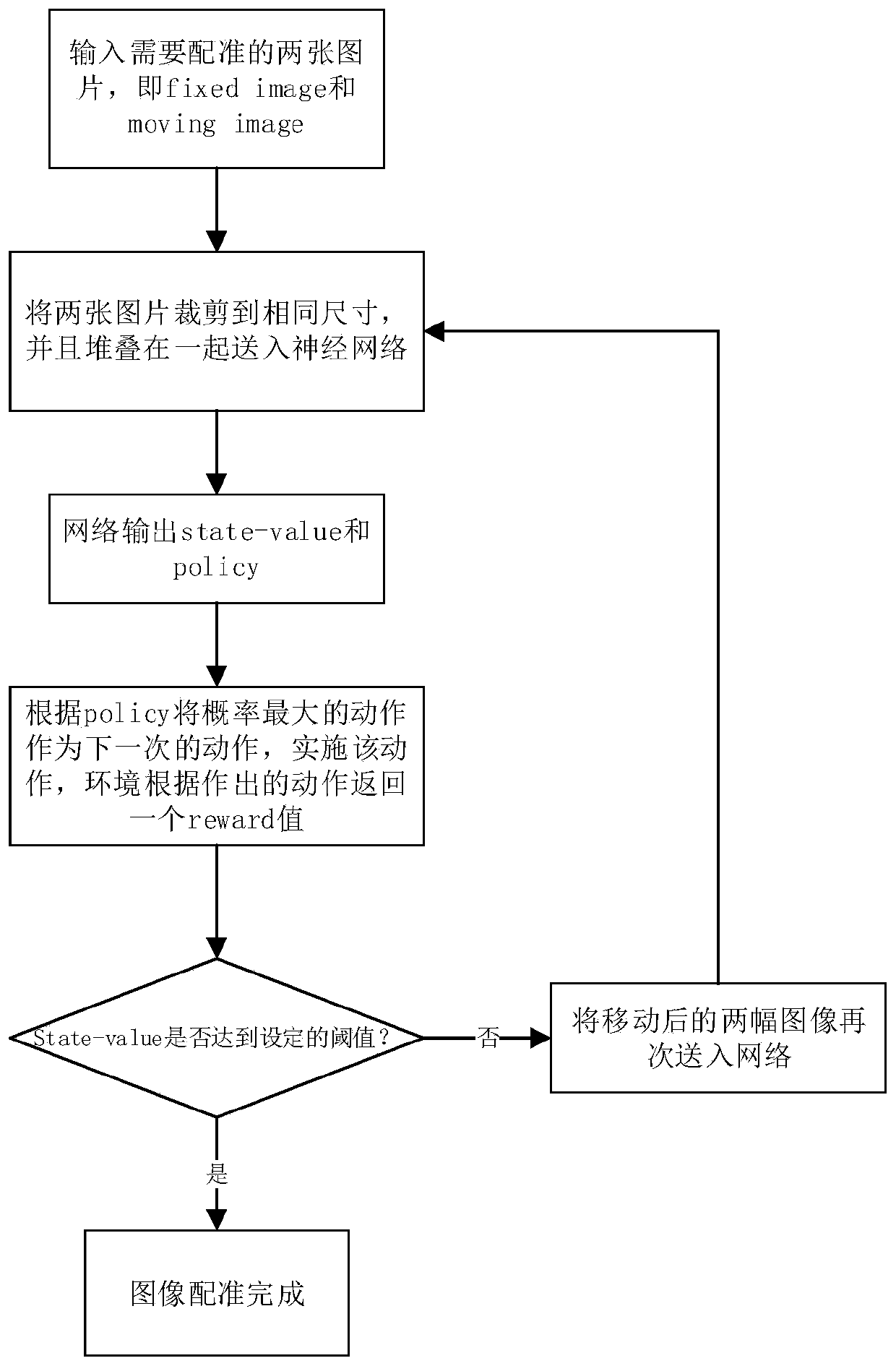

Image multi-mode registration method based on asynchronous deep reinforcement learning

ActiveCN110211165ARegistration stabilizationImprove registrationImage enhancementImage analysisAlgorithmReward value

The invention discloses an image multi-mode registration method based on asynchronous deep reinforcement learning. The registration method comprises the following steps: inputting two pictures of different modes (such as CT and MRII) into a neural network in a stacking manner for processing, and outputting current state value information and probability distribution information of strategy actions; moving the dynamic image in the environment according to the probability distribution information and returning a reward value; judging whether the current network state value information reaches athreshold; and sampling the current image registration and outputting a final result. The method is based on reinforcement learning (A3C algorithm). According to the technical scheme, a user-defined reward function is provided, a cyclic convolution structure is added to make full use of space-time information, Monte Carlo is adopted for image registration, the registration performance is improved,and compared with an existing registration method, the registration result is closer to a standard registration image, and the registration of images with large differences is more stable.

Owner:CHENGDU UNIV OF INFORMATION TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com