Patents

Literature

80 results about "Reinforcement learning control" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Humanoid robot gait control method based on model correlated reinforcement learning

ActiveCN106094813AImprove convergence rateImprove control effectPosition/course control in two dimensionsLearning controllerSimulation

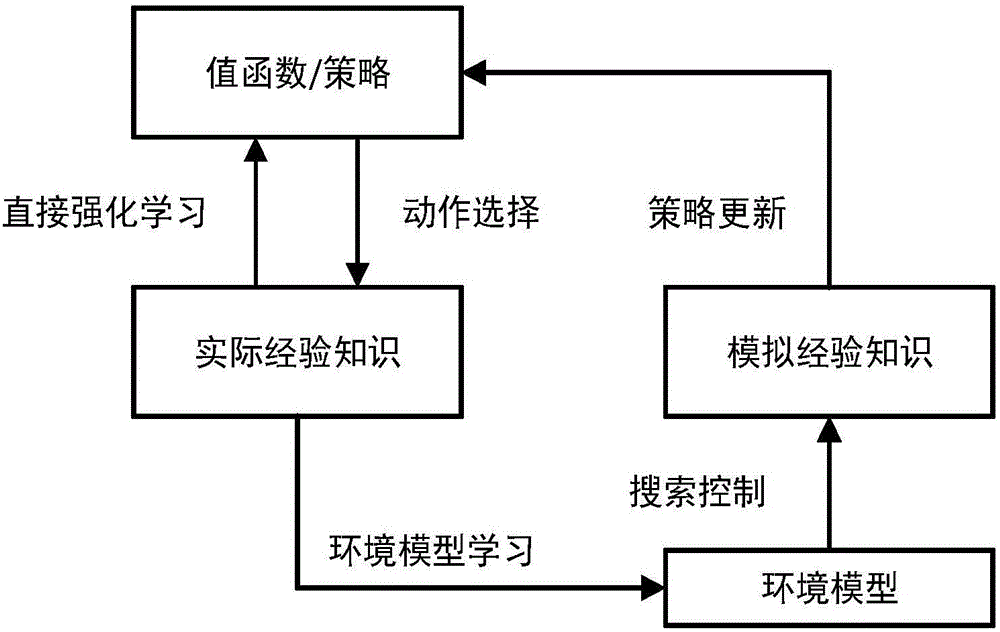

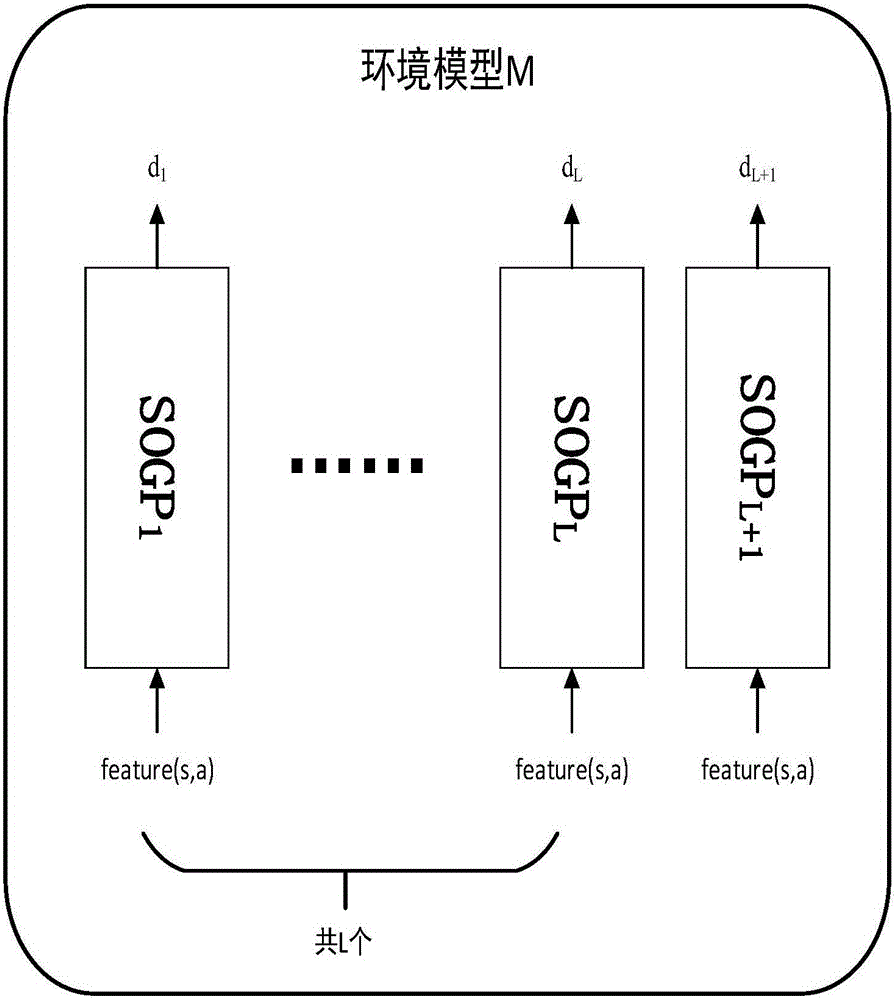

The invention discloses a humanoid robot gait control method based on model correlated reinforcement learning. The method comprises steps of 1) defining a reinforcement learning framework for a stable control task in forward and backward movements of a humanoid robot; 2) carrying out gait control of the humanoid robot with a model correlated reinforcement learning method based on the sparse online Gaussian process; and 3) improving a motion selection method of a reinforcement learning humanoid robot controller by a PID controller, and taking the improved operation as an optimizing initial point for the PID controller obtaining the motion selection operation of the reinforcement learning controller. The invention utilizes reinforcement learning to control gaits of the humanoid robot in movement, and thus the movement control of the humanoid robot can be automatically adjusted via interaction with the environment, a better control effect is achieved, and the humanoid robot is enabled to be stable in forward and backward directions.

Owner:SOUTH CHINA UNIV OF TECH

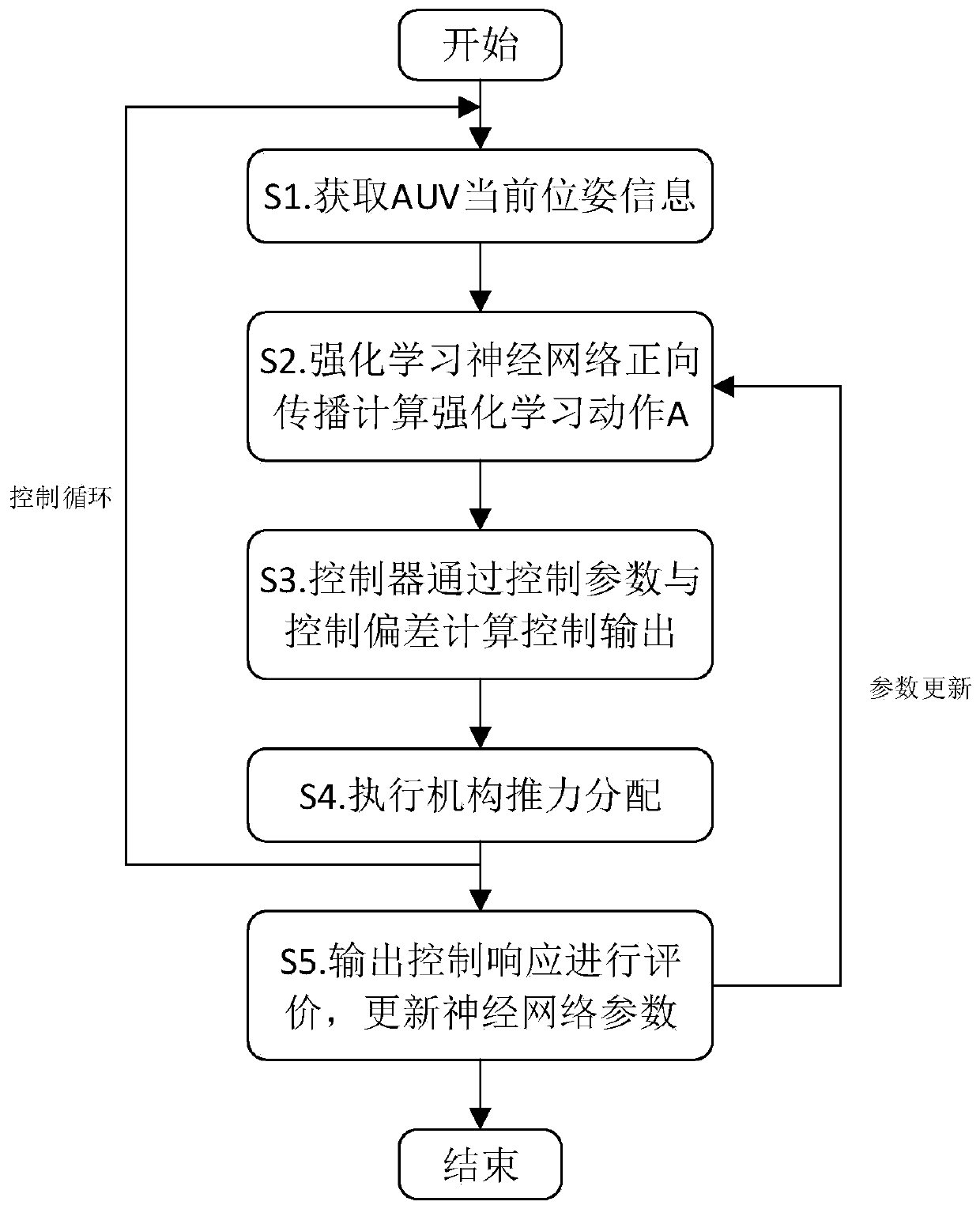

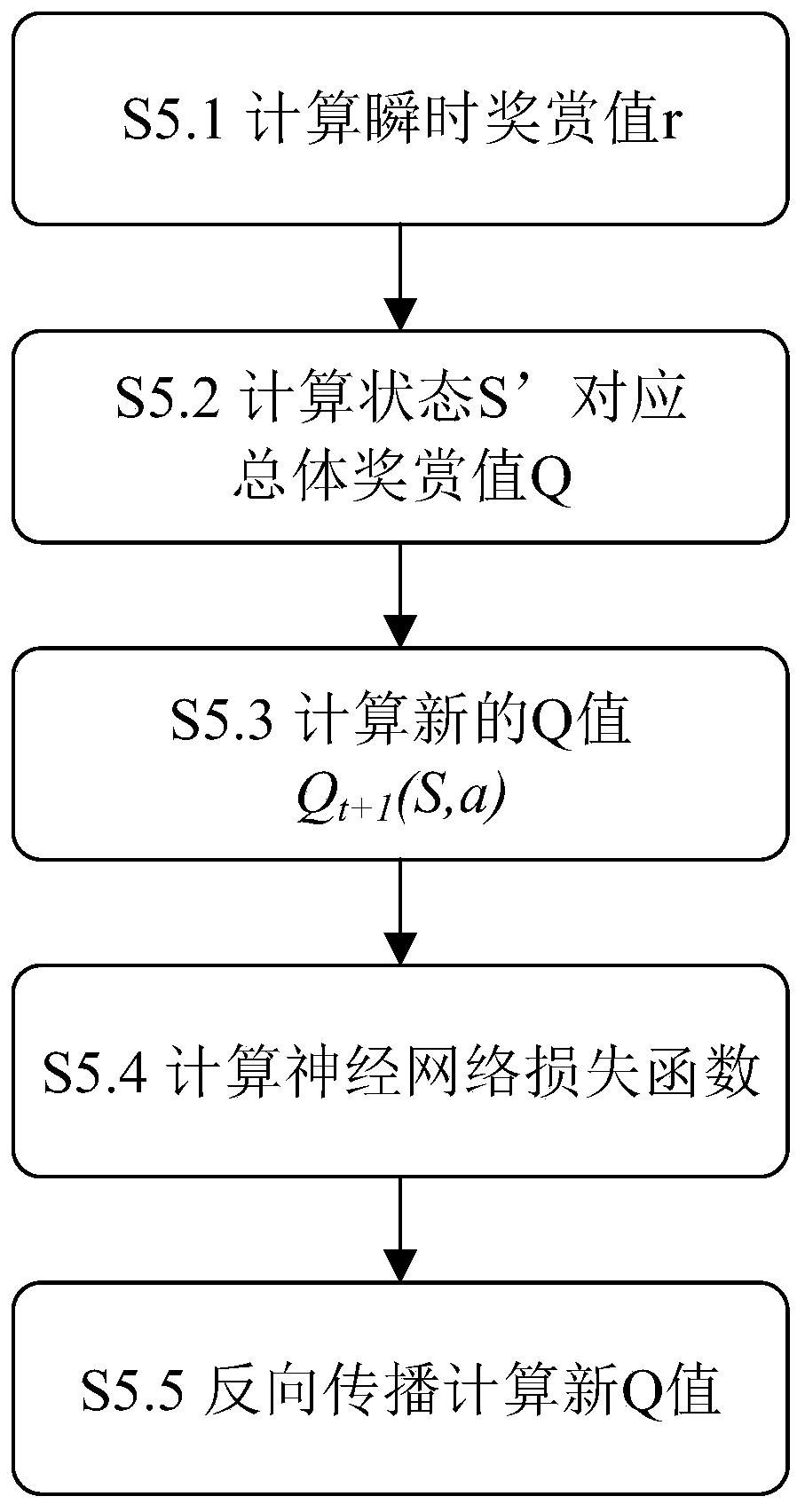

Neural network reinforcement learning control method of autonomous underwater robot

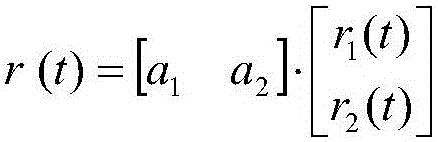

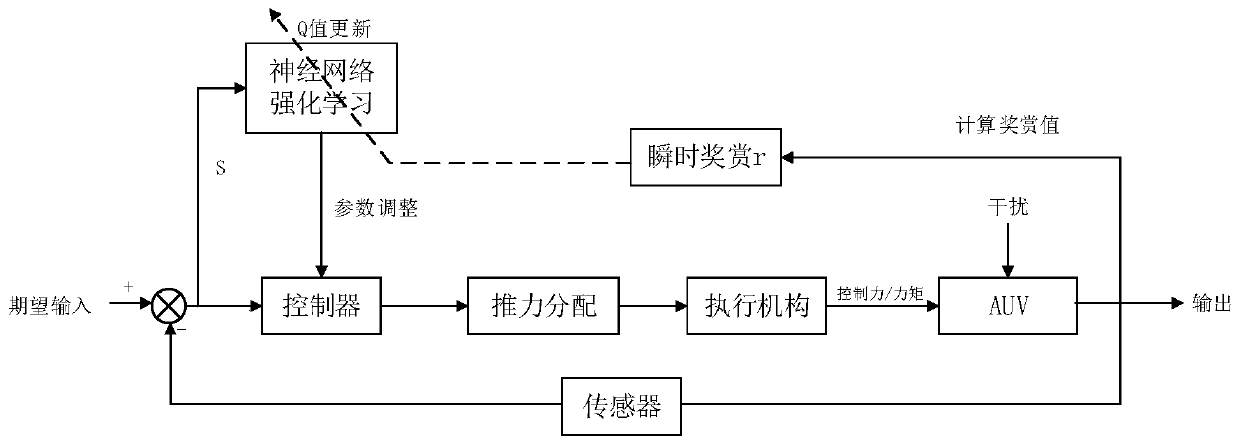

The invention provides a neural network reinforcement learning control method of an autonomous underwater robot. The neural network reinforcement learning control method of the autonomous underwater robot comprises the steps that current pose information of an autonomous underwater vehicle (AUV) is obtained; quantity of a state is calculated, the state is input into a reinforcement learning neuralnetwork to calculate a Q value in a forward propagation mode, and parameters of a controller are calculated by selecting an action A; the control parameters and control deviation are input into the controller, and control output is calculated; the autonomous robot performs thrust allocation according to executing mechanism arrangement; and a reward value is calculated through control response, reinforcement learning iteration is carried out, and reinforcement learning neural network parameters are updated. According to the neural network reinforcement learning control method of the autonomousunderwater robot, a reinforcement learning thought and a traditional control method are combined, so that the AUV judges the self motion performance in navigation, the self controller performance isadjusted online according to experiences generated in the motion, a complex environment is adapted faster through self-learning, and thus, better control precision and control stability are obtained.

Owner:HARBIN ENG UNIV

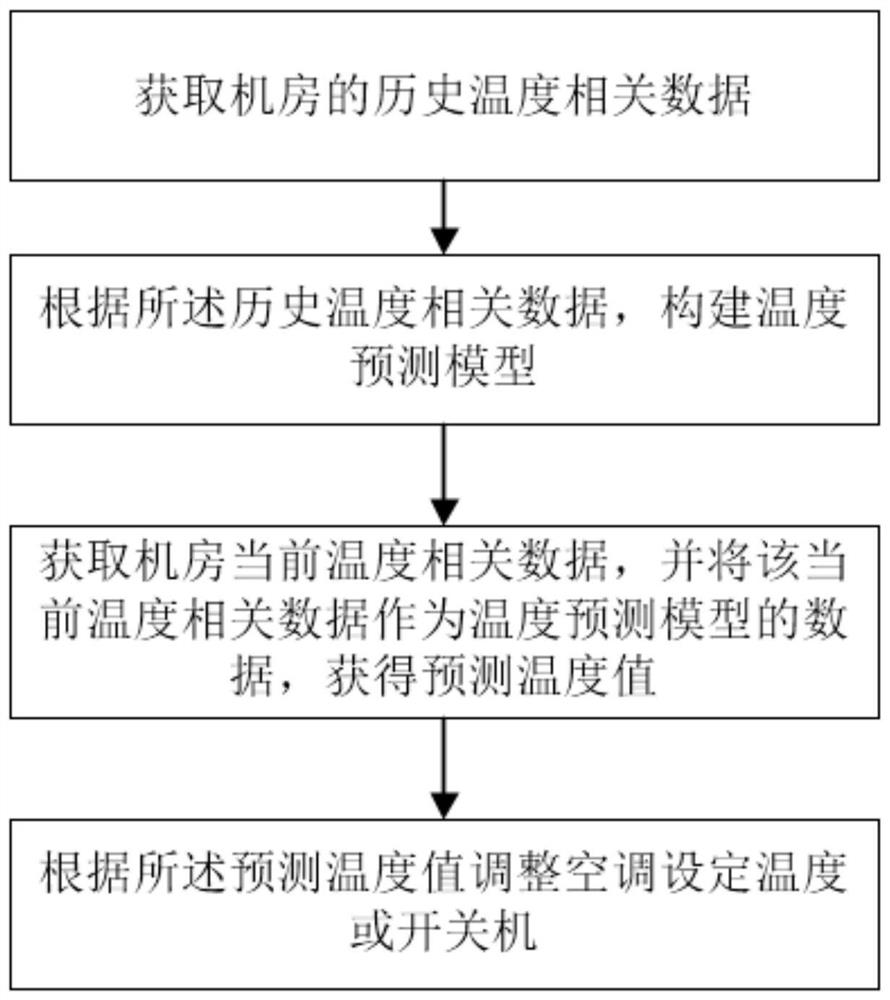

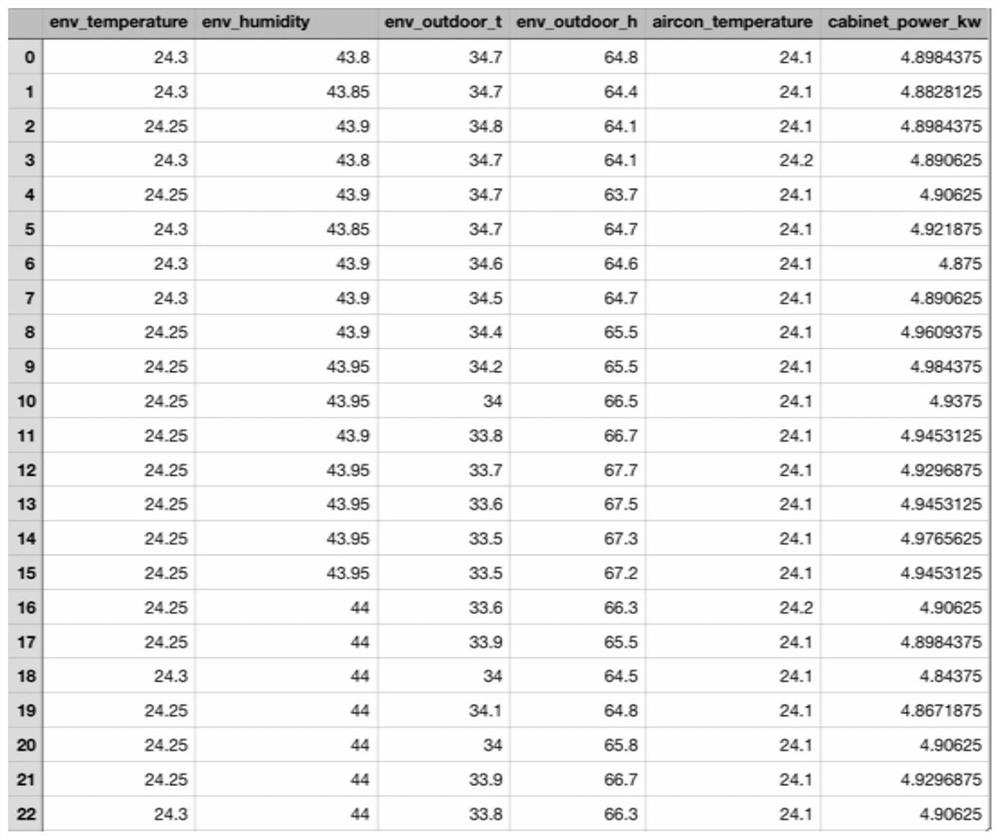

Machine room temperature regulation method and system

PendingCN112050397AAccurate settingStable temperature and humidityMechanical apparatusSpace heating and ventilation safety systemsAdaptive learningControl engineering

The invention provides a machine room temperature regulation and control method and system. The machine room temperature regulation and control method comprises the steps of obtaining historical temperature related data of a machine room; constructing a temperature prediction model according to the historical temperature related data; obtaining current temperature related data of the machine room,and taking the current temperature related data as data of the temperature prediction model to obtain a predicted temperature value; and adjusting a set temperature or startup and shutdown of an airconditioner according to the predicted temperature value. According to the machine room temperature regulation and control method, air conditioner control strategies of different machine rooms are learned in a self-adaptive mode by combining temperature prediction and reinforcement learning control strategies, so that control parameters of the air conditioner are set more accurately, and the temperature and humidity in the machine rooms can meet the normal working requirements of equipment and can also be kept stable.

Owner:浙江省邮电工程建设有限公司

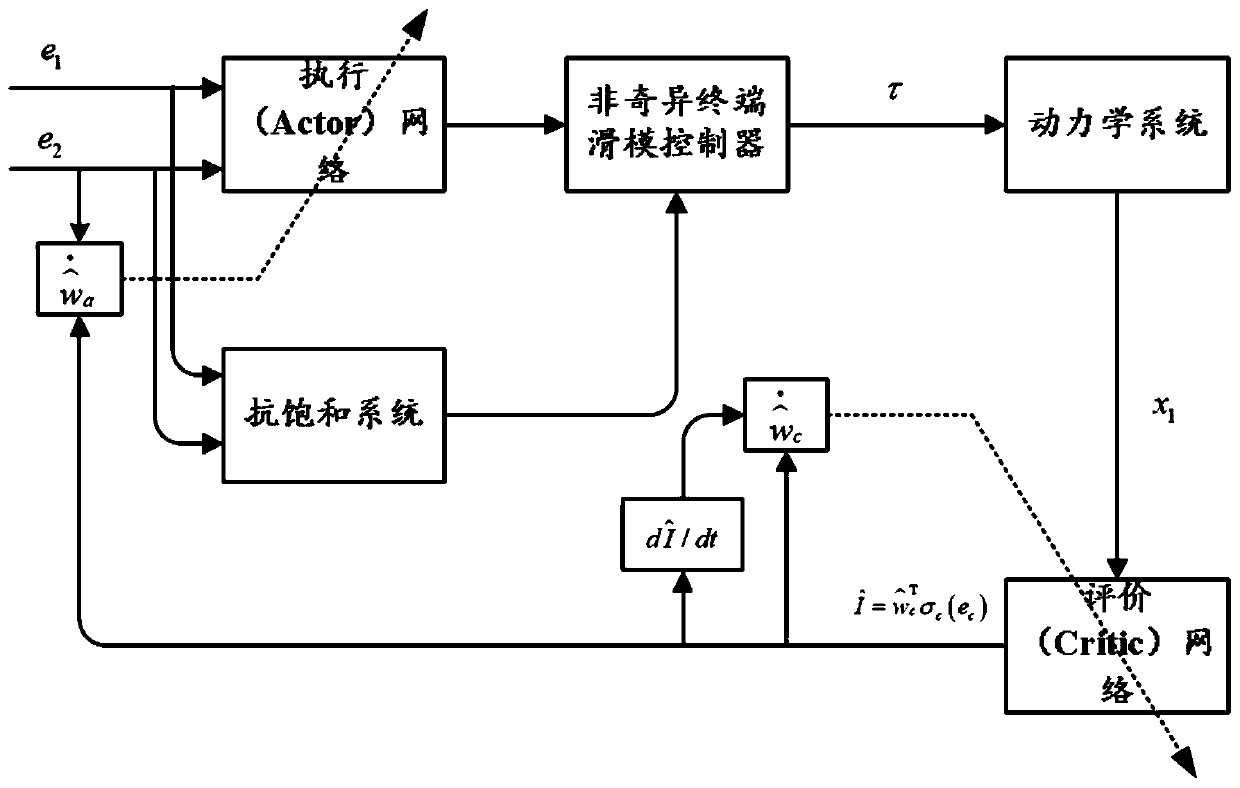

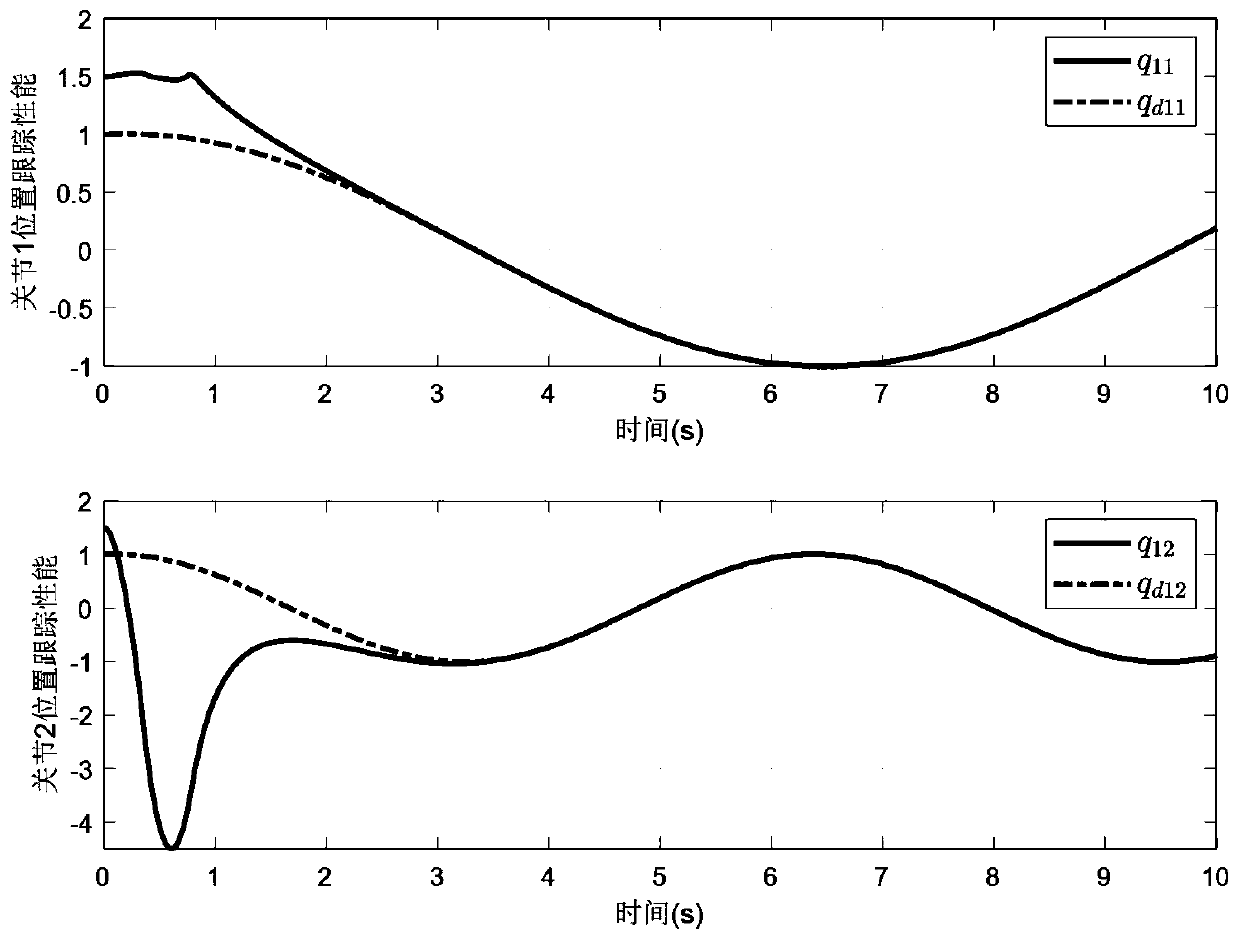

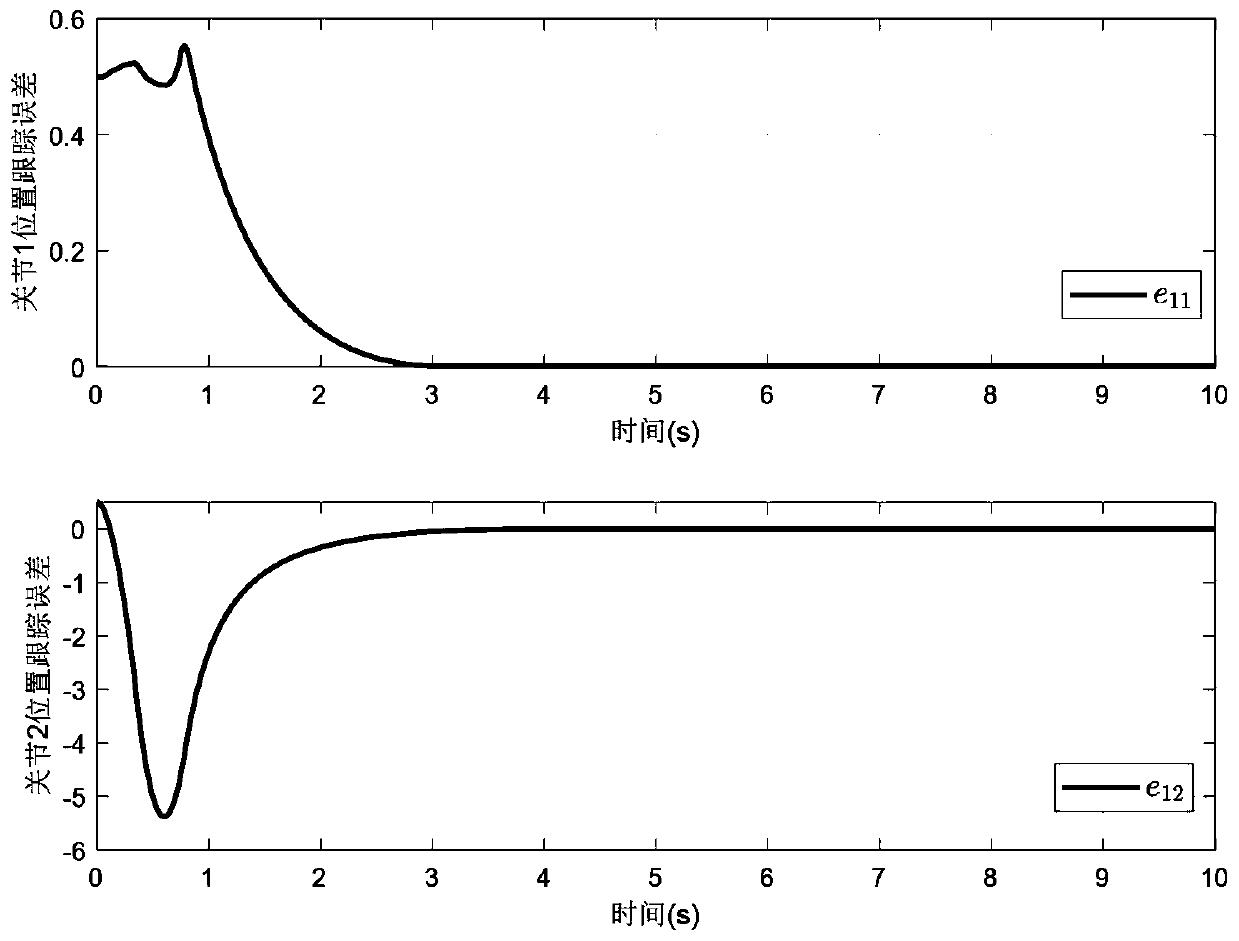

Mechanical arm input saturation fixed time trajectory tracking control method and system

ActiveCN111496792AThe convergence time does not depend onProgramme-controlled manipulatorSimulationOnline learning

The invention provides a mechanical arm input saturation fixed time trajectory tracking control method and system. The method includes the steps that a desired trajectory of a mechanical arm is obtained, and state data of the mechanical arm are obtained through a mechanical arm sensor; a reinforcement learning control algorithm is adopted to suppresses the model uncertainty of the mechanical arm according to the obtained state data; a nonlinear anti-saturation compensator is designed to compensate for the saturation overflow effect of a joint torque actuator in real time; and a non-singular fast terminal sliding mode controller is designed to enable the joint trajectory tracking error of the mechanical arm to converge to the small neighborhood of an origin within a fixed time, and the input saturation fixed time desired trajectory tracking control of the mechanical arm is realized. The mechanical arm input saturation fixed time trajectory tracking control method and system have the online learning ability of model uncertainty, so that the mechanical arm can accurately and quickly track a trajectory.

Owner:UNIV OF SCI & TECH BEIJING

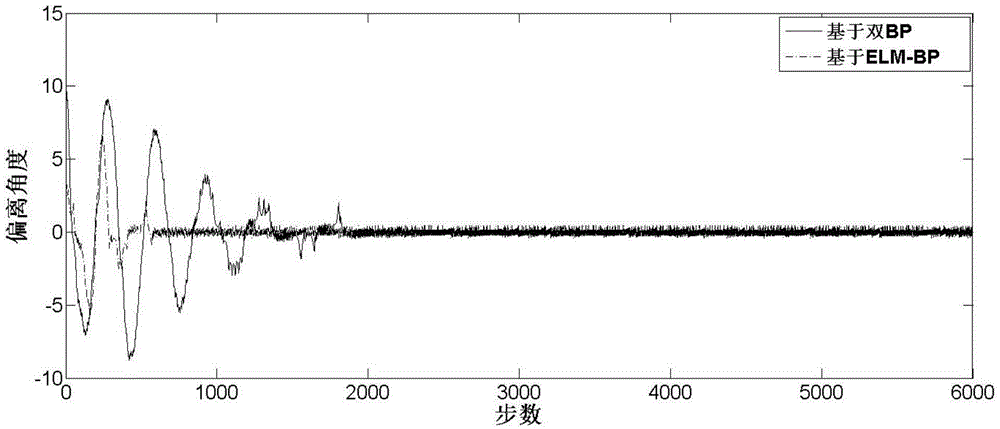

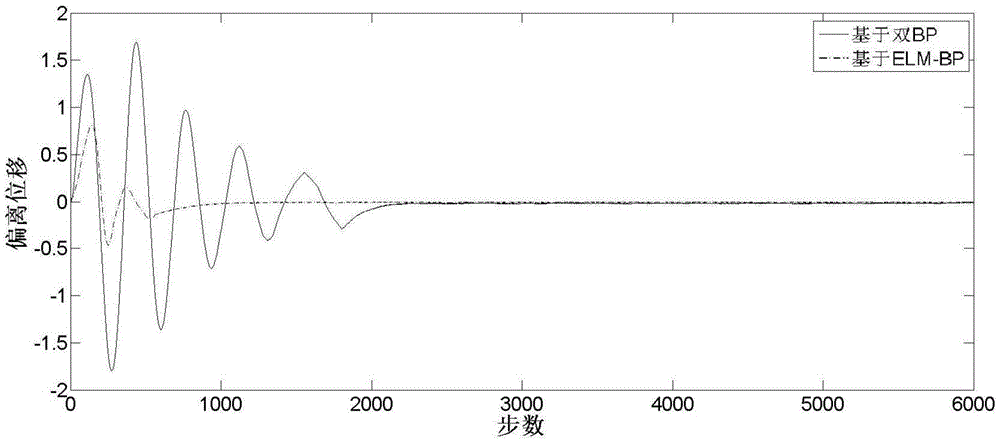

Inverted pendulum control method based on neural network and reinforced learning

InactiveCN105549384ASolve control problemsSolve the "curse of dimensionality" problemProgramme controlNeural learning methodsCurse of dimensionalityAlgorithm

The invention, which belongs to the technical field of artificial intelligence and control, relates to a neural network and enhanced learning algorithm, particularly to an inverted pendulum control method based on a neural network and reinforced learning, thereby carrying out self studying to complete control on an inverted pendulum. The method is characterized in that: step one, obtaining inverted pendulum system model information; step two, obtaining state information of an inverted pendulum and initializing a neural network; step three, carrying out and completing ELM training by using a straining sample SAM; step four, controlling the inverted pendulum by using an enhanced learning controller; step five, updating the training sample and a BP neural network; and step six, checking whether a control result meets a learning termination condition; if not, returning to the step two to carry out circulation continuously; and if so, finishing the algorithm. According to the invention, a problem of easy occurrence of a curse of dimensionality in continuous state space as well as a control problem of a non-linear system having a continuous state can be solved effectively; and the updating speed becomes fast.

Owner:CHINA UNIV OF MINING & TECH

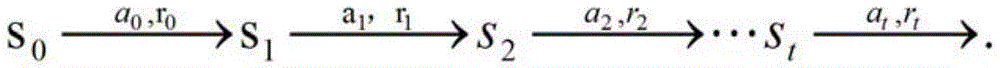

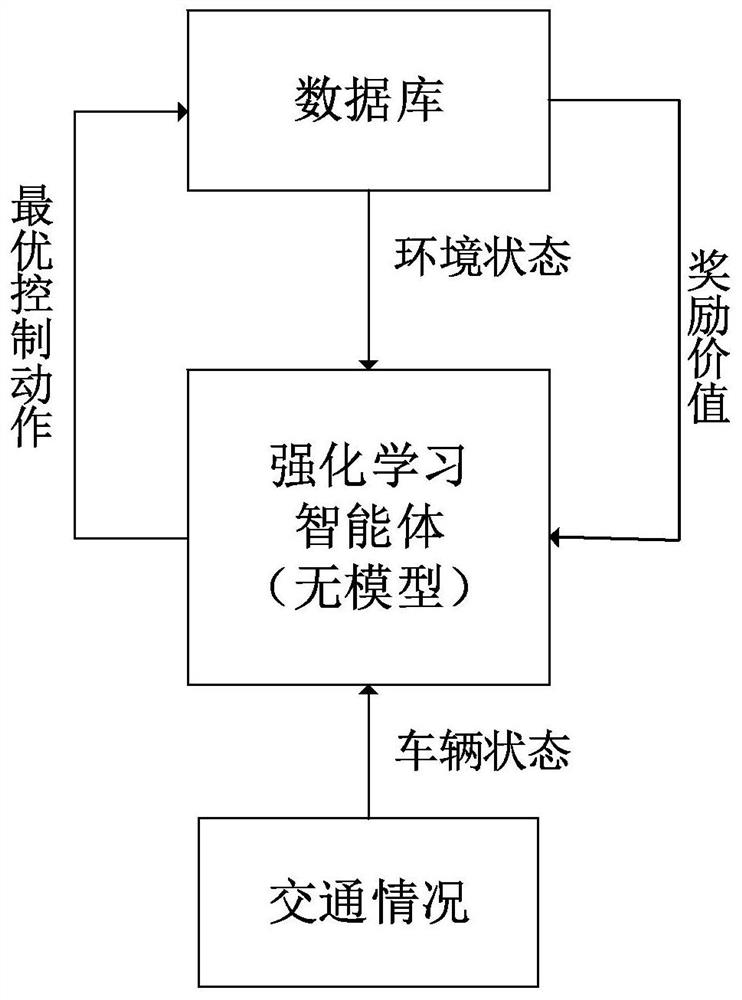

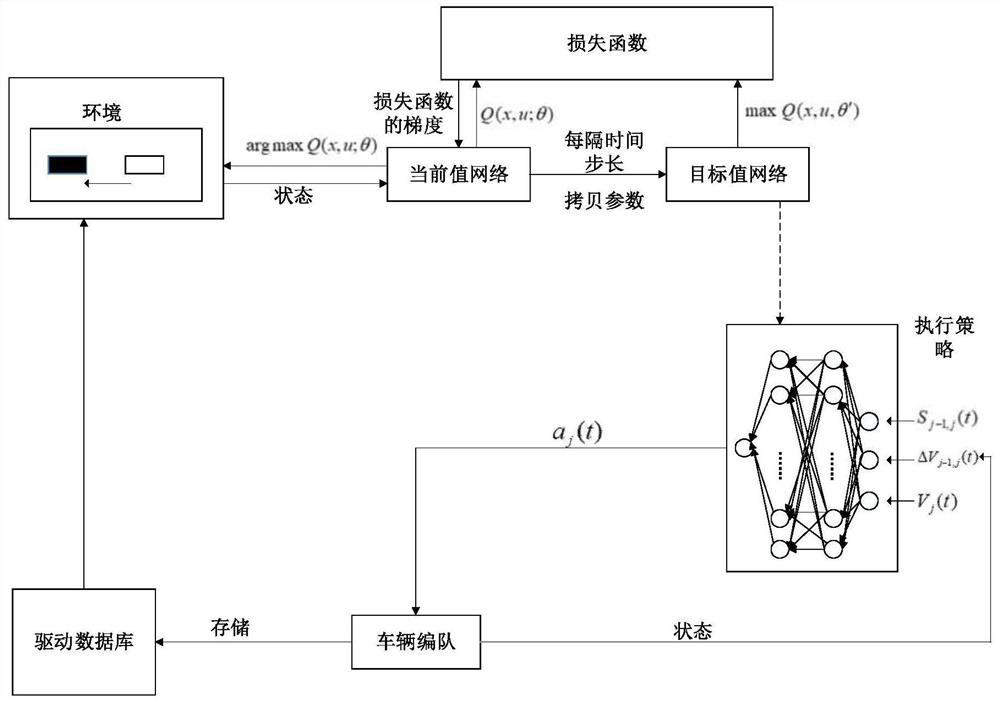

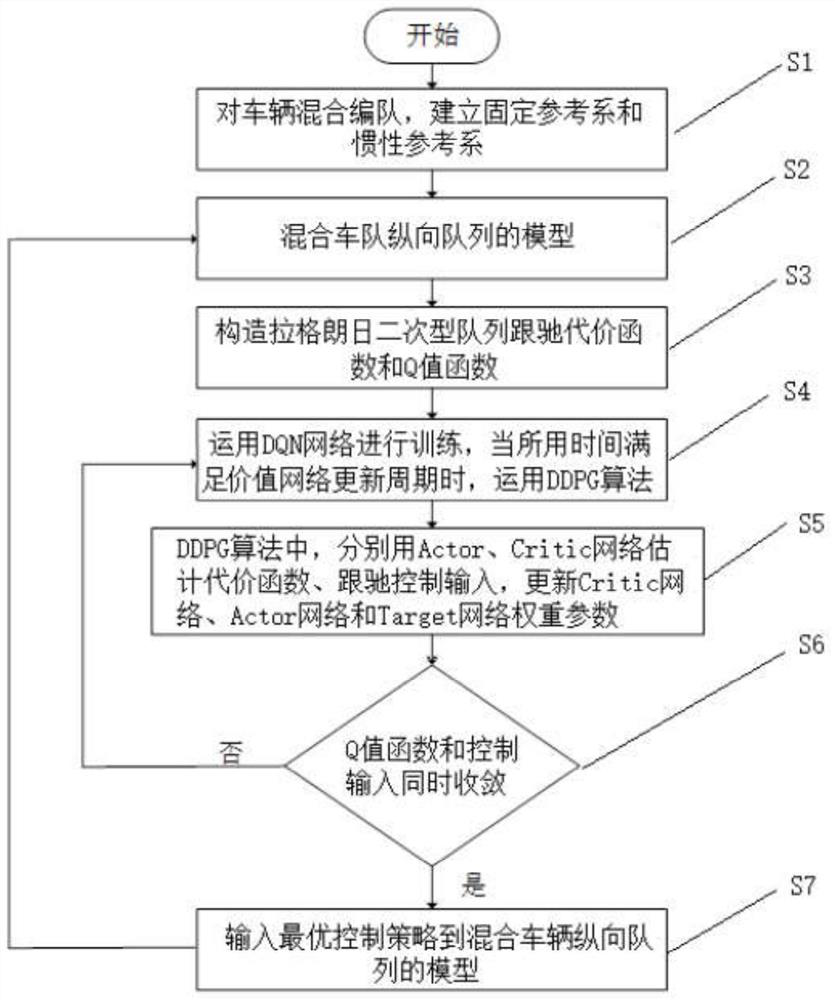

Vehicle control method based on reinforcement learning control strategy in hybrid fleet

ActiveCN112162555AExact output statisticsReduce computing costPosition/course control in two dimensionsVehiclesQ-learningHybrid vehicle

The invention provides a vehicle control method based on a reinforcement learning control strategy in a hybrid fleet, and the method comprises the steps: initializing a hybrid fleet, and building a fixed reference system and an inertial reference system; establishing a model of a mixed vehicle longitudinal queue in the inertial reference system; constructing a Lagrange quadratic queue car-following cost function, and obtaining an expression of a Q value function; training information obtained by the influence of surrounding vehicles on own vehicles by using a deep Q learning network; trainingparameters by using a DDPG algorithm, and if the Q value function process and the control input process realize convergence at the same time, completing the solution of the current optimal control strategy; inputting the optimal control strategy into the model of the longitudinal queue of the hybrid vehicle, and updating the state of the hybrid vehicle by the hybrid vehicle queue; and repeating the steps to finally complete the control task of the vehicles in the hybrid fleet. With the system, the problem of hybrid motorcade autonomous training is solved.

Owner:YANSHAN UNIV

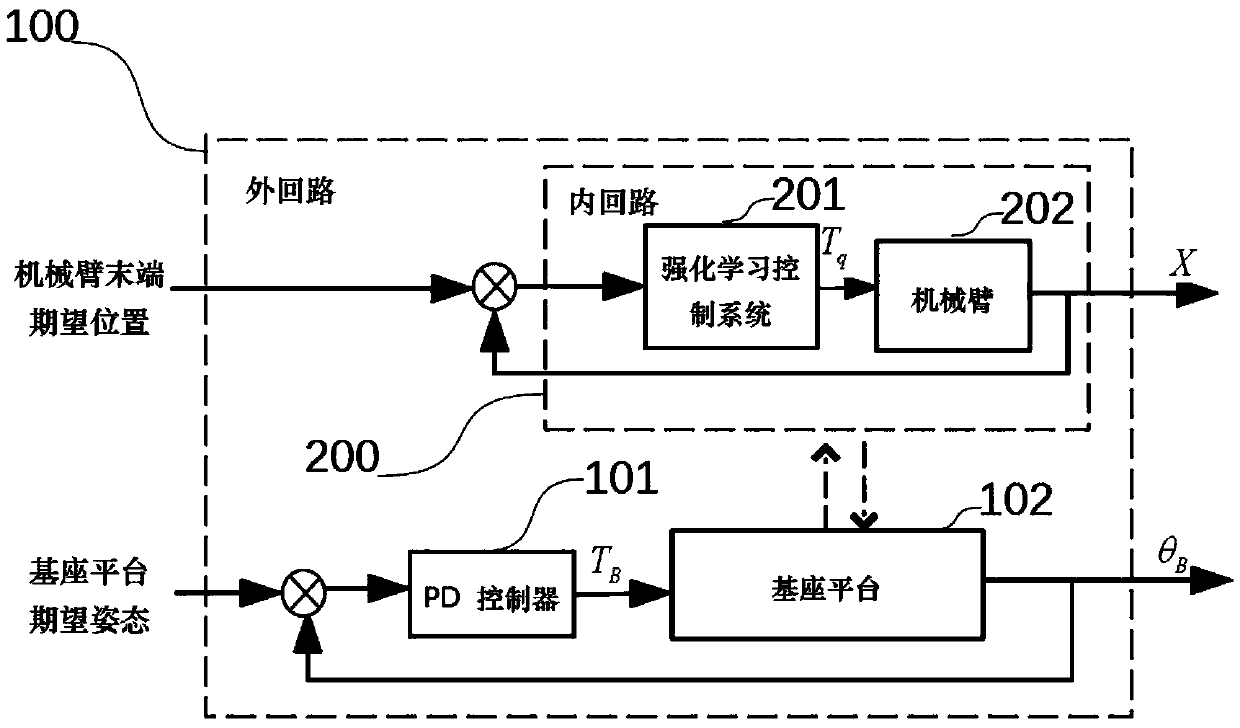

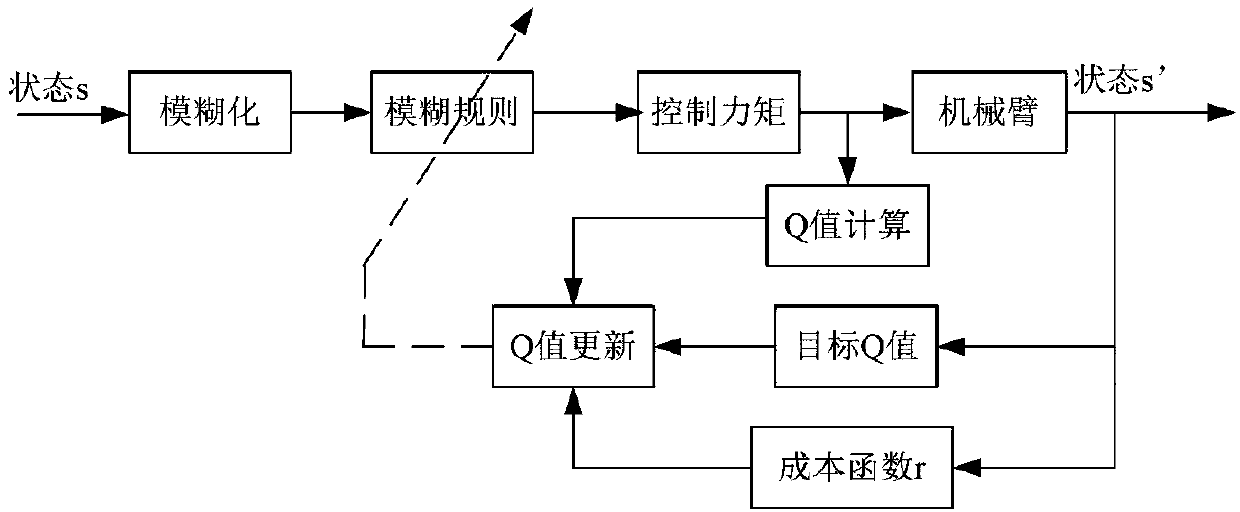

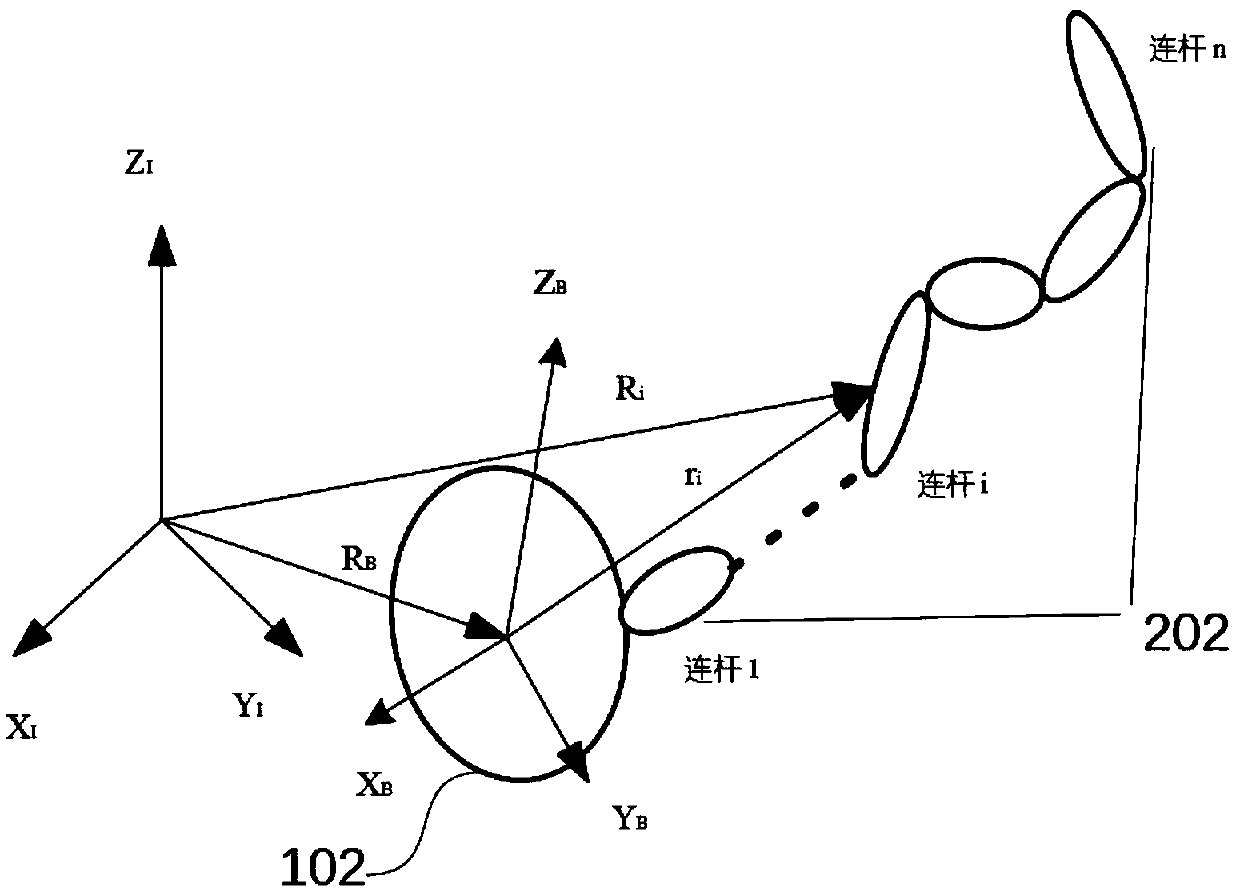

Space robot arresting control system, reinforce learning method and dynamics modeling method

InactiveCN109605365AReduce disturbanceSmooth terminal movementProgramme-controlled manipulatorInner loopControl system

The invention discloses a space robot mechanical arm arresting control system. The space robot mechanical arm arresting control system comprises two loops, namely, an inner loop and an outer loop, inthe outer loop, the system achieves the attitude stability of a space robot mechanical arm base platform in the arresting process through a PD controller, and in the inner loop, the system controls amechanical arm to achieve arresting maneuvering on a non-cooperative target through a reinforce learning control system based on reinforce learning. The invention further discloses a reinforce learning method for controlling the reinforce learning control system of the mechanical arm in the inner loop of the system and a space robot dynamics modeling method of the space robot mechanical arm arresting control system. According to the space robot arresting control system, the reinforce learning method and the dynamics modeling method, compared with PD control, the posture disturbance of the baseplatform under reinforce learning RL control is smaller, the movement process of the tail end of the mechanical arm is more stable, the control precision is higher, moreover, the motion flexibility of the mechanical arm under the reinforce learning RL control is good, and the autonomous intelligence is achieved to the greater extent.

Owner:DALIAN UNIV OF TECH

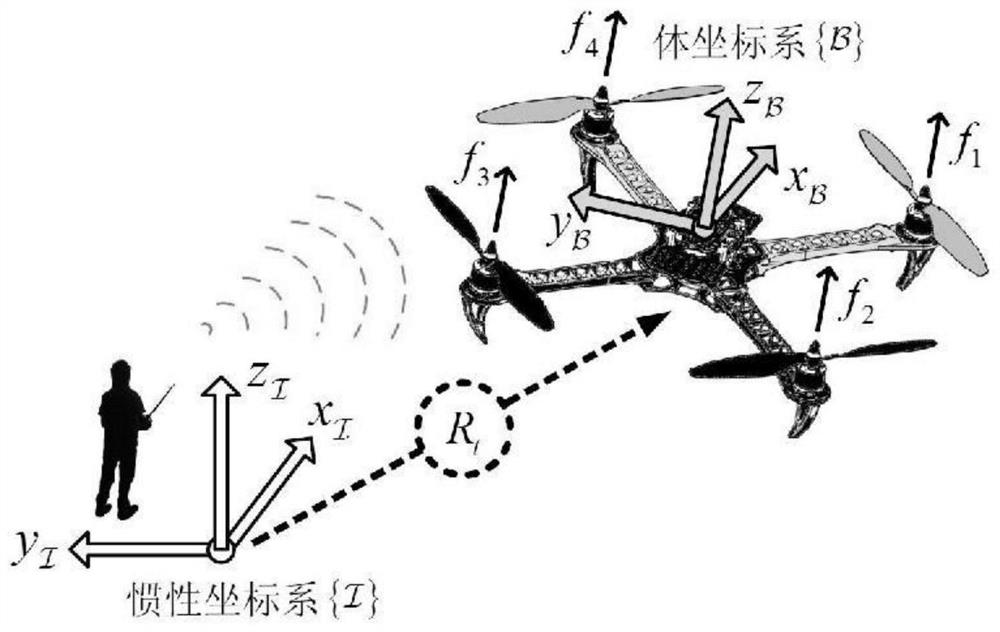

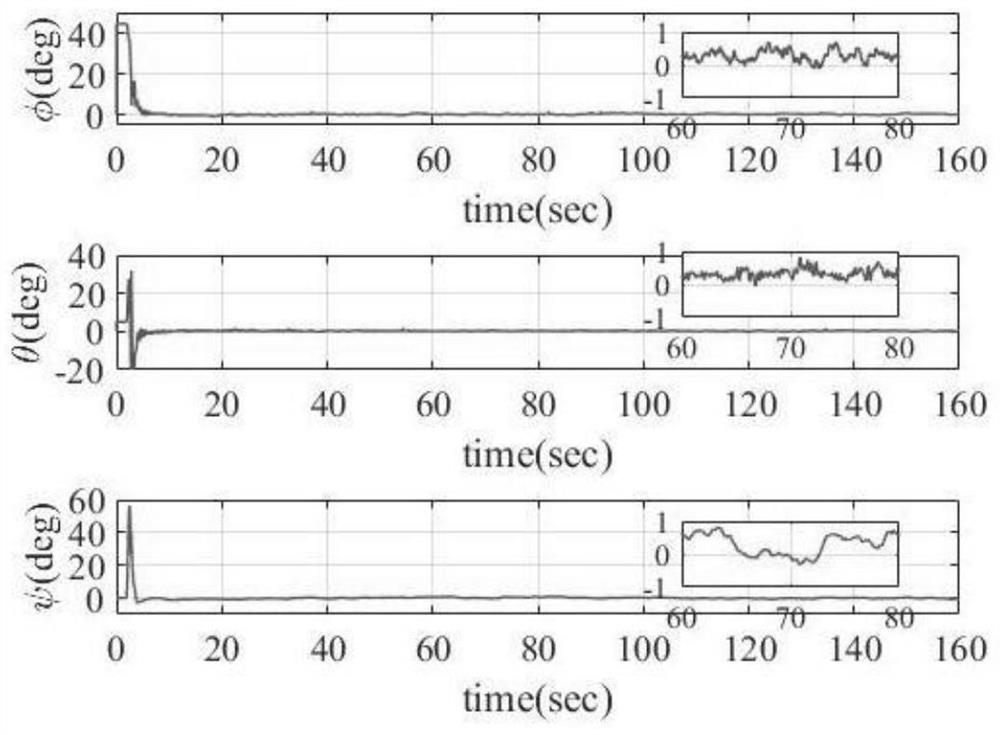

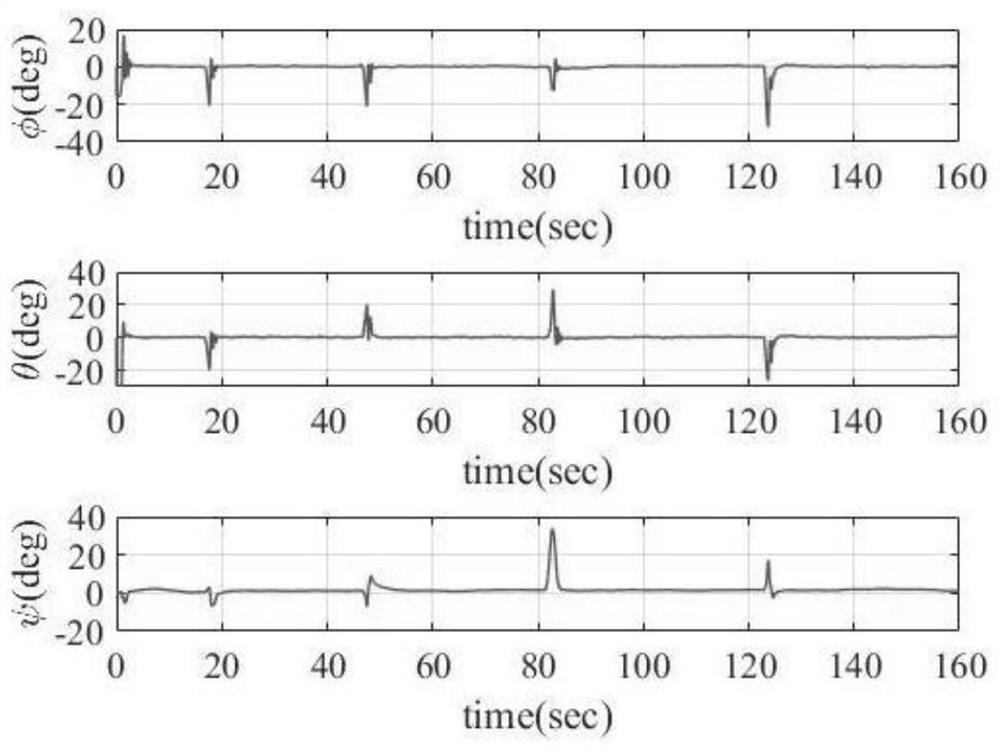

Quad-rotor unmanned aerial vehicle reinforcement learning nonlinear attitude control method

ActiveCN112363519AAchieving finite-time convergent controlImprove robustnessAttitude controlAttitude controlLearning controller

The invention relates to a quad-rotor unmanned aerial vehicle reinforcement learning nonlinear attitude control method, and aims to solve a problem of quad-rotor unmanned aerial vehicle attitude control of a quad-rotor unmanned aerial vehicle dynamical model with an unmodeled part; and a reinforcement learning controller based on an execution-evaluation neural network is designed for estimating the unmodeled part of the model. And meanwhile, a nonlinear robust controller based on multivariable super-twisting is designed, so that stable attitude control of the quadrotor unmanned aerial vehicleis realized.

Owner:TIANJIN UNIV

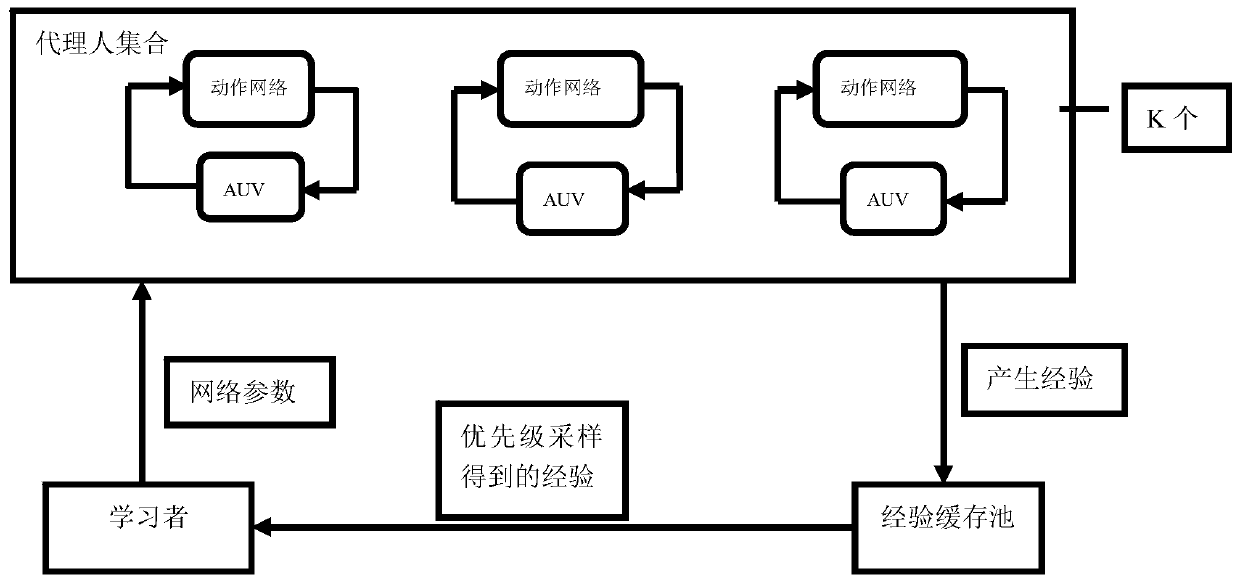

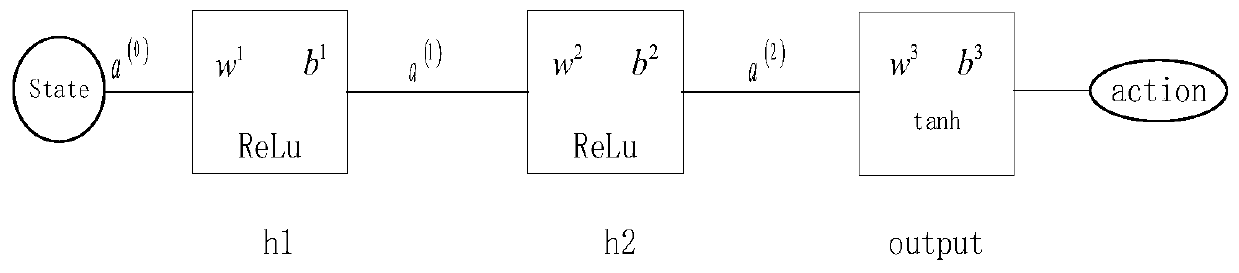

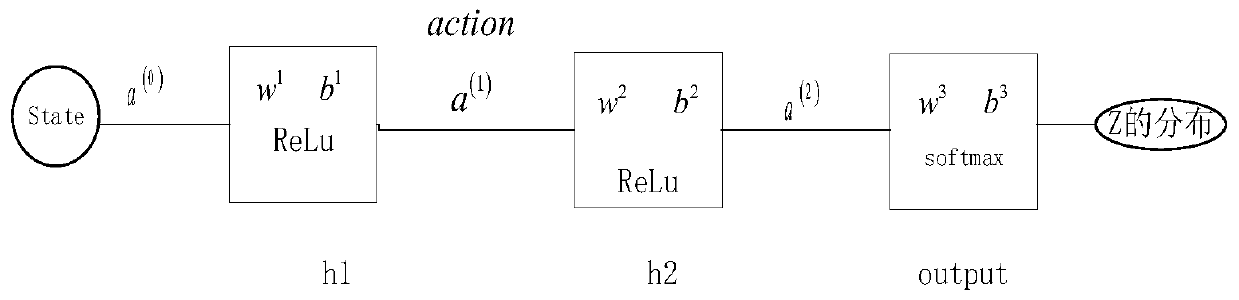

Deep reinforcement learning control method for vertical path following of intelligent underwater robot

ActiveCN110209152ASelf-learningHigh precisionMathematical modelsAutonomous decision making processRobot environmentArtificial intelligence

The invention provides a deep reinforcement learning control method for vertical path following ofan intelligent underwater robot. The deep reinforcement learning control method comprises the following steps that firstly, according to path following control requirements of the intelligent underwater robot, an intelligent underwater robot environment is established to interact with an agent; secondly, an agent set is established; thirdly, an experience buffer pool is established; fourthly, a learner is established; and fifthly, intelligent underwater robot path following control is conducted byusing a distributed deterministic strategy gradient. According to the deep reinforcement learning control method for the vertical path following ofthe intelligent underwater robot, the deep reinforcement learning control method for the vertical path following ofthe intelligent underwater robot is designed to solve the problem that marine environment in which the intelligent underwater robot is located is complex and variable, thus a traditional control method can not interact with the environment. The path followingand control task of the intelligent underwater robot can be finished in a distribution mode by using a deterministic strategy gradient, and the deep reinforcement learning control method for the vertical path following ofthe intelligent underwater robot has the advantages of self-learning, high precision, good adaptability and stable learning process.

Owner:HARBIN ENG UNIV

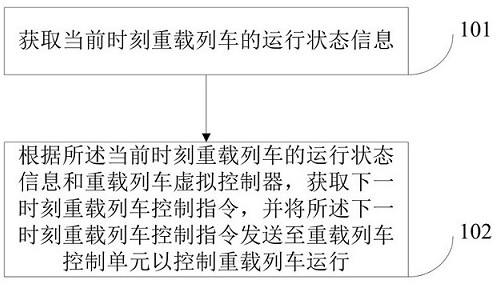

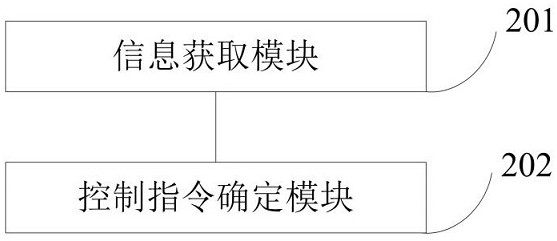

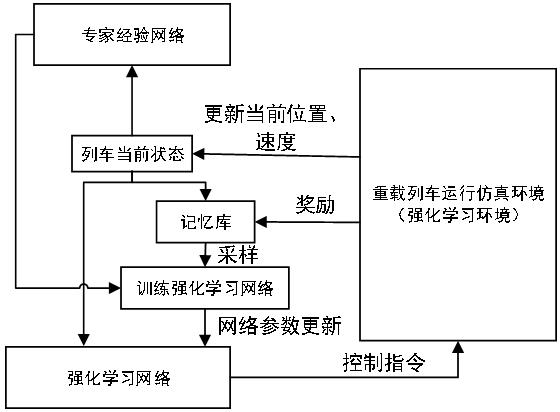

Heavy haul train reinforcement learning control method and system

ActiveCN112193280AAutomatic systemsSignalling indicators on vehicleControl cellReinforcement learning algorithm

The invention relates to a heavy haul train reinforcement learning control method and system, and relates to the technical field of heavy haul train intelligent control, and the method comprises the steps: obtaining the running state information of a heavy haul train at a current moment; according to the running state information of the heavy haul train at the current moment and a heavy haul trainvirtual controller, obtaining a heavy-haul train control instruction at a next moment, and sending the heavy haul train control instruction at the next moment to a heavy haul train control unit to control the heavy haul train to run; the heavy haul train virtual controller is obtained by training a reinforcement learning network according to heavy haul train running state data and an expert experience network, wherein the reinforcement learning network comprises a control network and two evaluation networks, and the reinforcement learning network is constructed according to an SAC reinforcement learning algorithm. According to the invention, the heavy haul train can have the properties of safety, stability and high efficiency in the running process.

Owner:EAST CHINA JIAOTONG UNIVERSITY

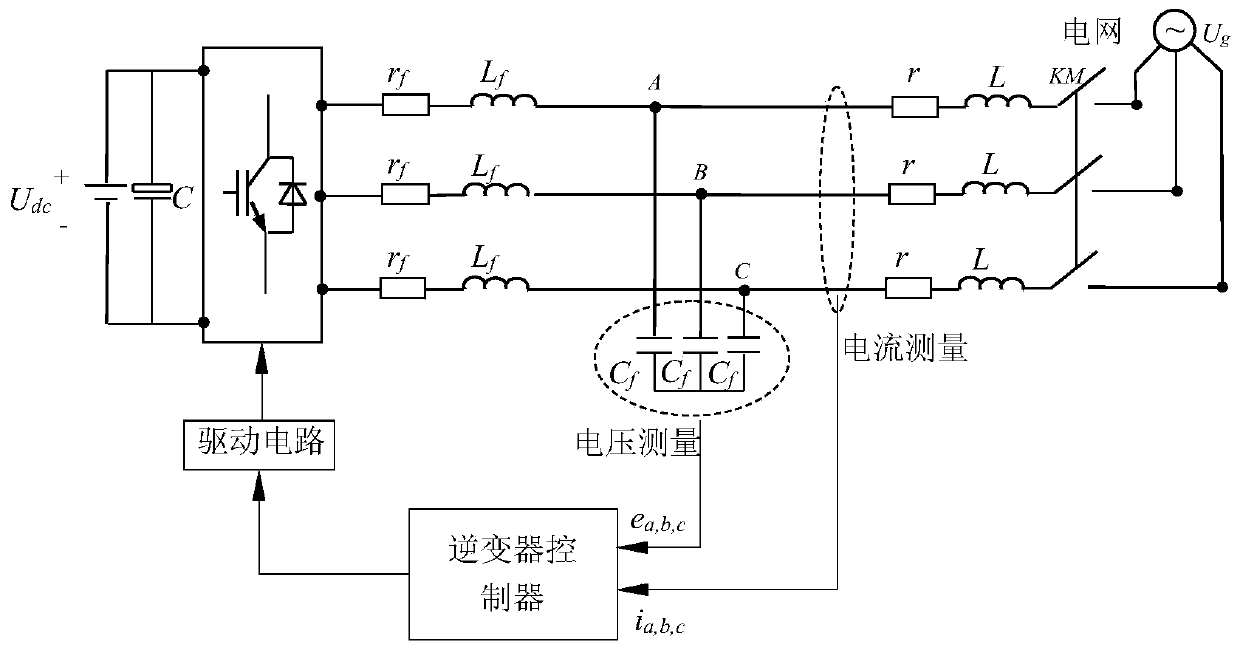

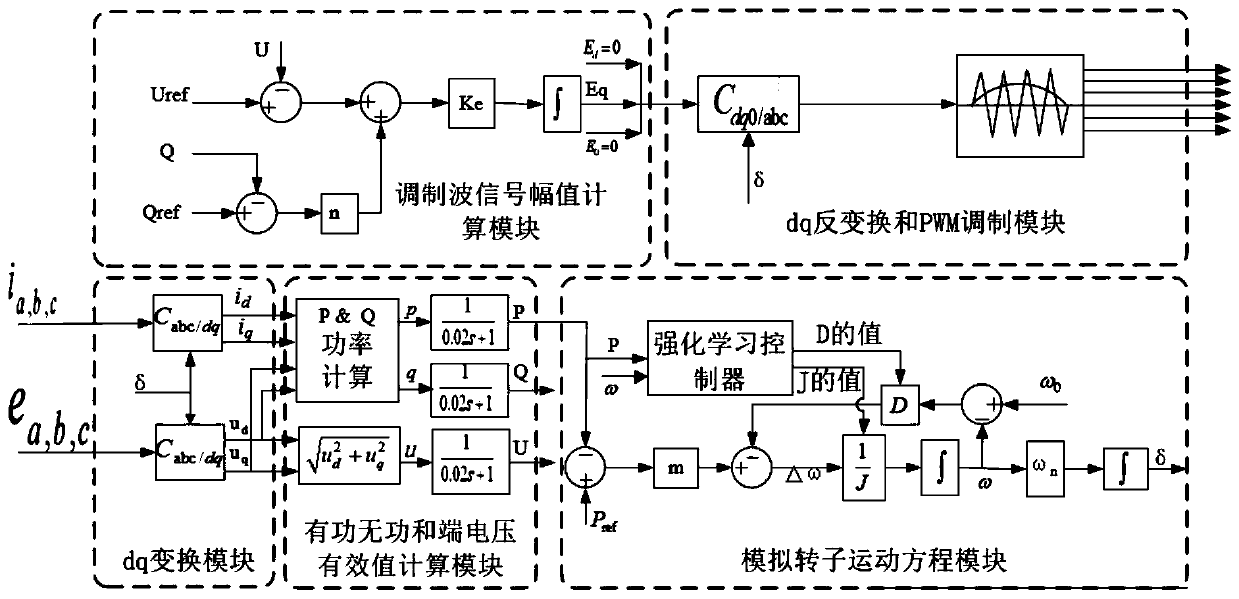

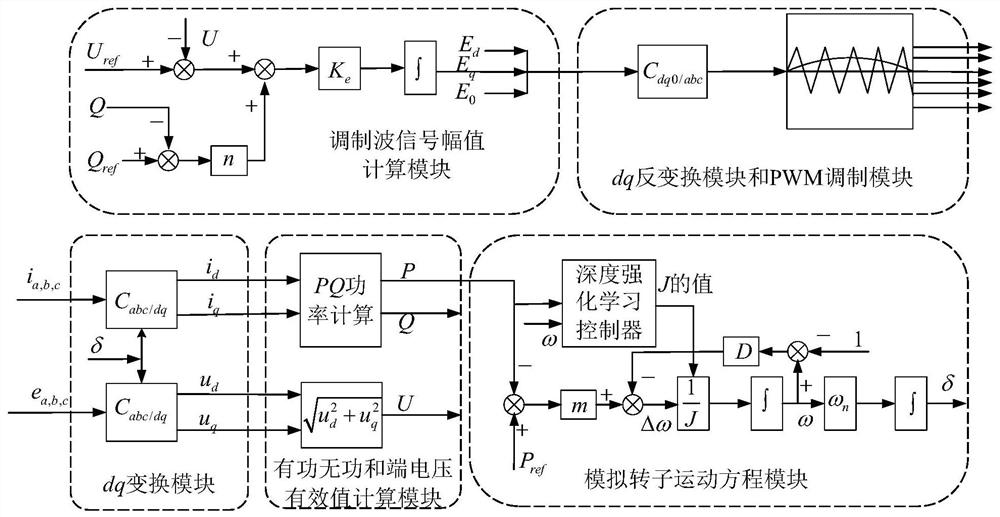

Adaptive adjustment inverter controller

The invention discloses a self-adaptive adjustment inverter controller. The inverter controller comprises a dq conversion module, an output active and reactive power and terminal voltage effective value calculation module, a modulation wave signal amplitude calculation module, a rotor motion equation simulation module, a reinforcement learning control module and a dq inverse transformation and PWMmodulation module. The inverter controller simulates a synchronous generator rotor motion equation, and adjusts the virtual moment of inertia and the damping coefficient on line through the reinforcement learning control module so as to obtain a good power system low-frequency oscillation suppression effect.

Owner:STATE GRID SICHUAN ECONOMIC RES INST

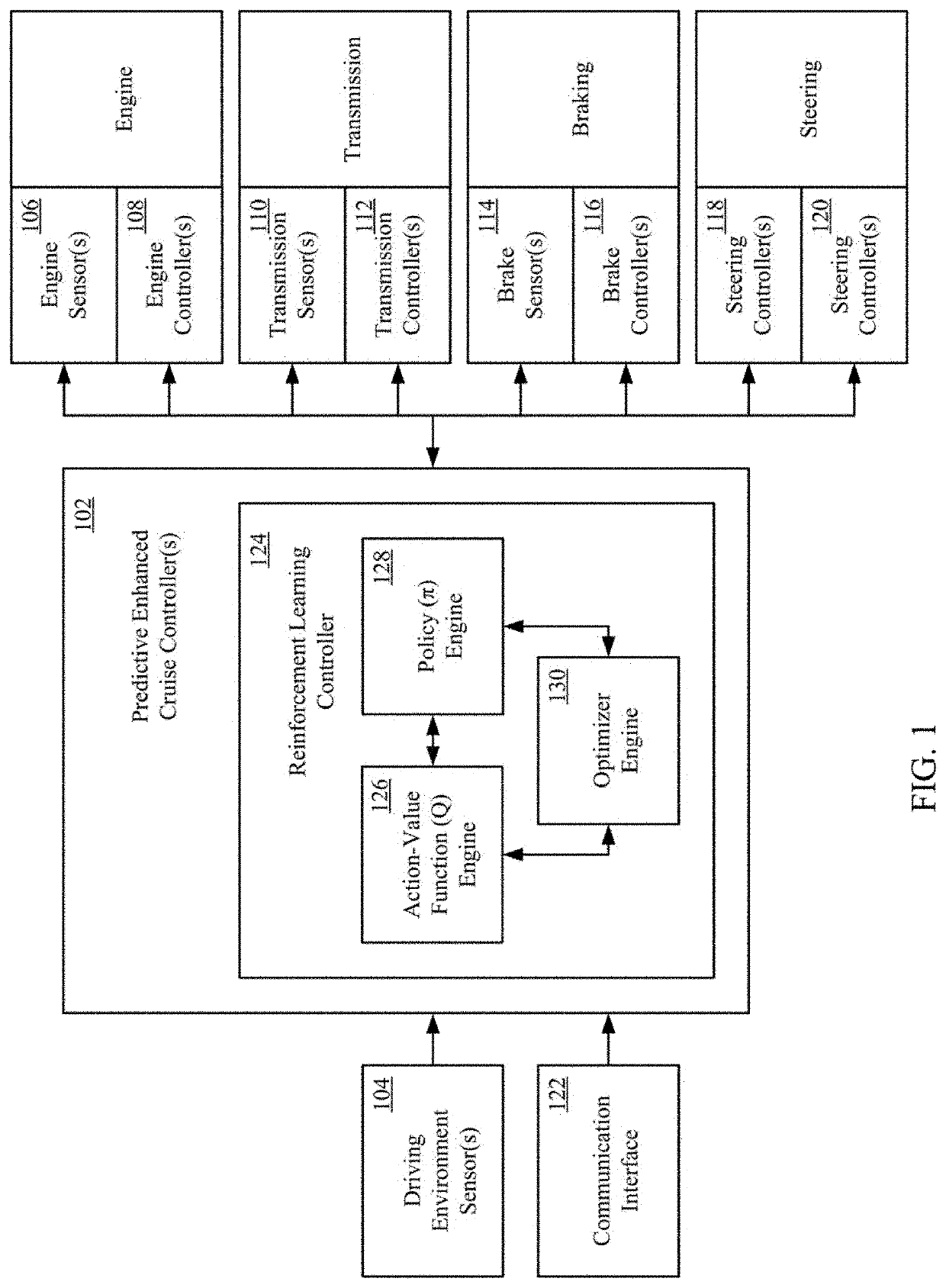

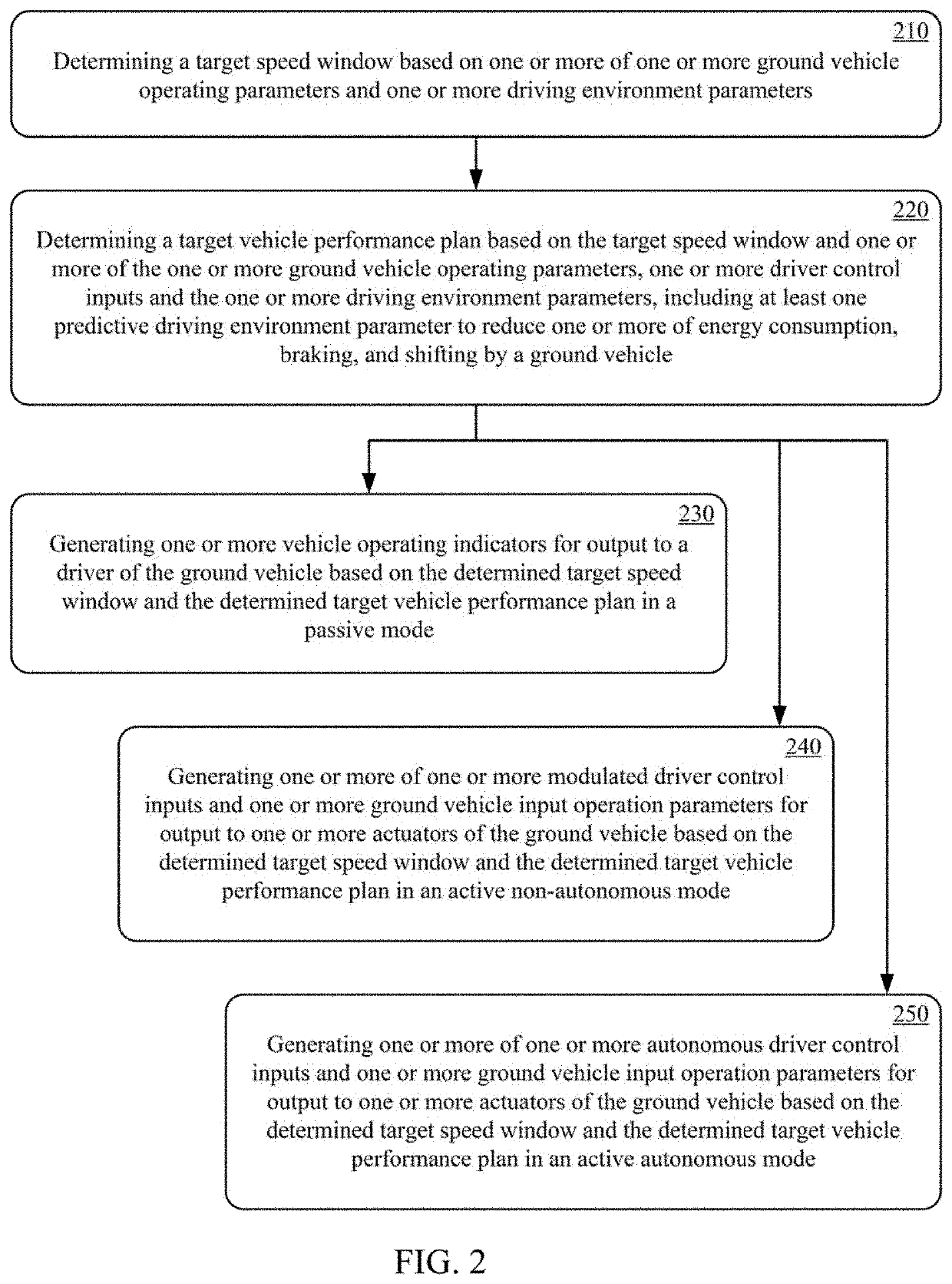

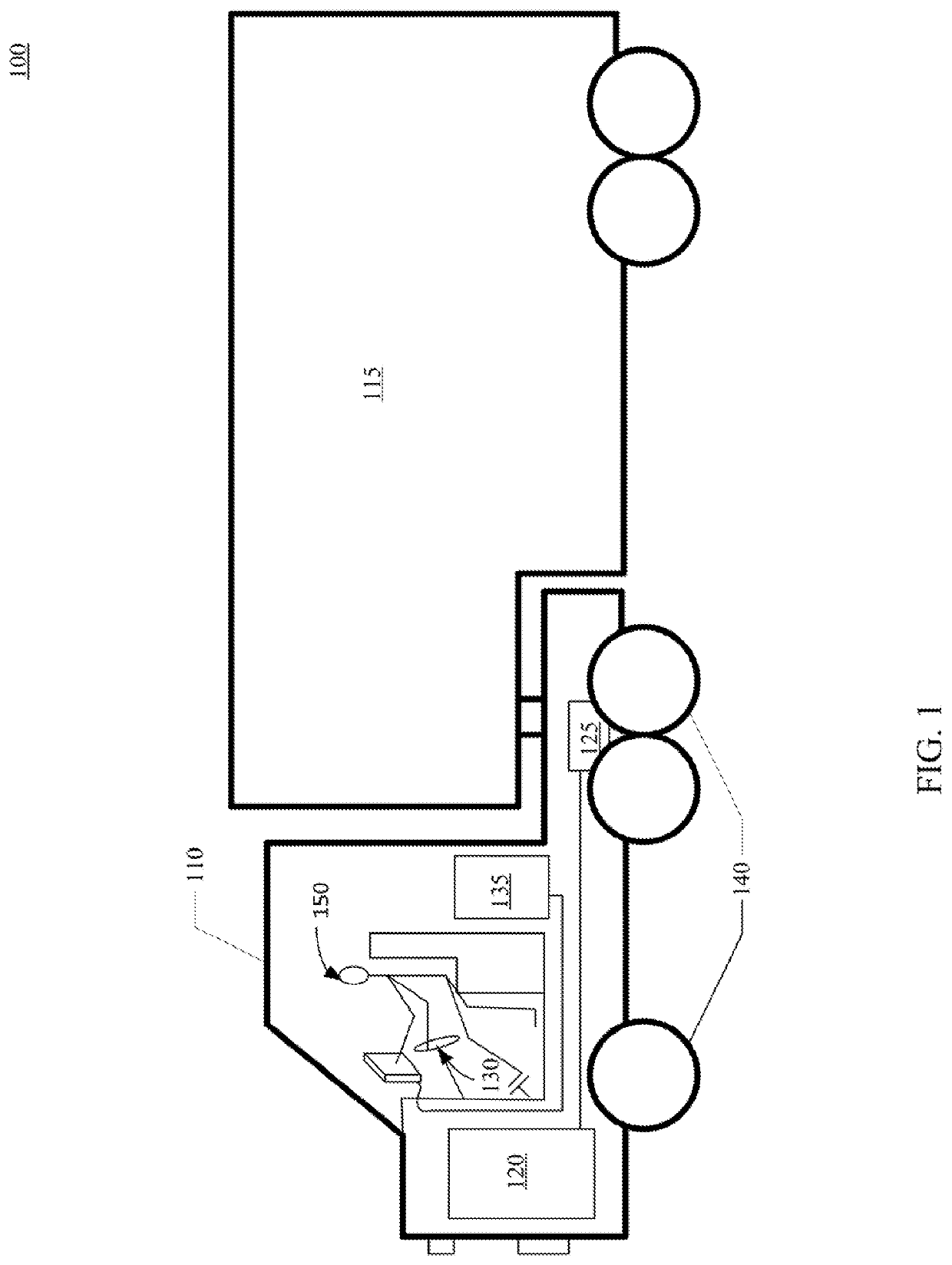

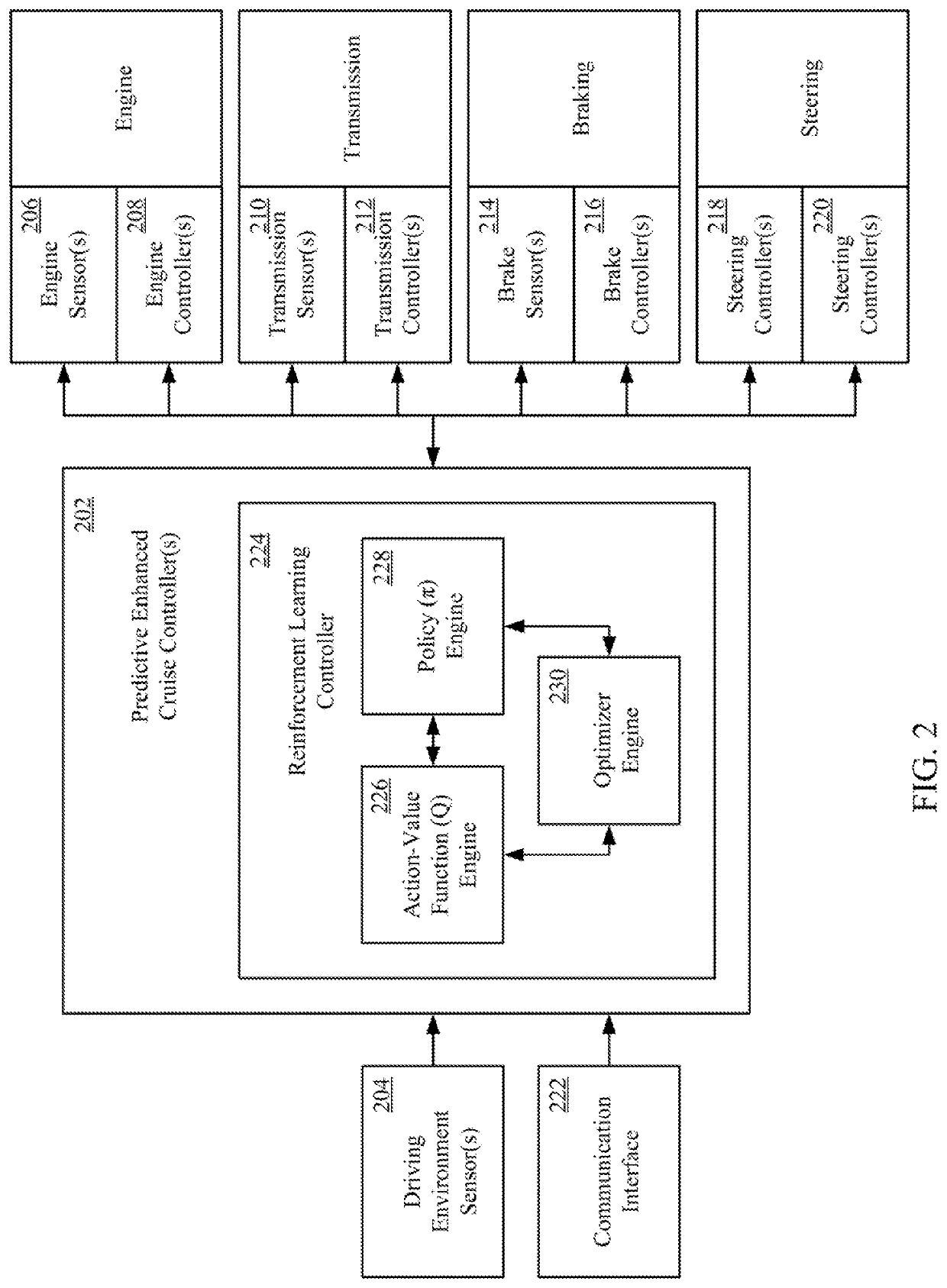

Reinforcement Learning Based Ground Vehicle Control Techniques

ActiveUS20190378036A1Optimize ground vehicle control techniqueReduce energy consumptionMathematical modelsArtificial lifeLearning controllerOperation mode

Reinforcement learning based ground vehicle control techniques adapted to reduce energy consumption, braking, shifting, travel distance, travel time, and or the like. The reinforcement learning techniques can include training a reinforcement learning controller based on a simulated ground vehicle environment during a simulation mode, and then further training the reinforcement learning controller based on a ground vehicle environment during an operating mode of a ground vehicle.

Owner:TRAXEN INC

Blind guiding robot of optimal output feedback controller and based on reinforcement learning

ActiveCN112130570ARealize multifunctional collaborative processingHigh precisionPosition/course control in two dimensionsVehiclesRobotic systemsSimulation

The invention relates to a blind guiding robot of an optimal output feedback controller and based on reinforcement learning, and belongs to the technical field of robots. By adopting a realsense D435idepth camera as a visual sensor, real-time environment information of the blind guiding robot in the advancing guiding process can be accurately and efficiently obtained. In order to solve the problem that a blind guiding robot faces many unstable factors in the moving process, a model-free synchronous integral reinforcement learning controller based on an ADP method is designed, an HJB equationof a constructed cost function is established by constructing the cost function of a blind guiding robot system based on reinforcement learning, the HJB equation is solved through a method based on synchronous reinforcement learning, and finally an optimal solution is obtained through an iterative method, so that the optimal control of the blind guiding robot system is achieved.

Owner:CHONGQING UNIV

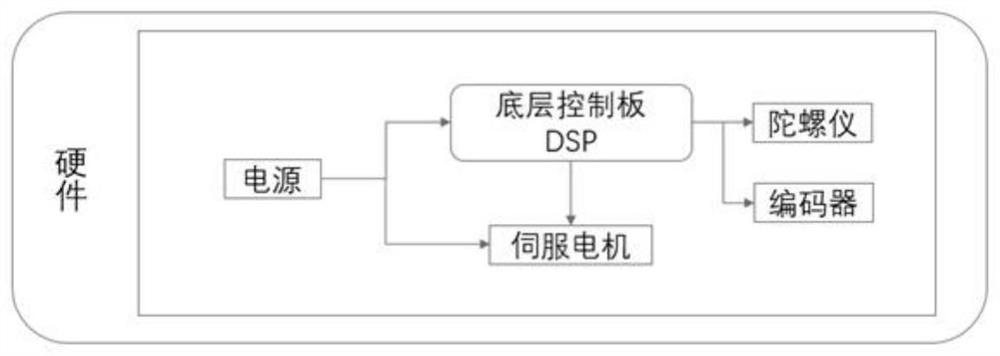

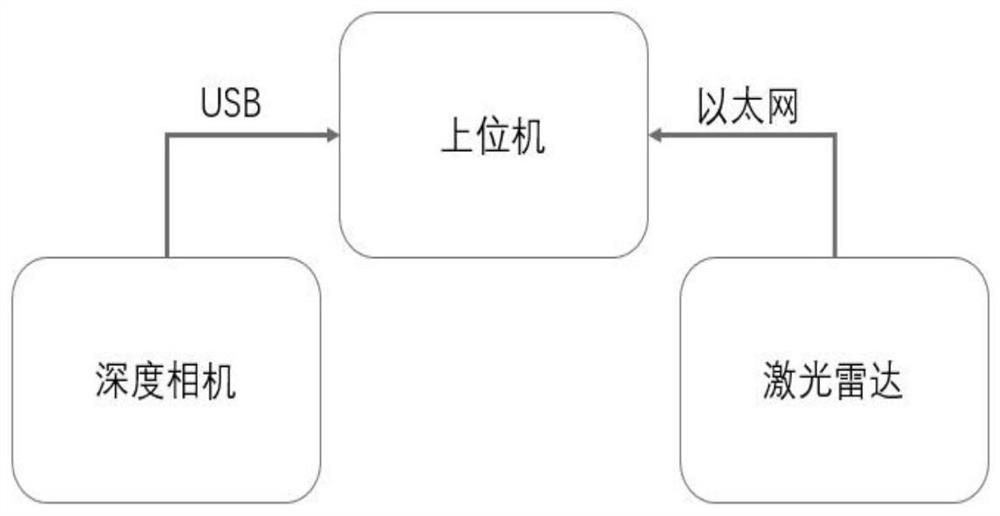

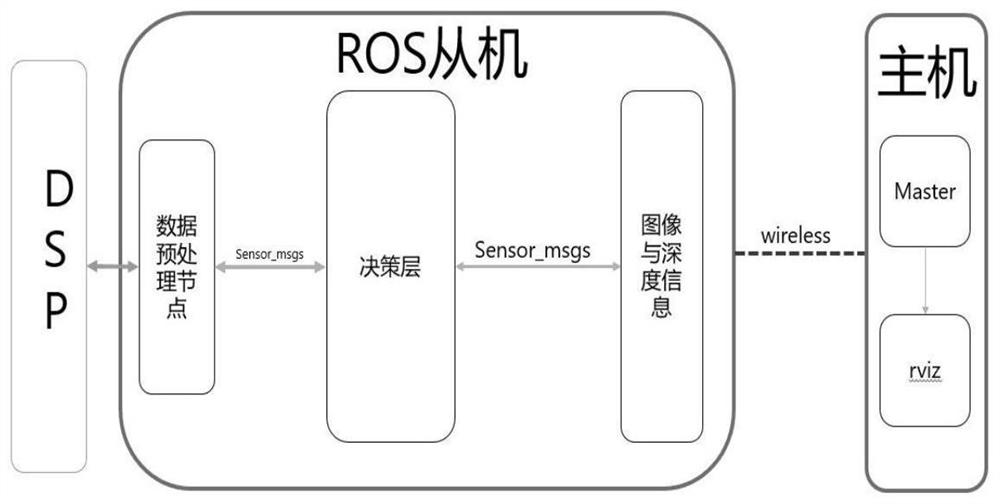

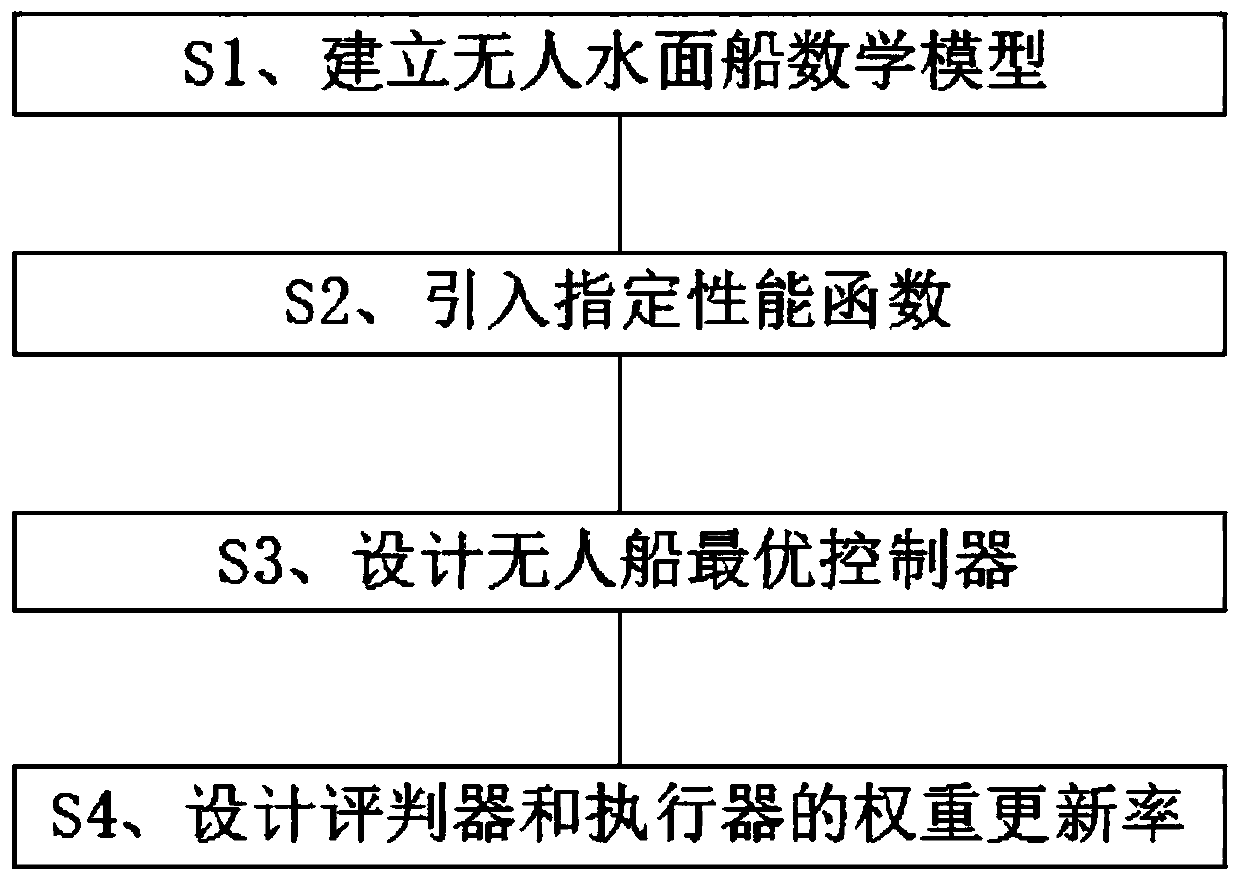

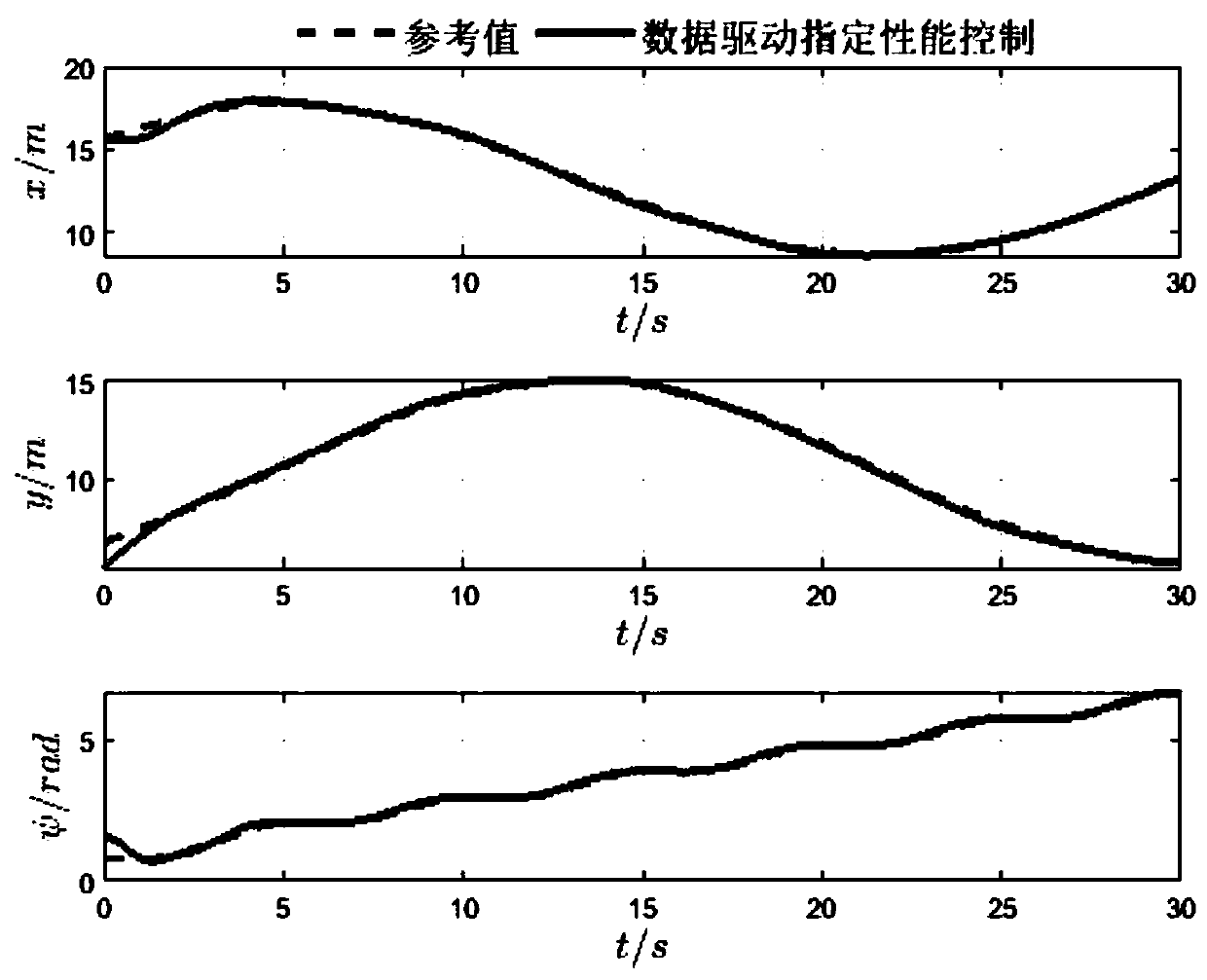

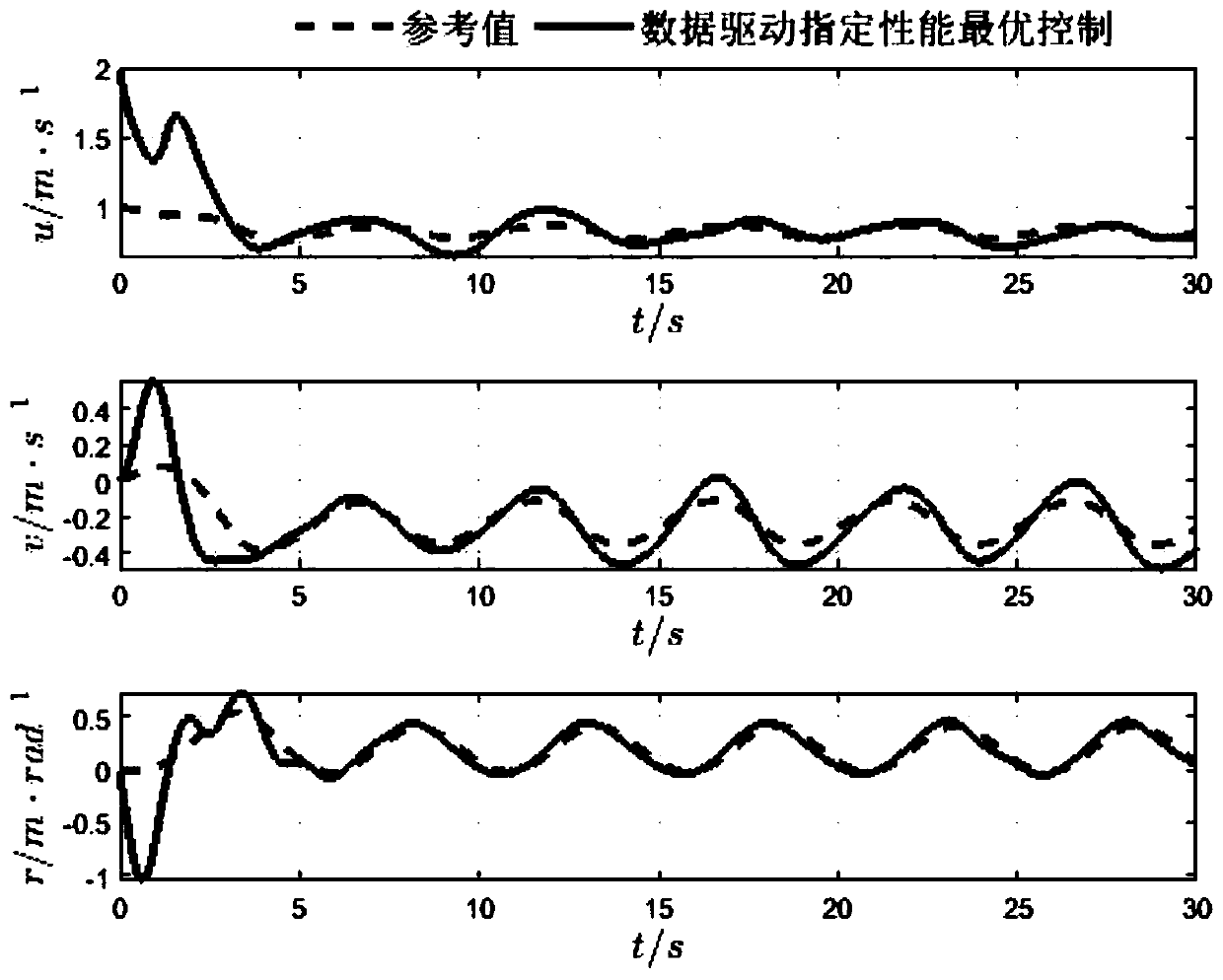

Unmanned vessel data driving reinforcement learning control method with specified performance

ActiveCN111308890AExtending Research on Reinforcement Learning ControlReduce usageSustainable transportationAdaptive controlPerformance functionControl system

The invention provides an unmanned vessel data driving reinforcement learning control method with specified performance for an unmanned surface vessel system. The method comprises the steps of S1, building an unmanned surface vessel mathematic model, S2, introducing a specified performance function, S3, designing an optimal controller of the unmanned vessel, and S4, designing weight updating ratesof the evaluator and the actuator. According to the method, simultaneous updating of the actuator and the evaluator can be realized, and the error can be within a specified range, so that the optimalcontrol strategy is obtained. Meanwhile, the method accelerates the convergence speed of the control system, and obviously improves the adaptability and reliability of the operation of the unmanned vessel system in an unknown environment.

Owner:DALIAN MARITIME UNIVERSITY

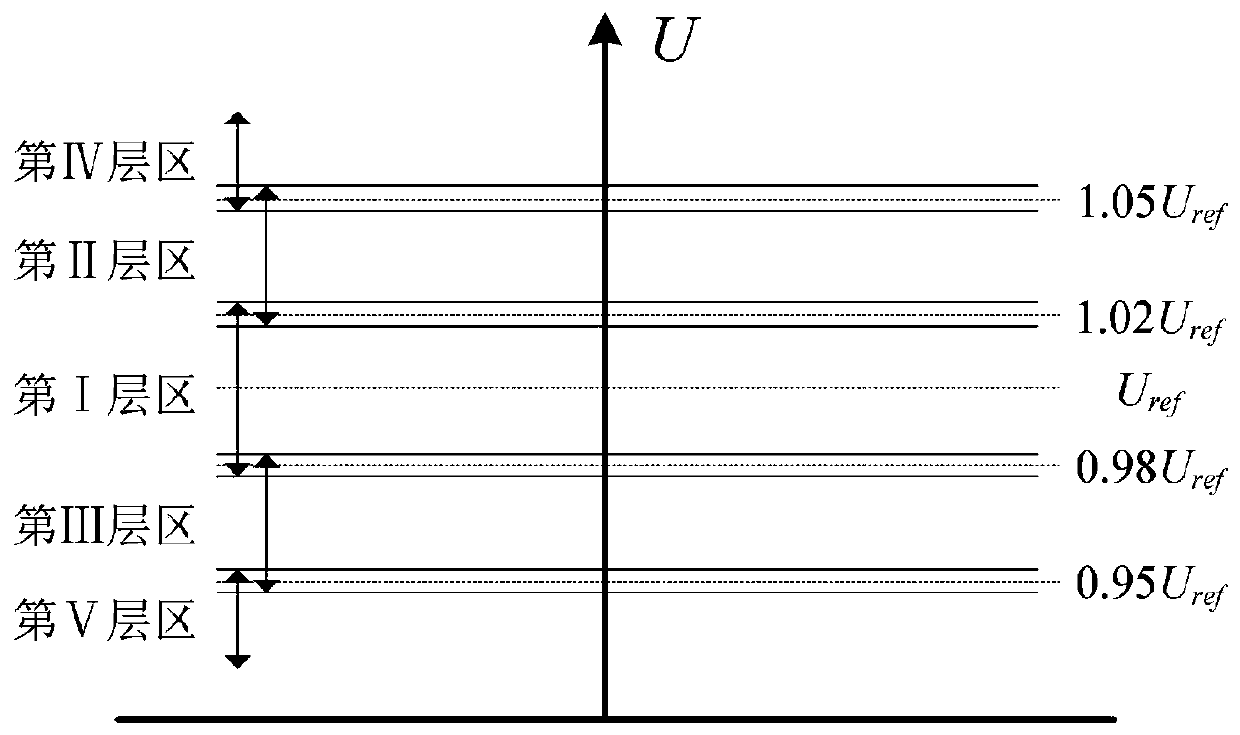

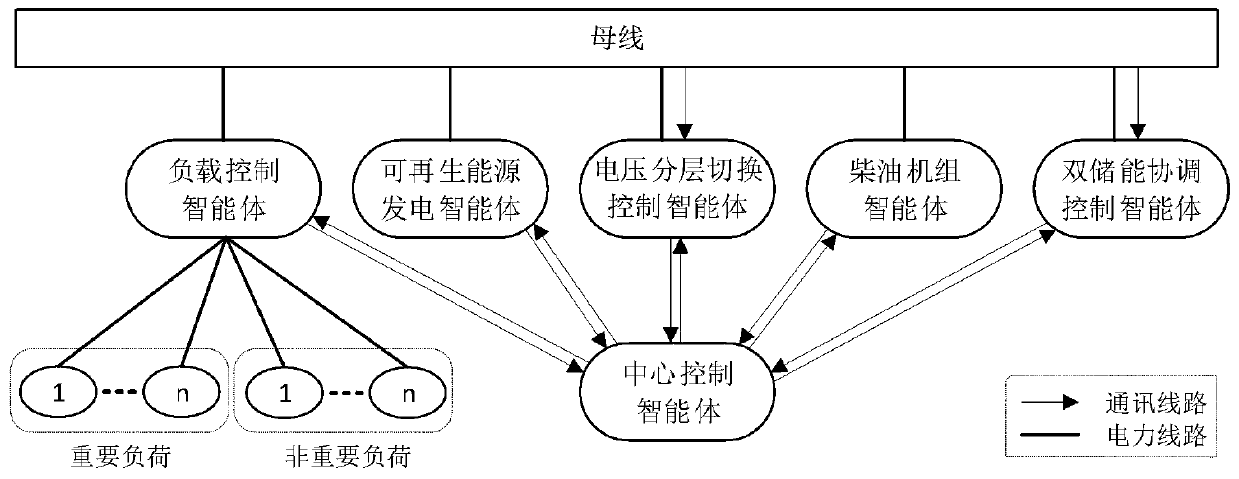

Microgrid hybrid coordination control method based on reinforcement learning and multi-agent theory

PendingCN111200285AReduce instabilityStabilize bus voltageSingle network parallel feeding arrangementsEnergy storageMicrogridControl engineering

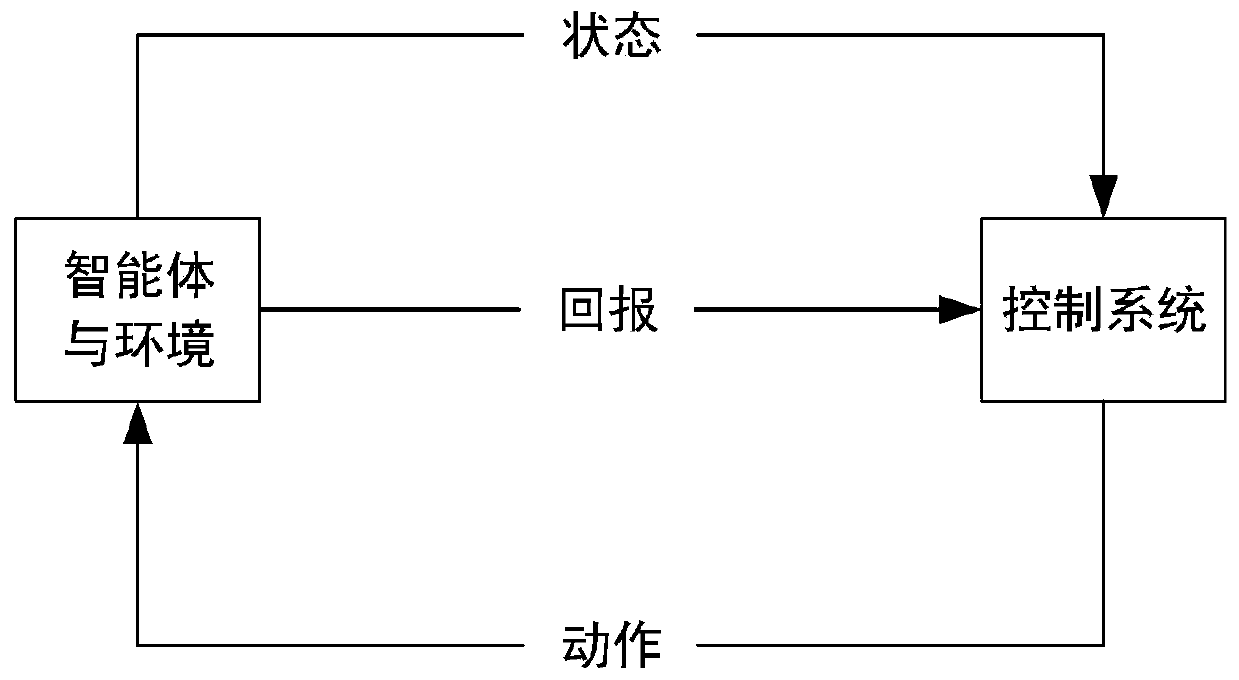

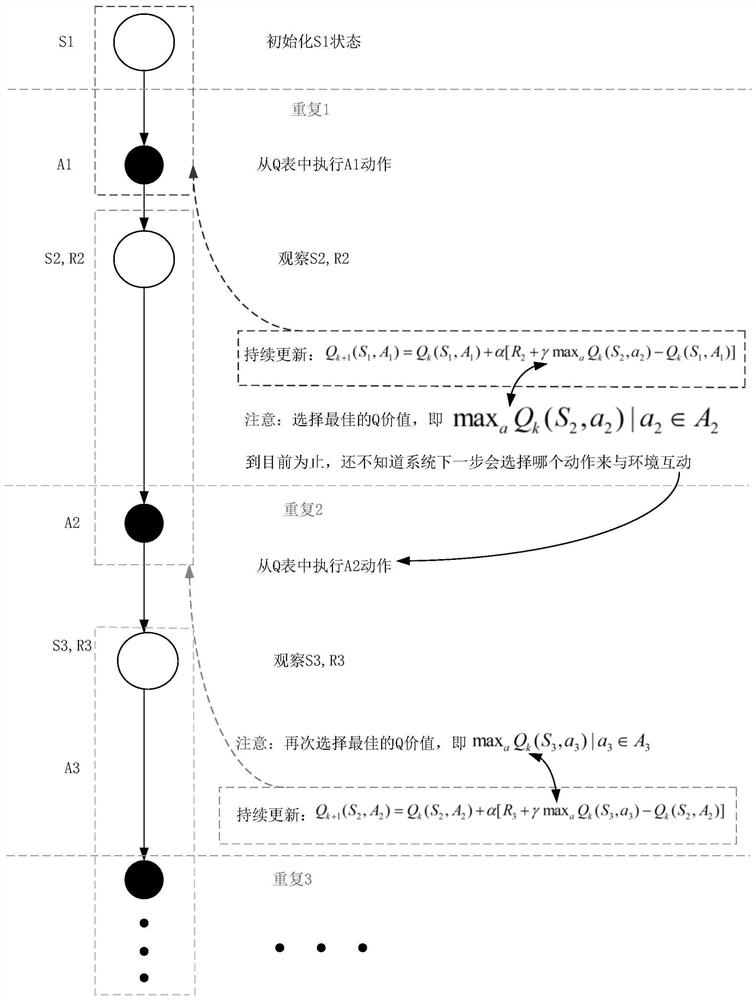

The invention discloses a microgrid hybrid coordination control method based on reinforcement learning and a multi-agent theory. The method comprises the steps of designing a transition voltage layercontrol strategy based on a voltage layering mode, designing a double-energy-storage sub-role control strategy, and enabling two energy storage to work separately when an energy storage unit works ina voltage stabilization mode; when energy storage assistance is needed to continuously absorb power or supplement power, converting the two-energy-storage working mode into cooperative charging / discharging; constructing an action space and a state space based on Q-Learning; designing a reinforcement learning control framework based on multiple agents, specifically, designing a basic updating ruleof a state-action pair and selecting a corresponding value function; designing a basic action selection mechanism and a return value strategy, specifically, designing a selection strategy adopted by the system in an initial state and a return value in each state; and designing a reinforcement learning algorithm flow, specifically, designing a proper algorithm flow based on the strategy to realizea control strategy.

Owner:YANSHAN UNIV

Reinforcement learning algorithm-based self-correction control method for double-fed induction wind power generator

InactiveCN106877766AHigh outputImprove robustnessElectronic commutation motor controlVector control systemsMathematical modelControl system

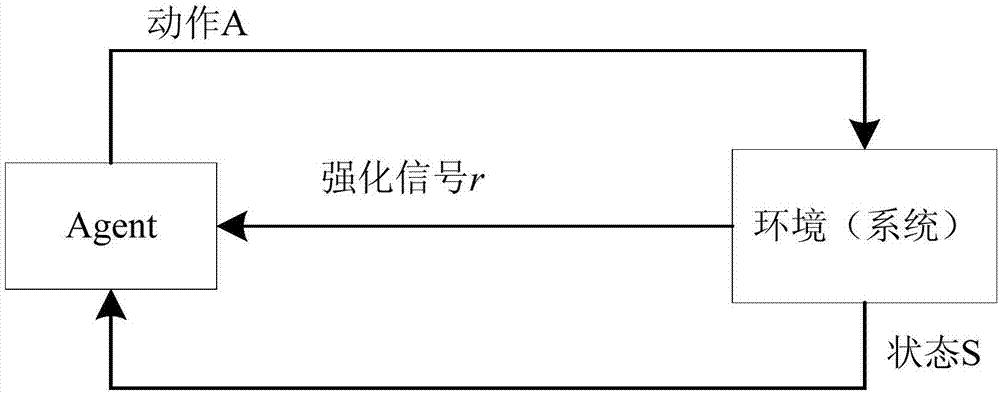

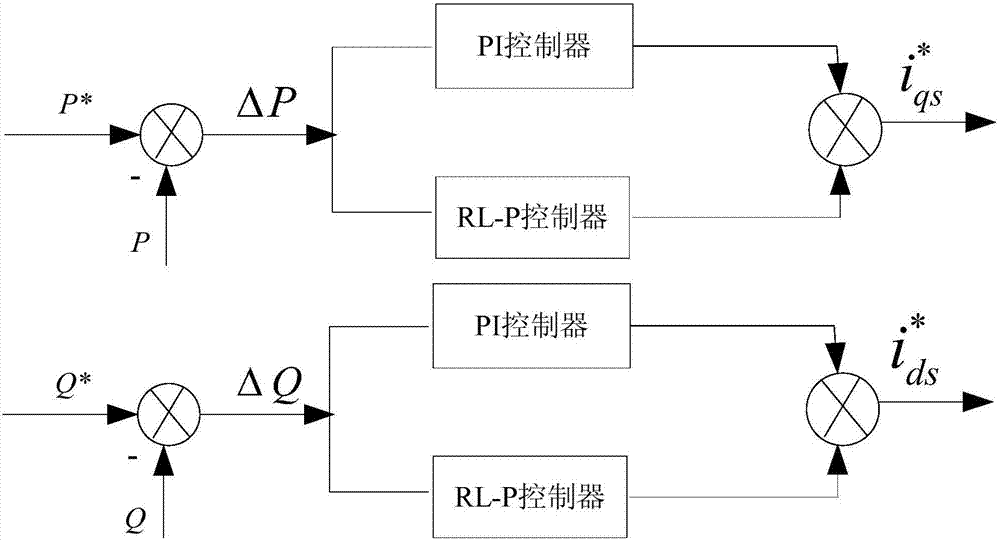

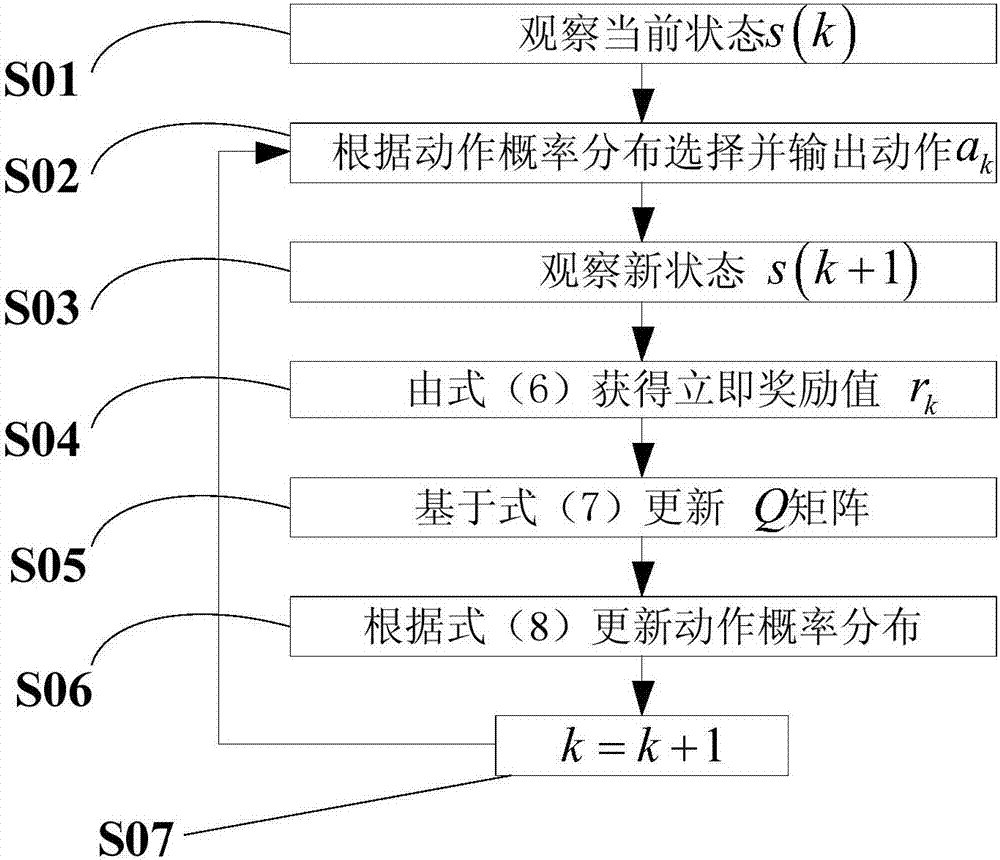

The invention discloses a reinforcement learning algorithm-based self-correction control method for a double-fed induction wind power generator. According to the method, an RL controller is added to a PI controller of a vector control system based on PI control to dynamically correct the output of the PI controller; the RL controller comprises an RL-P controller and an RL-Q controller; and the RL-P controller and the RL-Q controller are used for correcting active and reactive power control signals respectively. The Q learning algorithm is introduced to the method to be used as the reinforcement learning core algorithm; the reinforcement learning control algorithm is insensitive to a mathematical model and an operating state of a controlled object while the learning capability has relatively high adaptivity and robustness on parameter changes or external interference, so that output of the PI controller can be optimized rapidly and automatically online; and by virtue of the reinforcement learning algorithm-based self-correction control method, high dynamic performance is achieved, and the robustness and the adaptivity of the control system are obviously reinforced.

Owner:SOUTH CHINA UNIV OF TECH

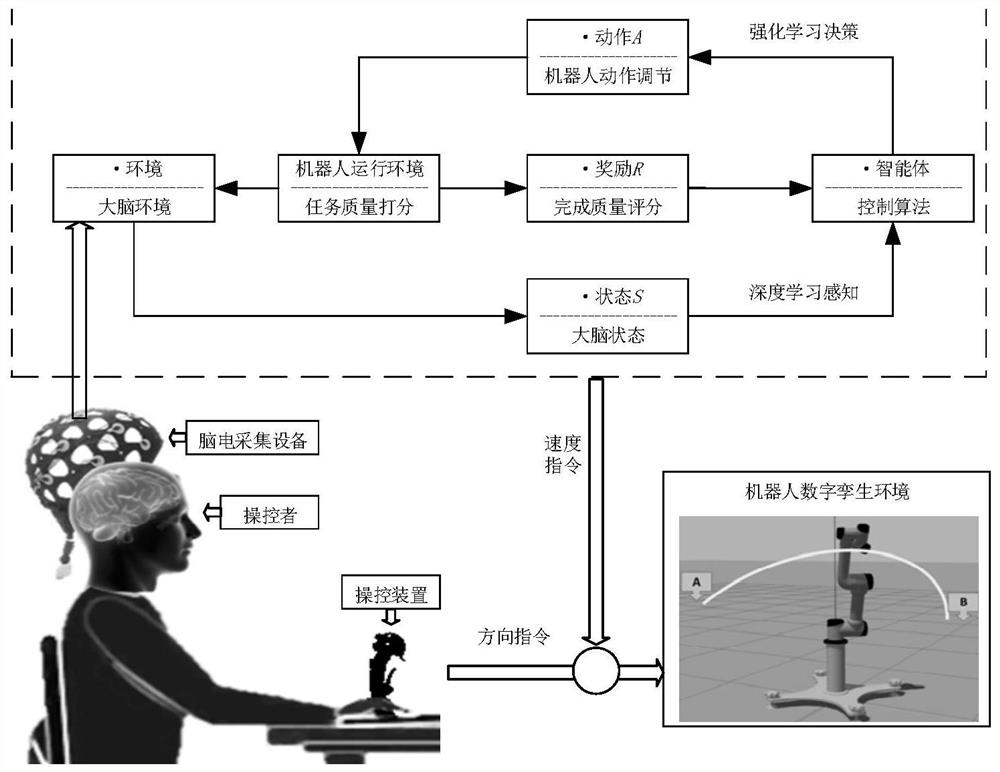

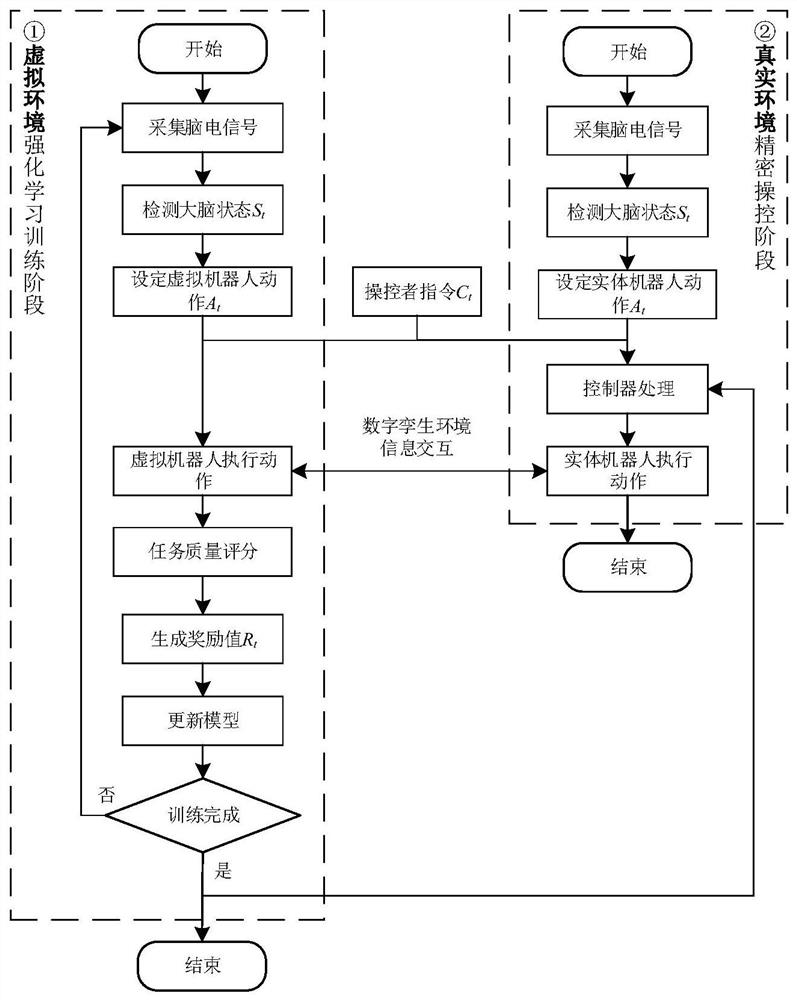

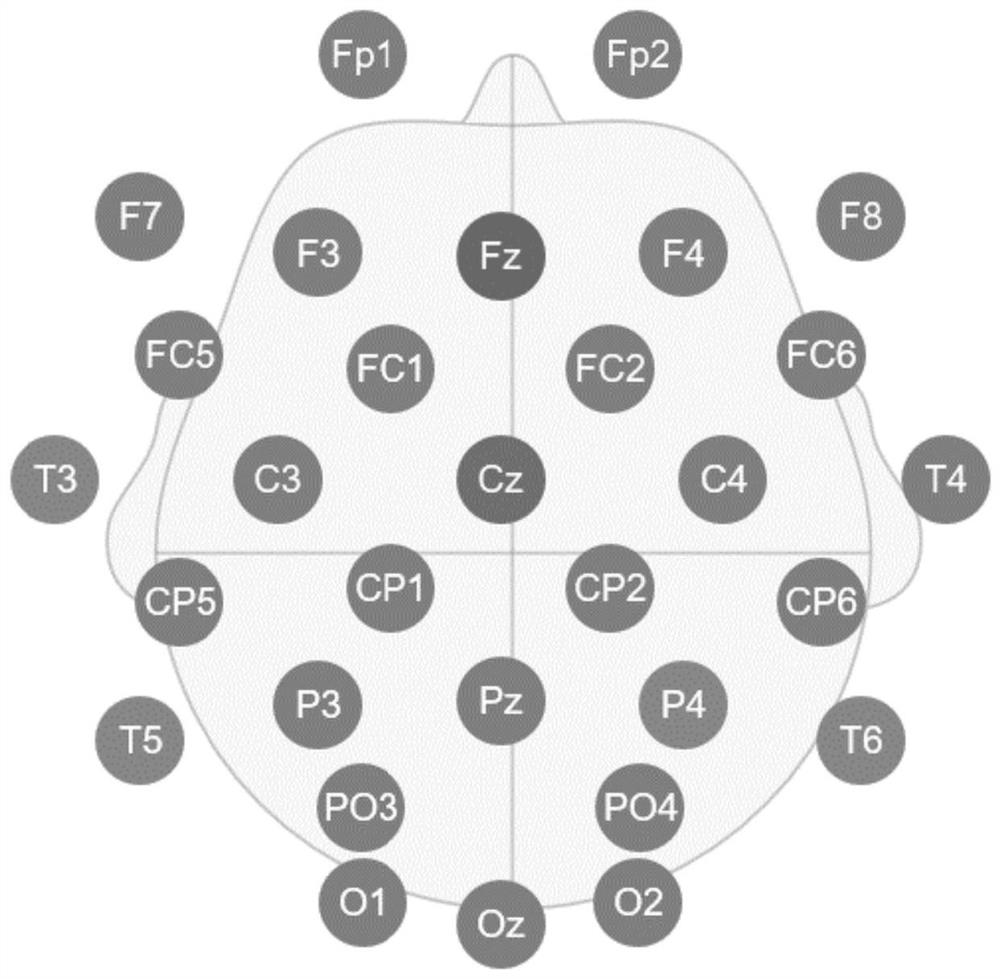

Brain-computer cooperation digital twinning reinforcement learning control method and system

ActiveCN112171669ATo achieve mutual adaptationImprove portabilityInput/output for user-computer interactionProgramme-controlled manipulatorInformation layerBrain state

The invention discloses a brain-computer cooperation digital twinning reinforcement learning control method and system. A brain-computer cooperation control model is constructed, an operator gives a virtual robot direction instruction, meanwhile, an electroencephalogram signal generated when the operator gives the virtual robot direction instruction is collected, a corresponding speed instructionof a virtual robot is given according to the collected electroencephalogram signal to complete a specified action, reward value calculation is performed on the brain-computer cooperation control modelaccording to the completion quality, training of the brain-computer cooperation control model is completed, a double-loop information interaction mechanism between the brain and the computer is realized through a brain-computer cooperation digital twinning environment in a reinforced learning manner, and interaction of an information layer and an instruction layer between the brain and the computer is realized. According to the method and the system, the brain state of the operator is detected through the electroencephalogram signals, compensation control is conducted on the instruction of the robot according to the brain state of the operator, accurate control is achieved, and compared with other brain-computer cooperation methods, the method has the advantages that the robustness and generalization ability are improved, and mutual adaptation and mutual growth between the brain and the computers are achieved.

Owner:XI AN JIAOTONG UNIV

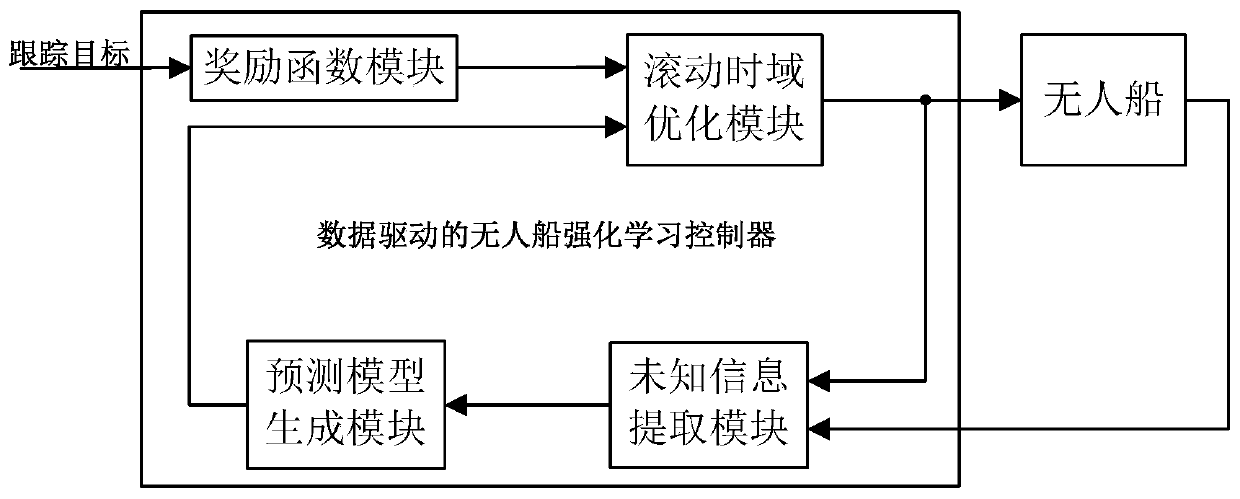

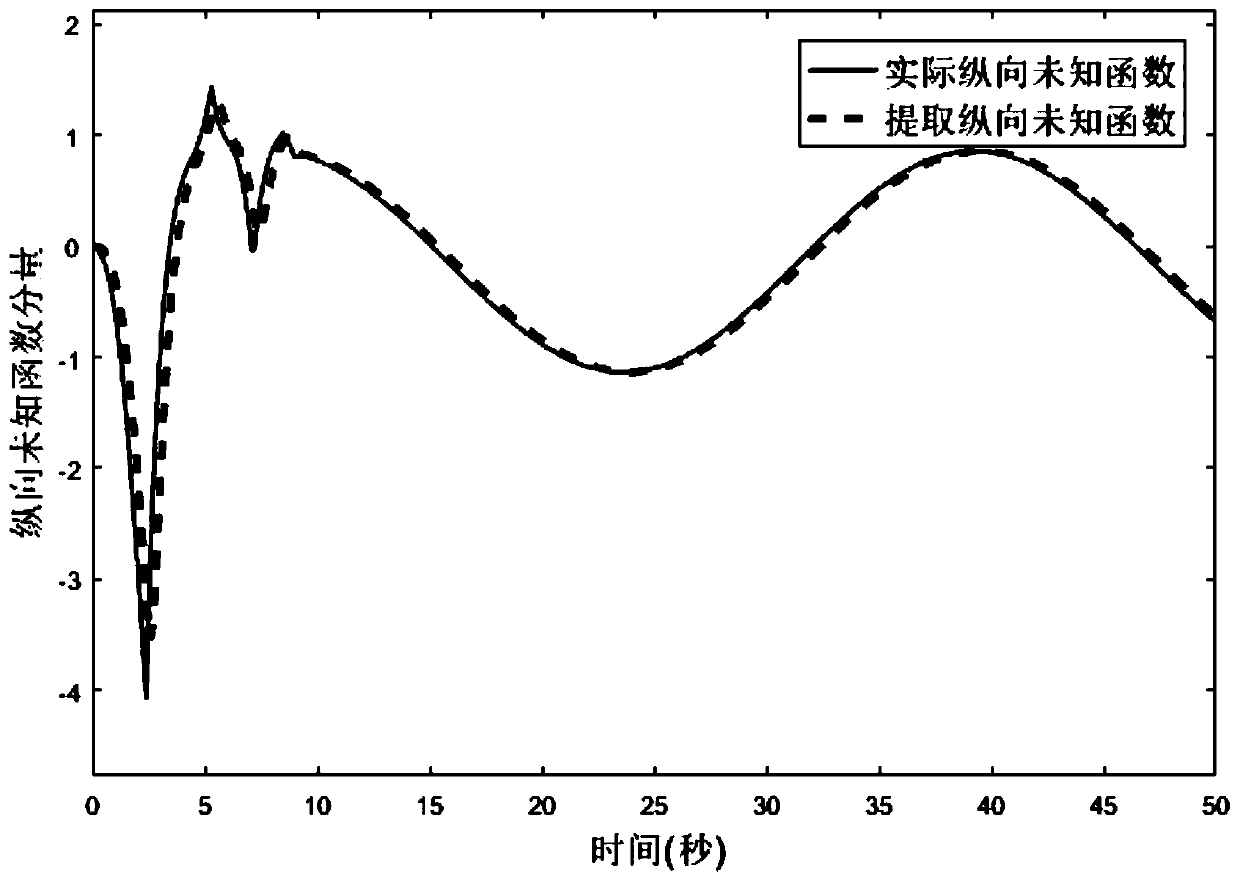

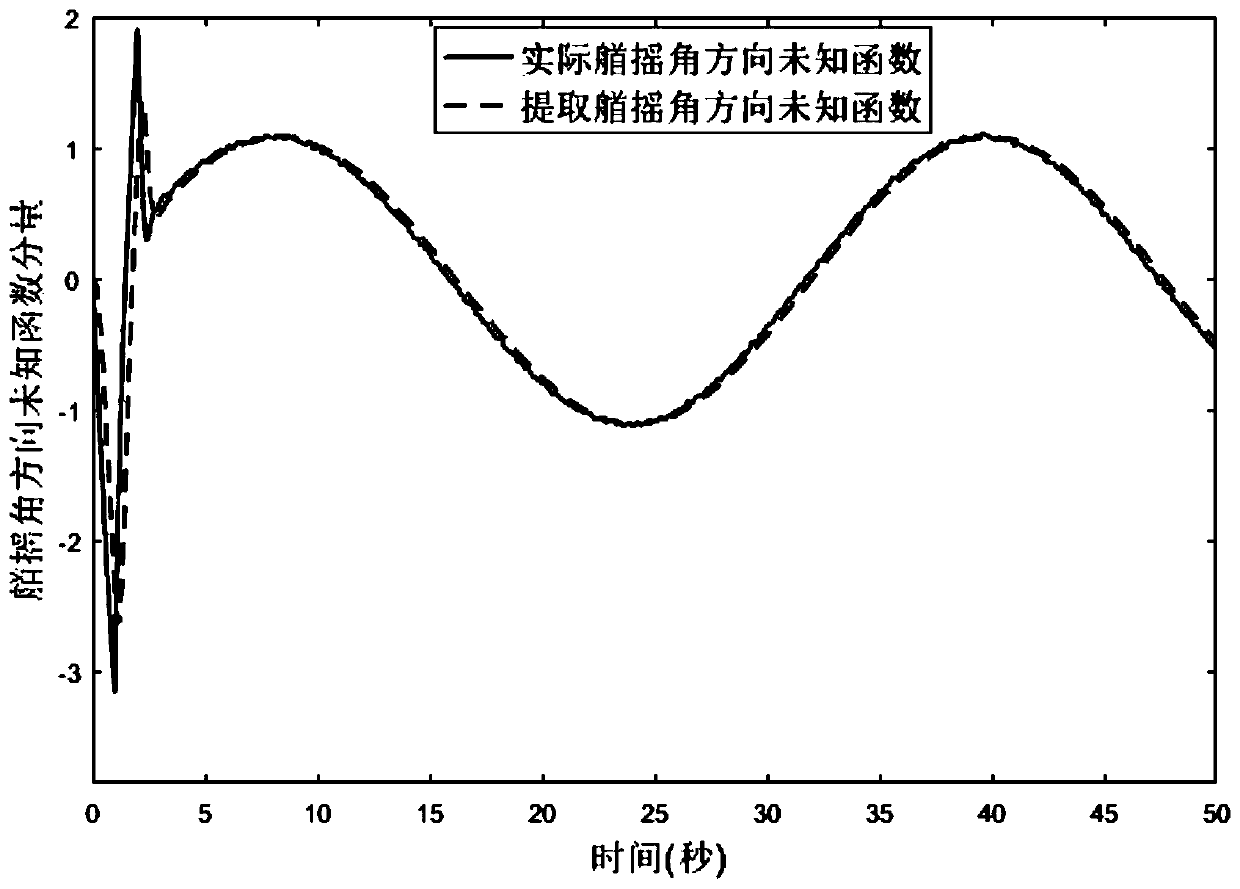

Unmanned ship reinforcement learning controller structure with data drive and design method thereof

The invention discloses an unmanned ship reinforcement learning controller structure with data drive and a design method thereof. The controller structure comprises an unknown information extraction module, a prediction model generation module, a reward function module and a moving horizon optimization module. The unmanned ship reinforcement learning controller structure with data drive and the design method thereof are based on the data drive with no need for accurate mathematical modeling for a controlled unmanned ship. The controller only employs the unknown information extraction module tocollect control input and output state data information of the unmanned ship and extract a dynamics unknown function, the prediction model generation module reconstructs the extraction information toobtain a prediction model, and the controller does not depend on accurate manual modeling of the unmanned ship. The unmanned ship reinforcement learning controller structure with data drive and the design method thereof do not need to design different controllers for the two levels of the kinematics and the dynamics. Through a prediction model and a set reward function, the control input is subjected to moving horizon optimization to achieve an optimal control effect. The unmanned ship reinforcement learning controller structure with data drive and the design method thereof can be suitable for an all-drive unmanned ship and an under-actuated unmanned ship.

Owner:DALIAN MARITIME UNIVERSITY

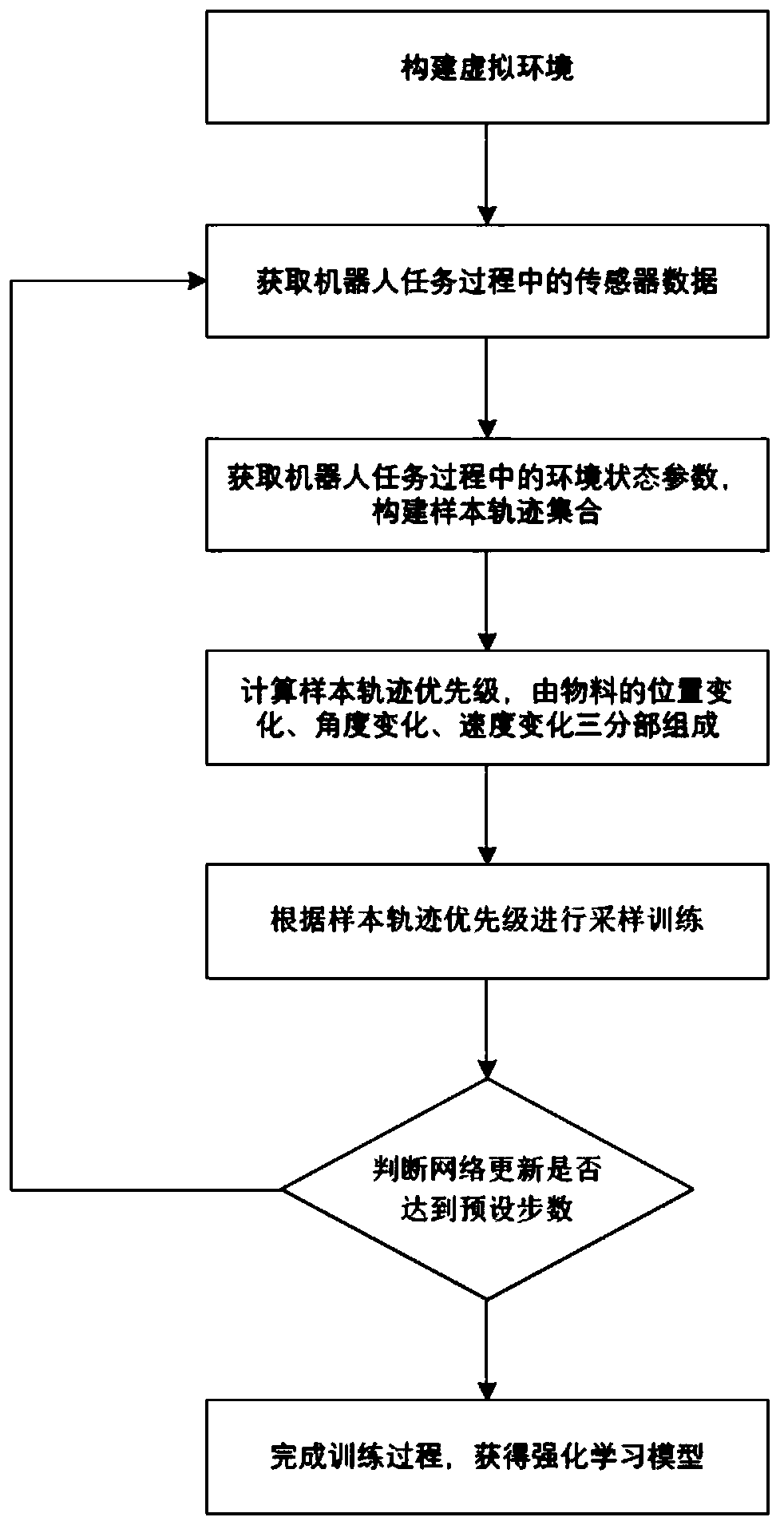

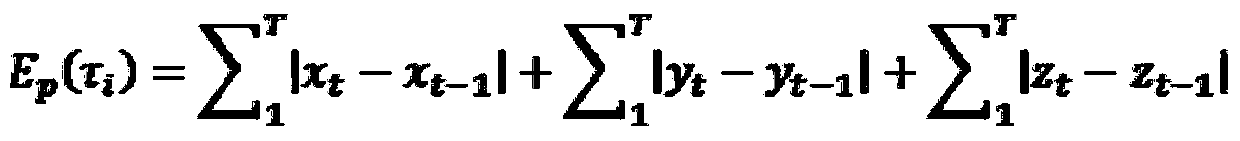

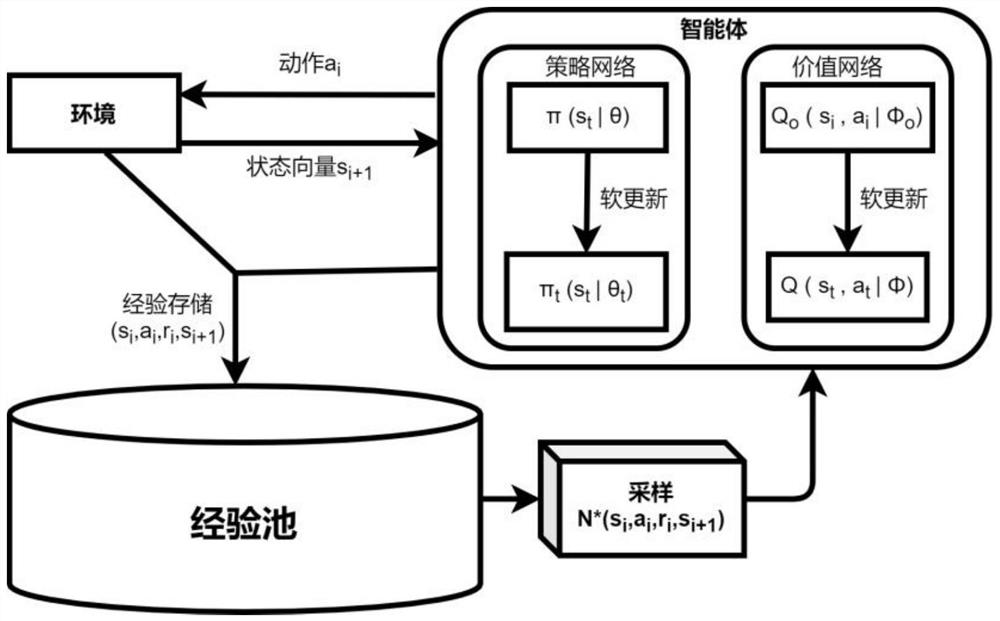

Deep reinforcement learning robot control method based on priority experience playback

ActiveCN111421538AImprove learning efficiencyImprove learning effectProgramme-controlled manipulatorTotal factory controlReinforcement learning algorithmEngineering

The invention discloses a deep reinforcement learning control algorithm based on a priority experience playback mechanism. The priority is calculated through employing state information of an object operated by a robot, an end-to-end robot control model is completed through employing a deep reinforcement learning method, and a deep reinforcement learning intelligent agent is enabled to autonomously learn and complete a specified task in the environment. In a training process, the state information of the target object is collected in real time and used for calculating the priority of experience playback, and then data in an experience playback pool is sampled and learned by a reinforcement learning algorithm according to the priority to obtain the control model. According to the method, onthe premise of ensuring the robustness of the deep reinforcement learning algorithm, environment information is utilized to the maximum extent, the effect of the control model is improved, and the learning convergence speed is increased.

Owner:XI AN JIAOTONG UNIV

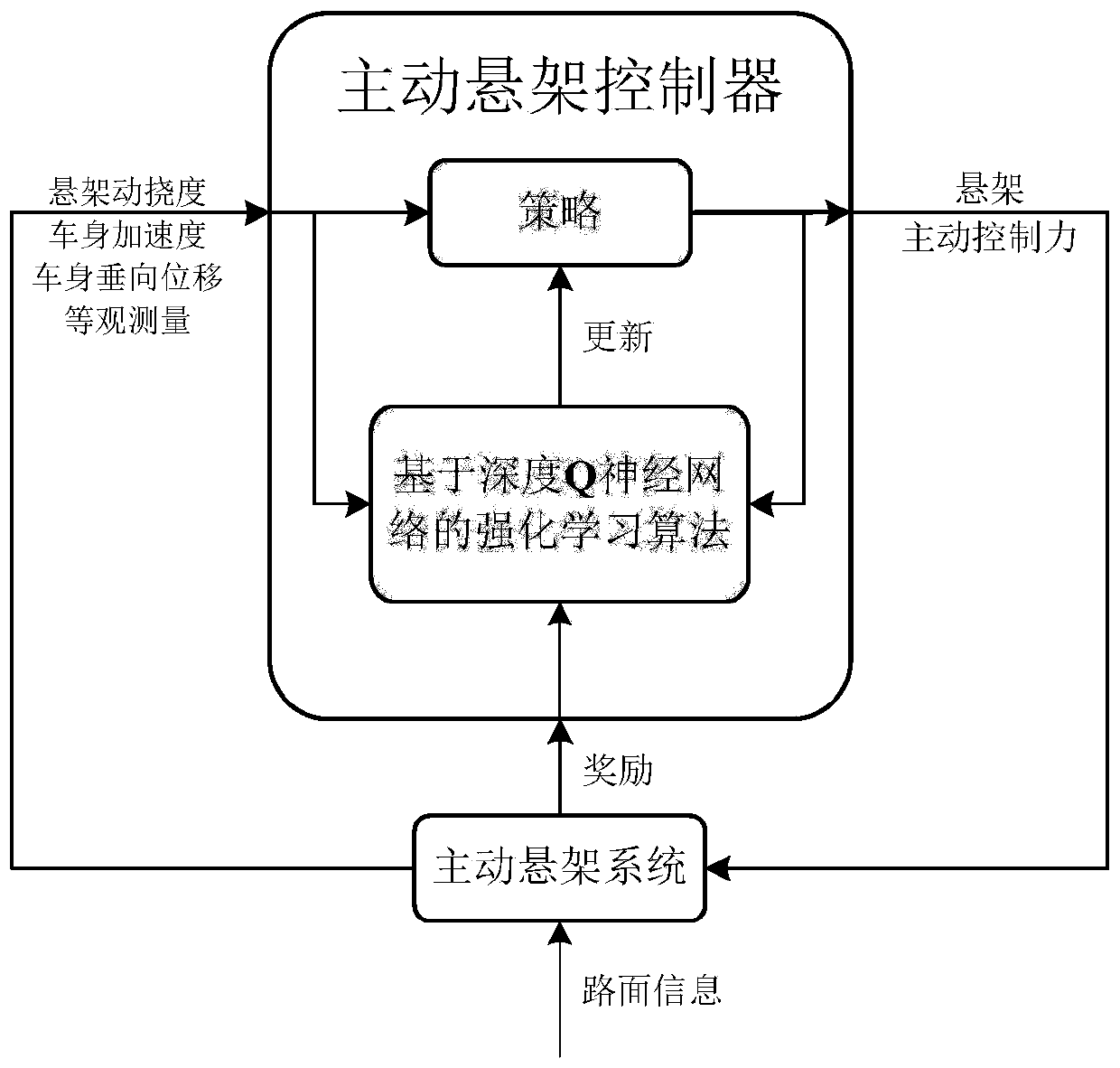

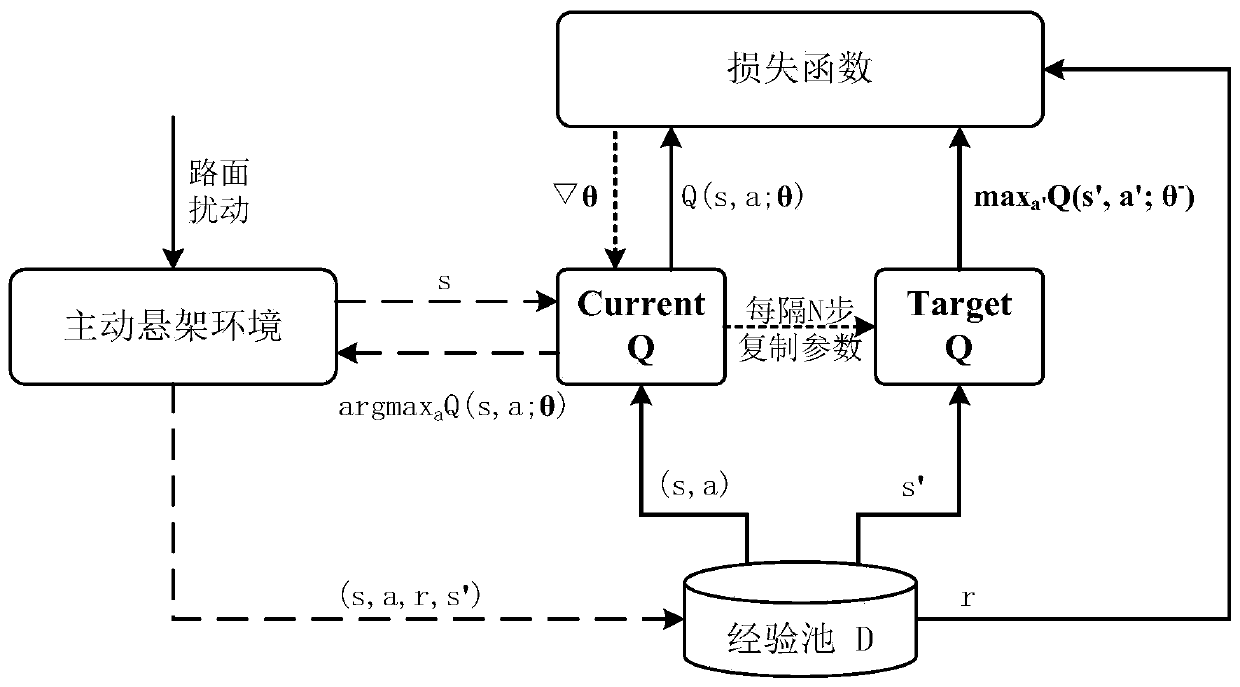

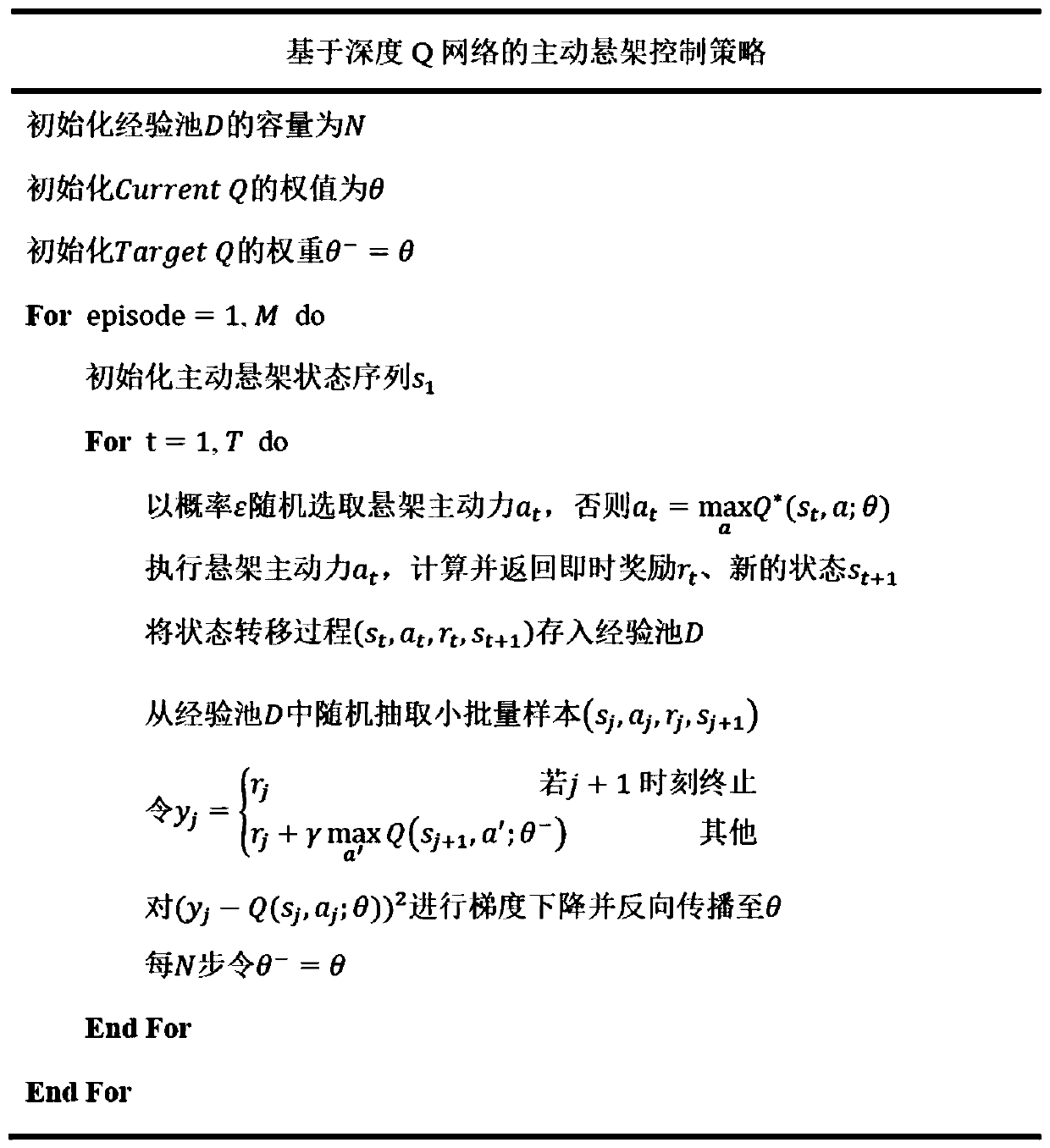

Active suspension reinforcement learning control method based on deep Q neural network

ActiveCN111487863AImprove comfortImprove adaptabilityAdaptive controlControl systemLearning controller

The invention relates to an active suspension reinforcement learning control method based on a deep Q neural network, and belongs to the technical field of automobile dynamic control and artificial intelligence. A reinforcement learning controller main body obtains state observed quantities such as vehicle body acceleration and a suspension dynamic deflection from a suspension system, a strategy is used for determining a reasonable active force to be applied to the suspension system, the suspension system changes the state at a current moment according to the active force, and meanwhile an award value is generated to judge quality of the current active force. By setting a reasonable reward function and combining dynamic data obtained from an environment, an optimal strategy can be determined to determine a size of an active control force so that overall performance of the control system is more excellent under a large amount of training. According to the reinforcement learning controlmethod based on the deep Q neural network, the active suspension system can be dynamically and adaptively adjusted; and therefore, influences caused by factors such as parameter uncertainty, changeable road surface interference and the like which are difficult to solve in a traditional suspension control method are overcome, and riding comfort of passengers is improved as much as possible on the premise that the overall safety of a vehicle is guaranteed.

Owner:SOUTHEAST UNIV +1

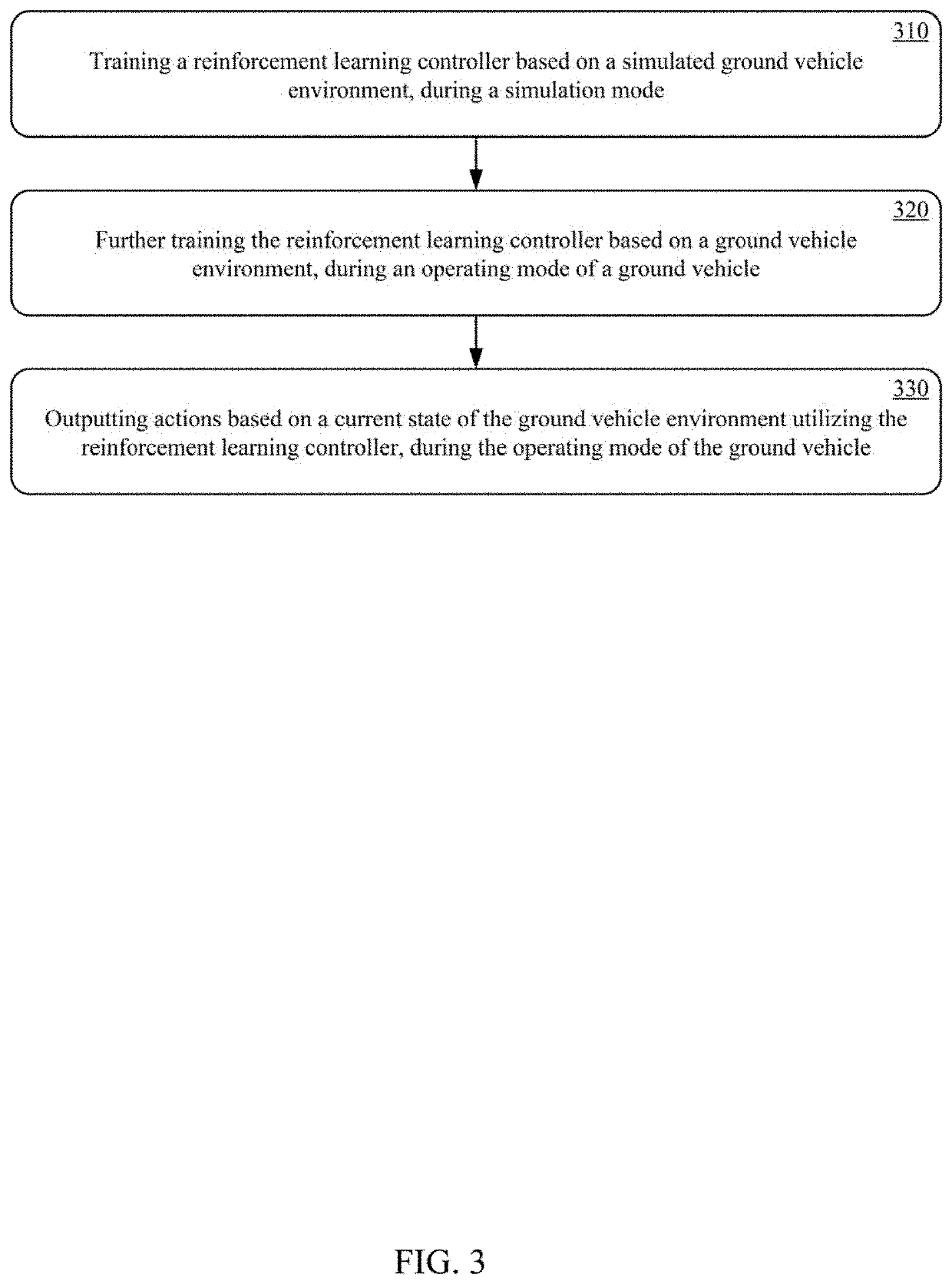

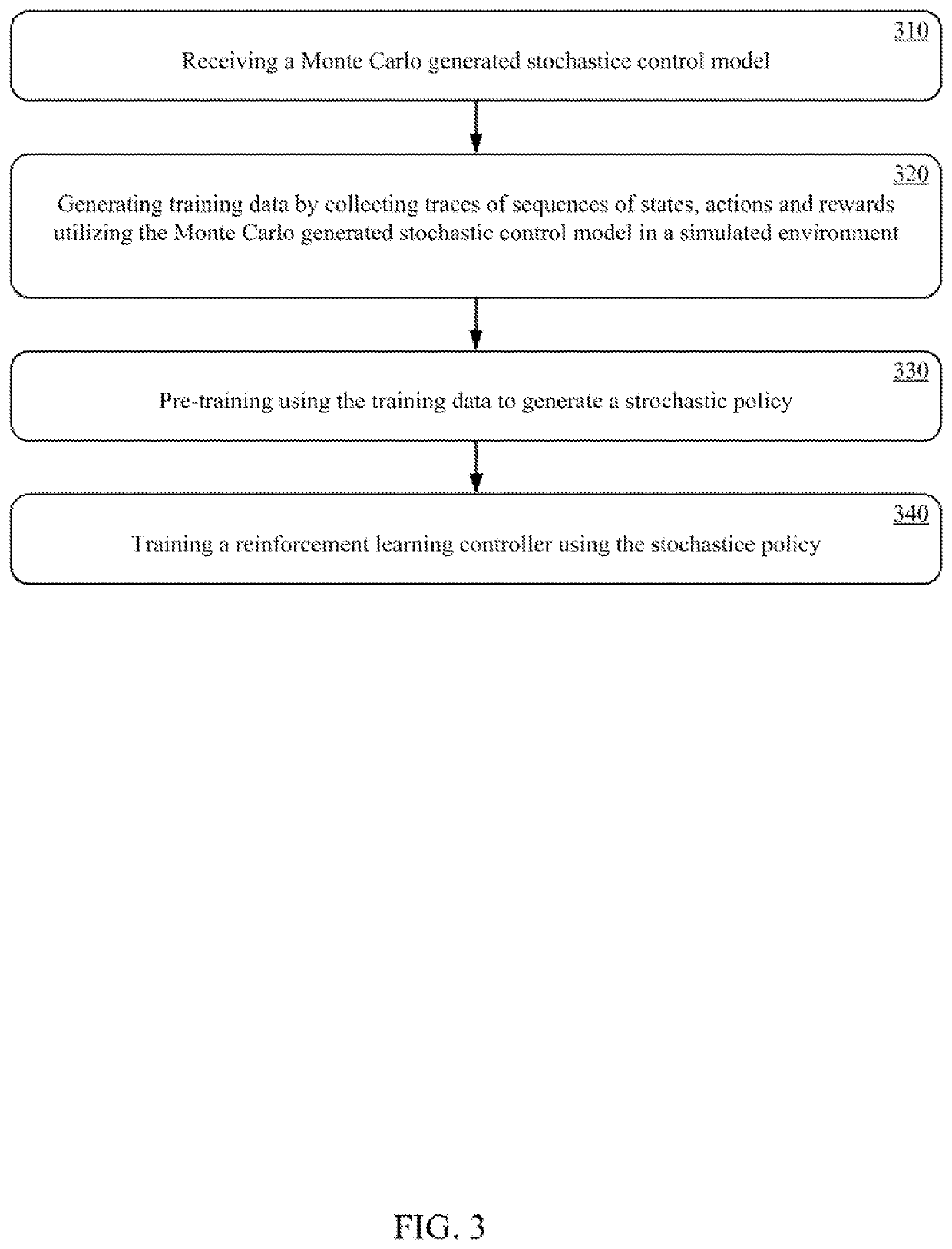

Pre-Training of a Reinforcement Learning Ground Vehicle Controller Using Monte Carlo Simulation

ActiveUS20190378042A1Well formedMathematical modelsArtificial lifeLearning controllerGround vehicles

Techniques for utilizing a Monte Carlo model to perform pre-training of a ground vehicle controller. A sampled distribution of actions and corresponding states can be utilized to train a reinforcement learning controller policy, learn an action-value function, or select a set of control parameters with a predetermined loss.

Owner:TRAXEN INC

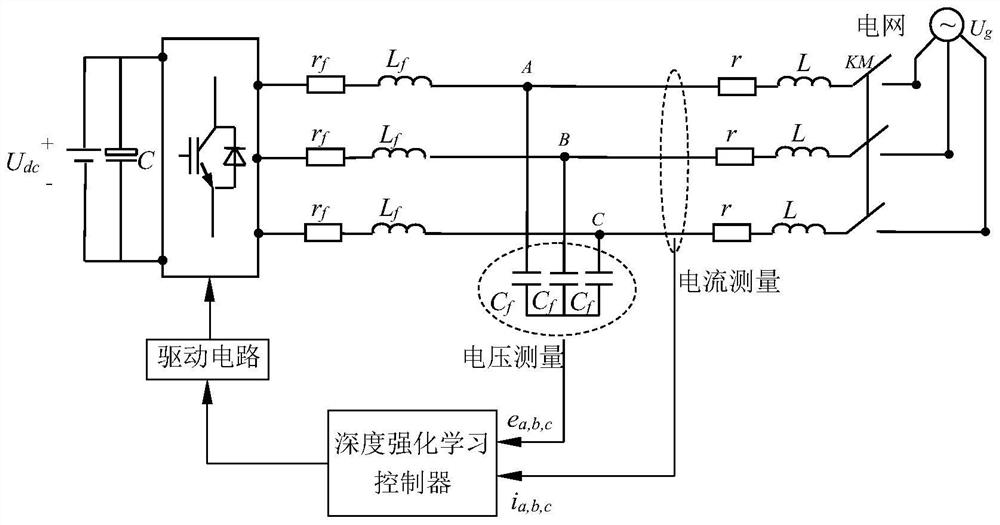

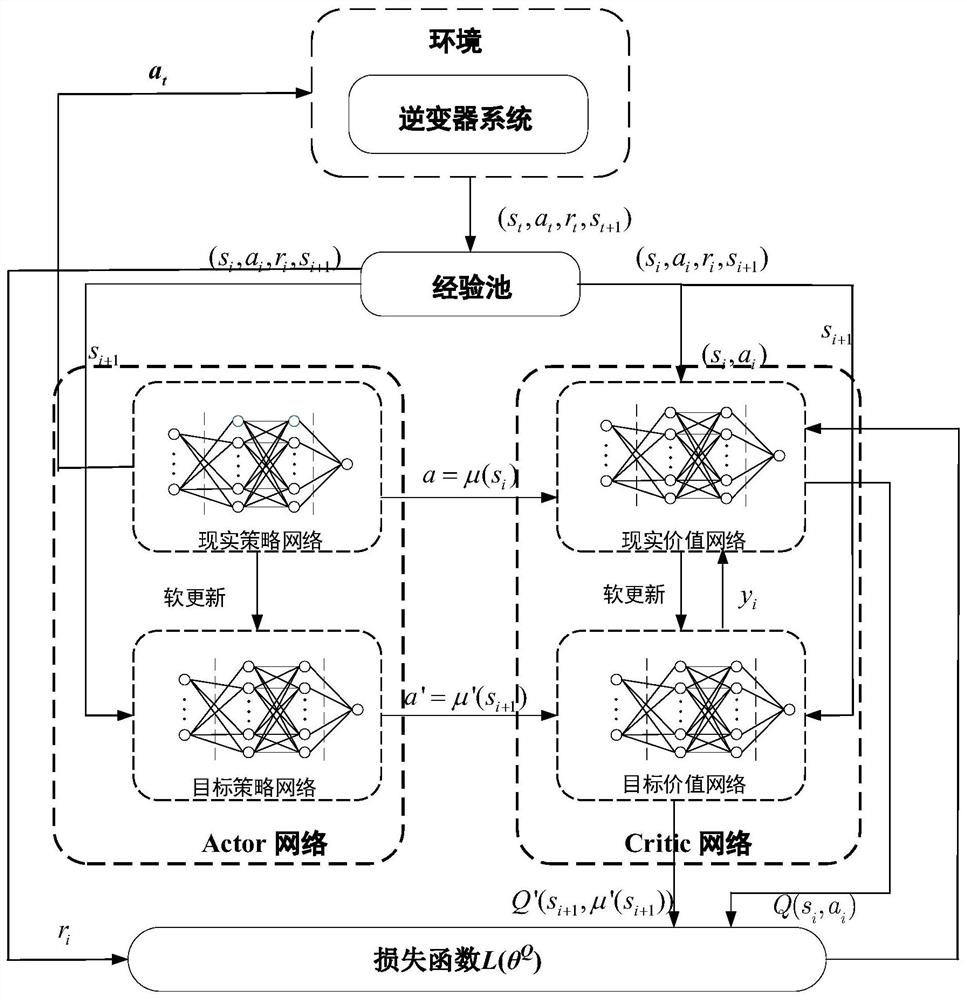

Inverter controller based on deep reinforcement learning

ActiveCN112187074AFlicker reduction in ac networkSingle network parallel feeding arrangementsTerminal voltageClassical mechanics

The invention discloses an inverter controller based on deep reinforcement learning. The inverter controller comprises a dq conversion module, an output active, reactive and terminal voltage effectivevalue calculation module, a modulation wave signal amplitude calculation module, a simulation rotor motion equation module, a deep reinforcement learning control module, and a dq inverse transformation and PWM modulation module, wherein the inverter controller simulates a synchronous generator rotor motion equation, and adjusts the virtual moment of inertia through the deep reinforcement learningcontrol module so as to obtain a good power system low-frequency oscillation suppression effect.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

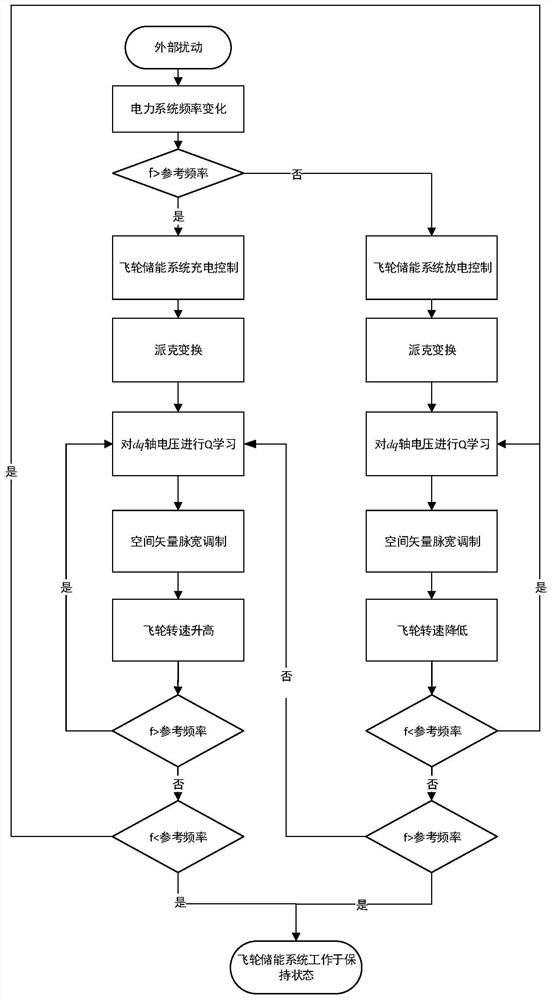

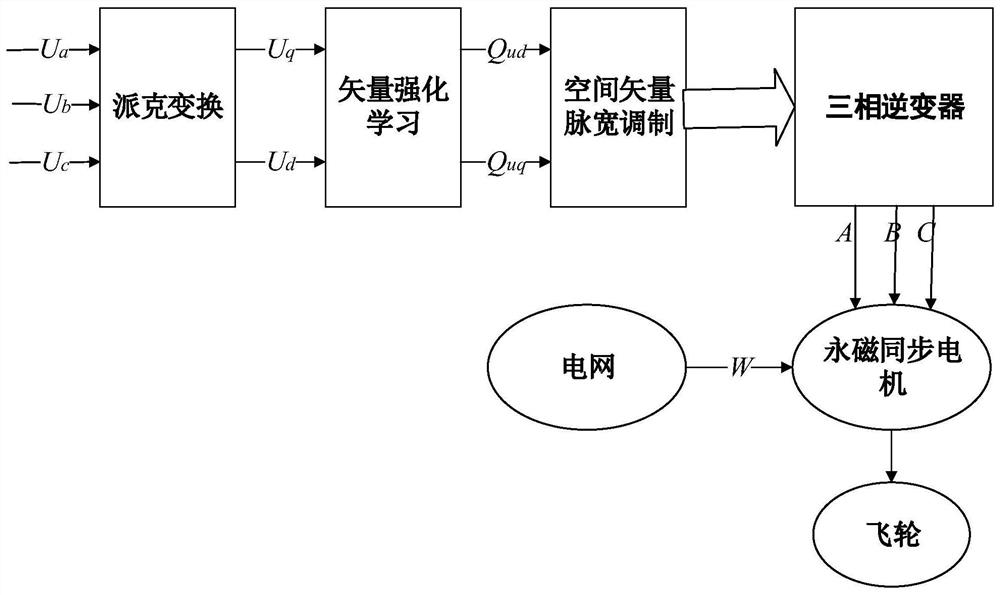

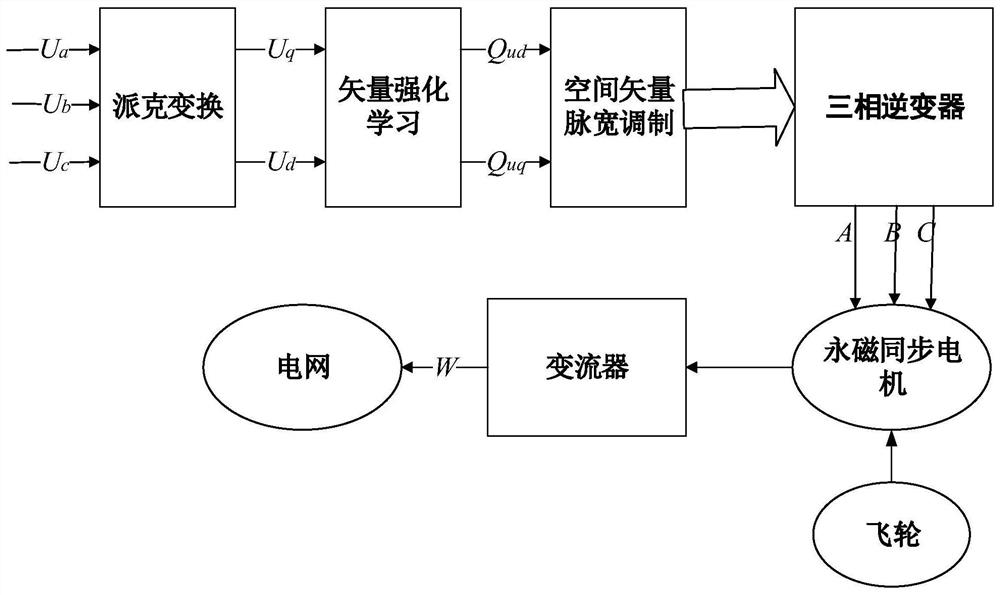

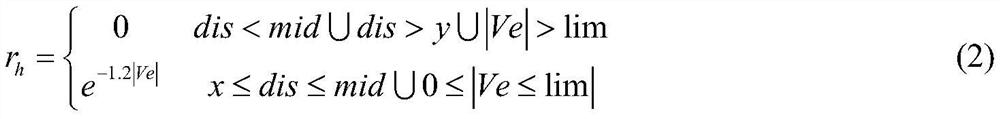

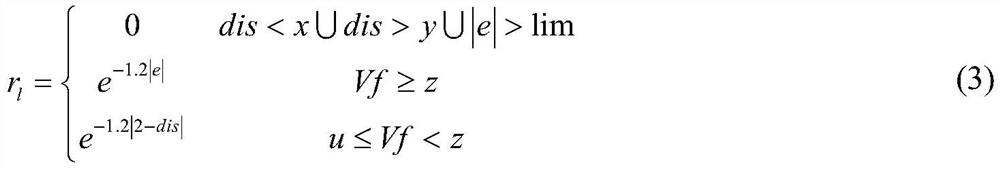

Vector reinforcement learning control method of power grid frequency modulation type flywheel energy storage system

ActiveCN112103971AOvercome the shortcoming of slow FM responseFast actionElectronic commutation motor controlAC motor controlNew energyElectric power system

The invention provides a vector reinforcement learning control method of a power grid frequency modulation type flywheel energy storage system. The method can overcome the current situation that traditional frequency modulation resources cannot meet frequency modulation requirements due to impact of randomness, volatility and uncertainty of new energy power generation and distributed power generation on a power grid in an existing power system. Frequency modulation of the flywheel energy storage system is combined with vector reinforcement learning, the optimal action of the flywheel energy storage system is selected by performing vector reinforcement learning on voltage, a motor of the system is controlled to work in a generator / motor state to achieve the purpose that the system works ina discharging / charging mode, and therefore the purpose of adjusting the frequency of a power system is achieved. According to the vector reinforcement learning control method of the power grid frequency modulation type flywheel energy storage system, the response speed is much higher than that of a traditional frequency modulation resource, the power grid frequency can be rapidly adjusted, the power grid frequency is kept within the allowable deviation range, the system frequency stability is maintained, and therefore the reliability and safety of power grid operation are guaranteed.

Owner:GUANGXI UNIV

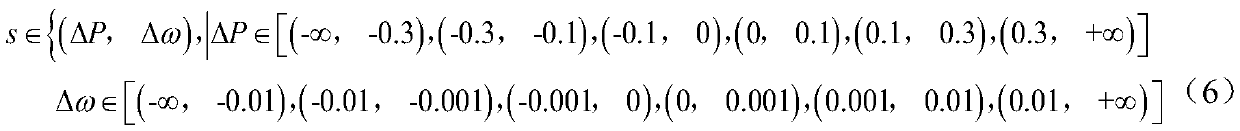

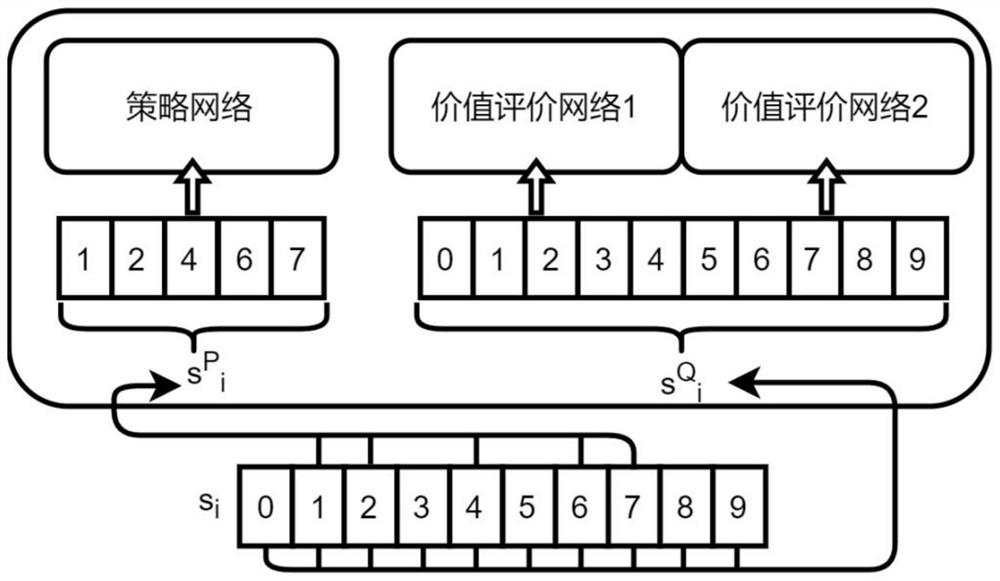

Automobile longitudinal multi-state control method based on deep reinforcement learning priority extraction

ActiveCN112861269AGood control smoothnessImprove control stabilityGeometric CADInternal combustion piston enginesTraffic crashTraffic accident

The invention discloses an automobile longitudinal multi-state control method based on deep reinforcement learning priority extraction. The method comprises the following steps: 1, defining a state parameter set s and a control parameter set a of automobile driving; 2, initializing deep reinforcement learning parameters, and constructing a deep neural network; 3, defining a deep reinforcement learning reward function and a priority extraction rule; 4, training the deep neural network and obtaining an optimal network model; and 5, obtaining a t moment state parameter st of the automobile, inputting the t moment state parameter st into the optimal network model to obtain an output at, and executing the output at by the automobile. According to the method, the multi-state driving of the automobile in the longitudinal direction is completed by combining the priority extraction algorithm and the deep reinforcement learning control method, so that the safety of the automobile in the driving process is higher, and traffic accidents are reduced.

Owner:HEFEI UNIV OF TECH

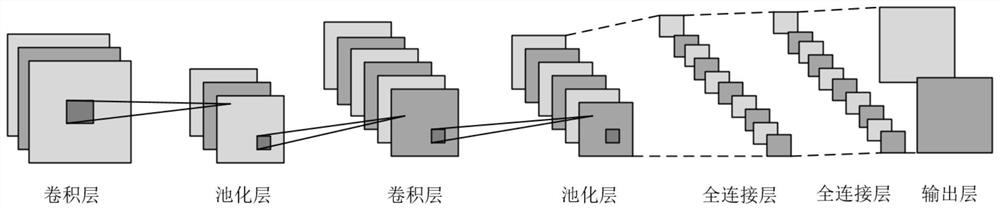

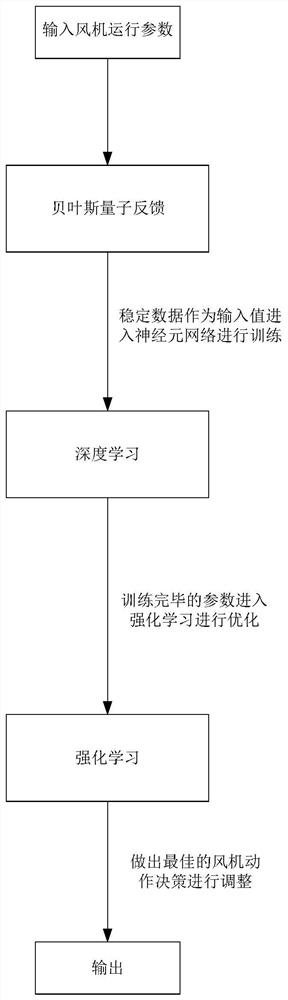

Quantum deep reinforcement learning control method of doubly-fed wind generator

ActiveCN112202196ASingle network parallel feeding arrangementsCharacter and pattern recognitionAlgorithmSimulation

The invention provides a quantum deep reinforcement learning control method of a doubly-fed wind generator. The method can solve the control problem of stator flux linkage change of the doubly-fed wind generator after a power grid fault is removed and when the power grid voltage is asymmetrically and suddenly increased. The method is a control method combining Bayesian quantum feedback control, deep learning and reinforcement learning. The Bayesian quantum feedback control process is divided into two steps of state estimation and feedback control, and feedback input is historical measurement and current measurement records. Bayesian quantum feedback can effectively control decoherence in solid quantum bits. The deep learning part adopts a convolutional neural network model and a back propagation method. In the reinforcement learning part, Q learning based on a Markov decision process is used as a control framework of the whole method. According to the method, the control stability of the doubly-fed wind generator can be effectively improved, and the wind energy utilization efficiency is improved.

Owner:GUANGXI UNIV

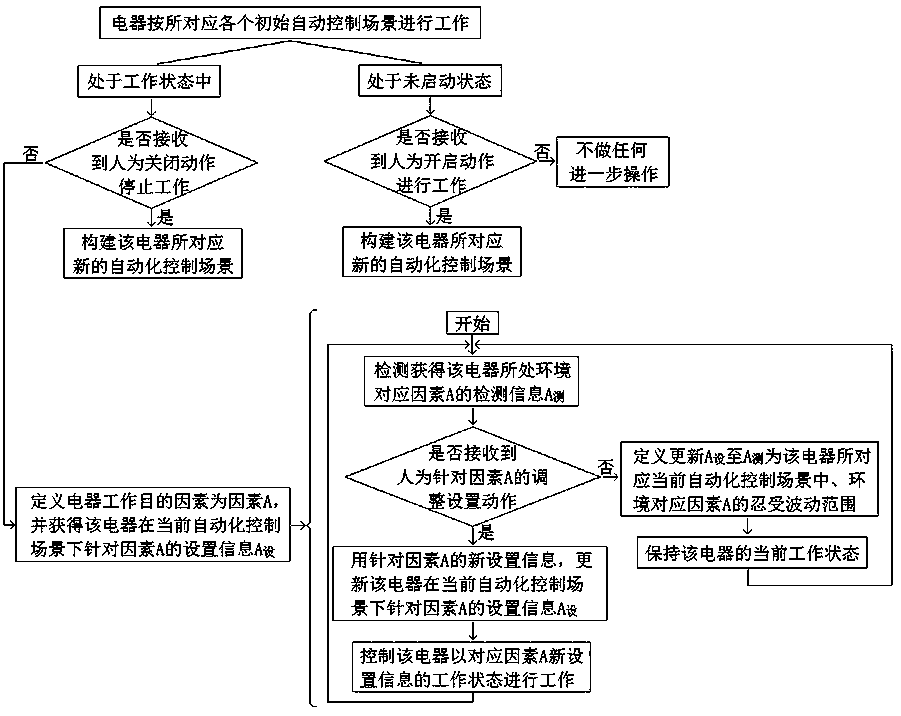

Electronic and electrical appliance control method and control equipment based on reinforcement learning, and storage medium

ActiveCN111338227AImprove efficiencyEfficient use effectComputer controlMachine learningAutomatic controlSimulation

The invention relates to an electronic appliance control method based on reinforcement learning. For an electronic appliance with an automatic scene control function, reinforcement learning control policies are applied, intervention control of a user on electronic and electrical equipment continuously is acquired to serve as decision input of reinforcement learning, a scene algorithm model adapting to automatic control of equipment under different scenes of a user is dynamically generated, a scene algorithm model of an automatic working mode of the electronic appliance closest to the use habitof the user is obtained, and the use efficiency of the electronic appliance is improved. The design method is applied to various electronic appliances, so that optimization and updating of a self-learning mode of automatic control of all-electronic appliance scenes are realized, and a better automatic scene control method is provided for smart homes and smart offices.

Owner:南京三满互联网络科技有限公司

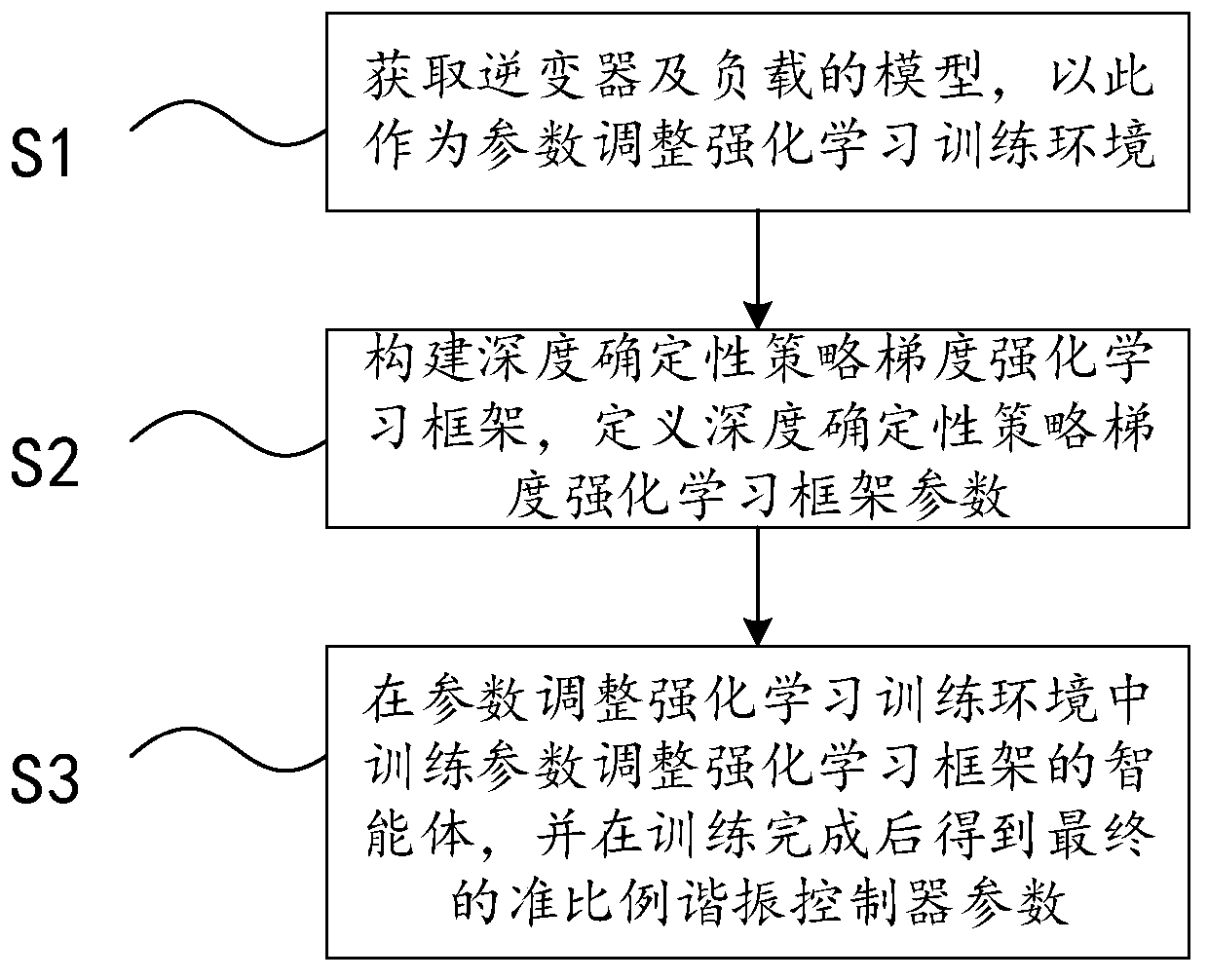

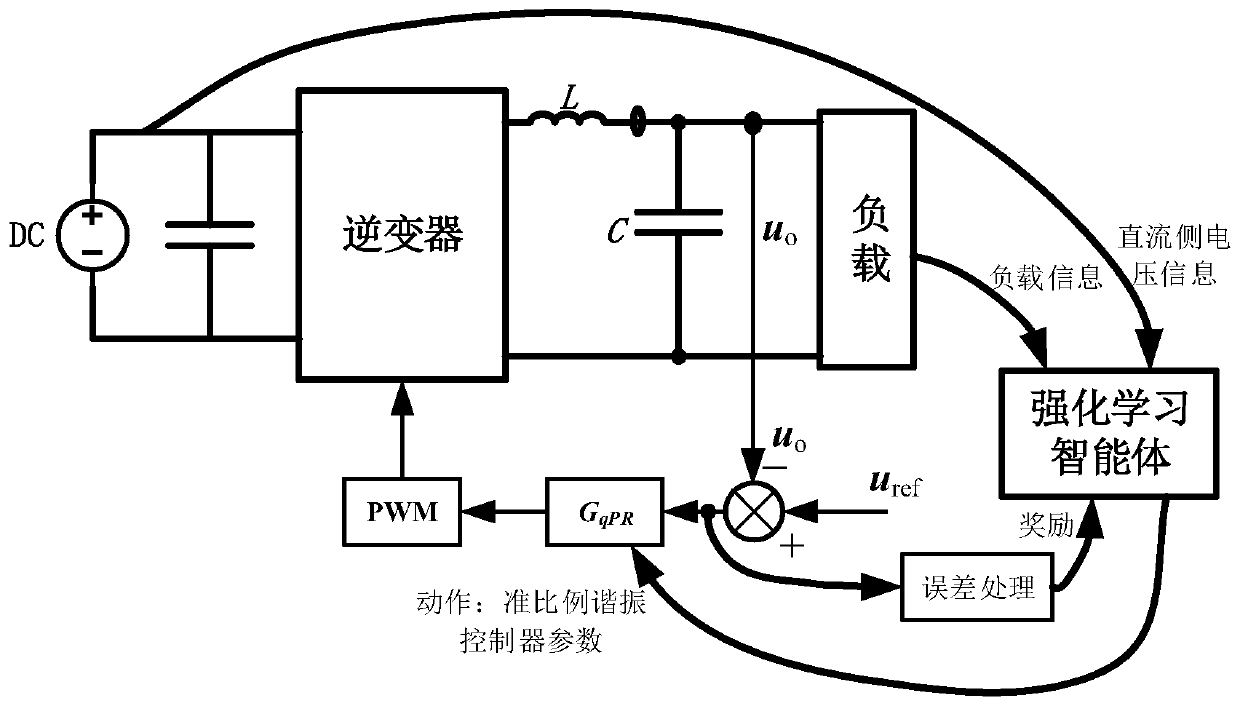

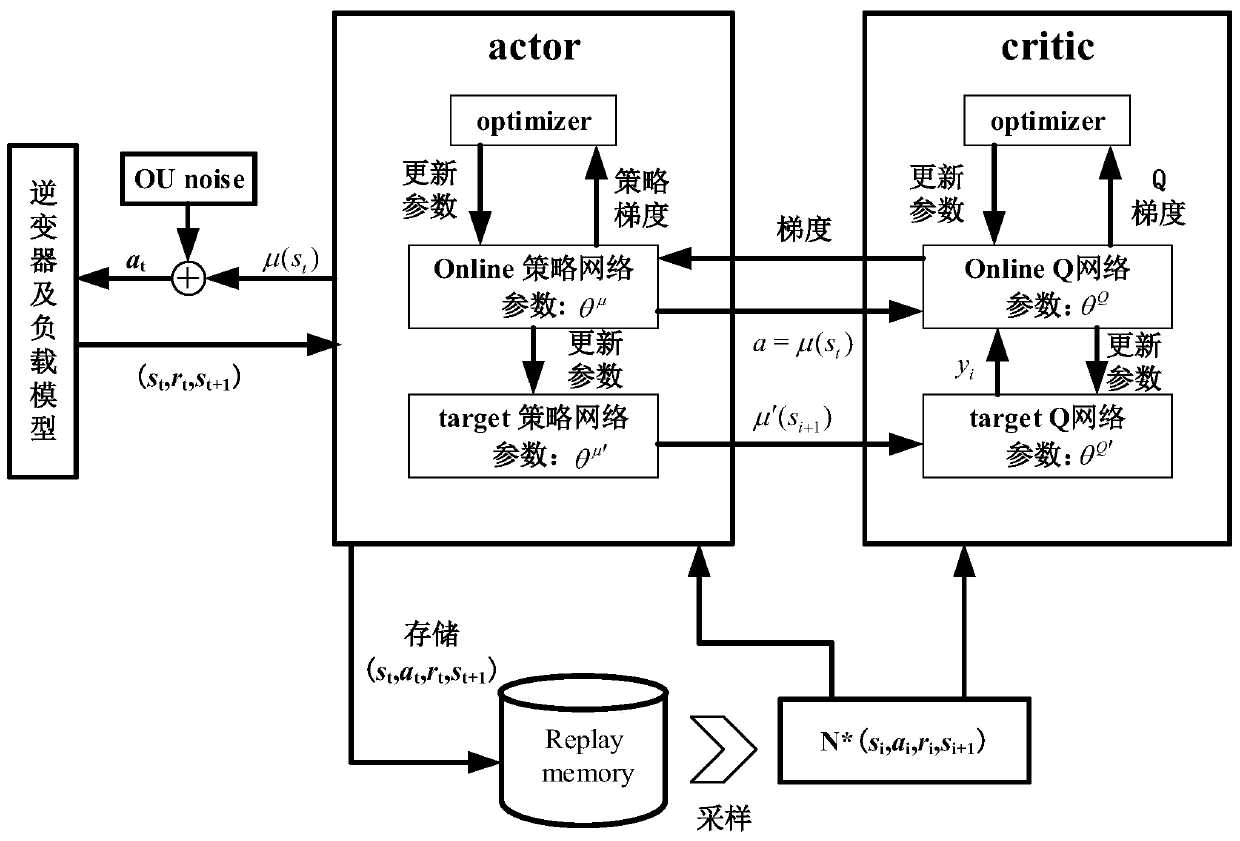

Quasi-proportional resonance controller parameter adjusting method and system

PendingCN111008708AAdaptableImprove robustnessDesign optimisation/simulationMachine learningMathematical modelSelf adaptive

The invention discloses a quasi-proportional resonance controller parameter adjustment method. The method comprises the following steps of: obtaining models of an inverter and a load, and adjusting areinforcement learning training environment by taking the models as parameters; constructing a depth deterministic strategy gradient reinforcement learning framework, and defining depth deterministicstrategy gradient reinforcement learning framework parameters, wherein the deep deterministic strategy gradient reinforcement learning framework parameters comprise a state, an action and a reward value; and training the intelligent agent of the parameter adjustment reinforcement learning framework in the parameter adjustment reinforcement learning training environment. According to the method forsetting control parameters of the multi-parallel quasi-proportional resonance controller based on the reinforcement learning method, because the reinforcement learning control algorithm is not sensitive to the mathematical model and the operation state of the controlled object, the self-learning capability of the reinforcement learning control algorithm has strong adaptability and robustness to parameter change or external interference, the control requirement can be met when the multi-quasi-proportional resonance controller is connected in parallel, and the control effect can be ensured whenthe load is changed.

Owner:GUANGDONG POWER GRID CO LTD +1

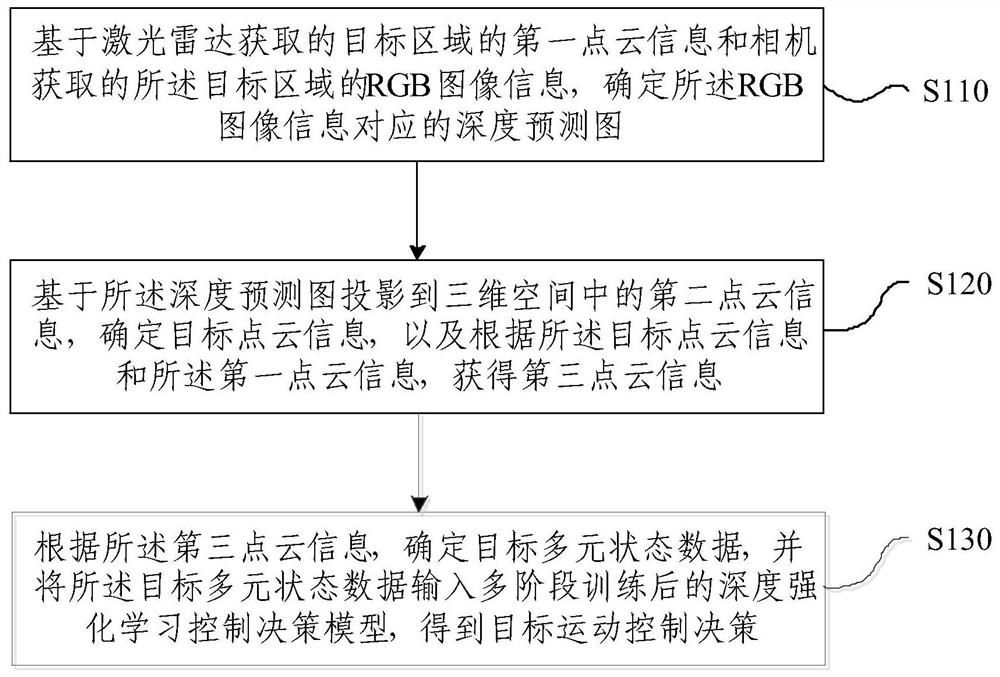

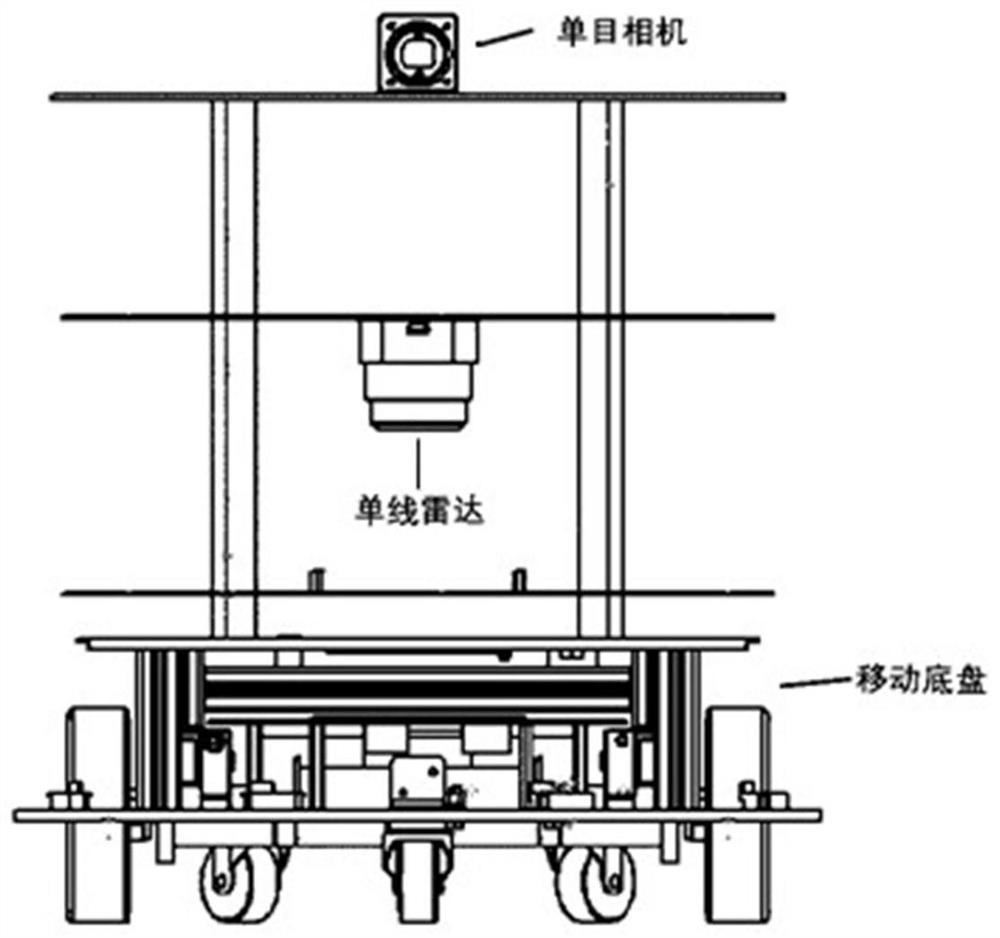

Motion control decision generation method and device, electronic equipment and storage medium

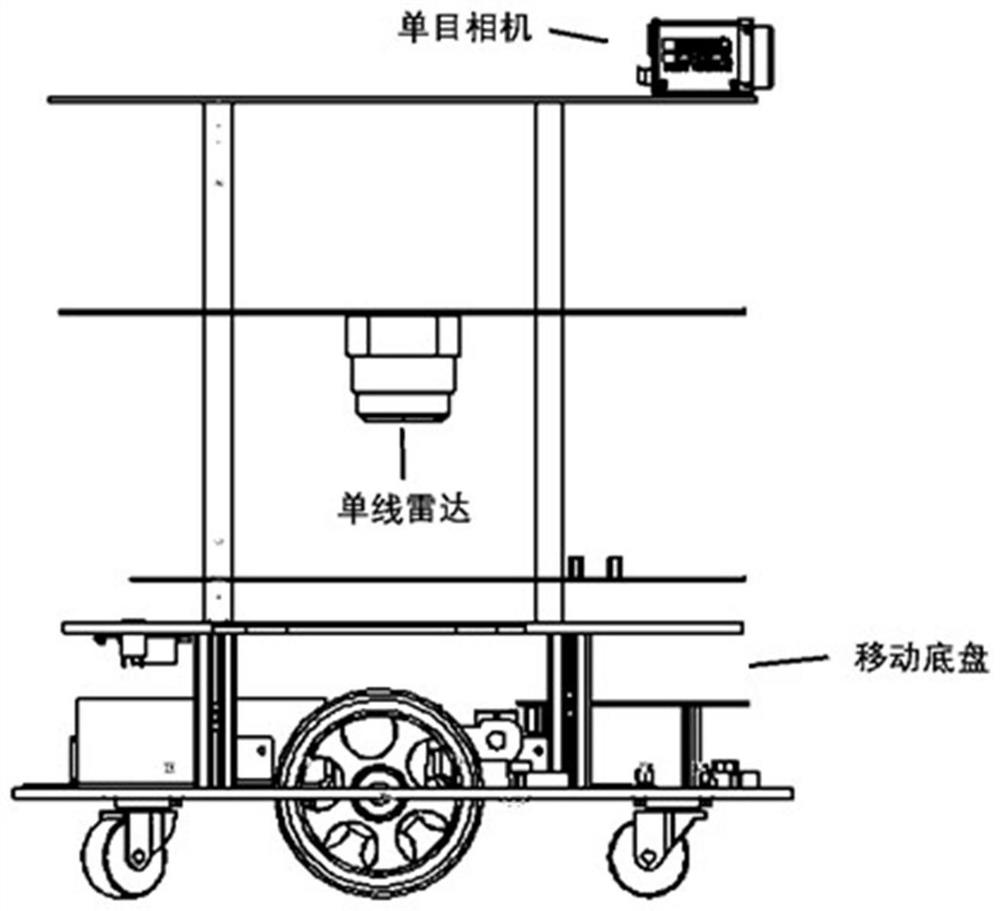

PendingCN113593035AOvercome plane limitationsImprove practicalityImage enhancementImage analysisDecision modelRgb image

The invention provides a motion control decision generation method and device, electronic equipment and a storage medium, and the method comprises the steps: determining a depth prediction map corresponding to the RGB image information based on the first point cloud information, obtained by a laser radar, of a target region and the RGB image information, obtained by a camera, of the target region; determining target point cloud information based on second point cloud information of the depth prediction map projected into the three-dimensional space, and obtaining third point cloud information according to the target point cloud information and the first point cloud information; and determining target multivariate state data according to the third point cloud information, and inputting the target multivariate state data into the deep reinforcement learning control decision model after multi-stage training to obtain a target motion control decision. According to the method provided by the invention, the plane limitation existing in radar detection can be effectively overcome, the target motion control decision is efficiently obtained, and a better moving obstacle avoidance effect is realized.

Owner:北京进睿科技有限公司

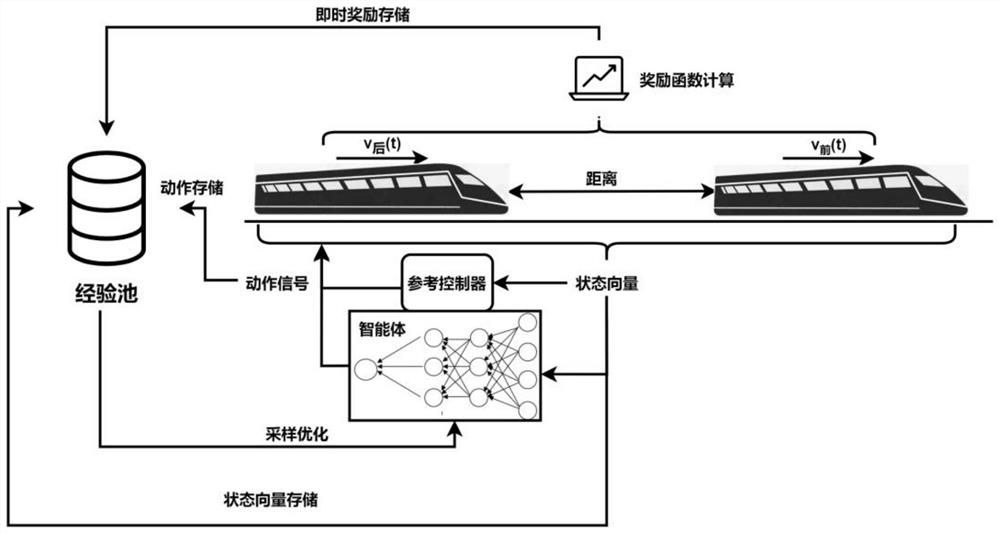

Train cooperative operation control method based on reference deep reinforcement learning

ActiveCN114880770AGuaranteed positioning errorGuaranteed Control LatencyGeometric CADInternal combustion piston enginesControl signalDimensionality reduction

The invention discloses a train cooperative operation control method based on reference deep reinforcement learning, and the method specifically comprises the steps: building a train cooperative operation simulation environment, setting a train safety distance and the like, and calculating the estimated shortest real-time distance of two trains; setting a reward function, and establishing an input dimensionality reduction reinforcement learning algorithm controller; a reference controller is added, when the train meets a reference control strategy condition, a reference control signal is used for replacing a reinforcement learning control signal, and the part of data is used for optimizing a reinforcement learning control strategy; training the network until the global reward of the network is optimal and the control result is combined with the expectation, and considering that the preliminary training of the network is completed; and loading the reference control strategy and the reinforcement learning control strategy on the real train, and outputting a train control signal according to the real train information to complete the cooperative operation control of the train. According to the method, the optimal strategy training speed is increased, and the robustness of the control strategy in the actual operation process is ensured.

Owner:SOUTHWEST JIAOTONG UNIV

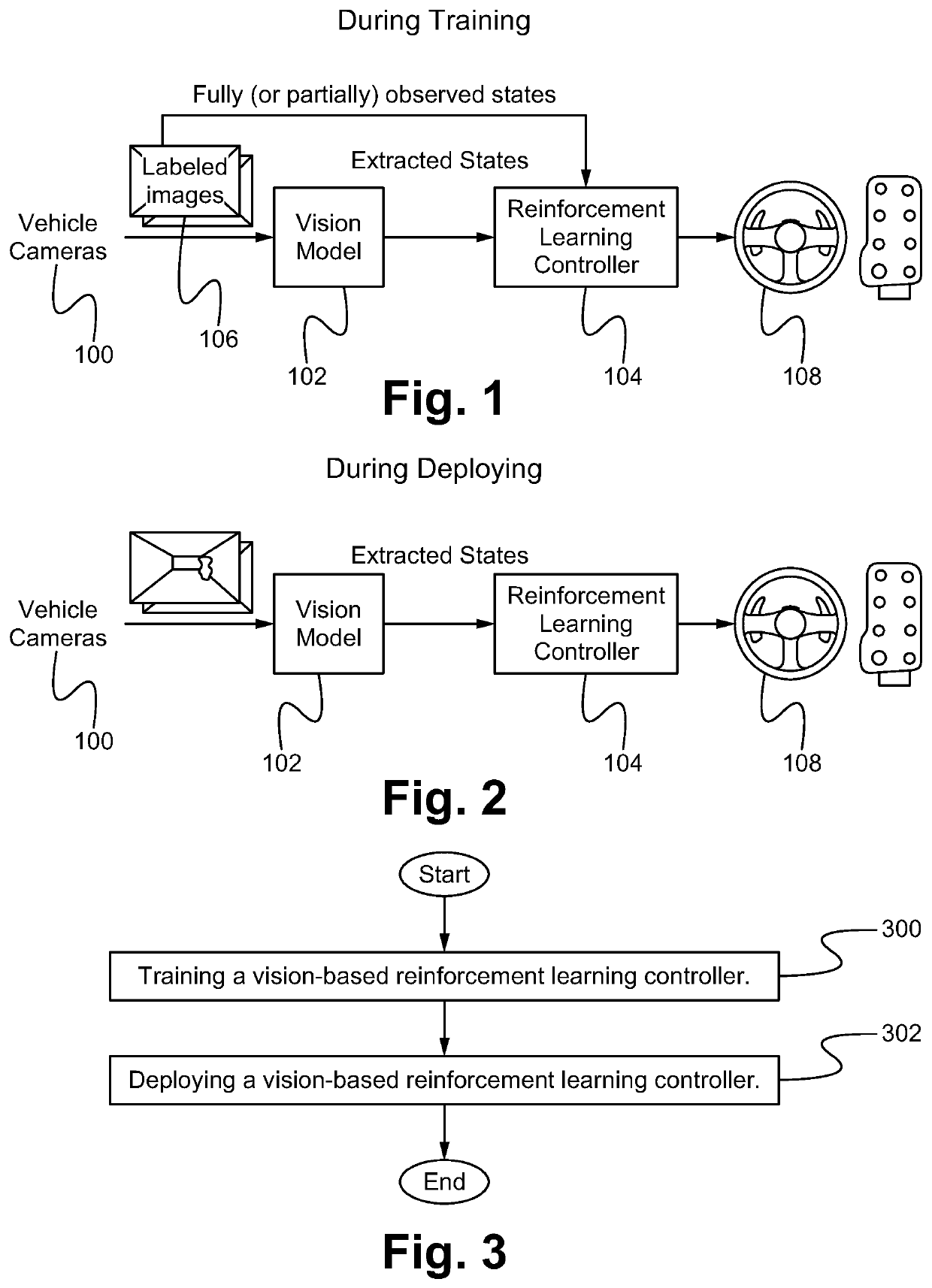

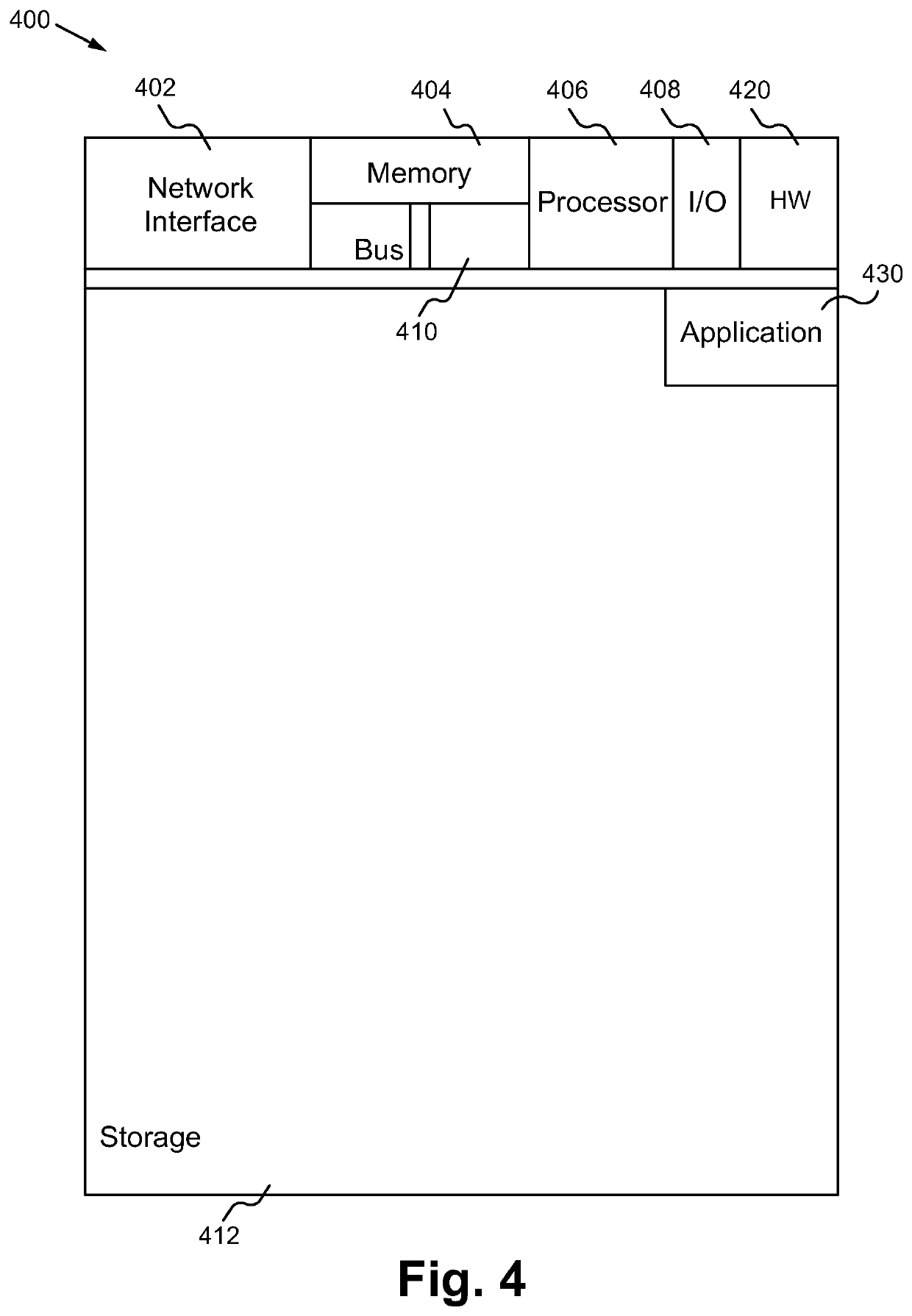

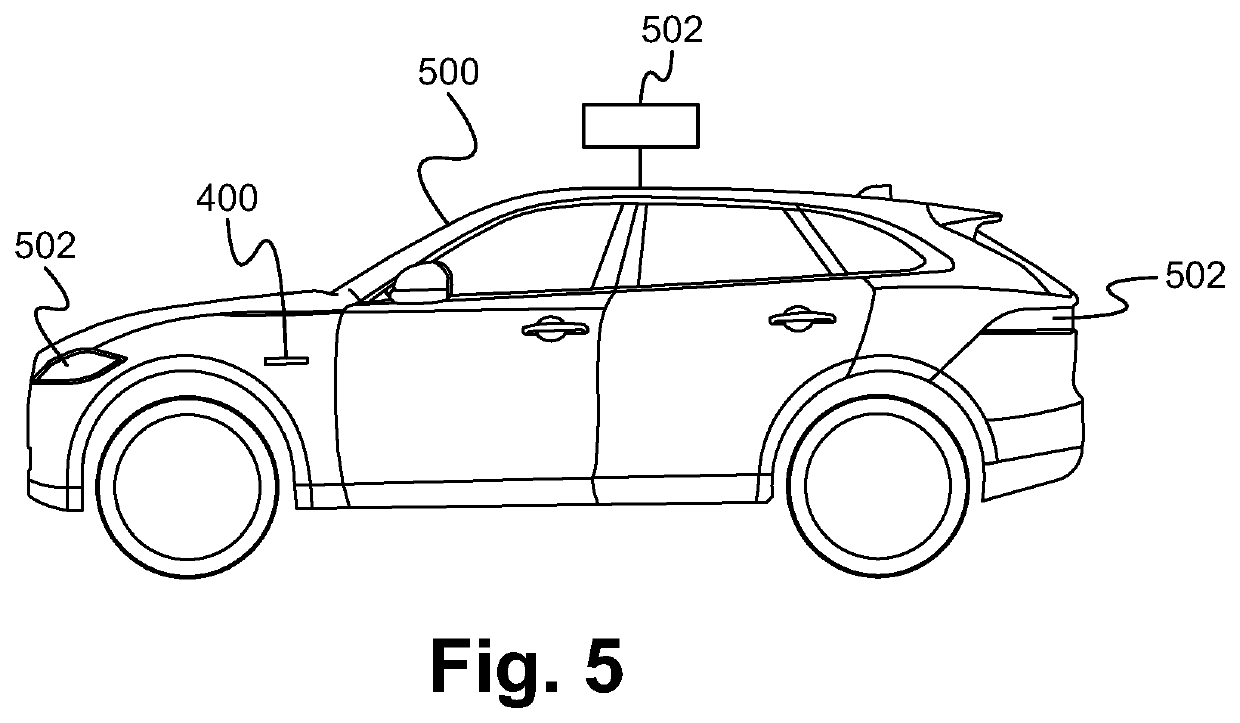

Vision-based sample-efficient reinforcement learning framework for autonomous driving

A framework combines vision and sample-efficient reinforcement-learning based on guided policy search for autonomous driving. A controller extracts environmental information from vision and is trained to drive using reinforcement learning.

Owner:SONY CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com