Neural network reinforcement learning control method of autonomous underwater robot

An underwater robot and neural network technology, applied in the field of autonomous underwater robot neural network reinforcement learning control, can solve the problems of poor adaptability to environmental changes, long time-consuming, difficult identification, etc., and achieve the effect of improving the controller

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

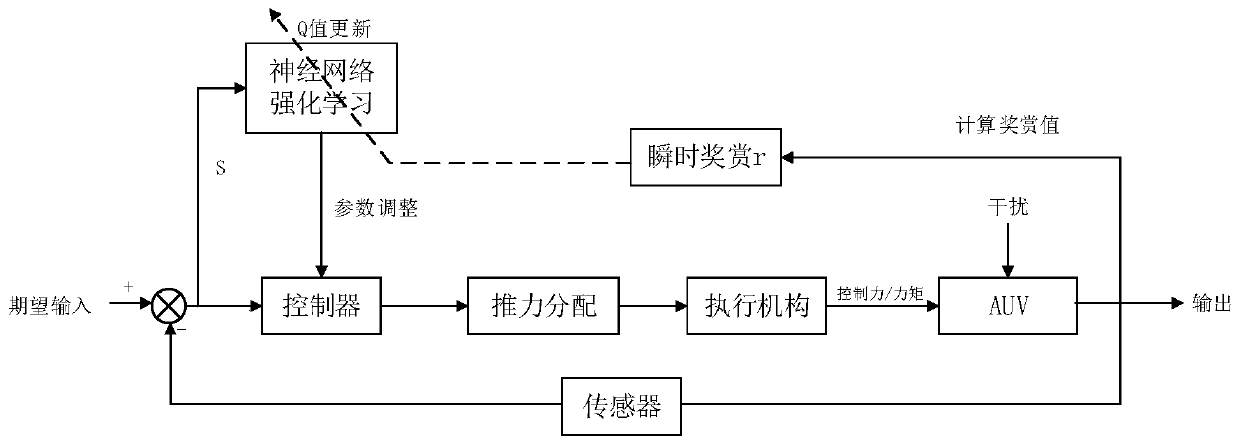

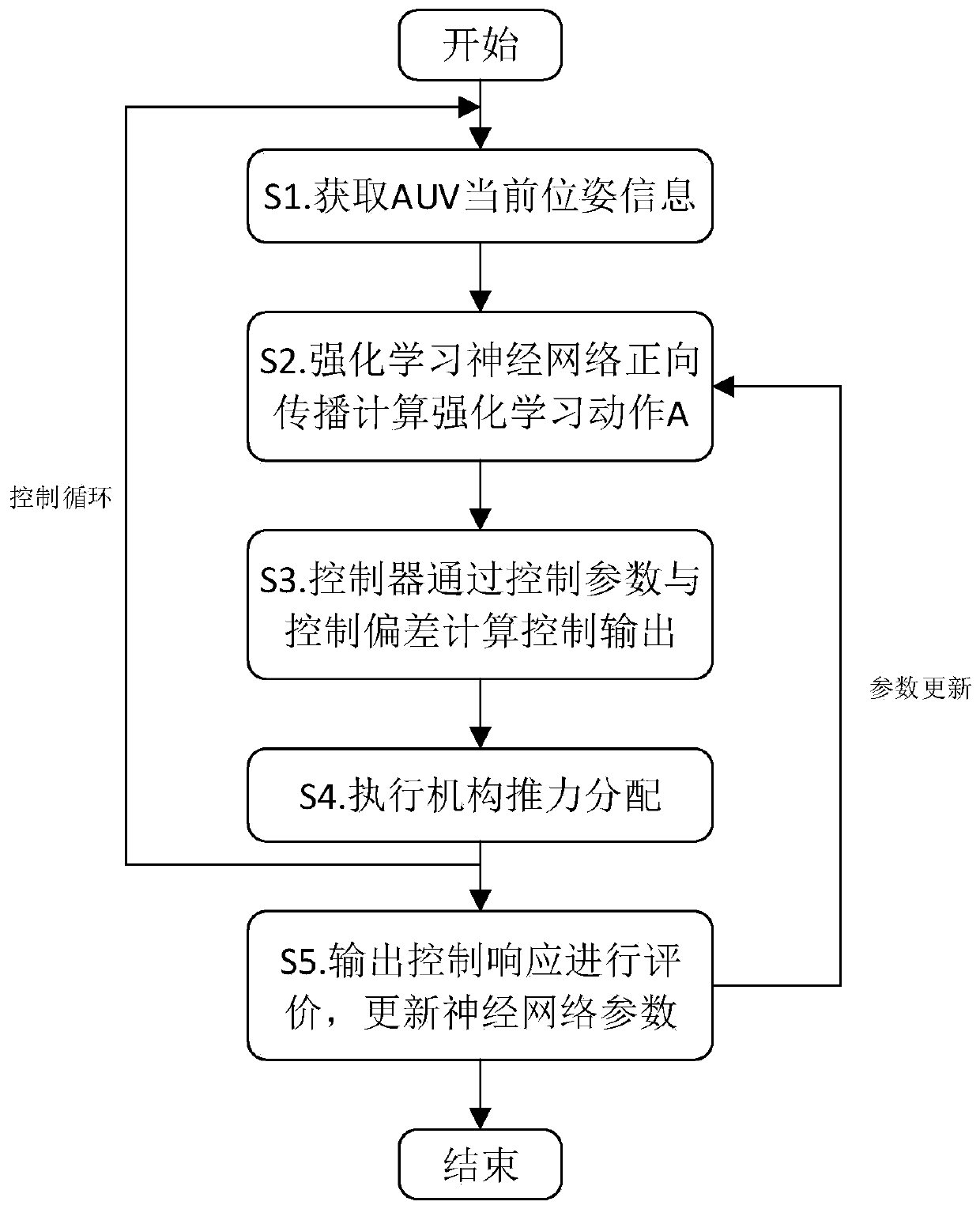

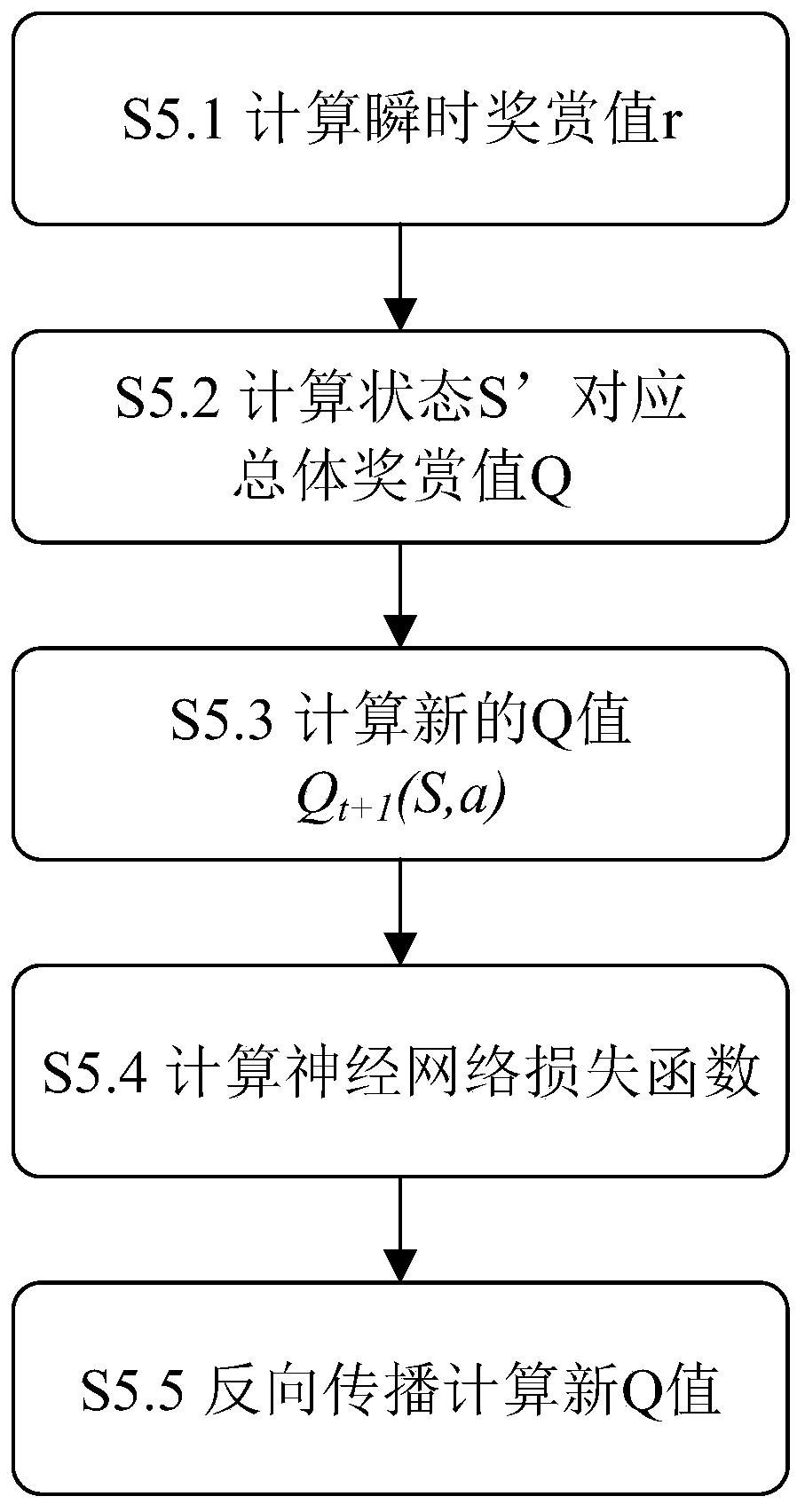

[0055] This article will combine Figure 1 to Figure 3 , taking the combined speed and heading control of an underactuated AUV as an example to illustrate the basic principle of the neural network reinforcement learning controller. For reinforcement learning, the basic idea is to solve sequential decision-making problems by establishing a mapping relationship between state behavior and the environment. The sequential decision-making problem is usually defined as a Markov decision process (Markov Decision Processes, MDP) quadruple E={S,A,P,R}, and its basic model is as follows: Figure 4 shown. Where E represents the environment of the object Agent, S is the state space of the Agent, A is the action space corresponding to the state of the Agent, P is the state transition probability of the Agent, and R is the instantaneous state reward value corresponding to the action. Its core idea is to find a strategy for the Agent, that is, the action sequence, so that the value of the d...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com