Patents

Literature

1194 results about "Reinforcement learning algorithm" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Reinforcement learning refers to goal-oriented algorithms, which learn how to attain a complex objective (goal) or maximize along a particular dimension over many steps; for example, maximize the points won in a game over many moves. They can start from a blank slate,...

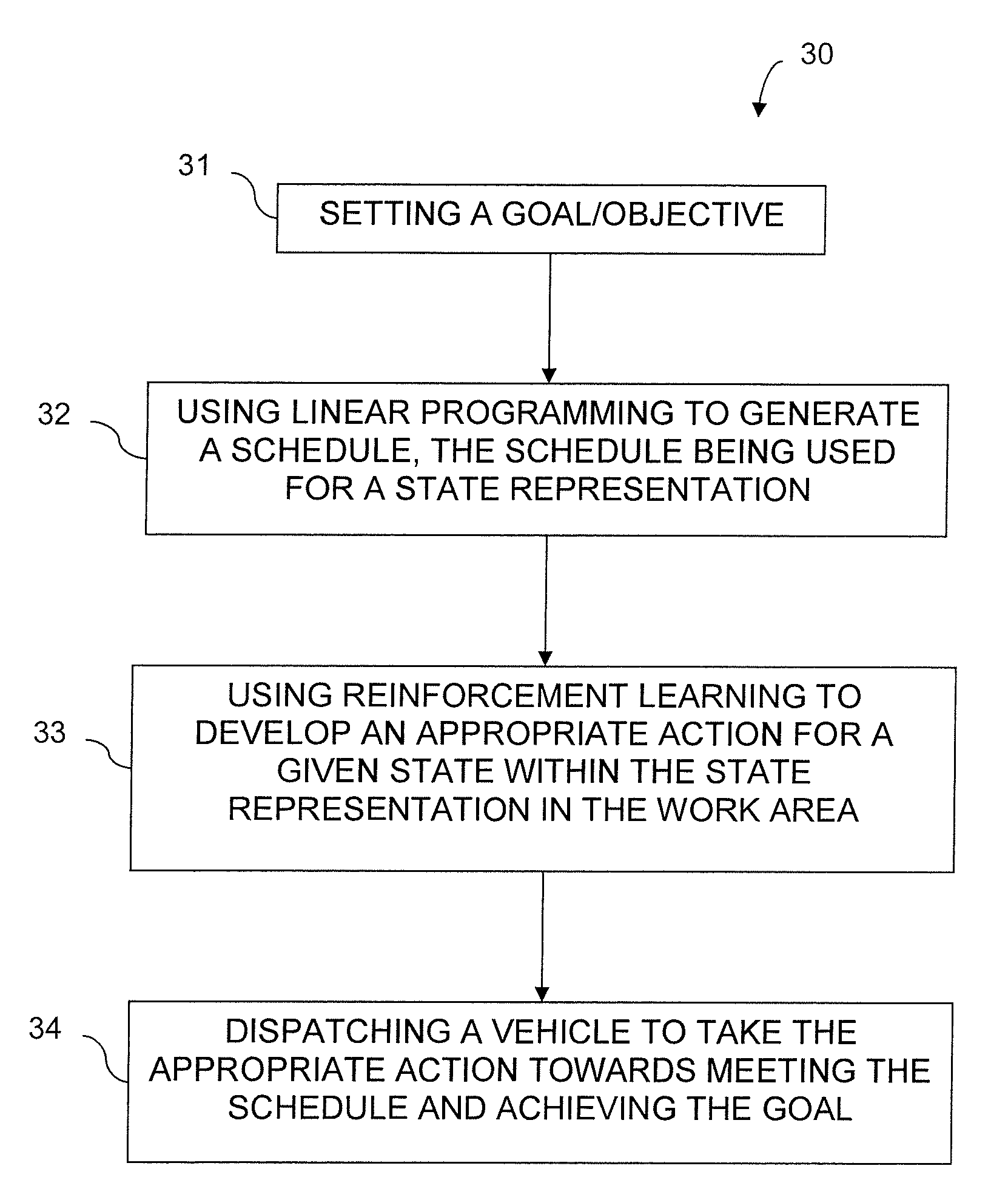

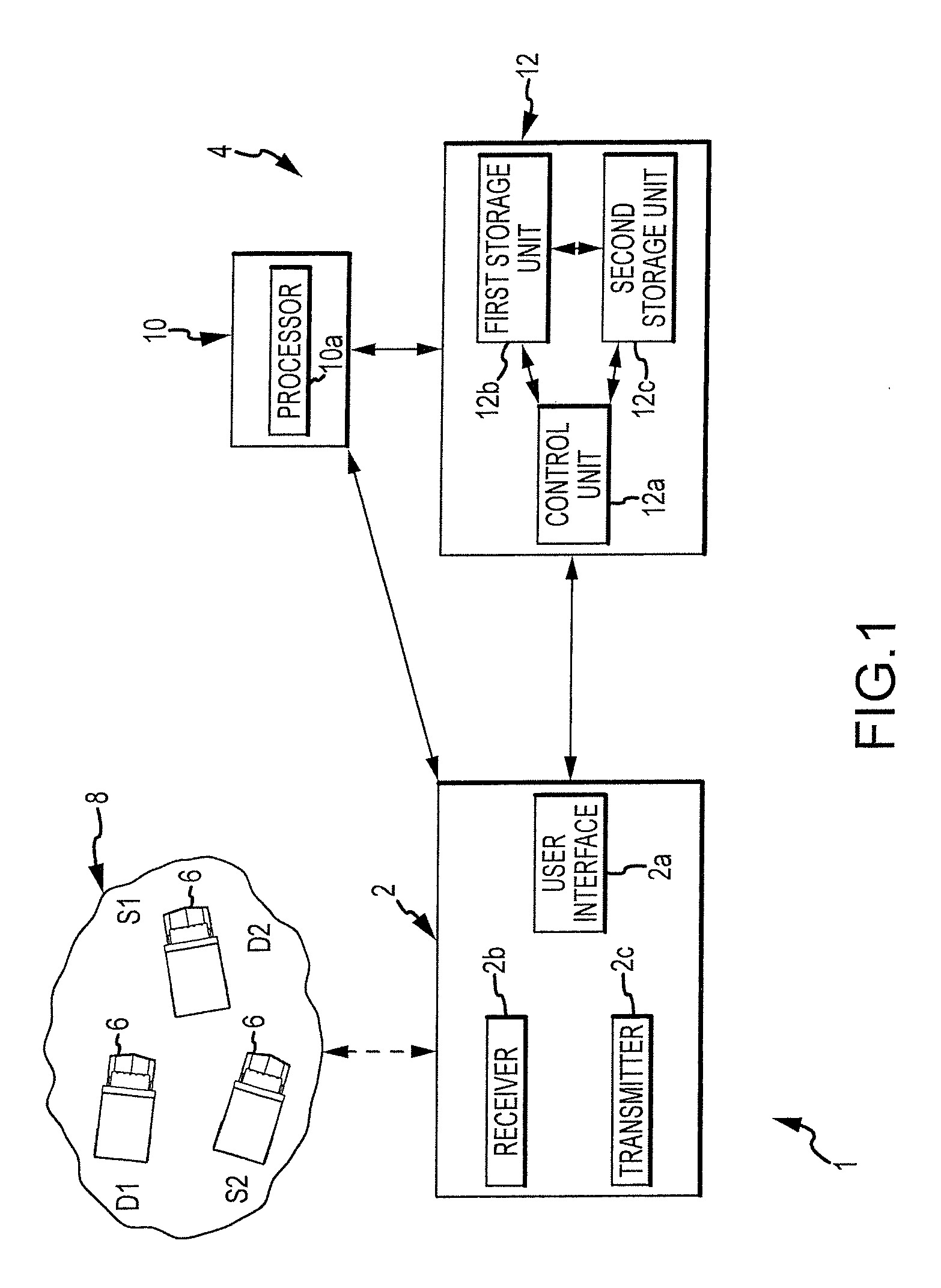

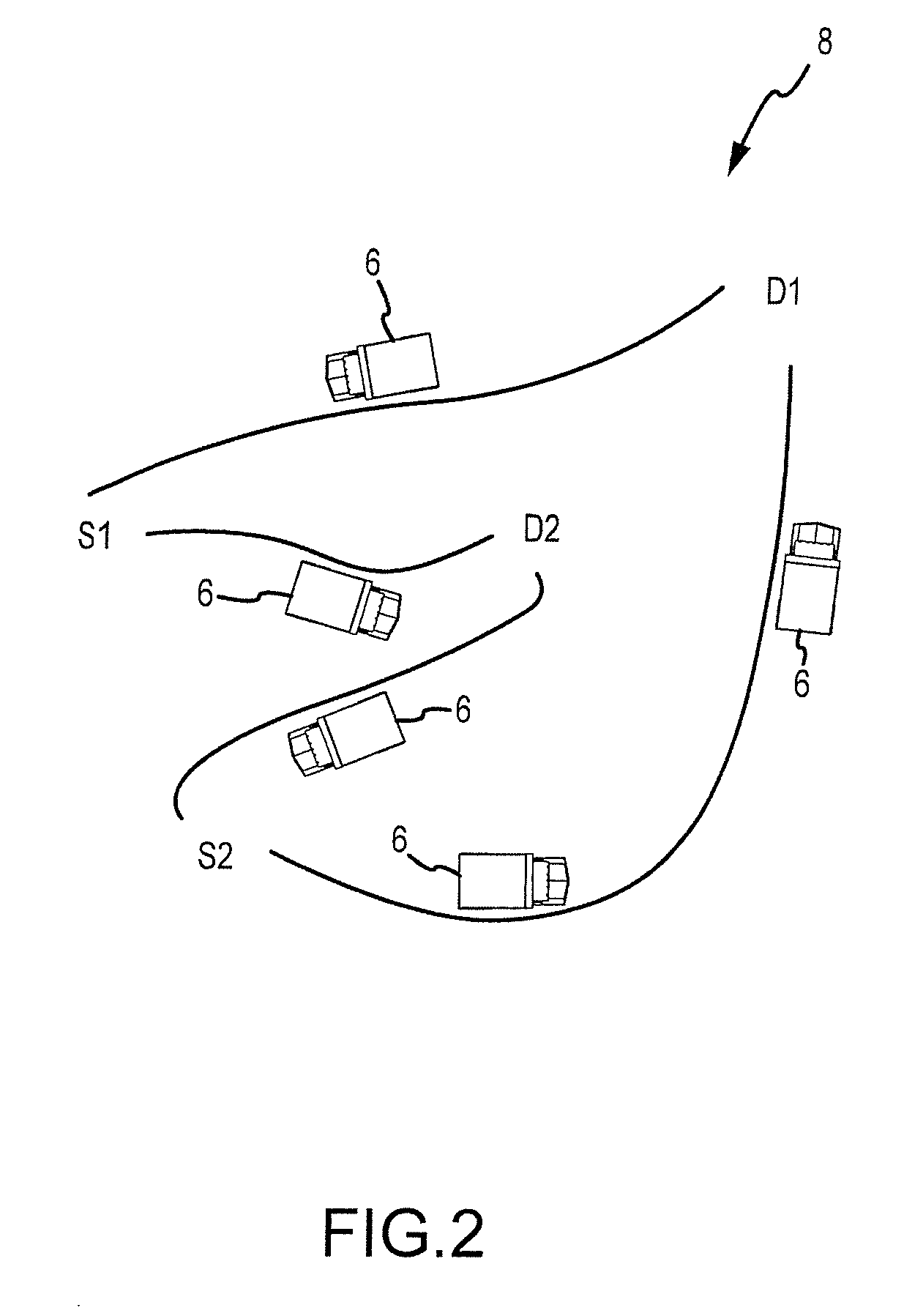

Vehicle dispatching method and system

ActiveUS20090327011A1Autonomous decision making processTransportation facility accessTime scheduleReinforcement learning algorithm

A system and method for dispatching a plurality of vehicles operating in a work area among a plurality of destination locations and a plurality of source locations includes implementing linear programming that takes in an optimization function and constraints to generate an optimum schedule for optimum production, utilizing a reinforcement learning algorithm that takes in the schedule as input and cycles through possible environmental states that could occur within the schedule by choosing one possible action for each possible environmental state and by observing the reward obtained by taking the action at each possible environmental state, developing a policy for each possible environmental state, and providing instructions to follow an action associated with the policy.

Owner:AUTONOMOUS SOLUTIONS

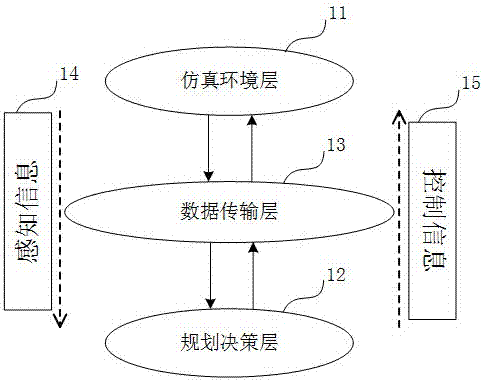

Artificial intelligent training platform for intelligent networking vehicle plan decision-making module

InactiveCN107506830AImprove generalization abilitySafe and "Real Training Scenarios"Mathematical modelsMachine learningFrame basedReinforcement learning algorithm

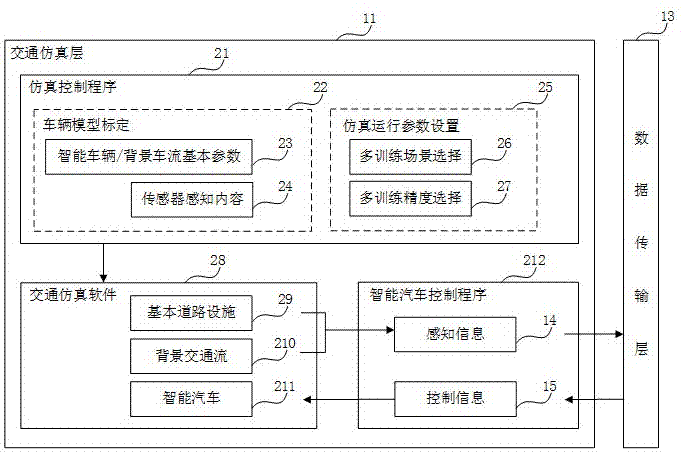

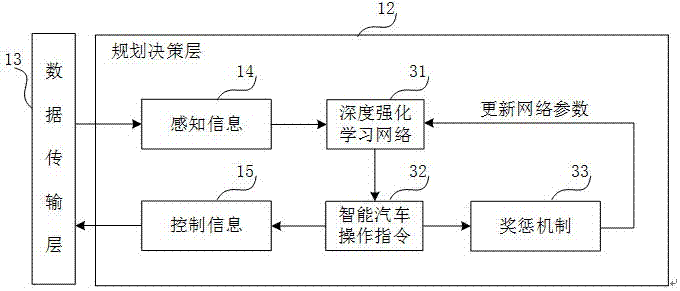

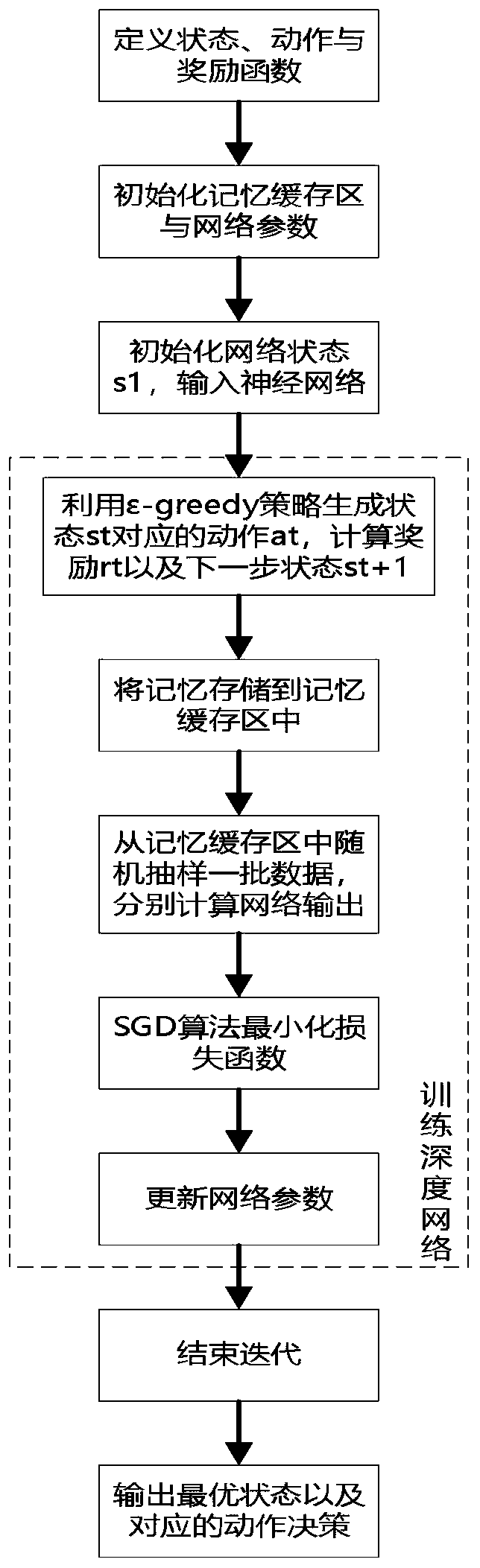

The invention, which relates to the technical field of an intelligent vehicle automatic driving and traffic simulation, relates to an artificial intelligent training platform for an intelligent networking vehicle plan decision-making module and aims at improving the intelligent level of the intelligent vehicle plan decision-making module based on enriched and vivid traffic scenes. The artificial intelligent training platform comprises a simulation environment layer, a data transmission layer, and a plan decision-making layer. The simulation environment layer is used for generating a true traffic scene based on a traffic simulation module and simulating sensing and reaction situations to the environment by an intelligent vehicle, thereby realizing multi-scene loading. The plan decision-making layer outputs a decision-making behavior of the intelligent vehicle by using environment sensing information as an input based on a deep reinforcement learning algorithm, thereby realizing training optimization of network parameters. And the data transmission layer connects the traffic environment module with a deep reinforcement learning frame based on a TCP / IP protocol to realize transmission of sensing information and vehicle control information between the simulated environment layer and the plan decision-making layer.

Owner:TONGJI UNIV

Automatic-driving intelligent vehicle trajectory tracking control strategy based on deep reinforcement learning

InactiveCN110322017ARealize autonomous drivingAvoid uncertaintyArtificial lifeKnowledge representationSteering wheelSimulation

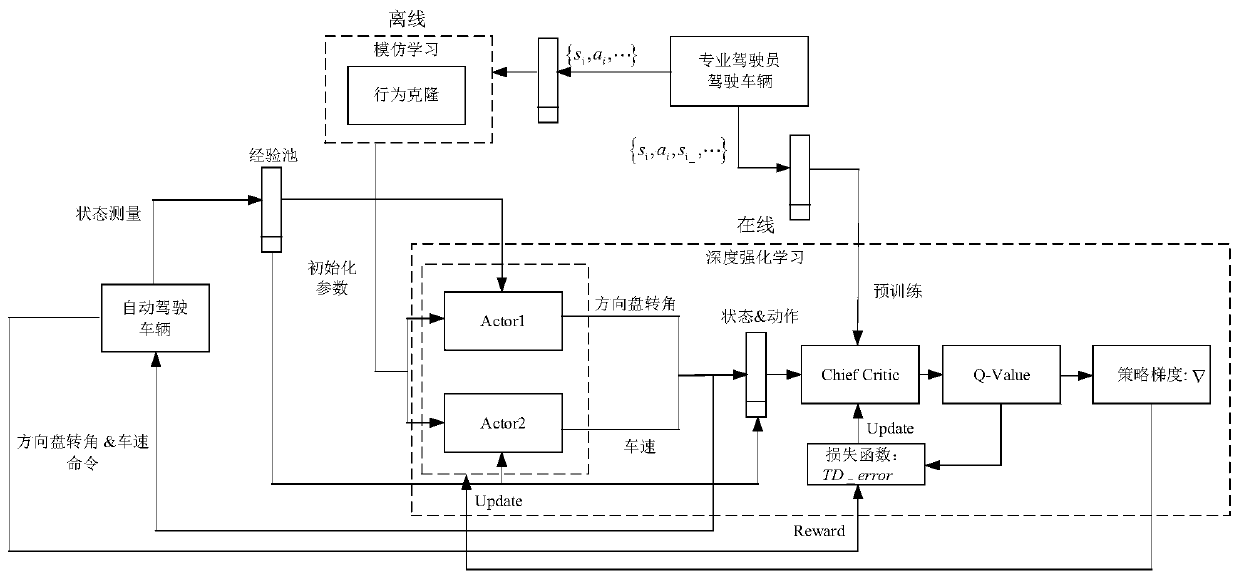

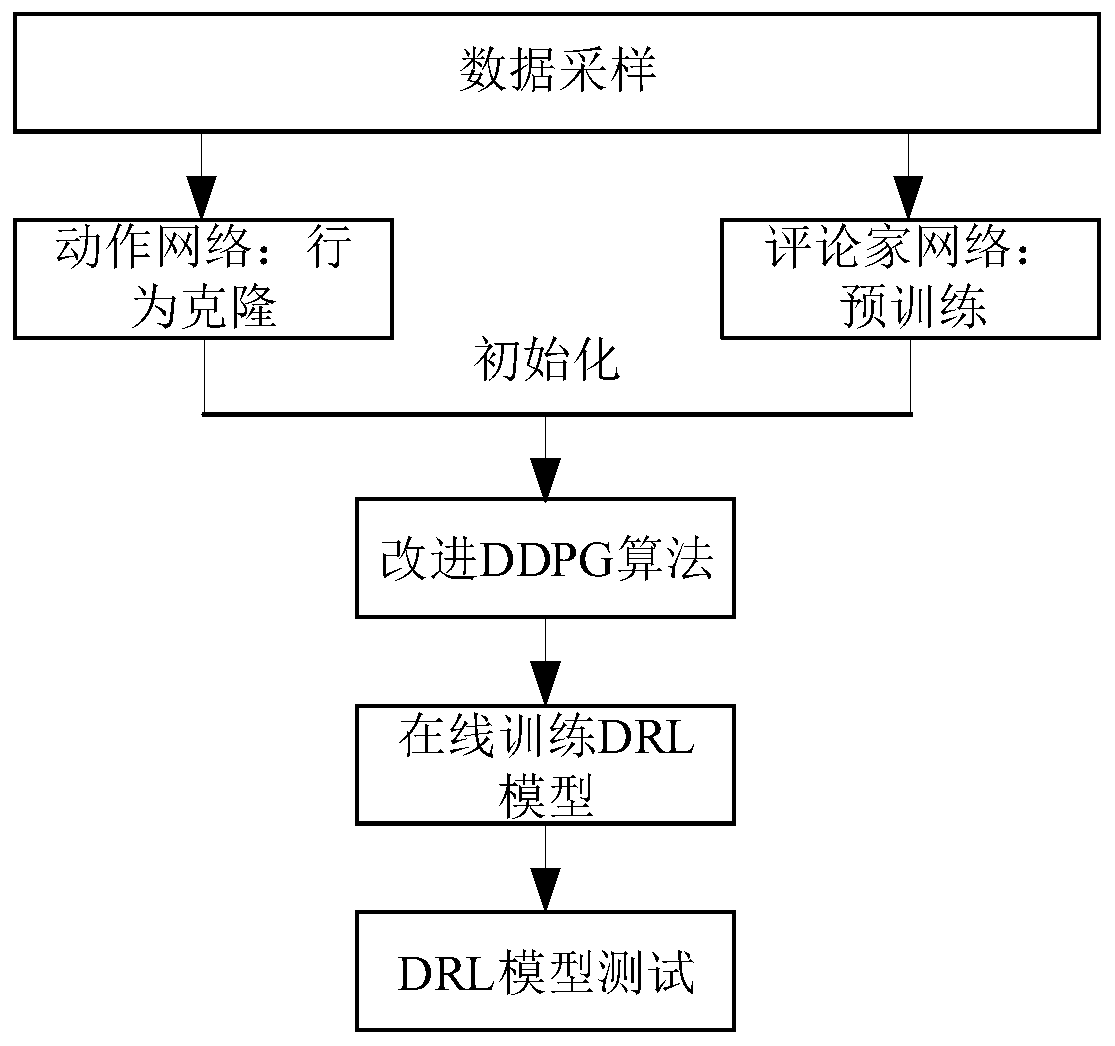

The invention discloses an automatic-driving intelligent vehicle trajectory tracking control strategy based on deep reinforcement learning. For an intelligent vehicle automatic-driving task, accordingto an action-critics structure in a deterministic policy gradient (DDPG) algorithm, a double-action network is adopted to output a steering wheel angle command and a vehicle speed command. A main reviewer network is designed to guide the updating process of the double-action network, and the stragety specifically comprises the steps of describing an automatic driving task as a Markov decision process: < st,at,Rt, st +1 >; initializing a'double-action 'network in the improved DDPG algorithm by adopting a behavior cloning algorithm; pre-training a'reviewer 'network in the deep reinforcement learning DDPG algorithm; designing a training road containing various driving scenes to carry out reinforcement learning online training; and setting a new road to test the trained deep reinforcement learning (DRL) model. The control strategy is designed by simulating the human driving learning process, and automatic driving of the intelligent vehicle in the simple road environment is achieved.

Owner:JILIN UNIV

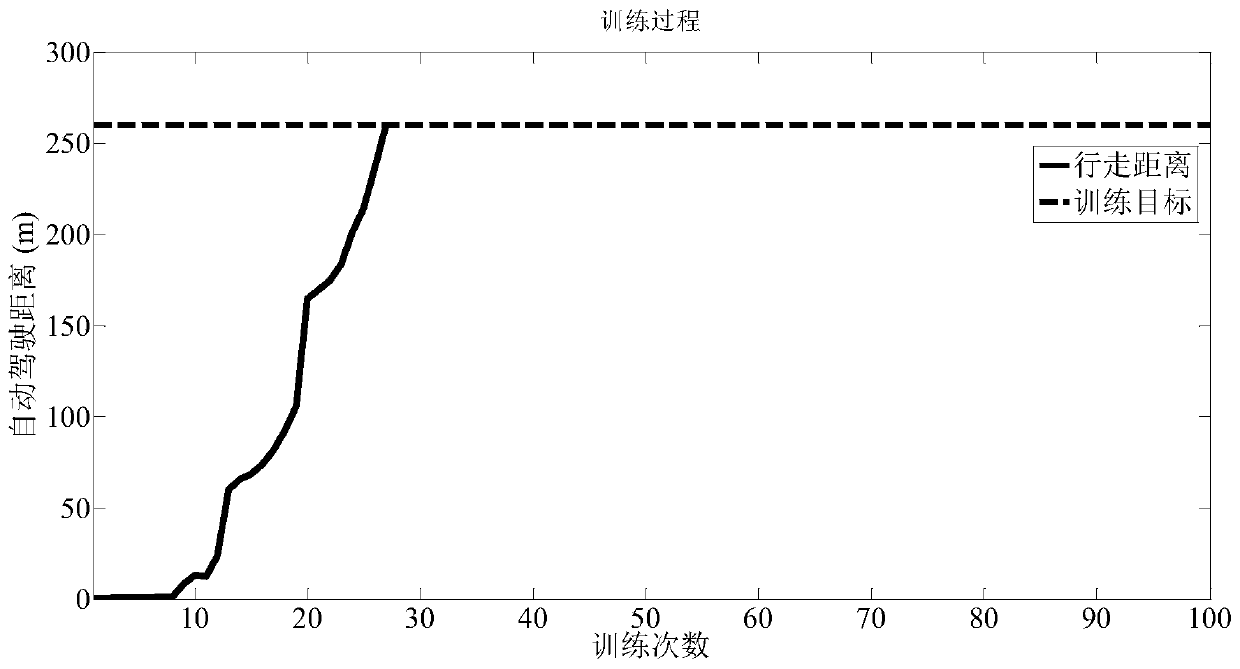

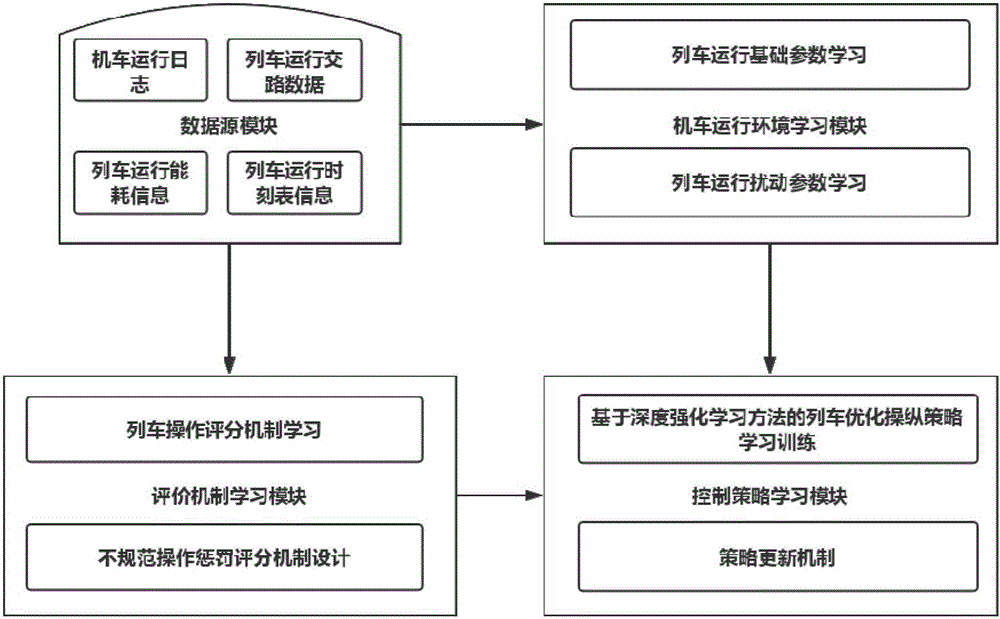

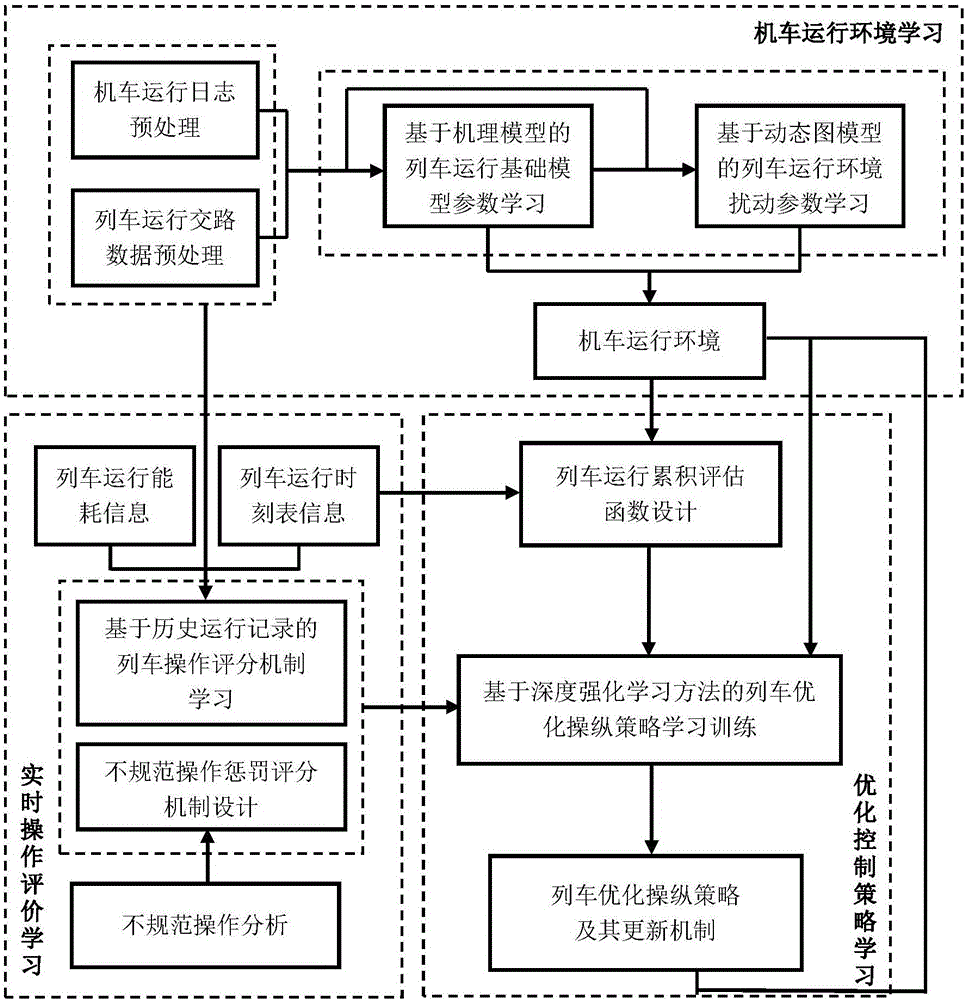

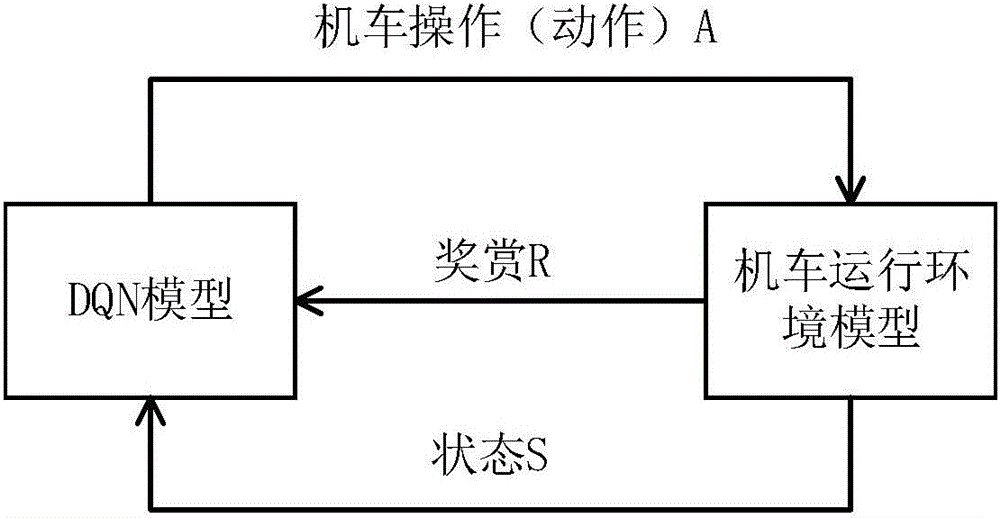

Intelligent locomotive operation method and system based on deep reinforcement learning

ActiveCN106842925AEasy to operateAvoid human involvementAdaptive controlReinforcement learning algorithmData source

The invention relates to an intelligent locomotive operation method and system based on deep reinforcement learning. The system comprises a data source module, a locomotive operation environment learning module, an evaluation mechanism learning module and a control strategy learning module, the data source module provides needed data input for the locomotive operation environment learning module and the evaluation mechanism learning module, and the locomotive operation environment learning module and the evaluation mechanism learning module output an obtained specific operating environment and a reward function value to the control strategy learning module. On the basis of a deep reinforcement learning algorithm, a locomotive operation environment model takes real-time evaluation of the locomotive operation action as feedback information, by rewarding or punishing the current operation action, a reward function is fed back to the control strategy to serve as a reward evaluation value, and the control strategy is combined with the operating state to iteratively update and optimize the strategy. Accordingly, intelligent and optimized locomotive operation can be better achieved, and artificial participation is greatly reduced.

Owner:TSINGHUA UNIV +2

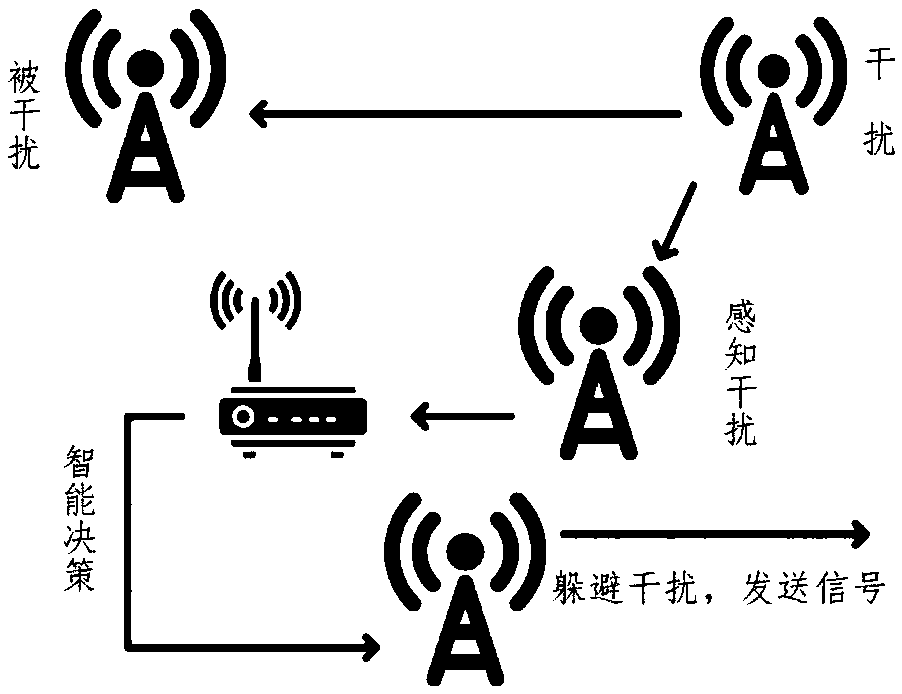

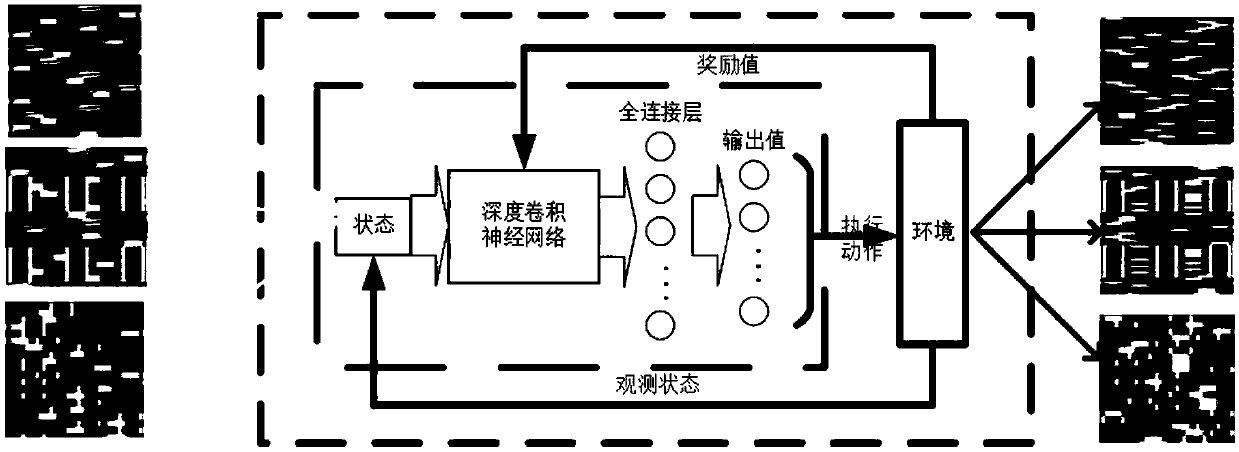

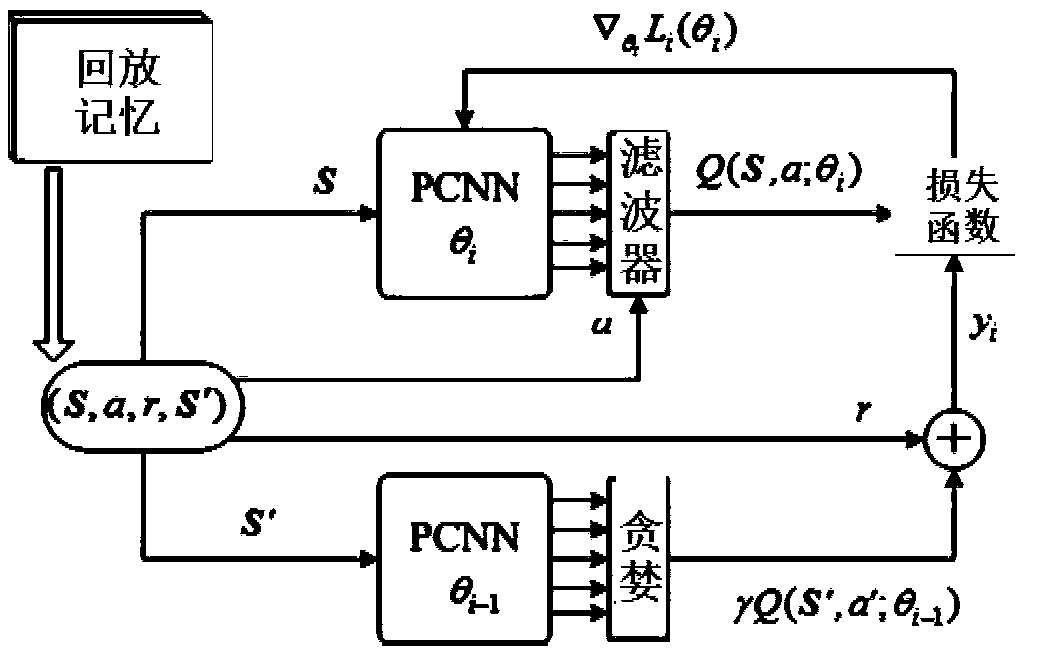

Deep Q neural network anti-interference model and intelligent anti-interference algorithm

ActiveCN108777872AReduce computational complexityEfficient solutionNetwork traffic/resource managementNeural architecturesTime domainFrequency spectrum

The invention discloses a deep Q neural network anti-interference model and an intelligent anti-interference algorithm. A pair of emitting end and receiving end is a user, communication with the useris carried out, user communication is interfered by one or multiple jammers, the spectrum waterfall map of the receiving end is used as an input state of learning, and frequency domain and time domaincharacteristics of the interference are calculated. The algorithm comprises steps that firstly, a Q-value table corresponding to fitting is obtained through the deep Q neural network; secondly, a strategy is selected by the user according to the probability, training is carried out based on a return value of the strategy and the next environmental state, and the network weight and frequency selection strategy are updated; when the maximum number of cycles is reached, the algorithm ends. The model is advantaged in that the model is complete, the physical meaning is clear, the design algorithmis reasonable and effective, and the anti-interference scene based on the deep reinforcement learning algorithm can be excellently described.

Owner:ARMY ENG UNIV OF PLA

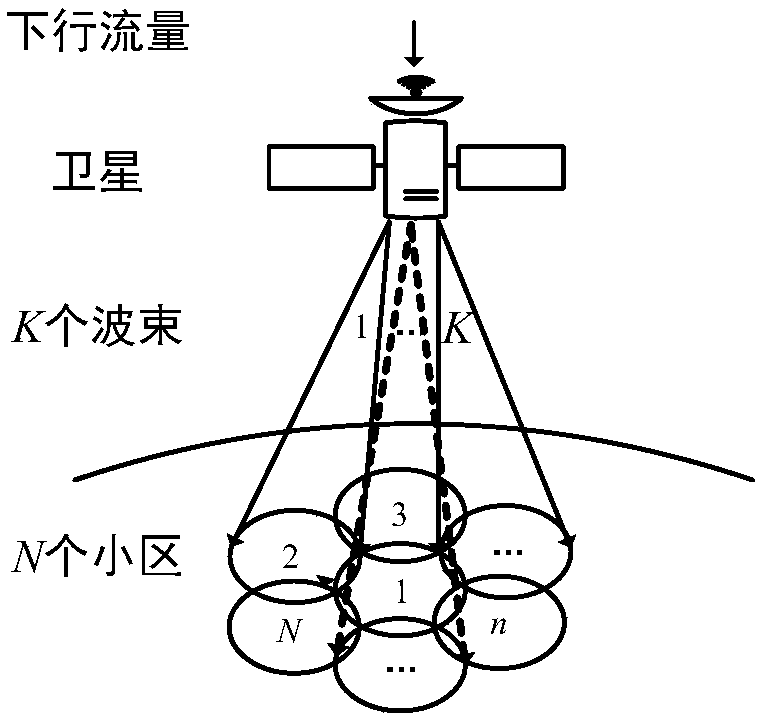

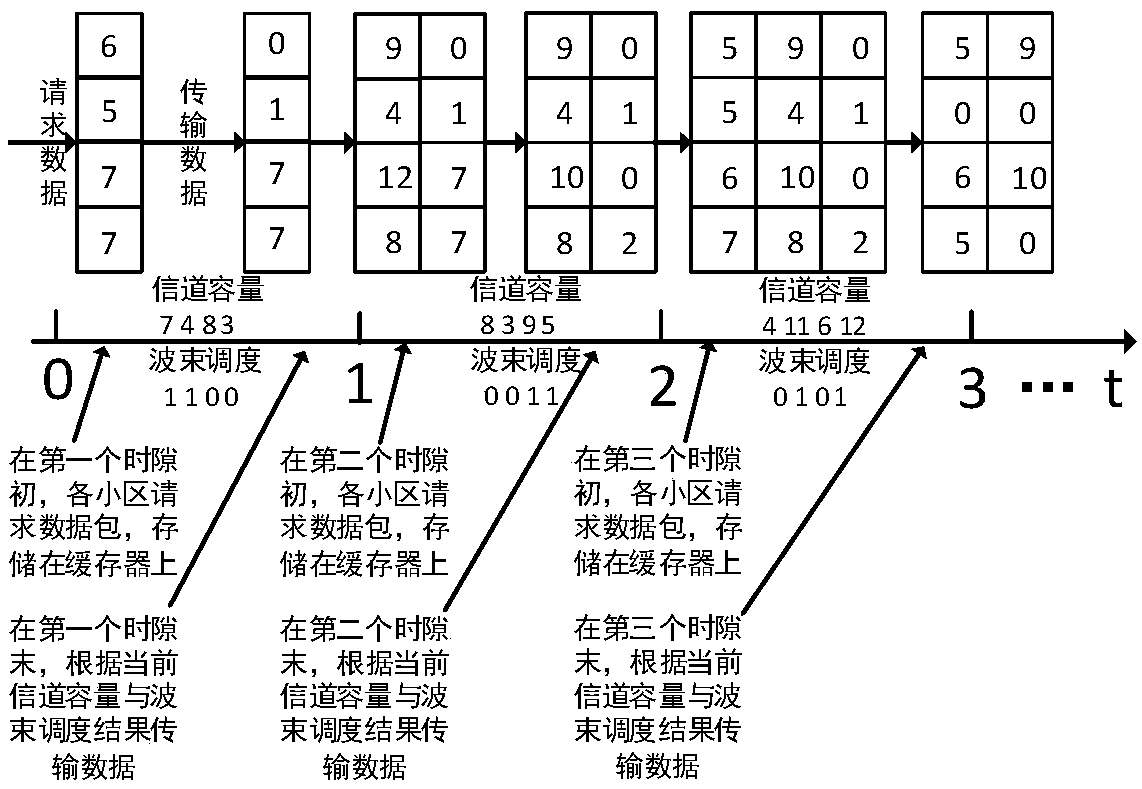

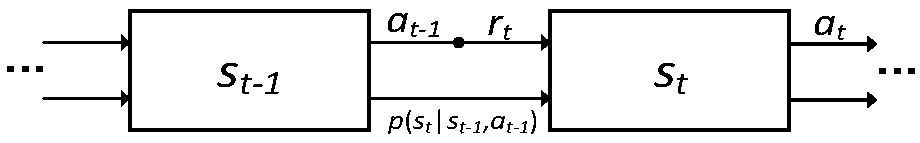

Dynamic beam scheduling method based on deep reinforcement learning

ActiveCN108966352ASpecific beam scheduling actionsWith online learning functionRadio transmissionWireless communicationNetwork packetReinforcement learning algorithm

The invention provides a dynamic beam scheduling method based on deep reinforcement learning, which belongs to the field of multi-beam satellite communication systems. The dynamic beam scheduling method comprises the steps of: firstly, modeling a dynamic beam scheduling problem into a Markov decision process, wherein states of each time slot comprise a data matrix, a delay matrix and a channel capacity matrix in a satellite buffer, actions represent a dynamic beam scheduling strategy, and a target is the long-term reduction of accumulated waiting delay of all data packets; and secondly, solving a best action strategy by utilizing a deep reinforcement learning algorithm, establishing a Q network of a CNN+DNN structure, training the Q network, using the trained Q network to make action decisions, and acquiring the best action strategy. According to the dynamic beam scheduling method, a satellite directly outputs a current beam scheduling result according to the environment state at the moment through a large amount of autonomous learning, maximizes the overall performance of the system in the long term, and greatly reduces the transmission waiting delay of the data packets while keeping the system throughput almost unchanged.

Owner:BEIJING UNIV OF POSTS & TELECOMM

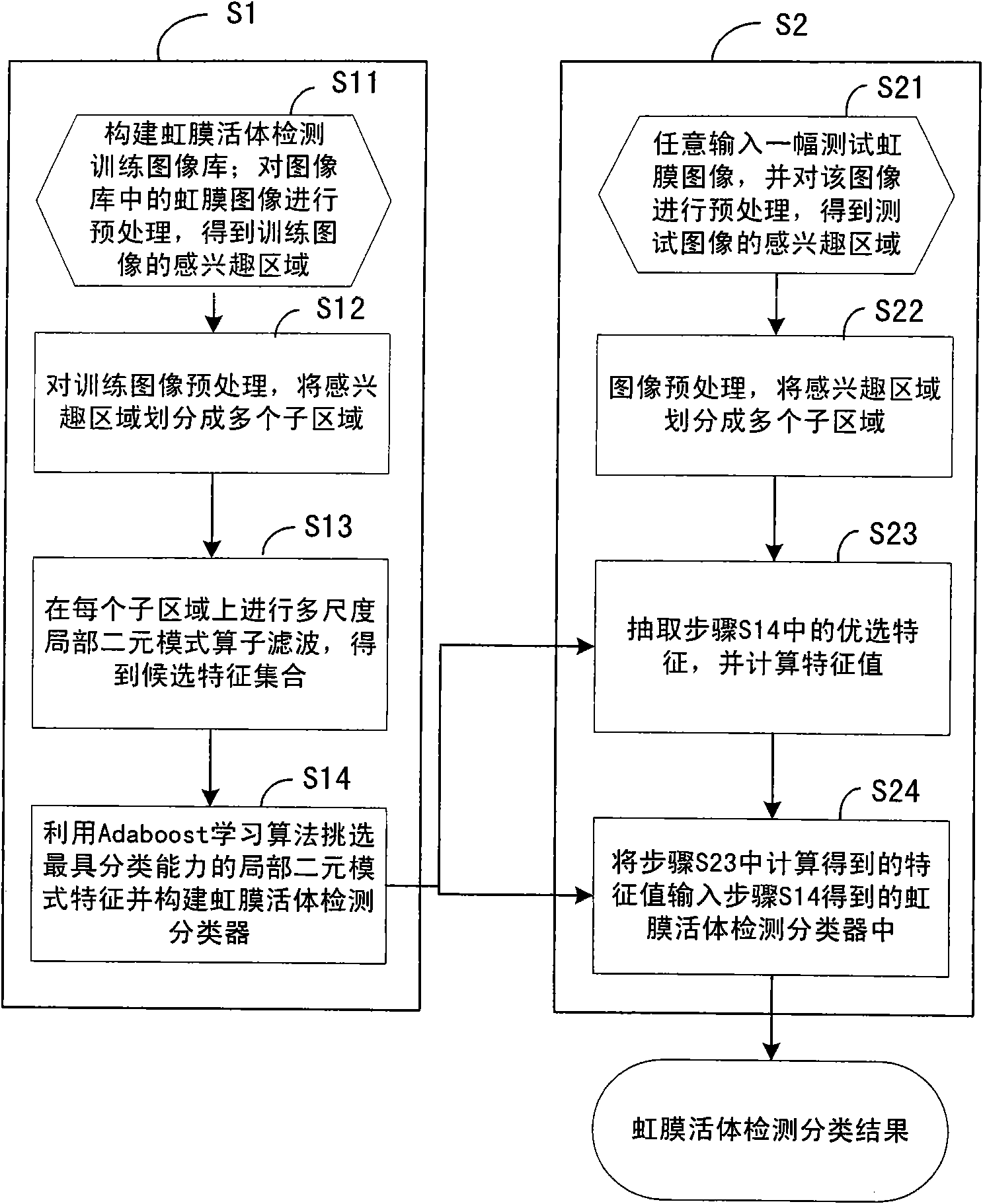

Living iris detection method

ActiveCN101833646AHigh precisionImprove securityCharacter and pattern recognitionPattern recognitionMedicine

The invention relates to a living iris detection method, which comprises the following steps of: S1, pre-treating a living iris image and an artificial iris image in a training image library; performing multi-scale characteristic extraction of local binary mode in an obtained interested area, and selecting the preferable one from the obtained candidate characteristics by using an adaptive reinforcement learning algorithm, and establishing a classifier for living iris detection; and S2, pre-treating the randomly input test iris image, and calculating the preferable local binary-mode characteristic in the obtained interested area; inputting the calculated characteristic value into the classifier for living iris detection obtained in step S1, and judging whether the test image is from the living iris according to the output result of the classifier. The invention can perform effective anti-forgery detection and alarm for the iris image and reduce error rate in iris recognition. The invention is widely applicable to various application systems for identification and safety precaution by using iris recognition.

Owner:BEIJING IRISKING

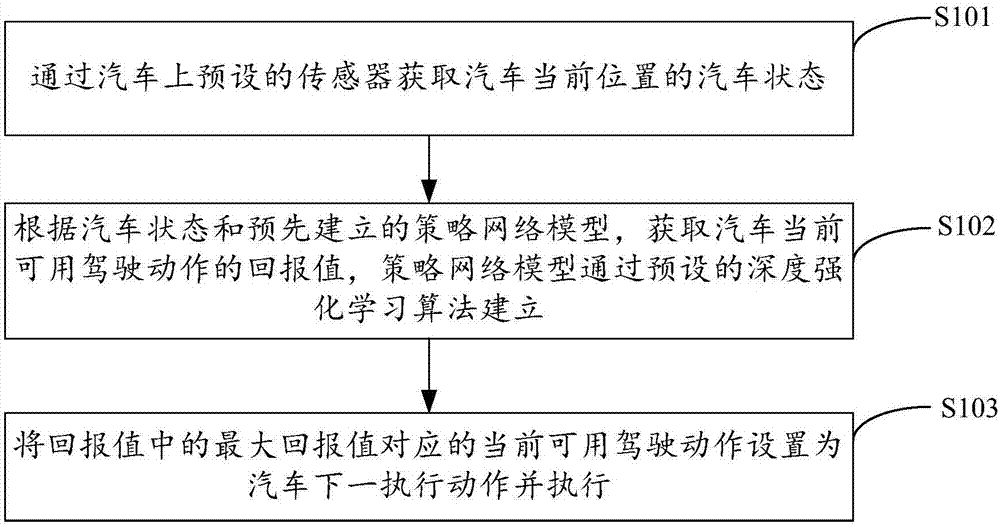

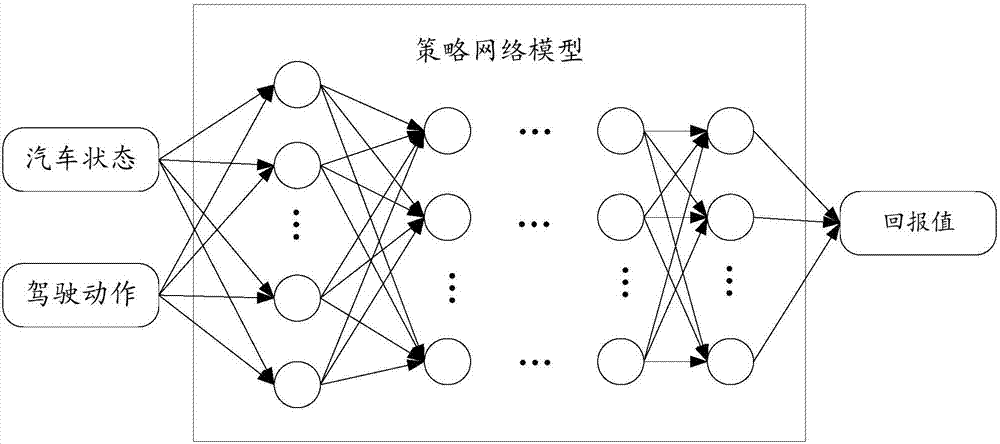

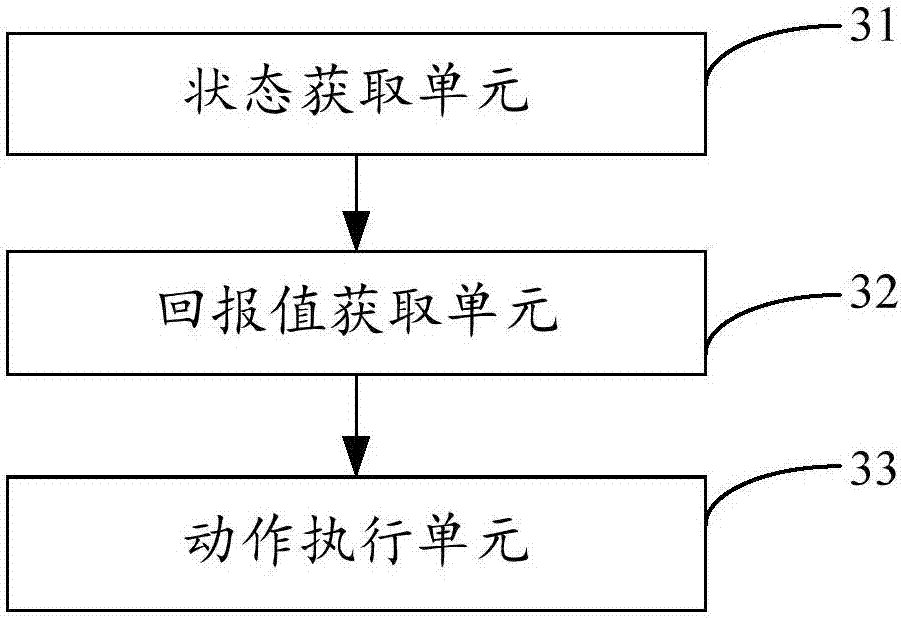

Automatic driving method and device of automobile

InactiveCN107168303ARealize autonomous drivingVarious driving scenariosPosition/course control in two dimensionsVehiclesReinforcement learning algorithmNetwork model

The present invention belongs to the automobile automatic driving technical field and provides an automatic driving method and device of an automobile. The method comprises the following steps that: the automobile state of the current position of the automobile is obtained through sensors preset on the automobile; the return values of the current available driving actions of the automobile are obtained according to the automobile state and a pre-established strategic network model, wherein the strategic network model is established through a preset deep reinforcement learning algorithm; and a current available driving action corresponding to a maximum return value in the return values is set to be a next execution action of the automobile and is executed. Thus, with the automatic driving method and device of the automobile of the invention adopted, under diverse driving conditions and complex traffic conditions, favorable driving actions are obtained timely and effectively and executed, and the automatic driving of the automobile can be realized.

Owner:SHENZHEN INST OF ADVANCED TECH CHINESE ACAD OF SCI

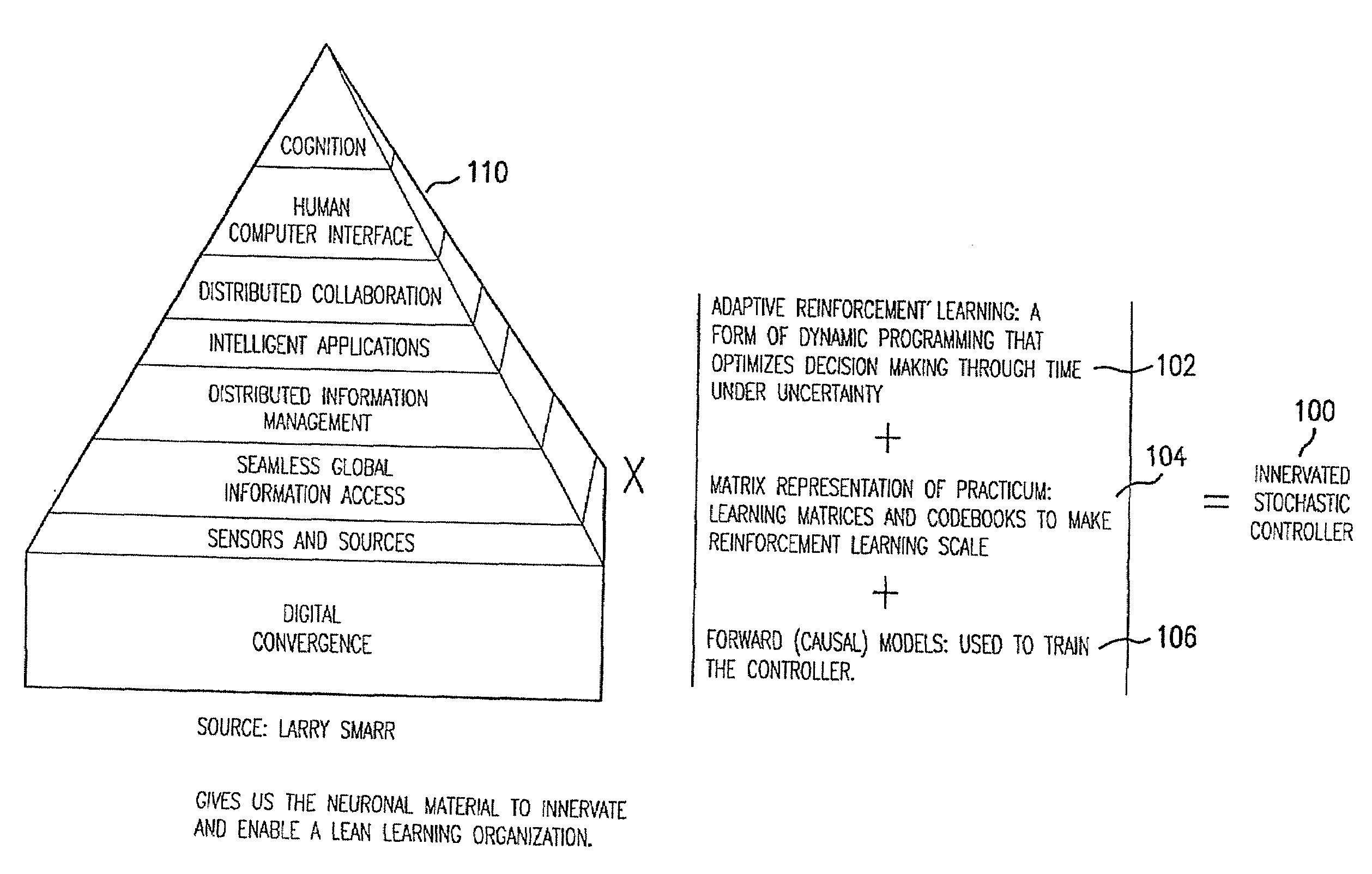

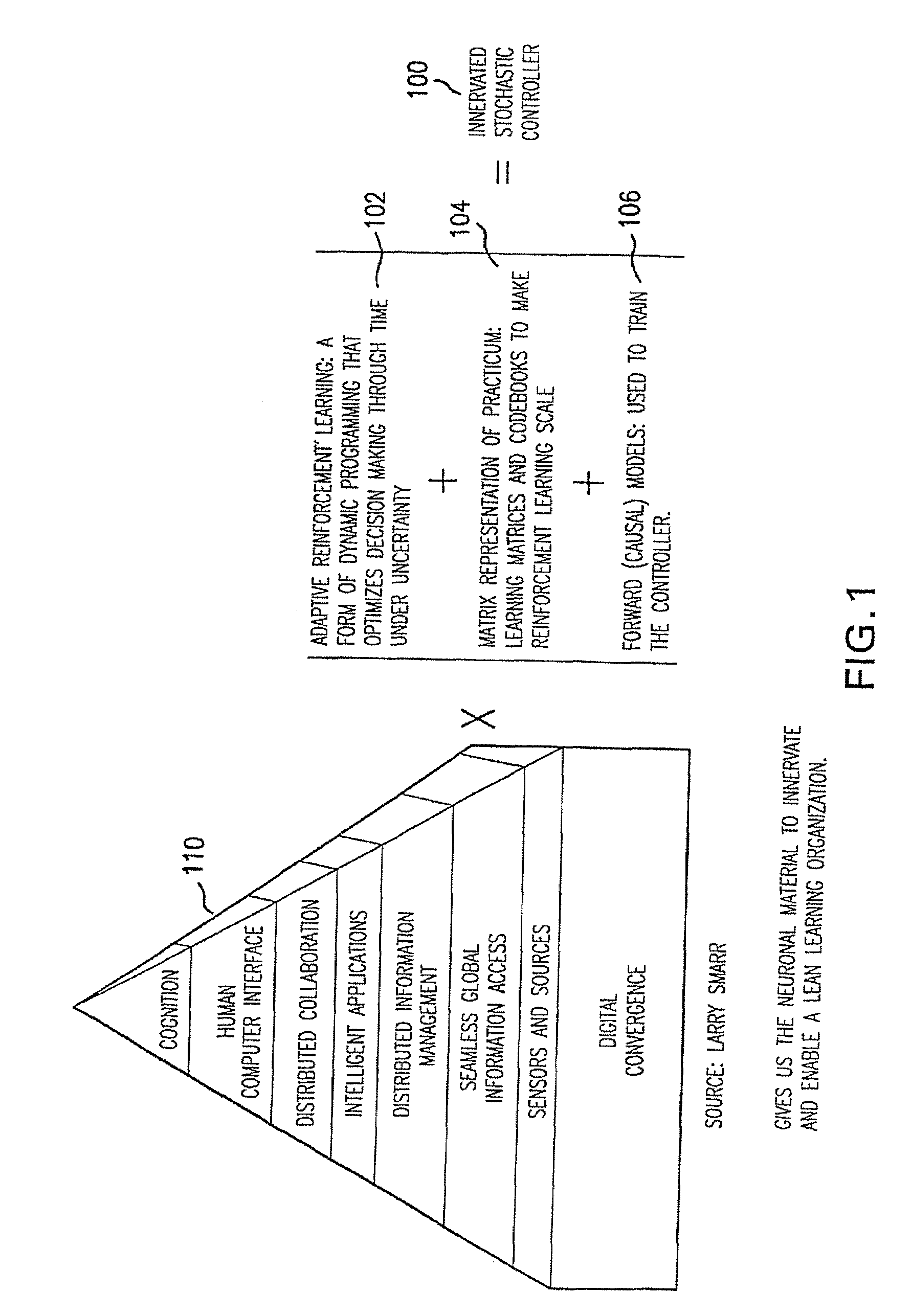

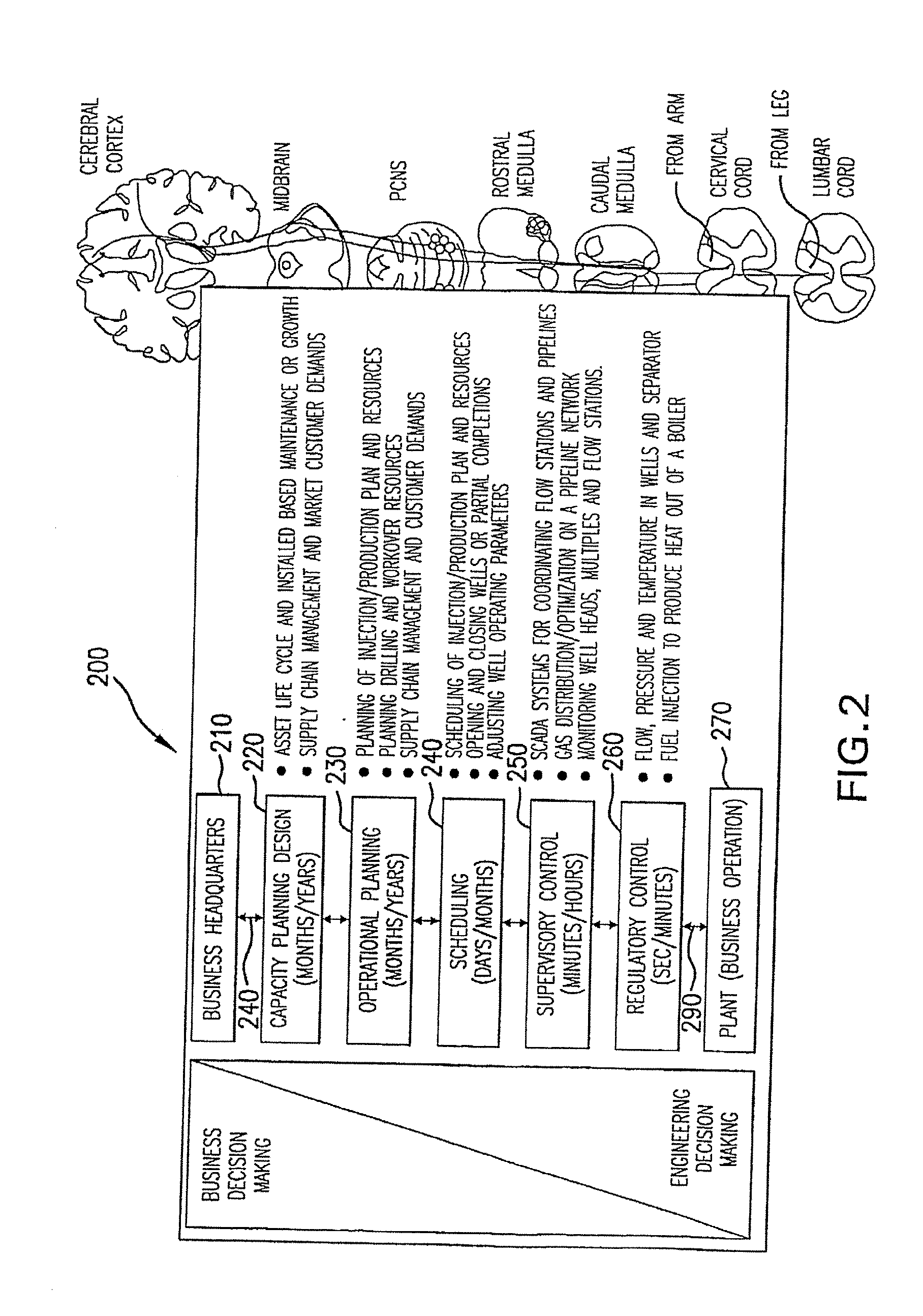

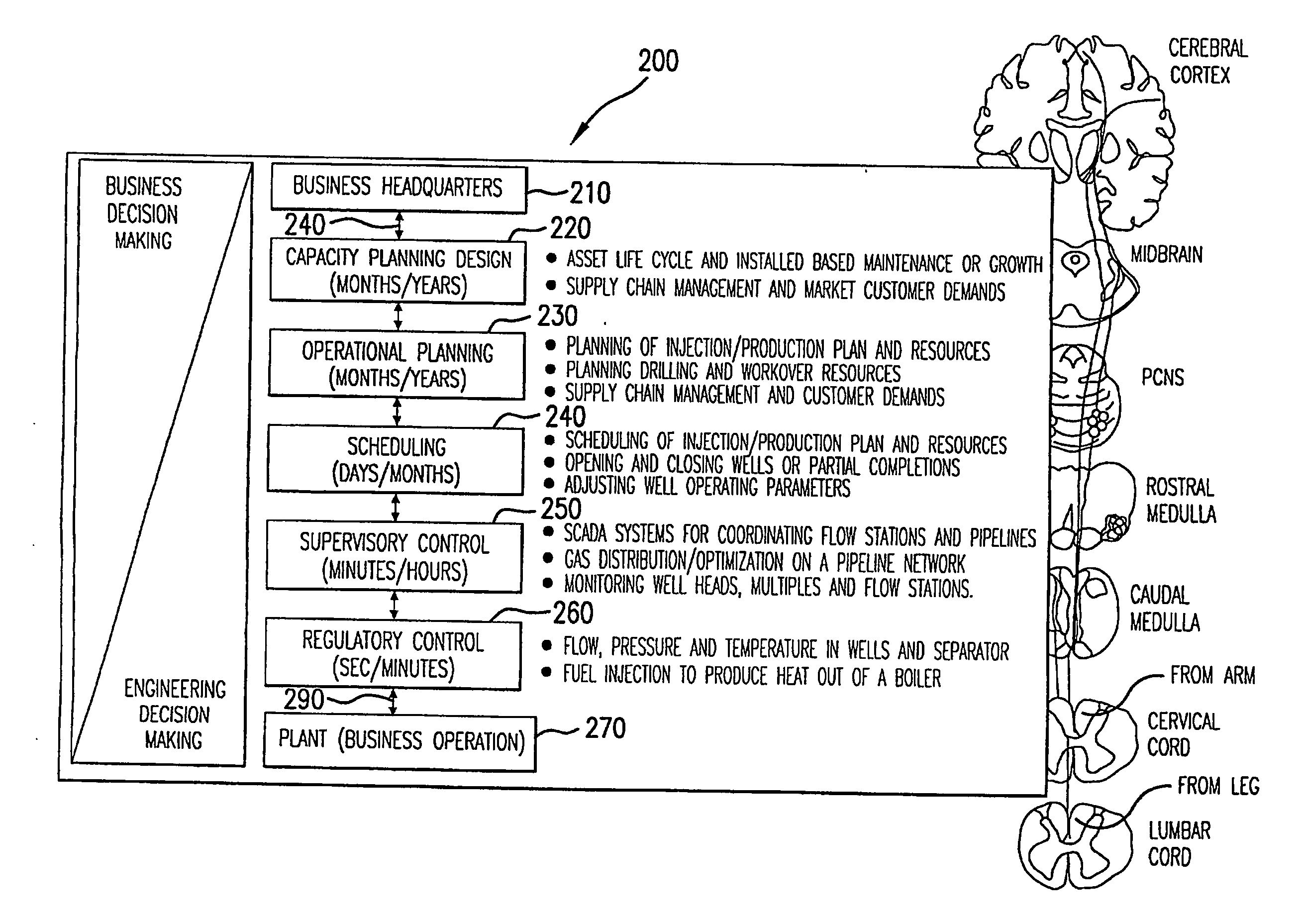

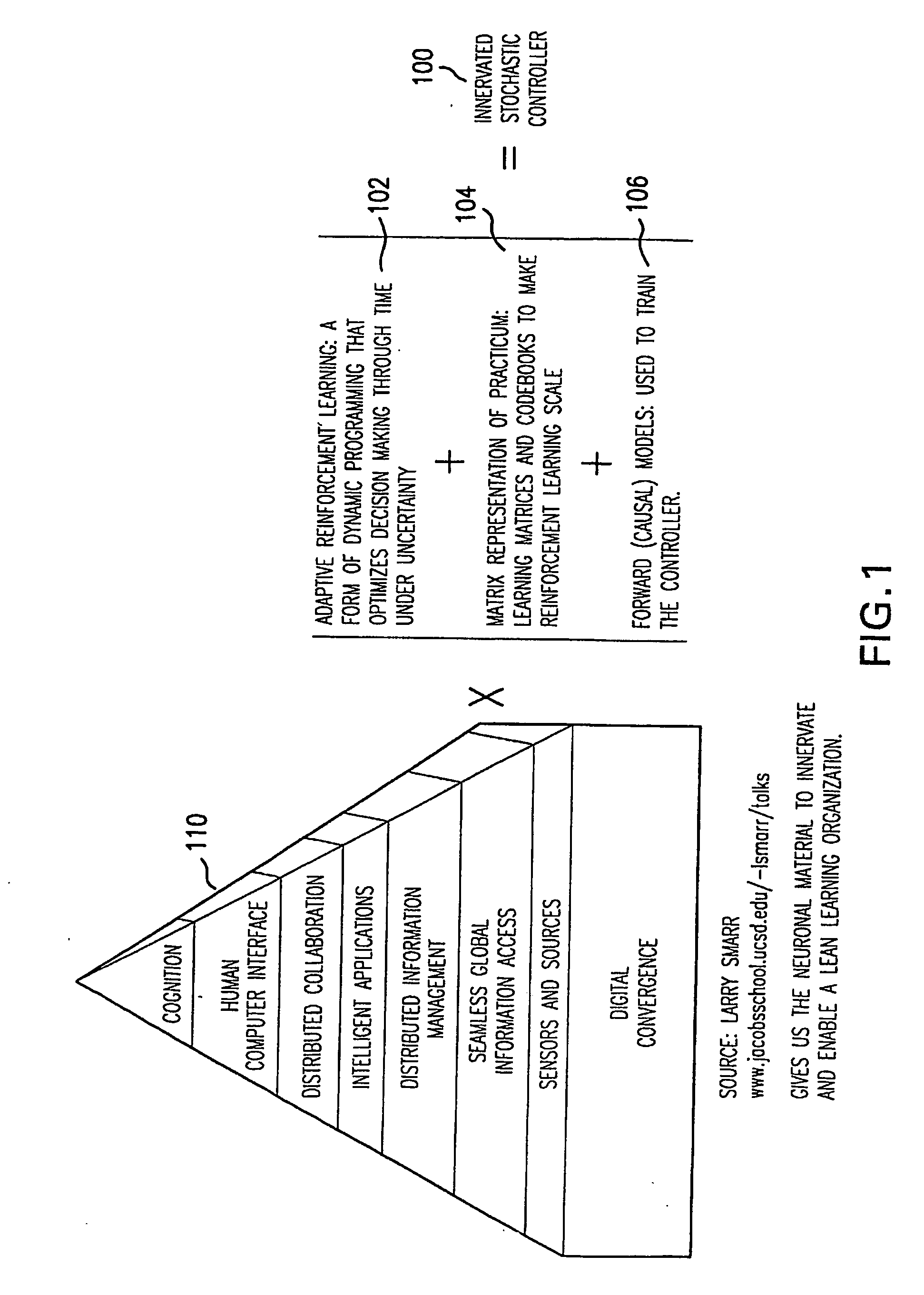

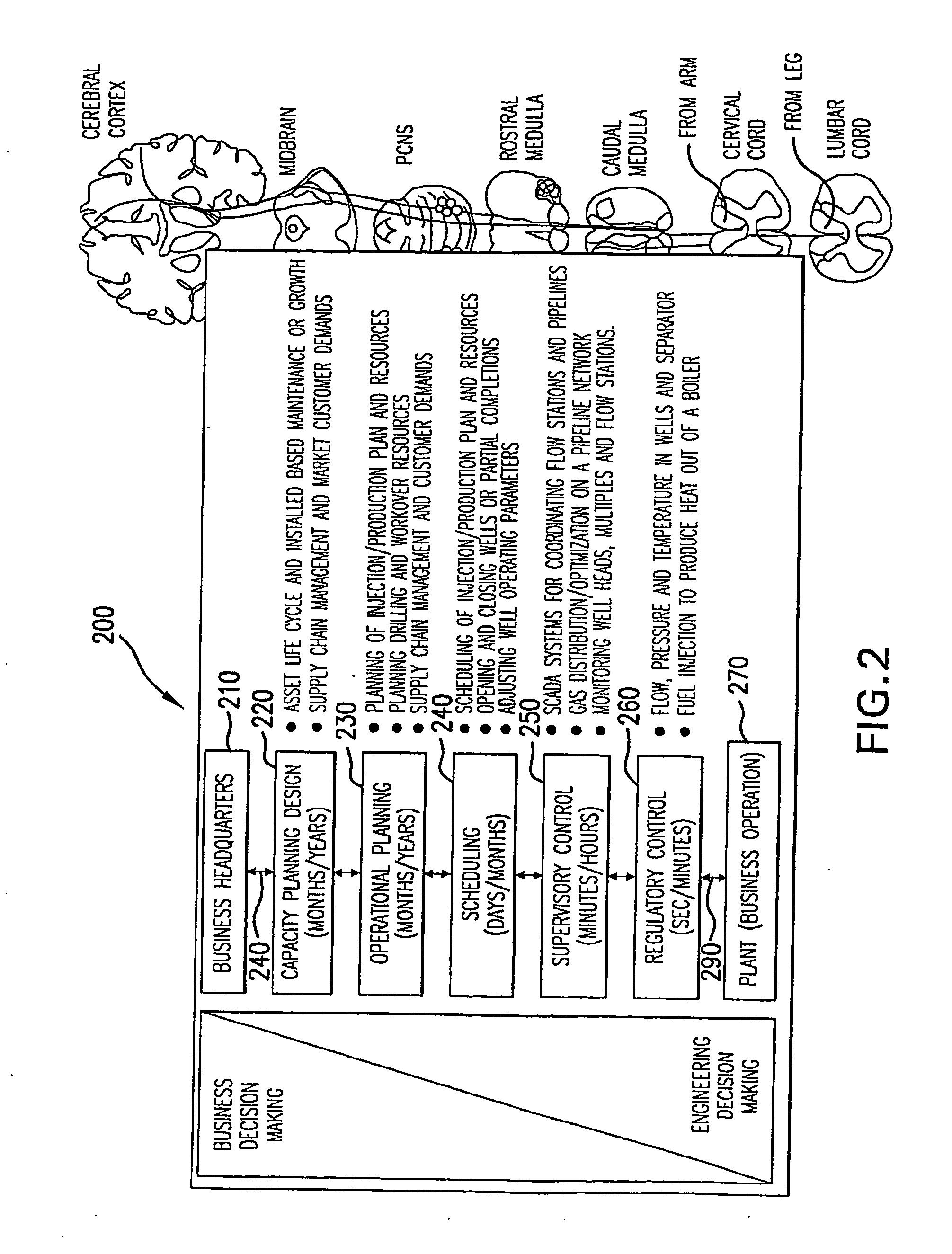

Innervated stochastic controller for real time business decision-making support

InactiveUS7395252B2Reduce demandInput/output for user-computer interactionDigital data processing detailsReinforcement learning algorithmComputer based learning

An Innervated Stochastic Controller optimizes business decision-making under uncertainty through time. The Innervated Stochastic Controller uses a unified reinforcement learning algorithm to treat multiple interconnected operational levels of a business process in a unified manner. The Innervated Stochastic Controller generates actions that are optimized with respect to both financial profitability and engineering efficiency at all levels of the business process. The Innervated Stochastic Controller can be configured to evaluate real options. In one embodiment of the invention, the Innervated Stochastic Controller is configured to generate actions that are martingales. In another embodiment of the invention, the Innervated Stochastic Controller is configured as a computer-based learning system for training power grid operators to respond to grid exigencies.

Owner:THE TRUSTEES OF COLUMBIA UNIV IN THE CITY OF NEW YORK

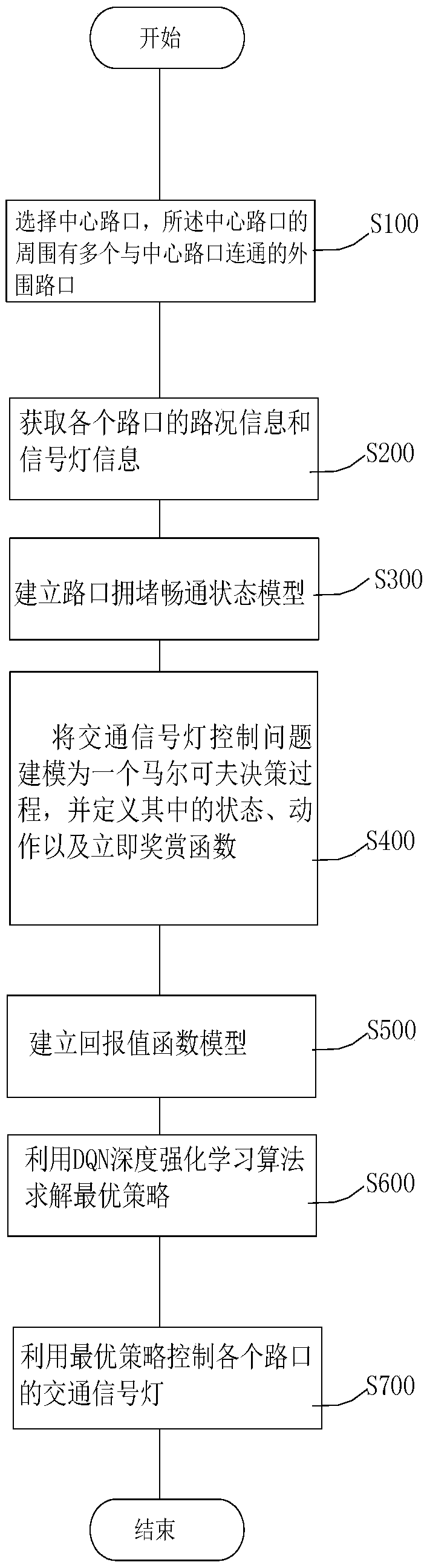

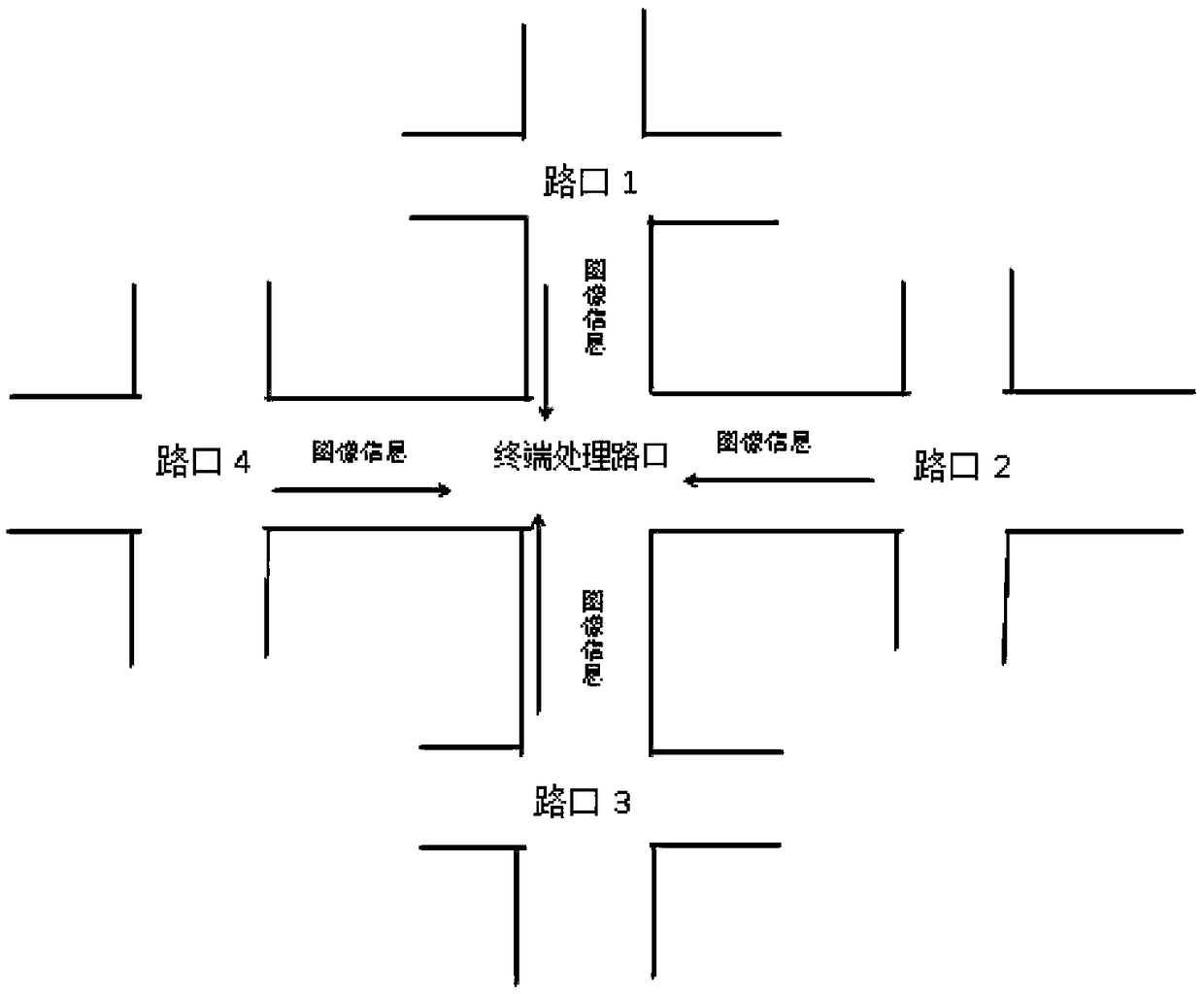

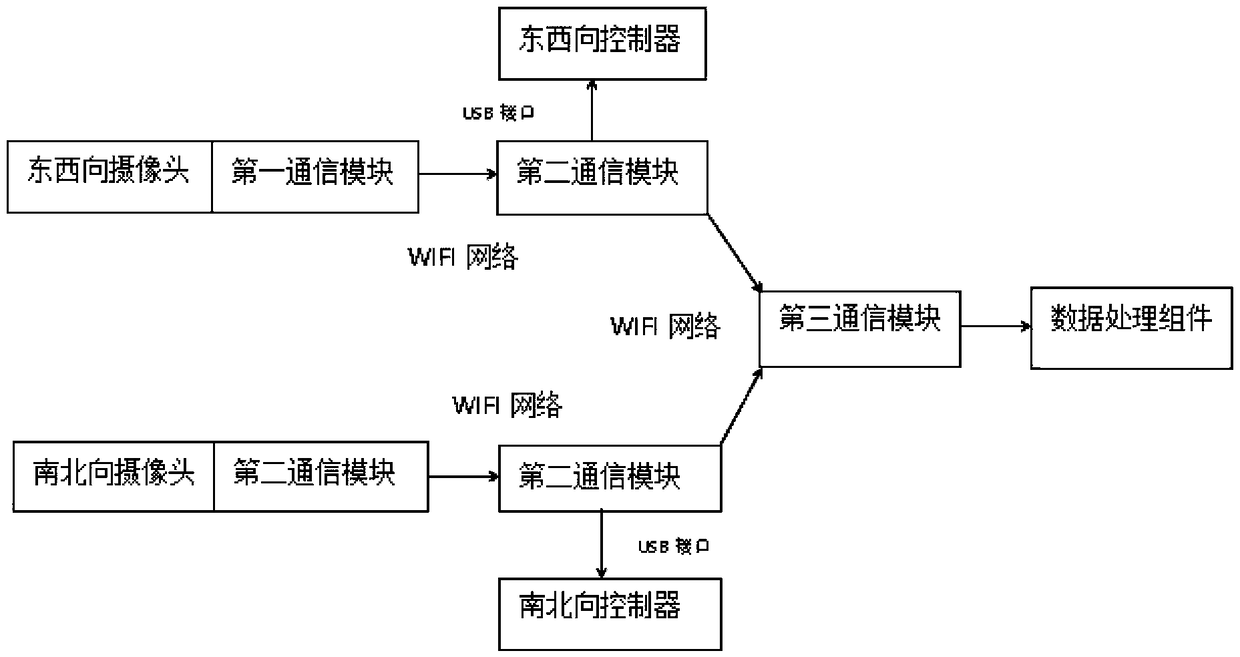

Signal lamp control method and system based on deep intensive learning and storage medium

The invention relates to an intelligent traffic lamp control method based on deep intensive learning. The method comprises the steps of selecting a center intersection, wherein multiple peripheral intersections communicated with the center intersection are arranged at the periphery of the center intersection; obtaining road condition information and signal lamp information of each intersection; building an intersection congestion and unblocking state model; modelling a traffic signal lamp control problem into a Markov decision-making process, and defining the state, motion and an immediate awarding function in the process; building a return value function model, utilizing a DQN deep intensive learning algorithm for solving an optimum strategy, and utilizing the optimum strategy for controlling traffic lights of all the intersections. By means of the method, a control strategy of the traffic lights can be self-adaptively and dynamically adjusted according to the real-time road conditioninformation. In the meanwhile, multiple intersections are synchronously adjusted, and full play can be given to the traffic capability of all the intersections.

Owner:SUZHOU UNIV OF SCI & TECH

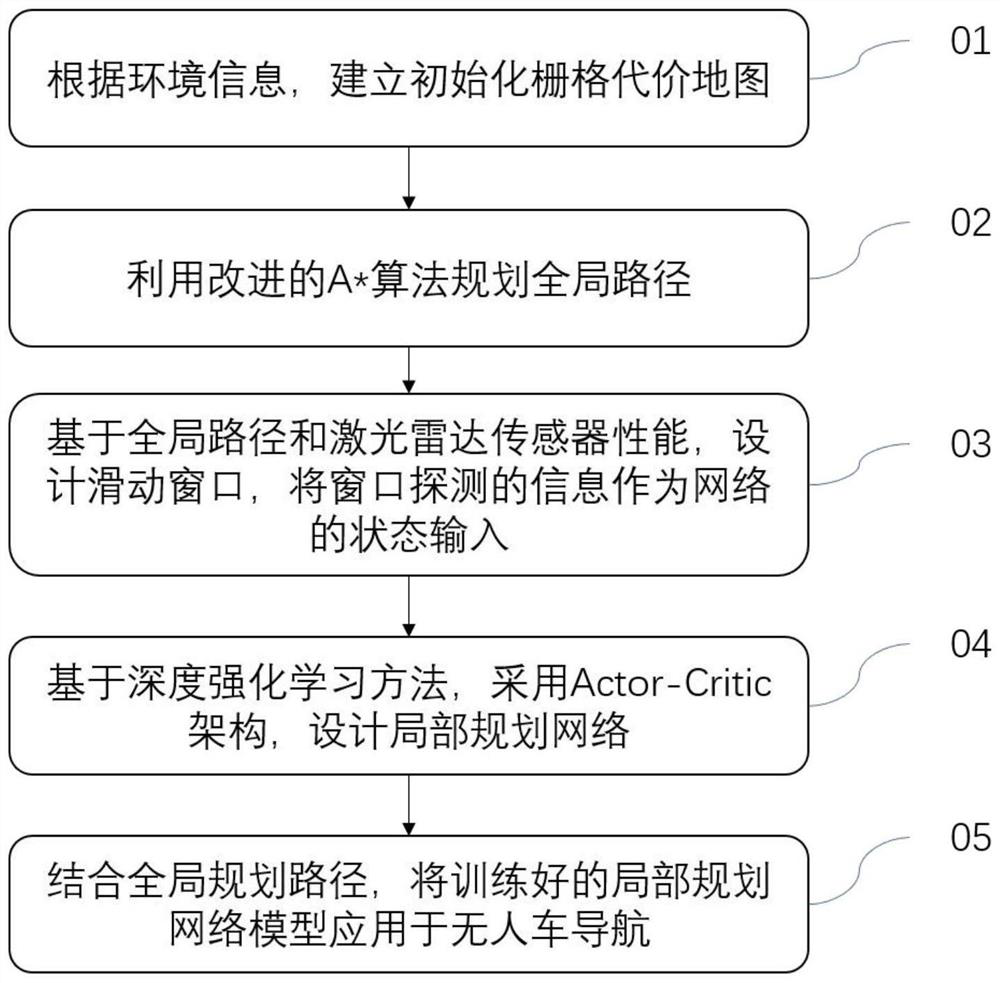

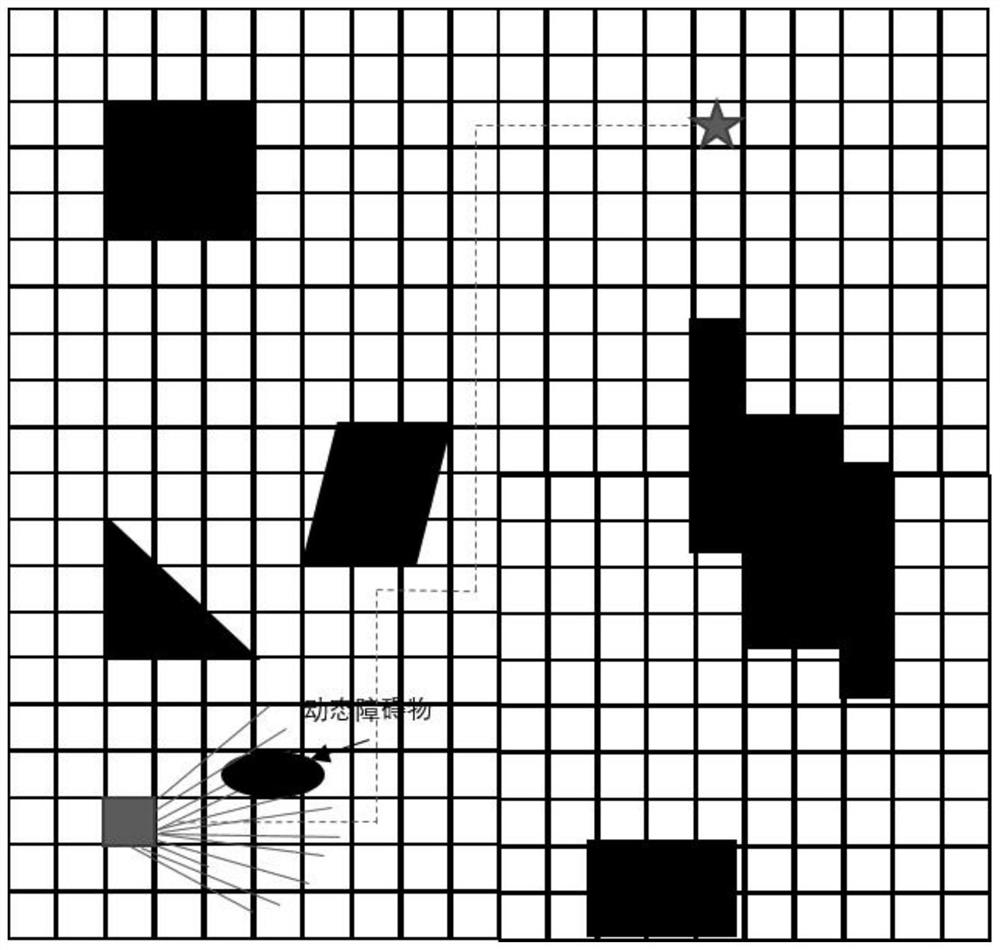

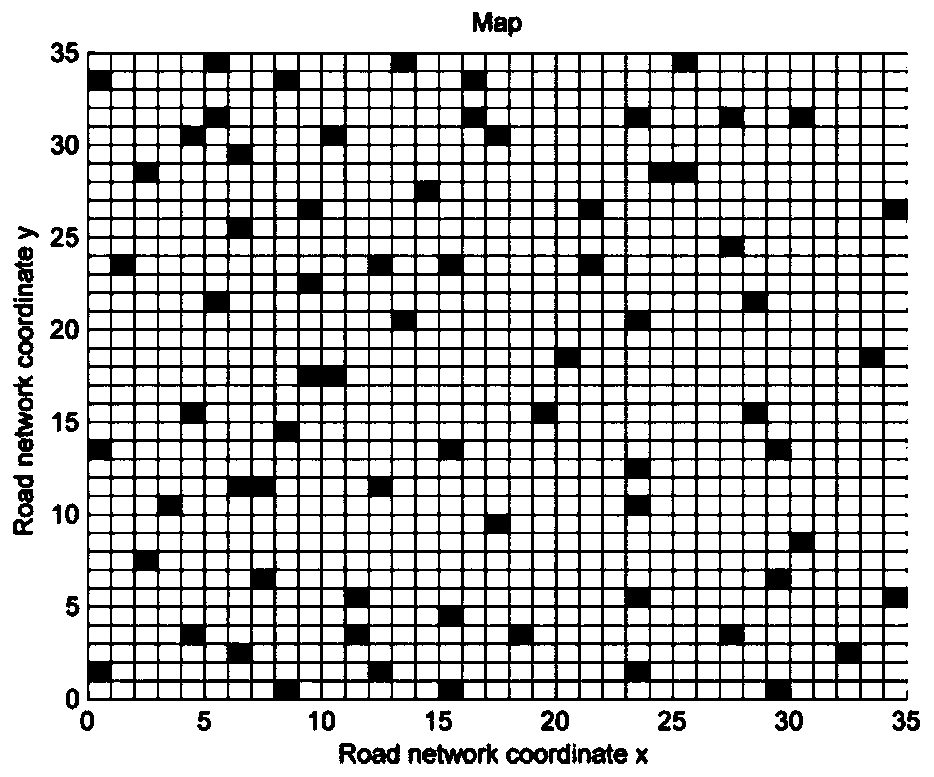

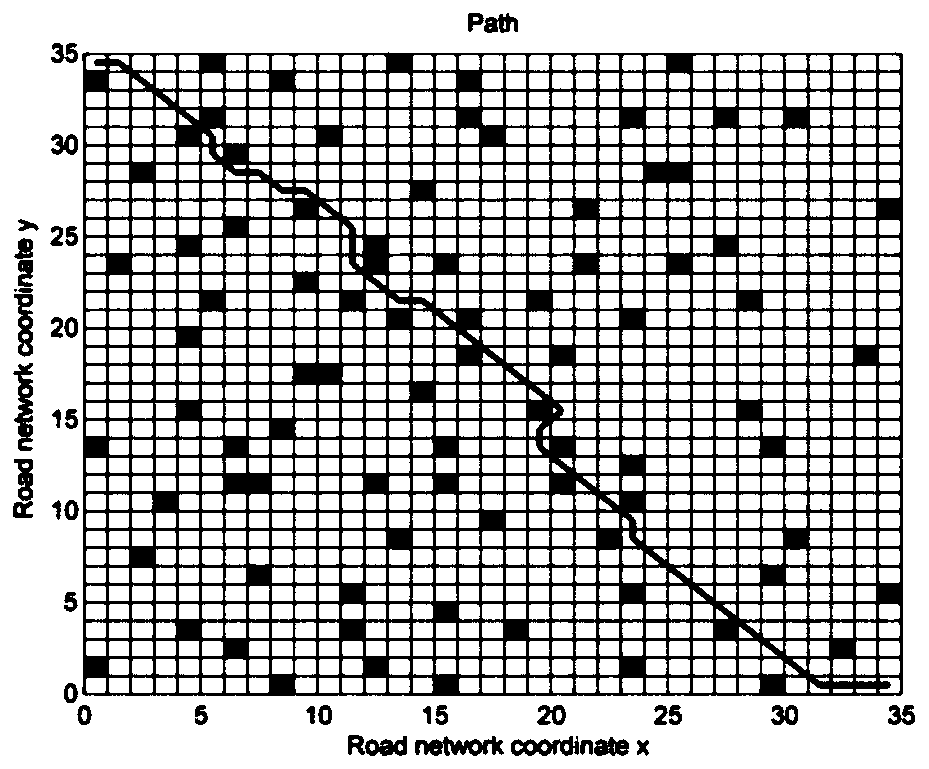

Unmanned vehicle path planning method based on improved A * algorithm and deep reinforcement learning

ActiveCN111780777AInstruments for road network navigationInternal combustion piston enginesSimulationReinforcement learning algorithm

The invention belongs to the technical field of unmanned vehicle navigation, particularly relates to an unmanned vehicle path planning method based on an improved A * algorithm and deep reinforcementlearning. The method aims to give full play to the advantages of global optimization of global path planning and real-time obstacle avoidance of local planning, improve the rapid real-time performanceof an A * algorithm and the complex environment adaptability of a deep reinforcement learning algorithm, and rapidly plan a collision-free optimal path of an unmanned vehicle from a starting point toa target point. The planning method comprises the following steps: establishing an initialized grid cost map according to environmental information; planning a global path by using an improved A * algorithm; designing a sliding window based on the global path and the performance of the laser radar sensor, and taking the information detected by the window as the state input of the network; on thebasis of a deep reinforcement learning method, using an Actor-Critic architecture for designing a local planning network. According to the invention, knowledge and a data method are combined, an optimal path can be obtained through rapid planning, and the unmanned vehicle has higher autonomy.

Owner:江苏泰州港核心港区投资有限公司

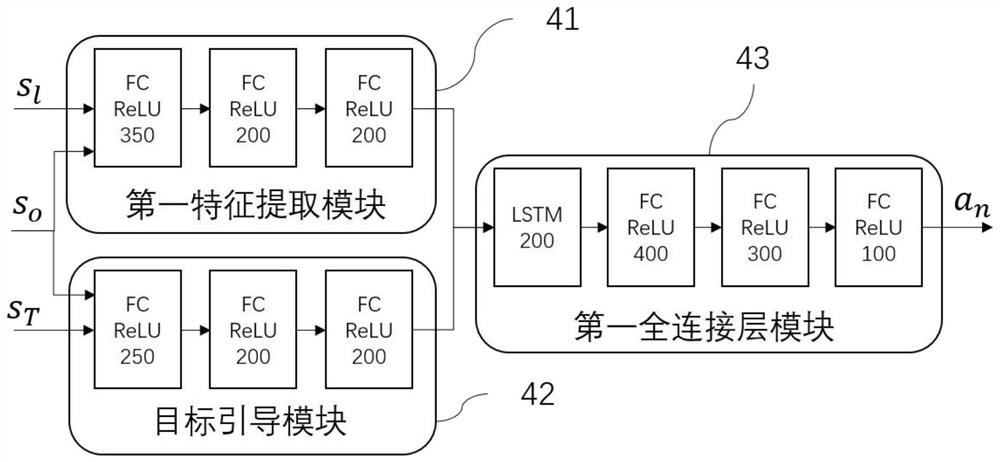

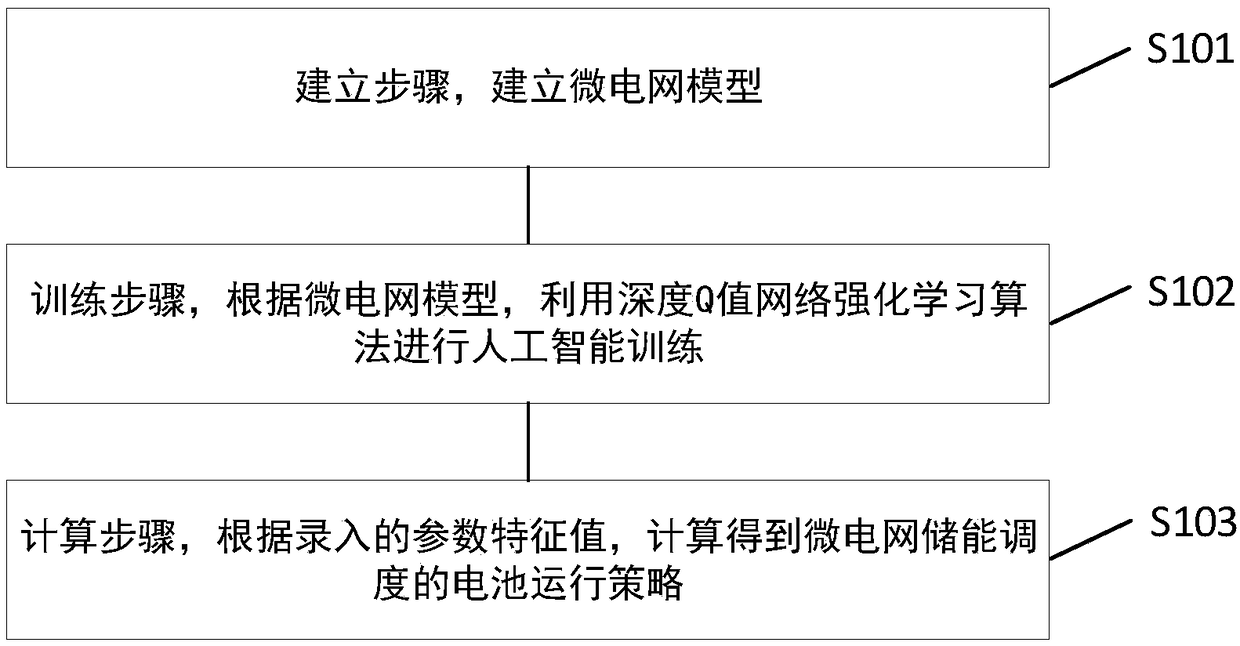

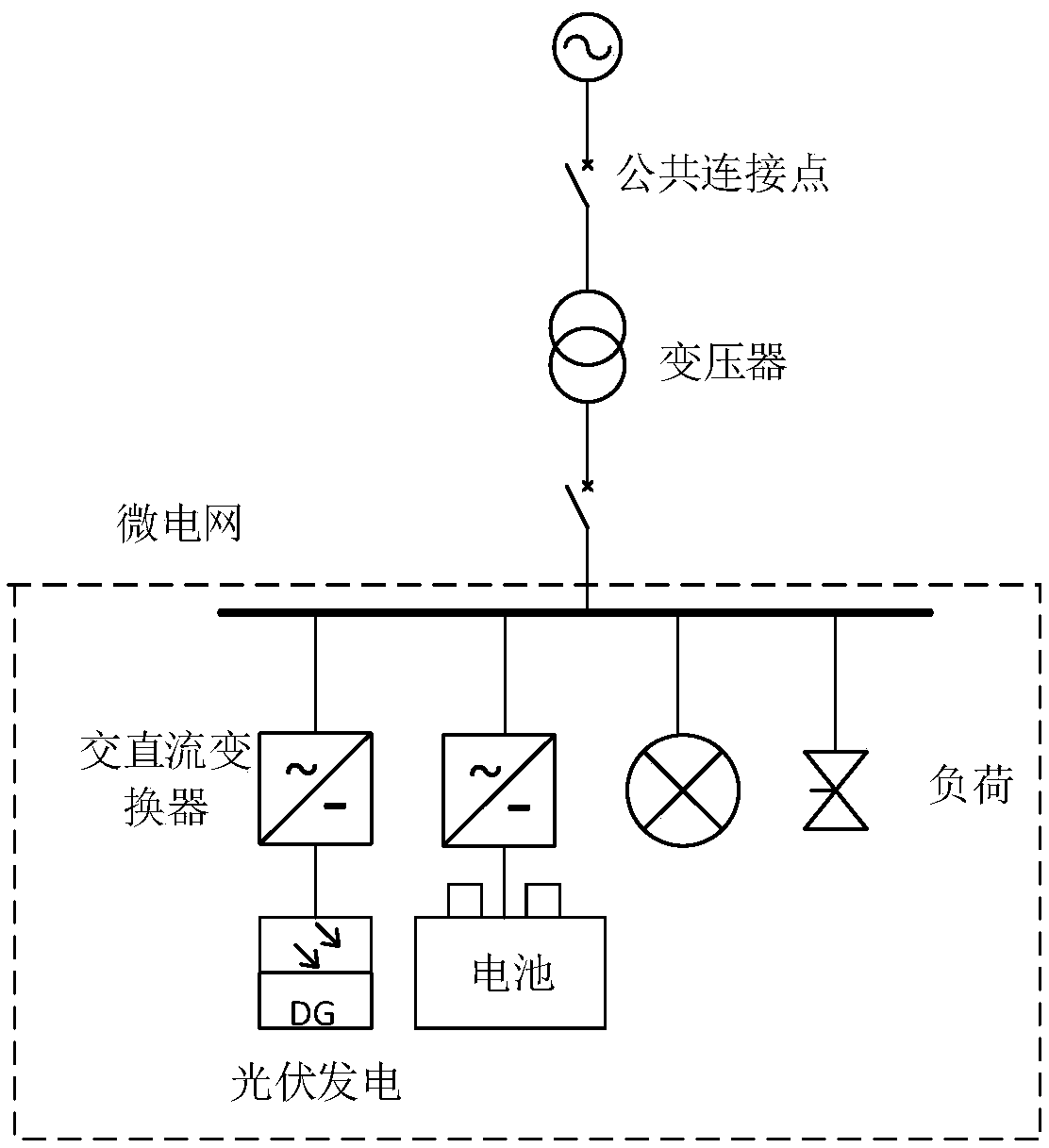

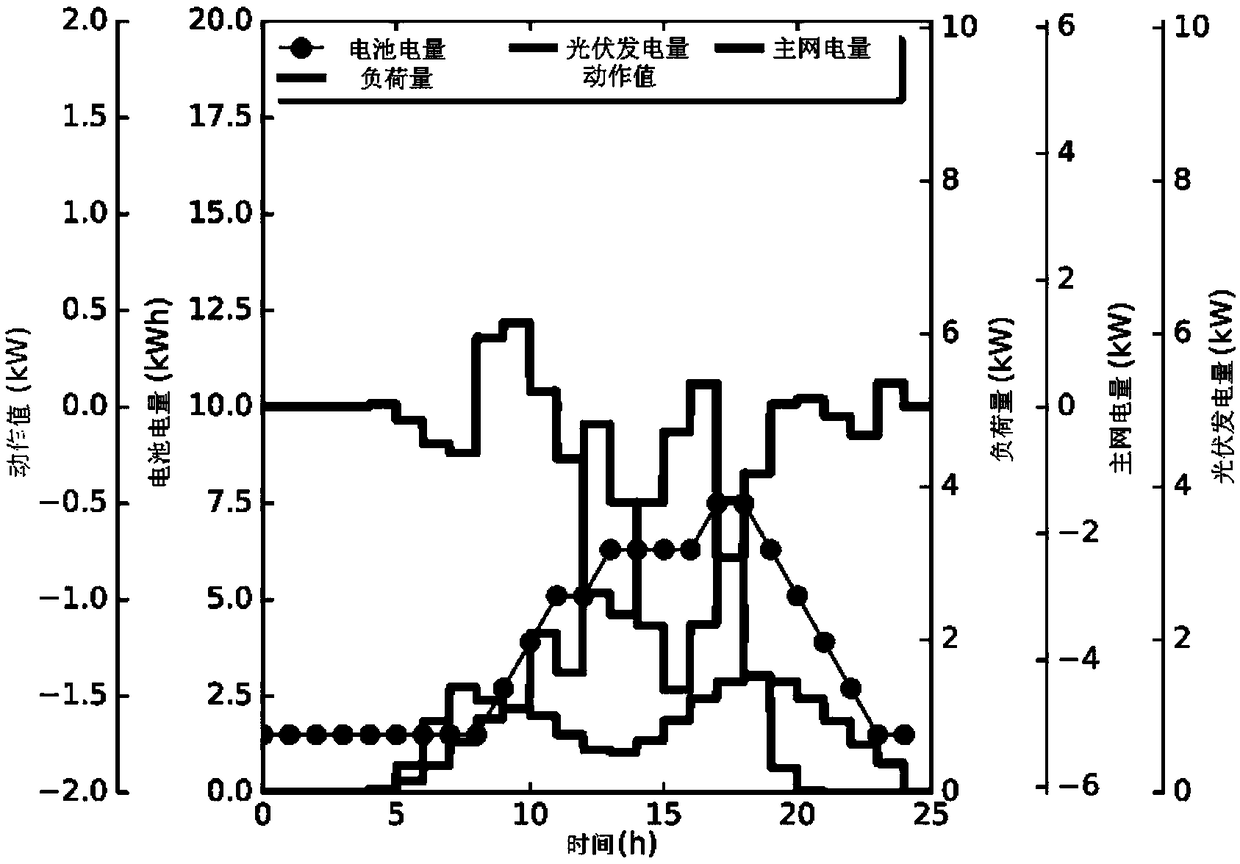

Micro-power-grid energy storage scheduling method and device based on deep Q-value network (DQN) reinforcement learning

ActiveCN109347149ASolved the estimation problemStrong estimation abilitySingle network parallel feeding arrangementsAc network load balancingDecompositionPower grid

The invention discloses a micro-power-grid energy storage scheduling method and device based on deep Q-value network reinforcement learning. A micro-power-grid model is established; a deep Q-value network reinforcement learning algorithm is utilized for artificial intelligence training according to the micro-power-grid model; and a battery running strategy of micro-power-grid energy storage scheduling is calculated and obtained according to input parameter feature values. According to the embodiment of the invention, deep Q-value networks are utilized for scheduling management on micro-power-grid energy, an agent decides the optimal energy storage scheduling strategy through interaction with an environment, a running mode of the battery is controlled in the constantly changing environment,features of energy storage management are dynamically determined on the basis of a micro-power-grid, and the micro-power-grid is enabled to obtain a maximum running benefit in interaction with a mainpower grid; and the networks are enabled to respectively calculate an evaluation value of the environment and an additional value, which is brought by action, through using a competitive Q-value network model, decomposition of the two parts enables a learning objective to be more stable and accurate, and estimation ability of the deep Q-value networks on environment status is enabled to be higher.

Owner:STATE GRID HENAN ELECTRIC POWER ELECTRIC POWER SCI RES INST +3

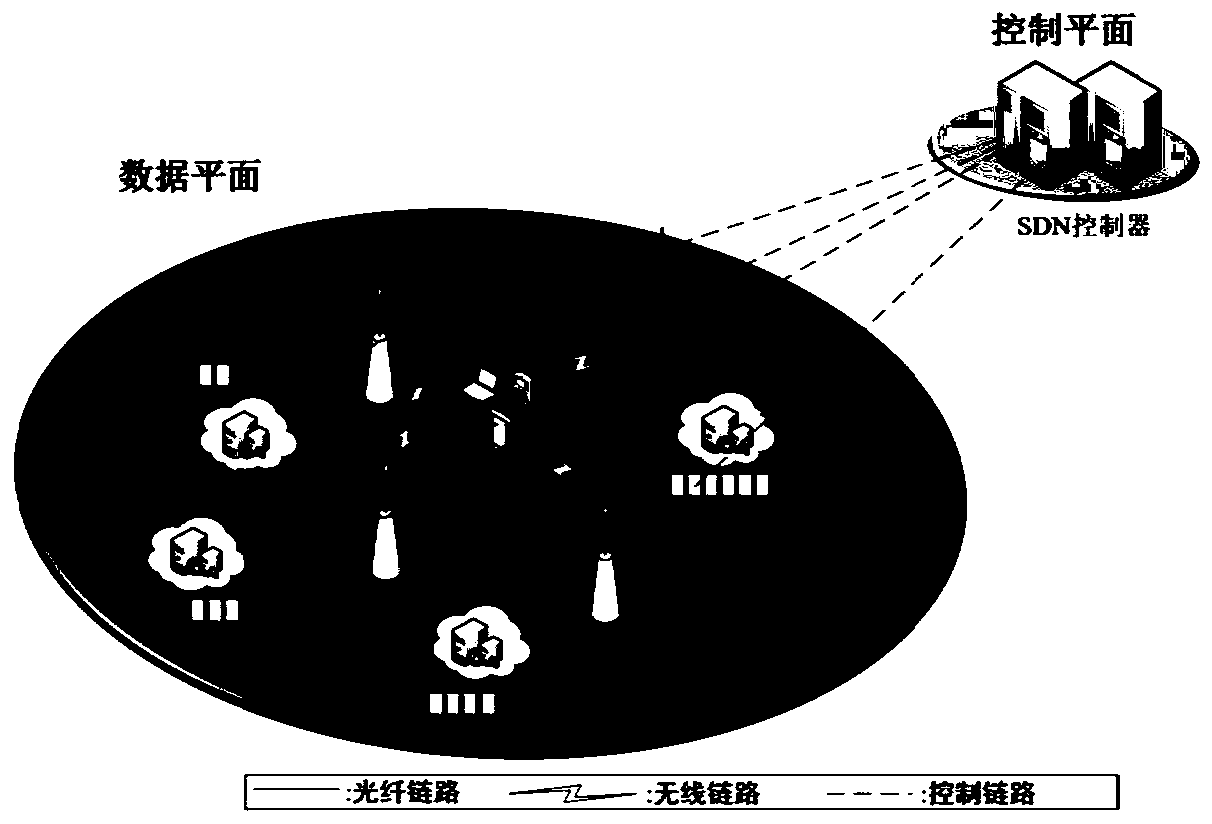

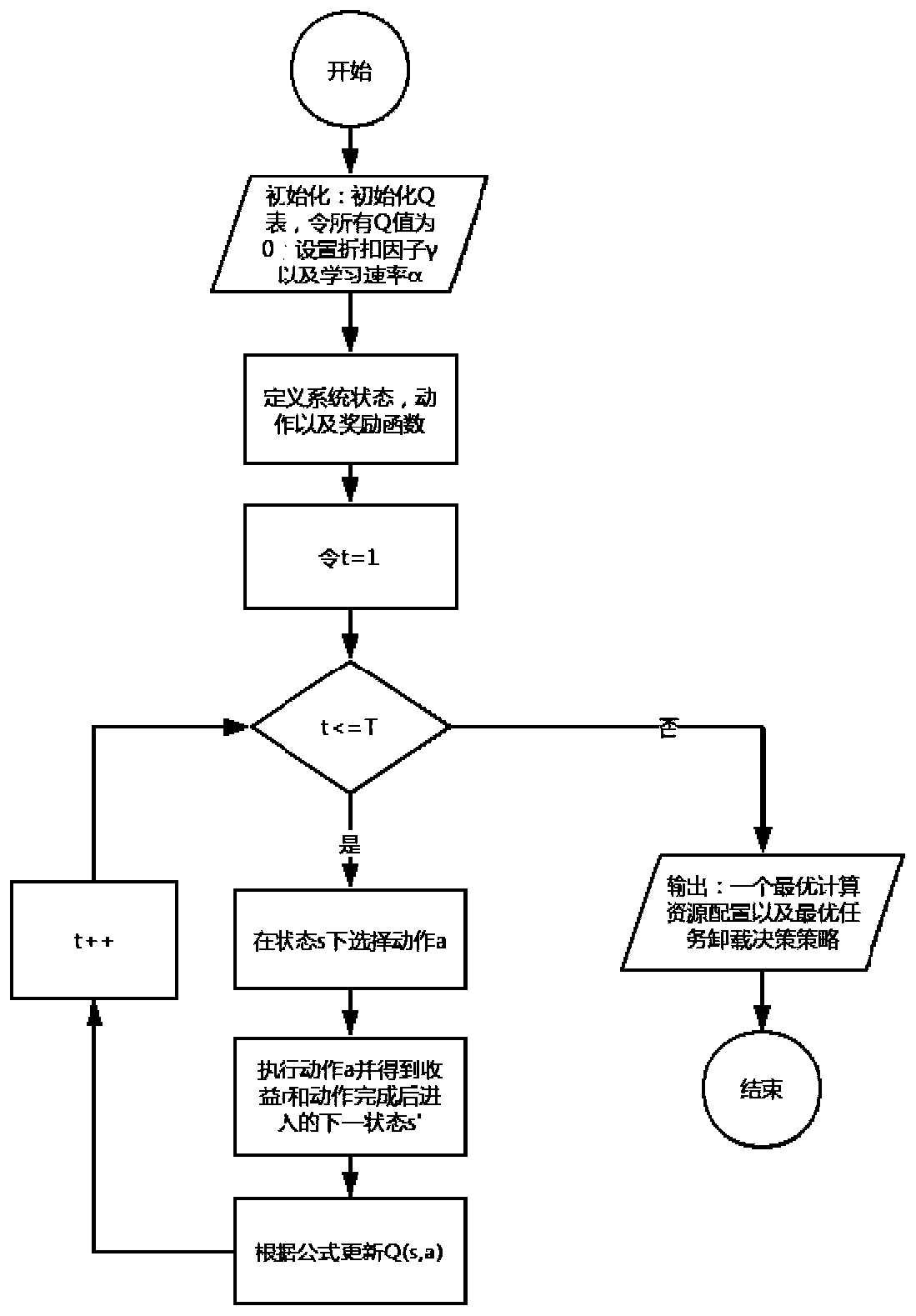

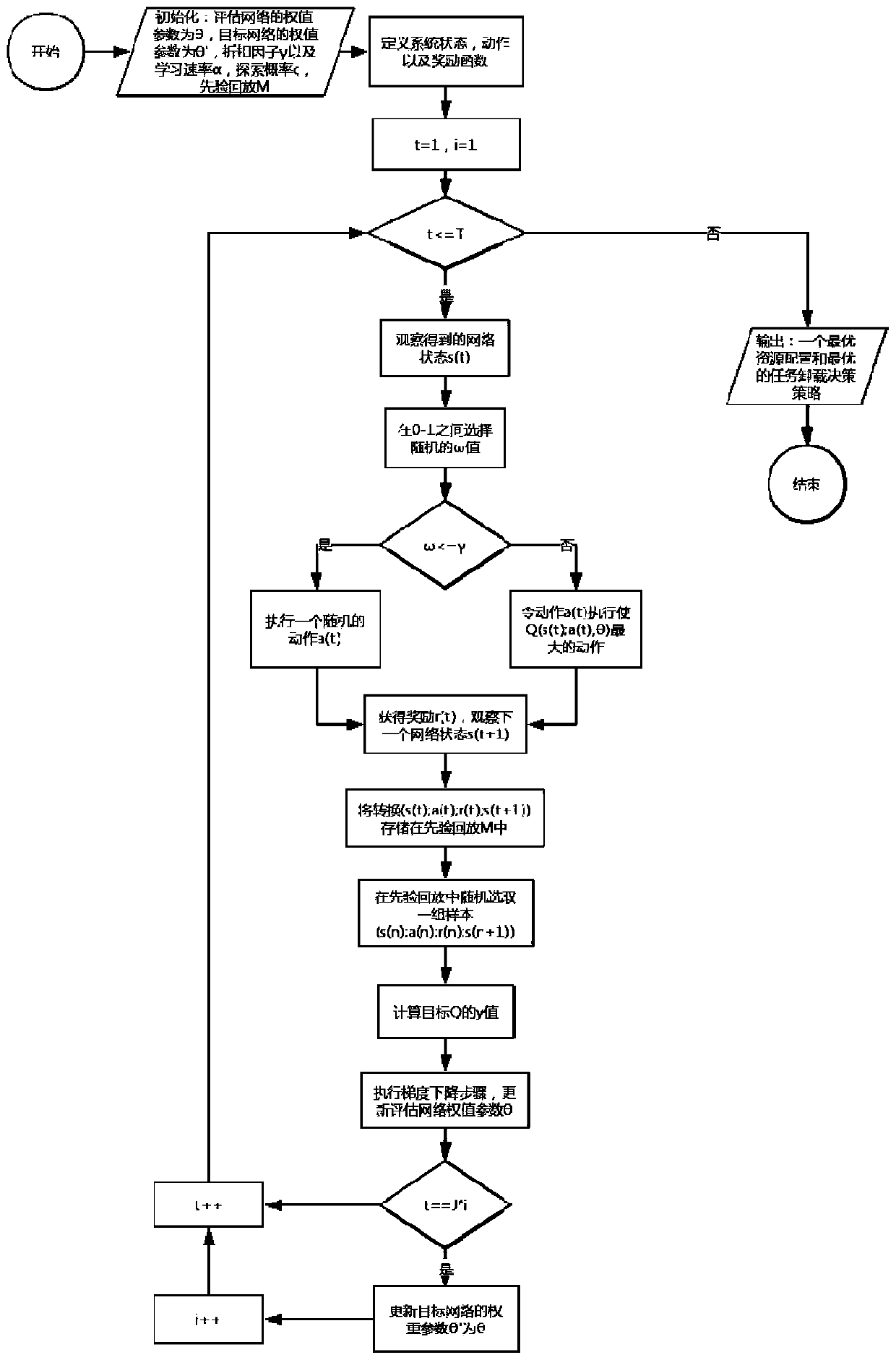

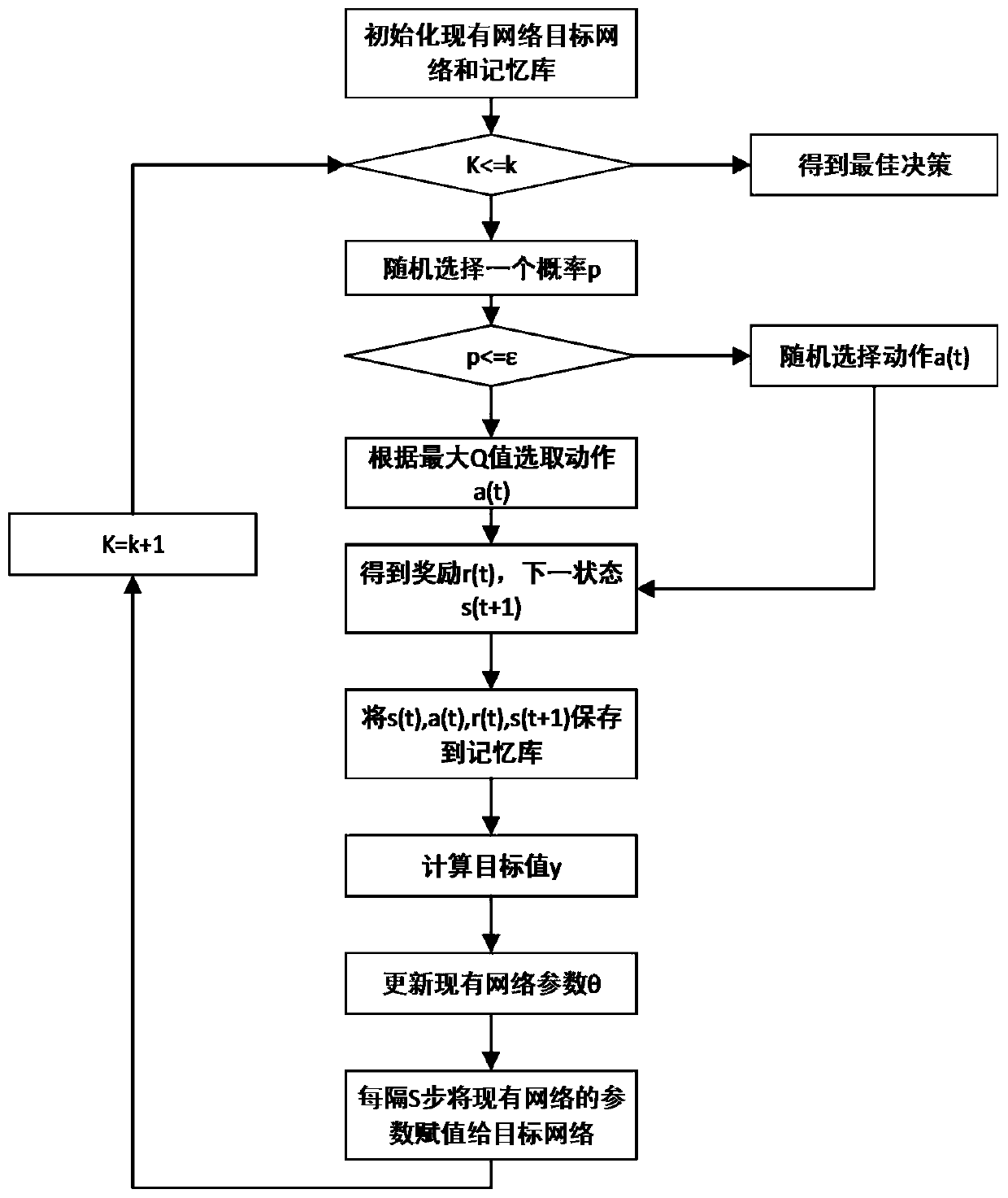

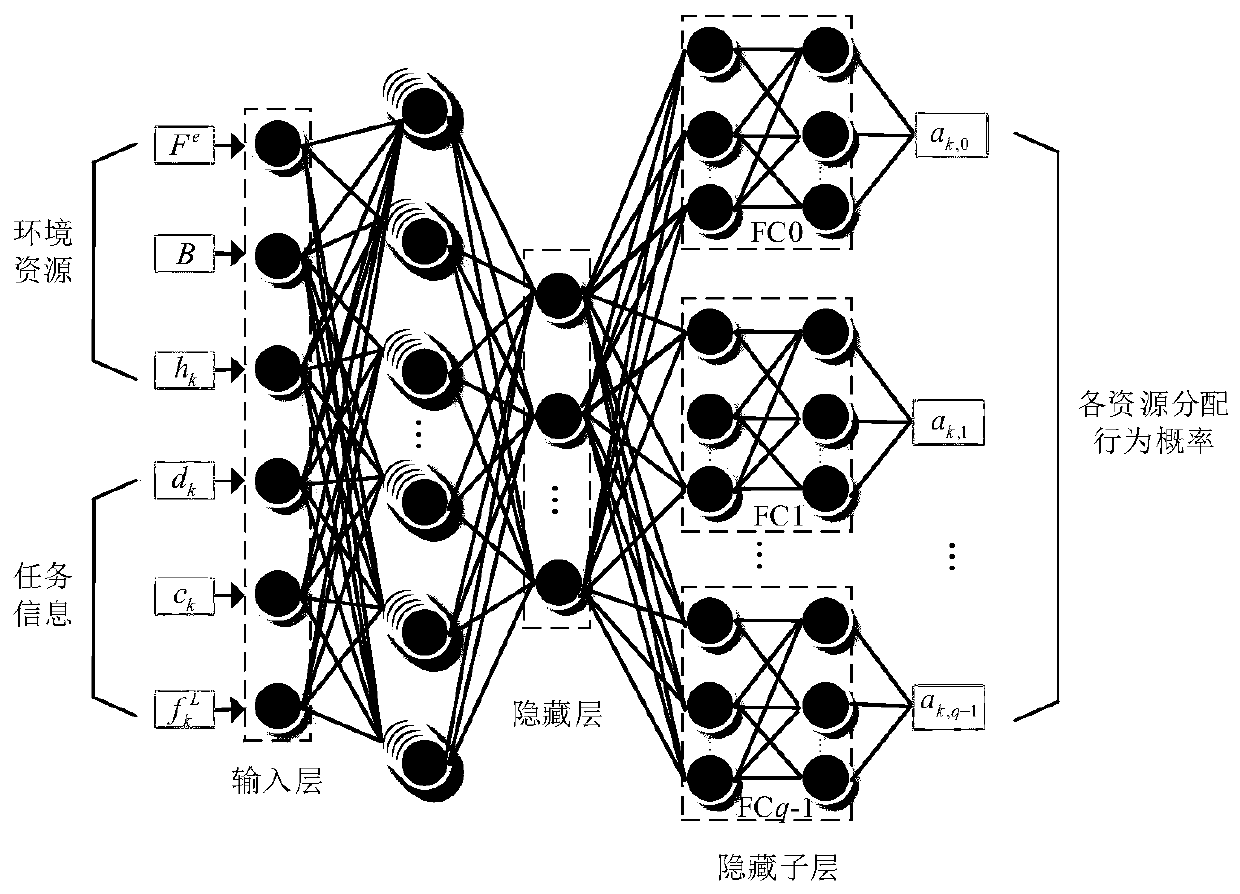

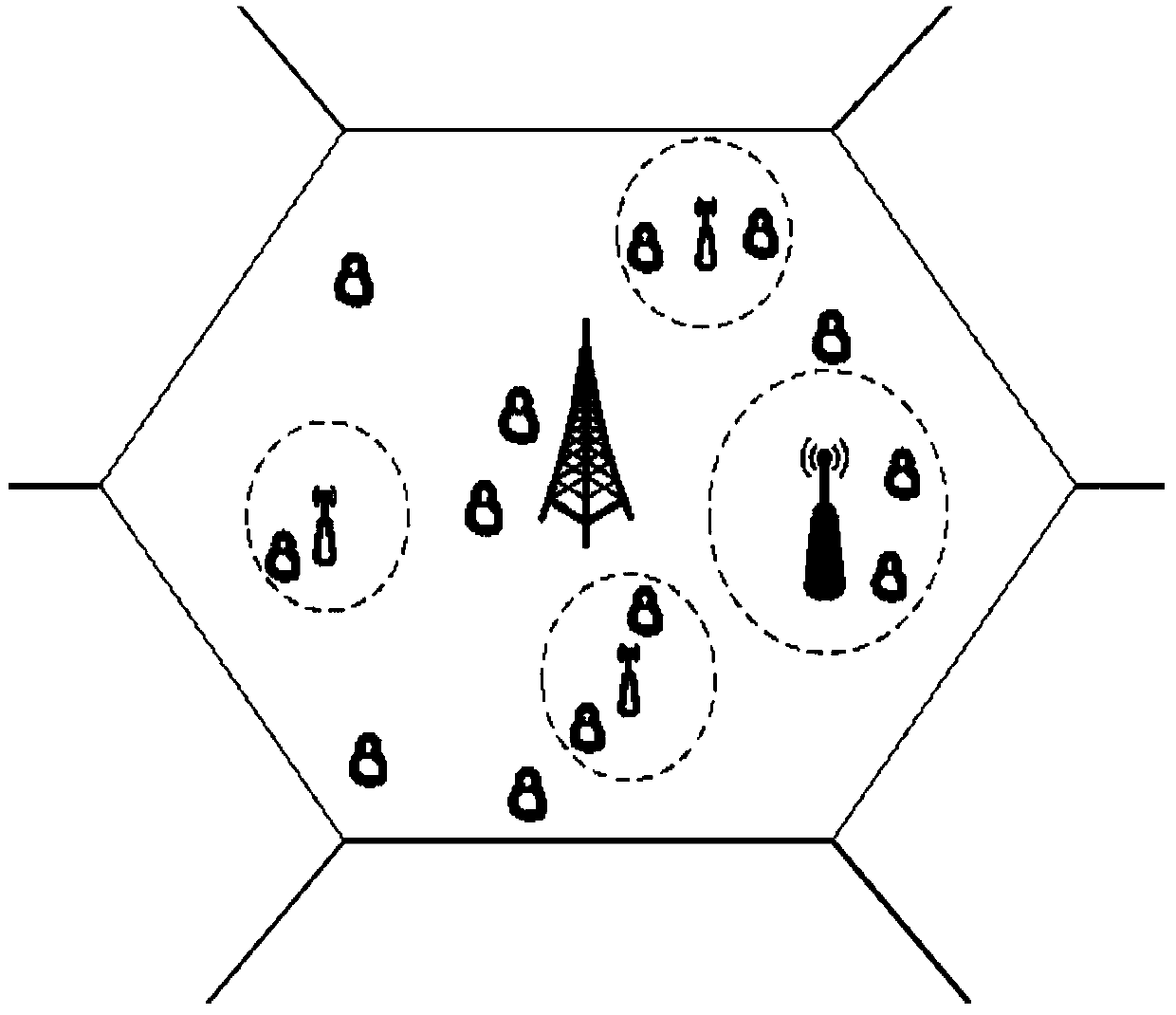

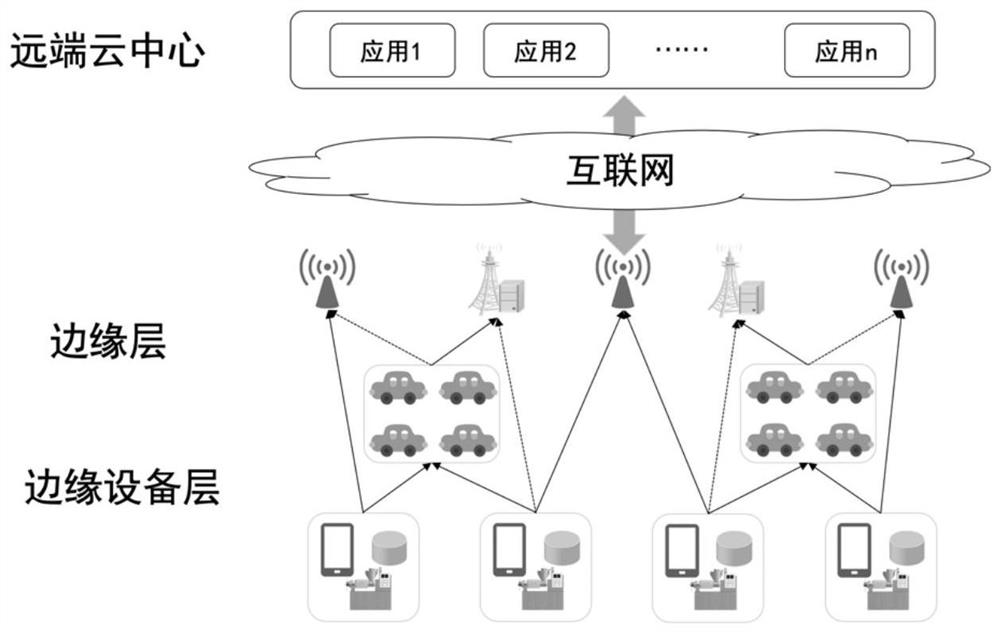

Computing resource allocation and task unloading method for edge computing of super-dense network

InactiveCN110798849AIncrease study timeFast convergenceWireless communicationMacro base stationsIndustrial engineering

A computing resource allocation and task unloading method for super-dense network edge computing comprises the following steps: step 1, establishing a system model based on a super-dense network edgecomputing network of an SDN, and obtaining network parameters; step 2, obtaining parameters required by edge calculation: unloading the parameters to an edge server of a macro base station and an edgeserver connected with a small base station s through local calculation in sequence to obtain an uplink data rate of a transmission calculation task; step 3, adopting a Q-learning scheme to obtain anoptimal computing resource allocation and task unloading strategy; and step 4, adopting a DQN scheme to obtain an optimal computing resource allocation and task unloading strategy. The method is suitable for a dynamic system by stimulating an intelligent agent to find an optimal solution on the basis of learning variables. In a reinforcement learning (RL) algorithm, Q-Learning has good performancein some time-varying networks. A deep learning technology is combined with Q-learning, a learning scheme based on a deep Q network (DQN) is provided, the benefits of mobile equipment and an operatorare optimized at the same time in a time-varying environment, and compared with a method based on Q-learning, the method is shorter in learning time and faster in convergence. The method realizes simultaneous optimization of benefits of mobile devices (MDs) and operators in a time-varying environment based on the DQN.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

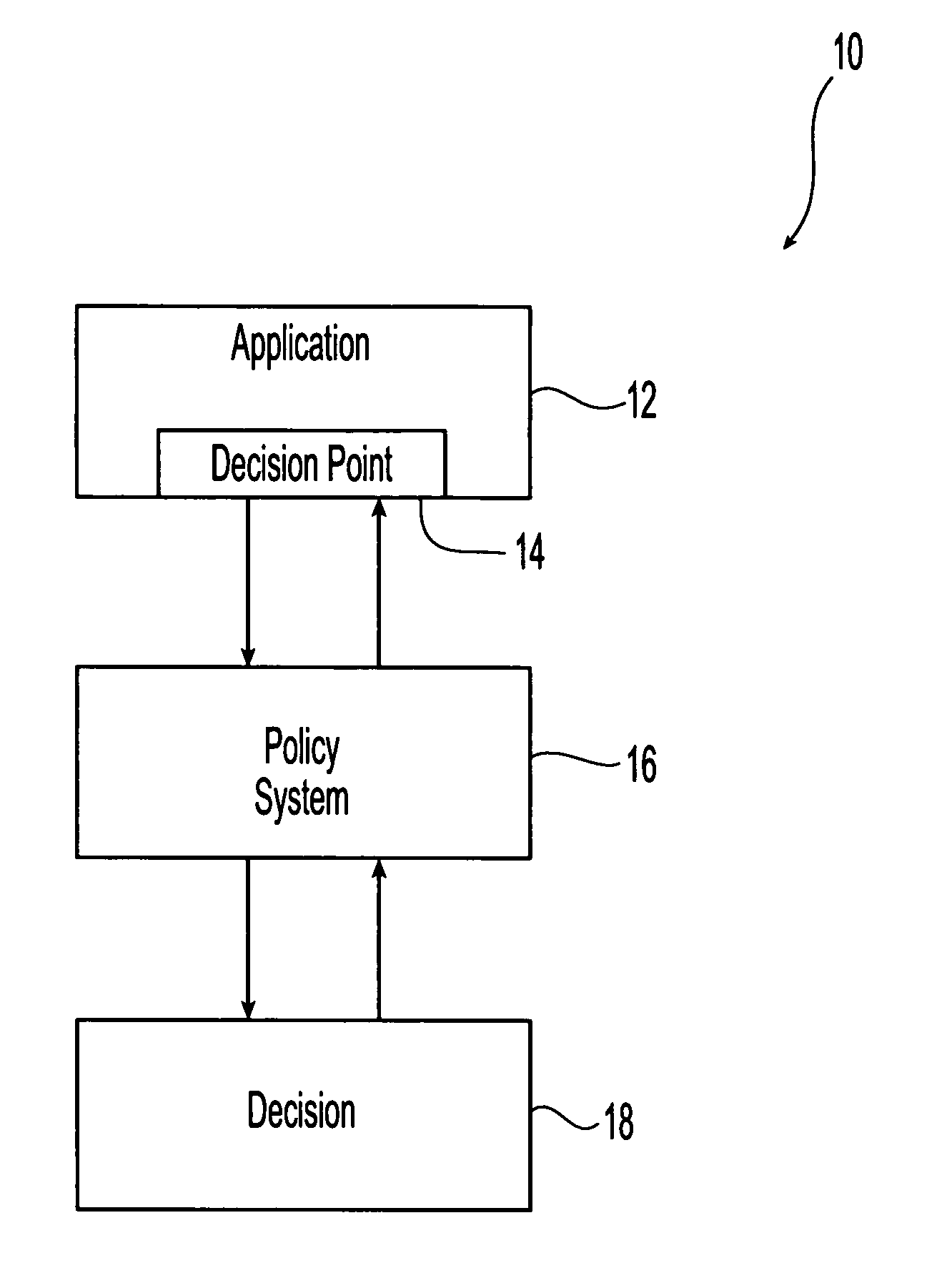

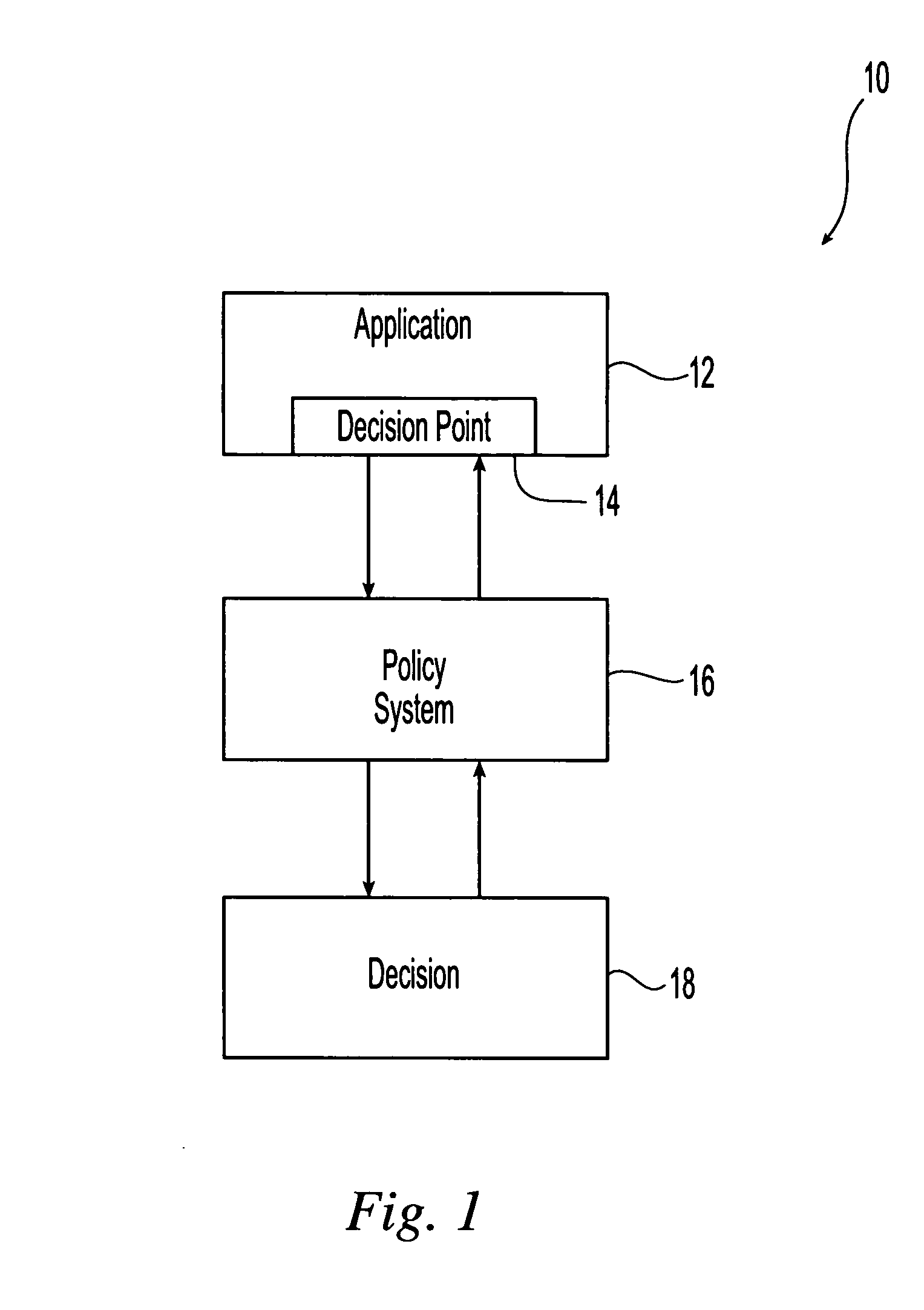

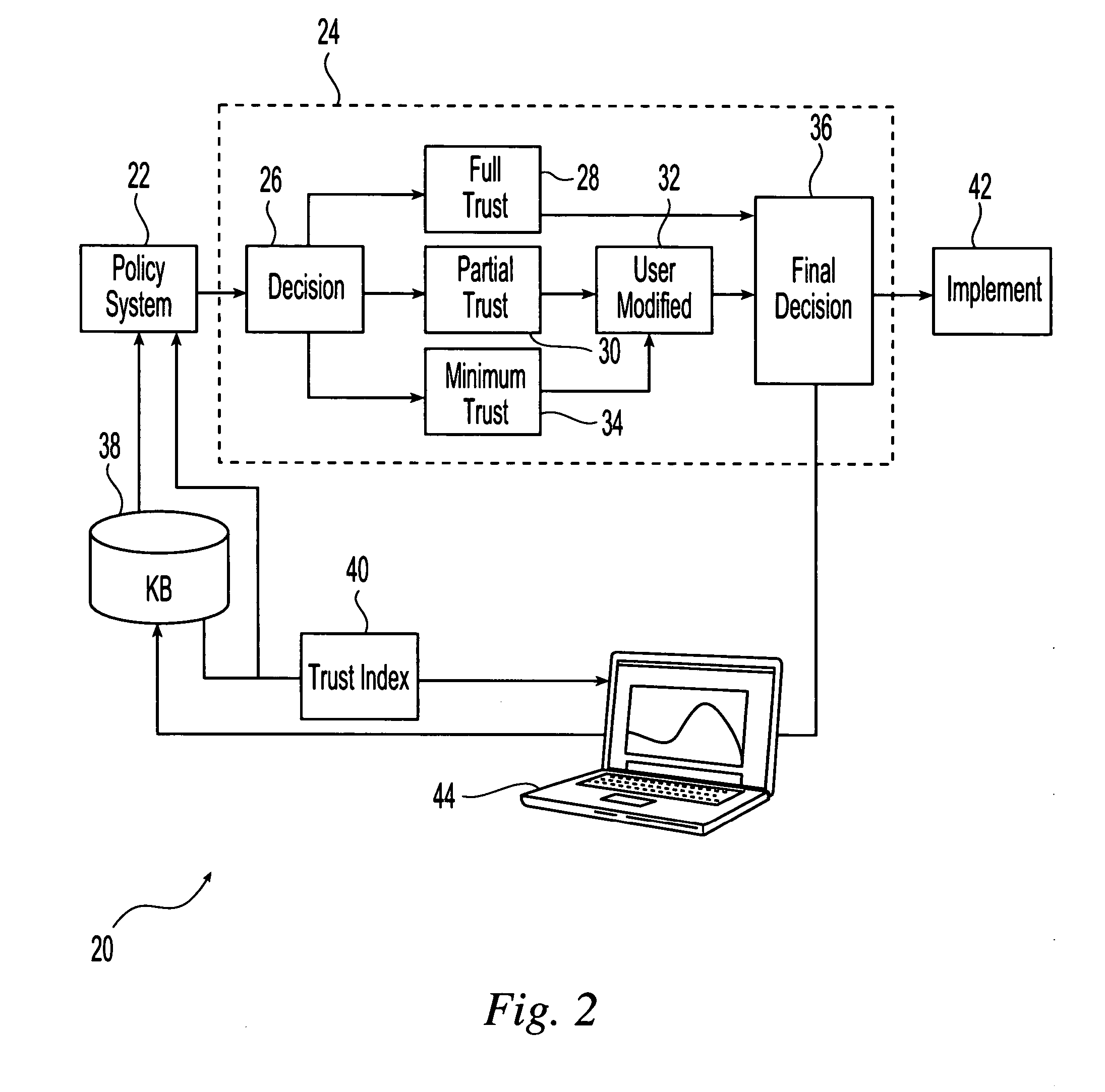

System to establish trust between policy systems and users

InactiveUS20060277591A1Improve trustLarge indexDigital data protectionSpecial data processing applicationsInternet privacyUser input

A system and method are provided to establish trust between a user and a policy system that generates recommended actions in accordance with specified policies. Trust is introduced into the policy-based system by assigning a value to each execution of each policy with respect to the policy-based system, called the instantaneous trust index. The instantaneous trust indices for each one of the policies, for the each execution of a given policy or for both are combined into the overall trust index for a given policy or for a given policy-based system. The recommended actions are processed in accordance with the level or trust associated with a given policy as expressed by the trust indices. Manual user input is provided to monitor or change the recommended actions. In addition, reinforcement learning algorithms are used to further enhance the level of trust between the user and the policy-based system.

Owner:IBM CORP

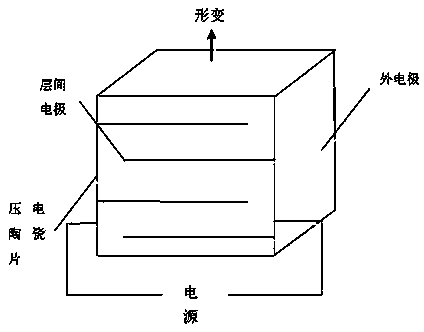

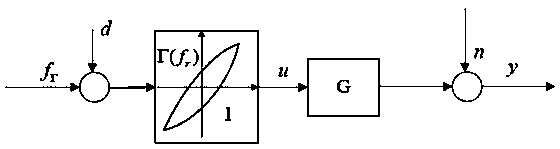

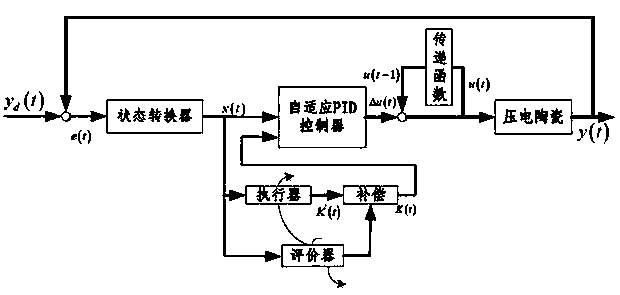

Adaptive learning control method of piezoelectric ceramics driver

InactiveCN103853046ASolving Hysteretic Nonlinear ProblemsHigh repeat positioning accuracyAdaptive controlHysteresisActuator

The invention relates to an adaptive learning control method of a piezoelectric ceramics driver. The adaptive learning control method of the piezoelectric ceramics driver comprises the following steps of (1), building a dynamic hysteretic model of the piezoelectric ceramics driver and designing a control method with the artificial neural network and a PID combined, (2), adopting a reinforcement learning algorithm to achieve adaptive setting of PID parameters on line, (3), adopting a three-layer radial basis function network to approach a strategic function of an actuator in the reinforcement learning algorithm and a value function of an evaluator in the reinforcement learning algorithm; (4), inputting a system error, an error first-order difference and an error second-order difference through a first layer of the radial basis function network, (5), achieving mapping of the system state to the three PID parameters through the actuator in the reinforcement learning algorithm, and (6), judging the output of the actuator and generating an error signal through the evaluator in the reinforcement learning algorithm, and updating system parameters through the signal. The adaptive learning control method of the piezoelectric ceramics driver solves the hysteresis nonlinear problem of the piezoelectric ceramics driver, improves the repeated locating accuracy of a piezoelectric ceramics drive platform, and eliminates influence on a system from hysteresis nonlinearity of piezoelectric ceramics.

Owner:GUANGDONG UNIV OF TECH

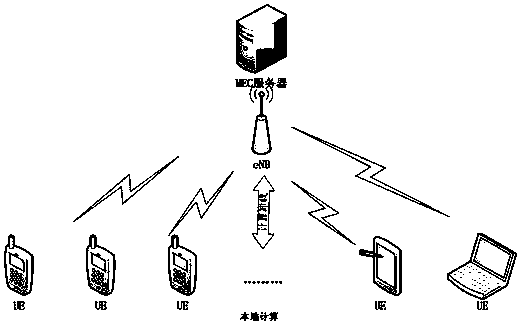

MEC unloading method under energy consumption and delay constraints

PendingCN109951897ATroubleshoot Compute OffloadingWireless communicationReinforcement learning algorithmDecision taking

The invention relates to an MEC unloading method under energy consumption and delay constraints. The method specifically comprises: carrying out system modeling, specifically, a base station and N mobile user devices are arranged in a cell, the base station and an MEC server are deployed together, each mobile user device is made to have a computing intensive task needing to be completed, and eachmobile user device can unload the task to the MEC server or execute the task locally; constructing a local calculation model and an unloading model to obtain the total local calculation cost and the total MEC unloading calculation cost; and searching an optimal computing unloading and resource allocation scheme through a reinforcement learning algorithm, i.e., computing unloading decisions of allmobile user devices in the cell and computing resource allocation of the MEC server. The optimal strategy can be obtained in the dynamic system, so that the energy consumption of the mobile user devices is reduced, the delay is reduced, and the service quality is ensured.

Owner:DONGHUA UNIV

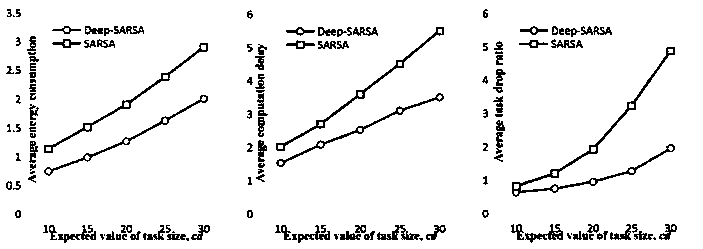

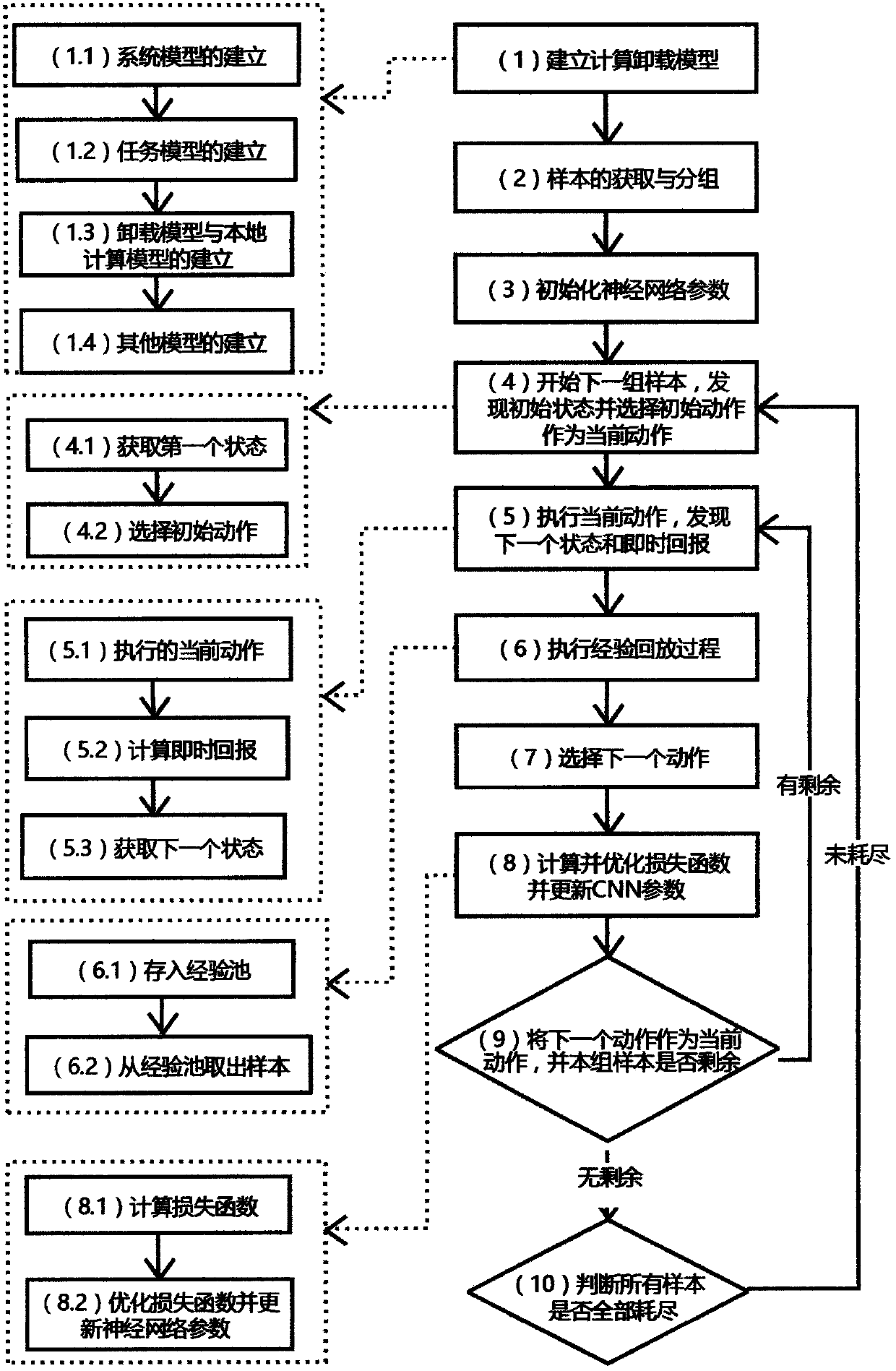

A computing unloading scheduling method based on depth reinforcement learning

InactiveCN109257429AReduce correlationEffective calculationNeural architecturesTransmissionAlgorithmState model

The invention provides a computing unloading scheduling method based on depth reinforcement learning, which provides a method for computing unloading to make unloading decision for Internet of Thingsequipment, including making decisions on various aspects needing to be unloaded according to the basic model of computing unloading. Based on different optimization objectives, different optimizationobjectives can be achieved by changing the value function. TheDeep-SARSA algorithm is similar to DQN algorithm, which combines reinforcement learning and depth learning. It can effectively change theunloading state and unloading action into training samples of depth learning when cooperating with experience pool. The invention can effectively carry out machine learning on an unloading state modelof an unlimited dimension, reducing the complexity of learning, this method uses neural network as the linear approximator of Q value, which can effectively improve the training speed and reduce thesample required for training. This method can effectively make the best decision through deep reinforcement learning under the given model and optimization objective.

Owner:NANJING UNIV

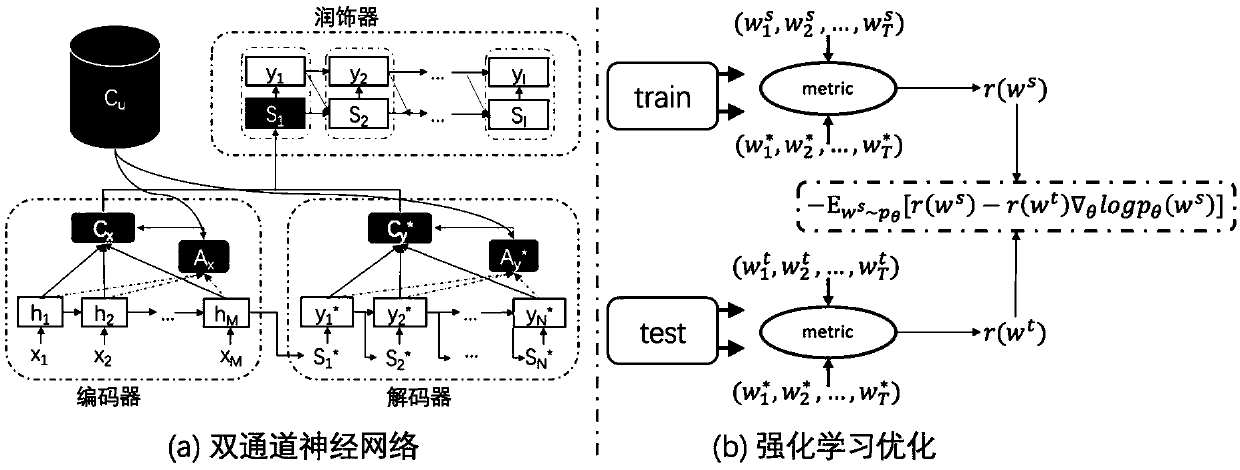

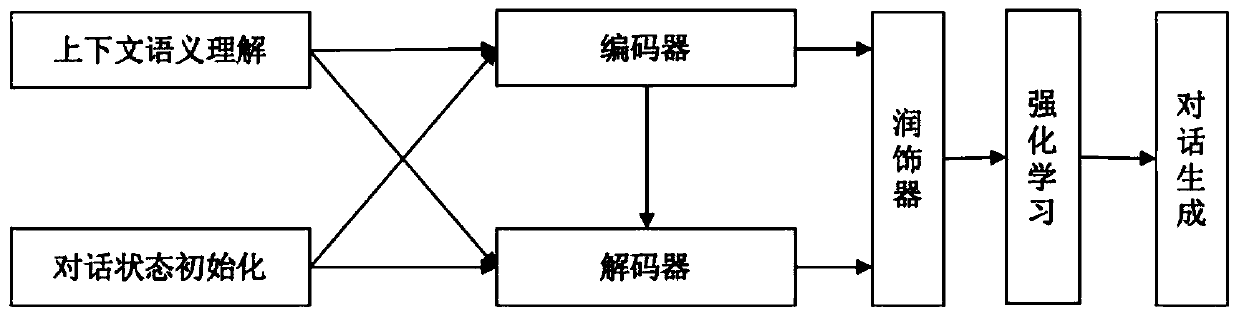

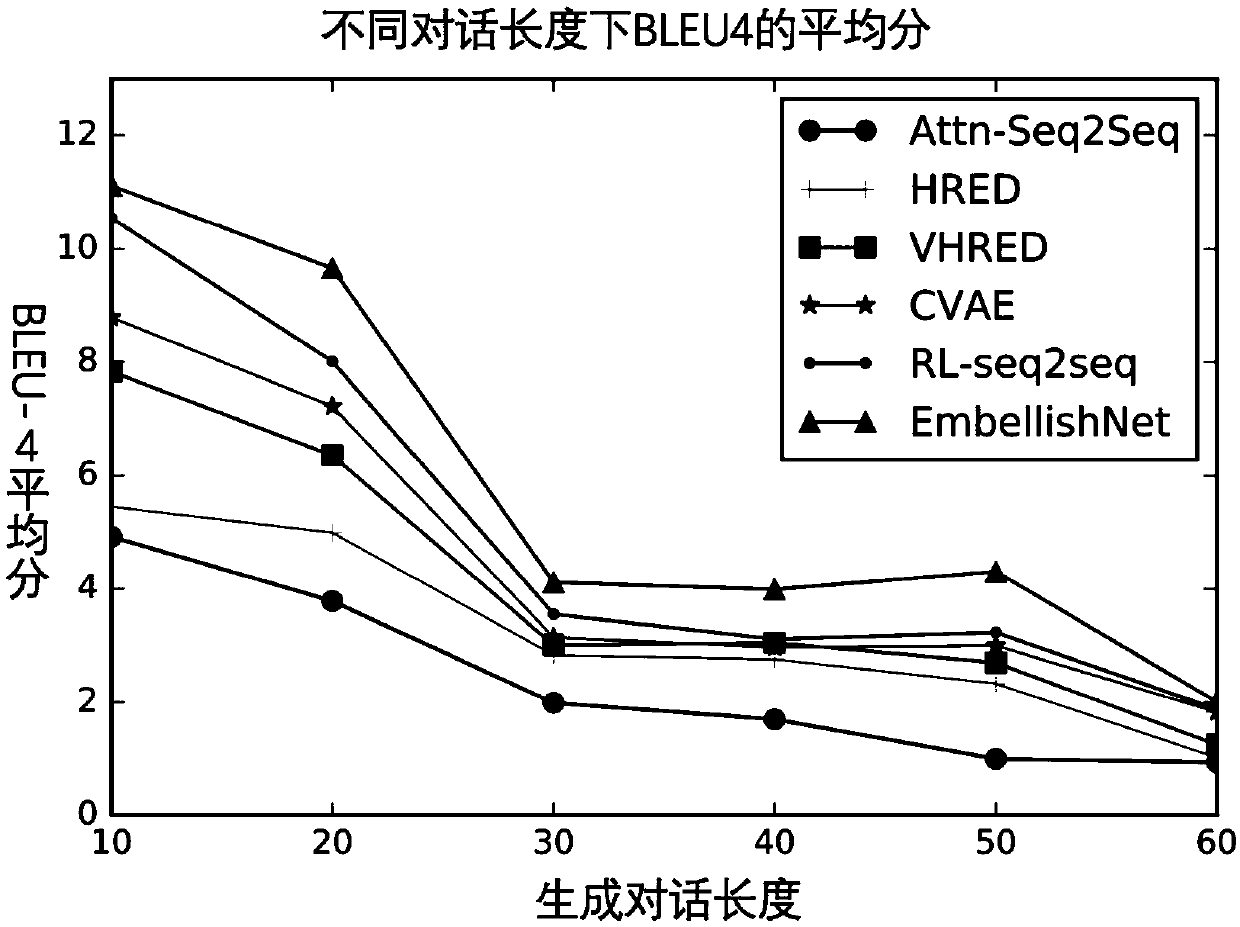

Reinforcement dual-channel sequence learning-based dialog reply generation method and system

ActiveCN108763504AGlobal understandingAvoid Hard-to-Regularize DifficultiesSemantic analysisSpecial data processing applicationsSemantic vectorLearning based

The invention discloses a reinforcement dual-channel sequence learning-based dialog reply generation method and system. The method comprises the following steps of: (1) modeling a context to obtain acontext semantic vector; (2) carrying out combined learning on a current dialog and the context semantic vector by utilizing an encoder so as to current a current dialog vector and an encoder vector;(3) inputting the context semantic vector and the current dialog vector into a decoder so as to obtain a first channel dialog reply draft and a decoder vector; (4) inputting the encoder vector, the decoder vector and the first cannel dialog reply draft into an embellishing device to carry out embellishing, so as to generate an embellished dialog reply of a second channel; (5) optimizing a target function by utilizing a reinforcement learning algorithm; and (6) ending model training and generating and outputting a dialog reply. By utilizing the method and system, dialog generation models can grasp global information more deeply, and replies more according with dialog scenes and having substantial contents can be generated.

Owner:ZHEJIANG UNIV

Innervated stochastic controller for real time business decision-making support

InactiveUS20070094187A1Reduce demandInput/output for user-computer interactionDigital data processing detailsPower gridReinforcement learning algorithm

An Innervated Stochastic Controller optimizes business decision-making under uncertainty through time. The Innervated Stochastic Controller uses a unified reinforcement learning algorithm to treat multiple interconnected operational levels of a business process in a unified manner. The Innervated Stochastic Controller generates actions that are optimized with respect to both financial profitability and engineering efficiency at all levels of the business process. The Innervated Stochastic Controller can be configured to evaluate real options. In one embodiment of the invention, the Innervated Stochastic Controller is configured to generate actions that are martingales. In another embodiment of the invention, the Innervated Stochastic Controller is configured as a computer-based learning system for training power grid operators to respond to grid exigencies.

Owner:THE TRUSTEES OF COLUMBIA UNIV IN THE CITY OF NEW YORK

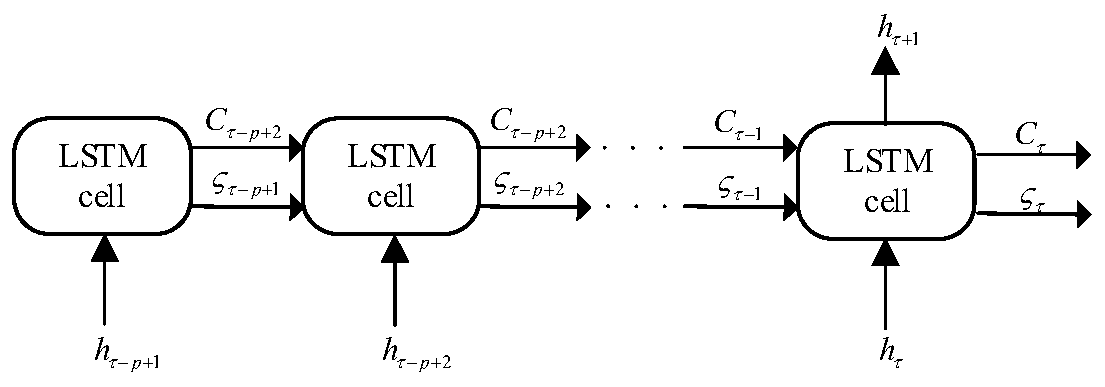

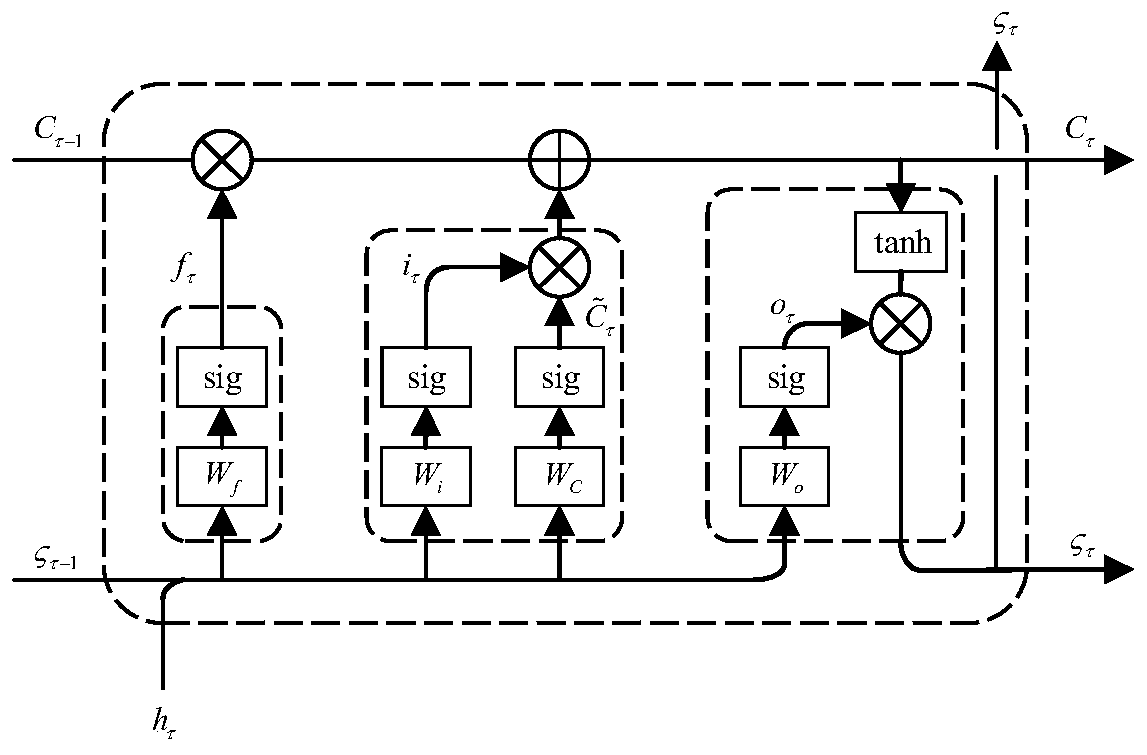

Edge computing task allocation method based on deep Monte Carlo tree search

InactiveCN110427261AFewer searchesLess search performanceProgram initiation/switchingResource allocationEdge serverEdge computing

The invention discloses an edge computing task allocation method based on deep Monte Carlo tree search, and aims to support optimization of resource allocation by an edge server. And the edge server takes the state of a mobile edge computing system as input, the edge server resource scheduling module outputs an optimal resource allocation scheme through a deep reinforcement learning algorithm, andthe mobile equipment terminal unloads the task according to the optimal resource allocation scheme and executes the task together with the edge server. The deep reinforcement learning algorithm is completed by mutual cooperation of DNN, MCTS and LSTM, and compared with greedy search and DQN algorithms, the algorithm provided by the invention is greatly improved in the aspects of optimizing service time delay and optimizing service energy consumption of the mobile terminal.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

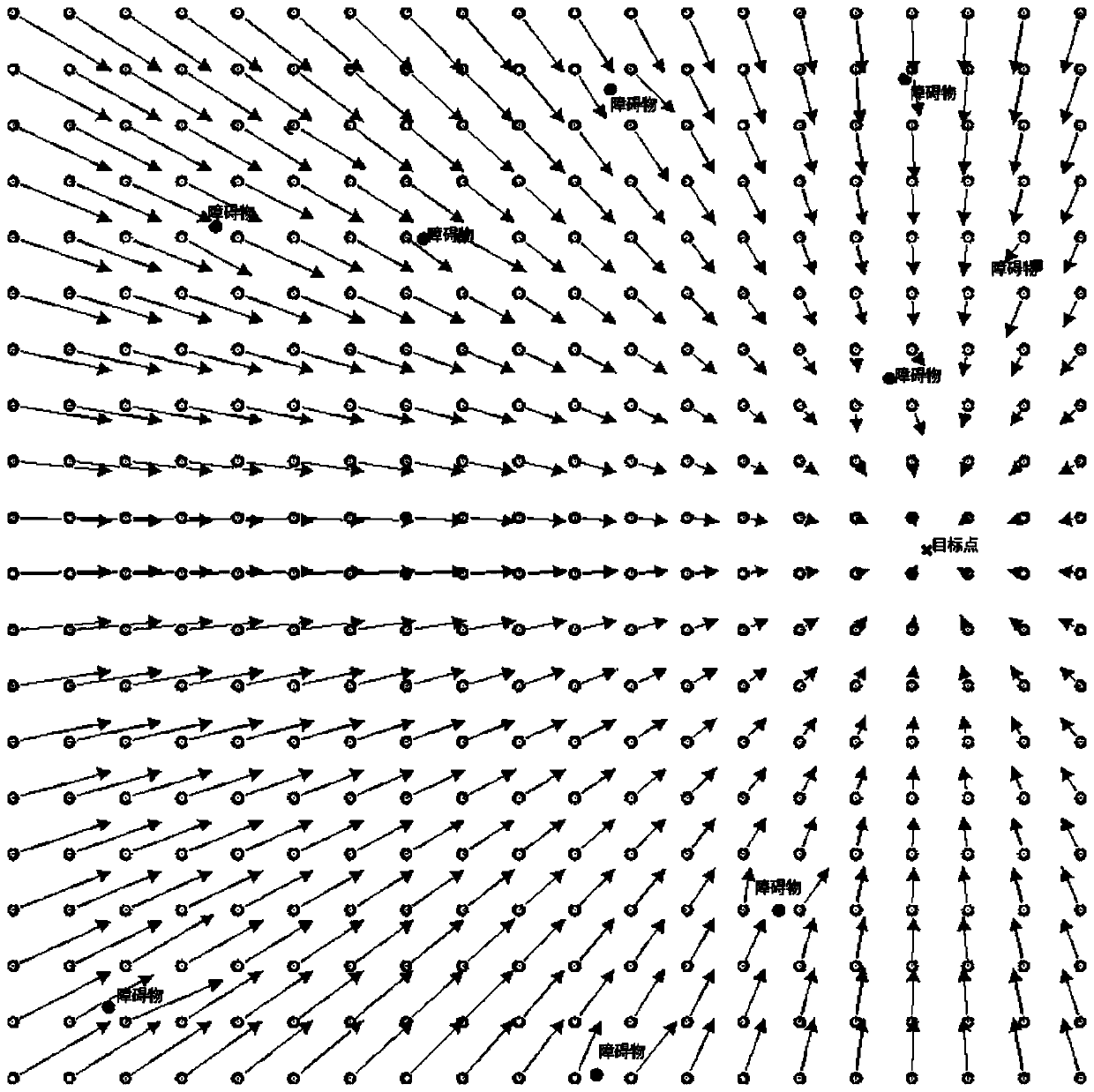

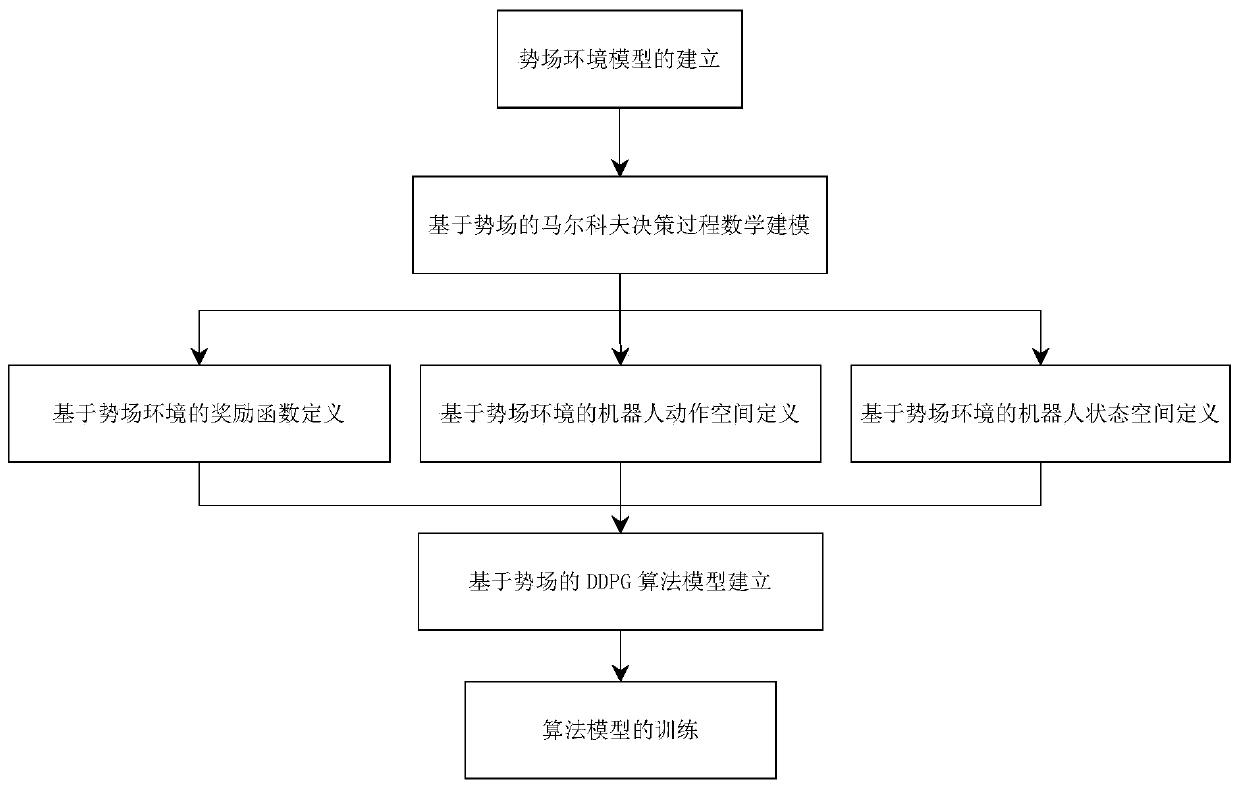

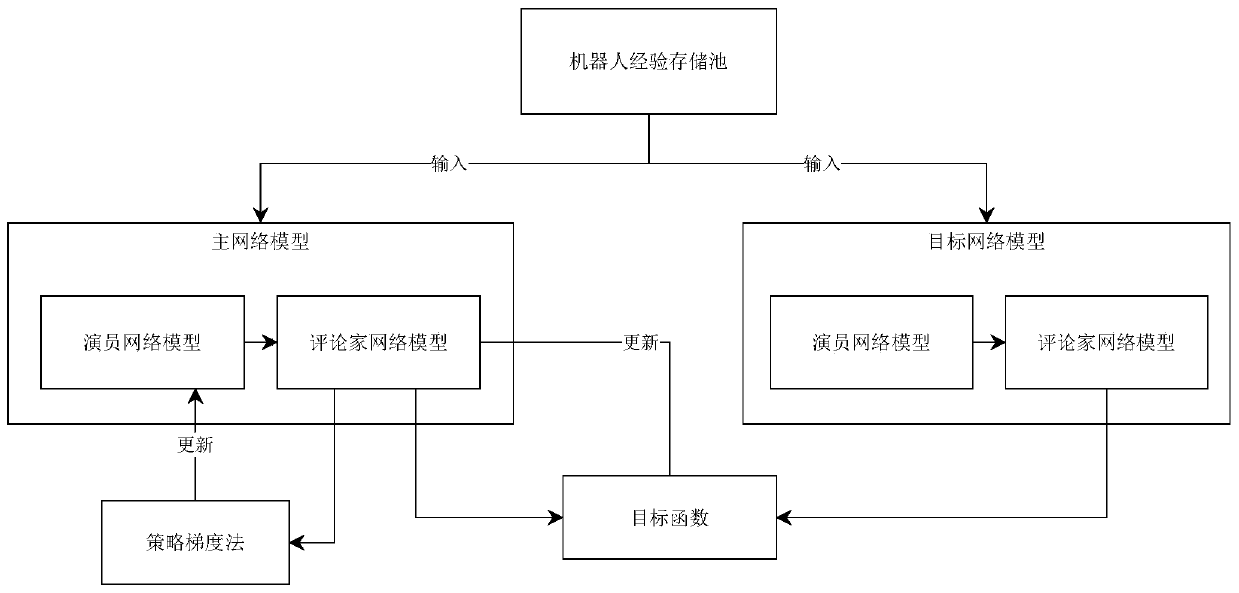

Reinforced learning path planning algorithm based on potential field

InactiveCN110794842ARealize path planningGuaranteed randomnessPosition/course control in two dimensionsReinforcement learning algorithmEngineering

The invention, which belongs to the field of intelligent algorithm optimization, provides a reinforced learning robot path planning algorithm based on a potential field in a complex environment, thereby realizing robot path planning in a complex dynamic environment under the environmental condition that a large number of movable obstacles exist in a scene. The method comprises the following steps:modeling environment space by utilizing the traditional artificial potential field method; defining a state function, a reward function and an action function in a Markov decision-making process according to a potential field model, and training the state function, the reward function and the action function in a simulation environment by utilizing a reinforcement learning algorithm of a depth deterministic strategy gradient; and thus enabling a robot to have the decision-making capability of performing collision-free path planning in a complex obstacle environment. Experimental results showthat the method has advantages of short decision-making time, low system resource occupation, and certain robustness; and robot path planning under complex environmental conditions can be realized.

Owner:BEIJING UNIV OF POSTS & TELECOMM

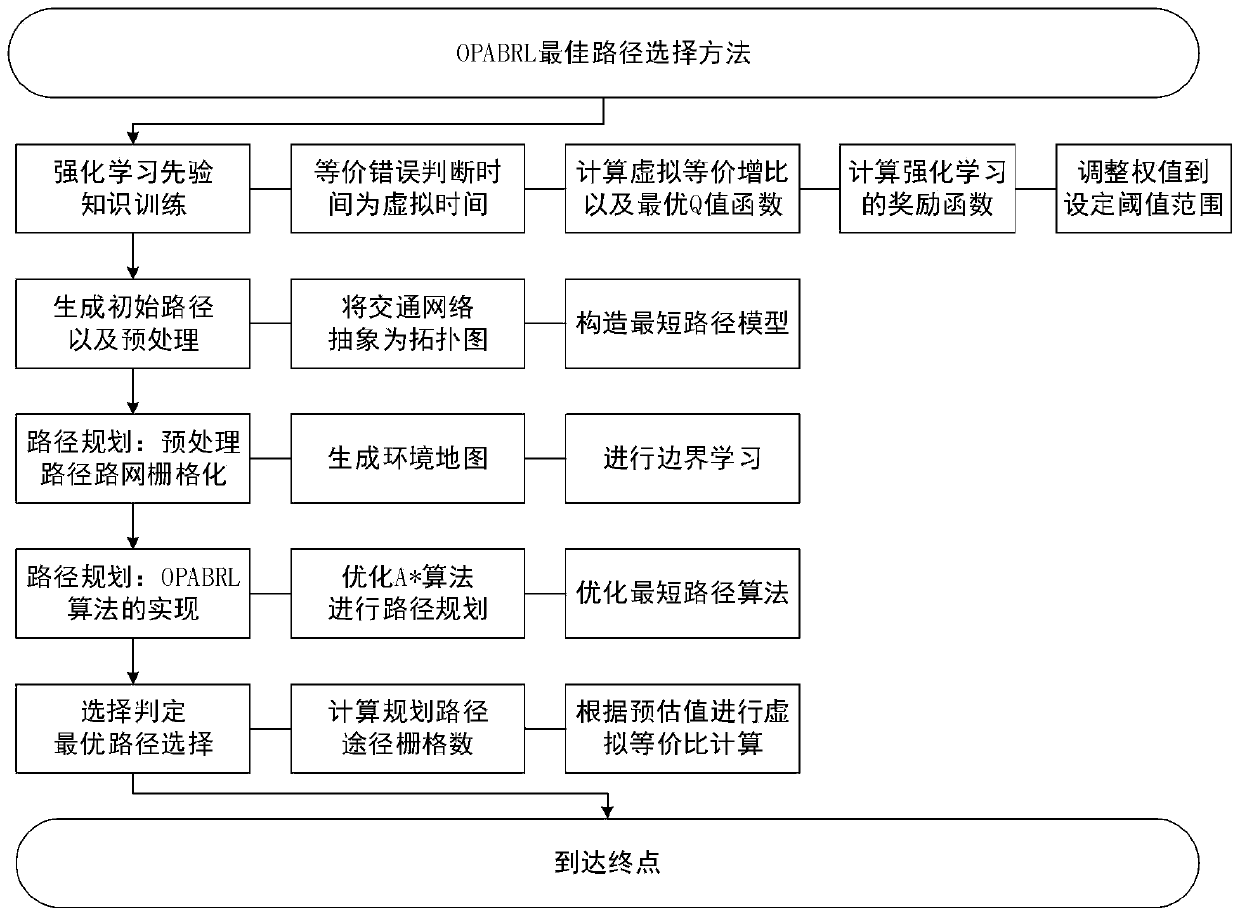

Machine learning strategy based distance preferred optimal path selection method

InactiveCN109947098ARefine search settingsEasy to handleBiological modelsPosition/course control in two dimensionsTraffic networkAlgorithm

Provided is a machine learning strategy based distance preferred optimal path selection method (OPABRL). A local path is planned according to path direction, width, curvature road intersect and faultdetail information in practical application of an intelligent driving vehicle. A reinforced learning algorithm is known and learned to design the prior knowledge based optimal path selection method ofthe reinforced learning strategy, the searching direction for the shortest path is set in the program, and the shortest path searching process is simplified. The path optimization method can be usedto help different types of intelligent driving vehicles plan an optimal path in the traffic network with height, width and weight limits or in accident / jamming conditions smoothly. According to simulation and scene experiments, the method of the invention is higher in efficiency and practicality compared with existing ACO, GA, ANNs and PSO algorithms.

Owner:TIANJIN UNIVERSITY OF TECHNOLOGY

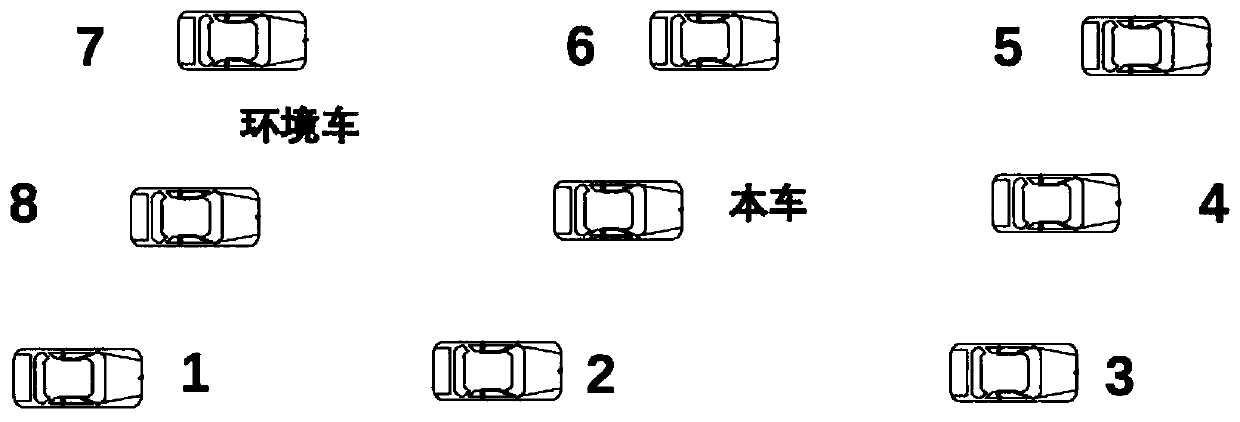

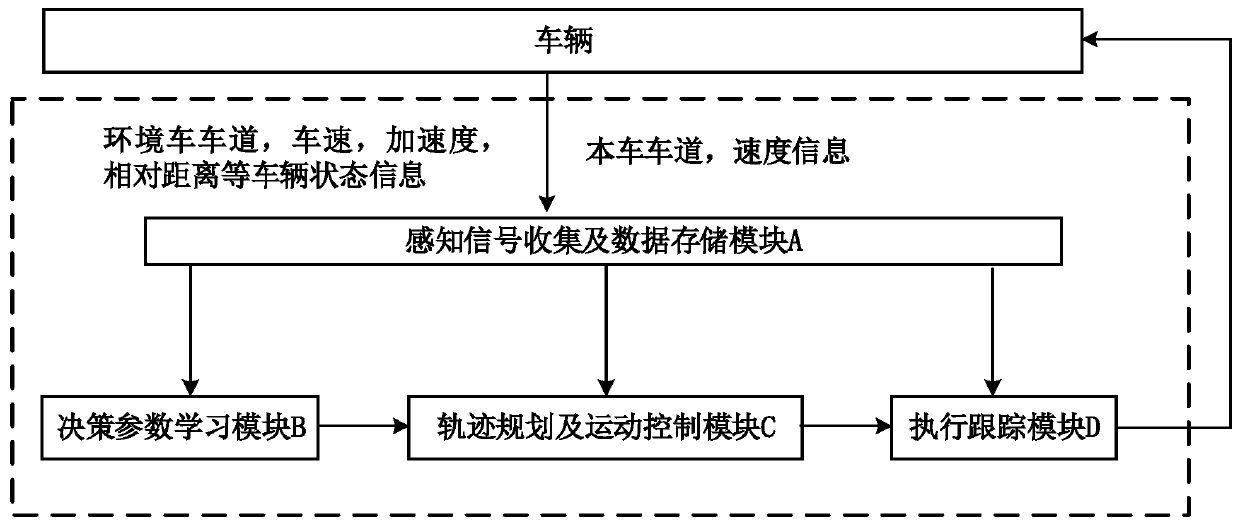

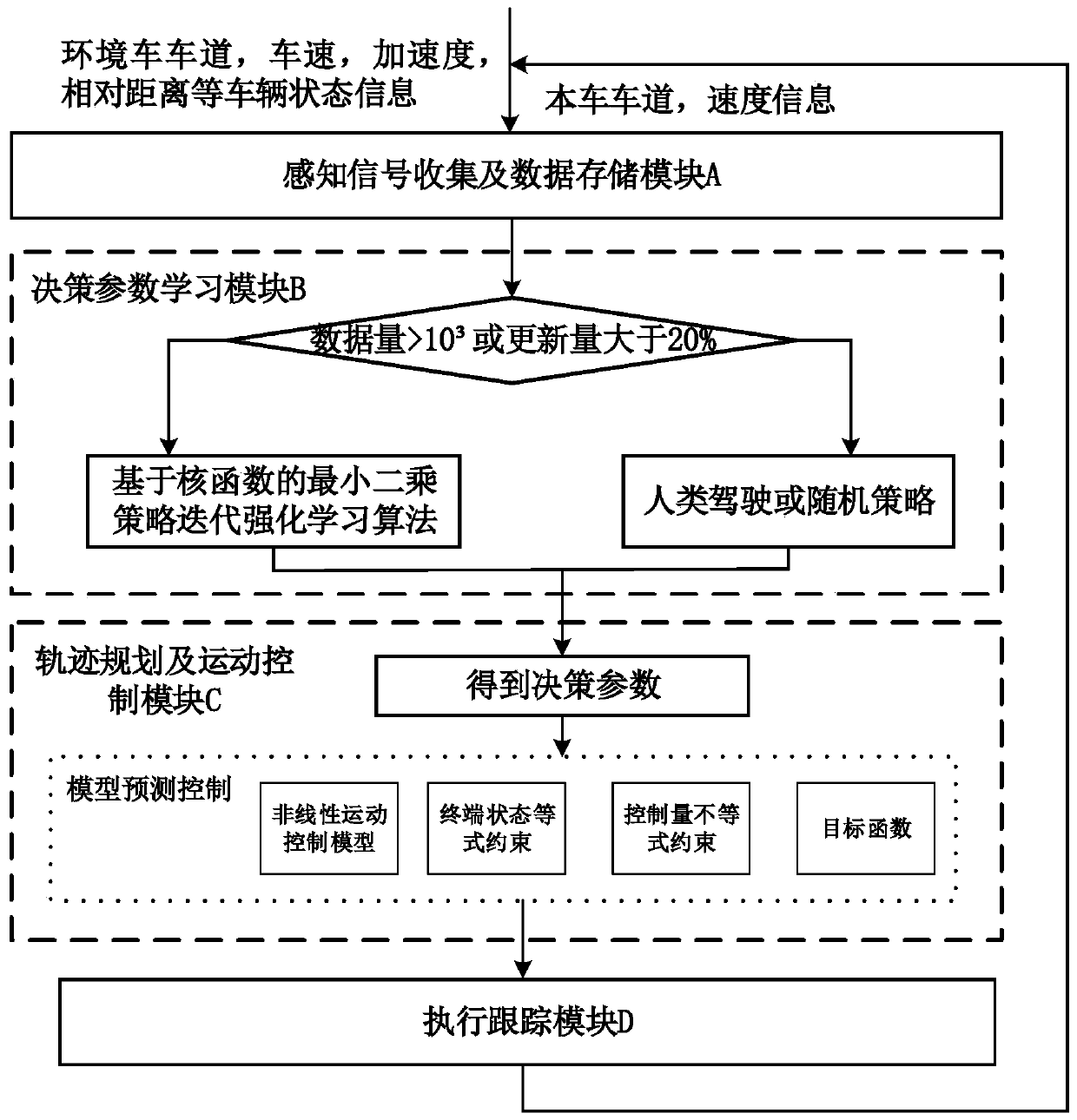

Parameterized learning decision control system suitable for lane changing and lane keeping and method

The invention belongs to the technical field of automobile advanced auxiliary driving and unmanned driving system design and relates to a parameterized learning decision control system suitable for lane changing and lane keeping behaviors and a method. The parameterized learning decision control system suitable for the lane changing and lane keeping behaviors of the invention is designed based ona parameterized decision framework; the system comprises a learning decision-making method and a track planning controller, wherein the learning decision-making method is designed under the lane changing and lane keeping scene of a vehicle on the basis of a reinforcement learning algorithm, and the track planning controller can be correspondingly parameterized under the scene so as to adapt to straight road and bend road planning. The system is suitable for a high-level automatic driving vehicle; the adaptability of the system to the different driving behavior characteristics of different drivers is effectively improved through online learning; and therefore, the system obtains better driving performance with safety guaranteed.

Owner:JILIN UNIV

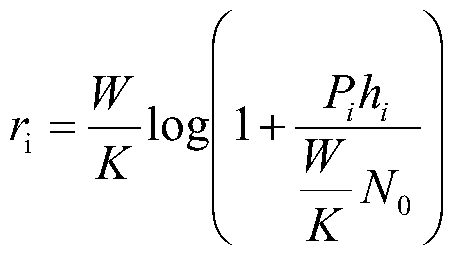

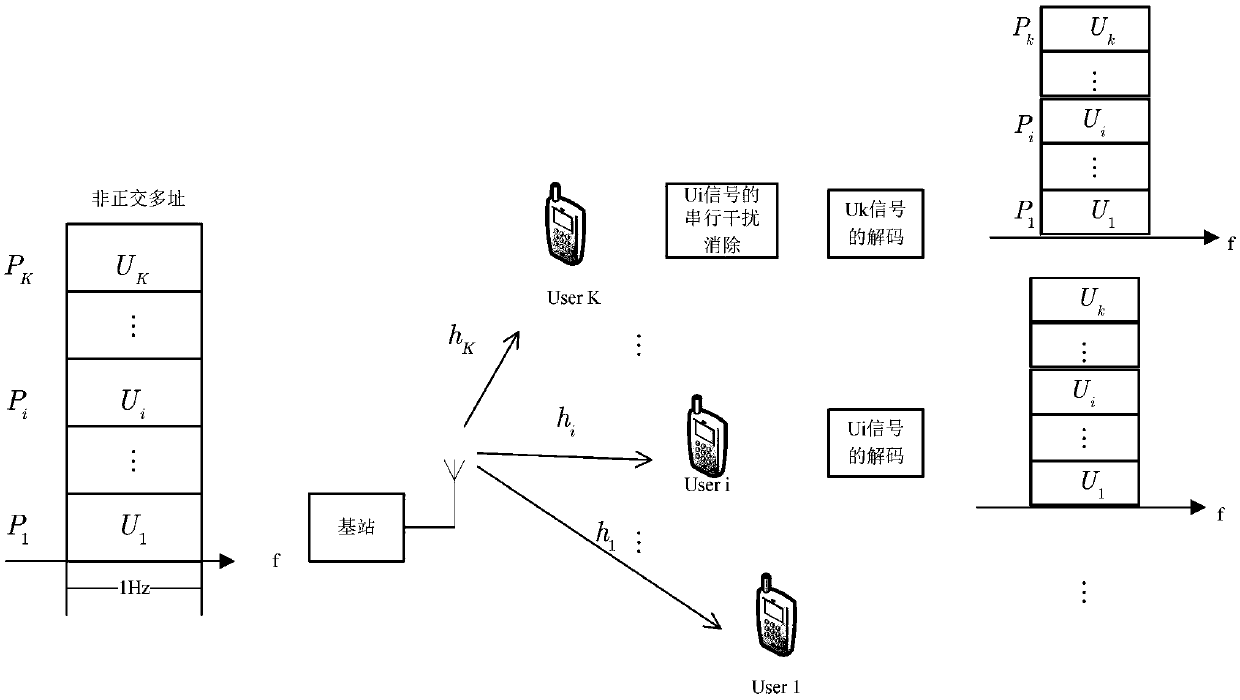

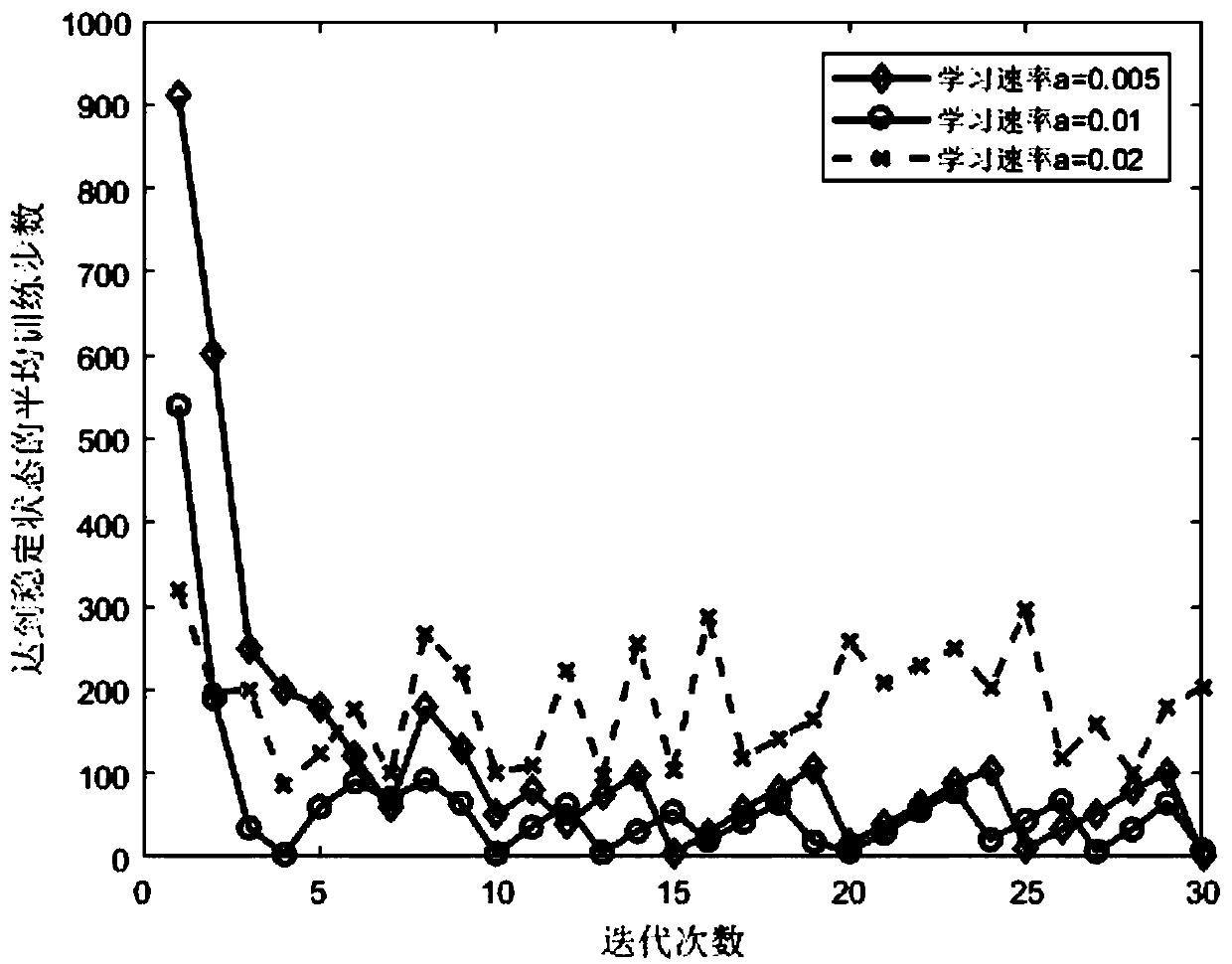

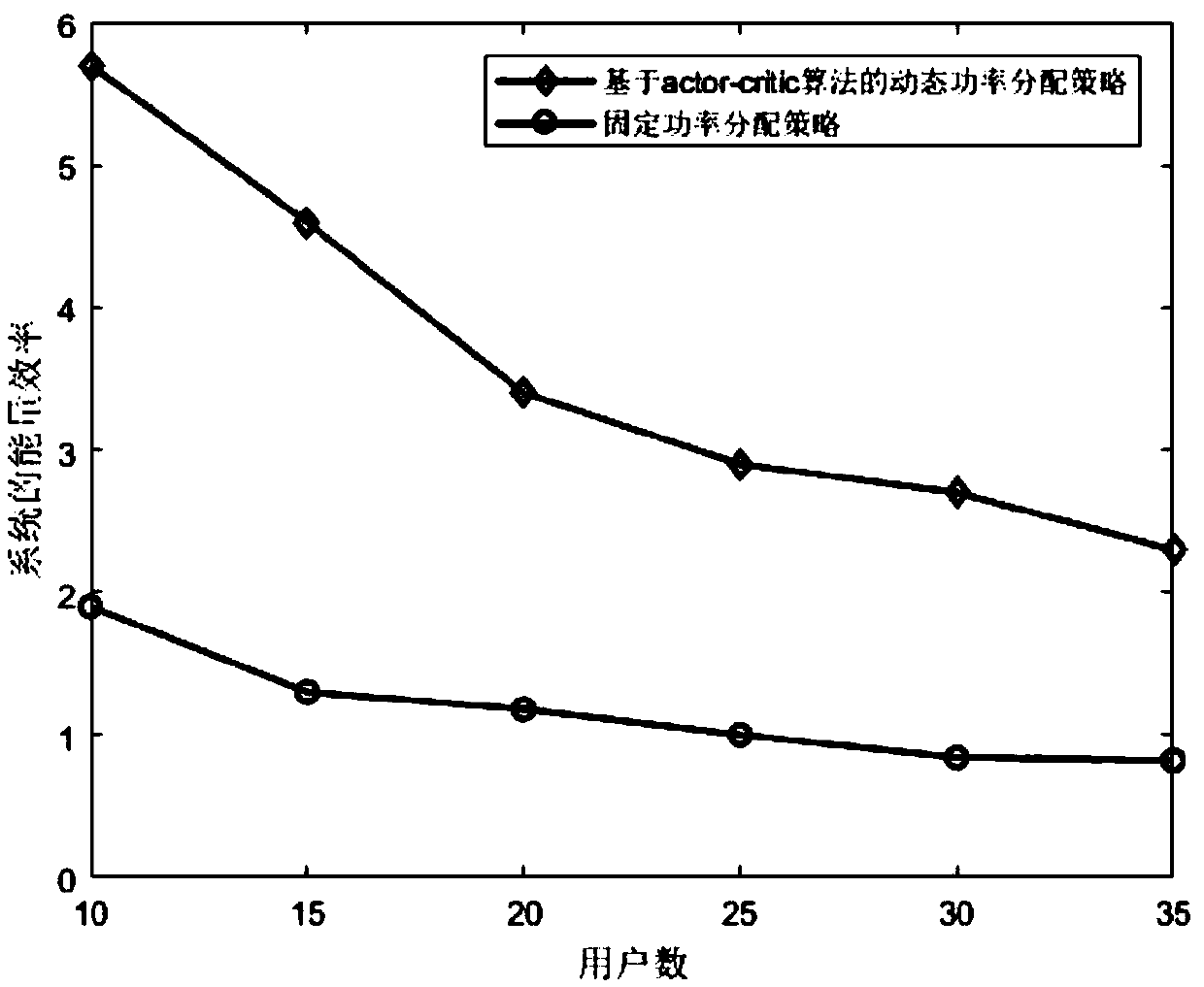

Power allocation method in power domain NOMA based on reinforcement learning algorithm

InactiveCN108924935AImprove energy efficiencyComputing modelsHigh level techniquesAlgorithmTheoretical computer science

The invention discloses a power allocation method in a power domain NOMA based on a reinforcement learning algorithm. Value function update is performed on a Critic part in an Actor-Critic algorithm,and then, an instant reward and a time difference error are fed back into an Actor part in the Actor-Critic algorithm to perform strategy update; and through continuous iteration, a state action valuefunction and a strategy finally tend to the optimal value function and the optimal strategy at last, the energy efficiency of the system is optimal at the moment, and the problems that the complexityof the existing power allocation method is high and that a good effect cannot be achieved on the optimization of the performance of the system are solved.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

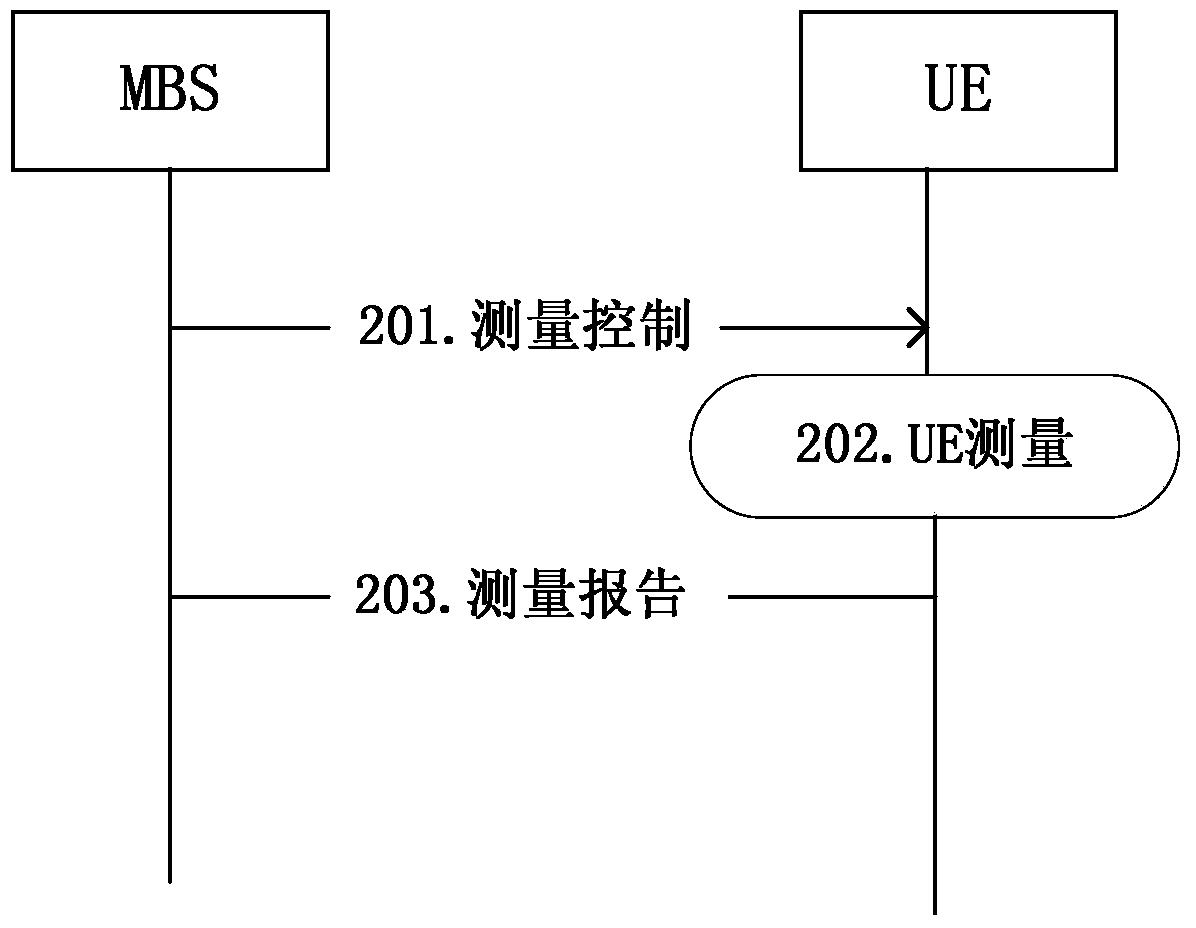

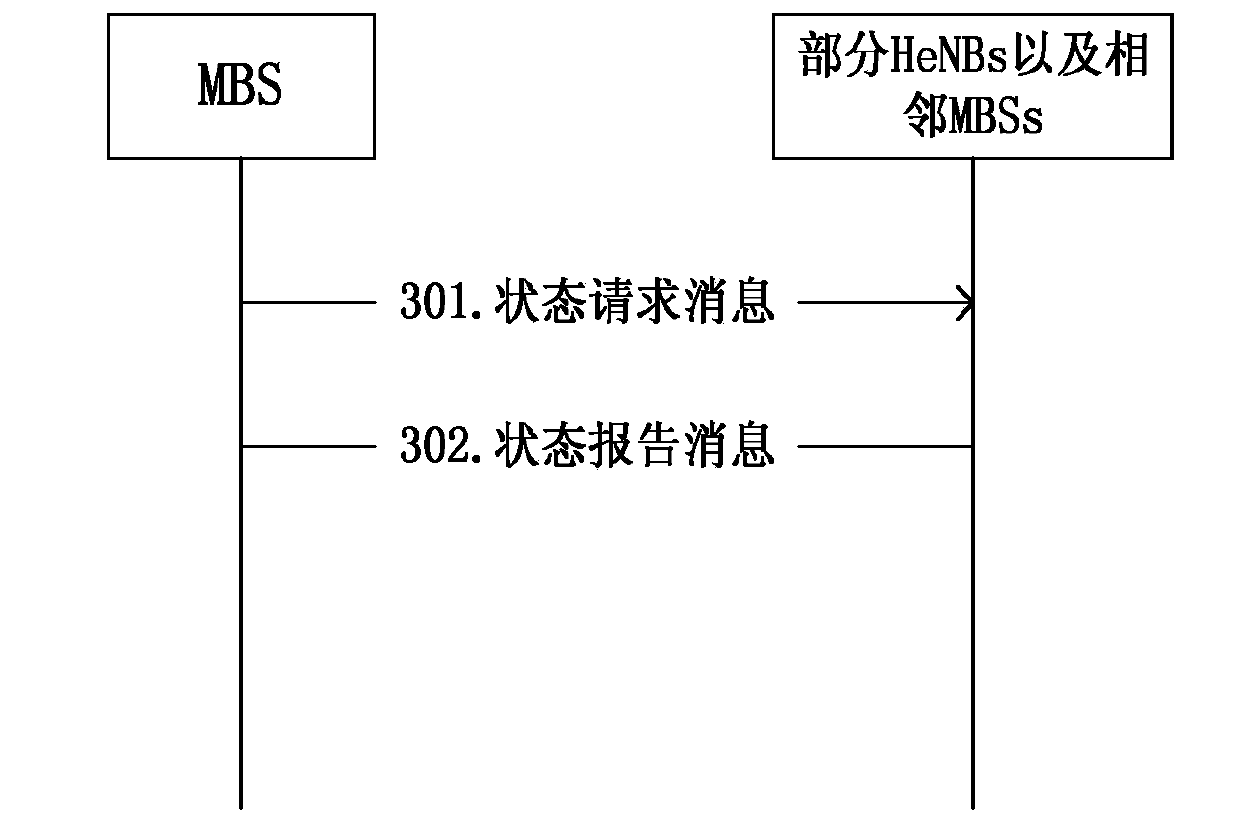

Method for achieving dynamic load balancing in heterogeneous network

InactiveCN103763747ARealize intelligenceImprove performanceNetwork planningReinforcement learning algorithmHeterogeneous network

The invention discloses a method for achieving dynamic load balancing in the heterogeneous network, and relates to the technical field of the heterogeneous communication network. According to the method, each base station senses the changes of network loads in the heterogeneous network, historical network load information is learnt, the network load situation in the future is forecasted, inter-cell handover or intra-cell handover is carried out on users newly connected into the network to achieve load balancing according to the forecasted load value of each base station, and the reinforcement learning algorithm, the optimal relevance algorithm, the bias factor solution algorithm and the optimal resource distribution algorithm based on the load balancing are included. The method achieves the intelligence of the network in a sense, combines the rapid load balancing with the dynamic network resource distribution adjustment, and improves the performance of the whole network. The method can also be used in the homogeneous network, and has good compatibility.

Owner:重庆固守大数据有限公司

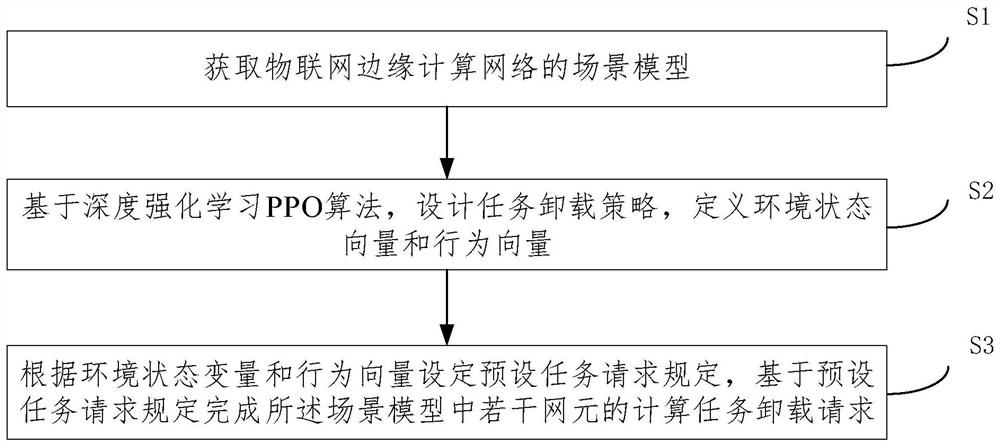

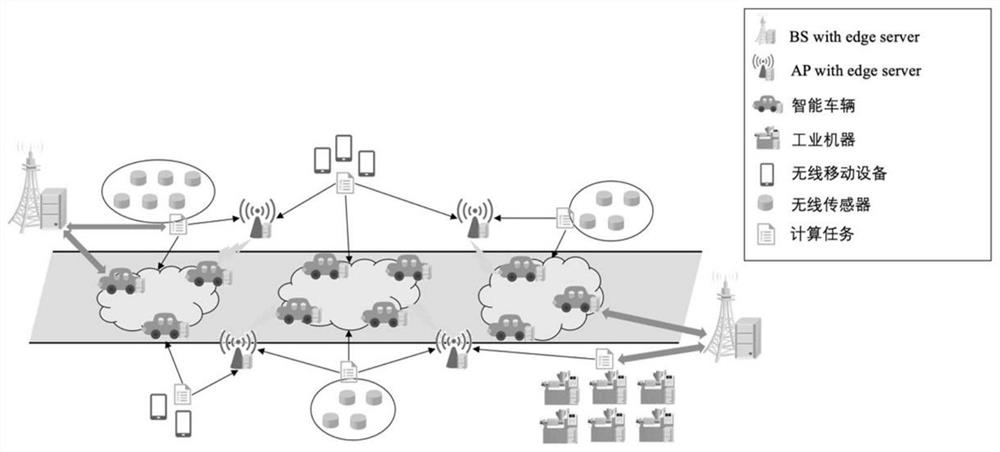

Internet of Things edge computing task unloading method and system

ActiveCN111835827AReduce latencyReduce complexityData switching networksEdge computingReinforcement learning algorithm

The embodiment of the invention provides an Internet of Things edge computing task unloading method and system. The method comprises the steps of obtaining a scene model of an Internet of Things edgecomputing network; designing a task unloading strategy based on a deep reinforcement learning PPO algorithm, and defining an environment state vector and a behavior vector; and setting a preset task request specification according to the environment state variable and the behavior vector, and completing calculation task unloading requests of a plurality of network elements in the scene model basedon the preset task request specification. According to the embodiment of the invention, the edge computing technology and the deep reinforcement learning technology are introduced under the scene ofthe Internet of Things, step-by-step learning is achieved by using the PPO algorithm in deep reinforcement learning and a neural network model is perfected, and a better edge computing task unloadingstrategy is applied, so that the network delay can be flexibly reduced under the condition of ensuring low complexity.

Owner:BEIJING UNIV OF POSTS & TELECOMM +3

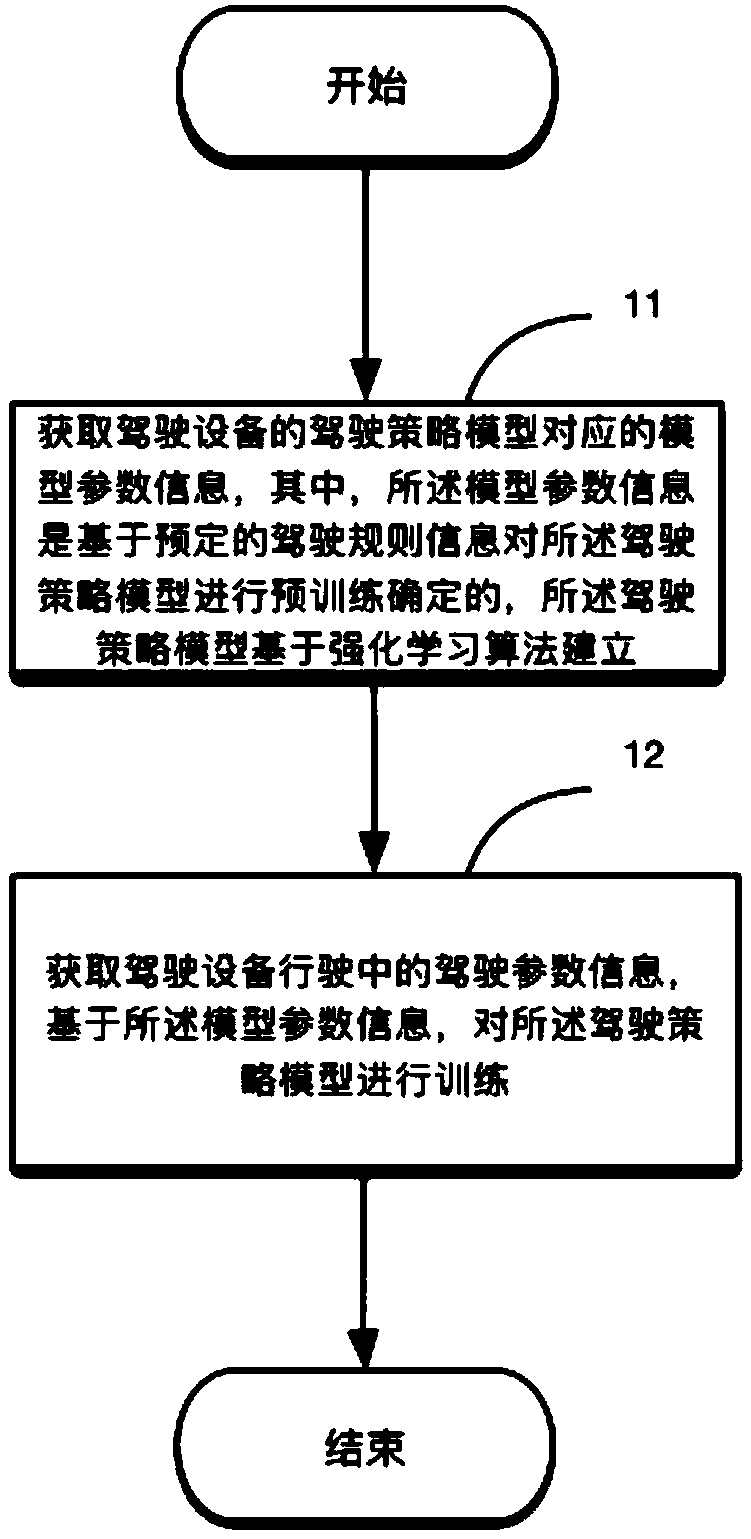

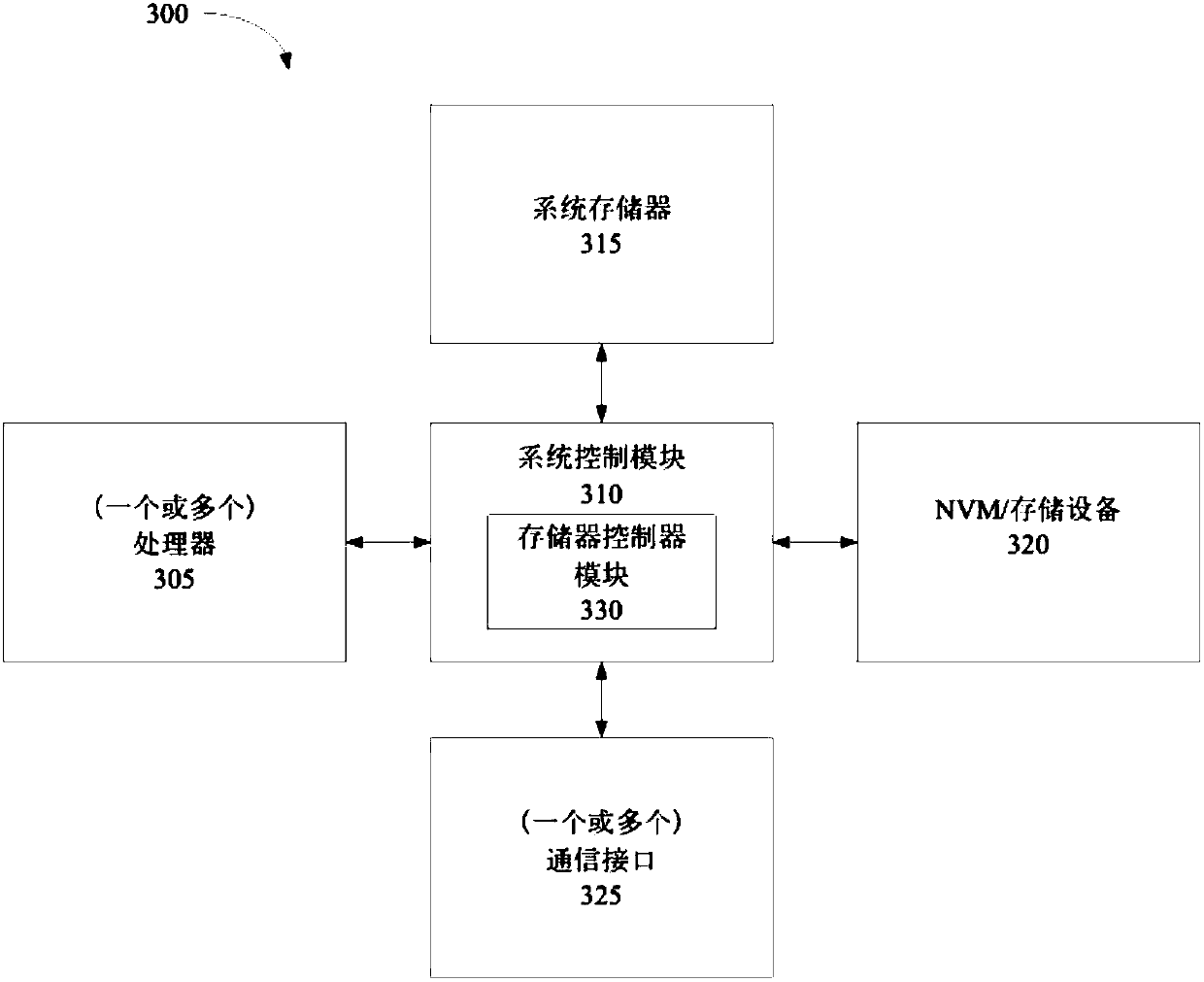

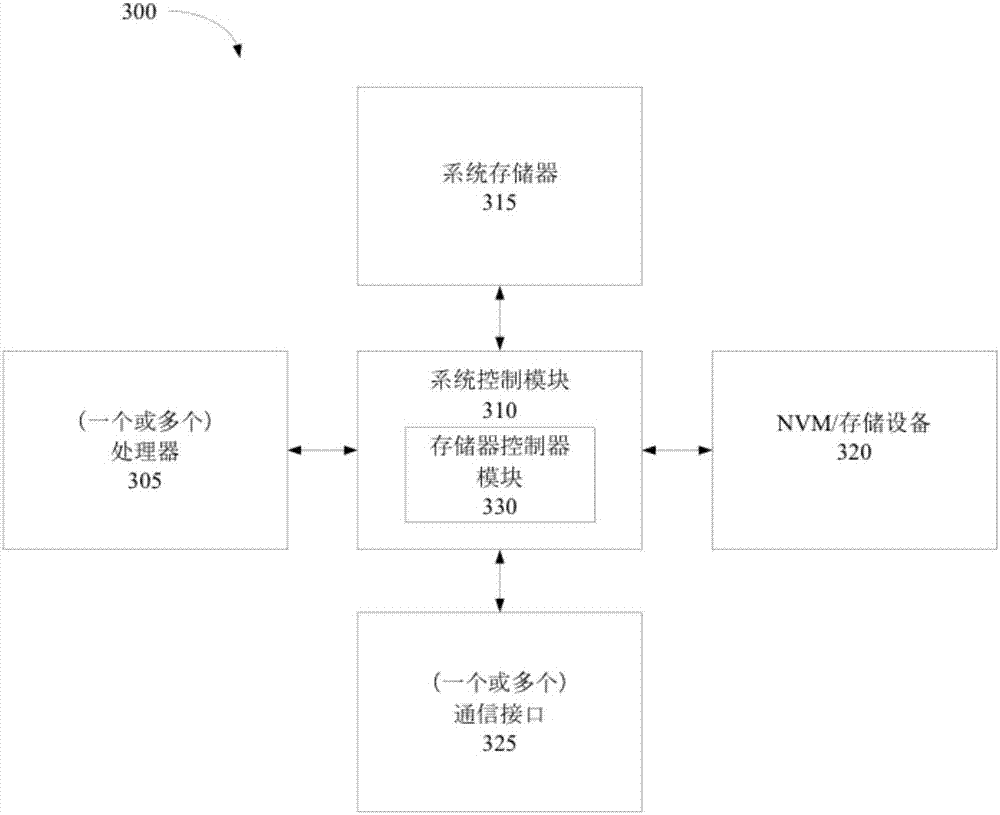

Driving strategy model training method and device

ActiveCN107862346AAvoid damageImprove rationalityCharacter and pattern recognitionMachine learningSimulationReinforcement learning algorithm

The invention aims at providing a driving strategy model training method and device. The method comprises acquiring model parameter information corresponding to a driving strategy model of a driving device, wherein the model parameter information is determined by training the driving strategy model on the basis of preset driving rule information, and the driving strategy model is established on the basis on reinforcement learning algorithms; acquiring the driving parameter information of the driving device during a driving process, and based on the model parameter information, training the driving strategy model. Compared with the prior art, the driving strategy model training method avoids exploration from scratch on training the driving strategy model, instead, the driving device can drive just like having learnt driving rules before training starts, so that the training process of the driving strategy model on the basis can be greatly shortened, and meanwhile, the number of times ofunreasonable driving strategies as well as damage to vehicles during the training process can be greatly reduced.

Owner:UISEE TECH BEIJING LTD

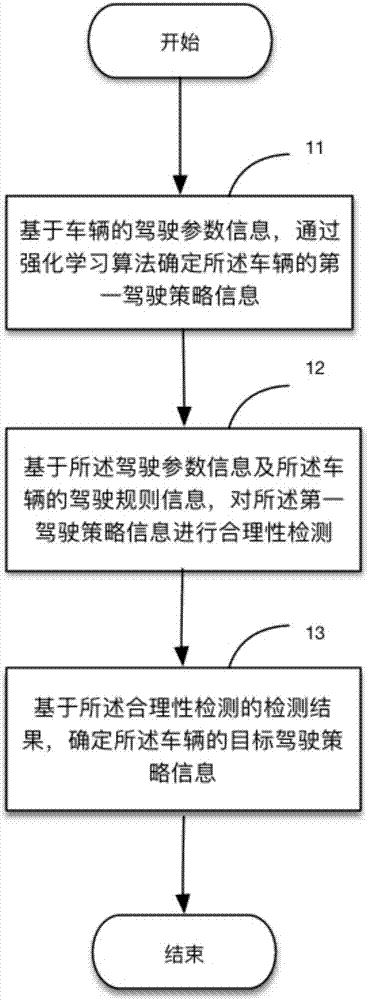

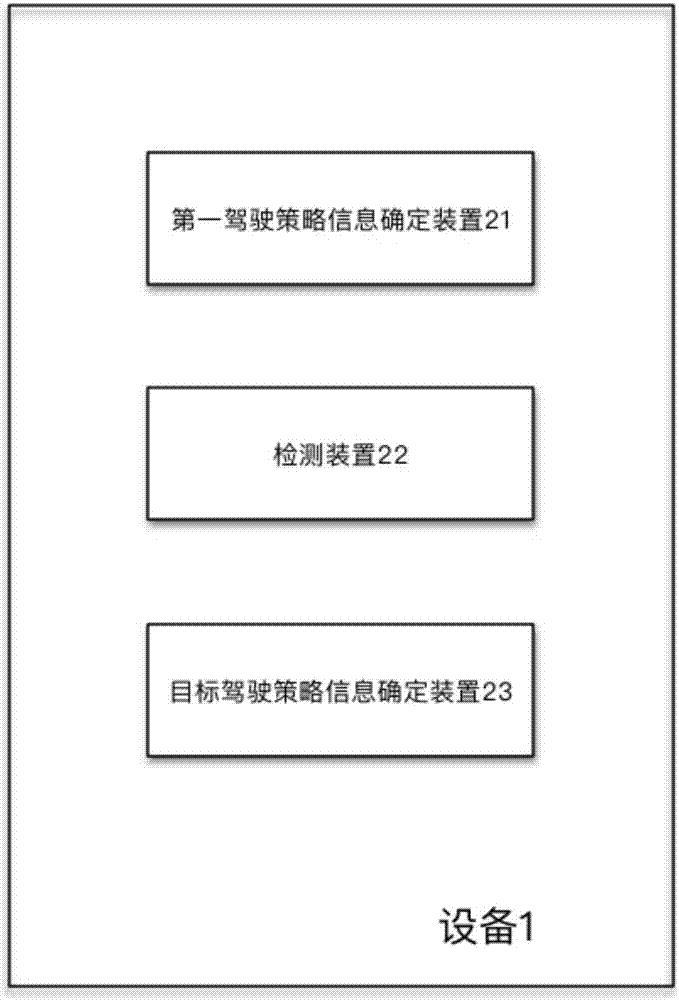

Method and device for determining driving strategy based on reinforcement learning and rule

ActiveCN108009587AEasy to controlImprove rationalityCharacter and pattern recognitionNeural learning methodsAlgorithmReinforcement learning algorithm

The purpose of the present application is to provide a method or a device for determining a driving strategy based on reinforcement learning and rule fusion. The method comprises: based on the drivingparameter information of a vehicle, determining first driving strategy information of the vehicle through a reinforcement learning algorithm; based on the driving parameter information and the driving rule information of the vehicle, performing rationality detection on the first driving strategy information; and determining target driving strategy information of the vehicle based on the detectionresult of the rationality detection. Compared with the prior art, according to the technical scheme of the present application, the first driving strategy information calculated and determined by thereinforcement learning algorithm is constrained by the rule, so that the method for determining the driving strategy provided by the present application is more intelligent than the existing method for implementing vehicle control by using the rule algorithm, or the method for implementing vehicle control by using the reinforcement learning algorithm, and the rationality and stability of the finally determined driving strategy are improved.

Owner:UISEE TECH BEIJING LTD

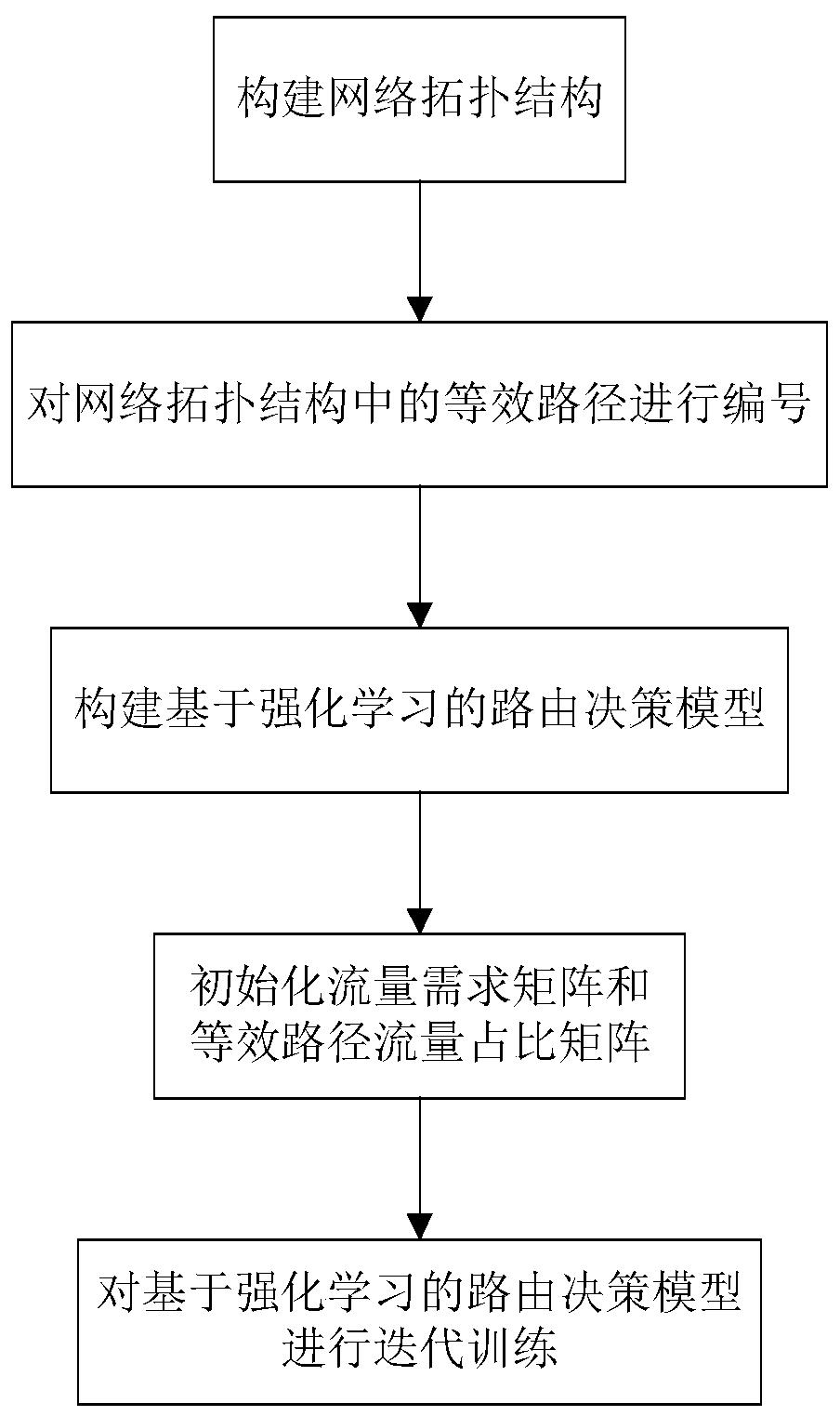

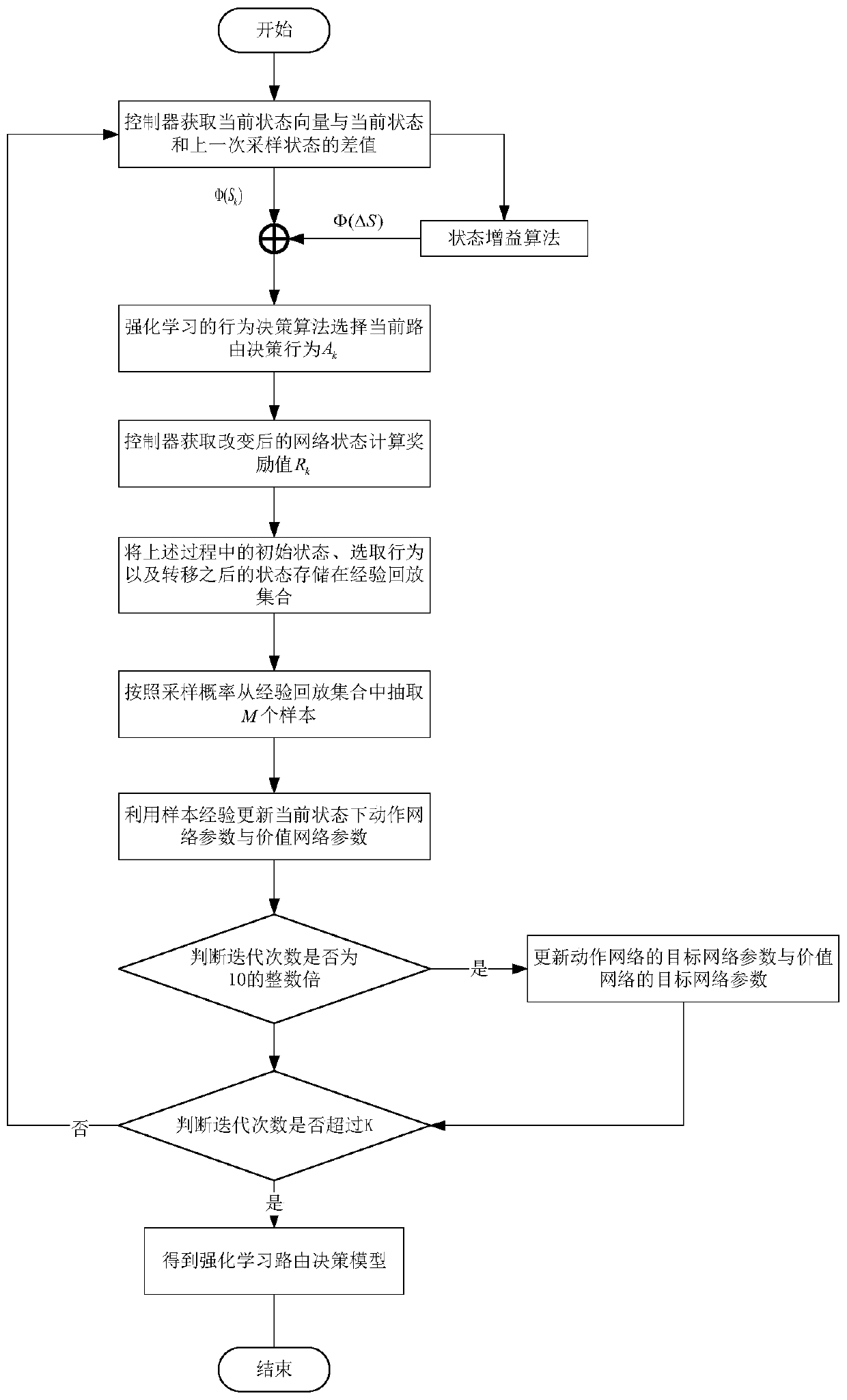

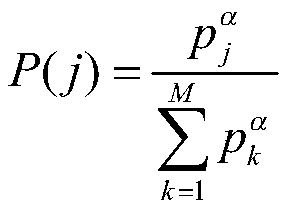

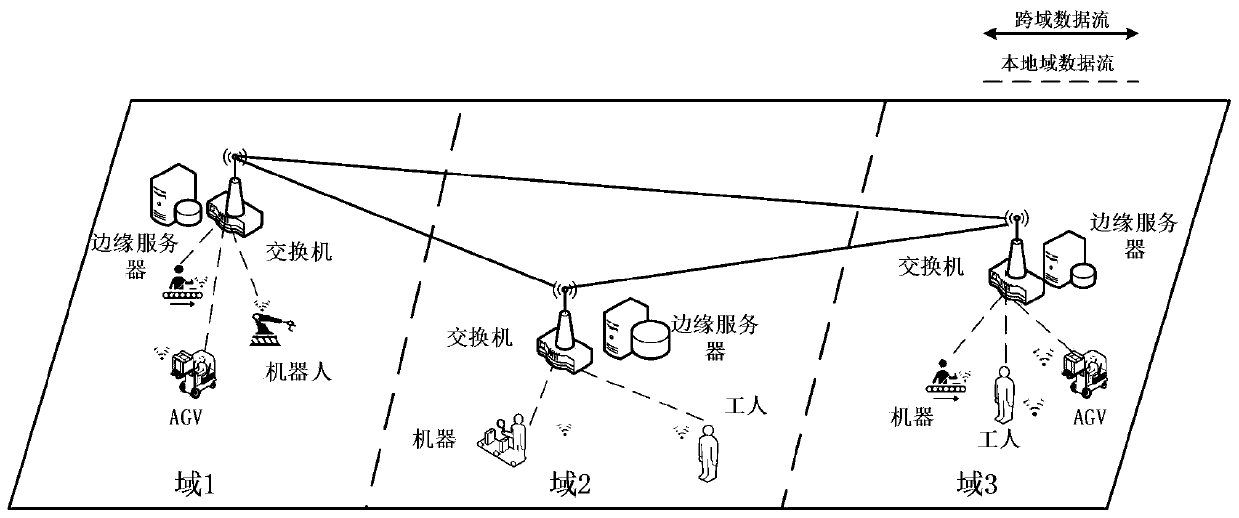

Intelligent routing decision method based on DDPG reinforcement learning algorithm

ActiveCN110611619AImprove equalization performanceSolve the congestion problem caused by unbalanced traffic distributionData switching networksNeural learning methodsRouting decisionData center

The invention provides an intelligent routing decision method based on reinforcement learning, in particular to an intelligent routing decision method based on a DDPG reinforcement learning algorithm.The method aims at designing an intelligent routing decision by utilizing reinforcement learning, balancing an equivalent path flow load and improving the processing capacity of a network for burst flow, an experience decision mechanism based on a sampling probability is adopted, the probability that experience with poorer performance is selected is higher, and the training efficiency of an algorithm is improved. In addition, noise is added into neural network parameters, system exploration is facilitated, and algorithm performance is improved. The method comprises the following steps: 1) constructing a network topology structure; 2) numbering equivalent paths in the network topology structure G0; (3) constructing a routing decision model based on a DDPG reinforcement learning algorithm,(4) initializing a flow demand matrix DM and an equivalent path flow proportion matrix PM, and (5) carrying out iterative training on the routing decision model based on reinforcement learning, and the method can be used for scenes such as a data center network.

Owner:XIDIAN UNIV +1

Task unloading method based on power control and resource allocation

ActiveCN111245651AReduce offload overheadReduce time complexityData switching networksQuality of serviceOptimal decision

The invention discloses a task unloading method based on power control and resource allocation, and relates to the field of industrial Internet of Things. The method comprises the steps: establishinga cross-domain network model of an industrial field; constructing a calculation model of an equipment task; according to the model, constructing a mixed integer nonlinear programming model for communication power control, resource allocation and calculation unloading problems; decomposing a problem into three sub-problems; solving an optimal communication power and a resource allocation strategy by utilizing convex optimization knowledge, a Lagrange multiplier method and a KKT (Karush-Kuhn-Tucker) condition; after substituting an original target function, solving an optimal decision of a taskcalculation position by utilizing a deep reinforcement learning algorithm, and obtaining an optimal strategy of communication power, resource allocation and the calculation position of task unloading.The method can obtain the optimal strategy in industrial network task unloading, and has the technical effects of reducing the task delay, reducing the equipment energy consumption and ensuring the service quality.

Owner:SHANGHAI JIAO TONG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com