Patents

Literature

1739 results about "Edge server" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Differentiated content and application delivery via internet

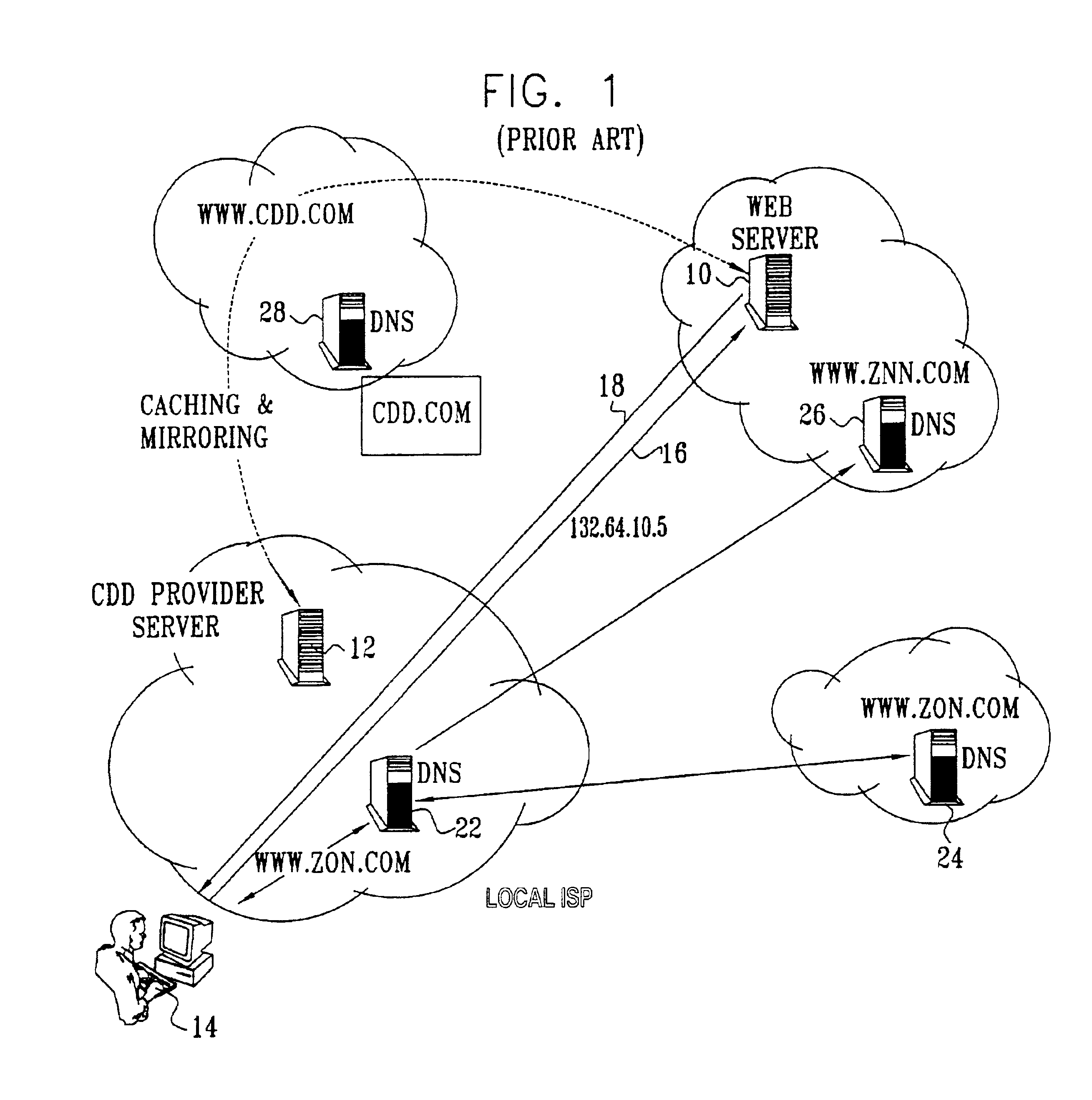

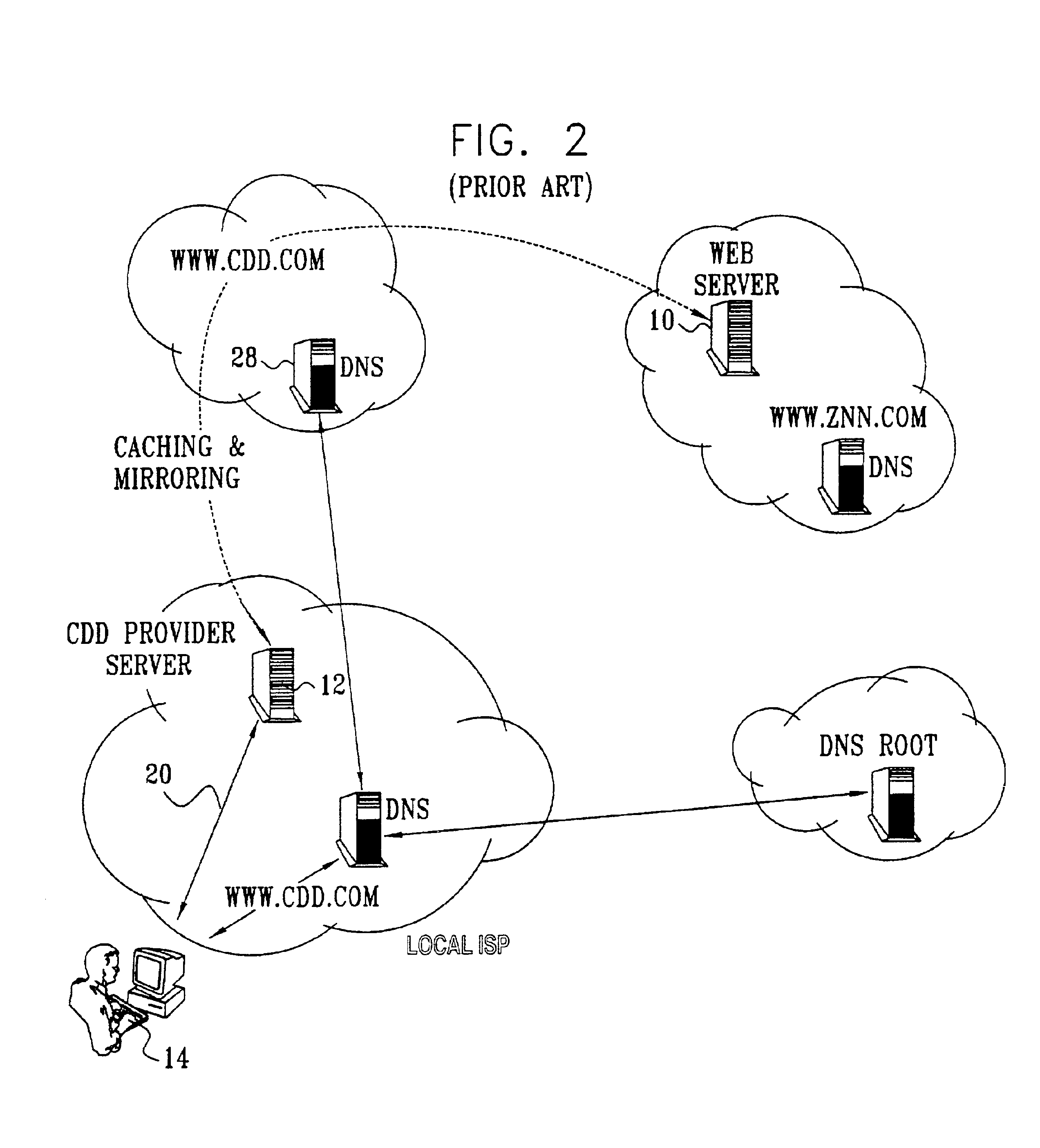

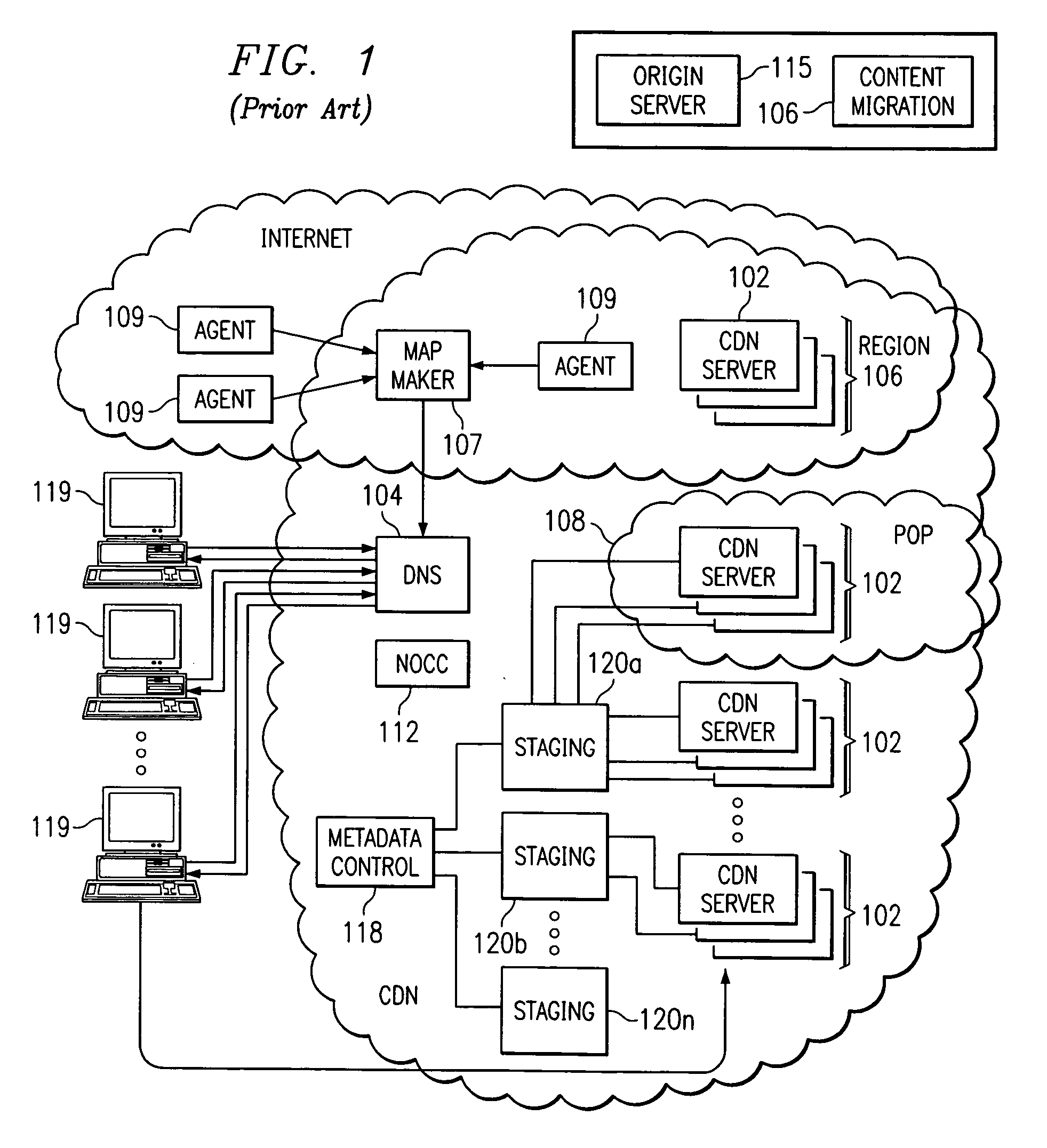

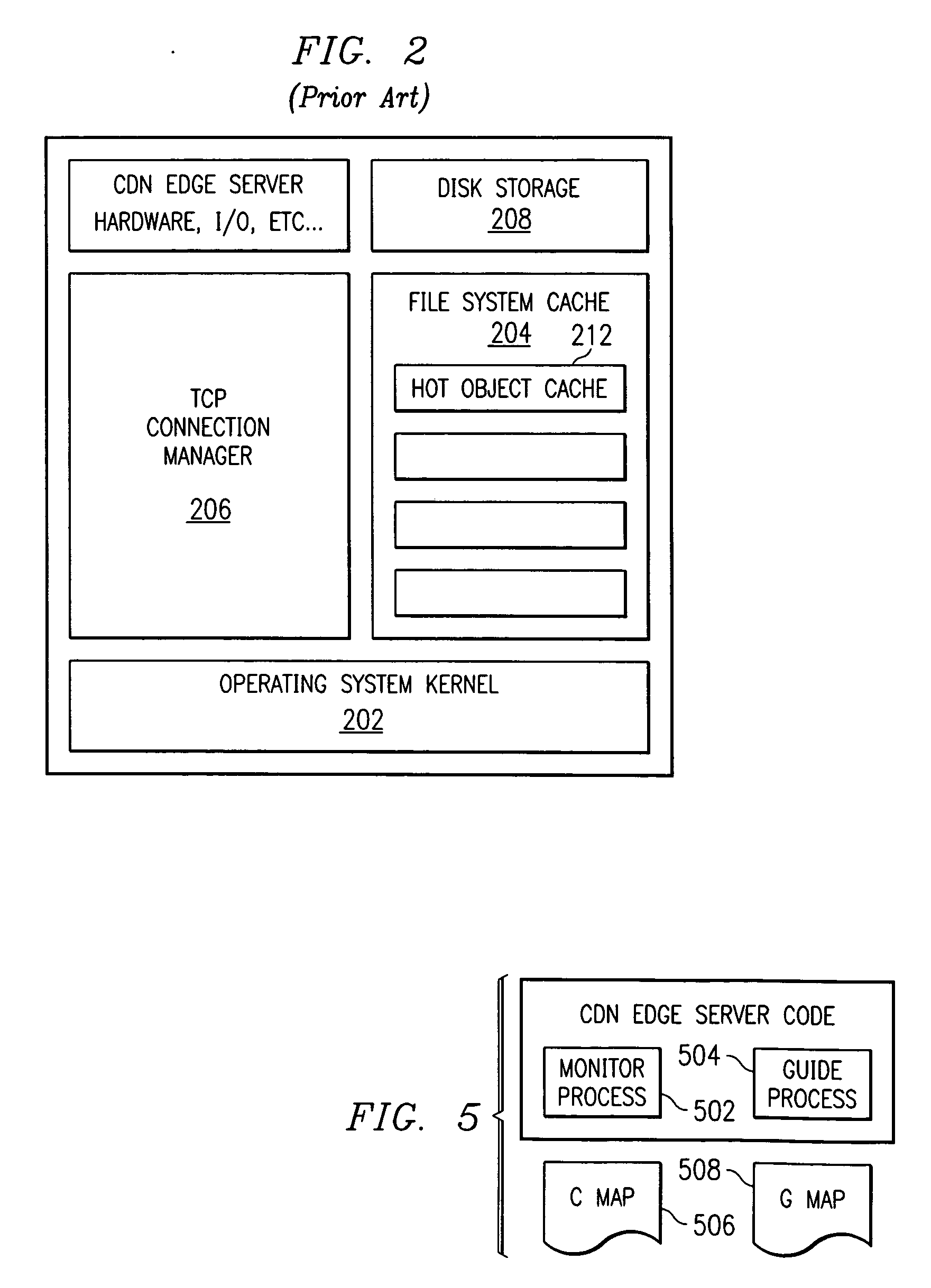

InactiveUS20020010798A1Multiple digital computer combinationsWebsite content managementScalable systemEdge server

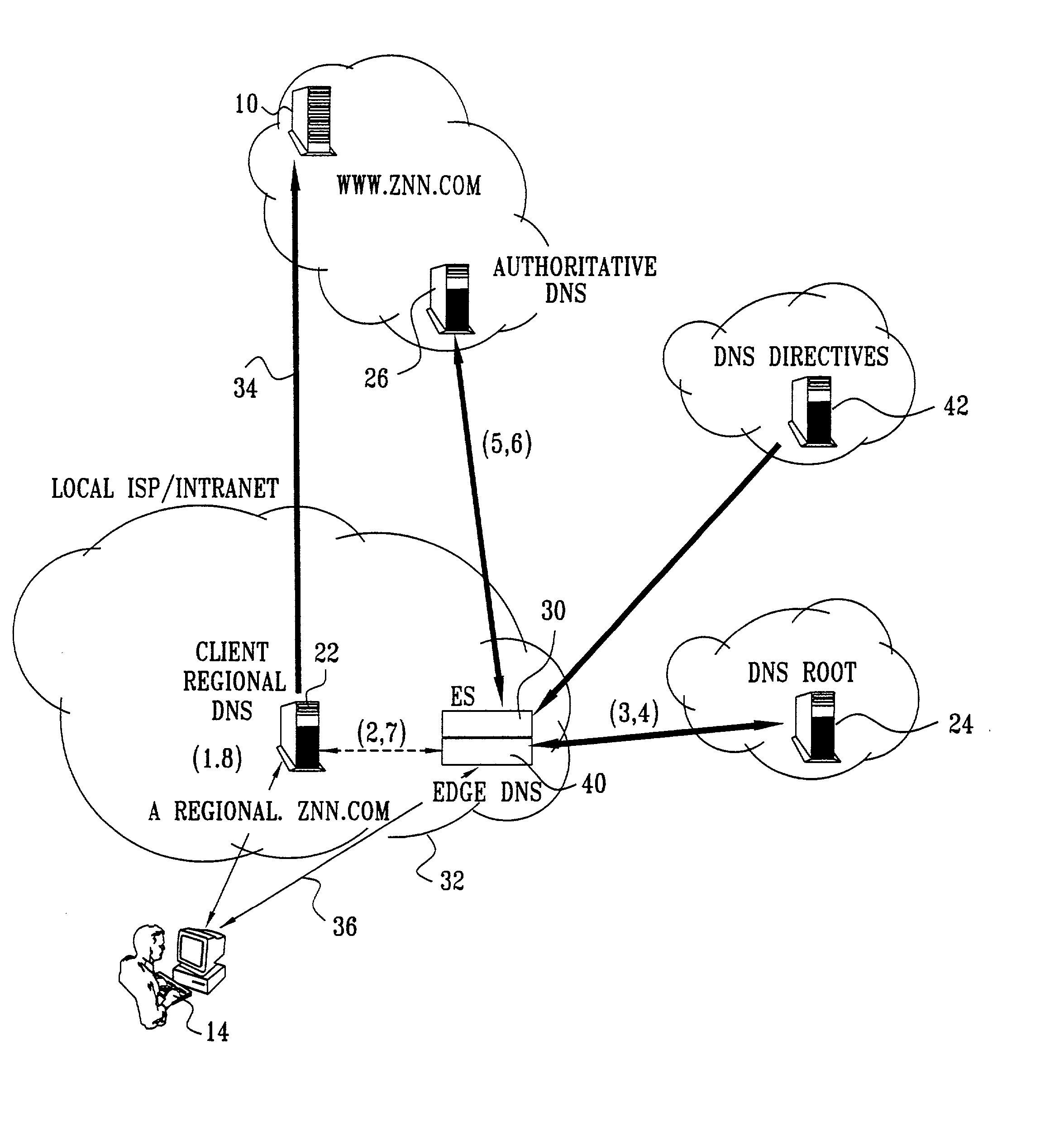

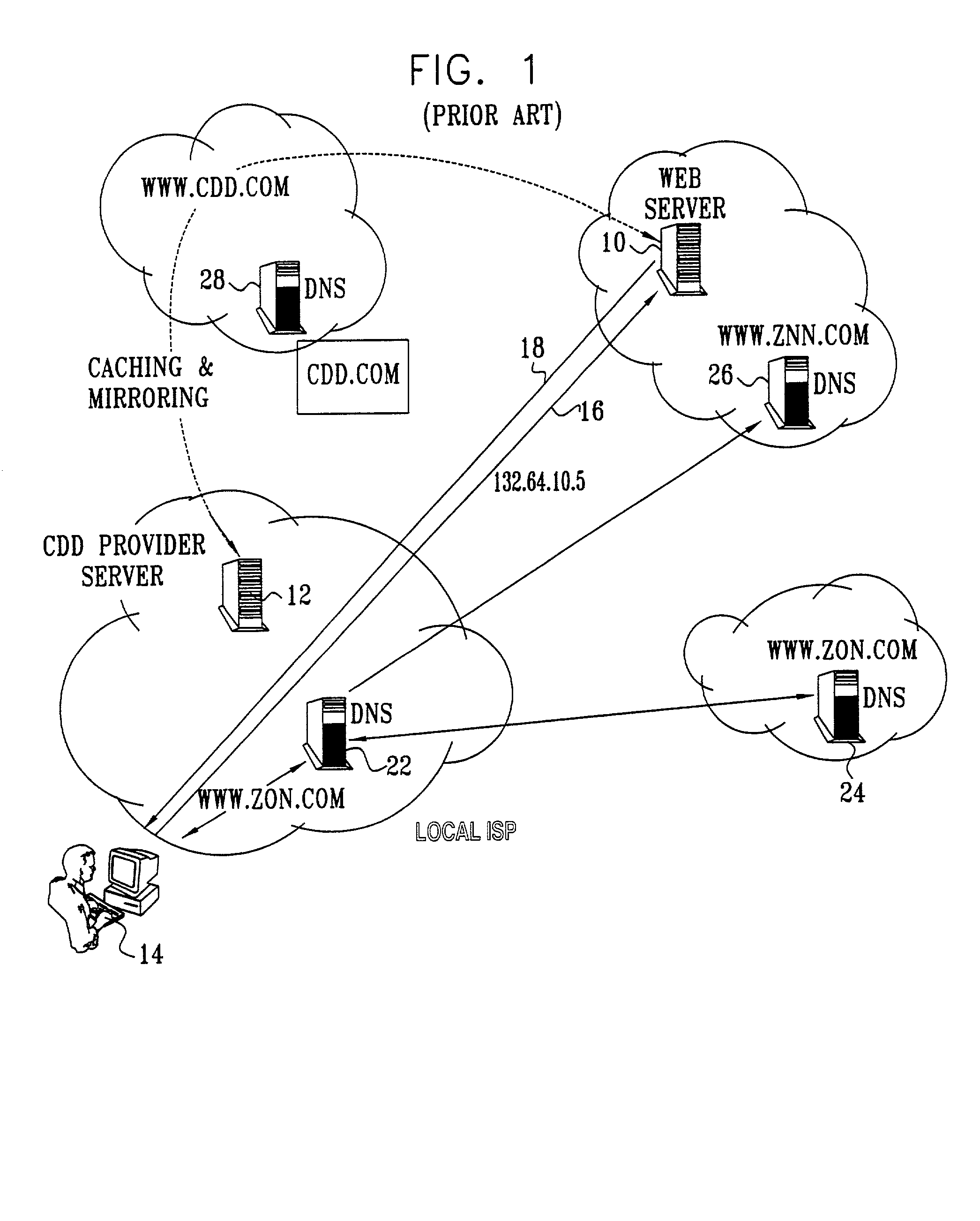

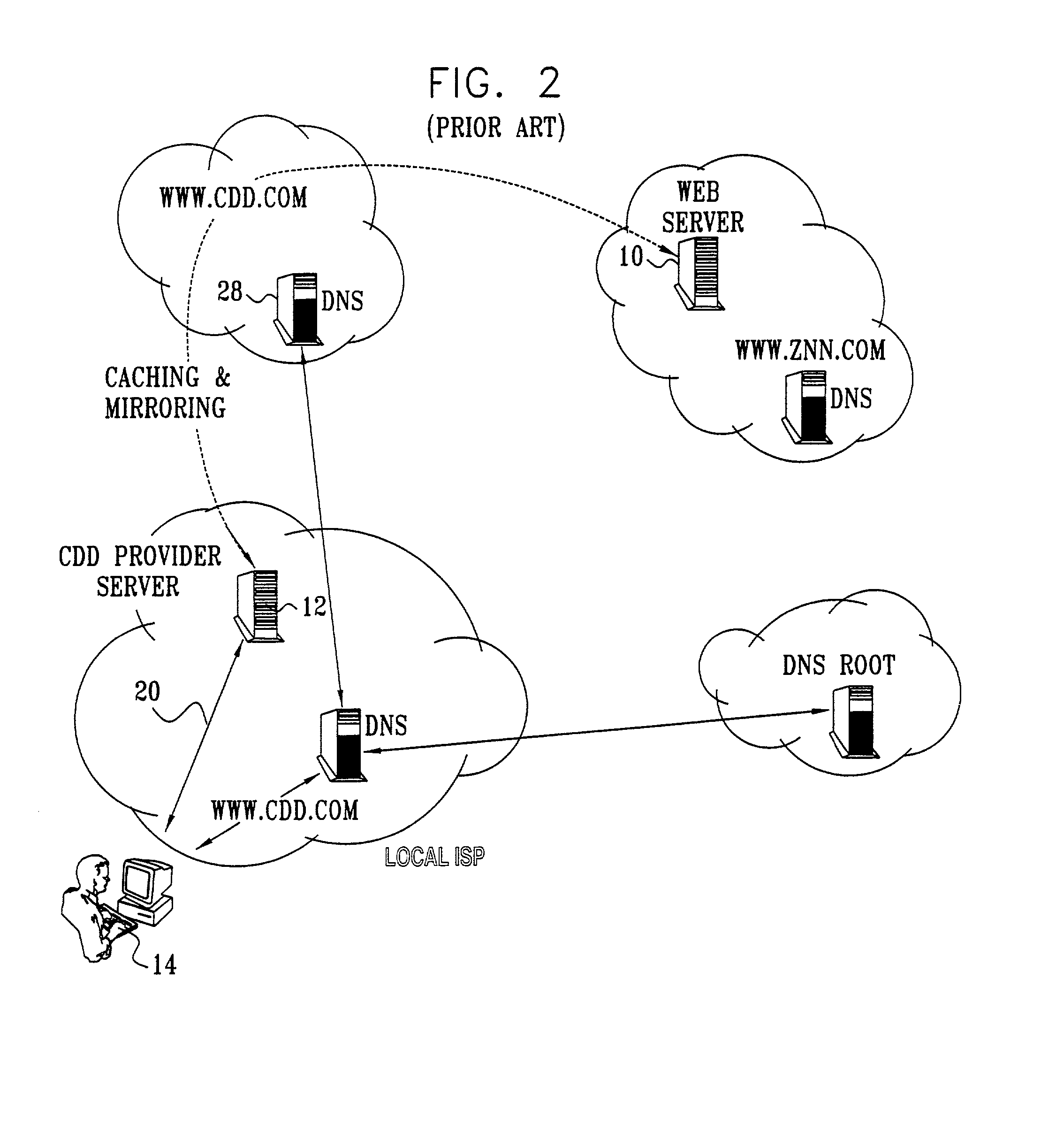

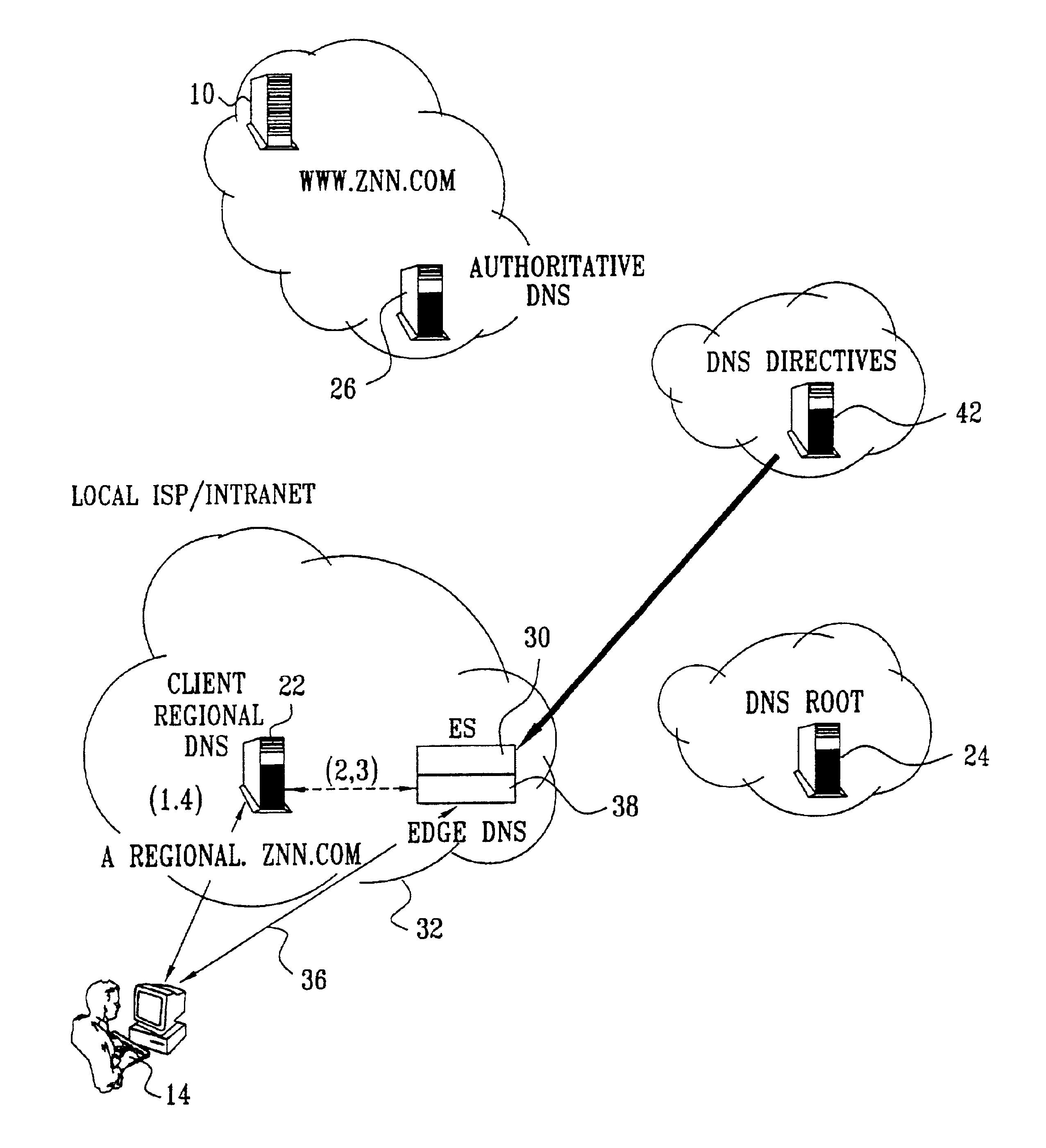

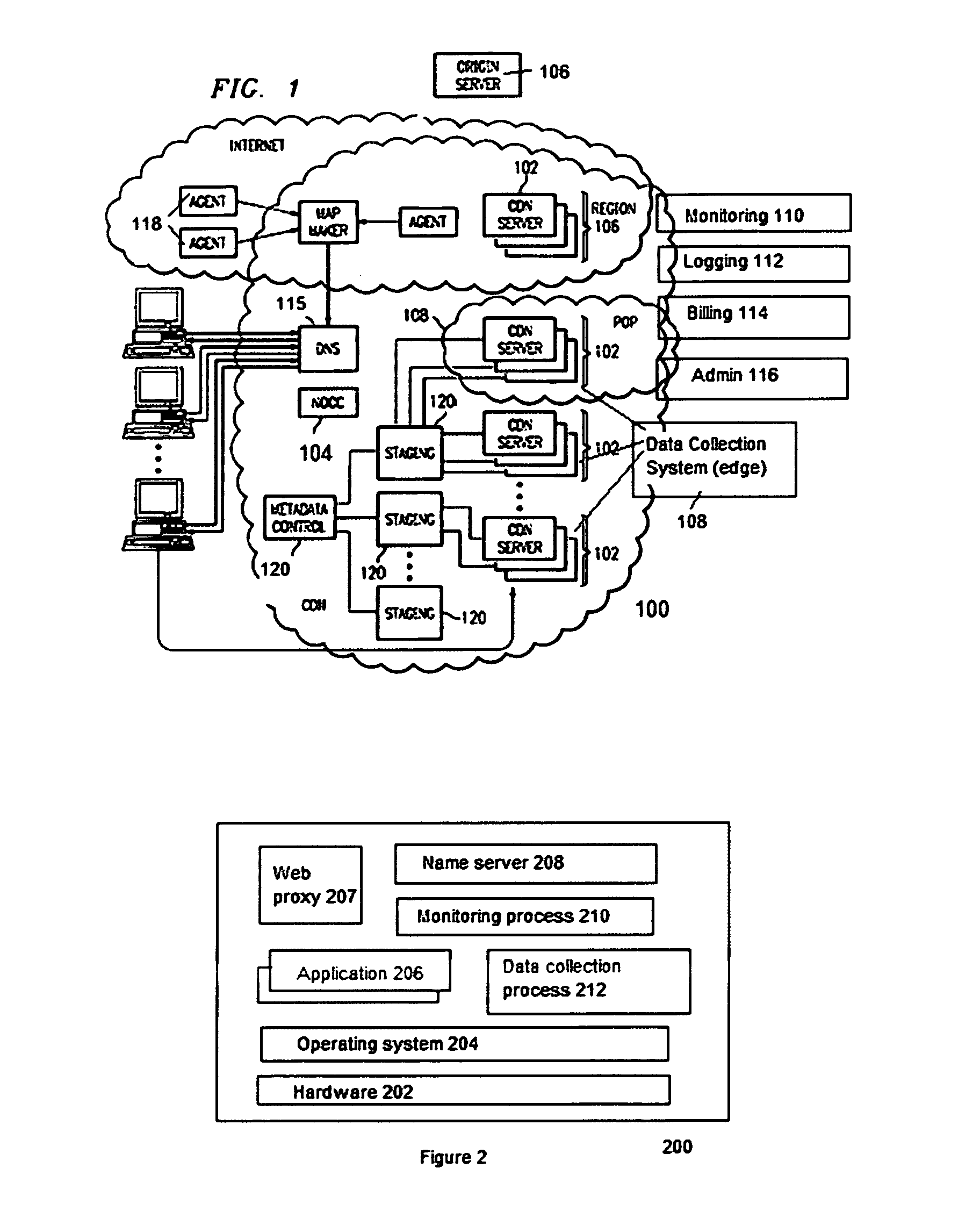

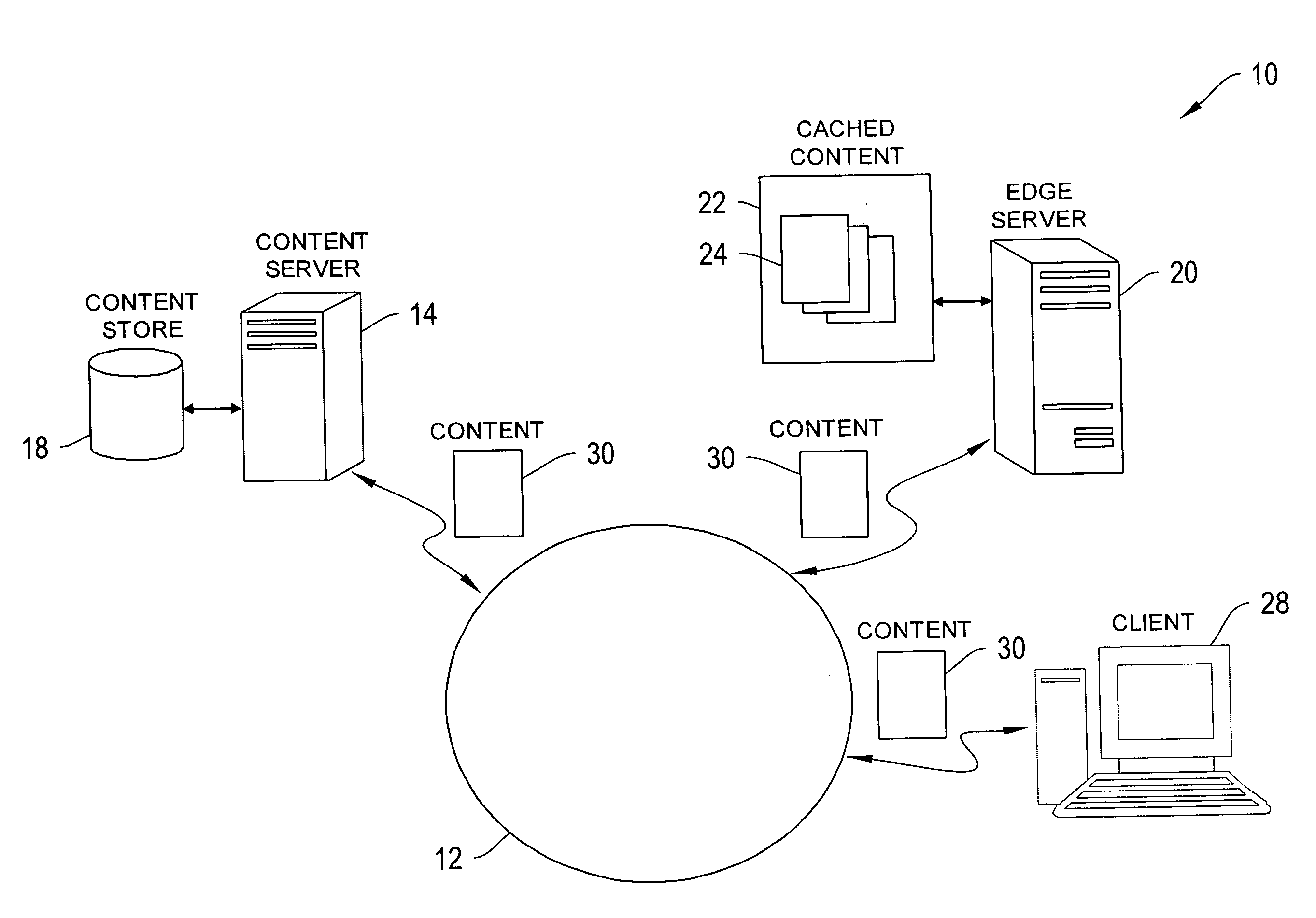

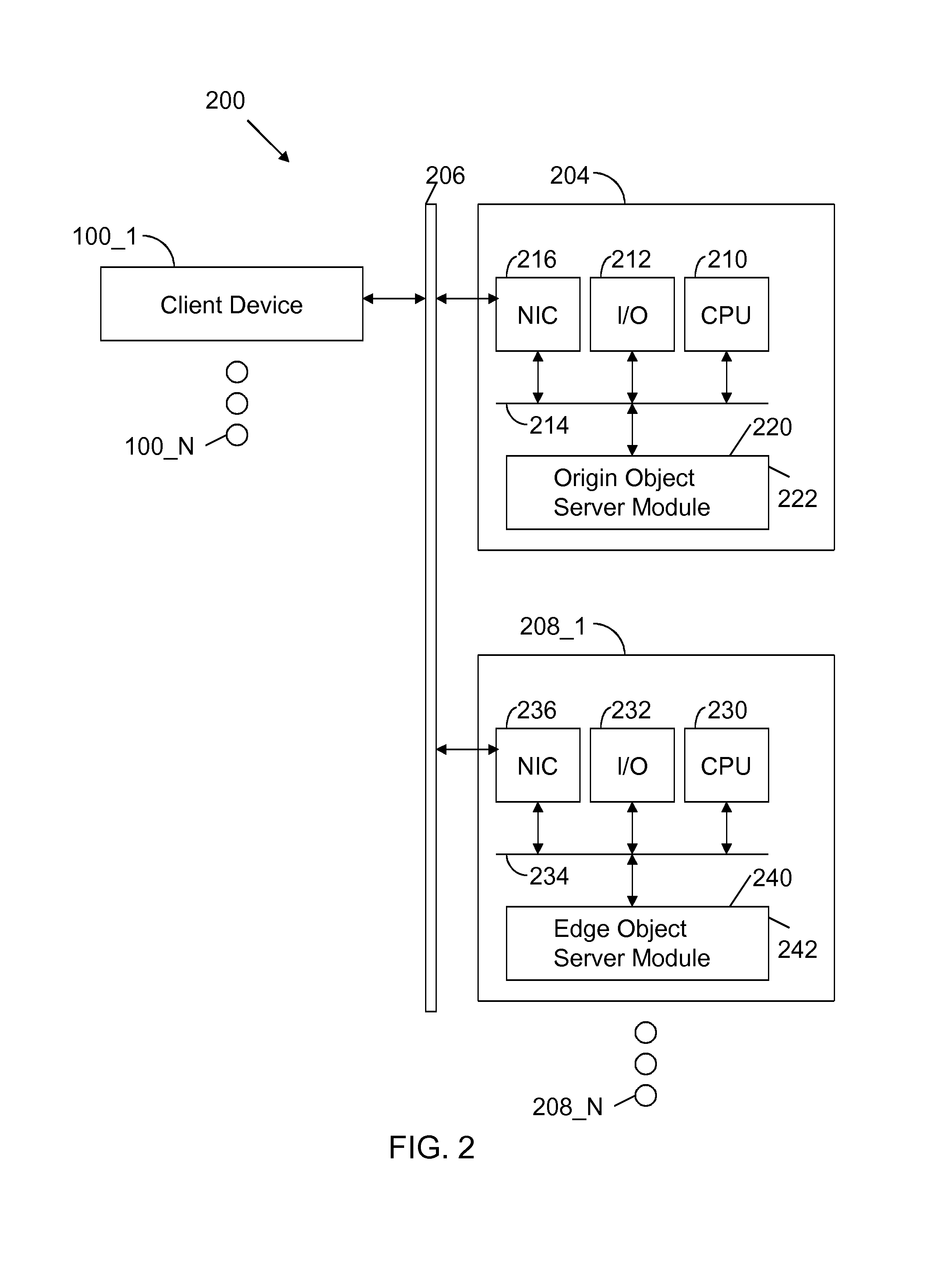

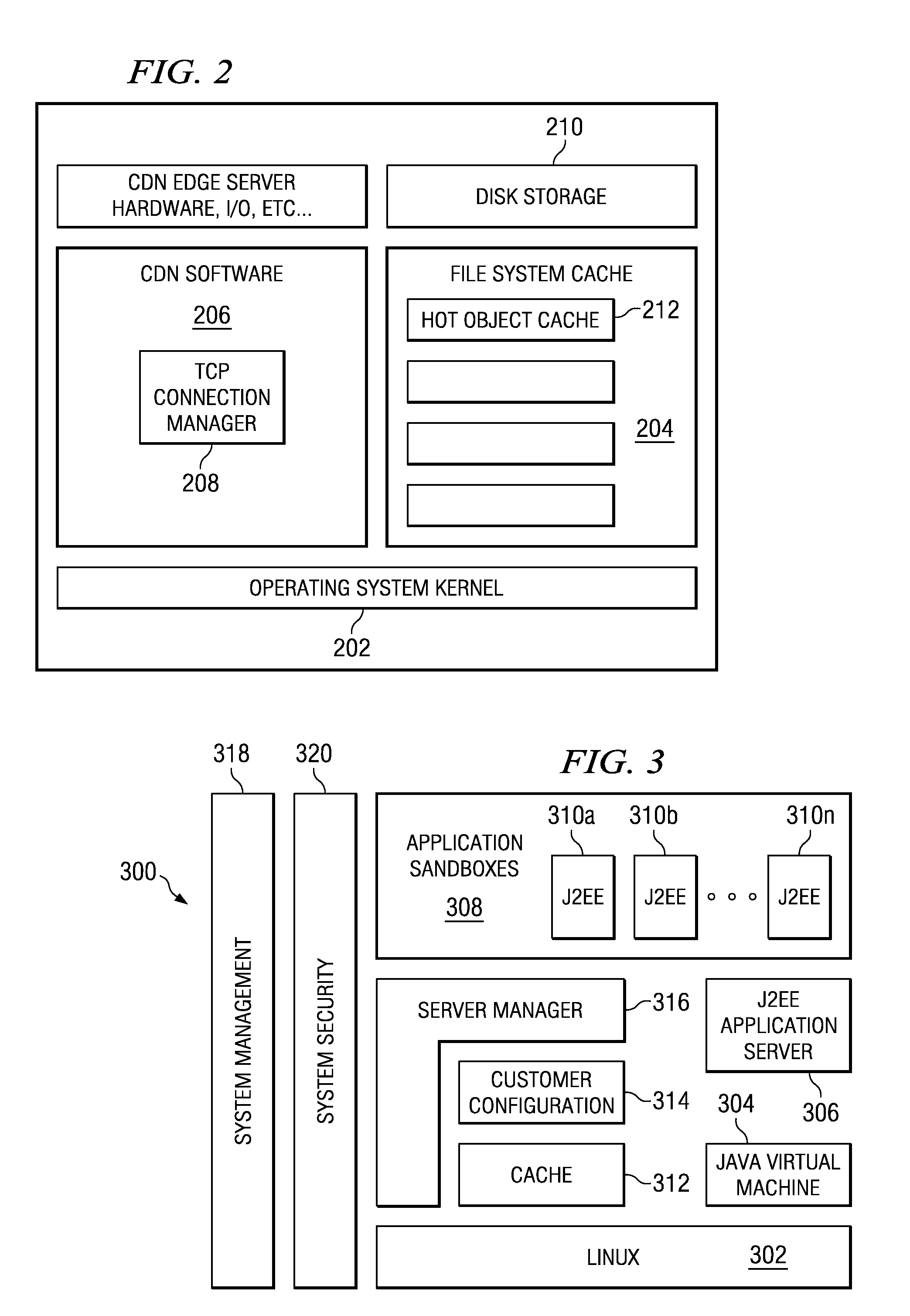

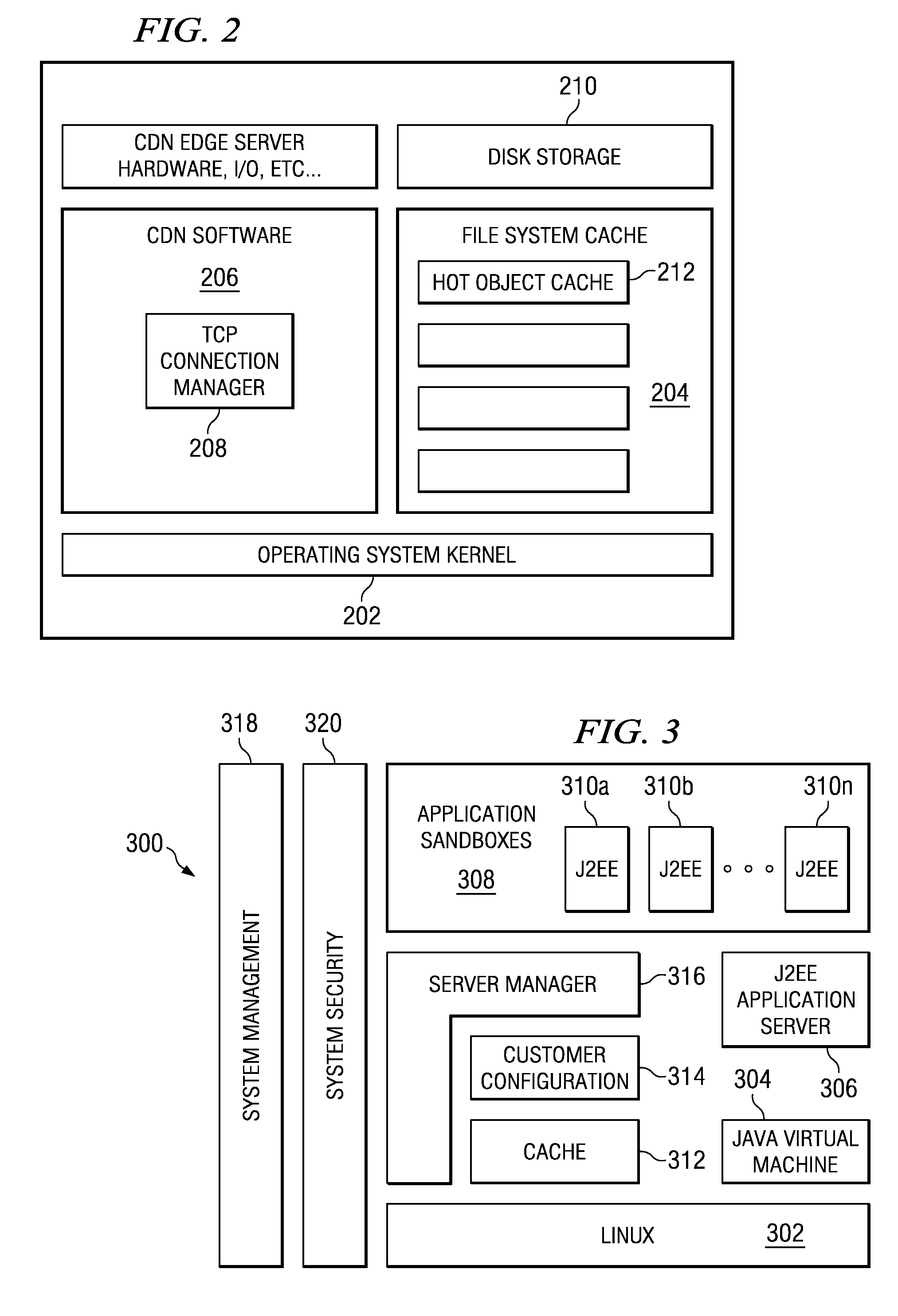

A technique for centralized and differentiated content and application delivery system allows content providers to directly control the delivery of content based on regional and temporal preferences, client identity and content priority. A scalable system is provided in an extensible framework for edge services, employing a combination of a flexible profile definition language and an open edge server architecture in order to add new and unforeseen services on demand. In one or more edge servers content providers are allocated dedicated resources, which are not affected by the demand or the delivery characteristics of other content providers. Each content provider can differentiate different local delivery resources within its global allocation. Since the per-site resources are guaranteed, intra-site differentiation can be guaranteed. Administrative resources are provided to dynamically adjust service policies of the edge servers.

Owner:CISCO TECH INC

Differentiated content and application delivery via internet

InactiveUS6976090B2Decentralized and differentiatedAdvanced technologyMultiple digital computer combinationsWebsite content managementScalable systemEdge server

Owner:CISCO TECH INC

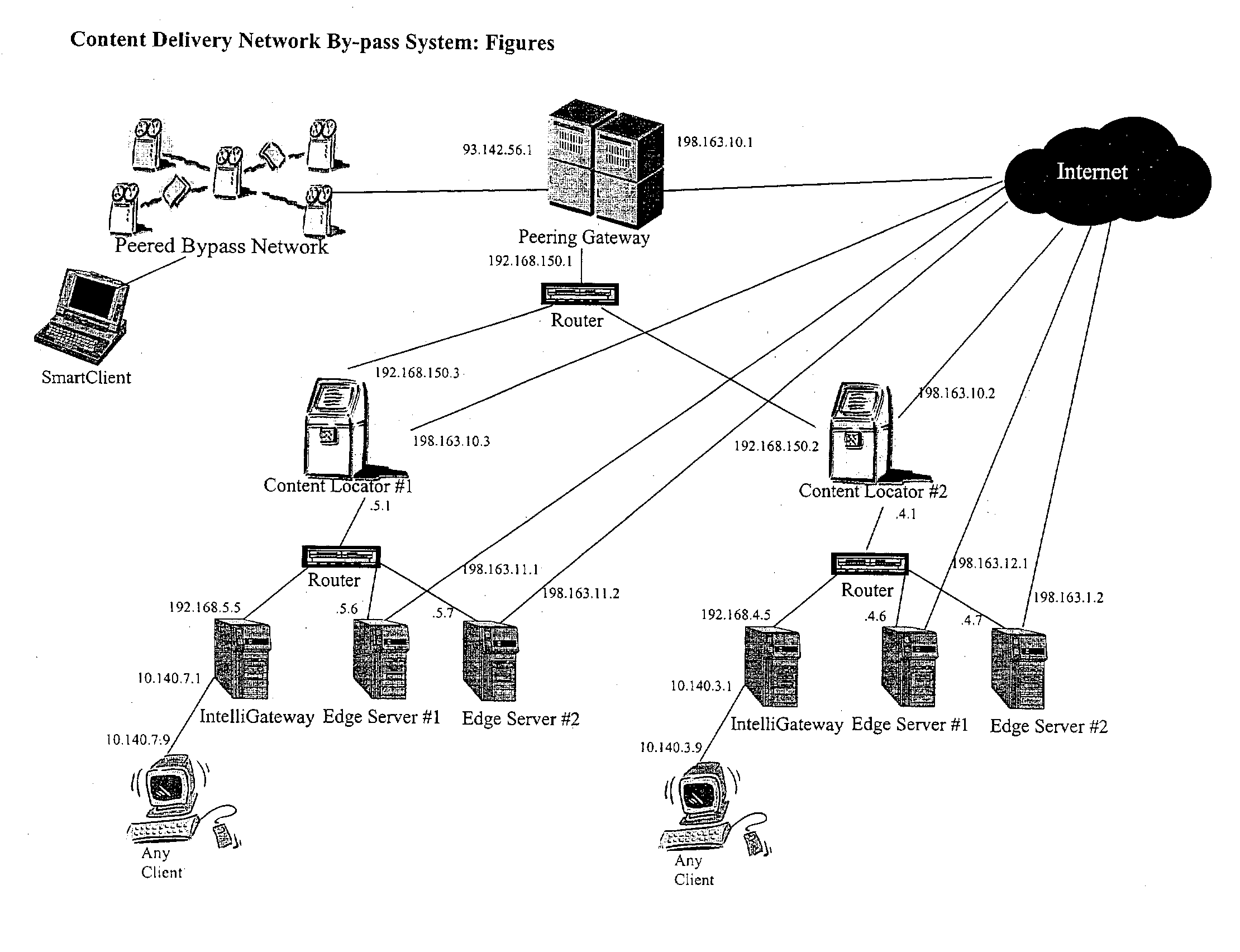

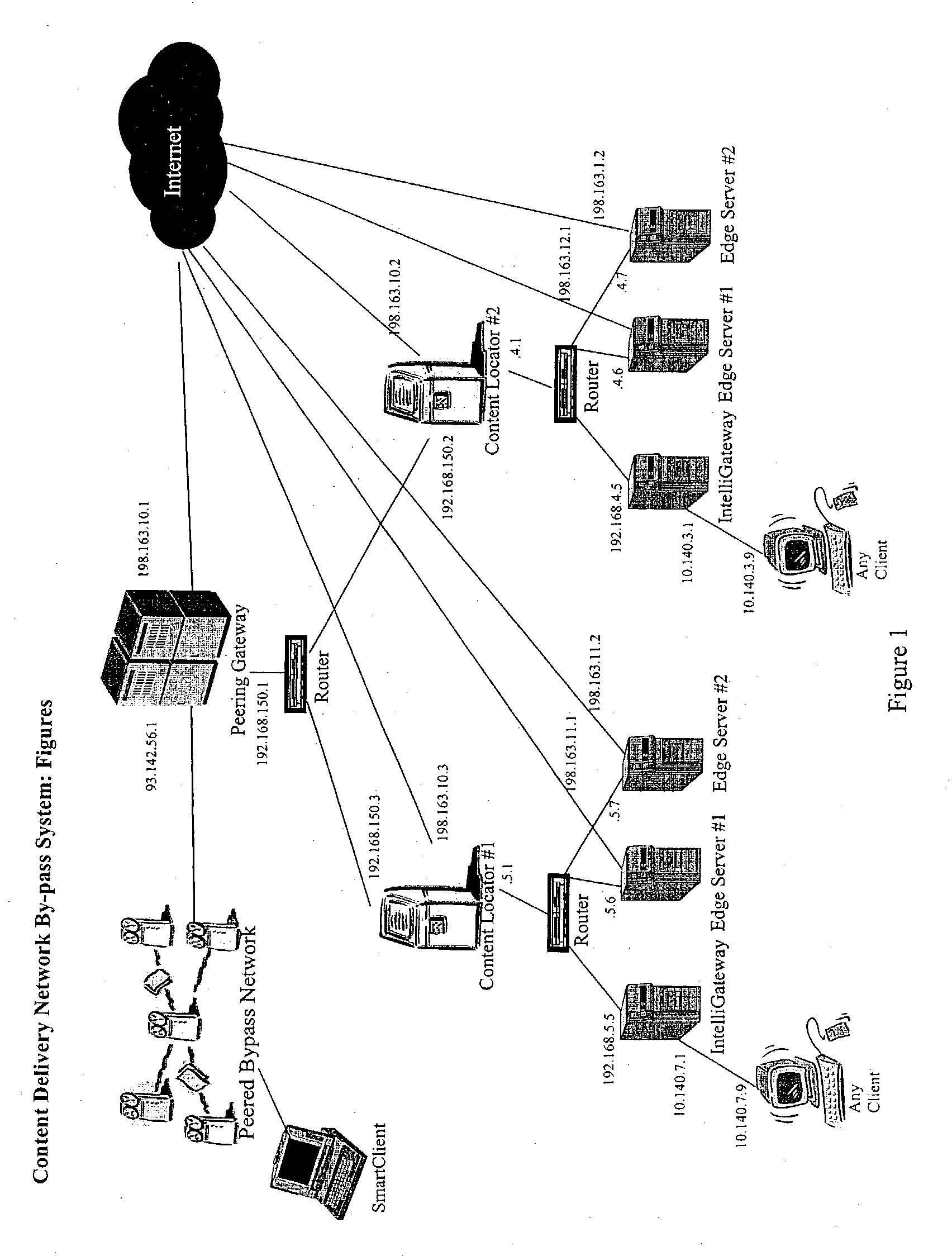

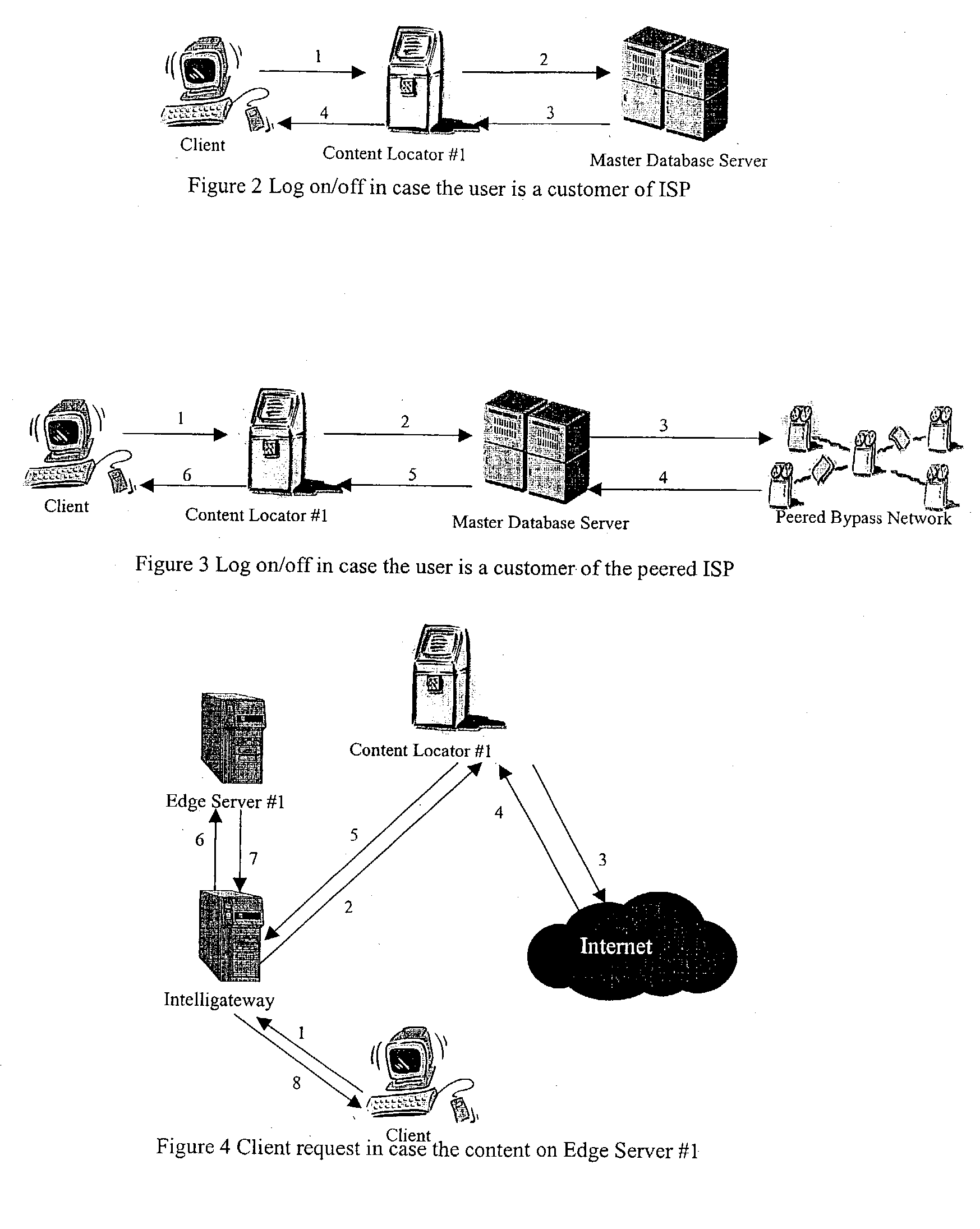

Content delivery network by-pass system

InactiveUS20030174648A1Increase capacityIncrease in sizeError preventionTransmission systemsWeb sitePeering

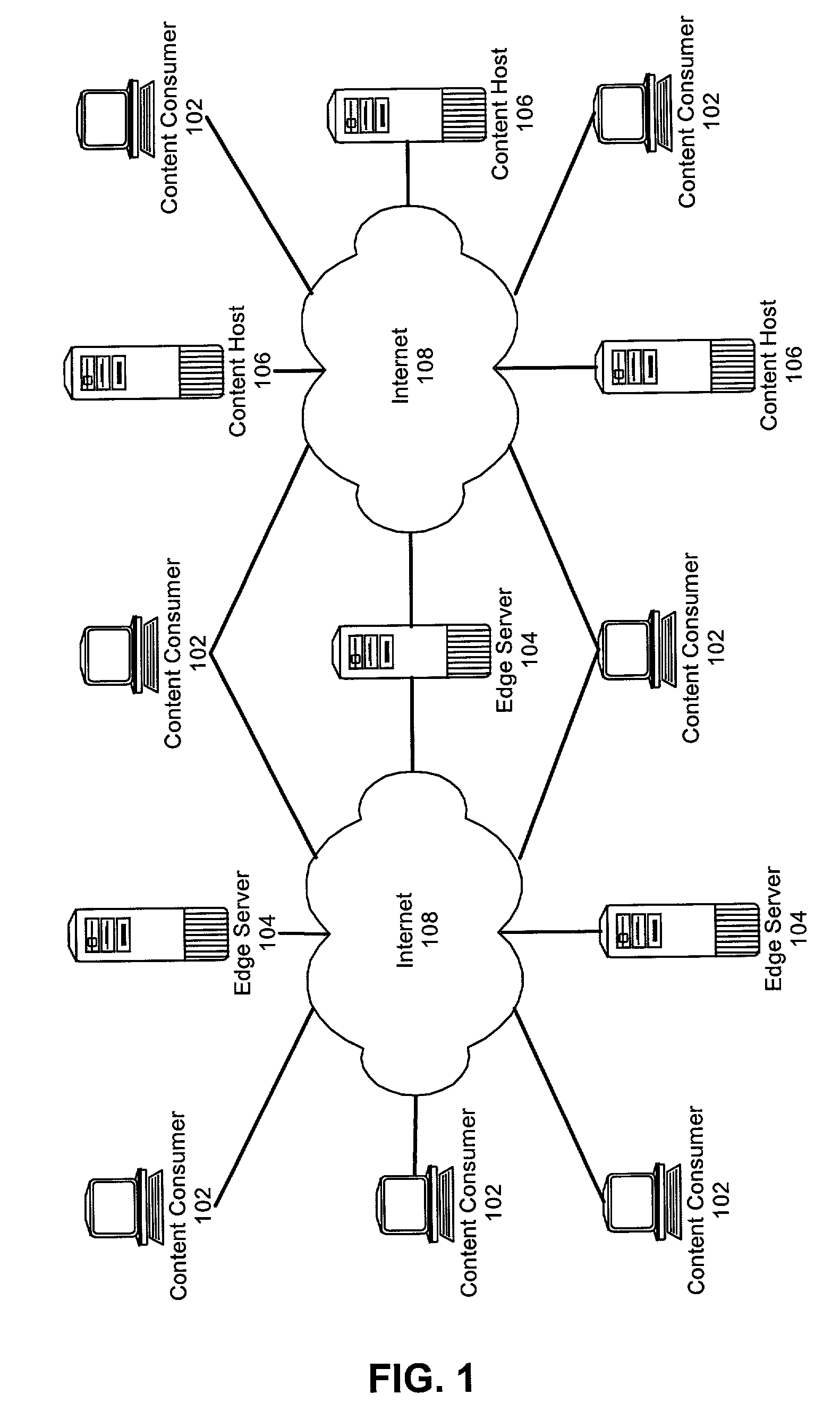

The bypass network is designed to provide fast access and high quality streaming media services anywhere anytime. There are five major components including Peering Gateway, Content Locator, Edge Server, Gateway and Client. The whole bypass network is divided into number of self-managed sub-networks, which are referred as local networks in this document. Each local network contains Edge Servers, gateways, and a Content Locator. The Edge Servers serve as cache storage and streaming servers for the local network. The gateways provide a connection point for the client computers. Each local network is managed by a Content Locator. The Content Locator handles all client requests by communicating with the Peering Gateway and actual web sites, and makes the content available on local Edge Servers. The Content Locator also balances the load on each Edge Server by monitoring the workload on them. One embodiment is designed for home users whose home machine does not move around frequently. A second embodiment is designed for business users who travel around very often where the laptops would self-configure as a client of the network.

Owner:TELECOMM RES LAB

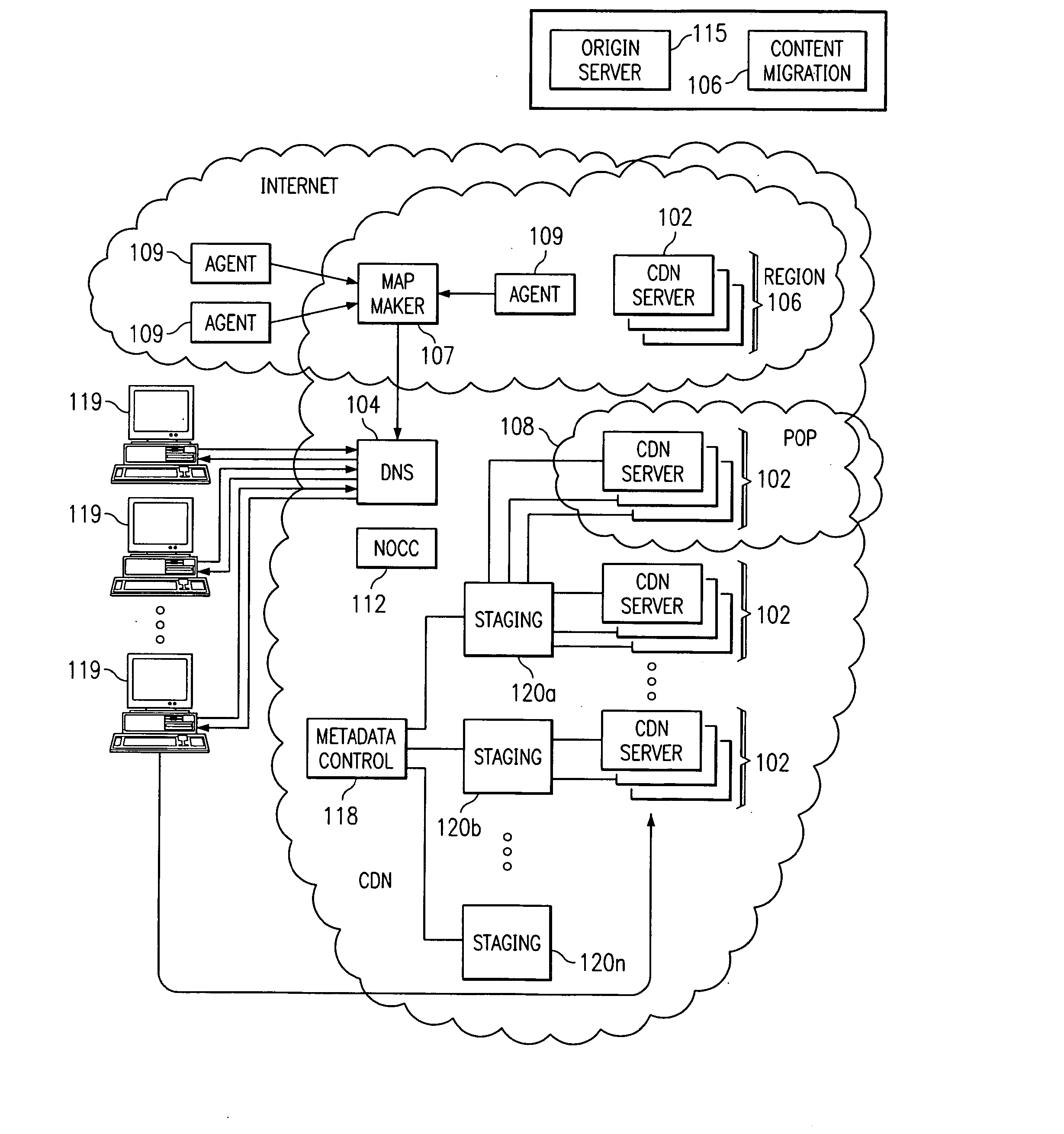

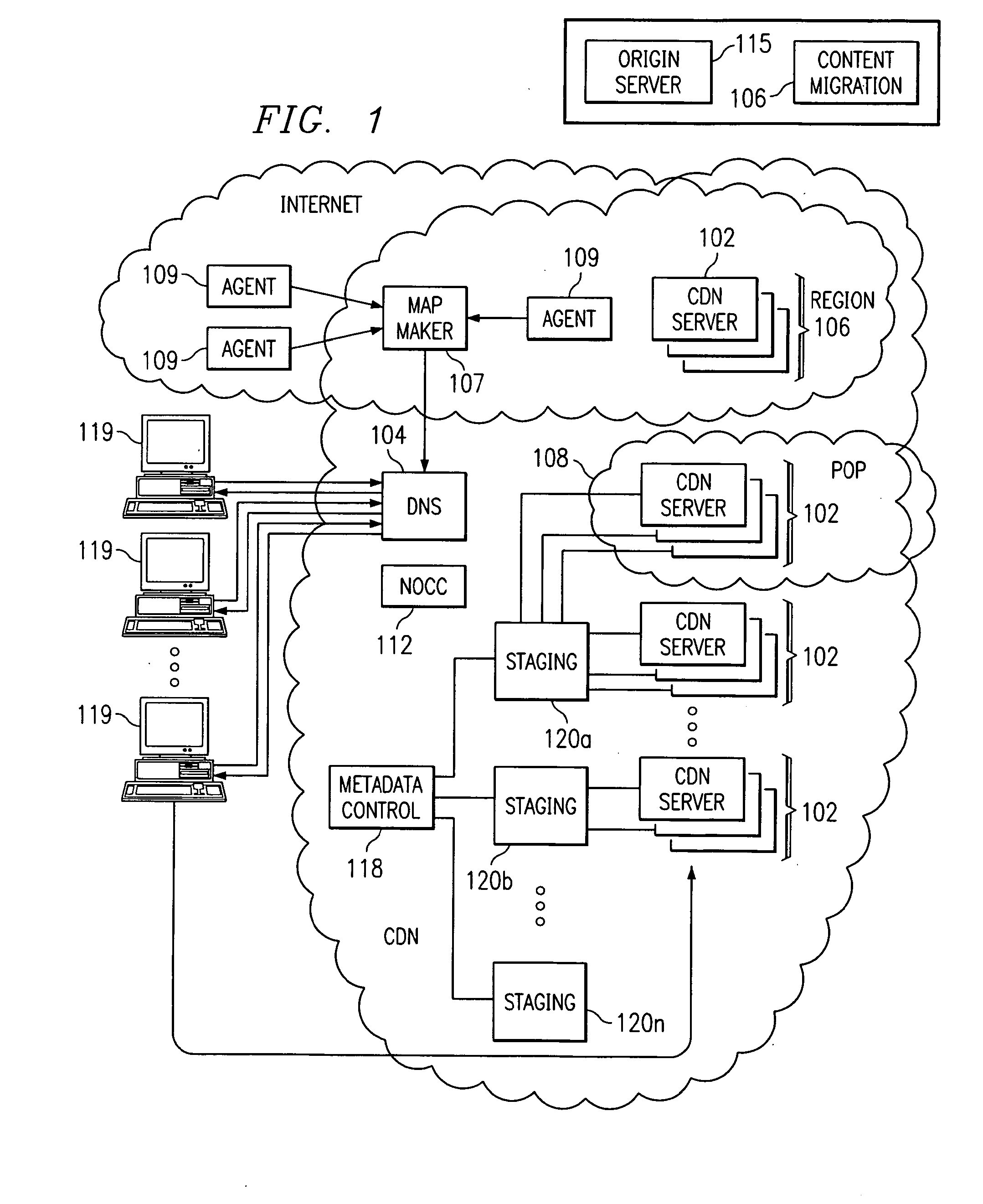

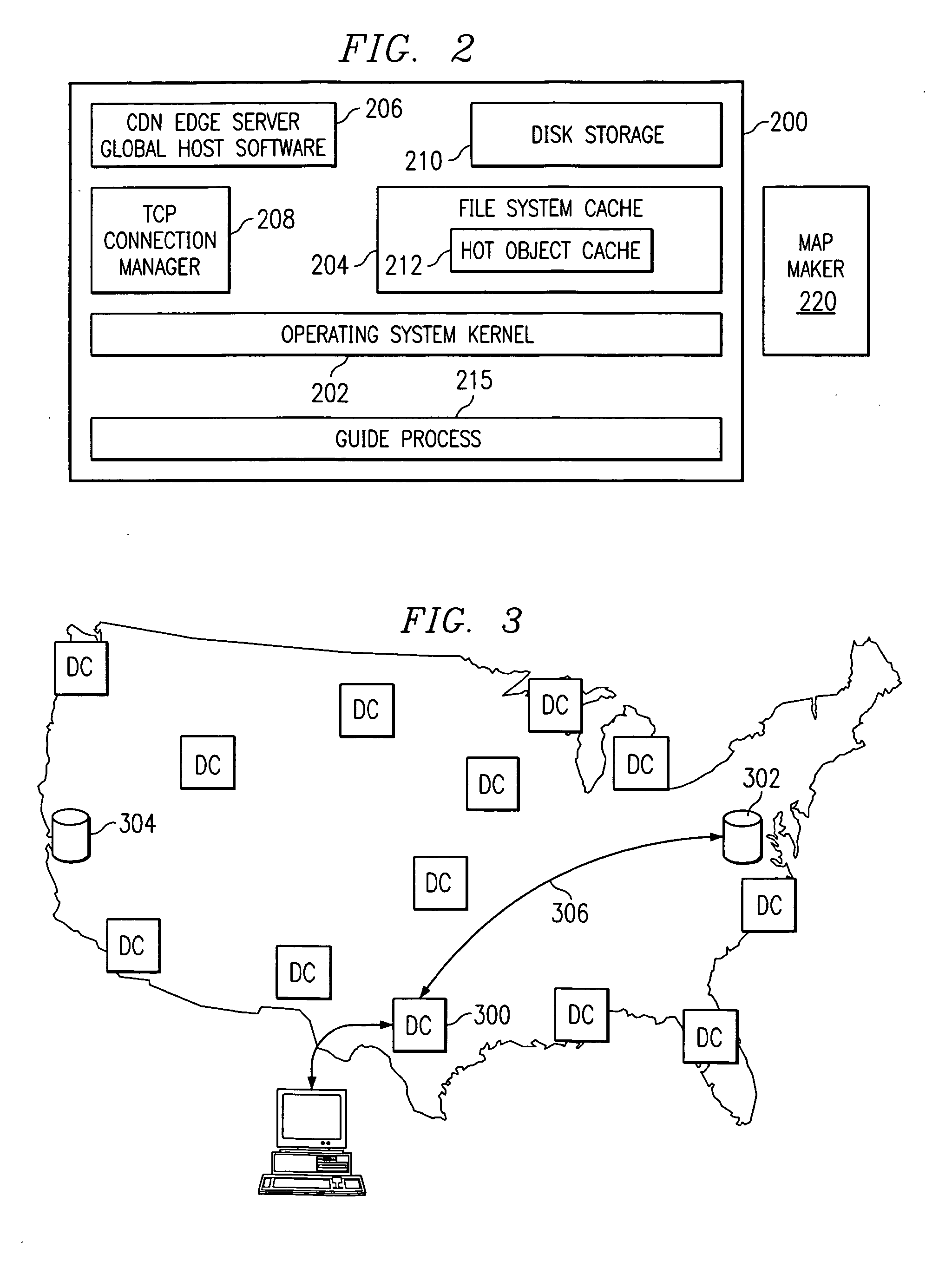

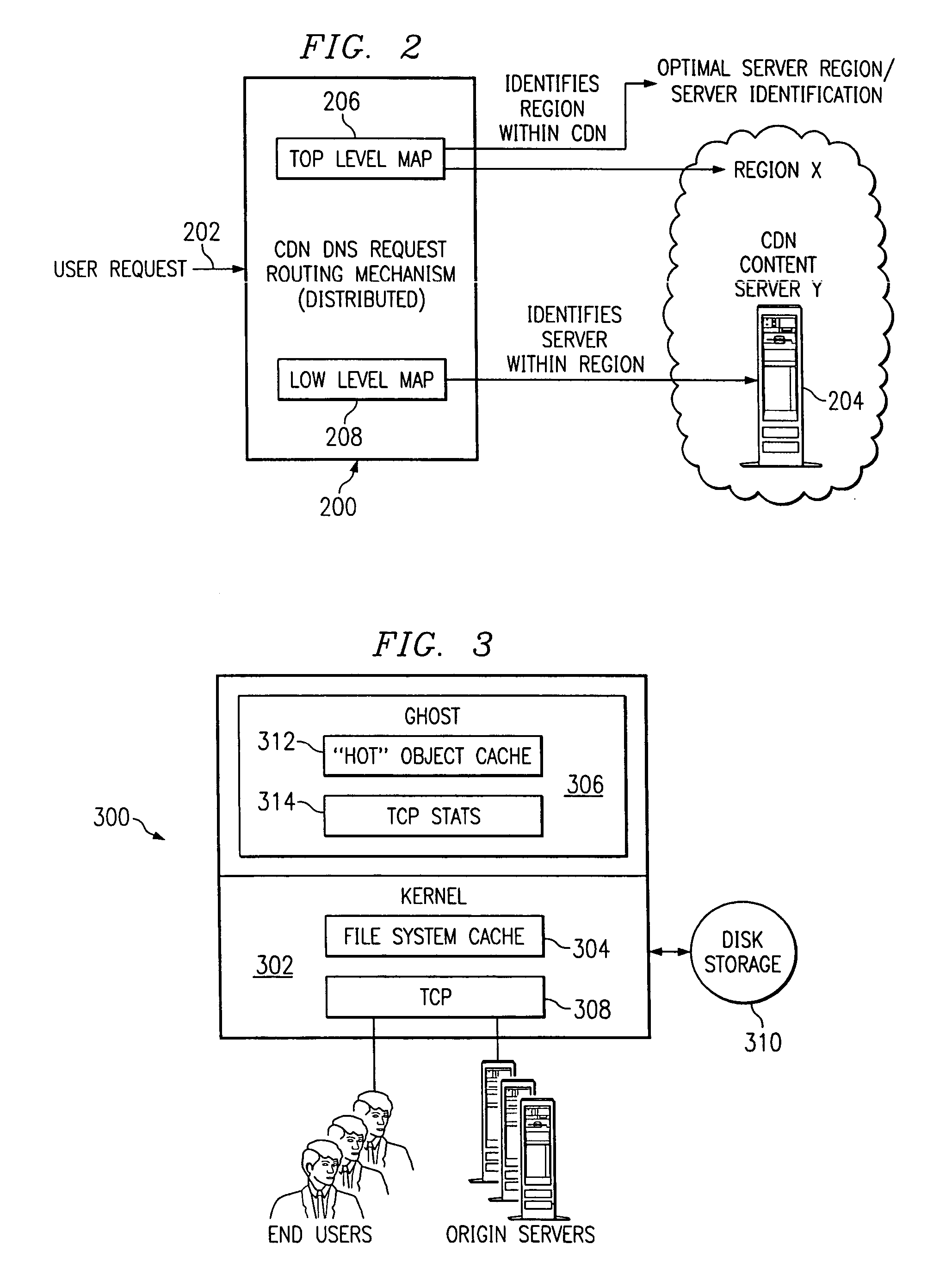

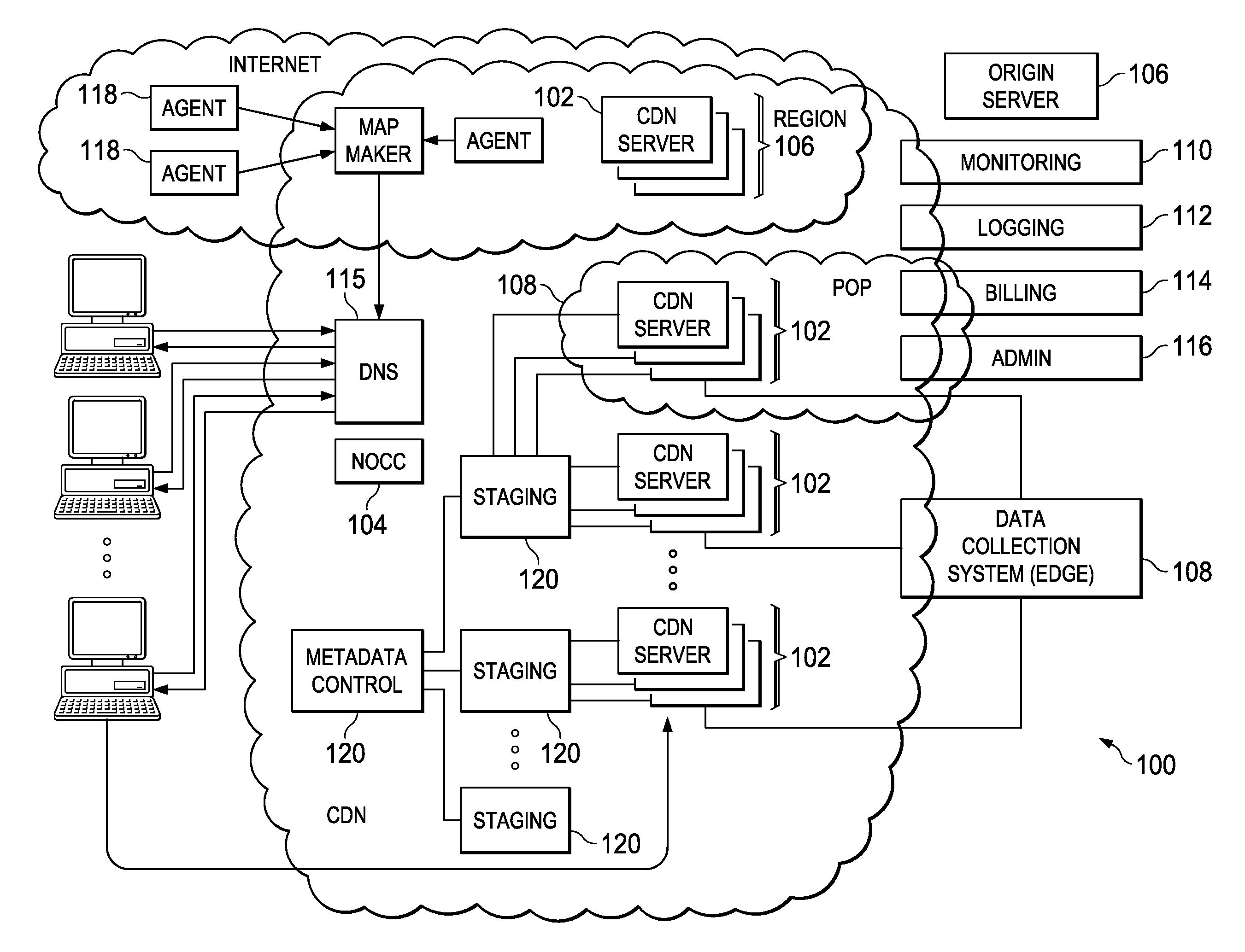

Optimal route selection in a content delivery network

InactiveUS20080008089A1Improve speed and reliabilityRetrieve content (cacheableError preventionTransmission systemsFile area networkPeer-to-peer

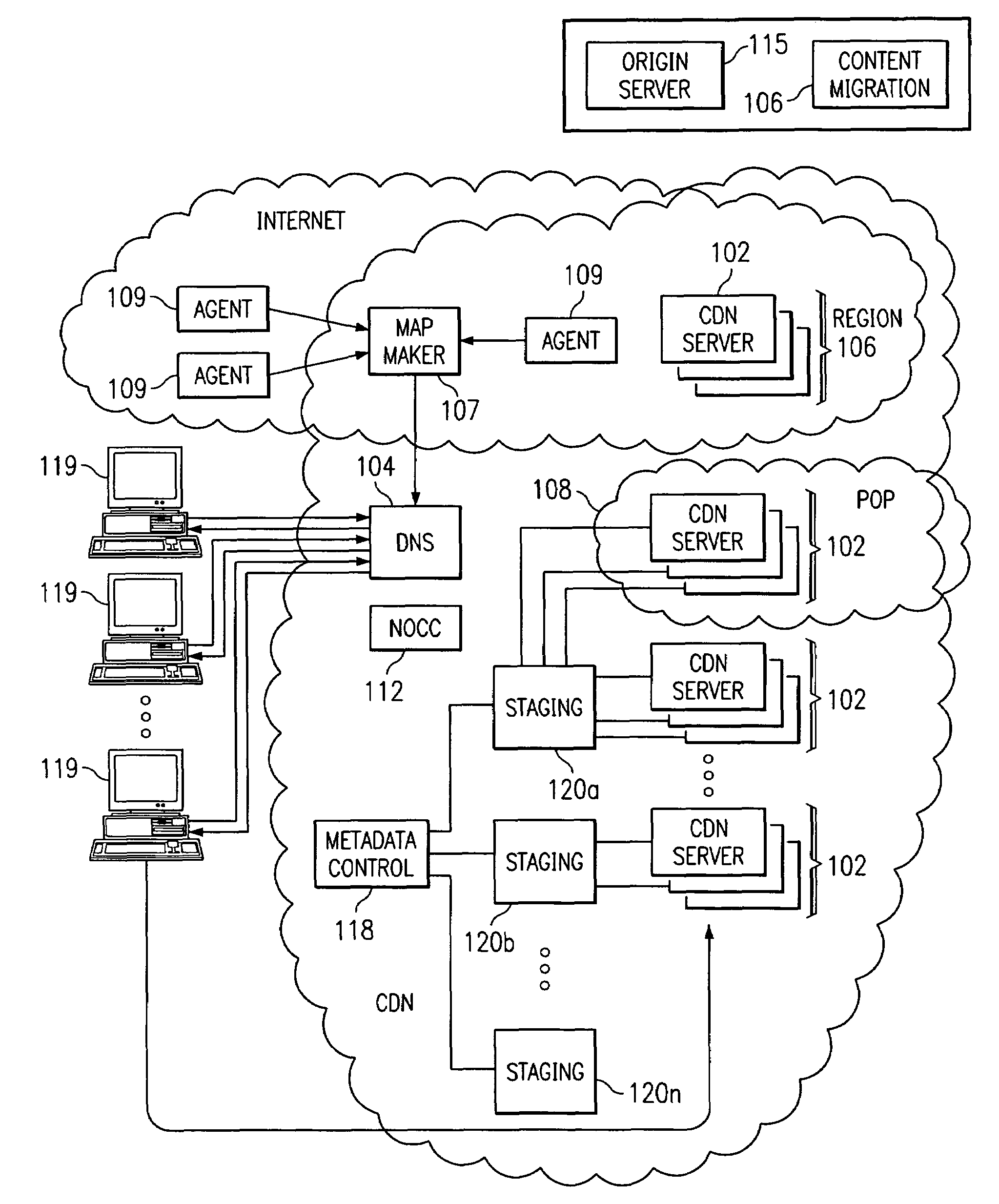

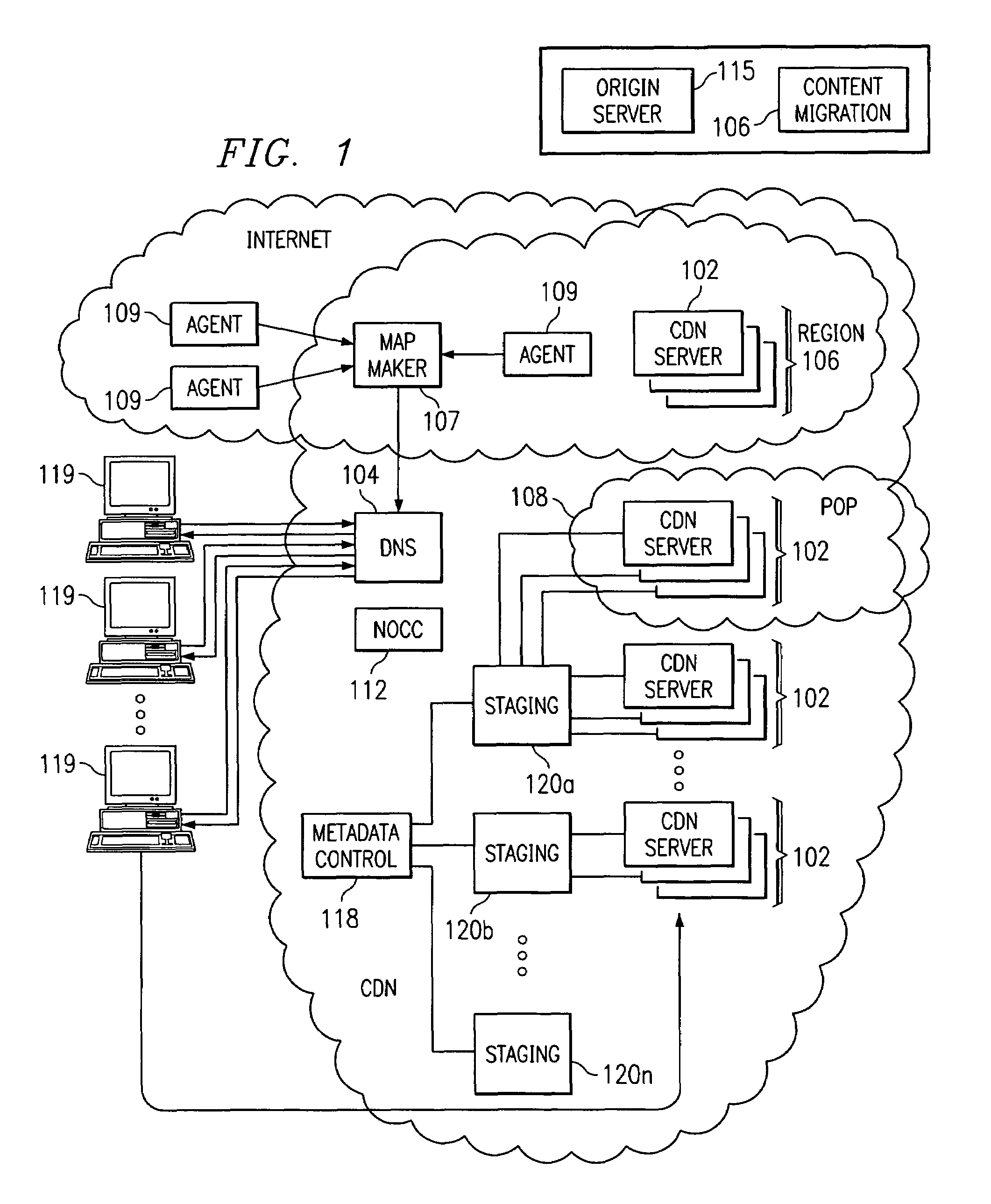

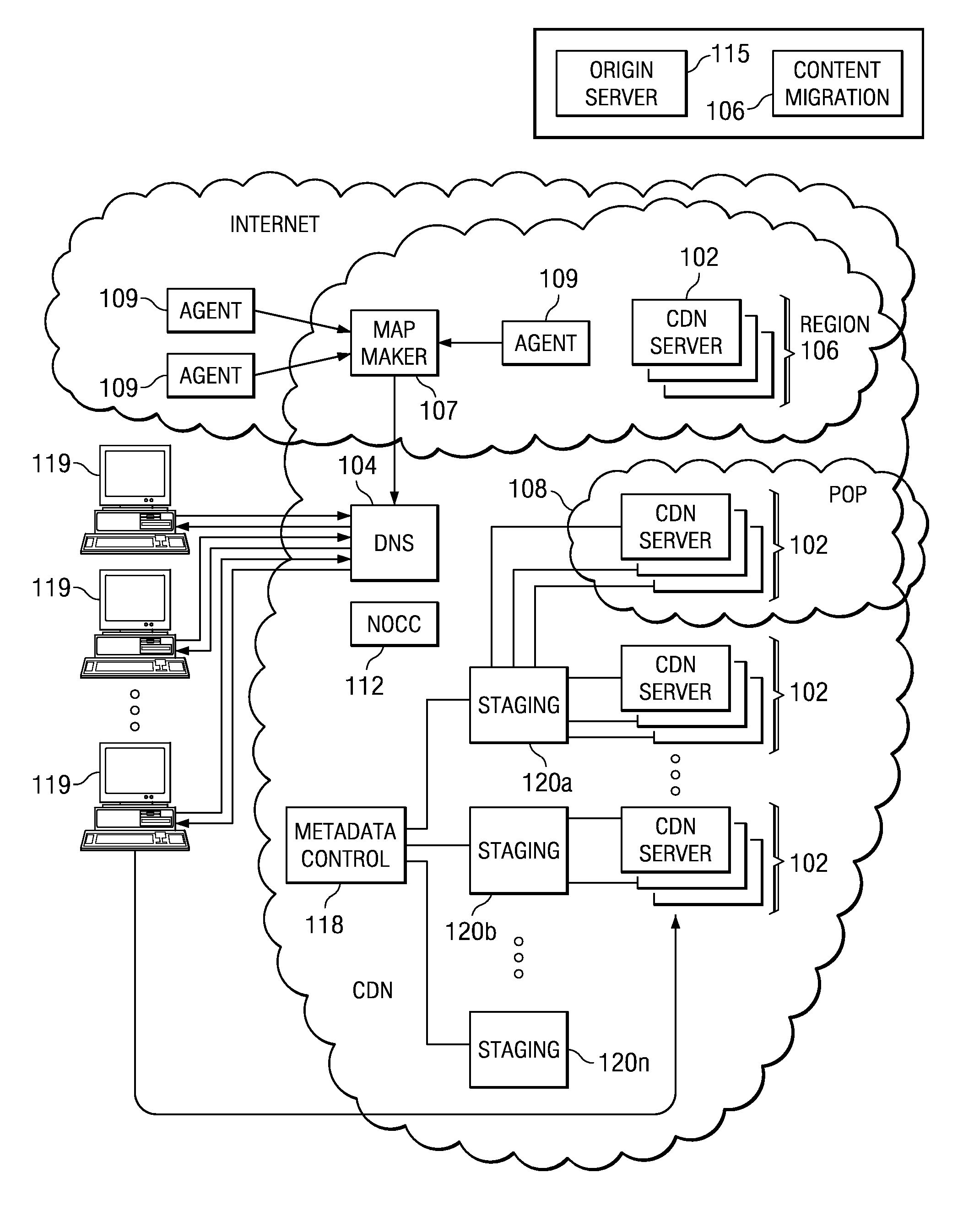

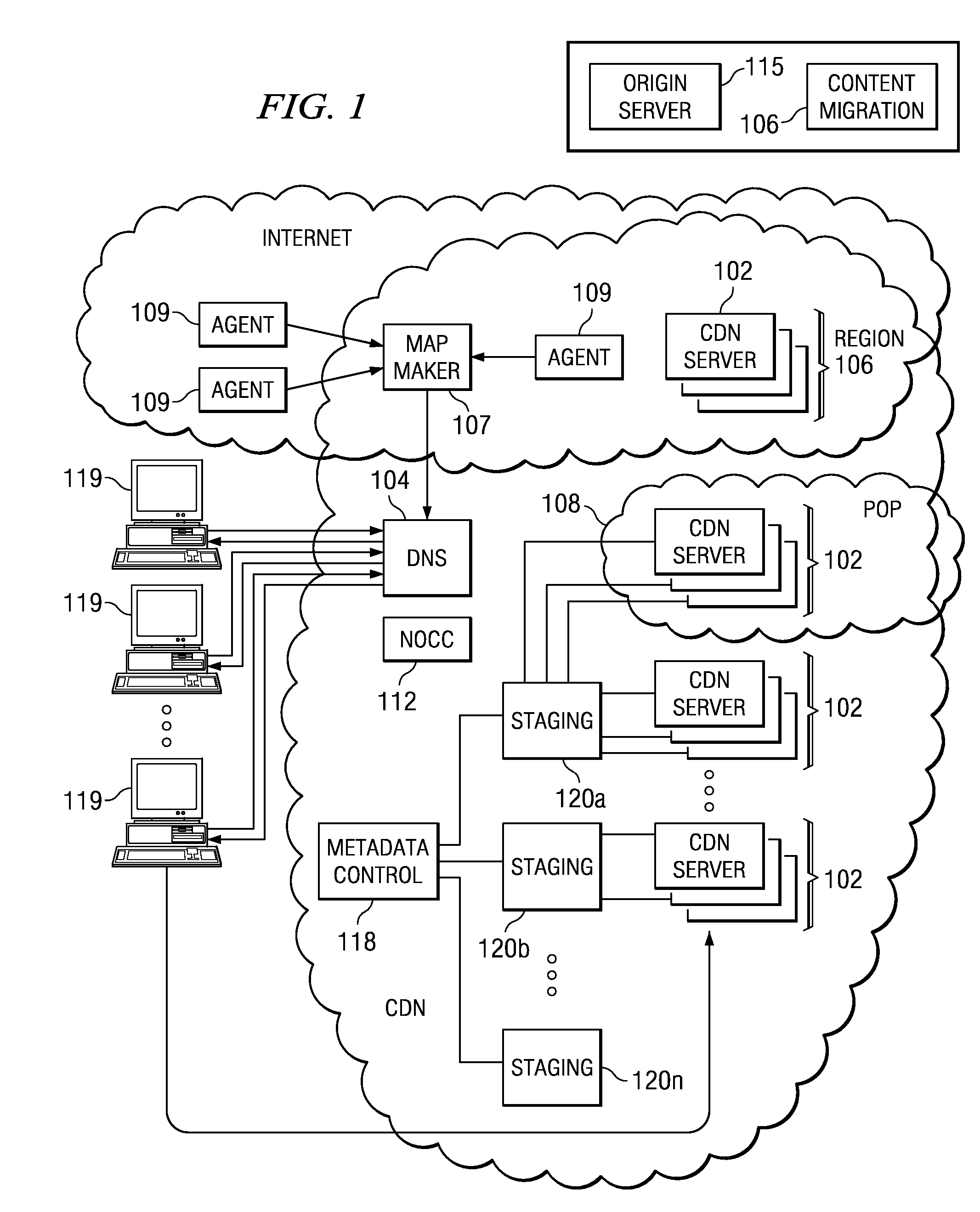

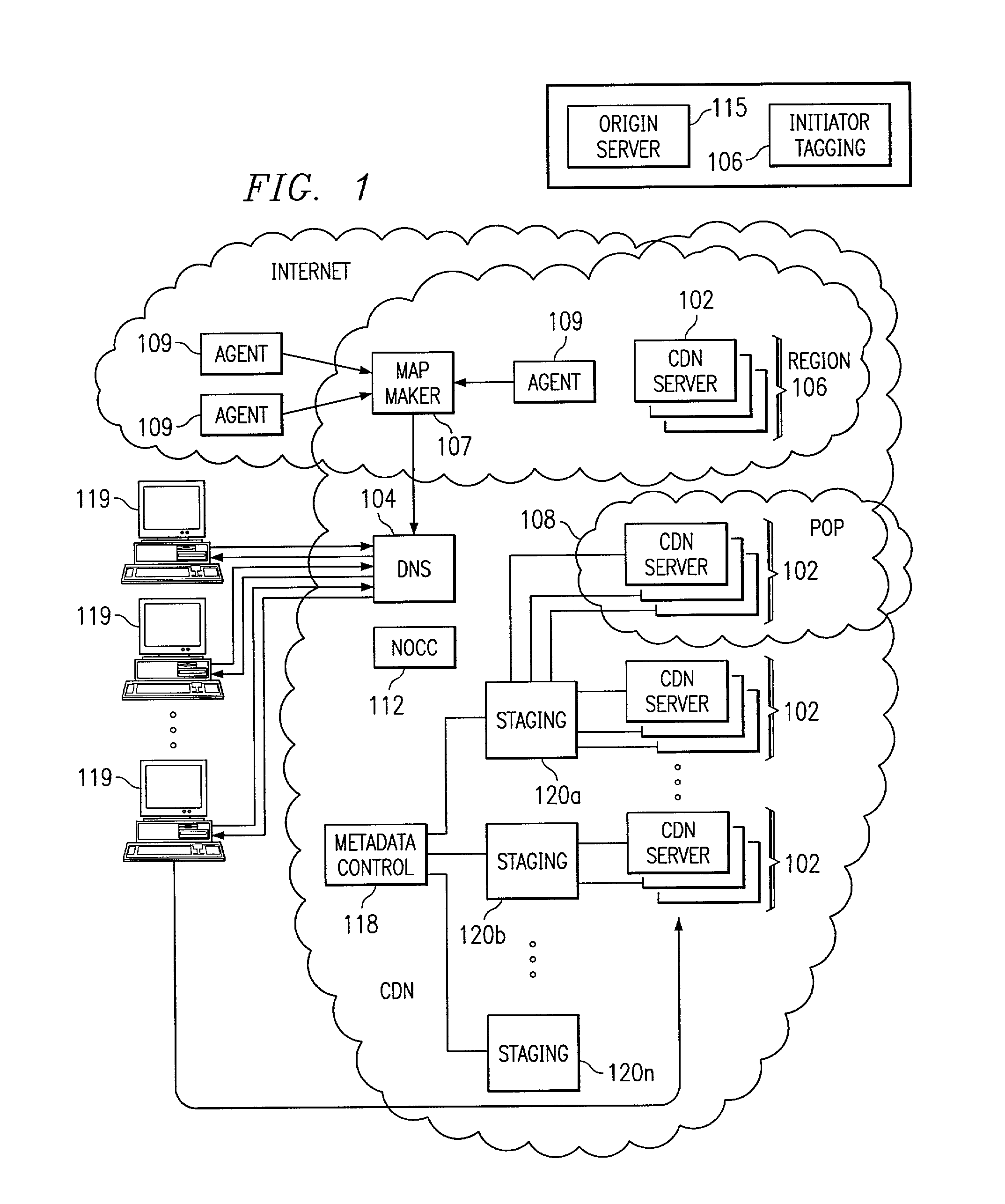

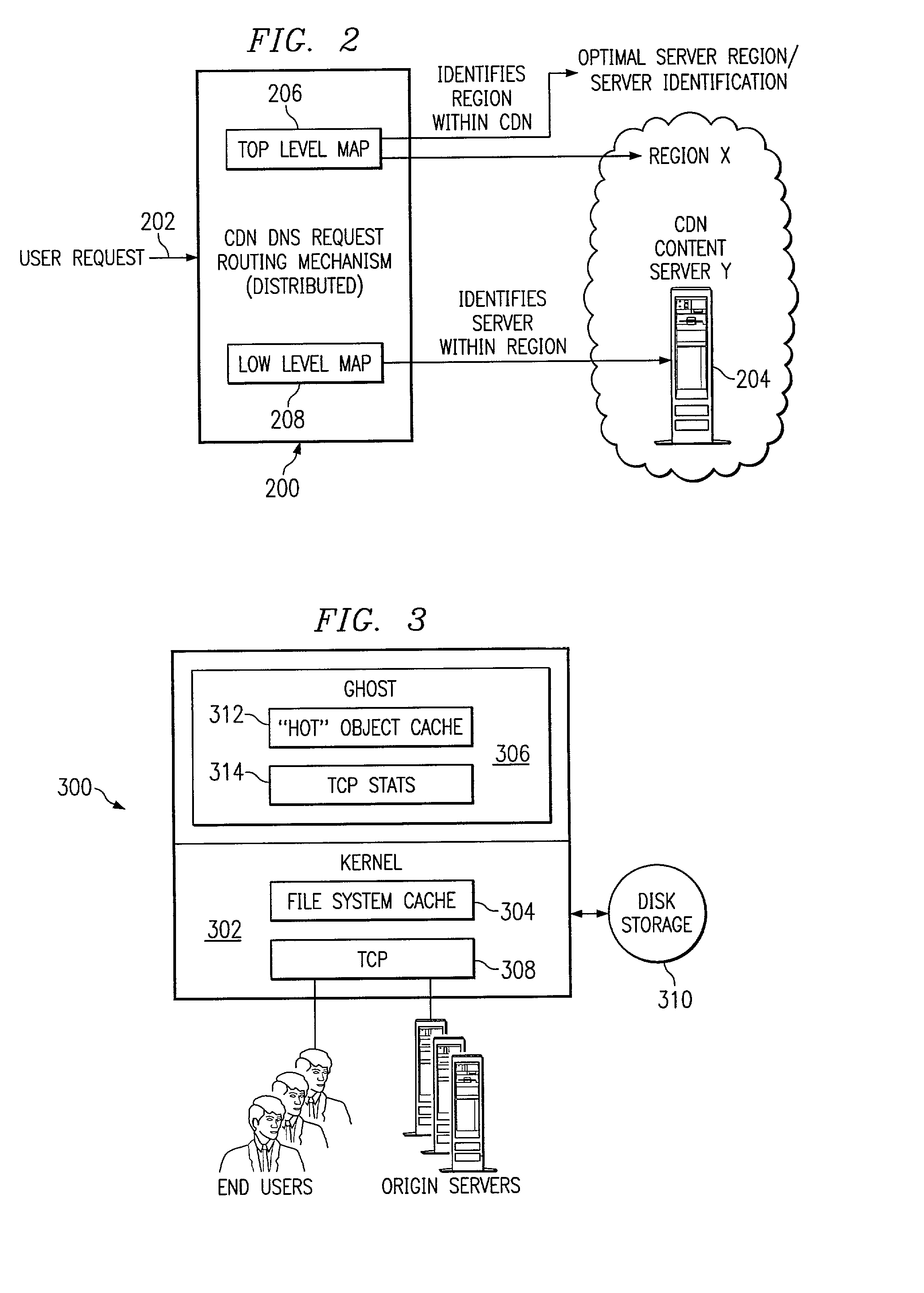

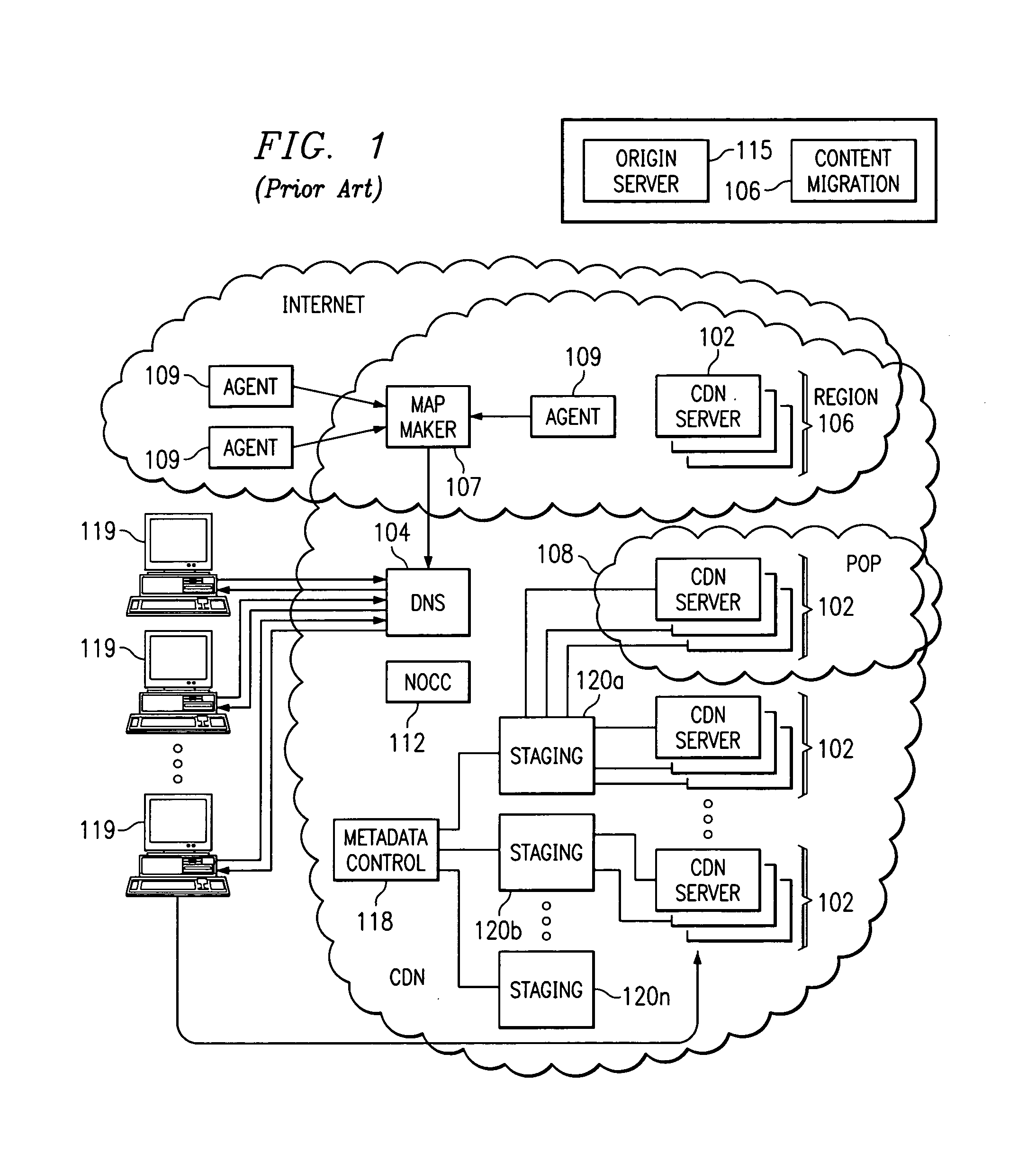

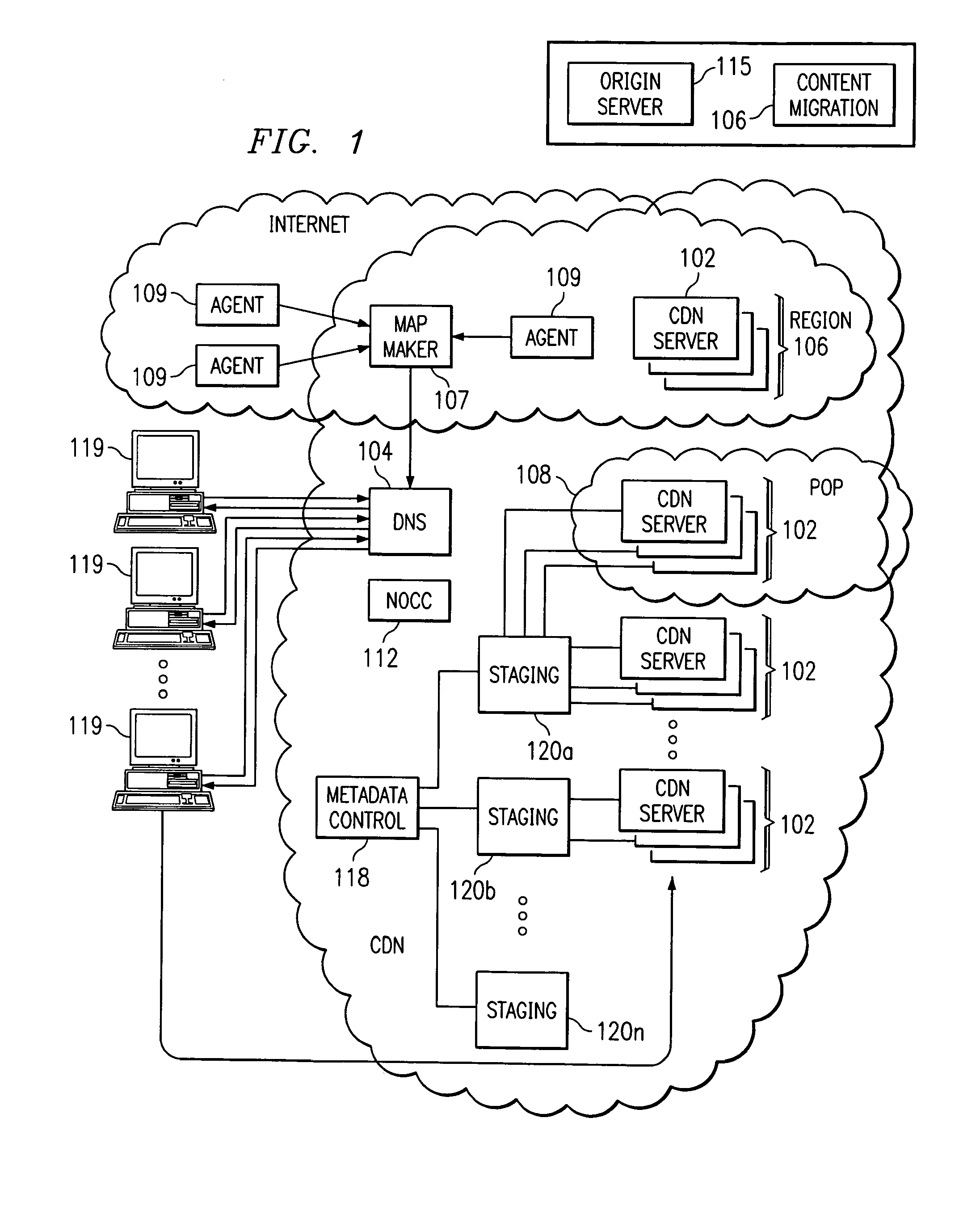

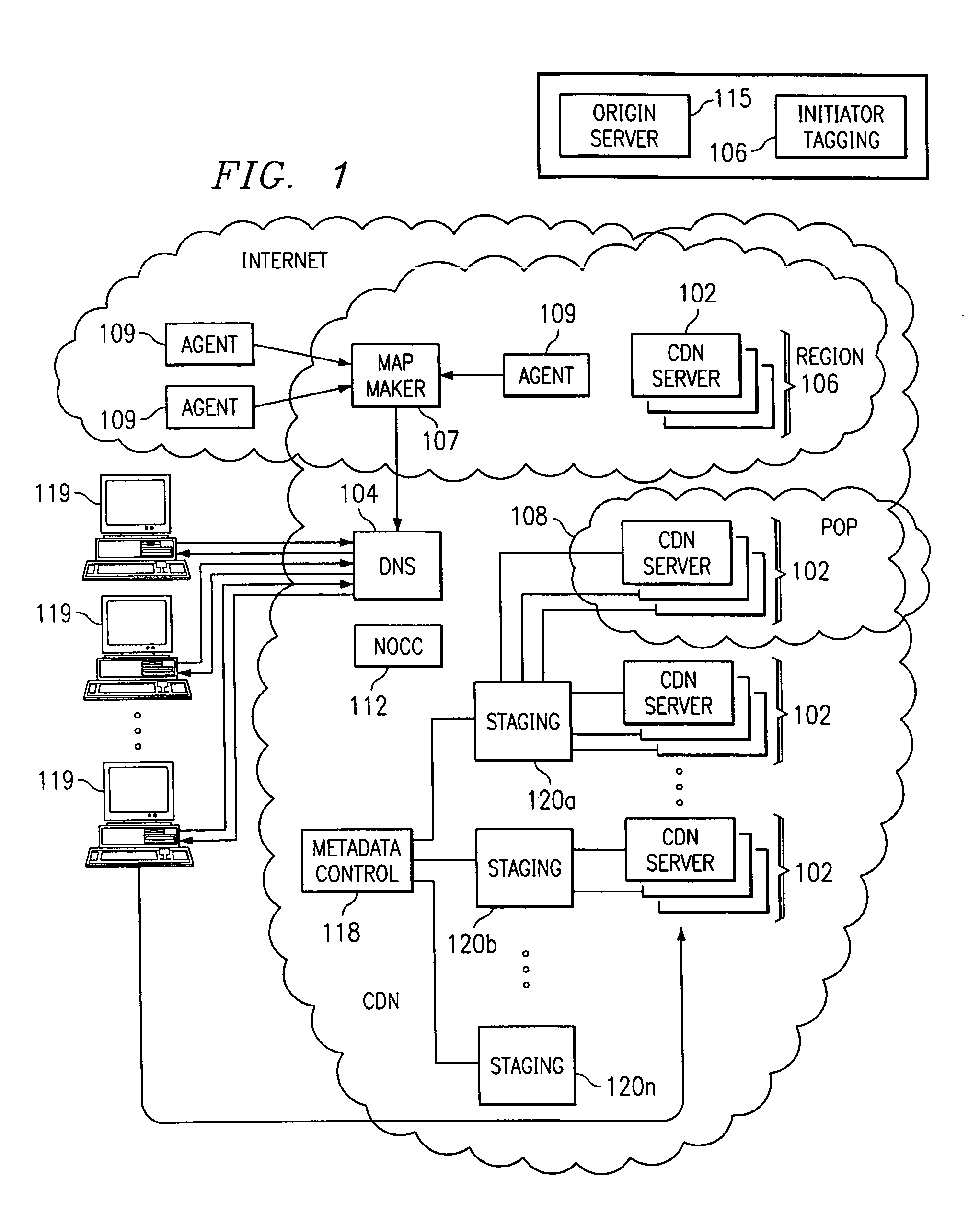

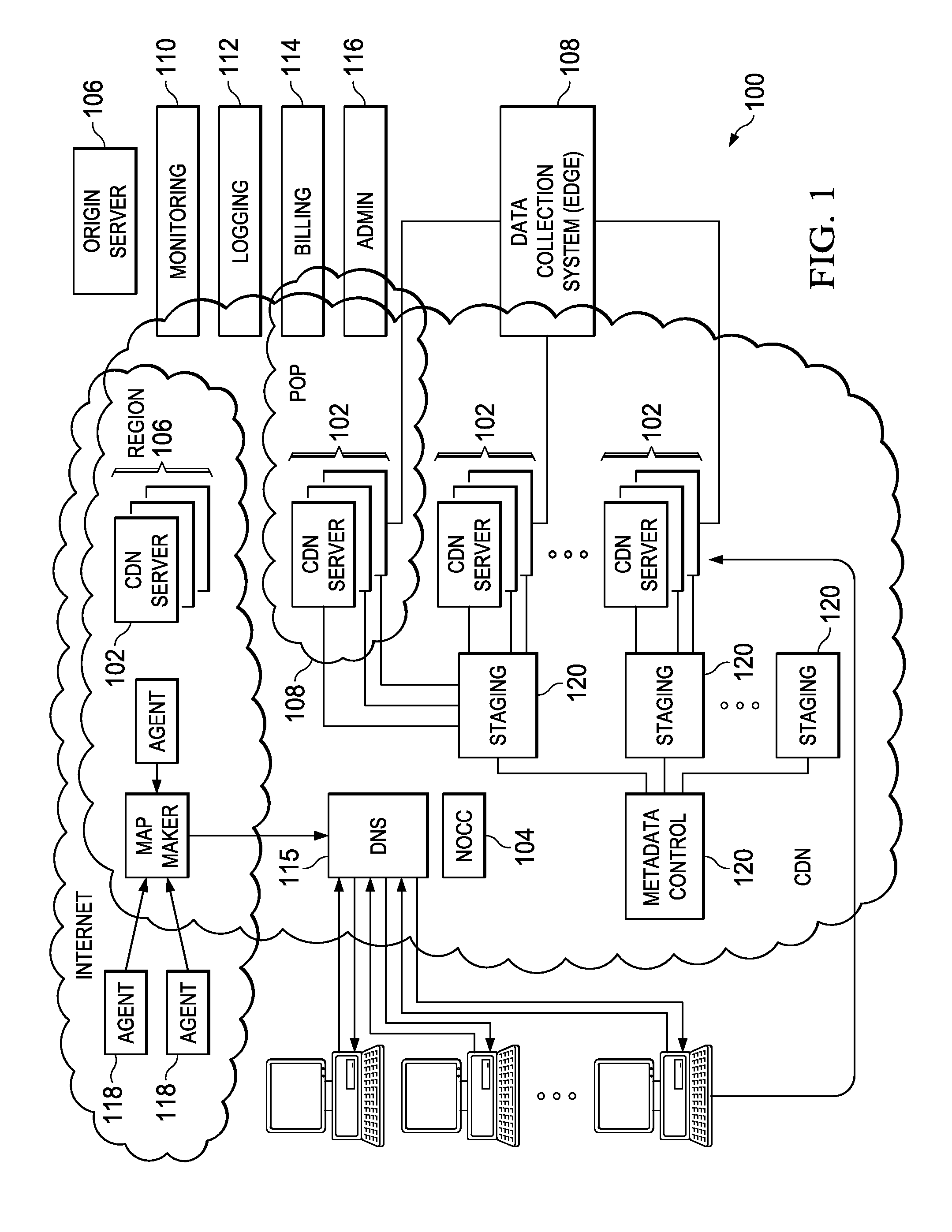

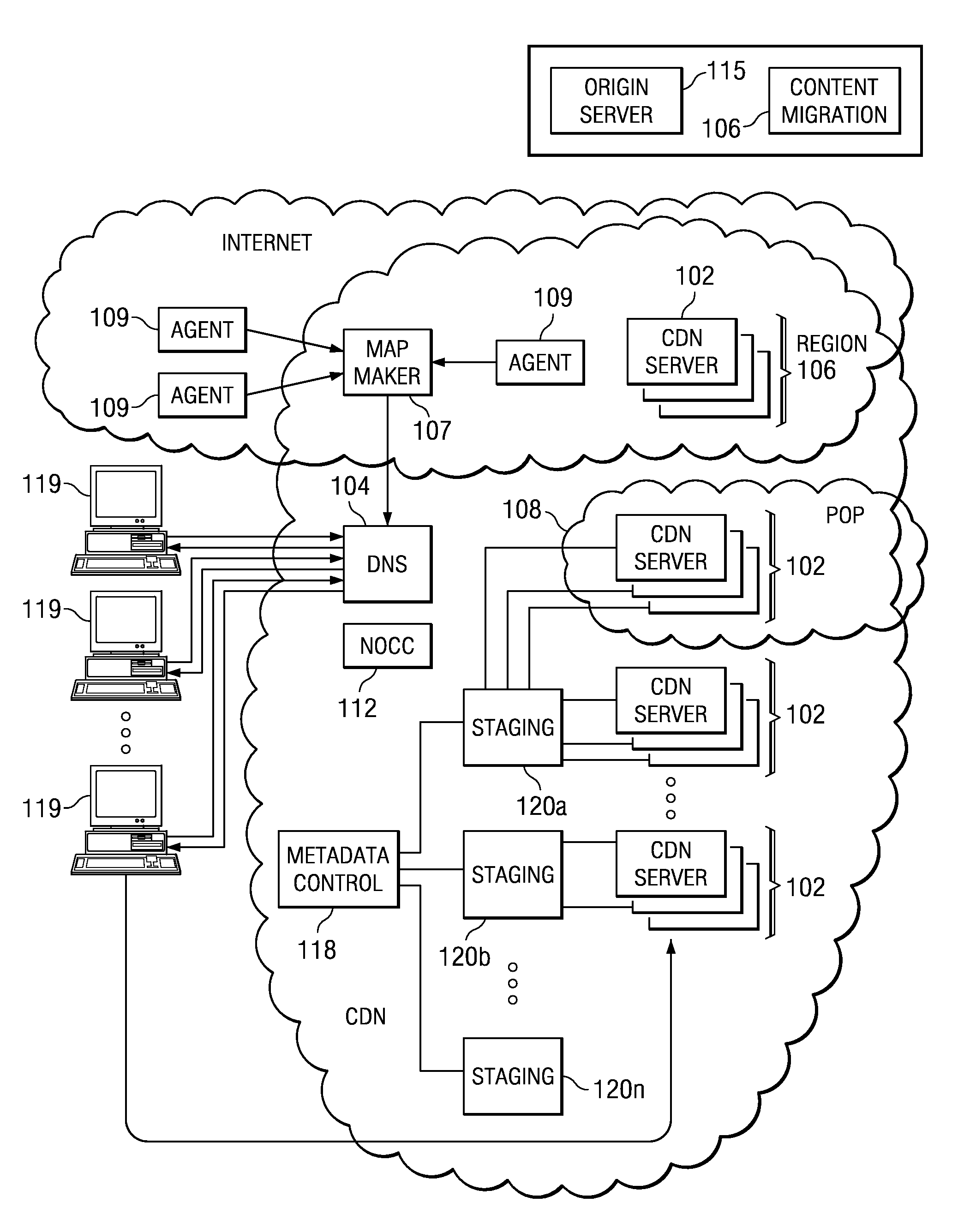

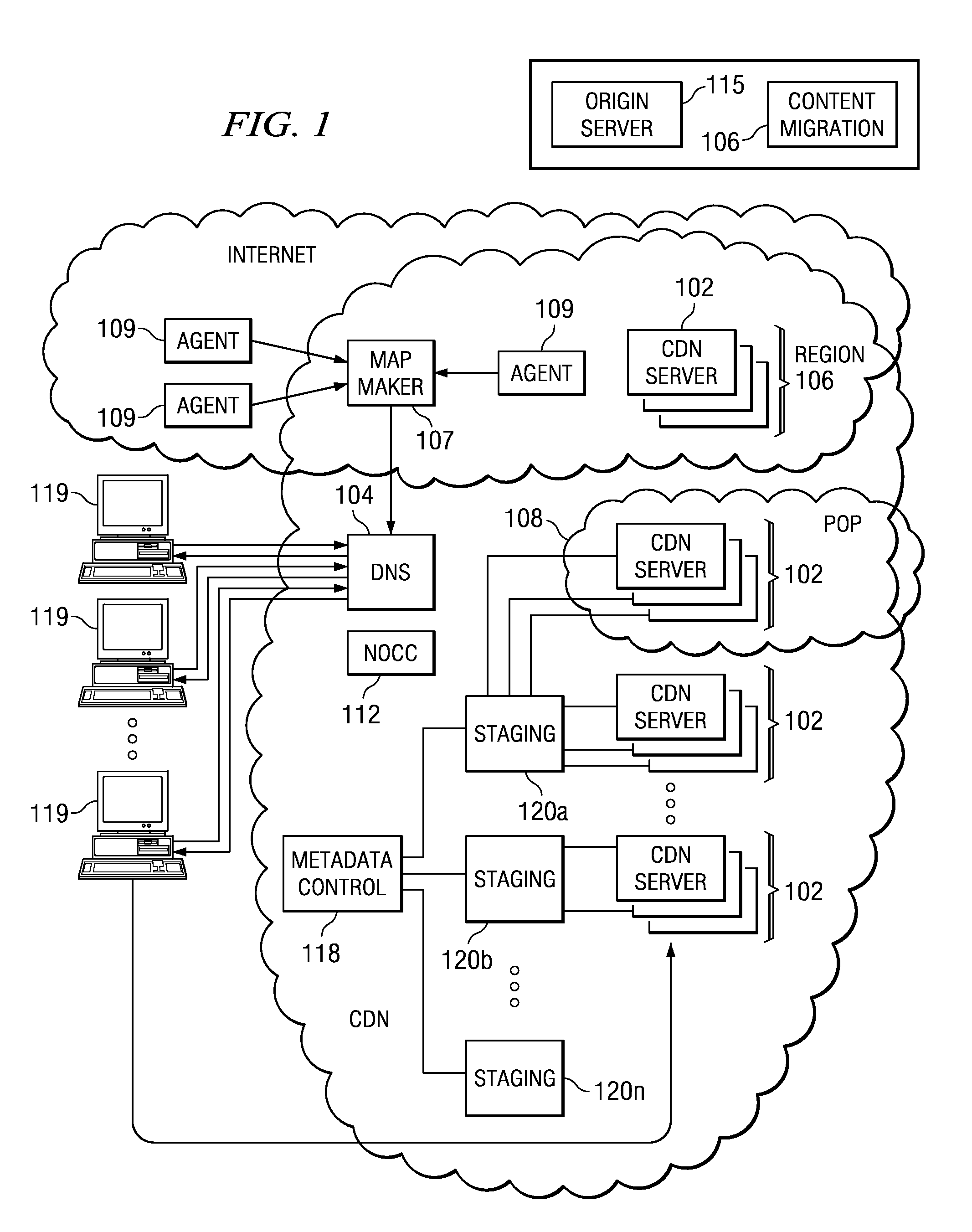

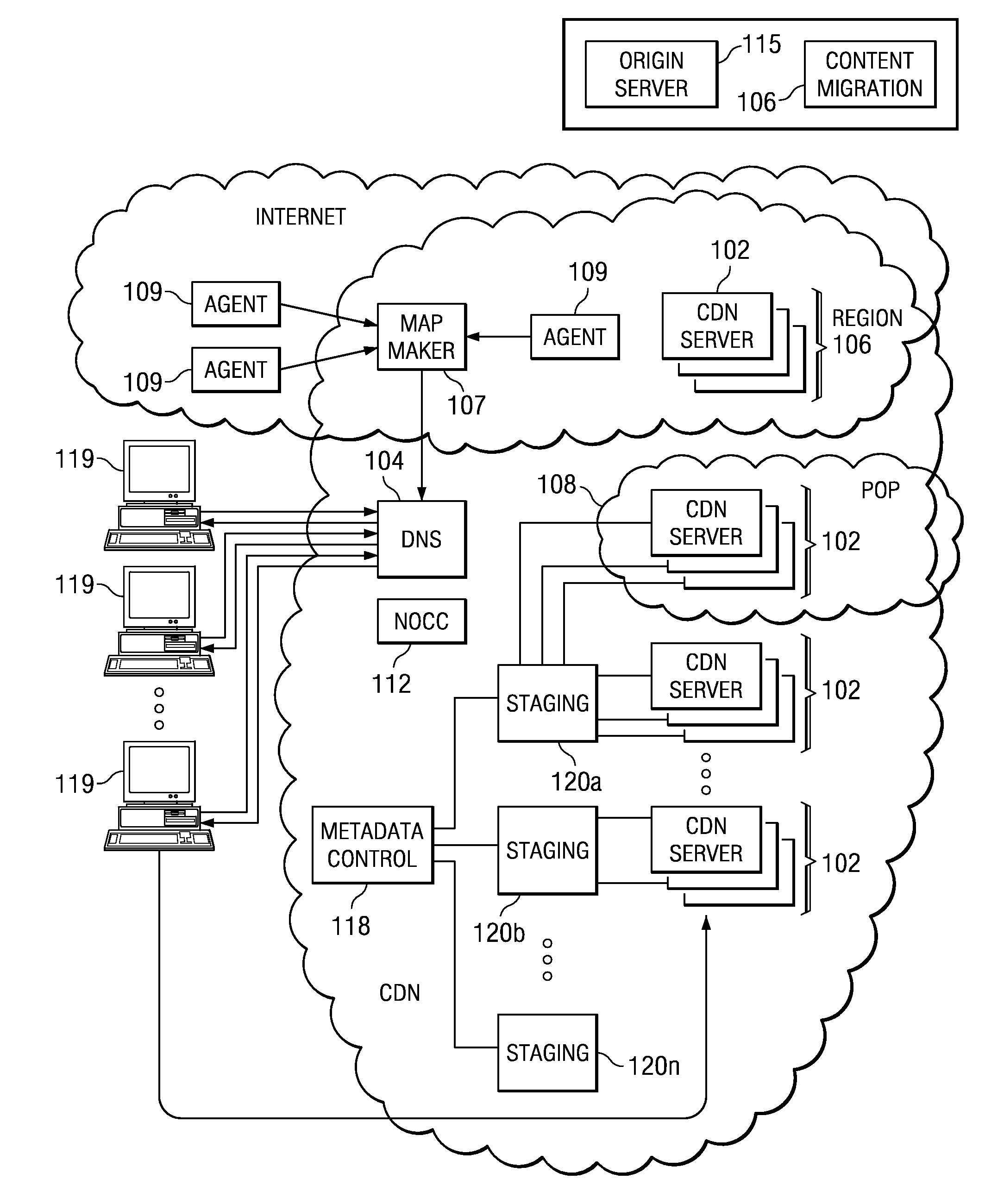

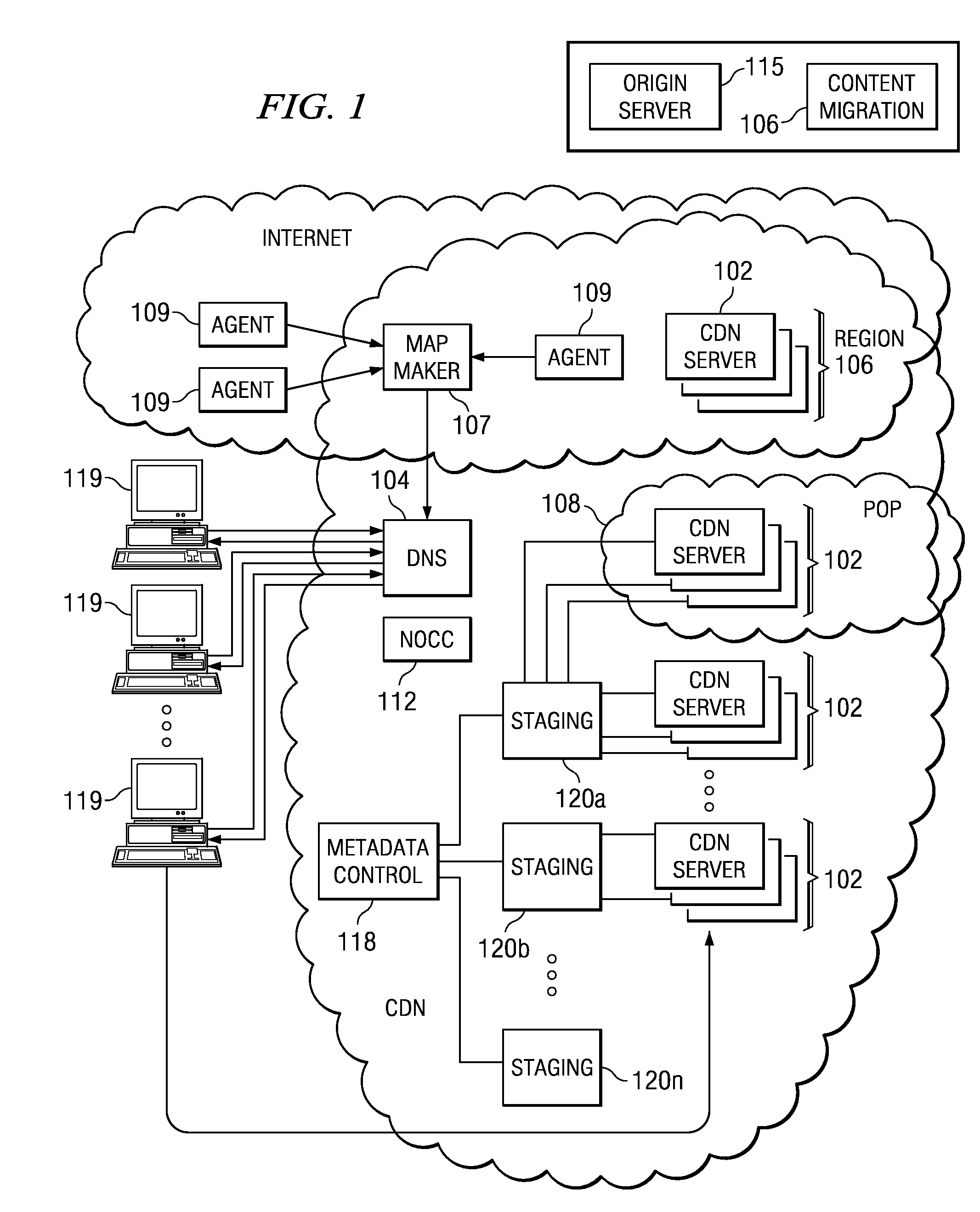

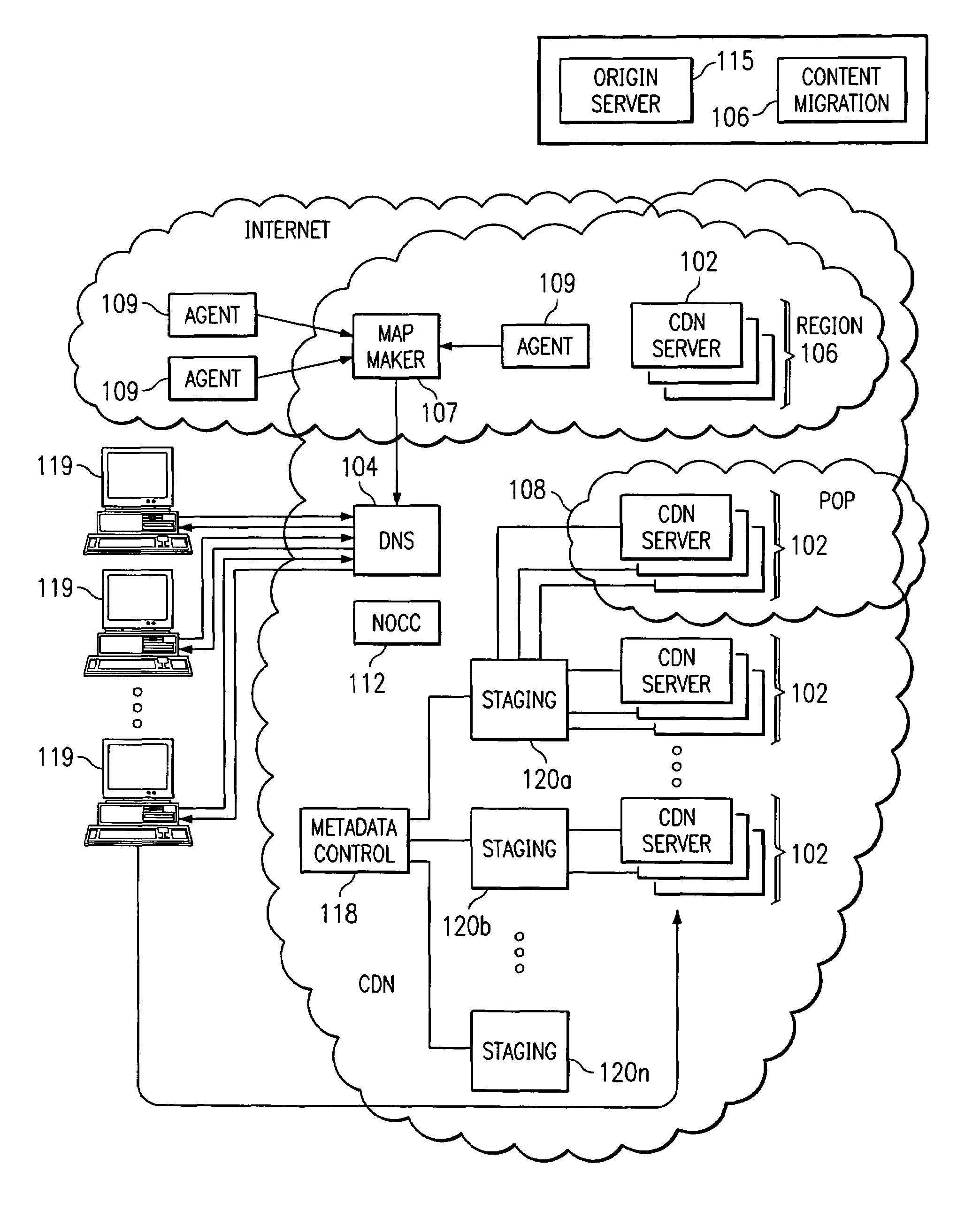

A routing mechanism, service or system operable in a distributed networking environment. One preferred environment is a content delivery network (CDN) wherein the present invention provides improved connectivity back to an origin server, especially for HTTP traffic. In a CDN, edge servers are typically organized into regions, with each region comprising a set of content servers that preferably operate in a peer-to-peer manner and share data across a common backbone such as a local area network (LAN). The inventive routing technique enables an edge server operating within a given CDN region to retrieve content (cacheable, non-cacheable and the like) from an origin server more efficiently by selectively routing through the CDN's own nodes, thereby avoiding network congestion and hot spots. The invention enables an edge server to fetch content from an origin server through an intermediate CDN server or, more generally, enables an edge server within a given first region to fetch content from the origin server through an intermediate CDN region.

Owner:AKAMAI TECH INC

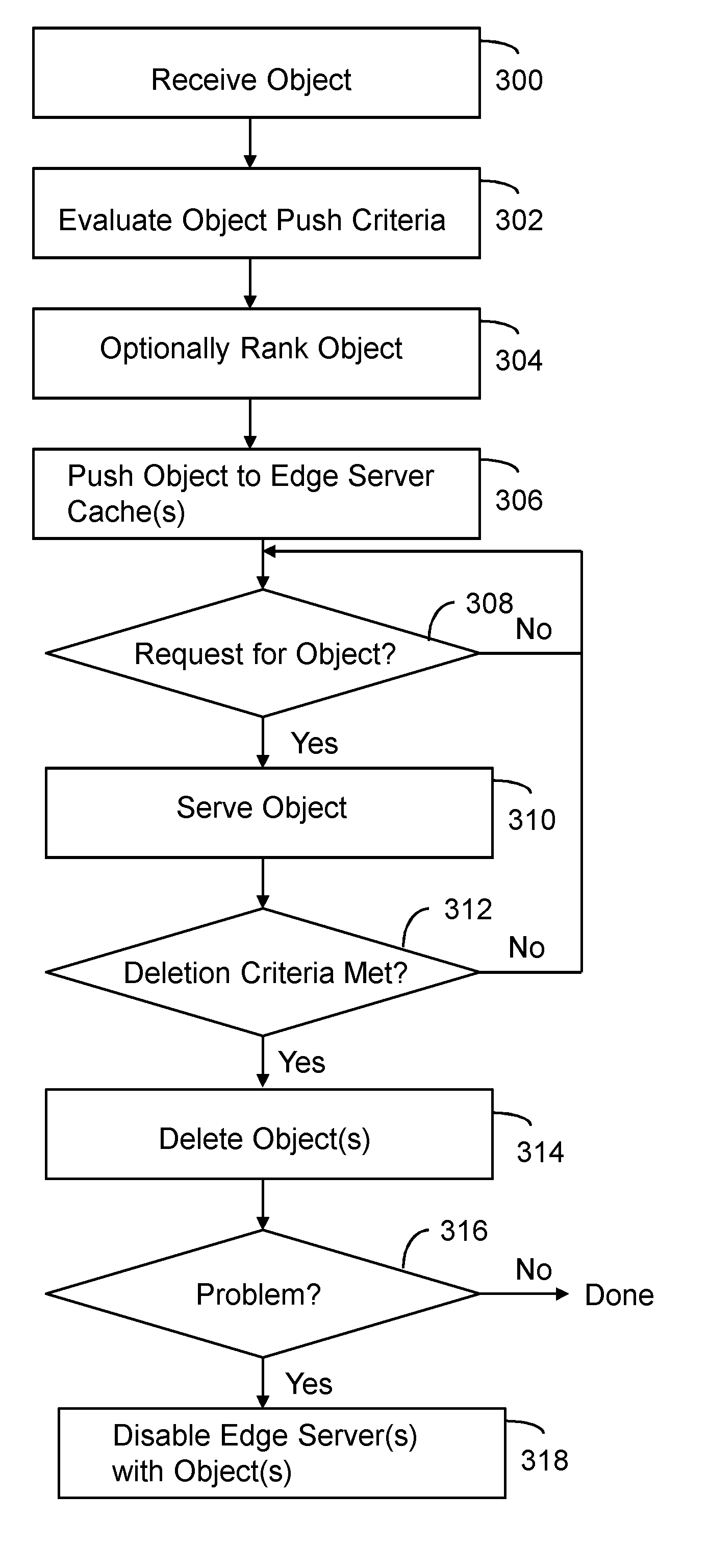

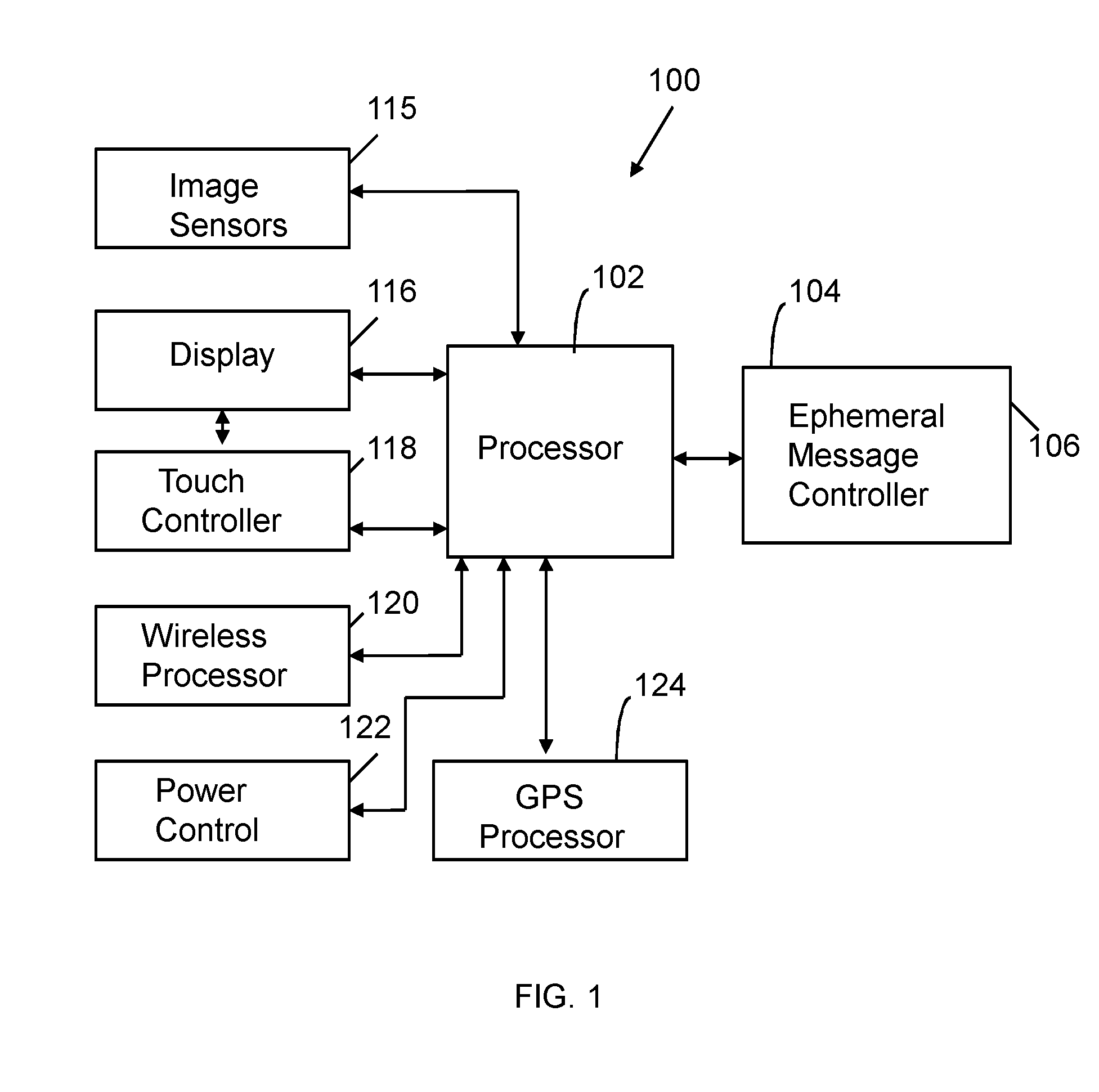

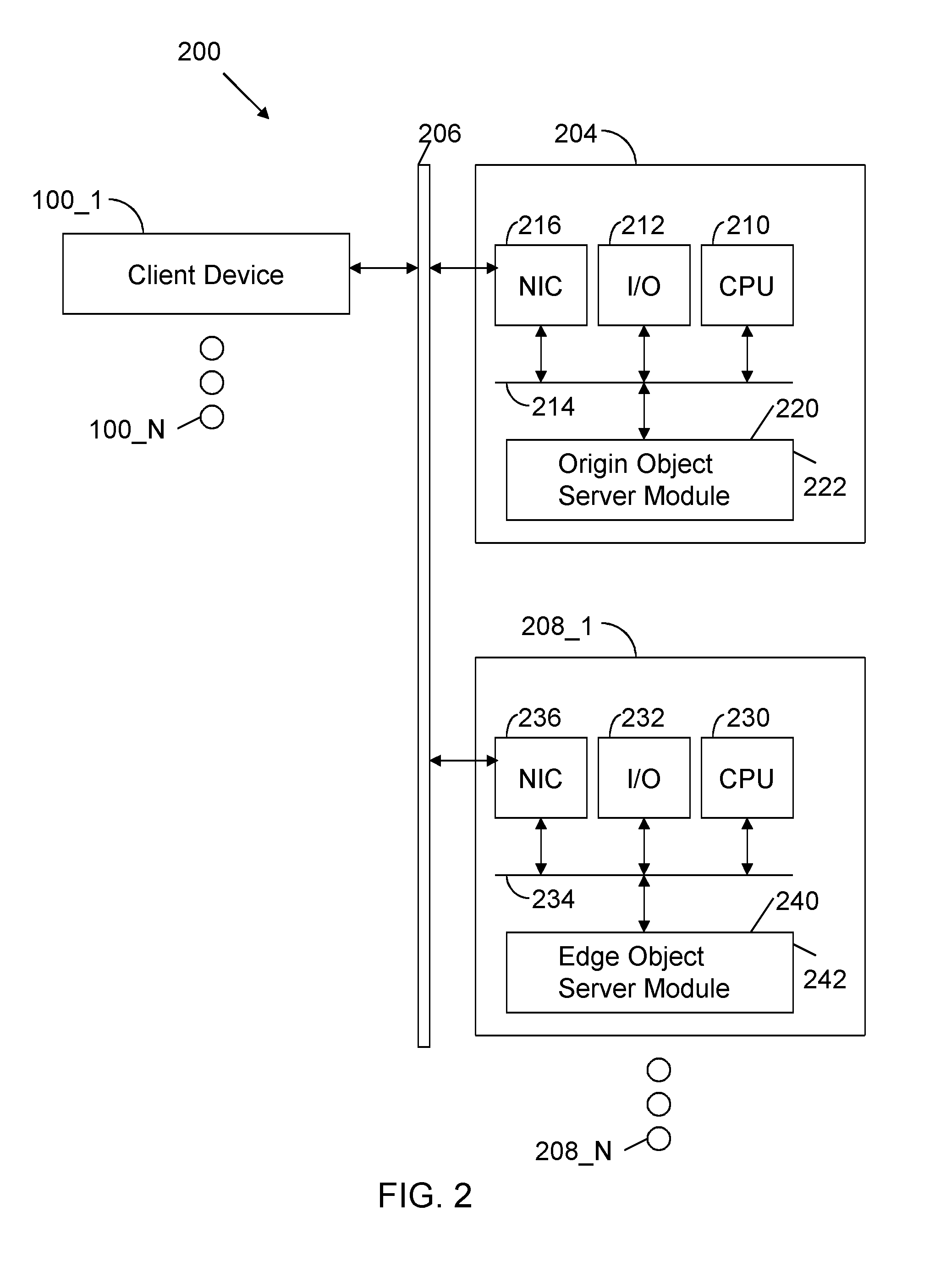

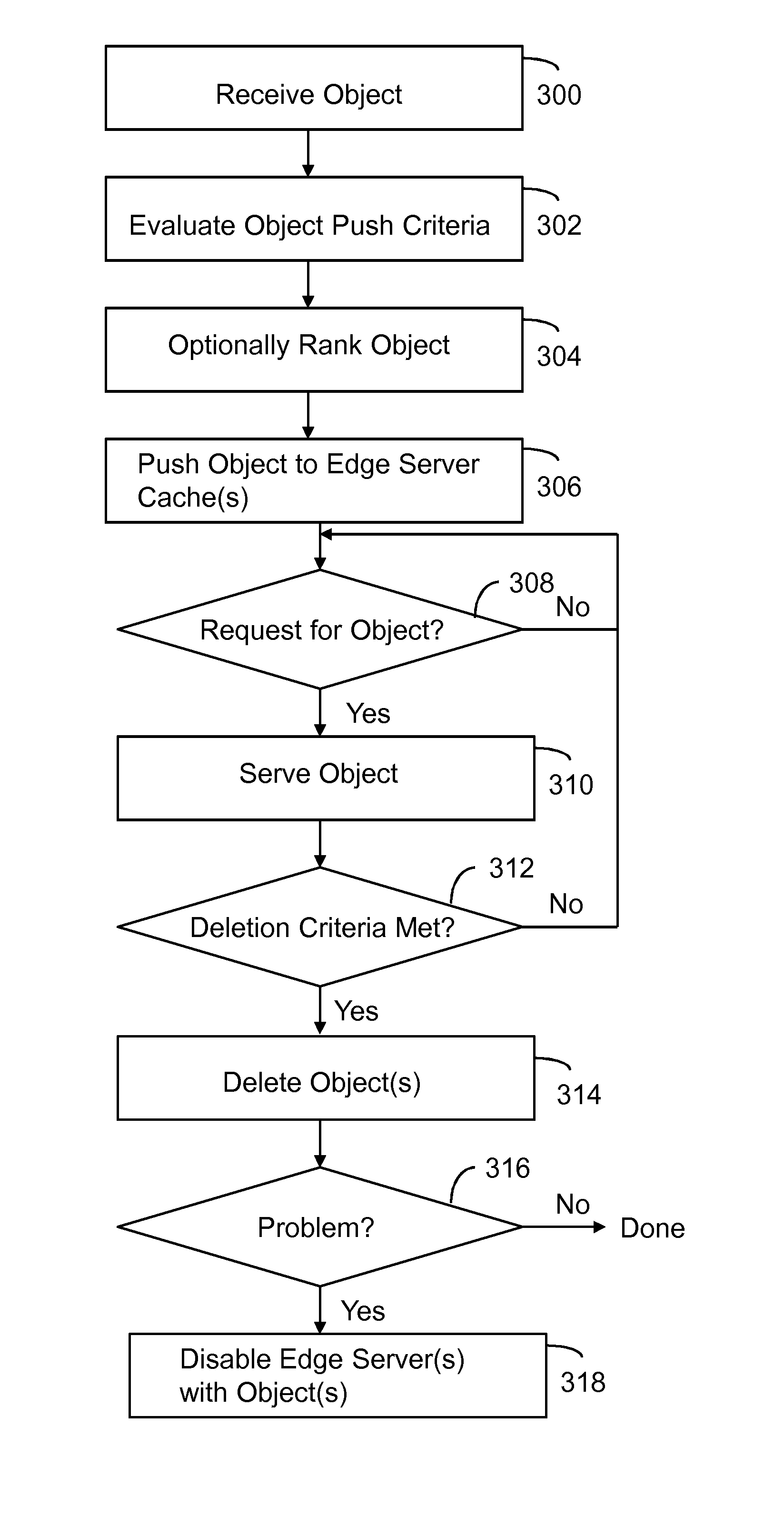

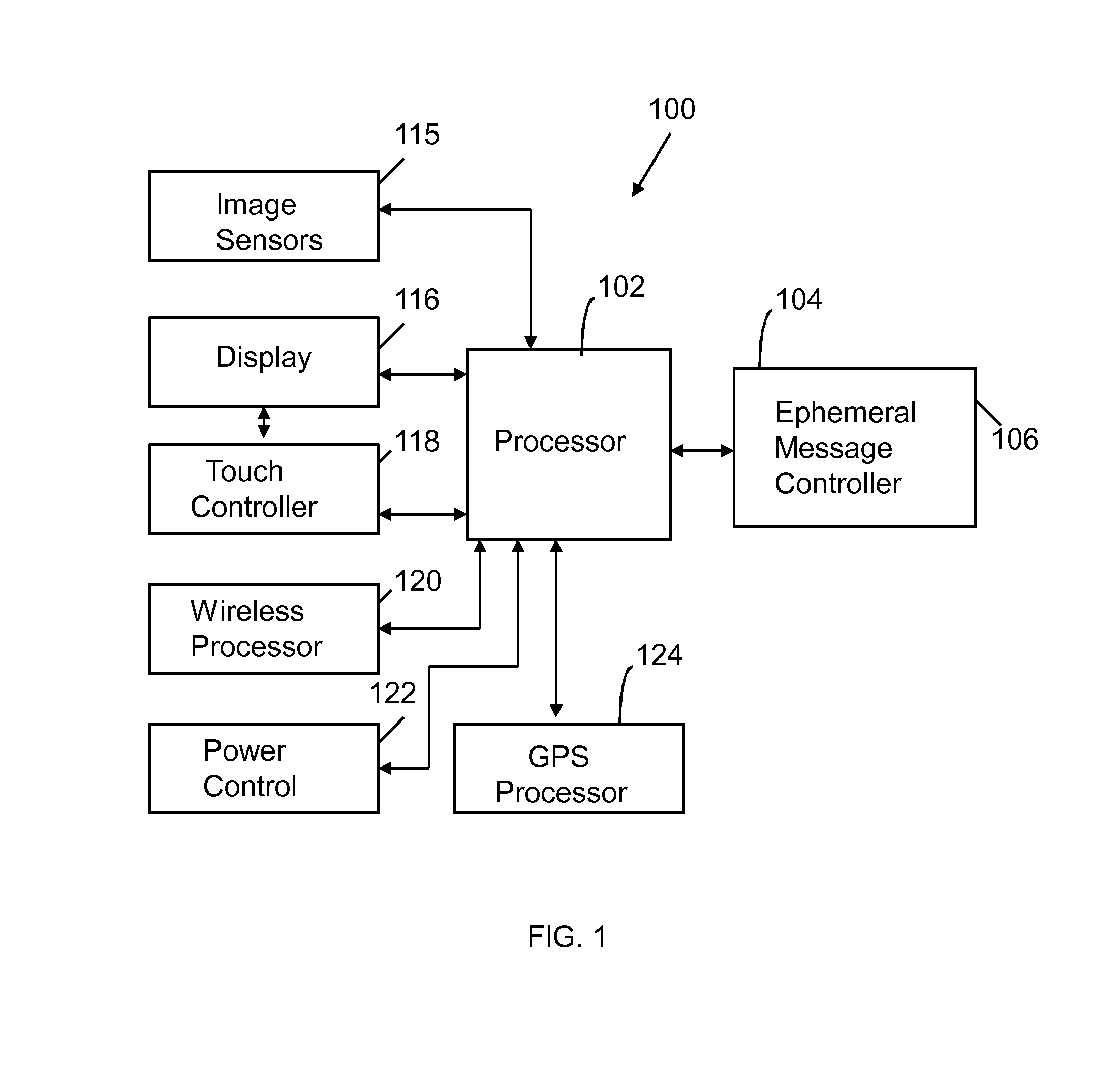

Content delivery network for ephemeral objects

ActiveUS9407712B1Multiple digital computer combinationsData switching networksEdge serverTime segment

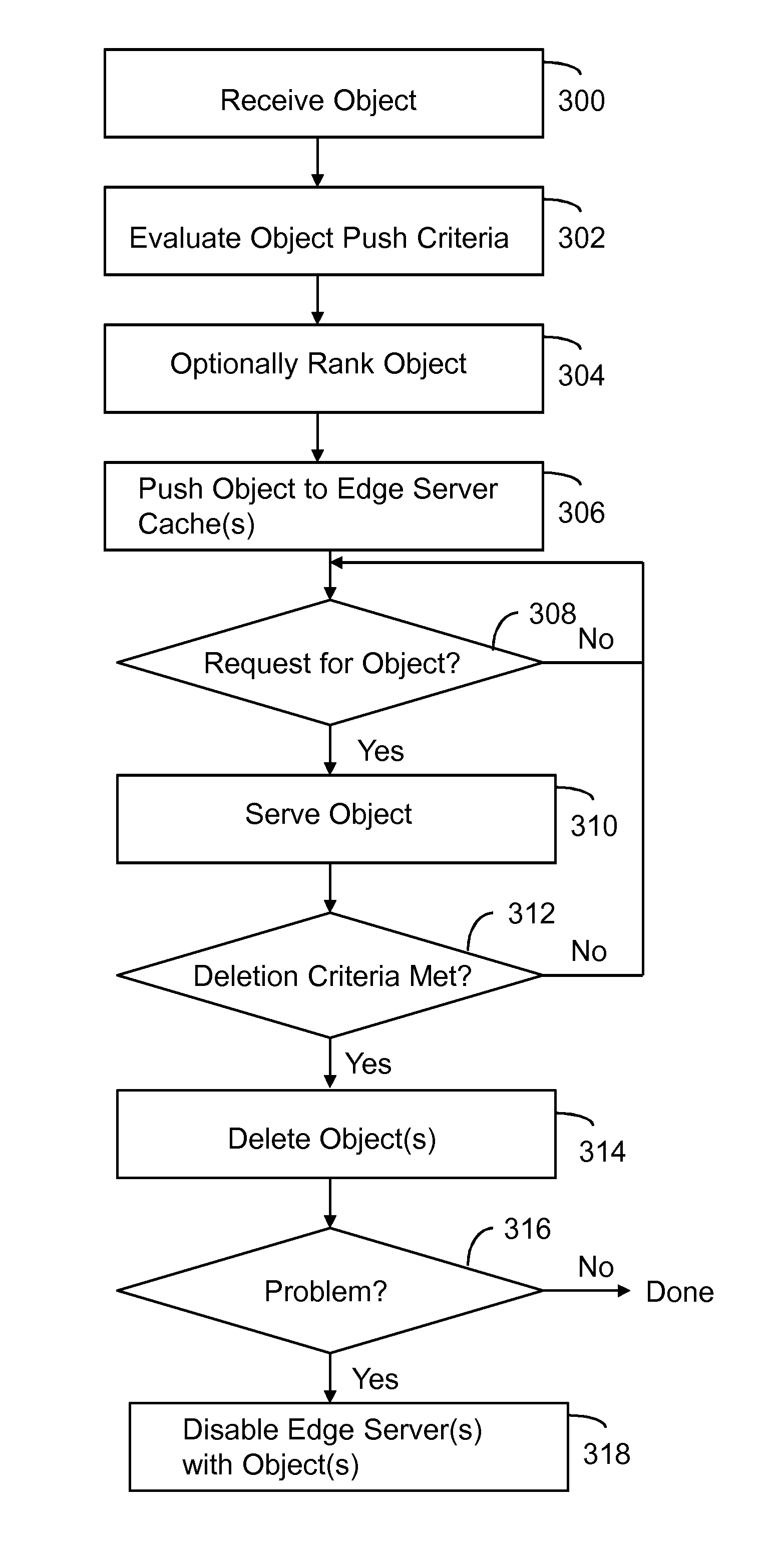

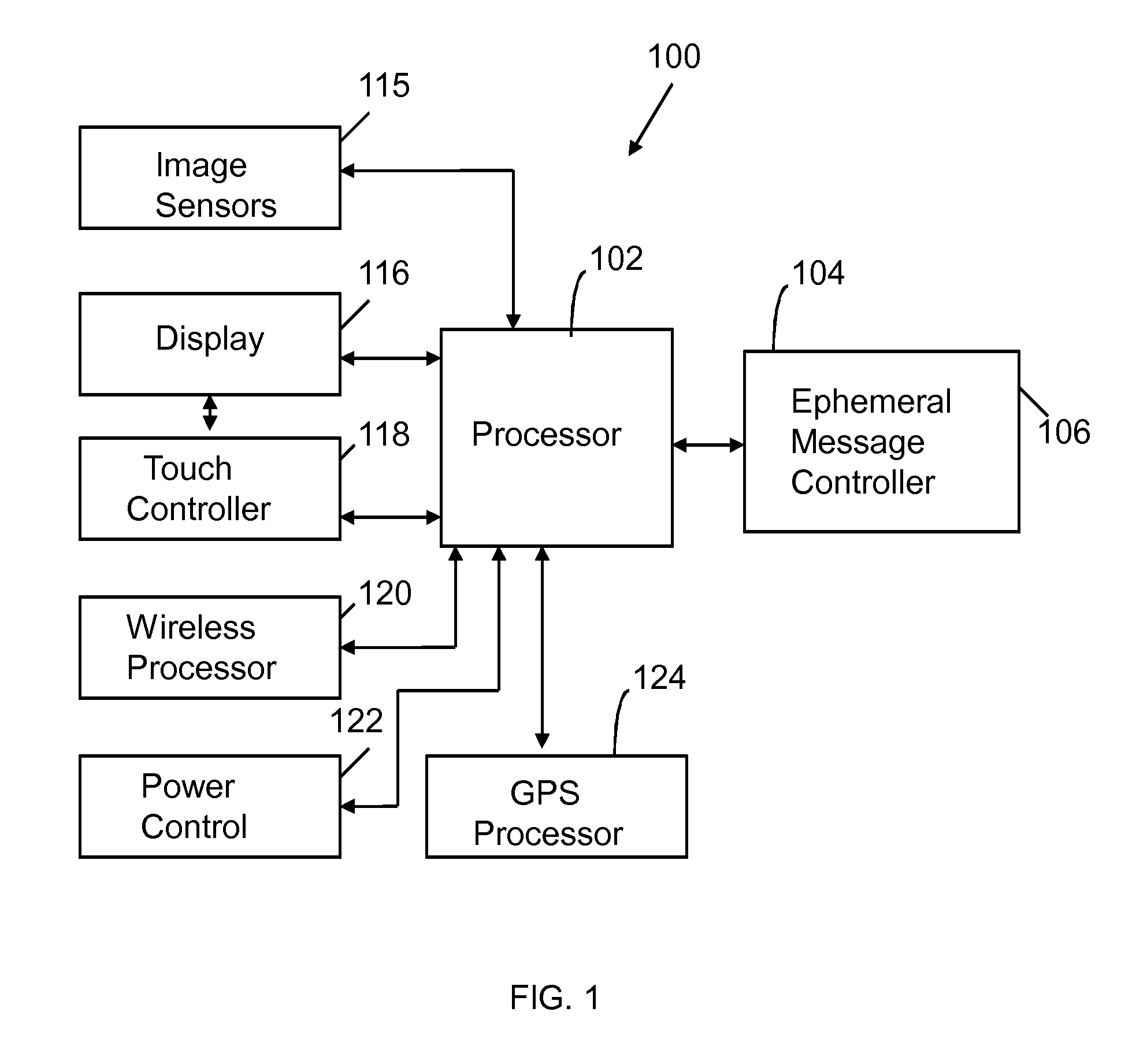

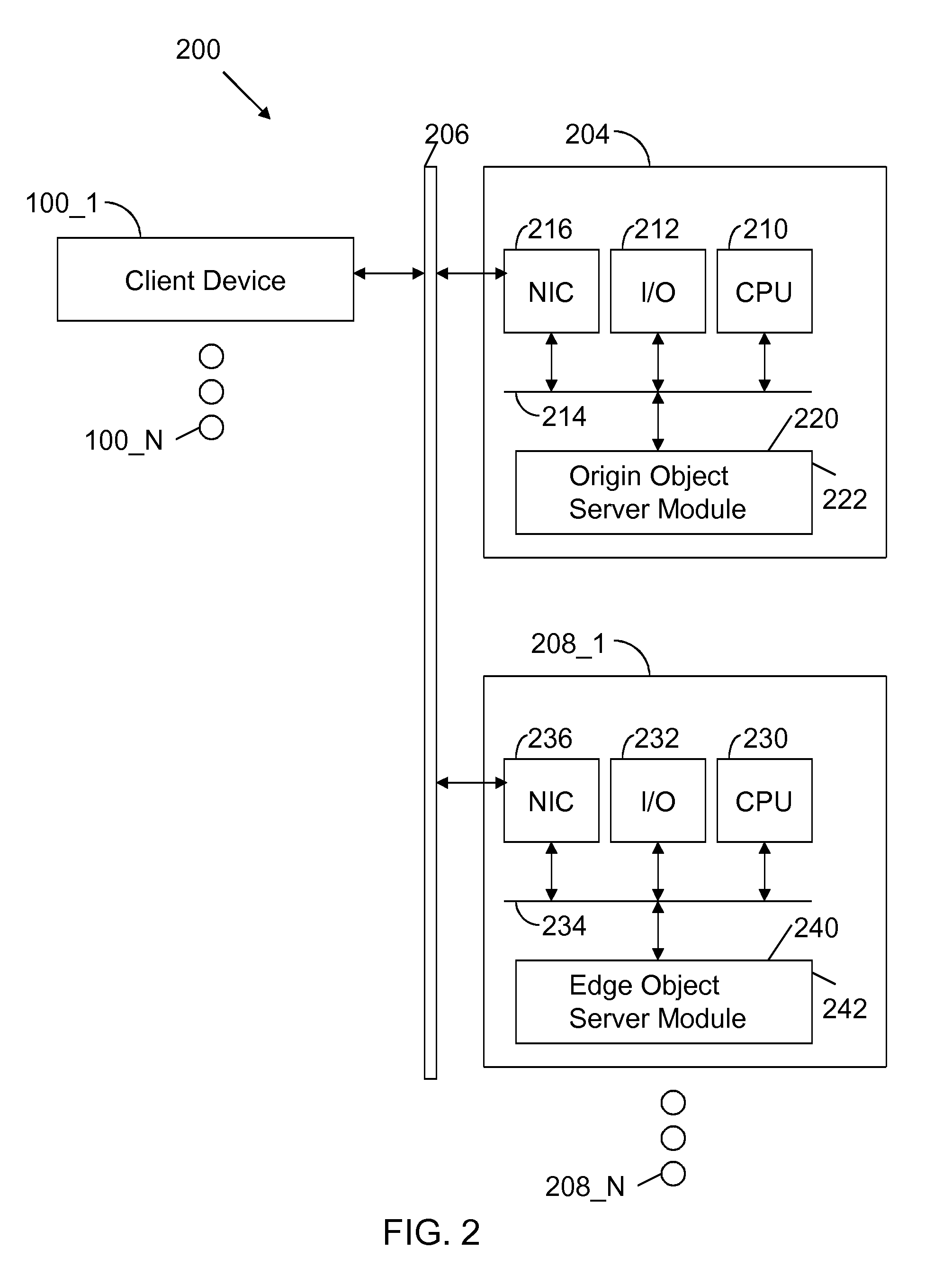

A computer implemented method includes receiving an object scheduled for automatic deletion after a specified viewing period, a specified number of views or a specified period of time. Object push criteria are evaluated. The object is pushed to an edge server cache in response to evaluating. The object is served in response to a request for the object.

Owner:SNAP INC

Optimal route selection in a content delivery network

ActiveUS7274658B2Improve speed and reliabilityRetrieve content (cacheableError preventionTransmission systemsFile area networkPeer-to-peer

A routing mechanism, service or system operable in a distributed networking environment. One preferred environment is a content delivery network (CDN) wherein the present invention provides improved connectivity back to an origin server, especially for HTTP traffic. In a CDN, edge servers are typically organized into regions, with each region comprising a set of content servers that preferably operate in a peer-to-peer manner and share data across a common backbone such as a local area network (LAN). The inventive routing technique enables an edge server operating within a given CDN region to retrieve content (cacheable, non-cacheable and the like) from an origin server more efficiently by selectively routing through the CDN's own nodes, thereby avoiding network congestion and hot spots. The invention enables an edge server to fetch content from an origin server through an intermediate CDN server or, more generally, enables an edge server within a given first region to fetch content from the origin server through an intermediate CDN region. As used herein, this routing through an intermediate server, node or region is sometimes referred to as “tunneling.”

Owner:AKAMAI TECH INC

Managing web tier session state objects in a content delivery network (CDN)

InactiveUS20070271385A1Error detection/correctionMultiple digital computer combinationsEdge serverClient-side

Business applications running on a content delivery network (CDN) having a distributed application framework can create, access and modify state for each client. Over time, a single client may desire to access a given application on different CDN edge servers within the same region and even across different regions. Each time, the application may need to access the latest “state” of the client even if the state was last modified by an application on a different server. A difficulty arises when a process or a machine that last modified the state dies or is temporarily or permanently unavailable. The present invention provides techniques for migrating session state data across CDN servers in a manner transparent to the user. A distributed application thus can access a latest “state” of a client even if the state was last modified by an application instance executing on a different CDN server, including a nearby (in-region) or a remote (out-of-region) server.

Owner:AKAMAI TECH INC

Content delivery network for ephemeral objects

A computer implemented method includes receiving an object scheduled for automatic deletion after a specified viewing period, a specified number of views or a specified period of time. Object push criteria are evaluated. The object is pushed to an edge server cache in response to evaluating. The object is served in response to a request for the object.

Owner:SNAP INC

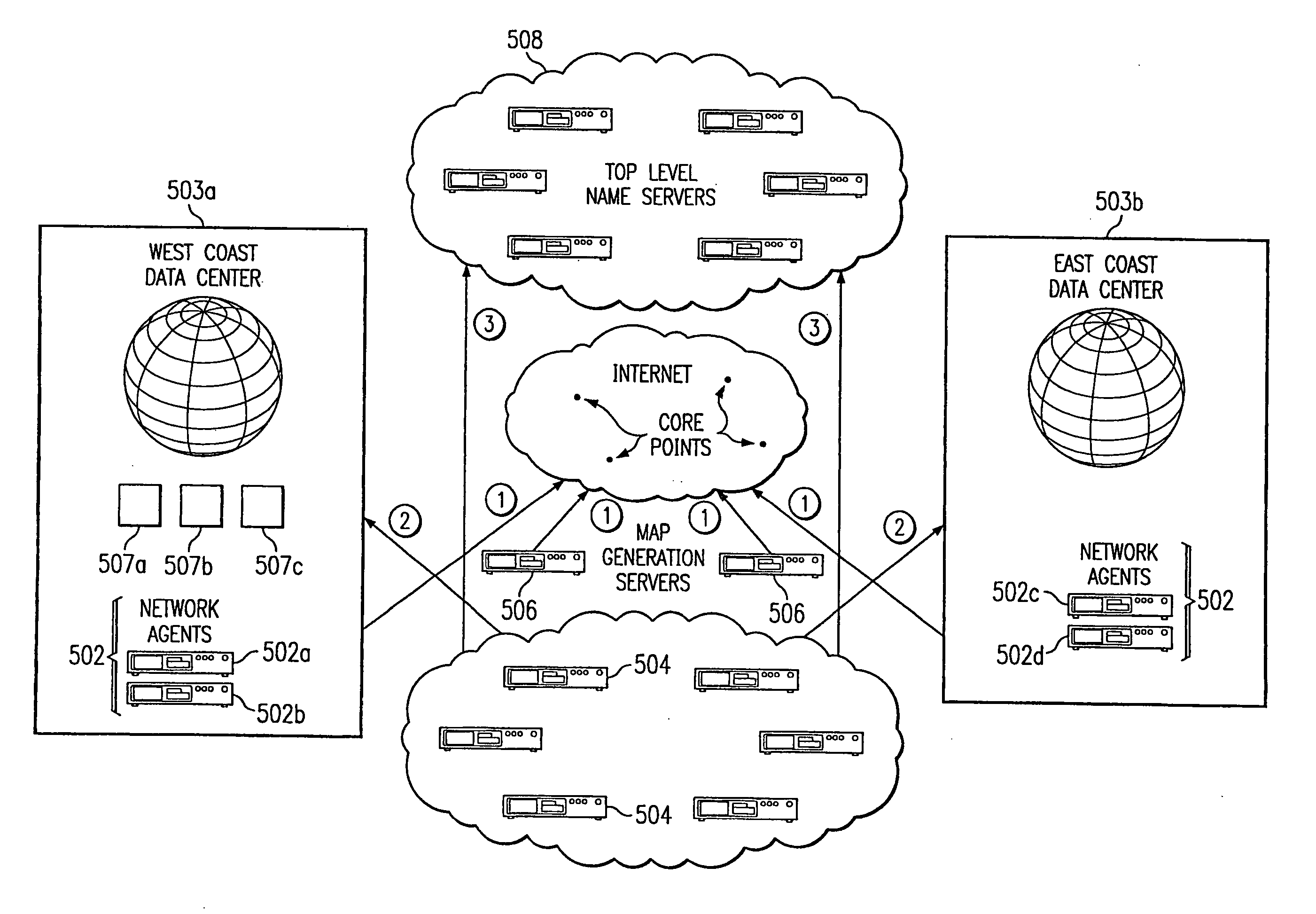

Content delivery network map generation using passive measurement data

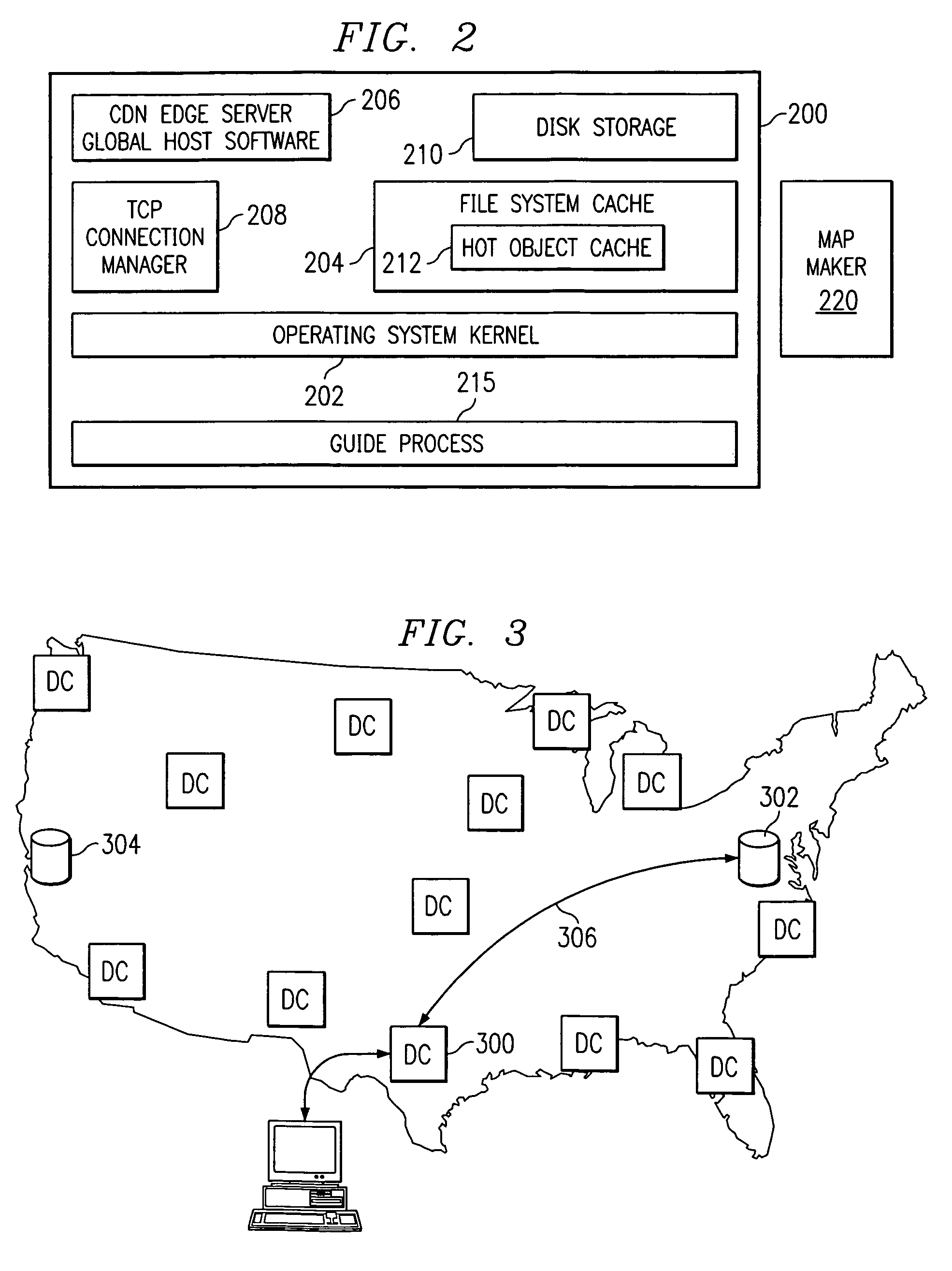

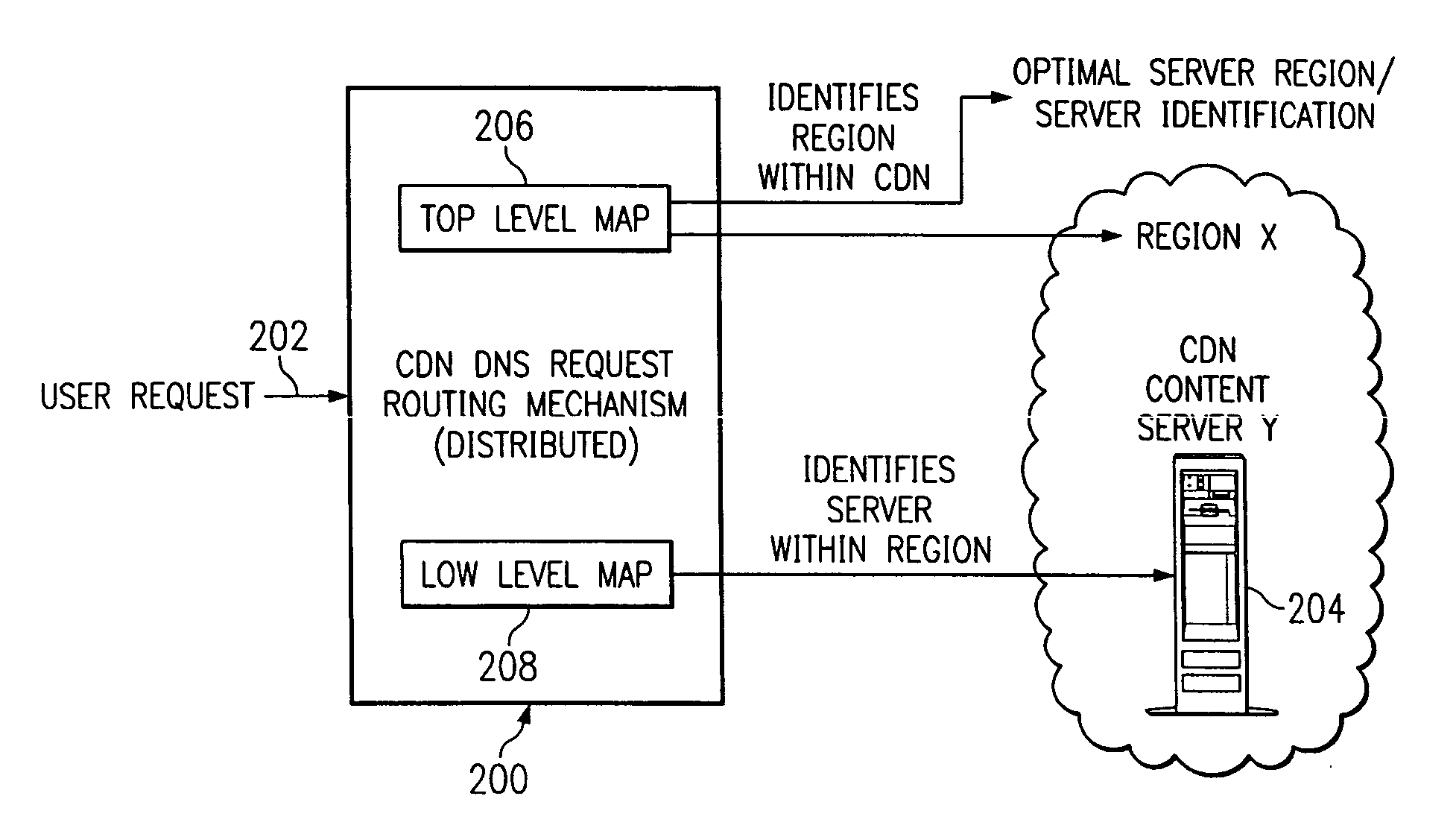

ActiveUS7007089B2Easy mappingImprove subsequent routingDigital computer detailsData switching networksGeneration processRouting decision

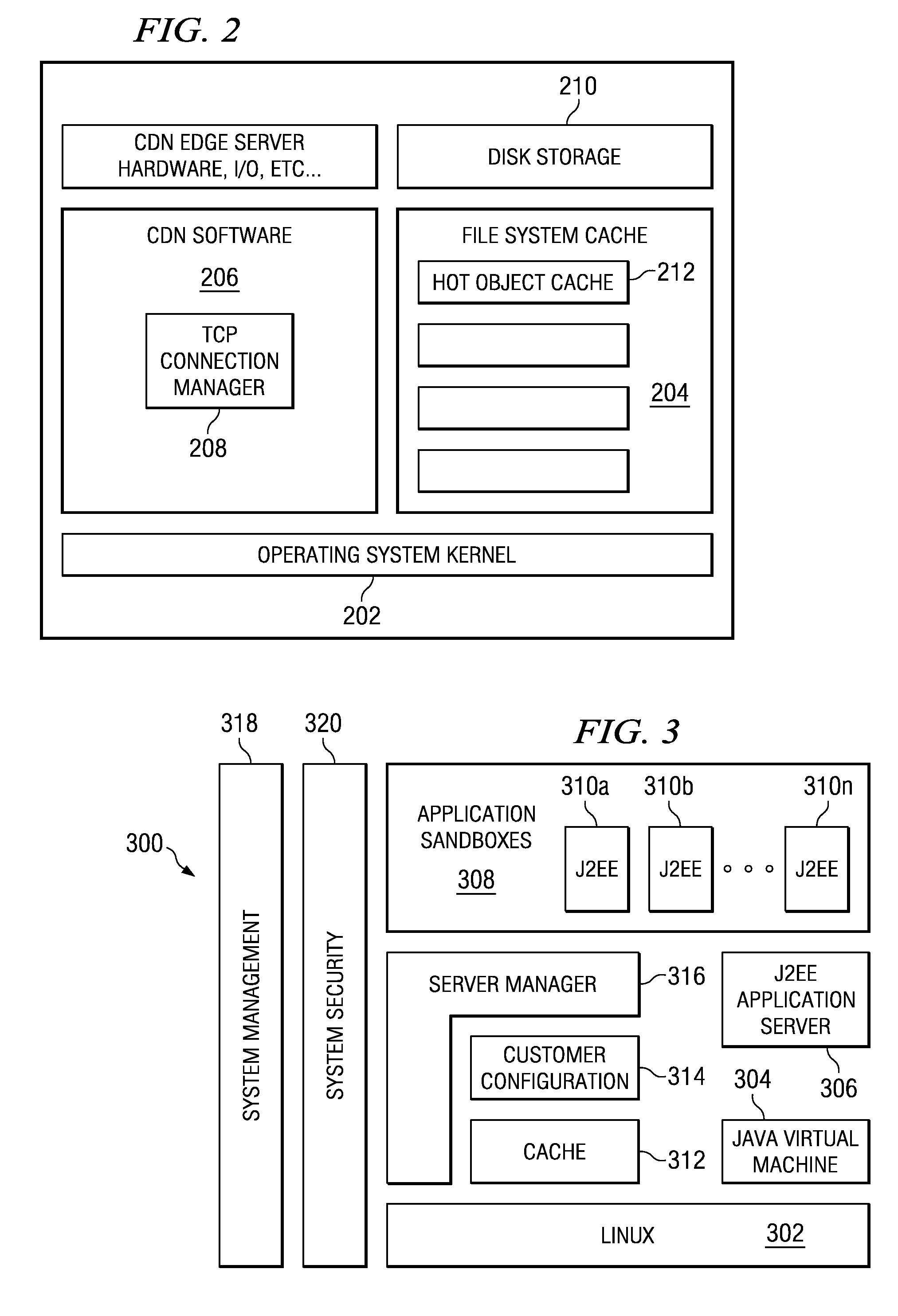

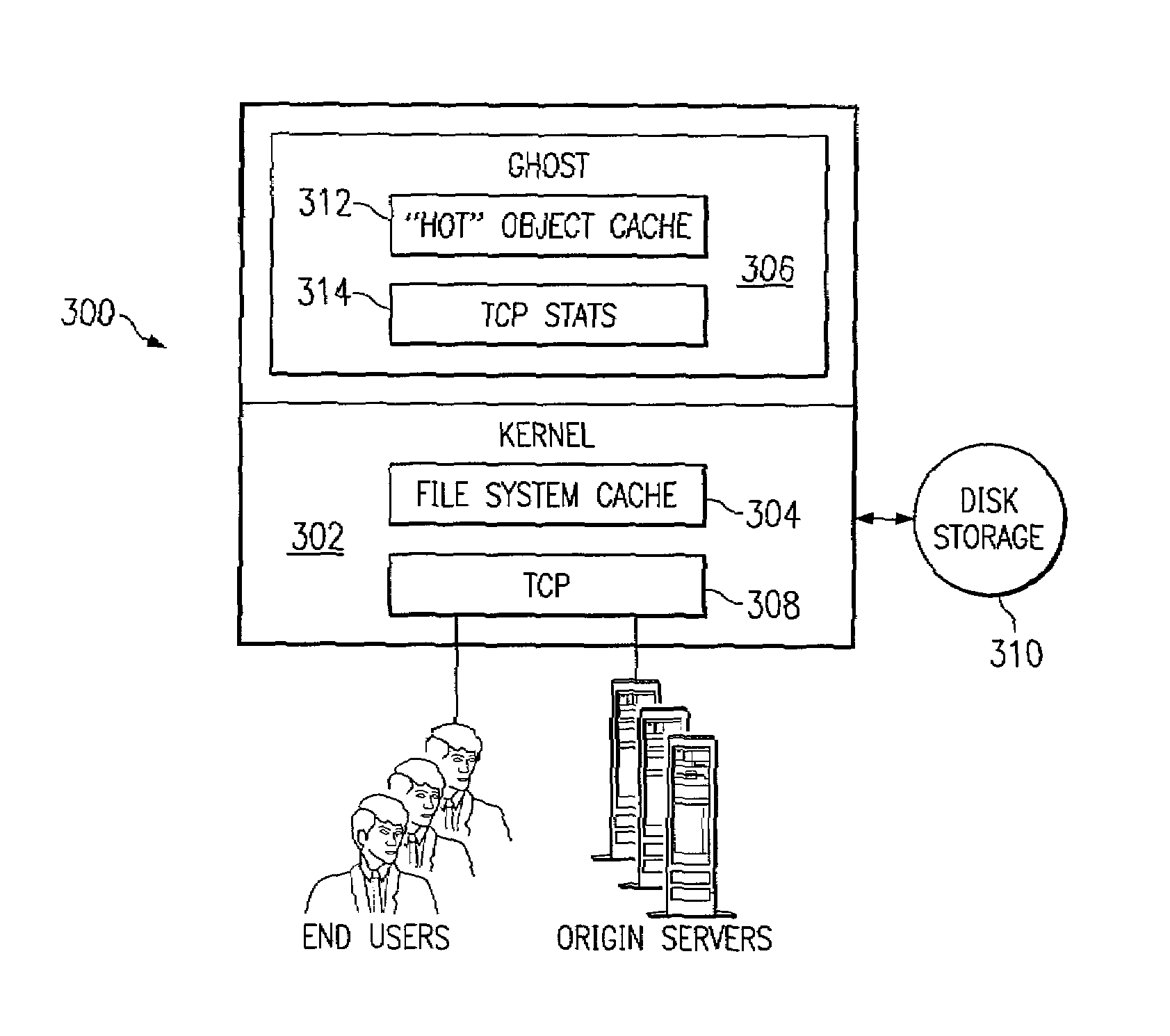

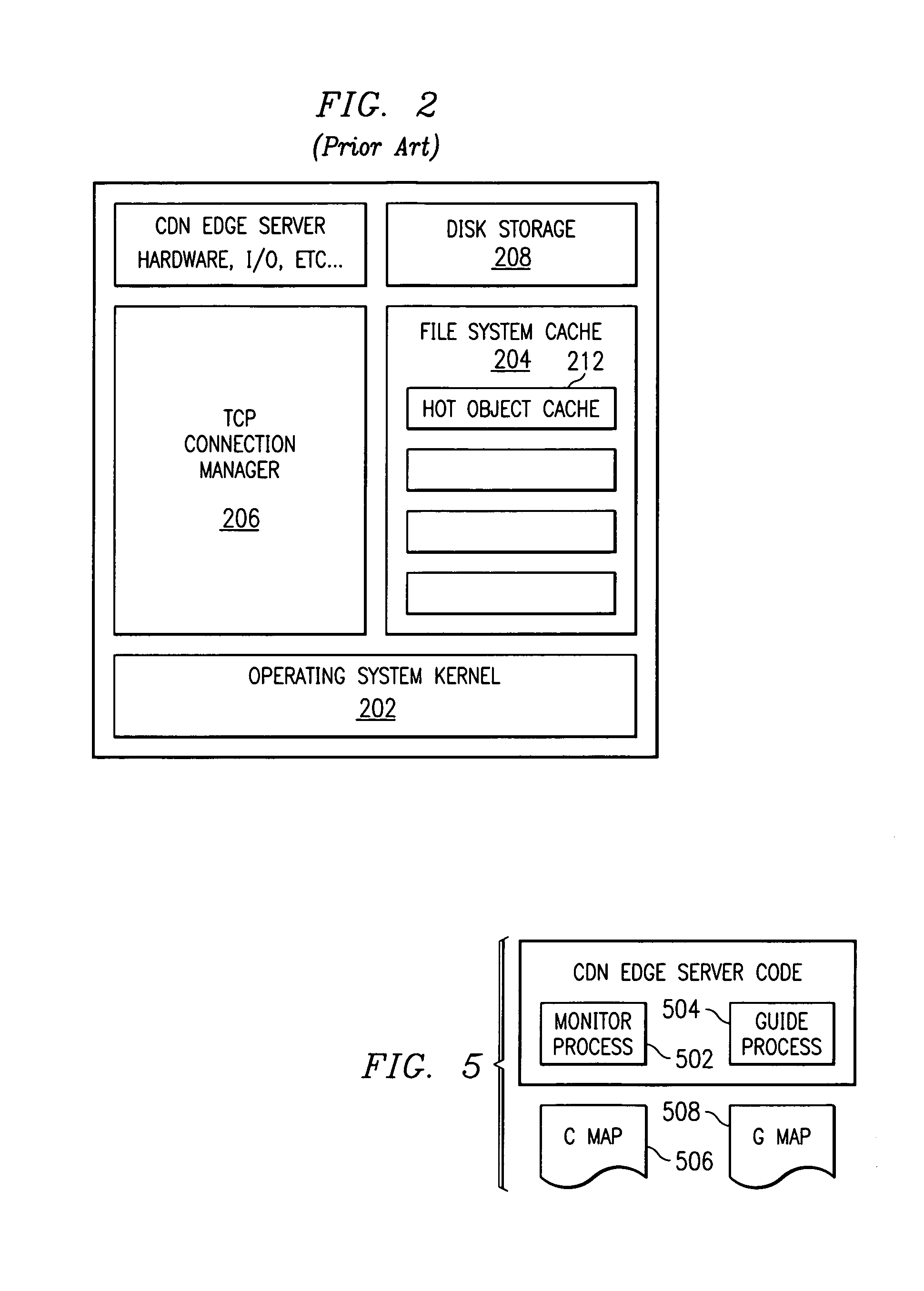

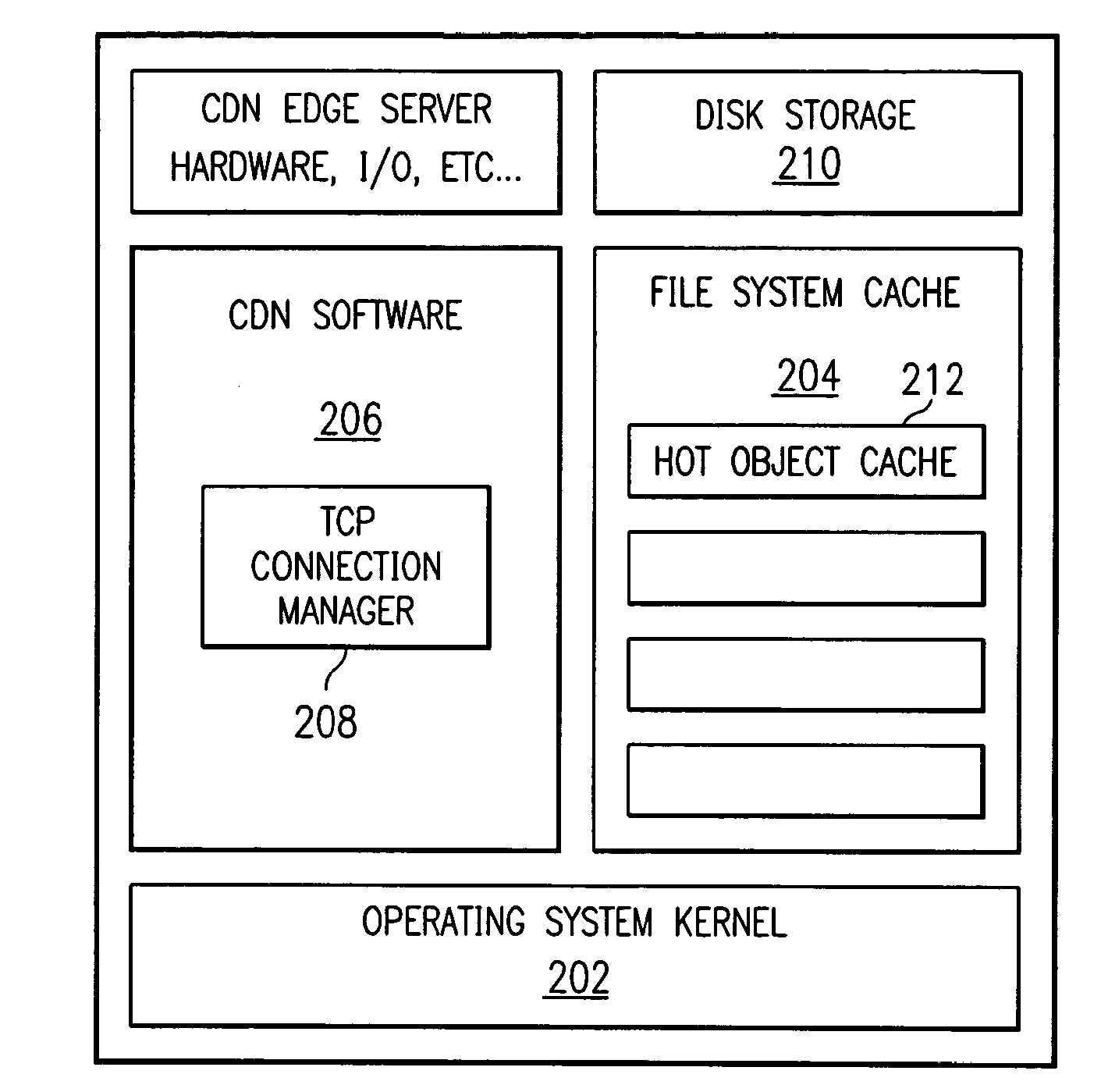

A routing method operative in a content delivery network (CDN) where the CDN includes a request routing mechanism for routing clients to subsets of edge servers within the CDN. According to the routing method, TCP connection data statistics are collected are edge servers located within a CDN region. The TCP connection data statistics are collected as connections are established between requesting clients and the CDN region and requests are serviced by those edge servers. Periodically, e.g., daily, the connection data statistics are provdied from the edge servers in a region back to the request routing mechanism. The TCP connection data statistics are then used by the request routing mechanism in subsequent routing decisions and, in particular, in the map generation processes. Thus, for example, the TCP connection data may be used to determine whether a given quality of service is being obtained by routing requesting clients to the CDN region. If not, the request routing mechanism generates a map that directs requesting clients away from the CDN region for a given time period or until the quality of service improves.

Owner:AKAMAI TECH INC

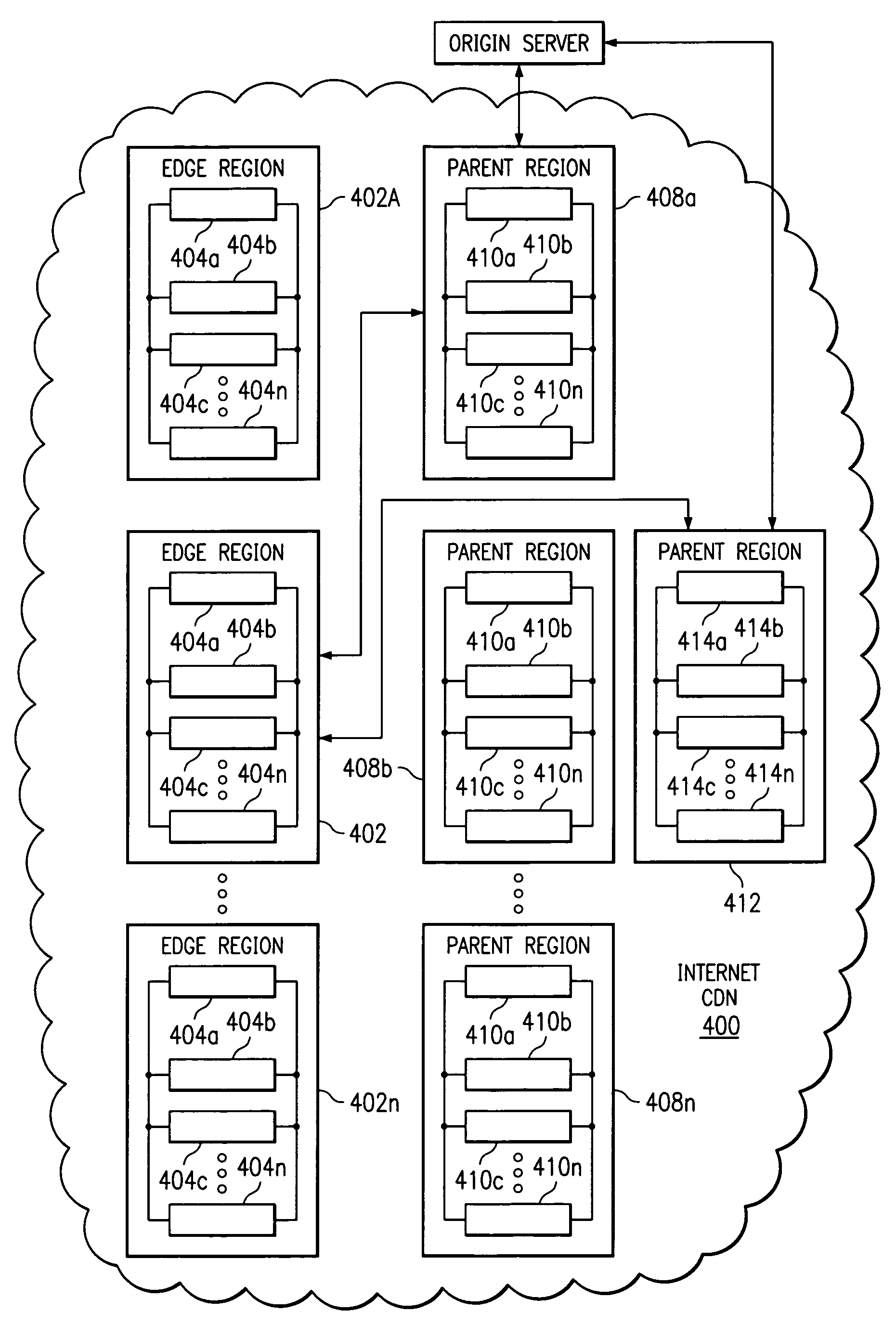

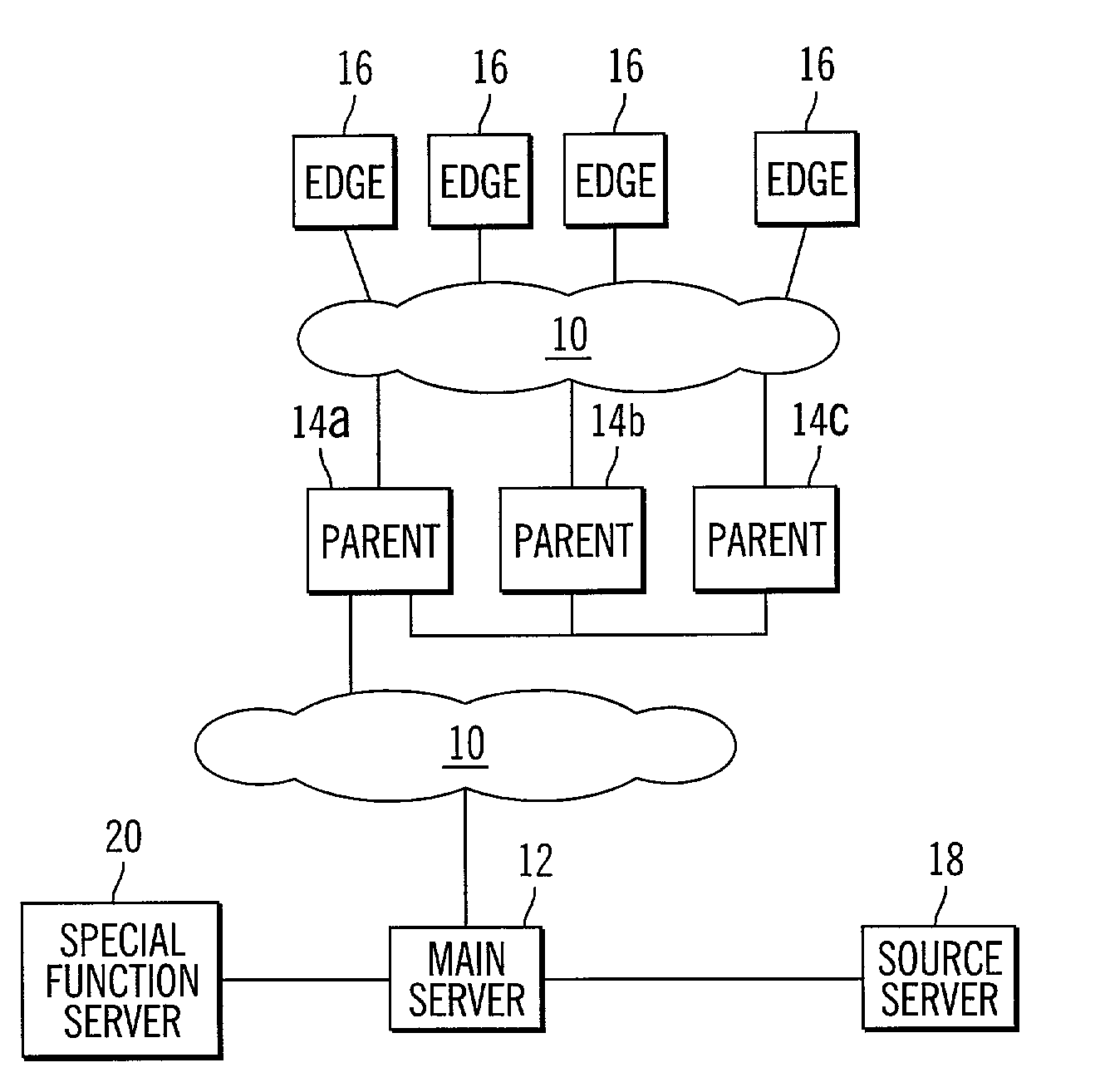

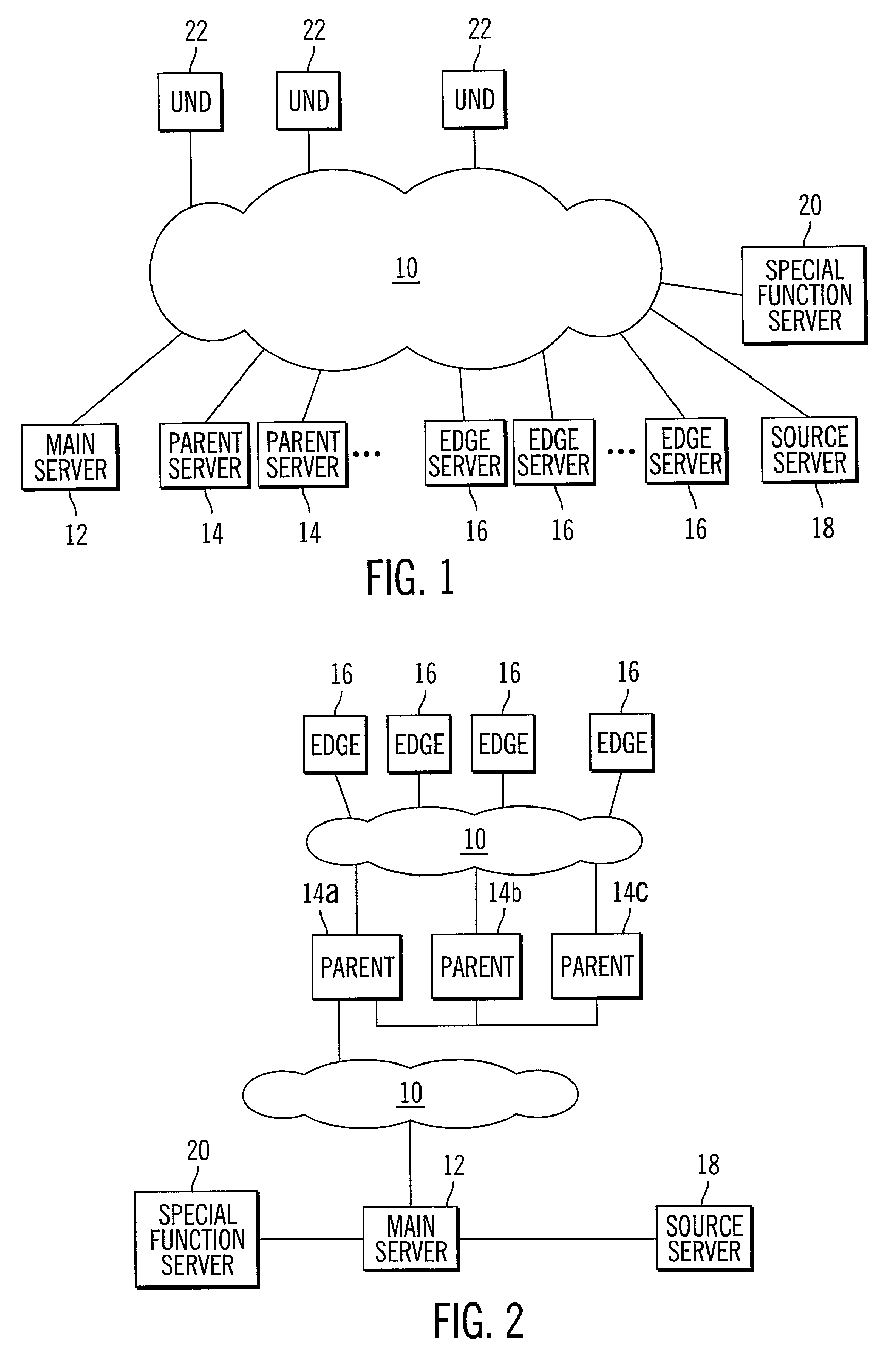

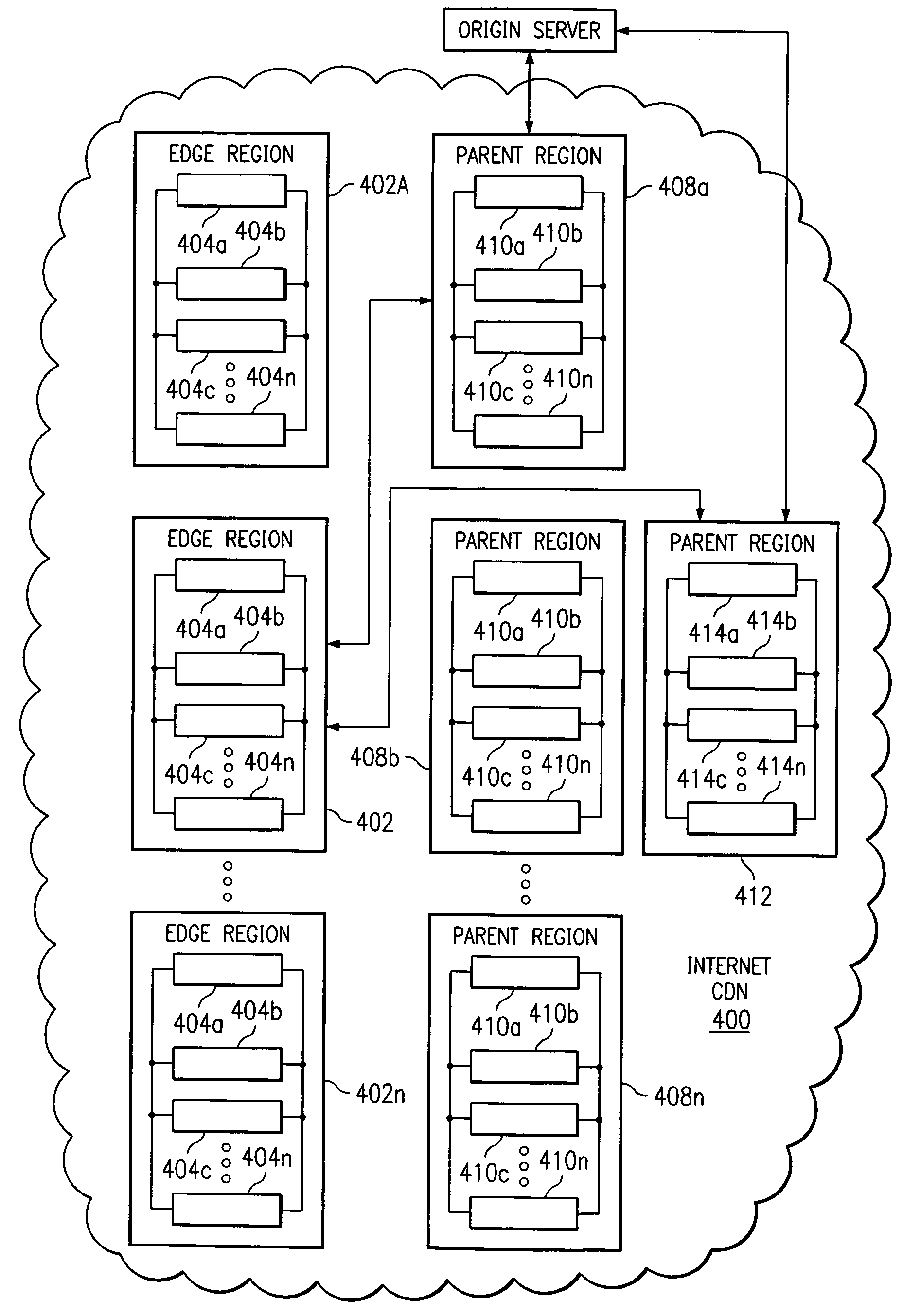

Method and system for tiered distribution in a content delivery network

InactiveUS7133905B2Effective bufferExcessive trafficMultiple digital computer combinationsLocation information based serviceCache hierarchyEdge server

A tiered distribution service is provided in a content delivery network (CDN) having a set of surrogate origin (namely, “edge”) servers organized into regions and that provide content delivery on behalf of participating content providers, wherein a given content provider operates an origin server. According to the invention, a cache hierarchy is established in the CDN comprising a given edge server region and either (a) a single parent region, or (b) a subset of the edge server regions. In response to a determination that a given object request cannot be serviced in the given edge region, instead of contacting the origin server, the request is provided to either the single parent region or to a given one of the subset of edge server regions for handling, preferably as a function of metadata associated with the given object request. The given object request is then serviced, if possible, by a given CDN server in either the single parent region or the given subset region. The original request is only forwarded on to the origin server if the request cannot be serviced by an intermediate node.

Owner:AKAMAI TECH INC

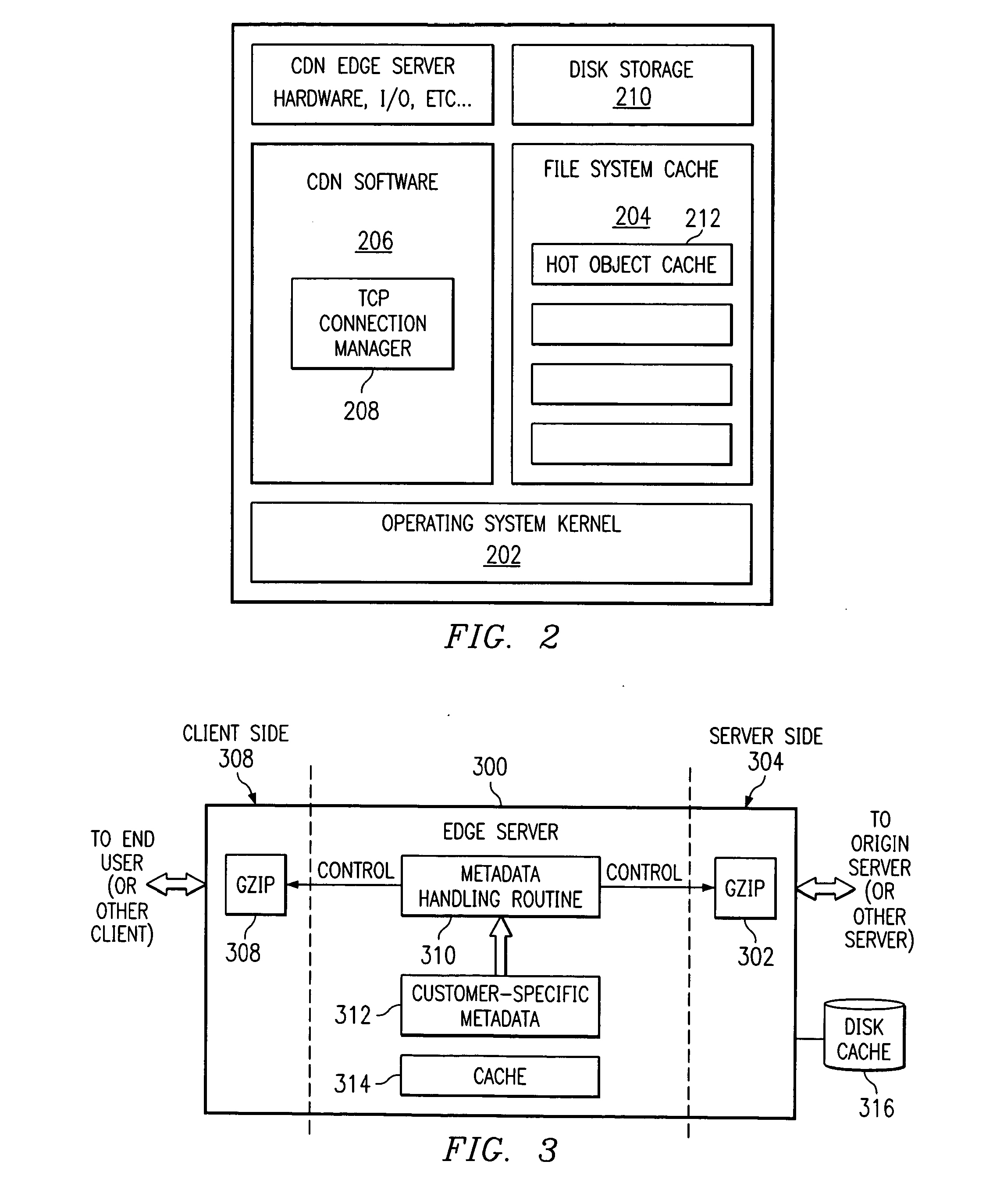

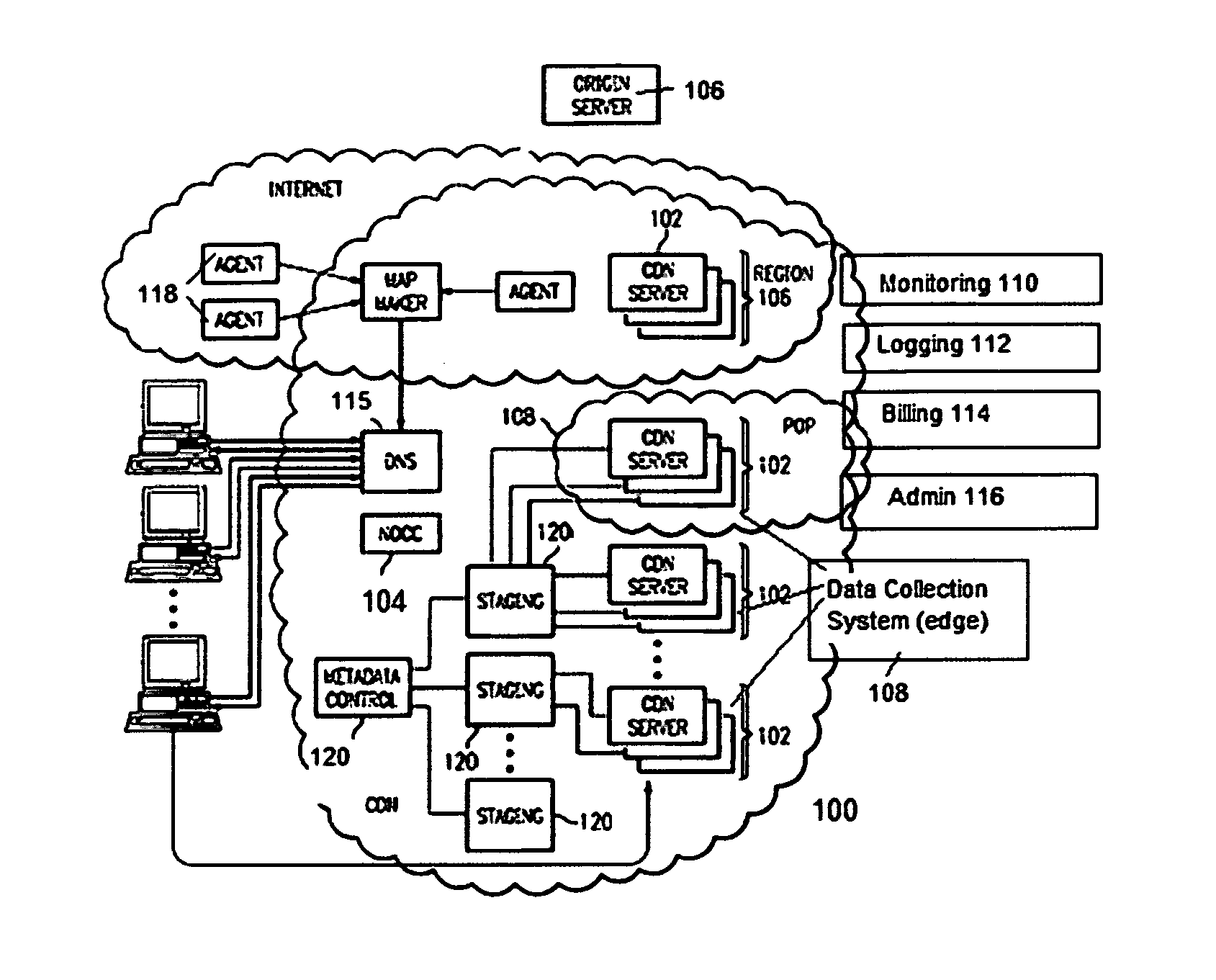

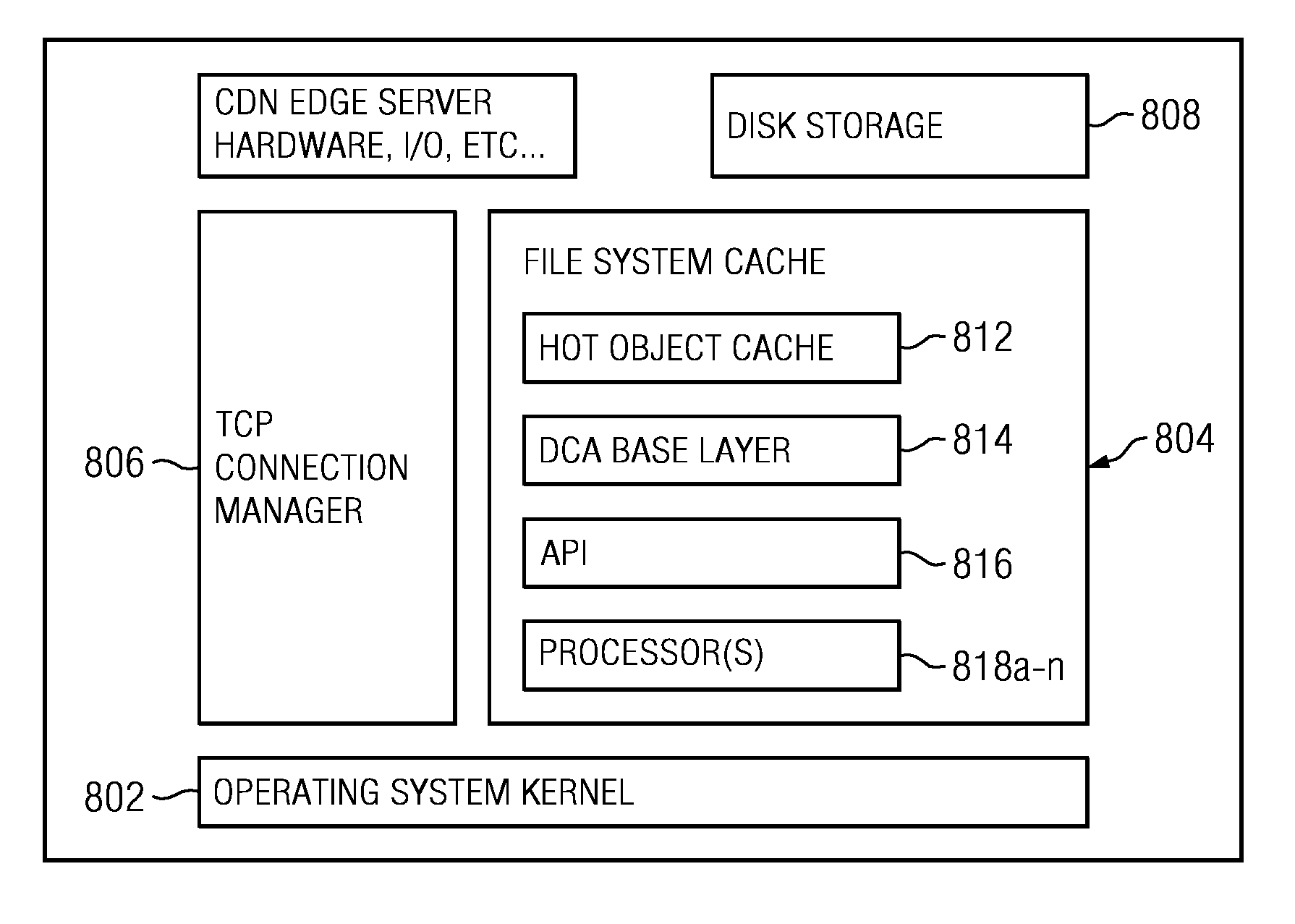

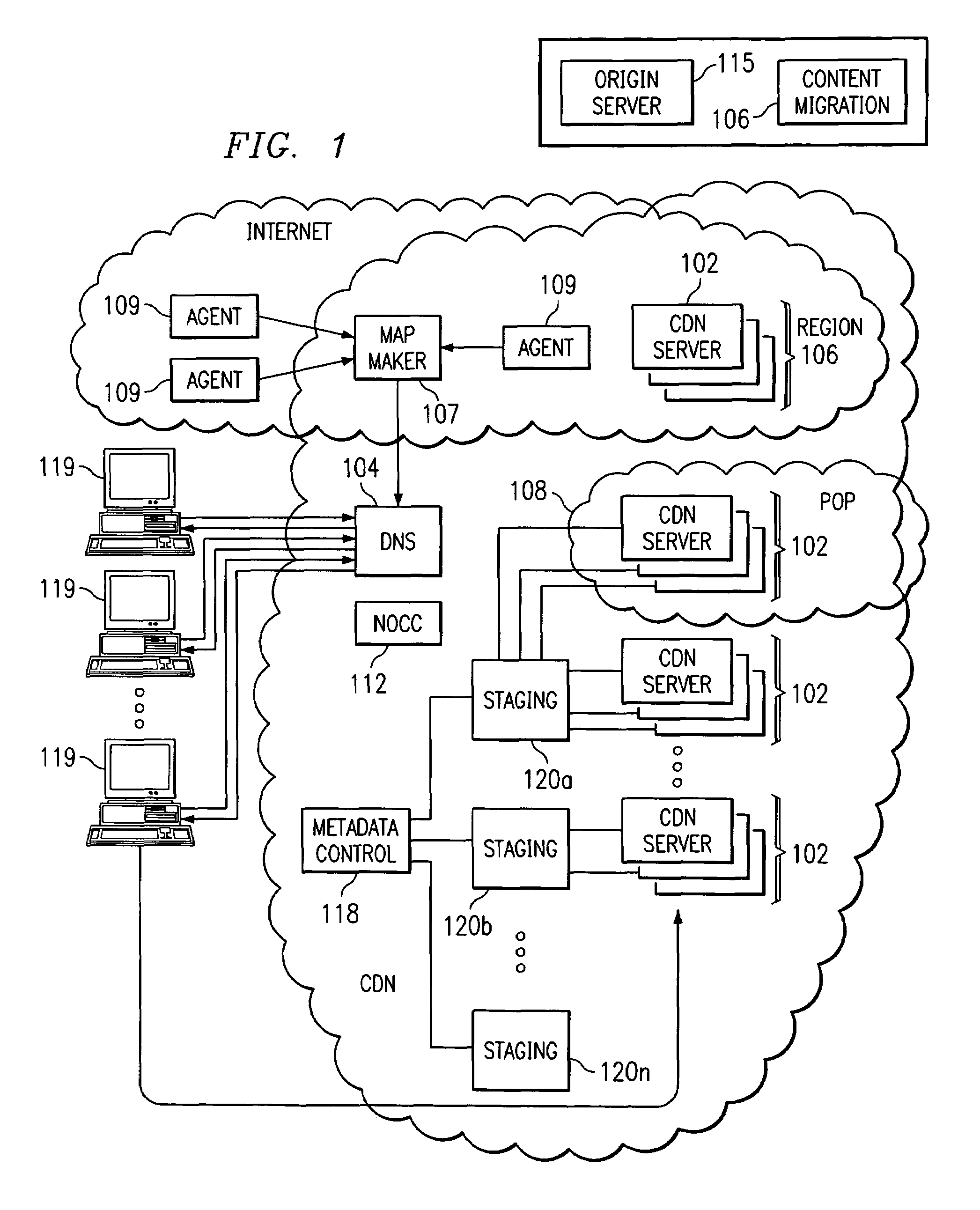

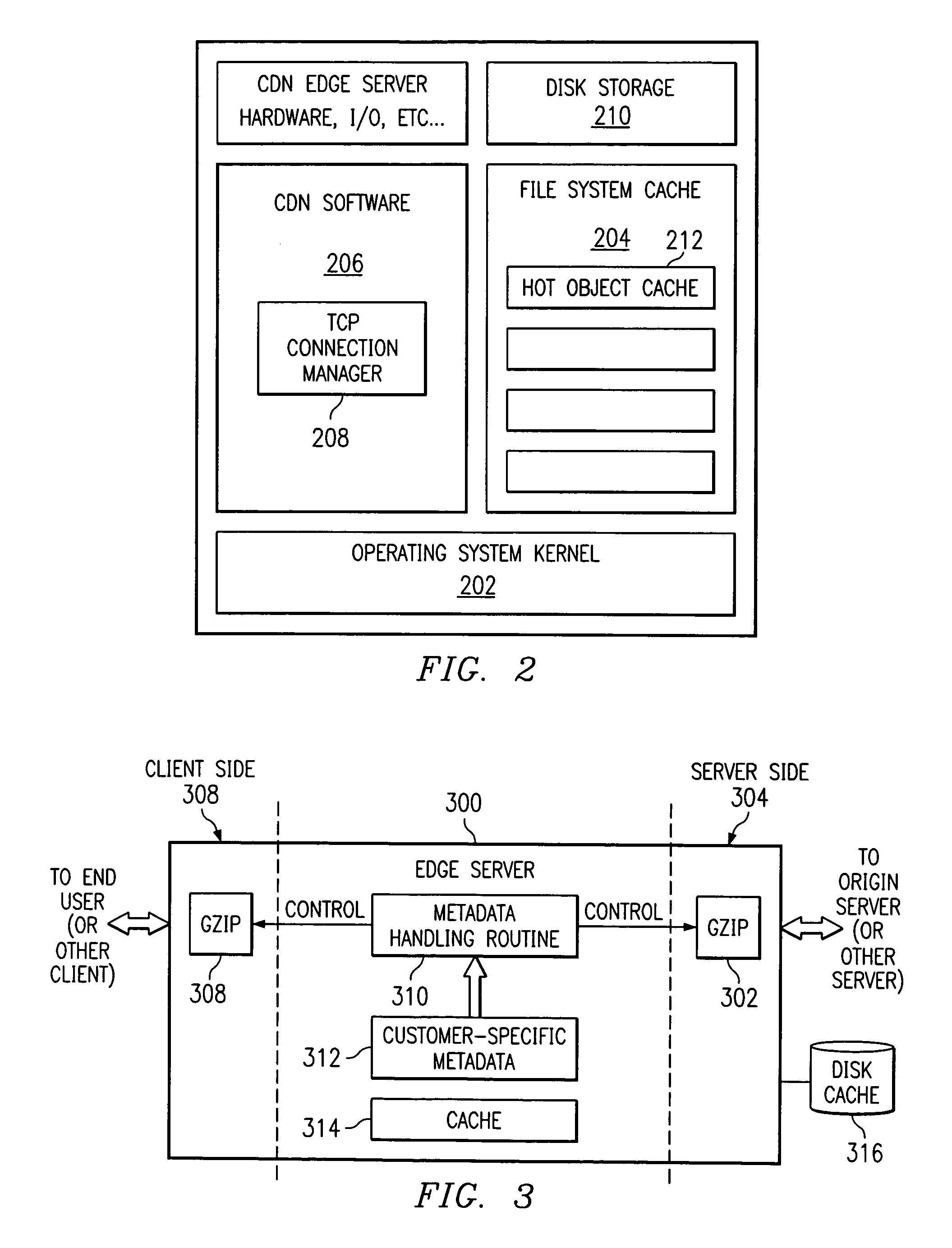

Method for caching and delivery of compressed content in a content delivery network

InactiveUS20080046596A1Easy to useReduce transfer timeDigital data information retrievalMultiple digital computer combinationsEdge serverComputer science

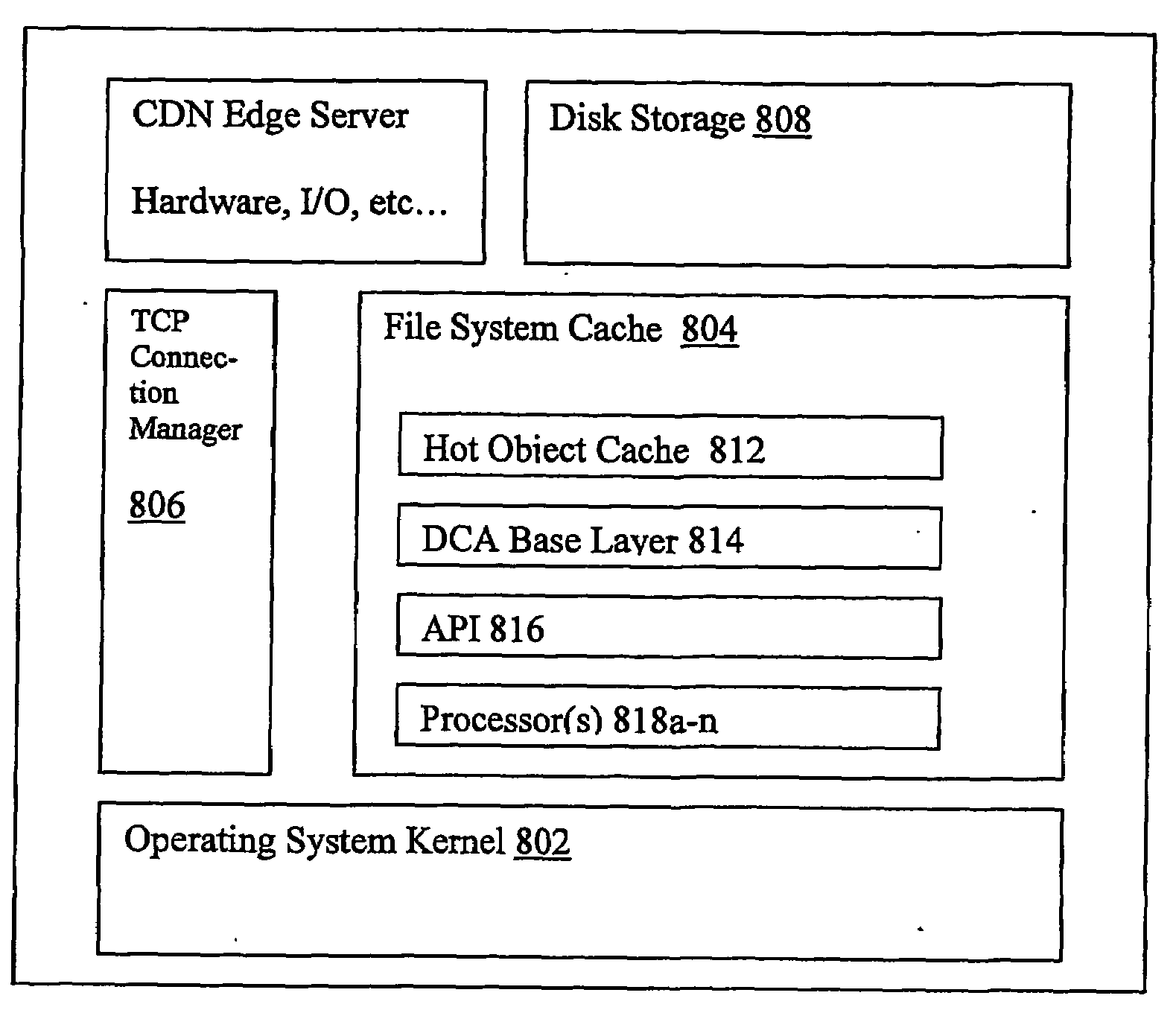

A content delivery network (CDN) edge server is provisioned to provide last mile acceleration of content to requesting end users. The CDN edge server fetches, compresses and caches content obtained from a content provider origin server, and serves that content in compressed form in response to receipt of an end user request for that content. It also provides “on-the-fly” compression of otherwise uncompressed content as such content is retrieved from cache and is delivered in response to receipt of an end user request for such content. A preferred compression routine is gzip, as most end user browsers support the capability to decompress files that are received in this format. The compression functionality preferably is enabled on the edge server using customer-specific metadata tags.

Owner:AFERGAN MICHAEL M +3

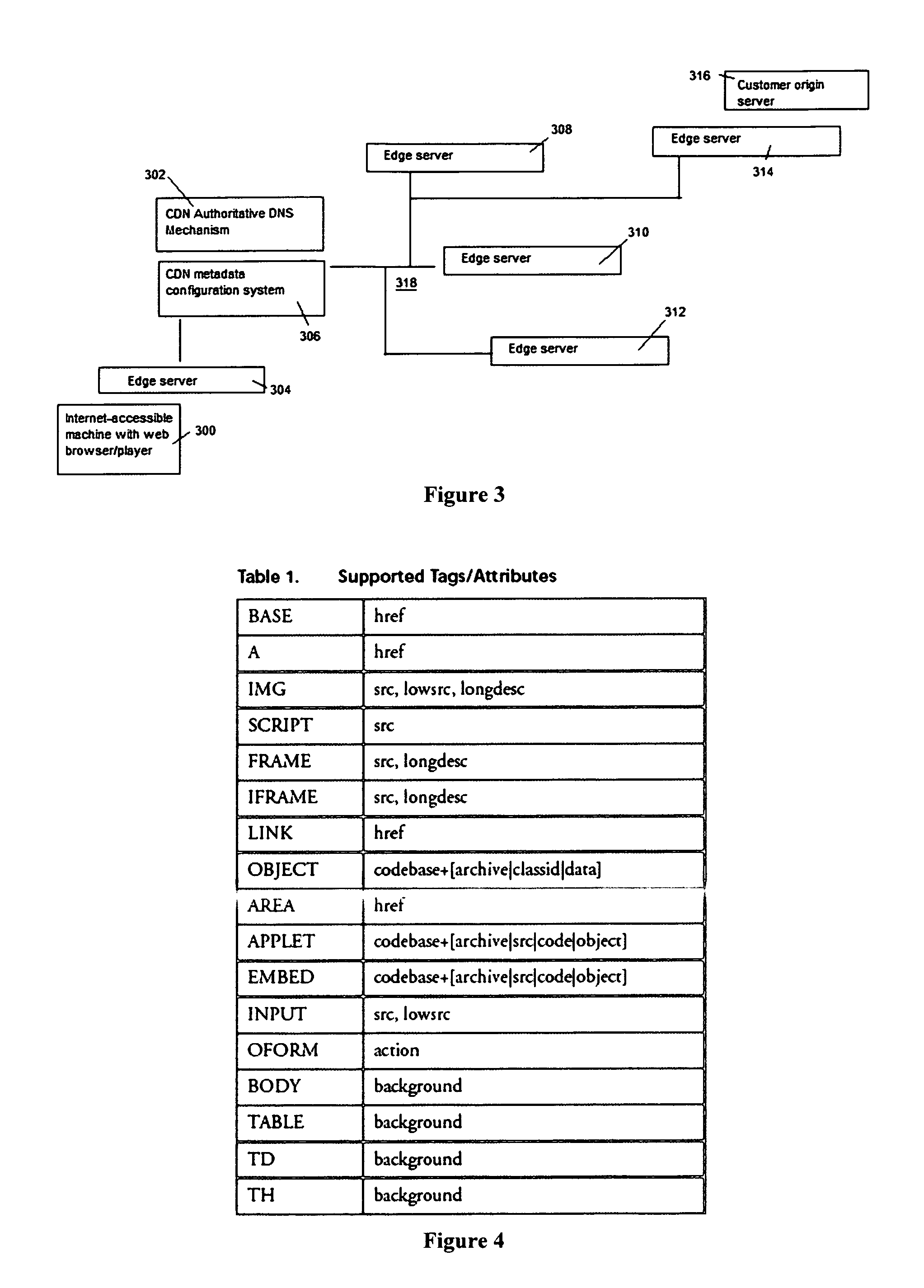

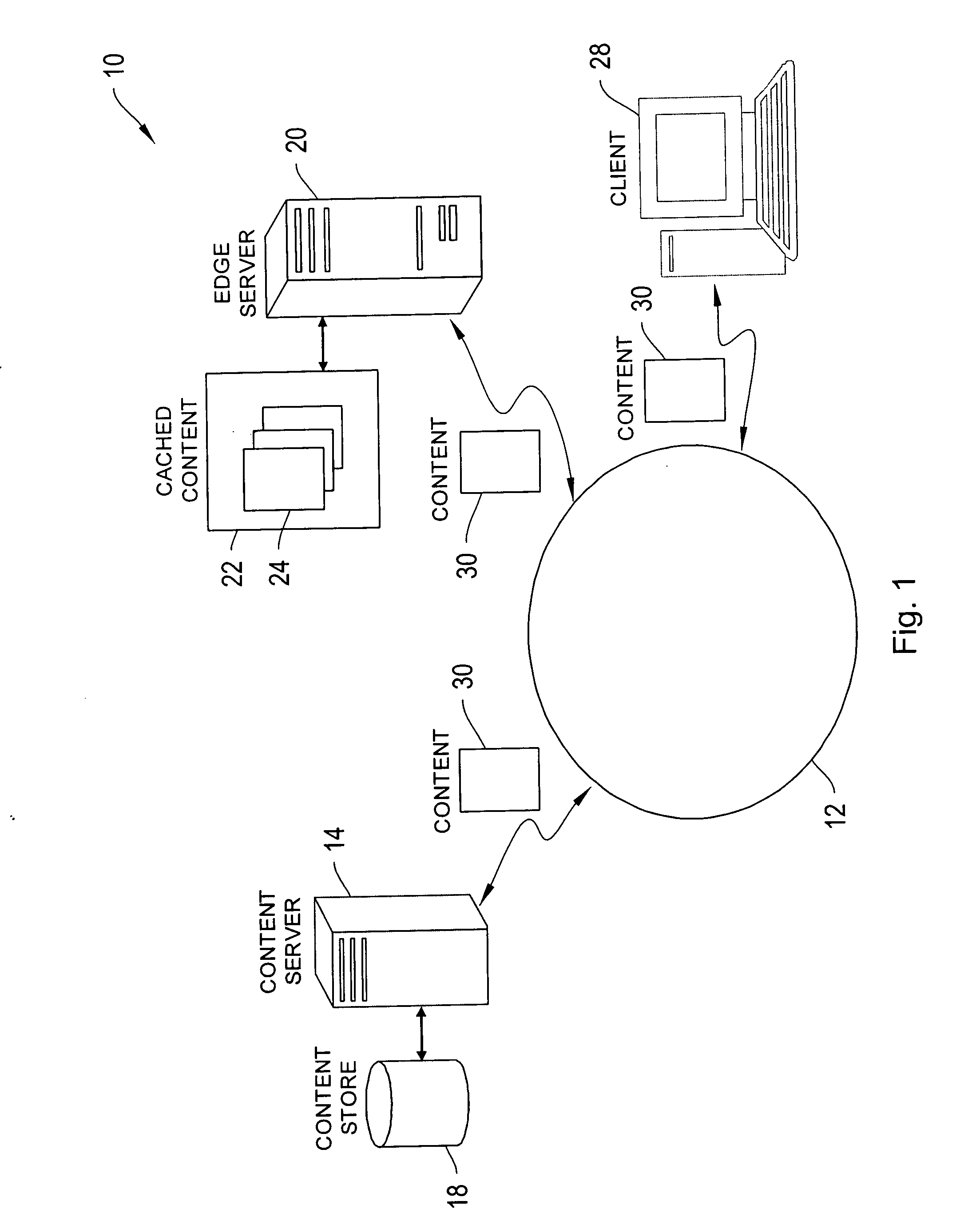

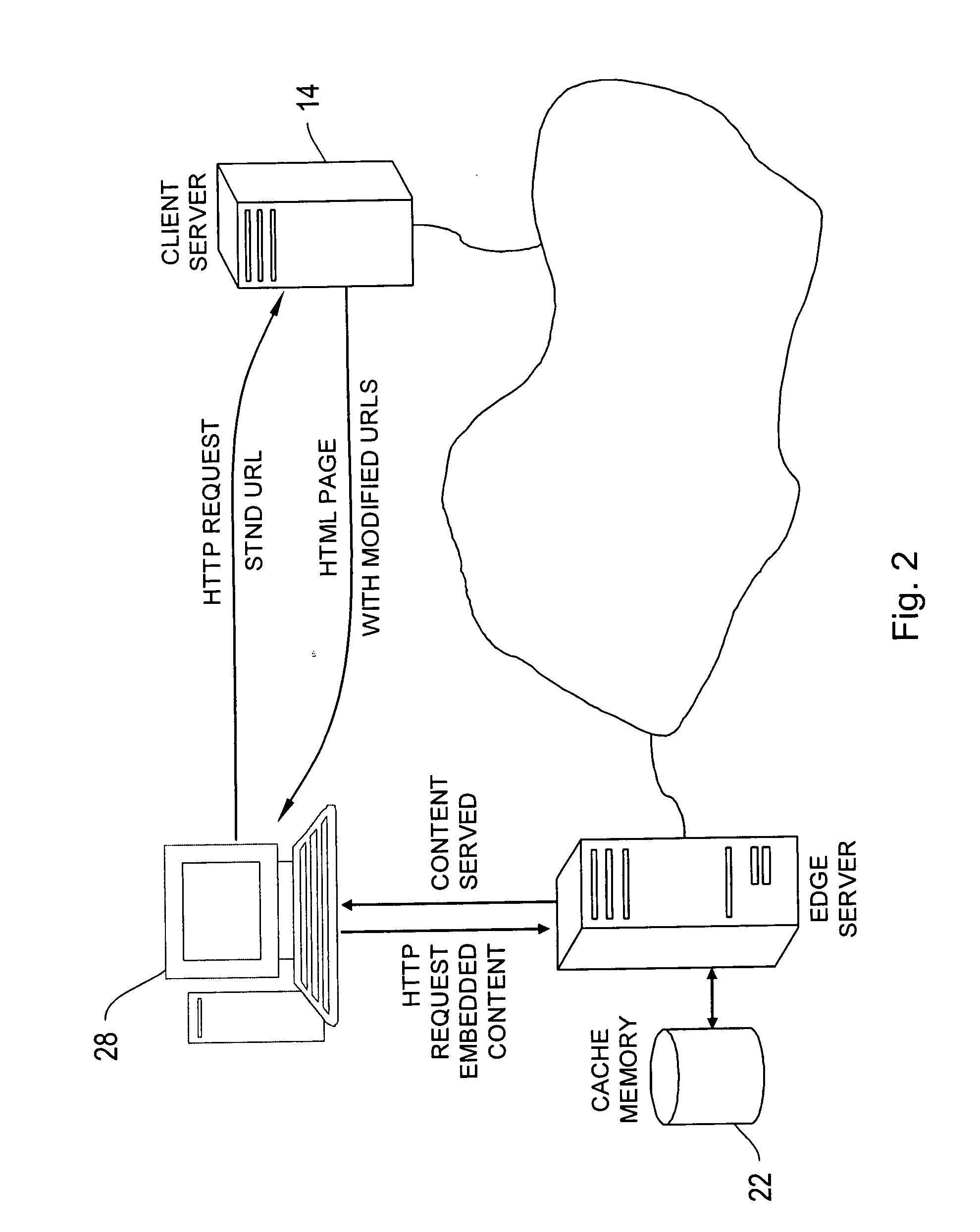

Site acceleration with content prefetching enabled through customer-specific configurations

ActiveUS20070156845A1More featureShorten the timeDigital data information retrievalMultiple digital computer combinationsWeb siteEdge server

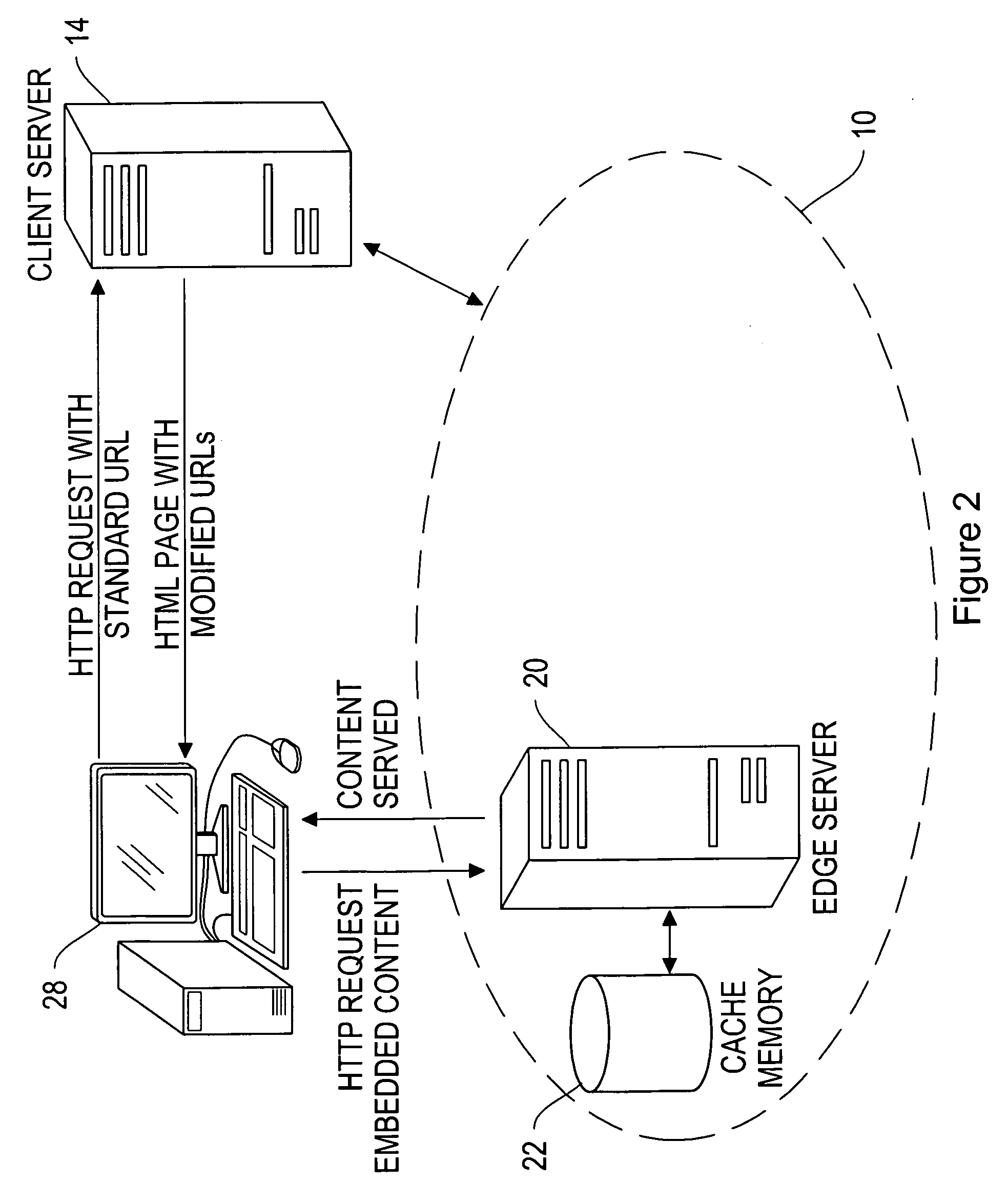

A CDN edge server is configured to provide one or more extended content delivery features on a domain-specific, customer-specific basis, preferably using configuration files that are distributed to the edge servers using a configuration system. A given configuration file includes a set of content handling rules and directives that facilitate one or more advanced content handling features, such as content prefetching. When prefetching is enabled, the edge server retrieves objects embedded in pages (normally HTML content) at the same time it serves the page to the browser rather than waiting for the browser's request for these objects. This can significantly decrease the overall rendering time of the page and improve the user experience of a Web site. Using a set of metadata tags, prefetching can be applied to either cacheable or uncacheable content. When prefetching is used for cacheable content, and the object to be prefetched is already in cache, the object is moved from disk into memory so that it is ready to be served. When prefetching is used for uncacheable content, preferably the retrieved objects are uniquely associated with the client browser request that triggered the prefetch so that these objects cannot be served to a different end user. By applying metadata in the configuration file, prefetching can be combined with tiered distribution and other edge server configuration options to further improve the speed of delivery and / or to protect the origin server from bursts of prefetching requests.

Owner:AKAMAI TECH INC

Content network global replacement policy

ActiveUS20090070533A1Efficiently and controllably deliverMemory adressing/allocation/relocationTransmissionEdge serverData store

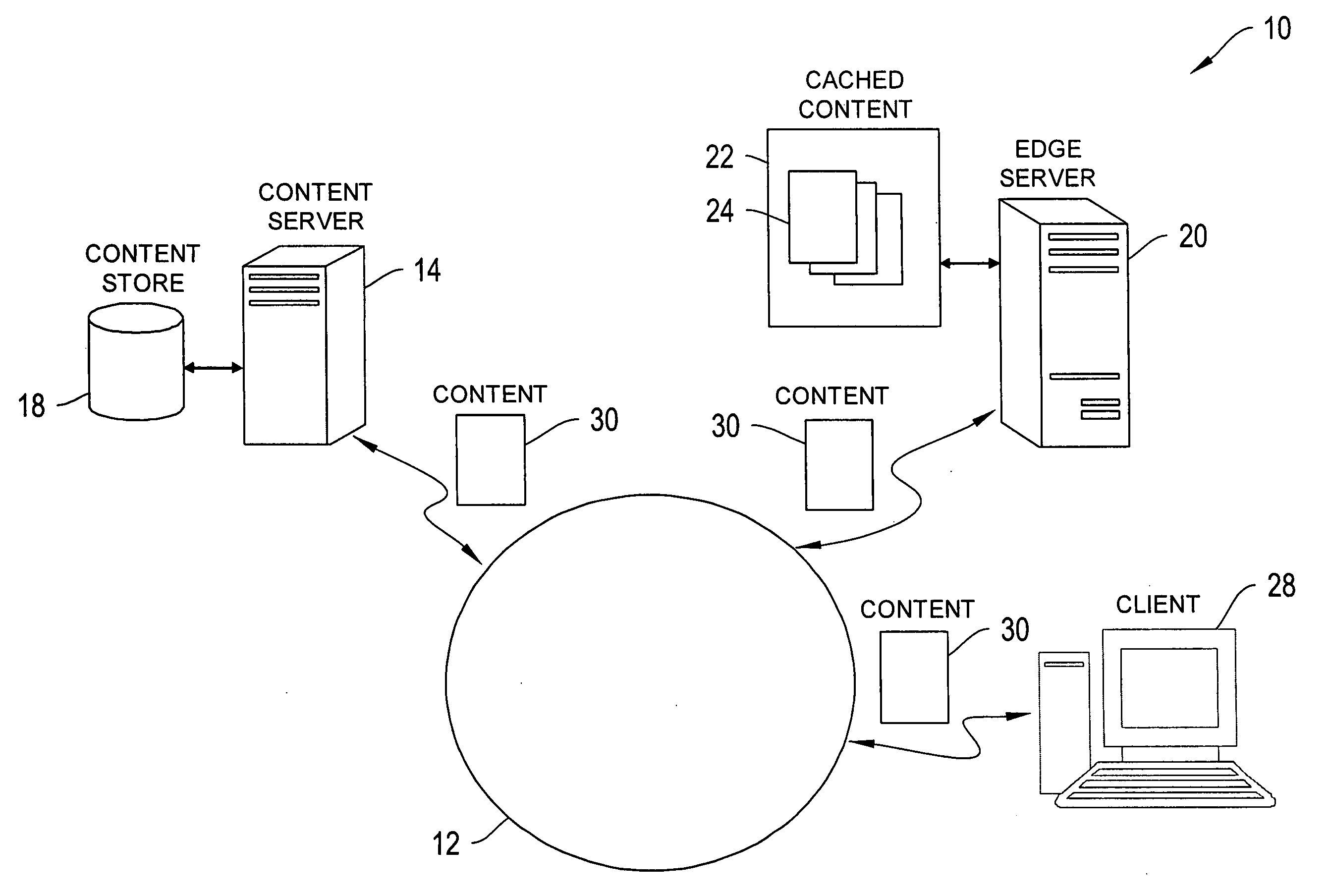

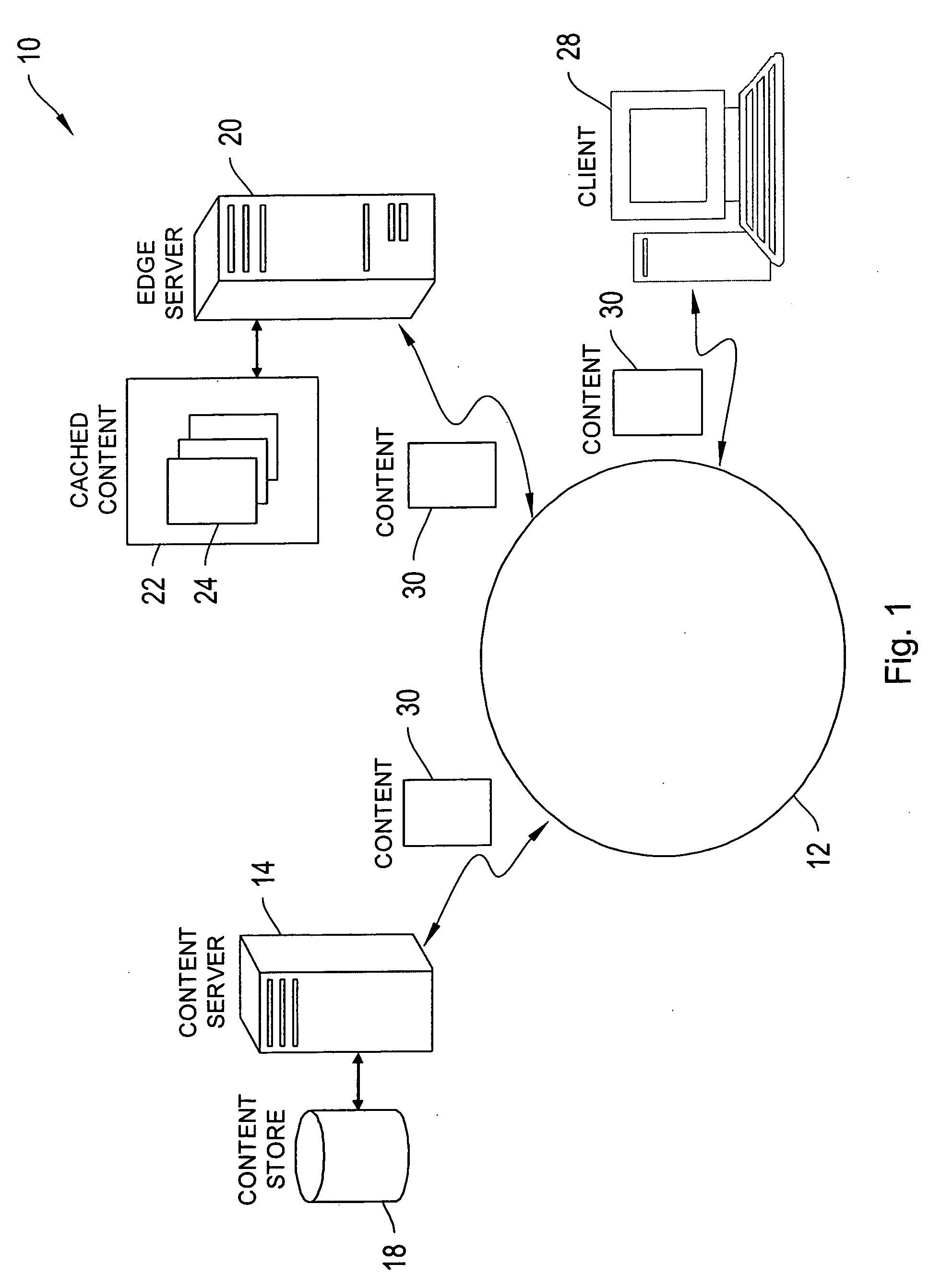

This invention is related to content delivery systems and methods. In one aspect of the invention, a content provider controls a replacement process operating at an edge server. The edge server services content providers and has a data store for storing content associated with respective ones of the content providers. A content provider sets a replacement policy at the edge server that controls the movement of content associated with the content provider, into and out of the data store. In another aspect of the invention, a content delivery system includes a content server storing content files, an edge server having cache memory for storing content files, and a replacement policy module for managing content stored within the cache memory. The replacement policy module can store portions of the content files at the content server within the cache memory, as a function of a replacement policy set by a content owner.

Owner:EDGIO INC

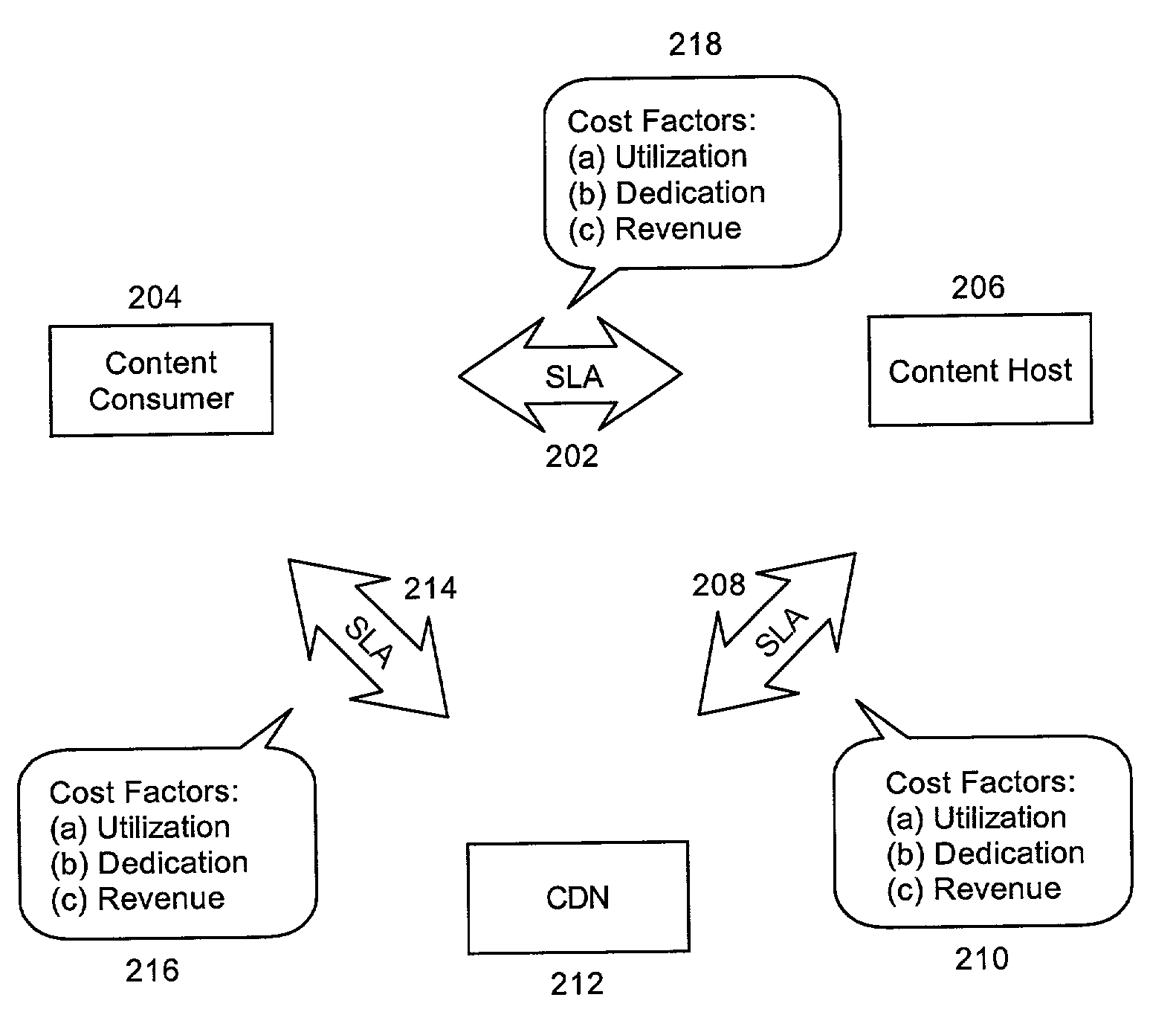

Multi-tier service level agreement method and system

ActiveUS7099936B2Overcome limitationsMultiple digital computer combinationsResourcesService-level agreementEdge server

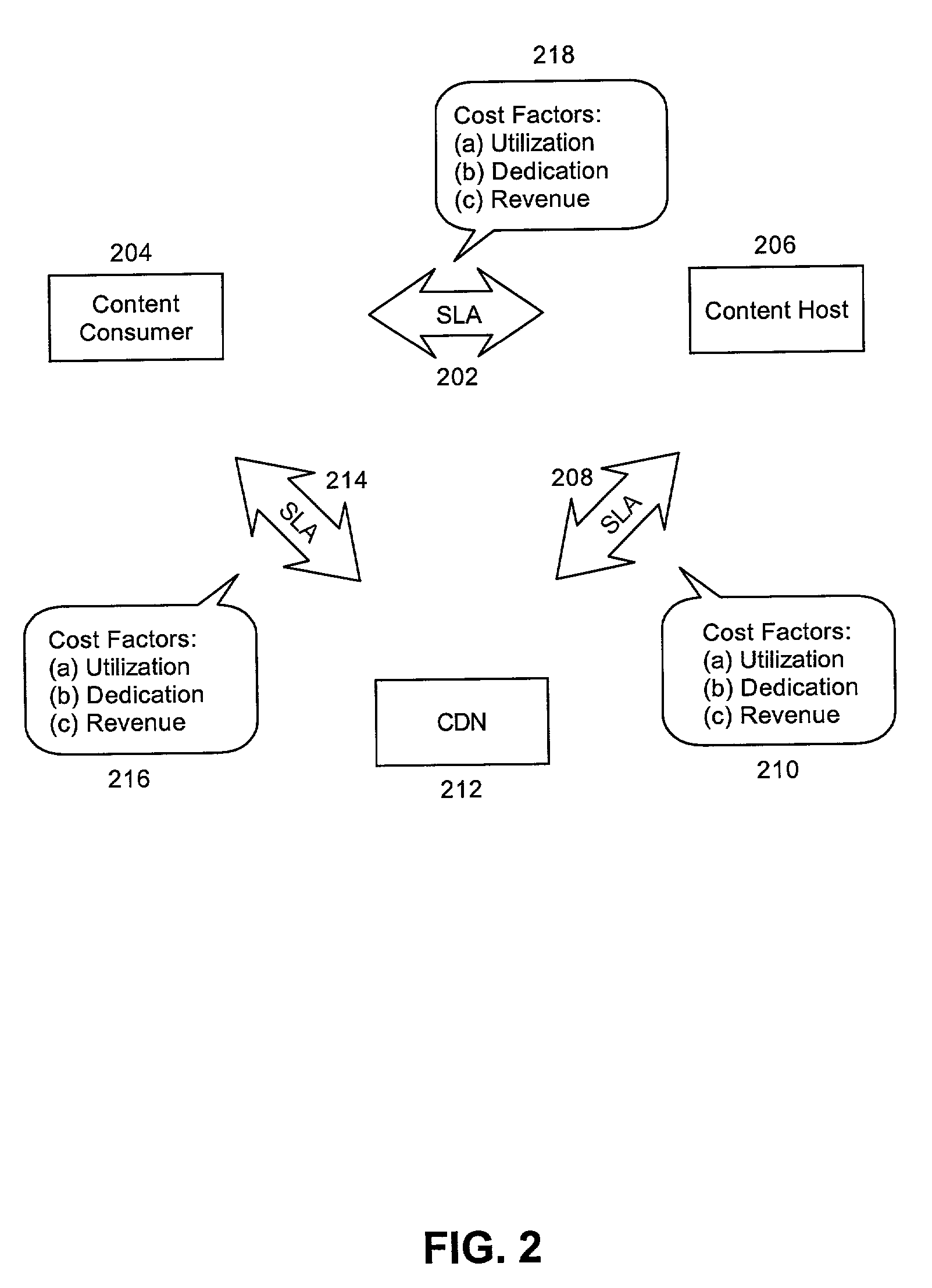

A method for managing multi-tier SLA relationships. The method can include first computing costs of utilizing edge server resources in a CDN, costs of dedicating content host resources in a content host in lieu of the utilization, and prospective revenues which can be generated by the content host providing services based on the resources to content consumers. Minimum QoS levels can be identified which must be maintained when providing the services to the content consumers according to QoS terms in established SLAs between the content host and individual ones of the content consumers. Finally, a new SLA can be established between the content host and the CDN. Importantly, the new SLA can include QoS terms for selectively allocating resources in the CDN. Moreover, the QoS terms can optimize revenues generated by the content host providing services based on the selective allocation of resources and the computed costs.

Owner:IBM CORP

Content delivery network map generation using passive measurement data

InactiveUS20060143293A1Improve subsequent routingDigital computer detailsData switching networksGeneration processRouting decision

A routing method operative in a content delivery network (CDN) where the CDN includes a request routing mechanism for routing clients to subsets of edge servers within the CDN. According to the routing method, TCP connection data statistics are collected are edge servers located within a CDN region. The TCP connection data statistics are collected as connections are established between requesting clients and the CDN region and requests are serviced by those edge servers. Periodically, e.g., daily, the connection data statistics are provdied from the edge servers in a region back to the request routing mechanism. The TCP connection data statistics are then used by the request routing mechanism in subsequent routing decisions and, in particular, in the map generation processes. Thus, for example, the TCP connection data may be used to determine whether a given quality of service is being obtained by routing requesting clients to the CDN region. If not, the request routing mechanism generates a map that directs requesting clients away from the CDN region for a given time period or until the quality of service improves.

Owner:AKAMAI TECH INC

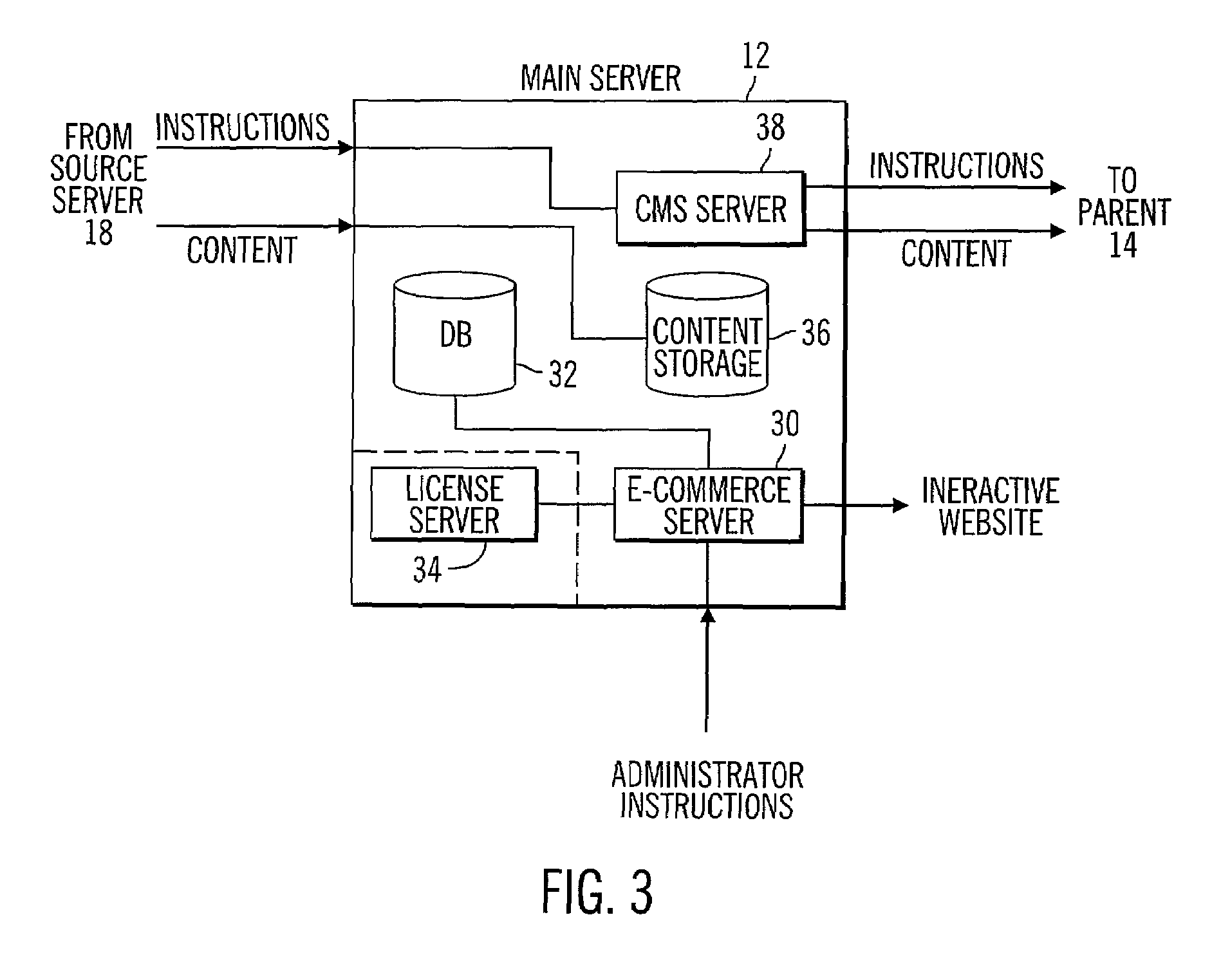

Network configured for delivery of content for download to a recipient

InactiveUS7024466B2Increase speed and efficiencyEffective controlMultiprogramming arrangementsMultiple digital computer combinationsEdge serverNetwork architecture

Owner:BLOCKBUSTER LLC

Method and system for tiered distribution in a content delivery network

InactiveUS20070055764A1Excessive trafficEffectively buffering web site infrastructureDigital computer detailsLocation information based serviceCache hierarchyEdge server

Owner:AKAMAI TECH INC

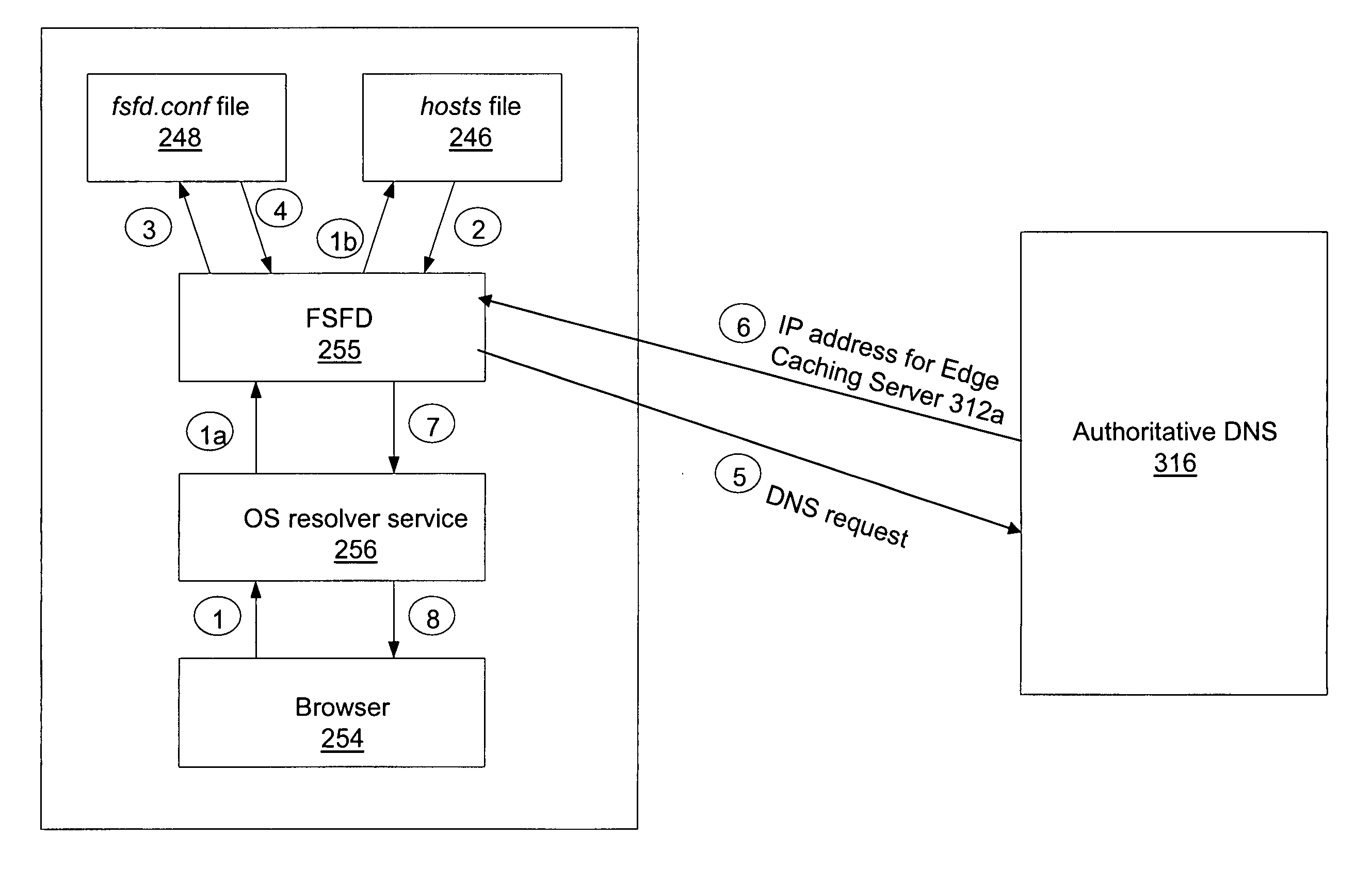

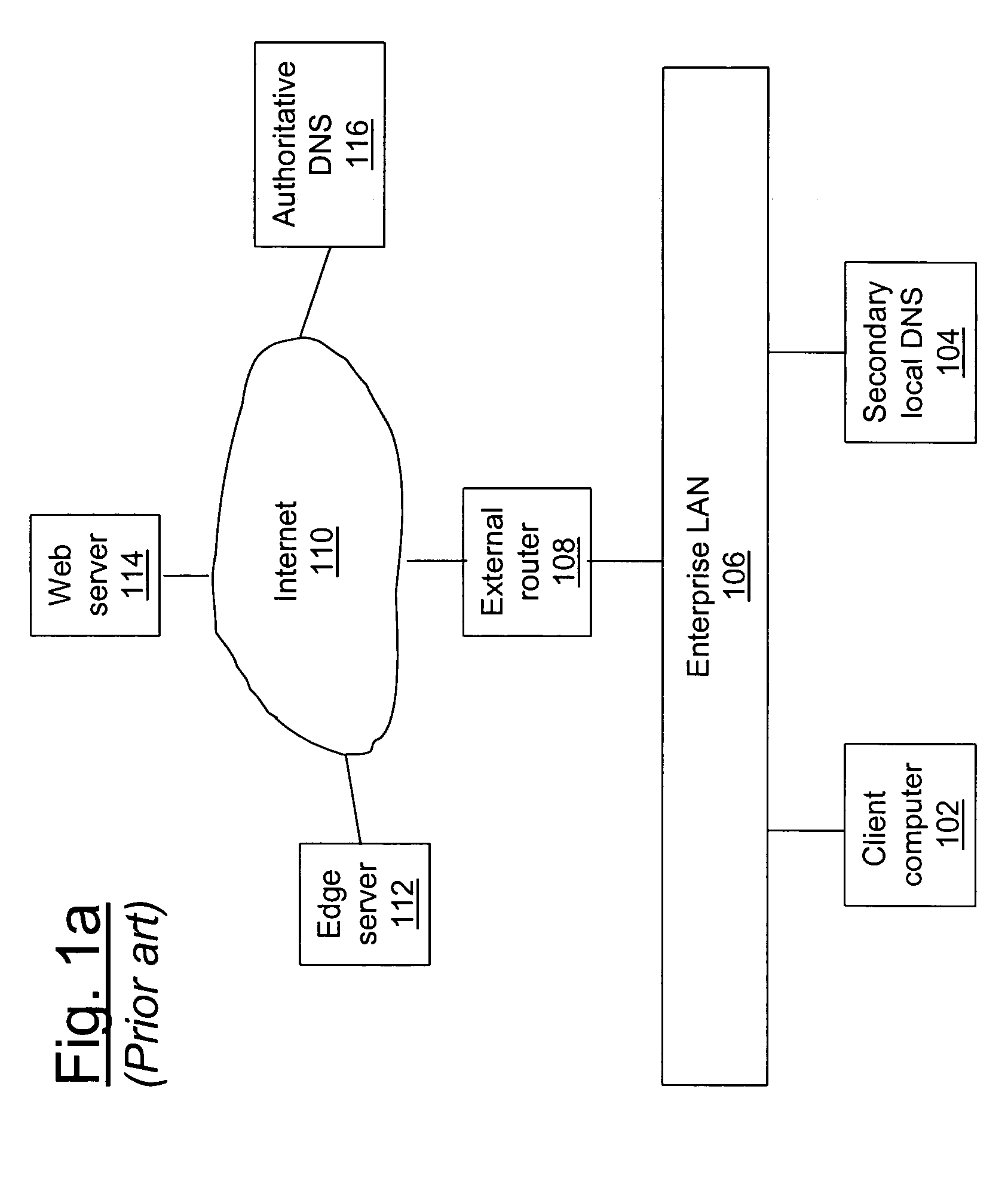

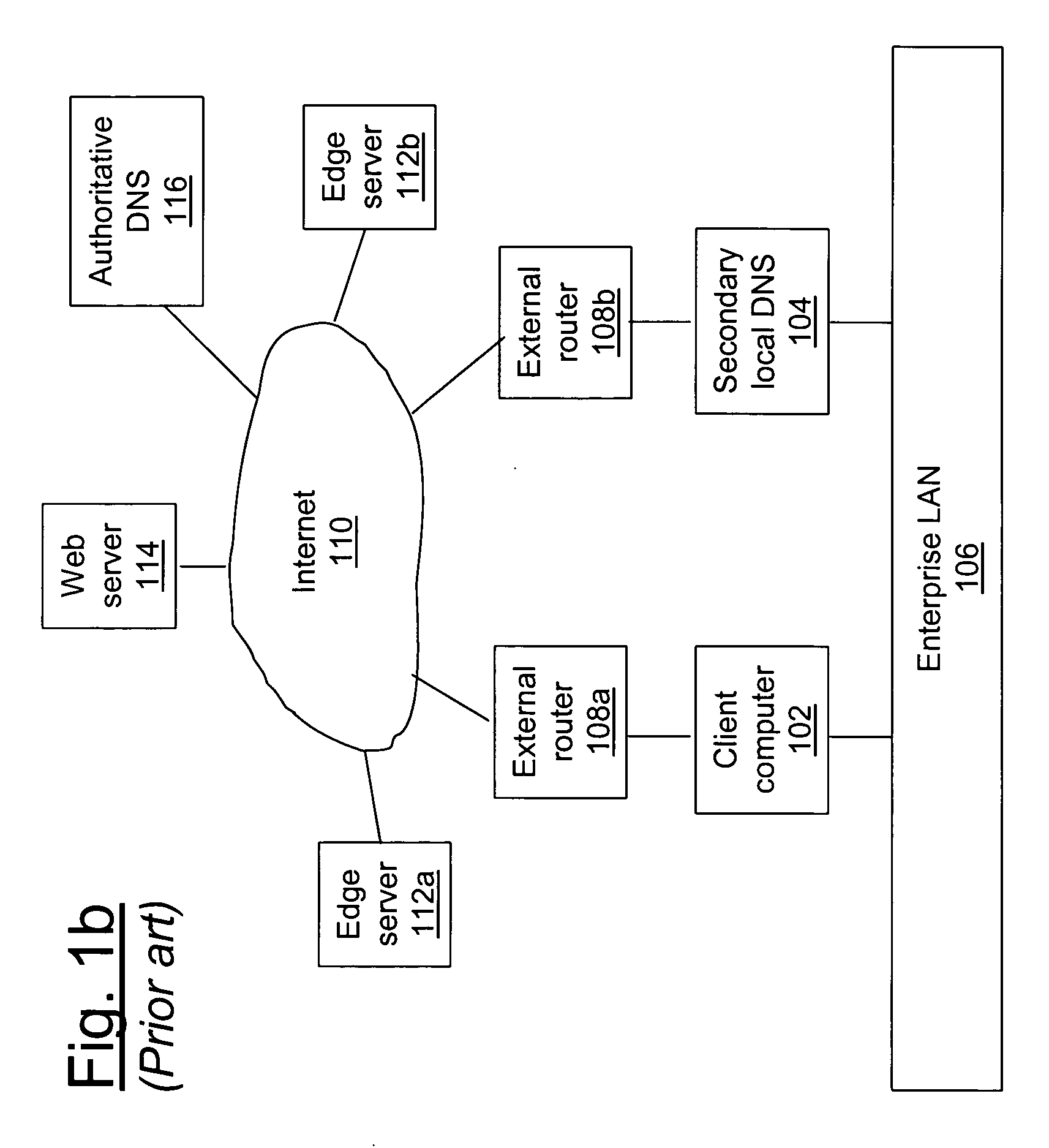

Determining address of closest edge server

A method and system is presented for bypassing a local Domain Name Server (DNS) when using edge caching servers. Domain names of frequently used business applications that are known to rely upon edge servers, together with the corresponding authoritative DNSs, are listed in both local hosts file and user defined FSFD local configuration file fsfd.conf. When the client computer's browser attempts to resolve a domain name, a File System Filtering Driver (FSFD) in the client computer intercepts the browser's request. If the domain name which is being resolved is found in a local FSFD configuration file fsfd.conf, then the FSFD initiates a DNS request directly to the appropriate authoritative DNS whose IP address gets extracted from the fsfd.conf record, thus bypassing the local DNS. The authoritative DNS returns the IP address for an edge caching server that is topographically proximate to the client computer's browser.

Owner:LINKEDIN

Content delivery network for ephemeral objects

ActiveUS9237202B1Multiple digital computer combinationsData switching networksEdge serverComputer science

A computer implemented method includes receiving an object scheduled for automatic deletion after a specified viewing period, a specified number of views or a specified period of time. Object push criteria are evaluated. The object is pushed to an edge server cache in response to evaluating. The object is served in response to a request for the object.

Owner:SNAP INC

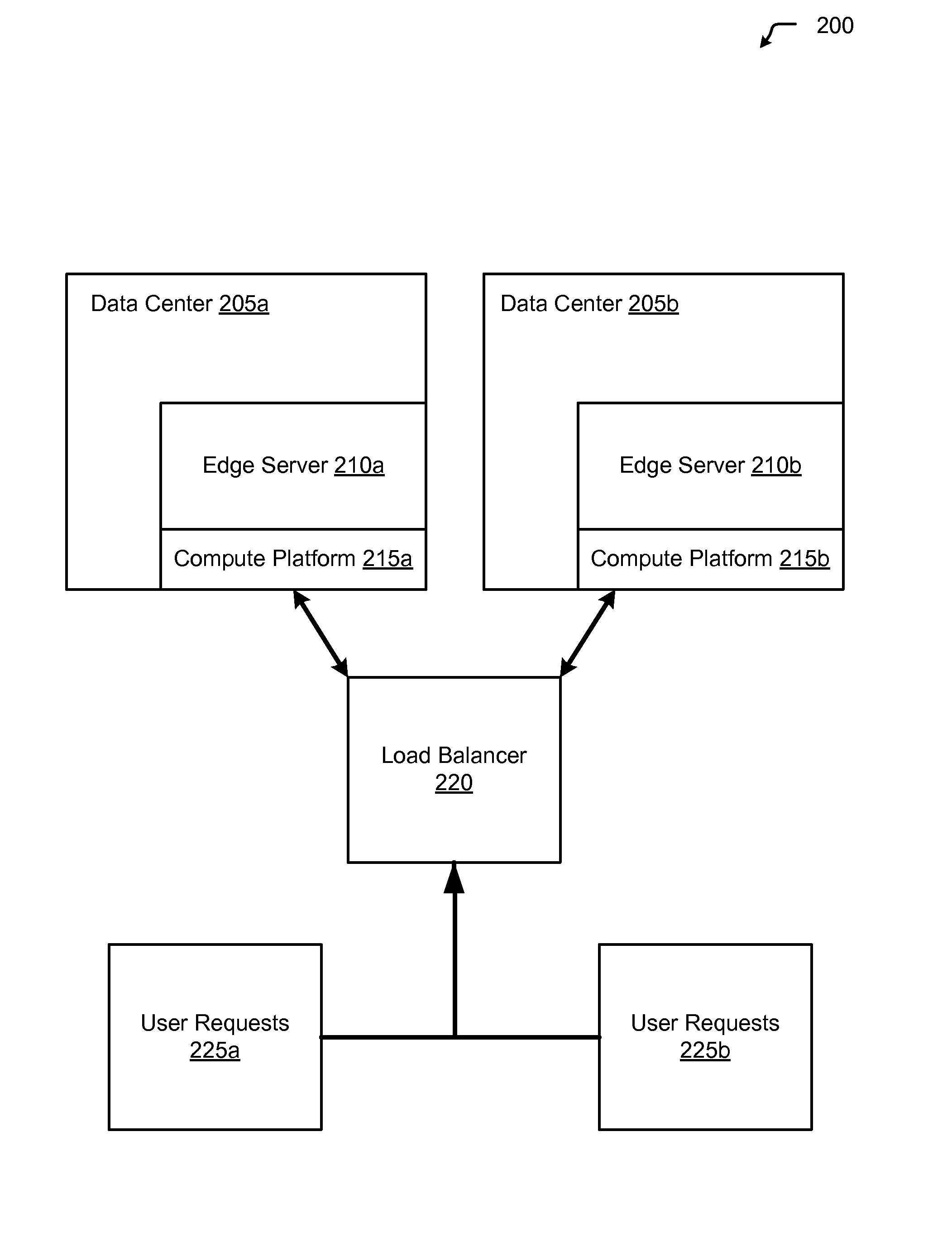

Global load balancing on a content delivery network

ActiveUS20100036954A1Improve performanceImprove efficiencyHybrid transportMultiple digital computer combinationsEdge serverNetwork connection

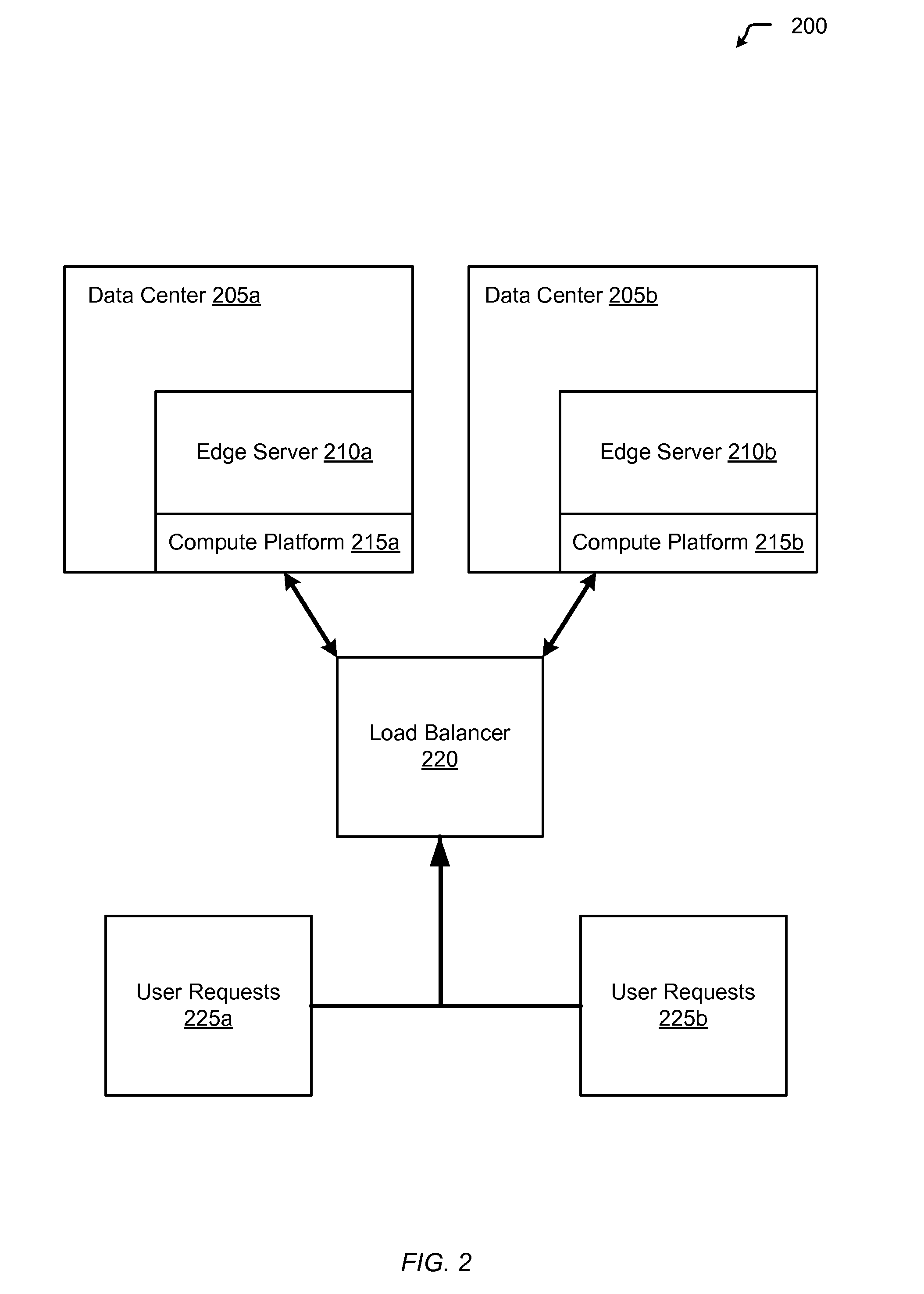

The invention relates to systems and methods of global load balancing in a content delivery network having a plurality of edge servers which may be distributed across multiple geographic locations. According to one aspect of the invention, a global load balancing system includes a first load balancing server for receiving a packet requesting content to be delivered to a client, selecting one of the plurality of edge servers to deliver the requested content to the client, and forwarding the packet across a network connection to a second load balancing server, which forwards the packet to the selected edge server. The selected edge server, in response to receiving the packet, sends across a network connection the requested content with an address for direct delivery to the client, thereby allowing the requested content to be delivered to the client while bypassing a return path through the first load balancing server.

Owner:EDGIO INC

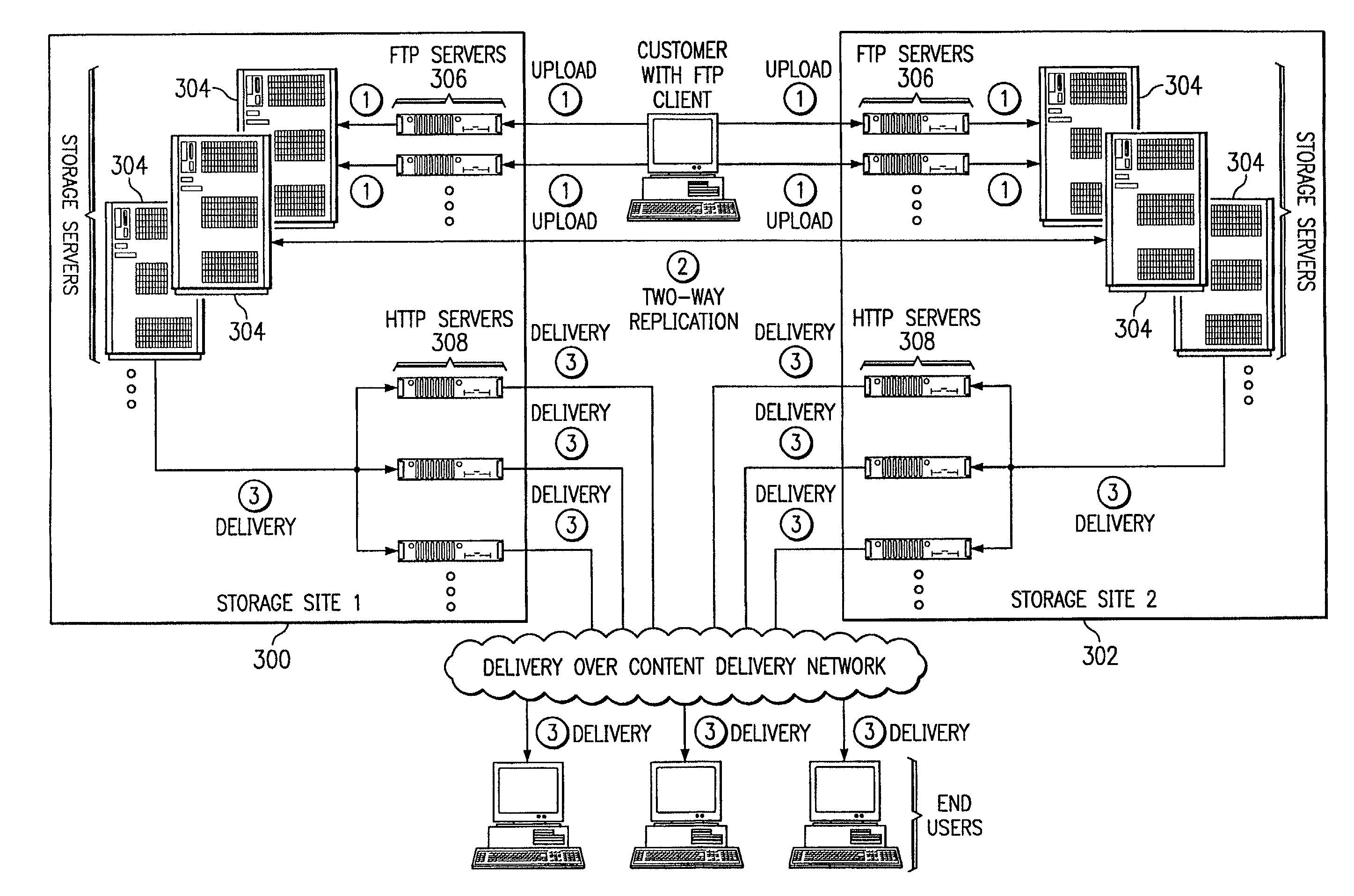

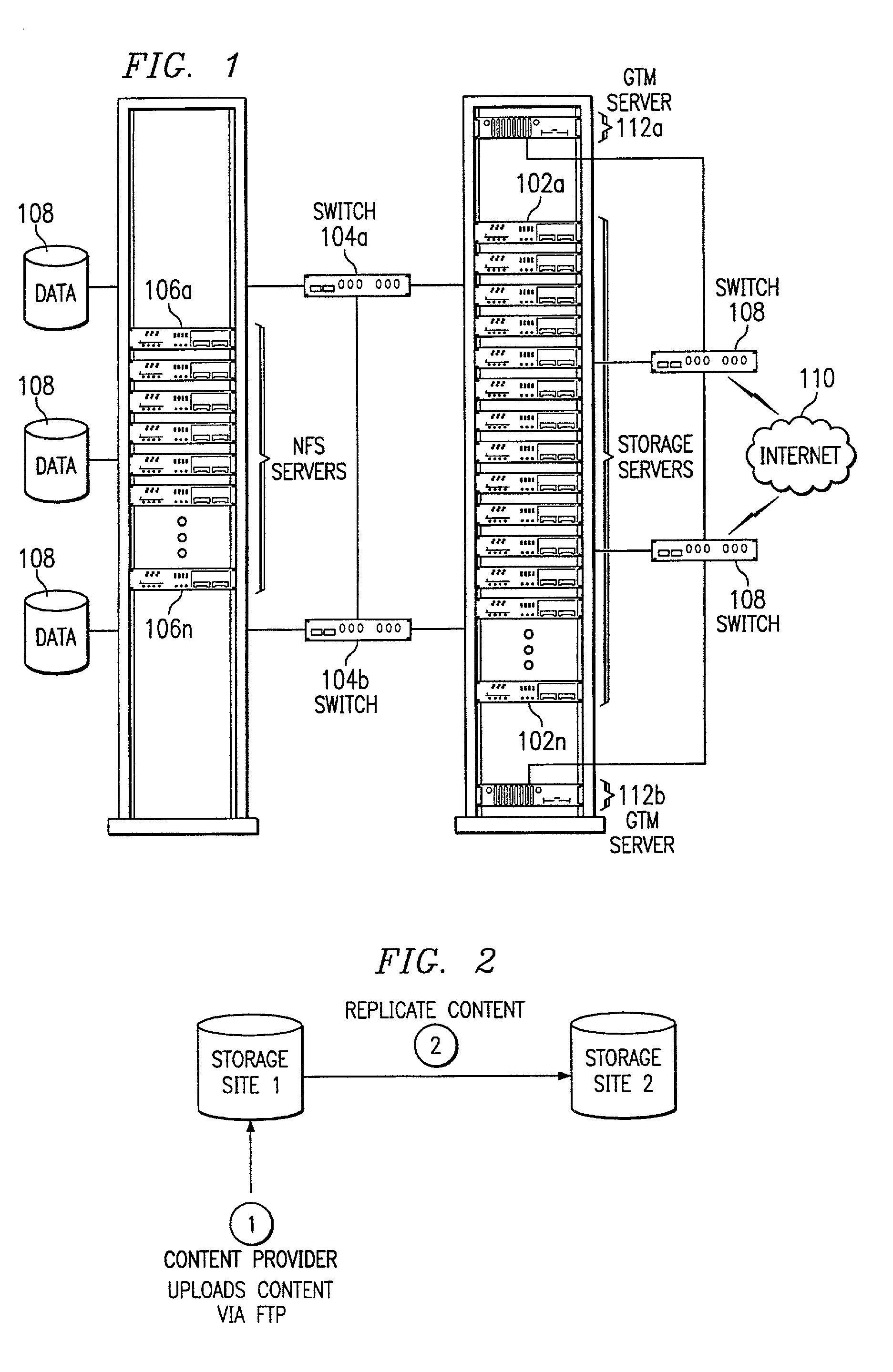

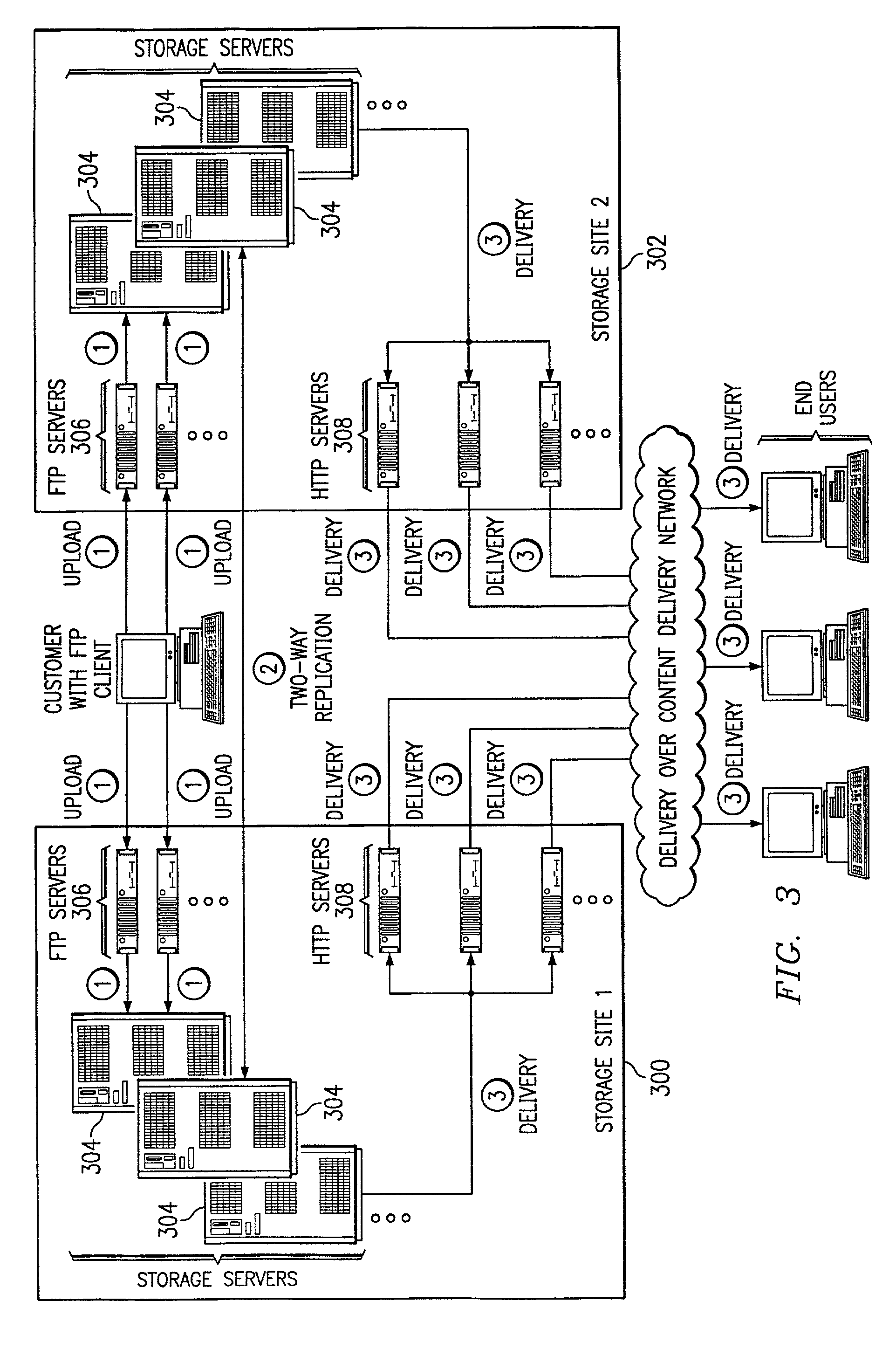

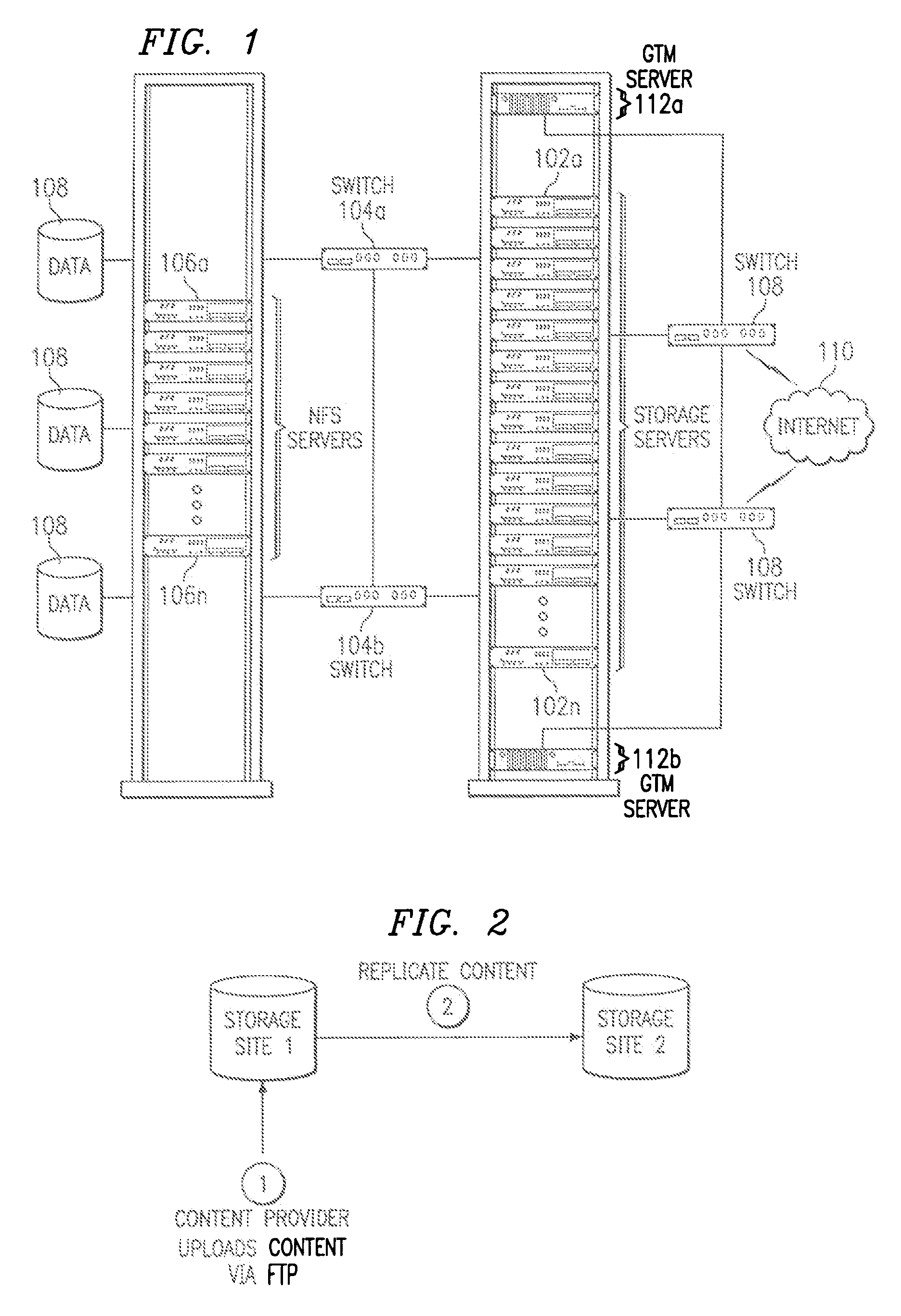

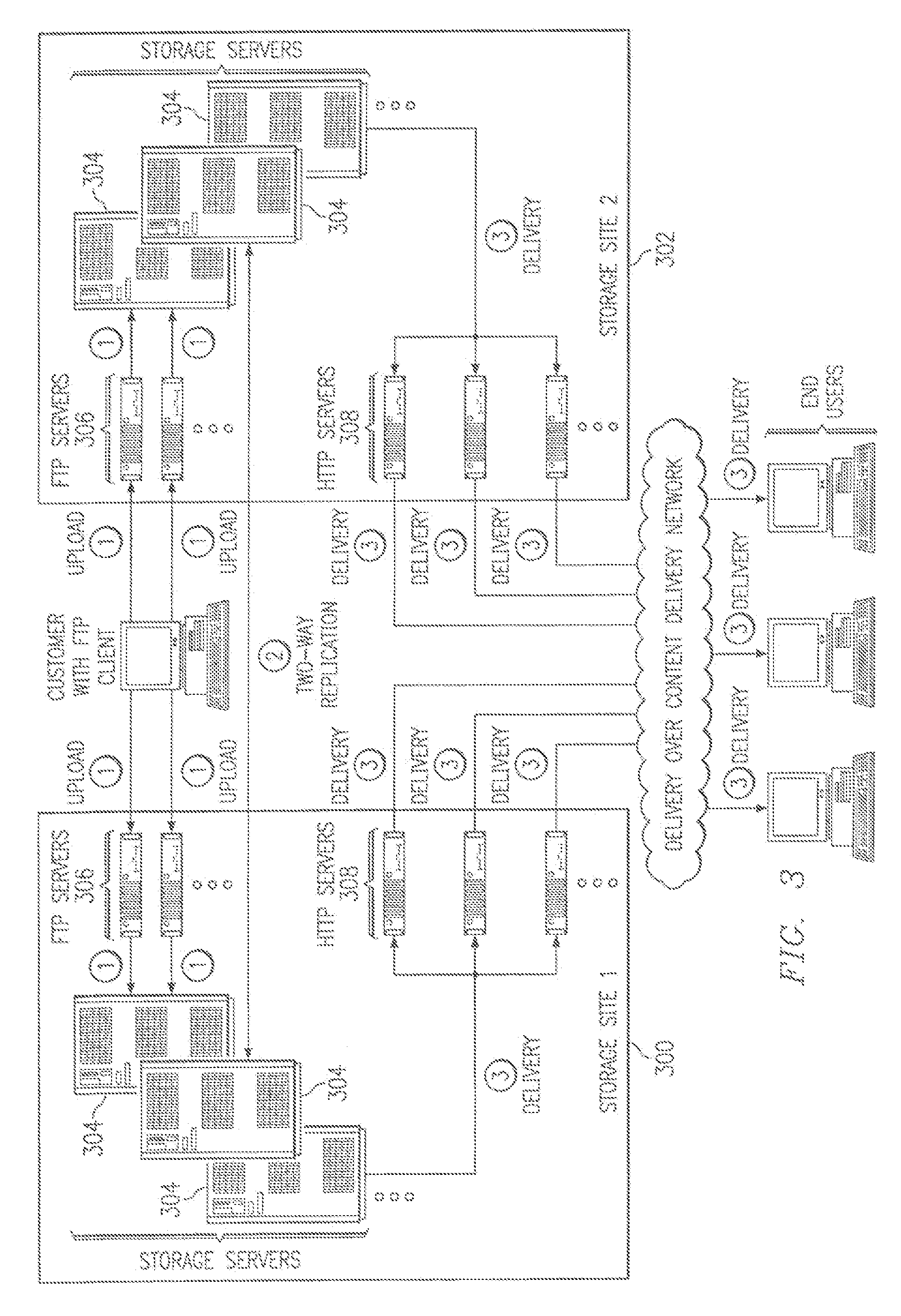

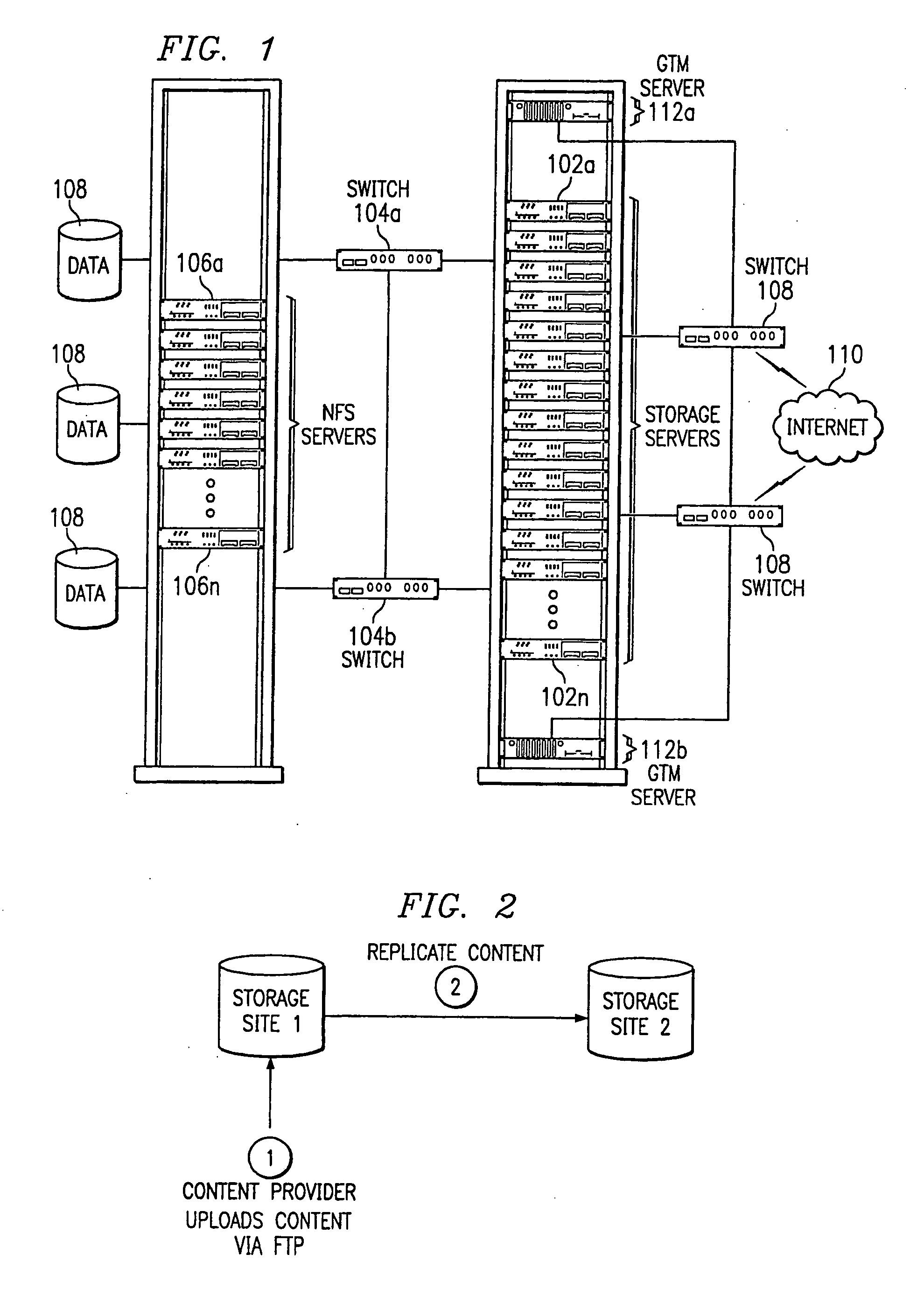

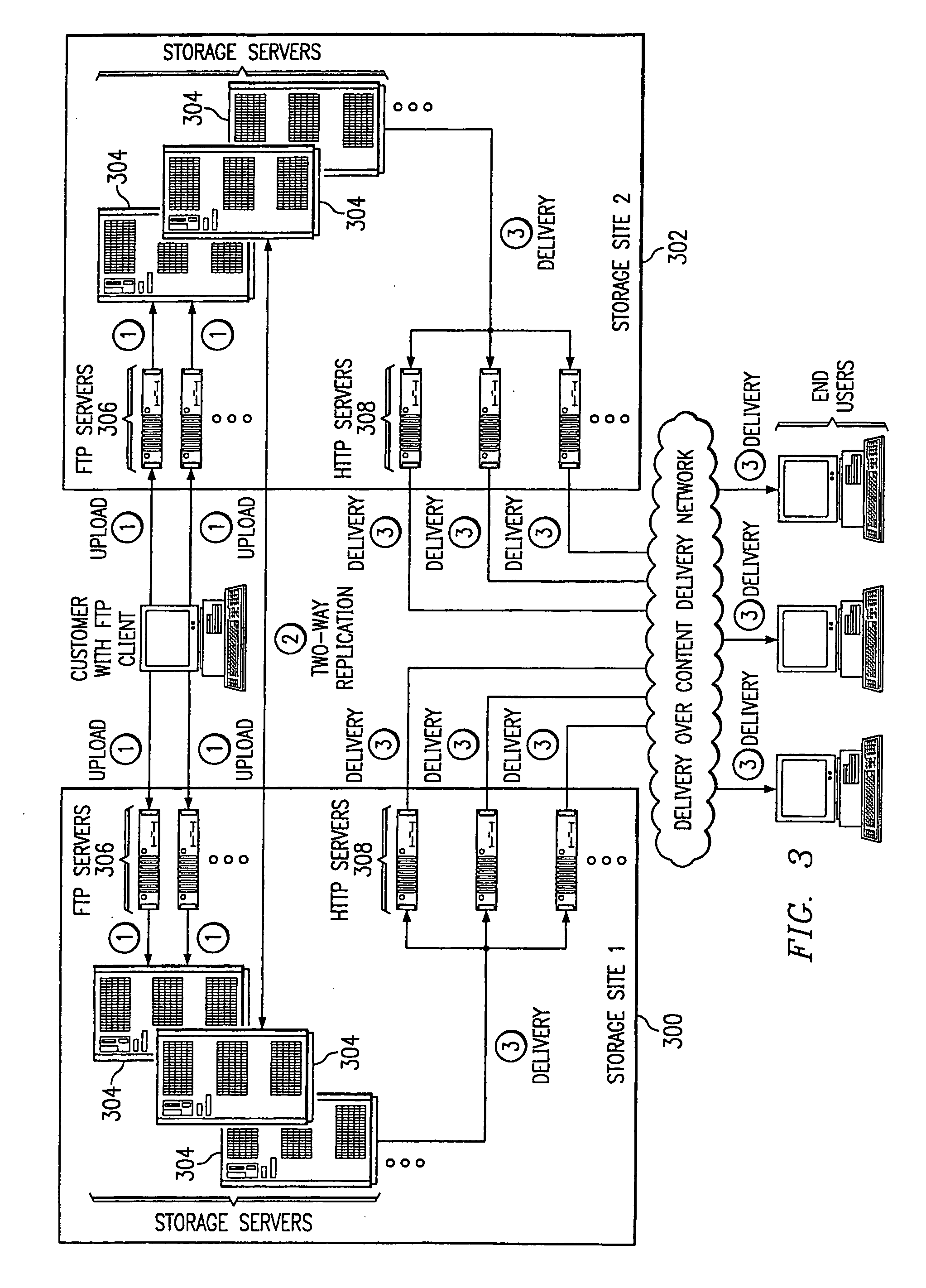

Scalable, high performance and highly available distributed storage system for Internet content

InactiveUS7472178B2Improve performanceIncrease contentMultiple digital computer combinationsWebsite content managementEdge serverInternet content

A method for content storage on behalf of participating content providers begins by having a given content provider identify content for storage. The content provider then uploads the content to a given storage site selected from a set of storage sites. Following upload, the content is replicated from the given storage site to at least one other storage site in the set. Upon request from a given entity, a given storage site from which the given entity may retrieve the content is then identified. The content is then downloaded from the identified given storage site to the given entity. In an illustrative embodiment, the given entity is an edge server of a content delivery network (CDN).

Owner:AKAMAI TECH INC

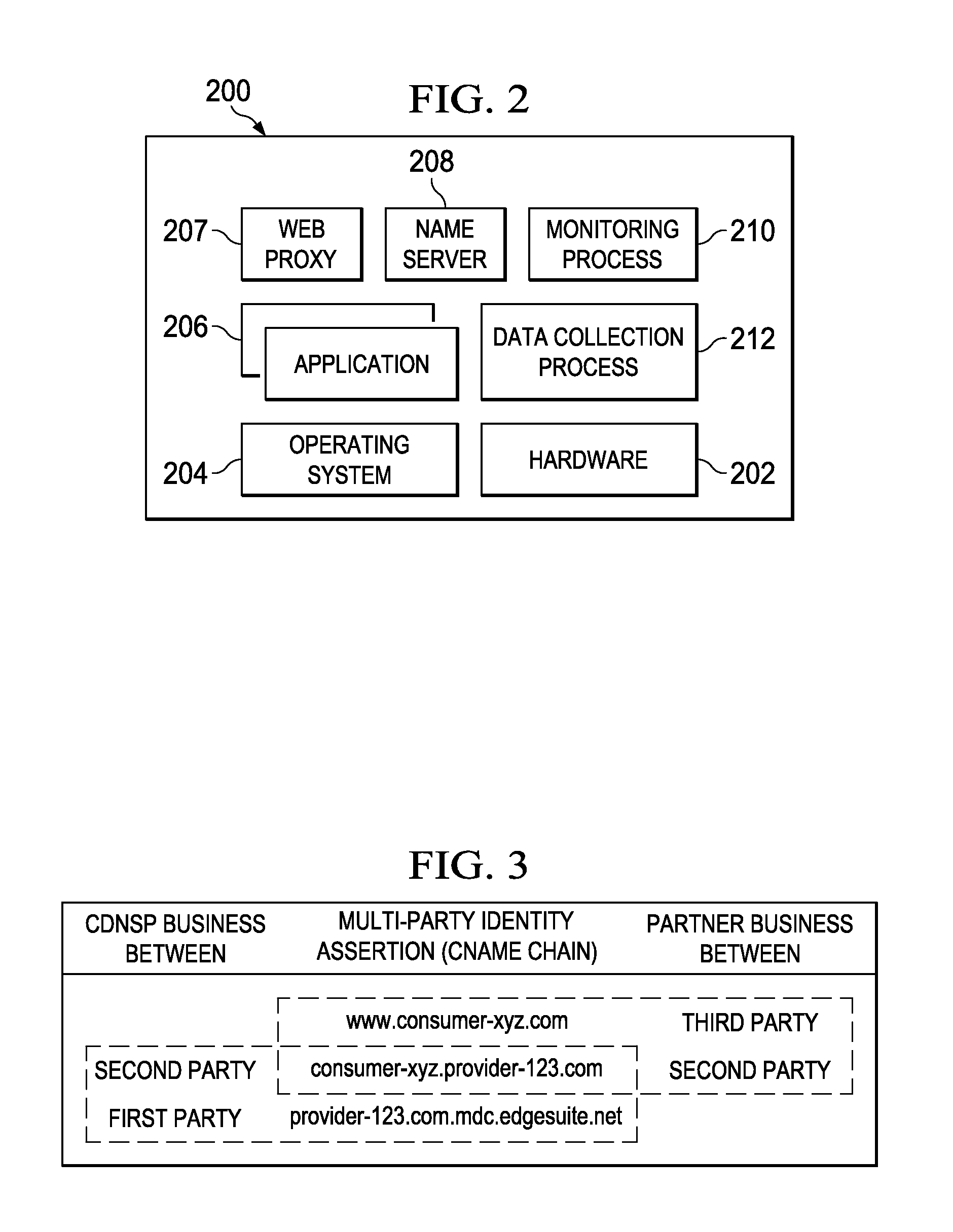

Multi-domain configuration handling in an edge network server

An Internet infrastructure delivery platform operated by a provider enables HTTP-based service to identified third parties at large scale. The platform provides this service to one or more cloud providers. The approach enables the CDN platform provider (the first party) to service third party traffic on behalf of the cloud provider (the second party). In operation, an edge server handling mechanism leverages DNS to determine if a request with an unknown host header should be serviced. Before serving a response, and assuming the host header includes an unrecognized name, the edge server resolves the host header and obtains an intermediate response, typically a list of aliases (e.g., DNS CNAMEs). The edge server checks the returned CNAME list to determine how to respond to the original request. Using just a single edge configuration, the CDN service provider can support instant provisioning of a cloud provider's identified third party traffic.

Owner:AKAMAI TECH INC

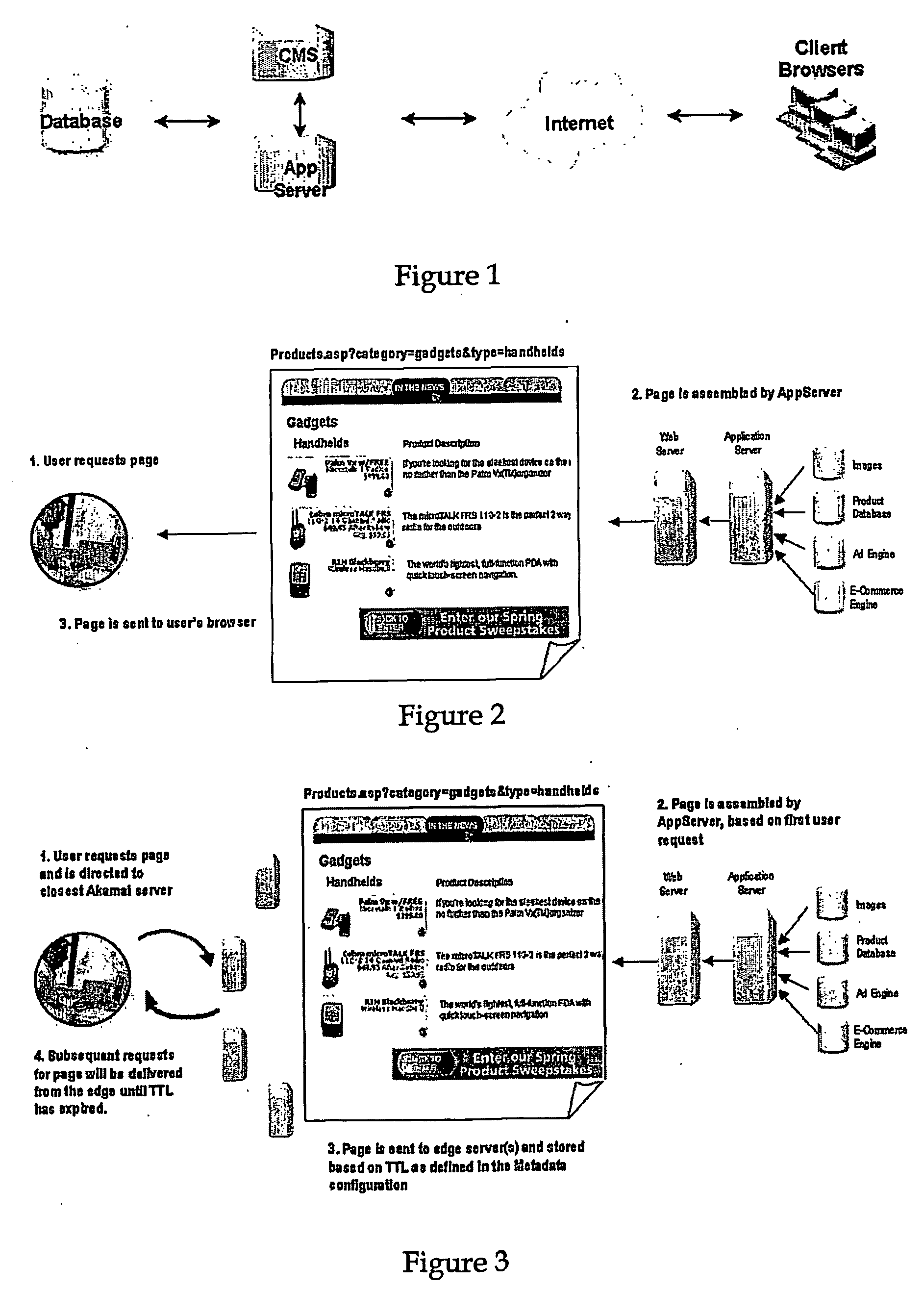

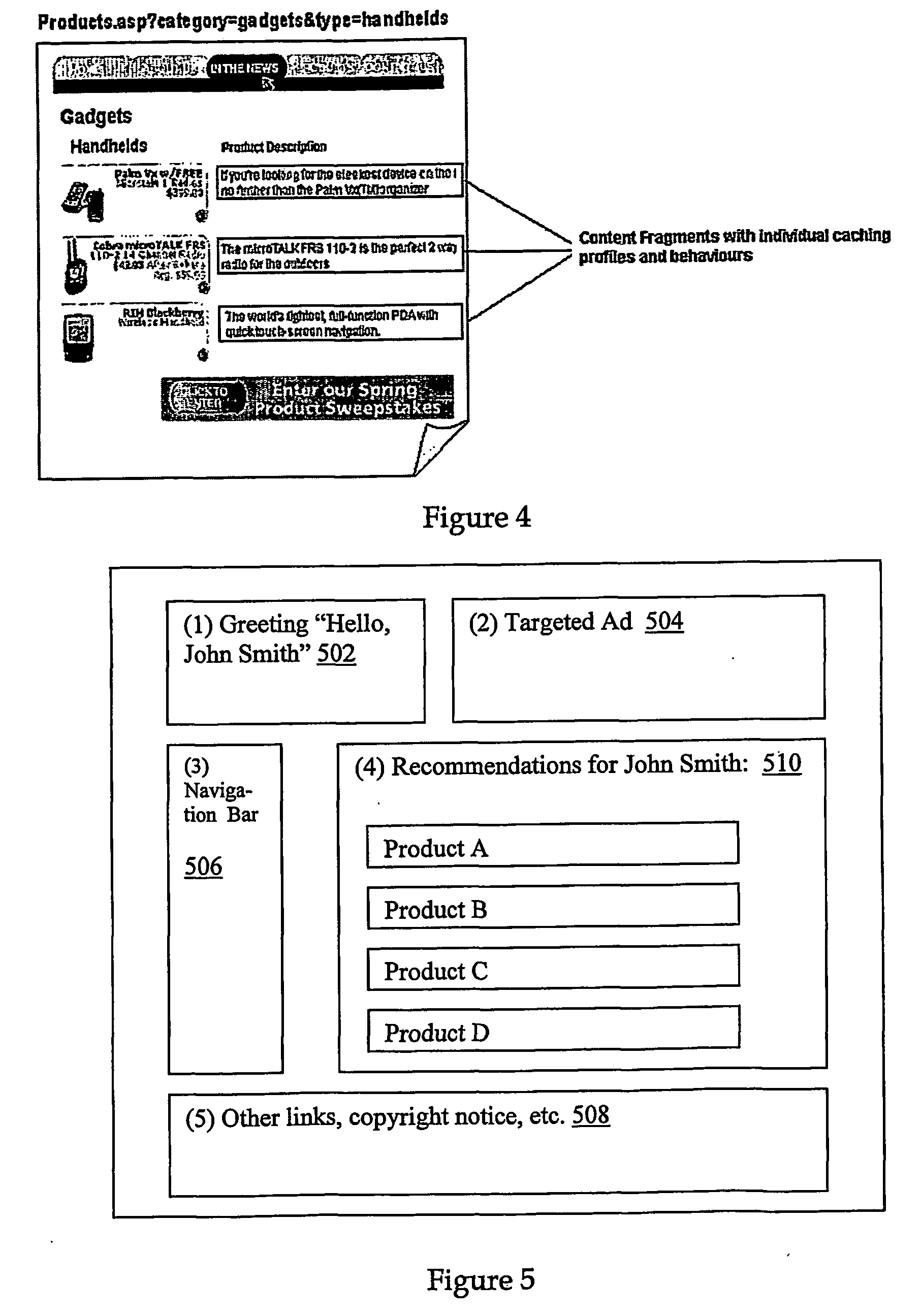

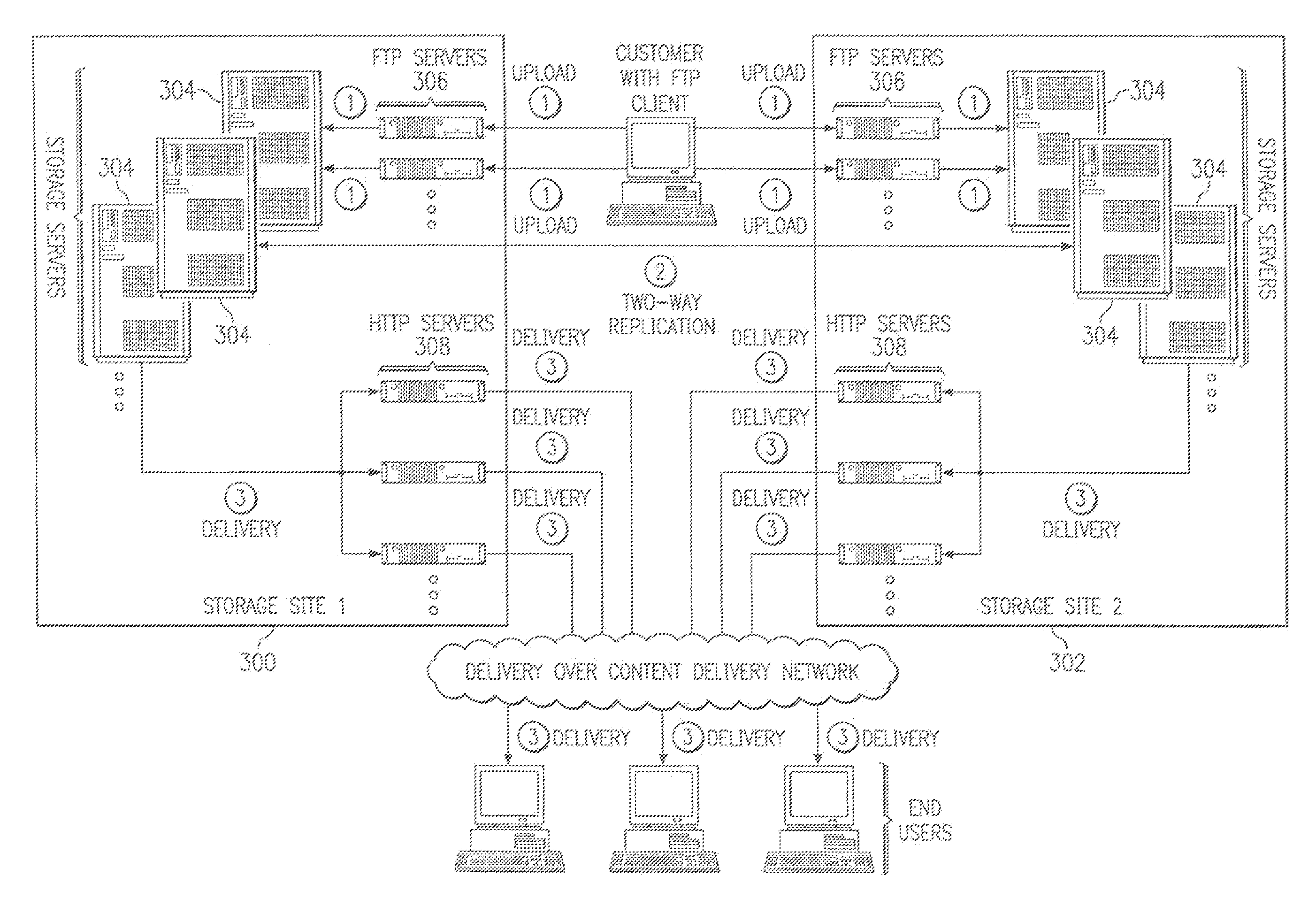

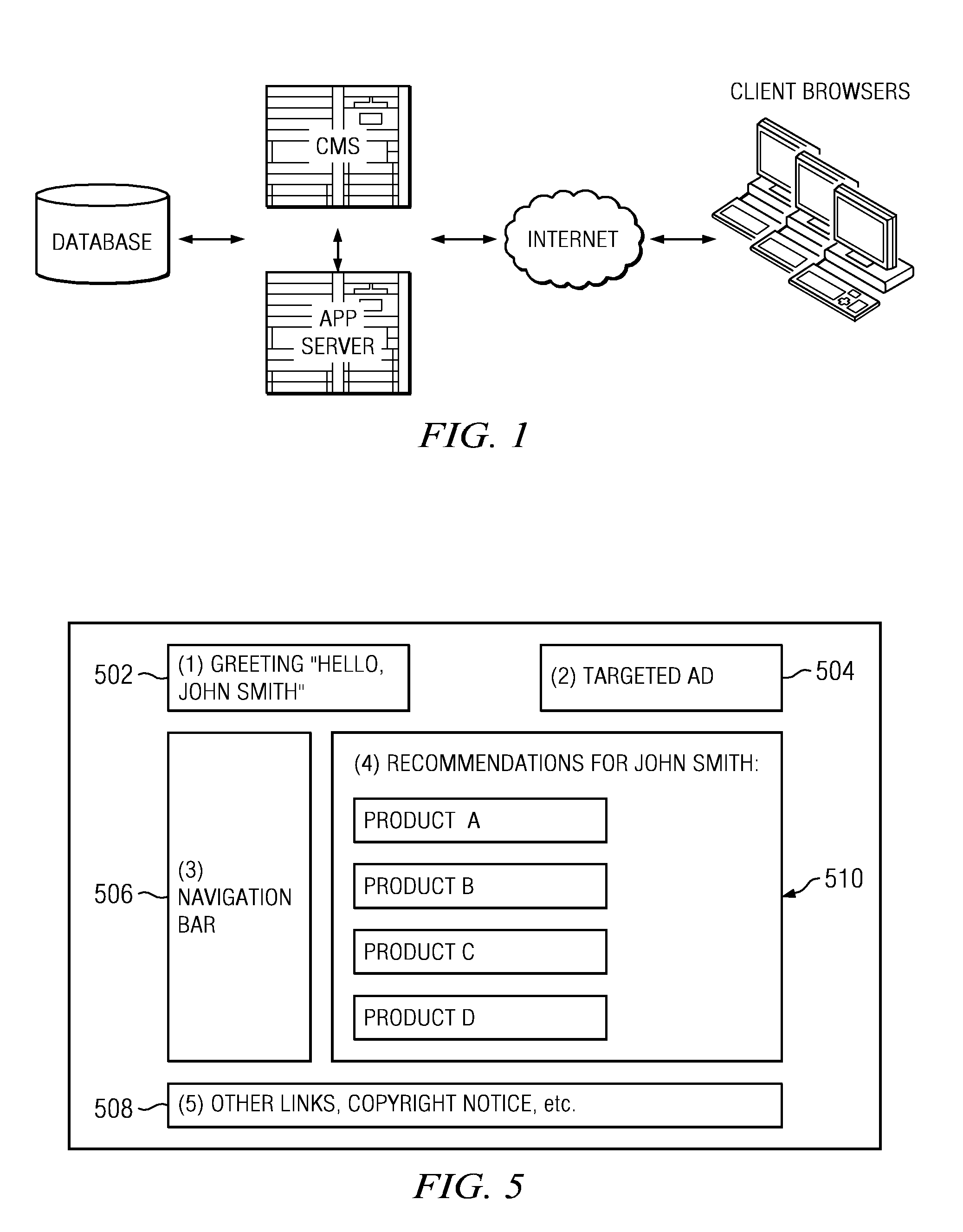

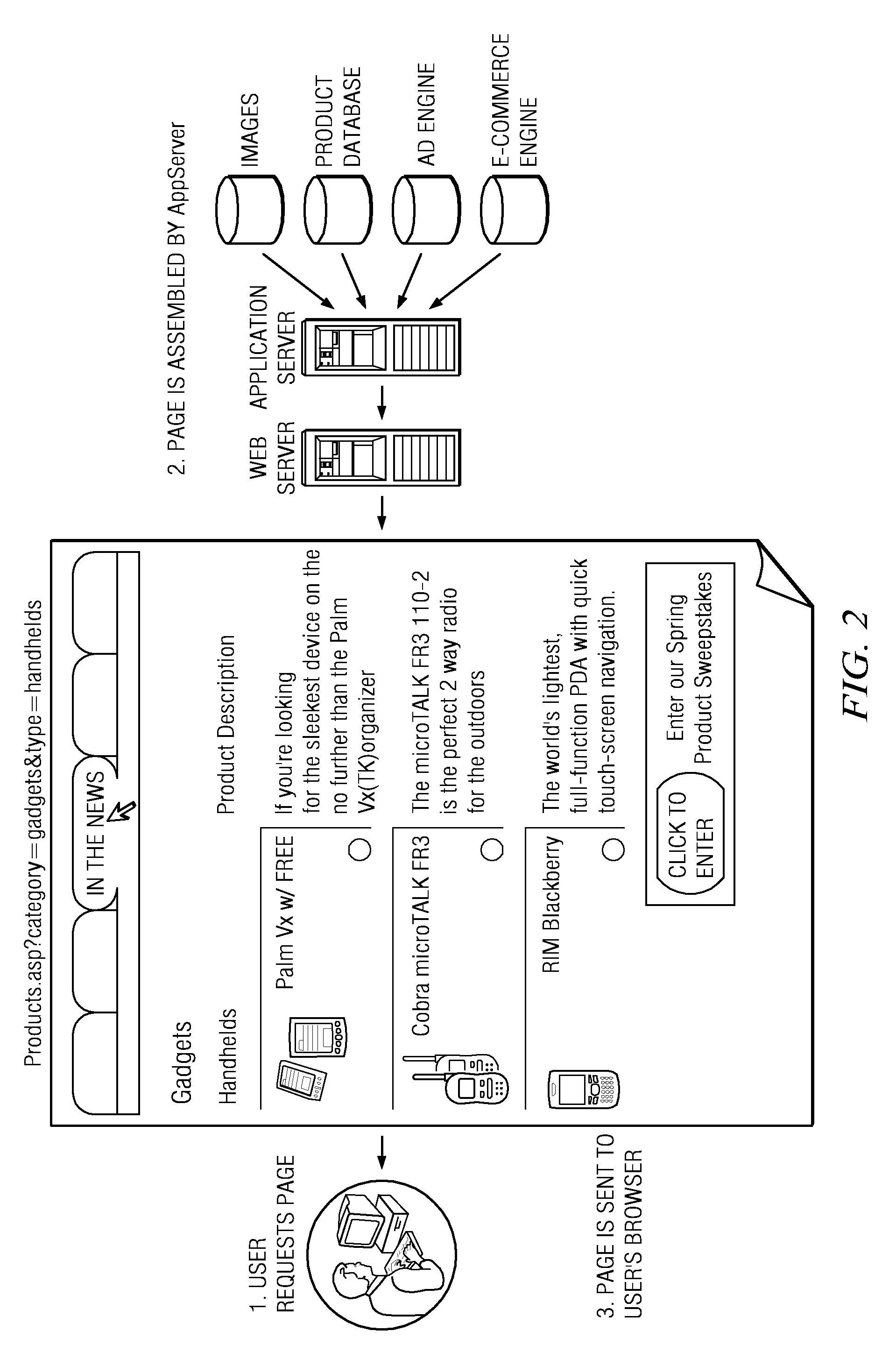

Dynamic content assembly on edge-of-network servers in a content delivery network

ActiveUS20090150518A1Improve site performanceImprove performanceDigital data information retrievalInterprogram communicationEdge serverWeb service

The present invention enables a content provider to dynamically assemble content at the edge of the Internet, preferably on content delivery network (CDN) edge servers. Preferably, the content provider leverages an “edge side include” (ESI) markup language that is used to define Web page fragments for dynamic assembly at the edge. Dynamic assembly improves site performance by catching the objects that comprise dynamically generated pages at the edge of the Internet, close to the end user. The content provider designs and develops the business logic to form and assemble the pages, for example, by using the ESI language within its development environment. Instead of being assembled by an application / web server in a centralized data center, the application / web server sends a page template and content fragments to a CDN edge server where the page is assembled. Each content fragment can have its own cacheability profile to manage the “freshness” of the content. Once a user requests a page (template), the edge server examines its cache for the included fragments and assembles the page on-the-fly.

Owner:AKAMAI TECH INC

Content storage and replication in a managed internet content storage environment

ActiveUS7340505B2Improve performanceIncrease contentError preventionFrequency-division multiplex detailsEdge serverInternet content

A method for content storage on behalf of participating content providers begins by having a given content provider identify content for storage. The content provider then uploads the content to a given storage site selected from a set of storage sites. Following upload, the content is replicated from the given storage site to at least one other storage site in the set. Upon request from a given entity, a given storage site from which the given entity may retrieve the content is then identified. The content is then downloaded from the identified given storage site to the given entity. In an illustrative embodiment, the given entity is an edge server of a content delivery network (CDN).

Owner:AKAMAI TECH INC

Managing web tier session state objects in a content delivery network (CDN)

ActiveUS7254634B1Error detection/correctionMultiple digital computer combinationsEdge serverApplication software

Business applications running on a content delivery network (CDN) having a distributed application framework can create, access and modify state for each client. Over time, a single client may desire to access a given application on different CDN edge servers within the same region and even across different regions. Each time, the application may need to access the latest “state” of the client even if the state was last modified by an application on a different server. A difficulty arises when a process or a machine that last modified the state dies or is temporarily or permanently unavailable. The present invention provides techniques for migrating session state data across CDN servers in a manner transparent to the user. A distributed application thus can access a latest “state” of a client even if the state was last modified by an application instance executing on a different CDN server, including a nearby (in-region) or a remote (out-of-region) server.

Owner:AKAMAI TECH INC

Managing web tier session state objects in a content delivery network (CDN)

InactiveUS7765304B2Error detection/correctionMultiple digital computer combinationsEdge serverApplication software

Business applications running on a content delivery network (CDN) having a distributed application framework can create, access and modify state for each client. Over time, a single client may desire to access a given application on different CDN edge servers within the same region and even across different regions. Each time, the application may need to access the latest “state” of the client even if the state was last modified by an application on a different server. A difficulty arises when a process or a machine that last modified the state dies or is temporarily or permanently unavailable. The present invention provides techniques for migrating session state data across CDN servers in a manner transparent to the user. A distributed application thus can access a latest “state” of a client even if the state was last modified by an application instance executing on a different CDN server, including a nearby (in-region) or a remote (out-of-region) server.

Owner:AKAMAI TECH INC

Scalable, high performance and highly available distributed storage system for Internet content

InactiveUS20070055765A1Infinite sequenceHigh degreeMultiple digital computer combinationsWebsite content managementInternet contentEdge server

A method for content storage on behalf of participating content providers begins by having a given content provider identify content for storage. The content provider then uploads the content to a given storage site selected from a set of storage sites. Following upload, the content is replicated from the given storage site to at least one other storage site in the set. Upon request from a given entity, a given storage site from which the given entity may retrieve the content is then identified. The content is then downloaded from the identified given storage site to the given entity. In an illustrative embodiment, the given entity is an edge server of a content delivery network (CDN).

Owner:AKAMAI TECH INC

Dynamic content assembly on edge-of-network servers in a content delivery network

ActiveUS7752258B2Improve performanceFast loadingDigital data information retrievalMultiple digital computer combinationsEdge serverData center

Owner:AKAMAI TECH INC

Method for caching and delivery of compressed content in a content delivery network

ActiveUS7395355B2Effective last mile acceleration of content deliveryEasy to useDigital data information retrievalMultiple digital computer combinationsEdge serverEngineering

A content delivery network (CDN) edge server is provisioned to provide last mile acceleration of content to requesting end users. The CDN edge server fetches, compresses and caches content obtained from a content provider origin server, and serves that content in compressed form in response to receipt of an end user request for that content. It also provides “on-the-fly” compression of otherwise uncompressed content as such content is retrieved from cache and is delivered in response to receipt of an end user request for such content. A preferred compression routine is gzip, as most end user browsers support the capability to decompress files that are received in this format. The compression functionality preferably is enabled on the edge server using customer-specific metadata tags.

Owner:AKAMAI TECH INC

Dynamic route requests for multiple clouds

InactiveUS20130080623A1Affect service qualityImprove application performanceDigital computer detailsProgram controlEdge serverUser device

Aspects of the present invention include a method of dynamically routing requests within multiple cloud computing networks. The method includes receiving a request for an application from a user device, forwarding the request to an edge server within a content delivery network (CDN), and analyzing the request to gather metrics about responsiveness provided by the multiple cloud computing networks running the application. The method further includes analyzing historical data for the multiple cloud computing networks regarding performance of the application, based on the performance metrics and the historical data, determining an optimal cloud computing network within the multiple cloud computing networks to route the request, routing the request to the optimal cloud computing network, and returning the response from the optimal cloud computing network to the user device.

Owner:LIMELIGHT NETWORKS

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com