Patents

Literature

96 results about "Forgery detection" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

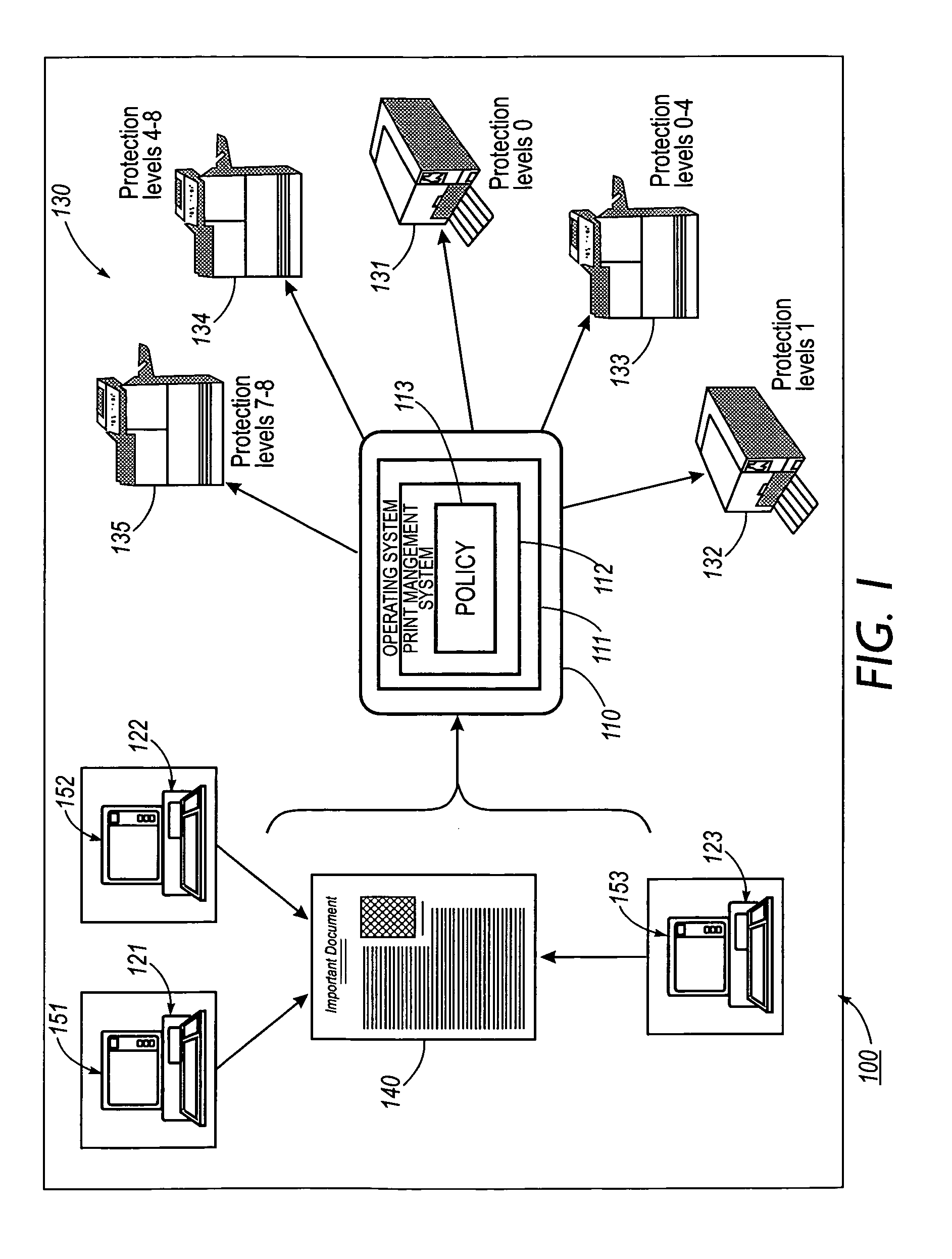

Systems and methods for forgery detection and deterrence of printed documents

InactiveUS6970259B1Digitally marking record carriersUser identity/authority verificationDigital signatureGlyph

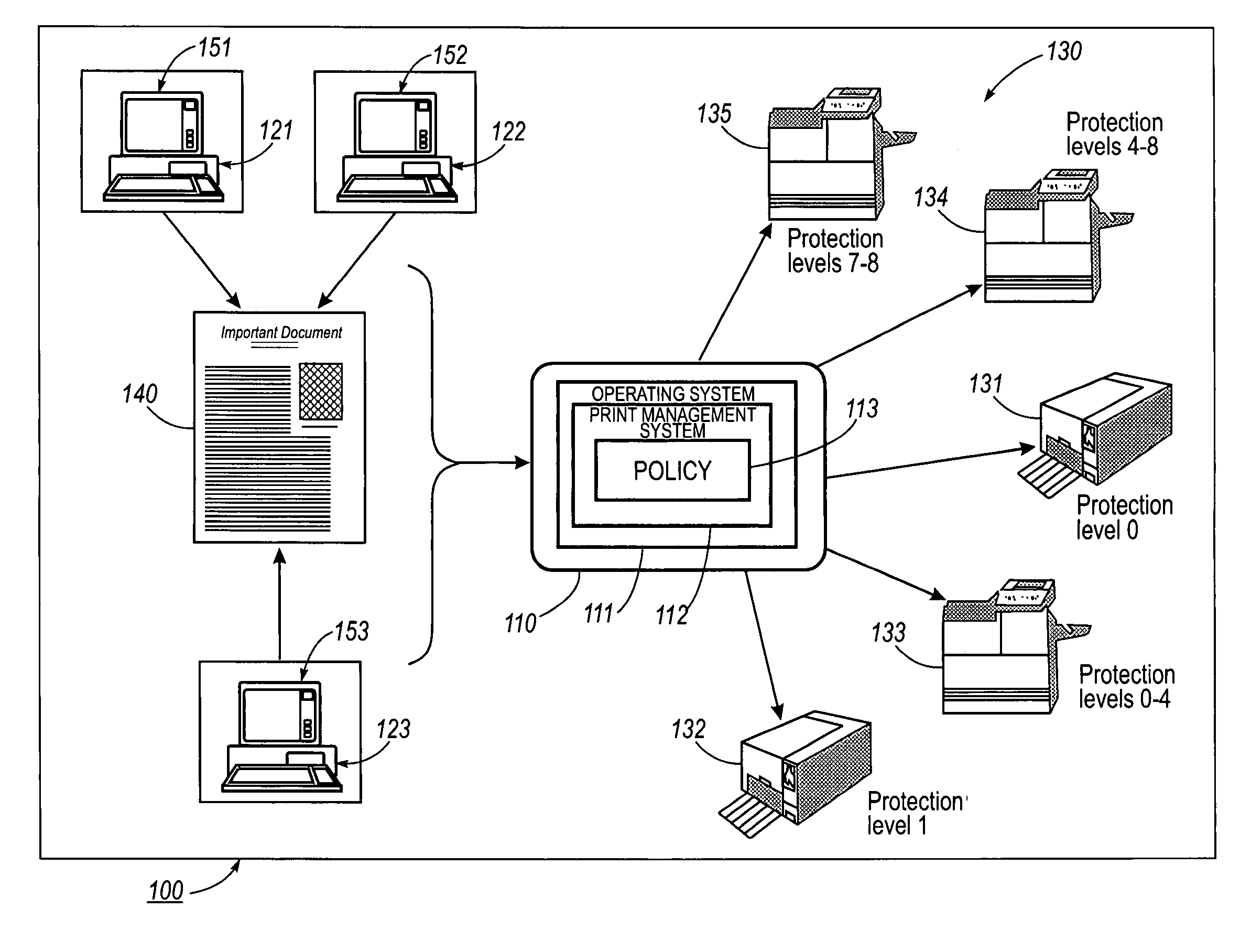

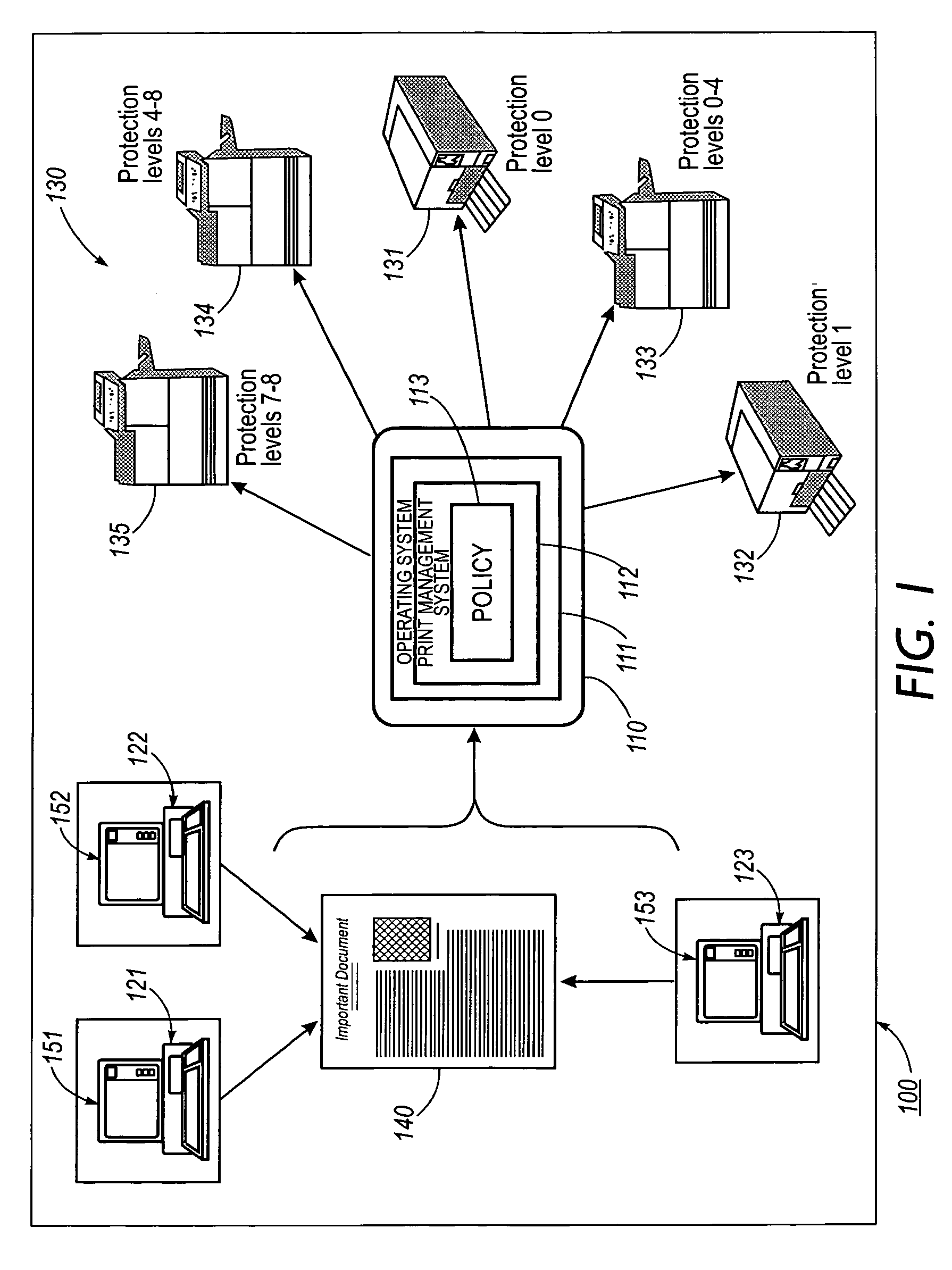

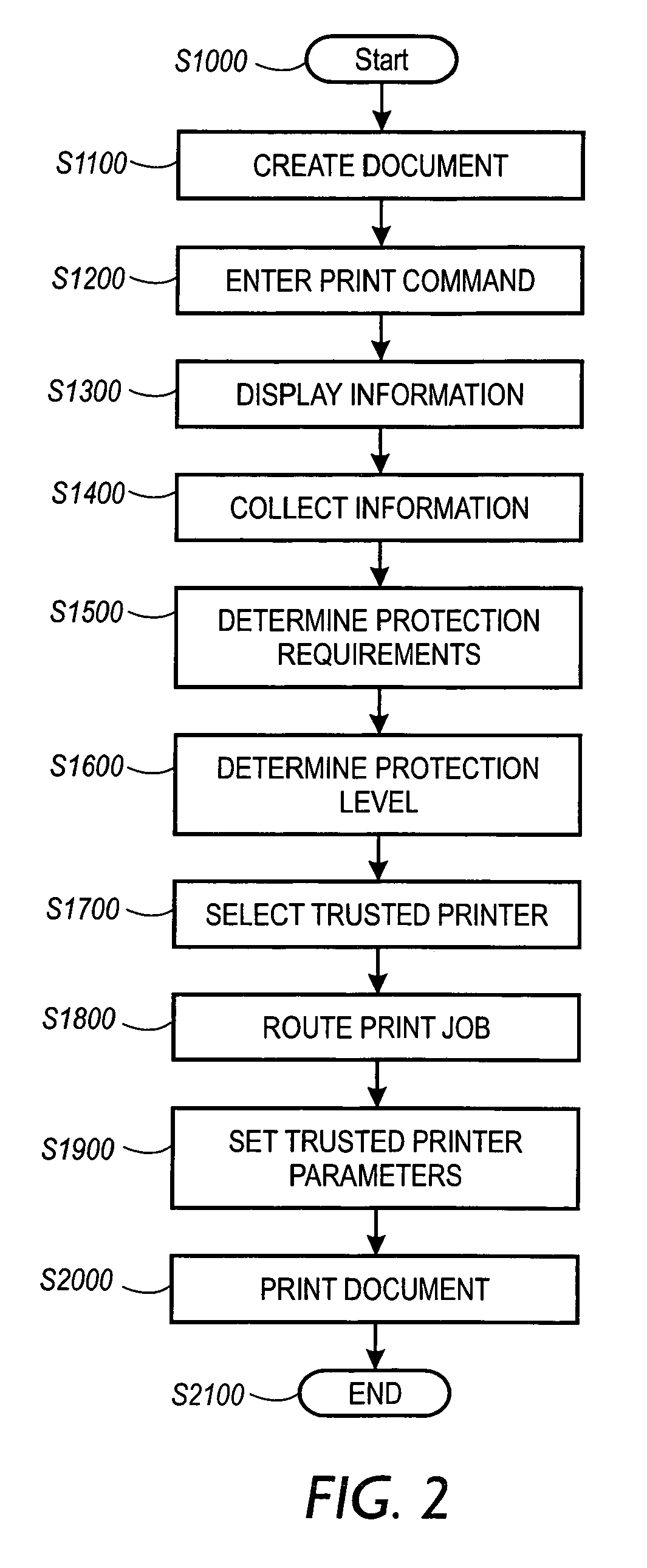

A print management system includes a policy that determines a protection level for a document to be printed. The document is printed using forgery detection and deterrence technologies, such as fragile and robust watermarks, glyphs, and digital signatures, that are appropriate to the level of protection determined by the policy. A plurality of printers are managed by a print management system. Each printer can provide a range of protection technologies. The policy determines the protection technologies for the document to be printed. The print management system routes the print job to a printer that can apply the appropriate protections and sets the appropriate parameters in the printer. Copy evidence that can establish that a document is a forgery and / or tracing information that identifies the custodian of the document and restrictions on copying of the document and use of the information in the document are included in the watermark that is printed on the document. A document can be verified as an original or established as a forgery by inspecting the copy evidence and / or tracing information in the watermark.

Owner:XEROX CORP

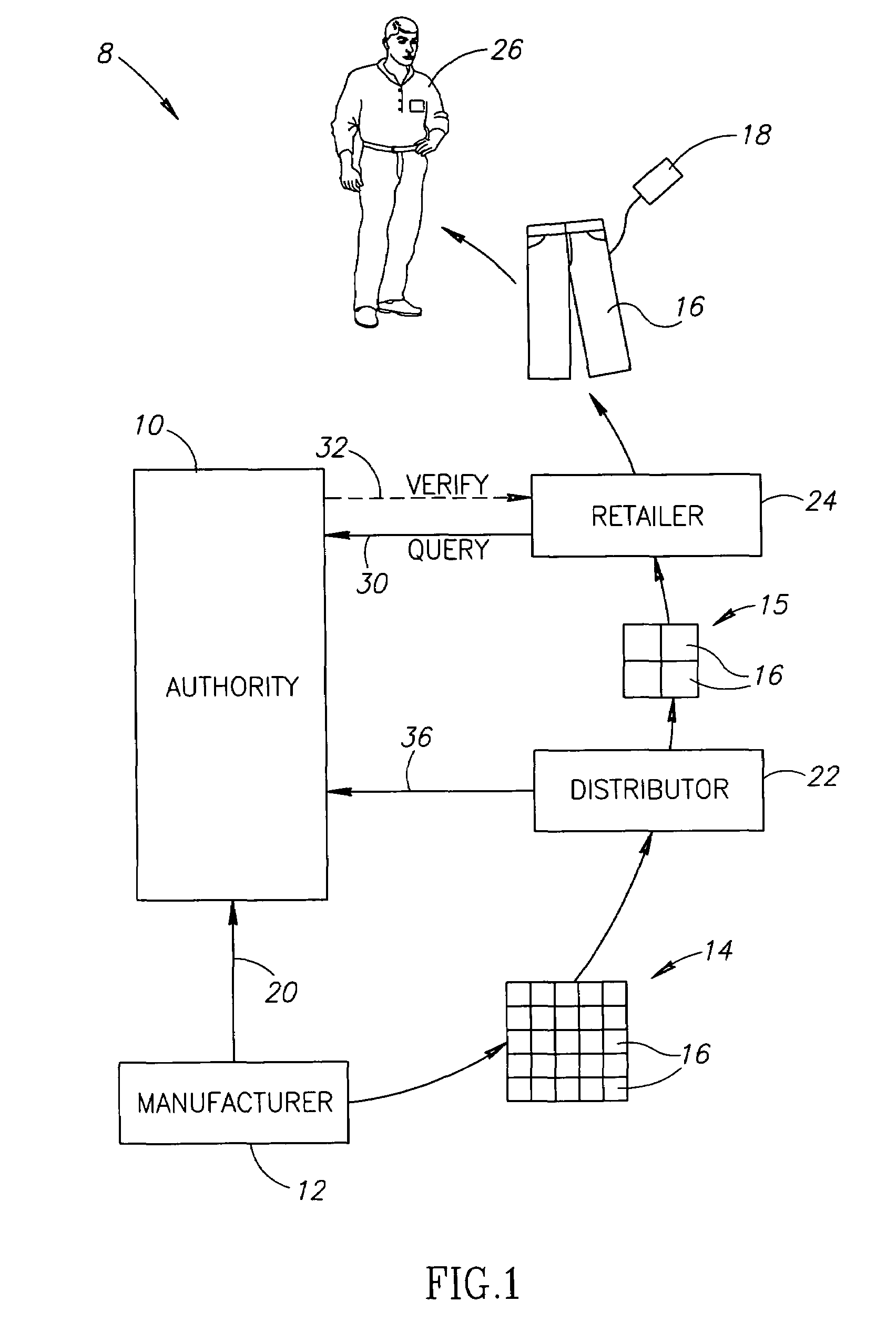

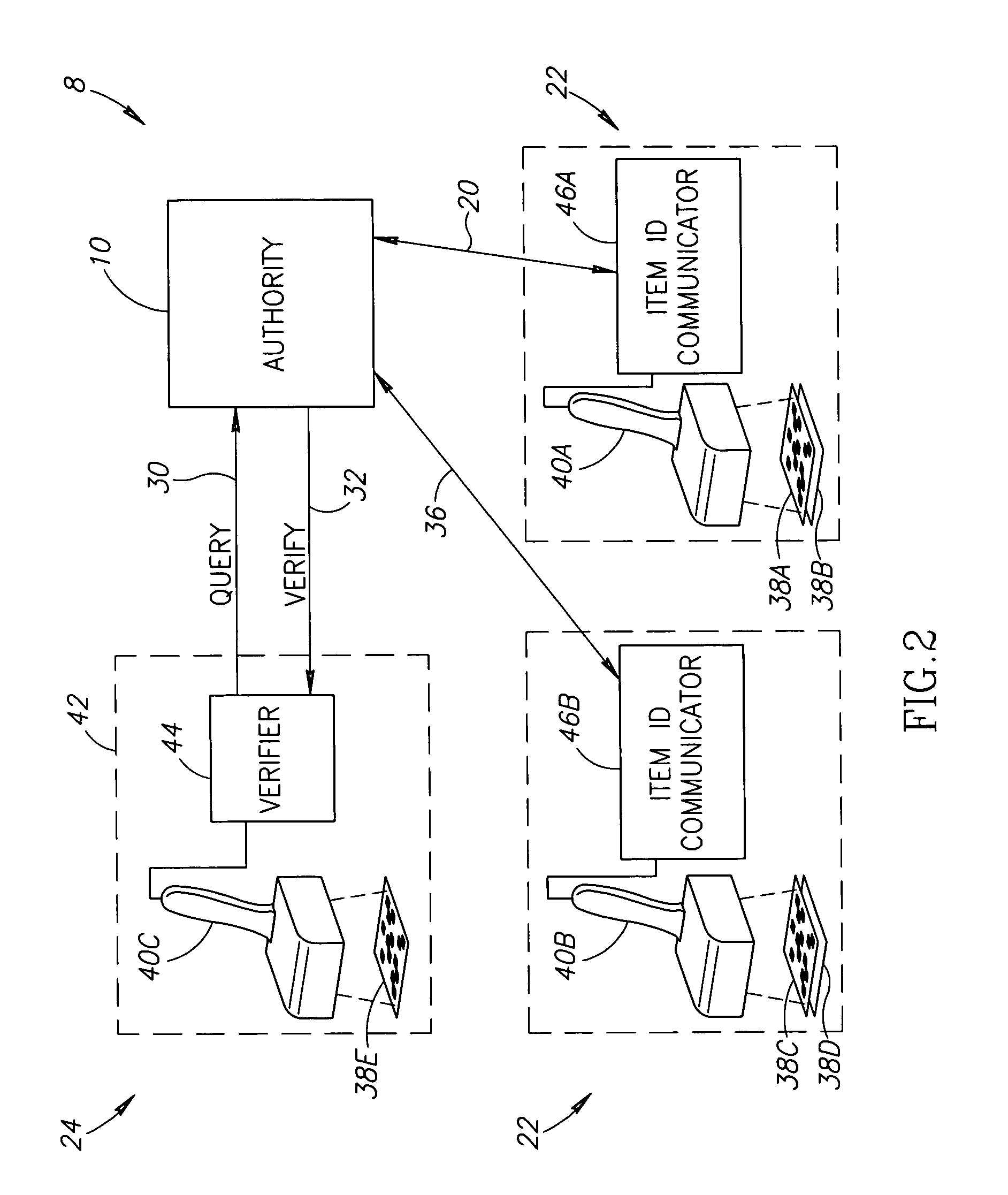

Counterfeit detection method

InactiveUS7222791B2Character and pattern recognitionVisual presentationItem generationForgery detection

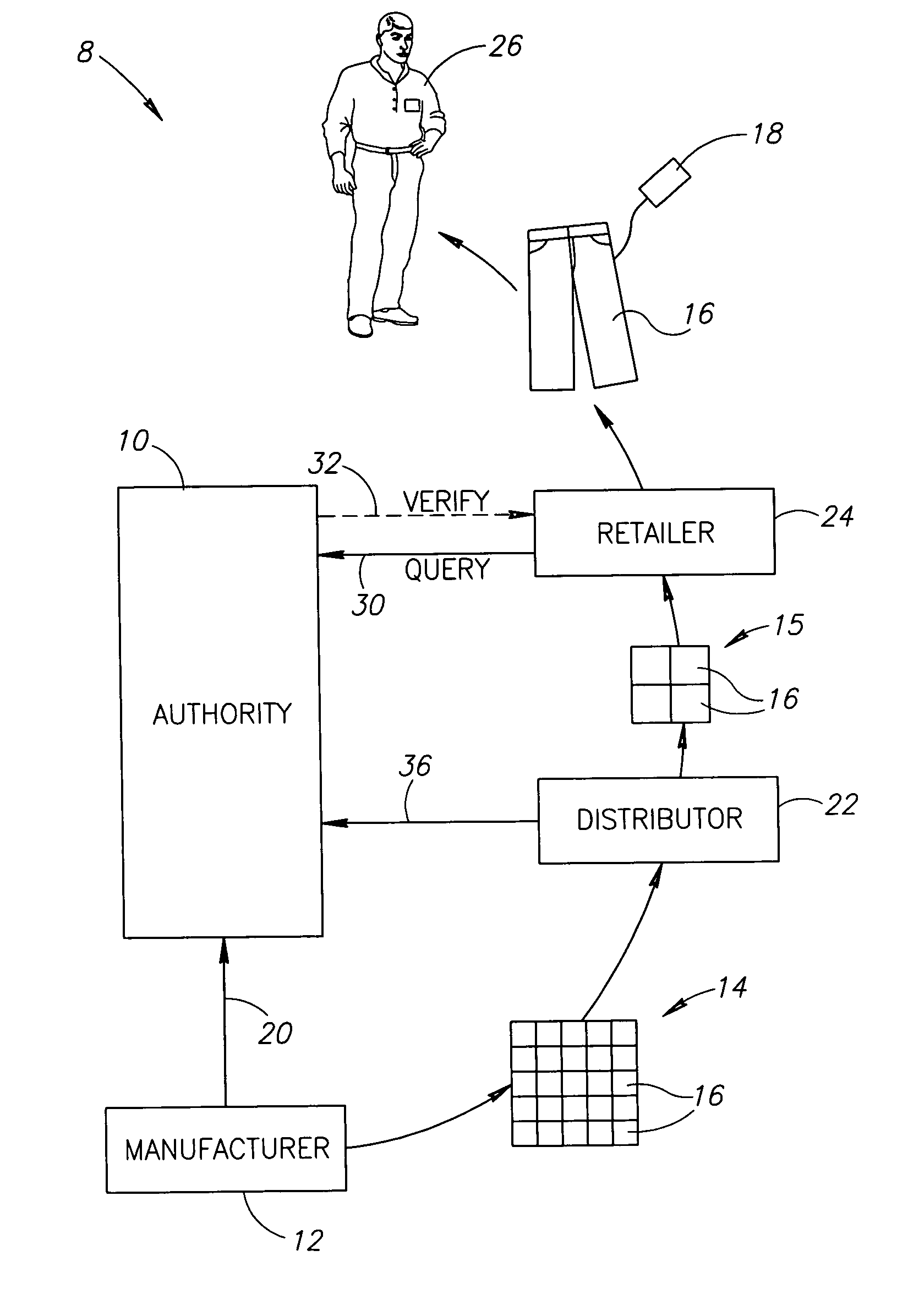

A counterfeit detection method includes electronically reading a label on a desired item in a store, transmitting an item identification code encoded in the read label to an authentication unit, receiving an indication from the authentication unit whether or not the item identification code is registered to the store and if the indication is positive, generating a certificate of authenticity for the desired item.

Owner:INT BUSINESS MASCH CORP

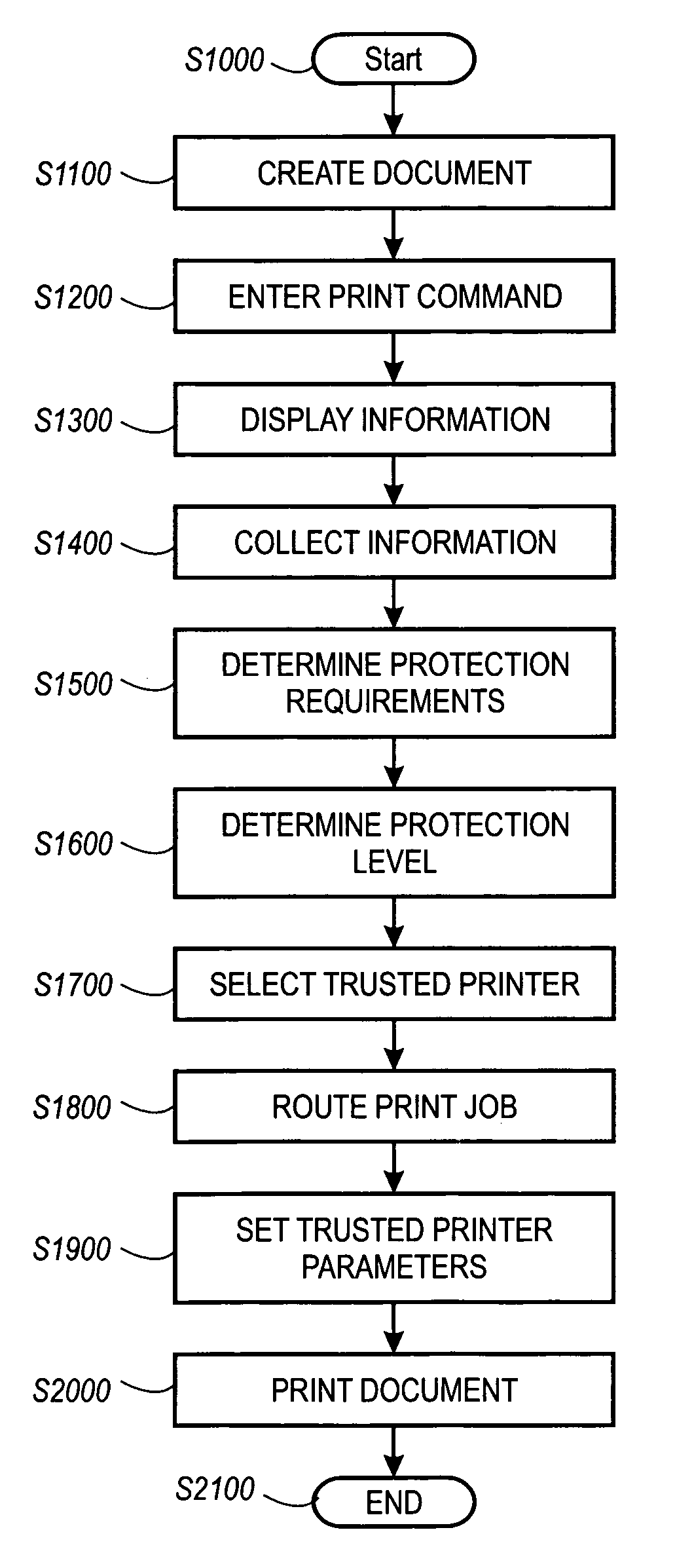

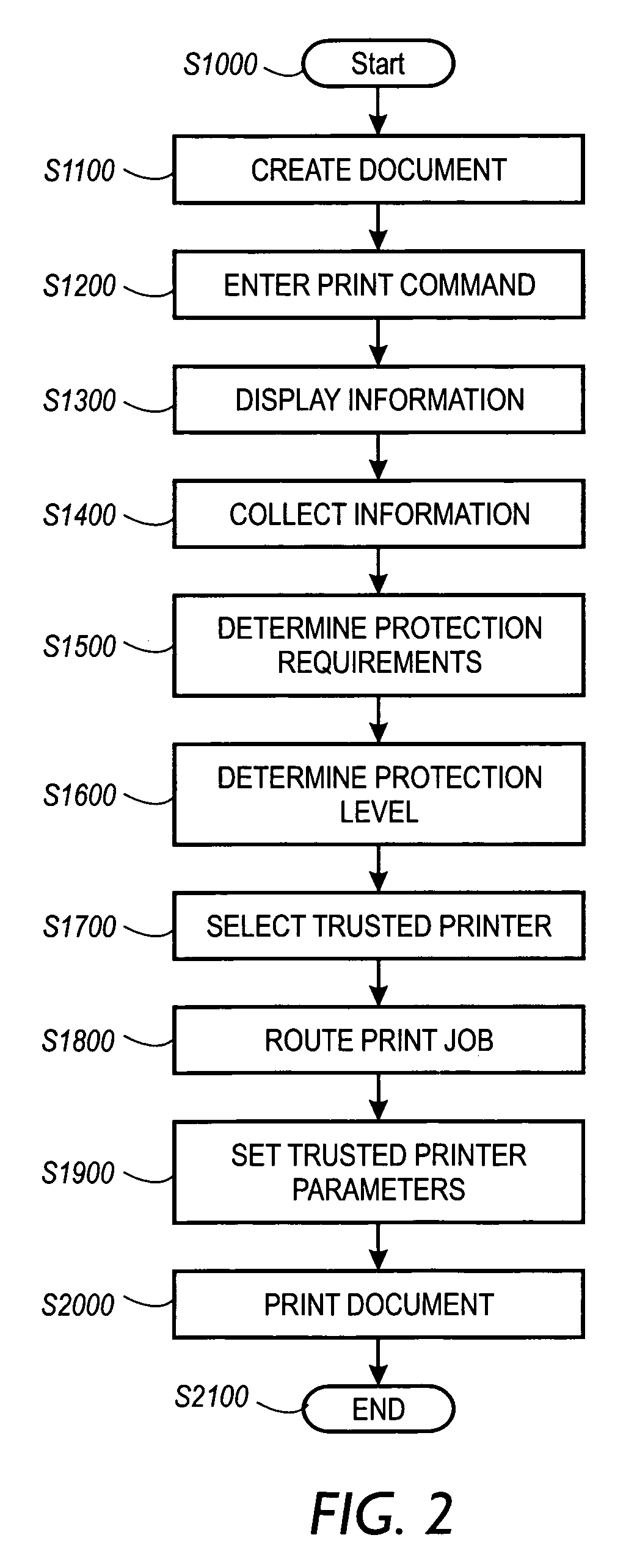

Systems and methods for policy based printing

InactiveUS7110541B1User identity/authority verificationCharacter and pattern recognitionDigital signatureGlyph

A print management system includes a policy that determines a protection level for a document to be printed. The document is printed using forgery detection and deterrence technologies, such as fragile and robust watermarks, glyphs, and digital signatures, that are appropriate to the level of protection determined by the policy. A plurality of printers are managed by a print management system. Each printer can provide a range of protection technologies. The policy determines the protection technologies for the document to be printed and the print management system routes the print job to a printer that can apply the appropriate protections and sets the appropriate parameters in the printer. Copy evidence that can verify that a document is a forgery and / or tracing information that identifies the custodian(s) of the document and restrictions on copying of the document and use of the information in the document are included in the watermark that is printed with the document information. A document can be verified as an original or a forgery by inspecting the copy evidence and / or tracing information in the watermark.

Owner:XEROX CORP

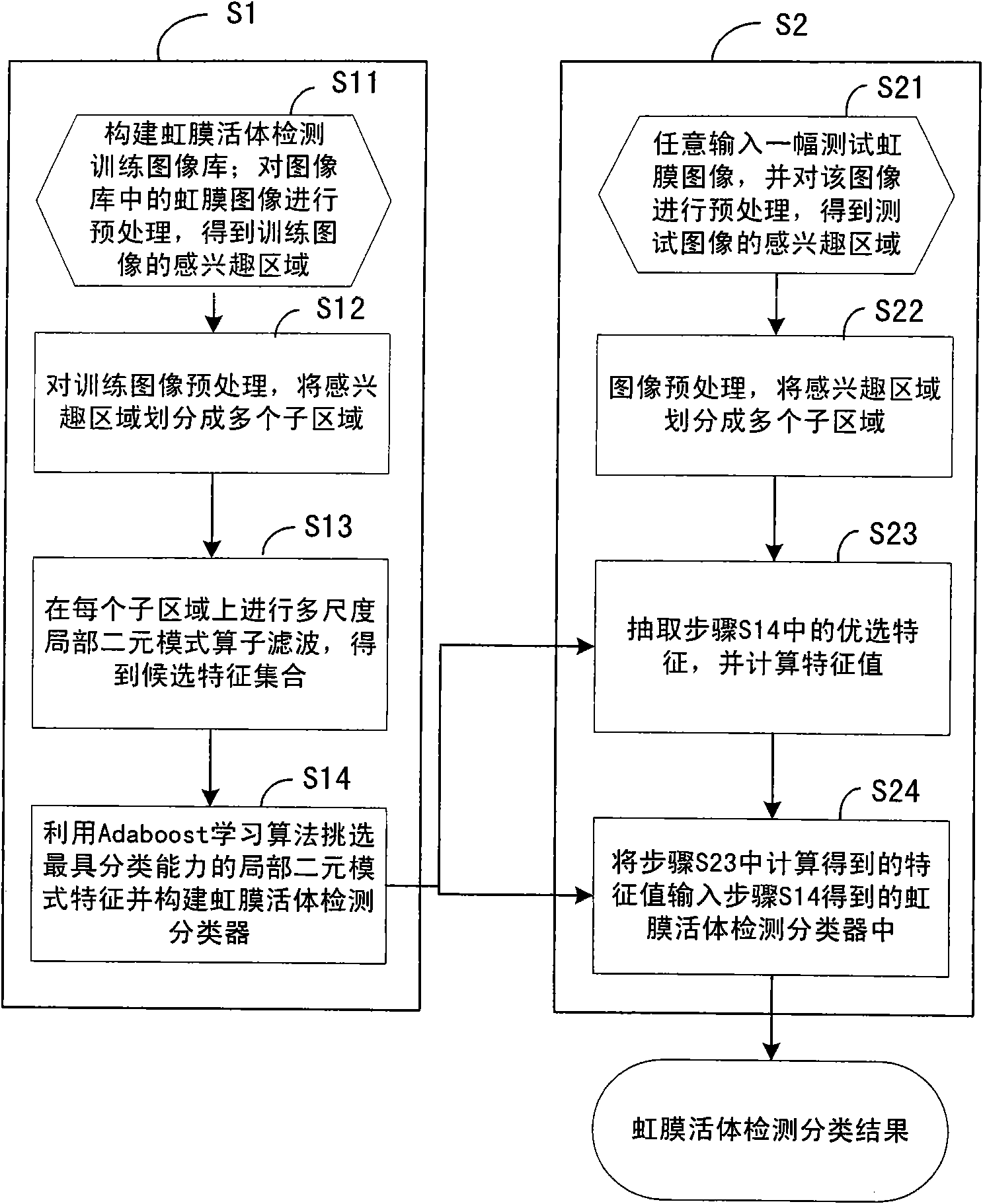

Living iris detection method

ActiveCN101833646AHigh precisionImprove securityCharacter and pattern recognitionPattern recognitionMedicine

The invention relates to a living iris detection method, which comprises the following steps of: S1, pre-treating a living iris image and an artificial iris image in a training image library; performing multi-scale characteristic extraction of local binary mode in an obtained interested area, and selecting the preferable one from the obtained candidate characteristics by using an adaptive reinforcement learning algorithm, and establishing a classifier for living iris detection; and S2, pre-treating the randomly input test iris image, and calculating the preferable local binary-mode characteristic in the obtained interested area; inputting the calculated characteristic value into the classifier for living iris detection obtained in step S1, and judging whether the test image is from the living iris according to the output result of the classifier. The invention can perform effective anti-forgery detection and alarm for the iris image and reduce error rate in iris recognition. The invention is widely applicable to various application systems for identification and safety precaution by using iris recognition.

Owner:BEIJING IRISKING

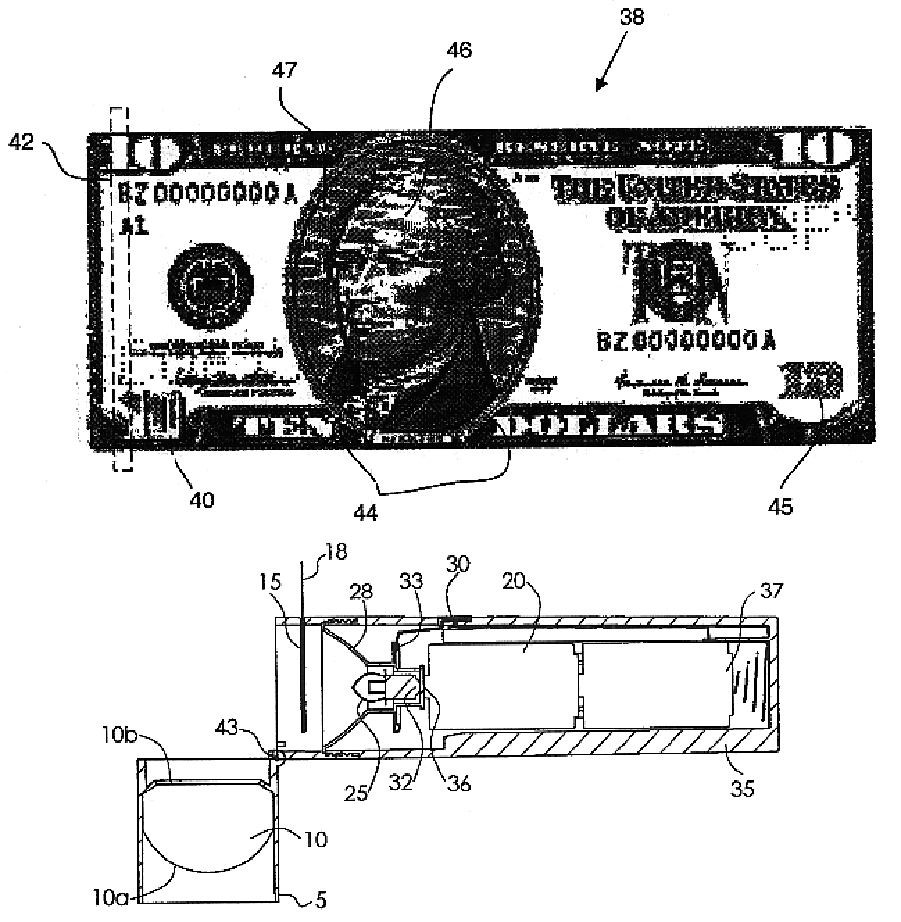

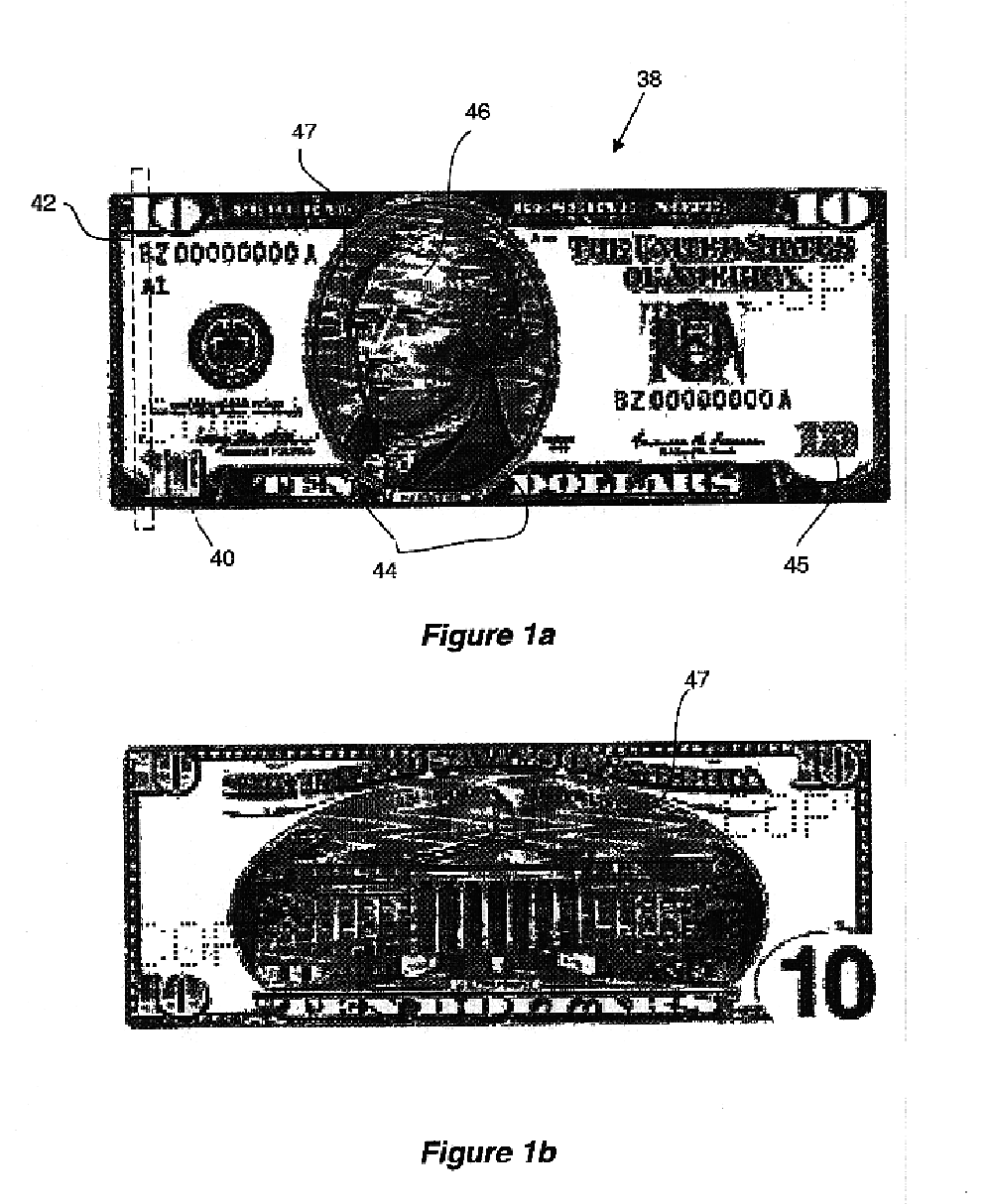

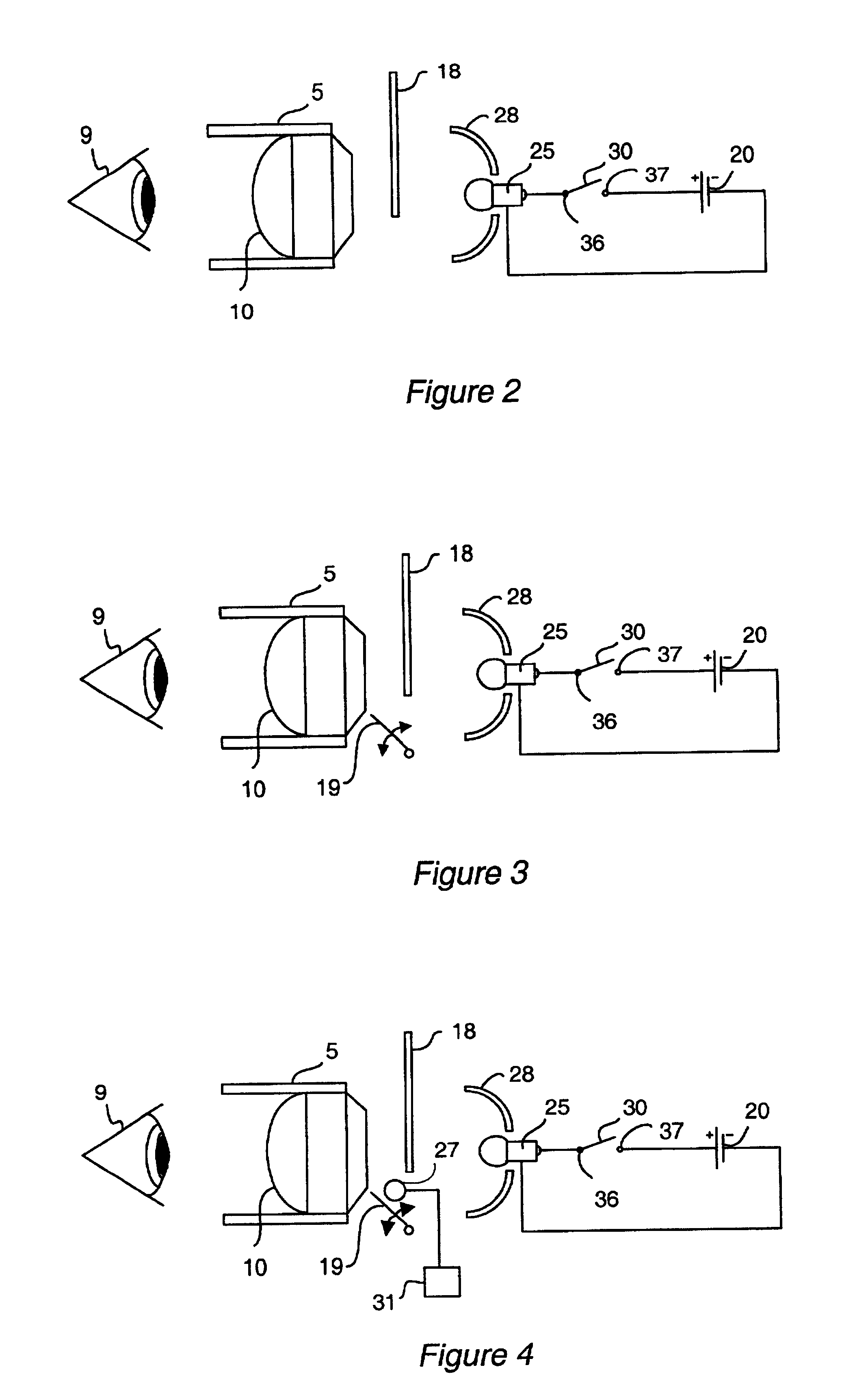

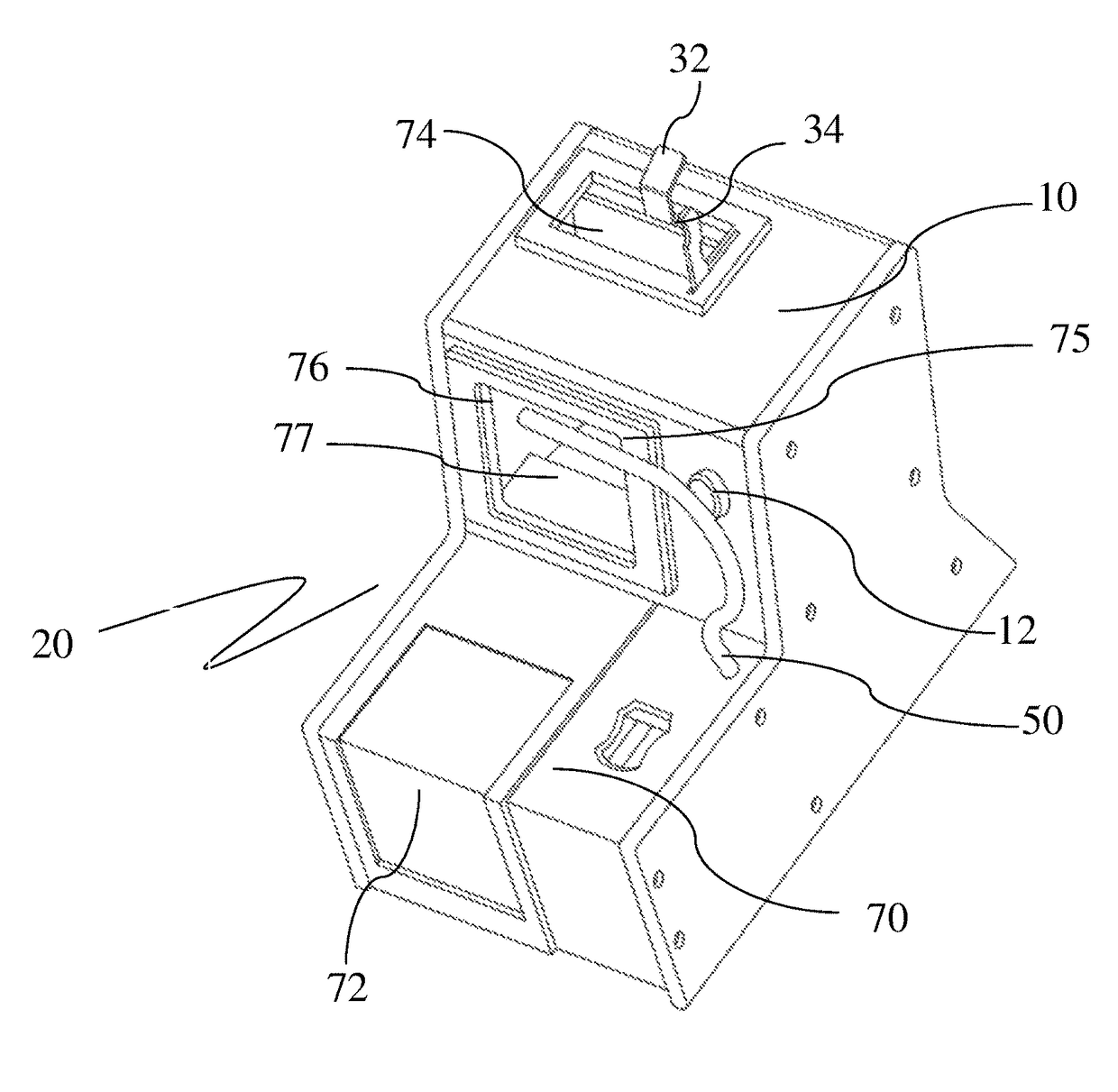

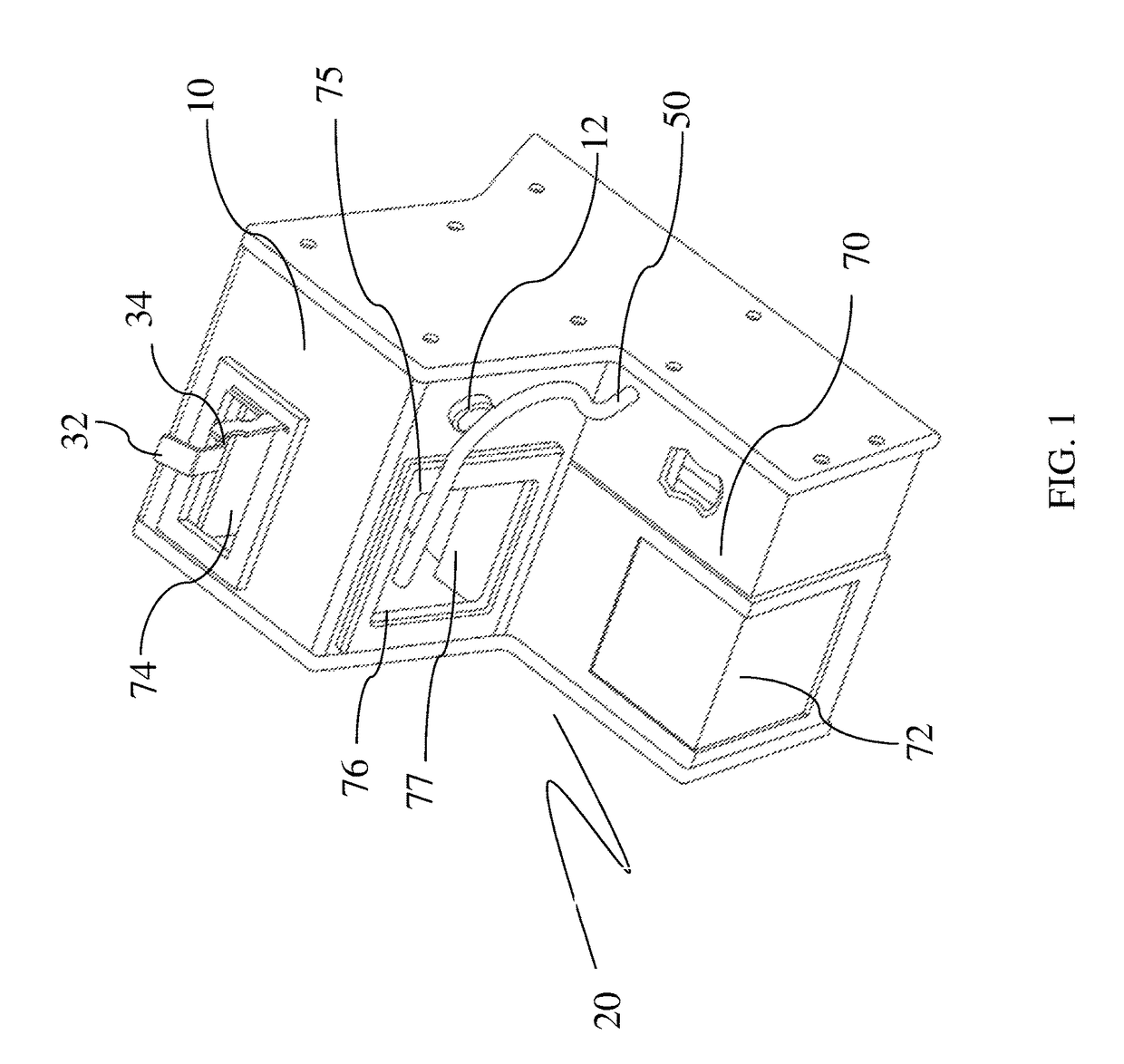

Counterfeit detection apparatus

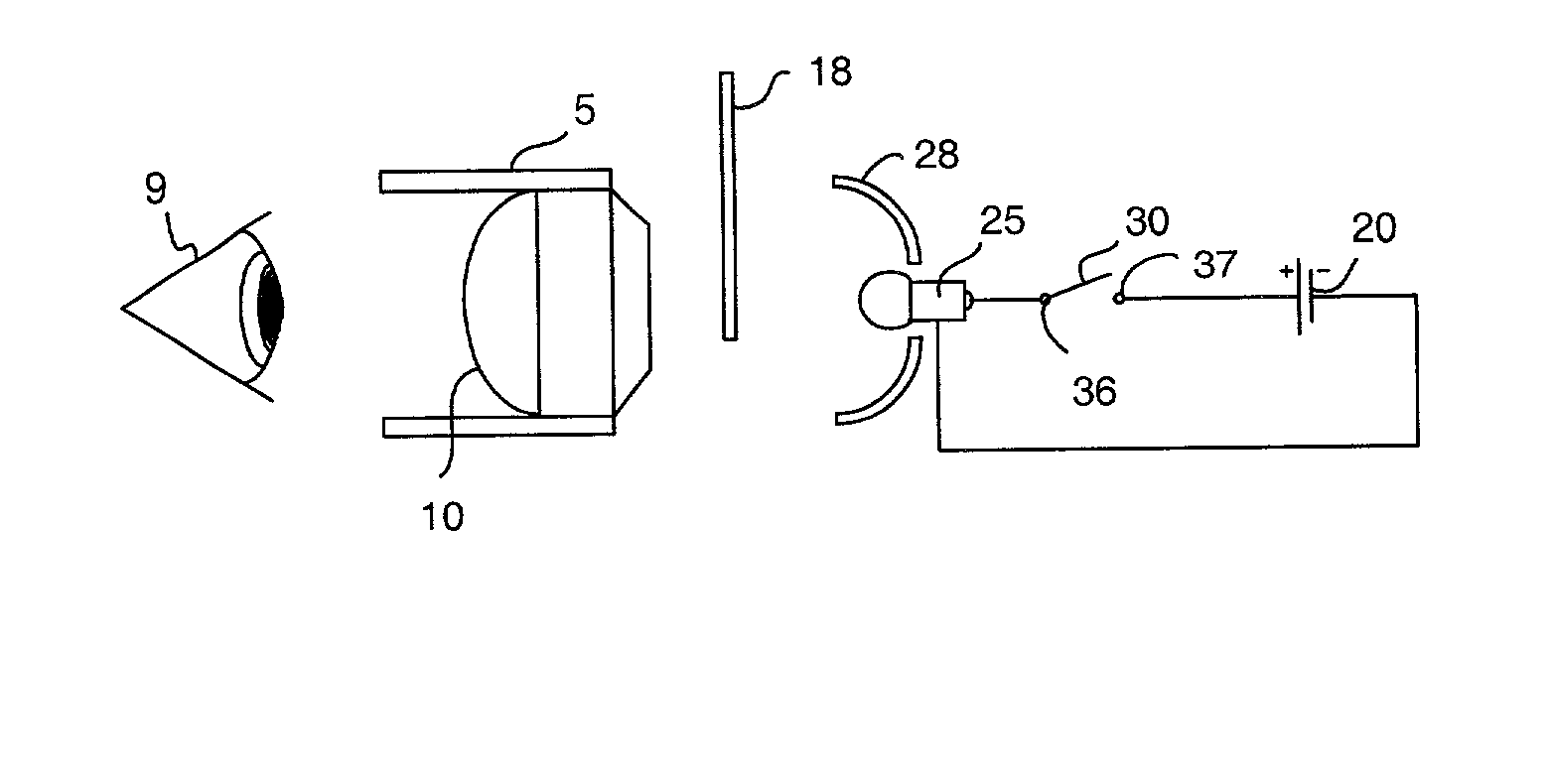

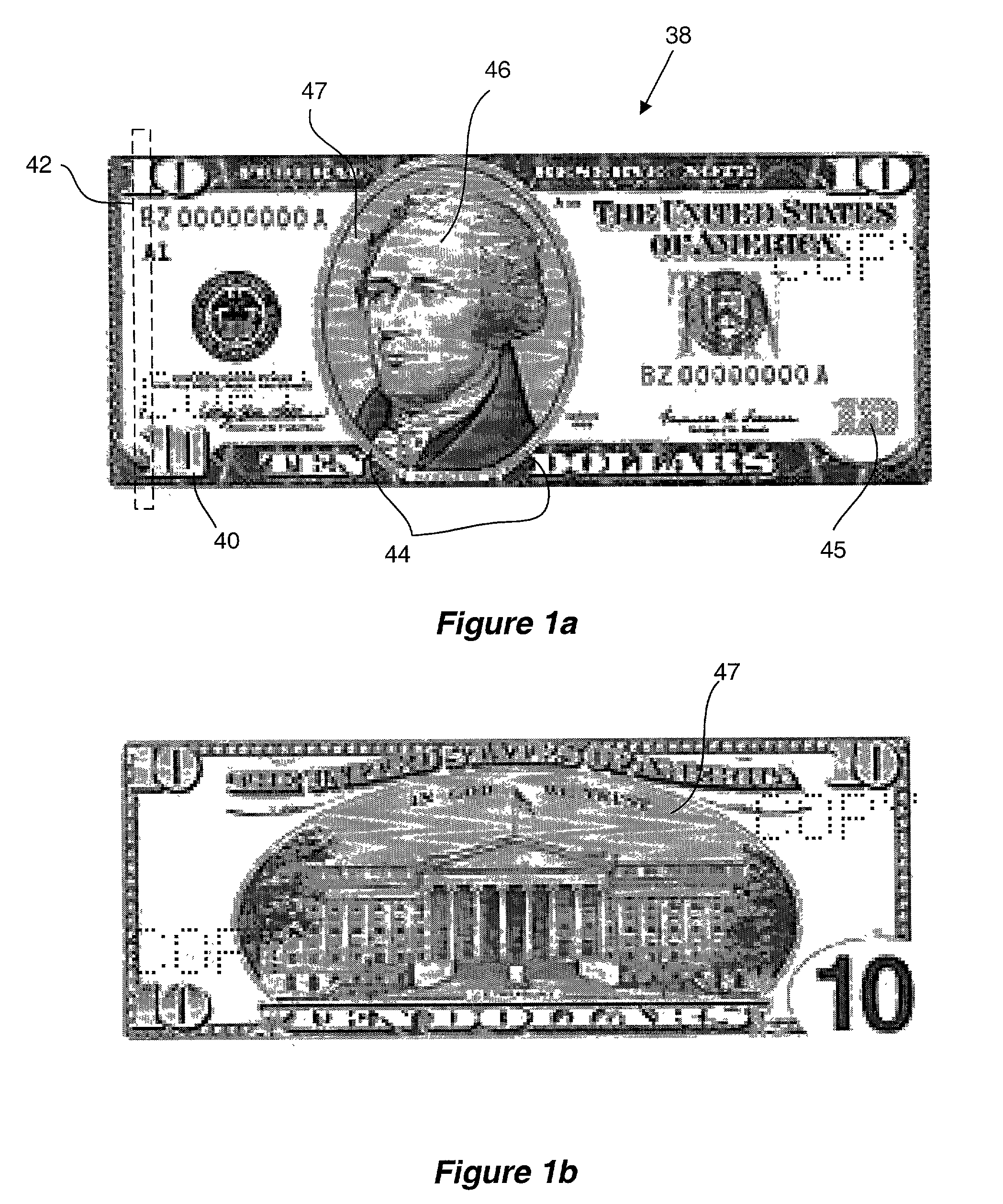

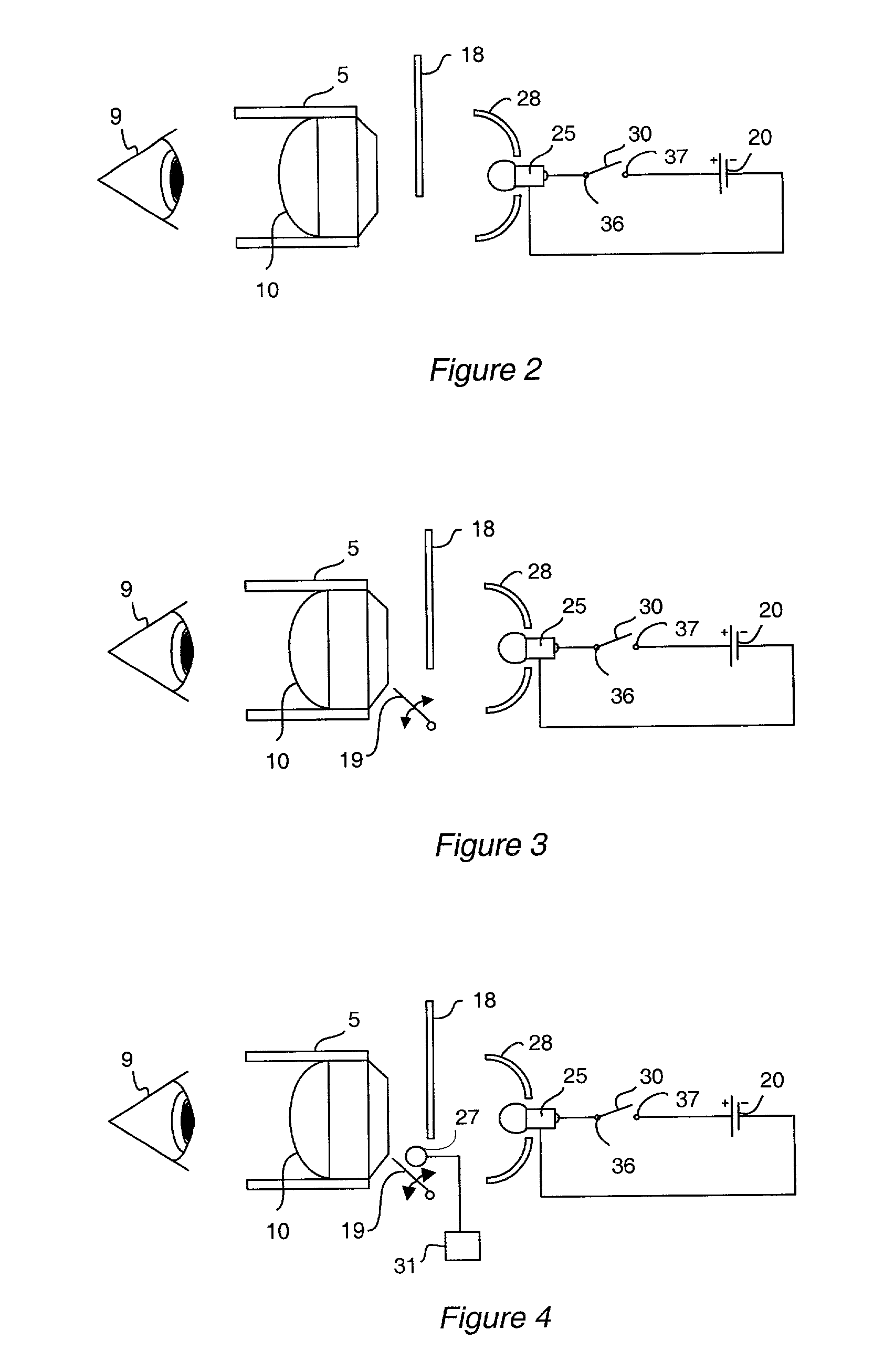

InactiveUS20020163633A1Enlarging microprintingEasy to seePaper-money testing devicesCharacter and pattern recognitionPolyesterCamera lens

A counterfeit detection device that is useful for optically examining the security features of currency to determine its authenticity. The apparatus places the currency at the object plane of a magnifying lens suitable for enlarging the image of the microprinting as well as examining other fine features of the bill. In one embodiment, the device consists of a rear illuminating light source a bill positioning slot a magnifying lens, and an environmental light shade. Further embodiments include a capability of front illumination to detect the color shifting ink, an ultraviolet light source causal to viewing the phosphorescent glow of the polyester thread with its identifying color. And finally, a version with a swing-away lens to view the watermark unmagnified.

Owner:COHEN ROY

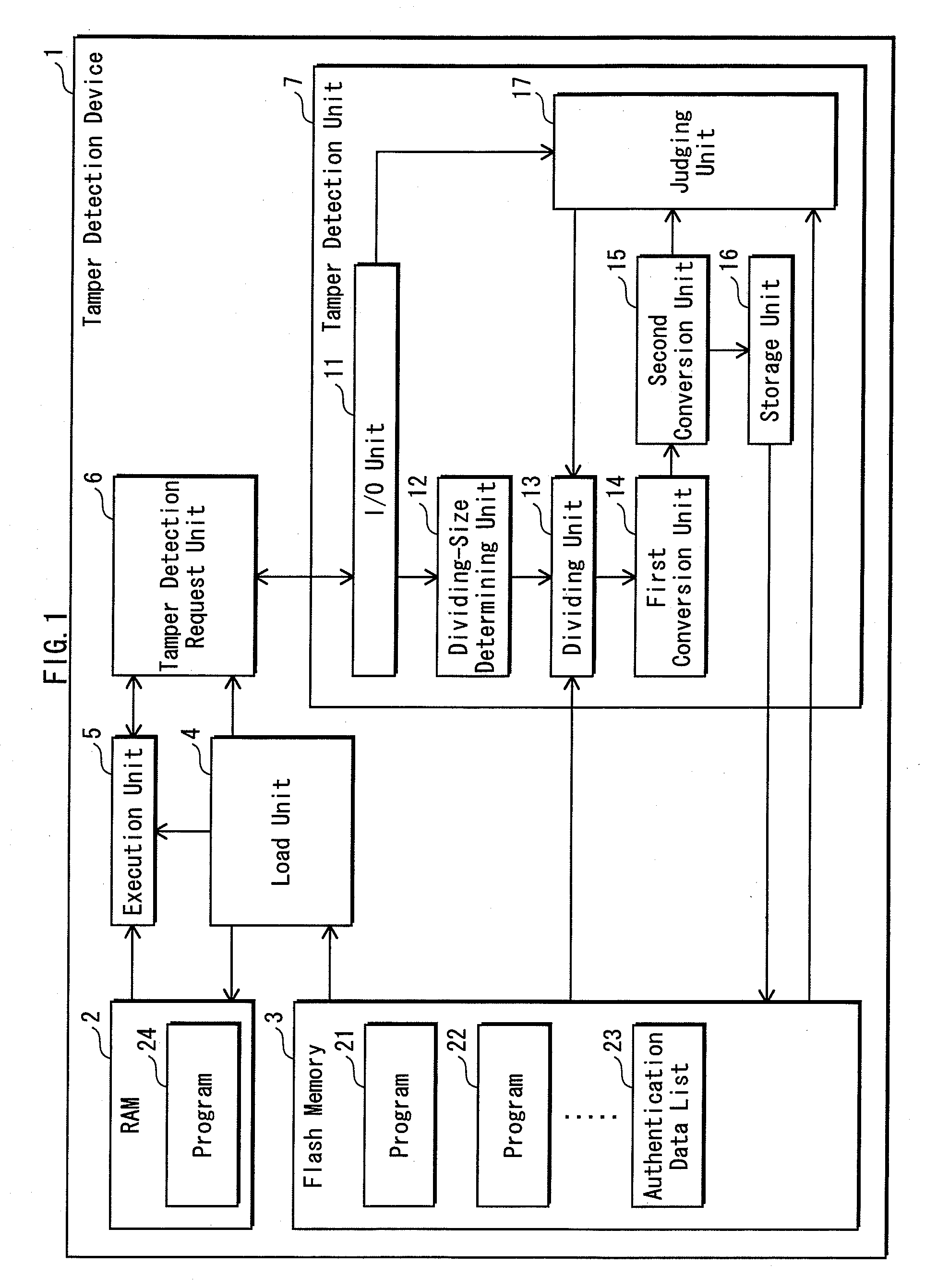

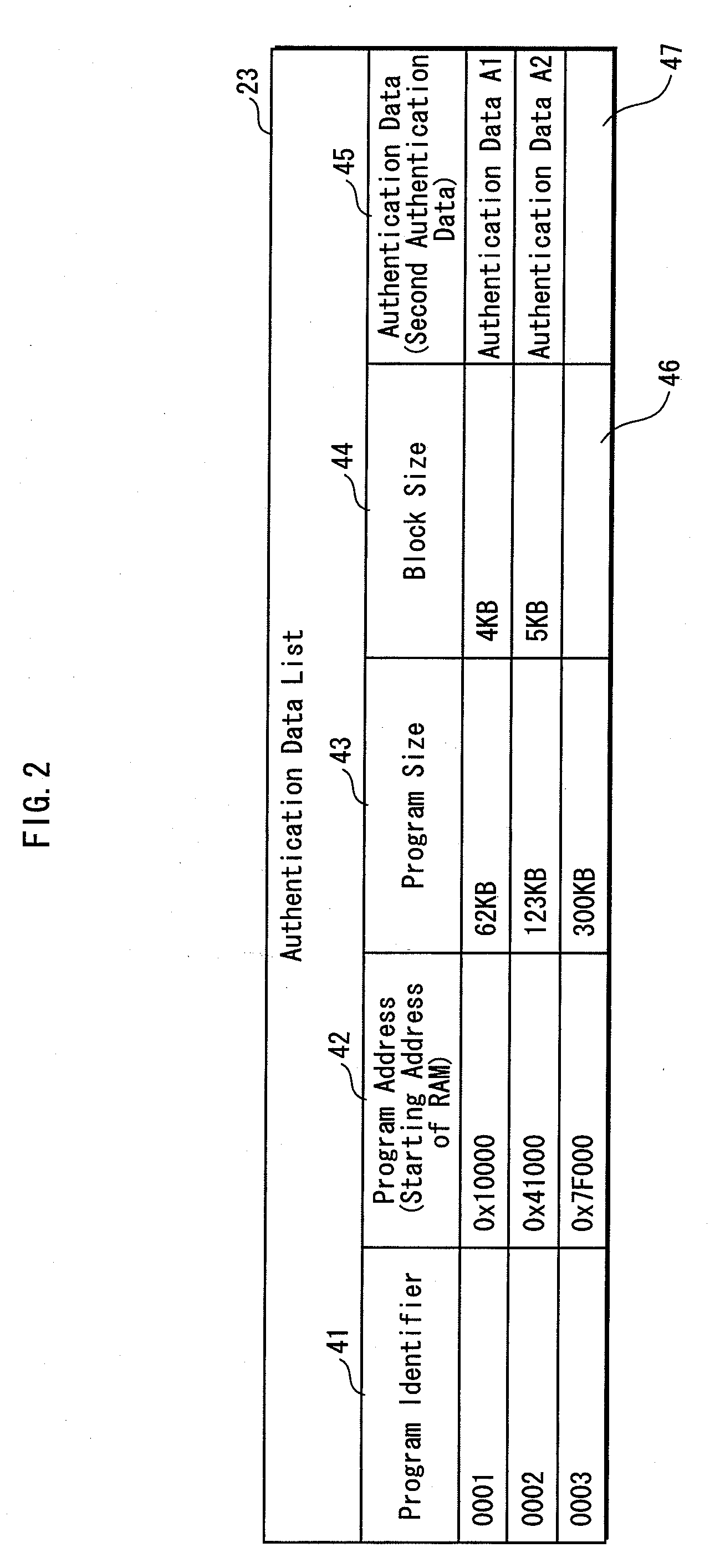

Falsification detecting system, falsification detecting method, falsification detecting program, recording medium, integrated circuit, authentication information generating device and falsification detecting device

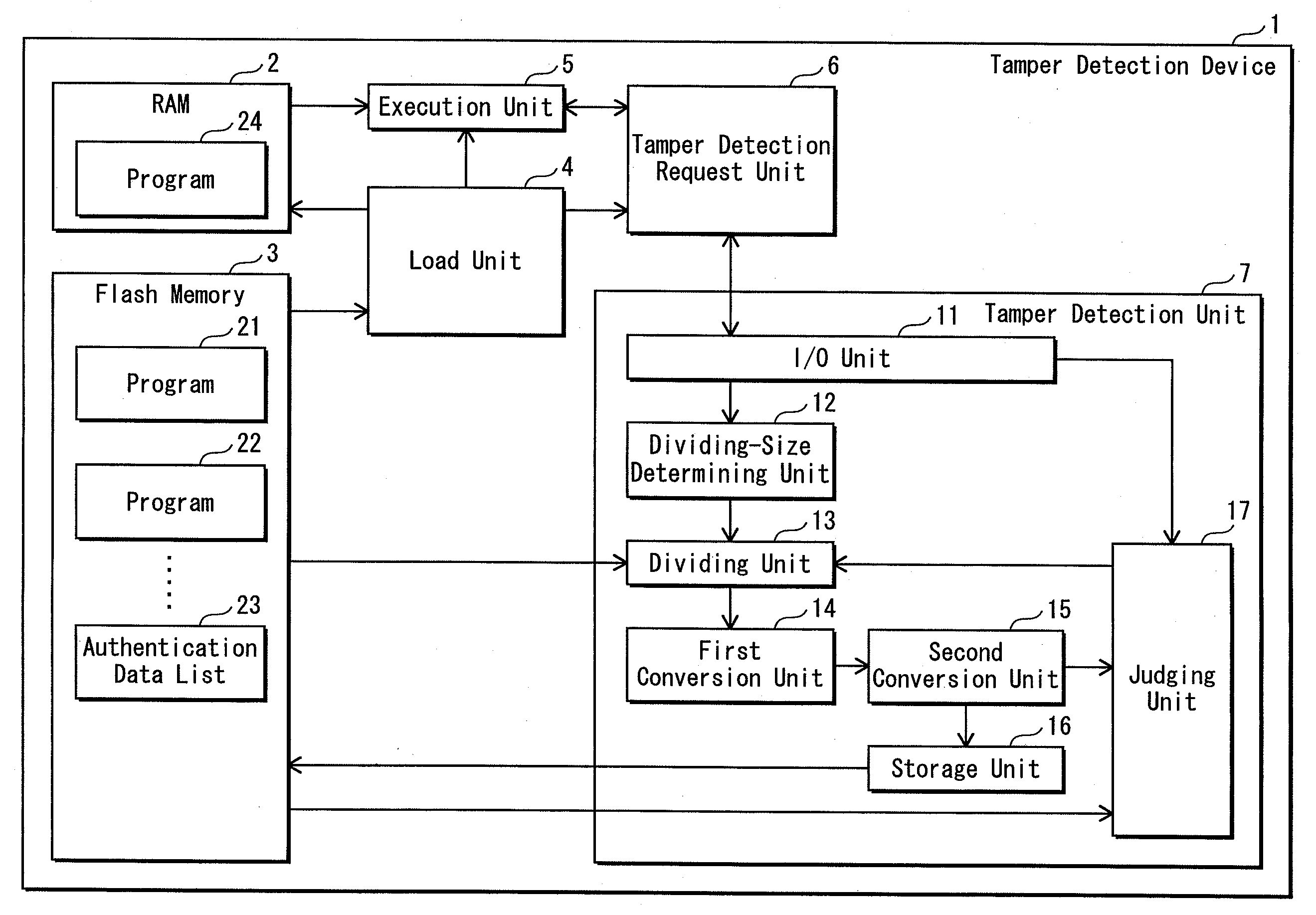

ActiveUS20100162352A1Reduce size of targetIncrease speedDigital data processing detailsUnauthorized memory use protectionComputer hardwareLogical operations

A tamper detection device detects tampering with a program loaded to memory, at high speed and without compromising the safety. Prior to loading of a program, a dividing-size determining unit 12 determines a block size based on random number information, a dividing unit 13 divides the program by the block size into data blocks, and a first conversion unit 14 converts, by conducting a logical operation, the data blocks into intermediate authentication data no greater than the block size, and a second conversion unit 15 conducts a second conversion on the intermediate authentication data to generate authentication data. The authentication data and the block size are stored. After the program loading, a program resulting from the loading is divided by the block size, followed by the first and second conversions to generate comparative data. The comparative data is compared with the authentication data to detect tampering of the loaded program.

Owner:PANASONIC CORP

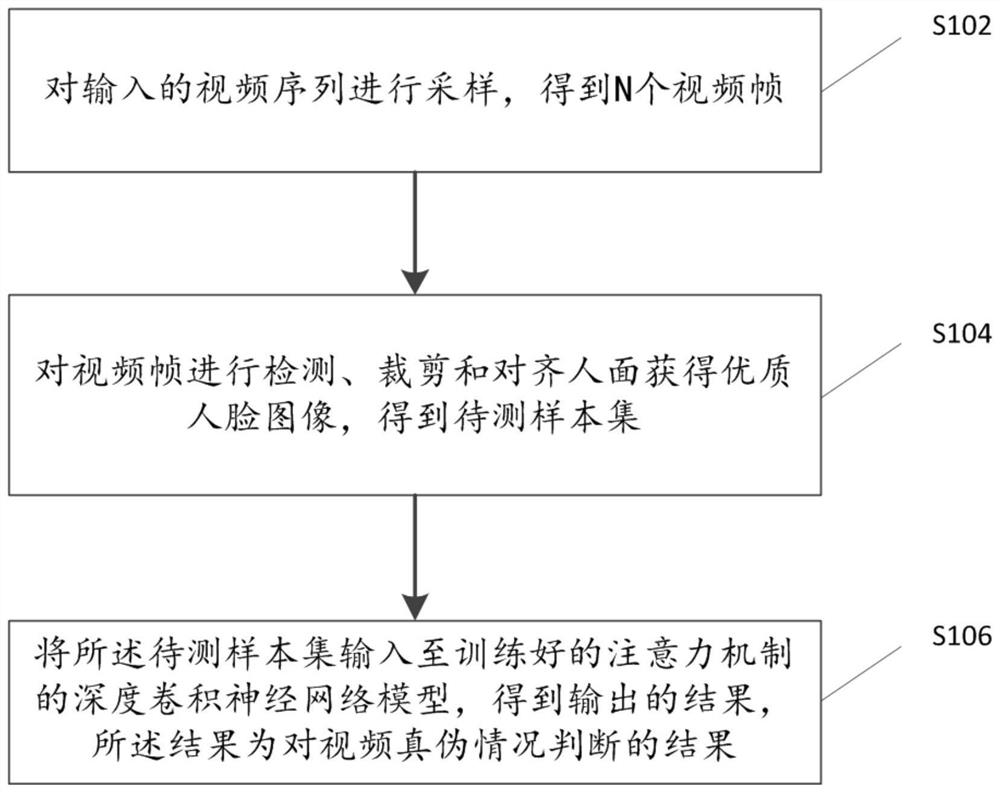

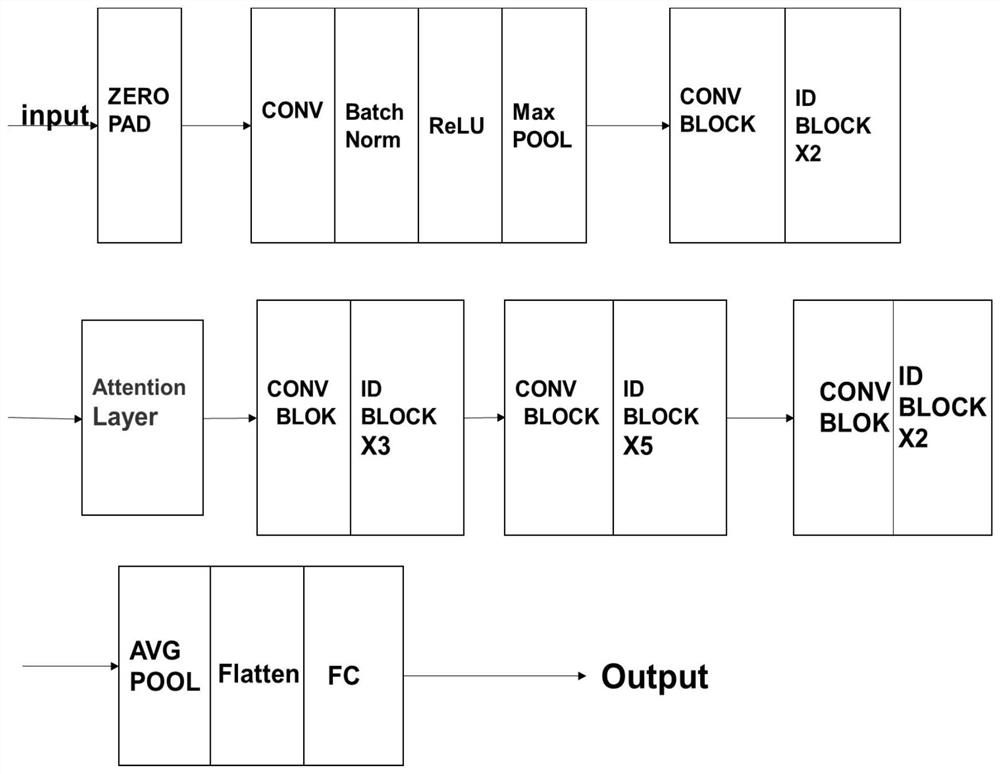

False face video identification method and system and readable storage medium

PendingCN111967427AHigh simulationGood identification effectCharacter and pattern recognitionNeural architecturesTest sampleEngineering

The invention discloses a false face video identification method and system based on a convolutional neural network and an attention mechanism, and a readable storage medium. The method comprises thesteps: carrying out the sampling of an inputted video sequence, and obtaining N video frames; detecting, cutting and aligning the video frame to a human face to obtain a high-quality human face image,and obtaining a to-be-detected sample set; inputting the to-be-tested sample set into a trained deep convolutional neural network model of an attention mechanism to obtain an output result which is aresult of judging the authenticity of the video. According to the method disclosed in the invention, the negative sample is obtained through the special processing method of the positive sample, thetime cost of obtaining the negative sample is reduced, the face image of the fake face video is simulated well, and the trained network has good identification capability; in addition, the method canhighlight the manipulated image regions, thereby guiding the neural network to detect the regions, facilitating the detection of face counterfeiting, and improving the accuracy of an original CNN model.

Owner:GUANGDONG UNIV OF TECH

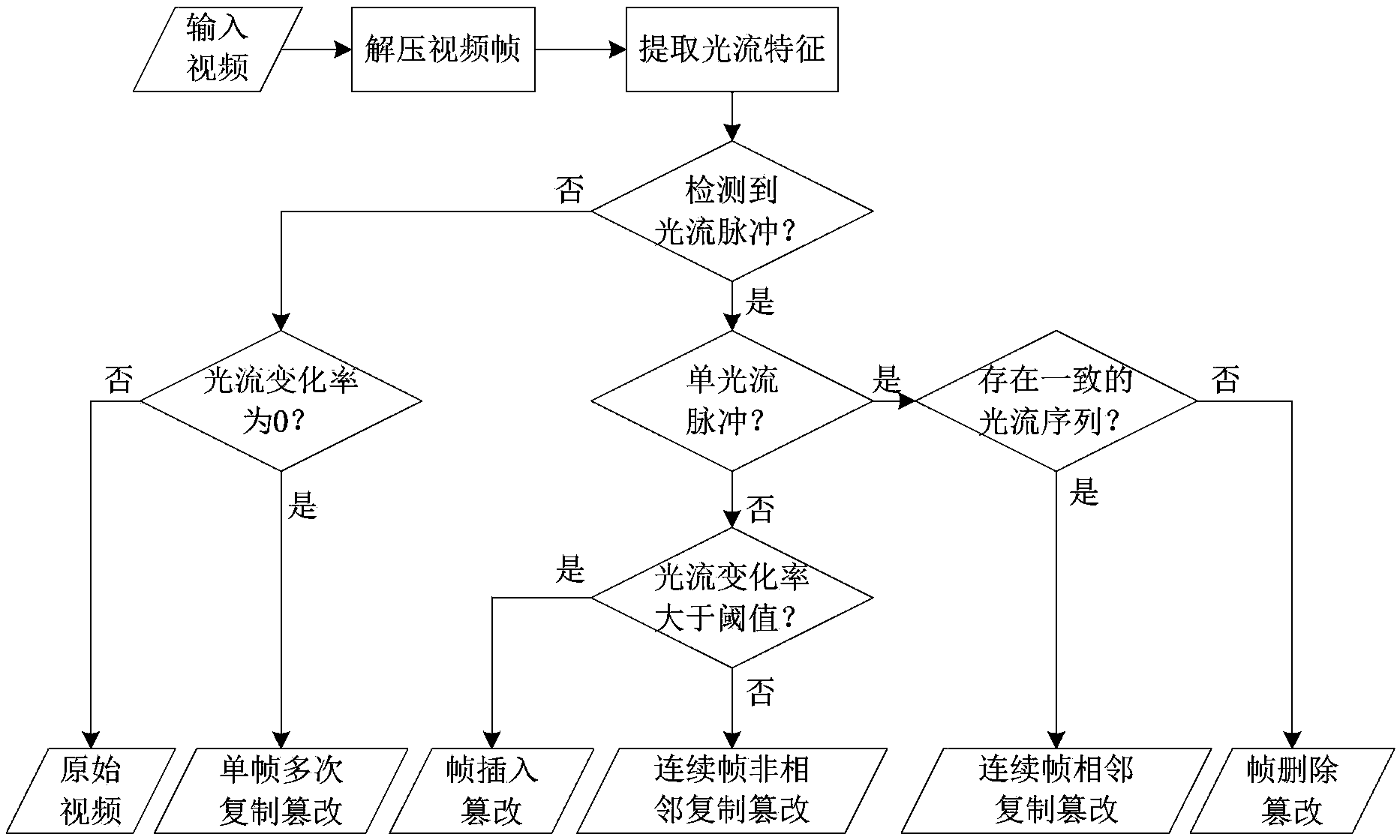

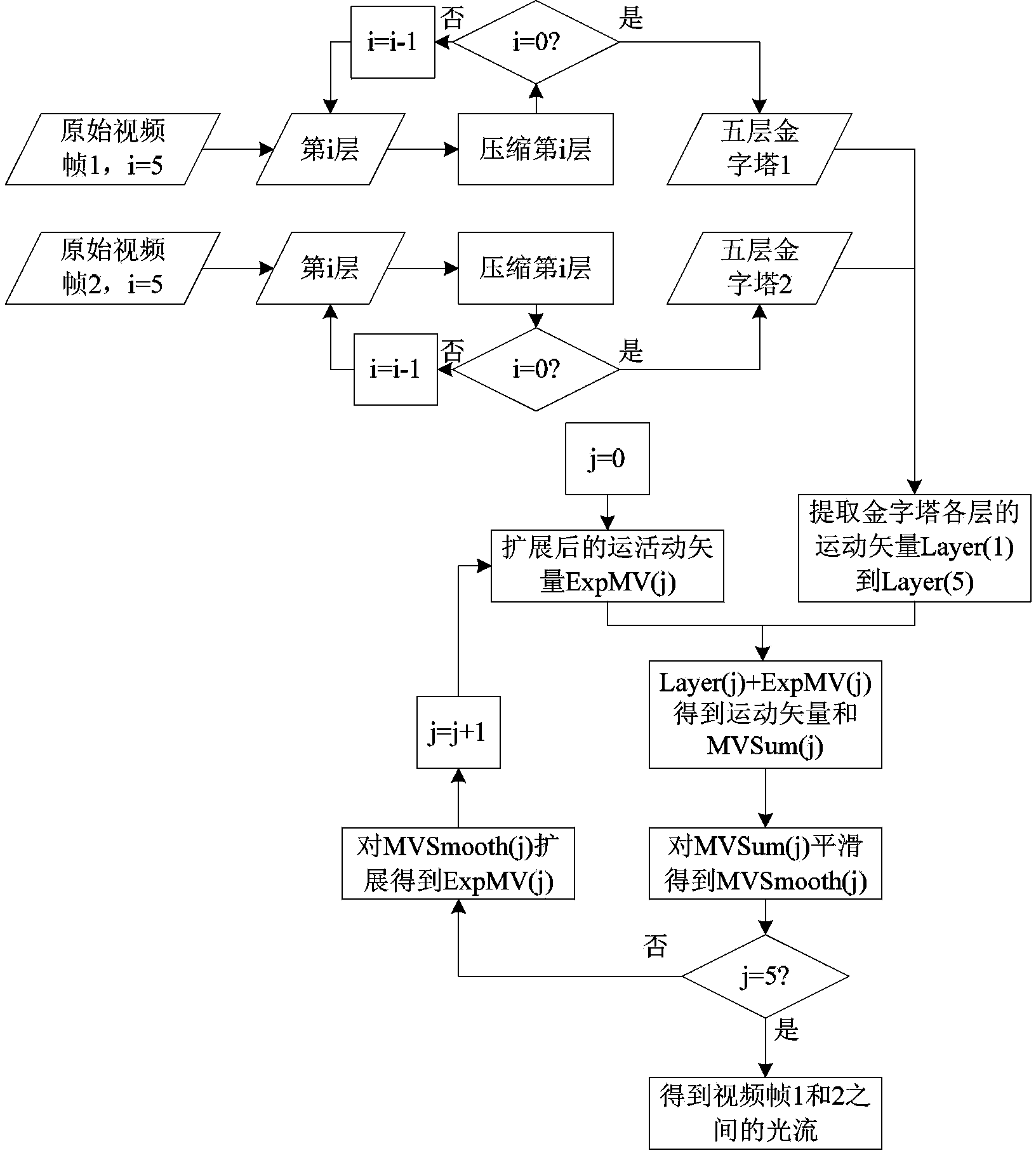

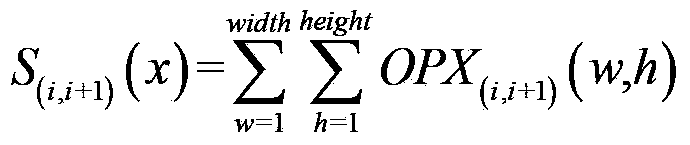

Video inter-frame forgery detection method based on light stream consistency

InactiveCN103384331ACumulative impactImprove time and efficiencyTelevision systemsSelective content distributionPassive detectionContent security

The invention provides a passive video inter-frame forgery detection method based on the light stream consistency in the field of video content safety. The detection method mainly comprises the steps of extracting light stream features of two adjacent frames in a surveillance video shot by a static camera, adding light stream absolute values in horizontal and vertical directions to generate two light stream sequences of the whole video in horizontal and vertical directions, analyzing the light stream sequences, and performing video inter-frame forgery detection for features of different inter-frame forgery modes. According to the detection method, through the light stream features which are high-robustness video features, five types of video inter-frame forgeries, including video frame insertion, frame deletion, repeated duplication of single frames, non-adjacent duplication of continuous frames and adjacent duplication of continuous frames can be detected effectively, and a forceful detection weapon is provided for the integrity and the authenticity of court testimony videos.

Owner:SHANGHAI JIAO TONG UNIV

Counterfeit detection apparatus

InactiveUS6714288B2Enlarging microprintingEasy to seePaper-money testing devicesCharacter and pattern recognitionPolyesterMicroprinting

A counterfeit detection device that is useful for optically examining the security features of currency to determine its authenticity. The apparatus places the currency at the object plane of a magnifying lens suitable for enlarging the image of the microprinting as well as examining other fine features of the bill. In one embodiment, the device consists of a rear illuminating light source a bill positioning slot a magnifying lens, and an environmental light shade. Further embodiments include a capability of front illumination to detect the color shifting ink, an ultraviolet light source causal to viewing the phosphorescent glow of the polyester thread with its identifying color. And finally, a version with a swing-away lens to view the watermark unmagnified.

Owner:COHEN ROY

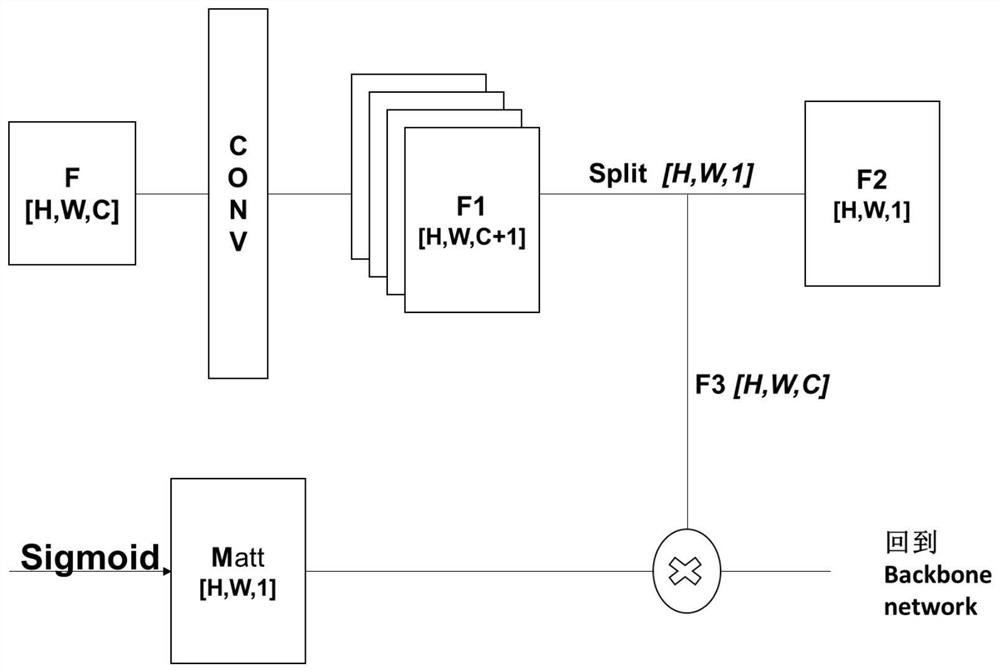

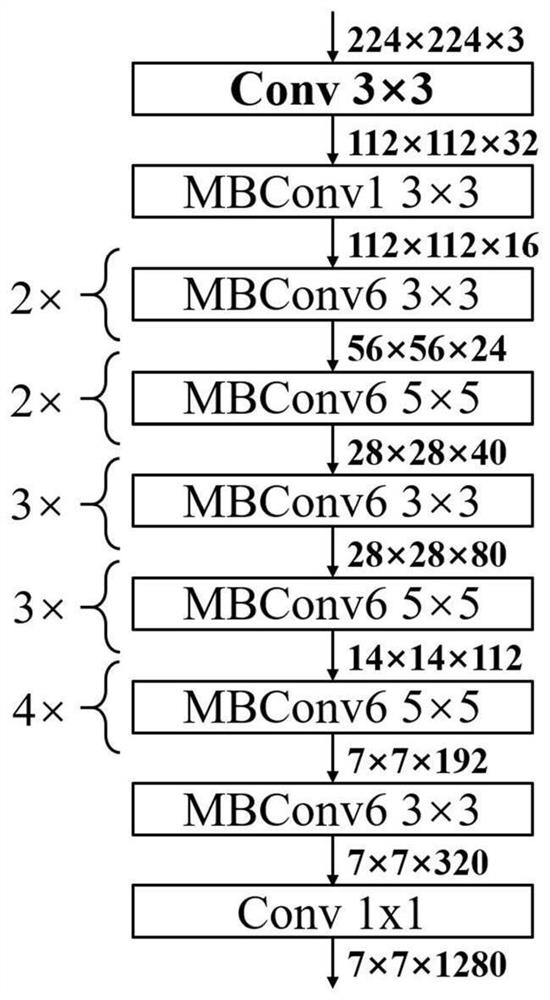

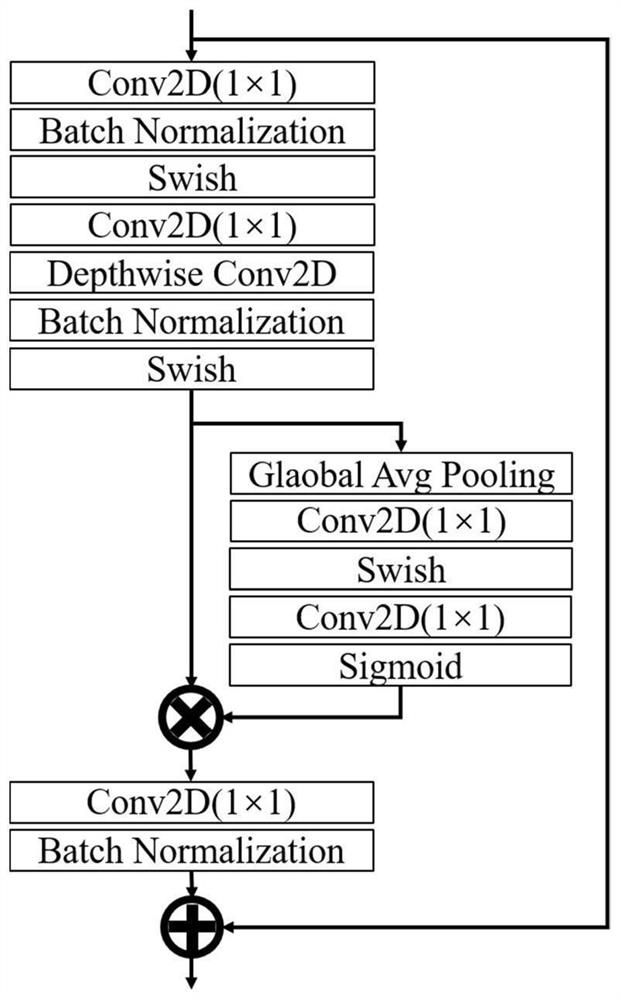

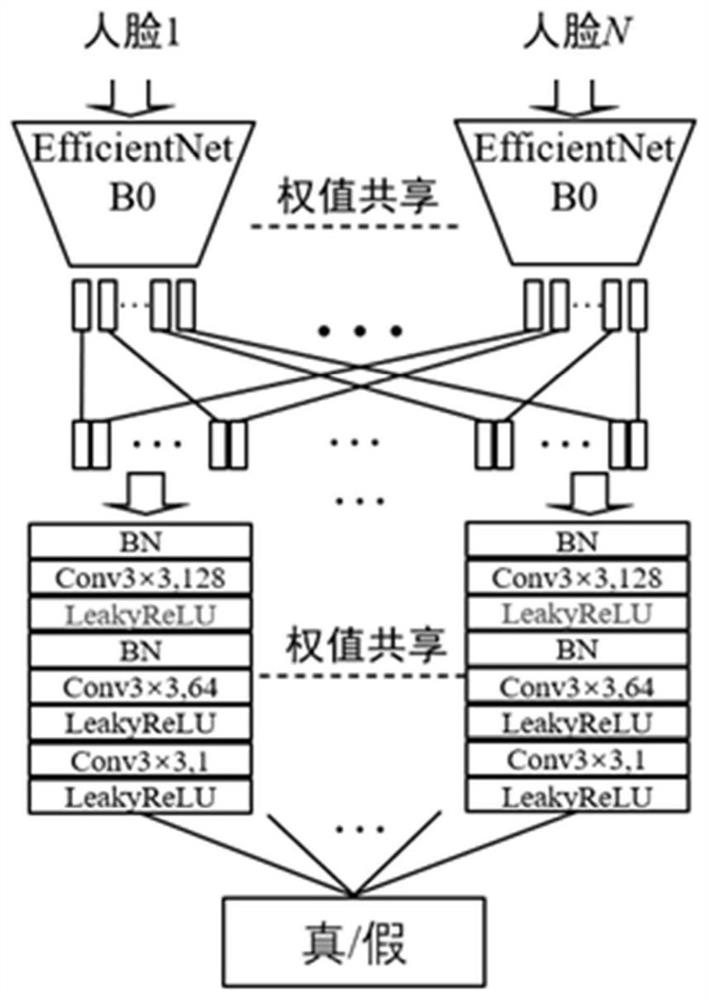

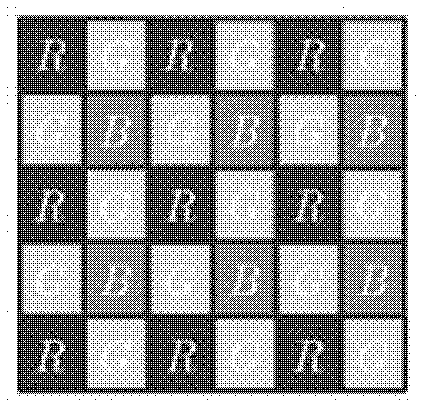

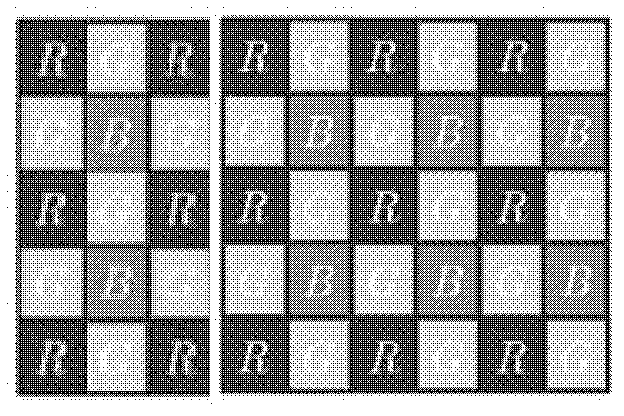

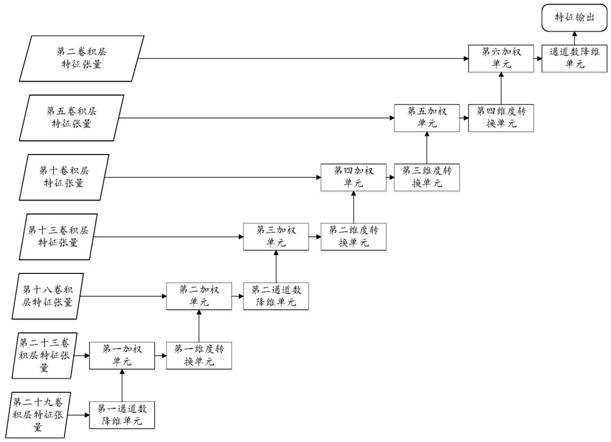

Refined feature fusion method for face counterfeit video detection

ActiveCN111967344AFully extractedImproving Forgery Detection AccuracyInternal combustion piston enginesNeural architecturesTime domainFeature vector

The invention discloses a refined feature fusion method for face counterfeit video detection and relates to the field of mode recognition. The method comprises steps of carrying out the frame decomposition of a true and false face video, and converting a video format file into a continuous image frame sequence; performing face position detection on the continuous image frame sequence, and adjusting a detection result to enable a face frame to contain a background; cutting a face frame for each frame of image to obtain a face image training set, and training an EfficentNet B0 model; randomly selecting N continuous frames from the face image sequence, and inputting the N continuous frames into an EfficentNet B0 model to obtain a feature map group; and decomposing the feature map group into independent feature maps, re-stacking the feature maps of the same channel according to an original sequence order to obtain a new feature map group, performing secondary feature extraction to obtain afeature vector, connecting the feature vector to a single neuron, and performing final video clip true and false classification by taking sigmoid as an activation function. According to the method, the spatial domain information is reserved, the time domain information is fully extracted, and counterfeiting detection precision is effectively improved.

Owner:NANJING UNIV OF INFORMATION SCI & TECH

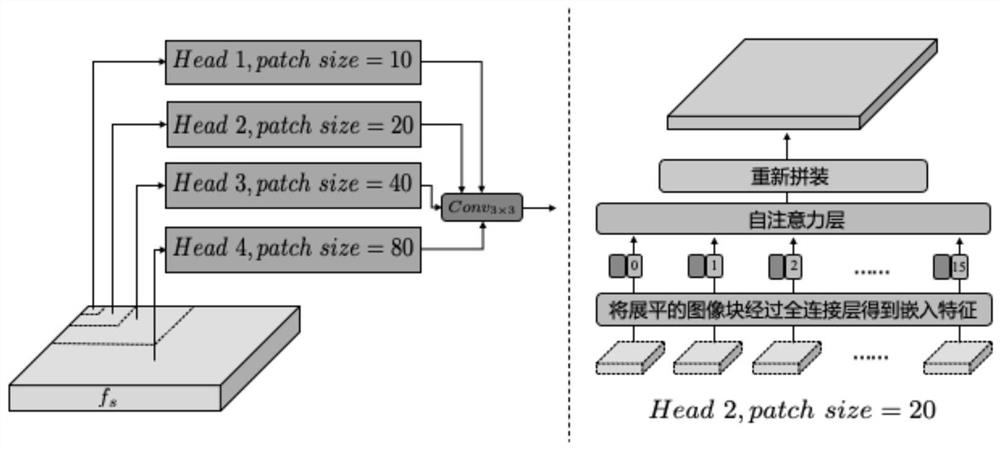

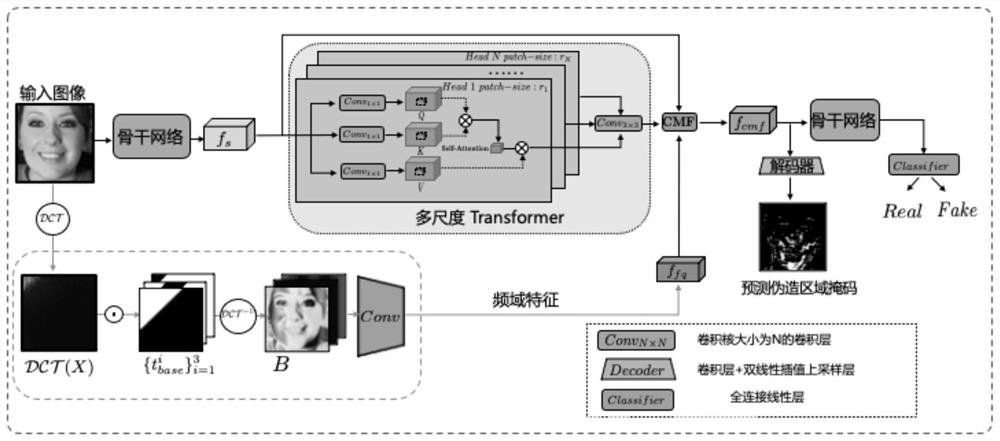

Deep fake face data identification method

InactiveCN113536990APowerful long-term dependency modeling capabilitiesImprove generalization abilityImage enhancementImage analysisPattern recognitionEngineering

The invention belongs to the technical field of neural network security, and particularly relates to a deep fake face data identification method. According to the method, fine counterfeit features are captured on different scales and are used for deep counterfeit detection. Specifically, a multi-modal multi-scale converter (M2TR) is introduced, and image blocks of different sizes are operated by using a multi-scale converter so as to detect local context inconsistency of different scales. In order to improve the detection result and improve the robustness of image compression, frequency information is introduced into the M2TR, and the frequency information is further combined with RGB features through a cross-modal fusion module. The effectiveness of the method is verified through a large number of experiments, and the performance of the method exceeds that of the most advanced deep counterfeiting detection method at present.

Owner:FUDAN UNIV

Forgery detection method for spliced and distorted digital photos

ActiveCN102609947AImprove versatilityReduce misjudgmentImage analysisPattern recognitionDigital pictures

The invention discloses a forgery detection method for spliced and distorted digital photos, comprising the steps of selecting random pixels; using the resampling and neural network algorithm to perform loop iteration so as to estimate CFA (color filter array) interpolation in the digital photos; continuously removing suspicious distorted points by an error mean deviation degree model in the resampling and iteration computation, finally, obtaining an undistorted pixel set in the whole primary color plane; and performing CFA interpolation algorithm function estimation by the pixels in the set as standard, judging suspicious distorted points according to the error mean deviation degree model so as to judge if the digital photos are fake. The forgery detection method for the spliced and distorted digital photos is good in generality, less in erroneous judgment and more accurate in forgery detection effect.

Owner:神州网云(北京)信息技术有限公司

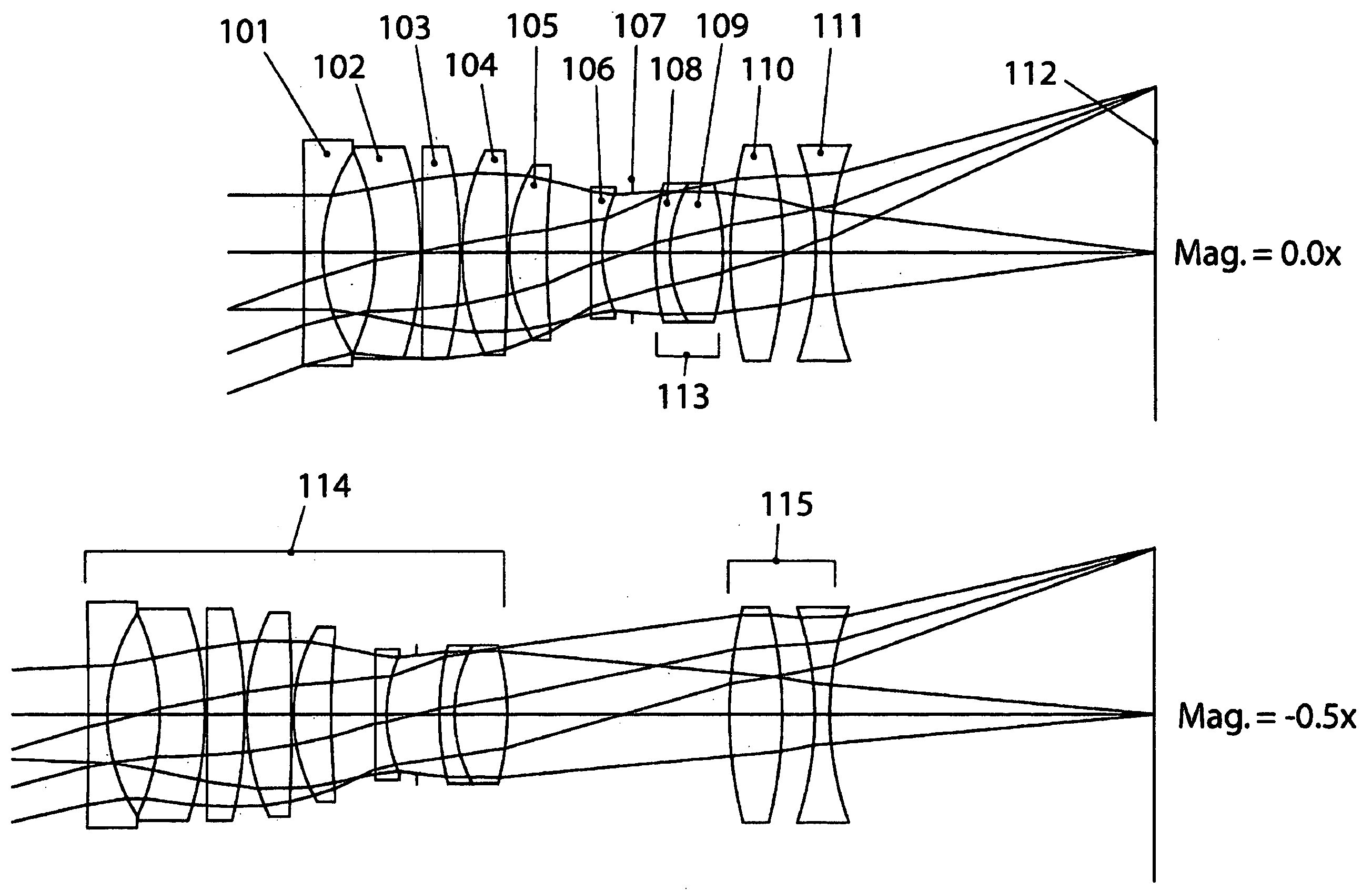

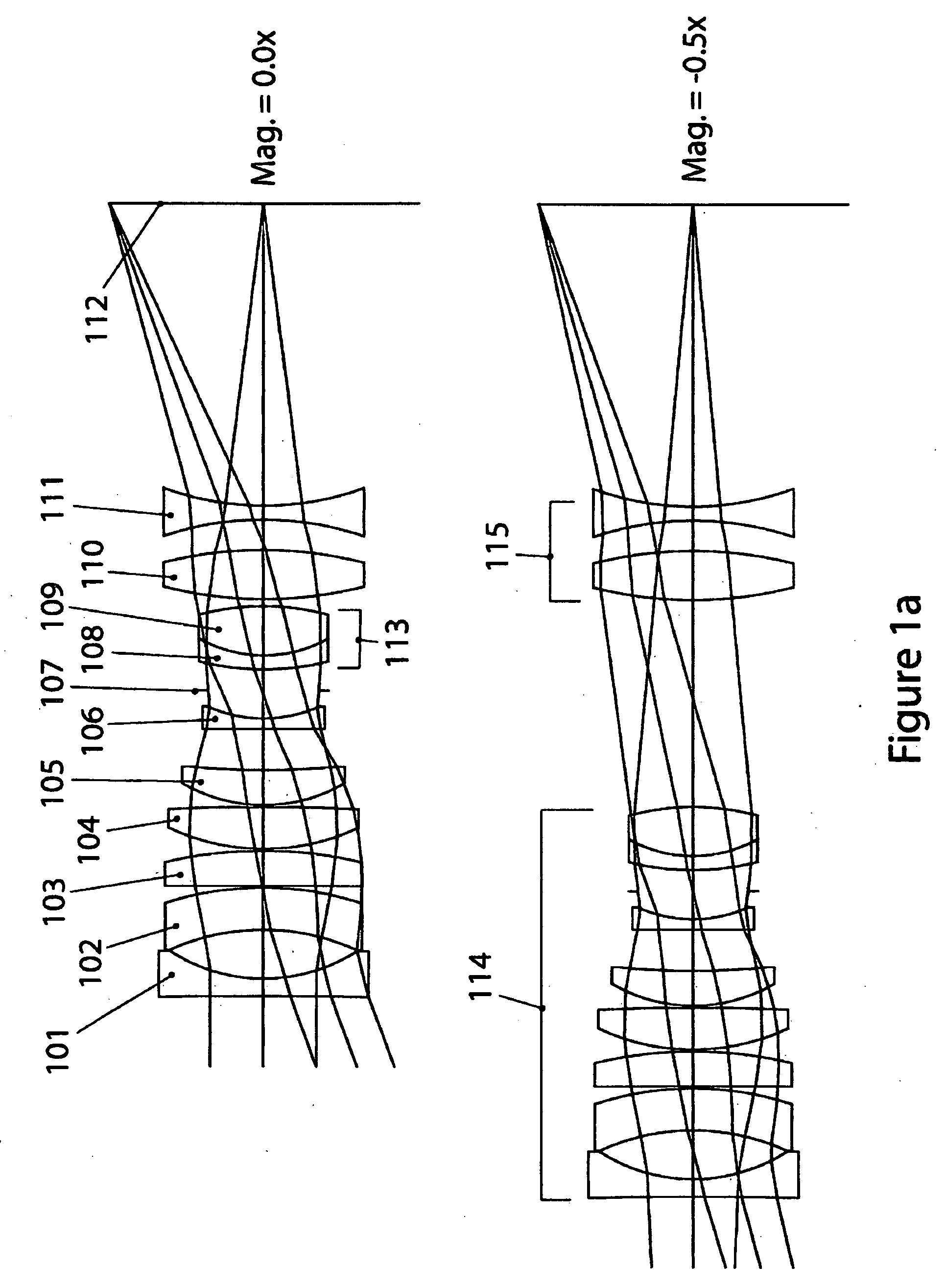

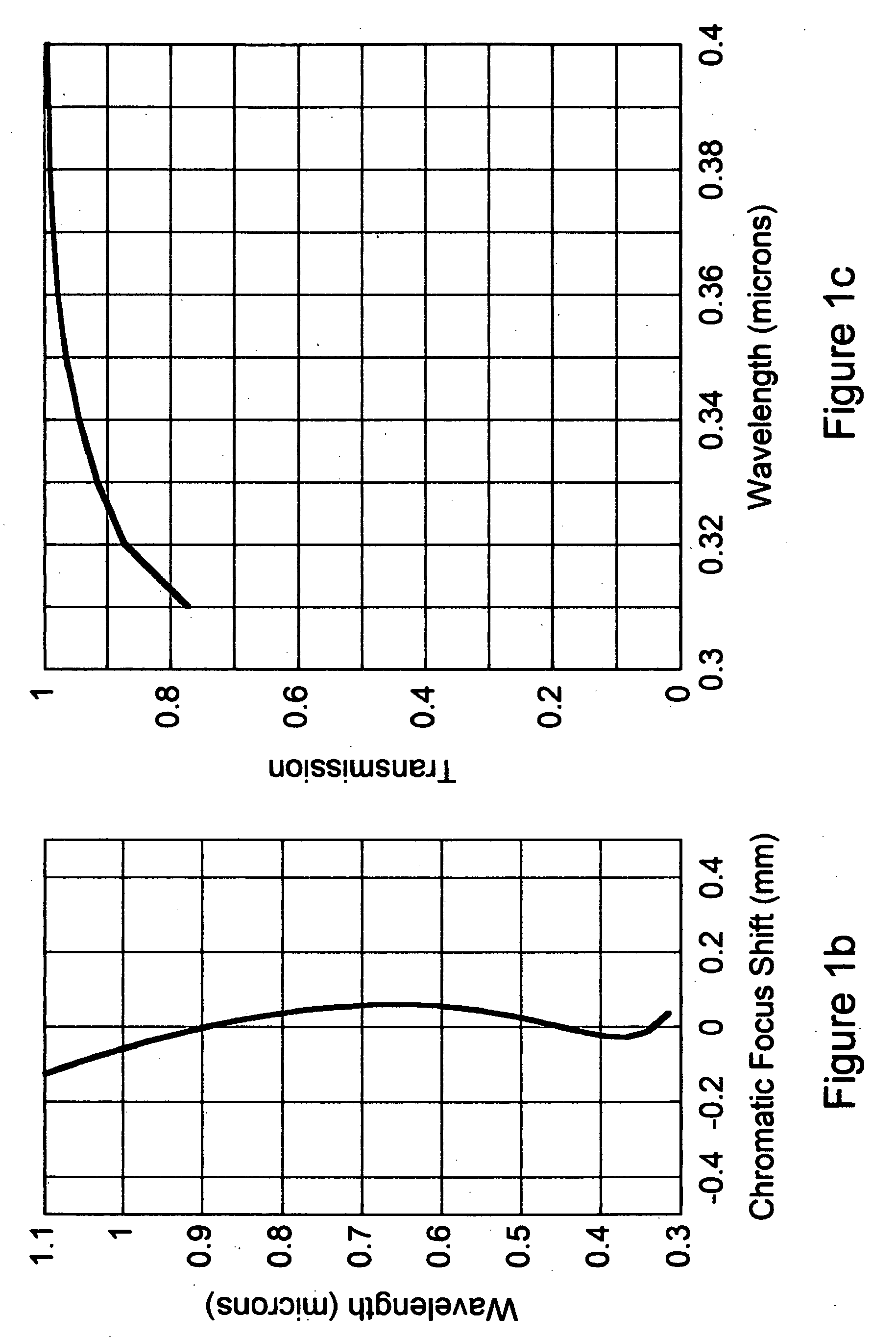

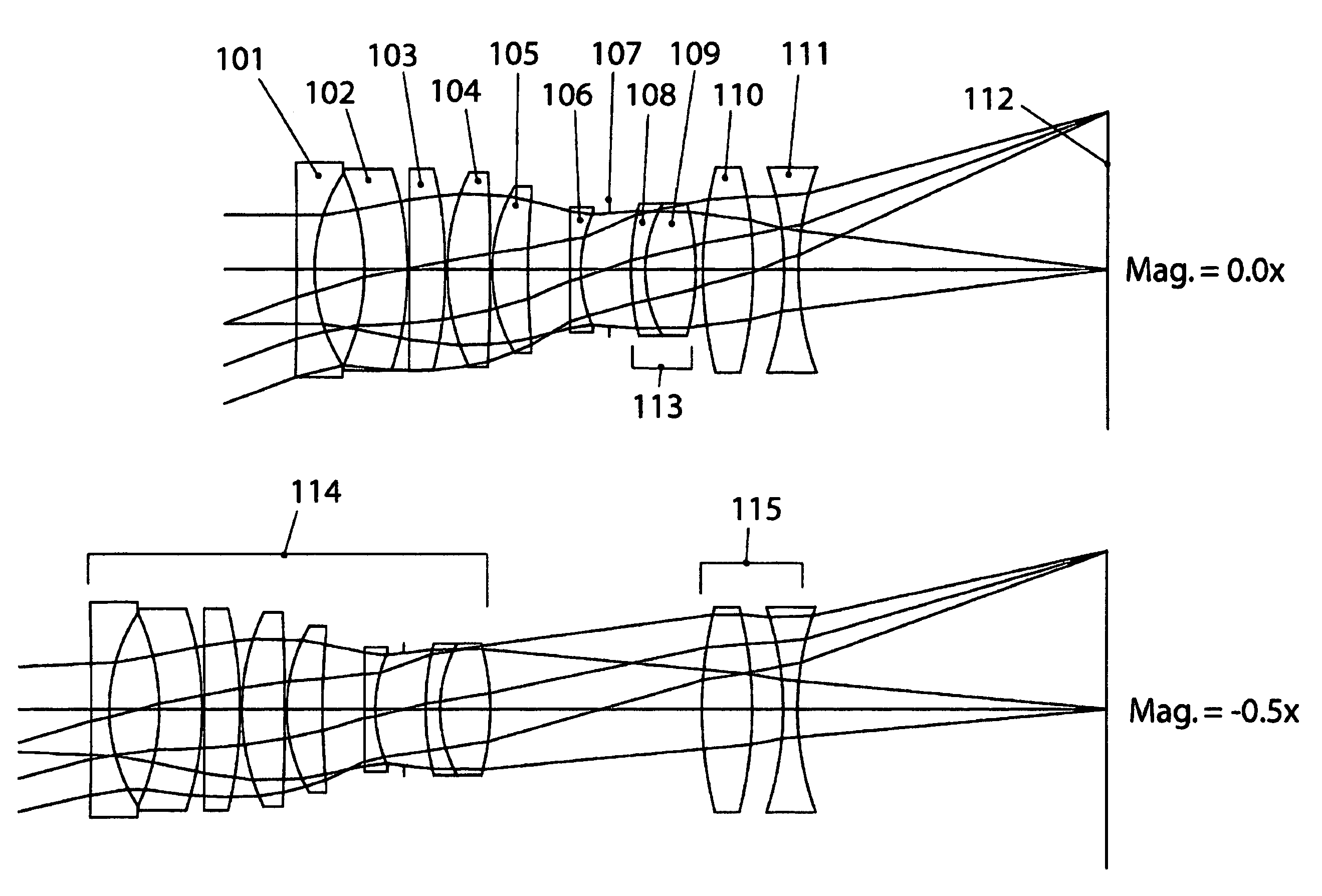

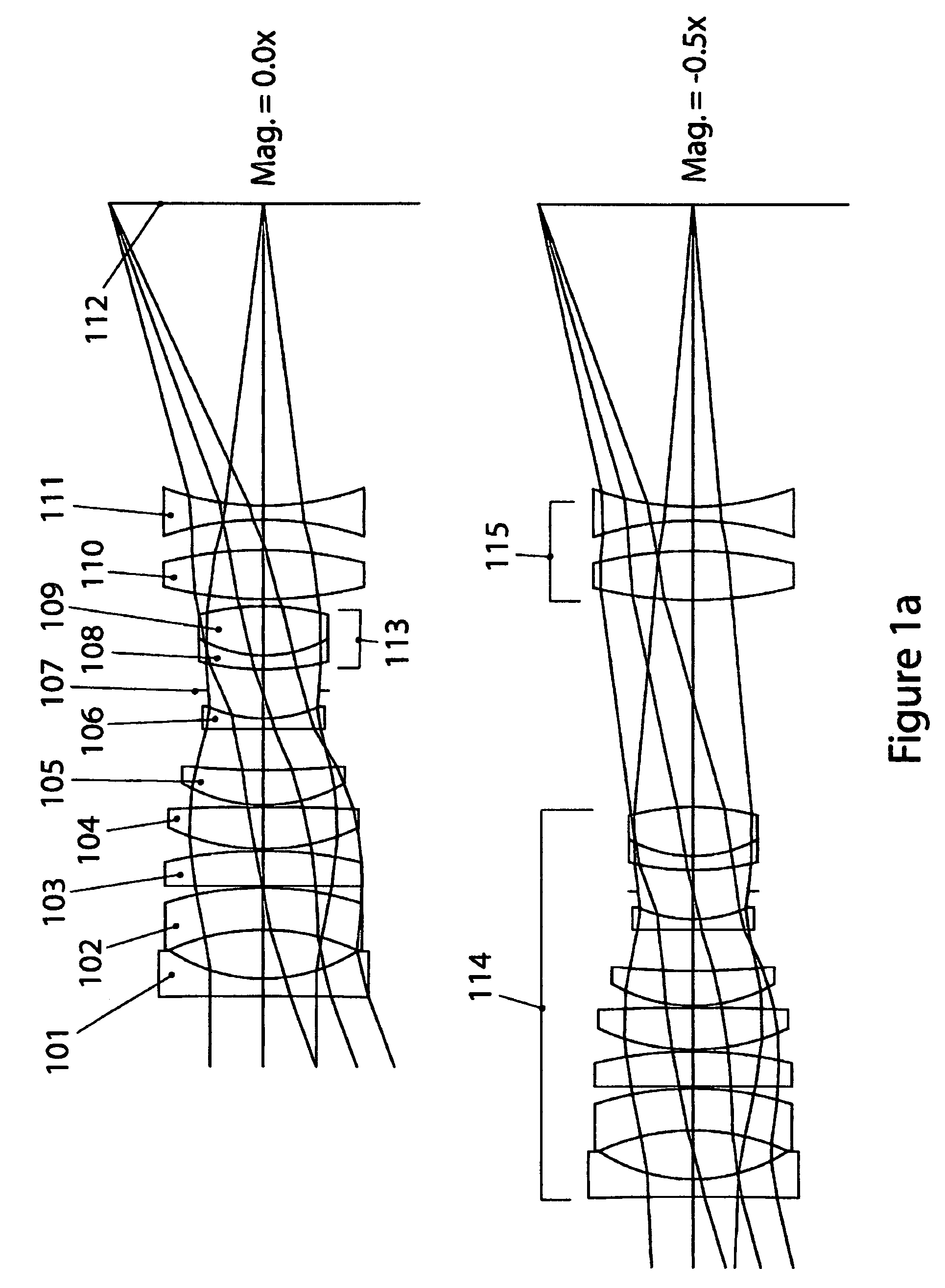

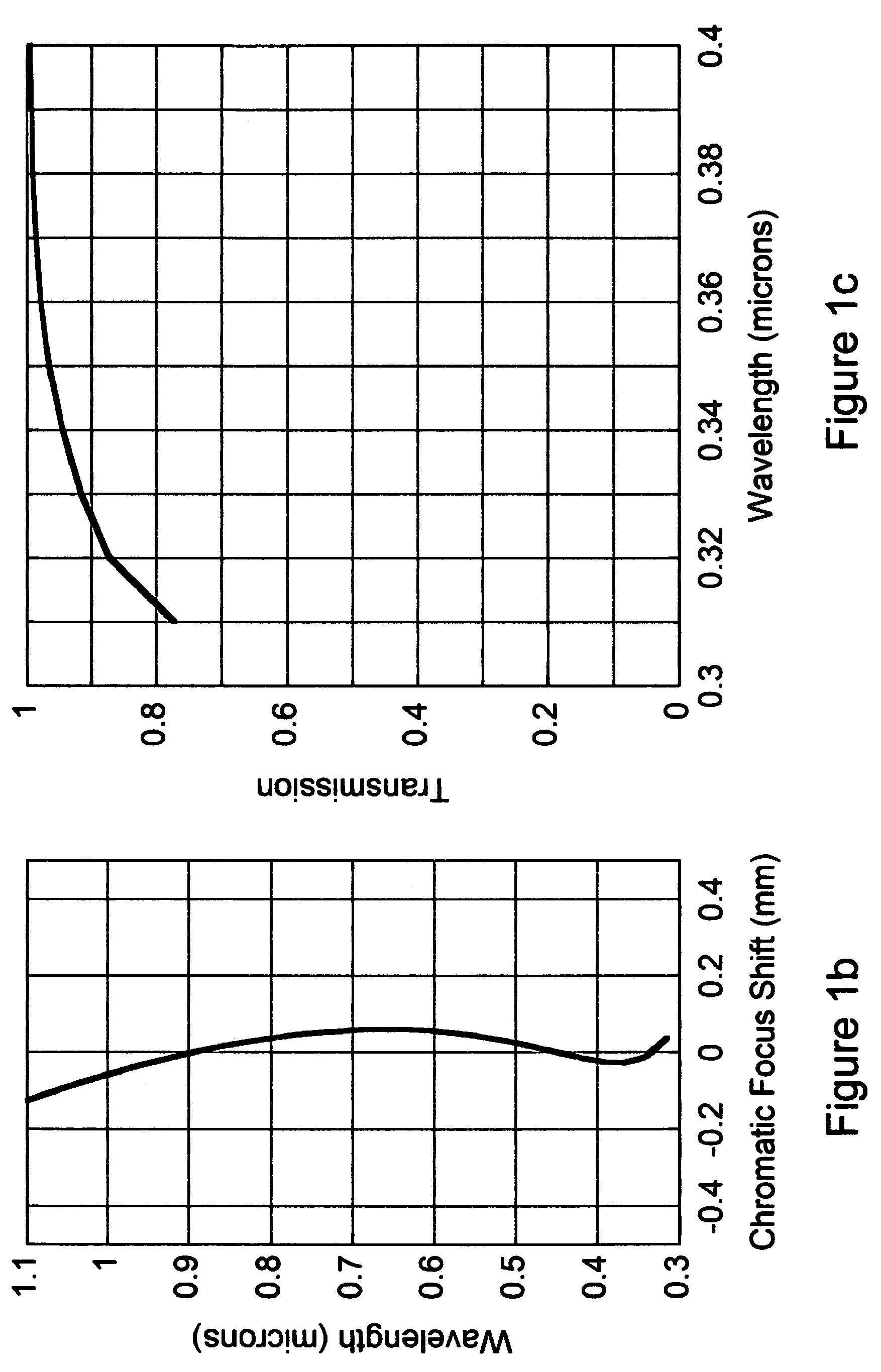

UV-VIS-IR imaging optical systems

Imaging optical systems having good transmission and that are well corrected over the full 315 nm-1100 nm ultraviolet-visible-infrared (UV-VIS-IR) wavelength band are disclosed. A wide variety of apochromatic and superachromatic design examples are presented. The imaging optical systems have a broad range of applications in fields where large-bandwidth imaging is called for, including but not limited to forensics, crime scene documentation, art conservation, forgery detection, medicine, scientific research, remote sensing, and fine art photography.

Owner:CALDWELL JAMES BRIAN

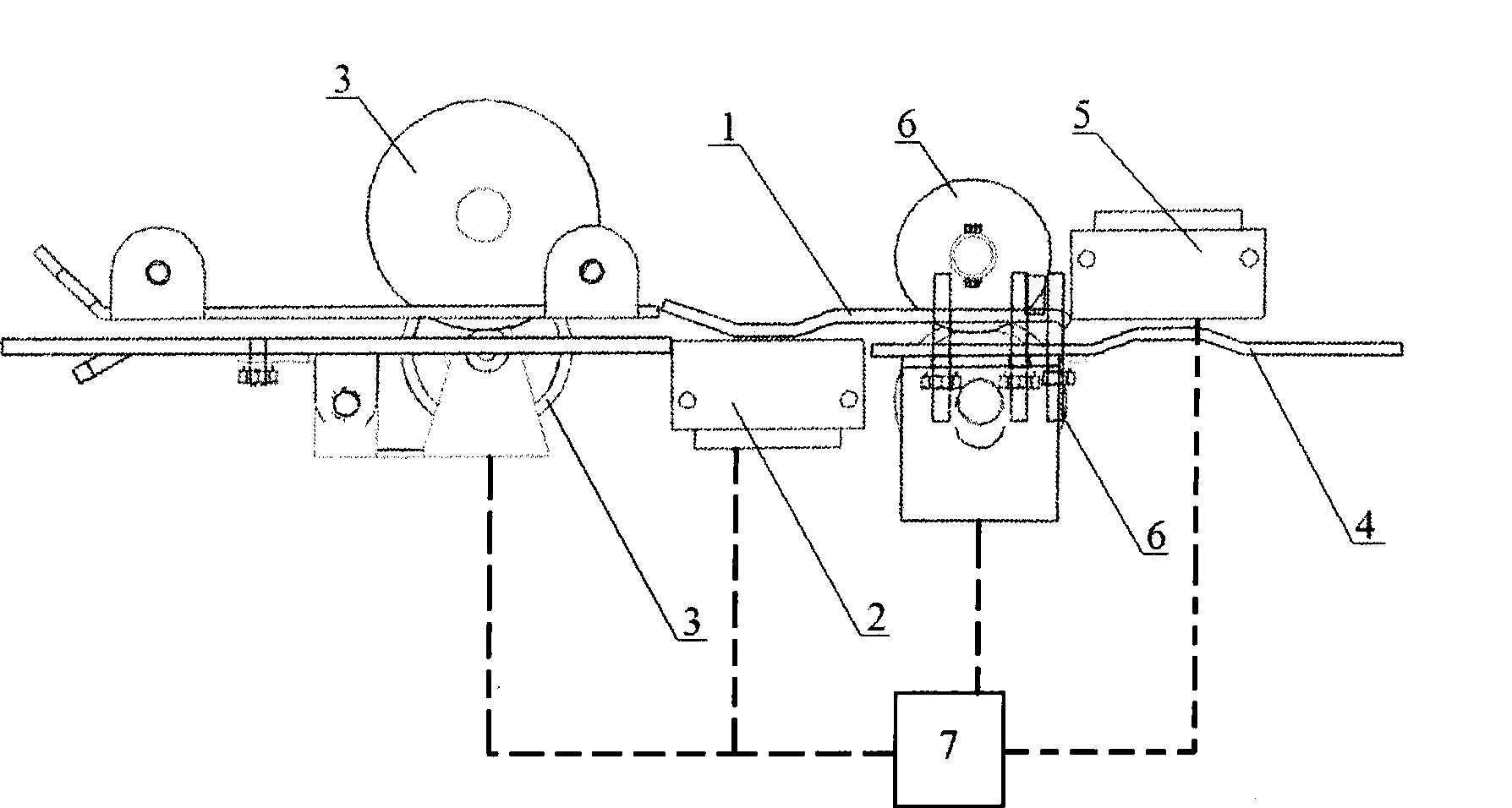

Note transportation and detection apparatus

InactiveCN101499188AValid entryGuaranteed treatment effectPaper-money testing devicesCharacter and pattern recognitionImaging processingSlide plate

A paper currency transmitting and detecting device in the paper currency anti-forgery technology field includes: a guidance wheel set, a first money transmitting sliding plate, a first image sensor, a second money transmitting sliding plate, a second image sensor and a control module, wherein, the first money transmitting sliding plate is fixedly arranged at the front end of the second money transmitting sliding plate, the first image sensor is arranged below the first money transmitting sliding plate, the second image sensor is arranged above the second money transmitting sliding plate, the guidance wheel set is arranged between the first money transmitting sliding plate and the second money transmitting plate, and the control module is respectively connected with the guidance wheel set, the first image sensor and the second image sensor. The paper currency transmitting and detecting device controls twice anti-forgery detection by a controller program, and effectively leads the paper currency waiting to be detected to enter the detection area of the image sensors smoothly by the match of money transmitting sliding plates with specific shapes, thus ensuring image processing effect and reducing the generation of misjudgment.

Owner:上海长江计算机信息技术有限公司 +1

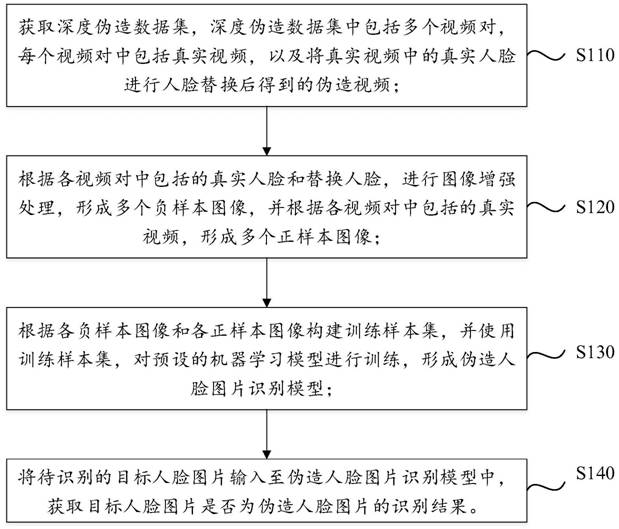

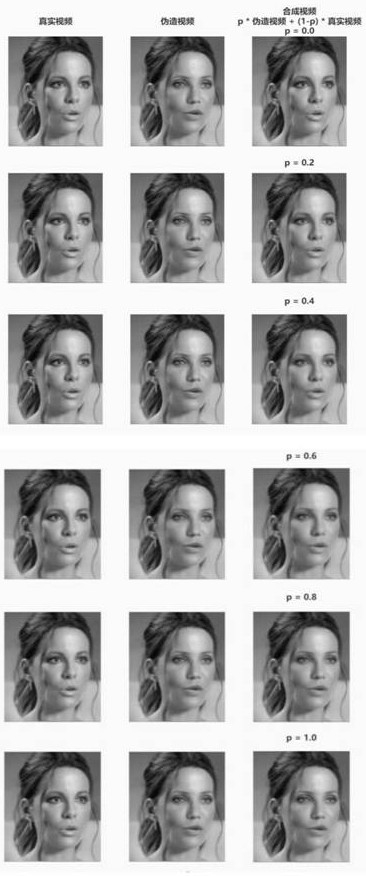

Method and device for identifying forged face picture, computer equipment and storage medium

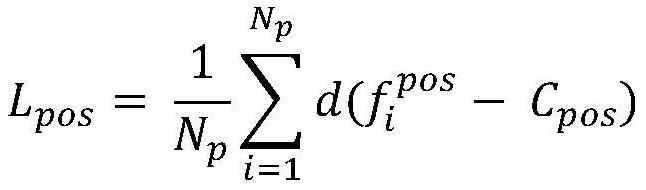

PendingCN113762138AMeet the needs of deep forgery detection and identificationCharacter and pattern recognitionMachine learningPositive sampleData set

The embodiment of the invention discloses a method and a device for identifying a forged face picture, computer equipment and a storage medium. The method comprises the steps: acquiring a deep counterfeit data set, wherein the deep counterfeit data set comprises a plurality of video pairs, and each video pair comprises a real video and a counterfeit video obtained by performing face replacement on a real face in the real video; forming a plurality of negative sample images according to the real face and the replacement face, and forming a plurality of positive sample images according to the real video; constructing a training sample set according to each negative sample image and each positive sample image, and training a machine learning model to form a fake face image recognition model; inputting a to-be-recognized target face picture into the recognition model, and obtaining a recognition result about whether the target face picture is a forged face picture or not. According to the embodiment of the invention, the problems that the type of a public data set counterfeit face is single and the network over-fitting possibility exists are solved, data enhancement is realized, and the requirements of deep counterfeit detection and recognition are met.

Owner:EVERSEC BEIJING TECH

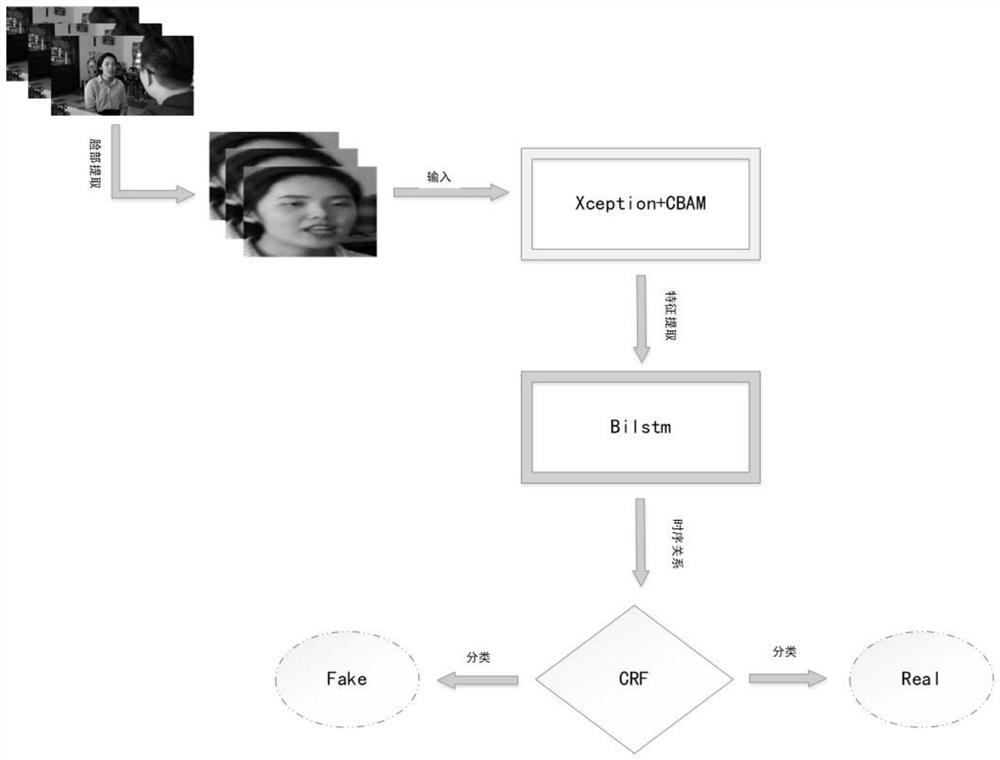

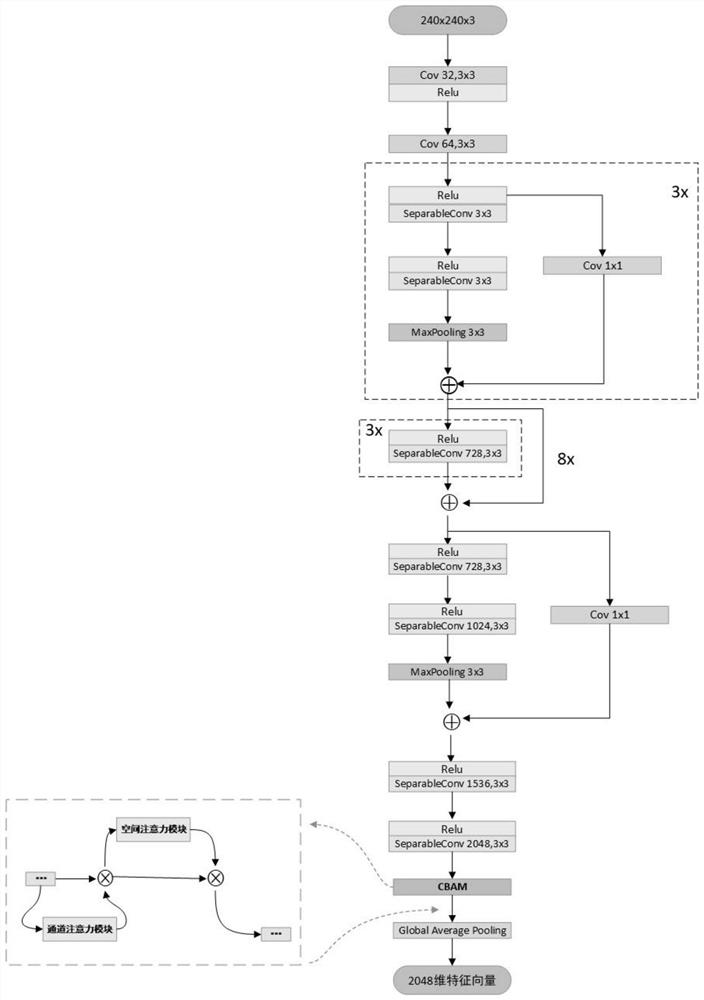

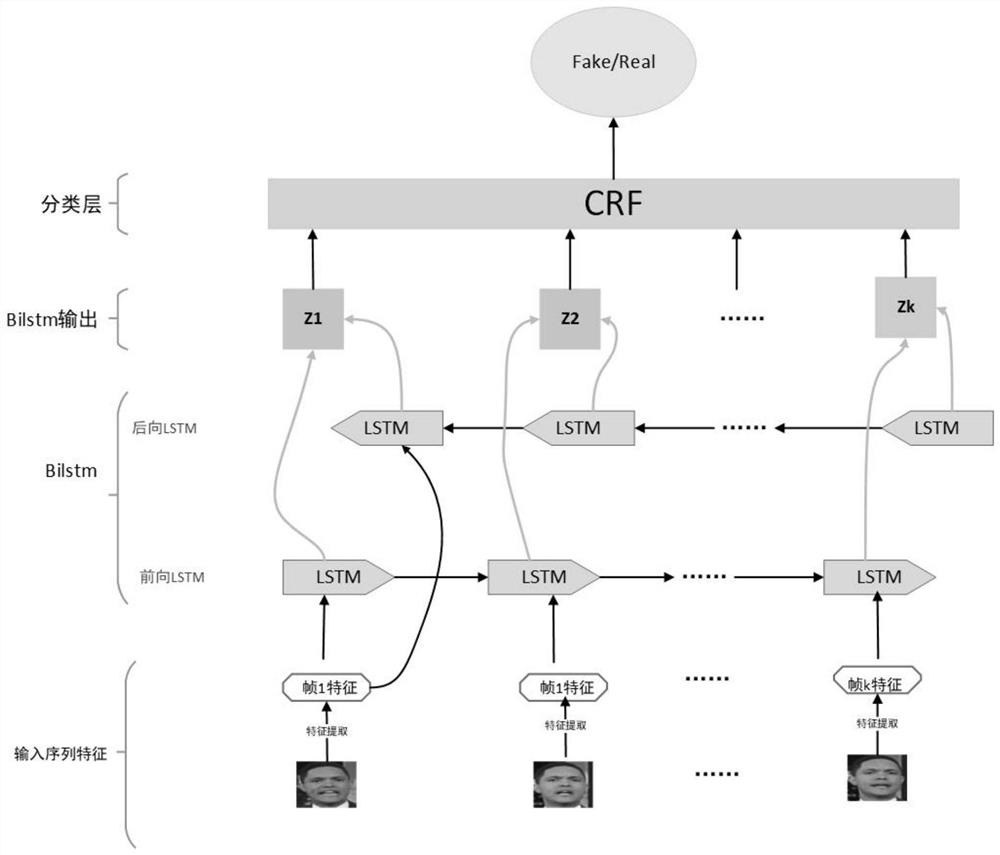

Method and system for detecting deep forged video based on time sequence inconsistency

ActiveCN112488013AImprove detection accuracyOdds of Overcoming MisjudgmentCharacter and pattern recognitionNeural architecturesConditional random fieldData set

The invention relates to a deep forged video detection method and system based on time sequence inconsistency, and belongs to the field of video detection. The method comprises the following steps: S1, acquiring a video data set, and preprocessing the data to obtain a face image of a video frame; S2, inputting the video frame into an attention mechanism module network of a fine-tuning network Xception + convolution module for training, wherein the attention mechanism module network is used for extracting video frame-level features; S3, performing feature extraction of continuous frames of thevideo by using the trained Xception network, and inputting the features into a bidirectional long-short-term memory network + conditional random field network model for training; S4, performing forgery detection on a to-be-tested video by using the trained model. According to the method, the time sequence inconsistency of the video between the frames is caused by using a counterfeiting technology,and the detection of the deeply counterfeited video is improved to a certain extent by combining a bidirectional long-short-term memory network and a conditional random field algorithm.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

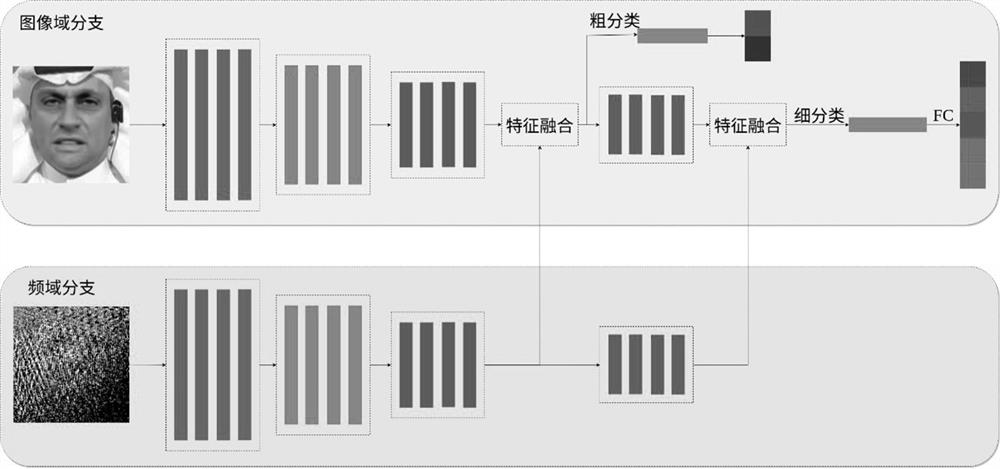

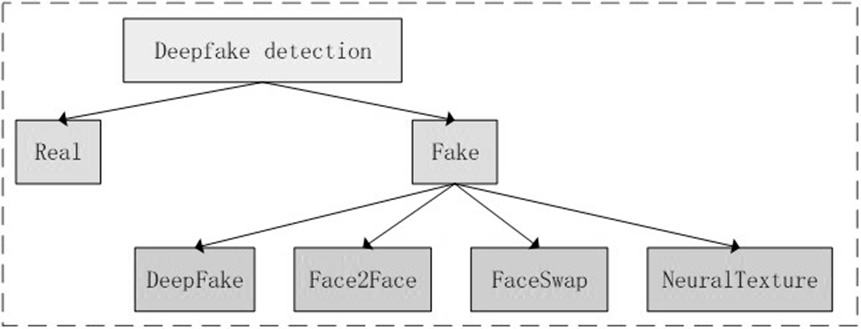

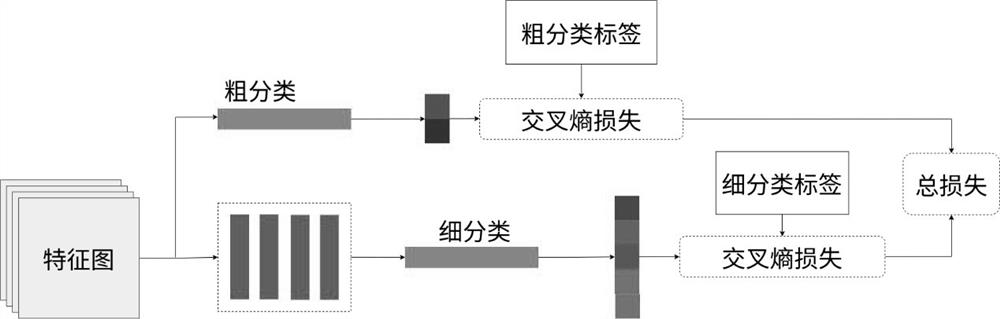

Face forgery detection method based on image domain and frequency domain double-flow network

PendingCN113723295AImplement hierarchical classificationImprove detection accuracyCharacter and pattern recognitionNeural architecturesVisual technologyEngineering

The invention relates to the technical field of computer vision, in particular to a face forgery detection method based on an image domain and frequency domain double-flow network. The detection method comprises a model training stage and a model inference stage. In the model training stage, a server with high computing performance is used for training a network model, network parameters are optimized by reducing a network loss function until the network converges, and a double-flow network model based on an image domain and a frequency domain is obtained; in the model inference stage, the network model obtained in the model training stage is utilized to judge whether the new image is a face counterfeit image or not. Compared with a method for converting face counterfeit detection into a dichotomy problem, the method realizes hierarchical classification, and can achieve better detection precision by using diversified supervision information of counterfeit images.

Owner:ZHEJIANG UNIV

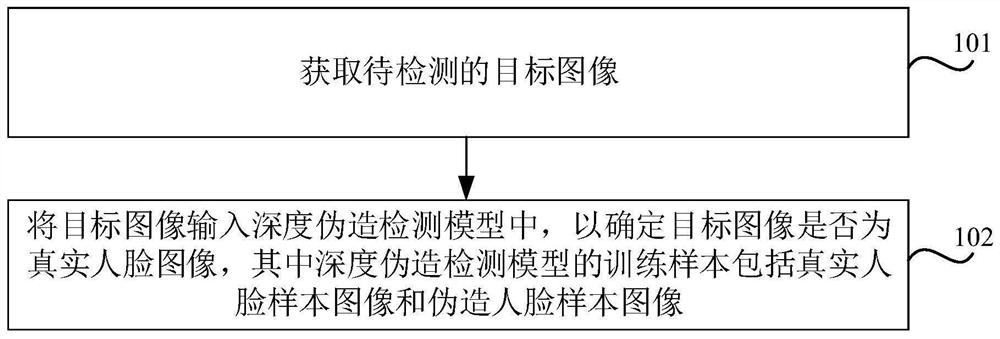

Deep forgery detection method and device, storage medium and electronic equipment

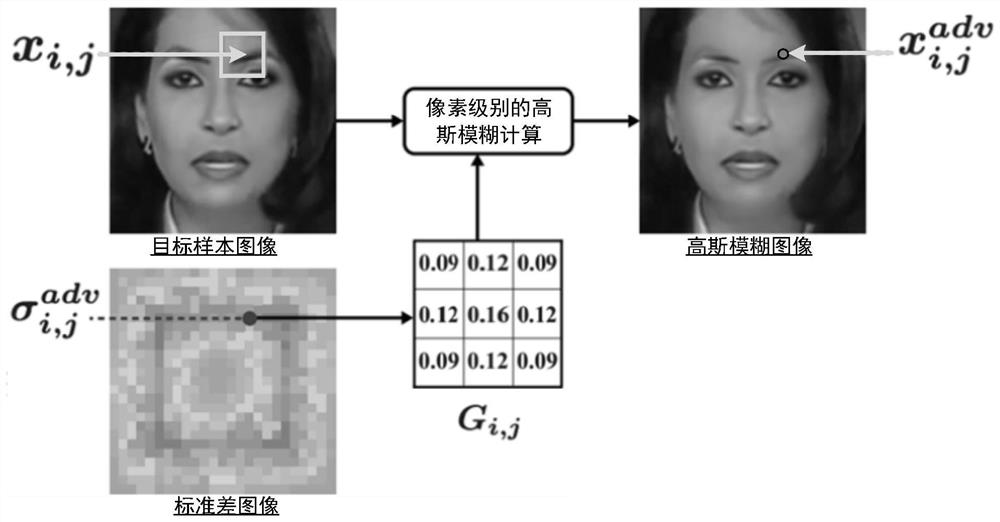

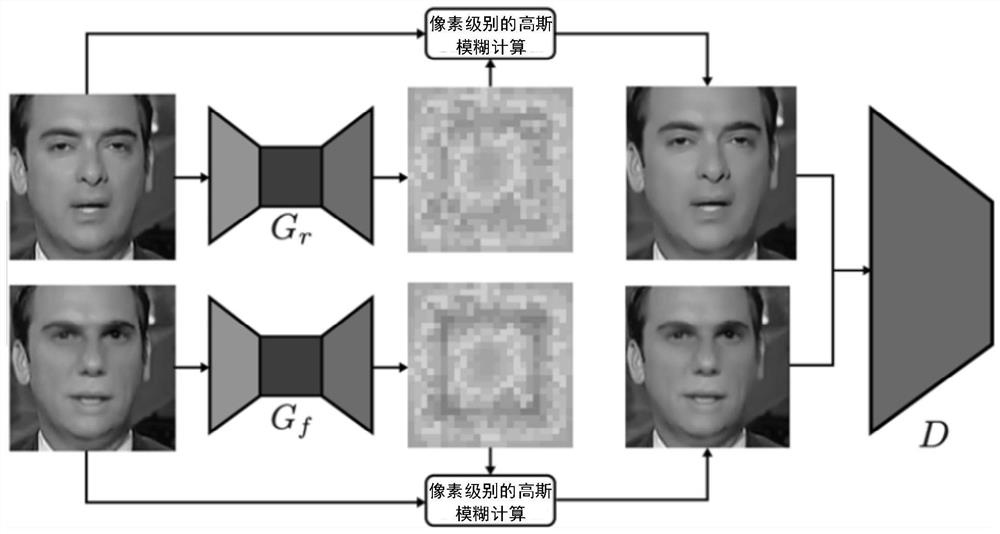

ActiveCN112766189AImprove generalization abilitySpoof detectionInternal combustion piston enginesEngineeringSample image

The invention relates to a deep forgery detection method and device, a storage medium and electronic equipment, and aims to improve the generalization performance of a deep forgery detection model so as to improve the scene applicability of deep forgery detection. The method comprises the following steps: acquiring a to-be-detected target image; inputting the target image into a deep forgery detection model to determine whether the target image is a real face image, wherein a training sample of the deep forgery detection model comprises a real face sample image and a counterfeit face sample image; and a training step of the deep forgery detection model comprising the following steps: generating a first adversarial sample image corresponding to the real face sample image and a second adversarial sample image corresponding to the counterfeit face sample image through a generator, and adjusting parameters of the deep forgery detection model according to the first adversarial sample image and the second adversarial sample image.

Owner:BEIJING YOUZHUJU NETWORK TECH CO LTD

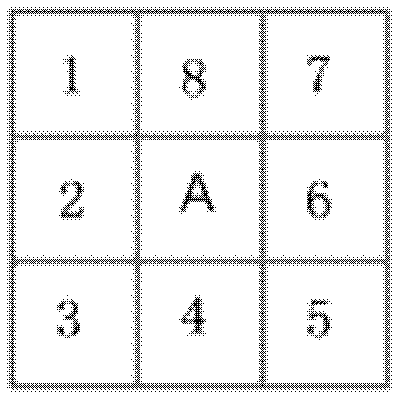

Face forgery detection method based on multi-region attention mechanism

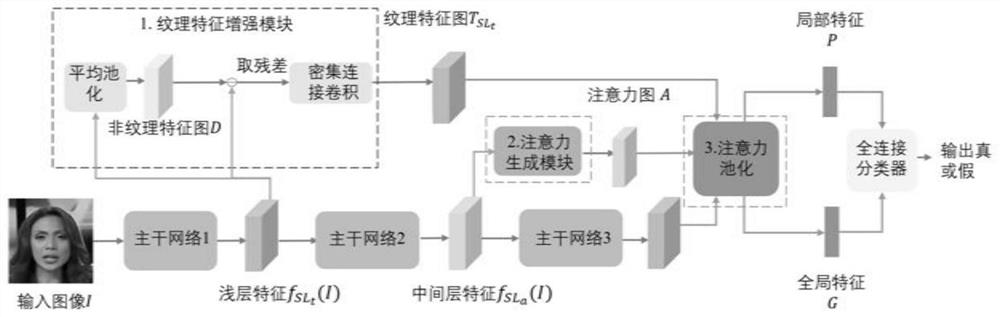

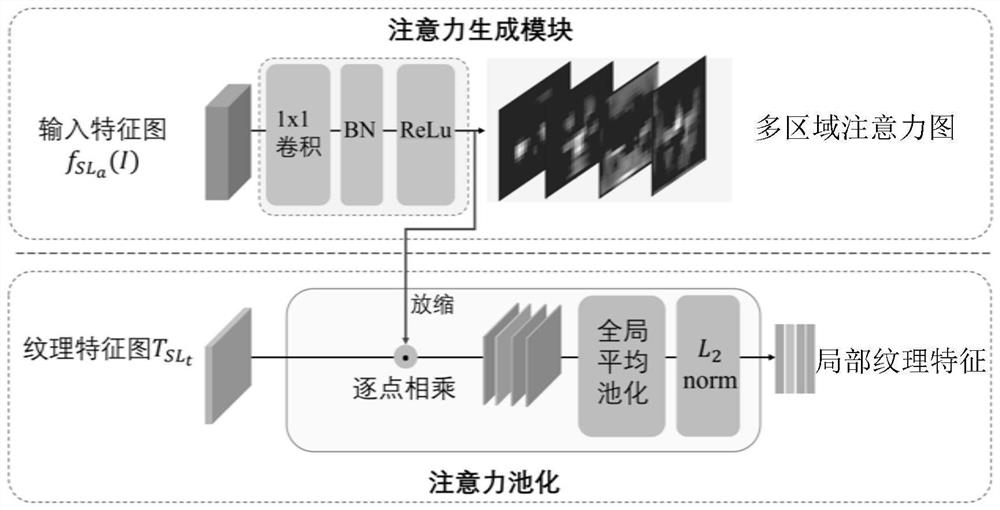

PendingCN113011332AImprove accuracyImage analysisNeural architecturesTexture enhancementForgery detection

The invention discloses a face forgery detection method based on a multi-region attention mechanism. The method comprises the steps: inputting a to-be-detected face image into a convolutional neural network, and obtaining a shallow-layer feature map, a middle-layer feature map and a deep-layer feature map; performing texture enhancement operation on the shallow feature map to obtain a texture feature map; generating a multi-region attention map for the intermediate layer feature map through a multi-attention mechanism; performing attention pooling on the texture feature map by using a multi-region attention map to obtain local texture features, adding the attention maps, and performing attention pooling on the deep feature map to obtain global features; and the global features and the local texture features are fused and then classified to obtain a face forgery detection result. The method has a plurality of attention regions, and each region can extract mutually independent features to enable the network to pay more attention to local texture information, so that the accuracy of a detection result is improved.

Owner:UNIV OF SCI & TECH OF CHINA

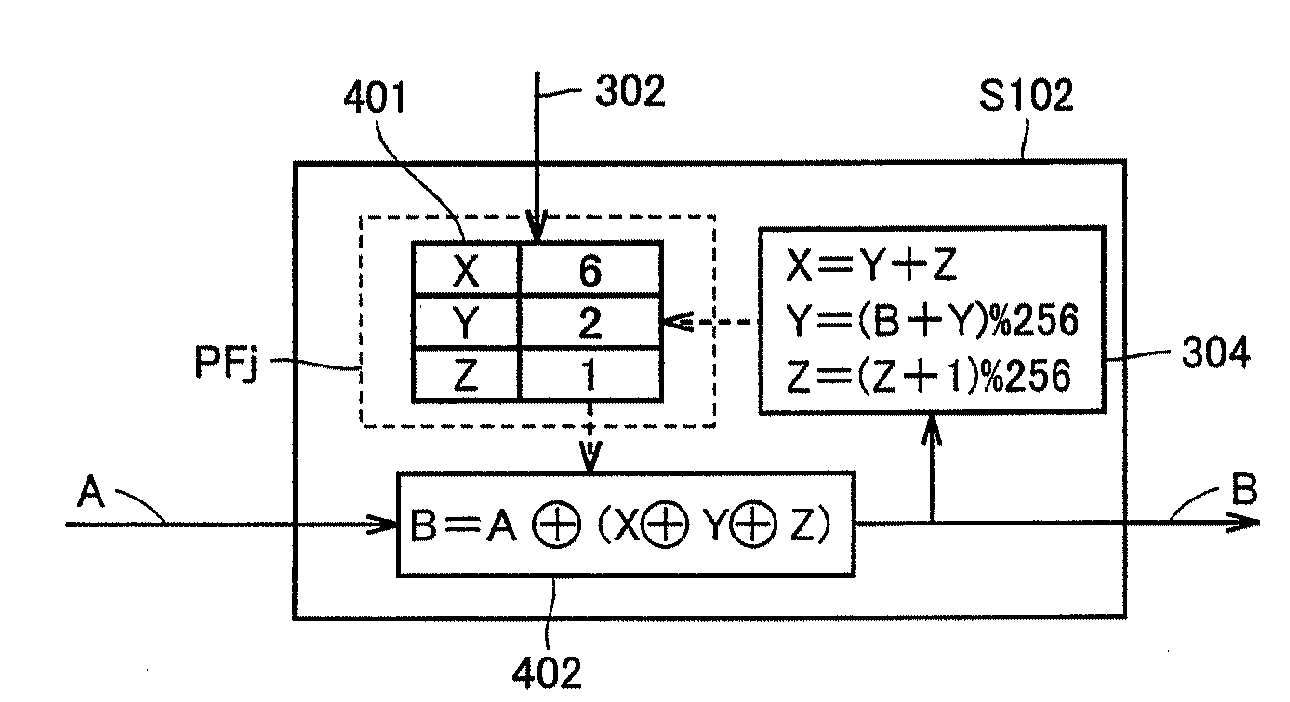

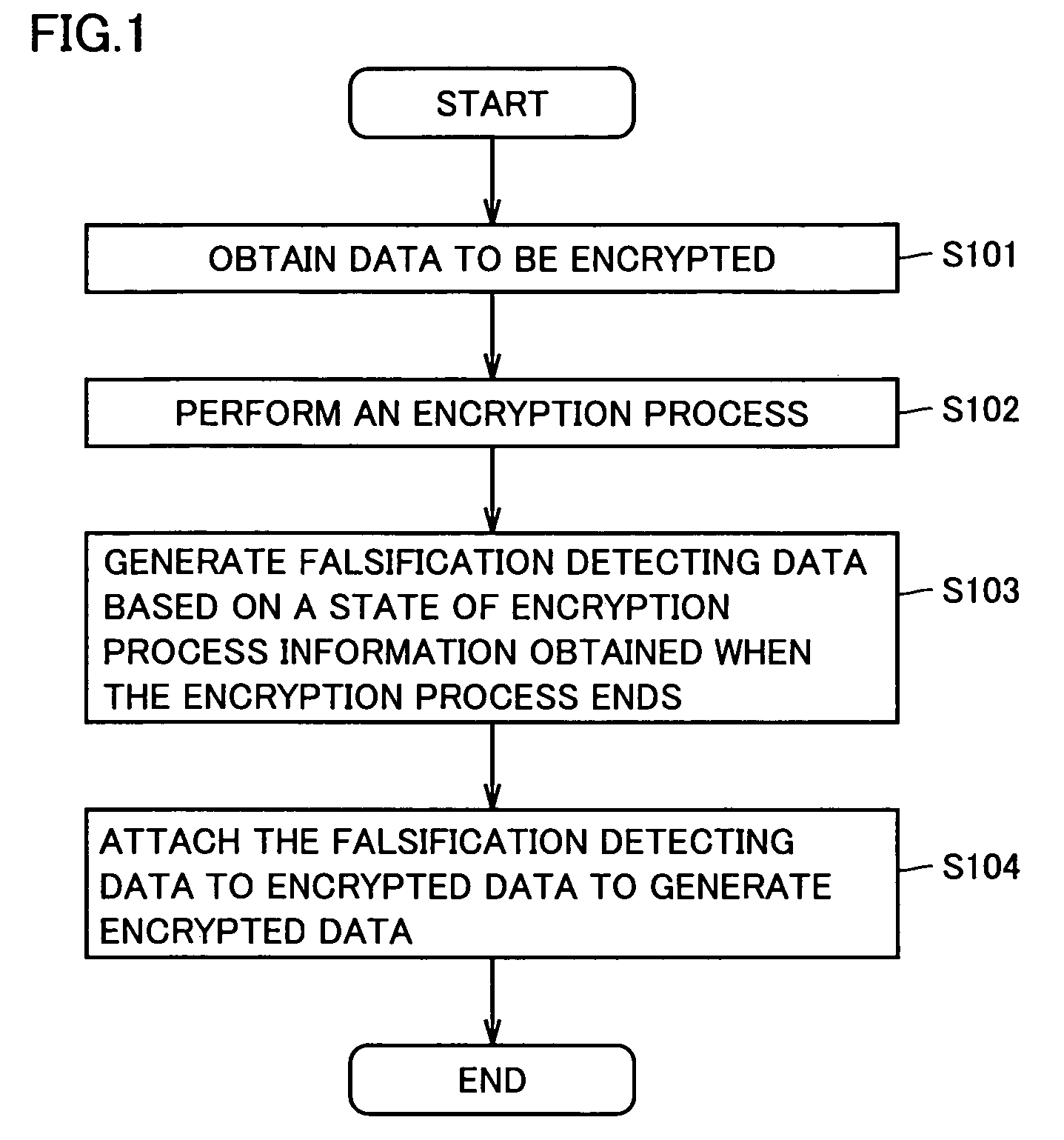

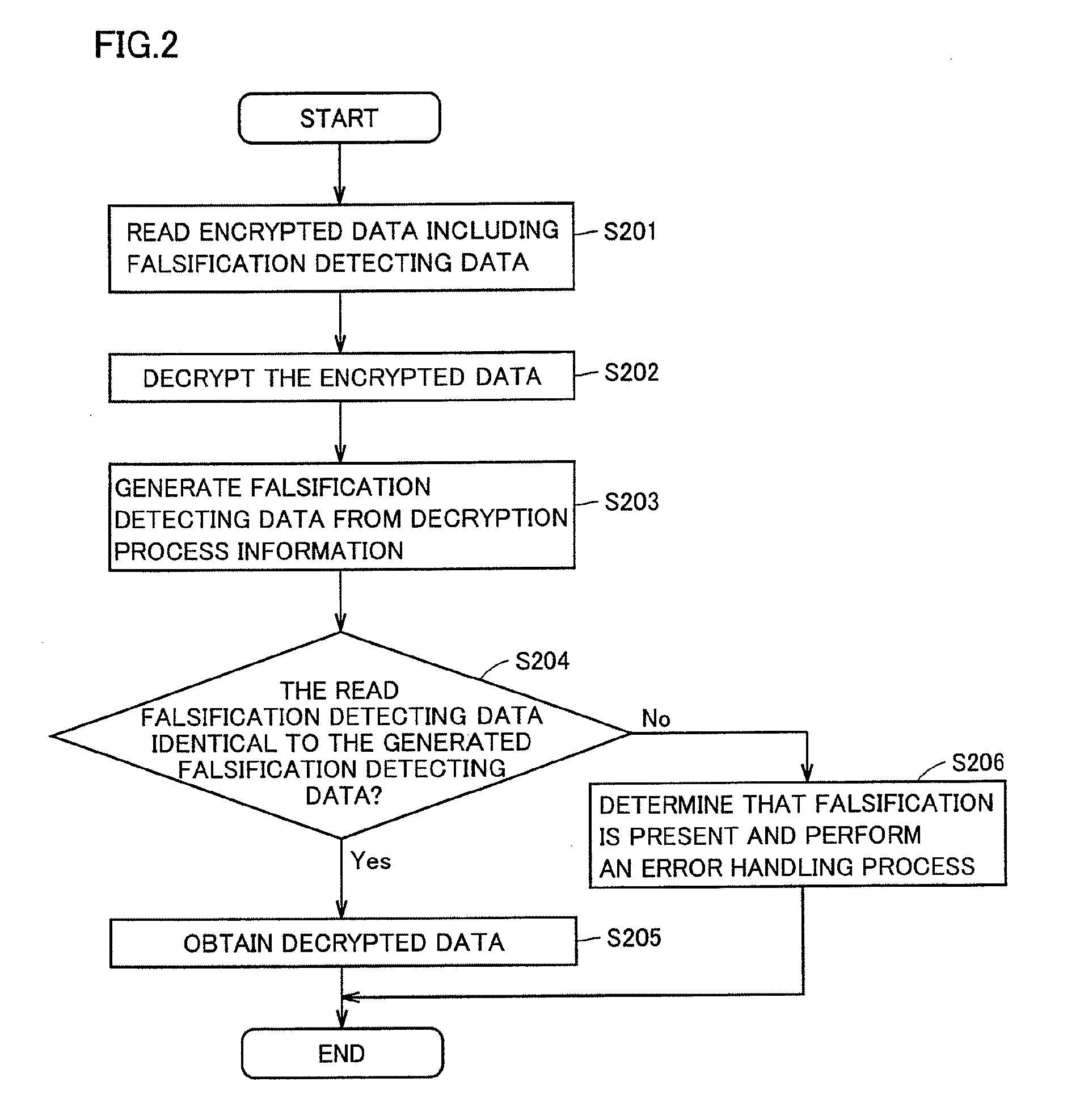

Apparatus and method of generating falsification detecting data of encrypted data in the course of process

InactiveUS7751556B2Reduce the amount of processingData detectedEncryption apparatus with shift registers/memoriesUser identity/authority verificationComputer hardwareForgery detection

Data to be encrypted (301) is partially extracted successively. A result of encrypting a previously extracted portion of the data is used to successively calculate that of encrypting the currently extracted portion of the data successively. Successively calculated results of the encryption are used to generate encrypted data (305). In generating the encrypted data, a finally calculated result of the encryption (PF(z+1)) is attached to the generated encrypted data. The finally calculated result is used as falsification detecting data (308) for detecting whether the data to be encrypted is falsified data.

Owner:SHARP KK

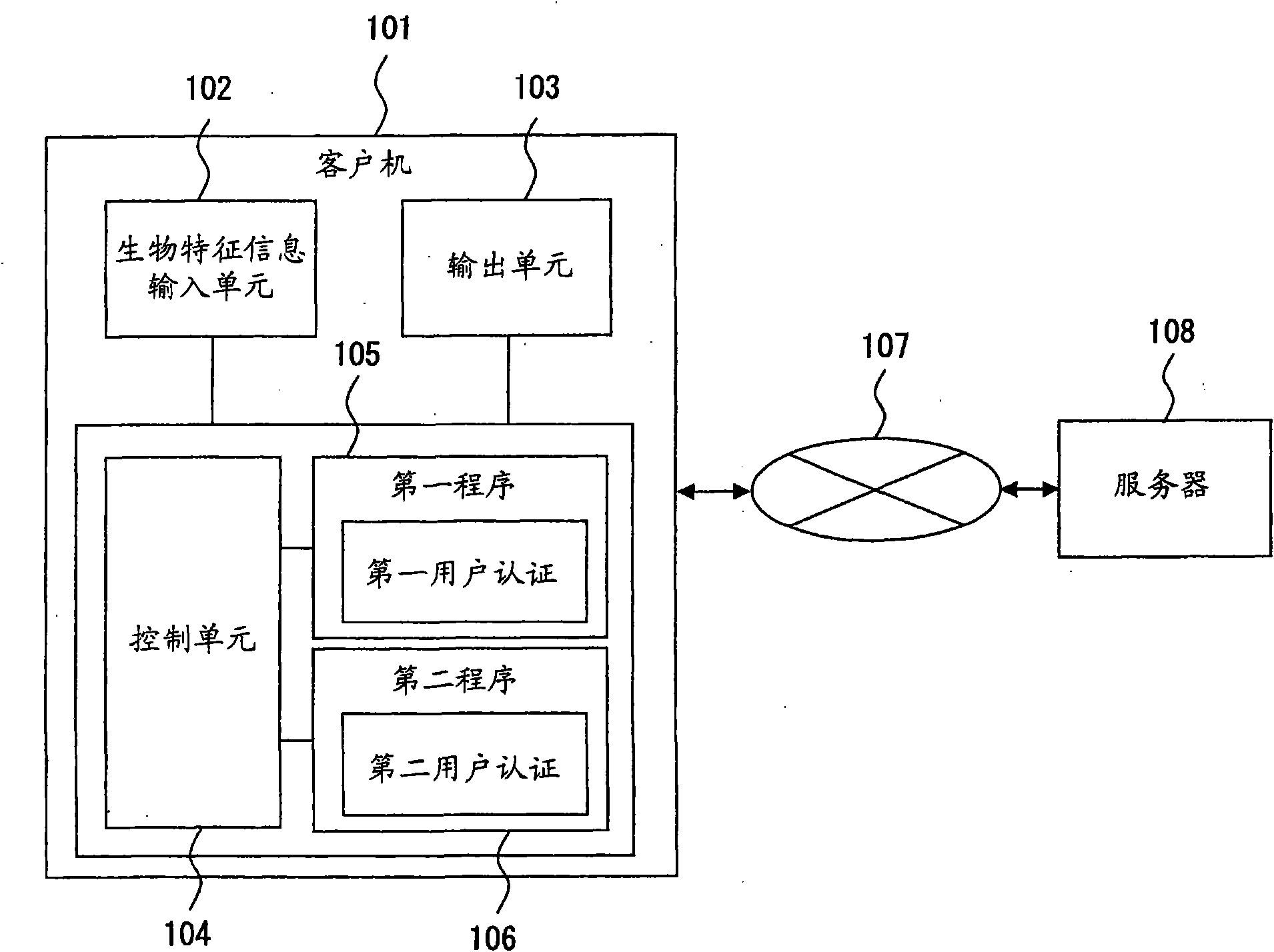

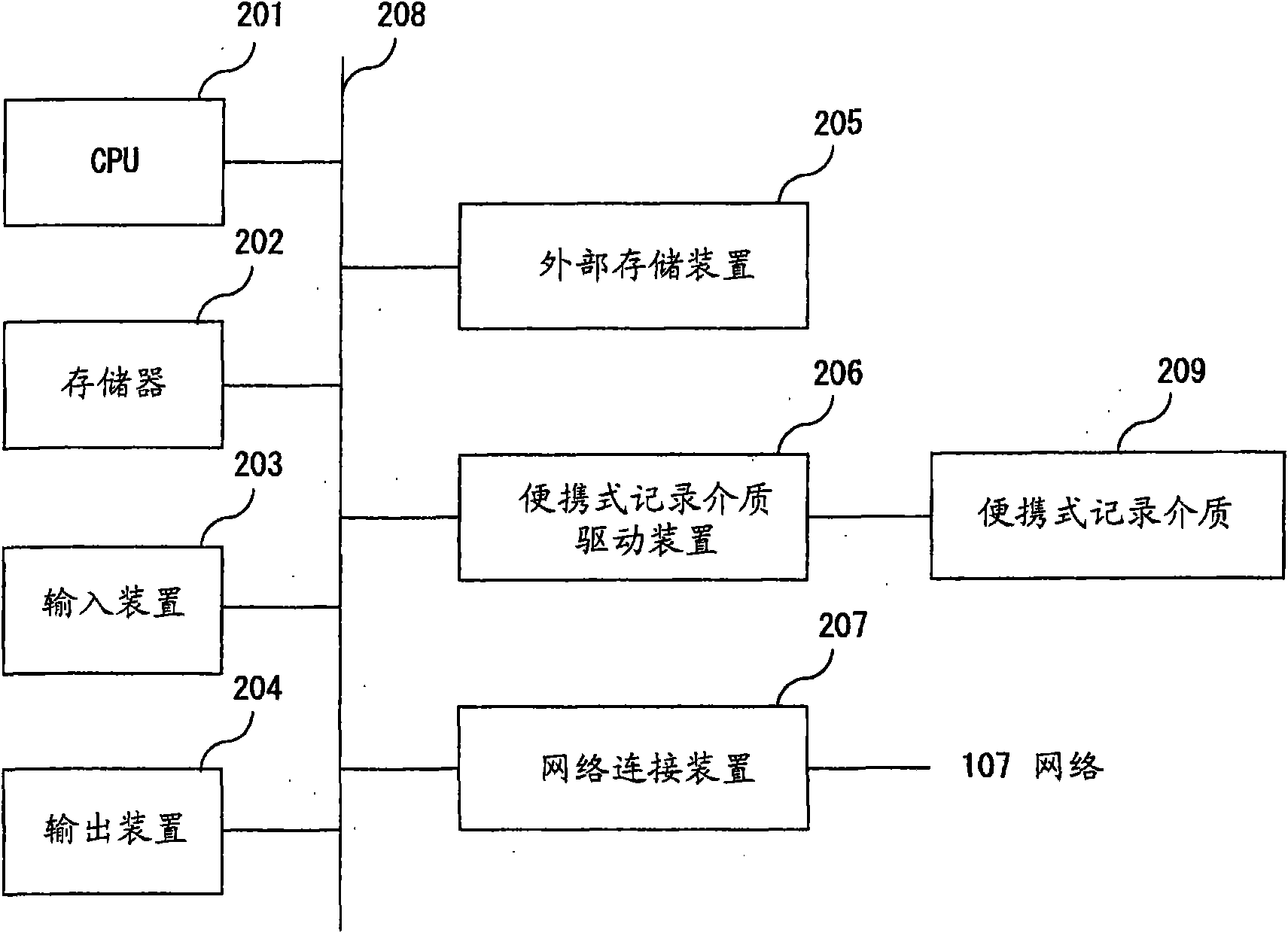

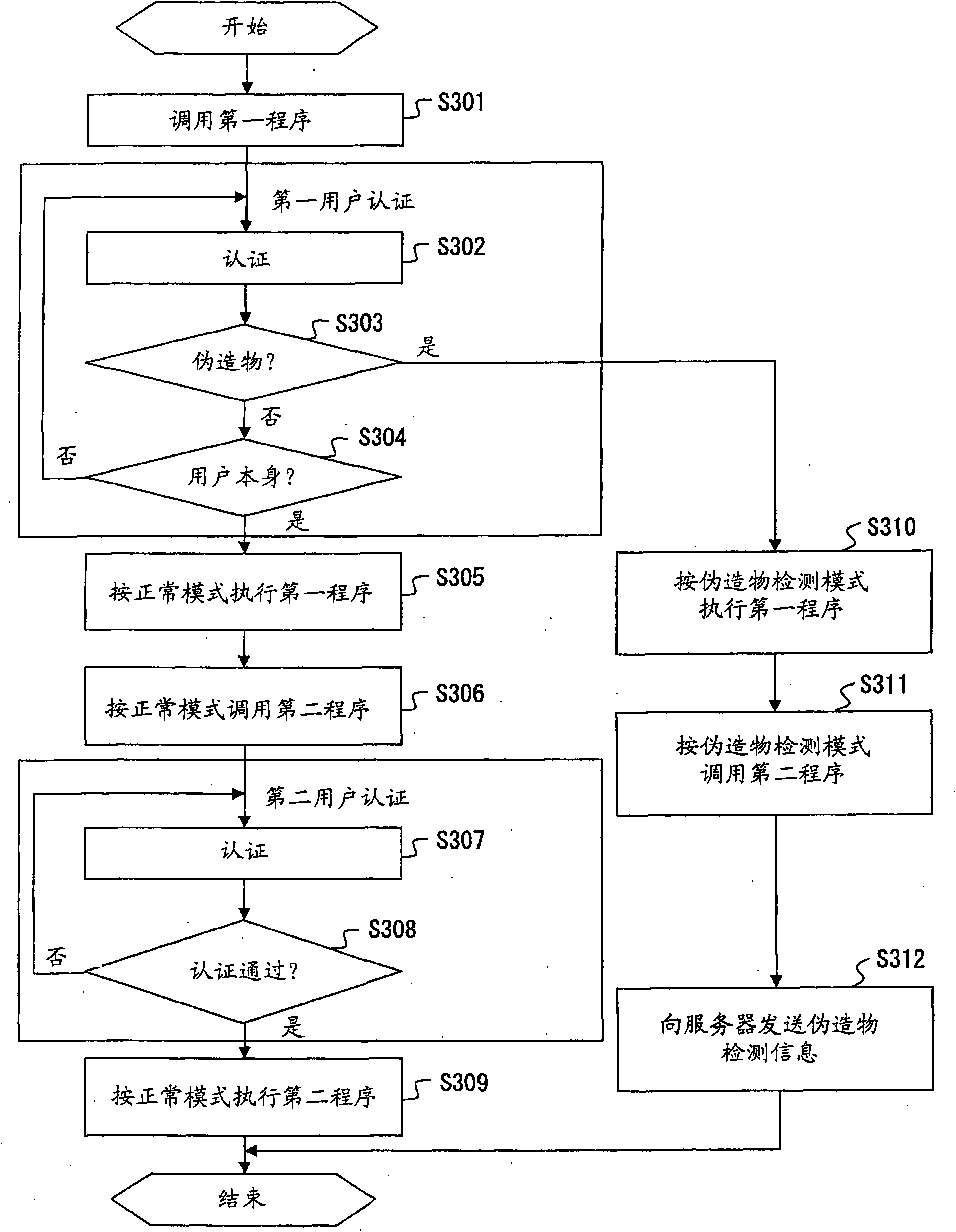

Authentication method and apparatus

InactiveCN101647701APerson identificationCharacter and pattern recognitionNetwork connectionUser authentication

The invention relates to an authentication method and an apparatus. An authentication method for use in an apparatus having a function, a function to execute a first program that executes a predetermined process after first user authentication with biometrics authentication is performed and does not have a network connection function, and a function to execute a second program that is invoked after the first program is executed, executed after second user authentication and has a network connection function comprises executing the first program in forgery detection mode regardless of a resultof a user determination if biometrics authentication with forgery is detected at the time of the first user authentication, invoking the second program in the forgery detection mode after the first program is executed in the forgery detection mode, and notifying a device connected to an external network of forgery detection information by using the network connection function after the second program is invoked in the forgery detection mode.

Owner:FUJITSU LTD

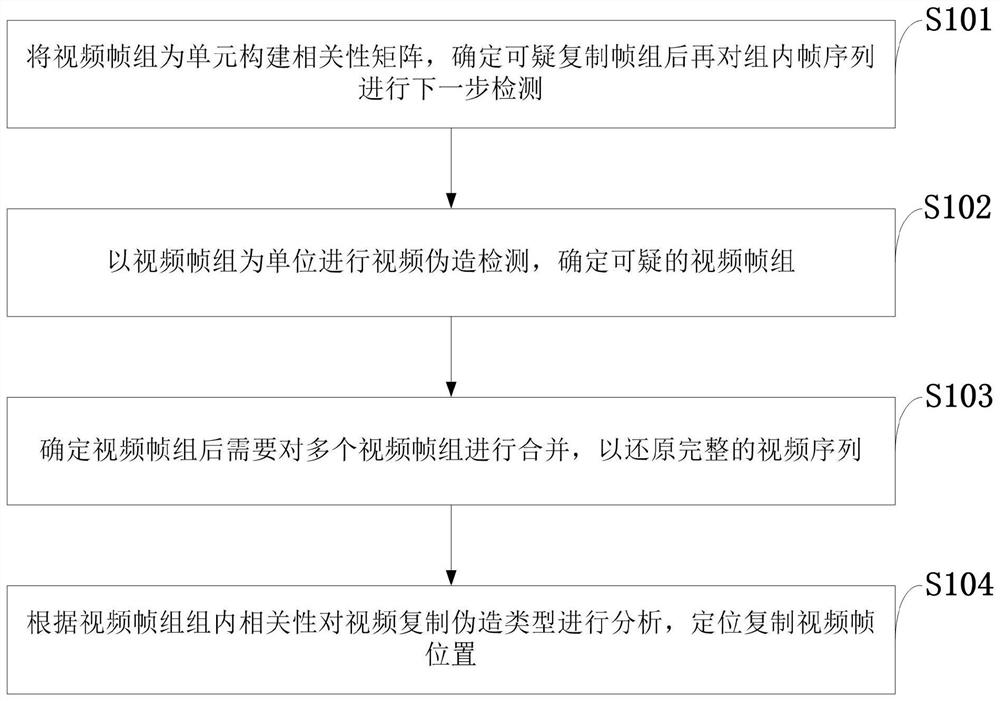

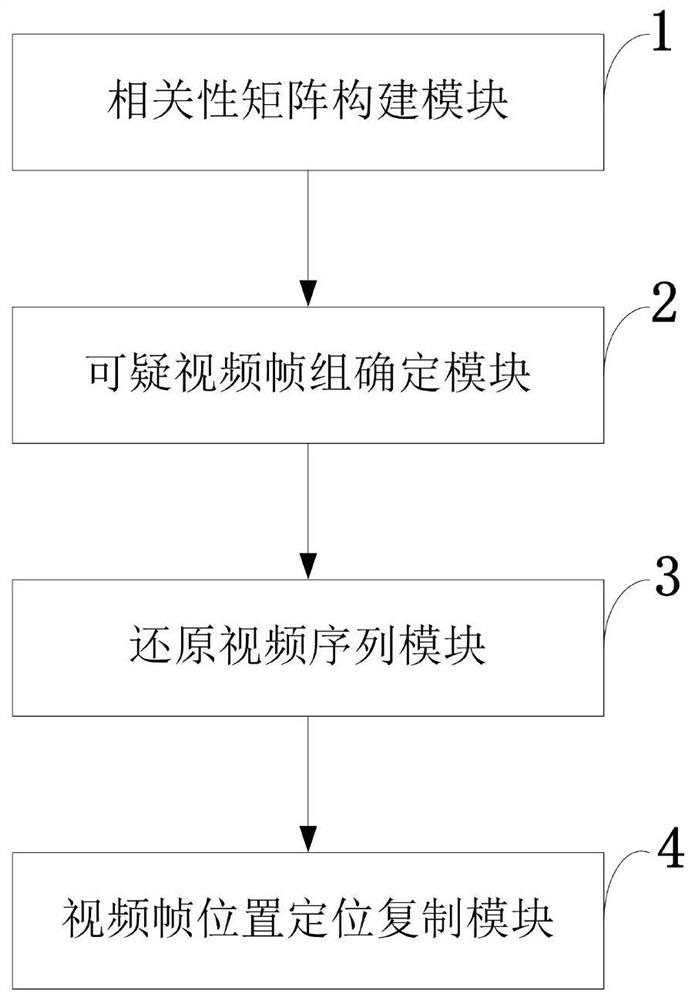

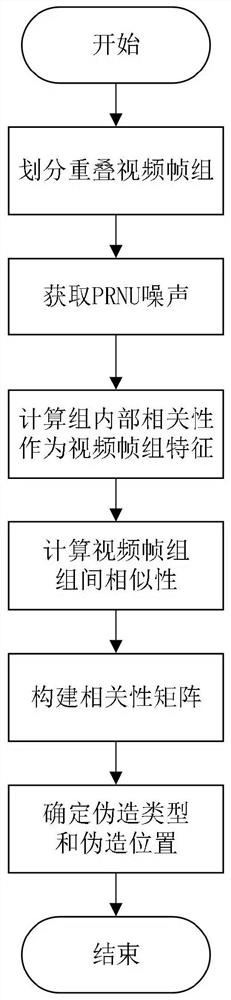

Video counterfeiting detection method and system, storage medium and video monitoring terminal

ActiveCN111652875AEffectively detect and determineImprove precisionImage analysisCharacter and pattern recognitionVideo monitoringInformation processing

The invention belongs to the technical field of video monitoring information processing, and discloses a video counterfeiting detection method and system, a storage medium and a video monitoring terminal, and the method comprises the steps: dividing a video frame sequence into overlapped sub-frame groups, and carrying out the similarity check of each sub-frame group as a query segment; taking thecorrelation between adjacent frames in the sub-frame groups as sub-frame group features, and calculating the similarity between the sub-frame groups; if the similarity is greater than a threshold value, selecting a corresponding sub-frame group as a forged frame group candidate; and determining the counterfeiting type and position by detecting the time relationship between adjacent frames in the sub-frame group. According to the method, the sample video is copied and forged in two frames; when the method is used for detection, results of all sample videos are averaged to obtain a final result,and experimental results show that the method can effectively detect copy forgery in the videos, can effectively detect two types of copy forgery and determine the position forgery position, and is higher in precision ratio and recall ratio compared with a literature algorithm.

Owner:XIDIAN UNIV

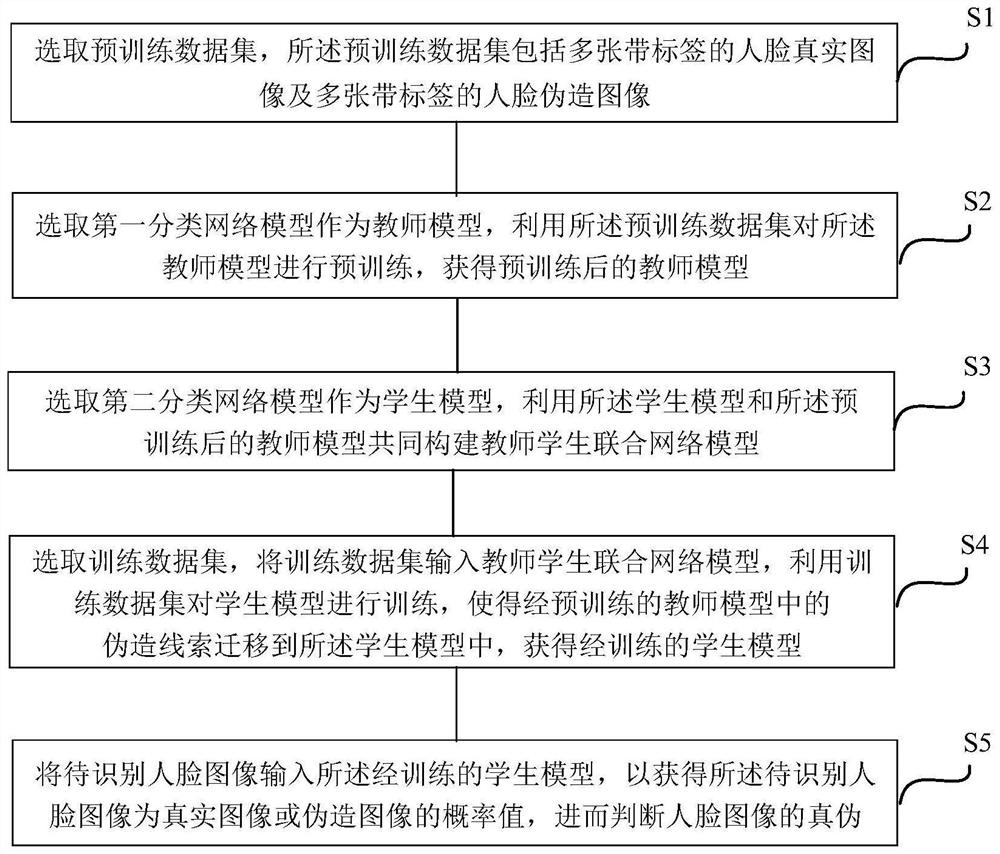

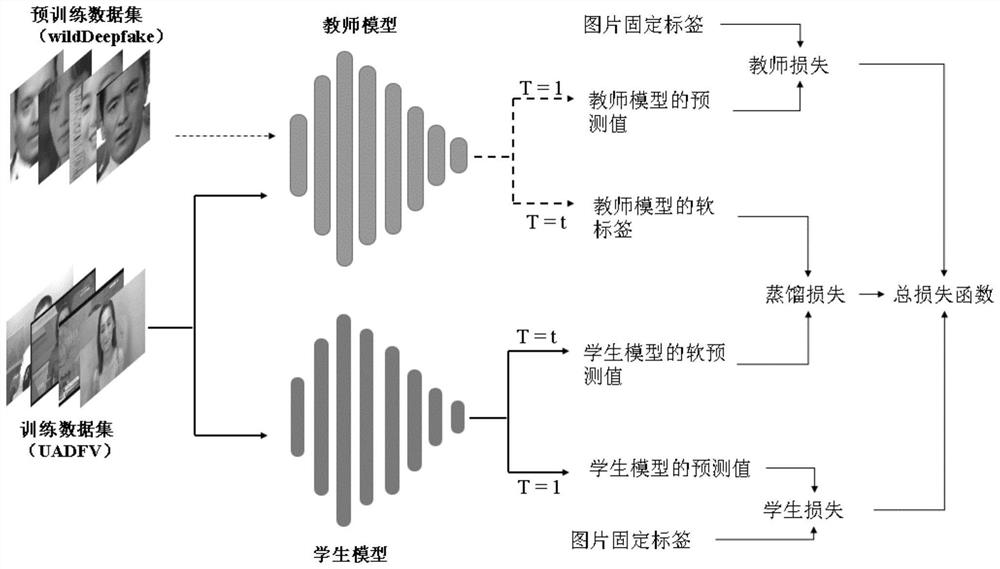

Human face forgery clue migration method based on knowledge distillation

PendingCN114170655ACorrect mistakesTraining accuratelyNeural architecturesSpoof detectionPattern recognitionData set

The invention discloses a face forgery clue migration method based on knowledge distillation. The method comprises the following steps: selecting a pre-training data set; selecting the first classification network model as a teacher model, and pre-training the teacher model by using the pre-training data set to obtain a pre-trained teacher model; selecting the second classification network model as a student model, and constructing a teacher-student joint network model by using the student model and the trained teacher model; selecting a training data set, inputting the training data set into the teacher-student joint network model, and training the student model by using the training data set to obtain a trained student model; and inputting the to-be-recognized face image into the trained student model to obtain a probability value that the to-be-recognized face image is a real image or a forged image, and further judging the authenticity of the face image. According to the invention, under the condition of avoiding loss of priori counterfeit clue knowledge, the accuracy and generalization of face counterfeit detection are improved.

Owner:XIDIAN UNIV

Picture counterfeiting detection method based on shadow matte consistency

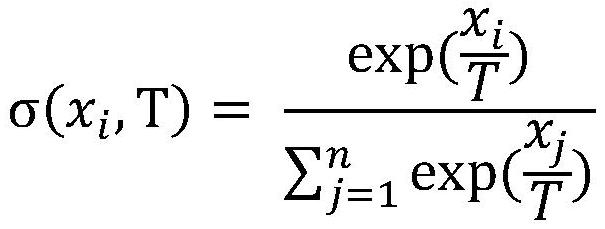

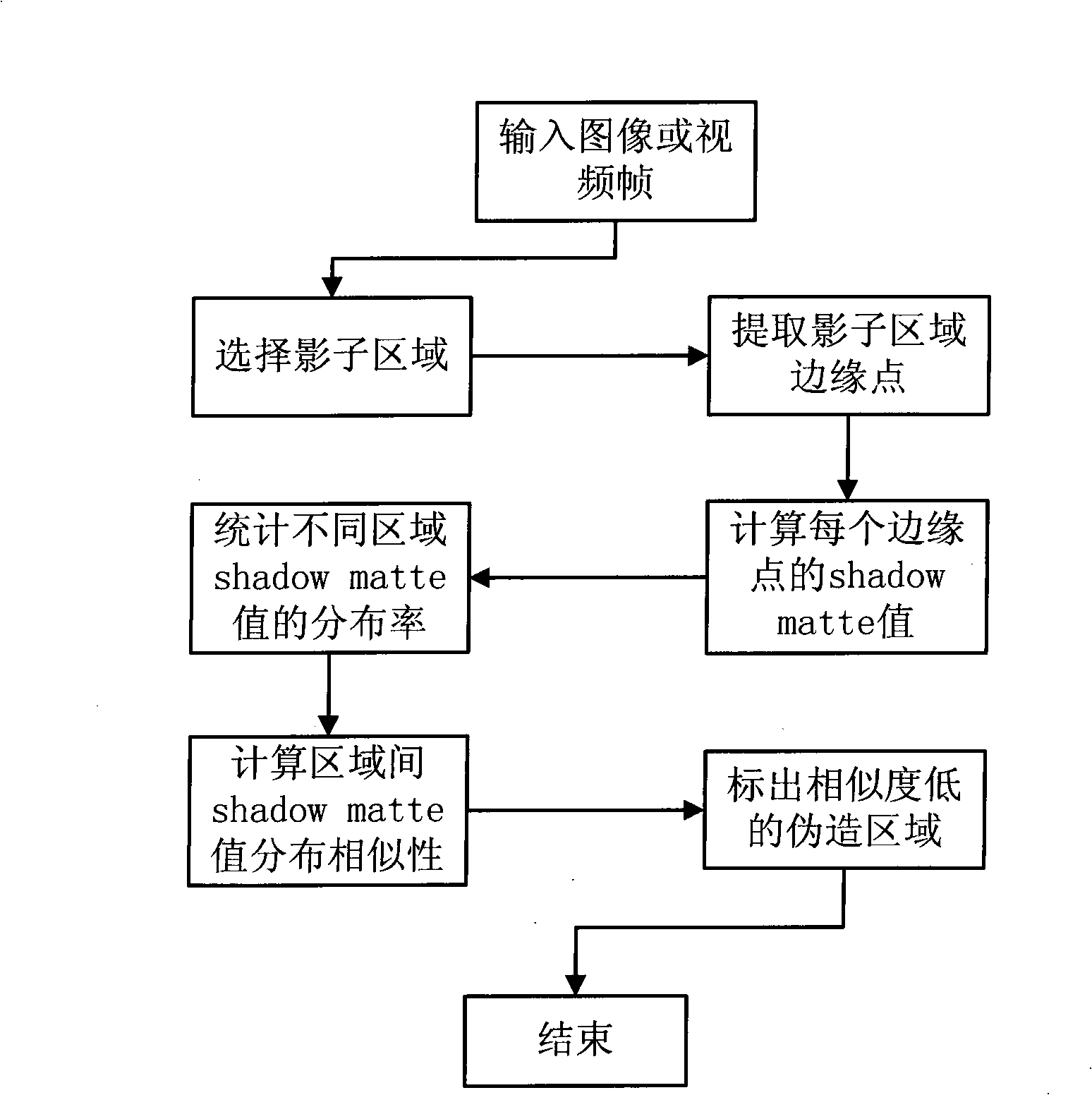

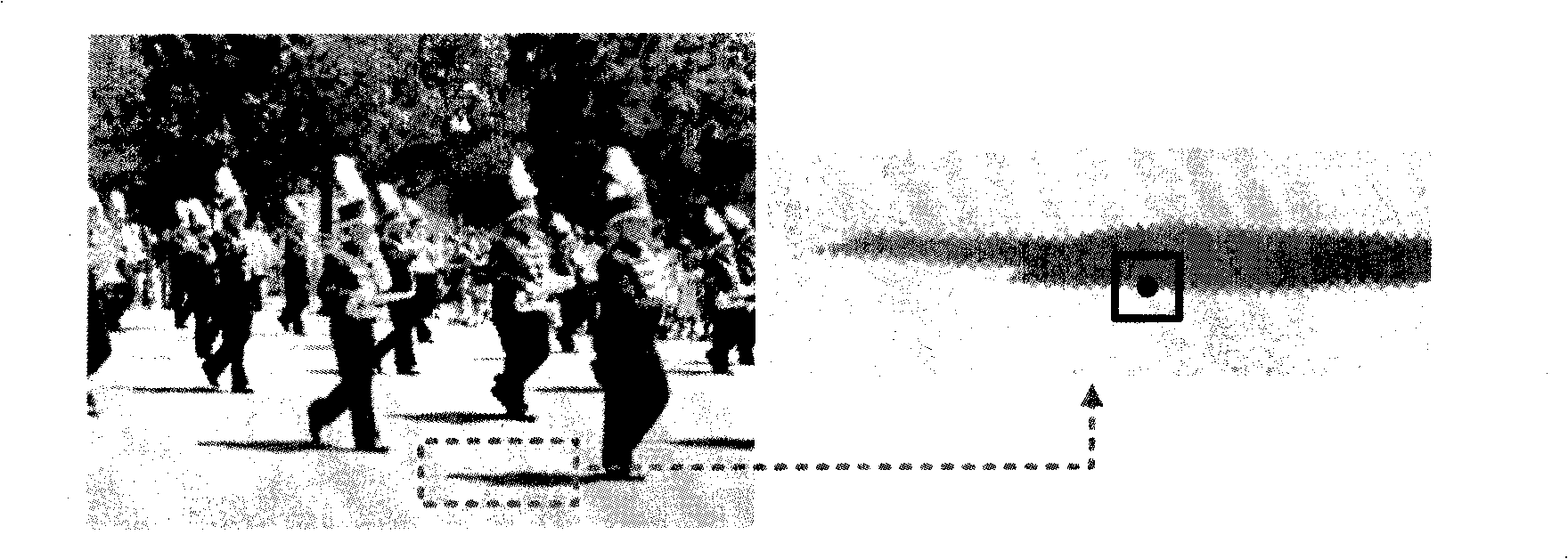

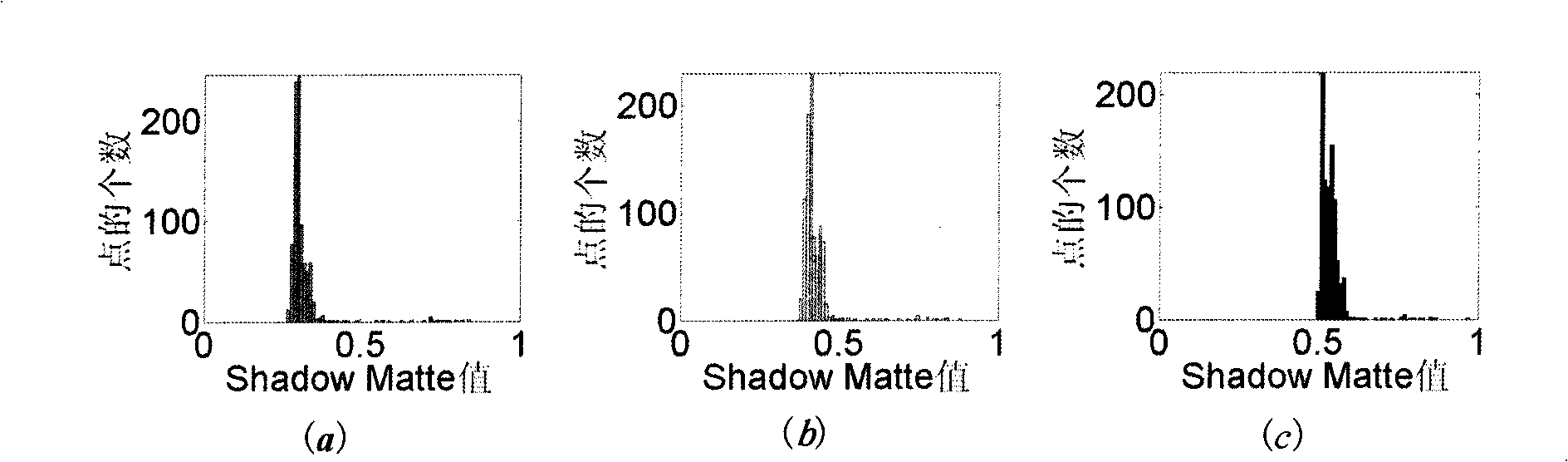

InactiveCN101527041AIncrease the areaReduce computational complexityImage analysisPattern recognitionComputation complexity

The invention belongs to the technical field of digital image authenticity identification and image processing, and relates to a picture counterfeiting detection method based on shadow matte consistency. The method comprises the following steps: finding out two or more shadow areas to be judged in an image to be detected, for each shadow area, extracting all boundary points of shadows; for each boundary point as a center, extending outwards to form an M*M rectangular area, classifying the points in a frame into two, namely points in the shadows and point out of the shadows, and calculating shadow matte values of the shadow boundary points; counting the distribution condition of the shadow matte value of each shadow area; and calculating the distribution similarity of the shadow matte values of different areas, and positioning counterfeiting areas. The method does not need to preprocess the images, has the advantage of low calculating complexity and has higher feasibility and applicability.

Owner:TIANJIN UNIV

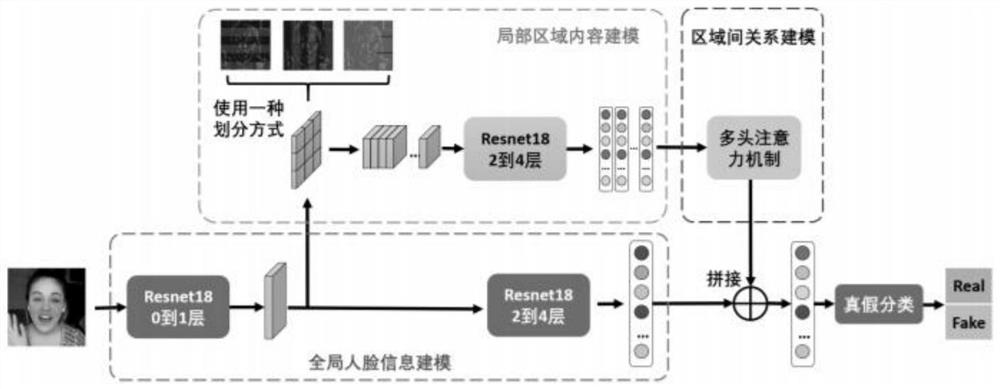

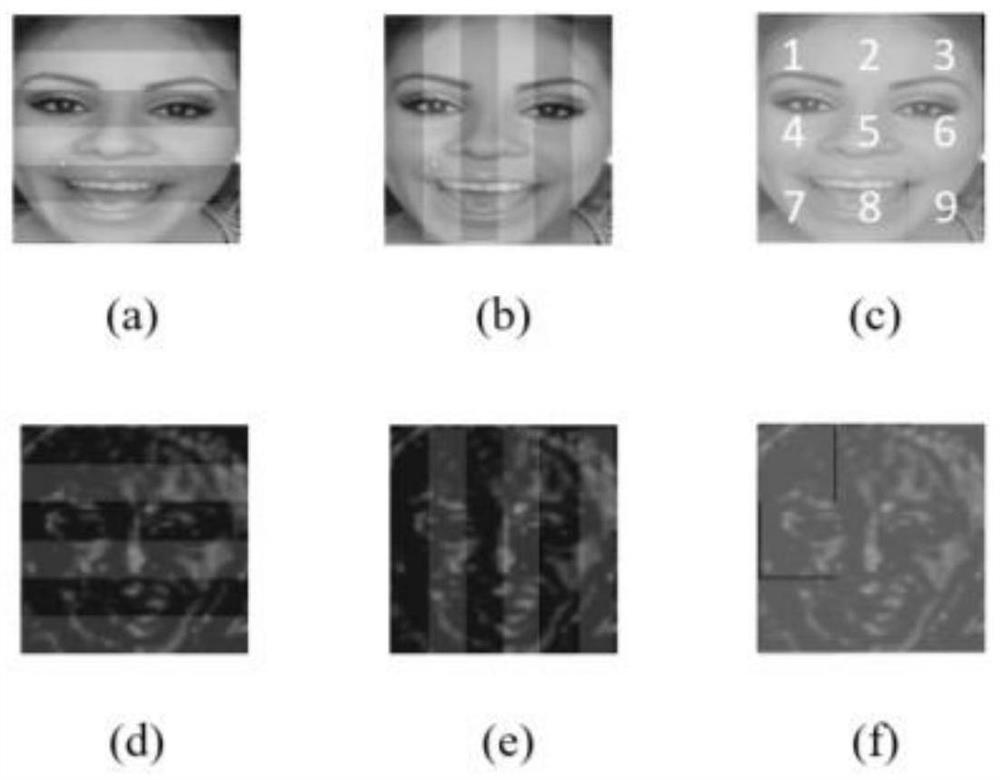

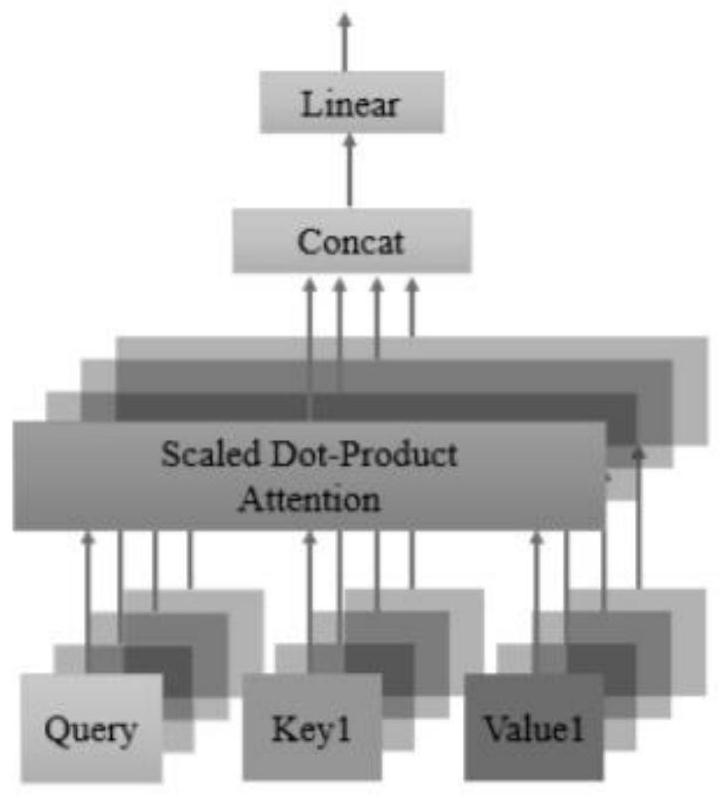

Face depth forgery detection method and system based on face division

ActiveCN113537027APromote resultsGeneralization results are stableCharacter and pattern recognitionMachine learningAttention modelFeature extraction

The invention provides a face depth forgery detection method and system based on face division. The method comprises the following steps: extracting global face features from training data; according to shallow convolution features generated in the process of obtaining the global face features, dividing the shallow convolution features into a plurality of image areas according to a preset face division mode, and inputting the image areas into a local face feature extraction model to obtain a plurality of local features of a face image; and extracting relation features among the plurality of local features through an attention model, splicing the relation features and the global features, inputting the spliced relation features and global features into a dichotomy model to obtain a detection result of the training data, and constructing a loss function according to the result and a label to train a global face feature extraction model, the local face feature extraction model, the attention model and the dichotomy model.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

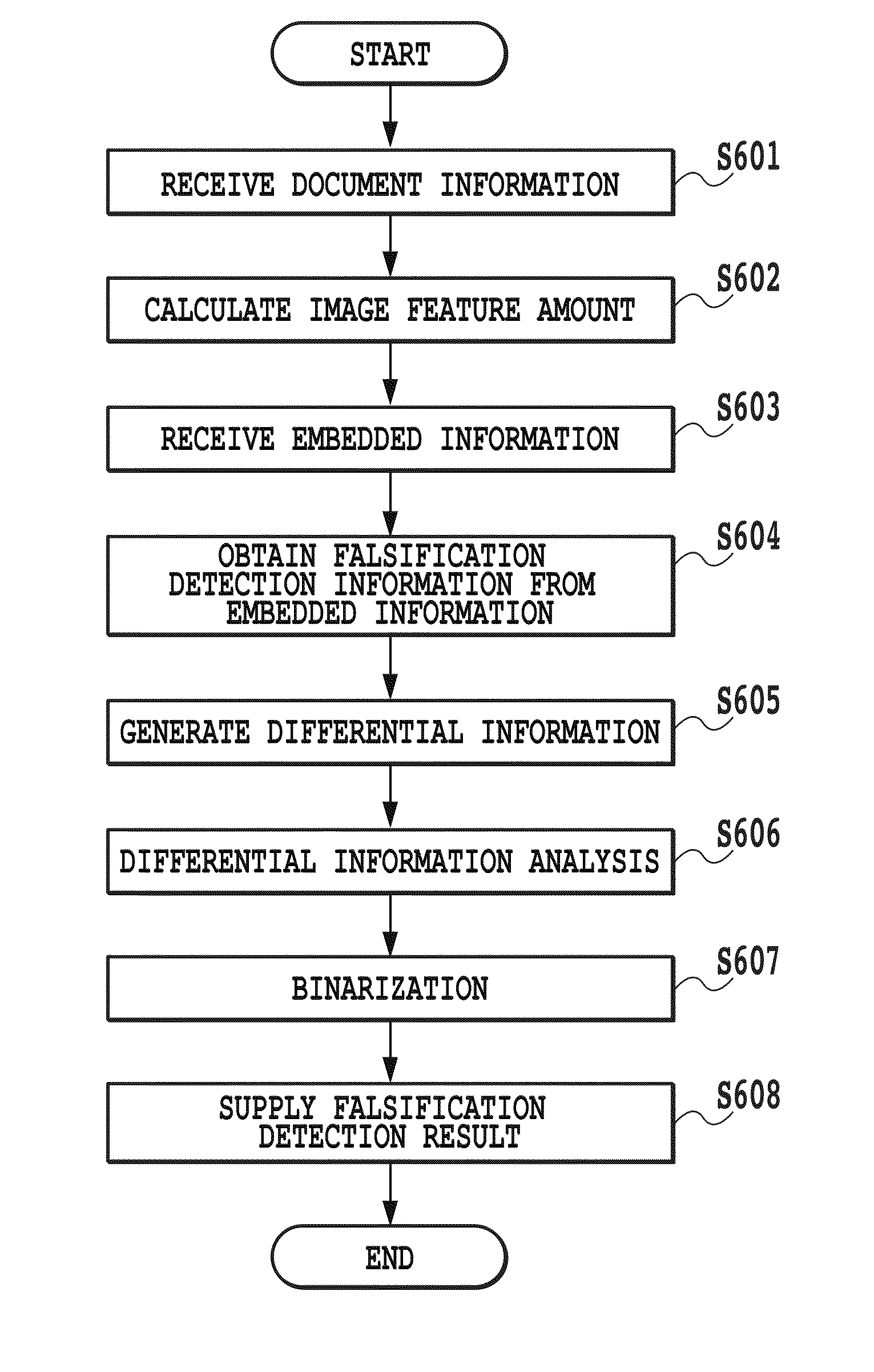

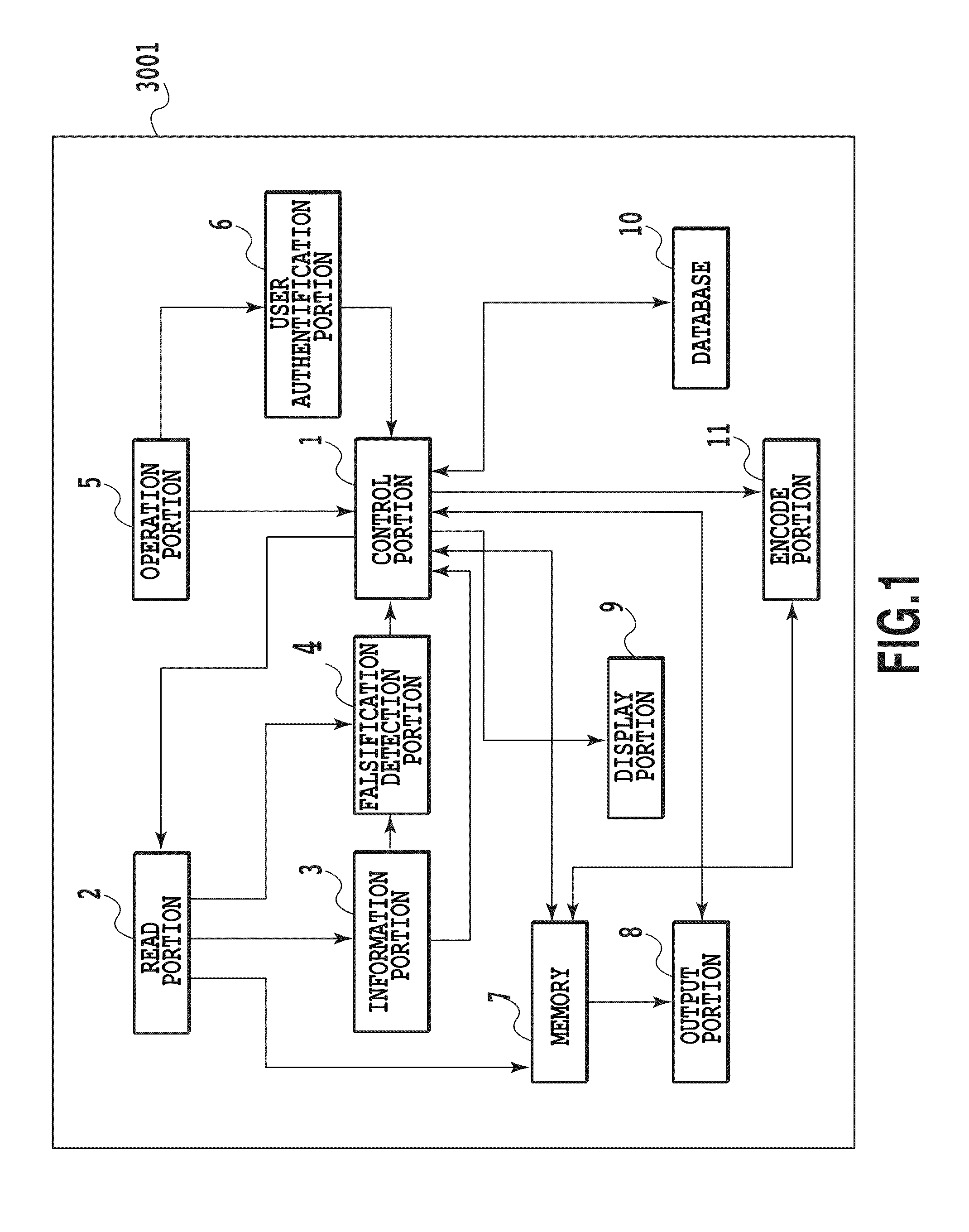

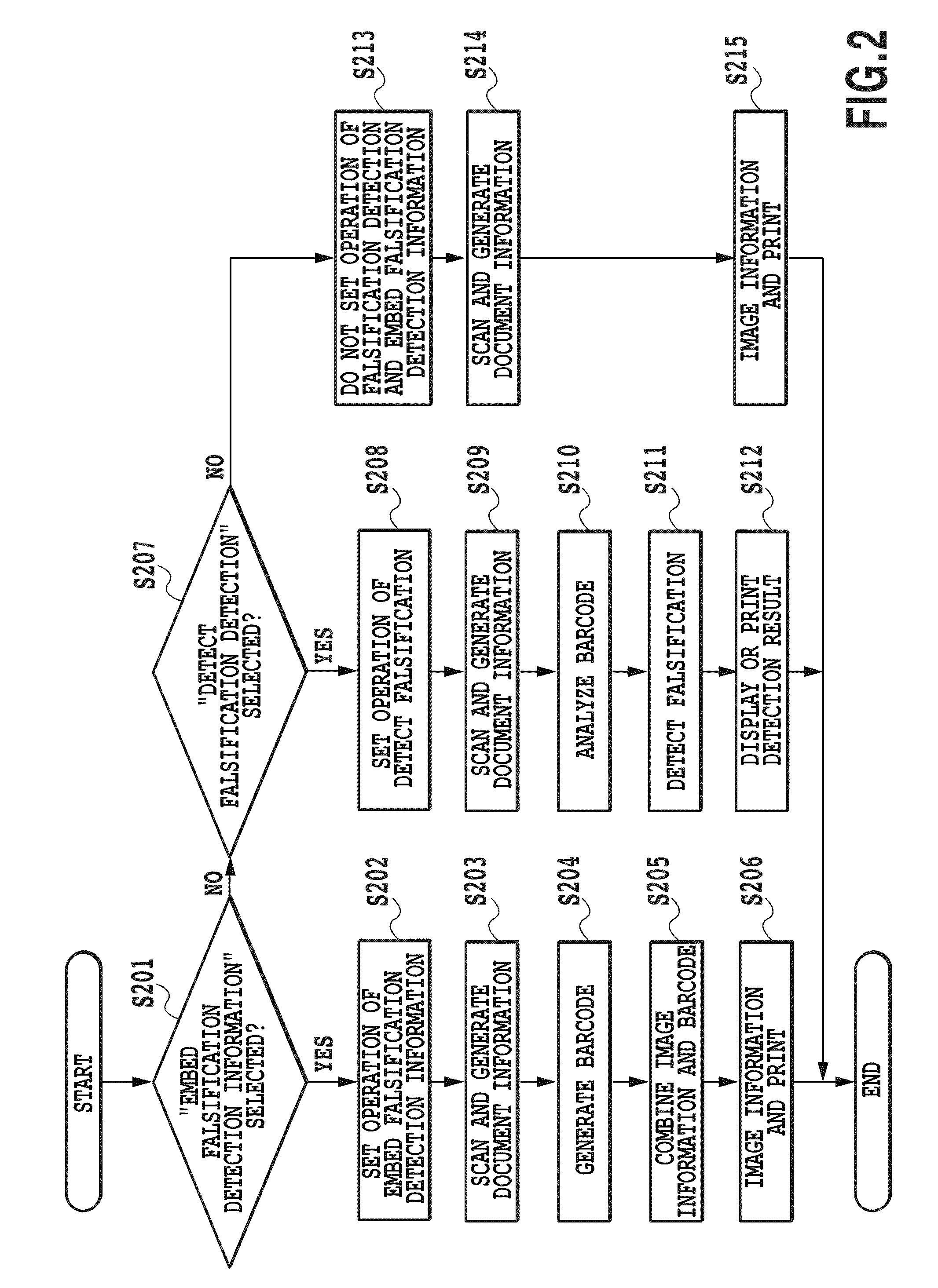

Image falsification detection device and image falsification detection method

InactiveUS8387860B2Image data processing detailsRecord carriers used with machinesDetection thresholdComputer science

A falsification detection threshold value for falsification detection is automatically determined. A falsification detection portion (4) performs division into uniform detection units, and calculates, for each of the cells of the detection units, the variation information of differential information on respective regions that are included in the detection units and are divided as described above (S802). It is understood that cells (901), (902) and (903) have values of 8, 170 and 140, respectively. The falsification detection portion (4) uses the variations in the differential information calculated in step S802 to calculate a differential information threshold for determining a falsified region within an image read by scanning (S803). The average value and the standard deviation of a region where falsification is not conducted are relatively small, and the average value and the standard deviation of a region where falsification is conducted are relatively large.

Owner:CANON KK

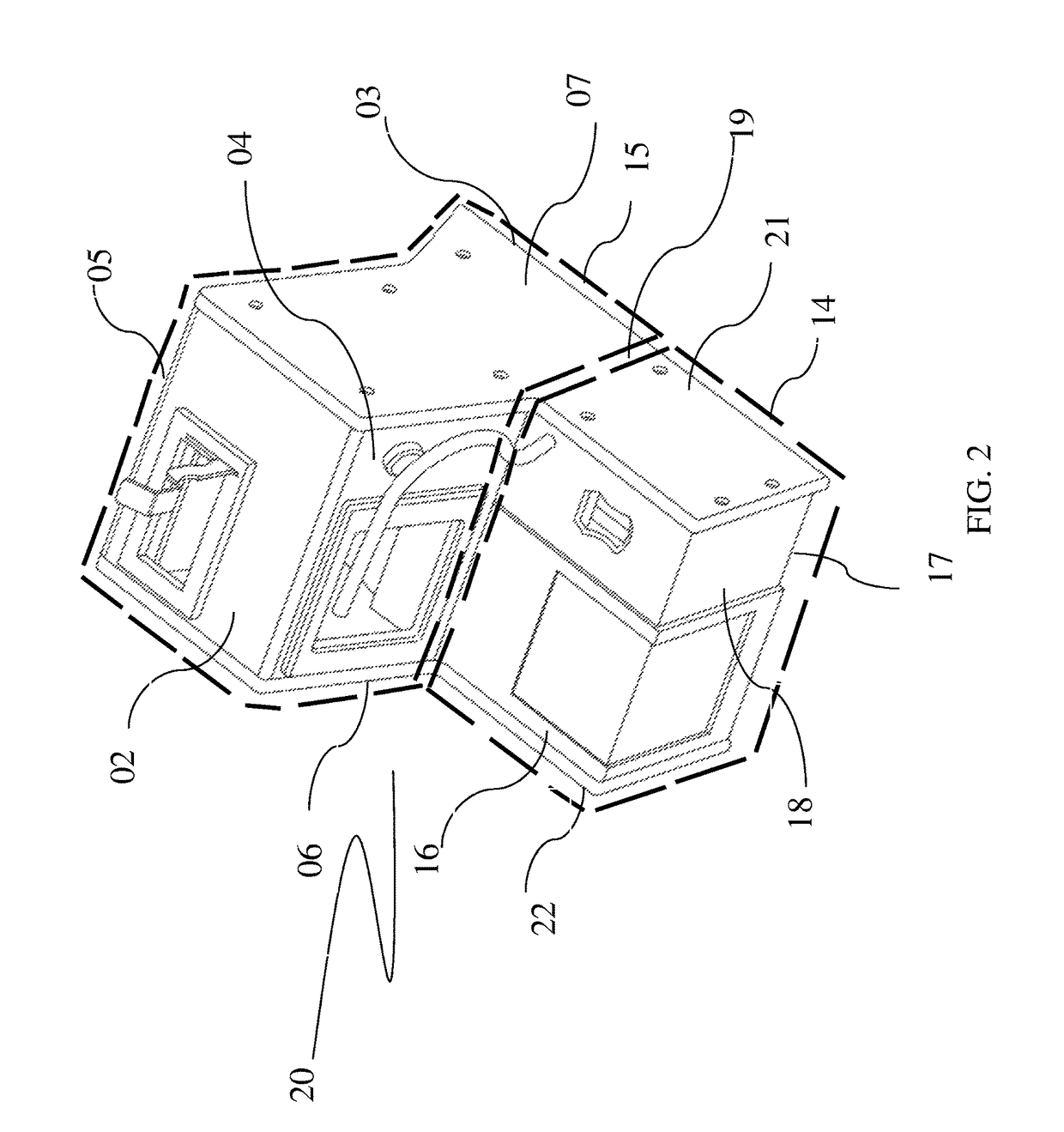

Document forgery detection system

InactiveUS9894240B1Paper-money testing devicesCharacter and pattern recognitionMagnifying glassValidation methods

Owner:ALJABRI AMER SAID RABIA MUBARAK

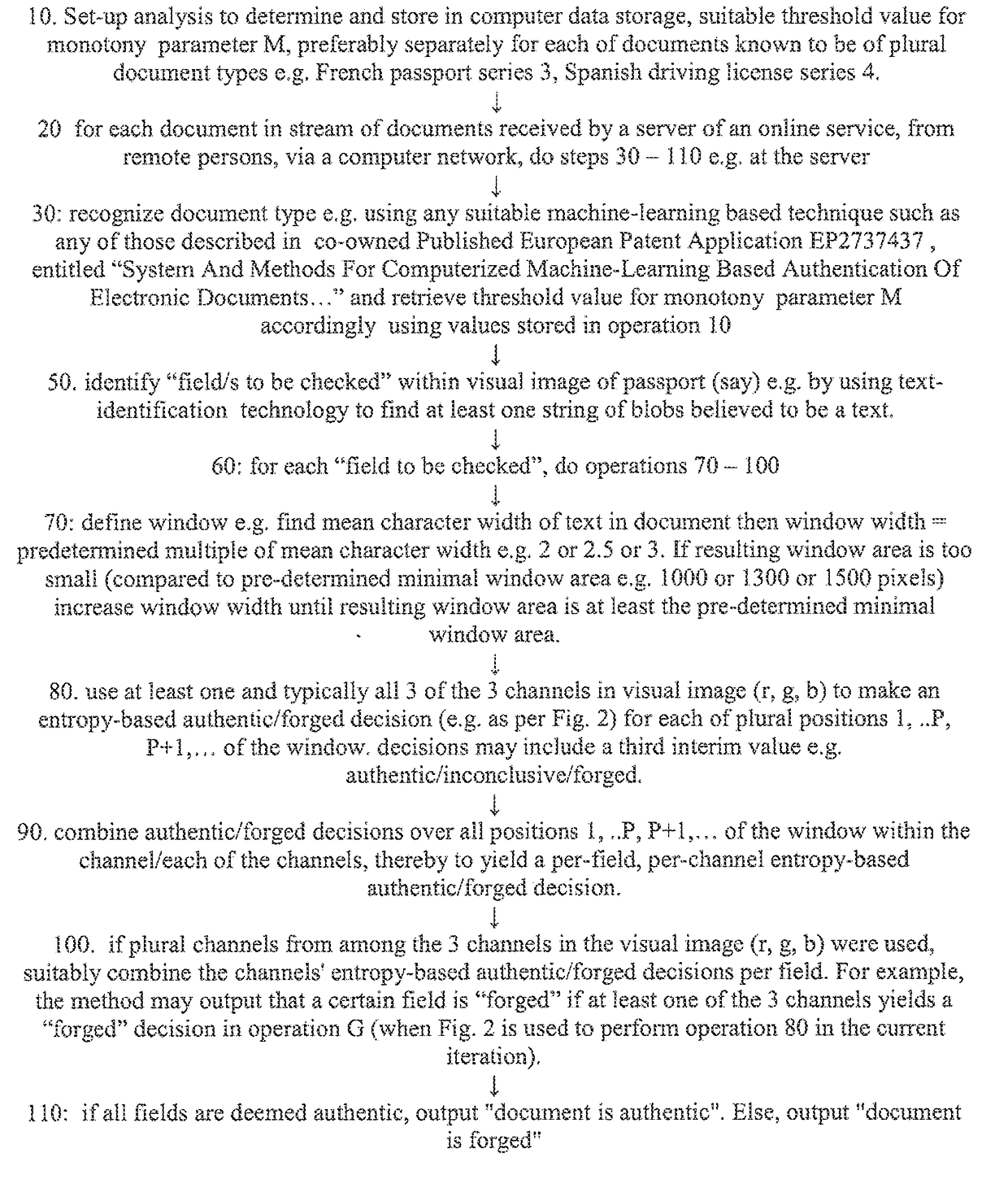

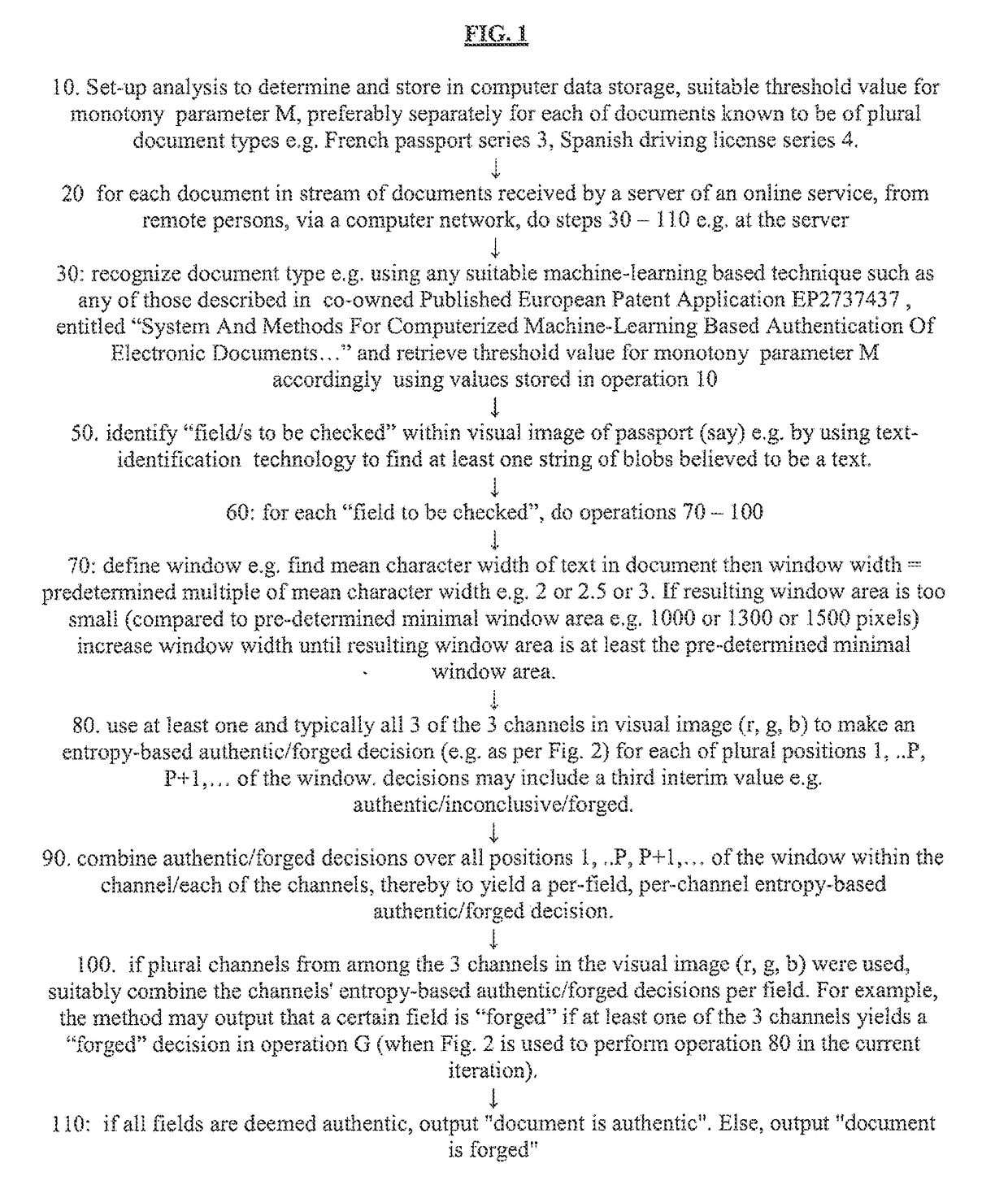

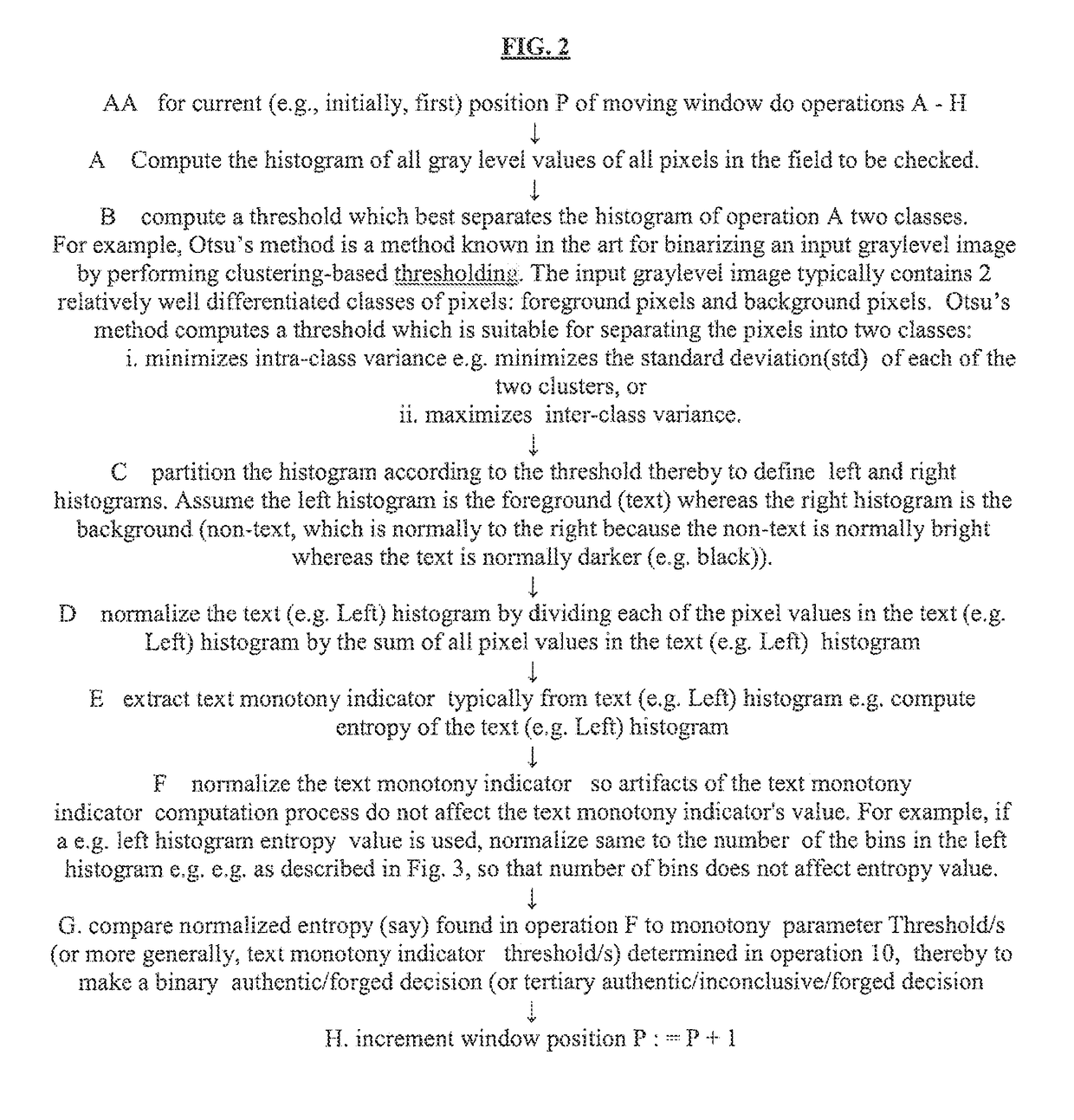

System and method for detecting forgeries

A document forgery detection method comprising using at least one processor for providing at least one histogram of gray level values occurring in at least a portion of at least one channel of an image assumed to represent a document including text, the histogram having been generated by image processing at least a portion of at least one channel of an image assumed to represent a document including text, the image having been sent by a remote end user to an online service over a computer network, evaluating monotony of at least a portion of the at least one histogram; and determining whether the image is authentic or forged based on at least one output of the evaluating.

Owner:AU10TIX

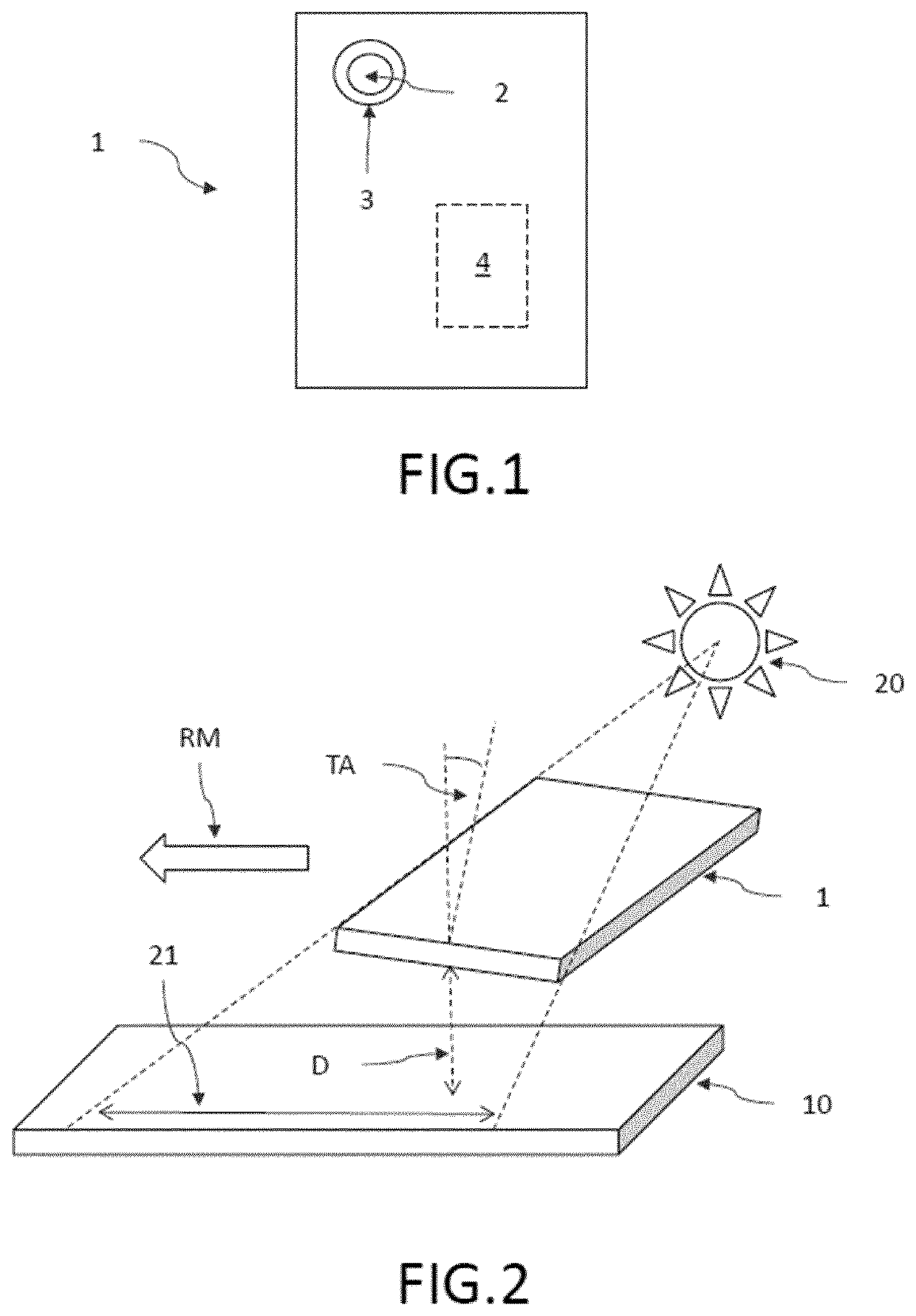

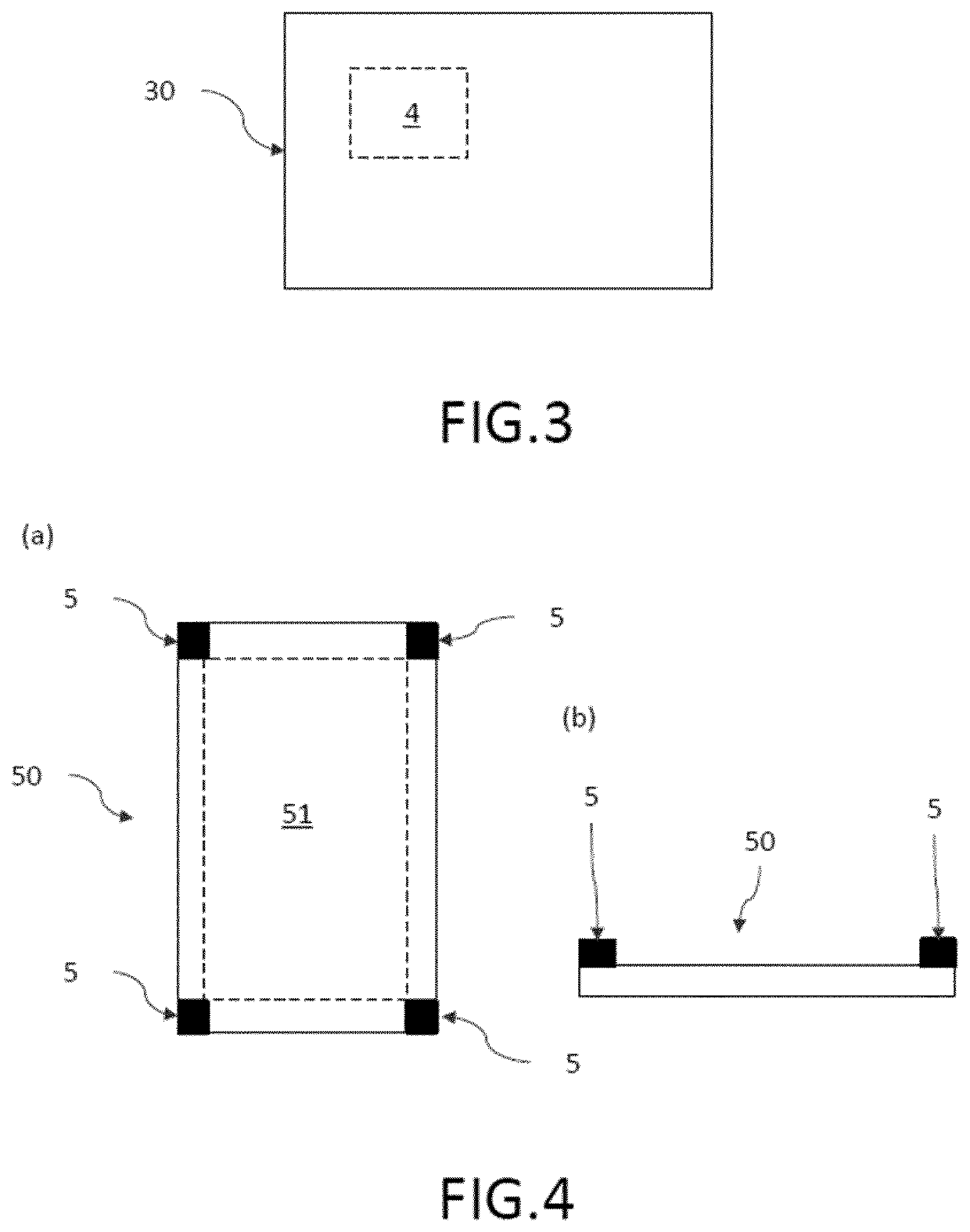

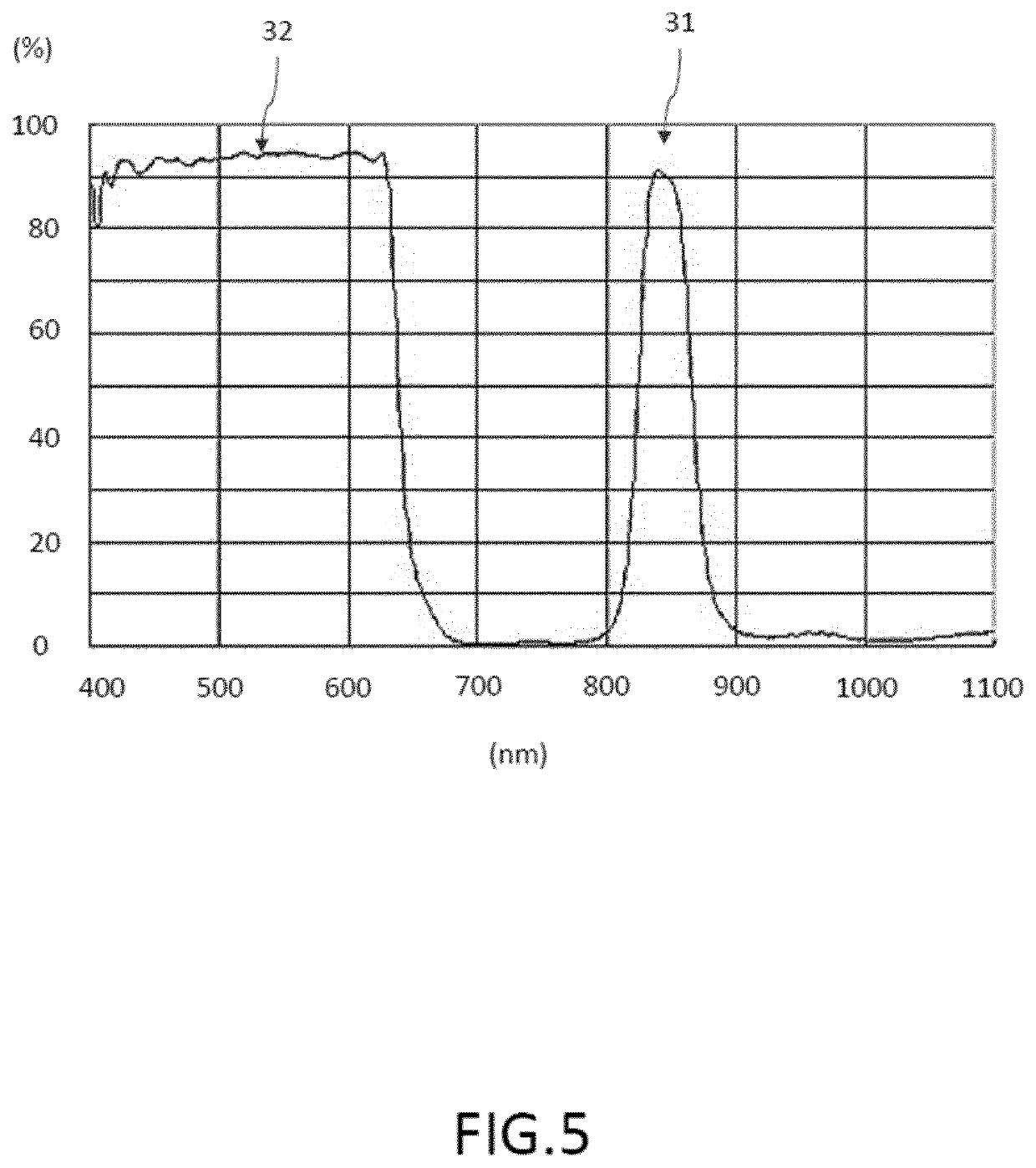

Counterfeit Detection In Bright Environment

ActiveUS20200312075A1Simple and reliable processImage enhancementTelevision system detailsImaging processingComputer graphics (images)

The invention describes a method (100) for counterfeit detection using a portable device (1) with installed counterfeit detection application, to a data carrier (30) comprising this counterfeit detection application, to the portable device (1) used in this method and to a cover (50) comprising guiding structures to perform this method comprising the steps of taking multiple overlapping pictures (110) from at least a portion of the object (10) with the camera (2) of the portable device (1) positioned in front of the object (10) during relatively moving (RM) the portable device with respect to and (1) along the object (10); combining (120) all taken pictures to a single combined image of at least the portion of the object (10) with increased resolution by the counterfeit detection application (4) executed on the portable device (1); applying image processing (130) to the combined image by the counterfeit detection application (4) providing an improved image with improved properties compared to the combined image to order to visualize or enhance the hidden features of the object (10) comprising an increased contrast in the infrared and / or ultraviolet wavelength range being transmitted by the adapted band pass filter (3); and comparing (140) the improved image with the visualized or enhanced hidden features of the object (10) with a reference image of the object (10) comprising the hidden features by the counterfeit detection application (4) to provide (200) an authentication result for the performed counterfeit detection.

Owner:LUMILEDS

UV-VIS-IR imaging optical systems

InactiveUS8289633B2Good dispersionPromote absorptionMountingsLensDocumentation procedureVisible infrared

Owner:CALDWELL JAMES BRIAN

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com