Patents

Literature

1688 results about "Positive sample" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

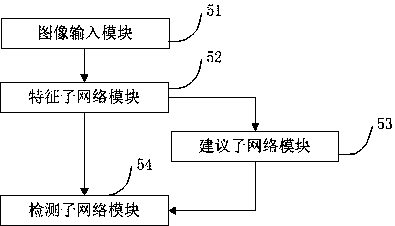

A visual target detection and labeling method

ActiveCN104217225AKeep boundariesHigh overlap rateCharacter and pattern recognitionPositive sampleData set

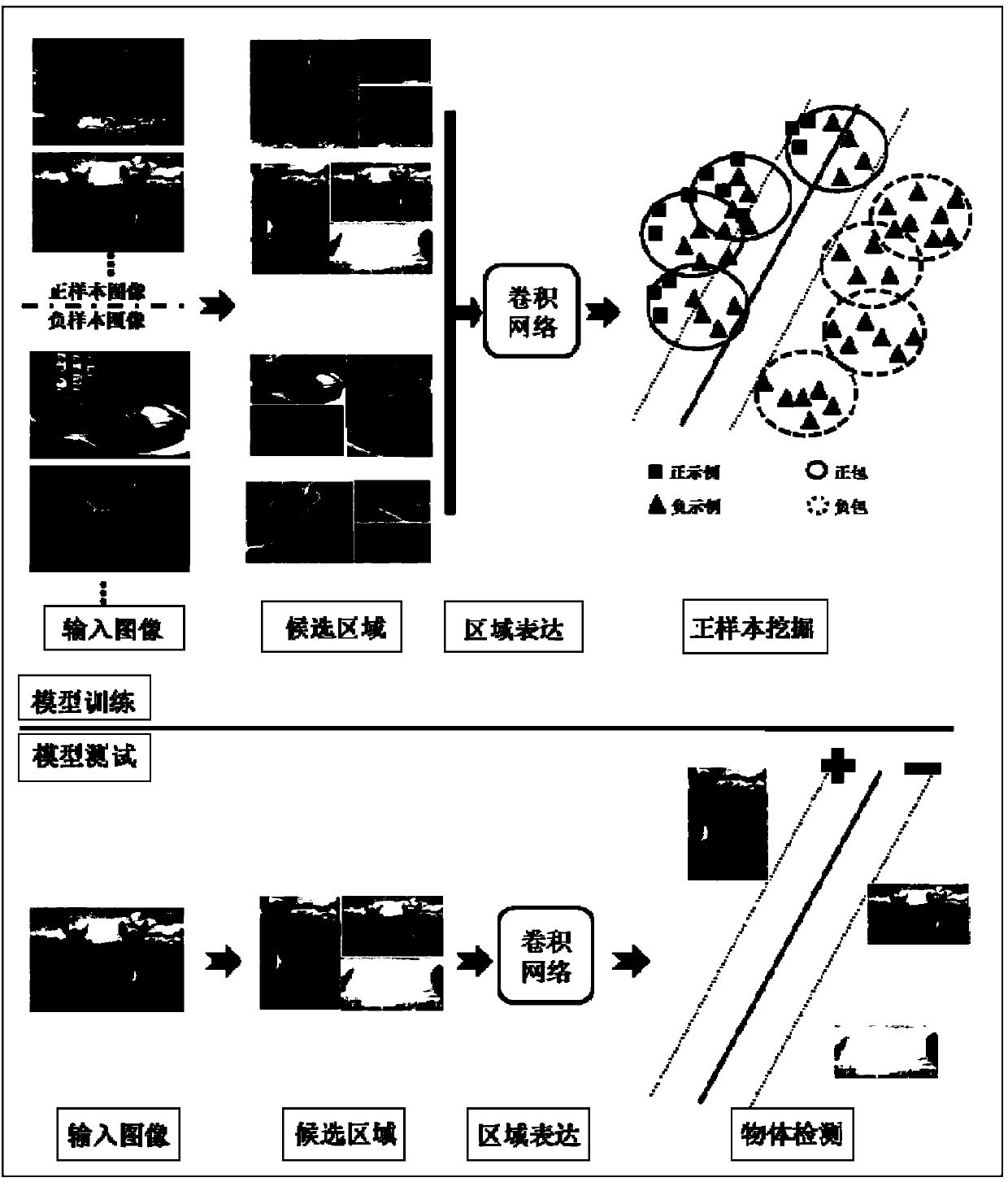

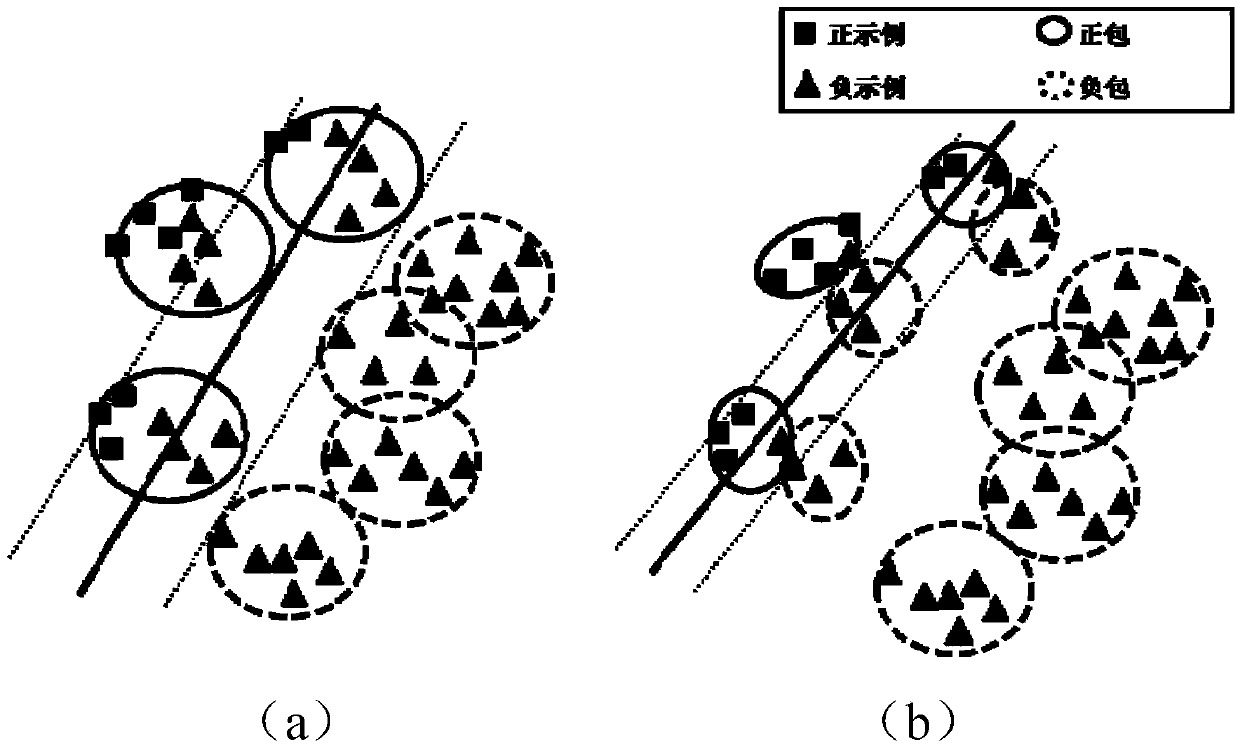

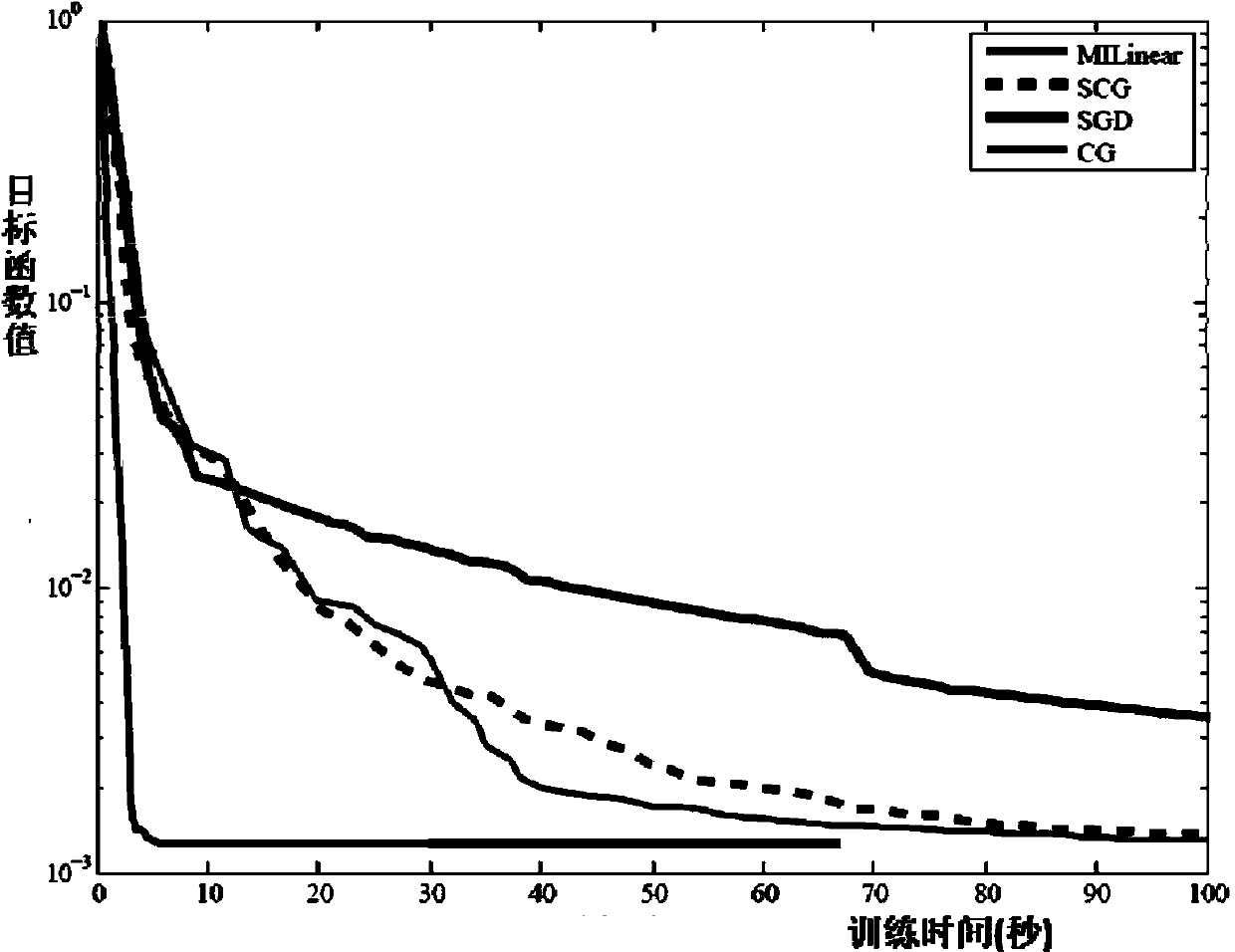

The present invention discloses a visual target detection and labeling method. The method includes: an image inputting step, to input an image to be detected; a candidate region extracting step, to extract a candidate window as the candidate region from the image to be detected using selectively search algorithm; a feature description extracting step, to perform feature description on the candidate region using a pre-trained large-scale convolutional neural network and output the feature description of the candidate region; a visual target predicting step, to predict the candidate region based on the feature description of the candidate region using a pre-trained object detection module, to estimate regions having the visual target; and a position labeling step, to labeling the position of the visual target according to the estimated result. Experiments show that, compared with the mainstream week supervision visual target detection and labeling method, the present invention has a stronger ability to excavate positive samples and a more general application prospect, and is suitable for visual target detection and automatic labeling tasks on the large-scale data set.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

Biometric Identification Systems and Methods

An exemplary embodiment of the present invention provides a method of verifying an identity of a person-to-be-identified using biometric signature data. The method comprises creating a sample database based on biometric signature data from a plurality of individuals, calculating a feature database by extracting selected features from entries in the sample database, calculating positive samples and negative sampled based on entries in the feature database, calculating a key bin feature using an adaptive boosting learning algorithm, the key bin feature distinguishing each of the positive samples and negative samples, and calculating a classifier from the key bin feature for use in identifying and authenticating a person-to-be-identified.

Owner:STONE LOCK GLOBAL INC

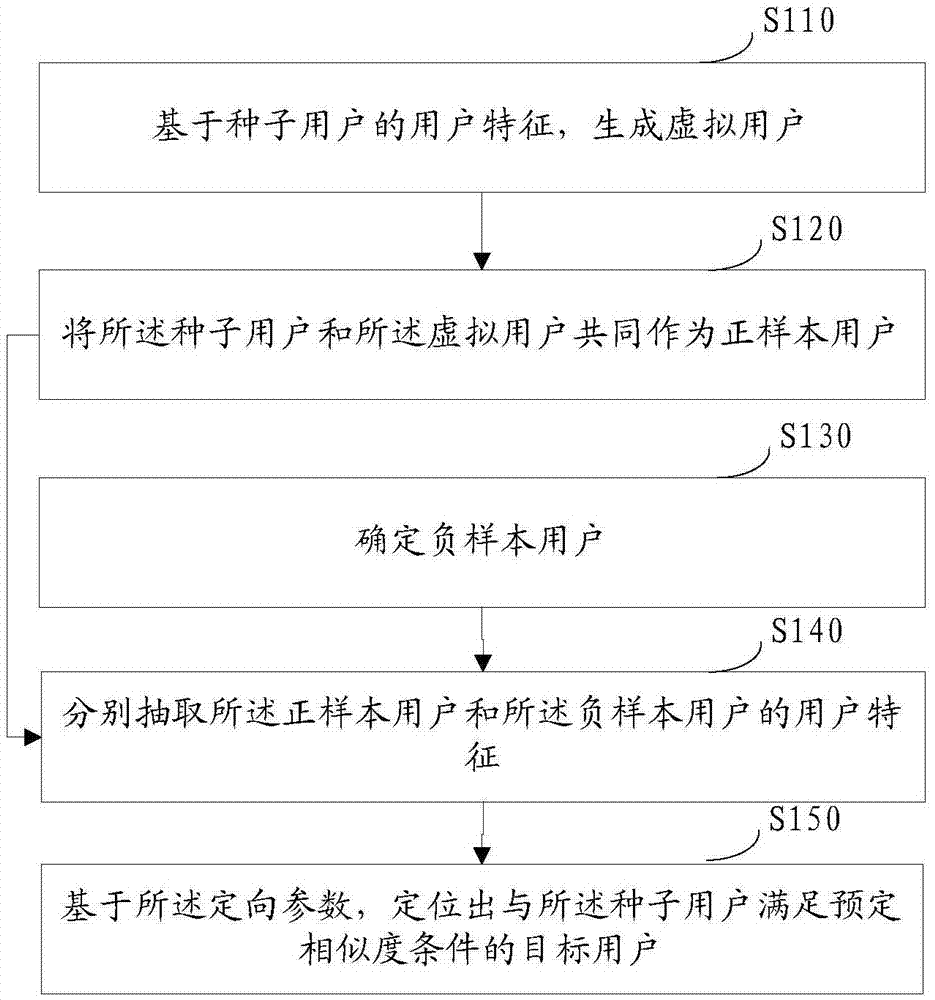

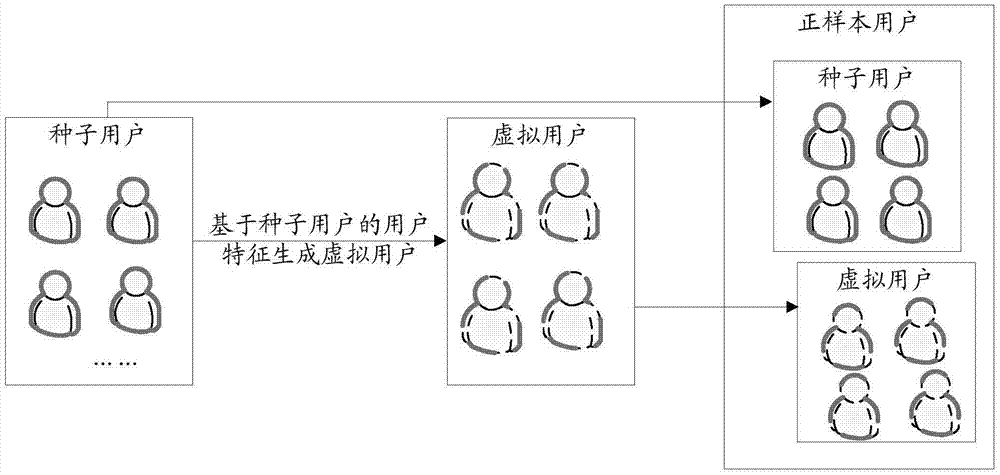

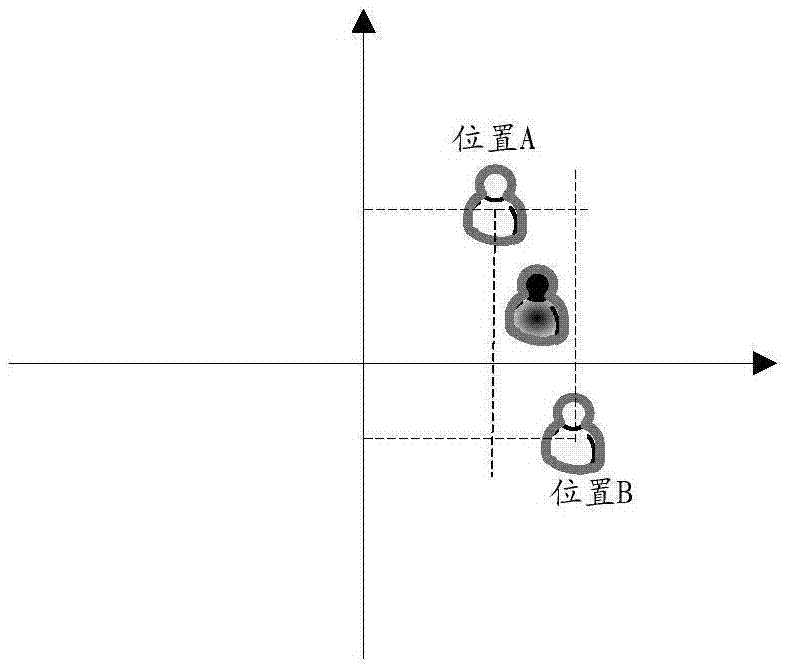

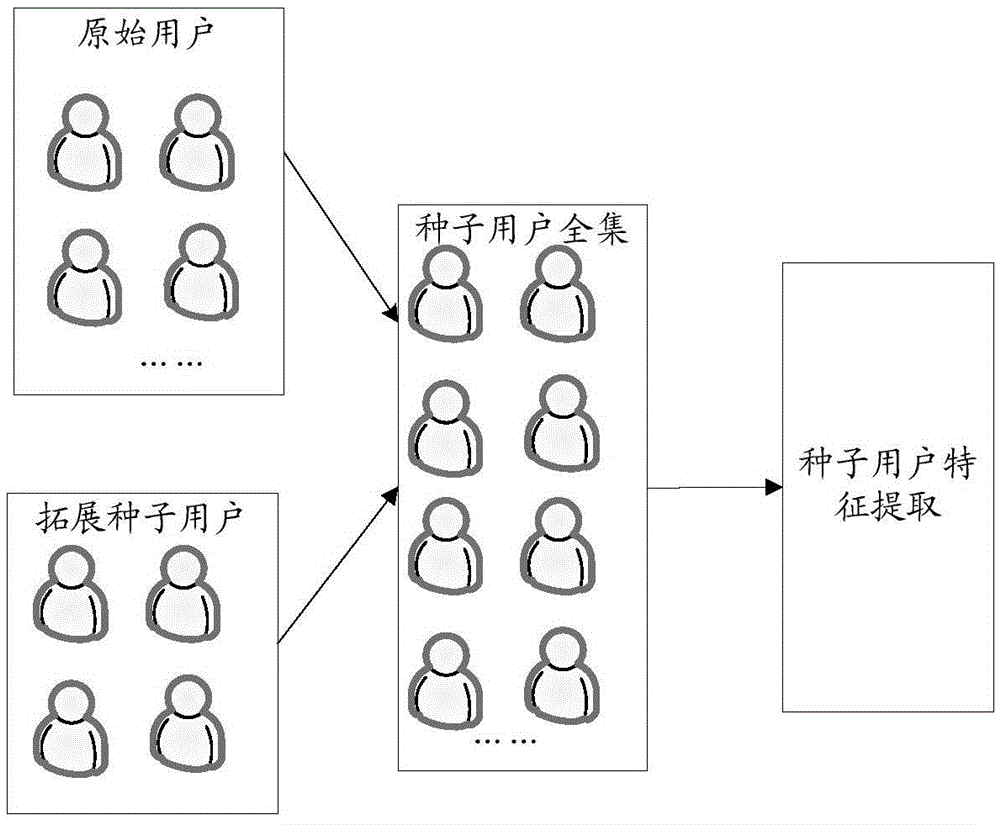

Target user orientation method and device

ActiveCN105447730AAlleviate the phenomenon of low positioning accuracyIncrease the number ofAdvertisementsPositive sampleVirtual user

The embodiment of the invention discloses a target user orientation method and device. The method comprises: generating a virtual user basing on the user characteristic of a seed user; taking the seed user and the virtual user as positive sample users; determining negative sample users; respectively extracting the user characteristics of the positive sample users and the negative sample users; training basing on the user characteristics of the positive sample users and the negative sample users, determining orientation parameters; and orientating the target user satisfying the predetermined similarity condition with the seed user basing on the orientation parameters.

Owner:TENCENT TECH (SHENZHEN) CO LTD

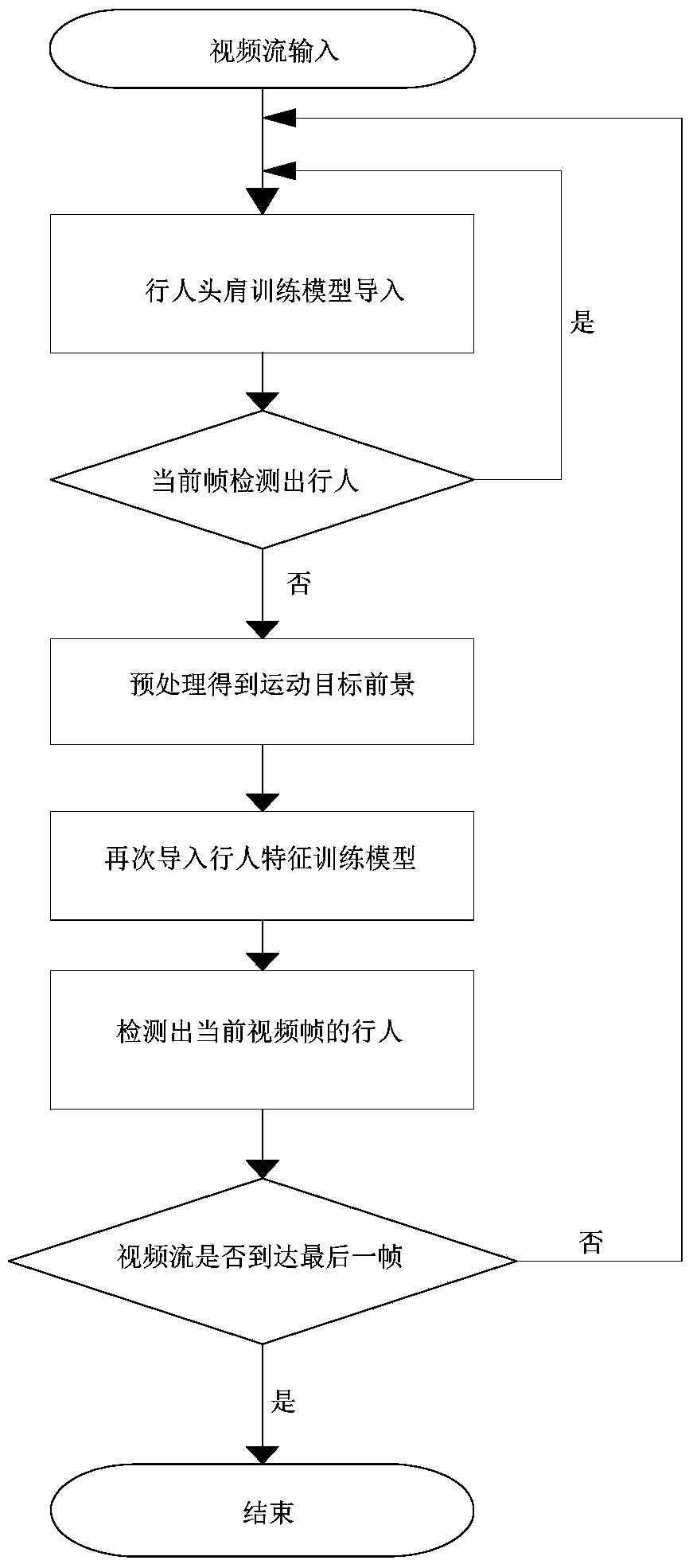

Pedestrian detection method

InactiveCN104166861AGuaranteed accuracyReduce redundancyCharacter and pattern recognitionPositive sampleData file

Provided is a pedestrian detection method. The pedestrian detection method comprises the following steps that a pedestrian positive sample set and a pedestrian negative sample set needed for training a convolutional neural network are prepared; the sample sets are preprocessed and normalized to conform to a unified standard, and a data file is generated; the structure of the convolutional neural network is designed, training is carried out, and a weight connection matrix is obtained during convergence of the network; self-adaptive background modeling is carried out on videos, information of moving objects in each frame is obtained, coarse selection is carried out on detected moving object regions at first, the regions with height to width ratios unsatisfying requirements are excluded, and candidate regions are generated; each candidate region is input into the convolutional neural network, and whether pedestrians exist is judged.

Owner:成都六活科技有限责任公司

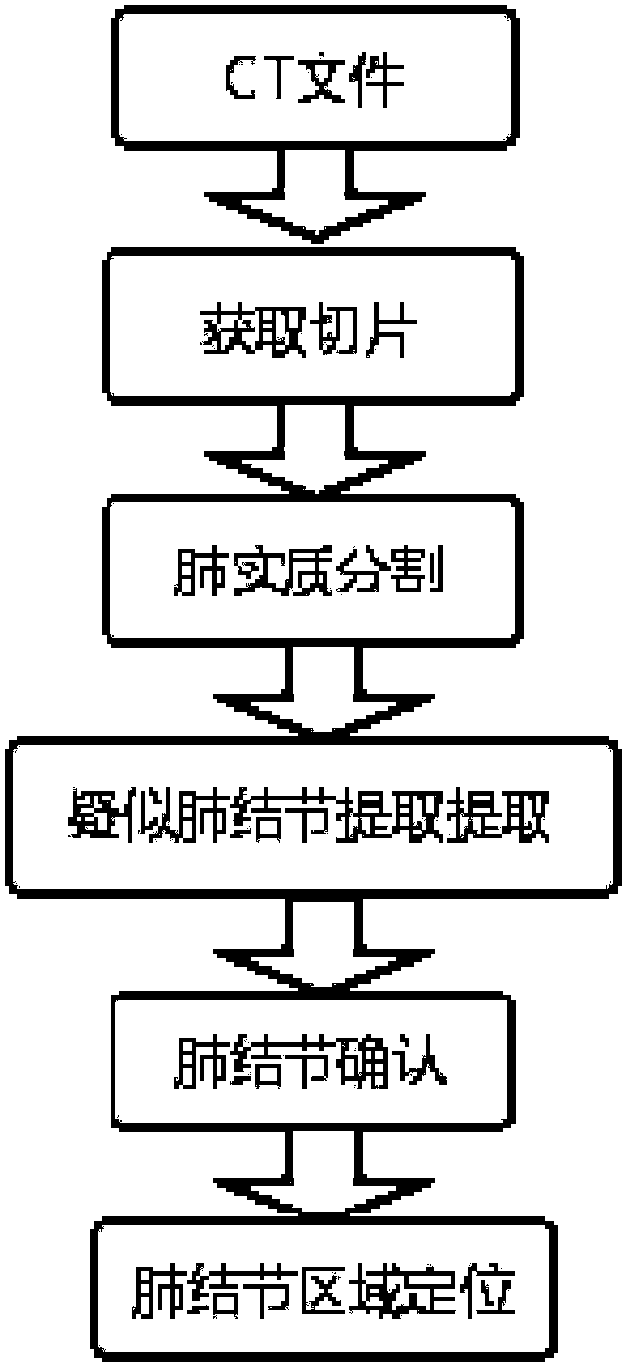

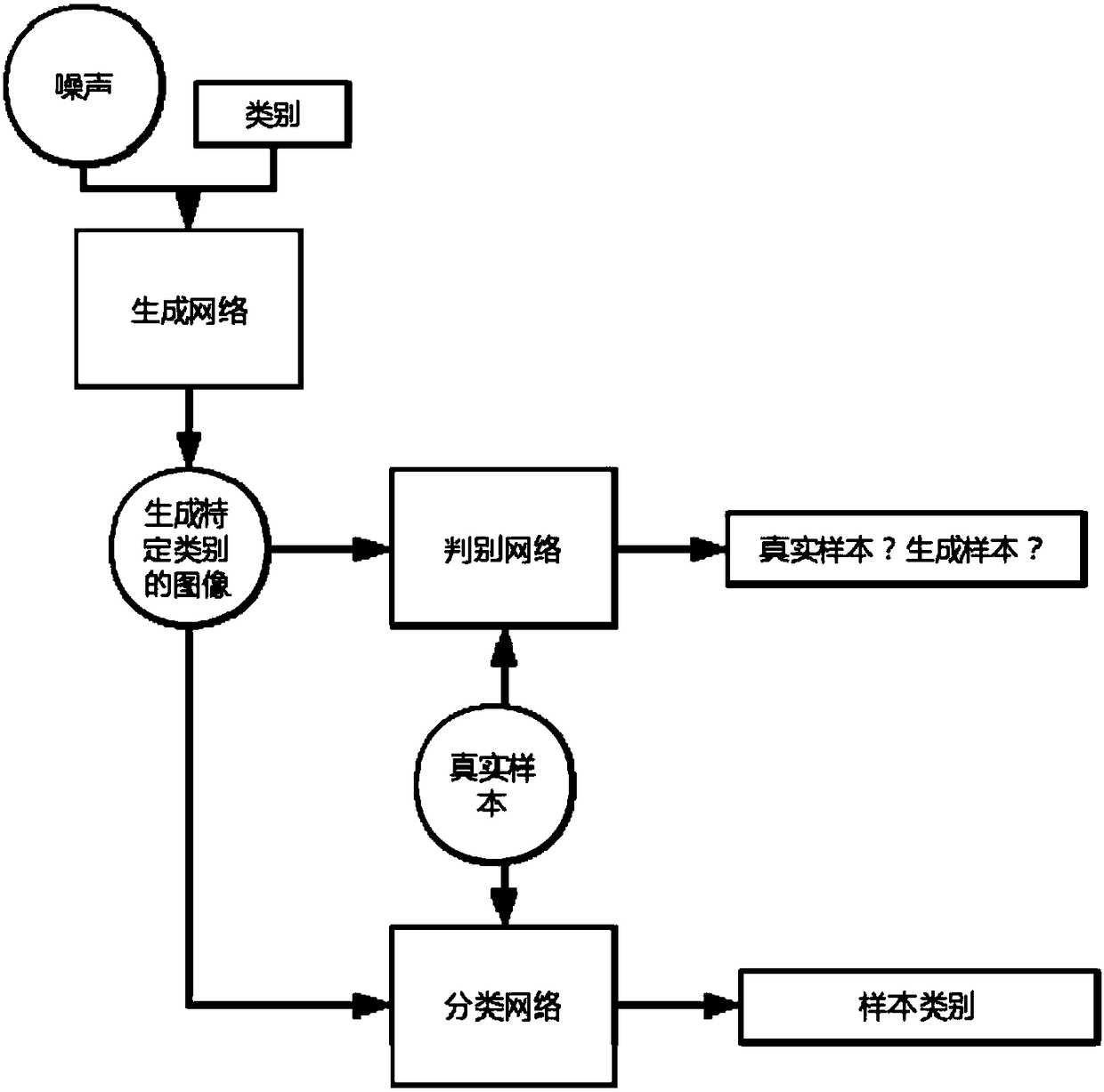

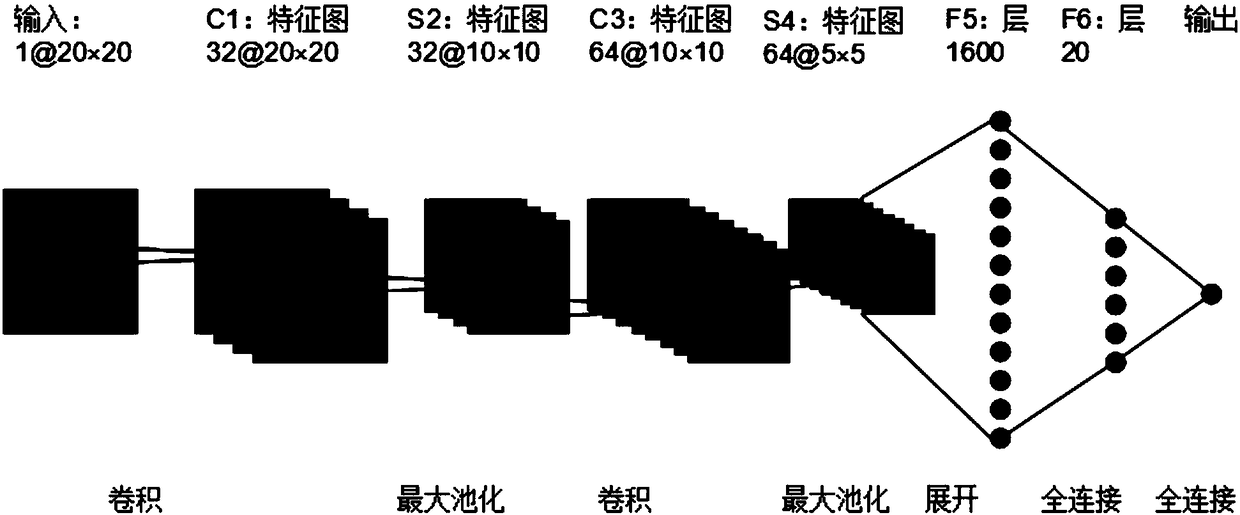

Generative adversarial network improved CT medical image pulmonary nodule detection method

InactiveCN108198179AArtifact AvoidanceAvoid preferenceImage enhancementImage analysisPulmonary nodulePulmonary parenchyma

The invention discloses a generative adversarial network improved CT medical image pulmonary nodule detection method. The method includes: 1), acquiring a section of a pulmonary CT image; 2), separating according to image morphological properties to acquire a ROI pulmonary parenchyma area; 3), acquiring different suspected pulmonary nodule candidate sets according to a connected domain formed by abinarized image; 4), building a model of an assistant classifier generative adversarial network to generate positive samples overcome the circumstance that positive-negative sample number is unbalanced; 5), building a convolution neural network to classify suspected pulmonary nodule parts to acquire pulmonary nodule areas; 6), using a non-maximum suppression algorithm to acquire a final area of pulmonary nodule. By the method, efficient processing performance of a computer can be fully utilized, certain expandability is provided, and data processing efficiency is improved; through a convolution neural network algorithm, classifying accuracy is improved, CT image data processing performance is improved, and pulmonary nodule images can be built and analyzed more efficiently.

Owner:SOUTH CHINA UNIV OF TECH

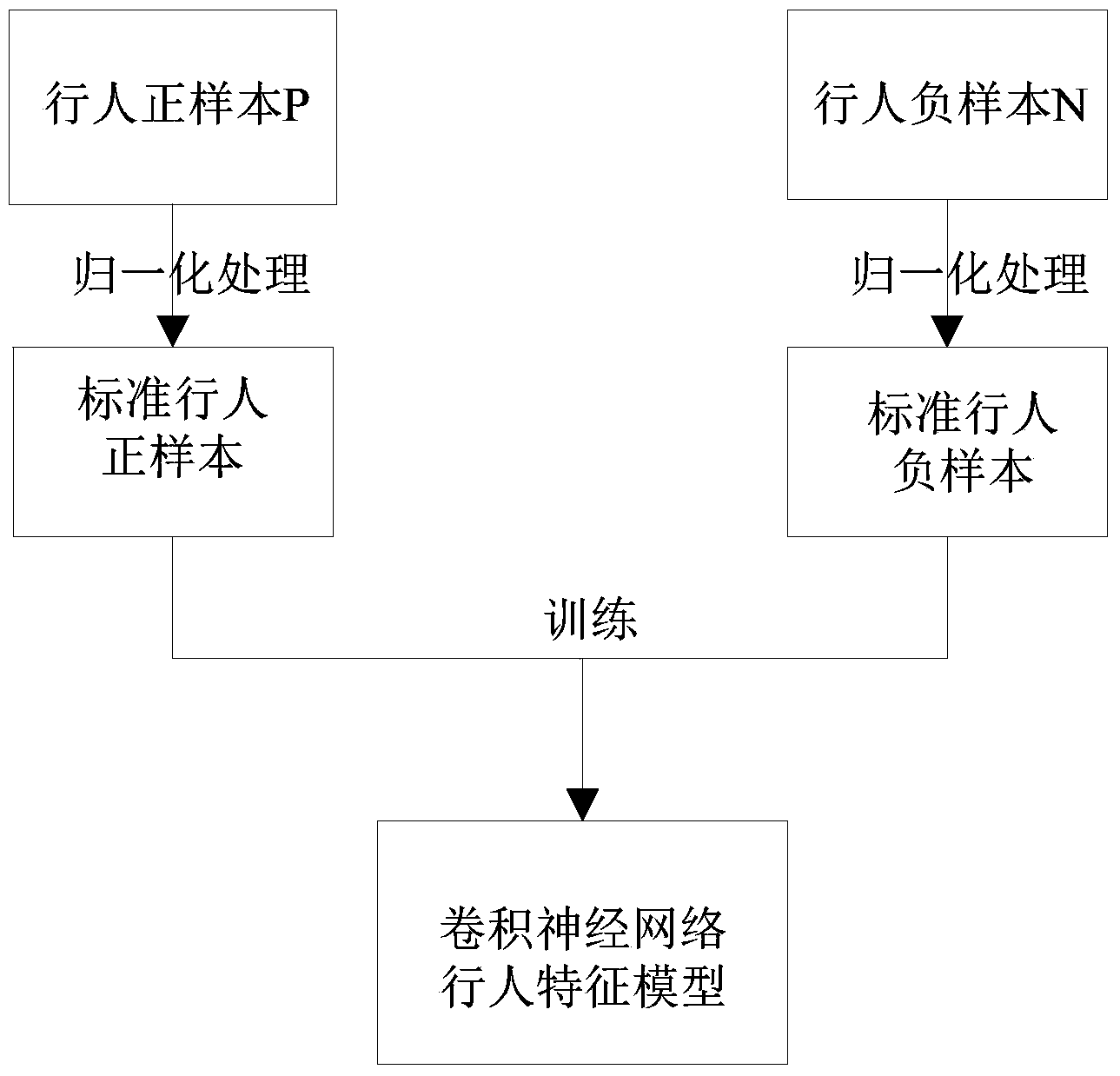

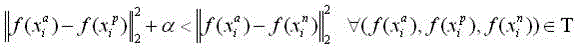

Triple loss-based improved neural network pedestrian re-identification method

ActiveCN106778527AGuaranteed robustnessImprove recognition accuracyCharacter and pattern recognitionFeature vectorPositive sample

The invention discloses a triple loss-based improved neural network pedestrian re-identification method. The method comprises the following steps of constructing a sample database, establishing positive and negative sample libraries based on the sample database, and randomly selecting two positive samples and one negative sample to form a triple; constructing a triple loss-based neural network, and performing training, wherein the neural network is formed by connecting three parallel convolution neural networks with a triple loss layer; inputting a to-be-tested picture and each sample picture in the expanded sample database, which serve as a group of inputs, to the trained neural network in sequence, wherein another input of the neural network is zero or zero input; and calculating a distance of eigenvectors of two input pictures output by the neural network by utilizing a Euclidean distance, and querying and arranging first 20 Euclidean distances in an ascending order, and then performing simple manual screening to obtain a final identification result. The method has the beneficial effects that the identification method can be suitable for a picture scene with a relatively great change, can ensure robustness, and has relatively high identification accuracy.

Owner:CHINACCS INFORMATION IND

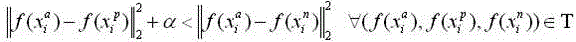

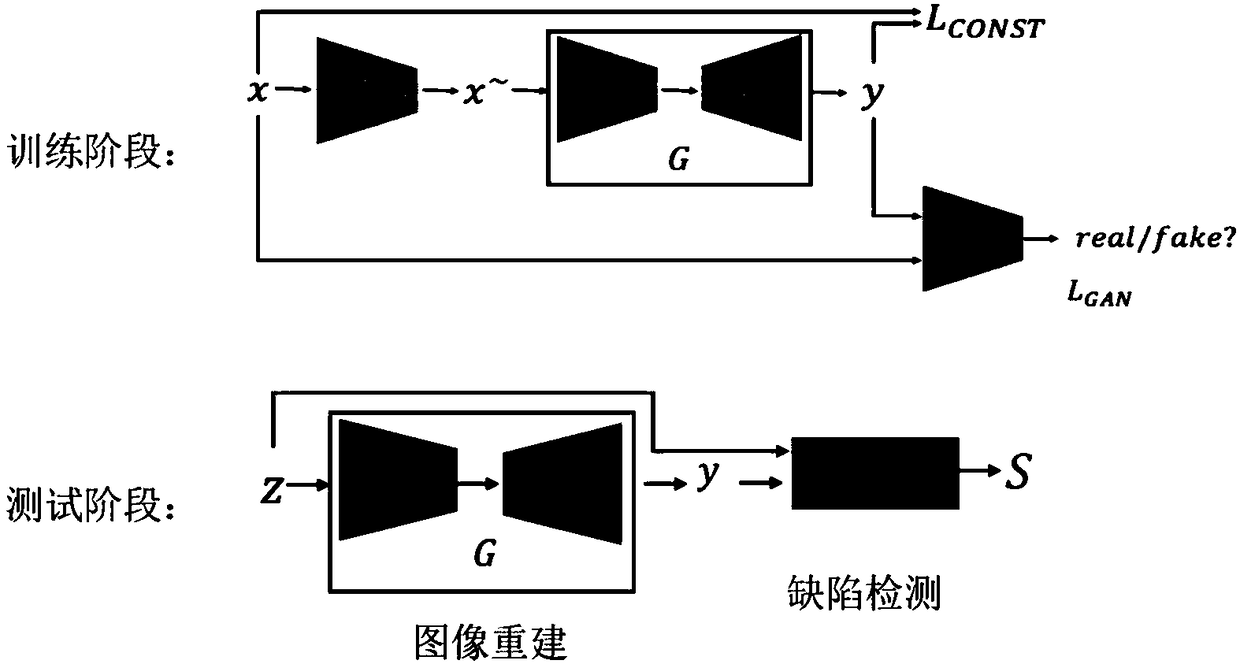

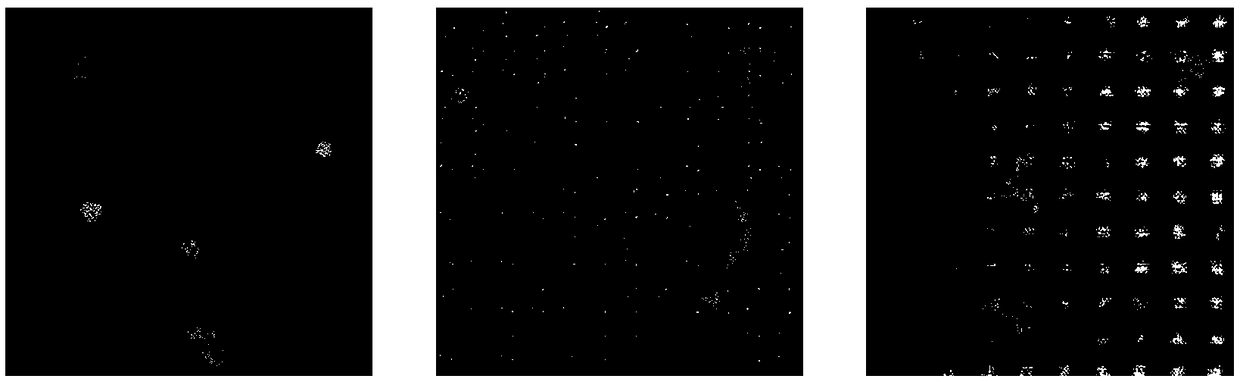

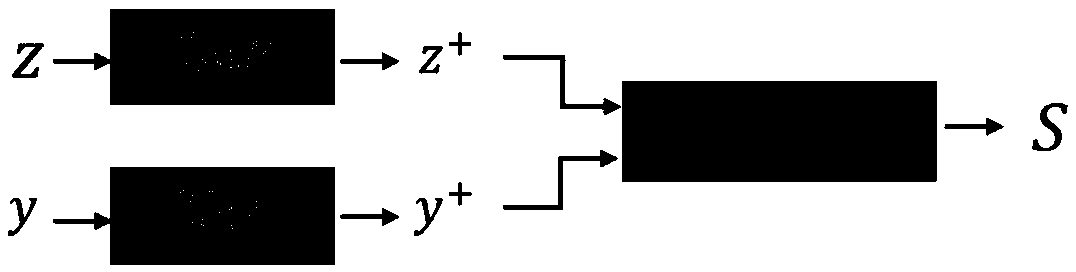

Surface defect detection method based on positive case training

ActiveCN108961217ALarge capacityComprehensive parametersImage enhancementImage analysisPositive sampleManual annotation

The invention relates to a surface defect detection method based on positive case training. The method comprises two steps of image reconstruction and defect detection, image reconstruction is to reconstruct an inputted original image into an image without defects, reconstruction steps are as follows, artificial defects and noise are added to the positive case image during training, a self-encoderis utilized for reconstruction, the L1 distance between the reconstruction result and the noise-free original image is calculated, the distance is minimized as a reconstruction target, in cooperationwith the generative adversarial network, the reconstruction image effect is optimized; defect detection is performed after image reconstruction, LBP features of the reconstructed image and the original image are calculated, after difference between the two feature images is made, the two images are binarized based on the fixed threshold, so the defects are found. The method is advantaged in thatthe depth learning method is utilized, the method can be sufficiently robust to be less susceptible to environmental changes when positive samples are enough, moreover, based on regular training, themethod does not rely on a large number of negative samples and manual annotation, the method is suitable for being used in real-world scenarios, and the surface defects can be better detected.

Owner:NANJING UNIV +2

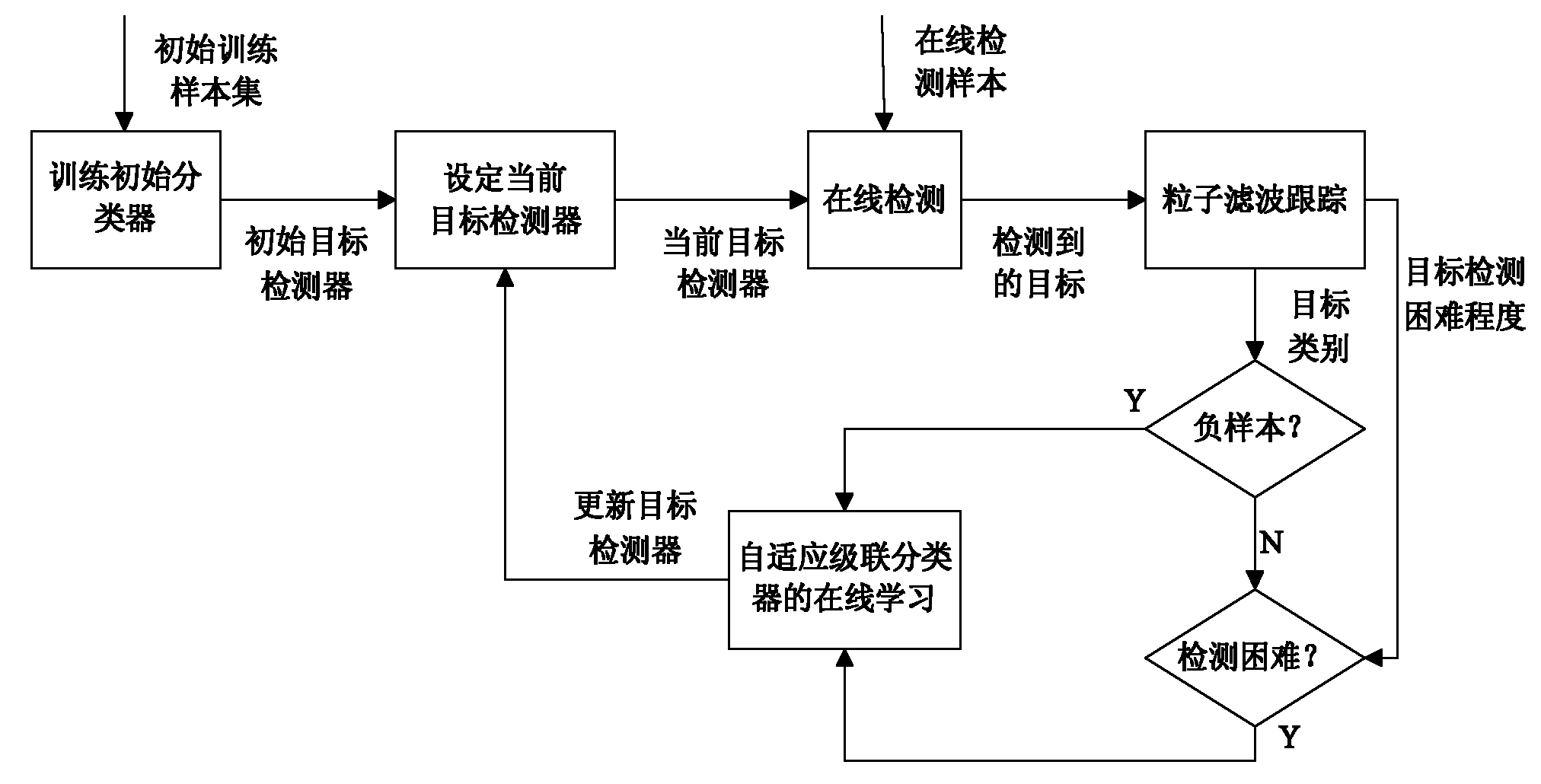

Self-adaptive cascade classifier training method based on online learning

InactiveCN101814149AHigh precisionHigh degree of intelligenceCharacter and pattern recognitionPositive sampleValue set

The invention discloses a self-adaptive cascade classifier training method based on online learning, which comprises the following steps: (1), preparing a training sample set with a small quantity of samples, and training an initial cascade classifier HC(x) in a cascade classifier algorithm; (2), using the HC(x) for traversal of image frames to be detected, extracting areas with sizes identical to the sizes of the training samples one by one, calculating a feature value set, classifying the areas with the initial cascade classifier, and judging whether the areas are target areas, thereby completing target detection; (3) tracking the detected targets in a particle filtering algorithm, verifying the target detection results through tracking, marking detection with errors as a negative sample for online learning, obtaining different attitudes of real targets through tracking and extracting a positive sample for online learning; and (4) carrying out online training and updating for the initial cascade classifier HC(x) in a self-adaptive cascade classifier algorithm when an online learning sample is obtained, thereby gradually improving the target detection accuracy of the classifier.

Owner:HUAZHONG UNIV OF SCI & TECH

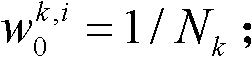

Method for constructing fracture recognition model and application

ActiveCN108305248AAccurate predictionShorten diagnostic timeImage enhancementImage analysisPositive sampleFracture type

The invention provides a method for constructing a fracture recognition model and application. The method comprises: step A, acquiring X-ray images of different fracture types of different fracture parts; step B, carrying out image preprocessing on the X-ray images of different fracture types of different fracture parts; step C, carrying out image feature extraction on the preprocessed images; step D, generating a candidate region according to the image features; step F, carrying out fracture type target detection and region localization on the candidate region; step G, according to a facturetype target detection score, carrying out difficult sample mining to obtain negative samples for training; step K, expanding positive samples for training based on an adversarial learning strategy; and step H, training is carried out by using the positive samples and the negative samples so as to obtain all fracture recognition models for different fracture parts. Therefore, the fracture type andthe regional position can be predicted accurately by using the model, so that the diagnosis time of the doctor is reduced substantially and the missed diagnosis and misdiagnosis rates are reduced.

Owner:HUIYING MEDICAL TECH (BEIJING) CO LTD

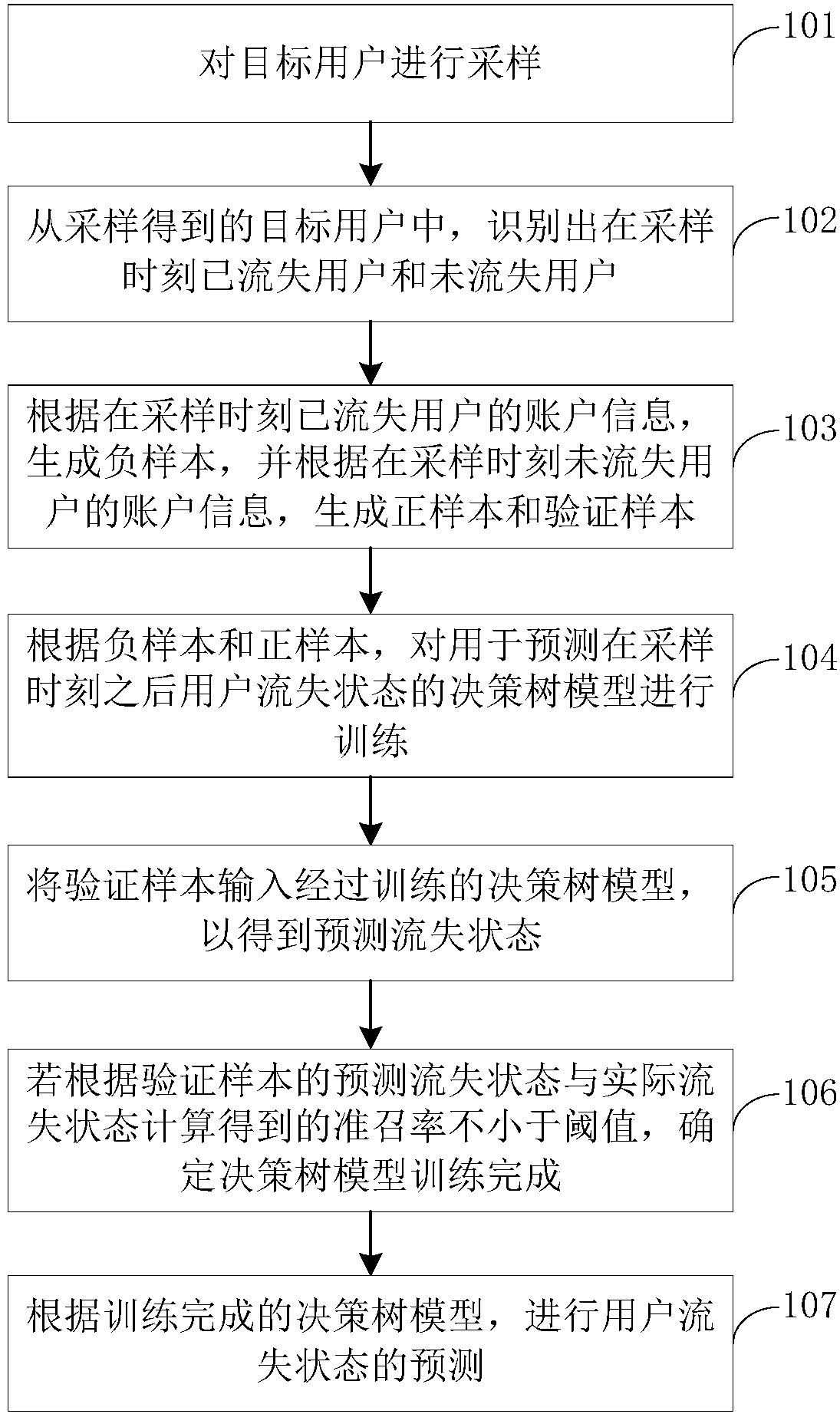

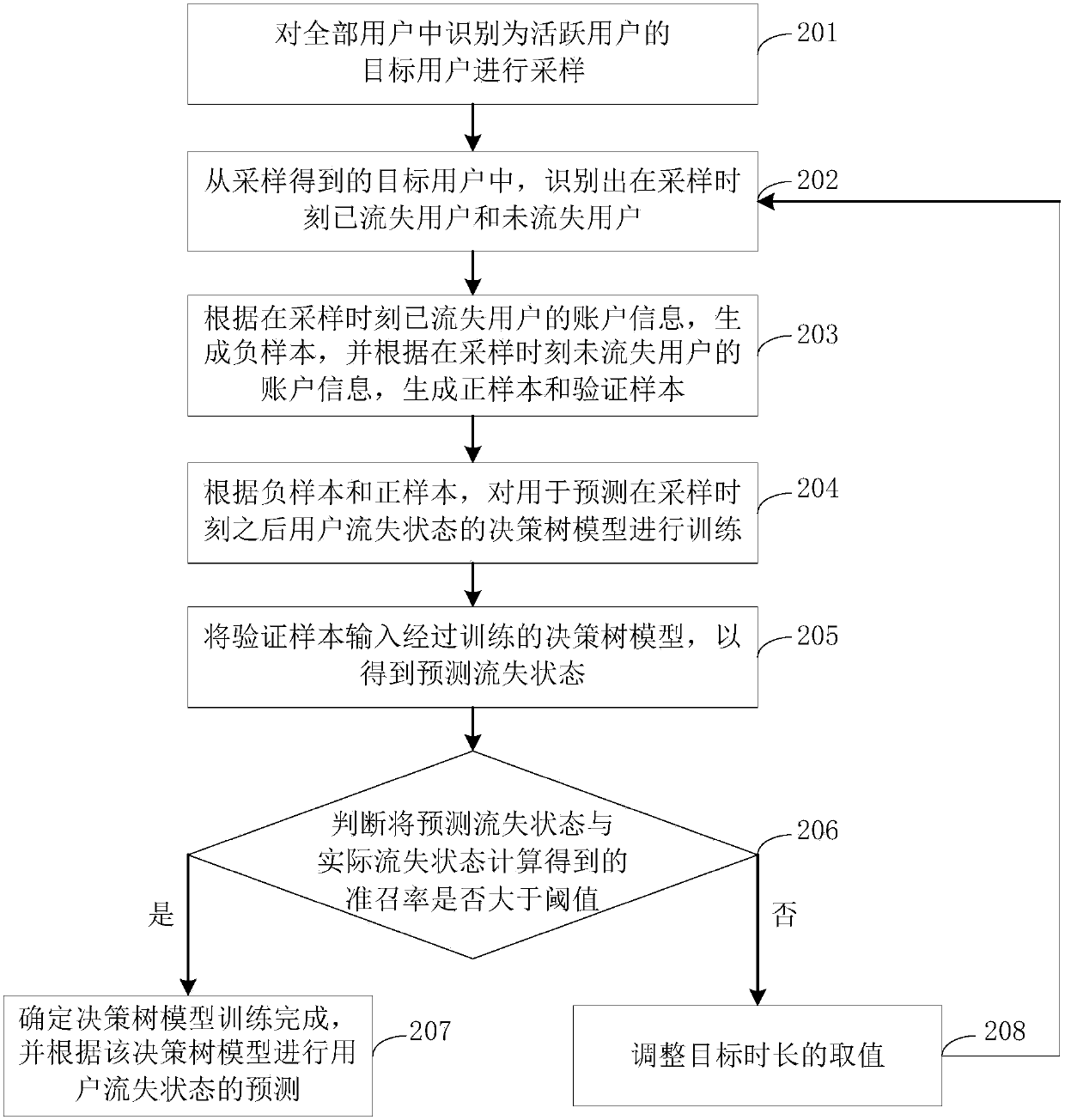

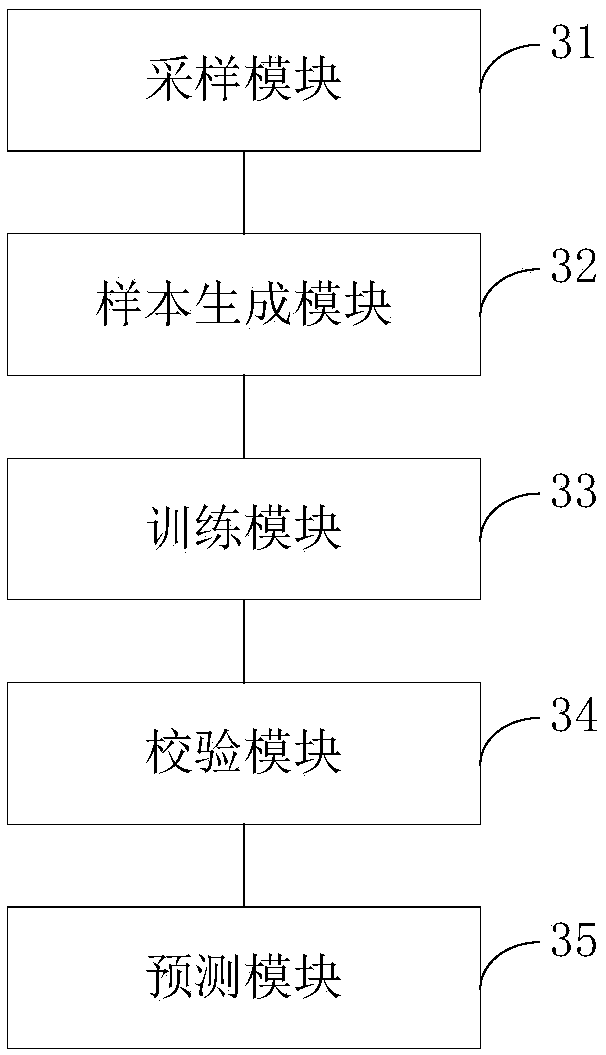

State prediction method and device

The invention discloses a state prediction method and device. The method comprises the following steps of: sampling target users; respectively generating a negative sample, a positive sample and a verification sample according to account information of lost and unlost users according to a recognized sampling moment; training a decision-making tree which is used for predicting user loss state afterthe sampling moment; inputting the verification sample into the trained decision-making tree model to obtain a predicted loss state; and if a correct recall rate obtained through carrying out calculation according to the predicted loss state and a practical loss state is not smaller than a threshold value, determining that the training of the decision-making tree model is completed, and carryingout user loss state prediction. According to the state prediction method and device, the decision-making tree model is trained by a training sample generated through sampling the target users, and user loss state prediction is carried out according to the trained decision-making tree model, so that the technical problems of relatively recognition efficiency low and reusing difficulty caused by user loss state prediction carried out through artificial experiences or established rules in the prior art are solved.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

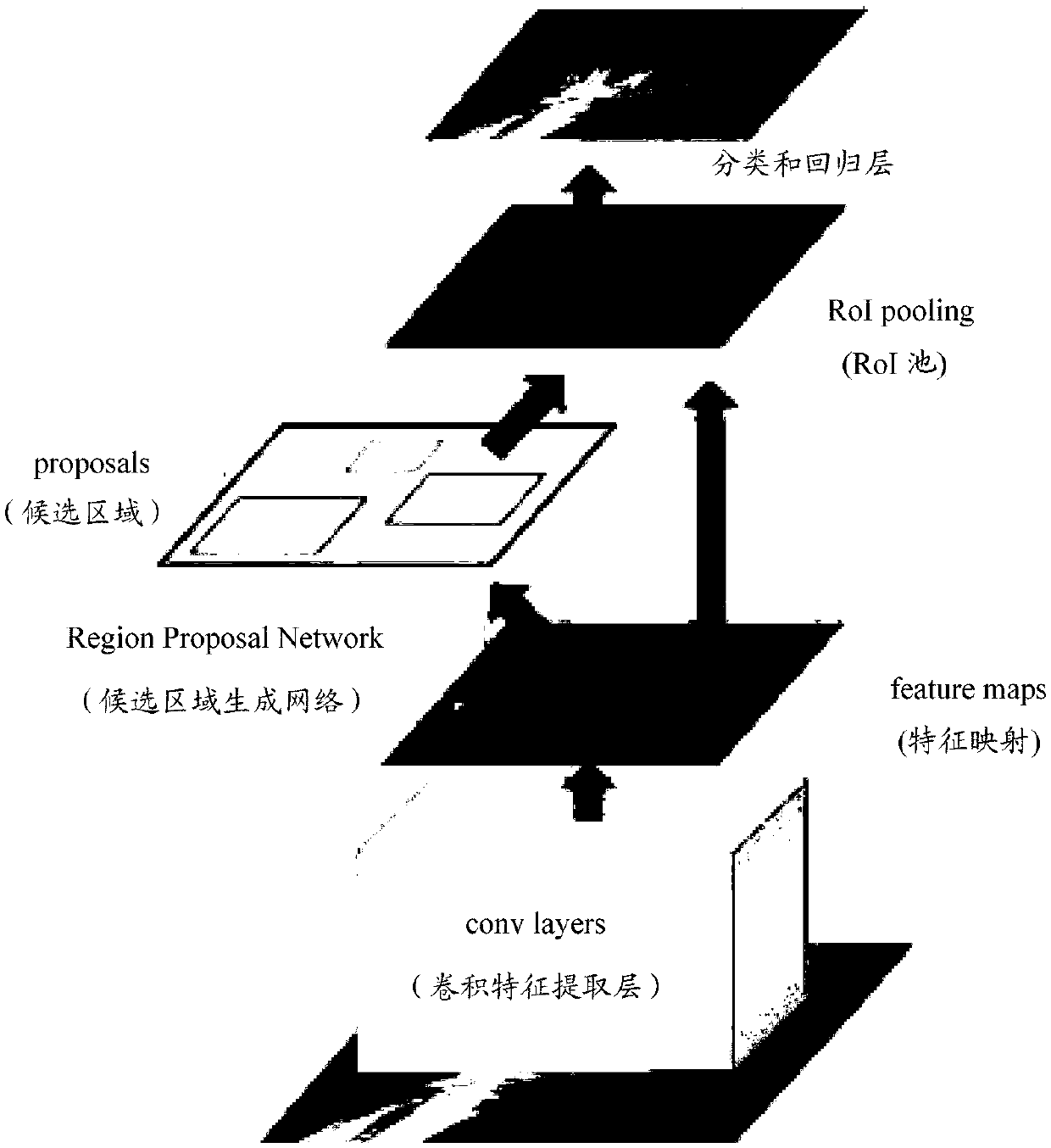

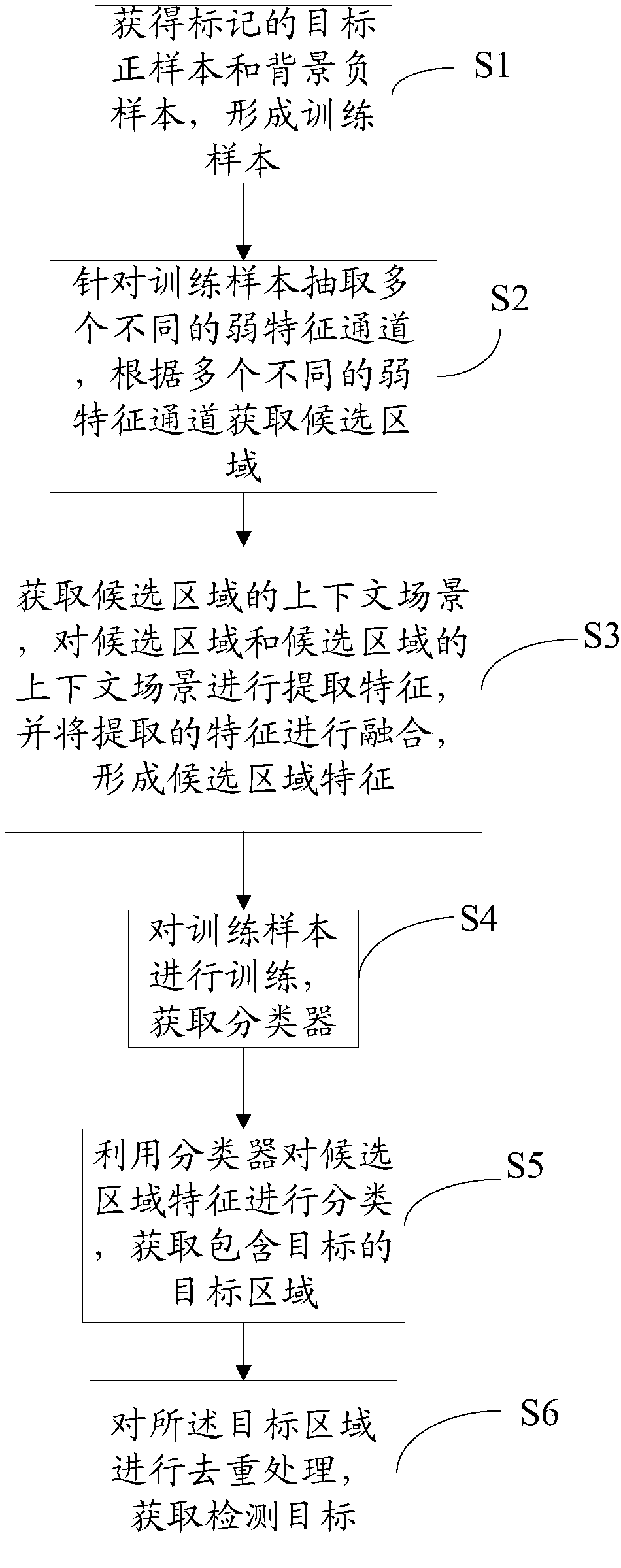

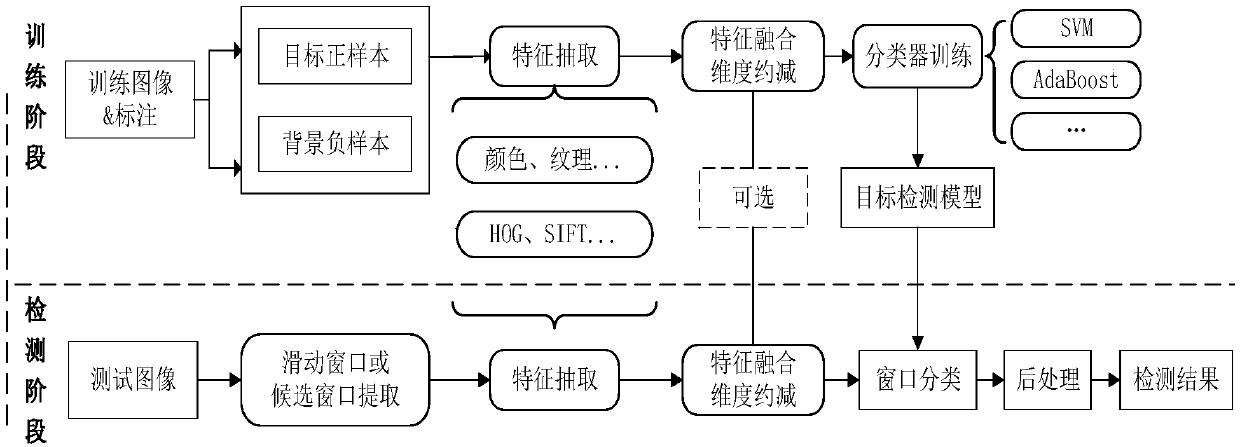

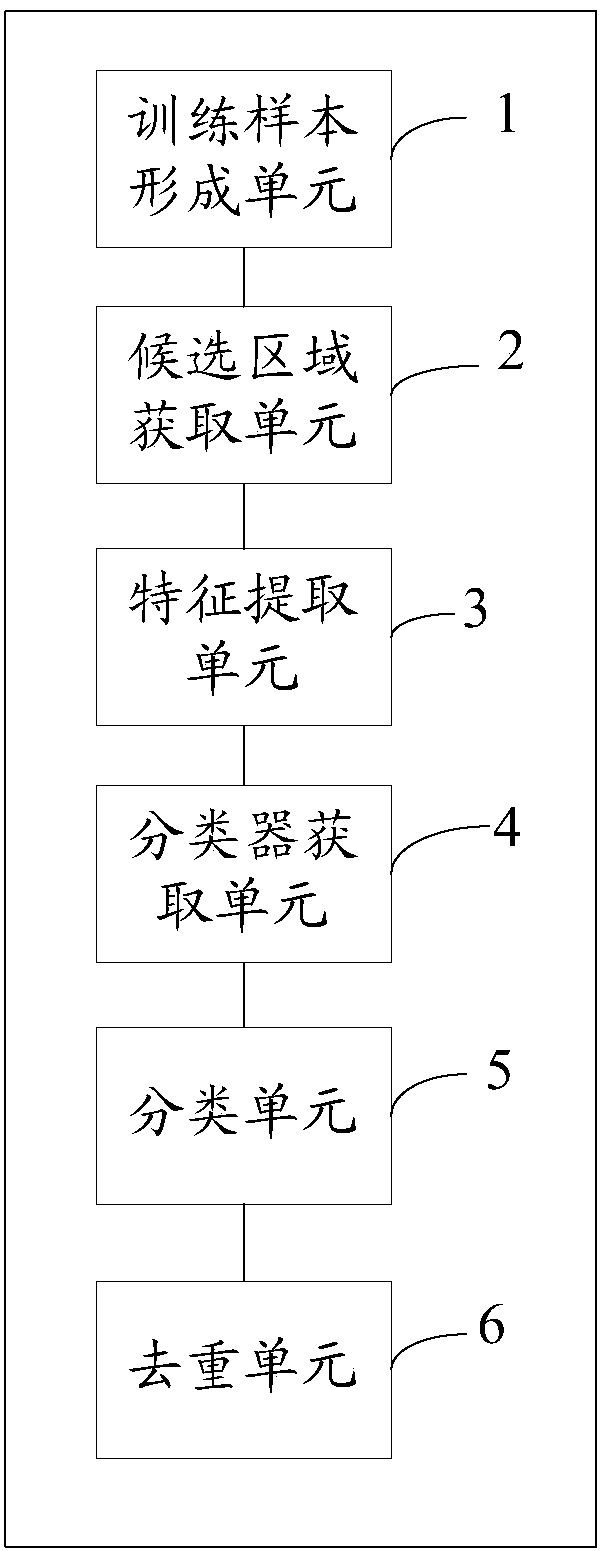

Target detection method based on high resolution optical satellite remote sensing images, and system thereof

ActiveCN108304873AObject detection optimizationImage enhancementImage analysisPositive sampleComputer vision

The invention relates to a target detection method based on high resolution optical satellite remote sensing images, and a system thereof. The target detection method based on high resolution opticalsatellite remote sensing images includes the steps: obtaining a marked target positive sample and a marked background negative sample to form a training sample; for the training sample, extracting a plurality of different weak characteristic channels, and according to the plurality of different weak channels, obtaining a candidate region; obtaining the context scene of the candidate region, performing characteristic extraction on the candidate region and the context scene of the candidate region, and fusing the extracted characteristics to form the characteristics of the candidate region; training the training sample, and obtaining a classifier; classifying the characteristics of the candidate region by means of the classifier, and obtaining a target region including the target; and performing duplicate removal on the target region to obtain a detection target. The target detection method based on high resolution optical satellite remote sensing images realizes target detection on remote sensing images with enlarged formats, optimizes the targets with very short interval and the target detection effect with uncommon length breadth ratio.

Owner:深圳市国脉畅行科技股份有限公司

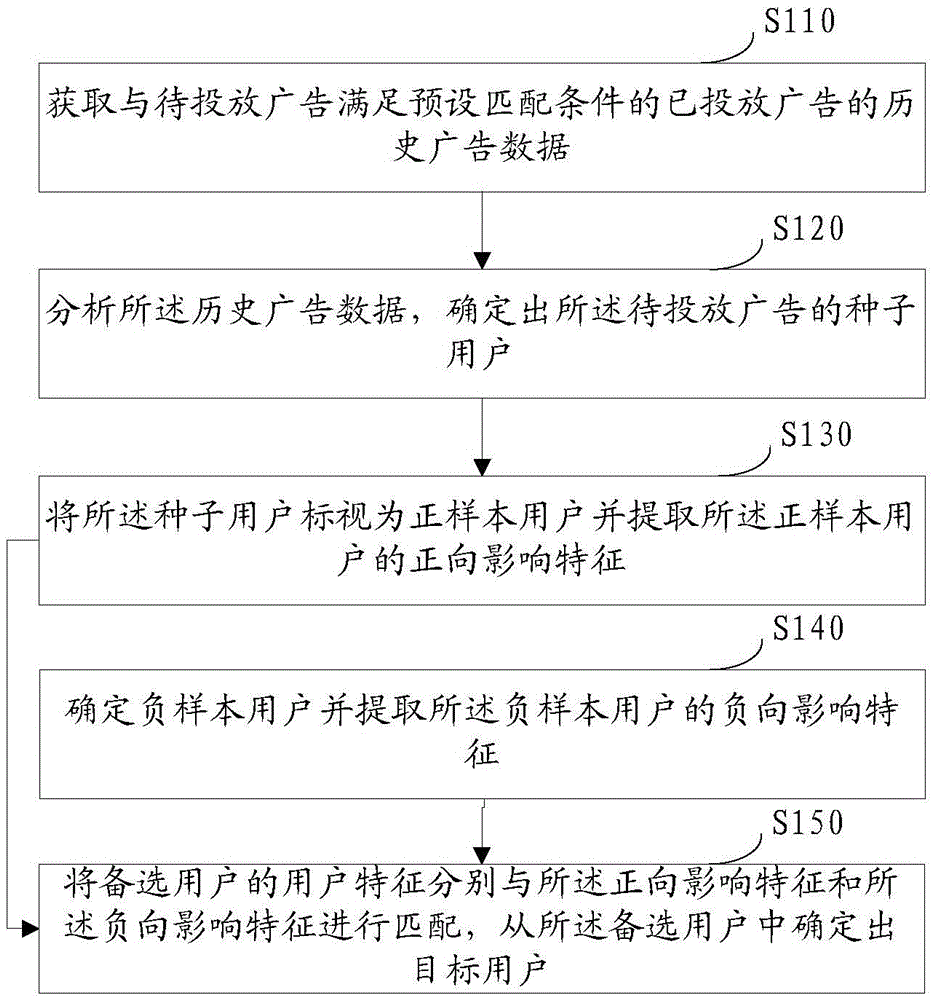

Target user determination method and apparatus

ActiveCN105550903APrecise positioningEasy to determineMarketingPositive sampleHuman–computer interaction

Embodiments of the invention provide a target user determination method and apparatus. The method comprises the steps of obtaining historical advertisement data of a transmitted advertisement meeting a preset matching condition with a to-be-transmitted advertisement; analyzing the historical advertisement data to determine a seed user of the to-be-transmitted advertisement; marking the seed user as a positive sample user and extracting a positive influence characteristic of the positive sample user; determining a negative sample user and extracting a negative influence characteristic of the negative sample user; and matching user characteristics of alternative users with the positive and negative influence characteristics to determine a target user from the alternative users.

Owner:TENCENT TECH (SHENZHEN) CO LTD

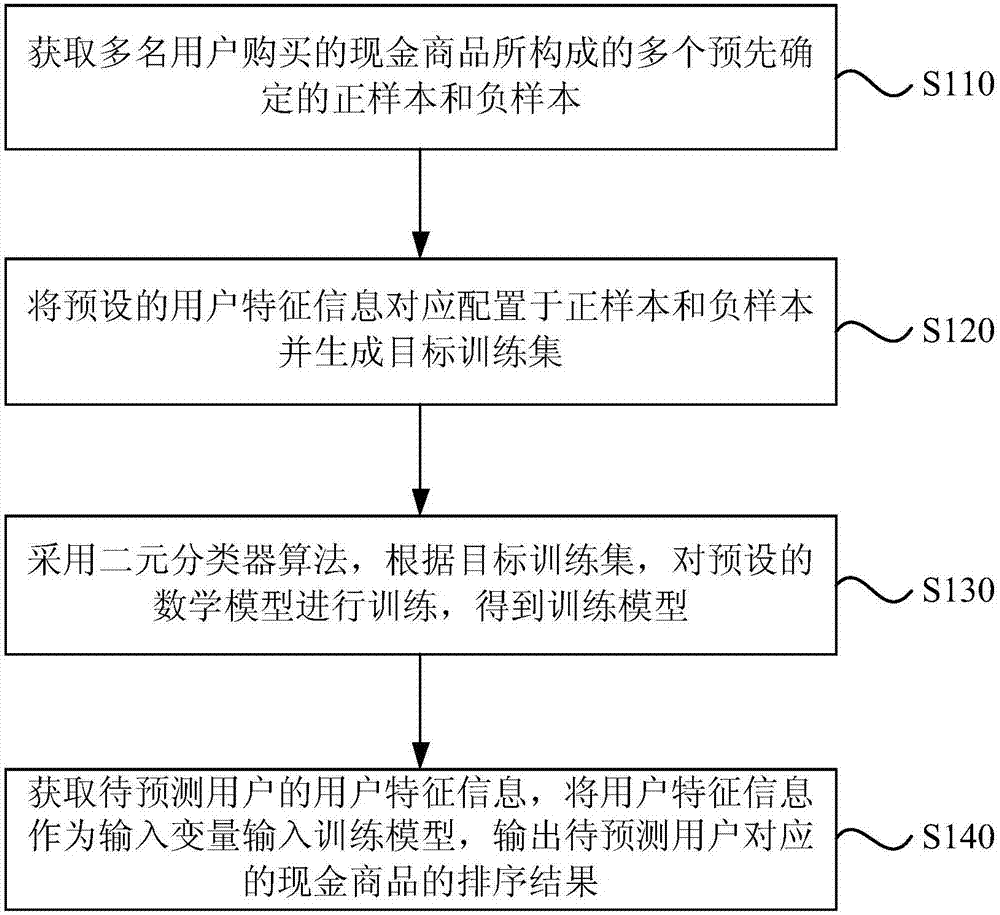

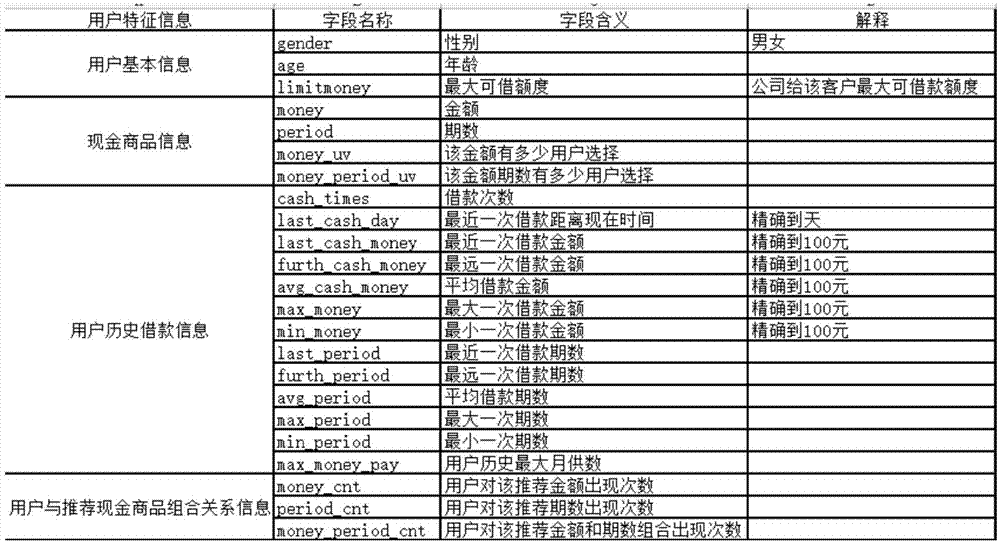

Method and device for recommending cash goods, equipment and storage medium

InactiveCN107578332AImprove experienceSolve the problem of large deviationFinanceBuying/selling/leasing transactionsPositive sampleMathematical model

The invention discloses a method and device for recommending cash goods, equipment and a storage medium. The method comprises the steps of obtaining multiple pre-determined positive samples and negative samples composed of cash goods purchased by multiple users; collocating preset user feature information with the positive samples and the negative samples correspondingly and generating a target training set; adopting a binary classifier algorithm, and training a preset mathematical model according to the target training set to obtain a training model; obtaining user feature information of a user to be predicted, inputting the user feature information as an input variable into the training model, and outputting an ordering result of cash goods corresponding to the user to be predicted. According to the method and device for recommending the cash goods, the equipment and the storage medium, the problem is solved that the cash goods recommended for the user are largely deviated from the cashed goods actually purchased by the user, the recommendation accuracy is improved, the profit is increased, and the user experience is improved.

Owner:SHENZHEN LEXIN SOFTWARE TECH CO LTD

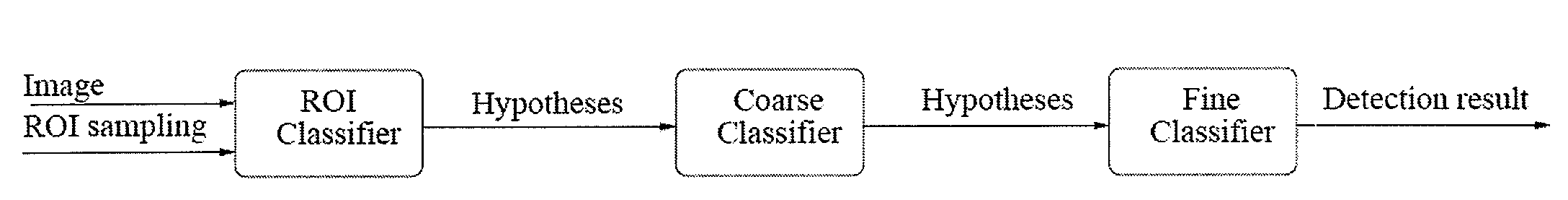

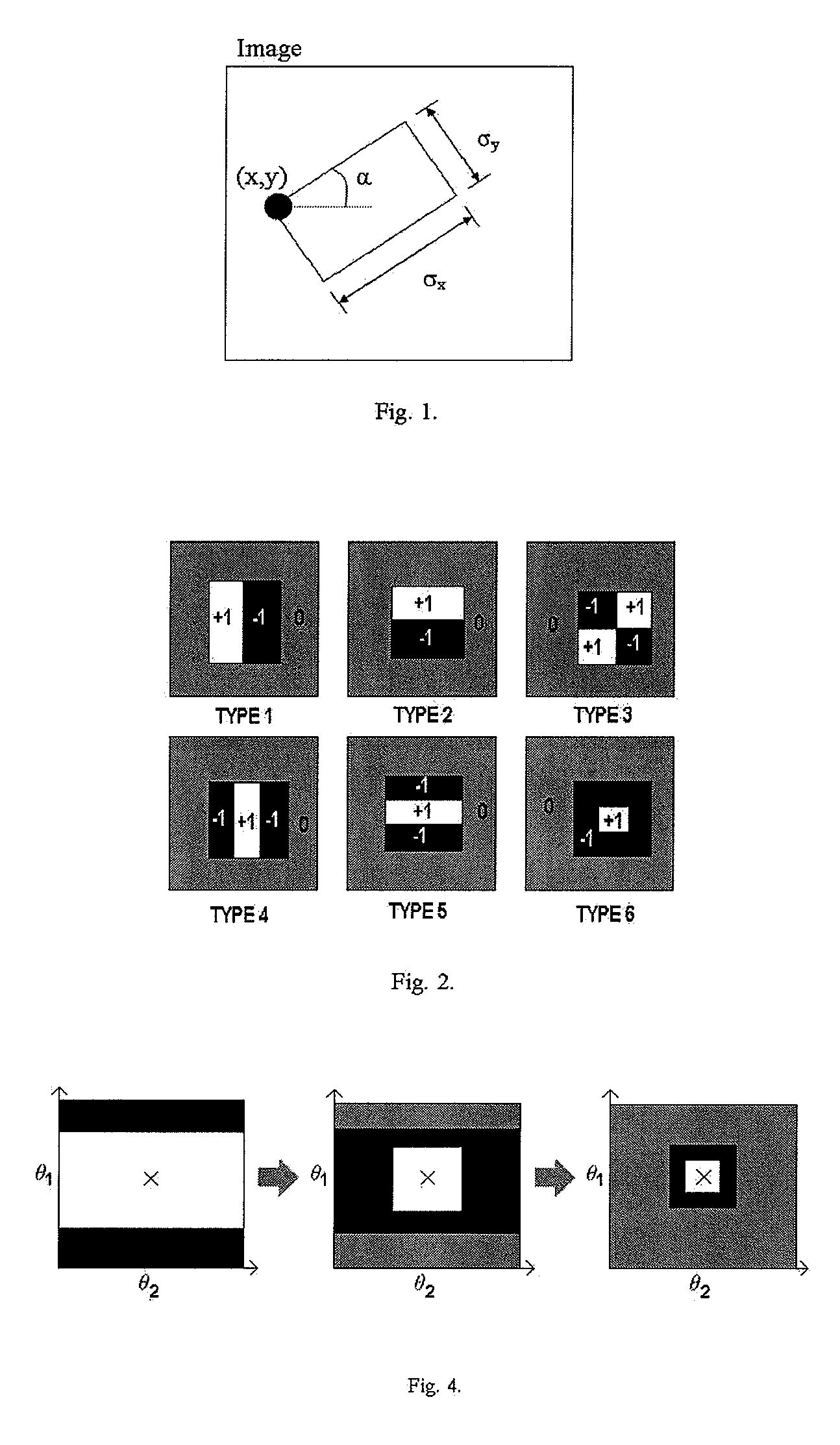

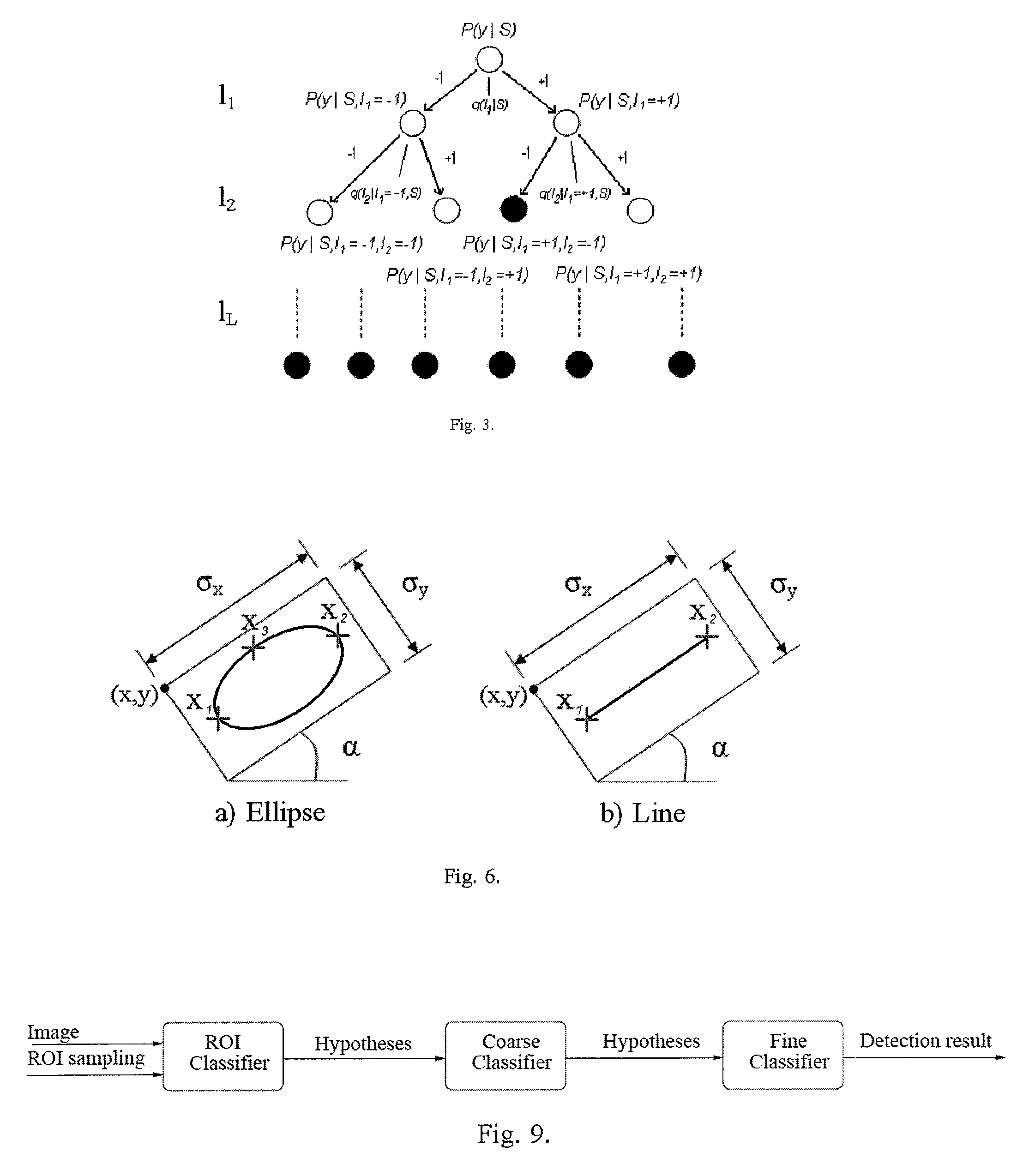

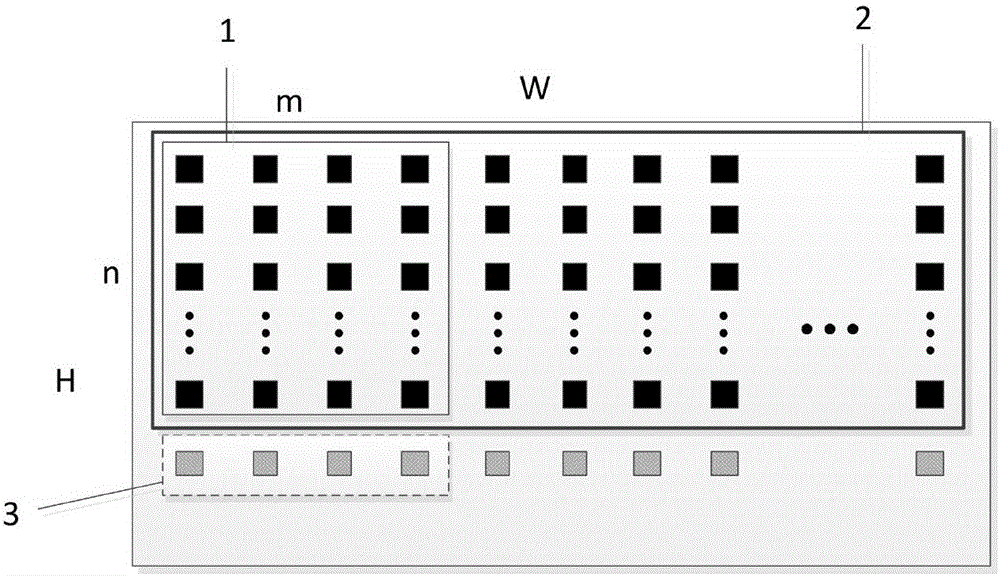

System and Method for Detection of Fetal Anatomies From Ultrasound Images Using a Constrained Probabilistic Boosting Tree

ActiveUS20080240532A1Easy to reinforceNoise robustUltrasonic/sonic/infrasonic diagnosticsCharacter and pattern recognitionPattern recognitionPositive sample

A method for detecting fetal anatomic features in ultrasound images includes providing an ultrasound image of a fetus, specifying an anatomic feature to be detected in a region S determined by parameter vector θ, providing a sequence of probabilistic boosting tree classifiers, each with a pre-specified height and number of nodes. Each classifier computes a posterior probability P(y|S) where yε{−1,+1}, with P(y=+1|S) representing a probability that region S contains the feature, and P(y=−1|S) representing a probability that region S contains background information. The feature is detected by uniformly sampling a parameter space of parameter vector θ using a first classifier with a sampling interval vector used for training said first classifier, and having each subsequent classifier classify positive samples identified by a preceding classifier using a smaller sampling interval vector used for training said preceding classifier. Each classifier forms a union of its positive samples with those of the preceding classifier.

Owner:SIEMENS MEDICAL SOLUTIONS USA INC

Focus-image detection device, method and computer-readable storage medium

ActiveCN109544534AReduce false positive rateRealize multi-scale detectionImage enhancementImage analysisPattern recognitionPositive sample

The invention provides a focus image detecting device. The focus image detecting device comprises an image obtaining module which obtains a medical image to be detected; A detection module that detects a lesion of the medical image according to the trained neural network model; Wherein the neural network model comprises an M-level neural network structure cascaded in sequence, and when training, anegative sample input to the second to M-level neural network structures comprises a false positive sample of the upper-level neural network structure, and M is a positive integer greater than 1.

Owner:SHANGHAI UNITED IMAGING INTELLIGENT MEDICAL TECH CO LTD

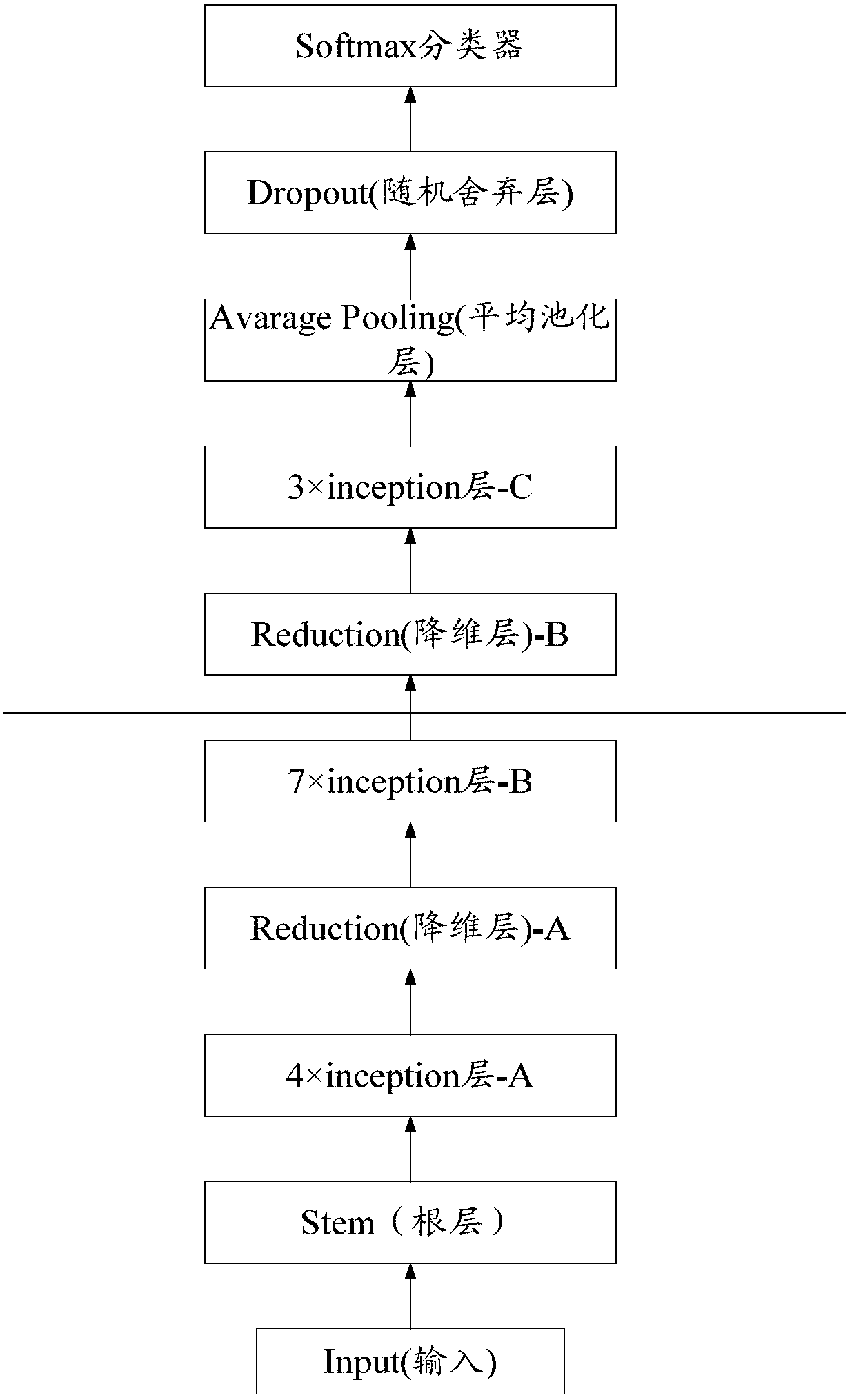

Mammary gland lesion area detection method based on deep learning and transfer learning

PendingCN109635835ASolve the binary classification problemImprove predictive performanceCharacter and pattern recognitionNeural architecturesPositive sampleData set

The invention provides a mammary gland lesion area detection method based on deep learning and transfer learning. The method comprises: preparation and amplification of a training set and a test set;according to lump position information marked by a doctor in the breast data set, extracting an available lump image and normalizing the size of the available lump image into a size of 100 * 100 pixels as a positive sample; according to the invention, the AlexNet network is used to train the parameter model of the classification model of the natural image on the ImageNet data set; training and transfer learning are carried out on a specific breast image data set, so that the binary classification problem of the convolutional neural network on a small-scale breast data set can be successfully solved, a lesion area in the breast image can be identified, and the prediction effect on the breast lesion is improved.

Owner:深圳蓝影医学科技股份有限公司

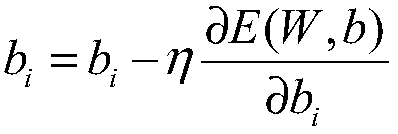

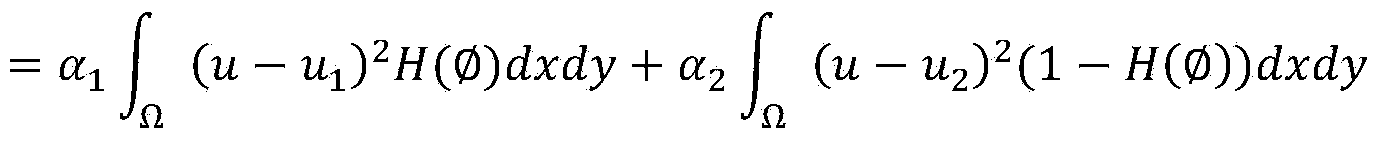

Optical remote-sensing image, GIS automatic registration and water body extraction integrated method

ActiveCN103400151ATaking into account relative positional constraintsExact matchImage analysisCharacter and pattern recognitionPositive sampleSvm classifier

An optical remote-sensing image, GIS (Geographic Information System) automatic registration and water body extraction integrated method comprises the following steps: 1, segmenting input optical remote-sensing images to obtain initial segmentation results of water systems; 2, performing local level set evolution segmentation to obtain a set R; 3, matching basic geographic information water system layer vector objects with objects in the set R, registering the images, executing the step 4 if the registration is successful, otherwise, returning to the step 2; 4, obtaining unchanged water bodies, suspected newly-added water bodies and suspected changed water bodies through buffer detection, further filtering out suspected water body objects, and confirming real changed water bodies and unchanged water bodies; 5, taking the multispectral values of corresponding pixels in unchanged water body objects as positive samples, randomly selecting the multispectral values of pixels on the optical remote-sensing image except the area in the set R as negative samples, training an SVM (Support Vector Machine) classifier, verifying whether the objects in a filtered suspected water body object set are water bodies or not, and obtaining water body results of segmentation, registration and extraction.

Owner:WUHAN UNIV

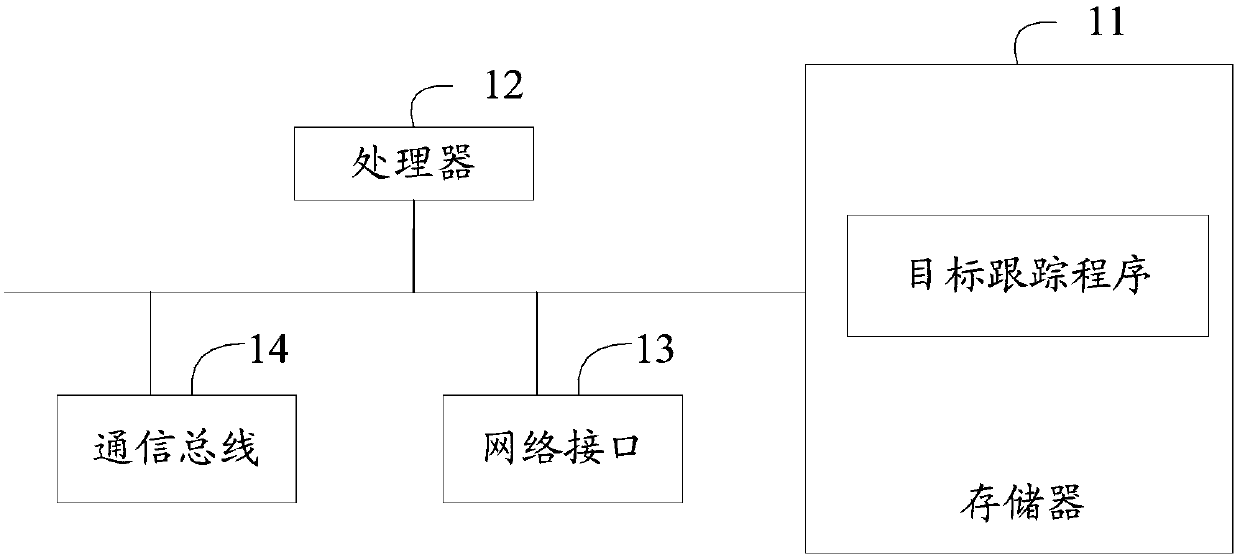

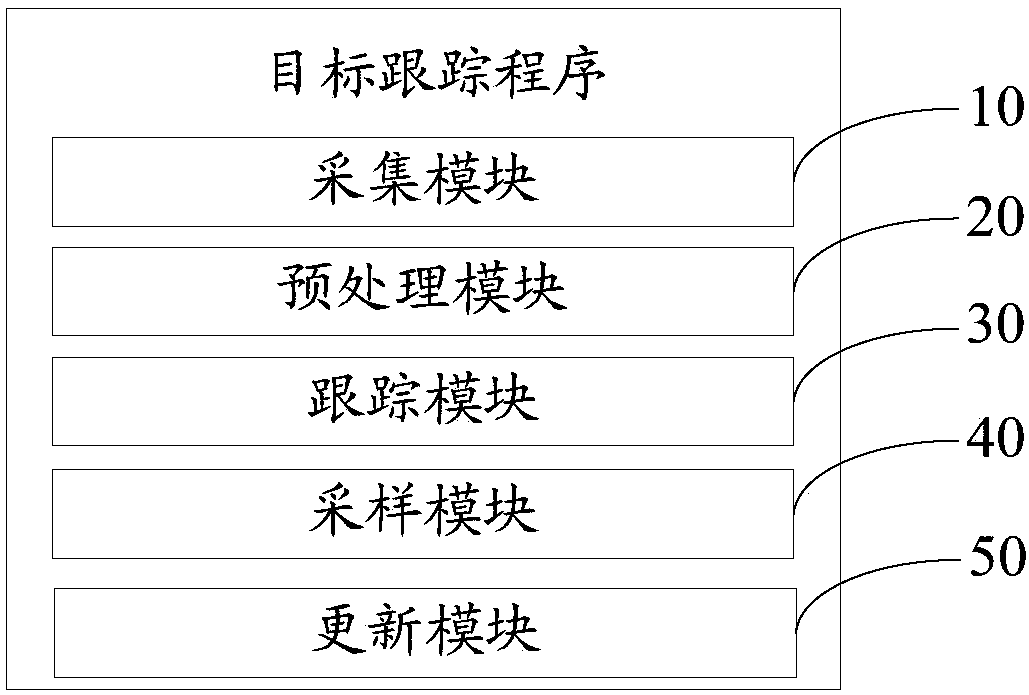

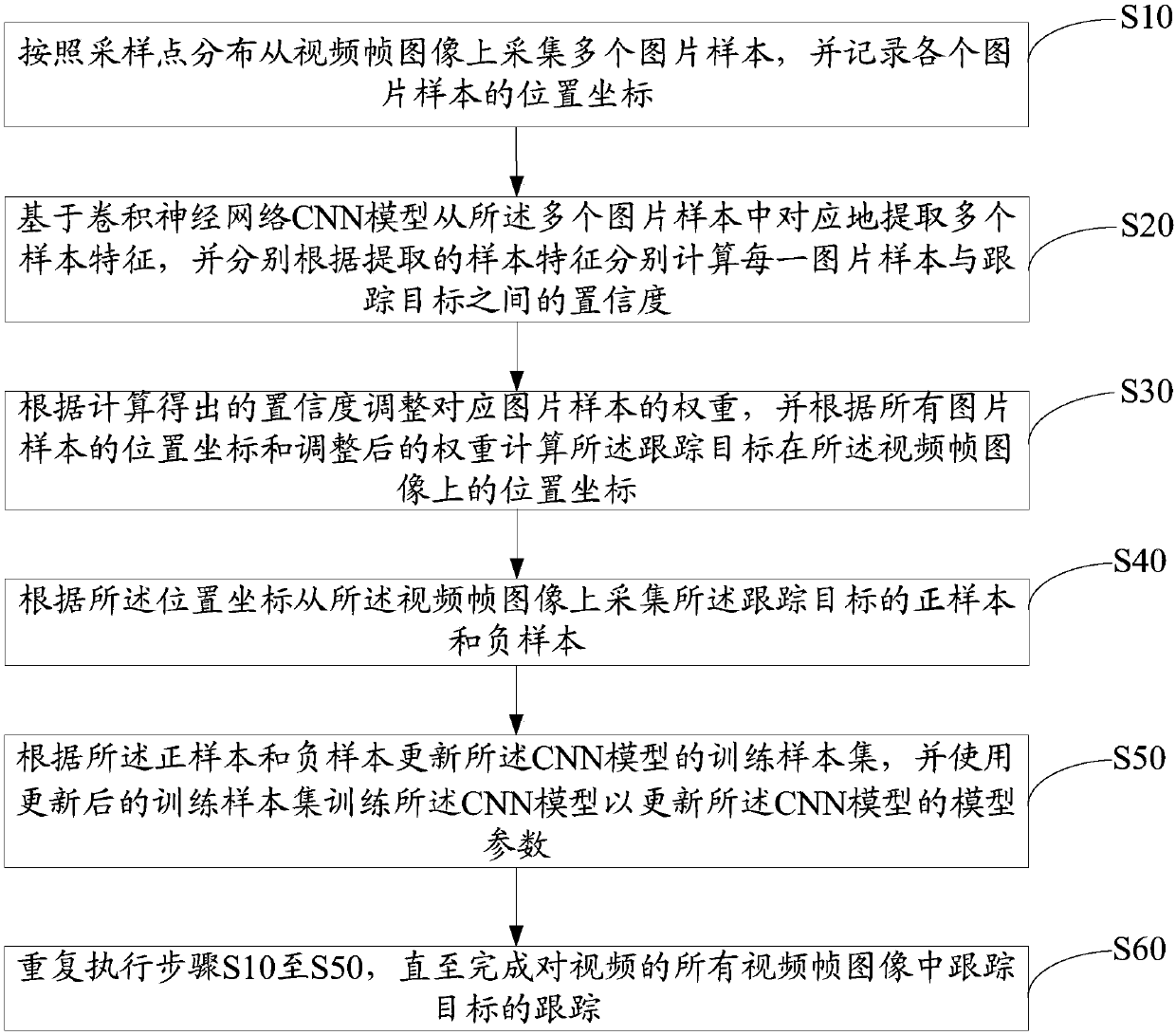

Target tracking device and method, and computer readable storage medium

InactiveCN107679455AImprove accuracyCharacter and pattern recognitionNeural architecturesPositive sampleAlgorithm

The invention discloses a target tracking device based on a convolutional neural network. The device comprises a memory and processor. A target tracking program which can be operated on the processoris stored in the memory. The program is executed by the processor. And an execution process comprises the following steps of according to sampling point distribution, collecting a picture sample froma video frame image and recording a position coordinate of the picture sample; based on a CNN model, extracting a sample characteristic from the picture sample, and according to the sample characteristic, calculating a confidence coefficient of the picture sample and a tracking target; according to the confidence coefficient, adjusting a weight of the picture sample, and according to the positioncoordinate and the weight, calculating a position coordinate of the tracking target; according to the position coordinate, collecting a positive sample and a negative sample from the video frame imageso as to train the sample set and train the CNN model, and then updating a model parameter of the CNN model; and repeating the above steps till that tracking of a video is completed. The invention also provides a target tracking method based on the convolutional neural network and a computer readable storage medium. In the invention, accuracy of target tracking is increased.

Owner:PING AN TECH (SHENZHEN) CO LTD

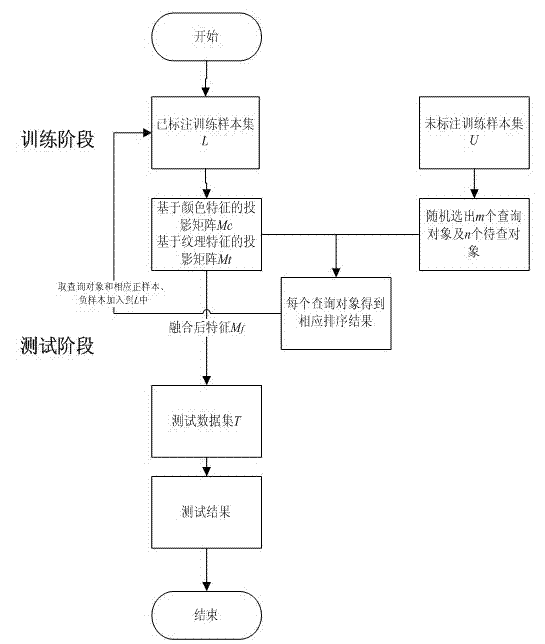

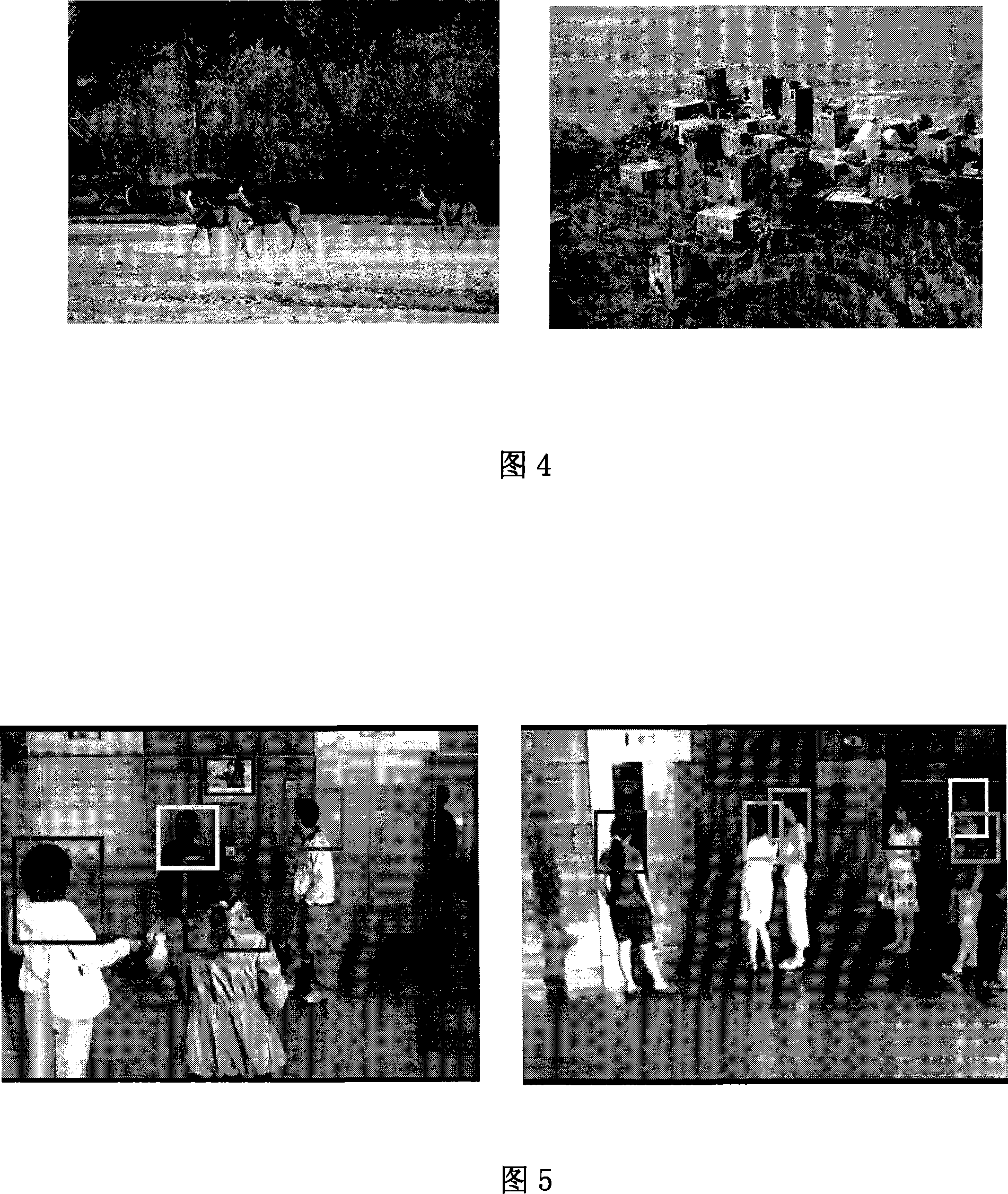

Pedestrian re-identifying method based on coordination scale learning

ActiveCN103793702AMeet hard-to-get requirementsImprove re-identification performanceCharacter and pattern recognitionPositive sampleVideo retrieval

The invention discloses a pedestrian re-identifying method based on coordination scale learning and belongs to the technical field of monitoring video retrieval. First, according to color and texture features of images in a marked training sample set L, scale learning is carried out, and covariance matrixes Mc and Mt in corresponding Mahalanobis distance are obtained; and checking targets are selected randomly, the Mc and the Mt are used for Mahalanobis distance measuring, a corresponding sorting result is obtained, positive samples and negative samples are obtained, a new marked training sample set L is obtained, the Mc and the Mt are updated until an unmarked training sample set U is empty, a final marked sample set L* is obtained, the color and texture features are fused, an Mf is obtained, and a Mahalanobis distance function based on the Mf can be used for pedestrian re-identifying. Under a semi-supervised framework, the pedestrian re-identifying technology based on scale learning is studied, scale learning is carried out with the marked samples assisted by the unmarked samples, the requirement that practical video investigation application marked training samples are hard to obtain is met, and re-identifying performance under few marked samples can be effectively improved.

Owner:WUHAN UNIV

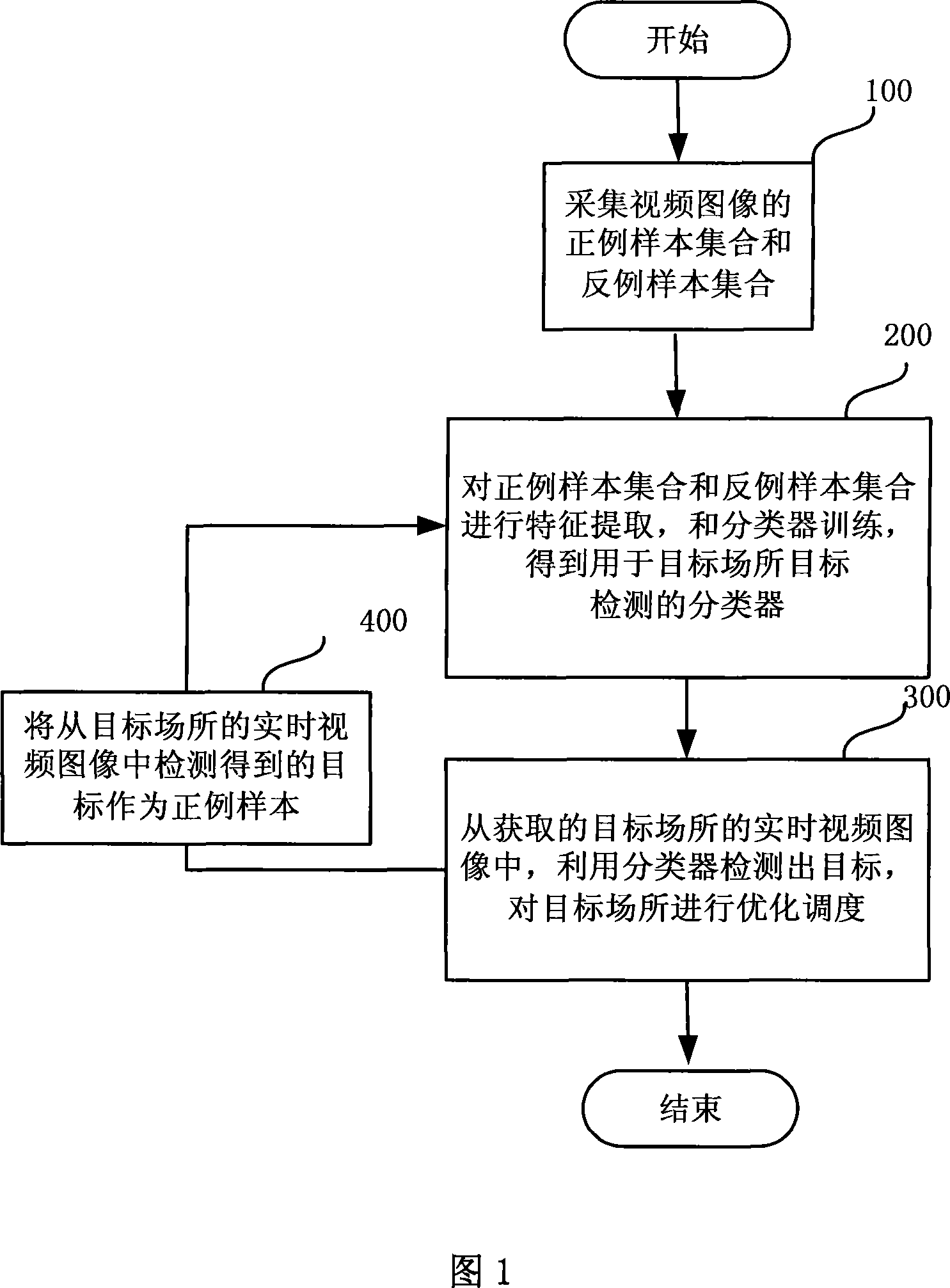

Target place optimized dispatching method and system

The invention discloses an objective location optimizing and scheduling method and system, which comprises the following steps: the positive sample aggregation and reverse sample aggregation opposite to the corresponding objective in the objective location are collected; in the positive sample aggregation and reverse sample aggregation, image feature is extracted and then training is performed, thereby obtaining classifier for objective inspection of objective location; in the obtained real-time video image of the objective location, the classifier is used to inspect the objective and perform optimization and scheduling of the objective location. The region of the objective inspected from the real-time video image obtained from the objective location is used as positive example, training is repeated, furthermore improving classification accuracy of the classifier, optimization and scheduling of the objective location are performed so as to improve working efficiency of the objective location.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

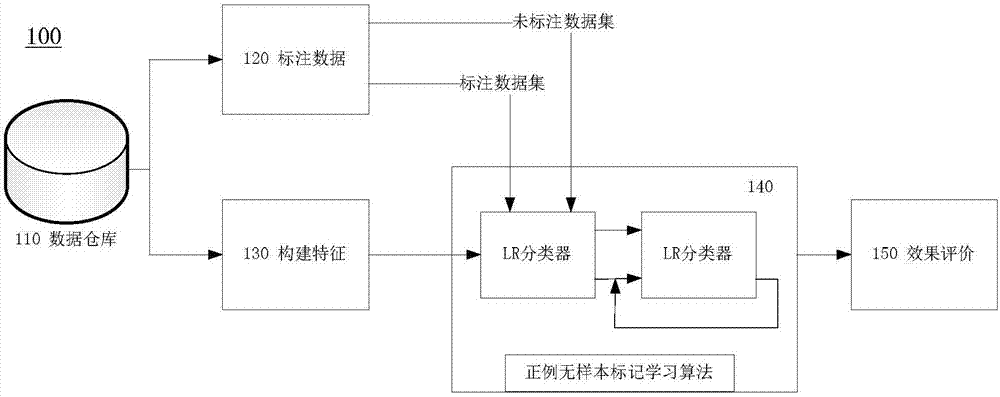

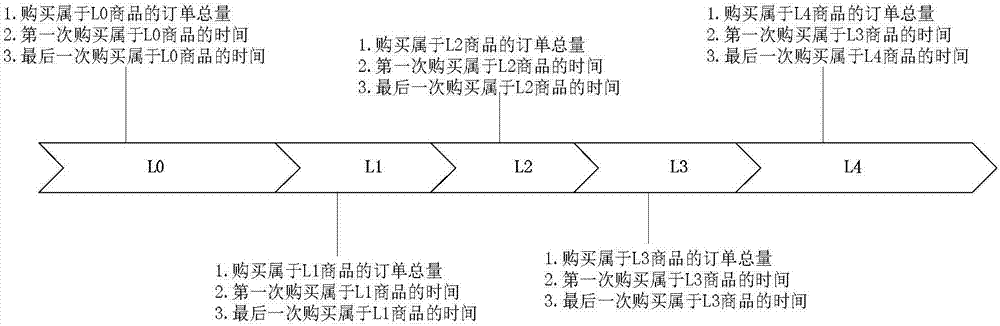

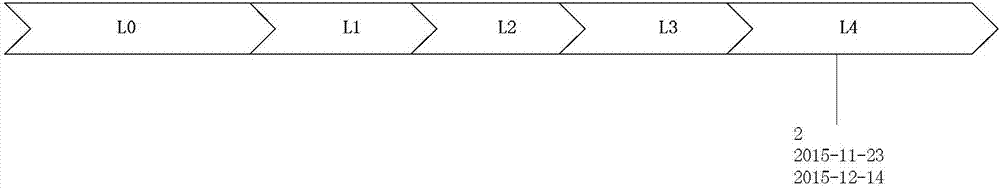

User data classification method and device, server and computer readable storage medium

ActiveCN107273454AAccurate classificationGood personalized recommendationRelational databasesMulti-dimensional databasesPositive sampleFeature extraction

The invention provides a user data classification method. The method comprises the steps that user data characteristics are generated; a tagged data set and a untagged data set of user data are generated according to tagging rules; a positive sample tagged data set P and an unknown sample data set U of one classification in multiple classifications are built according to the tagged data set and the untagged data set; a classifier is generated according to the positive sample tagged data set P, the unknown sample data set U and the corresponding user data characteristics; the classifier is used for determining whether the user data in the untagged data set belongs to the classification or not. Accordingly, the user data is classified through an improved positive sample tag learning algorithm, the method is suitable for characteristic extraction of people, people having the similar life stages in a system are mined, and then electric business advertisements achieving precise people orientation are provided.

Owner:BEIJING JINGDONG SHANGKE INFORMATION TECH CO LTD +1

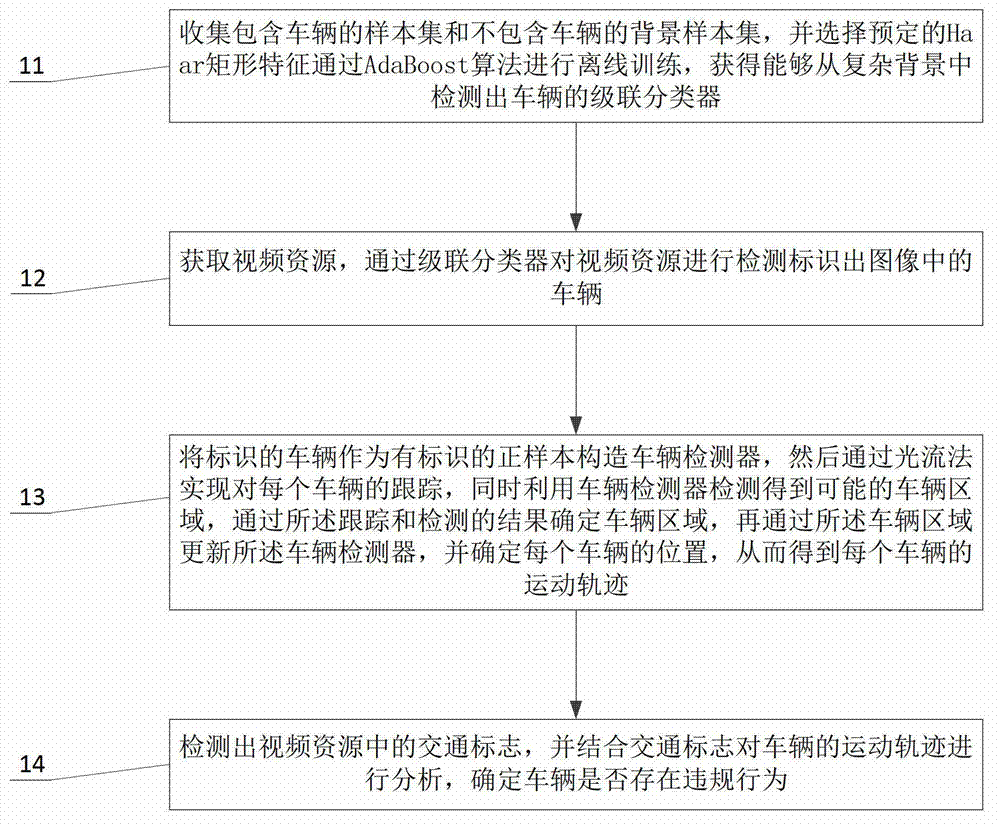

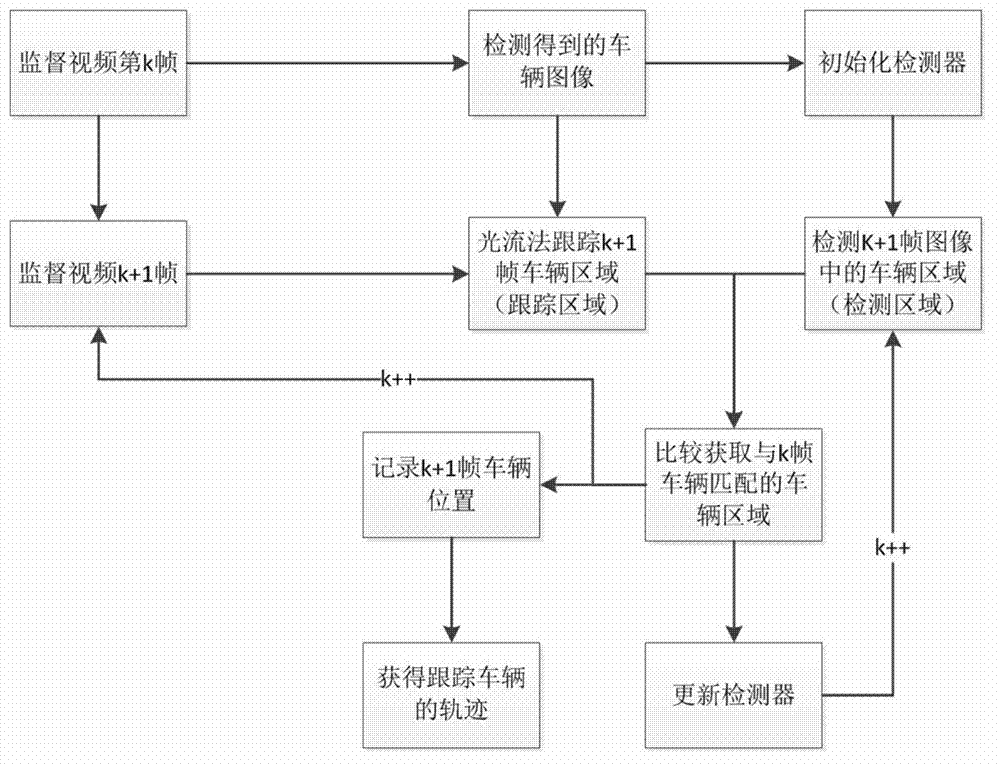

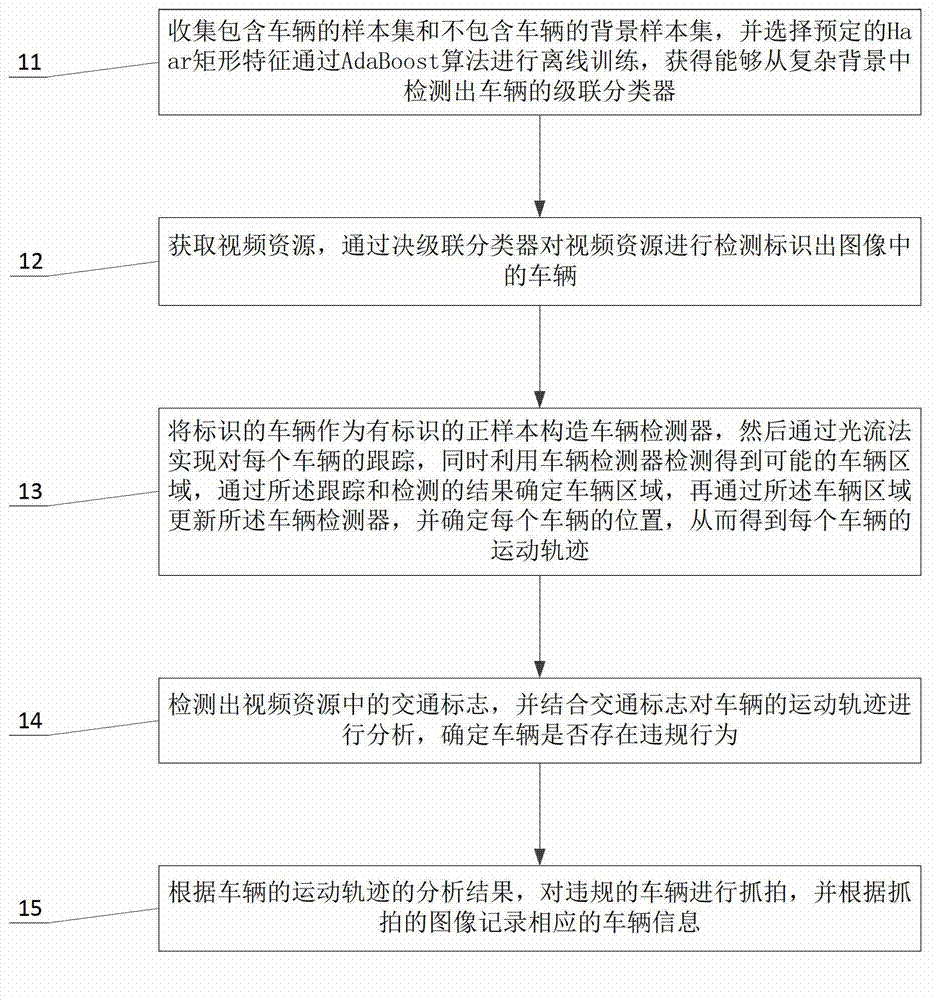

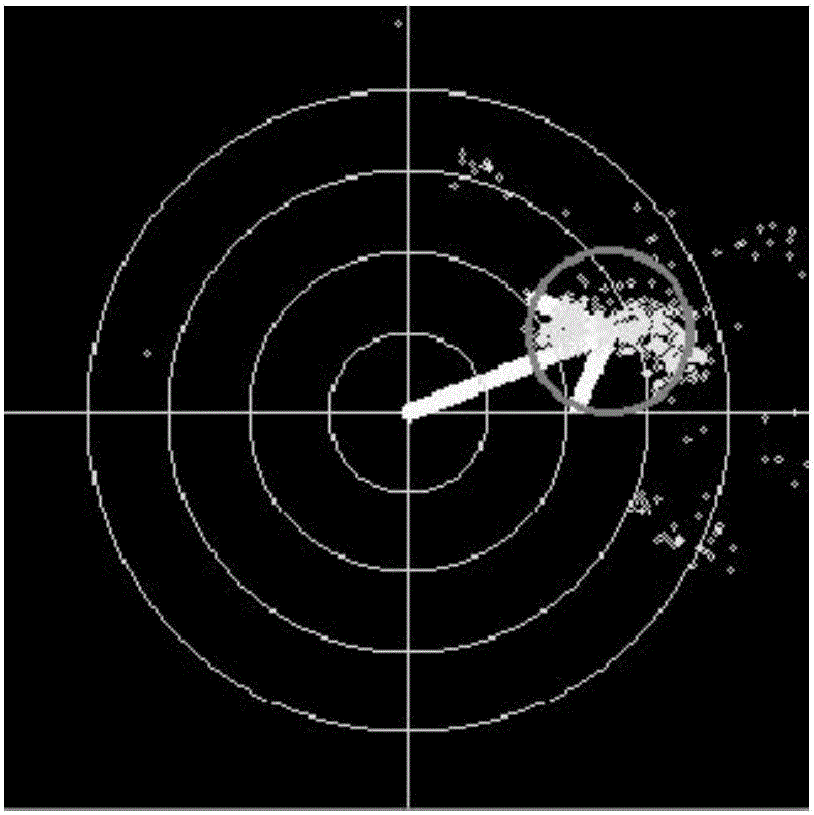

Method and system for intelligently analyzing vehicle behaviour

ActiveCN102902955ADetection of traffic movementCharacter and pattern recognitionPositive sampleVehicle behavior

Owner:UNIV OF SCI & TECH OF CHINA

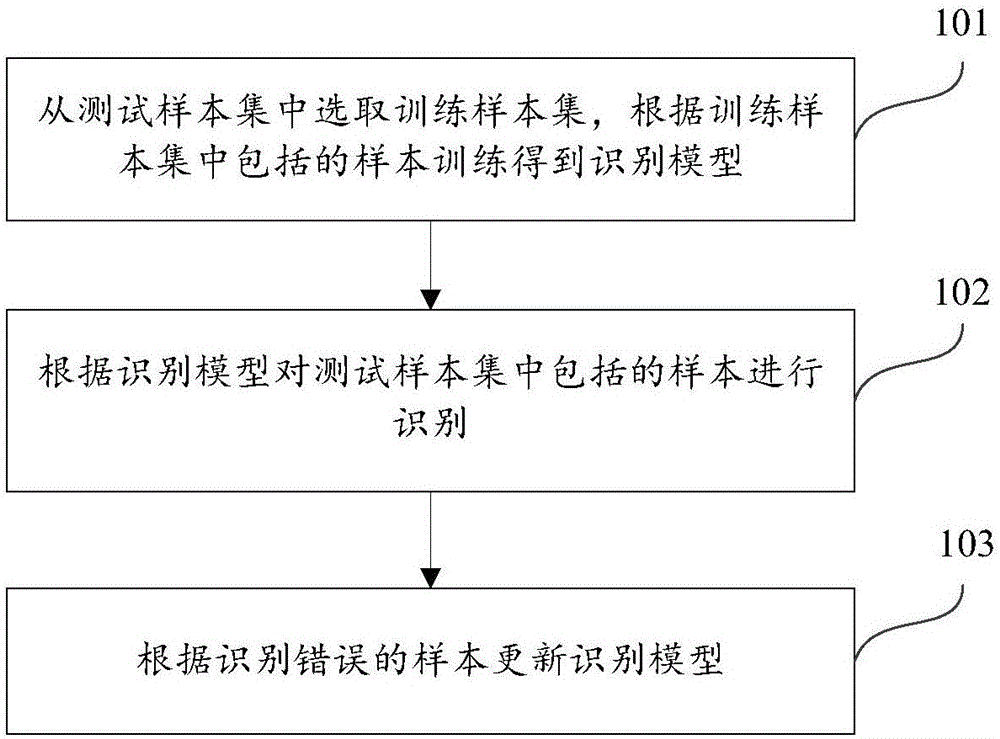

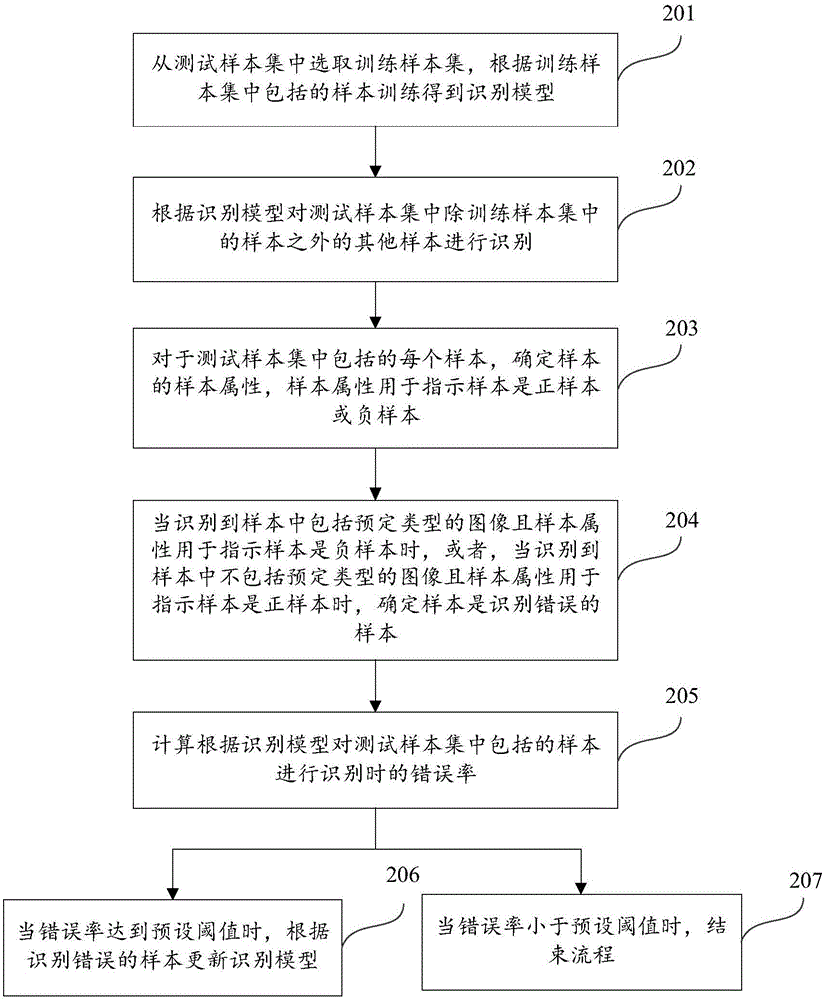

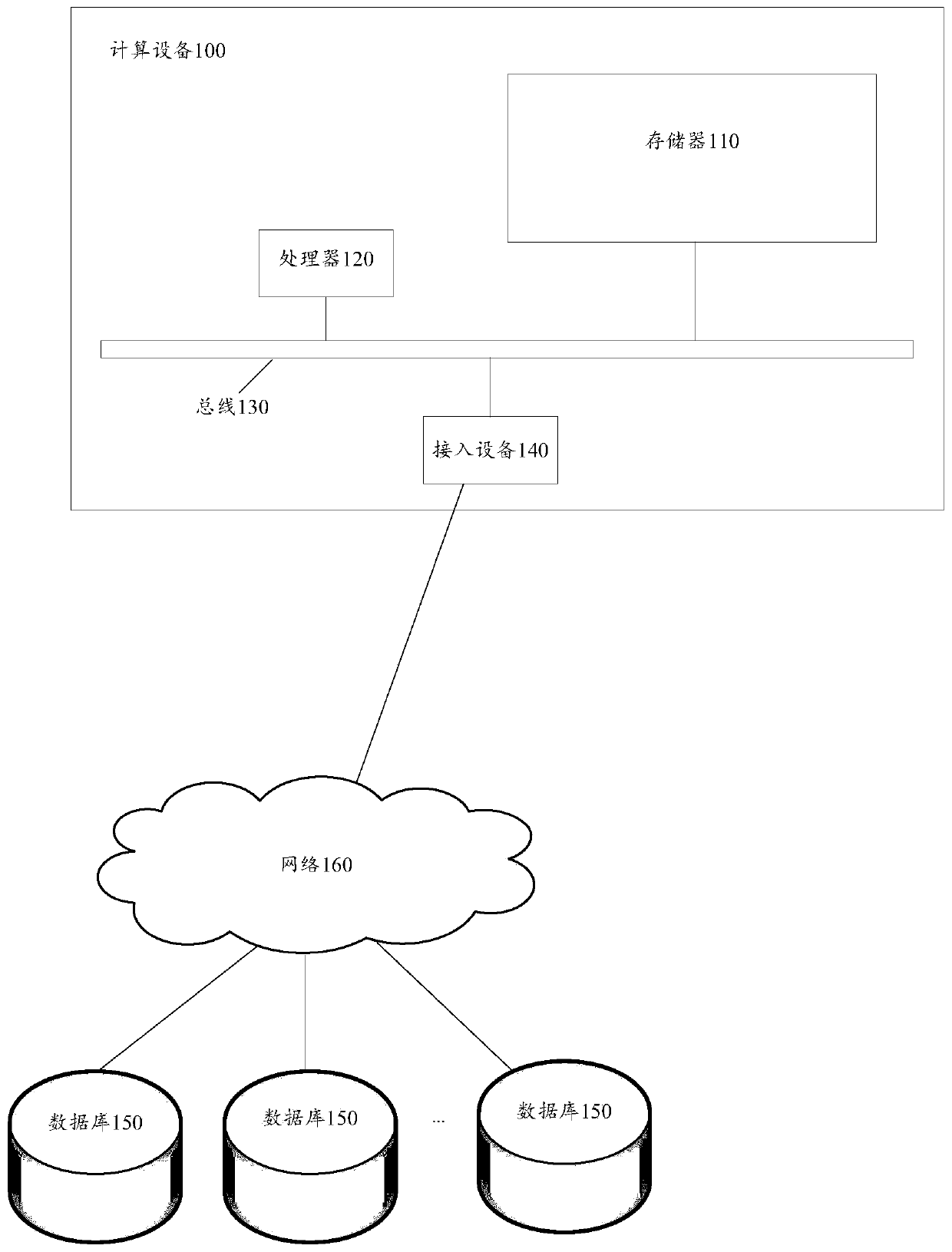

Model training method and device

InactiveCN106503617AImprove accuracyThe solution is not accurate enoughCharacter and pattern recognitionPositive sampleImaging processing

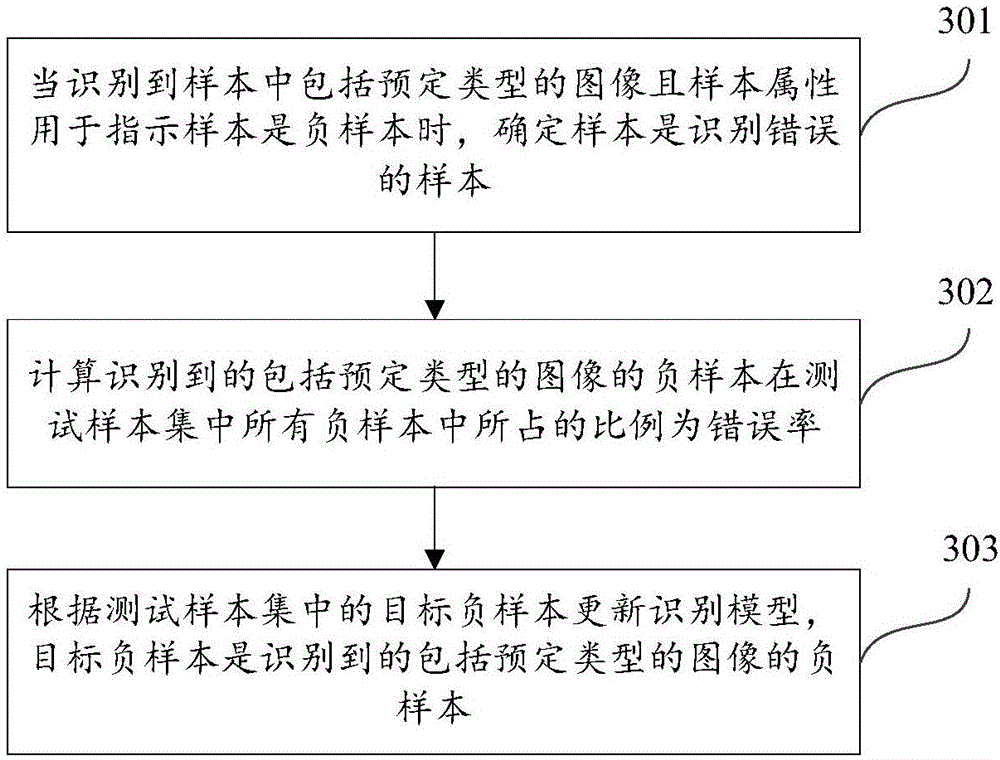

The invention discloses a model training method and device, and belongs to the field of image processing. The method includes selecting a training sample set from a test sample set, training to obtain an identification model according to samples included in the training sample set, the training sample set being a subset of the test sample set, the training sample set including positive samples and negative samples, the number of the negative samples in the training sample set being smaller than that of the negative samples in the test sample set, identifying samples included in the test sample set according to the identification model, and updating the identification model according to samples that are wrongly identified; the problem that since the samples are limited in number, a face identification model obtained by training is not accurate enough is solved; after the identification model is obtained by training a limited number of samples, the identification model is updated again according to the samples that are wrongly identified, the identification model can be constantly optimized, and thus the accuracy is improved when the identification model is used for identification.

Owner:BEIJING XIAOMI MOBILE SOFTWARE CO LTD

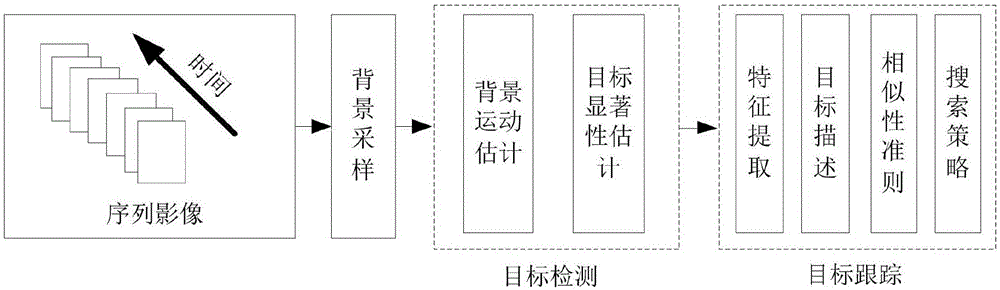

Video target detecting and tracking method based on optical flow features

InactiveCN106709472AAvoid driftingOvercoming cavitationScene recognitionPositive sampleParticle filtering algorithm

The invention provides a video target detecting and tracking method based on optical flow features. According to the technical scheme of the method, during the first step, an input image frame sequence is subjected to background sampling, and the optical flow vector of each pixel point after the sampling process is calculated. Meanwhile, the background motion is estimated based on the Mean Sift algorithm, and then the overall significance of a target is estimated. Finally, a threshold value is set according to the detection result of the target significance detection, so that a target region and a background region are separated. During the second step, the tracking of a video target is conducted: firstly, the target region is selected as a positive sample, and the background region is selected as a negative sample. The target is described based on the Haar features and the global color features of the target. Meanwhile, original features are subjected to sampling and compressing in the random matrix manner. Based on the bayesian criterion, the similarity between the target and a target of a previous frame is judged. Finally, the target is continuously tracked based on the particle filtering algorithm. In this way, multiple features including the target motion saliency, the color, the texture and the like are fused together, so that the success rate of target detection is improved. Therefore, the target can be quickly, effectively and continuously tracked.

Owner:湖南优象科技有限公司

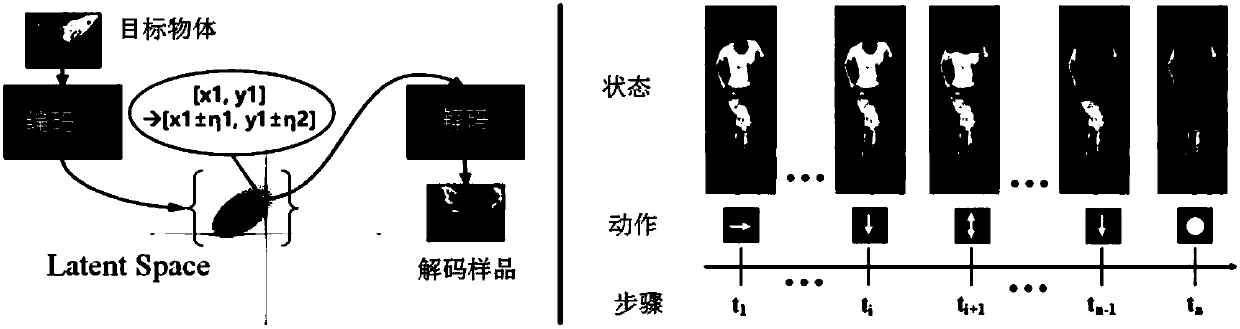

Target tracking method based on difficult positive sample generation

ActiveCN108596958AImprove robustnessImprove tracking accuracyImage enhancementImage analysisPositive sampleVideo processing

The invention discloses a target tracking method based on difficult positive sample generation. According to the method, for each video in training data, a variation auto-encoder is utilized to learna corresponding flow pattern, namely a positive sample generation network, codes are slightly adjusted according to an input image obtained after encoding, and a large quantity of positive samples aregenerated; the positive samples are input into a difficult positive sample conversion network, an intelligent body is trained to learn to shelter a target object through one background image block, the intelligent body performs bounding box adjustment continuously, so that the samples are difficult to recognize, the purpose of difficult positive sample generation is achieved, and sheltered difficult positive samples are output; and based on the generated difficult positive samples, a twin network is trained and used for matching between a target image block and candidate image blocks, and positioning of a target in a current frame is completed till processing of the whole video is completed. According to the target tracking method based on difficult positive sample generation, the flow pattern distribution of the target is learnt directly from the data, and a large quantity of diversified positive samples can be obtained.

Owner:ANHUI UNIVERSITY

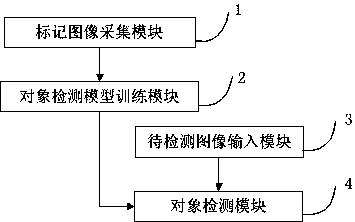

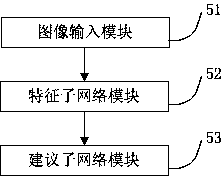

Improved convolutional neural network-based object detection device and method

ActiveCN107944442AOptimize network architectureEasy to detectCharacter and pattern recognitionNeural architecturesPositive sampleObject detection

The invention provides an improved convolutional neural network-based object detection method. The method comprises the following steps of: acquiring a mark image of a marked object; preliminarily training an improved convolutional neural network by adoption of a random sampling method, obtaining preliminarily classified positive samples and negative samples and corresponding classification probability values, and selecting a certain proportion of positive samples and negative samples according to the classification probability values to train the improved convolutional neural network so as toobtain a trained object detection model; inputting a to-be-detected image; and carrying out object detection on the to-be-detected image by adoption of the object detection model and outputting a detection result. Compared with the prior art, the method is capable of rapidly and correctly realizing object detection in images.

Owner:BEIJING ICETECH SCI & TECH CO LTD

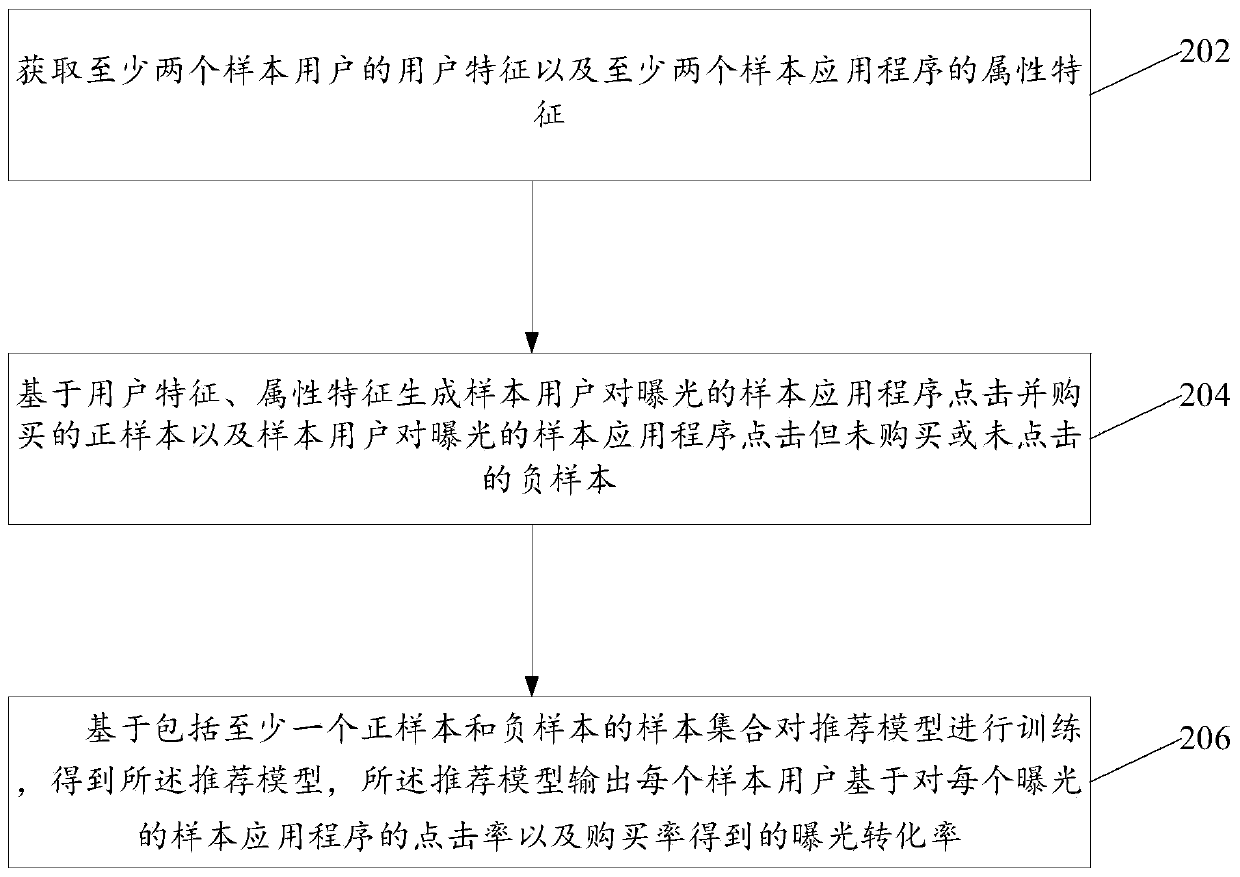

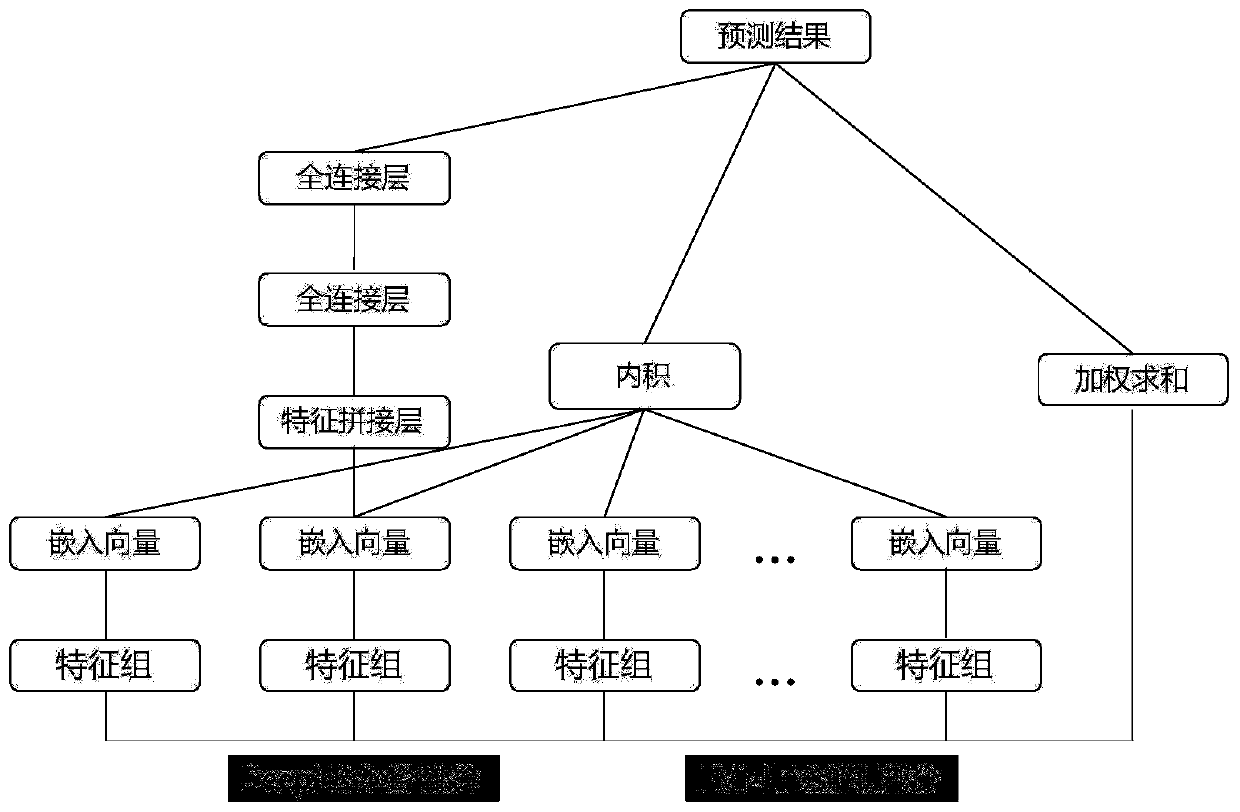

Training method and device of recommendation model, and recommendation method and device

ActiveCN110008399ASolve the problem of feature sparsityImprove recommendation effectDigital data information retrievalCharacter and pattern recognitionPositive sampleRecommendation model

The invention provides a training method and device of a recommendation model, and a recommendation method and device, and the training method of a recommendation model comprises the steps: obtainingthe user characteristics of at least two sample users and the attribute characteristics of at least two sample application programs; generating a positive sample which is clicked and purchased by thesample user on the exposed sample application program and a negative sample which is clicked but not purchased or unclicked by the sample user on the exposed sample application program based on the user characteristics and the attribute characteristics; and training a recommendation model on the basis of a sample set comprising at least one positive sample and at least one negative sample to obtain the recommendation model, and outputting an exposure conversion rate obtained by each sample user on the basis of the click rate and the purchase rate of each exposed sample application program by the recommendation model.

Owner:ADVANCED NEW TECH CO LTD

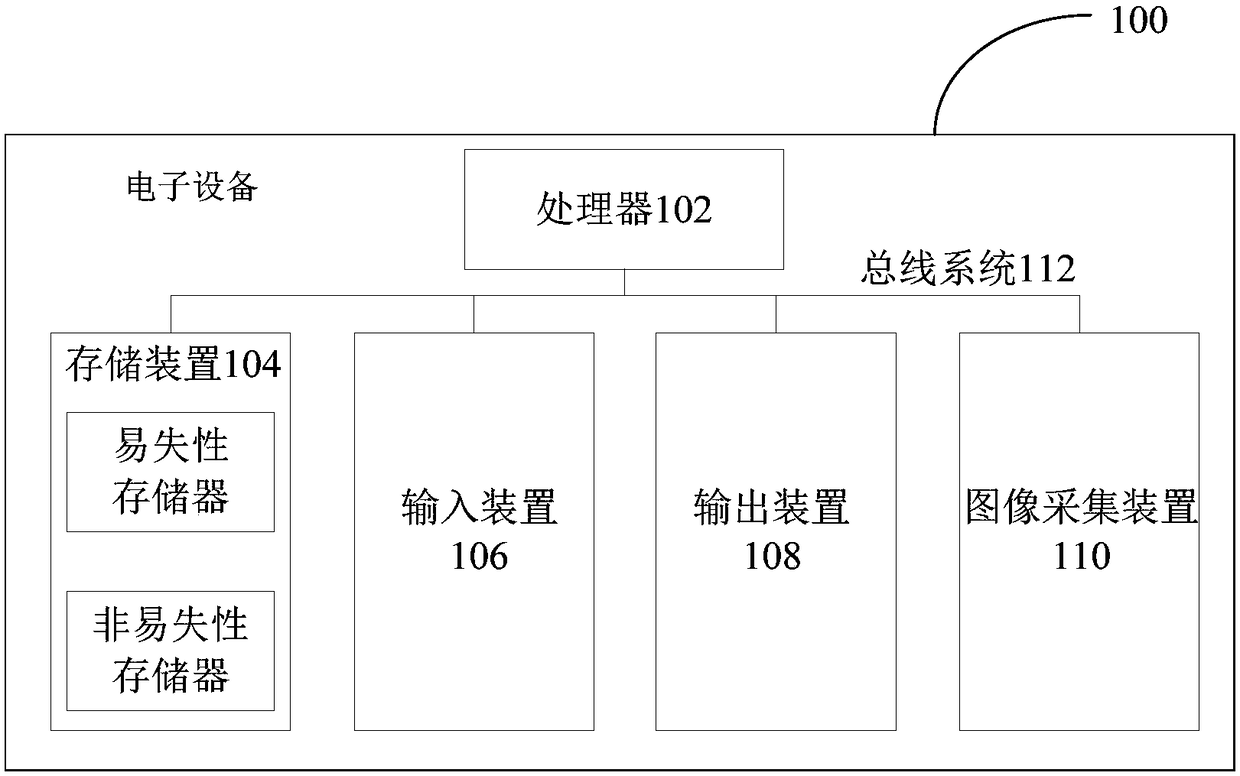

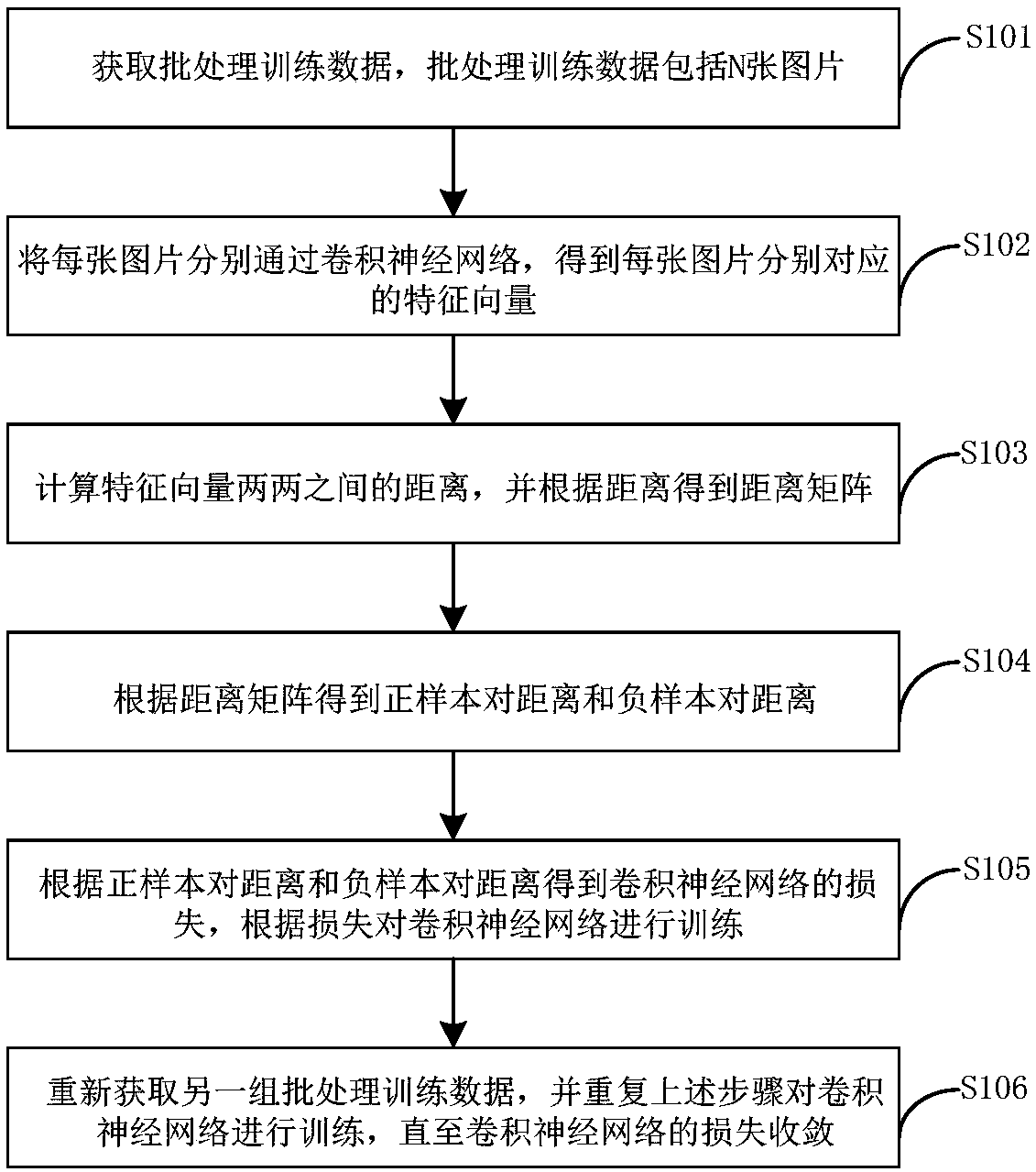

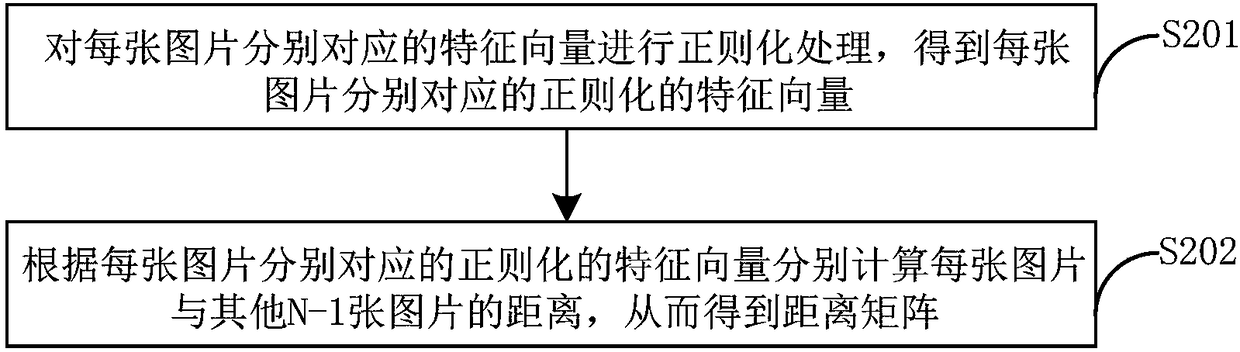

Re-recognition network training method, device and system, and re-recognition method, device and system

ActiveCN108108754AImprove generalization abilityImprove recognition accuracyCharacter and pattern recognitionNeural architecturesFeature vectorPositive sample

The present invention provides a re-recognition network training method, device and system, and a re-recognition method, device and system. The re-recognition network training method comprises the steps of: obtaining batch processing training data; obtaining feature vectors corresponding to N images included in the batch processing training data, calculating the distance between two each feature vectors according to the feature vector corresponding to each image, and obtaining a distance matrix; and according to the distance matrix obtained through calculation, selecting a positive sample pairwith the maximum distance and a negative sample pair with the minimum distance, employing the two selected boundary samples to calculate loss of a convolutional neural network to train a model. Therefore, the most difficult positive sample pair and the negative sample pair are learned to calculate the loss of the convolutional neural network so that the generalization capability of the convolutional neural network model can be improved and the identification precision is improved.

Owner:MEGVII BEIJINGTECH CO LTD

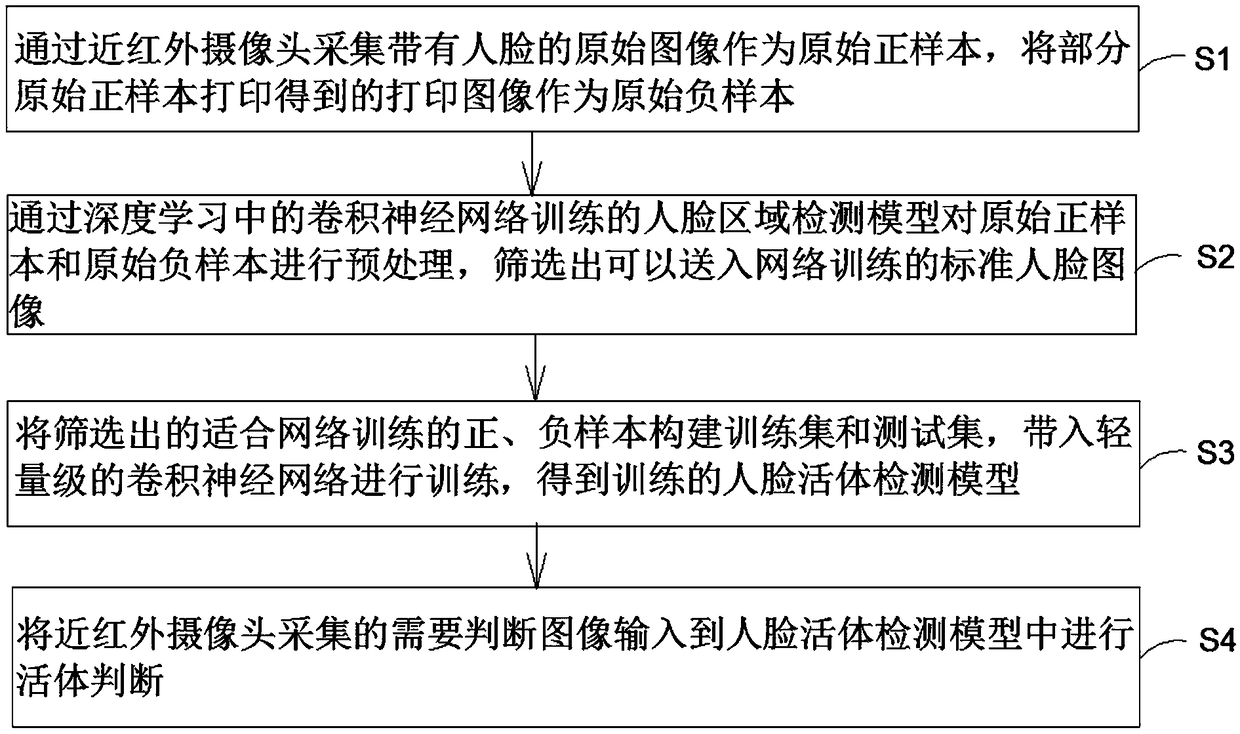

Near-infrared face vivo detection method and system

InactiveCN108898112AReduce sizeReduce the amount of parametersSpoof detectionPositive sampleTest set

The invention provides a near-infrared face vivo detection method. The method comprises the steps: S1, original positive samples and original negative samples are collected through a near-infrared camera, S2, preprocessing the original samples through the face region detection model in deep learning so as to select the standard face images which can be fed into the network to be trained; S3, constructing a training set and a test set from the selected positive and negative samples suitable for network training and substituting the samples into the lightweight convolutional neural network to betrained so as to obtain the trained face vivo detection model; and S4, the images to be judged collected by the near-infrared camera are inputted into the face vivo detection model for vivo judgment.The parameter quantity is reduced through the lightweight convolutional neural network, the size of the model is reduced, transplanting to the mobile terminal is more convenient and thus the method and the system can be widely popularized in the field of biological characteristic identification technology for the above reasons.

Owner:NORTHEASTERN UNIV

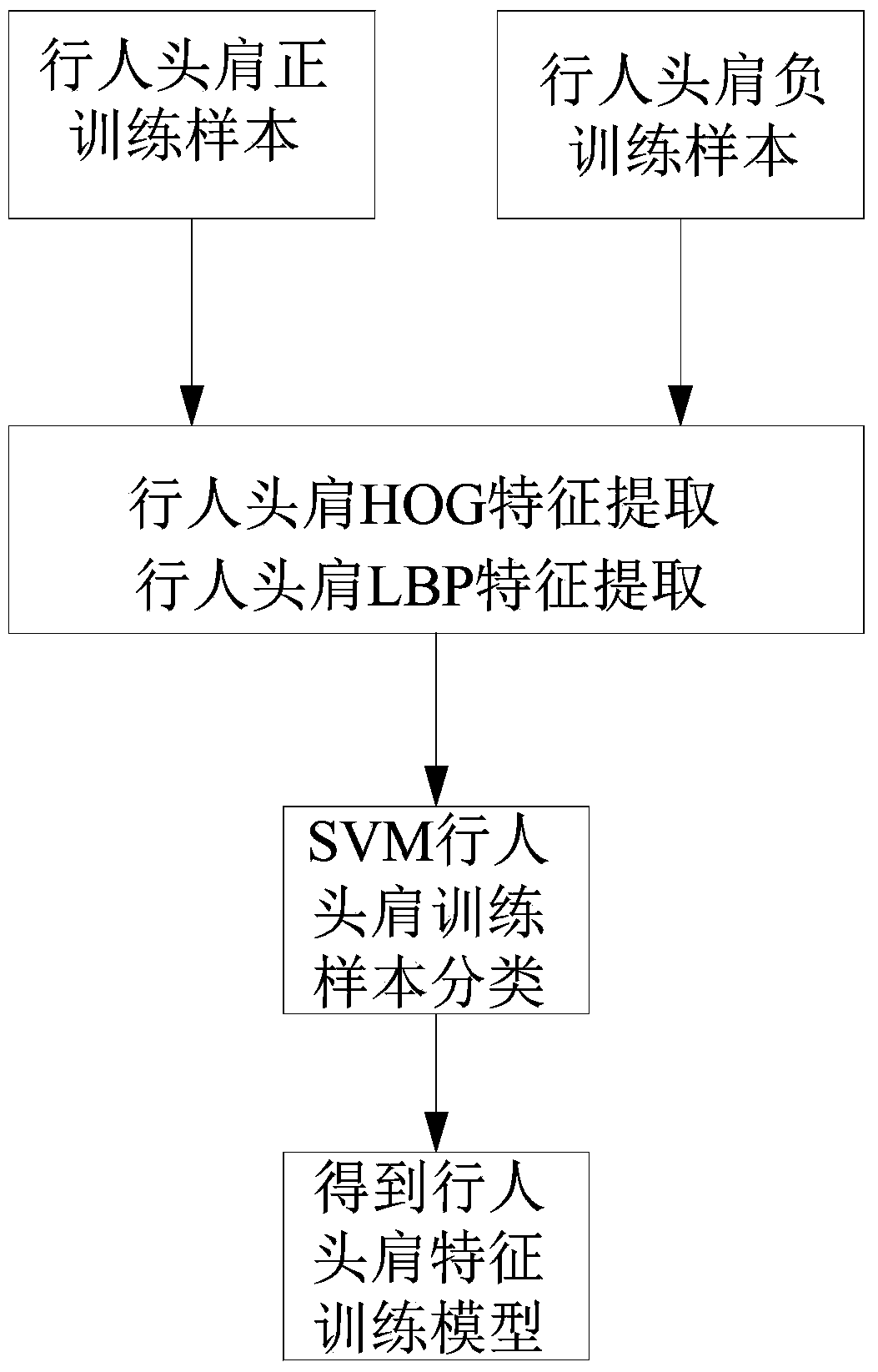

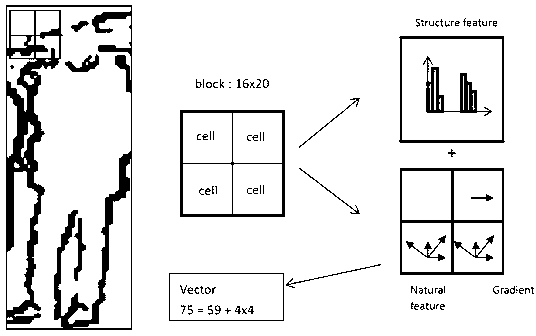

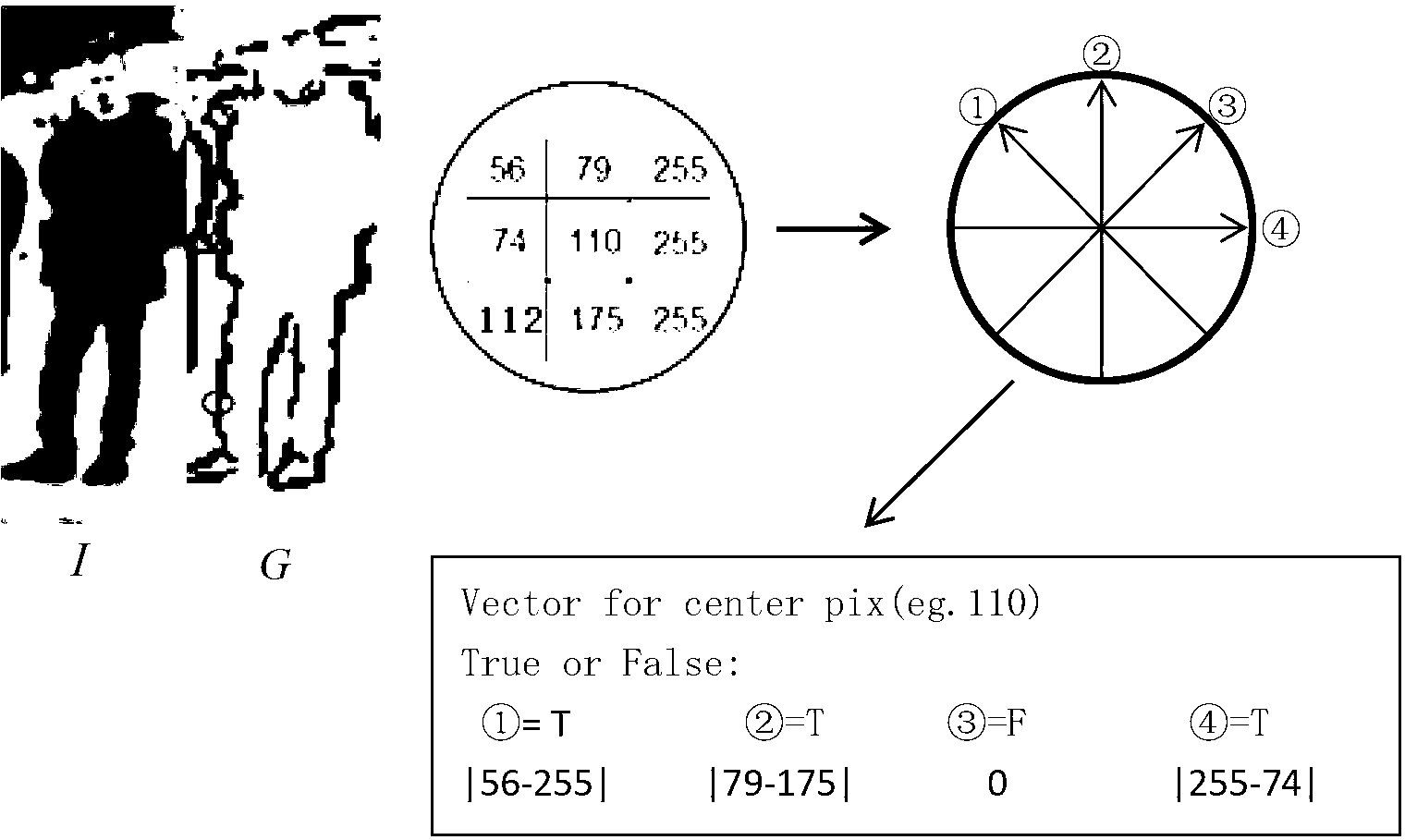

Pedestrian detection method based on combined features

ActiveCN102842045AAccurate descriptionIncrease technical speedCharacter and pattern recognitionSupport vector machinePositive sample

The invention discloses a pedestrian detection method based on combined features. A certain number of training samples which are the same in size comprise positive samples which comprise pedestrians and randomly intercepted negative samples which do not comprise the background of the pedestrians. Statistical structure gradient features of the training samples are extracted and then delivered to a support vector machine to train, and a classifier is obtained, and then a cascade structure is used to train an n-layer cascade classifier, an offline cascade classifier is obtained to be as a final classifier which distinguishes the pedestrians, the pedestrians in a photo or a video are detected by the final classifier and marked out. The pedestrian detection method based on the combined features can accurately describe the pedestrians and calculate simply, and can balance detection accuracy and detection speed well.

Owner:HUAQIAO UNIVERSITY

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com