Patents

Literature

677 results about "Manual annotation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

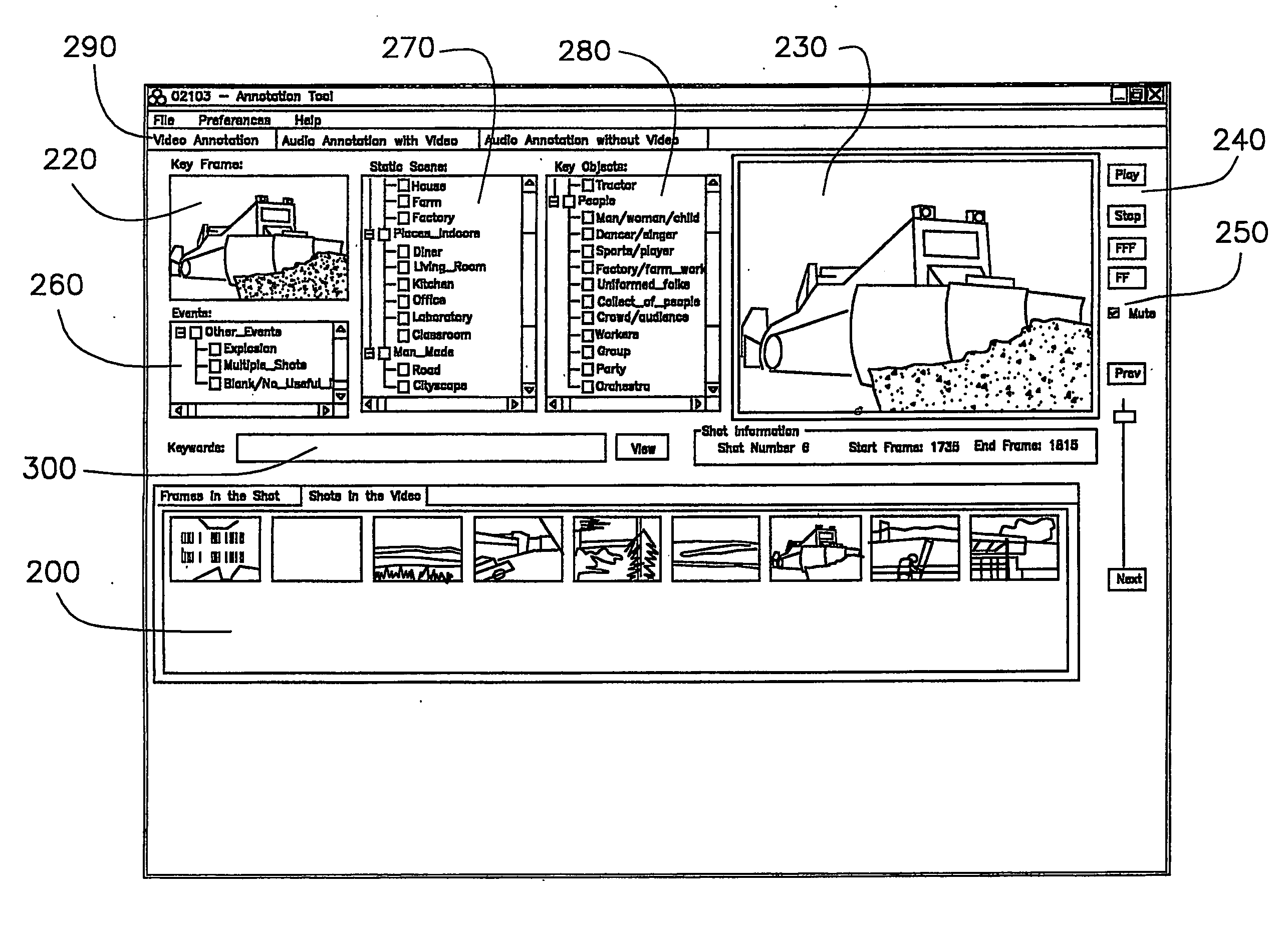

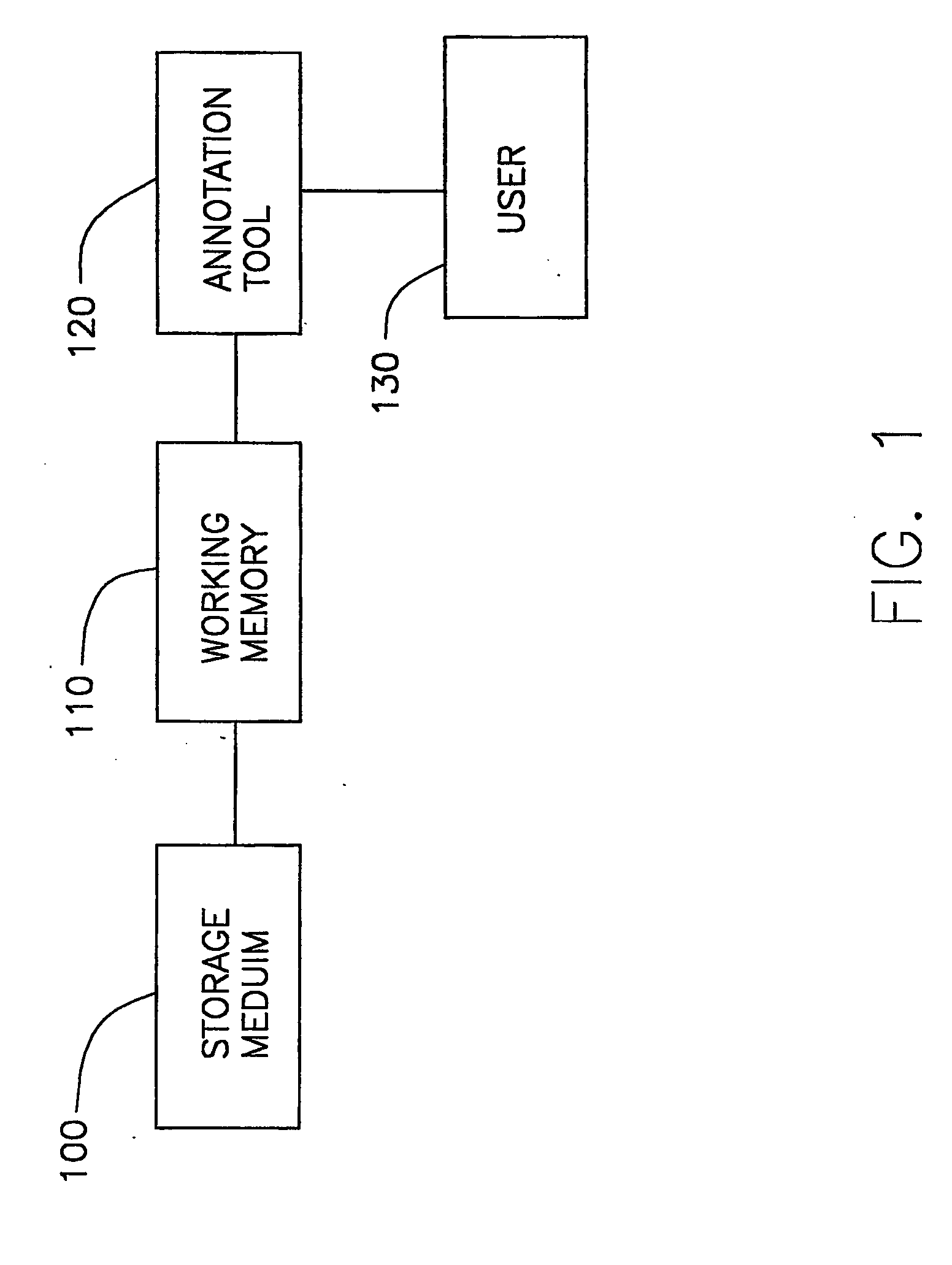

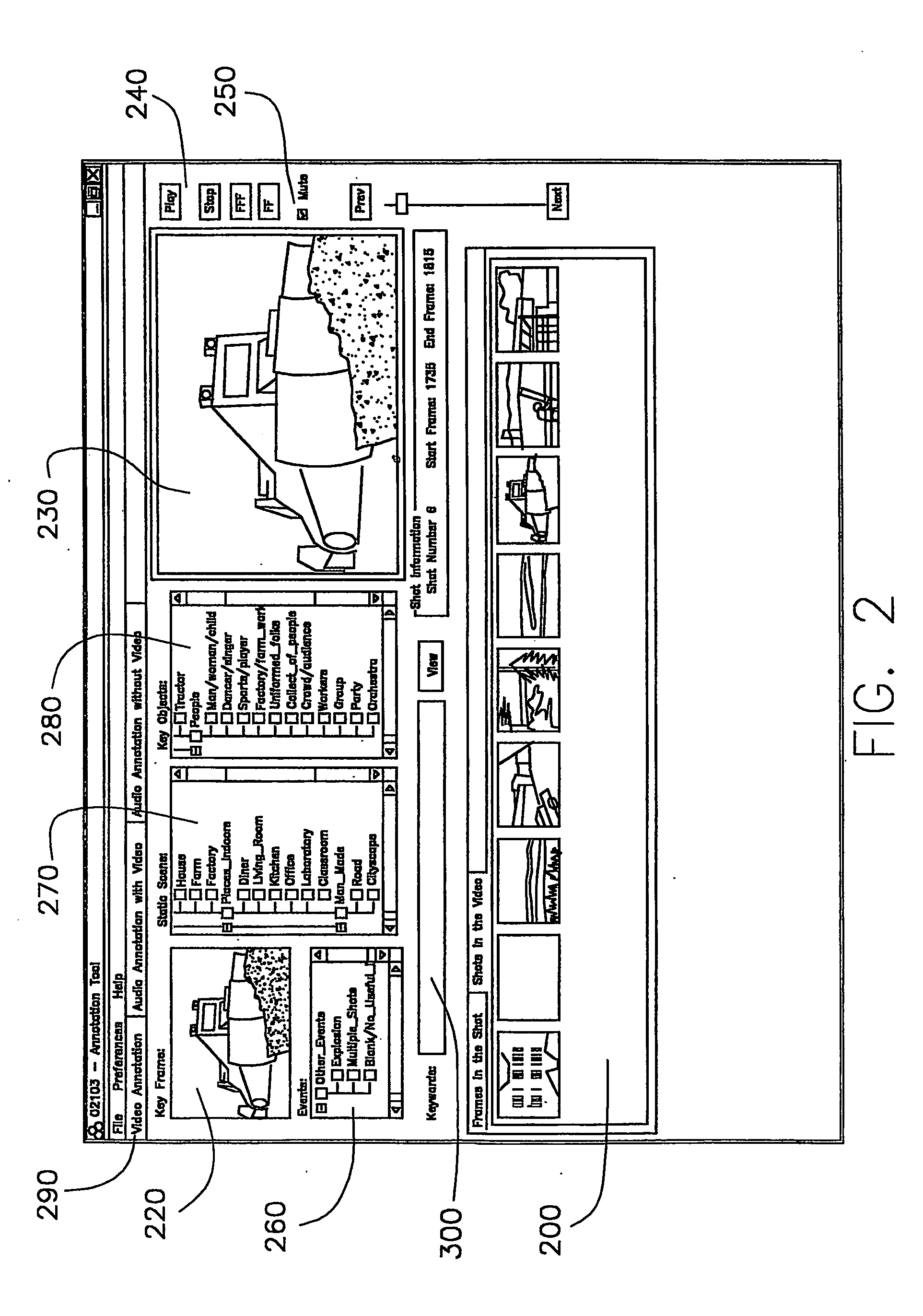

System and method for annotating multi-modal characteristics in multimedia documents

ActiveUS20060218481A1Metadata multimedia retrievalNatural language data processingManual annotationDocumentation

A manual annotation system of multi-modal characteristics in multimedia files. There is provided an arrangement for selection an observation modality of video with audio, video without audio, audio with video, or audio without video, to be used to annotate multimedia content. While annotating video or audio features is isolation results in less confidence in the identification of features, observing both audio and video simultaneously and annotating that observation results in a higher confidence level.

Owner:SINOEAST CONCEPT

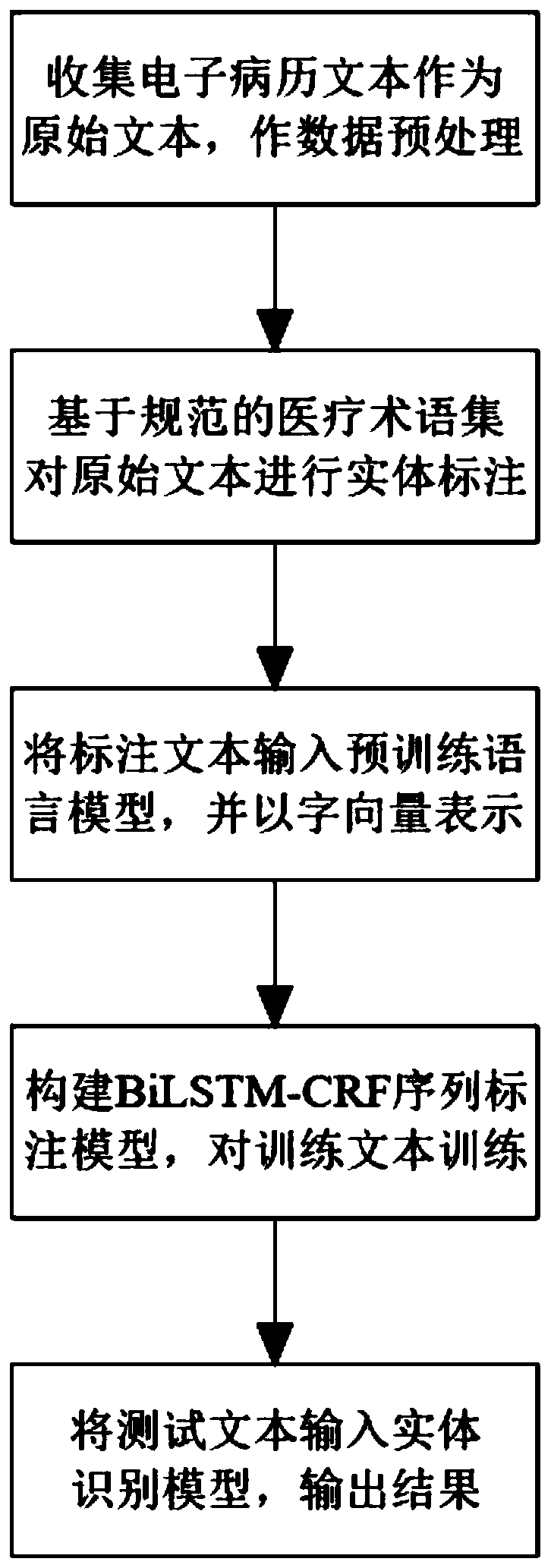

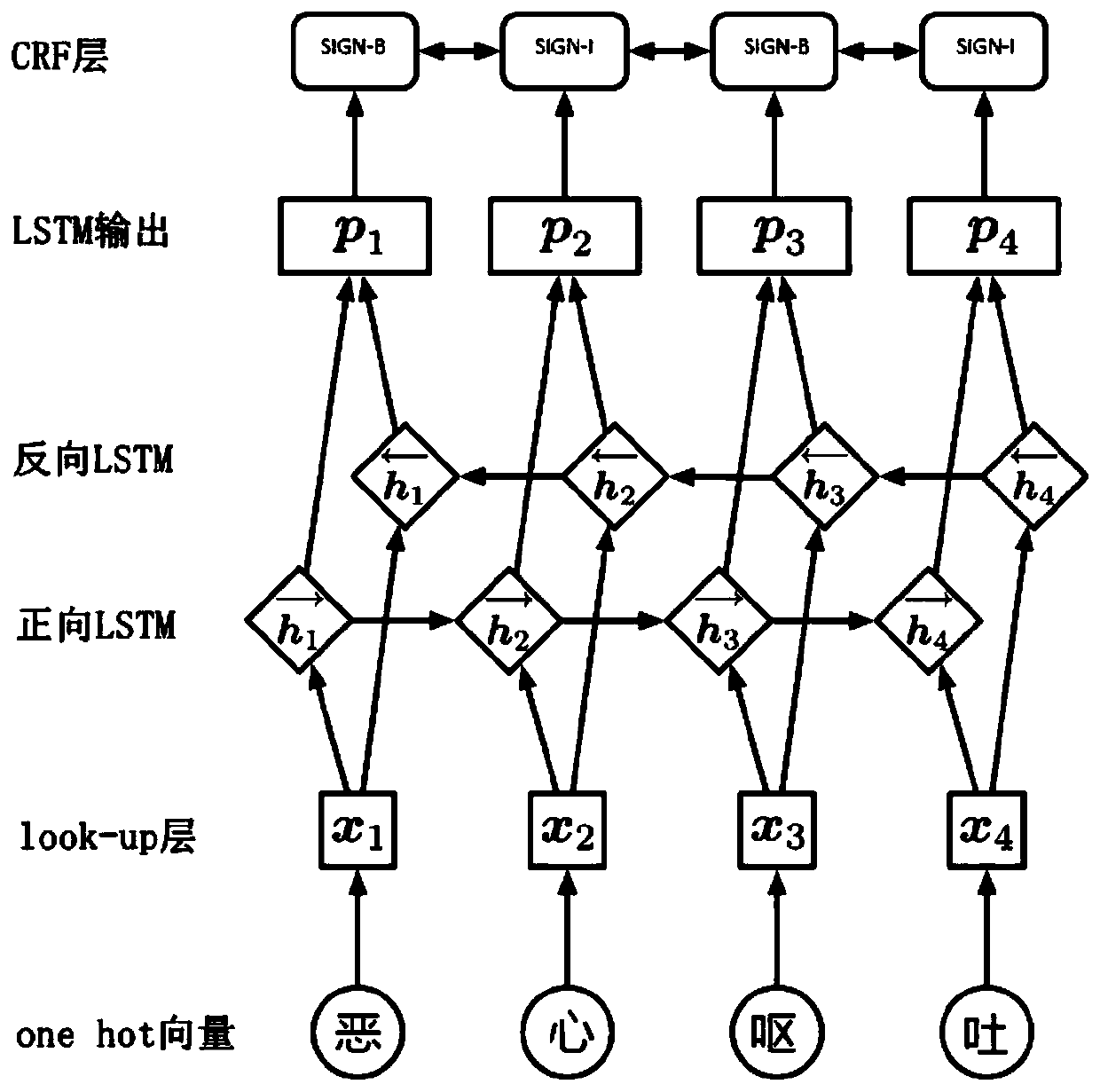

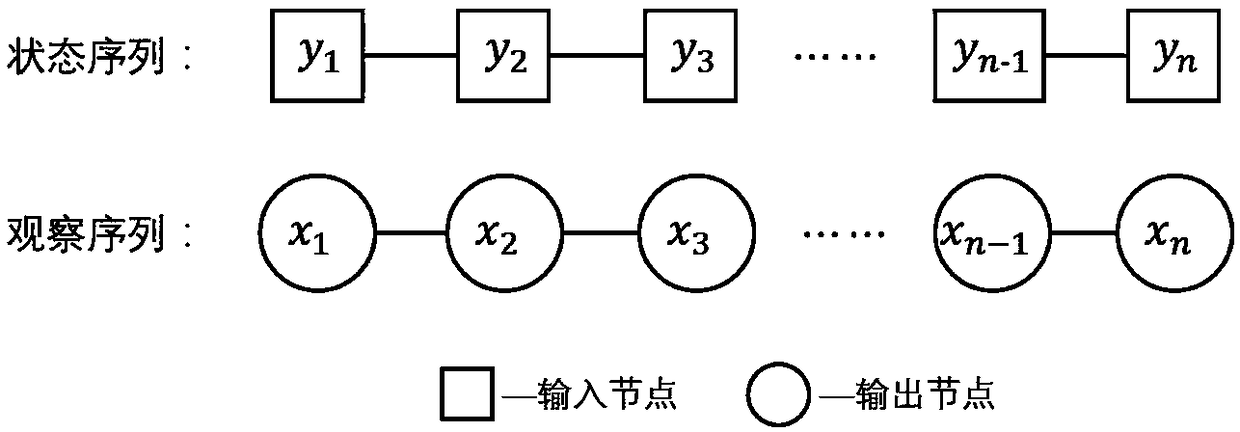

Electronic medical record text named entity recognition method based on pre-trained language model

PendingCN110705293AImprove recallImprove accuracySemantic analysisCharacter and pattern recognitionMedical recordManual annotation

The invention belongs to the technical field of medical information data processing, and particularly relates to an electronic medical record text named entity recognition method based on a pre-training language model, which comprises the following steps: collecting an electronic medical record text from a public data set as an original text, and preprocessing the original text; labeling the preprocessed original text entity based on the standard medical term set to obtain a labeled text; inputting the annotation text into a pre-training language model to obtain a training text represented bya word vector; constructing a BiLSTM-CRF sequence labeling model, and learning the training text to obtain a trained labeling model; and taking the trained labeling model as an entity recognition model, and inputting a test text to output a labeled category label sequence. According to the method, text features and semantic information in the deep language model are obtained through training in the super-large-scale Chinese corpus, a better semantic compression effect can be provided, the problem that manual annotation is tedious and complex is avoided, the method does not depend on dictionaries and rules, and the recall ratio and accuracy of named entity recognition are improved.

Owner:SUZHOU INST OF BIOMEDICAL ENG & TECH CHINESE ACADEMY OF SCI

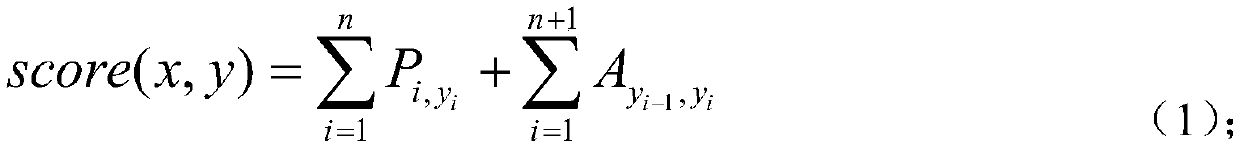

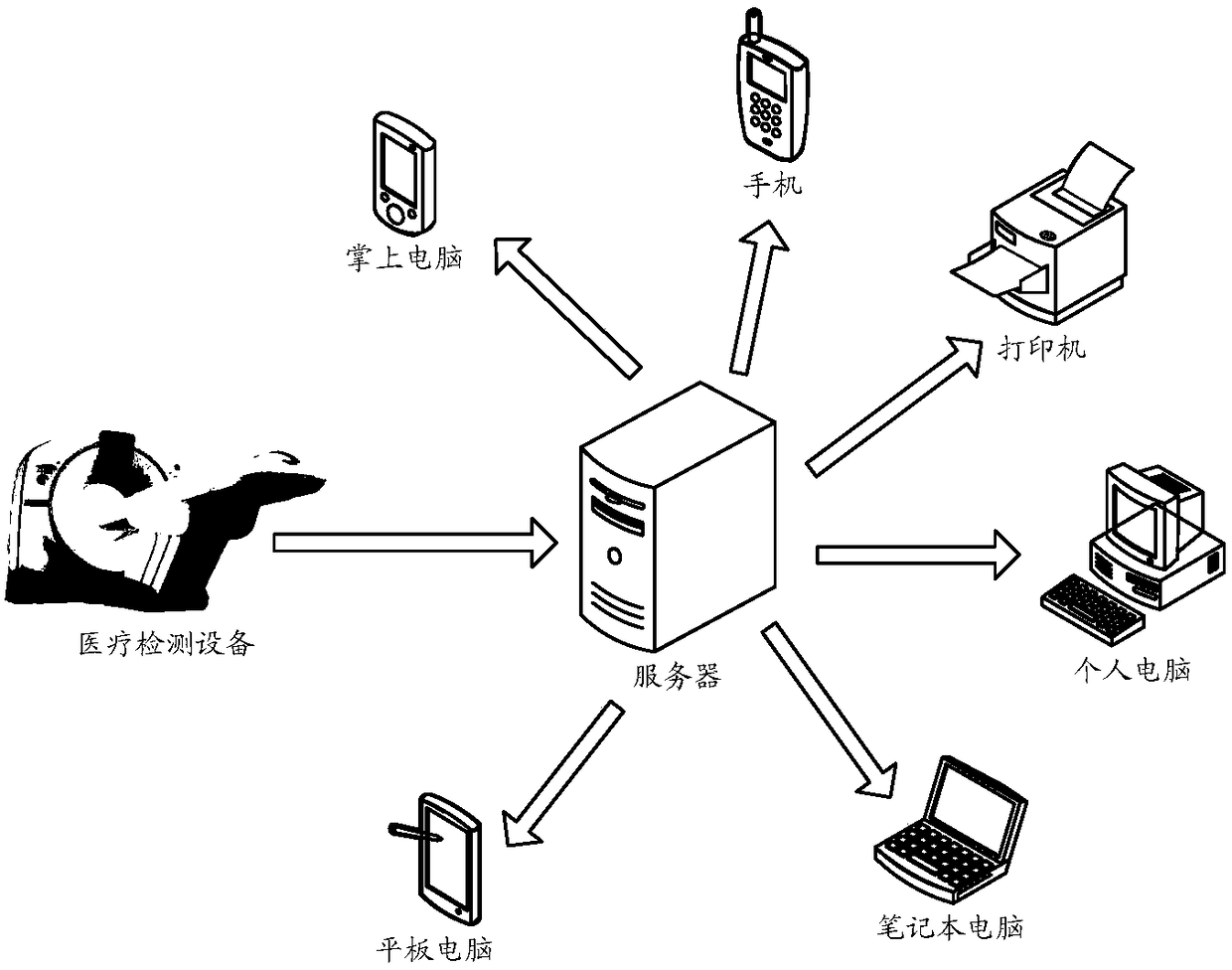

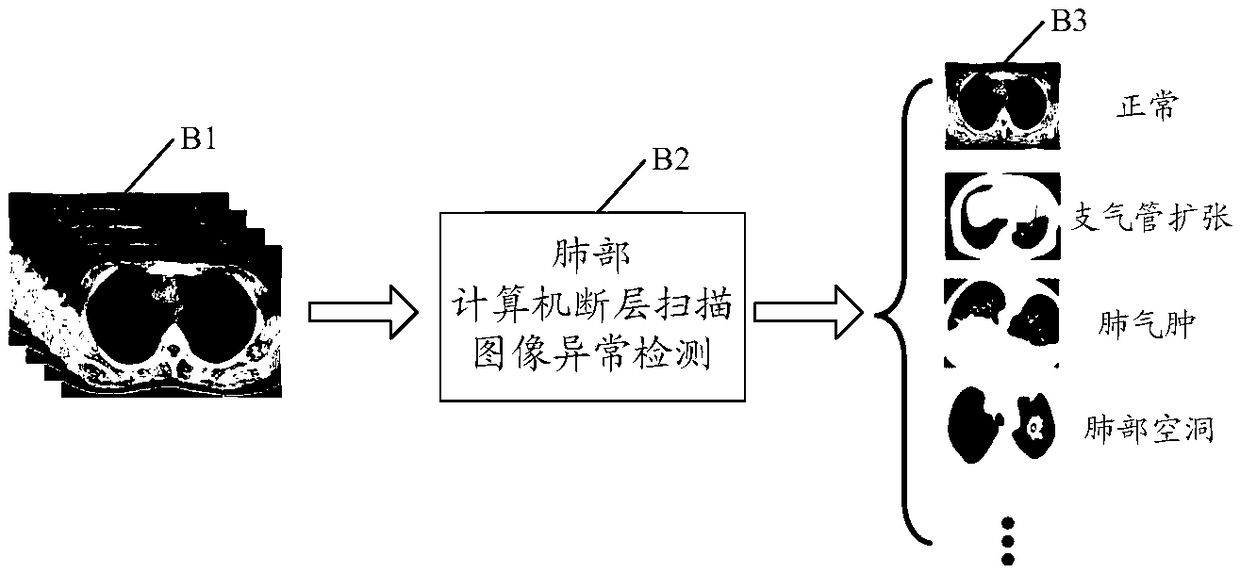

Method for identifying medical image, method for model training and server

ActiveCN109461495ASave time and costAccuracy biasUltrasonic/sonic/infrasonic diagnosticsImage enhancementPattern recognitionManual annotation

The embodiment of the invention discloses a method for identifying a medical image. The method comprises the steps that a to-be-identified medical image set is obtained, wherein the to-be-identified medical image set comprises at least one to-be-identified medical image; a to-be-identified area corresponding to each one to-be-identified medical image in the to-be-identified medical image set is extracted, wherein the to-be-identified area belongs to a part of the to-be-identified medical images; the recognition result of each to-be-identified area is determined through a medical image recognition model, the medical image recognition model is obtained according to training of a medical image sample set, the medical image sample set comprises at least one medical image sample, each medical image sample carries corresponding annotation information, the annotation information is used for indicating the types of the medical image samples, and the recognition result is used for indicating the types of the to-be-identified medical images. The embodiment of the invention also discloses a method for model training and a server. The method for identifying the medical image, the method for model training and the server greatly save the manual annotation cost and time cost, and have stronger reliability and credibility.

Owner:TENCENT TECH (SHENZHEN) CO LTD

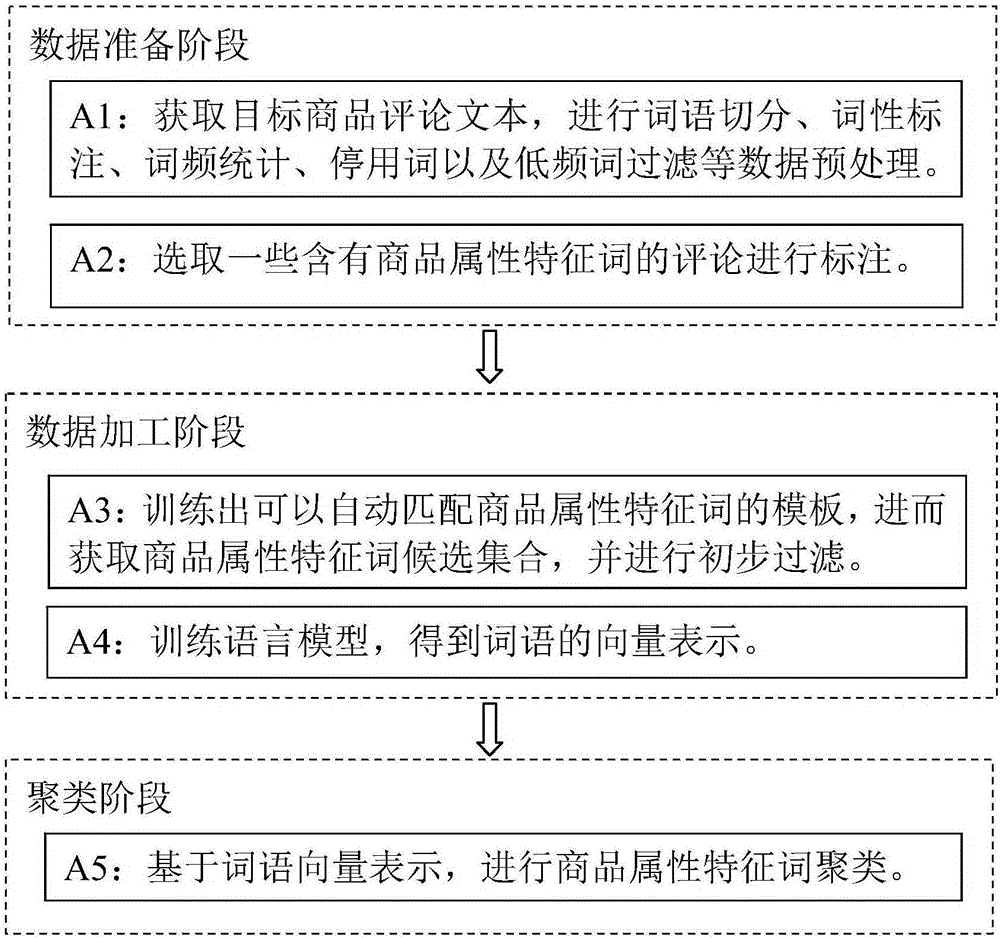

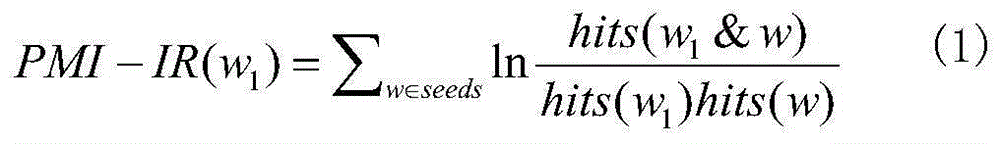

Commodity property characteristic word clustering method

ActiveCN105243129AEnhance expressive abilityReduce the numberSemantic analysisSpecial data processing applicationsPart of speechManual annotation

The present invention relates to a commodity property characteristic word clustering method. The method comprises the following steps: A1: obtaining comment texts of a target commodity from related e-commerce websites, and performing data preprocessing; A2: selecting a comment text containing commodity property characteristic words, performing manual annotation on the commodity property characteristic words, and using the manually annotated commodity property characteristic words as a training sample of an obtained part-of-speech template; A3: training the part-of-speech template according to the manually annotated data in the A2; A4: using data obtained in the A1 to train a language model, thereby obtaining a word vector representation; and A5: using a word vector obtained in the A4 to perform clustering on the commodity property characteristic words obtained in the A3, thereby obtaining a final property characteristic word set of the target commodity. The commodity property characteristic word clustering method provided by the present invention can be applied to a commodity recommendation system based on a commodity comment text. The number of commodity property characteristic words can be reduced by clustering, so that characteristic dimensions and characteristic sparsity are reduced, and the designed recommendation system is faster and more accurate.

Owner:SHENZHEN GRADUATE SCHOOL TSINGHUA UNIV

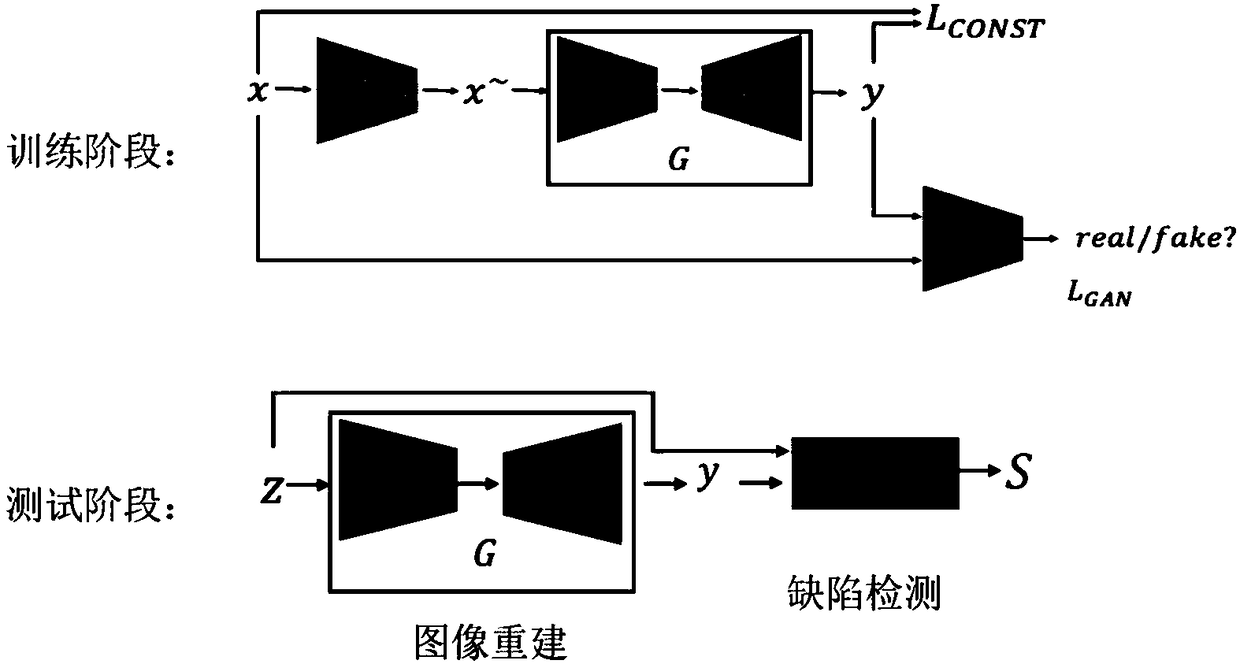

Surface defect detection method based on positive case training

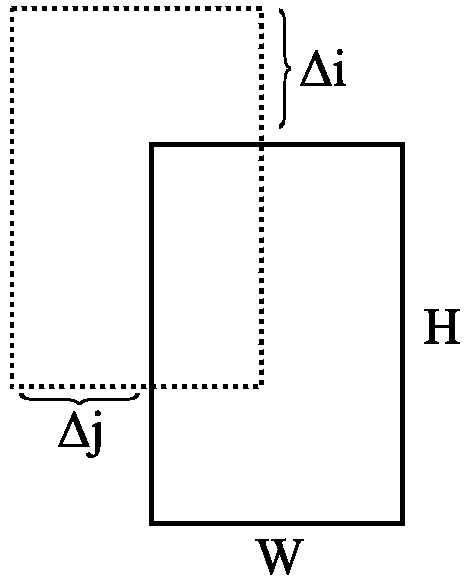

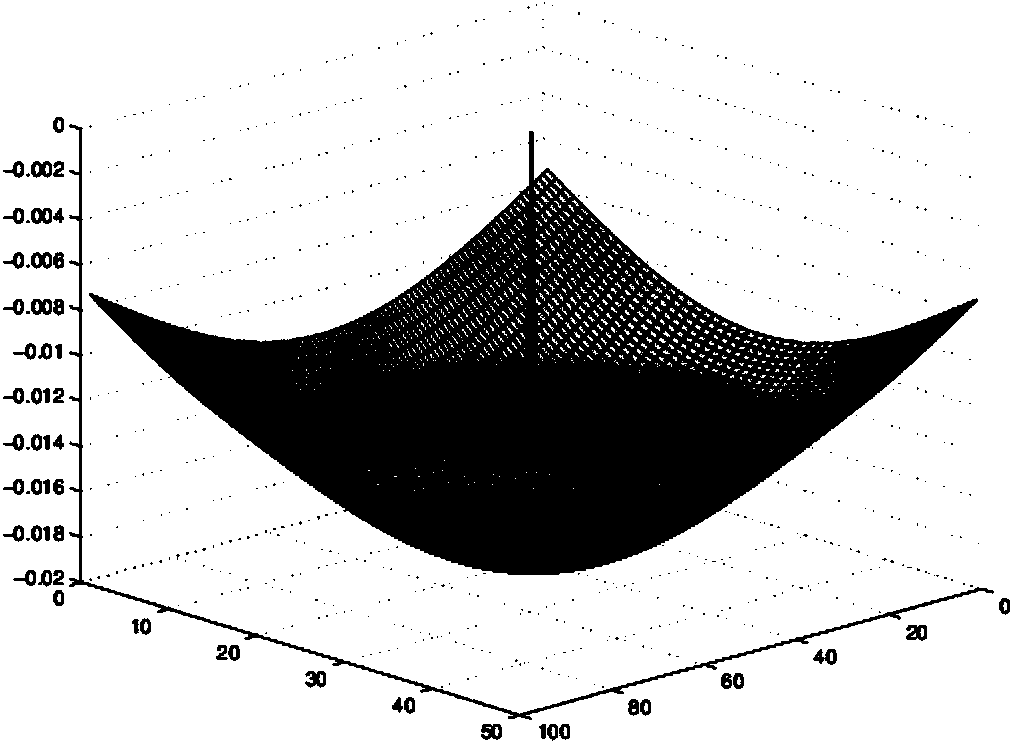

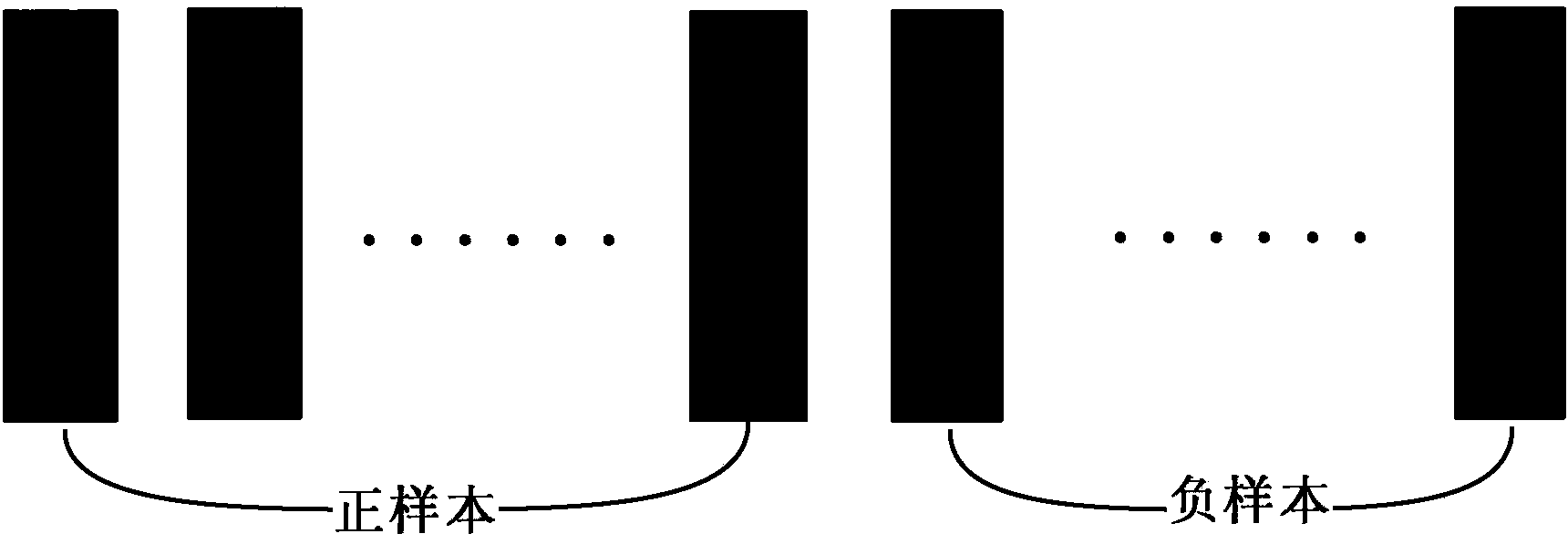

ActiveCN108961217ALarge capacityComprehensive parametersImage enhancementImage analysisPositive sampleManual annotation

The invention relates to a surface defect detection method based on positive case training. The method comprises two steps of image reconstruction and defect detection, image reconstruction is to reconstruct an inputted original image into an image without defects, reconstruction steps are as follows, artificial defects and noise are added to the positive case image during training, a self-encoderis utilized for reconstruction, the L1 distance between the reconstruction result and the noise-free original image is calculated, the distance is minimized as a reconstruction target, in cooperationwith the generative adversarial network, the reconstruction image effect is optimized; defect detection is performed after image reconstruction, LBP features of the reconstructed image and the original image are calculated, after difference between the two feature images is made, the two images are binarized based on the fixed threshold, so the defects are found. The method is advantaged in thatthe depth learning method is utilized, the method can be sufficiently robust to be less susceptible to environmental changes when positive samples are enough, moreover, based on regular training, themethod does not rely on a large number of negative samples and manual annotation, the method is suitable for being used in real-world scenarios, and the surface defects can be better detected.

Owner:NANJING UNIV +2

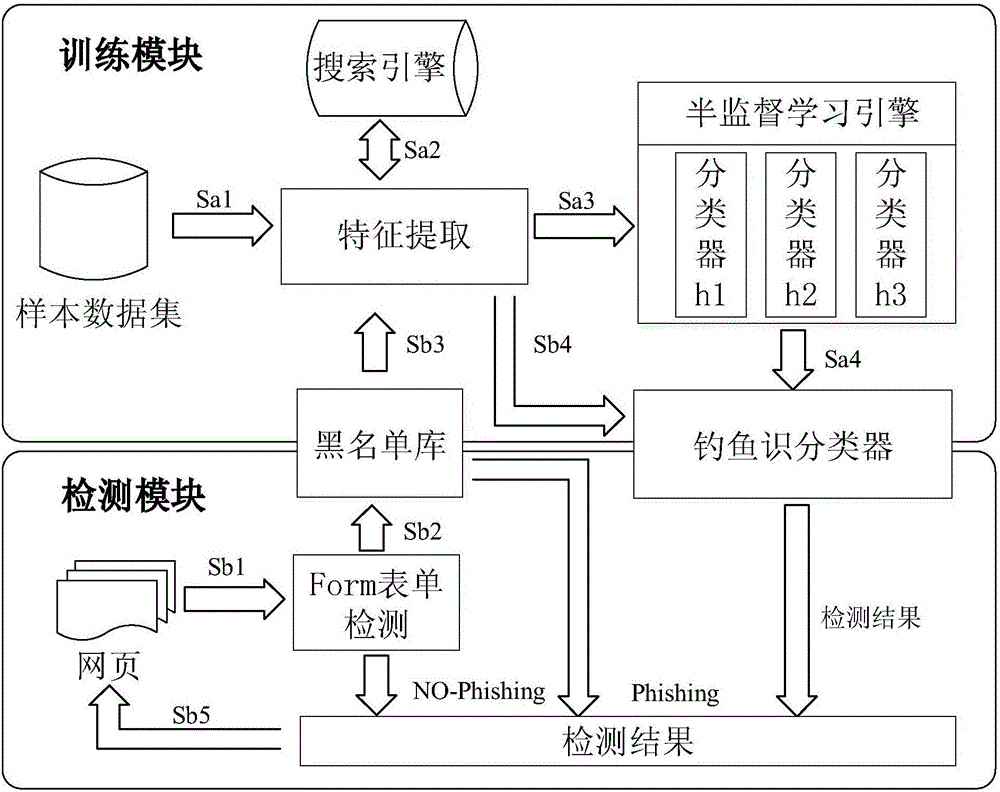

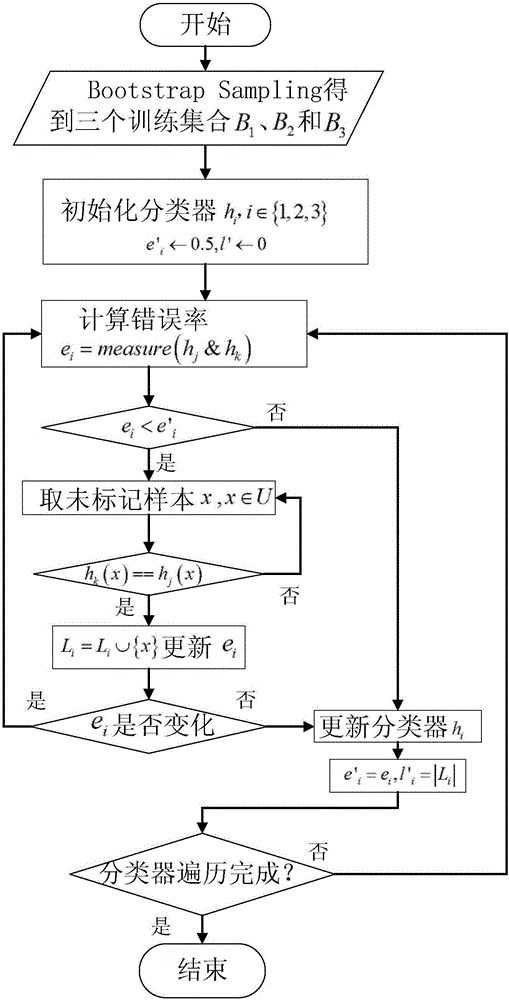

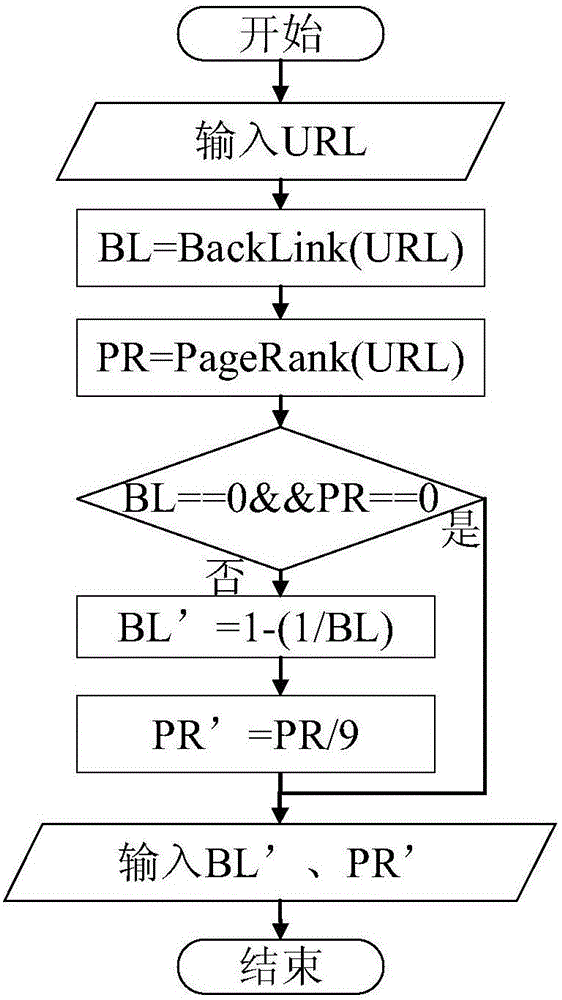

Multi-feature fusion phishing webpage detection method

ActiveCN106789888AEasy economic lossHigh false positive rateWeb data retrievalTransmissionManual annotationCategorical models

The invention relates to a multi-feature fusion phishing webpage detection method, which comprises two parts such as a training process and a detection process. The multi-feature fusion phishing webpage detection method integrates three views of phishing webpage characteristics by combining a semi-supervised learning tri-training method, and mainly solves a problem that the existing phishing webpage detection methods mostly need to perform classification model training by using supervised learning through a large amount of annotation data. The method provided by the invention mainly combines a coordinated training algorithm, starts from webpage URL characteristics, webpage information characteristics and webpage search information characteristics, applies the idea of multiple views and multiple classifiers to phishing webpage detection, and achieves the purposes of reducing the total numbers of manual annotation training samples and timely recognizing a phishing webpage through coordinated training and learning of different classifiers.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

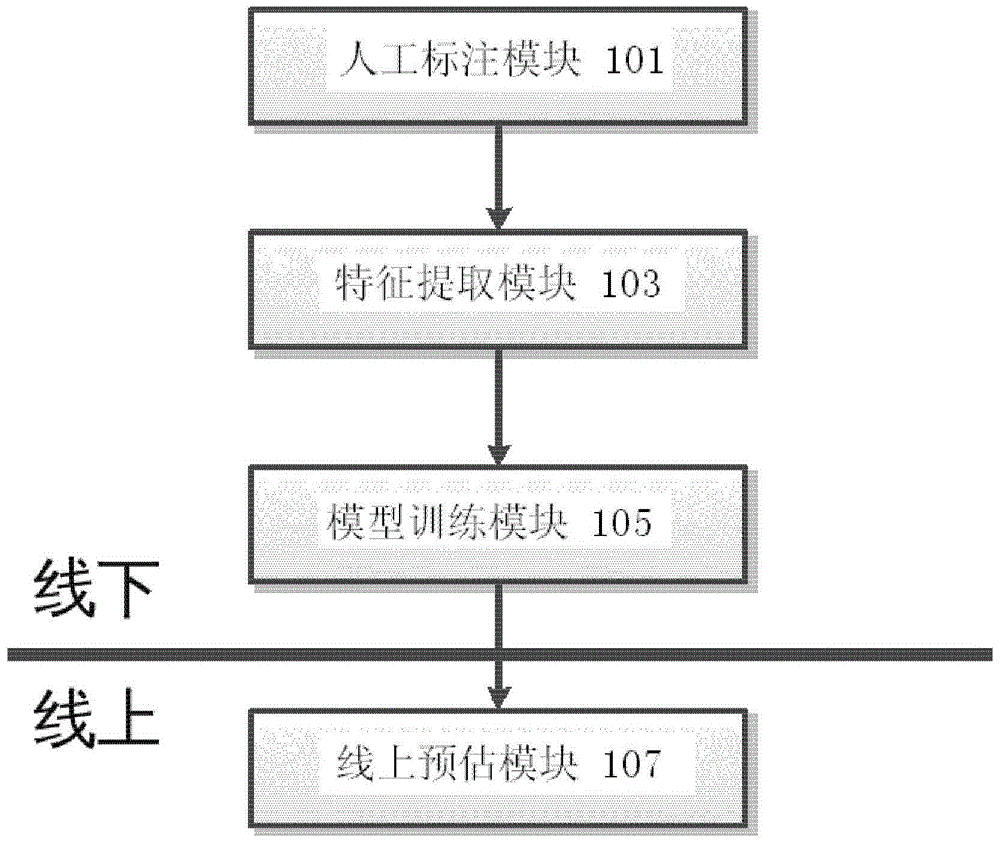

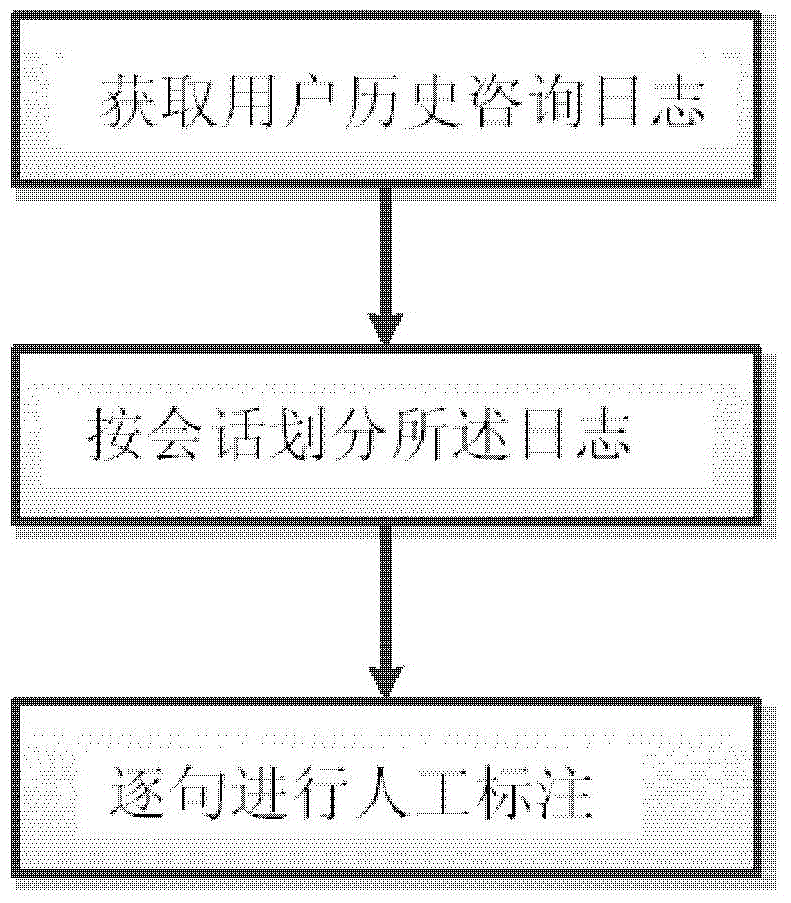

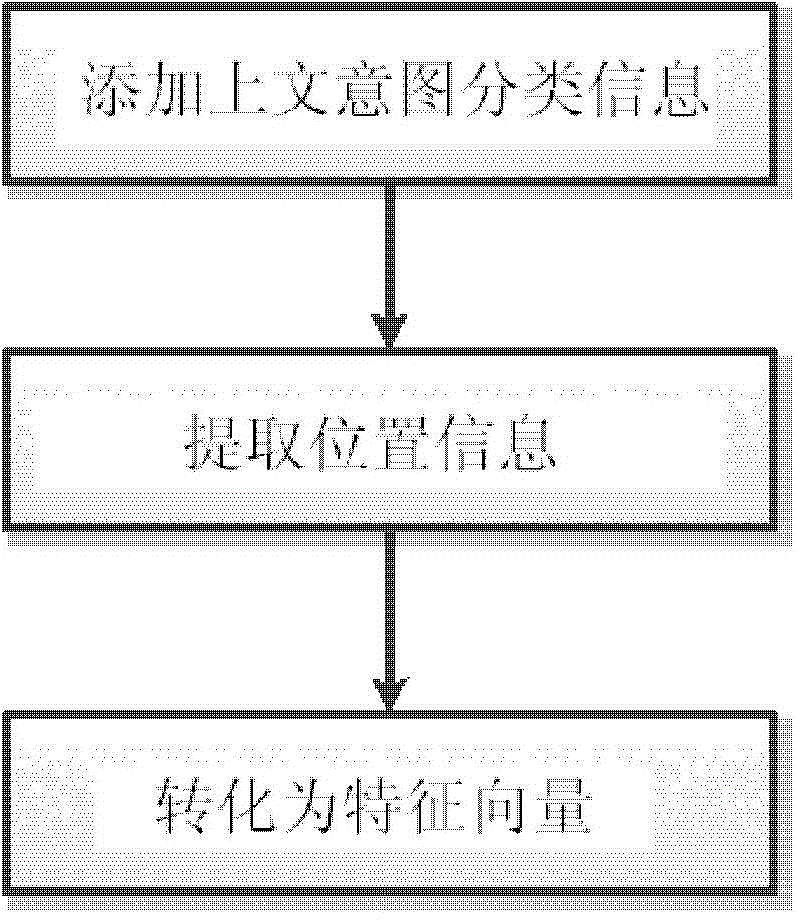

Method and system for intention recognition based on context

The invention discloses a method and system for intention recognition based on a context. The method comprises the steps of carrying out manual annotation on problems provided by a user and included in a user historical consulting log on the basis of the user historical consulting log; extracting features of each problem to generate a training corpus; carrying out model training on the generated training corpuses according to a supervised learning algorithm to obtain a training model; estimating the current problems and obtaining an estimated user intention recognition result on the basis of the obtained training model.

Owner:BEIJING JINGDONG SHANGKE INFORMATION TECH CO LTD +1

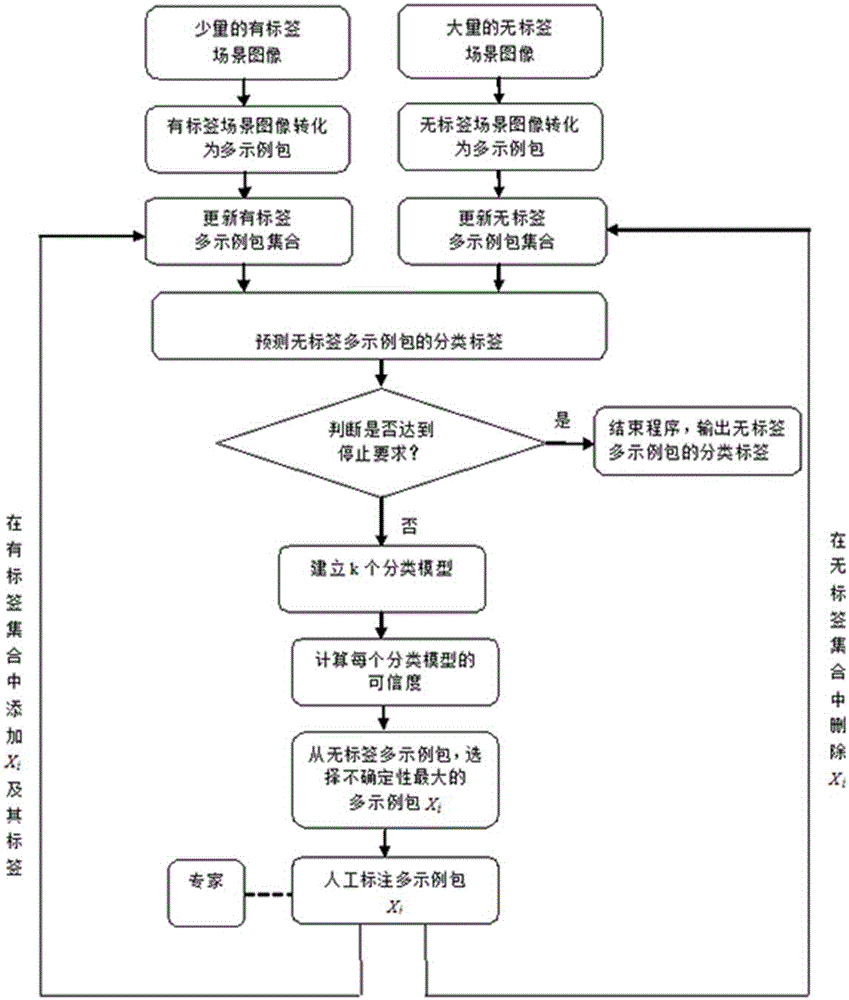

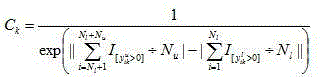

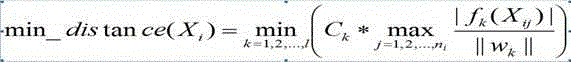

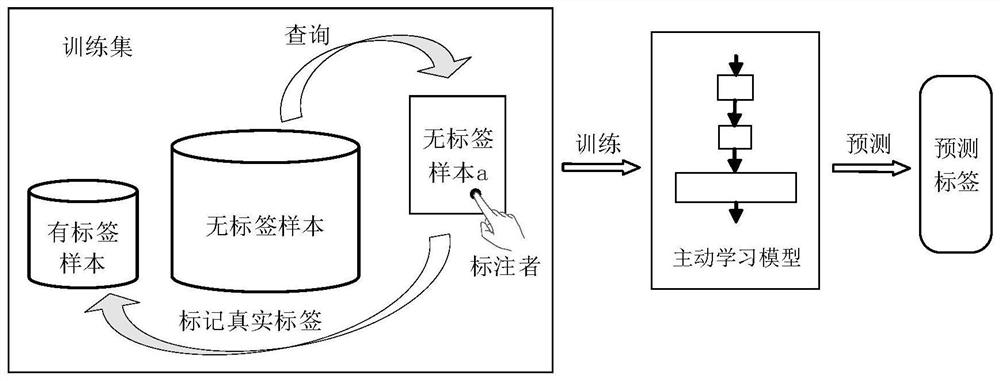

Scenario image annotation method based on active learning and multi-label multi-instance learning

InactiveCN105117429AGuaranteed accuracyHigh precisionCharacter and pattern recognitionSpecial data processing applicationsPattern recognitionManual annotation

The present invention is directed to two fundamental characteristics of a scene image: (1) the scene image often containing complex semantics; and (2) a great number of manual annotation images taking high labor cost. The invention further discloses a scene image annotation method based on an active learning and a multi-label and multi-instance learning. The method comprises: training an initial classification model on the basis of a label image; predicting a label to an unlabeled image; calculating a confidence of the classification model; selecting an unlabeled image with the greatest uncertainty; experts carrying on a manual annotation on the image; updating an image set; and stopping when an algorithm meeting the requirements. An active learning strategy utilized by the method ensures accuracy of the classification model, and significantly reduces the quantity of the scenario image needed to be manually annotated, thereby decreasing the annotation cost. Moreover, according to the method, the image is converted to a multi-label and multi-instance data, complex semantics of the image has a reasonable demonstration, and accuracy of image annotation is improved.

Owner:GUANGDONG UNIV OF TECH

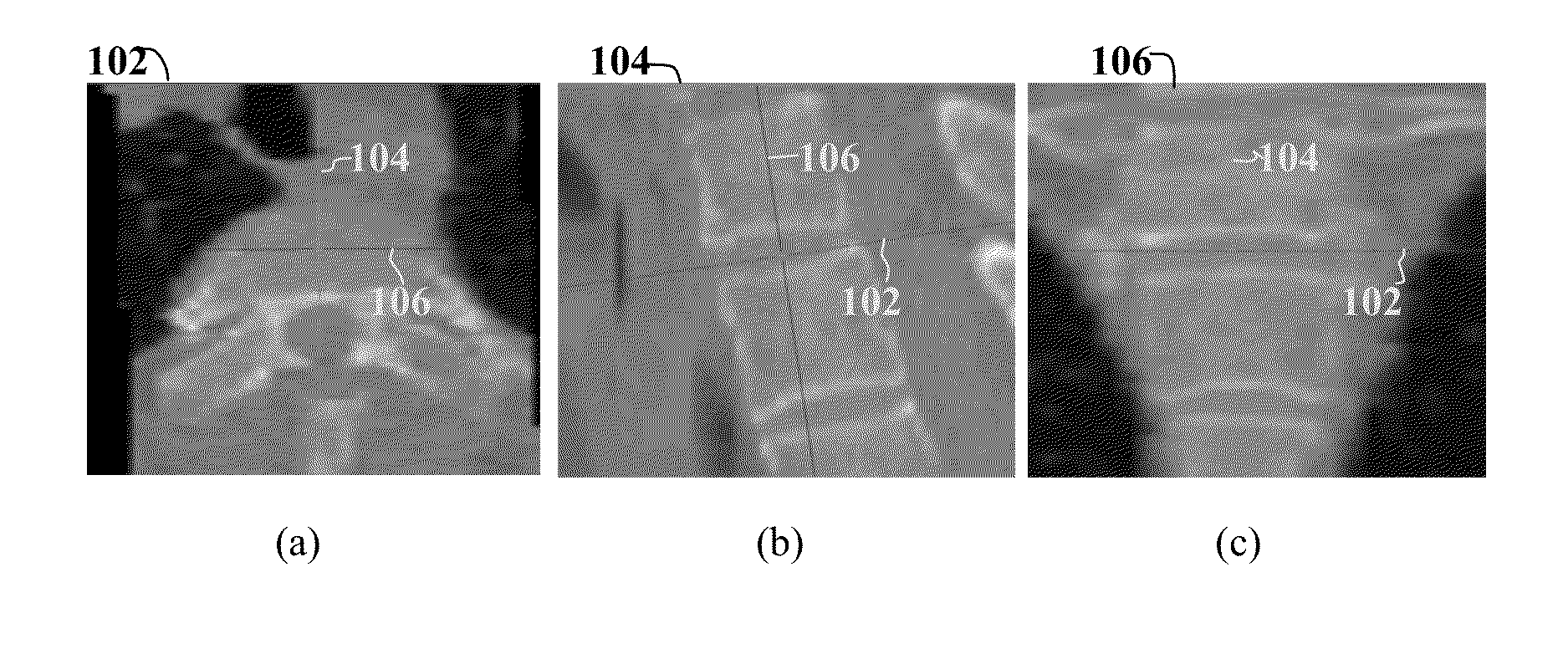

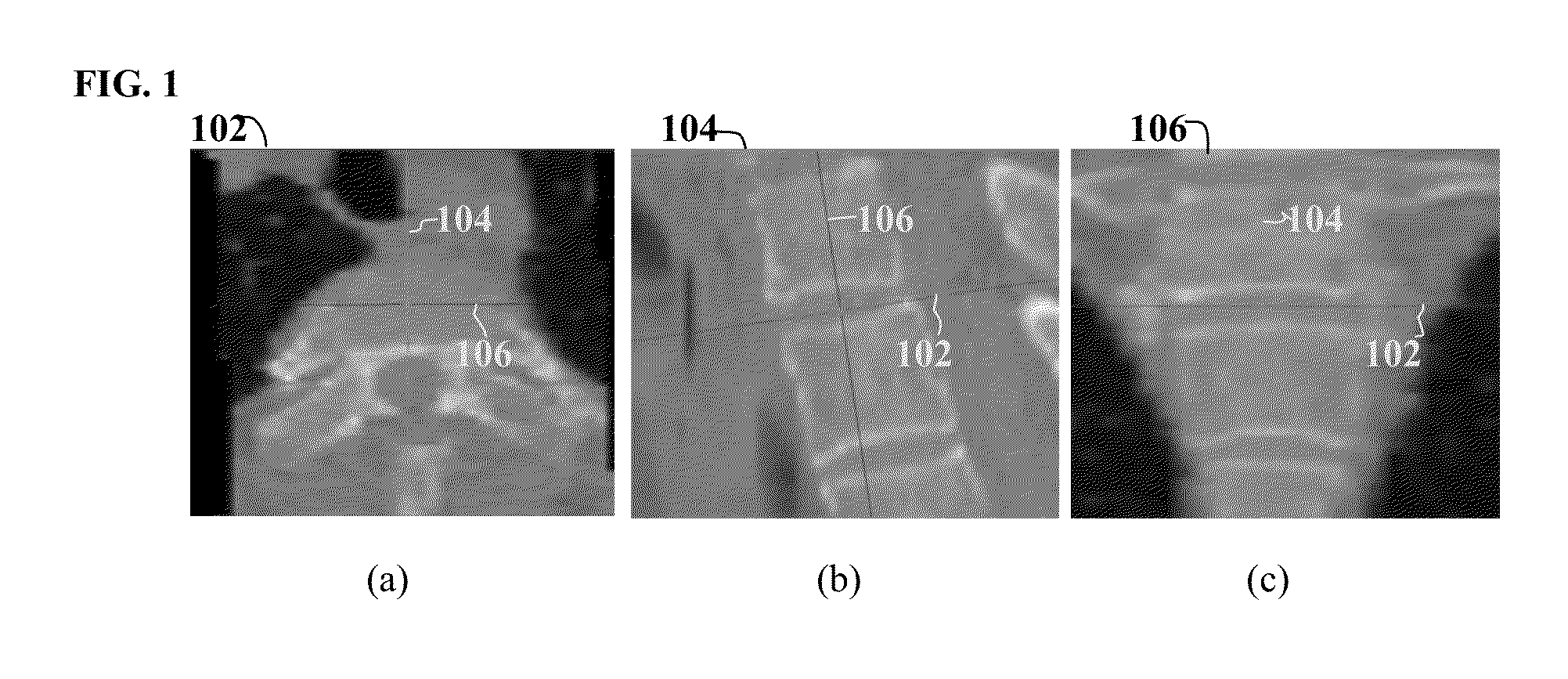

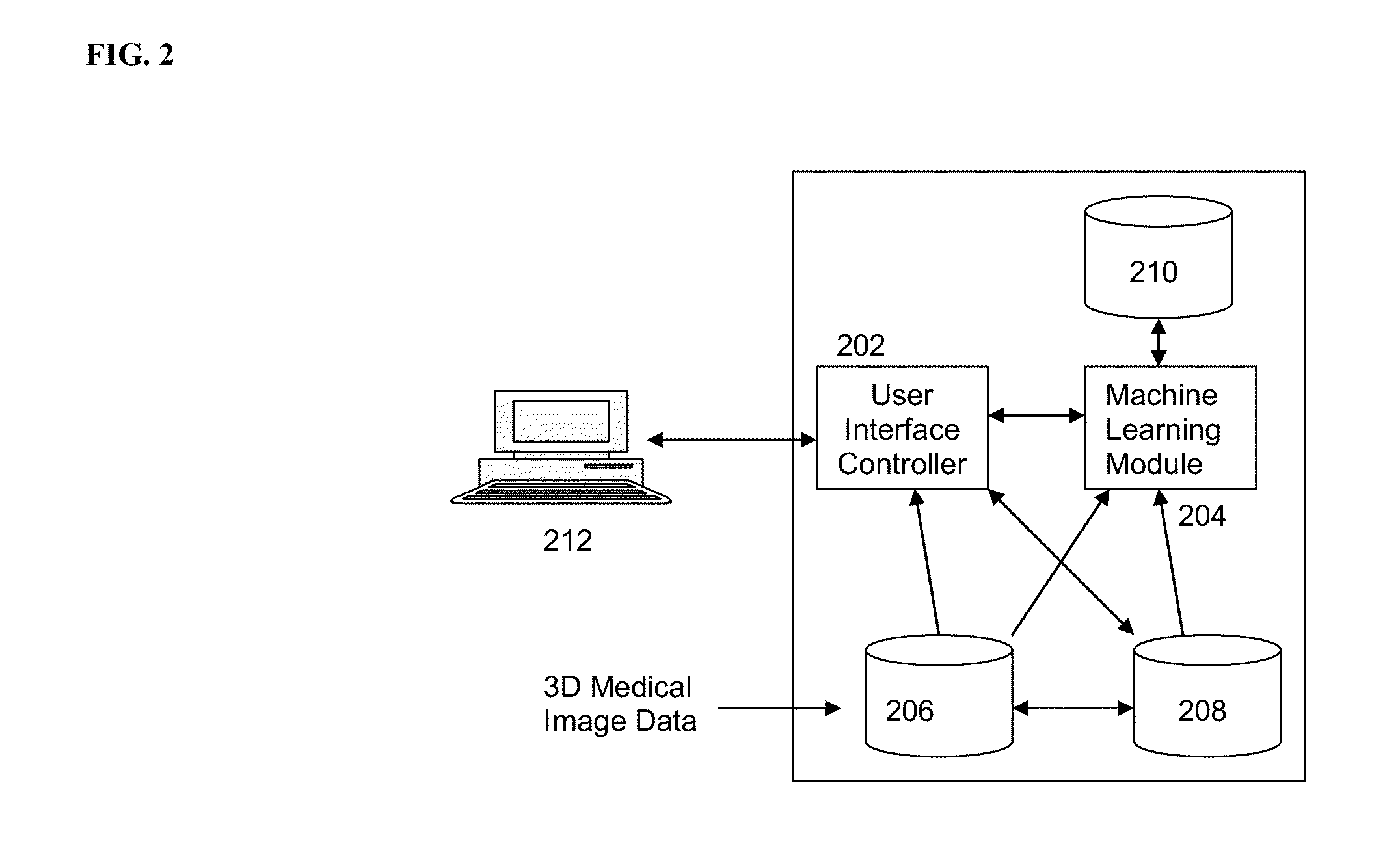

Method and System for On-Site Learning of Landmark Detection Models for End User-Specific Diagnostic Medical Image Reading

A method and system for on-line learning of landmark detection models for end-user specific diagnostic image reading is disclosed. A selection of a landmark to be detected in a 3D medical image is received. A current landmark detection result for the selected landmark in the 3D medical image is determined by automatically detecting the selected landmark in the 3D medical image using a stored landmark detection model corresponding to the selected landmark or by receiving a manual annotation of the selected landmark in the 3D medical image. The stored landmark detection model corresponding to the selected landmark is then updated based on the current landmark detection result for the selected landmark in the 3D medical image. The landmark selected in the 3D medical image can be a set of landmarks defining a custom view of the 3D medical image.

Owner:SIEMENS HEALTHCARE GMBH

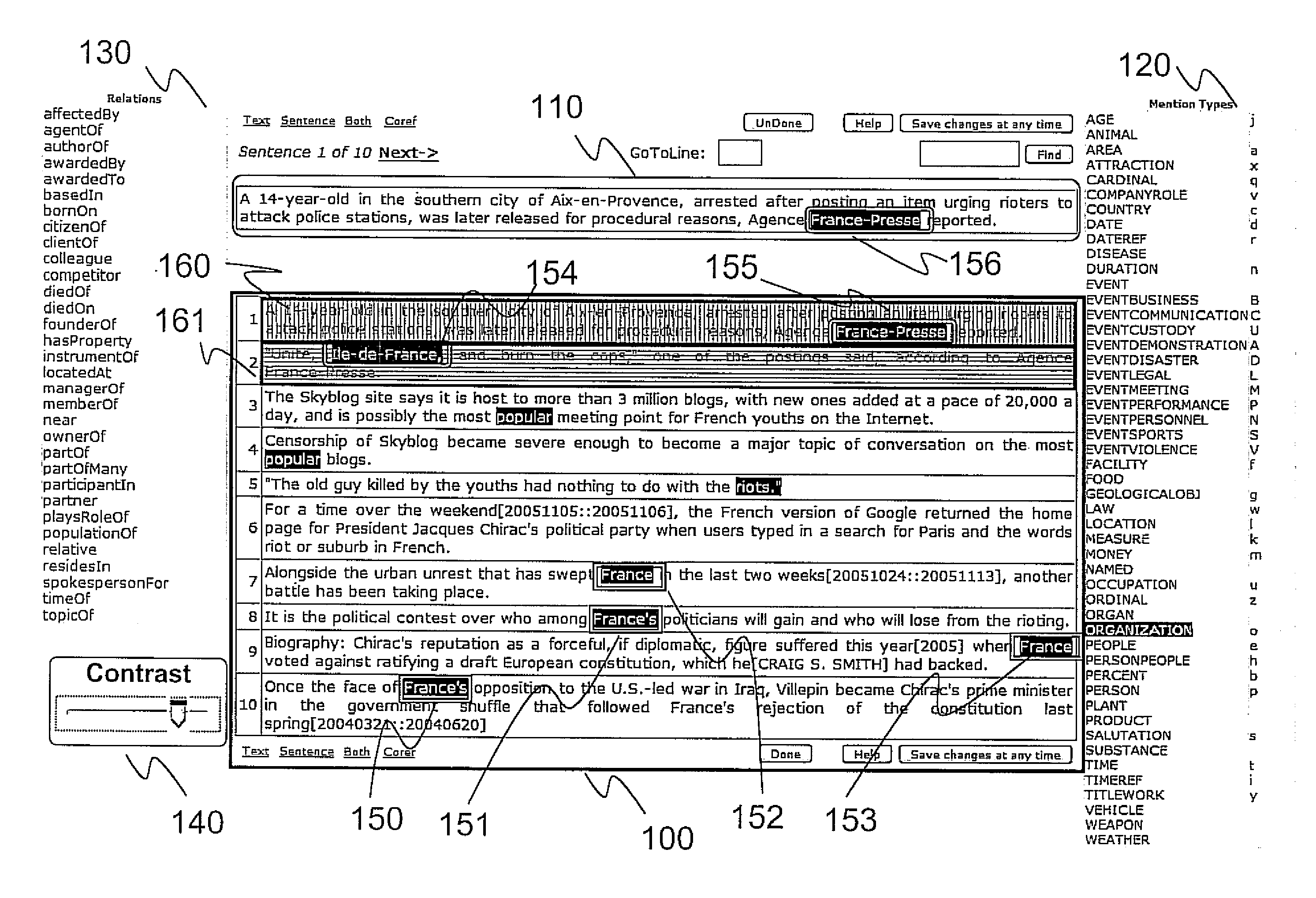

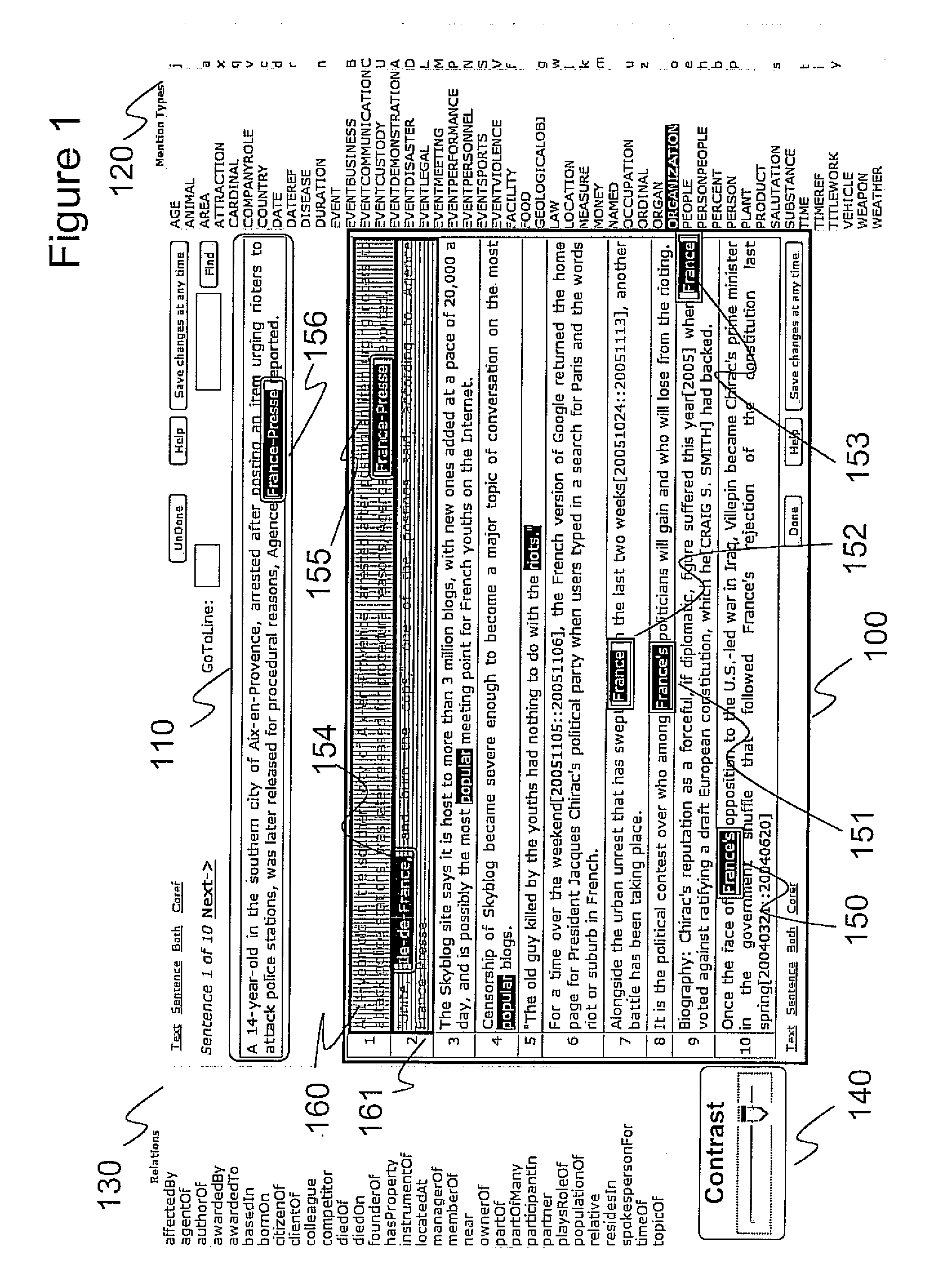

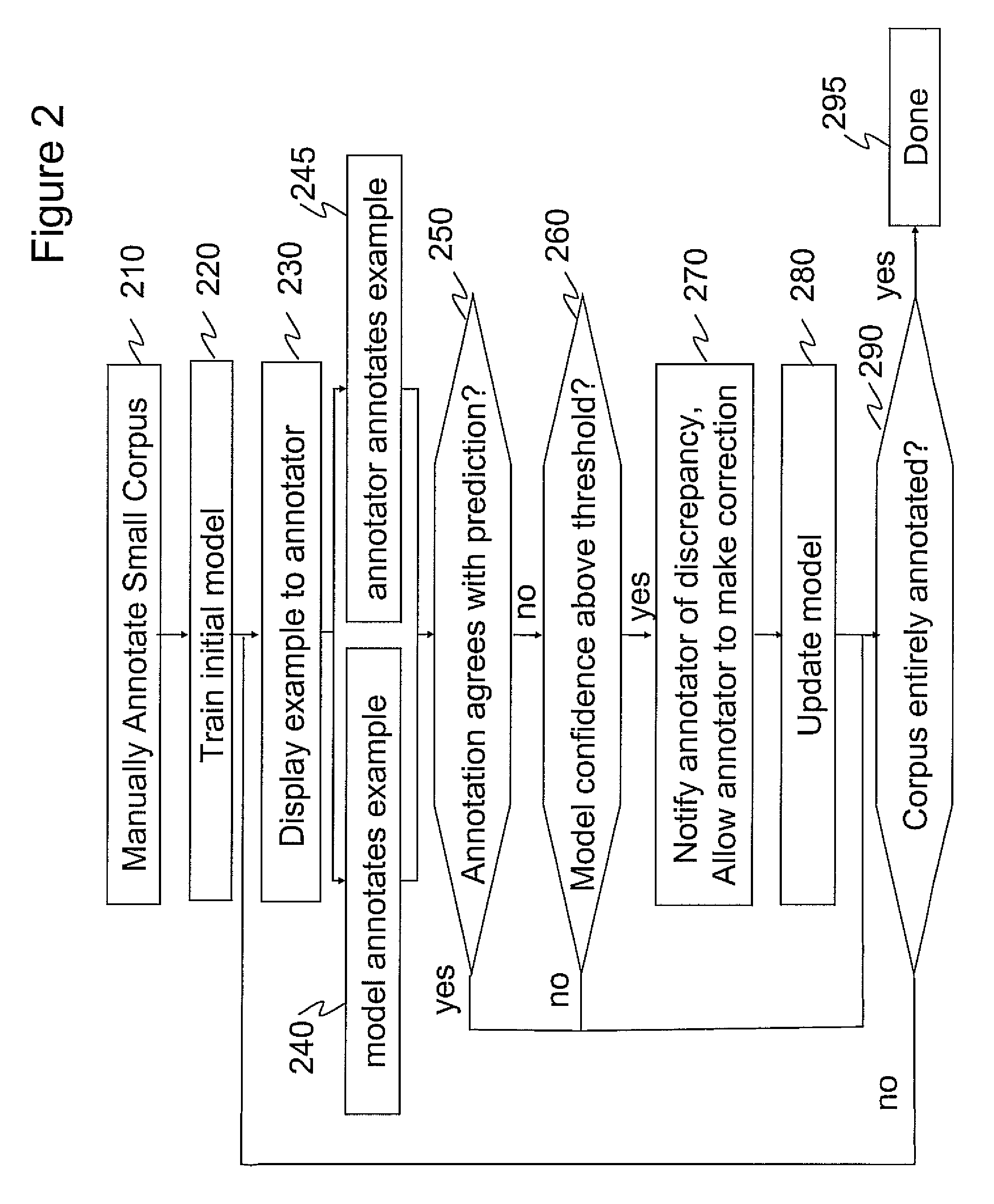

Model-driven feedback for annotation

InactiveUS20100023319A1ConfidenceNatural language analysisSpecial data processing applicationsData setManual annotation

A system, a method and a computer readable media for providing model-driven feedback to human annotators. In one exemplary embodiment, the method includes manually annotating an initial small dataset. The method further includes training an initial model using said annotated dataset. The method further includes comparing the annotations produced by the model with the annotations produced by the annotator. The method further includes notifying the annotator of discrepancies between the annotations and the predictions of the model. The method further includes allowing the annotator to modify the annotations if appropriate. The method further includes updating the model with the data annotated by the annotator.

Owner:IBM CORP

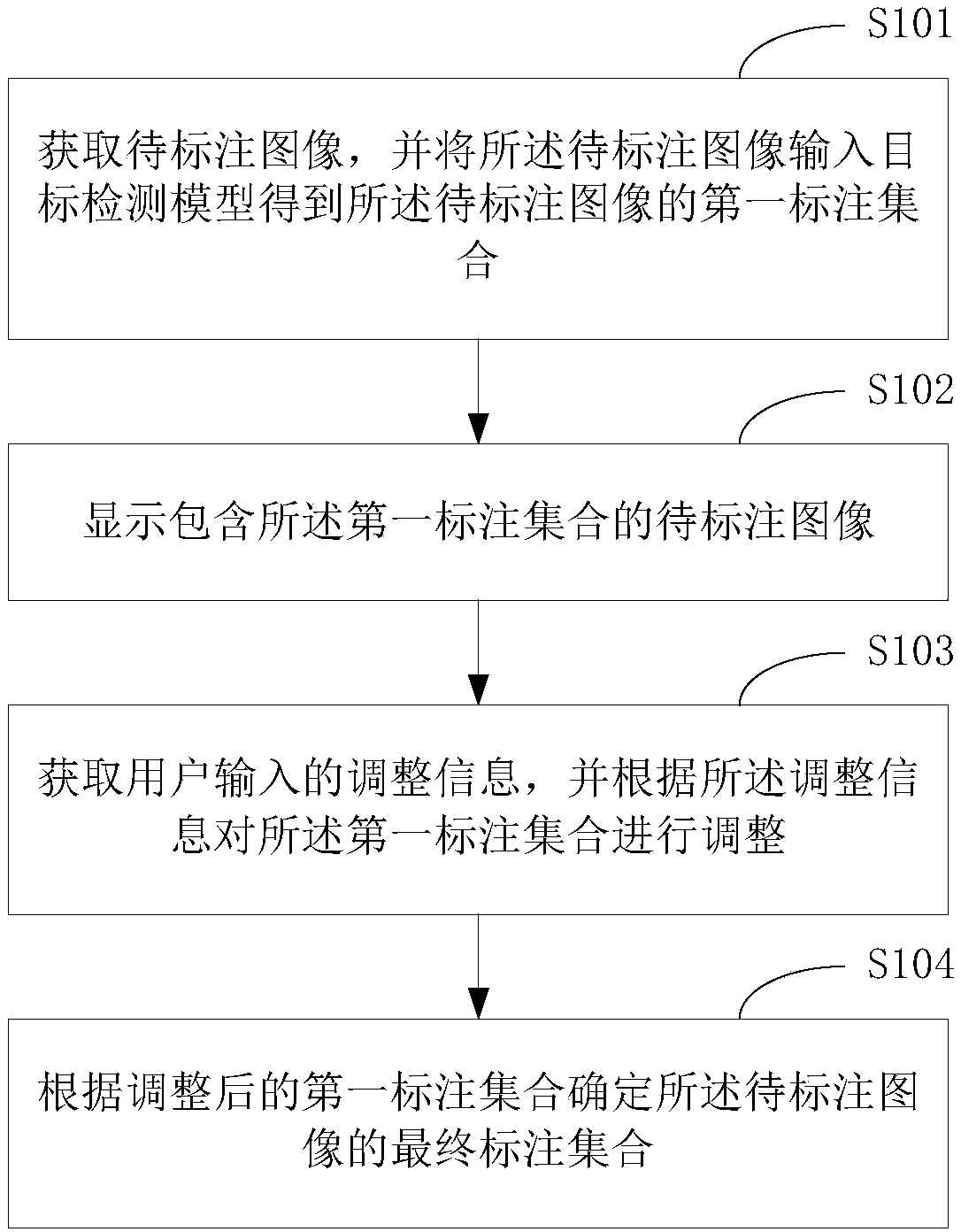

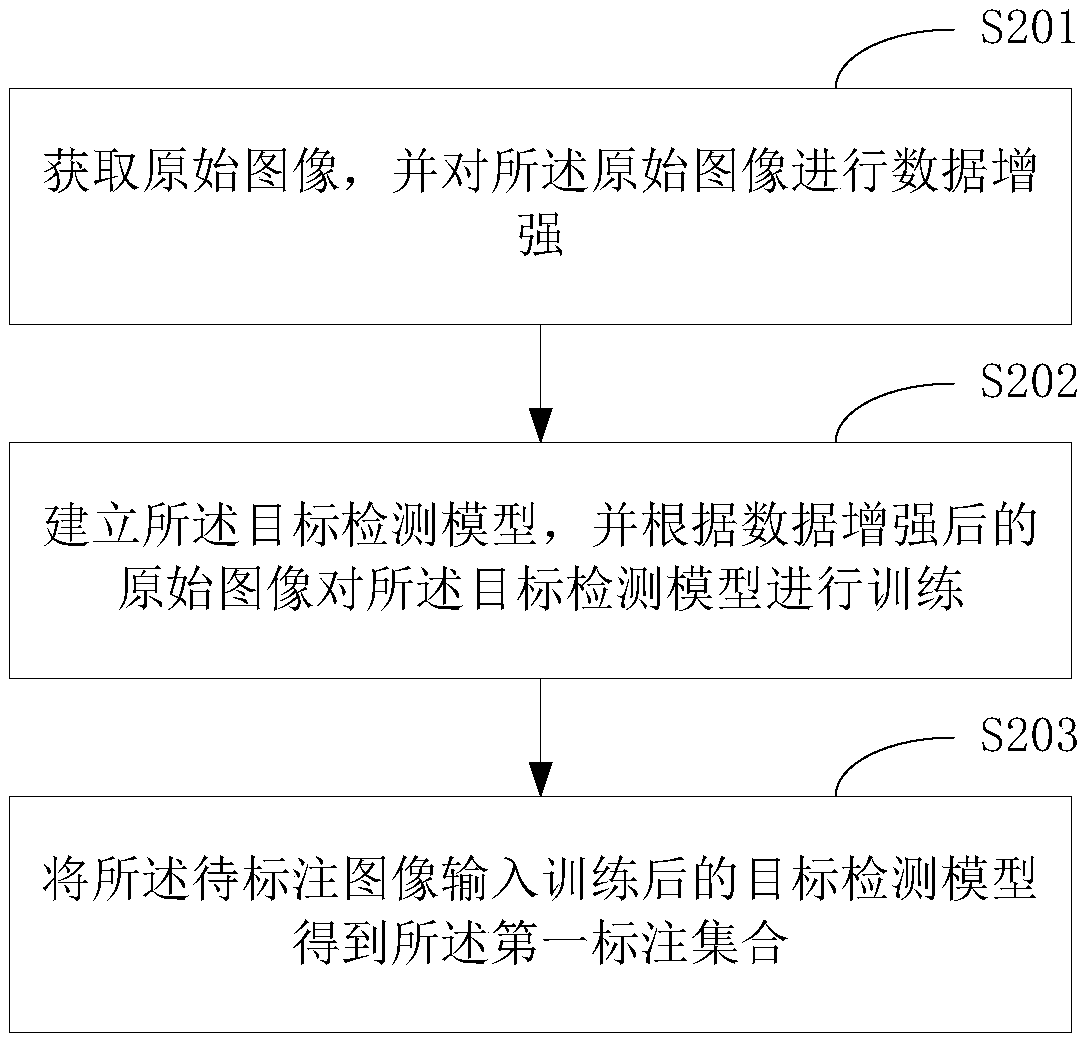

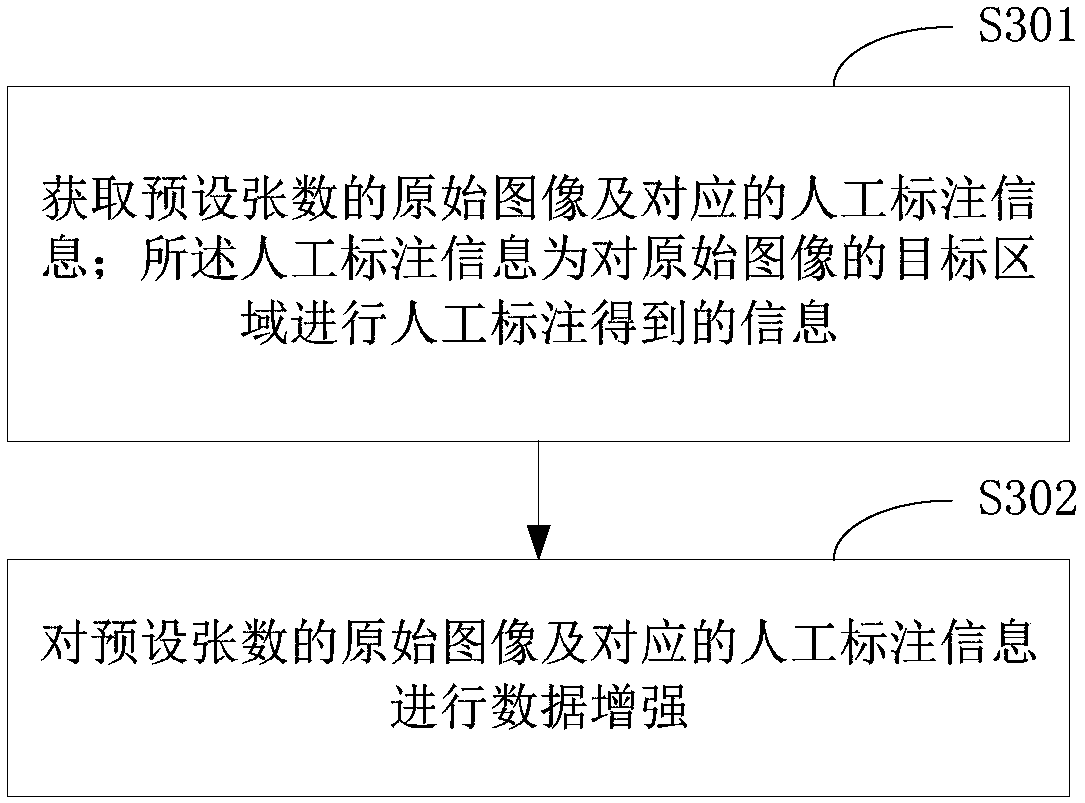

Image annotation method and terminal device

InactiveCN108573279AGuaranteed accuracyImproving the efficiency of image annotationCharacter and pattern recognitionImaging processingManual annotation

The invention relates to the image processing technology field and provides an image annotation method and a terminal device. The method comprises the following steps of acquiring an image to be annotated, and inputting the image to be annotated into a target detection model so as to obtain the first annotation set of the image to be annotated; displaying the image to be annotated containing the first annotation set; acquiring adjusting information input by a user, and according to the adjusting information, adjusting the first annotation set; and according to the adjusted first annotation set, determining the final annotation set of the image to be annotated. In the invention, the automatic annotation and the manual annotation of the image can be combined, image annotation efficiency is greatly increased, and annotation accuracy can be ensured.

Owner:RISEYE INTELLIGENT TECH SHENZHEN CO LTD

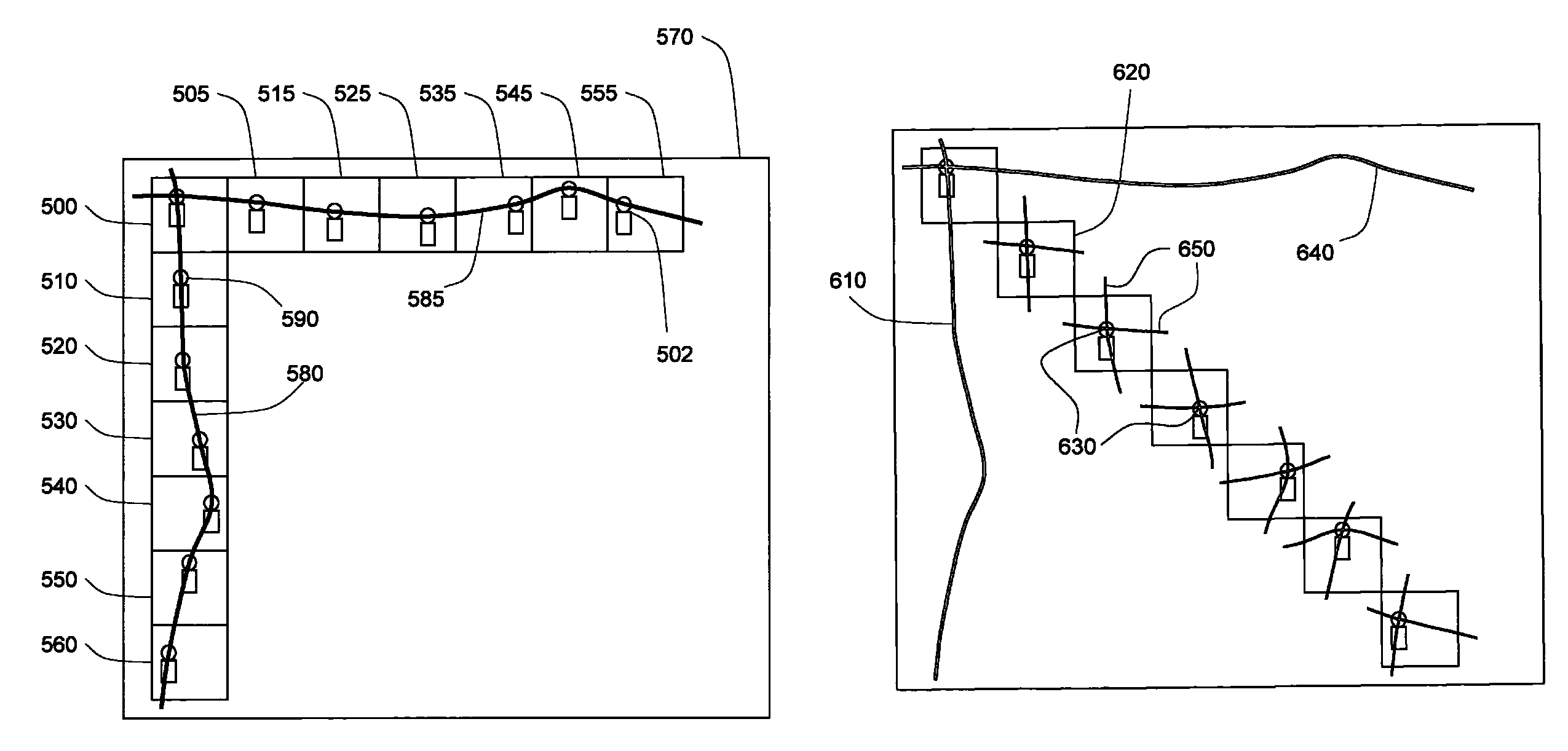

Method and system for efficient annotation of object trajectories in image sequences

InactiveUS7911482B1Effective markingSpeed up annotationDigital data information retrievalRecord information storageManual annotationTrack algorithm

Owner:XENOGENIC DEV LLC

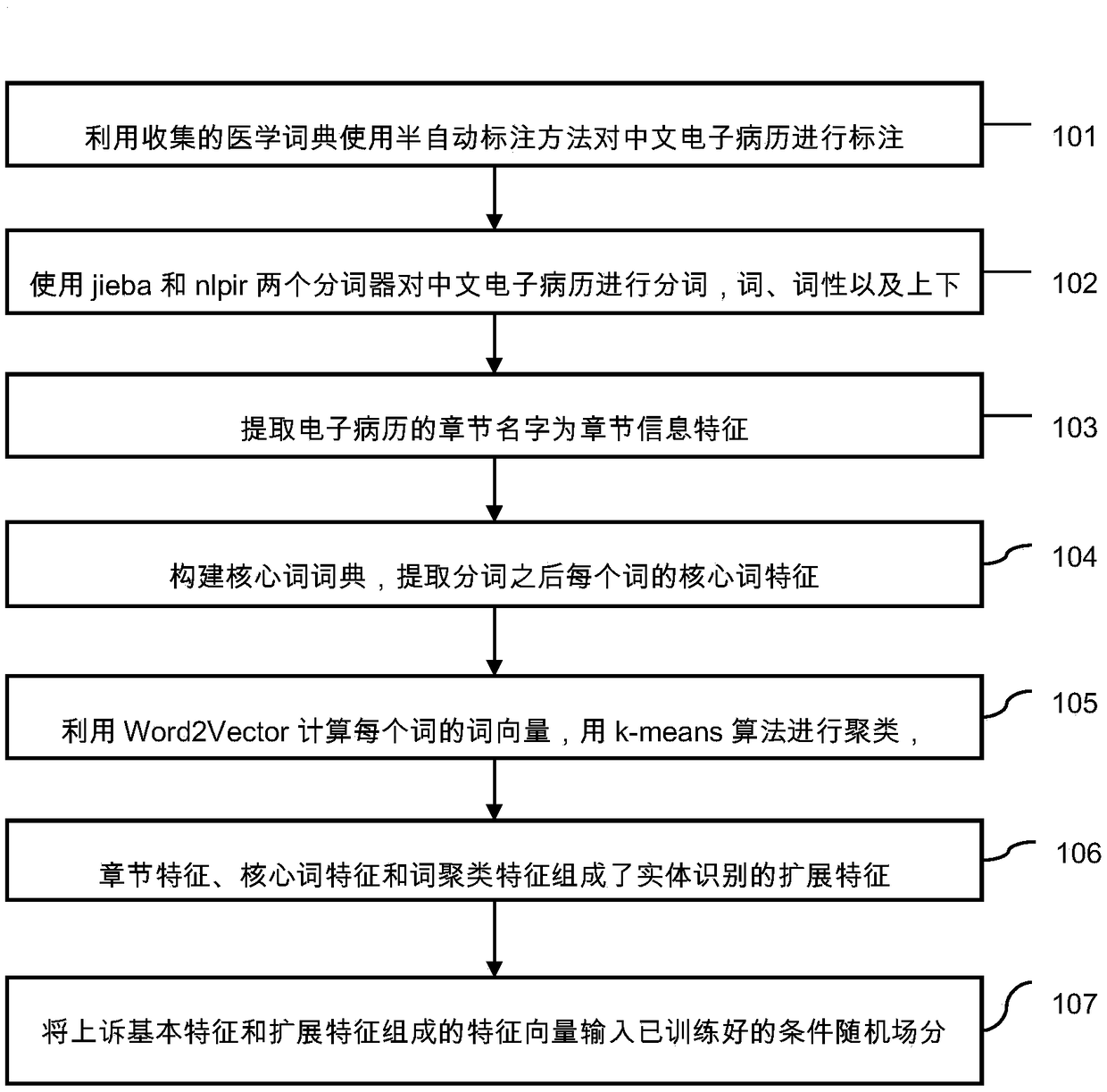

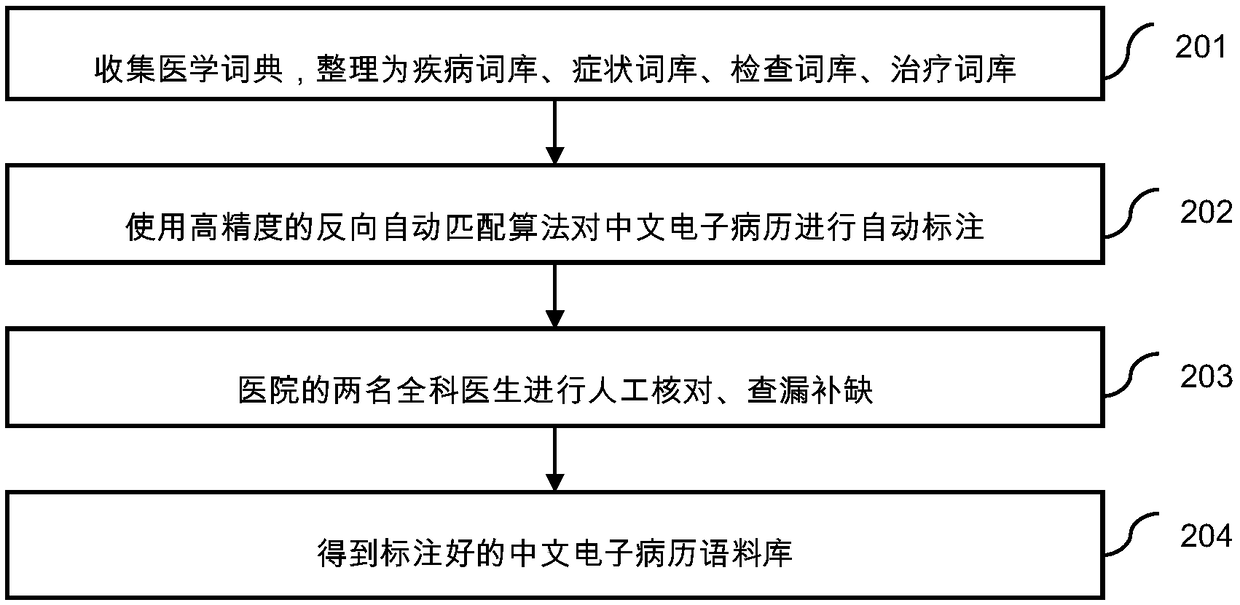

Entity identification method based on Chinese electronic medical records

InactiveCN108628824AAdvancing Medical Automated Question AnsweringMedical data miningNatural language data processingMedical recordManual annotation

The invention provides an entity identification method based on Chinese electronic medical records, and relates to the technical field of medical entity identification. In order to overcome the defects of the lack of a public Chinese electronic medical record annotation corpus in China currently, by constructing and managing a medical dictionary, a semi-automatic corpus annotation method is put forward, and the complexity of manual annotation is reduced. Secondly, the problems are solved that existing electronic medical record entity recognition methods based on characteristics mostly aim at ordinary texts or general electronic medical record texts, and unique characteristics of the Chinese electronic medical records are not considered. By means of the method, besides basic characteristicsof the general text, the unique chapter information characteristics of the Chinese electronic medical records are also extracted; core word characteristics obtained by counting character frequenciesand word frequencies are added into extension characteristics after the collected dictionary is subjected to single-character and word segmentation, a relationship of words is also added to the extension characteristics by clustering word vectors, and the accuracy of the entity identification of the Chinese electronic medical records is effectively improved.

Owner:上海熙业信息科技有限公司

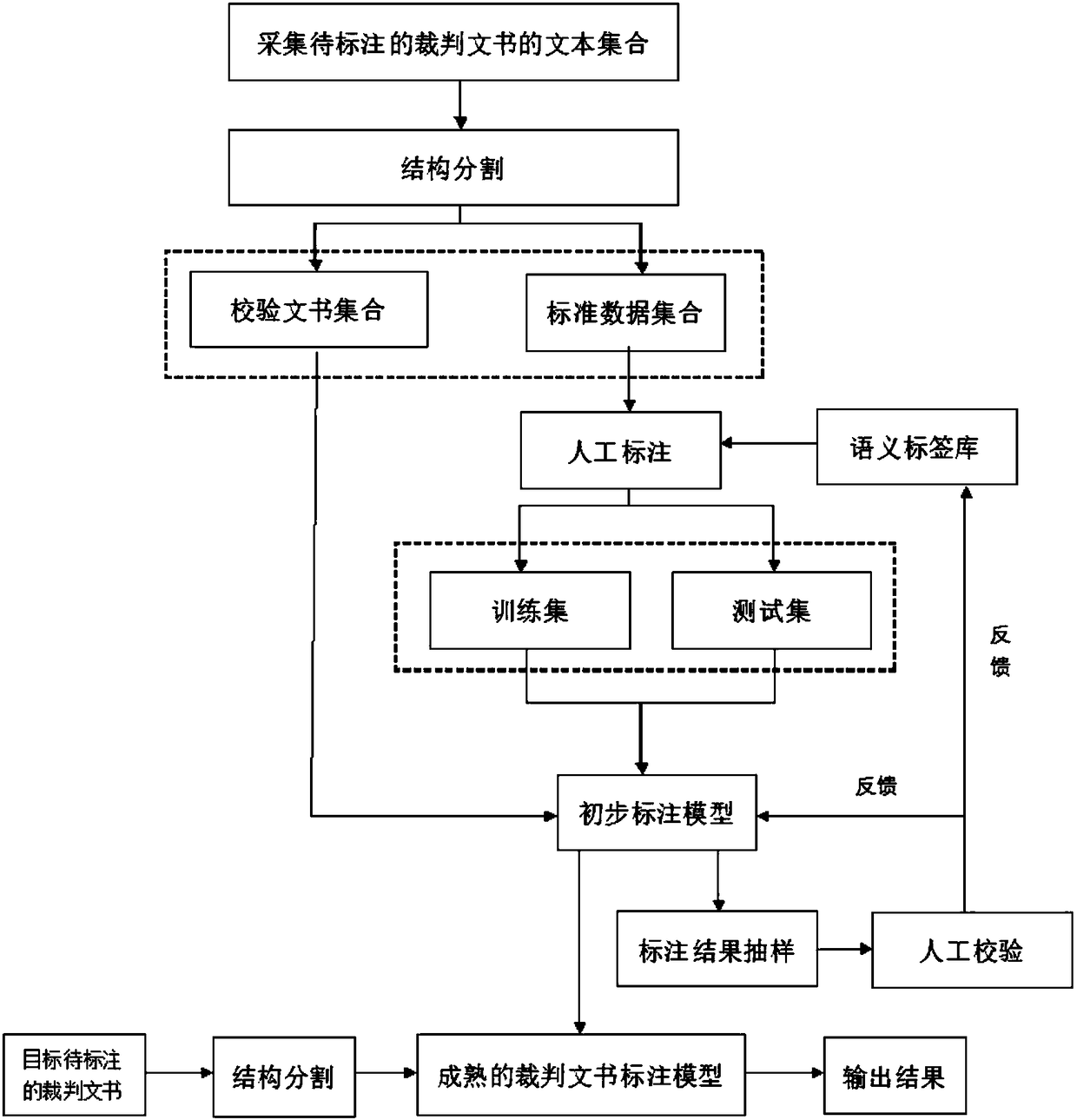

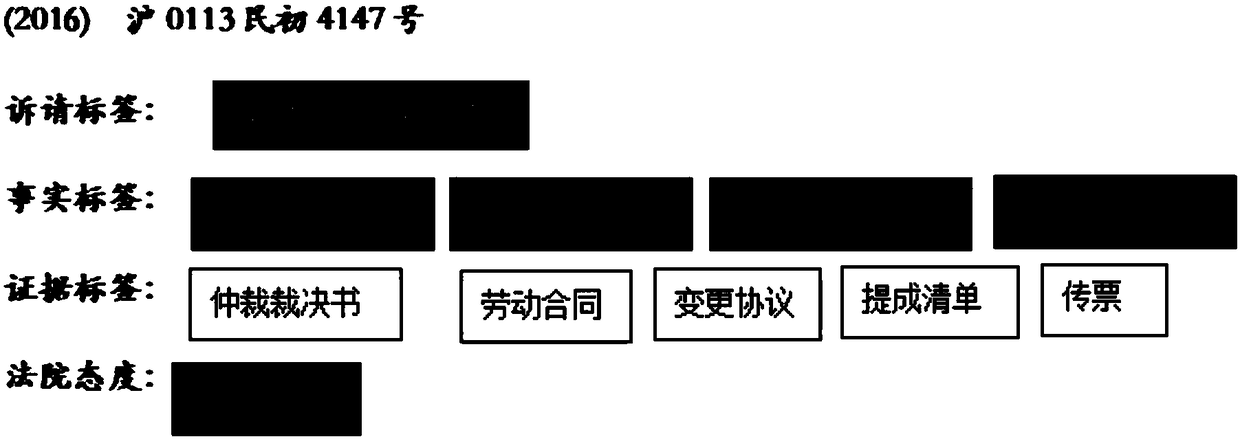

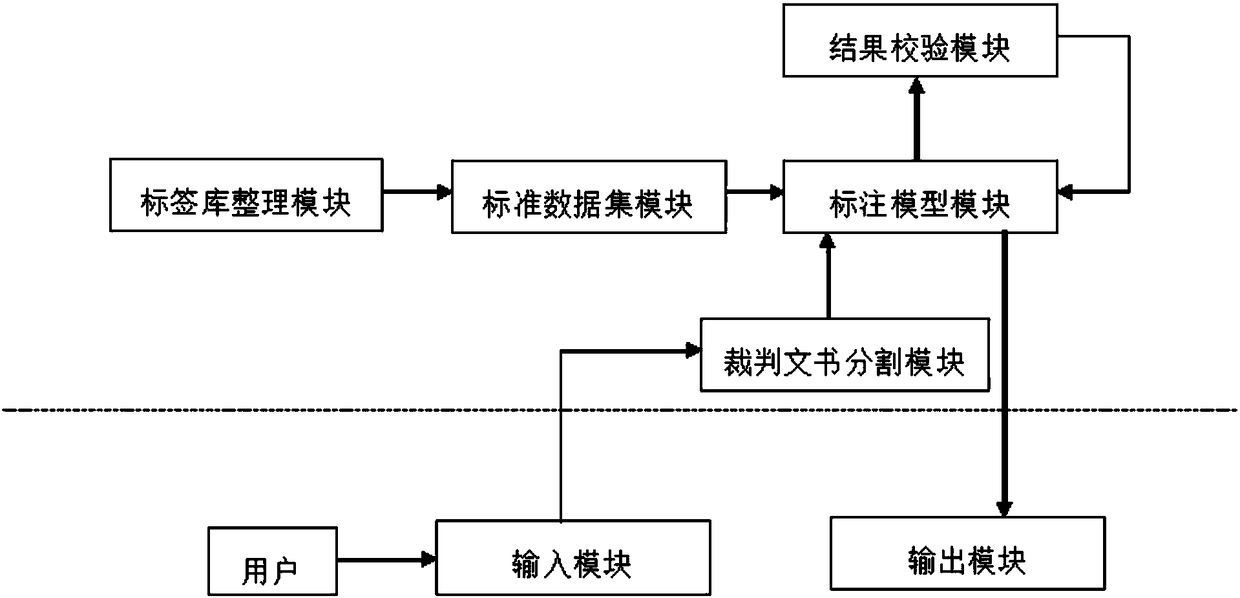

Adjudication document annotation method and device based on machine learning algorithm

ActiveCN108334500AEasy to see visuallyView accuratelySemantic analysisSpecial data processing applicationsManual annotationDocument model

The invention discloses an adjudication document annotation method and device based on a machine learning algorithm. The method comprises the following steps that: collecting the text set of adjudication documents to be annotated; carrying out structure segmentation on texts in the text set; establishing a semantic tag library; on the basis of the semantic tag library, carrying out manual annotation on the adjudication documents to be annotated; selecting parts of adjudication documents subjected to the manual annotation as a standard dataset to be handed for machine learning, and training andoptimizing a preliminary annotation model; selecting residual parts of adjudication document models subjected to the manual annotation to obtain a mature adjudication document annotation model; and after a target adjudication document to be annotated is segmented, inputting into the mature adjudication document annotation model to obtain an annotation result. Through the method, the problem in the relevant art that adjudication document legal elements are not completely extracted and case information extraction accuracy is low is solved.

Owner:江西思贤数据科技有限公司

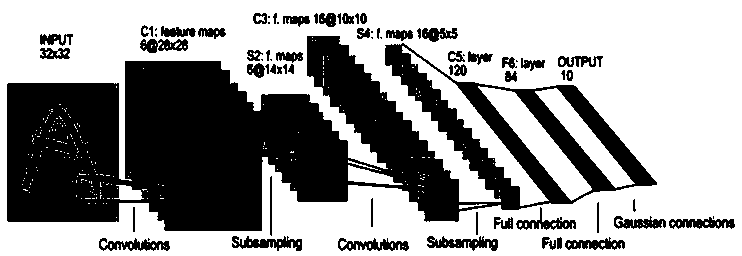

Dangerous behavior automatic identification method based on double-flow convolutional neural network

InactiveCN110084228AReduce distractionsAvoid interferenceCharacter and pattern recognitionNeural architecturesNerve networkManual annotation

The invention discloses a dangerous behavior automatic identification method based on a double-flow convolutional neural network. According to the method, the influence of a video background on personbehavior identification is reduced by carrying out partial manual annotation on persons in a video, and time features and spatial features in the video are learned by using a LeNet-5 network, and thefused space-time features are sent into a 3D convolutional neural network to complete identification of character actions in the video. Aiming at a large amount of irrelevant background information existing in a video, the method carries out manual marking on figures in a part of video frames, reduces noise interference by adding input supervision information, and effectively solves the problem that the video irrelevant background information interferes with figure action recognition. According to the automatic dangerous behavior recognition method based on the double-flow convolutional neural network and the 3D convolutional neural network, an automatic human dangerous action recognition network is constructed, a human dangerous action video data training network is used, and an automatic human dangerous action recognition model is constructed.

Owner:江苏德劭信息科技有限公司

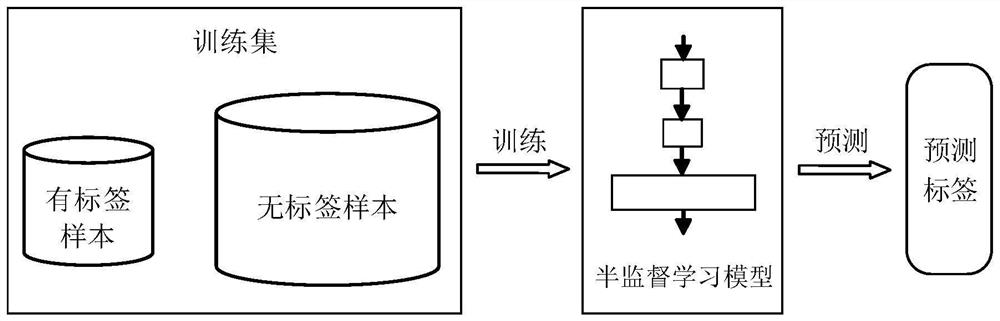

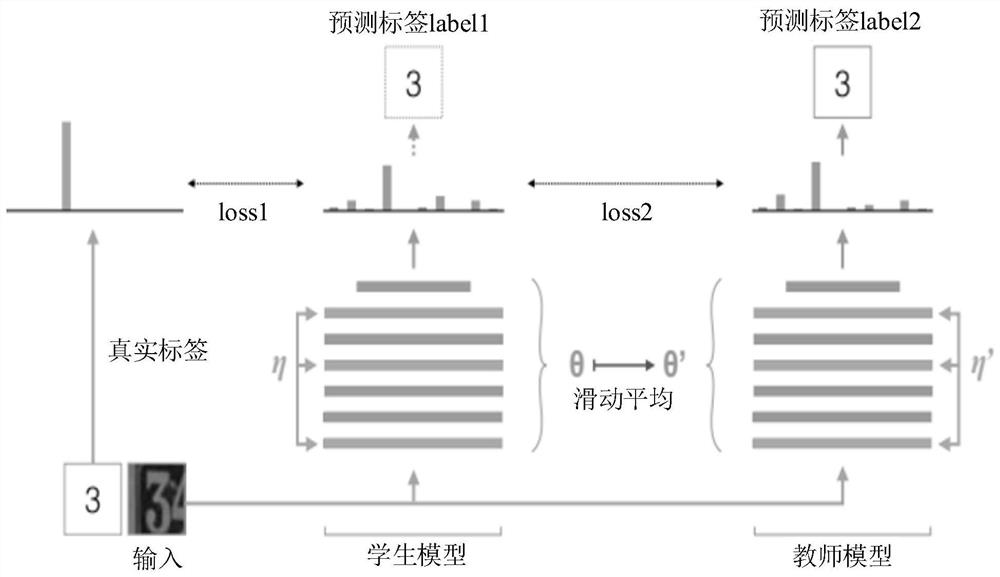

Training method of semi-supervised learning model, image processing method and equipment

PendingCN112183577AImprove accuracyCharacter and pattern recognitionMachine learningManual annotationImaging processing

The embodiment of the invention discloses a training method of a semi-supervised learning model, an image processing method and equipment, and can be applied to the field of computer vision in the field of artificial intelligence, and the method comprises the steps: firstly carrying out the prediction of the classification types of a part of unlabeled samples through a trained first semi-supervised learning model, and obtaining a prediction label; judging whether each prediction label is correct or not in a one-bit labeling manner, if the prediction is correct, obtaining a correct label (namely, a positive label) of the sample, otherwise, eliminating an error label (namely, a negative label) of the sample, and then, in the next training stage, reconstructing a training set (namely, a firsttraining set) by utilizing the information, and training the initial semi-supervised learning model again according to the first training set, so that the prediction accuracy of the model is improved, and due to the fact that the annotator only needs to answer 'Yes' or 'NO' to the prediction label in one-bit annotation, the annotation mode can relieve the manual annotation pressure that a large amount of correct label data is needed in machine learning.

Owner:HUAWEI TECH CO LTD

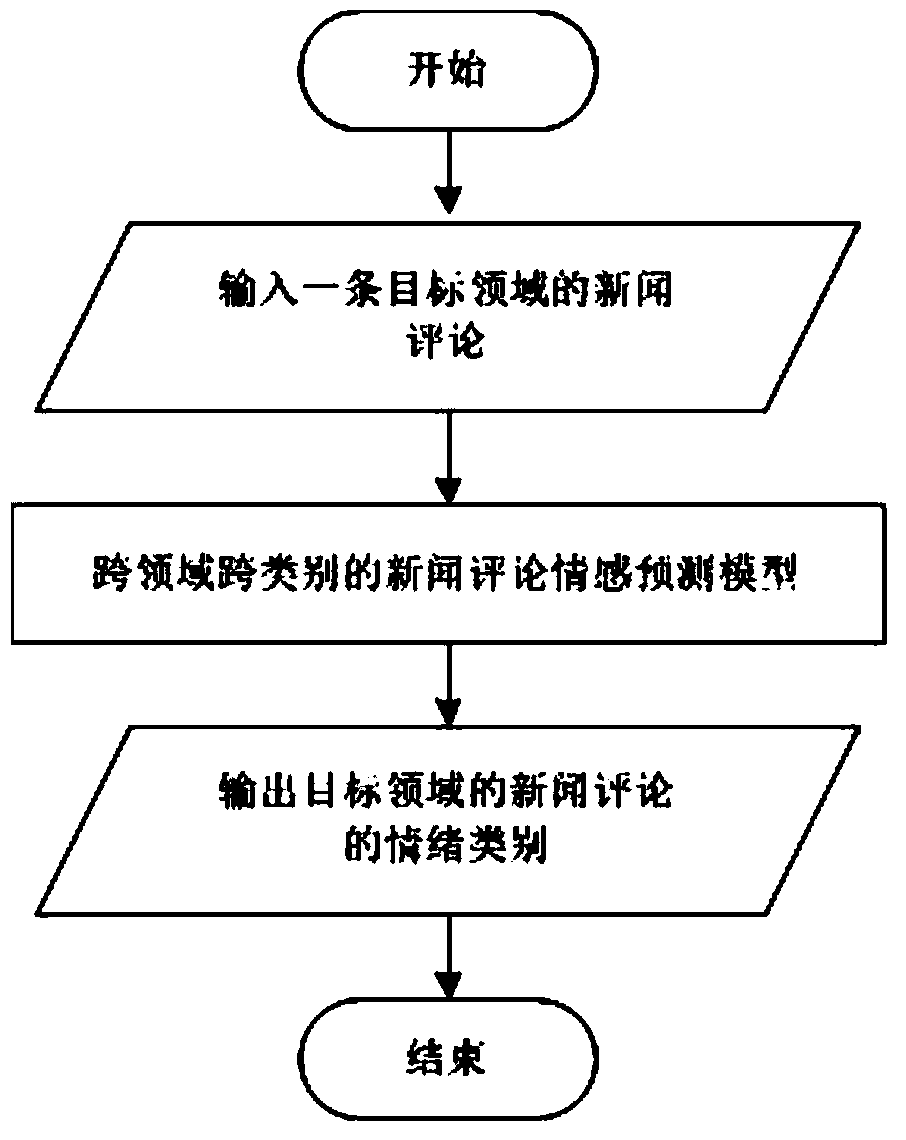

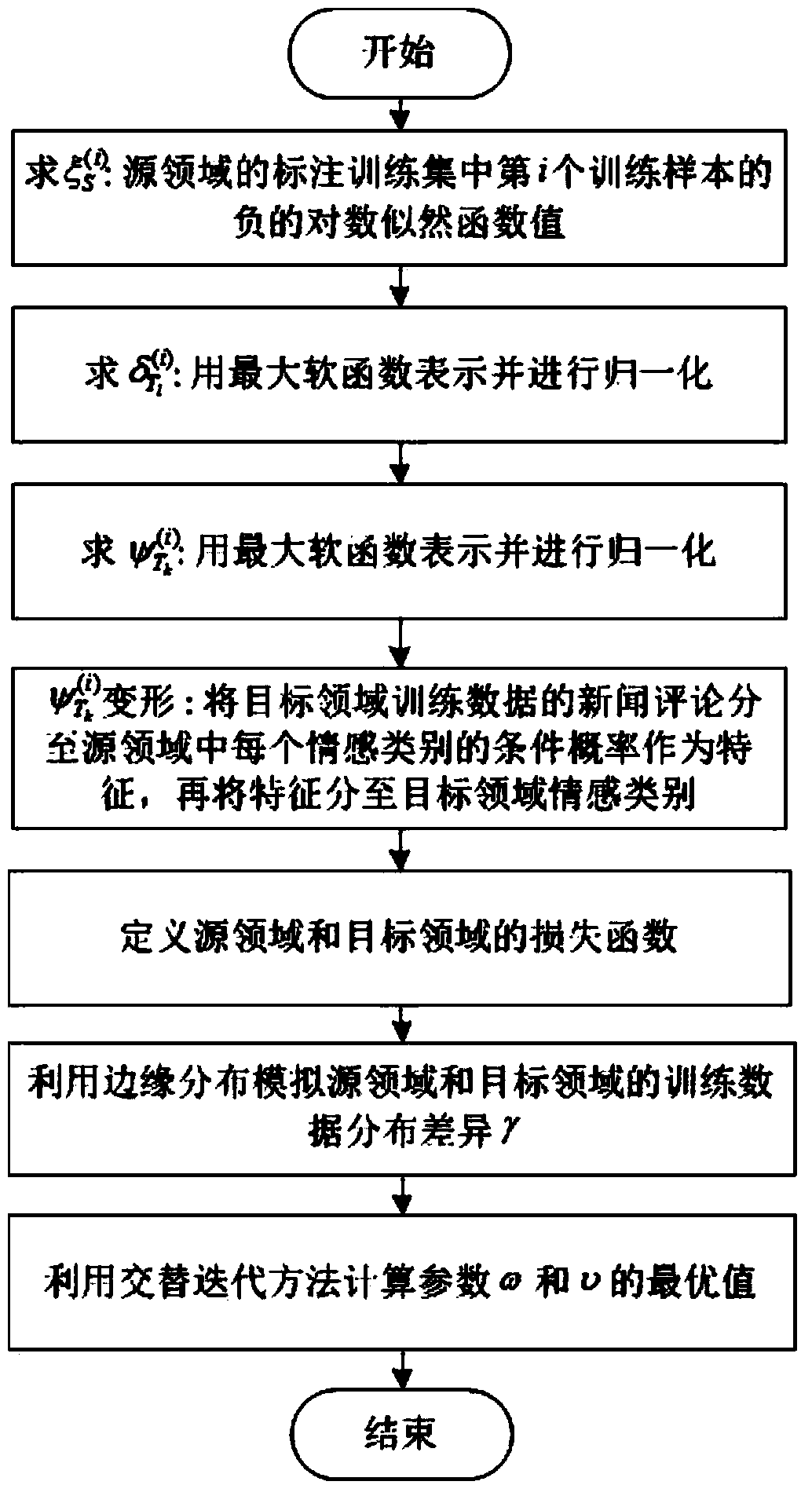

Cross-domain and cross-category news commentary emotion prediction method

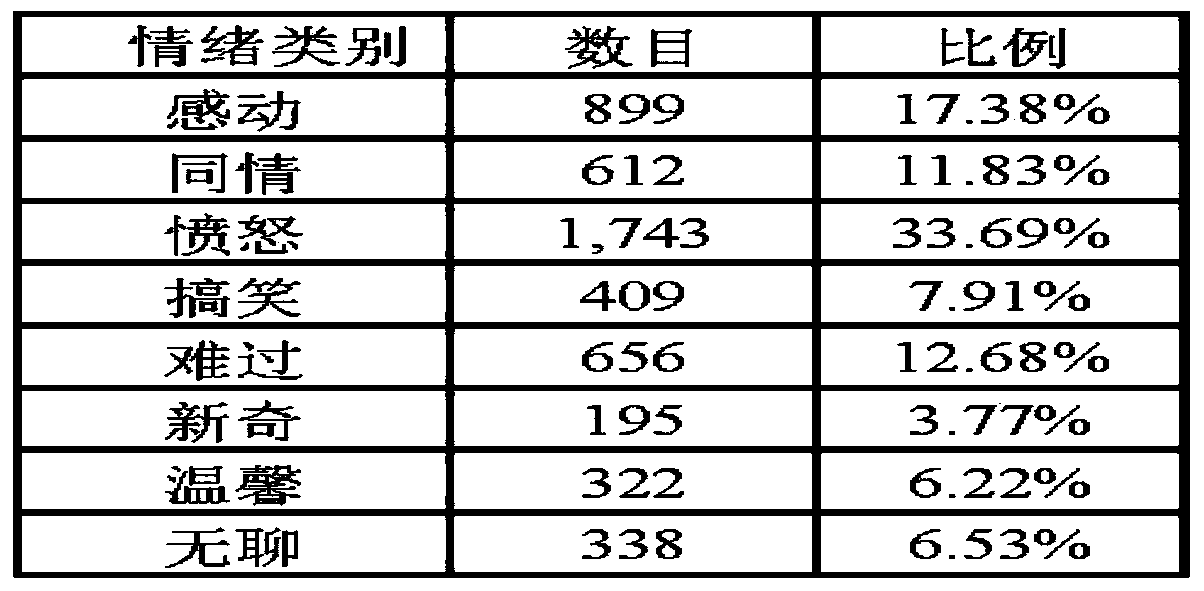

InactiveCN104239554ASolving the Sentiment Prediction ProblemAchieve knowledge transferEnergy efficient computingSpecial data processing applicationsManual annotationPredictive methods

The invention provides a cross-domain and cross-category news commentary emotion prediction method. According to the method disclosed by the invention, under the condition that a target domain is provided with a small amount of annotation data only and another related but different source domain is provided with a large amount of annotation data, knowledge transfer among different domains is realized through simulating the relationship between the emotion category collections of the source domain and the target domain, and a cross-domain and cross-category news commentary emotion prediction model is built, so that the problem of difficulty in emotion prediction of news commentaries of the target domain is solved; under the situation that the emotion category collections of the source domain and the target domain are different, the method disclosed by the invention is significantly better than other alternative cross-domain and cross-category online news commentary emotion prediction methods, and high cost resulting from manual annotation work and energy consumed through training more classification models are greatly reduced. The method can be applied to user sentiment analysis and public sentiment supervision.

Owner:NANKAI UNIV

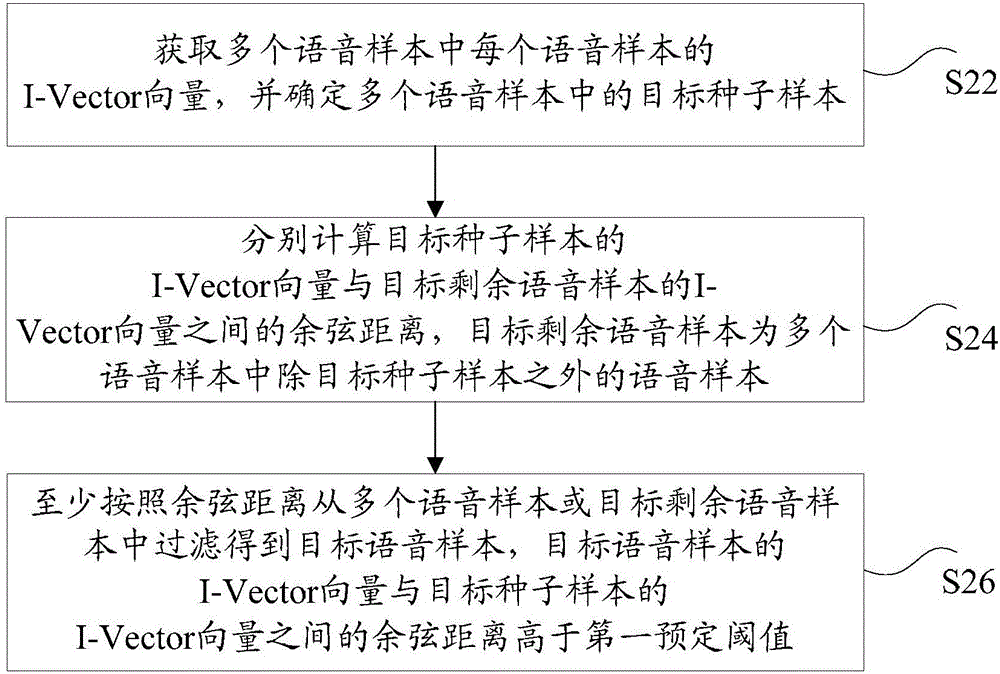

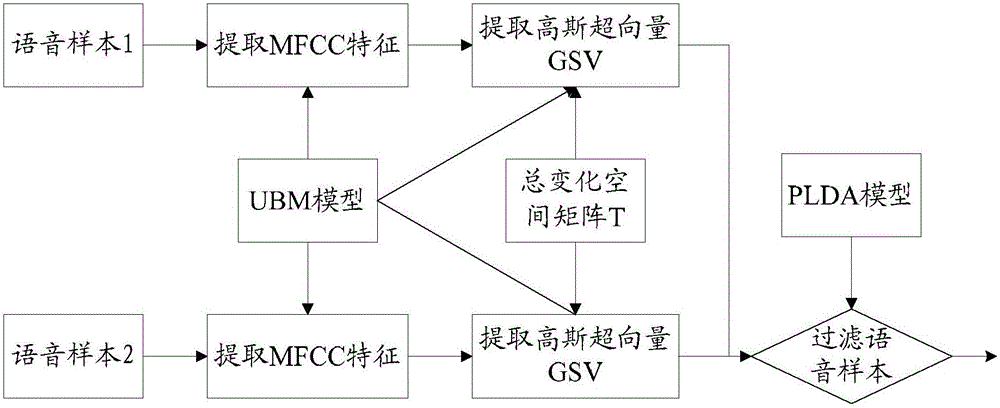

Voice data processing method and device

ActiveCN105869645AImprove cleaning efficiencySolve the technical problem of low cleaning efficiencySpeech analysisManual annotationSeed sample

The invention discloses a voice data processing method and a voice data processing device. The voice data processing method comprises the following steps: acquiring the I-Vector of each of a plurality of voice samples, and determining a target seed sample in the plurality of voice samples; respectively calculating the cosine distances between the I-Vector of the target seed sample and the I-Vectors of the target residual voice samples, wherein the target residual voice samples are the voice samples besides the target seed sample in the plurality of voice samples; and at least filtering from the plurality of voice samples or the target residual voice samples according to the cosine distances to obtain a target voice sample, wherein the cosine distance between the I-Vector of the target voice sample and the I-Vector of the target seed sample is higher than a first preset threshold value. With the adoption of the method and the device, the technical problem that in the relevant technologies, cleansing can not be carried out on voice data by adopting a manual annotation method, so that the voice data cleansing efficiency is low is solved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

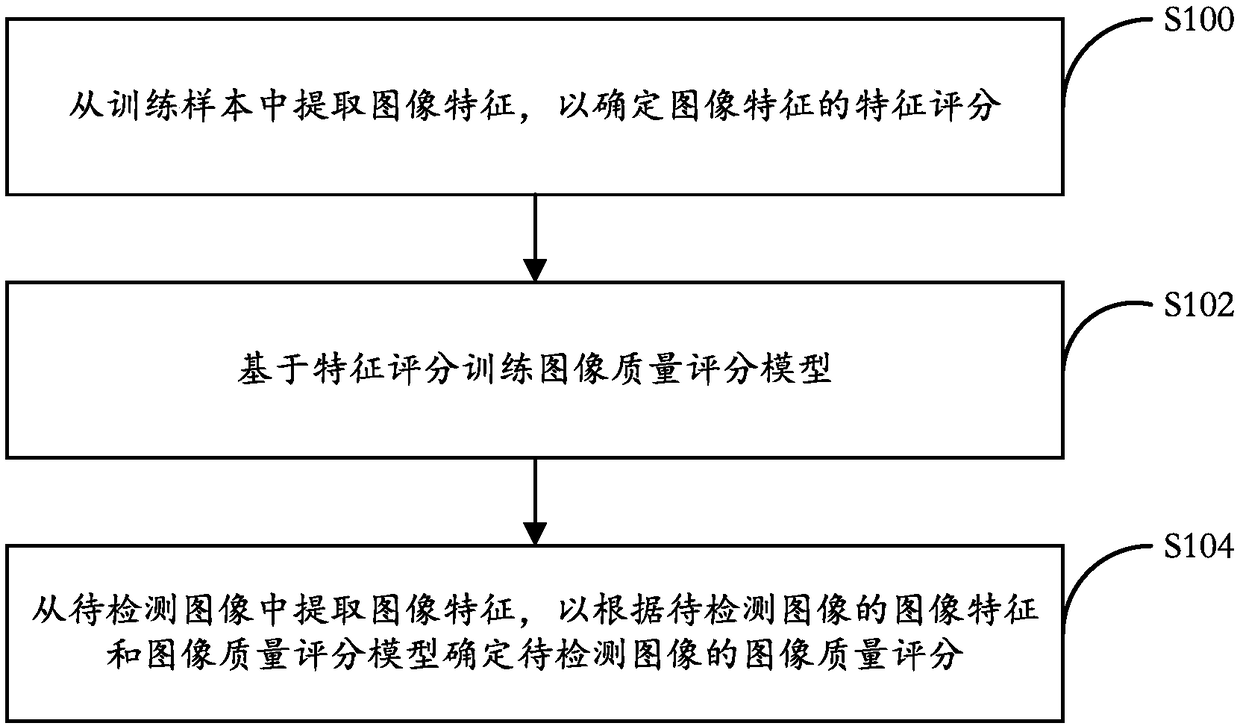

Image quality detection method, device and equipment

ActiveCN108288027AReduce processing costsImprove accuracyCharacter and pattern recognitionPattern recognitionManual annotation

The invention discloses an image quality detection method, device and equipment. The method comprises the following steps of: extracting image features from training samples so as to determine featurescores of the image features; training an image quality scoring model on the basis of the feature scores; and extracting an image feature from a to-be-detected image, so as to determine an image quality score of the to-be-detected image according to the image feature of the to-be-detected image and the image quality scoring model, wherein when the image quality scoring model is trained, originalimages acquired under different environments and extended images obtained through processing the original images are taken as the training samples. According to the method, feature scores (not artificially marked scores) are determined according to image features of images, and models are trained on the basis of the feature scores, so that image quality scores detected by the models are more objective, visual and correct, the operation speed of scoring carried out through computers is considerably higher than that of manual annotation, and the processing cost of the training samples can be reduced.

Owner:ENNEW DIGITAL TECH CO LTD

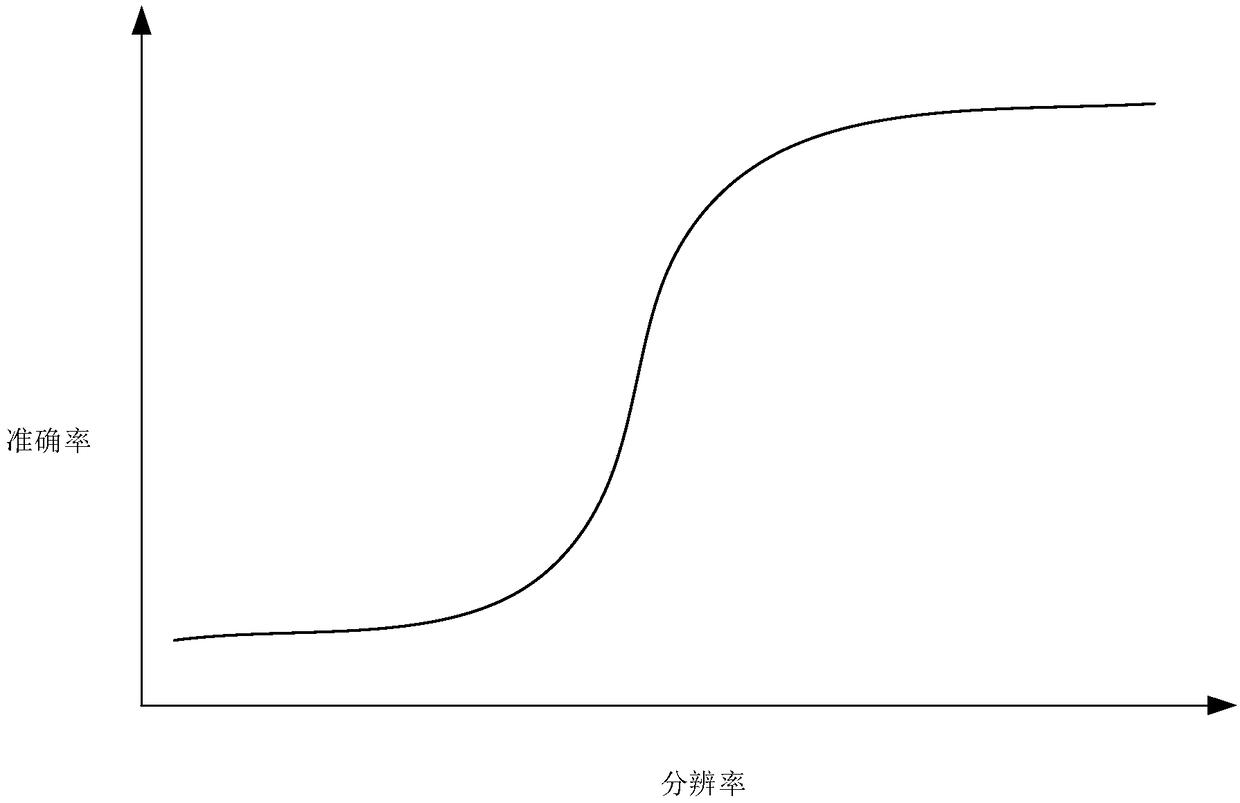

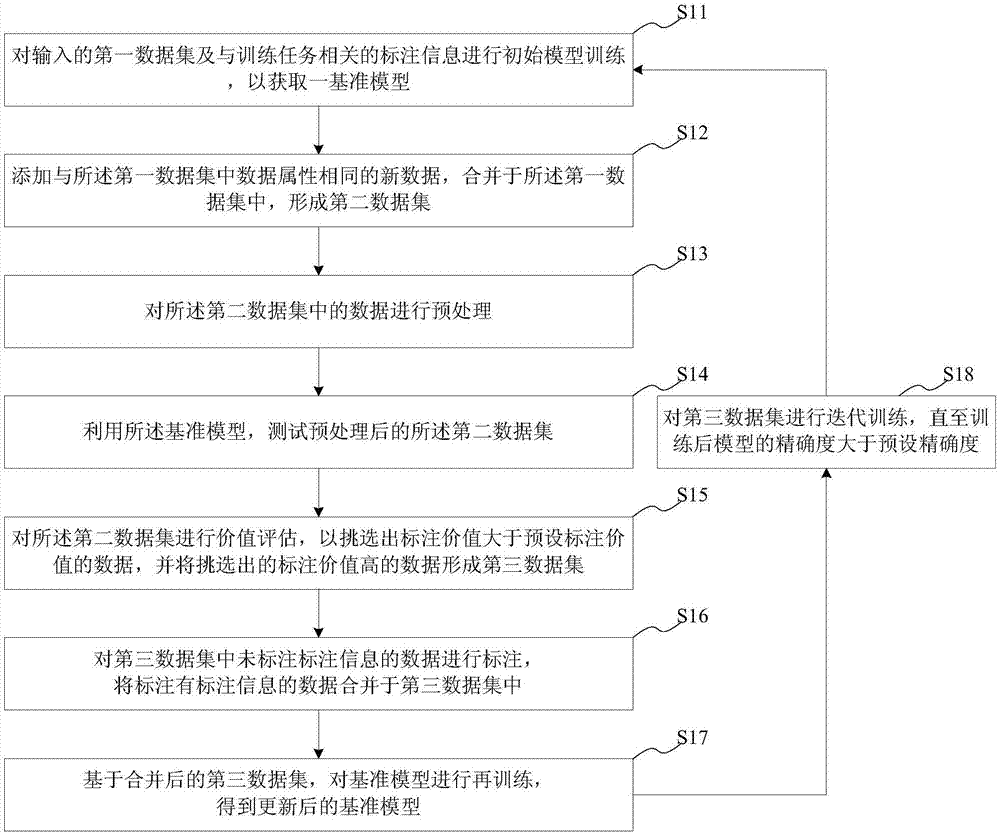

Training method/system of intelligent model, computer readable storage medium and terminal

InactiveCN107392125AImprove training efficiencyReduce the amount of manual annotationCharacter and pattern recognitionMachine learningReference modelData set

The present invention provides a training method / system of an intelligent model, a computer readable storage medium and a terminal. The training method includes the following steps that: initial model training is performed on an inputted first data set and annotation information related to a training task, so that a reference model can be obtained; new data are added and are merged in the first data set, so that a second data set can be formed; data testing and value assessment are performed on the second data set, so that data of which the annotation values are larger than a preset annotation value are selected to form a third data set; unannotated information in the third data set is annotated, and the annotated information is merged into the third data set; the reference model is ret-rained, so that an updated reference model is obtained; and the third data set is defined as a new first data set, and new data are added into the new first data set, and the above steps are executed circularly until the precision of the iteratively-trained model is greater than preset accuracy. With the training method / system of the intelligent model, the computer readable storage medium and the terminal of the method adopted, the number of manual annotations can be deceased; it does not need to annotate all the data, and therefore, annotation costs can be saved, and the training efficiency of the model can be improved.

Owner:SHANGHAI ADVANCED RES INST CHINESE ACADEMY OF SCI +1

Semi-automatic image annotation sample generating method based on target tracking

ActiveCN103559237ACharacter and pattern recognitionMetadata still image retrievalPattern recognitionManual annotation

The invention discloses a semi-automatic image annotation sample generating method based on target tracking. The method comprises processes of target tracking and semi-automatic annotation. A serial of samples are generated through a target tracking mechanism, a template learning mechanism is designed for tracking and detecting target areas, detection on videos or images is performed by means of learned templates, manual annotation is utilized to help to perform determination, and therefore, annotation samples are generated. The semi-automatic image annotation sample generating method based on the target tracking has the advantages of being capable of obtaining a large amount of image annotation samples by means of less labor consumption.

Owner:NANJING UNIV

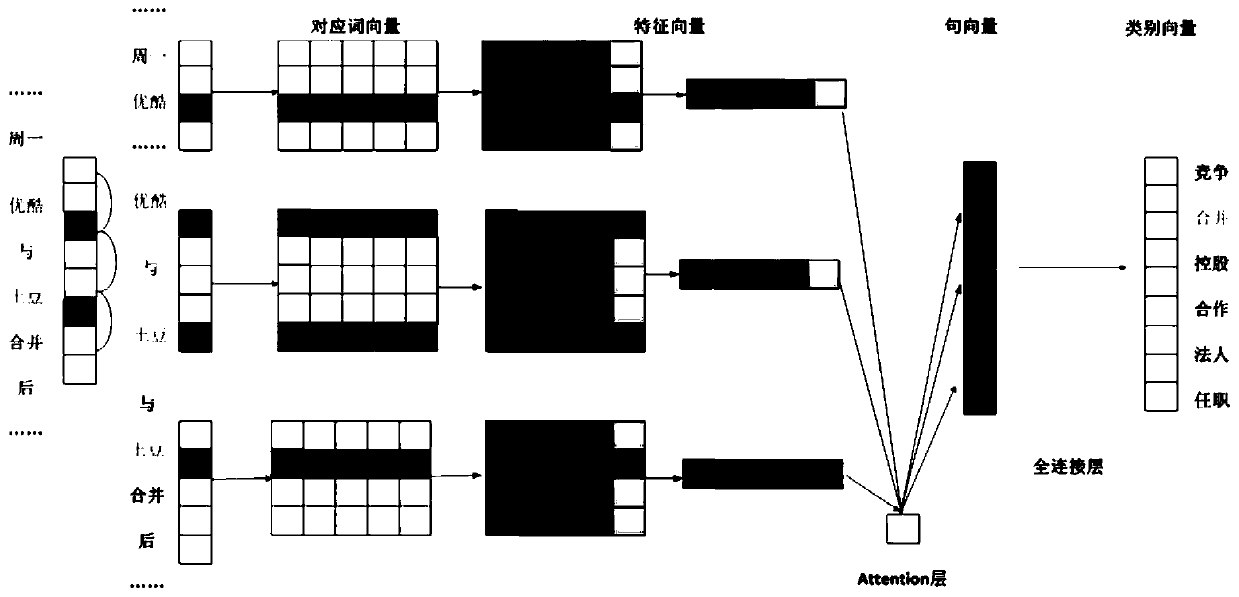

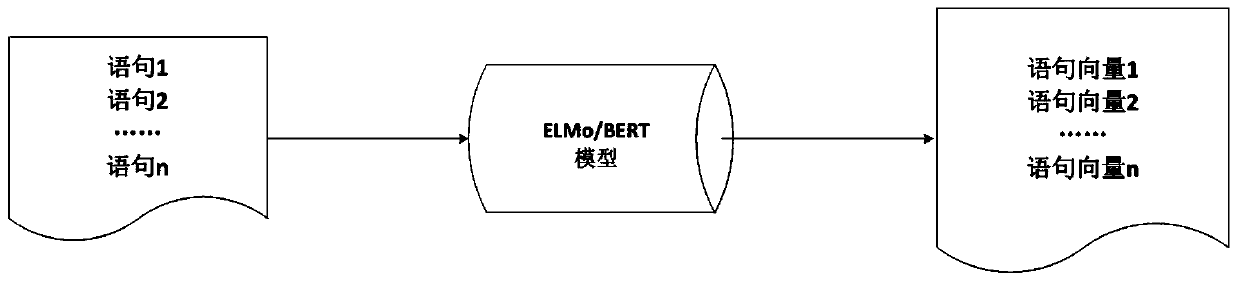

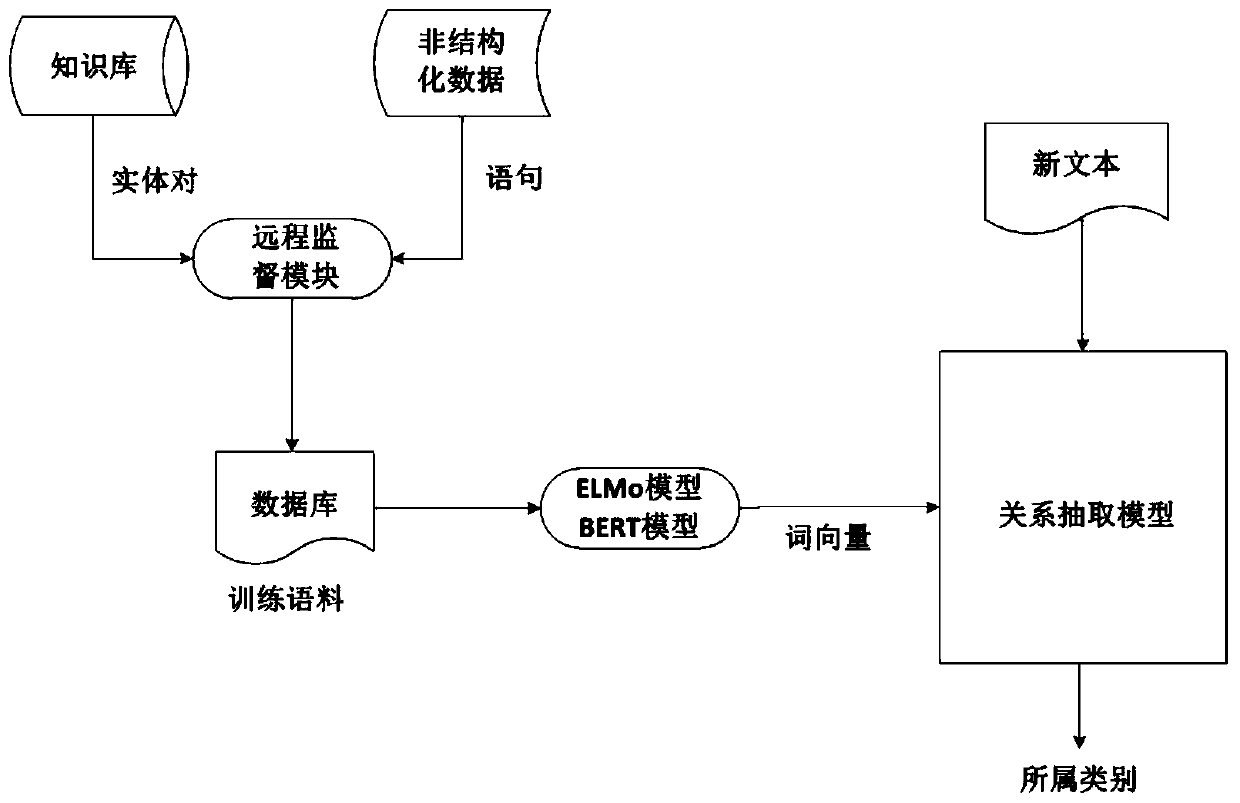

Entity relationship extraction method and system integrated with dynamic word vector technology

ActiveCN109871451AInsufficient reliefReduce dependenceSemantic analysisNeural architecturesManual annotationNerve network

The invention provides an entity relationship extraction method and system integrated with dynamic word vector technology. According to the system, an existing knowledge base corresponds to rich unstructured data by using a remote supervision method so as to generate a large amount of training data, so that the problem of insufficient manual annotation corpora is relieved, and the system can reduce the dependence on annotation data, thereby effectively reducing the labor cost. In order to obtain feature information between entities as much as possible, the basic architecture of the model adopts a segmented convolutional neural network; and the semantic information of the example sentences is further extracted by integrating a dynamic word vector technology.

Owner:GLOBAL TONE COMM TECH

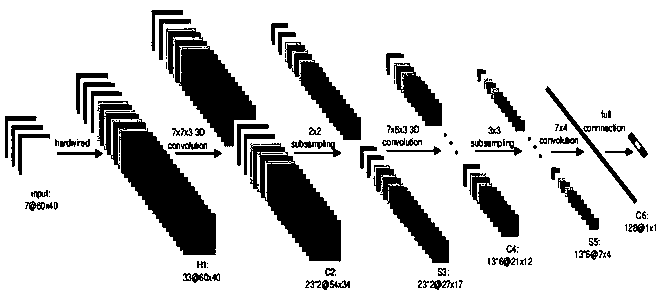

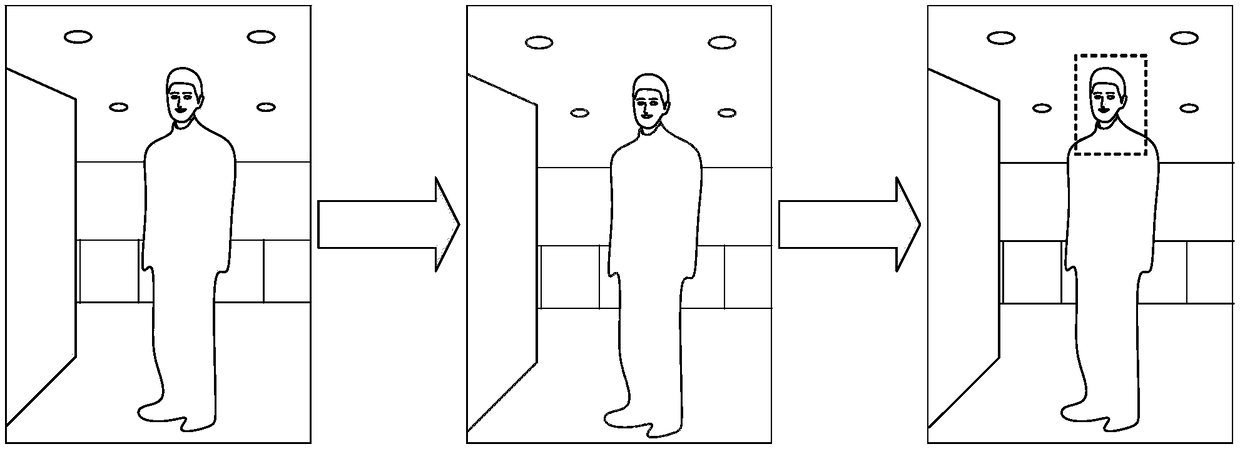

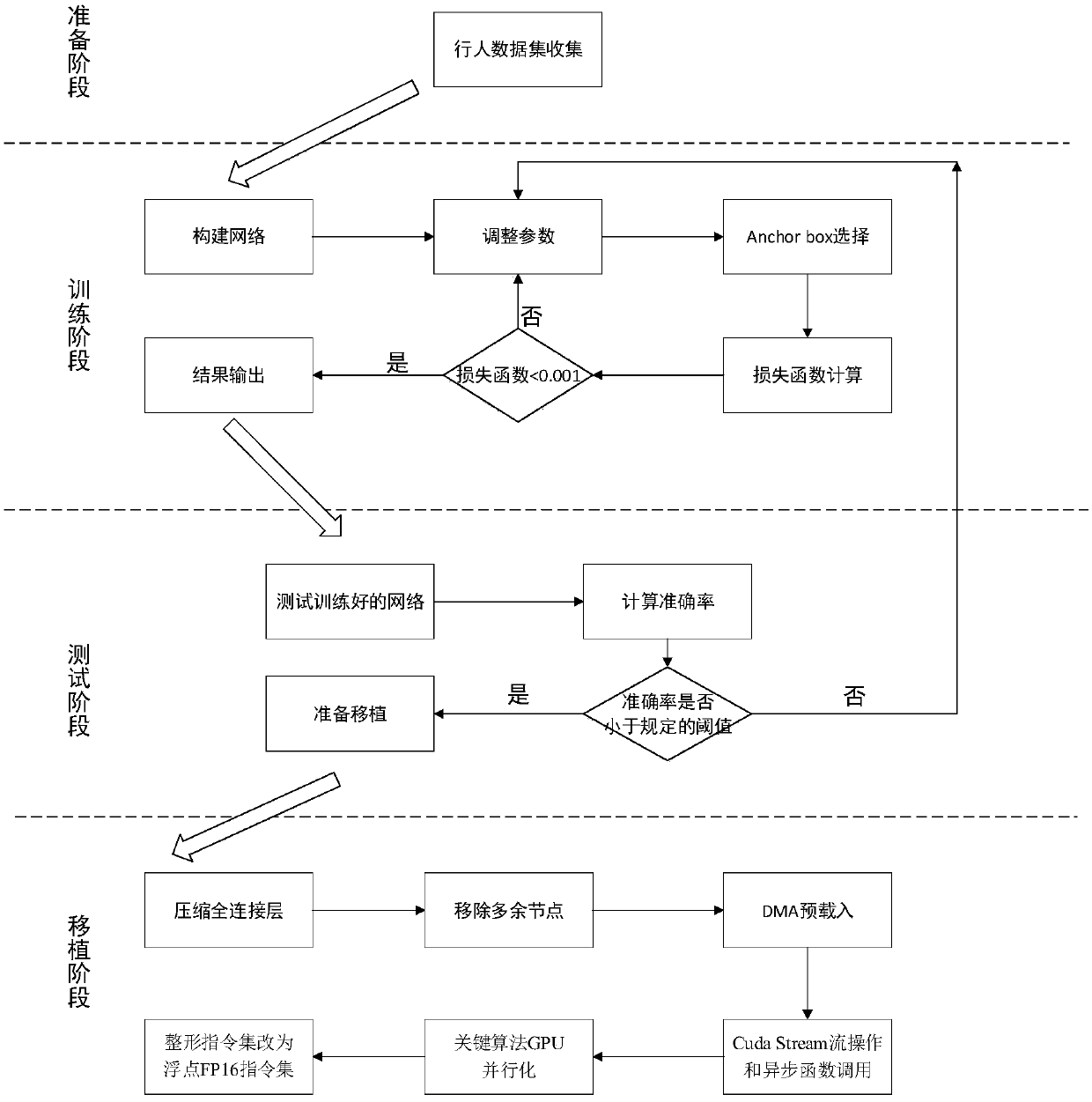

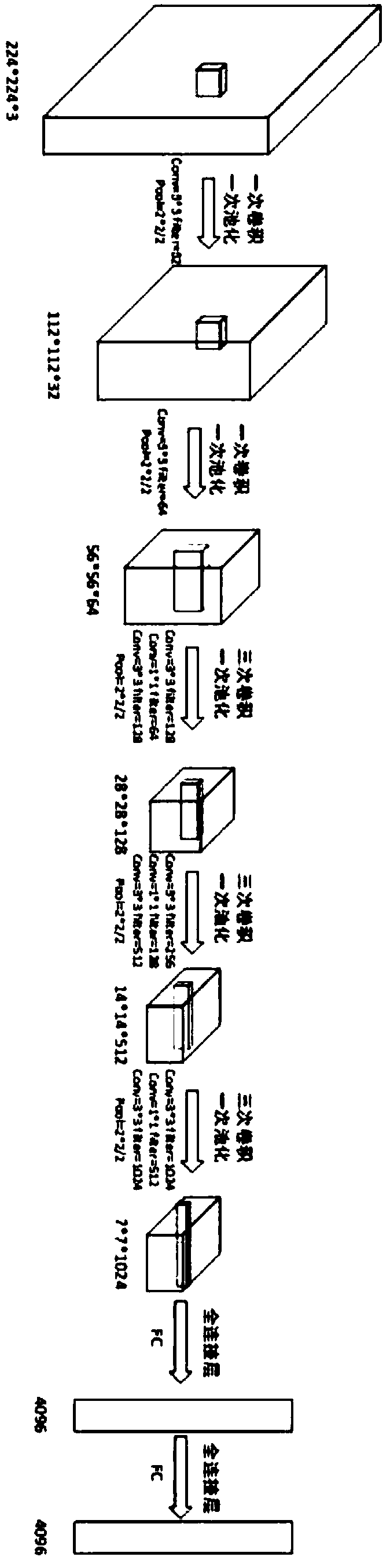

Deep learning pedestrian detection method based on embedded terminal

InactiveCN108805070AObvious speed advantageReduce complexityCharacter and pattern recognitionManual annotationData set

The invention discloses a deep learning pedestrian detection method based on an embedded terminal. The method comprises the steps that first, at a sample preparation stage, an existing automatic driving dataset is acquired, or manual annotations obtained after a fixed camera and a mobile camera shoot videos are collected; second, at a training stage, a large quantity of training images are used totrain parameters of a constructed convolutional neural network so as to complete detection feature learning; third, at a test stage, a large quantity of test images are input into the trained convolutional neural network, and a detection result is obtained; and fourth, at a porting stage, code level optimization and porting into the embedded terminal are performed. According to the method, the 18-layer convolutional neural network is adopted to perform pedestrian feature learning, and the method has an innovative advantage compared with a traditional machine learning method; and an optimization strategy for the embedded terminal is also proposed, the network scale and algorithm complexity are further reduced, and the method is suitable for ADAS function application.

Owner:合肥湛达智能科技有限公司

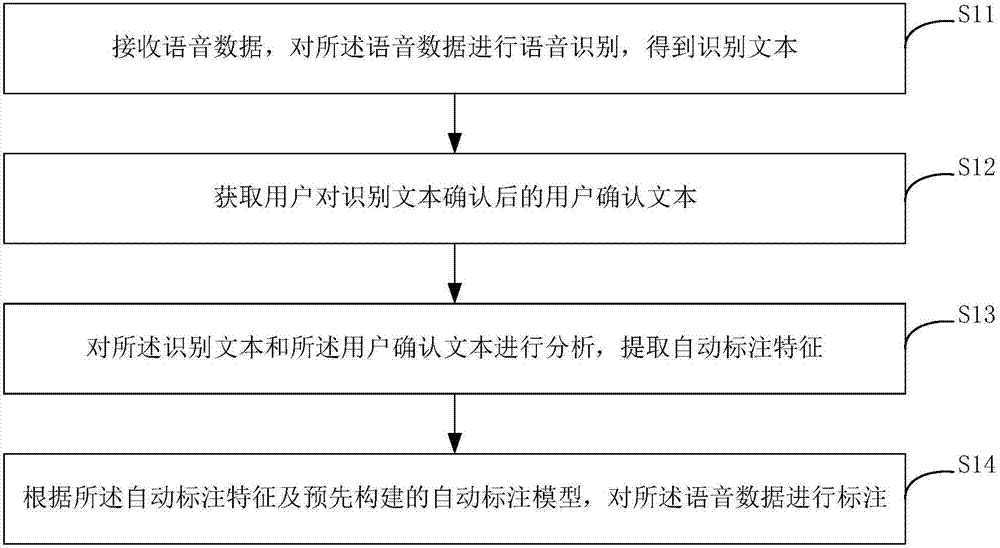

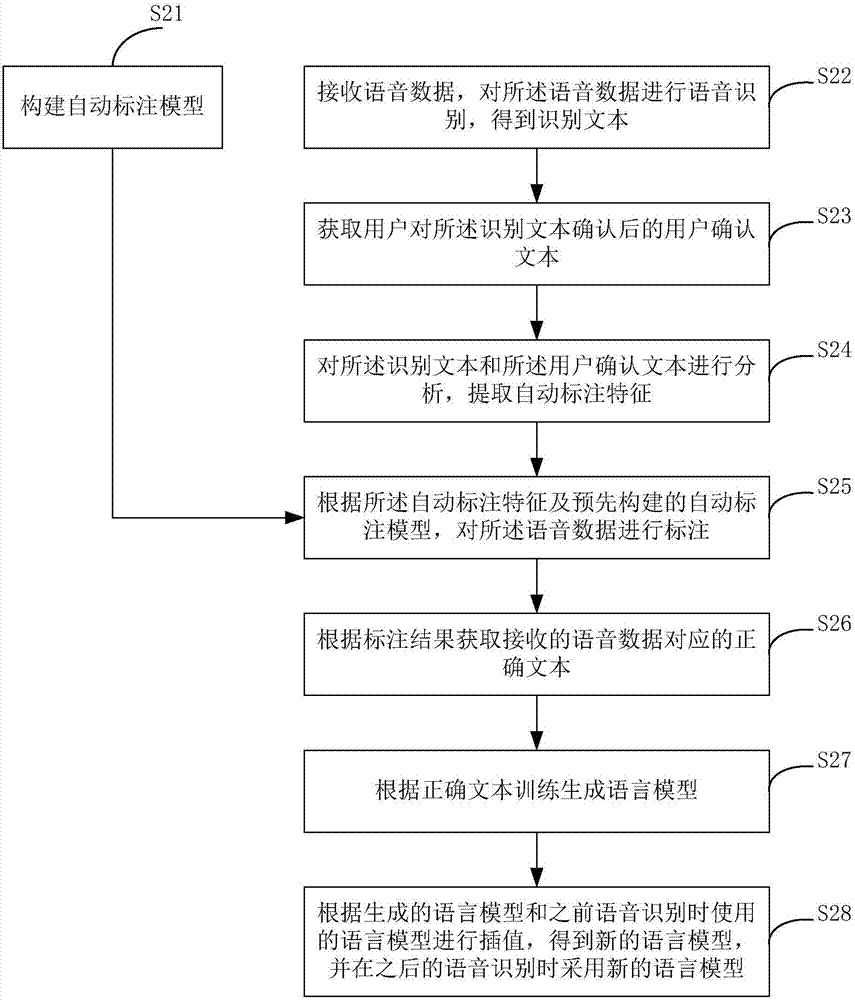

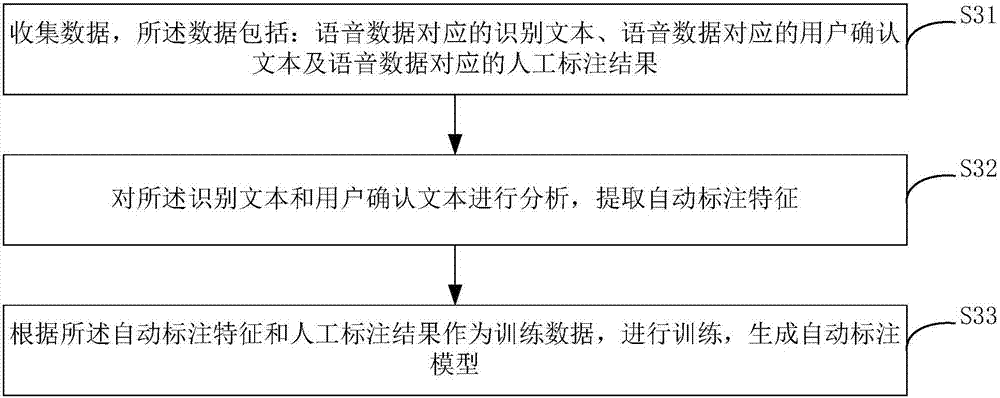

A voice data annotation method and apparatus

ActiveCN107578769ARealize automatic labelingImprove efficiencySpeech recognitionSpeech synthesisManual annotationData Annotation

The invention brings forward a voice data annotation method and apparatus. The voice data annotation method includes the steps of receiving voice data, performing voice recognition of the voice data to obtain a recognized text; obtaining a user-confirmed text after the user confirms the recognized text; and performing analyzing of the recognized text and the user confirmation text to extract automatic annotation features; and performing annotation of the voice data according to the automatic annotation features and a pre-constructed automatic annotation model. The method can solve problems existing in manual annotation, raises the efficiency of voice data annotation and reduces the cost.

Owner:IFLYTEK CO LTD

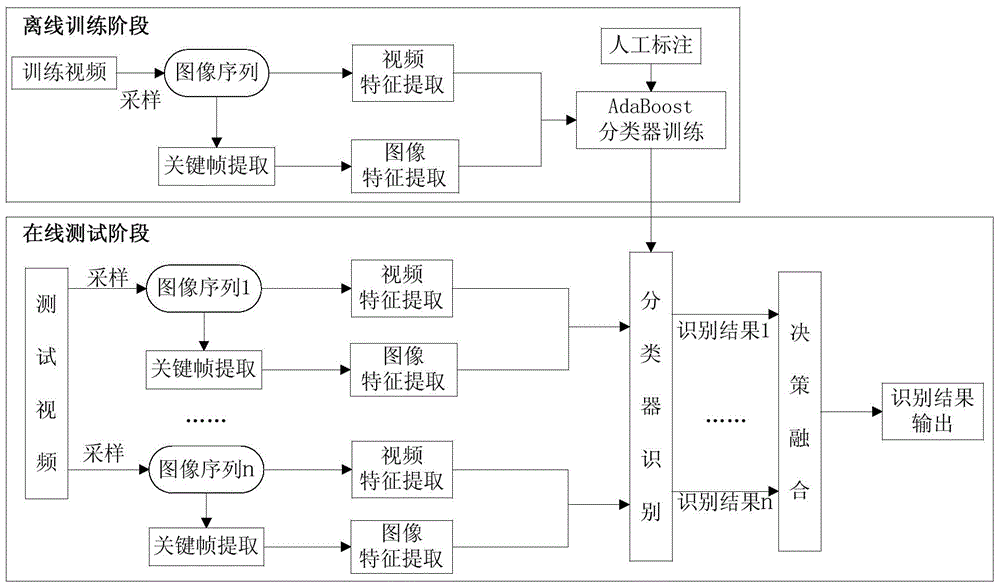

Video-based weather phenomenon recognition method

ActiveCN104463196AInformativeImprove the ability to distinguishCharacter and pattern recognitionPattern recognitionManual annotation

The invention discloses a video-based weather phenomenon recognition method. The method achieves classification recognition of common weather phenomena such as sun, cloud, rain, snow and fog. The method comprises the steps of training an off-line classifier, wherein an image sequence is sampled for a given training video; on the one hand, video characteristics of the image sequence are extracted; on the other hand, key frame images and image characteristics of the key frame images are extracted from the image sequence, the AdaBoost is adopted for conducting learning and training on the extracted video characteristics, the extracted image characteristics and manual annotations to obtain the classifier; recognizing the weather phenomena in an online mode, wherein a plurality of sets of image sequences are sampled for a testing video, video characteristics and image characteristics of each set of image sequences are extracted, the characteristics are sent into the classifier for classification to obtain a corresponding recognition result, then decision fusion is carried out in a voting mode, and the voting result is used as the weather phenomenon recognition result of the testing video.

Owner:PLA UNIV OF SCI & TECH

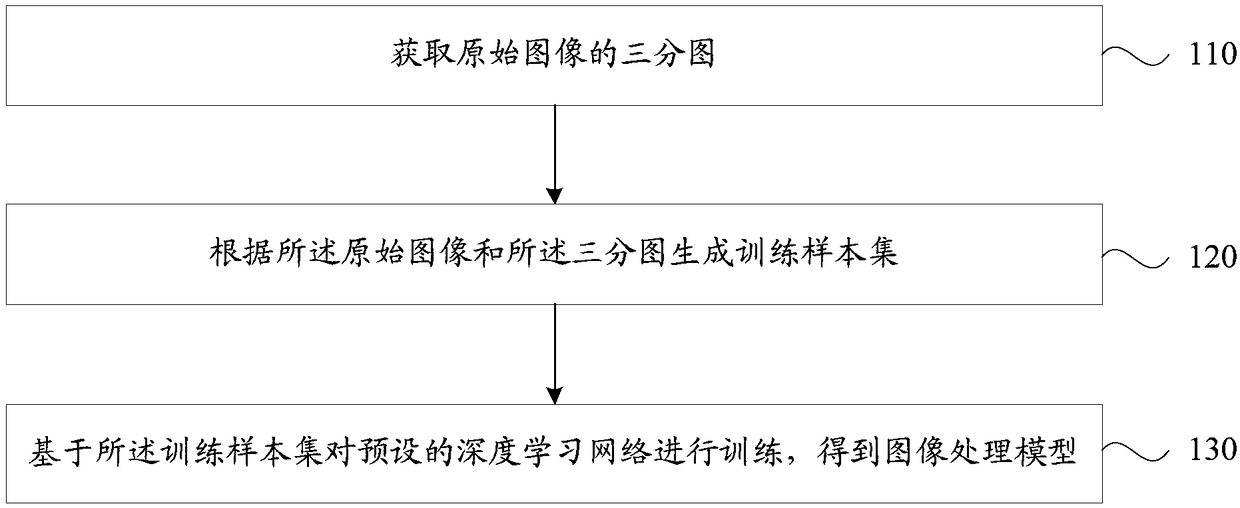

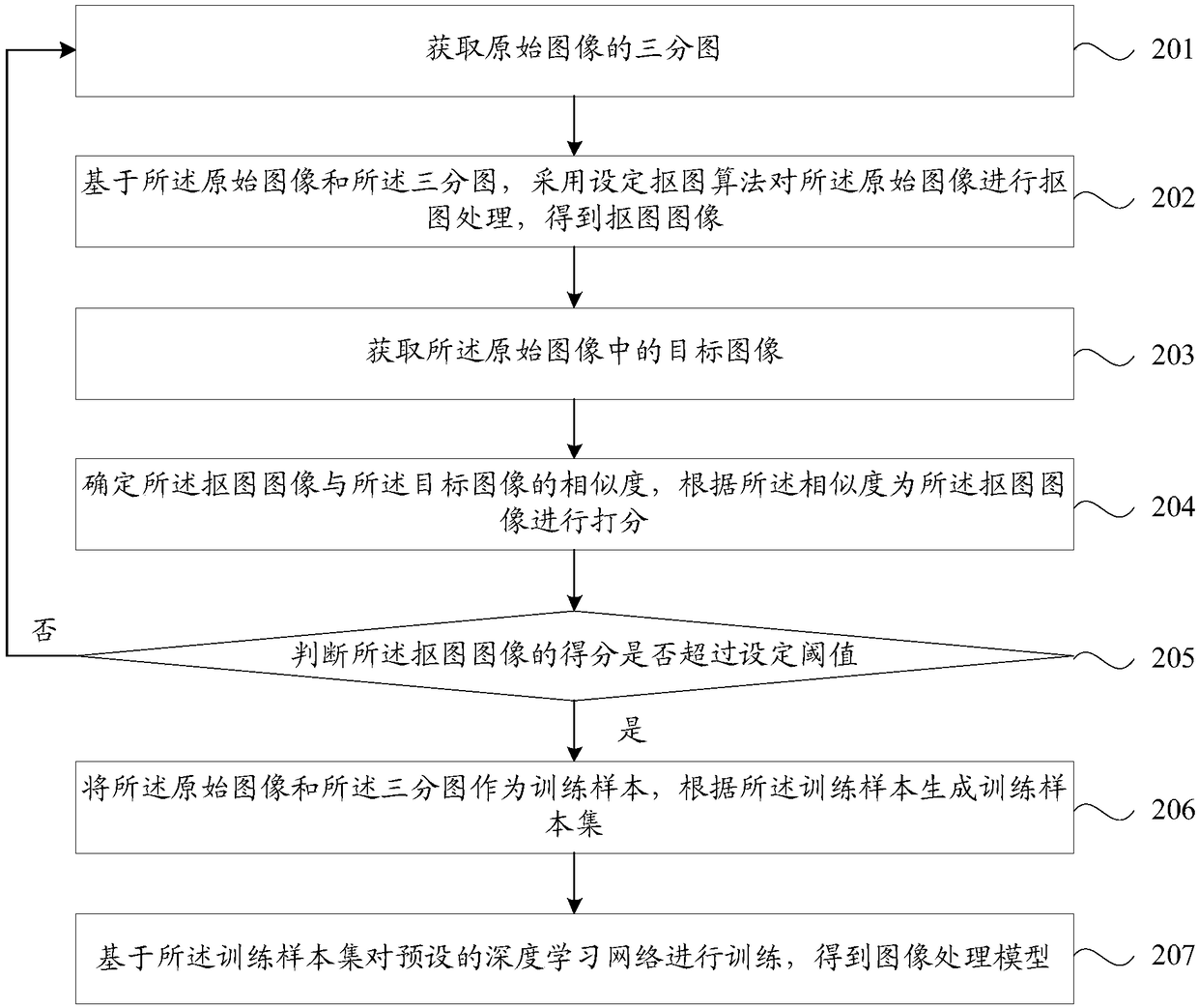

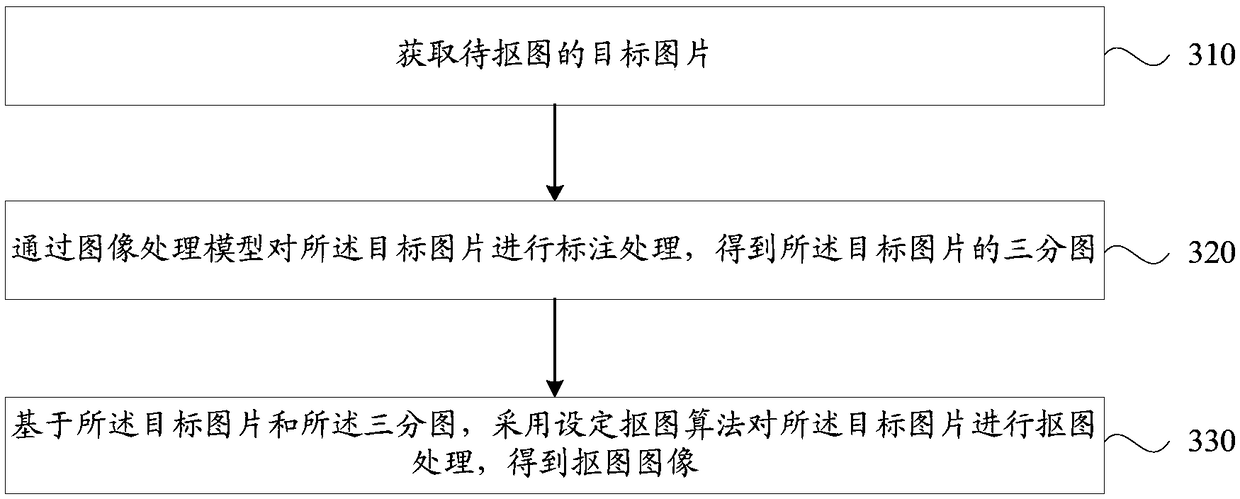

Training method, matting method, device, medium and terminal for image processing model

ActiveCN109461167AImprove labeling efficiencyReduce workloadImage enhancementImage analysisPattern recognitionManual annotation

The embodiment of the application discloses a training method, a matting method, a device, a medium and a terminal of an image processing model. The training method of the image processing model includes obtaining a trichotomy of the original image; Generating a training sample set according to the original image and the trichotomy; The preset depth learning network is trained based on the training sample set to obtain an image processing model, wherein, the image processing model is used for labeling the original image to obtain a trichotomy. By adopting the technical proposal, the input original image can be automatically annotated to obtain a trichotomy, and a large amount of hairline level data annotation can be carried out without manual annotation, the annotation workload can be reduced, and the image annotation efficiency can be improved. In addition, the matting effect can be optimized by using image processing model to label the original image, avoiding the error that may be introduced by manual labeling.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

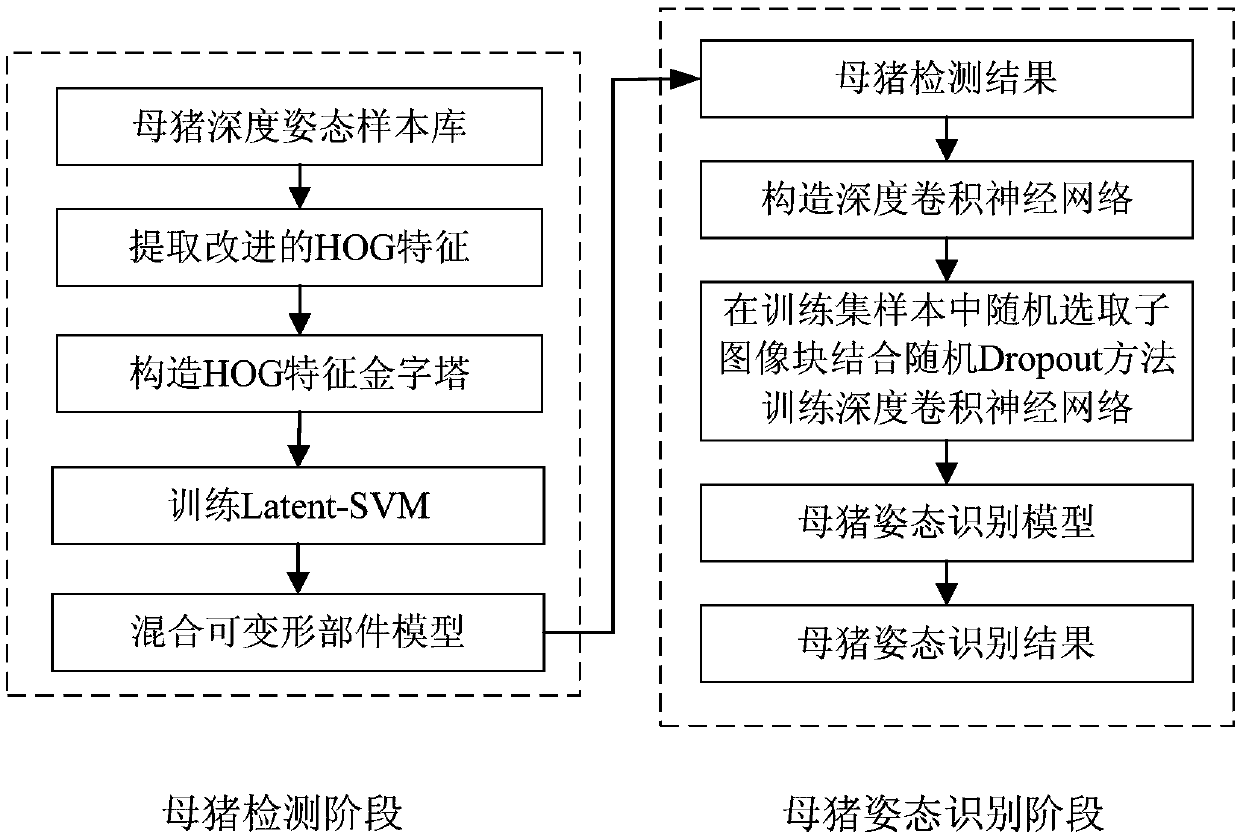

Automatic identification method for milking sow gesture on the basis of depth image

InactiveCN107844797AOvercome the difficult problem of identification and analysis at nightPrecise positioningCharacter and pattern recognitionNeural architecturesManual annotationRgb image

The invention discloses an automatic identification method for a milking sow gesture on the basis of a depth image. The method comprises the following steps that: collecting original depth image data,carrying out preprocessing, and carrying out manual annotation to form a milking sow gesture identification dataset; designing and training a milking sow hybrid deformable component model based on animproved HOG (Histogram of Oriented Gradient) feature; constructing a milking sow gesture identification deep convolutional neural network, utilizing an annotation frame and annotated gesture category training set information, and combining with a random Dropout method to train the network; inputting the test set into the milking sow hybrid deformable component model to obtain the target area ofthe milking sow; and inputting a target area result into the milking sow gesture identification deep convolutional neural network to identity the milking sow gesture. By use of the automatic identification method for the milking sow gesture on the basis of the depth image, the problem that an RGB (Red, Green and Blue) image is likely to be affected by the changes of factors, including outside illumination, shades and the like is overcome, the problem that the milking sow gesture is difficult in identification at night is solved, and the practical application requirement of all-weather milkingsow gesture monitoring can be met.

Owner:SOUTH CHINA AGRI UNIV

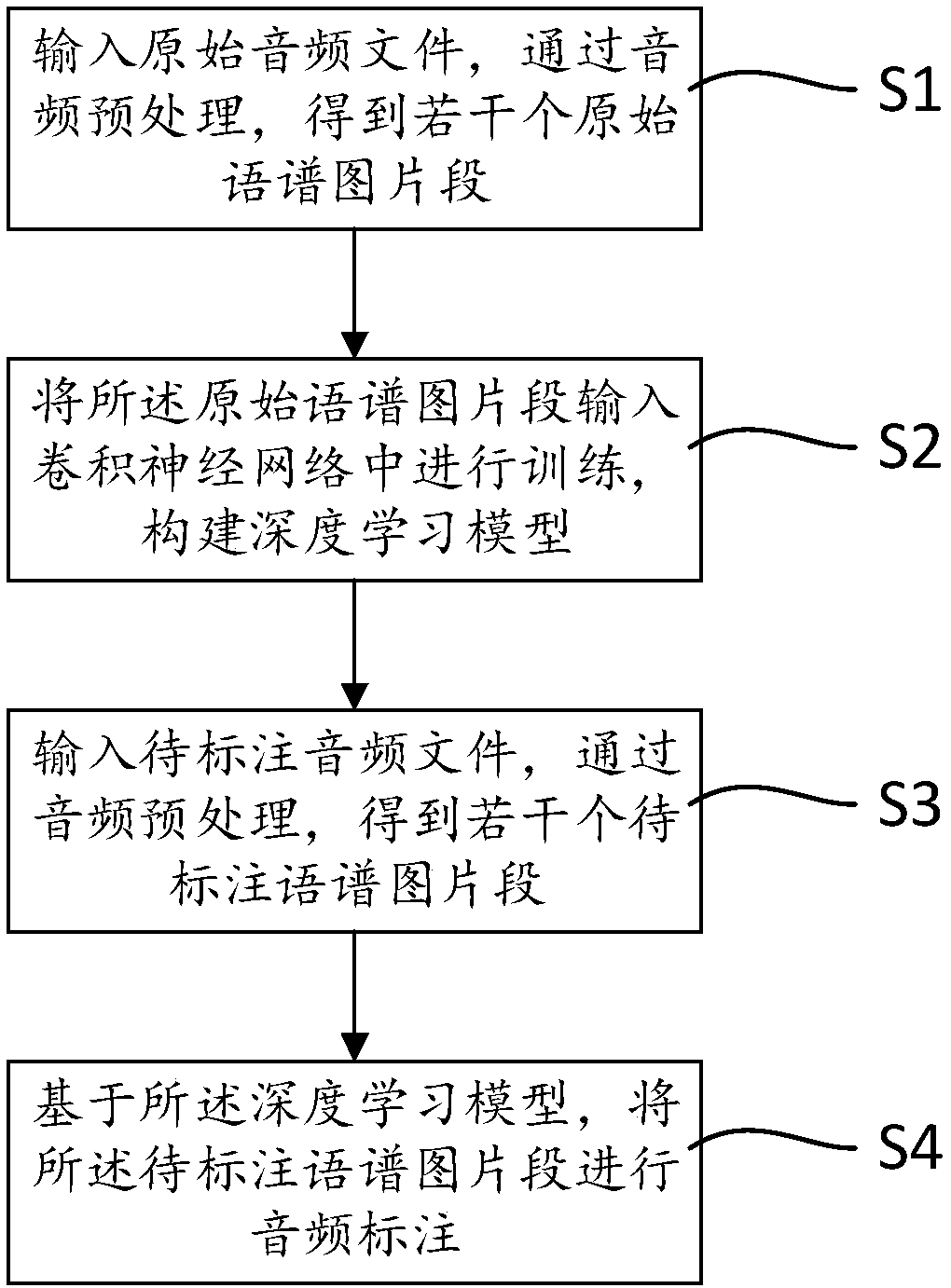

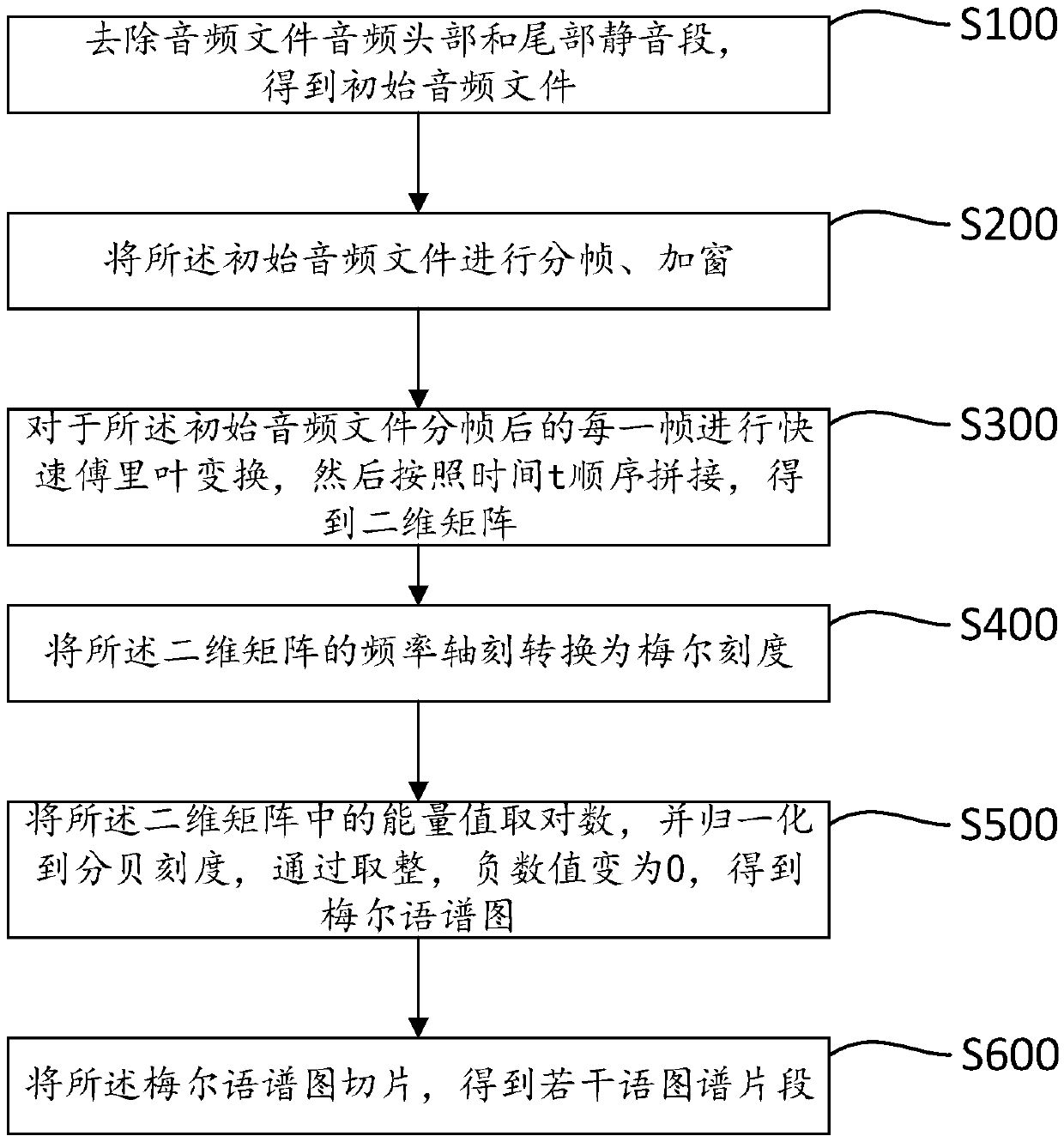

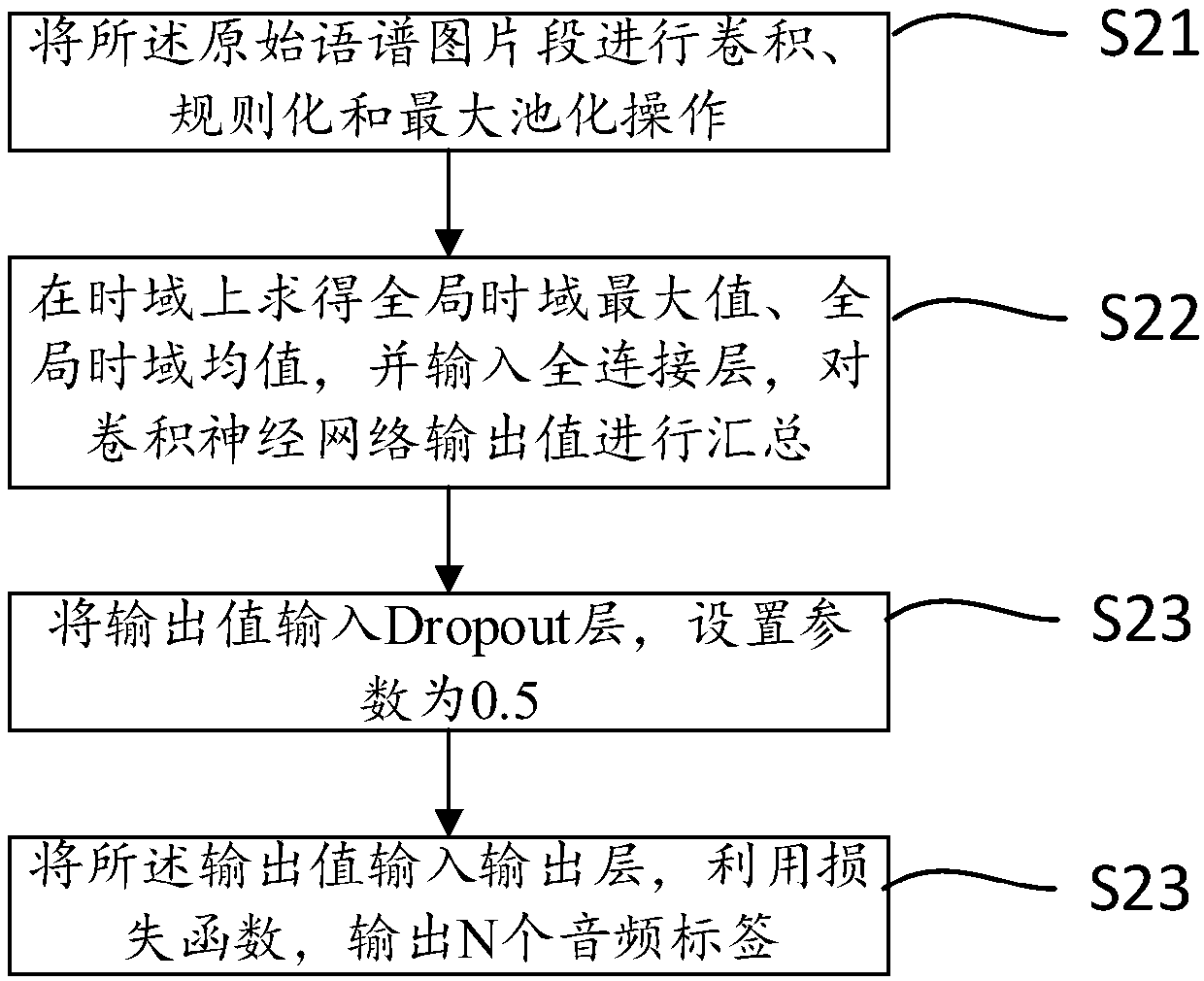

Deep-learning-based automatic audio annotation method

ActiveCN108053836AImprove labeling efficiencyImprove labeling accuracySpeech analysisSpecial data processing applicationsManual annotationLearning network

The invention relates to an audio annotation method, in particular to a deep-learning-based automatic audio annotation method. The deep-learning-based automatic audio annotation method comprises the following steps that original audio files are input, and by means of audio preprocessing, multiple original sound spectrograph segments are obtained; the original sound spectrograph segments are inputinto a convolutional neural network for training, and a deep-learning model is built; to-be-annotated audio files are input, and by means of audio preprocessing, multiple to-be-annotated sound spectrograph segments are obtained; on the basis of the deep-learning model, the to-be-annotated sound spectrograph segments are subjected to audio annotation. Accordingly, the convolutional neural network is utilized for training an audio deep-learning network, and the automatic audio annotation method is realized; compared with a traditional manual annotation mode, the annotation accuracy is improved,and the audio annotation efficiency is improved.

Owner:成都潜在人工智能科技有限公司

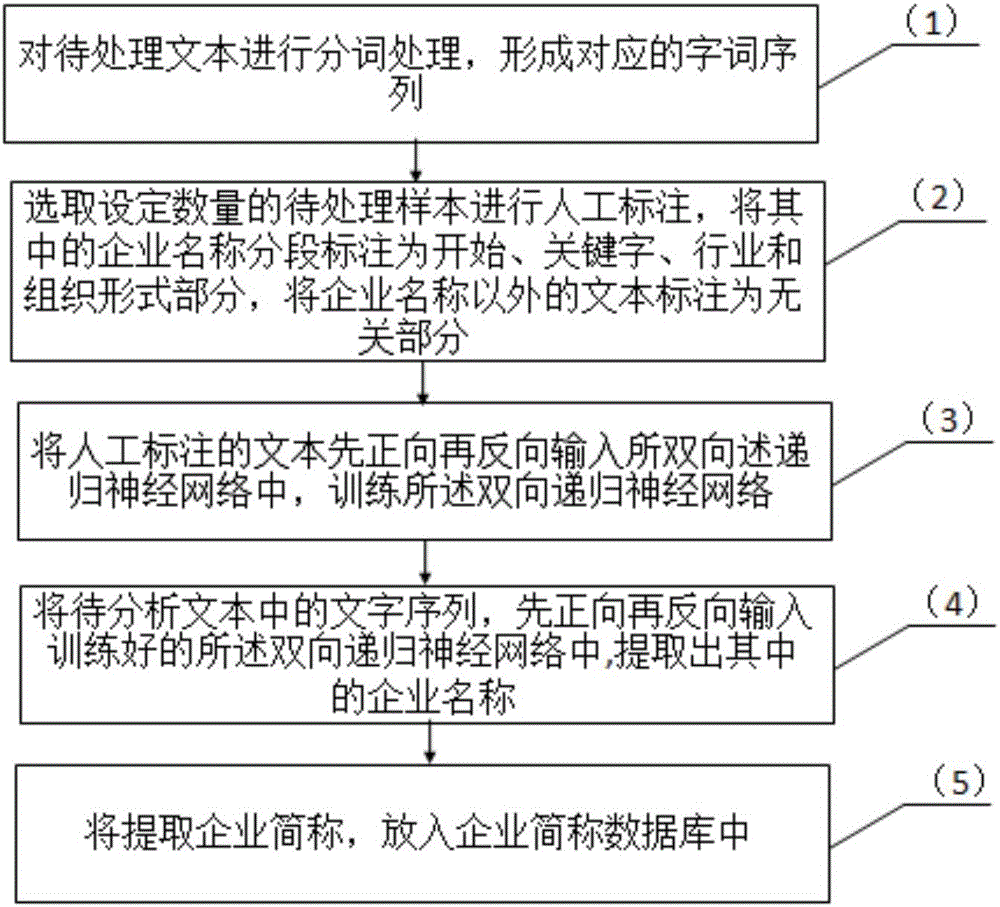

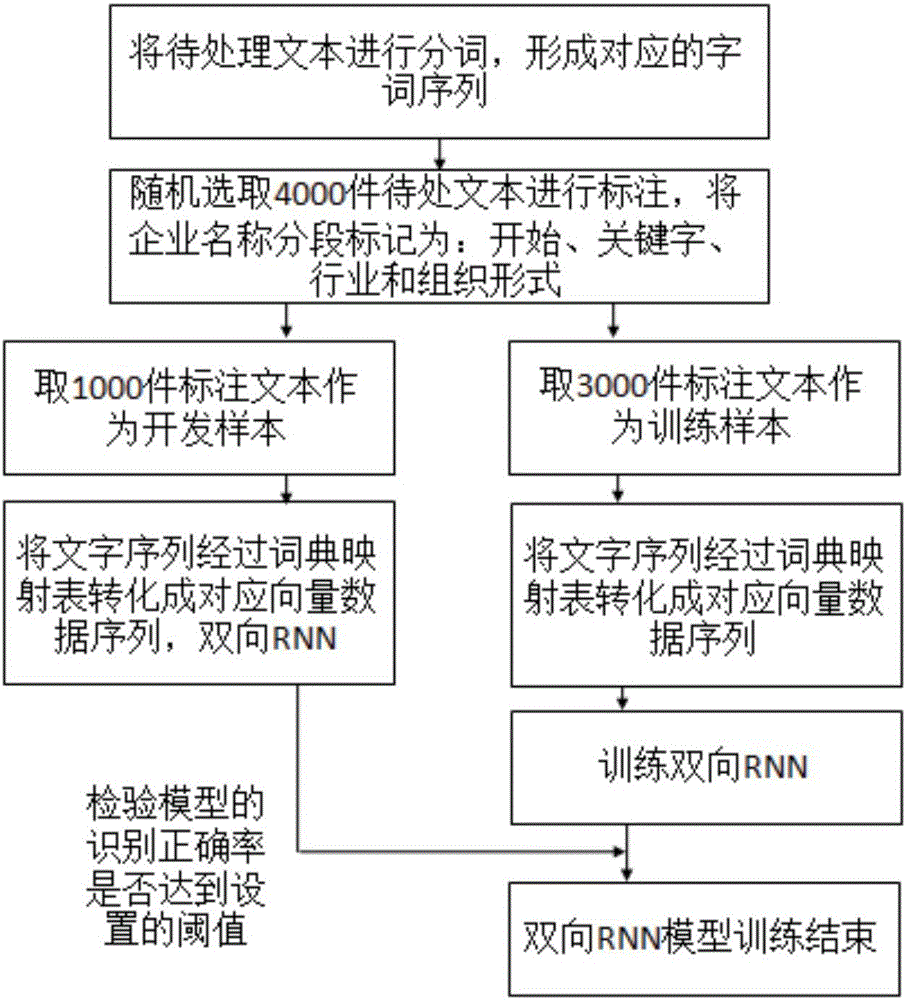

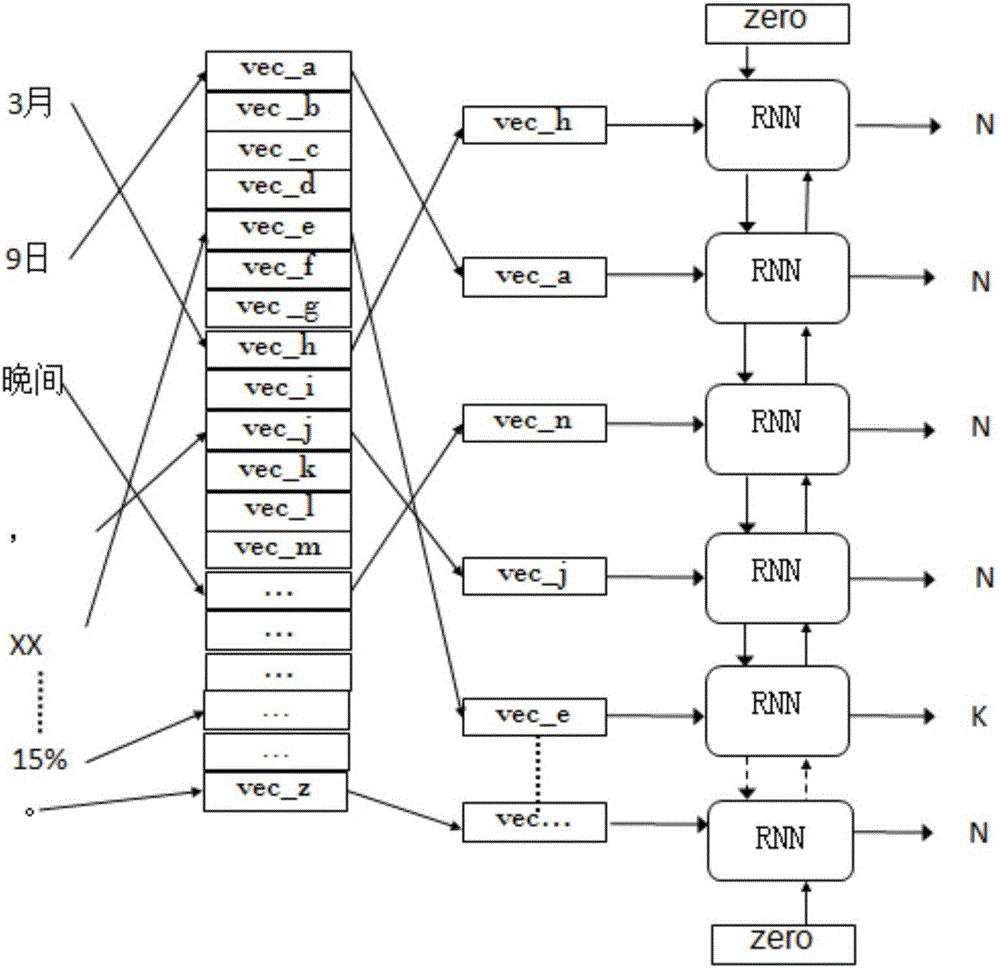

Bidirectional recursive neural network-based enterprise abbreviation extraction method

InactiveCN105975555APrediction is accurateSave human effortBiological neural network modelsSpecial data processing applicationsManual annotationRelevant information

The invention relates to the field of natural language processing, in particular to a bidirectional recursive neural network-based enterprise abbreviation extraction method. The method comprises the steps of serializing to-be-processed texts through word segmentation processing; selecting a certain number of the to-be-processed texts to perform manual annotation, segmentally annotating enterprise names in the to-be-processed texts as starting parts, keyword parts, industrial parts and organization form parts, and annotating data except the enterprise names as unrelated parts; inputting annotated training samples into a bidirectional recursive neural network to train the bidirectional recursive neural network; extracting word sequences belonging to the enterprise names through prediction of the bidirectional recursive neural network, and further extracting fields belonging to the keyword parts of the names as enterprise abbreviations; and establishing a corresponding enterprise abbreviation database. Therefore, powerful technical support is provided for related information analysis of informal texts.

Owner:成都数联铭品科技有限公司

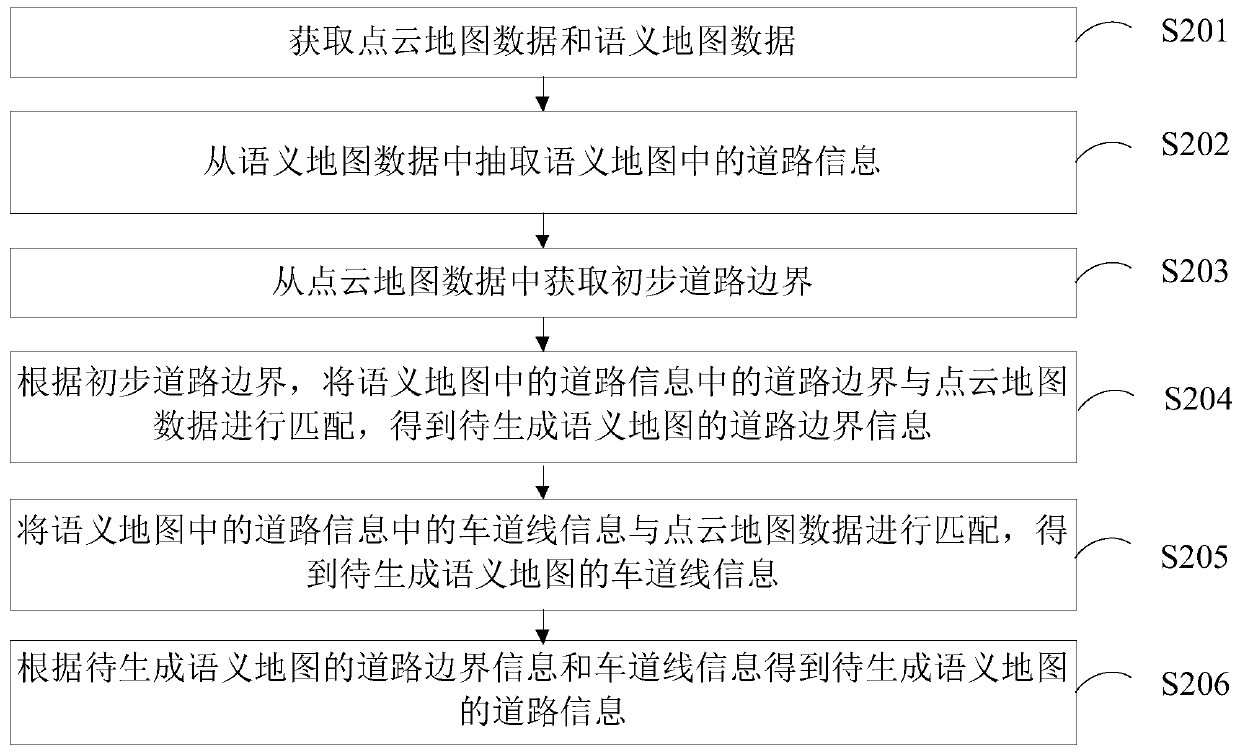

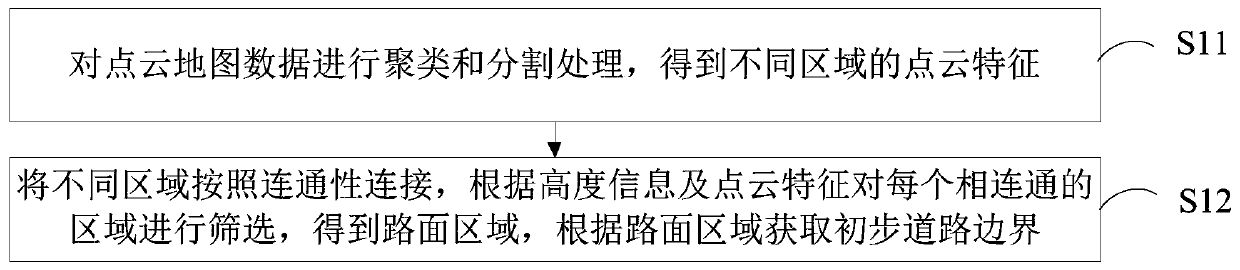

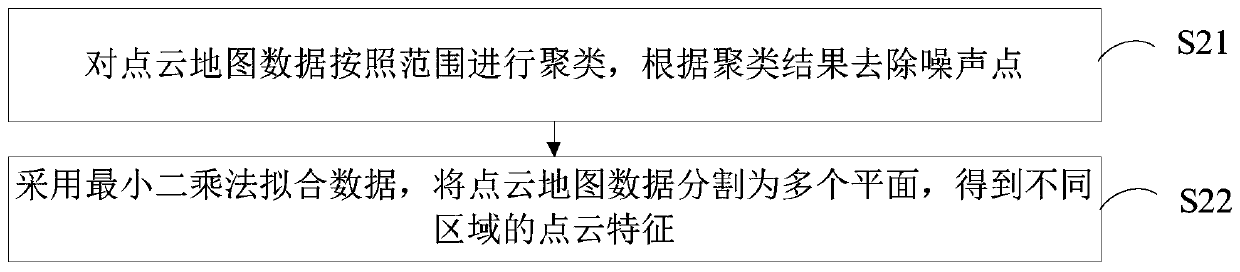

Device and method for acquiring road information from map data

ActiveCN109798903AImprove drawing speedEfficient acquisitionInstruments for road network navigationManual annotationPoint cloud

The invention relates to a device and method for acquiring road information from map data. The method includes: acquiring point cloud map data and semantic map data; extracting road information in a semantic map from the semantic map data; acquiring a primary road boundary from the point cloud map data; matching a road boundary in the road information in the semantic map with the point cloud map data to obtain road boundary information of a to-be-generated semantic map; matching lane line information in the road information in the semantic map with the point cloud map data to obtain lane lineinformation of the to-be-generated semantic map; obtaining road information of the to-be-generated semantic map according to the road boundary information and the lane line information of the to-be-generated semantic map. By means of matching of data in a traditional data map with point cloud in high-precision map data, manual annotation is greatly saved, and the map drawing speed is increased.

Owner:GUANGZHOU WERIDE TECH LTD CO

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com