Patents

Literature

122 results about "Decision fusion" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

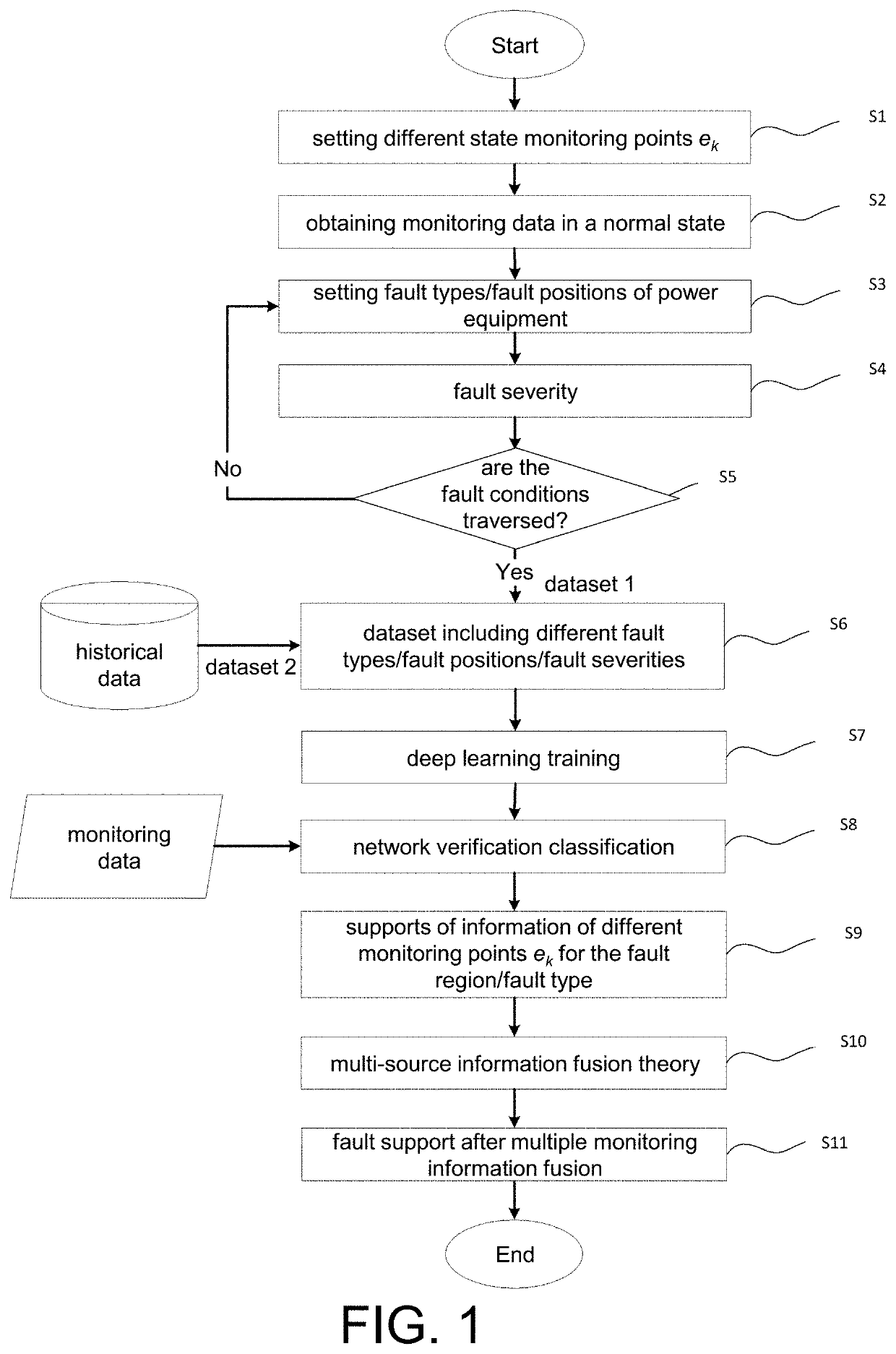

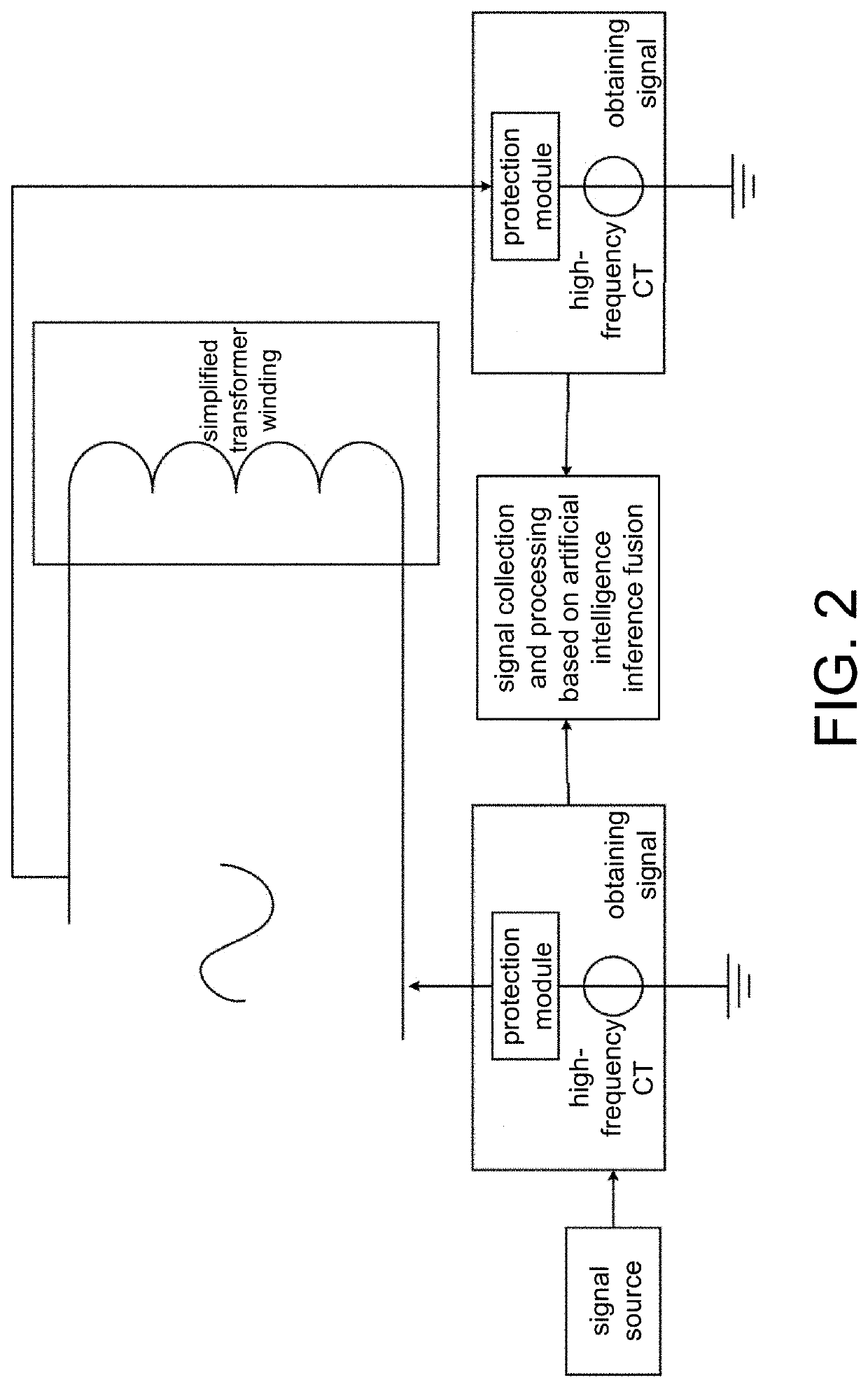

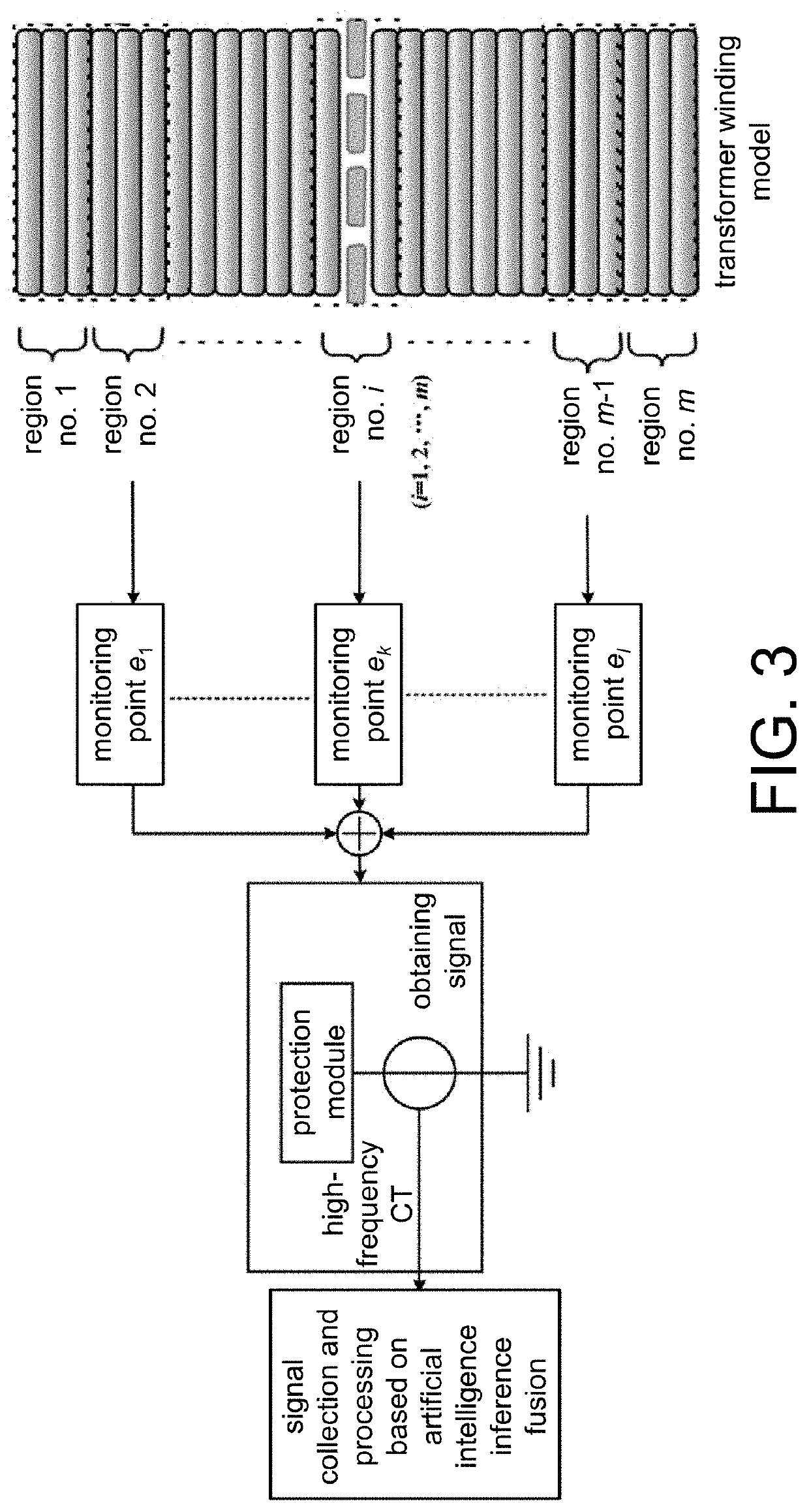

Power equipment fault detecting and positioning method of artificial intelligence inference fusion

ActiveUS20200387785A1Improve accuracyMathematical modelsDigital data processing detailsMultiple sensorElectric power equipment

A method includes steps: 1) obtaining monitoring information of different monitoring points in normal state of power equipment; 2) setting faults and obtaining monitoring information of different fault types, positions, monitoring points of the equipment; 3) taking the monitoring information obtained in steps 1) to 2) as training dataset, taking the fault types and positions as labels, inputting the training dataset and the labels to deep CNN for training; 4) collecting monitoring data, performing verification and classification using step 3), obtaining probability values corresponding to each of the labels; 5) taking classification results of different labels as basic probability assignment values, with respect to a monitoring system composed of multiple sensors, taking different sensors as different evidences for decision fusion, performing fusion processing using the DS evidence theory to obtain fault diagnosis result. The invention can intelligently realize fault detection, fault type determination, and fault positioning of the power equipment.

Owner:WUHAN UNIV

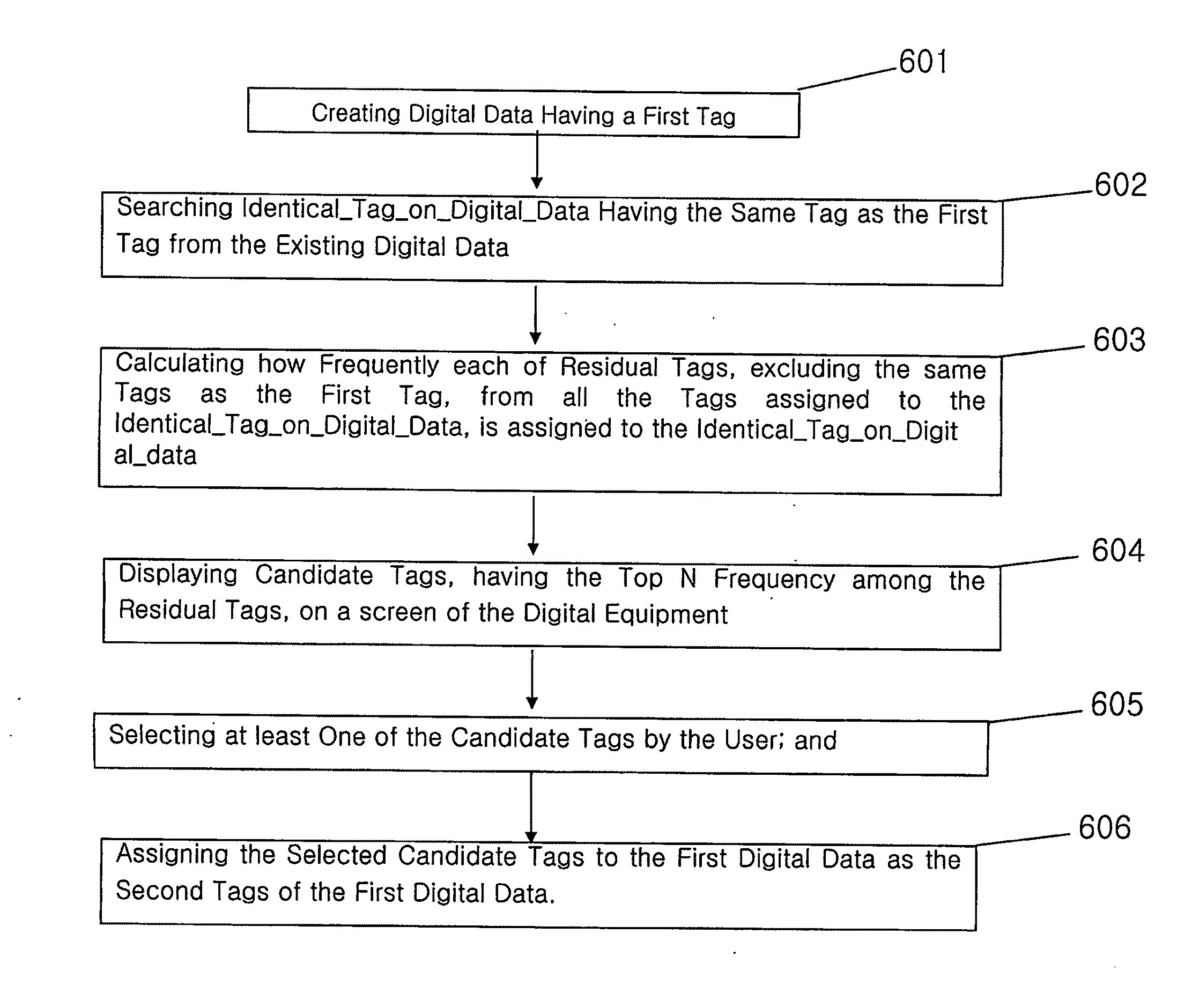

Methods for tagging person identification information to digital data and recommending additional tag by using decision fusion

InactiveUS20090256678A1Improve accuracyReduce user burdenTelevision system detailsElectric signal transmission systemsDigital dataManagement system

Person identification information is extracted from digital data with a high accuracy and additional tag is recommended by adopting a decision fusion. In other words, the person identification information is acquired by using various additional information to tag the same to the digital data automatically as a person tag, in a digital data management system in which the person identification information is extracted from the digital data by referring to its attributes and contents; and specific digital data having specific tags which are same as those attached to newly created digital data are found out and then candidate tags which are attached to the specific digital data except the specific tags are provided to a monitor in order for a user to choose one or more proper tags from the candidate tags, which are desired to be attached to the newly created digital data additionally, by using the decision fusion.

Owner:INTEL CORP

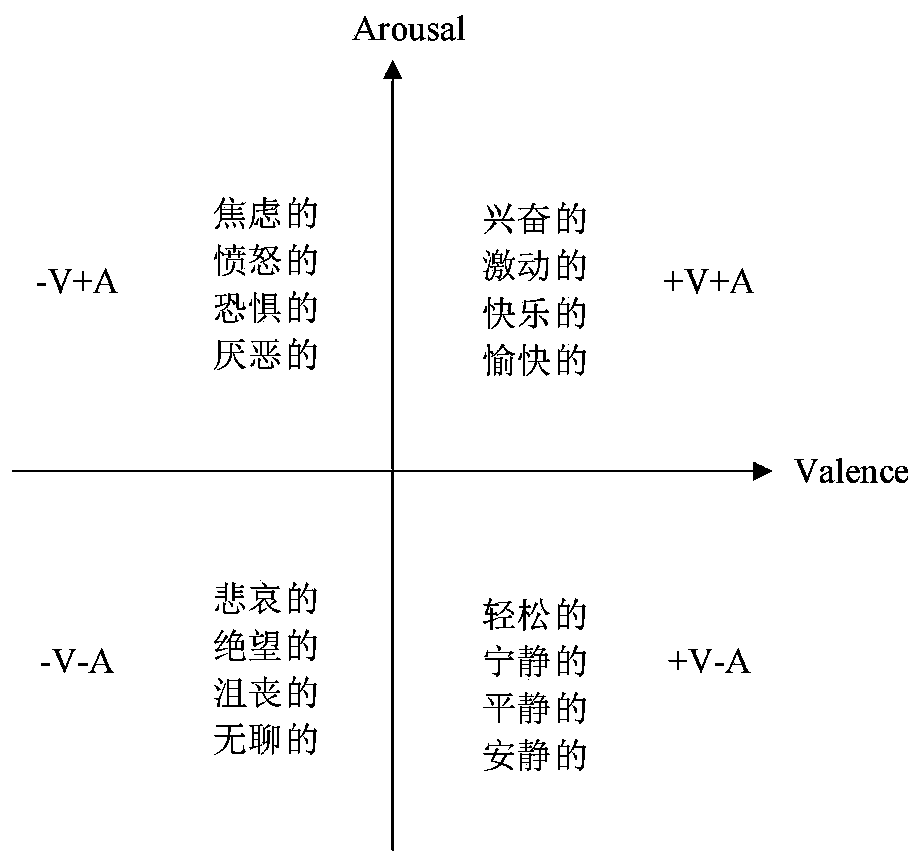

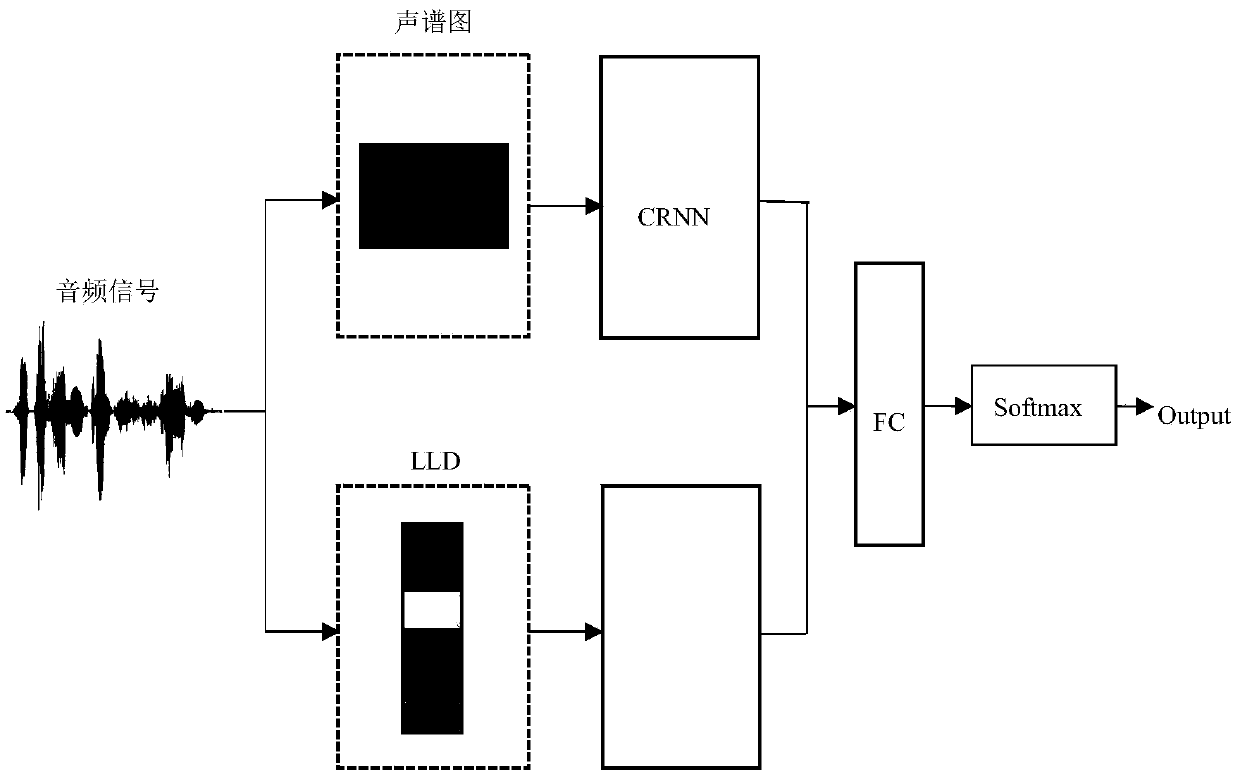

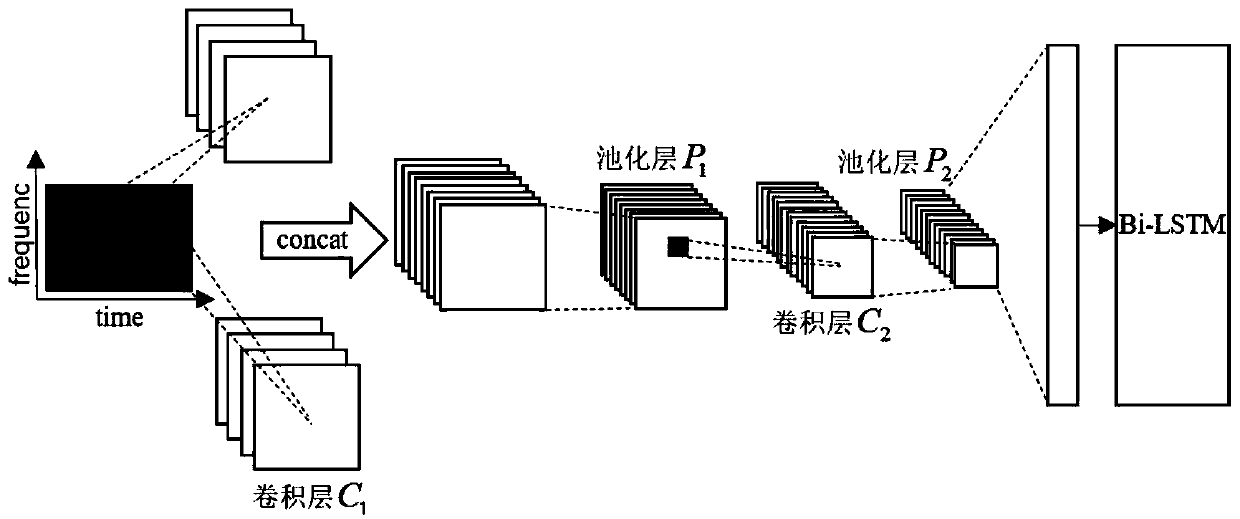

Chinese song emotion classification method based on multi-modal fusion

ActiveCN110674339AStrong complementarityDig richNeural architecturesSpecial data processing applicationsTime domainEmotion classification

The invention discloses a Chinese song emotion classification method based on multi-modal fusion. The Chinese song emotion classification method comprises the steps: firstly obtaining a spectrogram from an audio signal, extracting audio low-level features, and then carrying out the audio feature learning based on an LLD-CRNN model, thereby obtaining the audio features of a Chinese song; for lyricsand comment information, firstly constructing a music emotion dictionary, then constructing emotion vectors based on emotion intensity and part-of-speech on the basis of the dictionary, so that textfeatures of Chinese songs are obtained; and finally, performing multi-modal fusion by using a decision fusion method and a feature fusion method to obtain emotion categories of the Chinese songs. TheChinese song emotion classification method is based on an LLD-CRNN music emotion classification model, and the model uses a spectrogram and audio low-level features as an input sequence. The LLD is concentrated in a time domain or a frequency domain, and for the audio signal with associated change of time and frequency characteristics, the spectrogram is a two-dimensional representation of the audio signal in frequency, and loss of information amount is less, so that information complementation of the LLD and the spectrogram can be realized.

Owner:BEIJING UNIV OF TECH

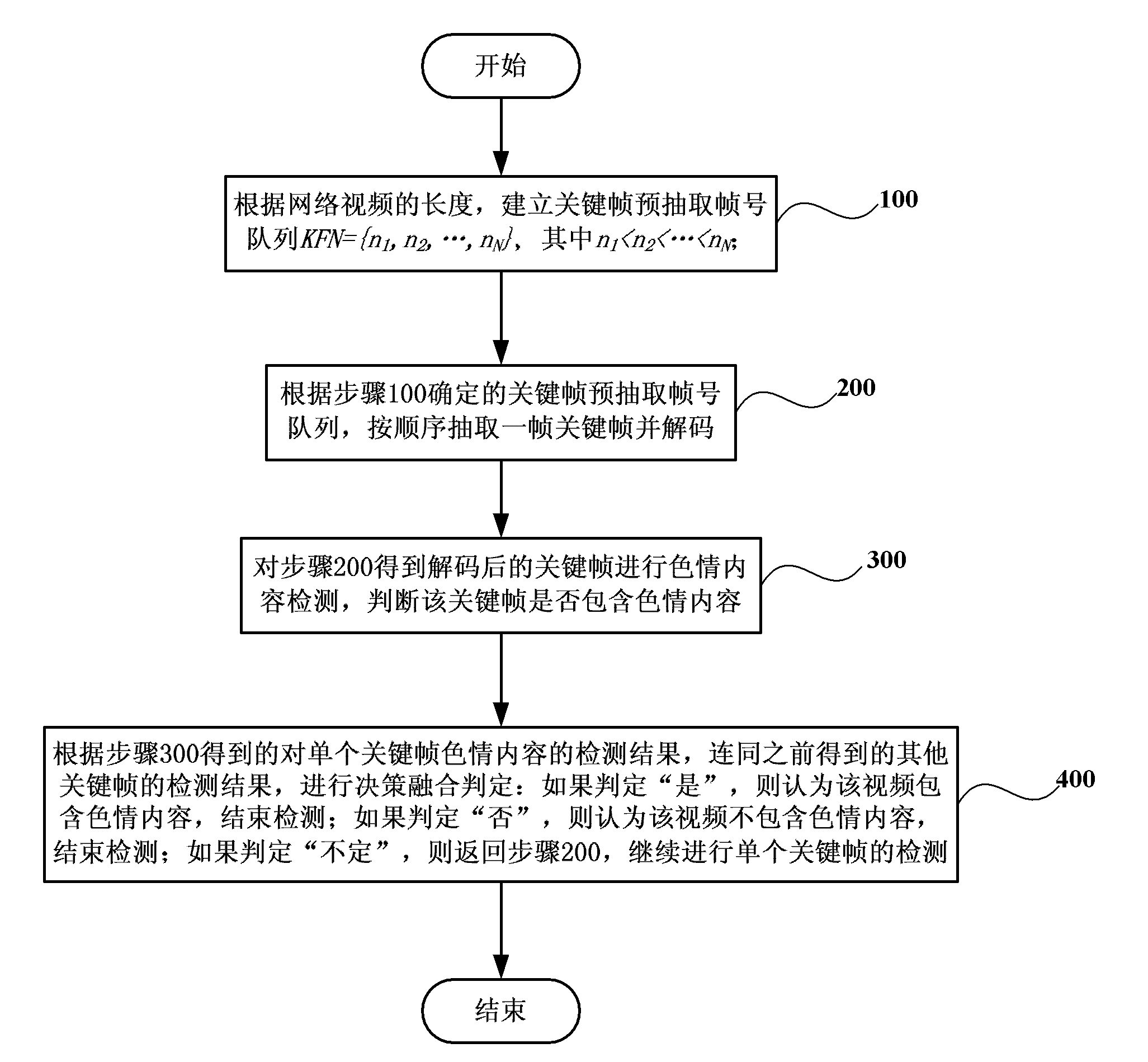

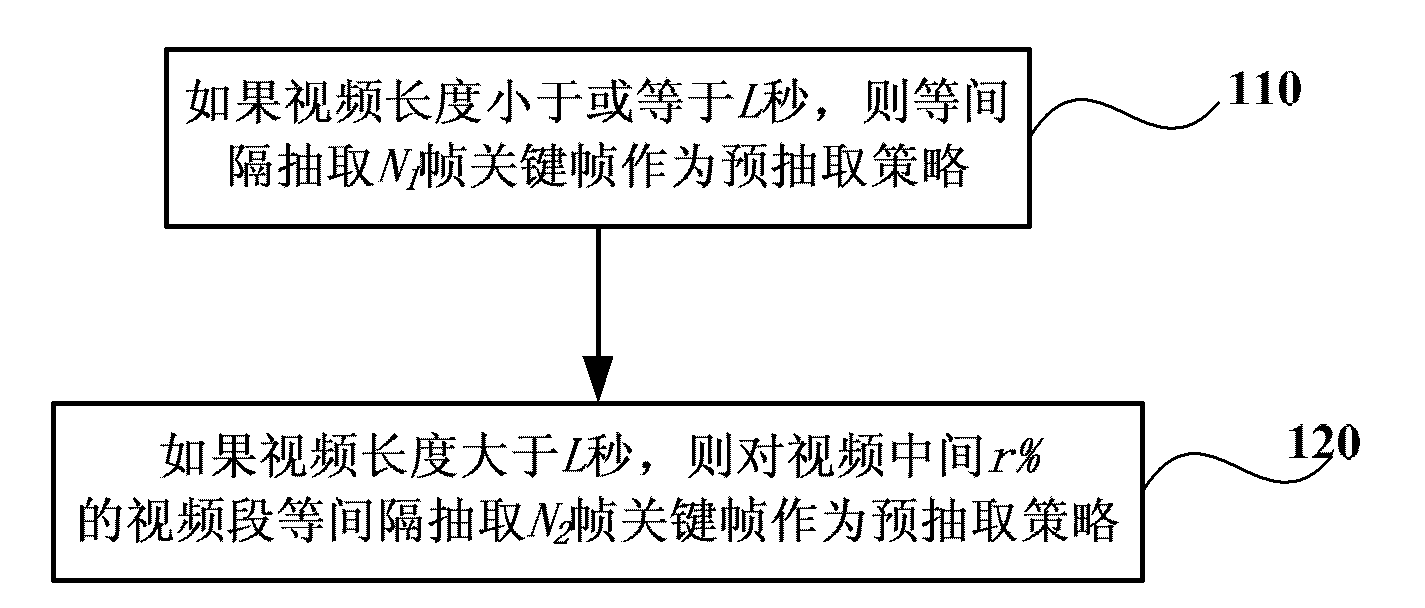

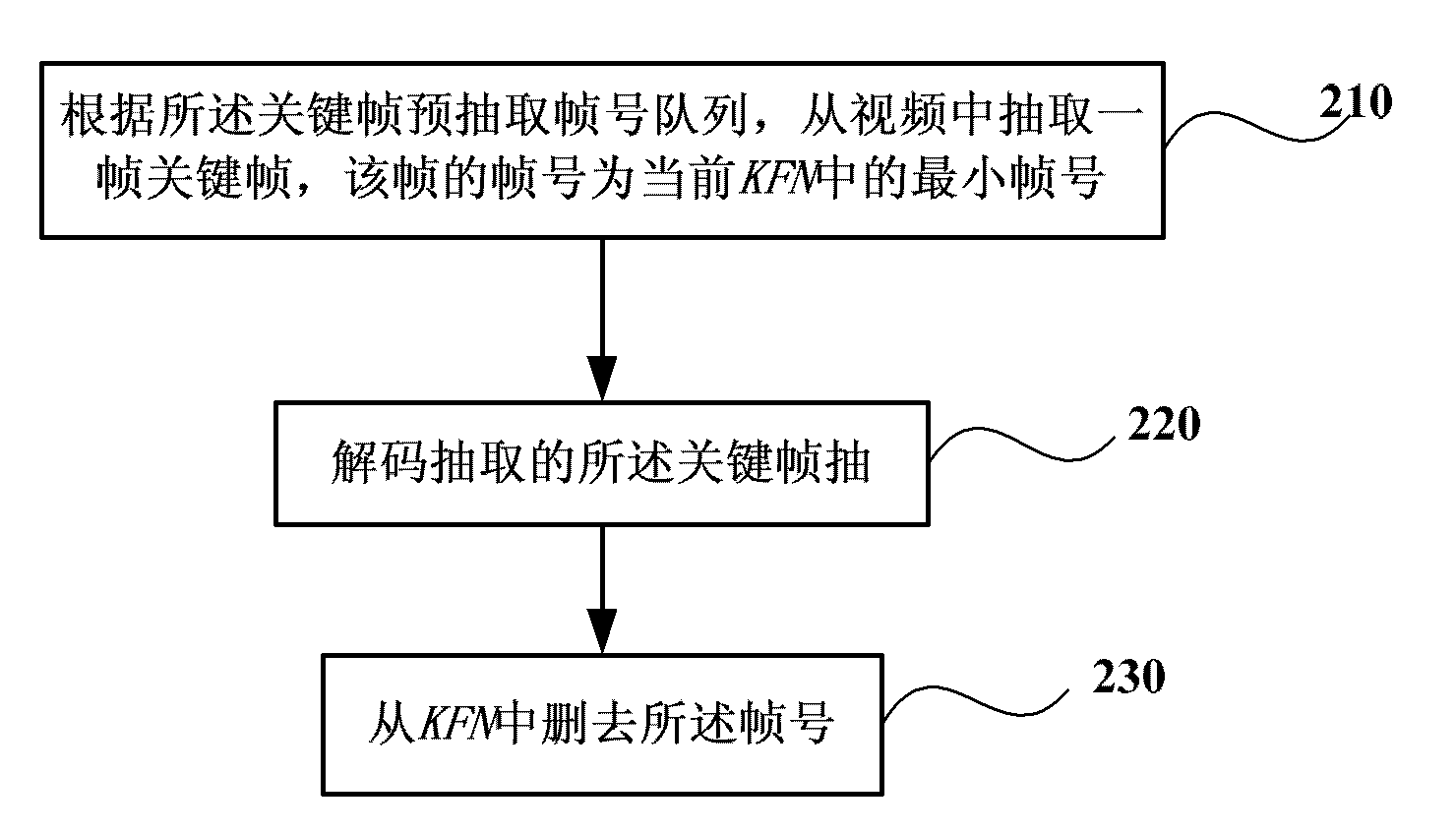

Method and system for detecting network pornography videos in real time

InactiveCN102073676AImprove detection efficiencyImprove detection accuracyCharacter and pattern recognitionSpecial data processing applicationsComputer hardwareKey frame

The invention discloses a method and system for detecting network pornography videos in real time. The method comprises the following steps: according to the length of a network video, establishing a pre-extracted frame number queue KFN of key frames, wherein KFN={n1, n2,..., nN} with the condition of n1<n2<...<nN; according to the pre-extracted frame number queue of key frames, extracting a key frame in order and decoding the key frame; carrying out pornographic content detection on the decoded key frame and judging whether the key frame contains the pornographic content; according to the detection result of the pornographic content of the single key frame together with the detection results, which are obtained previously, of other key frames, carrying out decision fusion judgment; if judging the key frame contains the pornographic content, considering the video contains the pornographic content and completing detection; if judging the key frame does not contain the pornographic content, considering the video does not contain the pornographic content and completing detection; and if whether the key frame contains the pornographic content is uncertain, continuing detecting the single key frame.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

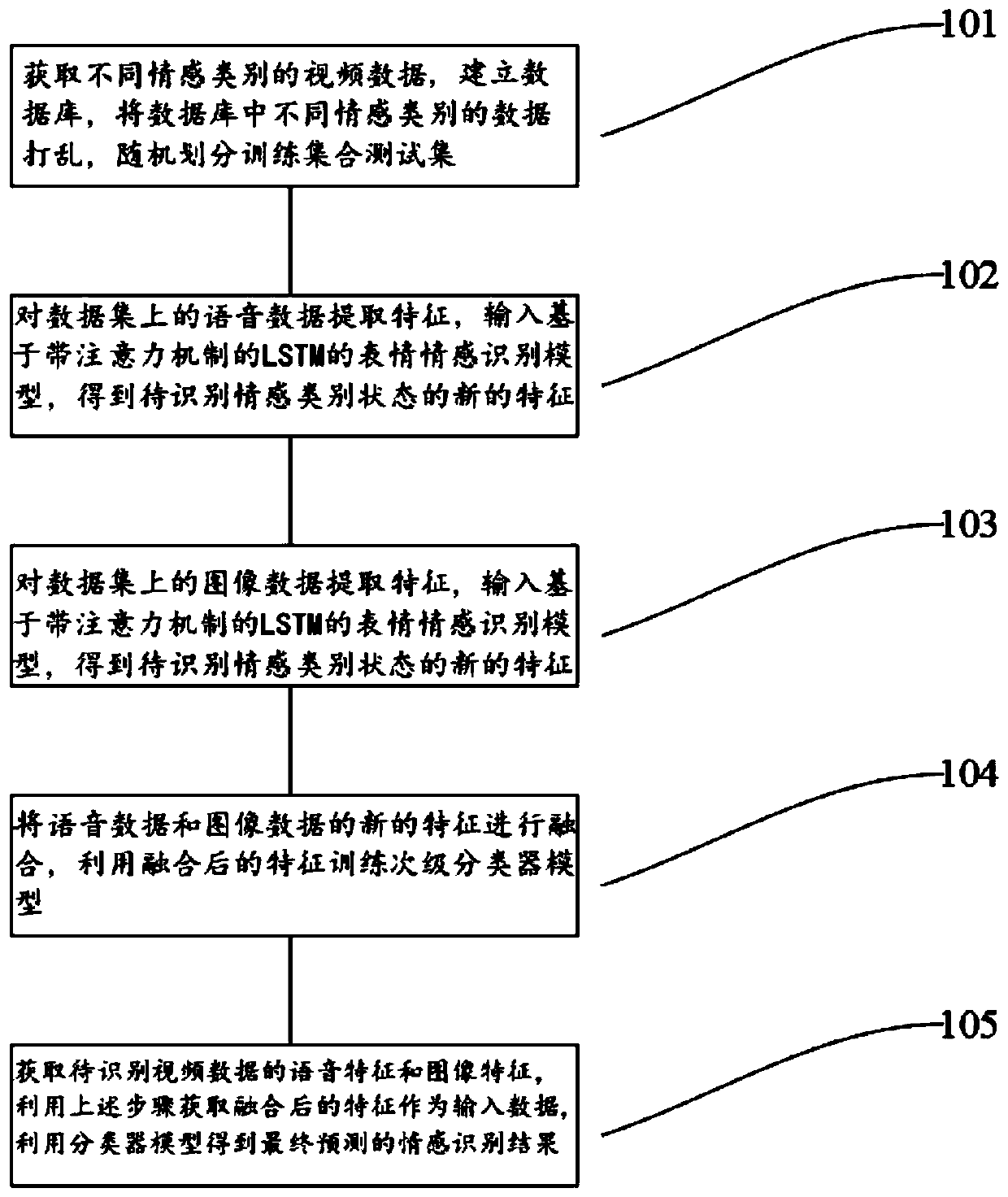

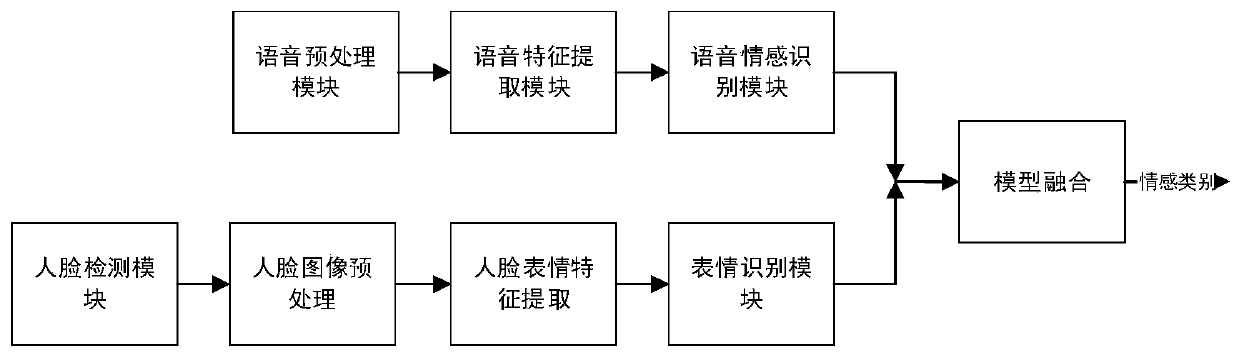

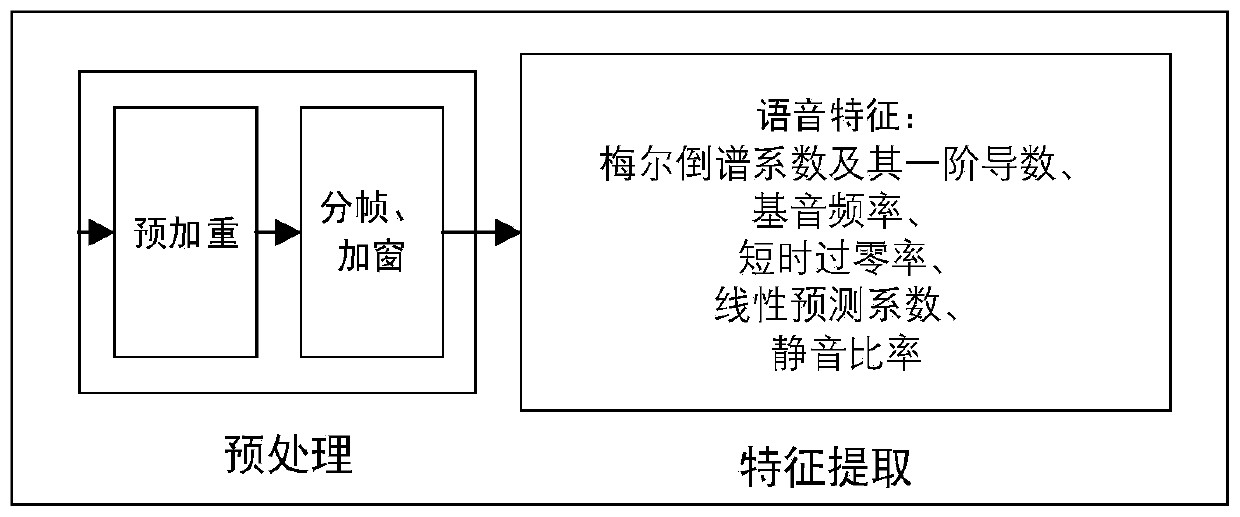

Emotion recognition method and device based on LSTM audio and video fusion and storage medium

PendingCN110826466AImprove accuracyImprove robustnessSpeech analysisNeural architecturesComputer visionBiology

The invention discloses an emotion recognition method and device based on LSTM (Long Short Term Memory) audio and video fusion and a storage medium. An LSTM model is adopted, a more detailed frame-level feature is used for training the model, and the obtained emotion recognition is accurate. Meanwhile, a method of combining decision fusion with later fusion is adopted, the recognition results of the two modes can be more effectively fused for the features of speech emotion recognition and the features of facial expression recognition, and a more accurate emotion recognition result is obtainedthrough calculation. According to the method provided by the invention, the emotional state of the prediction object can be obtained more accurately, and the accuracy and robustness of emotion recognition are improved.

Owner:南京励智心理大数据产业研究院有限公司

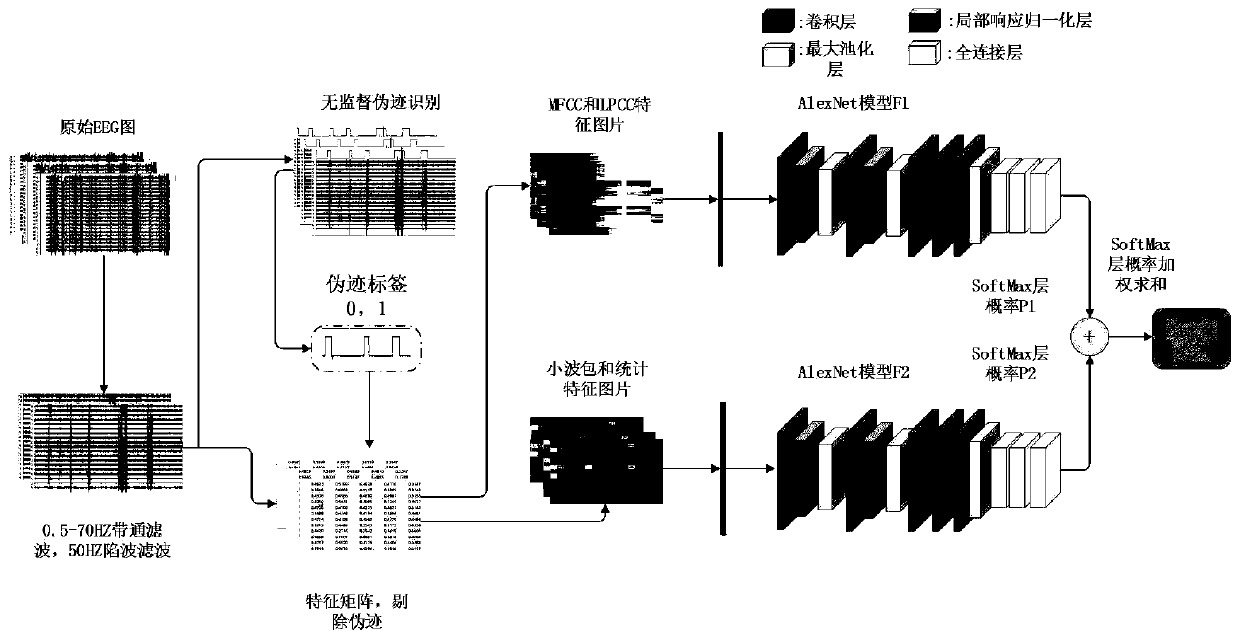

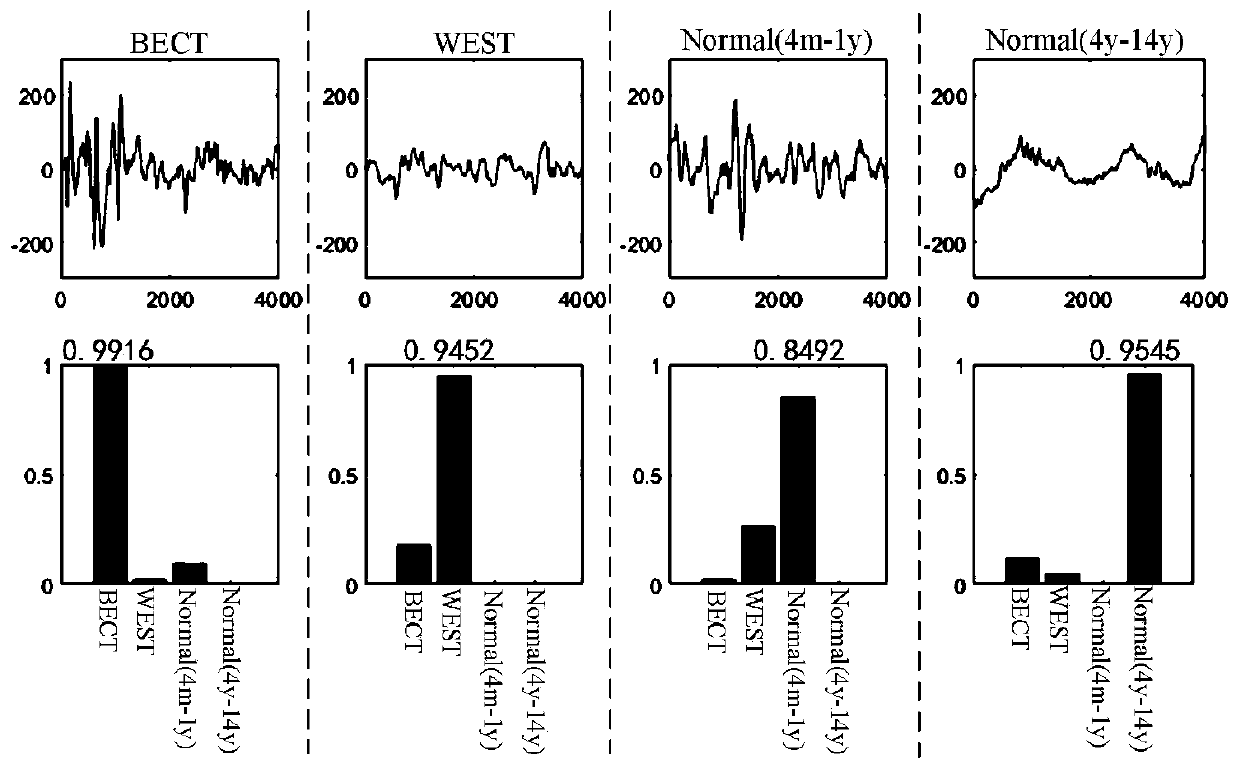

Children epilepsy syndrome classification method based on transfer learning multi-model decision fusion

PendingCN111291614AExpress abilityEasy to moveCharacter and pattern recognitionDiagnostic recording/measuringCluster algorithmUnsupervised clustering

The invention discloses a children epilepsy syndrome classification method based on transfer learning multi-model decision fusion. The method comprises the following steps: step 1, performing digitalfiltering on original multi-channel EEG signal data to delete and select a frequency band, and then performing artifact elimination based on a Riemannian geometry unsupervised clustering algorithm andabnormal data point elimination based on median filtering to obtain a pure EEG signal; step 2, extracting MFCC features, LPCC features, wavelet packet features and statistical features from the EEG signals; and step 3, inputting the MFCC and LPCC feature pictures and training a model F1, inputting wavelet packet features and statistical features and training a model F2, performing weighted summation on SoftMax probability output layers of the model F1 and the model F2, and obtaining a child epileptic syndrome category to which the sample belongs according to the obtained final probability. The method can achieve the precise classification of the children epileptic syndrome.

Owner:HANGZHOU DIANZI UNIV

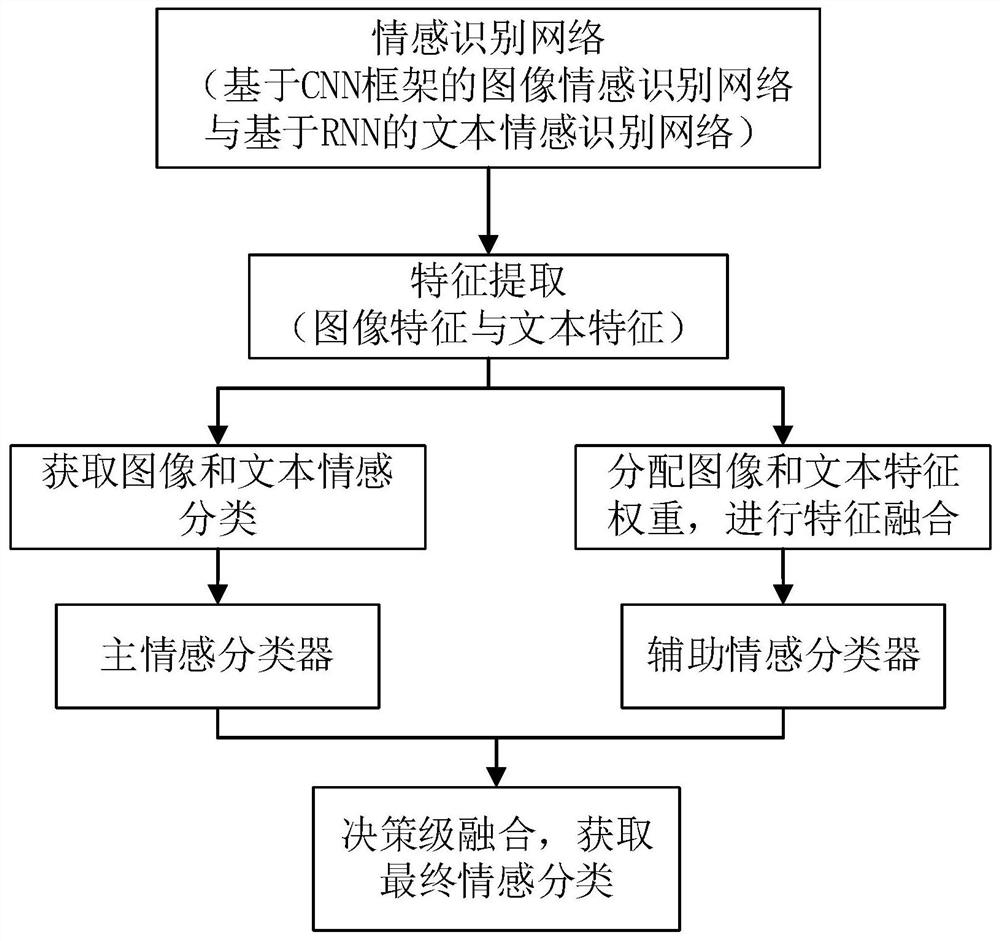

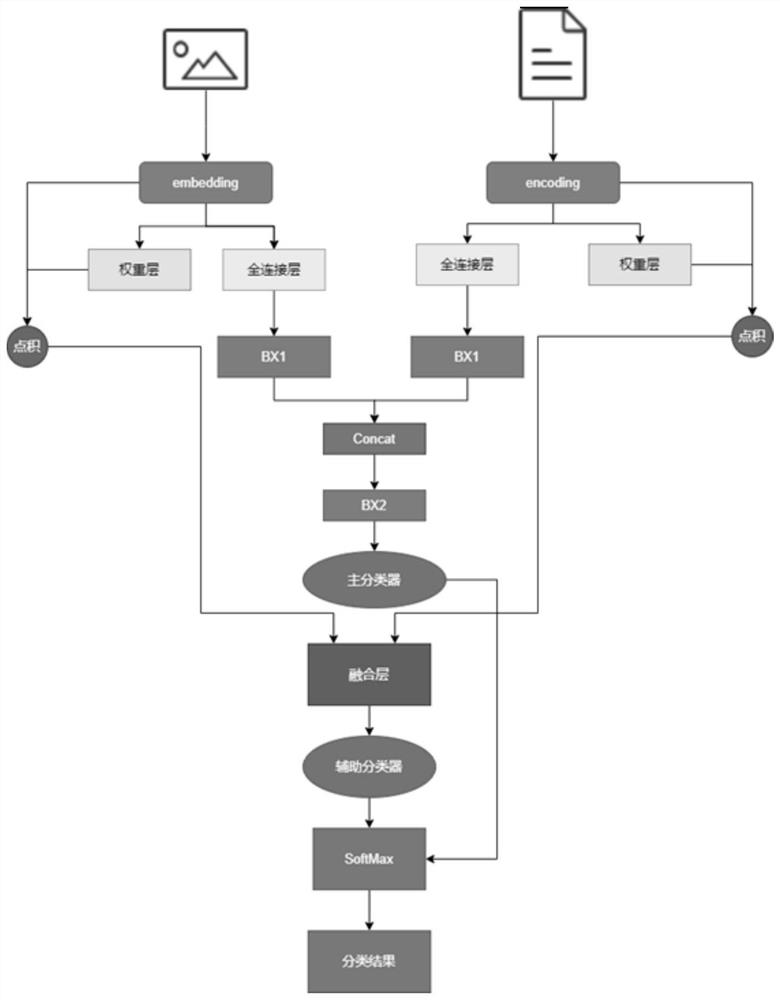

Feature fusion and decision fusion mixed multi-modal emotion recognition method

InactiveCN112800875AEasy to solveEasy to identifySemantic analysisNeural architecturesEmotion classificationMulti modal data

The invention discloses a feature fusion and decision fusion mixed multi-mode emotion recognition method, and belongs to the field of mode recognition and emotion recognition. The implementation method comprises the following steps: 1, constructing an image emotion recognition network by using a convolutional neural network framework, and obtaining image features and image emotion states; 2, constructing a text emotion recognition network by using a recurrent neural network framework, and obtaining text features and a text emotion state; and 3, constructing a multi-modal information fusion emotion recognition network, constructing a main classifier for fusing the image emotion state and the text emotion state and obtaining main emotion classification, constructing an auxiliary classifier for fusing the image features and the text features and obtaining auxiliary emotion classification, and fusing the main emotion classification and the auxiliary emotion classification to obtain final emotion classification. According to the method, information complementation among multi-modal information is utilized, the problem of low emotion recognition accuracy caused by factors such as information fuzziness or missing of single-modal information is avoided, and a new thought is provided for multi-modal data fusion and emotion recognition.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

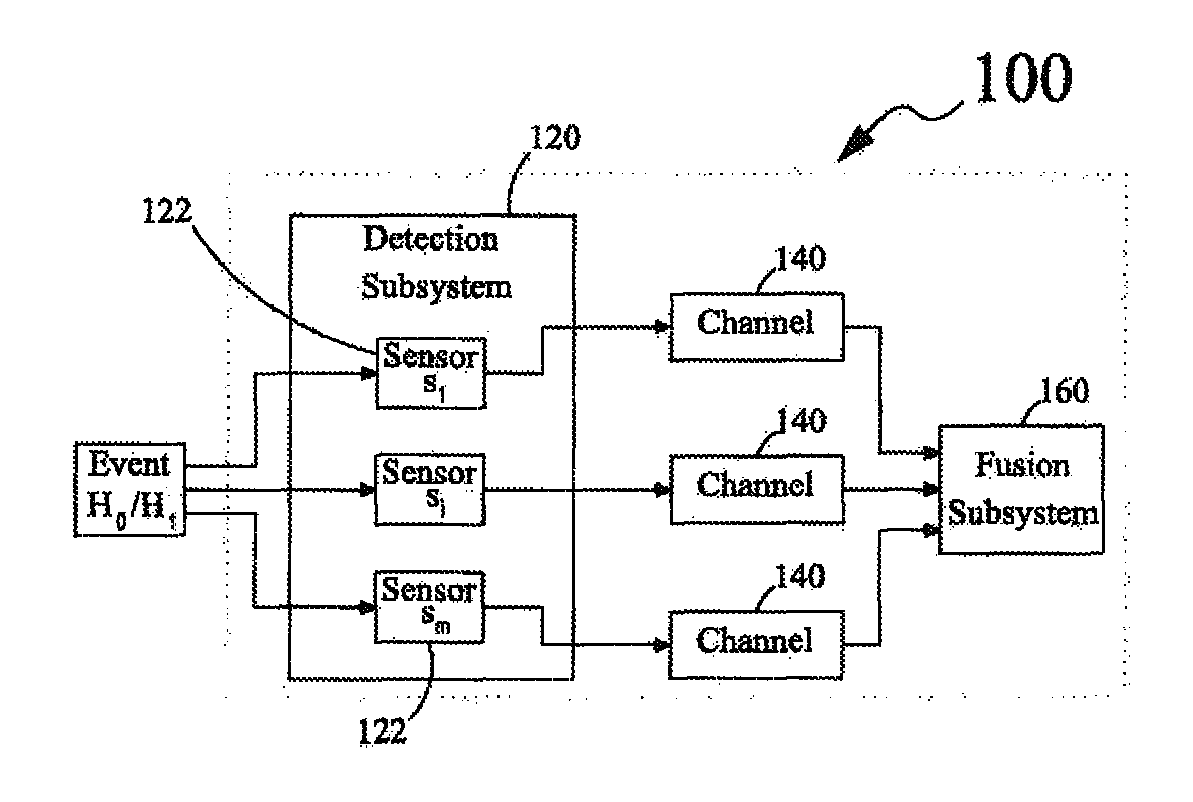

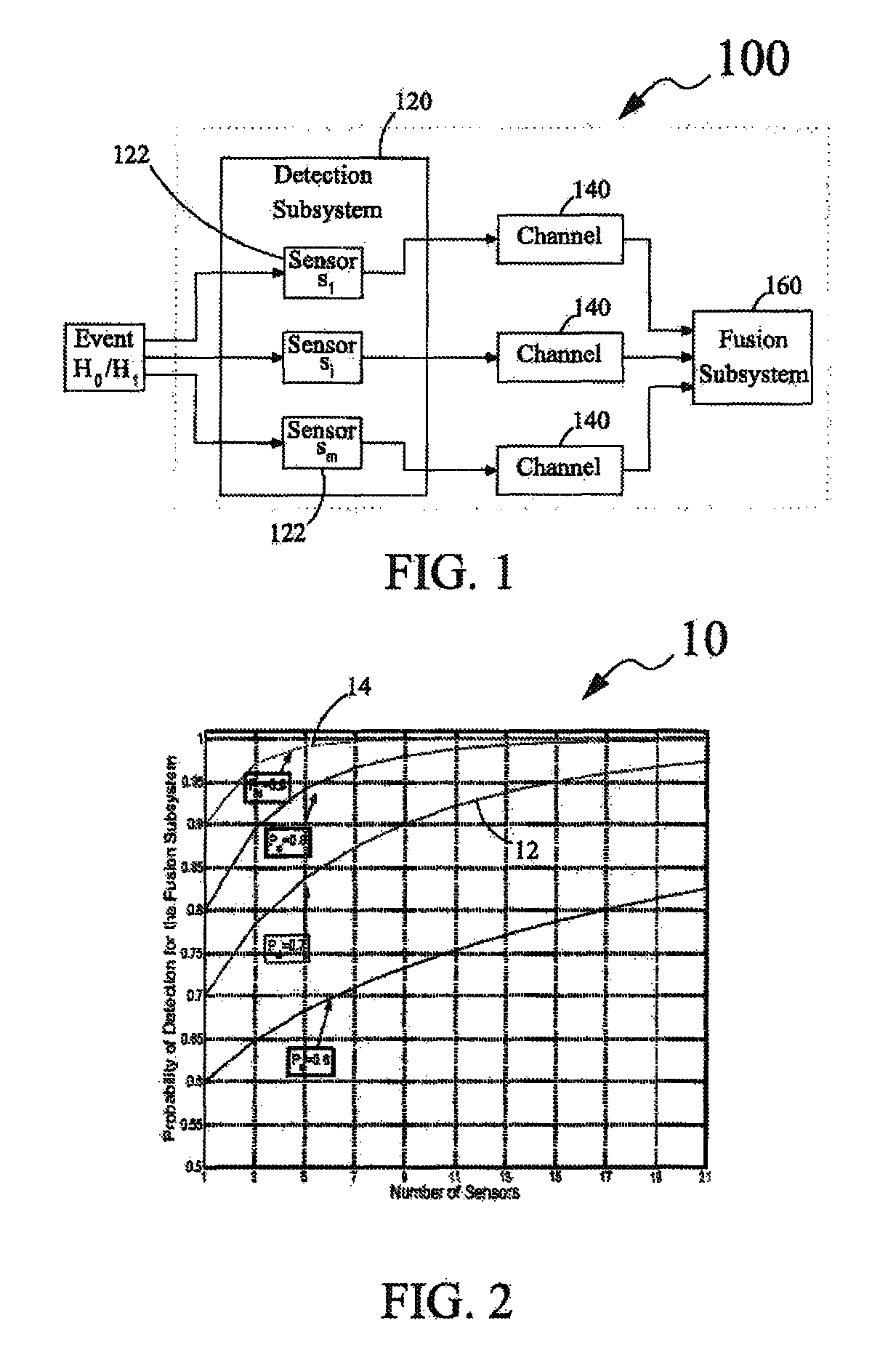

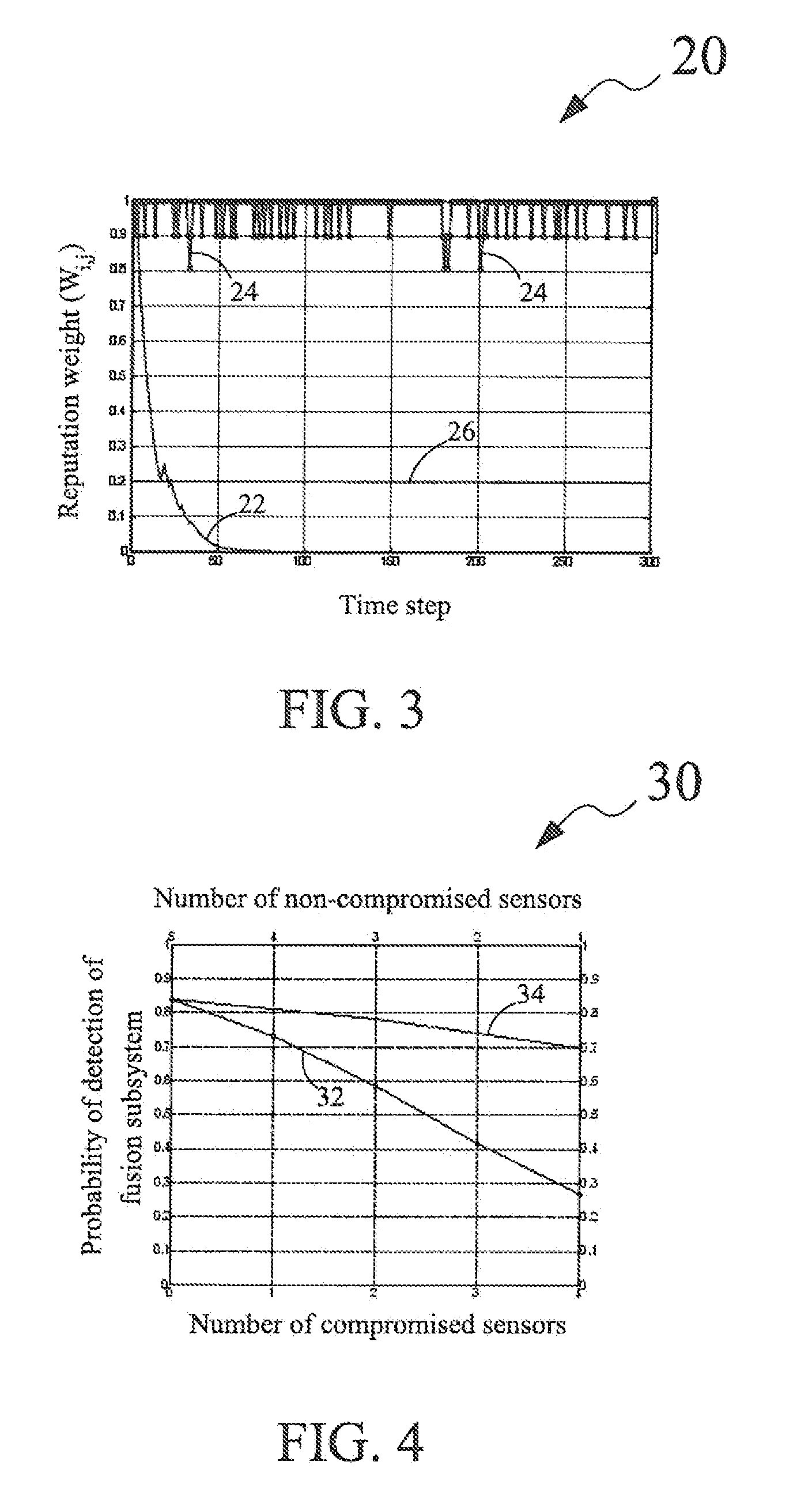

Trust management system for decision fusion in networks and method for decision fusion

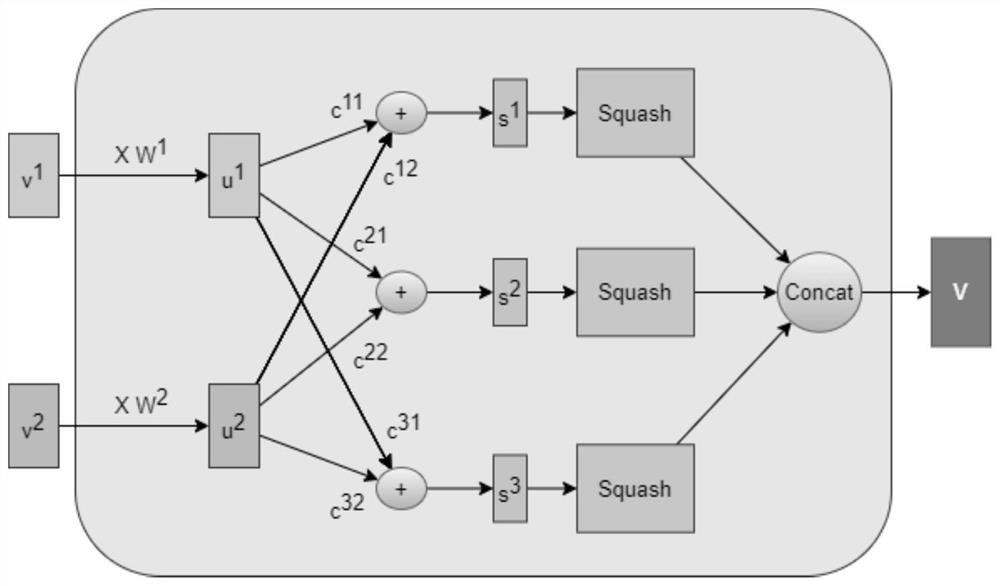

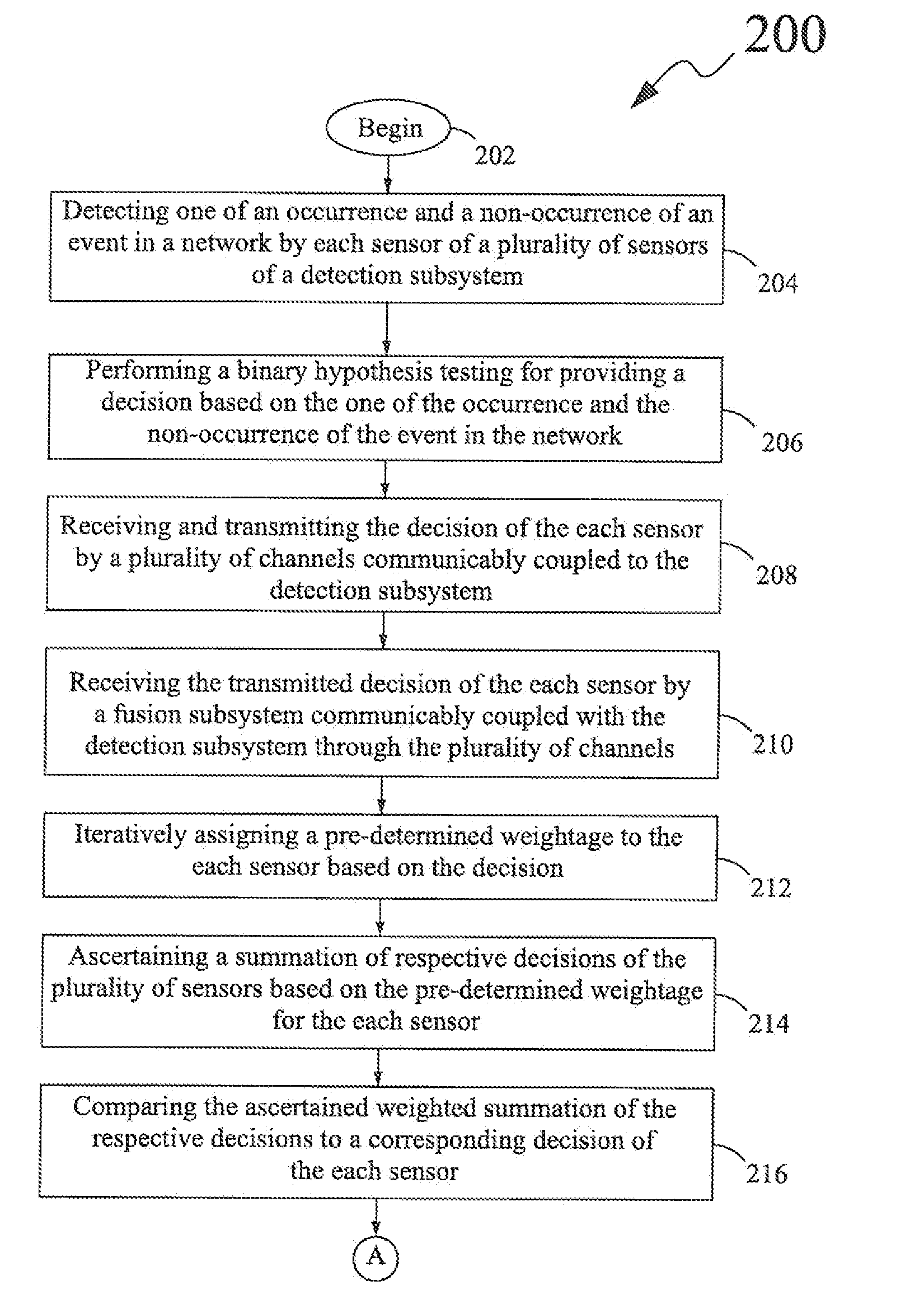

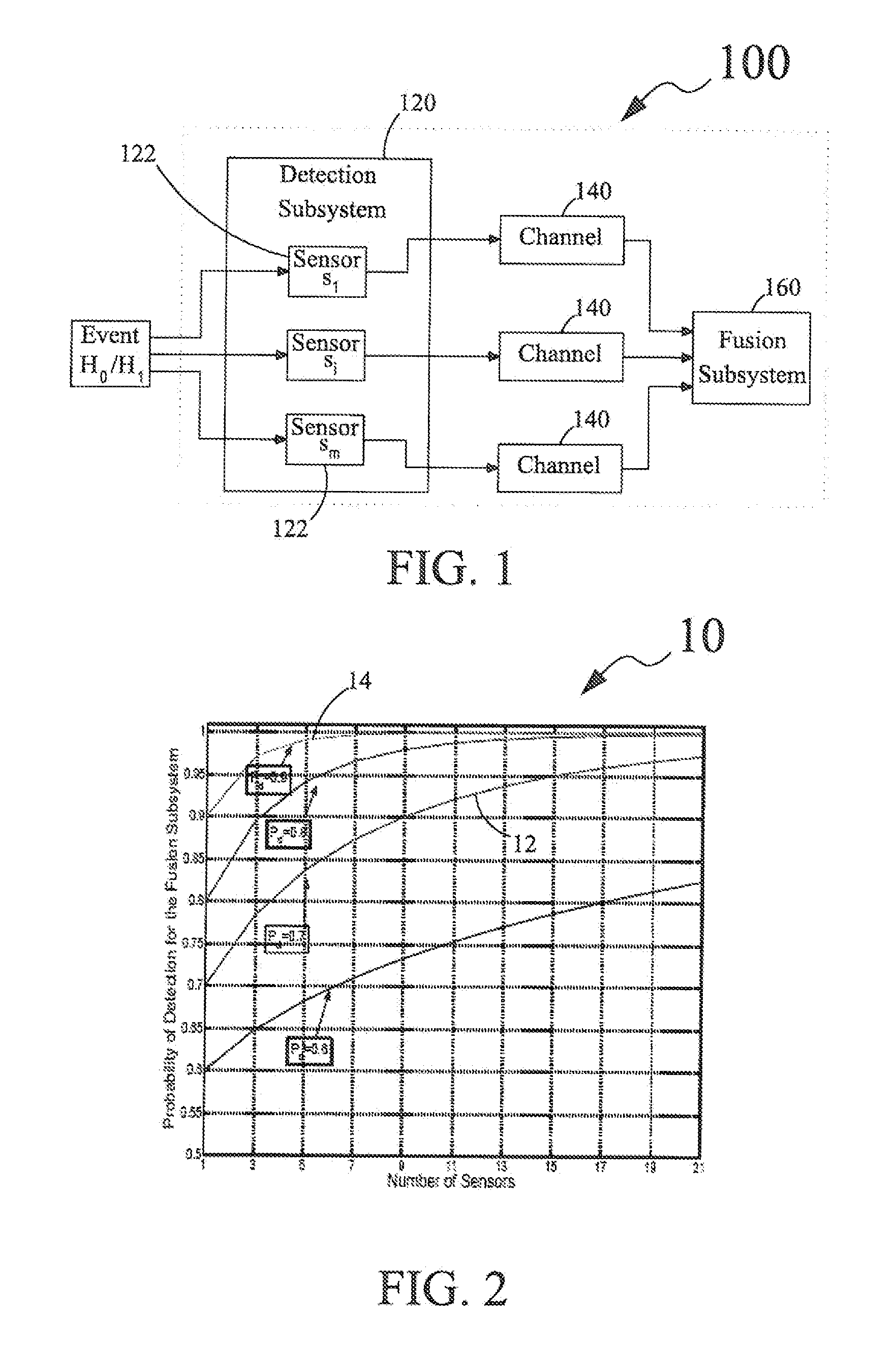

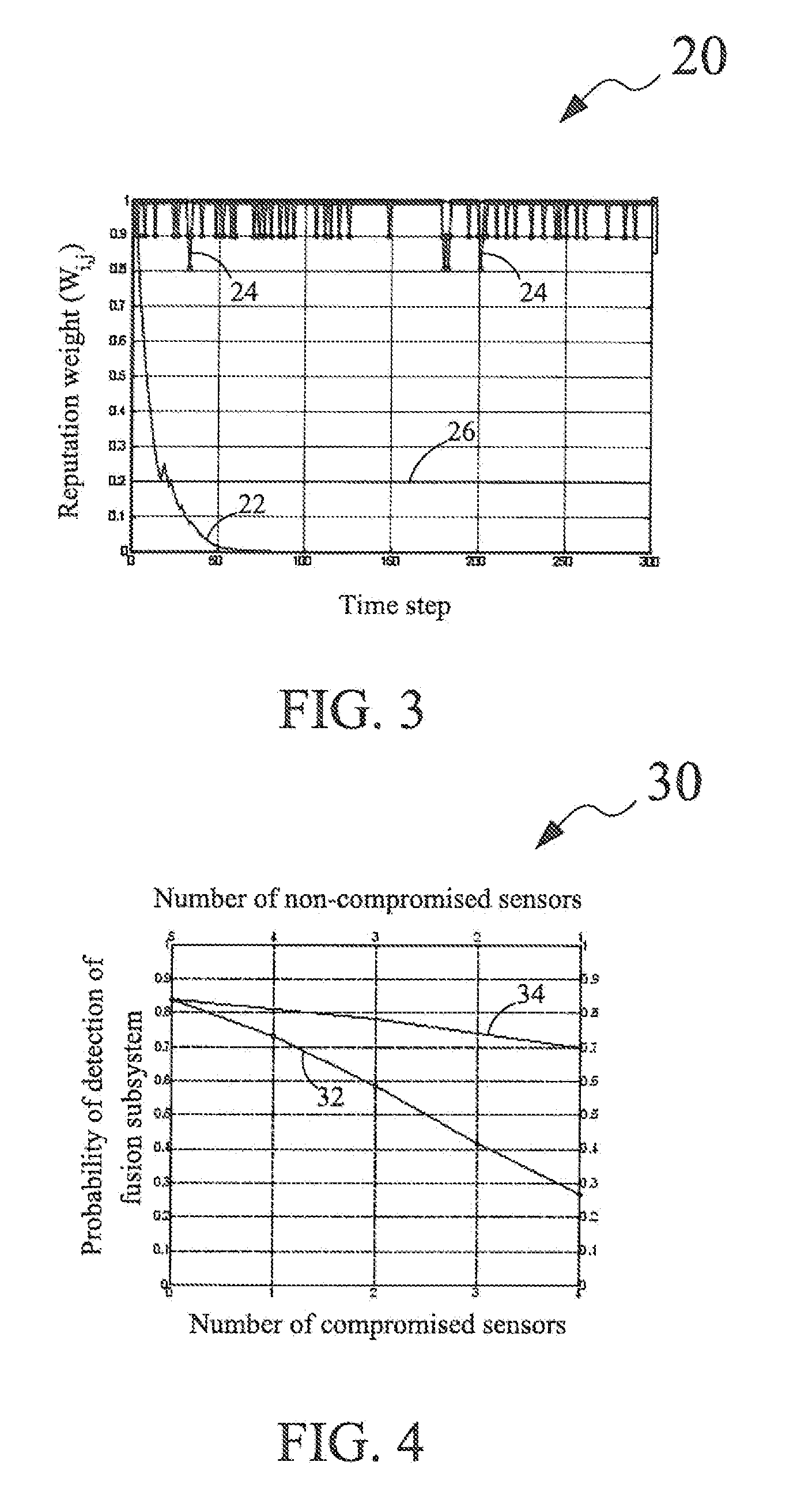

InactiveUS20120066169A1Improve fusion effectEfficiently and effectively manage trust for decision fusionProbabilistic networksFuzzy logic based systemsEngineeringData mining

Disclosed is a trust management system for decision fusion in a network. The trust management system includes a detection subsystem having a plurality of sensors, and a plurality of channels. Each sensor of the plurality of sensors detects one of an occurrence and a non-occurrence of an event in the network. The trust management system further includes a fusion subsystem communicably coupled to the detection subsystem through the plurality of channels for receiving a decision of the each sensor and iteratively assigning a pre-determined weightage. The fusion subsystem ascertains a summation of respective decisions of the plurality of sensors and compares the weighted summation with a corresponding decision of the each sensor. The fusion subsystem further updates the assigned pre-determined weightage and determines the presence of the each sensor being one of a compromised sensor and a non-compromised sensor. Further disclosed is a method for decision fusion in a network.

Owner:BAE SYST INFORMATION & ELECTRONICS SYST INTERGRATION INC

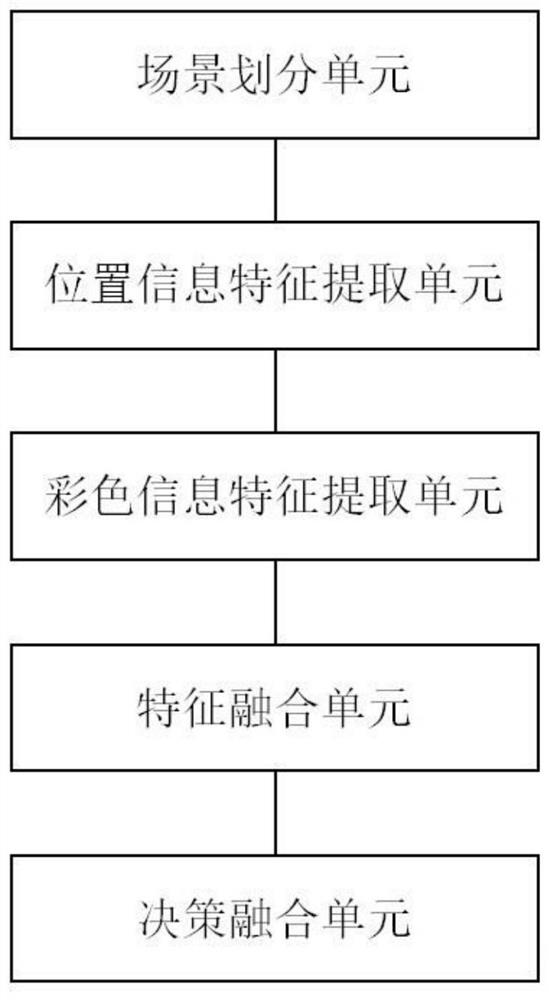

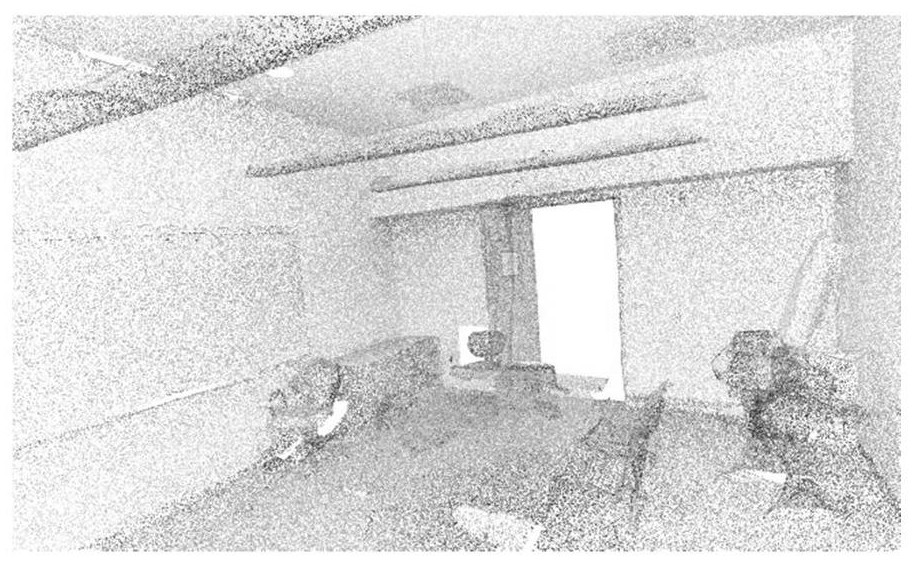

Multi-modal three-dimensional point cloud segmentation system and method

PendingCN111753698AImprove robustnessImprove generalization abilityNeural architecturesThree-dimensional object recognitionScene segmentationAlgorithm

The invention discloses a multi-modal three-dimensional point cloud segmentation system and method. According to the invention, the good fusion of modal data can be realized; a priori mask is introduced, robustness of an obtained scene segmentation result is improved, and the high segmentation precision is obtained. For different scenes, such as toilets, meeting rooms and offices, a good prediction result can be obtained, and the model has good generalization. For an unused skeleton network used for extracting point cloud features, a feature and decision fusion module can be attempted to be utilized, and the precision is improved; if calculation conditions allow, more points can be tried, and a larger area can be utilized, for example, the number of used points and the size of a scene areaare increased by the same multiple, so that the receptive field of the whole model is improved, and the perceptual capacity of the model to the whole scene is improved.

Owner:SOUTHEAST UNIV +1

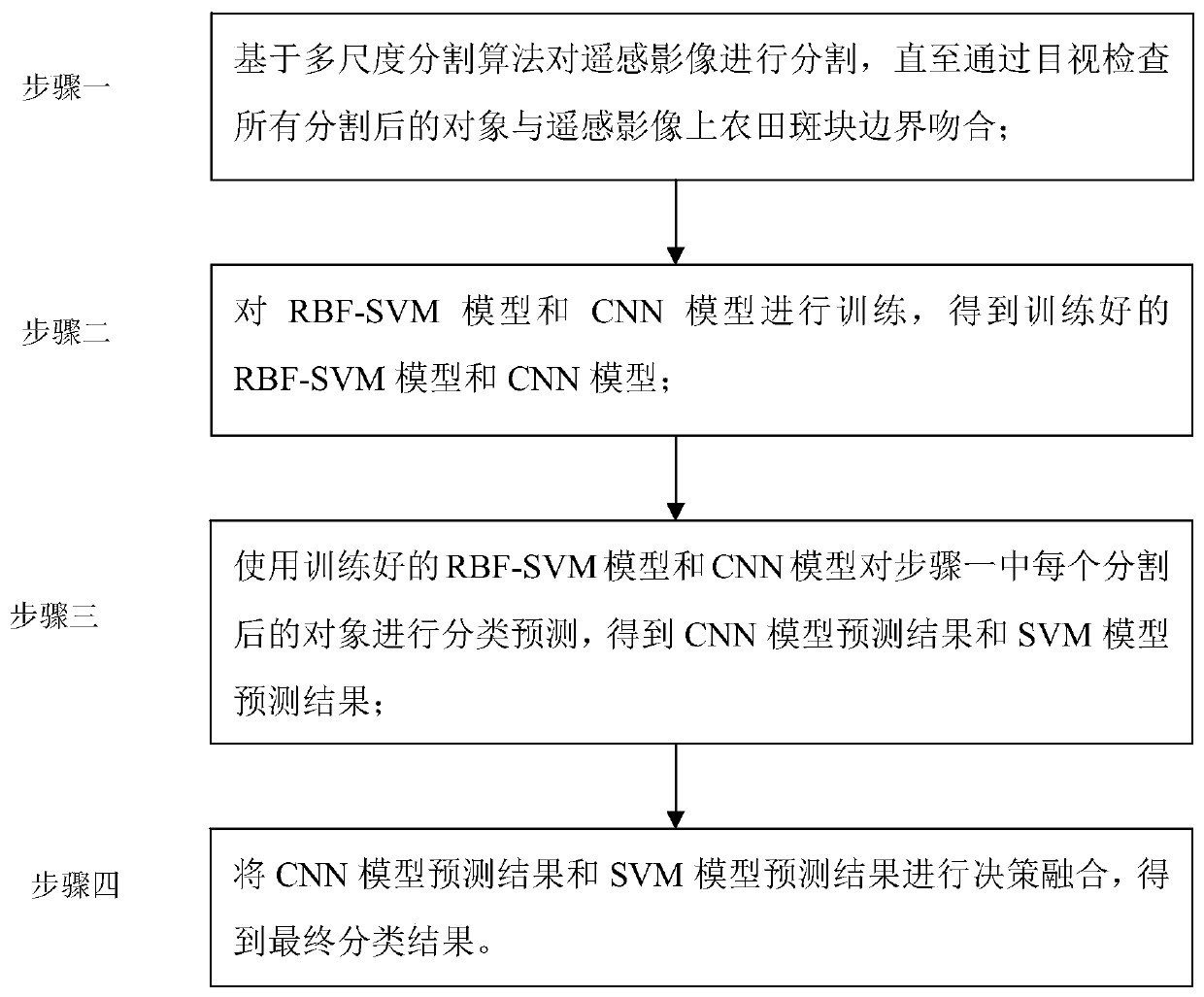

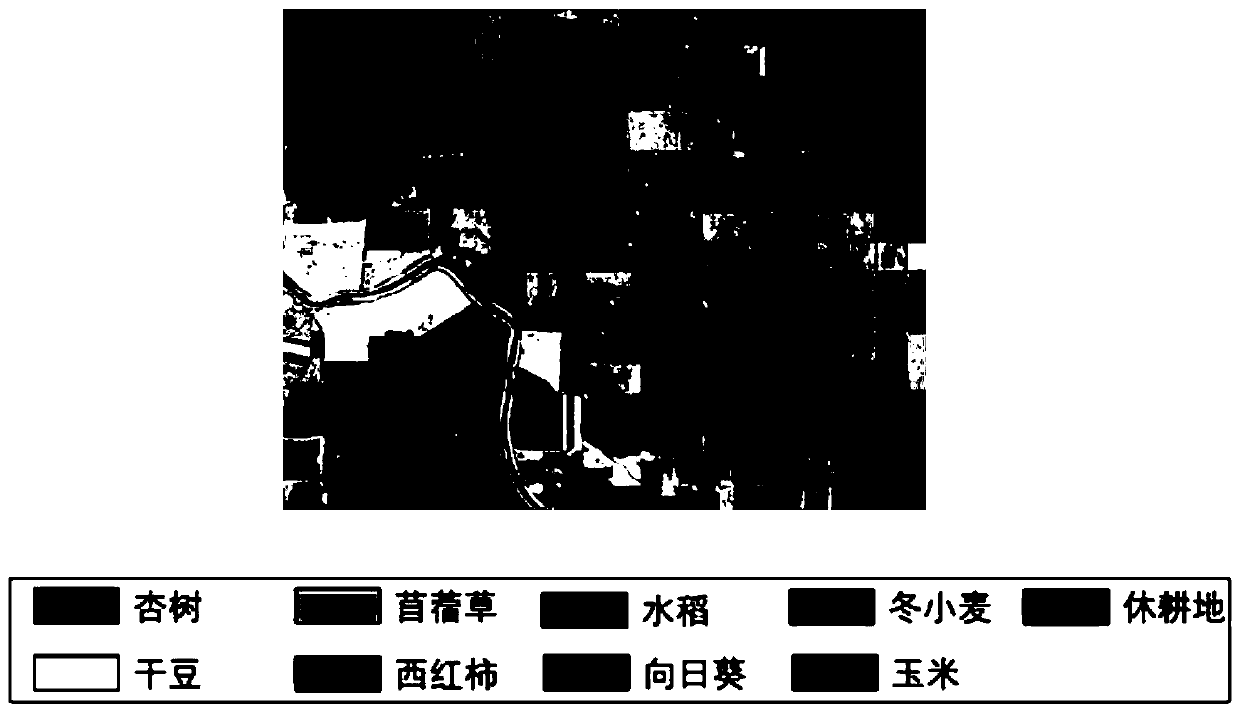

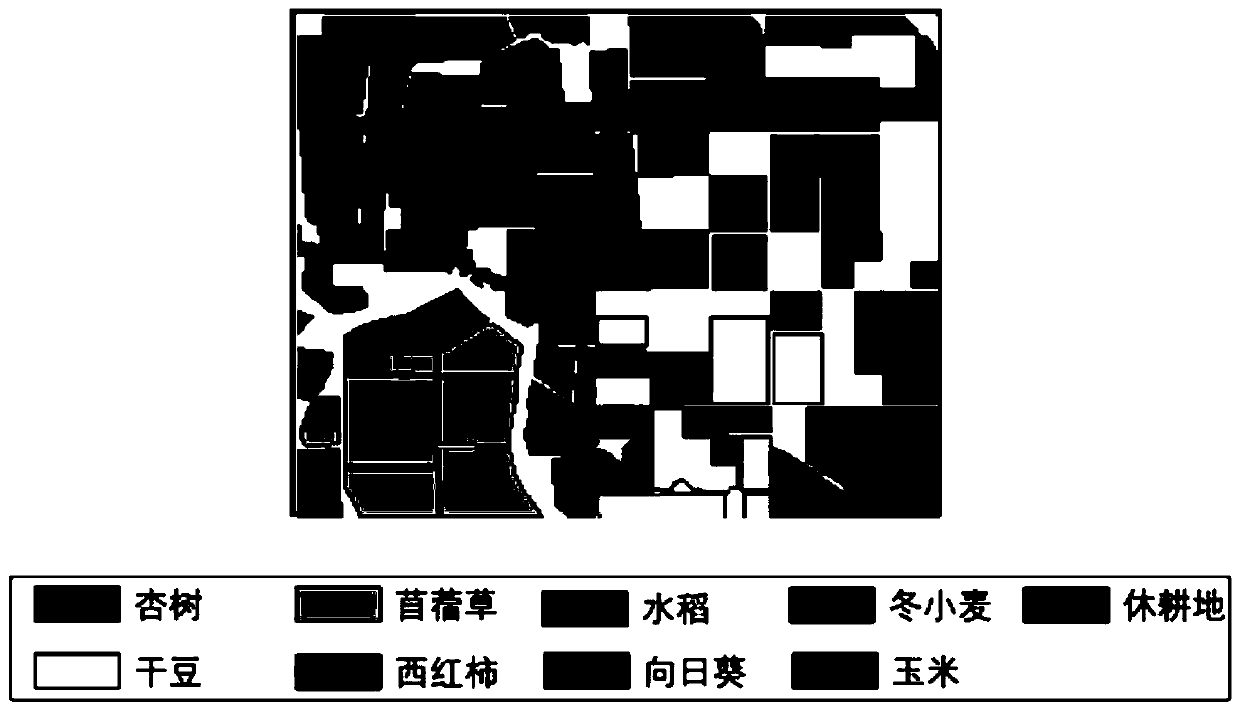

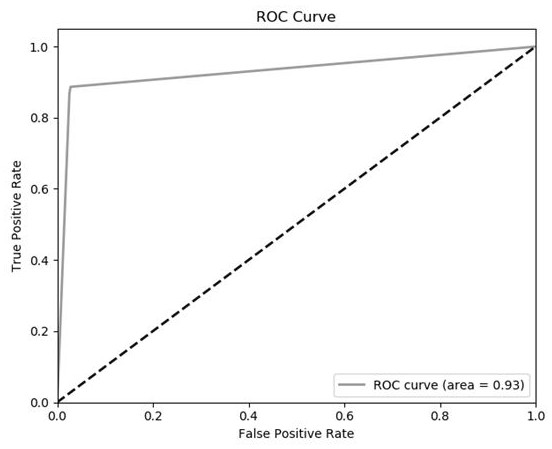

Object-based OBIA-SVM-CNN remote sensing image classification method

ActiveCN109740631AImproving the classification accuracy of remote sensingImprove classification accuracyCharacter and pattern recognitionObject basedVisual inspection

The invention provides an object-based OBIA-SVM-CNN remote sensing image classification method, and relates to a remote sensing image classification method. The objective of the invention is to solvethe problem of low remote sensing classification and identification accuracy of an existing complex farmland area. The method comprises the following steps: 1, segmenting a remote sensing image basedon a multi-scale segmentation algorithm until all segmented objects are matched with farmland plaque boundaries on the remote sensing image through visual inspection; 2, trainning RBF-SVM model and CNN model to get trained RBF-SVM model and CNN model; 3, using the trained RBF-SVM model and the CNN model to classify and predict each segmented object in the first, and obtaining the prediction results of the CNN model and the prediction results of the SVM model.; and 4, performing decision fusion on the CNN model prediction result and the SVM model prediction result to obtain a final classification result. The method is applied to the field of remote sensing image classification.

Owner:NORTHEAST INST OF GEOGRAPHY & AGRIECOLOGY C A S

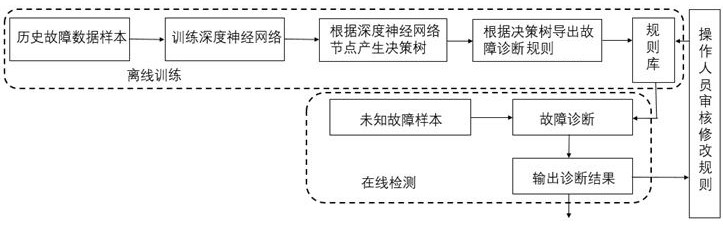

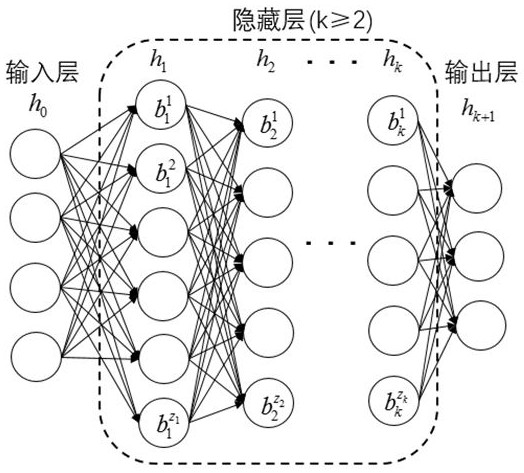

Blast furnace fault diagnosis rule exporting method based on deep neural network

ActiveCN111651931AConfidenceWell mixedSteel manufacturing process aspectsBlast furnace detailsIndustrial systemsEngineering

The invention discloses a blast furnace fault diagnosis rule exporting method based on a deep neural network, and belongs to the field of industrial process monitoring, modeling and simulation. The method comprises: firstly, modeling historical blast furnace fault data by adopting a deep neural network; then, for each fault, starting from an output layer of the network, establishing sub-models ofnodes of adjacent layers of the deep neural network by utilizing the decision tree in sequence, and exporting if-then rules; and finally, merging if-then rules layer by layer to finally obtain a blastfurnace fault diagnosis rule taking the blast furnace process variable as a rule antecedent and taking the fault category as a rule consequent. According to the method of the invention, the advantageof high diagnosis precision of the deep neural network is utilized, fault diagnosis knowledge is obtained from blast furnace historical data, the knowledge is converted into rules which are easily understood by blast furnace operators, man-machine collaborative knowledge and decision fusion is achieved, and the method can be widely applied to industrial systems with high reliability and accuracyrequirements for fault diagnosis.

Owner:ZHEJIANG UNIV

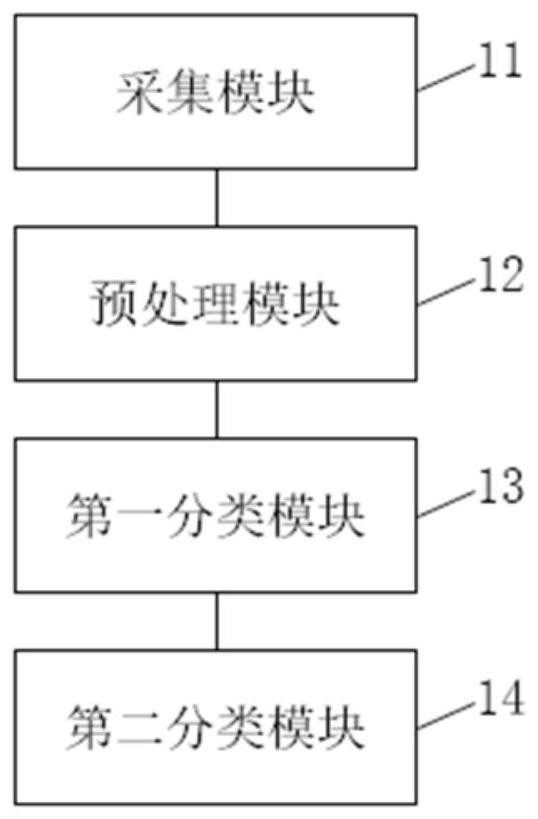

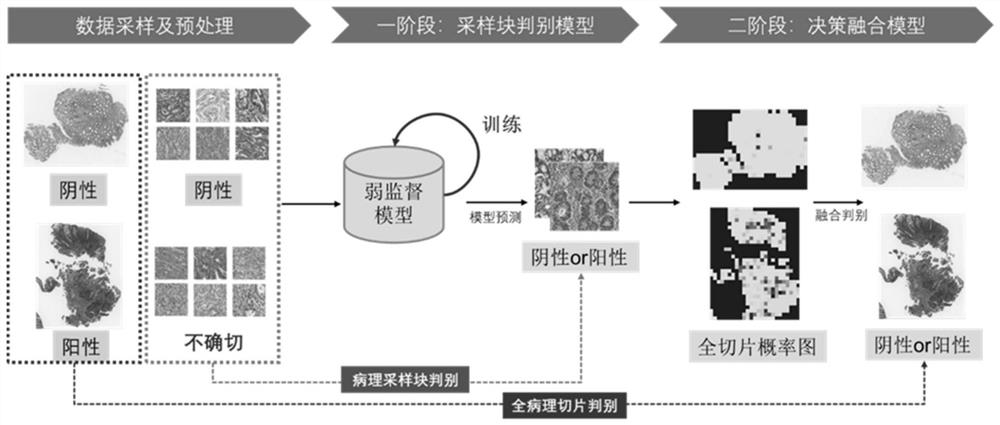

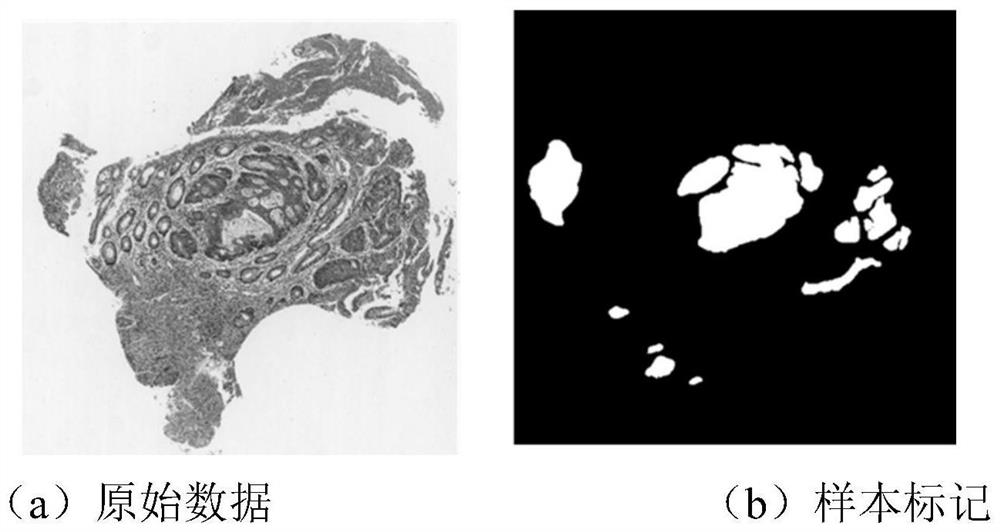

Colorectal cancer digital pathological image discrimination method and system based on weak supervised learning

PendingCN113221978AImplement data samplingAchieve cleaningMedical automated diagnosisCharacter and pattern recognitionData setRadiology

The invention discloses a colorectal cancer digital pathological image discrimination method and system based on weak supervised learning. The colorectal cancer digital pathological image discrimination system based on weak supervised learning comprises an acquisition module used for collecting a colorectal cancer digital pathological image data set; a preprocessing module used for preprocessing data in the collected data set to obtain preprocessed data; a first classification module used for constructing a sampling block discrimination model based on a weak supervised learning algorithm, inputting the preprocessed data into the constructed sampling block discrimination model for processing, and obtaining a classification result of all pathological image blocks in a full-slice sampling packet; and a second classification module used for constructing a decision fusion model, inputting the obtained classification result of the pathological image blocks into the decision fusion model for fusion processing, and obtaining a classification result of the all-digital pathological image.

Owner:ZHEJIANG NORMAL UNIVERSITY

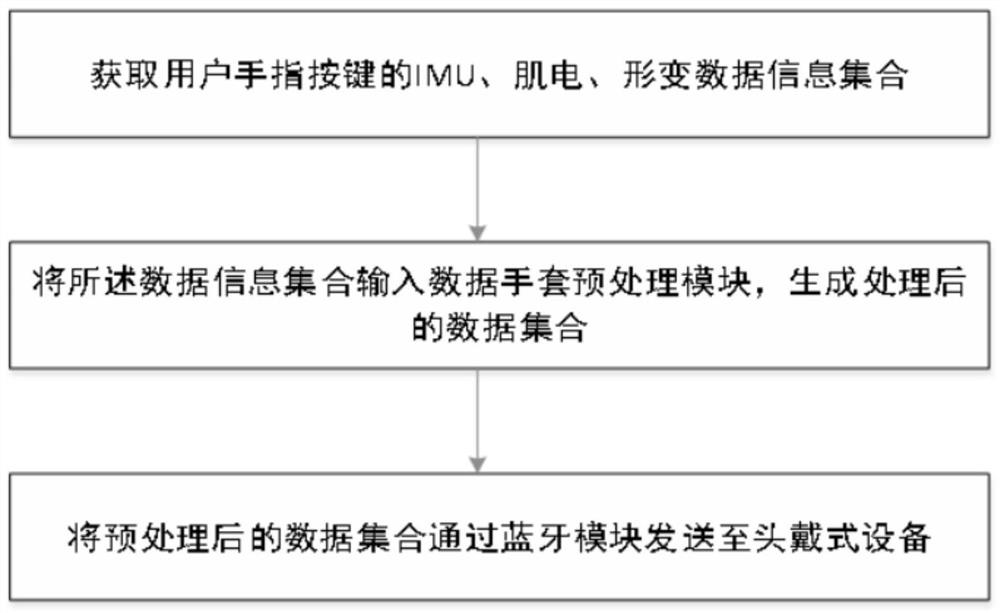

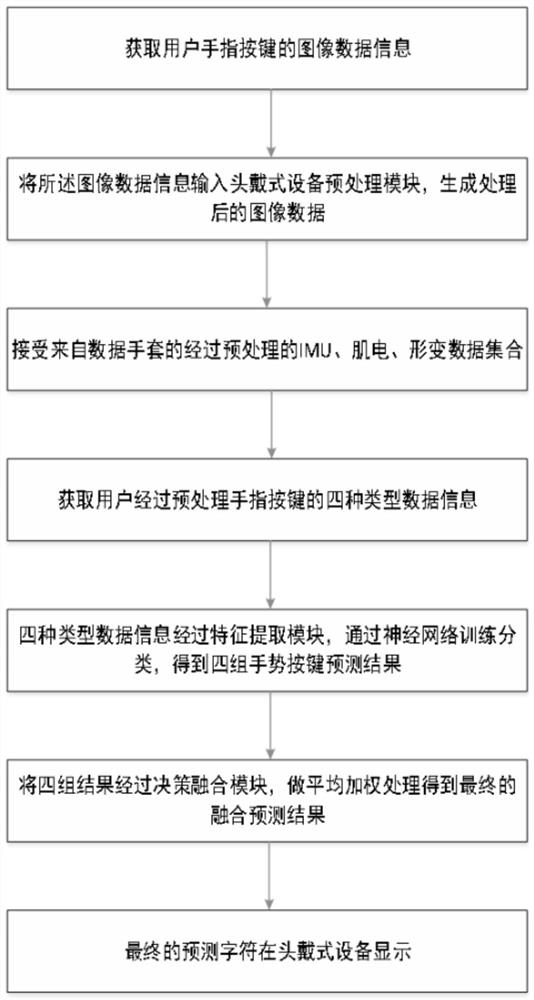

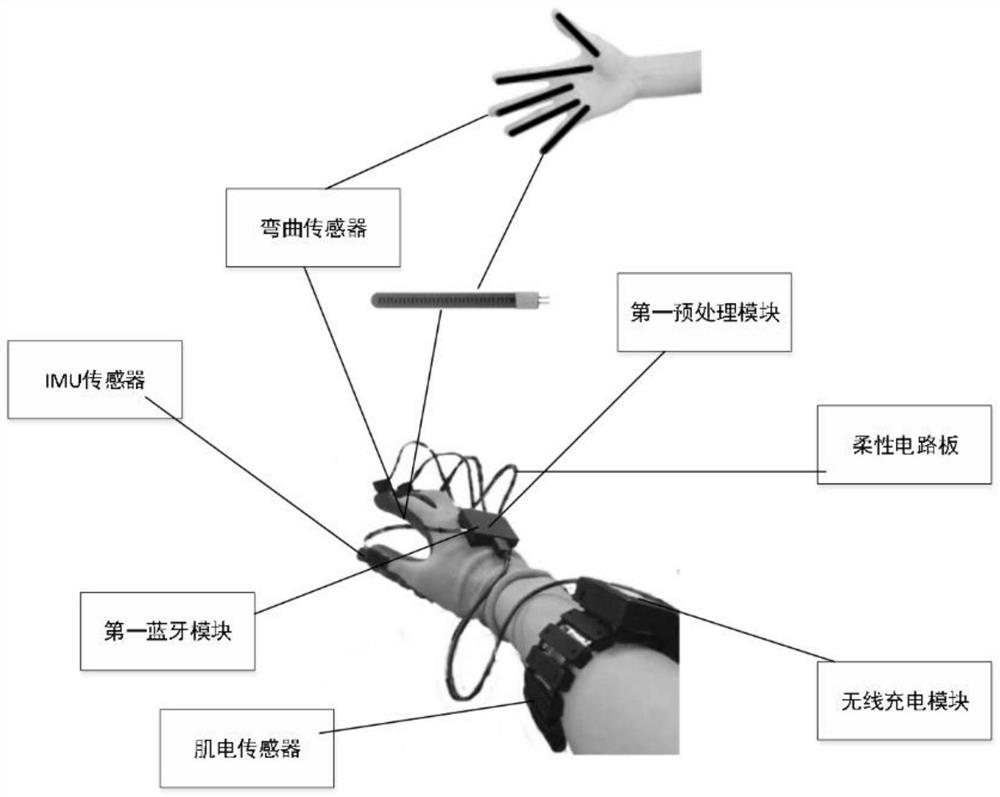

Multi-mode fusion gesture keyboard input method, device and system and storage medium

PendingCN111722713AGood sampling resultEasy to controlInput/output for user-computer interactionCharacter and pattern recognitionFeature extractionEngineering

The invention discloses a multi-mode fusion gesture keyboard input method, device and system and a storage medium. The method comprises the steps: acquiring IMU sensor data, myoelectricity sensor dataand bending sensor data of user keys; shooting the hand area of the user to obtain hand image data; and inputting the four pieces of preprocessed data into corresponding classifiers for feature extraction, then performing decision fusion, and identifying the four pieces of preprocessed data as corresponding gesture key input signals. The device comprises a data glove or a head-mounted device; thesystem comprises a data glove and a head-mounted device. The storage medium comprises a processor and a memory. Interaction between gestures and virtual keyboard characters is achieved in the head-mounted device, character images are displayed in real time, and an interactive interface with more three-dimensional scenes, richer information and more natural and friendly environment is presented for keyboard input of people.

Owner:TIANJIN UNIV

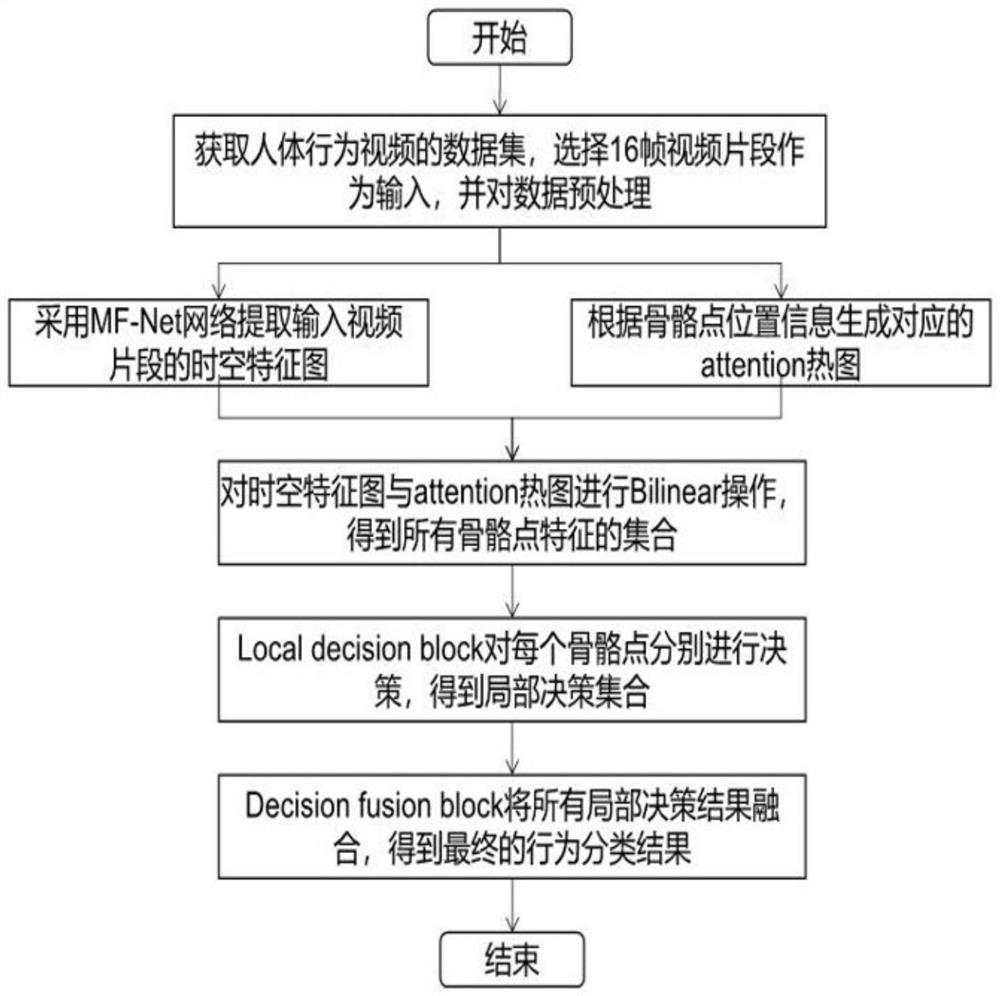

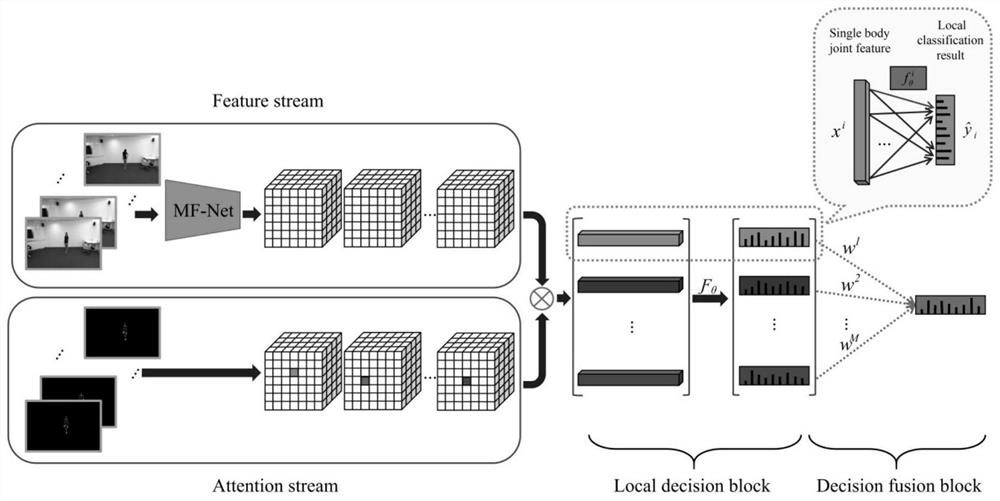

Human body behavior recognition method based on RGB video and skeleton sequence

ActiveCN111967379AReduce the amount of parametersImprove performanceCharacter and pattern recognitionNeural architecturesHuman bodyData set

The invention relates to a human body behavior recognition method based on an RGB video and a skeleton sequence, and belongs to the technical field of computer vision and pattern recognition. The method comprises the following steps: 1, carrying out the feature extraction of an inputted video segment through a feature stream, and obtaining a space-time feature map; step 2, generating a skeleton region heat map by the aid of the attention stream; 3, extracting the spatial and temporal features of the bone region through the binariar; step 4, generating a local decision result by using the localdecision block; and a fifth step of fusing the local decision results by using the decision fusion block to obtain a global decision result. According to the invention, two plug-and-play modules, i.e., a Loal decision block and a Decision block, are used for realizing decision fusion; and the Loal declusion block respectively performs decision making on the spatial and temporal features of each key area, and the Decision lusion block fuses all decision making results to obtain a final decision making result. According to the method, the accuracy of behavior recognition is effectively improvedon Pen Action and NTU RGB + D data sets.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

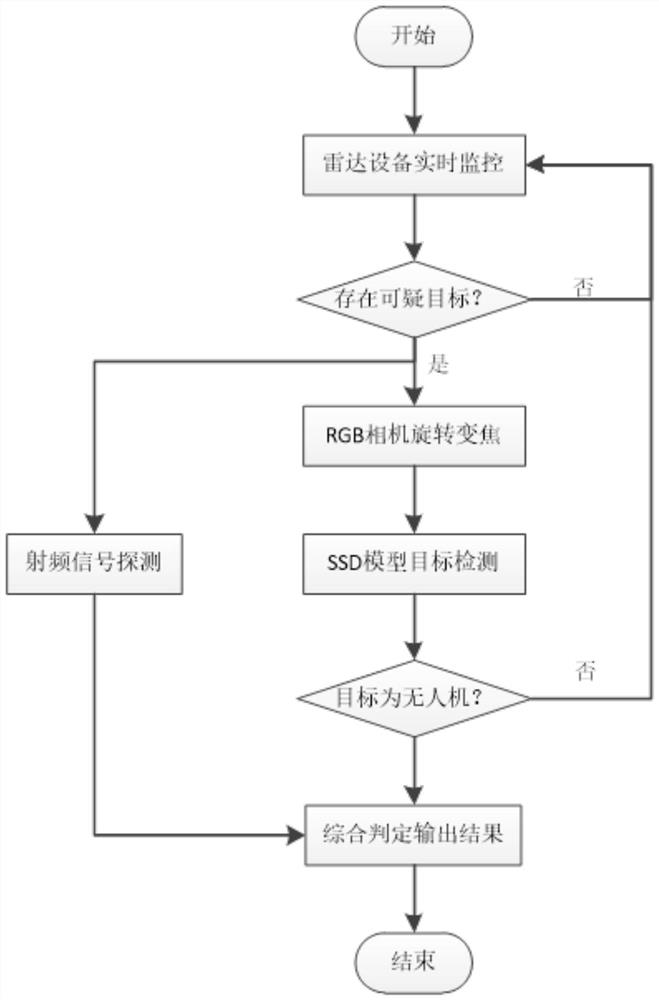

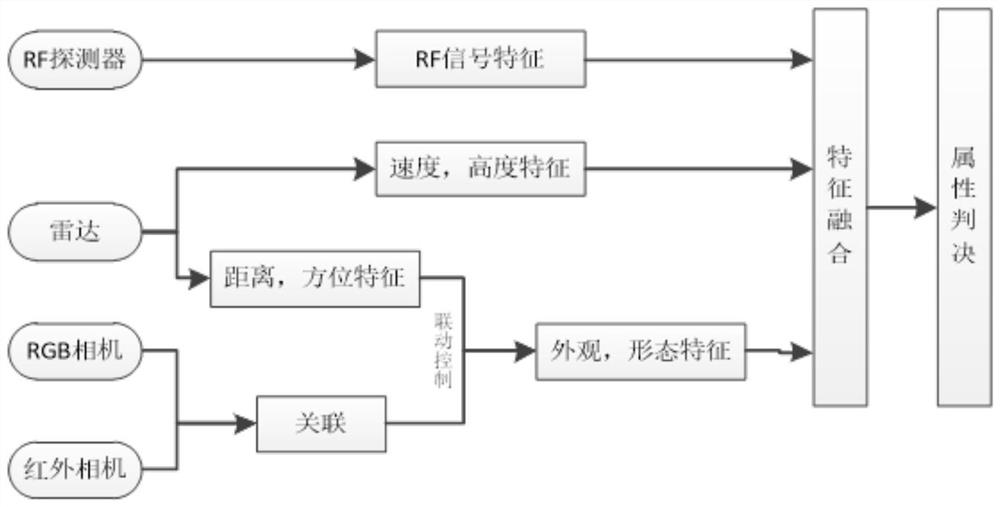

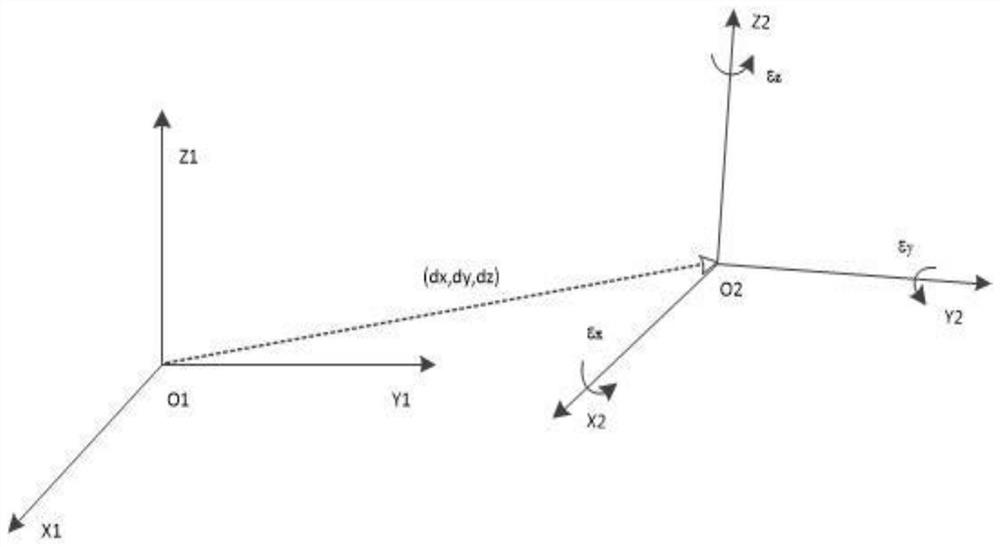

Unmanned aerial vehicle target detection method based on multi-sensor information fusion

PendingCN112068111AImprove accuracyReduce mistakesNeural architecturesNeural learning methodsData setUncrewed vehicle

The invention relates to an unmanned aerial vehicle target detection method based on multi-sensor information fusion. The method comprises the steps: step 1, carrying out time and coordinate registration on radar and photoelectric equipment, monitoring a low-altitude protection area in real time to obtain feature information of a small target, and carrying out feature layer fusion on the feature information; step 2, collecting and expanding images of various unmanned aerial vehicle targets to serve as an unmanned aerial vehicle target detection data set, and introducing an SSD deep learning network for training to obtain an SSD deep learning prediction model; and step 3, performing target detection by using the SSD deep learning prediction model and image information acquired by the photoelectric equipment, performing decision fusion on multiple types of information of the same target by setting a threshold range, and finally performing fusion decision on different information prediction results and multiple judgment results to determine whether the target is an unmanned aerial vehicle. Through fusion of multi-sensor information, the target detection range of the unmanned aerial vehicle is expanded, the detection efficiency is improved, and certain environmental interference can be resisted.

Owner:NAVAL UNIV OF ENG PLA

Oil-gas pipeline pre-warning system based on decision fusion and pre-warning method

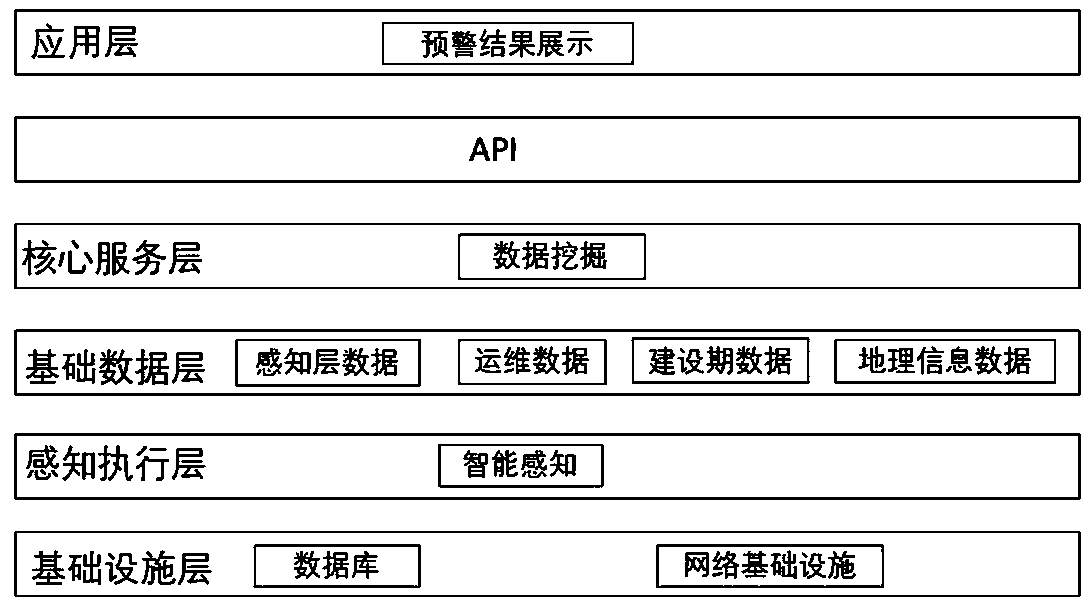

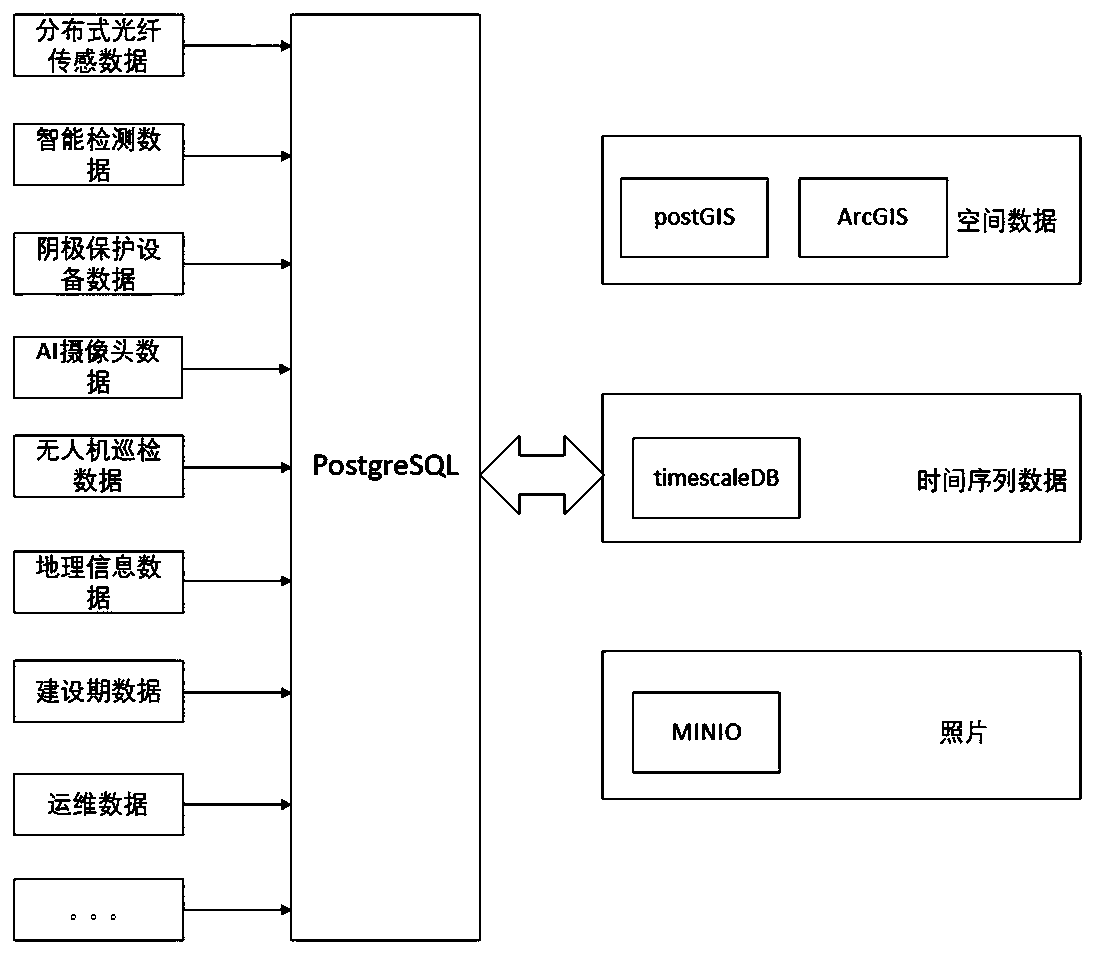

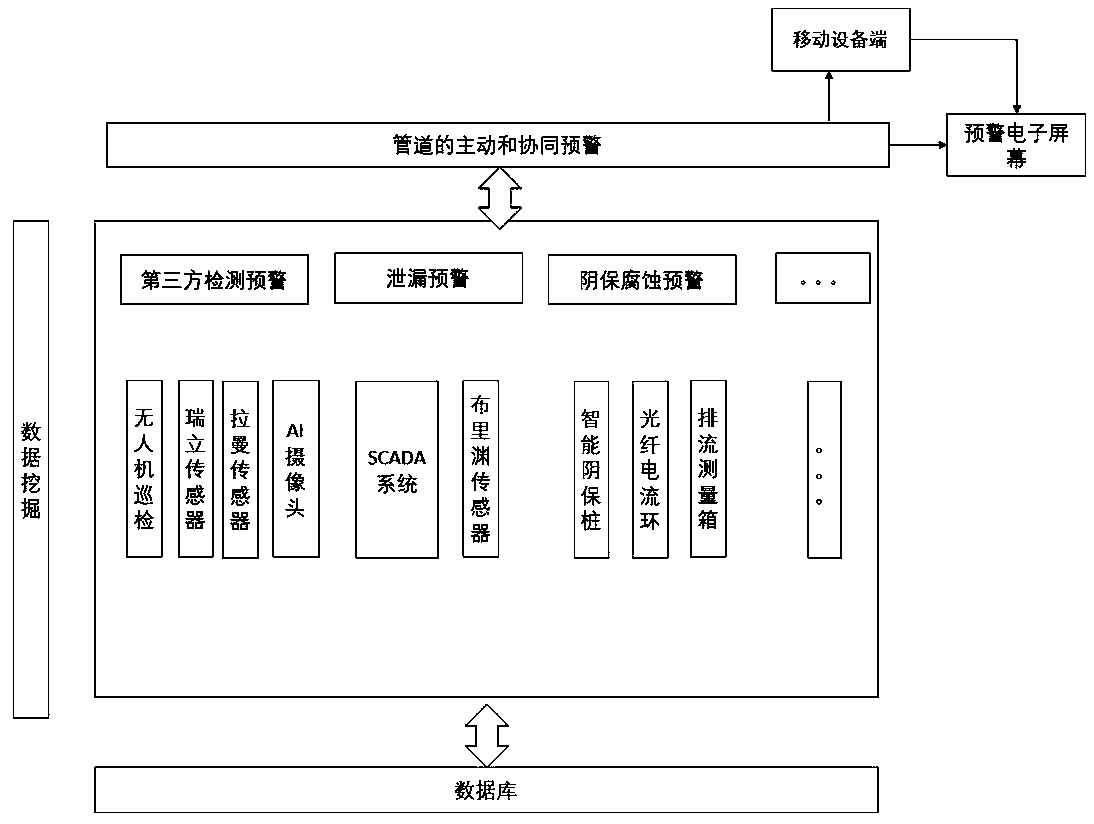

ActiveCN111536429AImprove performanceImprove integrityChecking time patrolsPipeline systemsInformation processingData acquisition

The invention belongs to the technical field of oil-gas pipeline monitoring and pre-warning systems, and particularly relates to an oil-gas pipeline pre-warning system based on decision fusion and a pre-warning method. The system comprises an infrastructure layer, a sensing actuation layer, a basic data layer and a core service layer. The infrastructure layer comprises intelligent sensing equipment. The sensing actuation layer carries out monitoring and data acquiring on an oil-gas pipeline through the intelligent sensing equipment. The basic data layer is used for storing data acquired by thesensing actuation layer and data in the full life circle of the oil-gas pipeline in a database. The core service layer is used for mining the data of the database. Multiple pre-warning grades of multiple pre-warning methods corresponding to different pre-warning tasks are subjected to decision fusion, and the pre-warning grades of the corresponding pre-warning tasks are obtained; the importance orders of all the pre-warning tasks and pre-warning methods are obtained through accident tree analysis, and active pre-warning is achieved; and multi-source data can be fused, the performance of information processing is effectively improved, the pre-warning accuracy is high, and the pre-warning system and the pre-warning method have higher pertinence for pre-warning of the oil-gas pipeline.

Owner:浙江浙能天然气运行有限公司 +2

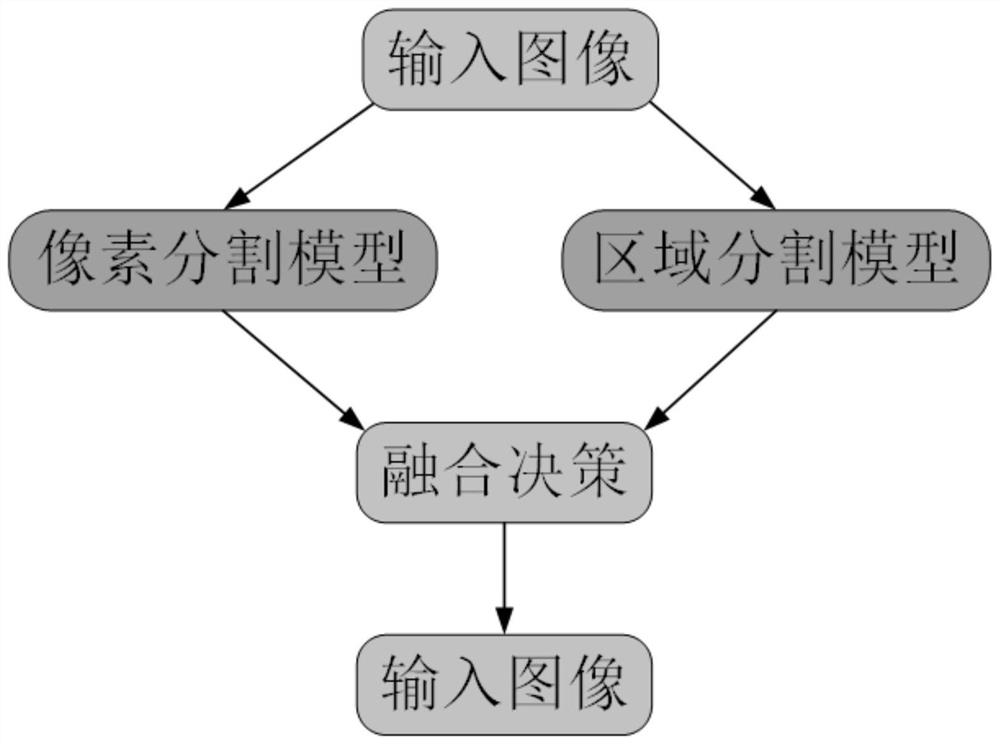

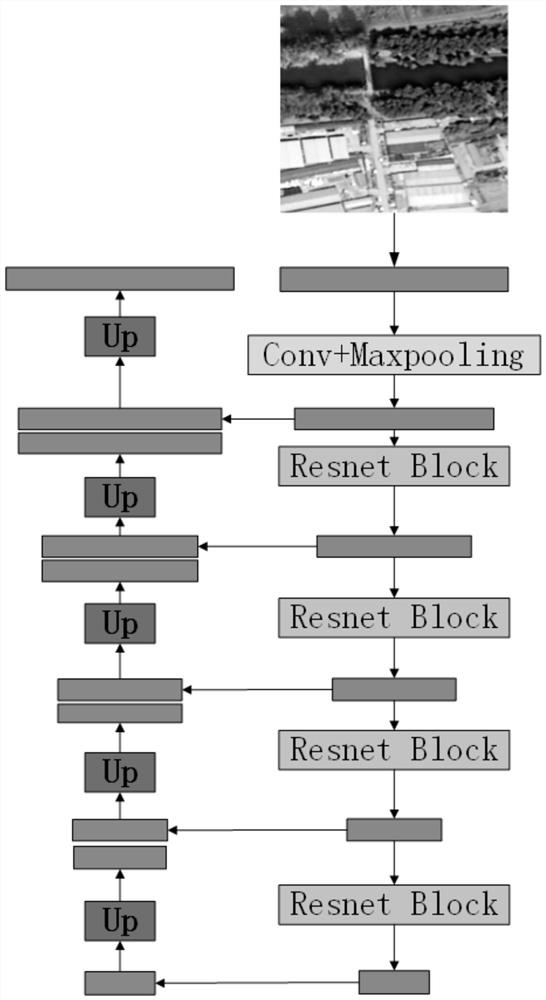

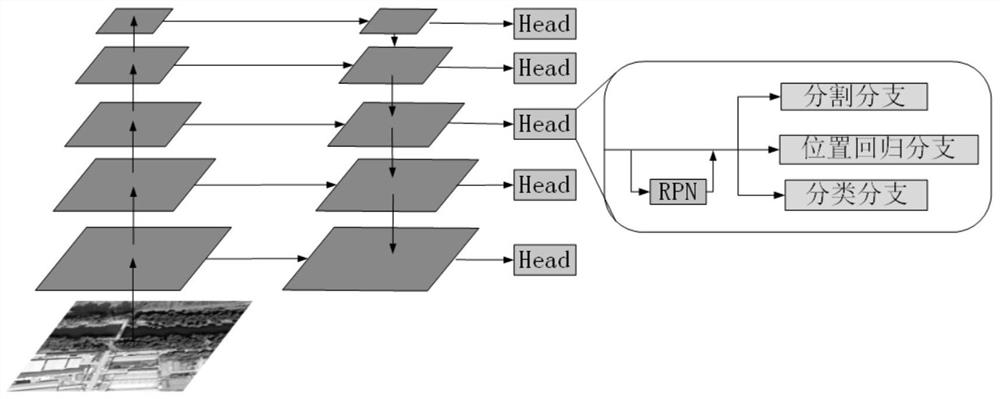

Building detection method based on pixel and region segmentation decision fusion

PendingCN111968088AImprove spatial continuityImprove liquidityImage enhancementImage analysisSensing dataTest sample

The invention discloses a building detection method based on pixel and region segmentation decision fusion. The building detection method comprises the following steps: respectively constructing a residual structure introduced pixel segmentation model and a feature pyramid network introduced region-based double segmentation model; generating a training sample set and a test sample set from the optical remote sensing data set; preprocessing the images in the training set samples; training a pixel segmentation model by using the mixed supervision loss added with the Dice loss and the cross entropy loss; inputting the test sample set into the trained double-segmentation network, and respectively outputting prediction results of the test sample set; and fusing the prediction result of the double-segmentation network according to the decision scheme, outputting the final detection result of the test sample set, and completing the detection. The method pays attention to the spatial consistency of the large-scale building, reserves the multi-scale features of the small-scale building, guarantees the richness of the features of the building, and improves the building detection accuracy.

Owner:XIDIAN UNIV

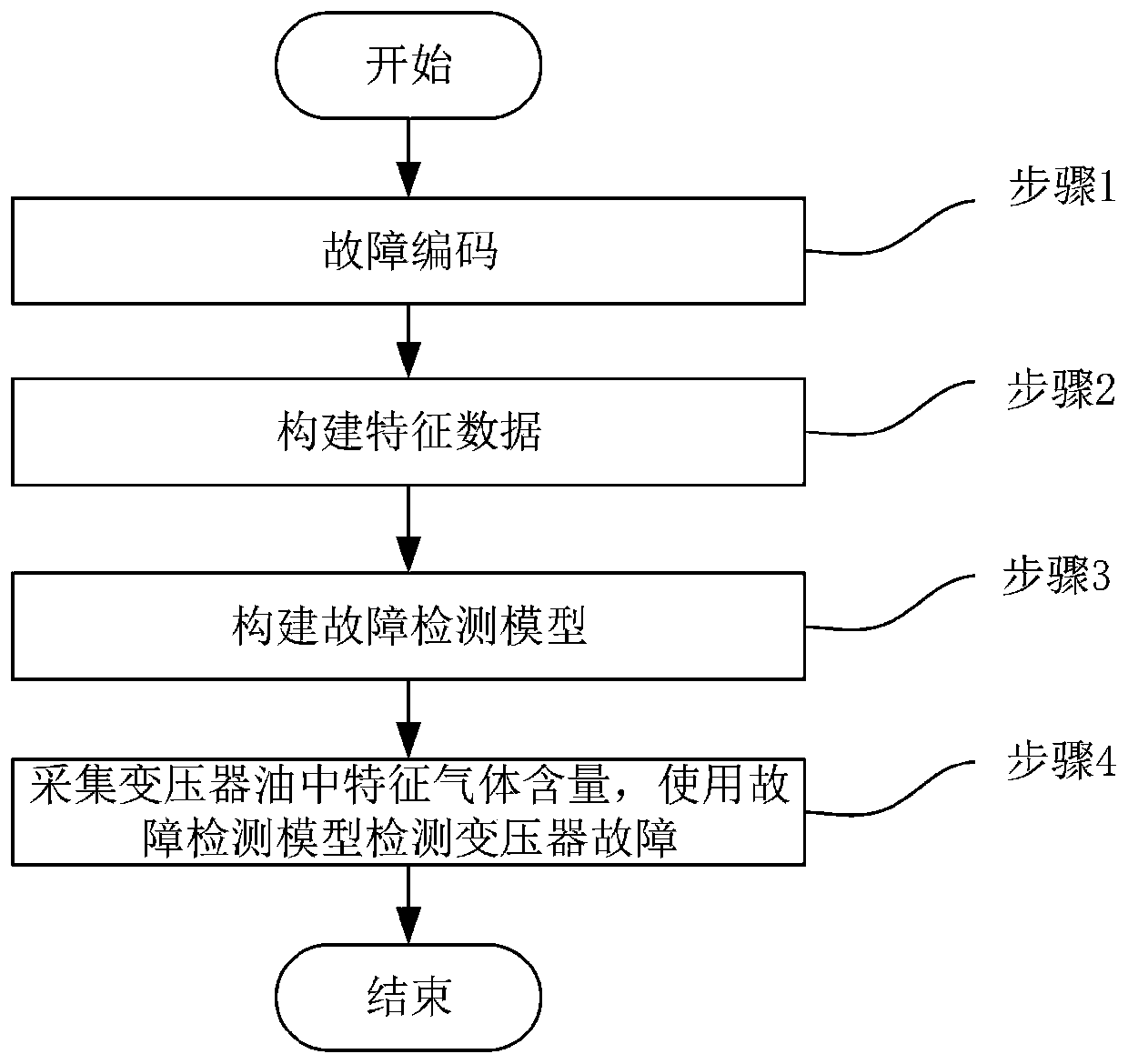

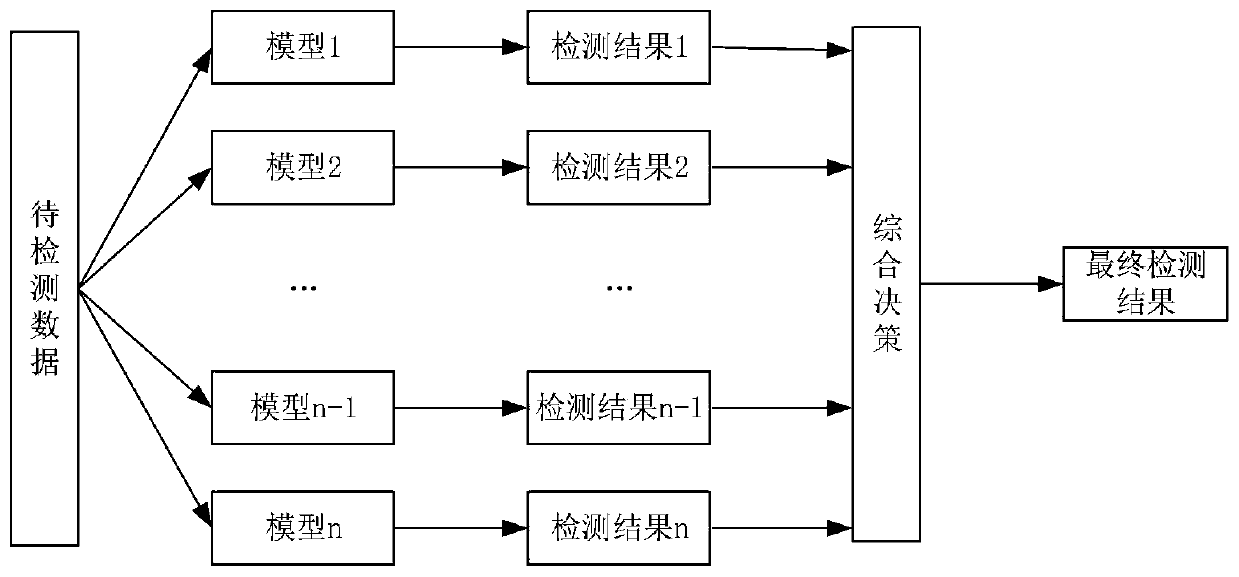

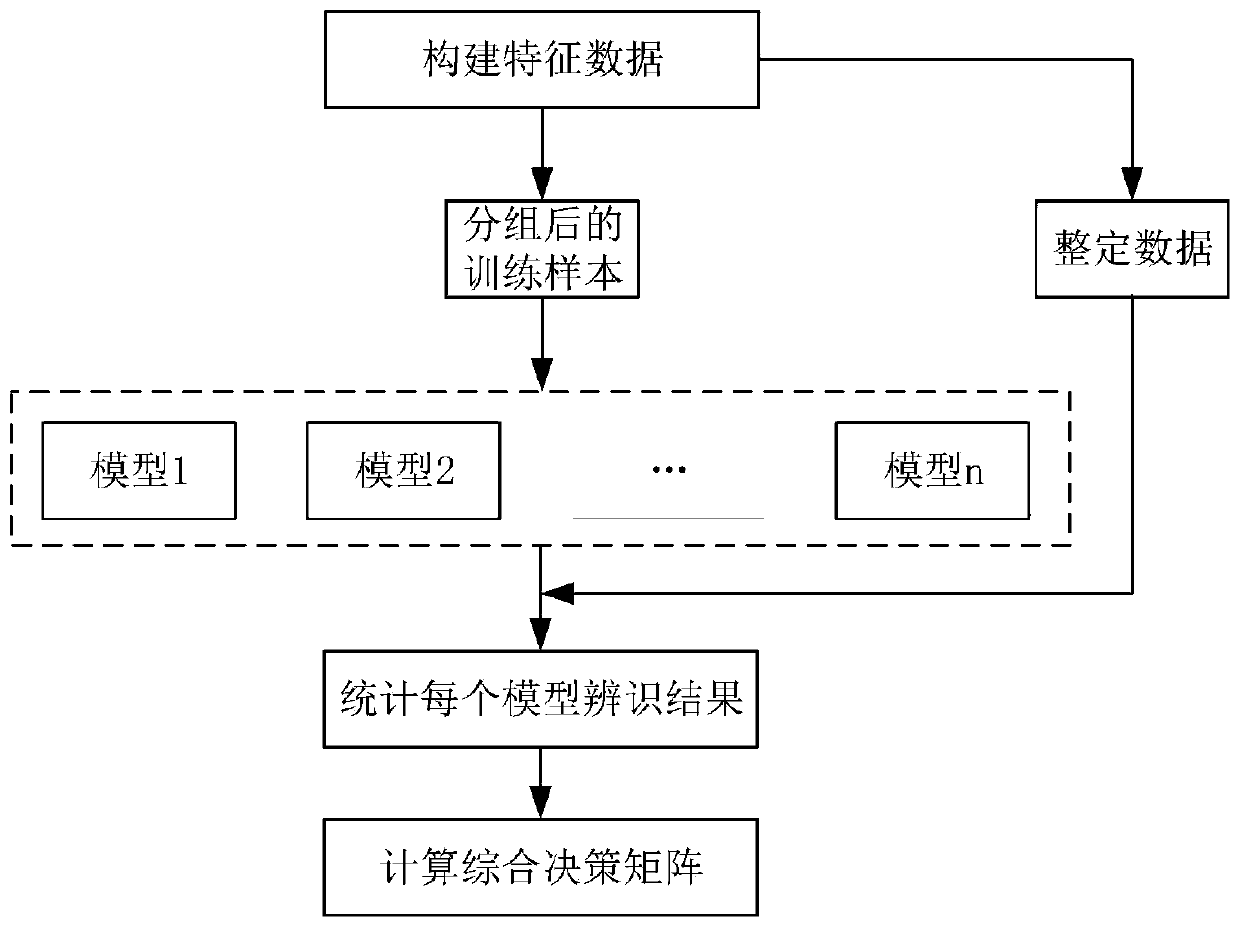

Oil-immersed transformer fault diagnosis method based on neural network and decision fusion

ActiveCN110879373AImprove recognition accuracyReduce identification errorTransformers testingNeural architecturesEngineeringNetwork model

The invention provides an oil-immersed transformer fault diagnosis method based on a neural network and decision fusion. The method based on the neural network and the decision fusion comprises the steps of fault coding, construction and training of a neural network model, and calculation of a decision fusion matrix. The method comprises the following steps: after encoding fault low-temperature overheating, medium-temperature overheating, high-temperature overheating, partial discharge, low-energy discharge and high-energy discharge, training a plurality of neural networks by using the contentof dissolved gas in five kinds of transformer oil as identification features, and calculating a decision fusion matrix according to the test accuracy of the neural networks to realize decision fusionof the plurality of neural networks. According to the method, the weight of the specific fault in the whole model identification can be adjusted according to the identification performance of the single neural network for the specific fault, so that the accuracy of fault diagnosis is improved, and the method has important significance for timely processing of transformer faults and stable and reliable operation of a power system.

Owner:WUHAN NARI LIABILITY OF STATE GRID ELECTRIC POWER RES INST

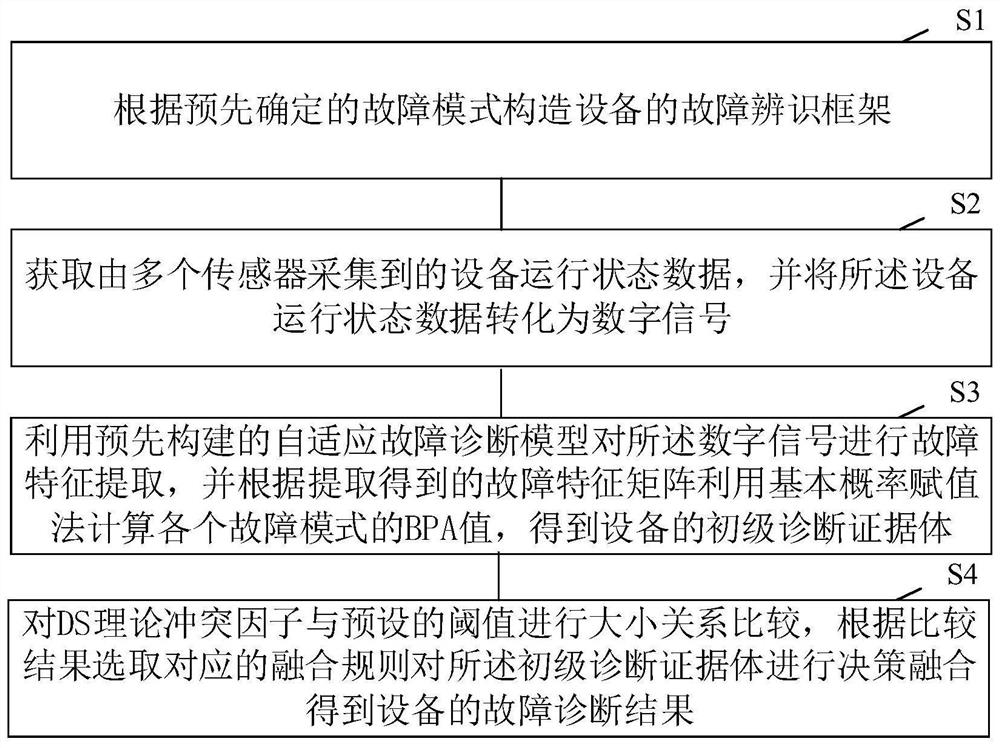

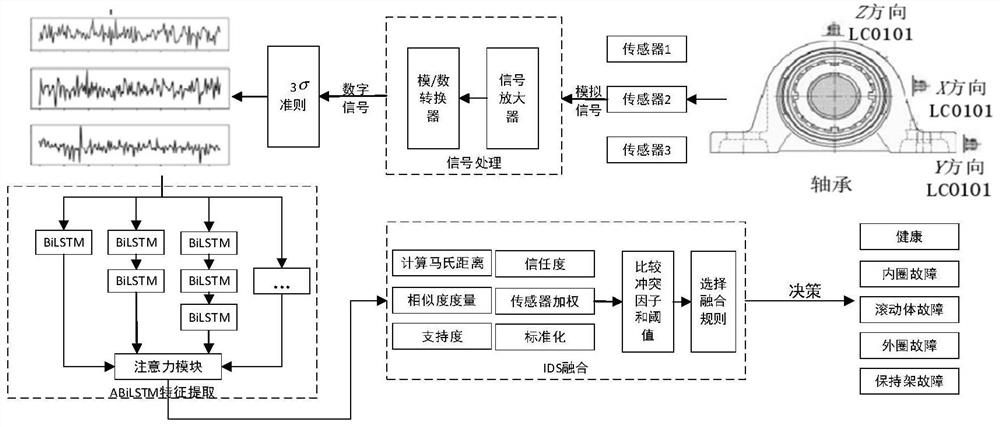

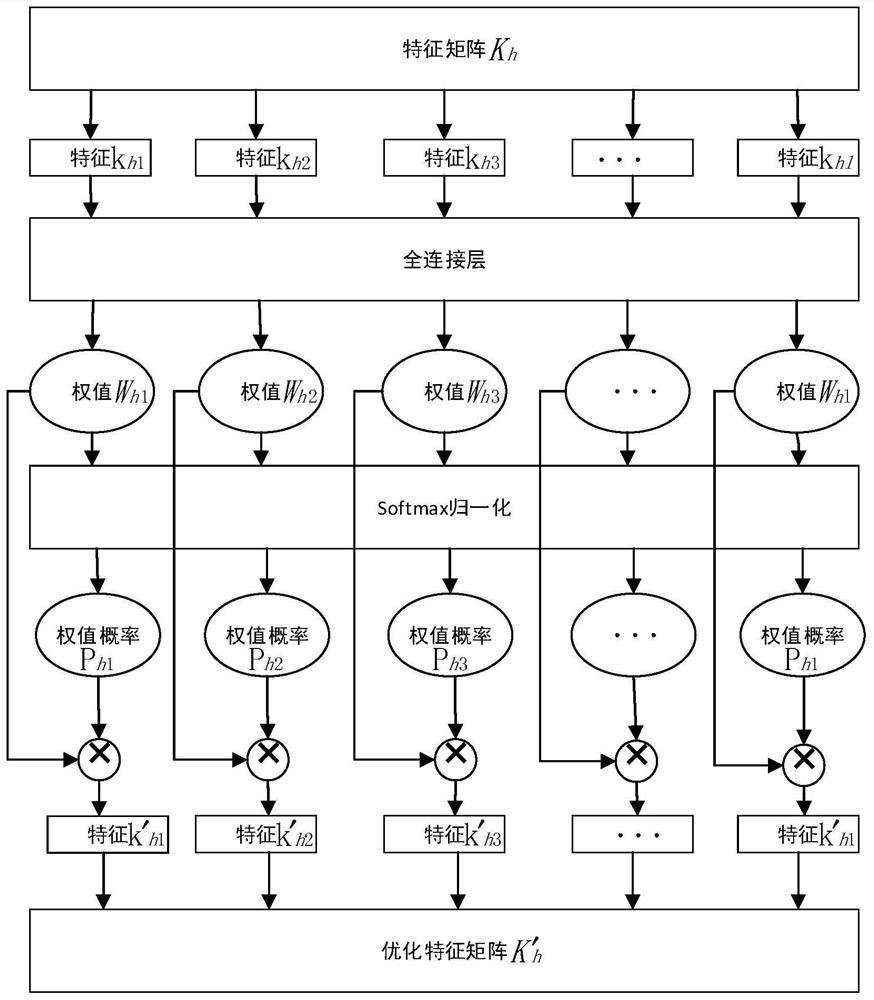

Equipment fault diagnosis method and device based on multi-sensor data fusion

PendingCN111931806AImprove efficiencyImprove accuracyCharacter and pattern recognitionNeural architecturesDecision fusionBasic probability

The invention provides an equipment fault diagnosis method and device based on multi-sensor data fusion. The method comprises the steps of constructing a fault identification framework of equipment according to a predetermined fault mode; acquiring equipment operation state data acquired by a plurality of sensors, and converting the equipment operation state data into digital signals; performing fault feature extraction on the digital signal by using a pre-constructed adaptive fault diagnosis model, and calculating a BPA value of each fault mode by using a basic probability assignment method according to an extracted fault feature matrix to obtain a primary diagnosis evidence body of the equipment; and comparing the DS theoretical conflict factor with a preset threshold, and selecting a corresponding fusion rule according to a comparison result to perform decision fusion on the primary diagnosis evidence body to obtain a fault diagnosis result of the equipment. According to the invention, multi-source fault signals can be fused to carry out equipment state monitoring and intelligent diagnosis, so that the equipment fault diagnosis efficiency and accuracy are effectively improved.

Owner:GCI SCI & TECH +2

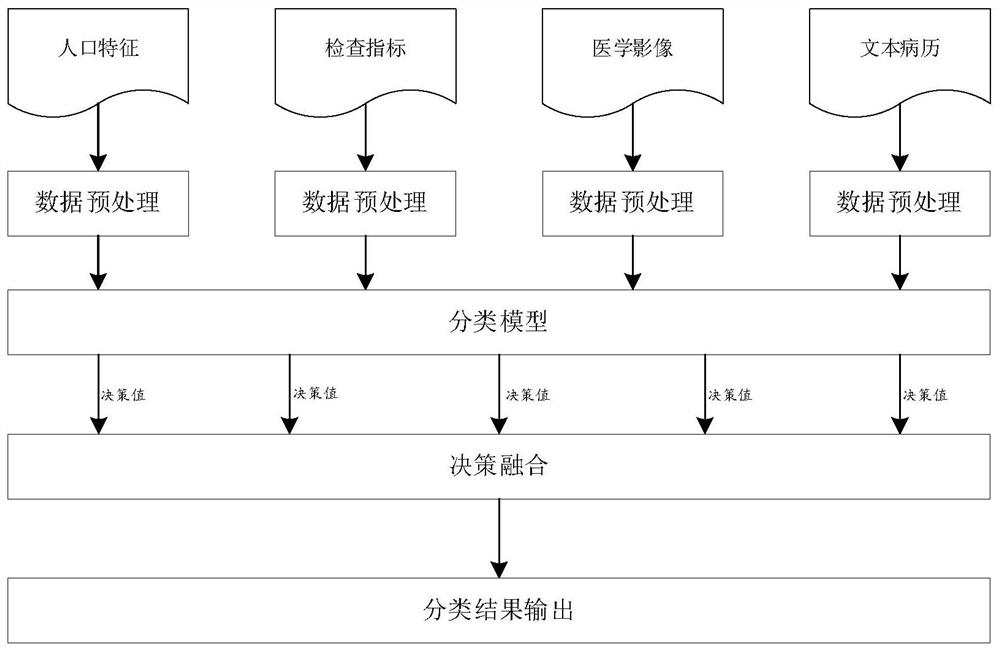

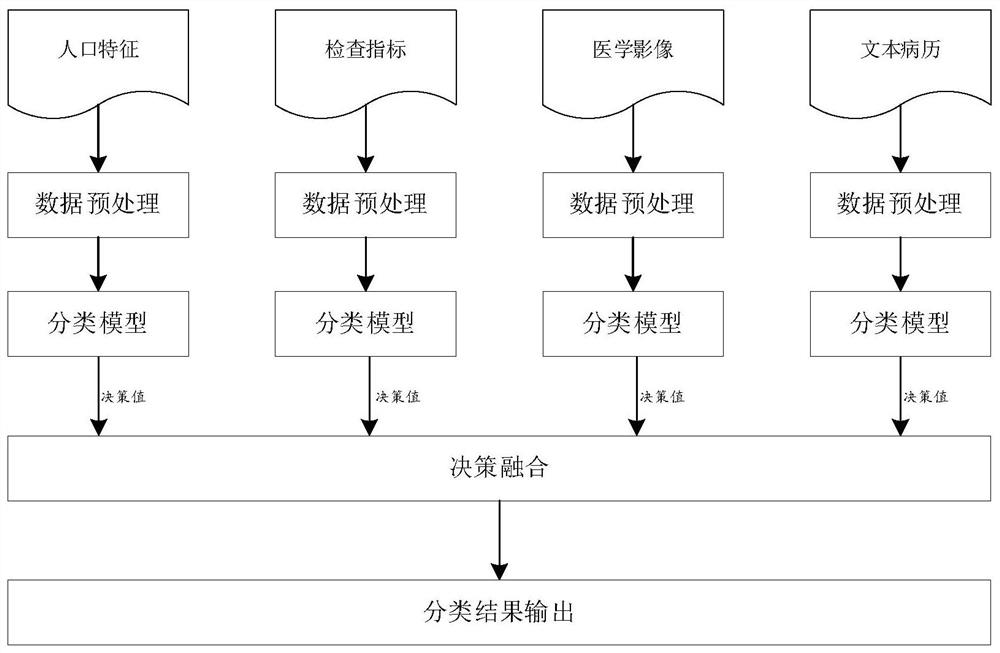

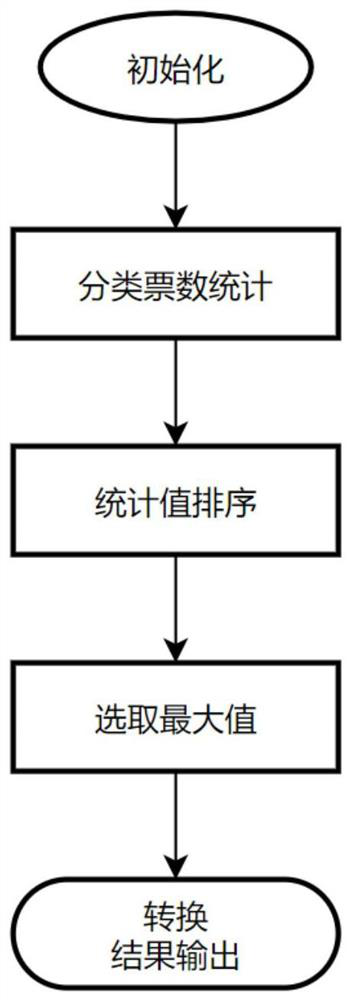

Medical diagnosis auxiliary method and system

PendingCN112530584AIncomplete solutionSolve the problem of single modelMedical automated diagnosisNeural architecturesComputer assistanceData source

The invention provides a medical diagnosis auxiliary method, which belongs to the technical field of computer assistance, and comprises the following steps: classifying based on various medical data by adopting various classification models to obtain a plurality of classification decision values; and performing decision fusion on the plurality of classification decision values to obtain a classification decision value as a classification result to be output. The invention further provides a medical diagnosis auxiliary system. According to the invention, the problems of incomplete data sources,single model and the like can be solved to a great extent by respectively operating various medical data and various classification models and finally performing decision fusion, and the overall accuracy of the universal medical diagnosis auxiliary system is effectively improved from the aspect of a technical framework.

Owner:贵州小宝健康科技有限公司 +1

A radar radiation source signal intra-pulse characteristic comprehensive evaluation method and system

ActiveCN109766926AFeature Evaluation PerfectIncreasing the SNR affects the significance indexWave based measurement systemsInternal combustion piston enginesFeature extractionFeature evaluation

The invention belongs to the technical field of radar radiation source signal characteristic evaluation in electronic countermeasure, and discloses a radar radiation source signal intra-pulse characteristic comprehensive evaluation method and system. The method comprises the following steps of firstly, carrying out feature extraction on a received radar radiation source signal, and carrying out feature evaluation index measurement and normalization according to an established evaluation system; carrying out improved interval analytic hierarchy process by combining expert priori knowledge and an actual environment, and establishing a nonlinear equation optimization model by using an improved projection pursuit algorithm; and finally, performing final subjective and objective decision fusionby using a projection spectrum gradient algorithm. The radar radiation source signal intra-pulse characteristic evaluation method and system can reasonably and effectively realize various radar radiation source signal intra-pulse characteristic evaluations based on an actual environment, and scientific and effective evaluation is carried out to help to select characteristics capable of highlighting radar radiation source signals so as to facilitate the subsequent radar radiation source signal sorting and identification.

Owner:XIDIAN UNIV

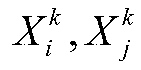

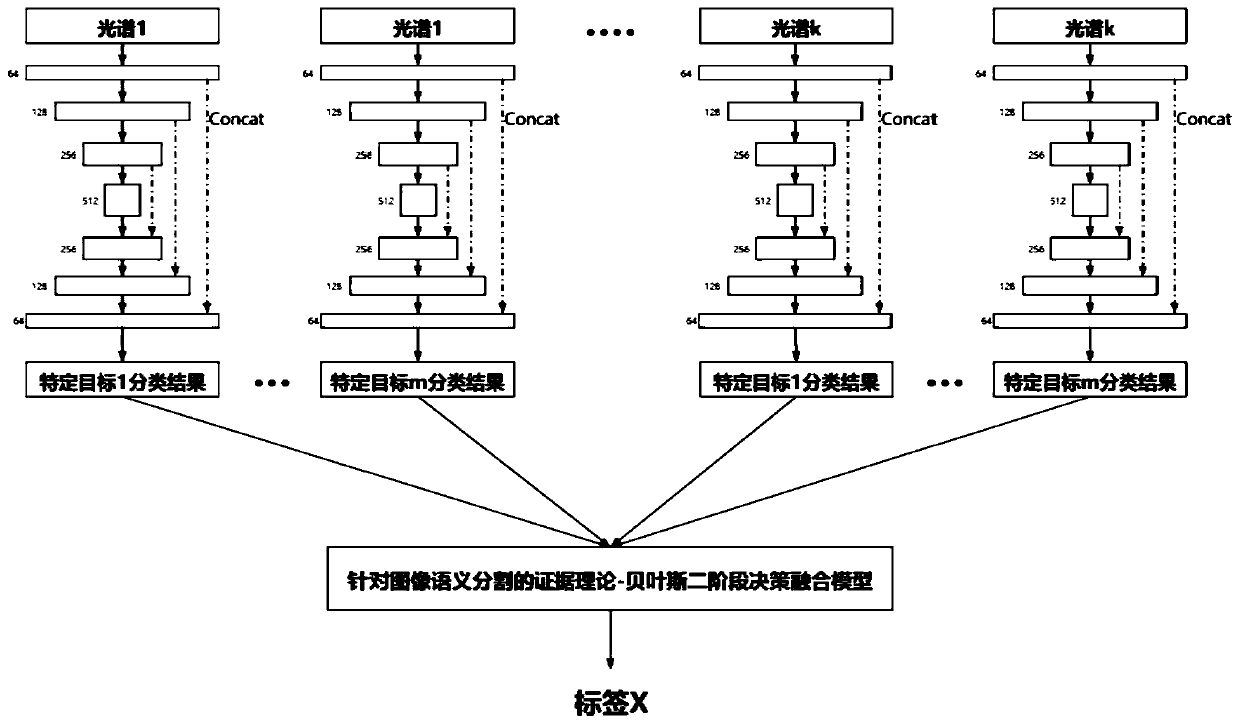

Data deep fusion image segmentation method for multispectral rescue robot

ActiveCN111582280ADiscriminatingImprove accuracyCharacter and pattern recognitionNeural architecturesPattern recognitionRescue robot

The invention provides a data deep fusion image segmentation method for a multispectral rescue robot, and aims to further improve the precision of image semantic segmentation of the rescue robot, improve the accuracy of troubleshooting and analysis of the rescue robot in a disaster site, and perform autonomous detection work without manual command and control. The method comprises the following steps: generating a target segmentation training data set; constructing a U-shaped network for identifying single-spectrum single-target image segmentation; establishing an evidence theory-Bayesian two-stage decision fusion model for image semantic segmentation; and training to obtain a semantic segmentation model of multispectral data fusion. The evidence theory-Bayesian two-stage decision fusion model for image semantic segmentation is obviously improved in the aspects of accuracy, recall rate, accuracy and the like, the robot can autonomously check a disaster site in a complex disaster reliefenvironment, the detection accuracy and efficiency are improved, the labor cost is reduced, the disaster relief time is shortened, and casualties are reduced.

Owner:吉林省森祥科技有限公司

Stock trend classification prediction method based on intelligent fusion calculation

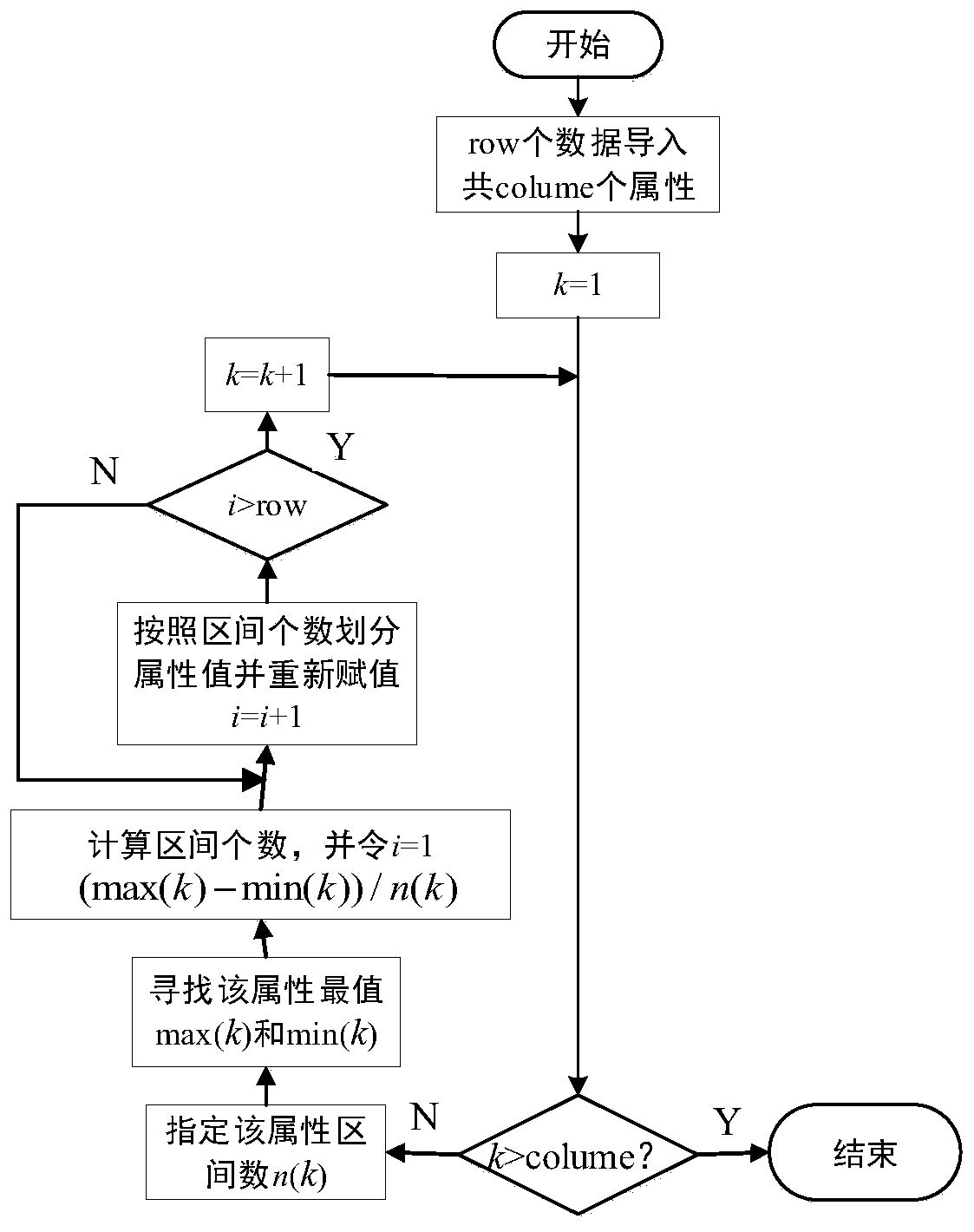

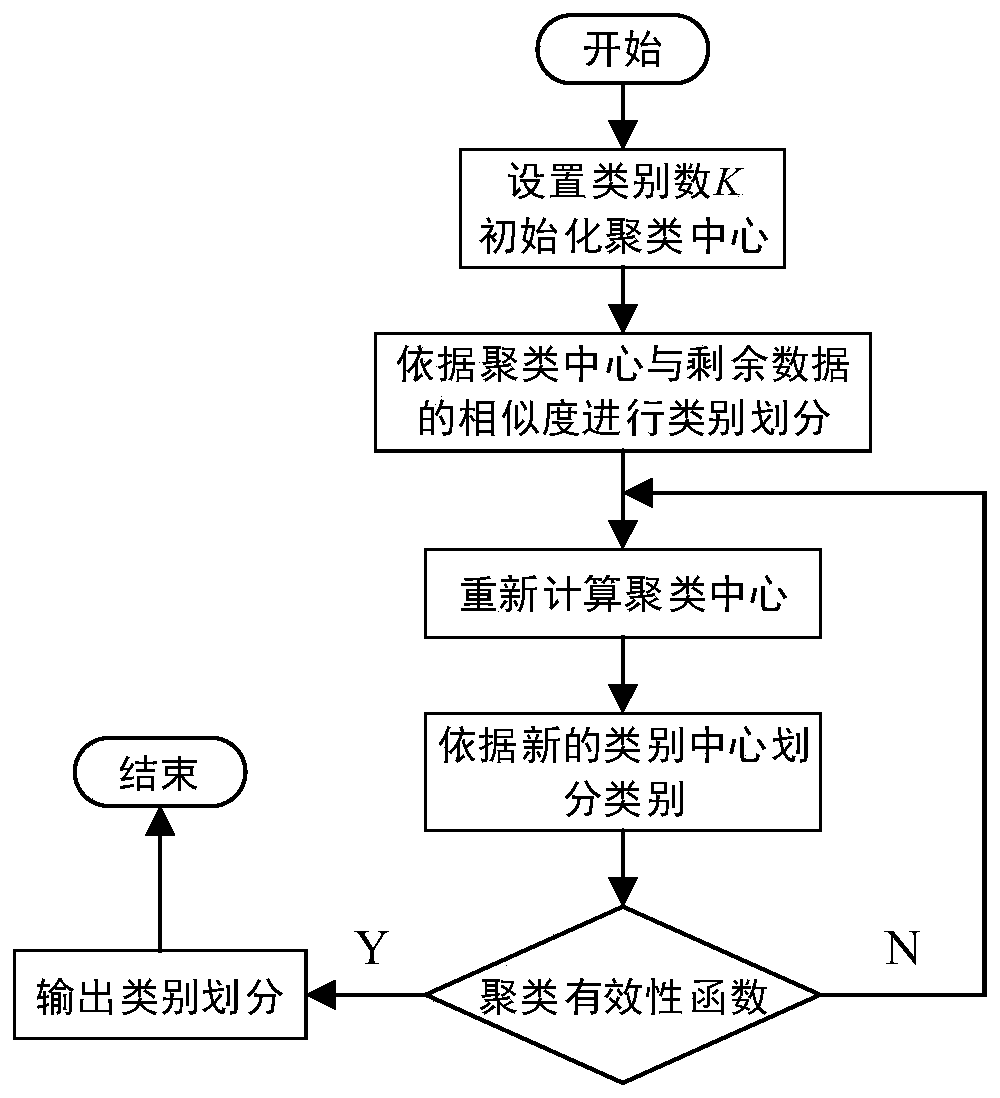

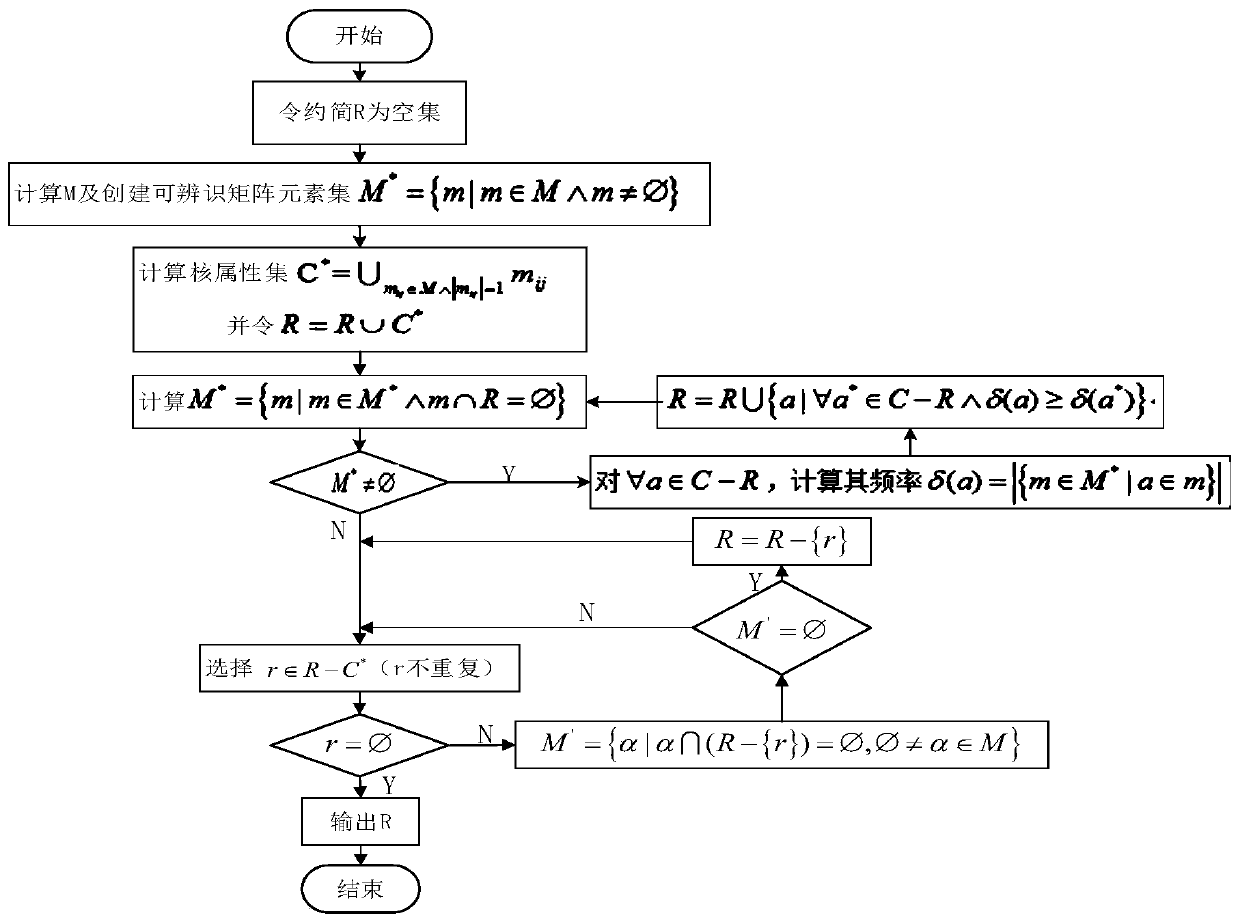

PendingCN110956541AImprove forecast accuracyFinanceCharacter and pattern recognitionData setDiscretization algorithm

The method comprises the following steps: performing discretization preprocessing on data in a complete data set of a target stock in a target time period by adopting an equidistant discretization algorithm and a one-dimensional K-Means clustering discretization algorithm; carrying out attribute reduction of the technical indexes; adopting a naive Bayes classifier and a K-nearest neighbor classifier, and according to the complete data set subjected to attribute reduction, carrying out classification prediction on the increase and decrease amplitude of the target stock in the next trading day;and performing decision fusion on the classification prediction results of the future increase and decrease of the target stock obtained by the two classifiers by using a D-S evidence combination rule, and finally taking the decision fusion result as a final classification prediction result of the future increase and decrease of the target stock. According to the invention, the prediction accuracyof various stock trend prediction methods based on a neural network, an SVM and the like can be obviously improved. When the method is used for constructing a multi-factor stock selection model, thenonlinear relationship between various stock index data and stock income is more significant.

Owner:XI AN JIAOTONG UNIV

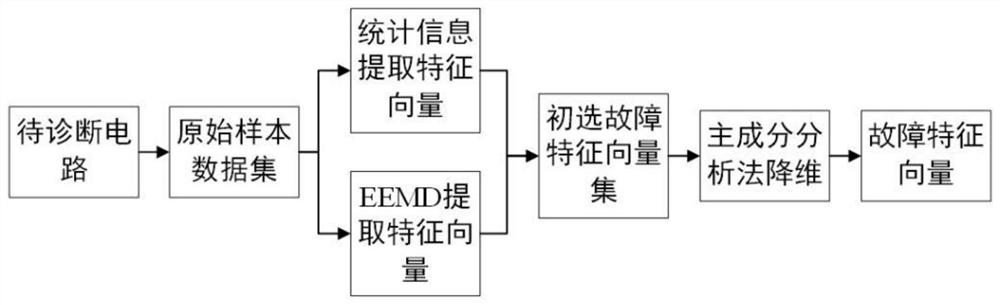

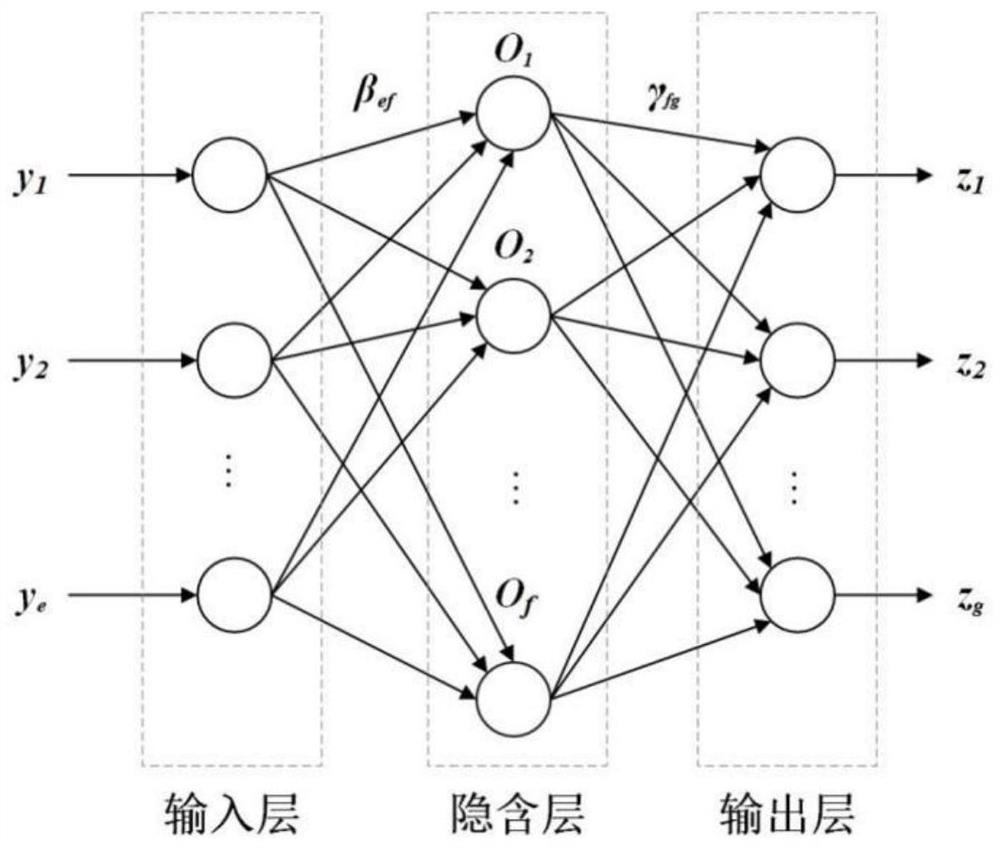

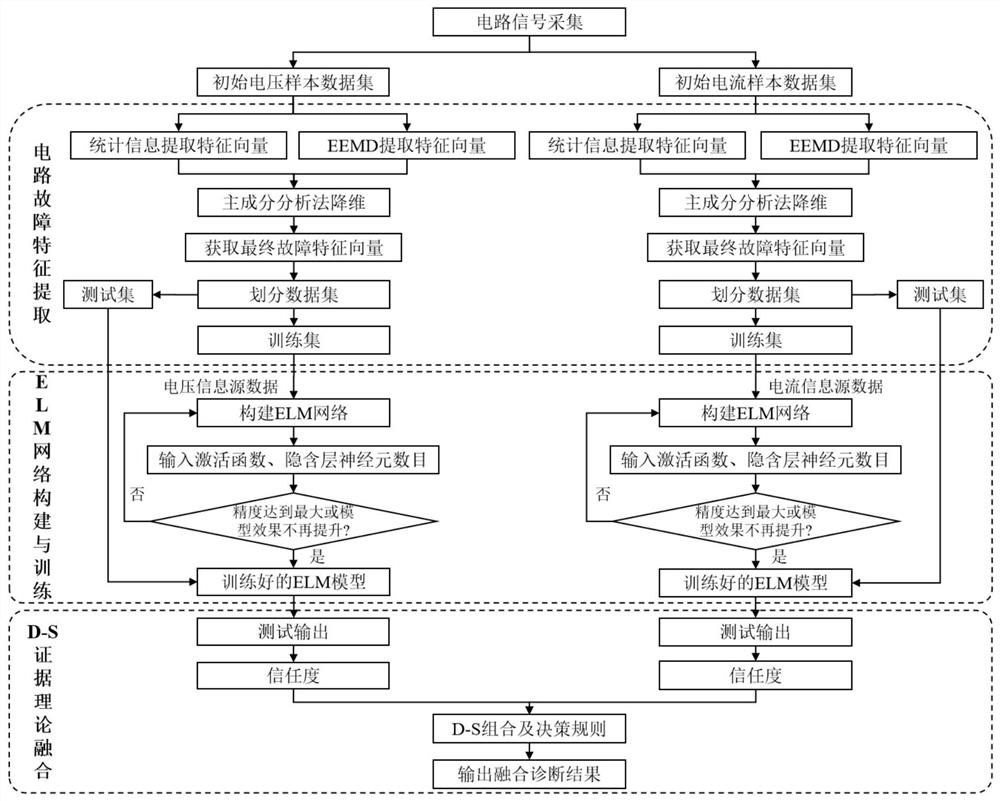

Circuit fault diagnosis method based on multi-feature information fusion

InactiveCN113484738AImprove accuracyImprove effectivenessAnalog circuit testingFeature vectorFeature extraction

The invention provides a circuit fault diagnosis method based on multi-feature information fusion. The method comprises the steps: extracting fault feature information through the combination of statistical characteristics and integrated empirical mode decomposition, and carrying out dimension reduction on a feature vector through a principal component analysis method to obtain a final fault feature vector; constructing a sample set of a sub-ELM neural network by each fault feature subspace, inputting the sample set into an ELM network model in sequence, training the ELM network model and determining optimal parameters of the model, and performing decision diagnosis by adopting the trained model to obtain initial output; and taking the initialdiagnosis output obtained through the ELM network as different evidence bodies, inputting the evidence bodies into a D-S evidence theory, and obtaining a decision result after diagnosis fusion according to a decision fusion rule. According to the method, the effect of extracting the fault features of the analog circuit is good, and compared with single information, the method has higher diagnosis precision, and can achieve accurate classification of circuit faults, so that the method has good engineering application value.

Owner:BEIHANG UNIV

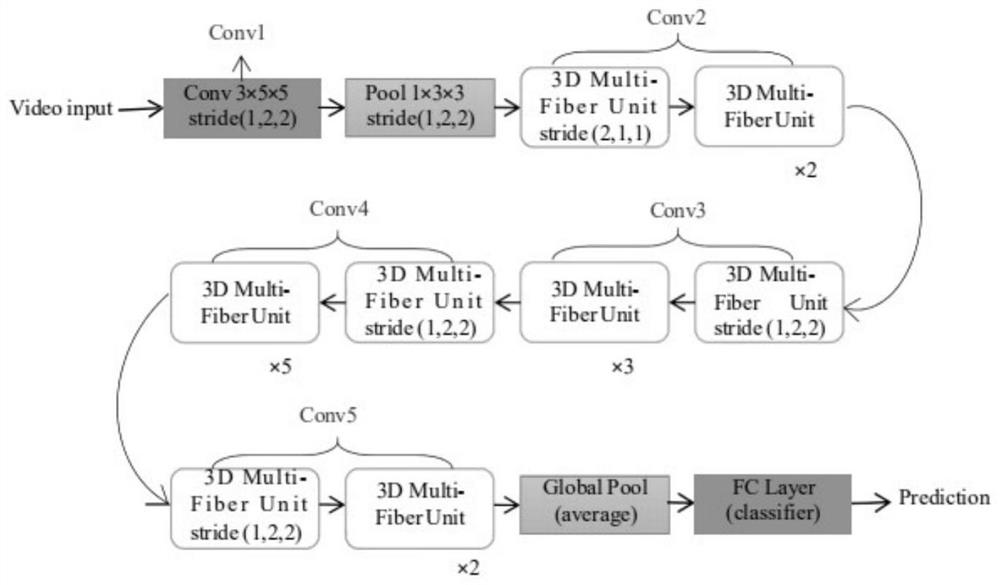

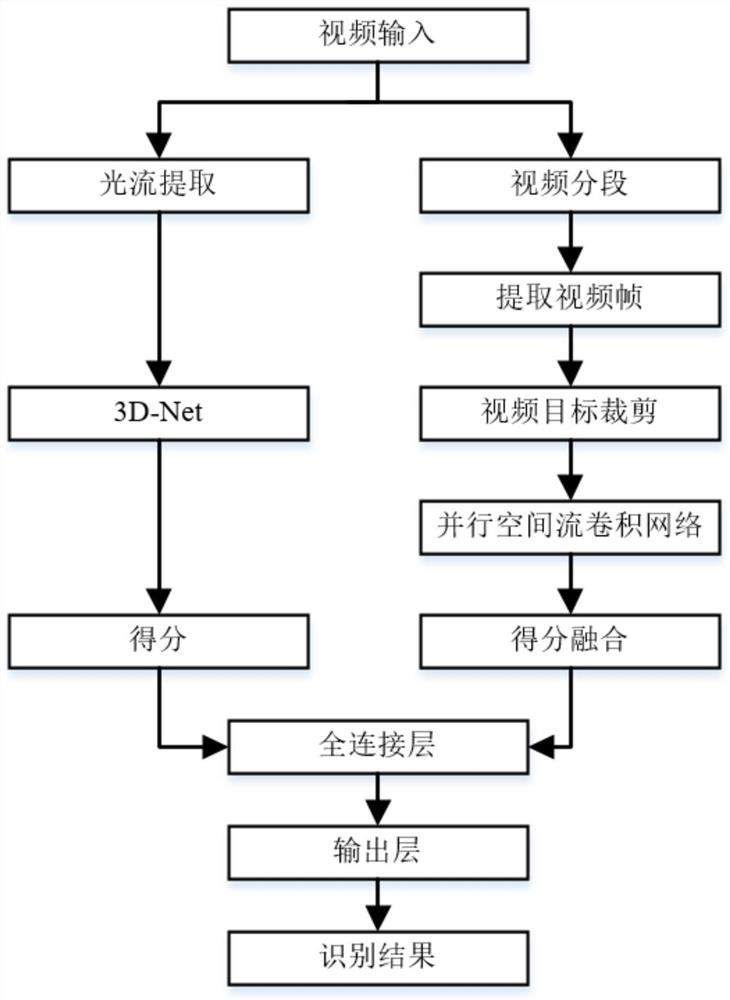

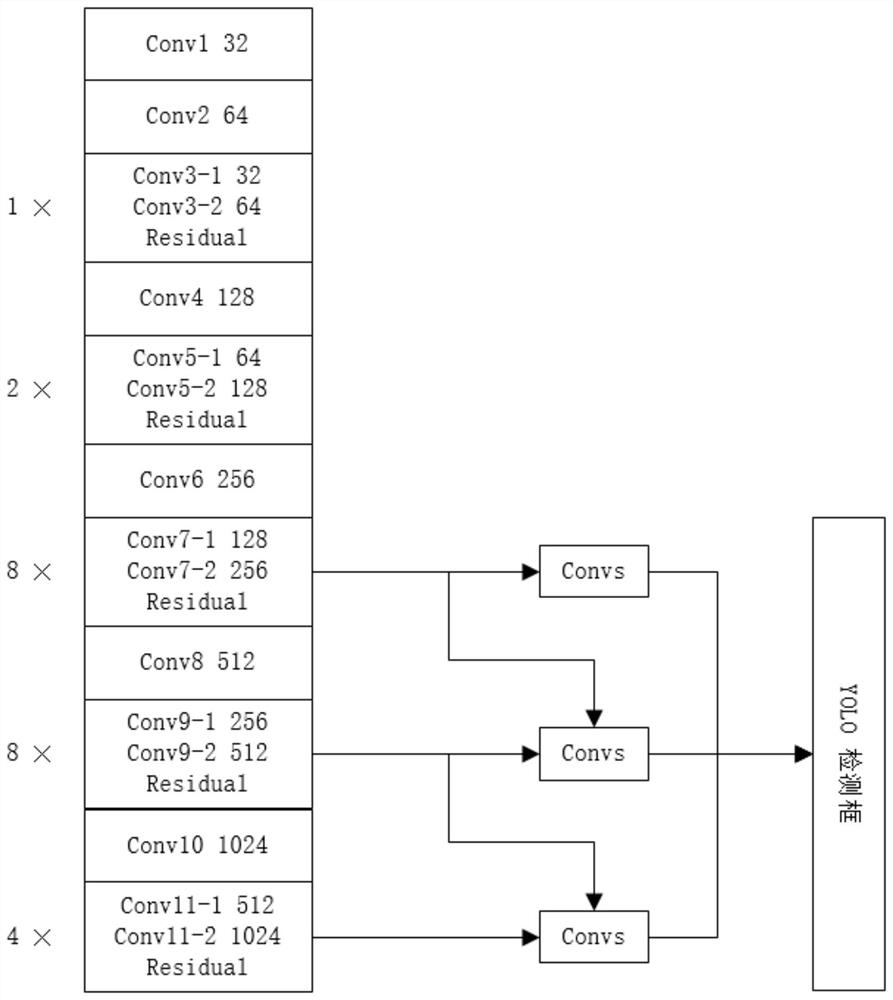

Double-flow convolution behavior recognition method based on 3D time flow and parallel spatial flow

ActiveCN112183240AThe recognition result is accurateReduce false recognition rateCharacter and pattern recognitionNeural architecturesFeature extractionAlgorithm

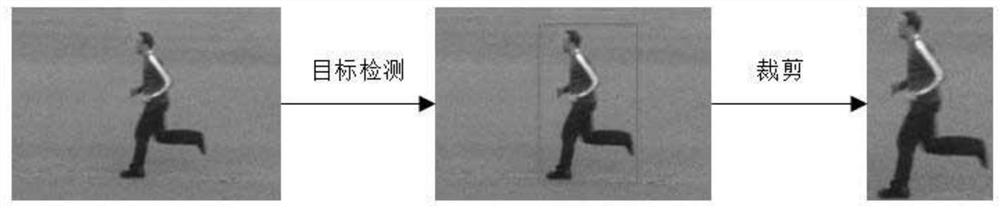

The invention discloses a double-flow convolution behavior recognition method based on 3D time flow and parallel spatial flow, and the method comprises the following steps: firstly carrying out the optical flow block extraction of an input video; secondly, segmenting an input video, extracting a video frame, and cutting out a human body part; inputting the optical flow block into a 3D convolutional neural network, and inputting the clipped frame into a parallel spatial flow convolutional network; finally, fusing the classification results of the parallel spatial streams and splicing with the scores of the time streams to form a full connection layer, and finally outputing an identification result through an output layer. According to the invention, the human body part cutting and the parallel spatial flow network are utilized to carry out single-frame identification, the single-frame identification accuracy is improved in space, and the 3D convolutional neural network is utilized to carry out action feature extraction of the optical flow, so that the identification accuracy of the time flow part is improved, and decision fusion is carried out by using the final single-layer neuralnetwork in combination with the spatial appearance features and the time action features, so that the overall recognition effect is improved.

Owner:SHANDONG UNIV

Trust management system for decision fusion in networks and method for decision fusion

InactiveUS8583585B2Efficiently and effectively manage trust for decision fusionImprove fusion effectProbabilistic networksFuzzy logic based systemsData miningComputer science

Owner:BAE SYST INFORMATION & ELECTRONICS SYST INTERGRATION INC

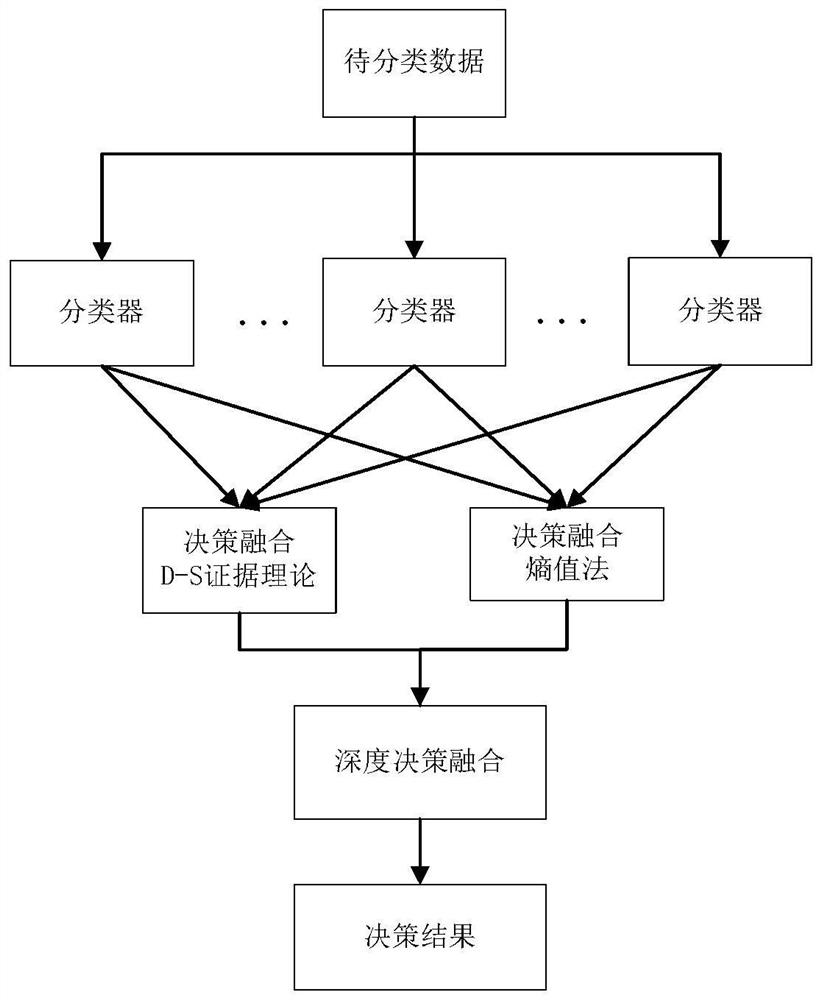

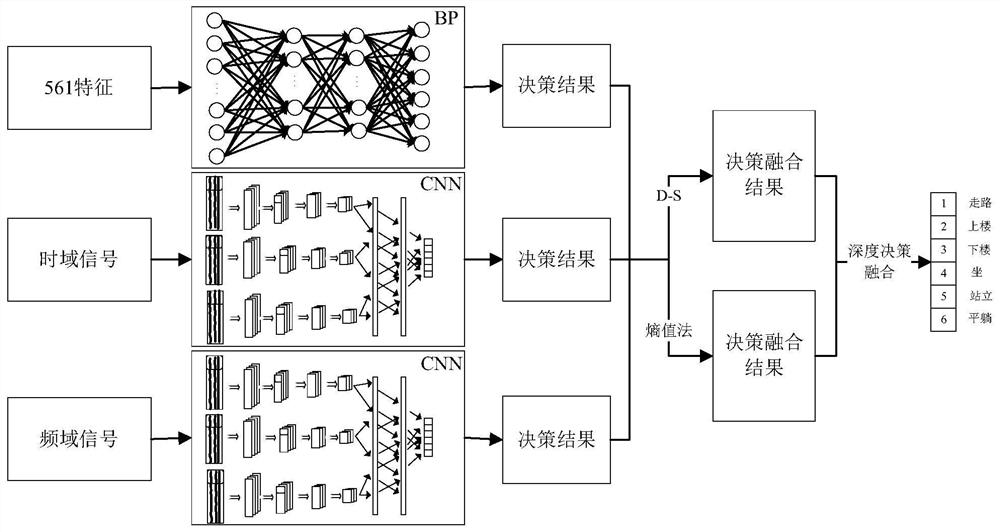

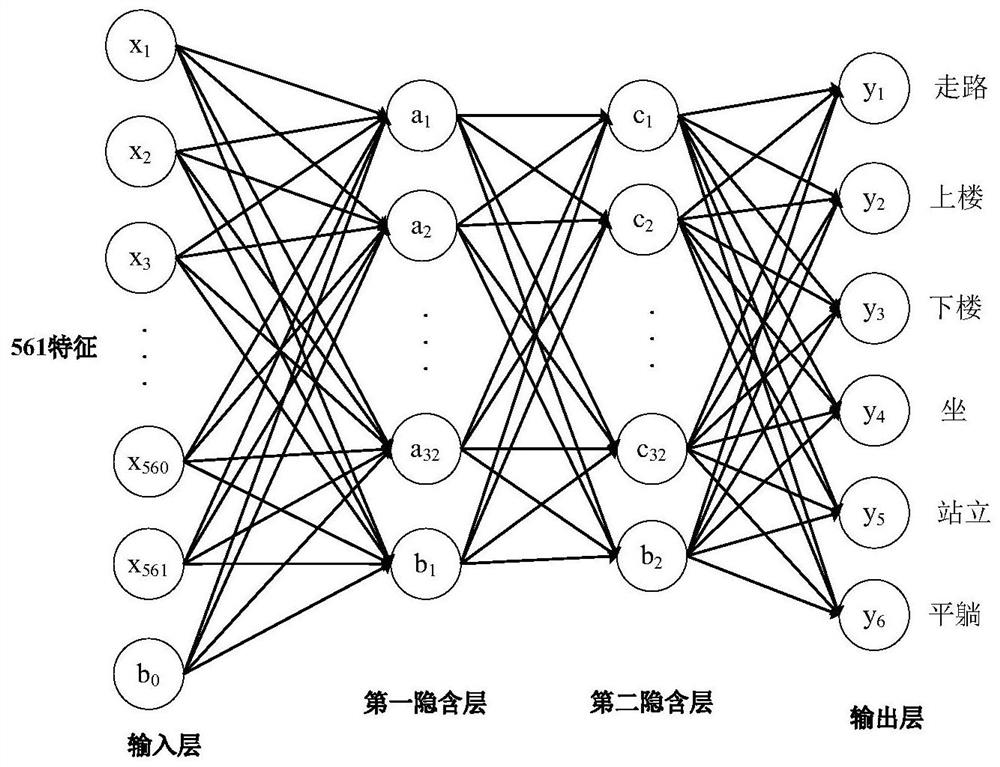

Deep decision fusion method based on entropy evaluation method and D-S evidence theory

InactiveCN113240034AFully excavatedImprove recognition accuracyCharacter and pattern recognitionNeural architecturesMultiple classifierMachine learning

The invention discloses a depth decision fusion method based on an entropy evaluation method and a D-S evidence theory, and belongs to the field of information fusion. Firstly, a plurality of classifiers are designed to carry out training recognition on samples, then single-layer decision fusion is carried out on recognition results of the multiple classifiers through an entropy evaluation method and a D-S evidence theory method; finally, a deep decision fusion method is adopted, and the result recognized by the method with the high recognition rate in the two decision fusion methods is reserved in each recognition process; and respective advantages of the two methods are fully exerted for identification. Compared with single classifier identification and single method decision fusion, the deep decision fusion method of the invention has higher identification accuracy, robustness and fault tolerance. Therefore, the method can be applied to various pattern recognition fields such as human body behavior recognition.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

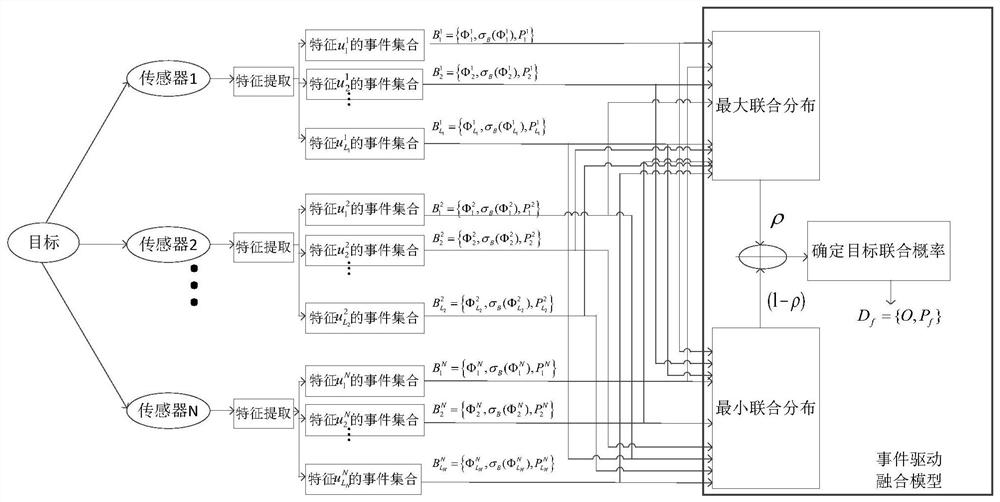

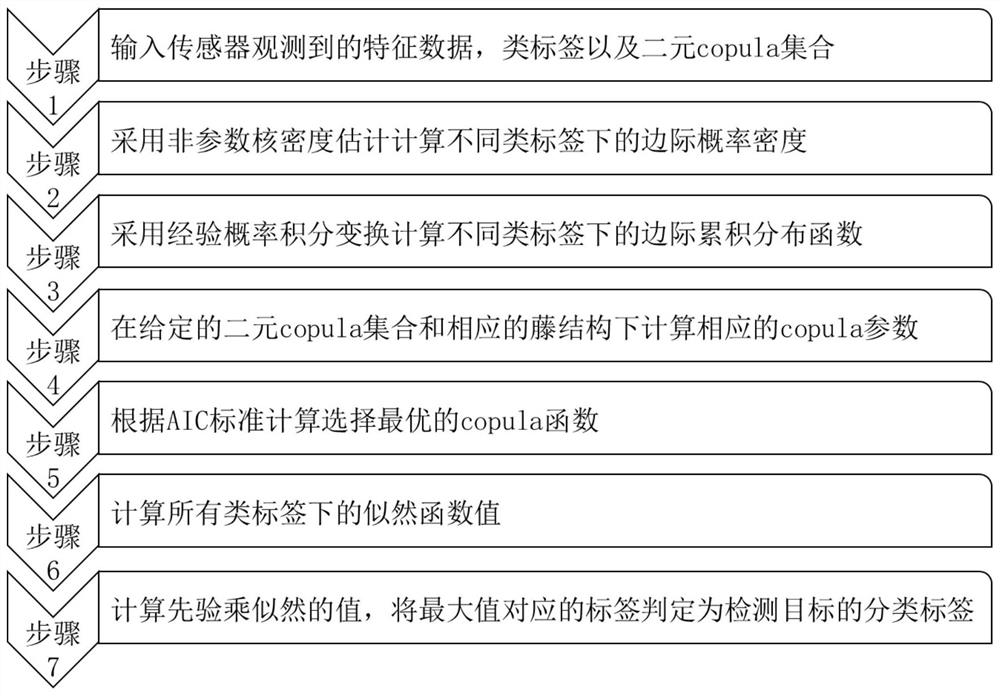

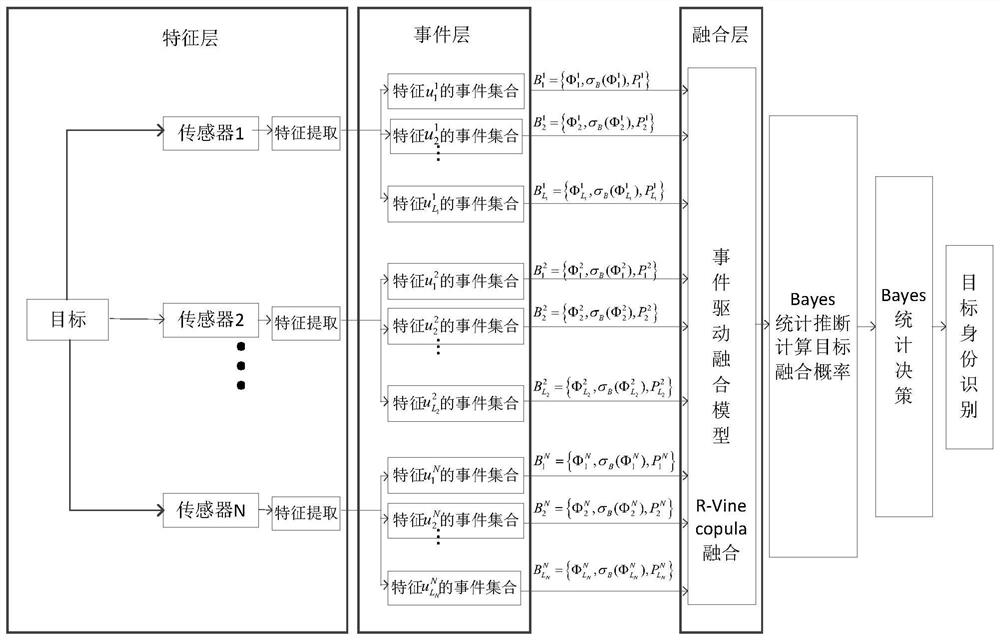

Multi-sensor Vine Copula heterogeneous information decision fusion method

ActiveCN112116019AExact joint probability distributionImprove fusion effectInternal combustion piston enginesScene recognitionFeature extractionPrior information

The invention discloses a multi-sensor Vine Copula heterogeneous information decision fusion method, and belongs to the field of information fusion. According to the method, a heterogeneous sensor Vine copula decision fusion algorithm is provided, different types of sensor data are unified into the same event space through feature extraction and event driving, and an event set corresponding to a target is established based on data features and prior information of the target; and modeling is carried out on the correlation between sensor data feature events through Vine copula. According to themethod, the correlation between the heterogeneous information fusion feature events can be flexibly constructed, more accurate event joint probability distribution is generated, and the decision fusion performance of heterogeneous sensors is improved; numerical analysis shows that the new method provided by the invention has a better decision-making effect on a target classification and identification problem, and can fuse heterogeneous sensor information more scientifically and reasonably.

Owner:SICHUAN UNIV

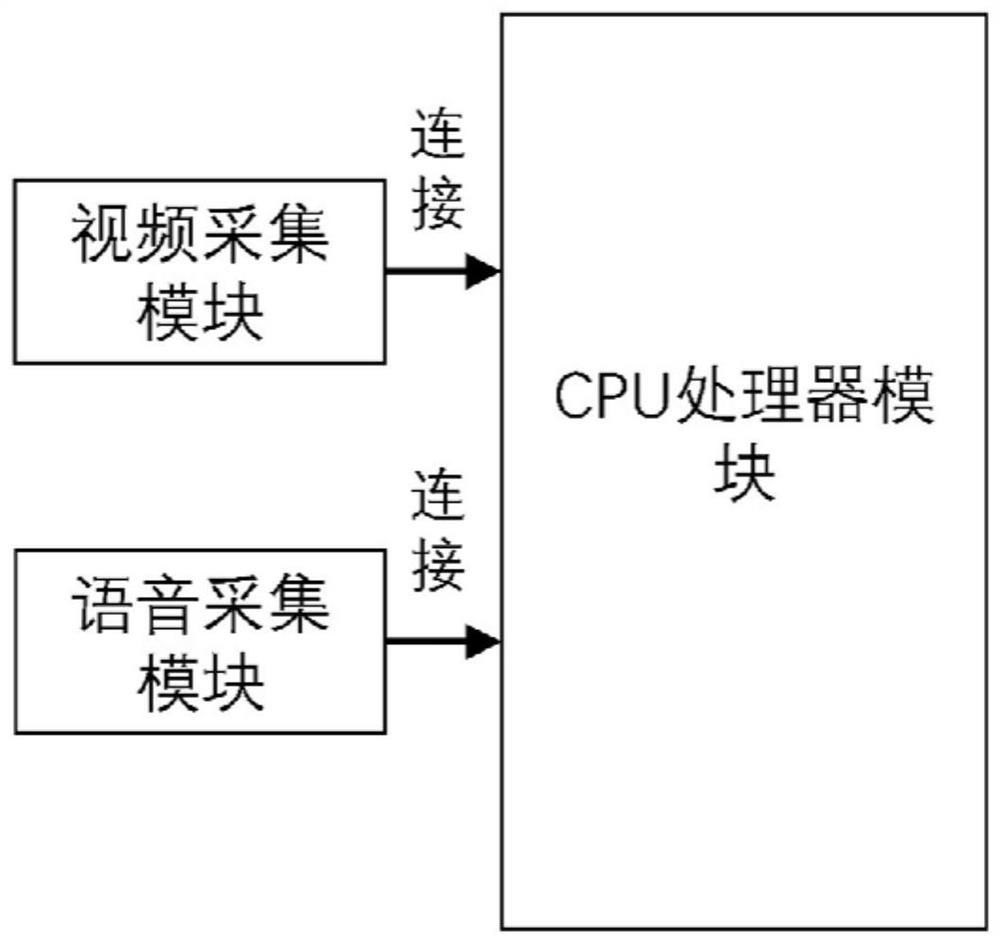

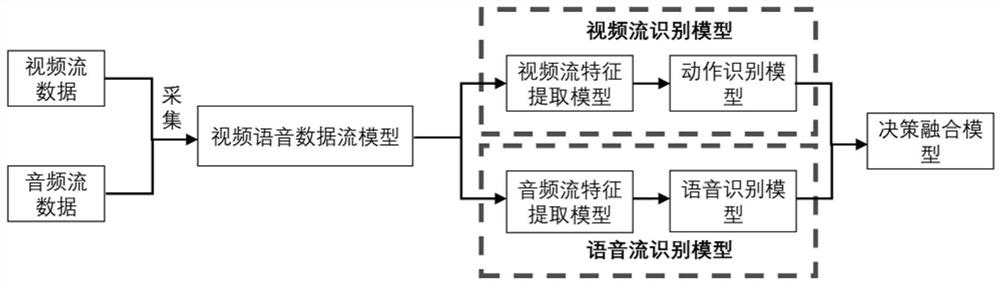

Bus-oriented system and method for recognizing violent behavior of passenger to driver

ActiveCN112766035AImprove accuracyGuaranteed reasonablenessInternal combustion piston enginesCharacter and pattern recognitionViolent behaviourHuman–computer interaction

The invention relates to a bus-oriented system and method for recognizing violent behaviors of passengers to drivers. The system comprises a processor module, a video collection module and a voice collection module, the processor module comprises a video and voice data collection model, a video stream recognition model, a voice stream recognition model and a decision fusion model; the processor module is connected with the video collection module and the voice collection module. According to the system for recognizing the violent behavior of the bus passenger to the driver, multi-mode recognition is carried out on the scene through videos and voices, and the accuracy of scene recognition is improved.

Owner:SOUTH CHINA UNIV OF TECH

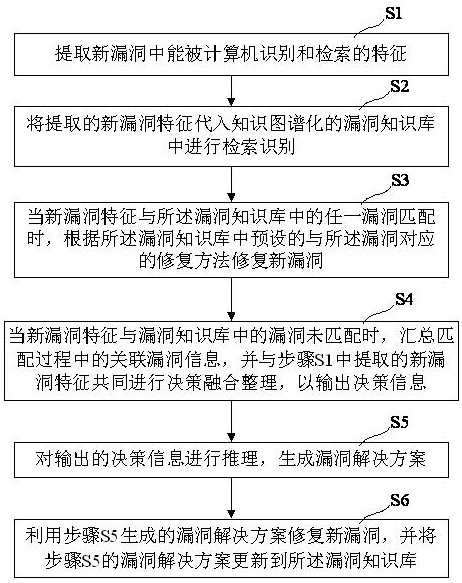

Vulnerability restoration method based on knowledge graph

ActiveCN113239365AImprove securityAvoid attackPlatform integrity maintainanceKnowledge representationTheoretical computer scienceEngineering

The invention relates to the technical field of network security, and particularly discloses a vulnerability restoration method based on a knowledge graph, and the method is high in restoration efficiency, low in error rate and safe. The method comprises the following steps: S1, extracting features which can be identified and retrieved by a computer in a new vulnerability; S2, substituting the extracted new vulnerability features into a knowledge mapping vulnerability knowledge base for retrieval and identification; S3, when the new vulnerability feature is matched with any vulnerability in the vulnerability knowledge base, repairing the new vulnerability according to a preset repairing method corresponding to the vulnerability in the vulnerability knowledge base; S4, when the new vulnerability features are not matched with the vulnerabilities in the vulnerability knowledge base, summarizing associated vulnerability information in the matching process, and jointly performing decision fusion arrangement on the associated vulnerability information and the new vulnerability features extracted in the step S1 to output decision information; S5, reasoning the output decision information to generate a vulnerability solution; and S6, repairing a new vulnerability by using the vulnerability solution generated in the step S5, and updating the vulnerability solution in the step S5 to the vulnerability knowledge base.

Owner:SHENZHEN Y& D ELECTRONICS CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com