Multi-mode fusion gesture keyboard input method, device and system and storage medium

A gesture keyboard and input method technology, applied in the fields of computer vision, gesture recognition, and human-computer interaction, can solve problems such as troublesome use, large space occupation, and inconvenient portability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

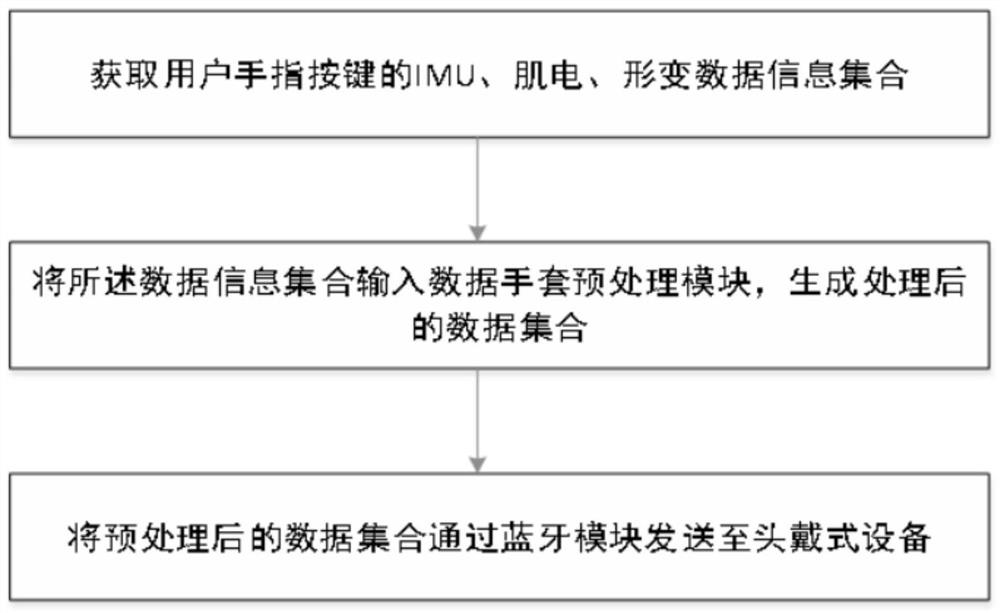

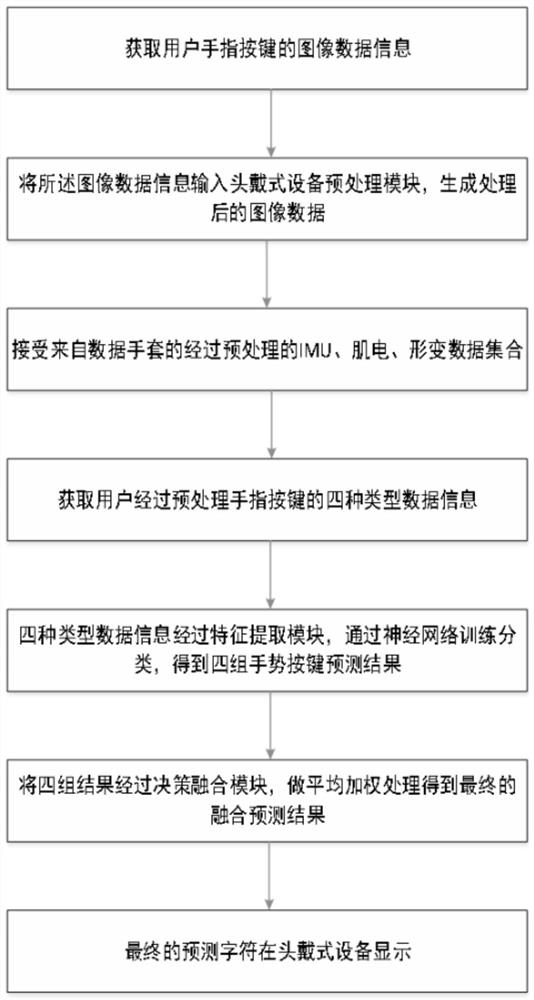

[0035] The embodiment of the present invention proposes a multi-modal fusion gesture keyboard input method, see figure 1 and figure 2 , the method includes the following steps:

[0036] Obtain the IMU sensor data, EMG sensor data, and bending sensor data of the user's keys by wearing data gloves;

[0037] The IMU sensor data, EMG sensor data, and bending sensor data are filtered and denoised by the preprocessing module and then sent to the head-mounted device through the Bluetooth module for processing; The preprocessed IMU sensor data, EMG sensor data, deformation data (ie bending sensor data), and hand image data are put into the corresponding classifier for feature extraction, and then decision fusion is performed to identify the corresponding gesture key input signal.

Embodiment 2

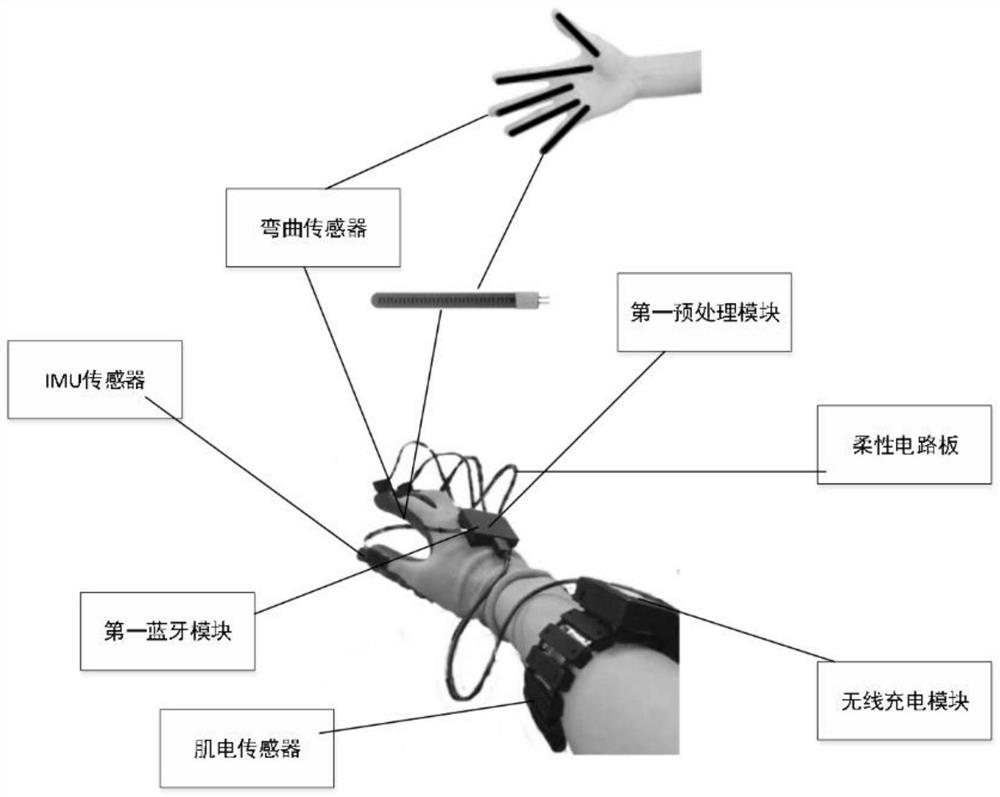

[0039] A multimodal fusion gesture keyboard input device, comprising: a data glove,

[0040] like image 3 shown, wherein the data glove includes: an IMU sensor module, an electromyography sensor module, a bending sensor module, a first preprocessing module, a first Bluetooth module, and a wireless charging module;

[0041] The IMU sensor module is a six-axis inertial measurement unit motion sensor, which is used to record the gestures of both hands and the motion information when making key actions. It includes a three-axis accelerometer to record acceleration information and a three-axis gyroscope (x, y, z axis) to record the angular velocity information, there are five sensors in total, which are located at the back of the five fingers respectively, and are connected with the micro-control unit at the back of the hand through the flexible circuit board;

[0042] The EMG sensor module is composed of six muscle pulse detection modules surrounded by a metal contact, which is ...

Embodiment 3

[0052] A multi-modal fusion gesture keyboard input device, comprising: a head-mounted device,

[0053] like Figure 5 As shown, the head-mounted device includes: a binocular camera module, a second preprocessing module, a feature extraction module, a decision fusion module, a second Bluetooth module, a display module, and a power supply module;

[0054] The binocular camera module is located under the head-mounted device, and is used to obtain the gesture images of the finger movement of the user wearing the data glove. A 50-frame binocular camera is used to record the image information of the keys of both hands in multiple frames:

[0055] The second preprocessing module is used to perform noise reduction processing on the collected gesture images;

[0056] The feature extraction module is used to perform neural network classification training on the inertial data information, myoelectric data information, and bending deformation data information collected by the data glove,...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com