Multi-modal three-dimensional point cloud segmentation system and method

A 3D point cloud and multi-modal technology, applied in 3D object recognition, character and pattern recognition, biological neural network models, etc., can solve the problem of being unable to directly process large-scale real point cloud scenes, susceptible to noise interference, and lack of information fusion and other issues to achieve good generalization, good robustness, and improved accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

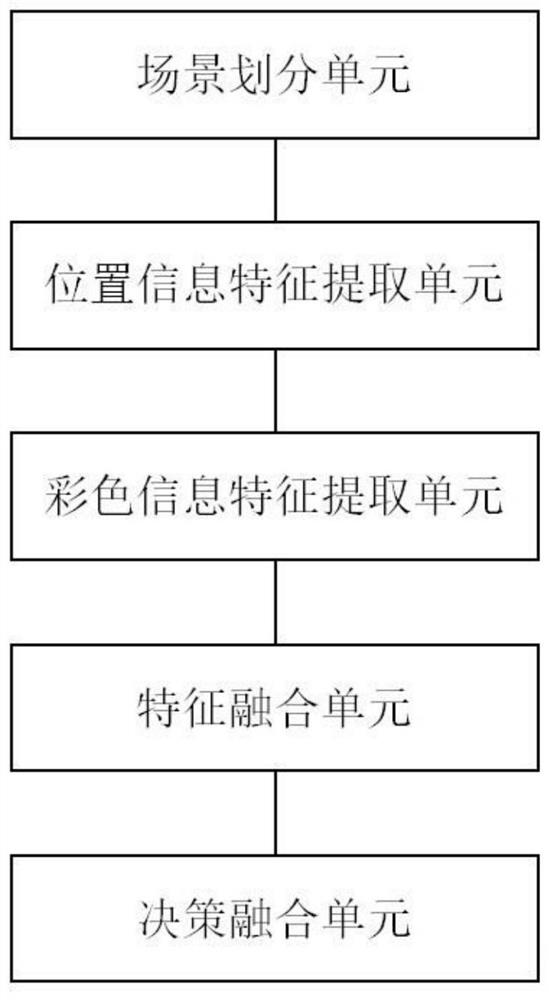

[0033] figure 1 It is a flow chart of multimodal 3D point cloud scene segmentation according to Embodiment 1 of the present invention, refer to below figure 1 , detail each step.

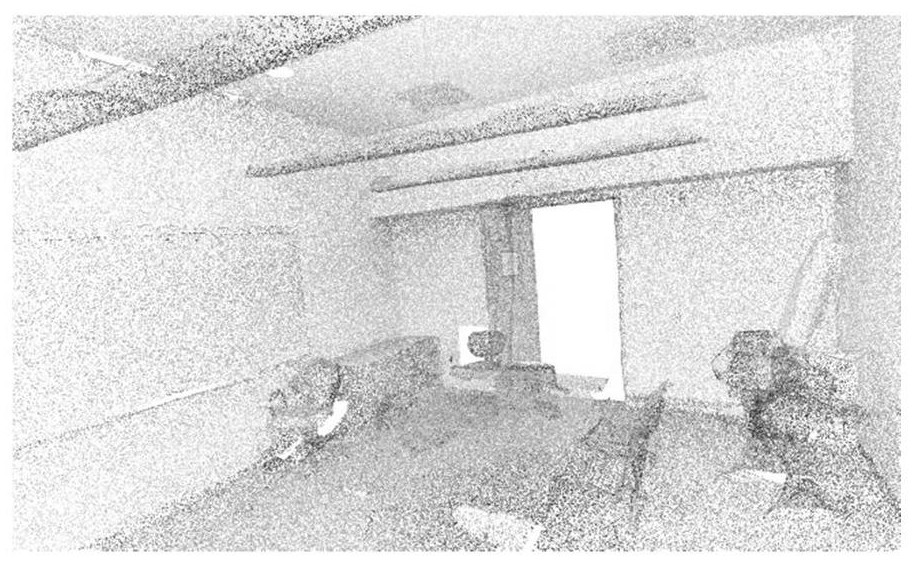

[0034] Step 1. Preprocess the collected data. According to the corresponding relationship between point cloud data and image pixels, back-project to obtain point cloud data with color information and spatial coordinates, and divide the whole scene into smaller area.

[0035] In this example the data is collected using a specific camera that combines 3 structured light sensors with different pitches to capture 18 RGB and depth images during a 360° rotation of each scanning position. Each 360° scan is performed in 60° increments, providing 6 sets of triple RGB-D data for each position. The output is a reconstructed 3D textured mesh of the scanned area, raw RGB-D image and camera metadata. Additional RGB-D data was generated based on this data, and a point cloud was made by sampling the mesh.

[0...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com