Patents

Literature

449results about How to "Expand the receptive field" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

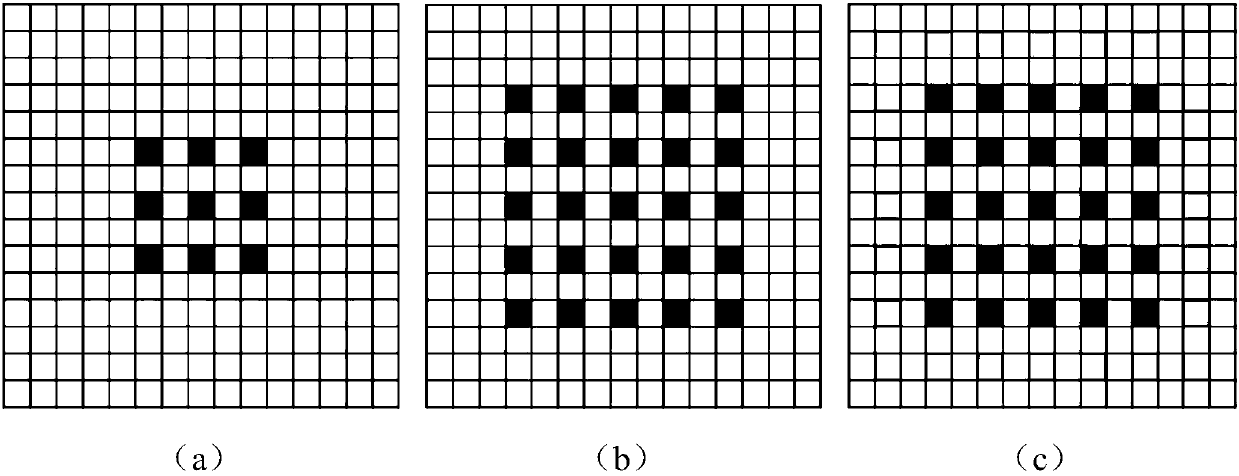

Multi-scale image semantic segmentation method

ActiveCN110232394AIncrease profitEasy to handleCharacter and pattern recognitionNeural architecturesSample imageMinutiae

The invention discloses a multi-scale image semantic segmentation method. The method comprises the following steps: obtaining a to-be-segmented image and a corresponding label; constructing a full convolutional deep neural network, wherein the full convolutional deep neural network comprises a convolution module, a hole convolution module, a pyramid pooling module, a 1 * 1 * depth convolution layer and a deconvolution structure; setting hole convolution as channel-by-channel operation, and utilizing low-scale, medium-scale and high-scale characteristics in a targeted mode; training the full convolutional deep neural network, establishing a loss function, and determining parameters of the full convolutional deep neural network by training the sample image; and inputting the to-be-segmentedimage into the trained full convolutional deep neural network to obtain a semantic segmentation result. By means of the method, the image semantic segmentation problem with complex details, holes andlarge targets can be well solved while the calculated amount and the parameter number are reduced, and the consistency of category labels can be reserved while the target edges can be well segmented.

Owner:SOUTH CHINA UNIV OF TECH

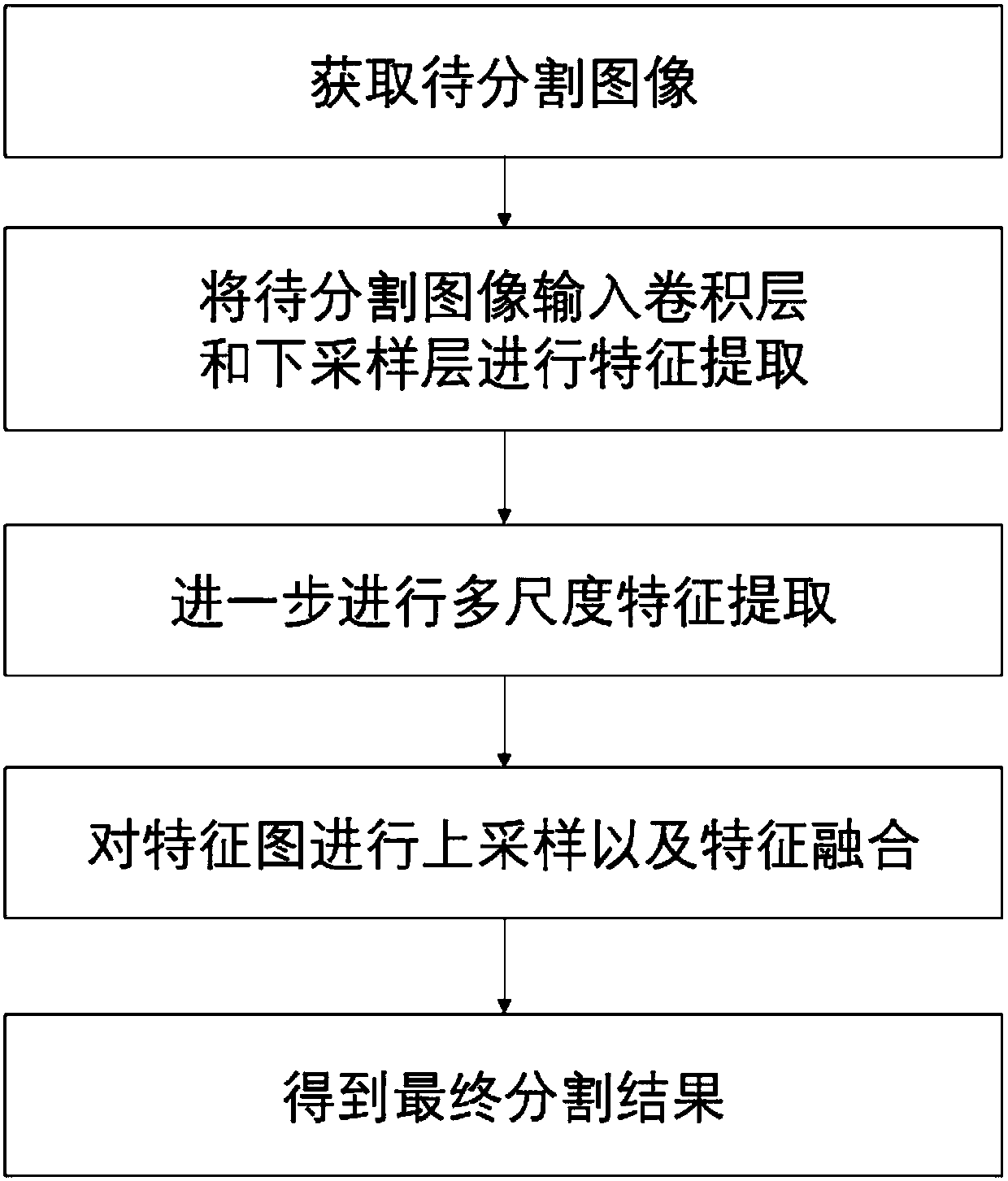

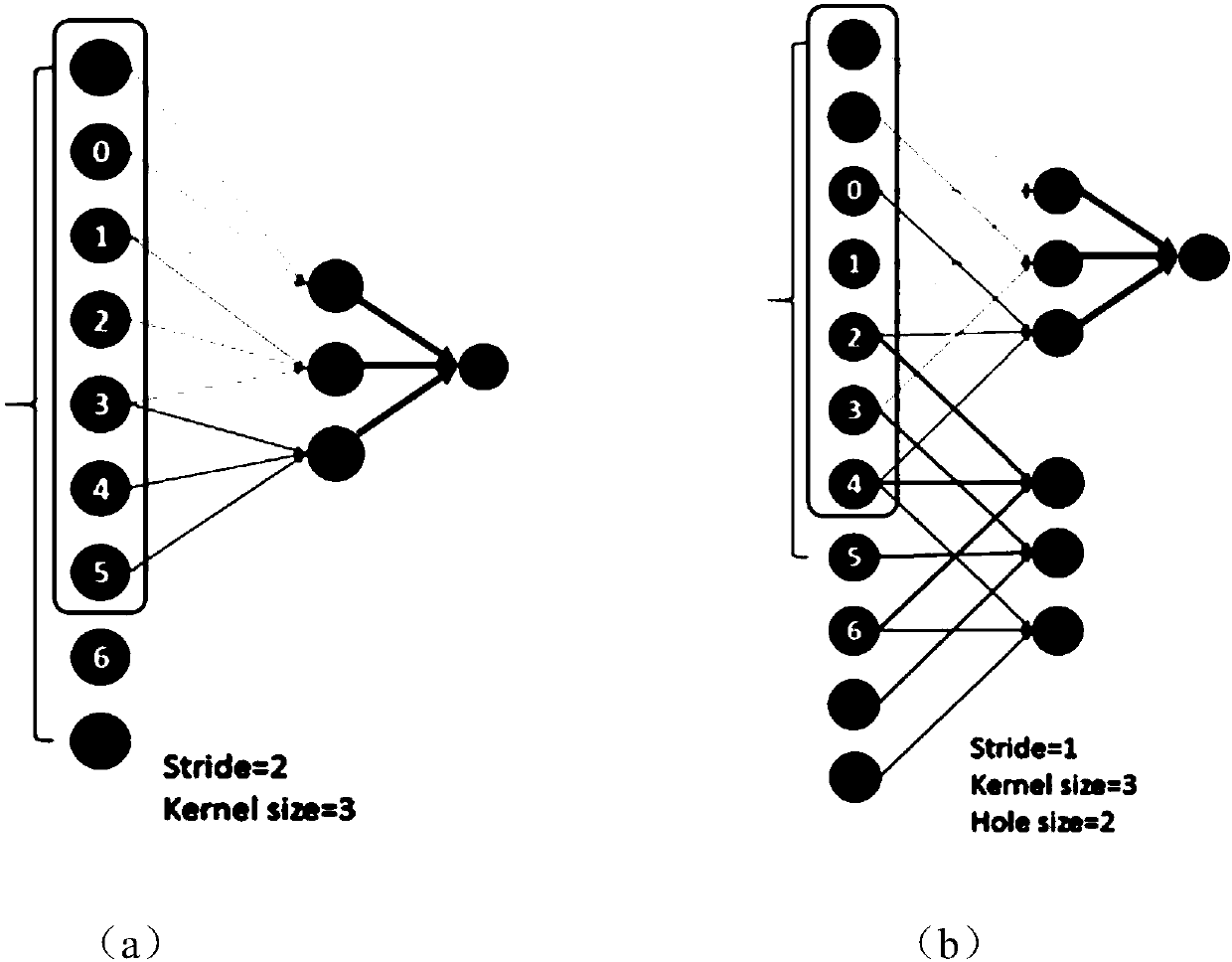

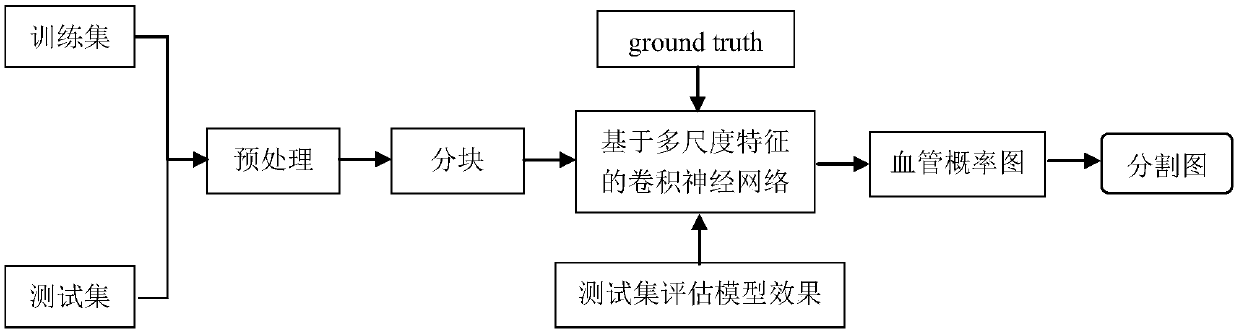

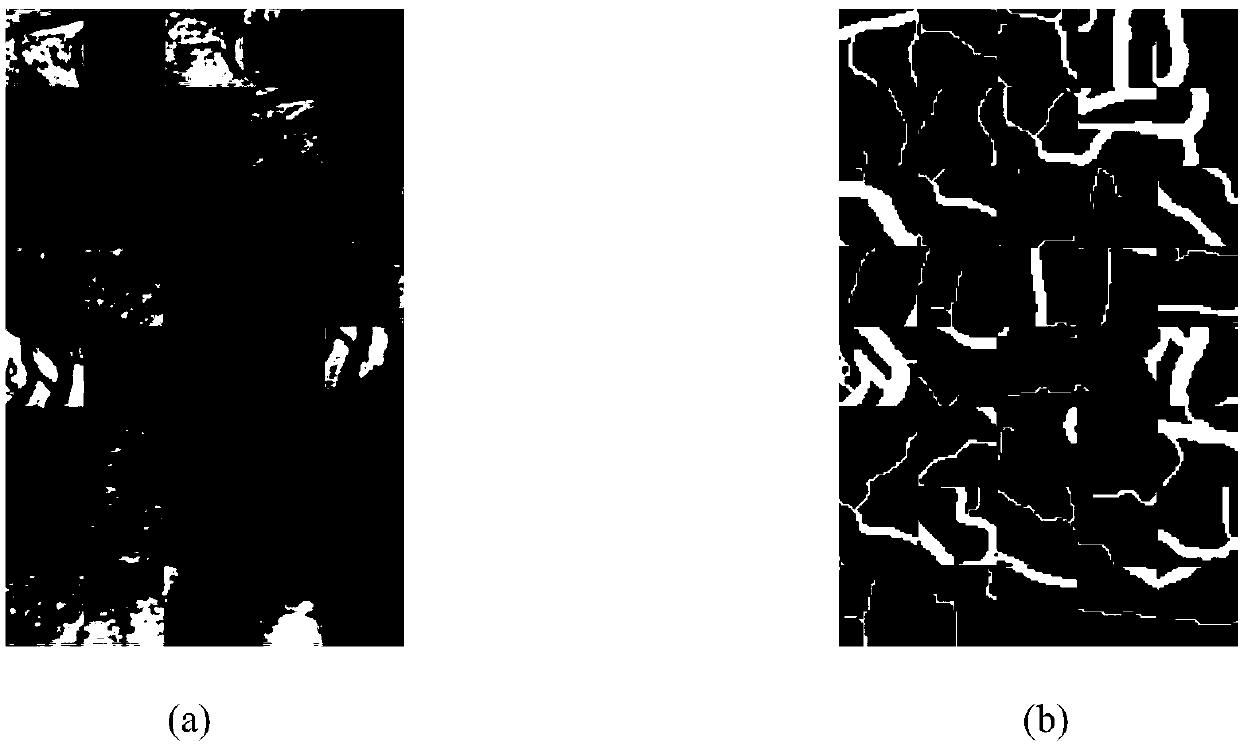

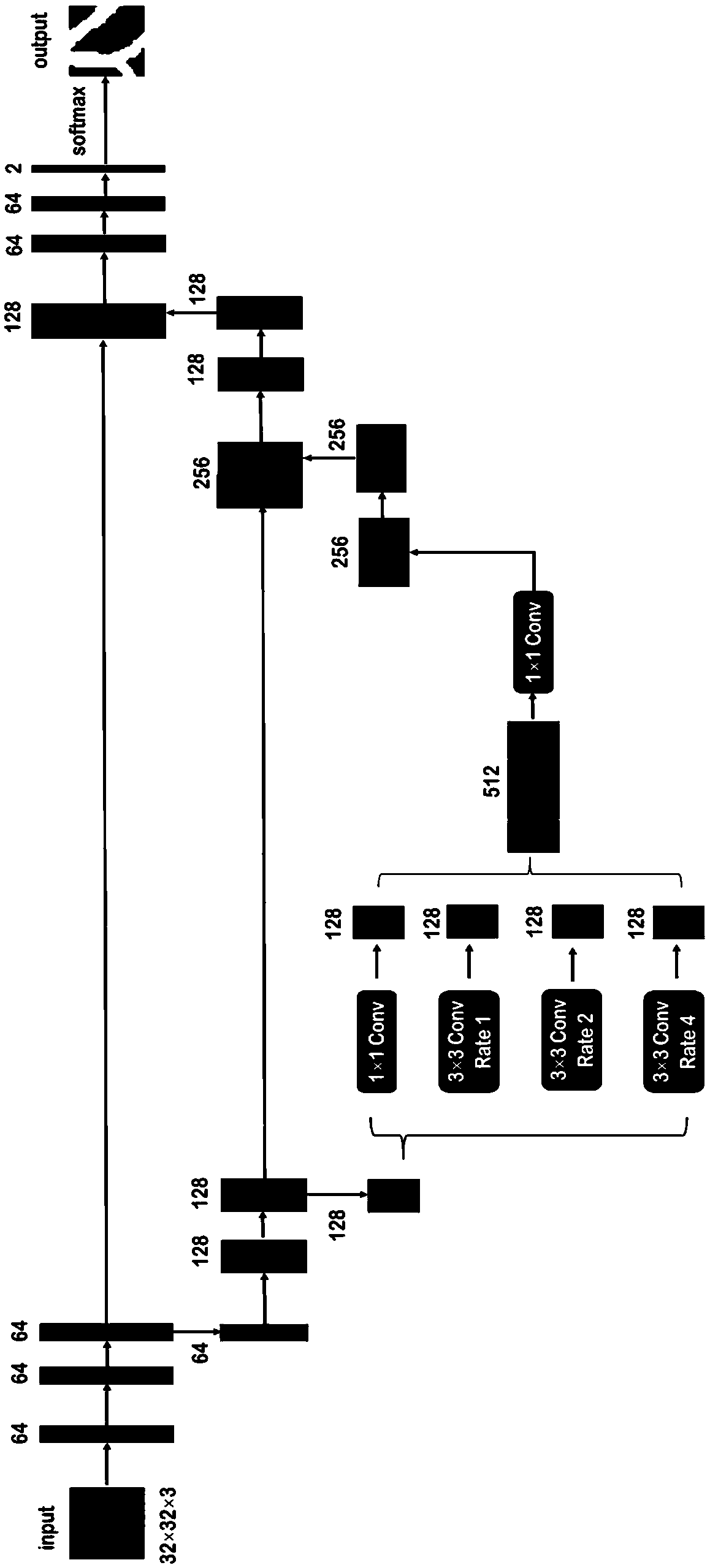

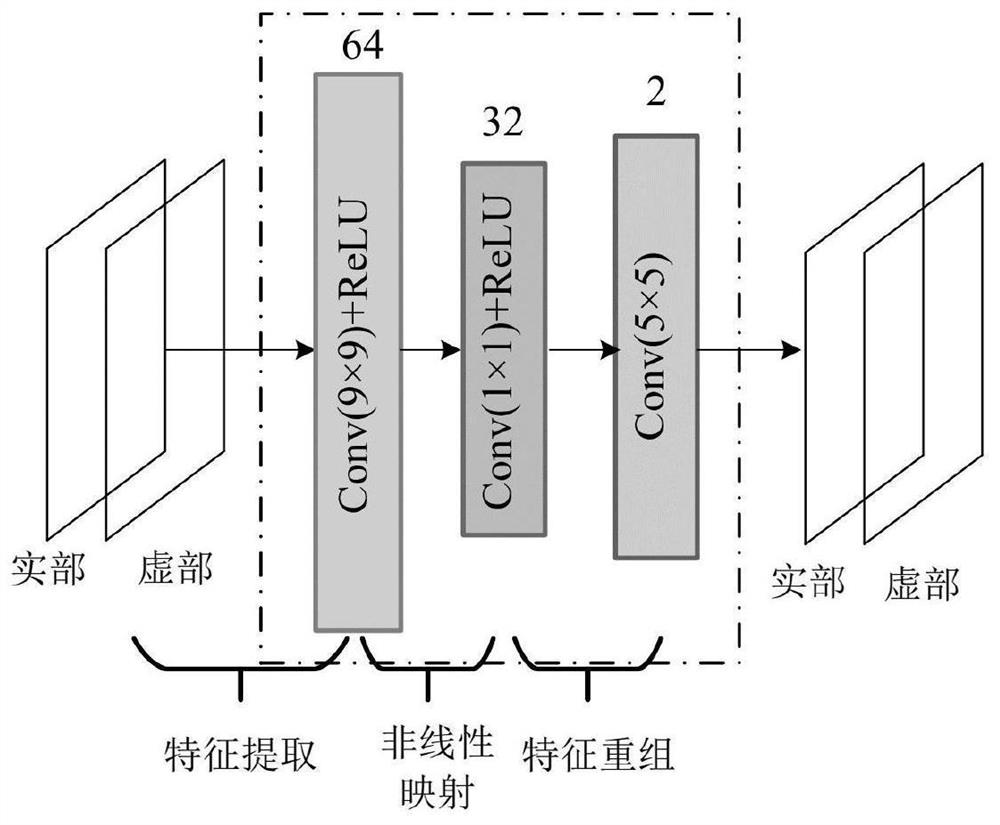

A retinal blood vessel image segmentation method based on a multi-scale feature convolutional neural network

InactiveCN108986124AExpand the receptive fieldReduce training parametersImage enhancementImage analysisAdaptive histogram equalizationHistogram

The invention belongs to the technical field of image processing, in order to realize automatic extraction and segmentation of retinal blood vessels, improve the anti-interference ability to factors such as blood vessel shadow and tissue deformation, and make the average accuracy rate of blood vessel segmentation result higher. The invention relates to a retinal blood vessel image segmentation method based on a multi-scale feature convolutional neural network. Firstly, retinal images are pre-processed appropriately, including adaptive histogram equalization and gamma brightness adjustment. Atthe same time, aiming at the problem of less retinal image data, data amplification is carried out, the experiment image is clipped and divided into blocks, Secondly, through construction of a multi-scale retinal vascular segmentation network, the spatial pyramidal cavity pooling is introduced into the convolutional neural network of the encoder-decoder structure, and the parameters of the model are optimized independently through many iterations to realize the automatic segmentation process of the pixel-level retinal blood vessels and obtain the retinal blood vessel segmentation map. The invention is mainly applied to the design and manufacture of medical devices.

Owner:TIANJIN UNIV

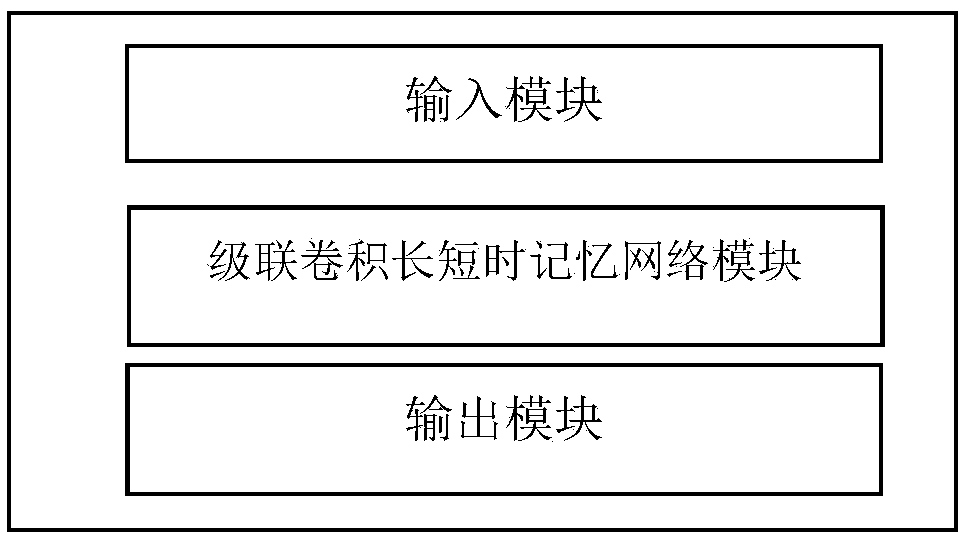

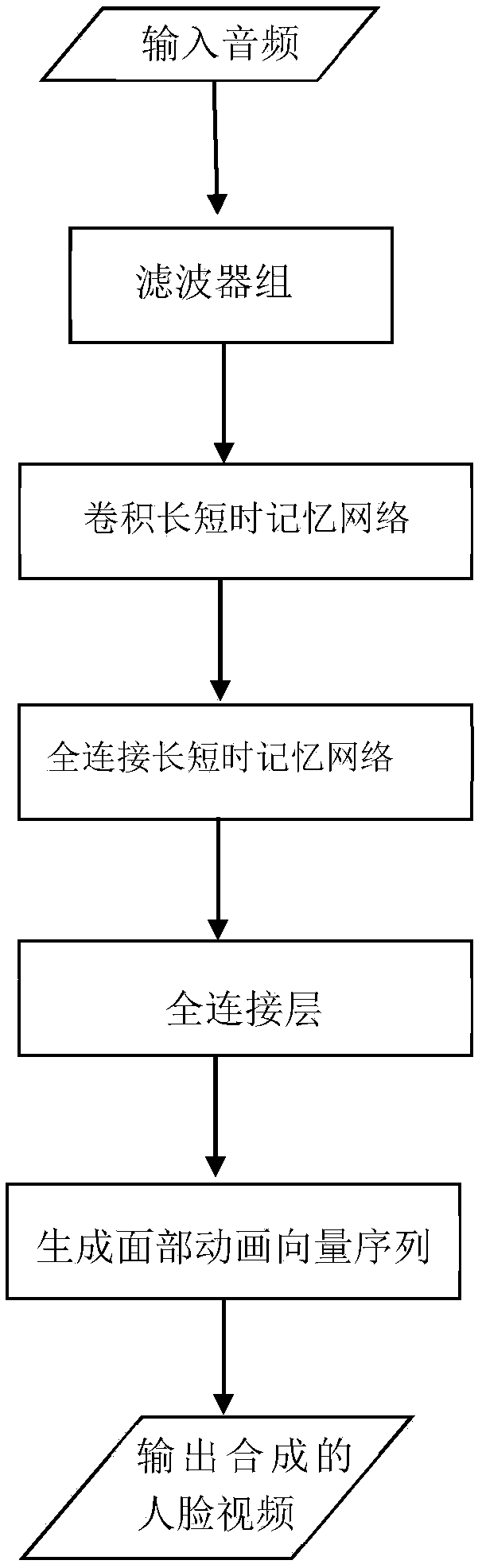

Speech-driven lip-synchronous face video synthesis algorithm based on concatenated convolution LSTM

PendingCN109308731AExpand the receptive fieldAdd depthSpeech analysisAnimationImage synthesisAudio frequency

The invention discloses a speech-driven lip synchronous face video synthesis algorithm of cascaded convolution LSTM. The speech video of the target person is captured as the background video, and the3D face model of the target is obtained by reconstructing the 3D face of the image sequence, and the facial animation vector sequence of the background video is obtained. The audio signal extracts thespeech features of the filter bank; The speech features of the filter bank are used as the input of the concatenated convolution short-time memory network, and the facial animation vector sequence isused as the output for the training test. Facial animation vector sequences of audio signals are used to replace facial animation vector sequences of target 3D face models to generate new 3D face models and render face images to synthesize lip-shaped synchronous face videos. The invention retains more voiceprint information, innovates to obtain the speech characteristics of the filter bank through the two-dimensional convolution neural network, expands the receptive field of the convolution neural network, increases the network depth, and obtains accurate lip-shaped synchronous face video.

Owner:ZHEJIANG UNIV

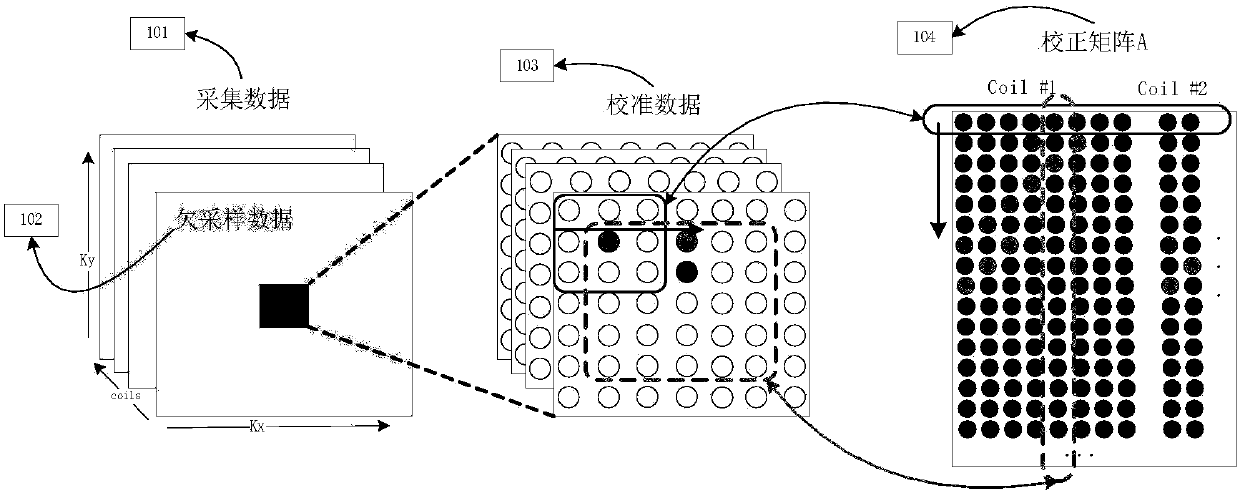

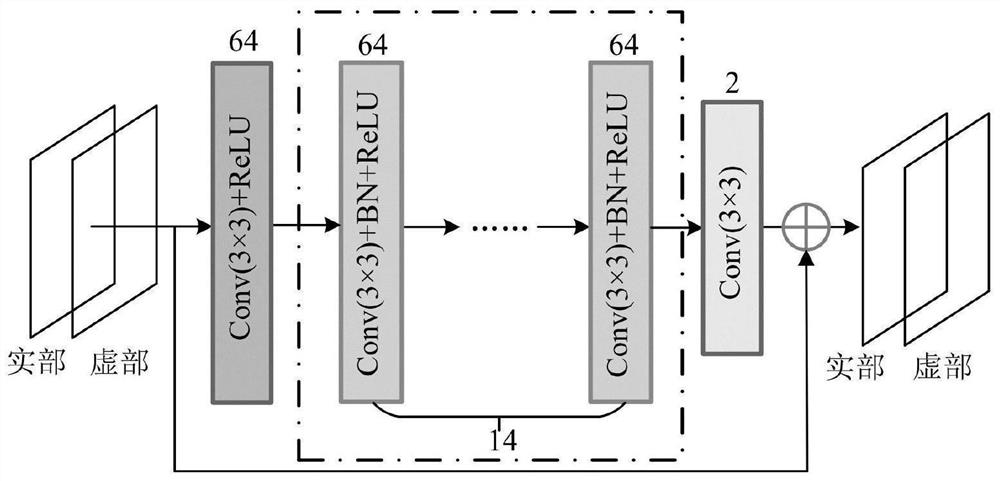

Magnetic resonance reconstruction method based on deep learning and convex set projection

ActiveCN108335339AFix workSolve the technical problems of backpropagation)Image enhancementReconstruction from projectionResonanceForward propagation

The invention discloses a magnetic resonance reconstruction method based on deep learning and convex set projection, and relates to the technical field of magnetic resonance. The method comprises thesteps that S1, a network is constructed according to overlapping structures of multiple convolutional neural network modules and multiple convex set projection layers and shared data, wherein the shared data includes acquired K-space data and coil sensitivity information, and the convex set projection layers are obtained on the basis of the shared data; S2, after the network is constructed, all network parameters are trained through a reverse propagation process and verified; S3, structure and operation characteristics of the network are determined according to the verified network parameters,known test set data is input, the forward propagation of the network is conducted, unknown mapping data is obtained, and magnetic resonance reconstruction is completed. The method solves the problemthat by means of a current magnetic resonance reconstruction technology based on deep learning, only single-channel magnetic resonance data can be supported, and multi-channel magnetic resonance datacannot be processed.

Owner:朱高杰

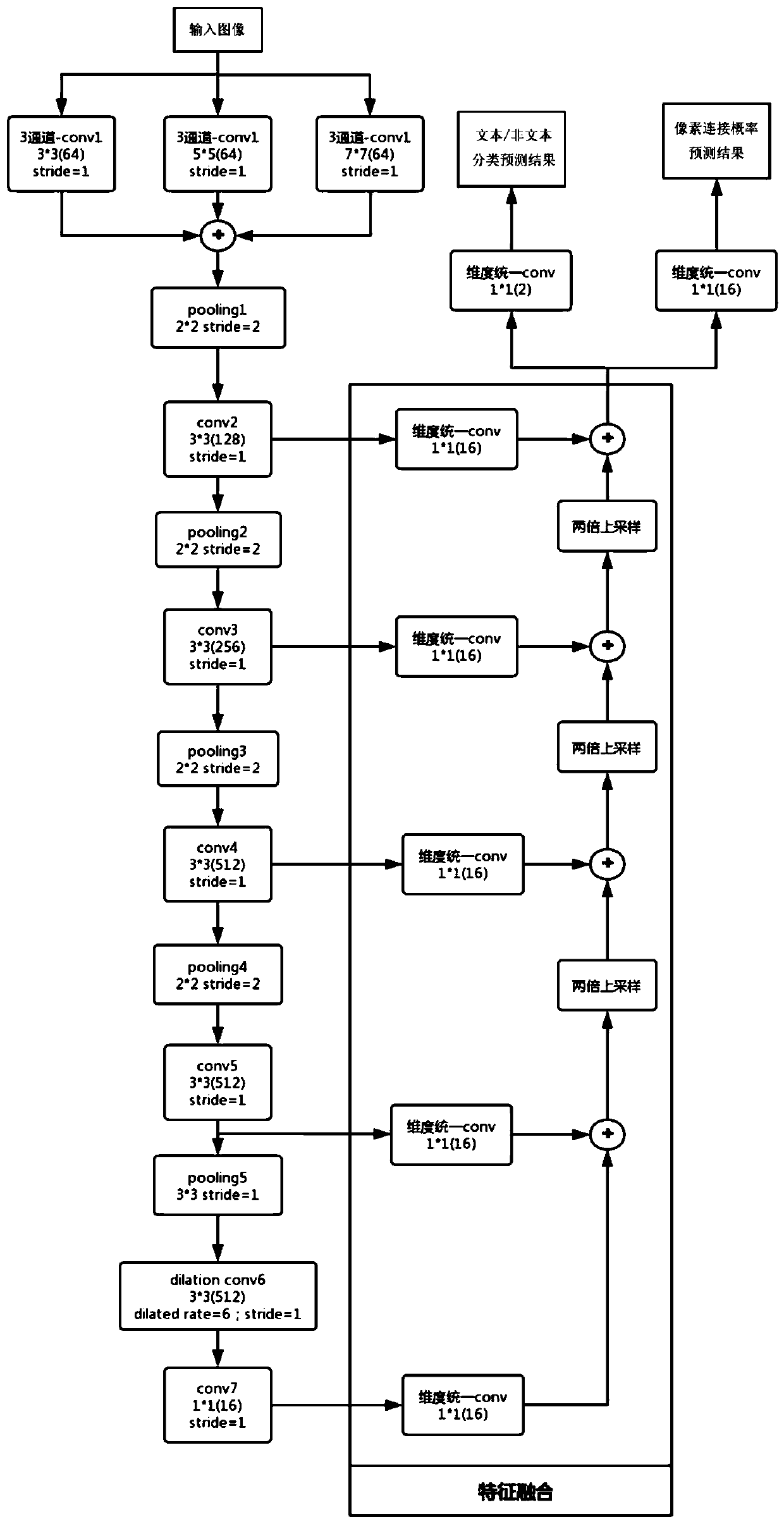

Text detection method, system and equipment based on multi-receptive field depth characteristics and medium

InactiveCN110020676AImprove detection accuracyImprove recallImage analysisCharacter and pattern recognitionText detectionTruth value

The invention discloses a text detection method, system and device based on multi-receptive field depth characteristics and a medium, and the method comprises the steps: obtaining a text detection database, and taking the text detection database as a network training database; building a multi-receptive field depth network model; inputting a natural scene text picture and corresponding textbox coordinate true value data in the network training database into a multi-receptive field depth network model for training; calculating an image mask for segmentation through the trained multi-receptive field depth network model to obtain a segmentation result, and converting the segmentation region into a regression textbox coordinate; and counting the textbox size of the network training database, designing a textbox filtering condition, and screening out a target textbox according to the textbox filtering condition. The method fully utilizes the feature learning capability and classification performance of the deep network model, combines the characteristics of image segmentation, has the characteristics of high detection accuracy, high recall rate, strong robustness and the like, and has agood text detection effect in a natural scene.

Owner:SOUTH CHINA UNIV OF TECH

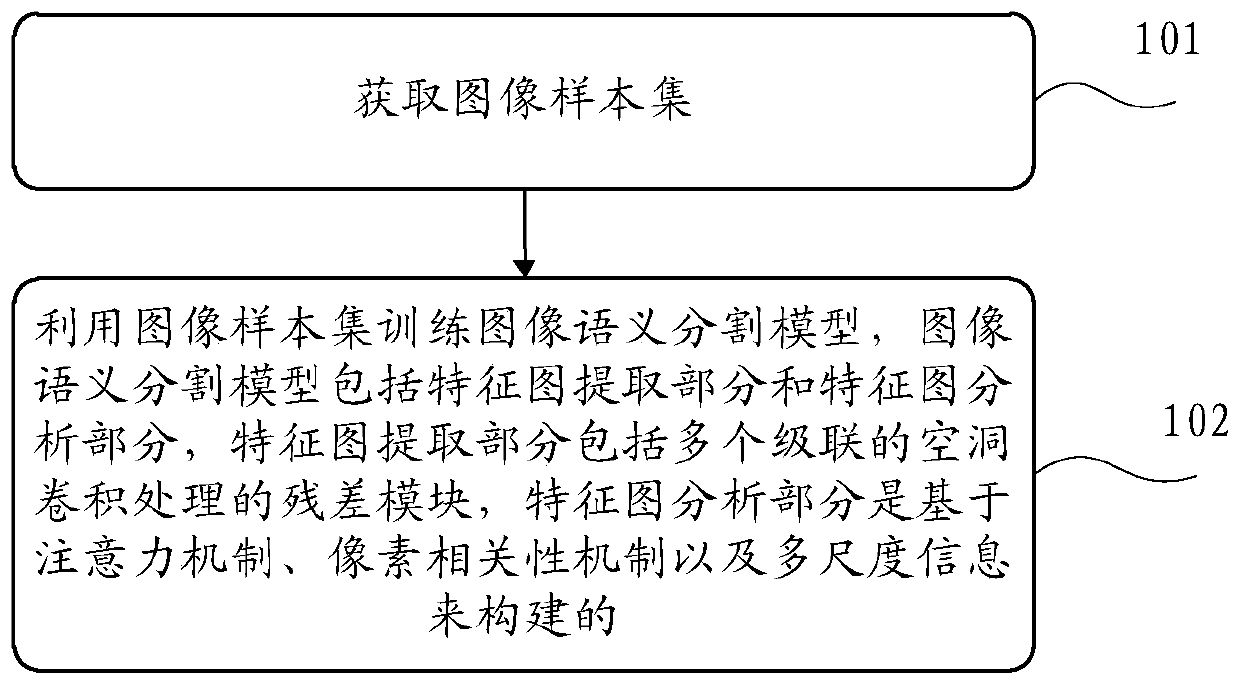

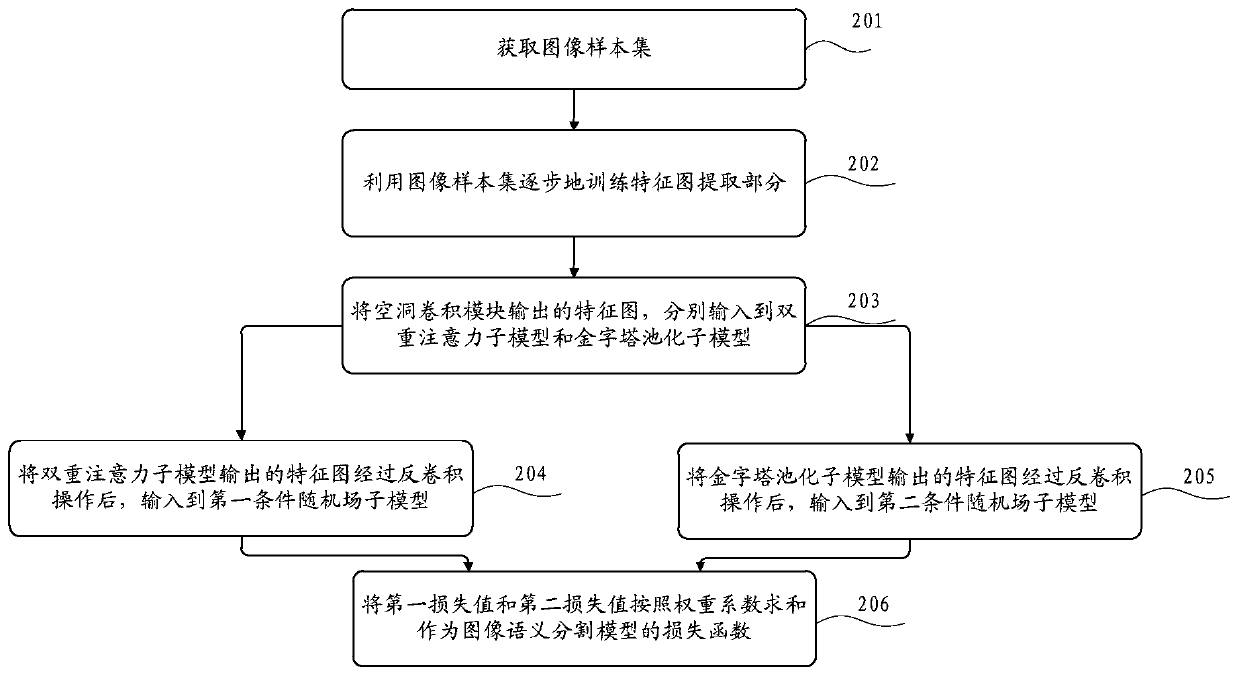

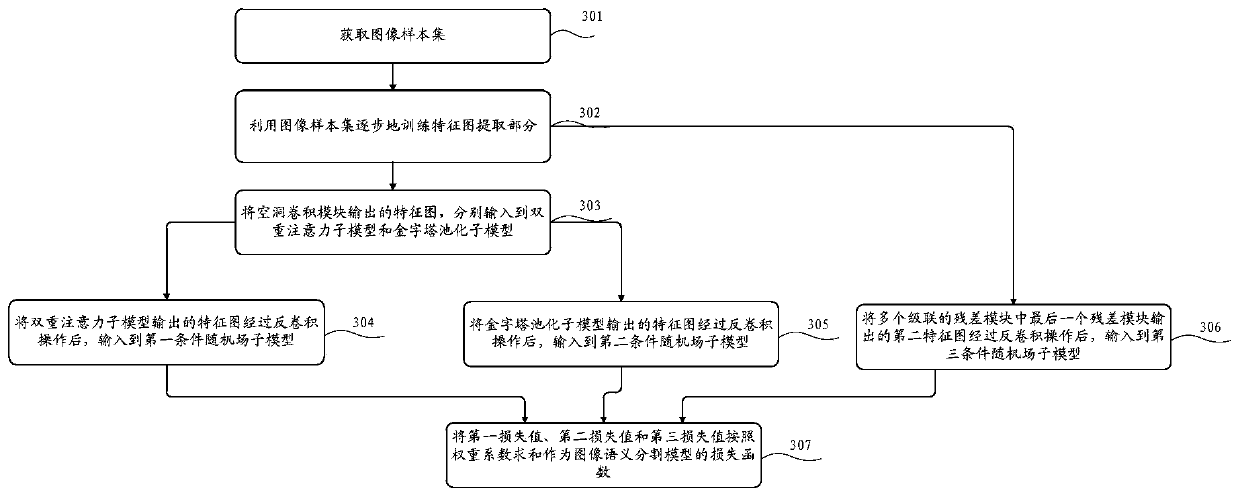

Image semantic segmentation model generation method and device, equipment and storage medium

ActiveCN110188765AExpand the receptive fieldEnhance feature expressionImage enhancementImage analysisPattern recognitionPixel correlation

The invention discloses an image semantic segmentation model generation method and device, equipment and a storage medium. The method comprises the steps of obtaining an image sample set; using the image sample set to train an image semantic segmentation model; wherein the image semantic segmentation model comprises a feature map extraction part and a feature map analysis part, the feature map extraction part comprises a plurality of cascaded hole convolution processing residual error modules, and the feature map analysis part is constructed based on an attention mechanism, a pixel correlationmechanism and multi-scale information. According to the technical scheme provided by the embodiment of the invention, the attention mechanism is effectively utilized to learn the dependency relationship in spatial position and channel dimension, the feature expression capability is enhanced, the pixel correlation mechanism is utilized to make the segmentation result more accurate, and meanwhile,the multi-scale feature information is also utilized to learn a global scene to improve the accuracy of pixel point classification.

Owner:BOE TECH GRP CO LTD

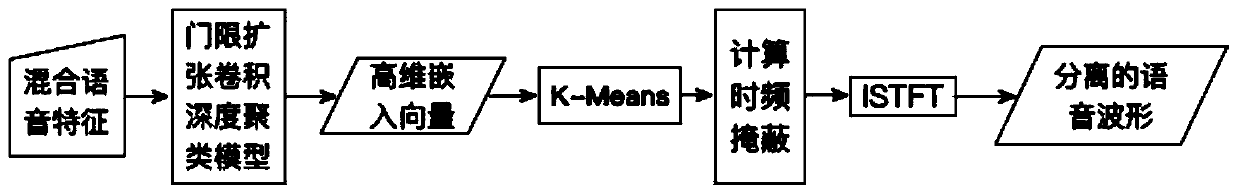

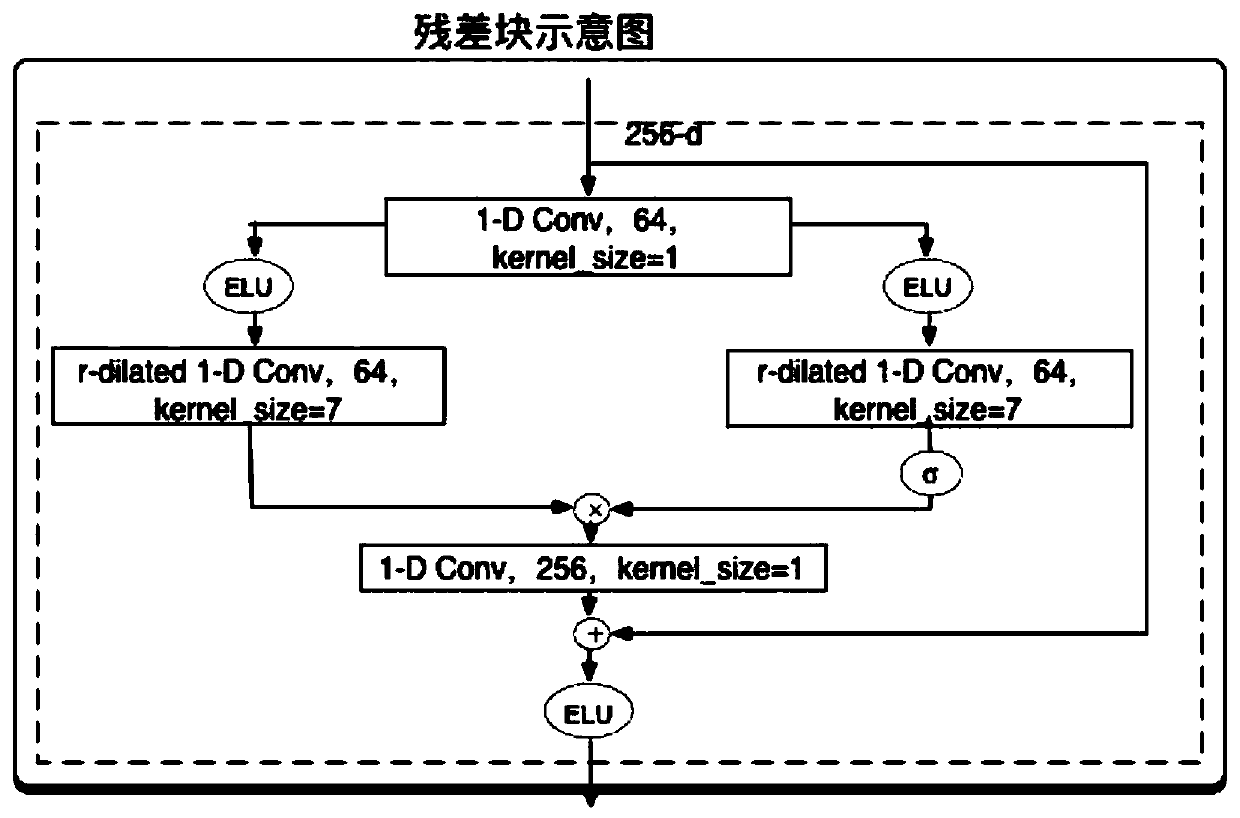

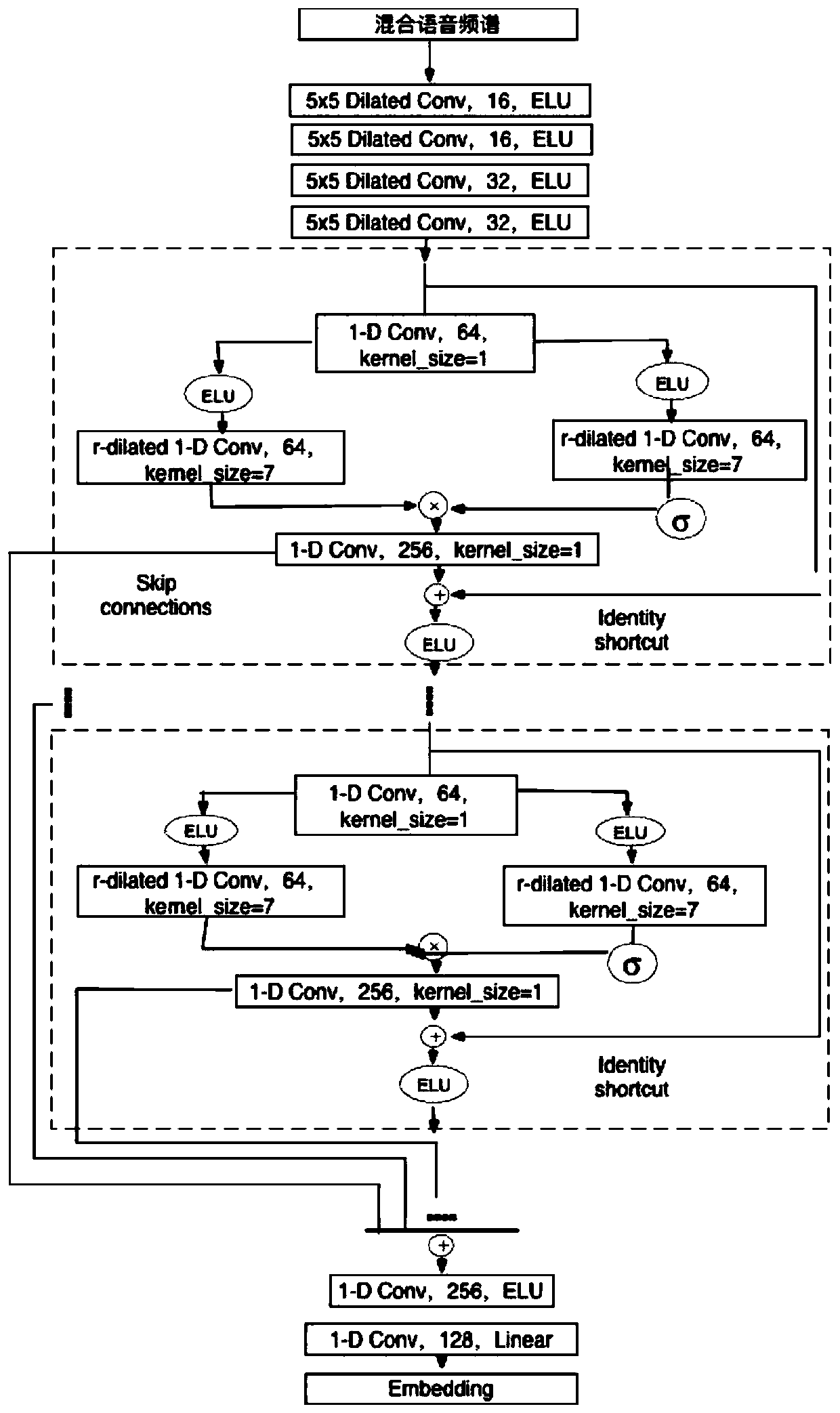

Multi-speaker voice separation method based on convolutional neural network and depth clustering

InactiveCN110459240AExpand the receptive fieldFew parametersSpeech analysisCharacter and pattern recognitionFrequency spectrumSound sources

The invention discloses a multi-speaker voice separation method based on a convolutional neural network and depth clustering. The method comprises the following steps: 1, a training stage: respectively performing framing, windowing and short-time Fourier transform on single-channel multi-speaker mixed voice and corresponding single-speaker voice; and training mixed voice amplitude frequency spectrum and single-speaker voice amplitude frequency spectrum as an input of a neural network model; 2, a testing stage: taking the mixed voice amplitude frequency spectrum as an input of a threshold expansion convolutional depth clustering model to obtain a high-dimensional embedded vector of each time-frequency unit in the mixed frequency spectrum; using a K-means clustering algorithm to classify thevectors according to a preset number of speakers, obtaining a time-frequency masking matrix of each sound source by means of the time-frequency unit corresponding to each vector, and multiplying thematrixes with the mixed voice amplitude frequency spectrum respectively to obtain a speaker frequency spectrum; and combining a mixed voice phase frequency spectrum according to the speaker frequencyspectrum, and obtaining a plurality of separate voice time domain waveform signals by adopting short-time Fourier inverse transform.

Owner:XINJIANG UNIVERSITY

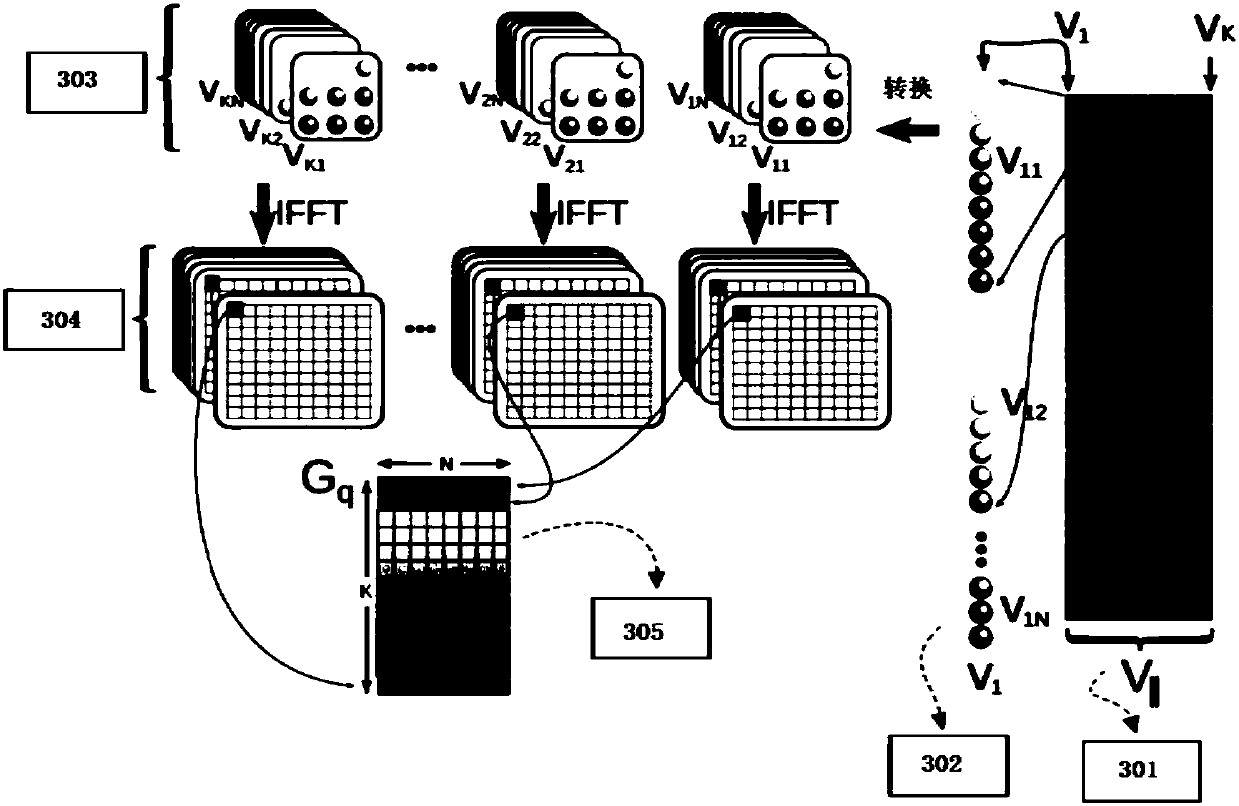

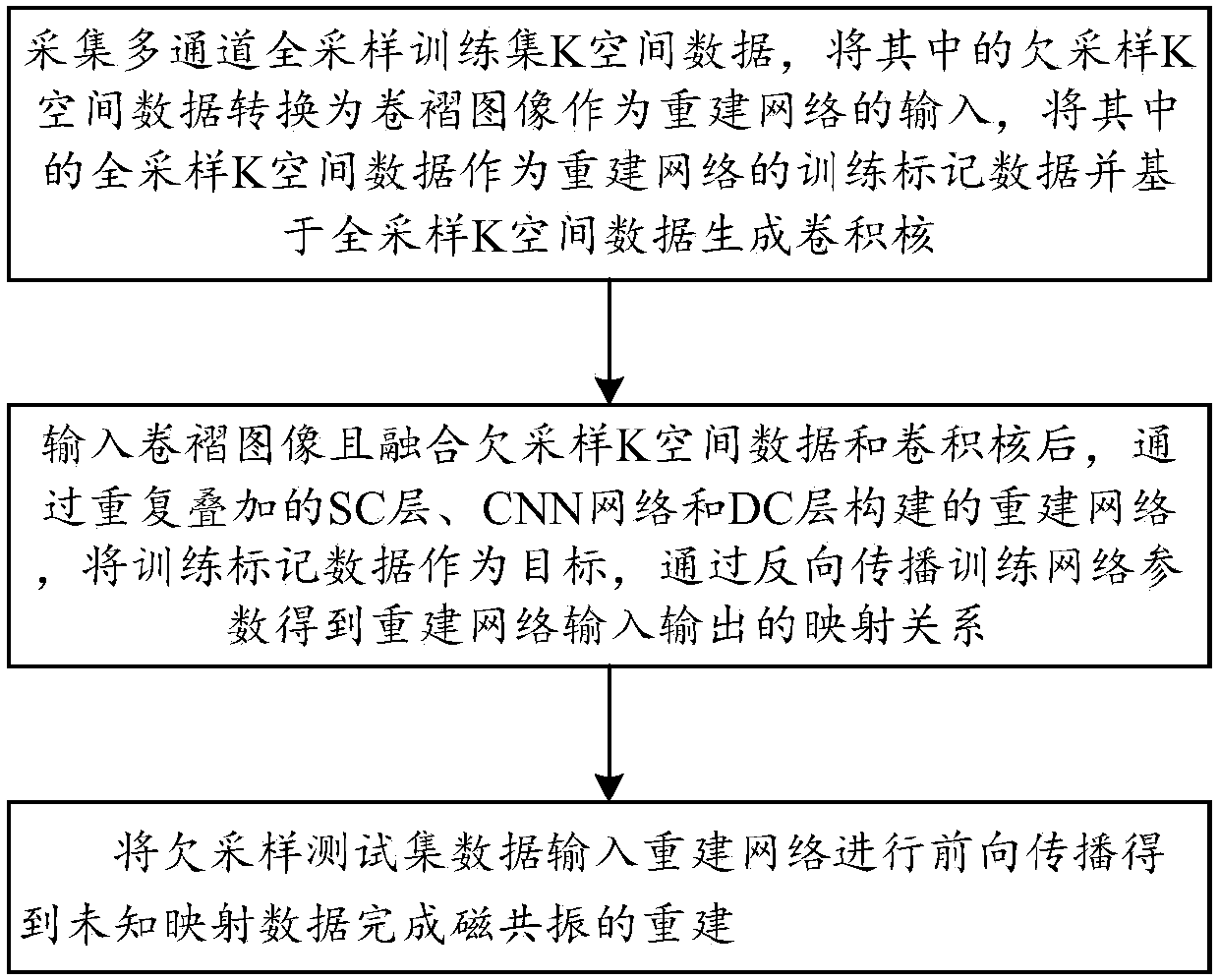

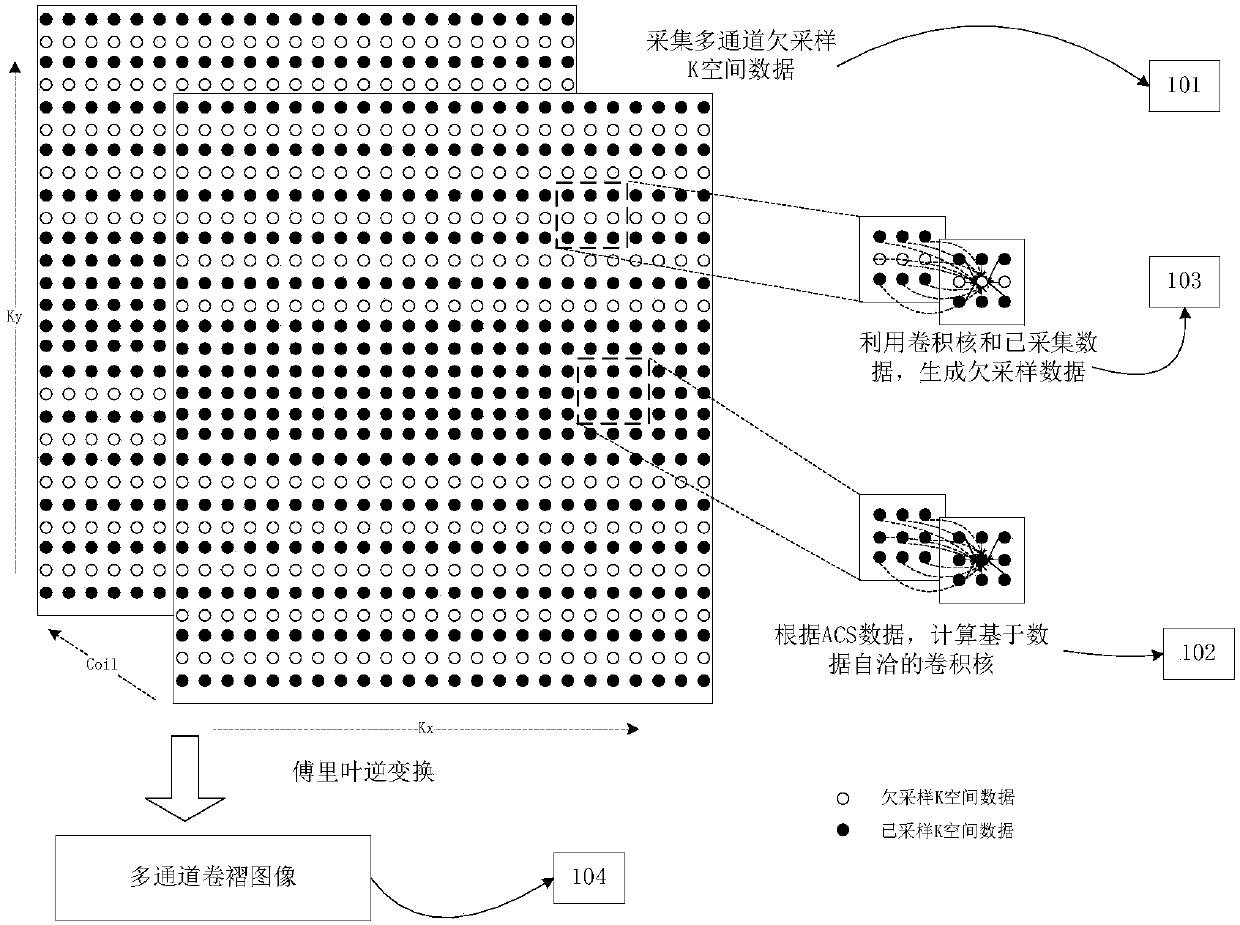

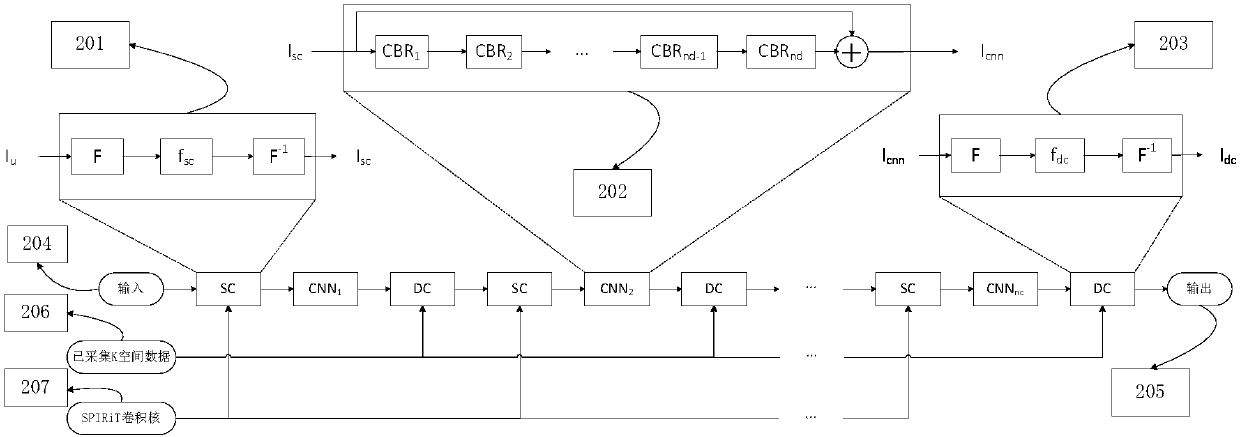

Magnetic resonance multi-channel reconstruction method based on deep learning and data self-consistence

ActiveCN108535675APrecise end-to-end mapping relationshipQuality improvementMeasurements using NMR imaging systemsChannel dataReconstruction method

The invention discloses a magnetic resonance multi-channel reconstruction method based on deep learning and data self-consistence and belongs to the magnetic resonance reconstruction method field. Themethod includes the following steps that: multi-channel full-sampling training set data are collected, under-sampled data are converted into wrap-around images which are used as the input of a reconstruction network, and full-sampled data are used as training mark data, and a convolution kernel is generated based the full-sampled data; 2, the data in the step 1 are inputted, on the basis of the reconstruction network constructed by means of the repeated superposition of SC layers, CNNs, and DC layers, and with the training mark data adopted as an objective, network parameters are trained withback propagation, so that mapping relationships between the input and output of the reconstruction network are obtained; and 3, test set data are inputted into the reconstruction network so as to besubjected to forward propagation, so that unknown mapping data are obtained, and the reconstruction of magnetic resonance is completed. With the method adopted, a problem that an existing resonance reconstruction method can only process single-channel data can be solved; more stable and accurate end-to-end mapping relationships can be obtained; the quality of magnetic resonance reconstruction canbe fundamentally improved; and magnetic resonance scanning time can be significantly shortened.

Owner:朱高杰

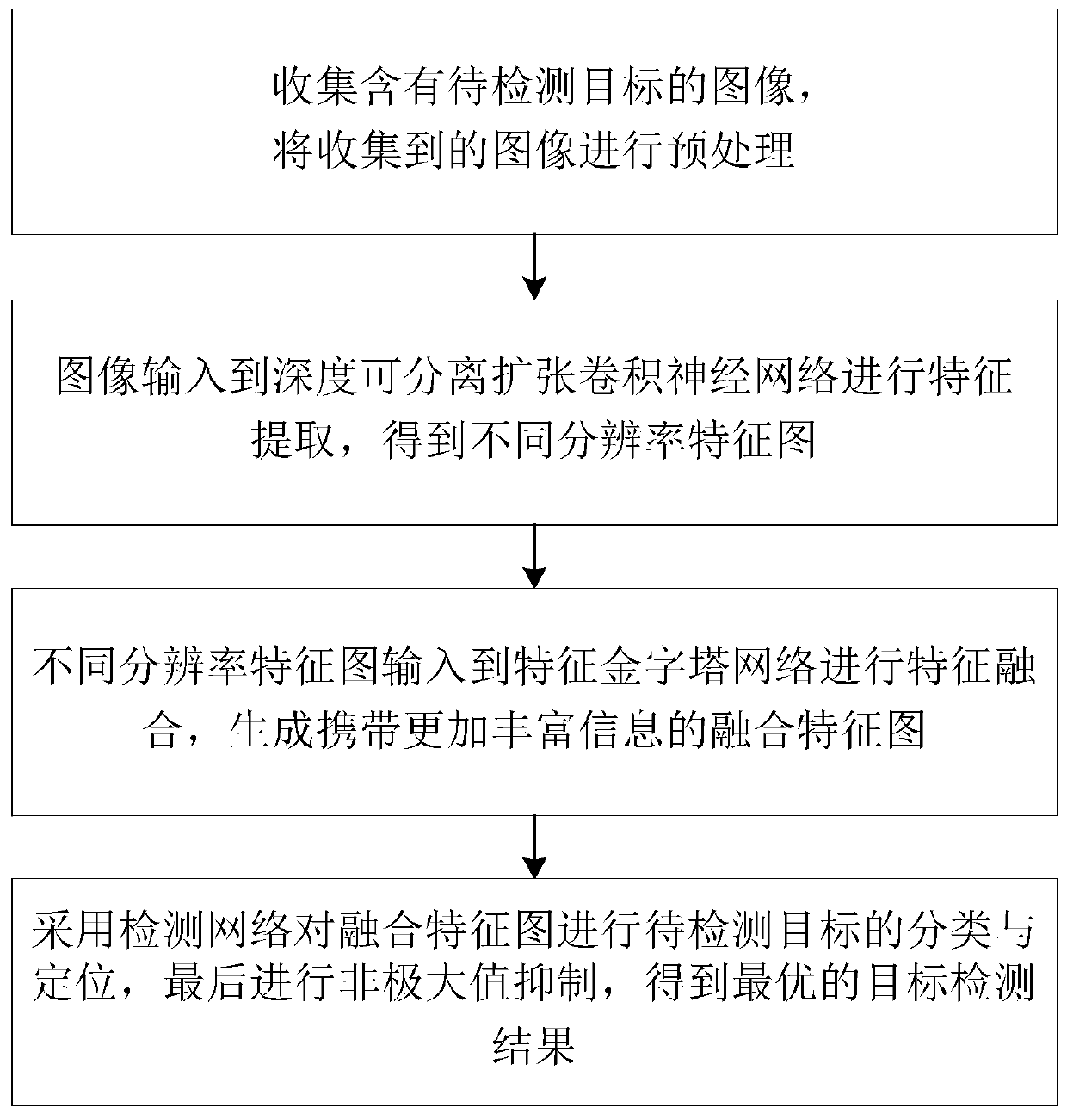

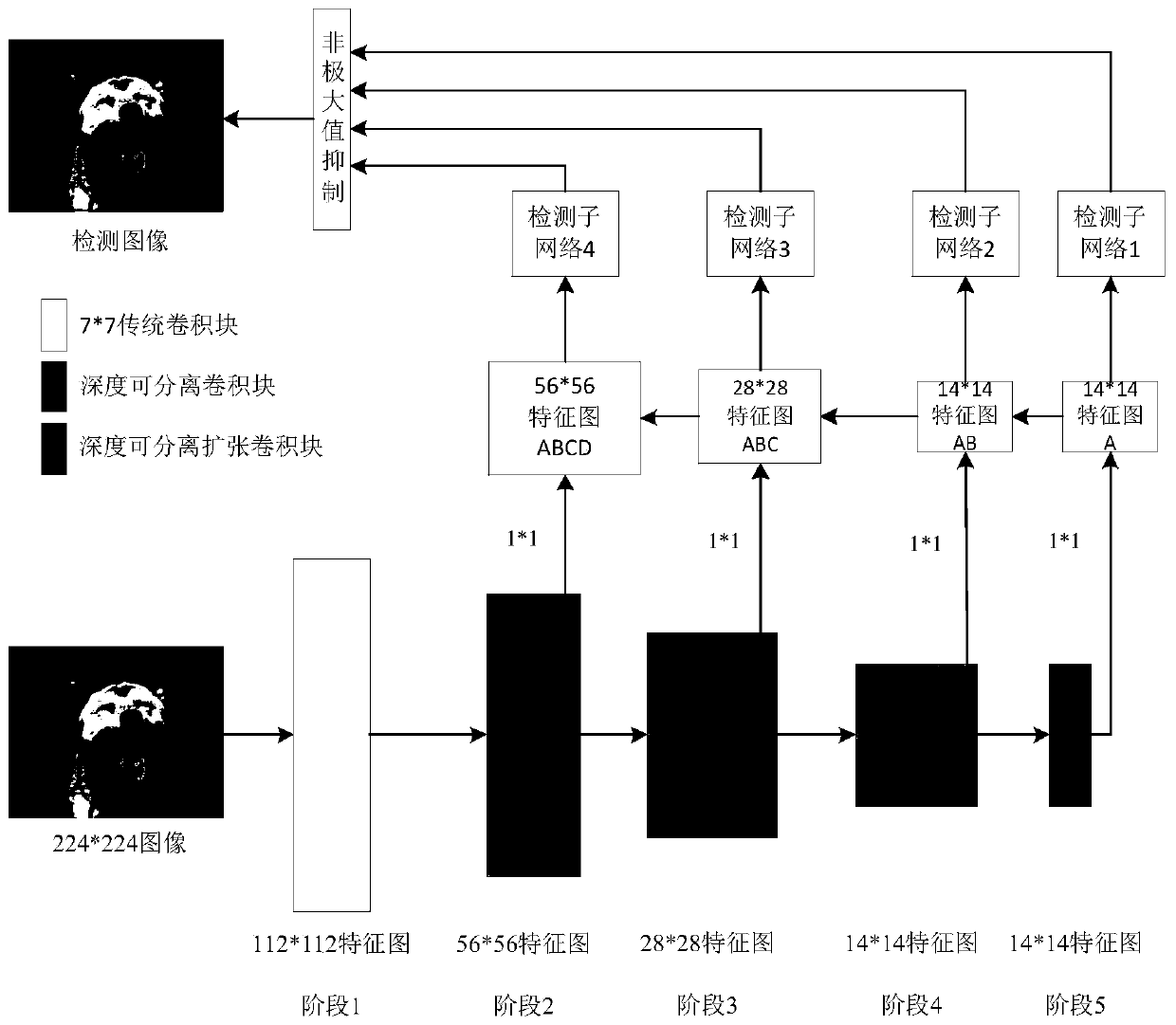

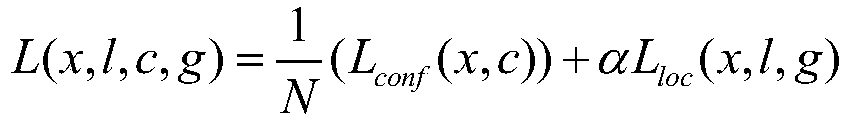

Lightweight deep network image target detection method suitable for Raspberry Pi

ActiveCN110287849AExpand the receptive fieldImprove return rateCharacter and pattern recognitionNeural architecturesDeep neural networksImage resolution

A lightweight deep network image target detection method applicable to Raspberry Pi belongs to the field of deep learning and target detection, and comprises the following steps: firstly, collecting an image containing a to-be-detected target, and preprocessing the collected image for network training; secondly, inputting the preprocessed image into a depth separable expansion convolutional neural network for feature extraction to obtain feature maps with different resolutions; inputting the feature maps with different resolutions into a feature pyramid network for feature fusion, and generating a fusion feature map carrying richer information; and then carrying out classification and positioning of a to-be-detected target on the fusion feature map by adopting a detection network, and finally carrying out non-maximum suppression to obtain an optimal target detection result. The image target detection method based on the deep neural network overcomes the difficulties that the image target detection method based on the deep neural network is difficult to realize on the Raspberry Pi platform and the image target detection method based on the lightweight network is low in detection accuracy on the Raspberry Pi platform.

Owner:BEIJING UNIV OF TECH

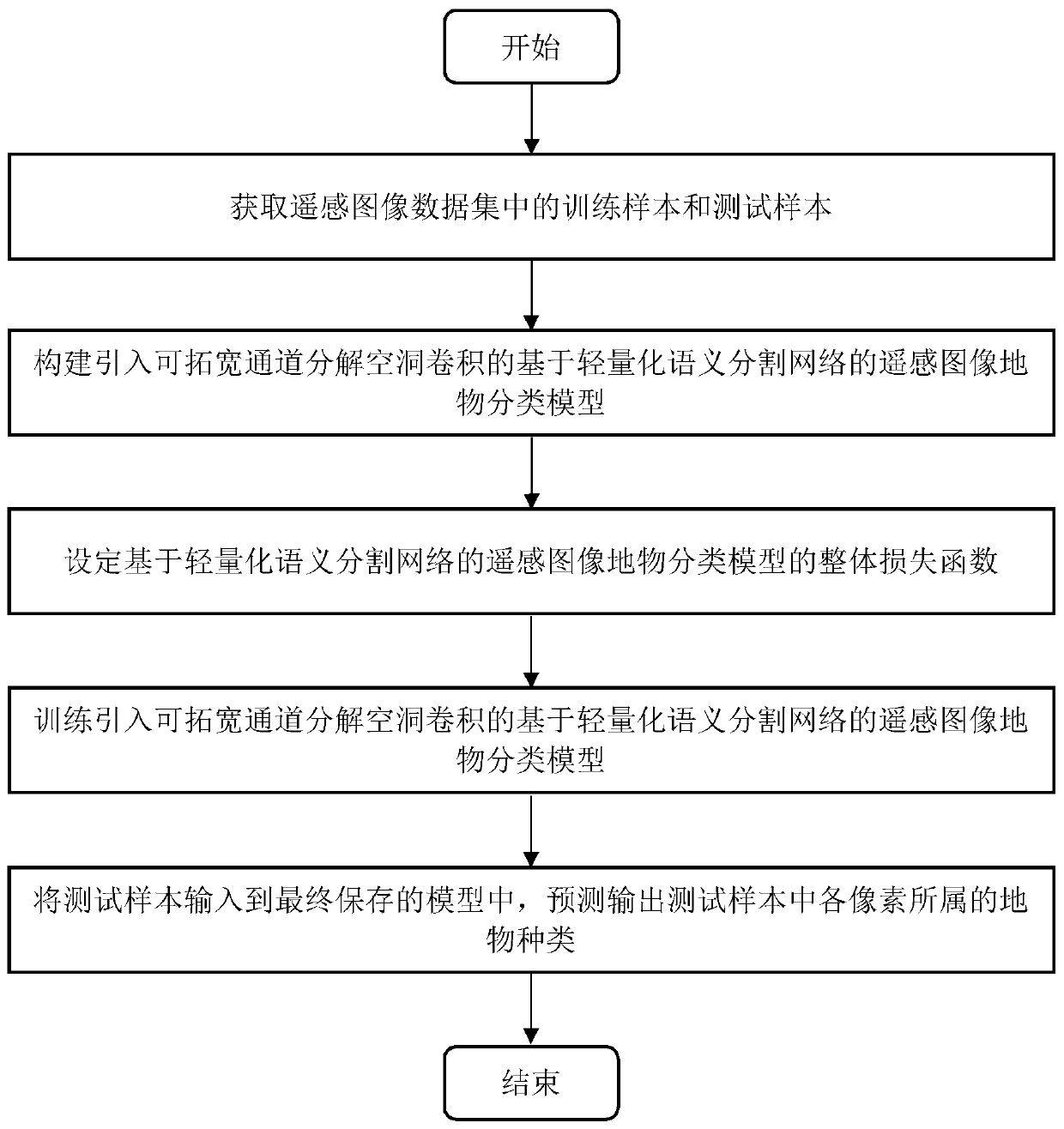

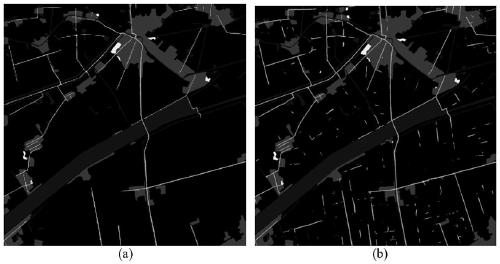

Remote sensing image terrain classification method based on lightweight semantic segmentation network

ActiveCN111079649APreserve spatial featuresMulti-context featuresScene recognitionData setTest sample

The invention discloses a remote sensing image terrain classification method based on a lightweight semantic segmentation network, and mainly solves the problems of low remote sensing image terrain classification precision and low training speed caused by insufficient utilization of image space and channel feature information and a huge model in an existing method. According to the scheme, the method includes obtaining a training sample and a test sample in a remote sensing image terrain classification data set; constructing and introducing a lightweight remote sensing image terrain classification model capable of broadening channel decomposition hole convolution, and designing an overall loss function of a concerned terrain edge; inputting a training sample into the constructed terrain classification model for training to obtain a trained model; and inputting the test sample into the trained model, and predicting and outputting a terrain classification result in the remote sensing image. According to the method of the invention, the feature expression capability is improved, the network parameters are reduced, the average precision and the training speed of remote sensing image terrain classification are improved, and the method can be used for obtaining the terrain distribution condition of a remote sensing image.

Owner:XIDIAN UNIV

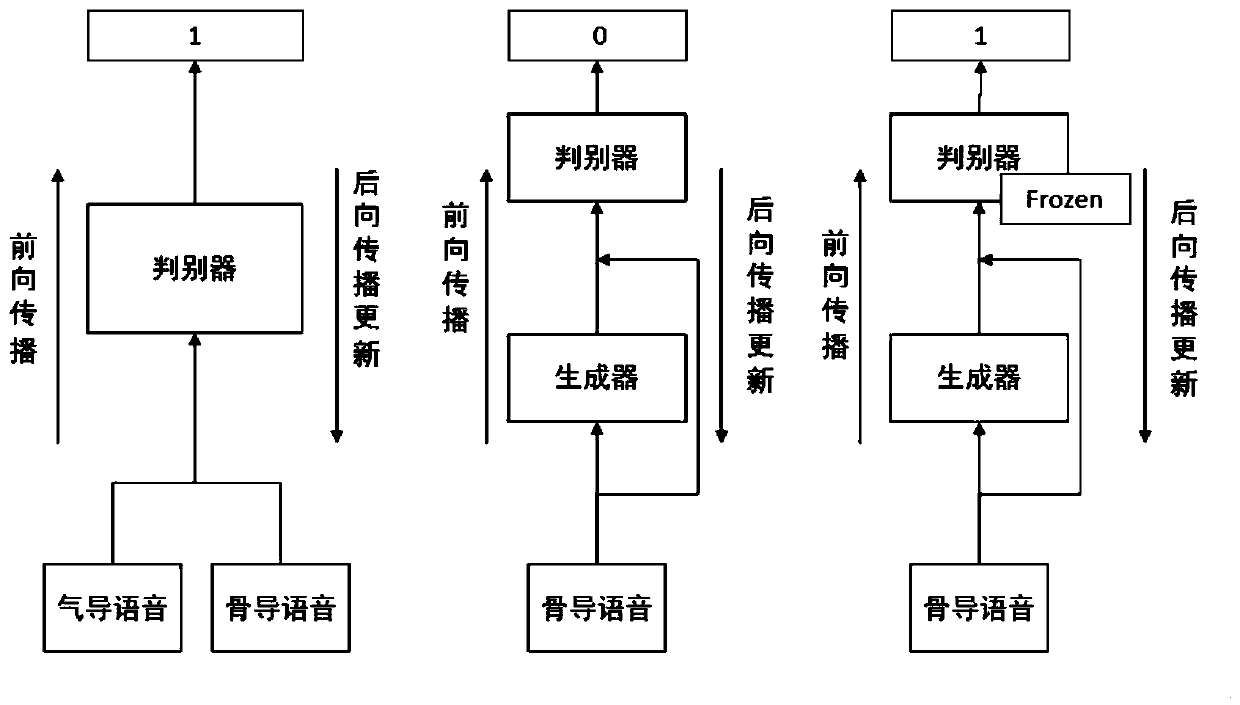

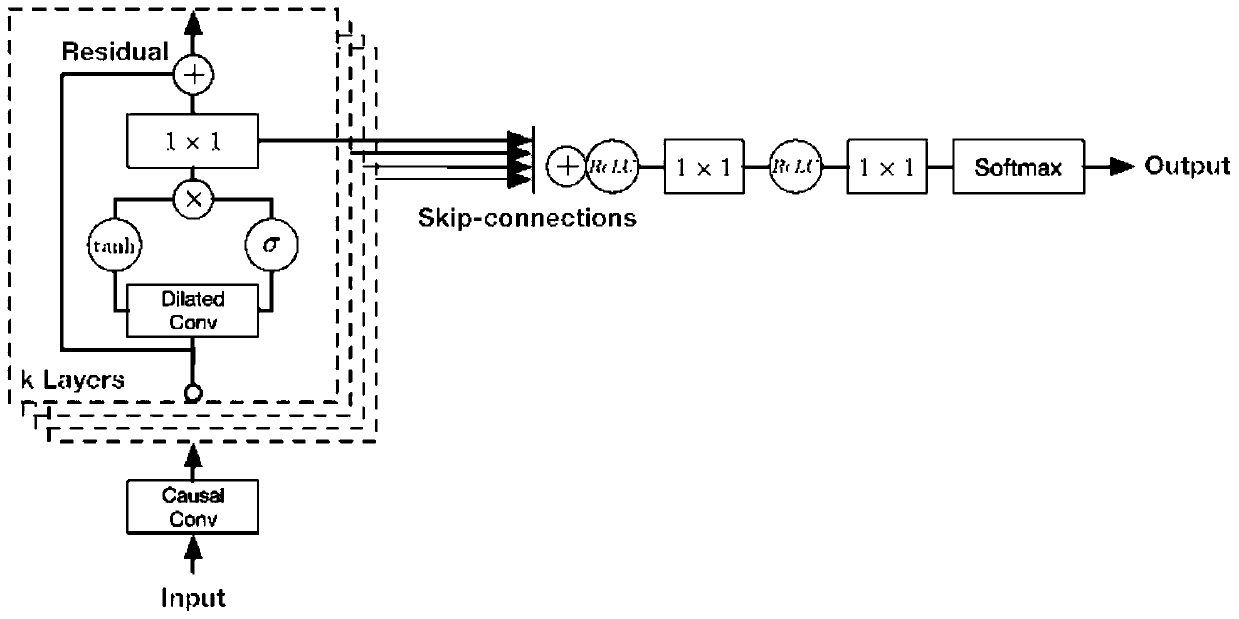

Dilated causal convolution generative adversarial network end-to-end bone conduction speech blind enhancement method

ActiveCN110136731AEnhancement effect is goodExpand the receptive fieldSpeech analysisDiscriminatorGenerative adversarial network

The invention relates to the field of artificial intelligence and medical rehabilitation instruments, aims to provide an end-to-end bone conduction speech enhancement method, and solves the problems of the absence of high-frequency components of bone conduction speech, poor auditory perception, communication under the background of strong noise and the like. According to the dilated causal convolution generative adversarial network end-to-end bone conduction speech blind enhancement method, a bone conduction original audio sampling point is taken as input data, a pure air conduction original audio is taken as an output target of training, bone conduction speech is input into a trained dilated causal convolution generative adversarial network, the dilated causal convolution generative adversarial network comprises a generator and a discriminator, the generator adopts dilated causal convolution, and an enhanced sample is output; the discriminator inputs original audio data and the enhanced speech sample generated by the generator, and a convolution layer in the discriminator is used for extracting deep nonlinear features, thereby performing deep similarity judgment of the sample. Thedilated causal convolution generative adversarial network end-to-end bone conduction speech blind enhancement method is mainly applied to the design and manufacturing occasion of bone conduction speech enhancement equipment.

Owner:TIANJIN UNIV

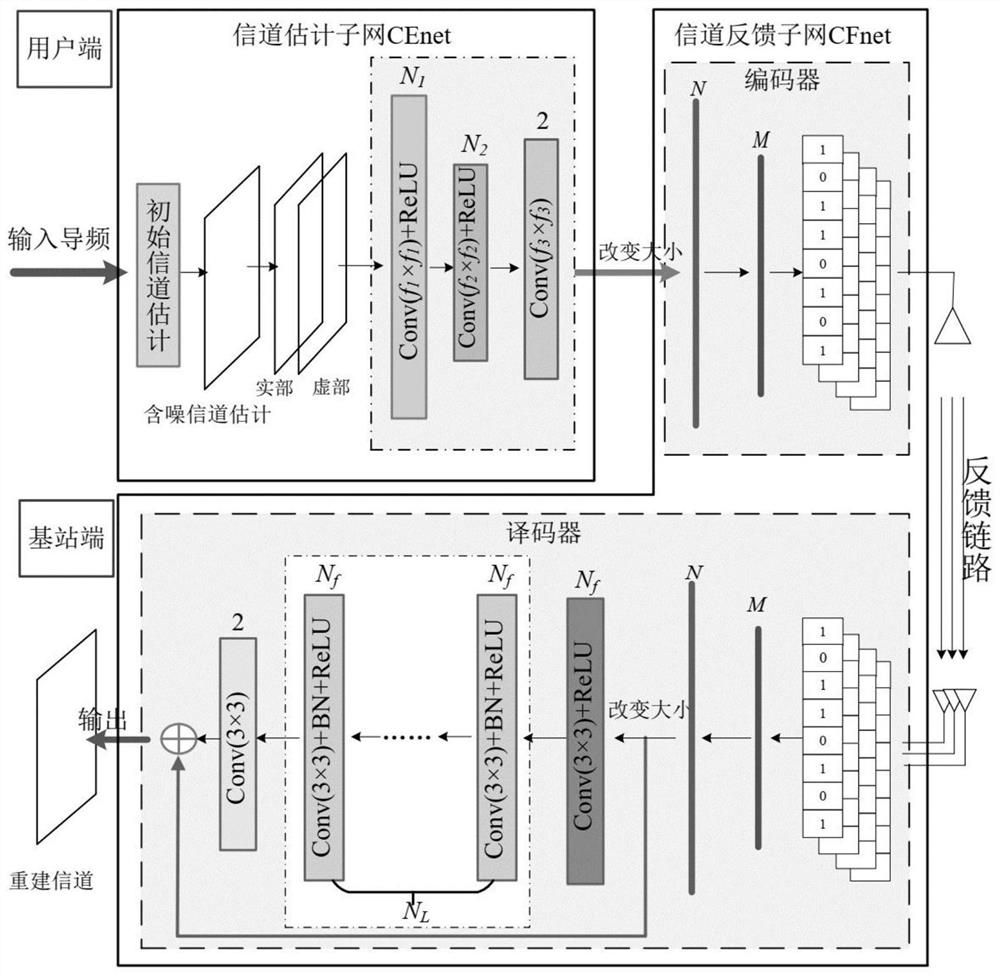

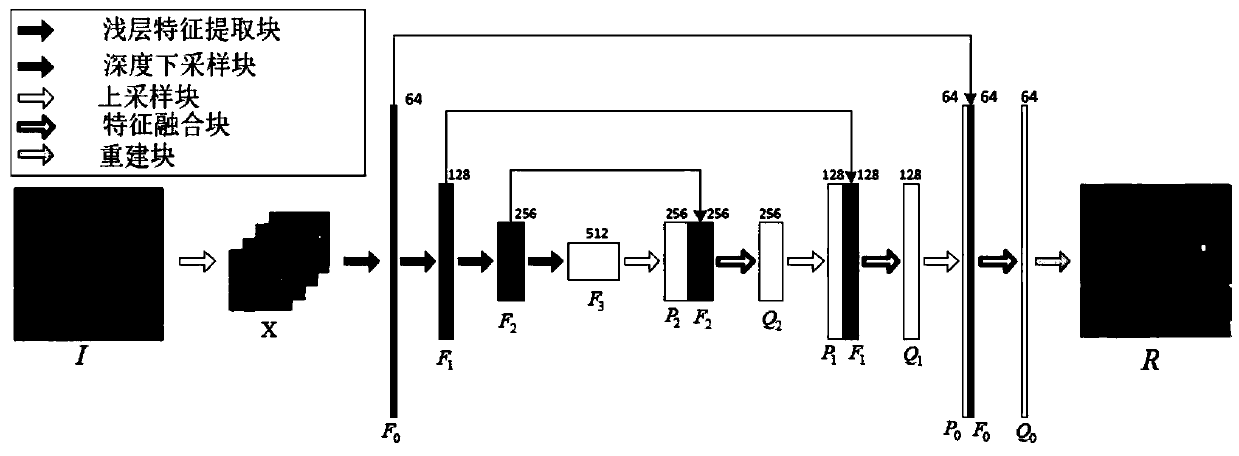

Large-scale MIMO channel joint estimation and feedback method based on deep learning

ActiveCN112737985AExpand the receptive fieldImprove reconstruction effectRadio transmissionChannel estimationChannel state informationAlgorithm

The invention discloses a large-scale MIMO channel joint estimation and feedback method based on deep learning. The method comprises the steps: performing initial channel estimation at a user side; constructing a channel estimation subnet CEnet, and minimizing the estimation error through training; constructing a channel feedback subnet; at the user side, inputting the optimized channel estimation value, and outputting compressed code words; at the base station end, inputting the code words, and outputting the reconstructed channel matrix. And the two subnets jointly form a channel estimation and feedback joint network CEFnet. A previous CSI feedback network assumes that perfect channel state information is obtained, does not consider that a channel in practice is obtained by estimation, and has errors and noise. According to the invention, a complete downlink channel estimation and feedback process is realized by constructing a channel estimation and feedback joint network CEFnet, the purpose of eliminating errors and noise is achieved by using a brand new network architecture, and the reconstruction precision is improved while the feedback overhead is reduced.

Owner:SOUTHEAST UNIV

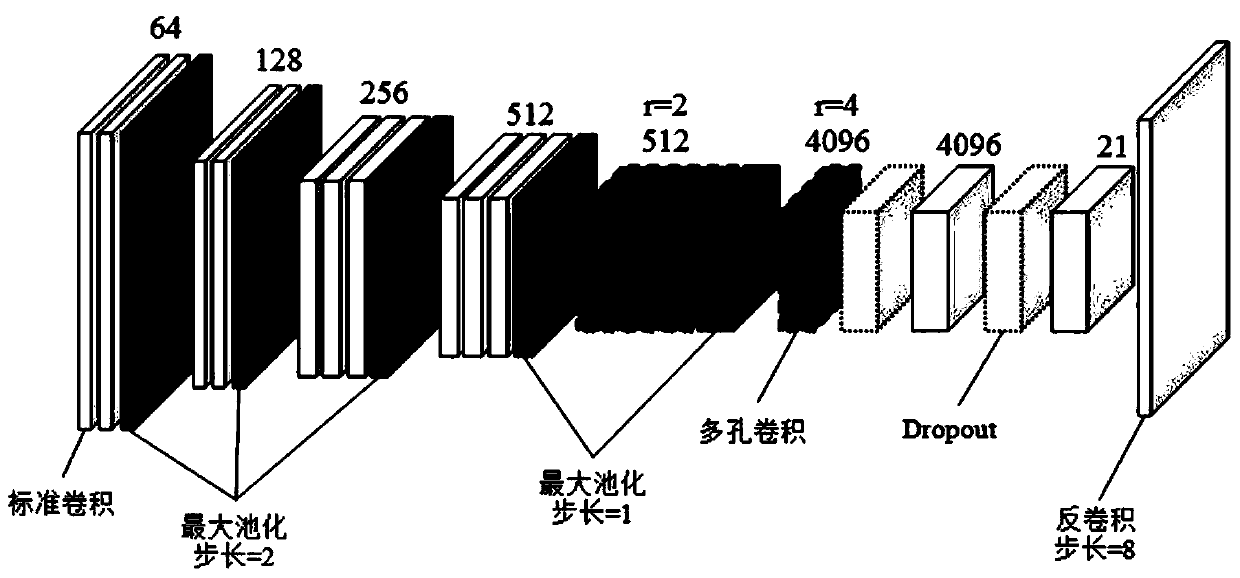

Semantic segmentation method based on improved full convolutional neural network

InactiveCN108921196APrecise Control of ResolutionExpand the receptive fieldCharacter and pattern recognitionStochastic gradient descentAlgorithm

The invention discloses a semantic segmentation method based on an improved full convolutional neural network. The semantic segmentation method comprises the steps of: acquiring training image data; inputting the training image data into a porous full convolutional neural network, and obtaining a size-reduced feature map through a standard convolution pooling layer; extracting denser features while maintaining the feature map size through a porous convolutional layer; predicting the feature map pixel-by-pixel to obtain a segmentation result; using the stochastic gradient descent method SGD totrain parameters in the porous convolutional neural network in the training; acquiring image data that needs to be subjected to semantic segmentation, and inputting the image data the trained porous convolutional neural network, and obtaining a corresponding semantic segmentation result. The invention can improve the problem that the feature map of the final upsampling recovery in the full convolution network loses sensitivity to the details of the image, and effectively expands the receptive field of a filter without increasing the number of parameters and the amount of calculation.

Owner:NANJING UNIV OF POSTS & TELECOMM

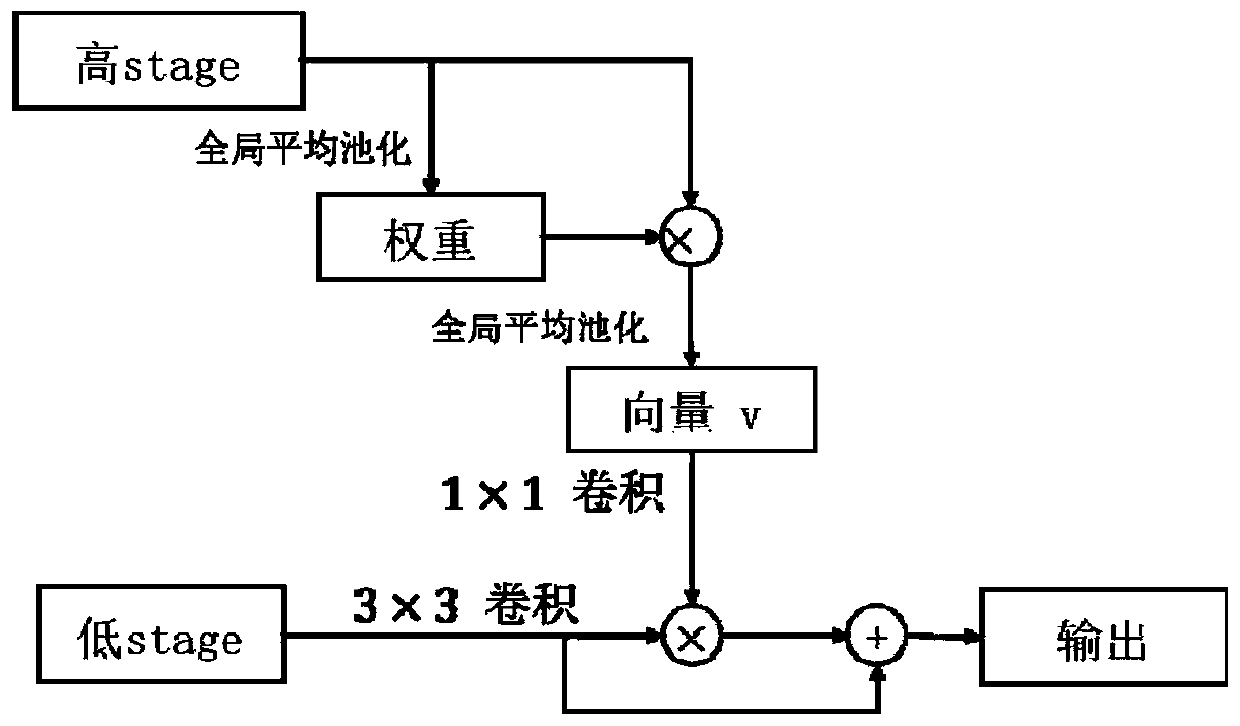

Lane line detection method based on structural information

ActiveCN111242037AImprove featuresInhibitory responseCharacter and pattern recognitionNeural architecturesEngineeringComputer vision

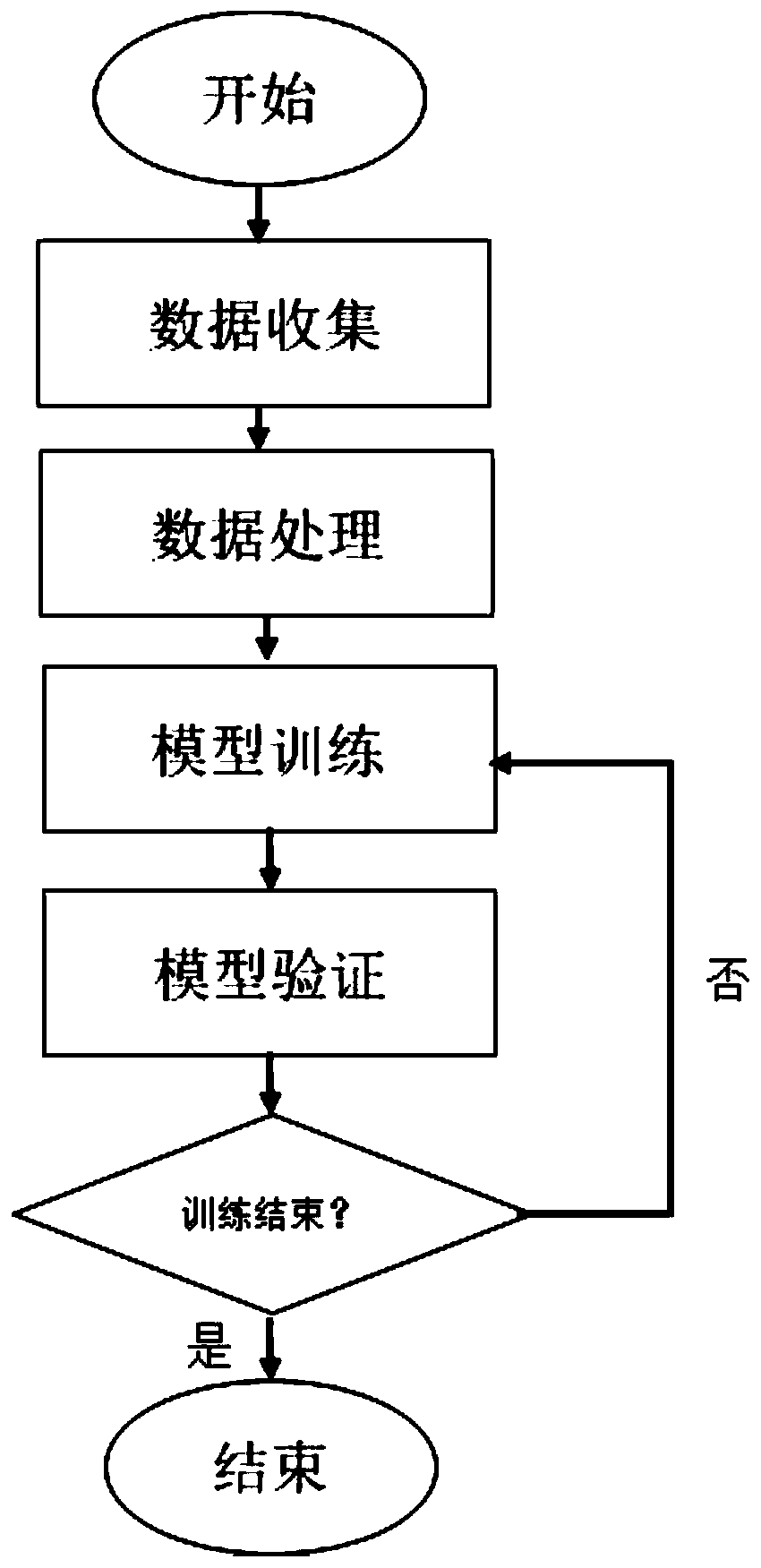

The invention discloses a lane line detection method based on structural information. The lane line detection method comprises the following steps: 1) acquiring data; 2) preprocessing the data; 3) constructing a model; 4) defining a loss function; 5) training a model; and 6) verifying the model. According to the method, the multi-scale features of the image are extracted by combining the deep convolutional neural network, the features of a lane line can be enhanced by a semantic information guided attention mechanism, the structural features of the lane line can be captured by multi-scale deformable convolution, the segmentation accuracy is improved by a decoding network, and the detection of the lane line is completed more accurately.

Owner:SOUTH CHINA UNIV OF TECH

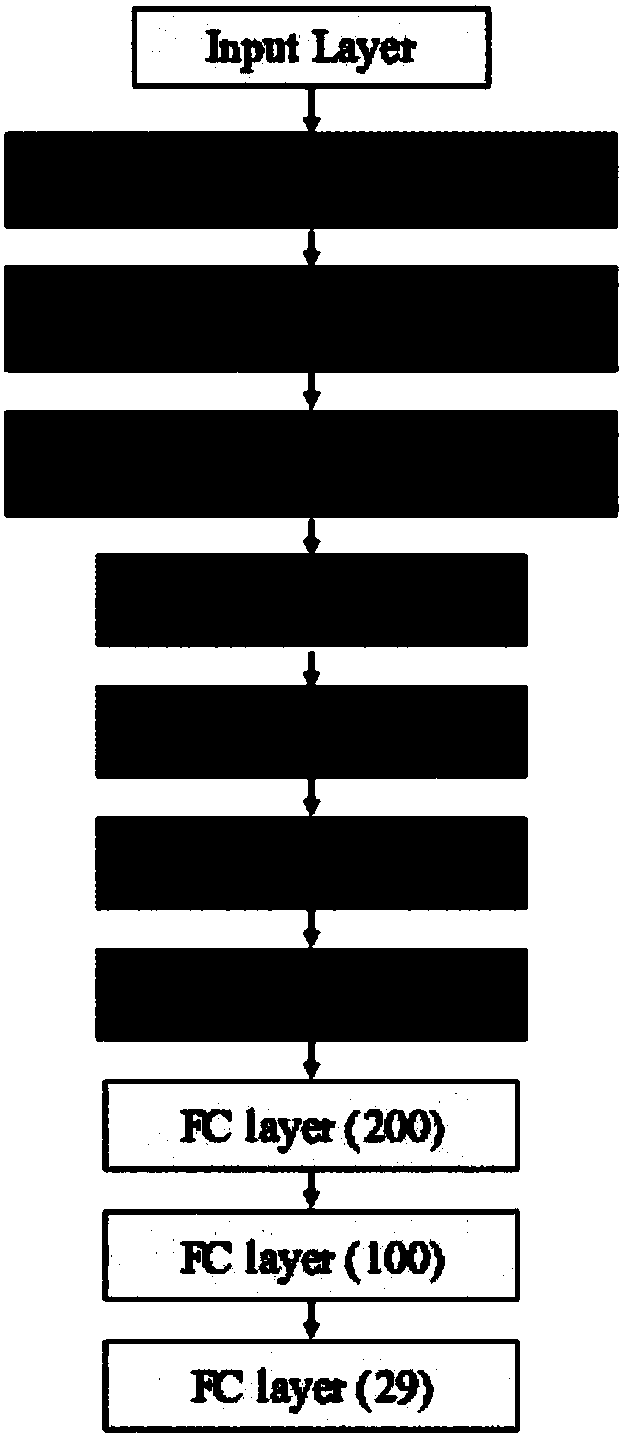

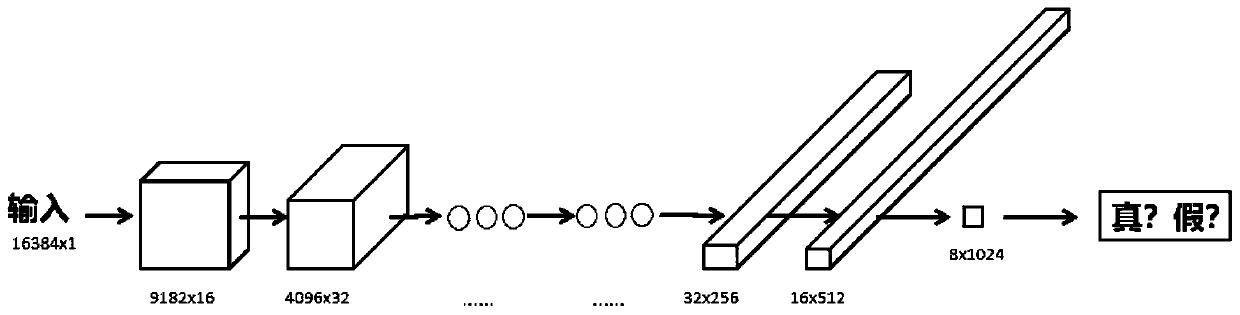

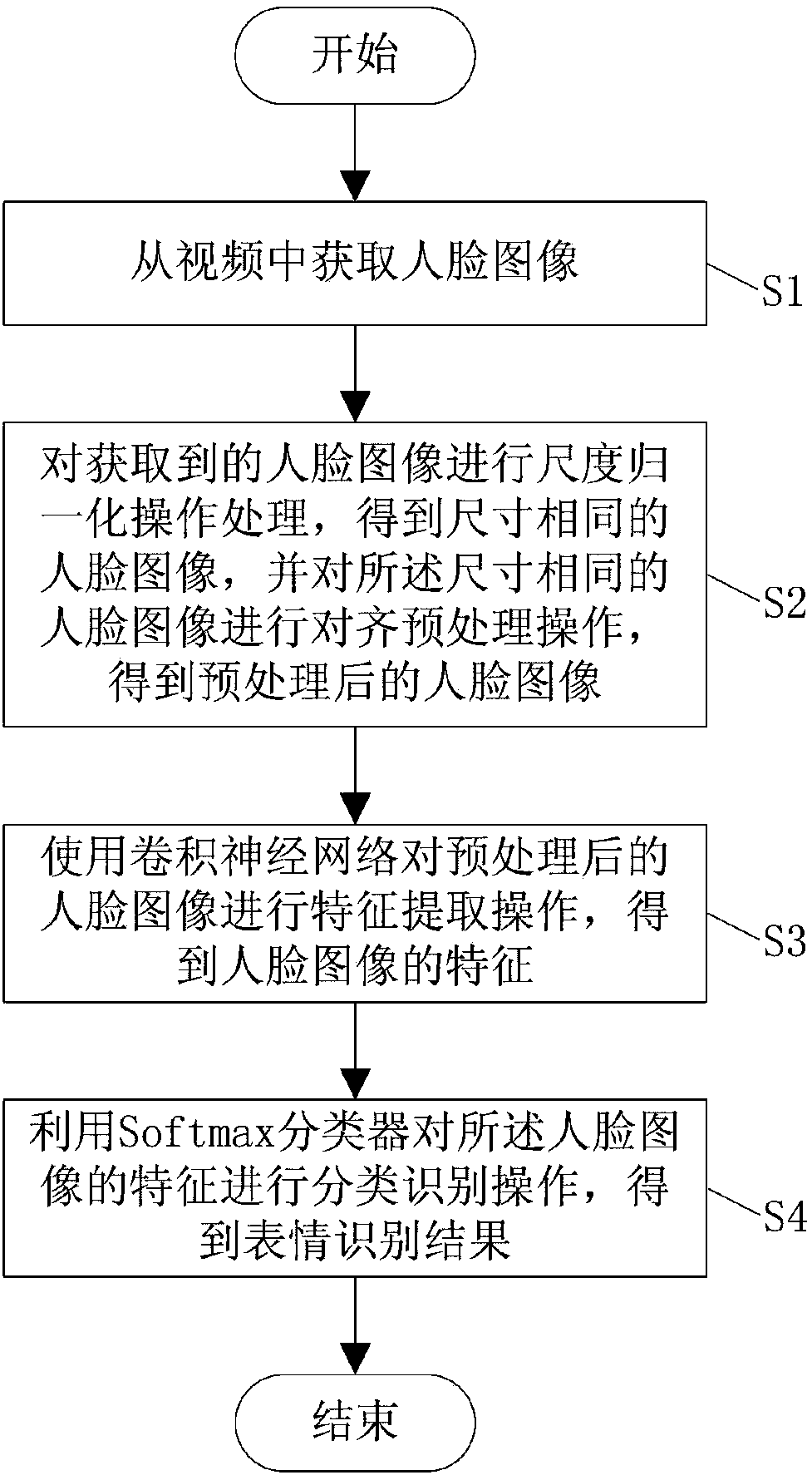

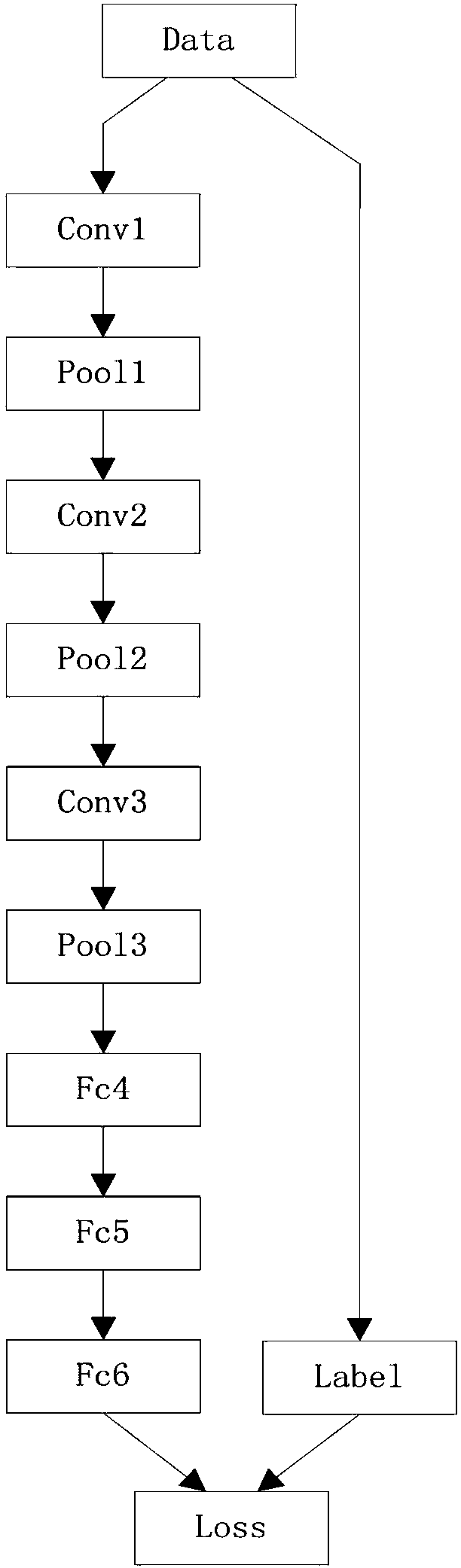

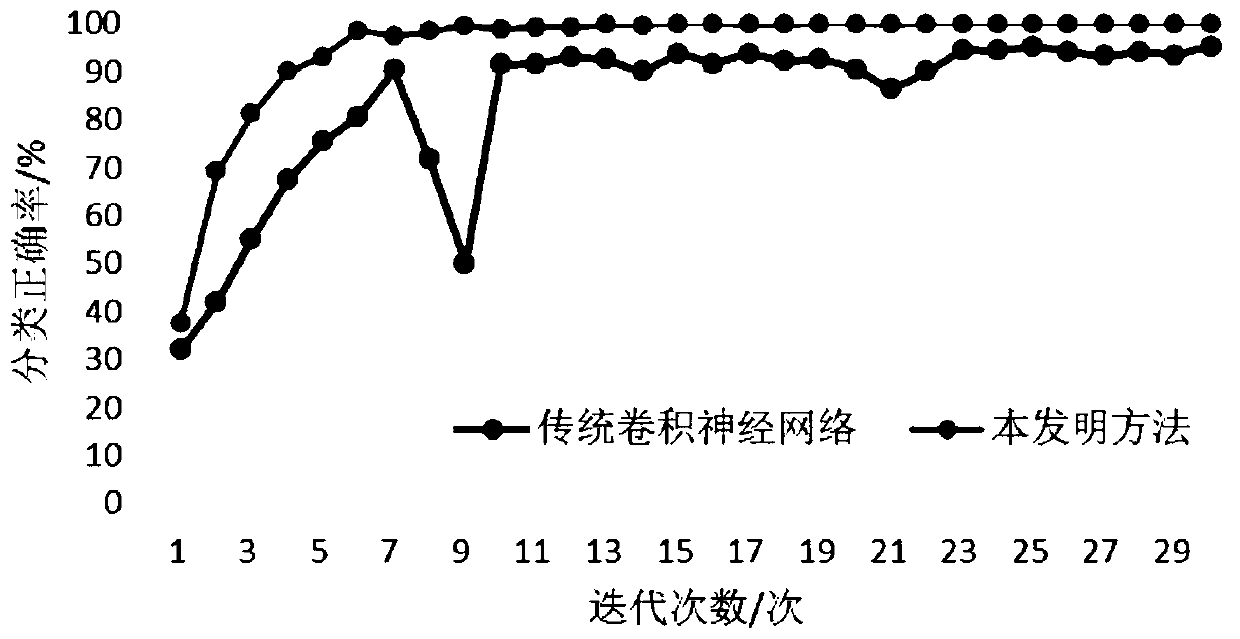

Convolutional neural network-based human face expression identification method

InactiveCN108256426AImprove accuracyFew adjustable parametersNeural architecturesAcquiring/recognising facial featuresFeature extractionConvolutional neural network

The invention relates to a human face expression identification method, in particular to a convolutional neural network-based human face expression identification method. The method comprises the steps of firstly obtaining human face images from a video, performing scale normalization operation processing on the obtained human face images to obtain human face images same in size, and performing alignment preprocessing operation on the human face images same in size to obtain preprocessed human face images; and performing feature extraction operation on the preprocessed human face images by using a convolutional neural network to obtain features of the human face images, and performing classified identification operation on the features of the human face images by utilizing a Softmax classifier. A human face expression identification algorithm realized by utilizing the convolutional neural network is an end-to-end process. According to the method, the human face images only need to be simply preprocessed and then fed into the convolutional neural network, the feature extraction is automatically performed, and a classification result is given, so that the accuracy is greatly improved, adjustable parameters are reduced, and intermediate processing steps are simplified to a great extent.

Owner:ANHUI SUN CREATE ELECTRONICS

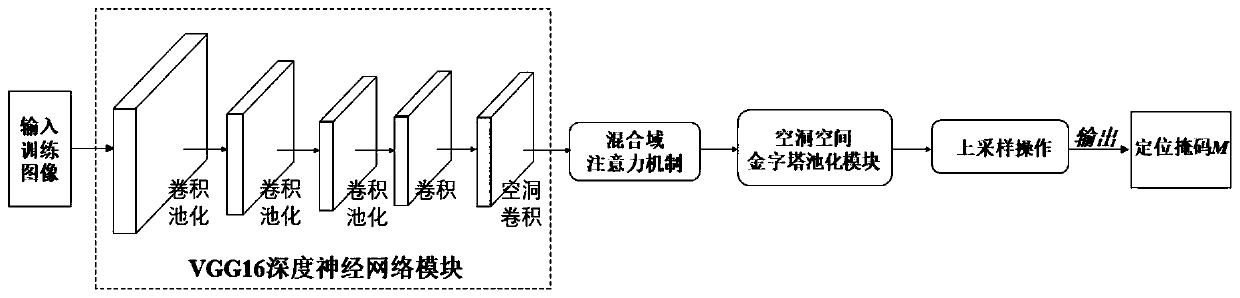

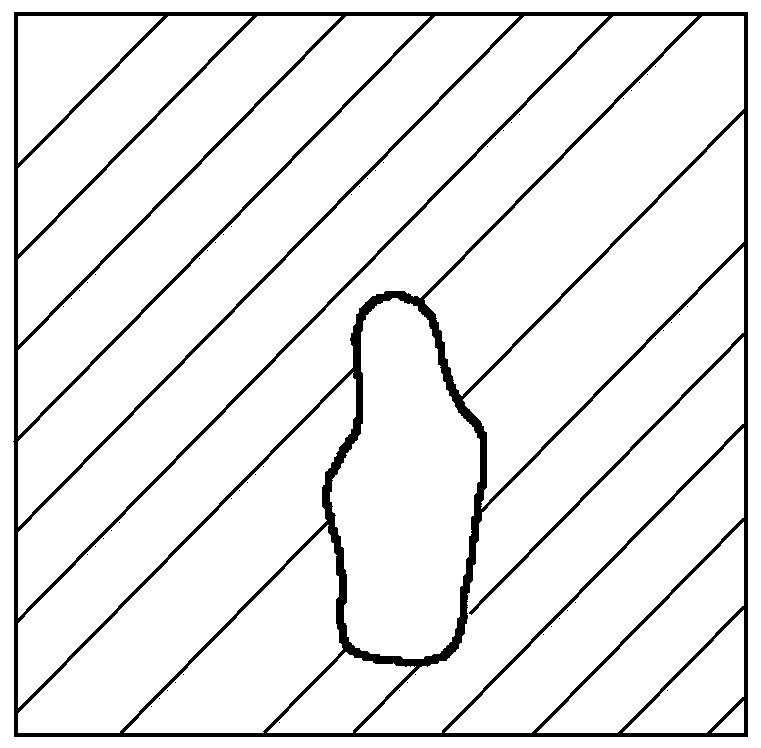

Image stitching tampering detection method

ActiveCN111080629ATake advantage ofExpand the receptive fieldImage enhancementImage analysisComputer graphics (images)Imaging analysis

The invention discloses an image stitching tampering detection method, relates to the field of image analysis, and is an image stitching tampering detection method based on a mixed domain attention mechanism and a cavity space pyramid pooling module, and the method comprises the steps of extracting a depth feature map F of an input image; obtaining a feature map Ffinal of the tampered area by adopting a mixed domain attention mechanism; adopting a cavity space pyramid pooling module to obtain a final positioning mask M; training a stitching tampering detection method based on the mixed domainattention mechanism and the cavity space pyramid pooling module. According to the measurement of image stitching tampering detection based on the mixed domain attention mechanism and the cavity spacepyramid pooling module, the defects that in the prior art, the tampering area of a stitched image cannot be accurately positioned based on a certain specific hypothesis, and a tampering target with asmall area is easily ignored in detection are overcome.

Owner:HEBEI UNIV OF TECH

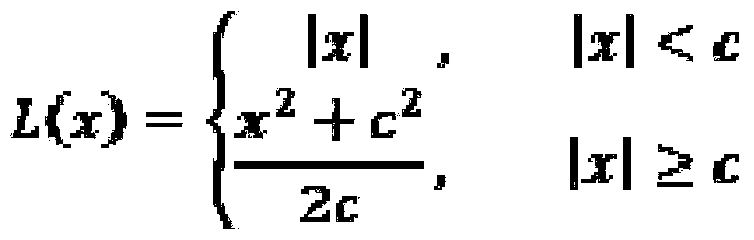

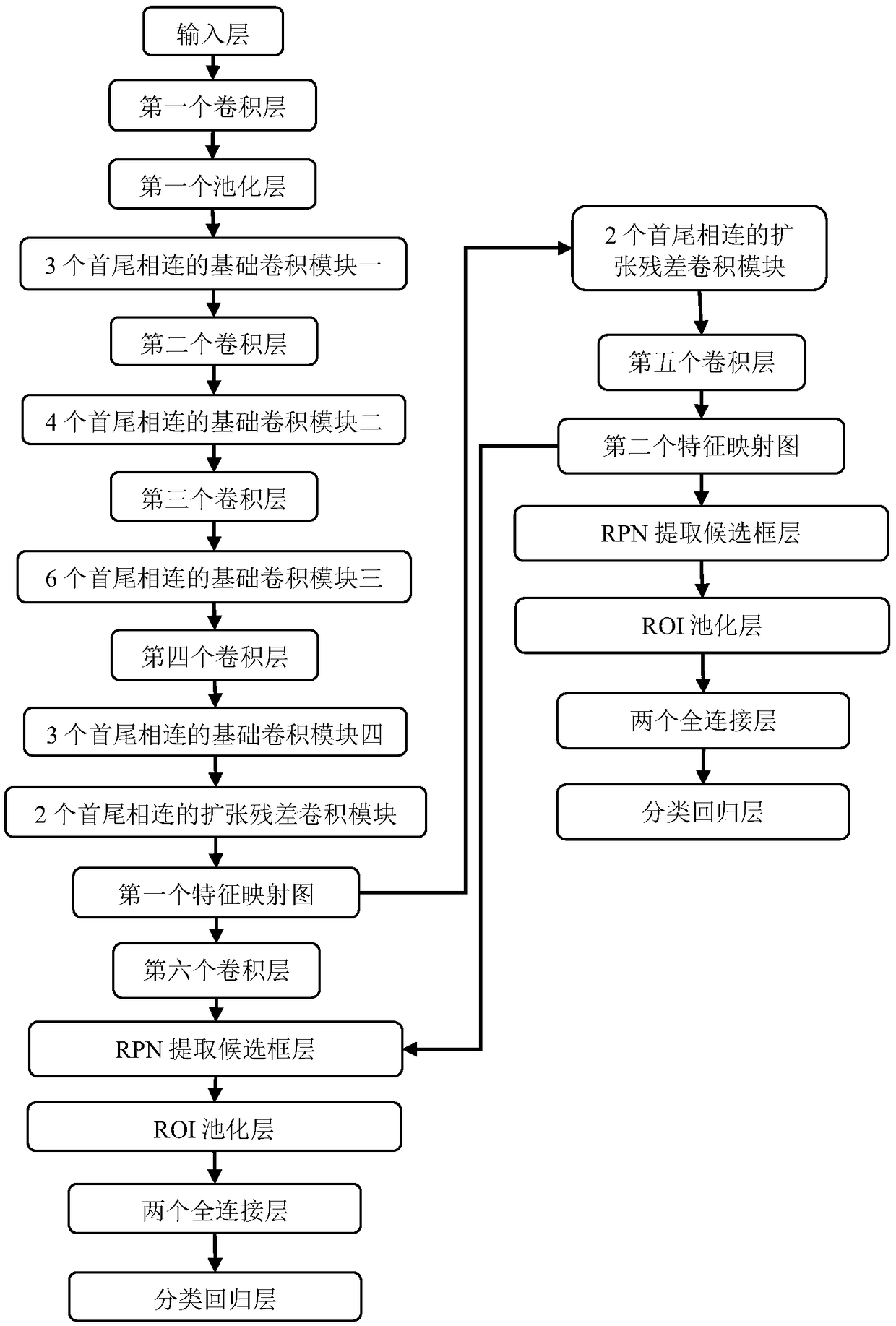

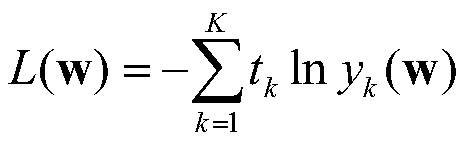

Monocular image depth estimation method based on pyramid pooling module

ActiveCN109410261AAdd depthExpand the receptive fieldImage analysisNeural architecturesHidden layerComputation complexity

The invention discloses a monocular image depth estimation method based on a pyramid pooling module. In a training stage, a neural network is firstly constructed, which comprises an input layer, a hidden layer and an output layer. The hidden layer includes a separate first convolution layer, a feature extraction network framework, a scale recovery network framework, a separate second convolution layer, a pyramid pooling module, and a separate connection layer. Each original monocular image in the training set is used as the original input image. The optimal weight vector and the optimal bias term of the trained neural network model are obtained by calculating the loss function value between the predicted depth image and the real depth image corresponding to each original monocular image inthe training set and inputting it into the neural network for training. In the testing phase, the monocular image to be predicted is input into the neural network model, and the predicted depth imageis obtained by using the optimal weight vector and the optimal bias term. The advantages are high prediction accuracy and low computational complexity.

Owner:ZHEJIANG UNIVERSITY OF SCIENCE AND TECHNOLOGY

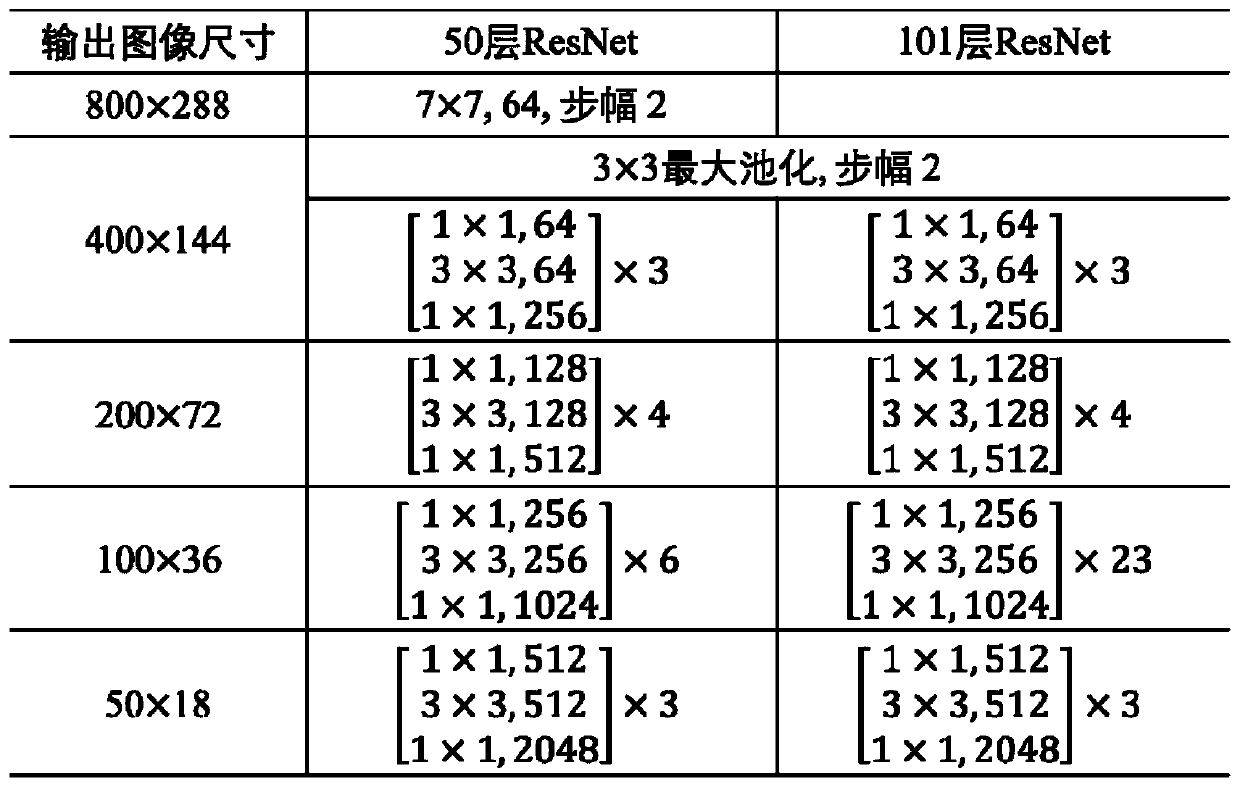

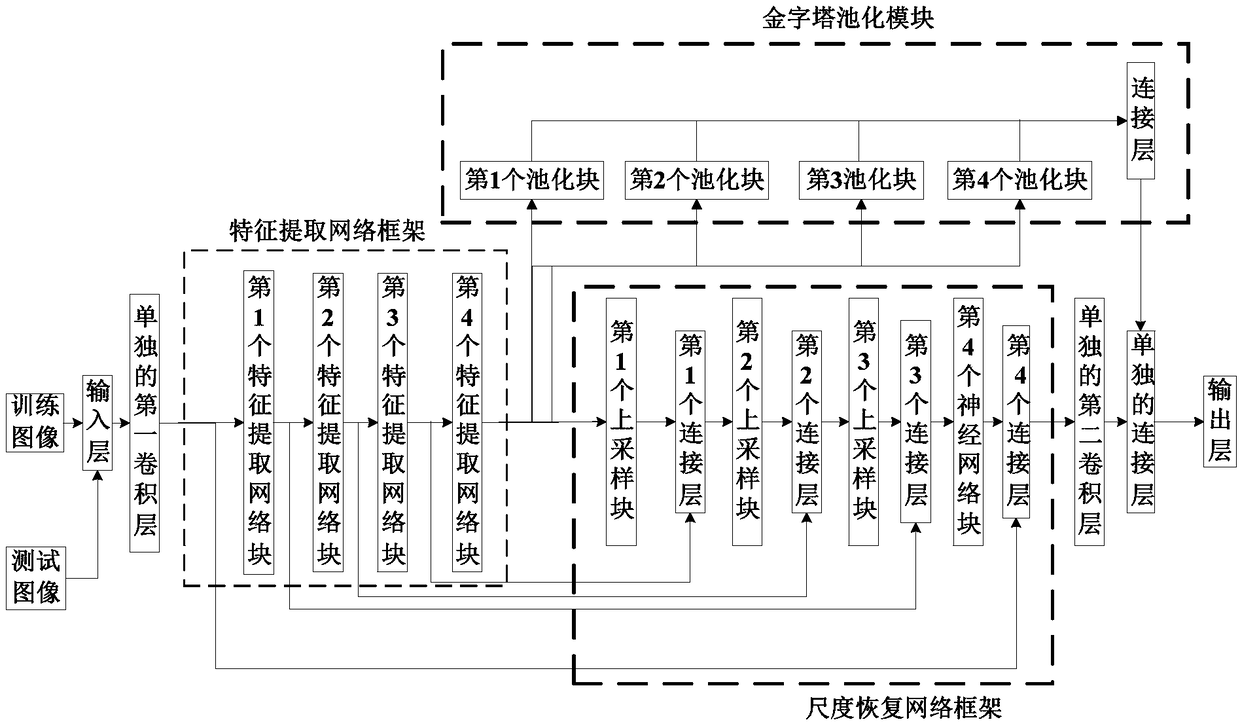

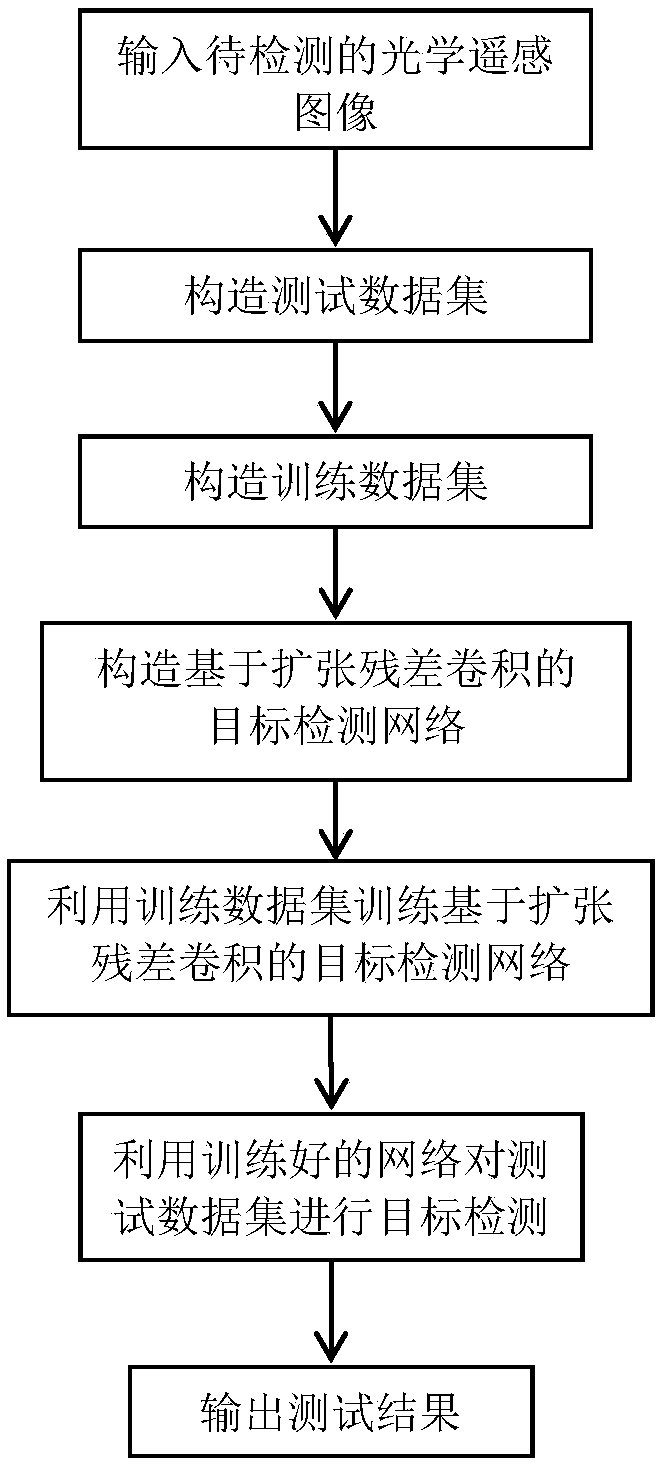

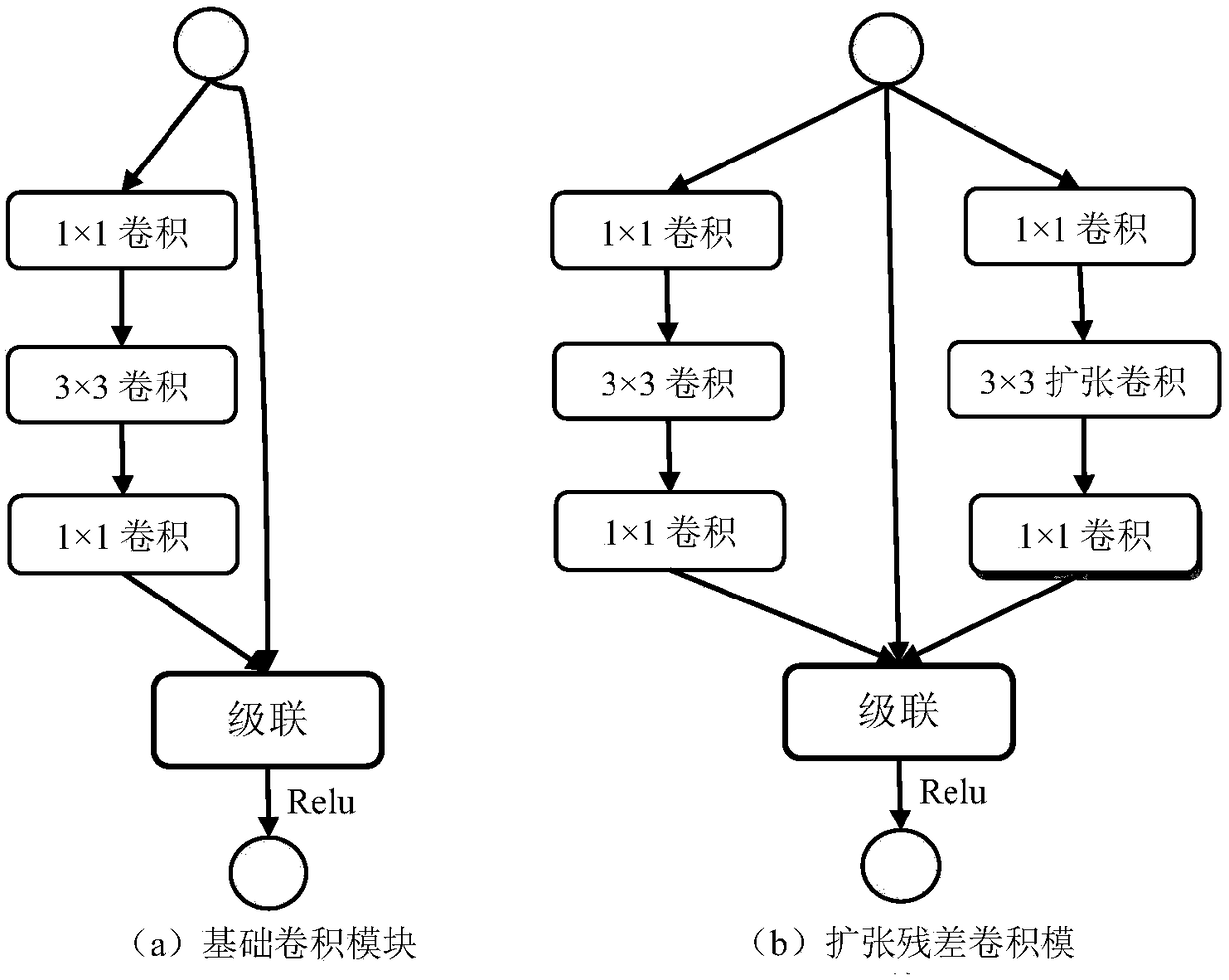

Optical remote sensing image target detection method based on extended residual convolution

ActiveCN109271856AExpand the receptive fieldImprove detection accuracyScene recognitionNeural architecturesData setSmall target

The invention discloses a depth convolution network optical remote sensing image target detection method based on extended residual convolution, which solves the problems of low detection accuracy rate and high false alarm rate of optical remote sensing image plane and ship in the prior art. The implementation steps are as follows: constructing a test data set; construct training data set; constructing a target detection network based on extended residual convolution for extended feature receptive field,training target detection network based on extended residual convolution using training data set; using the trained target detection network based on extended residual convolution to detect the target from the test data set, outputting test results. The network constructed by the inventionis more suitable for target detection of an optical remote sensing image by using an expanded residual convolution module and feature fusion, and not only improves the accuracy of a common target, butalso obviously improves the accuracy of small target detection for the optical remote sensing image. The method is used for object detection in optical remote sensing images.

Owner:XIDIAN UNIV

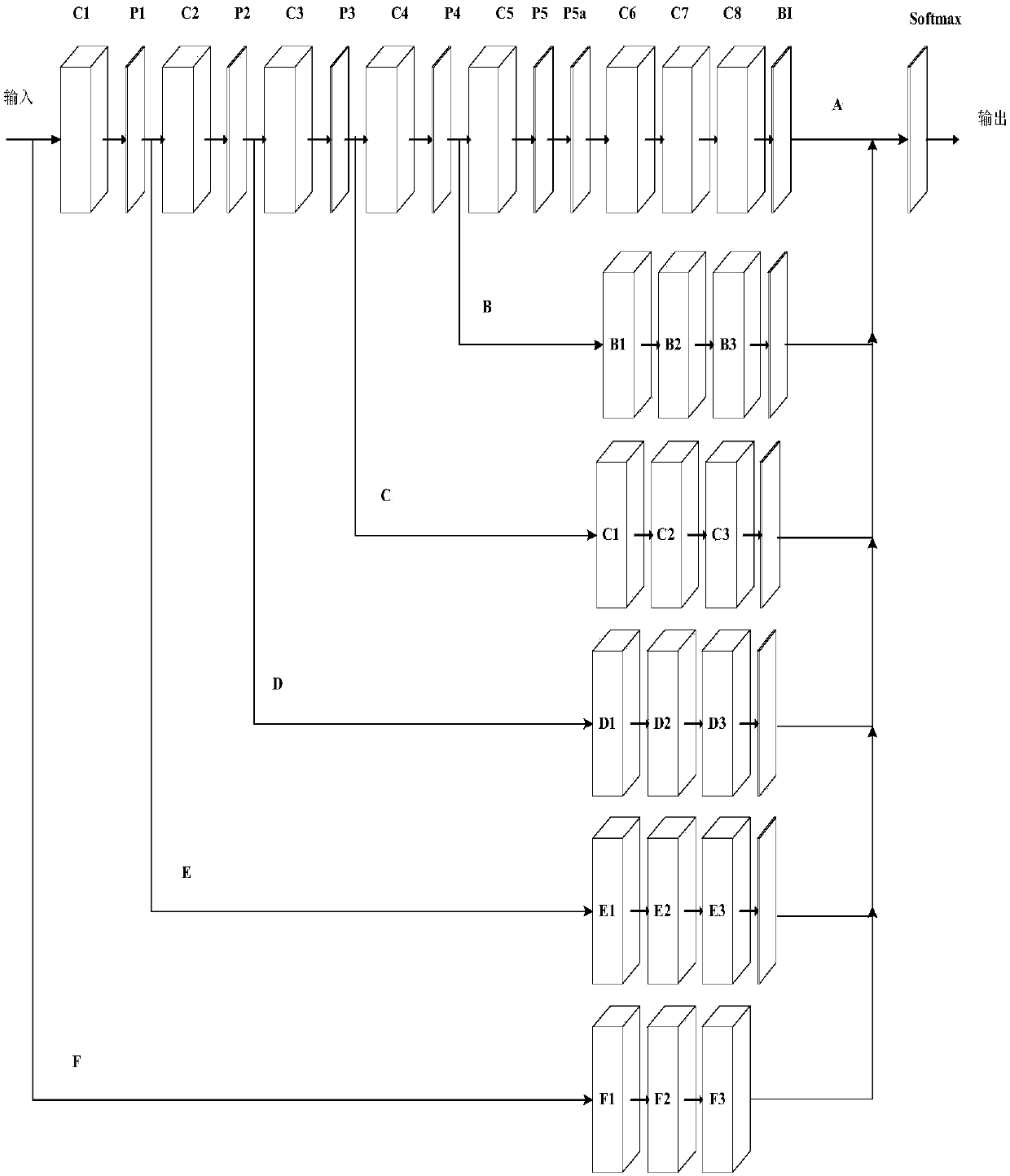

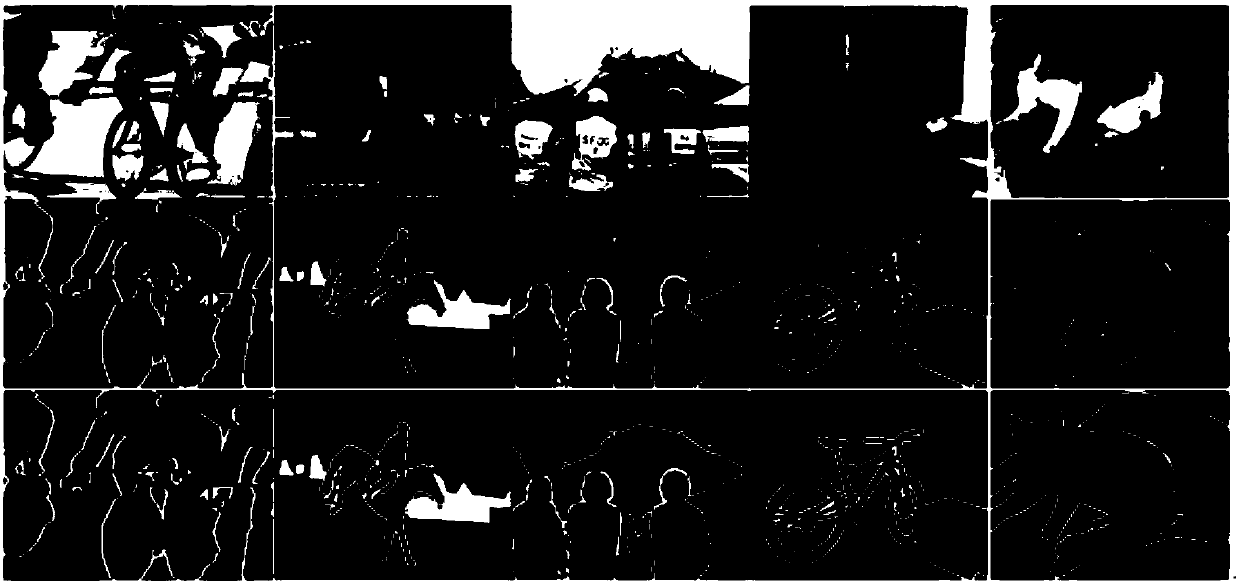

Semantic image segmentation method based on multichannel convolutional neural network

InactiveCN107657257AExpand the receptive fieldRich global informationCharacter and pattern recognitionNeural architecturesReceptive fieldTest phase

The invention provides a semantic image segmentation method based on a multichannel convolutional neural network. A provided network model comprises 6 channels; fusion of shallow and deep features isrealized through addition operation of outputs of the channels; and compared with a single-channel network, the structure can improve segmentation performance of semantic images. The whole network model structure improves a receptive field by combining an a'trous algorithm, so that the captured global information is allowed to be richer; and in the test phase, the segmentation result is subjectedto optimization through a full connection condition random field, so that the segmentation performance of the semantic images can be further improved.

Owner:CHINA UNIV OF MINING & TECH

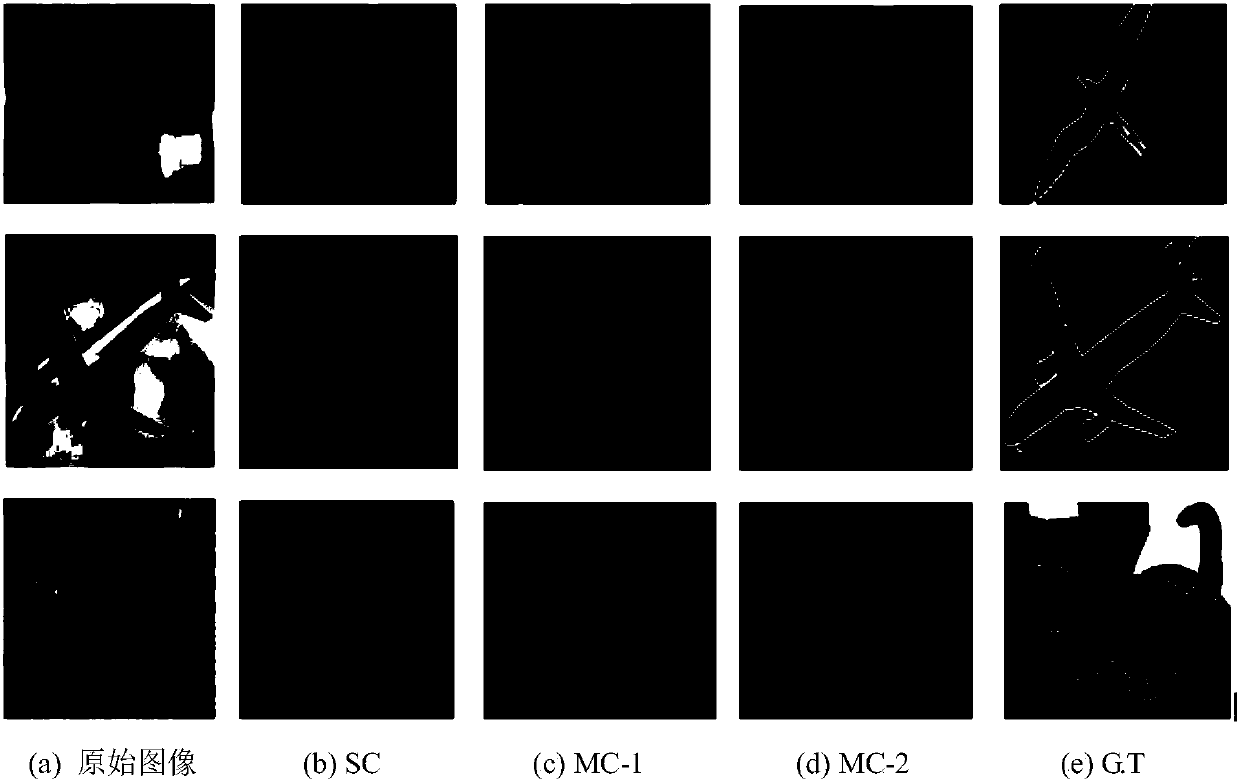

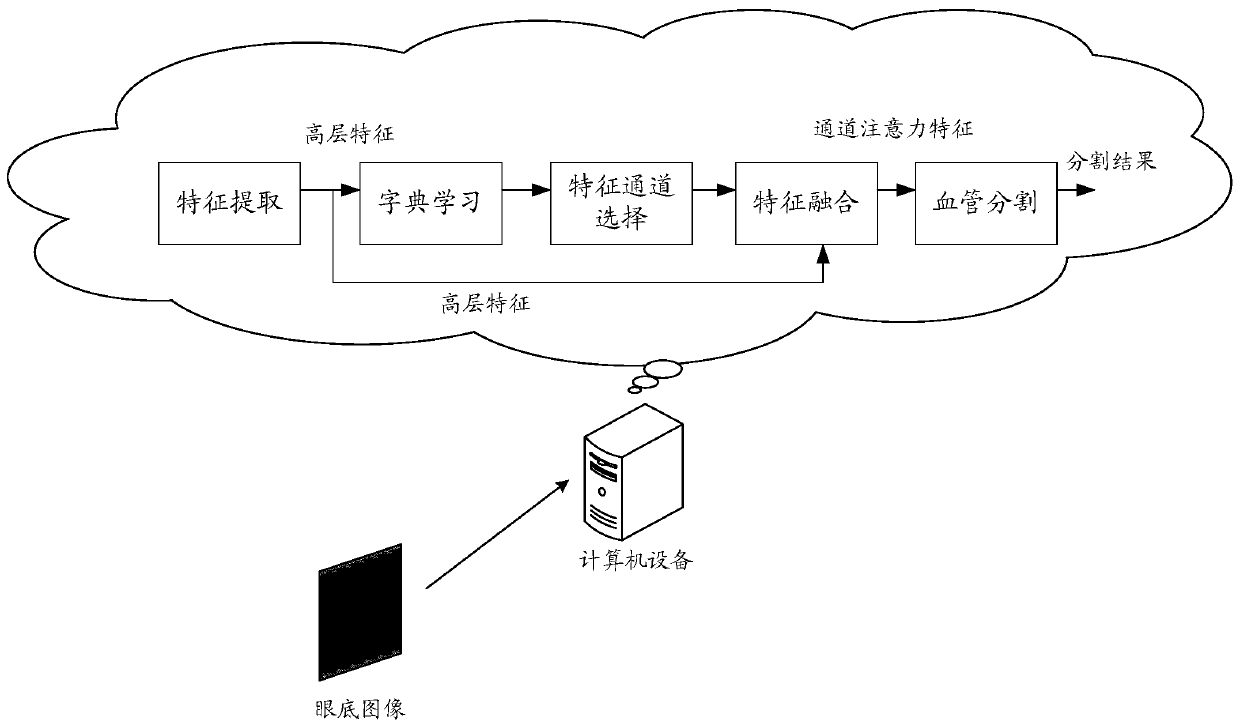

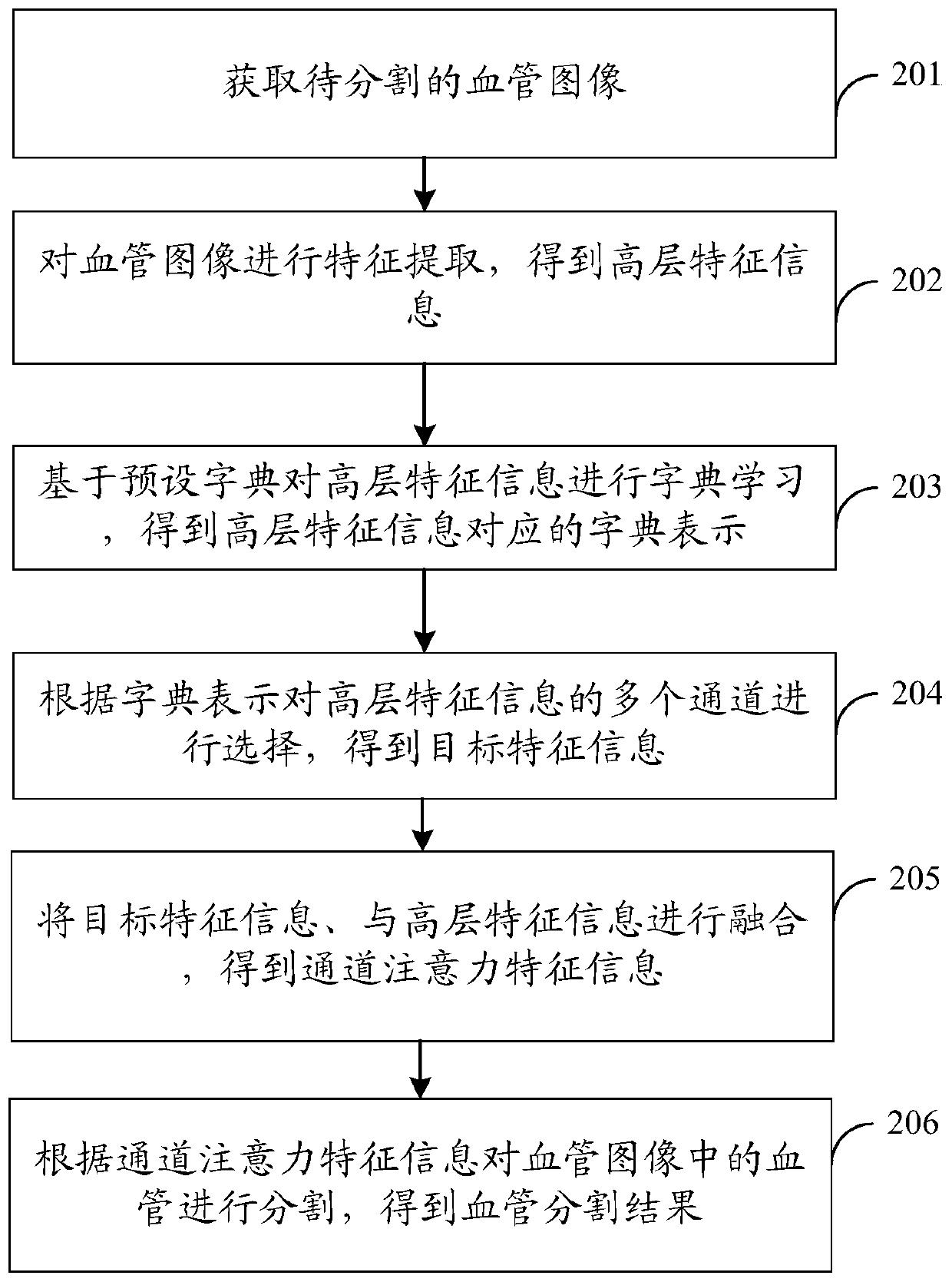

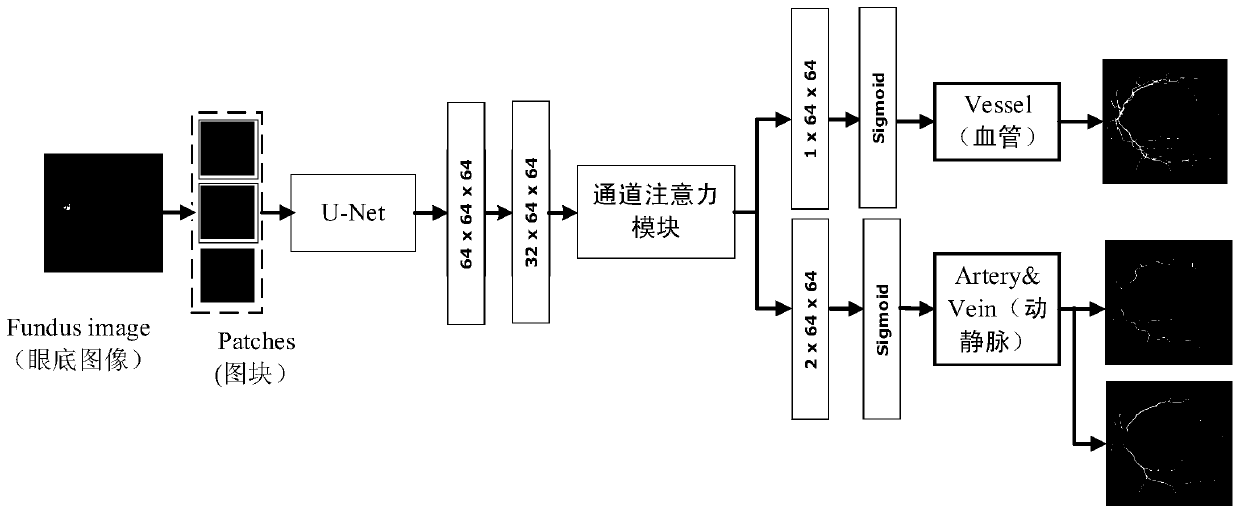

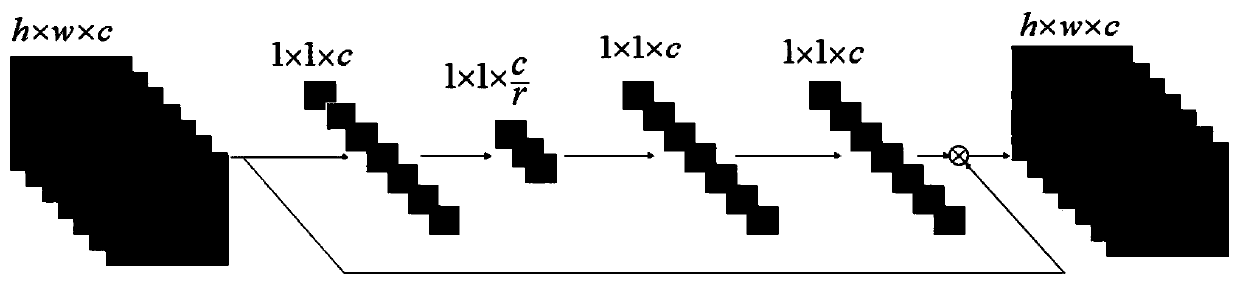

Blood vessel and fundus image segmentation method, device and equipment and readable storage medium

PendingCN110443813AImprove Segmentation AccuracyAvoid lossImage enhancementImage analysisDictionary learningFeature extraction

The embodiment of the invention discloses a blood vessel and eye fundus image segmentation method, device and equipment and a readable storage medium, and relates to the computer vision technology ofartificial intelligence. Specifically, the method comprises steps of acquiring a blood vessel image to be segmented, such as a fundus image; performing feature extraction on the blood vessel image such as the fundus image to obtain high-level feature information; performing dictionary learning on the high-level feature information based on a preset dictionary to obtain dictionary representation corresponding to the high-level feature information; selecting a plurality of channels of the high-level feature information according to the dictionary representation to obtain target feature information; fusing the target feature information with the high-level feature information to obtain channel attention feature information; and segmenting blood vessels in the blood vessel image, such as the fundus image, according to the channel attention feature information to obtain a blood vessel segmentation result. According to the scheme, global information loss of the characteristic blood vessel image such as the fundus image can be avoided, and the segmentation accuracy of the blood vessel image such as the fundus image is greatly improved.

Owner:腾讯医疗健康(深圳)有限公司

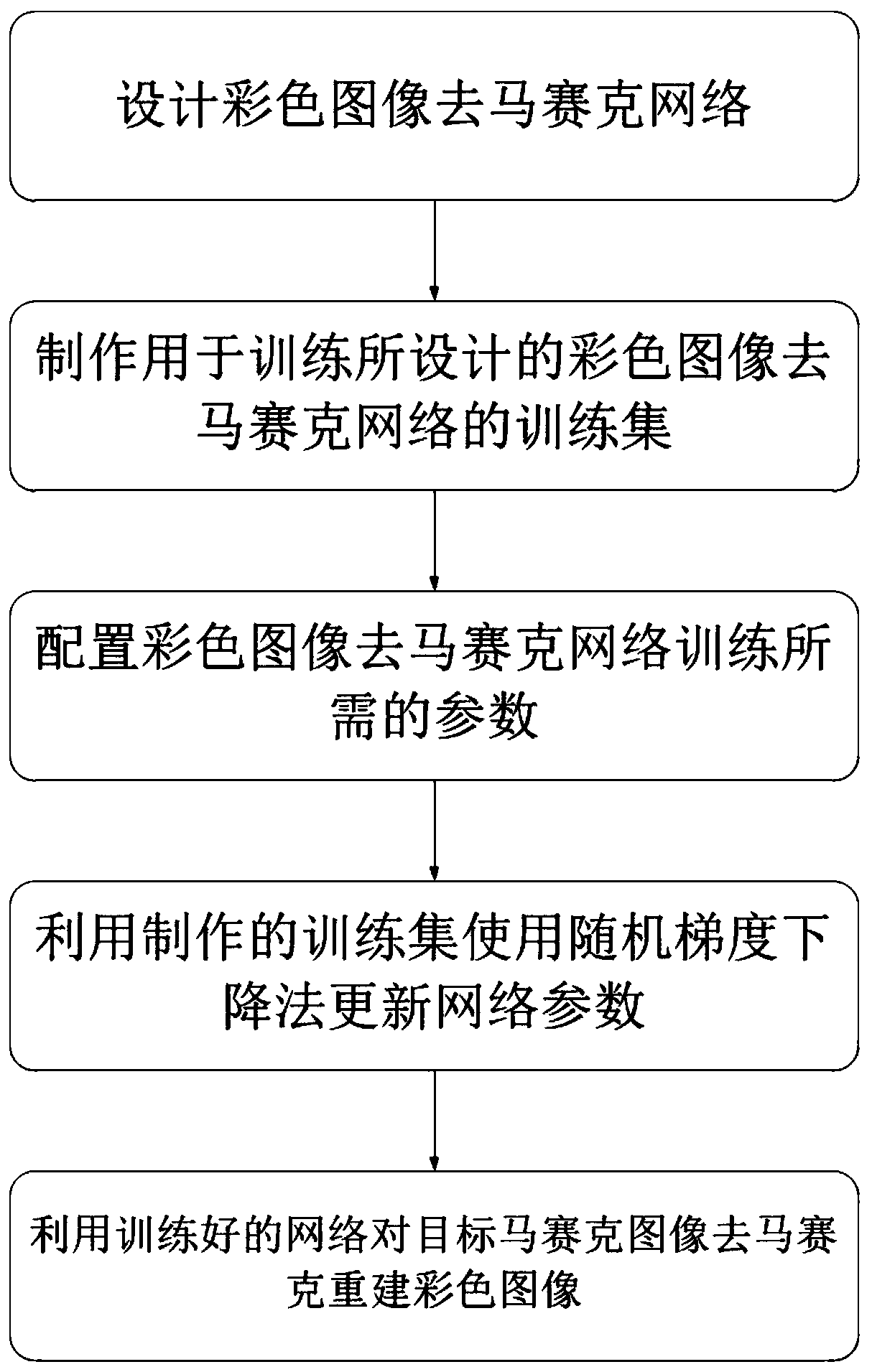

High-quality color image demosaicing method based on convolutional neural network

ActiveCN110009590AAvoid inaccuraciesQuality improvementImage enhancementImage analysisColor imageNetwork model

The invention discloses a high-quality color image demosaicing method based on a convolutional neural network, and belongs to the field of image signal processing. According to the method, in the color image demosaicing process, under the condition that the calculated amount is not greatly increased, the self-similarity and redundant information of the image are fully utilized, a color image demosaicing network is designed, and the color image demosaicing network is used for representing end-to-end mapping from the mosaic image to the color image; a training set for training the designed colorimage demosaicing network is made; parameters required by color image demosaicing network training are configured; network parameters are updated by using a random gradient descent method through themade training set; and a target color image with any resolution is directly reconstructed by using the trained network model to obtain a high-quality color image. The method has the advantages of high reconstruction efficiency and high reconstructed color image quality. The image demosaicing method can be used for finishing image demosaicing tasks in all cameras based on the Bayer color filter array.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

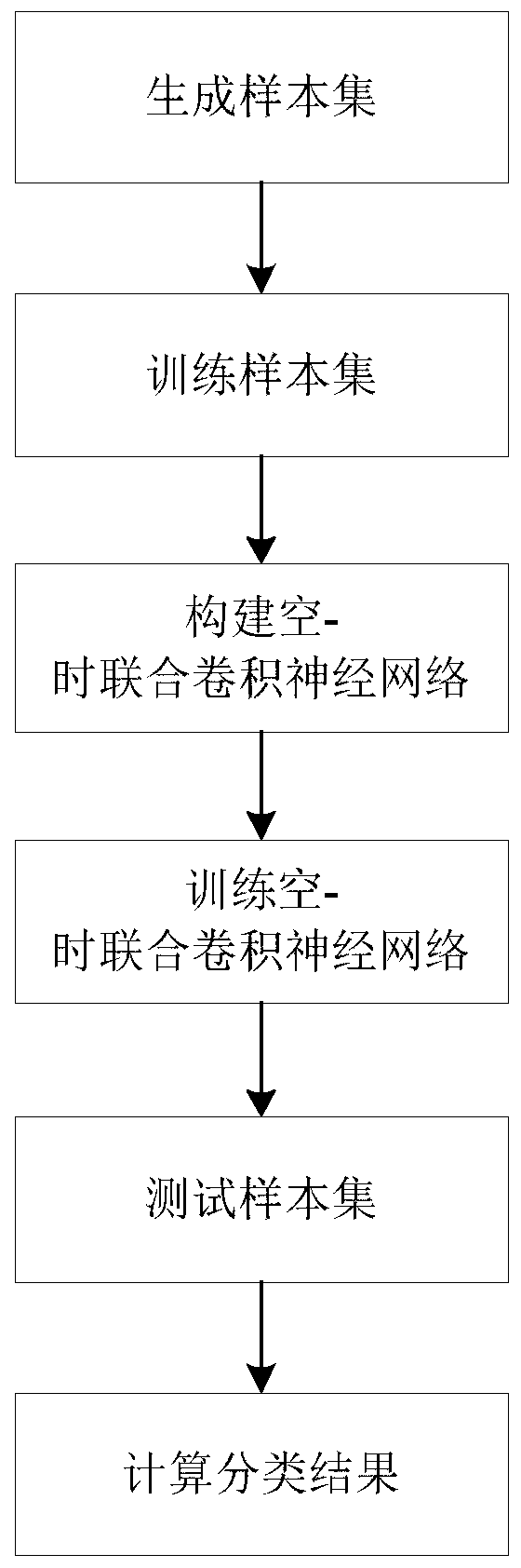

SAR sequence image classification method based on space-time joint convolution

ActiveCN110781830AImprove effectivenessOvercome the problem of destroying the time information of sequence imagesScene recognitionNeural architecturesTime informationGoal recognition

The invention discloses an SAR (Synthetic Aperture Radar) sequence image classification method based on space-time joint convolution, which mainly solves the problems of insufficient time informationutilization and low classification accuracy due to the fact that only single image features are utilized in the existing SAR target recognition technology. The method comprises the following steps: 1)generating a sample set, and generating a training sequence sample set and a test sequence sample set from the sample set; 2) constructing a space-time joint convolutional neural network; 3) traininga space-time joint convolutional neural network by using the training sequence sample set to obtain a trained space-time joint convolutional neural network; and 4) inputting the test sequence sampleset into the trained space-time joint convolutional neural network to obtain a classification result. According to the method, the space-time joint convolutional neural network is utilized to extractthe change characteristics of the time dimension and the space dimension of the SAR sequence image, and the accuracy of SAR target classification and recognition is improved. The method can be used for automatic target identification based on SAR sequence images.

Owner:XIDIAN UNIV

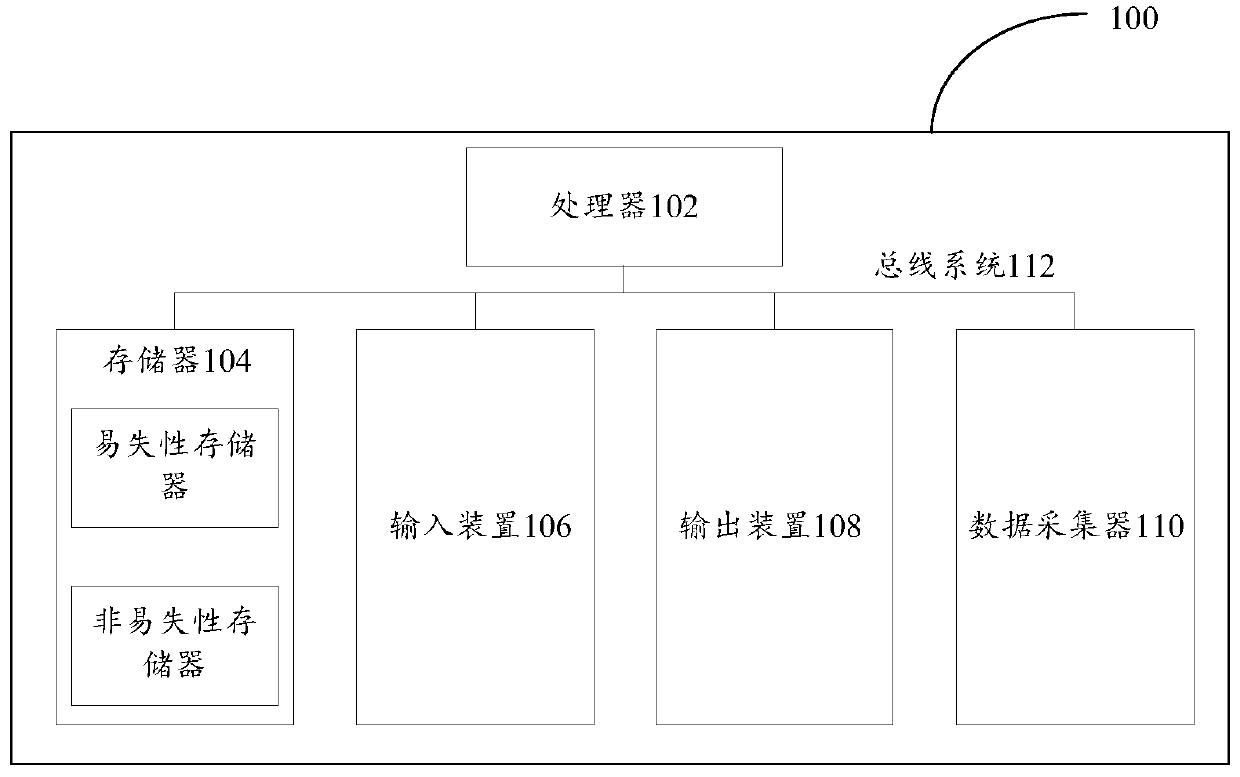

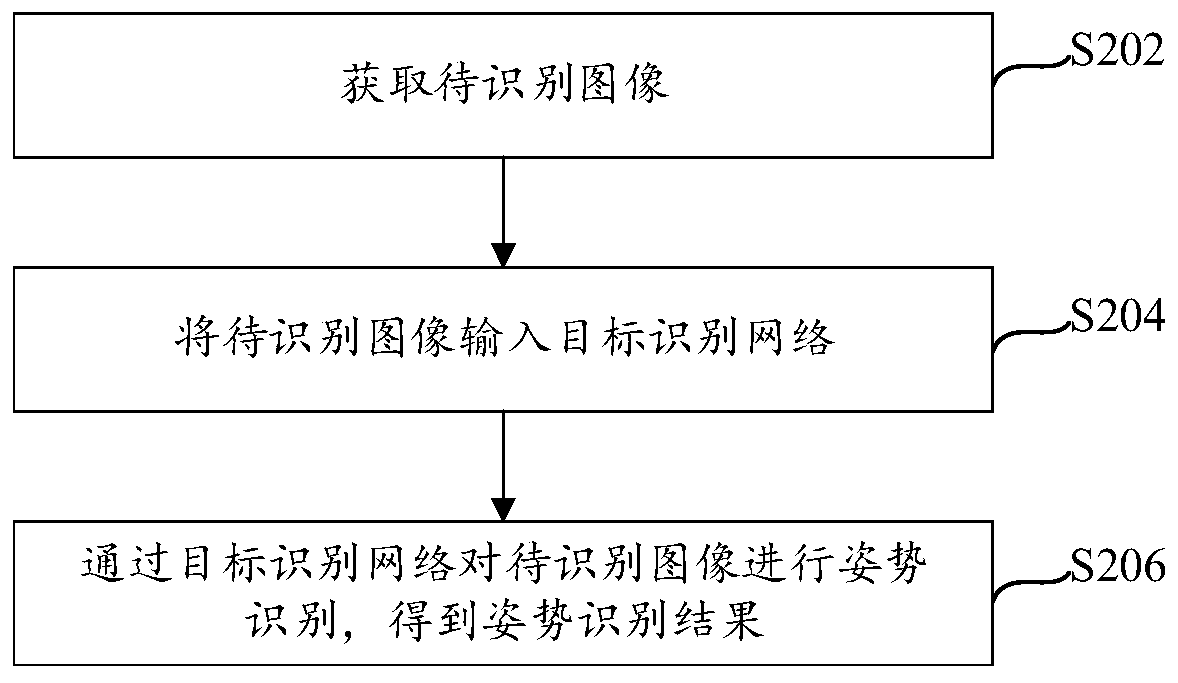

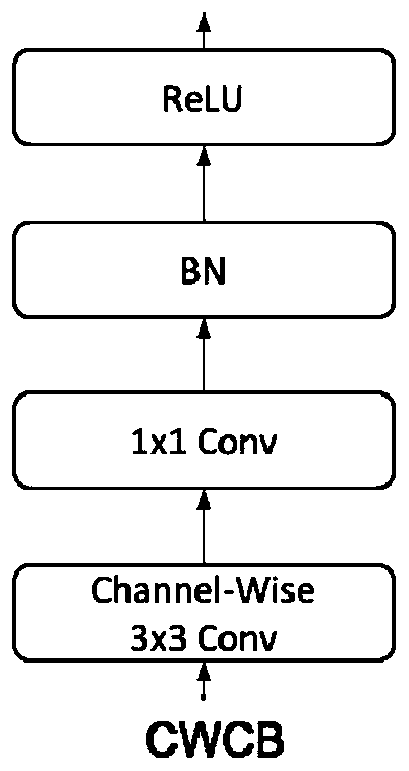

Image processing method and device and processing equipment

ActiveCN109740534AReduce the amount of parametersExpand the receptive fieldCharacter and pattern recognitionNeural architecturesPattern recognitionImaging processing

The invention provides an image processing method and device and processing equipment, and relates to the technical field of image recognition, and the method comprises the steps: obtaining a to-be-recognized image; inputting the image to be identified into a target identification network; wherein the target identification network comprises a plurality of convolution calculation layers and a plurality of residual error calculation layers which are connected in sequence; wherein the convolution calculation layer comprises a convolution block, and the residual calculation layer comprises a residual block; The residual block comprises at least two convolution blocks which are sequentially connected; The convolution block comprises at least one channel invariable convolution layer; when the channel invariant convolution layer calculates the input feature map, each channel of the input feature map is independently subjected to convolution transformation to obtain one channel of the output feature map; and performing posture recognition on the to-be-recognized image through the target recognition network to obtain a posture recognition result, the posture recognition result comprising the position and the mode of the target contained in the to-be-recognized image. According to the embodiment of the invention, the calculation amount can be reduced, the receptive field is increased, and the position and mode are accurately determined.

Owner:BEIJING KUANGSHI TECH

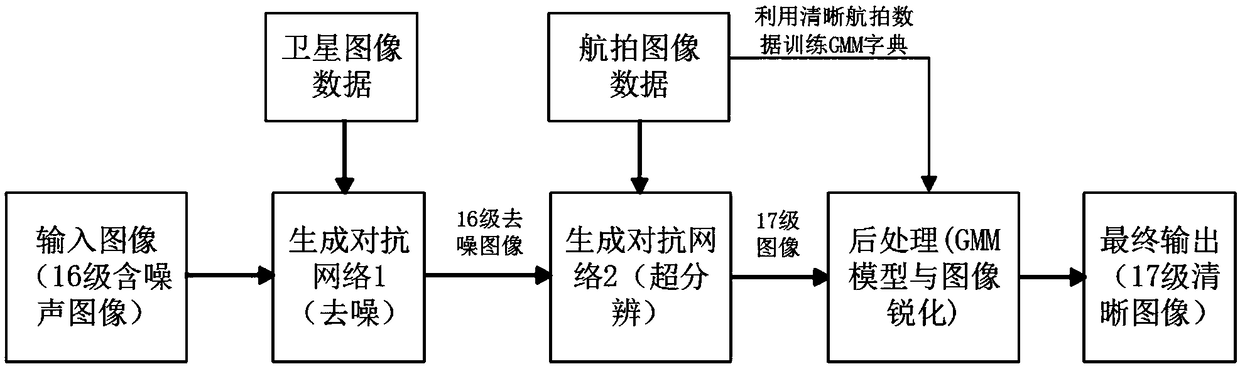

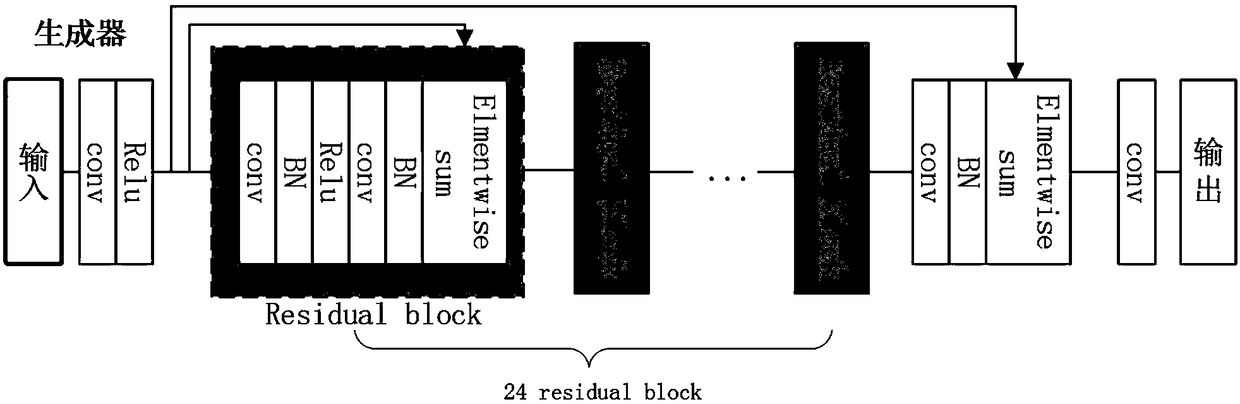

A satellite image super-resolution method based on adversarial network and aerial image a priori

ActiveCN109035142AHigh resolutionImprove visualizationGeometric image transformationImaging qualitySatellite image

The invention discloses a satellite image super-resolution method combining an adversarial network with an aerial image a priori. Firstly, an image pair composed of a 16-level noisy image and a corresponding 16-level non-noisy image is used for training a denoising model, and then the image super-resolution model is trained by using clear aerial data. Because there is no satellite image and aerialimage pairs, clear aerial images are used to construct an external priori dictionary of GMM model in the post-processing of the generated super-resolution images, and then the internal unclear satellite images are guided to be reconstructed. In order to further improve the image quality, Gaussian filter is used to sharpen the image after reconstruction. Finally, the high-resolution image of the original satellite image is obtained, and the visual quality of the image is improved based on the original satellite image. The effectiveness of the scheme can also be seen from the experiment. It provides an effective way to solve the problem of satellite image super-resolution and image quality improvement under the condition of conditional constraints in reality.

Owner:XI AN JIAOTONG UNIV +1

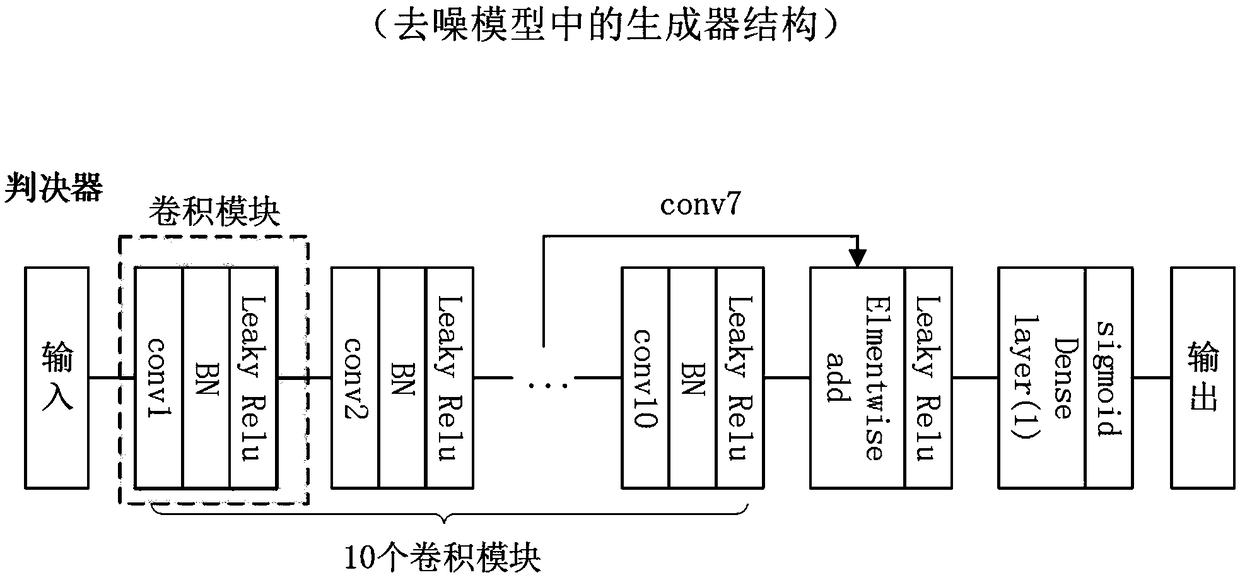

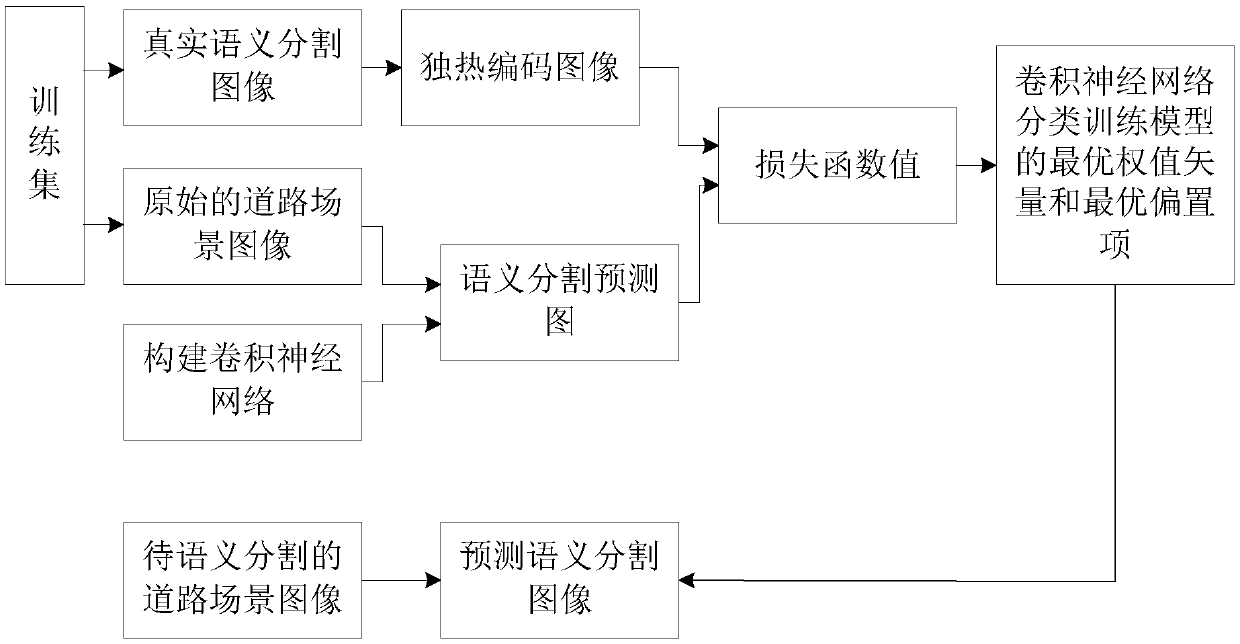

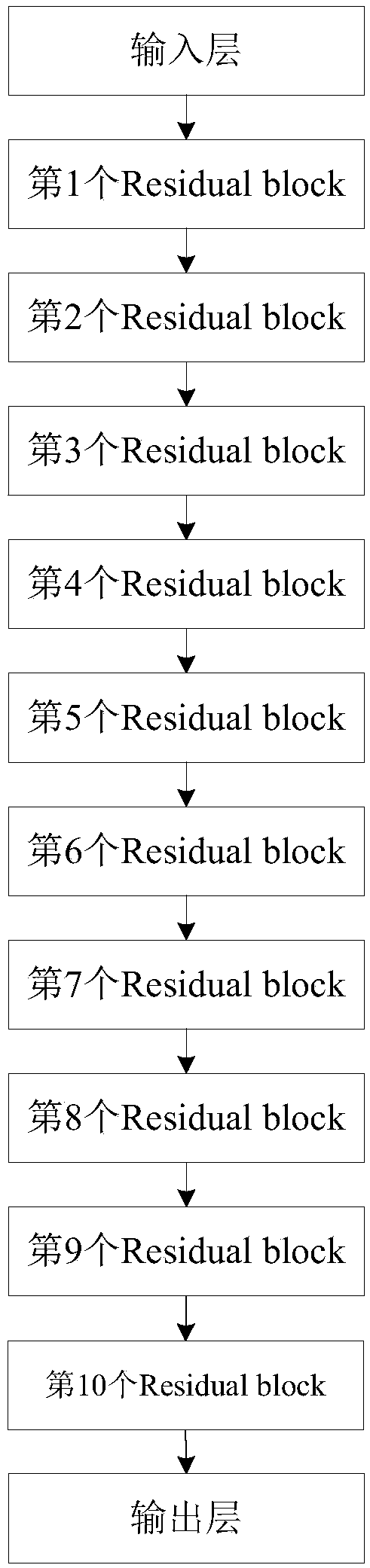

Road scene segmentation method based on residual network and expanded convolution

InactiveCN109635642AImprove the extraction effectFully absorbing structural efficiencyCharacter and pattern recognitionHidden layerComputation complexity

The invention discloses a road scene segmentation method based on a residual network and expanded convolution. The method comprises: a convolutional neural network being constructed in a training stage, and a hidden layer of the convolutional neural network being composed of ten Respondial blocks which are arranged in sequence; inputting each original road scene image in the training set into a convolutional neural network for training to obtain 12 semantic segmentation prediction images corresponding to each original road scene image; calculating a loss function value between a set formed by12 semantic segmentation prediction images corresponding to each original road scene image and a set formed by 12 independent thermal coding images processed by a corresponding real semantic segmentation image to obtain an optimal weight vector of the convolutional neural network classification training model. In the test stage, prediction is carried out by utilizing the optimal weight vector of the convolutional neural network classification training model, and a predicted semantic segmentation image corresponding to the road scene image to be subjected to semantic segmentation is obtained. The method has the advantages of low calculation complexity, high segmentation efficiency, high segmentation precision and good robustness.

Owner:ZHEJIANG UNIVERSITY OF SCIENCE AND TECHNOLOGY

Model training method and device, frame image generation method and device, frame insertion method and device, equipment and medium

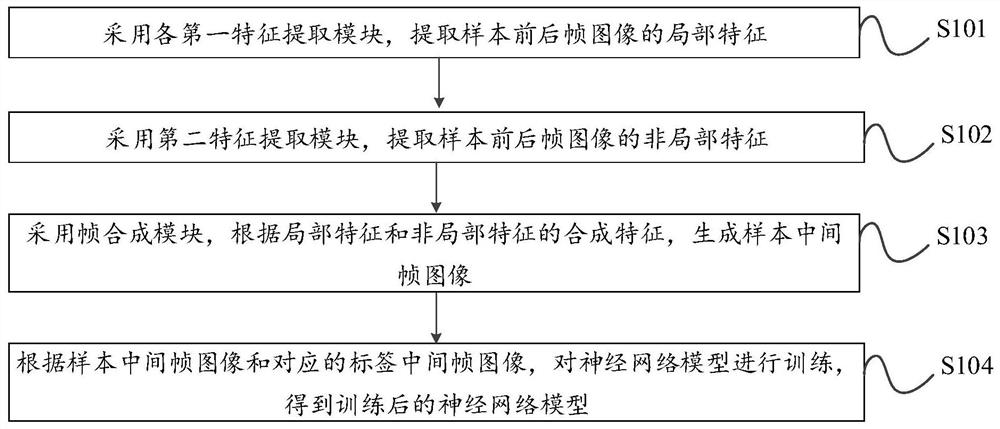

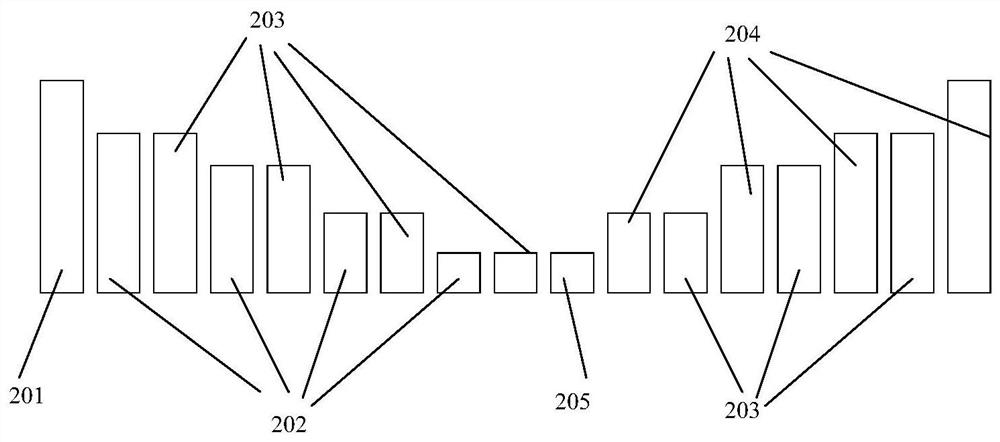

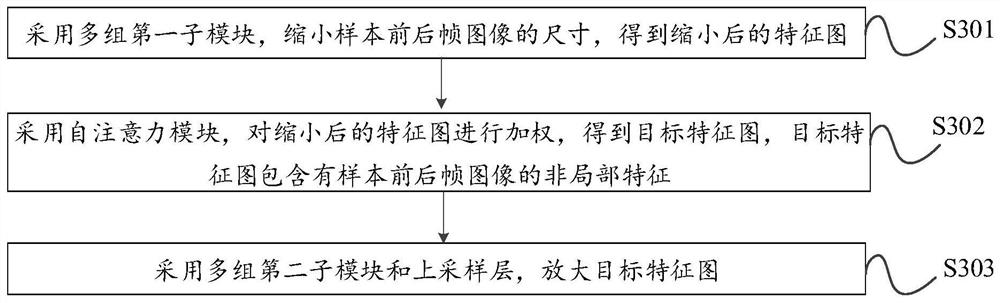

PendingCN111898701AExpand the receptive fieldImprove learning effectCharacter and pattern recognitionNeural architecturesFeature extractionEngineering

The invention provides a model training method and device, a frame image generation method and device, a frame insertion method and device, equipment and a medium and relates to the technical field ofmodel training. The method is applied to a neural network model and adopts a first feature extraction module to extract local features of front and back frame images of a sample; a second feature extraction module is adopted to extract non-local features of front and back frame images of the sample; a frame synthesis module is adopted to generate a sample intermediate frame image according to thesynthesis features of the local features and the non-local features; and the neural network model is trained according to the sample intermediate frame image and the corresponding label intermediateframe image to obtain a trained neural network model. According to the neural network model obtained by training based on the mode, the receptive field is expanded, the learning ability of large changes in front and back frame images is enhanced, and when the front and back frame images are processed based on the trained neural network model, the generated middle frame image is more accurate.

Owner:NETEASE (HANGZHOU) NETWORK CO LTD

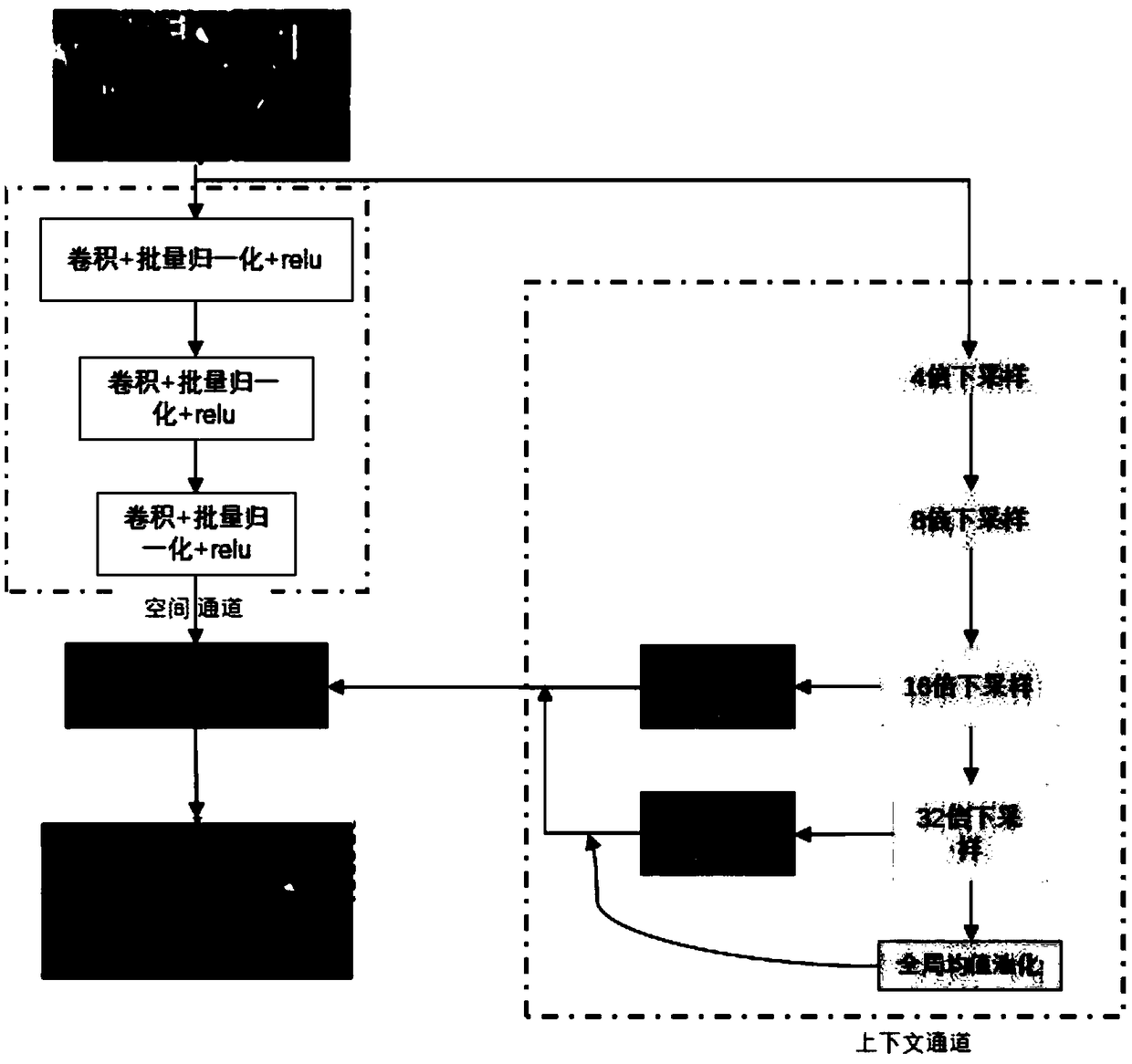

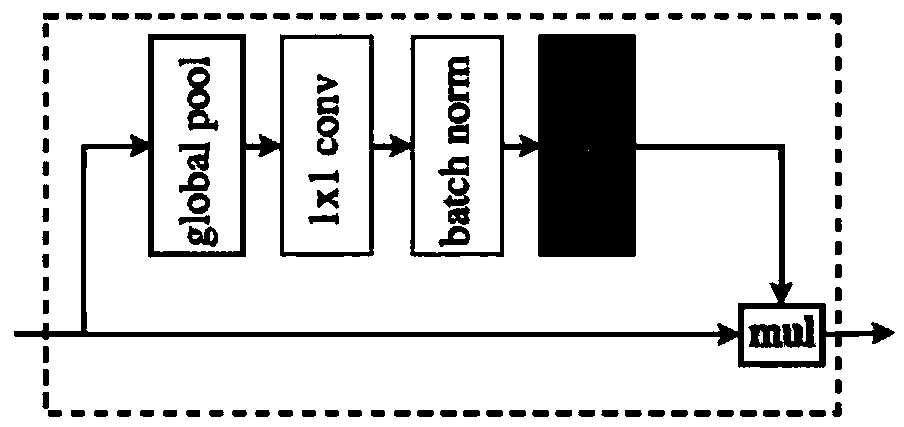

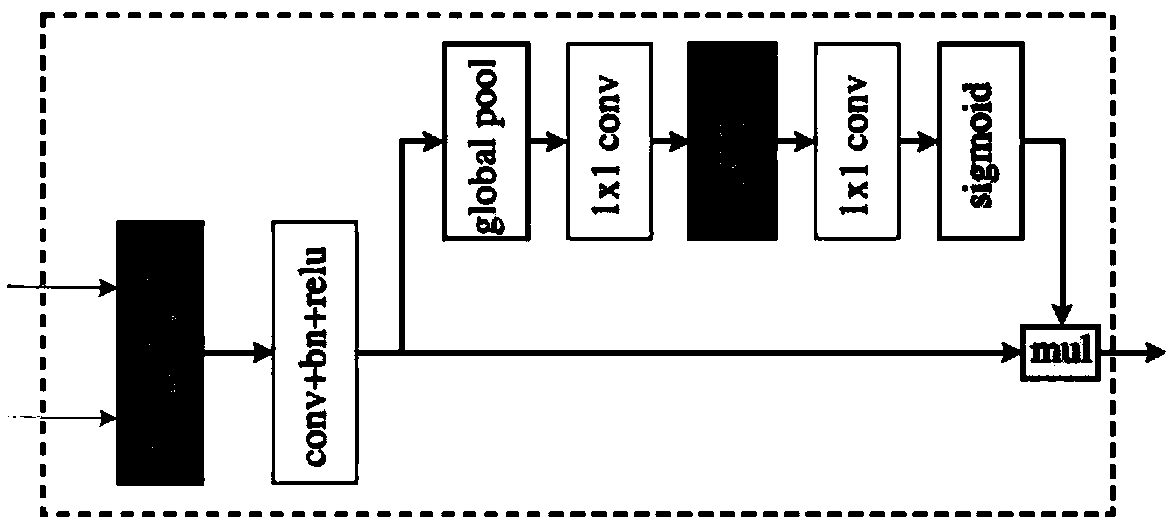

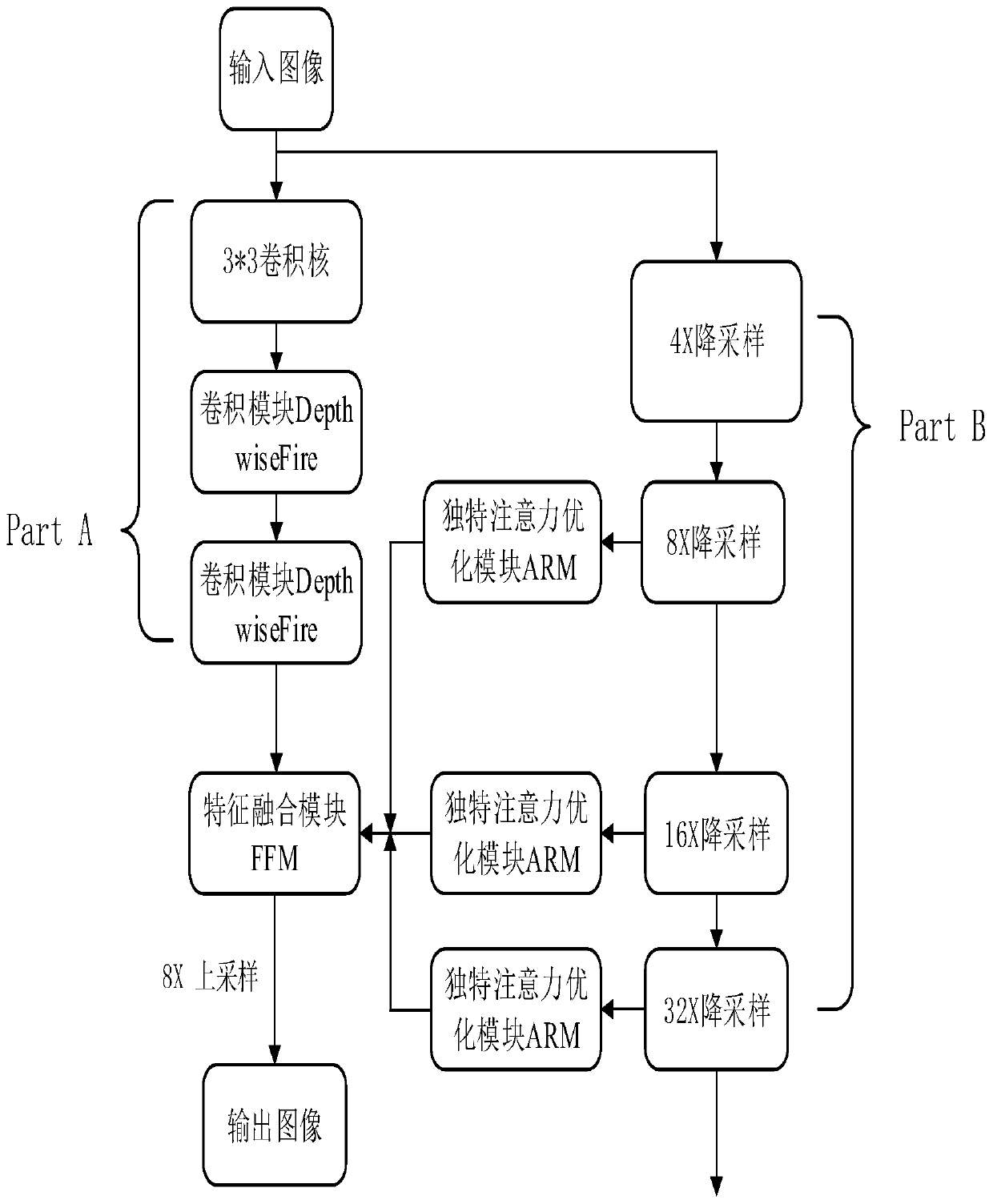

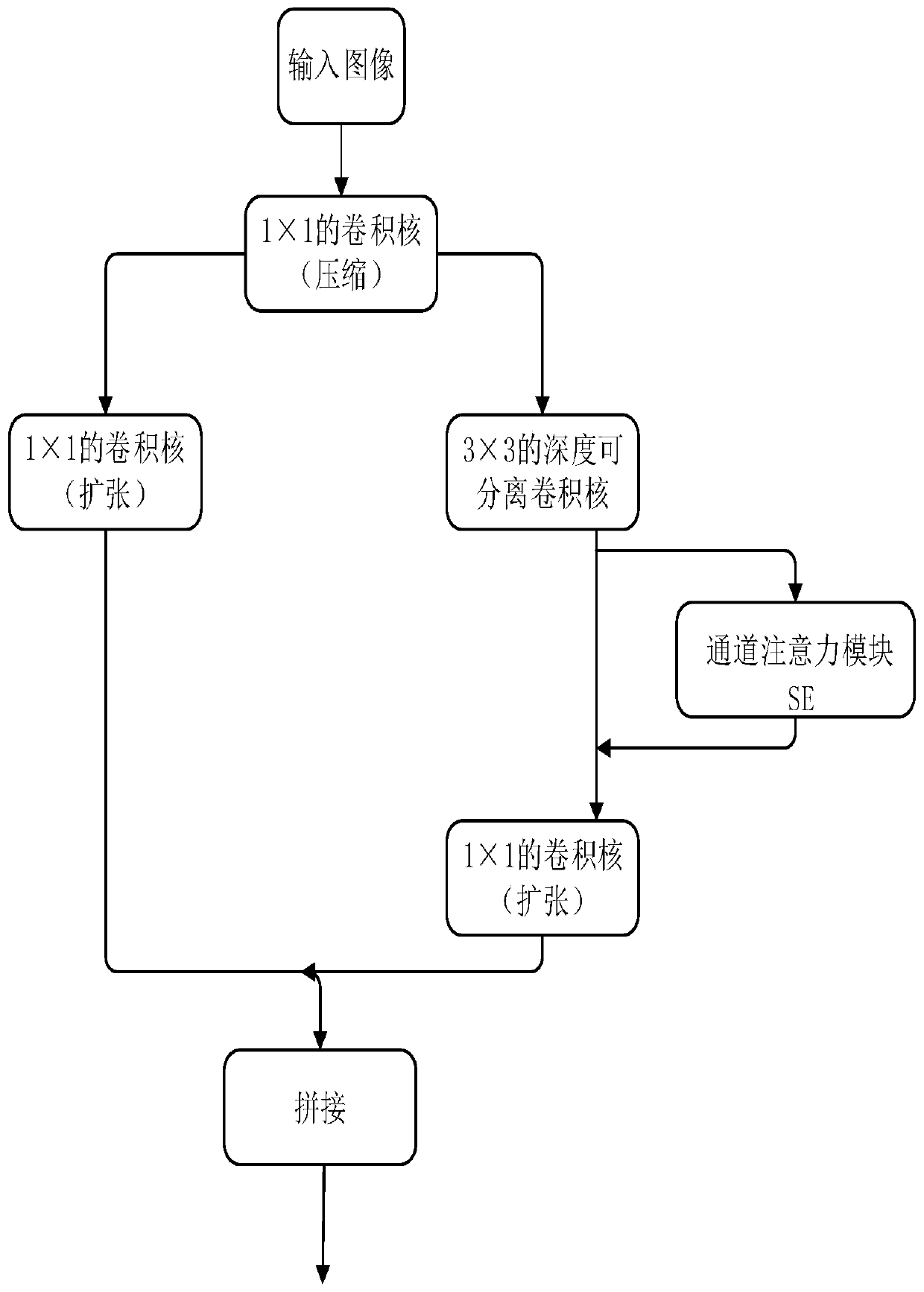

A vehicle image semantic segmentation system based on bilateral segmentation network

ActiveCN109101907AExpand the receptive fieldNo time consumingCharacter and pattern recognitionNeural architecturesComputer visionSegmentation system

The invention discloses a vehicle-mounted image semantic segmentation system based on a bilateral segmentation network. The system comprises a data storage module, which is used for storing a vehicle-mounted image training set and a vehicle-mounted image to be tested; a bilateral segmentation network consisting of a spatial channel and a context channel, wherein the spatial channel is used to extract the spatial information of the vehicle image, and the context channel is used to extract the context semantic information of the vehicle image; a training module for training a bilateral segmentation network using a vehicle-mounted image training set; a semantic segmentation module used for predicting the vehicle image to be tested by using the trained bilateral segmentation network, and obtaining the class to which each pixel in the vehicle image to be tested belongs. The invention discloses a bilateral segmentation network comprising a spatial channel and a context channel, wherein the spatial channel is used for extracting spatial information of an image while retaining enough spatial information, and the context channel is used for extracting context semantic information of the image while ensuring a large enough receptive field.

Owner:HUAZHONG UNIV OF SCI & TECH

Fabric surface defect detection method based on convolutional neural network

PendingCN111402203AReduce classification difficultyEfficient and accurate trainingImage enhancementImage analysisData setEngineering

The invention discloses a fabric surface defect detection method based on a convolutional neural network. The fabric surface defect detection method comprises the following steps: S1, collecting and marking a data set; S2, manufacturing GroundTruth of a defect sample image of the data set; S3, constructing a convolutional neural network model; S4, training a convolutional neural network model to obtain an optimal model; and S5, acquiring the defect image of the fabric online, inputting the image of the fabric to be detected into the trained convolutional neural network model for image segmentation, and realizing online automatic detection through the convolutional neural network model so as to identify defects existing on the surface of the fabric. According to the method, the defects of artificial design defect features can be overcome, the features can be learned from the pre-marked sample data set by using the convolutional neural network, so that the segmentation is quickly and accurately performed, the fabric surface defects are accurately and automatically detected, the manpower and material resources are saved, and the fabric product quality is improved.

Owner:HANGZHOU DIANZI UNIV

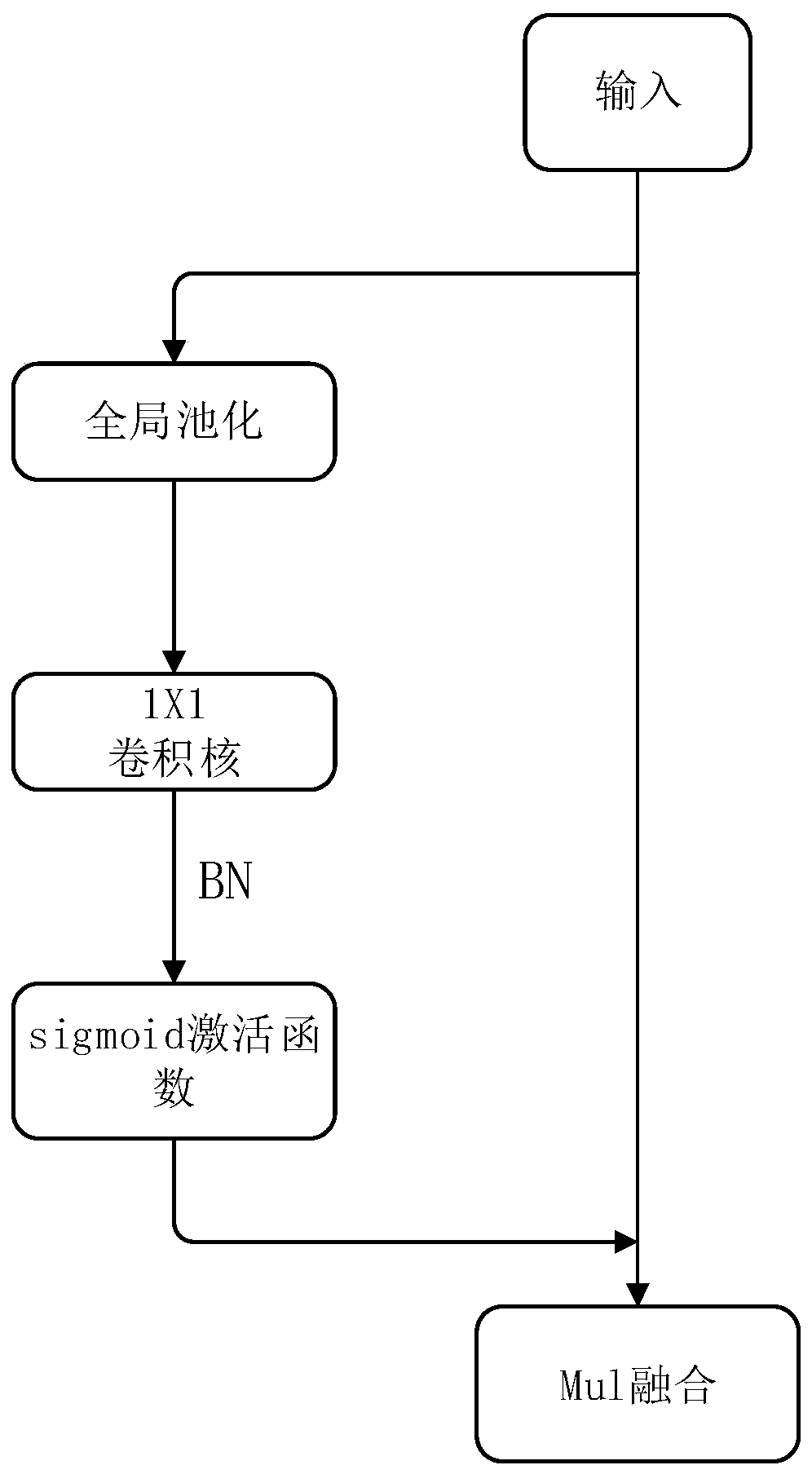

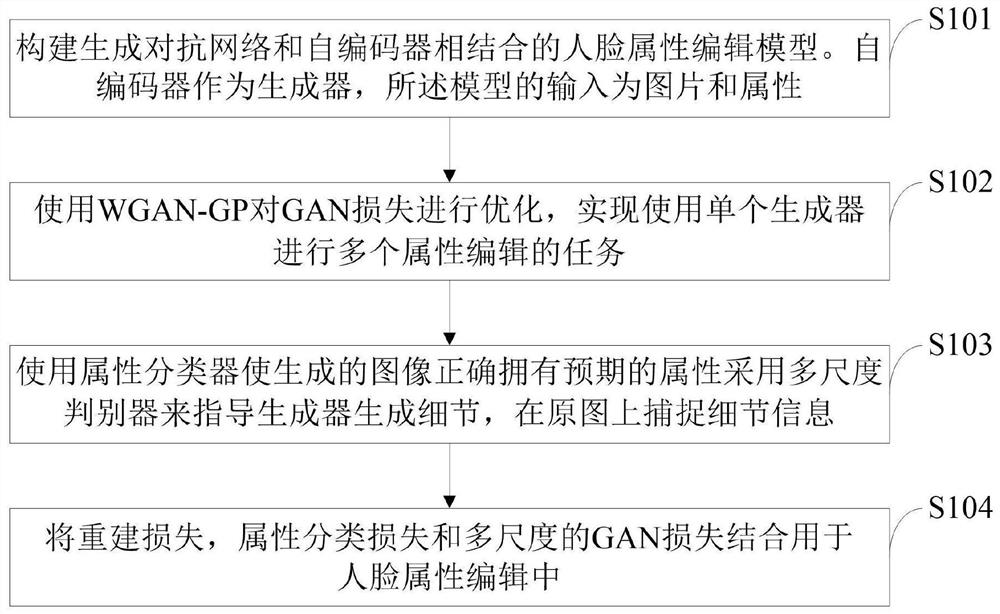

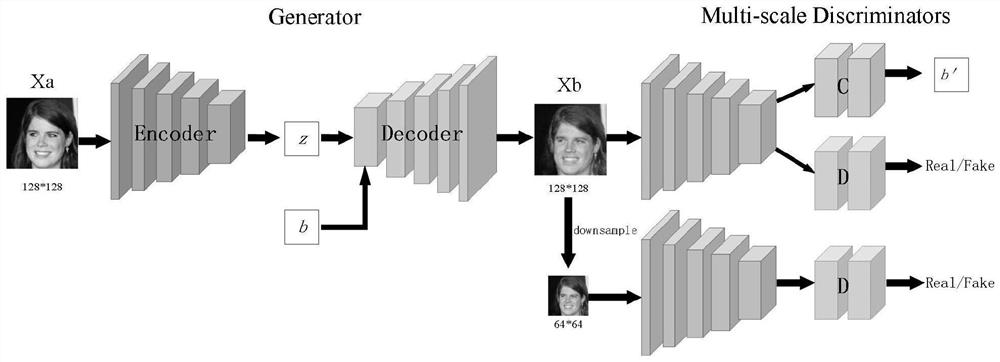

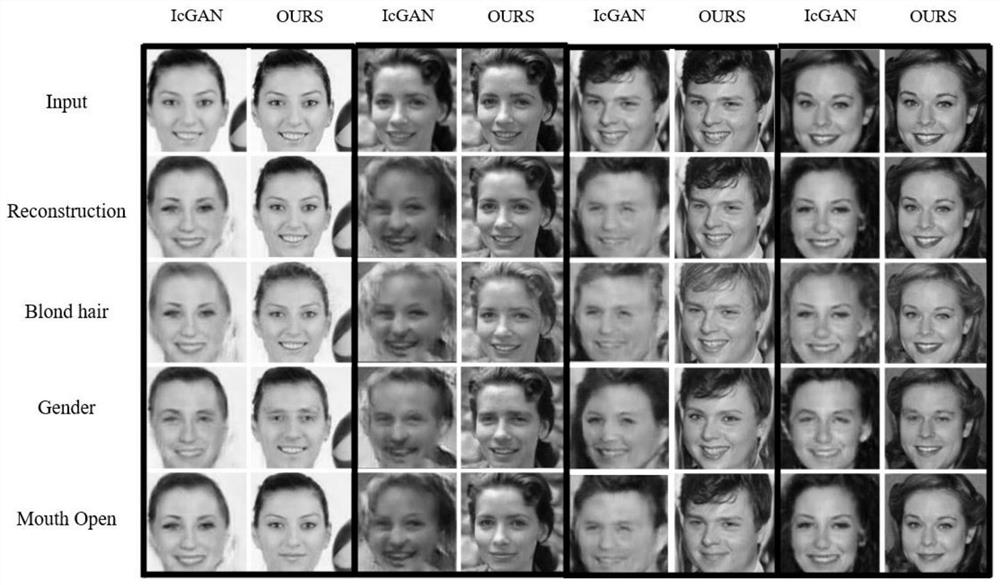

Face attribute editing method based on generative adversarial network and information processing terminal

ActiveCN111932444AImprove visual qualityOptimization of detailsGeometric image transformationCharacter and pattern recognitionPattern recognitionInformation processing

The invention belongs to the technical field of human face attribute editing, discloses a human face attribute editing method based on a generative adversarial network and an information processing terminal, and constructs a human face attribute editing model combining the generative adversarial network and an auto-encoder. Wherein the auto-encoder is used as a generator, and the input of the model is pictures and attributes; optimizing the GAN loss by using the WGAN-GP to realize a task of editing a plurality of attributes by using a single generator; enabling the generated image to correctlyhave expected attributes by using an attribute classifier; a multi-scale discriminator is adopted to guide a generator to generate details, and detail information is captured on an original image; and combining the reconstruction loss, the attribute classification loss and the multi-scale GAN loss for face attribute editing. Experiments on a CelebA data set show that a high-quality face image isgenerated on the basis that expected attributes are correctly owned, and the method has good performance in the aspects of single-attribute face editing, multi-attribute face editing and attribute strength control.

Owner:CHINA UNIV OF PETROLEUM (EAST CHINA) +1

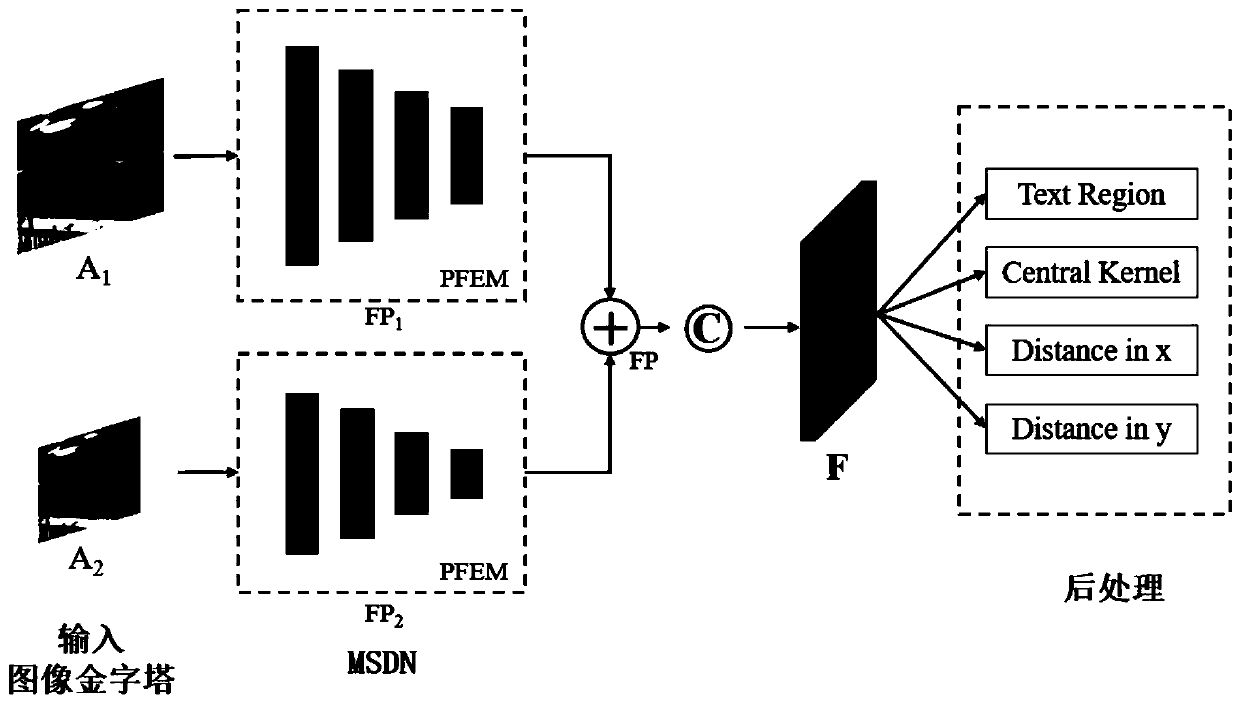

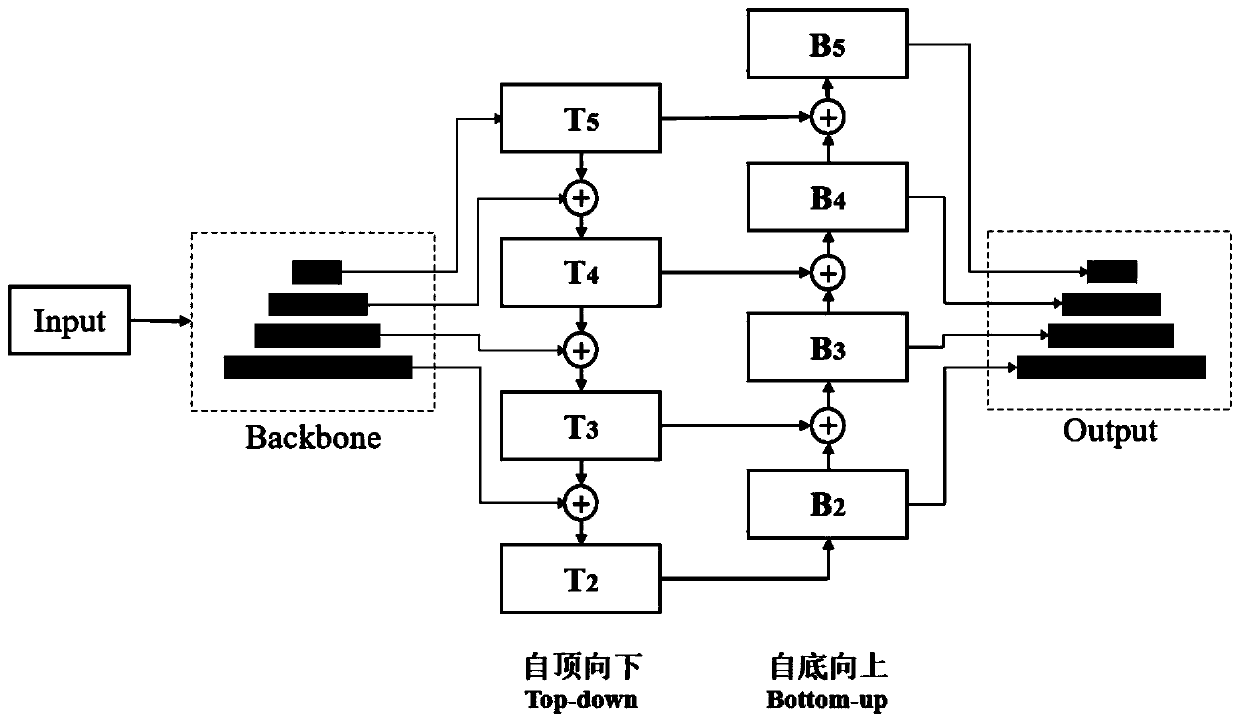

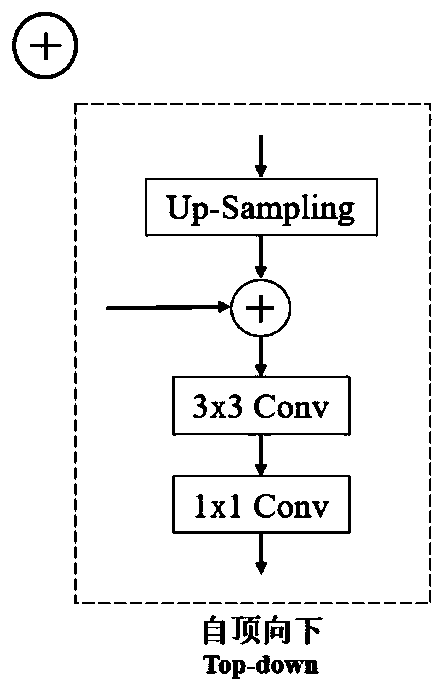

Segmentation-based multi-scale feature pyramid text detection method

ActiveCN111461114AExpand the receptive fieldEasy to detectCharacter and pattern recognitionText detectionFeature extraction

The invention discloses a segmentation-based multi-scale feature pyramid text detection method. The method comprises the steps of obtaining data; constructing a pyramid feature extraction model, and extracting features from the acquired data; sampling input data to obtain input images with different scales, respectively inputting the input images into a pyramid feature extraction model, extractingtext features, fusing and processing the text features of the input images with different scales through a multi-scale detection network to obtain a feature map, and predicting the feature map; and processing a prediction result to obtain a contour boundary line of the text region. The method has high robustness, can be directly applied to text detection of any shape in a natural scene, and achieves high accuracy, recall rate and F value.

Owner:SOUTH CHINA UNIV OF TECH +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com