Patents

Literature

4216results about "Acquiring/recognising facial features" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

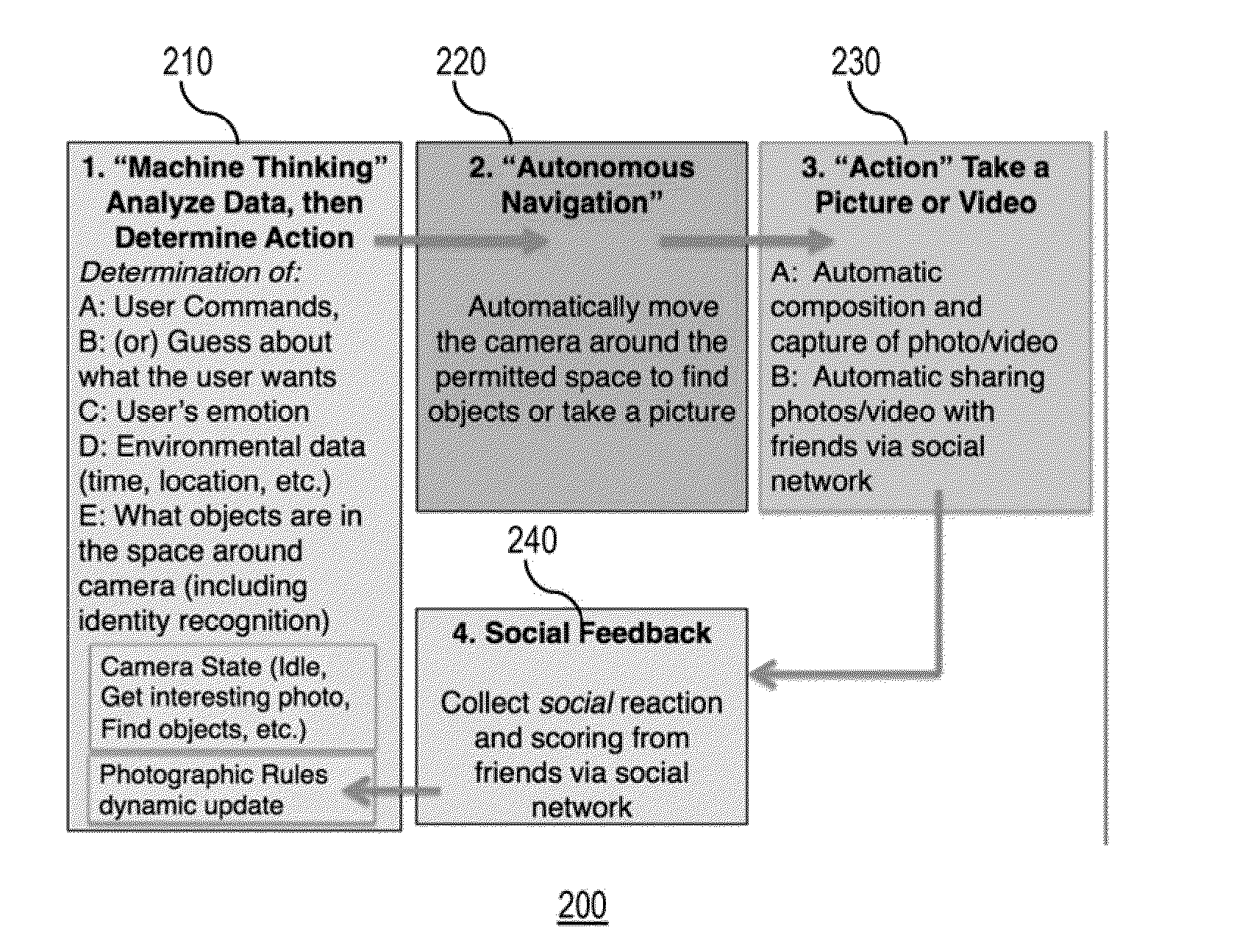

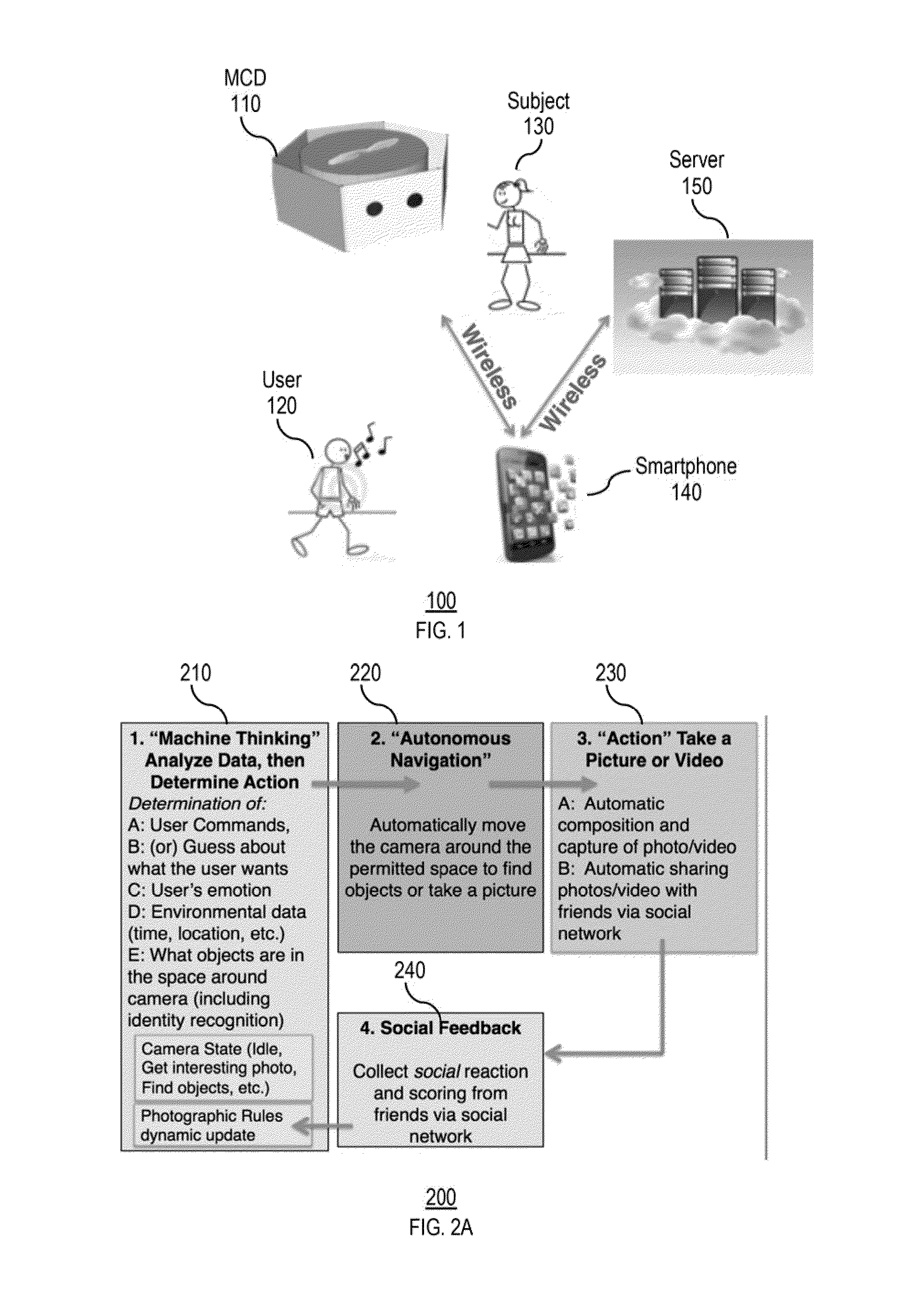

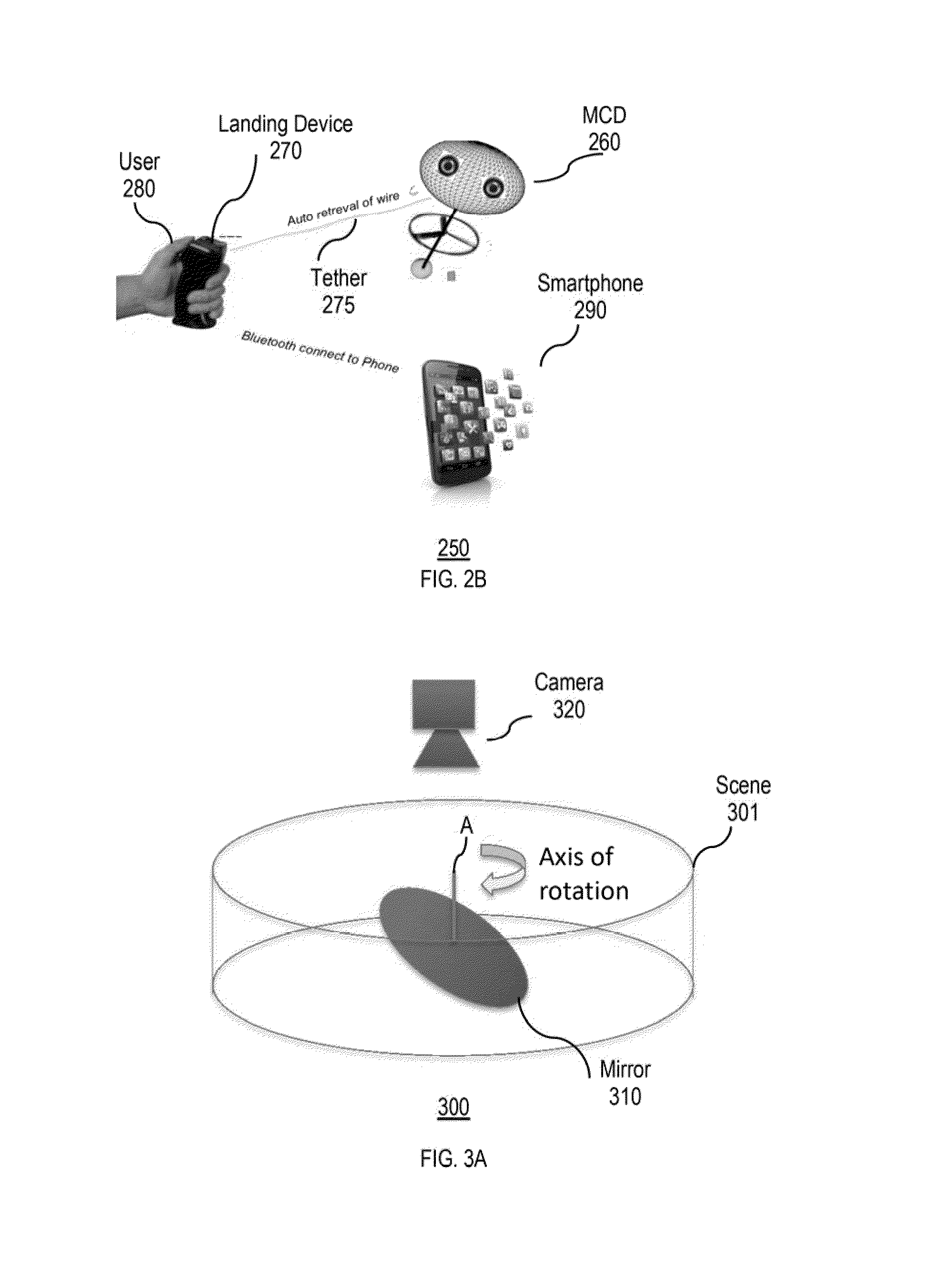

Autonomous media capturing

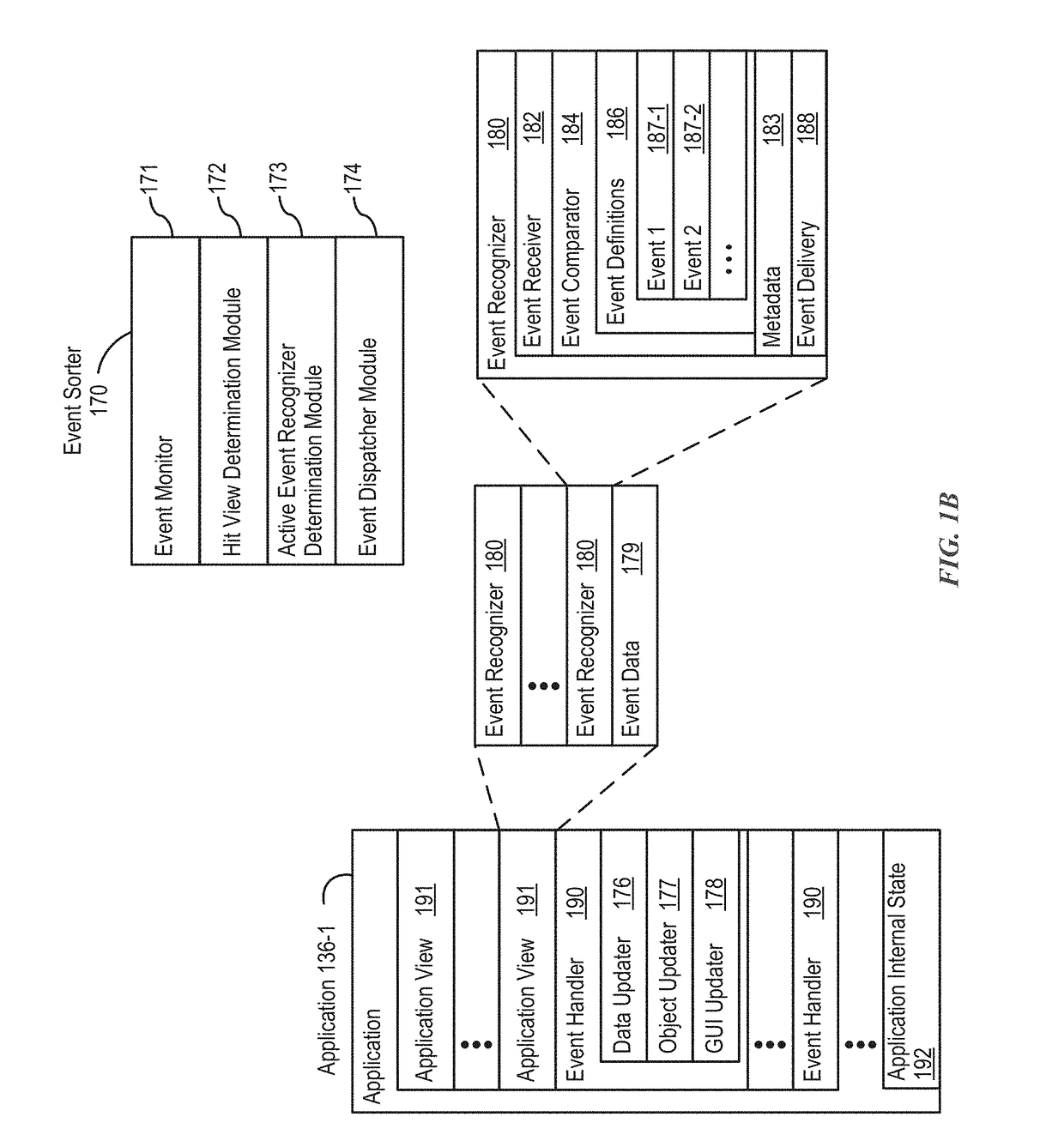

ActiveUS20160127641A1Simple processGood photoInput/output for user-computer interactionTelevision system detailsSocial circleSkill sets

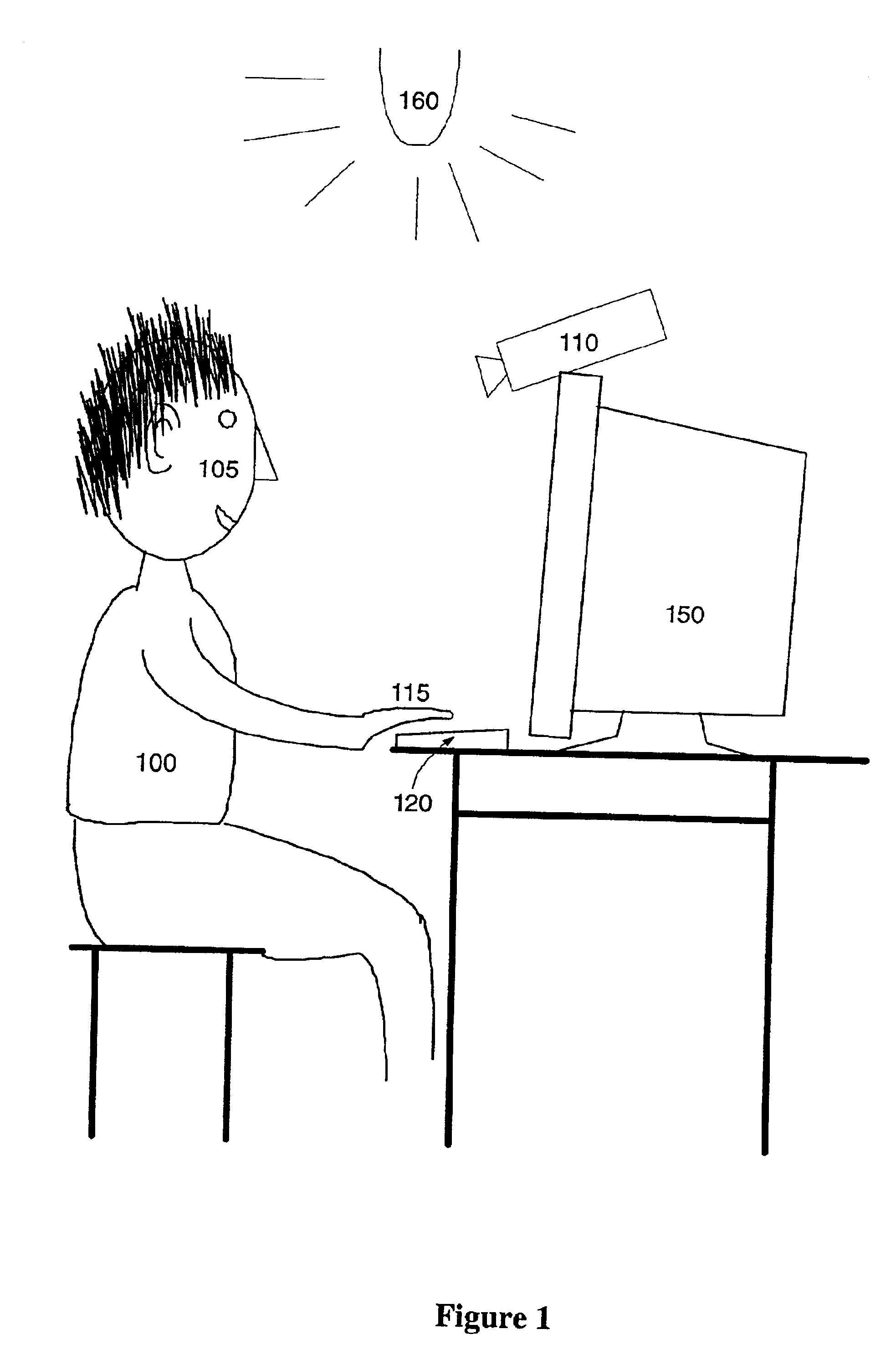

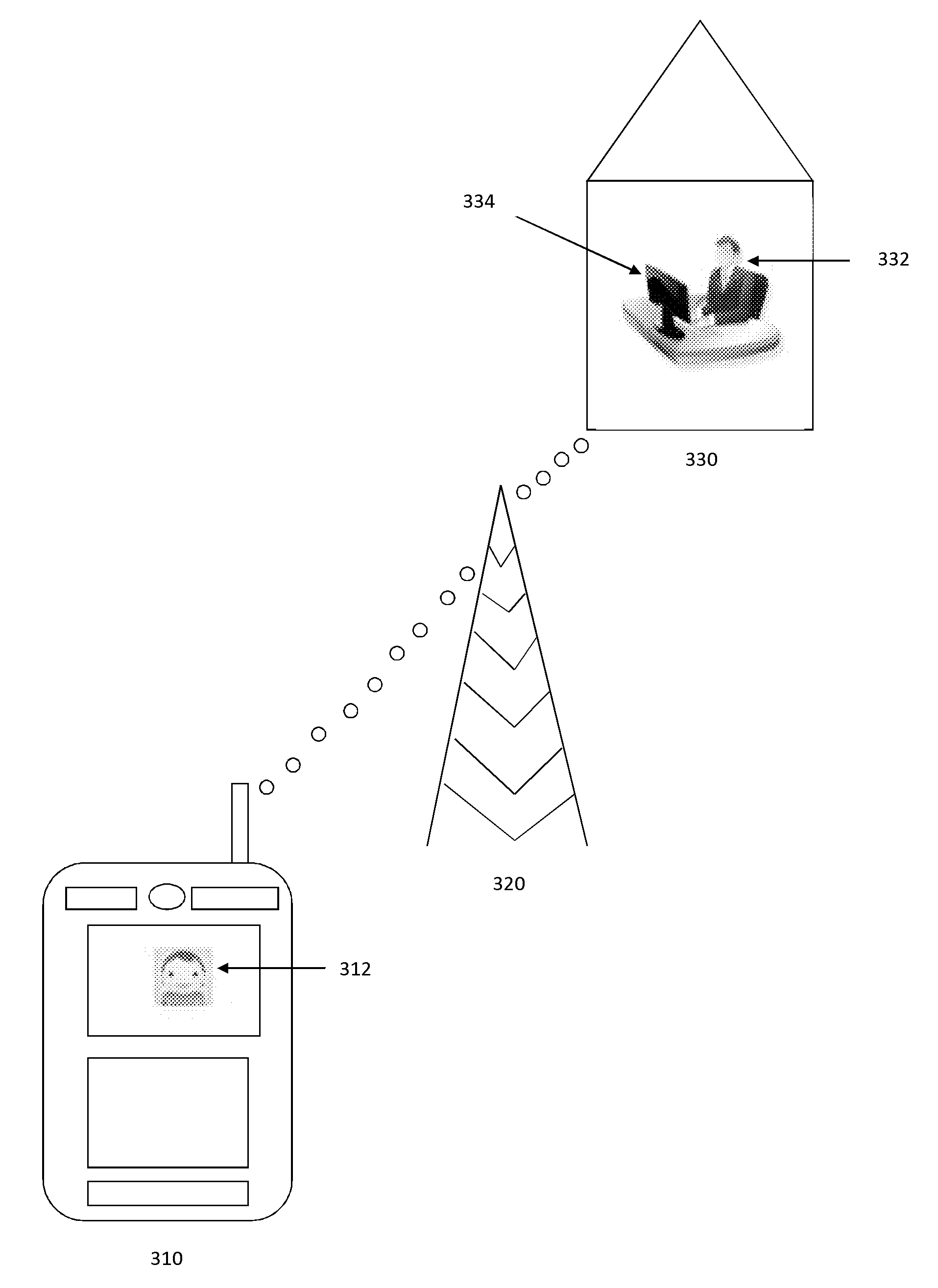

A media capture device (MCD) that provides a multi-sensor, free flight camera platform with advanced learning technology to replicate the desires and skills of the purchaser / owner is provided. Advanced algorithms may uniquely enable many functions for autonomous and revolutionary photography. The device may learn about the user, the environment, and / or how to optimize a photographic experience so that compelling events may be captured and composed into efficient and emotional sharing. The device may capture better photos and videos as perceived by one's social circle of friends, and / or may greatly simplify the process of using a camera to the ultimate convenience of full autonomous operation.

Owner:GOVE ROBERT JOHN

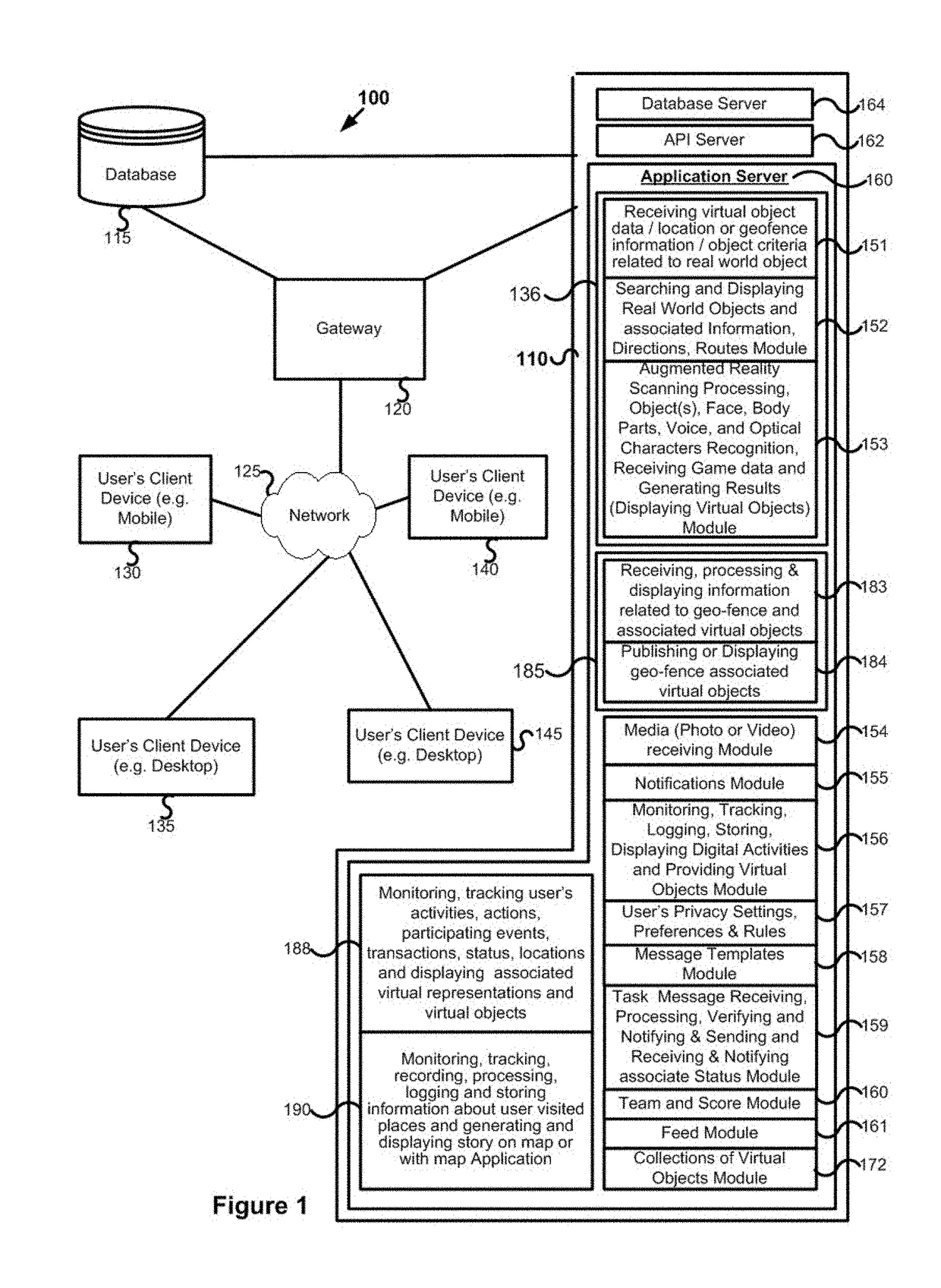

Generating, recording, simulating, displaying and sharing user related real world activities, actions, events, participations, transactions, status, experience, expressions, scenes, sharing, interactions with entities and associated plurality types of data in virtual world

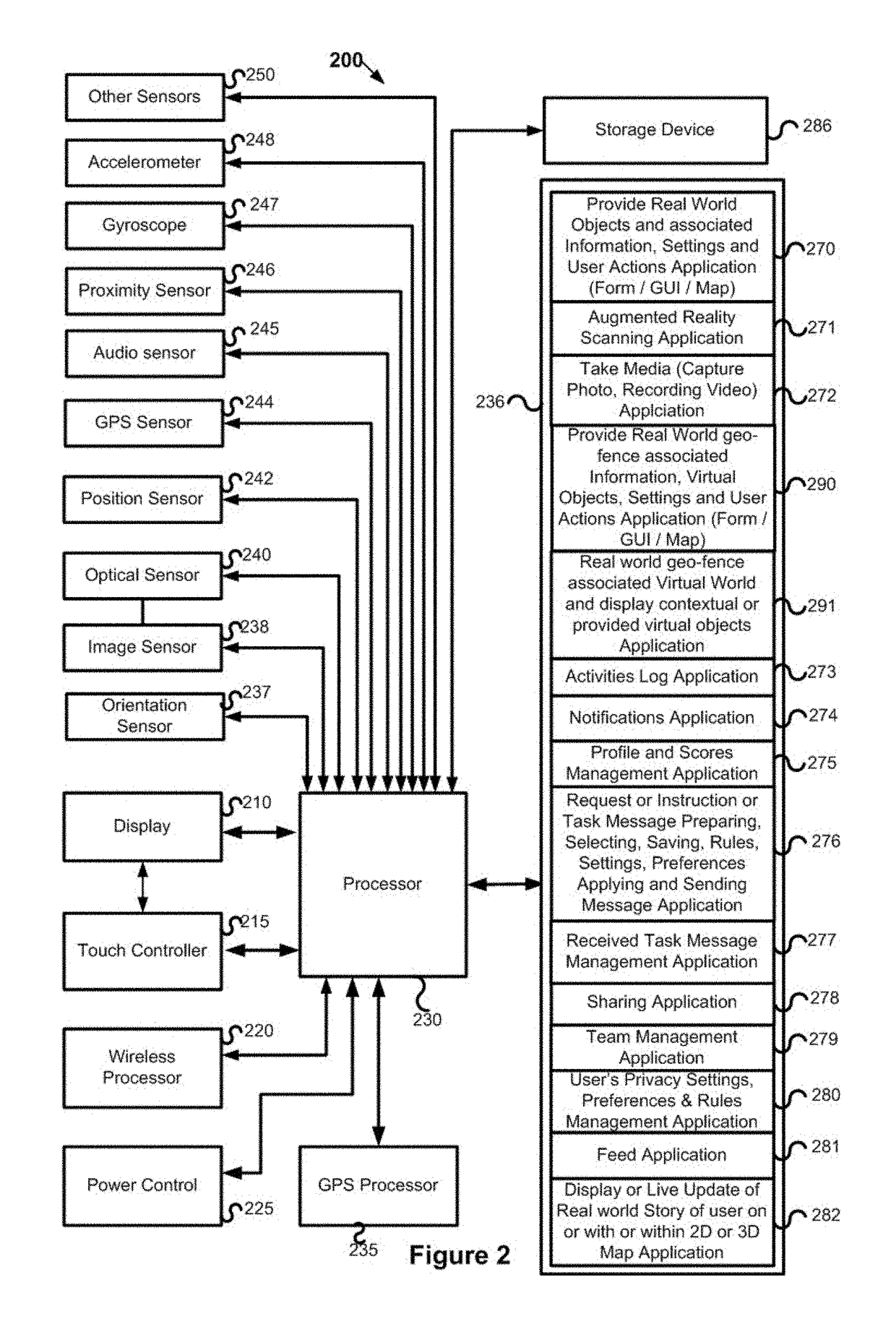

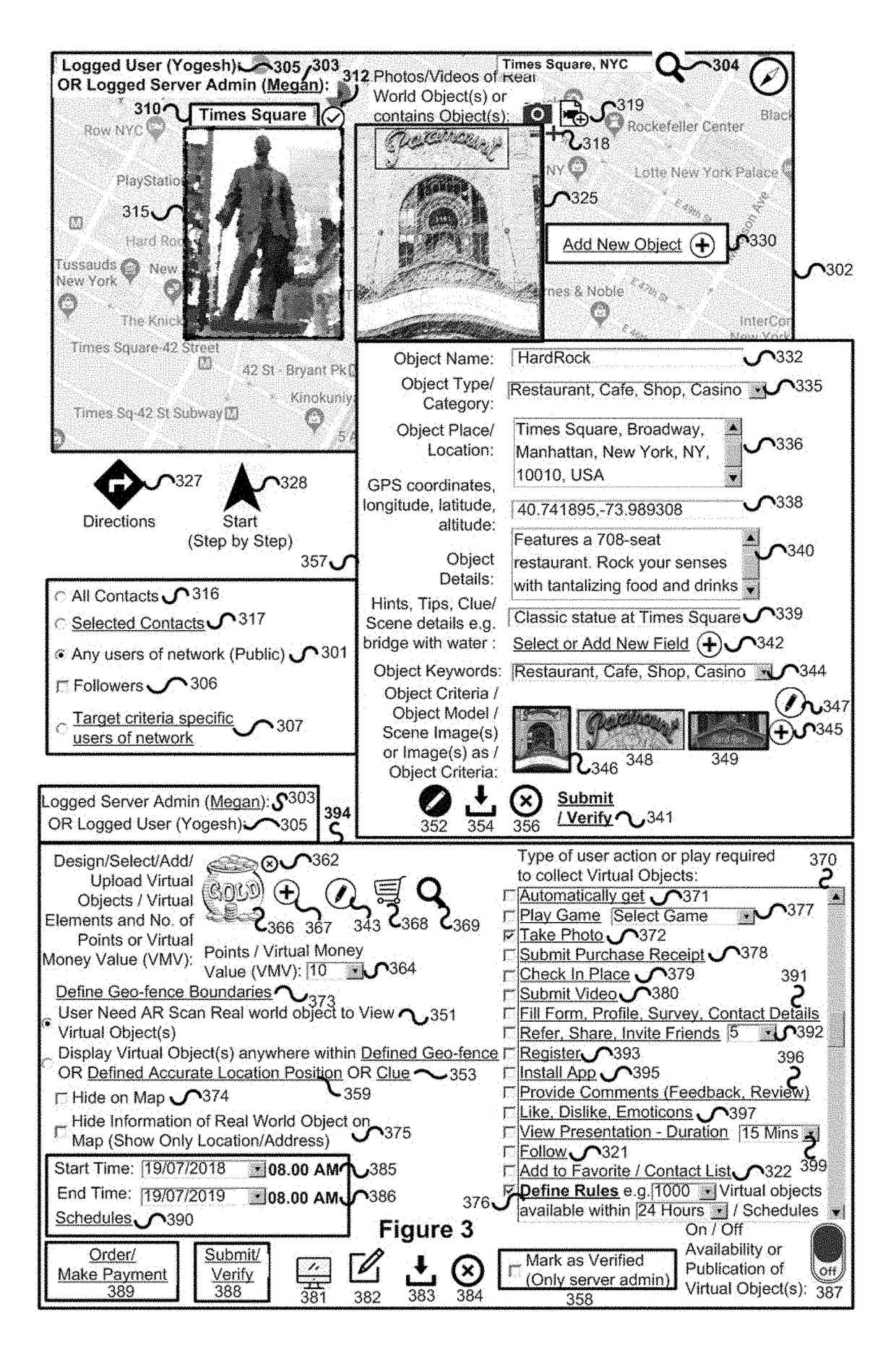

Systems and methods for virtual world simulations of the real-world or emulate real-life or real-life activities in virtual world or real life simulator or generating a virtual world based on real environment: host, at a server, a virtual world geography or environment that correspondences the real world geography or environment as a result, as the user continuously moves about or navigates in a range of coordinates in the real world, the user also continuously moves about in a range of coordinates in the real world map or virtual world; generate and access, by the server, a first avatar or representation, that is associated with a first user or entity in the virtual world; monitor, track and store, by the server, plurality types of data associated with user's real life or real life activities, actions, transactions, participated or participating events, current or past locations, checked-in places, participations, expressions, reactions, relations, connections, status, behaviours, sharing, communications, collaborations, interactions with various types of entities in the real world; receive, by the server, first data associated with a mobile device of the first user related to the first activity from the first geo-location co-ordinates or place; determine, by the server, one or more real world activities of the first user based on the first data; generate, record, simulate and update, by the server, virtual world based on said stored data, wherein updating a first avatar, that is associated with the first user or entity, in the virtual world; causing, by the server, a first avatar associated with the first user or entity, to engage in one or more virtual activities in the virtual world, that are at least one of the same as or sufficiently similar to or substantially similar to the determined one or more real world activities, by generating, recording, simulating, updating and displaying, by a simulation engine, simulation or a graphic user interface that presents a user a simulation of said real-life activities; and display in the virtual world, by the server, said real world activity or interacted entity or location or place or GPS co-ordinates related or associated or one or more types of user generated or provided or shared or identified contextual one or more types of contents, media, data and metadata from one or more sources including server, providers, contacts of user and users of network and external sources, databases, servers, networks, devices, websites and applications, wherein virtual world geography correspondences the real world geography. In an embodiment receiving from a user, a privacy settings, instructing to limit viewing of or sharing of said generated simulation of user's real world life or user's real world life activities to selected one or more contacts, followers, all or one or more criteria or filters specific users of network or make it as private.

Owner:RATHOD YOH

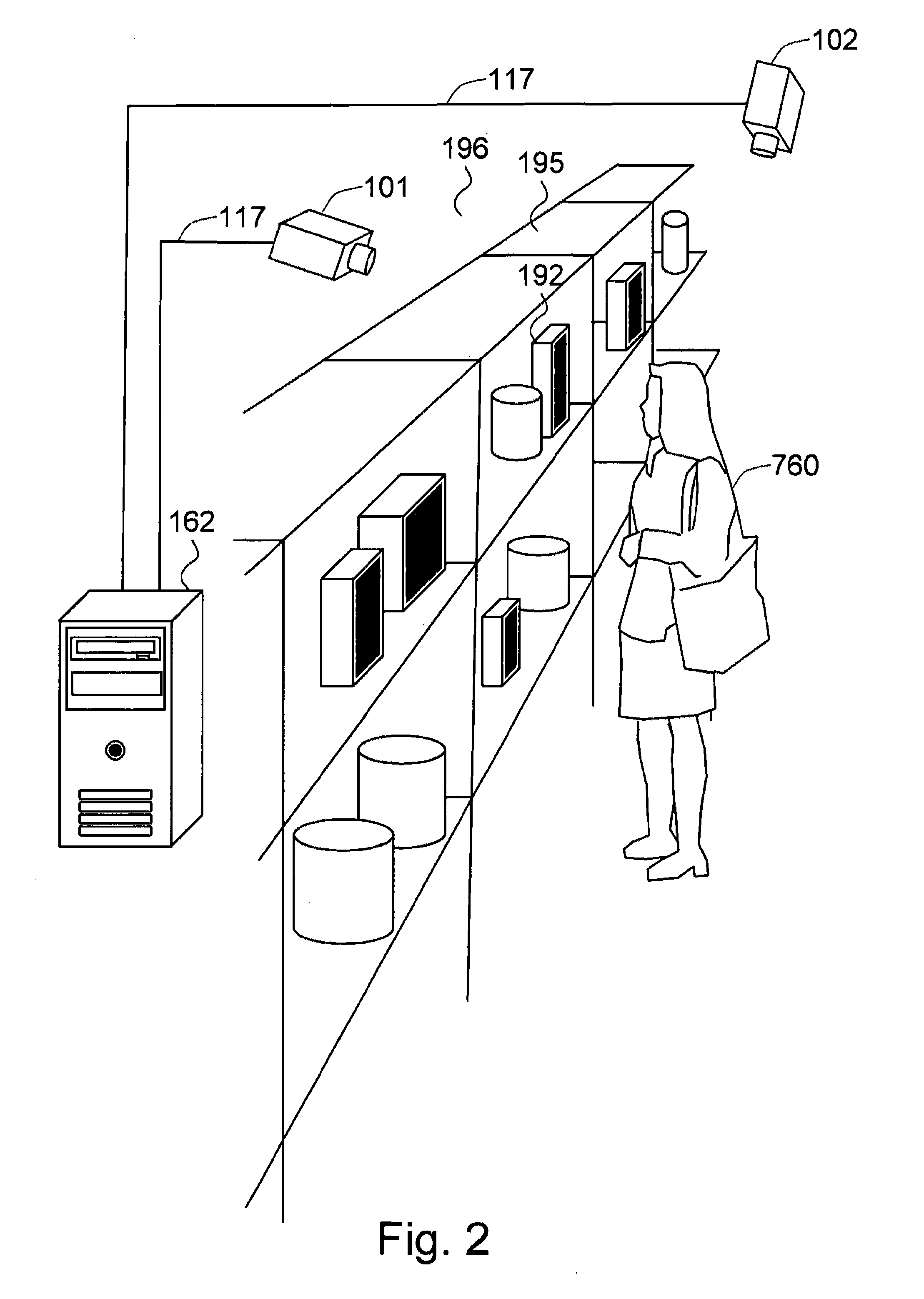

Method and system for measuring shopper response to products based on behavior and facial expression

ActiveUS8219438B1Reliable informationAccurate locationMarket predictionsAcquiring/recognising eyesPattern recognitionProduct base

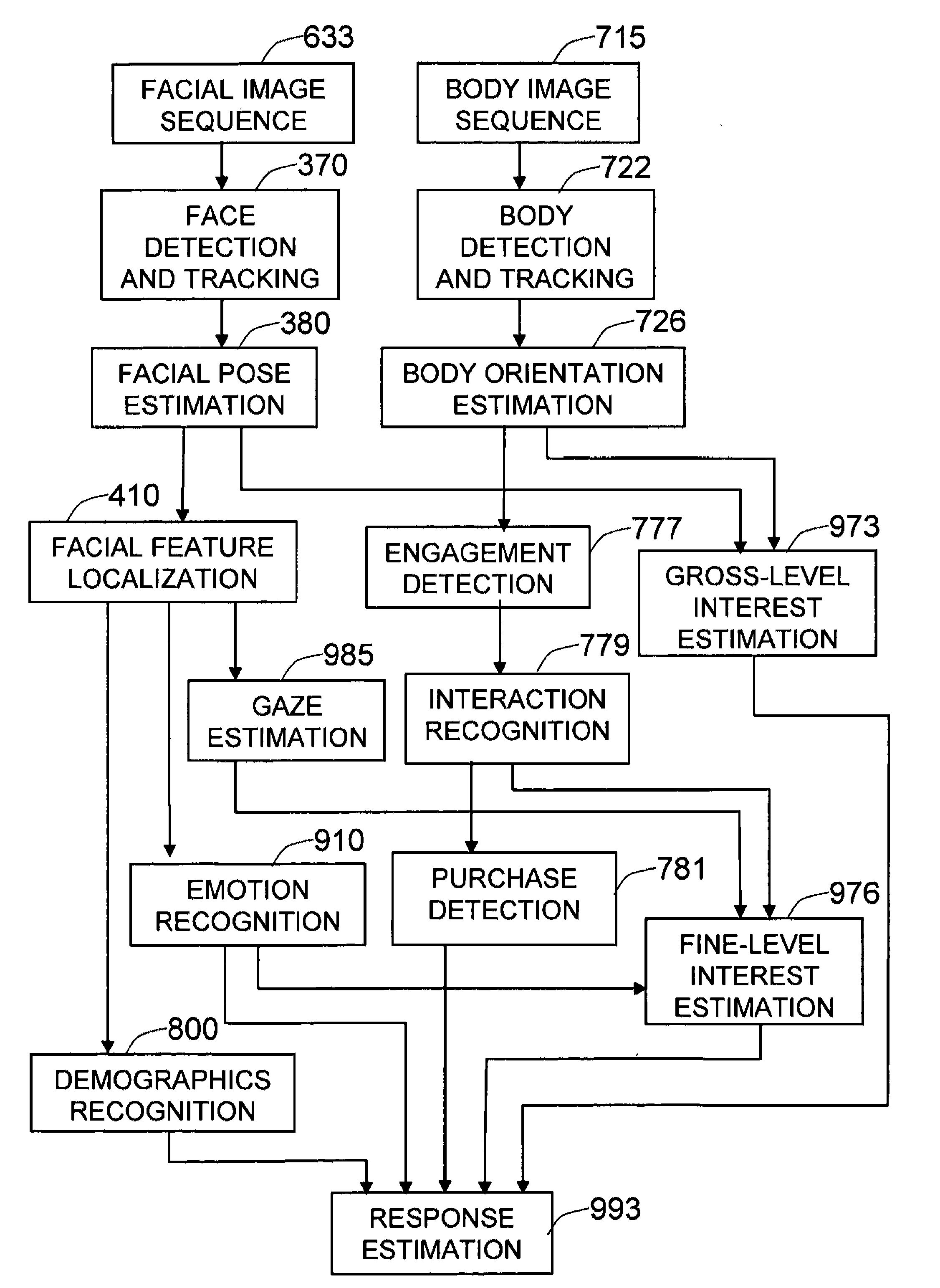

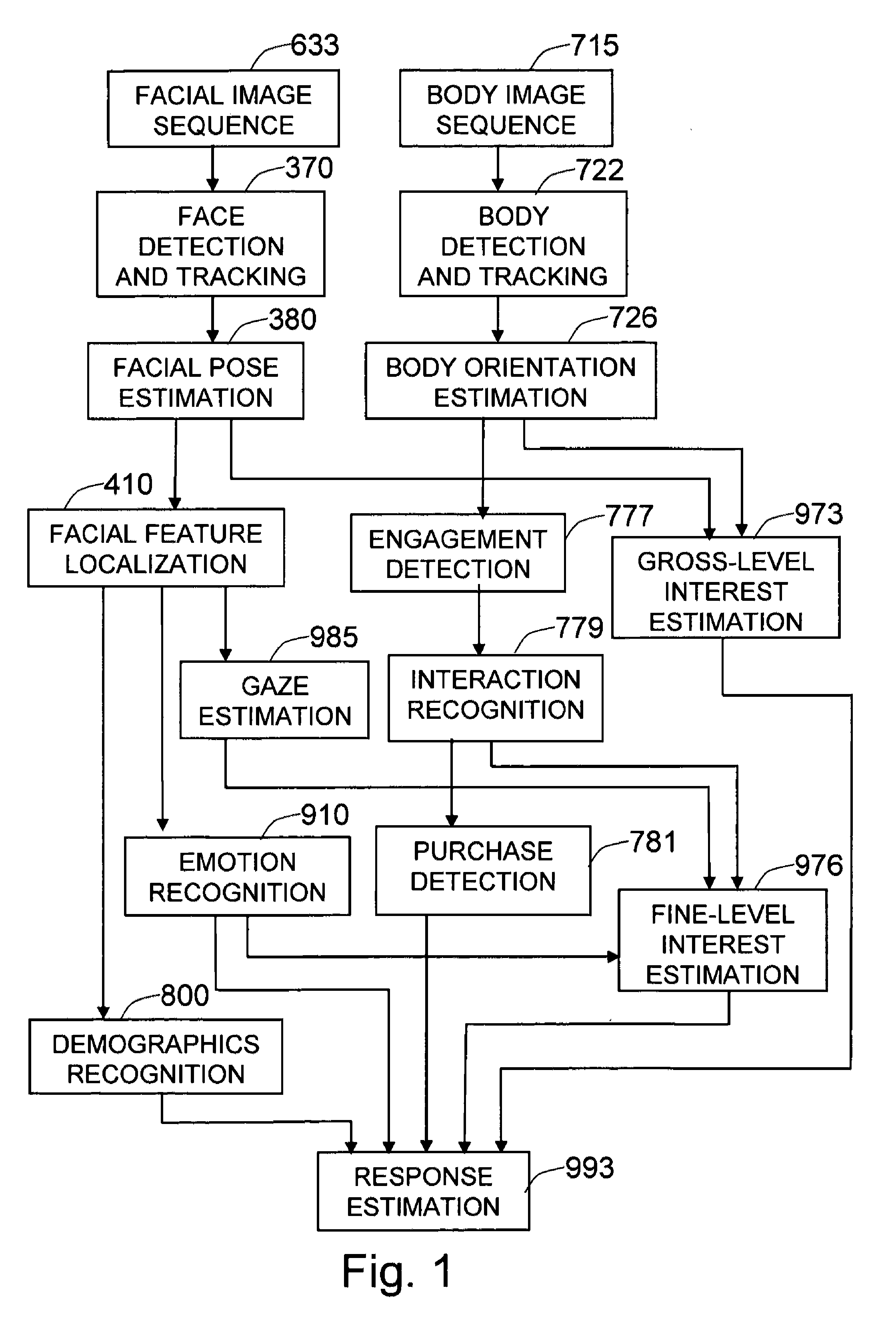

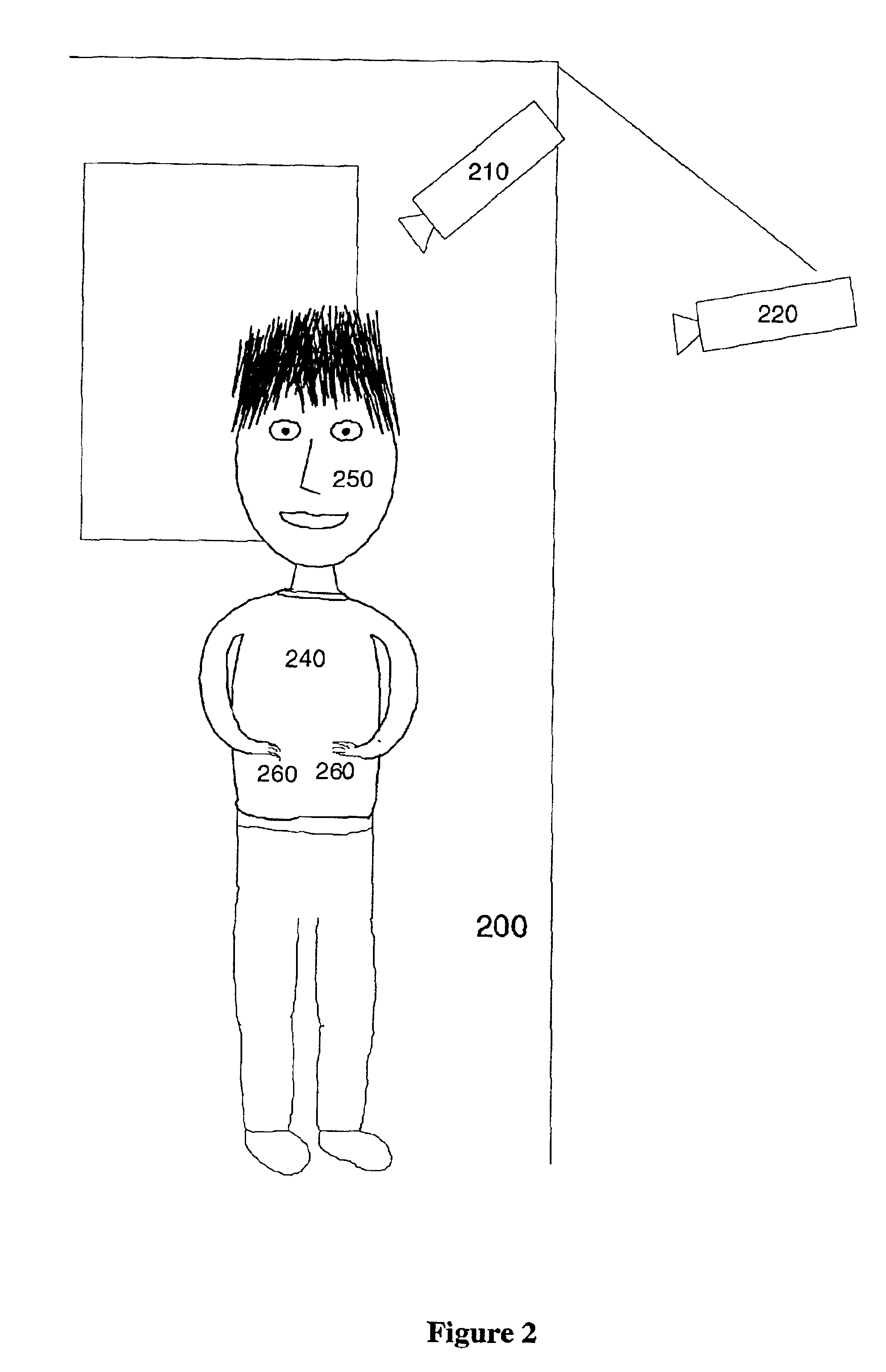

The present invention is a method and system for measuring human response to retail elements, based on the shopper's facial expressions and behaviors. From a facial image sequence, the facial geometry—facial pose and facial feature positions—is estimated to facilitate the recognition of facial expressions, gaze, and demographic categories. The recognized facial expression is translated into an affective state of the shopper and the gaze is translated into the target and the level of interest of the shopper. The body image sequence is processed to identify the shopper's interaction with a given retail element—such as a product, a brand, or a category. The dynamic changes of the affective state and the interest toward the retail element measured from facial image sequence is analyzed in the context of the recognized shopper's interaction with the retail element and the demographic categories, to estimate both the shopper's changes in attitude toward the retail element and the end response—such as a purchase decision or a product rating.

Owner:PARMER GEORGE A

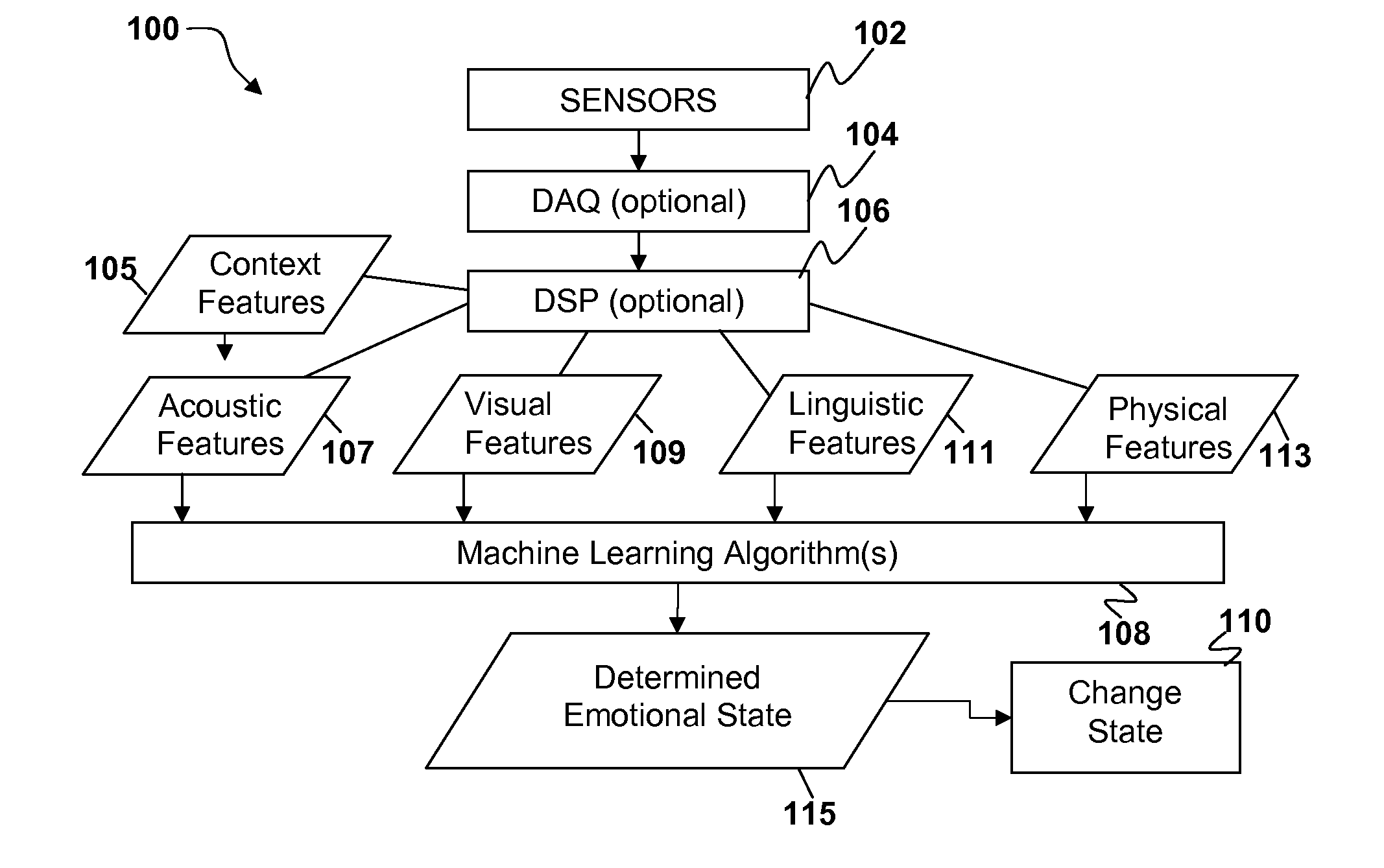

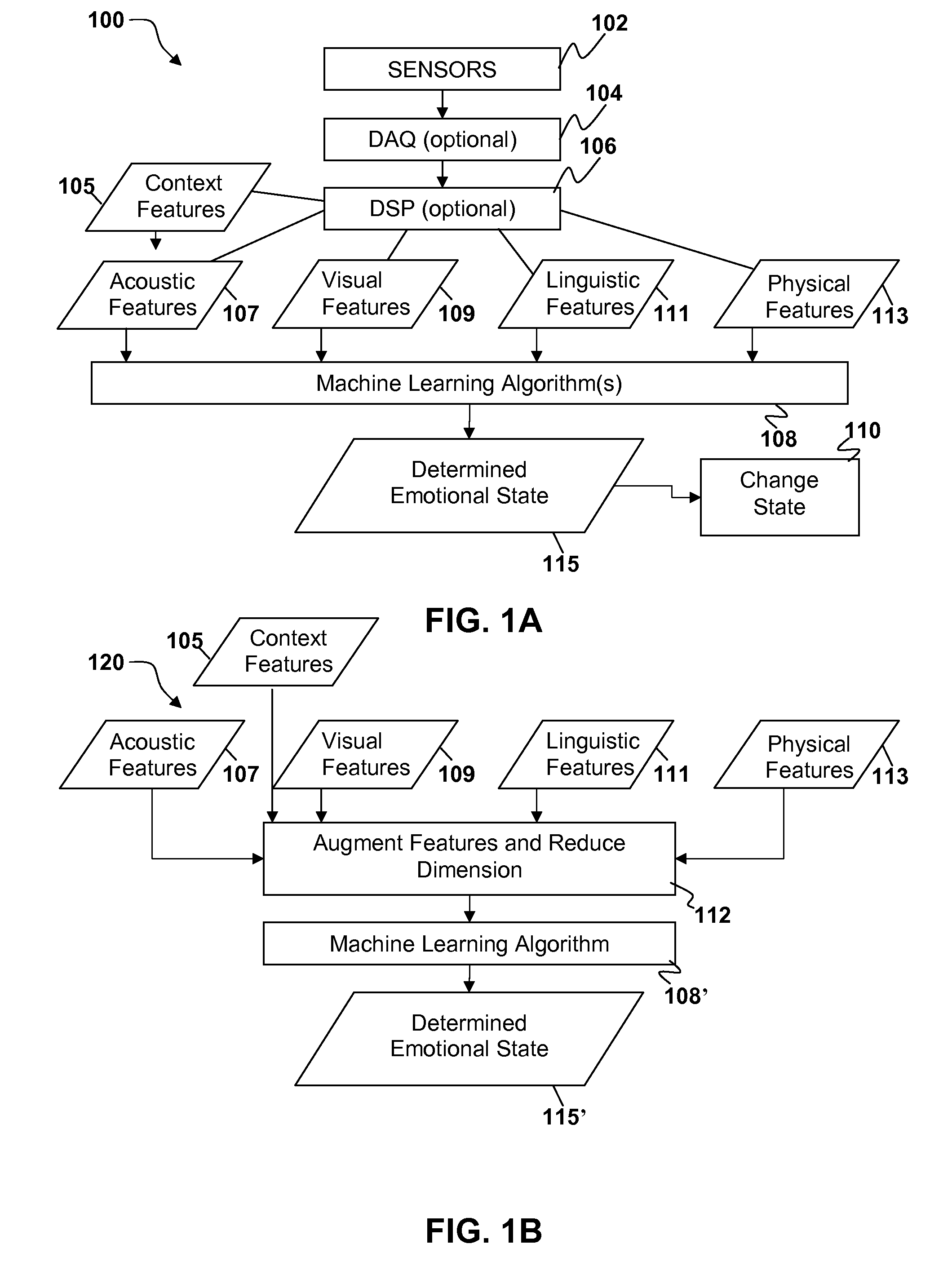

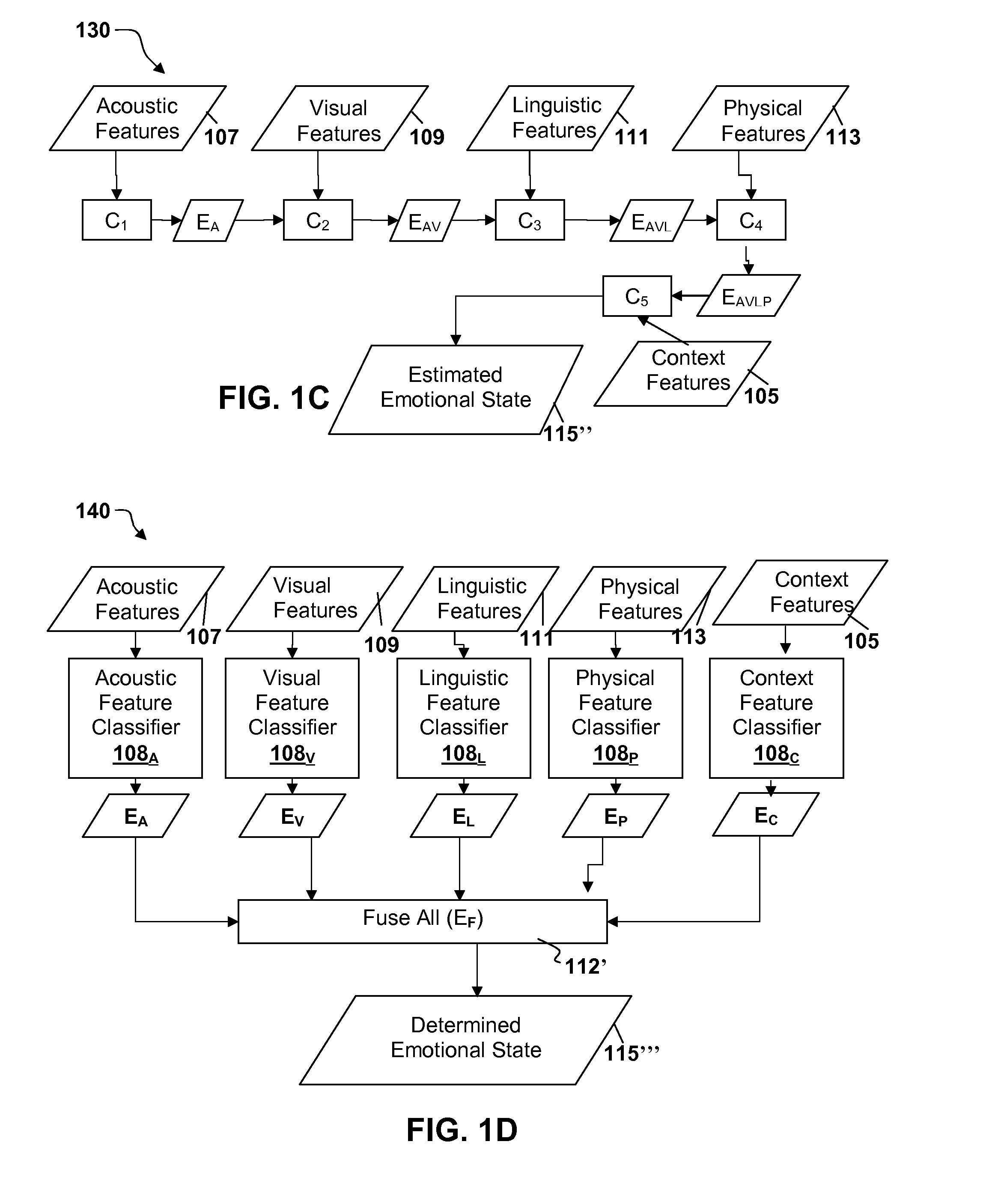

Multi-modal sensor based emotion recognition and emotional interface

ActiveUS20140112556A1Speech recognitionAcquiring/recognising facial featuresPattern recognitionSubject matter

Features, including one or more acoustic features, visual features, linguistic features, and physical features may be extracted from signals obtained by one or more sensors with a processor. The acoustic, visual, linguistic, and physical features may be analyzed with one or more machine learning algorithms and an emotional state of a user may be extracted from analysis of the features. It is emphasized that this abstract is provided to comply with the rules requiring an abstract that will allow a searcher or other reader to quickly ascertain the subject matter of the technical disclosure. It is submitted with the understanding that it will not be used to interpret or limit the scope or meaning of the claims.

Owner:SONY COMPUTER ENTERTAINMENT INC

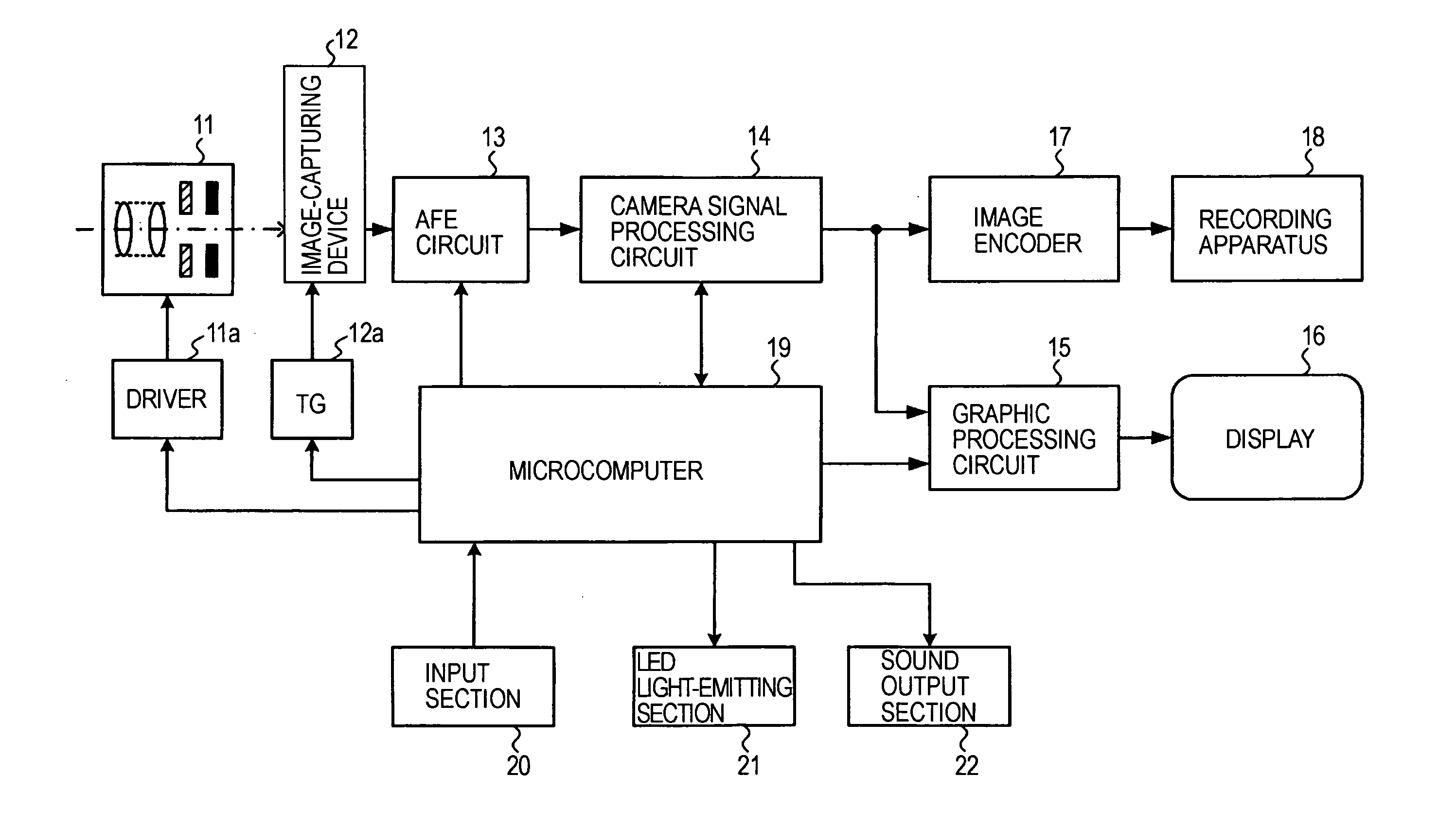

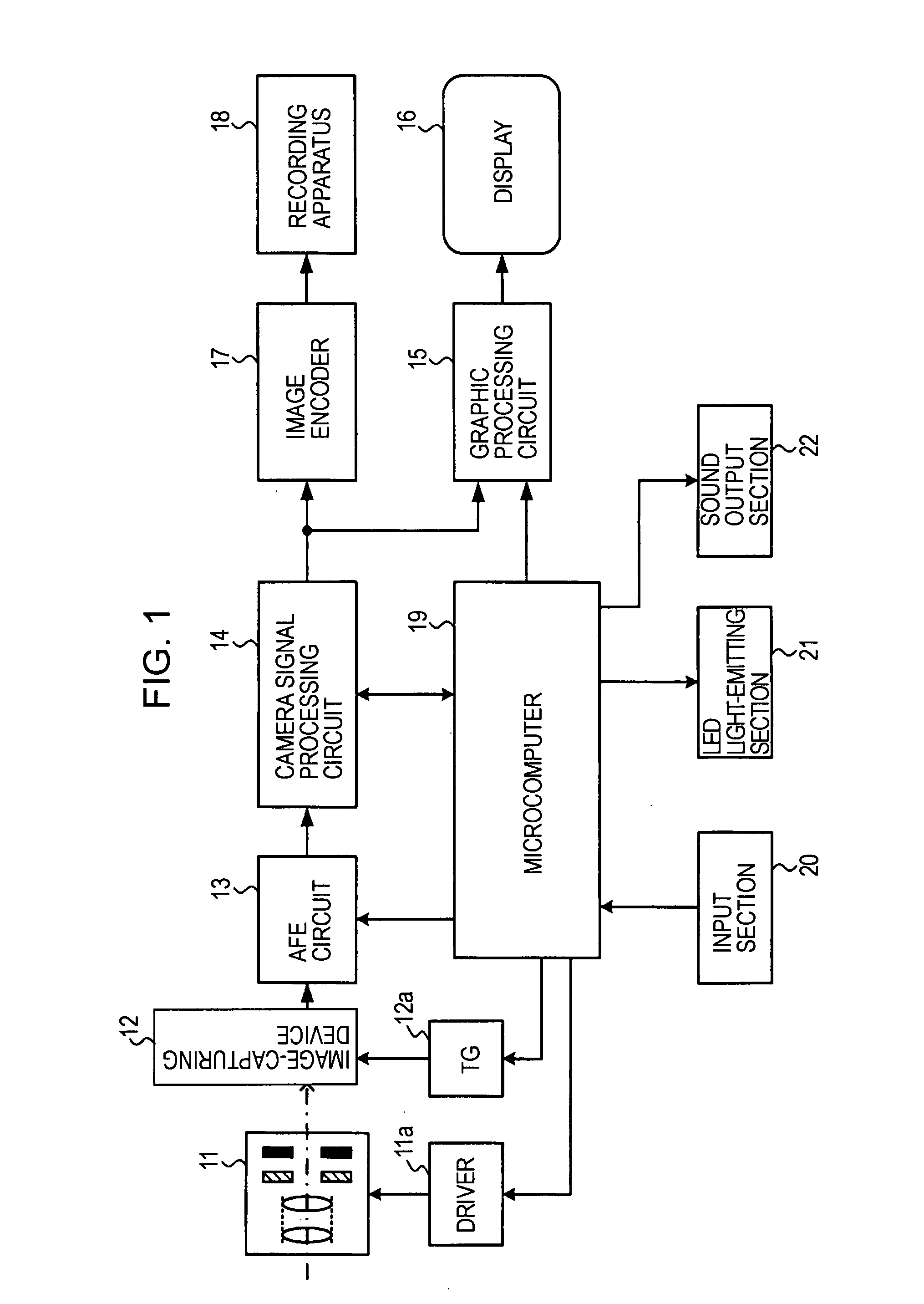

Image processing device, image device, image processing method

ActiveUS20060115157A1Sure easyAccurately determineImage enhancementImage analysisPattern recognitionImaging processing

Owner:CANON KK

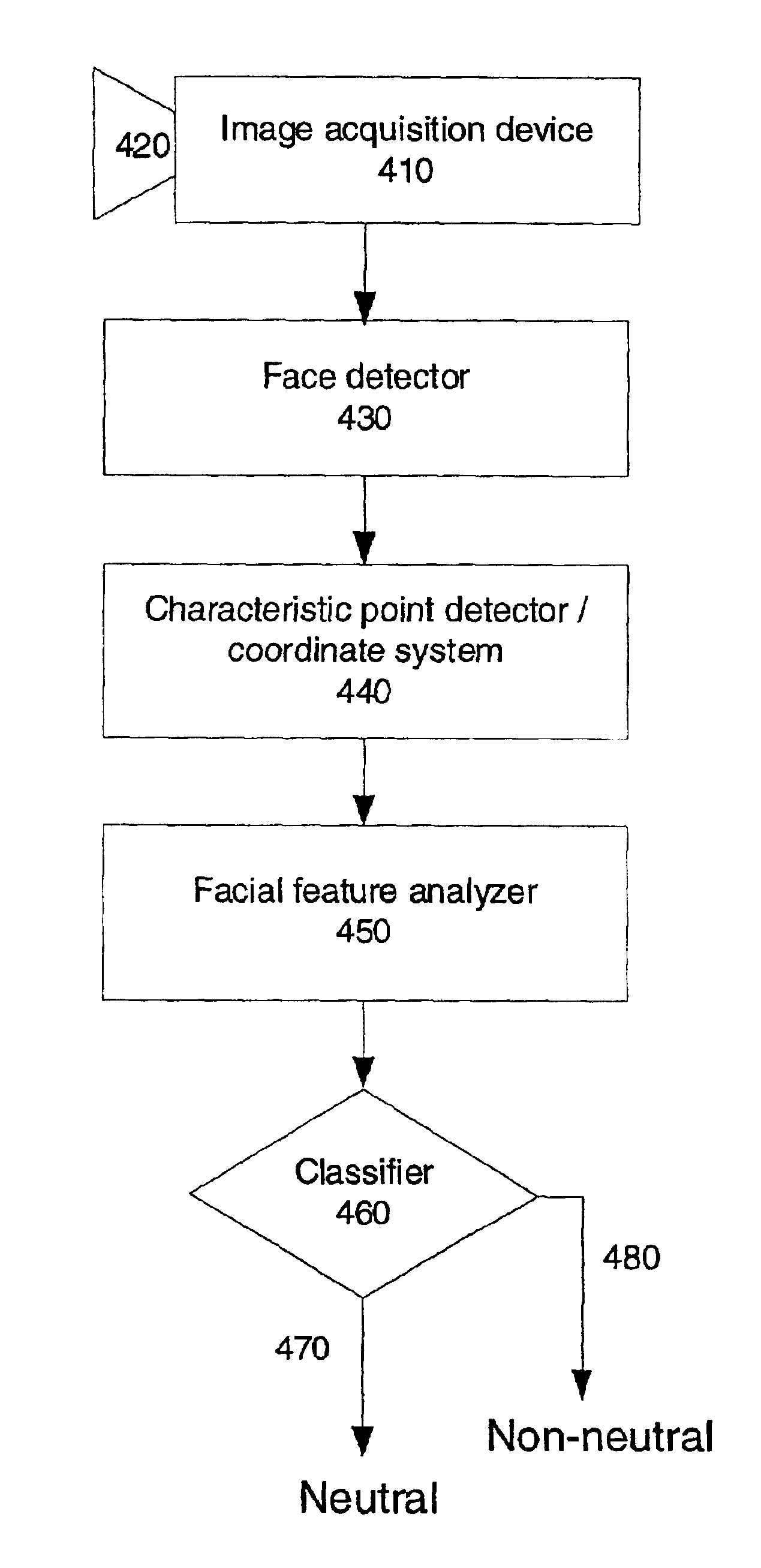

System and method for automatically detecting neutral expressionless faces in digital images

ActiveUS6879709B2Improve facial expression analysisEasy to analyzeProgramme controlElectric signal transmission systemsFace detectionDigital image

A system and method for automatic detecting neutral expressionless faces in digital images and video is described. First a face detector is used to detect the pose and position of a face and find the facial components. Second, the detected face is normalized to a standard size face. Then a set of geometrical facial features and three histograms in zones of mouth are extracted. Finally, by feeding these features to a classifier, the system detects if there is the neutral expressionless face or not.

Owner:SAMSUNG ELECTRONICS CO LTD

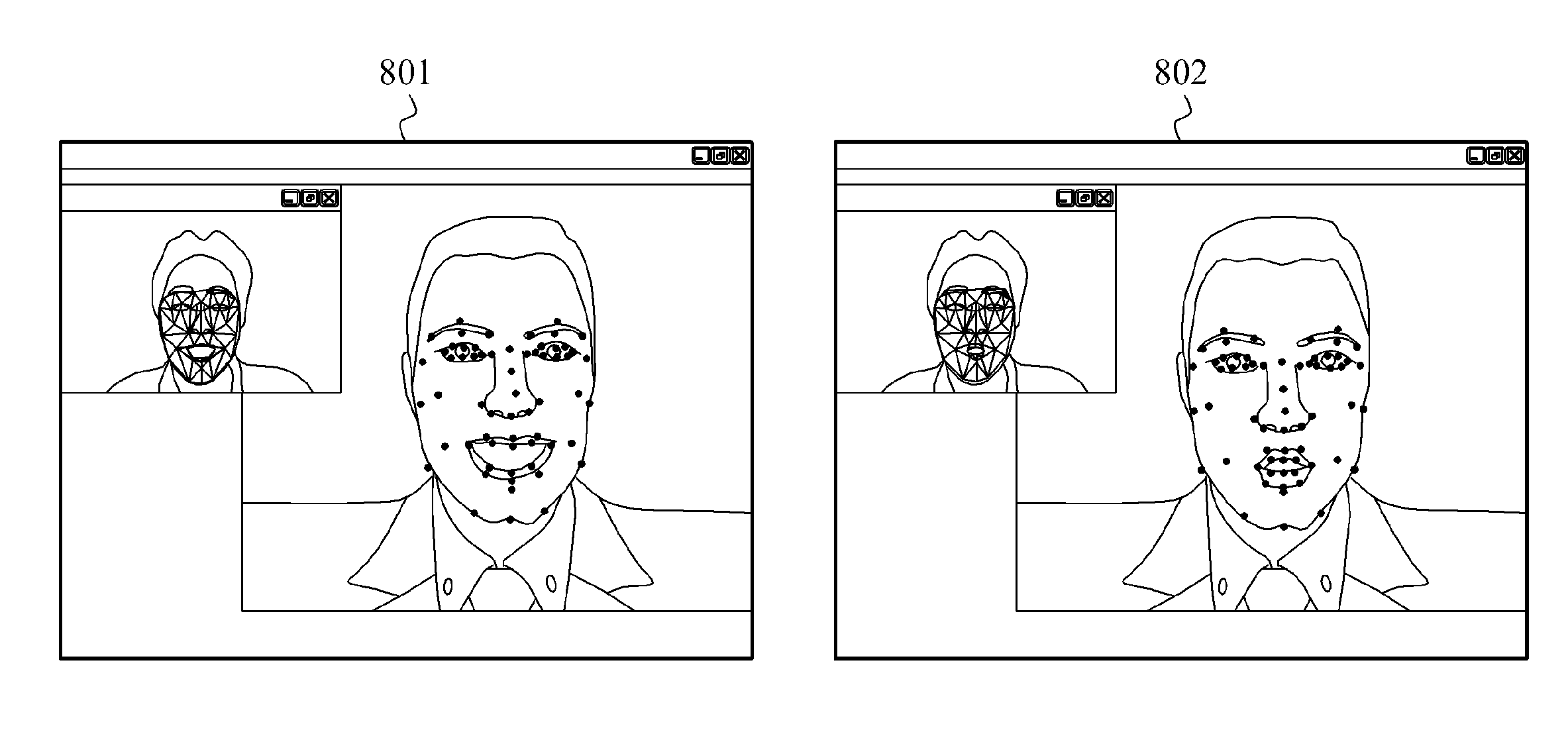

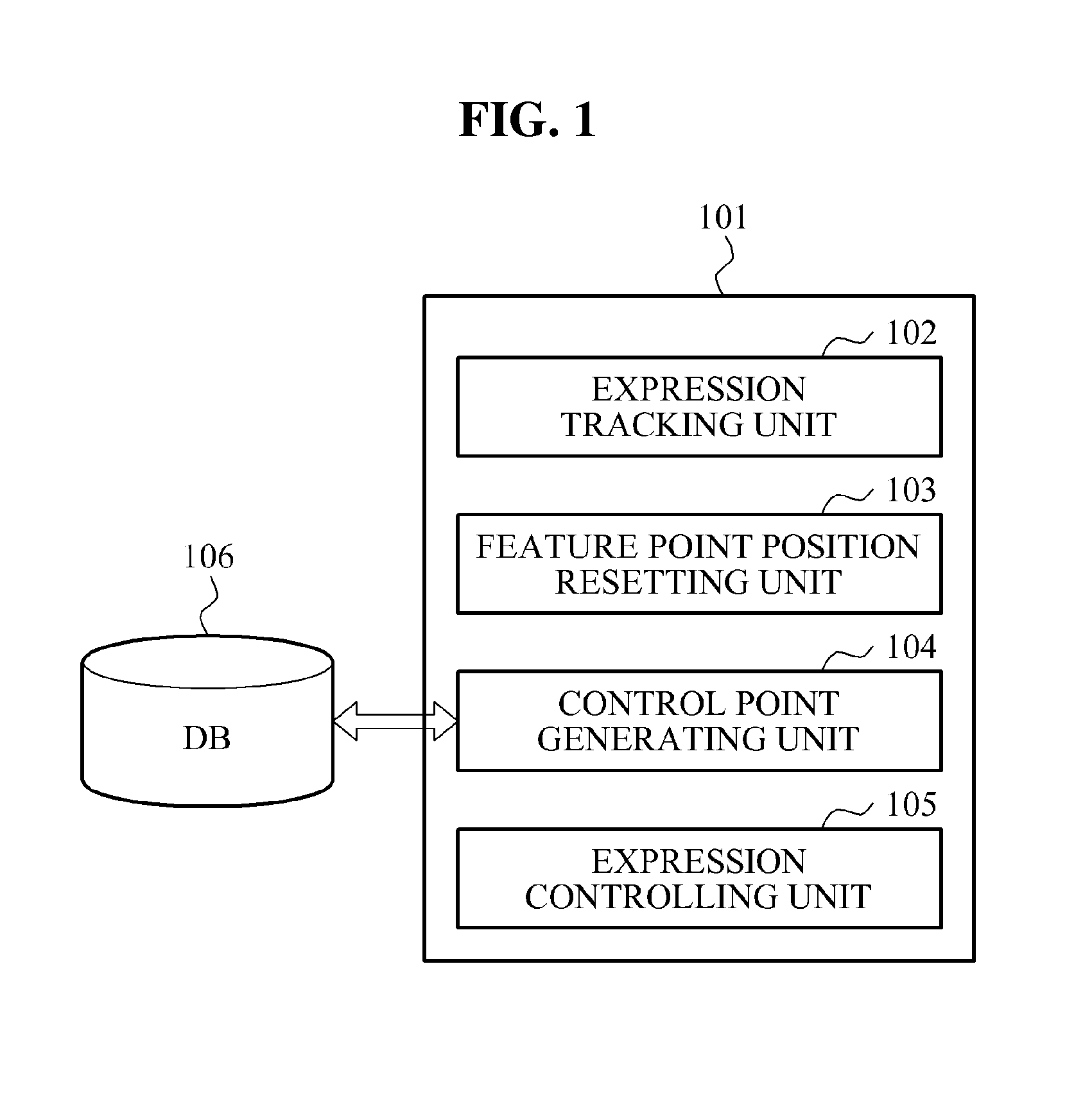

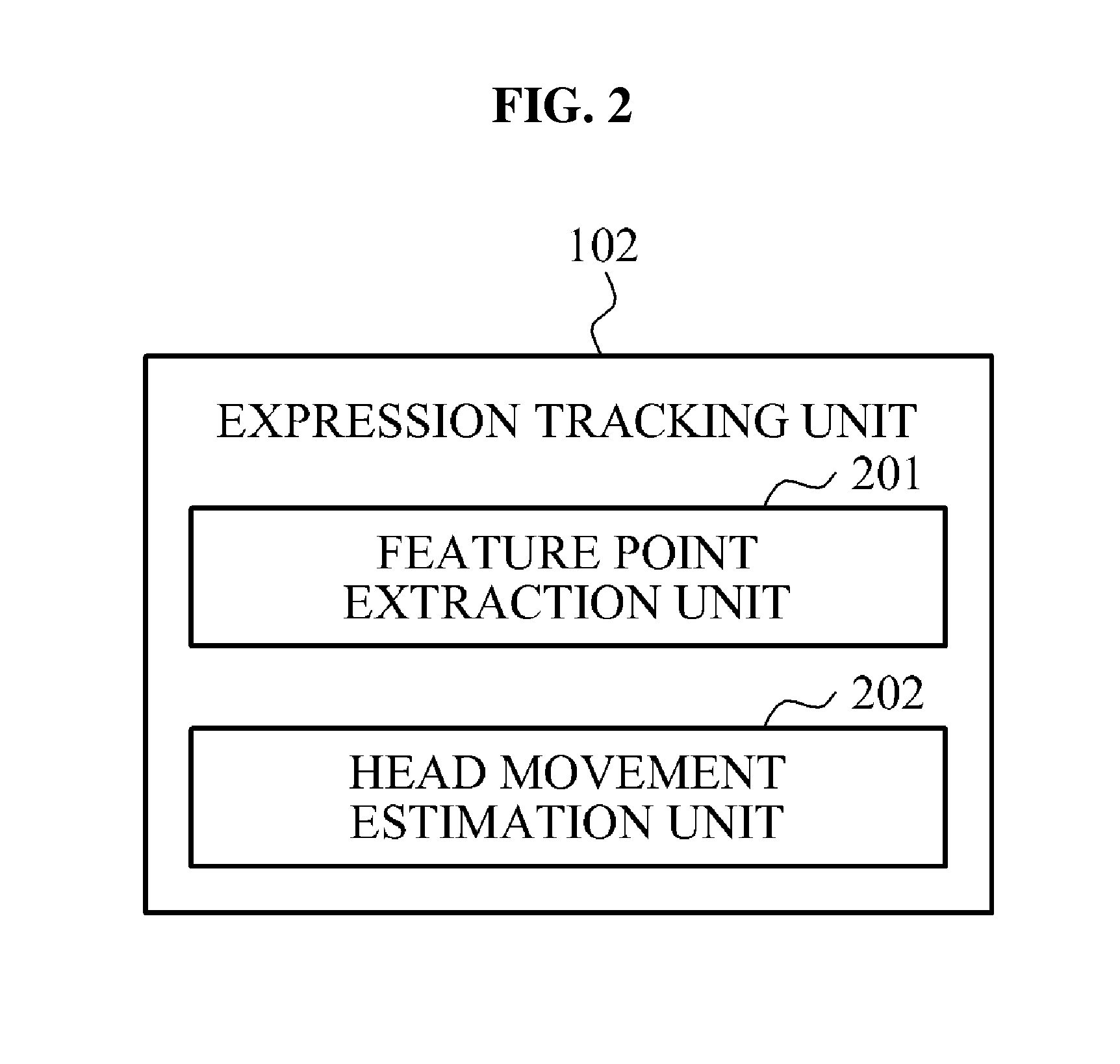

Apparatus and method for controlling avatar using expression control point

ActiveUS9298257B2Input/output for user-computer interactionImage enhancementHead movementsHuman–computer interaction

Owner:SAMSUNG ELECTRONICS CO LTD

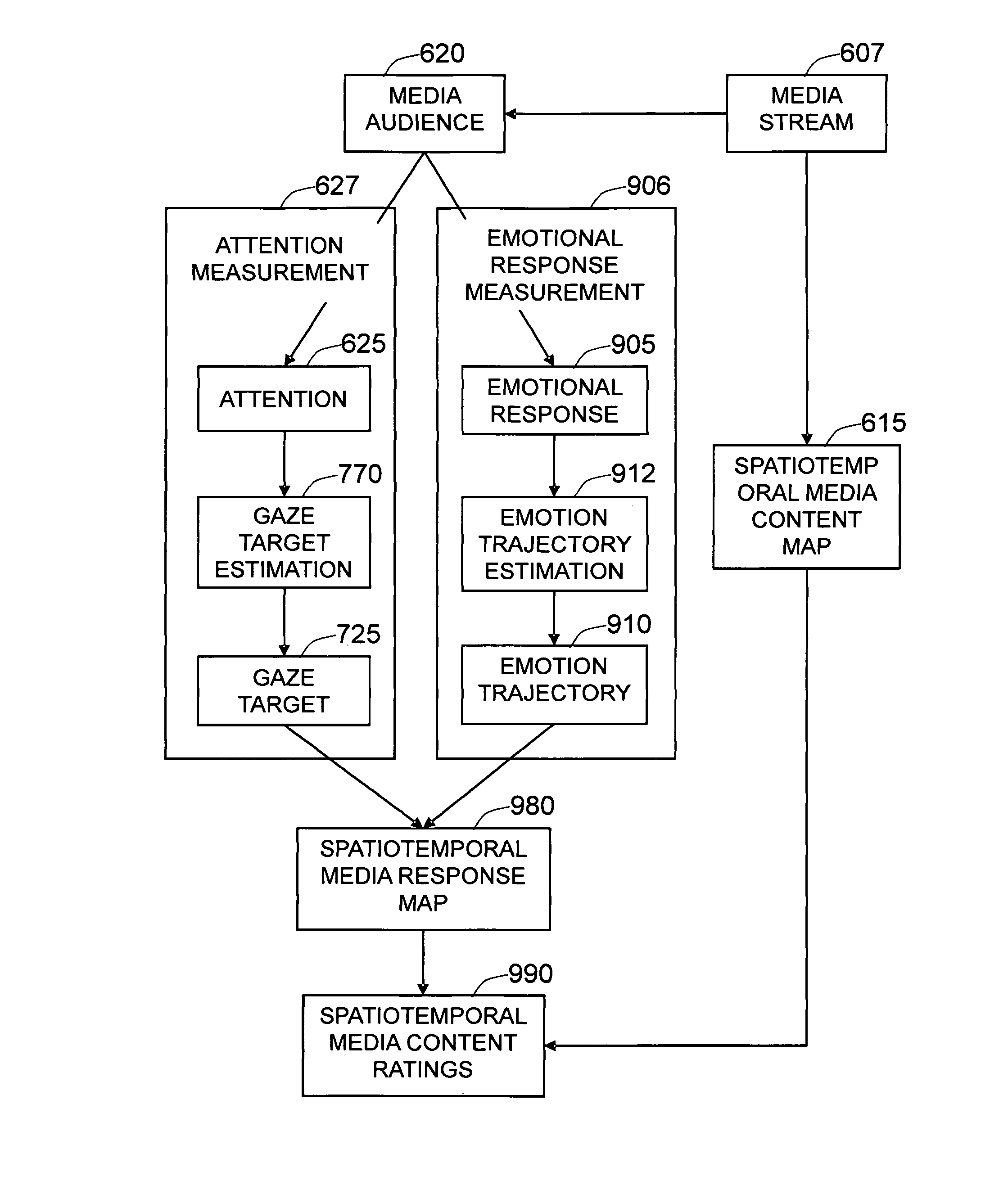

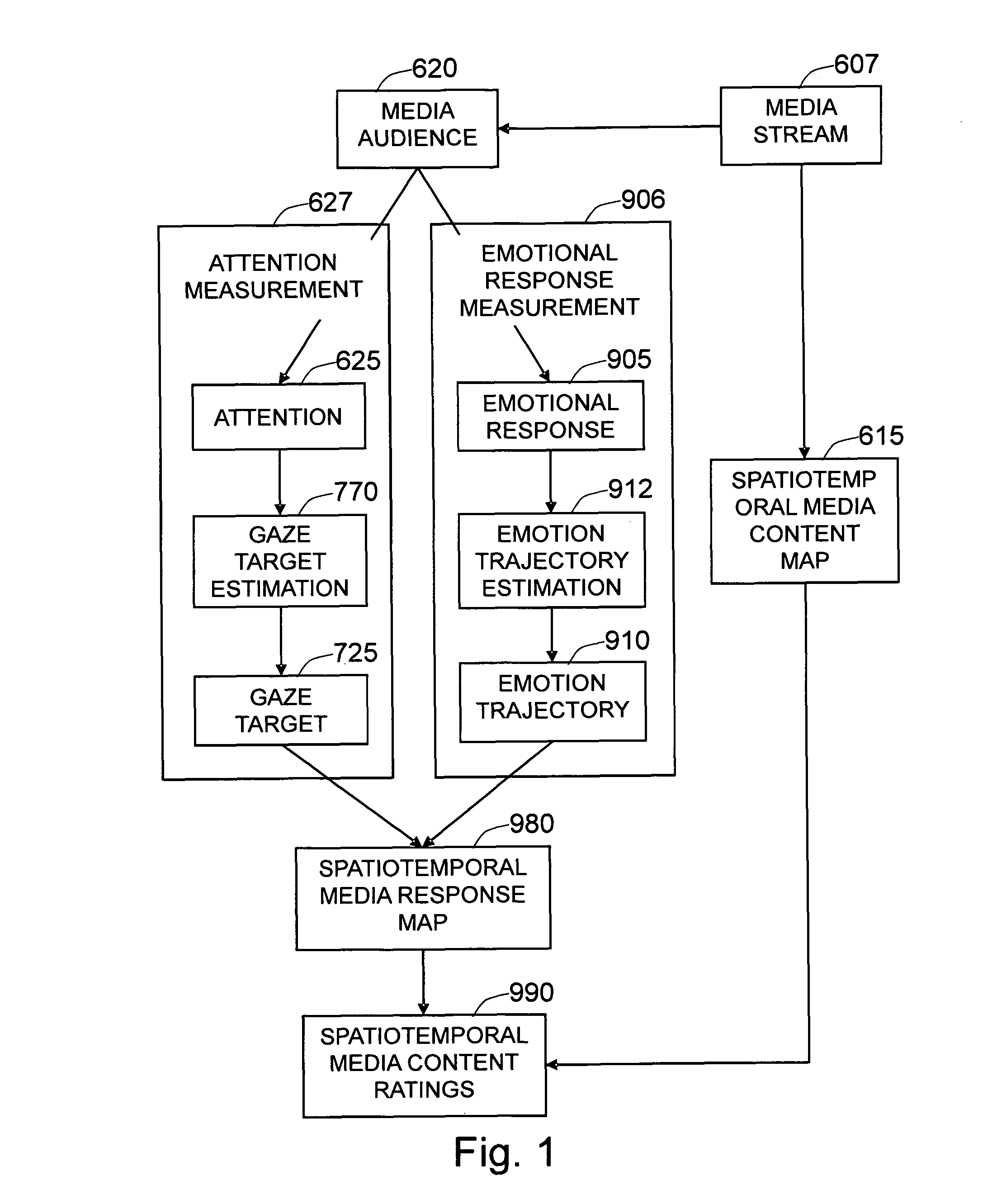

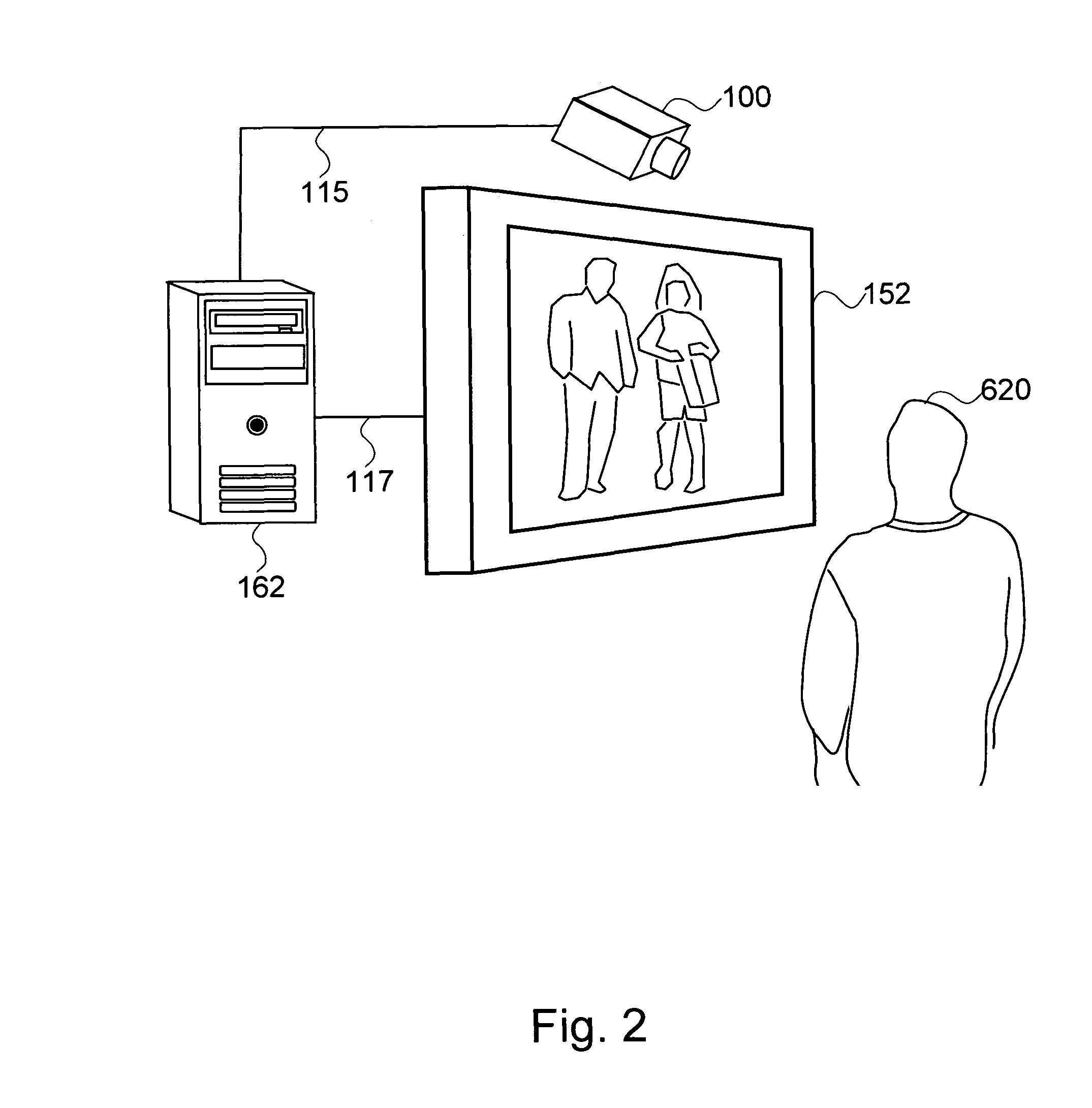

Method and system for measuring emotional and attentional response to dynamic digital media content

The present invention is a method and system to provide an automatic measurement of people's responses to dynamic digital media, based on changes in their facial expressions and attention to specific content. First, the method detects and tracks faces from the audience. It then localizes each of the faces and facial features to extract emotion-sensitive features of the face by applying emotion-sensitive feature filters, to determine the facial muscle actions of the face based on the extracted emotion-sensitive features. The changes in facial muscle actions are then converted to the changes in affective state, called an emotion trajectory. On the other hand, the method also estimates eye gaze based on extracted eye images and three-dimensional facial pose of the face based on localized facial images. The gaze direction of the person, is estimated based on the estimated eye gaze and the three-dimensional facial pose of the person. The gaze target on the media display is then estimated based on the estimated gaze direction and the position of the person. Finally, the response of the person to the dynamic digital media content is determined by analyzing the emotion trajectory in relation to the time and screen positions of the specific digital media sub-content that the person is watching.

Owner:MOTOROLA SOLUTIONS INC

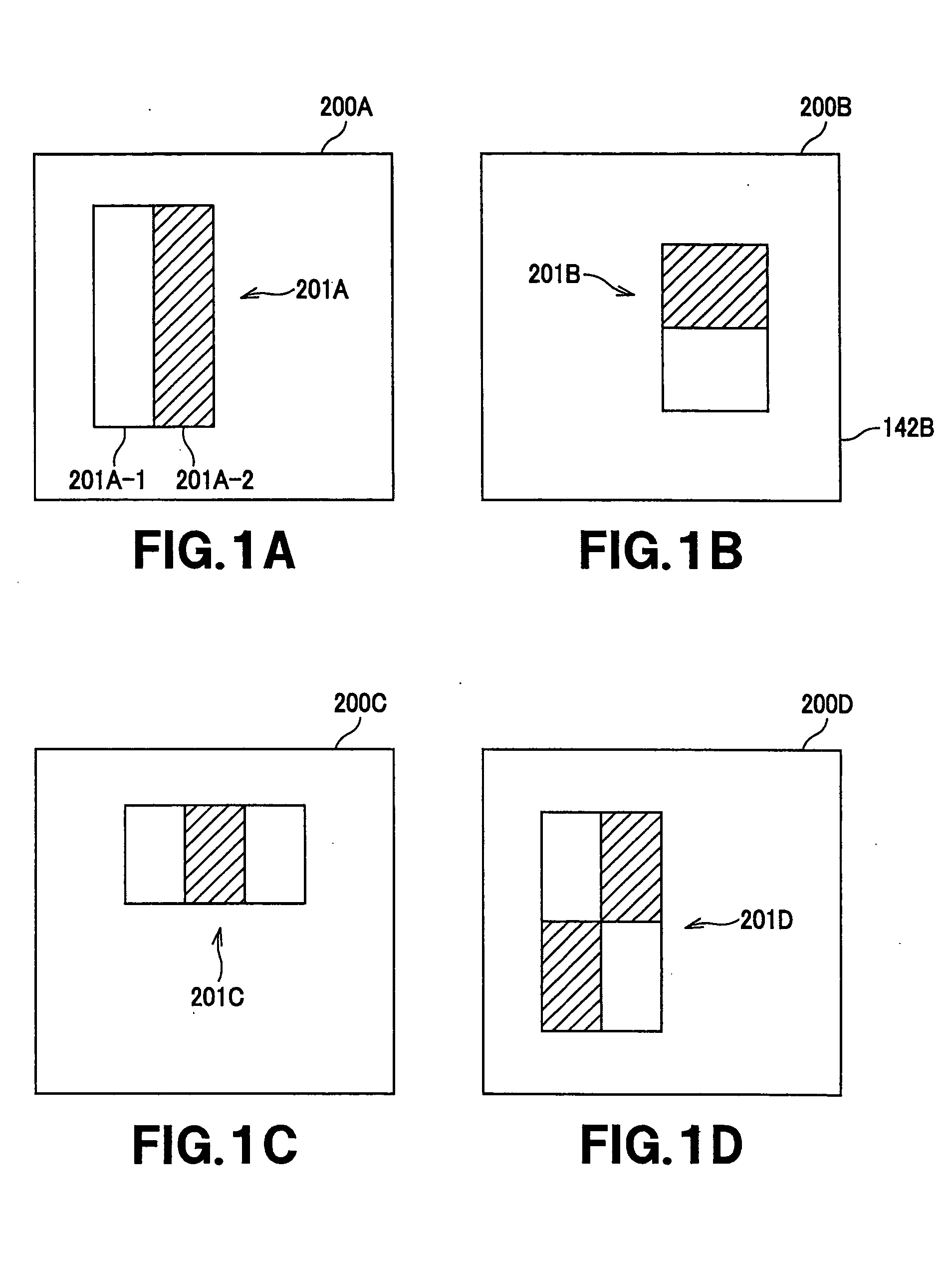

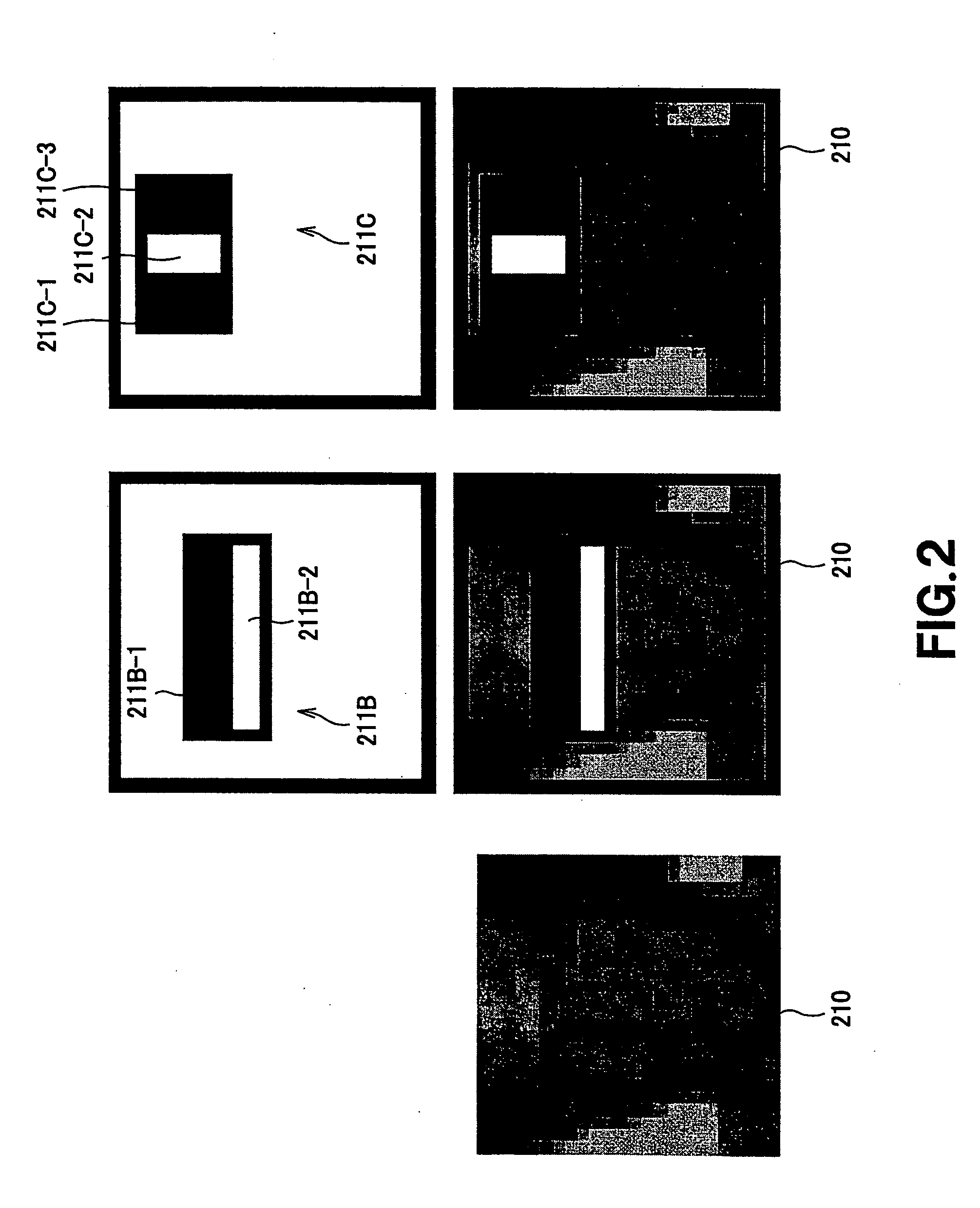

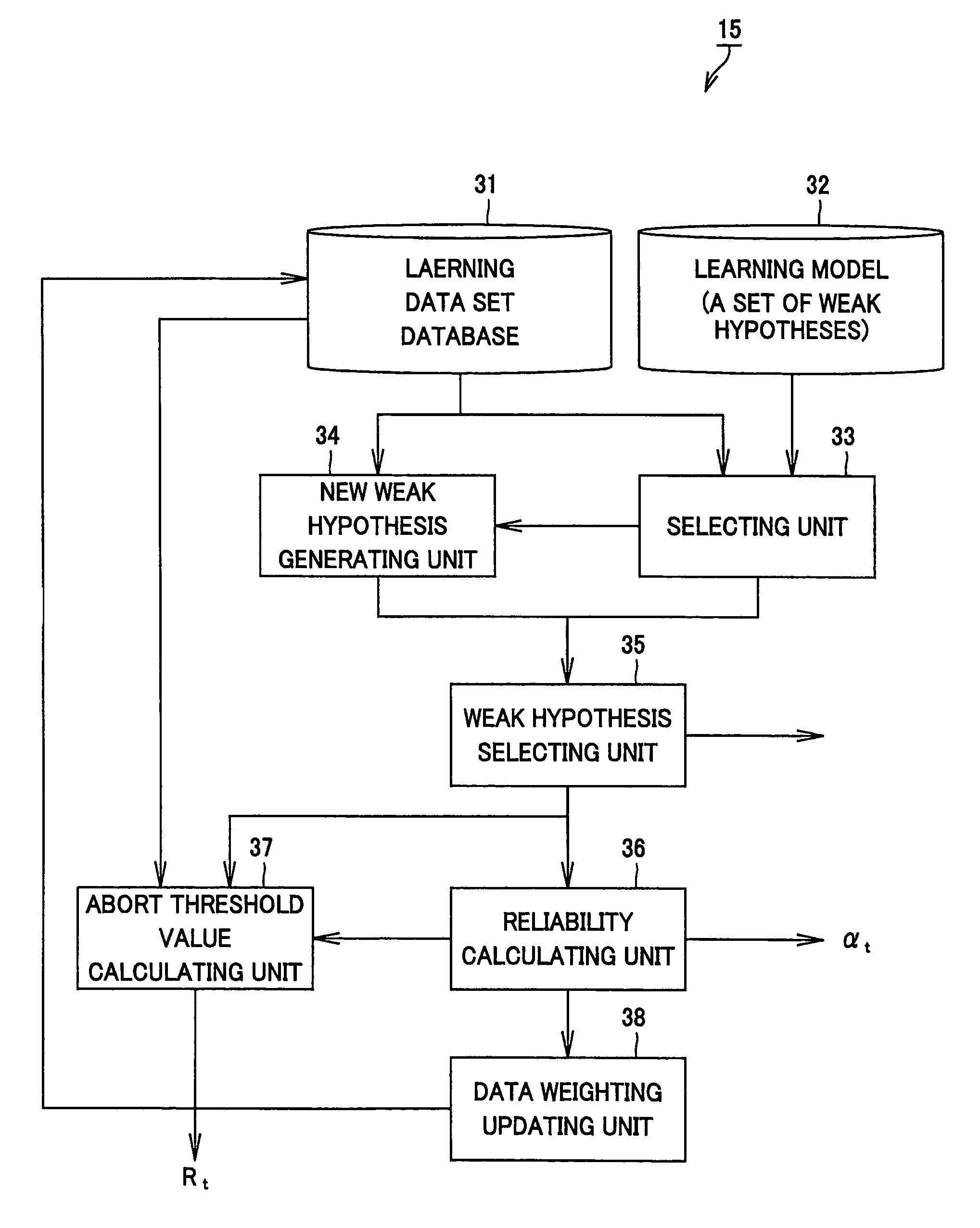

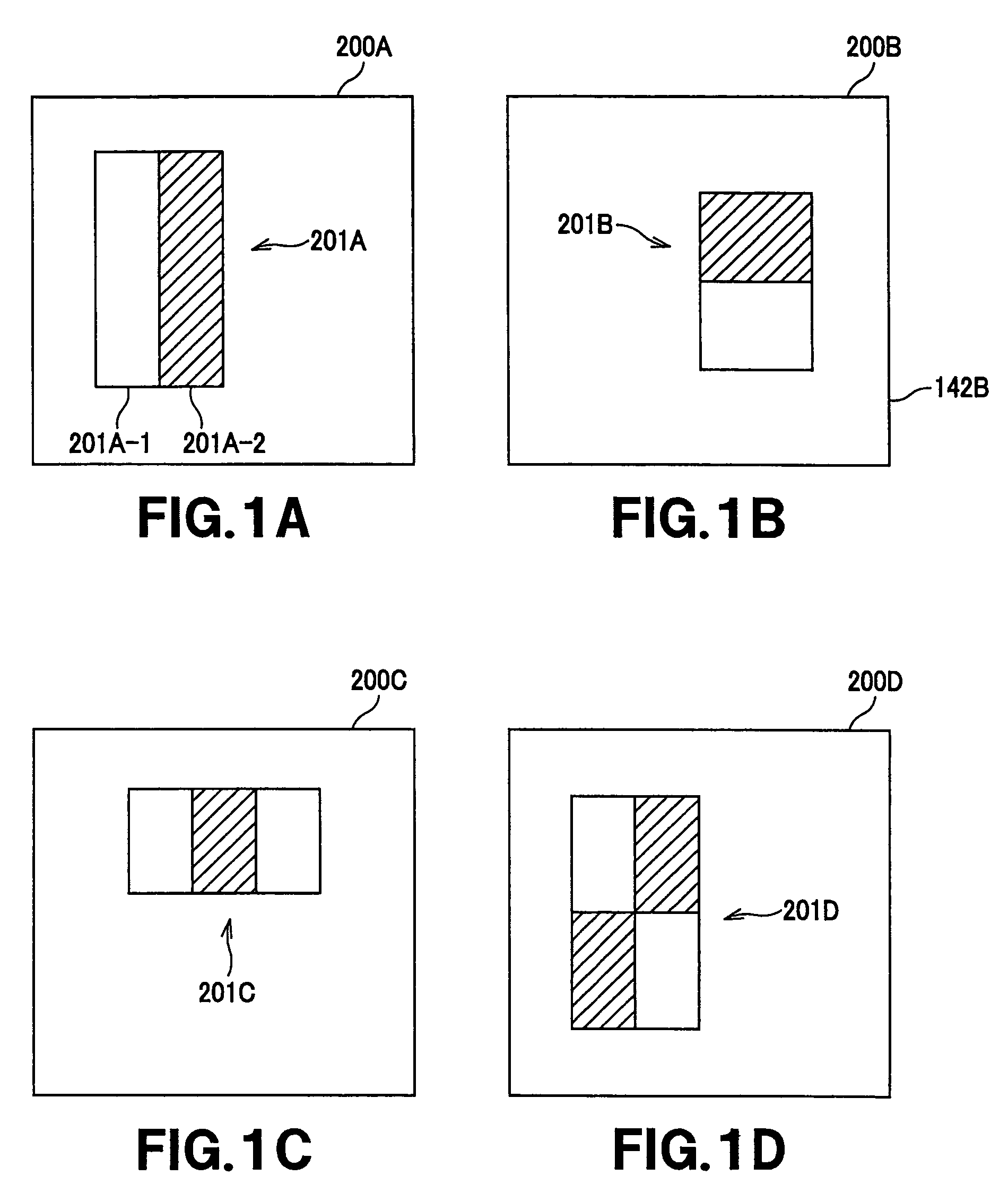

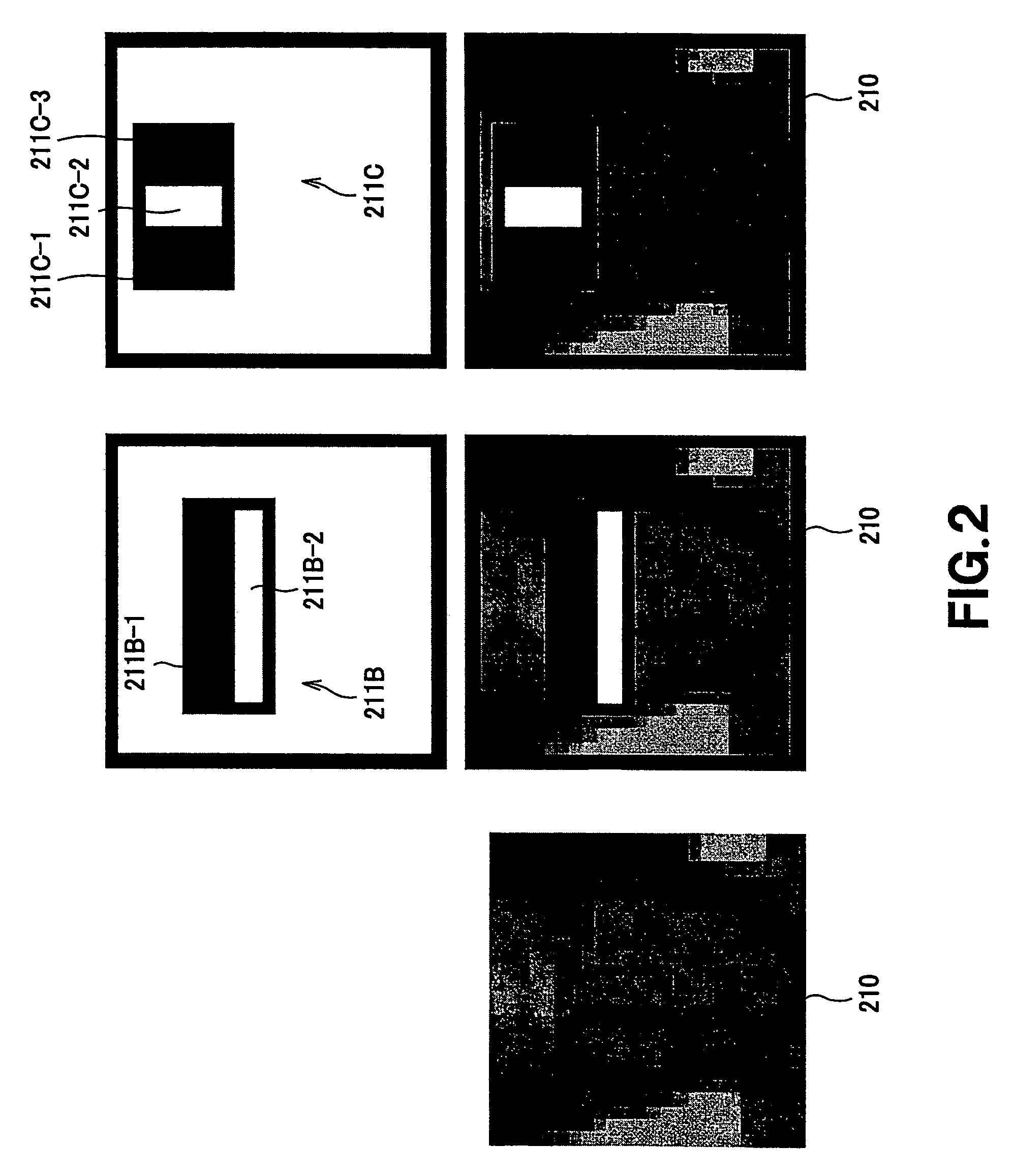

Weak hypothesis generation apparatus and method, learning apparatus and method, detection apparatus and method, facial expression learning apparatus and method, facial expression recognition apparatus and method, and robot apparatus

ActiveUS20050102246A1Lower performance requirementsIncrease speedImage analysisDigital computer detailsFace detectionHypothesis

A facial expression recognition system that uses a face detection apparatus realizing efficient learning and high-speed detection processing based on ensemble learning when detecting an area representing a detection target and that is robust against shifts of face position included in images and capable of highly accurate expression recognition, and a learning method for the system, are provided. When learning data to be used by the face detection apparatus by Adaboost, processing to select high-performance weak hypotheses from all weak hypotheses, then generate new weak hypotheses from these high-performance weak hypotheses on the basis of statistical characteristics, and select one weak hypothesis having the highest discrimination performance from these weak hypotheses, is repeated to sequentially generate a weak hypothesis, and a final hypothesis is thus acquired. In detection, using an abort threshold value that has been learned in advance, whether provided data can be obviously judged as a non-face is determined every time one weak hypothesis outputs the result of discrimination. If it can be judged so, processing is aborted. A predetermined Gabor filter is selected from the detected face image by an Adaboost technique, and a support vector for only a feature quantity extracted by the selected filter is learned, thus performing expression recognition.

Owner:SAN DIEGO UNIV OF CALIFORNIA +1

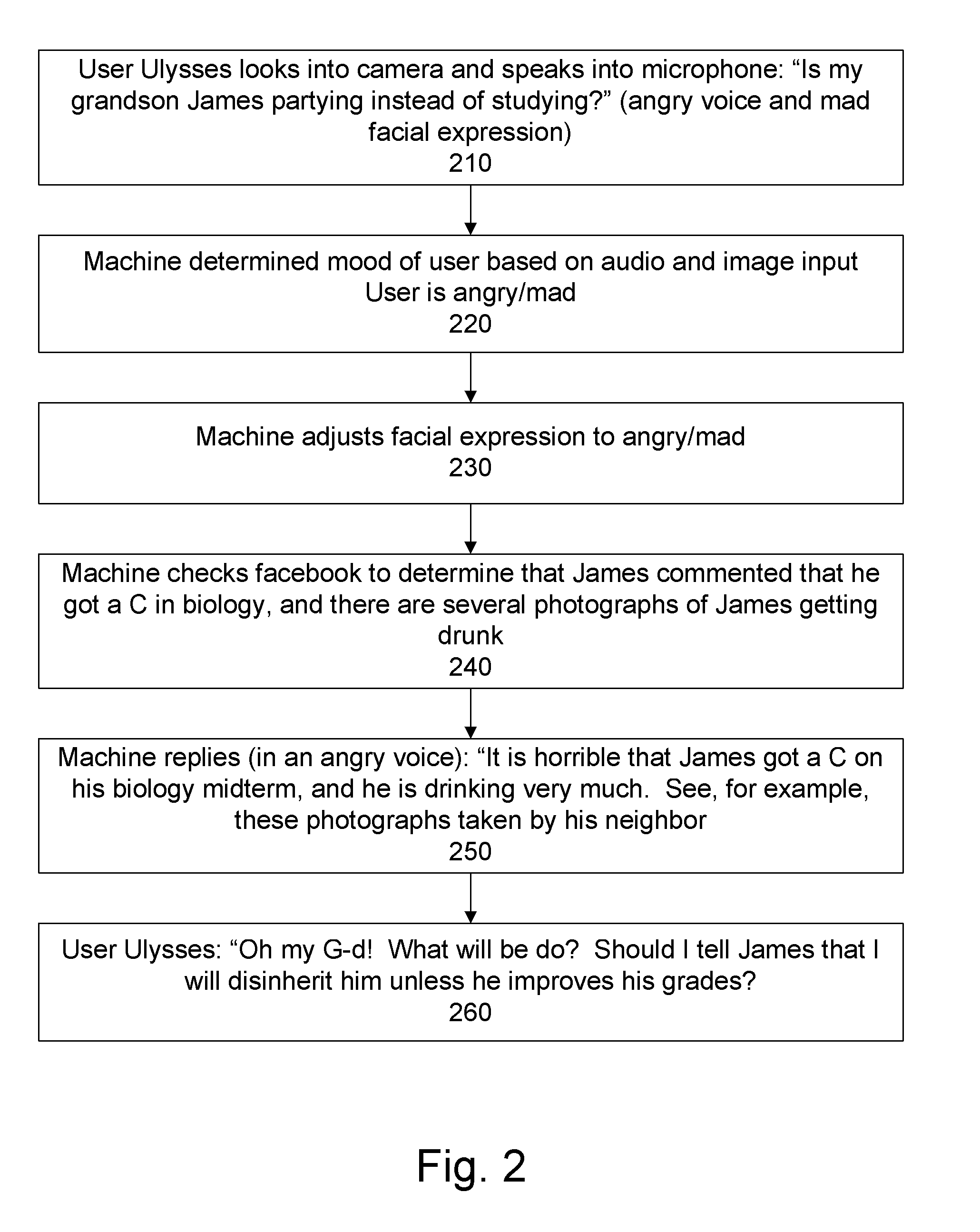

Electronic personal interactive device

ActiveUS20110283190A1Efficiently ascertainImprove securityInput/output for user-computer interactionDevices with GPS signal receiverInteraction deviceSpoken dialog

An interface device and method of use, comprising audio and image inputs; a processor for determining topics of interest, and receiving information of interest to the user from a remote resource; an audiovisual output for presenting an anthropomorphic object conveying the received information, having a selectively defined and adaptively alterable mood; an external communication device adapted to remotely communicate at least a voice conversation with a human user of the personal interface device. Also provided is a system and method adapted to receive logic for, synthesize, and engage in conversation dependent on received conversational logic and a personality.

Owner:POLTORAK TECH

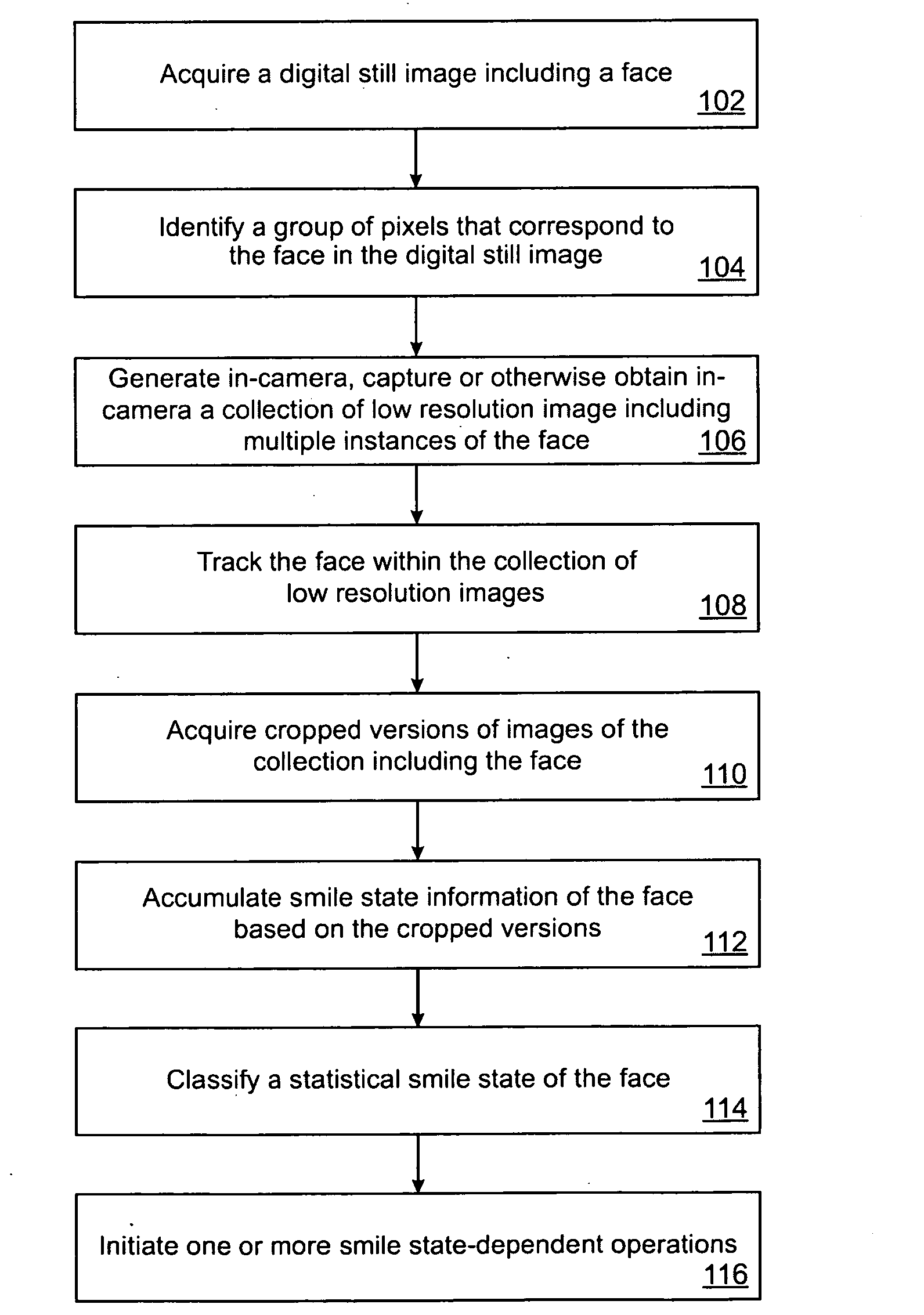

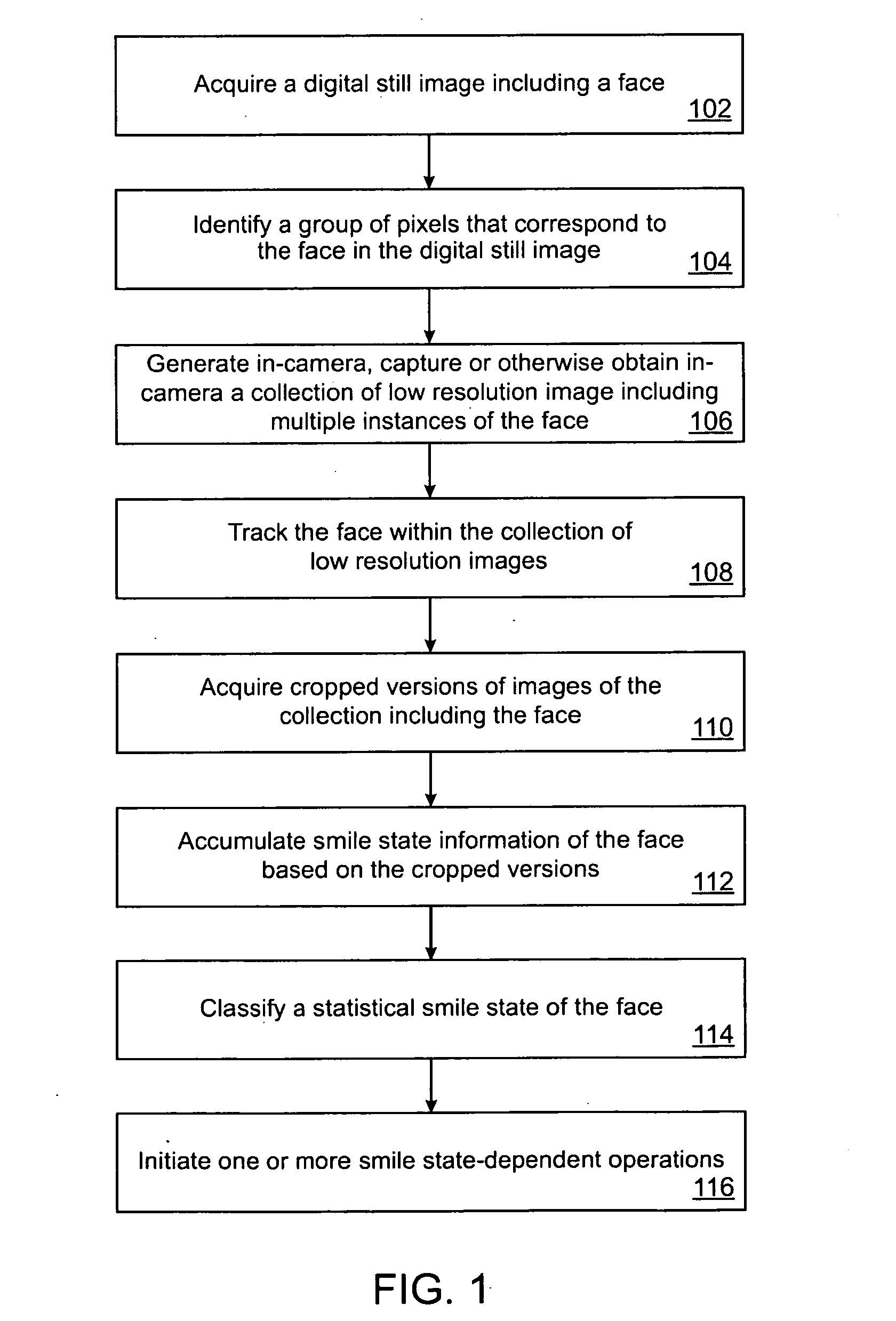

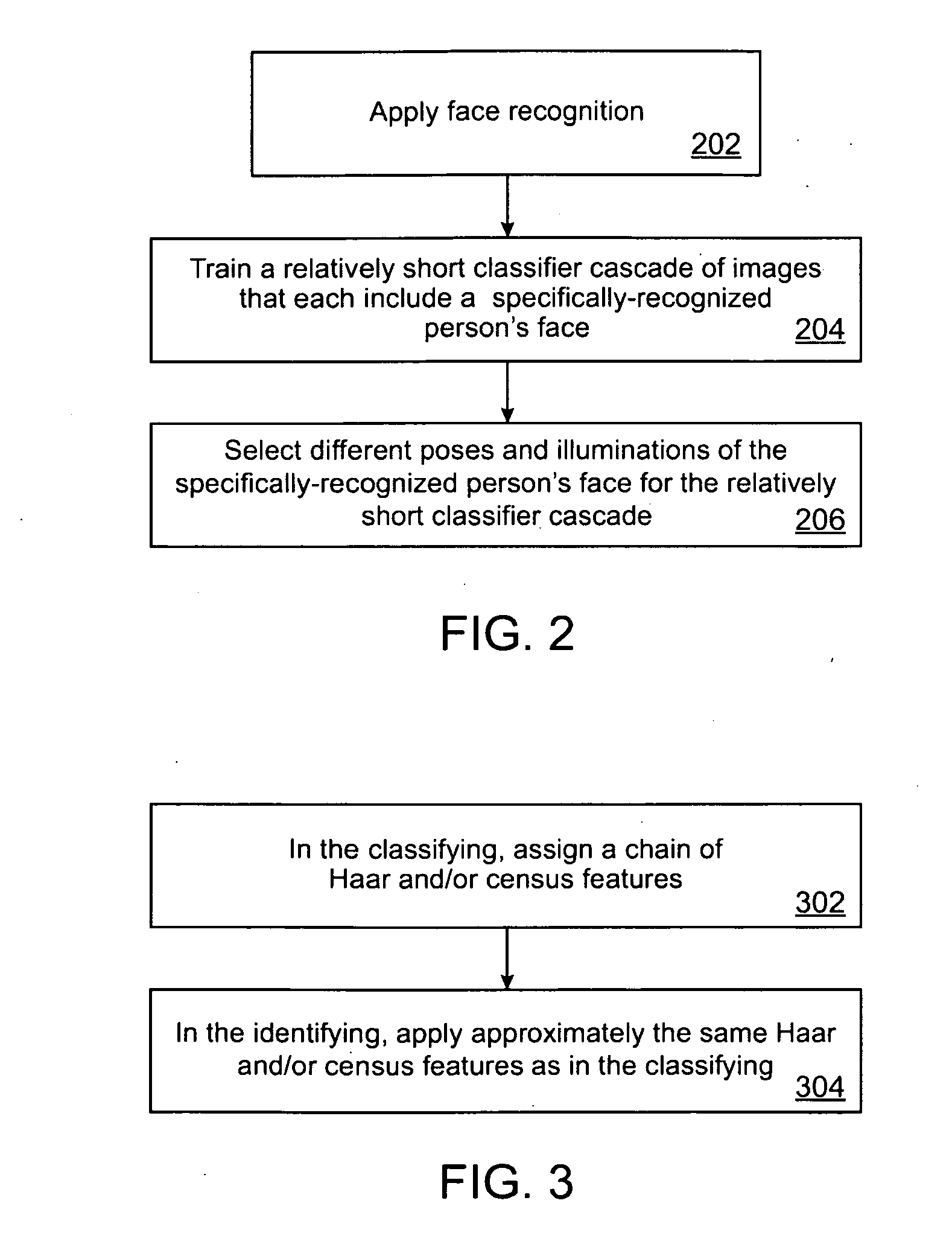

Detecting facial expressions in digital images

ActiveUS20090190803A1Reduce resolutionTelevision system detailsImage analysisPattern recognitionRadiology

A method and system for detecting facial expressions in digital images and applications therefore are disclosed. Analysis of a digital image determines whether or not a smile and / or blink is present on a person's face. Face recognition, and / or a pose or illumination condition determination, permits application of a specific, relatively small classifier cascade.

Owner:FOTONATION LTD

Vehicle mode activation by gesture recognition

Owner:GM GLOBAL TECH OPERATIONS LLC

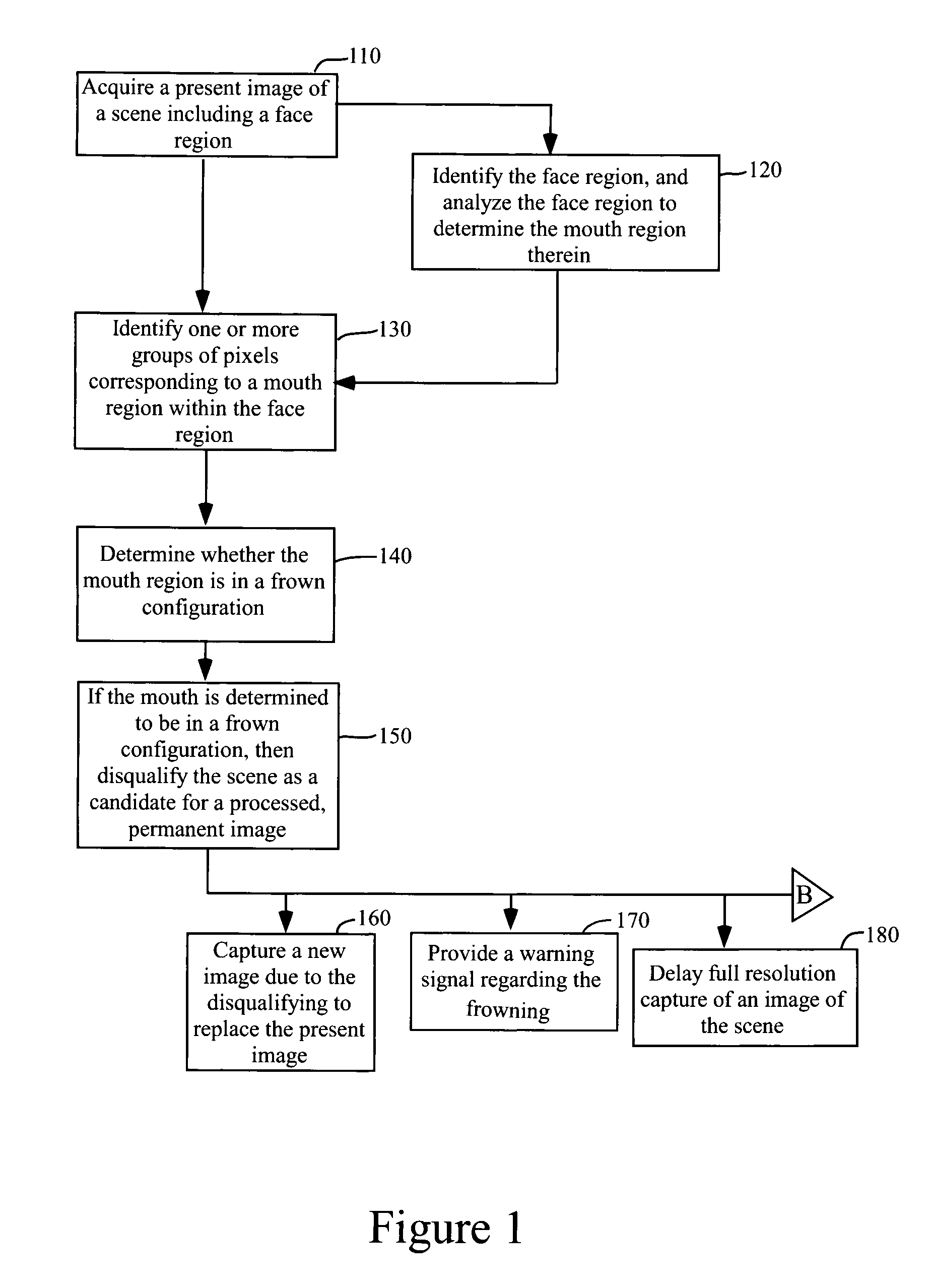

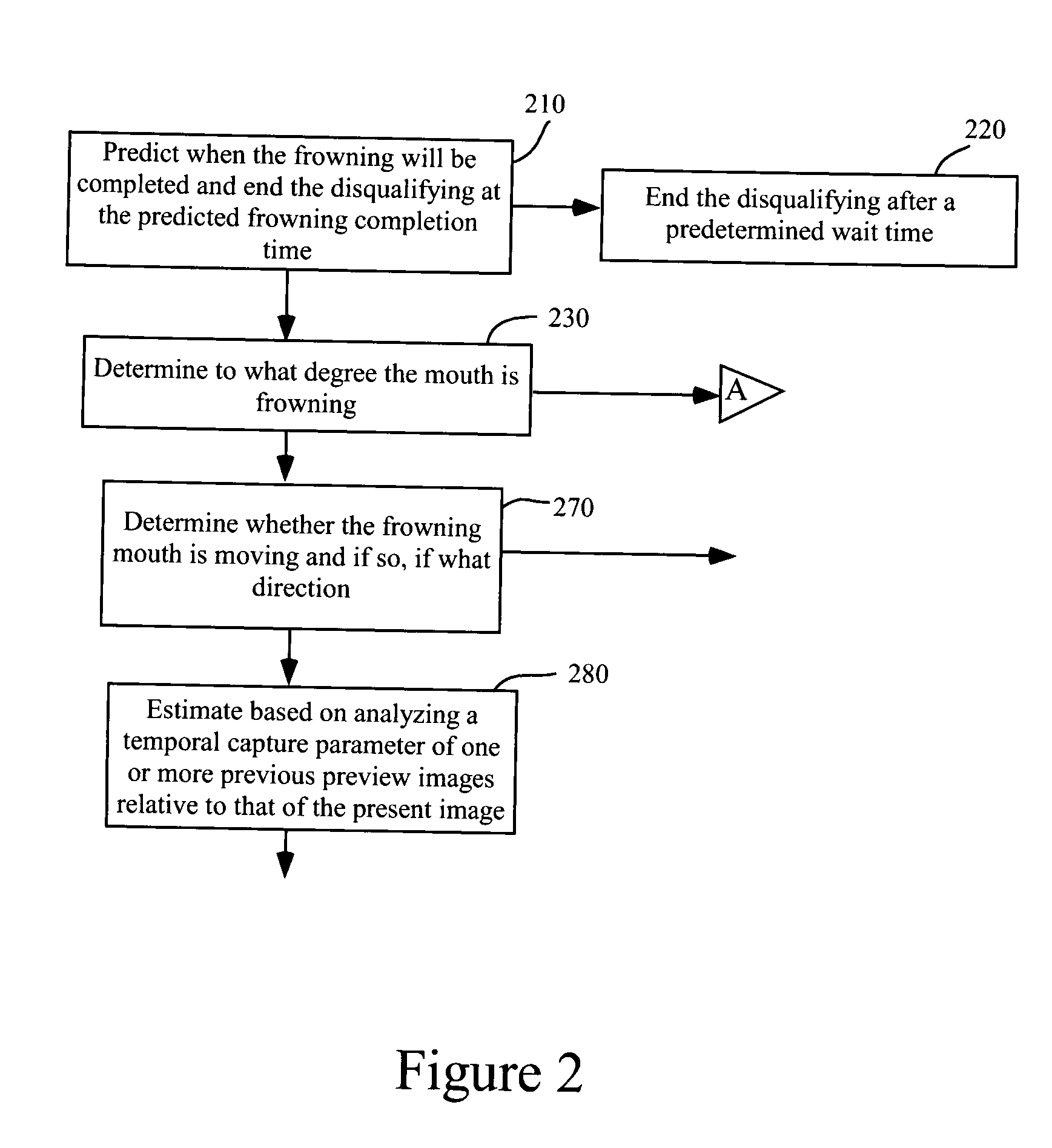

Digital Image Acquisition Control and Correction Method and Apparatus

ActiveUS20070201725A1Quick searchTelevision system detailsAcquiring/recognising facial featuresDigital imageCorrection method

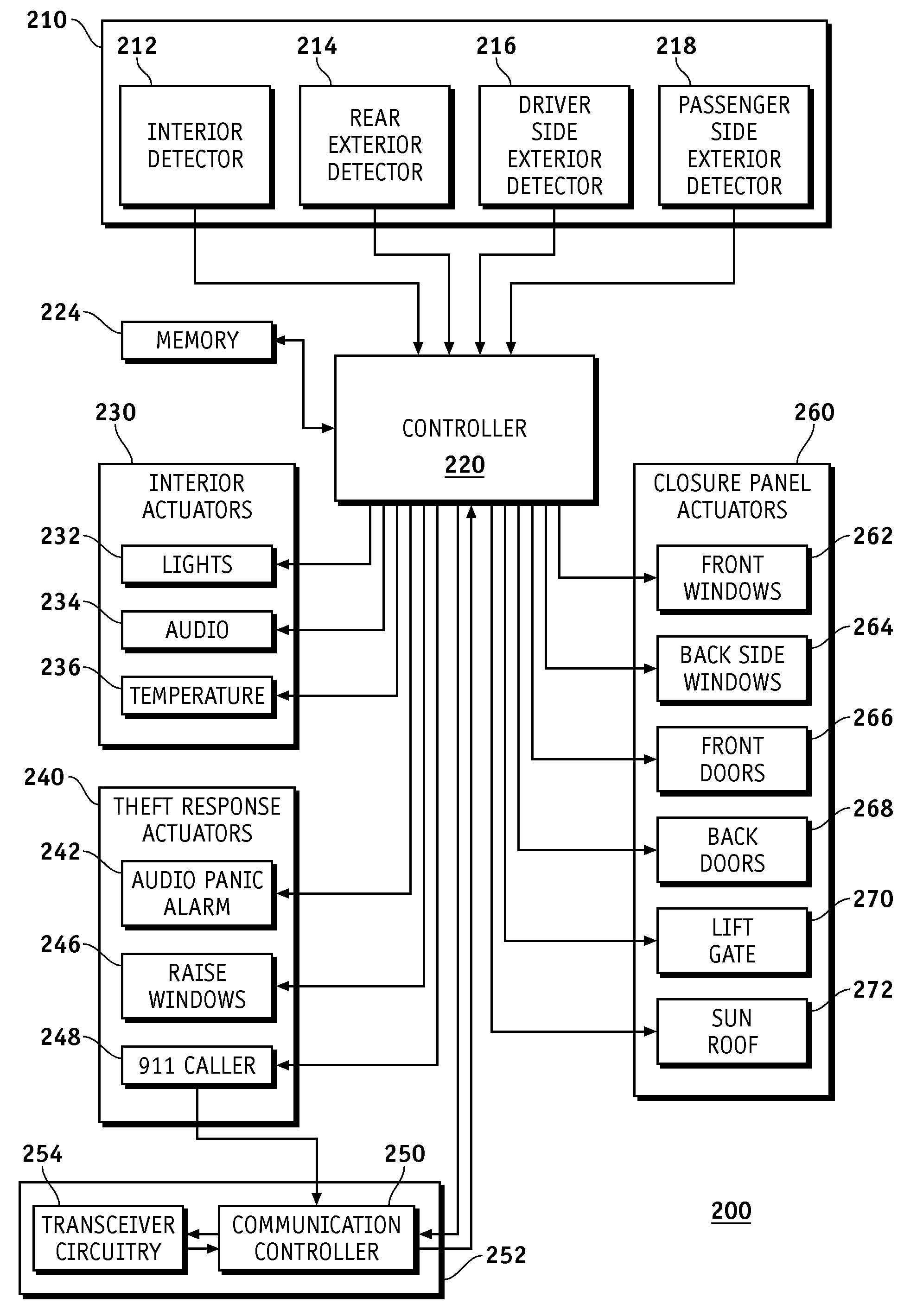

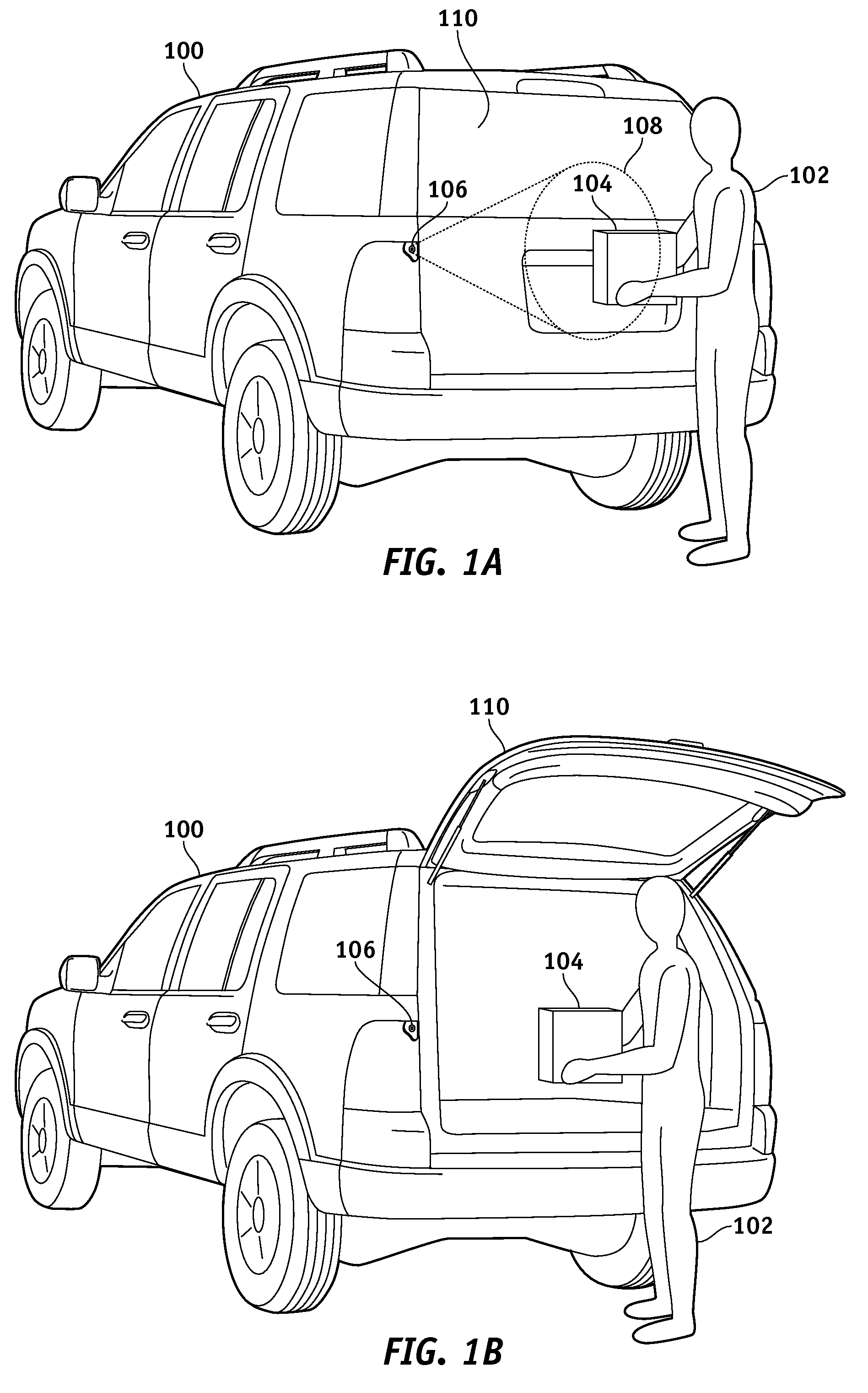

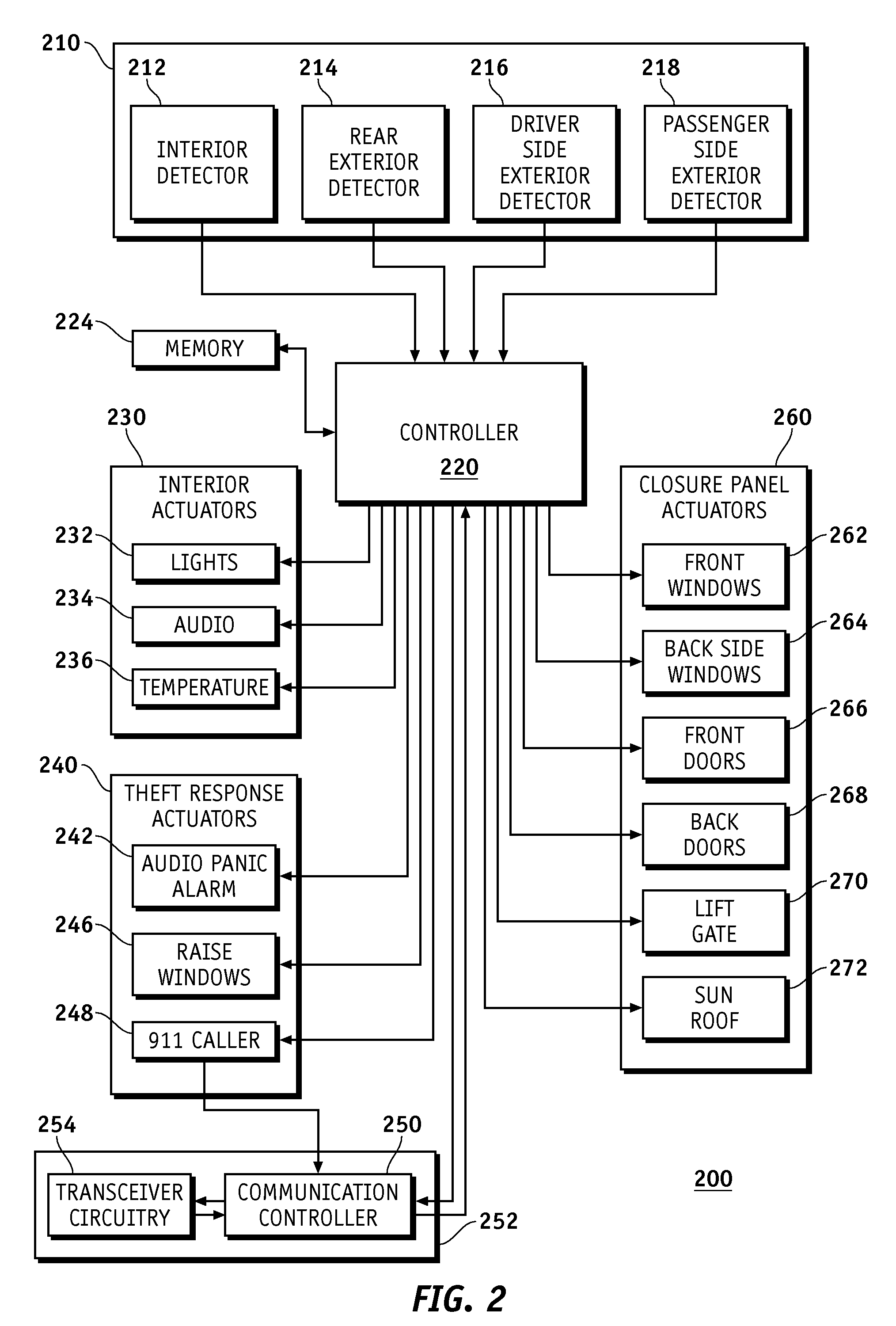

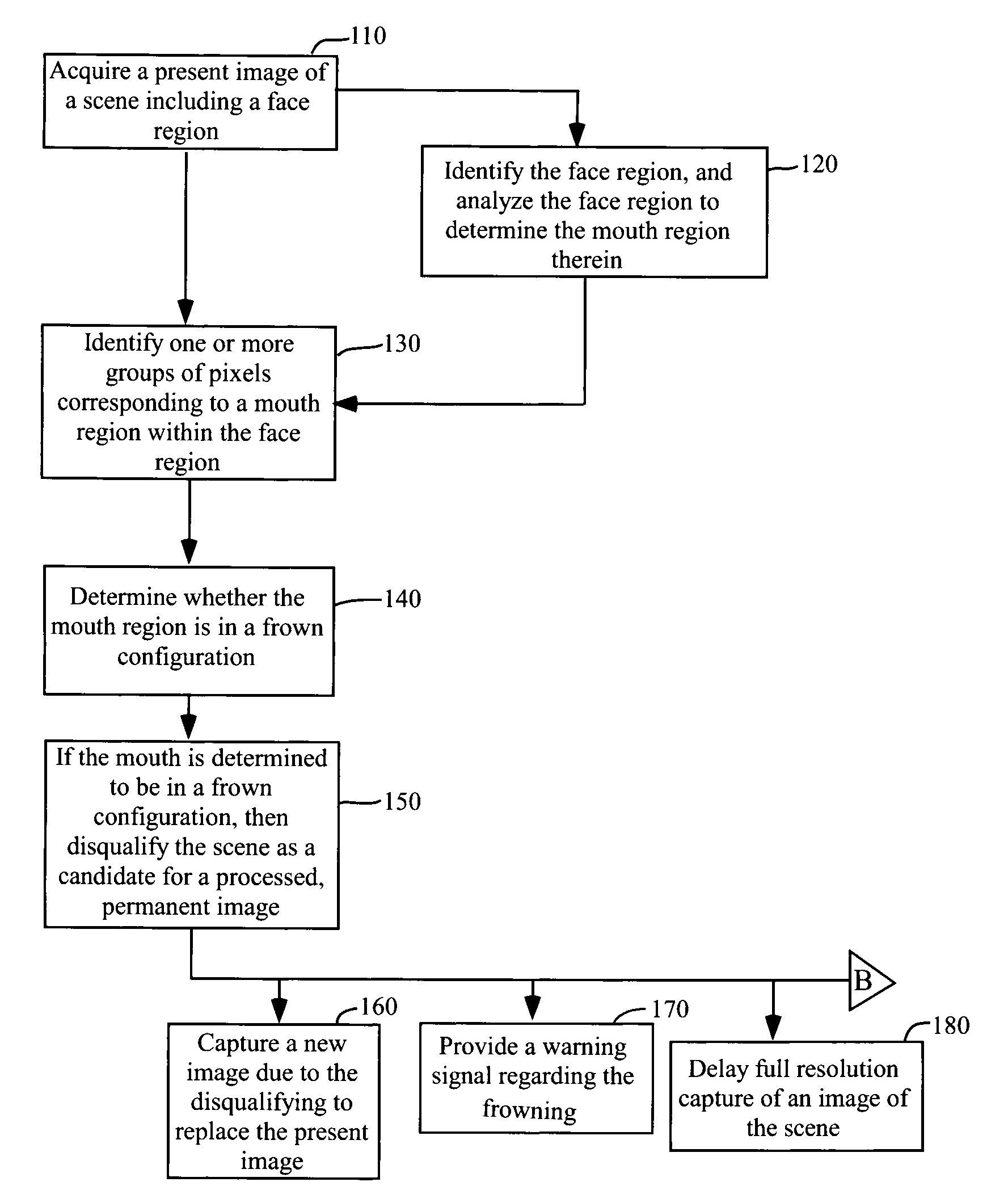

An unsatisfactory scene is disqualified as an image acquisition control for a camera. An image is acquired. One or more mouth regions are determined. The mouth regions are analyzed to determined whether they are frowning, and if so, then the scene is disqualified as a candidate for a processed, permanent image while the mouth is completing the frowning.

Owner:FOTONATION LTD

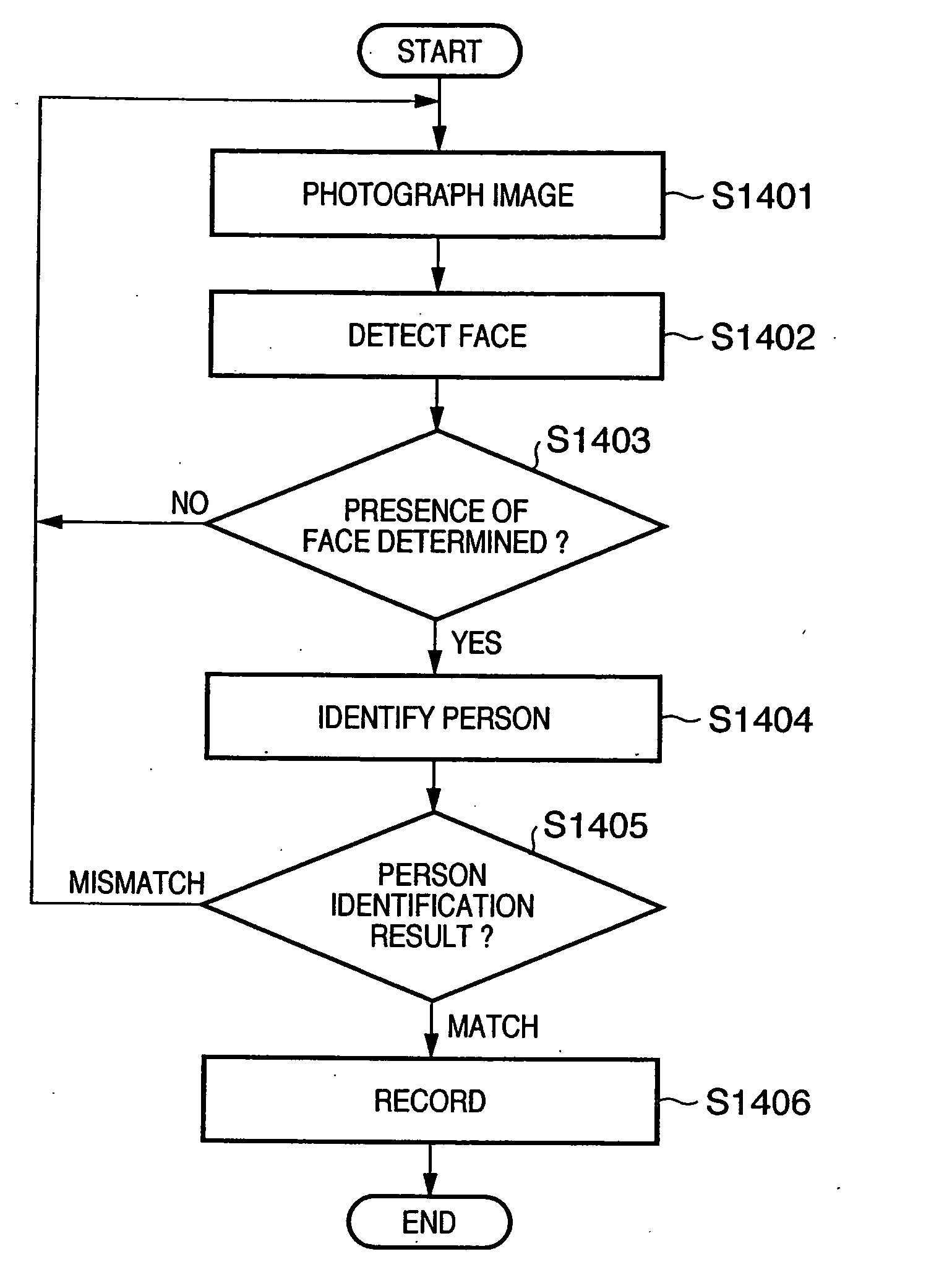

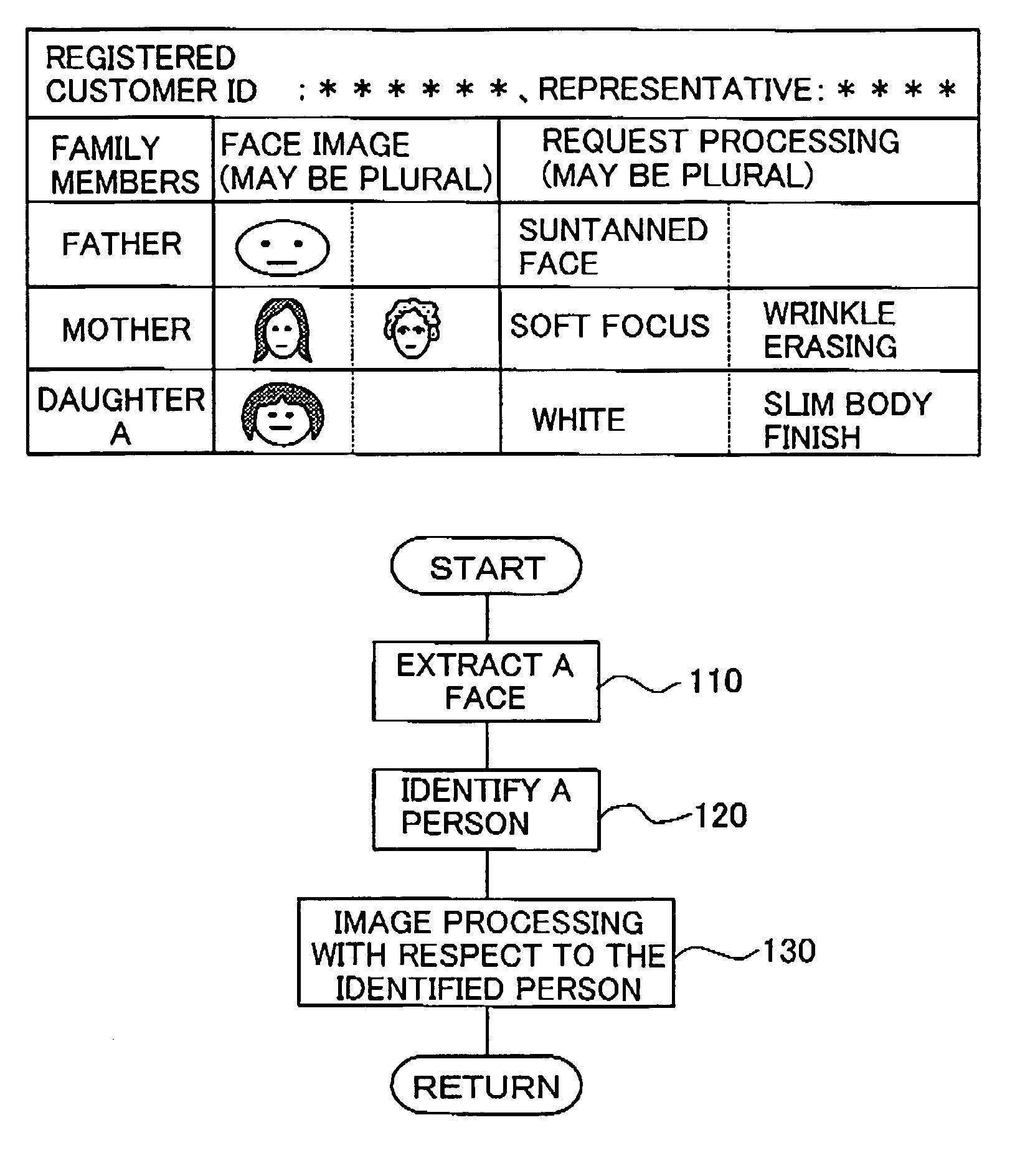

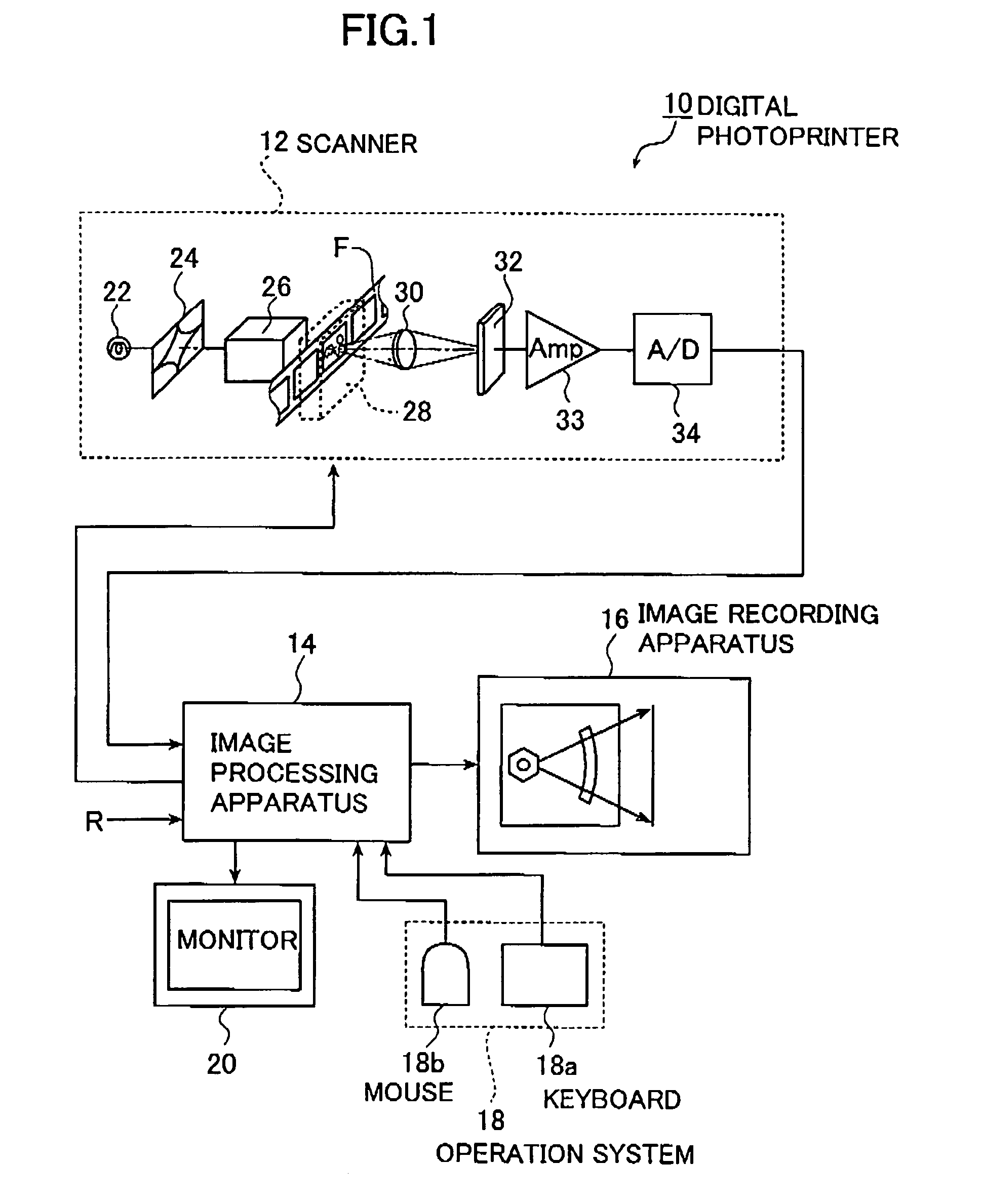

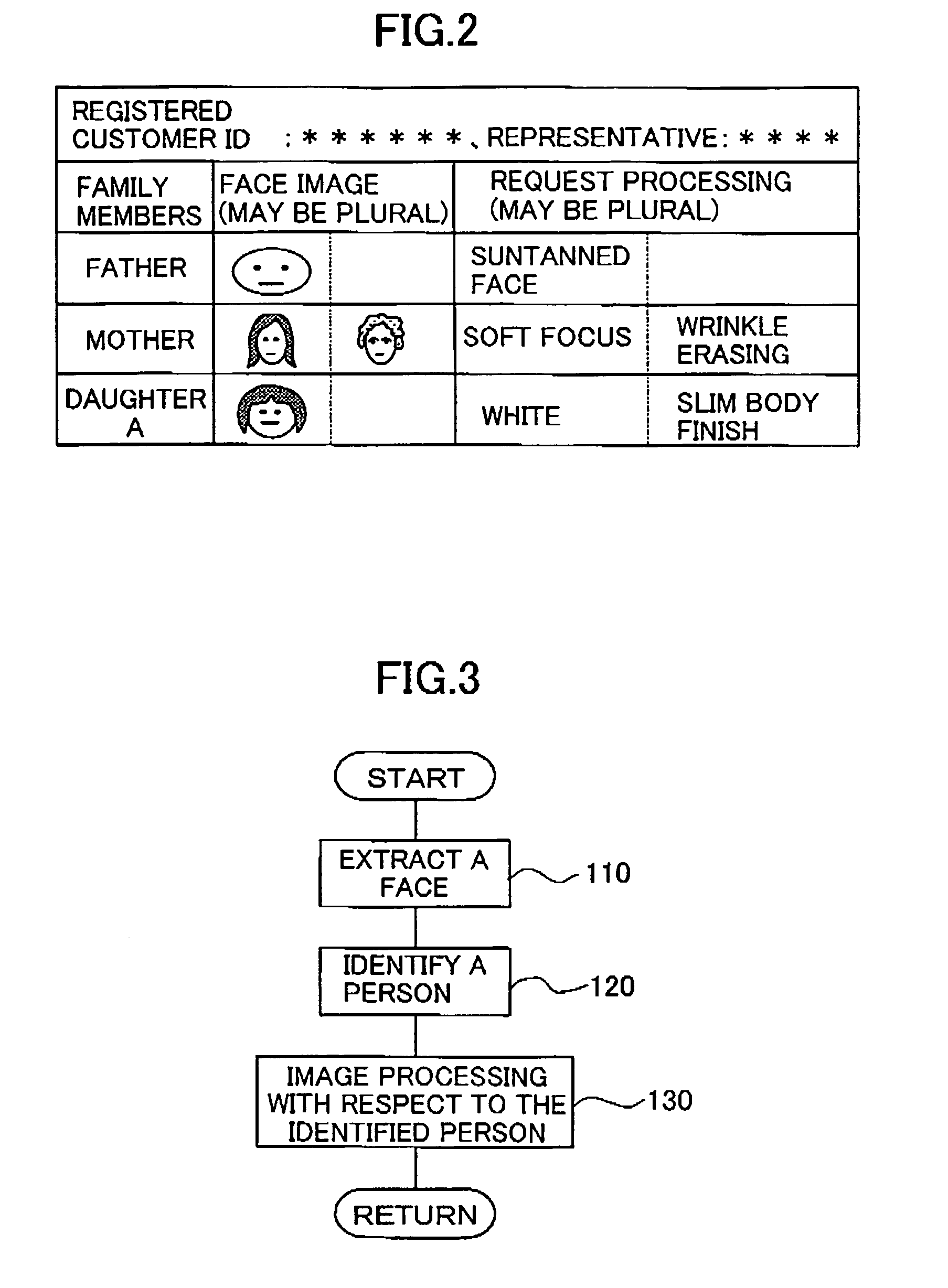

Image processing method using conditions corresponding to an identified person

InactiveUS7106887B2Remove unnatural feelingEasy to correctAcquiring/recognising facial featuresImaging processingImage processing software

The image processing method applies image processing to an inputted image data. The method registers predetermined image processing conditions for each specific person in advance, extracts a person in the inputted image data, identifies the extracted person to find if the extracted person is the specific person and selects image processing conditions corresponding to the identified specific person to perform the image processing based on the selected image processing conditions. Amusement aspect in photography and image representation can be enhanced, and even inexperienced or unskilled persons in personal computer or image processing software can correct images.

Owner:FUJIFILM CORP +1

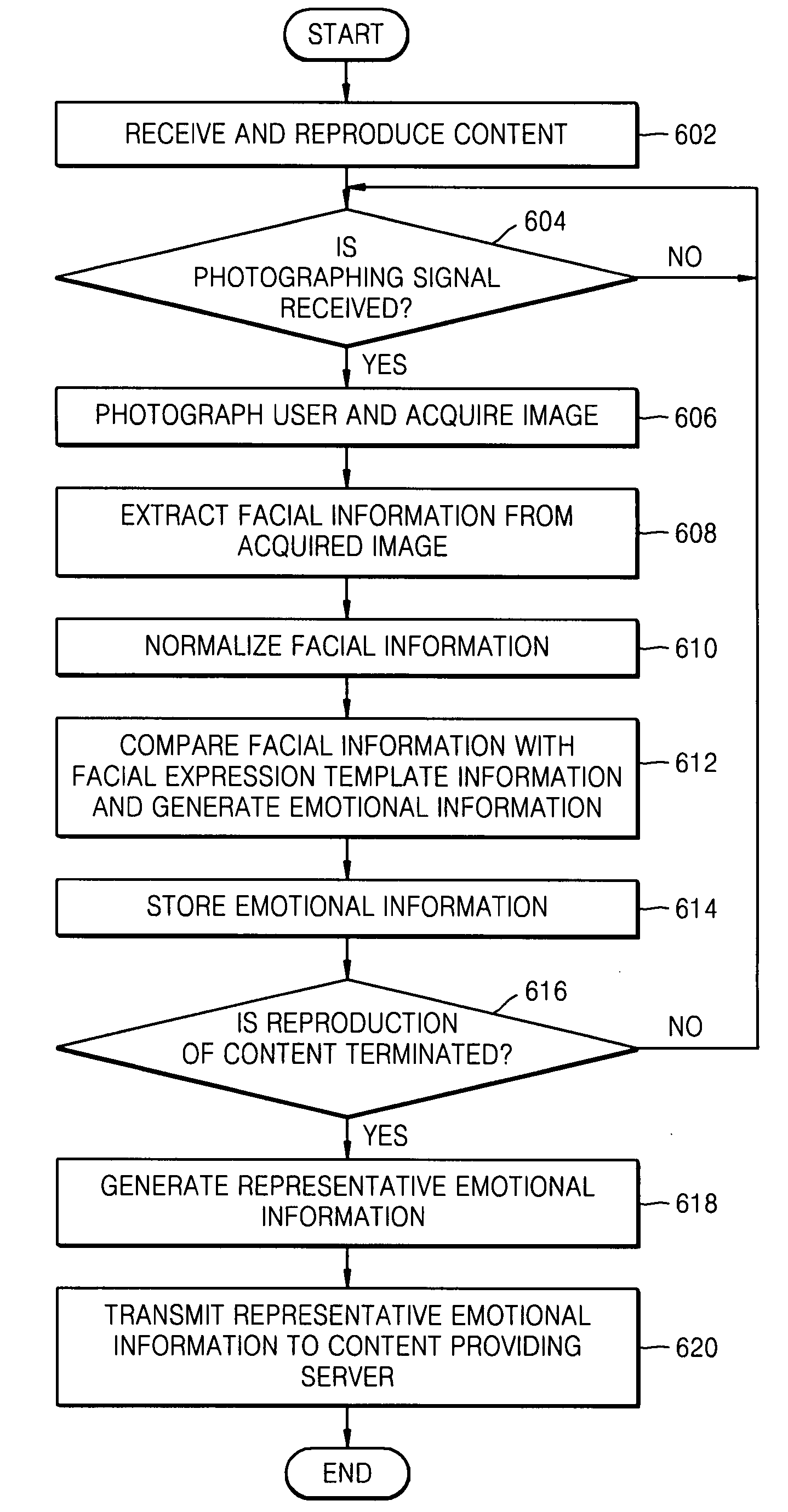

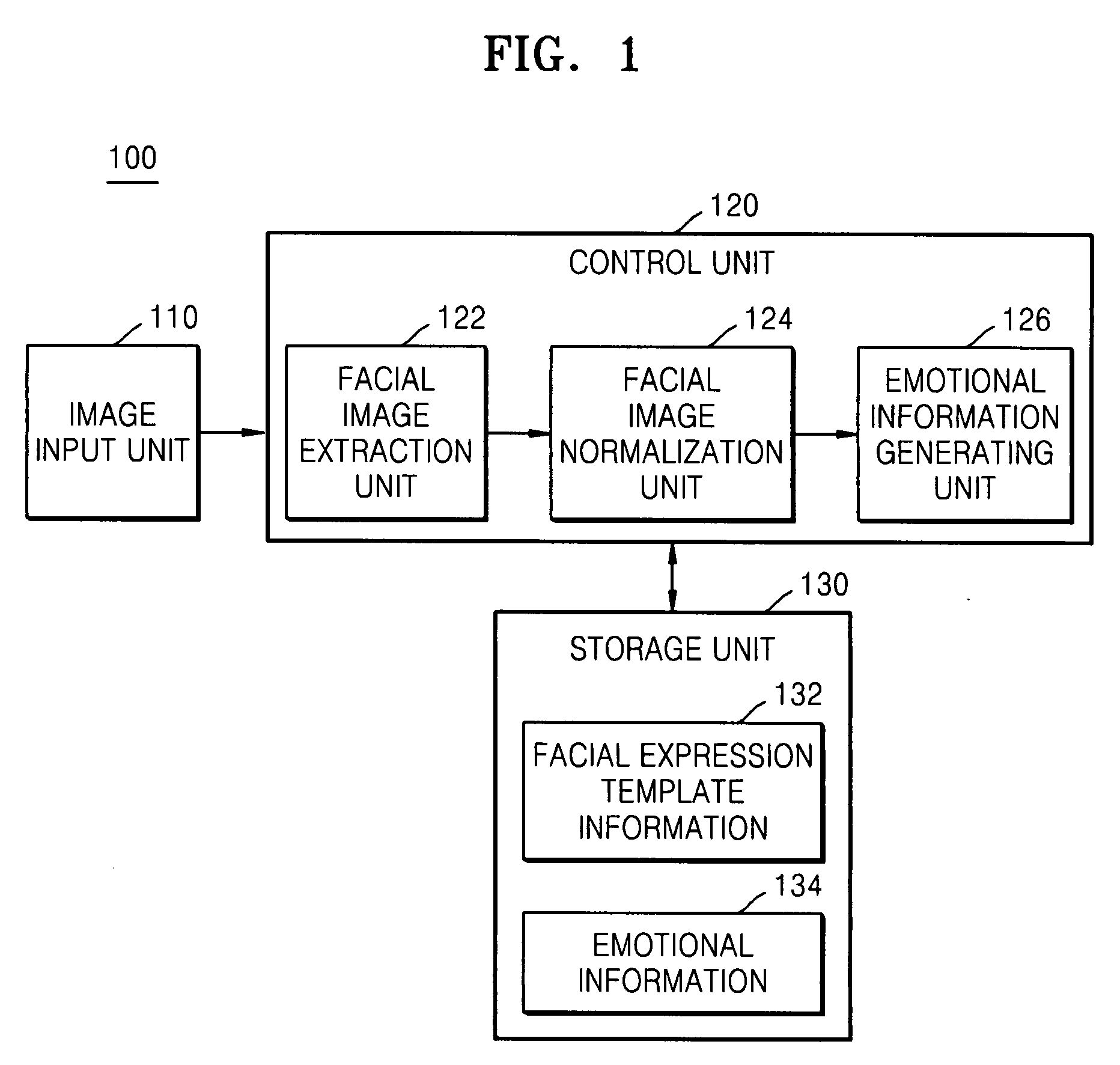

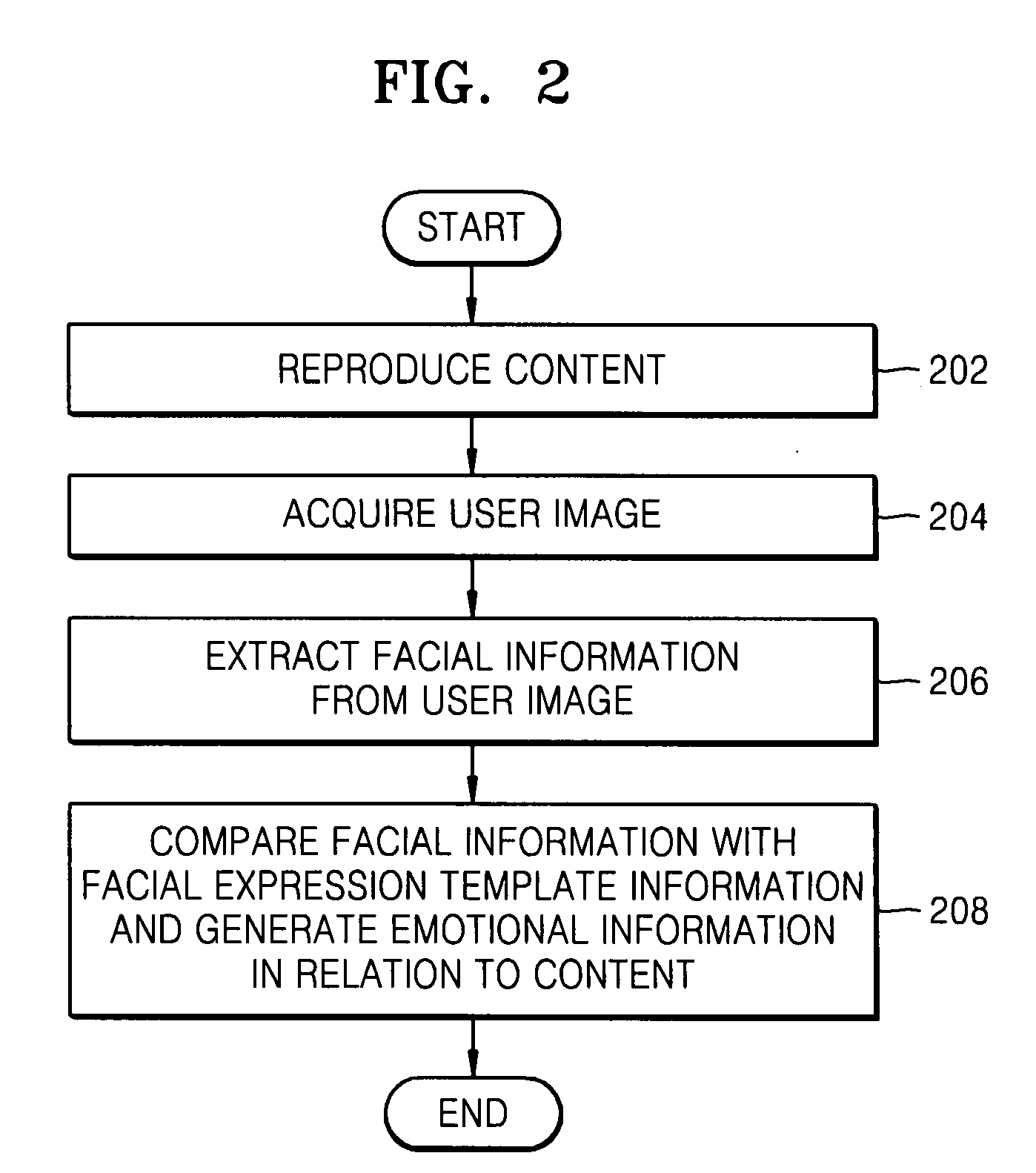

Method and apparatus for generating meta data of content

InactiveUS20080101660A1Data processing applicationsDigital data information retrievalFacial expressionClient-side

A method and apparatus are provided for generating emotional information including a user's impressions in relation to multimedia content or meta data regarding the emotional information, and a computer readable recording medium storing the method. The meta data generating method includes receiving emotional information in relation to the content from at least one client system which receives and reproduces the content; generating meta data for an emotion using the emotional information; and coupling the meta data for the emotion to the content. Accordingly, it is possible to automatically acquire emotional information by using the facial expression of a user who is appreciating multimedia content, and use the emotional information as meta data.

Owner:SAMSUNG ELECTRONICS CO LTD

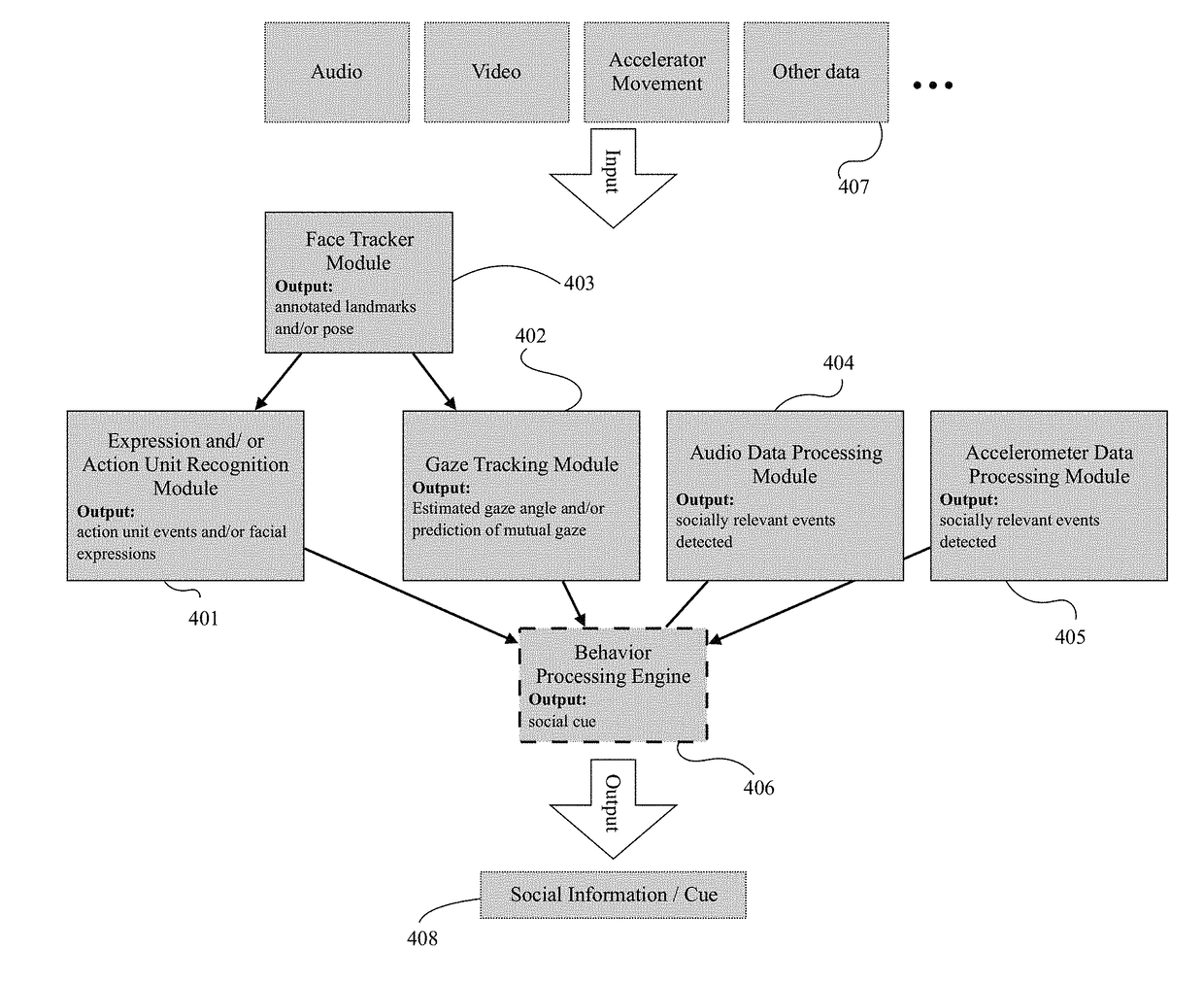

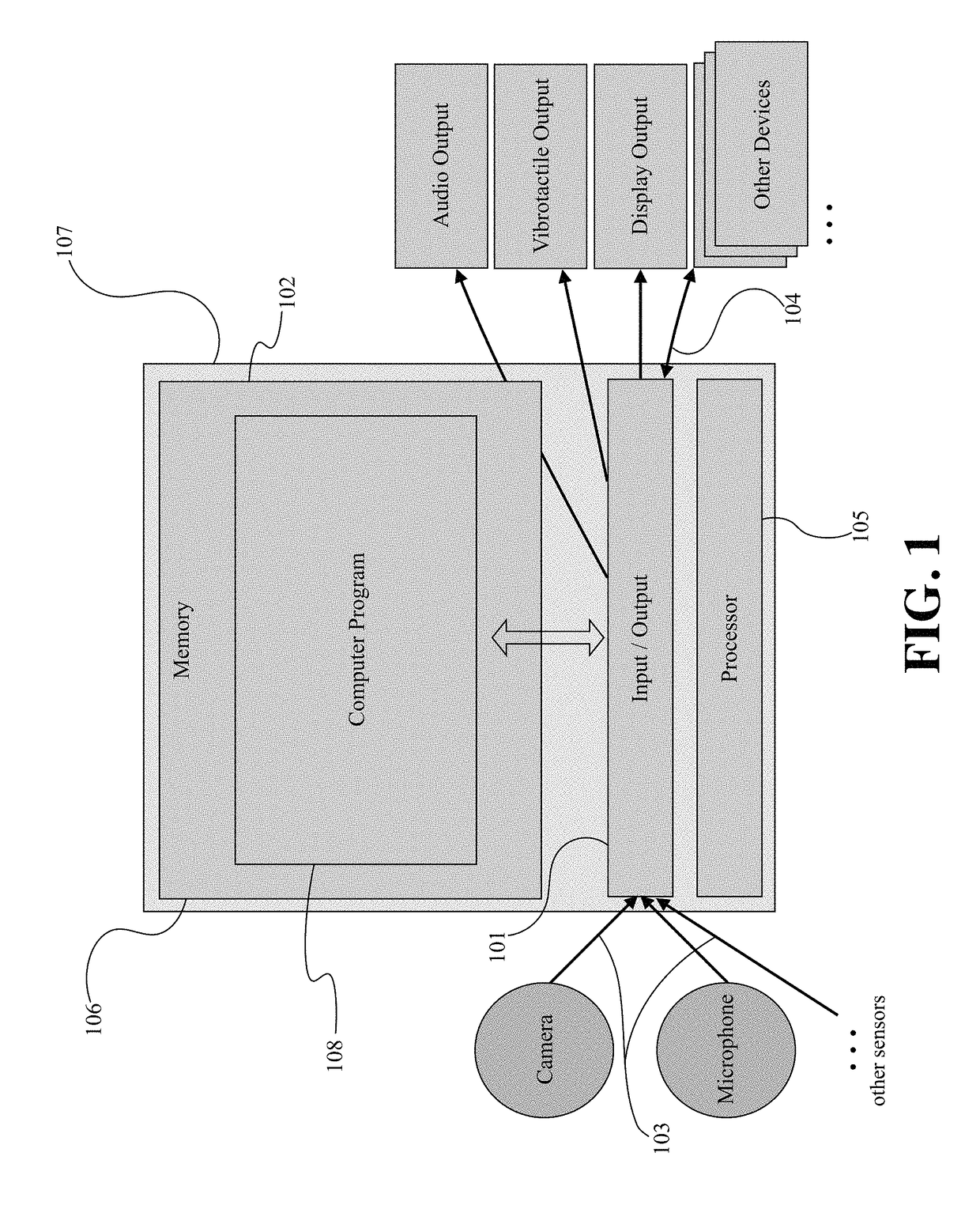

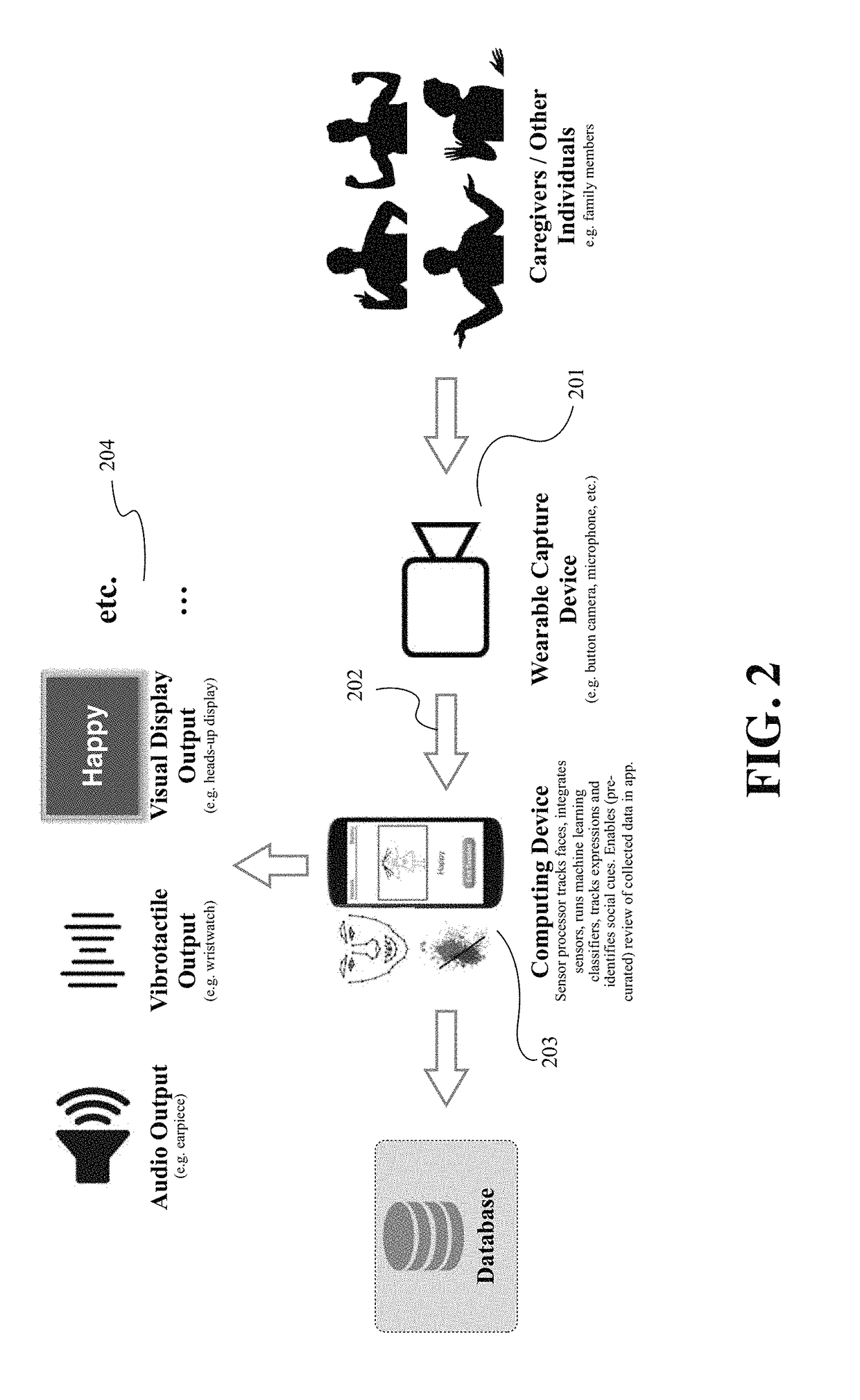

Systems and Methods for Using Mobile and Wearable Video Capture and Feedback Plat-Forms for Therapy of Mental Disorders

ActiveUS20170319123A1Improve performanceHealth-index calculationMedical automated diagnosisAccelerometerPhysical medicine and rehabilitation

Behavioral and mental health therapy systems in accordance with several embodiments of the invention include a wearable camera and / or a variety of sensors (accelerometer, microphone, among various other) connected to a computing system including a display, audio output, holographic output, and / or vibrotactile output to automatically recognize social cues from images captured by at least one camera and provide this information to the wearer via one or more outputs such as (but not limited to) displaying an image, displaying a holographic overlay, generating an audible signal, and / or generating a vibration.

Owner:THE BOARD OF TRUSTEES OF THE LELAND STANFORD JUNIOR UNIV

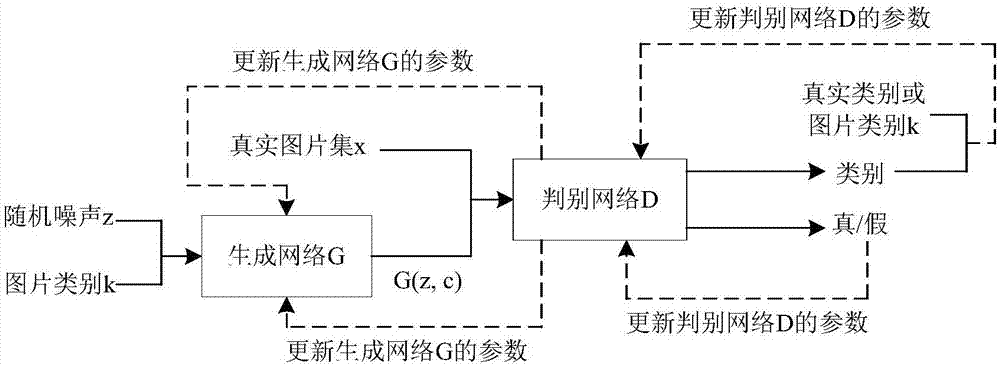

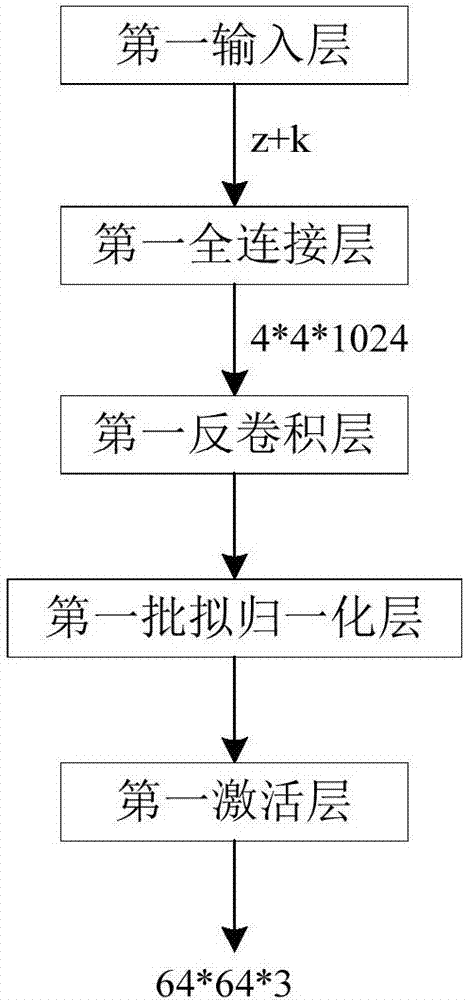

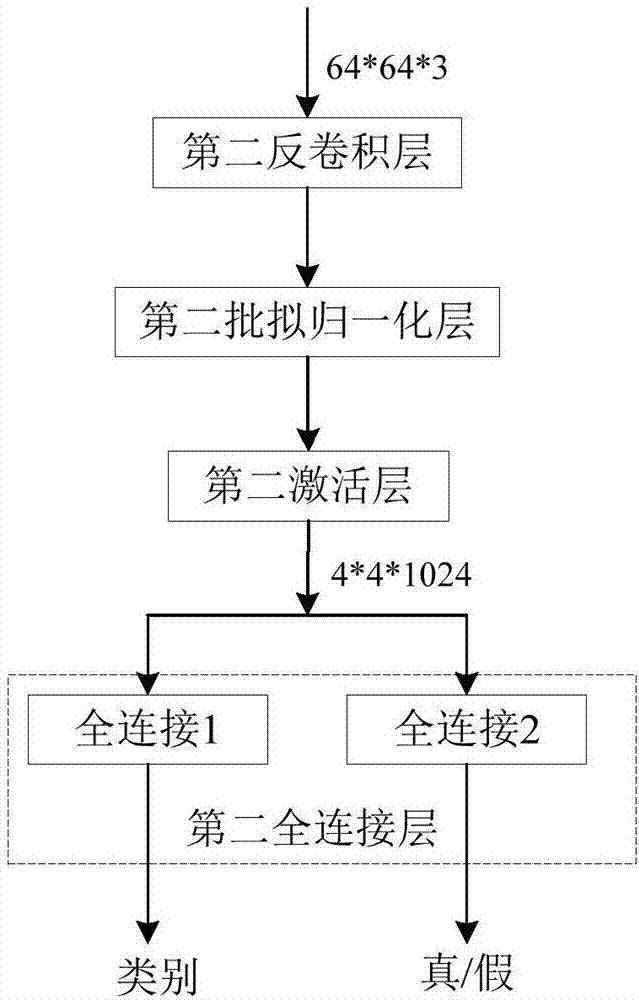

Picture generation method based on depth learning and generative adversarial network

The invention discloses a picture generation method based on depth learning and a generative adversarial network. The method comprises steps that (1), a picture database is established, multiple real pictures are collected and are further classified and marked, and each picture has a unique class label k corresponding to the each picture; (2), the generation network G is constructed, a vector combined by a random noise signal z and the class label k is inputted to the generation network G, and generated data is taken as input of a discrimination network D; (3), the discrimination network D is constructed, and a loss function of the discrimination network D comprises a first loss function used for determining true and false pictures and a second loss function used for determining picture classes; (4), the generation network is trained; (5), needed pictures are generated, the random noise signal z and the class label k are inputted to the generation network G trained in the step (4) to acquire pictures in a designated class. The method is advantaged in that not only can the pictures can be generated, but also the designated generation picture classes can be further realized.

Owner:SHENZHEN GRADUATE SCHOOL TSINGHUA UNIV

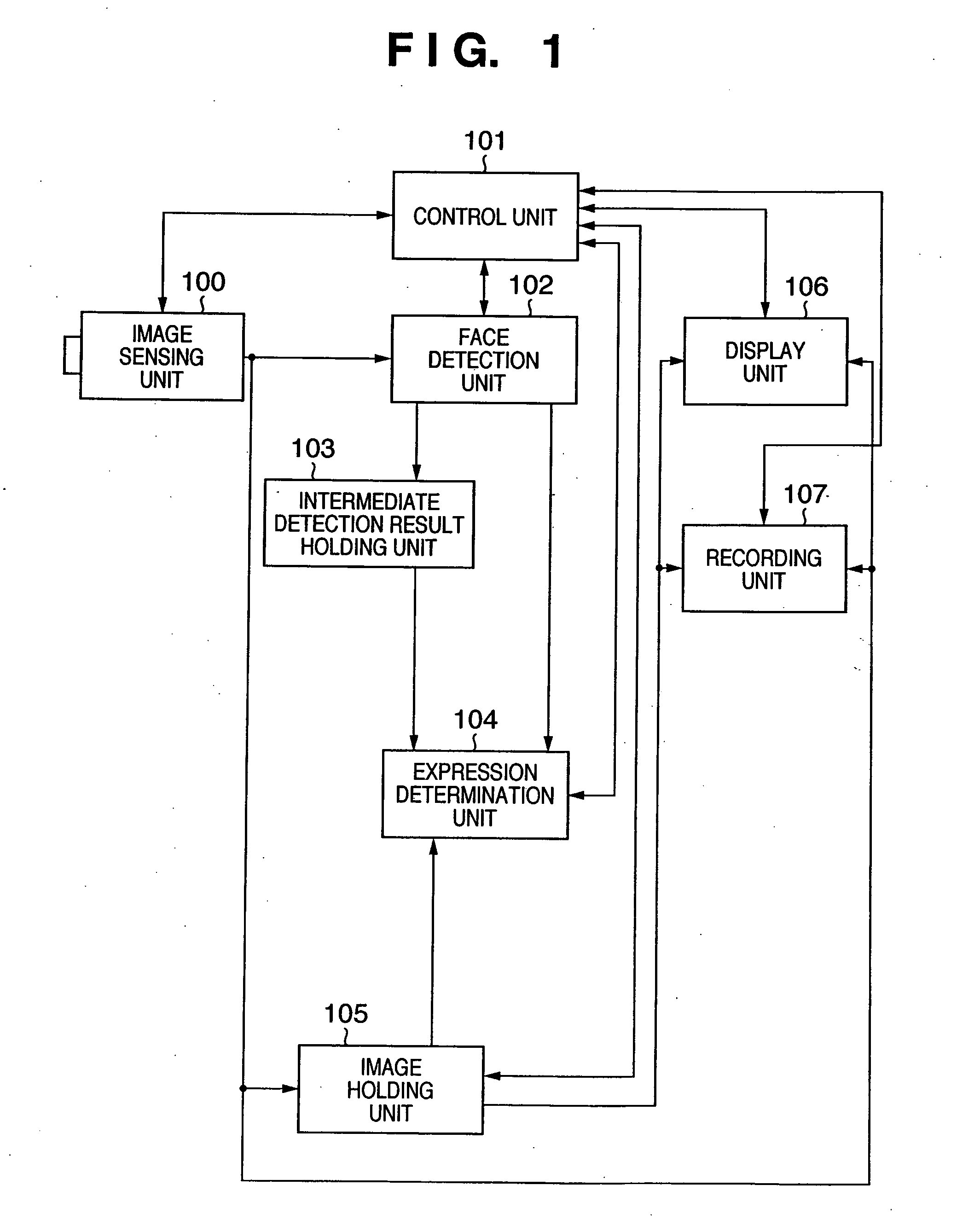

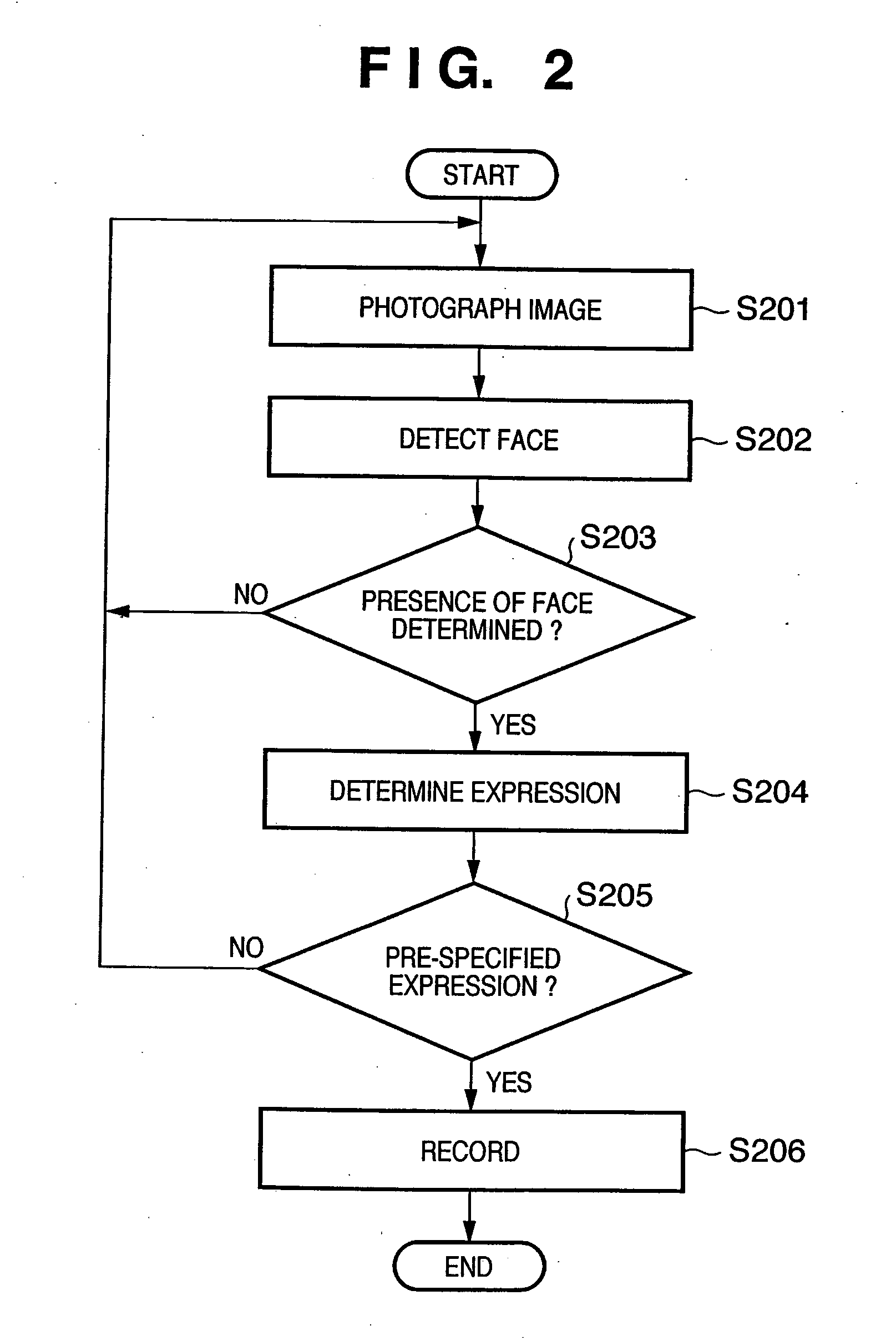

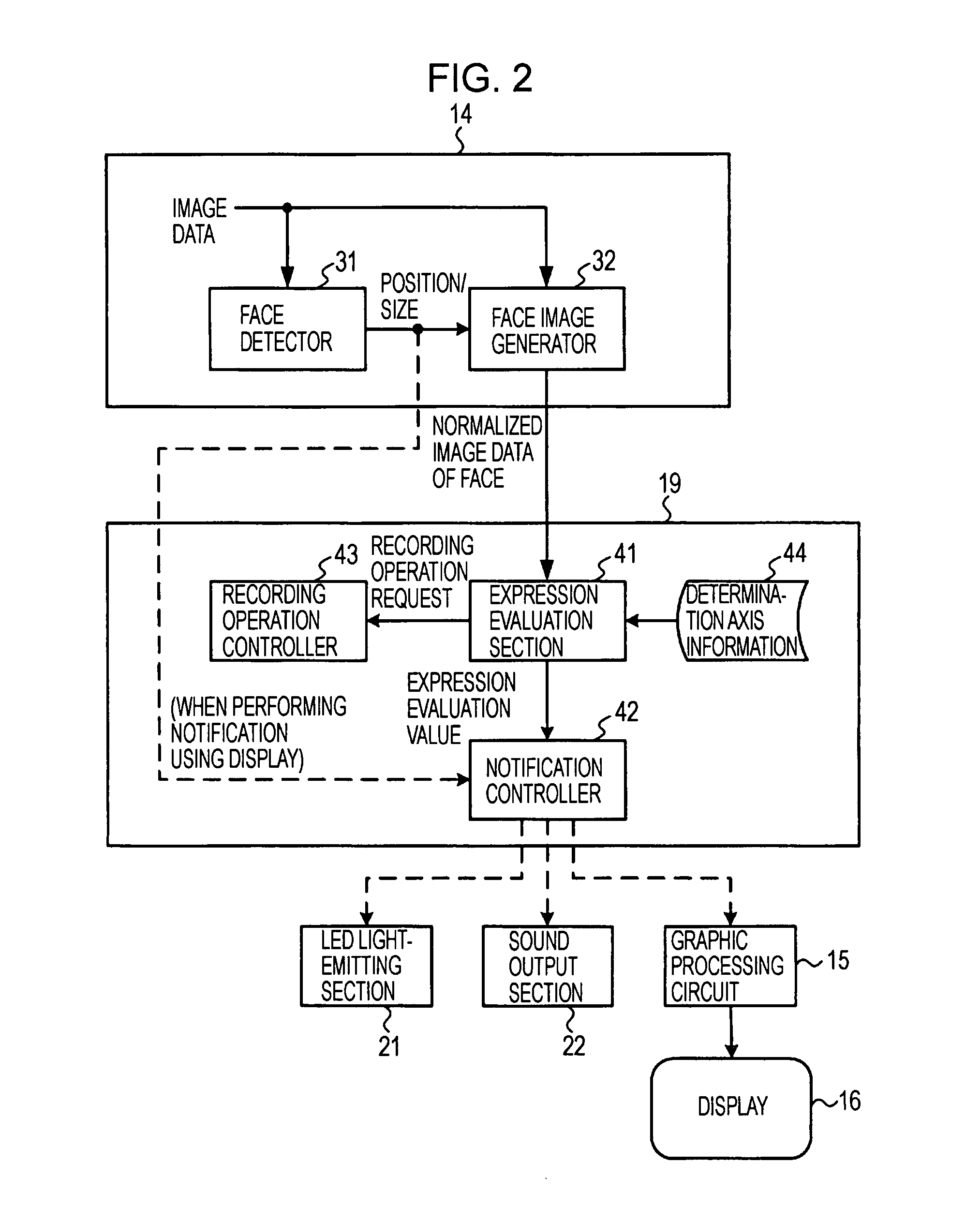

Image-capturing apparatus and method, expression evaluation apparatus, and program

ActiveUS20080037841A1Simple structureReliable recordTelevision system detailsSolid-state devicesImage signalImage capture

An image-capturing apparatus for capturing an image by using a solid-state image-capturing device may include a face detector configured to detect a face of a human being on the basis of an image signal in a period until an image signal obtained by image capturing is recorded on a recording medium; an expression evaluation section configured to evaluate the expression of the detected face and to compute an expression evaluation value indicating the degree to which the detected face is close to a specific expression in relation to expressions other than the specific expression; and a notification section configured to notify notification information corresponding to the computed expression evaluation value to an image-captured person.

Owner:SONY CORP

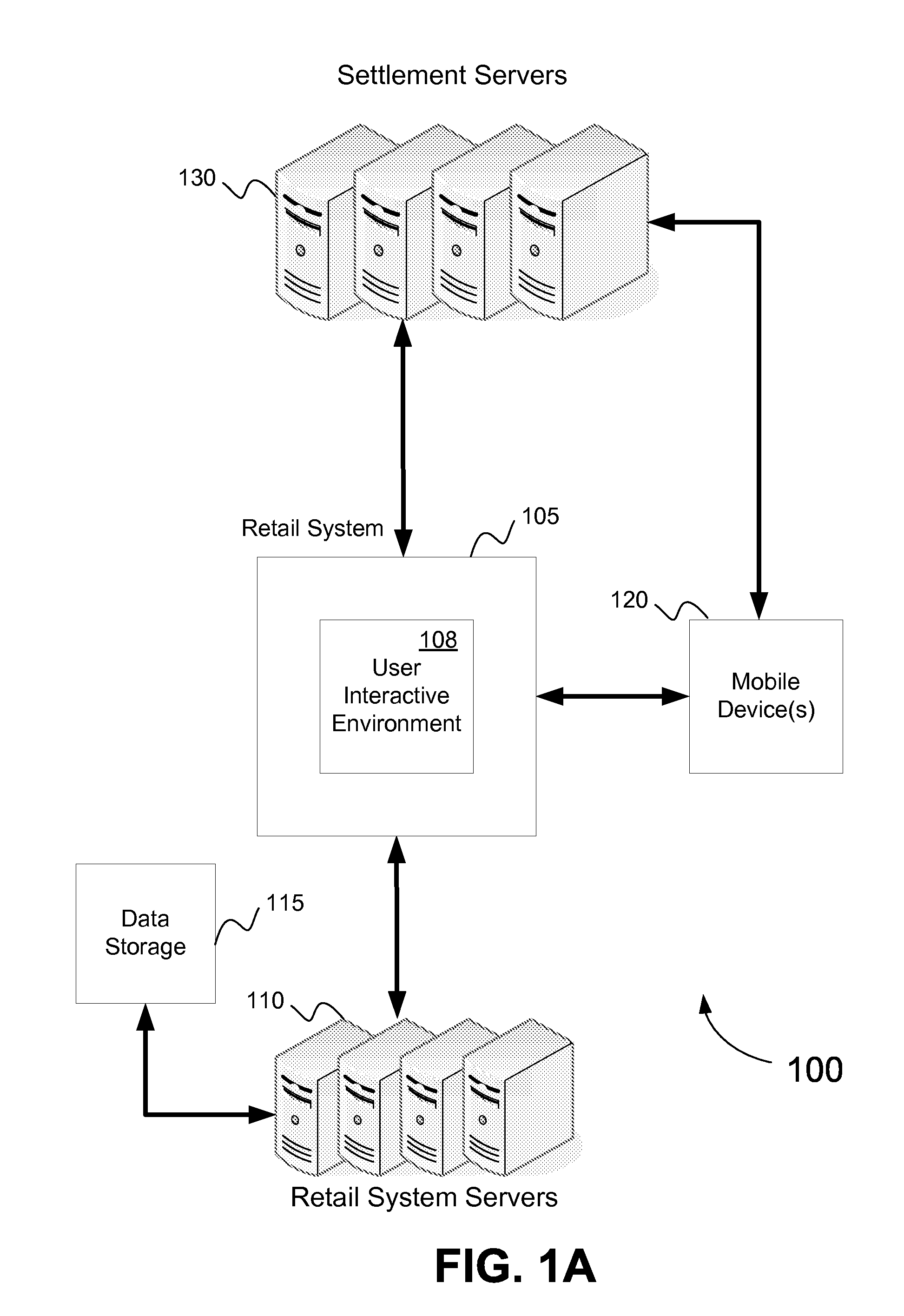

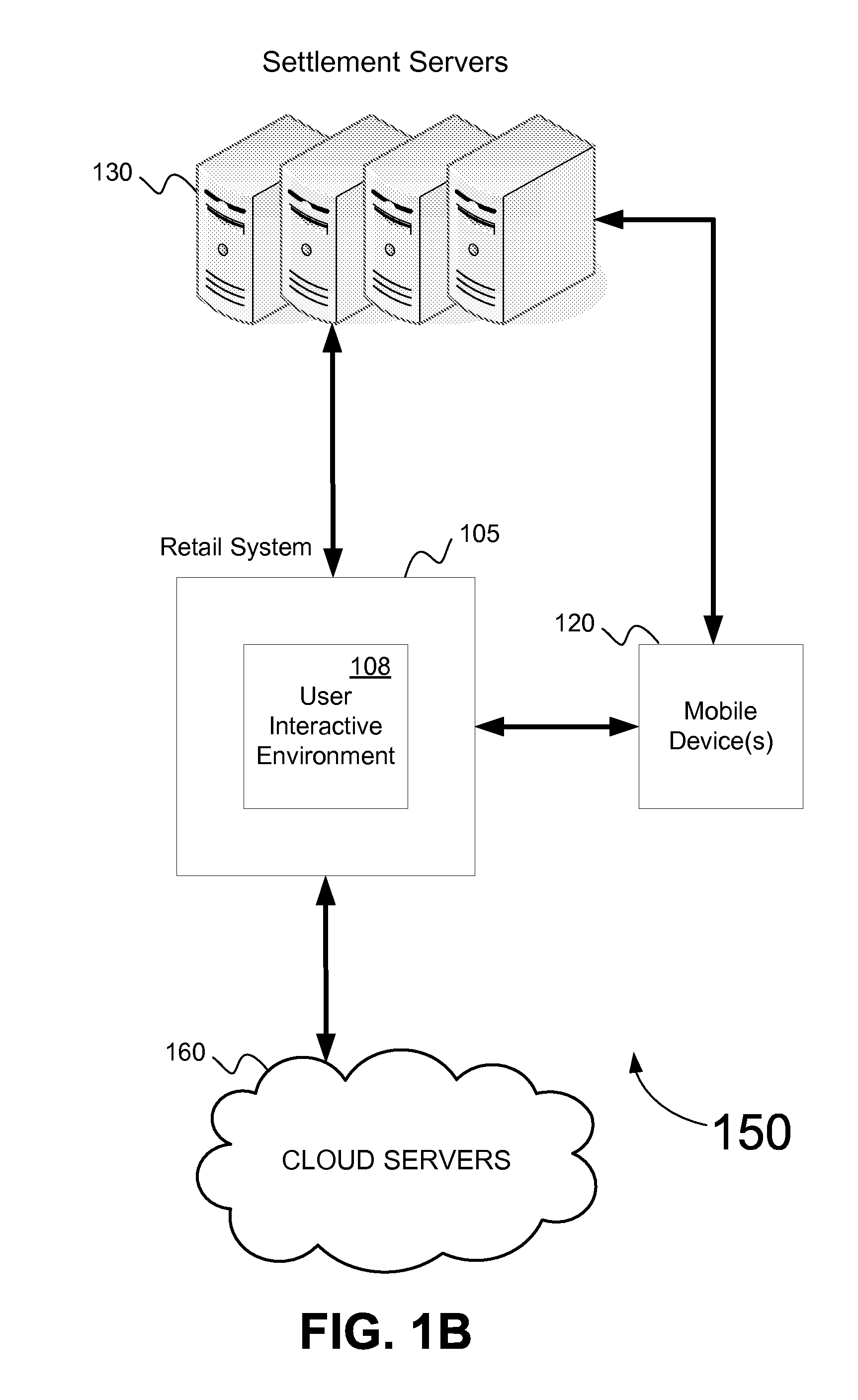

Interactive retail system

InactiveUS20130110666A1Great customizationOptimize spaceBuying/selling/leasing transactionsTransmissionDisplay deviceMultimedia

Owner:ADIDAS

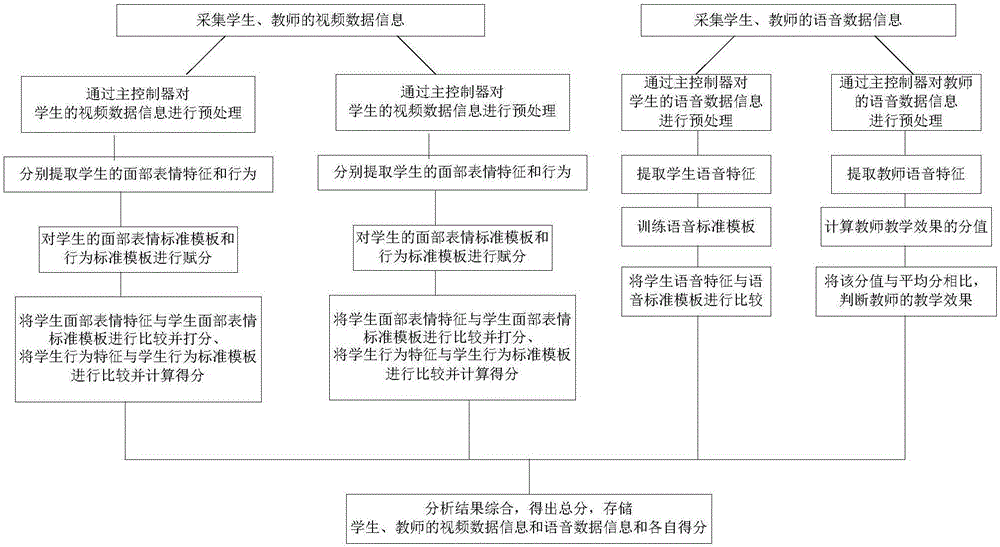

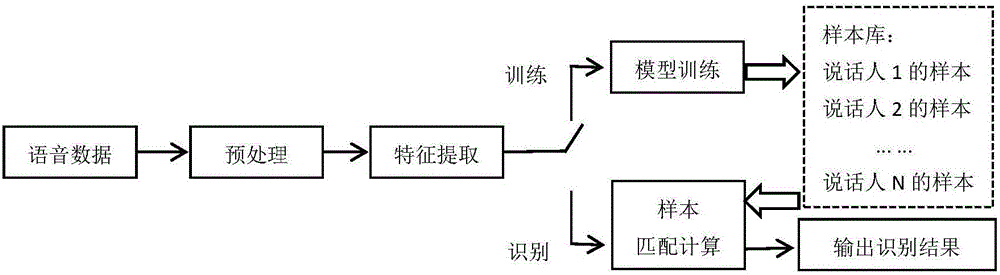

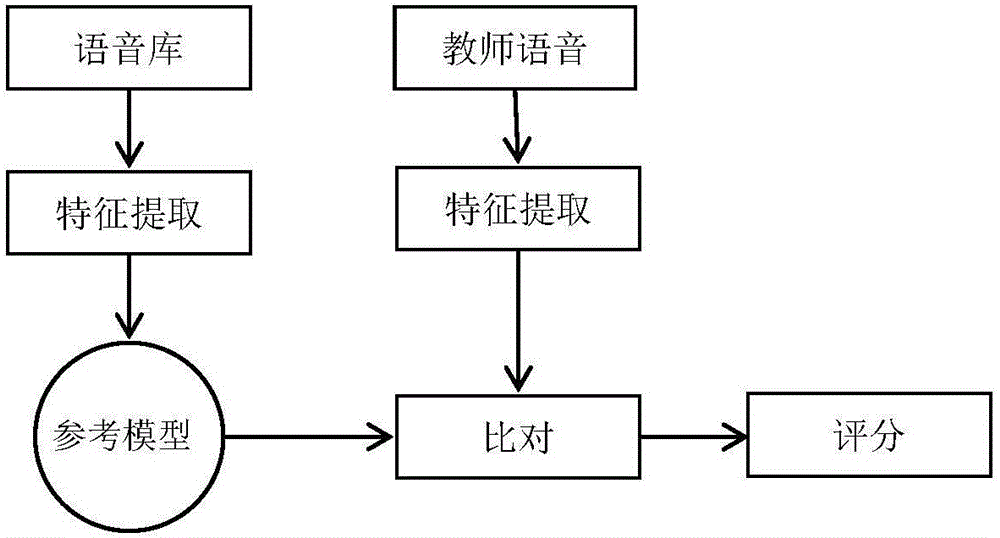

Classroom behavior monitoring system and method based on face and voice recognition

ActiveCN106851216AImprove developmentImprove learning effectSpeech analysisClosed circuit television systemsFacial expressionSpeech sound

Owner:SHANDONG NORMAL UNIV

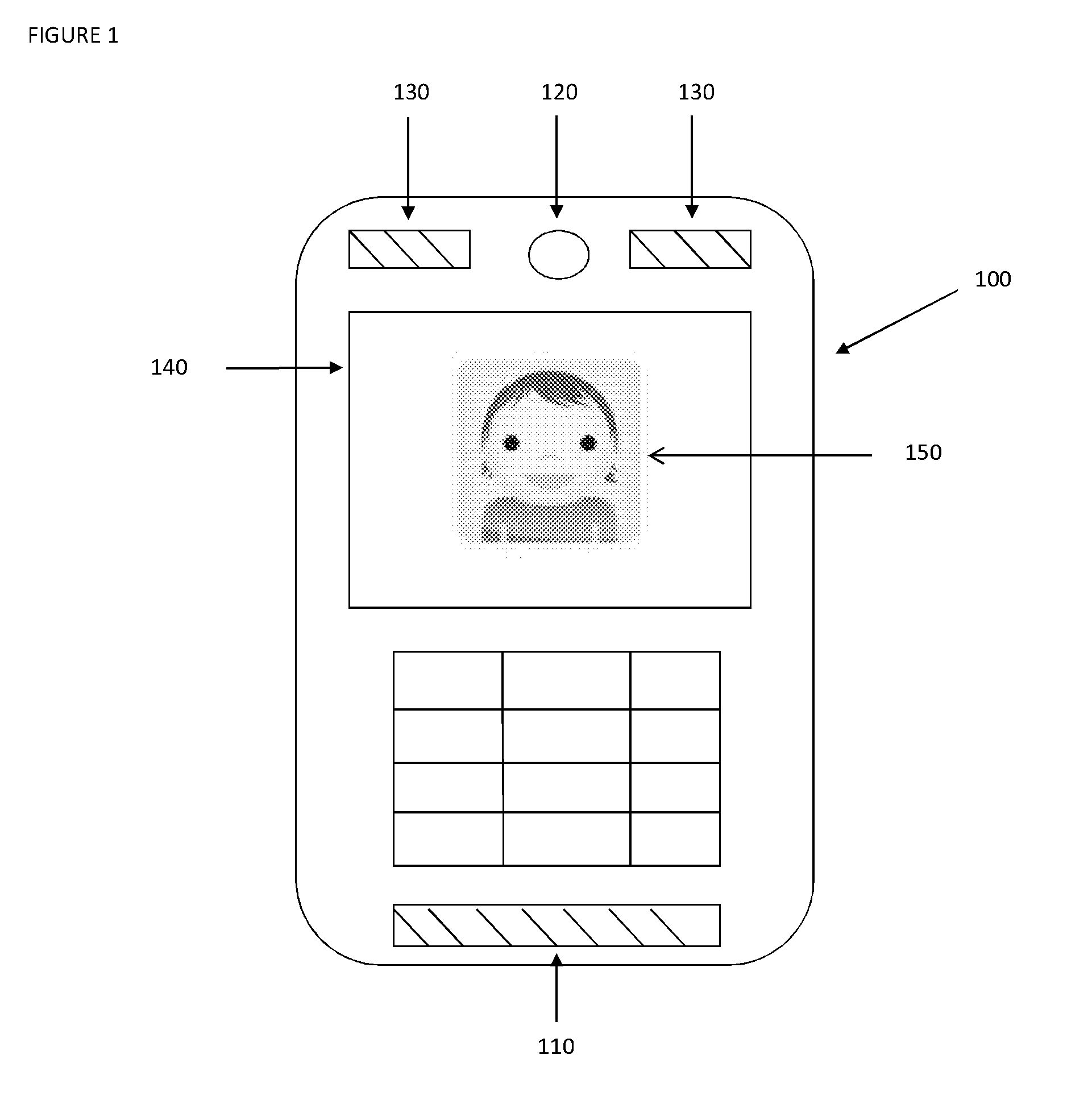

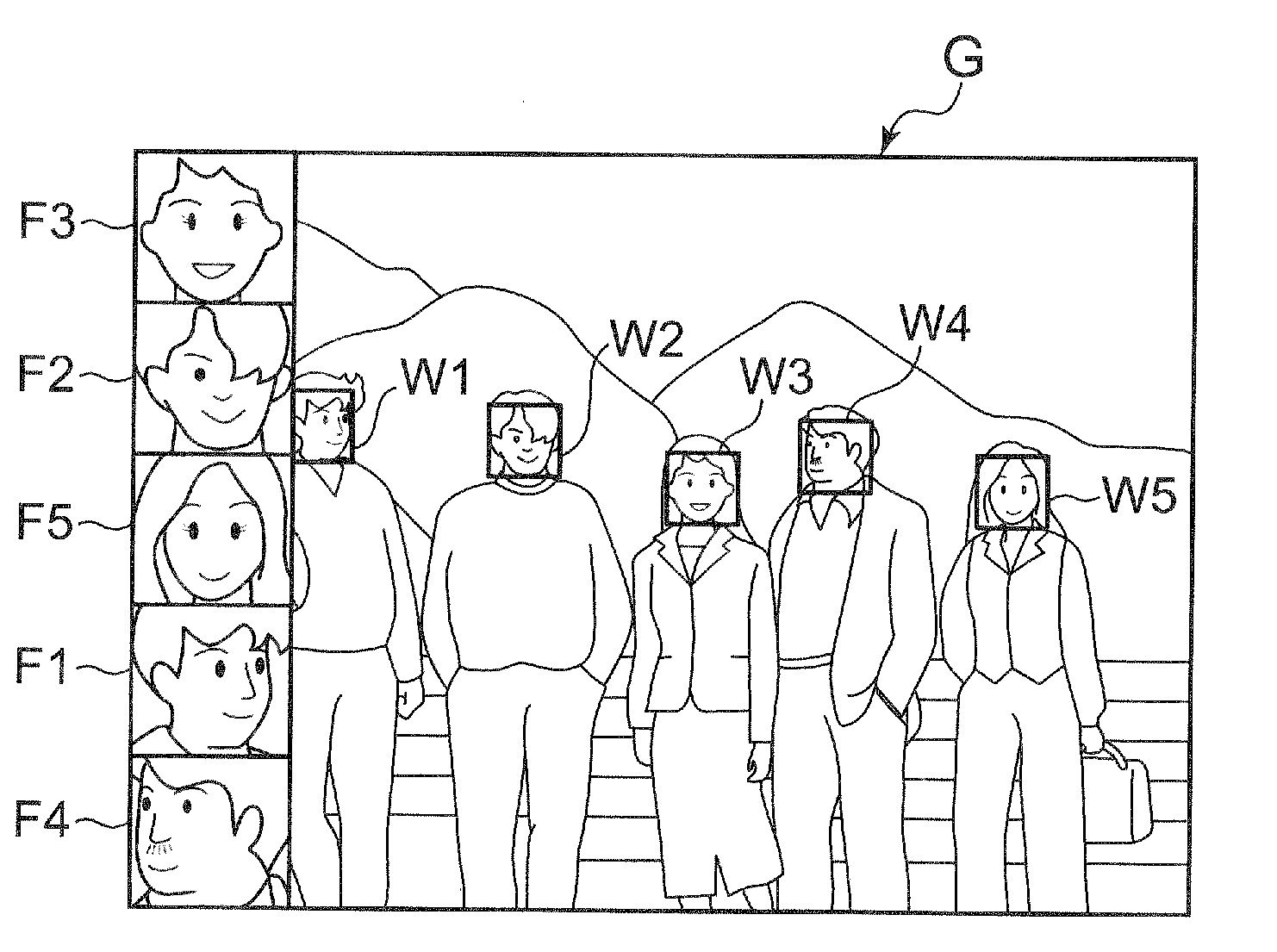

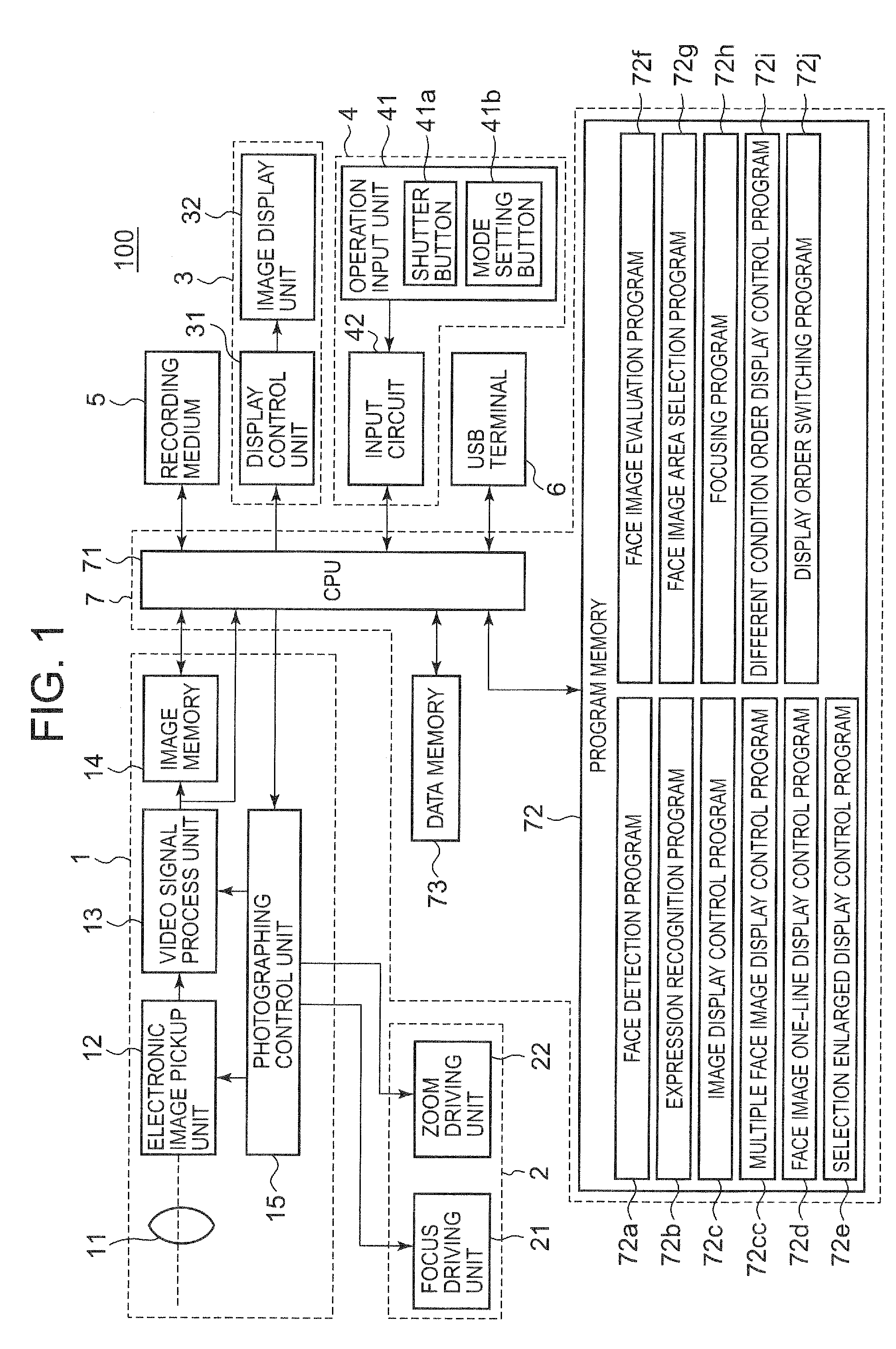

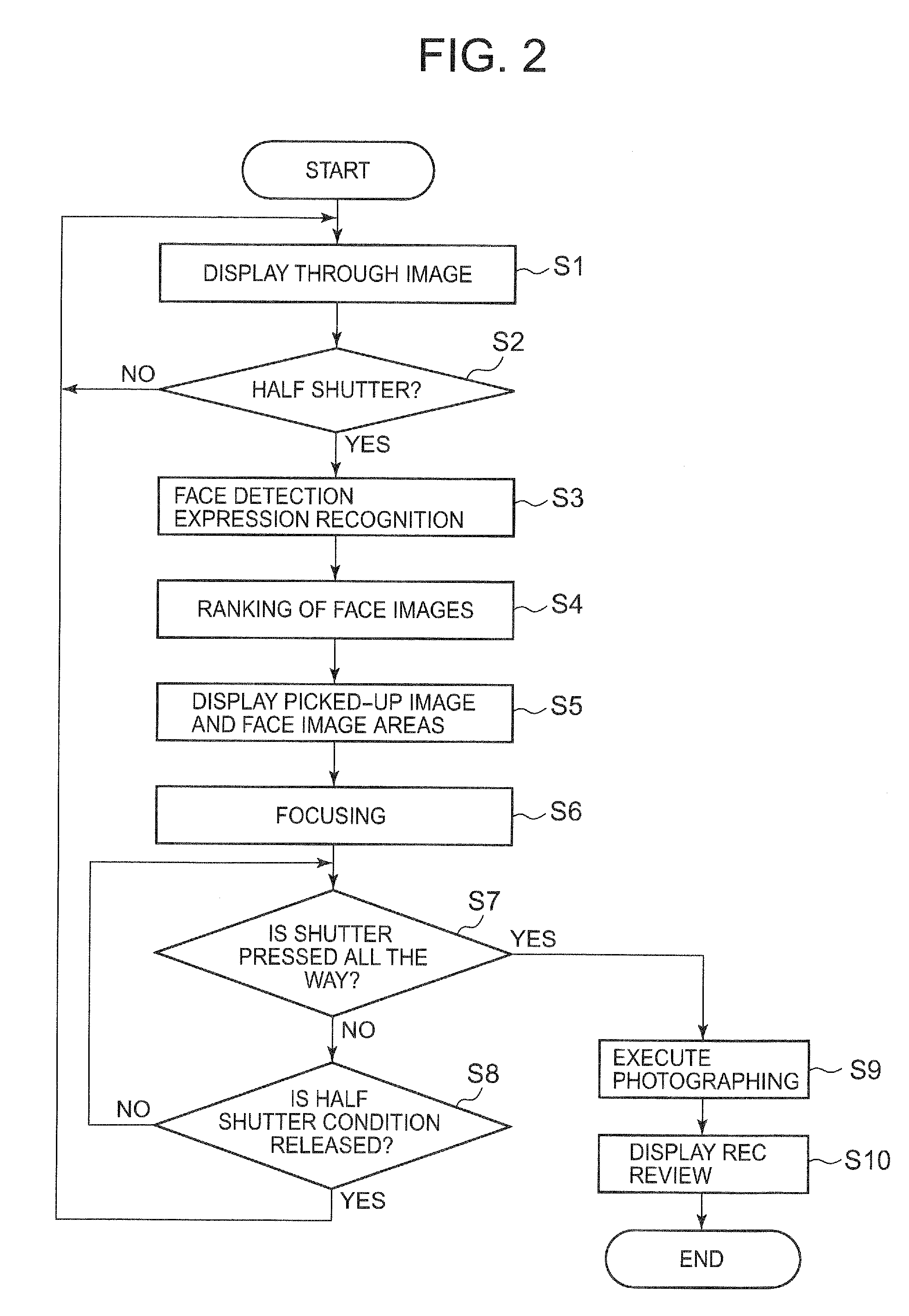

Image pickup apparatus equipped with face-recognition function

InactiveUS20080240563A1Easy to confirmTelevision system details2D-image generationRankingFacial expression

The image pickup apparatus 100 comprises the image pickup unit 1 to pick up an image of the subject which a user desires, a detecting of the face image area which includes the face of the subject person in the picked-up image based on the image information of the picked-up image, a recognizing of the expression of the face in the detected face image area, a ranking of the face image areas in the order of good smile of the recognized expressions and a displaying of the face image areas F arranged in the order of ranking and the entire picked-up image G on the same screen.

Owner:CASIO COMPUTER CO LTD

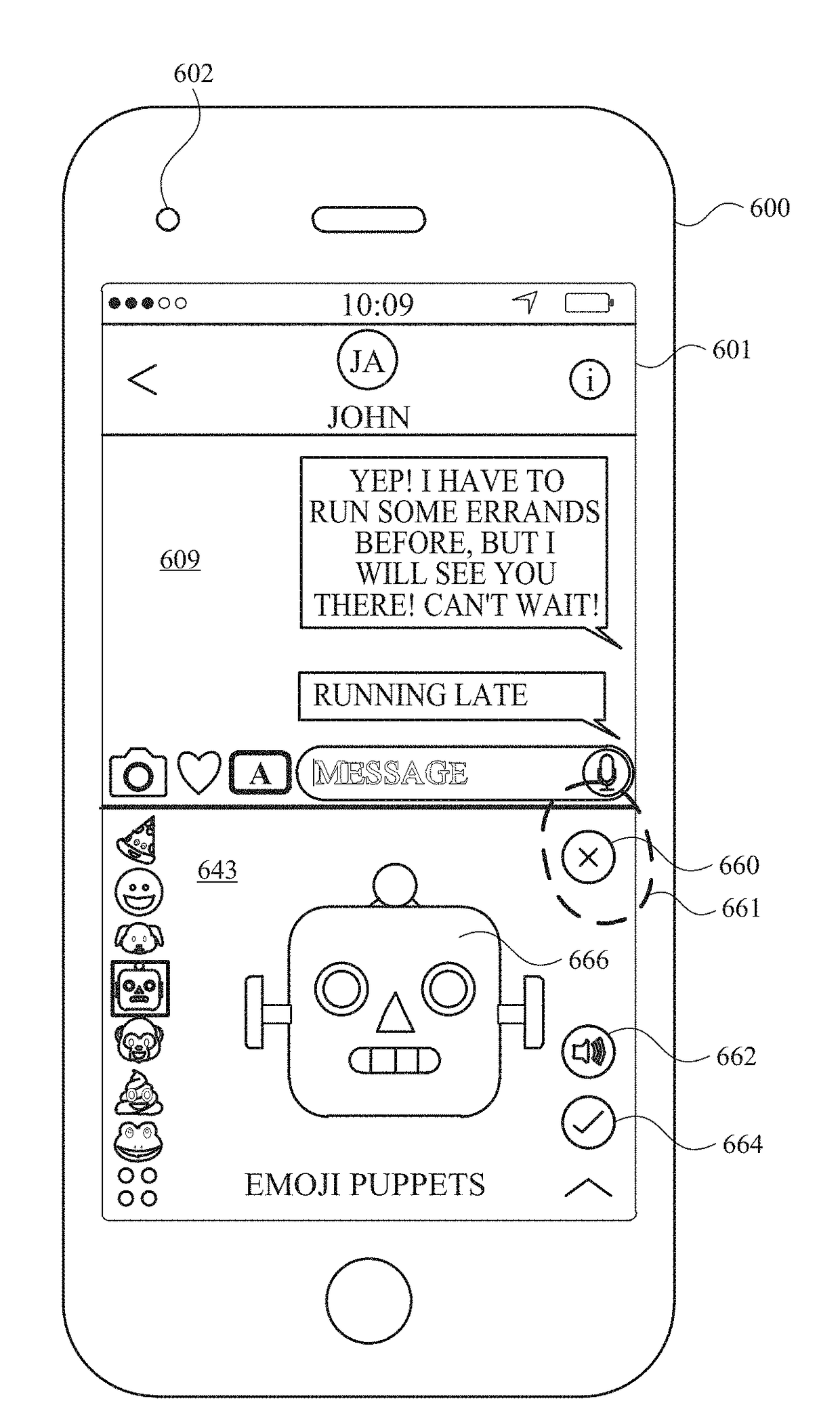

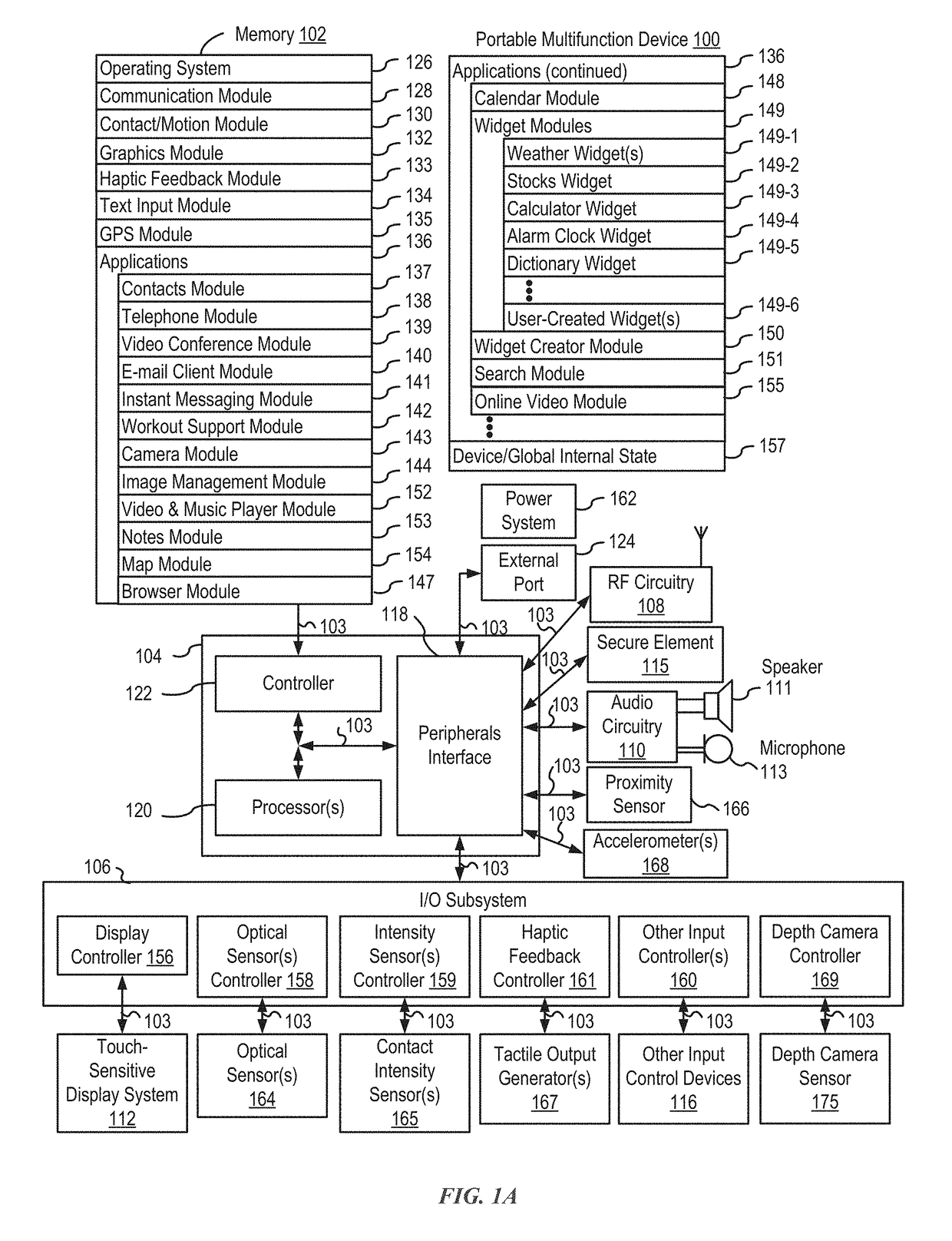

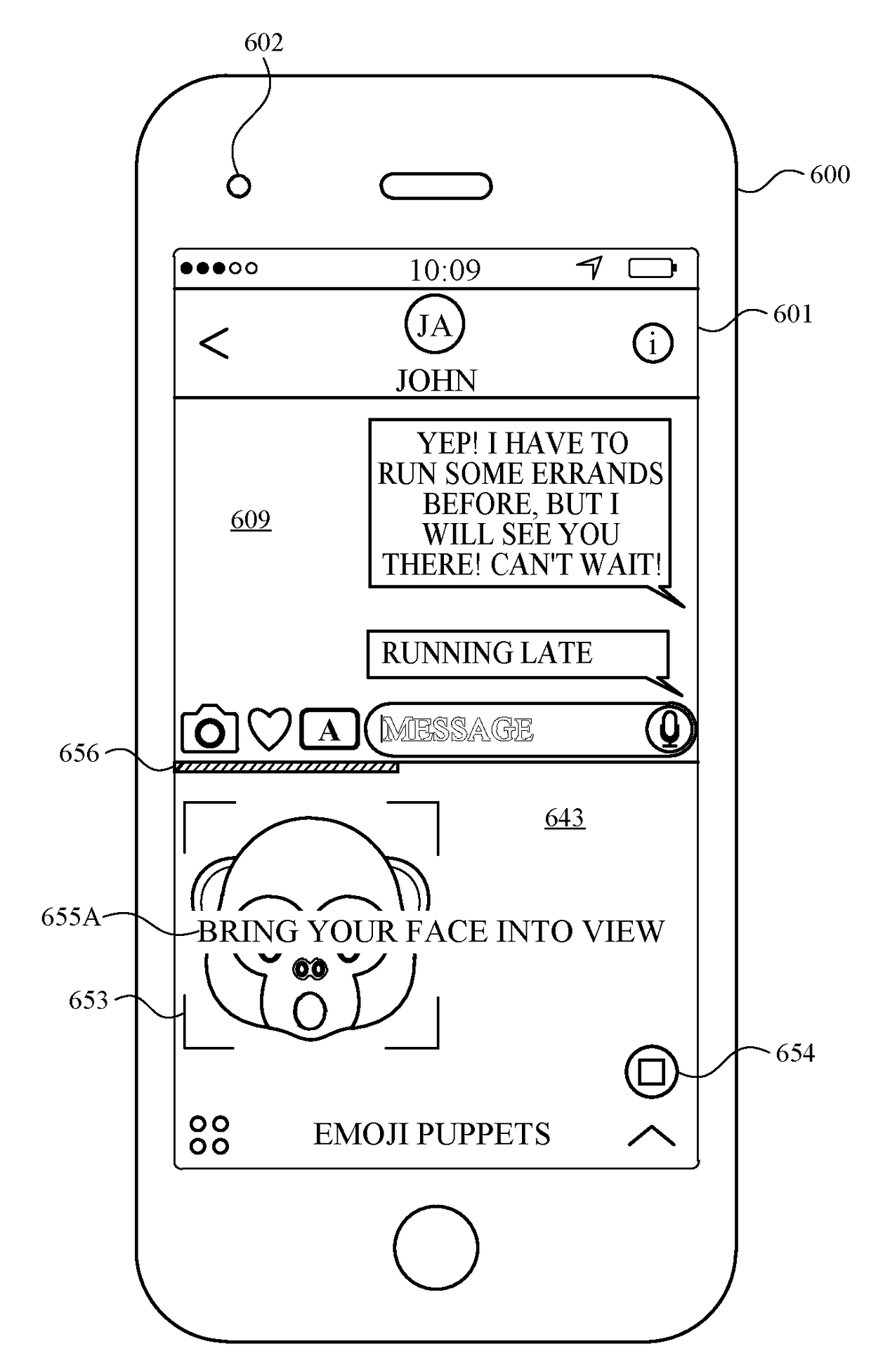

Emoji recording and sending

ActiveUS20180336715A1Faster and efficient methodFaster and efficient and interfaceTelevision system detailsSubstation equipmentComputer graphics (images)Ophthalmology

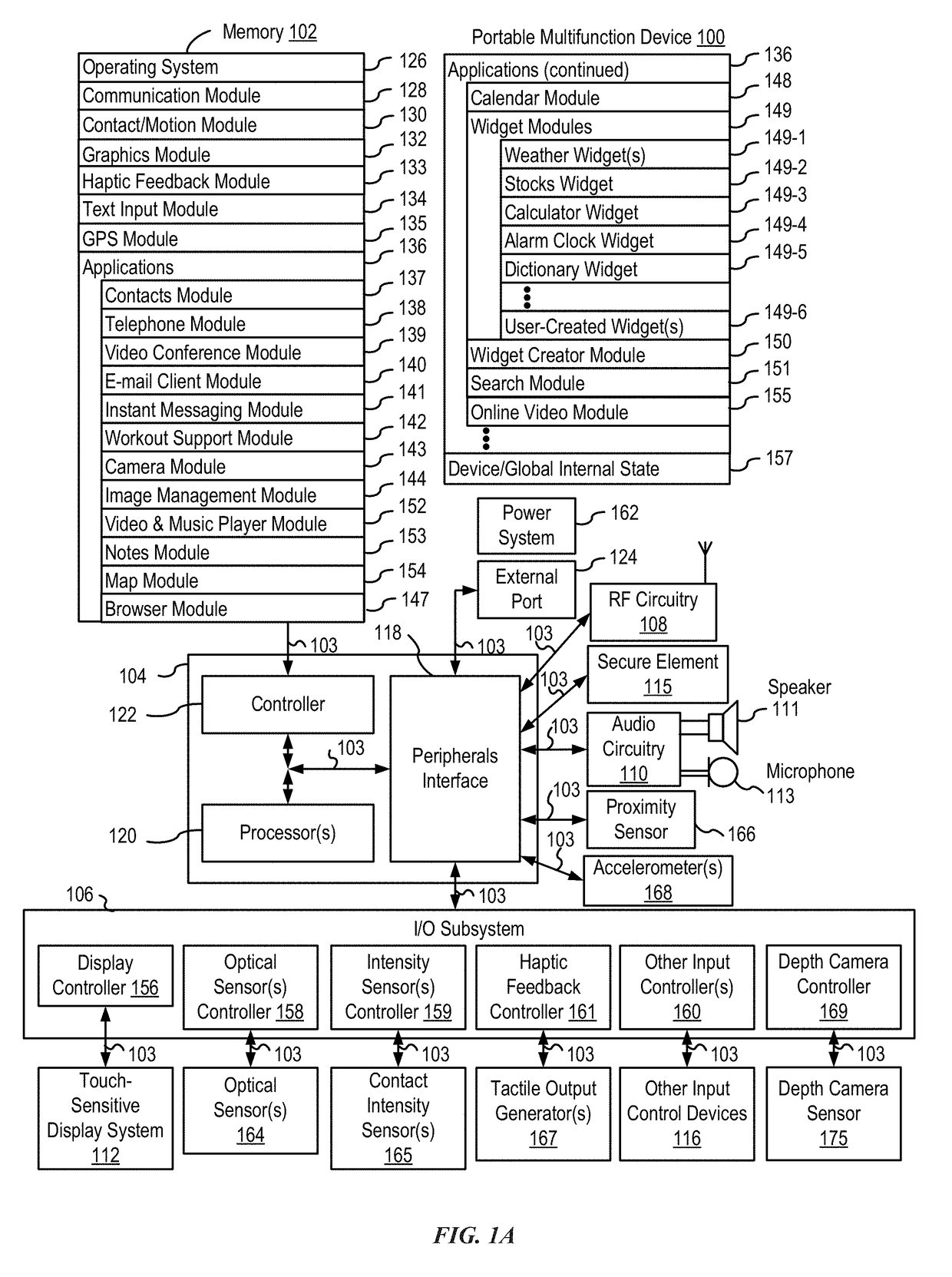

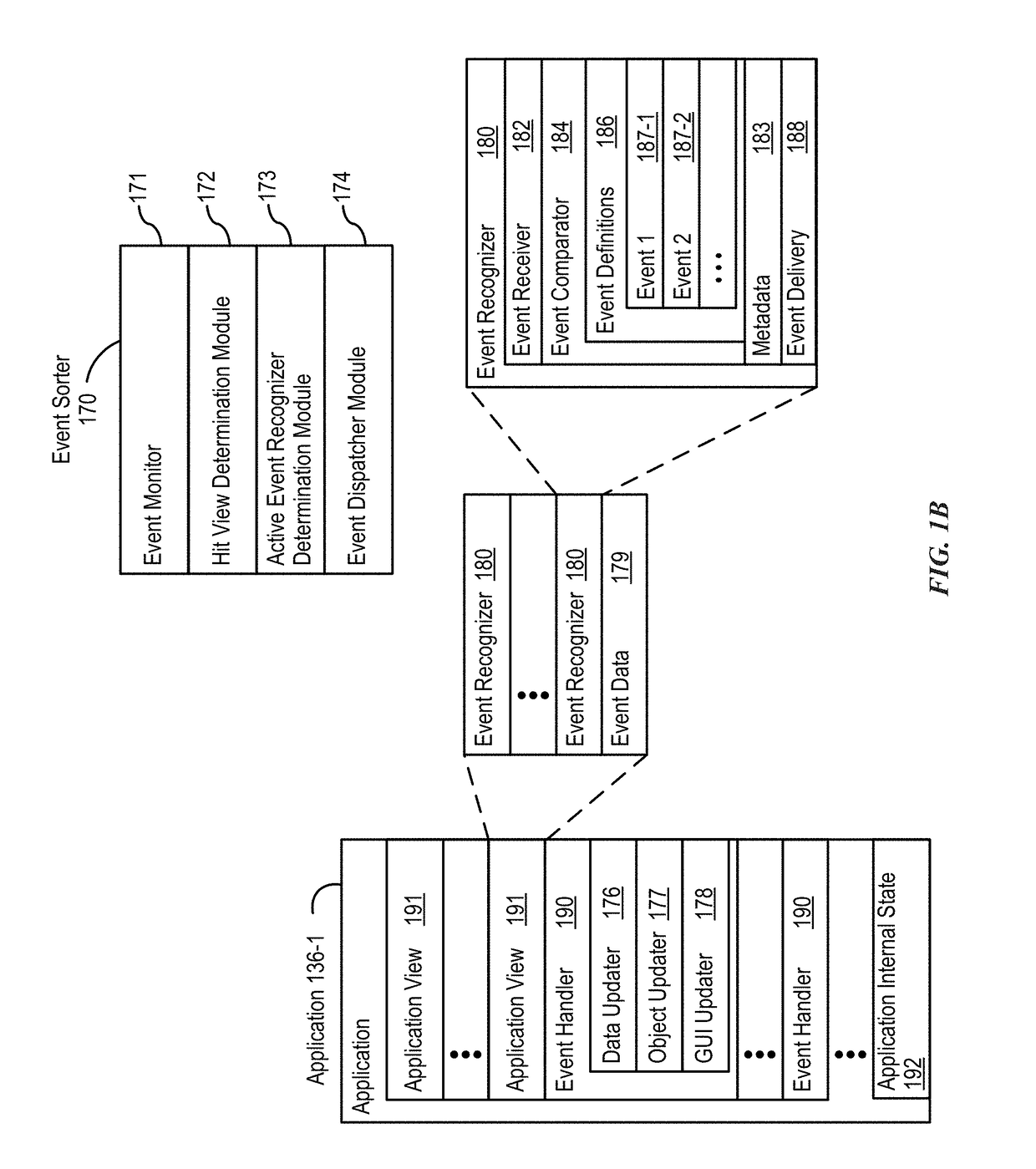

The present disclosure generally relates to generating and modifying virtual avatars. An electronic device having a camera and a display apparatus displays a virtual avatar that changes appearance in response to changes in a face in a field of view of the camera. In response to detecting changes in one or more physical features of the face in the field of view of the camera, the electronic device modifies one or more features of the virtual avatar.

Owner:APPLE INC

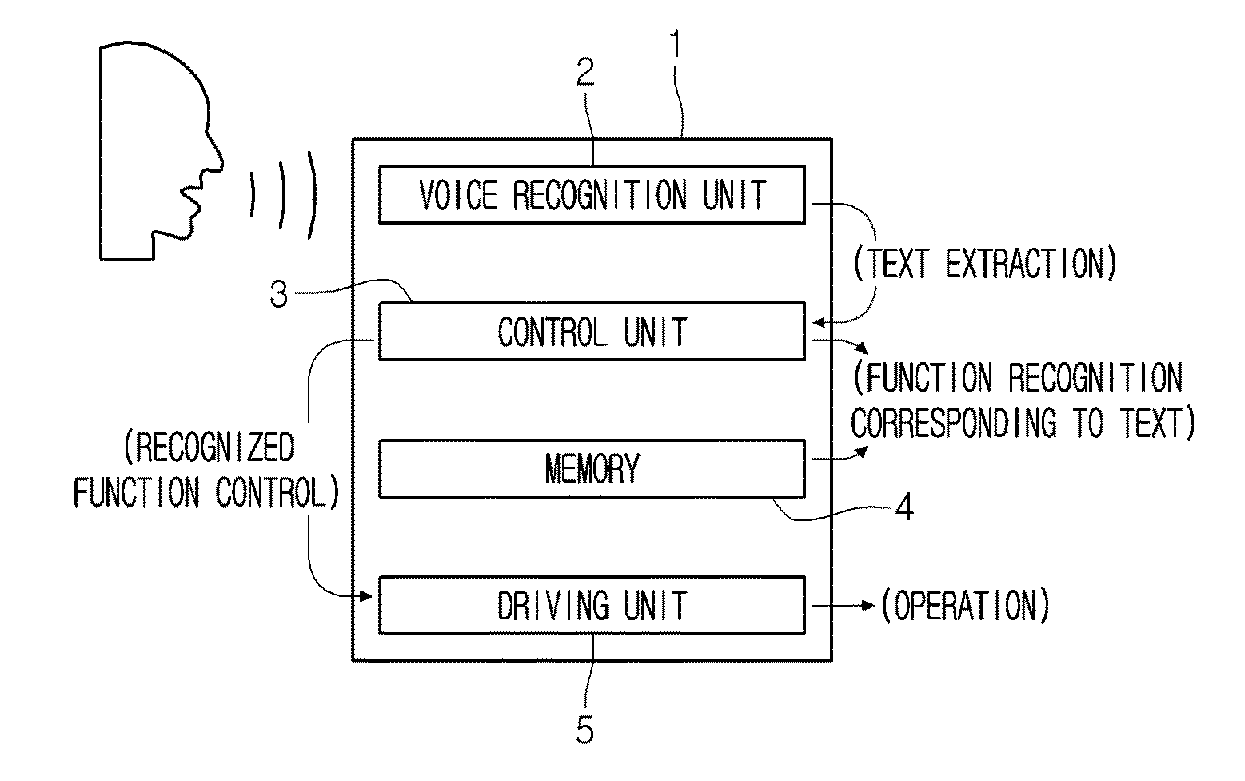

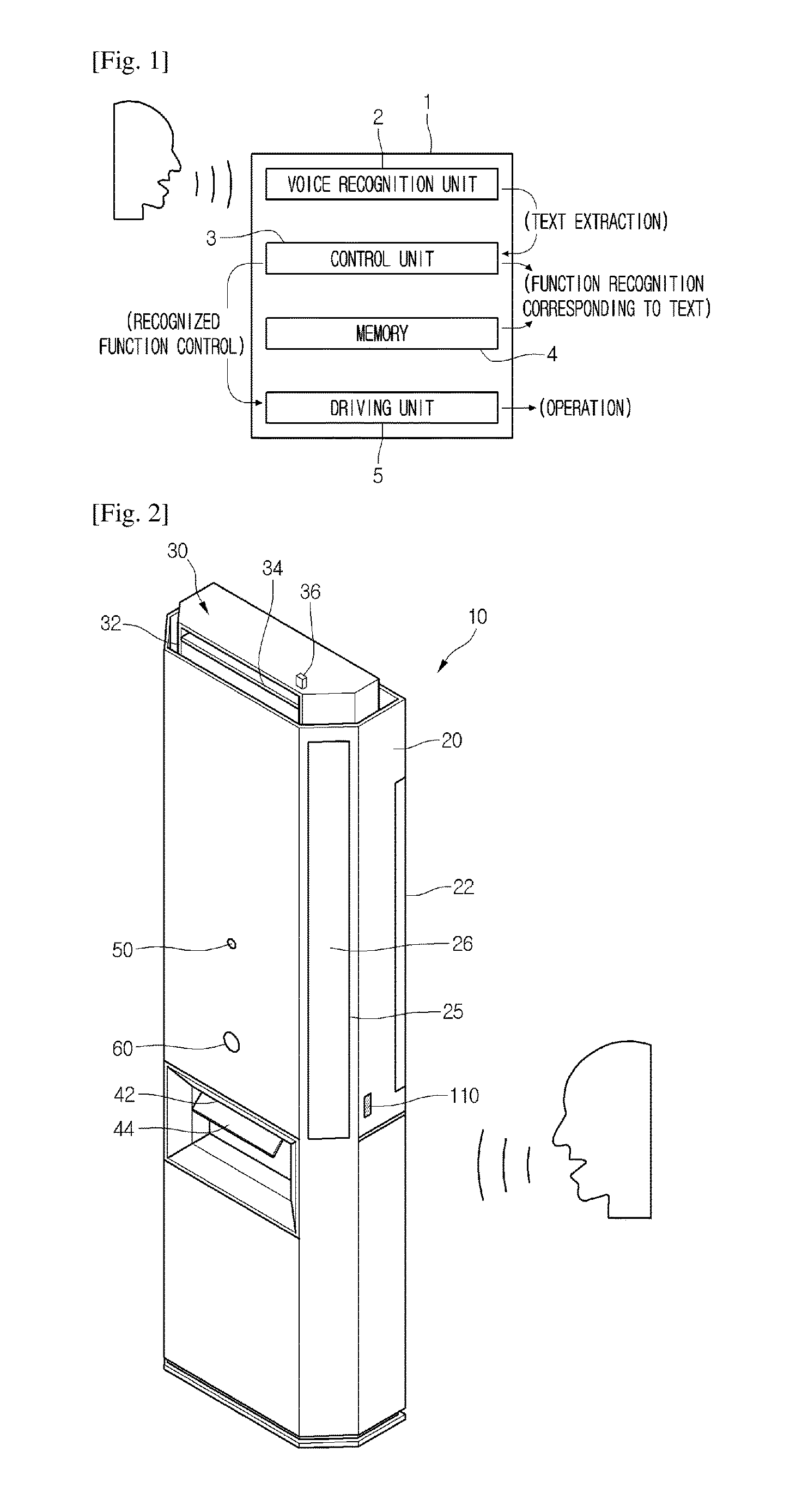

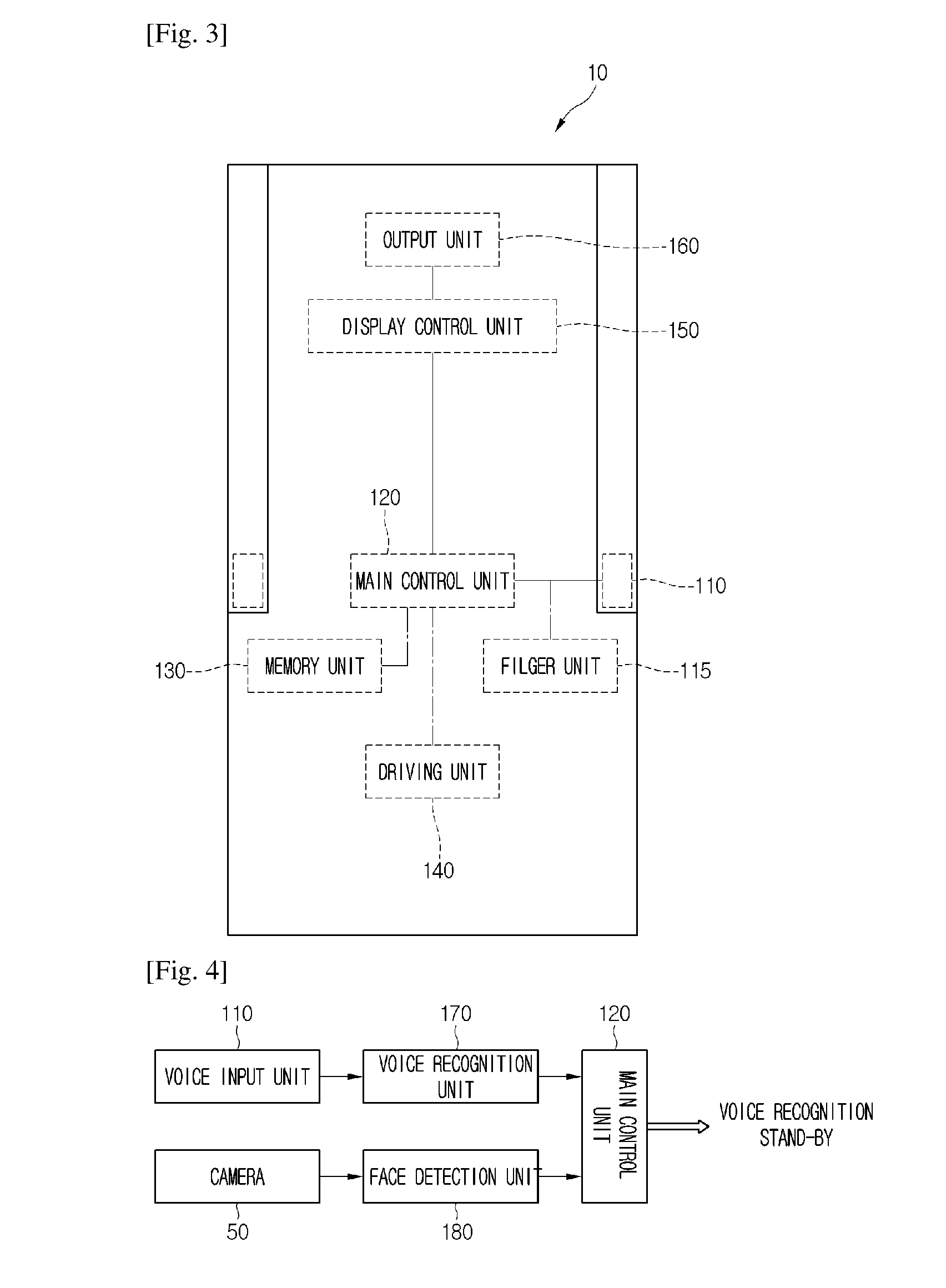

Smart home appliances, operating method of thereof, and voice recognition system using the smart home appliances

ActiveUS20170004828A1Improve usabilityPrevent misidentificationInput/output for user-computer interactionSpeech recognitionInformation controlHome appliance

Provided is a smart home appliance. The smart home appliance includes: a voice input unit collecting a voice; a voice recognition unit recognizing a text corresponding to the voice collected through the voice input unit; a capturing unit collecting an image for detecting a user's visage or face; a memory unit mapping the text recognized by the voice recognition unit and a setting function and storing the mapped information; and a control unit determining whether to perform a voice recognition service on the basis of at least one information of image information collected by the capturing unit and voice information collected by the voice input unit.

Owner:LG ELECTRONICS INC

Method for user function operation based on face recognition and mobile terminal supporting the same

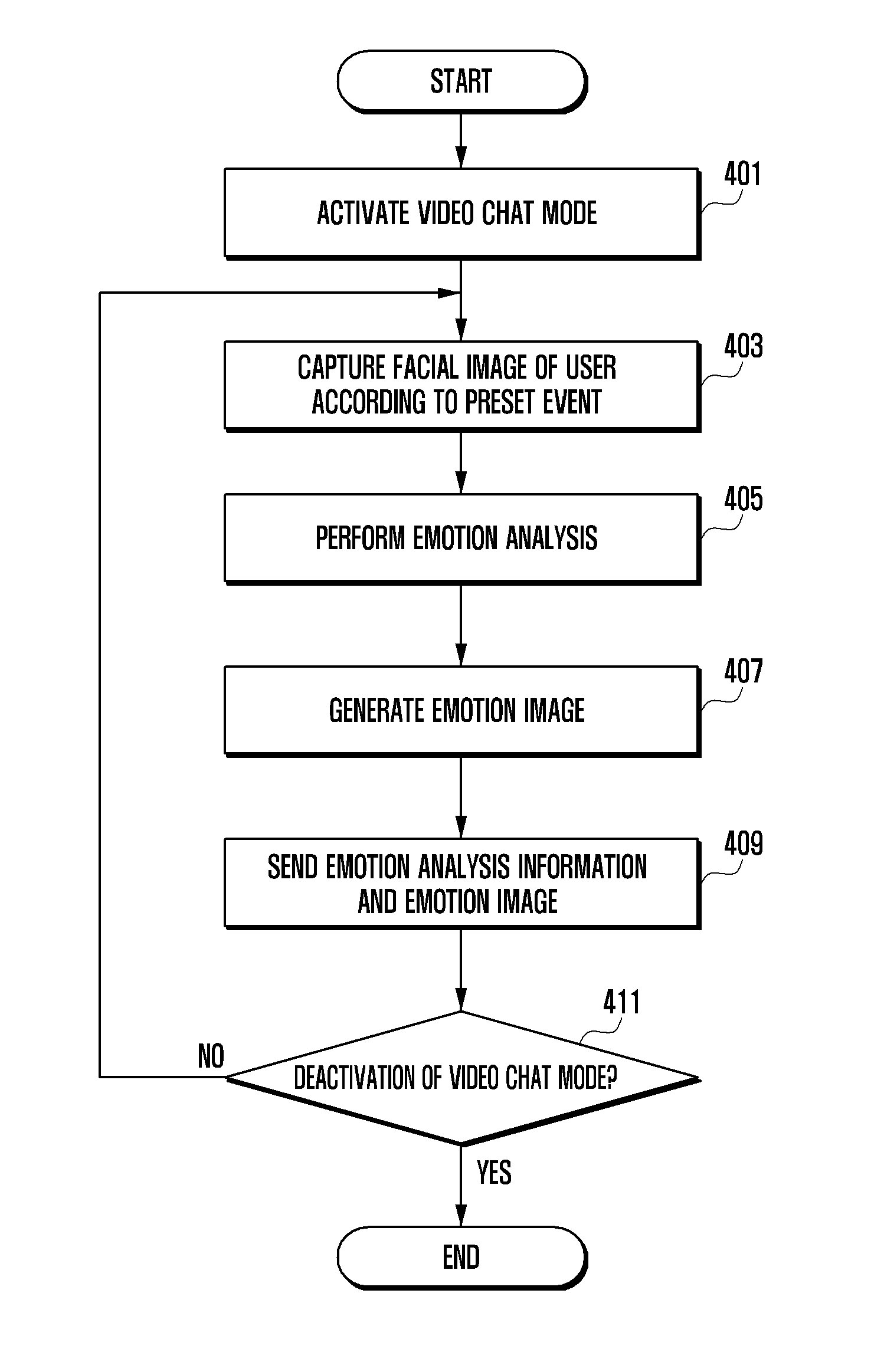

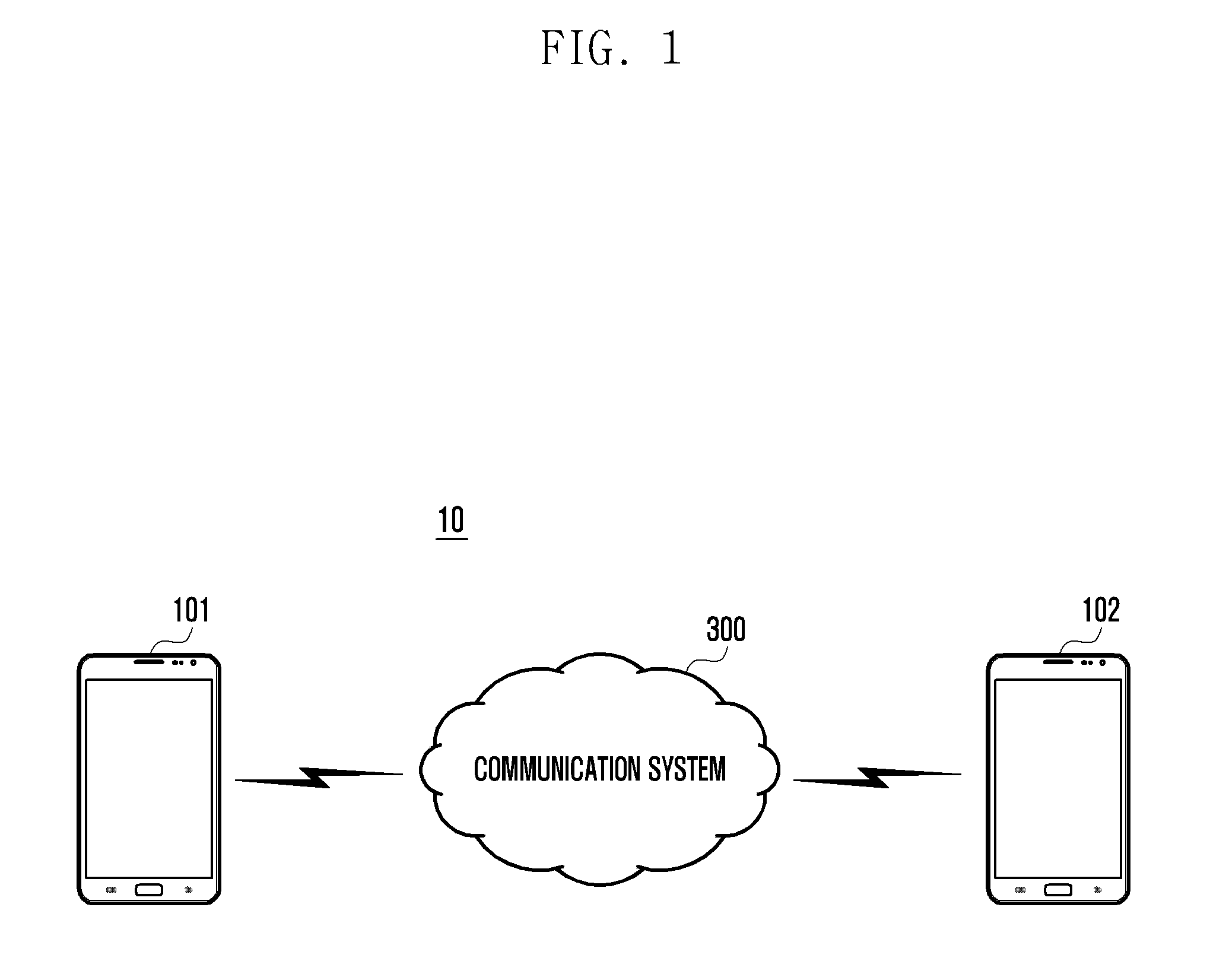

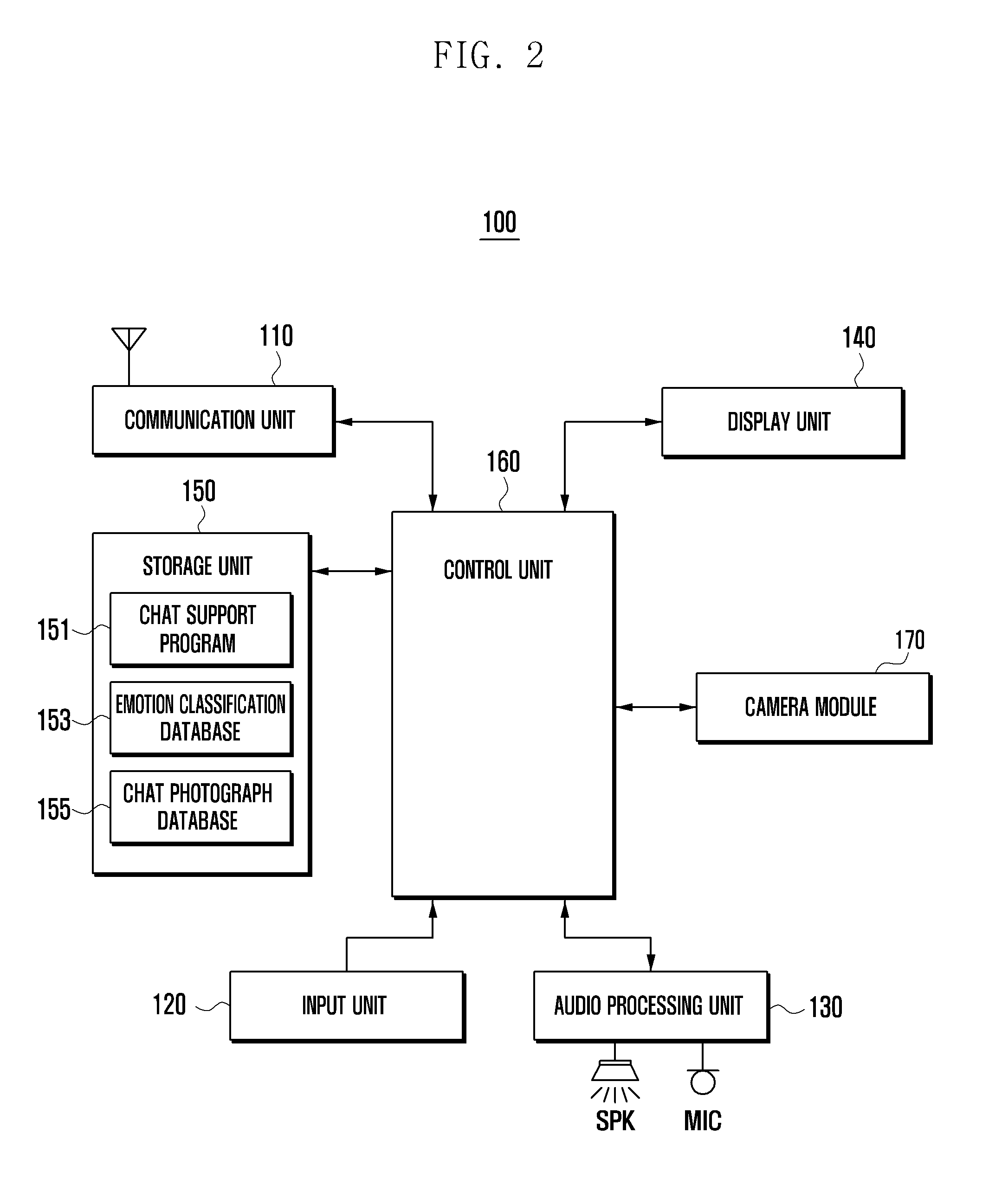

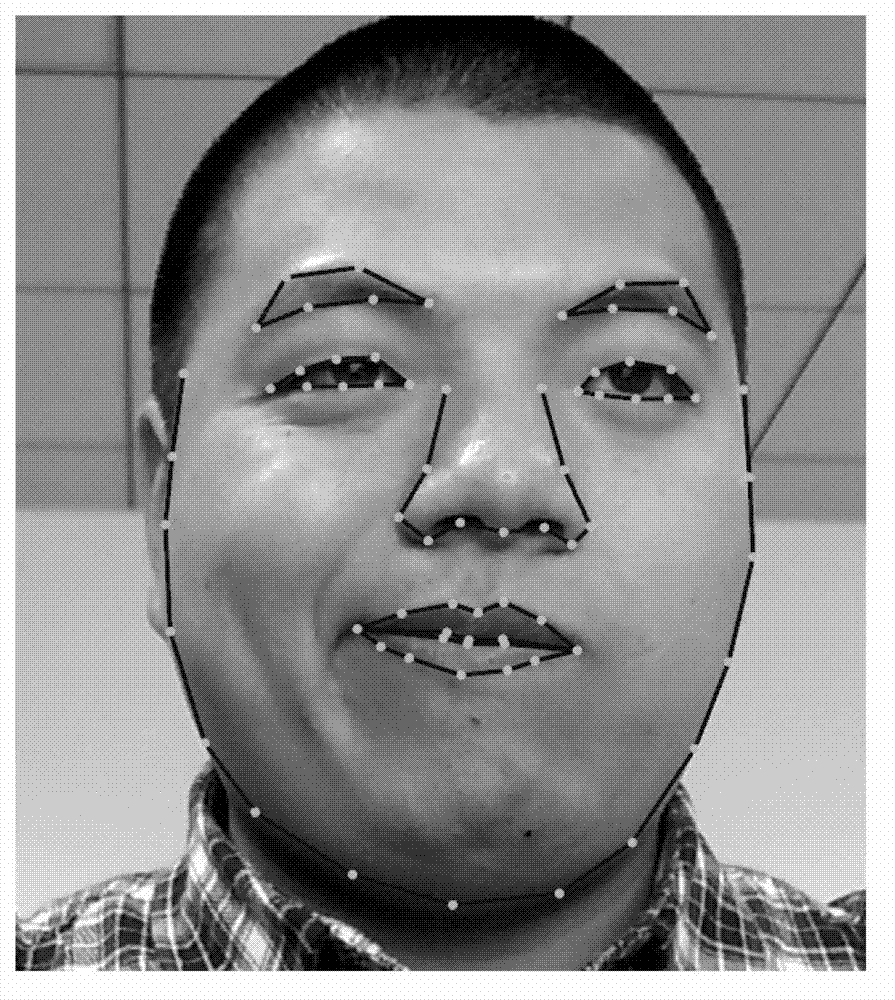

ActiveUS20140192134A1Facilitating video call functionEasy data managementTelevision system detailsColor television detailsPattern recognitionComputer module

A mobile device user interface method activates a camera module to support a video chat function and acquires an image of a target object using the camera module. In response to detecting a face in the captured image, the facial image data is analyzed to identify an emotional characteristic of the face by identifying a facial feature and comparing the identified feature with a predetermined feature associated with an emotion. The identified emotional characteristic is compared with a corresponding emotional characteristic of previously acquired facial image data of the target object. In response to the comparison, an emotion indicative image is generated and the generated emotion indicative image is transmitted to a destination terminal used in the video chat.

Owner:SAMSUNG ELECTRONICS CO LTD

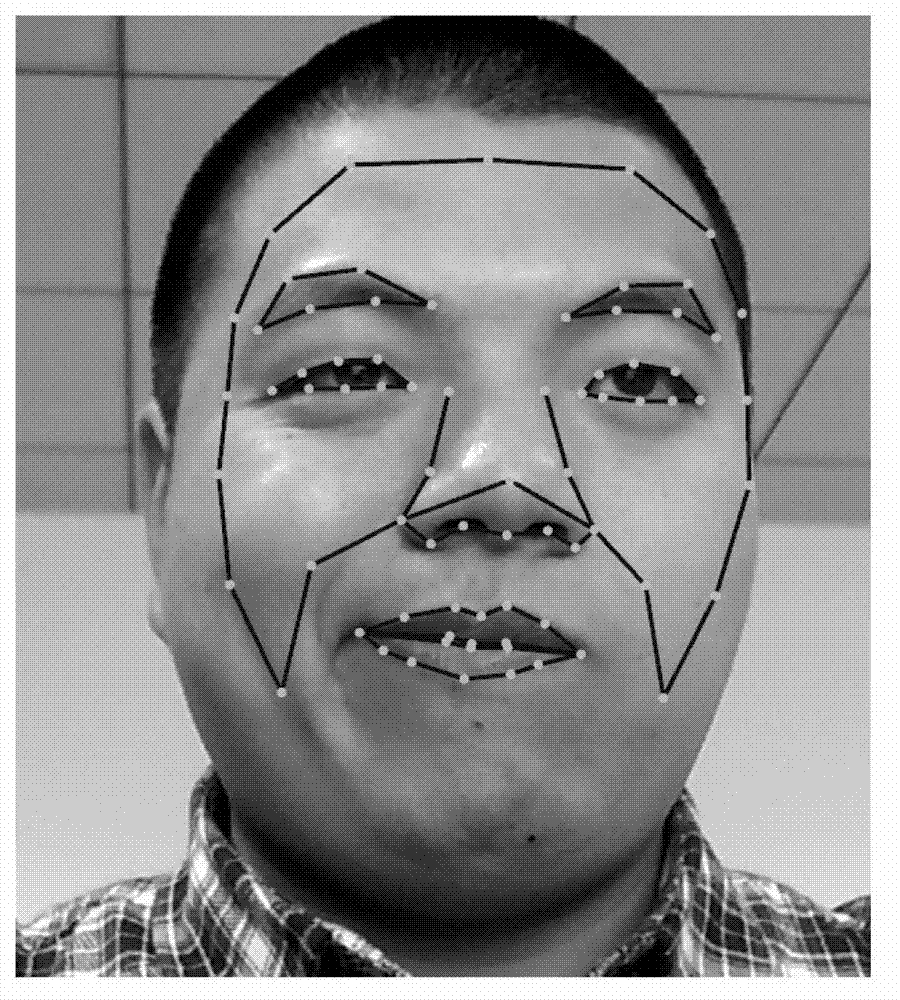

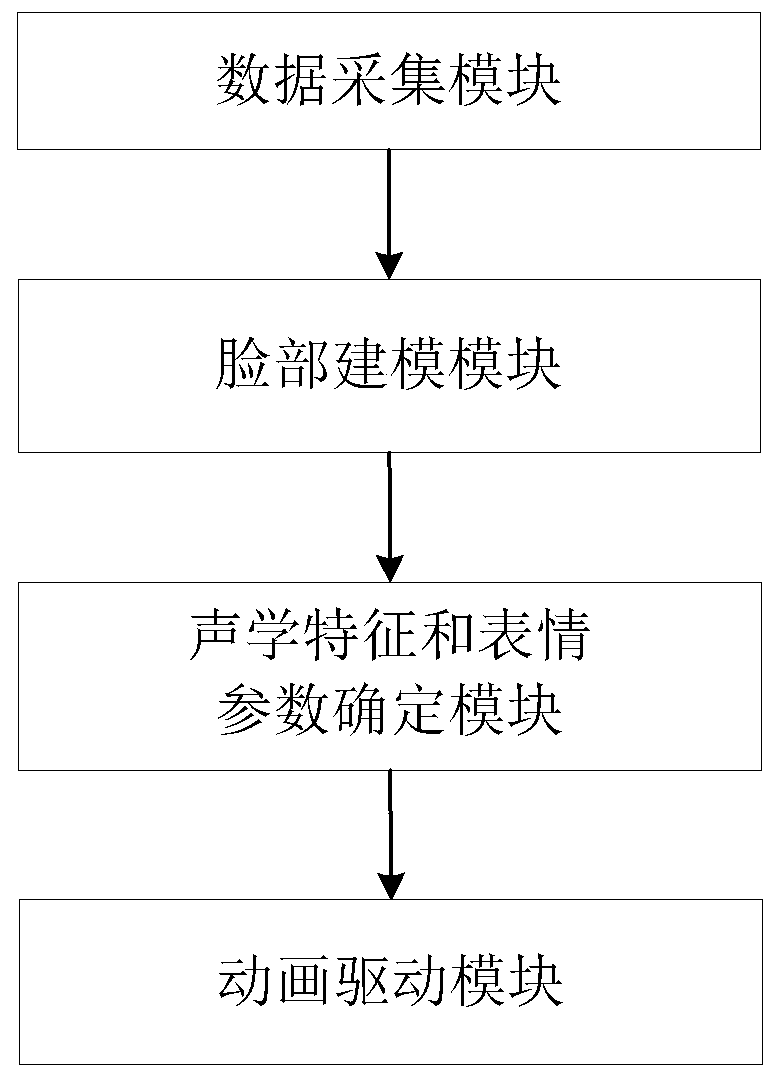

Real-time facial animation method based on single video camera

ActiveCN103093490AEasy to useImprove experienceImage enhancementImage analysisAnimationFacial expression

The invention discloses a real-time facial animation method based on a single video camera. The method includes the steps of using the single video camera, tracking three-dimensional positions of facial feature points in real time, parameterizing face posture and facial expression according to the three-dimensional positions, and finally mapping parameters on a substitute so as to drive facial animation of a cartoon character. A real-time speed can be reached only with an ordinary video camera of a user without need of an advanced acquisition device. The real-time facial animation method based on the single video camera can accurately process various wide-angle facial rotations, facial shifting and various exaggerated facial expressions and can further work in the environments with different illumination and background conditions, including indoor environment and sunny outdoor environment.

Owner:ZHEJIANG UNIV

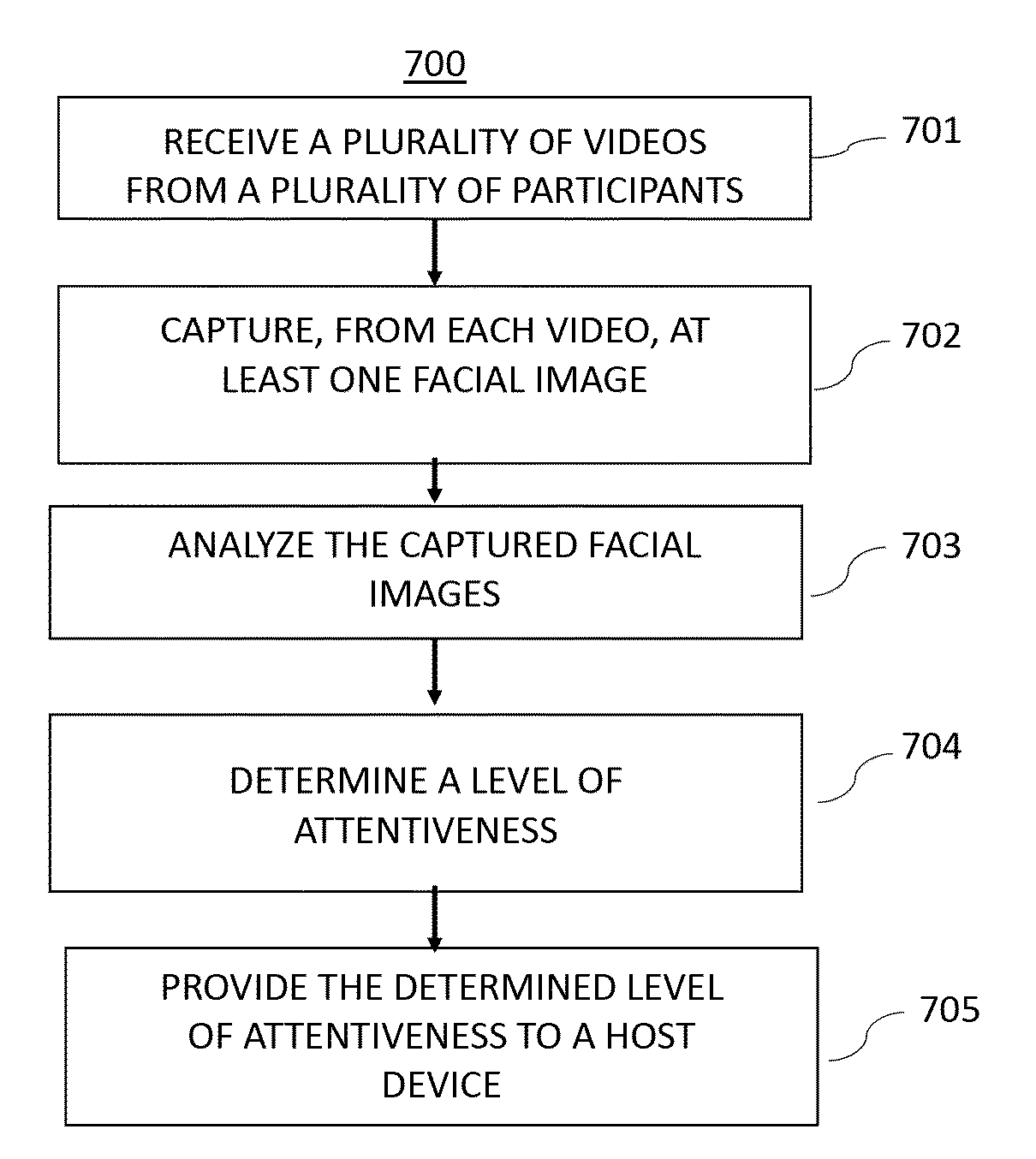

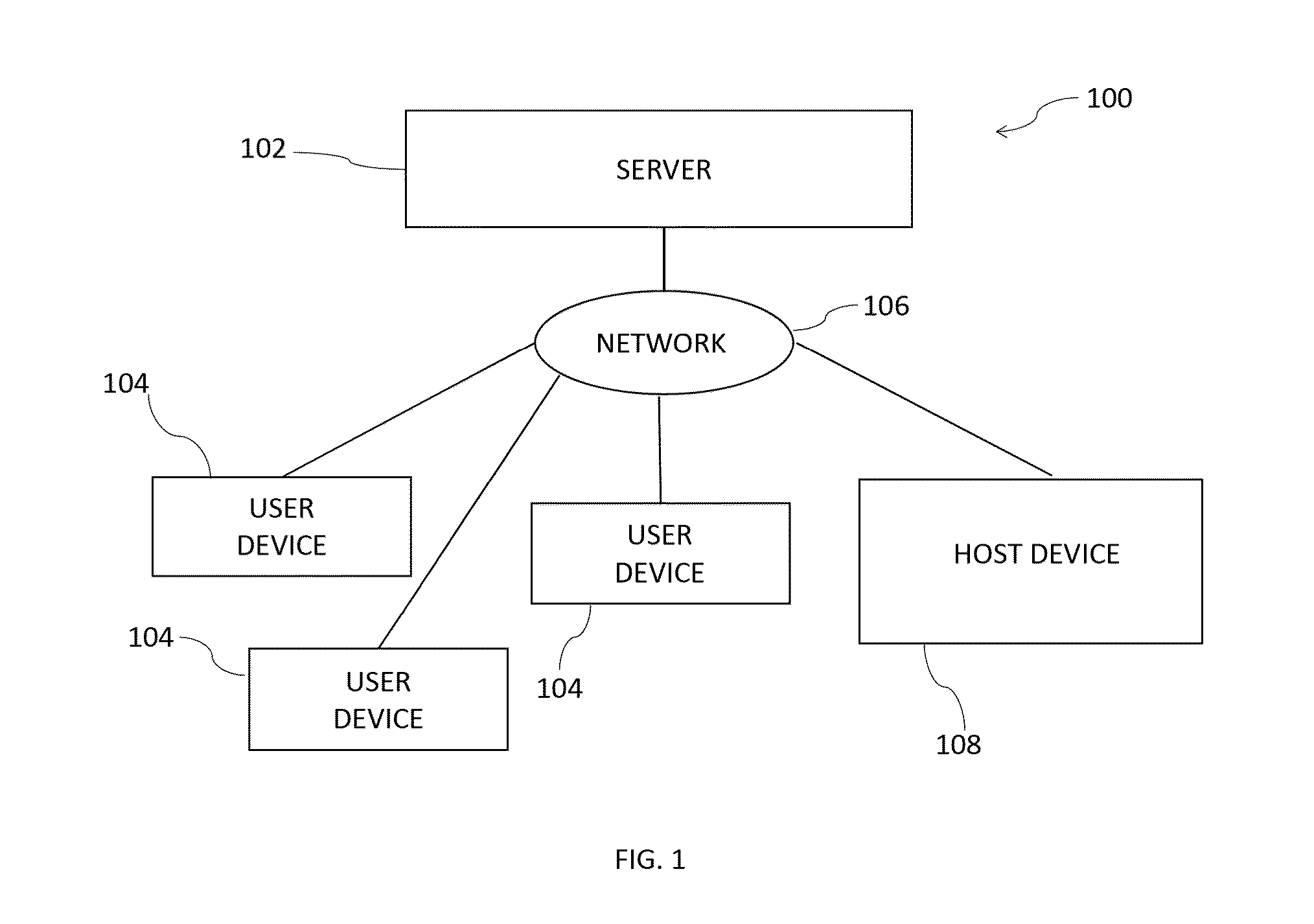

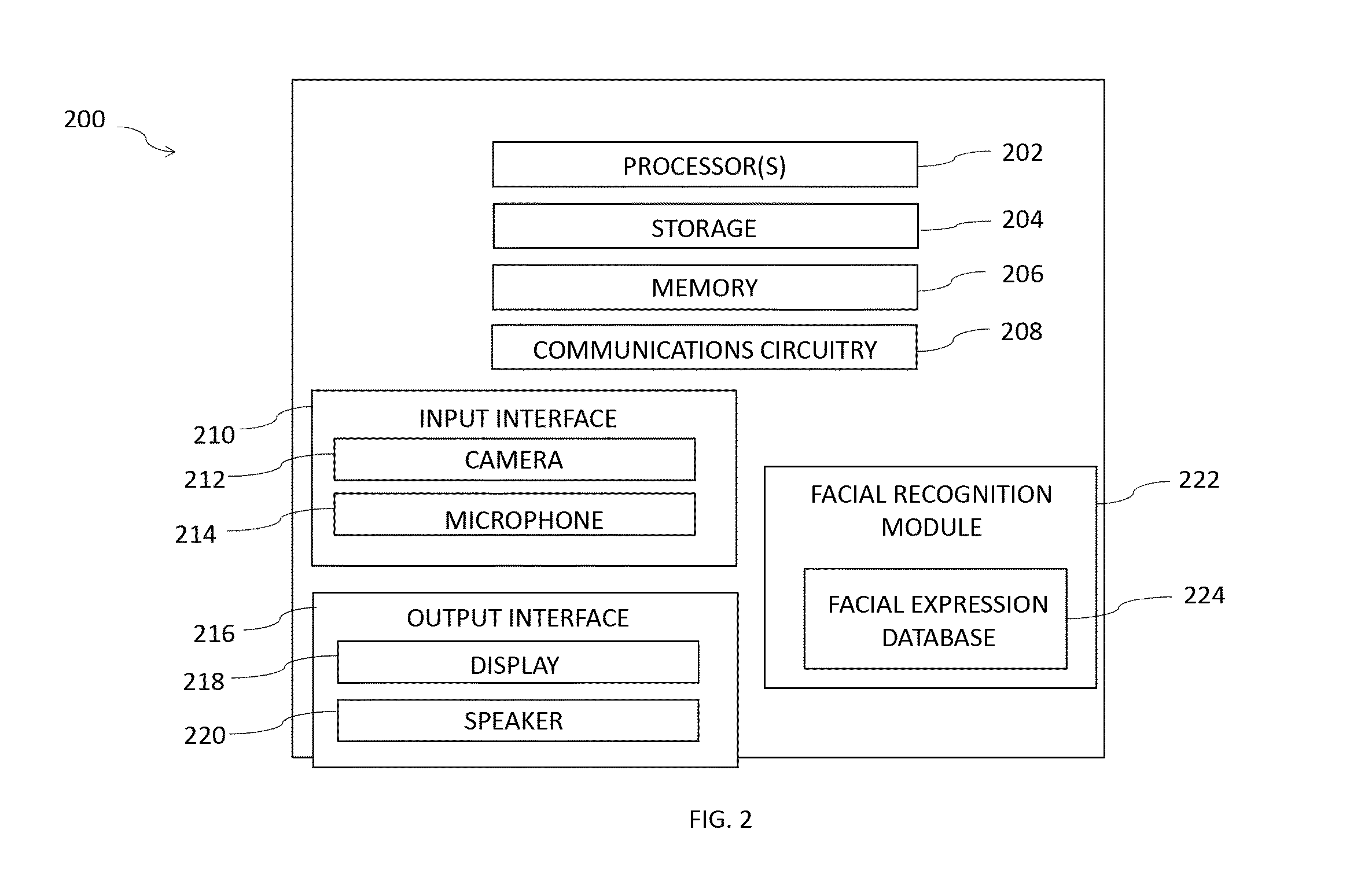

Systems and methods for analyzing facial expressions within an online classroom to gauge participant attentiveness

ActiveUS20160217321A1Monitoring participants' level of attentivenessAcquiring/recognising facial featuresFacial expressionHuman–computer interaction

Systems, methods, and non-transitory computer readable analyzing facial expressions within an interactive online event to gauge participant level of attentiveness are provided. Facial expressions from a plurality of participants accessing an interactive online event may be analyzed to determine each participant's facial expression. The determined expressions may be analyzed to determine an overall level of attentiveness. The level of attentiveness be relayed to the host of the interactive online event to inform him or her how the participants are reacting to the interactive online event. If there are participants not paying attention or confused, the host may modify their presentation to increase the attentiveness of the students.

Owner:SHINDIG

Weak hypothesis generation apparatus and method, learning apparatus and method, detection apparatus and method, facial expression learning apparatus and method, facial expression recognition apparatus and method, and robot apparatus

ActiveUS7379568B2Lower performance requirementsIncrease speedImage analysisDigital computer detailsFace detectionHypothesis

A facial expression recognition system that uses a face detection apparatus realizing efficient learning and high-speed detection processing based on ensemble learning when detecting an area representing a detection target and that is robust against shifts of face position included in images and capable of highly accurate expression recognition, and a learning method for the system, are provided. When learning data to be used by the face detection apparatus by Adaboost, processing to select high-performance weak hypotheses from all weak hypotheses, then generate new weak hypotheses from these high-performance weak hypotheses on the basis of statistical characteristics, and select one weak hypothesis having the highest discrimination performance from these weak hypotheses, is repeated to sequentially generate a weak hypothesis, and a final hypothesis is thus acquired. In detection, using an abort threshold value that has been learned in advance, whether provided data can be obviously judged as a non-face is determined every time one weak hypothesis outputs the result of discrimination. If it can be judged so, processing is aborted. A predetermined Gabor filter is selected from the detected face image by an Adaboost technique, and a support vector for only a feature quantity extracted by the selected filter is learned, thus performing expression recognition.

Owner:SAN DIEGO UNIV OF CALIFORNIA +1

Emoji recording and sending

ActiveUS20180335927A1Faster and efficient methodFaster and efficient and interfaceTelevision system detailsSubstation equipmentField of viewElectronic equipment

The present disclosure generally relates to generating and modifying virtual avatars. An electronic device having a camera and a display apparatus displays a virtual avatar that changes appearance in response to changes in a face in a field of view of the camera. In response to detecting changes in one or more physical features of the face in the field of view of the camera, the electronic device modifies one or more features of the virtual avatar.

Owner:APPLE INC

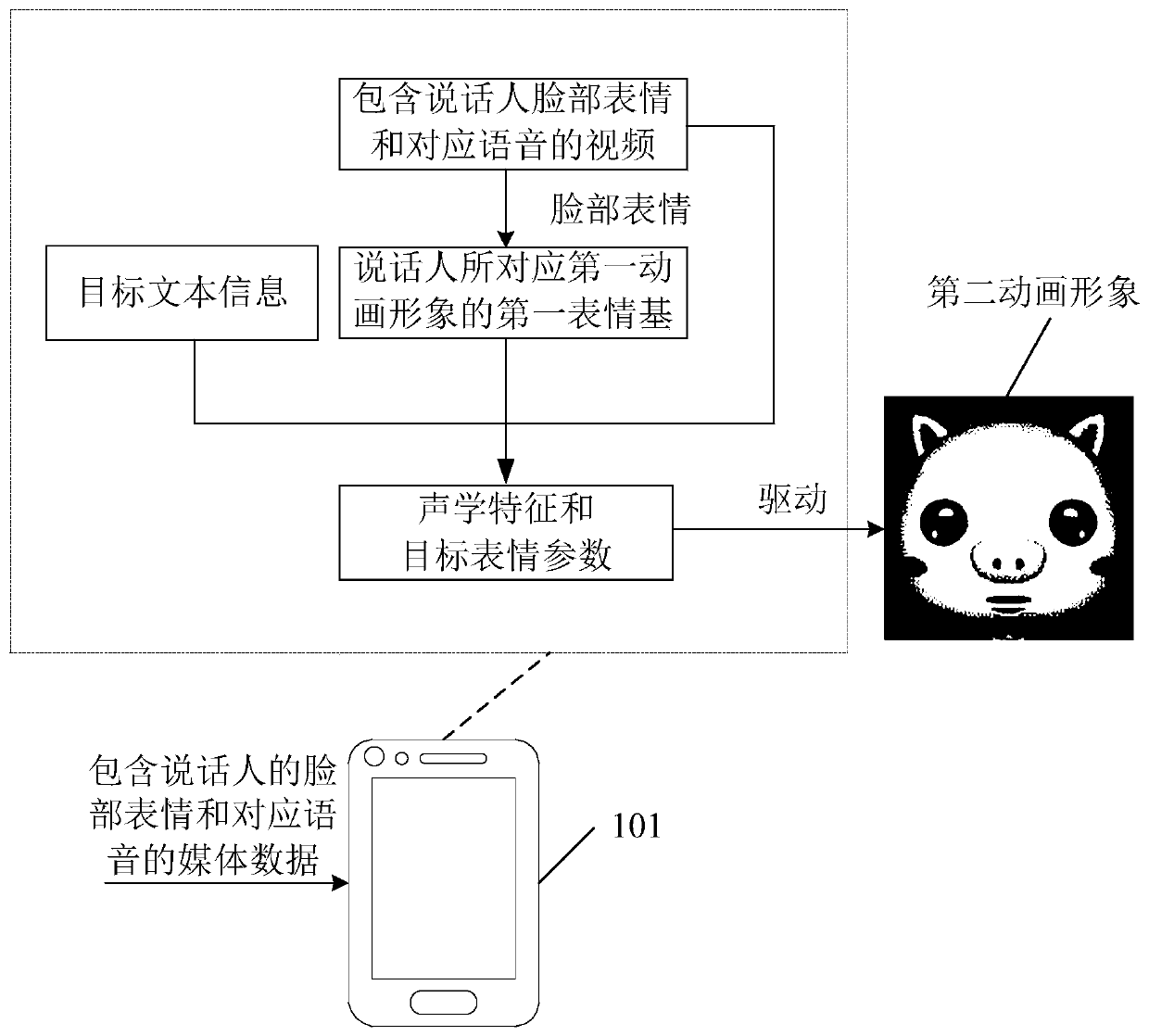

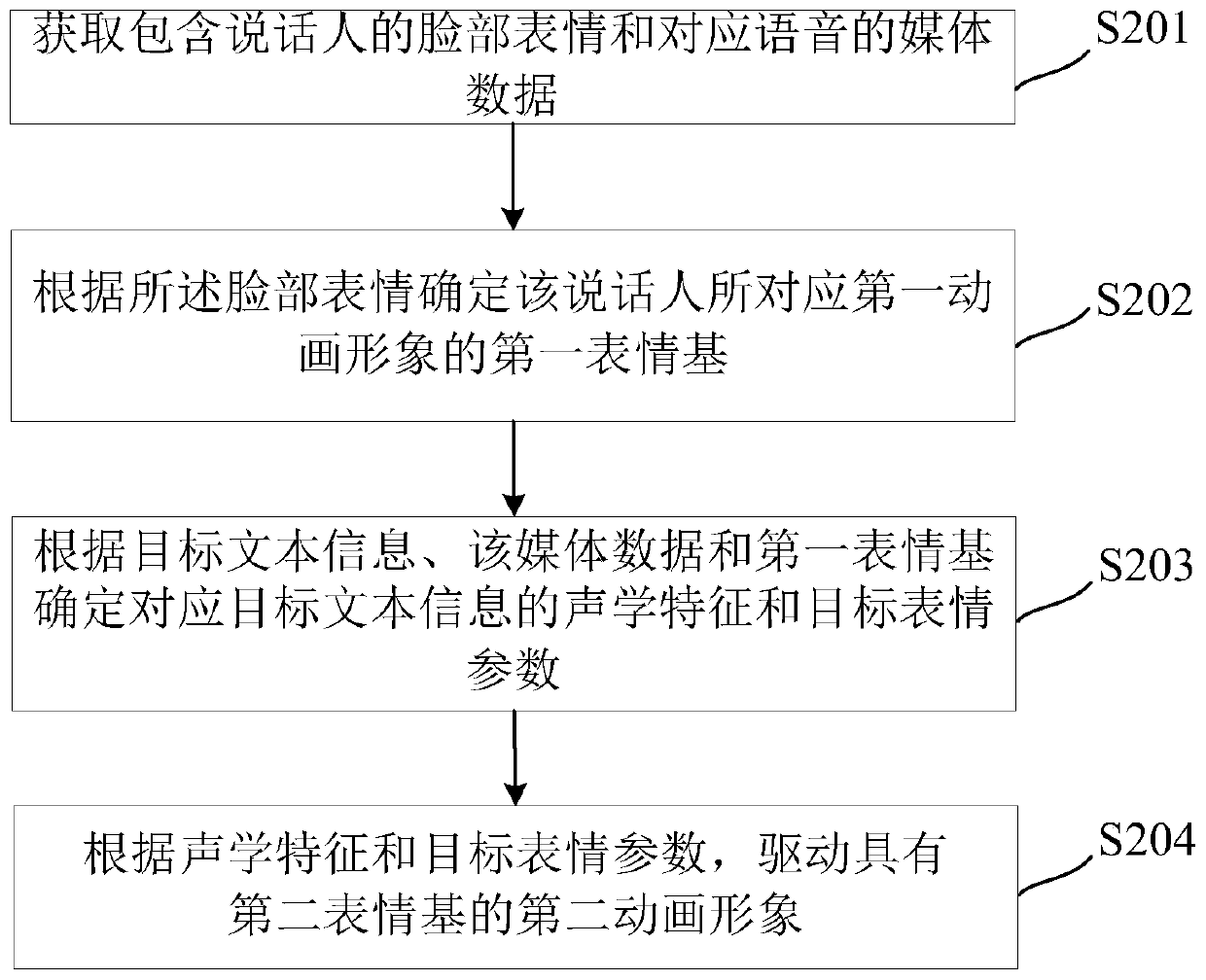

Animation image driving method and device based on artificial intelligence

ActiveCN110531860AImprove experienceInput/output for user-computer interactionAnimationTarget expressionAnimation

Owner:TENCENT TECH (SHENZHEN) CO LTD

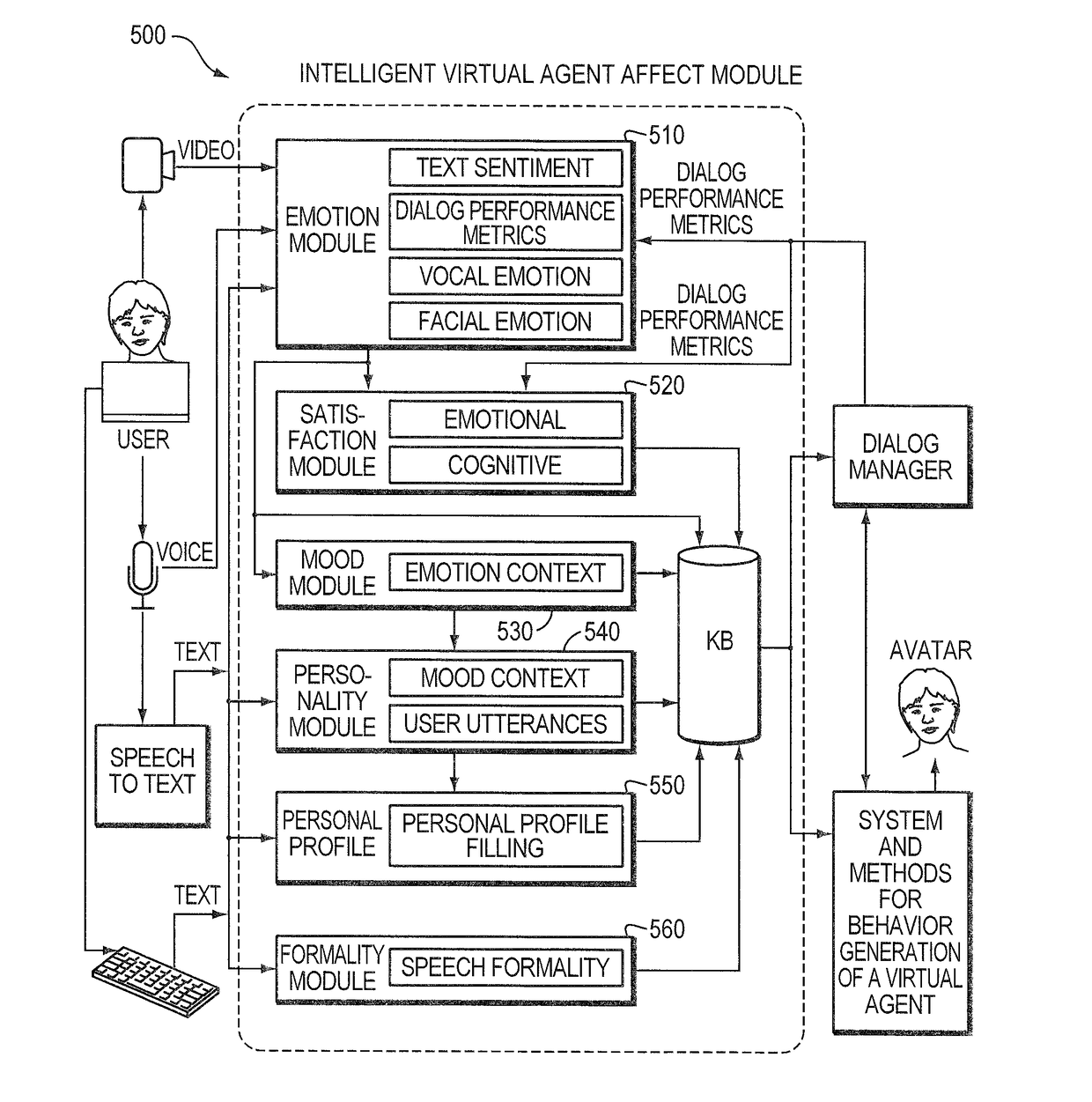

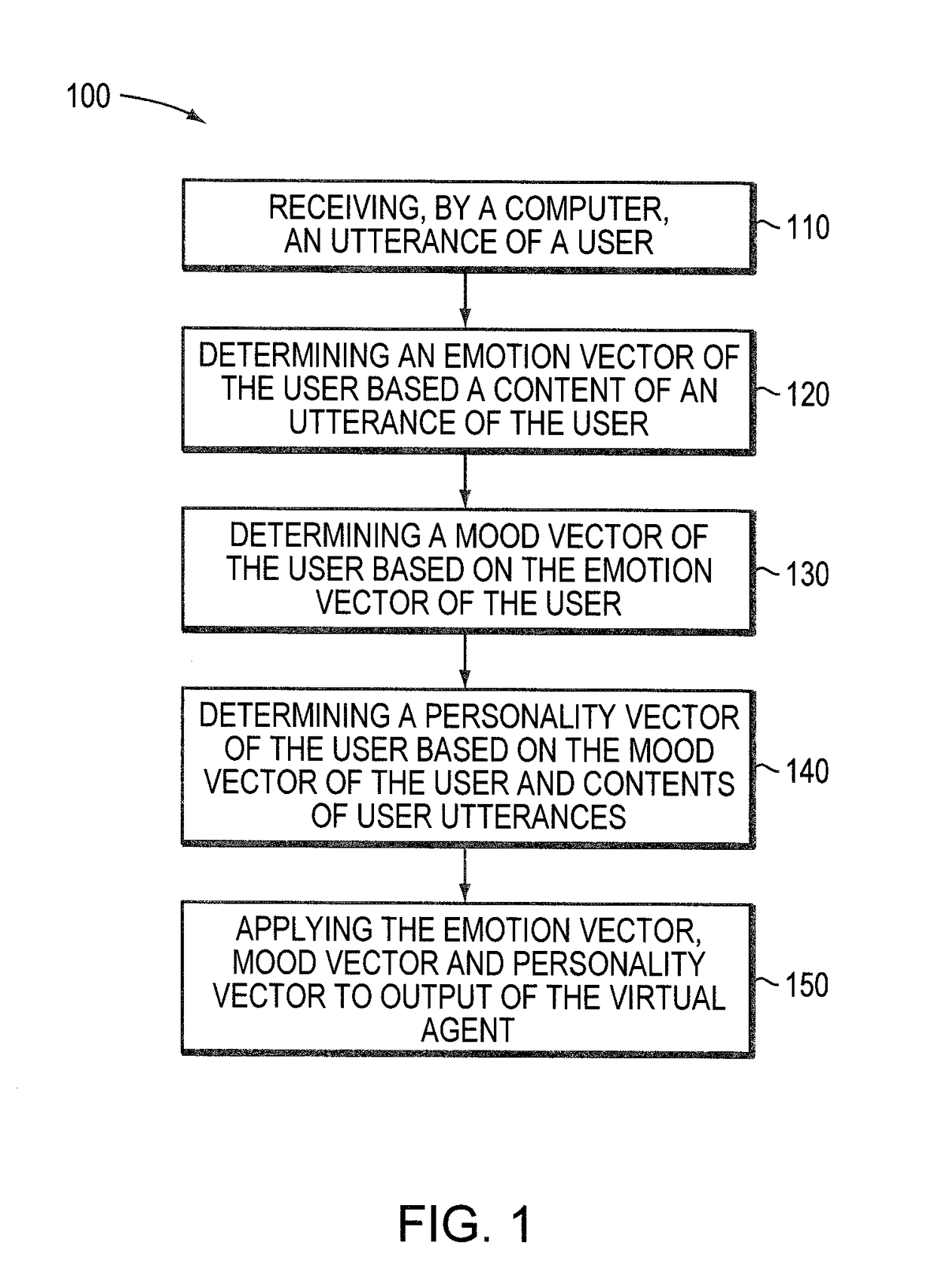

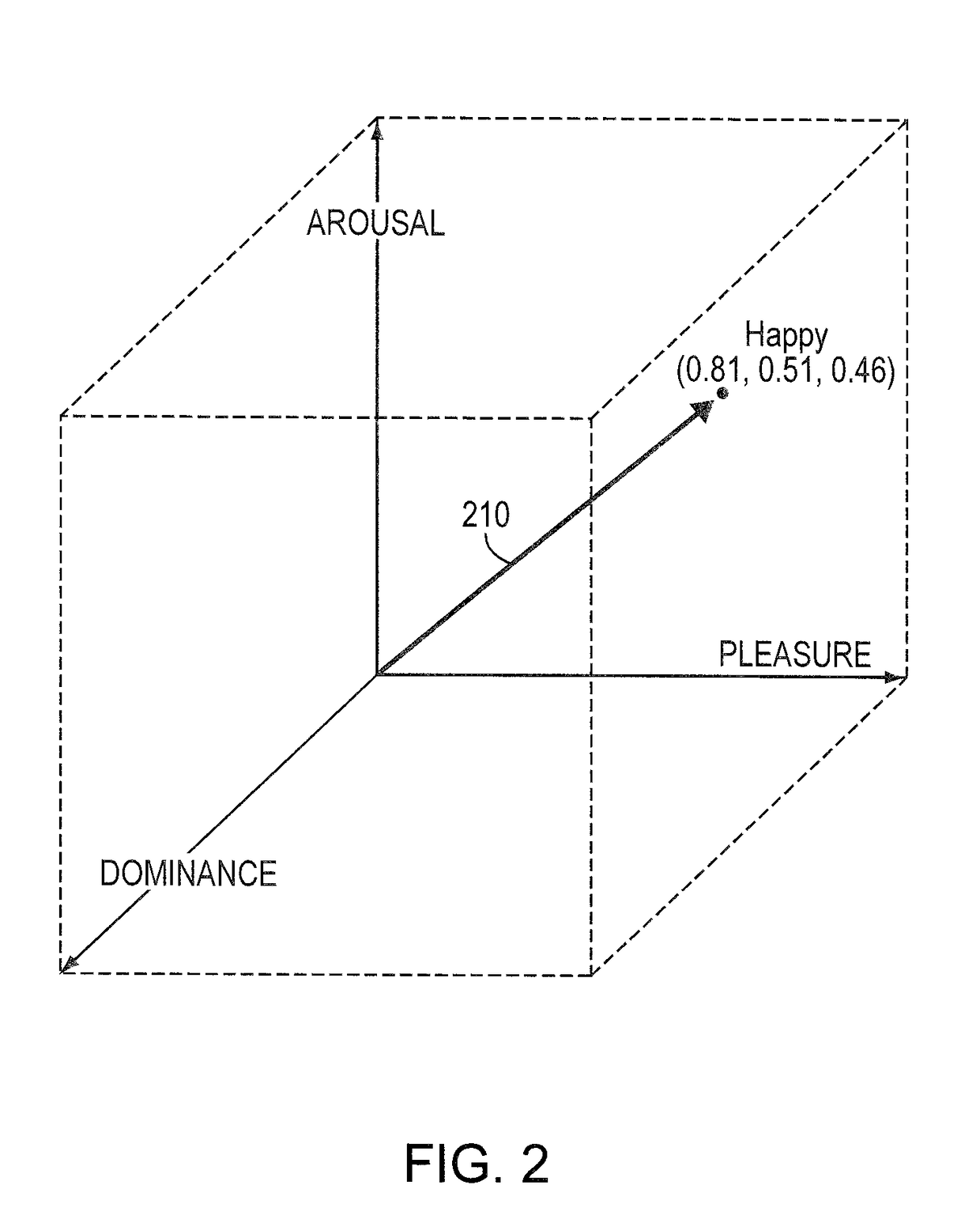

Generating communicative behaviors for anthropomorphic virtual agents based on user's affect

InactiveUS9812151B1Easy to understandNatural language data processingAnimationFacial expressionVirtual agent

Systems and methods for automatically generating at least one of facial expressions, body gestures, vocal expressions, or verbal expressions for a virtual agent based on emotion, mood and / or personality of a user and / or the virtual agent are provided. Systems and method for determining a user's emotion, mood and / or personality are also provided.

Owner:IPSOFT INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com