Method and system for measuring shopper response to products based on behavior and facial expression

a technology of applied in the field of method and system for measuring the response of shoppers to products based on behavior and facial expression, can solve the problems of not being able to make a direct connection between emotion-sensitive filter responses and facial expressions, and it is almost impossible to accurately determine a person's mental response, so as to achieve accurate facial features and more robust job

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

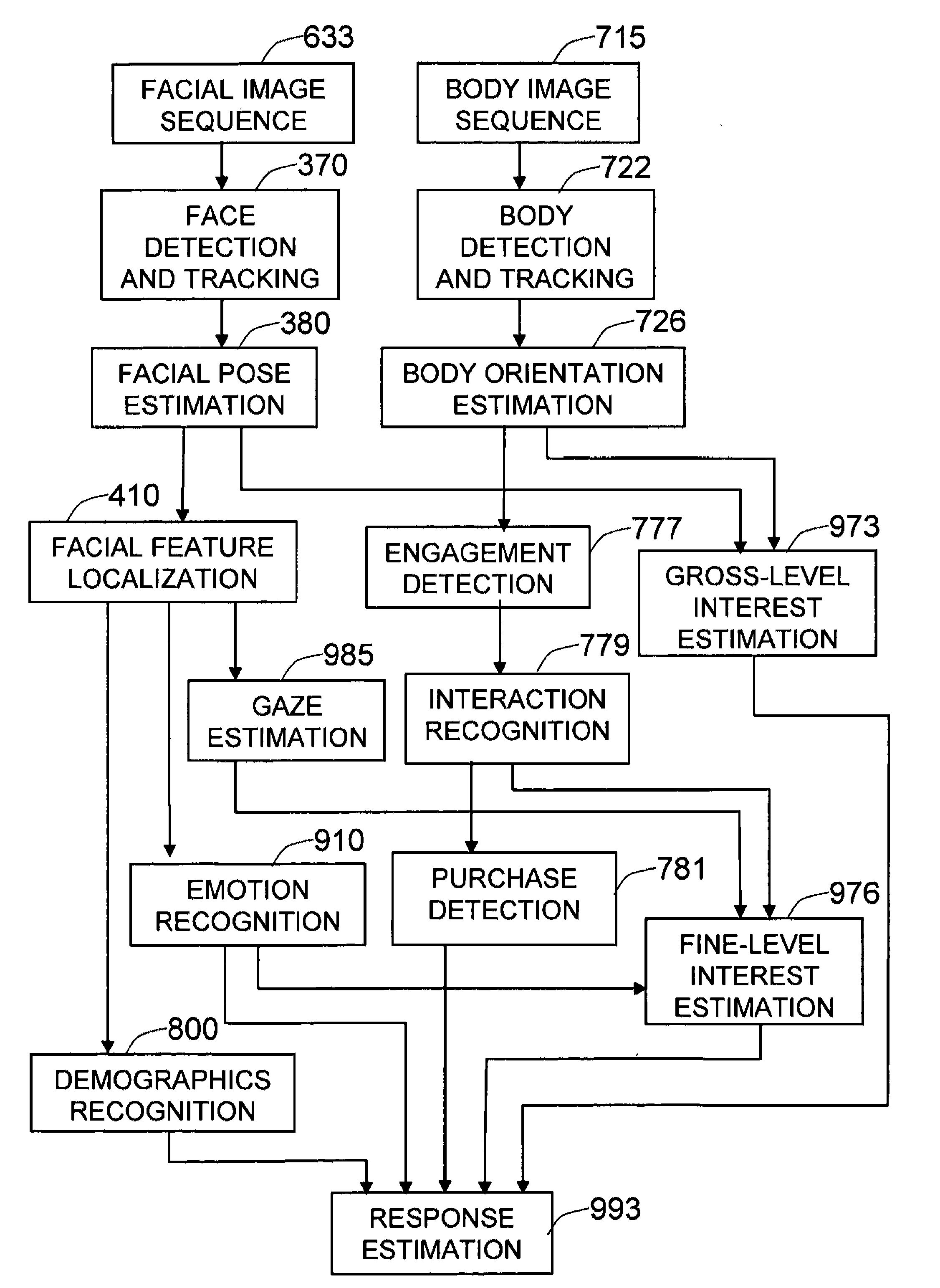

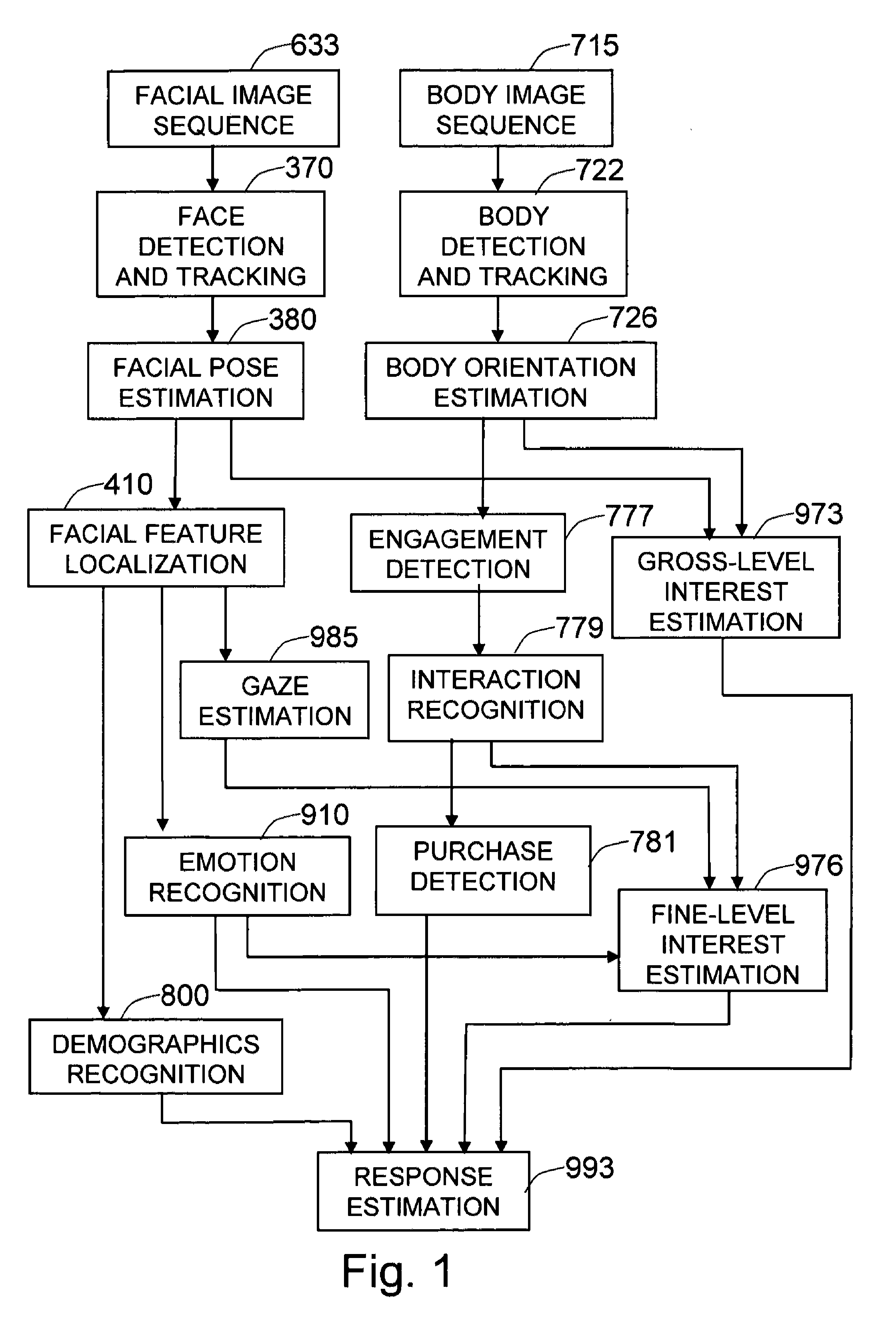

[0060]FIG. 1 is an overall scheme of the system in a preferred embodiment of the invention. The system accepts two different sources of data for processing: the facial image sequence 633 and the body image sequence 715. Given a facial image sequence 633 that potentially contains human faces, the face detection and tracking 370 step detects any human faces and keeps individual identities of them by tracking them. Using the learning machines trained from facial pose estimation training 820, the facial pose estimation 380 step then computes the (X, Y) shift, size variation, and orientation of the face inside the face detection window to normalize the facial image, as well as the three-dimensional pose (yaw, pitch) of the face. Employing the learning machines trained from the facial feature localization training 830, the facial feature localization 410 step then finds the accurate positions and boundaries of the facial features, such as eyes, eyebrows, nose, mouth, etc. Both the three-d...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com