Patents

Literature

1363 results about "Facial characteristic" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

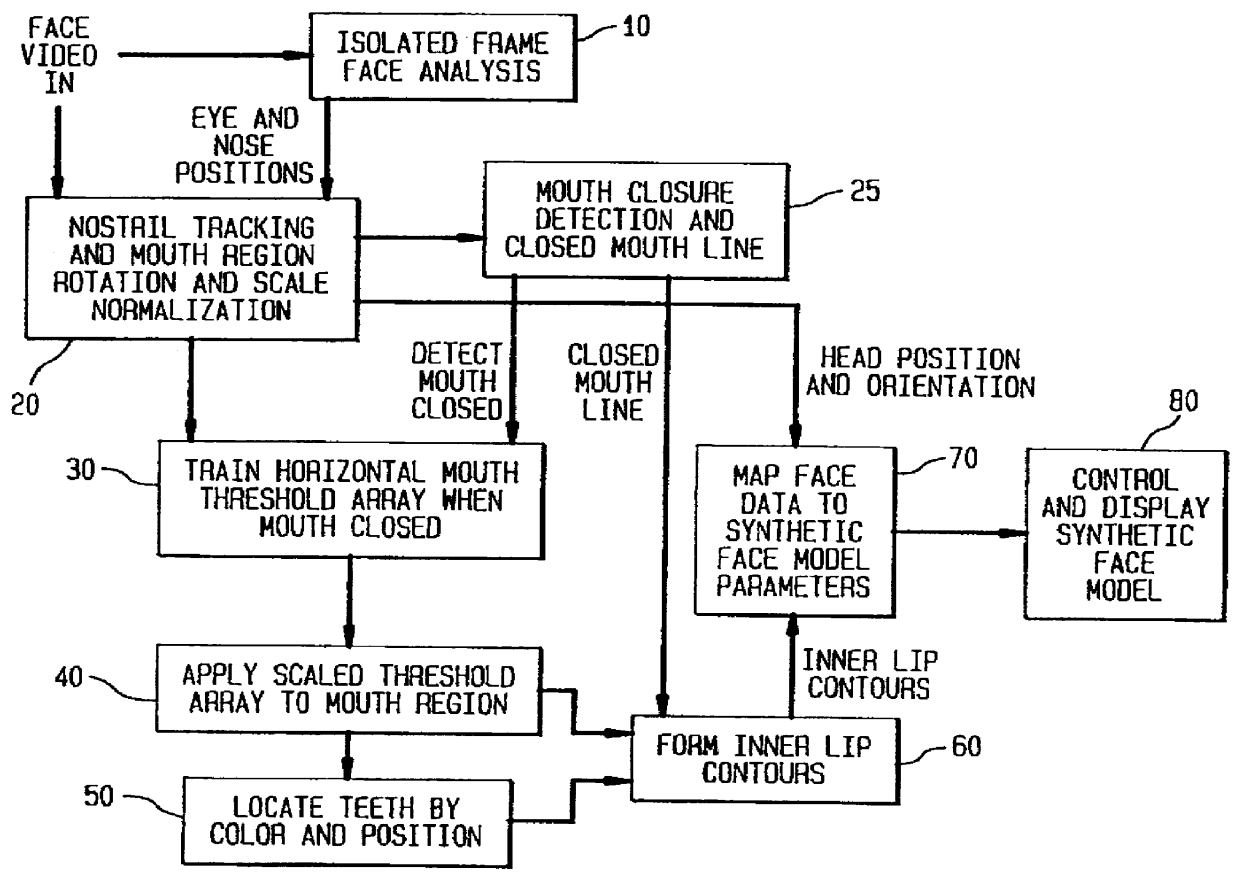

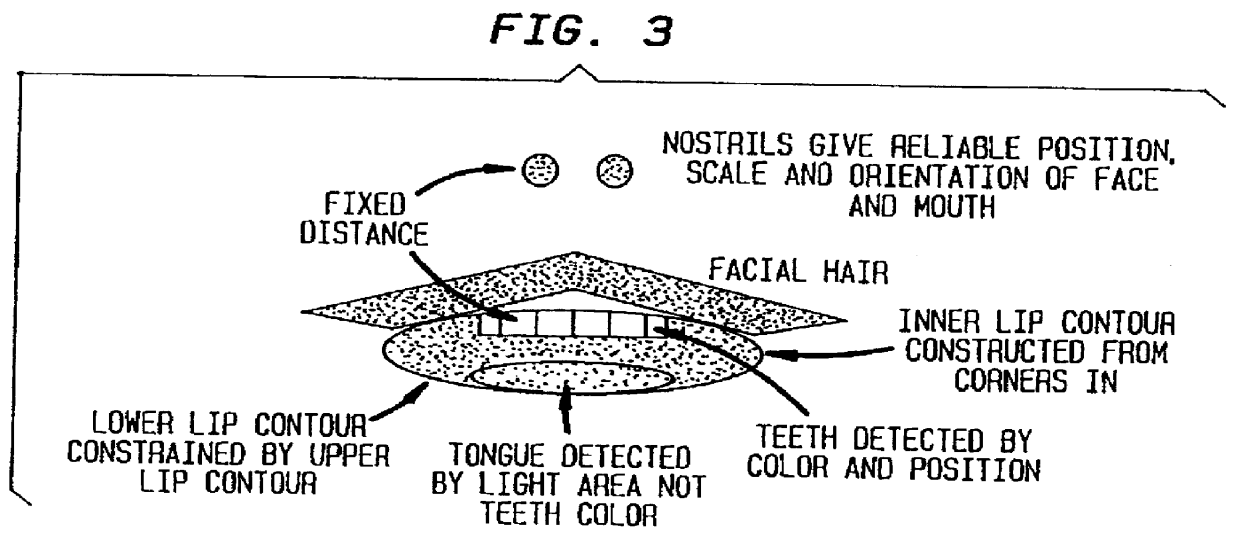

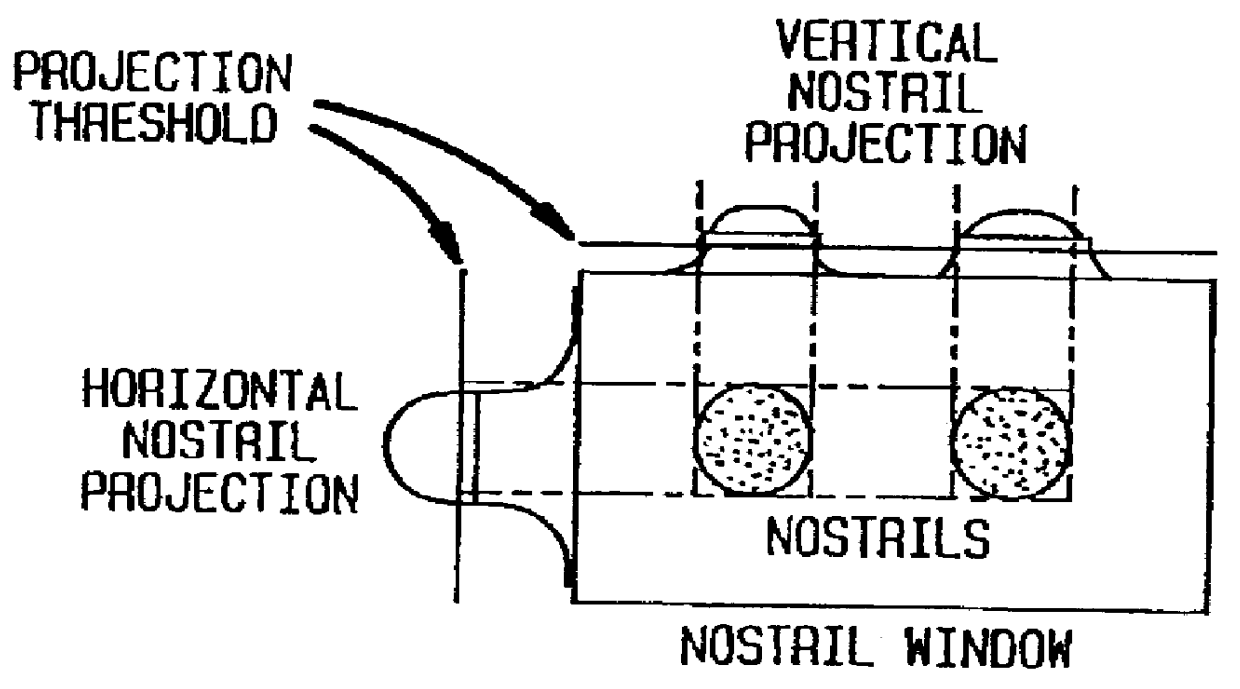

Face feature analysis for automatic lipreading and character animation

A face feature analysis which begins by generating multiple face feature candidates, e.g., eyes and nose positions, using an isolated frame face analysis. Then, a nostril tracking window is defined around a nose candidate and tests are applied to the pixels therein based on percentages of skin color area pixels and nostril area pixels to determine whether the nose candidate represents an actual nose. Once actual nostrils are identified, size, separation and contiguity of the actual nostrils is determined by projecting the nostril pixels within the nostril tracking window. A mouth window is defined around the mouth region and mouth detail analysis is then applied to the pixels within the mouth window to identify inner mouth and teeth pixels and therefrom generate an inner mouth contour. The nostril position and inner mouth contour are used to generate a synthetic model head. A direct comparison is made between the inner mouth contour generated and that of a synthetic model head and the synthetic model head is adjusted accordingly. Vector quantization algorithms may be used to develop a codebook of face model parameters to improve processing efficiency. The face feature analysis is suitable regardless of noise, illumination variations, head tilt, scale variations and nostril shape.

Owner:ALCATEL-LUCENT USA INC

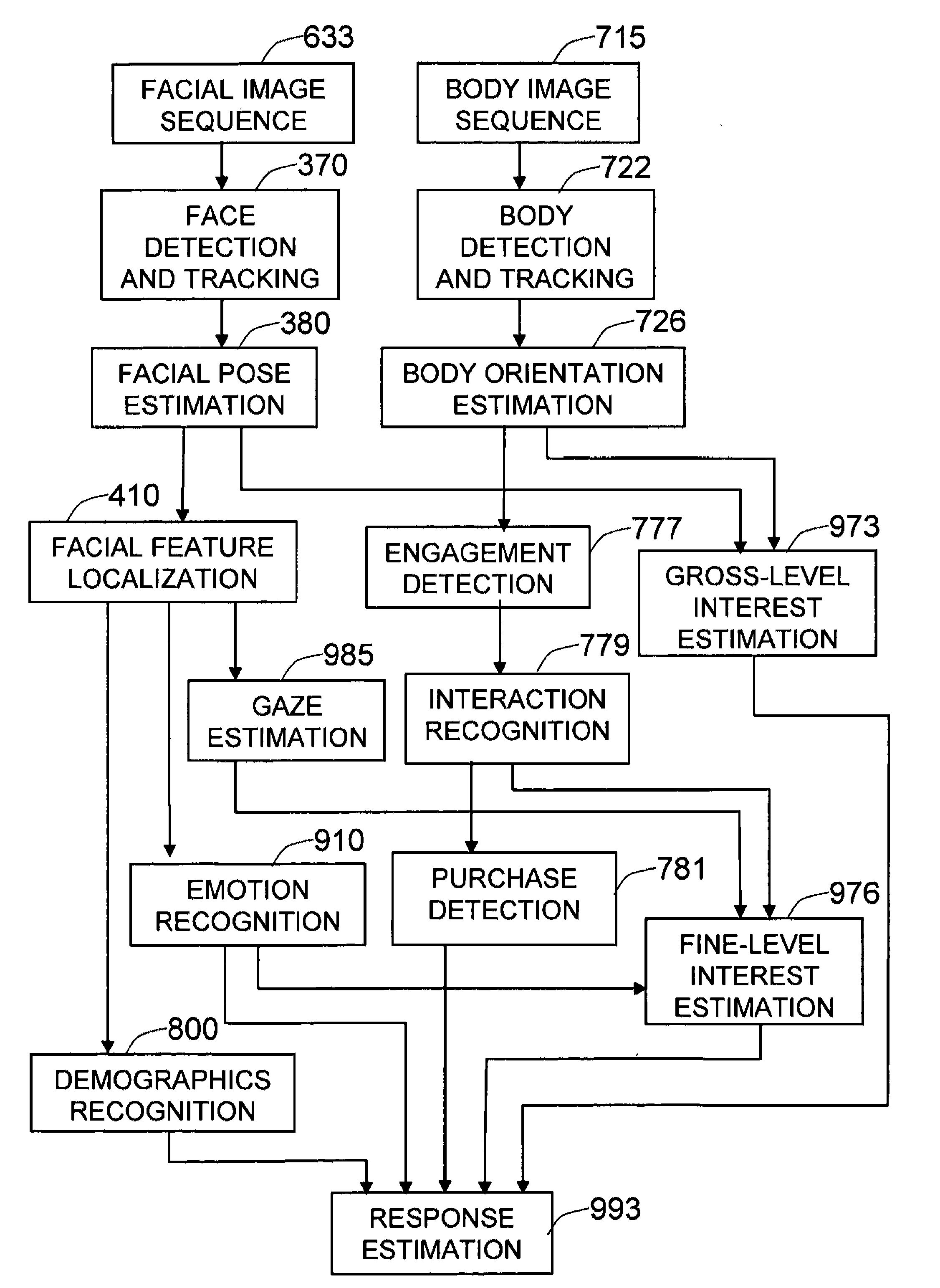

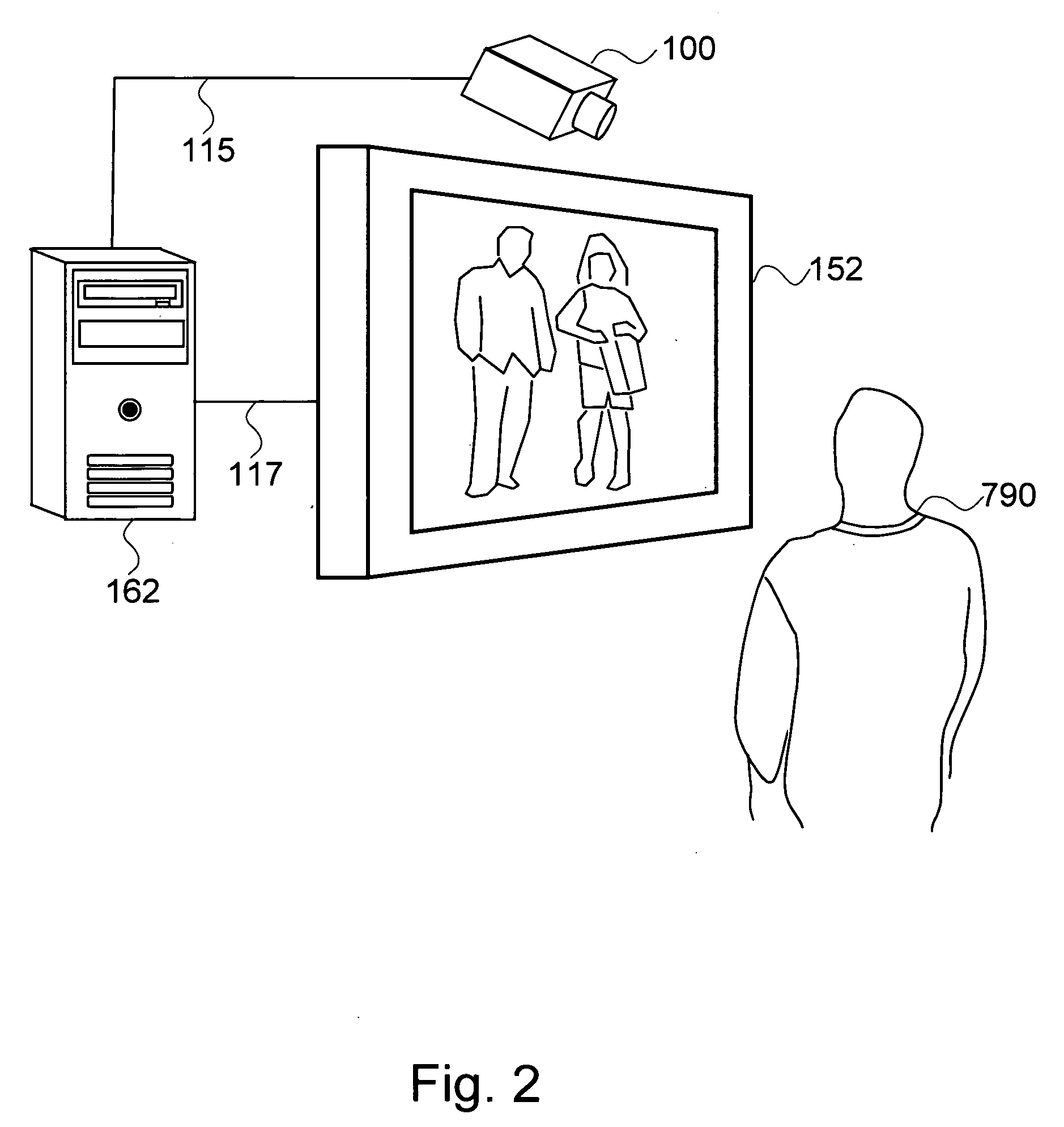

Method and system for measuring shopper response to products based on behavior and facial expression

ActiveUS8219438B1Reliable informationAccurate locationMarket predictionsAcquiring/recognising eyesPattern recognitionProduct base

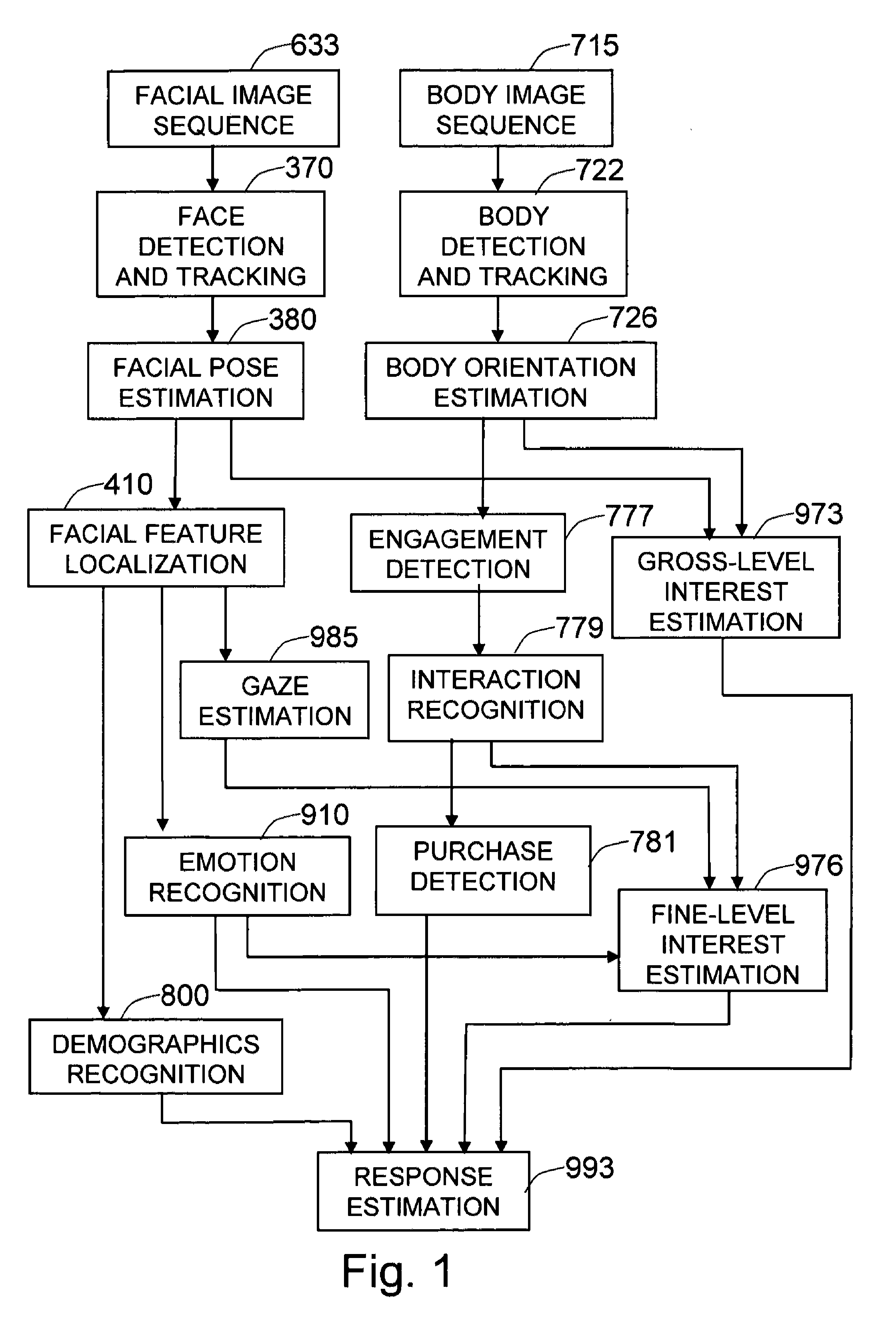

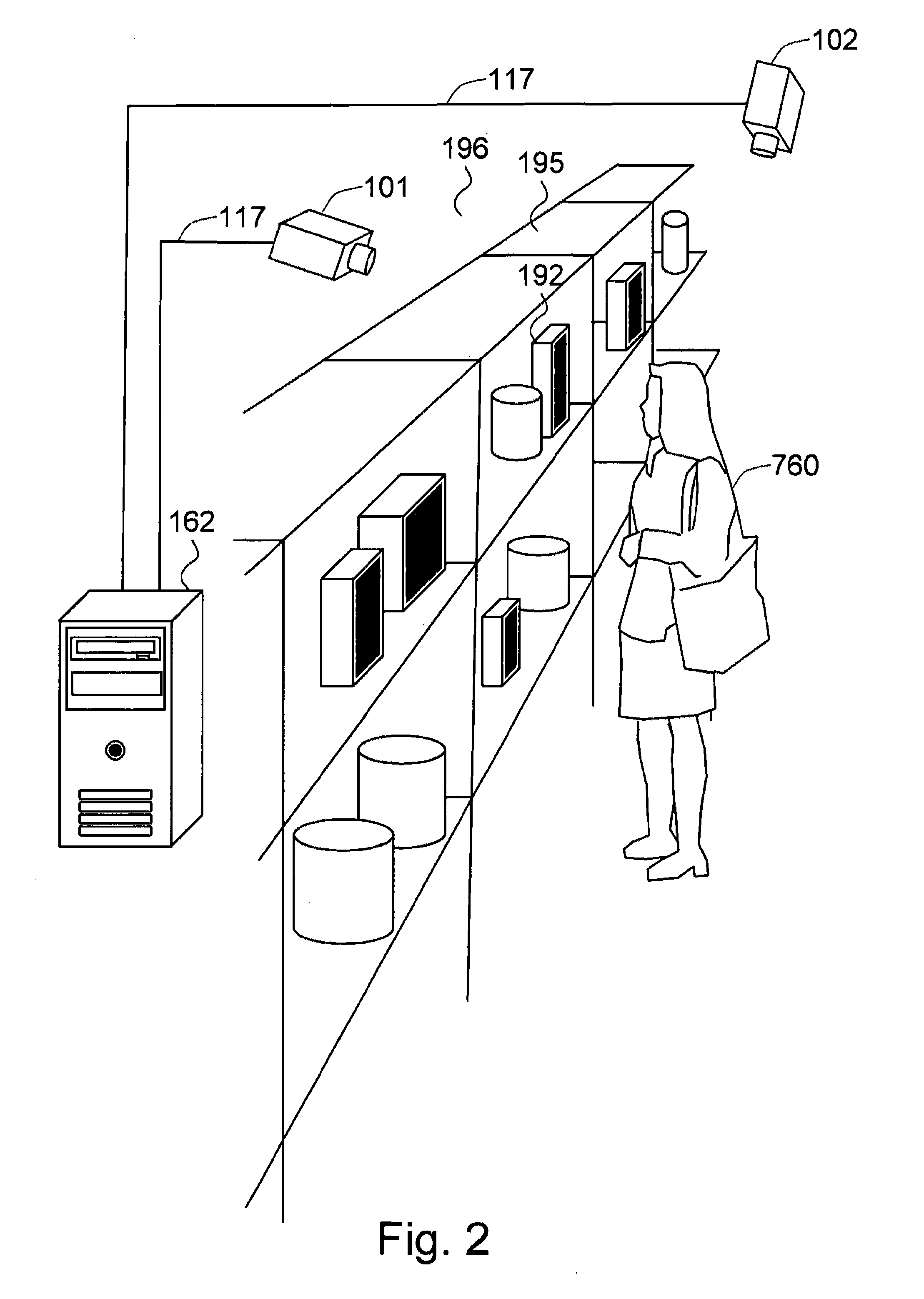

The present invention is a method and system for measuring human response to retail elements, based on the shopper's facial expressions and behaviors. From a facial image sequence, the facial geometry—facial pose and facial feature positions—is estimated to facilitate the recognition of facial expressions, gaze, and demographic categories. The recognized facial expression is translated into an affective state of the shopper and the gaze is translated into the target and the level of interest of the shopper. The body image sequence is processed to identify the shopper's interaction with a given retail element—such as a product, a brand, or a category. The dynamic changes of the affective state and the interest toward the retail element measured from facial image sequence is analyzed in the context of the recognized shopper's interaction with the retail element and the demographic categories, to estimate both the shopper's changes in attitude toward the retail element and the end response—such as a purchase decision or a product rating.

Owner:PARMER GEORGE A

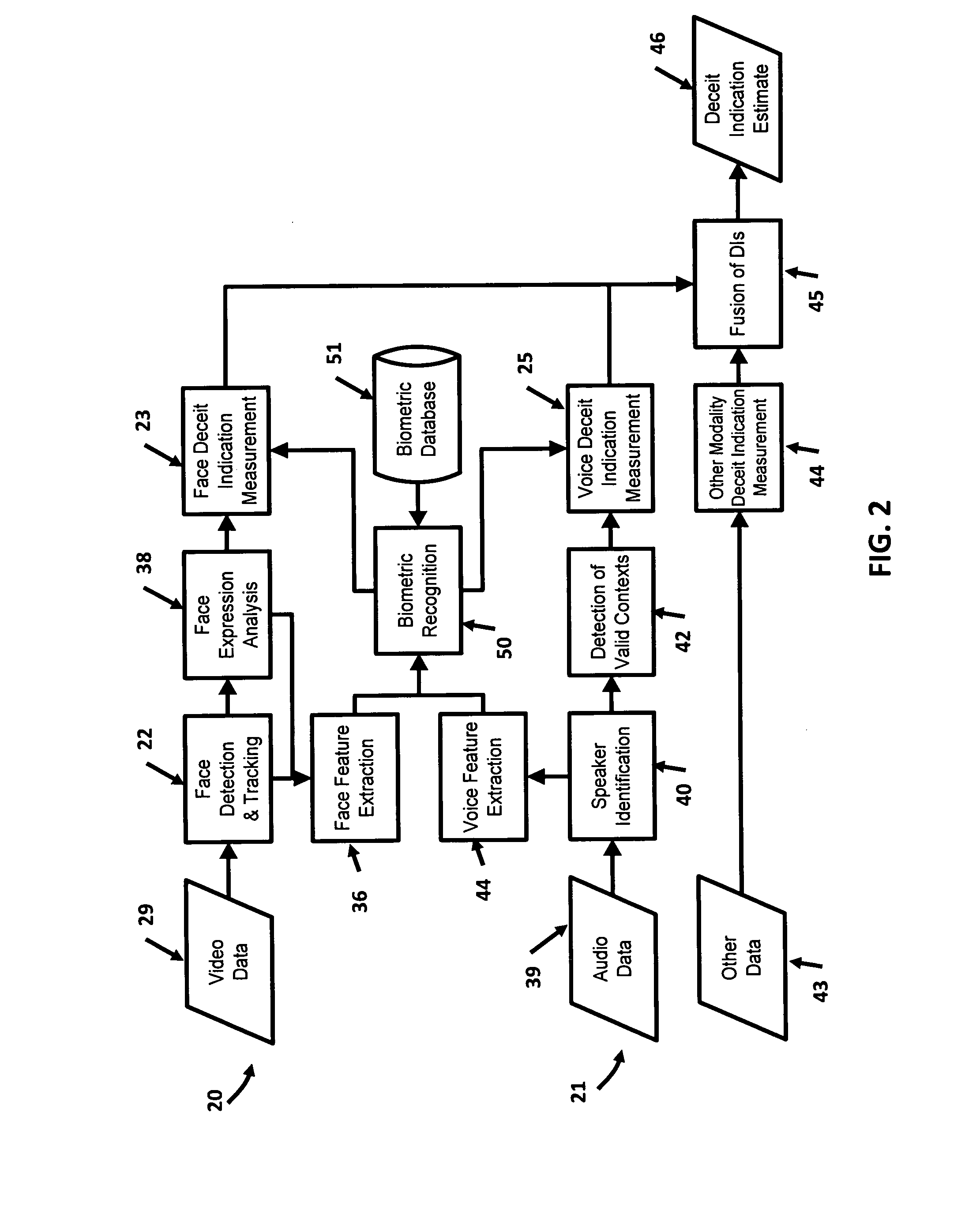

System for indicating deceit and verity

InactiveUS20080260212A1Simple methodCharacter and pattern recognitionDiagnostic recording/measuringPattern recognitionImproved method

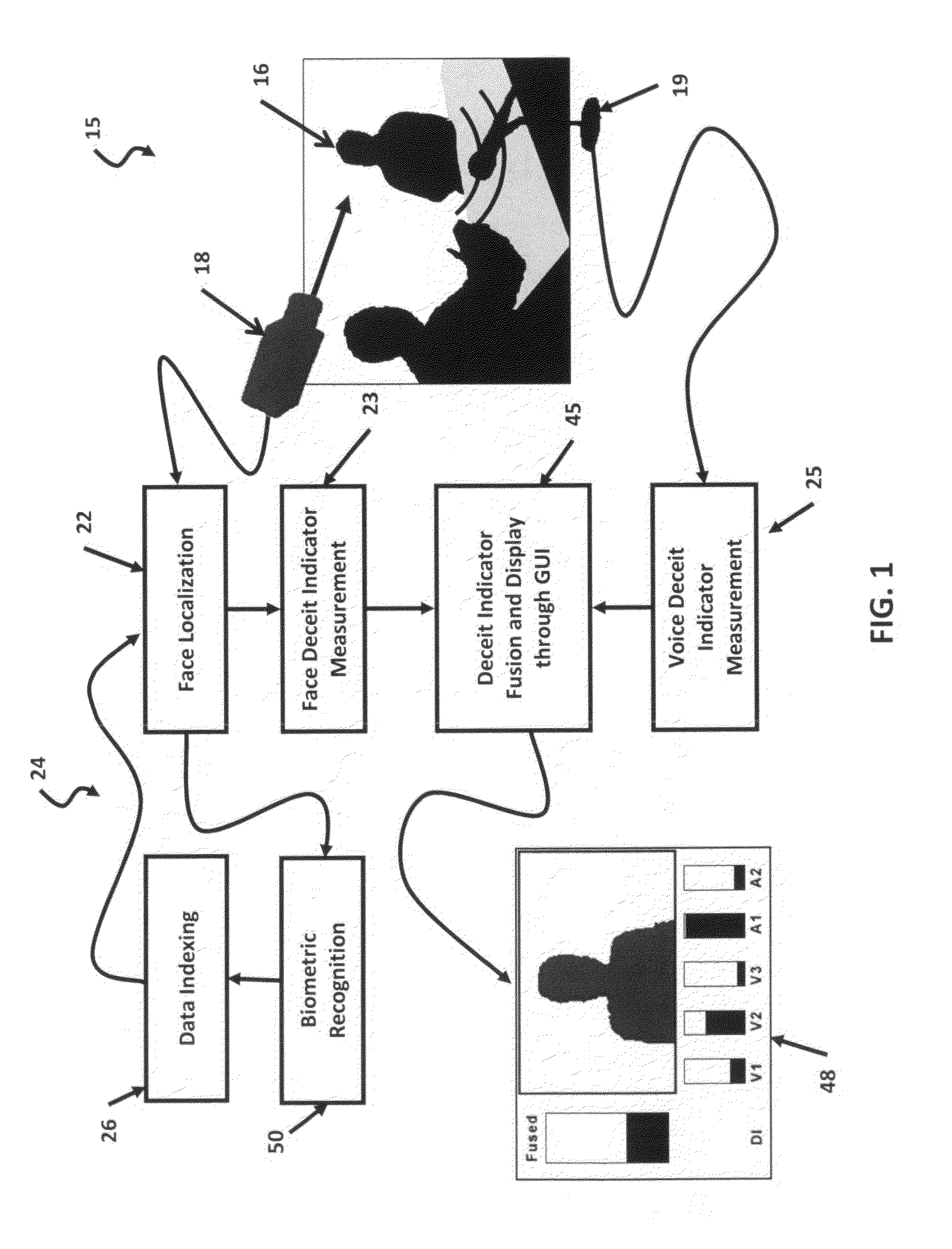

An improved method for detecting truth or deceit (15) comprising providing a video camera (18) adapted to record images of a subject's (16) face, recording images of the subject's face, providing a mathematical model (62) of a face defined by a set of facial feature locations and textures, providing a mathematical model of facial behaviors (78, 82, 98, 104) that correlate to truth or deceit, comparing (64) the facial feature locations to the image (29) to provide a set of matched facial feature locations (70), comparing (77, 90, 94, 100) the mathematical model of facial behaviors to the matched facial feature locations, and providing a deceit indication as a function of the comparison (78, 91, 95, 101 or 23).

Owner:MOSKAL MICHAEL D +4

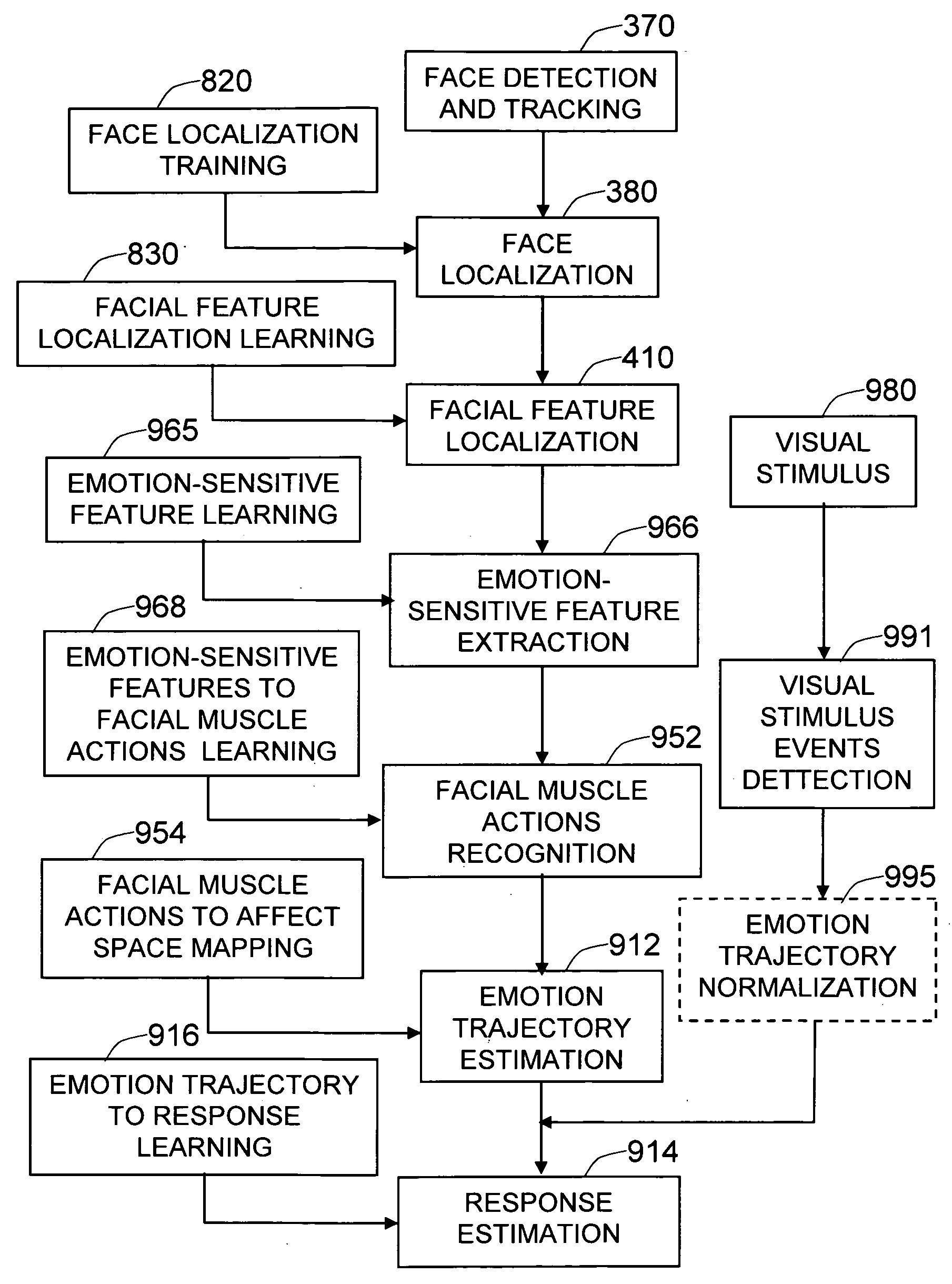

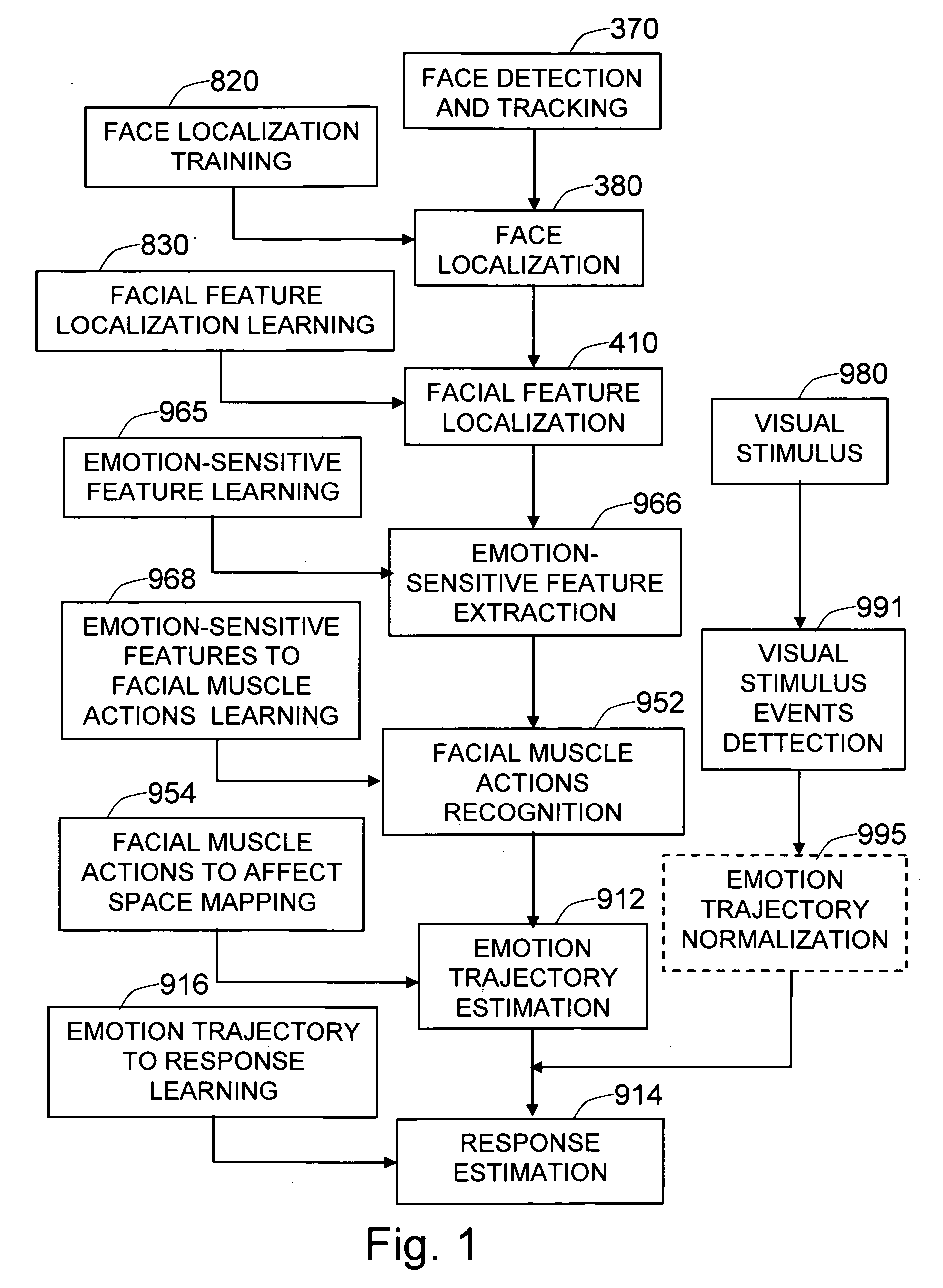

Method and system for measuring human response to visual stimulus based on changes in facial expression

ActiveUS20090285456A1Improve responseCharacter and pattern recognitionEye diagnosticsWrinkle skinLearning machine

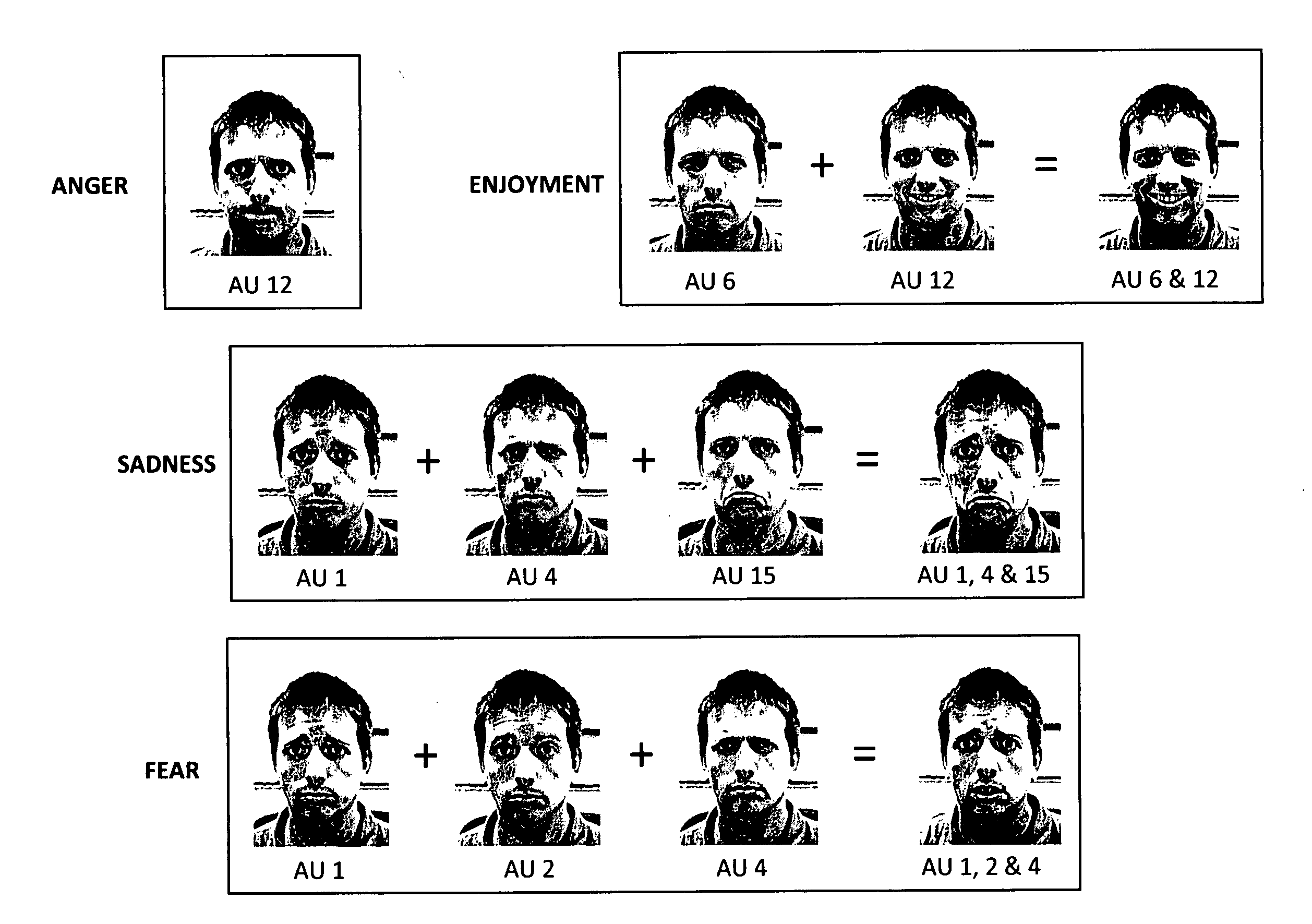

The present invention is a method and system for measuring human emotional response to visual stimulus, based on the person's facial expressions. Given a detected and tracked human face, it is accurately localized so that the facial features are correctly identified and localized. Face and facial features are localized using the geometrically specialized learning machines. Then the emotion-sensitive features, such as the shapes of the facial features or facial wrinkles, are extracted. The facial muscle actions are estimated using a learning machine trained on the emotion-sensitive features. The instantaneous facial muscle actions are projected to a point in affect space, using the relation between the facial muscle actions and the affective state (arousal, valence, and stance). The series of estimated emotional changes renders a trajectory in affect space, which is further analyzed in relation to the temporal changes in visual stimulus, to determine the response.

Owner:MOTOROLA SOLUTIONS INC

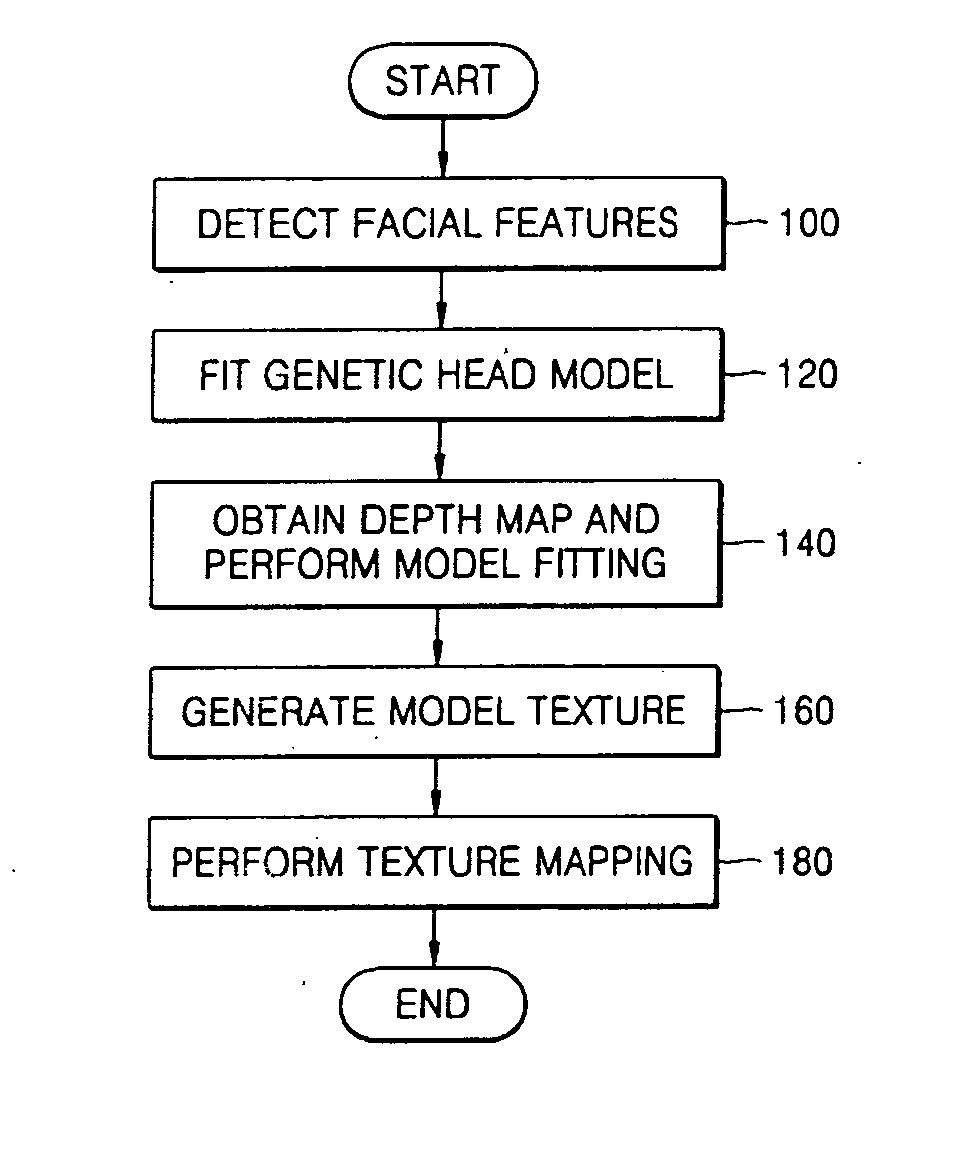

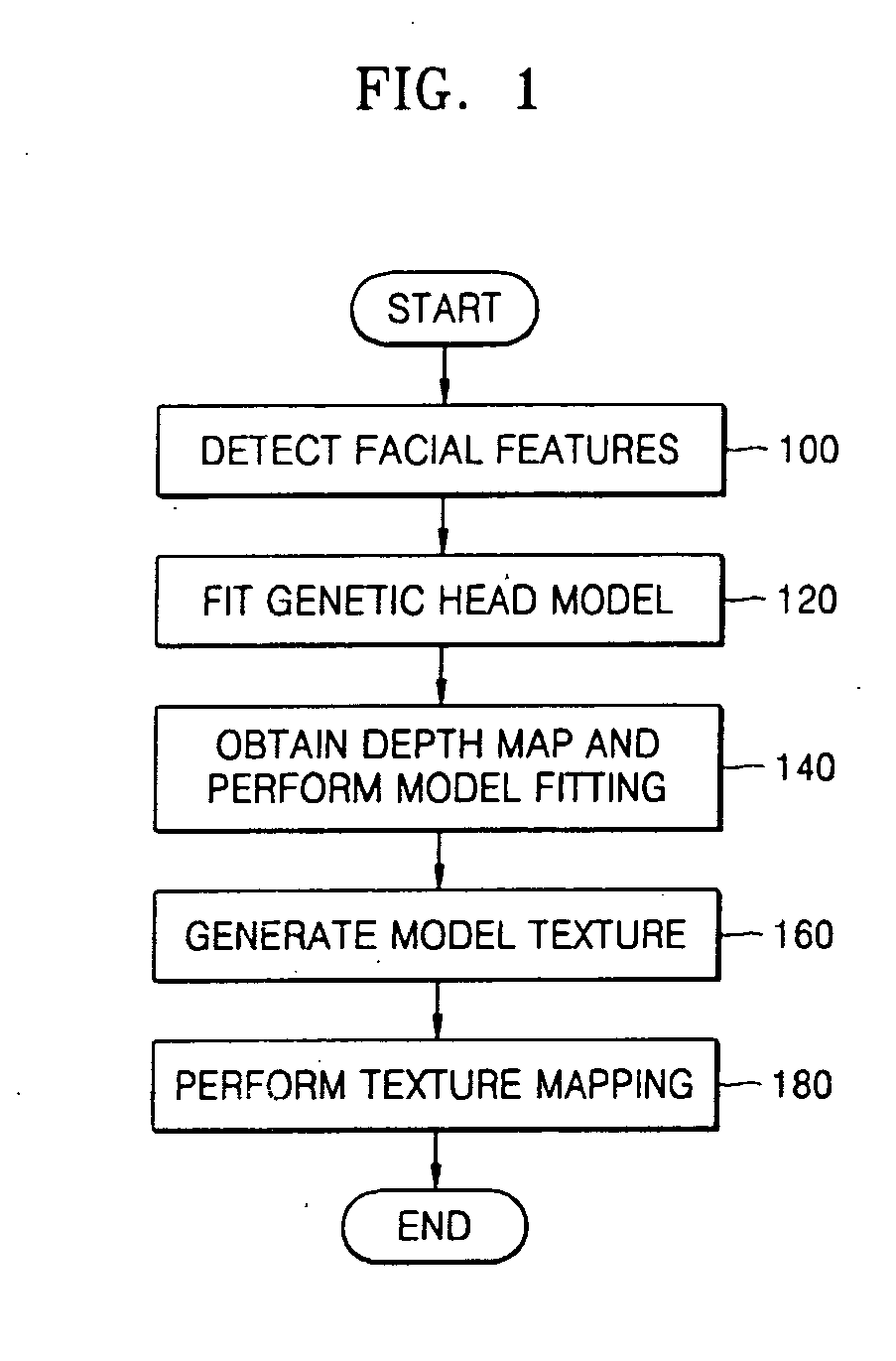

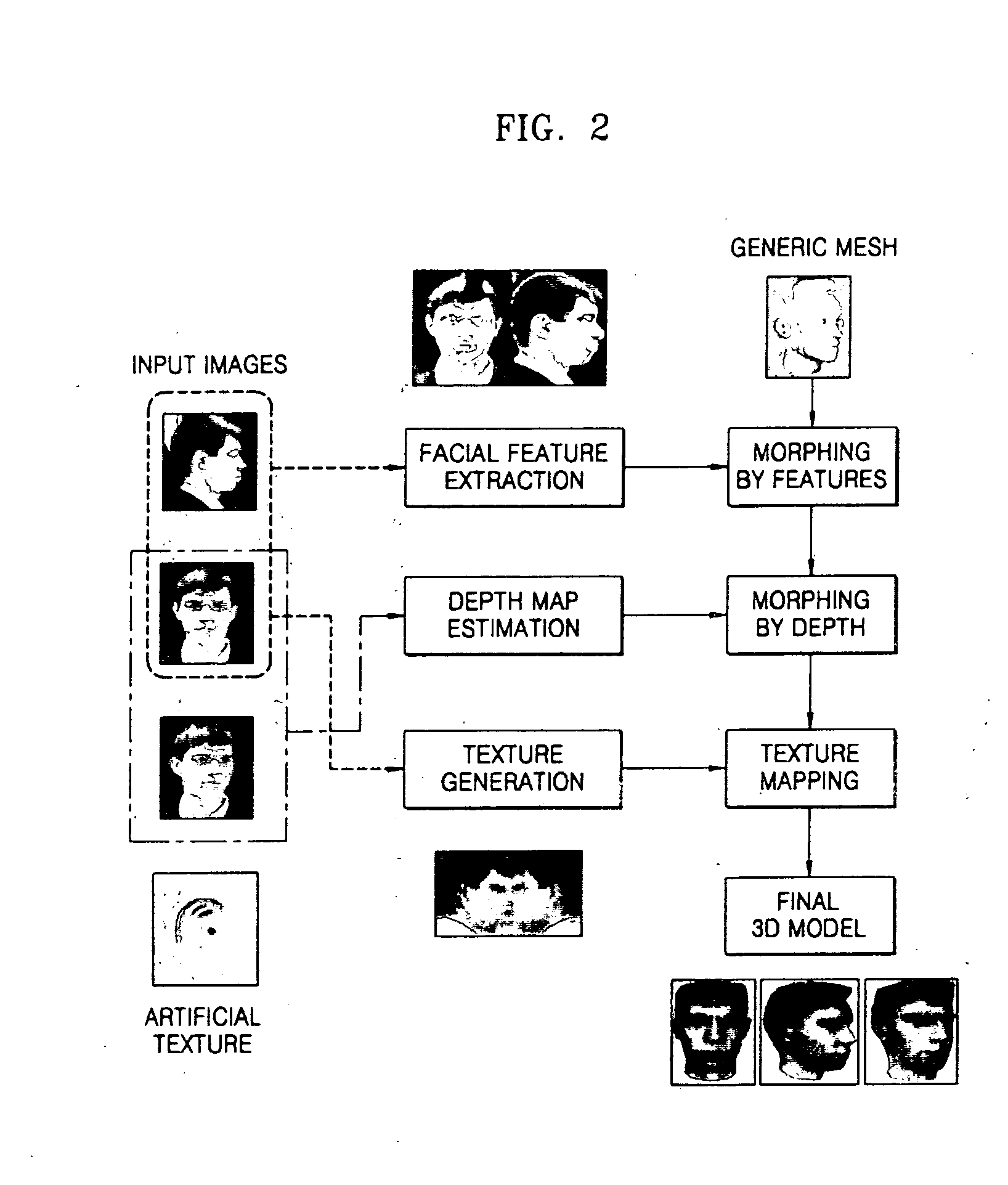

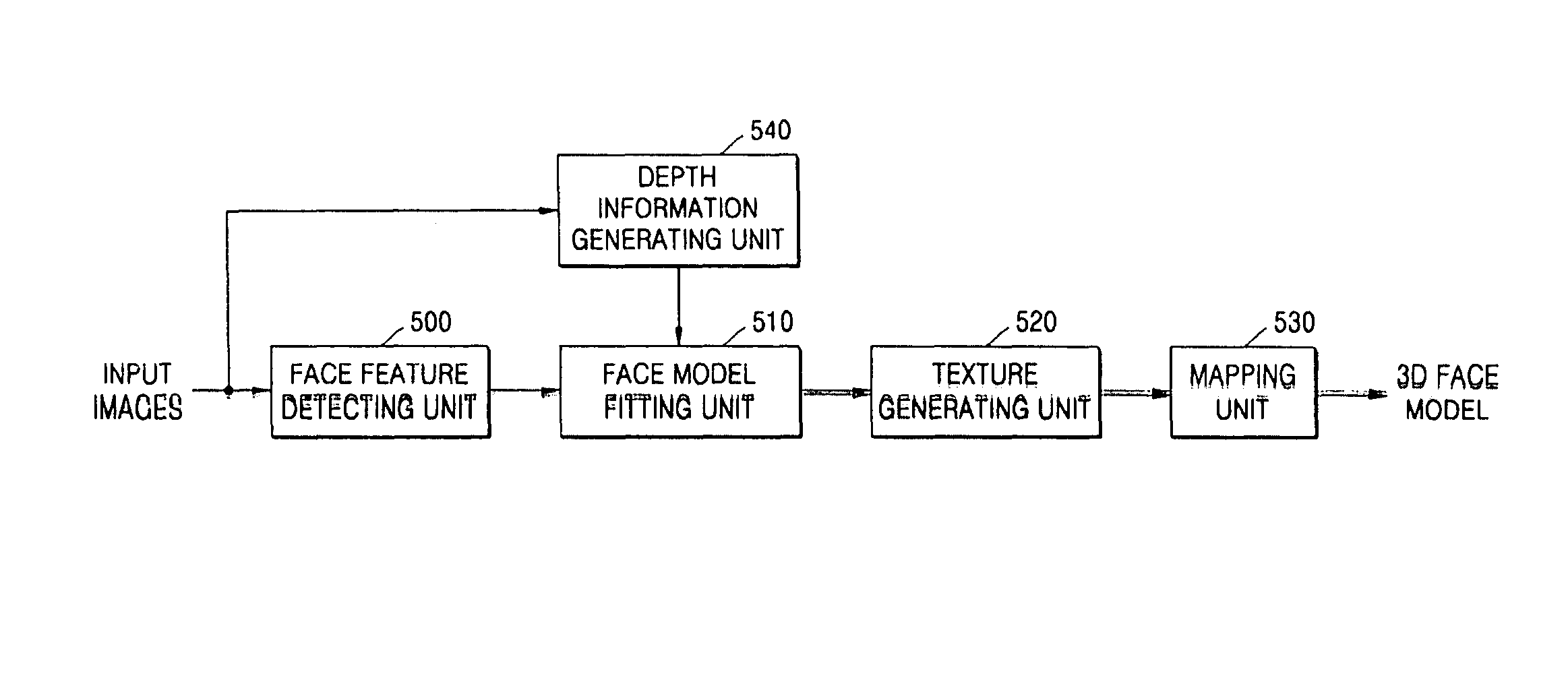

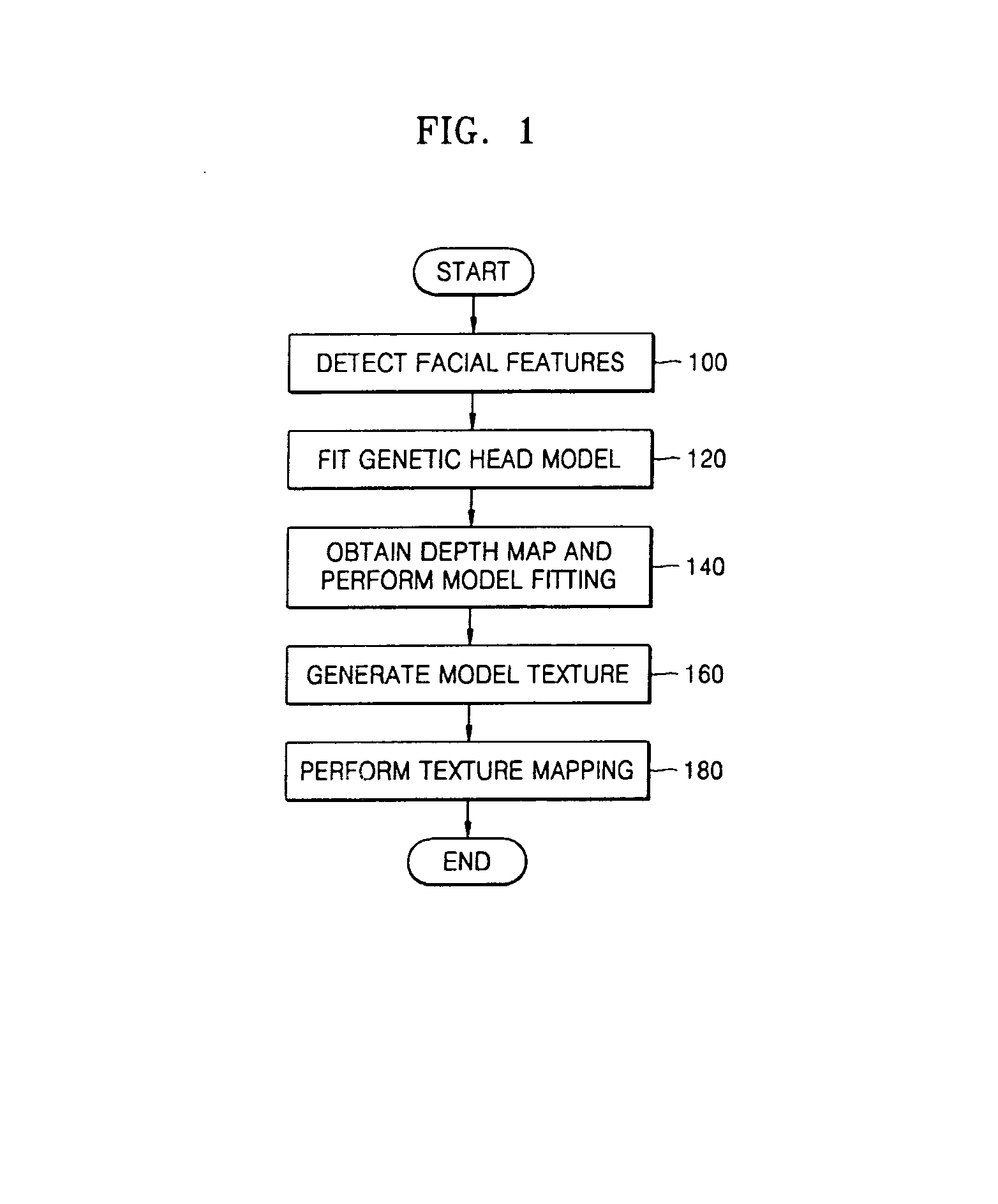

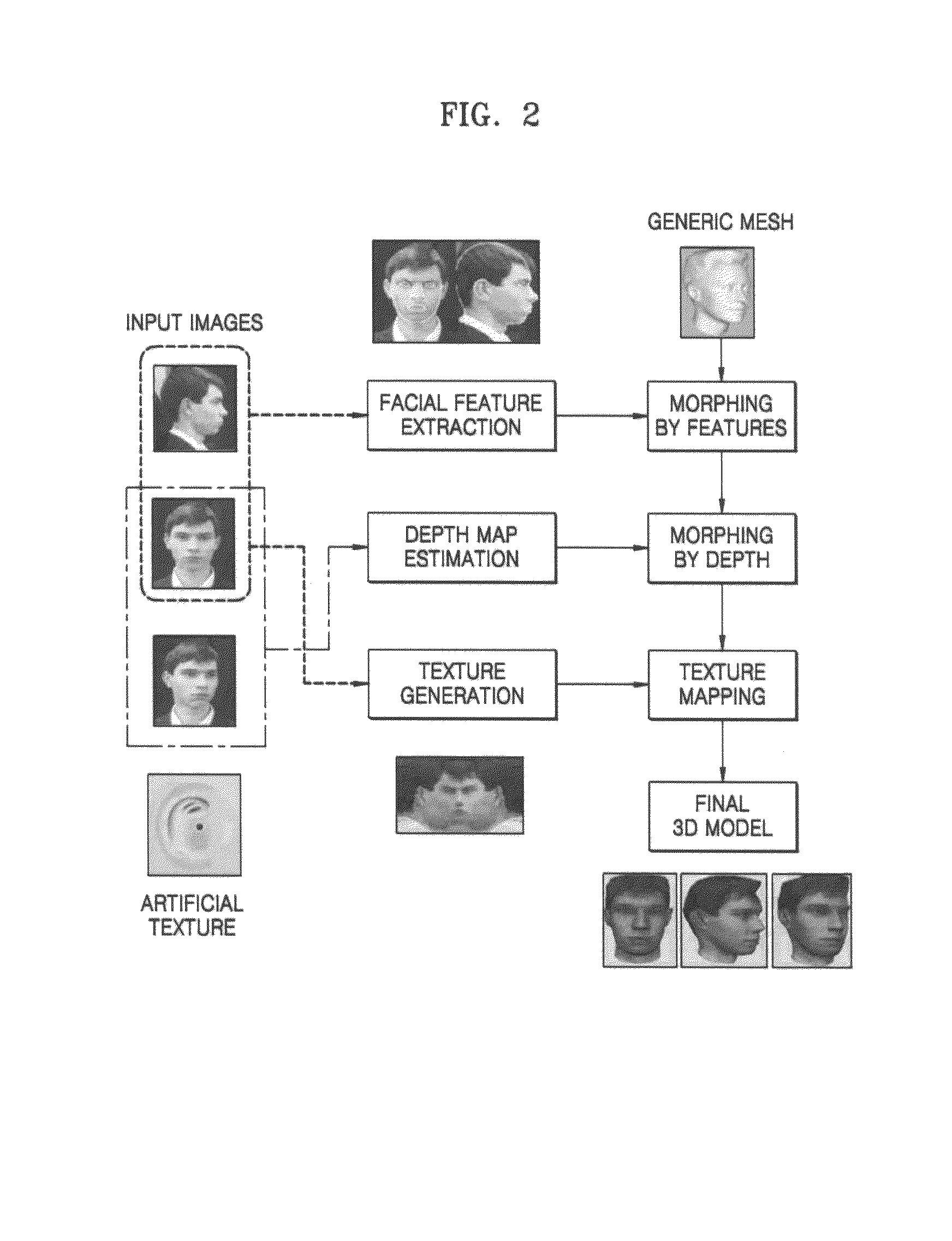

Method and apparatus for image-based photorealistic 3D face modeling

An apparatus and method for image-based 3D photorealistic head modeling are provided. The method for creating a 3D photorealistic head model includes: detecting frontal and profile features in input frontal and profile images; generating a 3D head model by fitting a 3D genetic model using the detected facial features; generating a realistic texture from the input frontal and profile images; and mapping the texture onto the 3D head model. In the apparatus and method, data obtained using a relatively cheap device, such as a digital camera, can be processed in an automated manner, and satisfactory results can be obtained even from imperfect input data. In other words, facial features can be extracted in an automated manner, and a robust “human-quality” face analysis algorithm is used.

Owner:SAMSUNG ELECTRONICS CO LTD

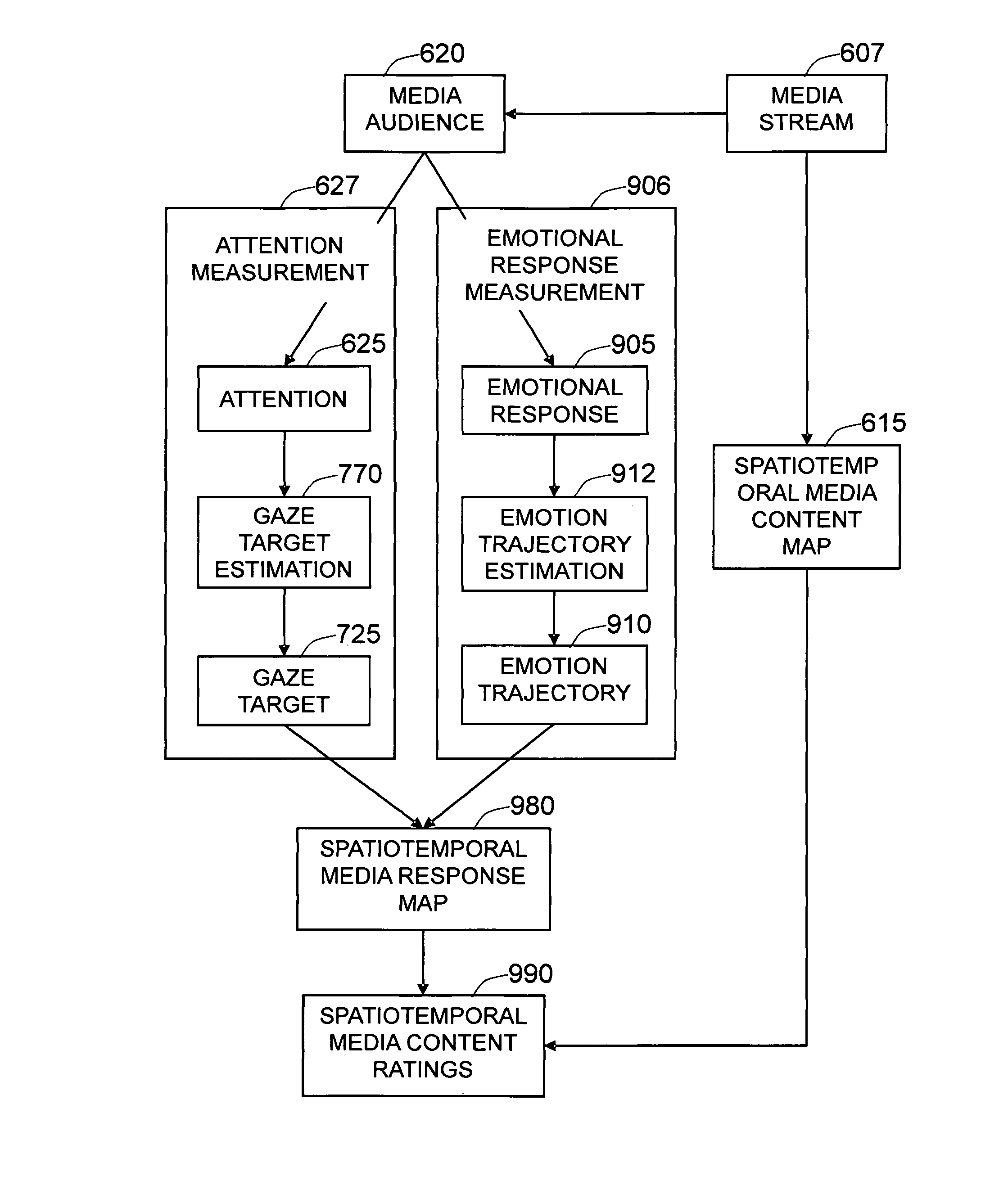

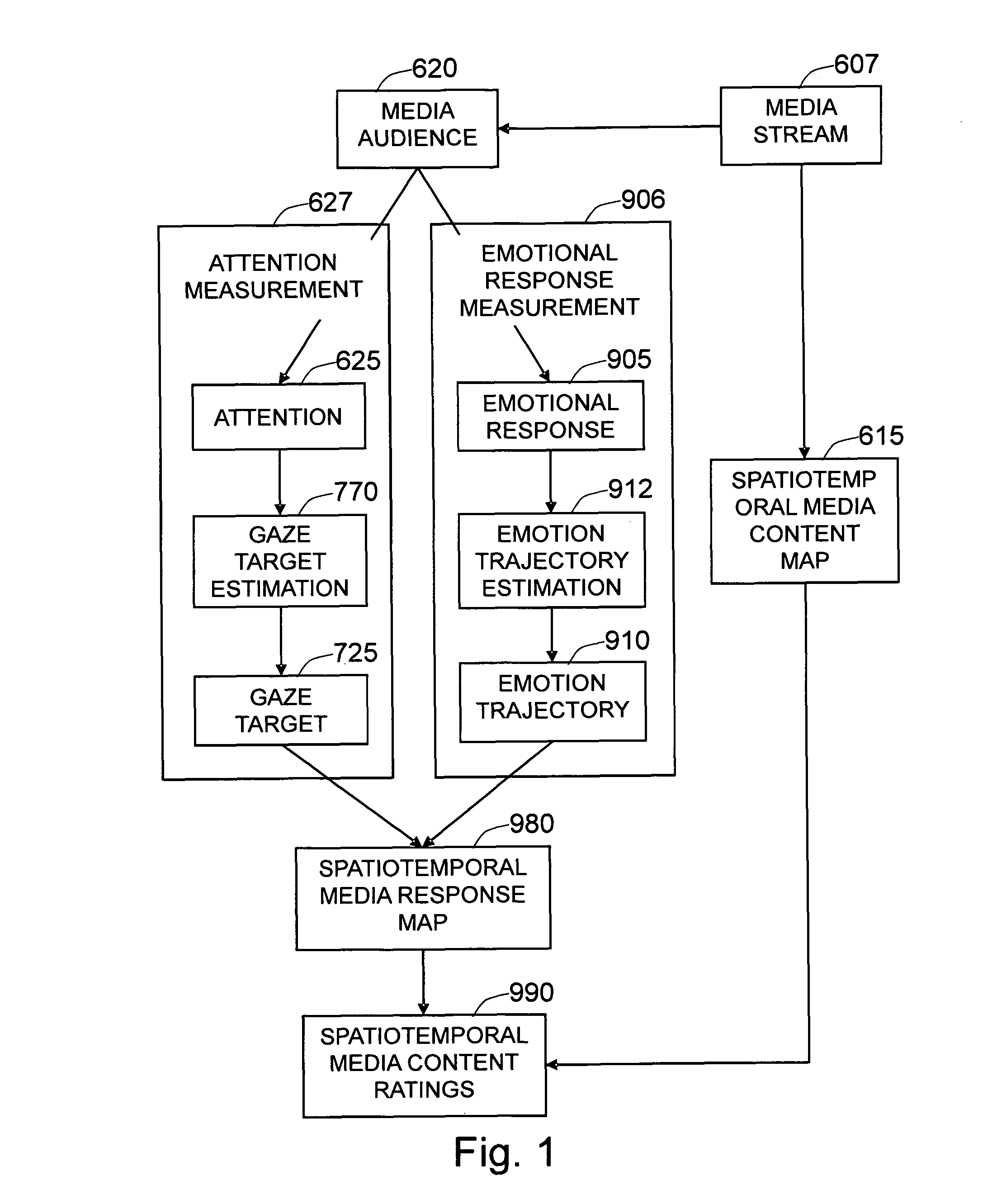

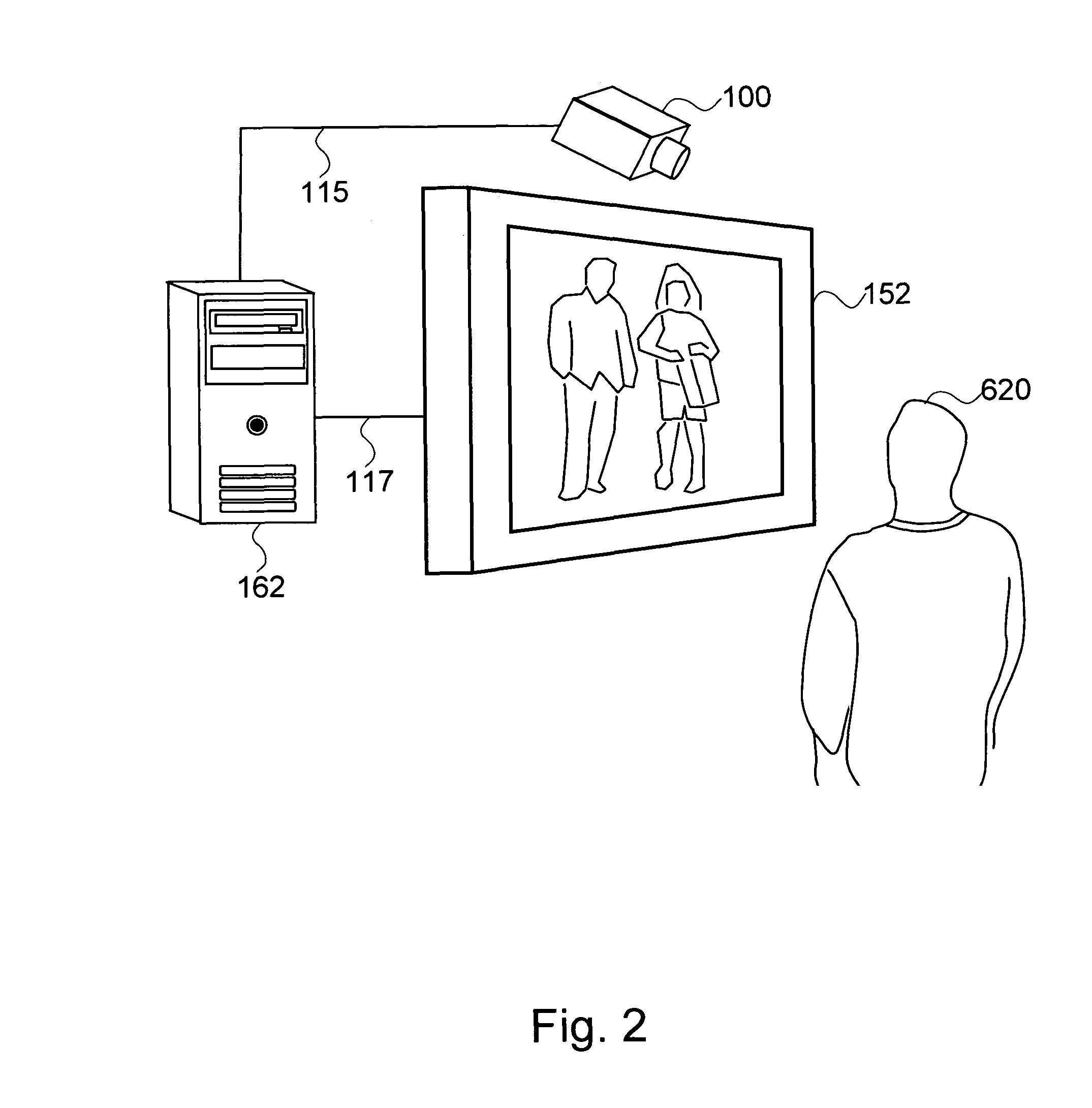

Method and system for measuring emotional and attentional response to dynamic digital media content

The present invention is a method and system to provide an automatic measurement of people's responses to dynamic digital media, based on changes in their facial expressions and attention to specific content. First, the method detects and tracks faces from the audience. It then localizes each of the faces and facial features to extract emotion-sensitive features of the face by applying emotion-sensitive feature filters, to determine the facial muscle actions of the face based on the extracted emotion-sensitive features. The changes in facial muscle actions are then converted to the changes in affective state, called an emotion trajectory. On the other hand, the method also estimates eye gaze based on extracted eye images and three-dimensional facial pose of the face based on localized facial images. The gaze direction of the person, is estimated based on the estimated eye gaze and the three-dimensional facial pose of the person. The gaze target on the media display is then estimated based on the estimated gaze direction and the position of the person. Finally, the response of the person to the dynamic digital media content is determined by analyzing the emotion trajectory in relation to the time and screen positions of the specific digital media sub-content that the person is watching.

Owner:MOTOROLA SOLUTIONS INC

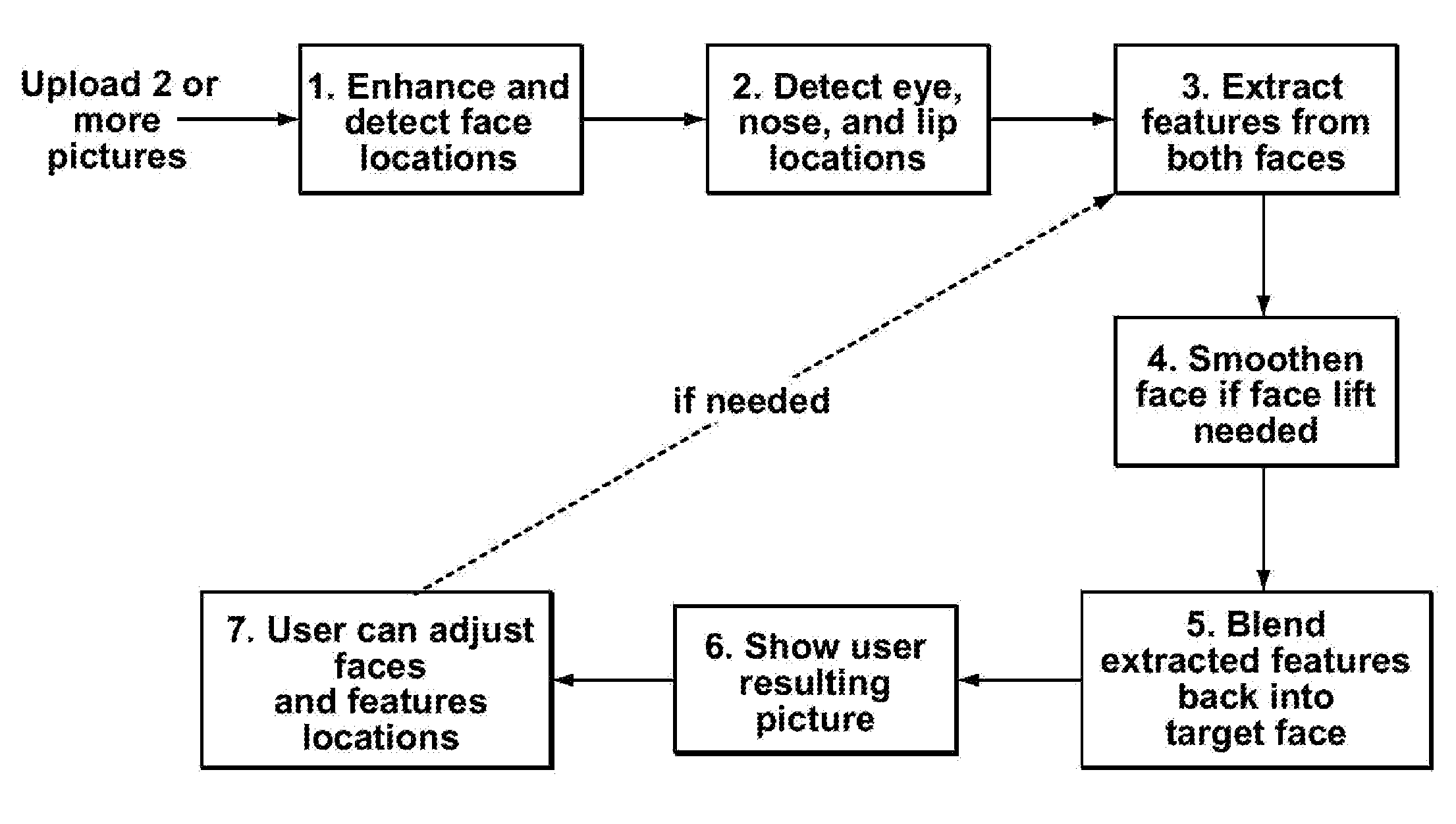

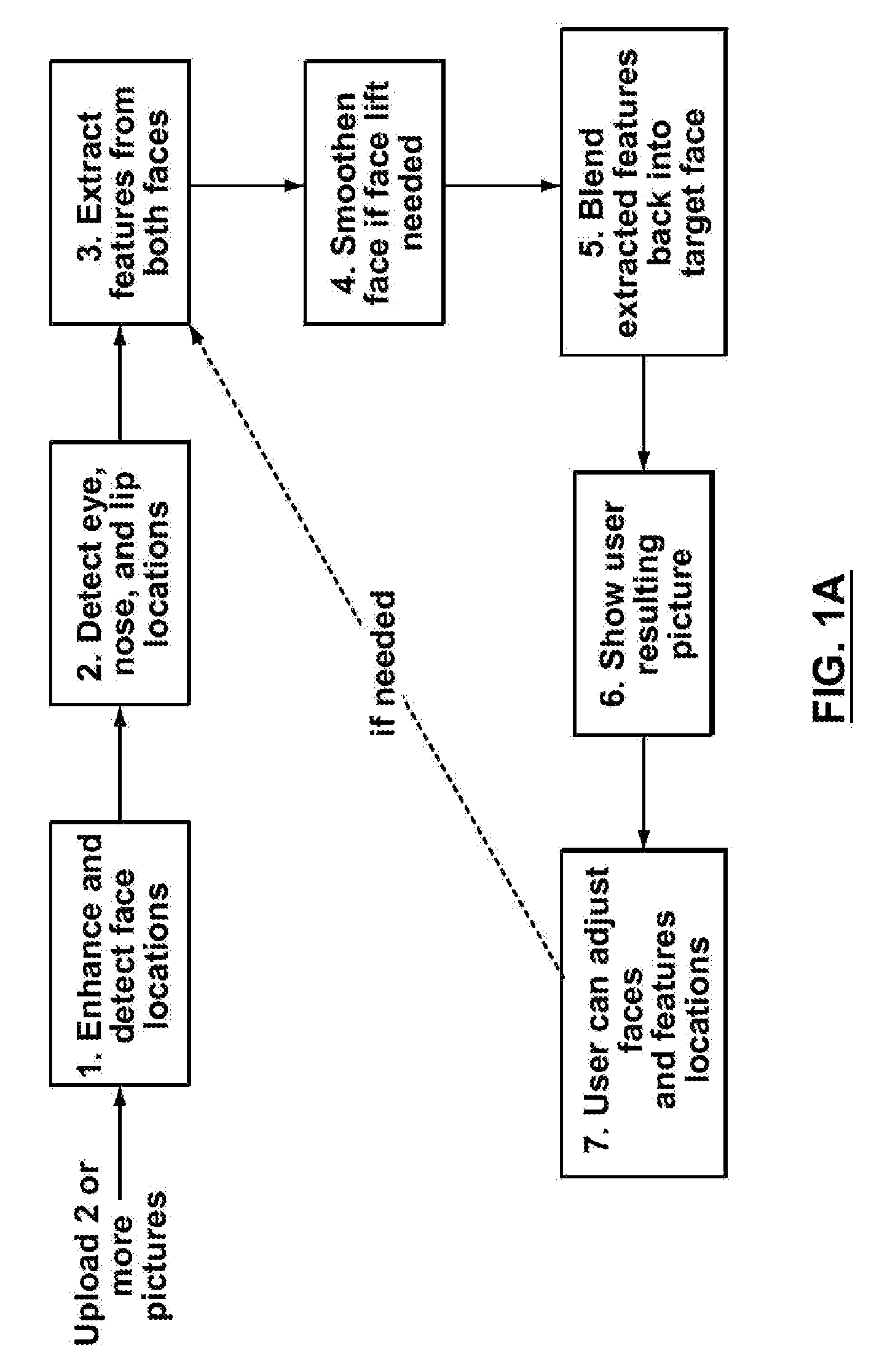

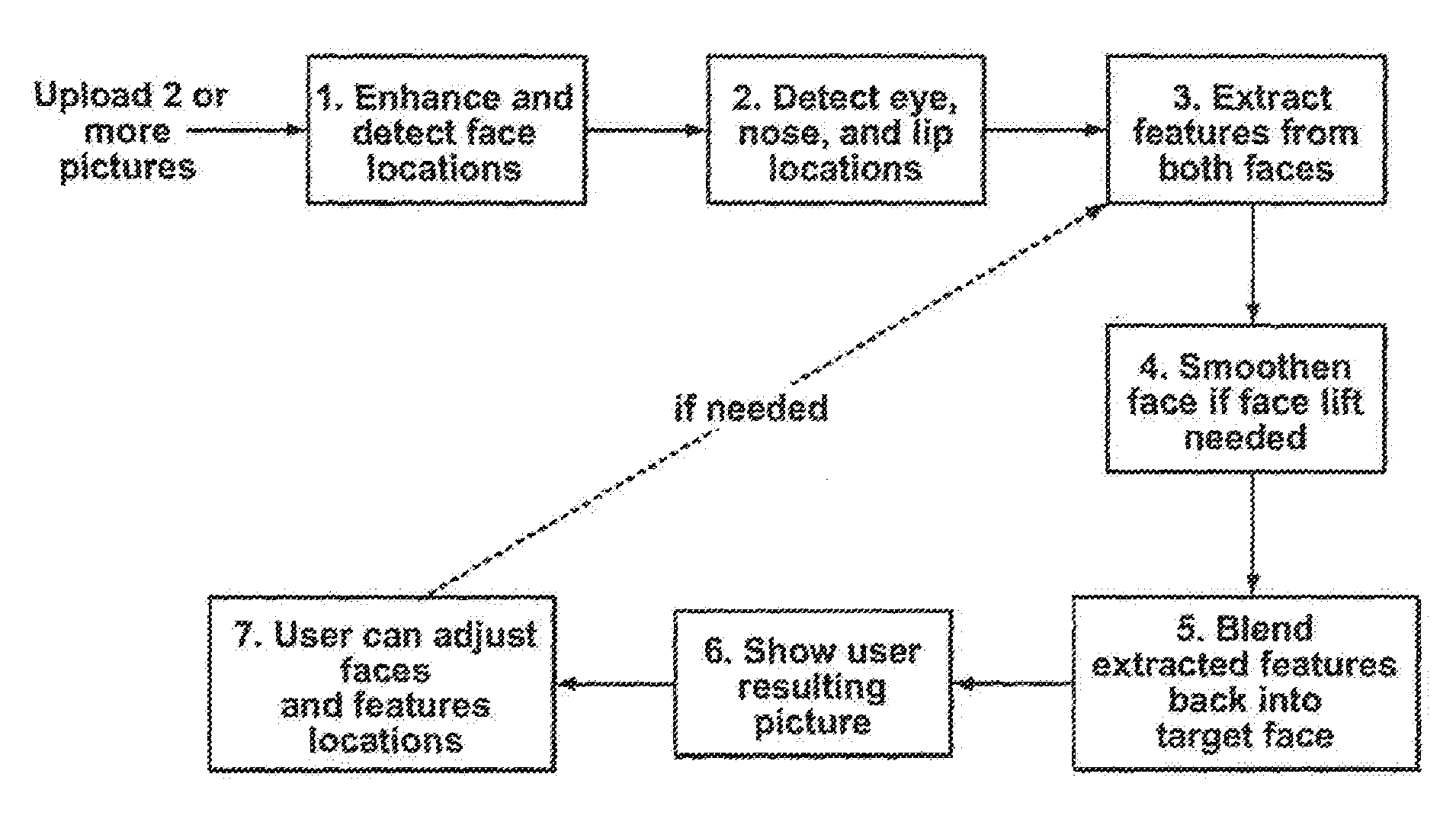

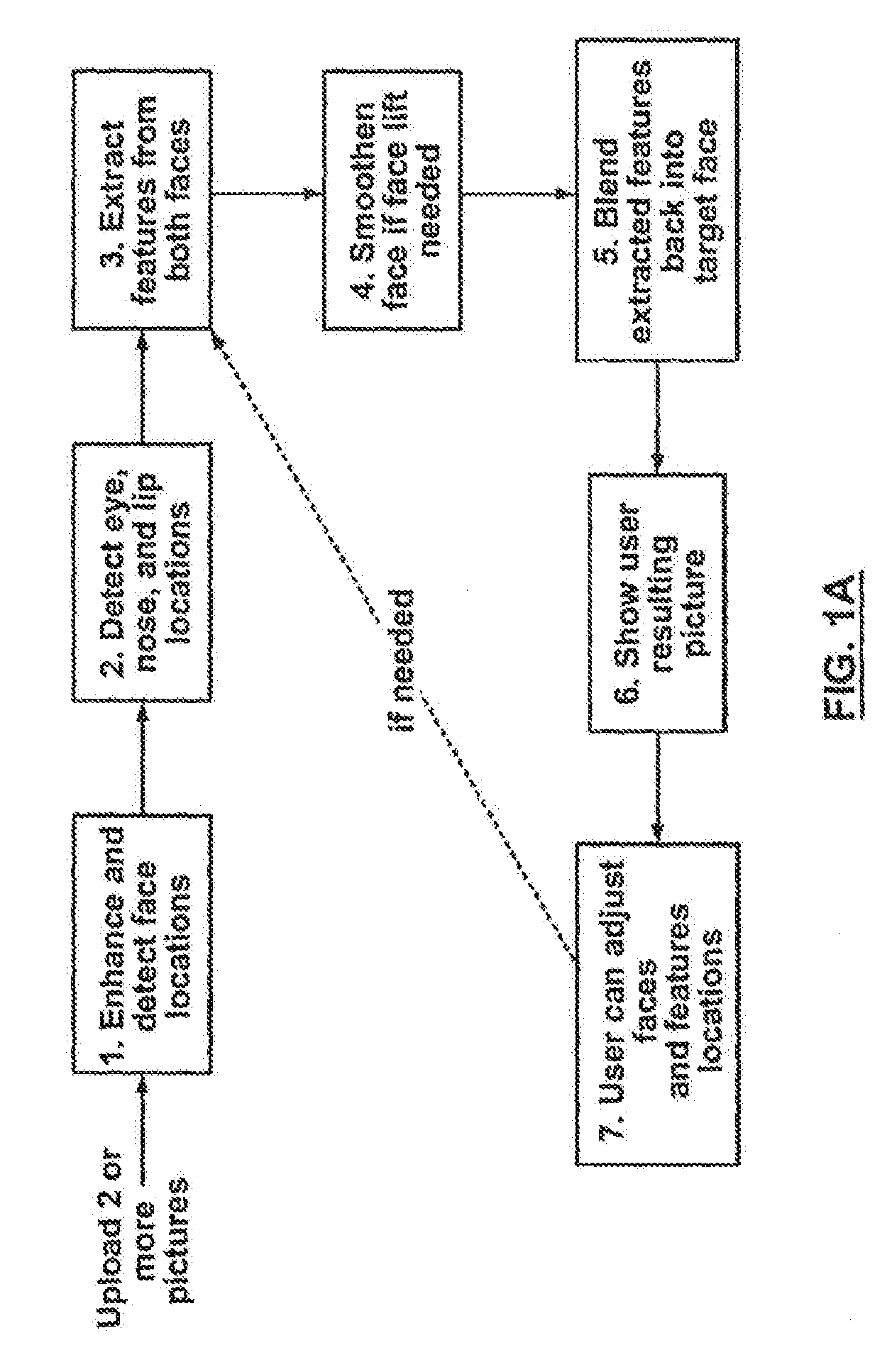

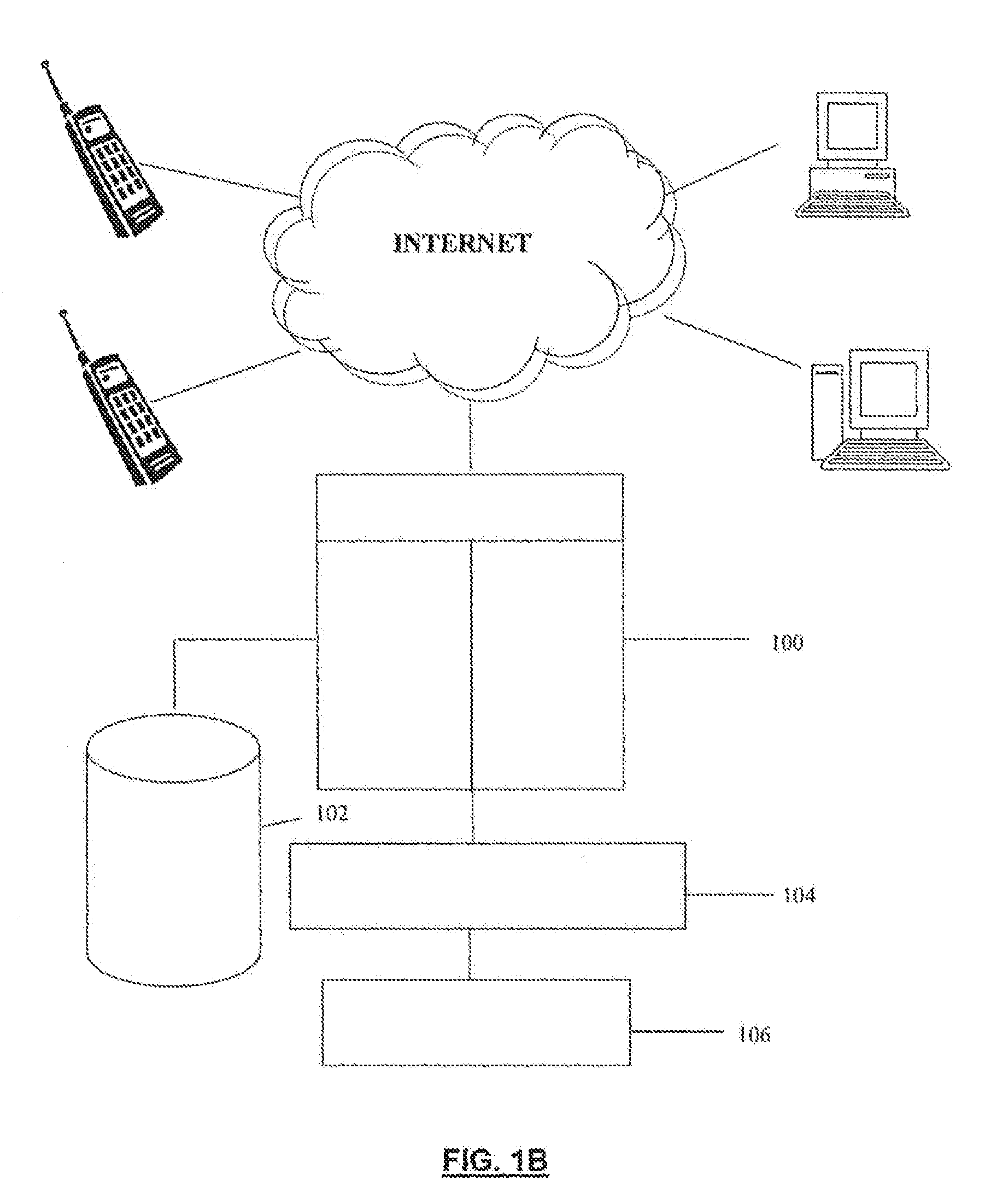

Method, system and computer program product for automatic and semi-automatic modification of digital images of faces

ActiveUS20070258656A1Increase perceived beautyImprove accuracyCharacter and pattern recognitionPictoral communicationWeb siteComputer graphics (images)

The present invention is directed at modifying digital images of faces automatically or semi-automatically. In one aspect, a method of detecting faces in digital images and matching and replacing features within the digital images is provided. Techniques for blending, recoloring, shifting and resizing of portions of digital images are disclosed. In other aspects, methods of virtual “face lifts” and methods of detecting faces within digital image are provided. Advantageously, the detection and localization of faces and facial features, such as the eyes, nose, lips and hair, can be achieved on an automated or semi-automated basis. User feedback and adjustment enables fine tuning of modified images. A variety of systems for matching and replacing features within digital images and detection of faces in digital images is also provided, including implementation as a website, through mobile phones, handheld computers, or a kiosk. Related computer program products are also disclosed.

Owner:LOREAL SA

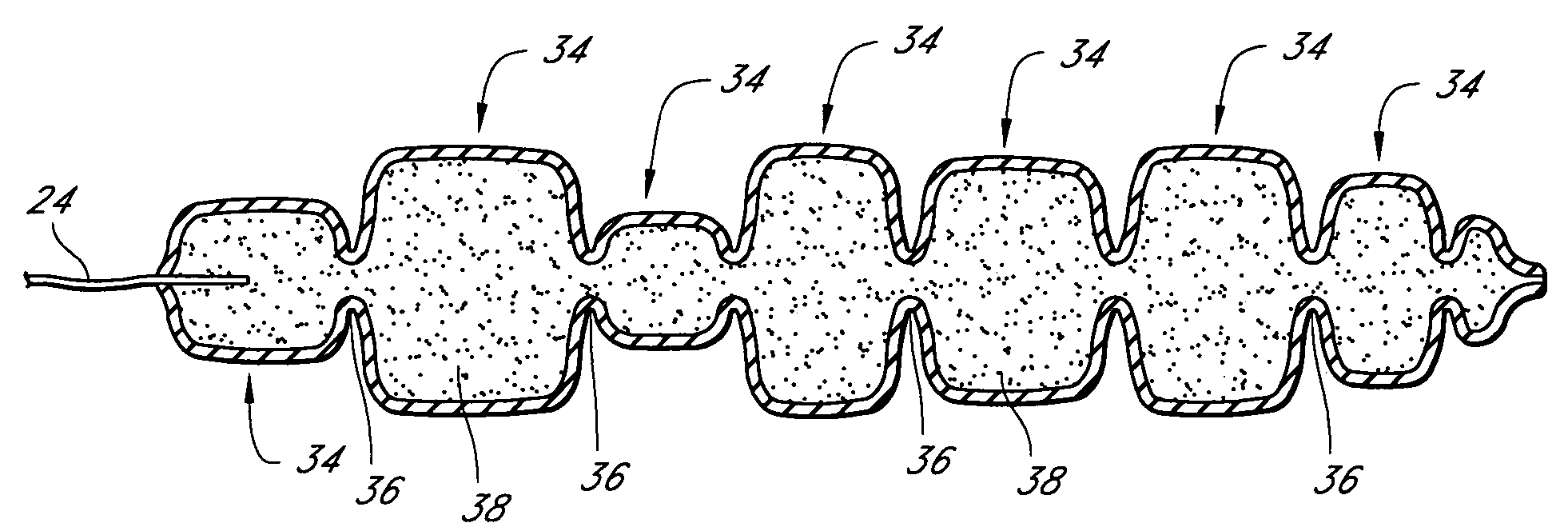

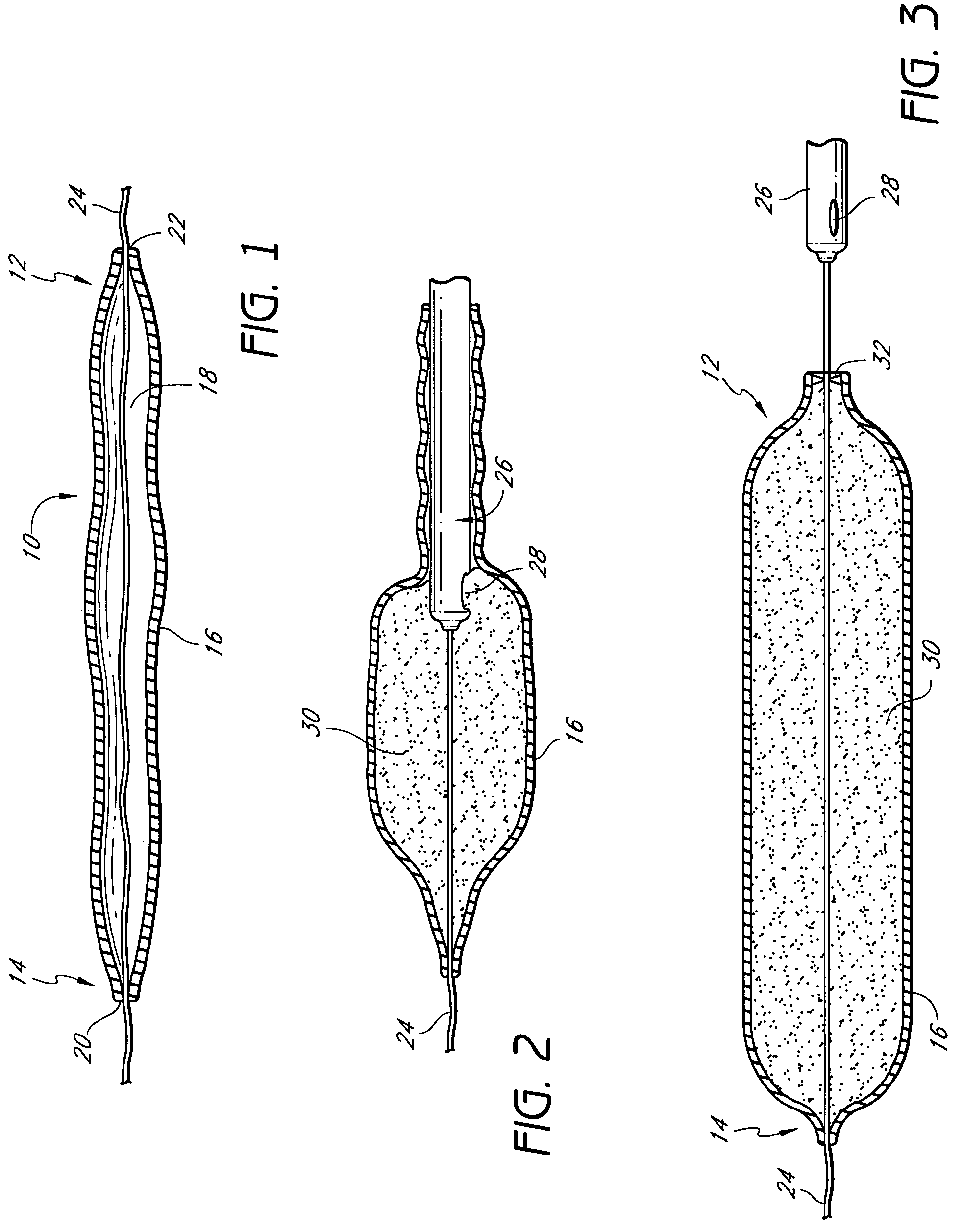

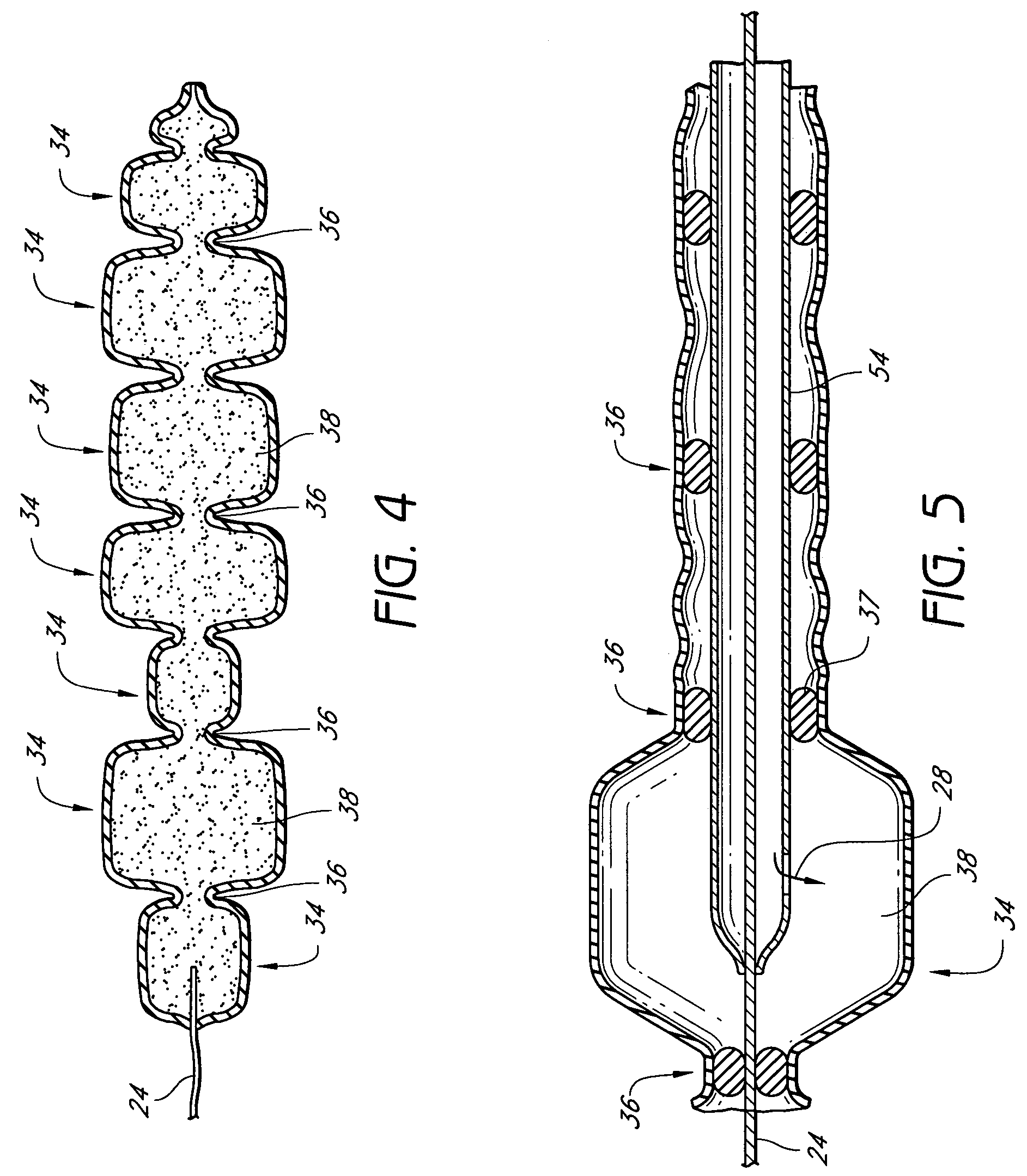

Systems and devices for soft tissue augmentation

Medical devices, systems and kits for filling tissue are disclosed. The device has a first configuration wherein the device can pass through a small catheter or needle placed in the tissue to be filled and a second configuration in which the device is expandable to a predetermined or customizable shape. In one application, the device is adapted to be placed into the skin to reduce facial wrinkles or augment facial features such as the lips. Kits and systems include multiple device sizes, filler tubes, and filler media.

Owner:EVERA MEDICAL

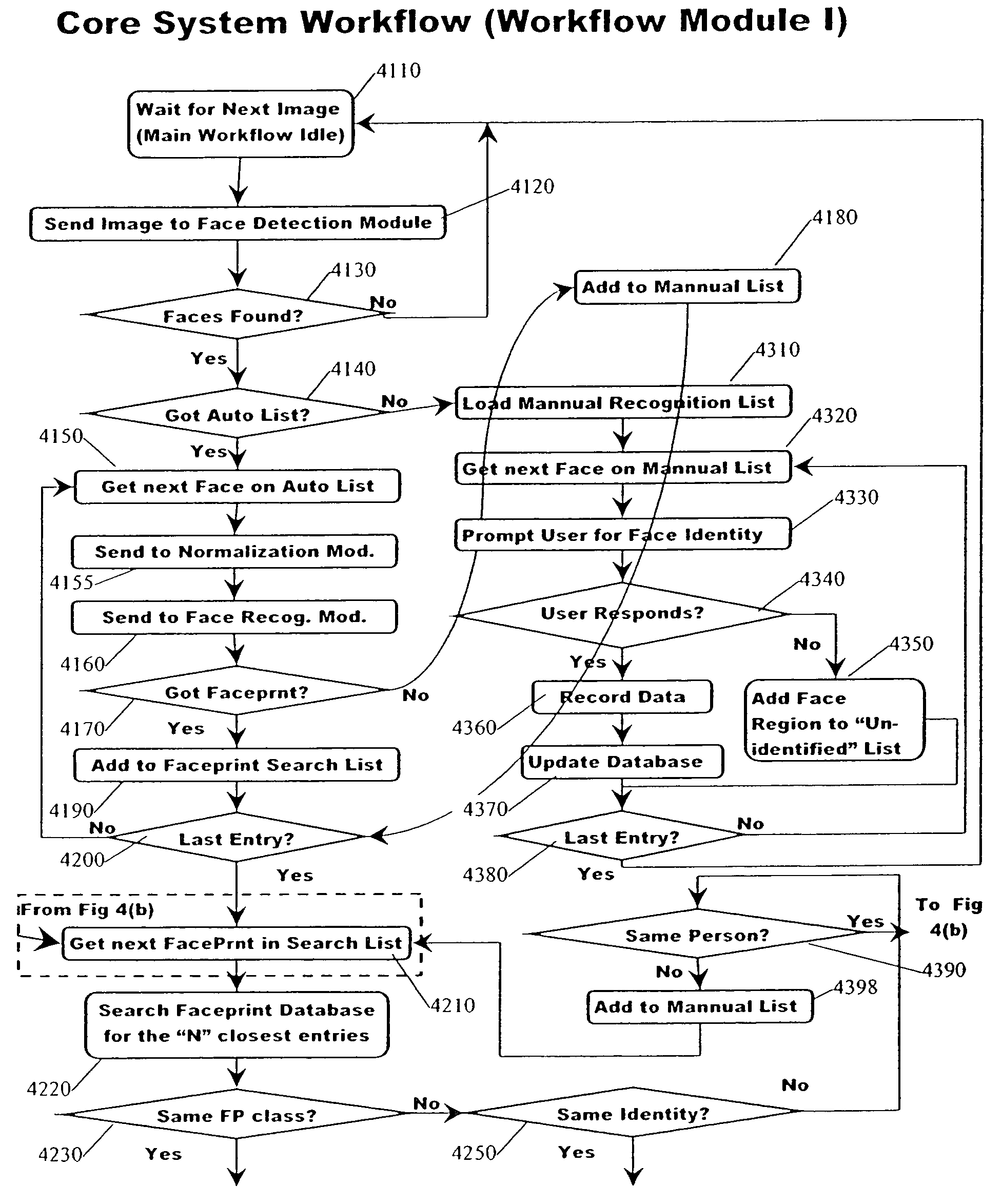

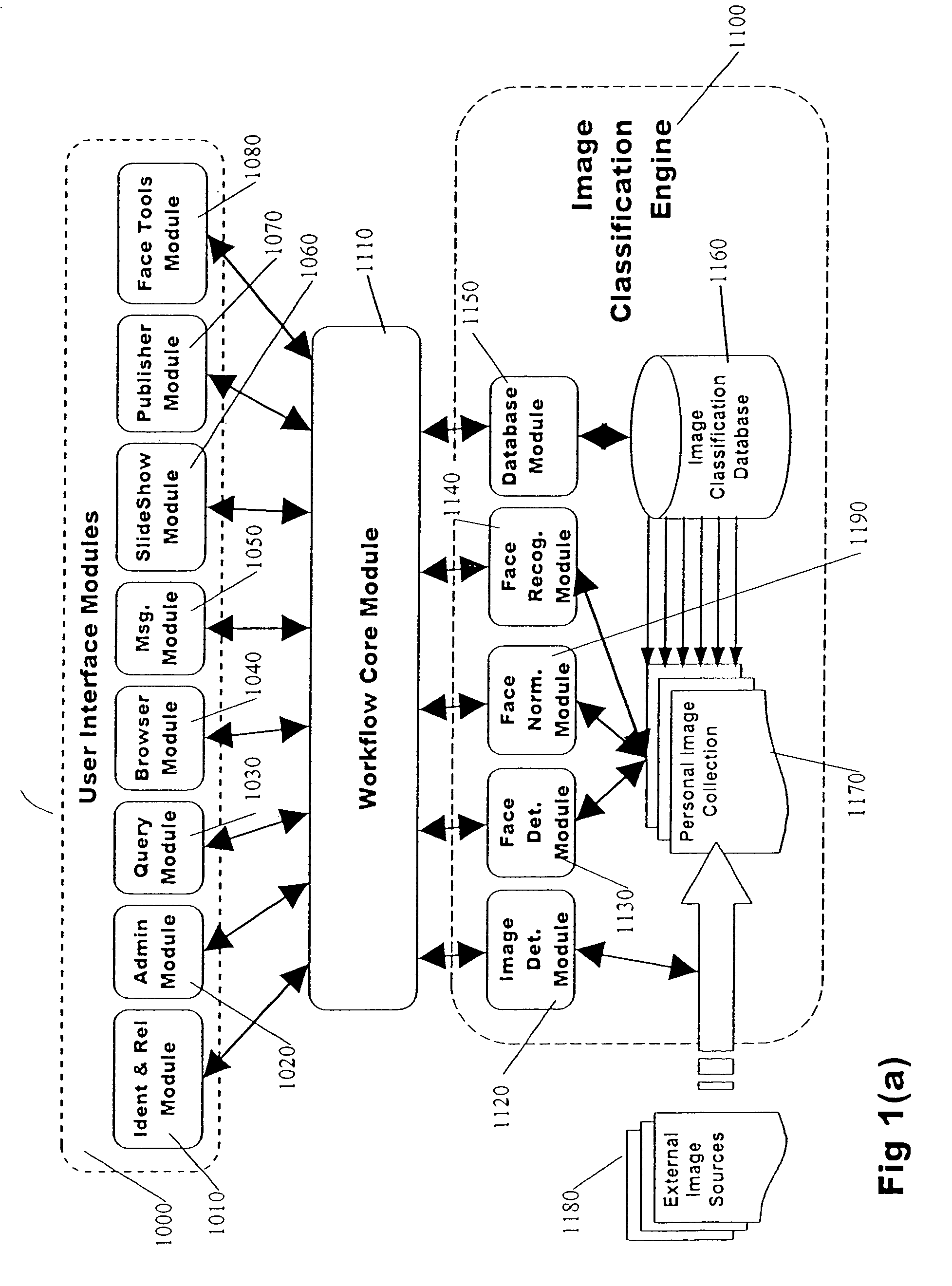

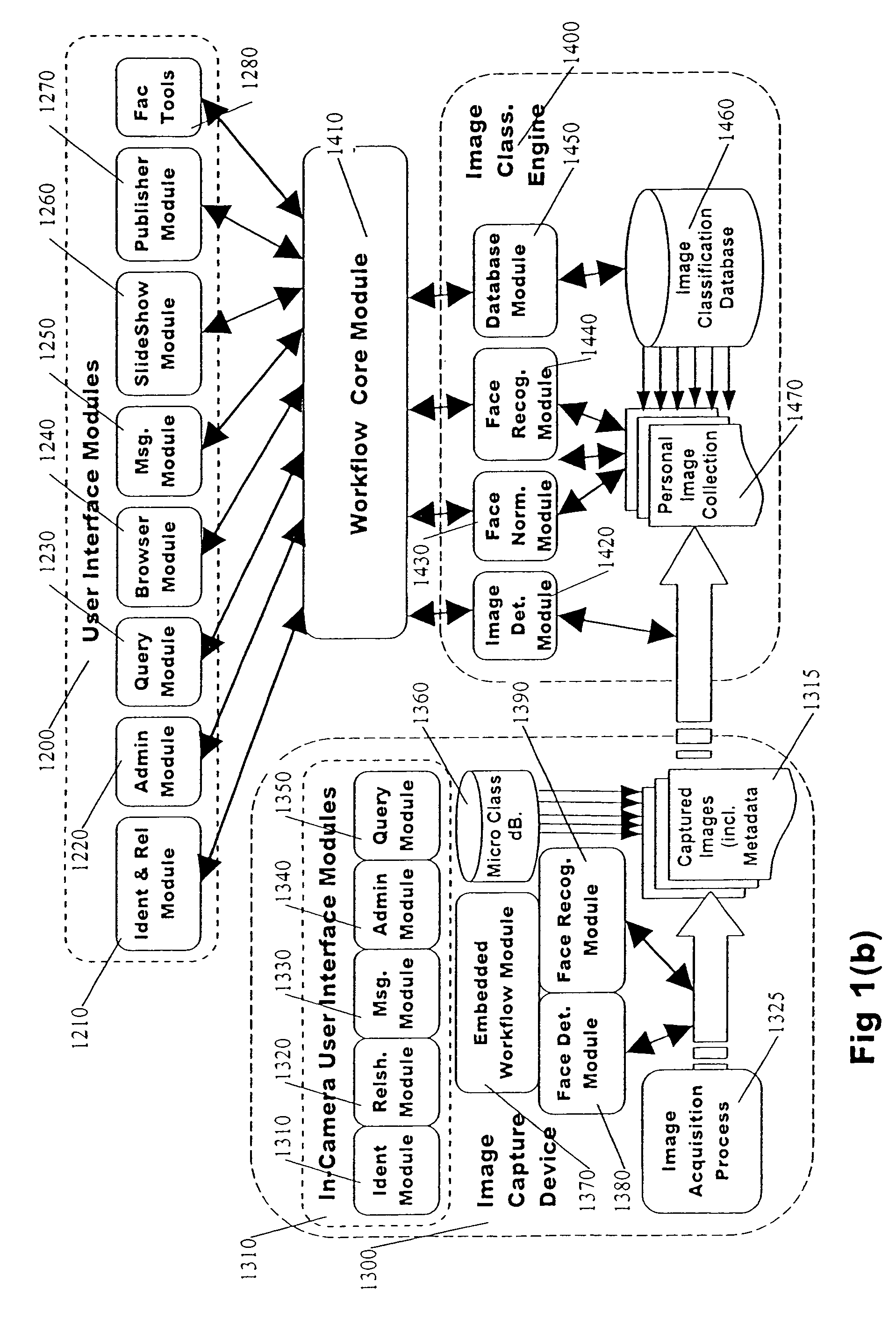

Classification system for consumer digital images using workflow, face detection, normalization, and face recognition

ActiveUS7555148B1Accurate identificationElectric signal transmission systemsImage analysisPattern recognitionFace detection

A processor-based system operating according to digitally-embedded programming instructions includes a face detection module for identifying face regions within digital images. A normalization module generates a normalized version of the face region that is at least pose normalized. A face recognition module extracts a set of face classifier parameter values from the normalized face region that are referred to as a faceprint. A workflow module compares the extracted faceprint to a database of archived faceprints previously determined to correspond to known identities. The workflow module determines based on the comparing whether the new faceprint corresponds to any of the known identities, and associates the new faceprint and normalized face region with a new or known identity within a database. A database module serves to archive data corresponding to the new faceprint and its associated parent image according to the associating by the workflow module within one or more digital data storage media.

Owner:FOTONATION LTD

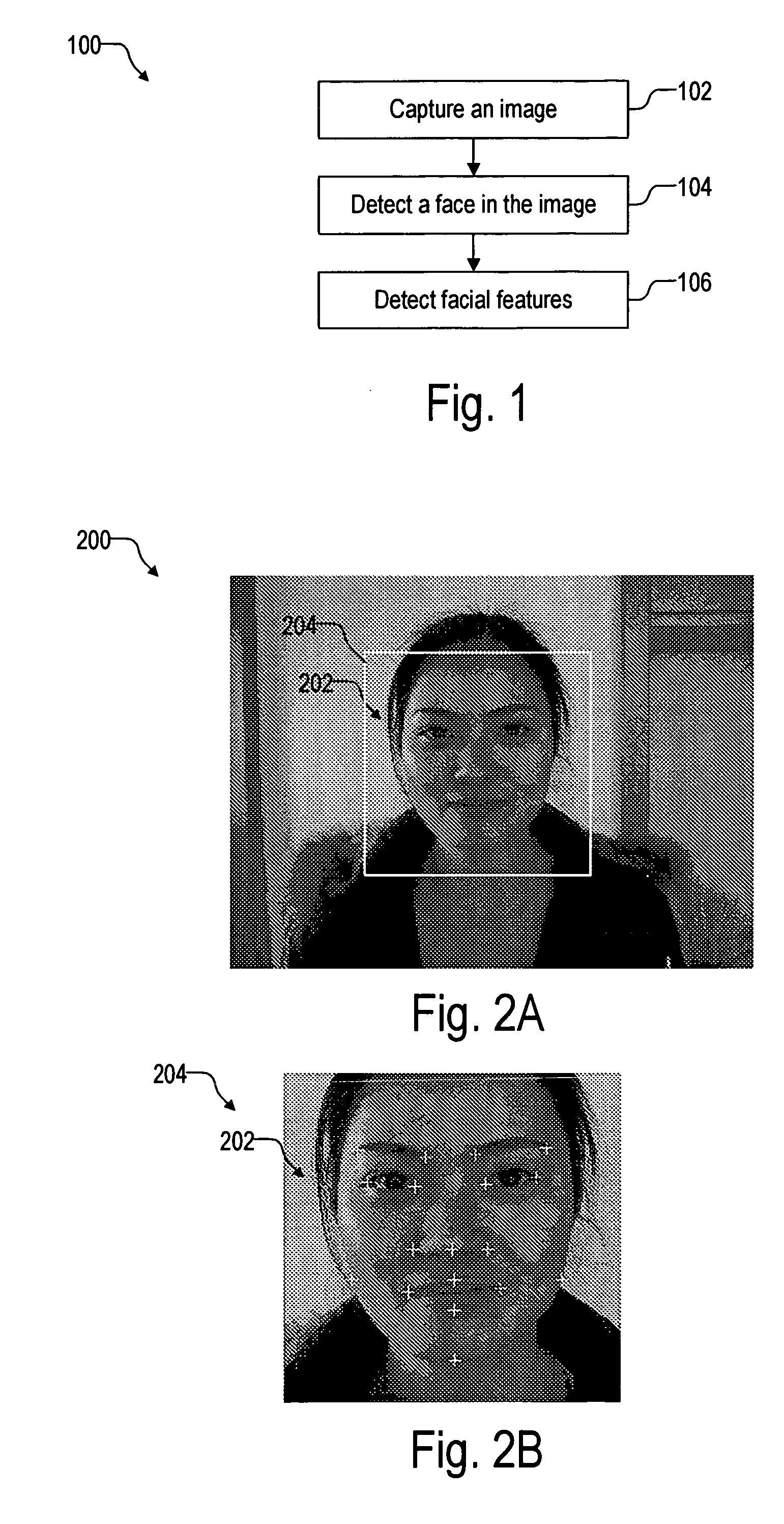

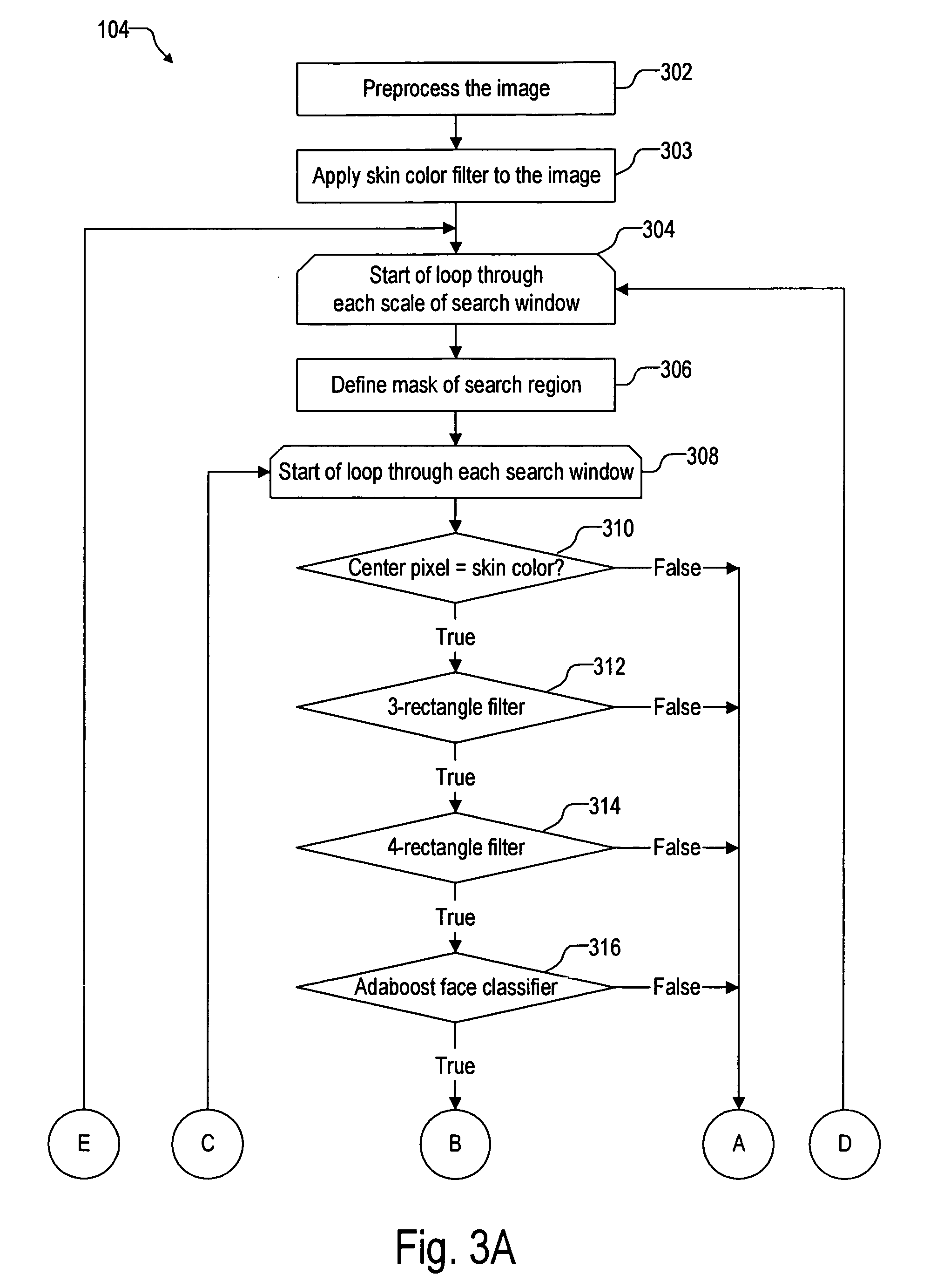

Facial feature detection on mobile devices

Locating an eye includes generating an intensity response map by applying a 3-rectangle filter and applying K-mean clustering to the map to determine the eye. Locating an eye corner includes applying logarithm transform and grayscale stretching to generate a grayscale eye patch, generating a binary map of the patch by using a threshold based on a histogram of the patch, and estimating the eye corner by averaging coordinates weighted by minimal eigenvalues of spatial gradient matrices in a search region based on the binary map. Locating a mouth corner includes generating another intensity response map and generating another binary map using another threshold based on another histogram of the intensity response map. Locating a chin or a cheek includes applying angle constrained gradient analysis to reject locations that cannot be the chin or cheek. Locating a cheek further includes removing falsely detected cheeks by parabola fitting curves through the cheeks.

Owner:ARCSOFT

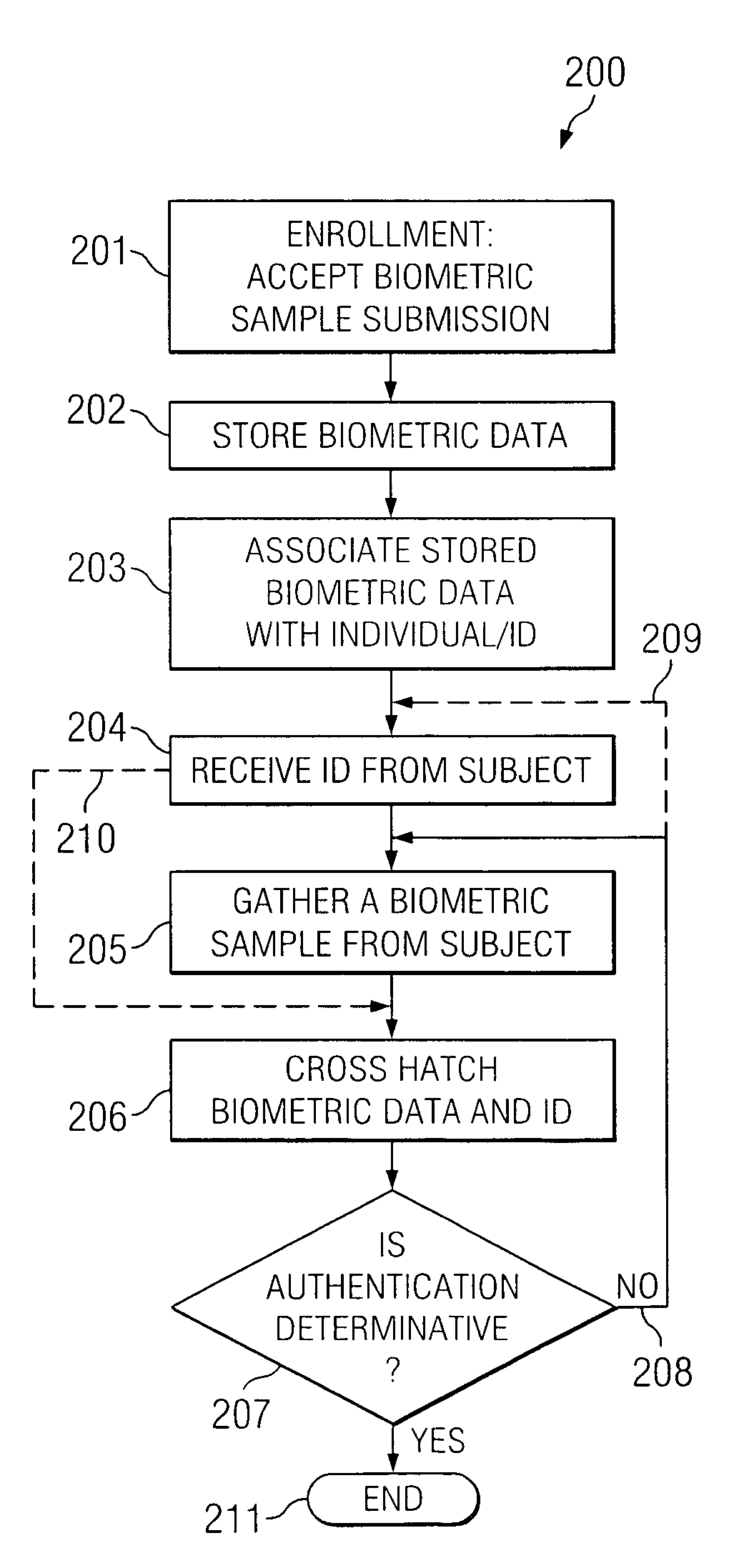

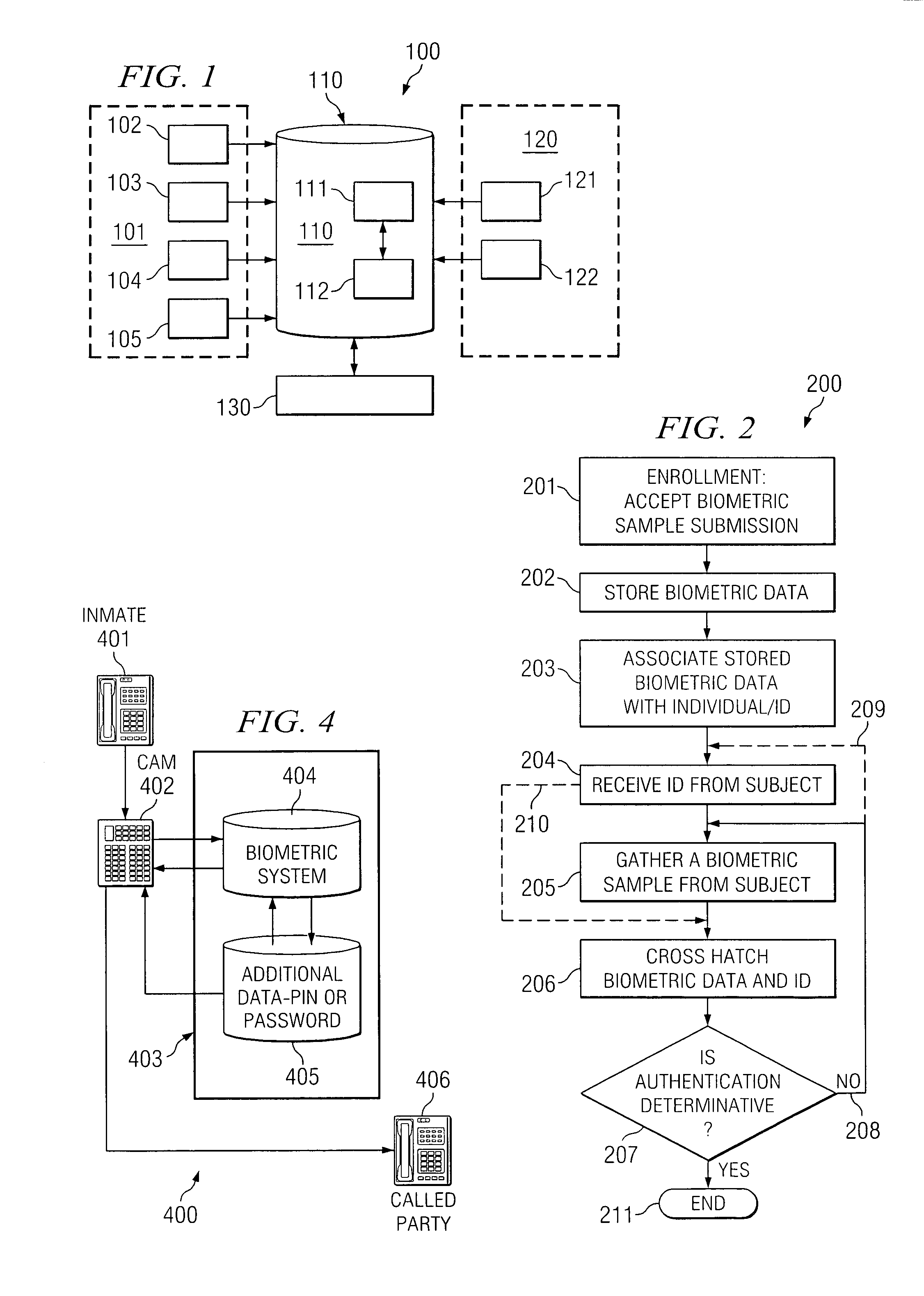

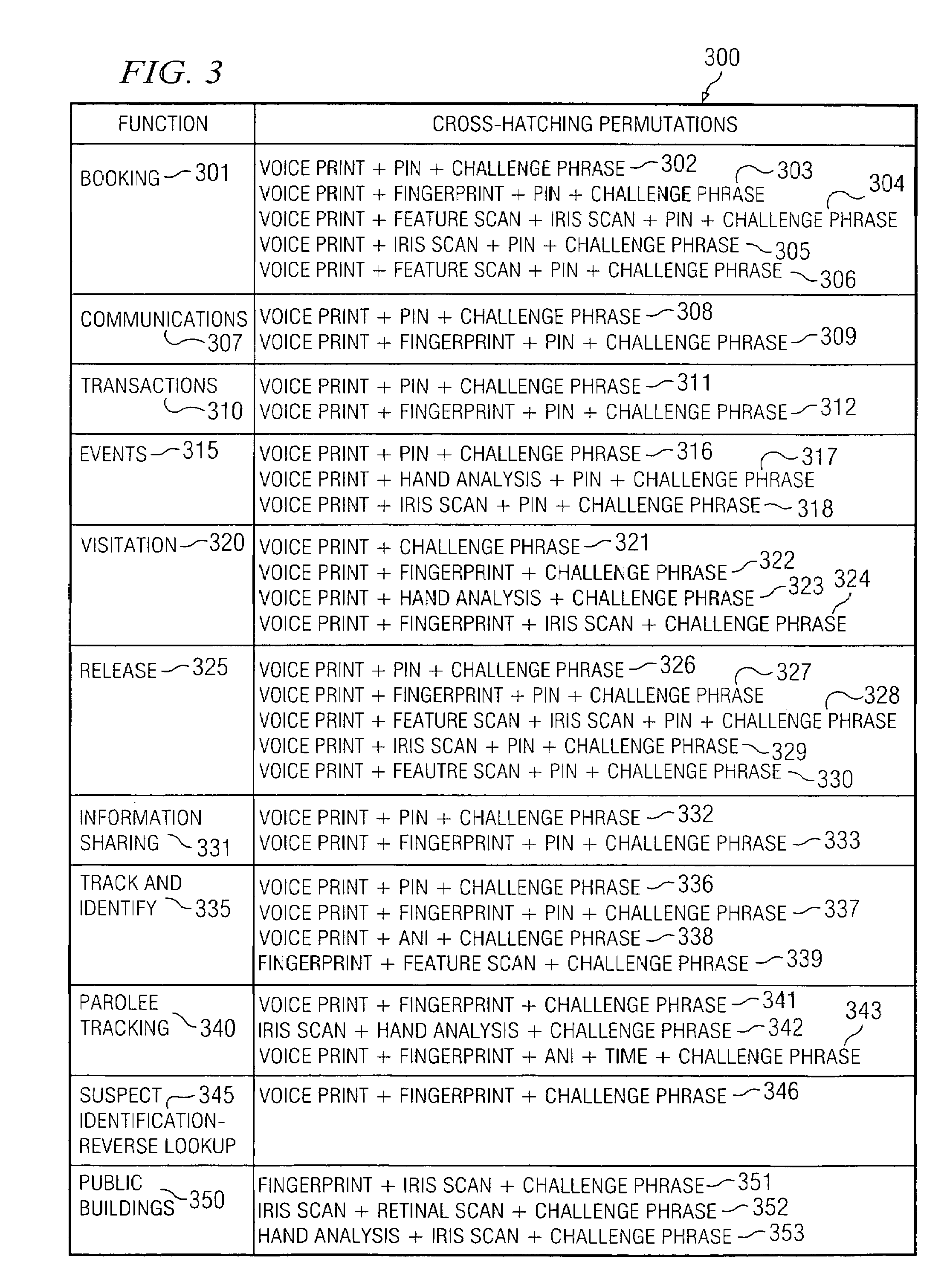

Systems and methods for cross-hatching biometrics with other identifying data

ActiveUS7278028B1Increase probabilityUnauthorized memory use protectionHardware monitoringPattern recognitionAuthentication

In accordance with embodiments of the present invention a present authentication or identification process includes iterative and successive cross-hatching of biometric components such as voice print, fingerprint, hand analysis, retina scan, iris scan, and / or features (such as facial characteristics, scars, tattoos and / or birthmarks) with other identifying data such as a PIN, password phrase, barcode, or identification card.

Owner:SECURUS TECH HLDG

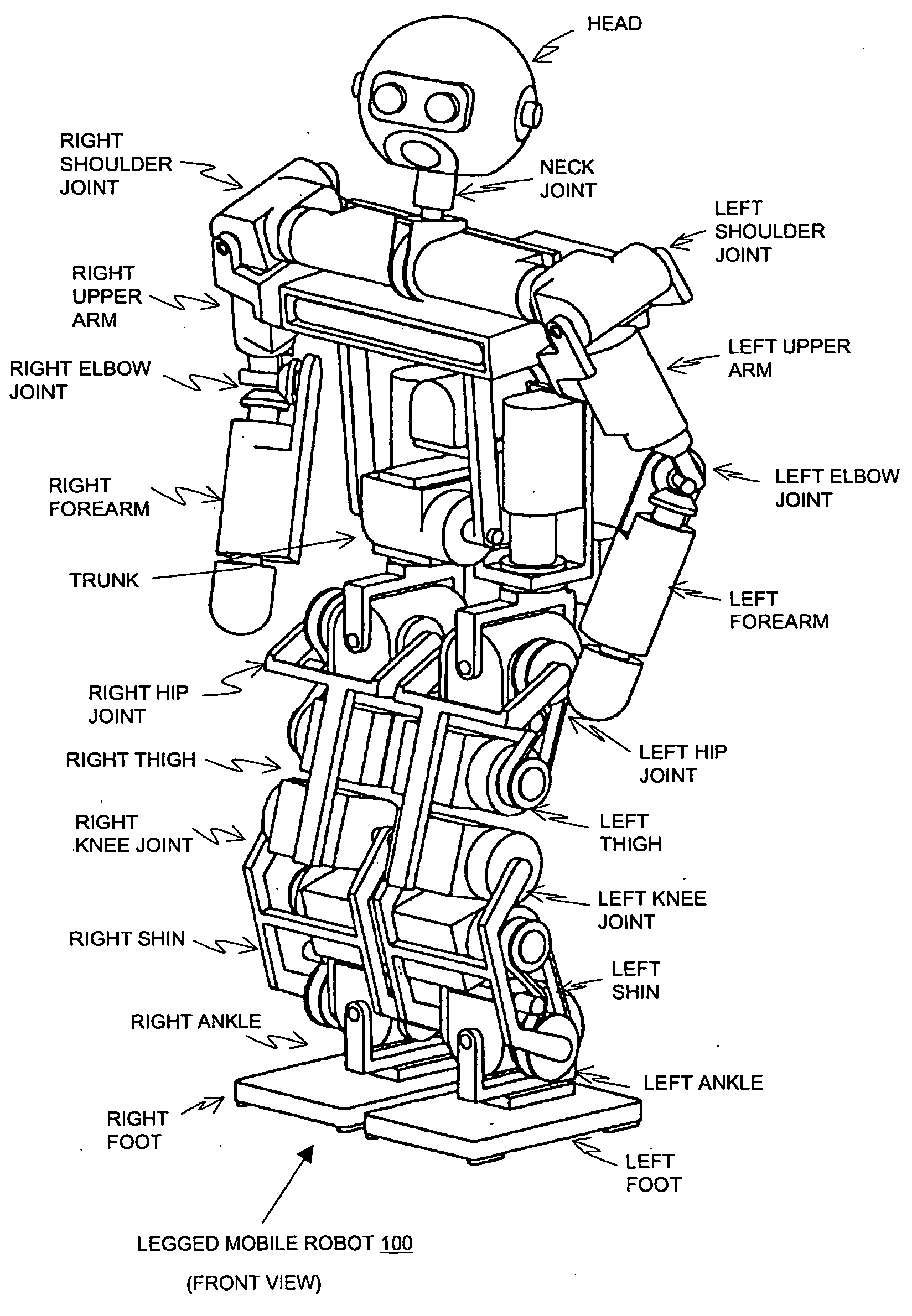

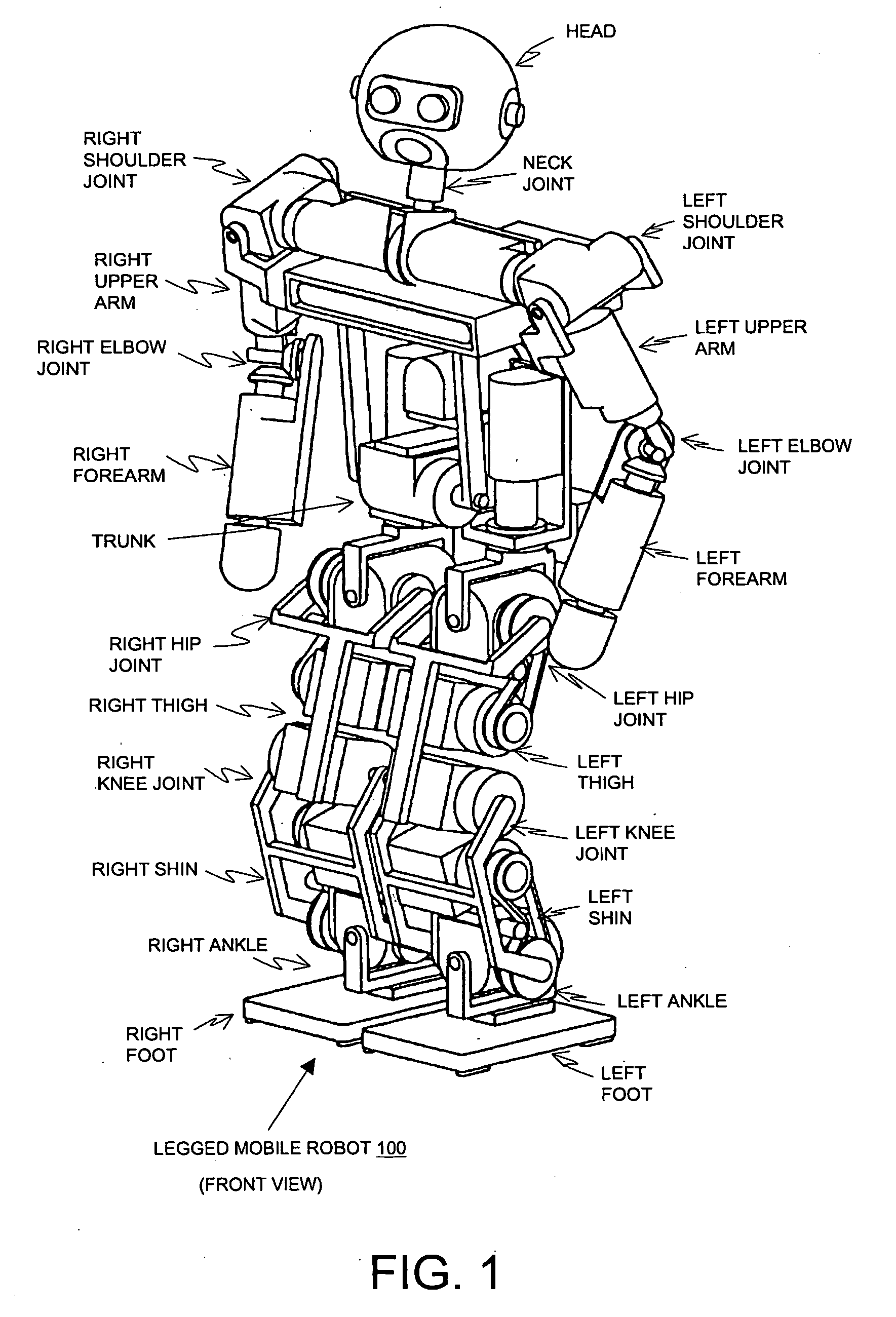

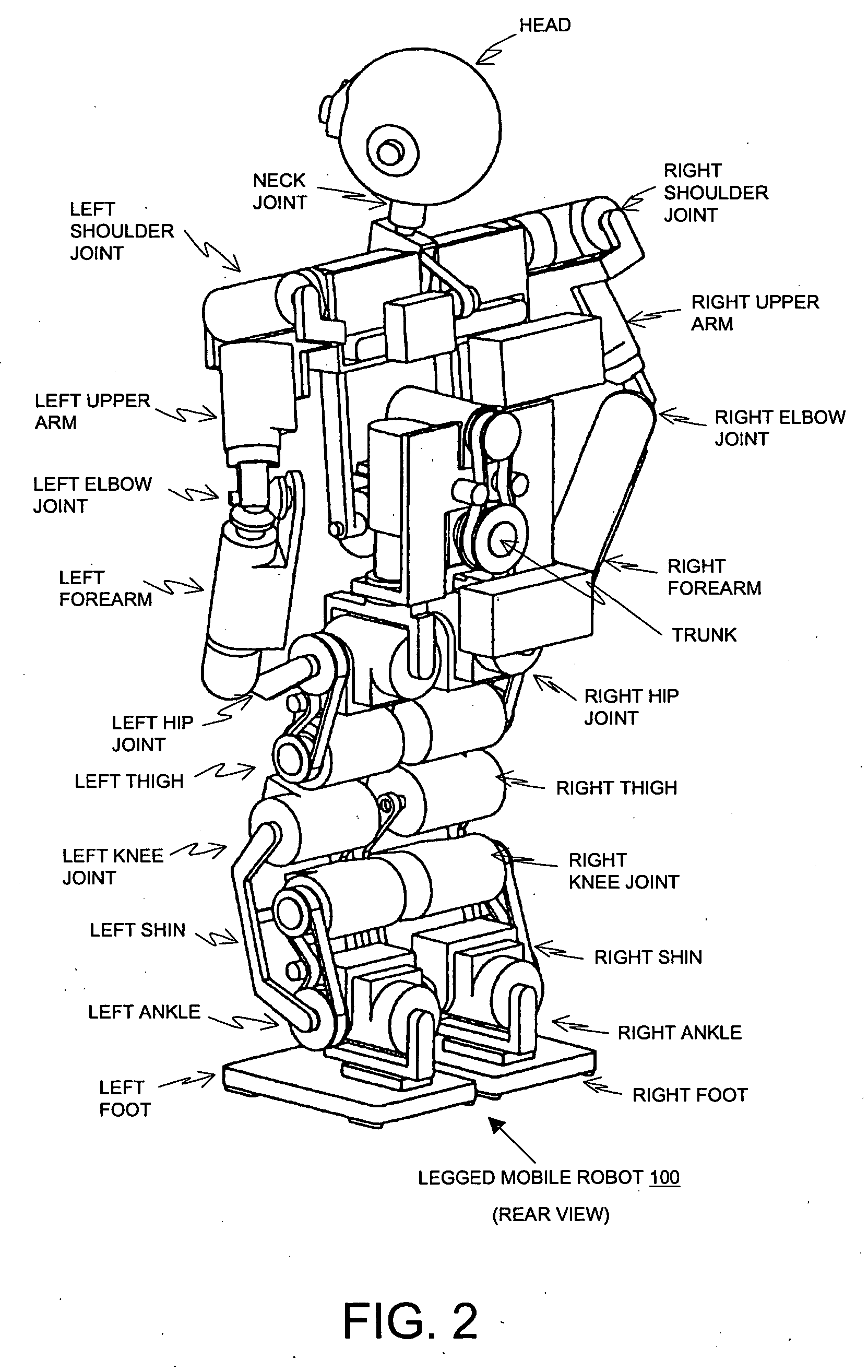

Robot apparatus, face recognition method, and face recognition apparatus

A robot includes a face extracting section for extracting features of a face included in an image captured by a CCD camera, and a face recognition section for recognizing the face based on a result of face extraction by the face extracting section. The face extracting section is implemented by Gabor filters that filter images using a plurality of filters that have orientation selectivity and that are associated with different frequency components. The face recognition section is implemented by a support vector machine that maps the result of face recognition to a non-linear space and that obtains a hyperplane that separates in that space to discriminate a face from a non-face. The robot is allowed to recognize a face of a user within a predetermined time under a dynamically changing environment.

Owner:SONY CORP

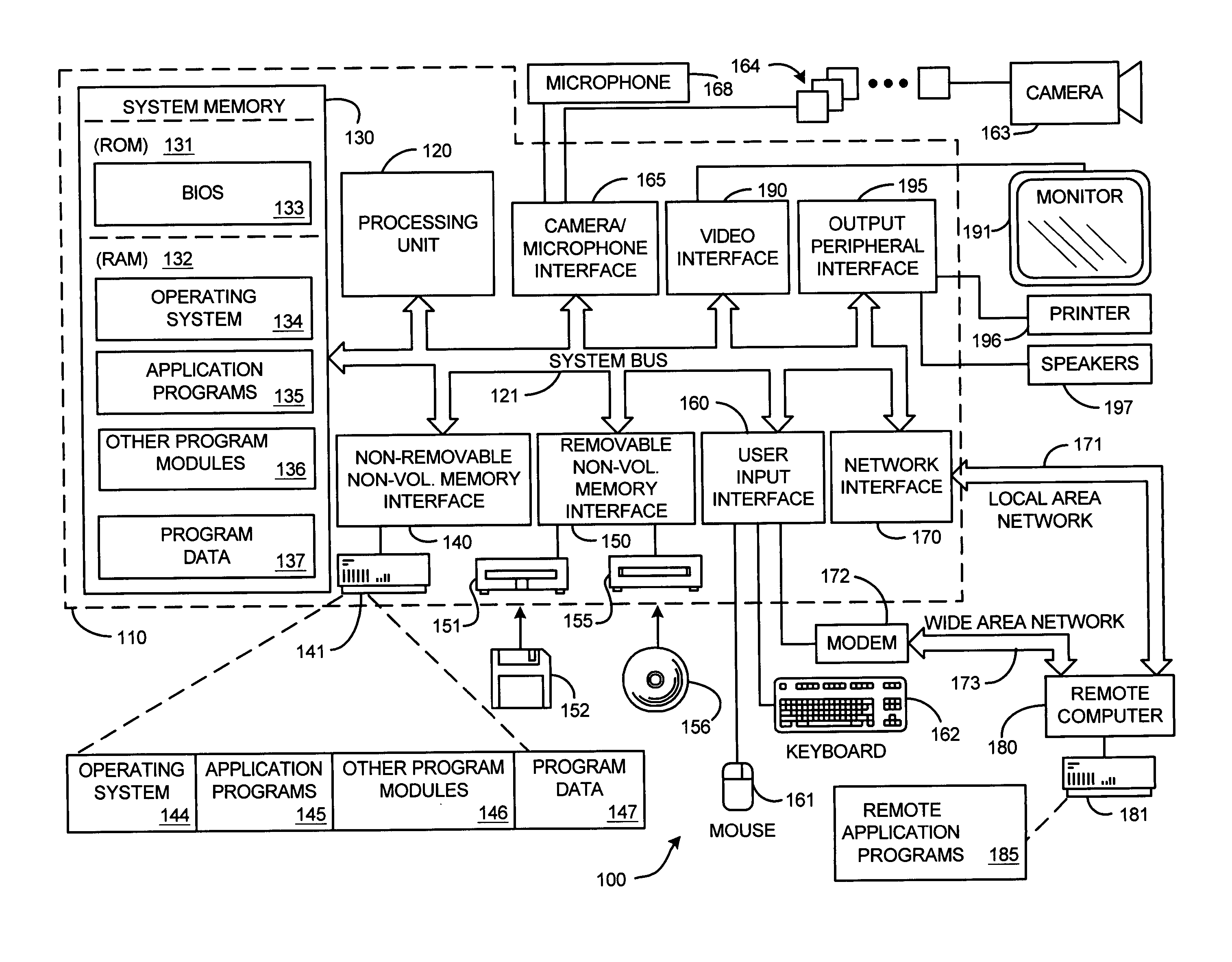

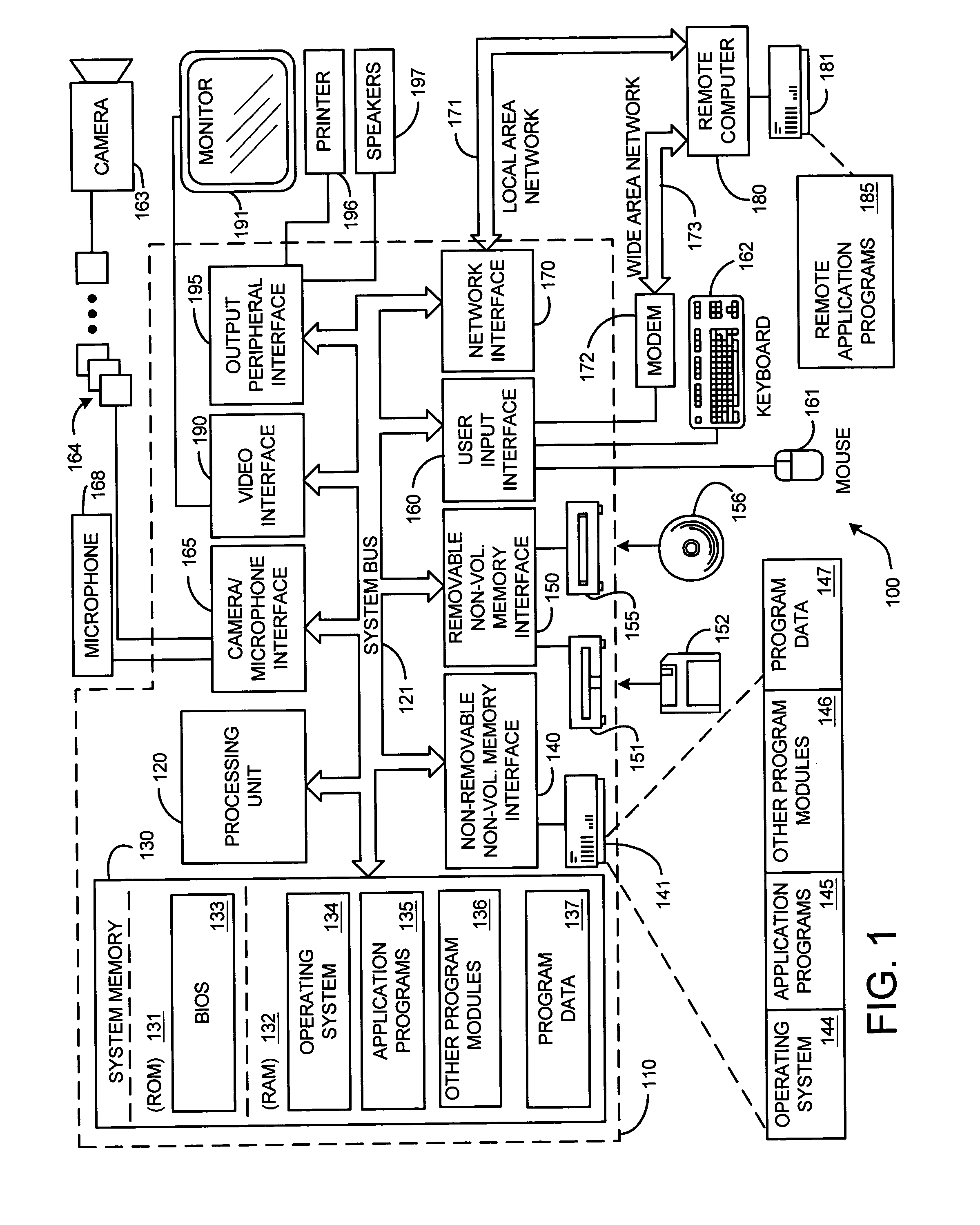

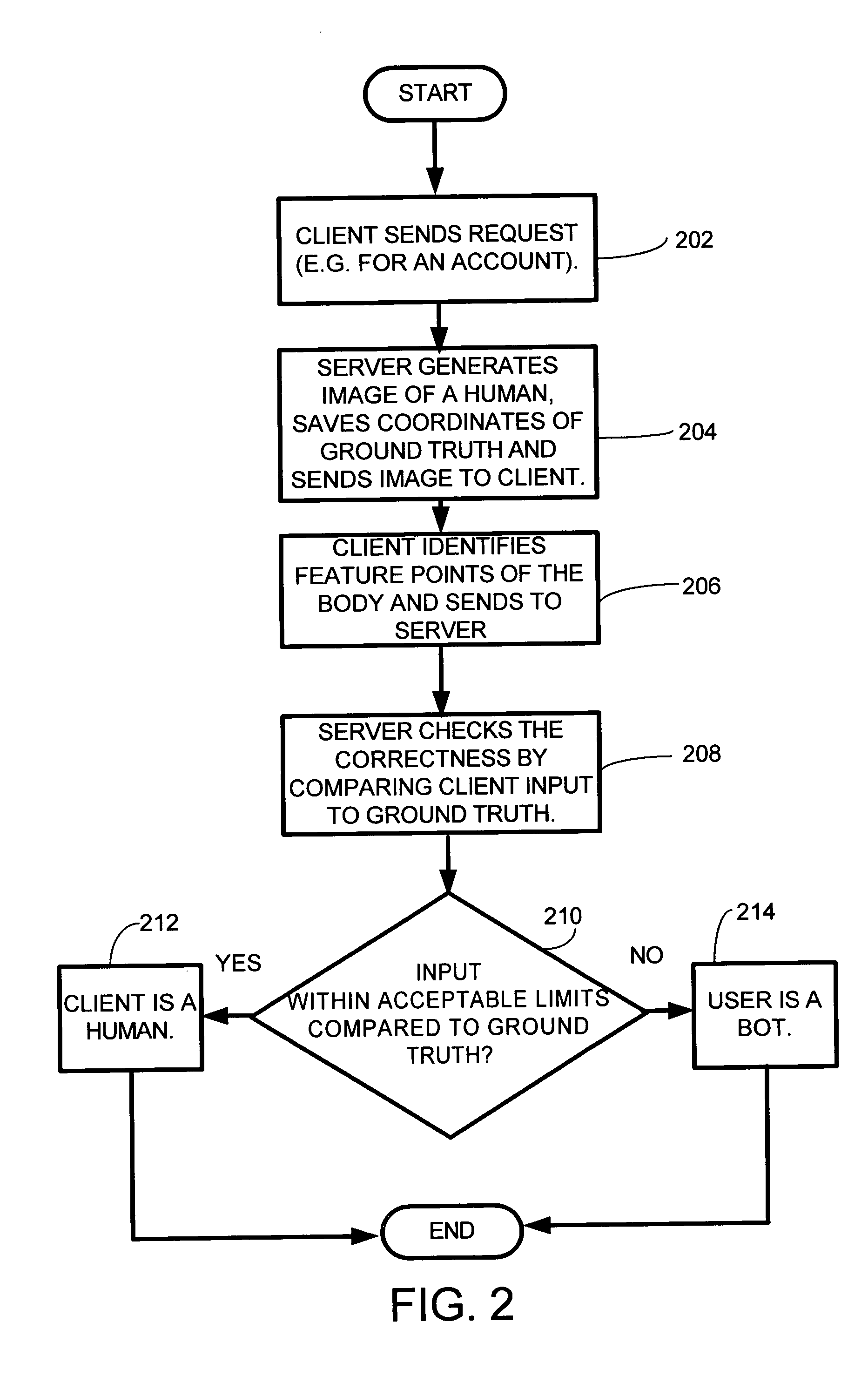

System and method for devising a human interactive proof that determines whether a remote client is a human or a computer program

InactiveUS20050065802A1Character and pattern recognitionComputer security arrangementsGuidelineUsability

A system and method for automatically determining if a remote client is a human or a computer. A set of HIP design guidelines which are important to ensure the security and usability of a HIP system are described. Furthermore, one embodiment of this new HIP system and method is based on human face and facial feature detection. Because human face is the most familiar object to all human users the embodiment of the invention employing a face is possibly the most universal HIP system so far.

Owner:MICROSOFT TECH LICENSING LLC

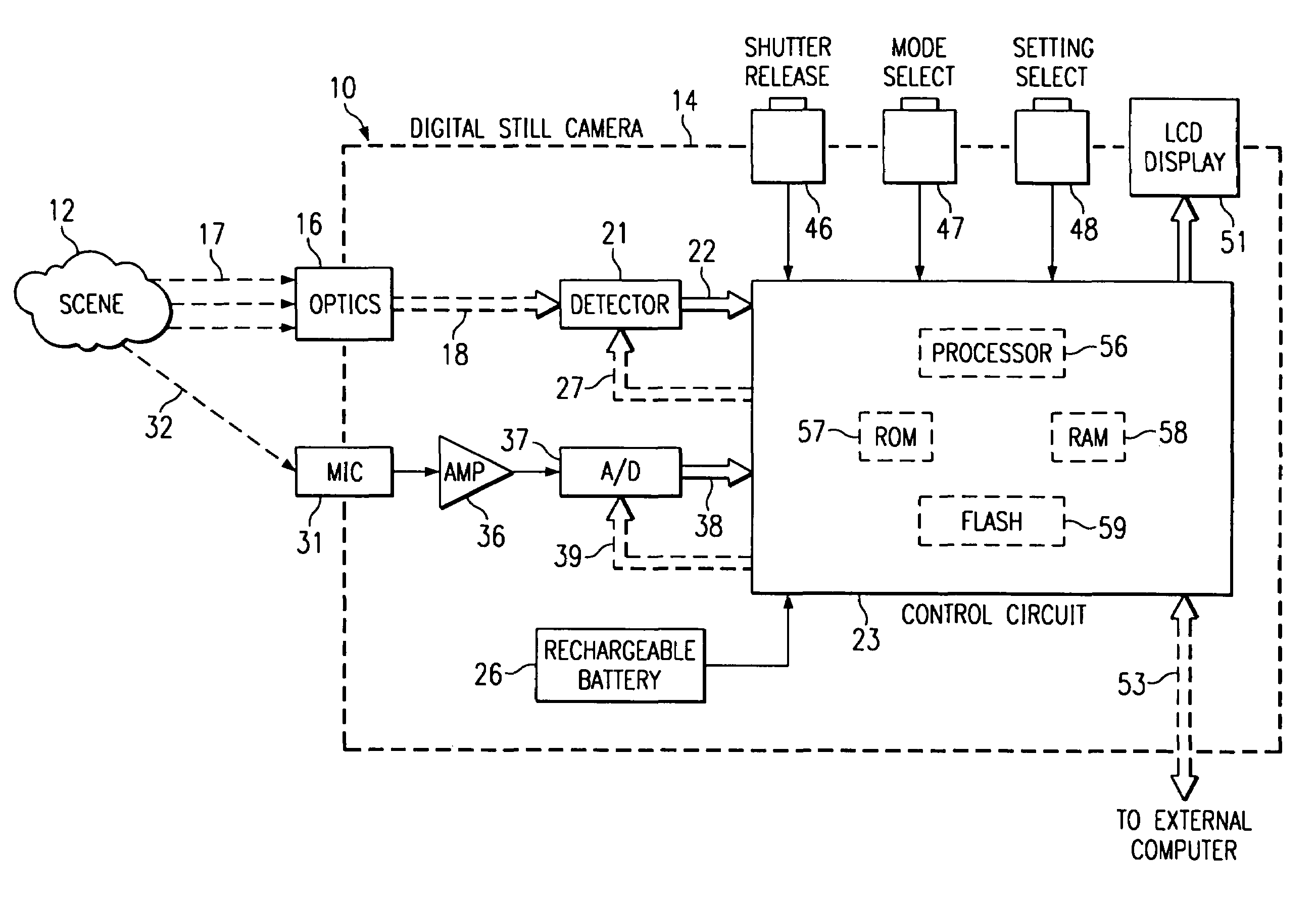

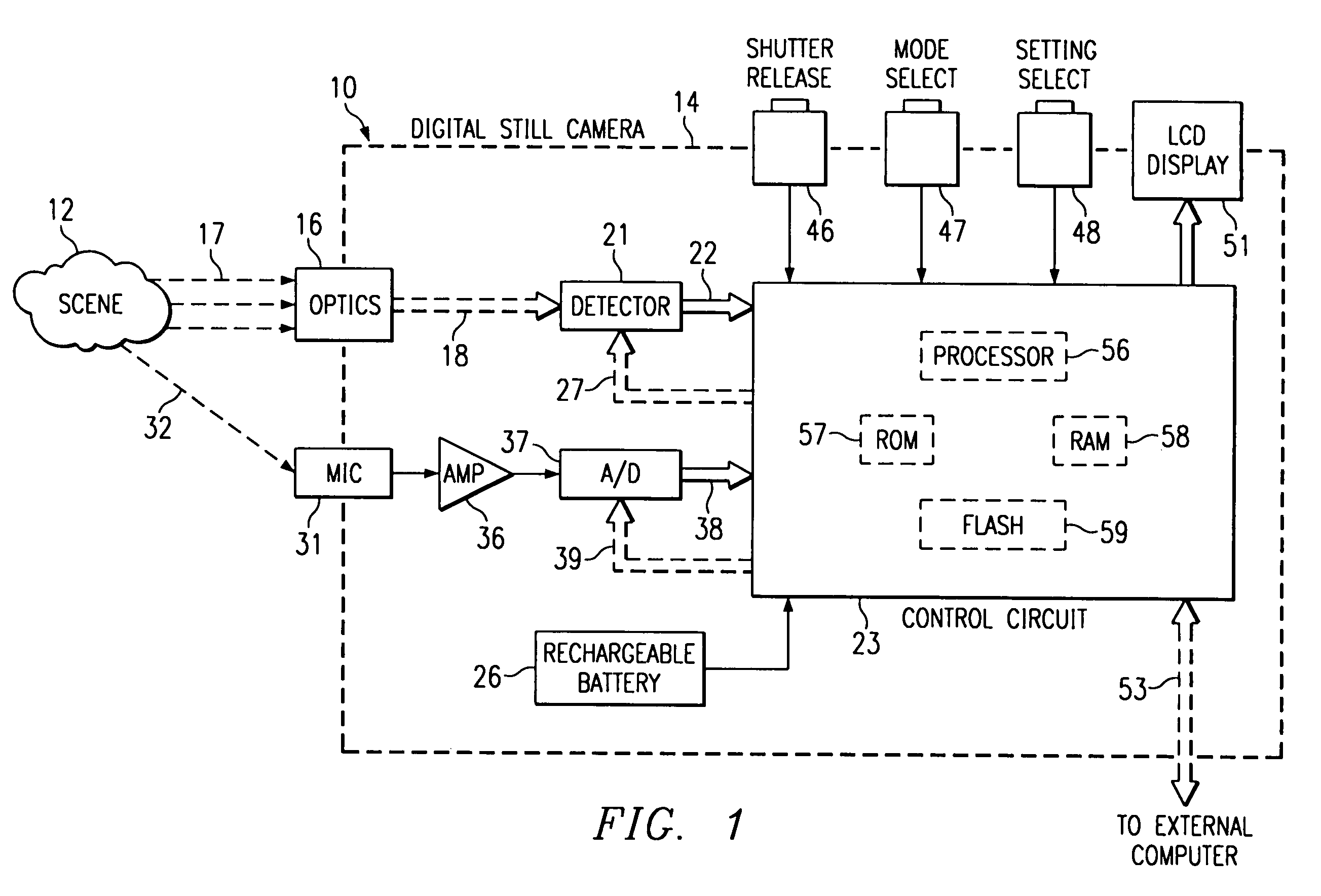

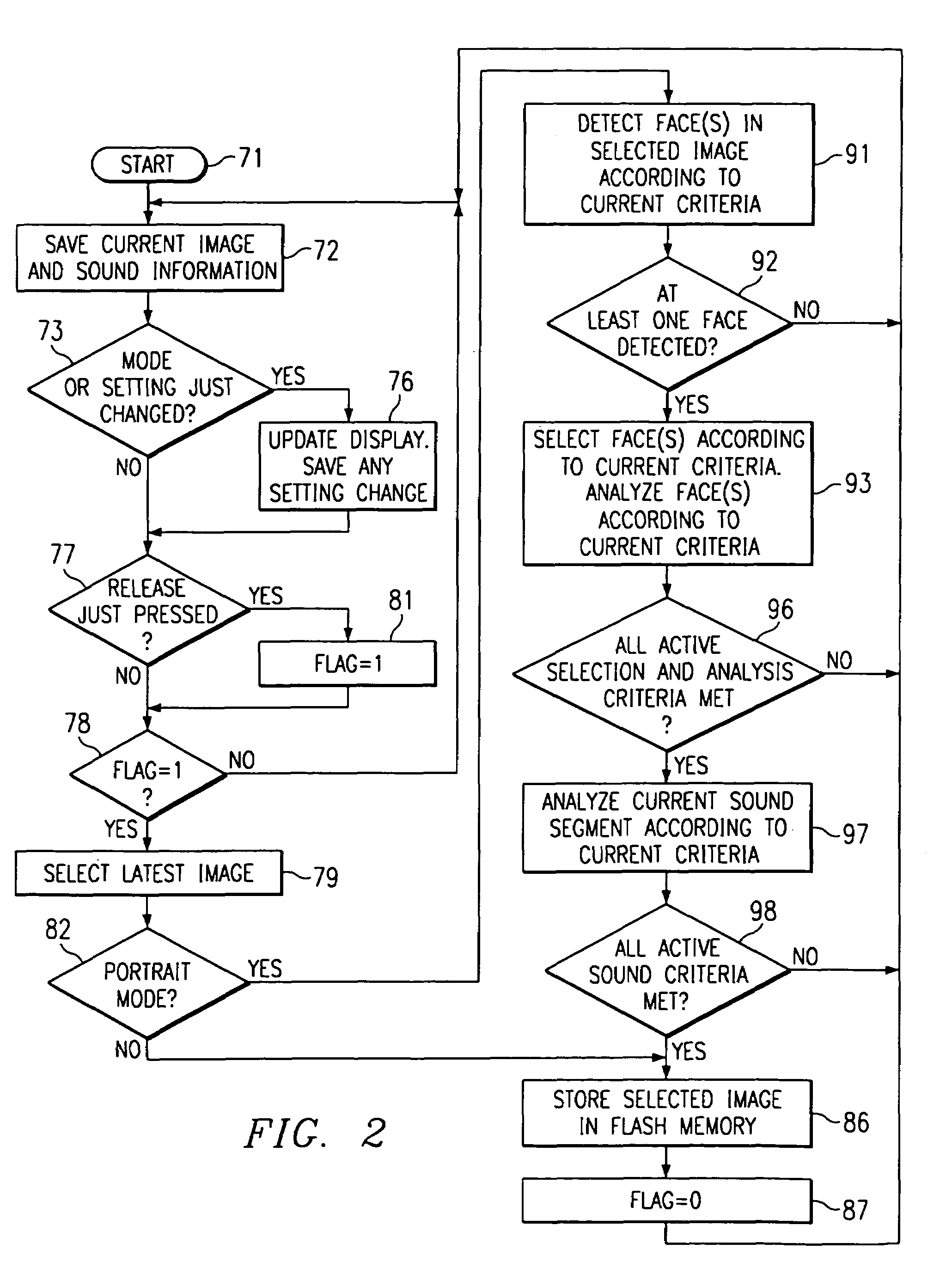

Digital still camera with high-quality portrait mode

InactiveUS7038715B1Reduction and elimination of imageQuality improvementTelevision system detailsCharacter and pattern recognitionDisplay deviceStill camera

A digital still camera (10) receives and digitizes visible radiation (17) and sound waves (32) from a scene (12). When an operator actuates a shutter release (46), the camera detects and evaluates digitized information (22, 38) from the scene in a continuing manner, until a point in time when information representative of a human facial characteristic satisfies a specified criteria set by the operator through use of switches (47, 48) and a display (51). The camera then records in a memory (59) a digital image of the scene, which corresponds to the point in time. The stored image is a high-quality image, which can avoid characteristics such as eyes that are closed or a mouth that is open.

Owner:TEXAS INSTR INC

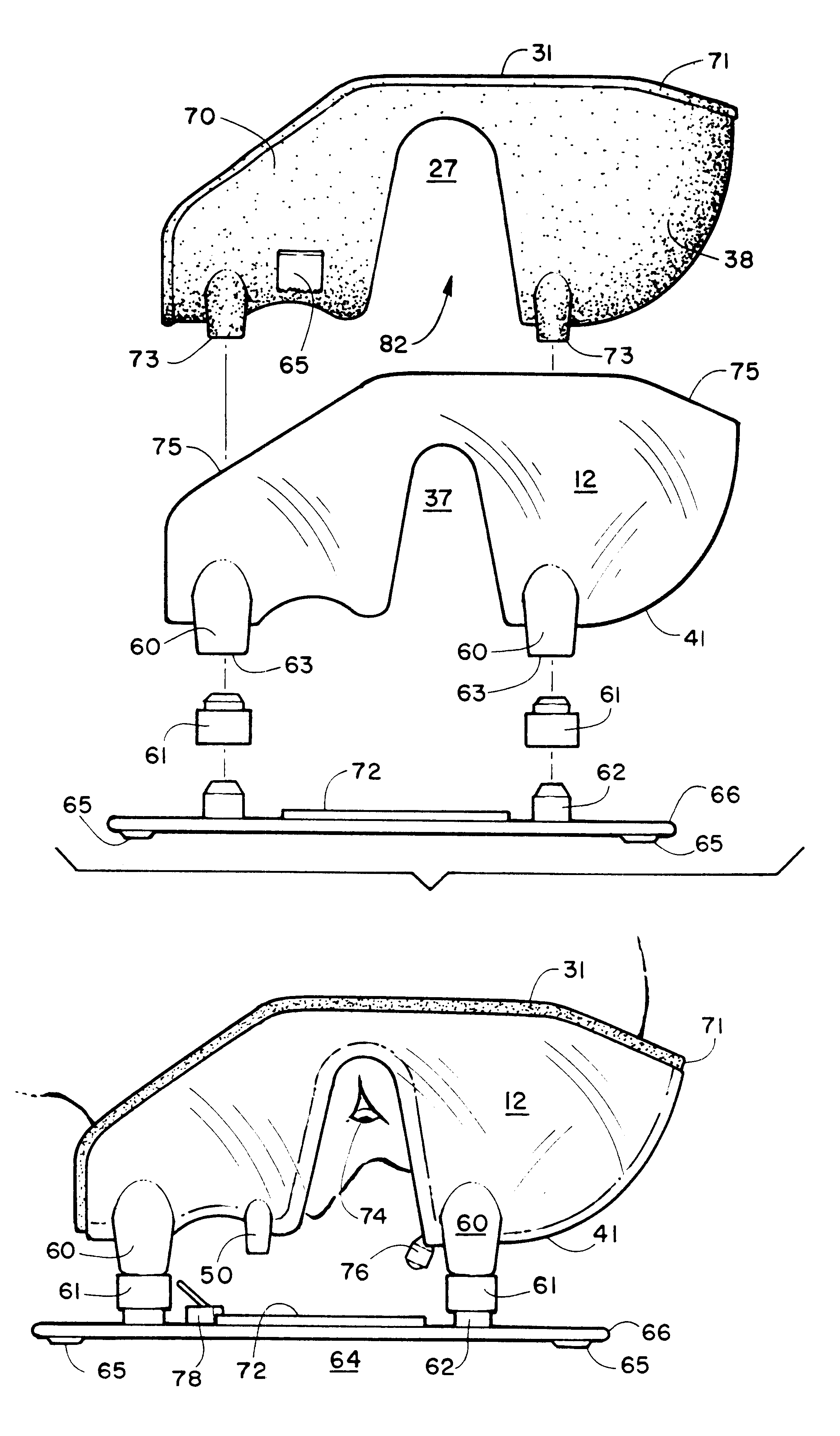

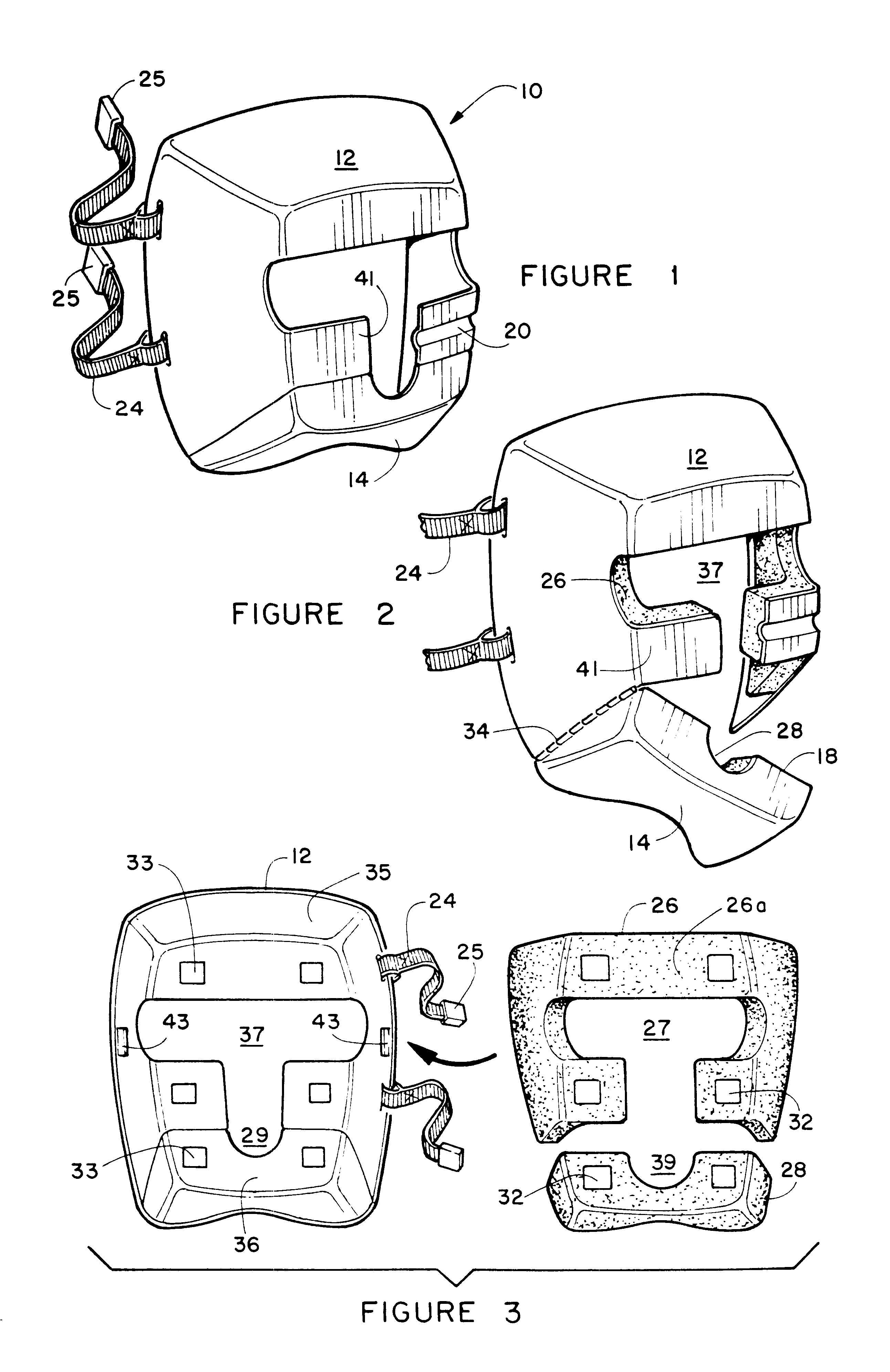

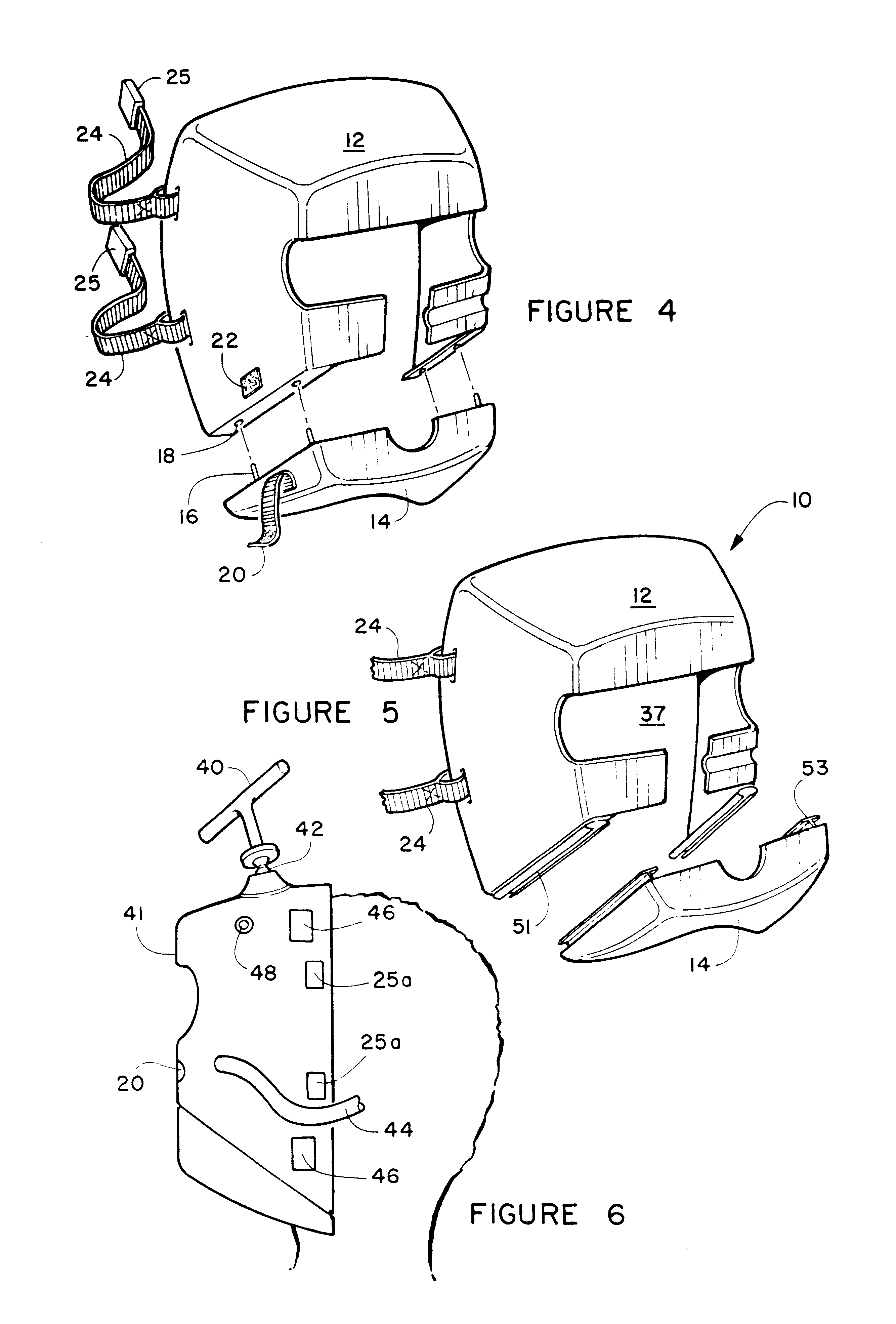

Protective cushion and cooperatively engageable helmet casing for anesthetized patient

InactiveUS6490737B1Aid in temperature controlEasy to viewOperating tablesEye treatmentTemperature controlChin

A protective helmet apparatus of modular construction to be worn by anesthetized patients for facial support during surgery. The helmet apparatus is assembled using one of a plurality of interchangeable, substantially transparent helmet casings, which are removably attachable to a plurality of dismountable facial cushions providing even support to the facial surface of a patient. The removable facial cushions are dimensioned on and interior surface to accommodate different sized facial structures of different patients to yield maximum pressure diffusion on the face and chin of the patient and are replaceable when worn. The exterior surface of the facial cushions are dimensioned for cooperative engagement with the interior surface of the helmet casing. A plurality of different facial cushions and helmet casings are modular in design and dimension to be interchangeable with each other thus providing accommodate the broad differences in facial structure and size of patients using them for surgery. The cushions may be marked with printed or color coded indicia to designate size. A view of the patients eyes and surrounding area is afforded through in line ocular apertures extending around a front surface area and up at least one sidewall. The ocular aperture is in line with a cushion ocular aperture when the cushion is engaged with the casing thereby allowing a view of the patent eye and surrounding face through the ocular aperture from the side of the device. Additional utility is provided by variable elevation above a registered engagement with a mount which also may provide a mirrored surface to reflect the patent facial features for viewing by upright doctors and operating staff. An optional integral heating element aids in temperature control of the patient's head during surgery.

Owner:DUPACO

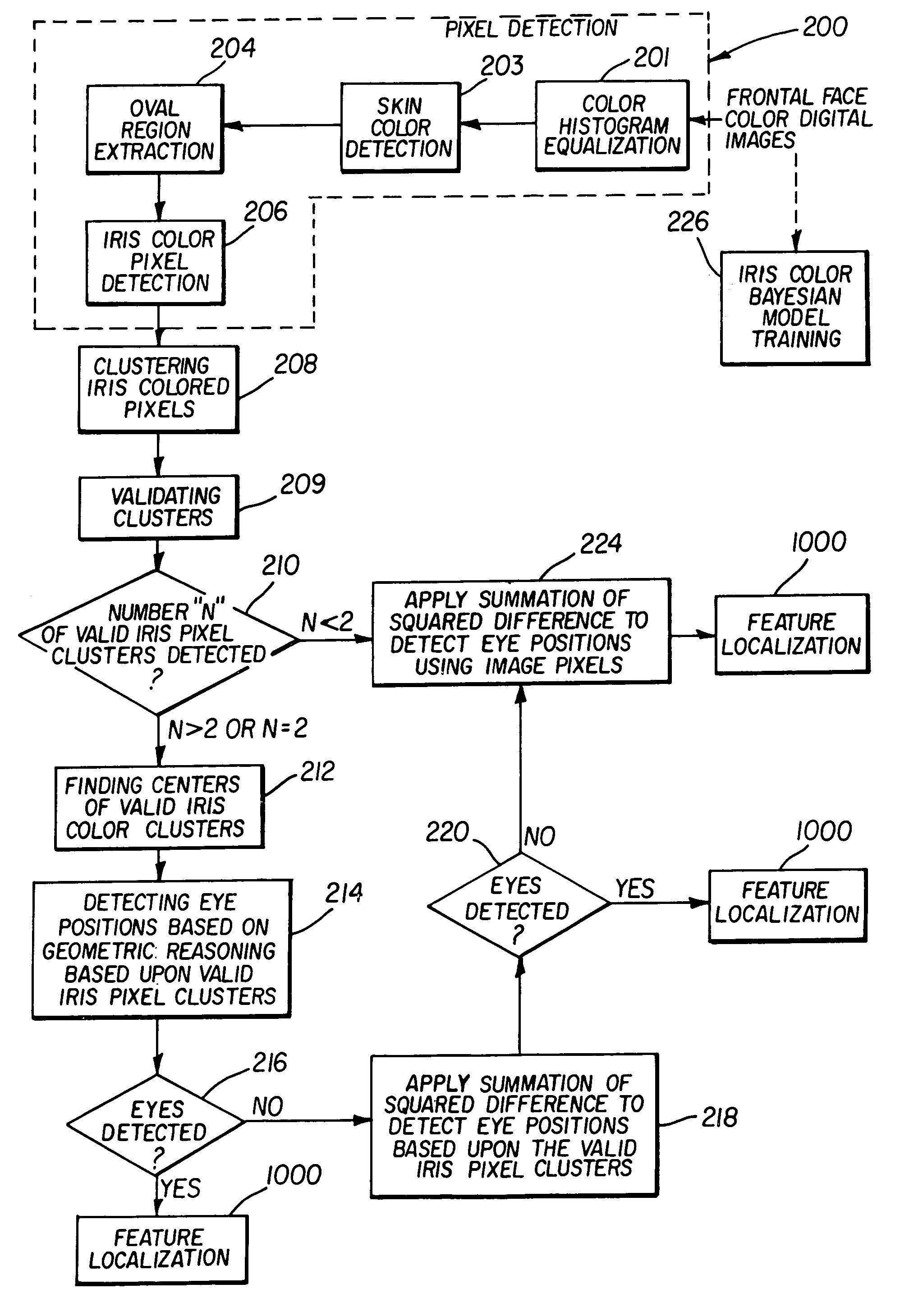

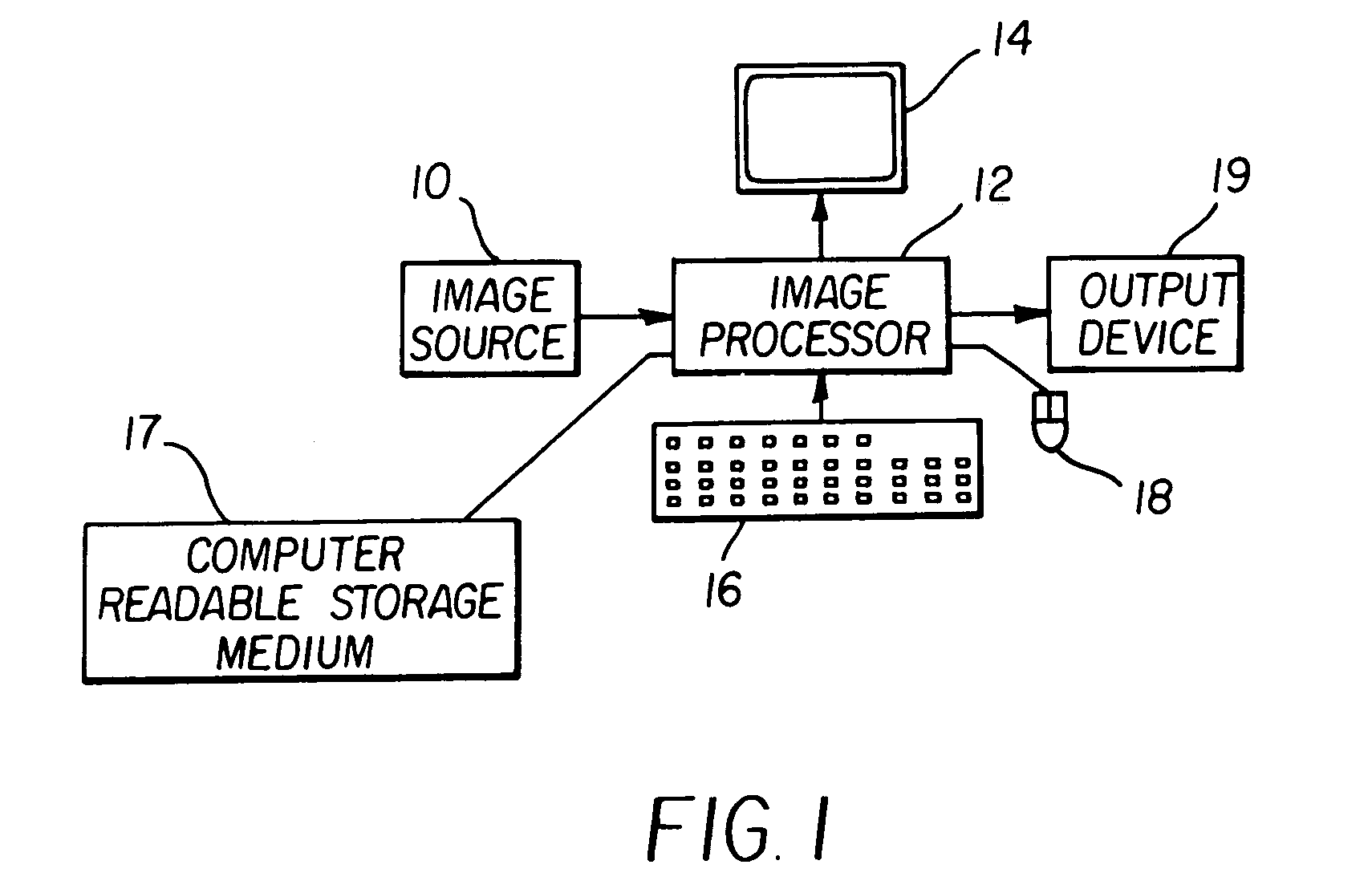

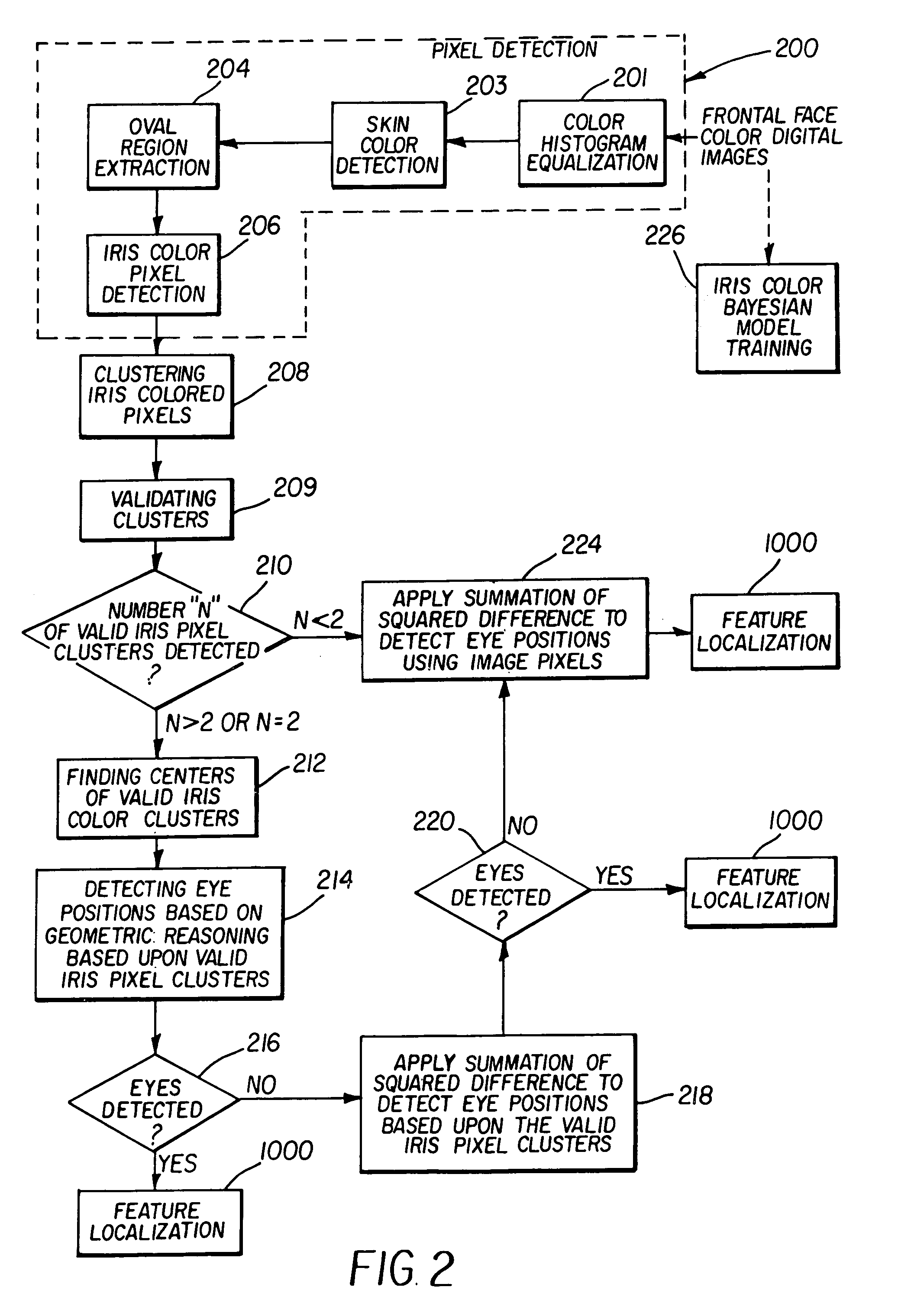

Method and computer program product for locating facial features

A digital image processing method detects facial features in a digital image. This method includes the steps of detecting iris pixels in the image, clustering the iris pixels, and selecting at least one of the following schemes to identify eye positions: applying geometric reasoning to detect eye positions using the iris pixel clusters; applying a summation of squared difference method using the iris pixel clusters to detect eye positions; and applying a summation of squared difference method to detect eye positions from the pixels in the image. The method applied to identify eye positions is selected on the basis of the number of iris pixel clusters, and the facial features are located using the identified eye positions.

Owner:MONUMENT PEAK VENTURES LLC

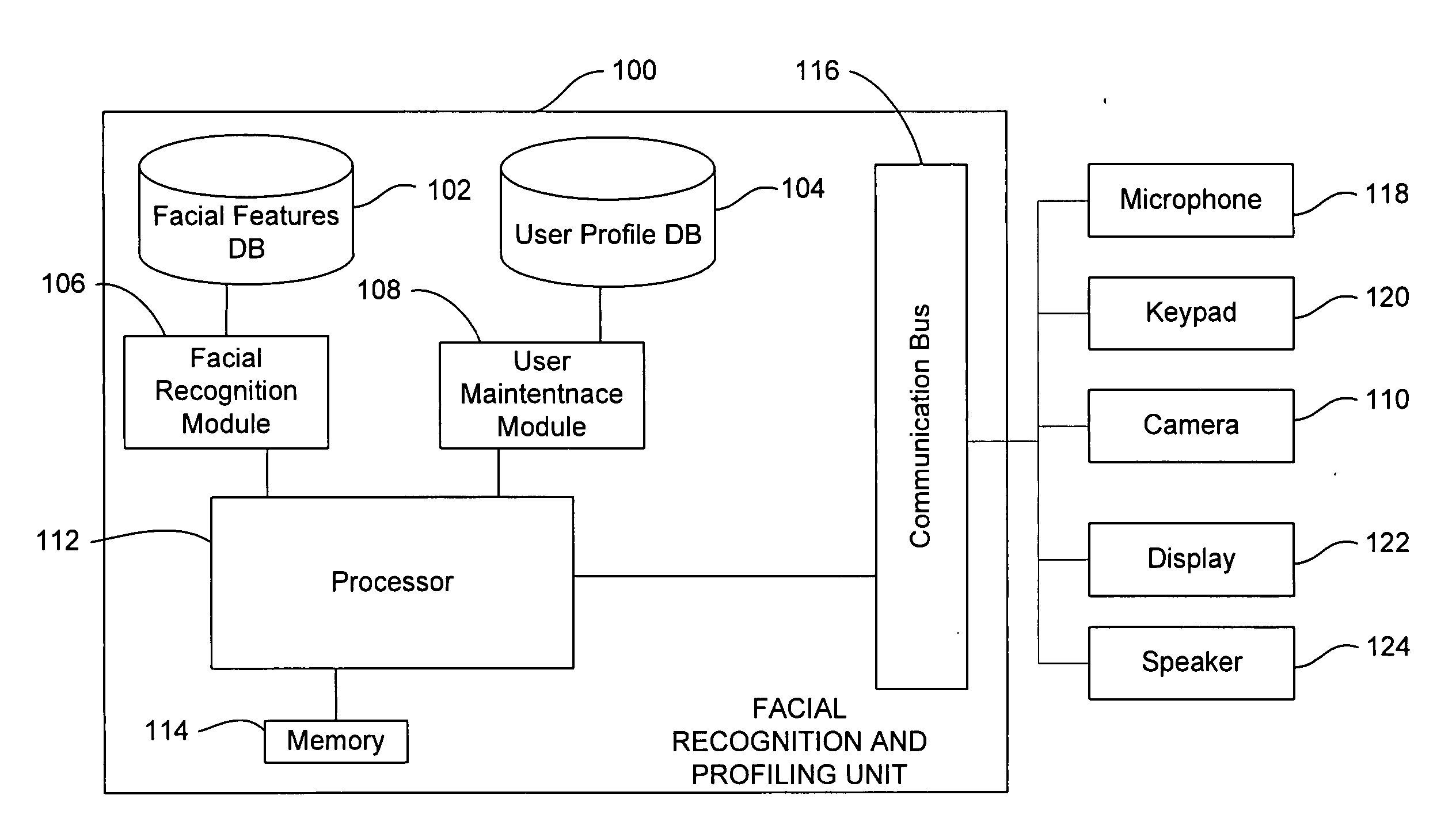

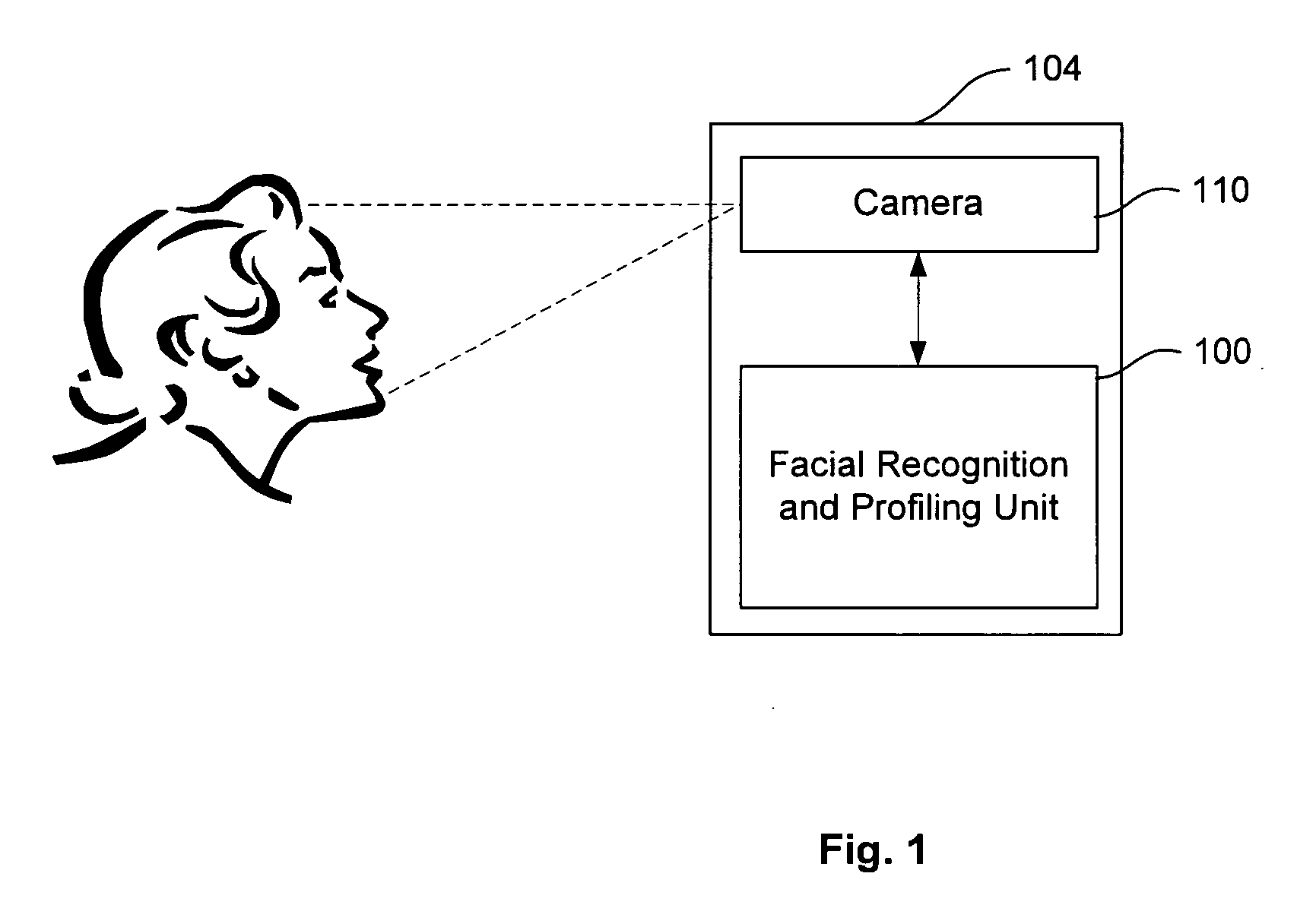

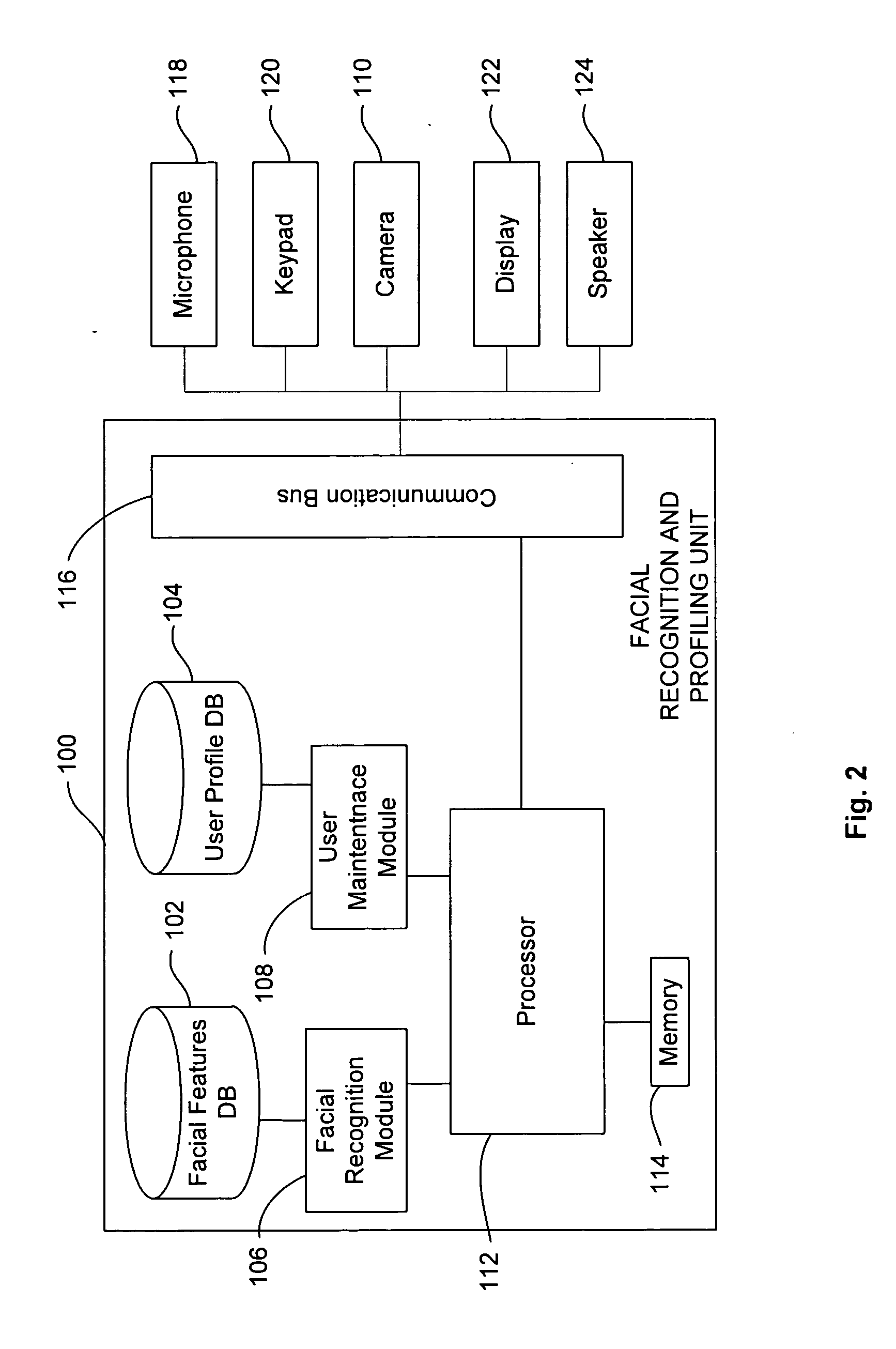

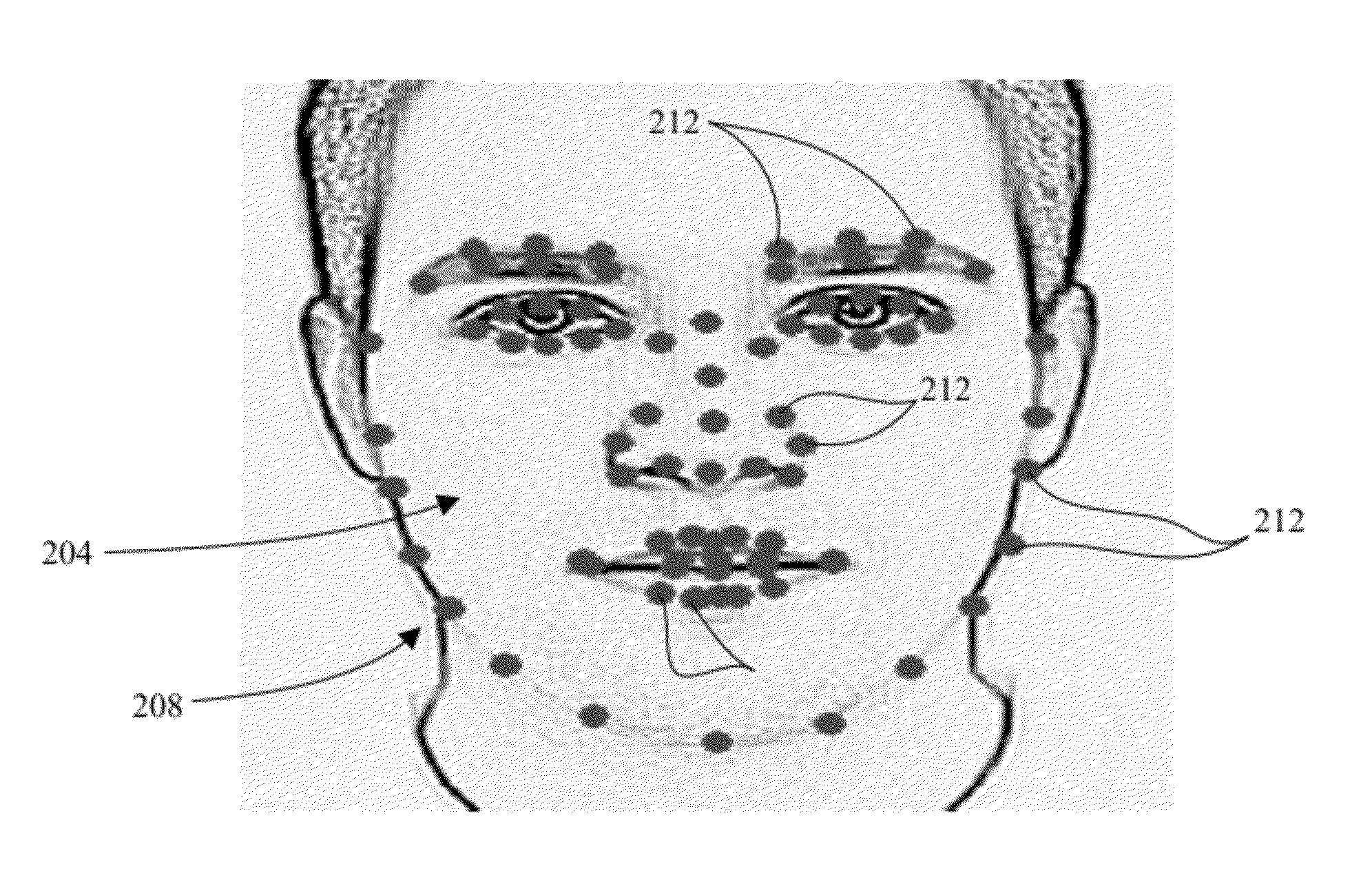

Method and apparatus for providing user profiling based on facial recognition

InactiveUS20070140532A1Electric signal transmission systemsImage analysisData matchingElectrical devices

A method and system of providing user profiling for an electrical device is disclosed. Face representation data is captured with an imaging device. The imaging device focuses on the face of the user to capture the face representation data. A determination is made as to whether a facial feature database includes user facial feature data that matches the face representation data. User preference data is loaded on a memory module of the electrical device when the face representation data matches user facial feature data in the facial feature database. A new user profile is added to the user profile database when the face representation data does not match user facial feature data in the facial feature database.

Owner:GENERAL INSTR CORP

Rapid 3D Face Reconstruction From a 2D Image and Methods Using Such Rapid 3D Face Reconstruction

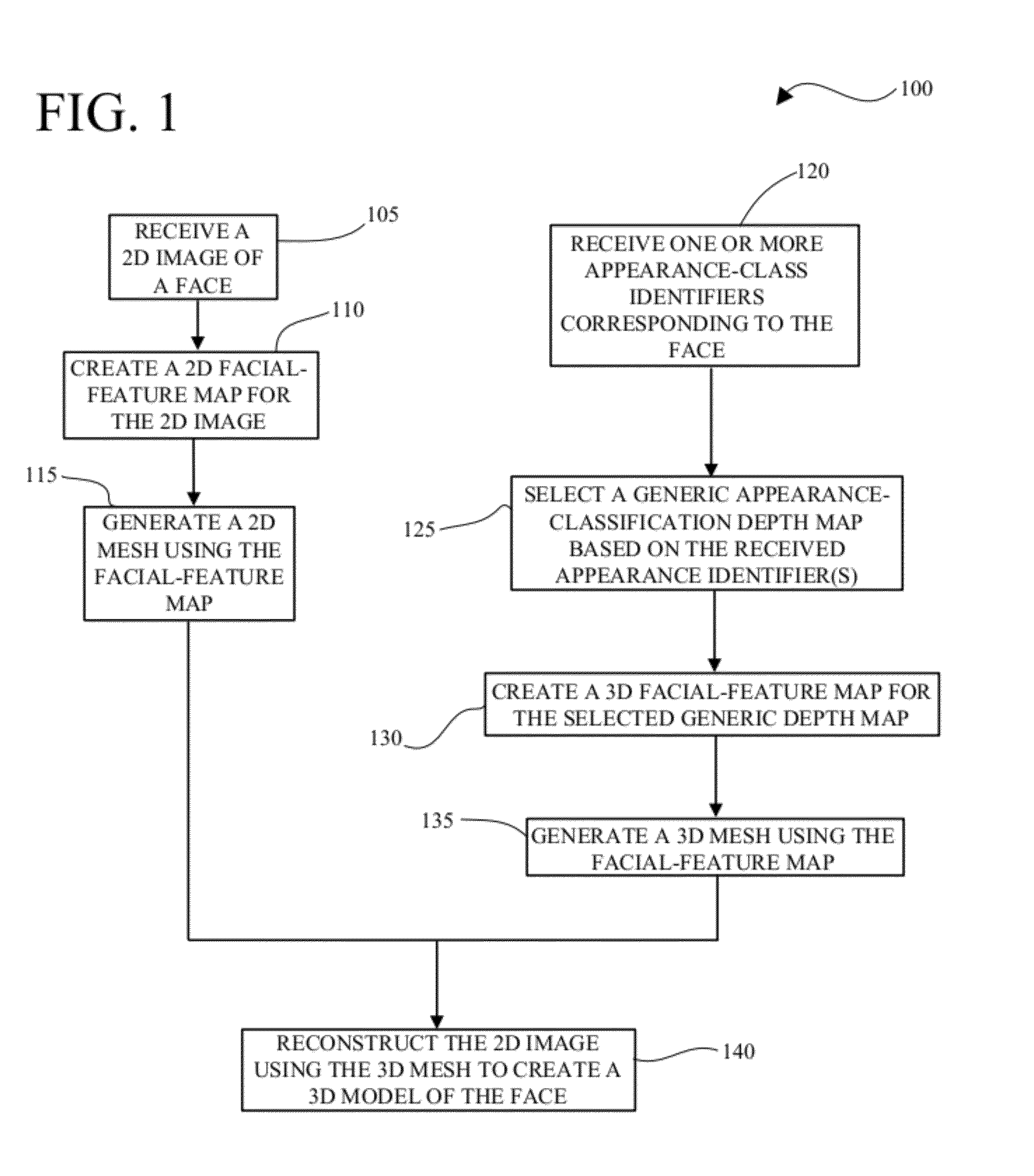

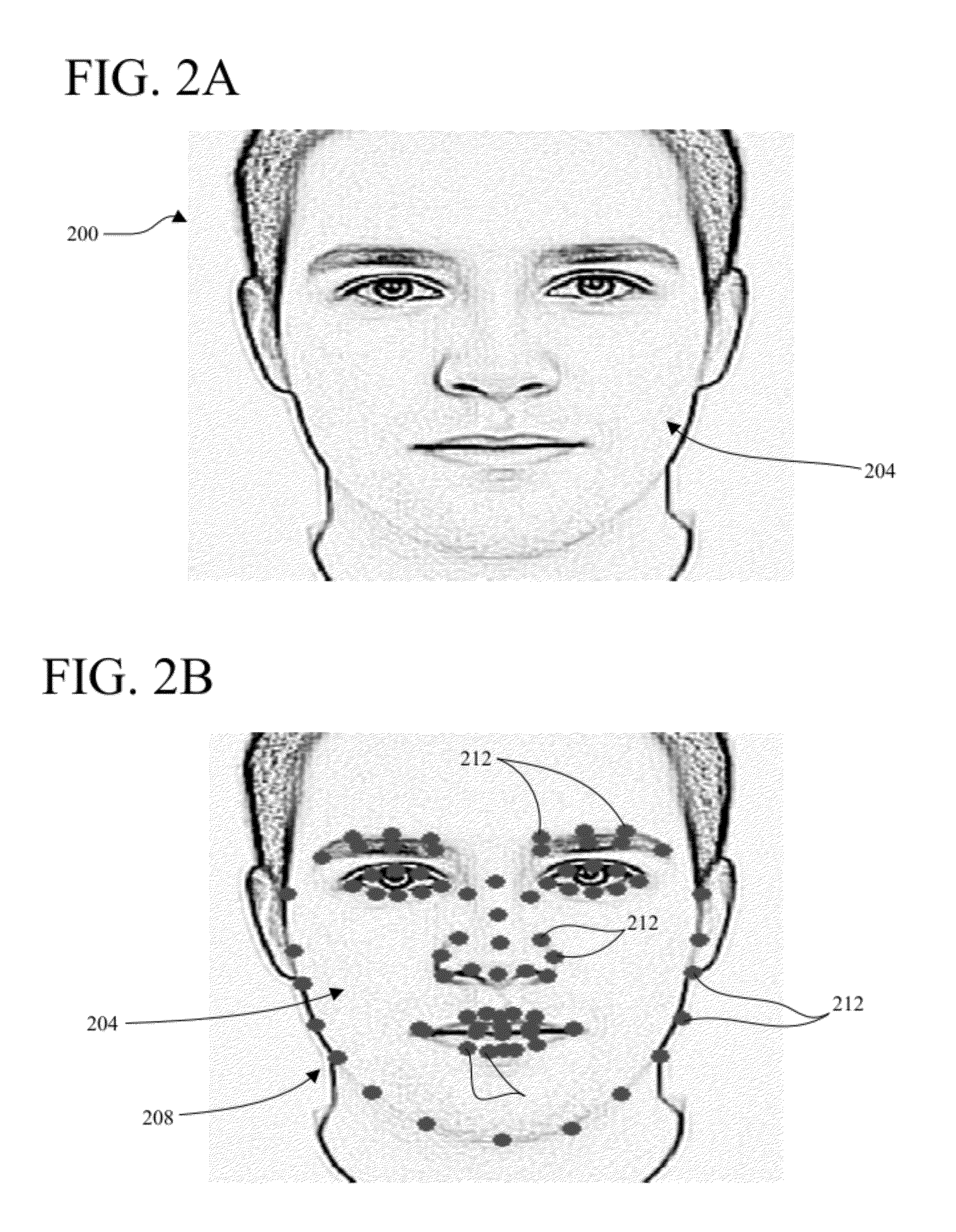

Creating a 3D face reconstruction model using a single 2D image and a generic facial depth map that provides depth information. In one example, the generic facial depth map is selected based on gender and ethnicity / race. In one embodiment, a set of facial features of the 2D image is mapped to create a facial-feature map, and a 2D mesh is created using the map. The same set of facial features is also mapped onto a generic facial depth map, and a 3D mesh is created therefrom. The 2D image is then warped by transposing depth information from the 3D mesh of the generic facial depth map onto the 2D mesh of the 2D image so as to create a reconstructed 3D model of the face. The reconstructed 3D model can be used, for example, to create one or more synthetic off-angle-pose images of the subject of the original 2D image.

Owner:CARNEGIE MELLON UNIV

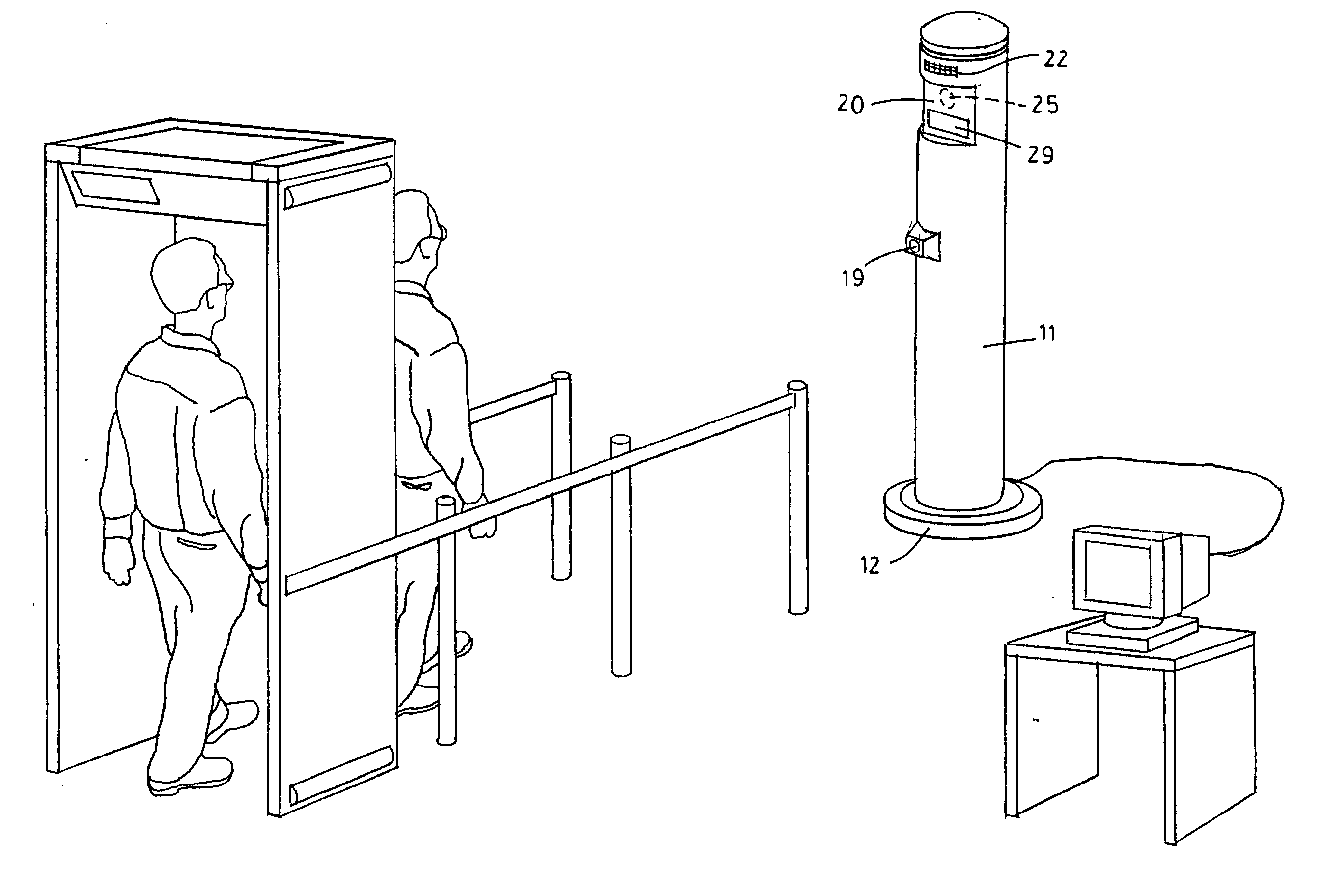

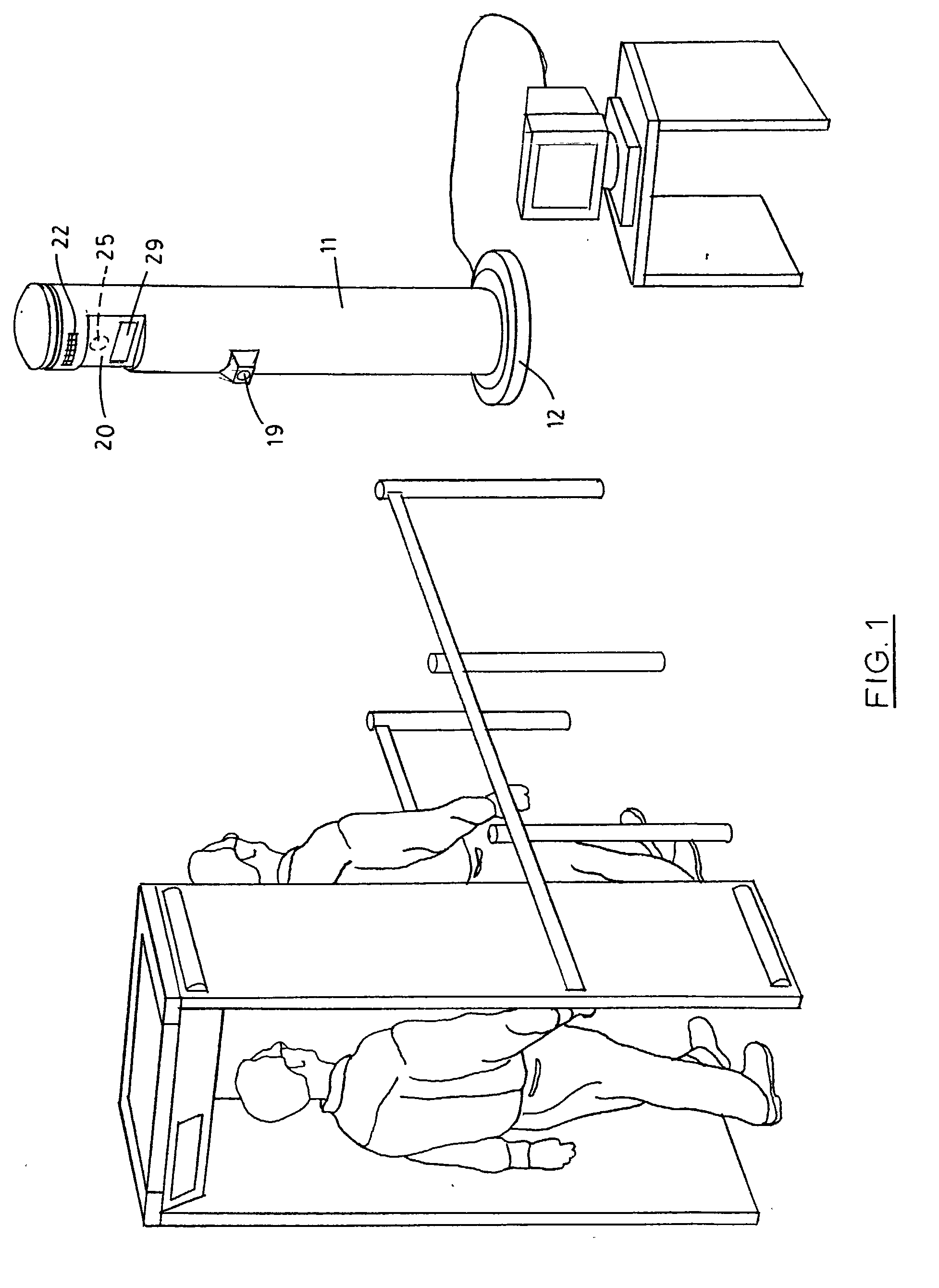

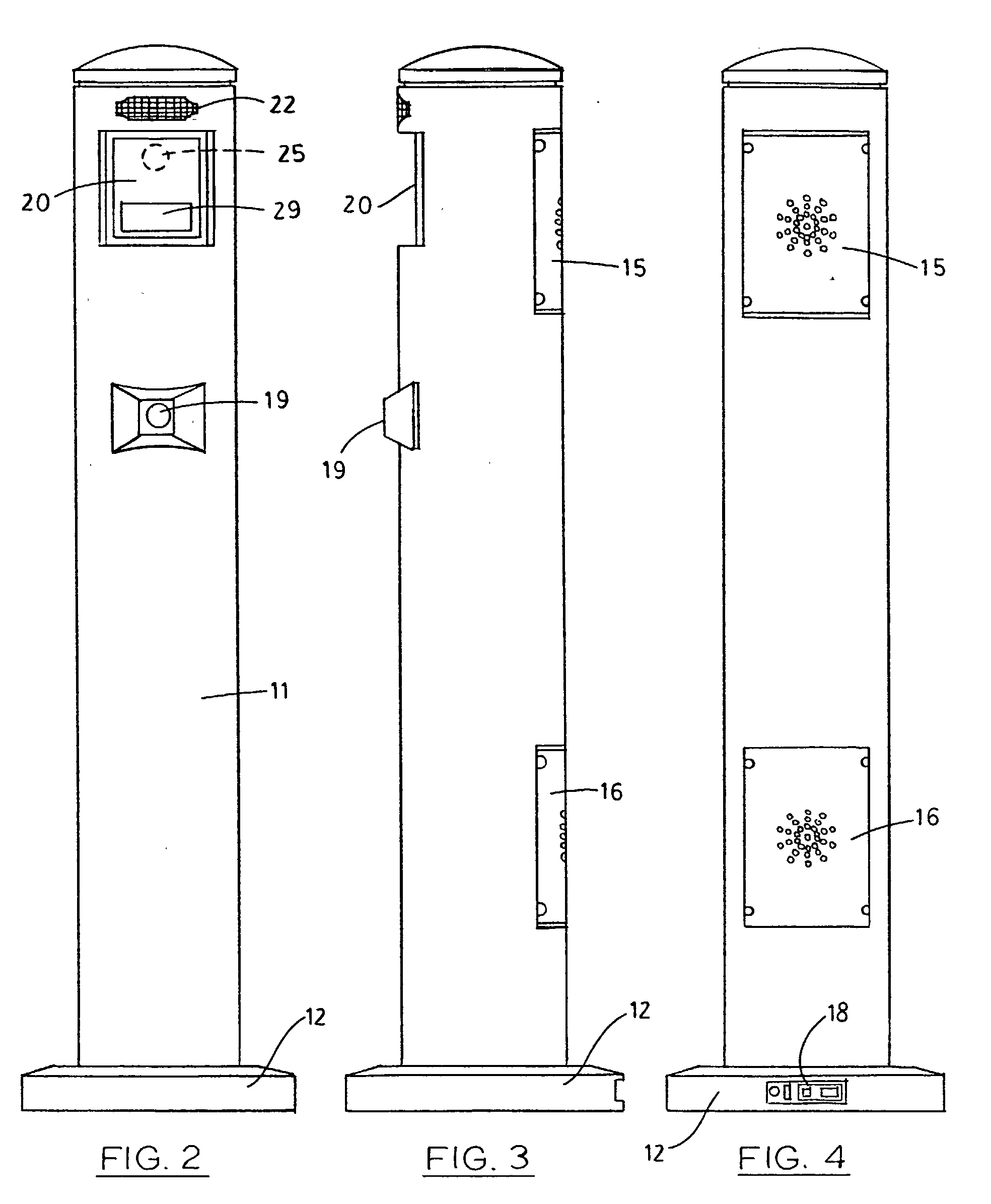

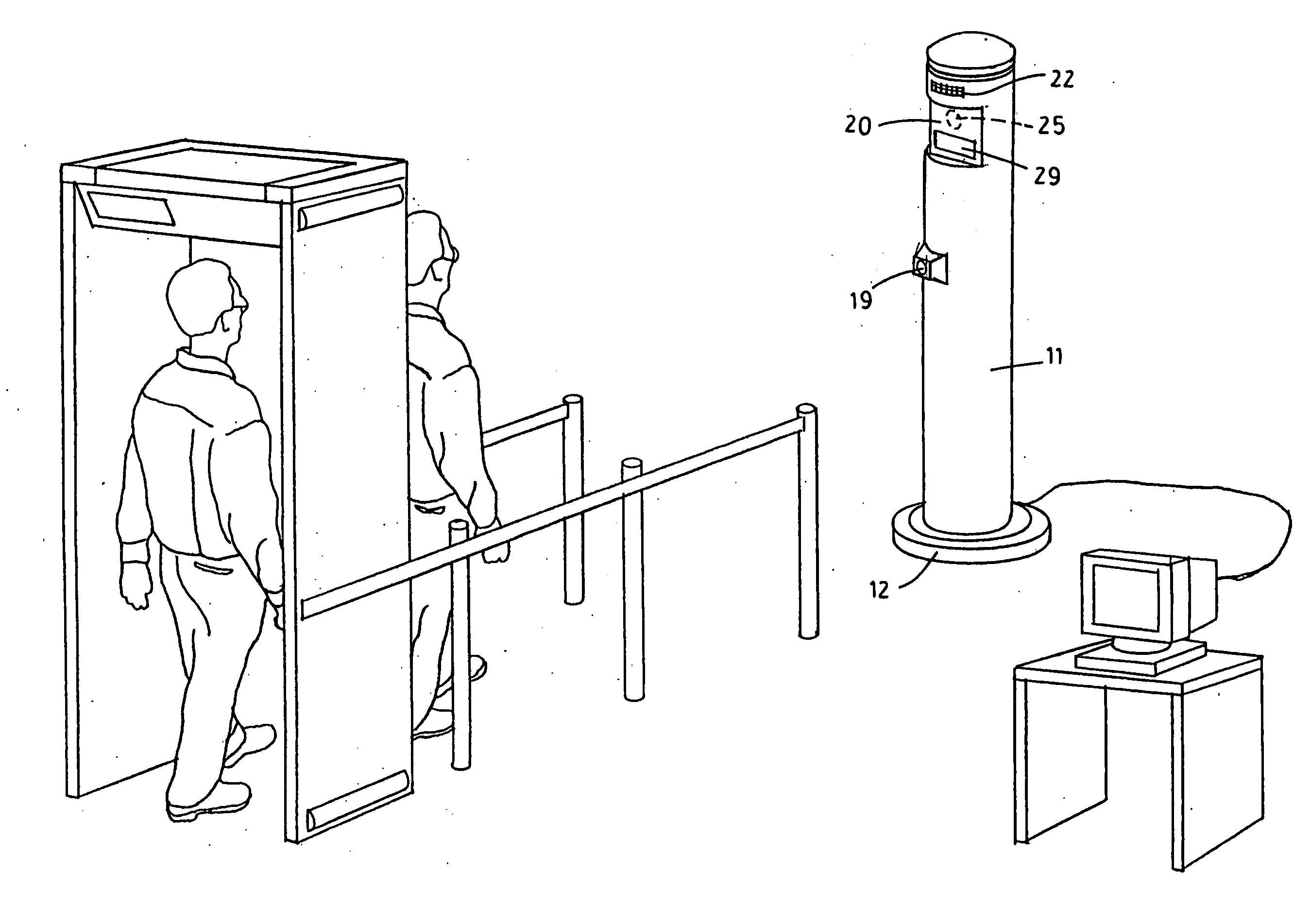

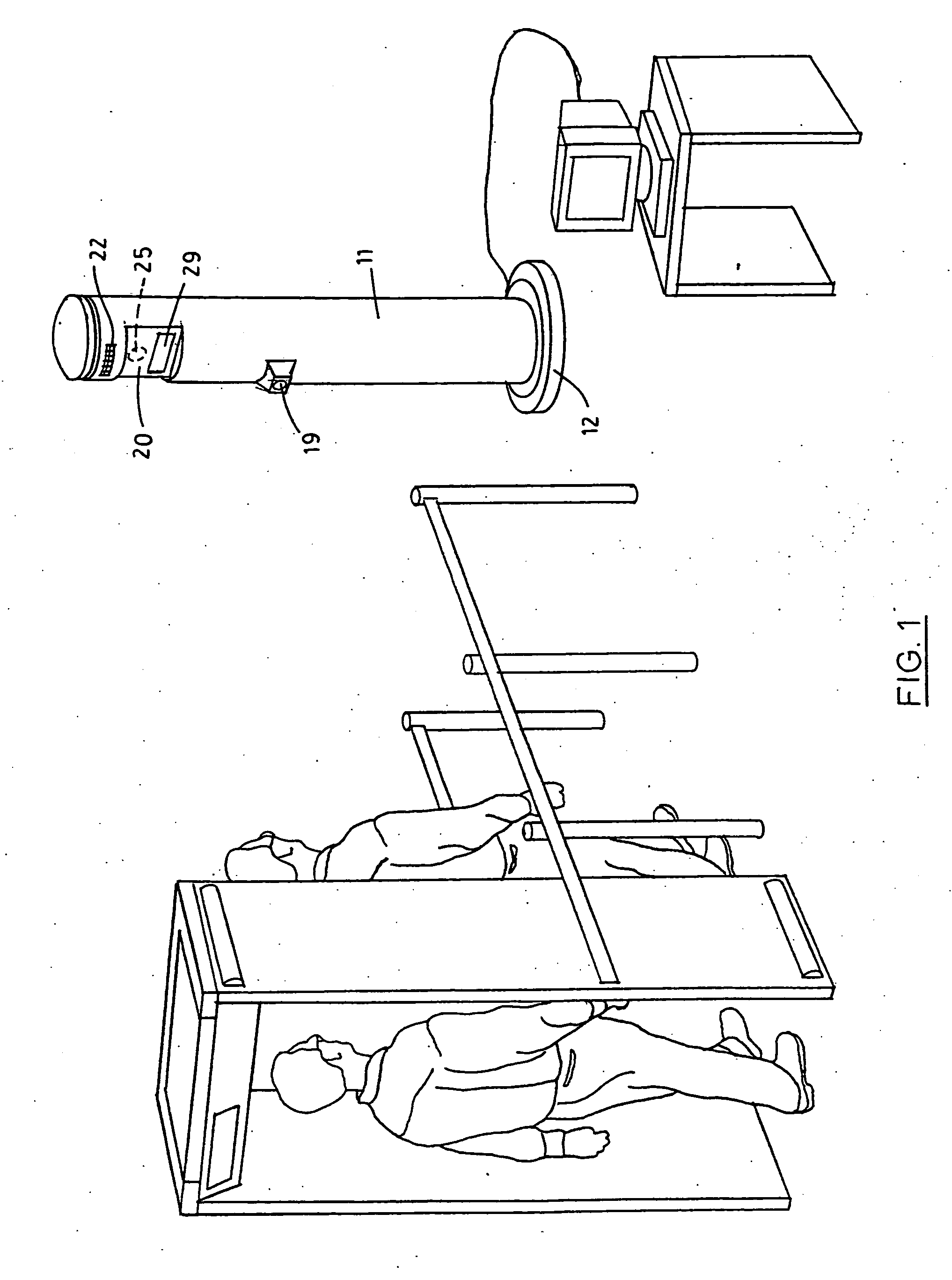

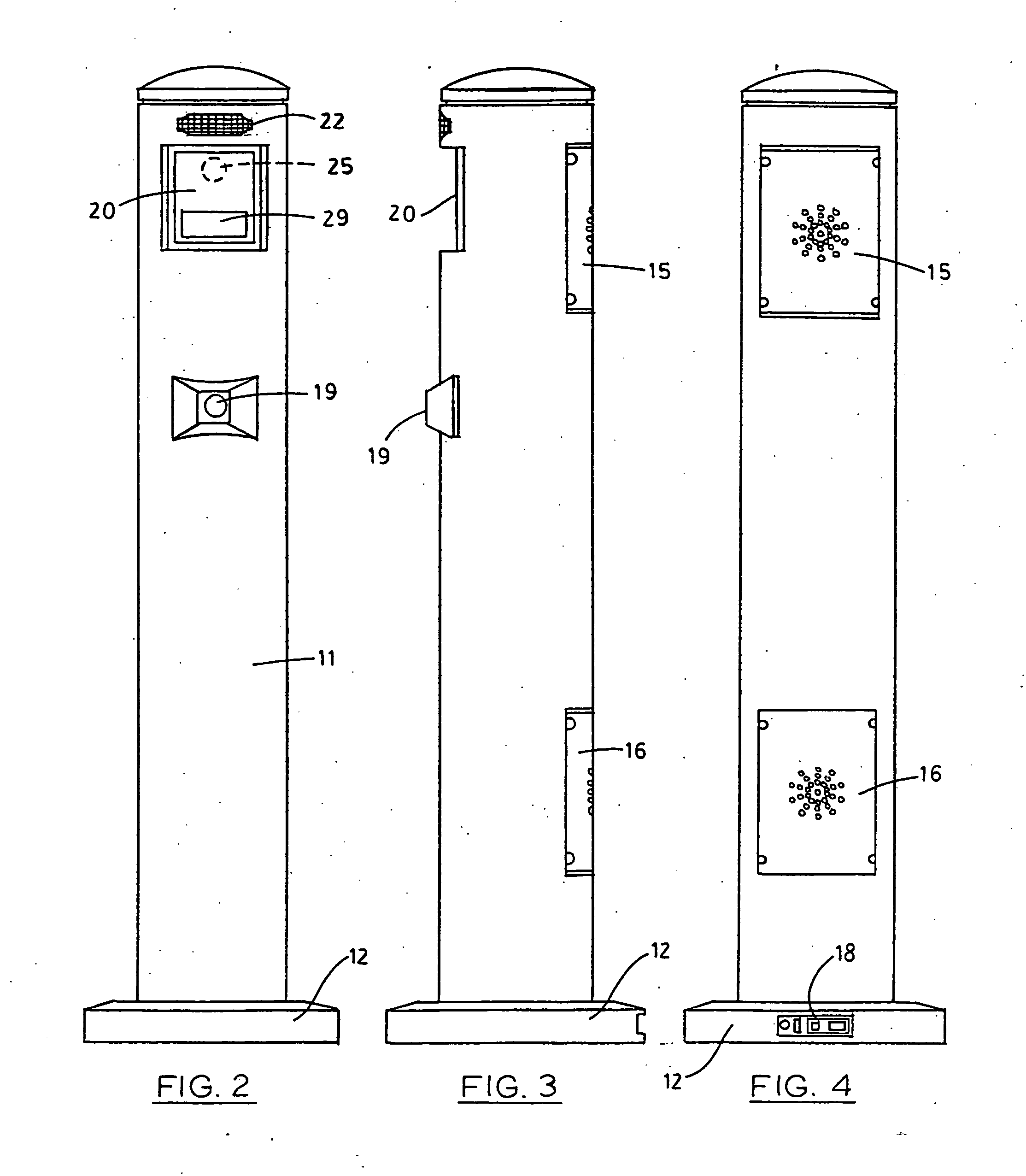

Security identification system

ActiveUS20030142853A1Improve image qualityCharacter and pattern recognitionColor television detailsRe identificationFacial characteristic

This is a security and identification system designed to obtain improved images for use by real-time facial recognition and identification systems for screening individuals passing through secure entry or checkpoints such as airport passenger terminals, government offices, and other secure locations. The system detects the presence of a subject at the checkpoint, interactively instructs the subject to stop and move into position for proper identification and recognition, analyzes the facial features of the subject as (s)he passes through the checkpoint, and compares the features of the subject with those in a database. The system then generates different signals depending upon whether or not the subject is recognized. In one aspect of the invention, different methods and apparatus are provided for compensating for low ambient light so as to improve the quality of the facial image of the subject that is obtained.

Owner:PELCO INC

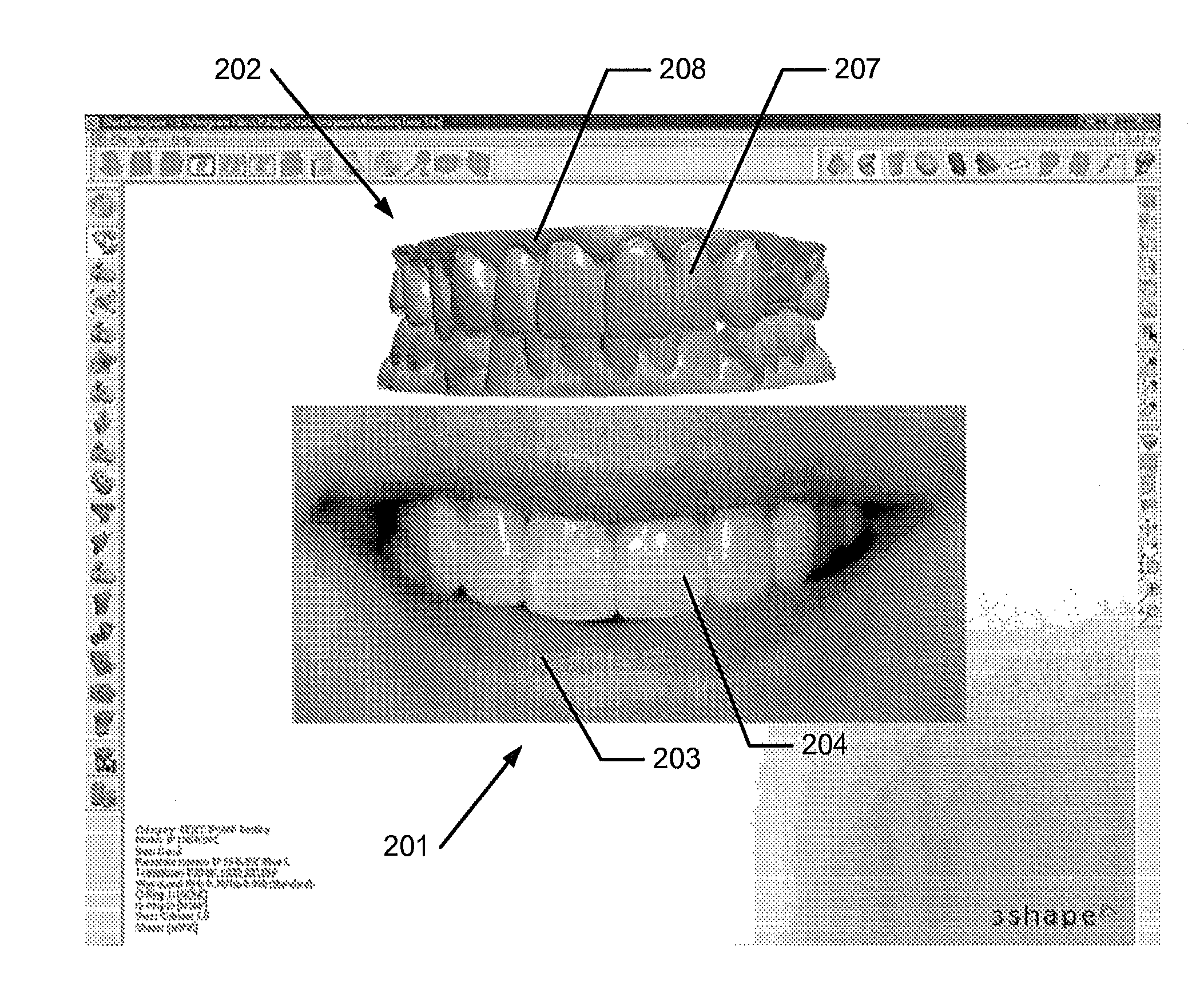

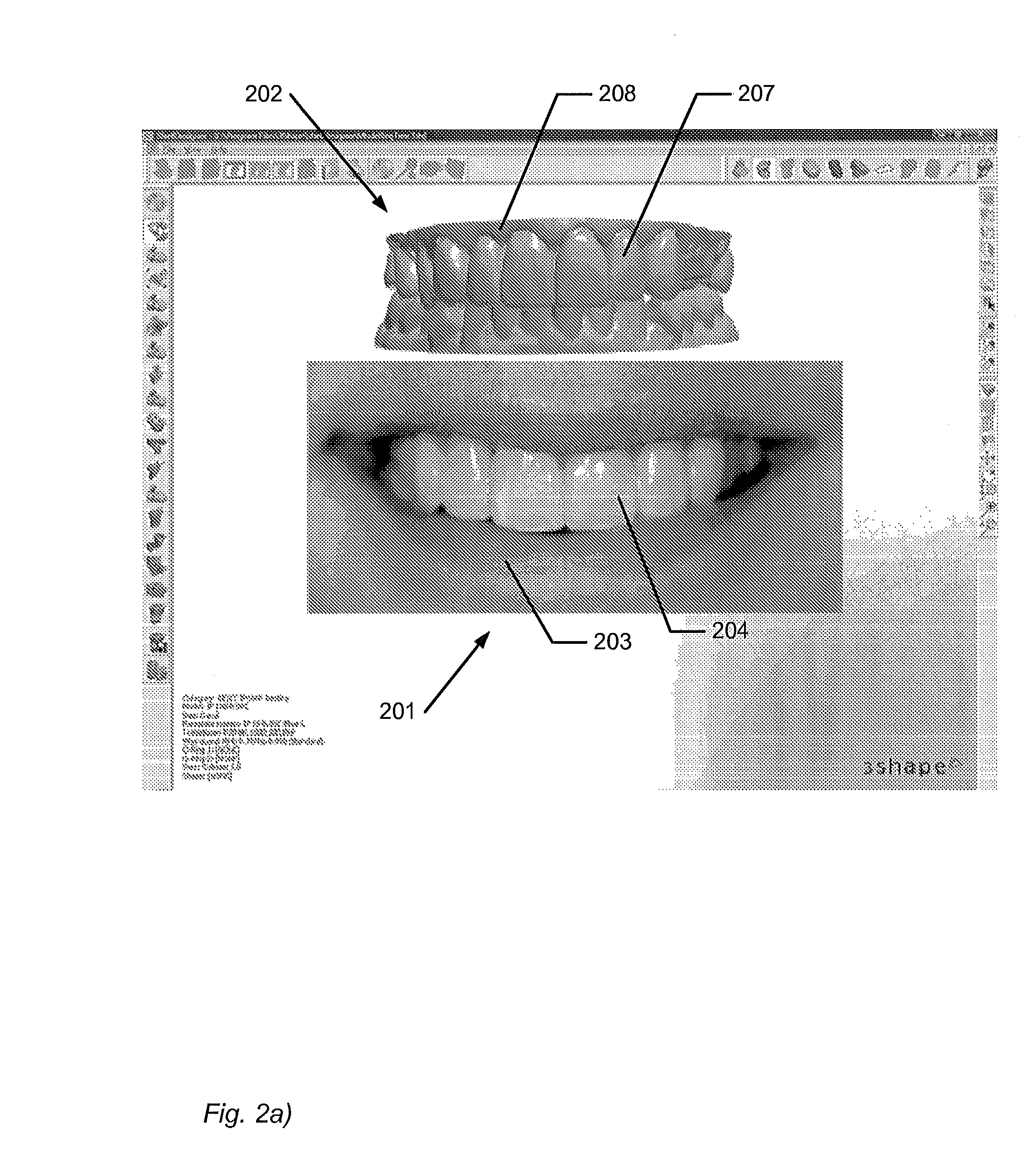

2d image arrangement

ActiveUS20130218530A1Good for comparisonDetection is slightImpression capsAdditive manufacturing apparatusViewpointsComputer graphics (images)

Disclosed is a method of designing a dental restoration for a patient, wherein the method includes providing one or more 2D images, where at least one 2D image includes at least one facial feature; providing a 3D virtual model of at least part of the patient's oral cavity; arranging at least one of the one or more 2D images relative to the 3D virtual model in a virtual 3D space such that the 2D image and the 3D virtual model are aligned when viewed from a viewpoint, whereby the 3D virtual model and the 2D image are both visualized in the 3D space; and modeling a restoration on the 3D virtual model, where the restoration is designed to fit the facial feature of the at least one 2D image.

Owner:3SHAPE AS

Security identification system

InactiveUS20070133844A1Quality improvementProgramme controlElectric signal transmission systemsRecognition systemFacial characteristic

This is a security and identification system designed to obtain improved images for use by real-time facial recognition and identification systems for screening individuals passing through secure entry or checkpoints such as airport passenger terminals, government offices, and other secure locations. The system detects the presence of a subject at the checkpoint, interactively instructs the subject to stop and move into position for proper identification and recognition, analyzes the facial features of the subject as (s)he passes through the checkpoint, and compares the features of the subject with those in a database. The system then generates different signals depending upon whether or not the subject is recognized. In one aspect of the invention, different methods and apparatus are provided for compensating for low ambient light so as to improve the quality of the facial image of the subject that is obtained.

Owner:PELCO INC

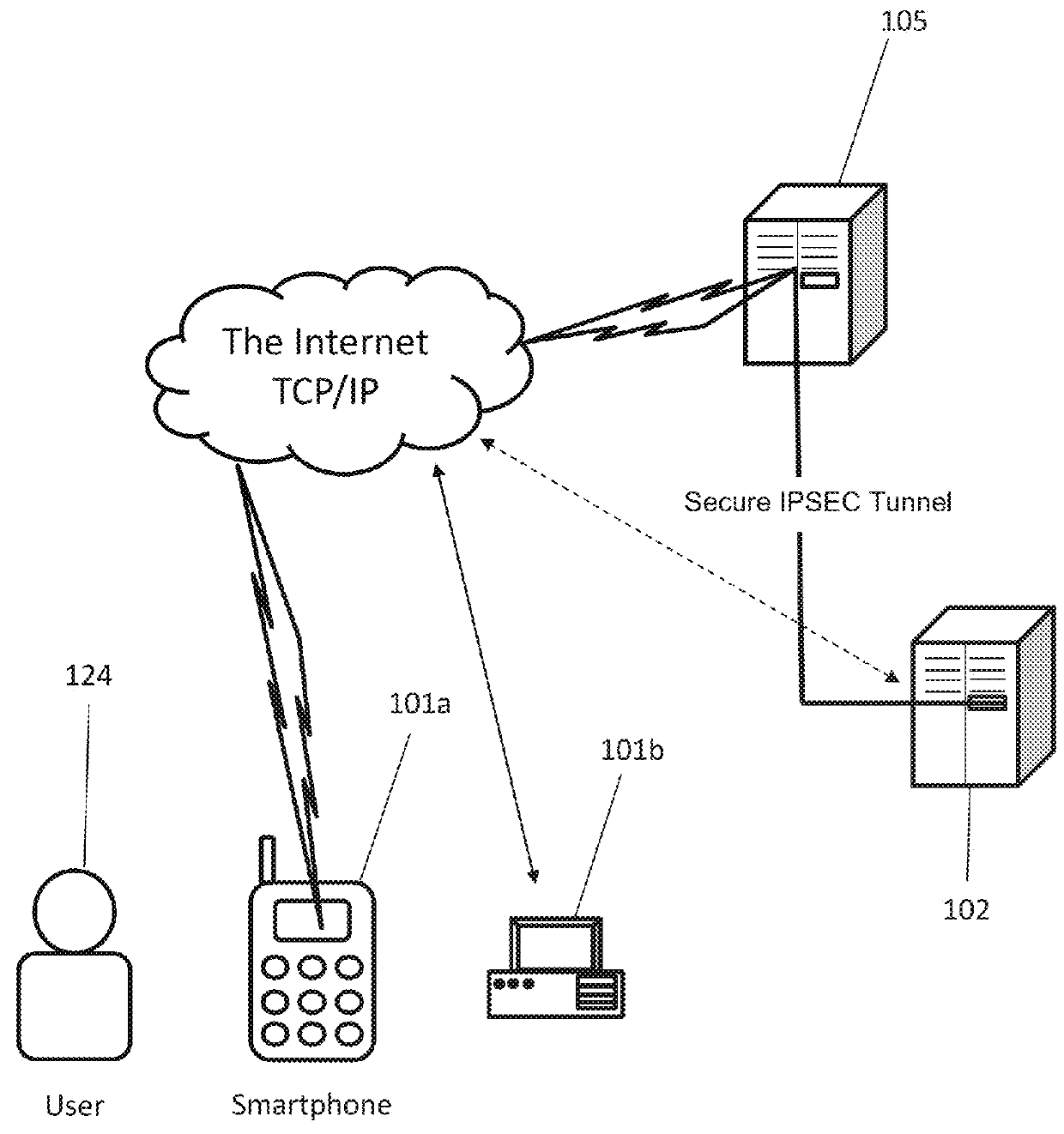

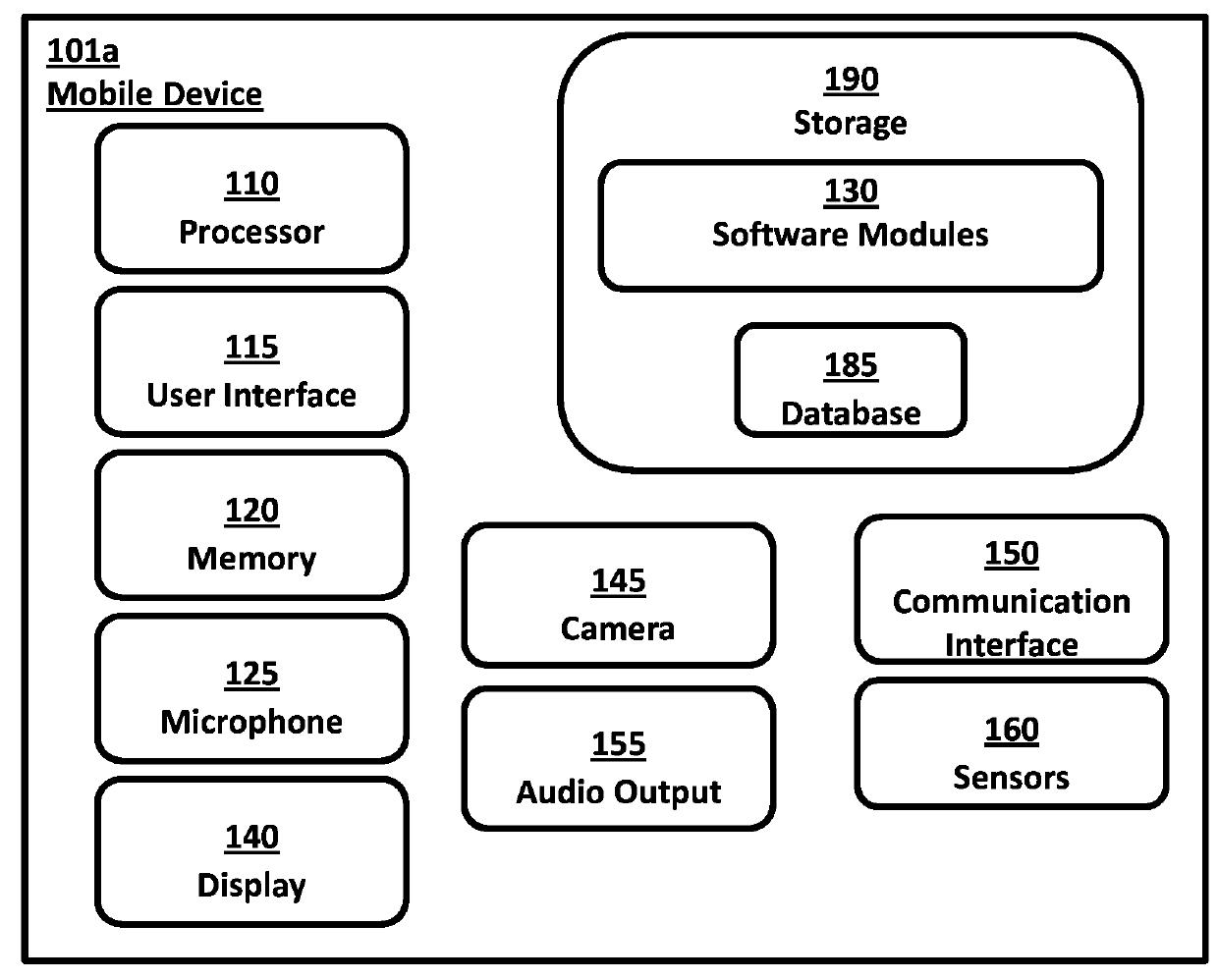

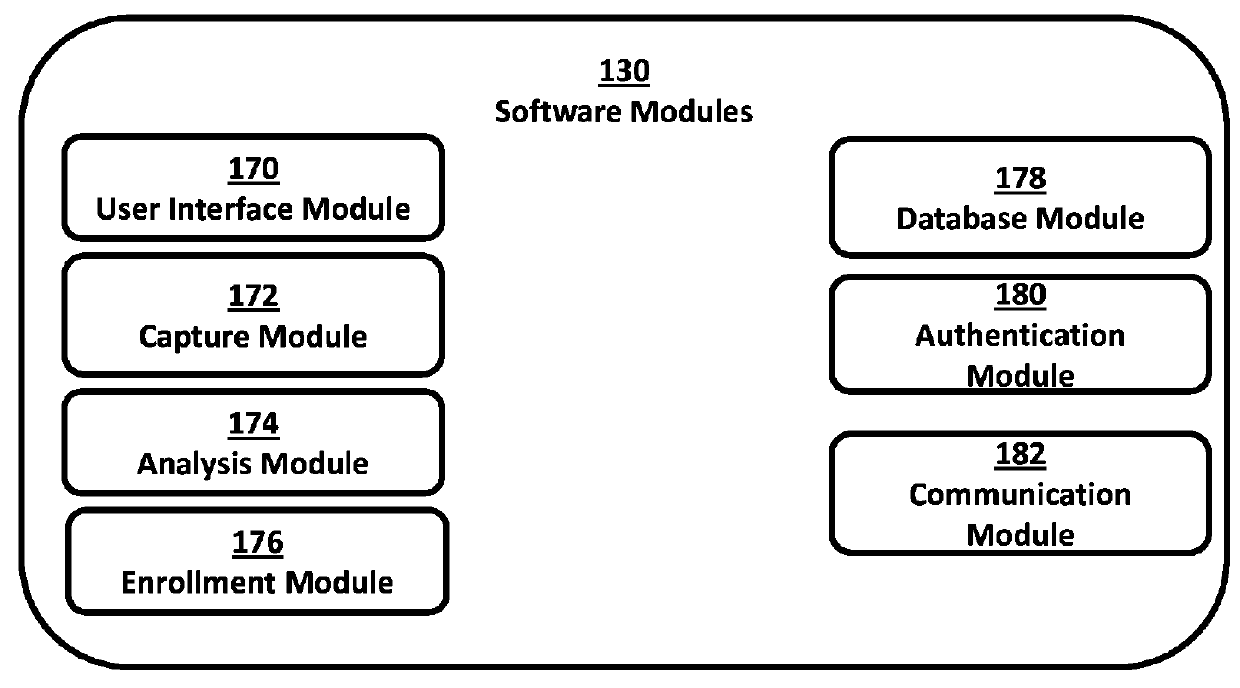

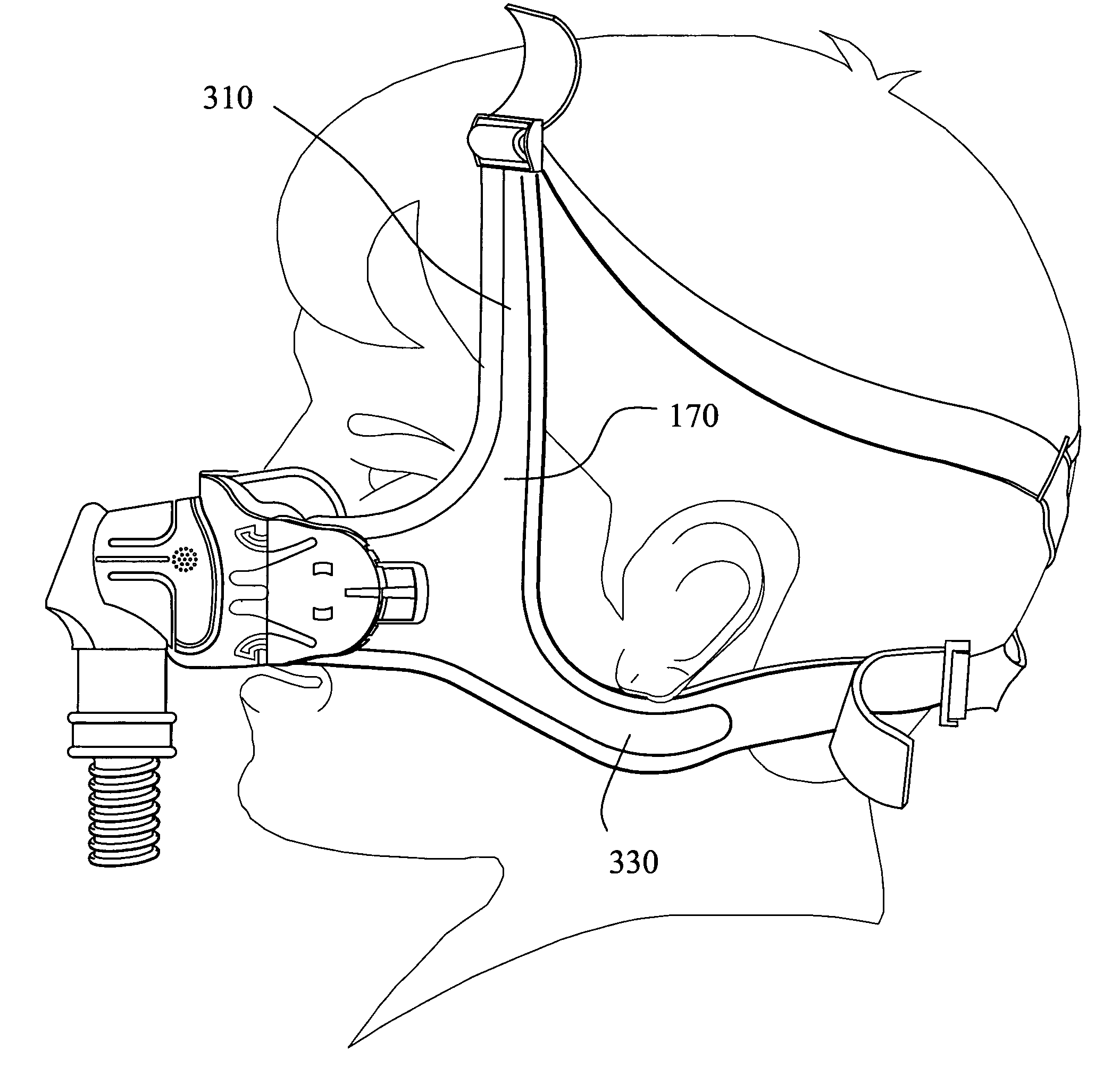

System and method for determining liveness

InactiveUS20160057138A1Image analysisDigital data processing detailsLivenessComputer graphics (images)

Systems and methods are provided for recording a user's biometric features and determining whether the user is alive (“liveness”) using mobile devices such as a smartphone. The systems and methods described herein enable a series of operations whereby a user using a mobile device can capture a sequence of images of a user's face. The mobile device is also configured analyze the imagery to identify and determine the position of facial features within the images and the changes in position of features throughout the sequence of images. Using the change in position of the features, the mobile device is further configured to determine whether the user is alive by identifying gestures and comparing the identified gestures to a prescribed combination of facial gestures that are uniquely defined for the particular user.

Owner:VERIDIUM IP LTD

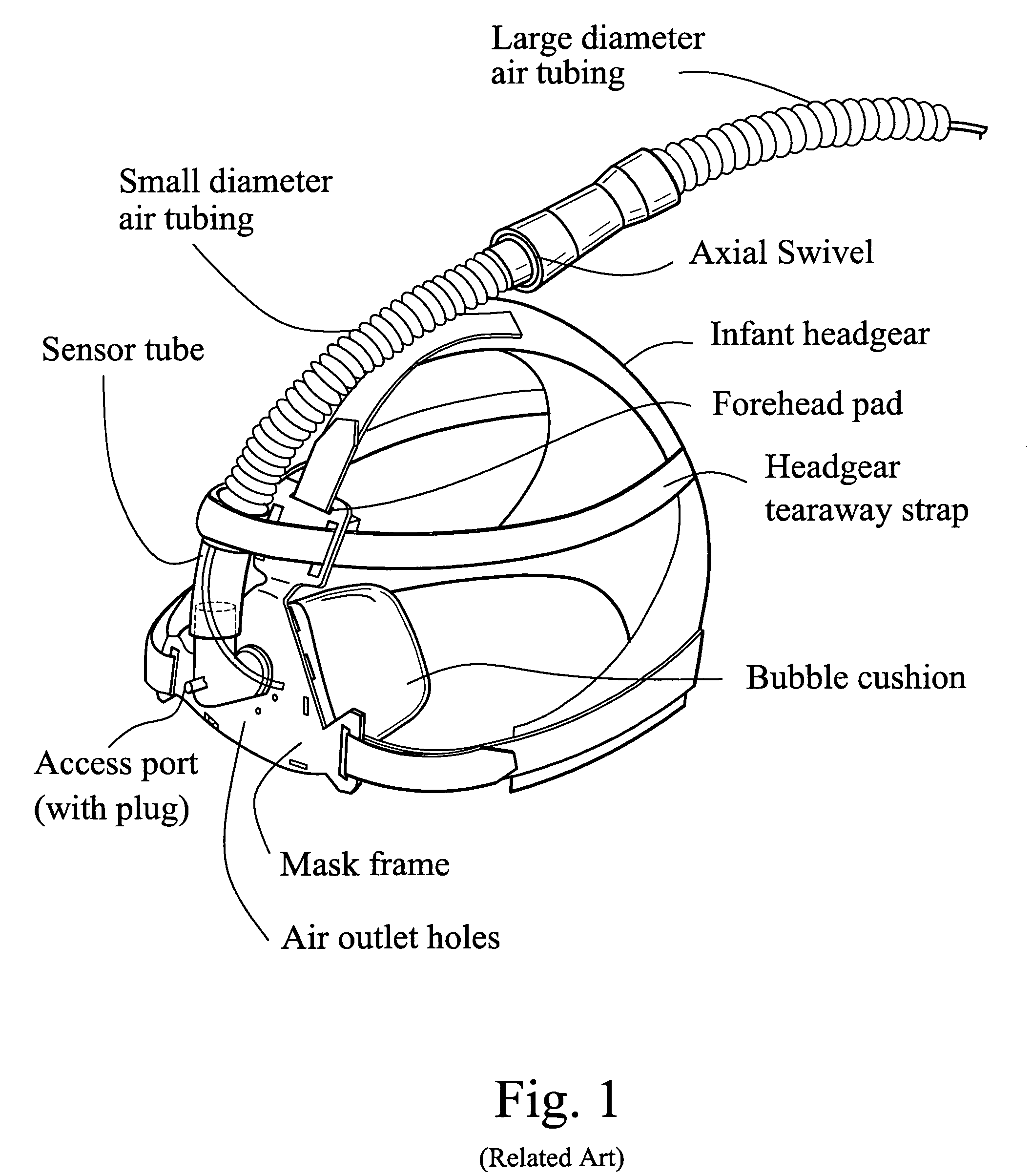

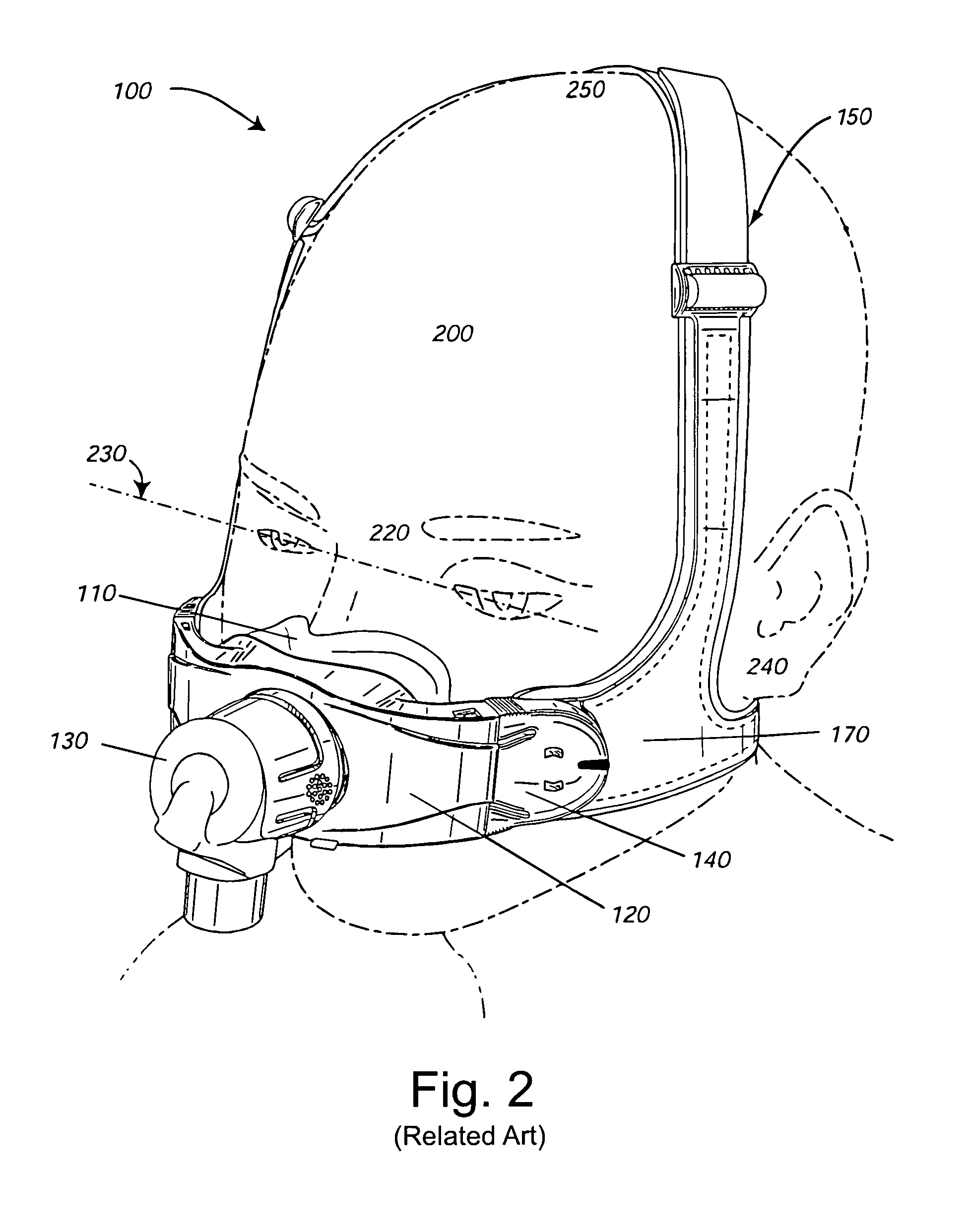

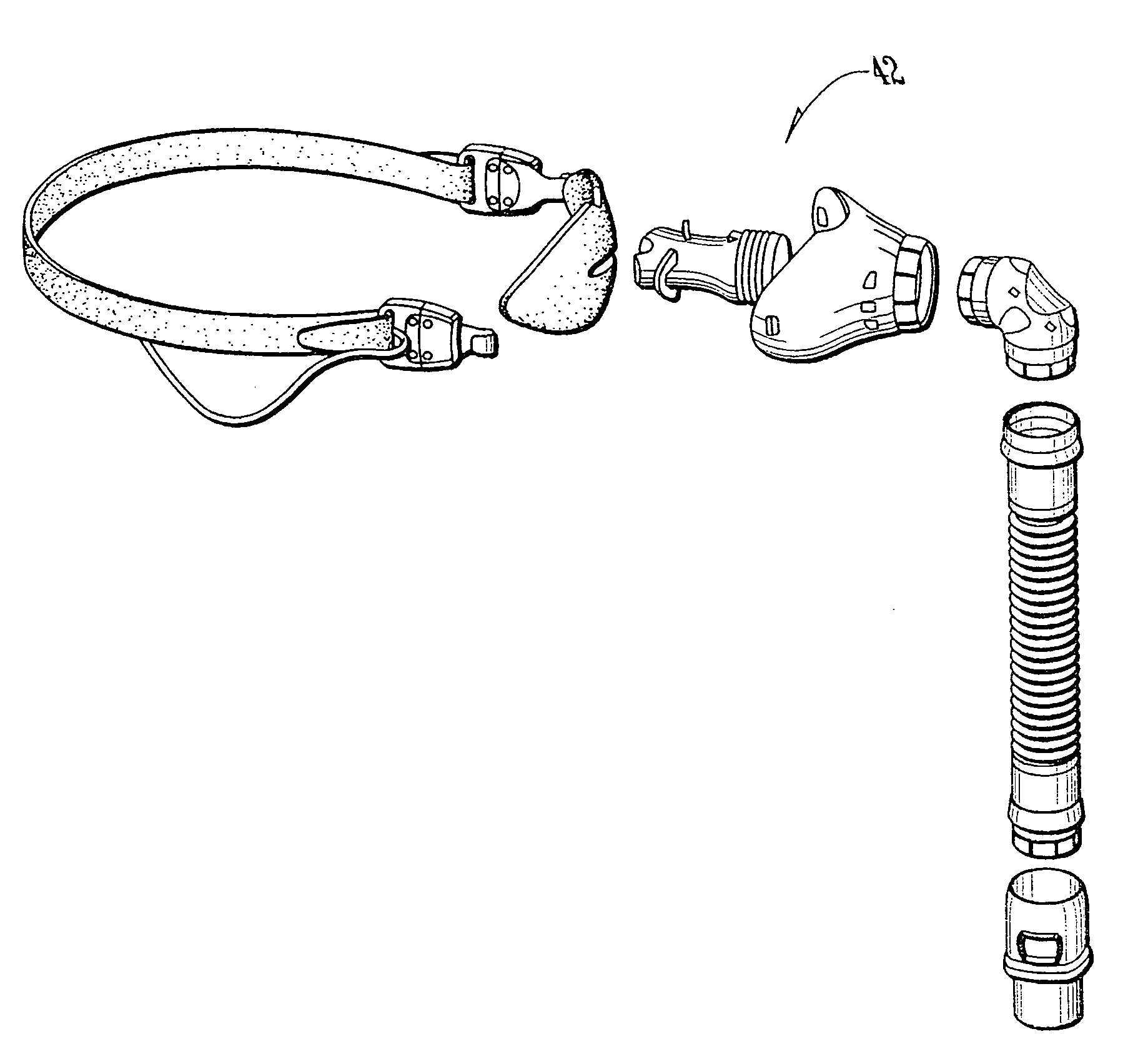

Nasal mask assembly

A mask system is provided to fit pre-adult patients, or patients having facial features that are very small or child-like, e.g., patients having dimensions in the lower 5%-10% of the population. For example, the headgear and / or cushion are dimensioned to accommodate this range of patients.

Owner:RESMED LTD

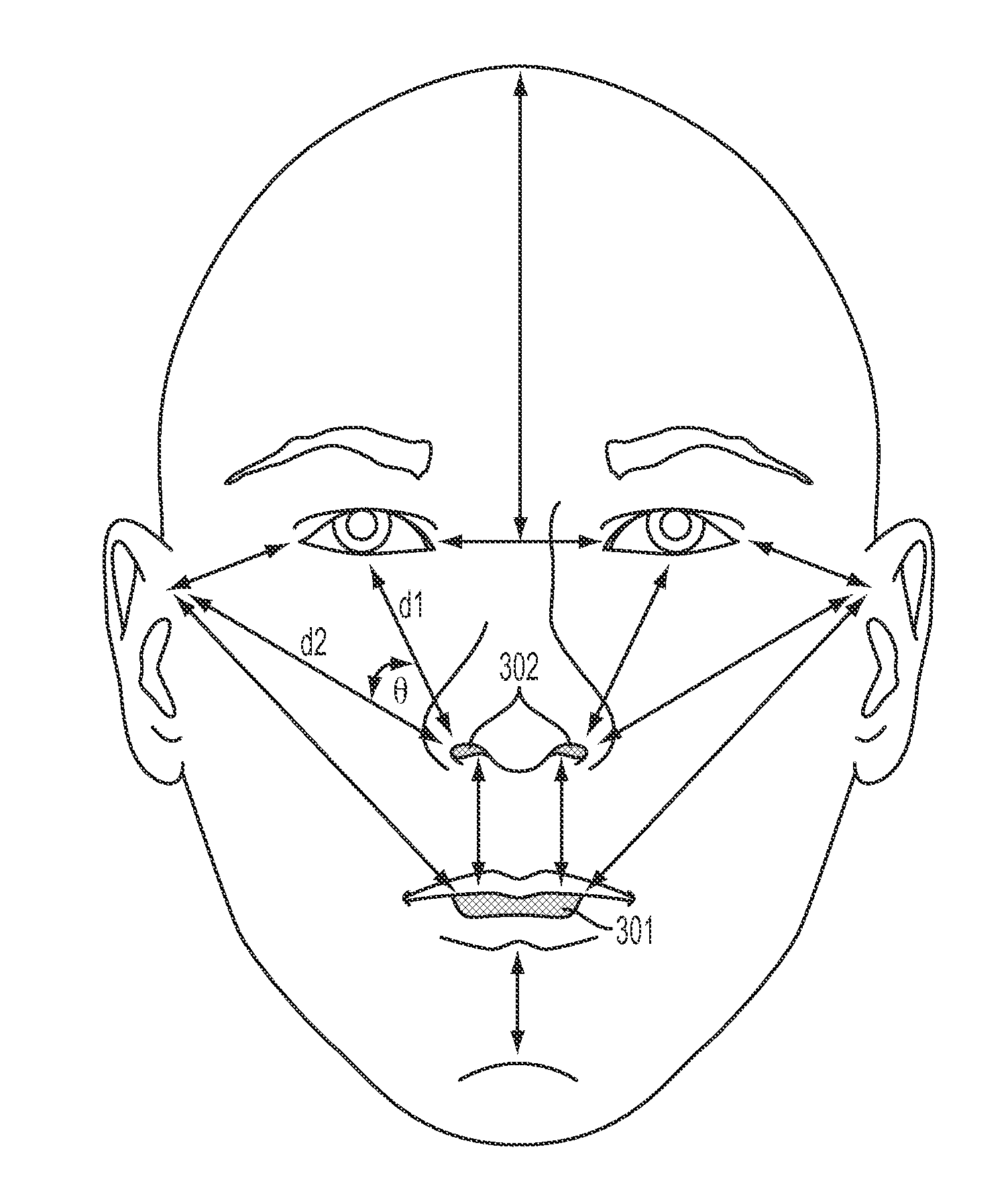

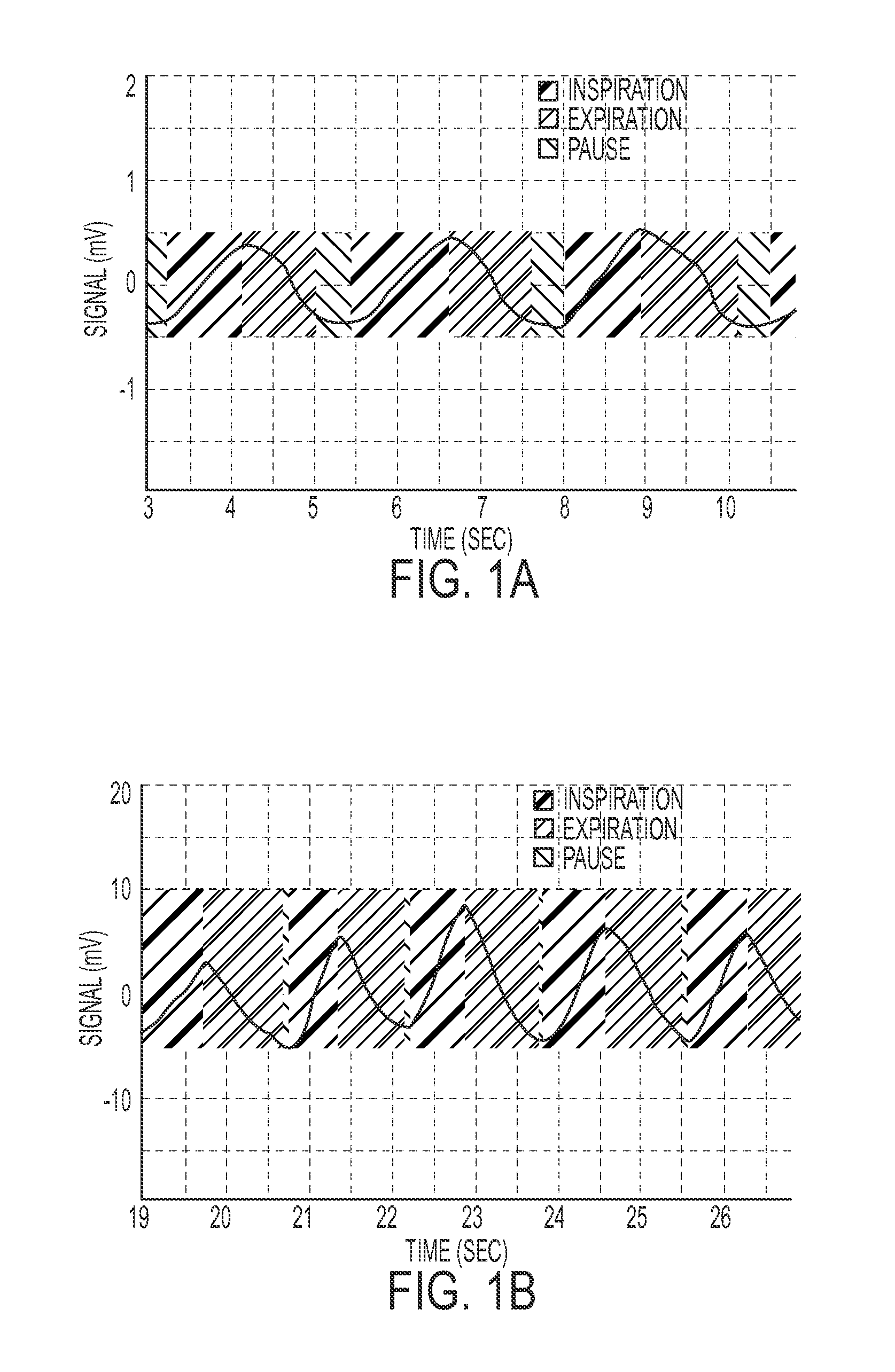

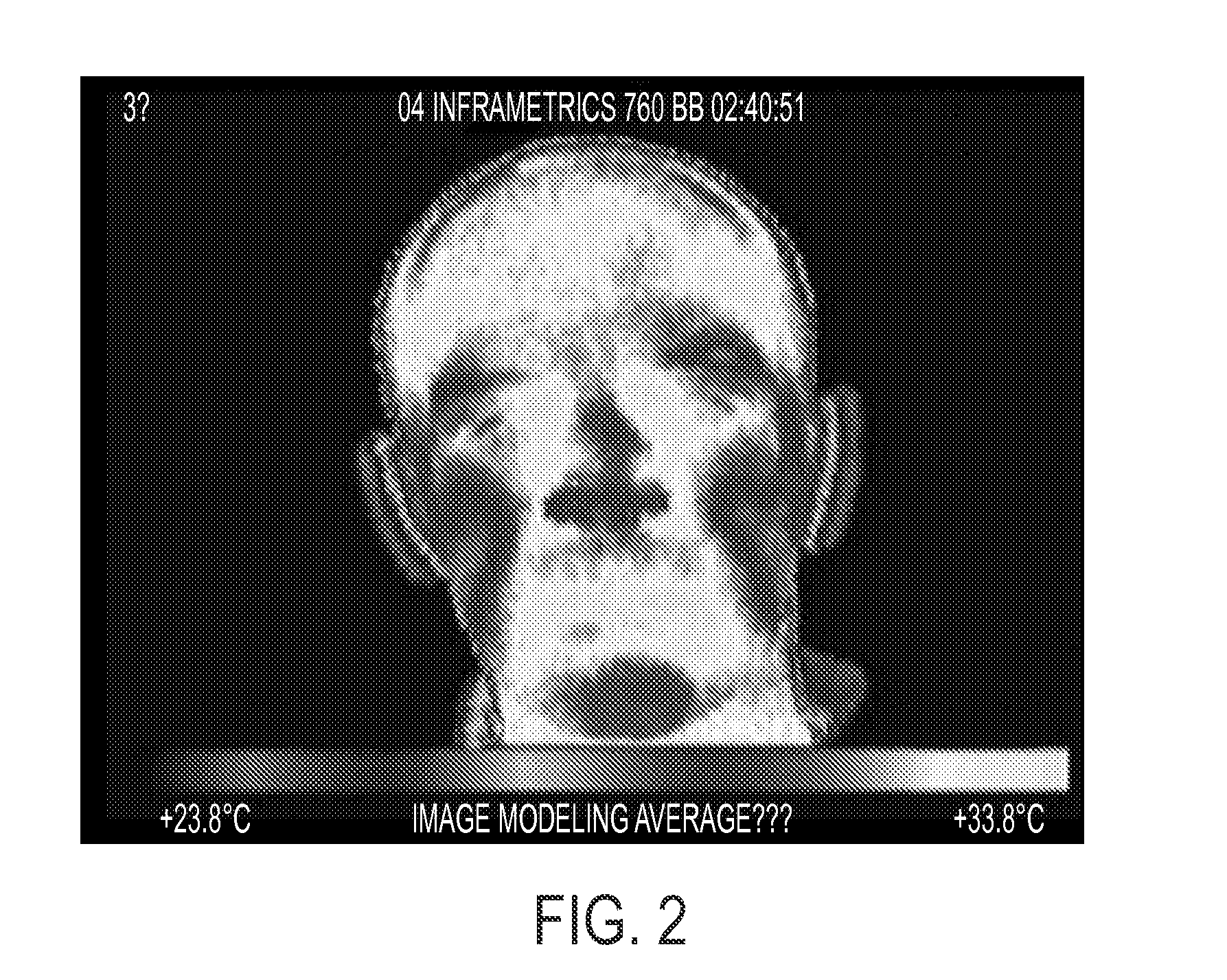

Monitoring respiration with a thermal imaging system

ActiveUS20120289850A1Reliable and accurate mannerMedical imagingRespiratory organ evaluationCommunication interfaceSpectral bands

What is disclosed is a system and method for monitoring respiration of a subject or subject of interest using a thermal imaging system with single or multiple spectral bands set to a temperature range of a facial region of that person. Temperatures of extremities of the head and face are used to locate facial features in the captured thermal images, i.e., nose and mouth, which are associated with respiration. The RGB signals obtained from the camera are plotted to obtain a respiration pattern. From the respiration pattern, a rate of respiration is obtained. The system includes display and communication interfaces wherein alerts can be activated if the respiration rate falls outside a level of acceptability. The teachings hereof find their uses in an array of devices such as, for example, devices which monitor the respiration of an infant to signal the onset of a respiratory problem or failure.

Owner:XEROX CORP

Method and apparatus for image-based photorealistic 3D face modeling

An apparatus and method for image-based 3D photorealistic head modeling are provided. The method for creating a 3D photorealistic head model includes: detecting frontal and profile features in input frontal and profile images; generating a 3D head model by fitting a 3D genetic model using the detected facial features; generating a realistic texture from the input frontal and profile images; and mapping the texture onto the 3D head model. In the apparatus and method, data obtained using a relatively cheap device, such as a digital camera, can be processed in an automated manner, and satisfactory results can be obtained even from imperfect input data. In other words, facial features can be extracted in an automated manner, and a robust “human-quality” face analysis algorithm is used.

Owner:SAMSUNG ELECTRONICS CO LTD

Method, system and computer program product for automatic and semi-automatic modificatoin of digital images of faces

ActiveUS20120299945A1Increase perceived beautyImprove accuracyCharacter and pattern recognitionCathode-ray tube indicatorsSemi automaticHand held

The present invention is directed at modifying digital images of faces automatically or semi-automatically. In one aspect, a method of detecting faces in digital images and matching and replacing features within the digital images is provided. Techniques for blending, recoloring, shifting and resizing or portions of digital images are disclosed. In other aspects, methods of virtual “face lifts” are methods of detecting faces within digital image are provided. Advantageously, the detection and localization of faces and facial features, such as the eyes, nose, lips and hair, can be achieved on an automated or semi-automated basis. User feedback and adjustment enables fine tuning of modified images. A variety of systems for matching and replacing features within digital images is also provided, including implementation as a website, through mobile phones, handheld computers, or a kiosk. Related computer program products are also disclosed.

Owner:LOREAL SA

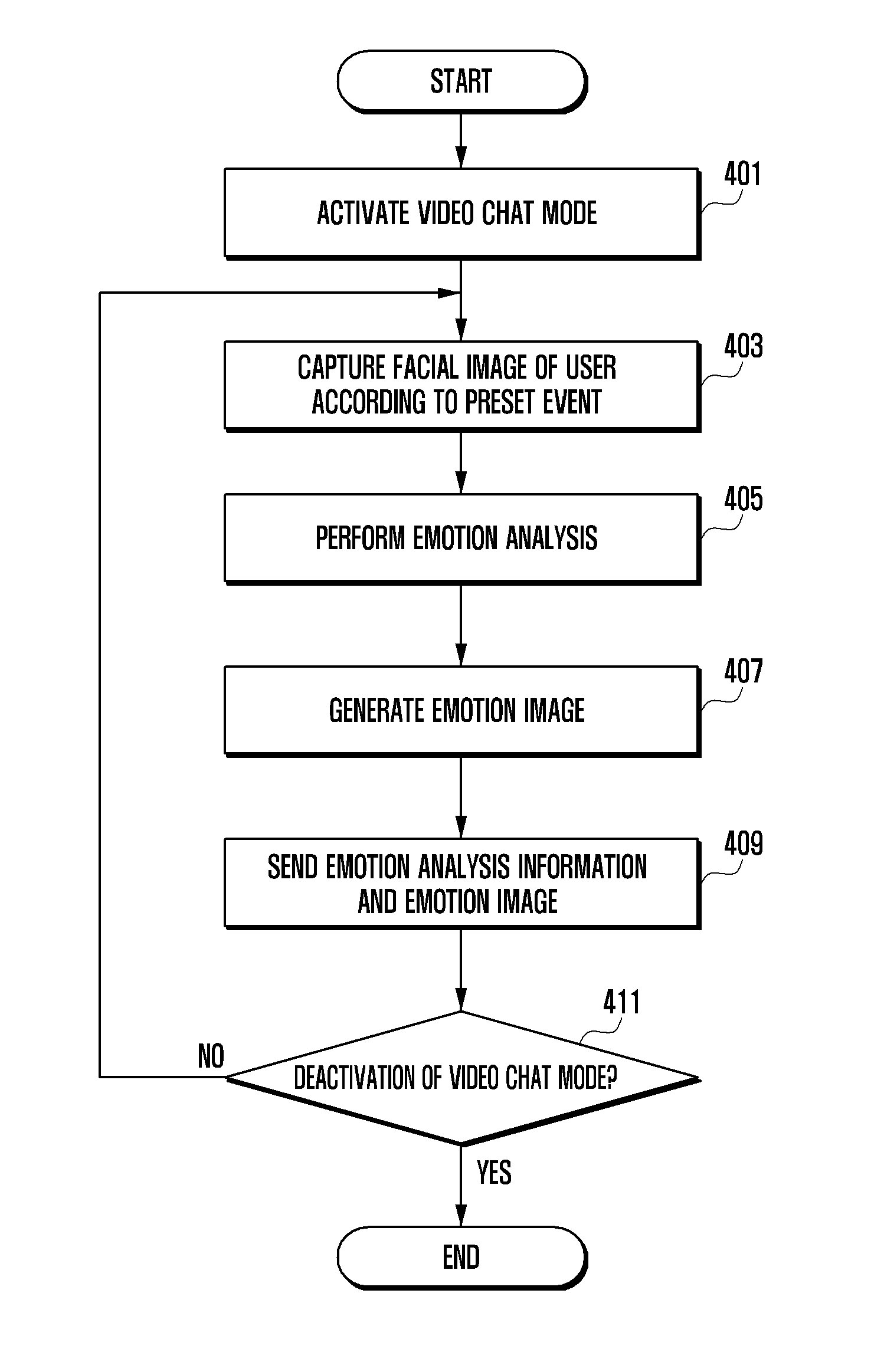

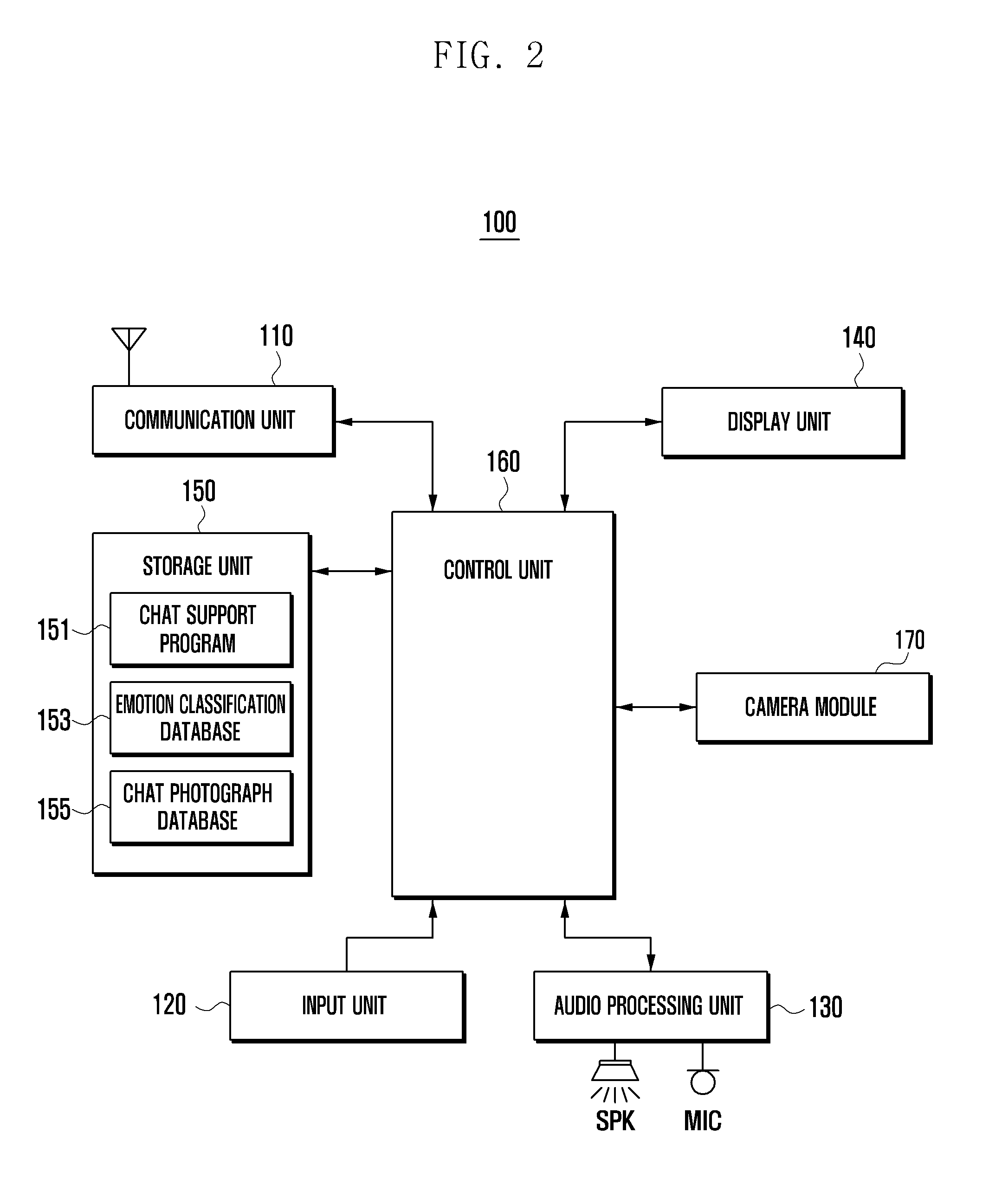

Method for user function operation based on face recognition and mobile terminal supporting the same

ActiveUS20140192134A1Facilitating video call functionEasy data managementTelevision system detailsColor television detailsPattern recognitionComputer module

A mobile device user interface method activates a camera module to support a video chat function and acquires an image of a target object using the camera module. In response to detecting a face in the captured image, the facial image data is analyzed to identify an emotional characteristic of the face by identifying a facial feature and comparing the identified feature with a predetermined feature associated with an emotion. The identified emotional characteristic is compared with a corresponding emotional characteristic of previously acquired facial image data of the target object. In response to the comparison, an emotion indicative image is generated and the generated emotion indicative image is transmitted to a destination terminal used in the video chat.

Owner:SAMSUNG ELECTRONICS CO LTD

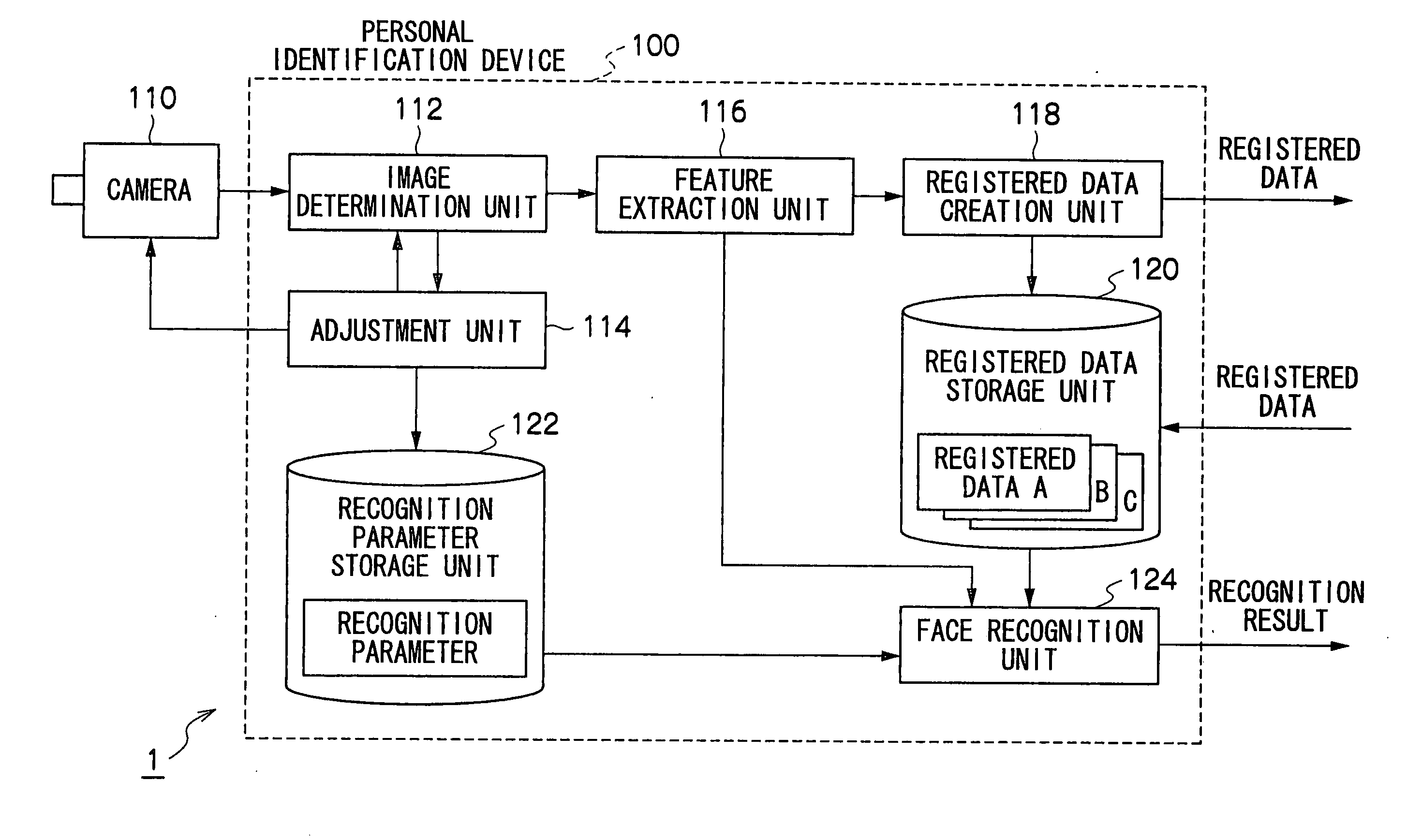

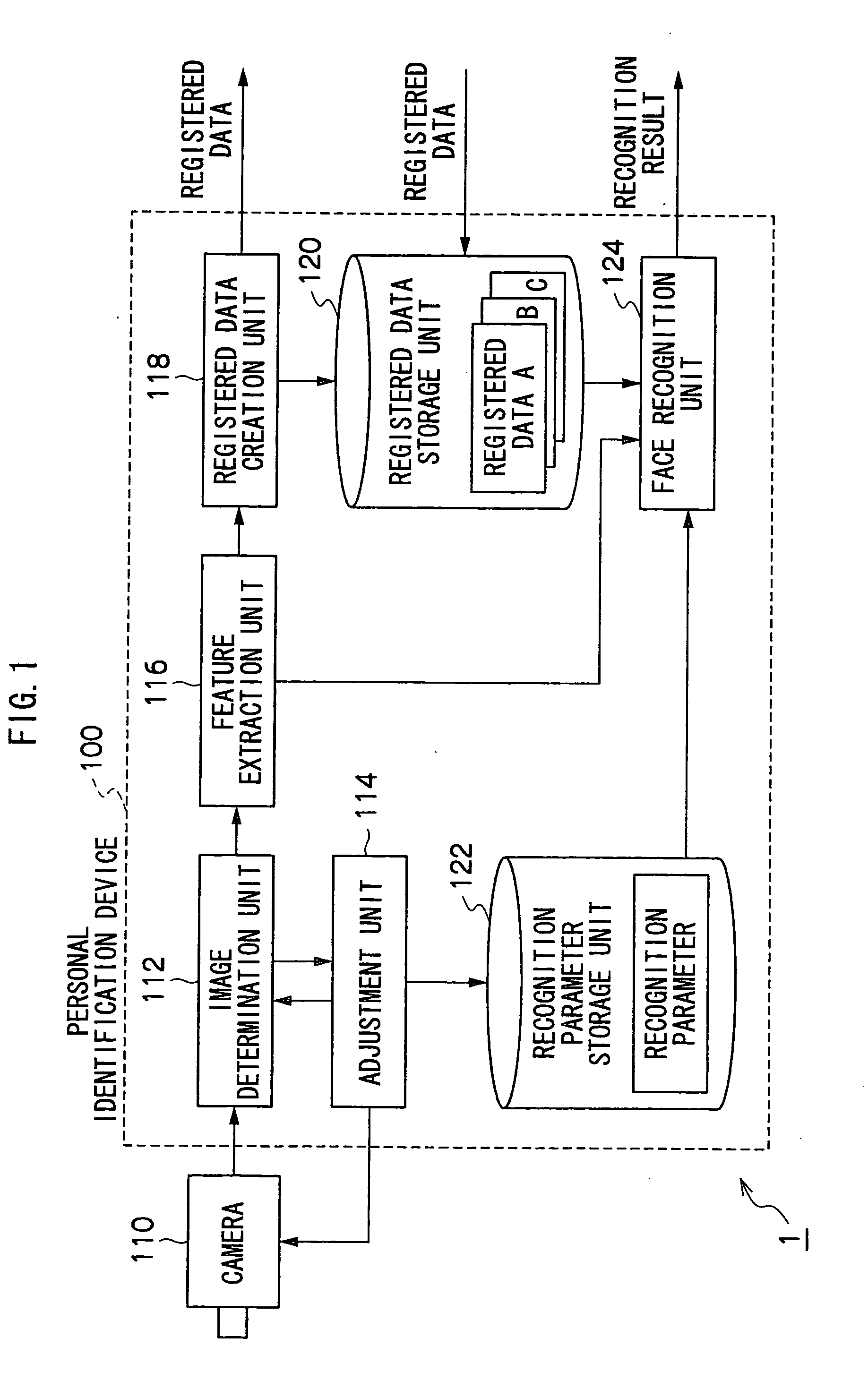

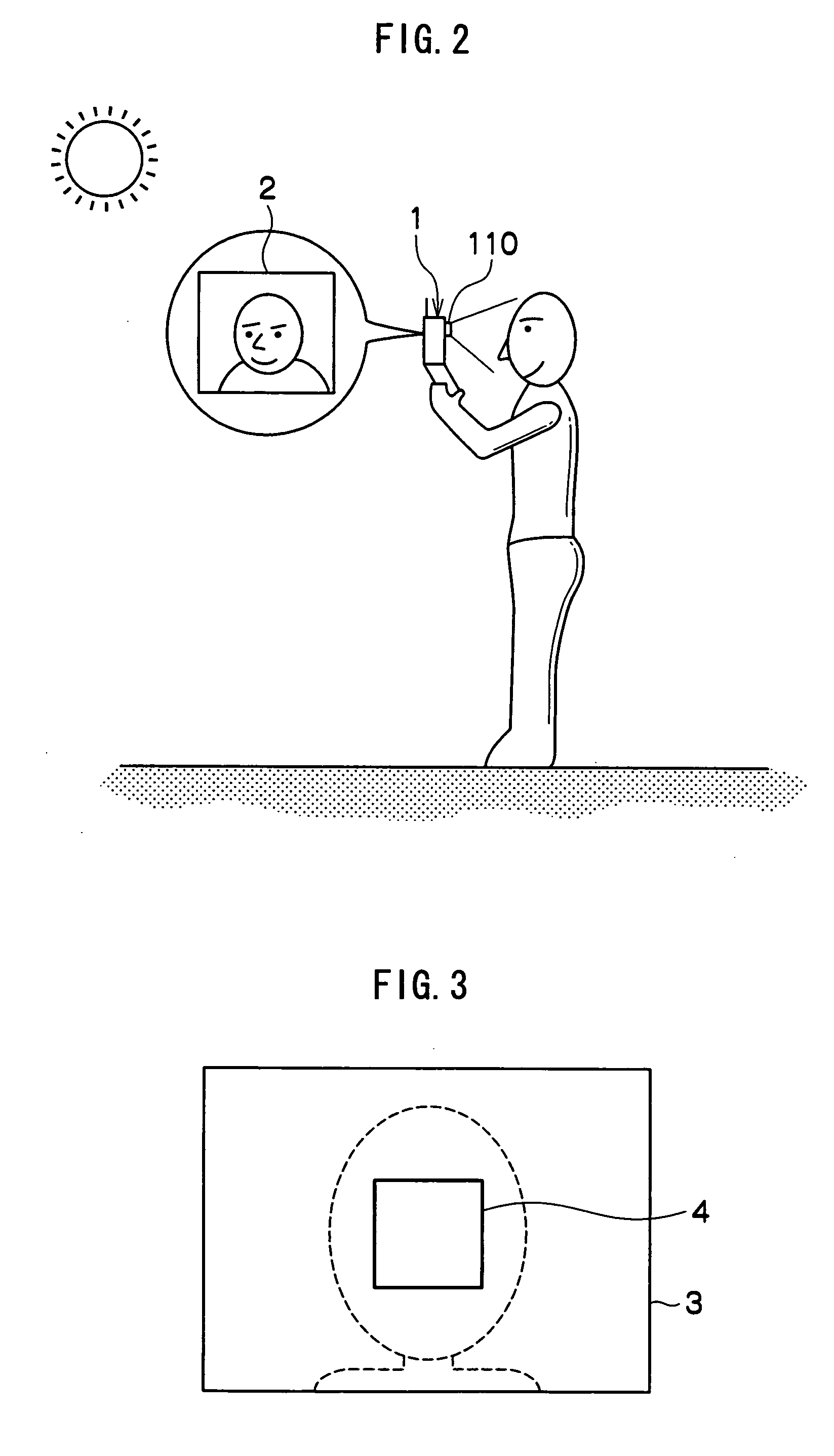

Personal Identification Device and Personal Identification Method

InactiveUS20090060293A1Improve performanceImprove image qualityTelevision system detailsCharacter and pattern recognitionImage extractionFeature extraction

A personal identification device of the present invention is provided with a registered data storage unit for storing registered data containing facial feature data for a registered user, a recognition parameter storage unit for storing recognition parameters, a image determination unit for determining whether or not the image quality of a user facial image input from an imaging device is appropriate, an adjustment unit for adjusting the settings of the imaging device or modifying the recognition parameters, in accordance with the result of determination carried out in the image determination unit, a feature extraction unit for extracting user facial feature data from a facial image, and a face recognition unit for comparing the extracted feature data with the registered data to determine whether or not the user is a registered user based on the result of comparison and the recognition parameters.

Owner:OKI ELECTRIC IND CO LTD

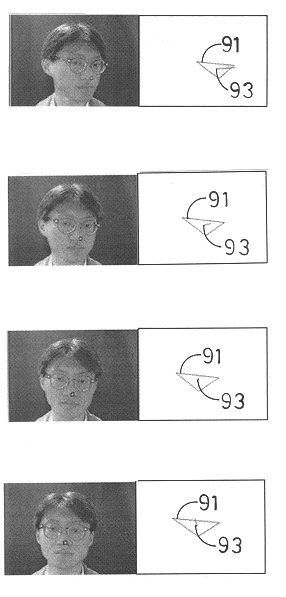

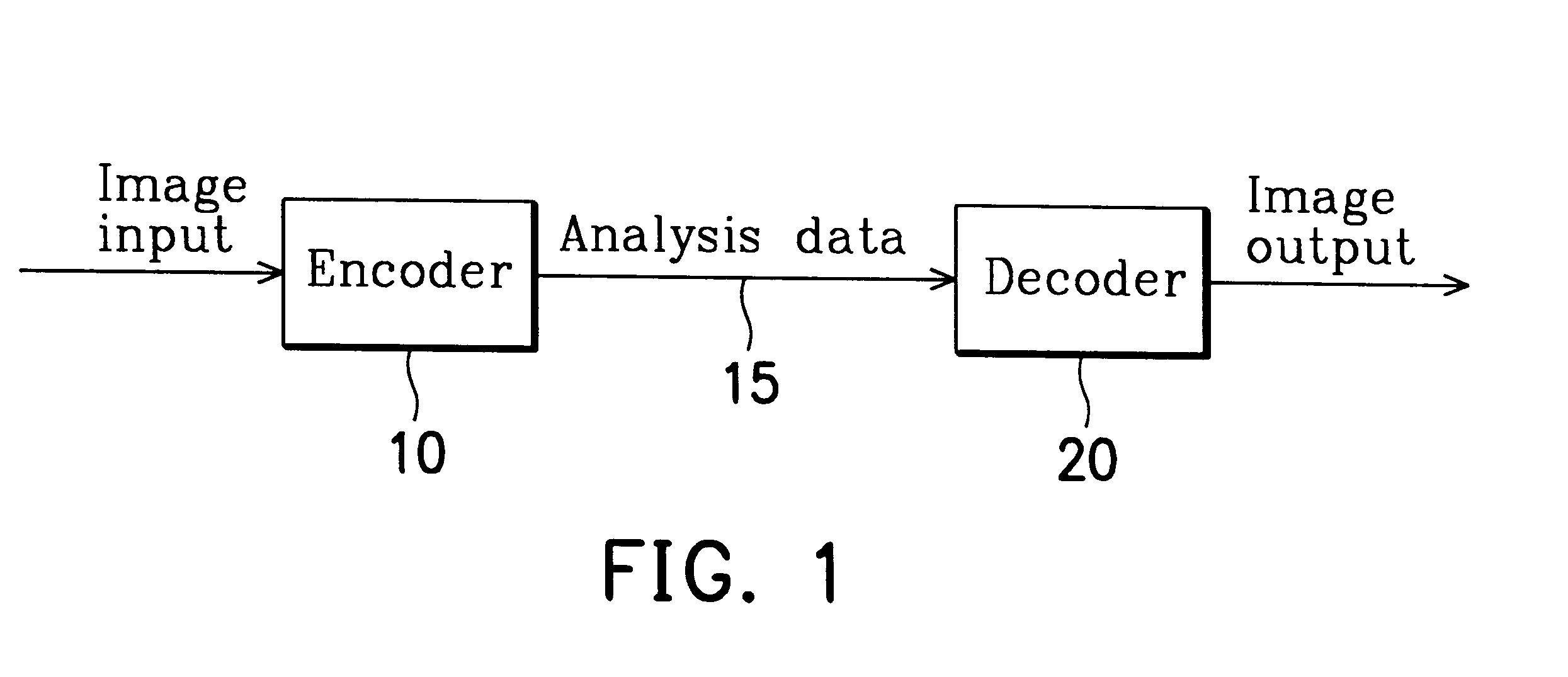

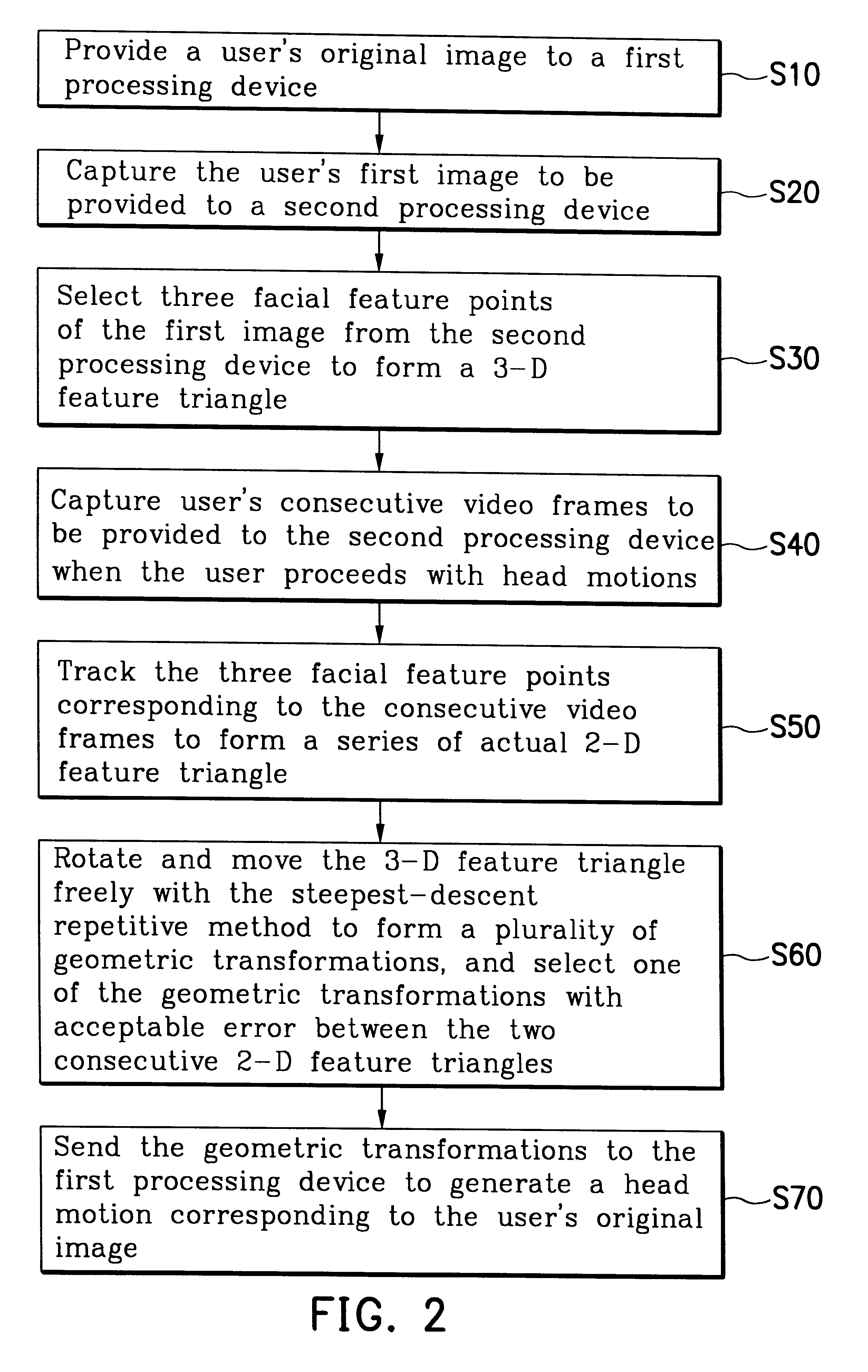

Method of image processing using three facial feature points in three-dimensional head motion tracking

A method of image processing in three-dimensional (3-D) head motion tracking is disclosed. The method includes: providing a user's source image to a first processing device; capturing the user's first image and providing it to a second processing device; selecting three facial feature points of the first image from the second processing device to form a 3-D feature triangle; capturing user's consecutive video frames and providing them to the second processing device when the user proceeds with head motions; tracking the three facial feature points corresponding to the consecutive video frames to form a series of actual 2-D feature triangle; rotating and translating the 3-D feature triangle freely to form a plurality of geometric transformations, selecting one of the geometric transformations with acceptable error between the two consecutive 2-D feature triangles, repeating the step until the last frame of the consecutive video frames and geometric transformations corresponding to various consecutive video frames are formed; and providing the geometric transformations to the first processing device to generate a head motion corresponding to the user's source image.

Owner:CYBERLINK

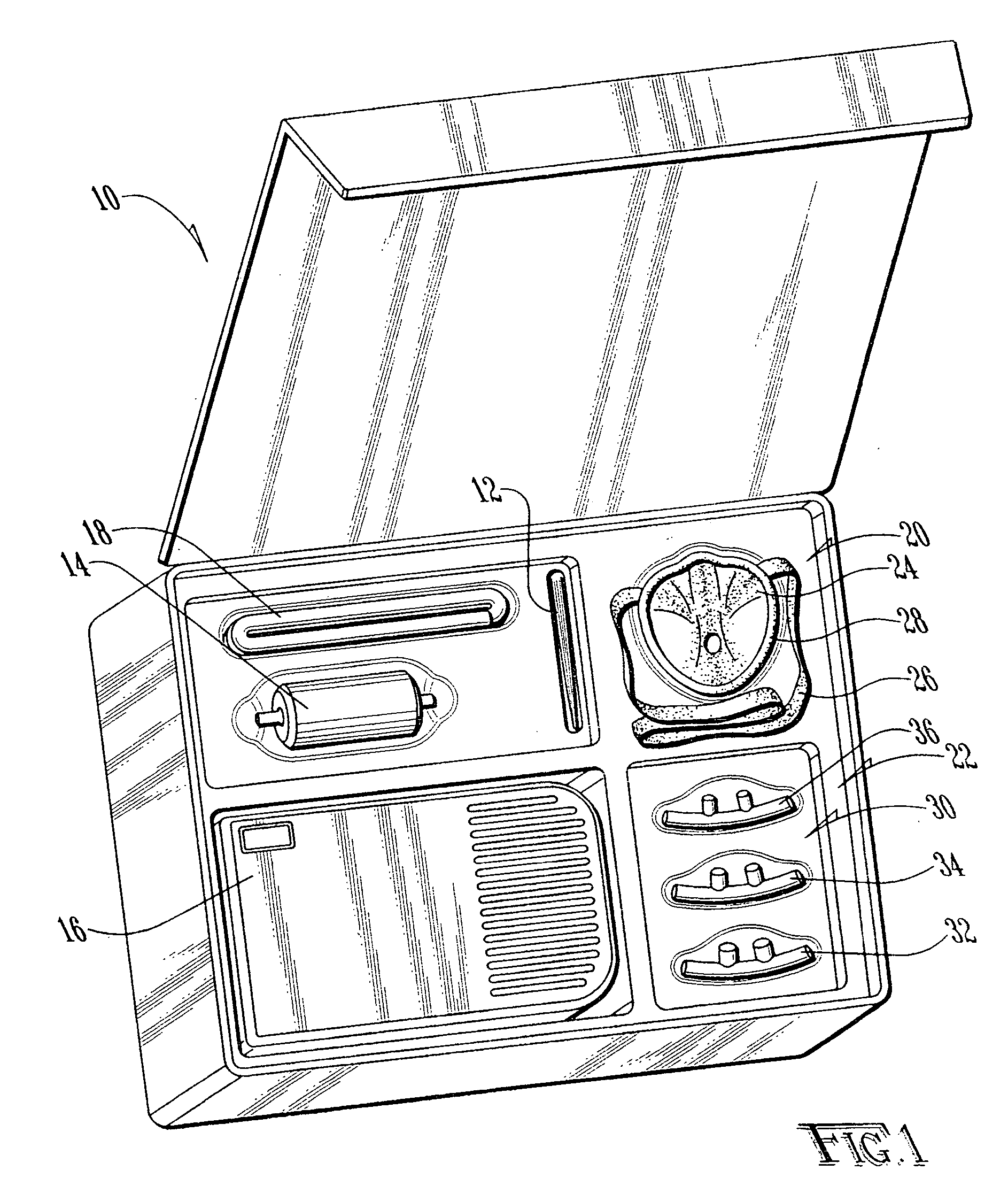

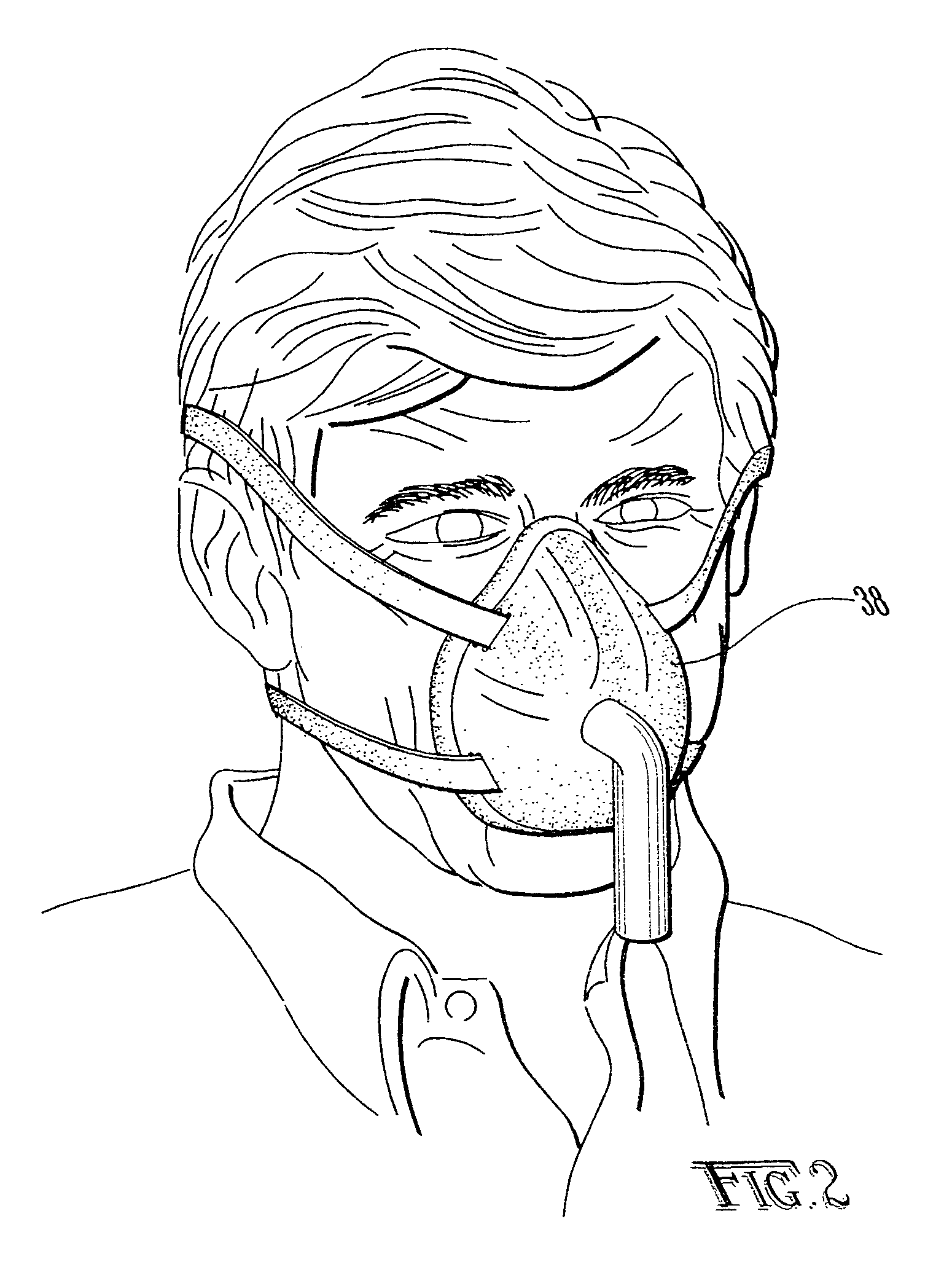

Method for dispensing a prescription product

InactiveUS20070000492A1Reduces initialLow costOperating means/releasing devices for valvesRespiratory masksPositive airway pressureAlternative treatment

A method for dispensing alternative prescription products to a patient to provide the patient alternative treatment options and increase treatment protocol compliance. After a patient is diagnosed with a medical condition requiring treatment with a prescription product, the patient is provided with a kit containing a multiple continuous positive airway CPAP interfaces designed to adapt to a plurality of different facial features. Instead of being custom fitted by a CPAP interface specialist, the patient is given multiple CPAP interfaces to try and select the CPAP interface most desirable to the patient. The patient indicates the most desirable CPAP interface and, after a predetermined period of time, the patient is provided with a new CPAP interface matching the most desirable CPAP interface selected by the patient.

Owner:MEDICAL INDS AMERICA

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com