Patents

Literature

137 results about "Character animation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Character animation is a specialized area of the animation process, which involves bringing animated characters to life. The role of a Character Animator is analogous to that of a film or stage actor, and character animators are often said to be "actors with a pencil" (or a mouse). Character animators breathe life in their characters, creating the illusion of thought, emotion and personality. Character animation is often distinguished from creature animation, which involves bringing photo-realistic animals and creatures to life.

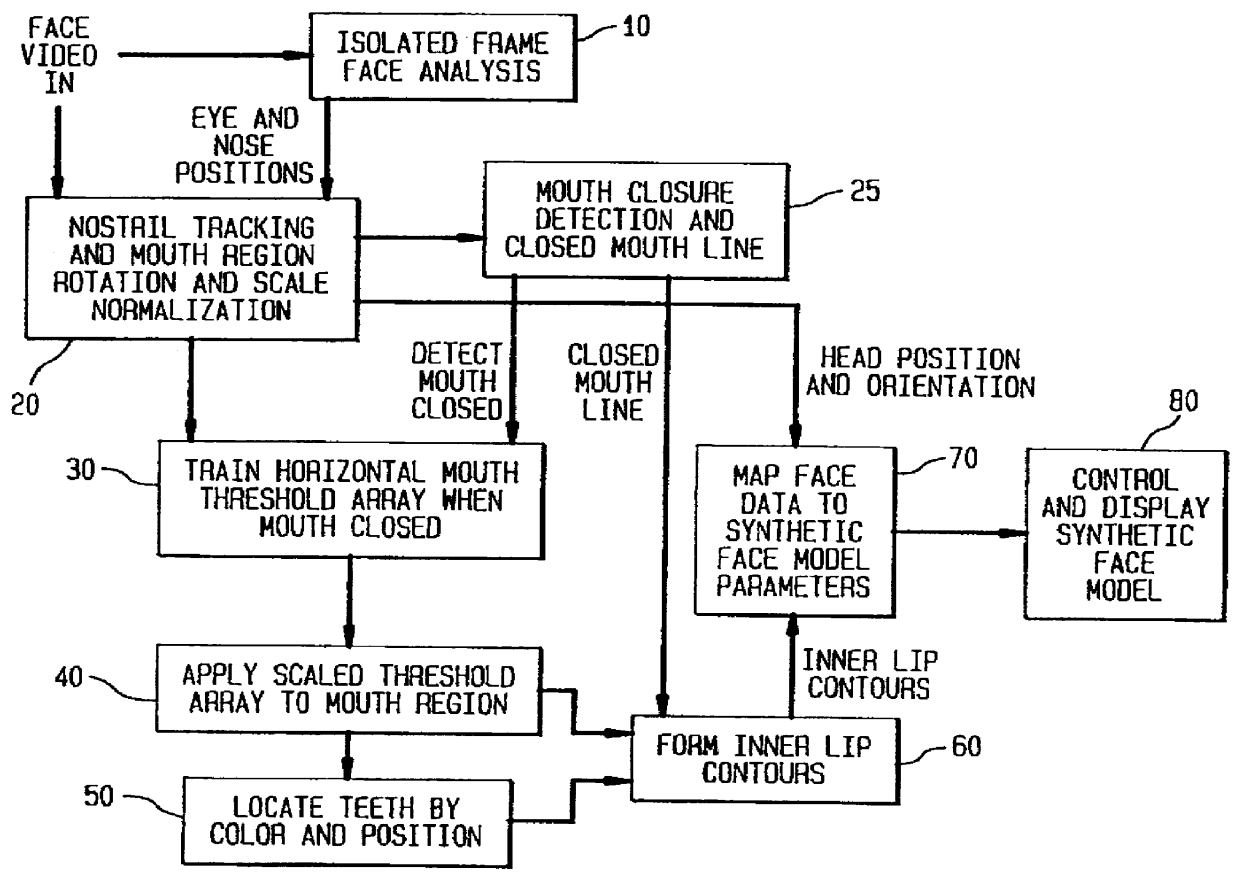

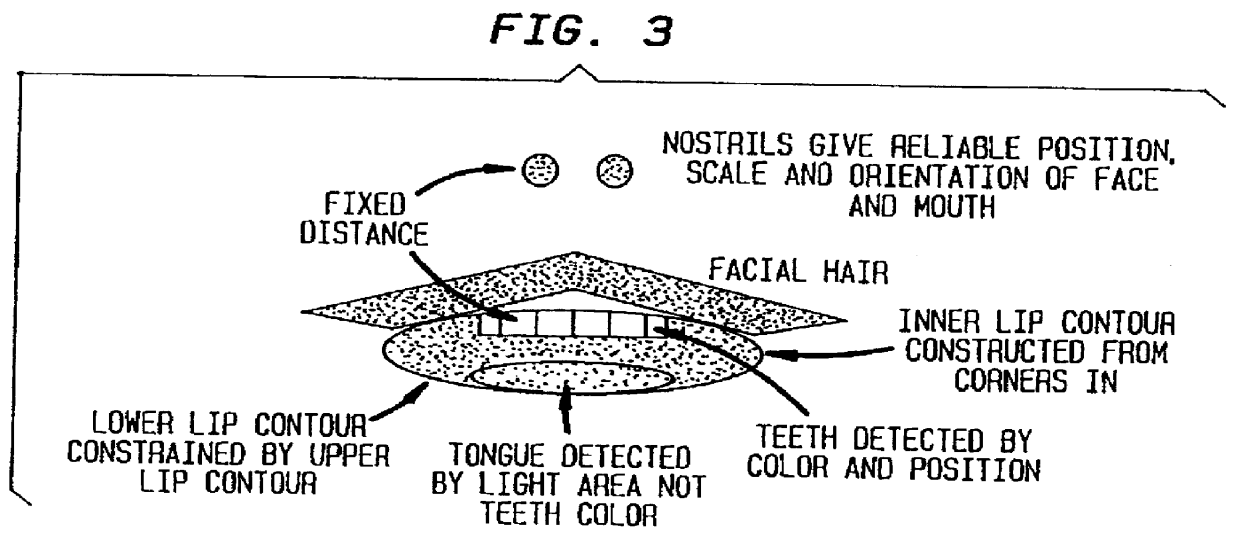

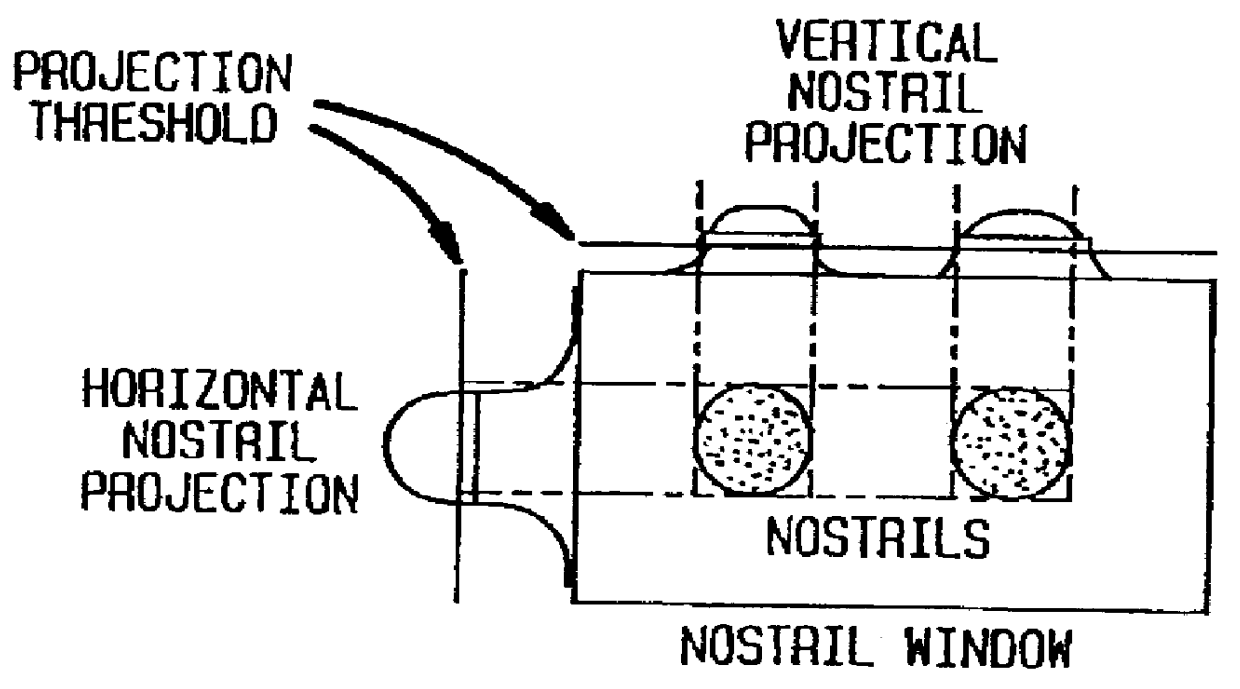

Face feature analysis for automatic lipreading and character animation

A face feature analysis which begins by generating multiple face feature candidates, e.g., eyes and nose positions, using an isolated frame face analysis. Then, a nostril tracking window is defined around a nose candidate and tests are applied to the pixels therein based on percentages of skin color area pixels and nostril area pixels to determine whether the nose candidate represents an actual nose. Once actual nostrils are identified, size, separation and contiguity of the actual nostrils is determined by projecting the nostril pixels within the nostril tracking window. A mouth window is defined around the mouth region and mouth detail analysis is then applied to the pixels within the mouth window to identify inner mouth and teeth pixels and therefrom generate an inner mouth contour. The nostril position and inner mouth contour are used to generate a synthetic model head. A direct comparison is made between the inner mouth contour generated and that of a synthetic model head and the synthetic model head is adjusted accordingly. Vector quantization algorithms may be used to develop a codebook of face model parameters to improve processing efficiency. The face feature analysis is suitable regardless of noise, illumination variations, head tilt, scale variations and nostril shape.

Owner:ALCATEL-LUCENT USA INC

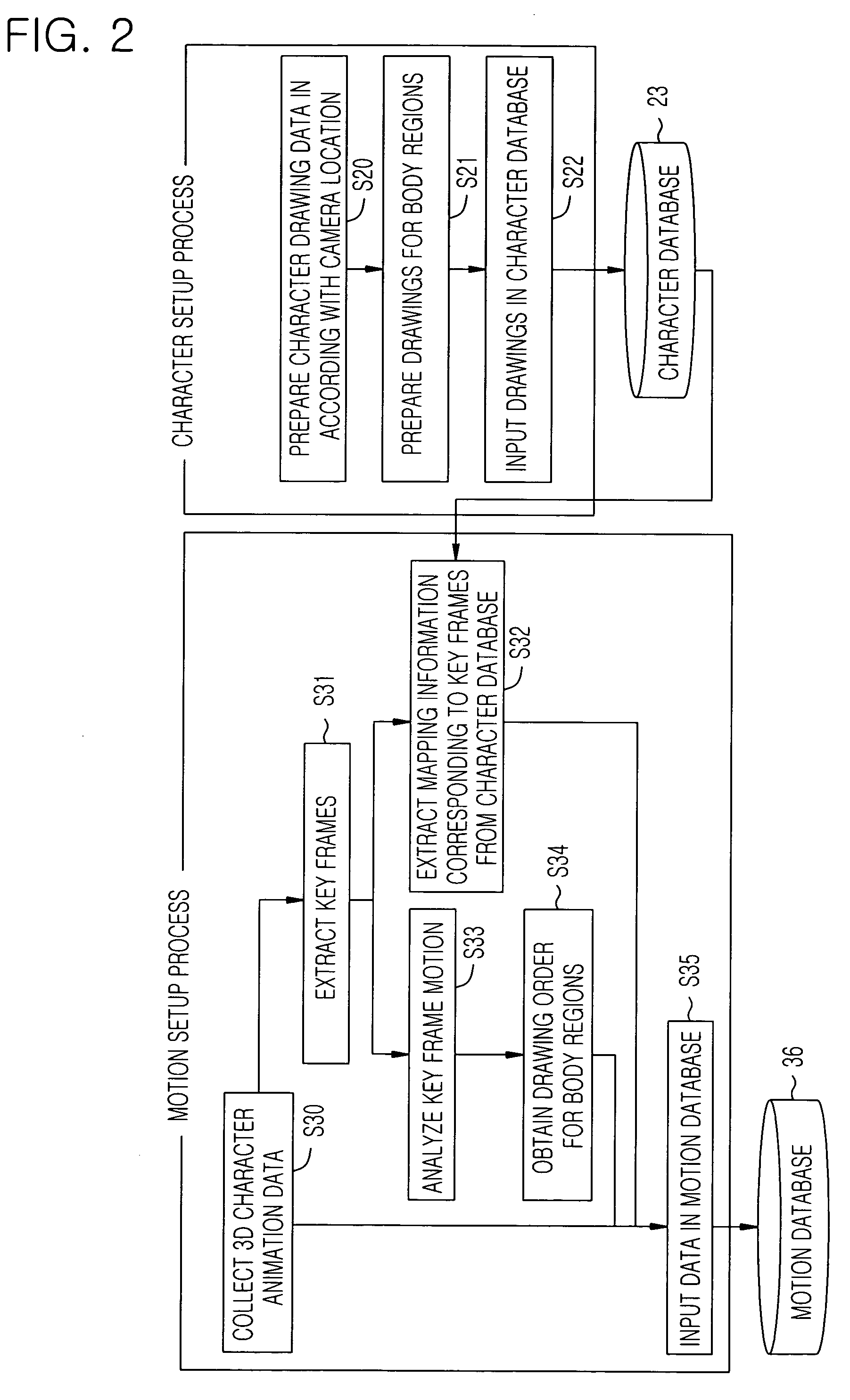

Generating action data for the animation of characters

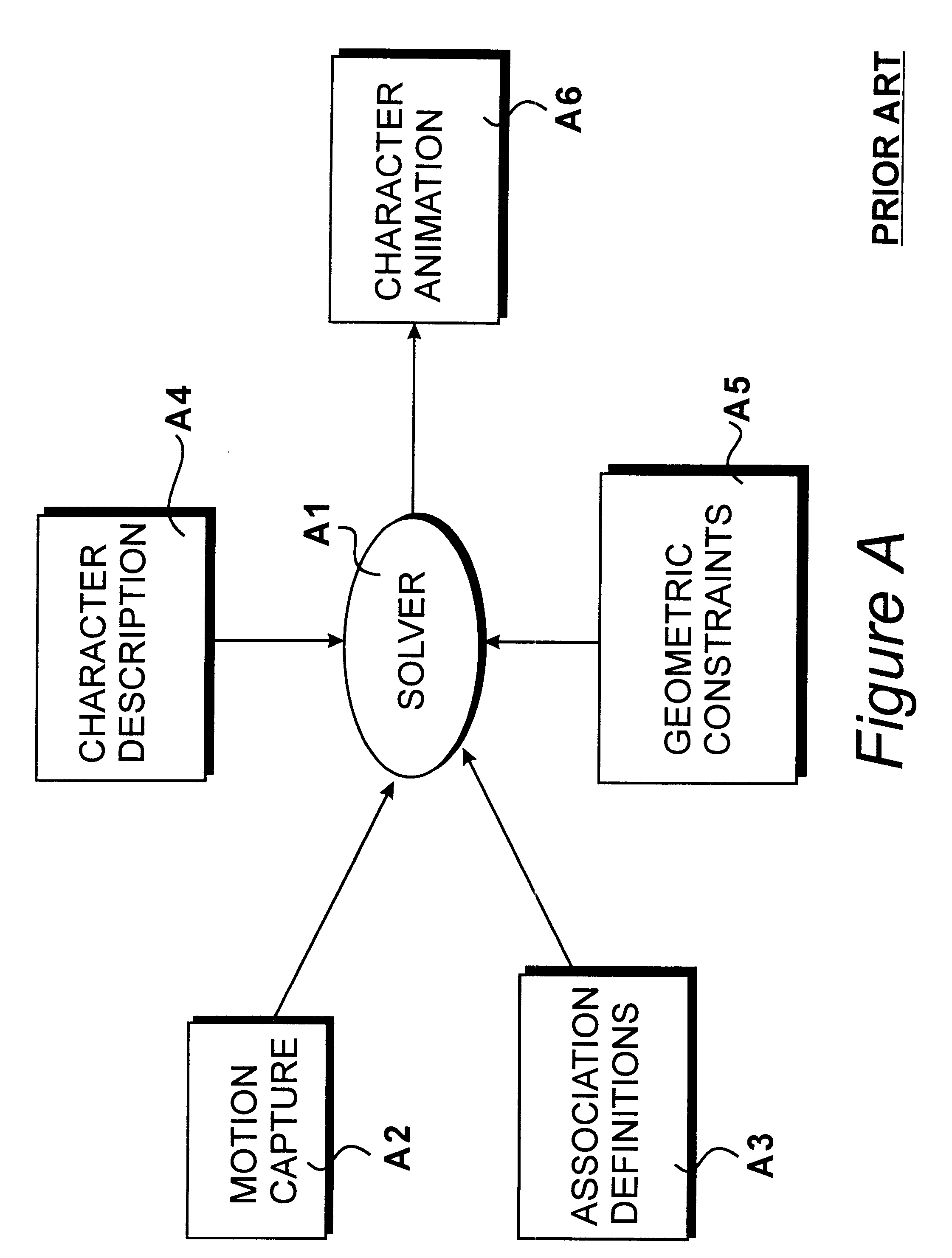

Action data for the animation of characters is generated in a computer animation system. Body part positions for a selected character are positioned in response to body part positions captured from performance data. The positions and orientations of body parts are identified for a generic actor in response to a performance in combination with a bio-mechanical model. As a separate stage of processing, positions and orientations of body parts for a character are identified in response to the position and orientation of body parts for the generic actor in combination with a bio-mechanical model. Registration data for the performance associates body parts of the performance and body parts of the generic actor. Similar registration data for the character associates body parts in the generic actor with body parts of the character. In this way, using the generic actor model, it is possible to combine any performance data that has been registered to the generic actor with any character definitions that have been associated to a similar generic actor.

Owner:KAYDARA +1

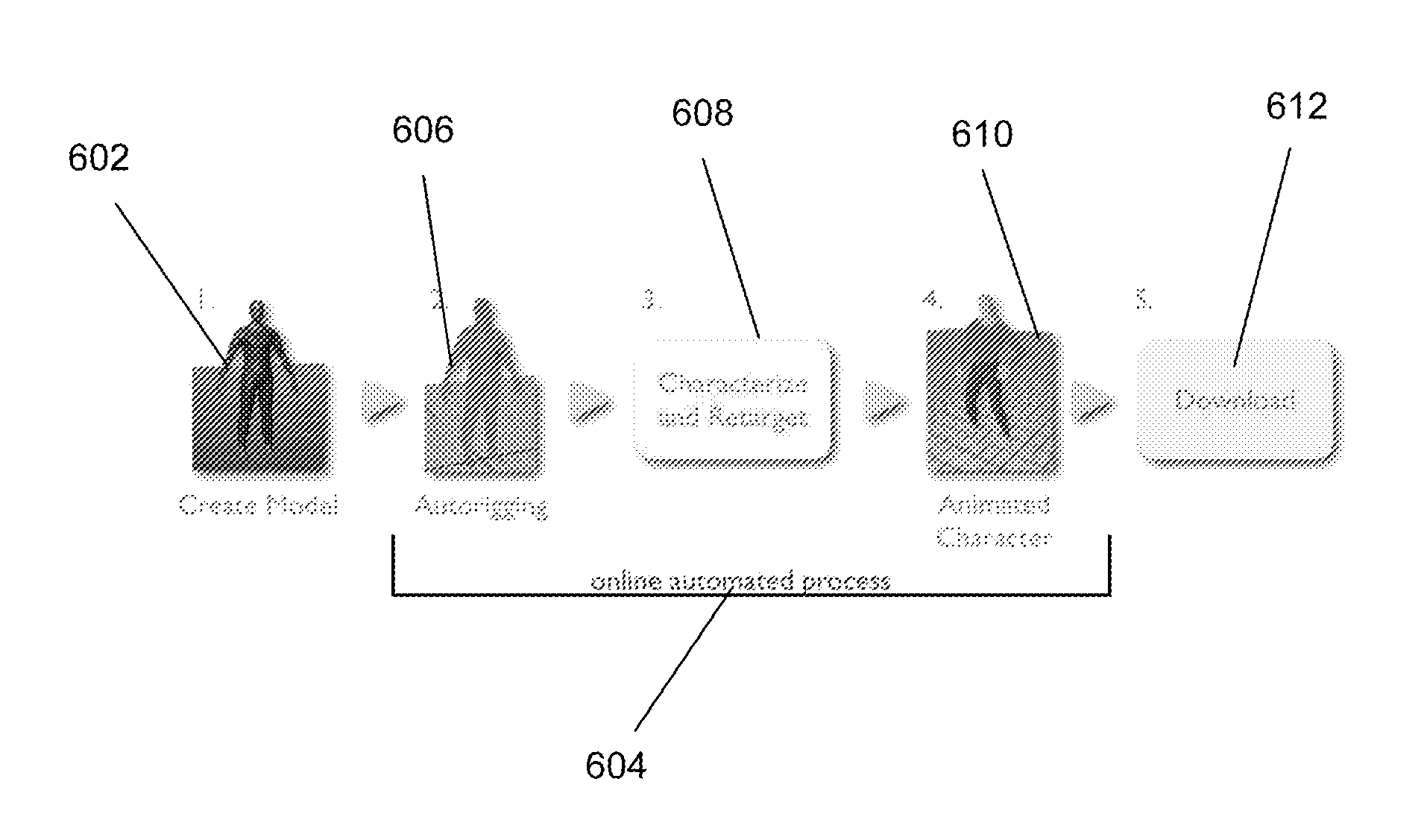

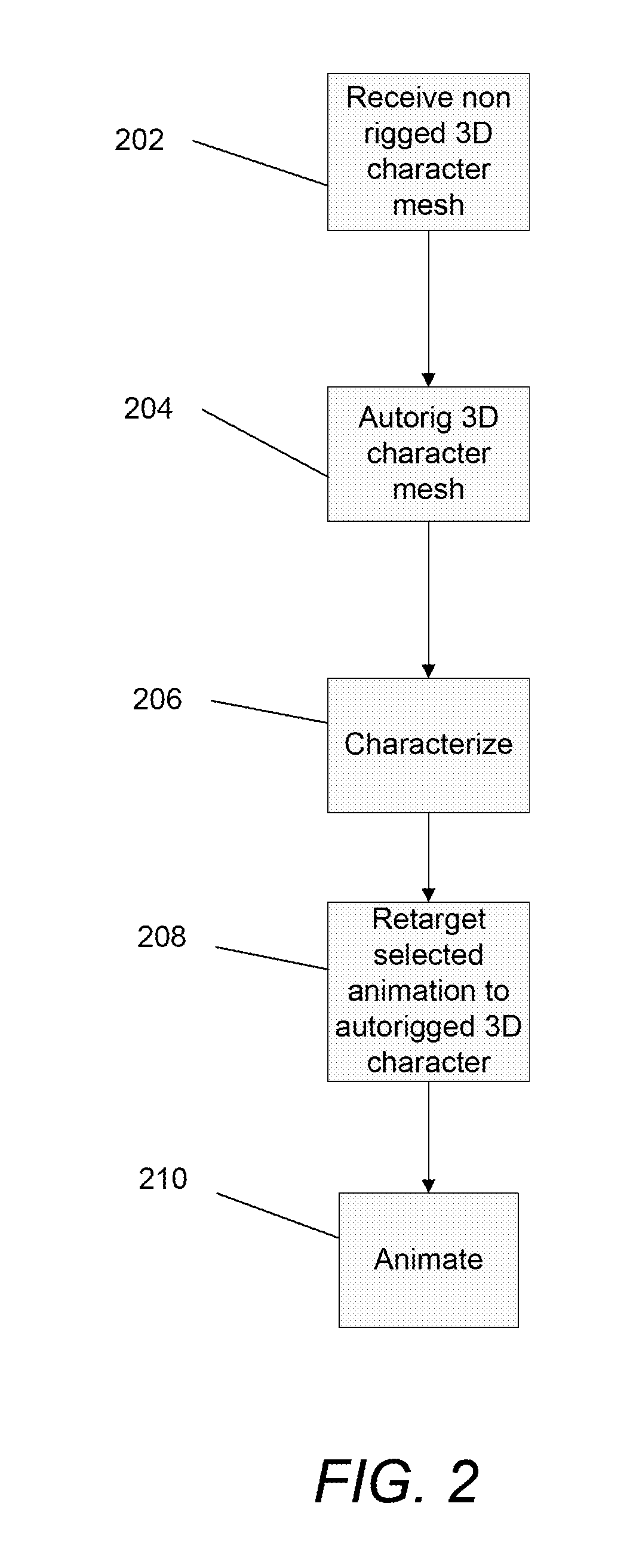

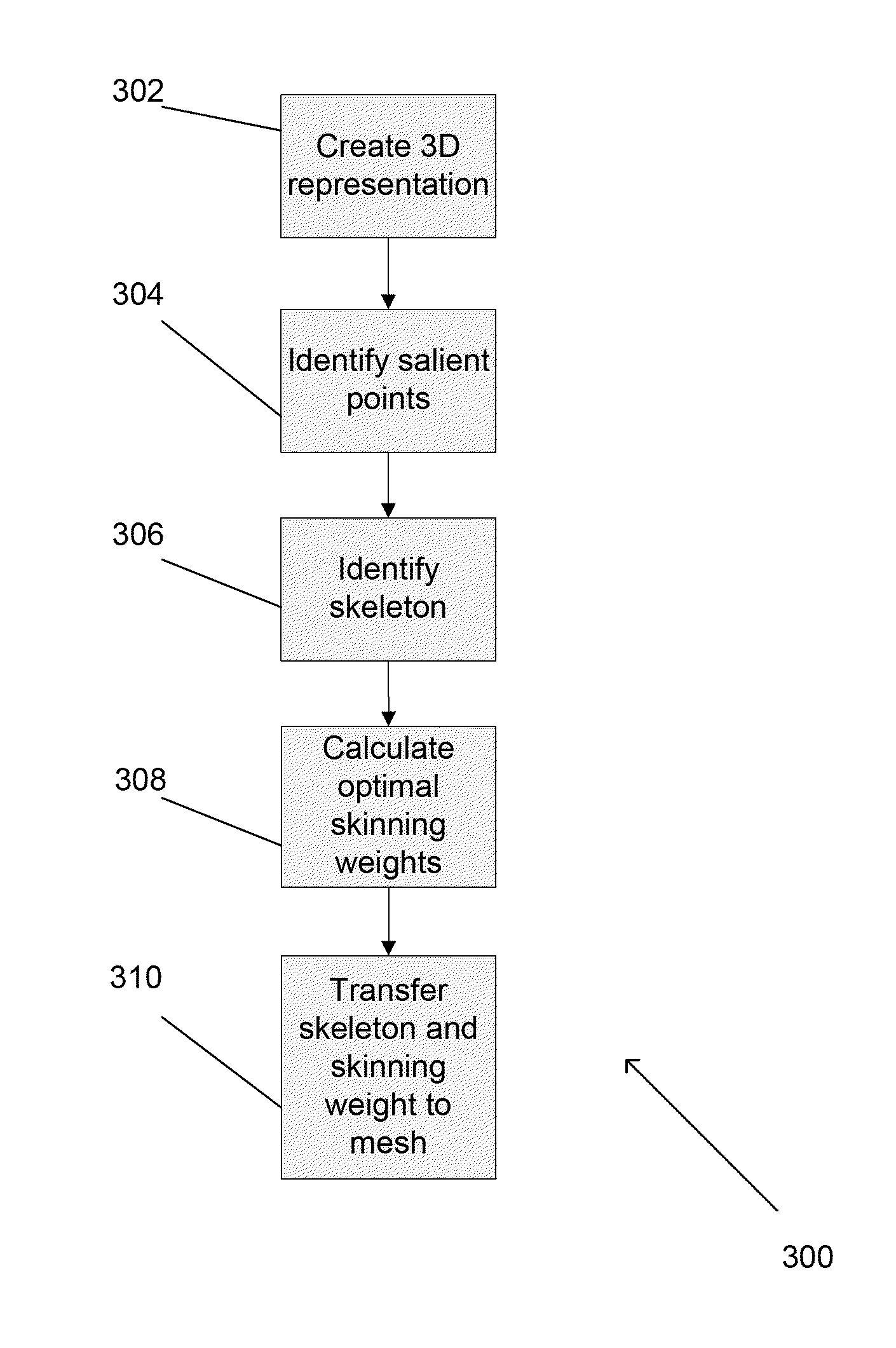

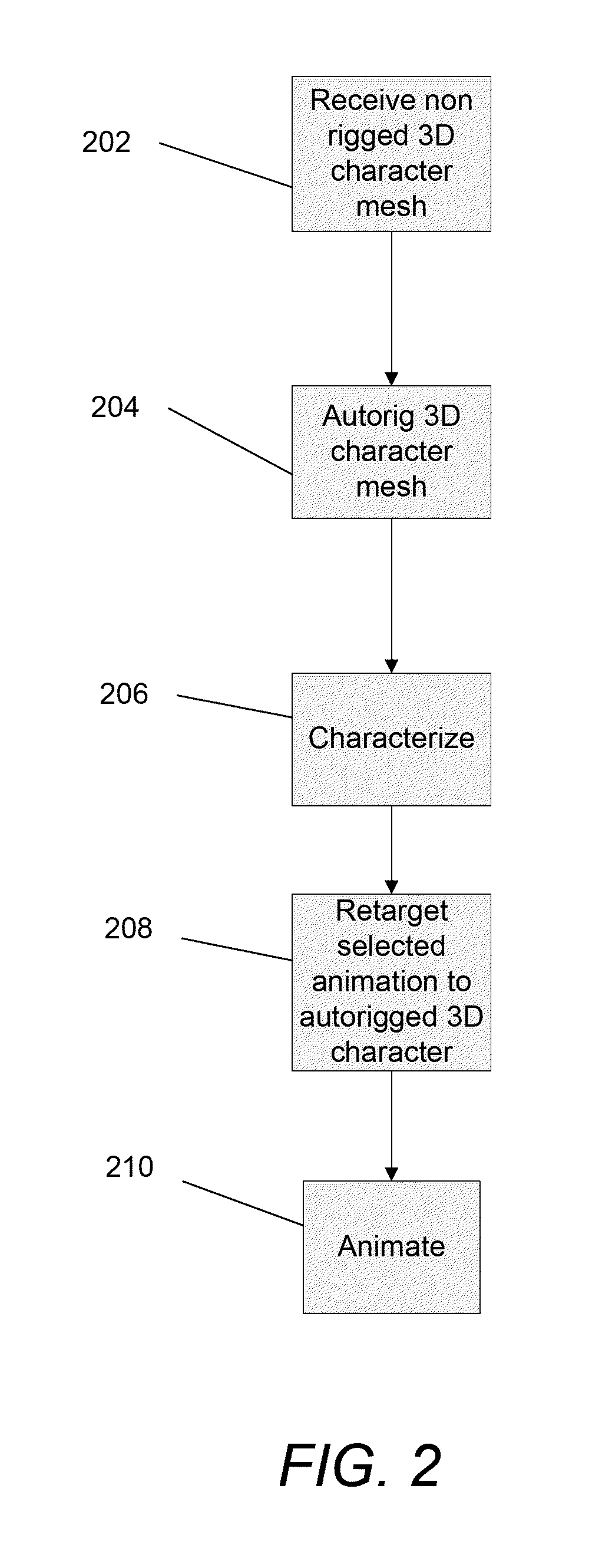

Automatic generation of 3D character animation from 3D meshes

Owner:ADOBE SYST INC

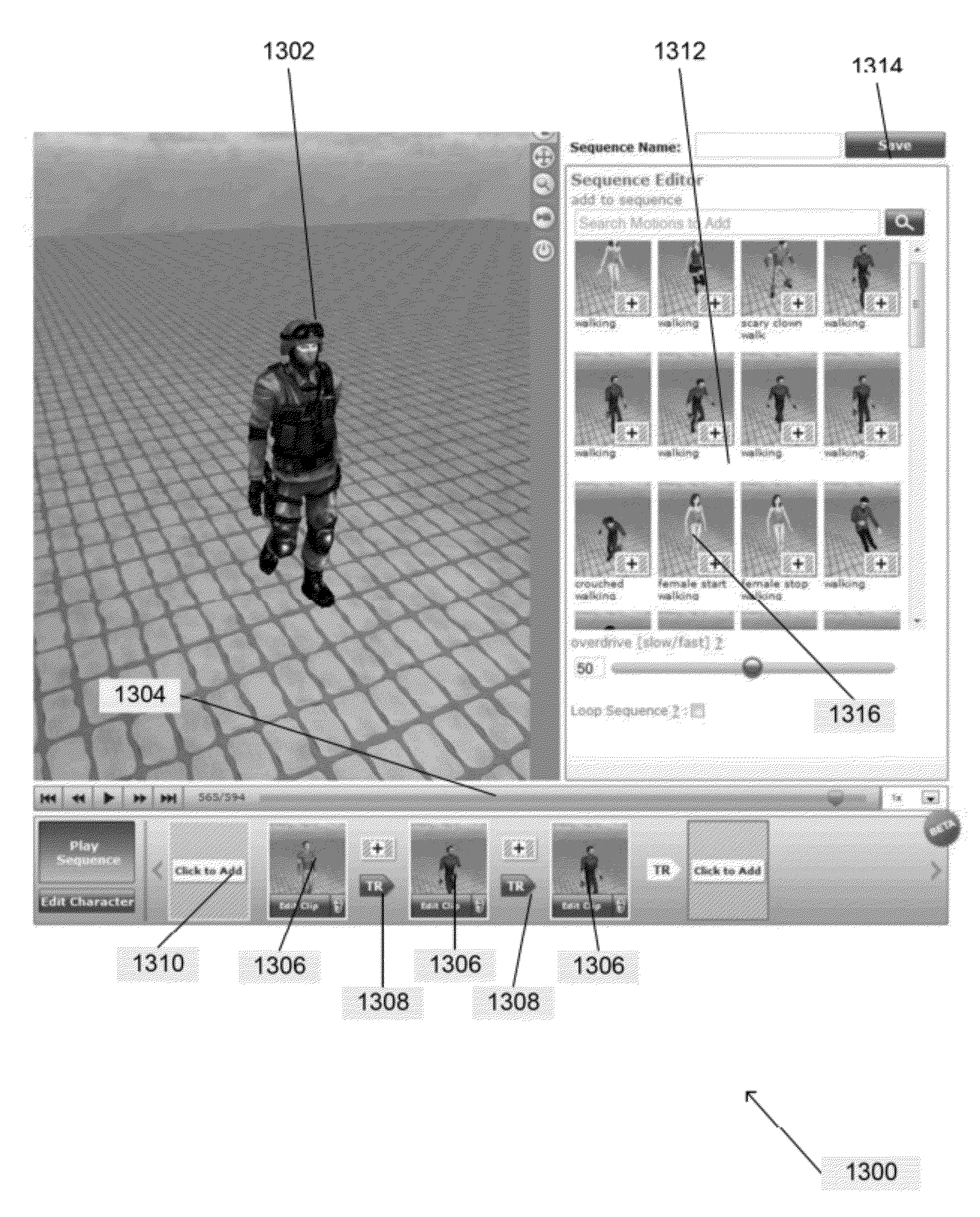

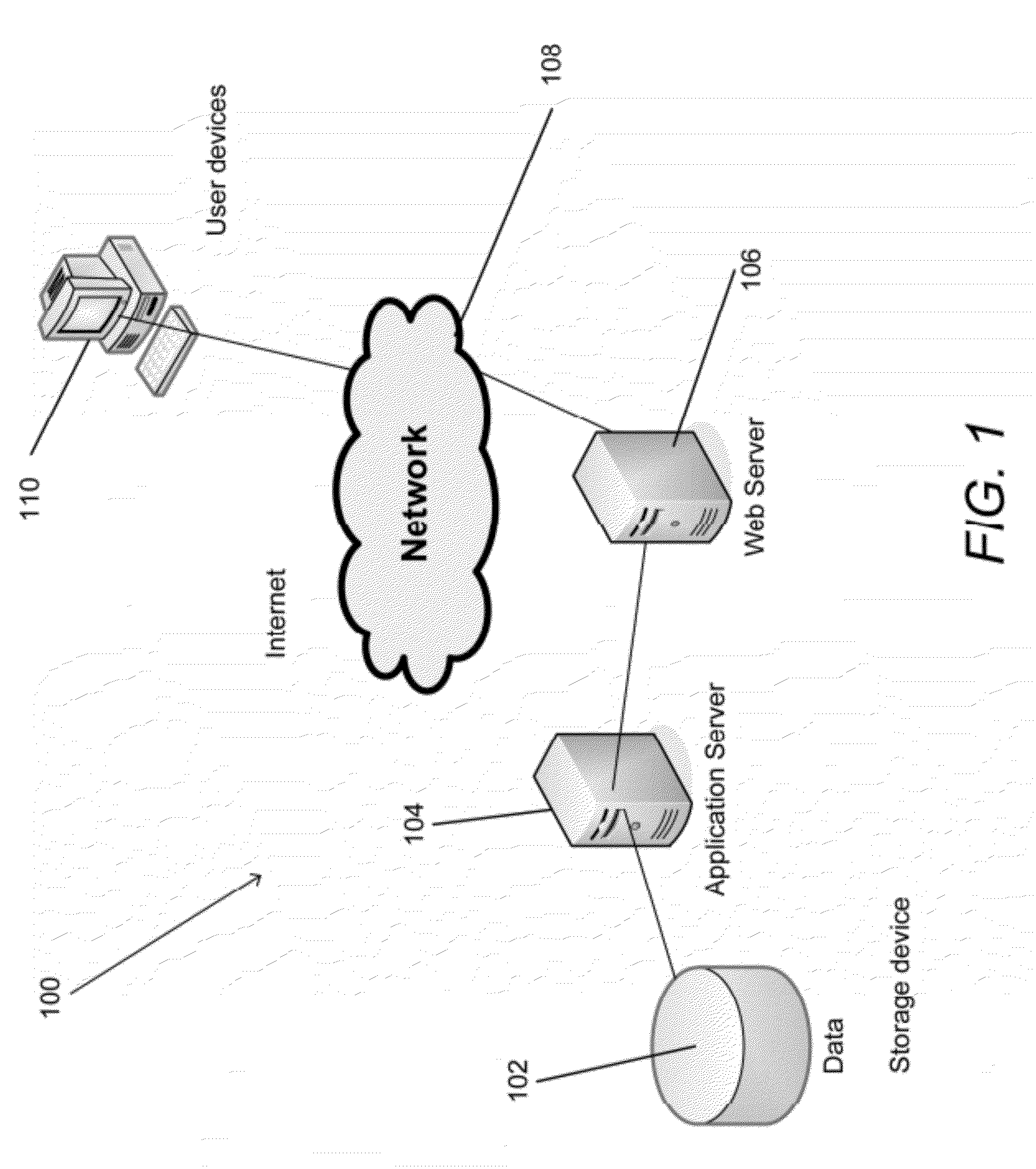

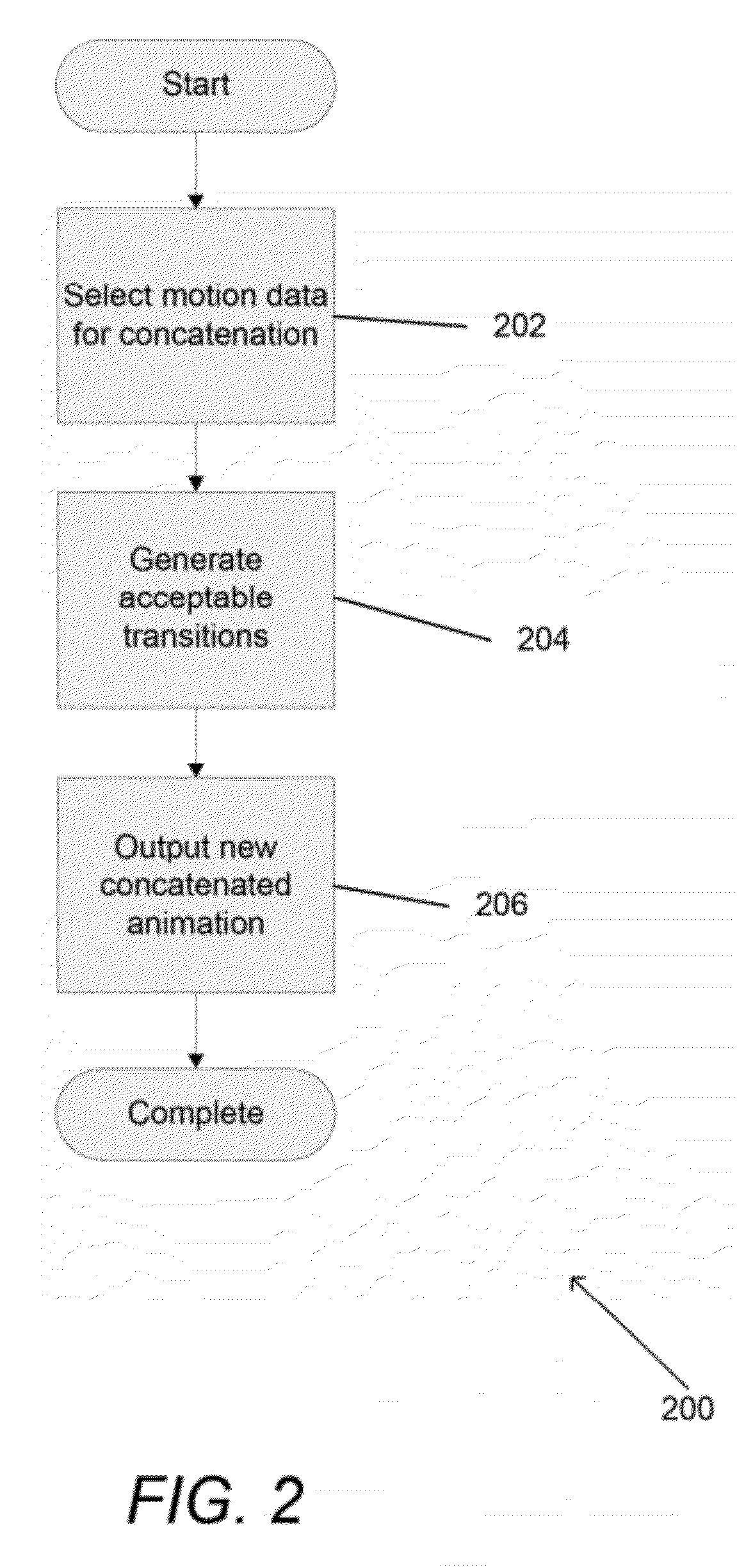

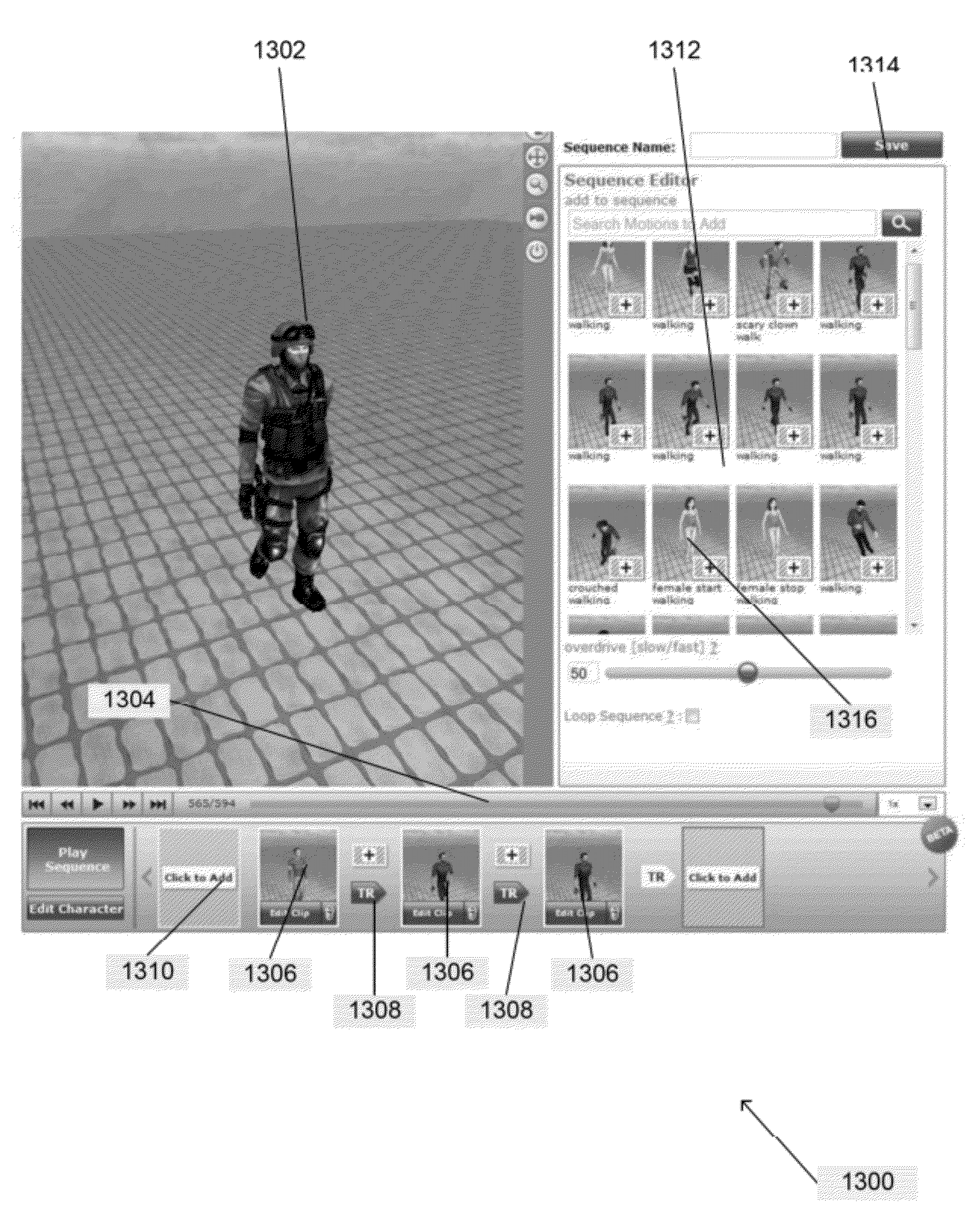

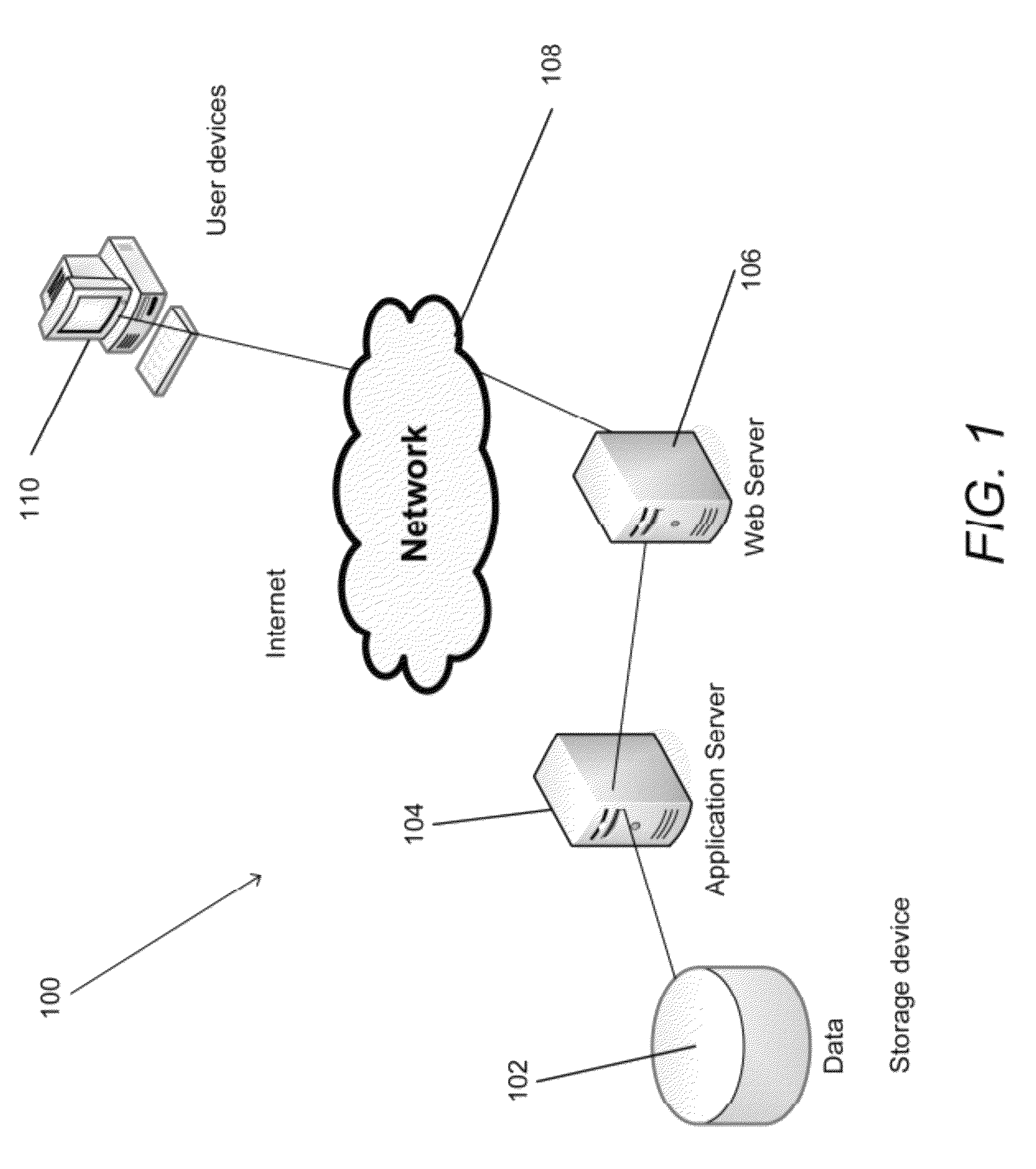

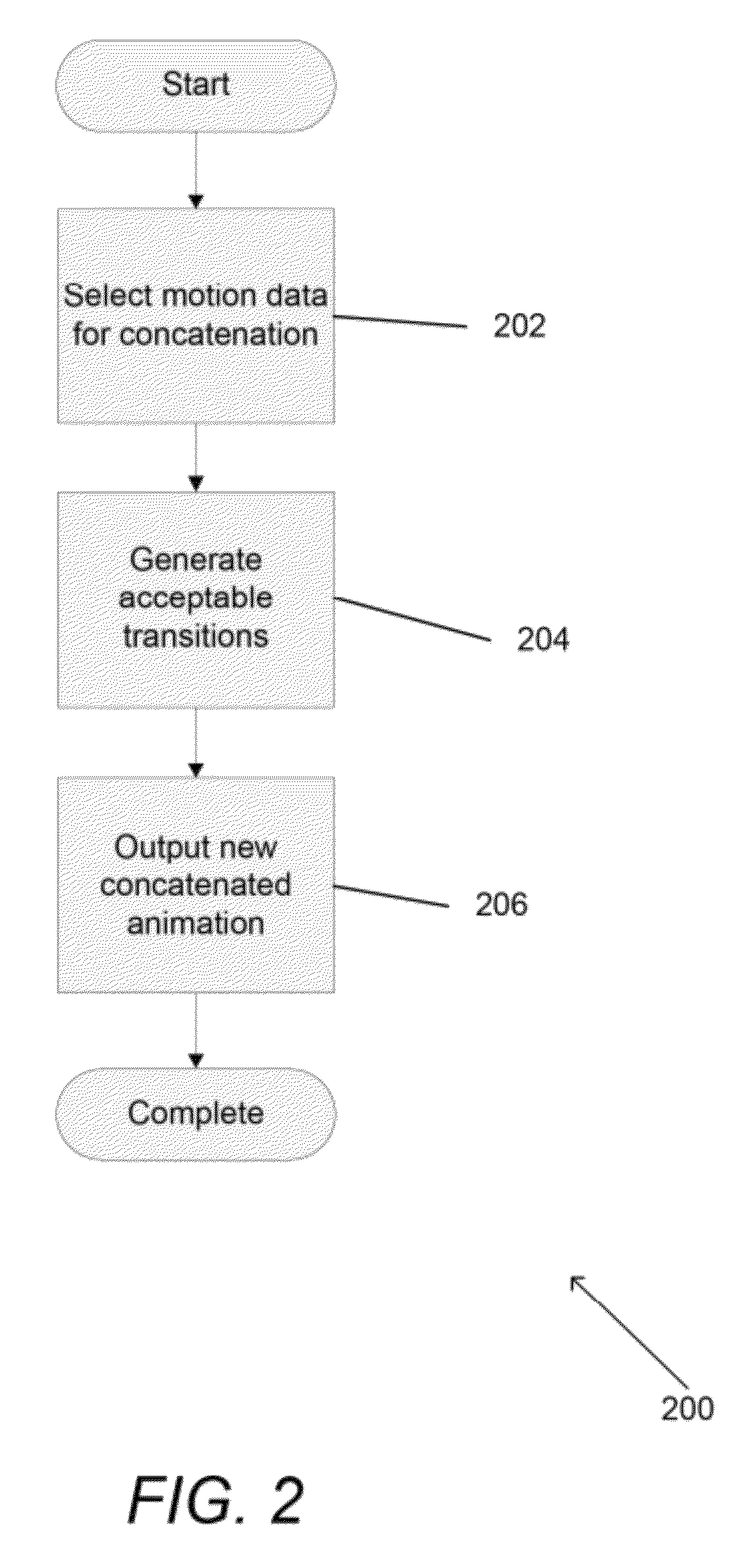

Real-time automatic concatenation of 3D animation sequences

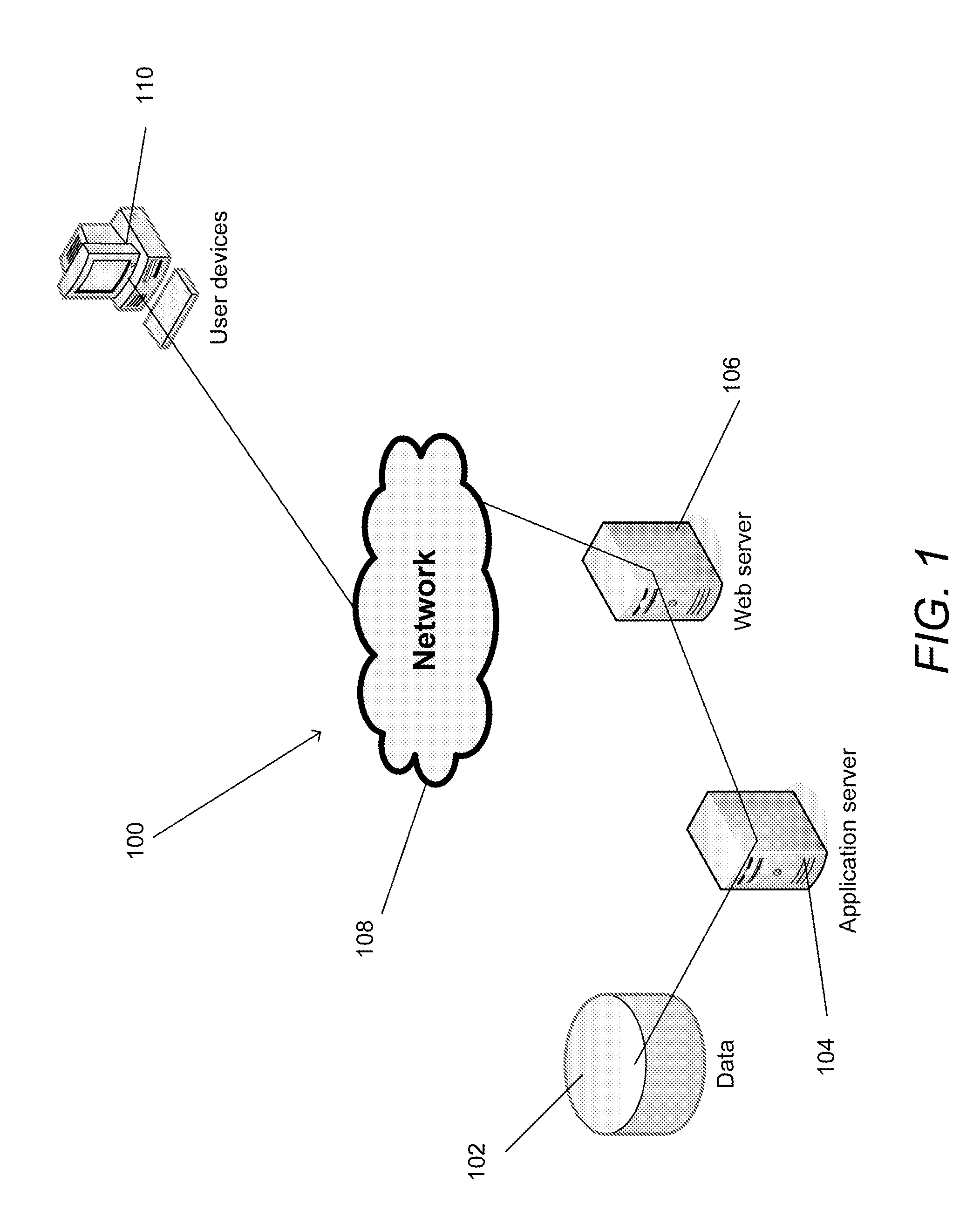

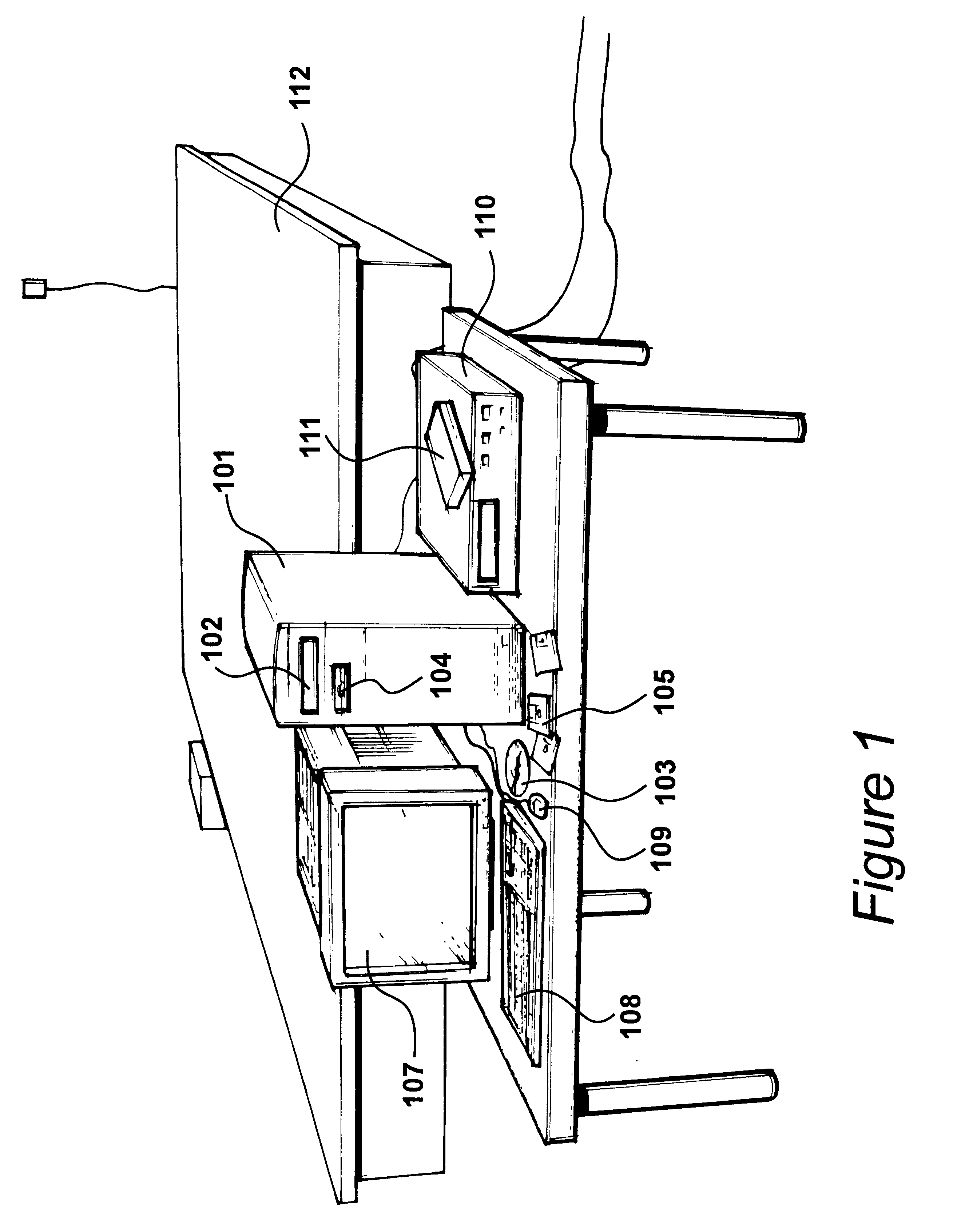

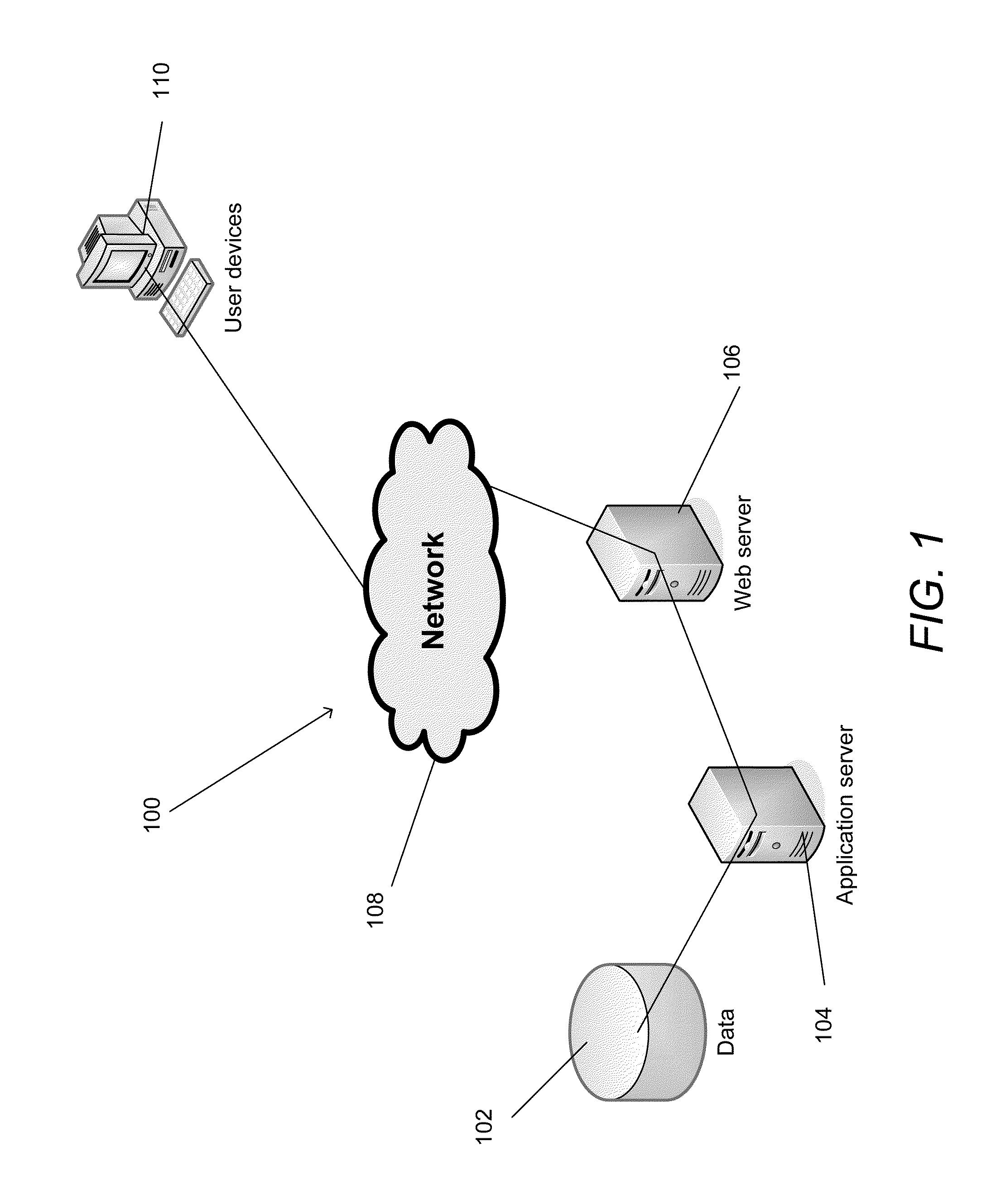

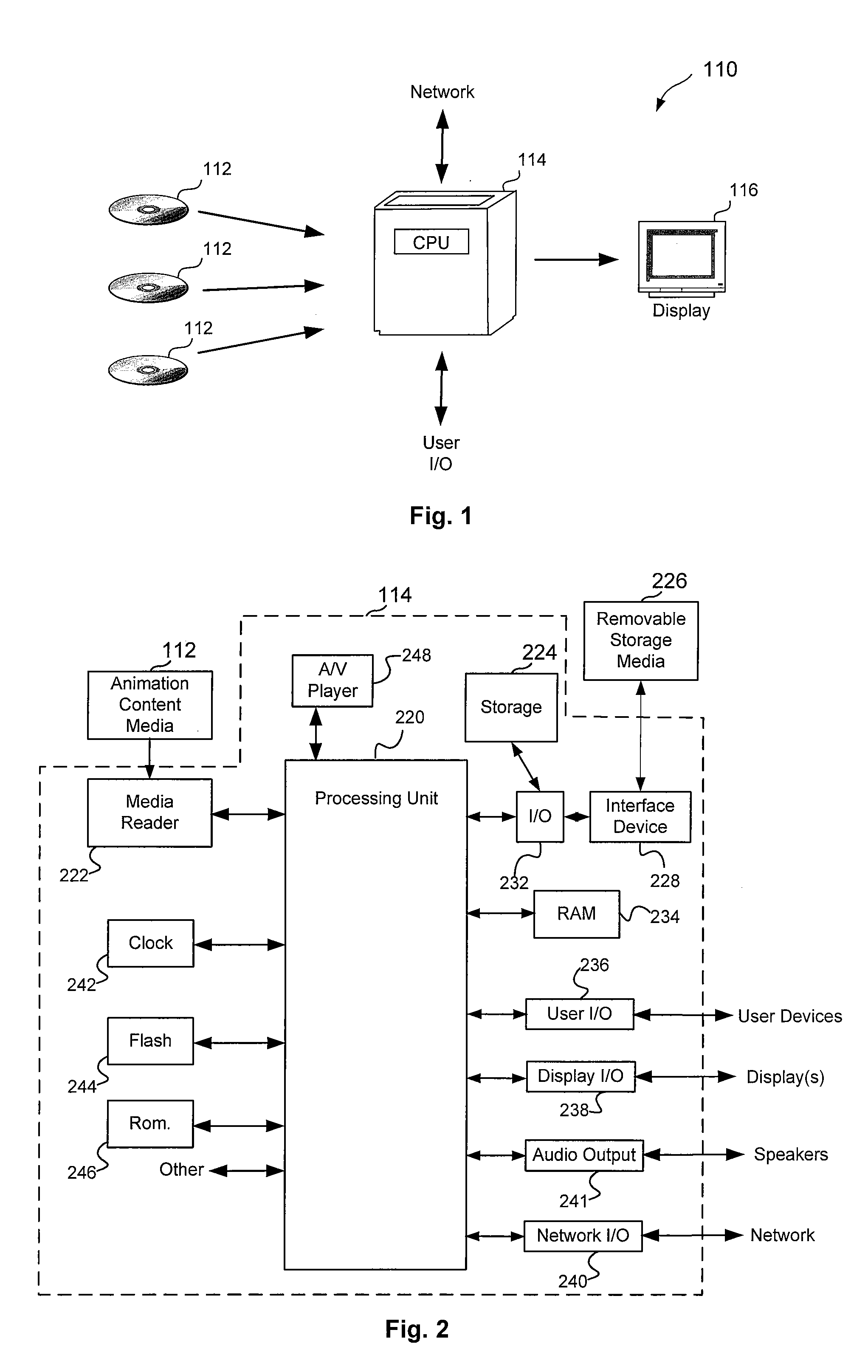

Systems and methods for generating and concatenating 3D character animations are described including systems in which recommendations are made by the animation system concerning motions that smoothly transition when concatenated. One embodiment includes a server system connected to a communication network and configured to communicate with a user device that is also connected to the communication network. In addition, the server system is configured to generate a user interface that is accessible via the communication network, the server system is configured to receive high level descriptions of desired sequences of motion via the user interface, the server system is configured to generate synthetic motion data based on the high level descriptions and to concatenate the synthetic motion data, the server system is configured to stream the concatenated synthetic motion data to a rendering engine on the user device, and the user device is configured to render a 3D character animated using the streamed synthetic motion data.

Owner:ADOBE INC

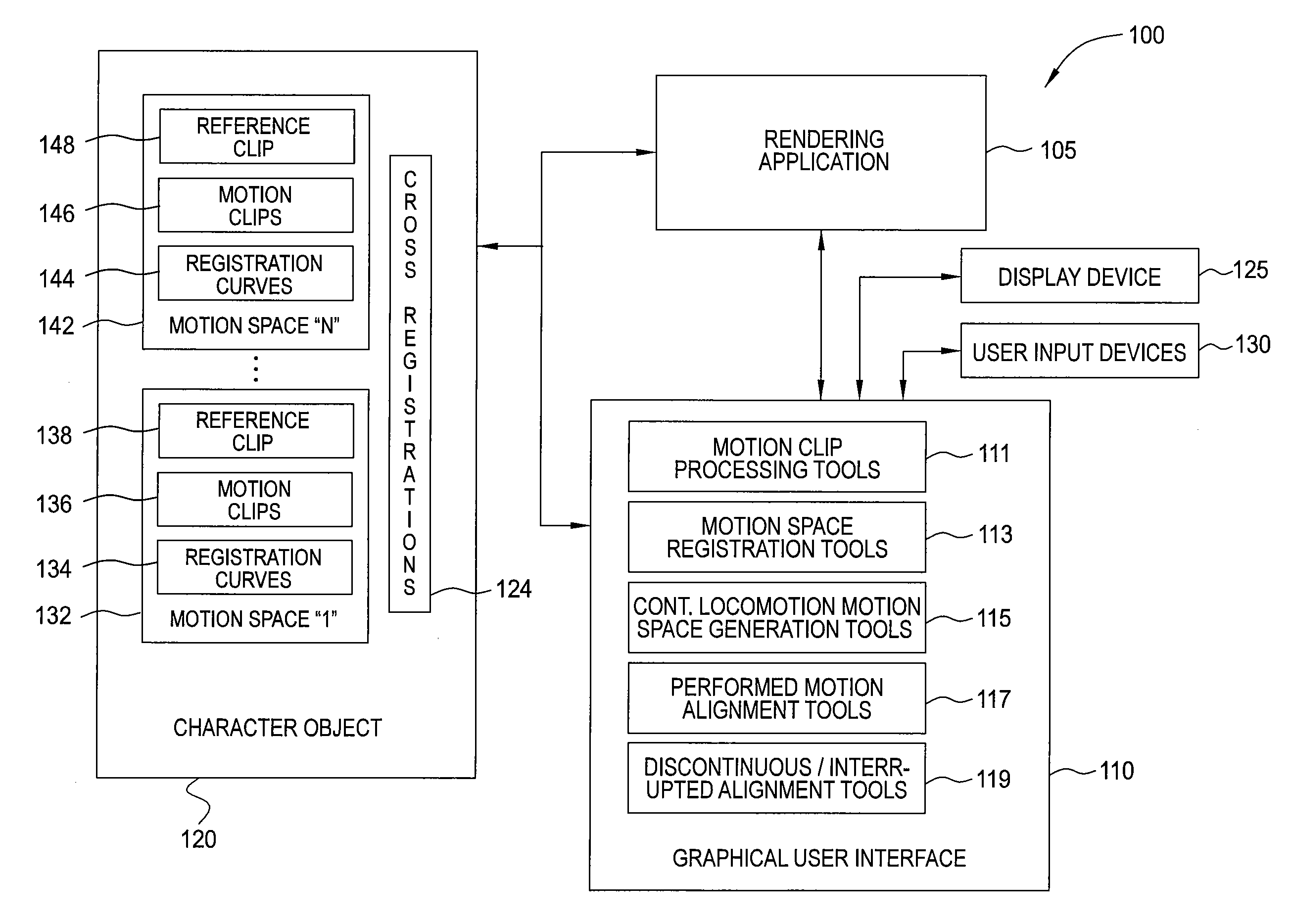

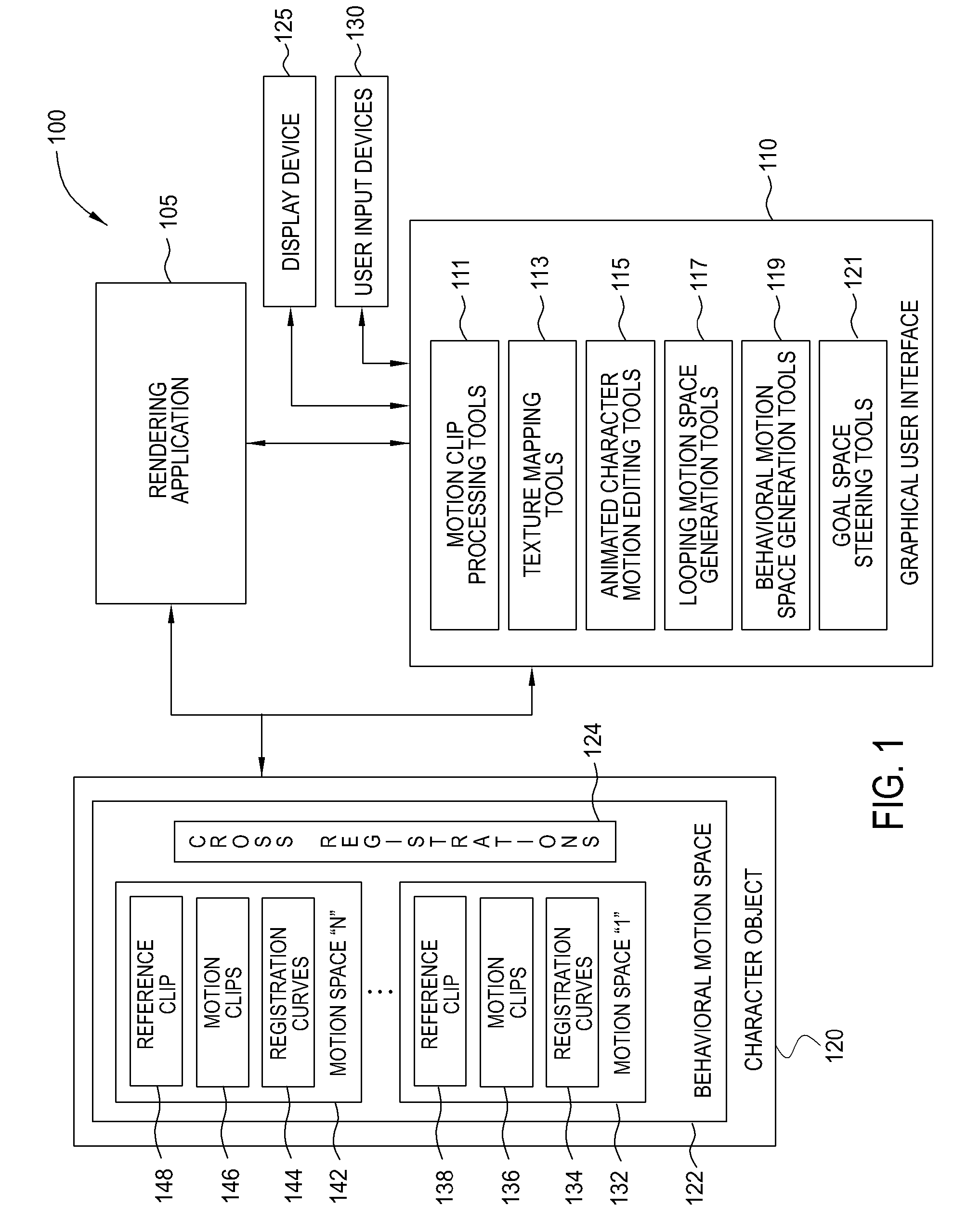

System and method for real-time character animation

InactiveUS20110012903A1Minimizing and even eliminating motion artifactMinimizing or even eliminating motion artifactsAnimation3D-image renderingAnimationTransition point

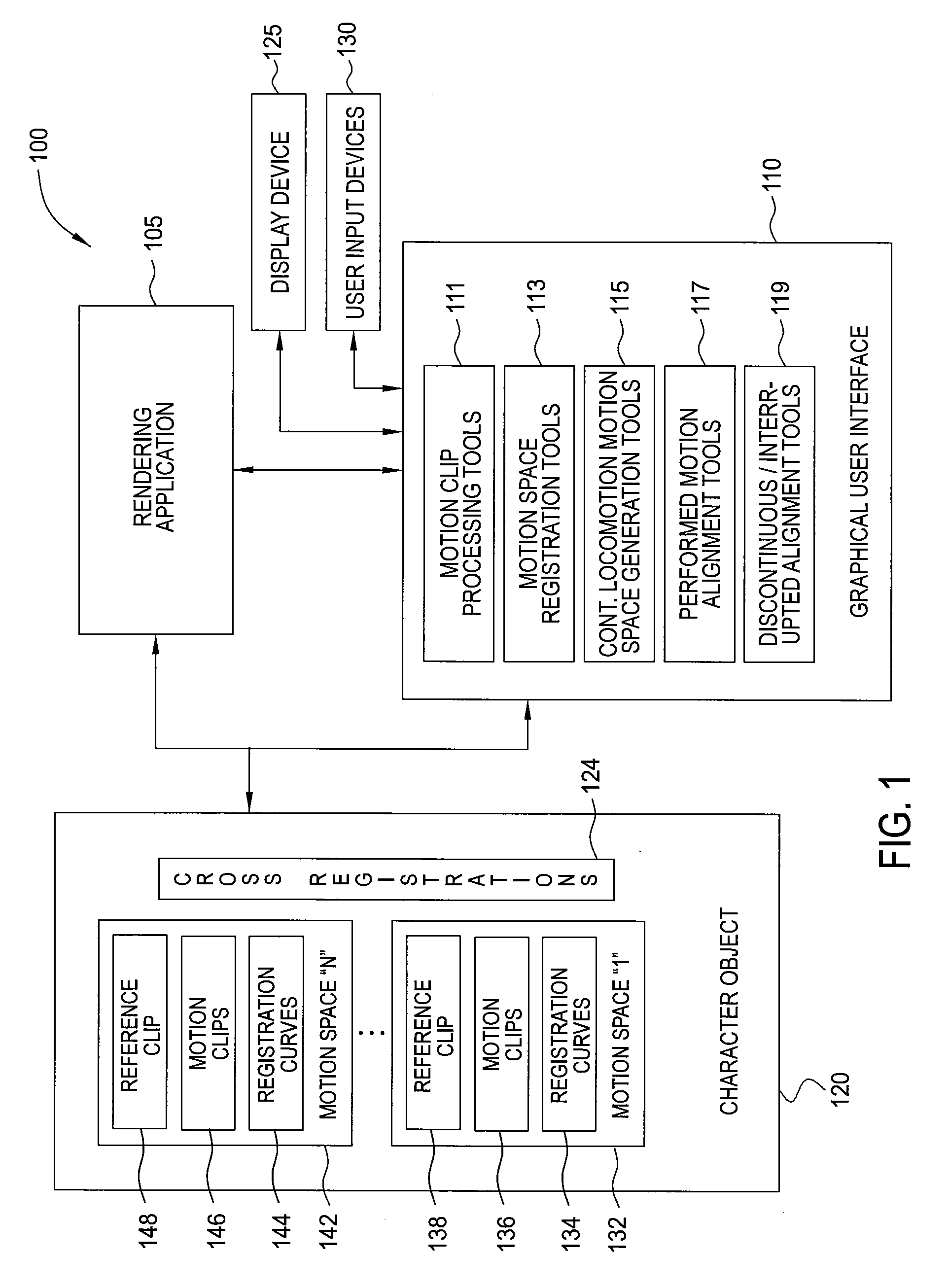

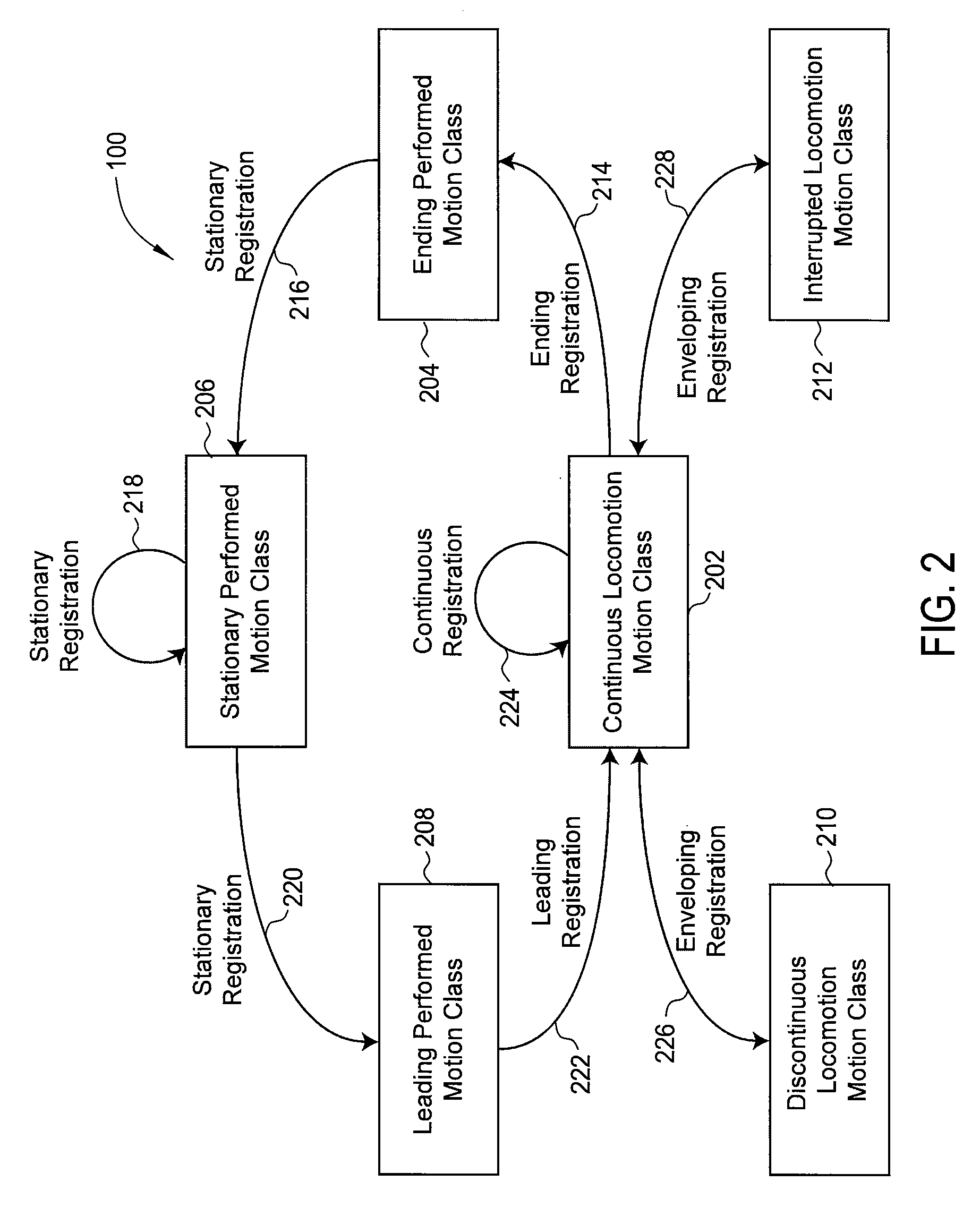

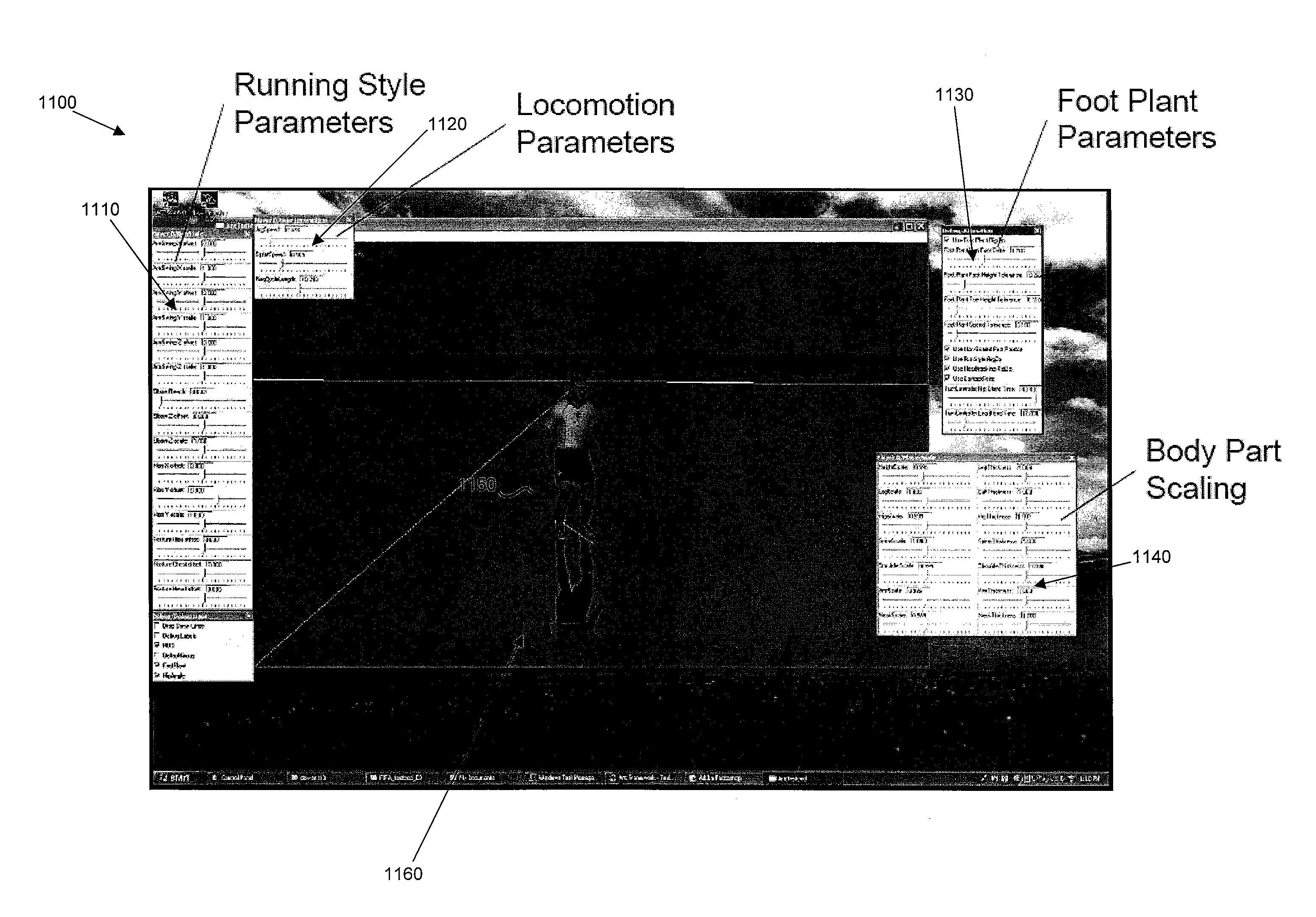

A method for generating a motion sequence of a character object in a rendering application. The method includes selecting a first motion clip associated with a first motion class and selecting a second motion clip associated with a second motion class, where the first and second motion clips are stored in a memory. The method further includes generating a registration curve that temporally and spatially aligns one or more frames of the first motion clip with one or more frames of the second motion clip, and rendering the motion sequence of the character object by blending the one or more frames of the first motion clip with one or more frames of second motion clip based on the registration curve. One advantage of techniques described herein is that they provide for creating motion sequences having multiple motion types while minimizing or even eliminating motion artifacts at the transition points.

Owner:AUTODESK INC

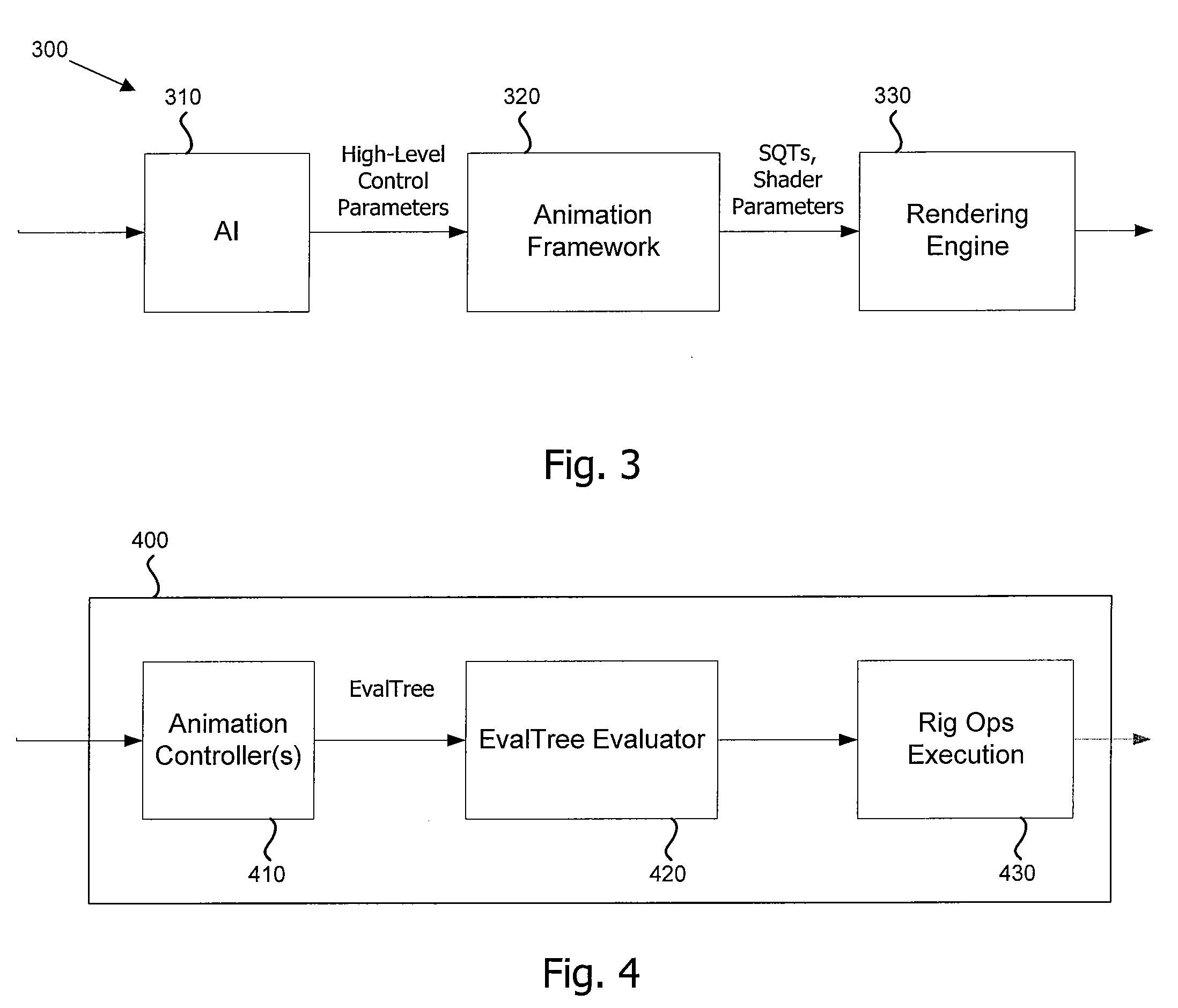

Character animation framework

InactiveUS20090091563A1Change in dataEasy to reuseAnimation3D-image renderingApplication softwareCharacter animation

An extensible character animation framework is provided that enables video game design teams to develop reusable animation controllers that are customizable for specific applications. According to embodiments, the animation framework enables animators to construct complex animations by creating hierarchies of animation controllers, and the complex animation is created by blending the animation outputs of each of the animation controllers in the hierarchy. The extensible animation framework also provides animators with the ability to customize various attributes of a character being animated and to view the changes to the animation in real-time in order to provide immediate feedback to the animators without requiring that the animators manually rebuild the animation data each time that the animators make a chance to the animation data.

Owner:ELECTRONICS ARTS INC

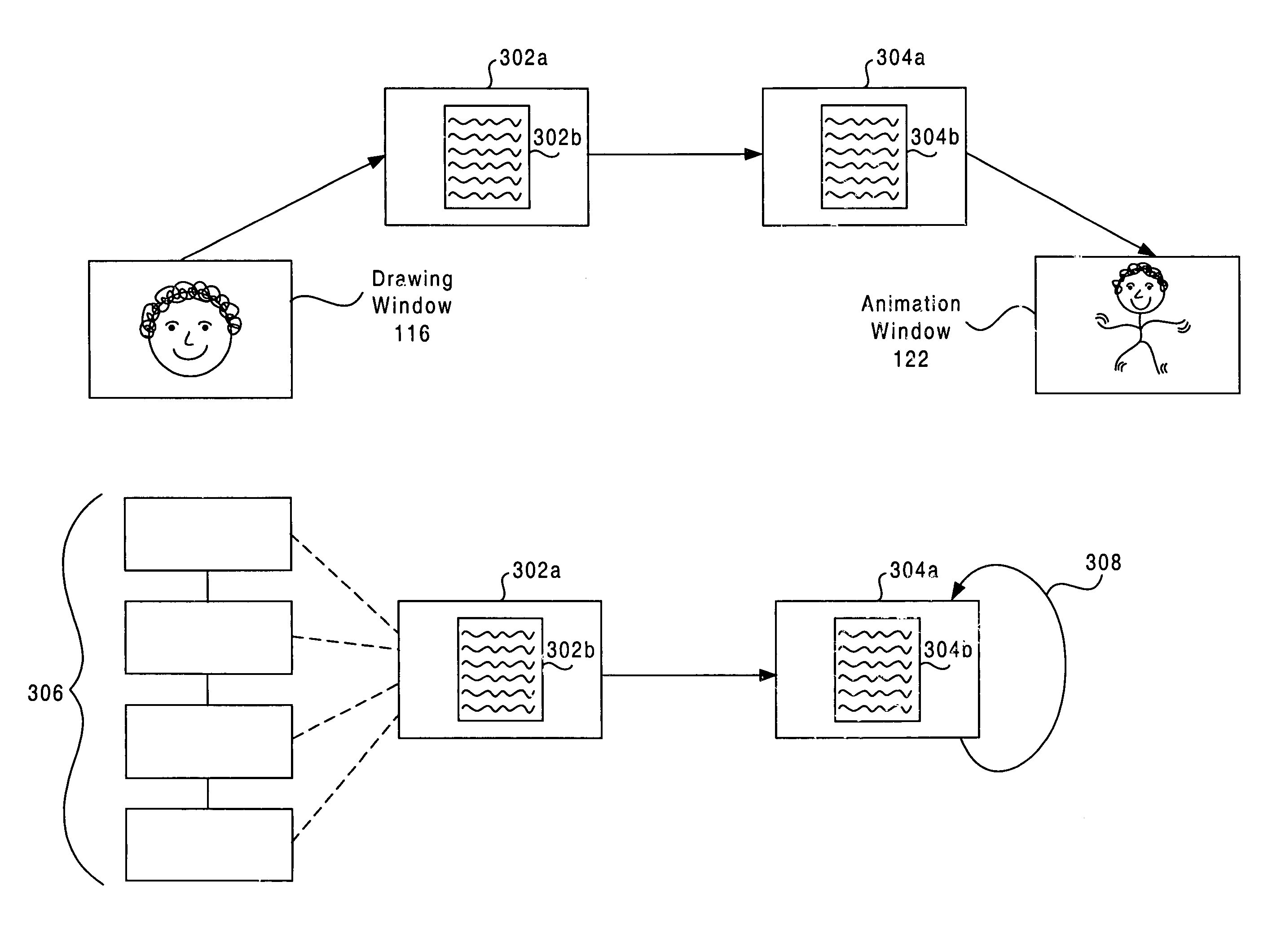

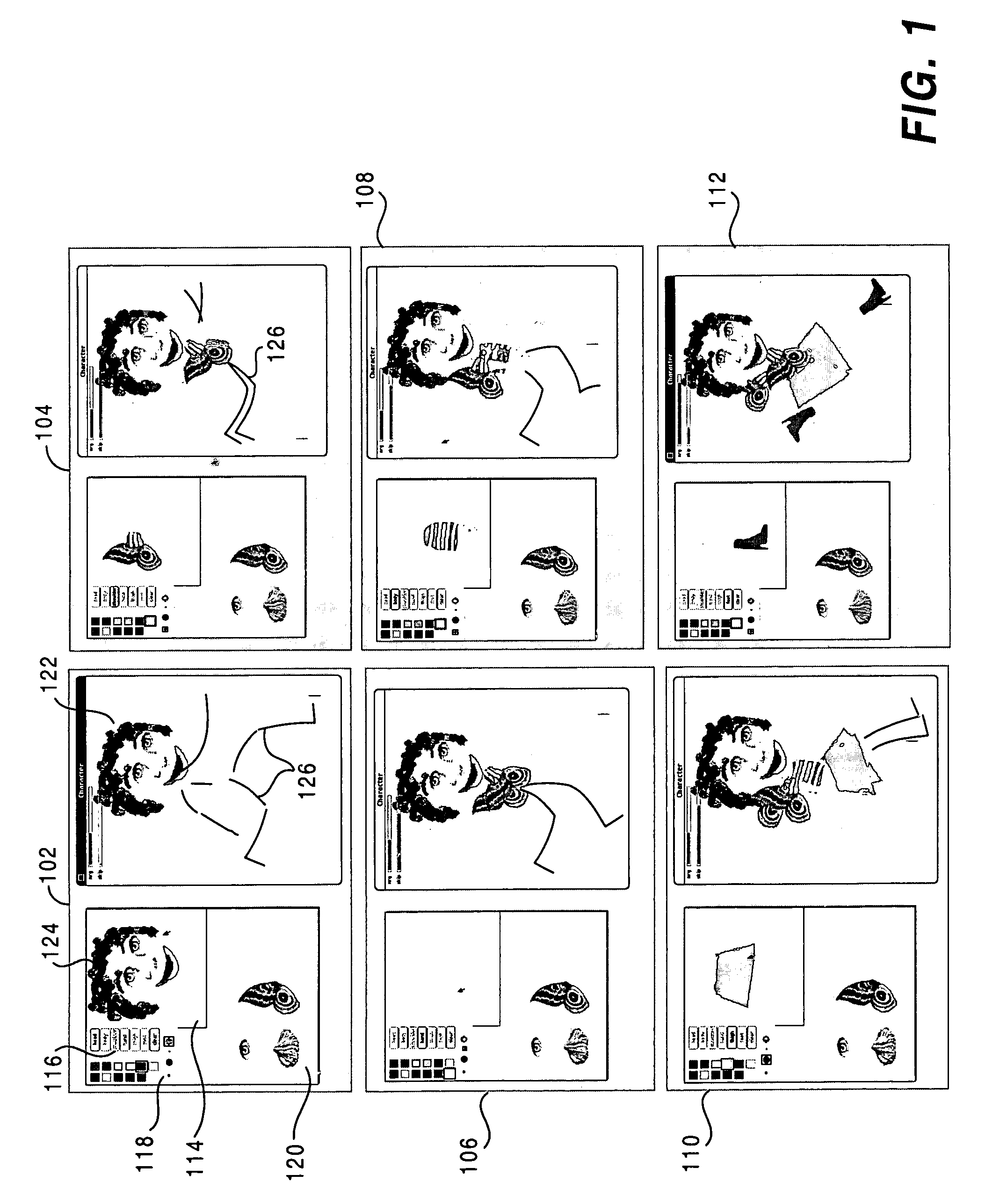

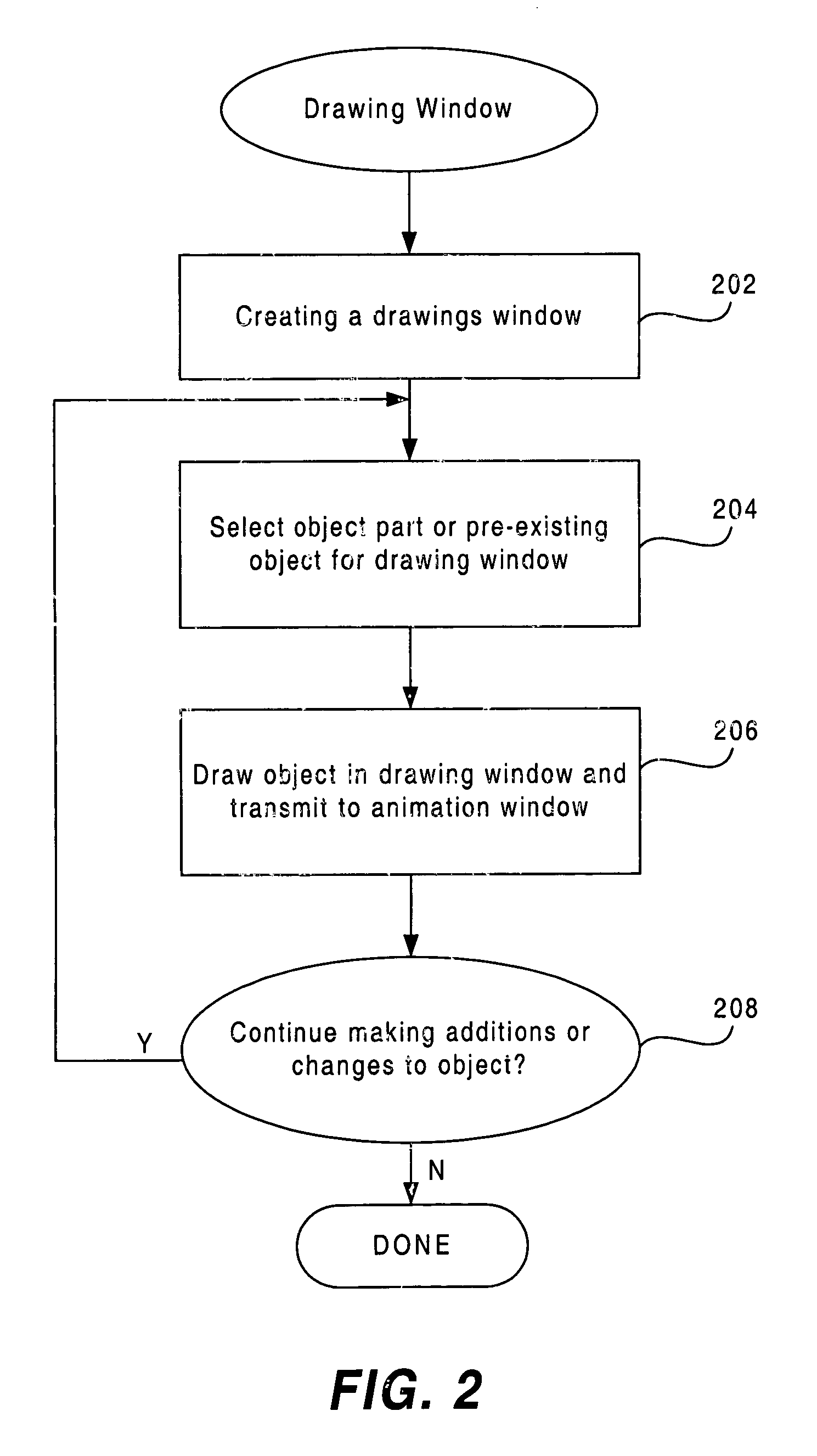

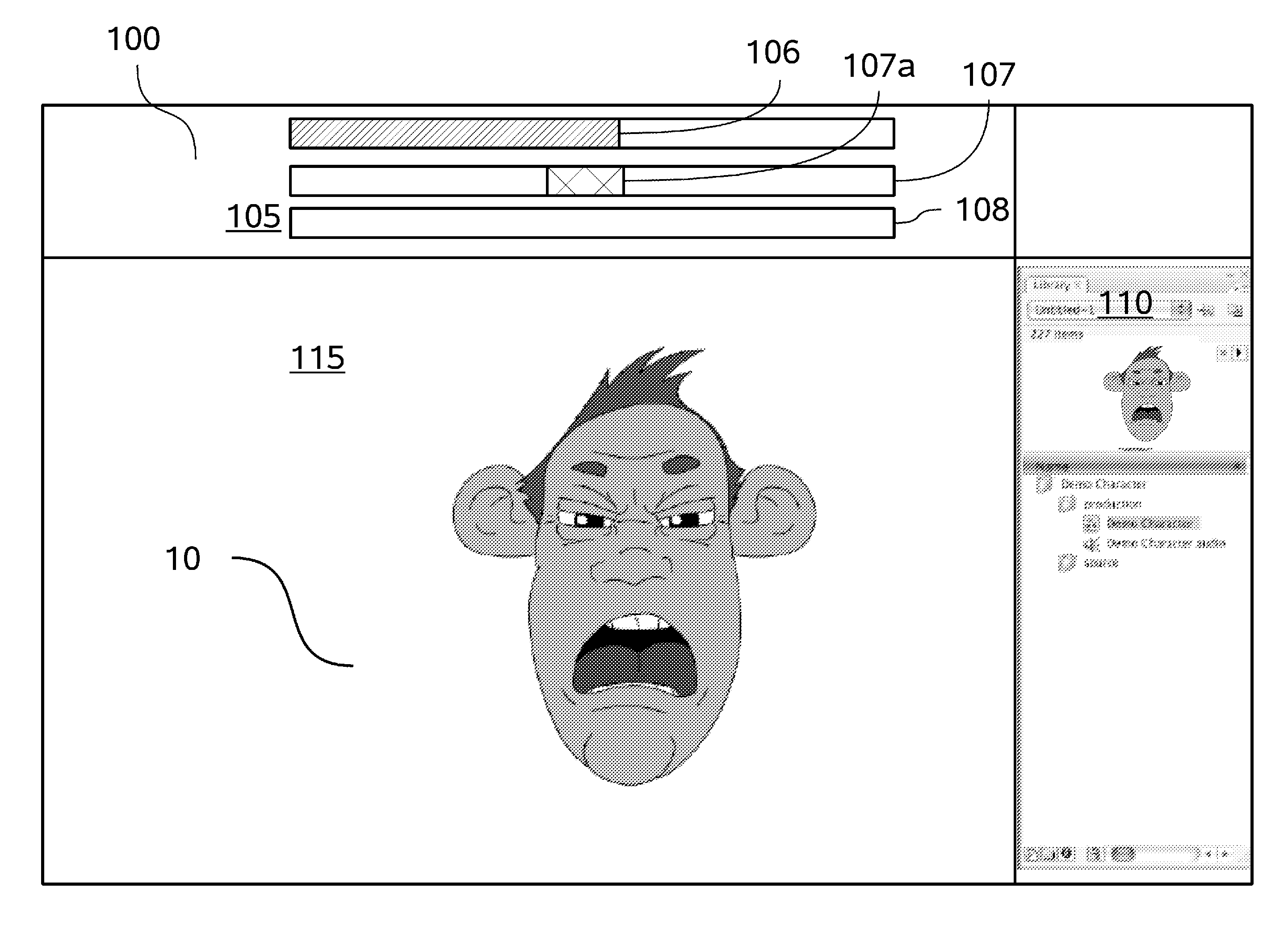

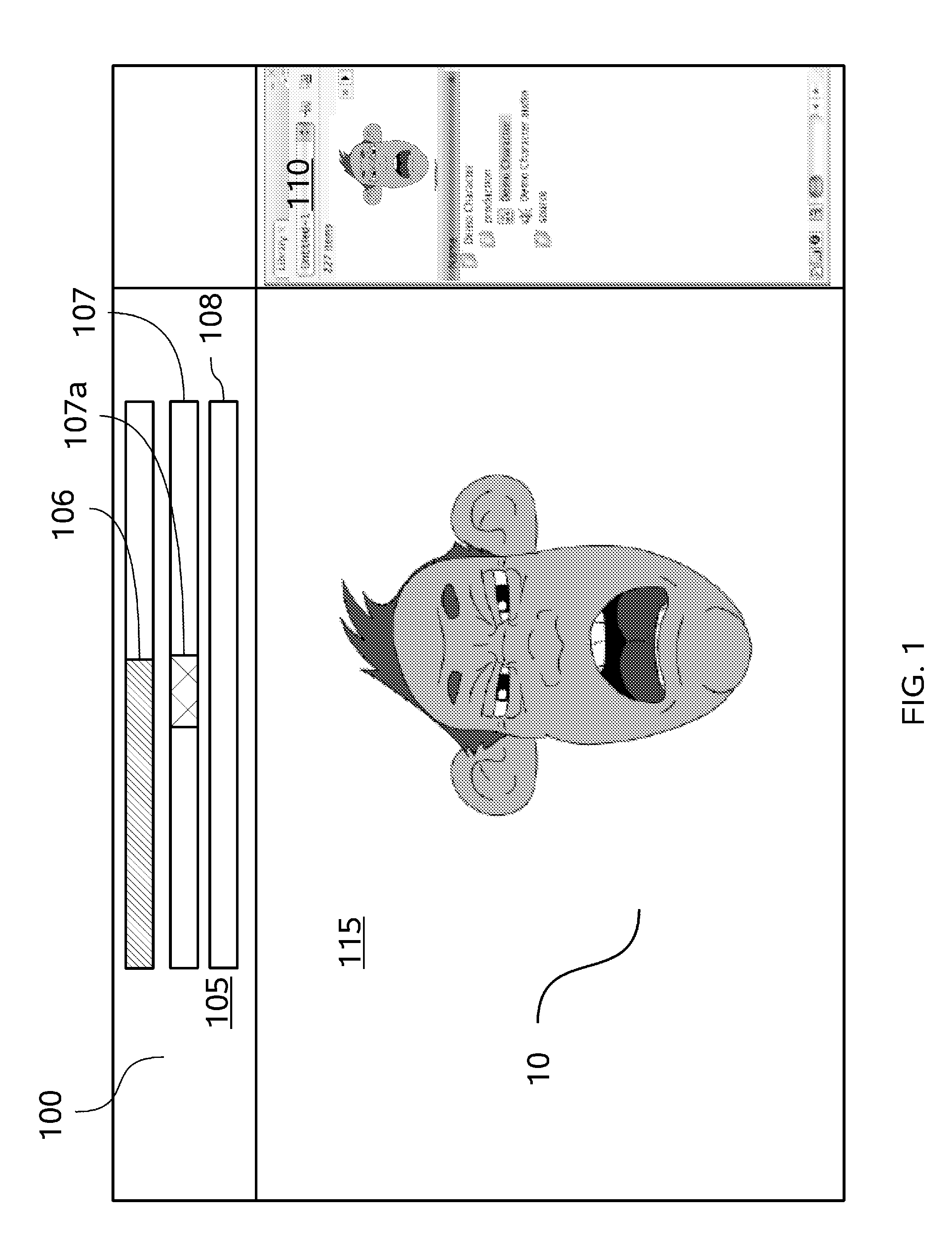

Methods and systems for a character motion animation tool

InactiveUS6924803B1Instant sense of gratificationImprove interactive experienceAnimation3D-image renderingCommunity contextSingle process

A method and system for creating an object or character in a drawing window and simultaneously displaying the object or character in animated form wherein the animated form is available for instant playback or feedback to the user is described. A single process is used for creating the object and animating the object. The user is able to draw the object in a drawing window and have the object animated in an animation window as the object is being drawn, thereby allowing the user to immediately see the results of the animation. A single process is used to create the object or character (i.e., the “drawing” stage) and to display the object in animated form. The drawing and animation can be shown side-by-side for the user thereby enabling the user to see the animation of a character that the user had created moments before. The animation can take place in an animation window or be placed in a pre-existing context, such as an ongoing story line or a collaborative community context created by multiple users.

Owner:VULCAN PATENTS

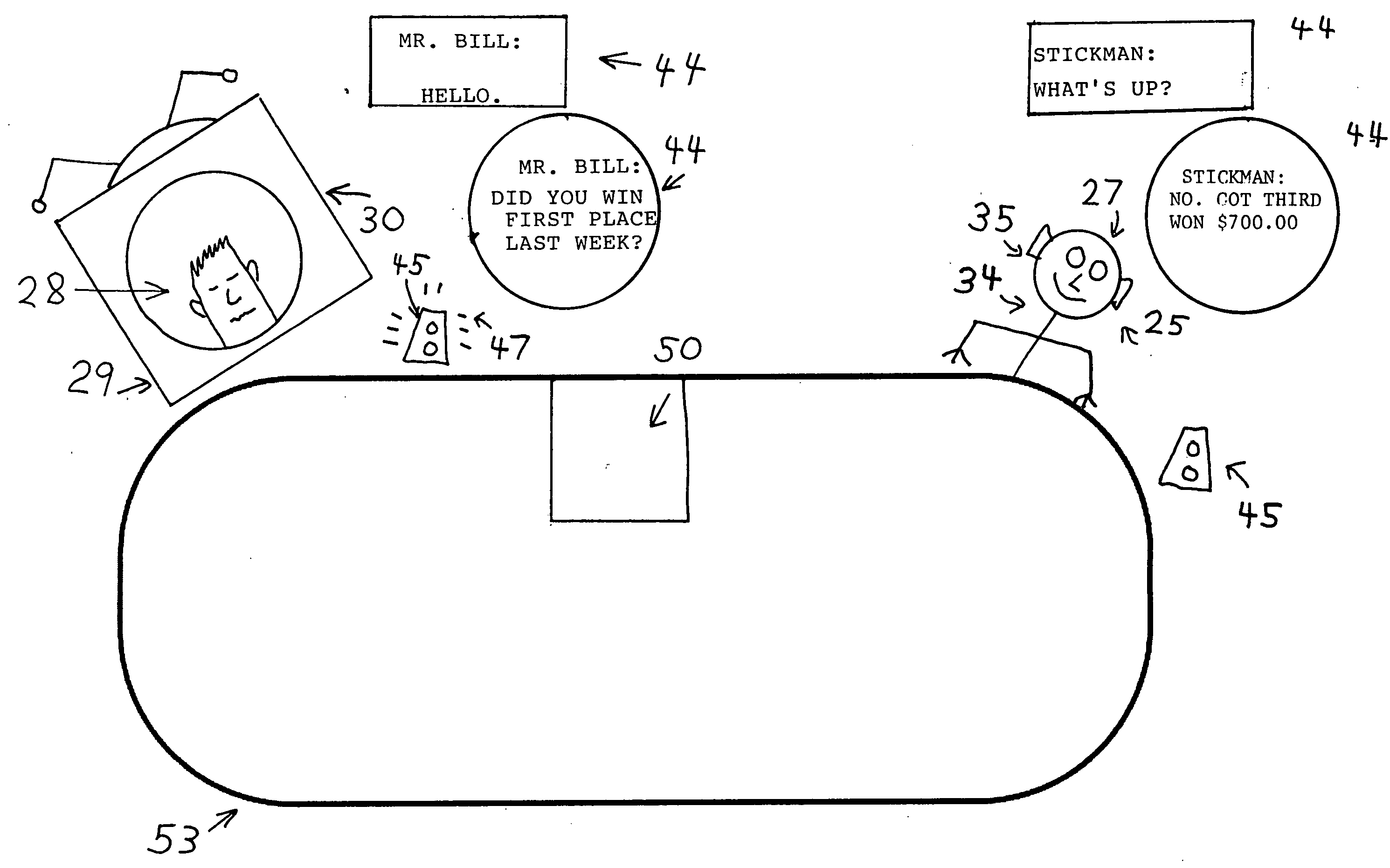

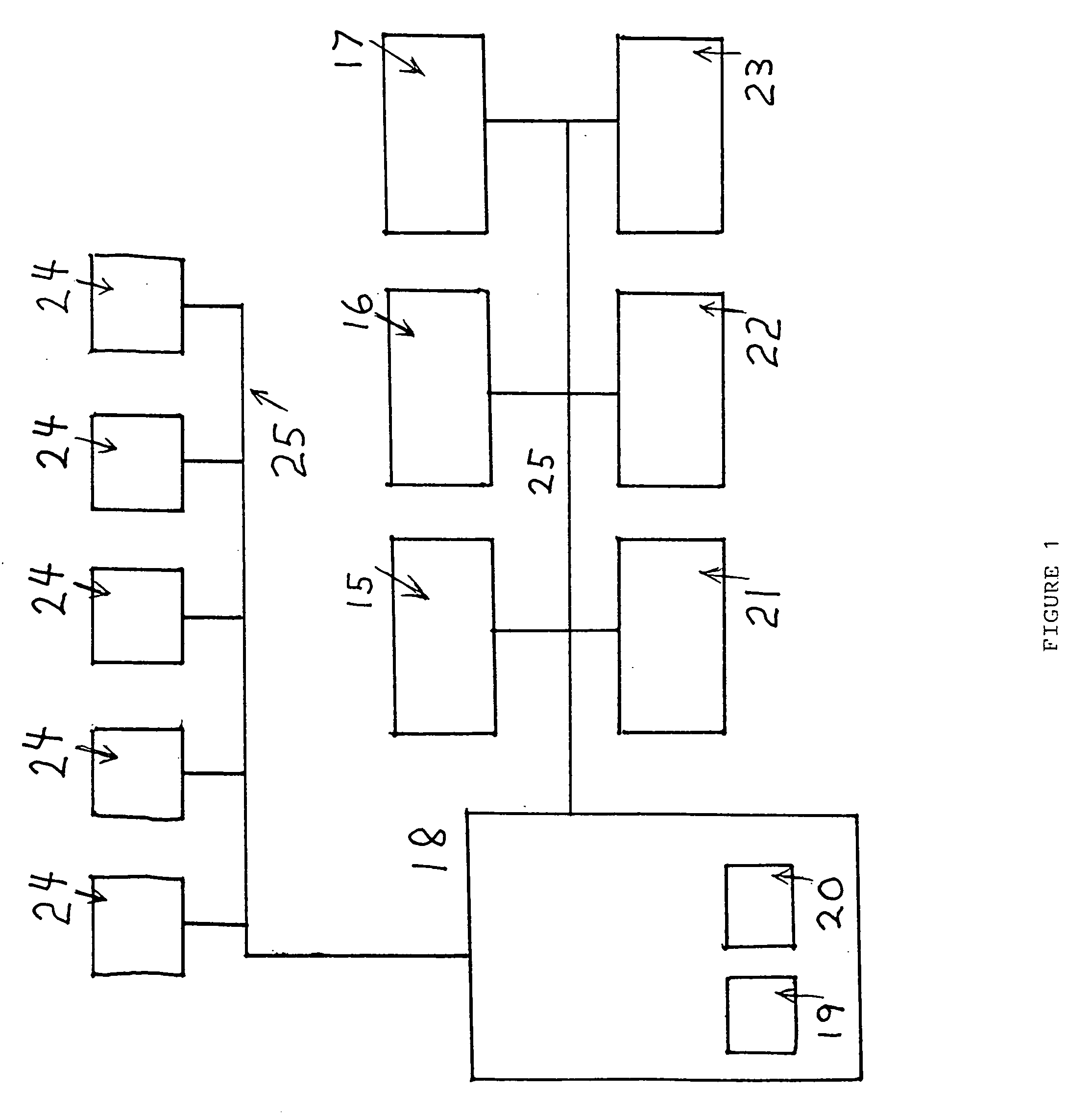

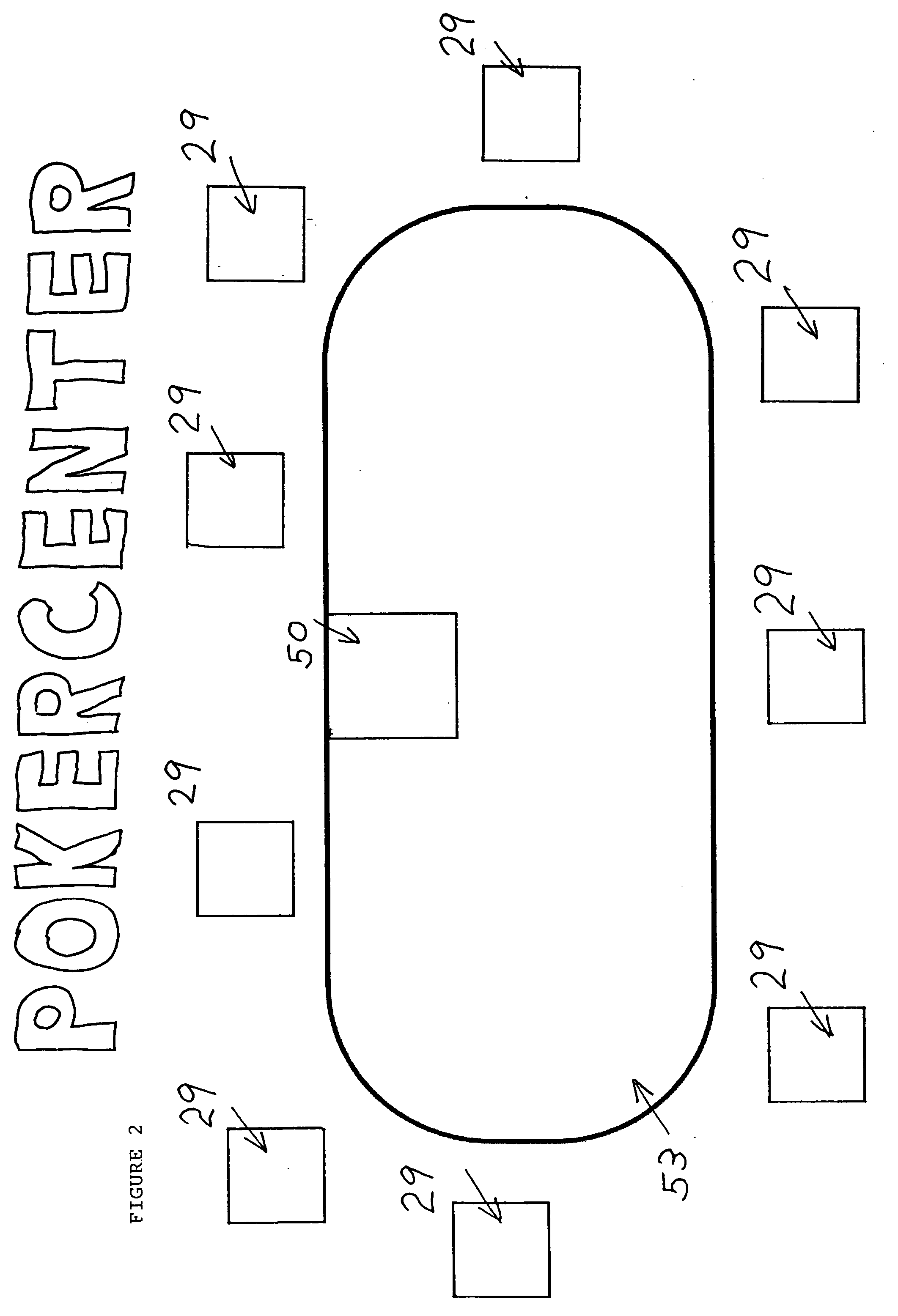

Interactive gaming system with animated, real-time characters

InactiveUS20070283265A1Increase valueEnhanced interactionVideo gamesSpecial data processing applicationsAnimationVisual perception

A gaming system enables users to interact with one another during electronic game play with animated imagery. The system comprises a central processing unit having certain character-building menus and certain character-directing commands. Users thereof, via user-based hardware, communicate with one another by way of the central processing unit and a communication network. Animatable characters and character display units are displayed upon the user's hardware and provide an entertaining visual and / or auditory forum for interacting with other players. The character commands are operable to animate user-identifying characters for incorporating animated body language into game play for enhancing player interaction.

Owner:PORTANO MICHAEL D

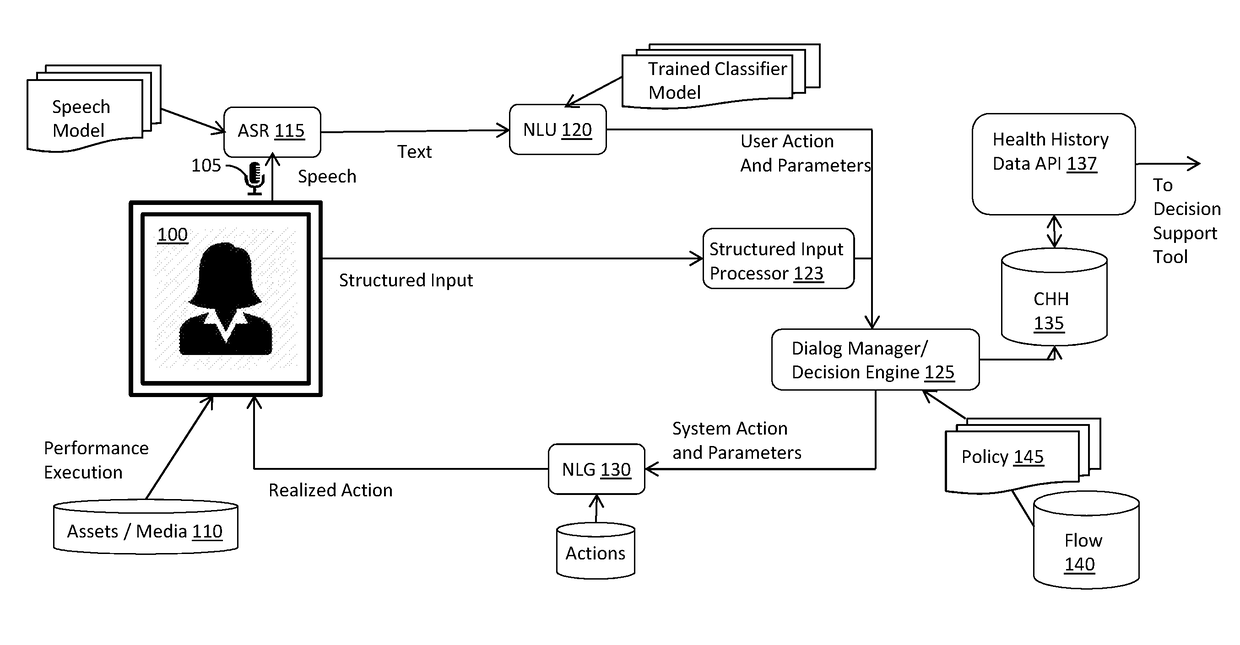

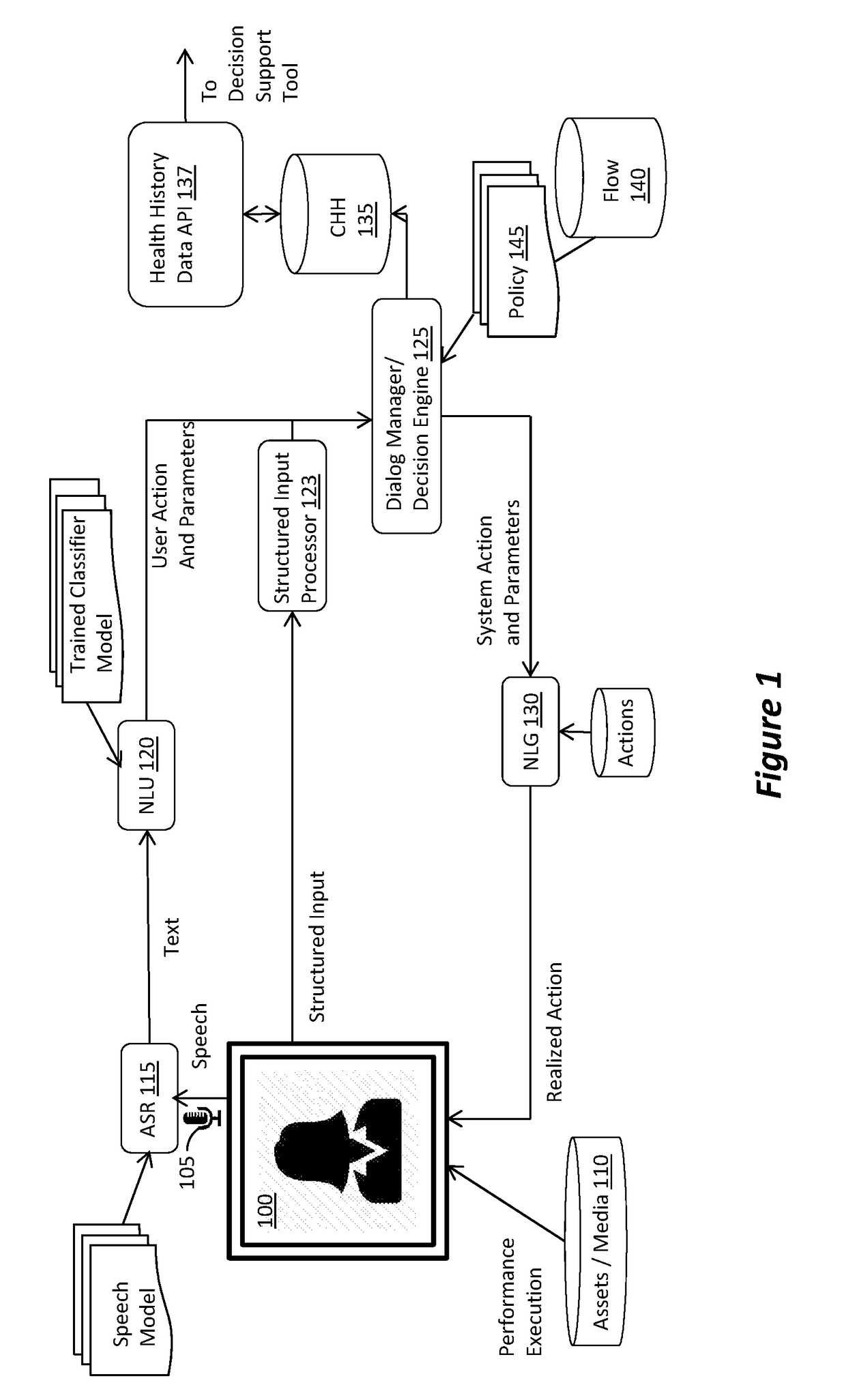

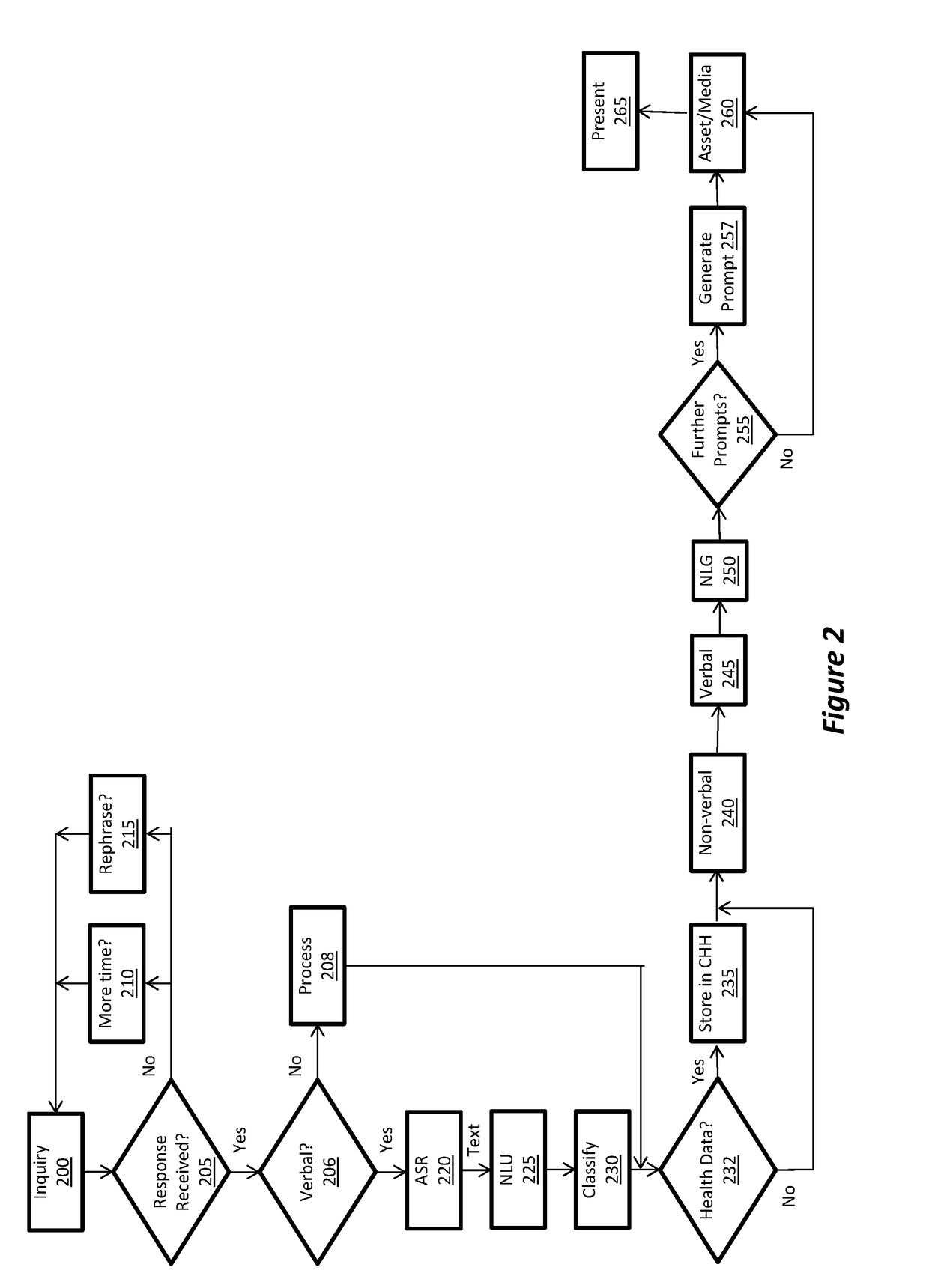

Method and system for patients data collection and analysis

ActiveUS20170300648A1Increase genetic variant interpretationImprove medical diagnosisMedical communicationHealth-index calculationAnimationInformation Harvesting

A conversational and embodied Virtual Assistant (VA) with Decision Support (DS) capabilities that can simulate and improve upon information gathering sessions between clinicians, researchers, and patients. The system incorporates a conversational and embodied VA and a DS and deploys natural interaction enabled by natural language processing, automatic speech recognition, and an animation framework capable of rendering character animation performances through generated verbal and nonverbal behaviors, all supplemented by on-screen prompts.

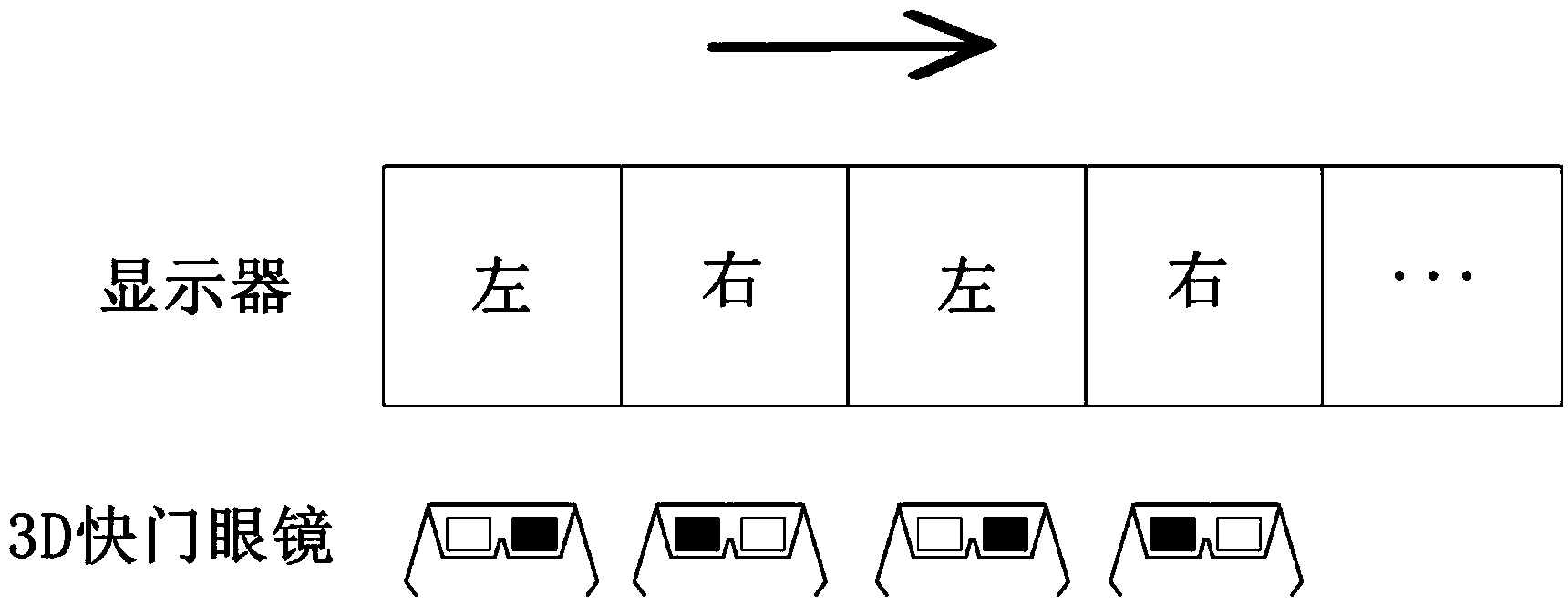

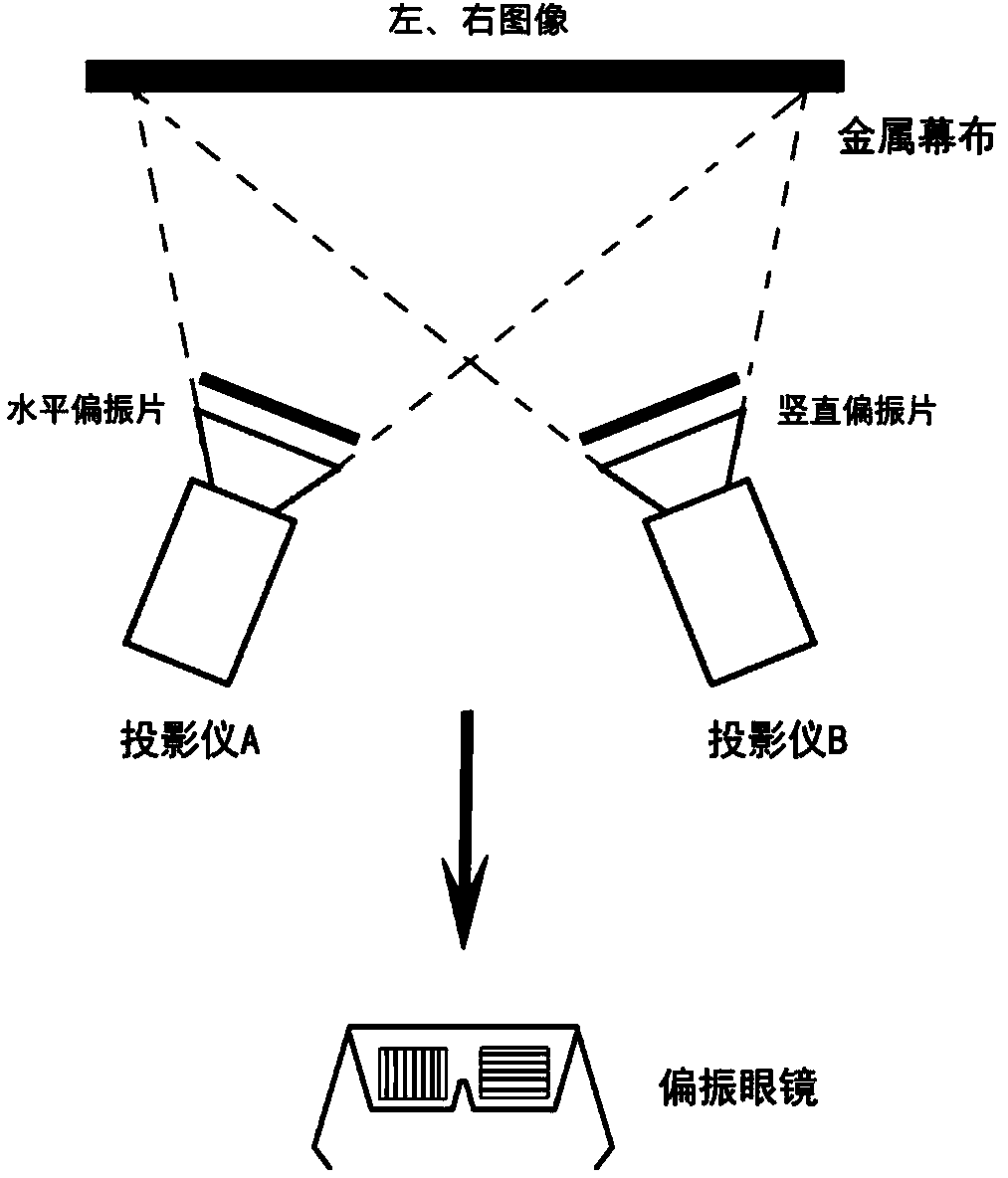

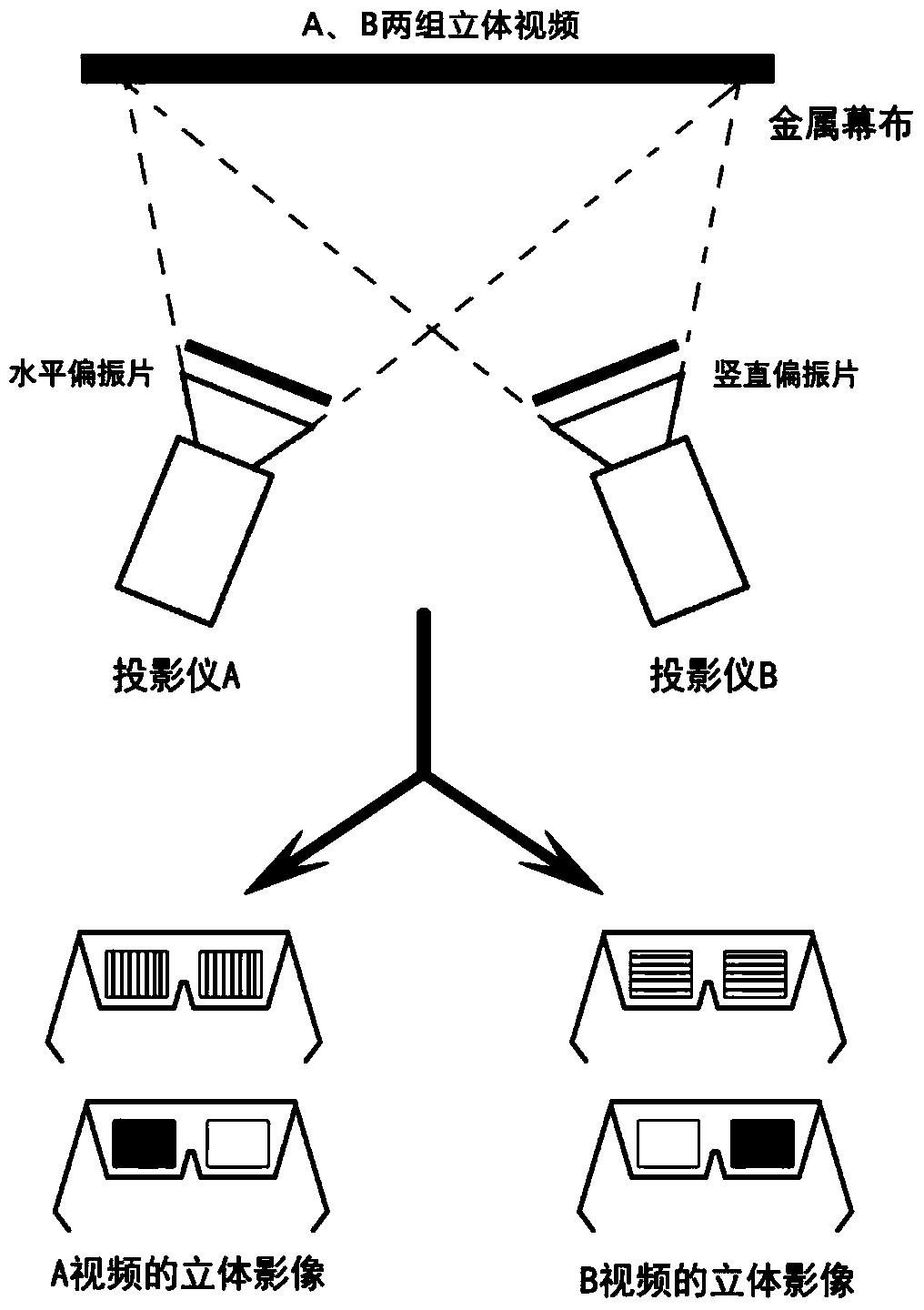

Virtual simulation system and method of antagonistic event with net based on three-dimensional multi-image display

InactiveCN104394400AExpand the scope of activitiesReal interactionVideo gamesSteroscopic systemsNetwork connectionDisplay device

The invention discloses a virtual simulation system and method of an antagonistic event with a net based on three-dimensional multi-image display. The system comprises a network connection module, a game logic module, an interaction control module, a physical engine module, a three-dimensional rendering module and a dual-image projection display module, wherein the network connection module comprises a server sub-module and a client sub-module and is used for network communication and data transmission; the game logic module is used for storing game rules, controlling the play of character animation and performing mapping by position; the interaction control module is used for controlling corresponding game characters in a virtual tennis game scene to move and shooting three-dimensional images of different sight points; the physical engine module is used for efficiently and vividly simulating the physical effects of a tennis ball, such as rebound and collision through a physical engine and enabling the game scene to be relatively real and vivid. According to the system, the three-dimensional multi-image display operation can be performed on a same display screen, and the same game scene can be rendered in real time based on different sight angles.

Owner:SHANDONG UNIV

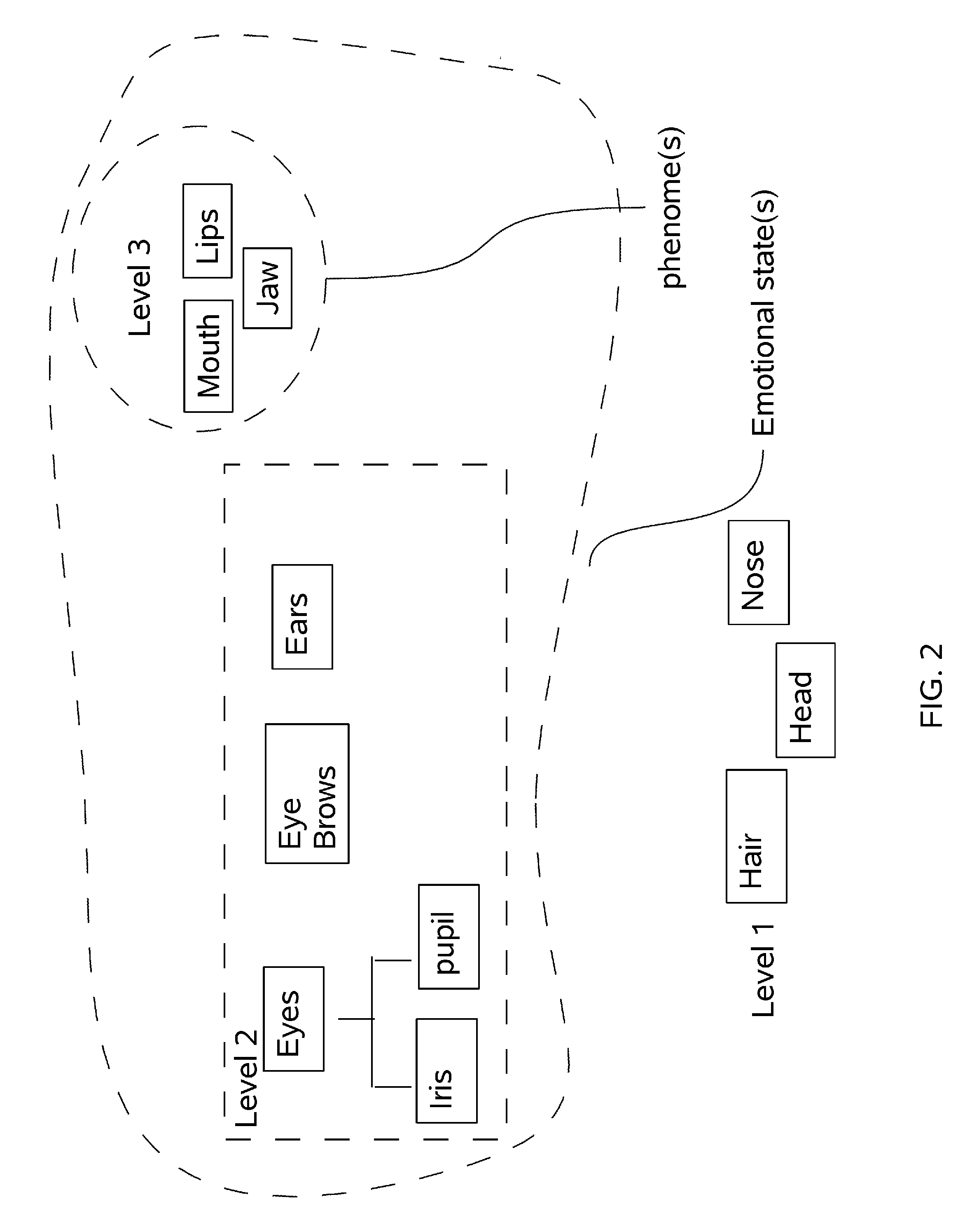

Method and Apparatus for Character Animation

The present invention provides various means for the animation of character expression in coordination with an audio sound track. The animator selects or creates characters and expressive characteristic from a menu, and then enters the characteristics, including lip and mouth morphology, in coordination with a running sound track.

Owner:SONOMA DATA SOLUTIONS

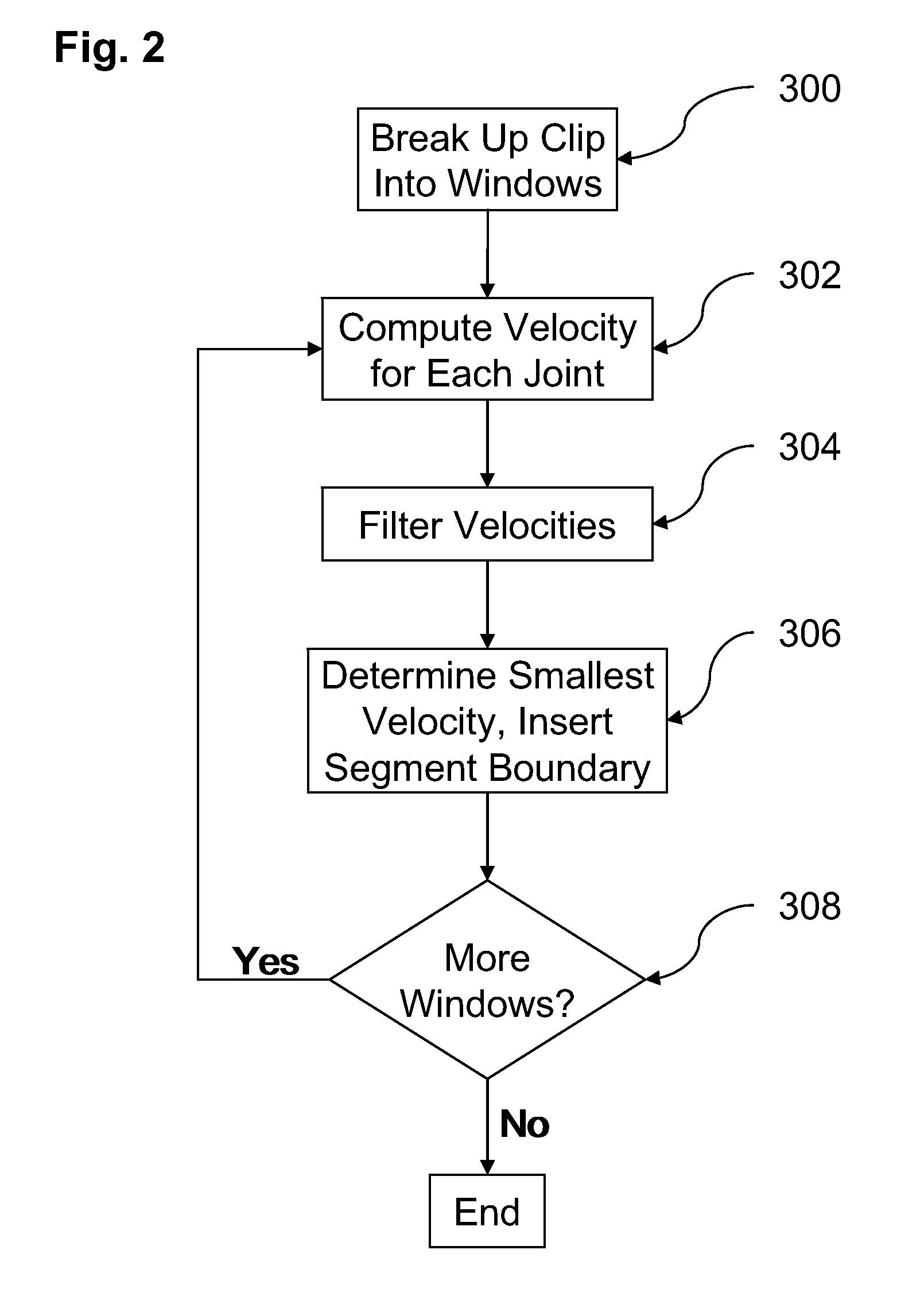

Method, system and storage device for creating, manipulating and transforming animation

An animation method, system, and storage device which takes animators submissions of characters and animations and breaks the animations into segments where discontinuities will be minimized; allows users to assemble the segments into new animations; allows users to apply modifiers to the characters; provides a semantic restraint system for virtual objects; and provides automatic character animation retargeting.

Owner:ANIMATE ME

Real-time automatic concatenation of 3D animation sequences

Systems and methods for generating and concatenating 3D character animations are described including systems in which recommendations are made by the animation system concerning motions that smoothly transition when concatenated. One embodiment includes a server system connected to a communication network and configured to communicate with a user device that is also connected to the communication network. In addition, the server system is configured to generate a user interface that is accessible via the communication network, the server system is configured to receive high level descriptions of desired sequences of motion via the user interface, the server system is configured to generate synthetic motion data based on the high level descriptions and to concatenate the synthetic motion data, the server system is configured to stream the concatenated synthetic motion data to a rendering engine on the user device, and the user device is configured to render a 3D character animated using the streamed synthetic motion data.

Owner:ADOBE SYST INC

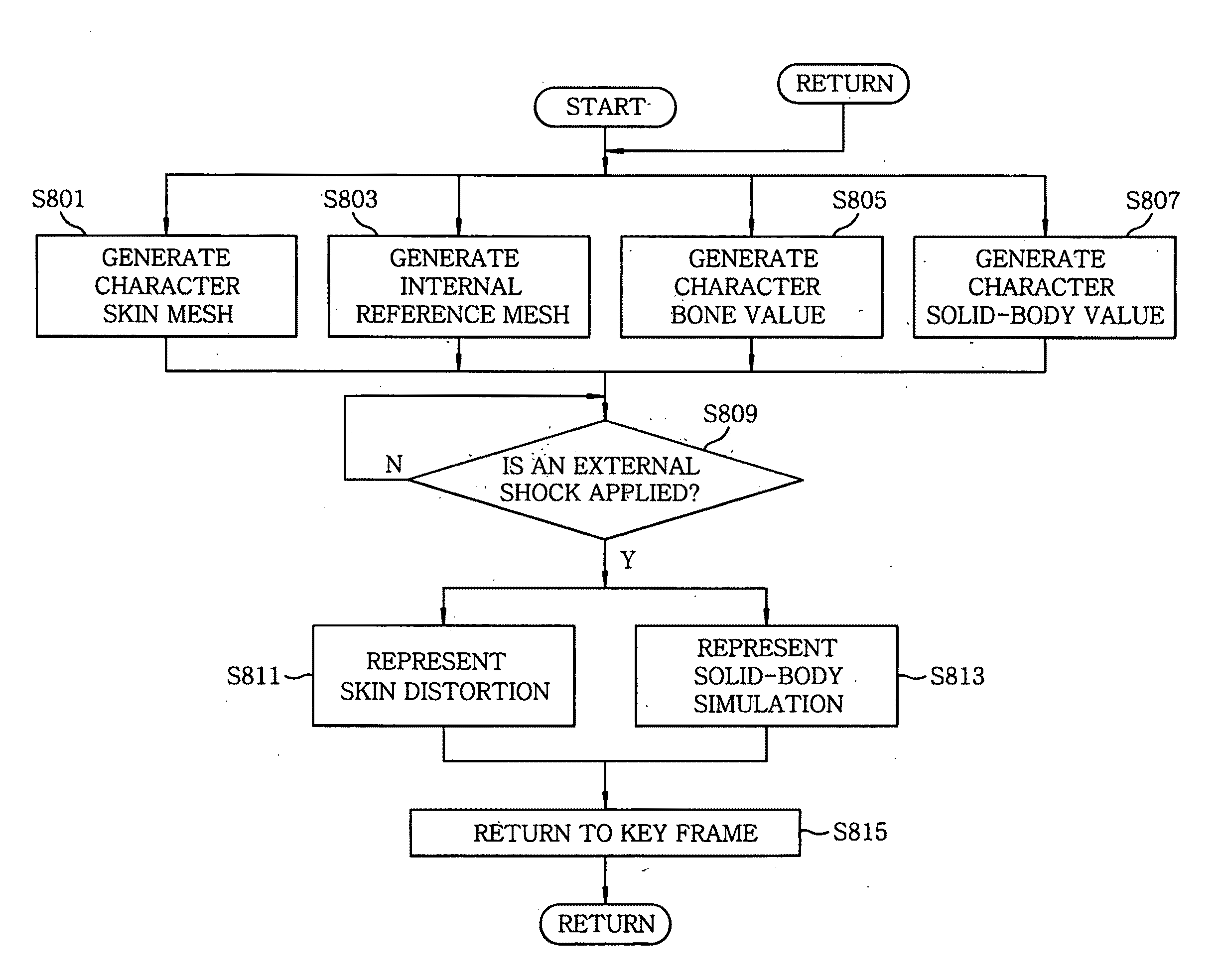

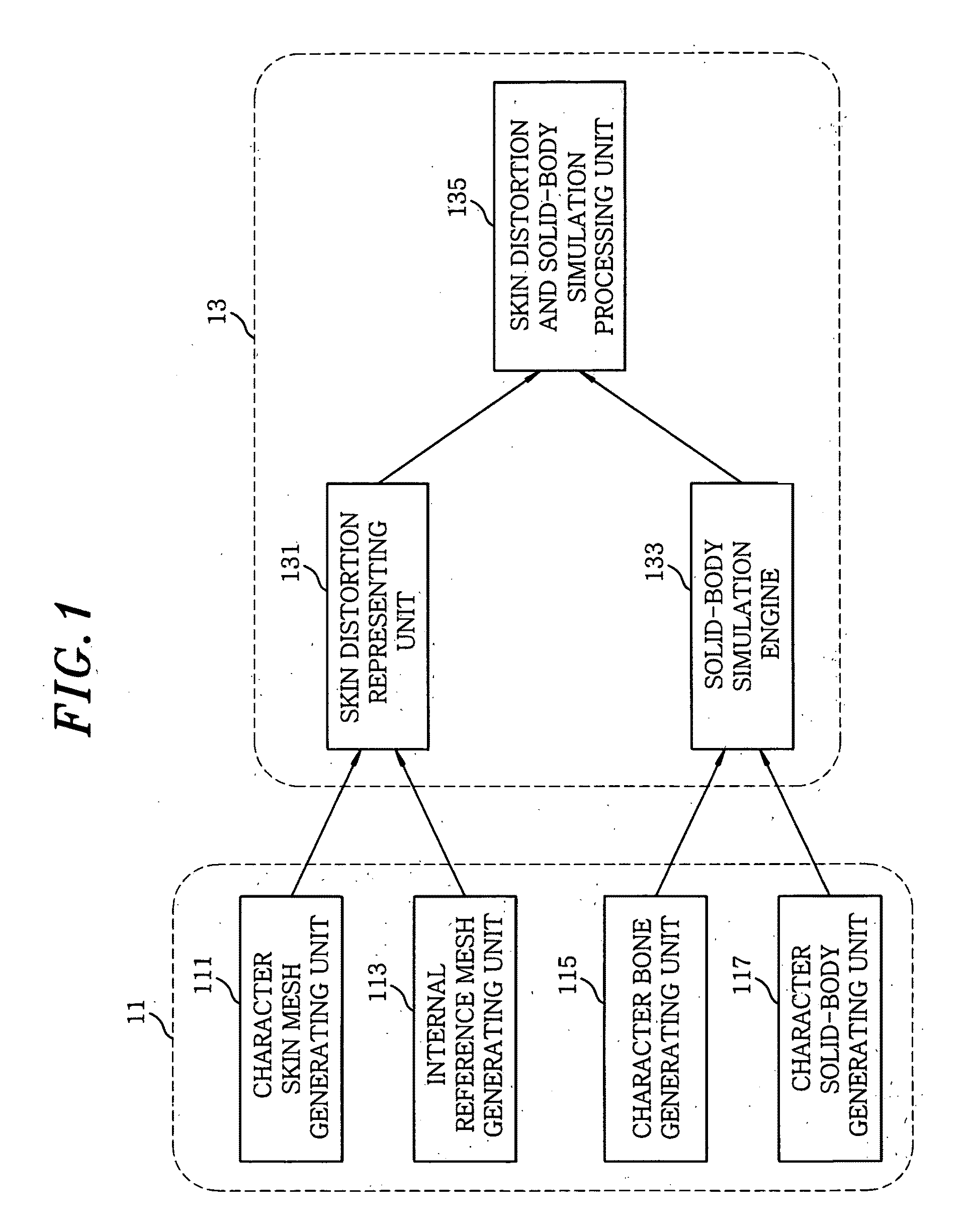

Character animation system and method

A character animation system includes a data generating unit for generating a character skin mesh and an internal reference mesh, a character bone value, and a character solid-body value, a skin distortion representing unit for representing skin distortion using the generated character skin mesh and the internal reference mesh when an external shock is applied to a character, and a solid-body simulation engine for applying the generated character bone value and the character solid-body value to a real-time physical simulation library and representing character solid-body simulation. The system further includes a skin distortion and solid-body simulation processing unit for processing to return to a key frame to be newly applied after the skin distortion and the solid-body simulation are represented.

Owner:ELECTRONICS & TELECOMM RES INST

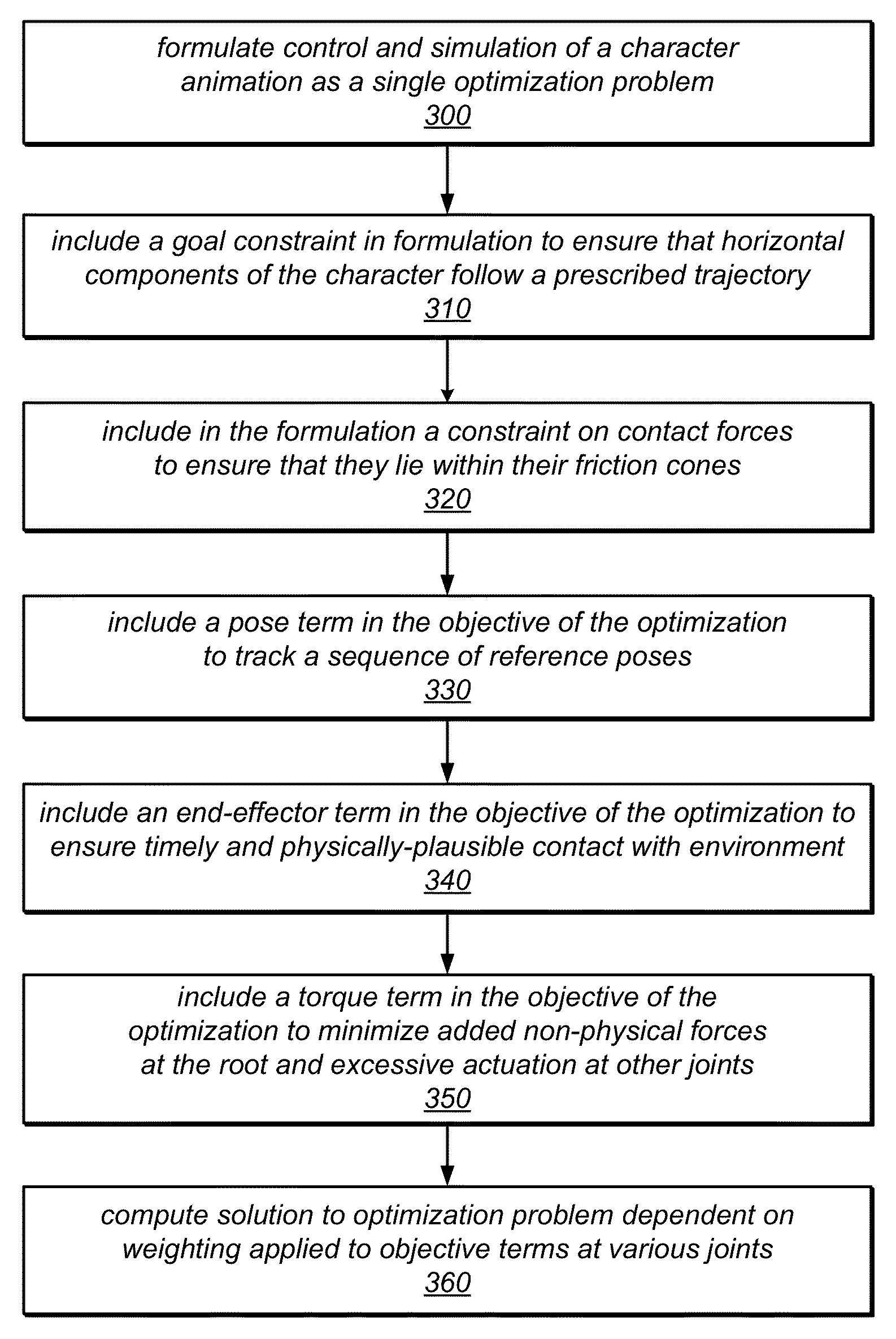

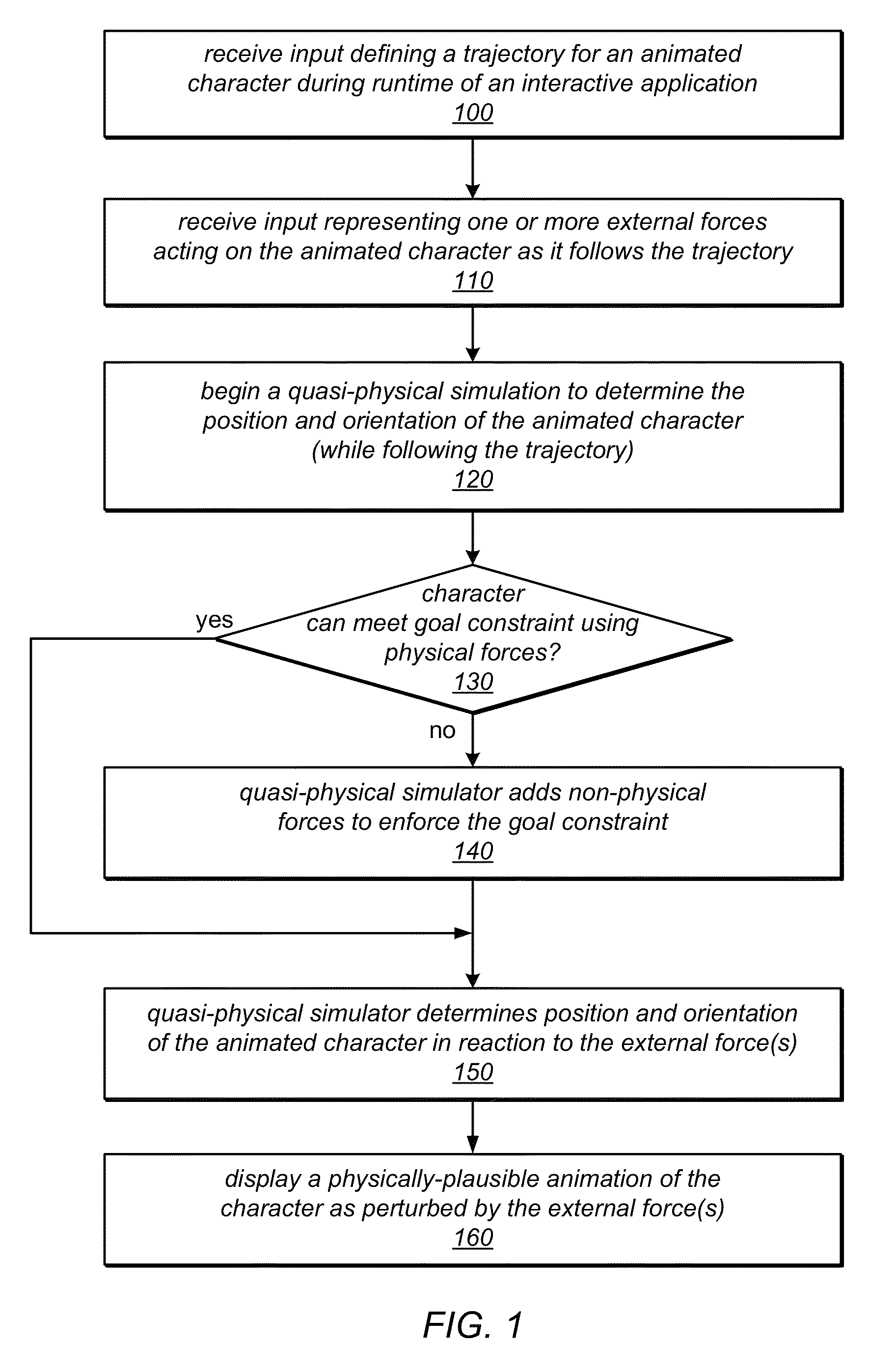

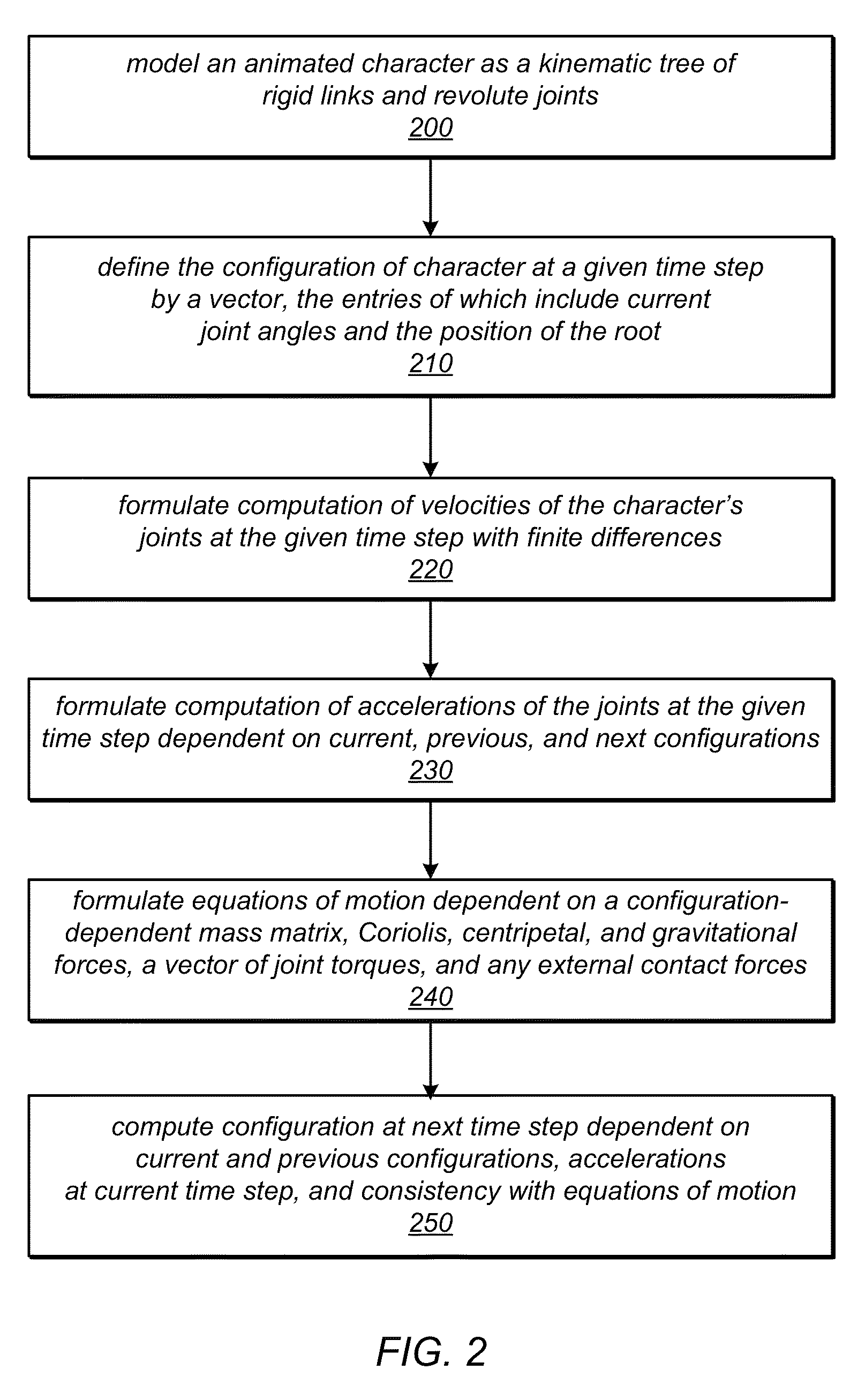

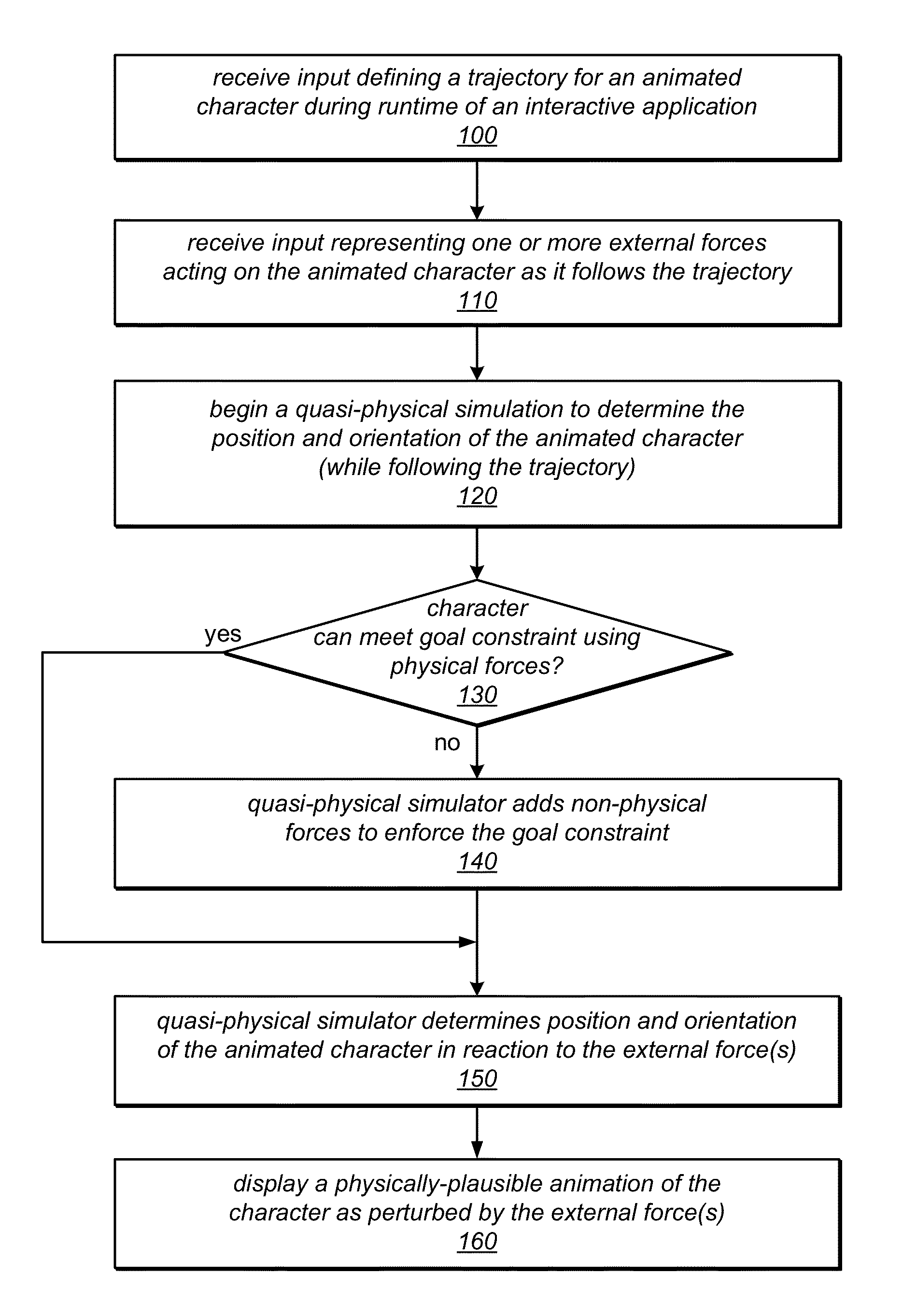

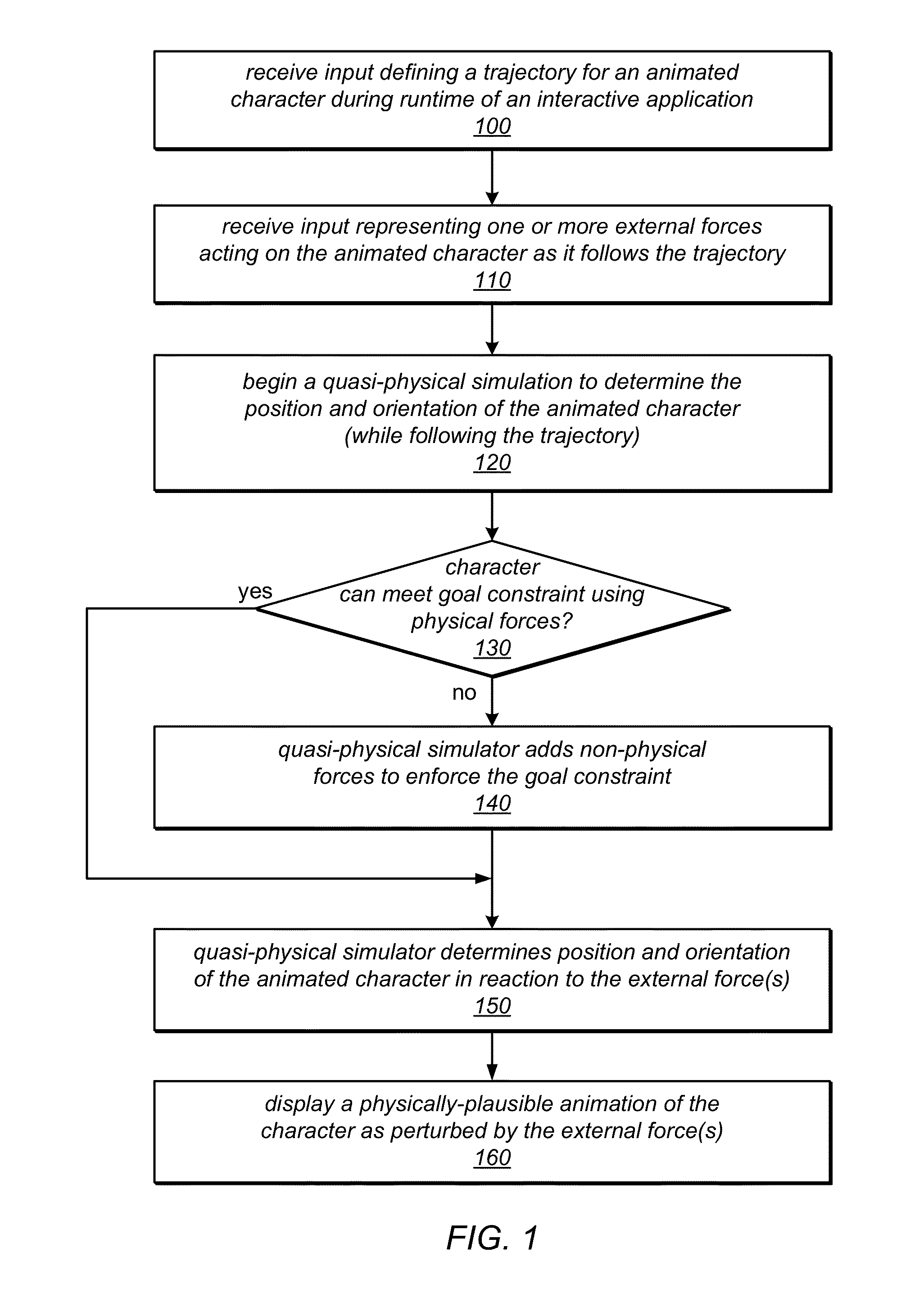

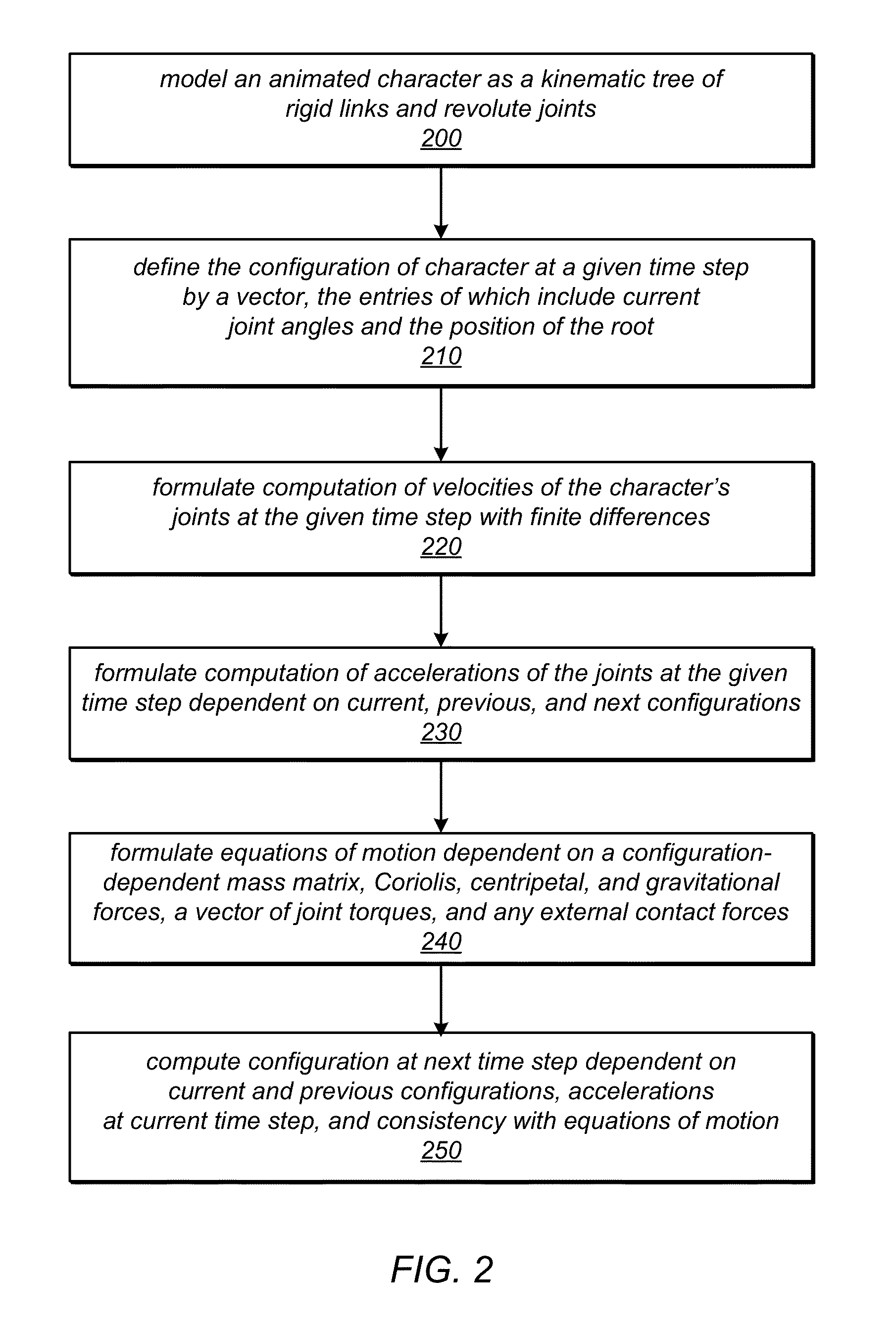

System and method for robust physically-plausible character animation

An interactive application may include a quasi-physical simulator configured to determine the configuration of animated characters as they move within the application and are acted on by external forces. The simulator may work together with a parameterized animation module that synthesizes and provides reference poses for the animation from example motion clips that it has segmented and parameterized. The simulator may receive input defining a trajectory for an animated character and input representing one or more external forces acting on the character, and may perform a quasi-physical simulation to determine a pose for the character in the current animation frame in reaction to the external forces. The simulator may enforce a goal constraint that the animated character follows the trajectory, e.g., by adding a non-physical force to the simulation, the magnitude of which may be dependent on a torque objective that attempts to minimize the use of such non-physical forces.

Owner:ADOBE SYST INC

System and Method for Robust Physically-Plausible Character Animation

An interactive application may include a quasi-physical simulator configured to determine the configuration of animated characters as they move within the application and are acted on by external forces. The simulator may work together with a parameterized animation module that synthesizes and provides reference poses for the animation from example motion clips that it has segmented and parameterized. The simulator may receive input defining a trajectory for an animated character and input representing one or more external forces acting on the character, and may perform a quasi-physical simulation to determine a pose for the character in the current animation frame in reaction to the external forces. The simulator may enforce a goal constraint that the animated character follows the trajectory, e.g., by adding a non-physical force to the simulation, the magnitude of which may be dependent on a torque objective that attempts to minimize the use of such non-physical forces.

Owner:ADOBE SYST INC

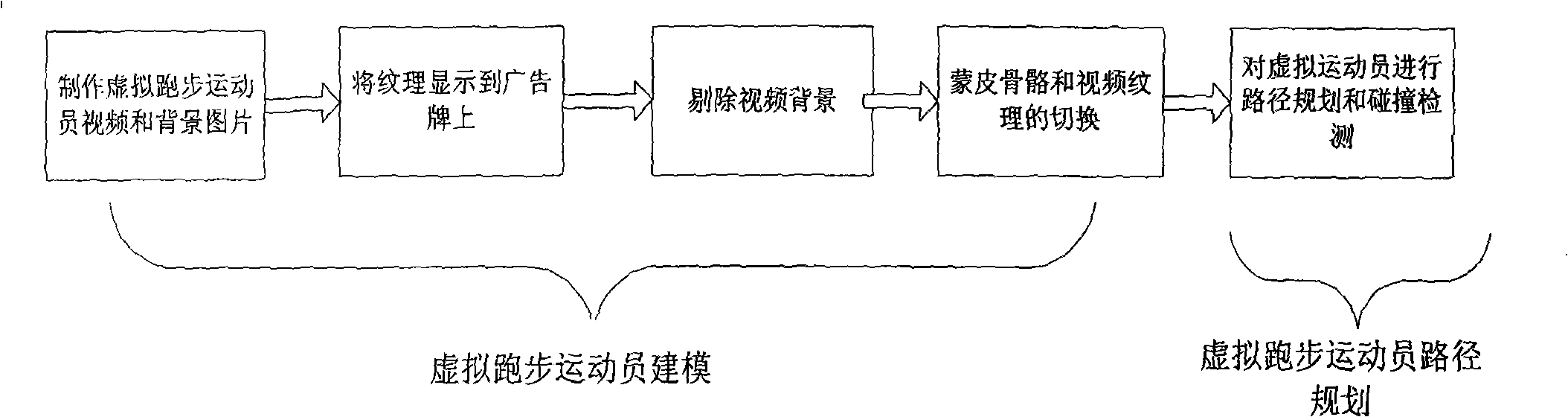

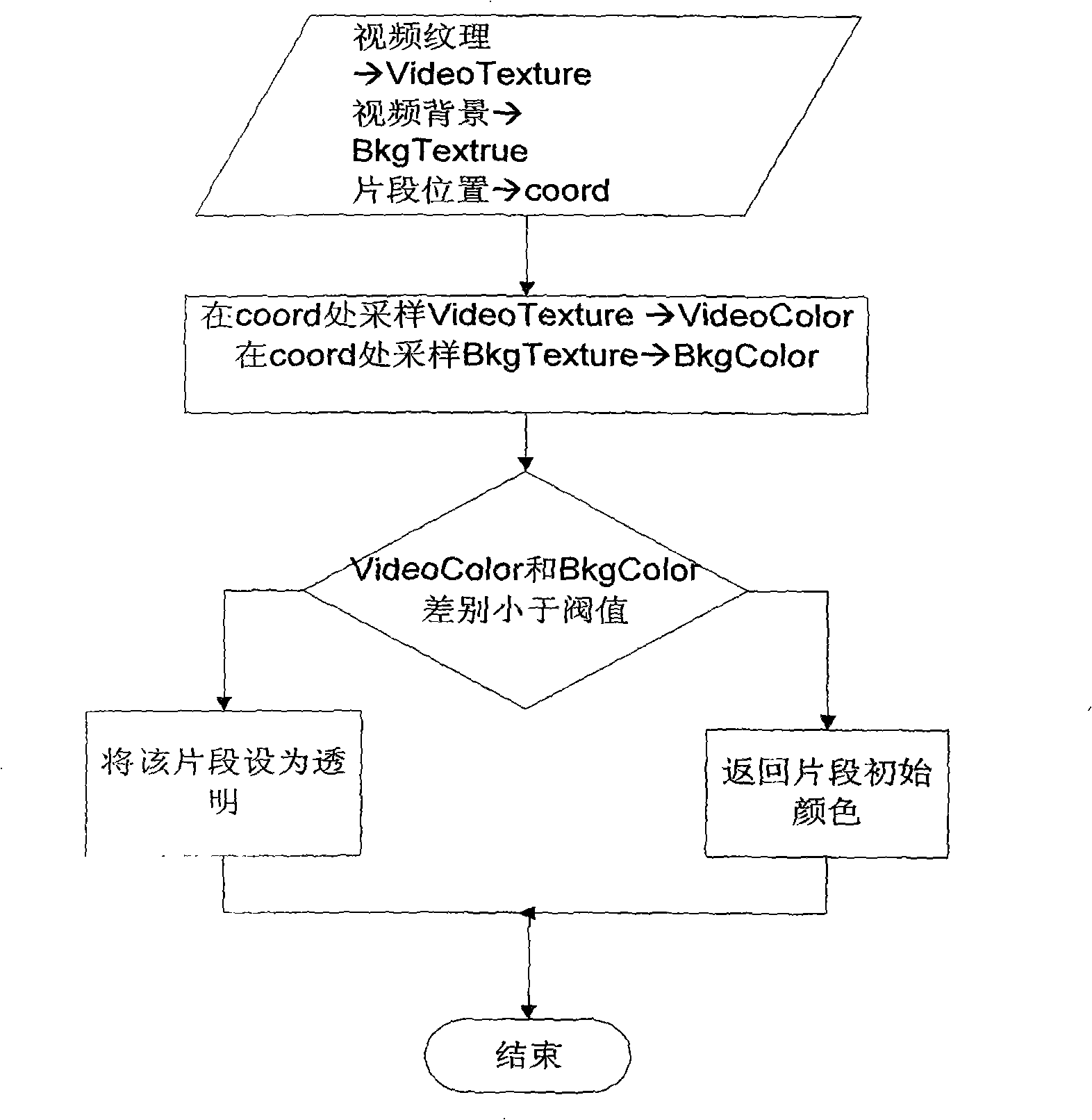

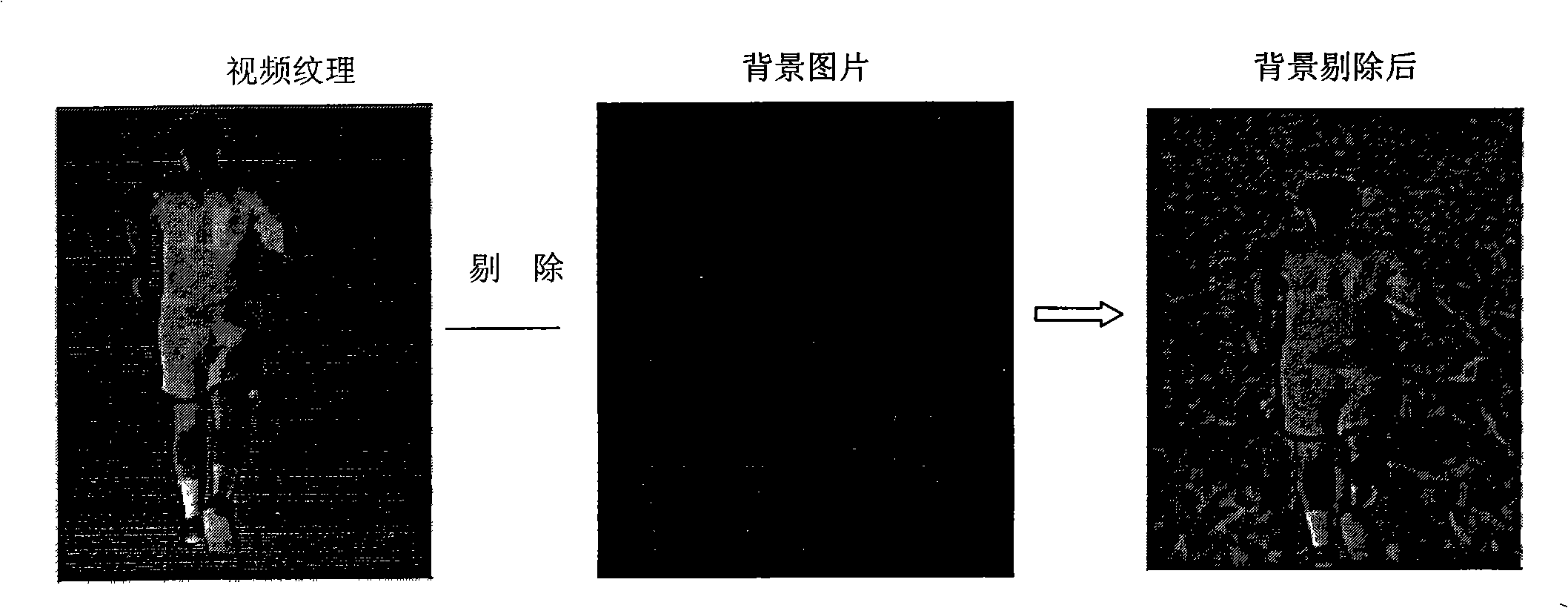

Drafting method for virtual running athlete based on video texture

InactiveCN101320482ARealize switchingEasy to manufactureSpecial data processing applications3D-image renderingAnimationWorkload

The present invention discloses a drawing method for a vedio texture-based virtual runner. The method is mainly applied in a game. Firstly, a virtual runner video comprising animation effect and a video background picture are manufactured; then the video is used as texture to be displayed on a billboard in a game scene; the video texture and the background picture are transferred into a pixel tinter, and a video background is eliminated; the switching of a character animation in a normal skinned mesh form and the video texture is realized in terms of the distance from a view point; finally, a series of key points are stored to be used as the moving path of the virtual runner. The present invention uses character modeling based on the video to replace a normally used skinned mesh, thereby greatly reducing game rendering expense; a video animation can draw material from the reality, thereby reducing the workload of 3D character modeling.

Owner:ZHEJIANG UNIV

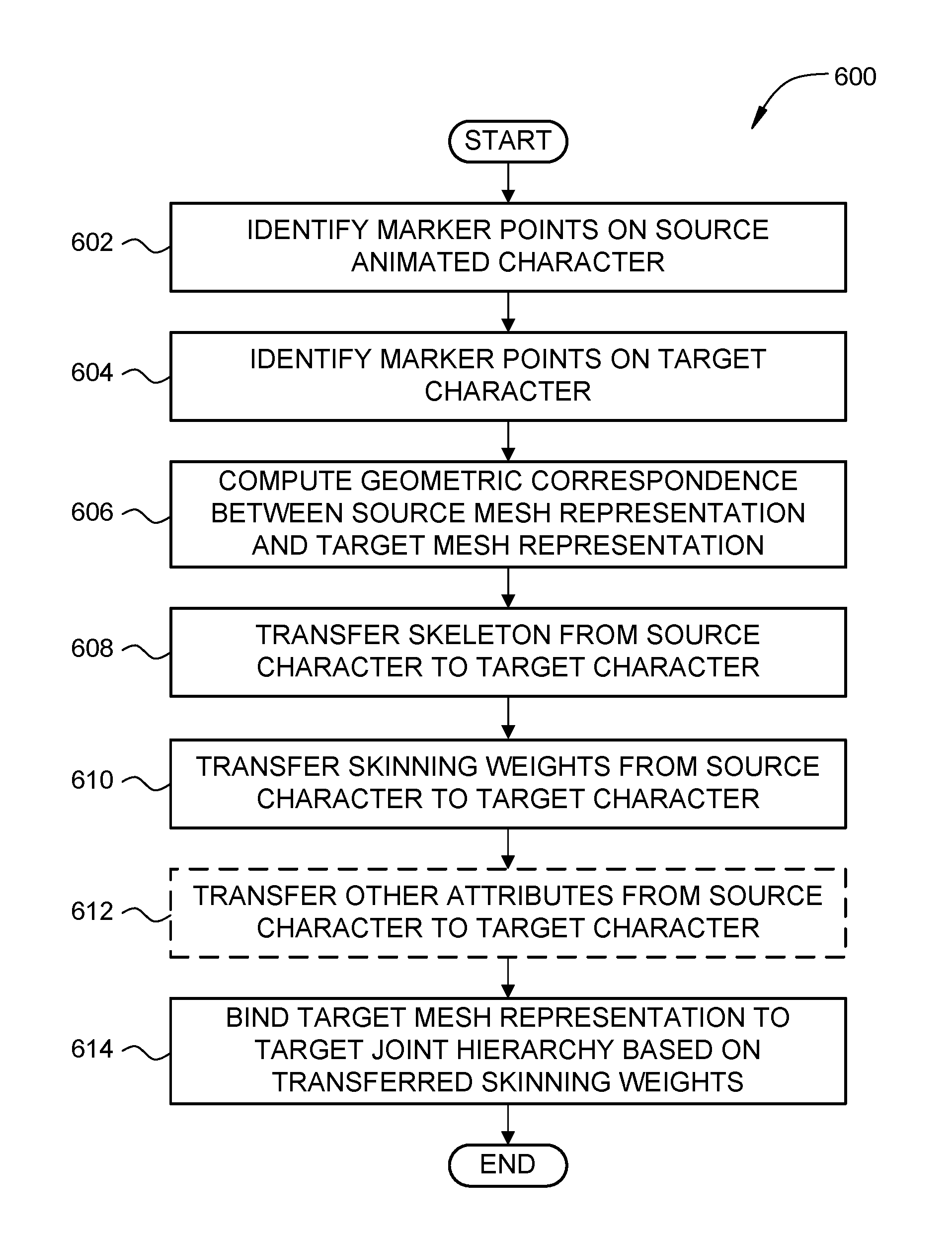

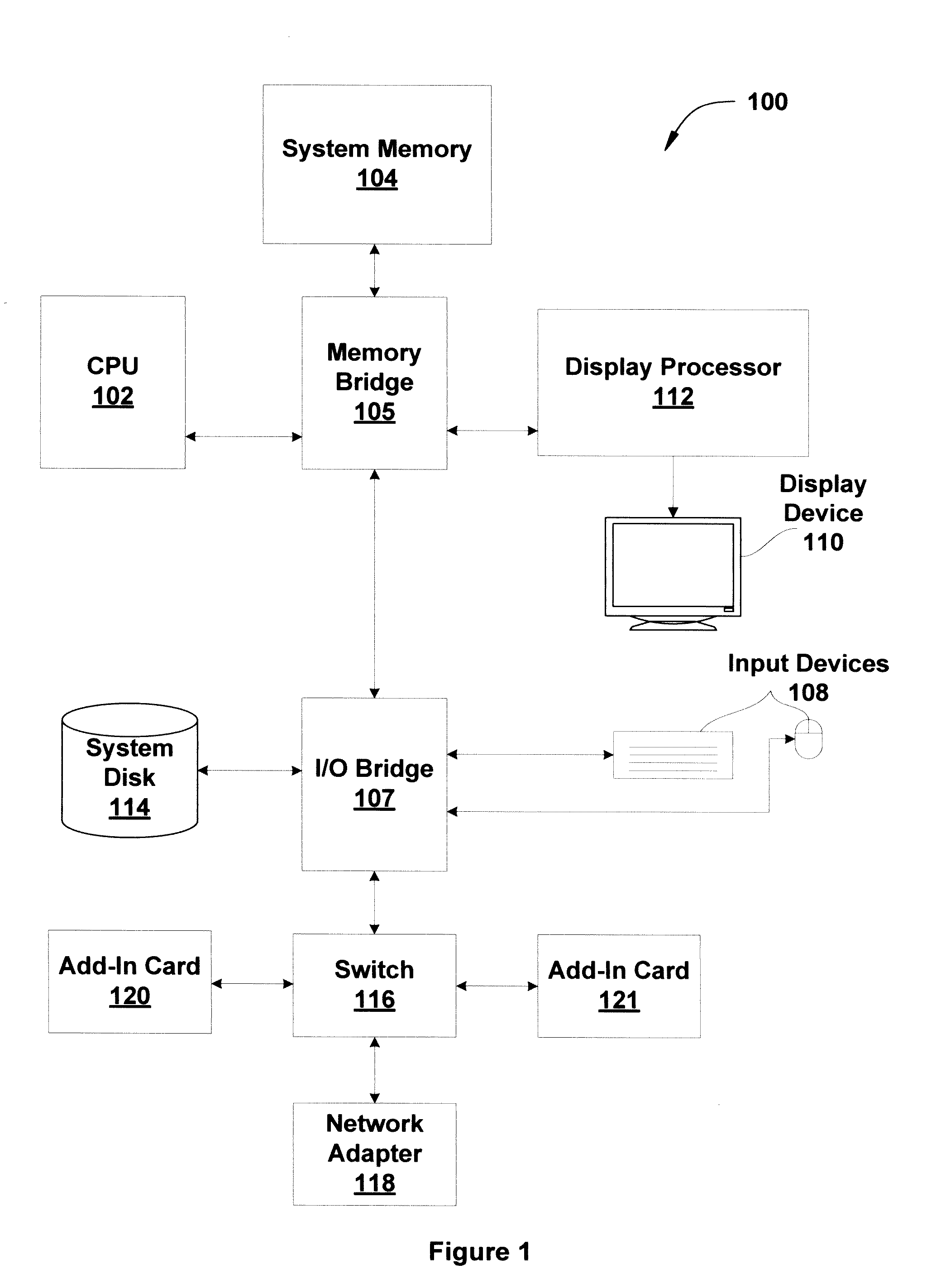

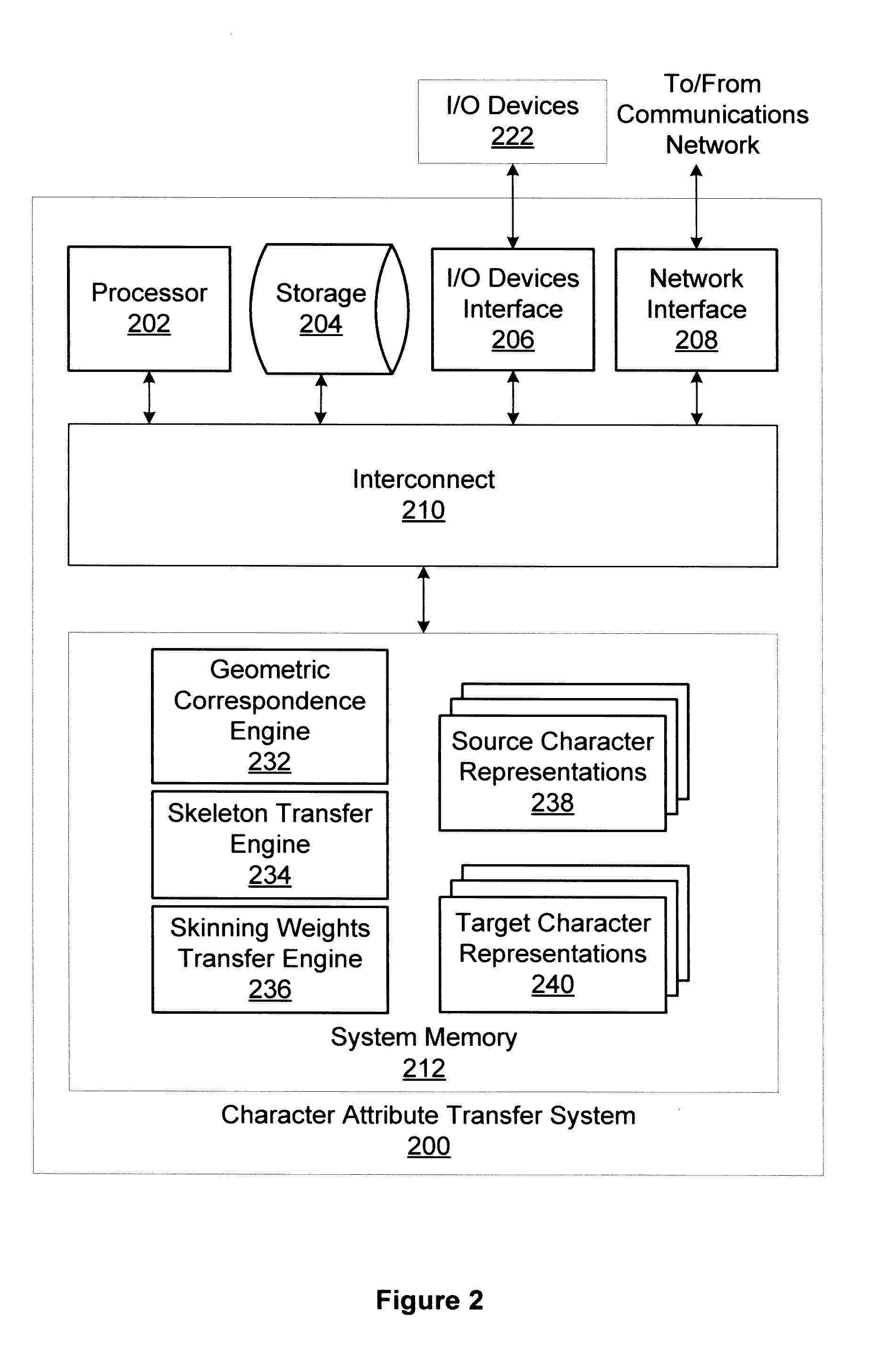

Robust attribute transfer for character animation

One embodiment of the invention disclosed herein provides techniques for transferring attributes from a source animated character to a target character. A character attribute transfer system identifies a first set of markers corresponding to the source animated character. The character attribute transfer system identifies a second set of markers corresponding to the target character. The character attribute transfer system generates a geometric correspondence between the source animated character and the target character based on the first set of markers and the second set of markers independent of differences in geometry between the source animated character and the target character. The character attribute transfer system transfers a first attribute from the source animated character to the target character based on the geometric correspondence.

Owner:AUTODESK INC

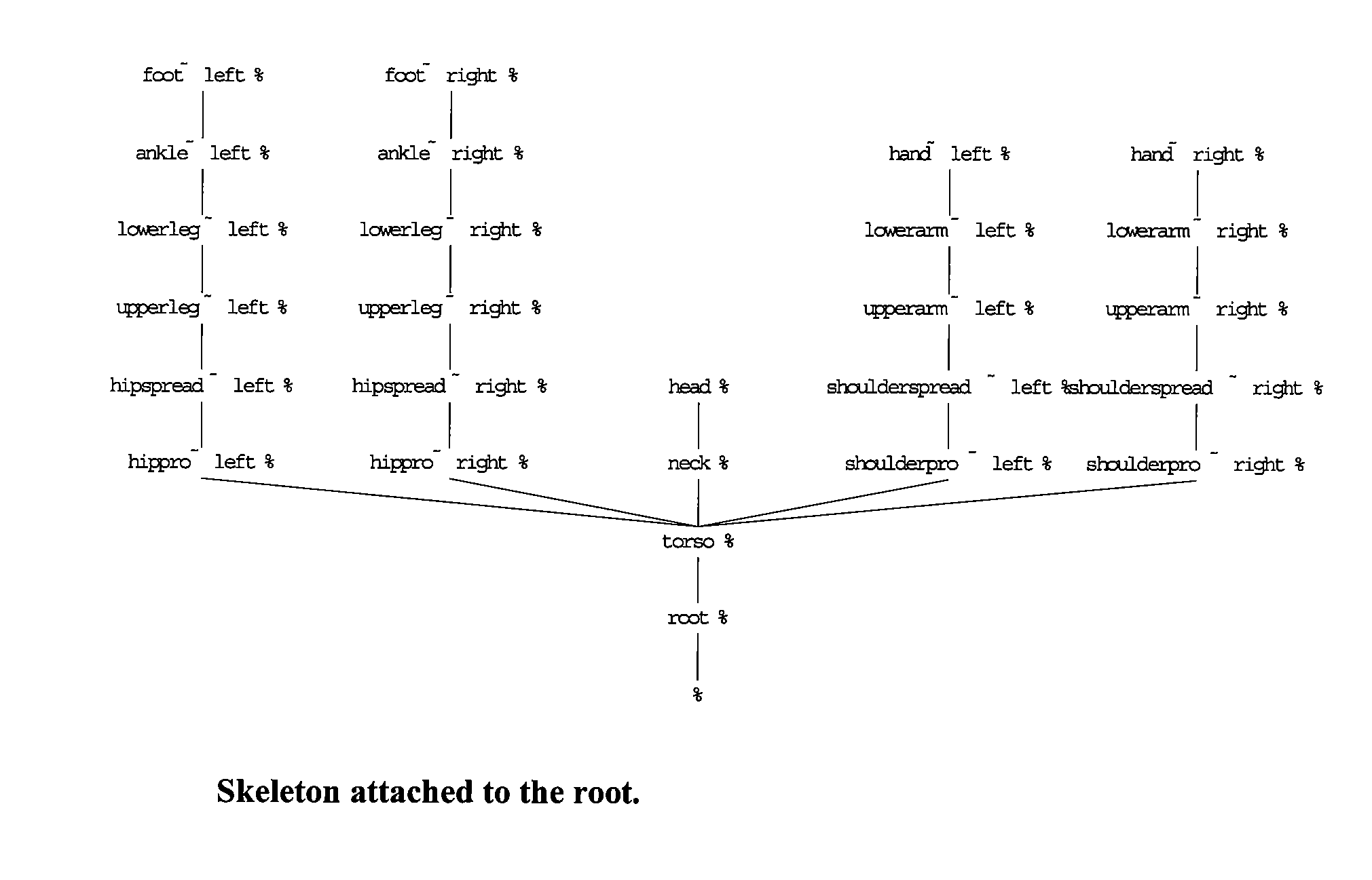

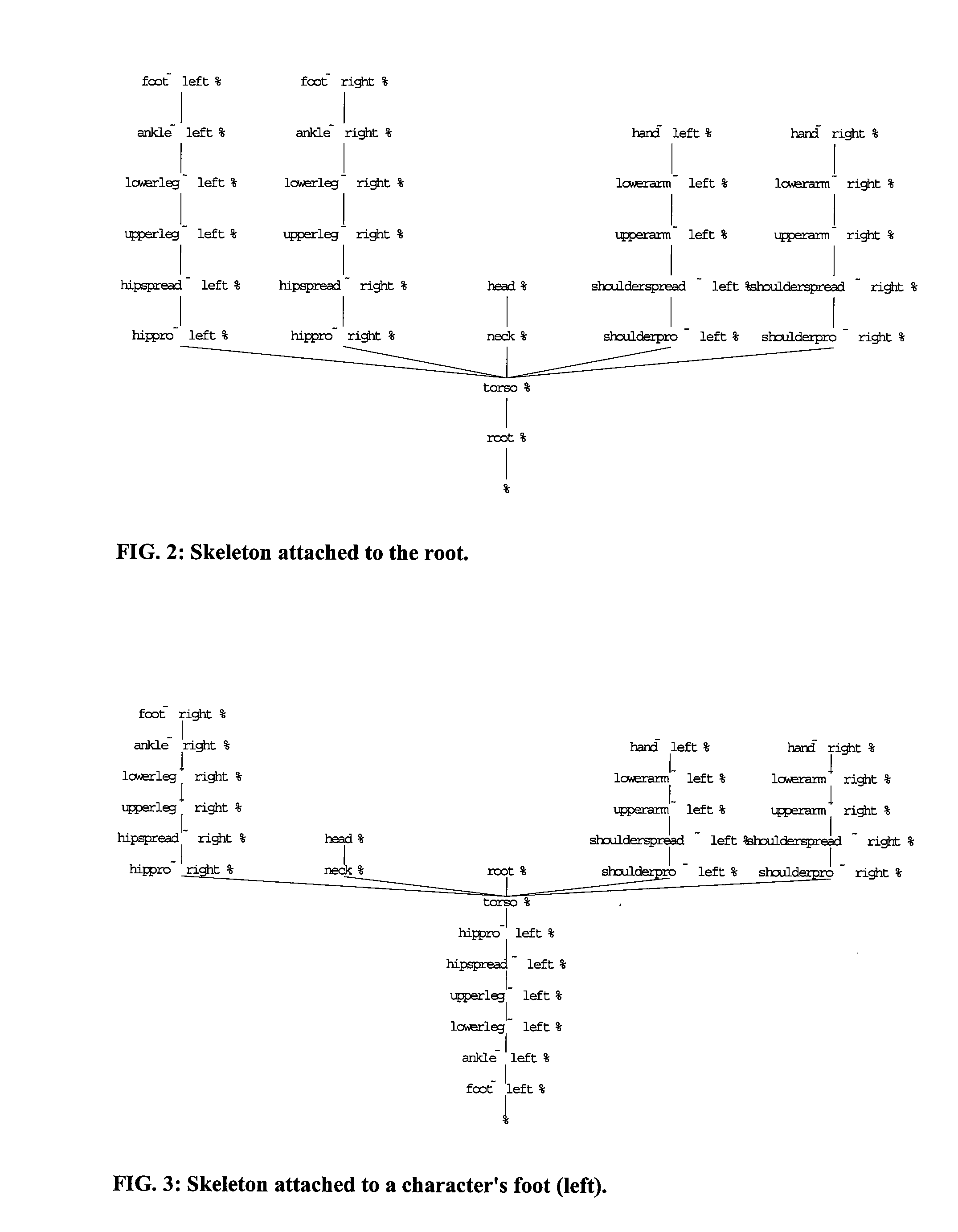

Adaptive contact based skeleton for animation of characters in video games

Equilibrium forces and momentum are calculated using character skeleton node graphs having variable root nodes determined in real time based on contacts with fixed objects or other constraints that apply external forces to the character. The skeleton node graph can be traveled backwards and in so doing it is possible to determine a physically possible reaction force. The tree can be traveled one way to apply angles and positions and then traveled back the other way ‘to calculate’ forces and moments. Unless the root is correct, however, reaction forces will not be able to be properly determined. The adaptive skeleton framework enables calculations of reactive forces on certain contact nodes to be made. Calculation of reactive forces enables more realistic displayed motion of a simulated character.

Owner:ELECTRONICS ARTS INC

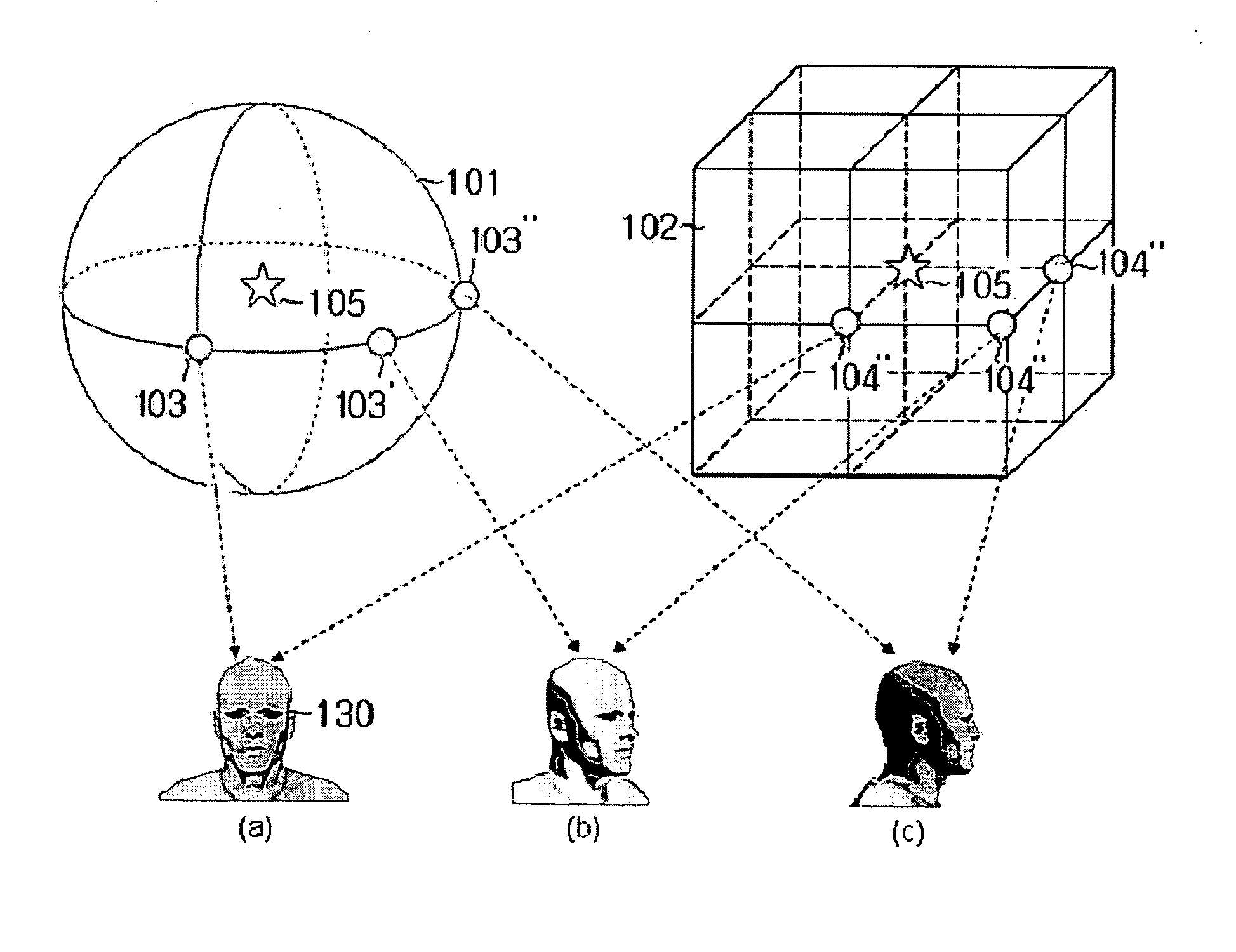

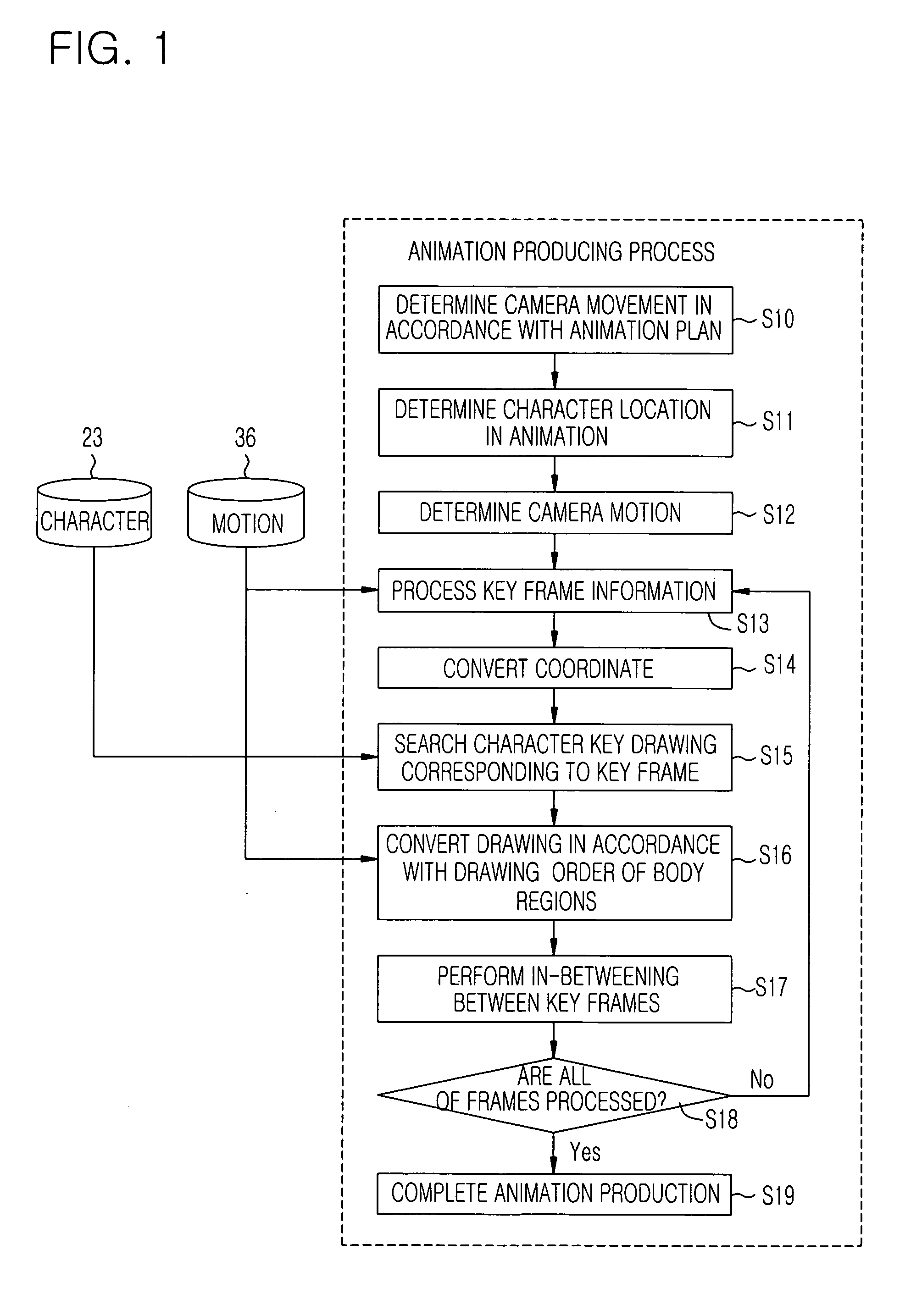

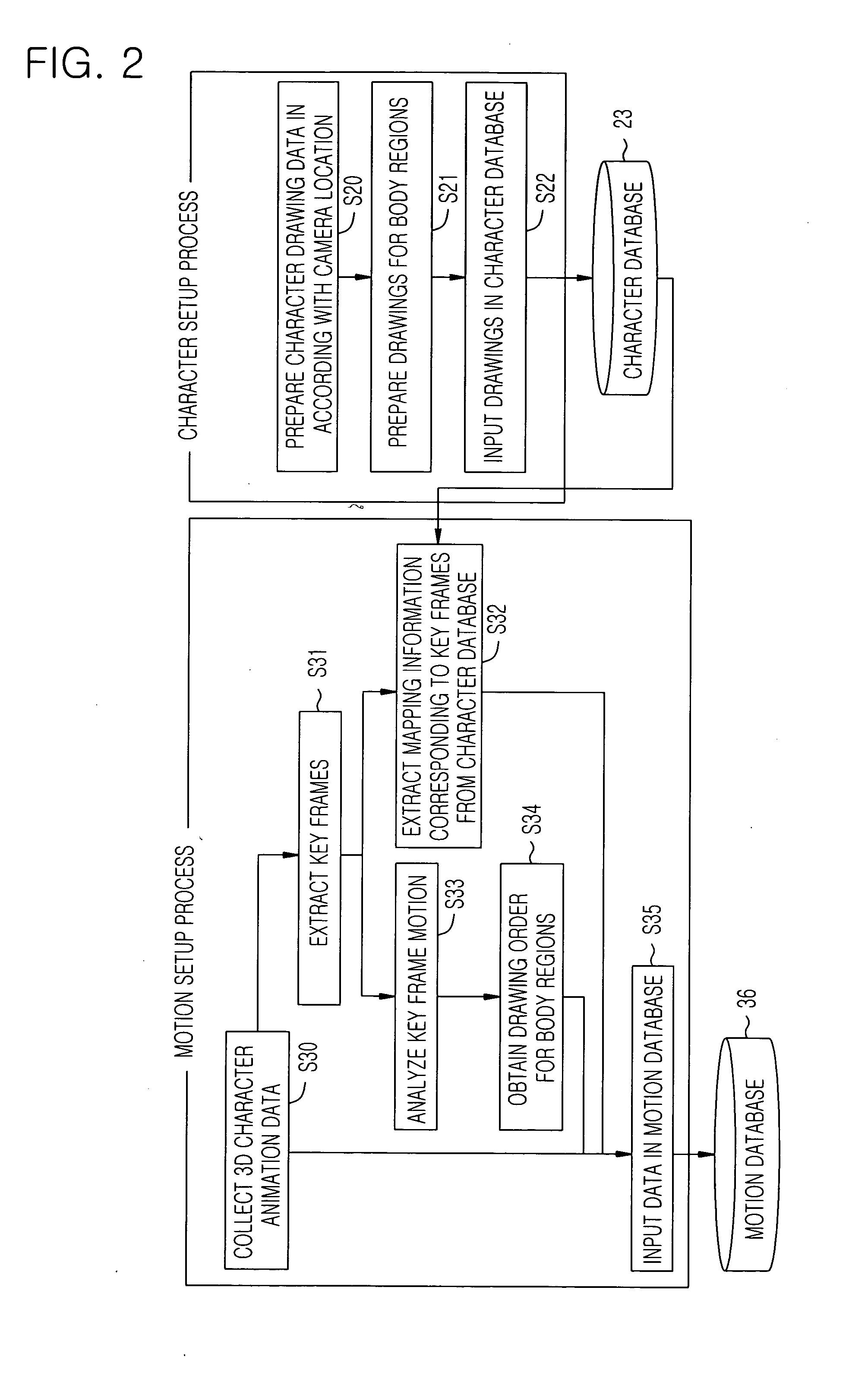

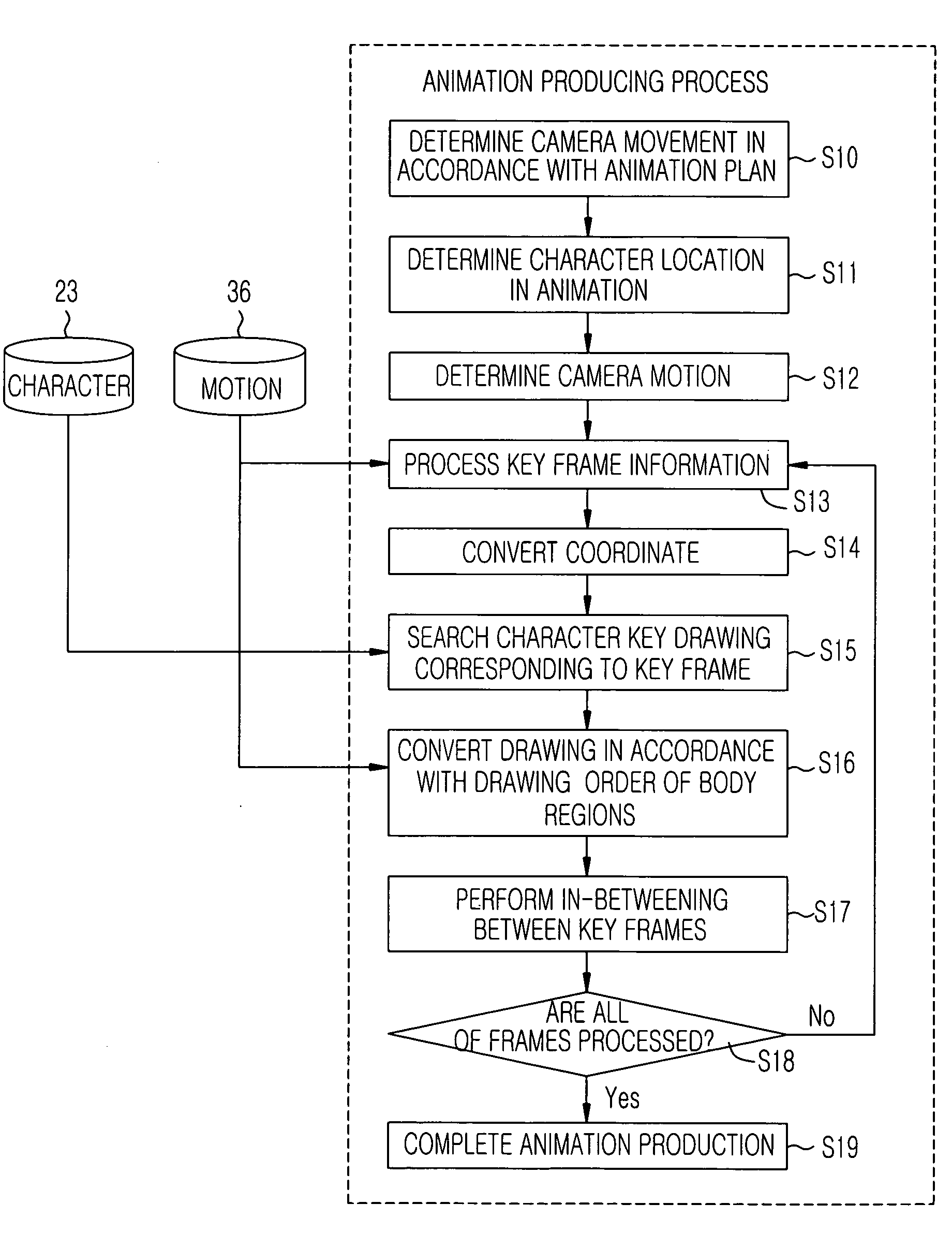

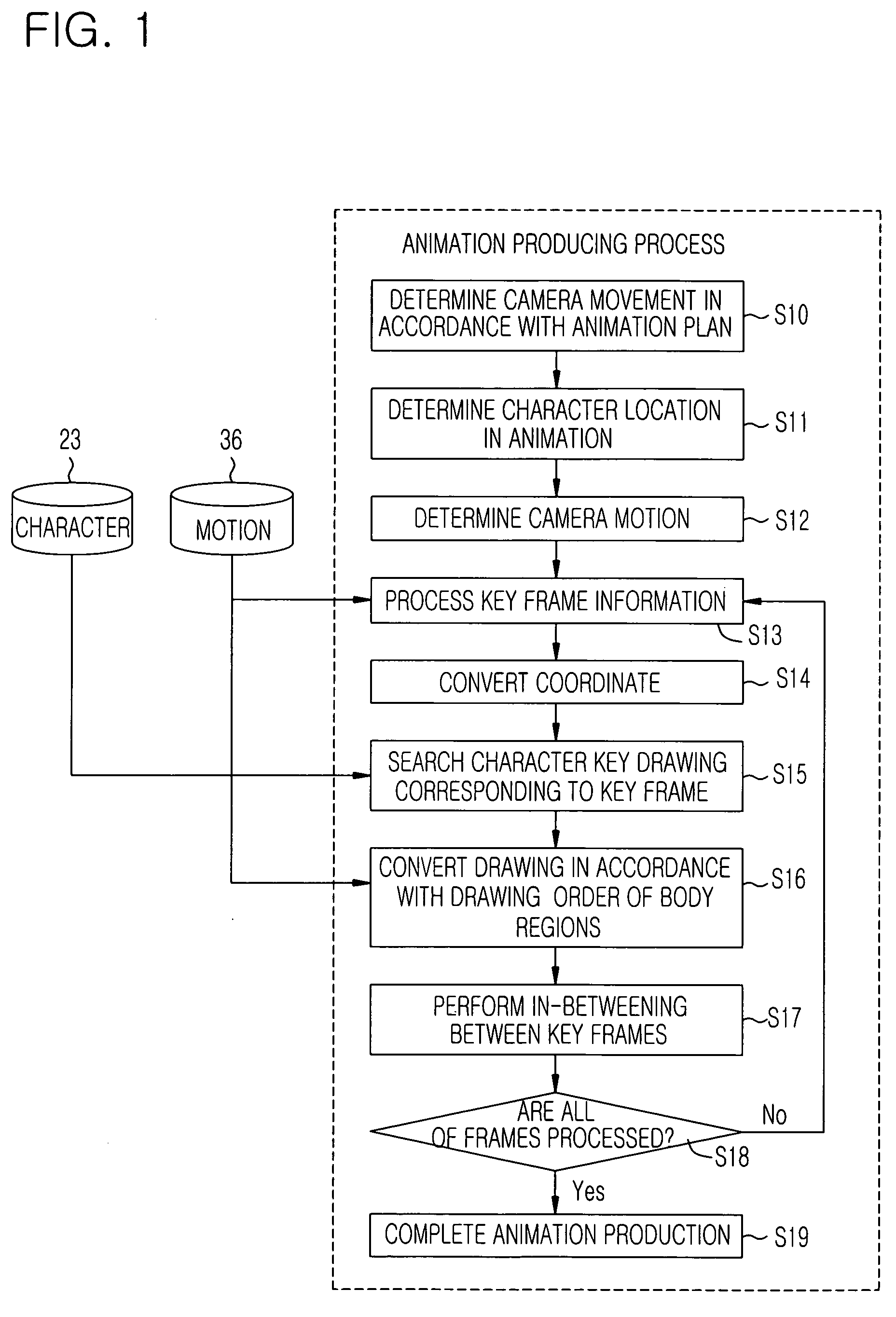

Method of representing and animating two-dimensional humanoid character in three-dimensional space

InactiveUS20070132765A1Efficient productionDrawback can be solvedVisual presentationAnimationComputer graphics (images)Three-dimensional space

There is provided a method of representing and animating a 2D (Two-Dimensional) character in a 3D (Three-Dimensional) space for a character animation. The method includes performing a pre-processing operation in which data of a character that is required to represent and animate the 2D character like a 3D character is prepared and stored and producing the character animation using the stored data.

Owner:ELECTRONICS & TELECOMM RES INST

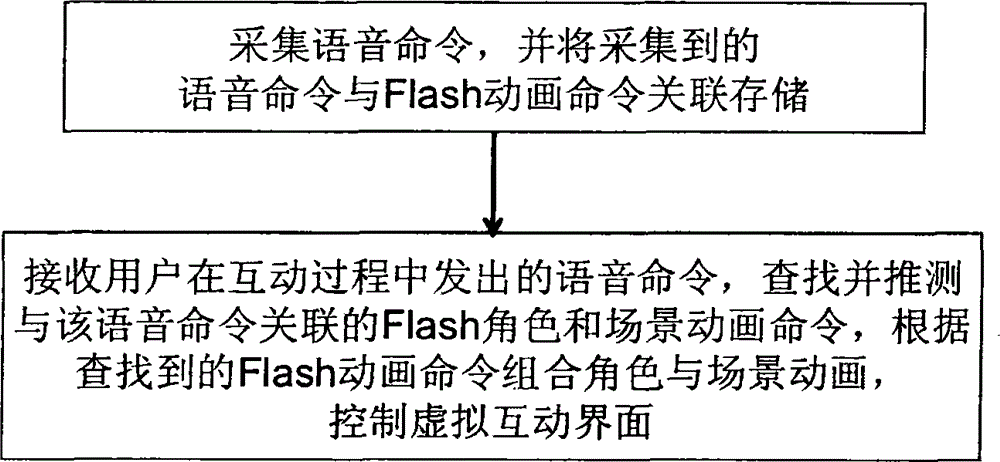

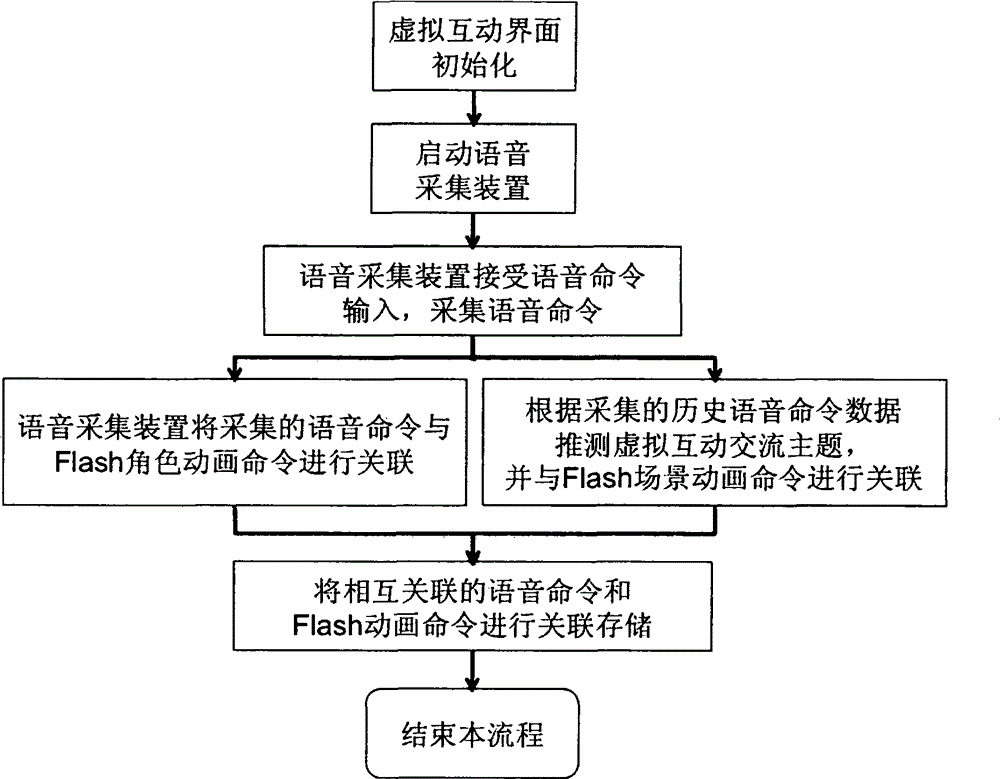

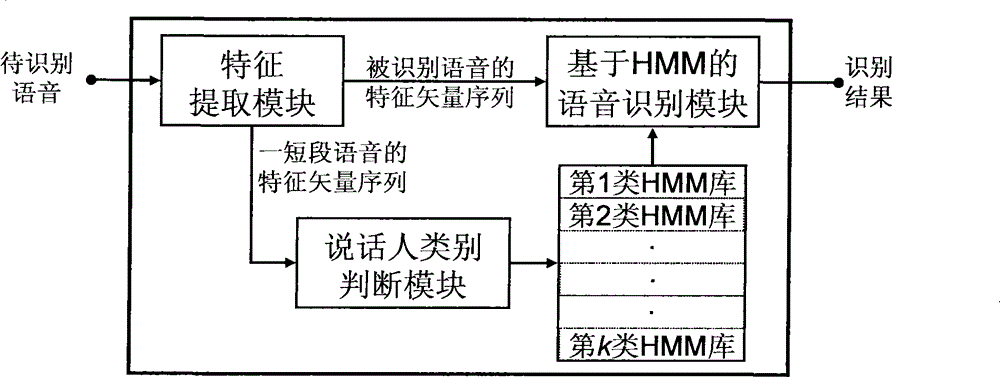

Voice-based control method and control system

InactiveCN102750125AEasy to controlReal lifeSpeech recognitionSound input/outputComputer hardwareAnimation

The invention relates to a voice-based control method and a control system. The voice-based control method comprises the following steps of: collecting a voice command and associatively storing the voice command and a character animation command; predicting a scene by aiming at the voice command according to a historical voice command, and associatively storing a communication theme and a scene animation command; receiving the voice command sent by a user in an interaction process and recognizing, and predicting the communication theme according to the voice command, and finding out the scene animation command associated with the communication theme through the communication theme; and combining the found character animation command and the scene animation command. By using the voice-based control method, a virtual interaction system can be controlled by utilizing voice so that a net citizen user can experience more real life in a virtual environment.

Owner:WUXI PARADISE SOFTWARE TECH

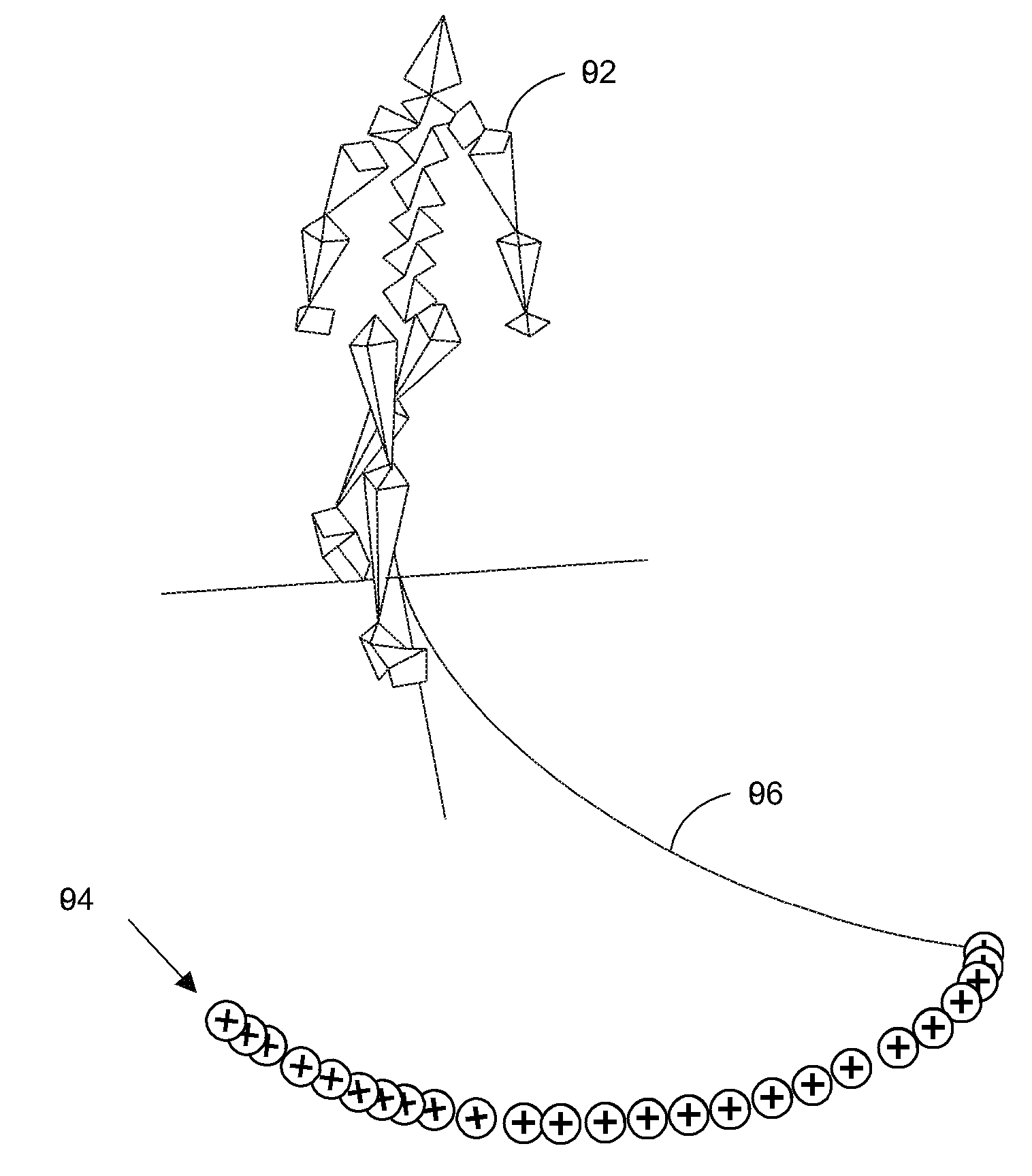

Behavioral motion space blending for goal-directed character animation

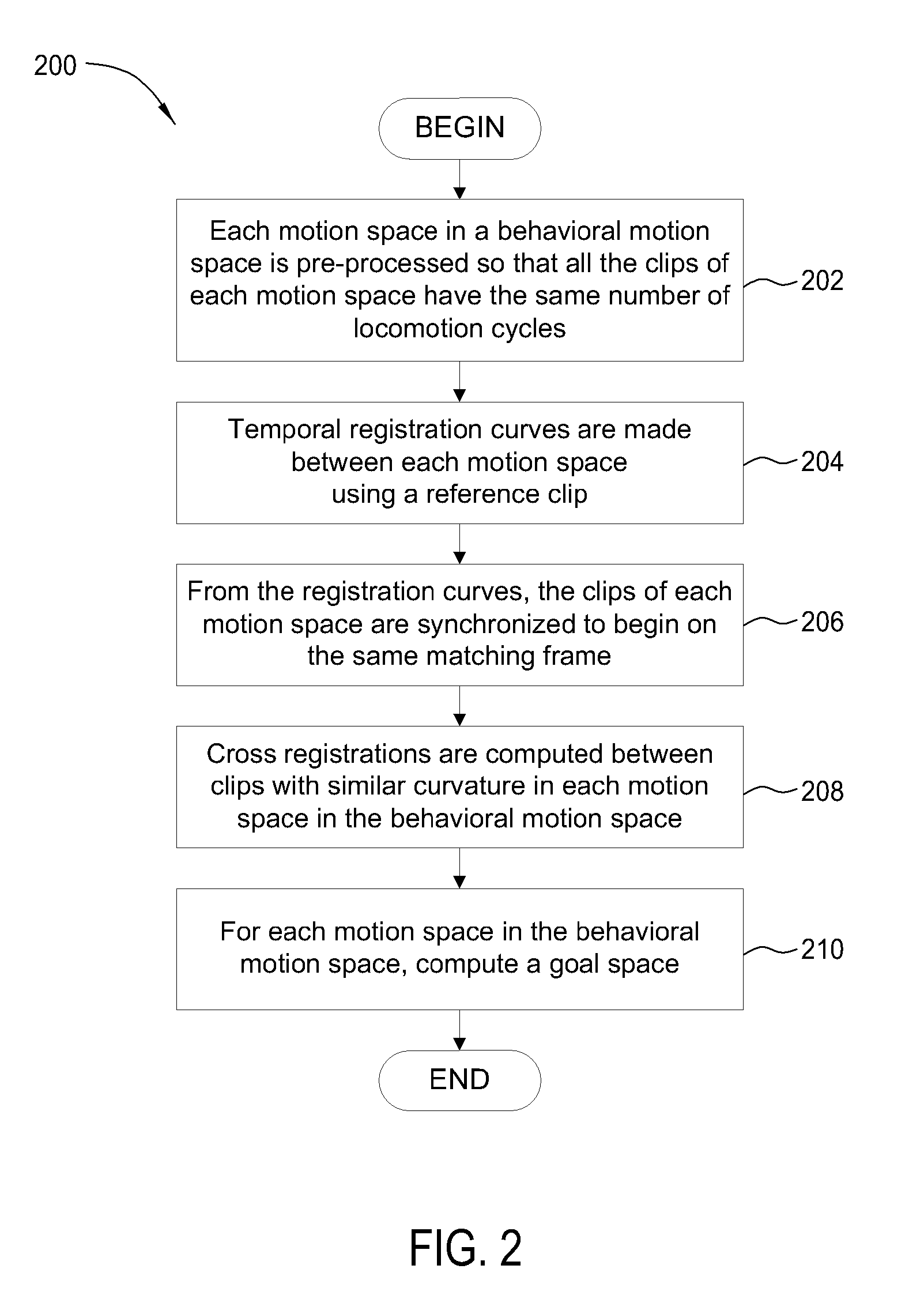

A method for rendering frames of an animation sequence using a plurality of motion clips included in a plurality of motion spaces that define a behavioral motion space. Each motion space in the behavioral motion space depicts a character performing a different type of locomotion, including running, walking, or jogging. Each motion space is pre-processed to that all the motion clips have the same number of periodic cycles. Registration curves are made between reference clips from each motion space to synchronic the motion spaces.

Owner:AUTODESK INC

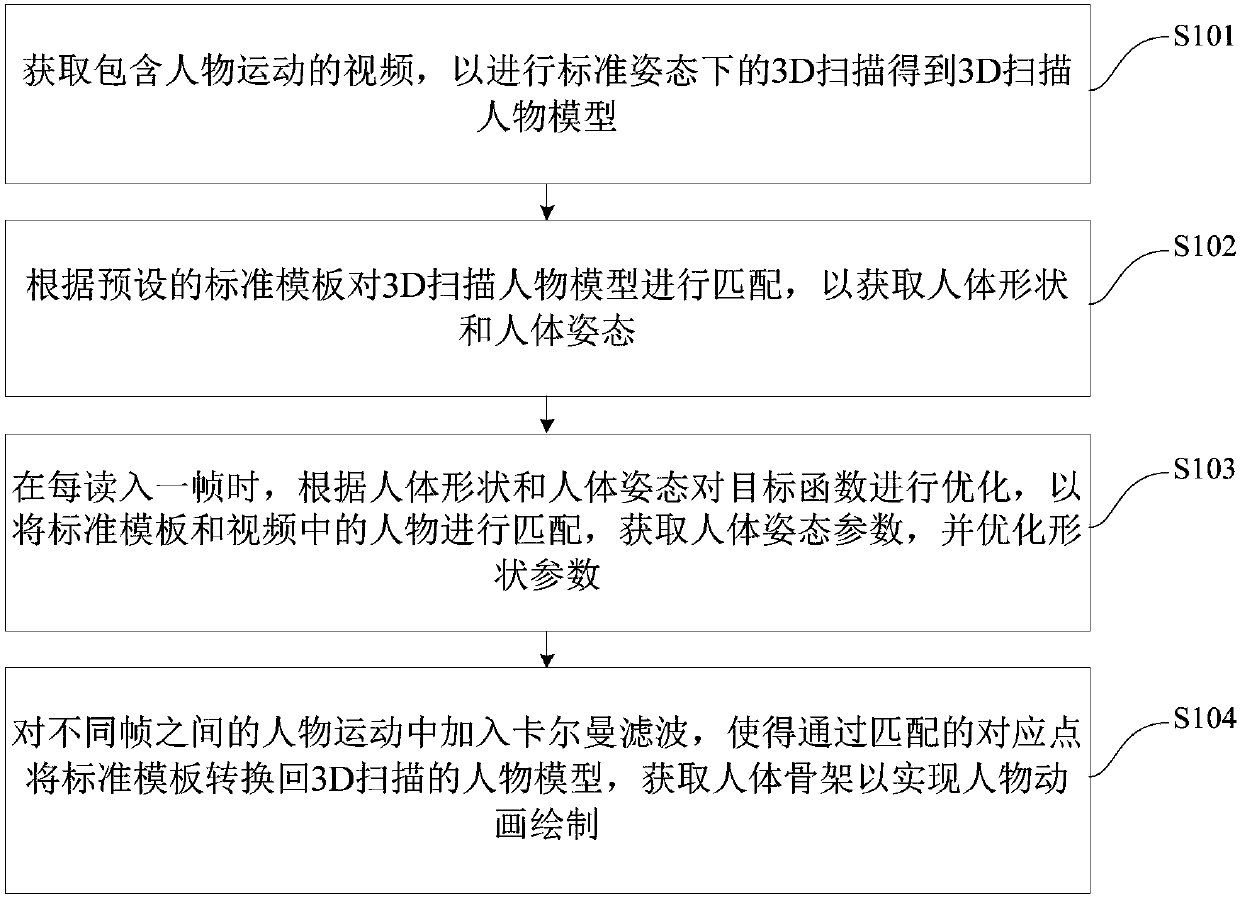

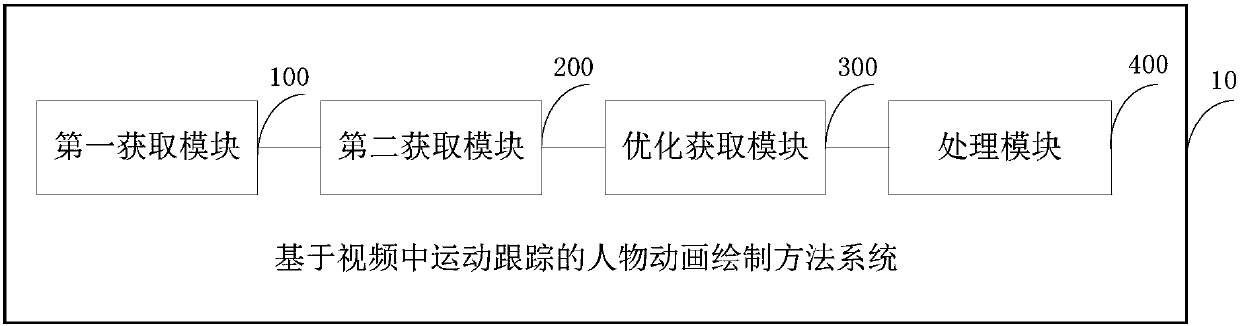

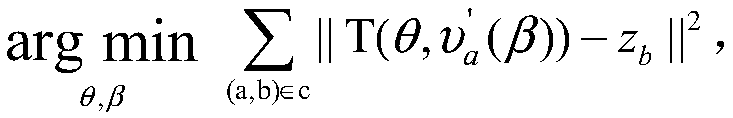

Character animation drawing method and system based on motion tracking in video

ActiveCN108022278AHigh precisionImprove accuracyImage enhancementDetails involving processing stepsHuman bodyAnimation

The invention discloses a character animation drawing method and system based on motion tracking in a video, wherein the method comprises the following steps: the video containing character movement is obtained, and 3D scanning character models are obtained via 3D scanning in a standard posture; the 3D scanning character models are matched with preset standard templates, and therefore human body shapes and human body postures are obtained. When each frame is read in, an objective function is optimized according to the human body shapes and human body postures to match the standard templates with characters in the video, human body posture parameters can be obtained, and optimization of shape parameters can be realized; Kalman filtering is added to the character motions between different frames, so that the standard templates are converted back to the 3D scanning character models via corresponding matched points, and human skeletons are obtained to realize character animation drawing. This method can help effectively improve accuracy and reliability of animation production and has broad application prospects; the method is simple and easy to implement.

Owner:TSINGHUA UNIV

Method of representing and animating two-dimensional humanoid character in three-dimensional space

InactiveUS7423650B2Efficient productionDrawback can be solvedVisual presentationAnimationAnimationThree-dimensional space

There is provided a method of representing and animating a 2D (Two-Dimensional) character in a 3D (Three-Dimensional) space for a character animation. The method includes performing a pre-processing operation in which data of a character that is required to represent and animate the 2D character like a 3D character is prepared and stored and producing the character animation using the stored data.

Owner:ELECTRONICS & TELECOMM RES INST

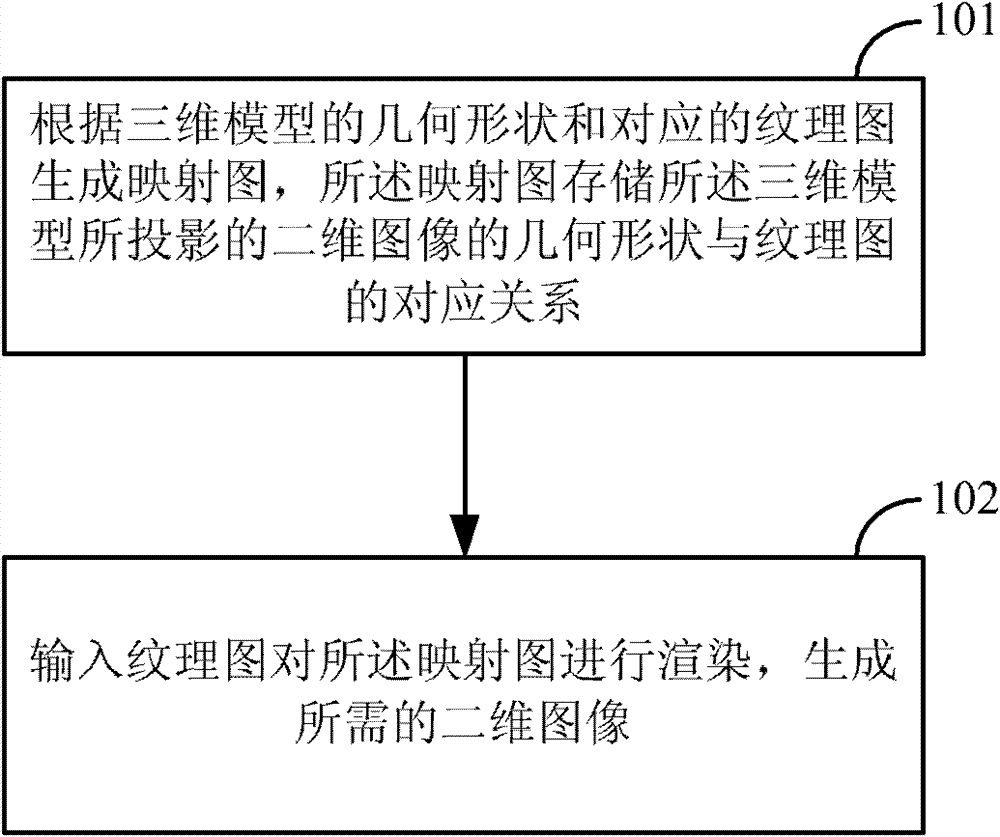

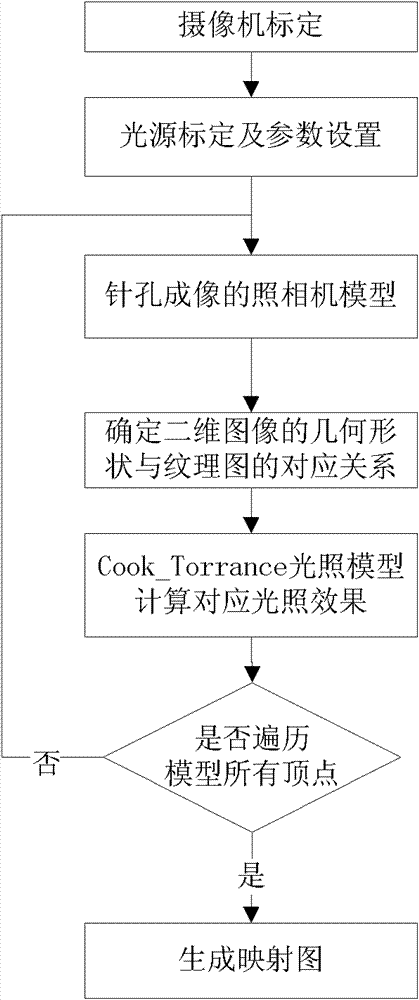

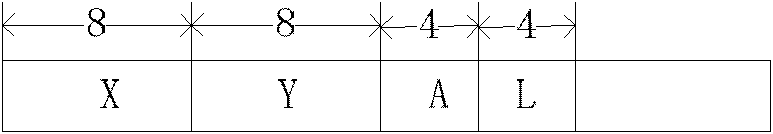

Method and device for generating two-dimensional images

ActiveCN102819855AEasy to renderSave bandwidthFilling planer surface with attributesAnimation3D modeling

The invention discloses a method and a device for generating two-dimensional images. The method includes: generating a map according to the geometrical shape of a three-dimensional model and a corresponding texture map, wherein the map stores the corresponding relation between the texture map and the geometrical shape of a two-dimensional image projected by the three-dimensional model; and inputting the texture map to render the map so as to generate the two-dimensional image. When the map is generated, the three-dimensional model is projected by a camera model of pinhole imaging, the coordinate of the texture map is directly obtained during projecting, the intensity of corresponding texture points is processed by the aid of an illumination model, and transparency of the corresponding texture points is processed by designing a learning strategy of two-step projection. The corresponding relation between the geometrical shape of the two-dimensional mapped image and the texture map can be well maintained, different final images can be rendered conveniently by changing different texture maps, flexibility is improved greatly, and the network bandwidth is further saved by only transmitting the texture map as compared with that of the prior art by transmitting the whole character animation sequence.

Owner:BEIJING PEOPLE HAPPY INFORMATION TECH

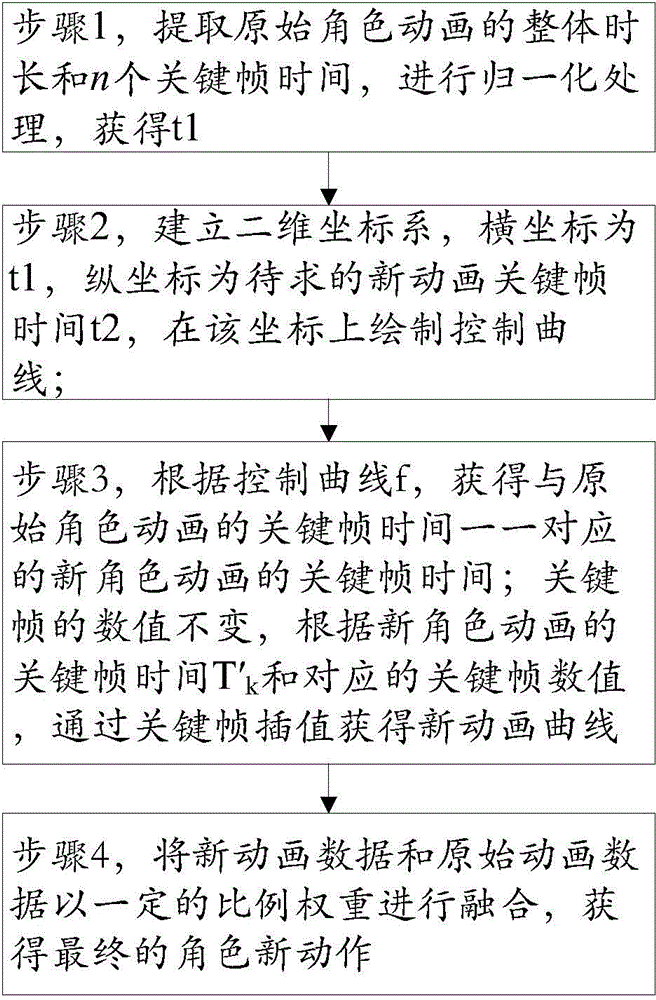

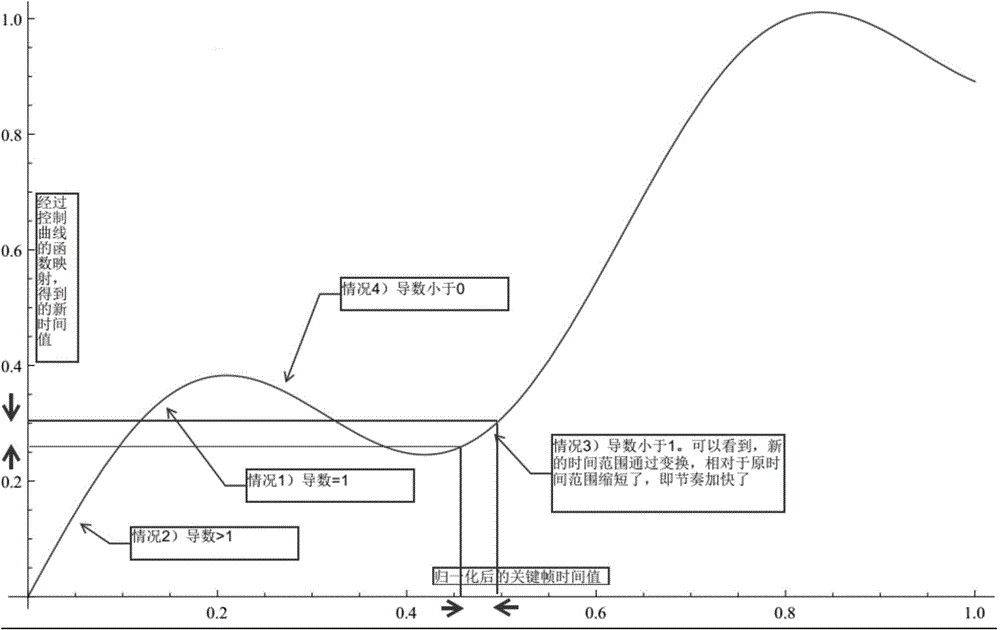

Nonlinear modification method for 3D character animation

ActiveCN104574481AEasy to implementReliable implementationDetails involving 3D image dataAnimationAlgorithmAnimation

The invention discloses a nonlinear modification method for a 3D character animation. By using the nonlinear modification method, local acceleration / deceleration and other nonlinear rhythm changes can be performed on the basis of original character animation data, and even inverted sequencing of original actions can be achieved. According to time data of a keyframe sample and the relation between a control curve and the rhythm changes, character animation of which local rhythm is changeable can be obtained through the processes of normalization, mapping of the control curve and inverted normalization, and therefore the nonlinear modification method is easy to implement and reliable.

Owner:北京春天影视科技有限公司

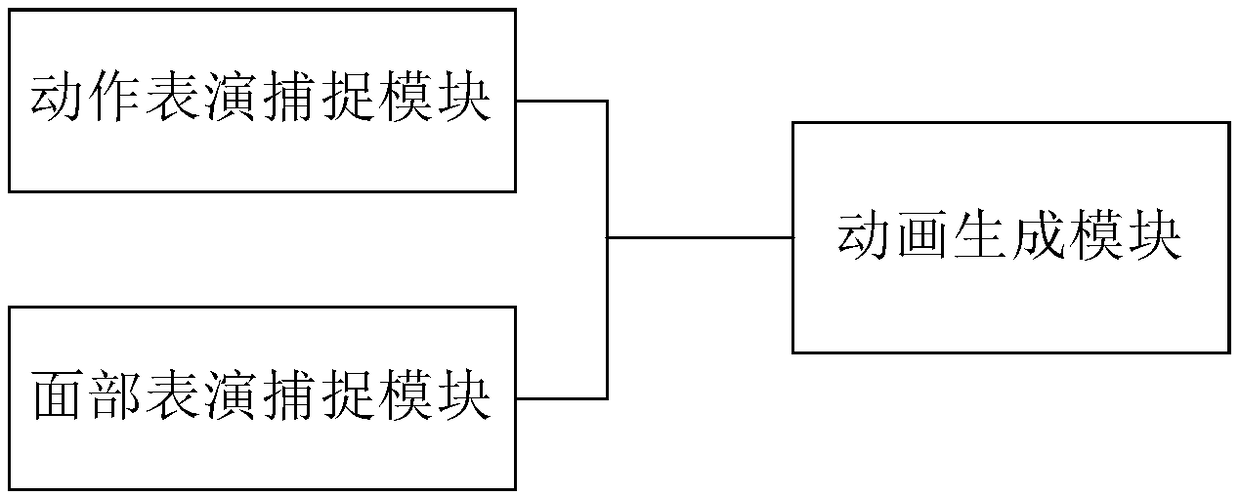

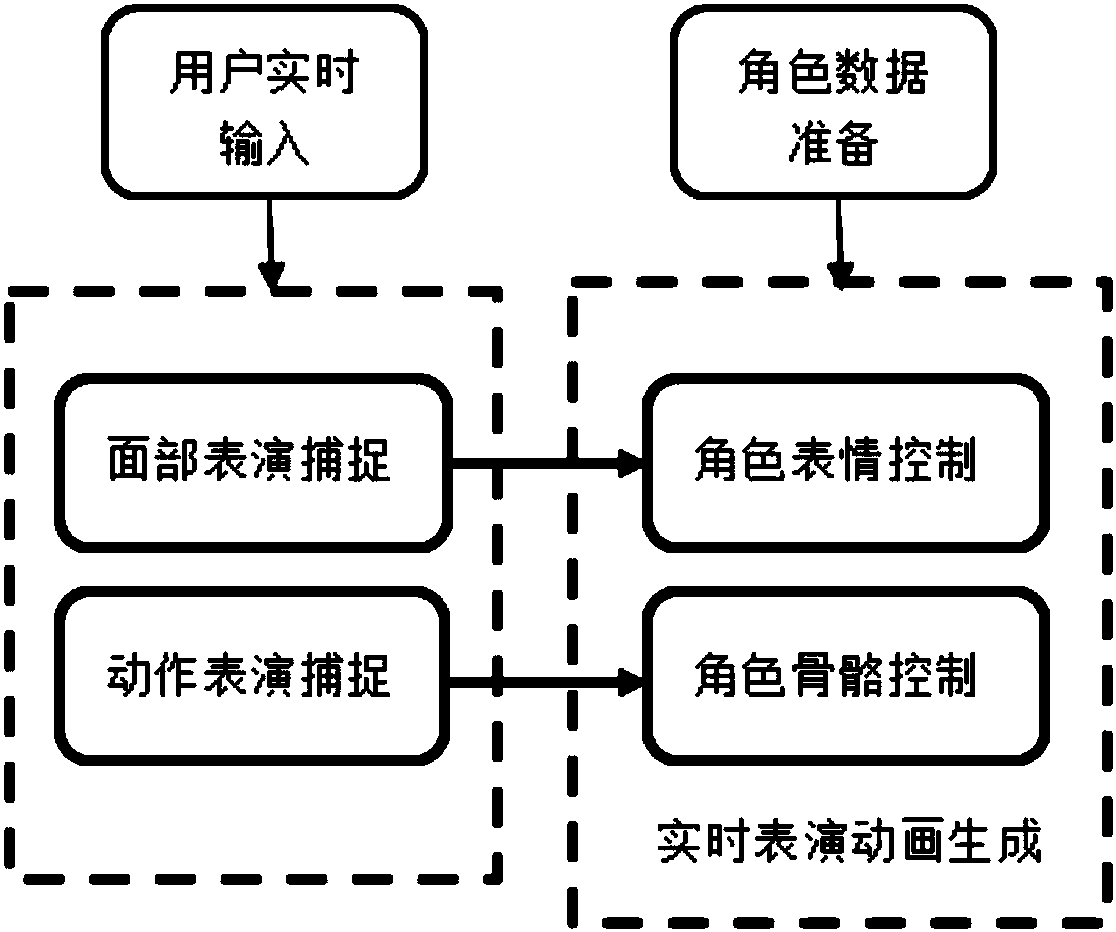

UE (Unreal Engine)-based performance capture system without marker

InactiveCN108564642ASolve the sense of intrusionAnimationAcquiring/recognising facial featuresGraphicsHuman body

The invention relates to the field of image processing, provides a UE (Unreal Engine)-based performance capture system without a marker, and aims to solve the problem that in methods of simultaneouslycapturing actions and expressions of performers to generate character animations, marker points cause intrusive feeling on the performers, and interference is enabled to be on performance. The systemincludes: a facial-performance capturing module, which is configured to collect facial image data of a performer, and calculate weight parameters of a facial expression of the performer according tothe facial image data; an action performance capturing module, which is configured to collect bone image data of the above performer, and determine human body posture parameters of the above performeraccording to the bone image data; and an animation generation module, which is configured to utilize a UE graphics program to generate an action and an expression of a character 3D model according tothe weight parameters of the above facial expression and the above human body posture parameters. The system realizes capturing of performer actions and expressions, and gives a virtual character realistic and reasonable actions and vivid expressions according to action and expression data.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

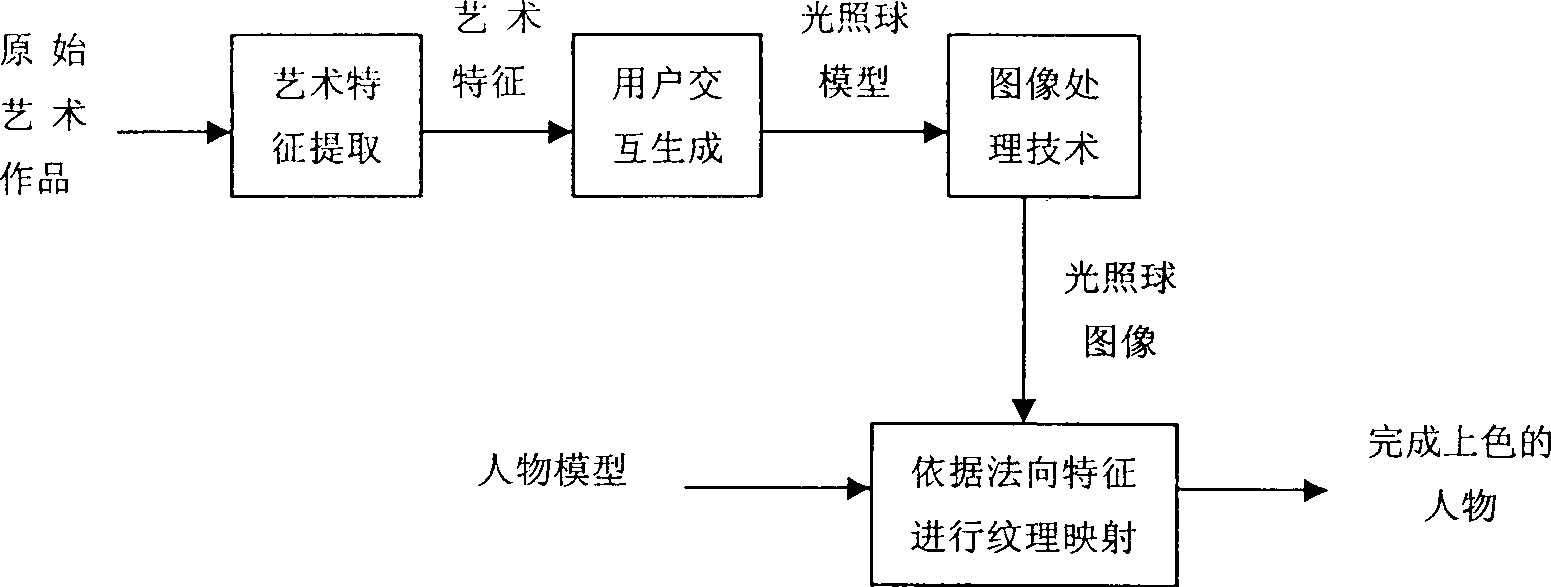

Computer assisted character animation drawing method based on light irradiated ball model

InactiveCN101477705AGlobally consistentSolve the problem of not being able to flexibly reflect the expressiveness of the artist's worksAnimation3D-image renderingAnimationComputer-aided

The invention discloses a method for rendering character animation in an artistic manner with the assistance of a computer. The method is based on an illumination spherical model. The invention is characterized in that the illumination spherical model which extracts and creates an artistic style from a work of art is applied to rendering of a character model, so that coloring of an artistic style is realized conveniently and rapidly, and character animation can be manufactured as if by non-photorealistic rendering. By drawing on a method for rendering information about illumination on a sphere, the invention ensures that when a complex object which is uniform in illumination material is rendered, the illumination is consistent in an all-round manner. Because of extraction and application of the illumination spherical model, character rendering of the artistic style can be realized conveniently and rapidly only through simple interactive operation, so that rendering efficiency is improved. The invention solves the problems that the prior computer assisted animation has low rendering efficiency, and the traditional image technology cannot give expression to works of an artist flexibly.

Owner:ZHEJIANG UNIV

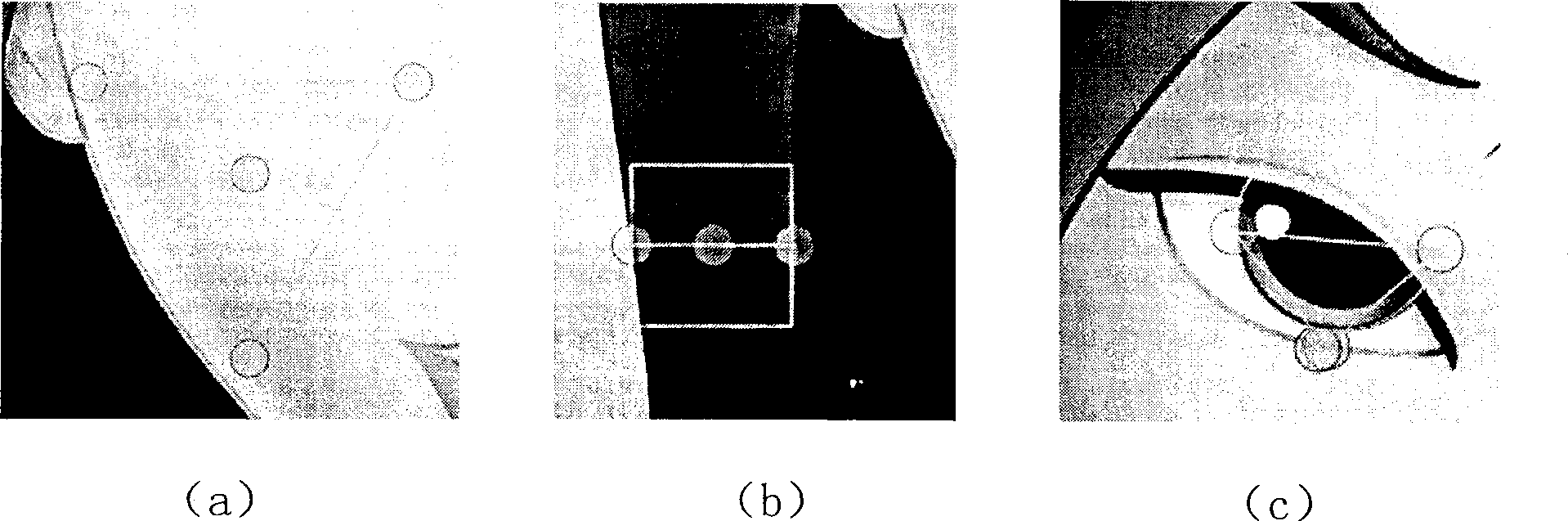

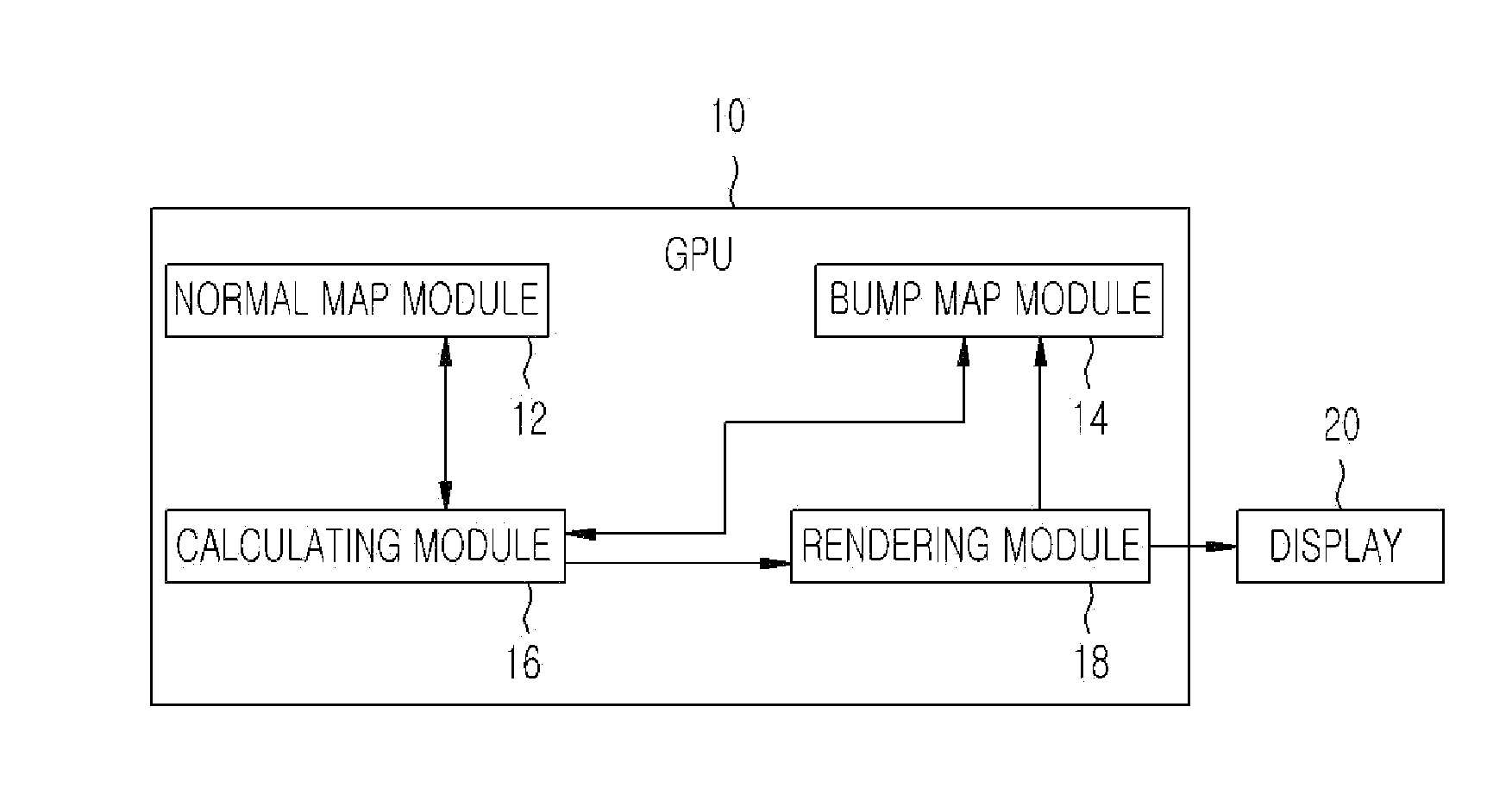

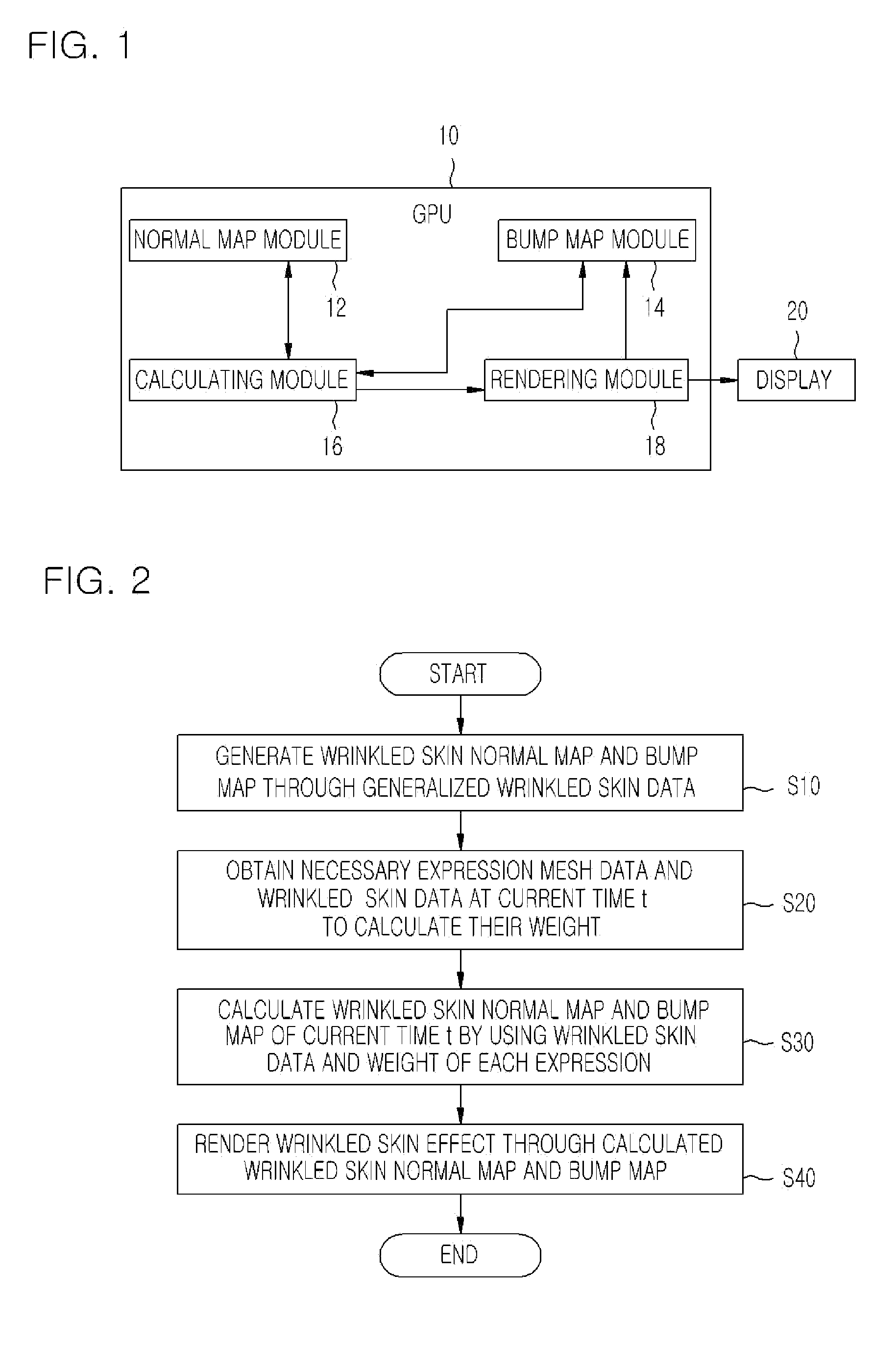

Method and apparatus for rendering efficient real-time wrinkled skin in character animation

Provided are an apparatus and method for providing the optimized speed and realistic expressions in real time while rendering wrinkled skin during character animation. The wrinkled skin at each expression is rendered using a normal map and a bump map. Generalized wrinkled skin data and weight data are generated by calculating a difference of the normal and bump maps and other normal and bump maps without expressions. Then, the wrinkled skin data of a desirable character is generated using the generalized wrinkle skin data at each expression, and then the normal and bump maps expressing a final wrinkled skin are calculated using the weight at each expression in a current animation time t. Therefore, the wrinkled skin in animation is displayed.

Owner:ELECTRONICS & TELECOMM RES INST

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com