Patents

Literature

73 results about "Video texture" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

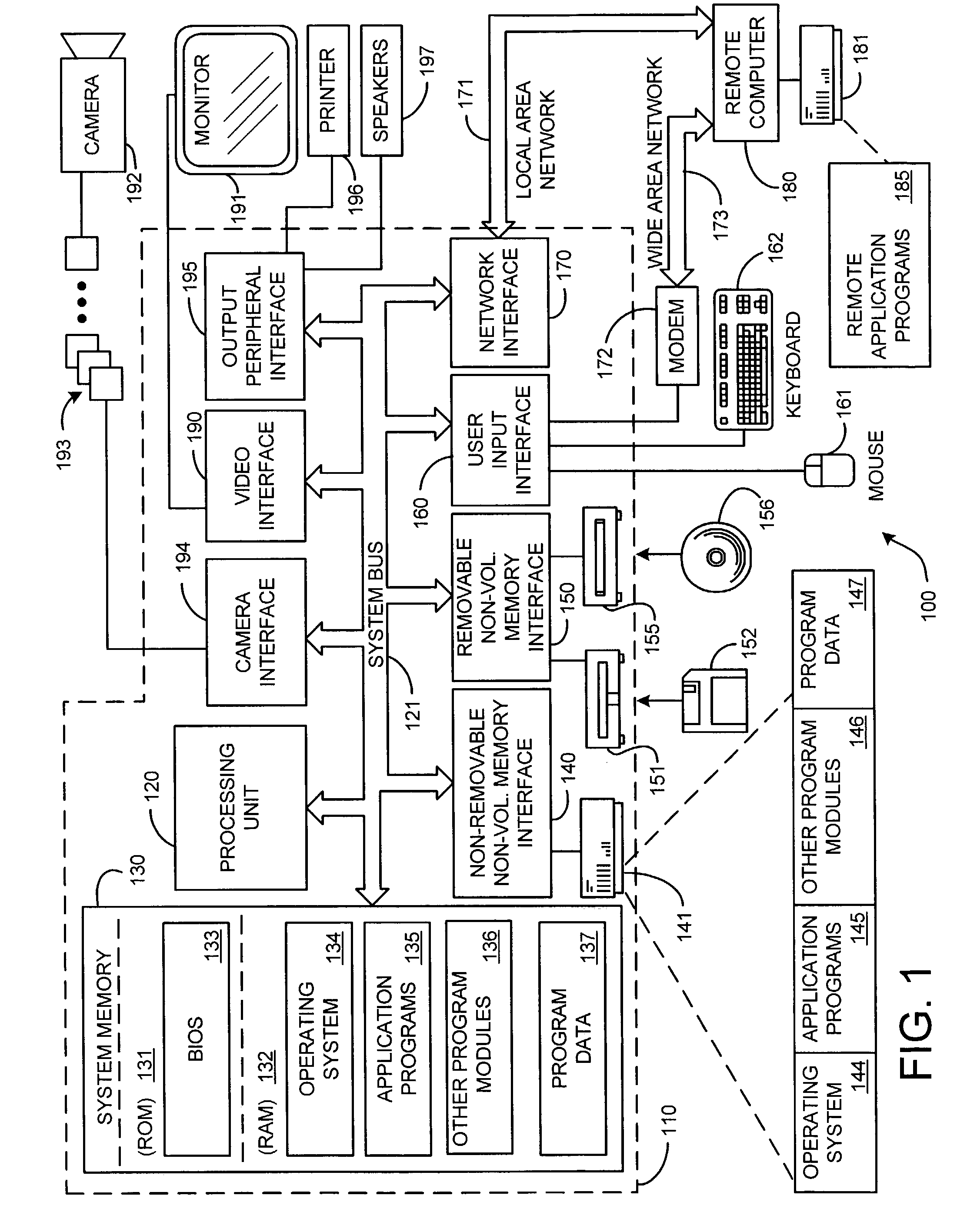

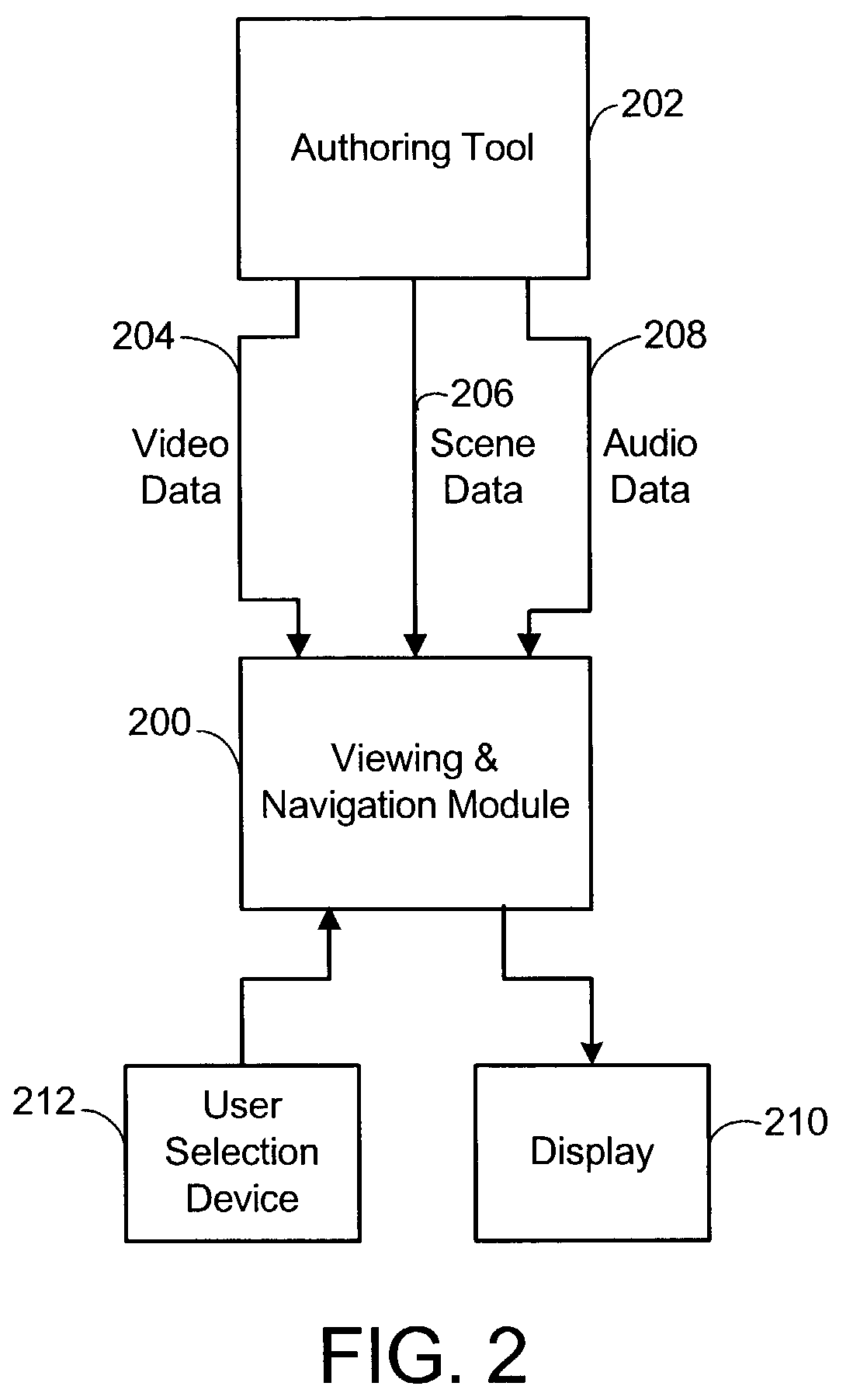

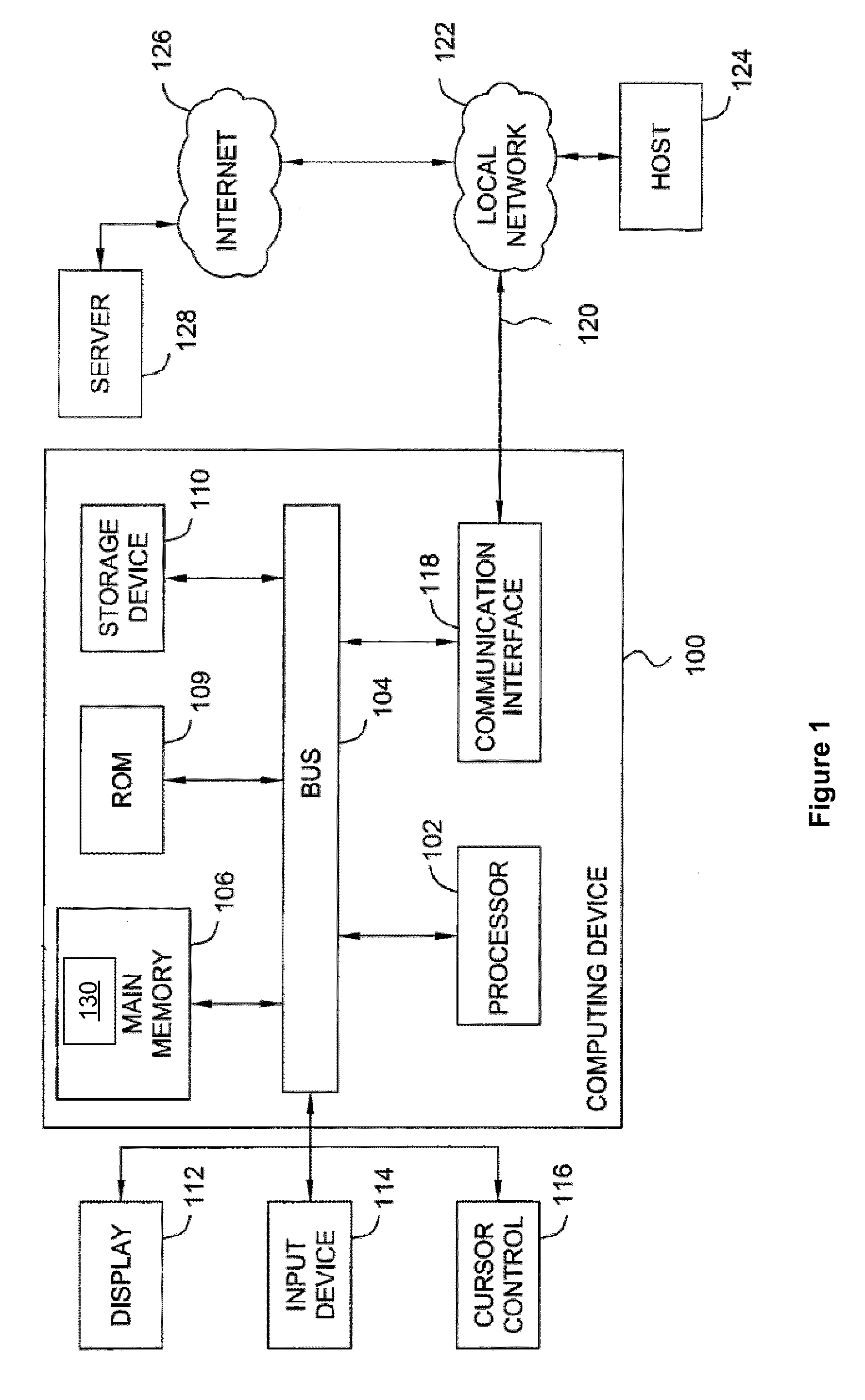

System and process for viewing and navigating through an interactive video tour

InactiveUS20050283730A1Unprecedented sense of presenceImprove experienceTelevision system detailsDigital data information retrievalInteractive videoComputer science

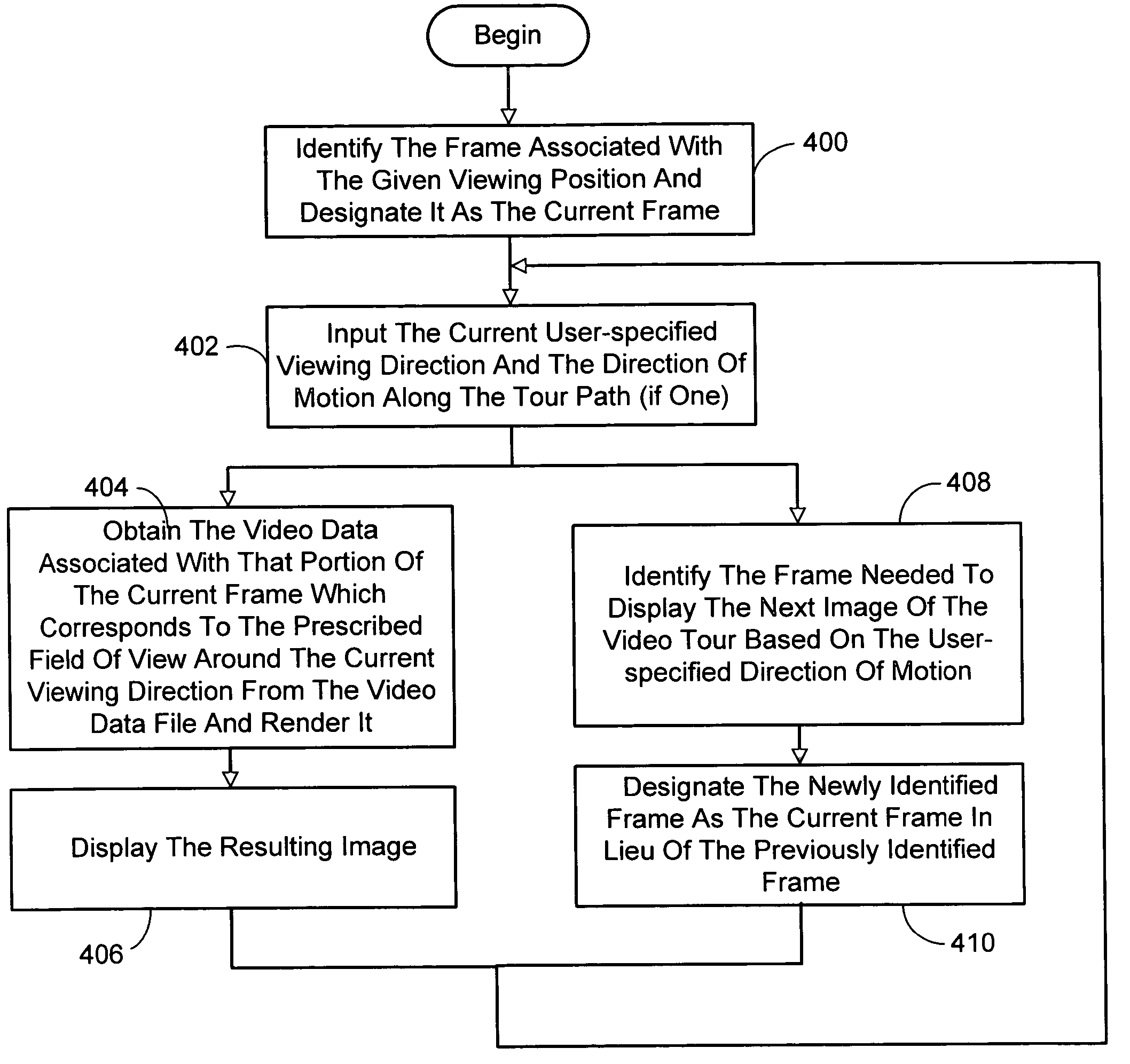

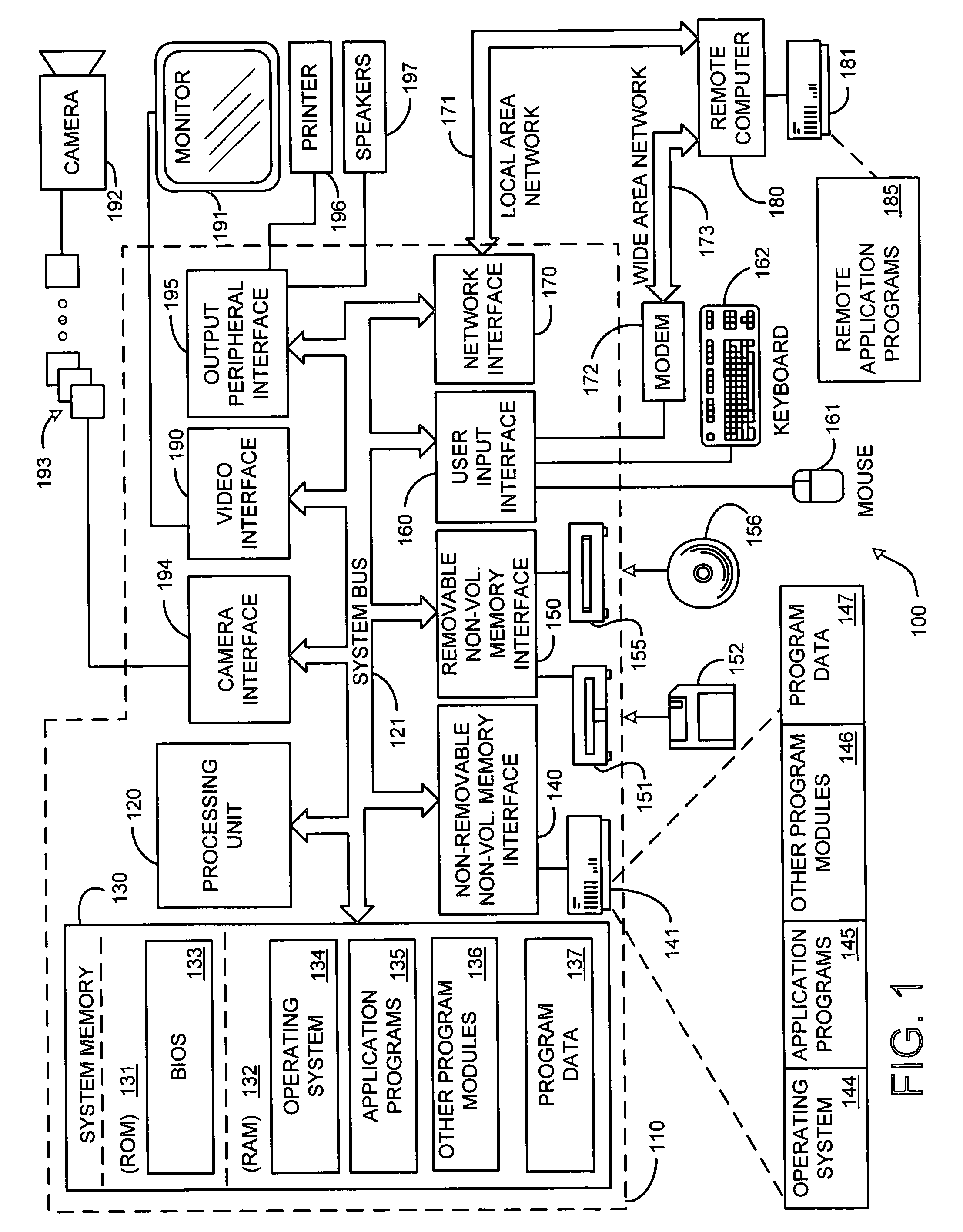

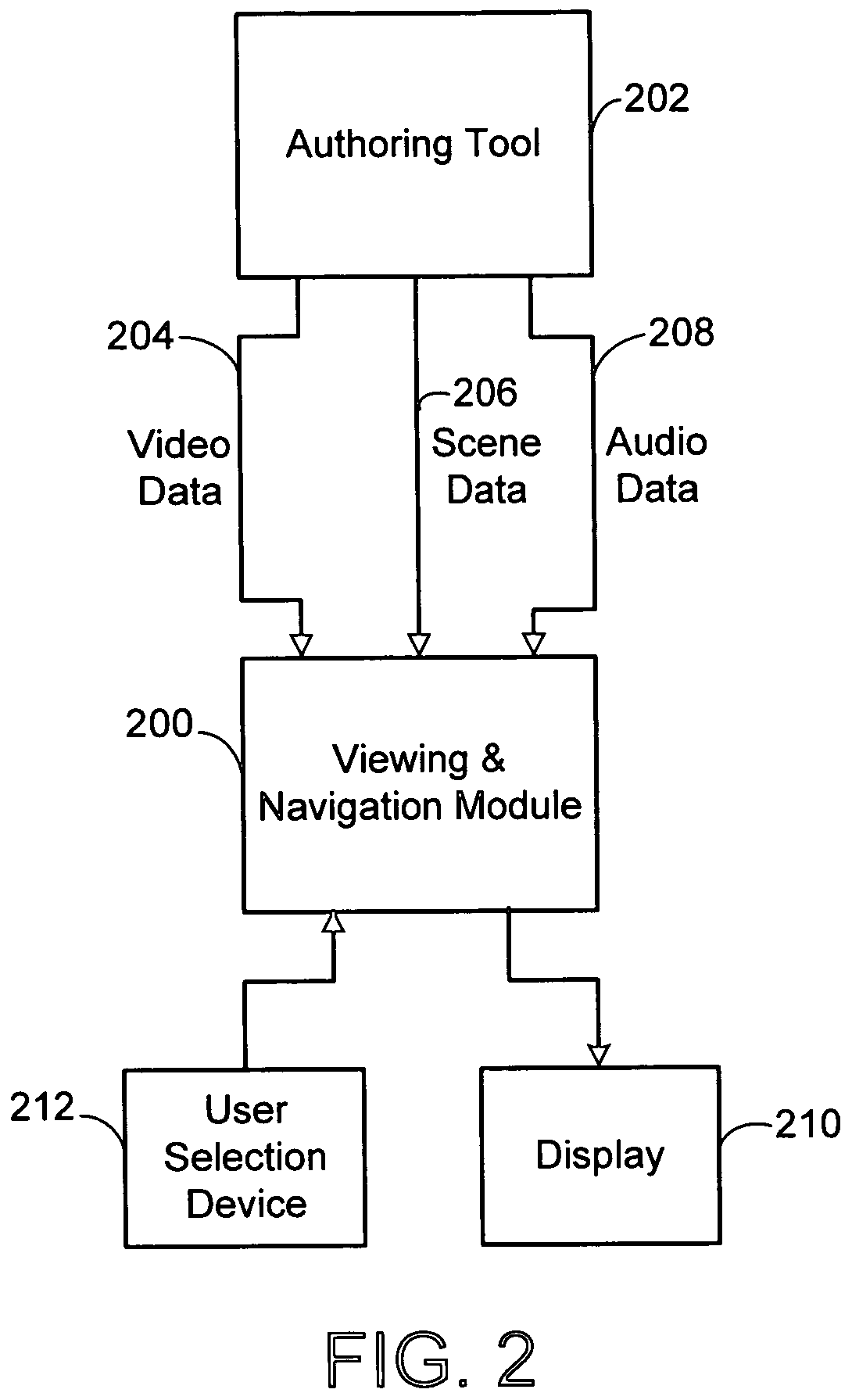

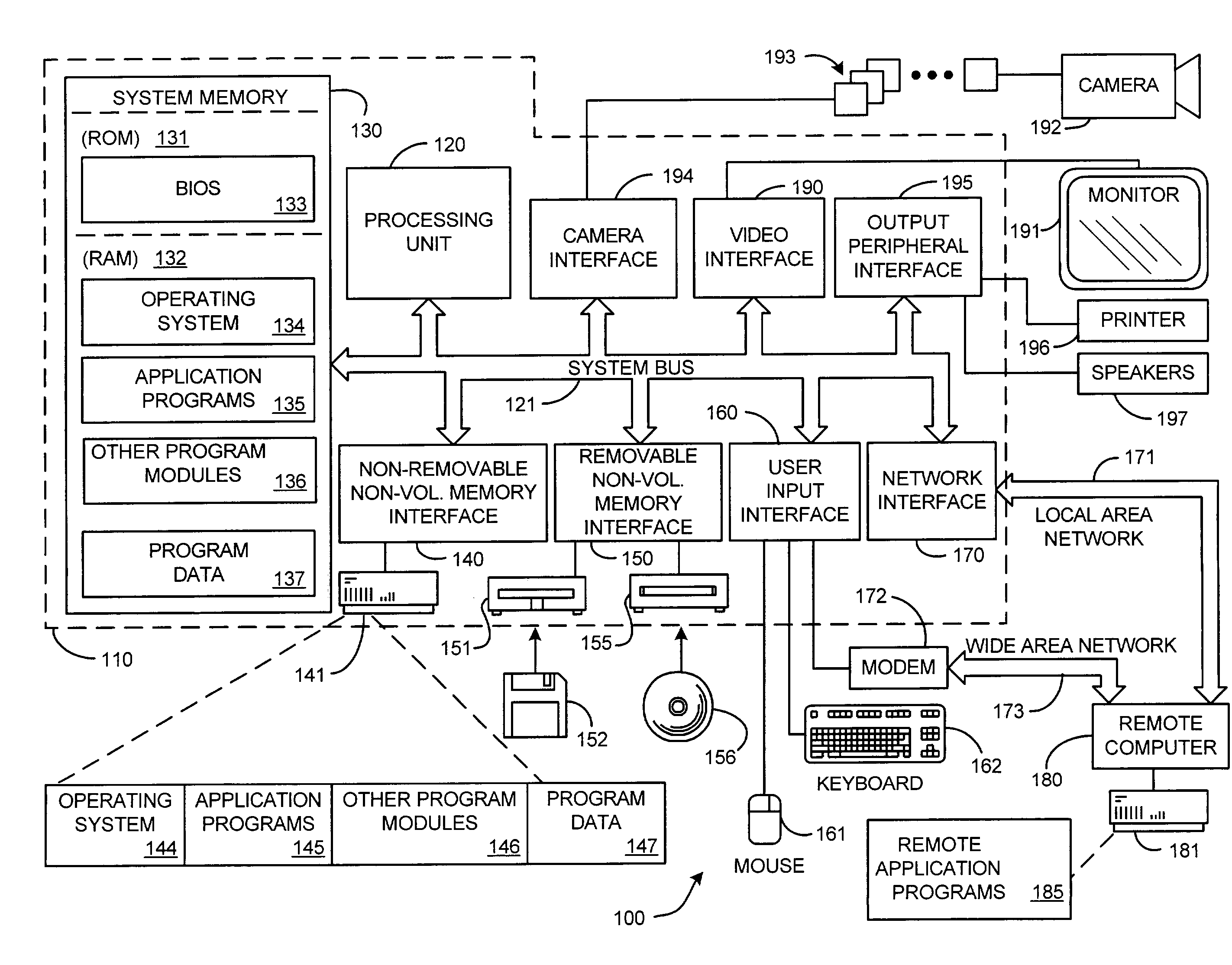

A system and process for providing an interactive video tour of a tour site to a user is presented. In general, the system and process provides an image-based rendering system that enables users to explore remote real world locations, such as a house or a garden. The present approach is based directly on filming an environment, and then using image-based rendering techniques to replay the tour in an interactive manner. As such, the resulting experience is referred to as Interactive Video Tours. The experience is interactive in that the user can move freely along a path, choose between different directions of motion at branch points in the path, and look around in any direction. The user experience is additionally enhanced with multimedia elements such as overview maps, video textures, and sound.

Owner:MICROSOFT TECH LICENSING LLC

System and process for viewing and navigating through an interactive video tour

ActiveUS6968973B2Unprecedented sense of presenceImprove experienceDigital data information retrievalCoin-freed apparatus detailsInteractive videoSystem usage

A system and process for providing an interactive video tour of a tour site to a user is presented. In general, the system and process provides an image-based rendering system that enables users to explore remote real world locations, such as a house or a garden. The present approach is based directly on filming an environment, and then using image-based rendering techniques to replay the tour in an interactive manner. As such, the resulting experience is referred to as Interactive Video Tours. The experience is interactive in that the user can move freely along a path, choose between different directions of motion at branch points in the path, and look around in any direction. The user experience is additionally enhanced with multimedia elements such as overview maps, video textures, and sound.

Owner:MICROSOFT TECH LICENSING LLC

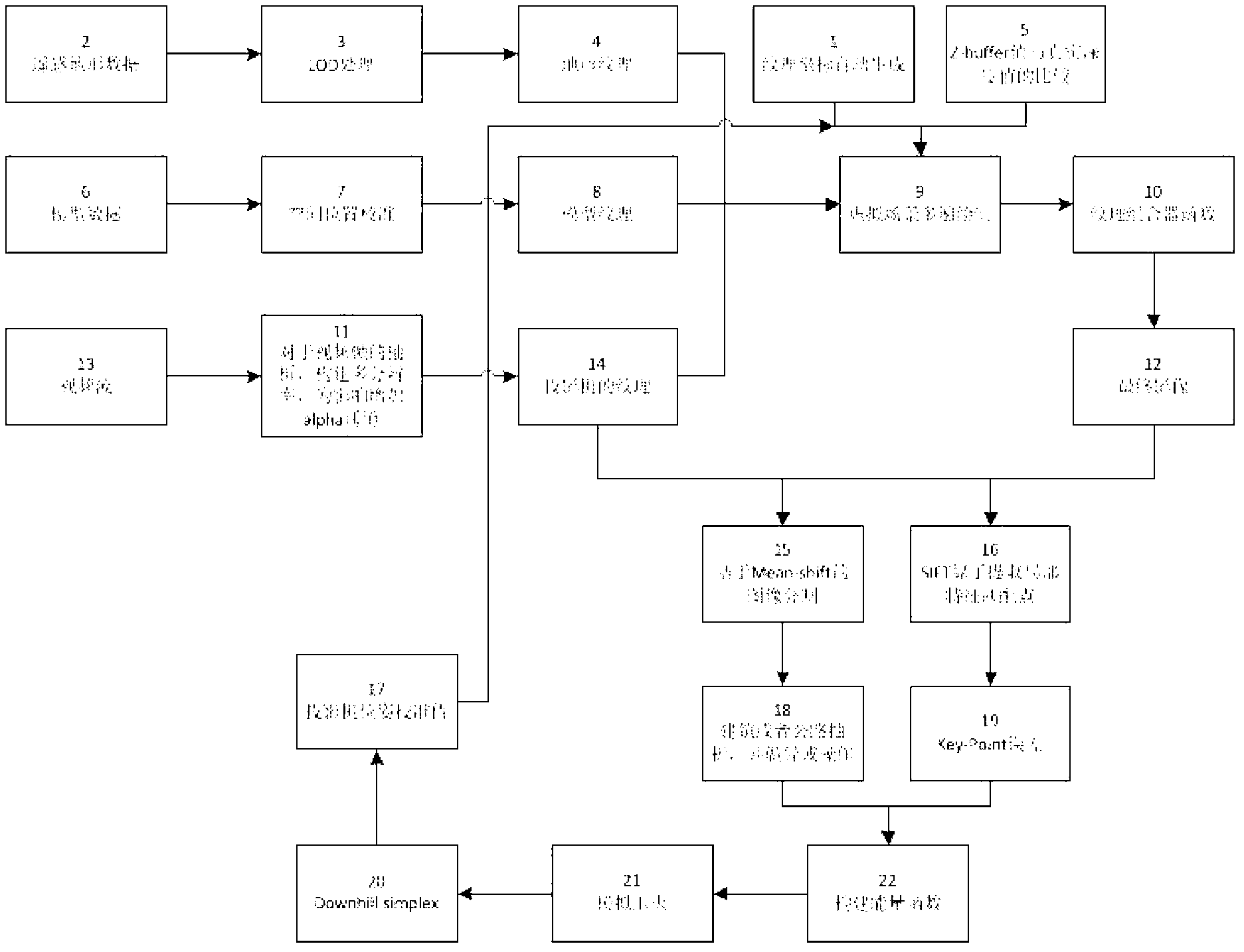

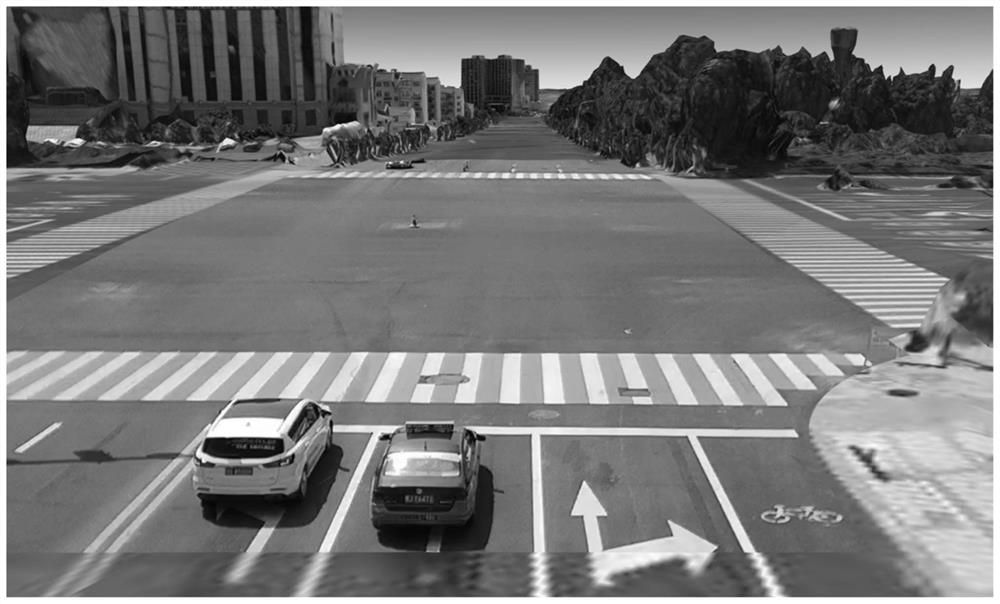

Automatic matching correction method of video texture projection in three-dimensional virtual-real fusion environment

ActiveCN103226830AAchieve integrationIncrease the number ofImage analysisCorrection algorithmEarth surface

The invention relates to an automatic matching correction method of a video texture projection in a three-dimensional virtual-real fusion environment and a method of fusing an actual video image and a virtual scene. The automatic matching correction method comprises the steps of constructing the virtual scene, obtaining video data, fusing video textures and correcting a projector. A shot actual video is subjected to virtual scene fusion on the surface of the complicated scene such as an earth surface and a building by a texture projection mode, the expression and showing abilities of dynamic scene information in the virtual-real environment are improved, and the layered sense of the scene is enhanced. A dynamic video texture coverage effect of the large-scale virtual scene can be realized by increasing the number of the videos at different shooting angles, so that a dynamic reality effect of the virtual-real fusion of the virtual-real environment and the display scene is realized. The obvious color jump is eliminated, and a visual effect is improved by conducting color consistency processing on video frames in advance. With the adoption of an automatic correction algorithm, the virtual scene and the actual video can be fused more precisely.

Owner:北京微视威信息科技有限公司

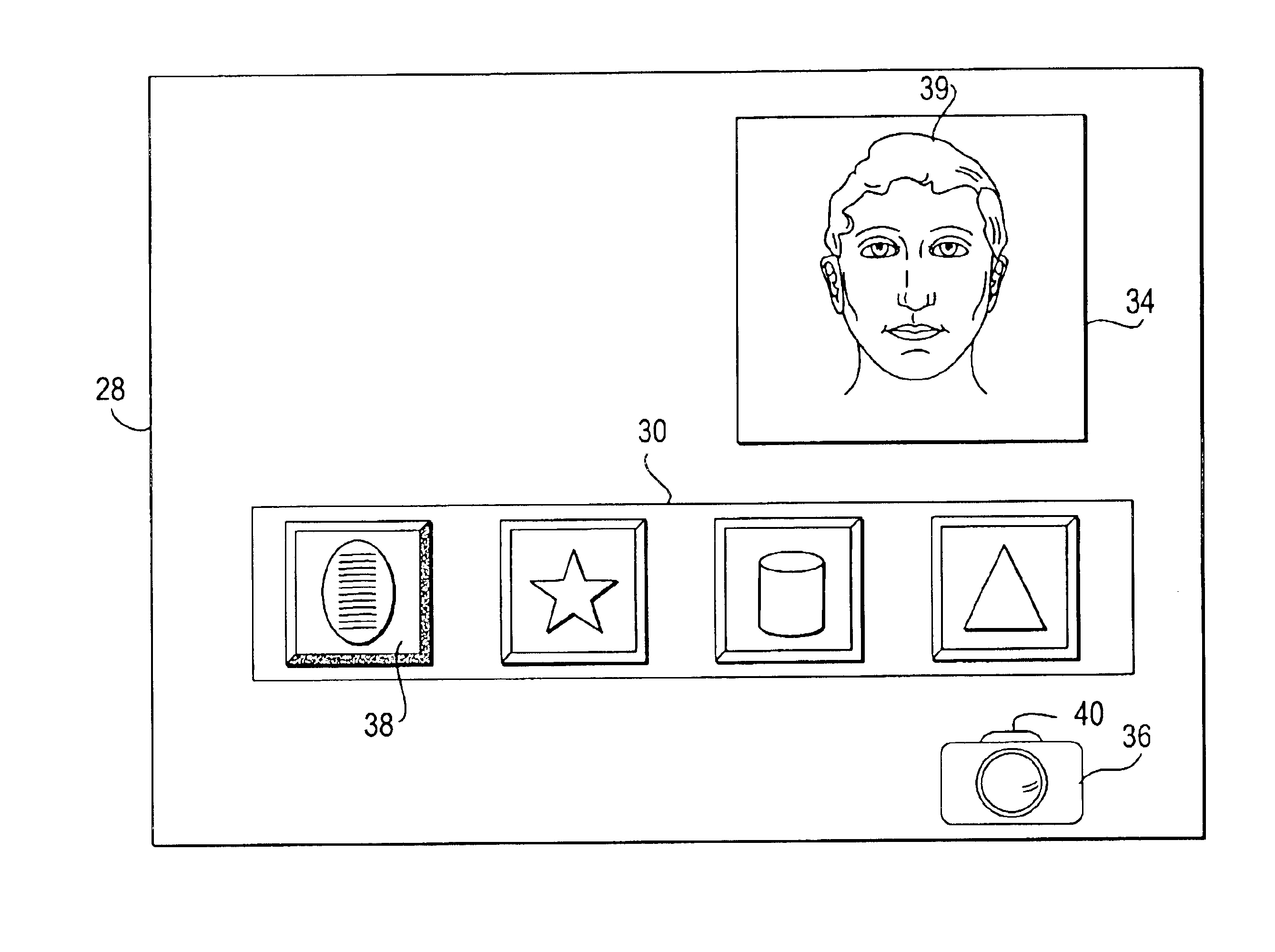

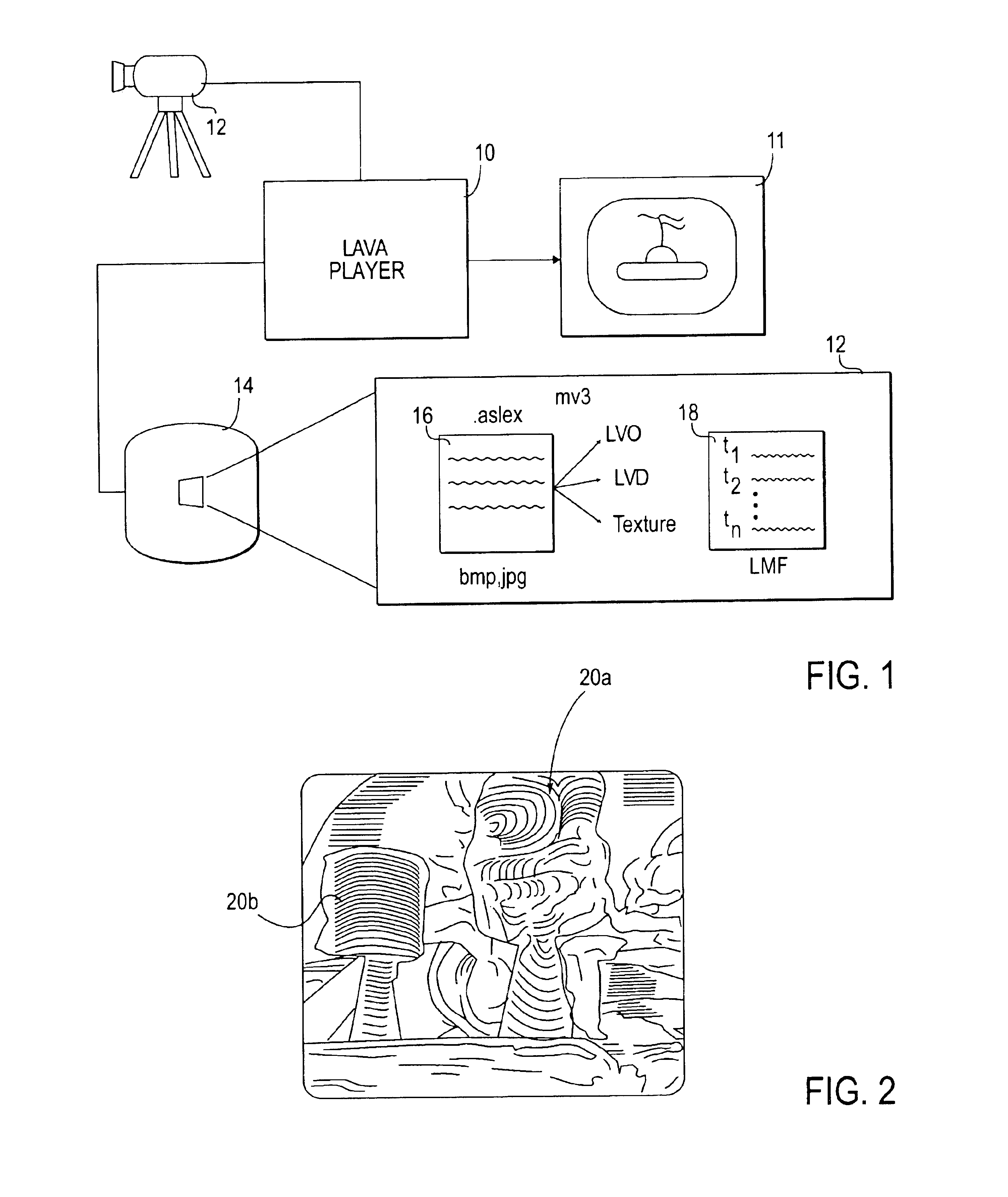

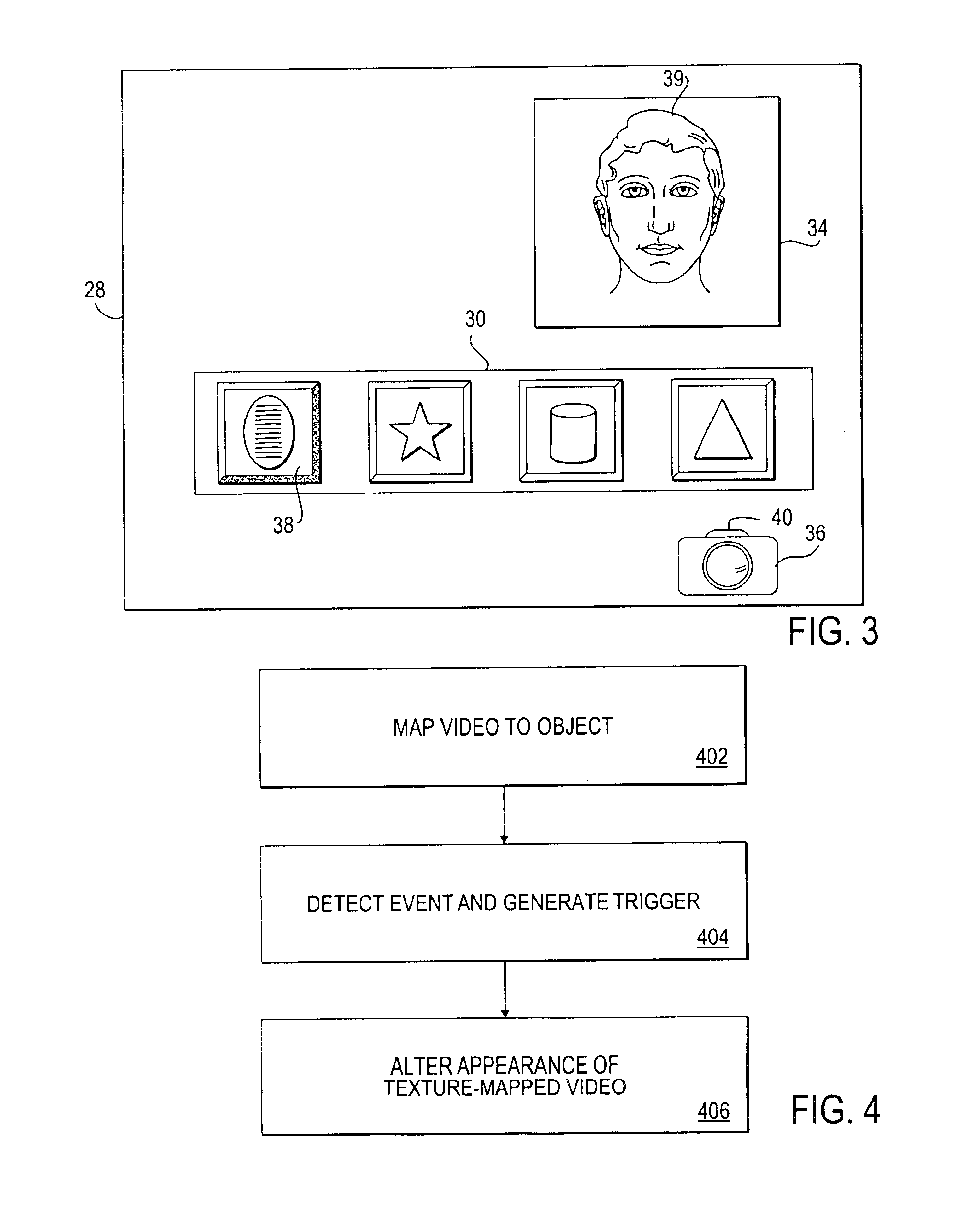

Automated acquisition of video textures acquired from a digital camera for mapping to audio-driven deformable objects

InactiveUS6856329B1Easy to changeElectrophonic musical instrumentsStage arrangementsAnimationAudio frequency

A technique for enhancing an audio-driven computer generated animation includes the step of mapping a video clip generated by a digital camera to an object displayed in the animation. Additionally, the object or the video clip can be deformed when selected events are detected during playback of the video clip.

Owner:CREATIVE TECH CORP

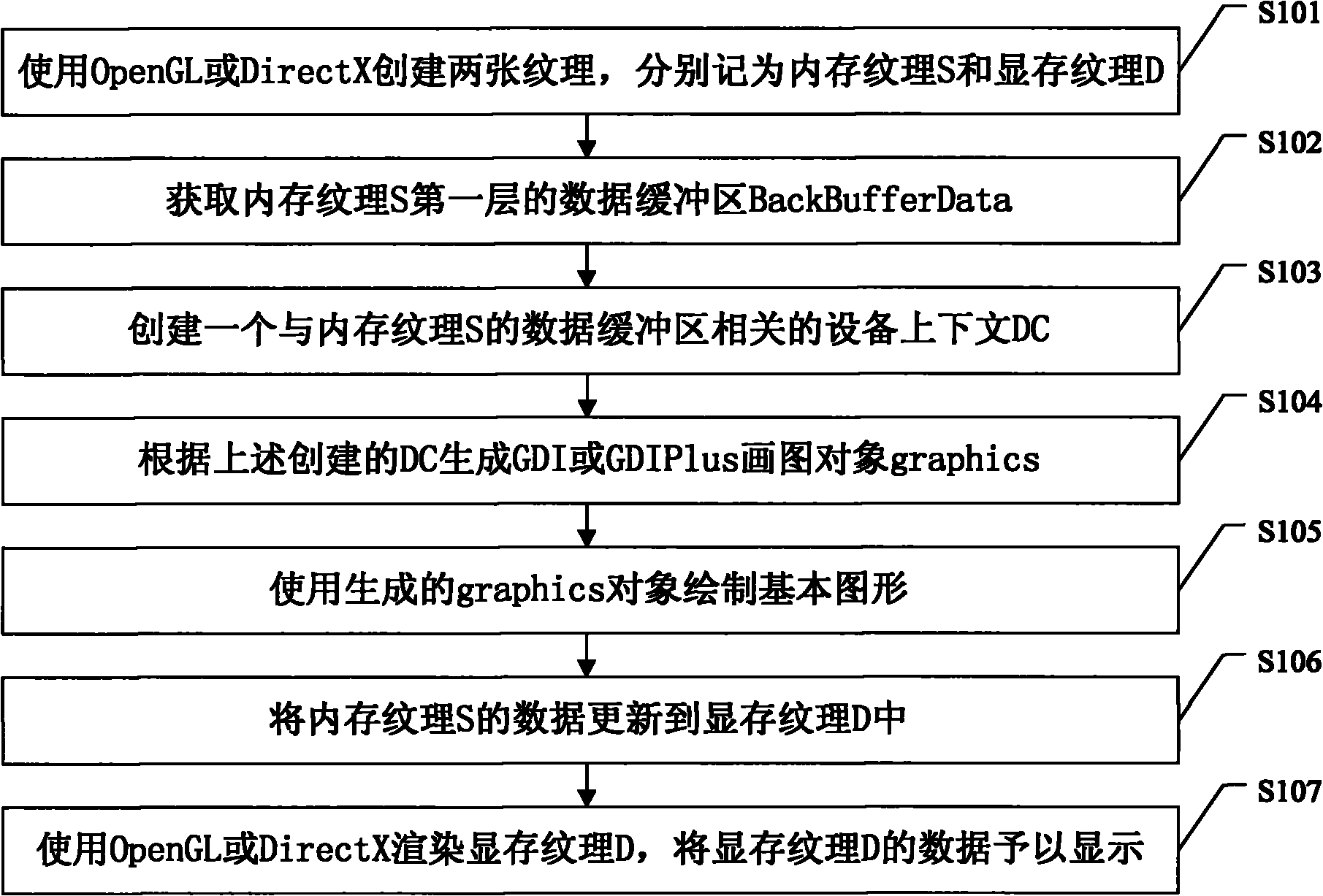

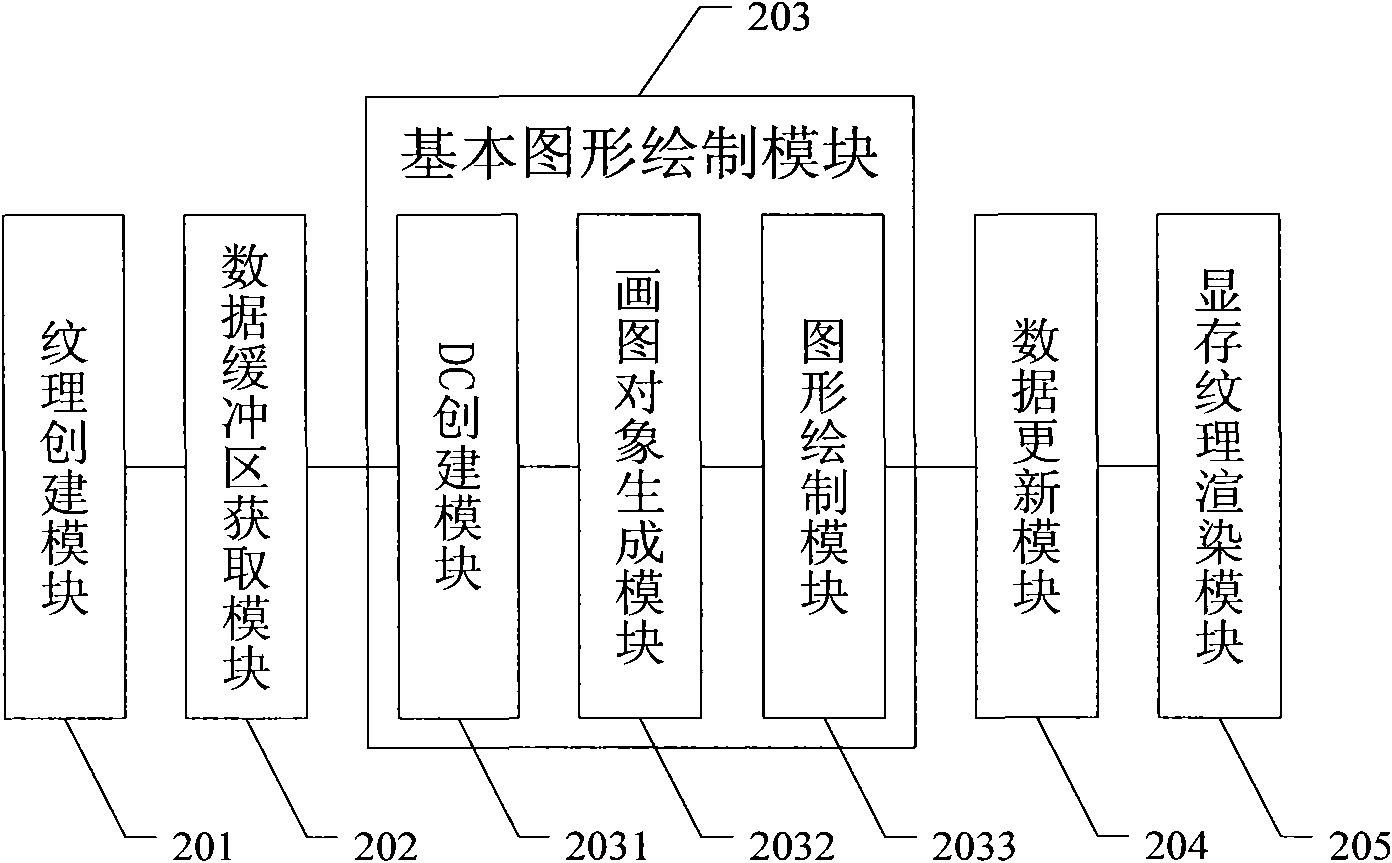

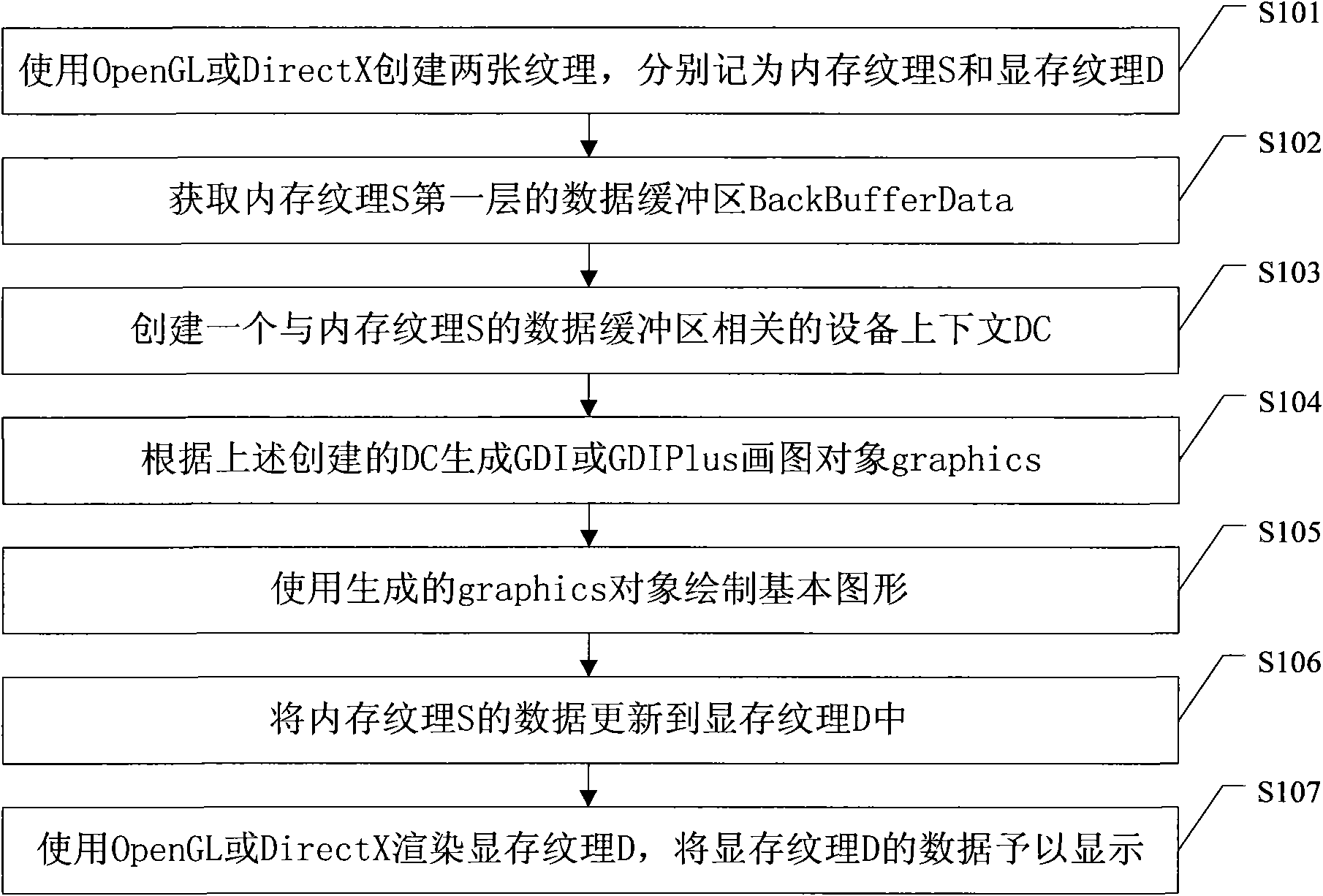

Method and device for efficiently drawing and rendering basic graphic

InactiveCN101882077ARealize the drawing functionImprove efficiencyDrawing from basic elementsSpecific program execution arrangementsGraphicsVideo texture

The invention relates to a method and a device for efficiently drawing and rendering a basic graphic, which comprises the following steps of: creating a memory texture and a video texture by using OpenGL or DirecX to obtain a data buffer in the first layer of memory texture, and creating a device context (DC) related to the data buffer; creating GDI (Graphics Device Interface) or GDIPlus drawing object by using the DC, and drawing the basic graphic by using the drawing object; and updating the data in the memory texture to the video texture, rendering the video texture by using OpenGL or DirecX, and displaying the data in the video texture. The scheme of the invention not only maintains the advantage of high graphic rendering efficiency of OpenGL and DirecX, but also favorably utilizes the traditional algorithm and the function packaging of GDI and GDIPlus to favorably realize better combination of the existing algorithm and the function packaging, simply and conveniently realizes thedrawing function of the basic graphic, and lowers the technical requirements of OpenGL and DirecX for developers.

Owner:GUANGDONG VTRON TECH CO LTD

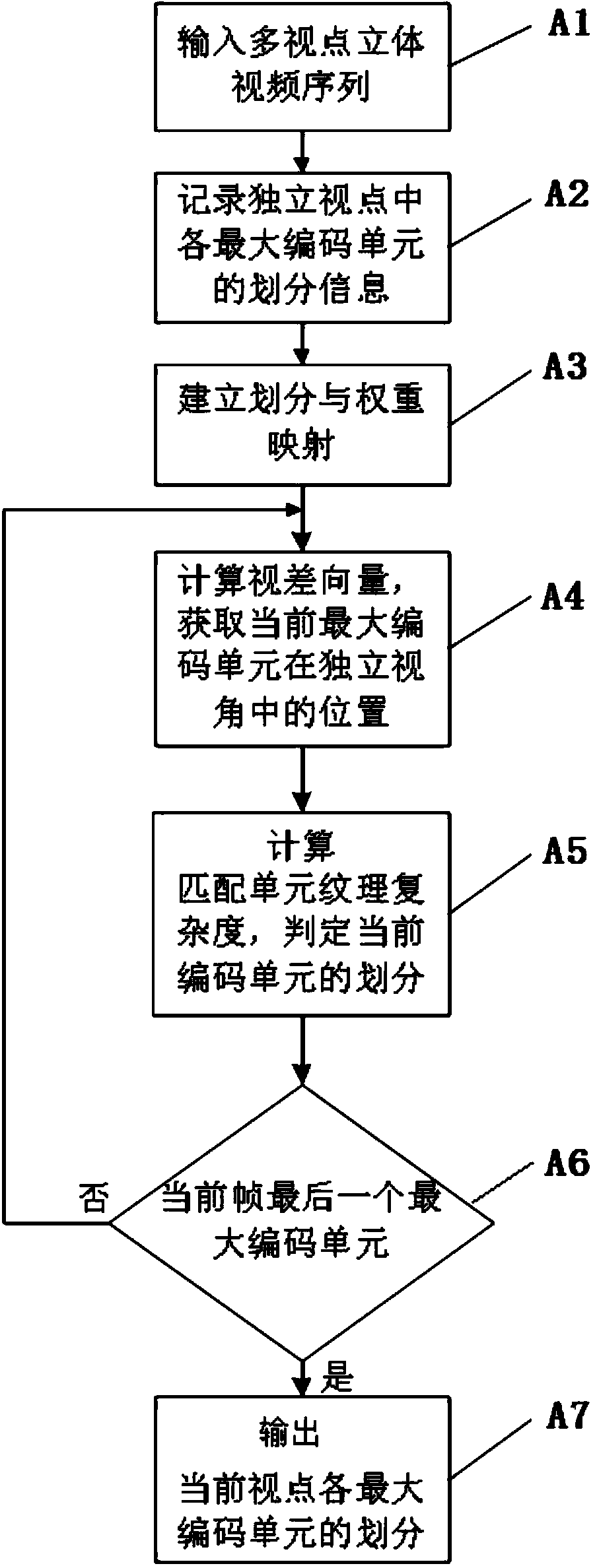

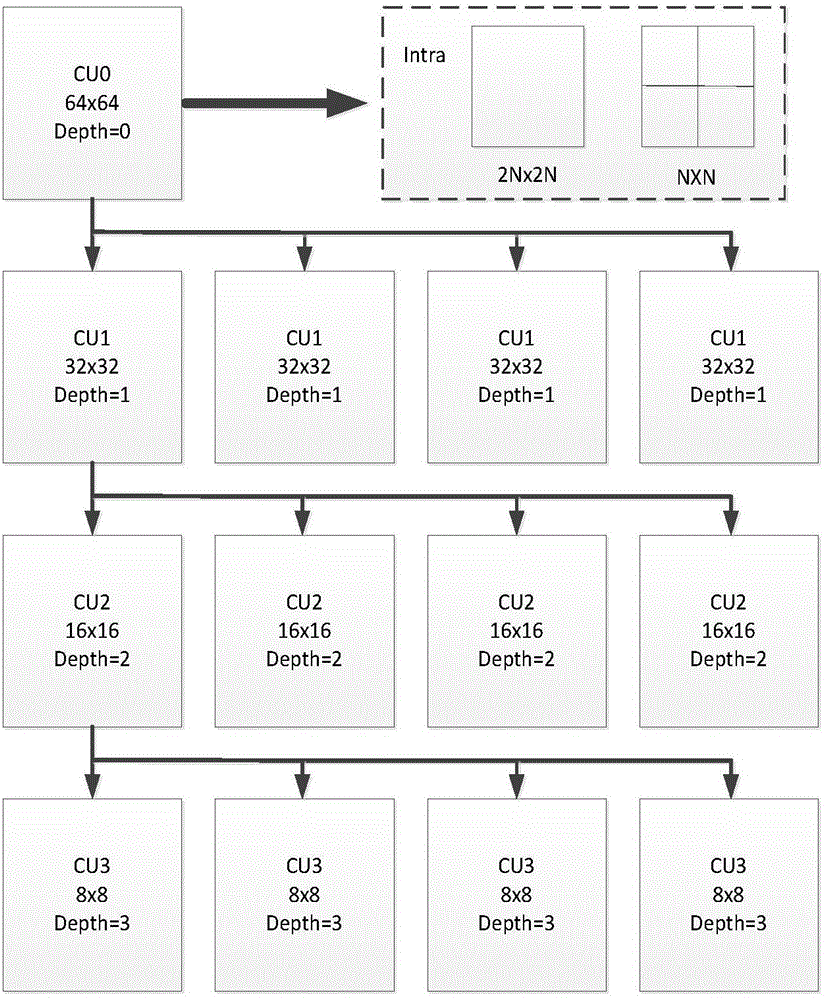

Coding unit partition method and multi-view video coding method using coding unit partition method

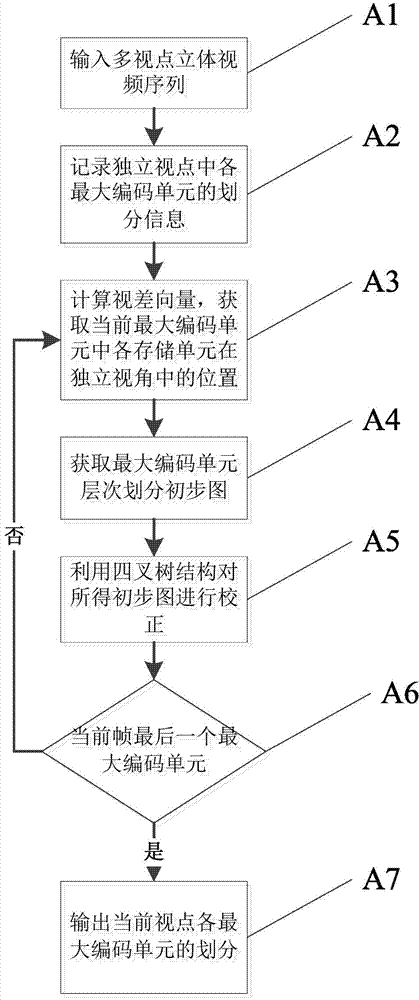

ActiveCN103428499AAvoid calculationAccelerating the process of CU partitioningTelevision systemsSteroscopic systemsComputer architectureVideo texture

The invention provides a coding unit partition method. The method includes the following steps: A1, inputting more than two viewpoint video texture images and depth image sequences, A2, recording partition information of all maximum CUs of independent viewpoints, A3, establishing mapping between the partition information of the maximum CUs and weights, A4, acquiring matching units of the maximum CUs in the independent viewpoints, A5, determining division of sub-blocks corresponding to the maximum CUs to be coded in texture images of current non-independent viewpoints to be coded, and A6, outputting division of all the maximum CUs of current coded viewpoints. According to the CU partition method, statistical information of division of the CUs of the coded synchronous independent viewpoints is used for achieving division of the CUs of the non-independent viewpoints to be coded, exhaustion and iteration partition method of the CUs of the non-independent viewpoints are omitted in calculation, therefore, the whole CU partition process is accelerated, and efficiency is improved.

Owner:SHENZHEN GRADUATE SCHOOL TSINGHUA UNIV

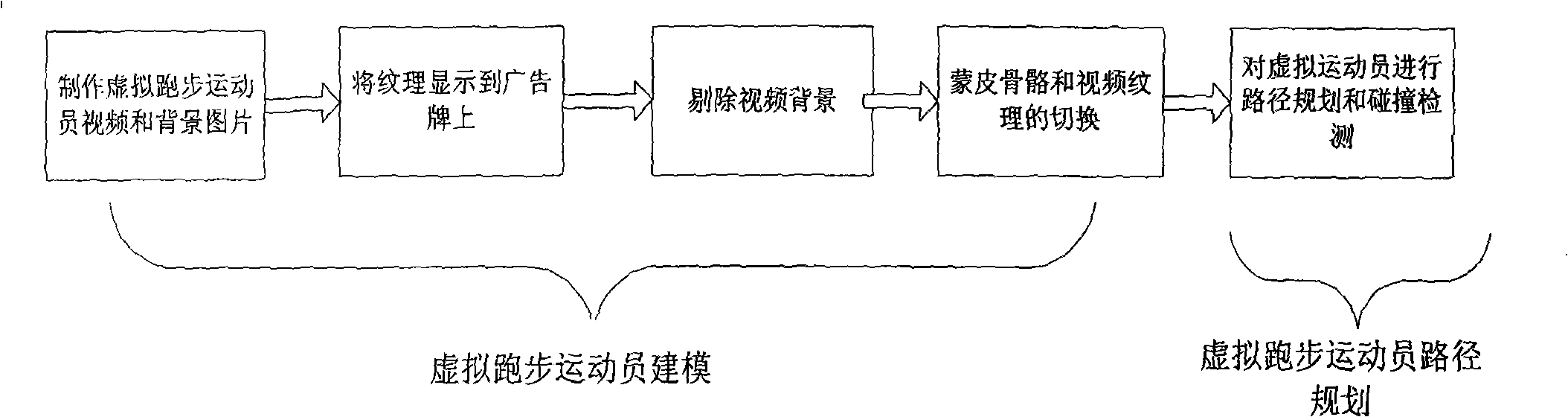

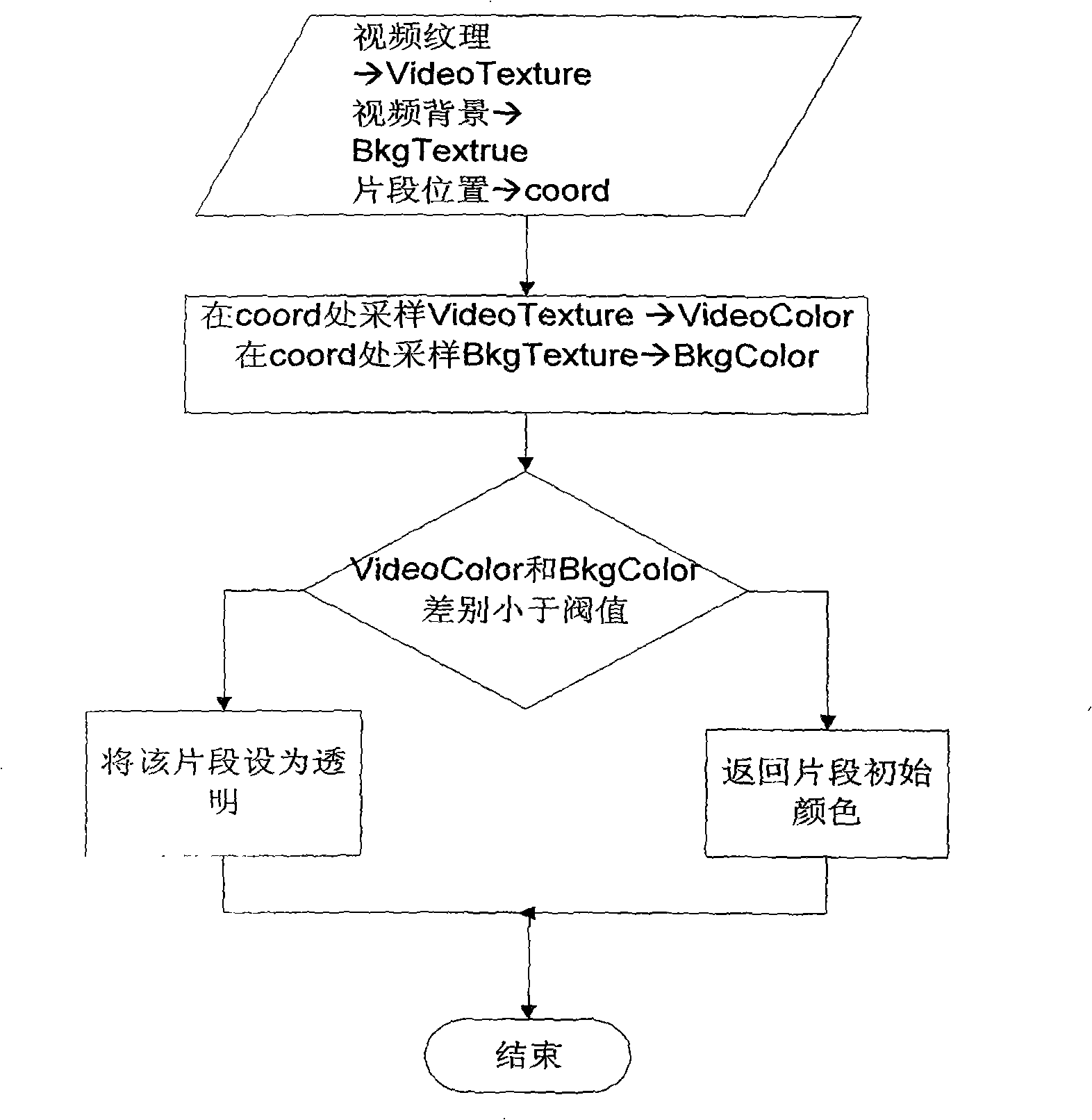

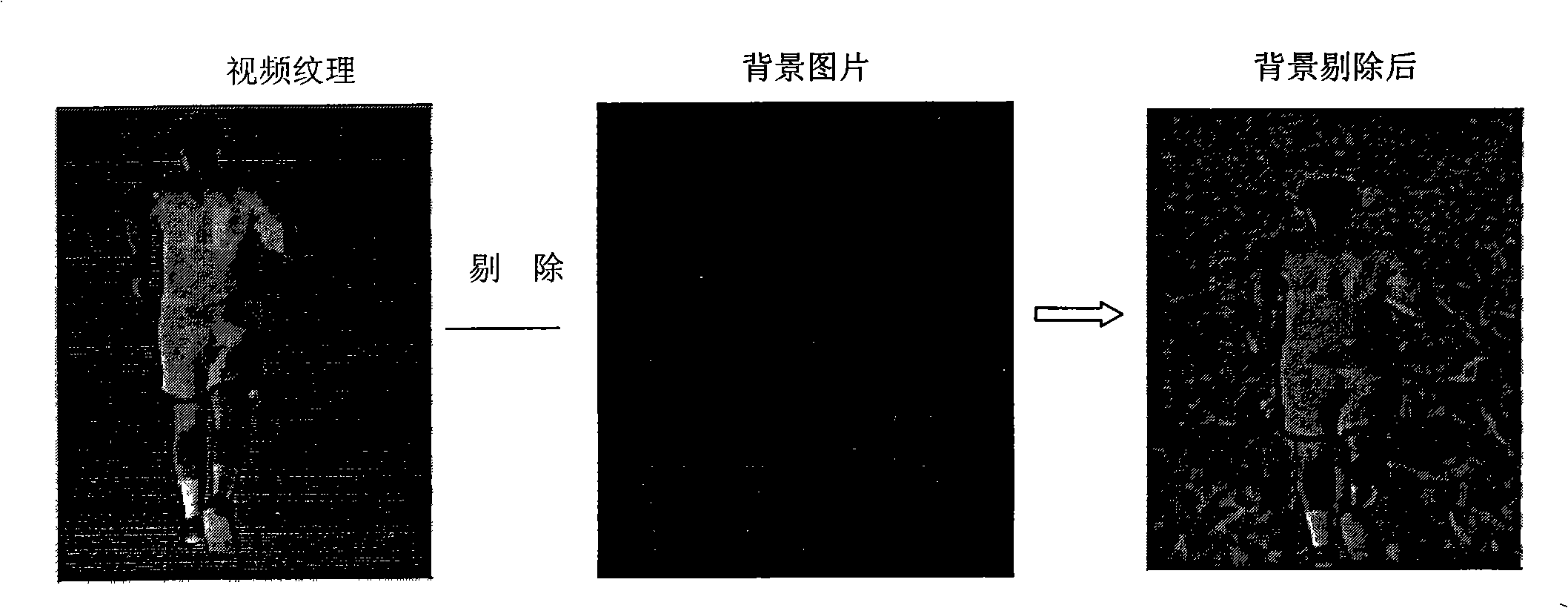

Drafting method for virtual running athlete based on video texture

InactiveCN101320482ARealize switchingEasy to manufactureSpecial data processing applications3D-image renderingAnimationWorkload

The present invention discloses a drawing method for a vedio texture-based virtual runner. The method is mainly applied in a game. Firstly, a virtual runner video comprising animation effect and a video background picture are manufactured; then the video is used as texture to be displayed on a billboard in a game scene; the video texture and the background picture are transferred into a pixel tinter, and a video background is eliminated; the switching of a character animation in a normal skinned mesh form and the video texture is realized in terms of the distance from a view point; finally, a series of key points are stored to be used as the moving path of the virtual runner. The present invention uses character modeling based on the video to replace a normally used skinned mesh, thereby greatly reducing game rendering expense; a video animation can draw material from the reality, thereby reducing the workload of 3D character modeling.

Owner:ZHEJIANG UNIV

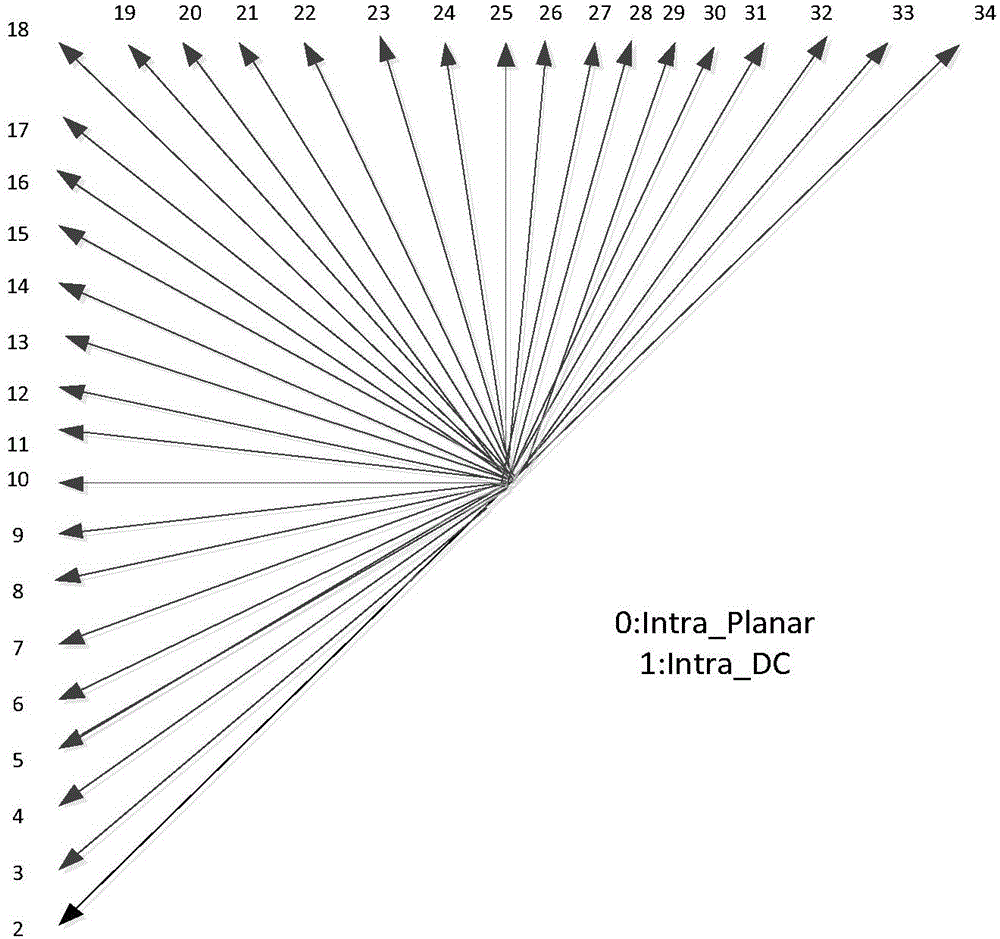

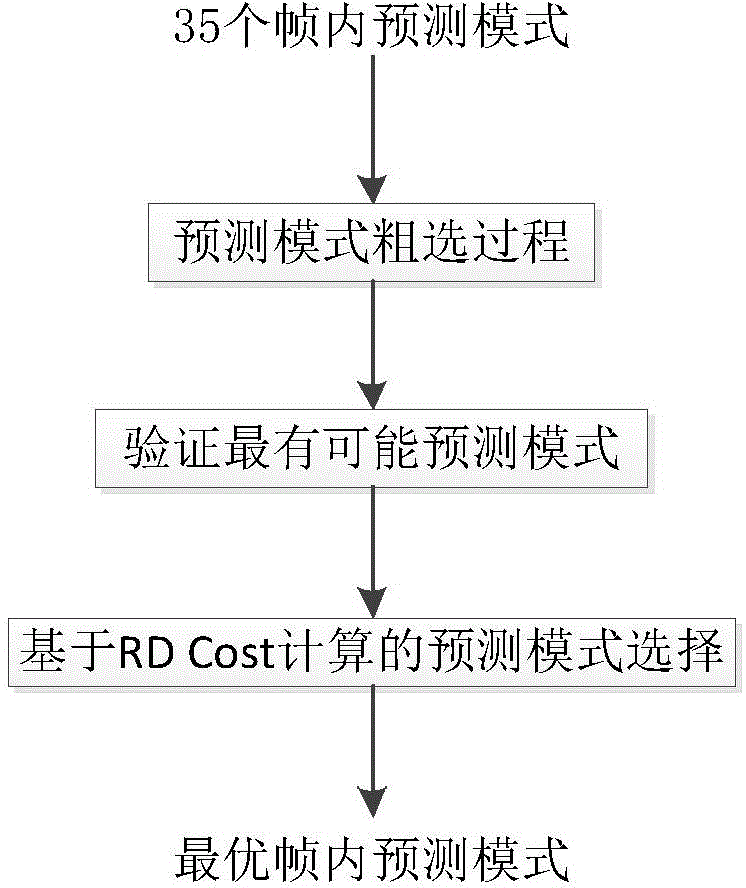

Quick HEVC (High Efficiency Video Coding) inter-frame prediction mode selection method

ActiveCN104639940AGuaranteed coding qualityReduce coding complexityDigital video signal modificationHadamard transformAlgorithm

The invention discloses a quick HEVC (High Efficiency Video Coding) inter-frame prediction mode selection method. After coarse selection of inter-frame prediction modes, the statistical property of Hadamard transform-based cost values corresponding to the coarsely selected inter-frame prediction modes is fully utilized, and the correlation of the video texture direction and an inter-frame prediction mode angle are fully considered; for prediction unit types of different sizes, the inter-frame prediction modes after the coarse selection are quickly screened through a threshold value method or the continuity of the coarsely selected inter-frame prediction modes is calculated to reflect the texture direction of the prediction unit, so that the unnecessary coarsely selected inter-frame prediction mode is screened without introducing more extra calculation; in a verification process of the most possible prediction mode, the correlation of the coarsely selected inter-frame prediction modes and the most possible prediction mode and the space correlation of a video image per se are fully considered, the final optimal inter-frame prediction mode is quickly obtained, and the inter-frame coding complexity is reduced on the premise that the video coding quality is guaranteed.

Owner:NINGBO UNIV

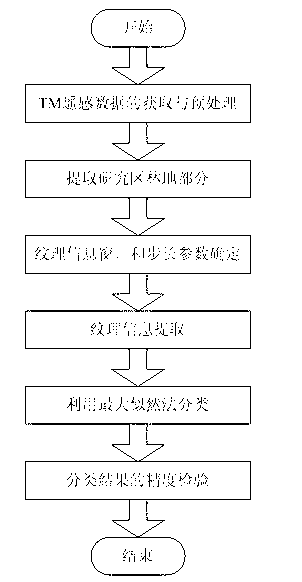

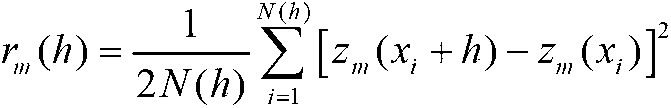

Forest type identification method based on texture information

InactiveCN103020649AImprove the accuracy of remote sensing recognitionCharacter and pattern recognitionSensing dataData source

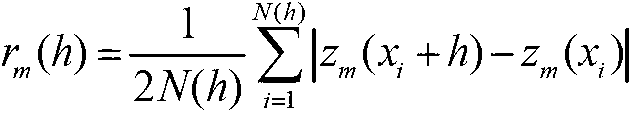

The invention discloses a forest type identification method based on texture information, belongs to the technical field of remote sensing, and particularly relates to a remote sensing method for classifying forest type on the basis of TM (thematic map) data of the texture information. The method includes the following six steps of S1, confirming TM remote sensing data of an appropriate time as a data source according to bio-meteorology information, preprocessing the TM data; S2, extracting a forest land portion of a researched area; S3, confirming size of a window or a step when the texture information is extracted; S4, extracting video texture information by means of the principle of geostatistics; S5, classifying TM video by means of the maximum likelihood method; and S6, verifying classified result of the maximum likelihood method by means of geoclimatic verifying data. By the aid of the forest type identification method based on the texture information, precision of remote sensing for the forest type, especially the precision for identifying large-area forest type, is improved.

Owner:NORTHEAST FORESTRY UNIVERSITY

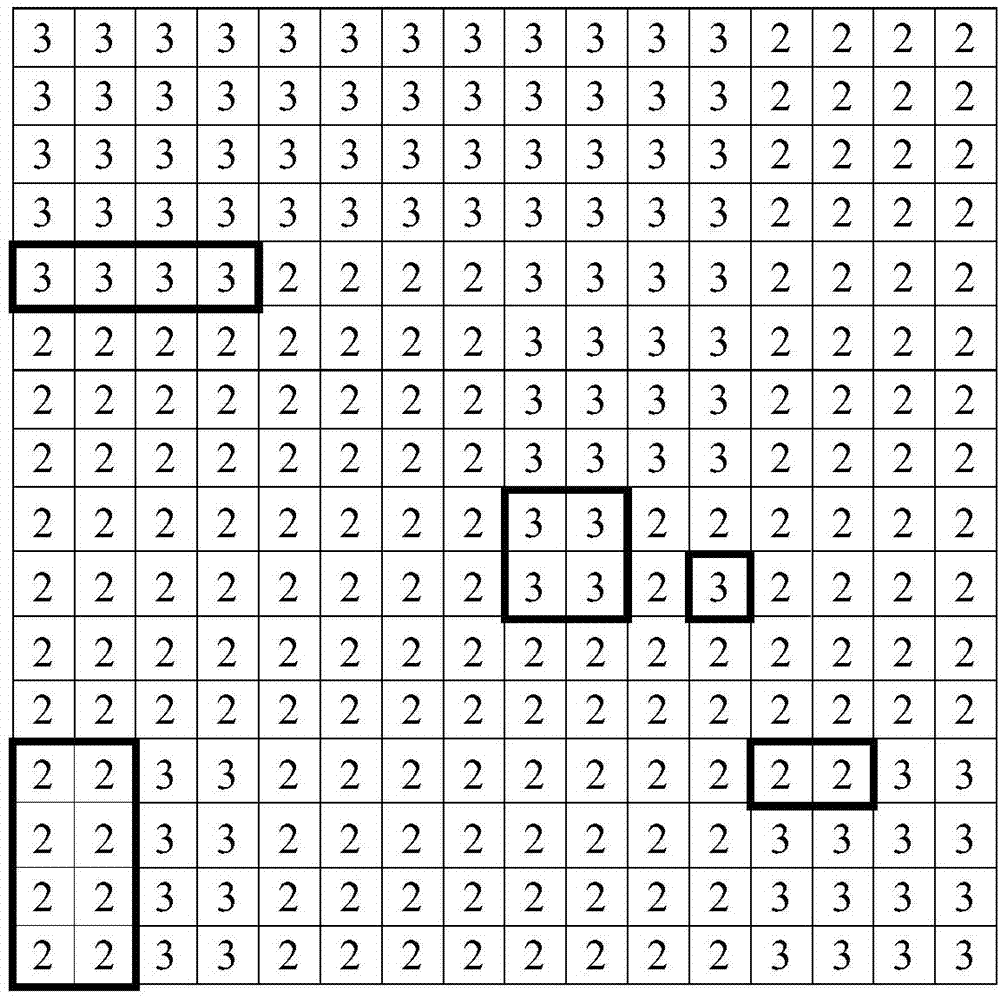

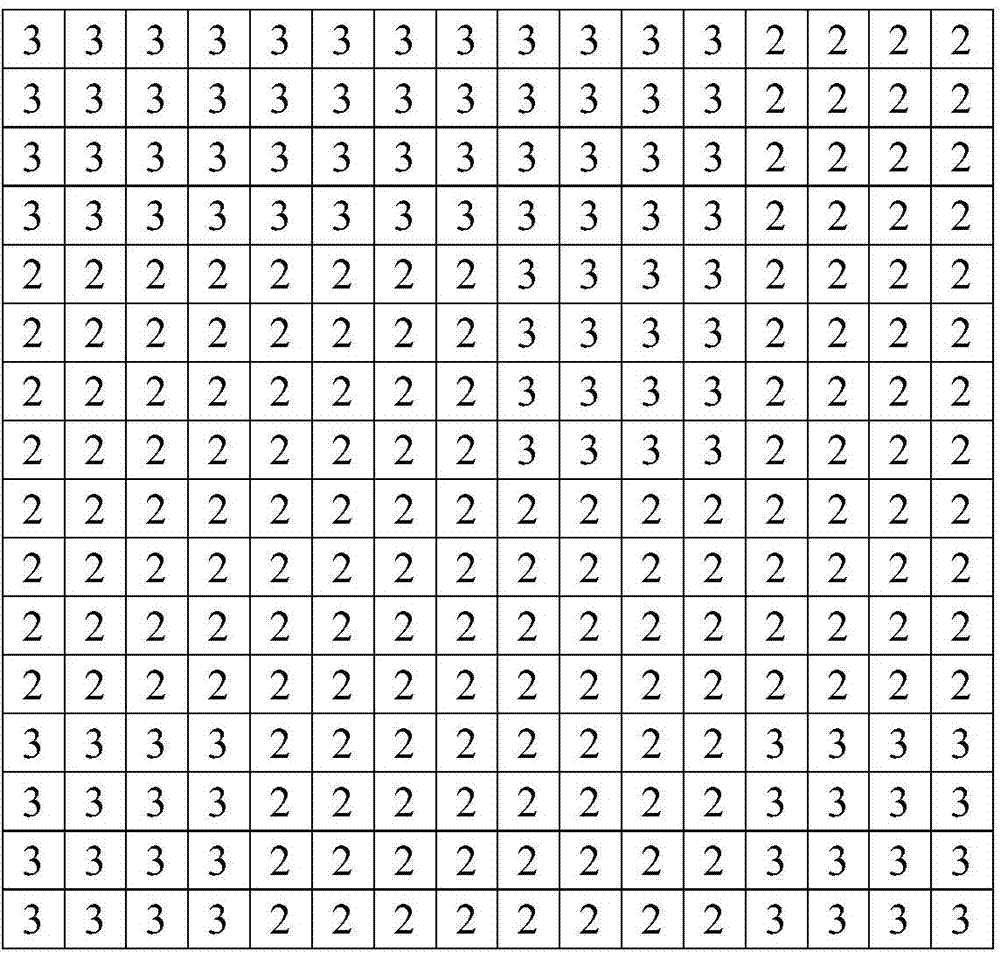

Coding unit dividing method and video coding method based on quad-tree constraint

ActiveCN104202612ASpeed up divisionAvoid calculationDigital video signal modificationComputer architectureViewpoints

The invention discloses a coding unit dividing method and a video coding method based on quad-tree constraint. The coding unit dividing method comprises the following steps of inputting a video texture image with more than two viewpoints and a depth image sequence; recording division level information of a maximum coding unit of each independent viewpoint; obtaining a matching unit of each storage unit in the maximum coding unit in the independent viewpoint; obtaining an initial division level graph of sub-blocks corresponding to the maximum coding unit; correcting the initial division level graph by utilizing a quad-tree constraint condition; and outputting division of each maximum coding unit of the current coding viewpoint. The coding unit dividing method is adopted to divide the coding units in the video coding method. By utilizing the division information of the coding units of the independent viewpoint to help the dependent viewpoint to carry out division of the coding units and utilizing the quad-tree constraint condition to correct the division level graph of the coding units, the existing complicated algorithm is avoided, the whole division process of the coding units is accelerated, and the efficiency is improved.

Owner:SHENZHEN GRADUATE SCHOOL TSINGHUA UNIV

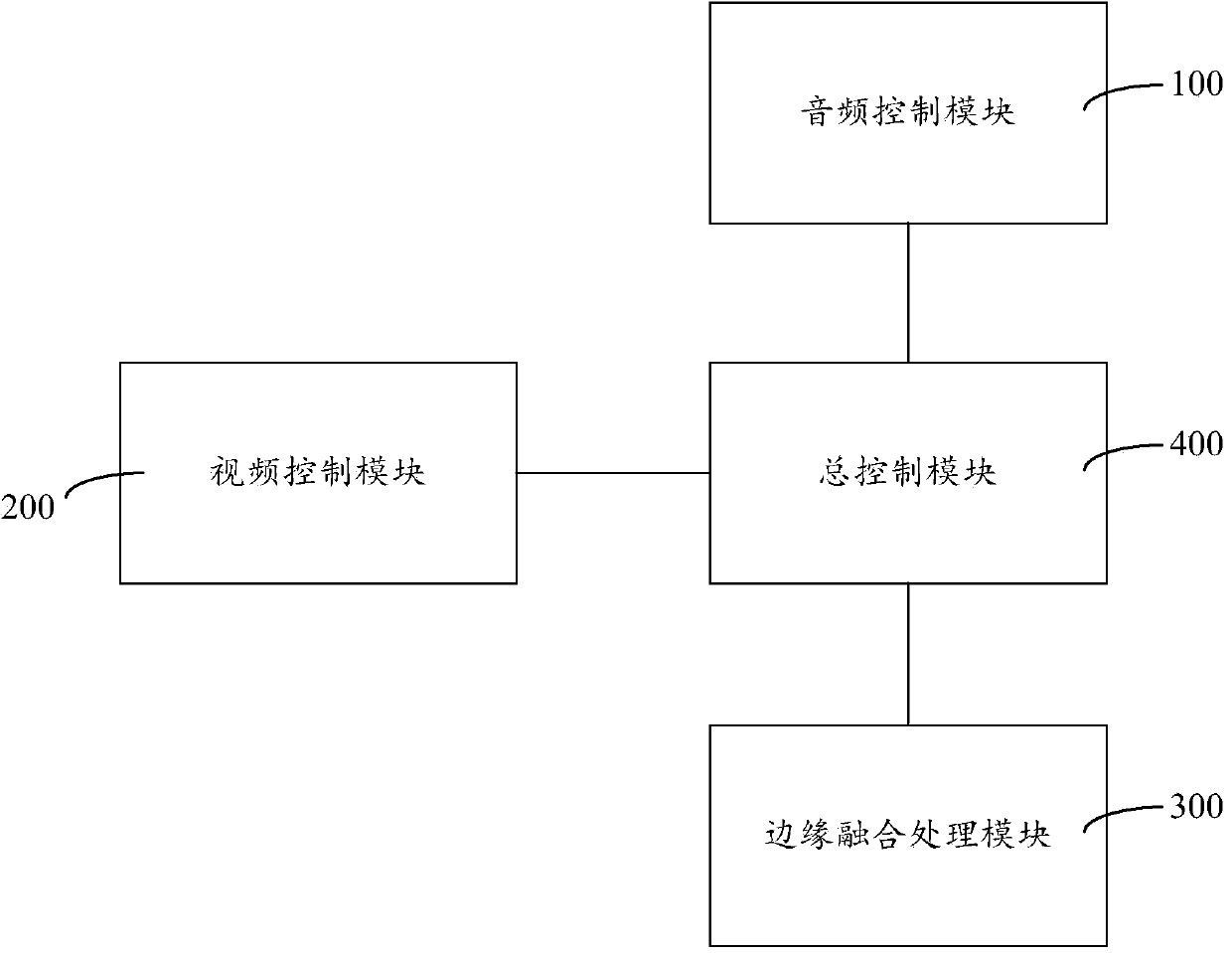

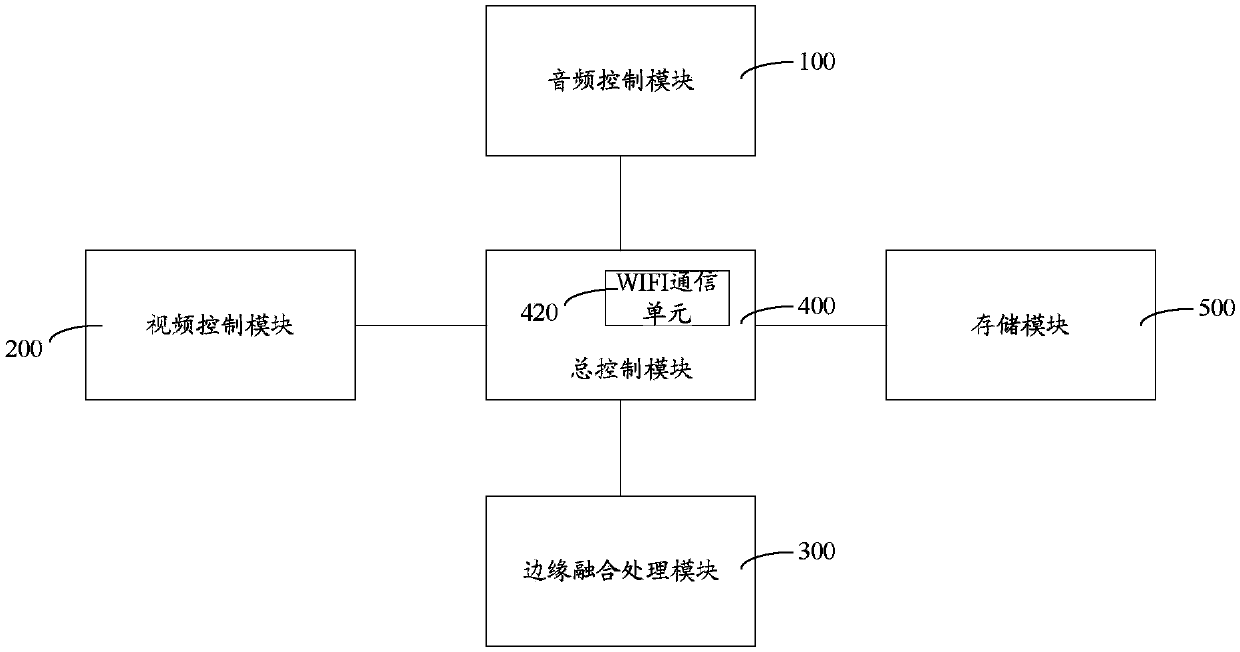

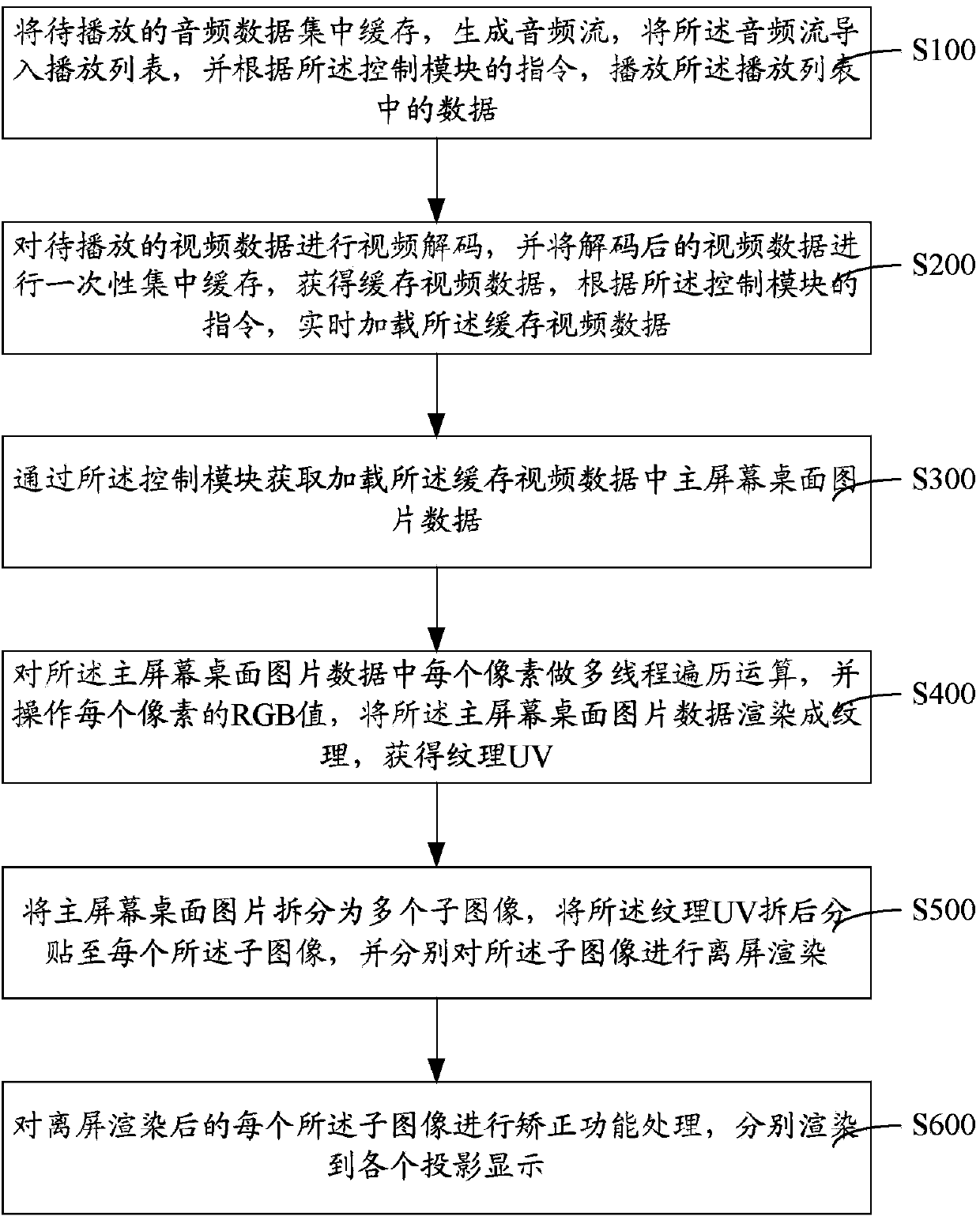

Immersive virtual display system and display method of CAVE (Cave Automatic Virtual Environment)

ActiveCN104202546AIncrease contentTimely outputTelevision system detailsColor television detailsCave automatic virtual environmentLarge screen

The invention provides an immersive virtual display system and a display method of a CAVE (Cave Automatic Virtual Environment). An audio control module is used for caching audio data to be played and generating an audio stream to form a play list, so as to be convenient for outputting audio data in time; a video control module is used for intensively caching video data once, so as to play video images smoothly and efficiently; an edge melting processing module is used for gripping desktop picture data on a main screen through a master control module, therefore processing time can be saved; furthermore, by adopting multithreading traversal operation and supporting multi-channel simultaneous processing, the processing efficiency can be improved; in addition, by adopting a plurality of correction functions to process, including vertex correcting process, geometrical correction processing and pixel RGB (Red Green Blue) colorful correction, on the one hand, the effect of edge melting can be ensured; on the other hand, the data handling capacity can be reduced, therefore, the system is not limited to single channel projection by a video texture technology, the large screen playing and multi-channel large-screen display can be realized by taking a player fusion technique as edge fuse processing.

Owner:SHANGHAI FINEKITE EXHIBITION ENG

Robotic texture

Owner:ETH ZURICH EIDGENOESSISCHE TECHN HOCHSCHULE ZURICH +1

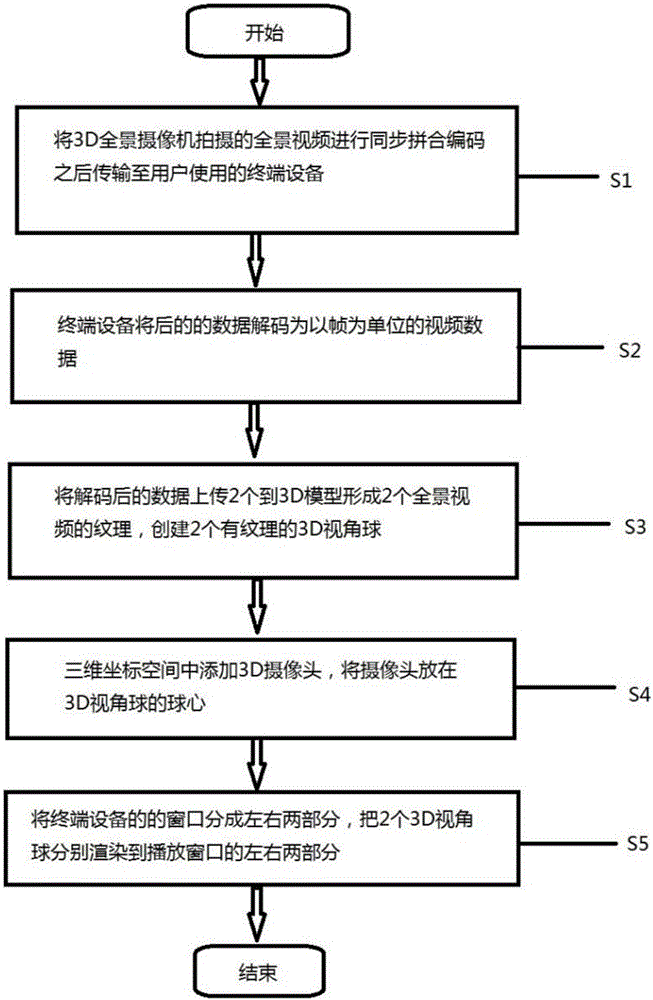

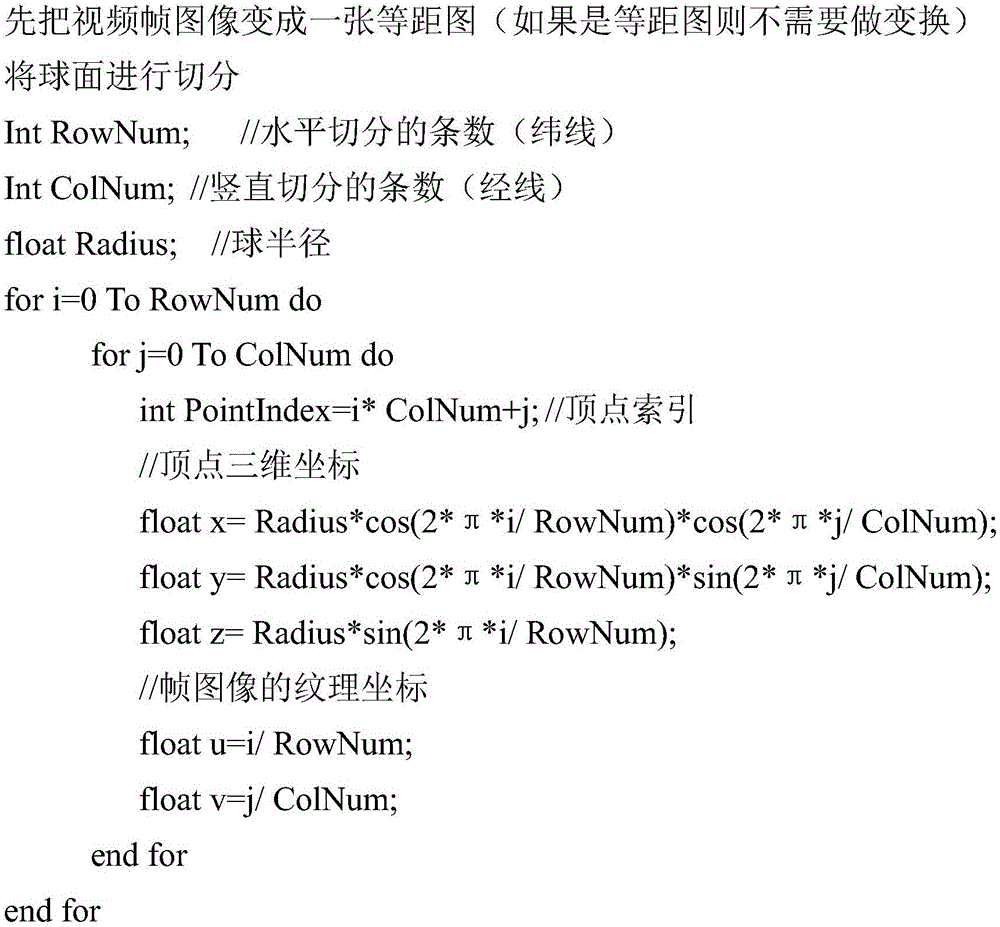

Method and system for watching 3D panoramic video based on network video live broadcast platform

InactiveCN106851244ASynchronous view switchingGorgeous 3D experienceSteroscopic systemsTerminal equipment3d camera

The invention discloses a method and system for watching a 3D panoramic video based on a network video live broadcast platform. The method comprises an establishment step of establishing two 3D models in a panoramic player of a terminal device, splitting video data into left and right part content corresponding to left and right eyes, adding the left and right part content to the 3D models, thereby forming panoramic video textures, establishing left and right three-dimensional coordinate spaces in the player of the terminal device, and establishing a 3D visual angle sphere on which the panoramic video textures are mapped, in each coordinate space; an addition step of adding two 3D cameras to each three-dimensional coordinate space and placing the lenses of the 3D cameras at the sphere centers of the3D visual angle spheres; and a rendering step of dividing a display window of the terminal device into left and right parts and rendering the two 3D visual angle spheres on which the panoramic video textures are mapped to the left and right parts of the display window.

Owner:北京阿吉比科技有限公司

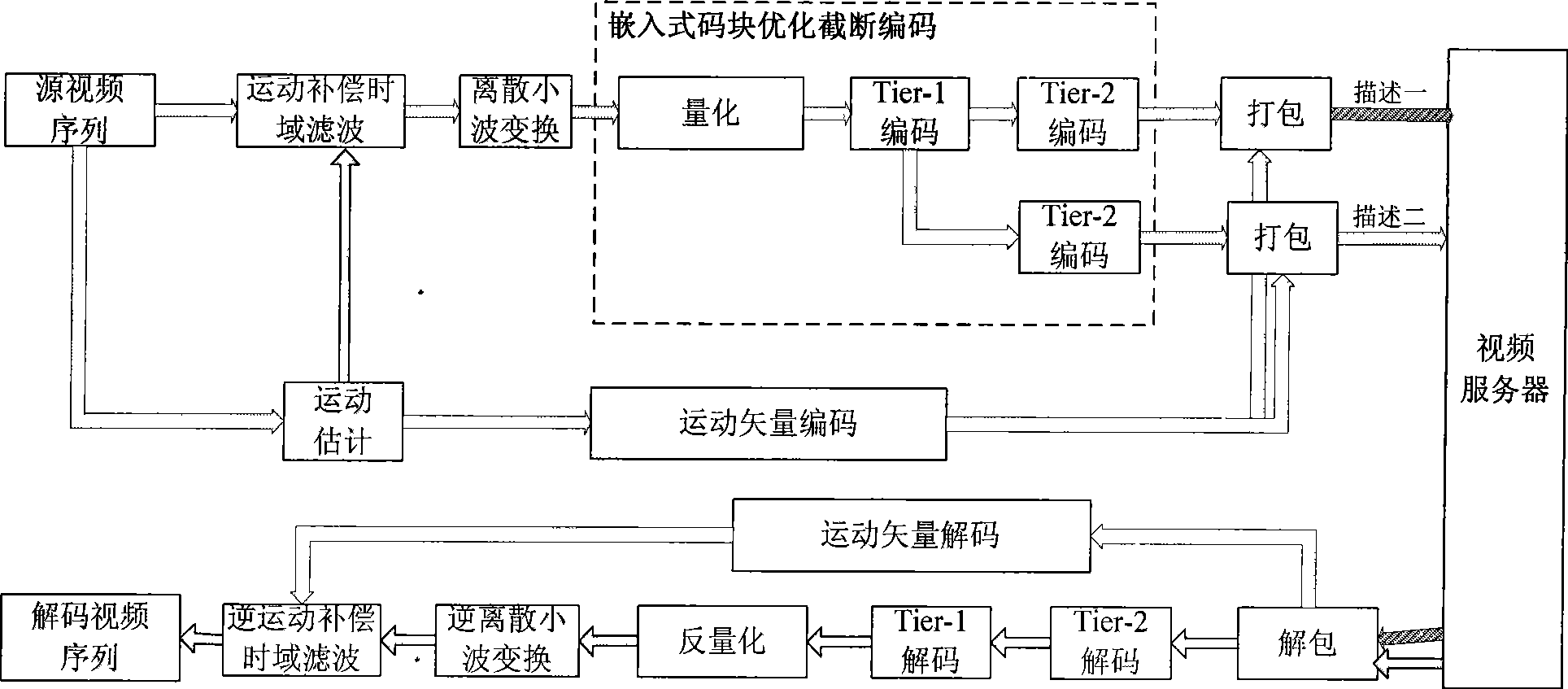

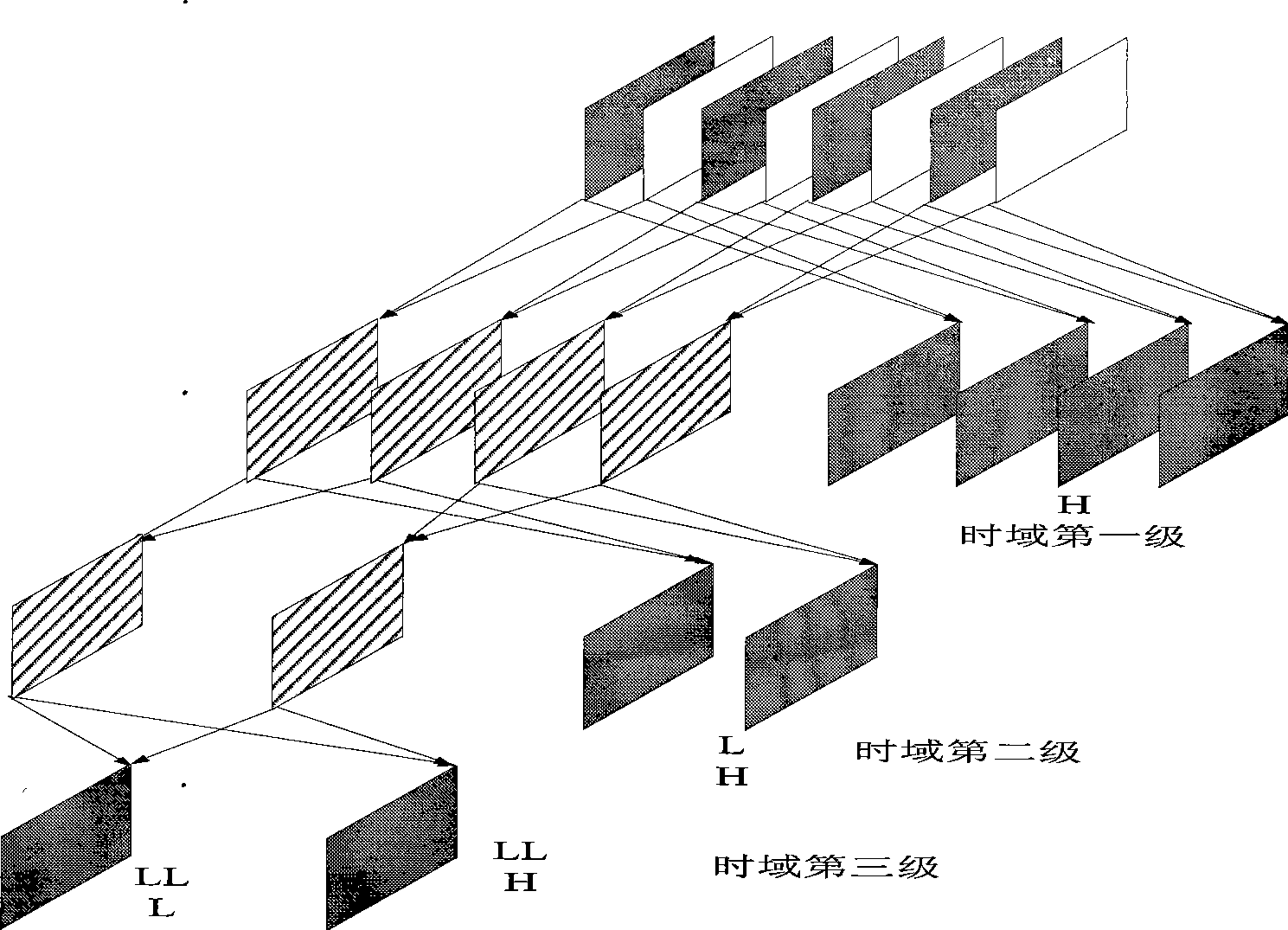

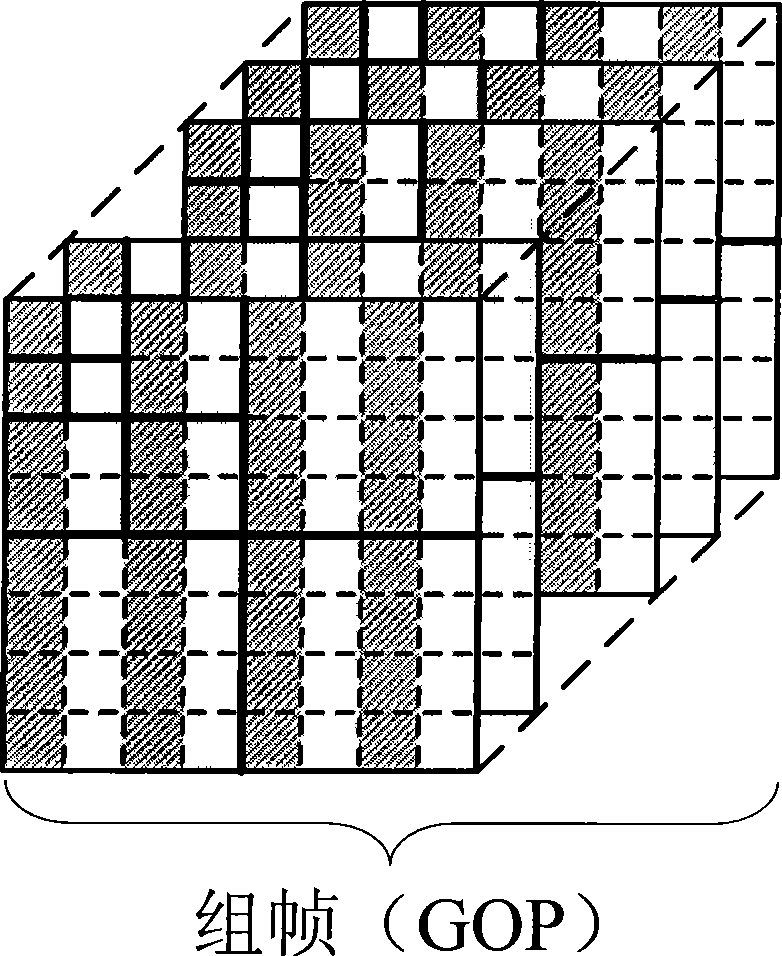

Scalable multi-description video encoding structure design method based on code rate control

InactiveCN101478677AImproved Stability TransmissionStable viewing qualityPulse modulation television signal transmissionDigital video signal modificationComputer architectureVideo decoding

The present invention discloses an extensible multi-description video coding structure design method based on code rate control. According to the invention, a same video is executed with two or a plurality of descriptions with same importance. The time domain redundancy is eliminated through a ''movement compensation time domain filtering technique''. The effective coding of multi-description video texture information of three-dimensional wavelet coefficient is realized through an ''entropy coding technique''. The forming of different video descriptions is realized through a ''Lagrange characteristic code rate control technique''. The random truncation of bitstream is realized through a ''three-dimensional sectile embedded type code rate control technique'' for satisfying video decoding results with different qualities. The independent transmission of a plurality of sub-transmission is realized through a ''multi-path transmission technique''.

Owner:XI AN JIAOTONG UNIV

Method and apparatus for displaying multi-party video in instant communication

ActiveCN101179409AImprove experienceImprove the three-dimensional effectSpecial service provision for substationTwo-way working systemsThree dimensional shapeVideo image

The invention discloses a method for displaying the multiparty video in the instant communication. The method comprises the processes that: a plurality of polygonal video display zones in which the multiparty video is displayed in the instant communication is determined and a plurality of polygonal video display zones wherein take a three-dimensional shape in the display space; the video needing to be displayed is respectively segmented into the triangles and the corresponding video display zones are segmented into the same number of the triangles, and the corresponding relation between the video texture vertex of the triangle after being segmented and the triangle vertex of the display zone is determined according to the mapping relation; the video texture is mapped to the corresponding zone according to the corresponding relation between the triangle vertexes and the transform or zooming processing is applied to the video texture in the video display zone, and the video images in a plurality of polygonal video display zones are displayed. The invention further simultaneously discloses a device for displaying the multiparty video.

Owner:TENCENT TECH (SHENZHEN) CO LTD

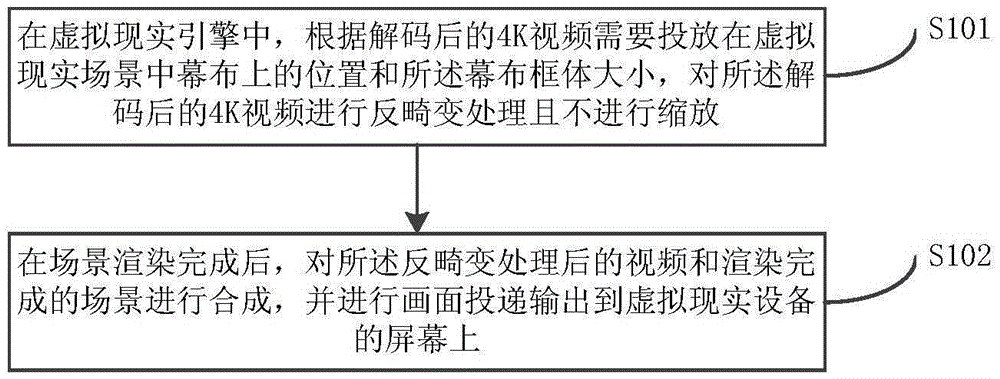

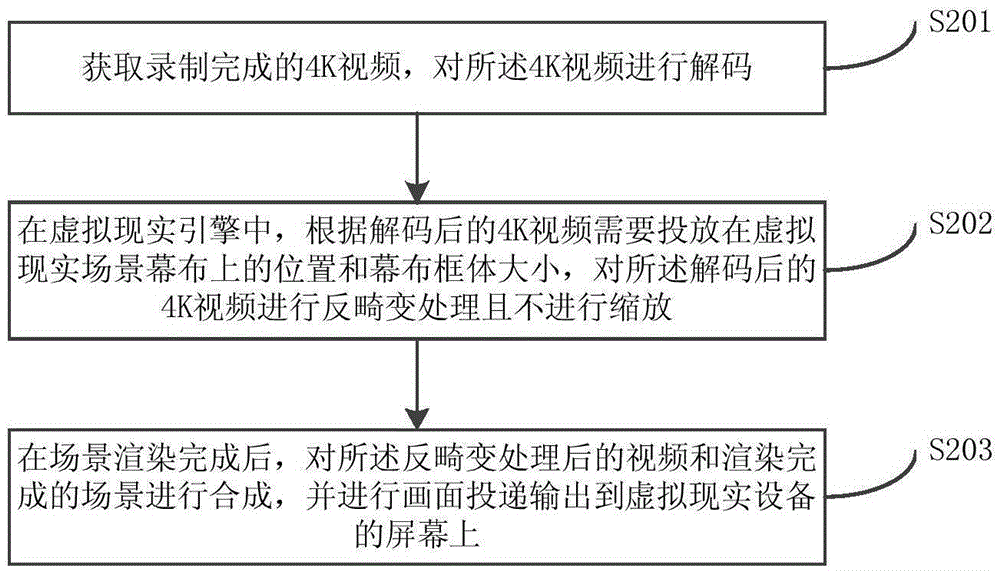

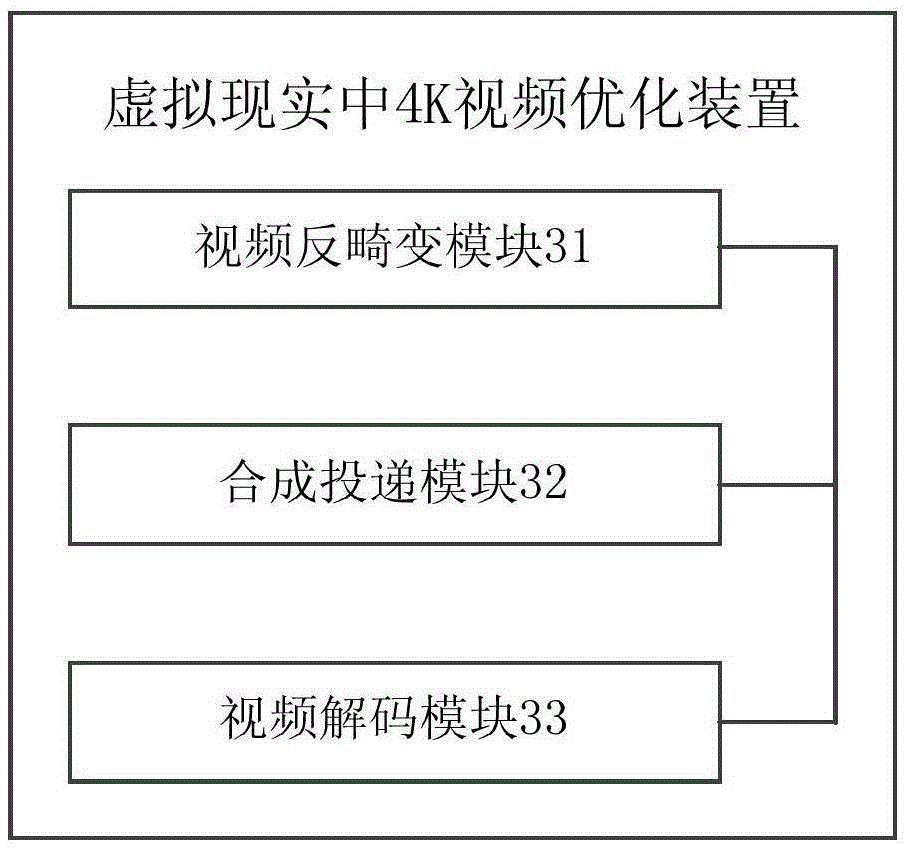

Virtual reality 4K video optimization method and device

InactiveCN105915972AImprove clarityConvenience to workSelective content distributionVideo optimizationDistortion

The invention discloses a virtual reality 4K video optimization method and device. The method comprises the steps that step A: anti-distortion processing is performed on a decoded 4K video and zooming is not performed in a virtual reality engine according to the position of projection of the decoded 4K video on the screen of a virtual reality scene and the size of the frame of the screen; and step B: after completion of scene rendering, the video after anti-distortion processing and the rendered scene are synthesized, and the frames are delivered and outputted to the screen of virtual reality equipment. Anti-distortion processing is performed on the video and zooming is not performed before the video texture is pasted on the screen of the virtual reality scene, the video is deformed into the shape suitable for playing of the screen of the virtual reality scene, and finally the video and the scene are directly synthesized in the process of synthesis of the video and the scene and delivery to the screen of the virtual reality equipment so that the problem of the fuzzy frames caused by reducing the 4K video and then zooming the 4K video can be avoided.

Owner:LE SHI ZHI ZIN ELECTRONIC TECHNOLOGY (TIANJIN) LTD

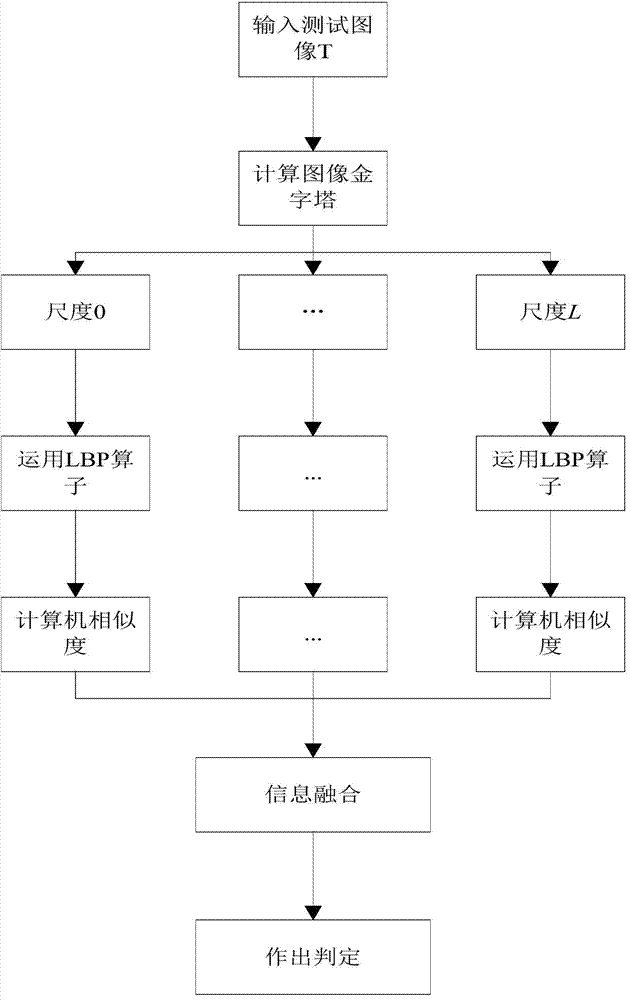

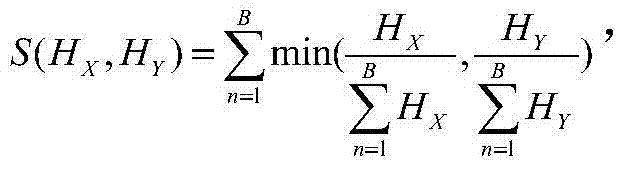

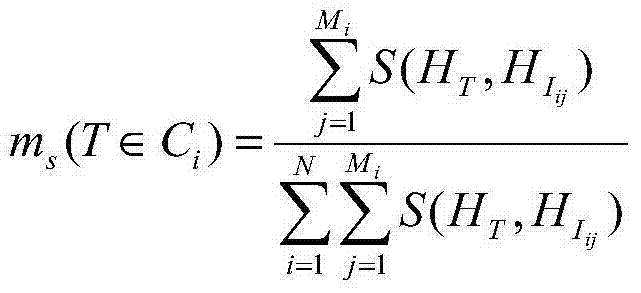

Effective multiscale texture recognition method

The invention discloses an effective multiscale texture recognition method. The method comprises the steps that an image pyramid of an input image is calculated firstly, then an LBP operator is applied to the image pyramid with various scales, next, the image pyramid of each scale generates a feature vector, multiscale information is integrated through similarity fusion on each scale according to the D-S evidence principle, and particularly, the similarity of the tested image and a target sample is calculated by fusing the similarity between the tested image and the sample of each scale. By means of the effective multiscale texture recognition method, the identification precision of a public data set Brodatz'salbum and an MIT video texture database (VisTex) reaches 96.43% and 91.67%. Meanwhile, the method has a certain robustness to image rotation invariance and has a certain application value in the practical application.

Owner:WUHAN UNIV

Method for generating dynamic pattern texture and terminal

ActiveCN102306076AEasy to handleImprove the effect processing abilityInput/output processes for data processingGraphicsMultiple frame

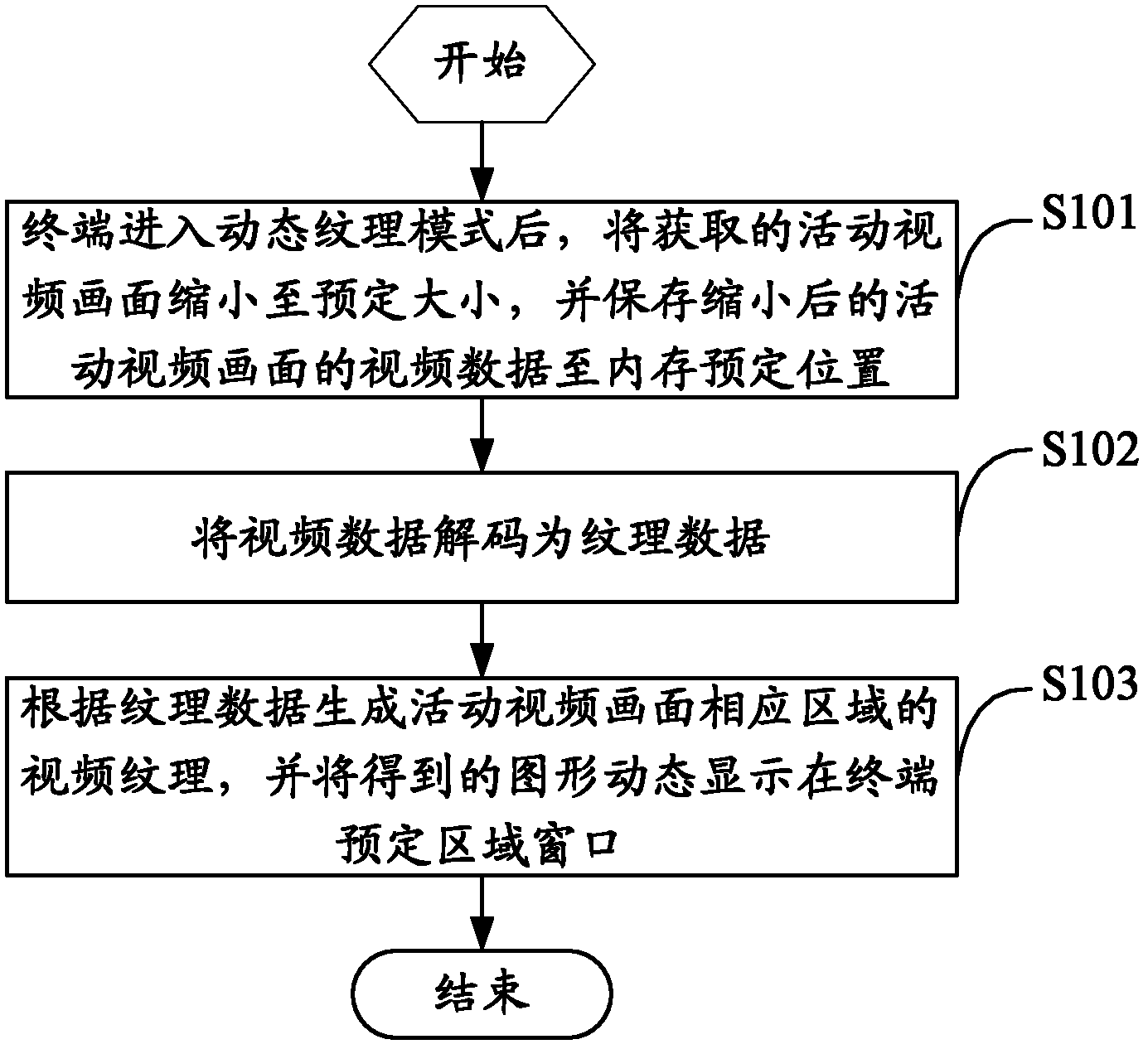

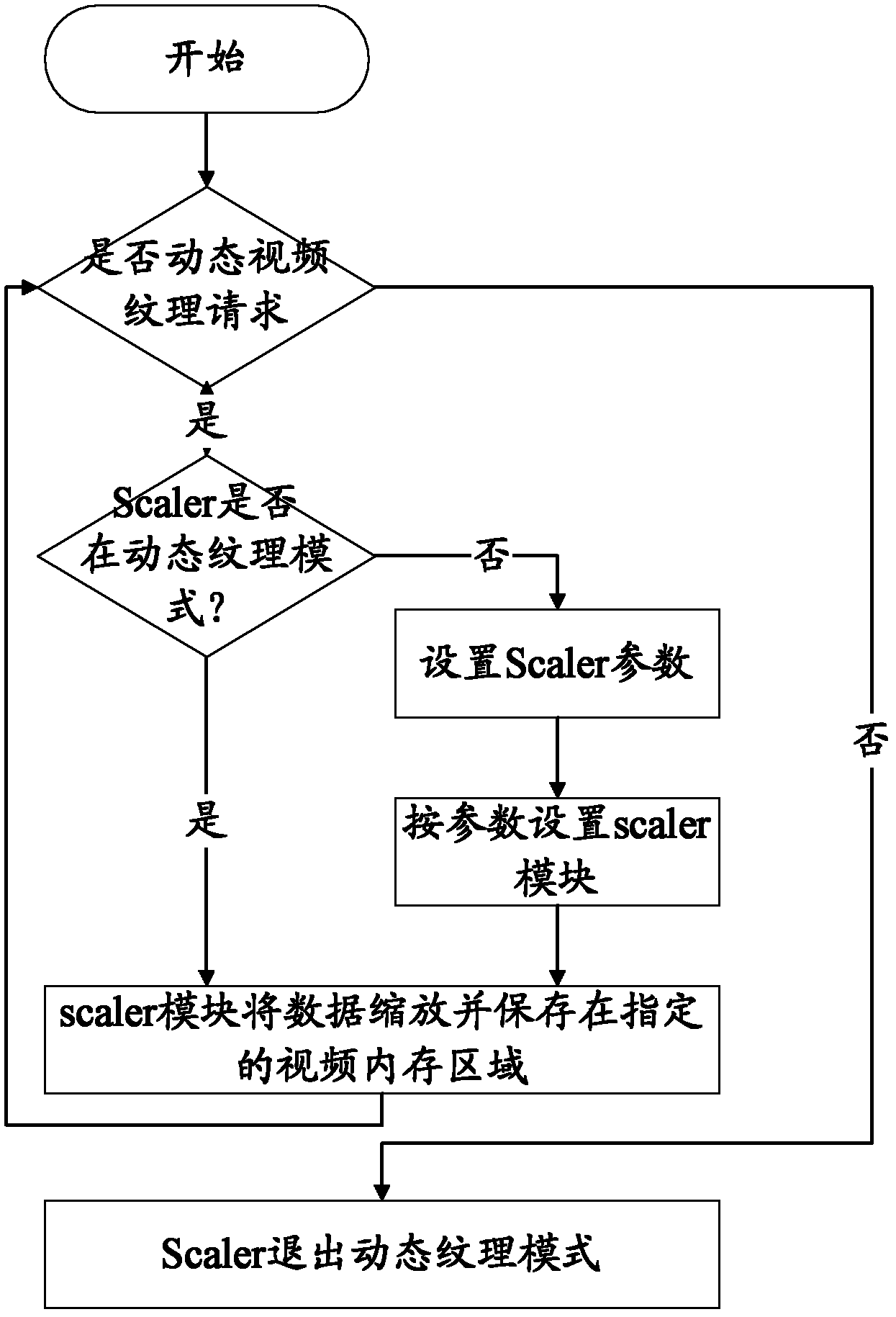

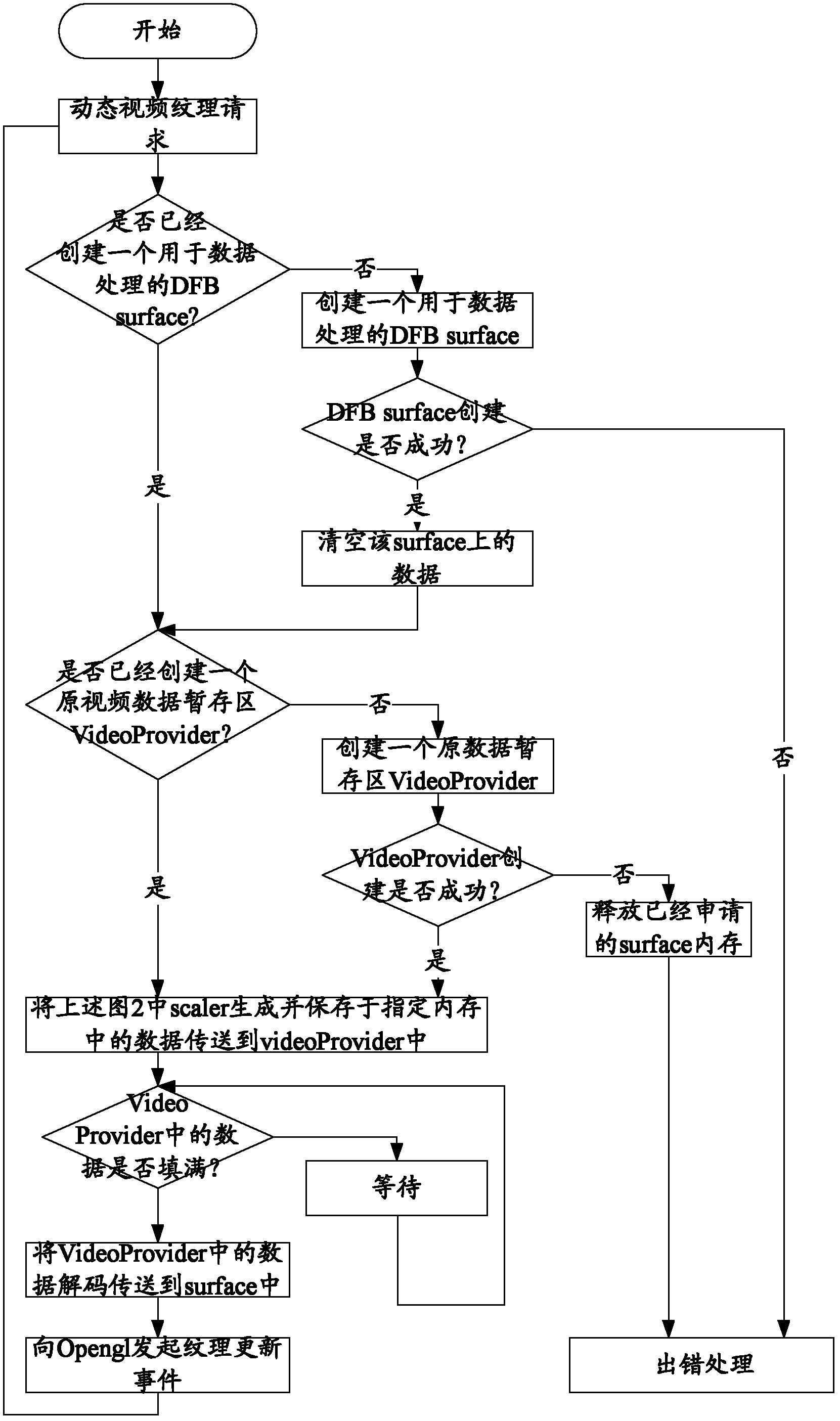

The invention relates to a method for generating dynamic pattern texture and a terminal. The method comprises the steps of: reducing an obtained movable video picture to a predetermined size after the terminal enters a dynamic texture mode; storing video data of the reduced movable video picture into the predetermined position of a memory; decoding the video data into texture data; generating video texture of the corresponding area of the movable video picture according to the texture data; and dynamically displaying an obtained pattern in a window in the predetermined area of the terminal. According to the invention, the movable video picture is converted into multi-frame texture through Saler technology, a frame buffering and decoding technology and OpenGl pattern processing technology; the multi-frame texture is bonded to a specified area in a pattern interface; the texture is dynamically processed through the OpenGl technology to achieve various video conversion effects; the processing capability of the pattern interface for the video effects is enhanced; the interface becomes more friendly; and the effect processing capability of a product is enhanced.

Owner:SHENZHEN TCL NEW-TECH CO LTD

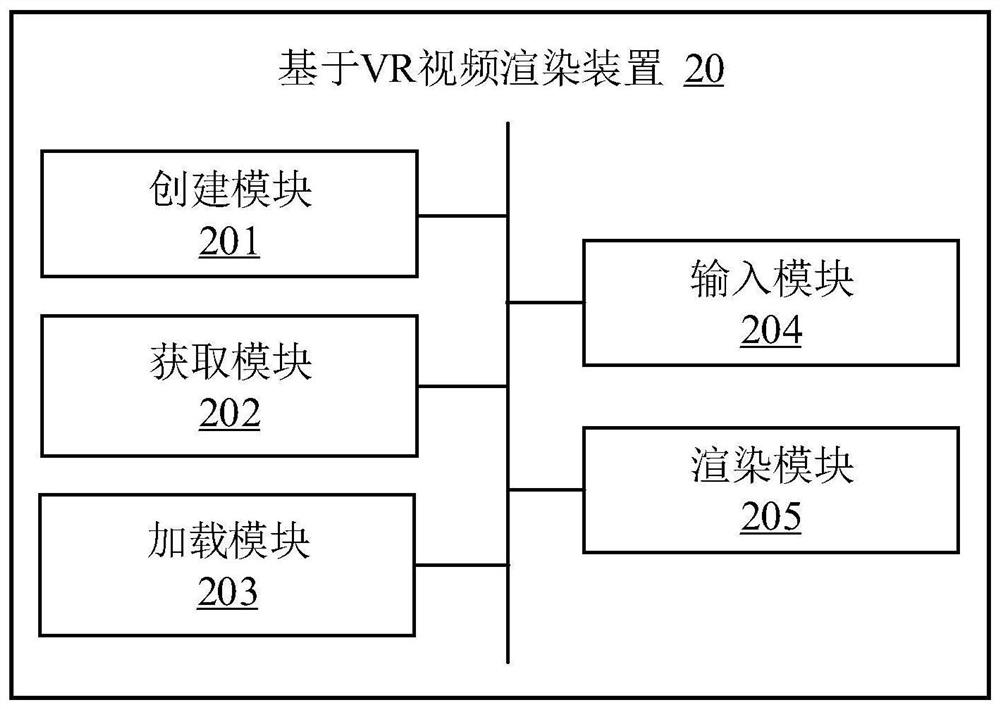

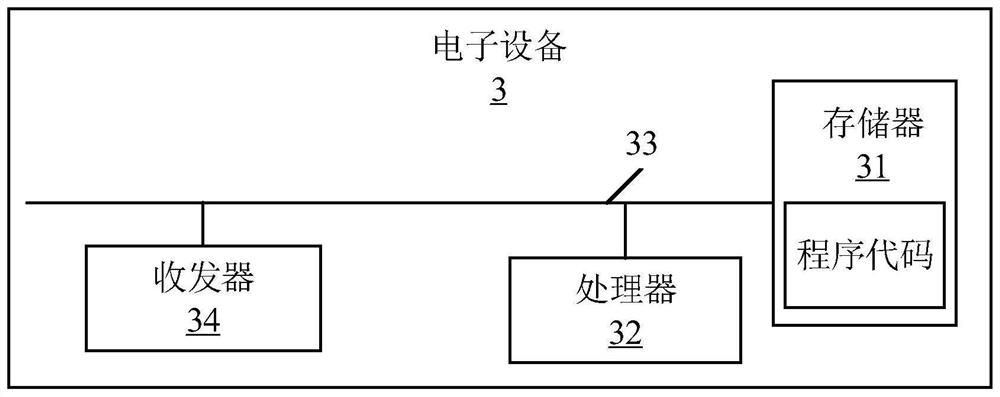

VR-based video rendering method and device, electronic equipment and storage medium

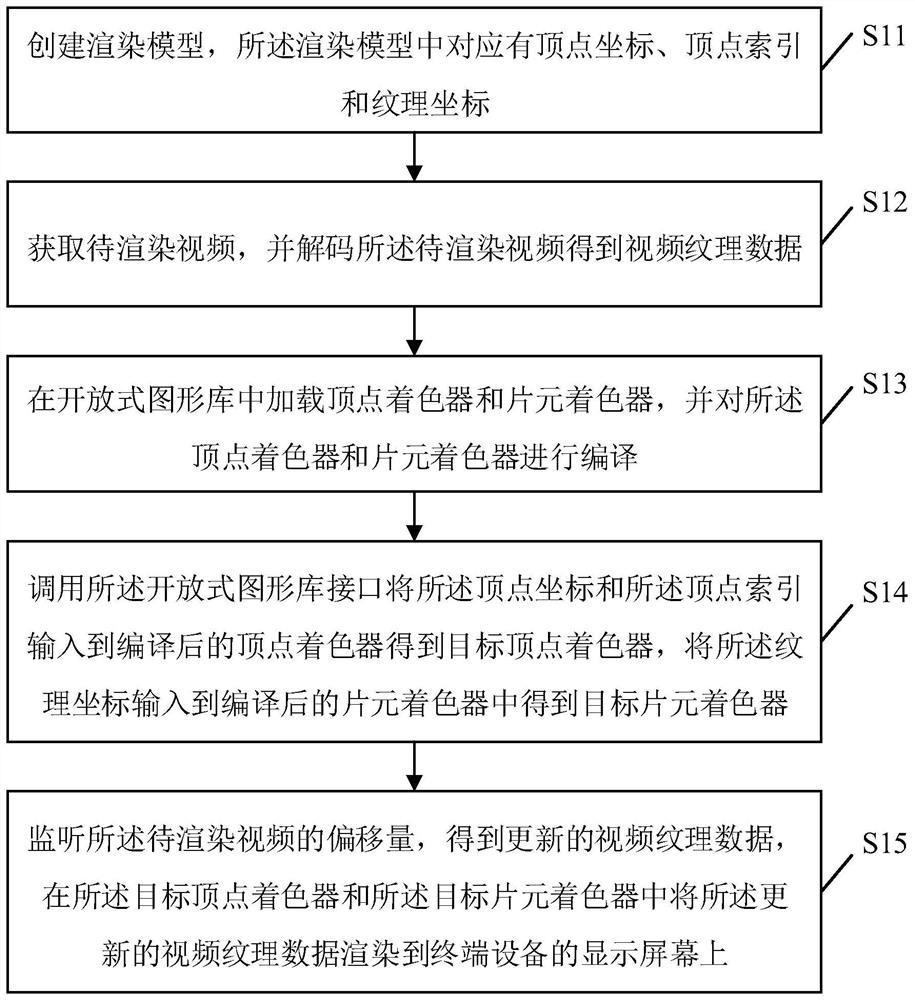

PendingCN111754614AImprove accuracyReduce fever3D-image renderingComputational scienceTerminal equipment

The invention relates to the technical field of artificial intelligence, and provides a VR-based video rendering method and device, electronic equipment and a storage medium. Obtaining a to-be-rendered video and decoding to obtain video texture data; loading a vertex shader and a fragment shader and compiling; inputting the vertex coordinates and the vertex indexes into a vertex shader to obtain atarget vertex shader, and inputting the texture coordinates into a fragment shader to obtain a target fragment shader; and monitoring the offset of the to-be-rendered video to obtain updated video texture data, and rendering the updated video texture data to a display screen of the terminal device in the target vertex shader and the target fragment shader. According to the invention, the updatedvideo texture data is rendered to the display screen of the terminal device through the preset rendering mode, so that the video rendering accuracy is improved. In addition, the invention also relatesto the technical field of the block chain, and the to-be-rendered video can be stored in the block chain node.

Owner:PINGAN INT SMART CITY TECH CO LTD

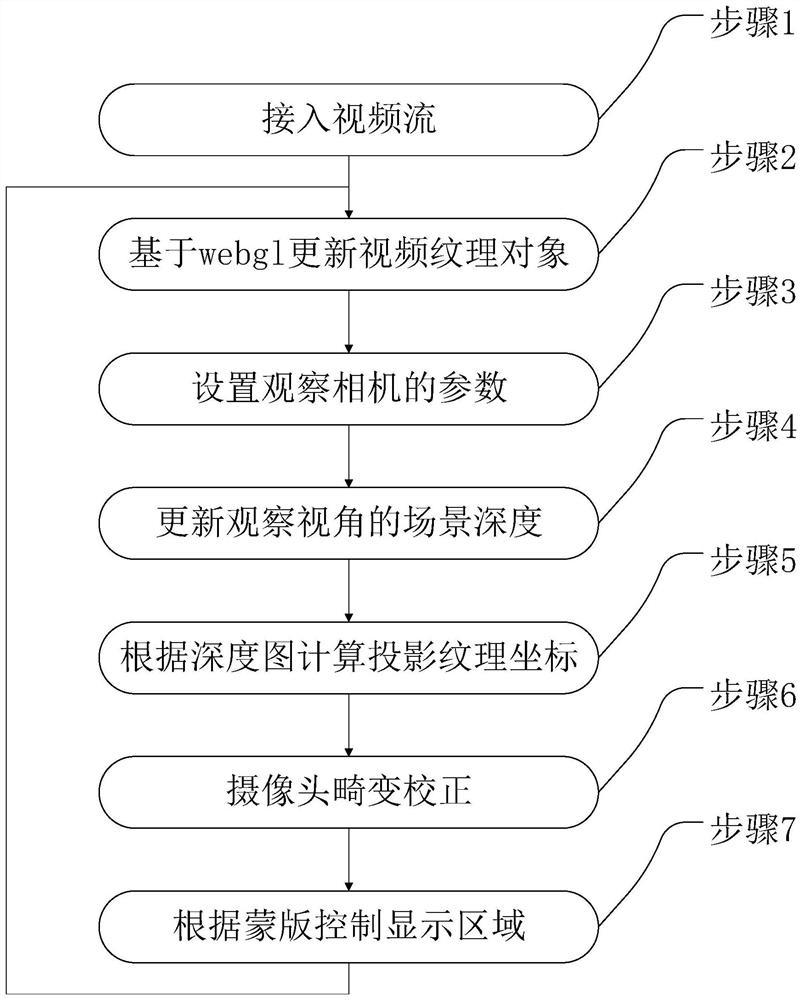

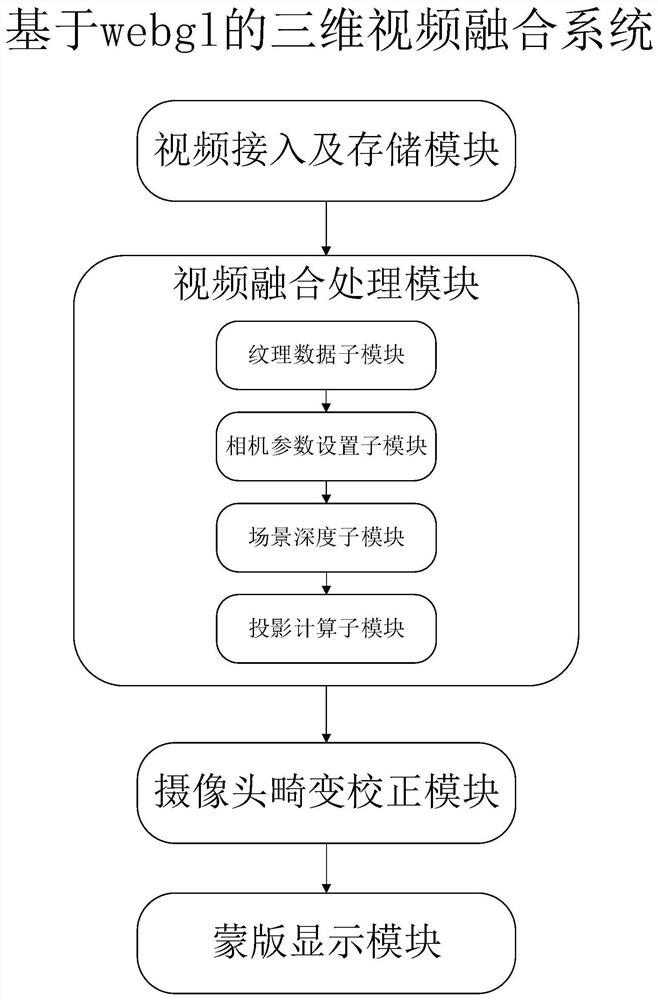

Three-dimensional video fusion method and system based on WebGL

ActiveCN112437276AAvoid overlapping displayImprove the display effectImage enhancementTelevision system detailsViewing frustumRadiology

The invention provides a three-dimensional video fusion method and system based on WebGL. According to the invention, there is no need to process a video source, and the method comprises the steps: accessing an HTTP video stream, updating a video texture object based on WebGL, updating and setting a near cutting surface, a far cutting surface and a camera position and orientation of a view cone ofan observation camera, then updating the scene depth of an observation view angle, projecting and restoring to an observer camera coordinate system, fusing with the live-action model, performing distortion correction on the camera, and finally realizing a video area cutting effect by adopting masking. The problems in the prior art are solved, three-dimensional video fusion is achieved on the basis of WebGL, the projection area is cut, adjacent videos can be prevented from being displayed in an overlapped mode, distortion correction is conducted on the cameras, and therefore the good display effect can be achieved for the cameras with large distortion and the situation that the installation positions are low.

Owner:埃洛克航空科技(北京)有限公司

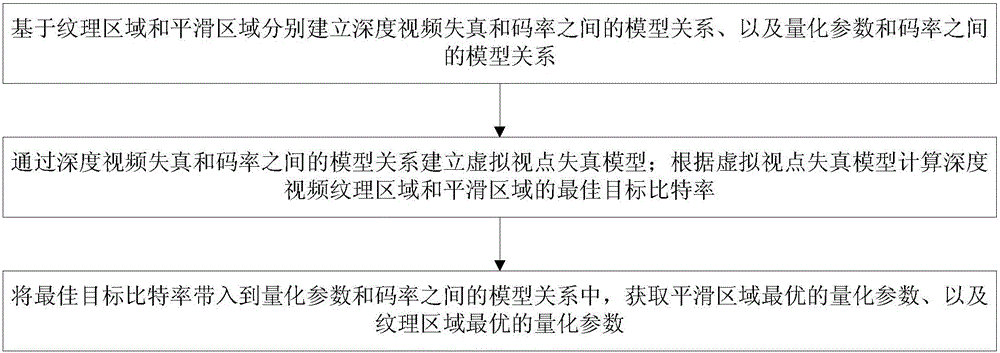

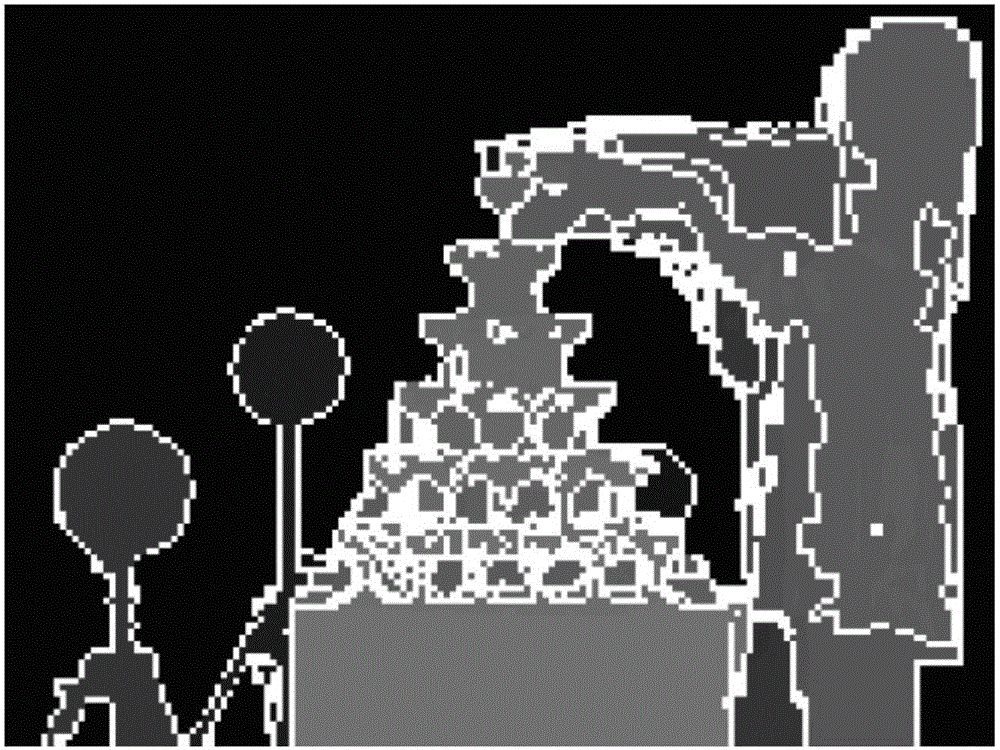

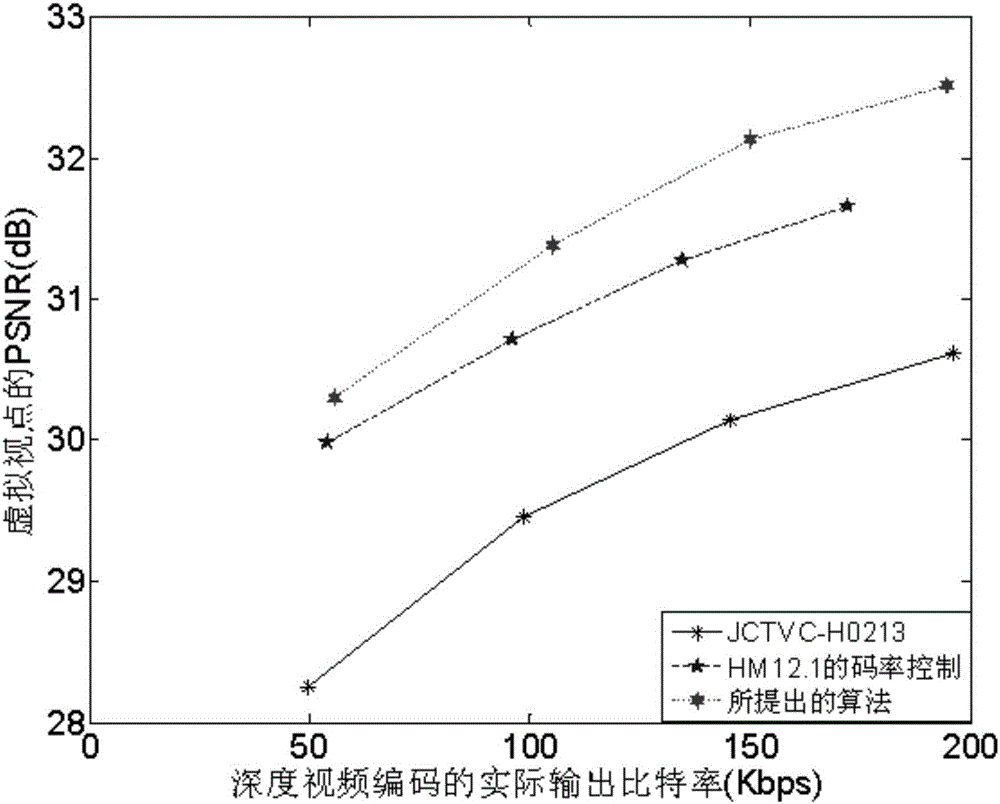

Bit allocation and rate control method for deep video coding

InactiveCN105898331AImprove accuracyImprove encoding qualityDigital video signal modificationSteroscopic systemsPattern recognitionViewpoints

The invention discloses a bit allocation and rate control method for deep video coding. The method comprises the following steps: respectively establishing a model relation between deep video distortion and rates and a model relation between quantization parameters and rates based on a texture region and a smooth region; establishing a virtual viewpoint distortion model via the model relation between deep video distortion and rates; calculating optimal target bit rates of the texture region and the smooth region of deep video according to the virtual viewpoint distortion model; and bringing the optimal target bit rates to the model relation between quantization parameters and rates, to obtain an optimal quantization parameter of the smooth region and an optimal quantization parameter of the texture region. According to the method, the regional characteristics of the deep video are combined to a rate control algorithm, so that the accuracy of bit allocation of deep video coding is improved, meanwhile, the coding quality of the texture region of the deep video is improved, then the quality of a drawn virtual view is improved, and the application requirements of a 3D video system are met.

Owner:TIANJIN UNIV

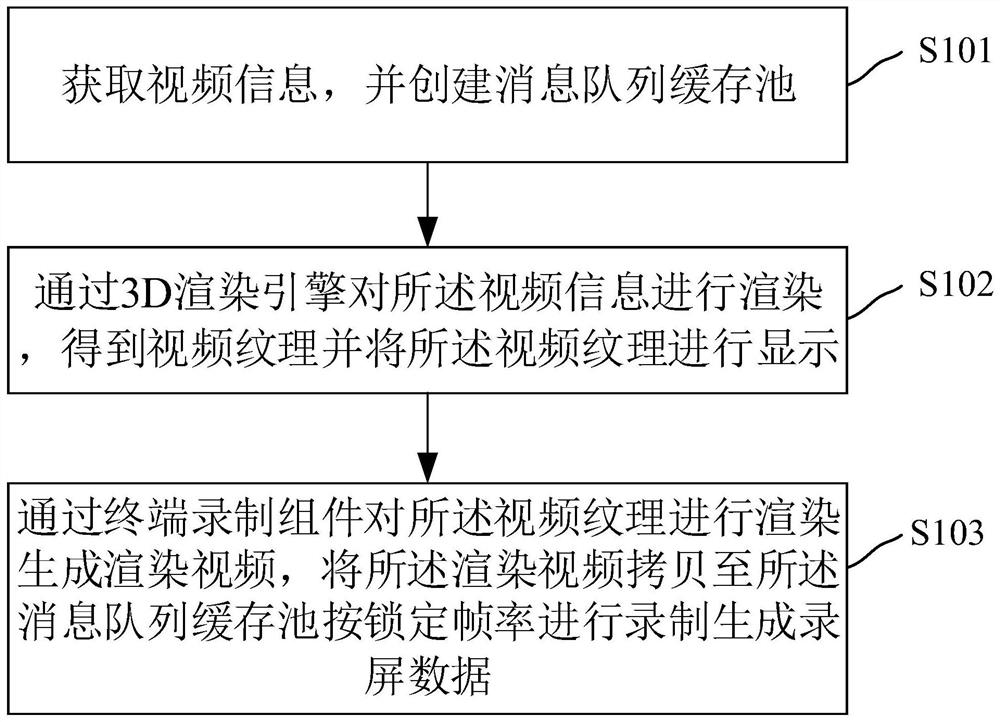

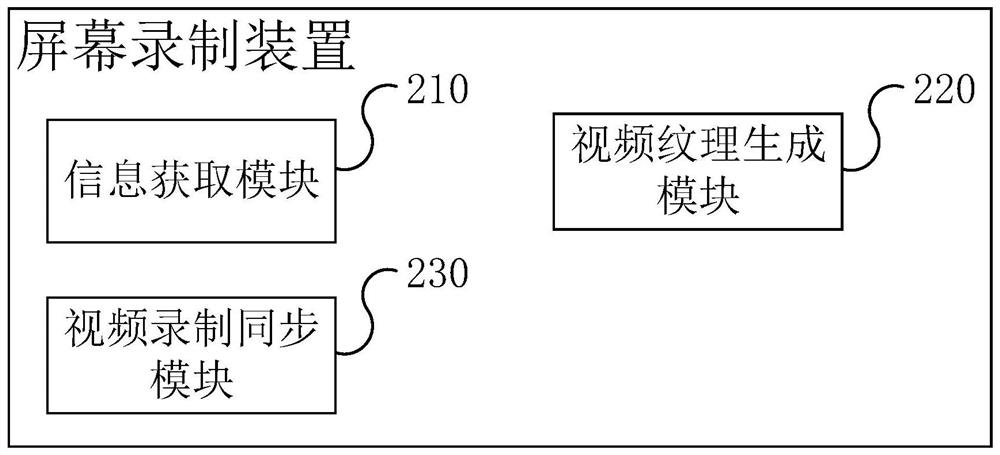

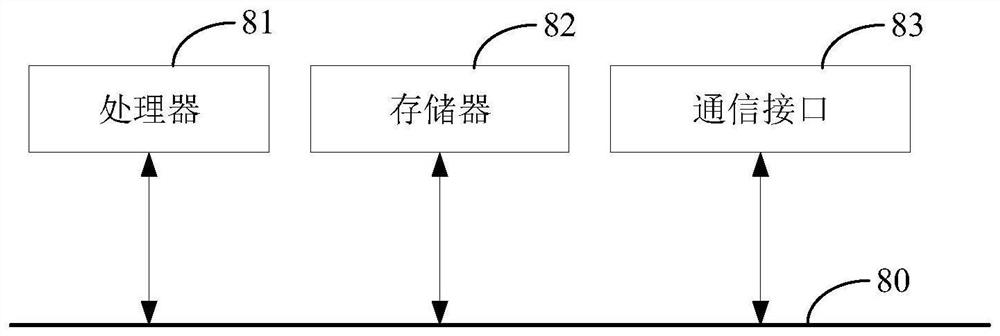

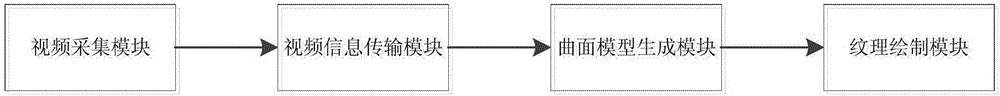

Screen recording method and device, computer equipment and computer readable storage medium

PendingCN112218148AResolve resolutionSolve the problem that is easy to cause the screen to freezeSelective content distributionComputer hardwareMessage queue

The invention relates to a screen recording method and device, computer equipment and a computer readable storage medium. The method comprises steps of obtaining the video information, and creating amessage queue cache pool; rendering the video information through a 3D rendering engine to obtain a video texture, and displaying the video texture; rendering the video texture through a terminal recording component to generate a rendered video, copying the rendered video to the message queue cache pool, and recording the rendered video according to a locked frame rate to generate screen recordingdata. According to the method, the 3D rendering engine is adopted to process the video information in advance to obtain the video texture, and the terminal recording component synchronously renders and records the video texture, so problems that the resolution of the video recorded by the terminal is low and pictures are easy to be blocked are solved, and the definition and the frame rate of therecorded video are ensured.

Owner:杭州易现先进科技有限公司

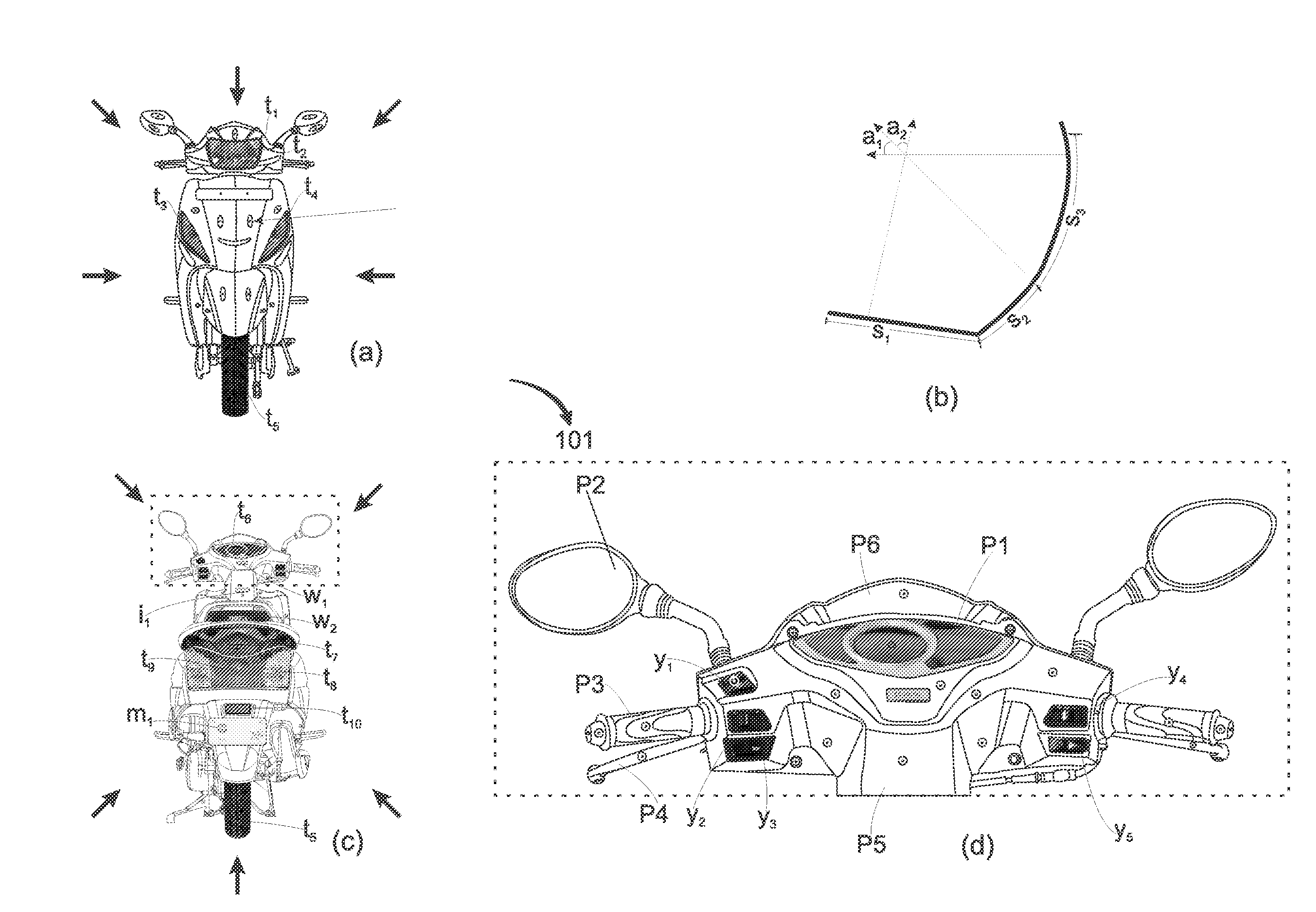

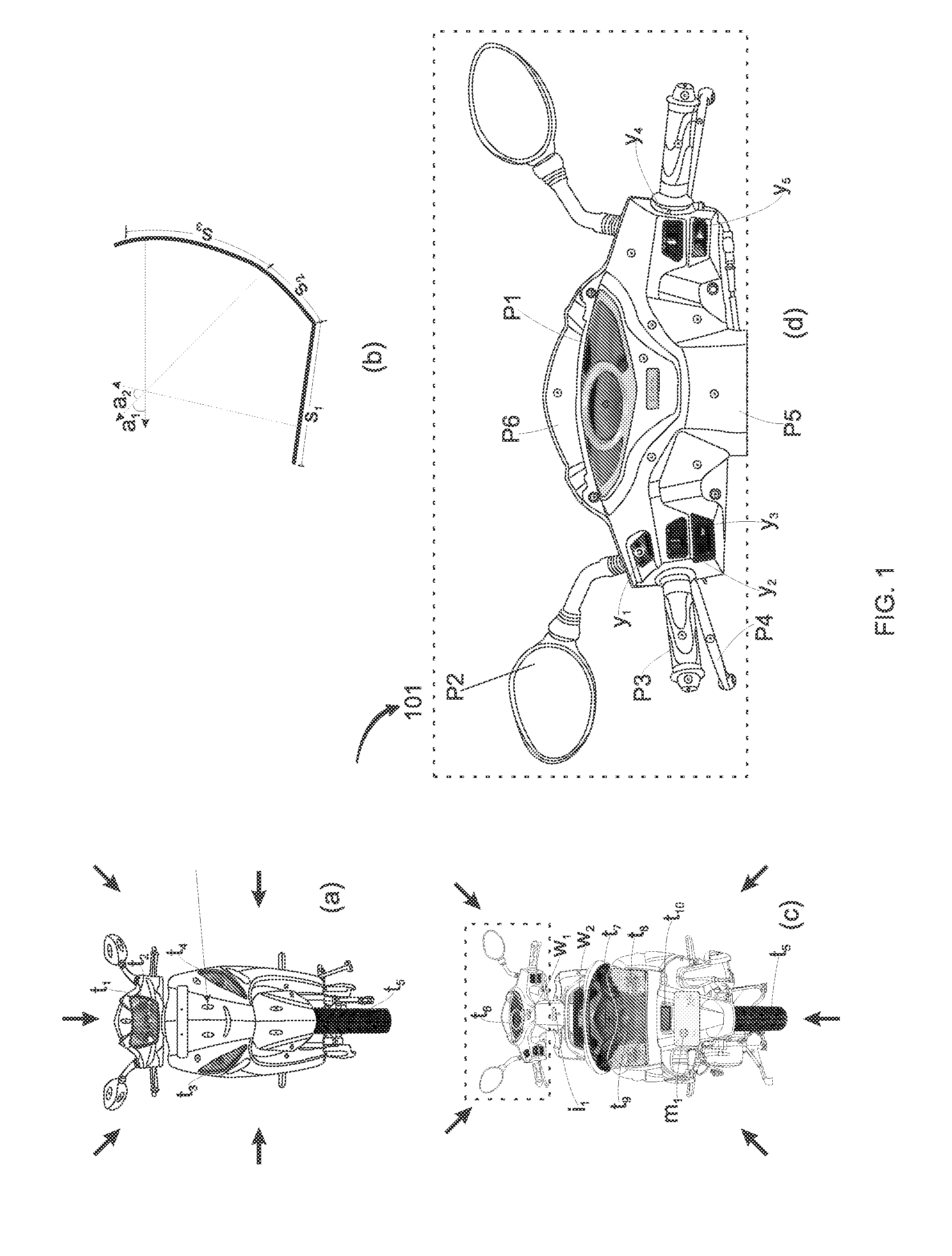

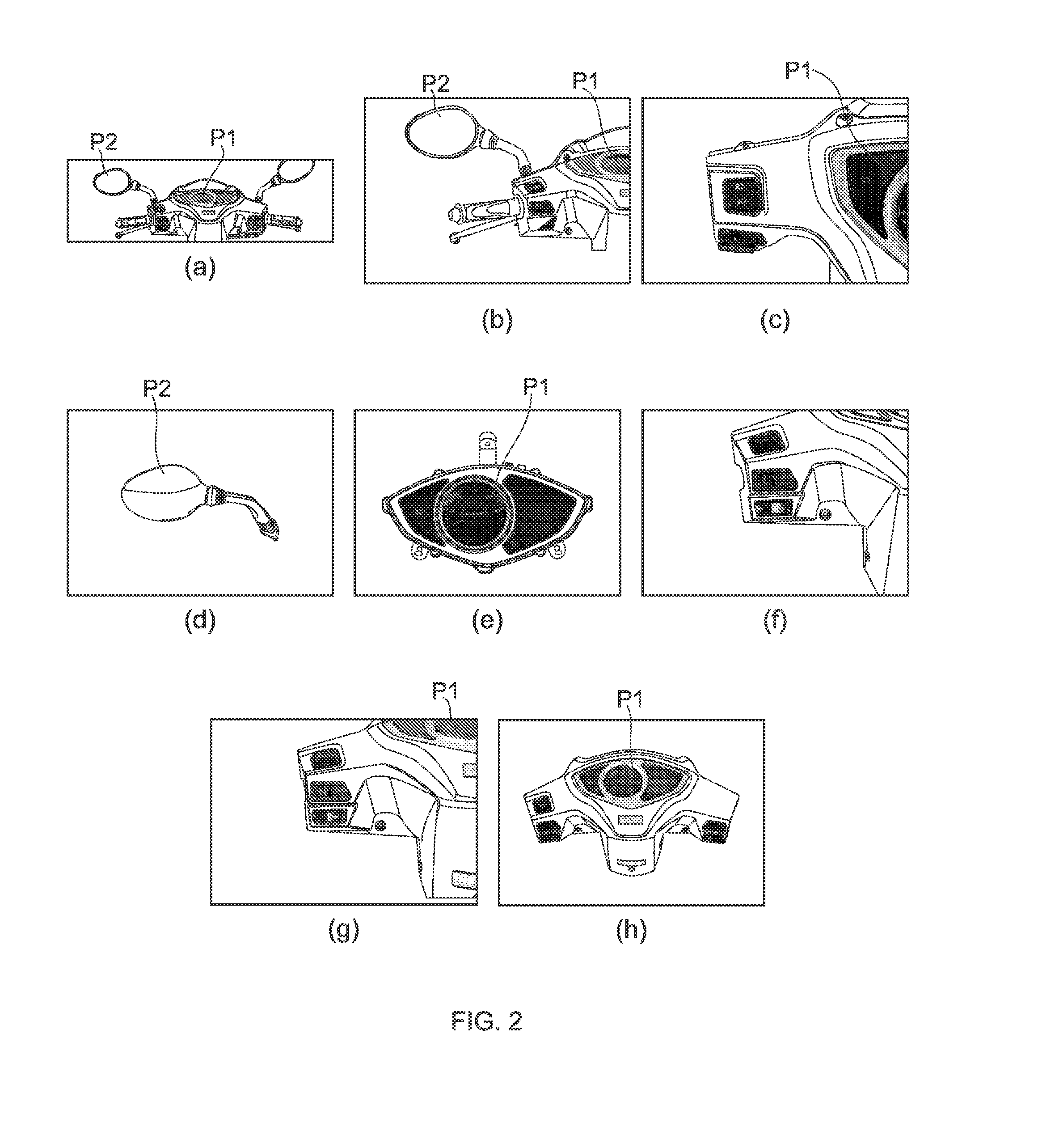

Texturing of 3d-models using photographs and/or video for use in user-controlled interactions implementation

ActiveUS20160307357A1Precise detailingSmall file sizeImage rendering3D-image rendering3D computer graphicsLight emitting device

Texturing of external and / or internal surfaces, or on internal parts of 3D models representing real objects, for providing extremely real-like, vivid and detailed view on and / or within the 3D-model, is made possible using a plurality of real photographs and / or video of the real objects. The 3D models are 3D computer graphics models used in user-controlled interactions implementation purpose. The view of texture on the 3D-model that is textured using real photographs and / or video replicates view of texture as on the real 3D object. Displaying realistic texture on 3D-model surface applying video as texture is made possible replicating real view of light blinking from a physical light emitting device of real object such as head light or rear light of an automotive vehicle.

Owner:VATS NITIN +1

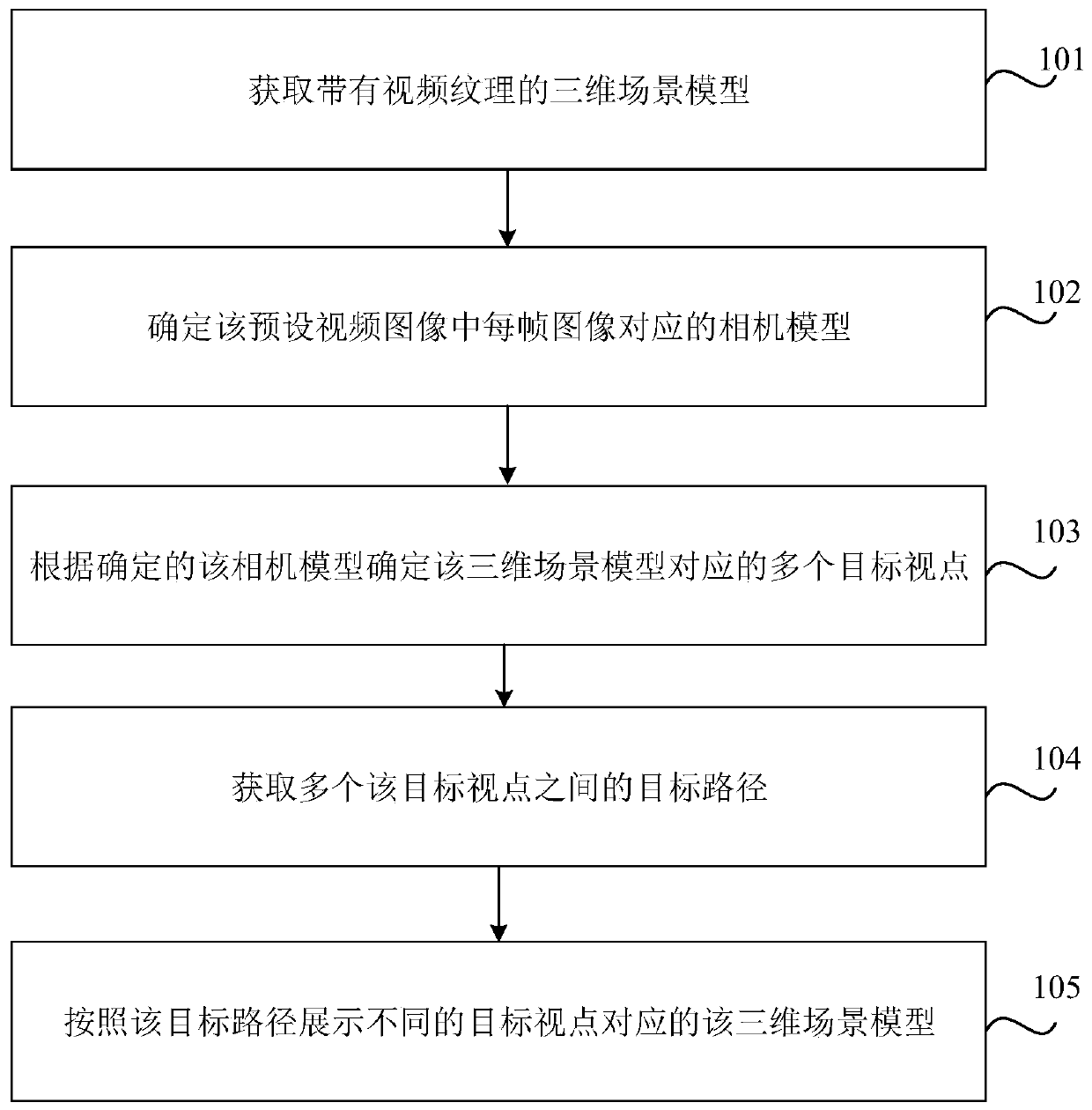

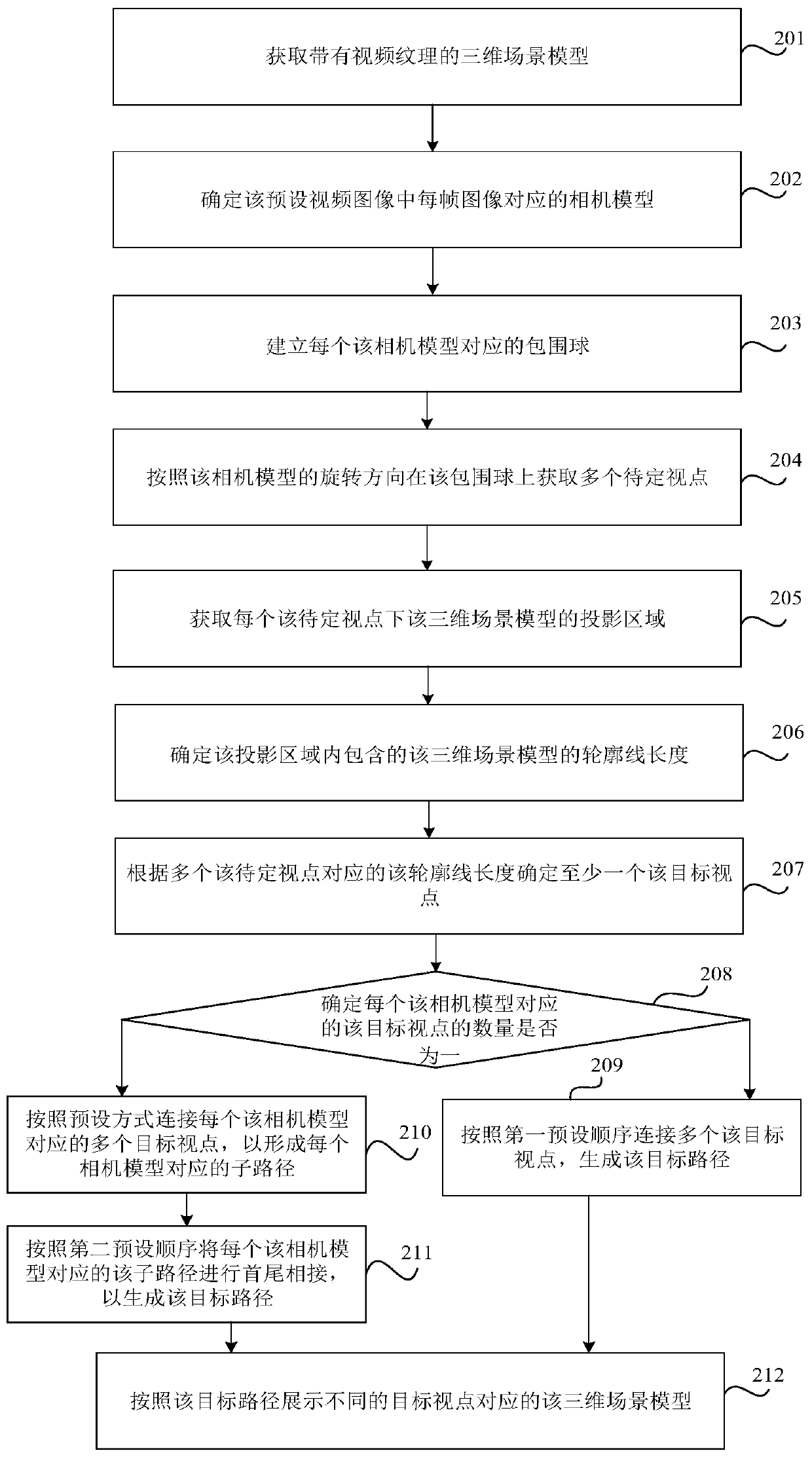

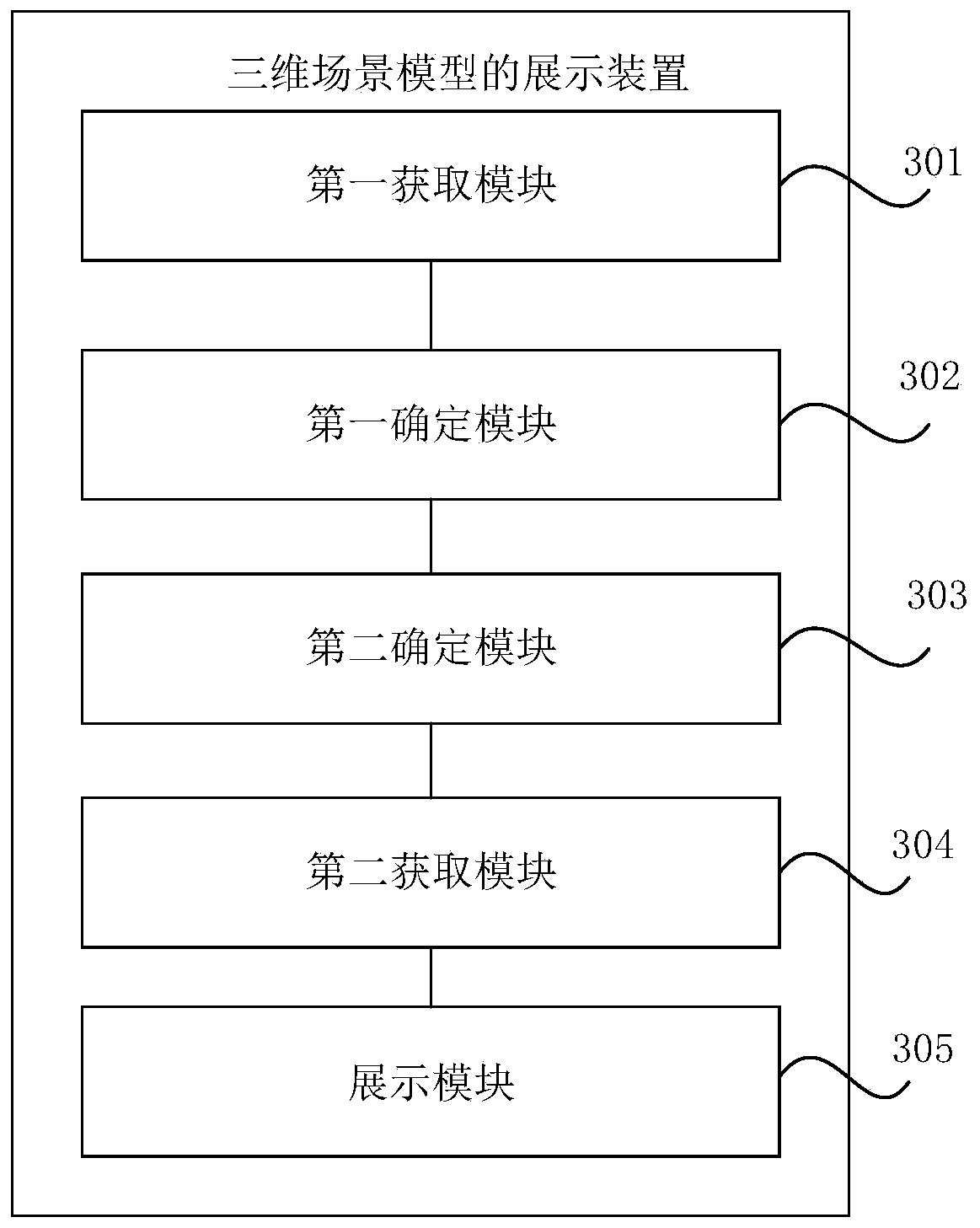

Three-dimensional scene model display method and device, storage medium and electronic equipment

ActiveCN111294584AImprove experienceImprove the three-dimensional effectClosed circuit television systemsSteroscopic systemsEngineeringImage pair

The invention relates to a three-dimensional scene model display method and device, a storage medium and electronic equipment, and the method comprises the steps: obtaining a three-dimensional scene model with a video texture, and enabling a preset video image to be subjected to three-dimensional reconstruction to generate the three-dimensional scene model; determining a camera model correspondingto each frame of image in the preset video image; determining a plurality of target viewpoints corresponding to the three-dimensional scene model according to the determined camera model; obtaining atarget path among the plurality of target viewpoints, wherein the target path is a corresponding moving track when the display view angle is switched among different target viewpoints; and displayingthe three-dimensional scene models corresponding to different target viewpoints according to the target path. Thus, the target paths among the multiple target viewpoints are obtained, the three-dimensional scene models corresponding to the different target viewpoints are displayed according to the target paths, the monitoring content can be displayed at different display view angles, the monitoring display three-dimensional effect can be improved, and the user experience is improved.

Owner:北京五一视界数字孪生科技股份有限公司

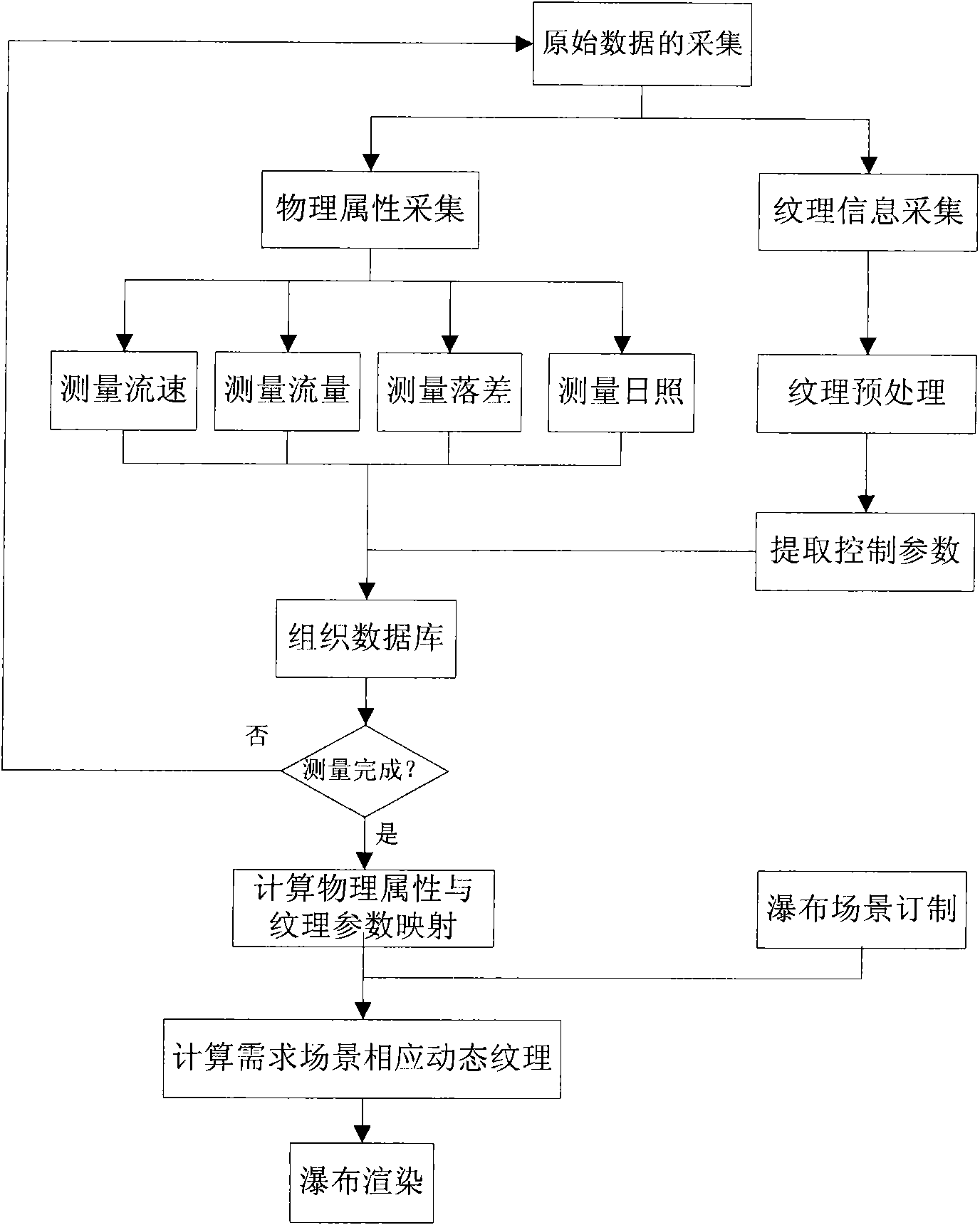

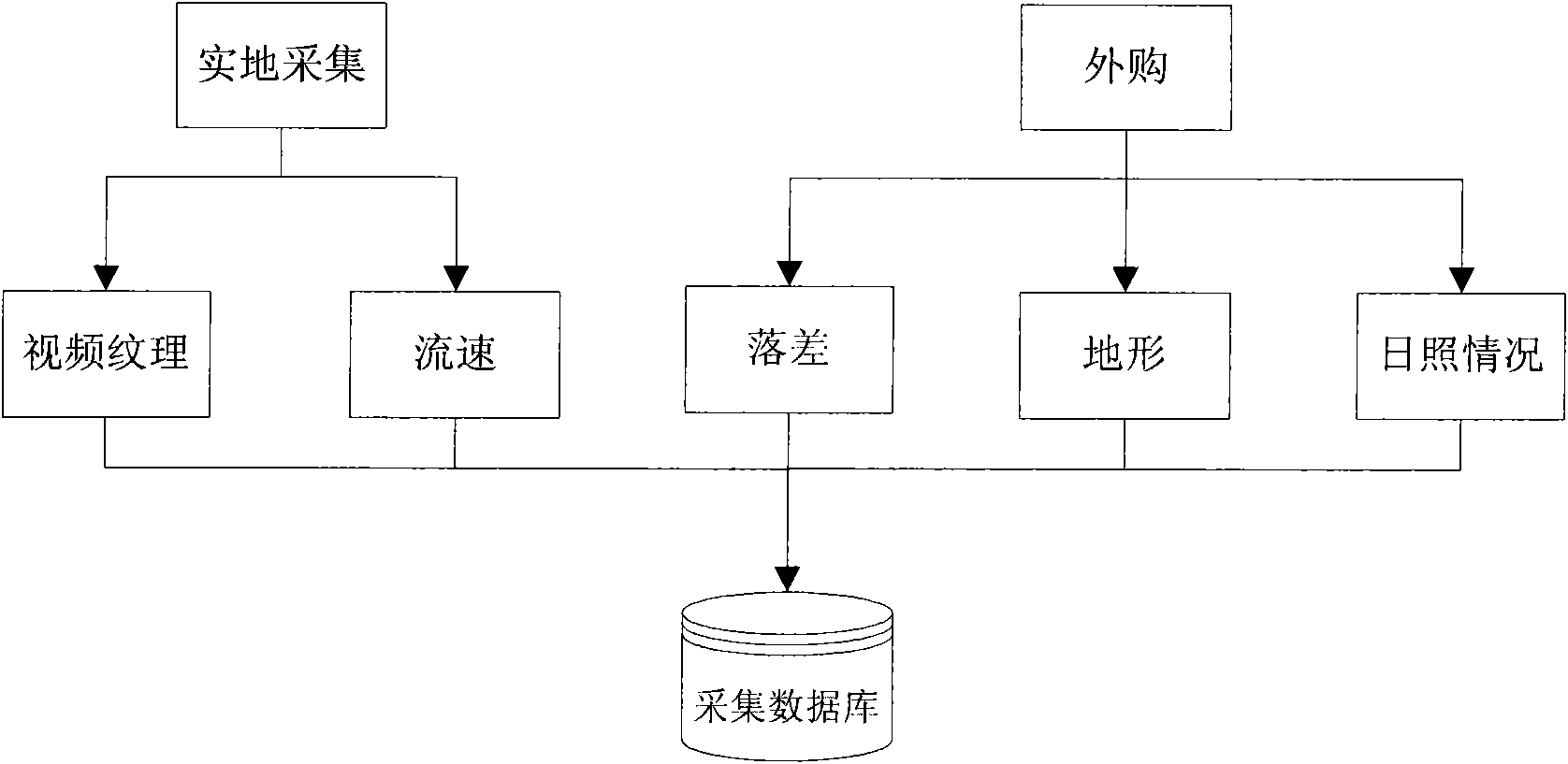

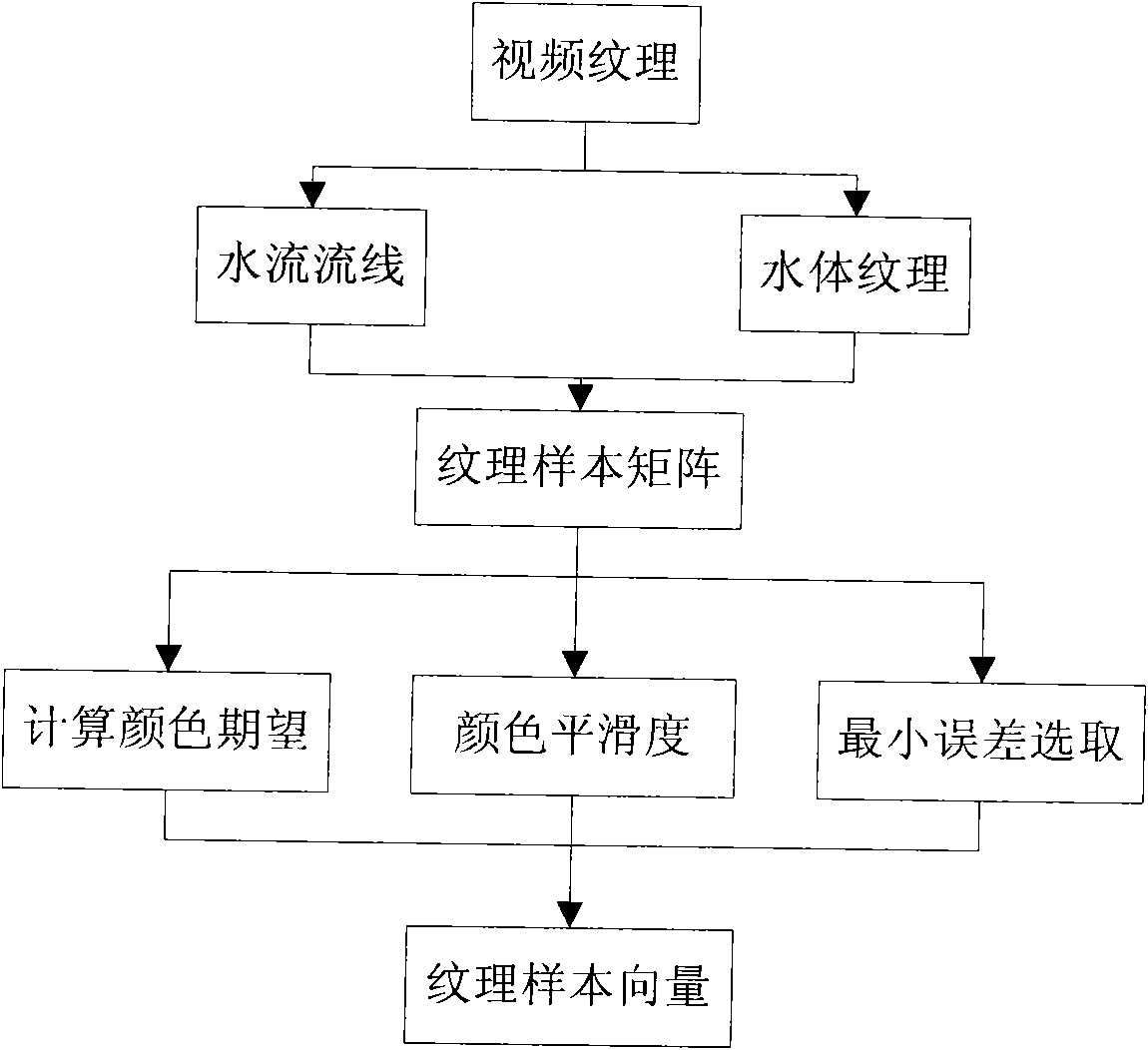

Dynamic texture waterfall modeling method combined with multiple physical attributes

InactiveCN101937576AOvercoming generational distortionOvercome model untrustworthiness3D-image renderingTerrainCorrelation analysis

The invention relates to a dynamic texture waterfall modeling method combining with multiple physical attributes, belonging to the technical field of virtual reality science. The dynamic texture waterfall modeling method comprises the following steps of: (1) measuring on the spot to acquire physical attribute parameters of real waterfalls in different environments, such as flow velocity, flow rate, head drop, terrain and sunlight which influence the forms of the real waterfalls, and also recording video textures in corresponding states by using a video camera; (2) extracting a plurality of control parameters from the dynamic textures of a waterfall model; (3) reasonably reorganizing acquired data in a database, and building distribution models for the physical attributes through correlation analysis; (4) determining demands according to scenes, and calculating real data inside the database by utilizing a mapping law to obtain the dynamic textures of the waterfalls, which approac to real effect; and (5) rendering the waterfall scenes by using the dynamic textures. According to the invention, a statistic law of multiple physical attributes and the dynamic textures of the waterfalls is extracted, high-lifelike waterfall scene modeling is carried out on the waterfalls in any environmental form by utilizing statistical distribution and the traditional data, the defect of model texture distortion brought about by ignoring physical factors in the traditional waterfall modeling is overcome and the real simulation of the surface textures of waterfall flows driven by the physical attribute parameters is realized.

Owner:BEIHANG UNIV

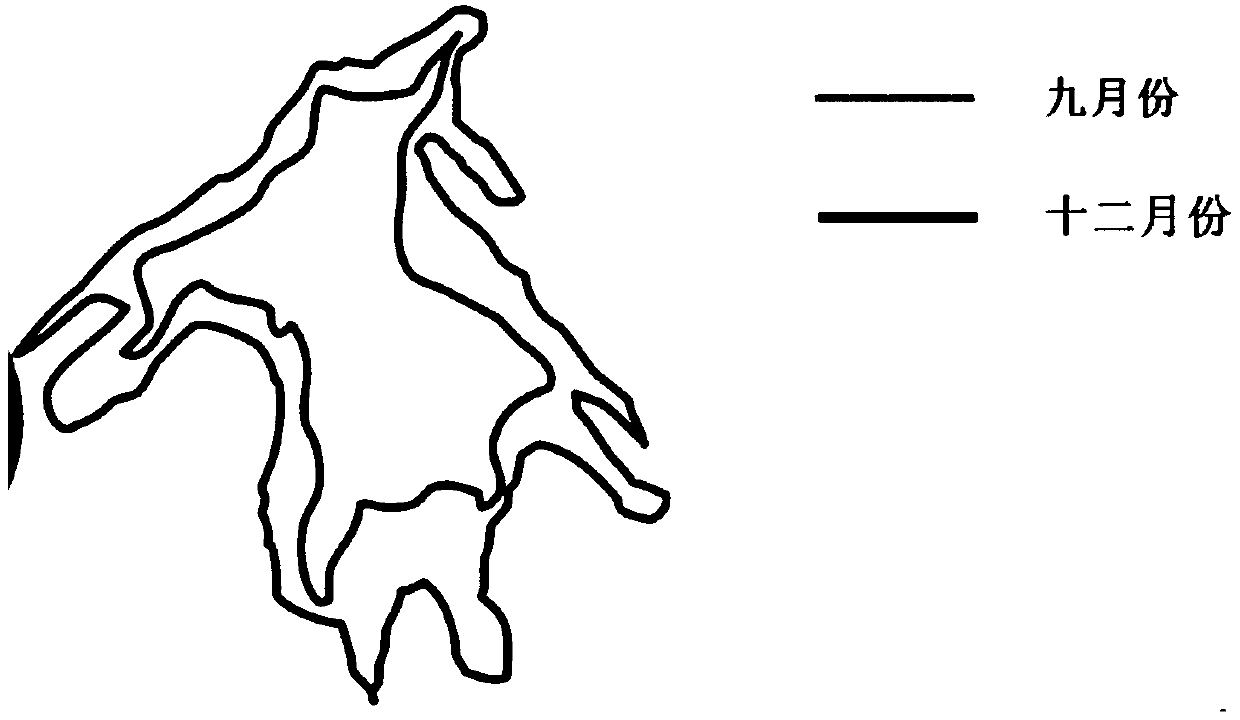

Mountain disaster early-warning method and system

The invention provides a mountain disaster early-warning method and system. The mountain disaster early-warning method comprises the following steps that mountain disaster data are collected under thesame view point; static panoramas are spliced and synthesized; video textures in the mountain disaster motion process are generated; the static panoramas and the video textures are combined to generate a constructed dynamic panorama; and multi-period images are overlaid, and the change of the elevation and horizontal displacement before and after mountain disaster deformation is quantitatively analyzed. According to the mountain disaster early-warning method, the video textures and the panoramas are combined, the dynamic panorama in the deformation process of mountain disasters such as the landslide and the debris flow is constructed, meanwhile, the multi-period images are overlaid, the quantitative analysis result of mountain disaster deformation data is obtained, and the mountain disaster is early warned.

Owner:CHENGDU UNIVERSITY OF TECHNOLOGY

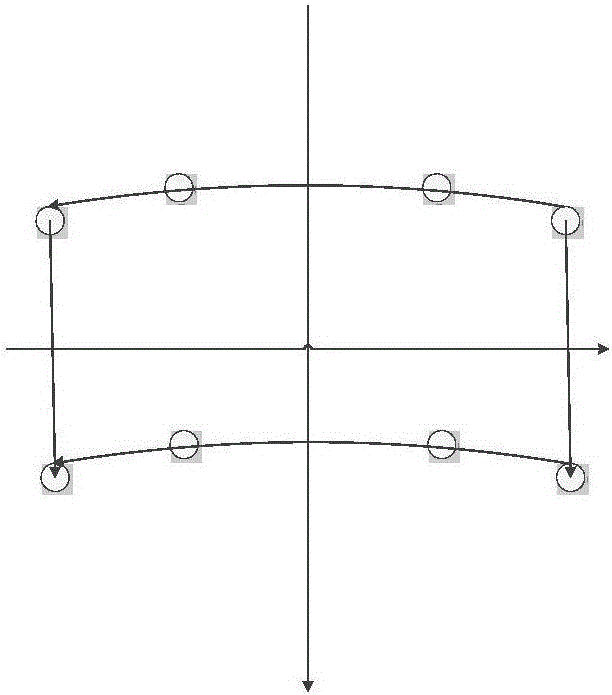

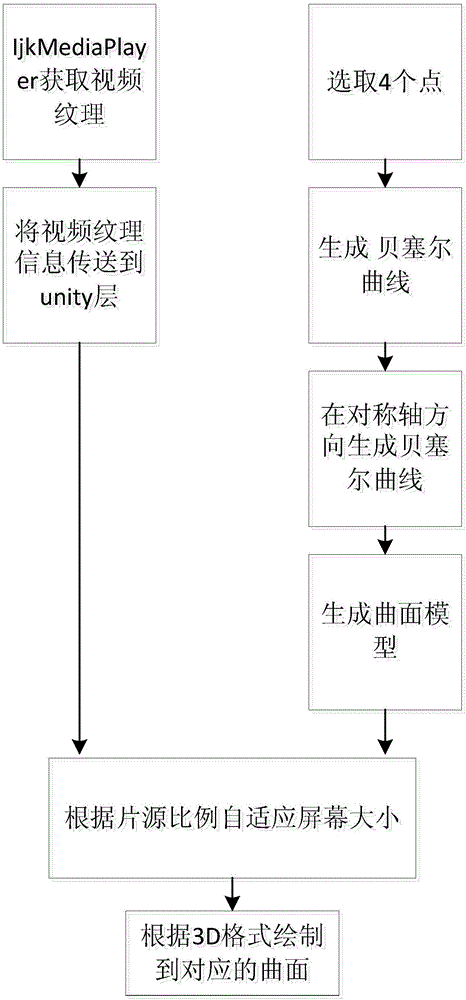

Method and device for playing 3D video in three-dimensional virtual scene by means of curved surface, and mobile phone

PendingCN106600676AAchieve playbackImprove compatibilitySubstation equipment3D-image renderingMobile phoneVideo texture

The invention discloses a method for playing a 3D video in a three-dimensional virtual scene by means of a curved surface. The method comprises the steps of generating two curved surface models in a three-dimensional engine, setting the global coordinates of the two curved surface models to same and placing the two curved surface models in front of a camera in the virtual scene; calling texture information of the 3D video in a system layer through a java reflection mechanism three-dimensional engine; and mapping two partial textures of the 3D video on the corresponding curved surface models in a picture mapping manner. The method realizes a function of playing the 3D video by means of one curved surface model in the virtual reality scene and utilizes video texture information obtained through ijkMediaPlayer for drawing the model generated in unity, thereby realizing 3D video playing and ensuring better compatibility and better immersion type experience.

Owner:FLYING FOX INFORMATION TECH TIANJIN CO LTD

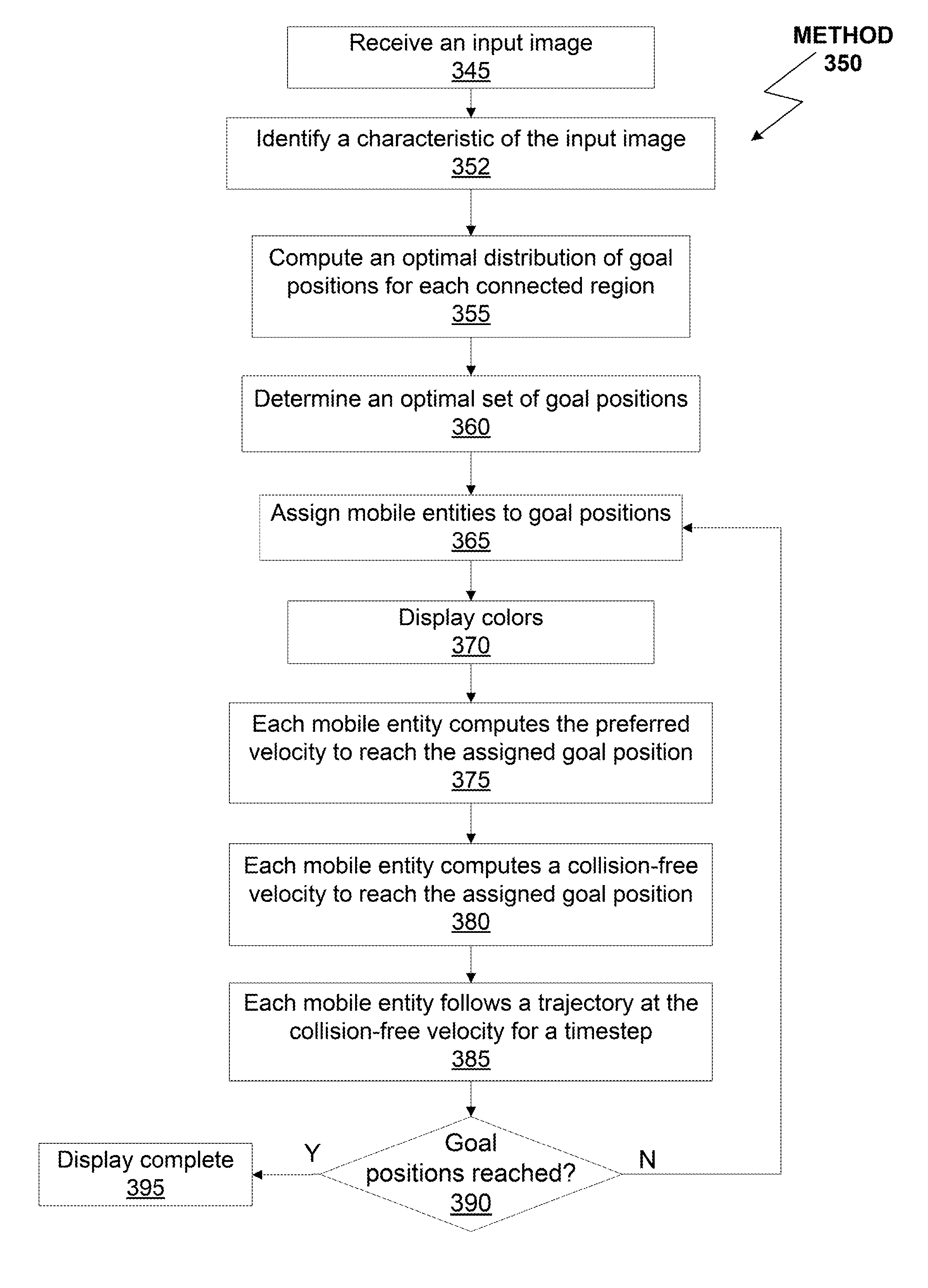

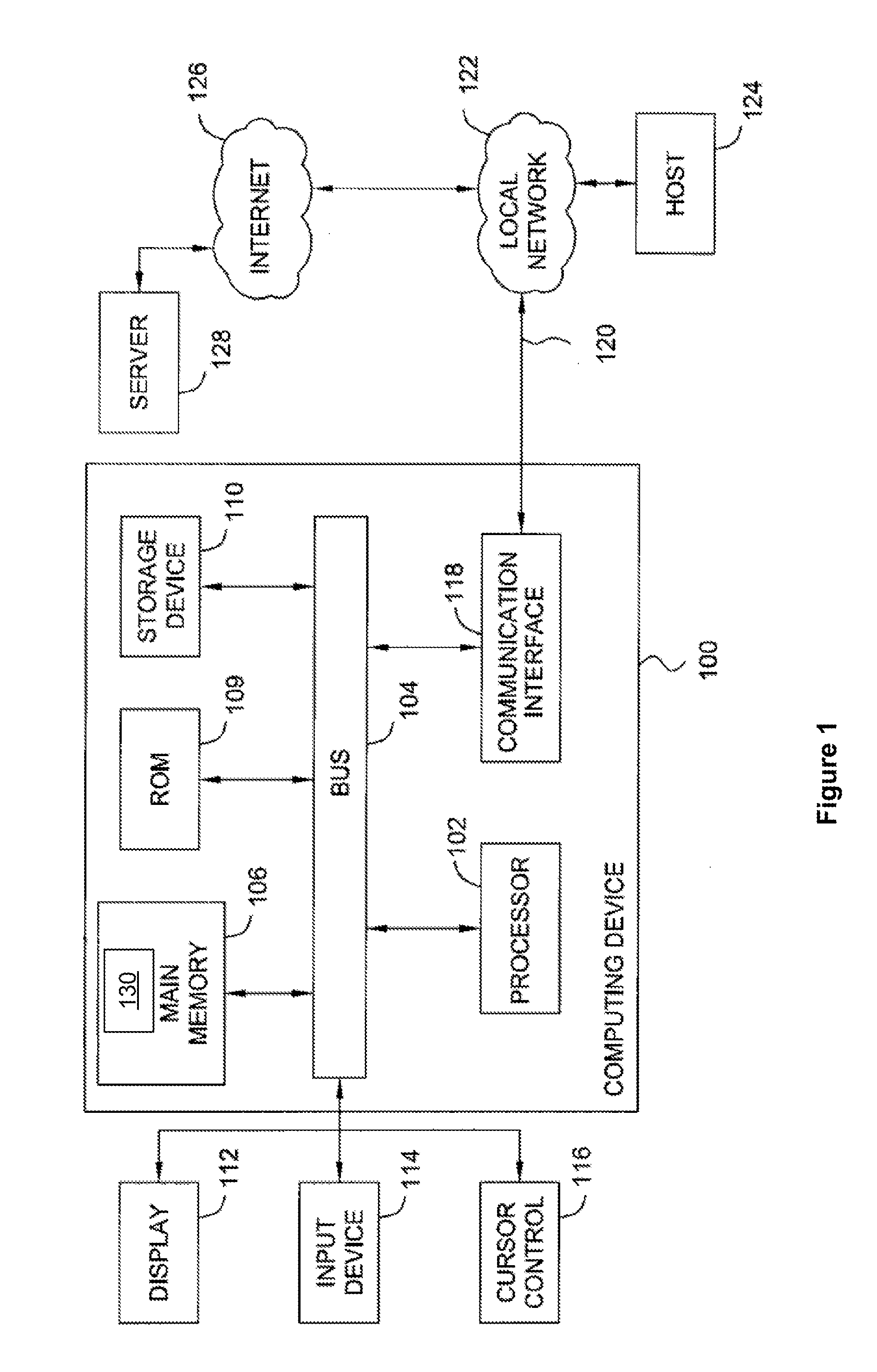

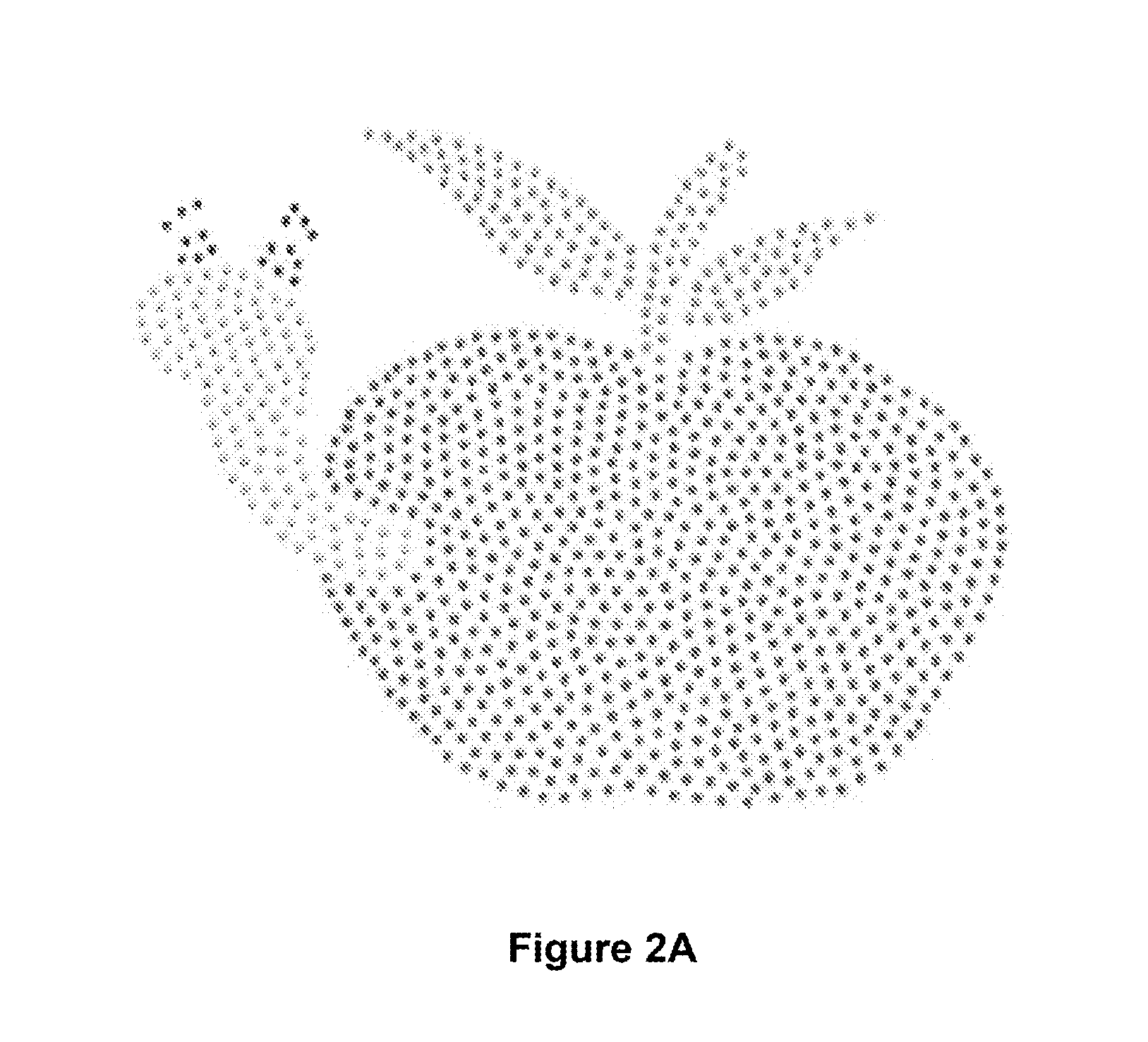

Robotic texture

Techniques are disclosed for controlling robot pixels to display a visual representation of a real-world video texture. Mobile robots with controllable color may generate visual representations of the real-world video texture to create an effect like fire, sunlight on water, leaves fluttering in sunlight, a wheat field swaying in the wind, crowd flow in a busy city, and clouds in the sky. The robot pixels function as a display device for a given allocation of robot pixels. Techniques are also disclosed for distributed collision avoidance among multiple non-holonomic and holonomic robots to guarantee smooth and collision-free motions.

Owner:ETH ZURICH EIDGENOESSISCHE TECHN HOCHSCHULE ZURICH +1

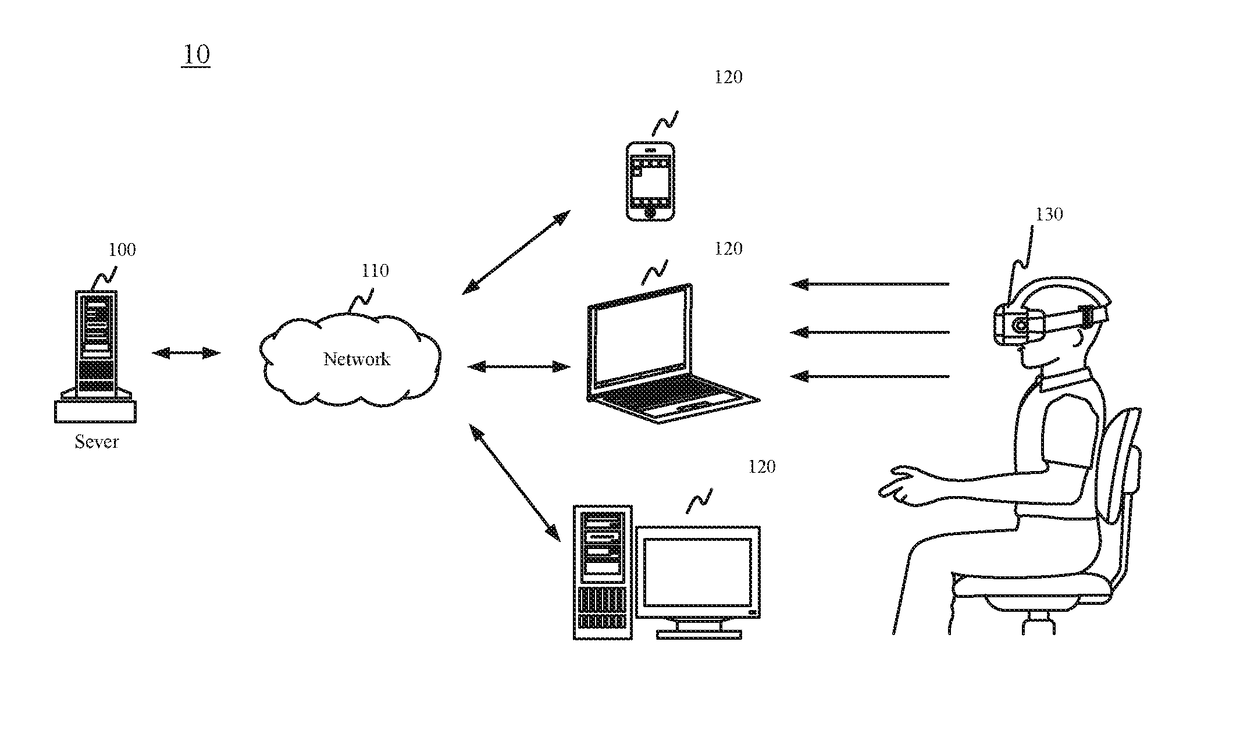

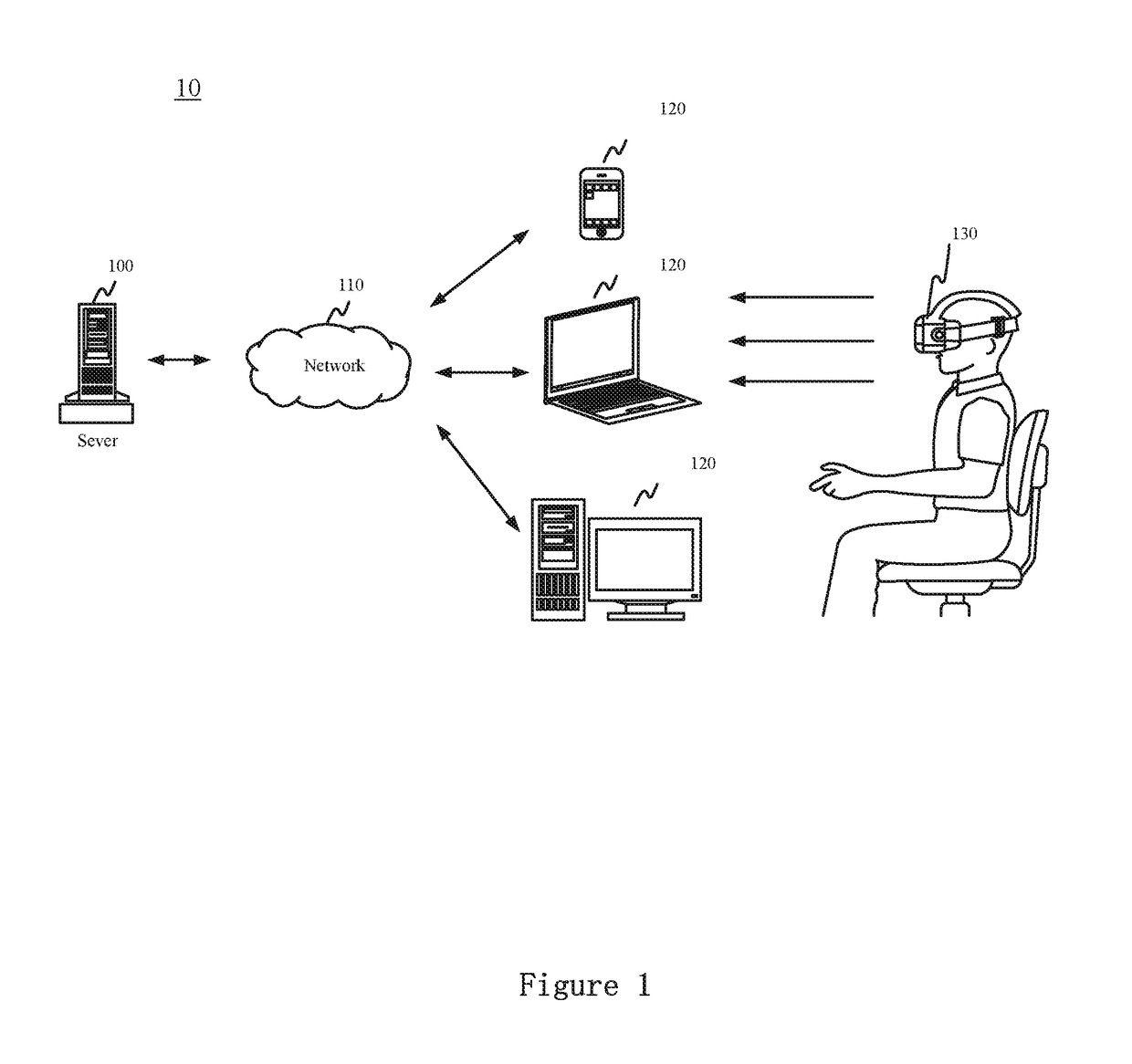

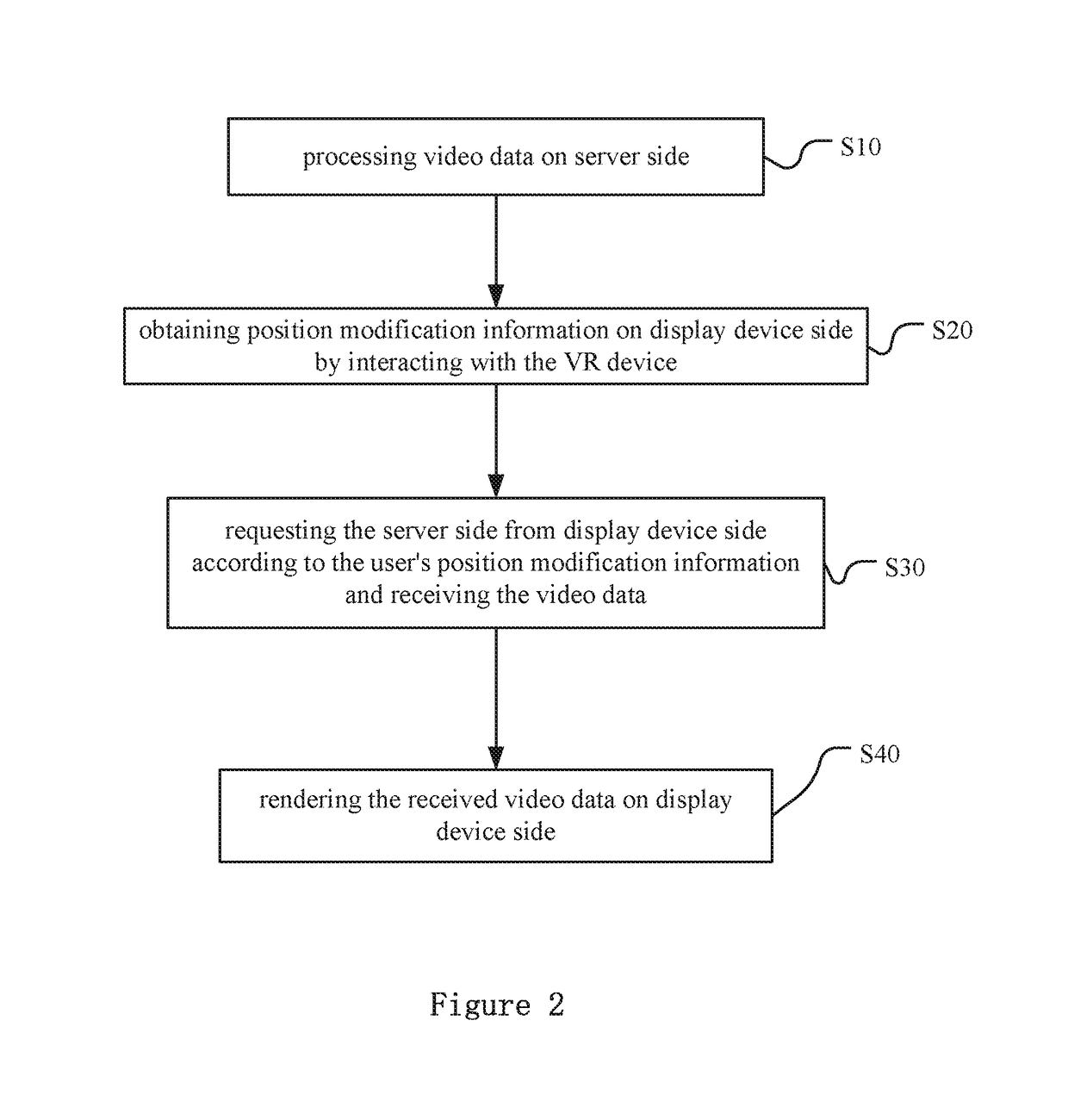

Method and System for Real-Time Rendering Displaying High Resolution Virtual Reality (VR) Video

InactiveUS20180192026A1Reduce data transferImprove efficiencyTelevision system detailsGeometric image transformationData transmissionVideo texture

A method and a system for rendering VR video are disclosed. In the method, a base video model and an enhancement video model are built respectively, with UV coordinates being initialized. base video segments and enhancement video segments are obtained according to a user's viewport. A base video texture is generated according to pixel information of the base video segments and the UV coordinates of the base video model. An enhancement video texture is generated according to pixel information of the enhancement video segments and the UV coordinates of the enhancement video model. Pixel information is reconstructed by adding the base video texture and the enhancement video texture with each other according to alignment coordinates. An image is drawn according to the reconstructed pixel information. The method reduces data transmission and improves rendering efficiency without affecting the user's viewing experience.

Owner:BLACK SAILS TECH INC

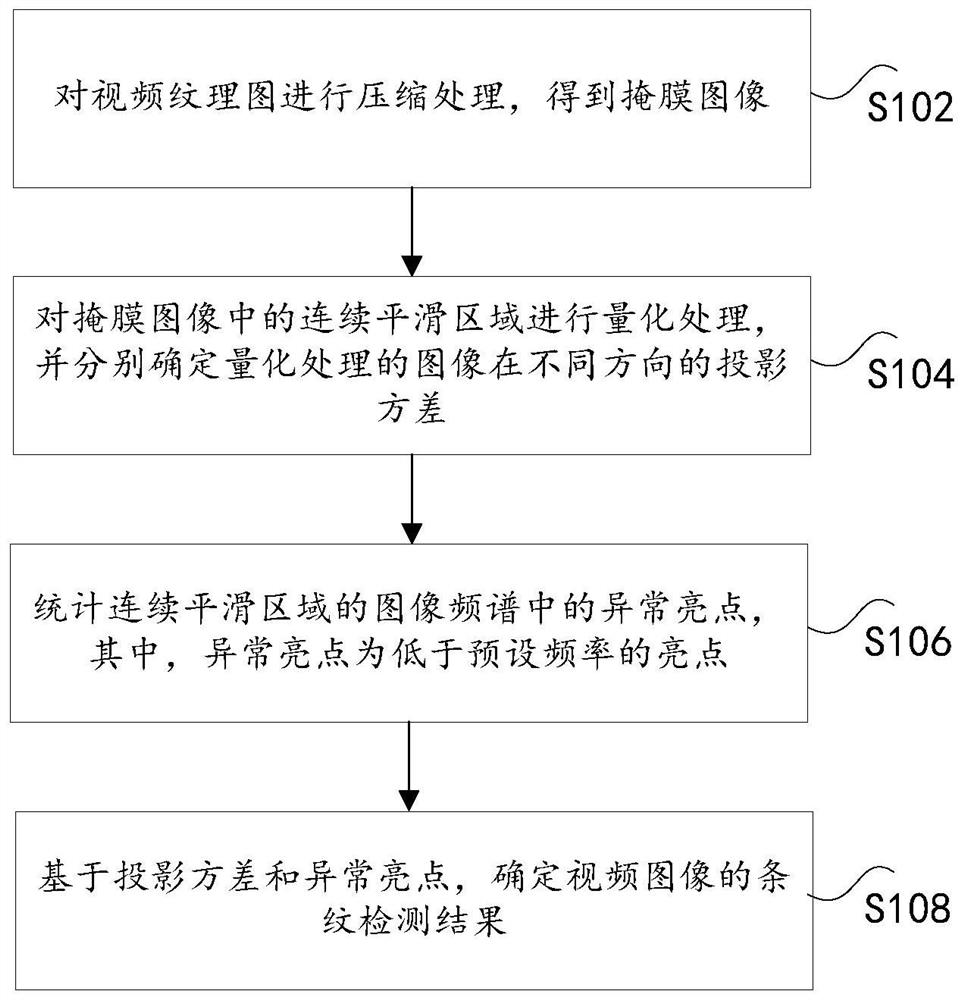

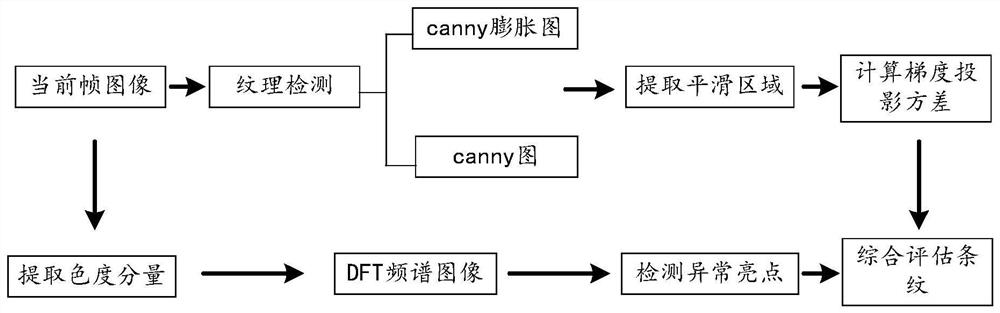

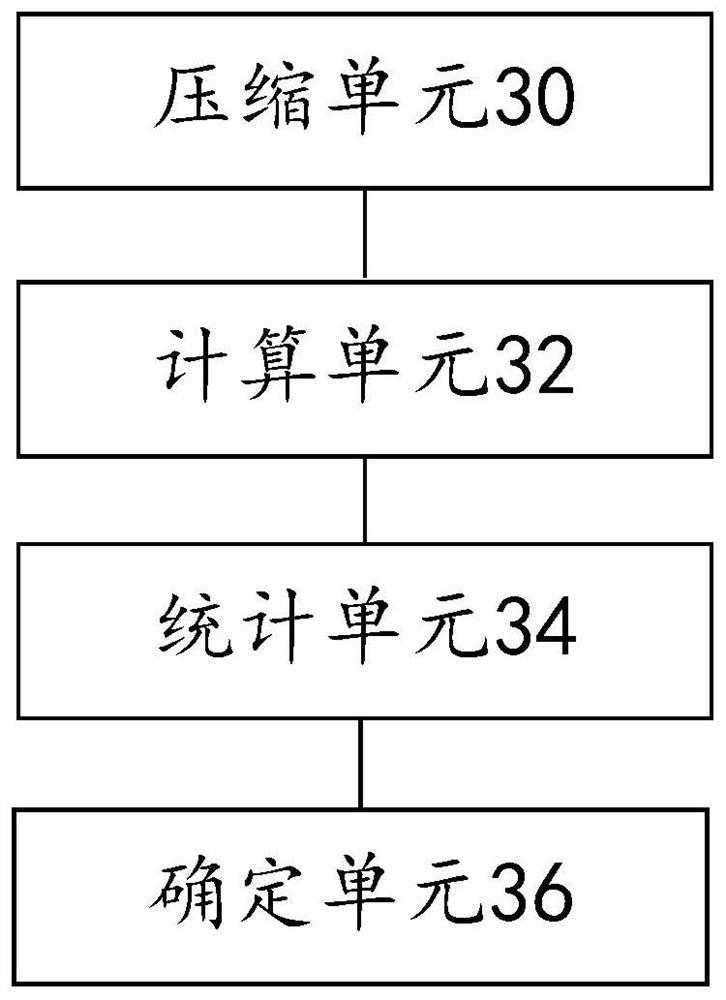

Video image stripe detection method and device, and computer readable storage medium

PendingCN113569713AImprove robustnessAbility to detect dynamic changes in videoCharacter and pattern recognitionPattern recognitionImage pair

The invention discloses a stripe detection method and device for a video image and a computer readable storage medium. The detection method comprises the steps: compressing a video texture image to obtain a mask image, wherein the video texture image is an image obtained after a video image is preprocessed; carrying out quantization processing on a continuous smooth region in the mask image, and respectively determining projection variances of the quantized image in different directions; counting abnormal bright spots in the image spectrum of the continuous smooth area, the abnormal bright spots being bright spots lower than a preset frequency; and determining a stripe detection result of the video image based on the projection variance and the abnormal bright spot. According to the invention, the technical problems of poor robustness and low efficiency of video image stripe detection in the prior art are solved.

Owner:ZHEJIANG DAHUA TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com