Automatic matching correction method of video texture projection in three-dimensional virtual-real fusion environment

A technology of video texture and virtual reality fusion, applied in the field of virtual reality, can solve problems such as lack of too much reference significance, achieve the effect of improving visual effects, overcoming scene conditions, and improving efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

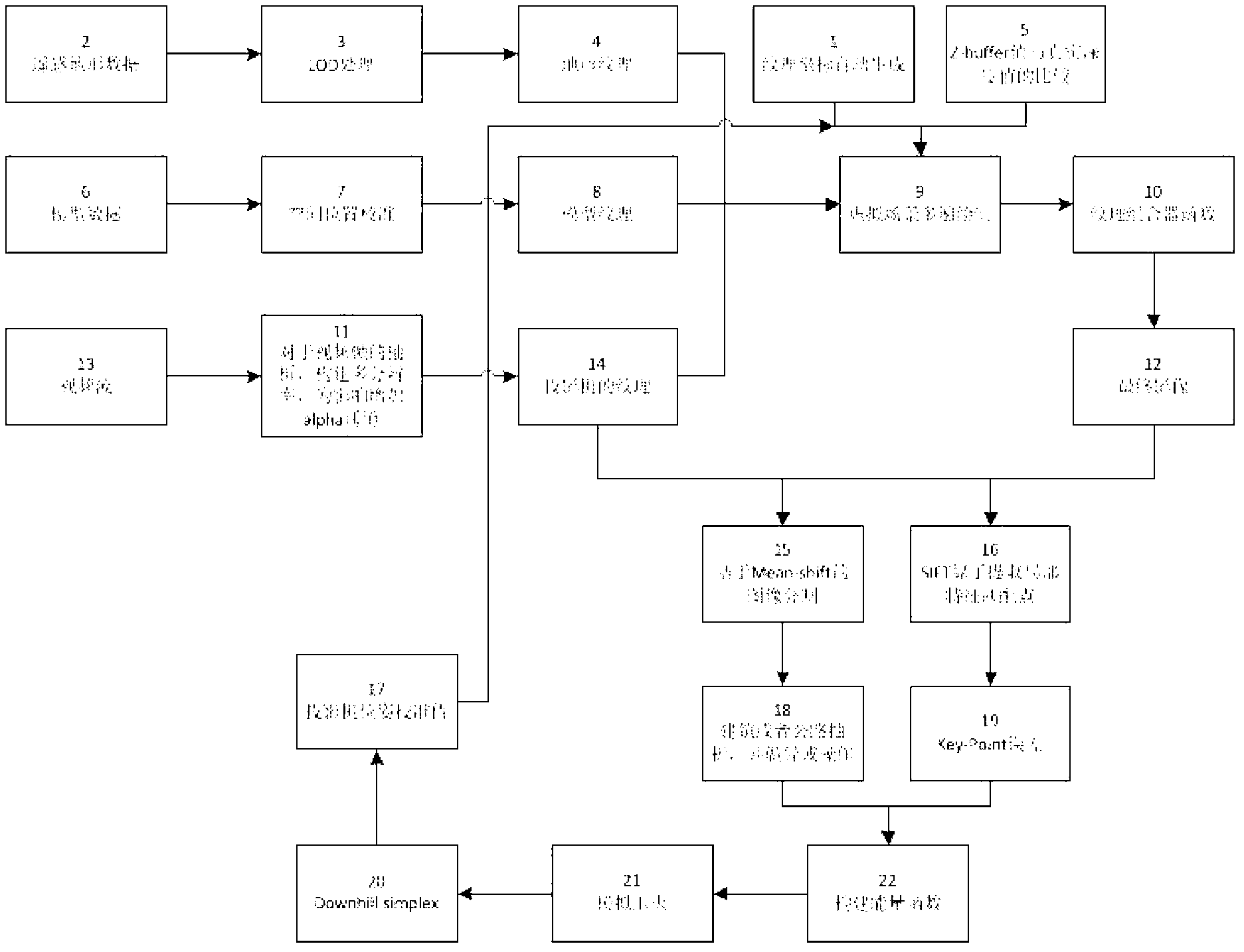

Method used

Image

Examples

Embodiment Construction

[0057] The technical solutions in the embodiments of the present invention will be clearly and completely described below in conjunction with the accompanying drawings in the embodiments of the present invention. It should be understood that the described embodiments are only some of the embodiments of the present invention, not all of them. example. Based on the embodiments of the present invention, all other embodiments obtained by those skilled in the art without making creative efforts belong to the protection scope of the present invention.

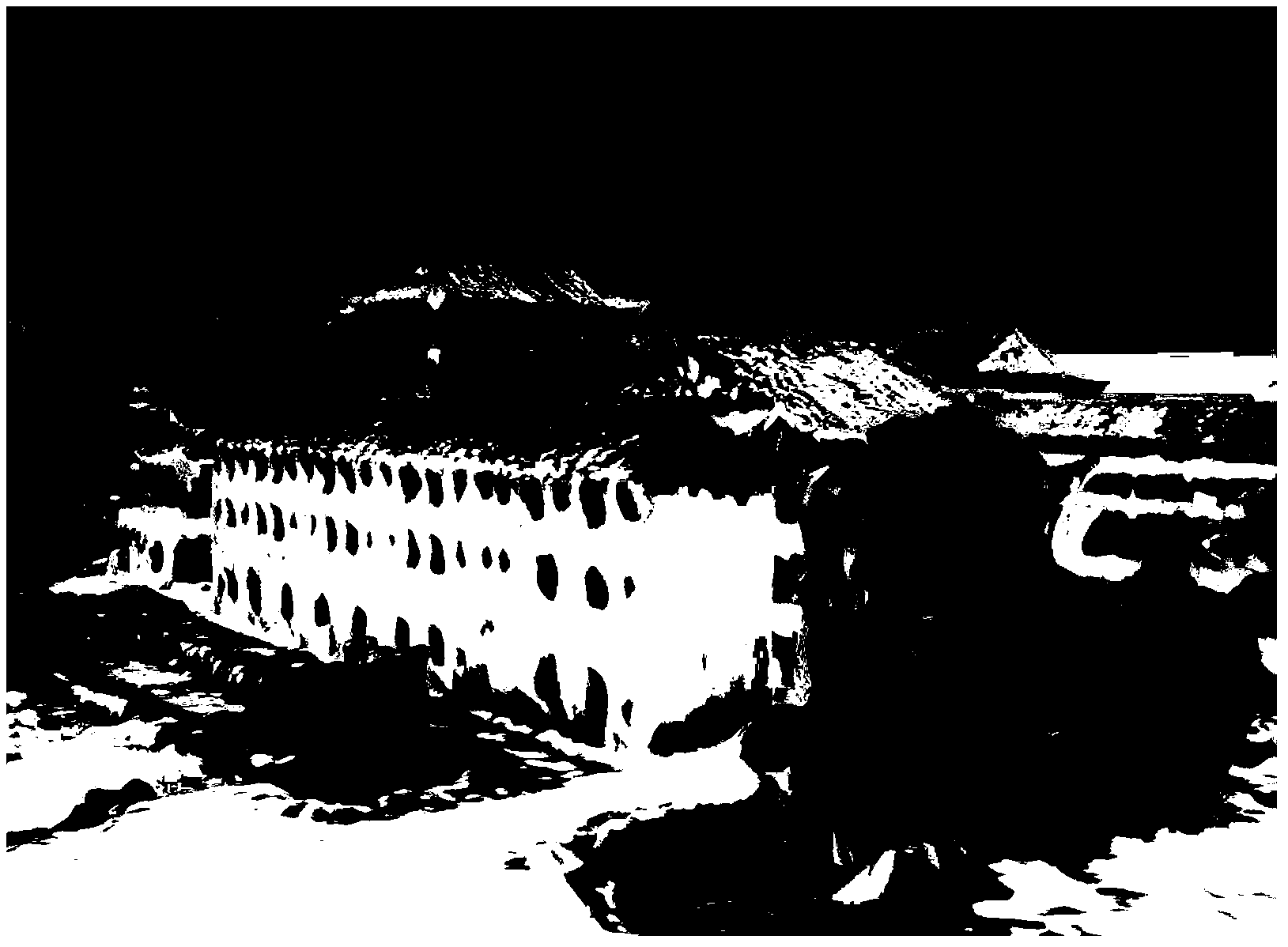

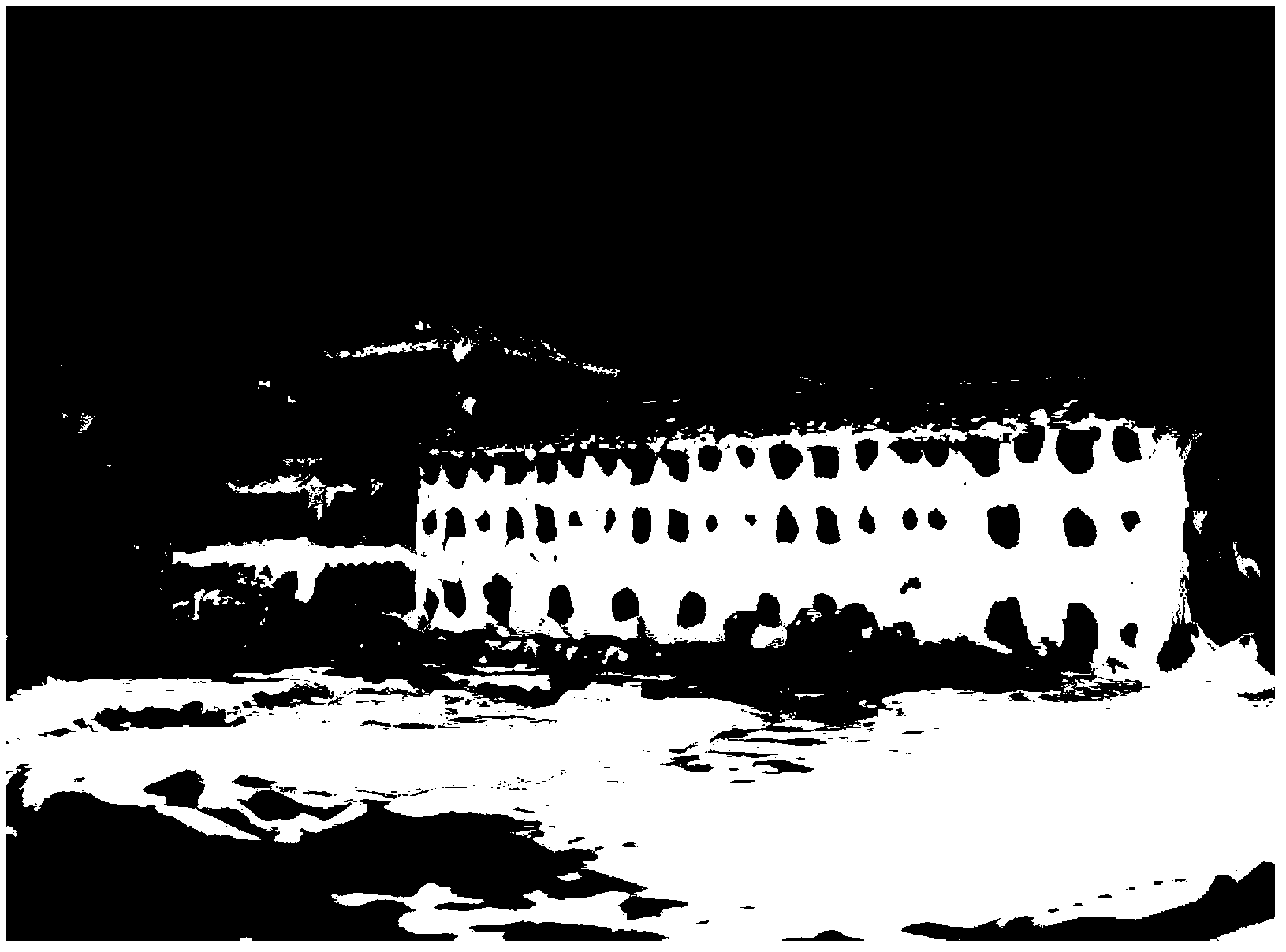

[0058] (1) Construct a virtual scene. Use pre-acquired remote sensing images to set terrain textures, construct models with static texture images on the surface and virtual scenes composed of models in virtual space, the spatial position of models in the scene and the relative position, orientation, size and other elements between models should be as close as possible It may be consistent with the real scene.

[0059] (2) Acquire v...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com