Patents

Literature

396 results about "Color consistency" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Color consistency refers to the average amount of variation in chromaticity among a batch of supposedly identical lamp samples. Generally speaking, the more complicated the physics and chemistry of the light source, the more difficult it is to manufacture with consistent color properties.

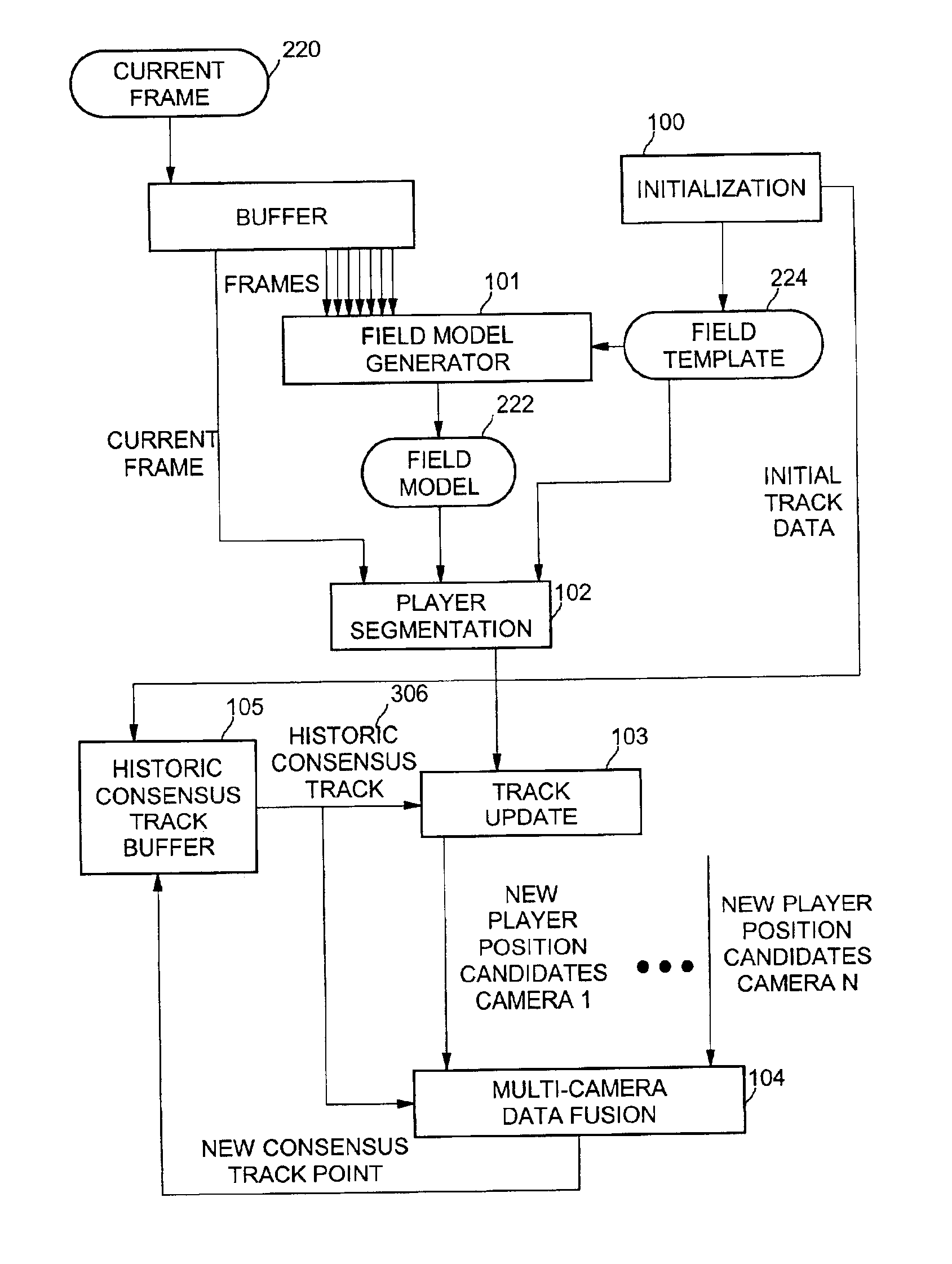

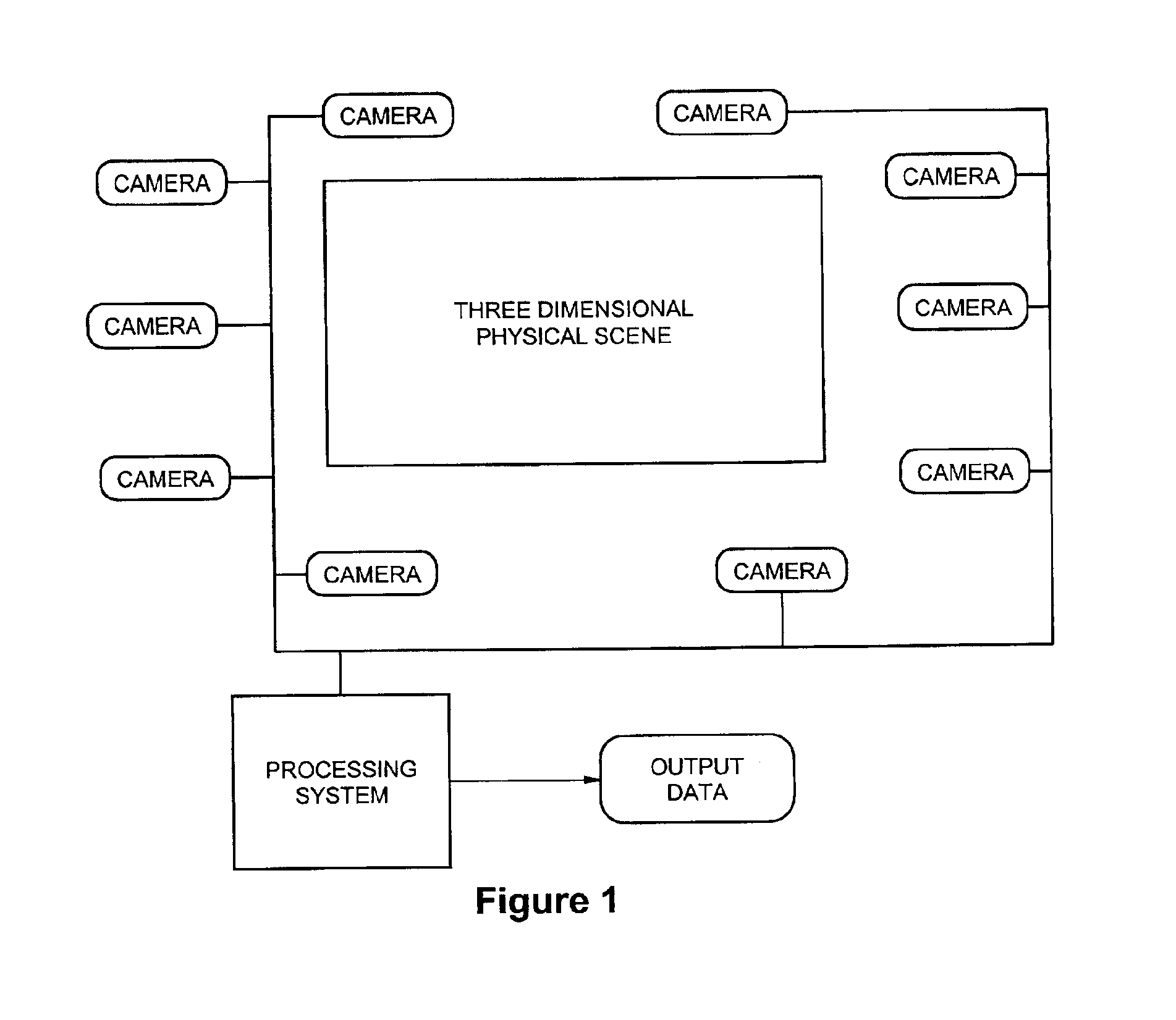

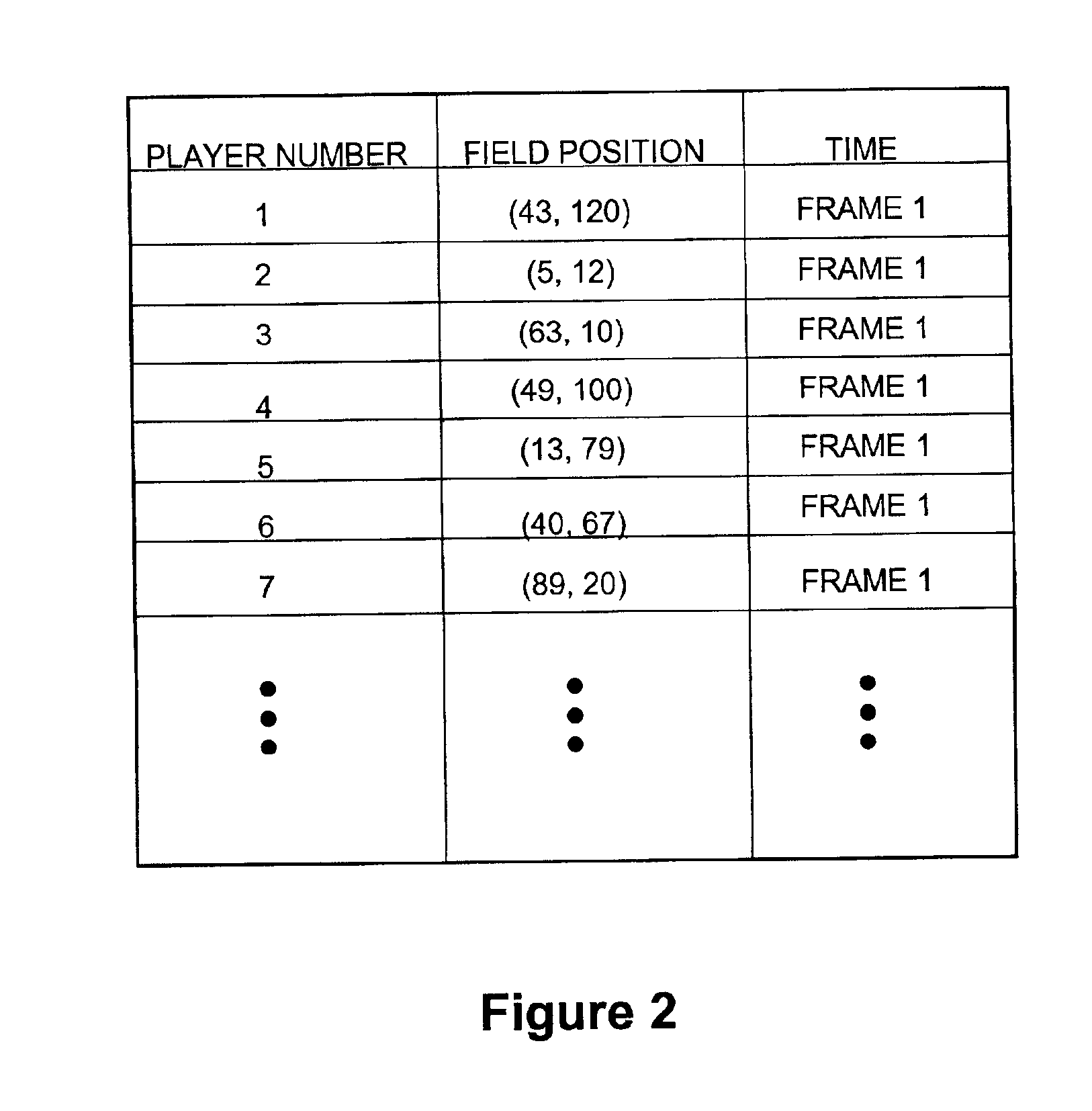

Method for simultaneous visual tracking of multiple bodies in a closed structured environment

Simultaneous tracking of multiple objects in a sequence of video frames captured by multiple cameras may be accomplished by extracting a foreground elements from a background in a frame, segmenting objects from the foreground surface, tracking objects within the frame, globally tracking positions of objects over time across multiple frames, fusing track data of objects obtained from multiple cameras to infer object positions, and resolving conflicts to estimate most likely object positions over time. Embodiments of the present invention improve substantially over existing trackers by including a technique for extraction of the region of interest that corresponds to a playing field, a technique for segmenting players from the field under varying illuminations, a template matching criteria that does not rely on specific shapes or color coherency of objects but on connected component properties, and techniques for reasoning about occlusions and consolidating tracking data from multiple cameras.

Owner:UATC LLC

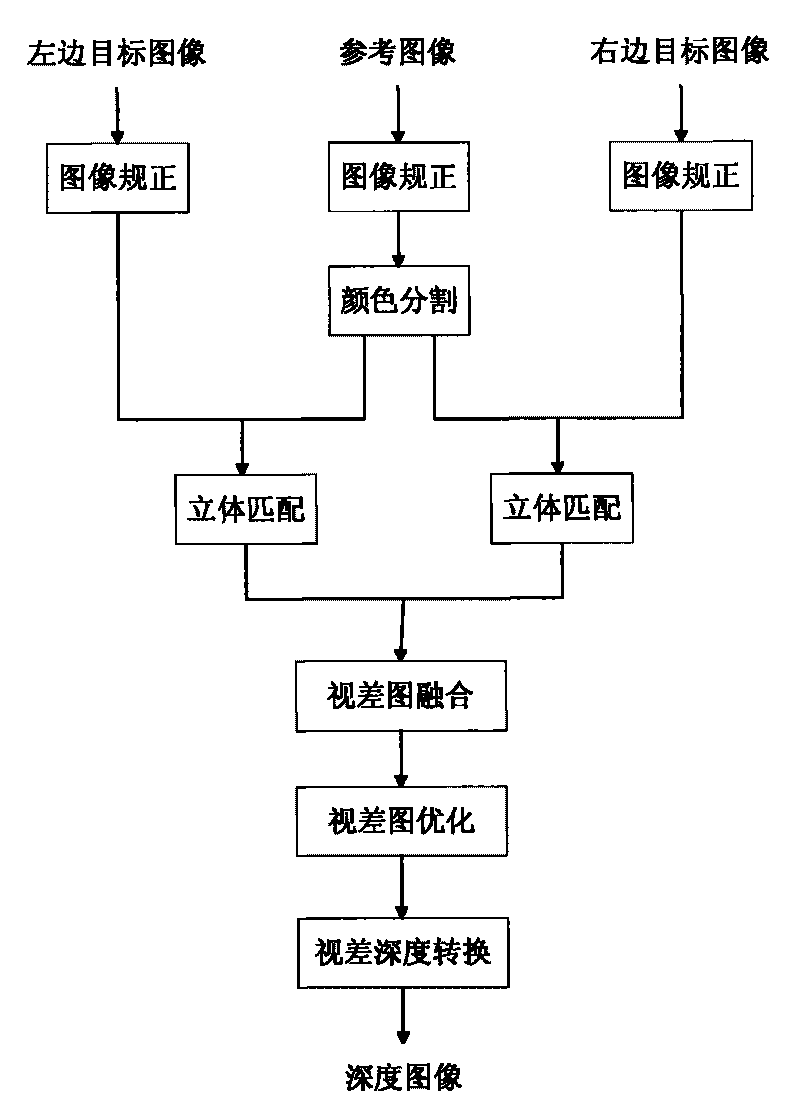

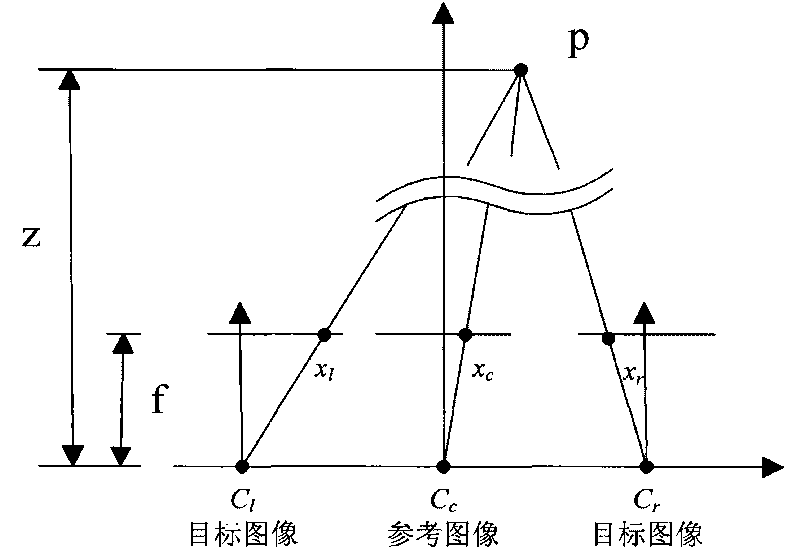

Method for acquiring range image by stereo matching of multi-aperture photographing based on color segmentation

InactiveCN101720047AEliminate mismatch pointsImproved Parallax AccuracyImage analysisSteroscopic systemsParallaxStereo matching

The invention discloses a method for acquiring a range image by stereo matching of multi-aperture photographing based on color segmentation. The method comprises the following steps of: (1) carrying out image correction on all input images; (2) carrying out the color segmentation on a reference image, and extracting an area with consistent color in the reference image; (3) respectively carrying out local window matching on the multiple input images to obtain multiple parallax images; (4) removing mismatched points which are generated during the matching by applying a bilateral matching strategy; (5) synthesizing the multiple parallax images into a parallax image, and filling parallax information of the mismatched points; (6) carrying out post-treatment optimization on the parallax image to obtain a dense parallax image; and (7) converting the parallax image into a range image according to the relationship between parallax and depth. By acquiring range information from multiple viewpoint images and utilizing image information provided by the multiple viewpoint images, the invention can not only solve the problem of mismatching brought by periodic repetitive textural features and shelter and the like in the images, but also can improve matching precision and obtain an accurate range image.

Owner:SHANGHAI UNIV

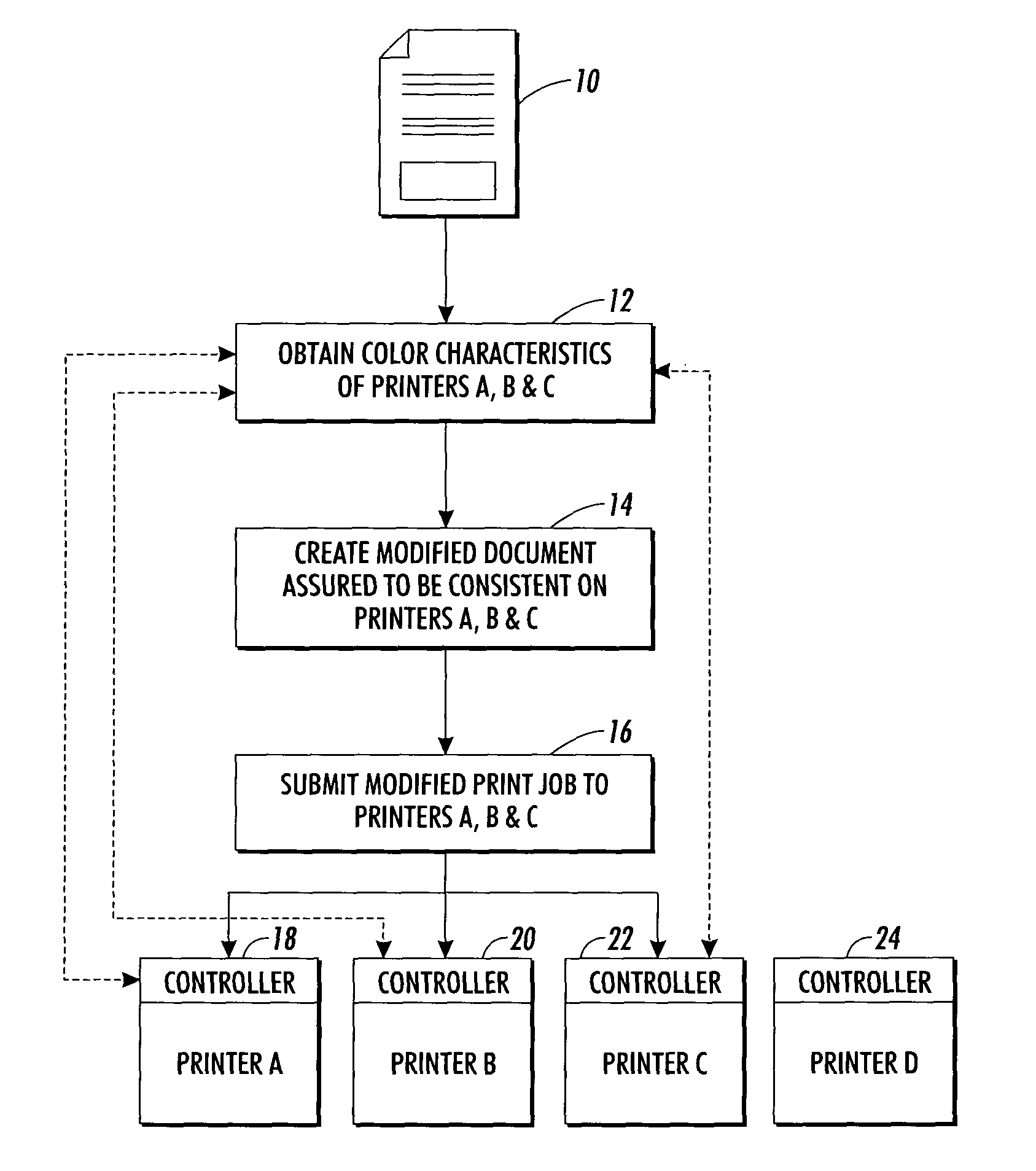

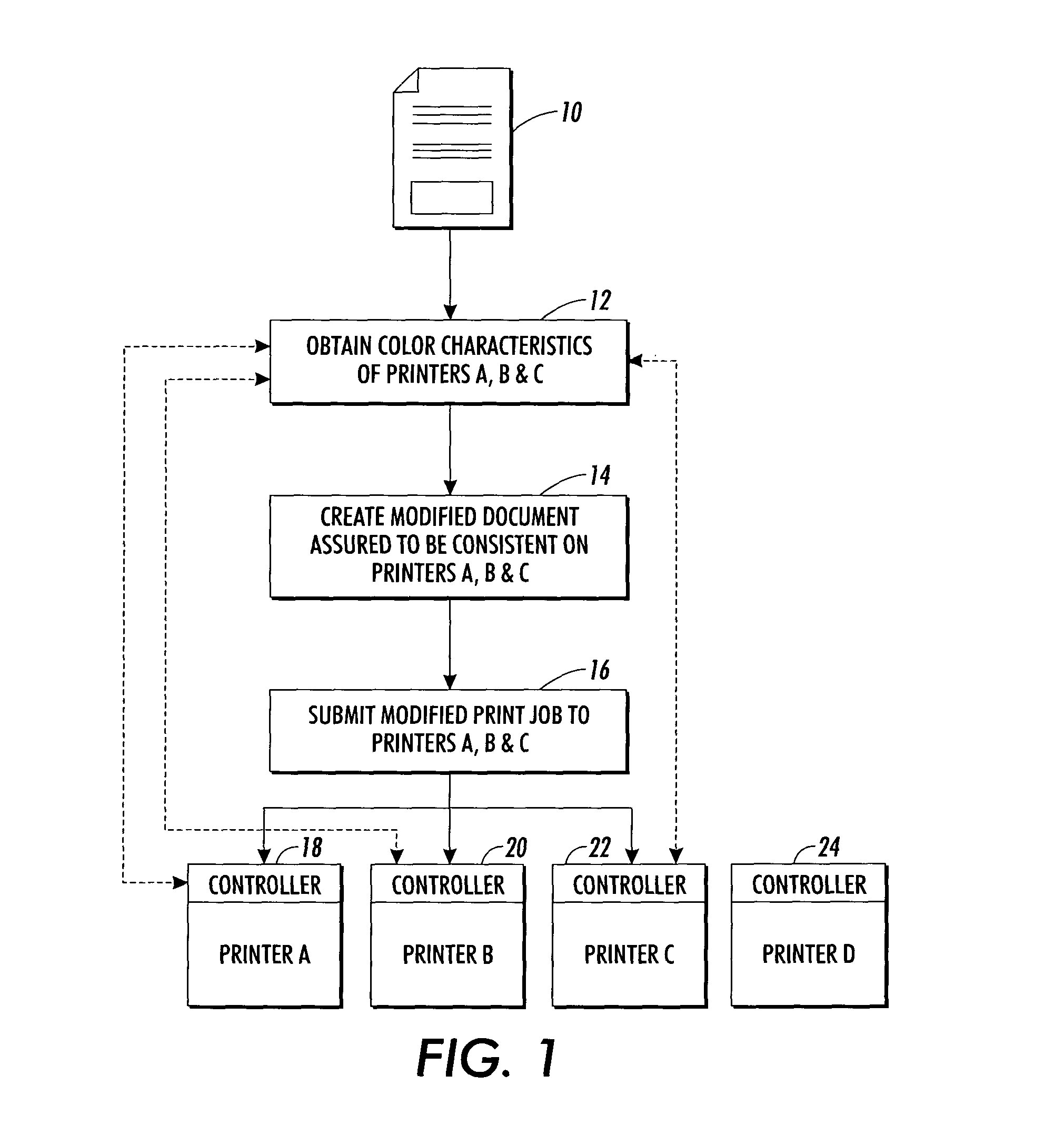

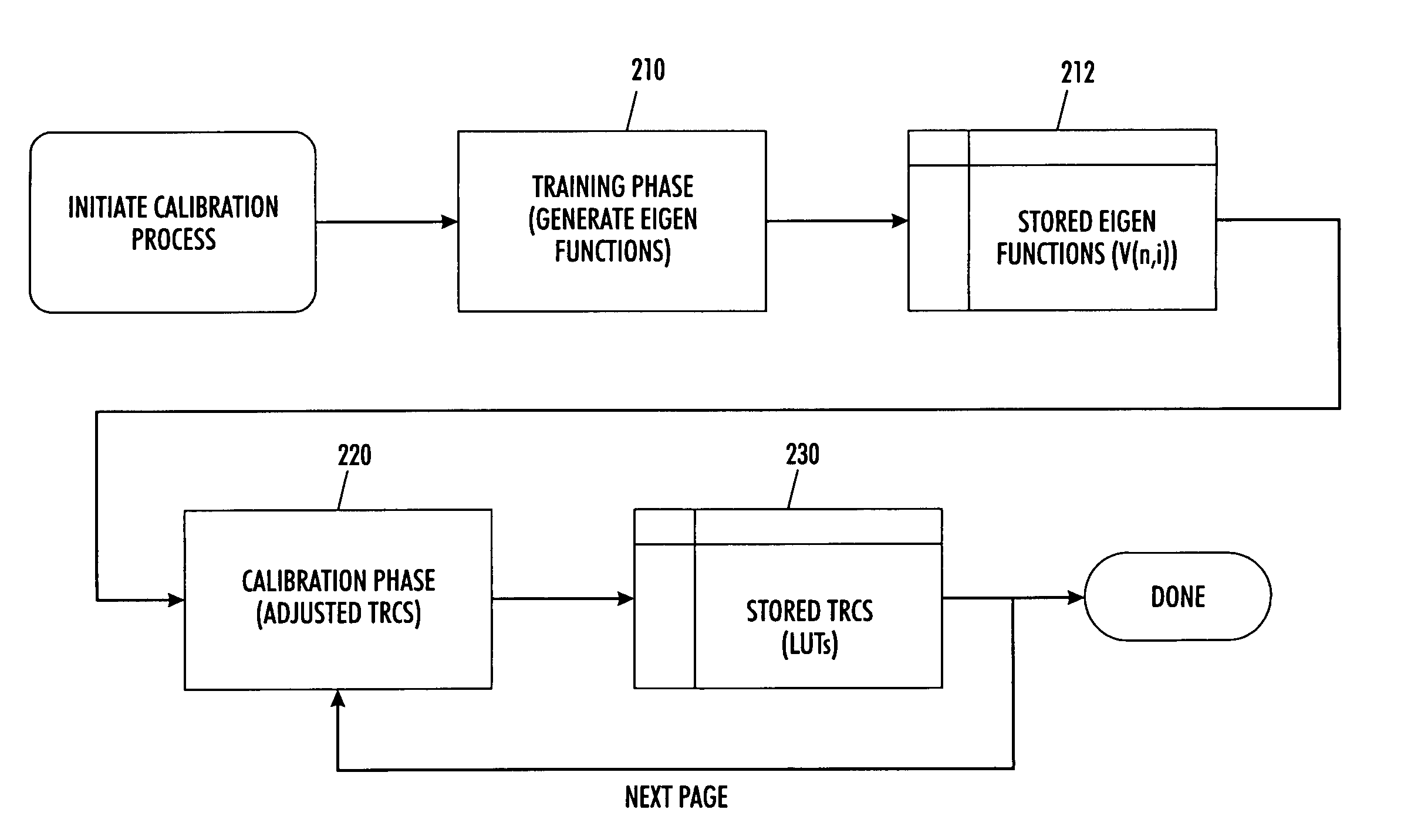

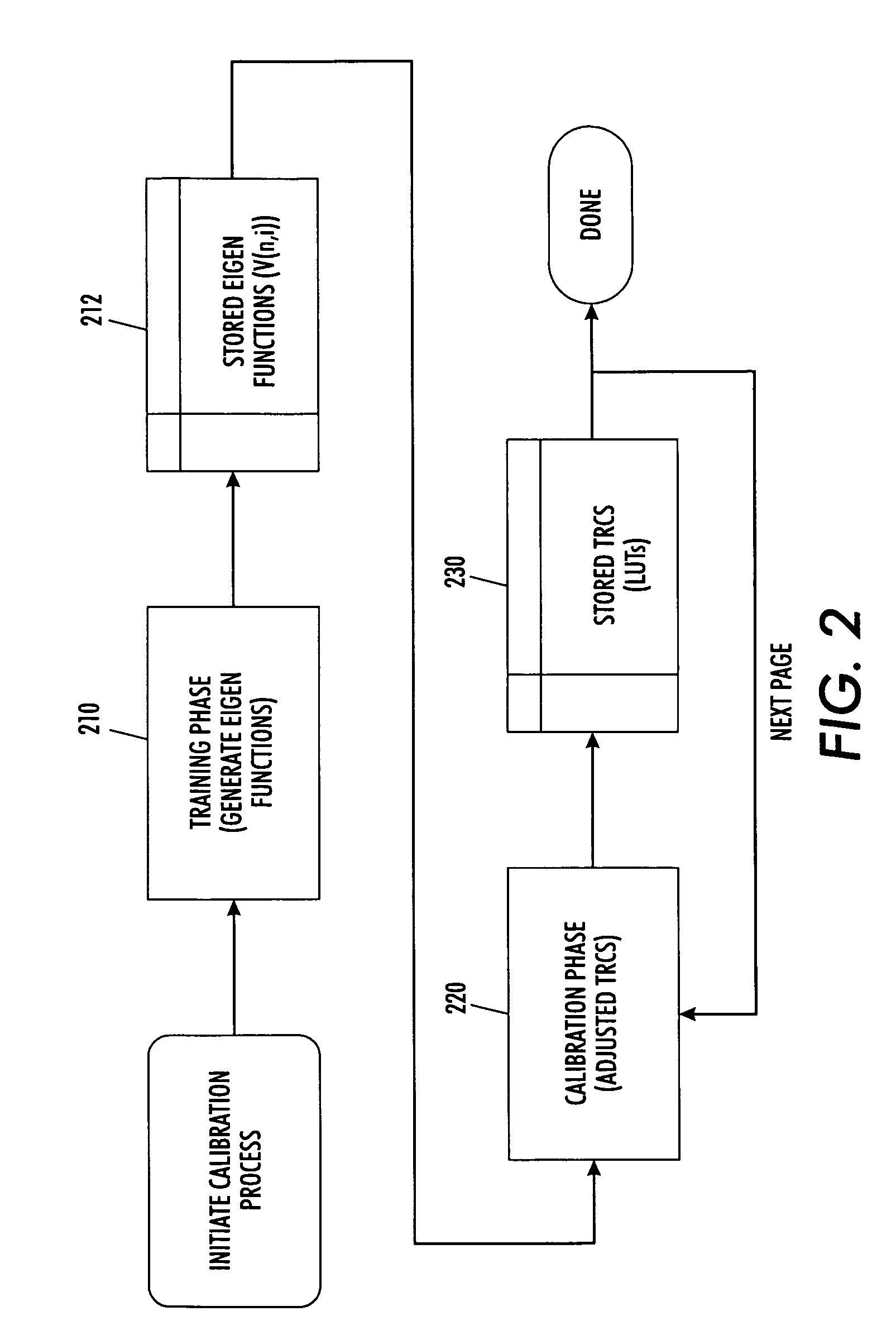

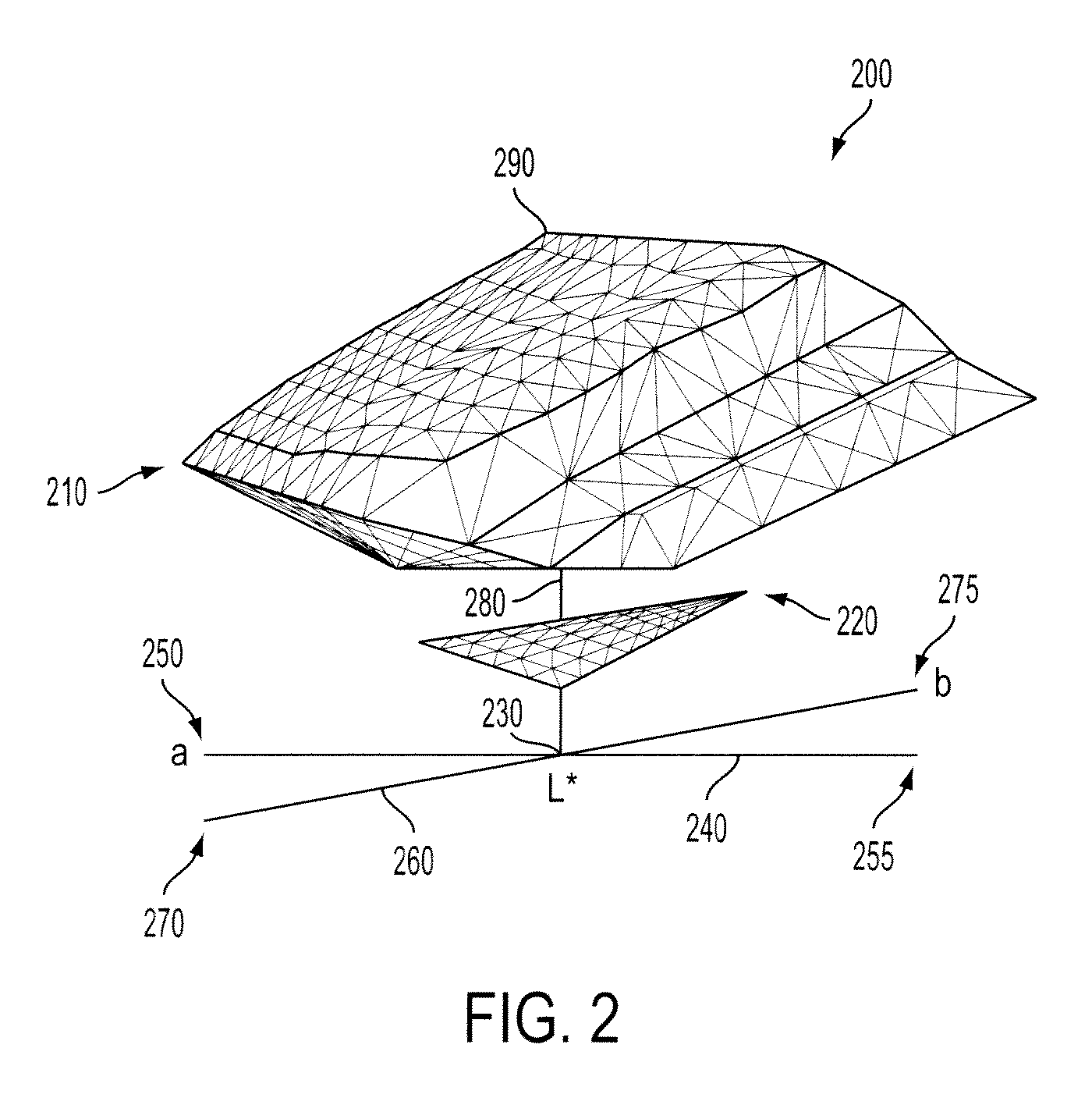

System and method for obtaining color consistency for a color print job across multiple output devices

ActiveUS20050036159A1Maintain color consistencyDigitally marking record carriersDigital computer detailsGamutColor mapping

A method for maintaining color consistency in an environment of networked devices is disclosed. The method involves identifying a group of devices to which a job is intended to be rendered; obtaining color characteristics from devices in the identified group; modifying the job based on the obtained color characteristics; and rendering the job on one or more of the devices. More specifically, device controllers associated with each of the output devices are queried to obtain color characteristics specific to the associated output device. Preferably, the original job and the modified job employ device independent color descriptions. Modifications are computed by a transform determined by using the color characteristics of the output devices along with the content of the job itself. The method further comprises mapping colors in the original job to the output devices' common gamut, i.e., intersection of the gamuts of the individual printers wherein the color gamut of each device is obtained from a device characterization profile either by retrieving the gamut tag or by derivation using the characterization data in the profile. The color gamut of each device is computed with knowledge of the transforms that relate device independent color to device dependent color using a combination of device calibration and characterization information. Alternatively, transformations are determined dynamically based on the characteristics of the target group of output devices. From the individual color gamuts of the devices, a common intersection gamut is derived. The common intersection gamut derivation generally comprises an intersection of two three-dimensional volumes in color space. This may be performed geometrically by intersecting the surfaces representing the boundaries of the gamut volumes—which are typically chosen as triangles. Alternately, the intersection may be computed by generating a grid of points known to include all involved device gamuts. This is then mapped sequentially to each individual gamut in turn resulting in a set of points that lie within the common gamut to produce a connected gamut surface. Once the common intersection gamut is derived, the input job colors are mapped to this gamut. The optimal technique generally depends on the characteristics of the input job and the user's rendering intent. Final color correction employs a standard calorimetric transform for each output device that does not involve any gamut mapping.

Owner:XEROX CORP

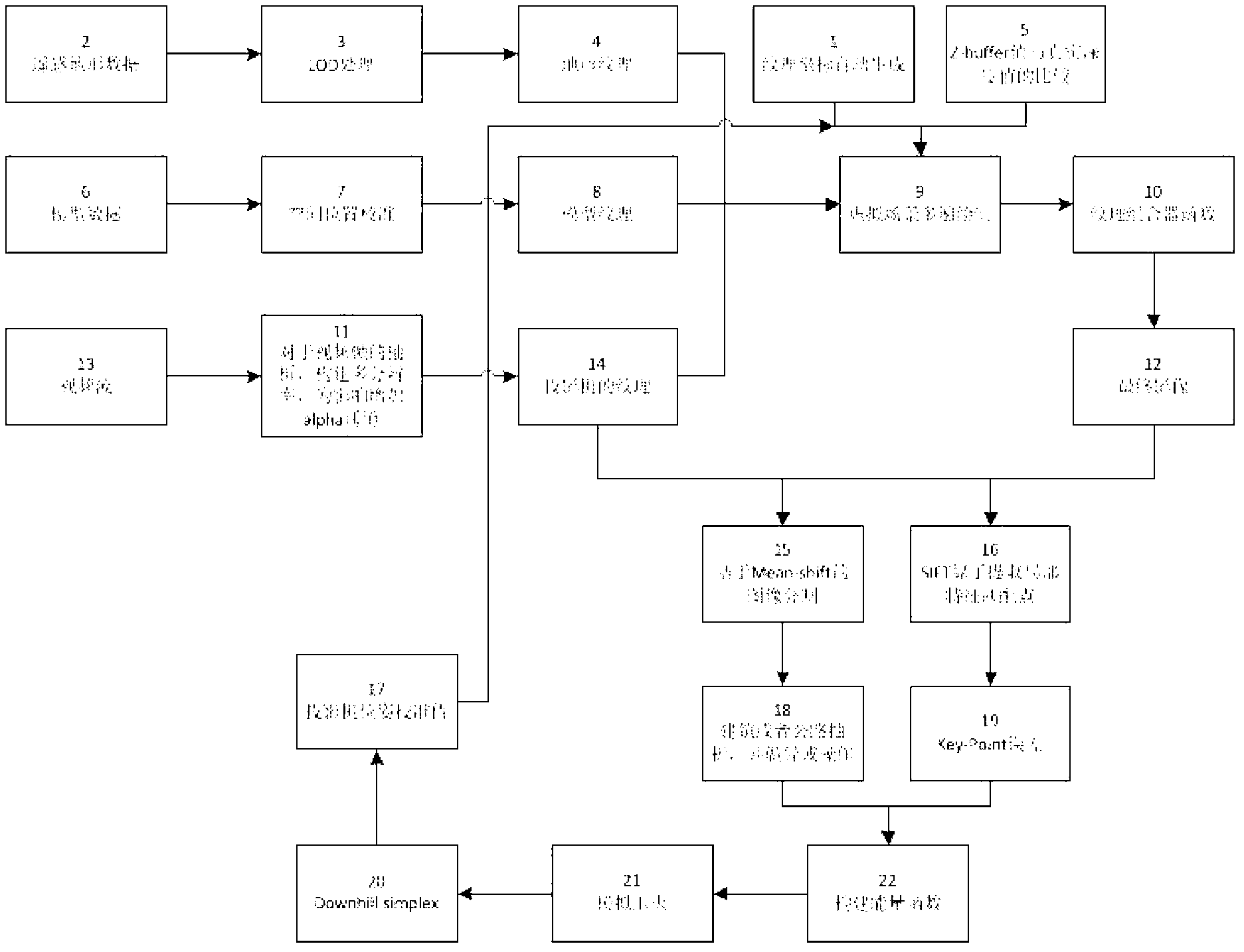

Automatic matching correction method of video texture projection in three-dimensional virtual-real fusion environment

ActiveCN103226830AAchieve integrationIncrease the number ofImage analysisCorrection algorithmEarth surface

The invention relates to an automatic matching correction method of a video texture projection in a three-dimensional virtual-real fusion environment and a method of fusing an actual video image and a virtual scene. The automatic matching correction method comprises the steps of constructing the virtual scene, obtaining video data, fusing video textures and correcting a projector. A shot actual video is subjected to virtual scene fusion on the surface of the complicated scene such as an earth surface and a building by a texture projection mode, the expression and showing abilities of dynamic scene information in the virtual-real environment are improved, and the layered sense of the scene is enhanced. A dynamic video texture coverage effect of the large-scale virtual scene can be realized by increasing the number of the videos at different shooting angles, so that a dynamic reality effect of the virtual-real fusion of the virtual-real environment and the display scene is realized. The obvious color jump is eliminated, and a visual effect is improved by conducting color consistency processing on video frames in advance. With the adoption of an automatic correction algorithm, the virtual scene and the actual video can be fused more precisely.

Owner:北京微视威信息科技有限公司

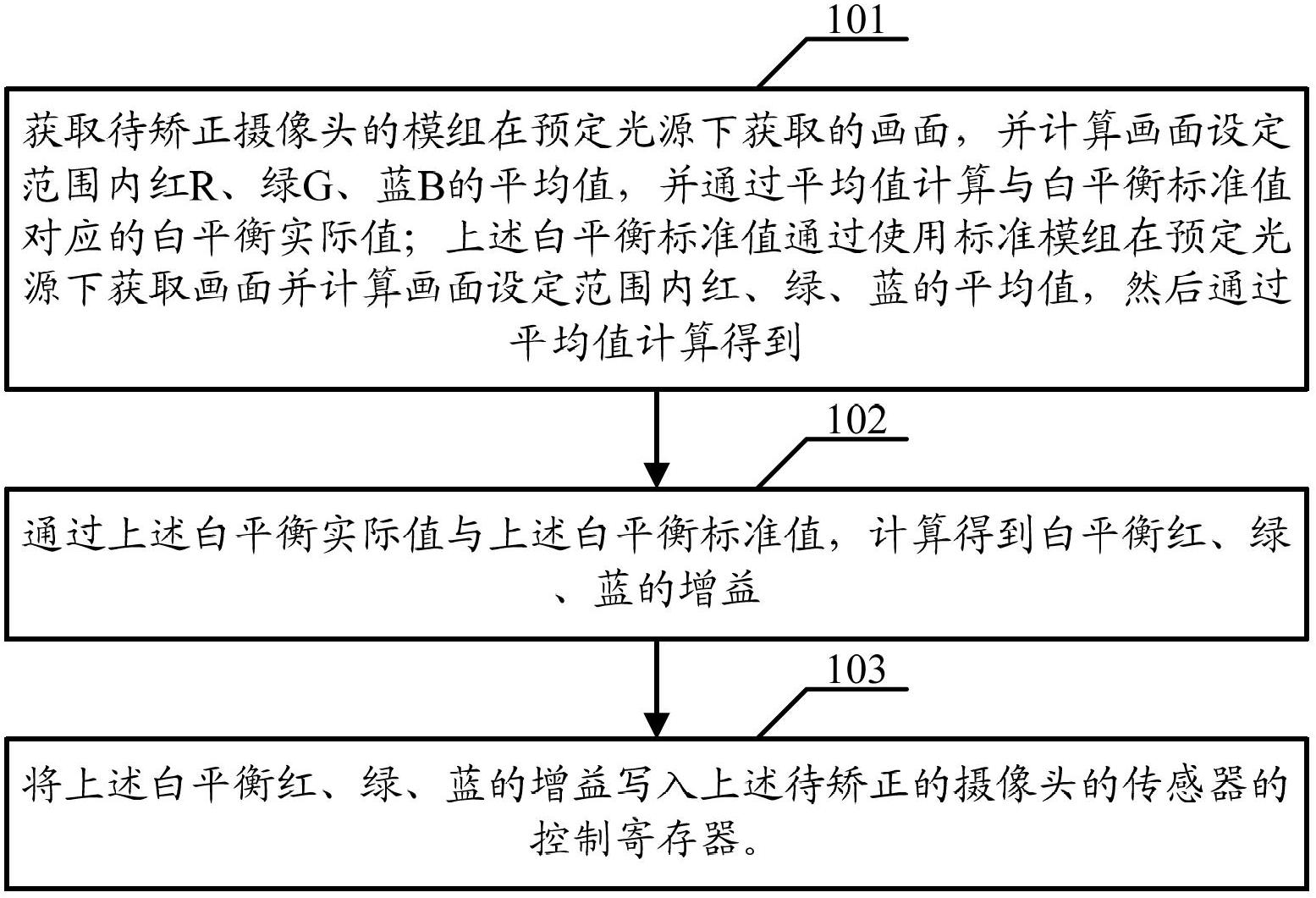

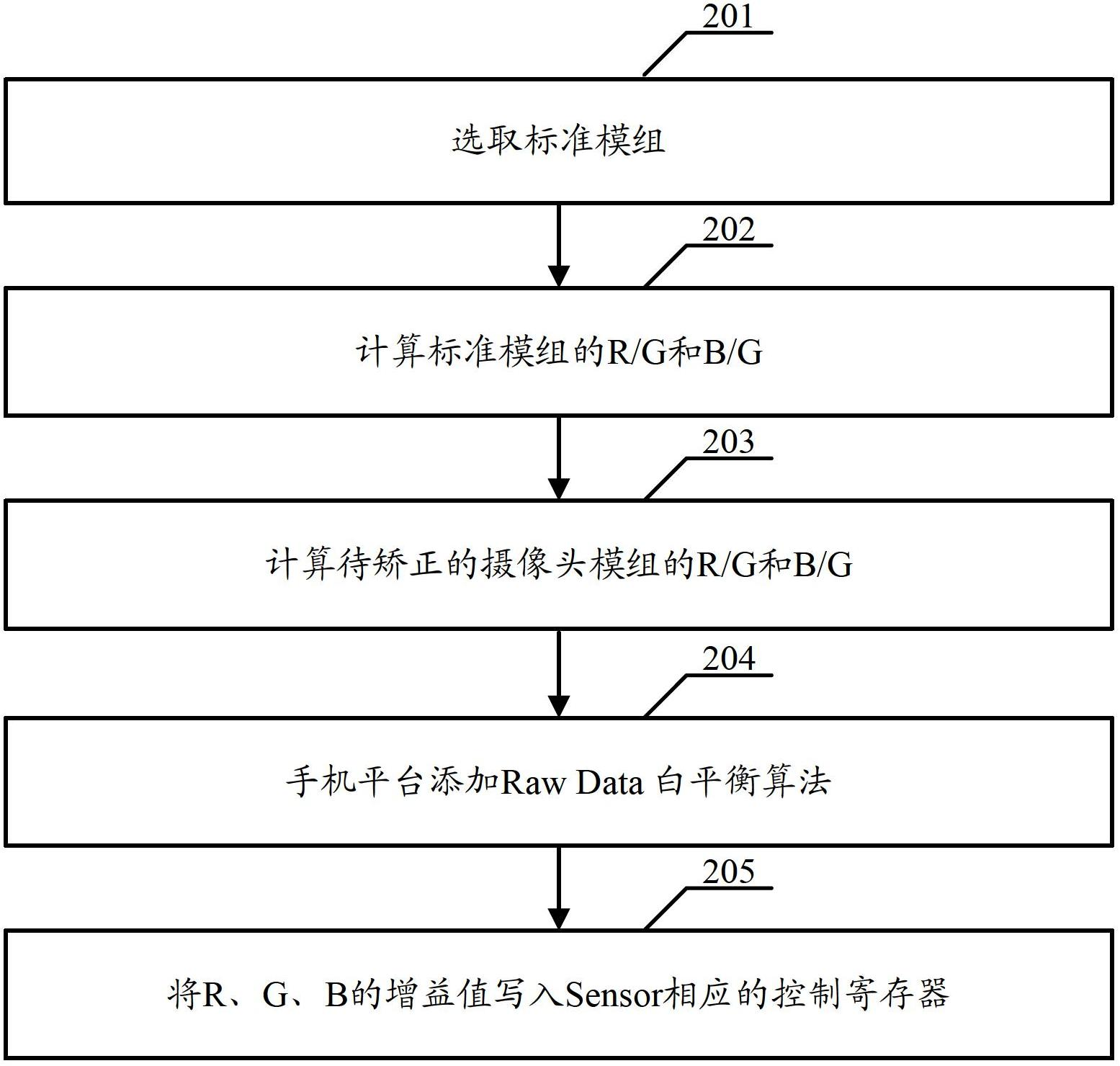

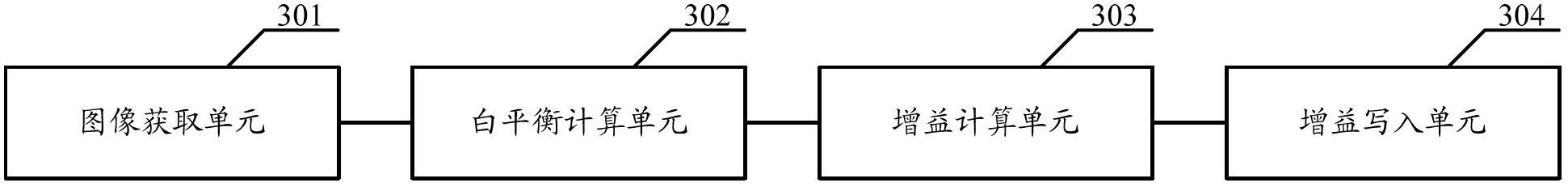

White balance processing method and device

InactiveCN102685513ASolve the problem of color castSolving Color Consistency IssuesColor signal processing circuitsControl registerConsistency problem

The embodiment of the invention discloses a white balance processing method and device. The white balance processing method comprises the following steps of: obtaining a picture obtained by a module of a camera to be corrected under a pre-set light source, calculating an average value of red R, green G and blue B within a picture setting range, and calculating a white balance actual value corresponding to a white balance standard value through the average value, wherein the white balance standard value is obtained by obtaining a picture under the pre-set light source by using a standard module, calculating the average value of the red, the green and the blue within the picture setting range, and calculating through average value; obtaining gains of the red, the green and the blue of the white balance into a control register of a sensor of the camera to be corrected through a white balance value actual value and the white balance standard value; and writing the gains of the red, the green and the blue of the white balance to a control register of a sensor of the camera to be corrected. The problems of color cast of a single module, color consistency of different modules, and image color cast can be solved by carrying out proper correction of the white balance on RAW DATA output by the camera.

Owner:TRULY OPTO ELECTRONICS

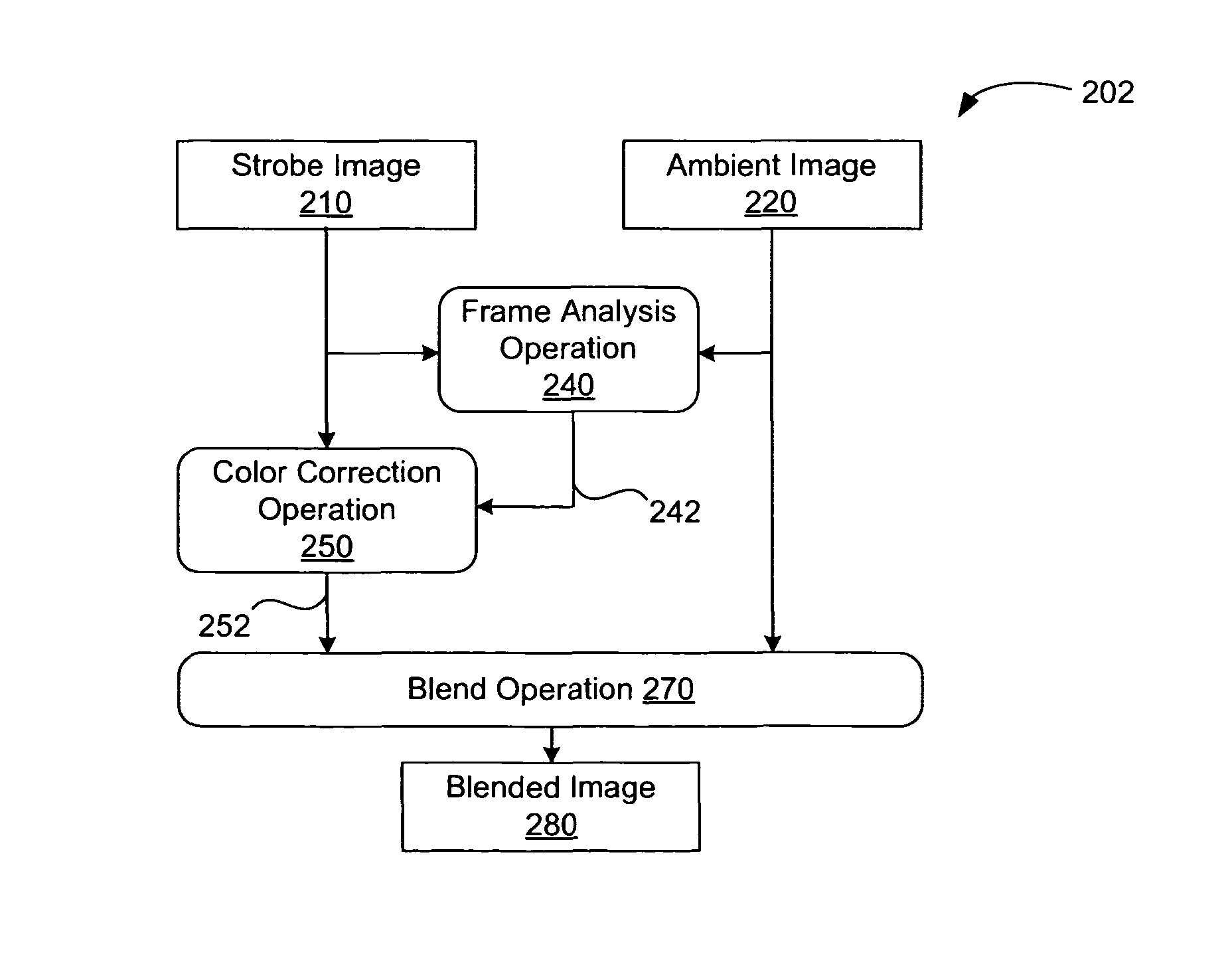

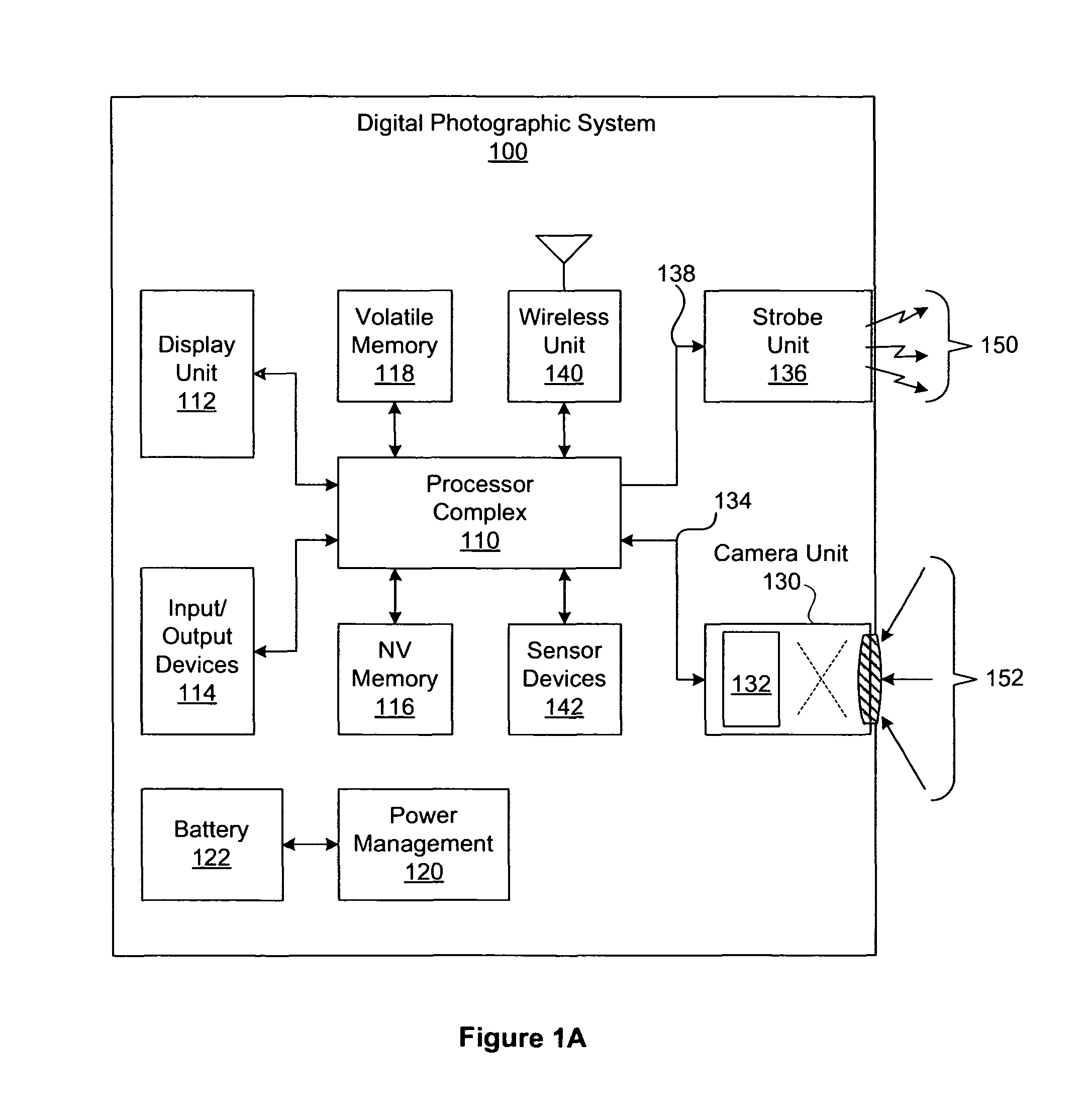

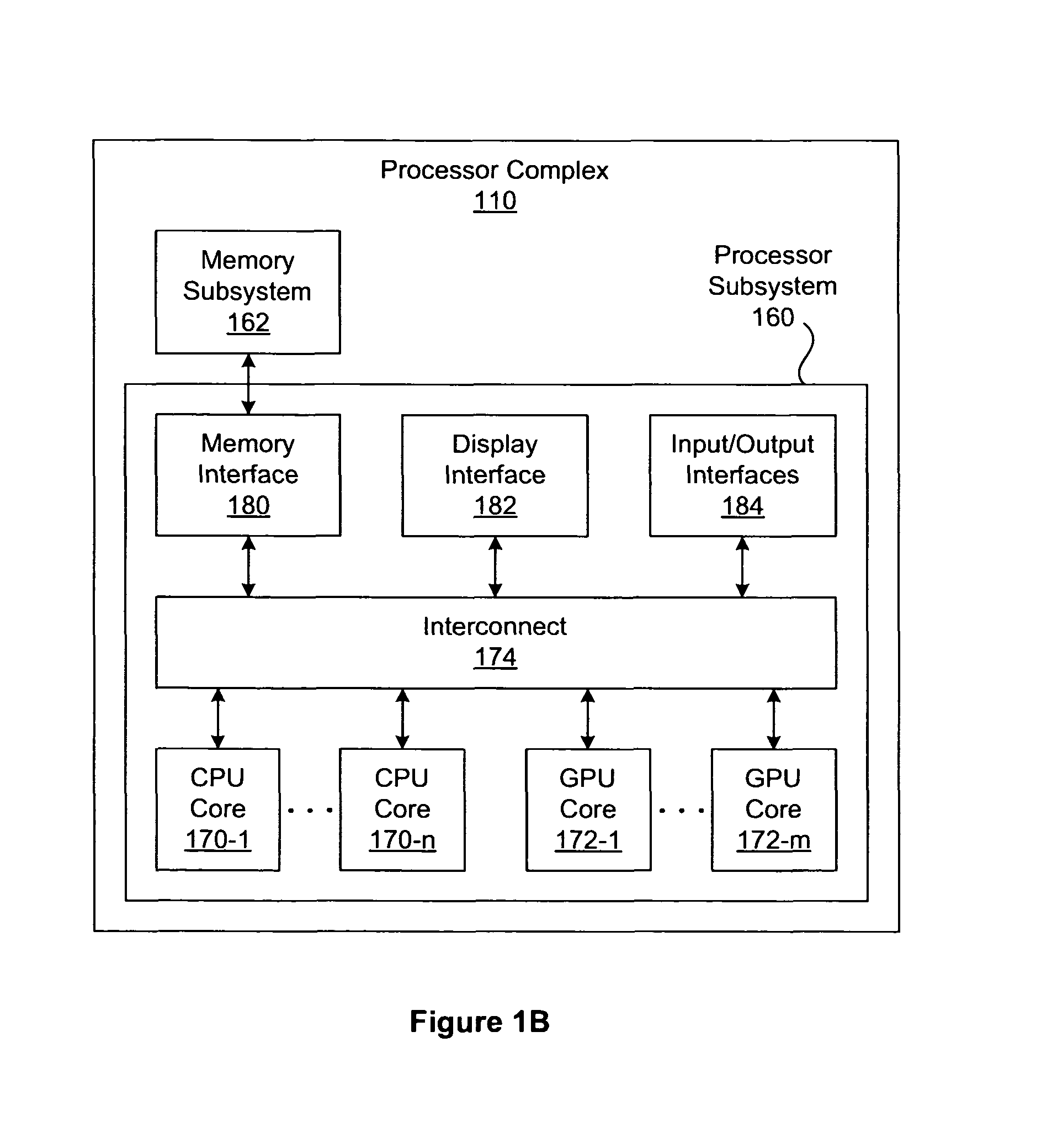

Color balance in digital photography

A technique for generating a digital photograph comprises blending two related images, each sampled according to a different illumination environment. The two related images are blended according to a blend surface function that includes a height discontinuity separating two different blend weight regions. Color consistency between the two related images is achieved by spatial color correction prior to blending. The technique enables a digital camera to generate a strobe image having an appearance of consistent color despite discordant strobe and ambient scene illumination.

Owner:DUELIGHT

Method for calibrating colors of multiple cameras in open environment

InactiveCN102137272AColor Calibration ImplementationColor signal processing circuitsTelevision systemsColor mappingMonitoring system

The invention discloses a method for calibrating colors of multiple cameras in an open environment, comprising the specific steps as follows: firstly, the white balance of a single camera is calibrated by adopting an X-Rite 24 color standard color card so as to solve the problem that physical parameters of the cameras are inconsistent with one another; secondly, data of the X-Rite 24 color standard color card of different cameras under different monitoring scenes is obtained, the position of the color card is obtained in a man-machine interaction way, the position and the color average value of each color lump in the color card are calculated to obtain 24 color values in two scenes with multiple cameras, color mapping relation among the cameras is calculated in a polynominal regression method, and each camera is subjected to color calibration; and finally, areas with similar colors in the two scenes are found according to an H-S color matching method, and color calibration parameters are adjusted in a self-adaption way by detecting the change of pixel colors in the matched areas with the similar colors through on-line detection, thereby ensuring that the multiple cameras in a monitoring system are consistent in color in the open environment.

Owner:XIAN UNIV OF TECH

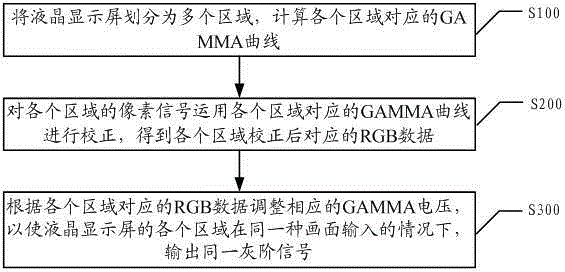

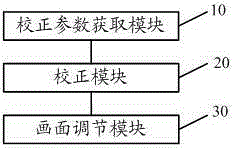

Liquid crystal display screen picture consistency adjusting method and system

ActiveCN105259687AImprove consistencyLow costStatic indicating devicesNon-linear opticsLiquid-crystal displayComputer science

The invention discloses a liquid crystal display screen picture consistency adjusting method and system. The liquid crystal display screen is divided into a plurality of areas and then a Gamma curve corresponding to each area is calculated; a pixel signal of each area is corrected by the corresponding Gamma curve of each area and RGB data of each area after the correction can be achieved; Gamma voltage is correspondingly adjusted according to the RGB data of each area, so each area of the liquid crystal display screen can be input on the same picture and output the same gray scale signal; and picture color consistency can be improved. A present TV framework structure is employed, so hardware cost cannot be increased and great convenience is brought to users.

Owner:SHENZHEN SKYWORTH RGB ELECTRONICS CO LTD

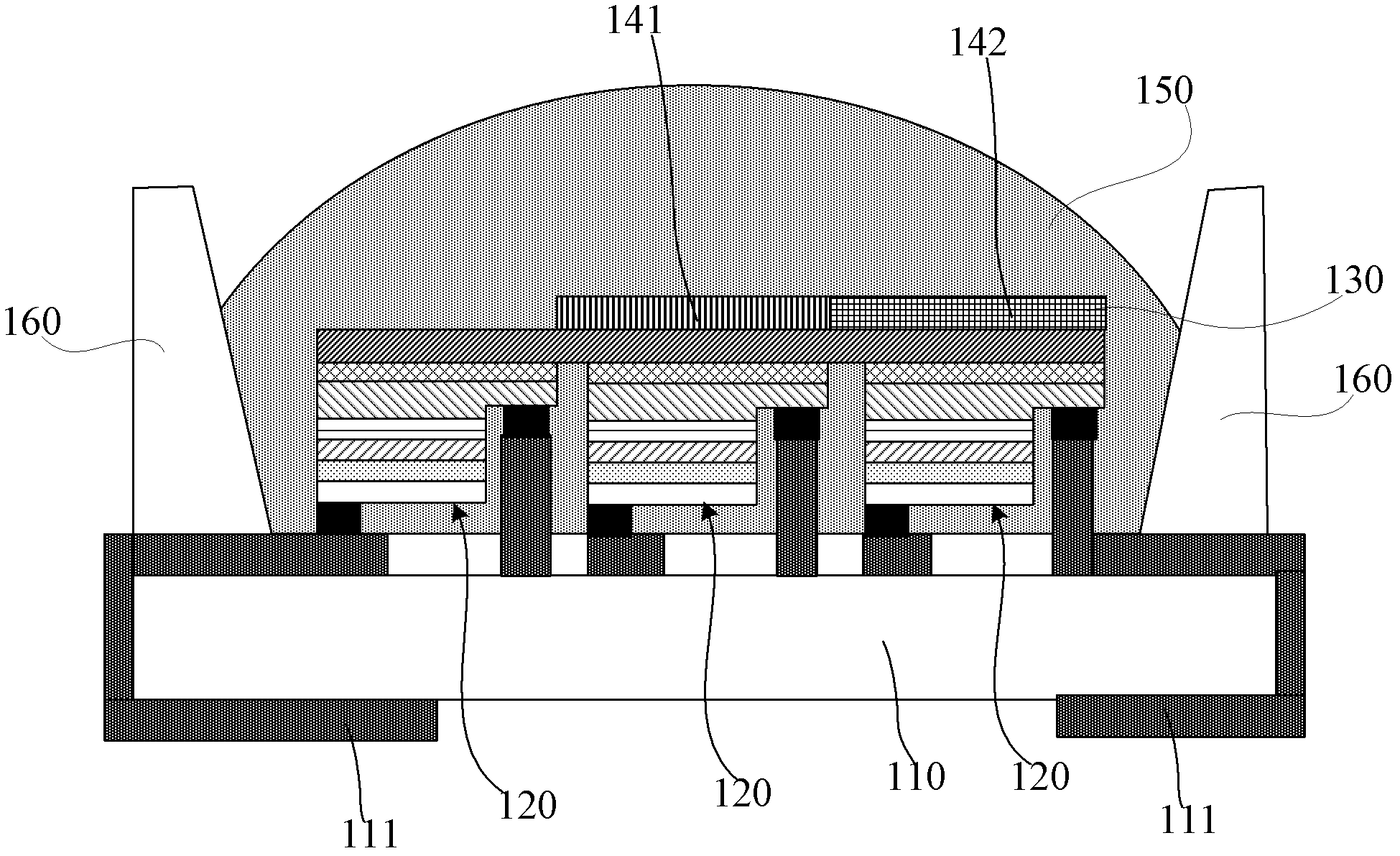

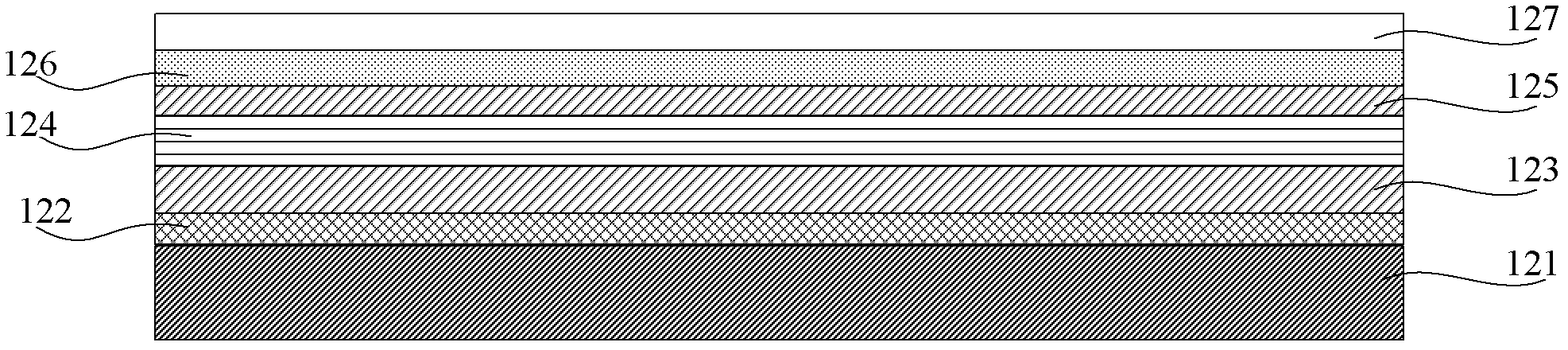

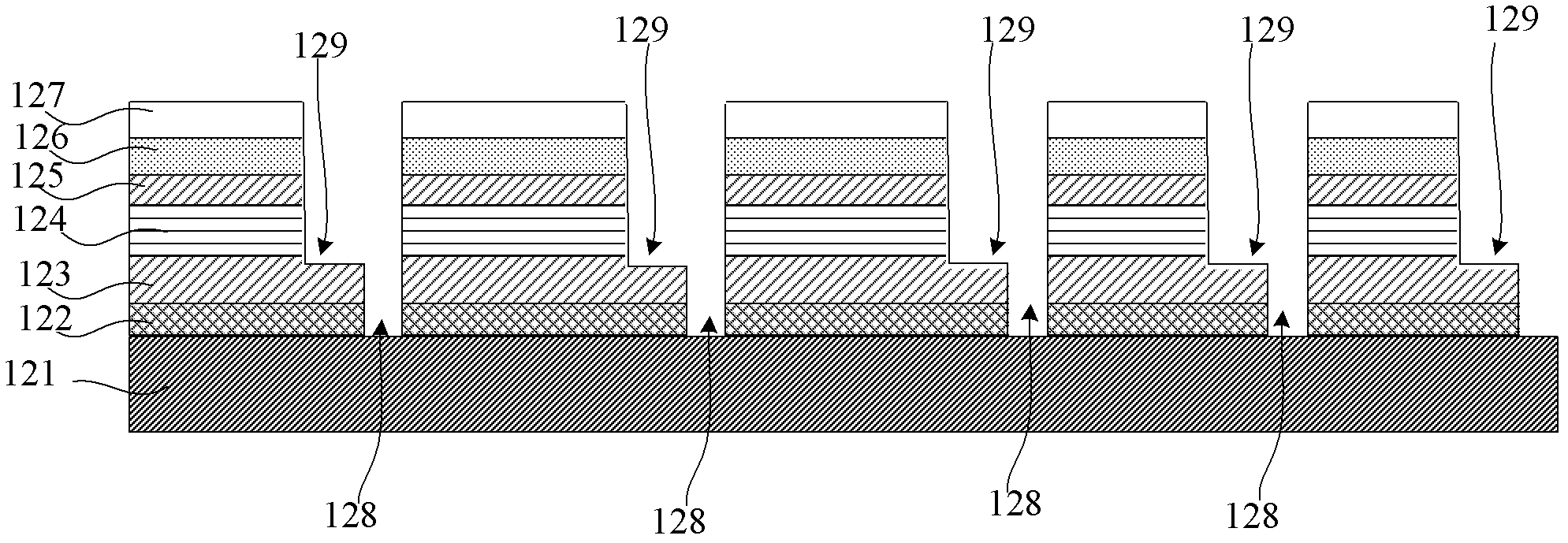

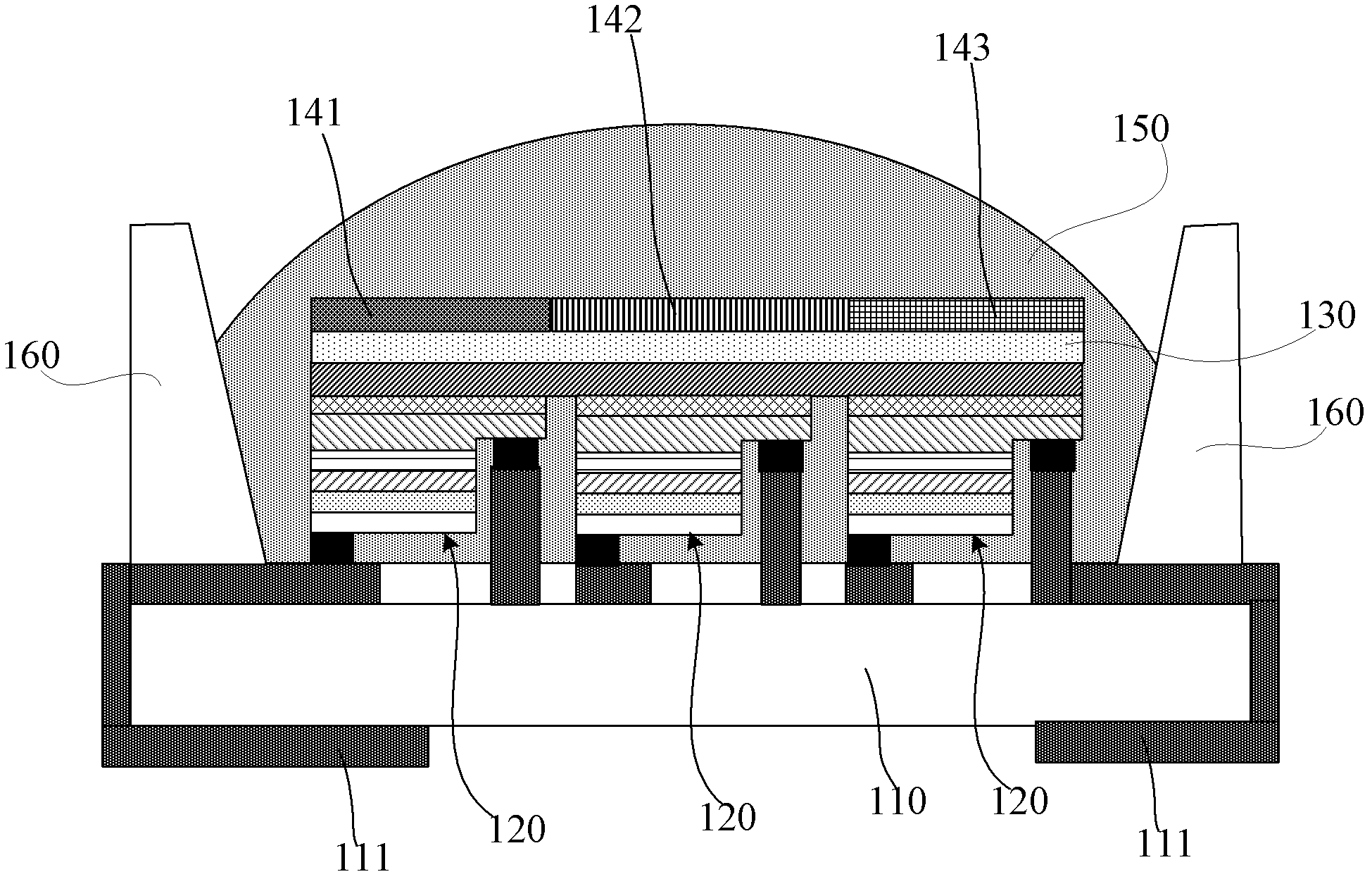

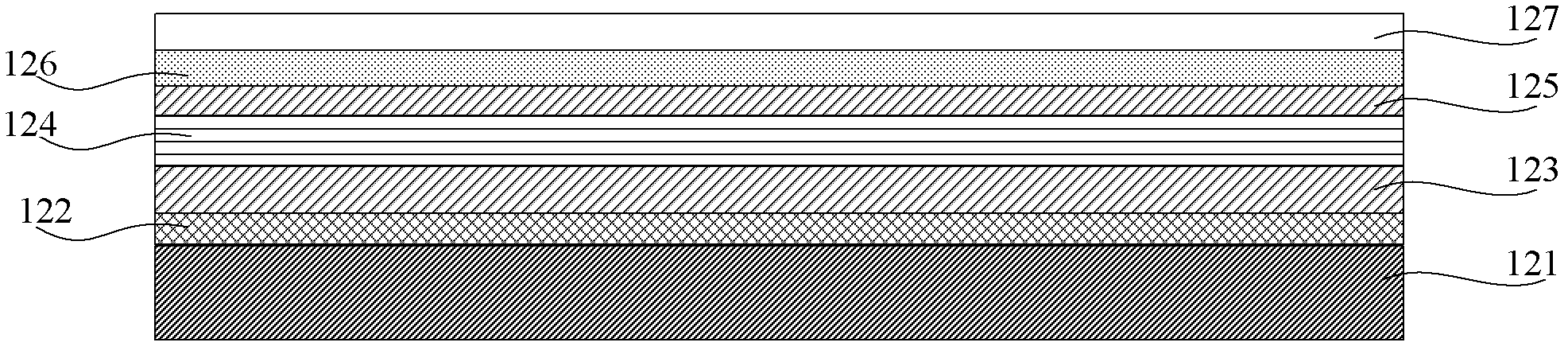

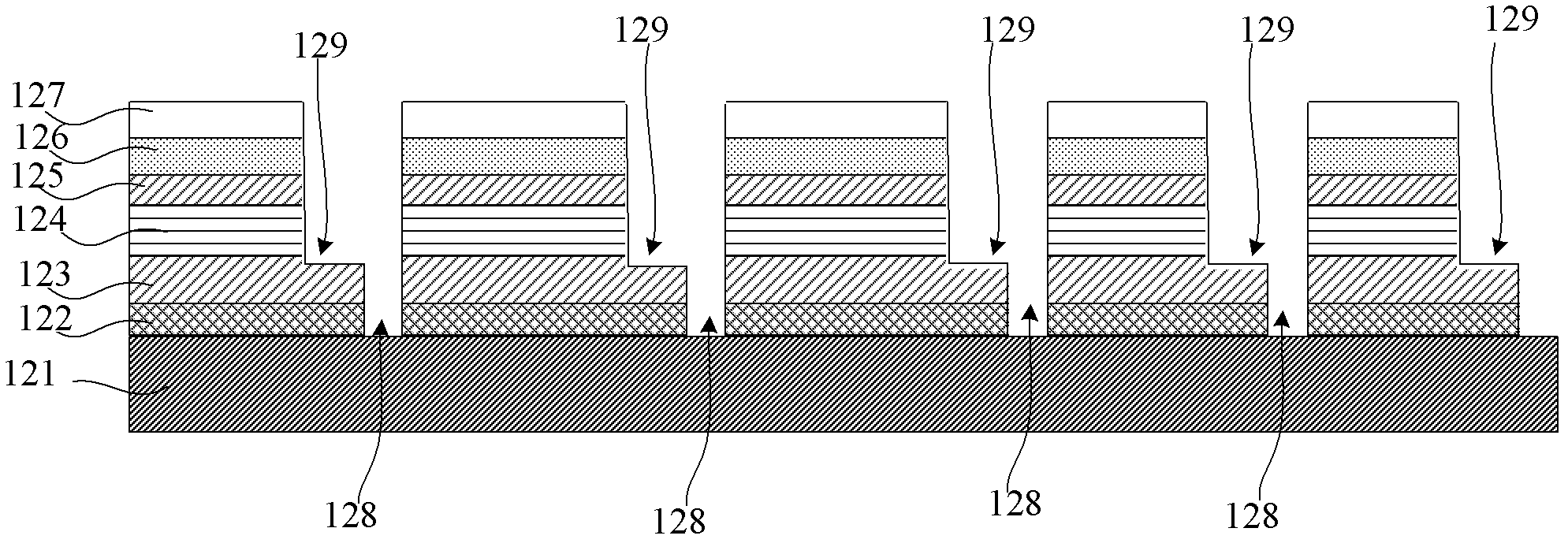

Light emitting diode (LED) pixel unit device structure and preparation method thereof

InactiveCN102214650AAttenuation consistentReduced package areaSolid-state devicesSemiconductor devicesUltrasound attenuationLED display

The invention discloses a light emitting diode (LED) pixel unit device structure. Three blue LED chip units which are connected with one another through the same substrate and electrically isolated from one another are inversely assembled with one another, so a package area is reduced and the resolution of an LED display screen is improved; the structures of the LED chip units are the same; greenfluorescent powder and red fluorescent powder are coated on two of the LED chip units respectively, so the two LED chip units can emit green light and red light respectively; therefore, during use, the attenuation of the LED chip units are consistent, and the color consistency of the display screen is enhanced. The invention also discloses a preparation method of the structure. An LED module is inversely arranged on a heat conducting substrate, so steps of chip bonding and gold line bonding are omitted, the cost is reduced, manufacturing efficiency is improved, the problem that a welding diskand a lead wire in an LED package block light is solved, the light emergent efficiency of an LED is improved greatly, package space is saved, and the LED package can be further miniaturized and integrated.

Owner:ENRAYTEK OPTOELECTRONICS

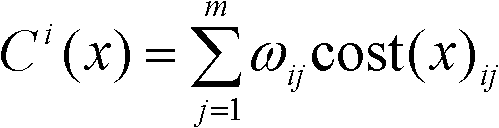

LED (light emitting diode) pixel unit device structure and preparation method thereof

InactiveCN102214651AImprove light extraction efficiencyAchieve integrationSolid-state devicesSemiconductor devicesHeat conductingEngineering

The invention discloses an LED (light emitting diode) pixel unit device structure. In the structure, three LED chip units which are connected by a same substrate but electrically separated are used and are packaged in a face-down mode to reduce the packaging area of the device, thus the resolution ratio of an LED display screen can be improved; and each LED chip unit has the very same structure, an R color filter film, a G color filter film and a B color filter film are respectively formed on each LED chip unit for sending out a red light, a green light and a blue light respectively, thus each LED chip unit can have an uniform attenuation and the color consistency of the display screen can be improved. The invention also discloses a preparation method for the structure. The LED modules are arranged on a heat conducting substrate in the face-down mode, thus the procedures of chip die bond and gold wire bonding can be omitted, the manufacturing costs can be reduced, the production efficiency can be improved, the problem of light-blocking of the pad and the lead in small-sized LED packaging can be solved, the light extraction efficiency of the LED can be considerably improved, the packaging space can be saved and the further miniaturization and integration of the LED packaging size can be realized.

Owner:ENRAYTEK OPTOELECTRONICS

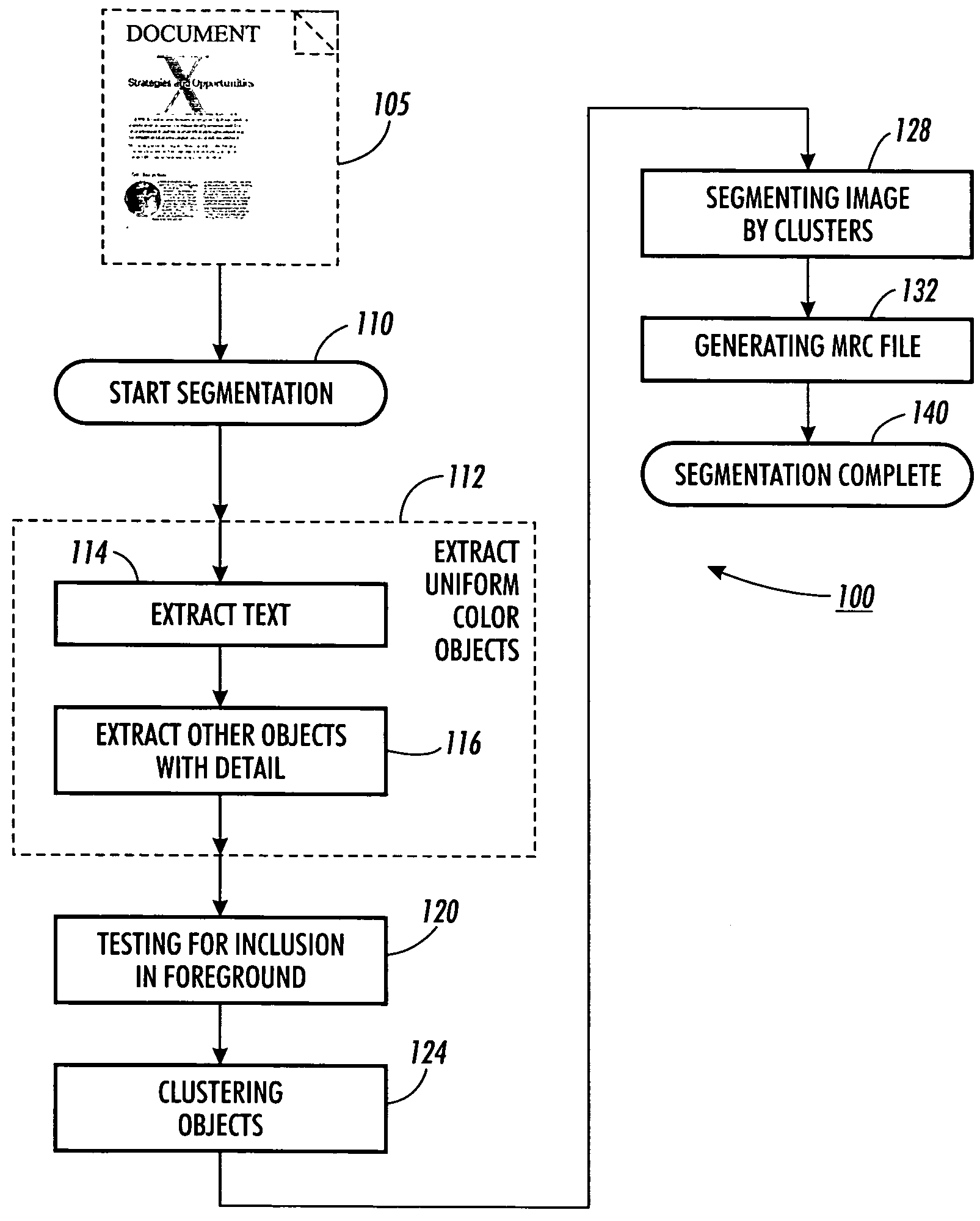

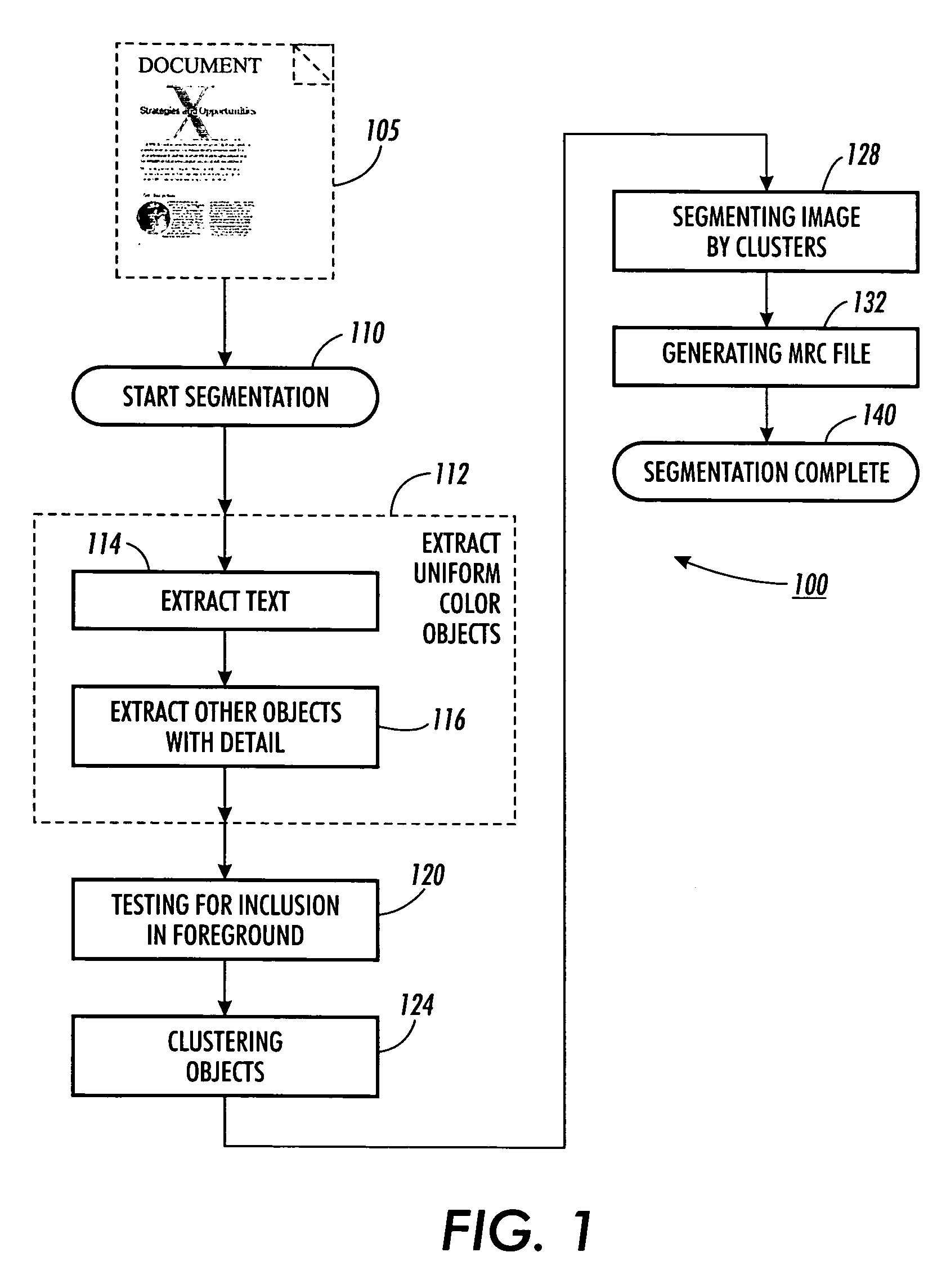

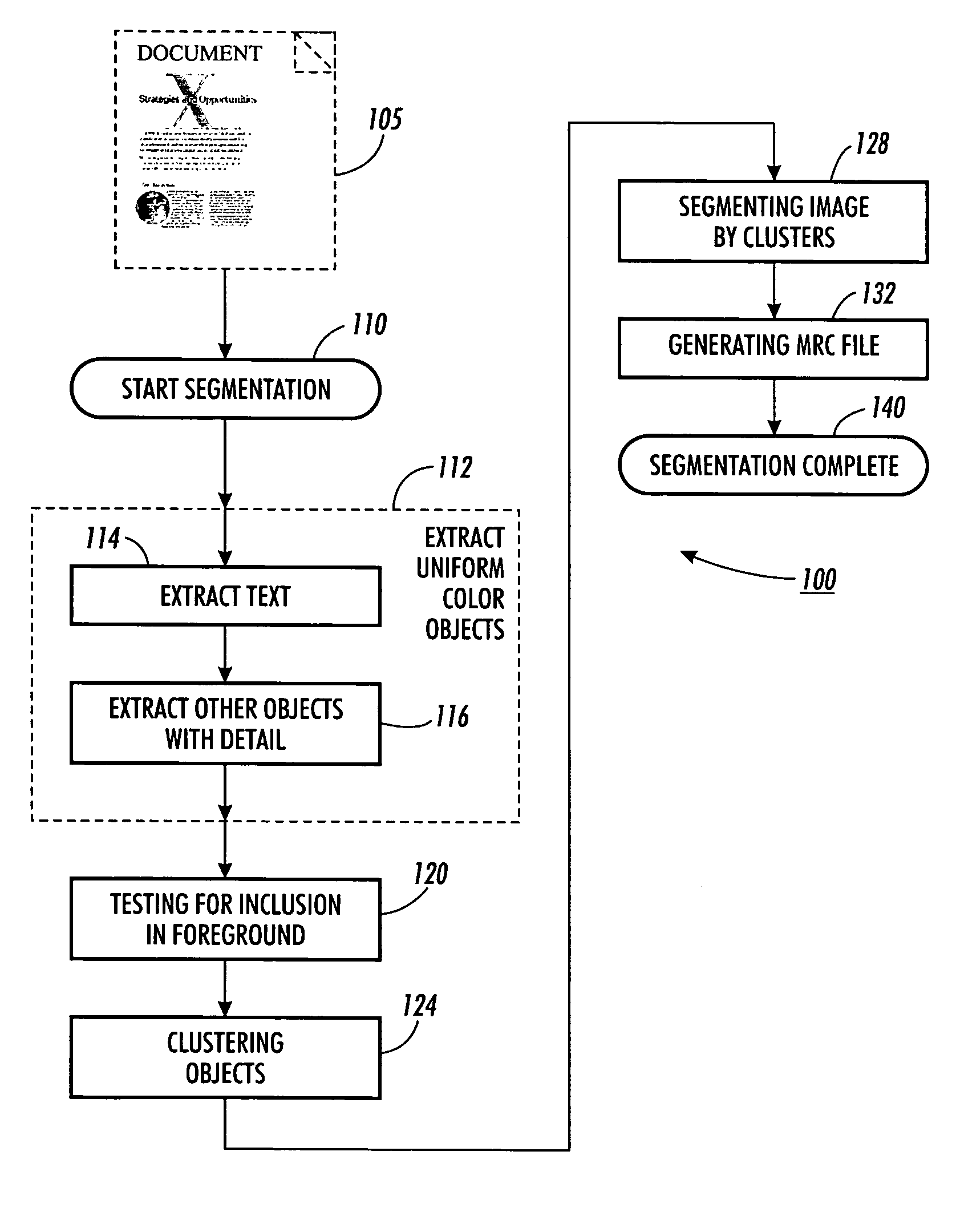

Method for image segmentation to identify regions with constant foreground color

InactiveUS20050275897A1Avoid problemsVisual presentationCharacter recognitionGratingMixed raster content

The present invention is a method for image segmentation to produce a mixed raster content (MRC) image with constant foreground layers. The invention extracts uniform text and other uniform color objects that carry detail information. The method includes four primary steps. First, the objects are extracted from the image. Next, the objects are tested for color consistency and other features to decide if they should be chosen for coding to the MRC foreground layers. The objects that are chosen are then clustered in color space. The image is finally segmented such that each foreground layer codes the objects from the same color cluster.

Owner:XEROX CORP

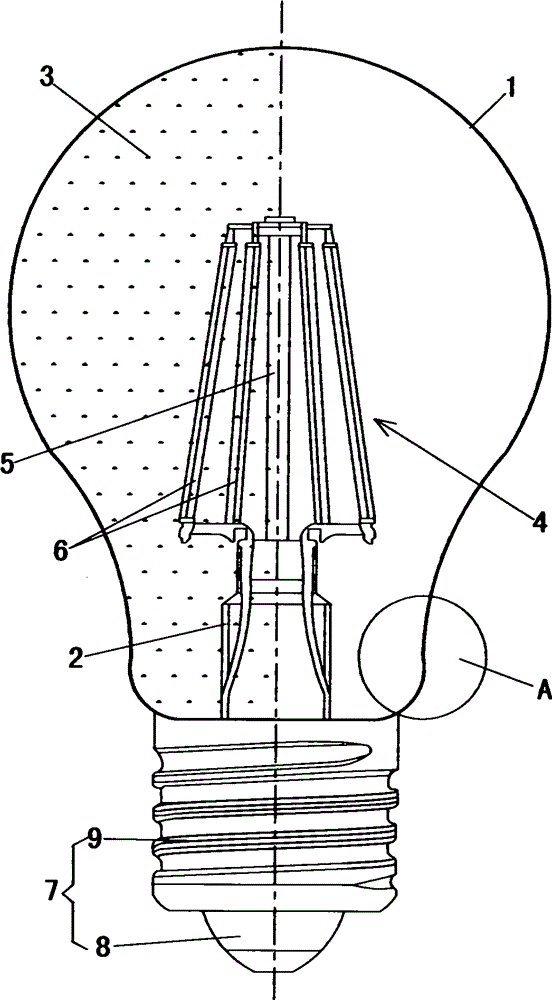

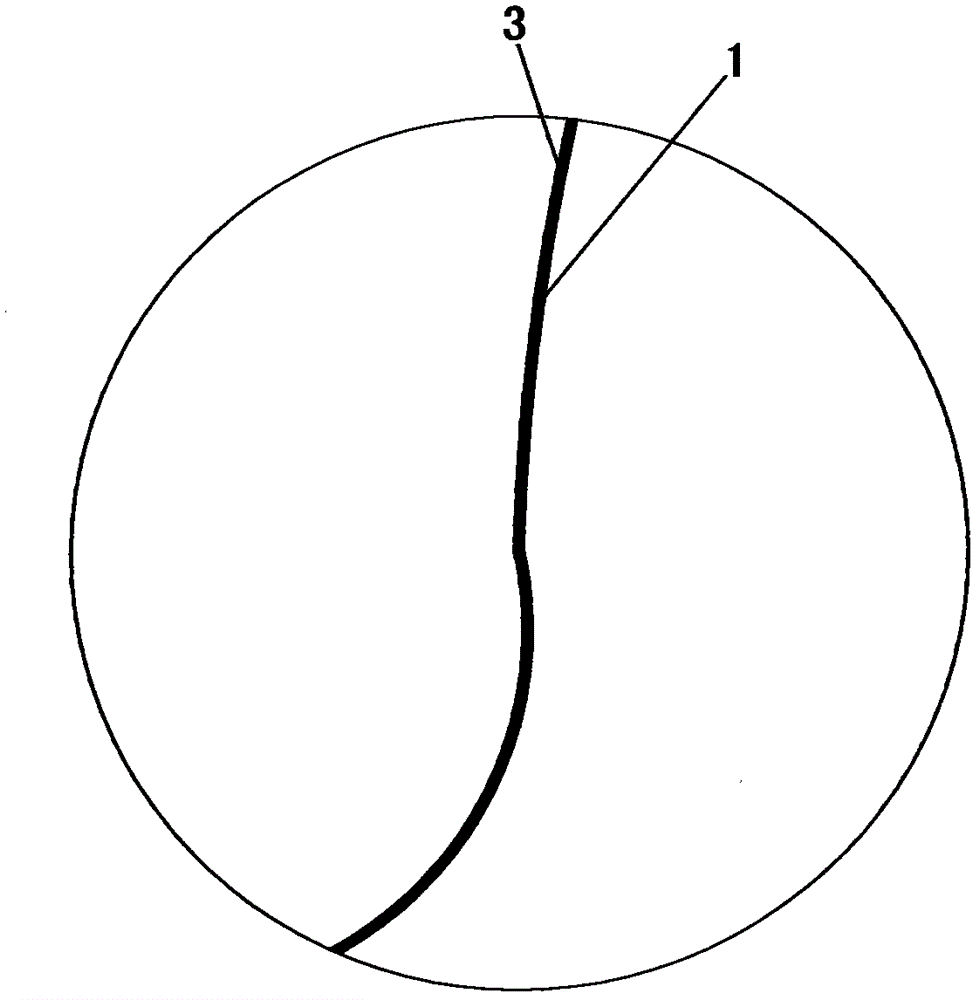

360-degree lighting bulb and processing method thereof

InactiveCN105042354AGood color consistencyThere is no color difference problemPoint-like light sourceElectric circuit arrangementsEffect lightEngineering

A 360-degree lighting bulb and a processing method thereof are provided; the bulb comprises a glass shield and a core rod; a glass shield port and a core rod bell mouth are fused to form a vacuum seal cavity filled with heat radiation gas; an inner wall of the glass shield is coated with a phosphor layer; a 360-degree lighting element is arranged in the glass shield, and comprises a rack arranged on the core rod; the rack is connected with a plurality of LED lighting chips; electrodes of each LED lighting chip are connected with conduction pins on the rack through metal wires according to polarity characteristics, thus forming more than one loop; the rack having the plurality of LED lighting chips is coated with a glue layer; the conduction pins on the rack are welded with conduction lead wires on the core column according to polarity characteristics; the glass shield is connected with a lamp holder. The lighting bulb uses the LED lighting chips of the 360-degree lighting element to illuminate, and glue mutual reflection (refraction) characteristic can excite the phosphor layer on the surface of the glass shield, so the bulb is uniform in lighting, good in color consistency, has no color difference, is high in yield rate, low in cost, and simple in technology.

Owner:王志根

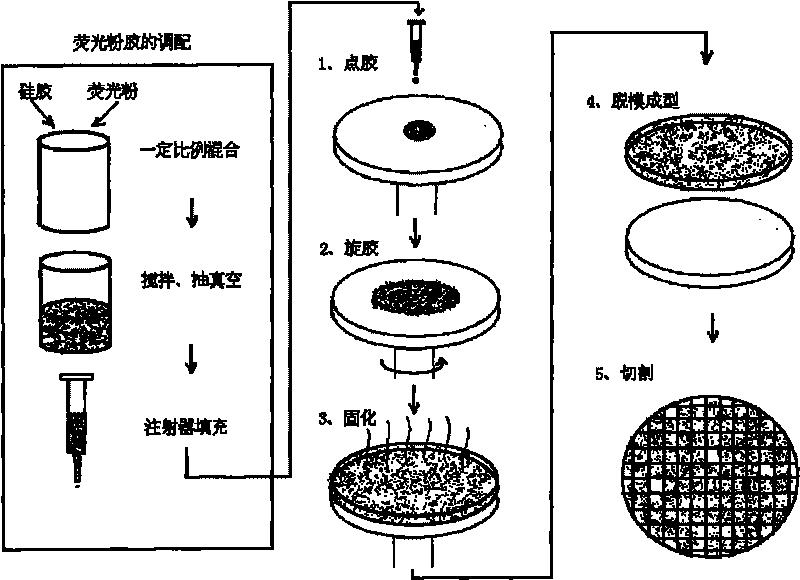

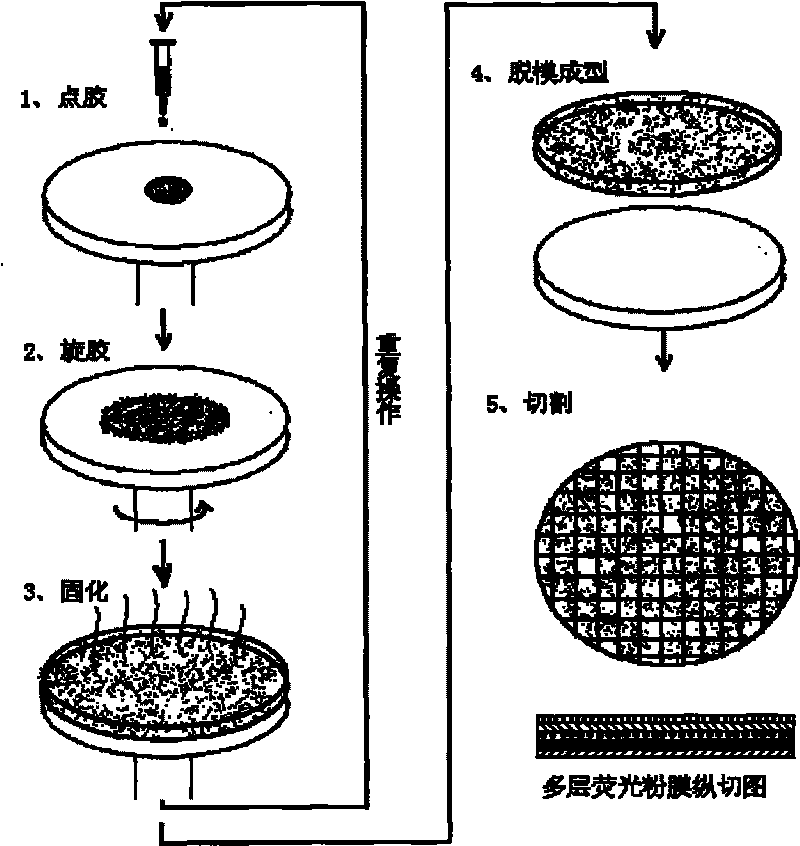

Phosphor powder film making method and obtained phosphor powder film encapsulating method

InactiveCN101699638AStable jobEasy to prepareSolid-state devicesSemiconductor/solid-state device manufacturingPhosphorWhite light

The invention discloses a phosphor powder film making method and obtained phosphor powder film encapsulating method. The invention is characterized in that a method spinning phosphor powder glue is adopted to independently make phosphor powder film in encapsulating process, then the phosphor powder film is covered on an LED chip coated with heat insulating material, and finally the heat insulating material is cured to complete white-light LED phosphor powder coating. The quantity of LED chip is one or more than one. The invention is simple in technology and low in making cost, can accurately control the thickness of phosphor powder film, greatly improves light color consistency of LED product, is applicable to large scale industrialized production and adopts heat insulating encapsulating manner to solve heat dissipation problem of LED and reduce lumens depreciation, so that light color is more stable.

Owner:SUN YAT SEN UNIV

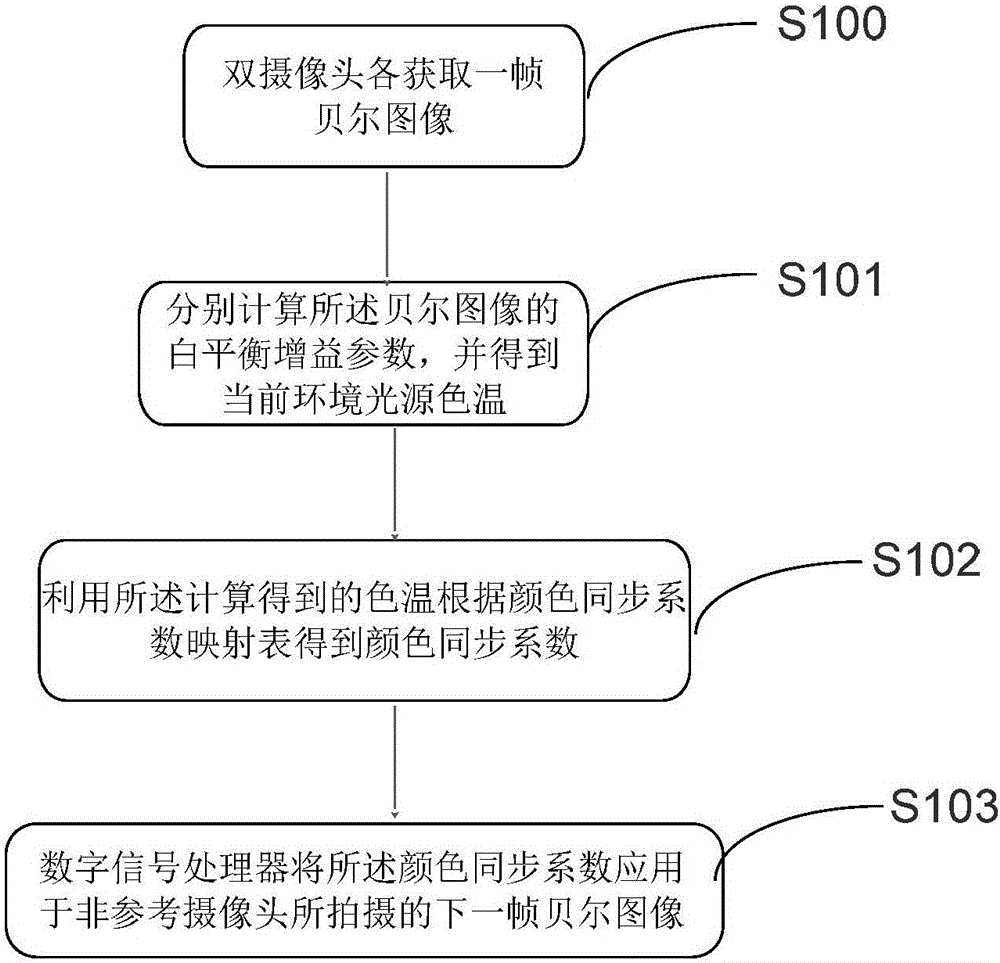

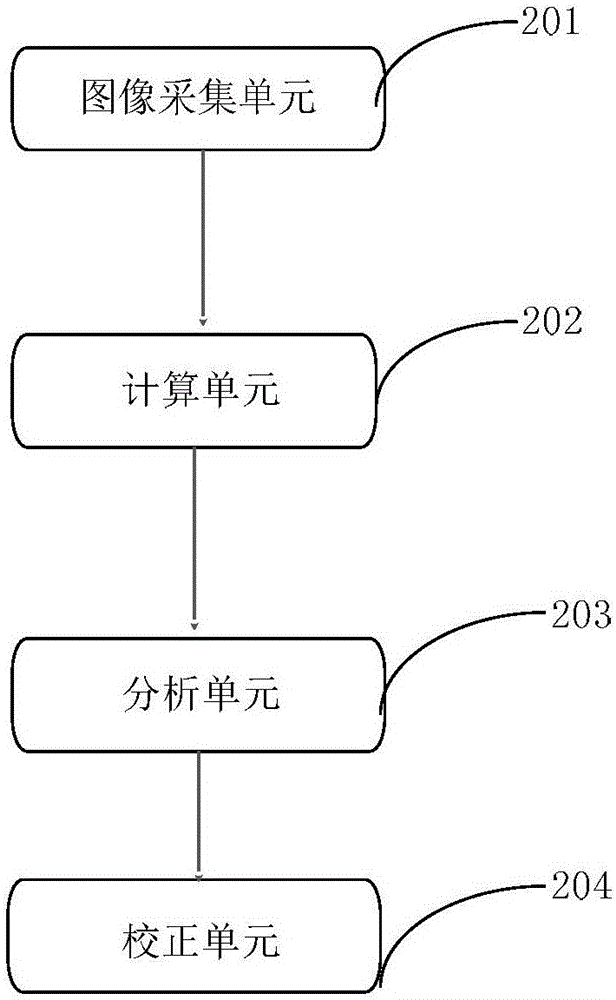

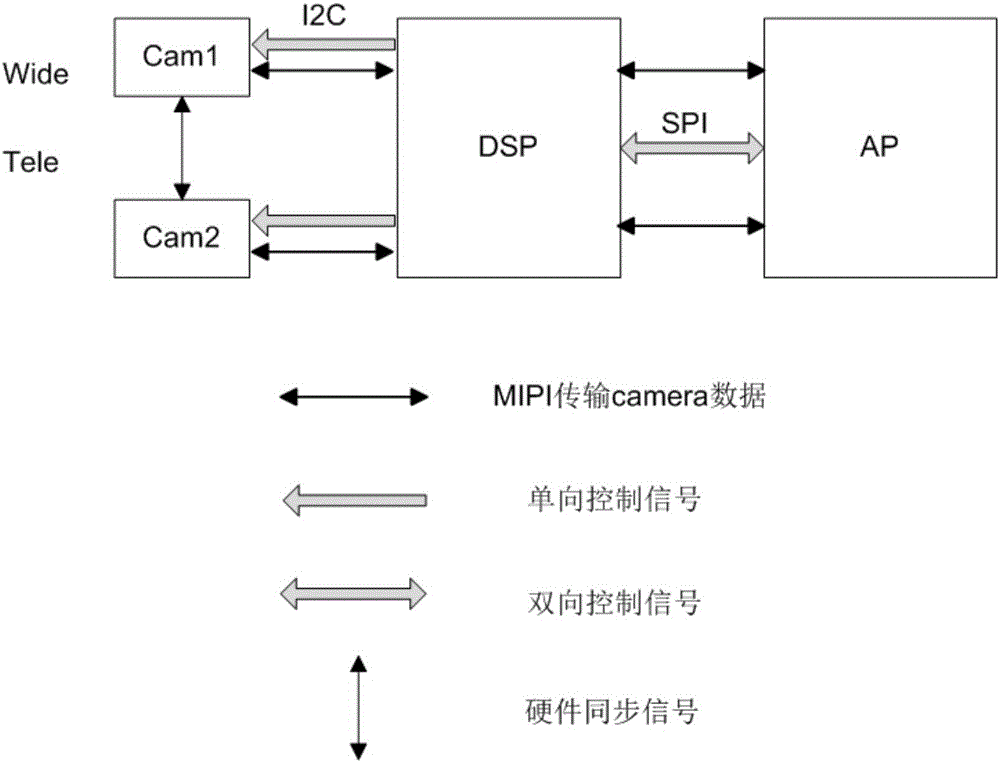

Double-camera color synchronization method and device, and terminal

InactiveCN106131527AImprove synthesis abilityImprove experienceColor signal processing circuitsOriginal dataVisual perception

The invention provides a double-camera color synchronization method and device, and a terminal. The method comprises the steps that the double cameras obtain Bayer images; the white balance gain parameters of the Bayer images are calculated, and current environment light source color temperature is obtained; a color synchronization coefficient is obtained by employing the calculated color temperature according to a color synchronization coefficient mapping table; a digital signal processor applies the color synchronization coefficient to a next Bayer image photographed by a non-reference camera. According to the method, the device and the terminal, the image data is based on the Bayer image data; compared with 8-bit images processed by an image signal processor ISP, 10-bit Bayer images have richer data information, and therefore, the effect of an image synthesized by employing the Bayer images is better. According to the method, the device and the terminal, the original data color consistency of the double cameras is calibrated, the vision difference resulting from color inconsistency is improved, the synthesizing effect of the double cameras is improved, and the user experience is improved.

Owner:ZEUSIS INC

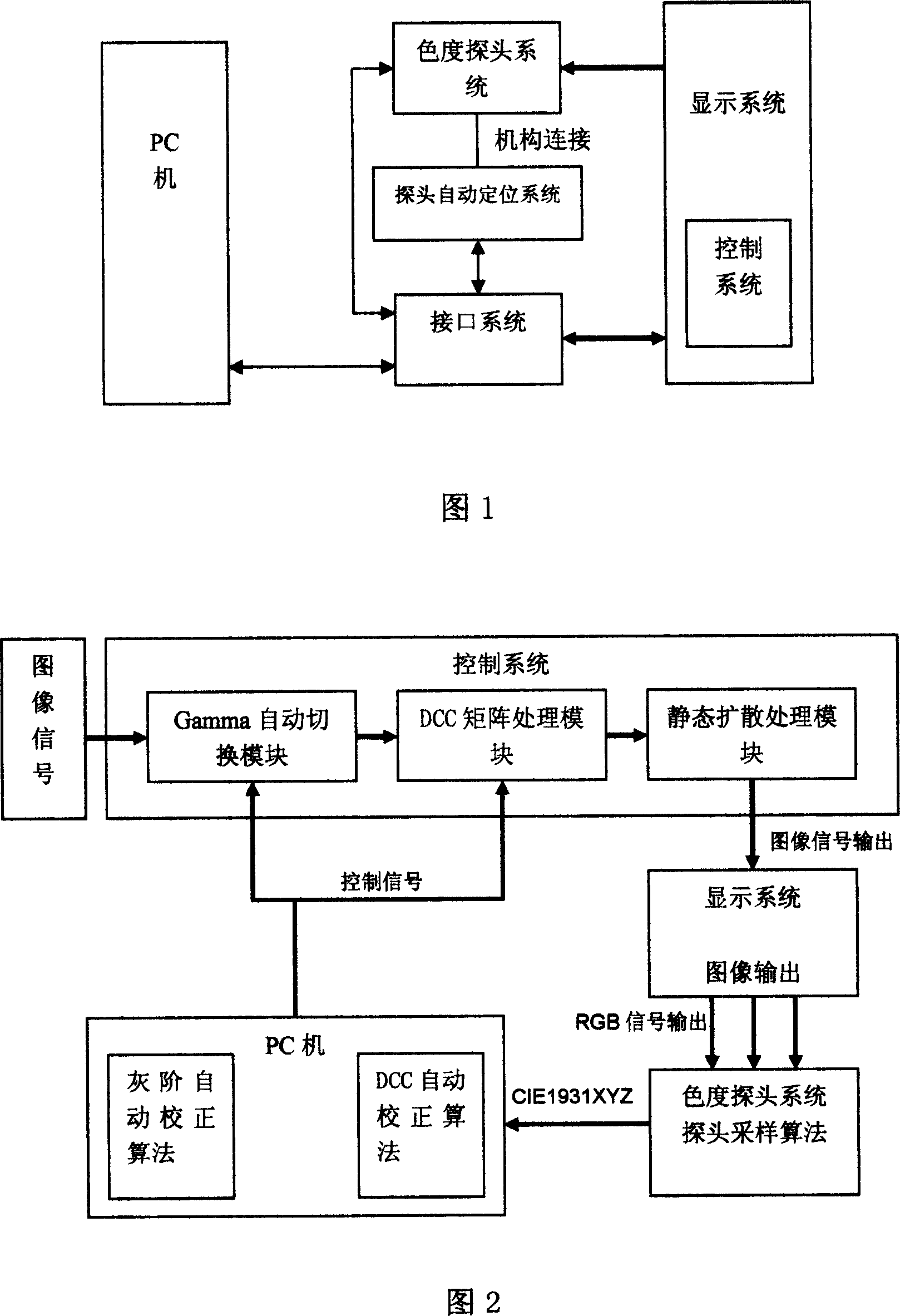

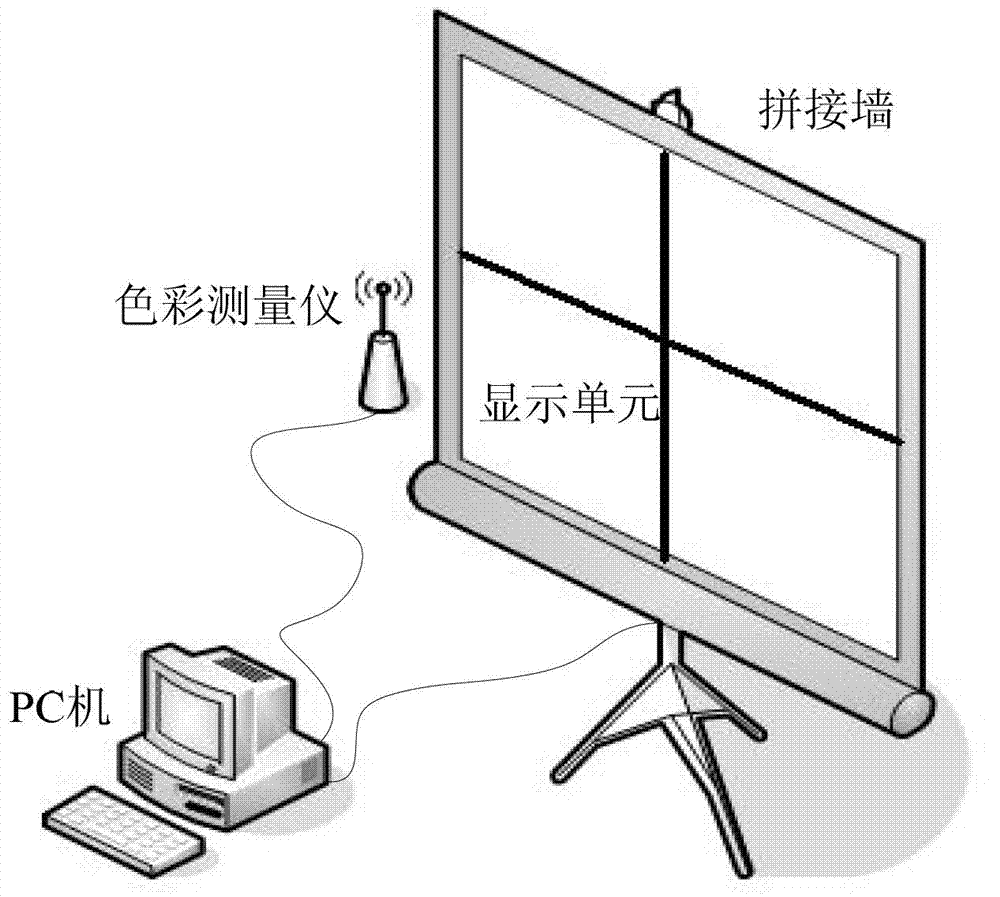

Color management system for mixed connection wall and its control method

InactiveCN101009852AImprove compatibilityHigh precisionColor signal processing circuitsCathode-ray tube indicatorsControl systemComputer science

The disclosed color management system for joint wall comprises: a PC, a chroma probe system, a probe auto-positioning system mechanical connected with last system, a display system with a control system, and the interface system. This invention has high precision and fast speed, and can correct many features of current joint display system, such as base color consistency, gray, white field, and dark field, etc.

Owner:GUANGDONG VTRON TECH CO LTD

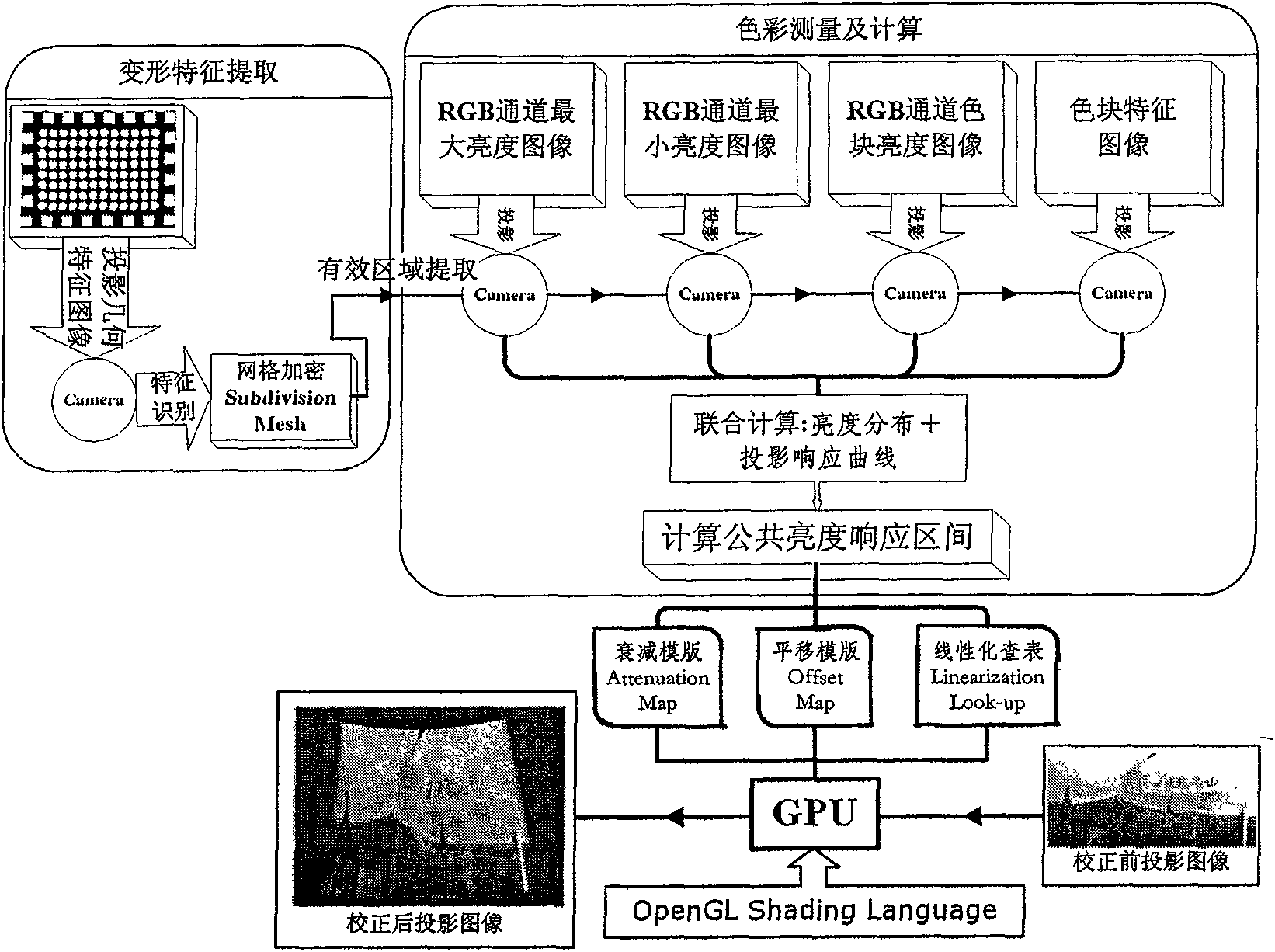

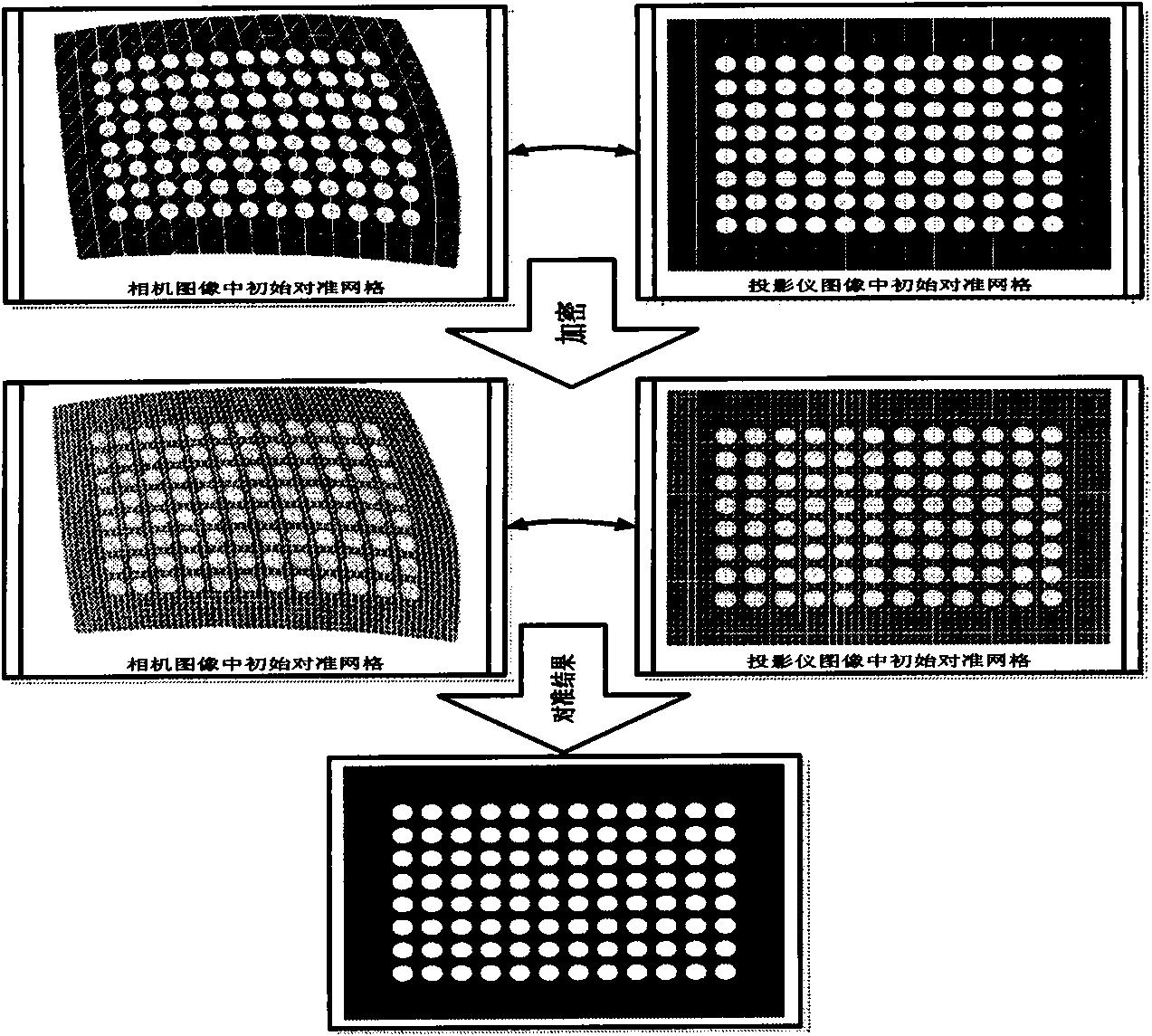

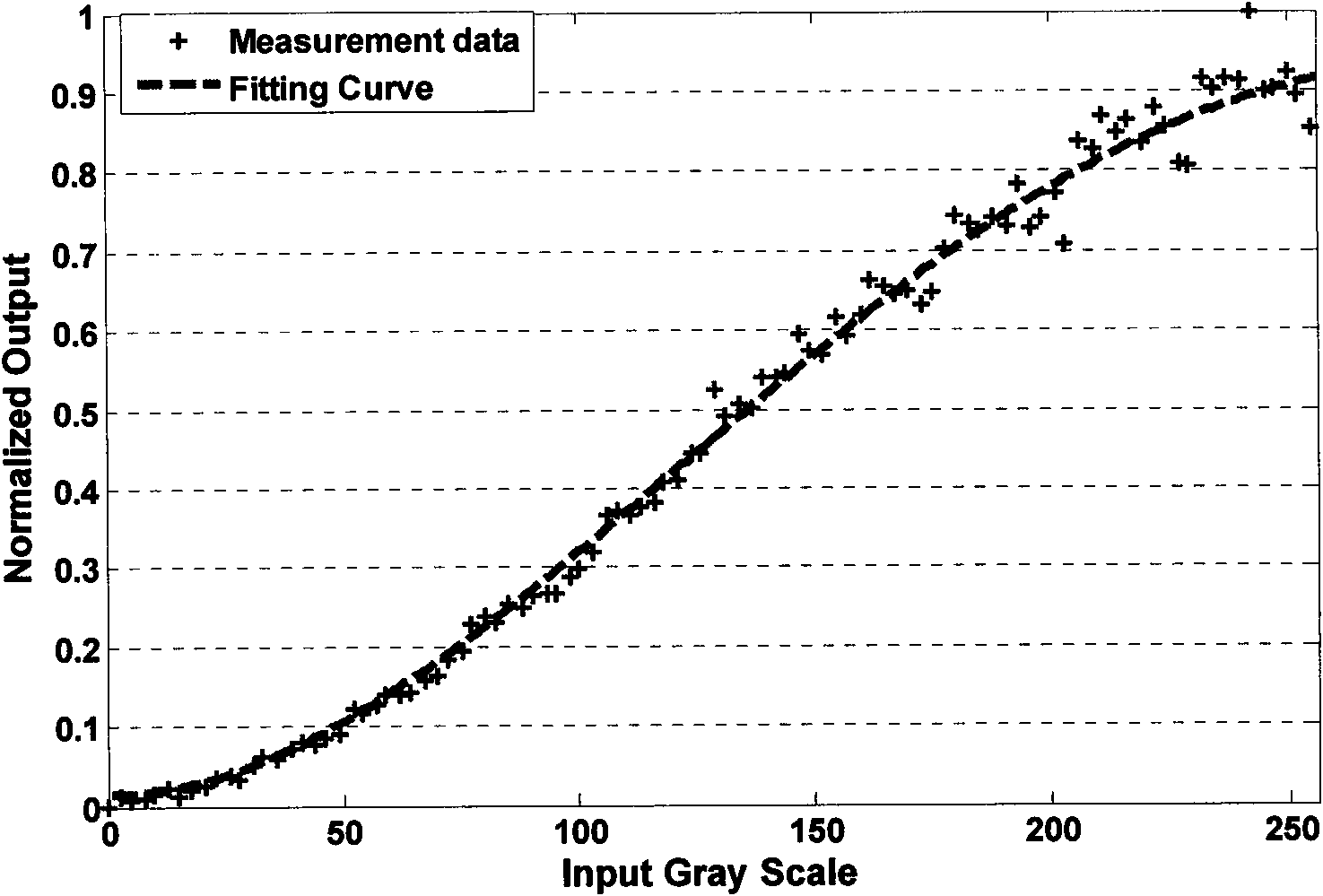

Correcting method of multiple projector display wall colors of arbitrary smooth curve screens independent of geometric correction

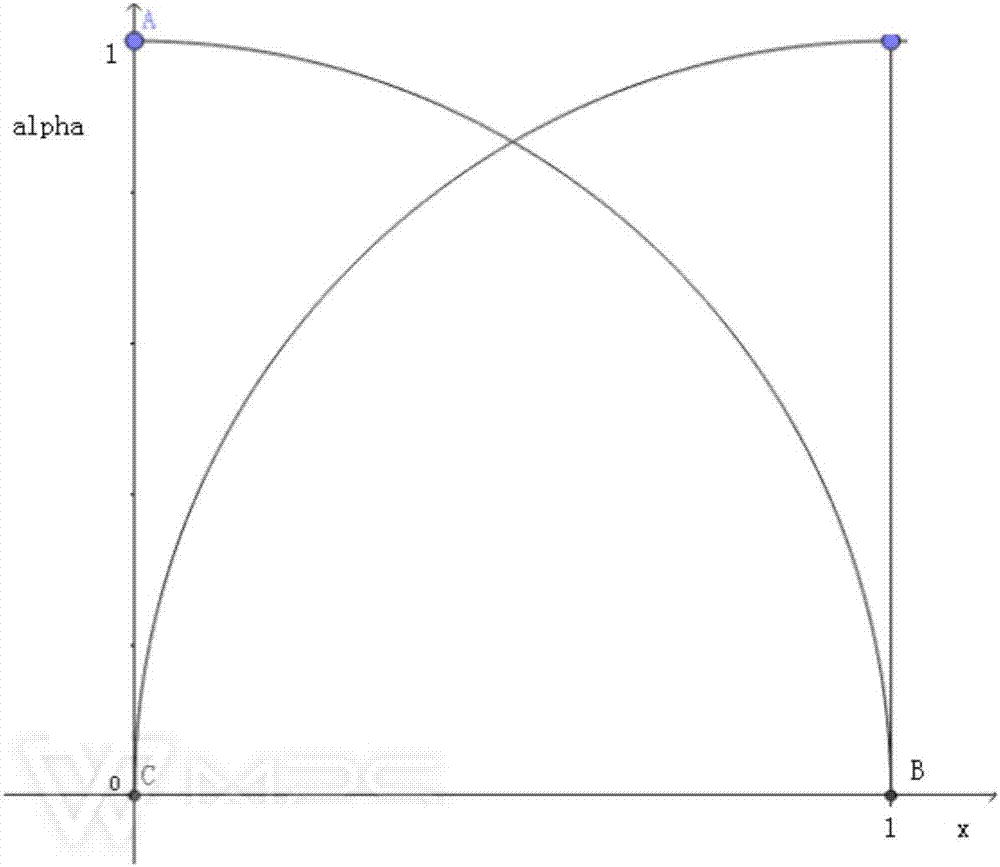

ActiveCN101621701AObvious advantagesGood effectColor signal processing circuitsPicture reproducers using projection devicesCamera imageProjection image

The invention relates to a correcting method of multiple projector display wall colors of arbitrary smooth curve screens independent of geometric correction, which belongs to the image display processing of a computer system. The method comprises the following steps: firstly, obtaining a corresponding relation between a projector image and a camera image by utilizing an image alignment algorithm; secondly, shooting four sets of projection images of the projector image in three channels of RGB under different inputs; thirdly, calculating the color information of each pixel of a projector by utilizing an image alignment relation and a shooting result; and finally, combining all calculating results to obtain a projector common lightness response region and realizing a color consistency correction effect by adjusting inputs in real time by GPU. The correcting method is separated from geometric correction results, establishes the pixel level alignment relation between the projector image and the CCD camera image by utilizing the self-adaptive image alignment algorithm, can measure the lightness information of each pixel of the projector and adapt to arbitrary smooth projection screens. The alignment algorithm does not need any projectors, cameras and screen curve parameter calibrations and has the characteristics of simplicity, stability, self-adaptation and high speed.

Owner:WISESOFT CO LTD

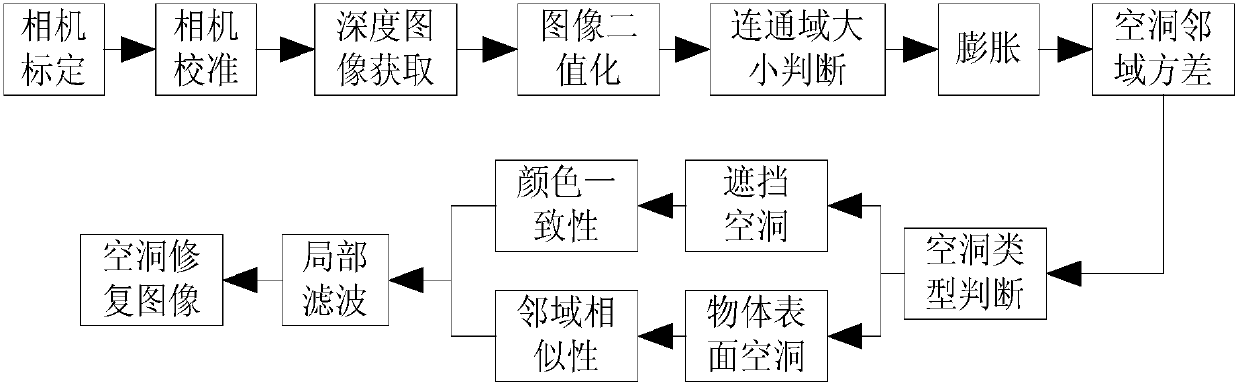

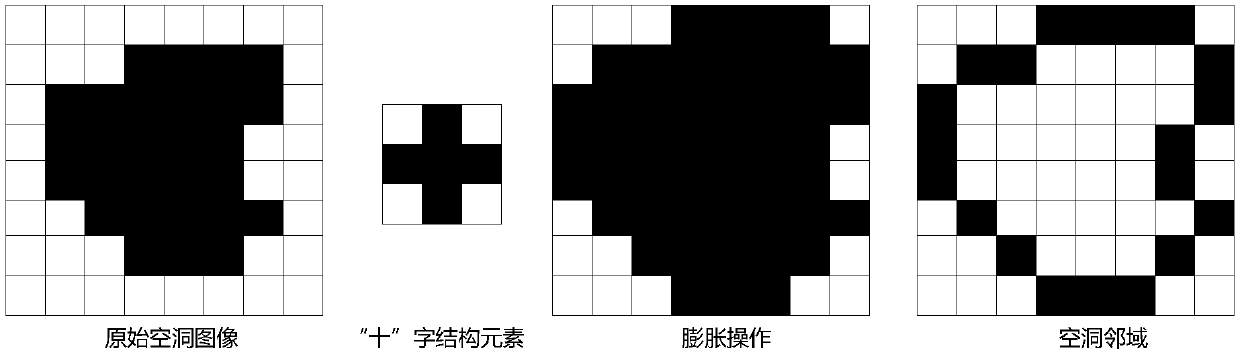

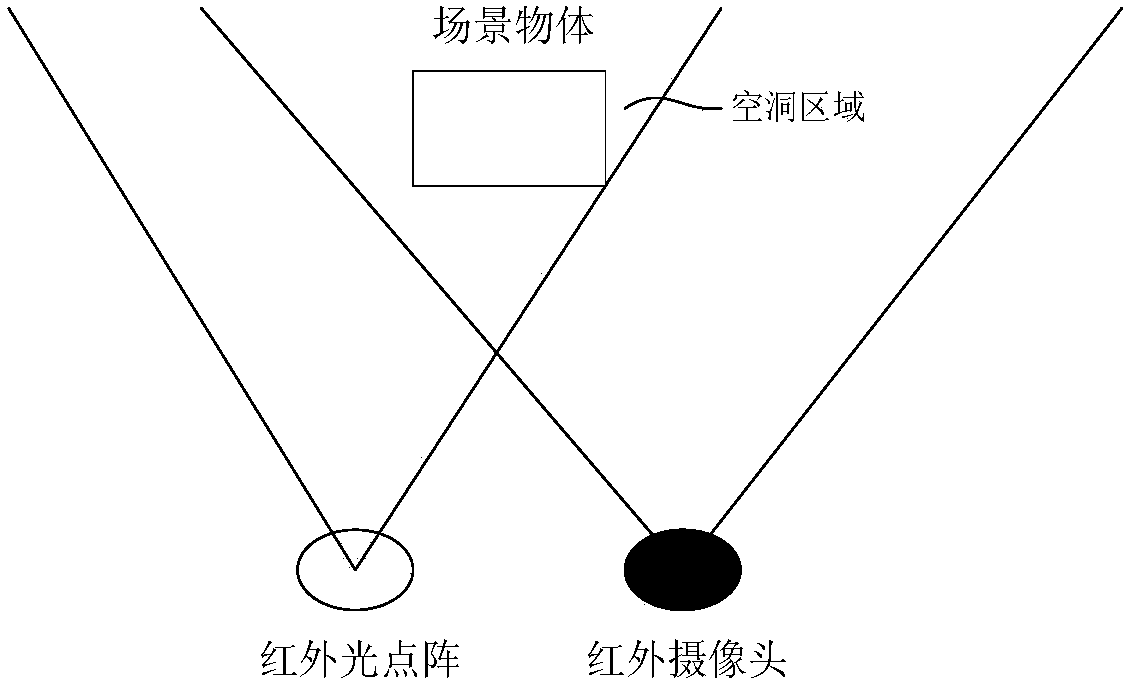

RGB-D camera depth image recovery method combining color images

ActiveCN108399632AGuaranteed validityGuaranteed originalityImage enhancementImage analysisIn planeColor image

The invention relates to a RGB-D camera depth image recovery method combining color images, and belongs to the field of depth image recovery. The method comprises that a Zhang's calibration method isadopted to calibrate color and depth cameras, and internal and external parameters of the cameras are acquired; according to the principle of pin-hole imaging and coordinate transformation, coordinatealignment of the color and depth cameras is achieved; color and depth images of a scene are respectively acquired, and a depth threshold method is adopted to carry out binaryzation processing on theimages; a size of a cavity connected domain is judged to determine existence of cavities; an expansion operation is carried out to obtain a cavity neighborhood; depth value variances of pixel points of a cavity domain are calculated, according to the depth value variances, the cavities are divided into shielding cavities and in-plane cavities; color consistency and domain similarity are respectively adopted to recover the two types of cavities; a partial filter method is adopted to filter the recovered images, and noises are removed. The method ensures the depth image recovery, keeps the original information of the depth images as much as possible, and improves the recovery efficiency of the depth images.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

System and method for image based control using inline sensors

InactiveUS7800779B2Digitally marking record carriersDigital computer detailsComputer graphics (images)Color calibration

Disclosed are a system and method are directed to efficient image based color calibration and improving color consistency performance, and more particularly to the use of continuous or dynamic calibration performed during printing and enabling adjustment on a page by page basis.

Owner:XEROX CORP

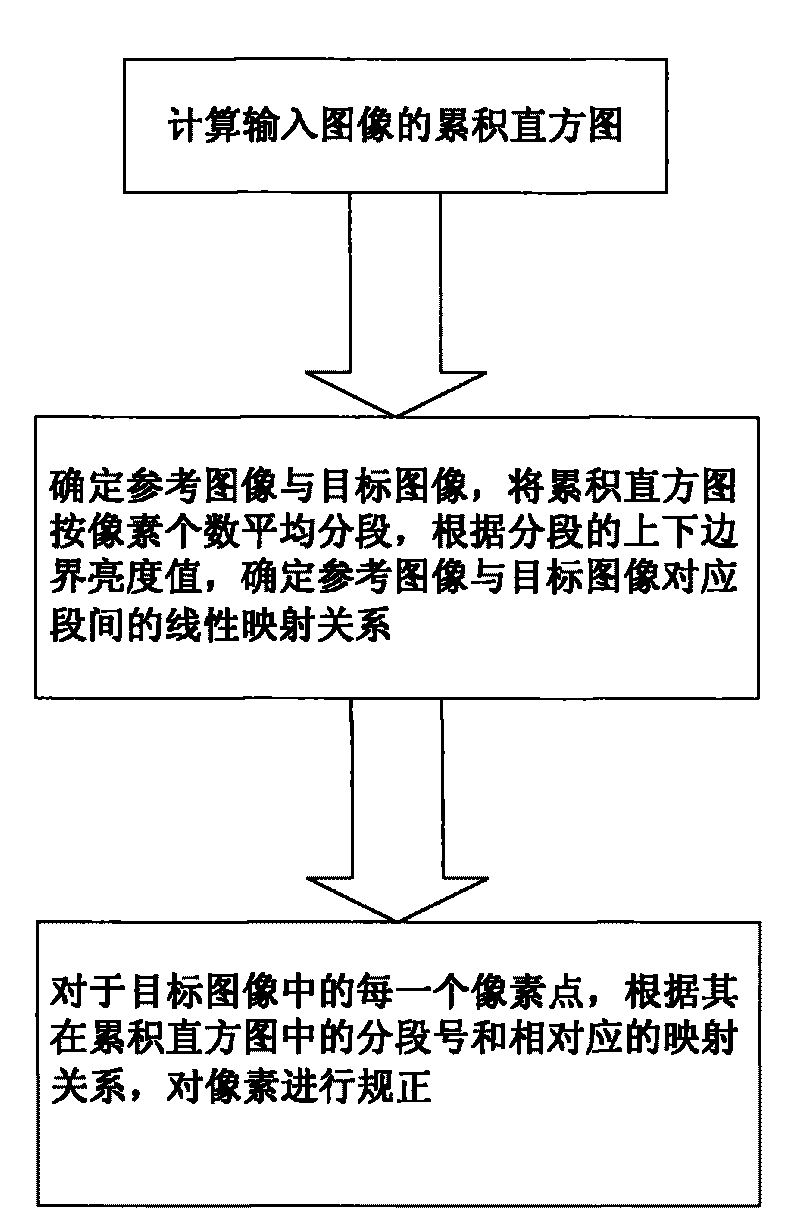

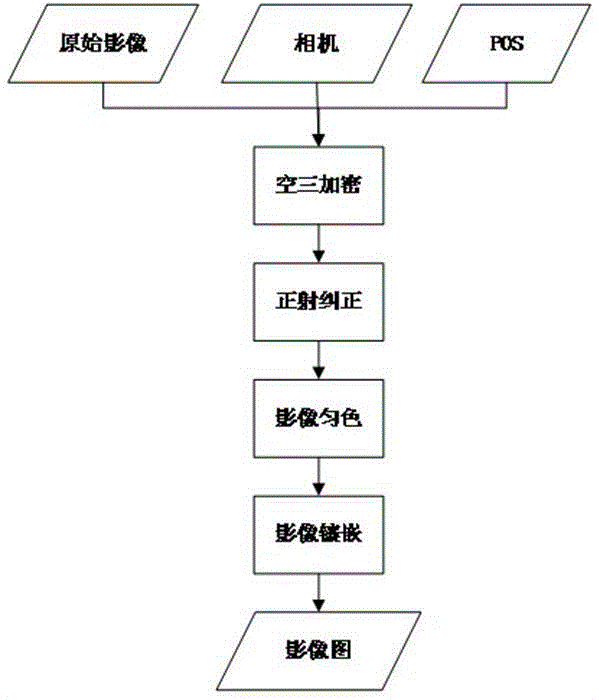

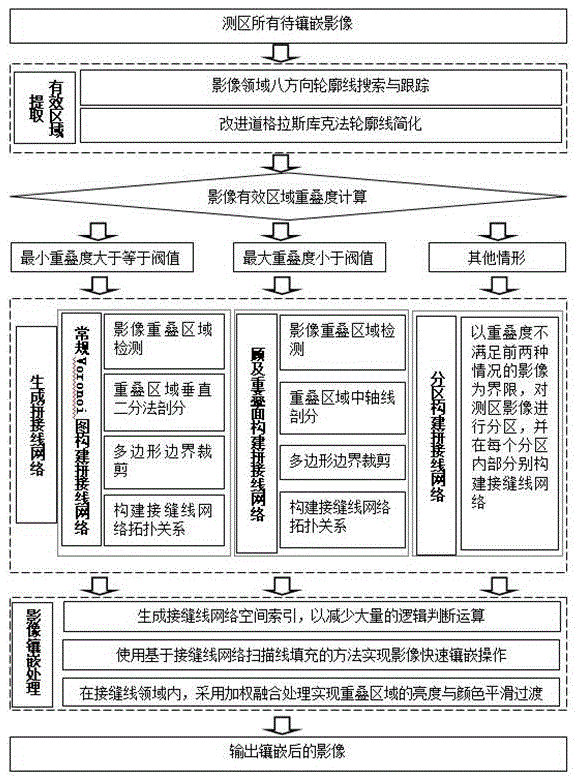

Optical image color consistency self-adaption processing and quick mosaic method

ActiveCN105528797AImplement automatic mosaic processingFix color inconsistenciesImage enhancementImage analysisBatch processingTriangulation

The invention discloses optical image color consistency self-adaption processing and a quick mosaic method and is applied in micro and mini unmanned aerial vehicle optical images. The self-adaption processing and the quick mosaic method comprise following steps: A: aero-triangulation: aero-triangulation is performed to a raw image on the basis of POS data and camera data to obtain high-precision POS data and a DEM of a test zone; B: ortho-rectification: digital differential rectification is performed to the image on the basis of the high-precision POS data and the test zone DEM to obtain a single ortho-image; C: image color-homogenizing: a global color-homogenizing template is constructed, batch processing of color-homogenizing is performed to the single image, and color blending is performed to overlapping regions of each image; D: image mosaicing: a mosaic line algorithm is adopted, images with overlapping degrees are jointed, and a photo map is output. The optical image color consistency self-adaption processing and quick mosaic method can be applied in fully automatic processing of micro and mini unmanned aerial vehicle optical images; by use of the method, the problem of color inconstancy of single image and overlapping regions is solved, and automatic mosaic processing of images with all overlapping degrees can be realized.

Owner:深圳飞马机器人科技有限公司

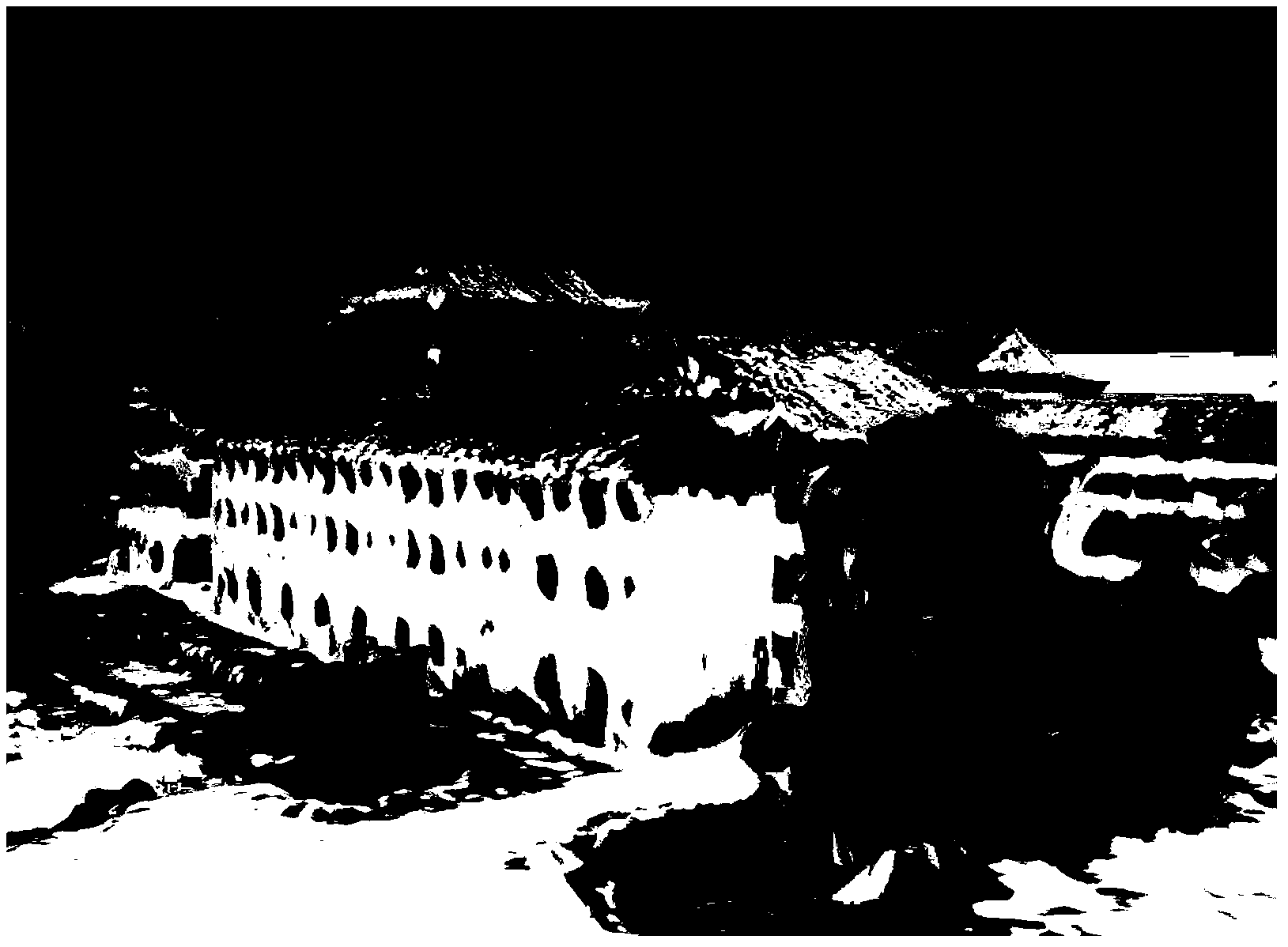

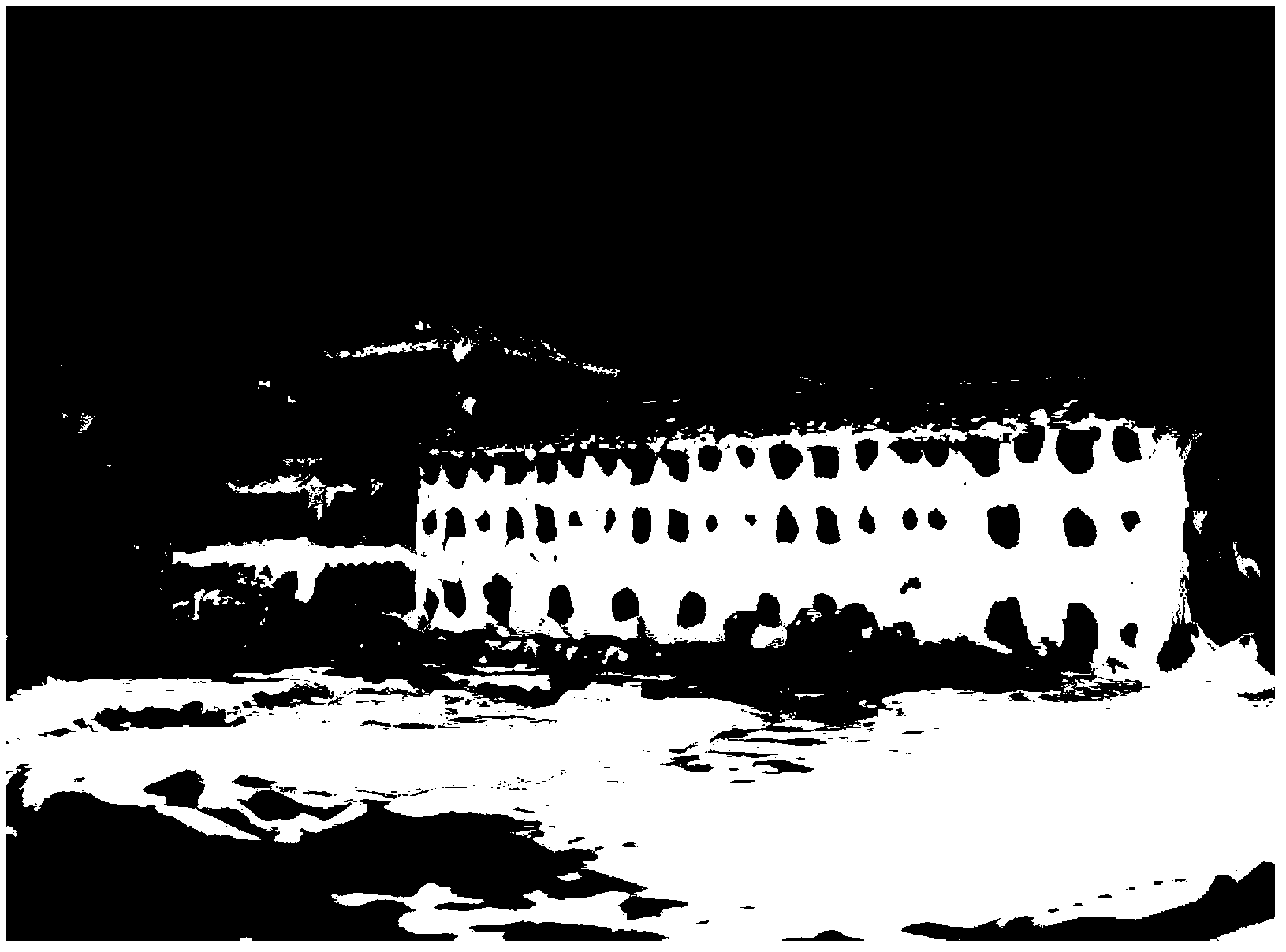

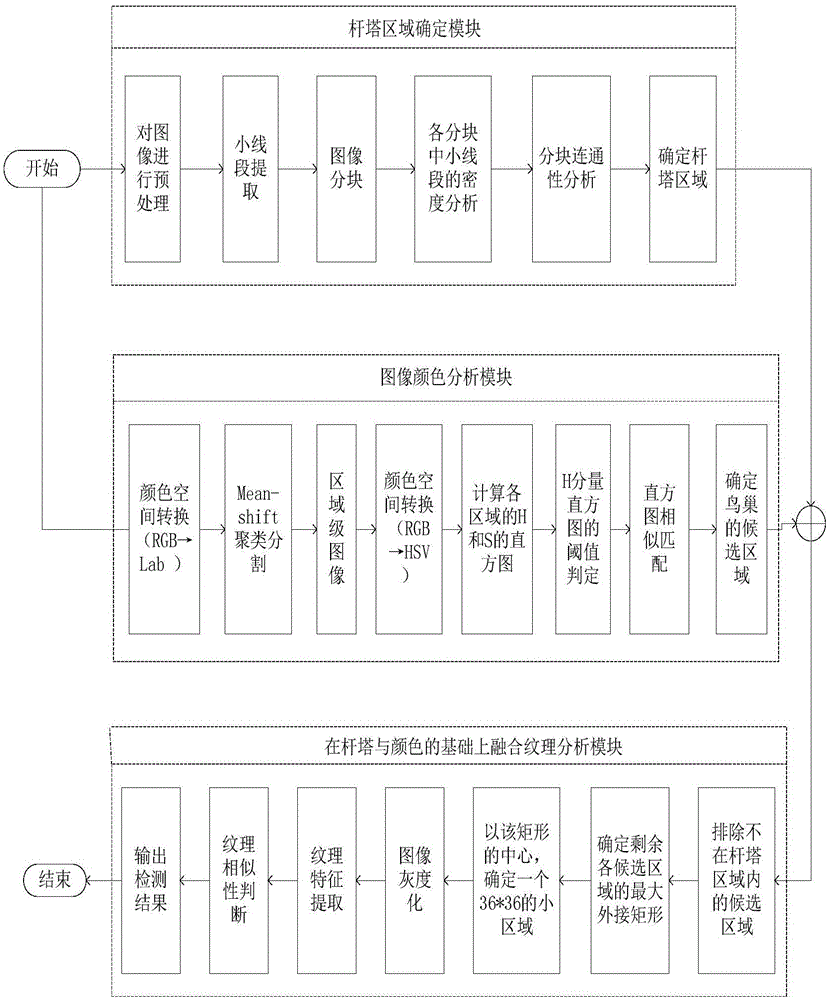

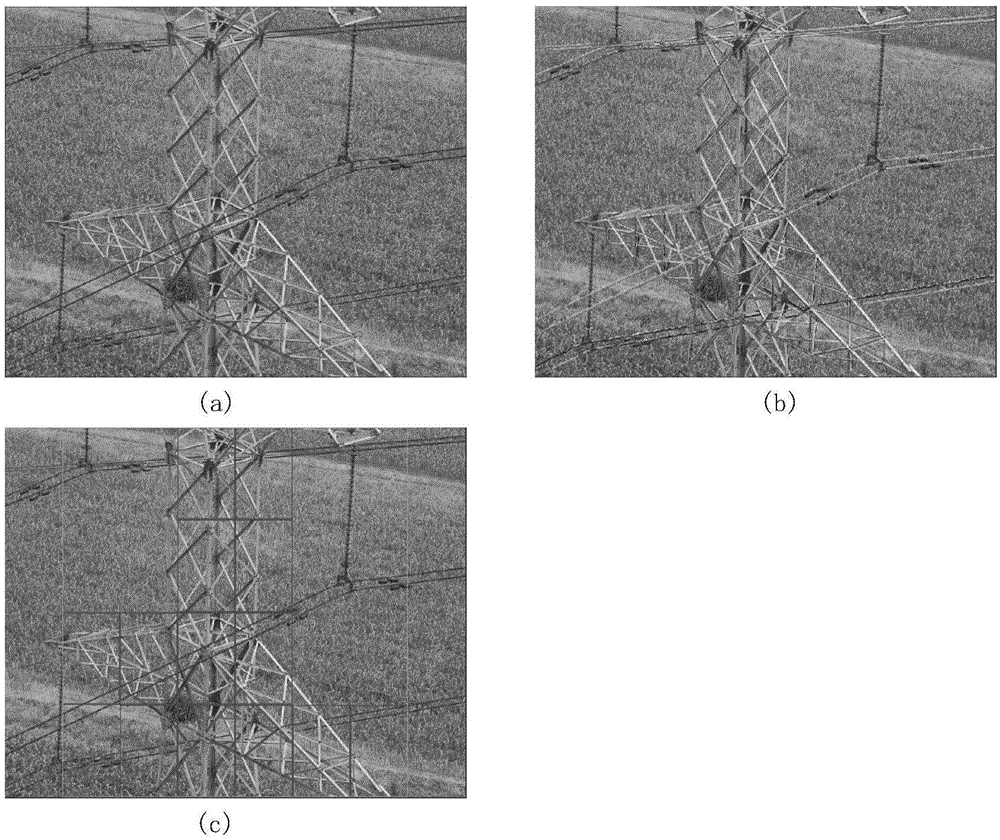

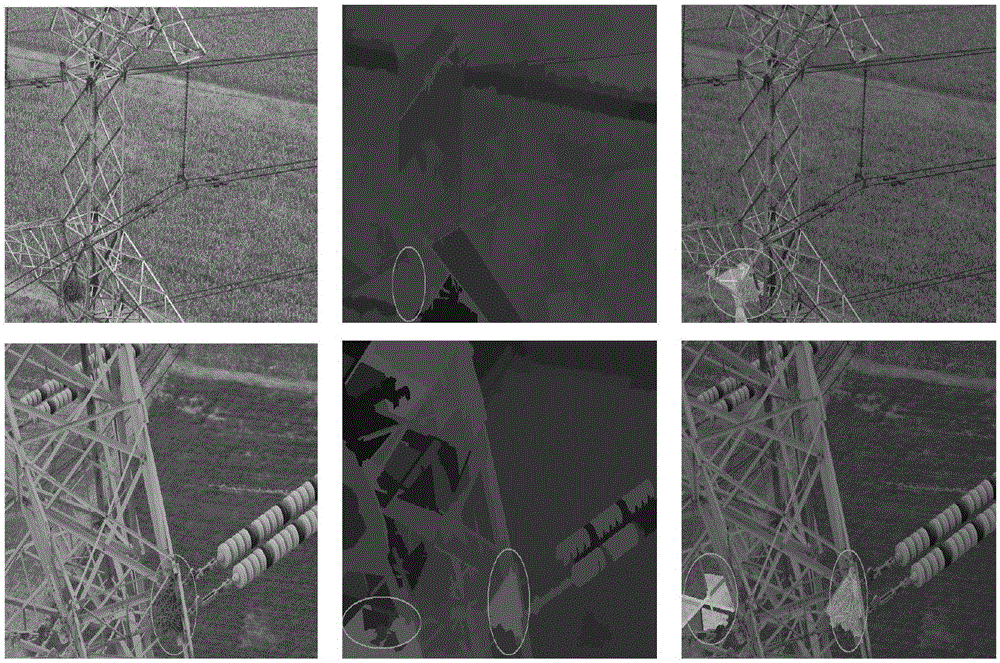

Method for detecting bird nests in power transmission line poles based on unmanned plane images

The invention provides a method for detecting bird nests in power transmission line poles based on unmanned plane images, which is method of perceiving and analyzing power transmission line structure features. Firstly, line segments in different directions are extracted from a polling image, a Gestalt perception theory is adopted to merge small interrupted line segments, and the merged line segments are clustered into parallel line sets. Then, the image is divided into 8*4 blocks according to a structural feature (nearly symmetrical intersection feature) of a pole in the image, the quantity statistics of line segments in four different directions in each block is analyzed, and an area where the pole is in the image is detected. The invention provides a bird nest detection method which fuses colors and textures. Firstly, an area of color consistency in an image is obtained by mean-shift cluster segmentation. Then, according to features of an H histogram of a bird nest sample, multiple areas which are most similar to the bird nest sample in the image are selected as candidate areas of a bird nest through a histogram interaction method. Then, three co-occurrence matrix features of entropy and inertia moments and dissimilarity, which can best represent the bird nest, are selected to calculate texture features of the candidate areas of the bird nest. Finally, matching between each candidate area of the bird nest and the bird nest sample texture similarity is carried out to achieve the bird nest detection.

Owner:上海深邃智能科技有限公司

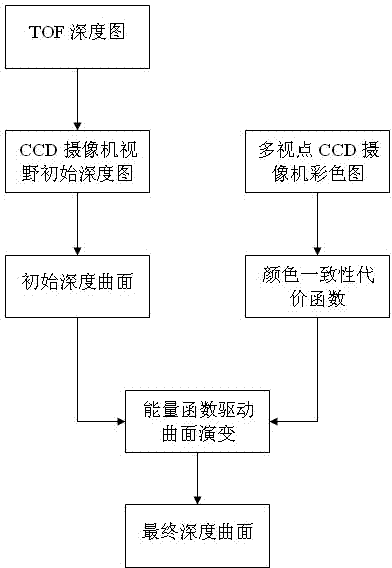

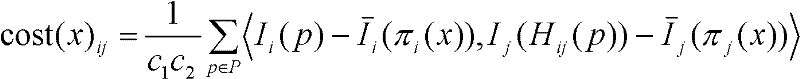

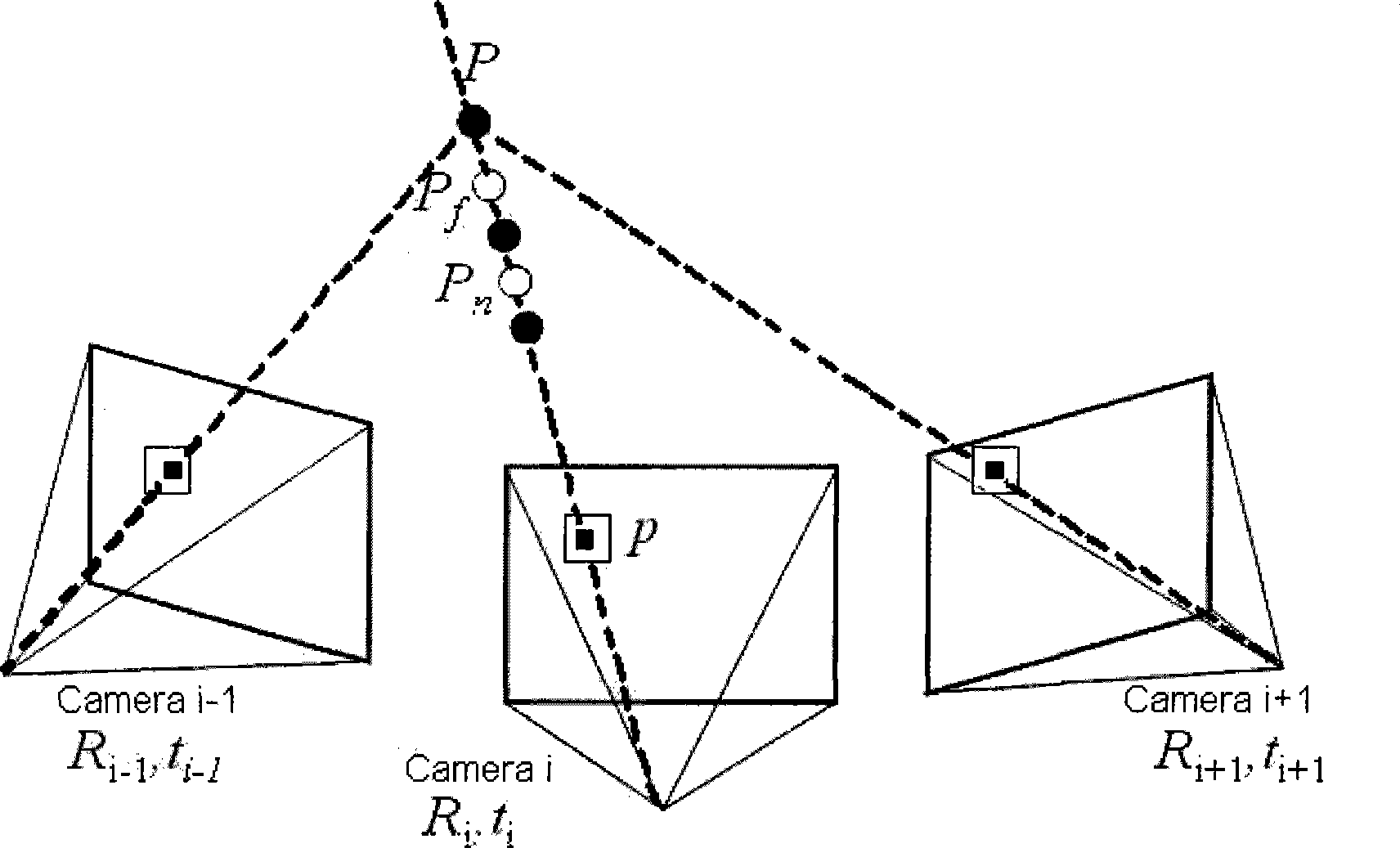

Depth integration and curved-surface evolution based multi-viewpoint three-dimensional reconstruction method

The invention discloses a depth integration and curved-surface evolution based multi-viewpoint three-dimensional reconstruction method. The method comprises the following steps: firstly, calibrating a camera array consisting of depth cameras and visible light cameras, projecting depth maps obtained by the depth cameras to a viewpoint in the array by using calibration parameters so as to obtain an initial depth map of the viewpoint, and then obtaining an initial depth curved-surface according to the initial depth map; secondary, defining a curved-surface evolution based energy function, wherein the definition of the energy function comprises the color consistency cost of a plurality of cameras, and the uncertainty and curved-surface smoothing items of the initial depth curved-surface; and finally, driving the depth curved-surface evolution through convexification and minimization on the energy function so as to obtain a final depth curved-surface, namely, three-dimensional scene information. According to the invention, the initial depth information and the color consistency cost of a plurality of cameras are simultaneously fused, therefore, the method is a three-dimensional reconstruction method by which scene space information can be effectively obtained, and can meet various application requirements in three-dimensional televisions, virtual realities and the like.

Owner:ZHEJIANG UNIV

Double-layer glue LED display screen and processing method thereof

InactiveCN107731121AImprove the display effectSolve the display effectIdentification meansLED displayEngineering

The invention discloses a double-layer glue LED display screen and a processing method thereof. The double-layer glue LED display screen includes a circuit board and an LED light-emitting chip mountedon the surface of the circuit board and further includes a black glue layer covering the surface of the circuit board and a transparent adhesive layer which has a flat surface and covers the black glue layer and the LED light-emitting chip, wherein gaps of the LED light-emitting chip are filled with the black glue layer, the upper surface of the LED light-emitting chip is flush with the black glue layer, to form a flat surface. The double-layer glue LED display screen causes the LED light-emitting chip to be protected by the black glue layer, surface color consistency after display screen splicing is ensured, the black glue layer is flushed with the upper surface of the LED light-emitting chip, the viewing angle of the display screen is expanded, a moire pattern phenomenon is avoided, a display effect of the double-layer glue LED display screen is improved, and the transparent adhesive layer is taken for protection, so that waterproof, fireproof, dustproof, antistatic and pressure-proof effects can be achieved.

Owner:SHENZHEN SHOWHO TECH CO LTD

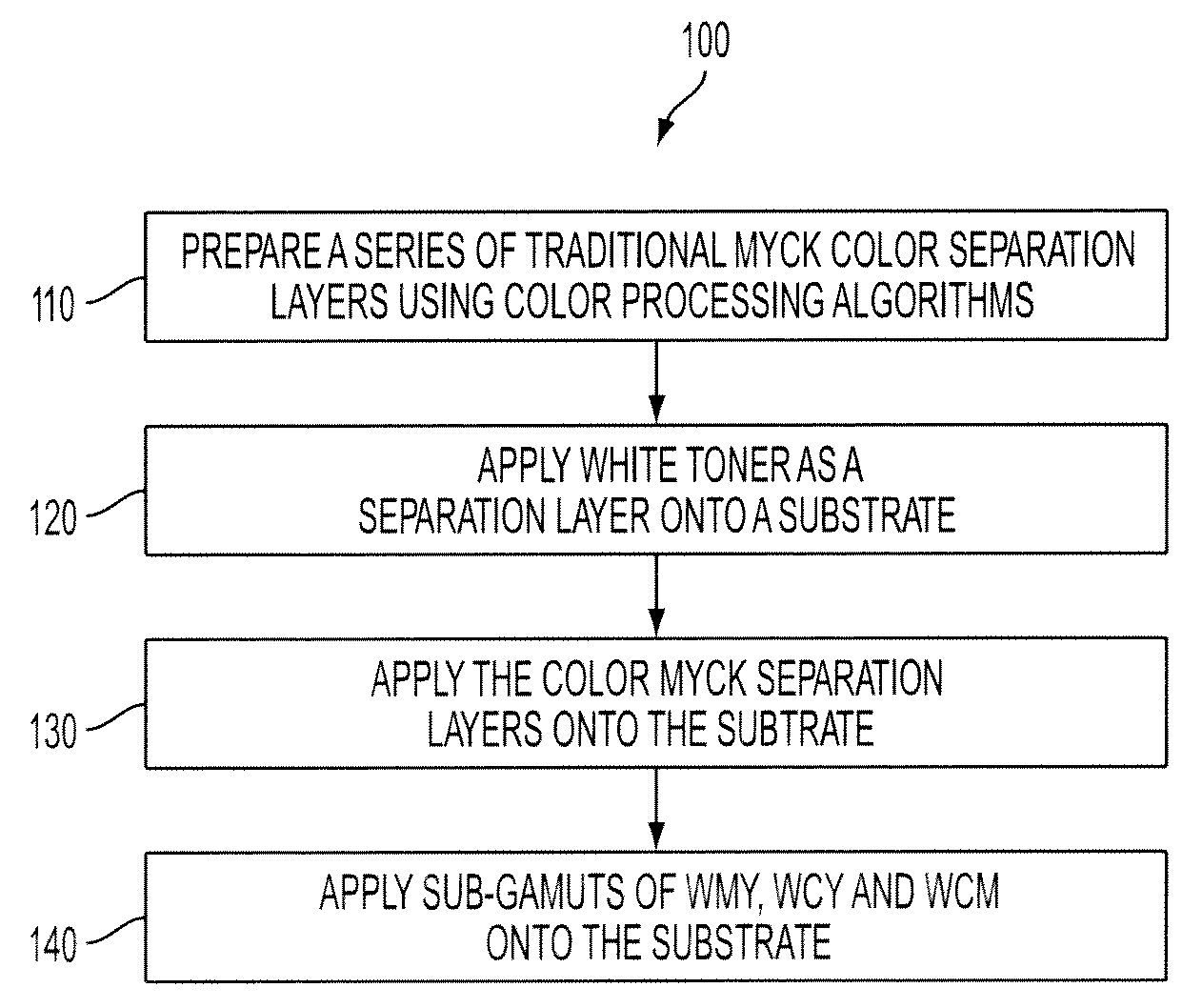

Method to create spot colors with white and CMYK toner and achieve color consistency

ActiveUS20090296173A1Digitally marking record carriersDigital computer detailsColor printingComputer graphics (images)

A system and method for achieving process spot color consistency using white and CMYK toners is disclosed. The present application employs traditional CMYK using the automated spot color editing approach and enhances this approach by applying a white toner to the printing substrate prior to applying the color. This new and novel method will improve the color printing technology for printing or alternately, applying the application of the white as a distinct separation layer for the color toner separations, on plastics, ceramics, woods, and other such non-paper materials.

Owner:XEROX CORP

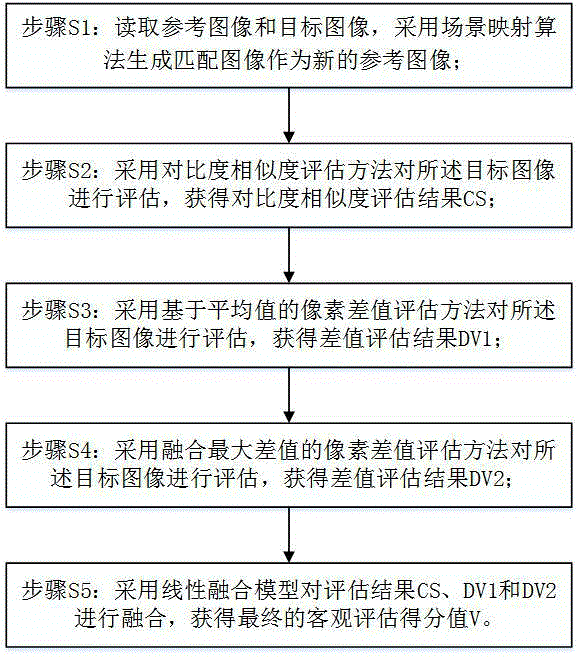

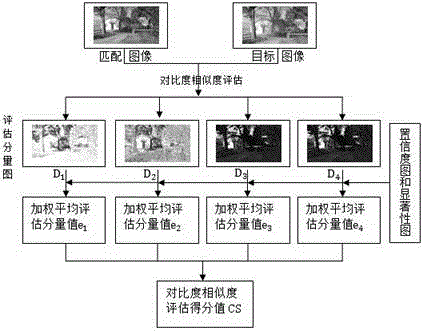

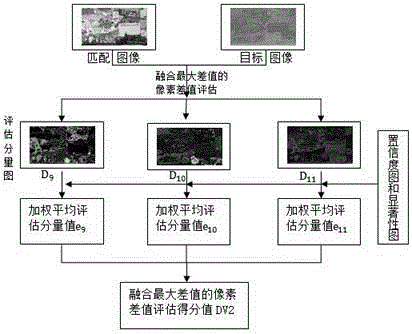

Color correction objective assessment method consistent with subjective perception

ActiveCN105046708AImprove accuracyImprove use valueImage enhancementImage analysisPattern recognitionPixel value difference

The invention relates to a color correction objective assessment method consistent with subjective perception. The method comprises the following steps: Step S1, reading a reference image and a target image and generating a matched image by a scenario mapping algorithm to be used as a new reference image; Step S2, assessing the target image by a contrast similarity assessment method to obtain a contrast similarity assessment result CS; Step S3, assessing the target image by a pixel-value difference assessment method based on average value to obtain a difference value assessment result DV1; Step S4, assessing the target image by a pixel-value difference assessment method of maximum difference fusion to obtain a difference value assessment result DV2; and Step S5, fusing the assessment results CS, DV1 and DV2 by a linear fusion model to obtain a final objective assessment score value V. The method can effectively assess color consistency between images, has good correlation with subjective scoring of users and has accuracy, and can be applied in the field of image mosaic and left and right view colors consistency assessment of three-dimensional images.

Owner:FUZHOU UNIV

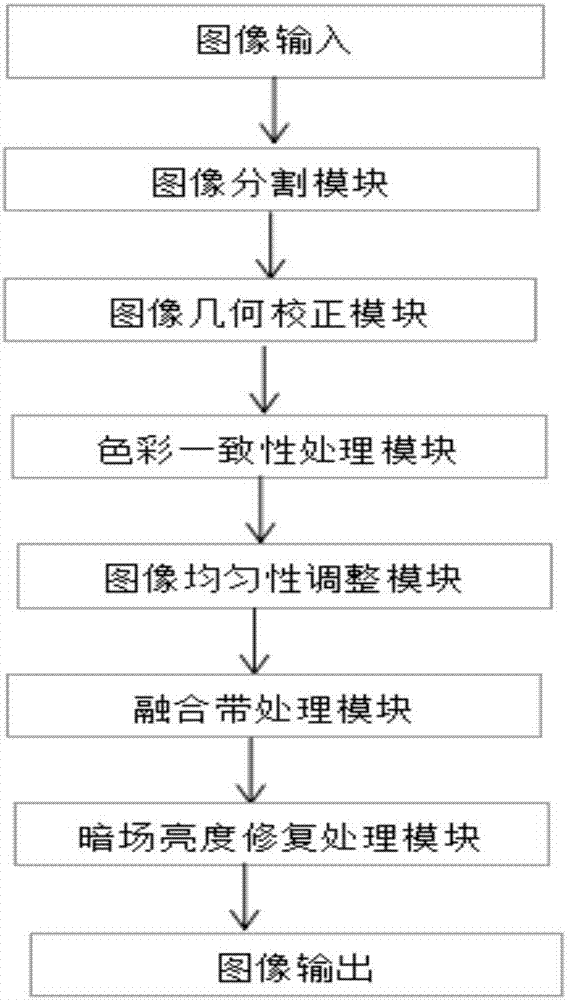

Projector image edge fusion system and method

InactiveCN108012131AAchieve integrationRealize seamless splicingPicture reproducers using projection devicesField conditionsImage segmentation

The invention discloses a projector image edge fusion system and method, and relates an image edge fusion processing for fusing multiple pictures in the field of projector devices. The system comprises an image segmentation processing module used for performing equal division processing on images; a color consistency processing module used for adjusting the colors of more than two images to be consistent; an image uniformity adjustment module used for adjusting the brightness and color uniformity of the images; a fusion tape processing module used for performing image cutting and gradual imagebrightness change processing on fusion tapes between more than two images; a dark field brightness repair processing module used for adjusting the brightness of a non-overlapping areas under dark field conditions to make it consistent with the brightness of an overlapping area. In the projector image edge fusion system and method disclosed by the invention, the dark field problem in the fusion isperfectly solved by the dark field brightness repair processing module, and the fusion band brightness and the smoothness of the colors can be well achieved through the fusion band processing module,so that the details of the picture can be further improved.

Owner:SICHUAN CHANGHONG ELECTRIC CO LTD

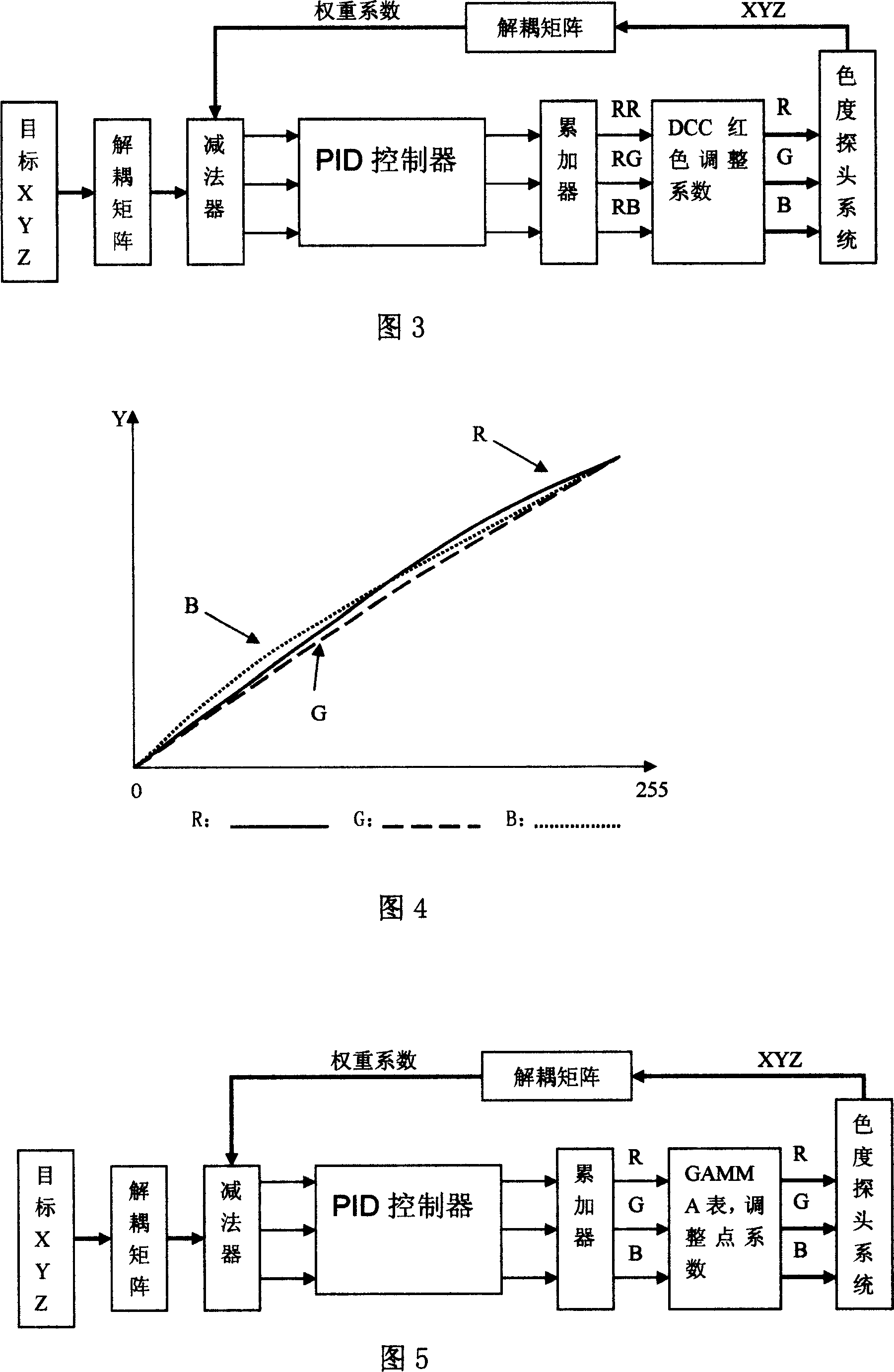

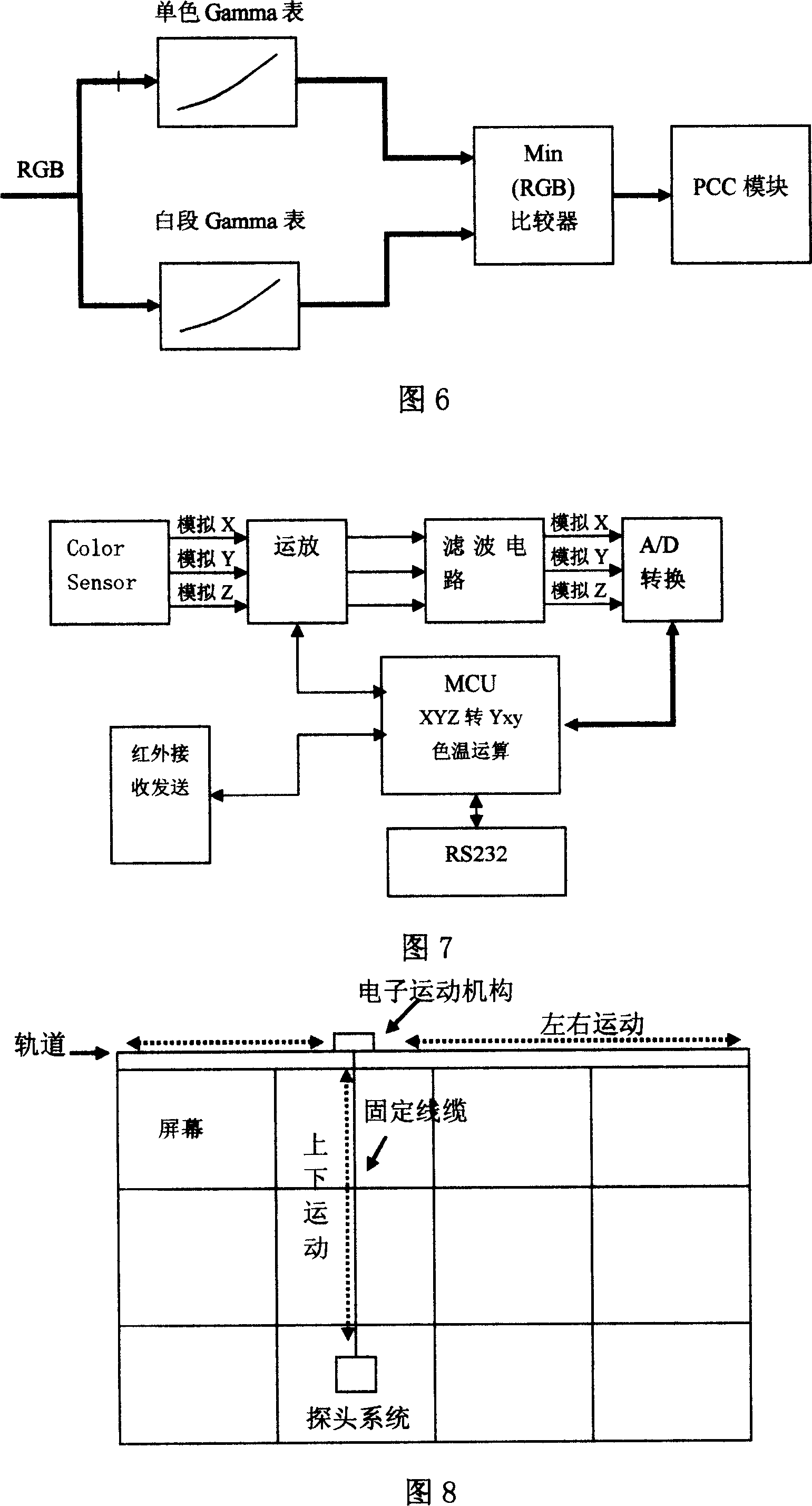

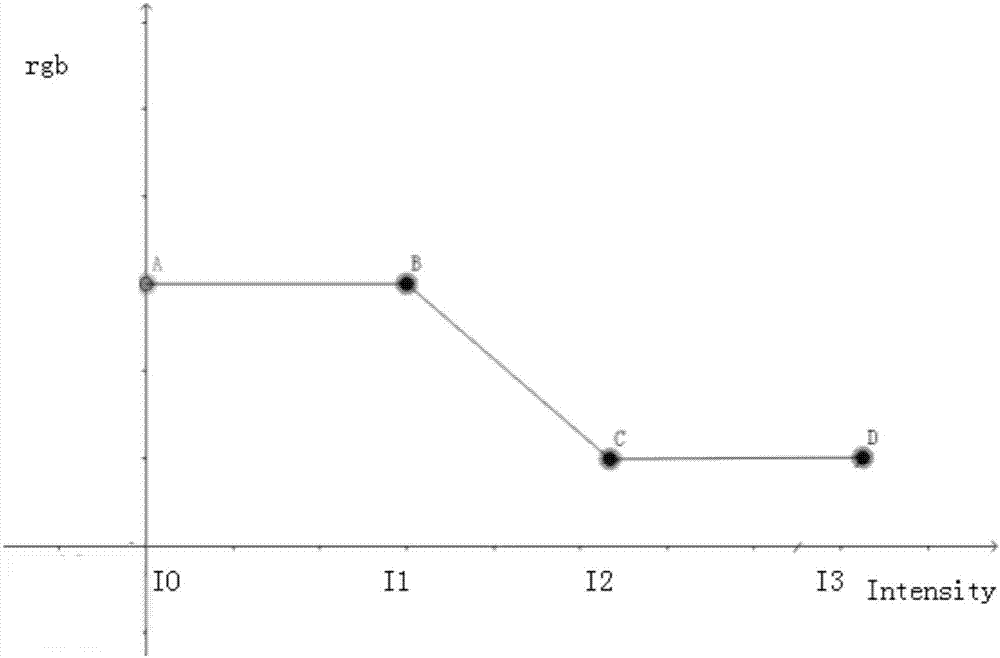

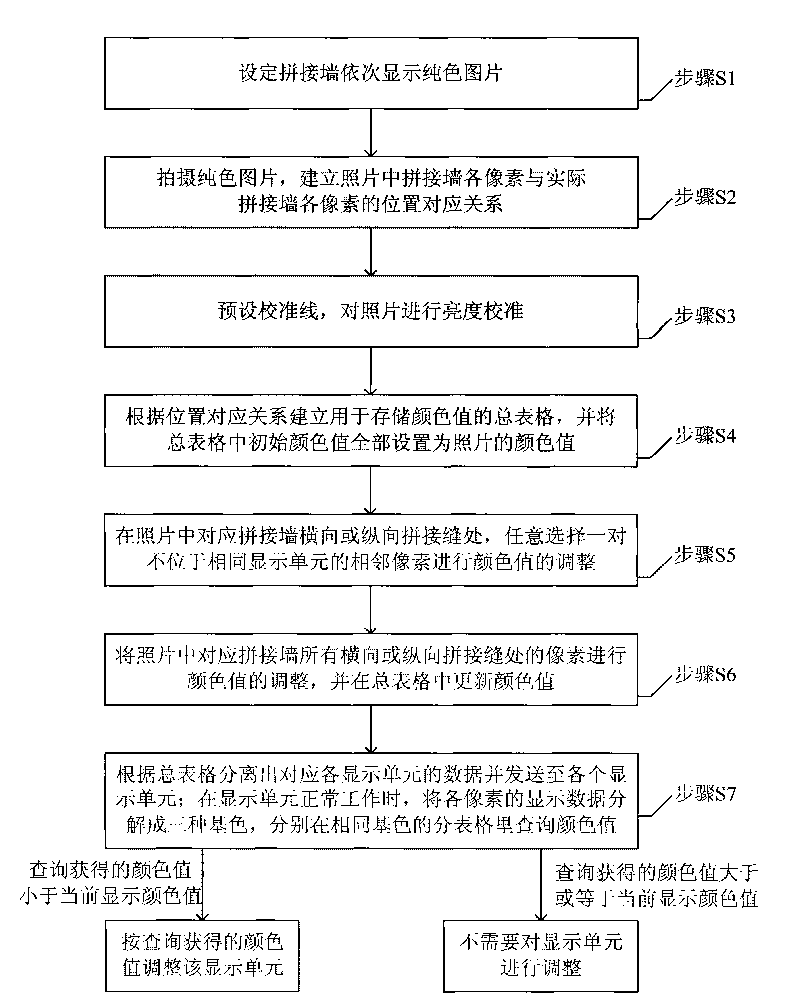

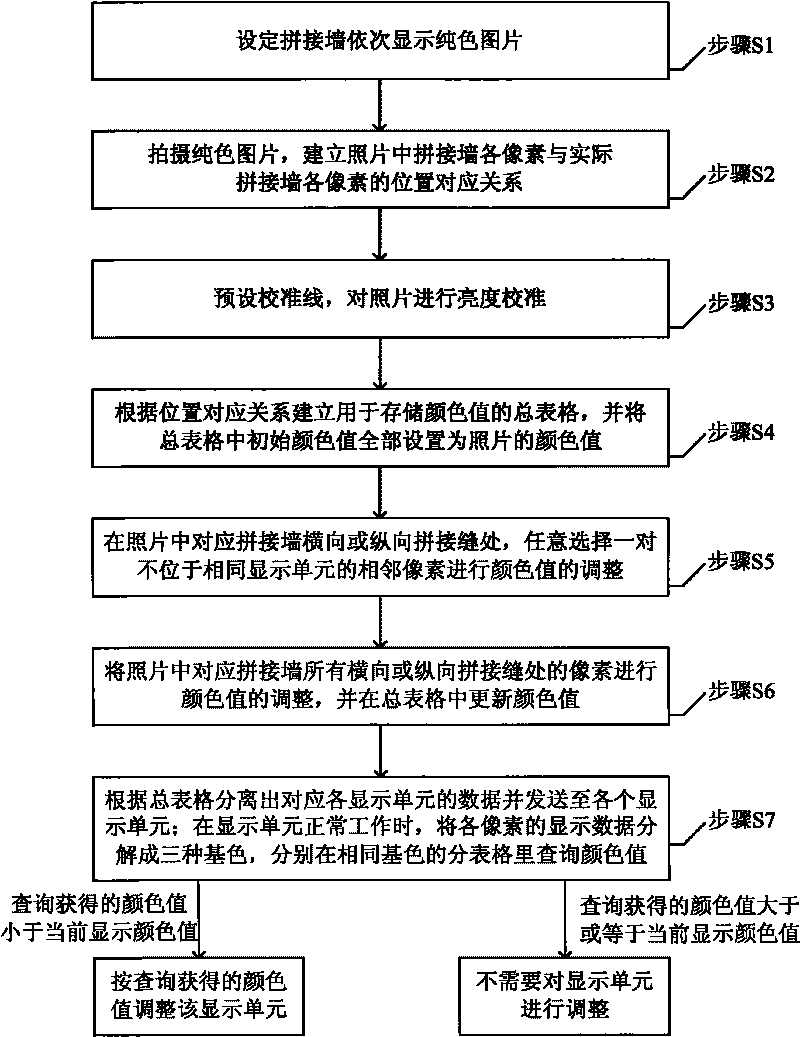

Colour calibration method of display unit

ActiveCN101719362AAchieve regulationAchieve consistent results with color adjustmentsCathode-ray tube indicatorsColor calibrationColor consistency

Owner:GUANGDONG VTRON TECH CO LTD

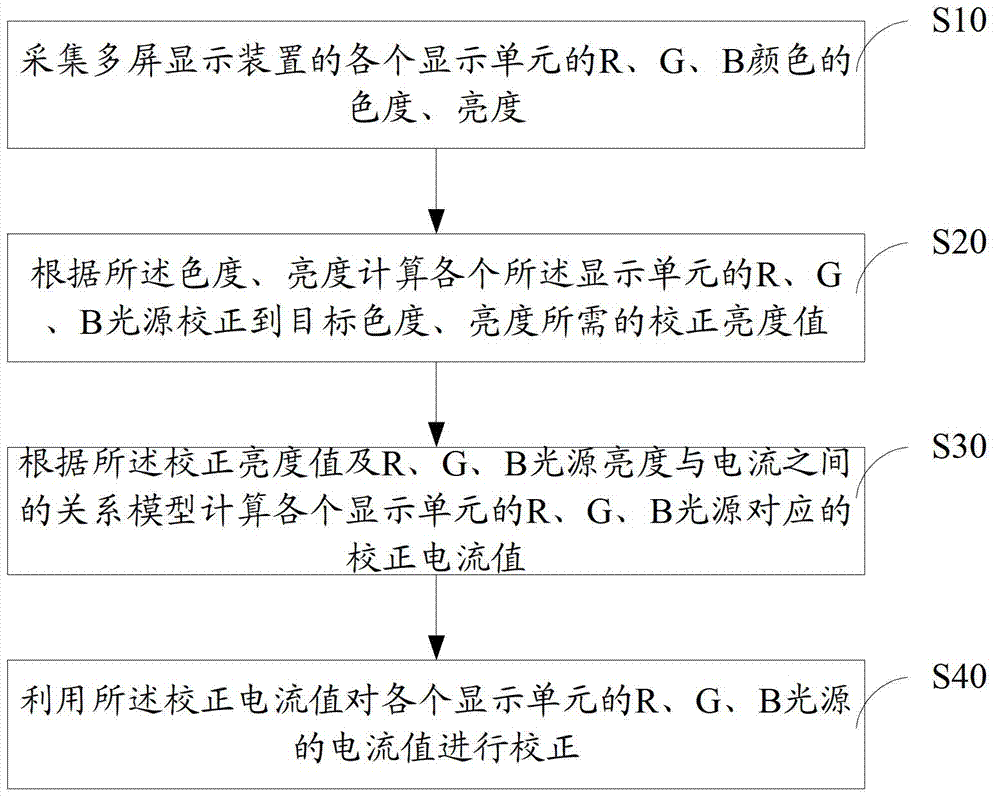

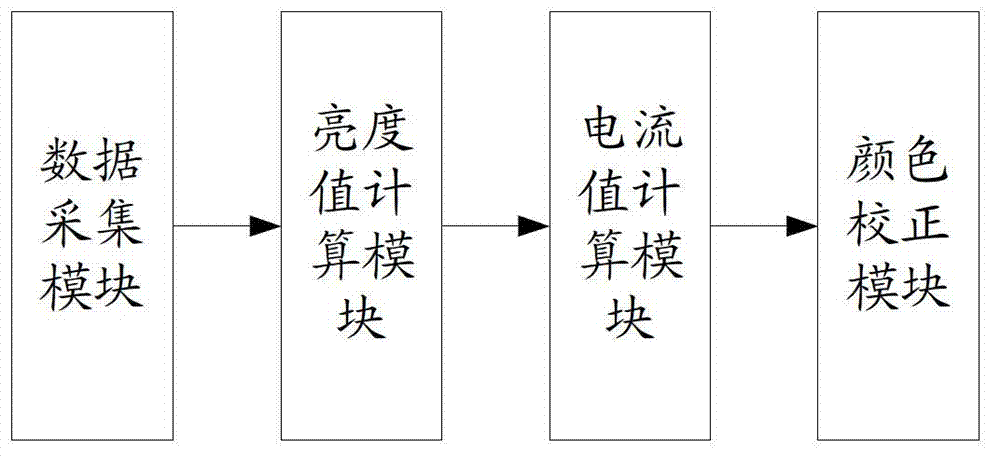

Color correction method and system for multi-screen display device

InactiveCN103050109AColor Correction ImplementationMaintain color hierarchyCathode-ray tube indicatorsRelational modelColor correction

The invention discloses a color correction method and a color correction system for a multi-screen display device. The method comprises the following steps: acquiring chroma and brightness of red, green and blue (R, G and B) of each display unit of the multi-screen display device; calculating corrected brightness values required by correcting R, G and B light sources of each display unit to reach the target chroma and brightness according to the chroma and brightness; calculating corrected current values corresponding to the R, G and B light sources of each display unit according to the corrected brightness value and a relation model between the brightness of the R, G and B light sources and current; and correcting current values of the R, G and B light sources of each display unit according to the corrected current values. After the multi-screen display device is corrected, the color consistence of each display unit of the multi-screen display device is good; and compared with the traditional signal compression correction method, a current value correction method can keep the color layering among display units and has better display effect.

Owner:GUANGDONG VTRON TECH CO LTD

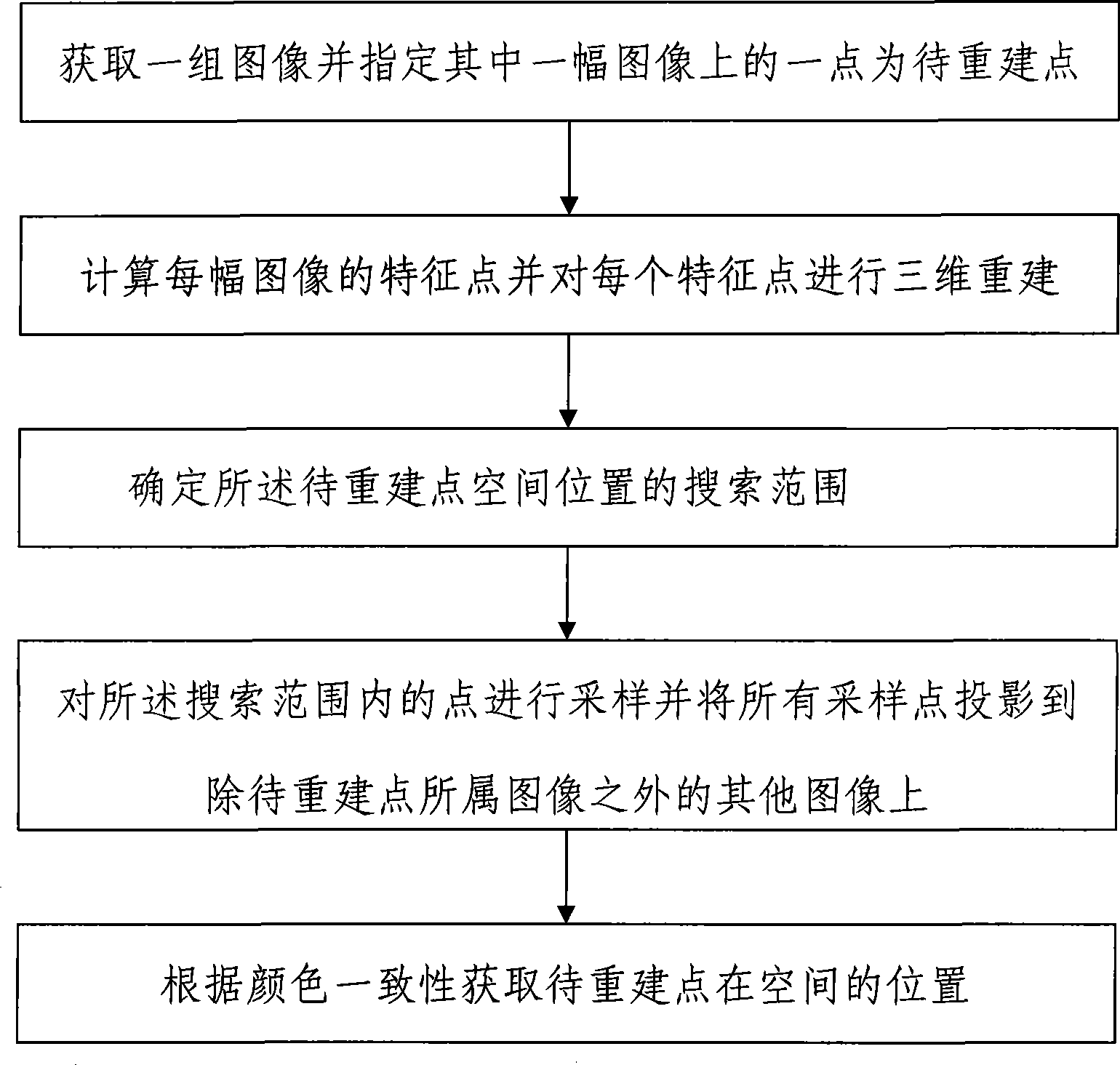

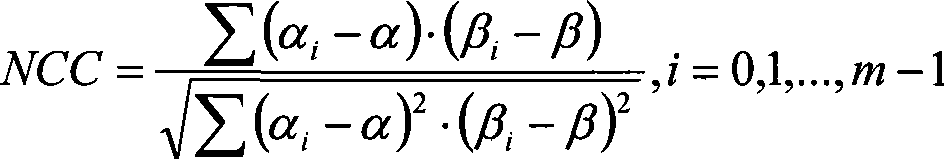

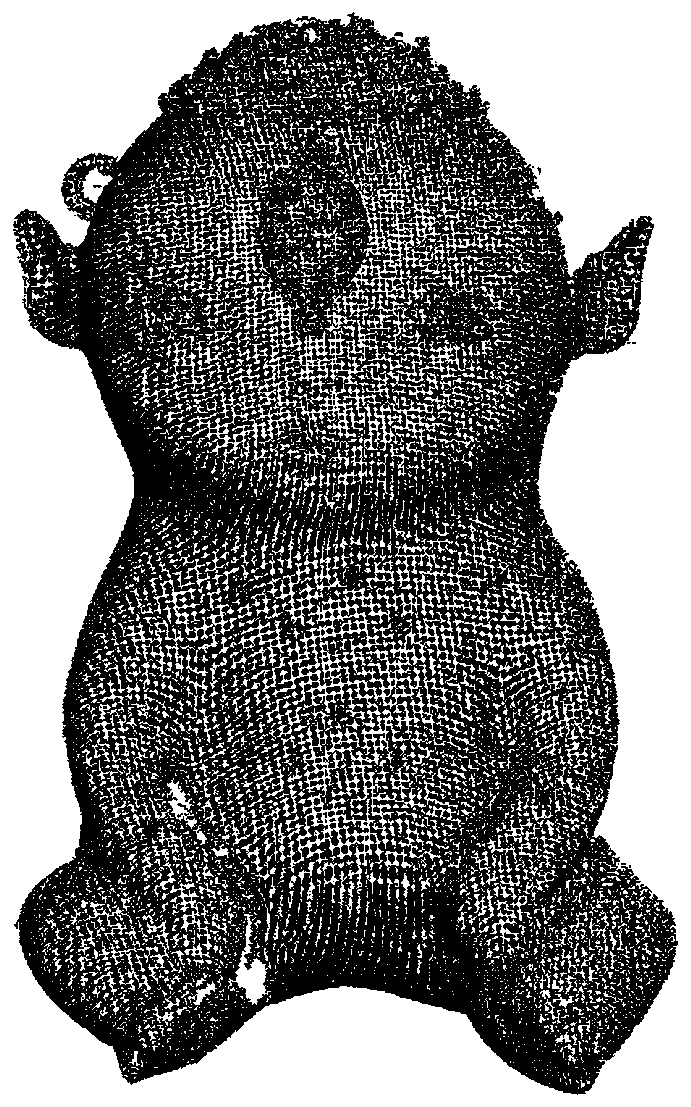

Three-dimensional reconstruction method on basis of image

Owner:PEKING UNIV

3D-RGB point cloud registration method based on local gray scale sequence model descriptor

ActiveCN110490912ASmooth interferenceImprove registration accuracyImage enhancementImage analysisThree dimensional measurementNear neighbor

The invention belongs to the technical field of computer vision, image processing and three-dimensional measurement, and particularly relates to a 3D-RGB point cloud registration method based on a local gray scale sequence model descriptor. The method comprises the following steps: 1, calculating a four-neighborhood gray average value of each point in two point clouds; 2, dividing adjacent pointsof the key points into six parts according to gray values, and finally connecting the feature vectors of the six parts in series to form a key point feature descriptor; 3, constructing a point-to-point mutual corresponding relationship between the source point cloud and the target point cloud according to a nearest neighbor ratio method and an Euclidean distance threshold, and removing an error corresponding relationship by utilizing random sampling consistency and color consistency; and 4, solving a conversion matrix between the source point cloud and the target point cloud by using the corresponding relationship, and performing spatial transformation on the source point cloud to complete registration of the point cloud. According to the method, the influence of unobvious geometric information and light intensity change on point cloud registration can be effectively reduced. The method has a wider application range. The precision and the robustness of three-dimensional point cloud registration are improved.

Owner:HARBIN ENG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com