Patents

Literature

168 results about "Pixel value difference" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

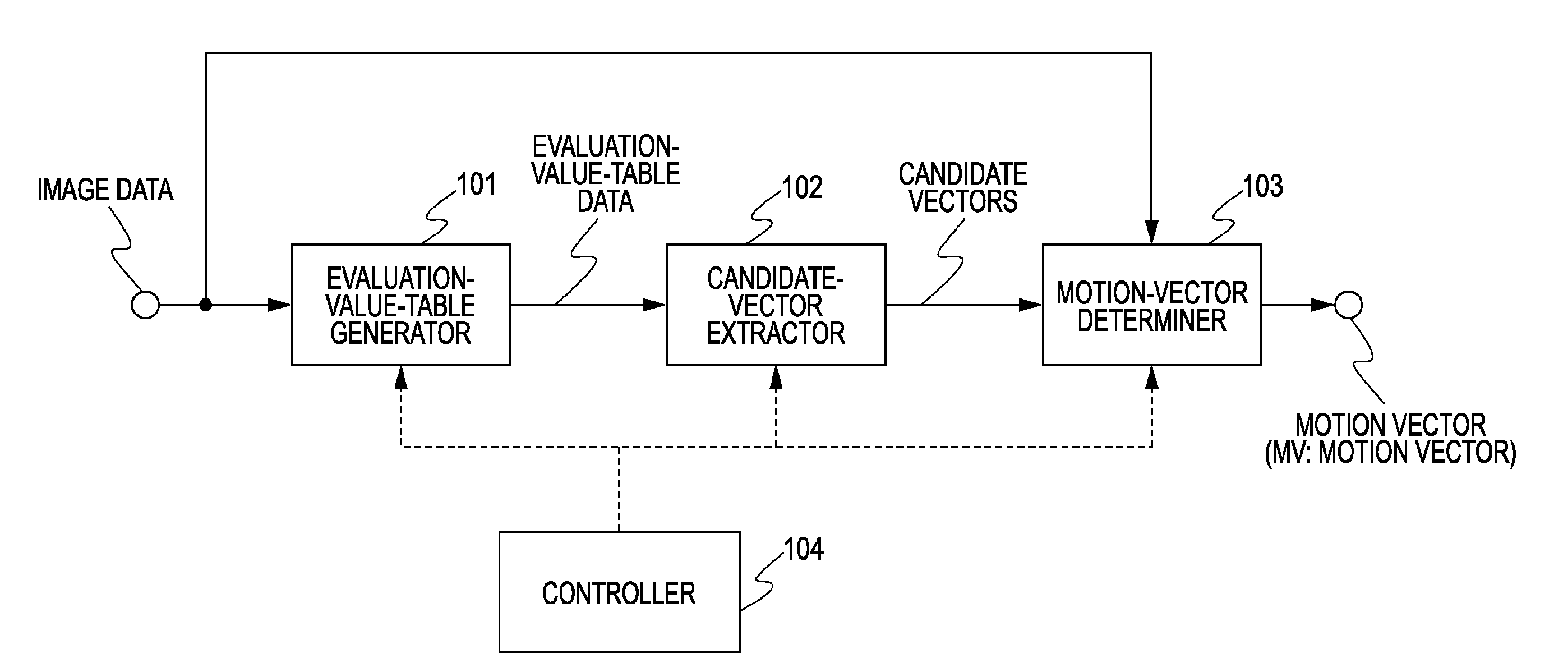

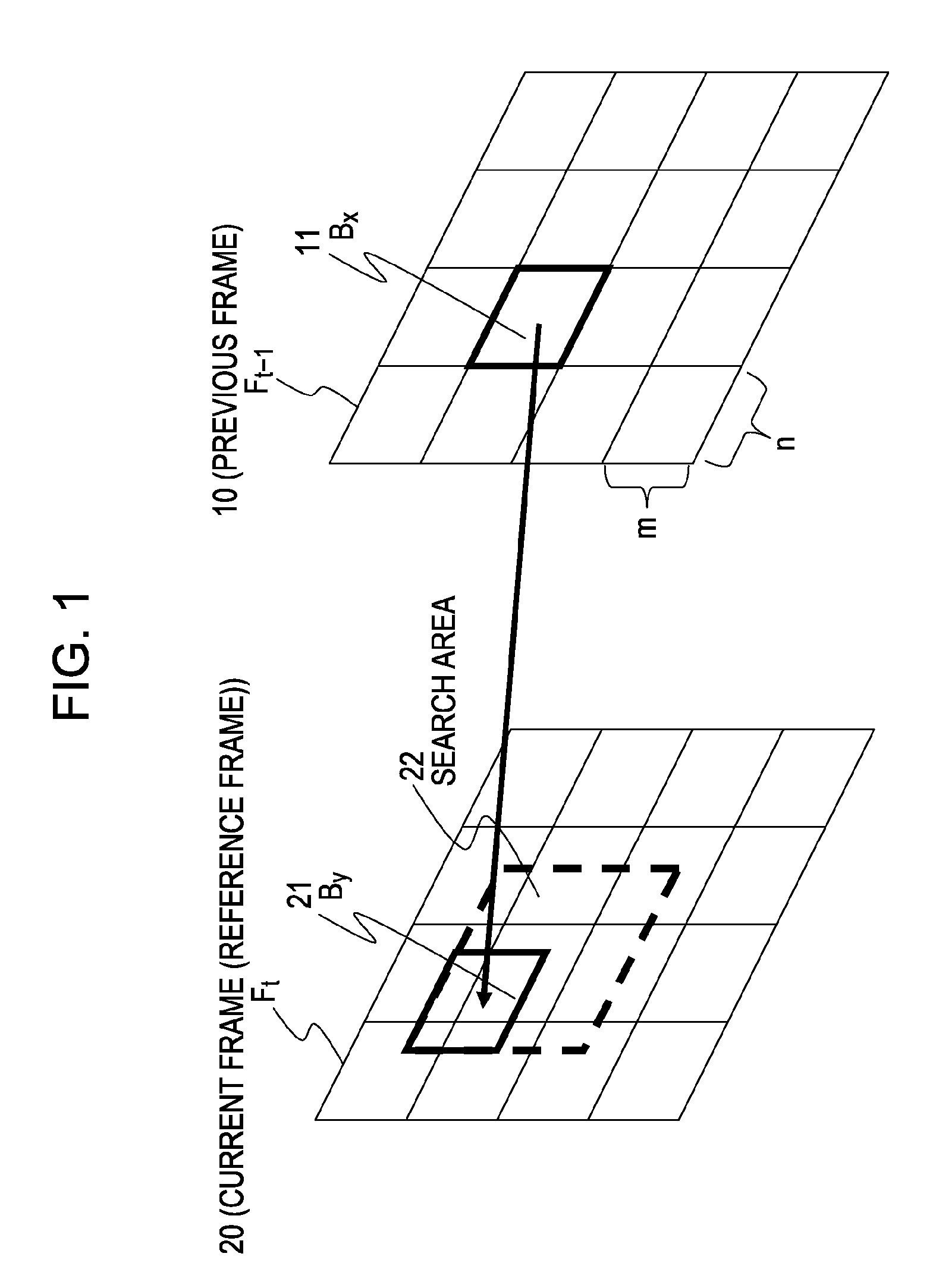

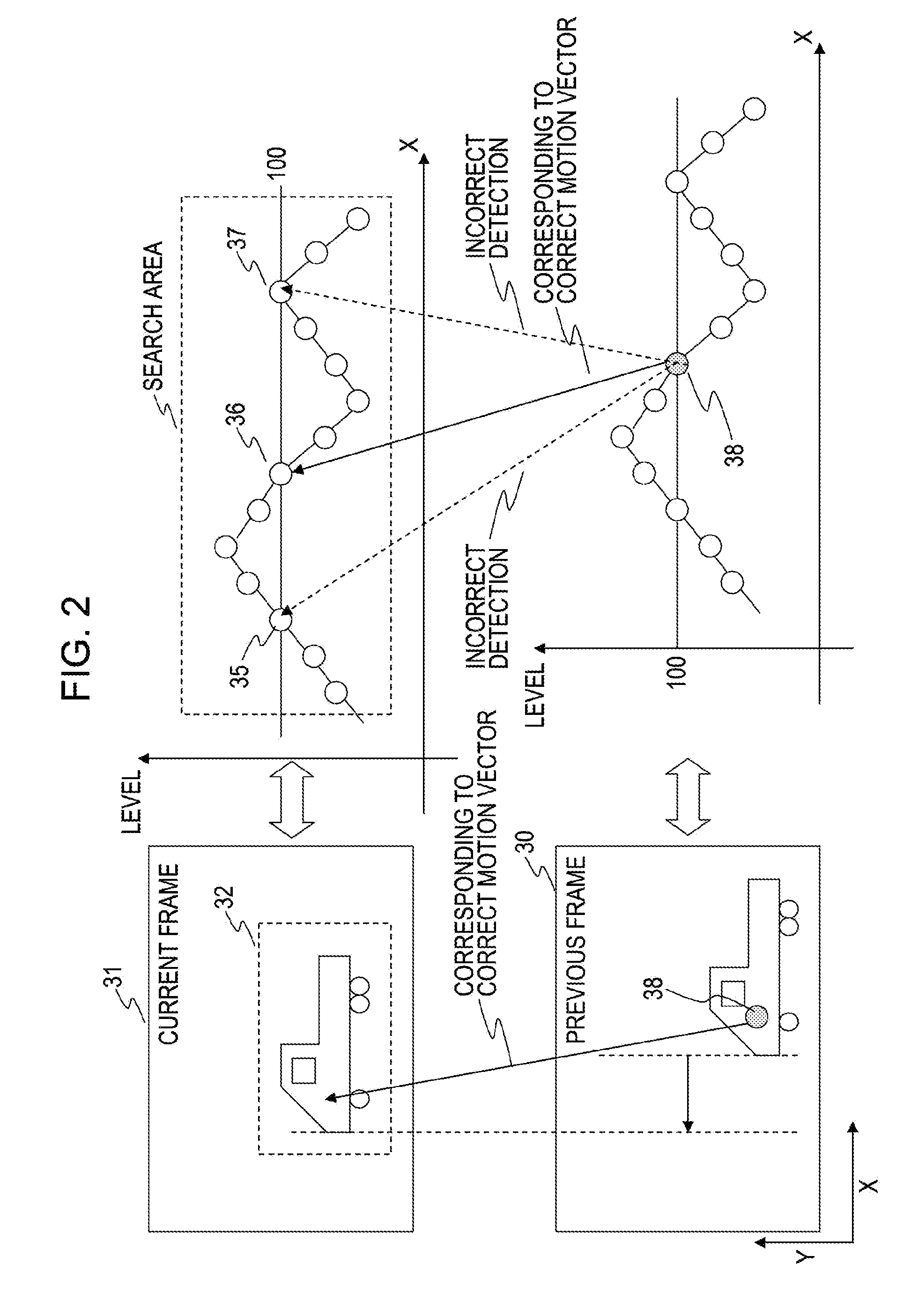

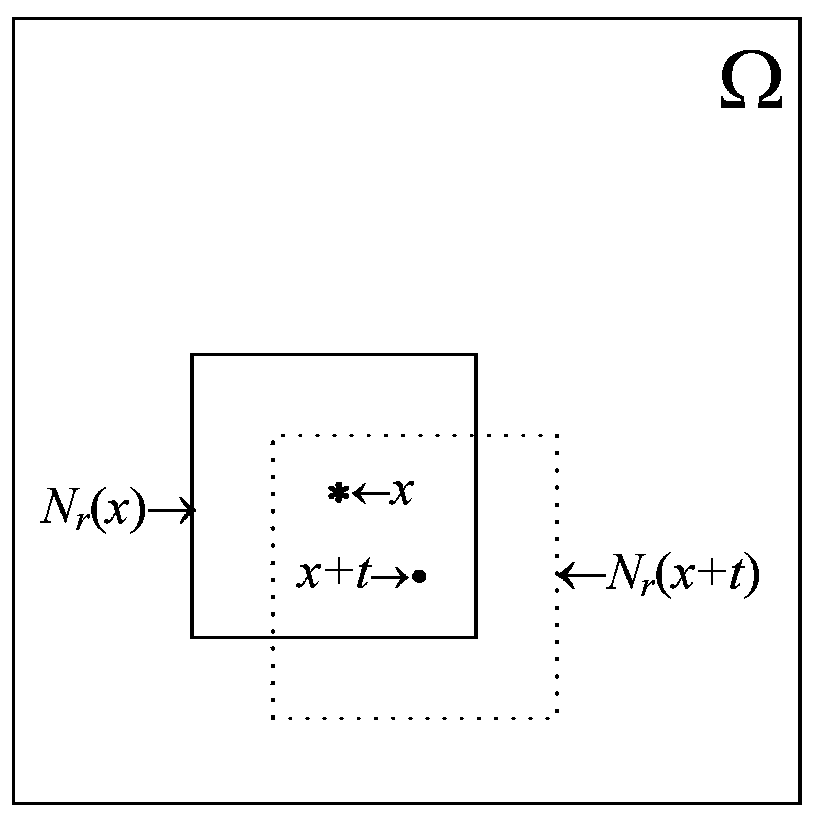

Motion-vector detecting device, motion-vector detecting method, and computer program

InactiveUS20060285596A1Accurate evaluation-value tableAccurate Motion DetectionColor television with pulse code modulationImage analysisPattern recognitionRelevant information

A device and method that serve to generate an accurate evaluation-value table and to detect a correct motion vector are provided. A weight coefficient W is calculated on the basis of correlation information of a representative-point pixel and flag-correlation information based on flag data corresponding to pixel-value difference data between a subject pixel and a pixel in a neighboring region of the subject pixel. A confidence index is generated on the basis of the weight coefficient W calculated and an activity A as an index of complexity of image data, or a confidence index is generated on the basis of similarity of motion between the representative point and a pixel neighboring the representative point, and an evaluation value corresponding to the confidence index is accumulated to generate an evaluation-value table. Furthermore, correlation is checked on the basis of positions or pixel values of feature pixels neighboring the subject pixel to determine a motion vector.

Owner:SONY CORP

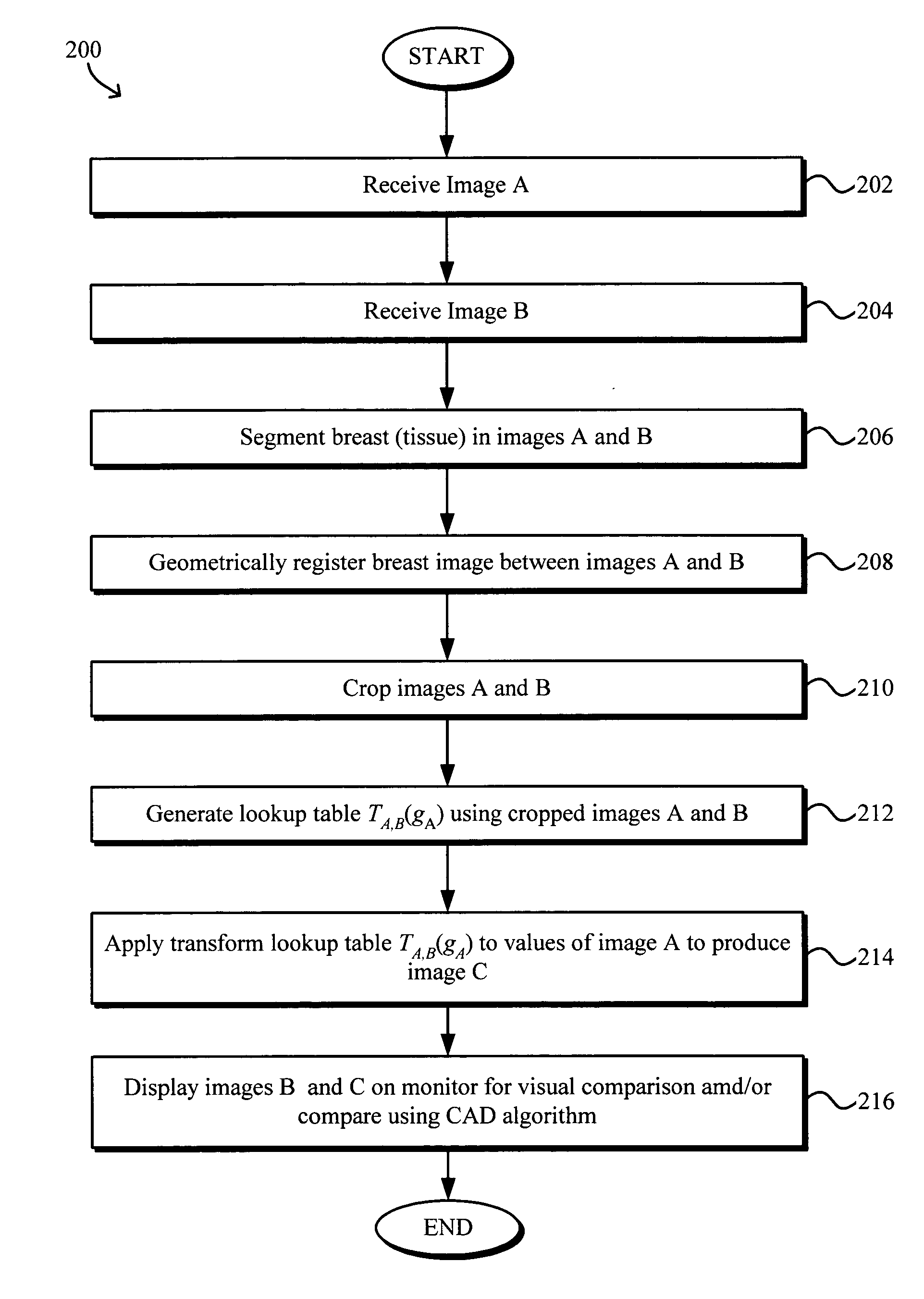

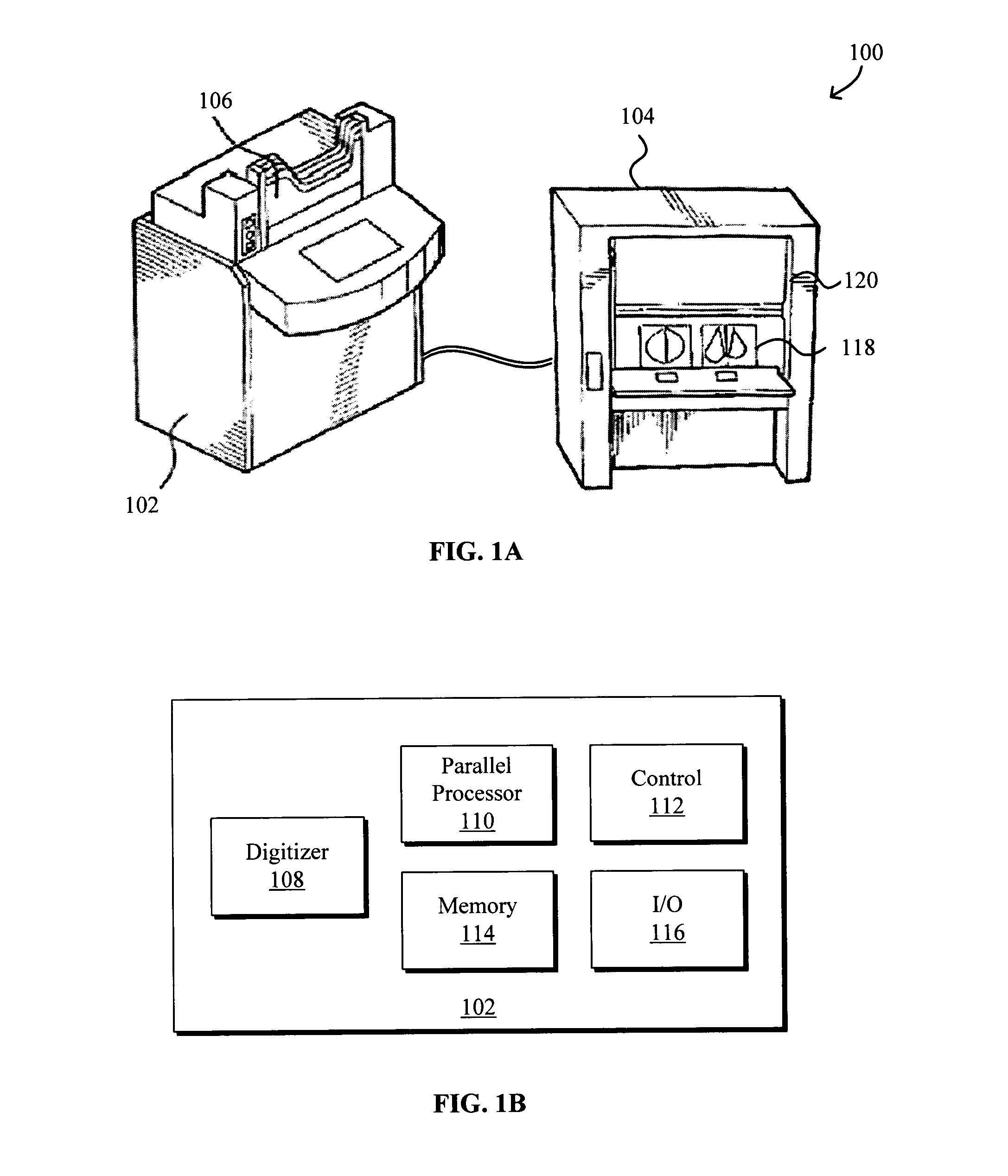

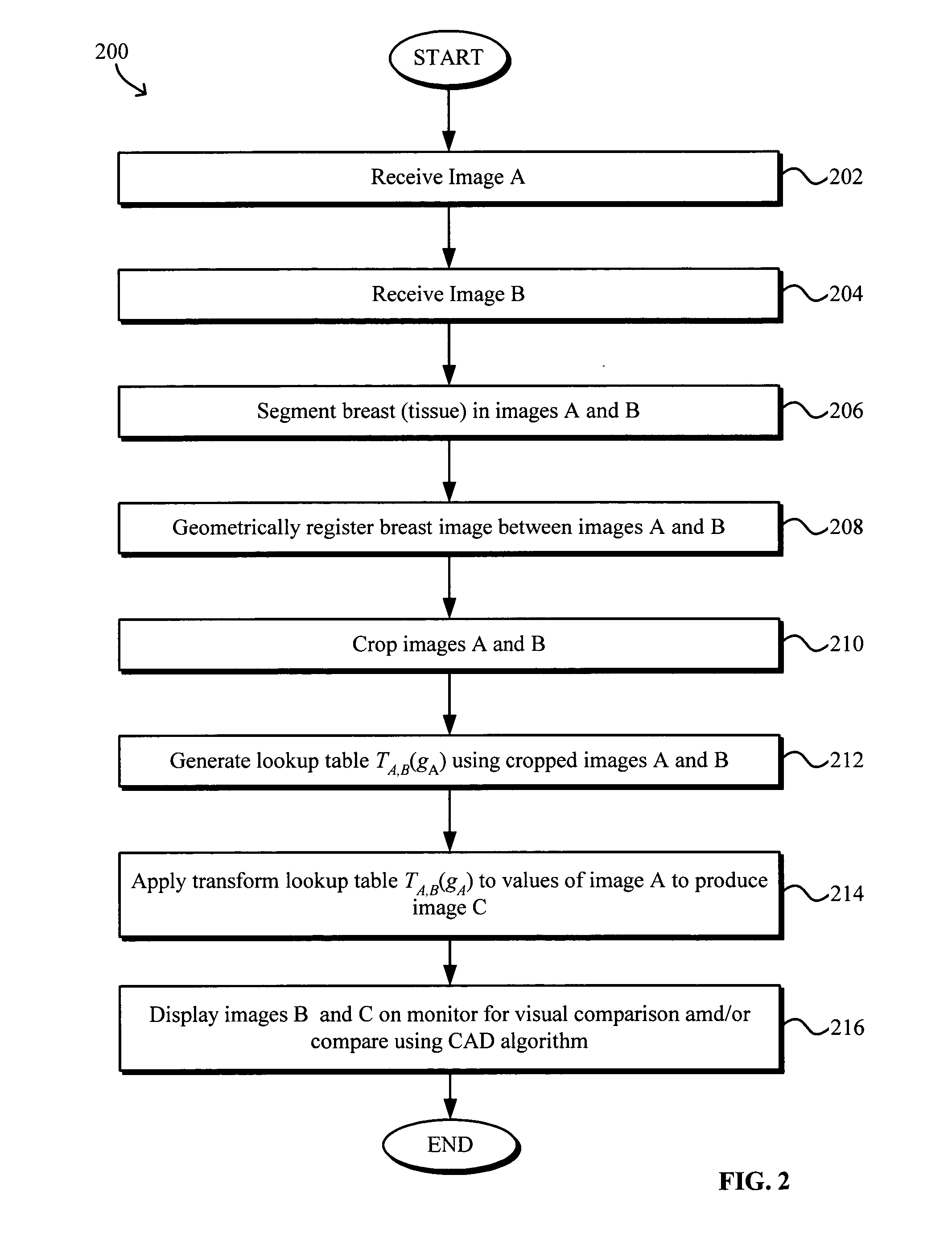

Model-based grayscale registration of medical images

ActiveUS20050013471A1Increase speedImprove reliabilityImage enhancementImage analysisPixel value differenceVisual perception

Numerical image processing of two or more medical images to provide grayscale registration thereof is described, the numerical image processing algorithms being based at least in part on a model of medical image acquisition. The grayscale registered temporal images may then be displayed for visual comparison by a clinician and / or further processed by a computer-aided diagnosis (CAD) system for detection of medical abnormalities therein. A parametric method includes spatially registering two images and performing gray scale registration of the images. A parametric transform model, e.g., analog to analog, digital to digital, analog to digital, or digital to analog model, is selected based on the image acquisition method(s) of the images, i.e., digital or analog / film. Gray scale registration involves generating a joint pixel value histogram from the two images, statistically fitting parameters of the transform model to the joint histogram, generating a lookup table, and using the lookup table to transform and register pixel values of one image to the pixel values of the other image. The models take into account the most relevant image acquisition parameters that influence pixel value differences between images, e.g., tissue compression, incident radiation intensity, exposure time, film and digitizer characteristic curves for analog image, and digital detector response for digital image. The method facilitates temporal comparisons of medical images such as mammograms and / or comparisons of analog with digital images.

Owner:HOLOGIC INC

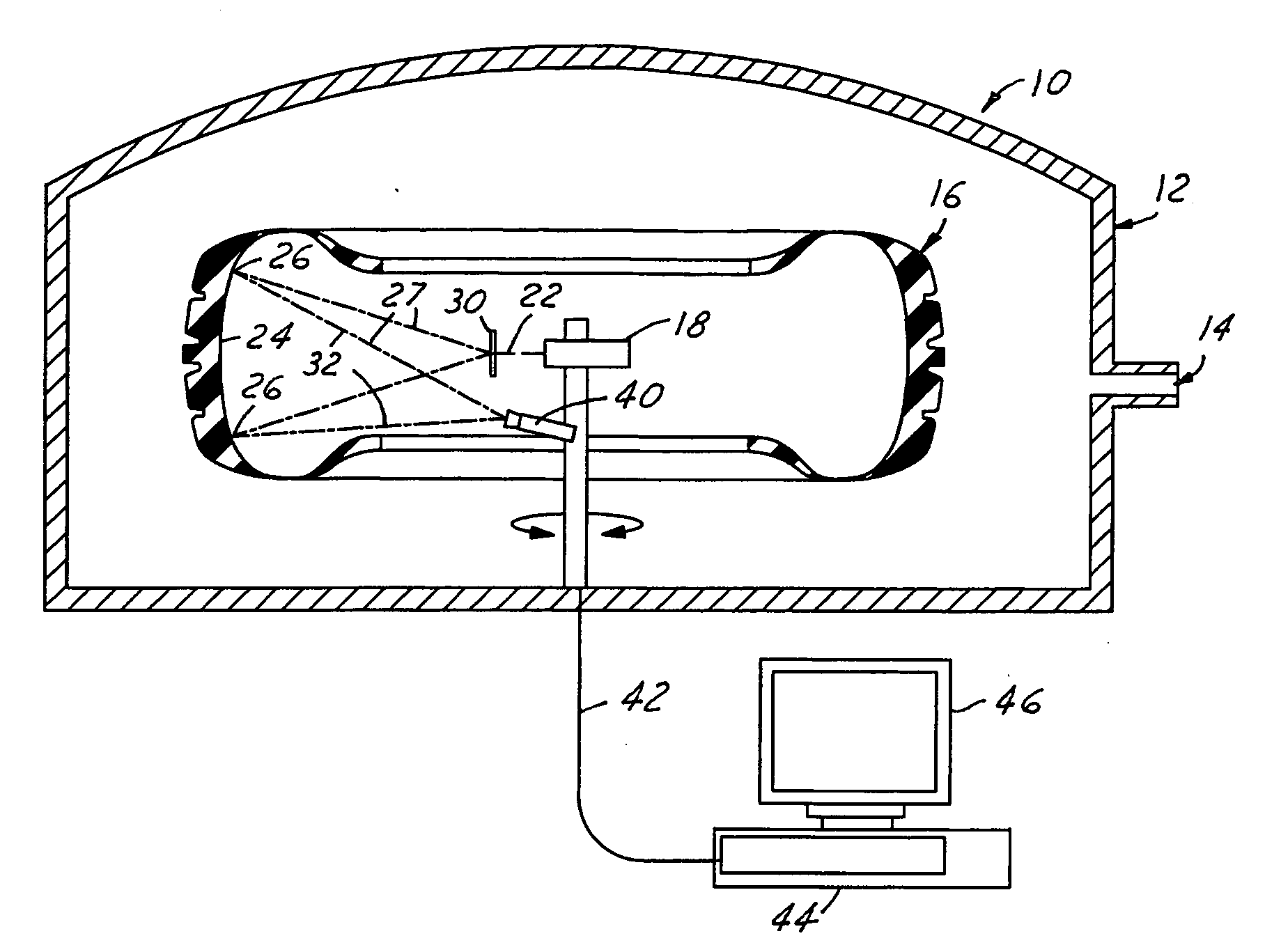

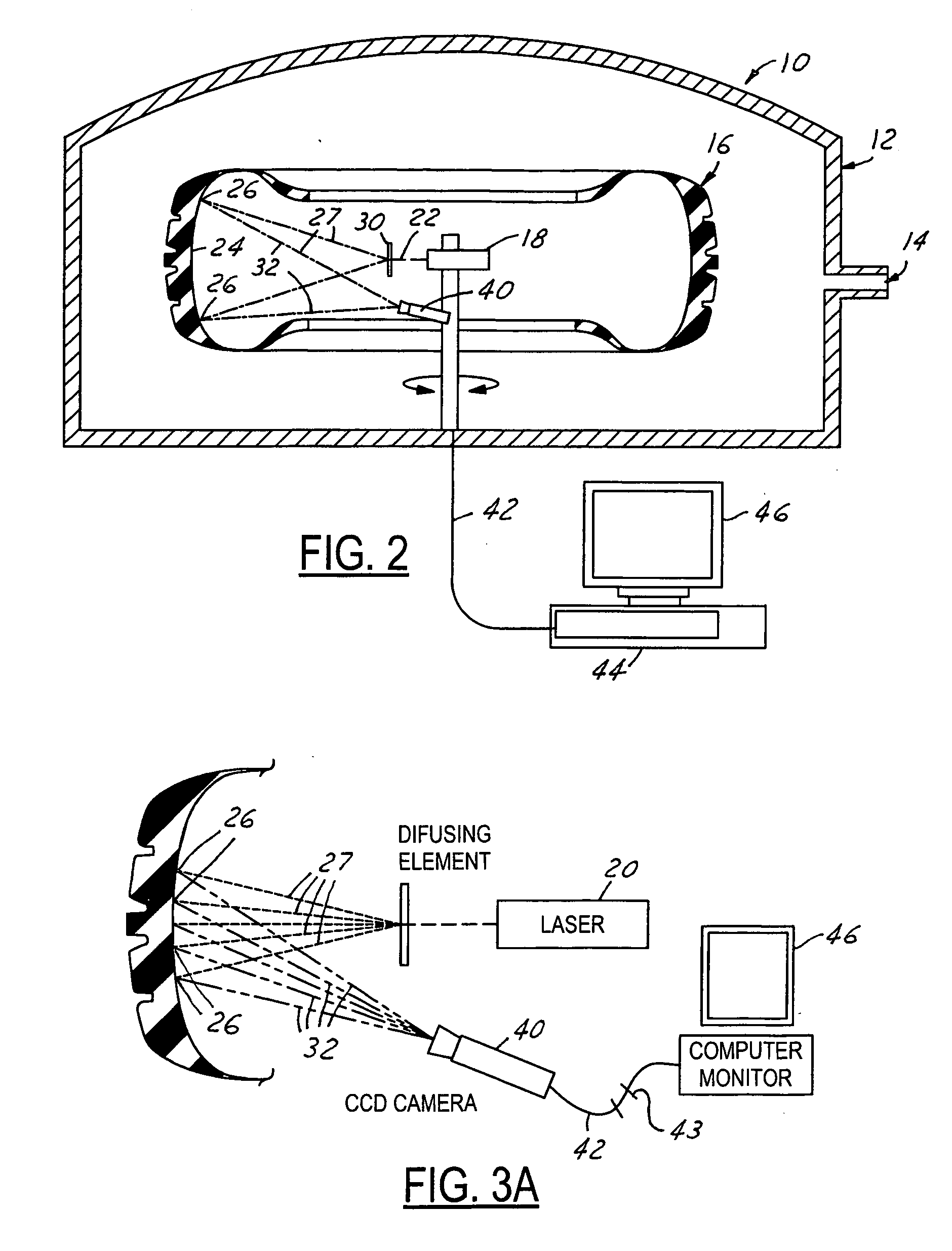

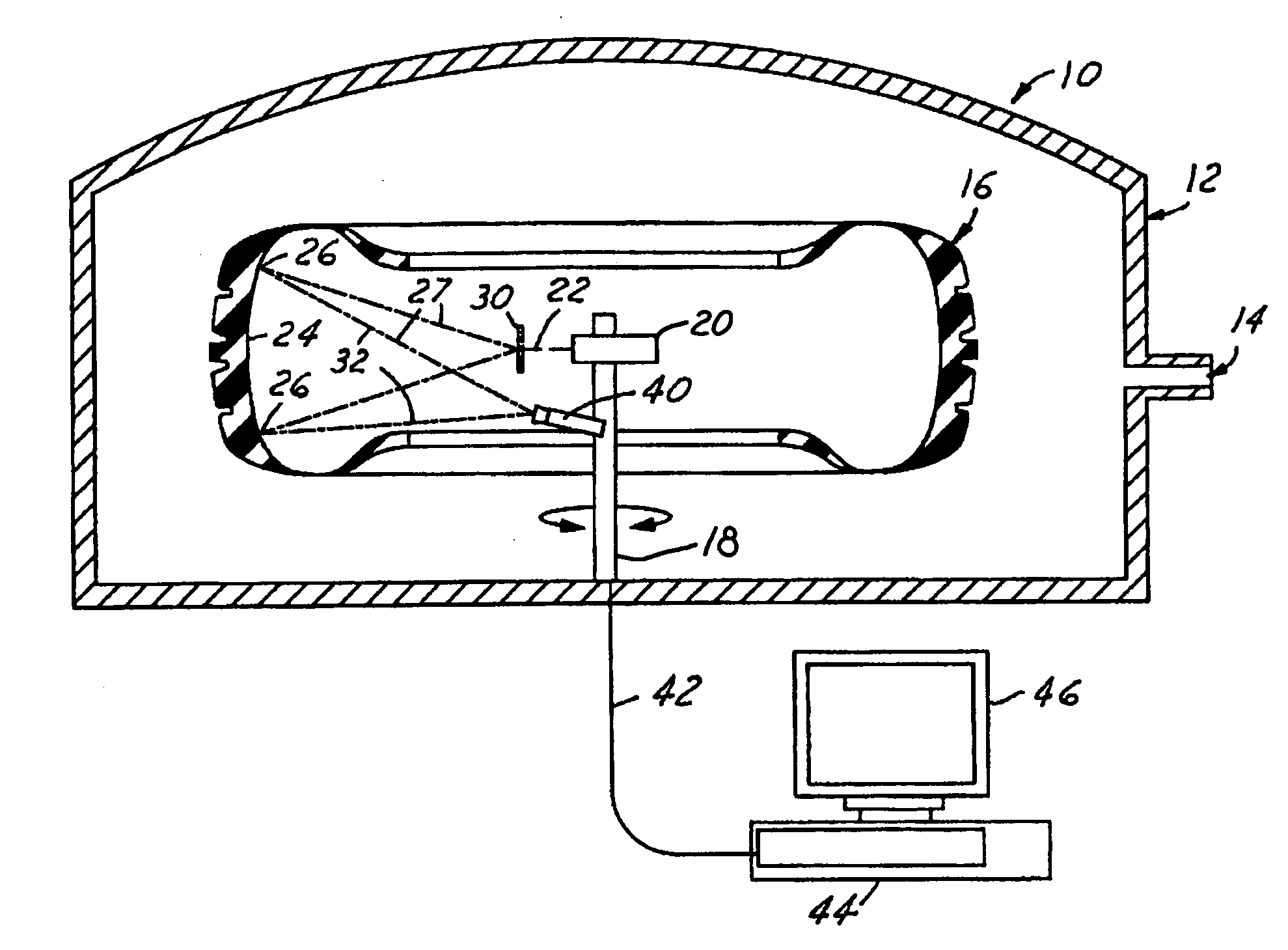

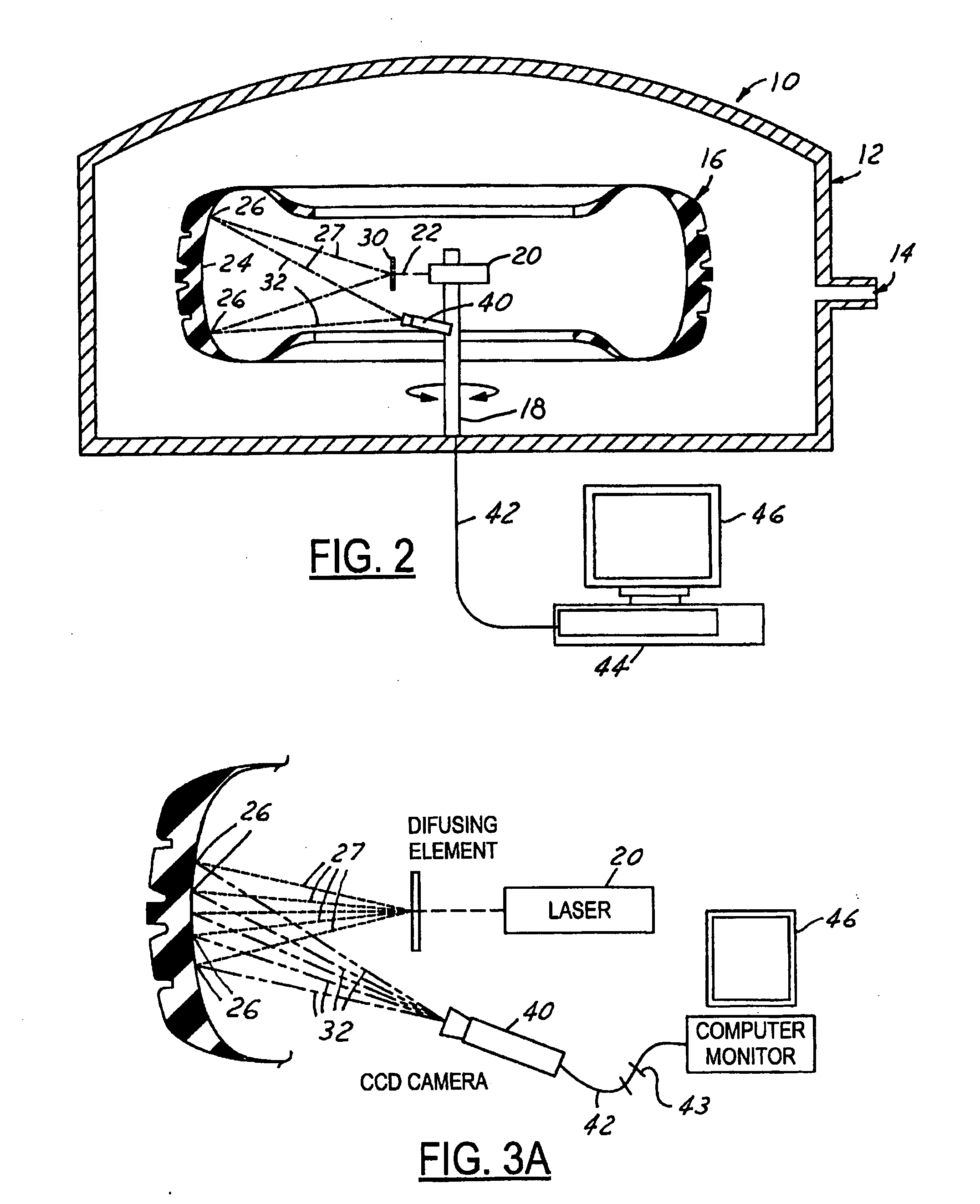

Non-destructive testing and imaging

InactiveUS20050264796A1Optically investigating flaws/contaminationUsing optical meansNon destructivePixel value difference

A method of non-destructive testing includes non-destructively testing an object over a range of test levels, directing coherent light onto the object, directly receiving the coherent light substantially as reflected straight from the object, and capturing the reflected coherent light over the range of test levels as a plurality of digital images of the object. The method also includes calculating differences between pixel values of a plurality of pairs of digital images of the plurality of digital images, and adding the pixel value differences of the plurality of pairs of digital images to yield at least one cumulative differential image.

Owner:RAVEN ENG

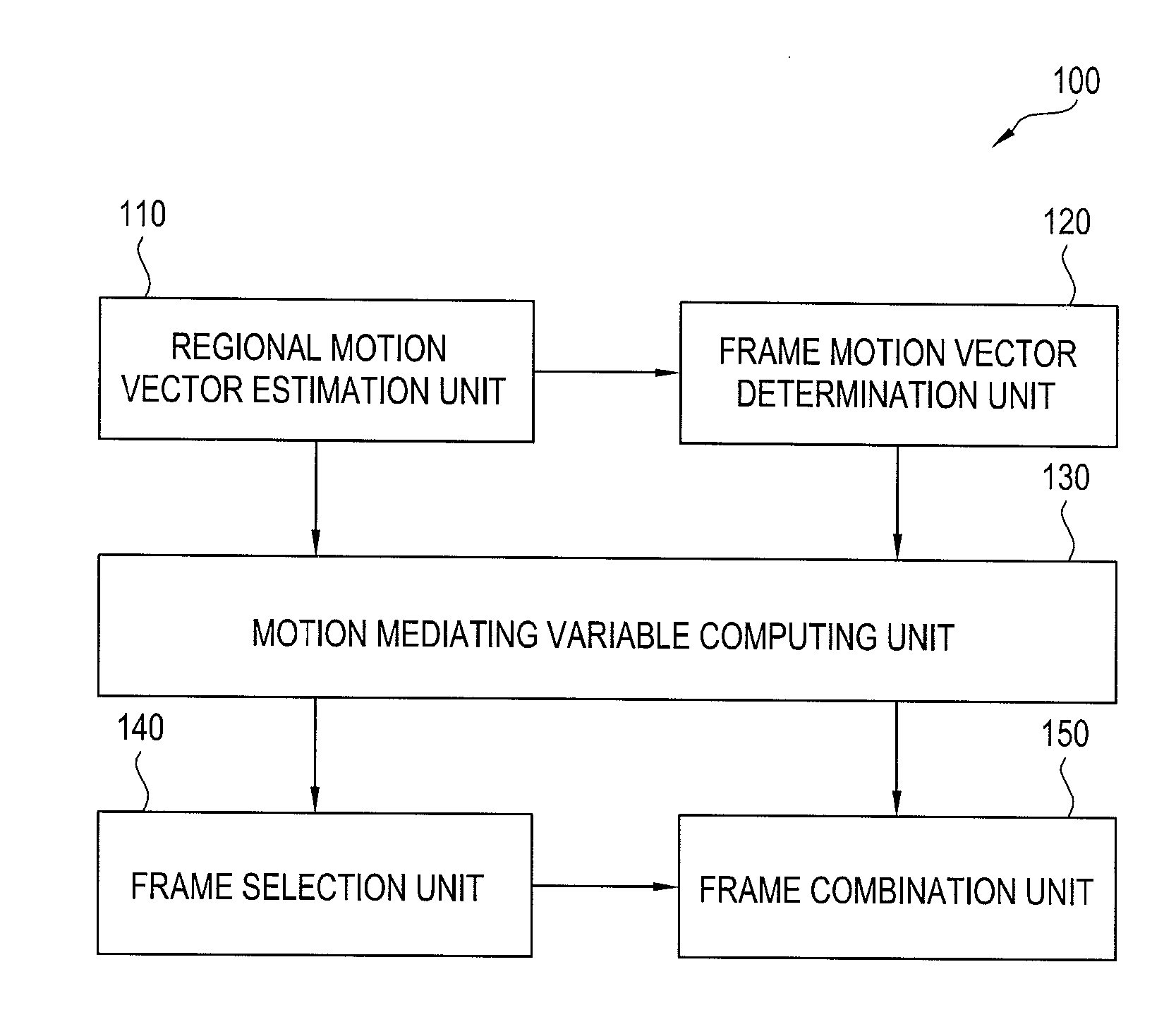

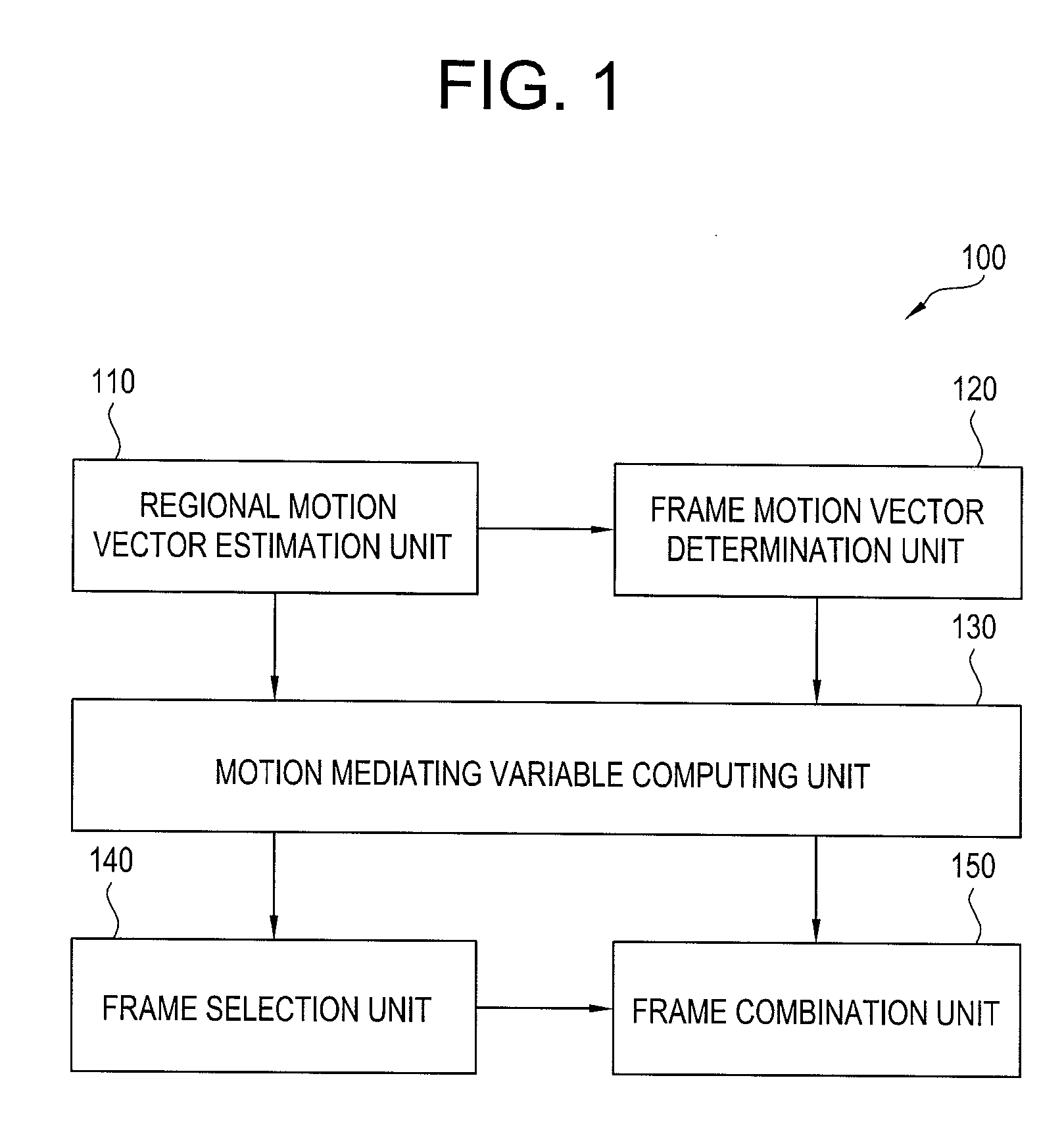

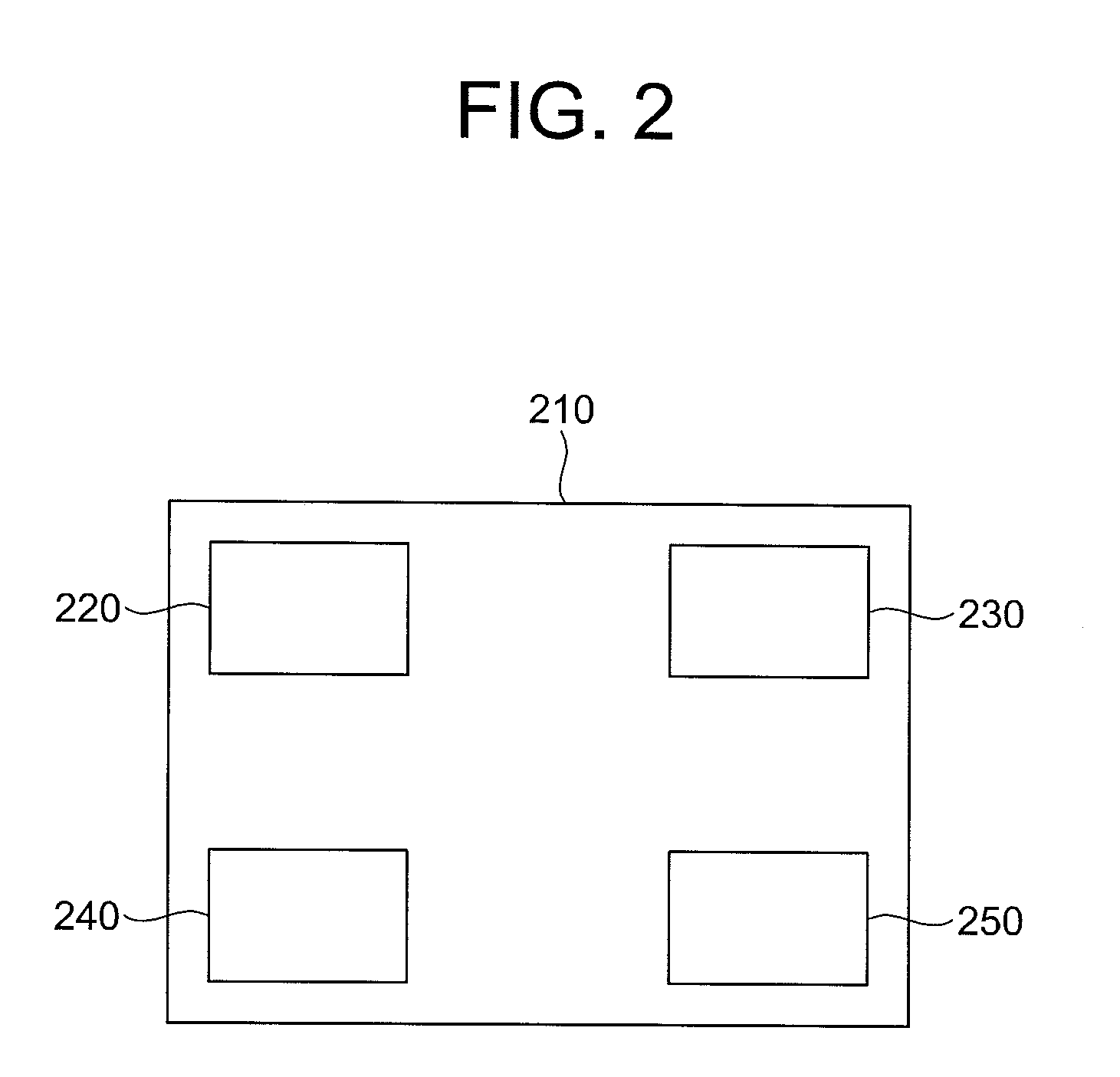

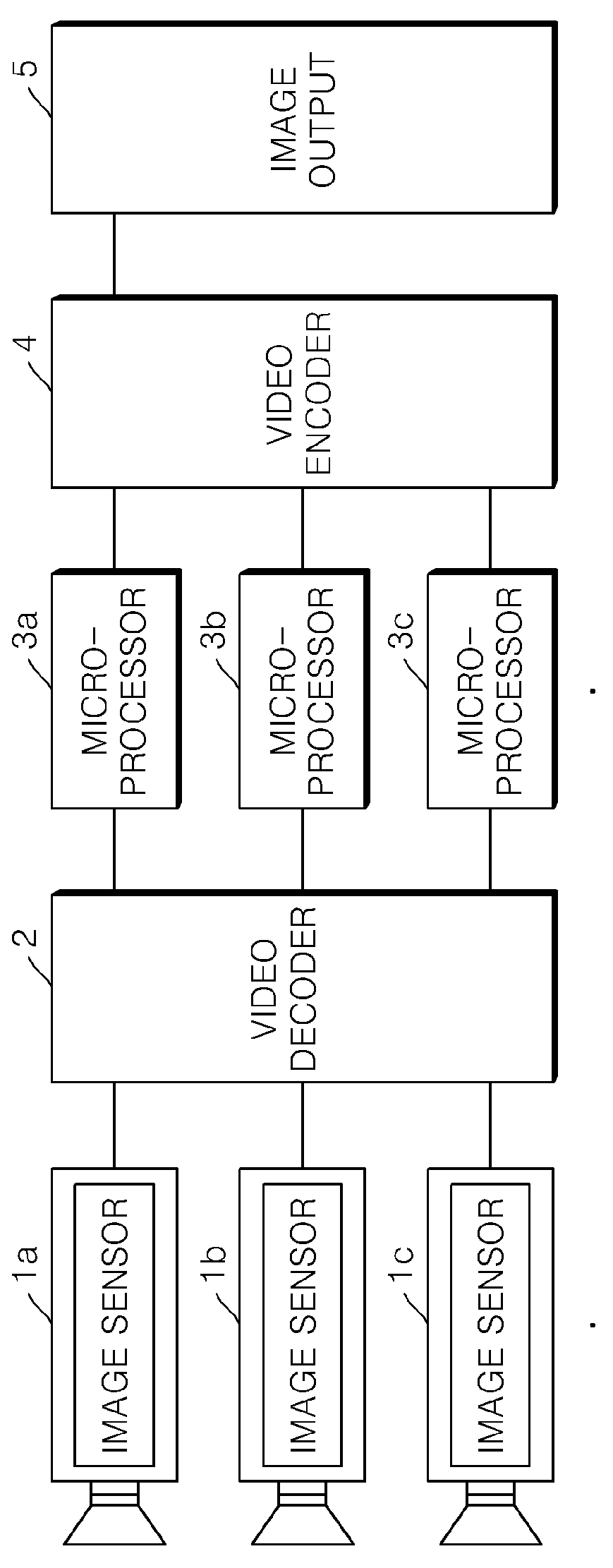

Apparatus and method for generating panorama images and apparatus and method for object-tracking using the same

InactiveUS20090231447A1Simple calculationMinimize impactTelevision system detailsImage analysisPixel value differenceMotion vector

Provided are an apparatus and method for generating panorama images and an apparatus and method for tracking an object using the same. The apparatus for generating panorama images estimates regional motion vectors with respect to respective lower regions set in an image frame, determines a frame motion vector by using the regional motion vectors based on a codebook storing sets of normalized regional motion vectors corresponding to a camera's motion and then accumulates motion mediating variables computed with respect to respective continuous image frames to determine an overlapping region, and matches the overlapping regions to generate a panorama image. The apparatus for tracking an object sets the panorama image generated by the apparatus for generating panorama images as a background image, and determines an object region by averaging pixel value difference values between pixels that constitute a captured input image and corresponding pixels in the panorama image. Regional motion vectors are estimated by using the coordinate of a pixel selected in lower regions, and thus a computing process can be simplified and a process speed can be increased. In addition, effects of wrongly estimated regional motion vectors can be minimized by using a codebook. Furthermore, noises are minimized and an object can be accurately extracted by using averaged pixel value difference values.

Owner:CHUNG ANG UNIV IND ACADEMIC COOP FOUND

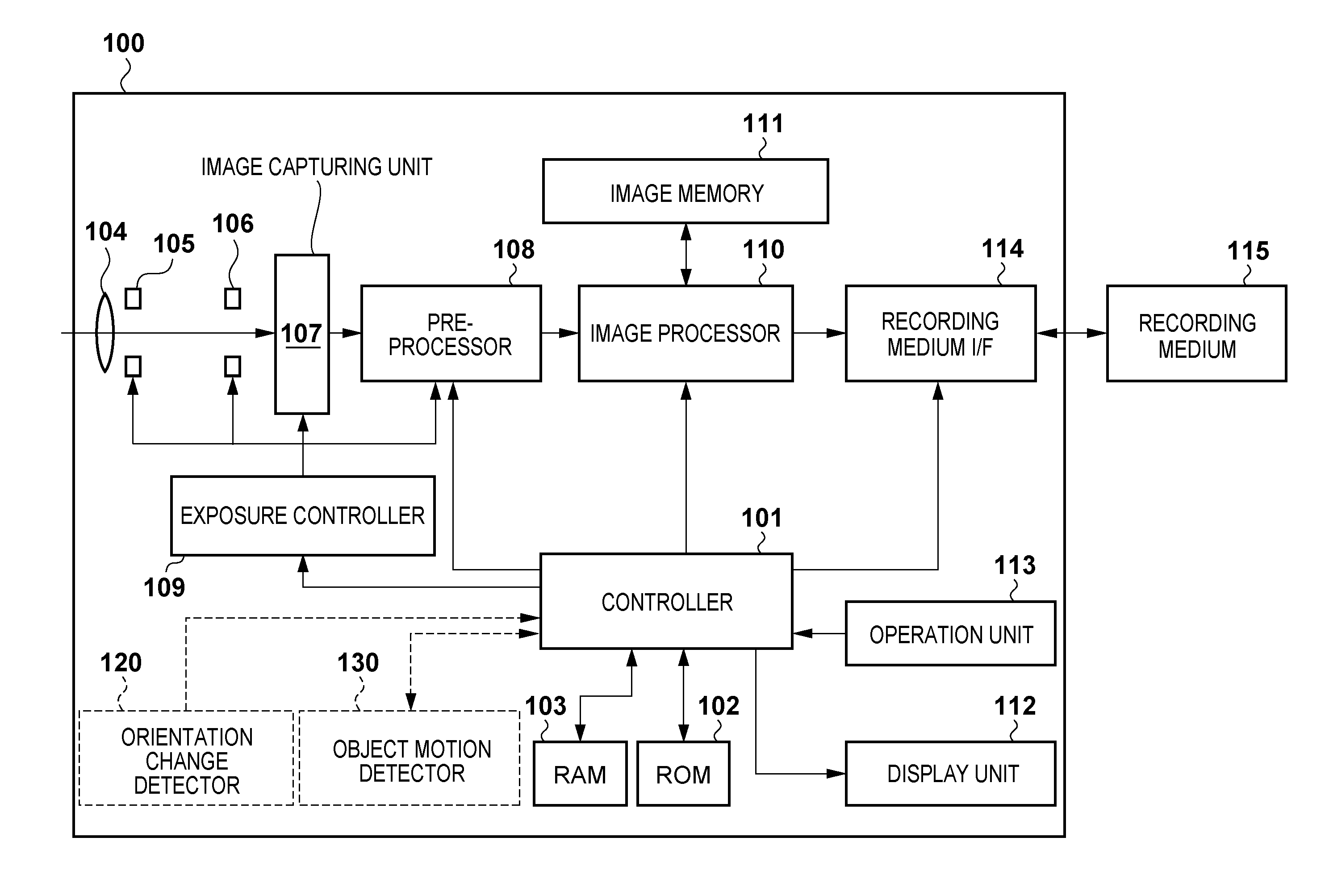

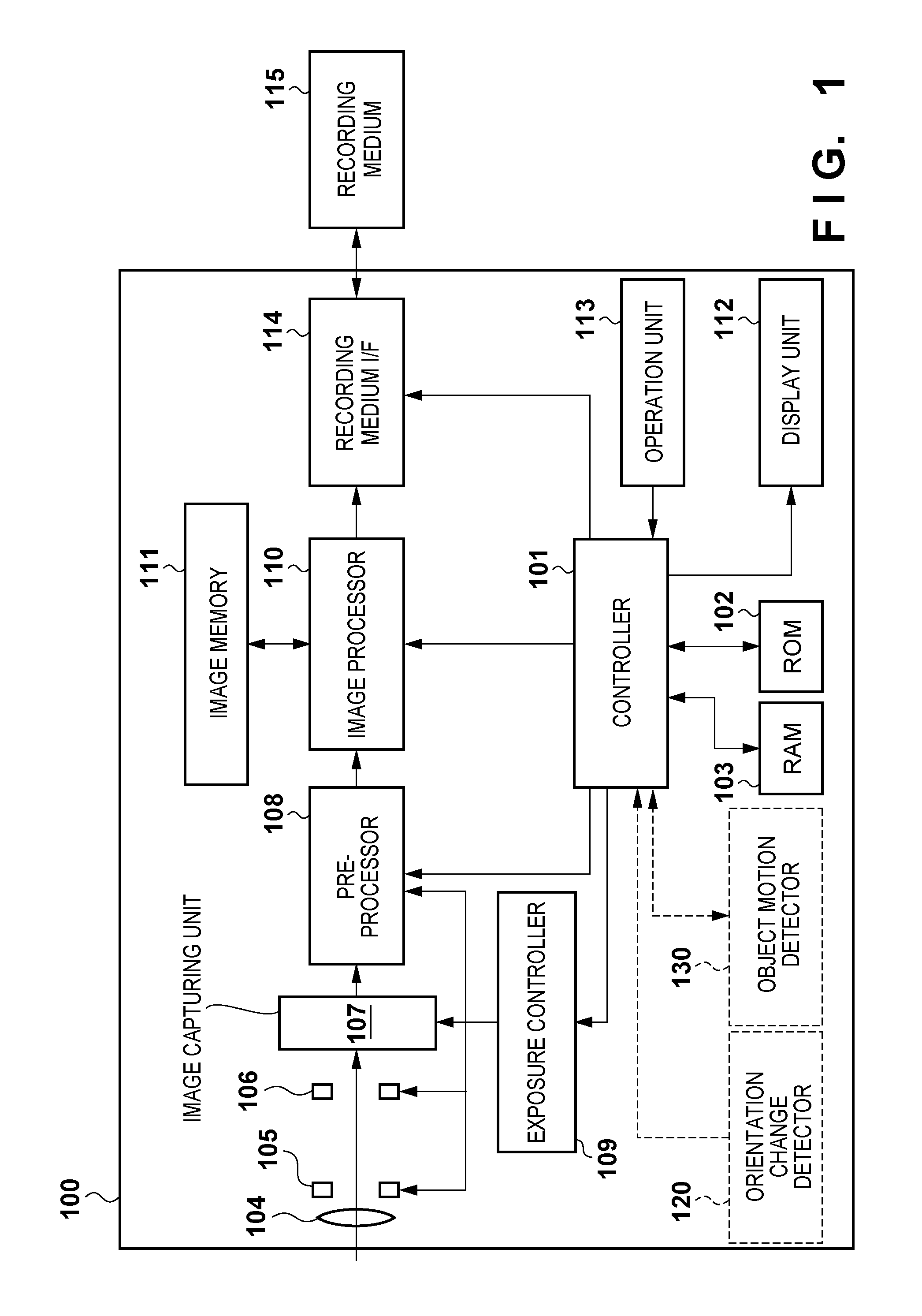

Image processing apparatus and control method thereof

InactiveUS20120249830A1Avoids image discontinuityImage enhancementTelevision system detailsImaging processingPixel value difference

Owner:CANON KK

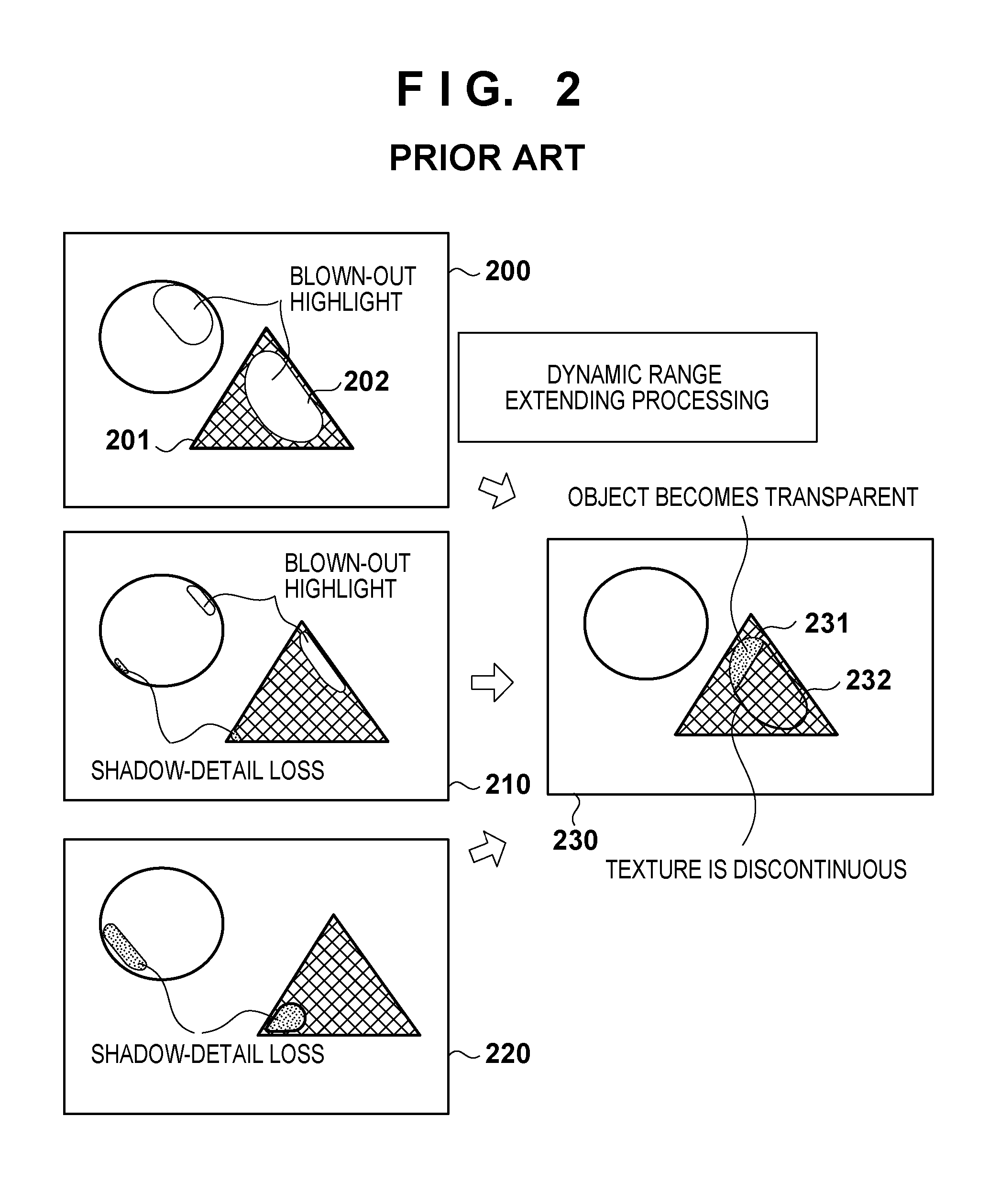

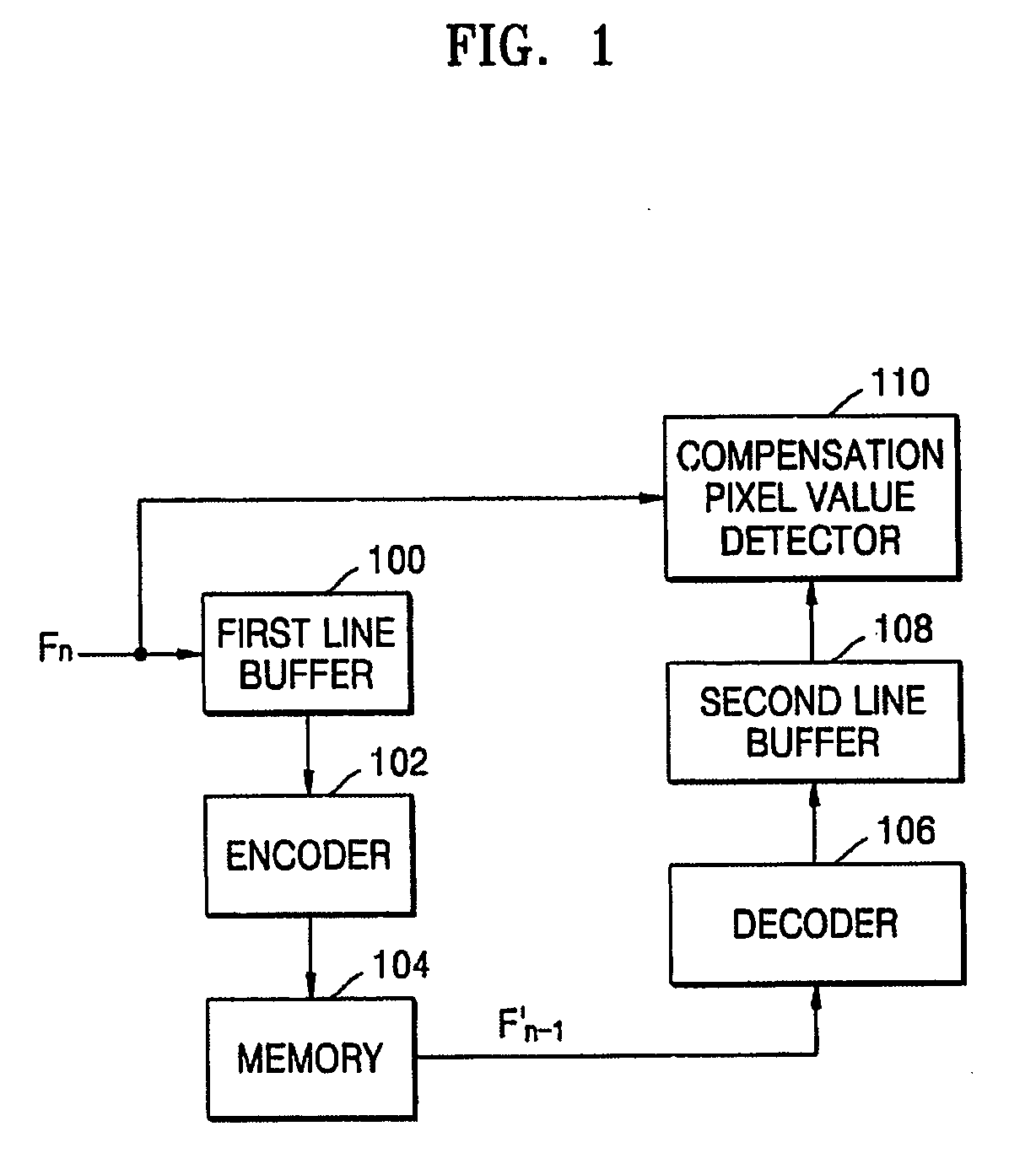

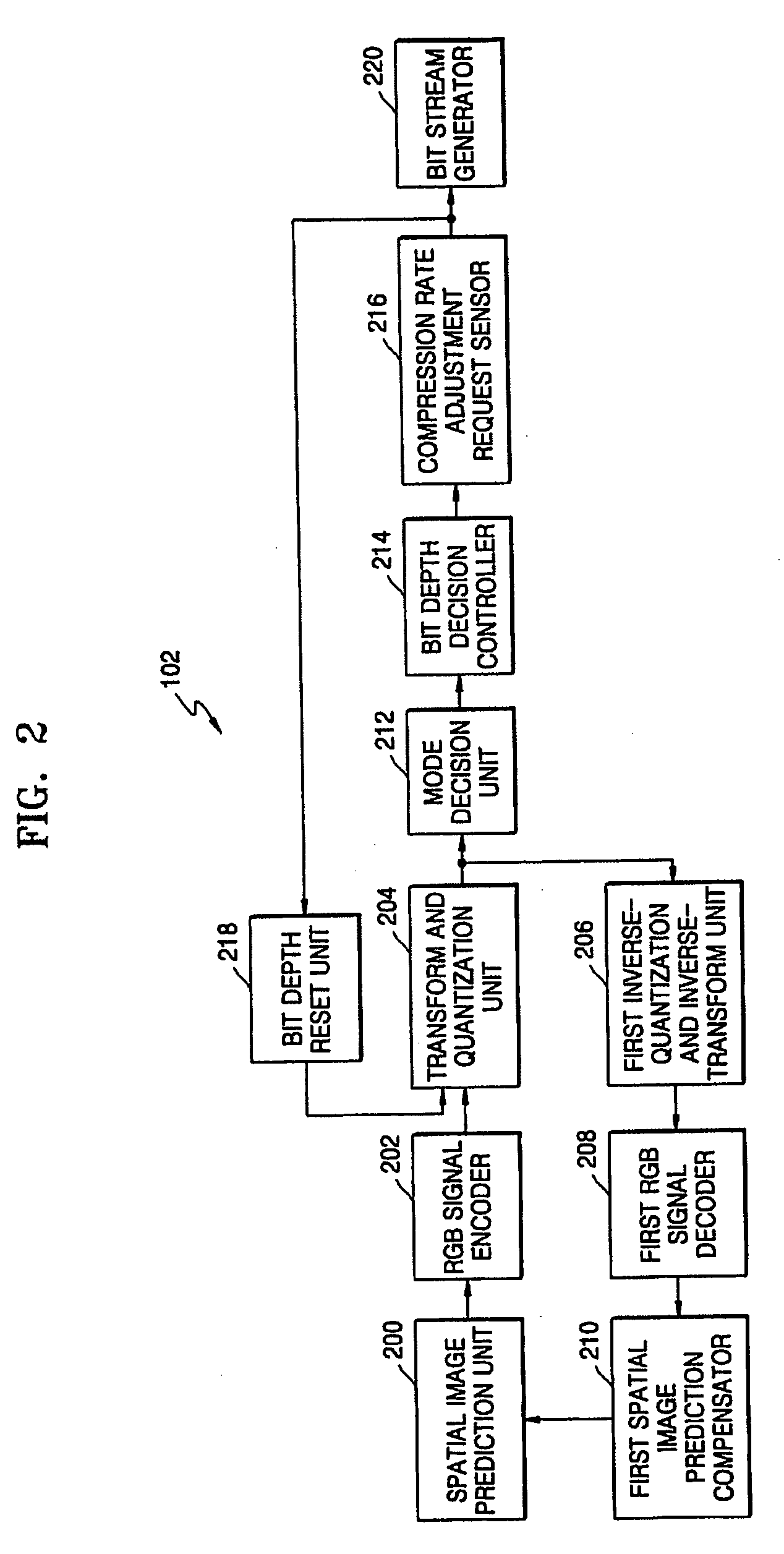

Apparatus and method for performing dynamic capacitance compensation (DCC) in liquid crystal display (LCD)

ActiveUS20060098879A1Minimizing chip sizeReducing picture-quality deteriorationCharacter and pattern recognitionCathode-ray tube indicatorsCapacitanceLiquid-crystal display

There are provided an apparatus and method for performing dynamic capacitance compensation (DCC) in a liquid crystal display (LCD). The DCC apparatus includes: a first line buffer reading and temporarily storing pixel values of an image for each line; an encoder transforming and quantizing the pixel values stored for each line for each block and generating bit streams; a memory storing the generated bit streams; a decoder decoding the bit streams stored in the memory for the each block and outputting the decoded bit streams; a second line buffer reading and temporarily storing the decoded pixel values for the each block; and a compensation pixel value detector detecting a compensation pixel value for each pixel, from pixel value differences between pixel values of a current frame stored in the first line buffer and pixel values of a previous frame stored in the second line buffer. Therefore, it is possible to reduce the number of pins of a memory interface by reducing the number of memory device for storing pixel values of image data, required for performing DCC of a LCD, resulting in minimizing a chip size, and to enhance compression efficiency without visual deterioration in images.

Owner:SAMSUNG ELECTRONICS CO LTD

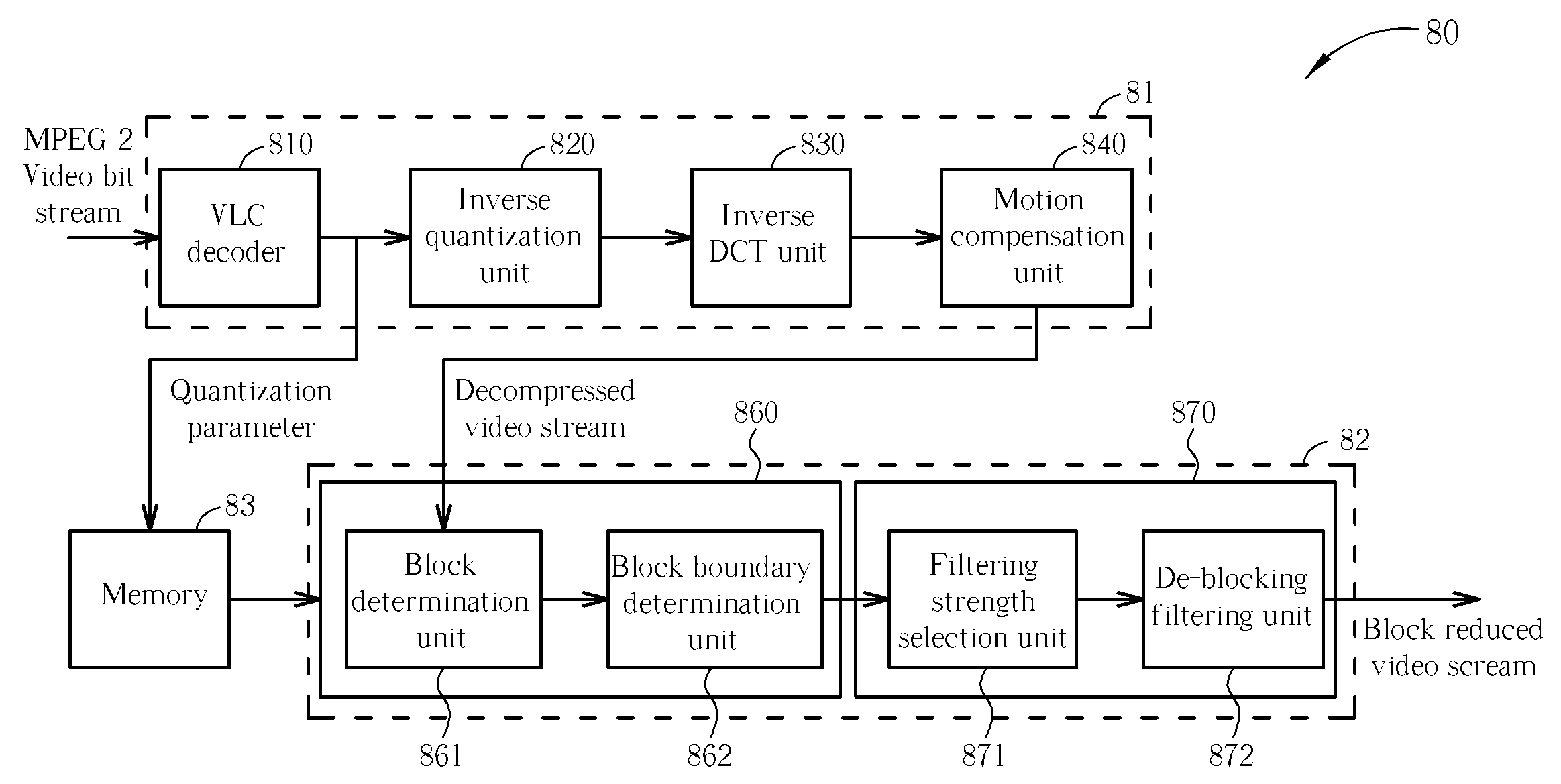

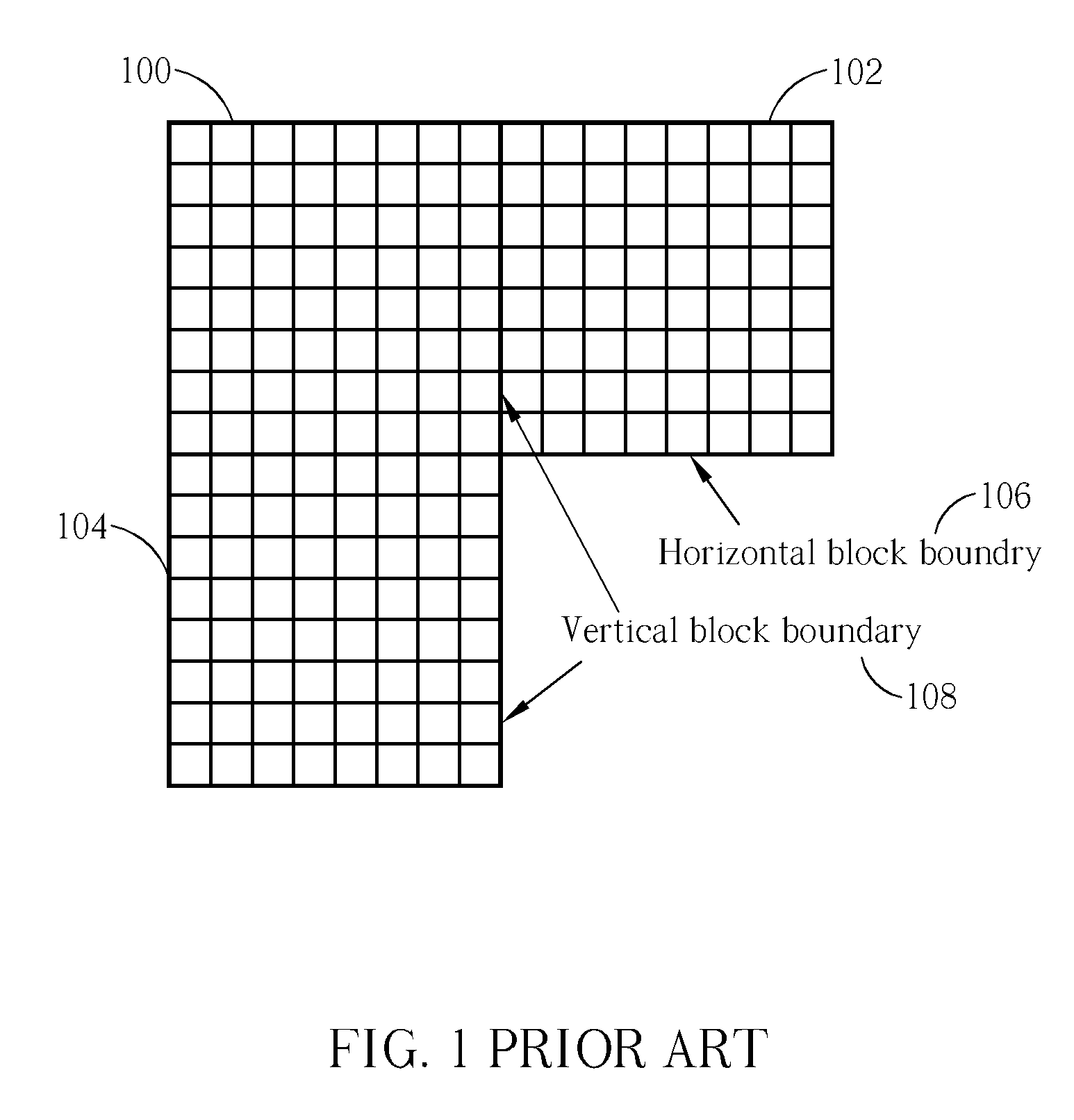

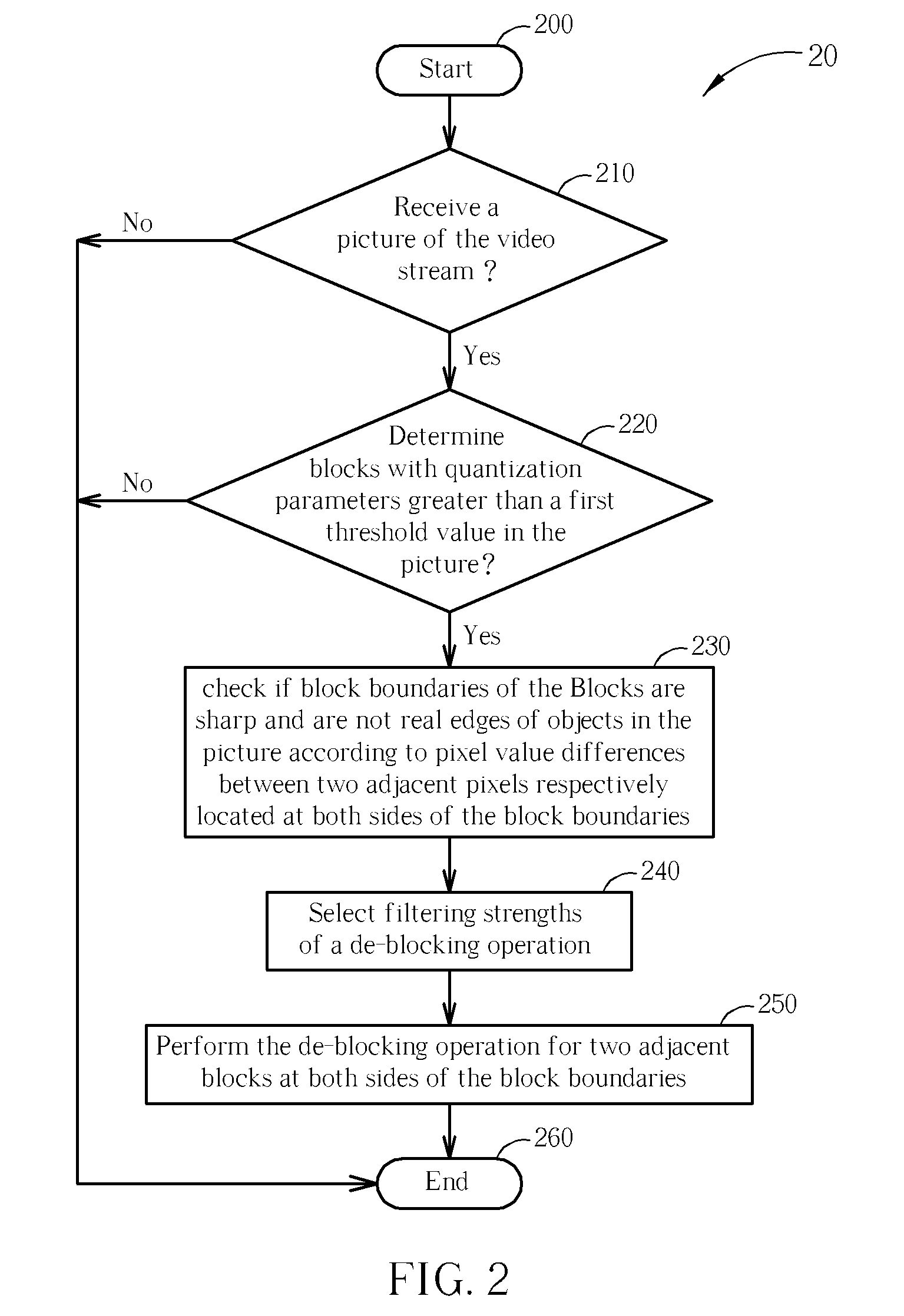

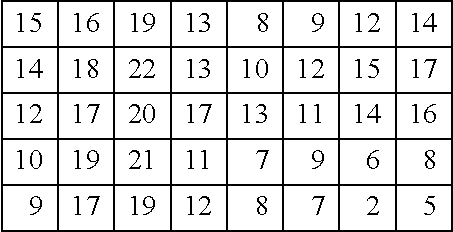

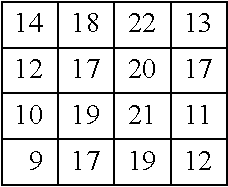

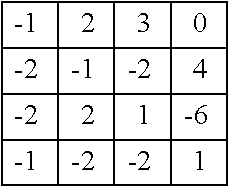

Method and Related Device for Reducing Blocking Artifacts in Video Streams

InactiveUS20090080517A1Reduce blockinessColor television with pulse code modulationColor television with bandwidth reductionPattern recognitionPixel value difference

A method for reducing blocking artifacts in a video stream comprises receiving a picture of the video stream, wherein the picture includes a plurality of macroblocks and each of the plurality of macroblock includes four blocks, determining blocks with quantization parameters greater than a first threshold value in the picture, checking if block boundaries of the blocks are sharp and are real edges of objects in the picture according to pixel value differences between two adjacent pixels respectively located at both sides of the block boundaries, selecting filtering strengths of a de-blocking operation according to the pixel value differences when the block boundaries are sharp and are not real edges of the objects in the picture, and performing the de-blocking operation for two adjacent blocks at both sides of the block boundaries.

Owner:SILICON INTEGRATED SYSTEMS

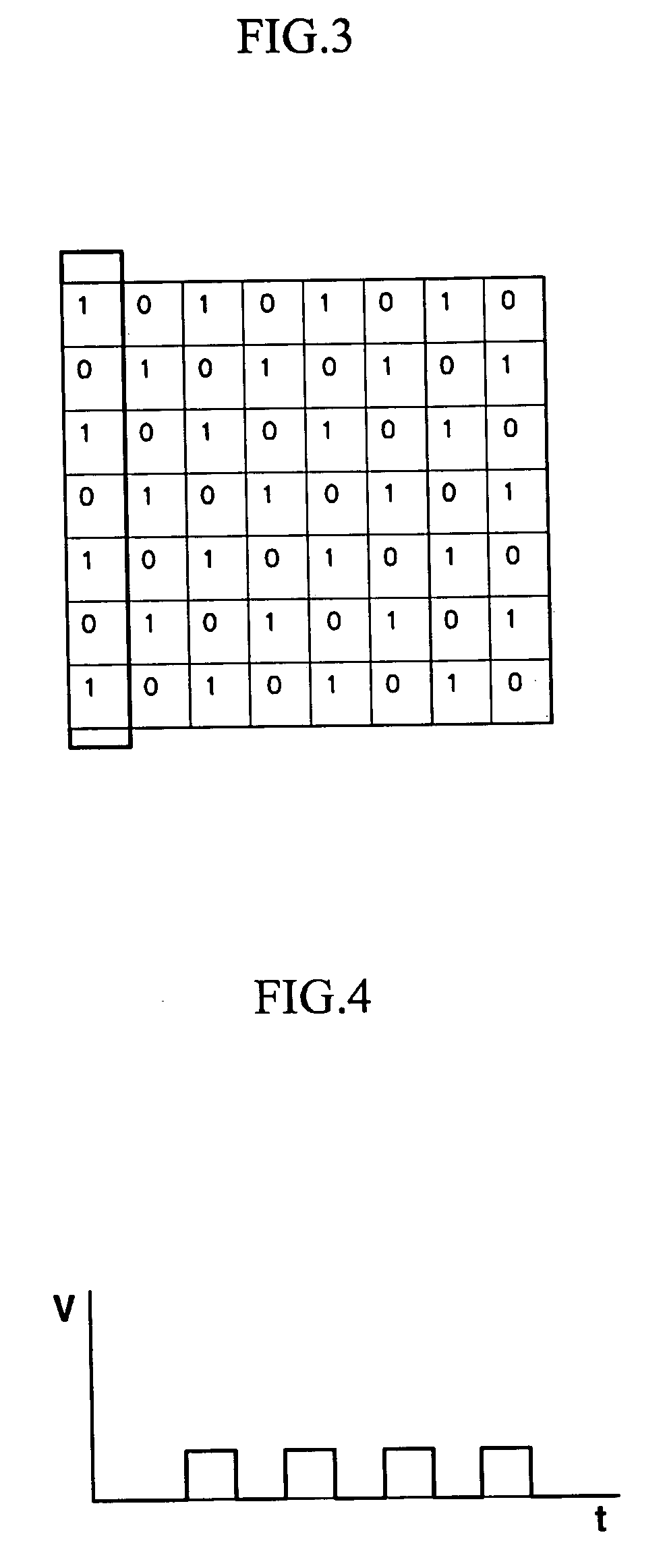

Fast loss less image compression system based on neighborhood comparisons

InactiveUS20040008896A1Reduce varianceEasy to compressCode conversionCharacter and pattern recognitionPixel value differenceImage compression

A fast loss less image compression system based on neighborhood comparisons compares pixel value differences with neighboring pixels and replaces such pixel values with the minimum of the differences. A marker is attached to a block of pixels, such that all the pixels in that block are compared with neighbors of one direction. The marker indicates how all of the pixels in that block are compared. Intermittent Huffman-tree construction is used such that one tree is used for several frames. Huffman coding is used to compress the resulting frame. A single Huffman-tree is constructed once every predetermined number of frames. The frequency of Huffman-tree construction can be performed according to the instantaneous availability of processor time to perform the construction. When more processing time is available, the Huffman-trees are computed more frequently. Such frequency variation can be implemented by using an input video frame buffer. If the buffer is a certain size, then processor time for Huffman-tree construction is available.

Owner:ZAXEL SYST

Non-destructive testing and imaging

InactiveUS20080147347A1Material analysis by optical meansUsing optical meansNon destructivePixel value difference

A method of non-destructive testing includes non-destructively testing an object over a range of test levels, directing coherent light onto the object, directly receiving the coherent light substantially as reflected straight from the object, and capturing the reflected coherent light over the range of test levels as a plurality of digital images of the object. The method also includes calculating differences between pixel values of a plurality of pairs of digital images of the plurality of digital images, and adding the pixel value differences of the plurality of pairs of digital images to yield at least one cumulative differential image.

Owner:SHEAROGRAPHICS

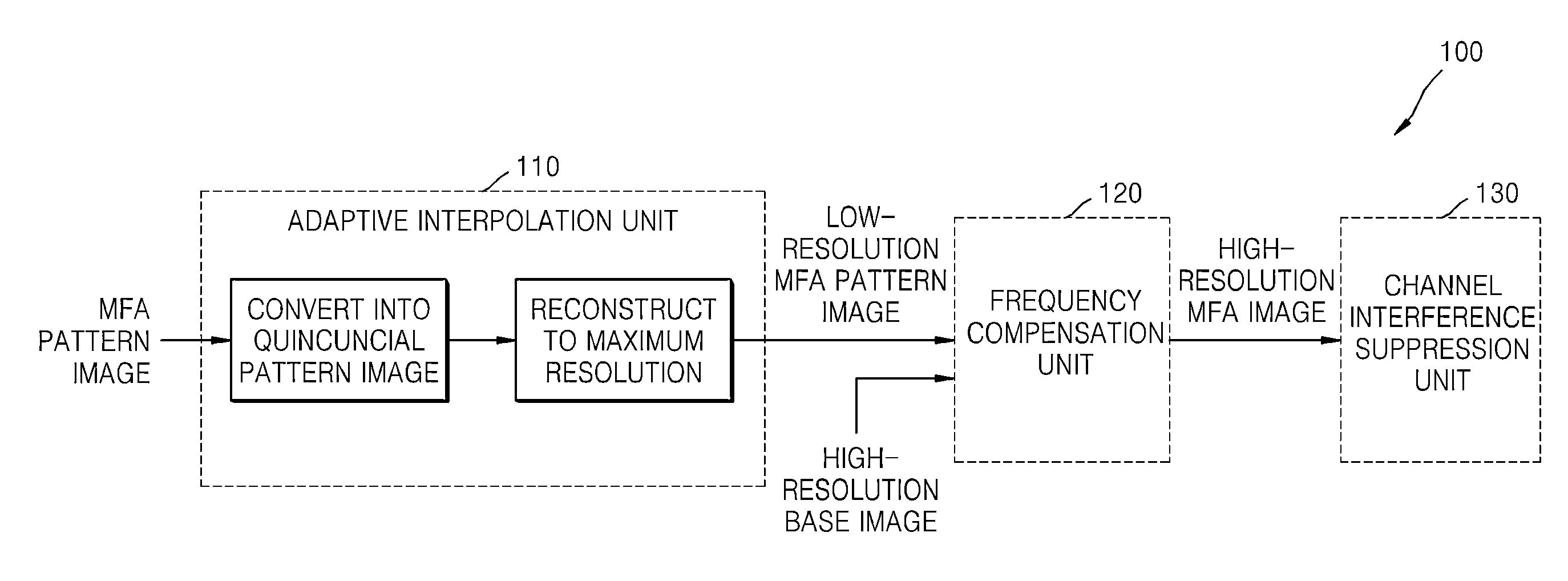

Method and apparatus for processing image

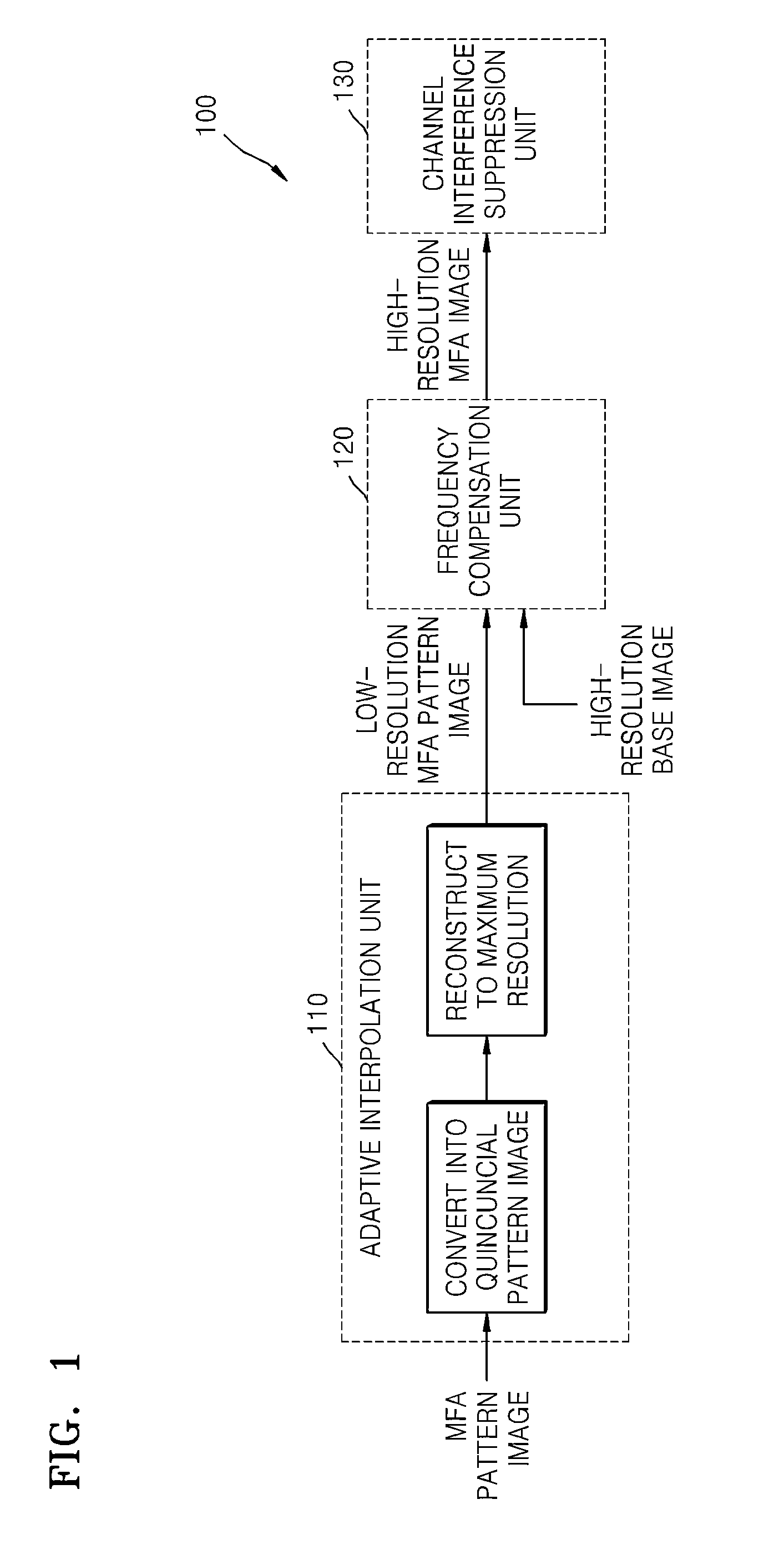

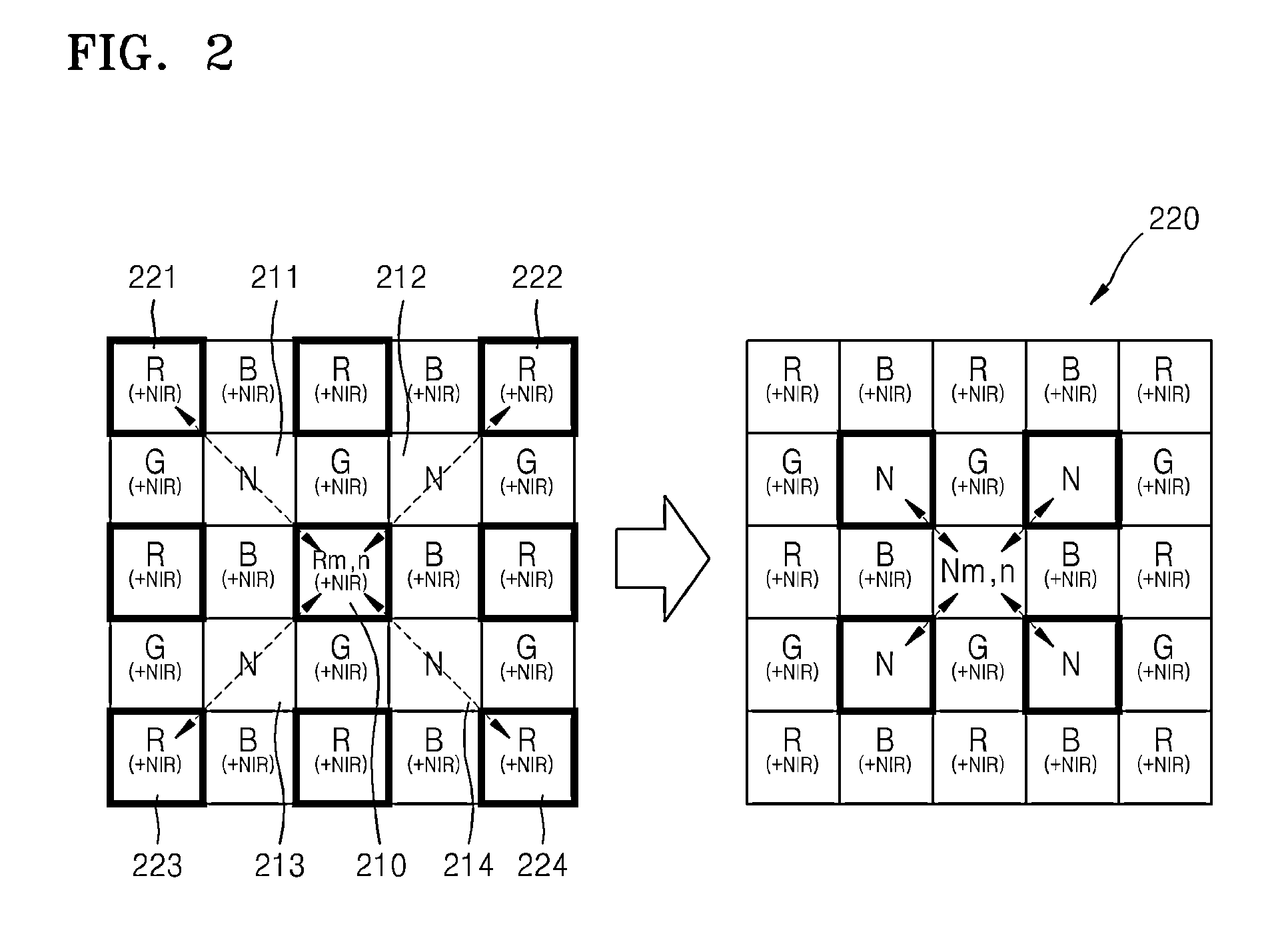

ActiveUS20140153823A1Eliminate generationRemove color distortionImage enhancementTelevision system detailsImaging processingPixel value difference

An image processing apparatus includes an adaptive interpolation device which converts a MFA pattern image into a quincuncial pattern image based on difference values, and interpolates color channels and an NIR channel, based on difference values of the converted quincuncial pattern image in vertical and horizontal pixel directions; a frequency compensation device which obtains a high-resolution MFA image using high-frequency and medium-frequency components of a high-resolution base image, based on linear regression analysis and compared energy levels of MFA channel images to an energy level of a base image; and a channel interference suppression device which removes color distortion generated between each channel of the high-resolution MFA image, and another channel of the high-resolution MFA image and a base channel using a weighted average of pixel value differences between each channel of the high-resolution MFA image, and the other channel of the high-resolution MFA image and the base channel.

Owner:IND ACADEMIC CORP FOUND YONSEI UNIV +1

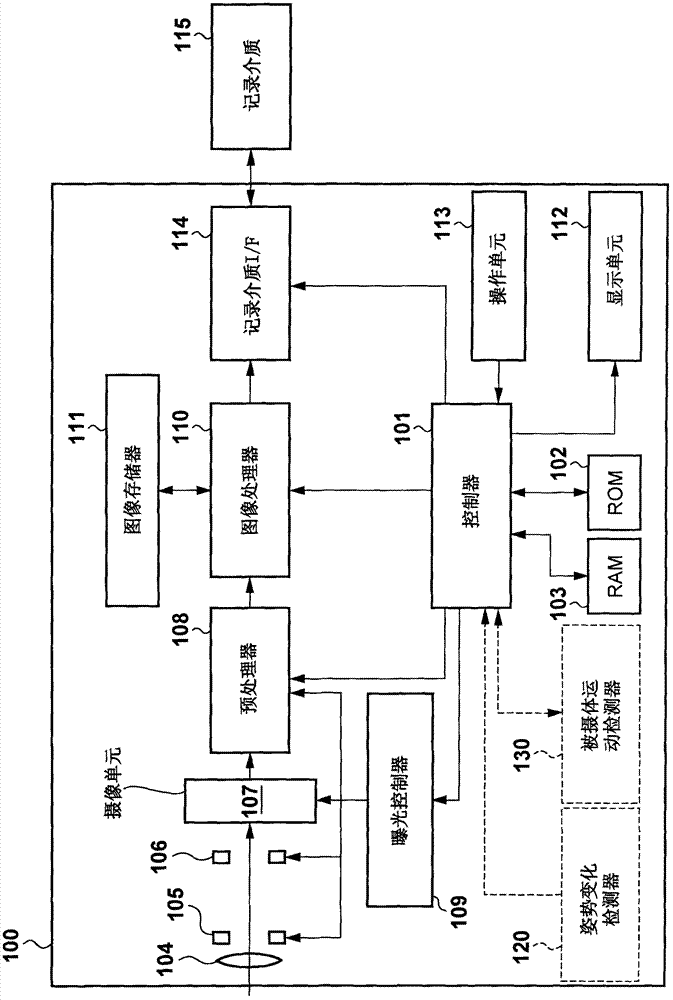

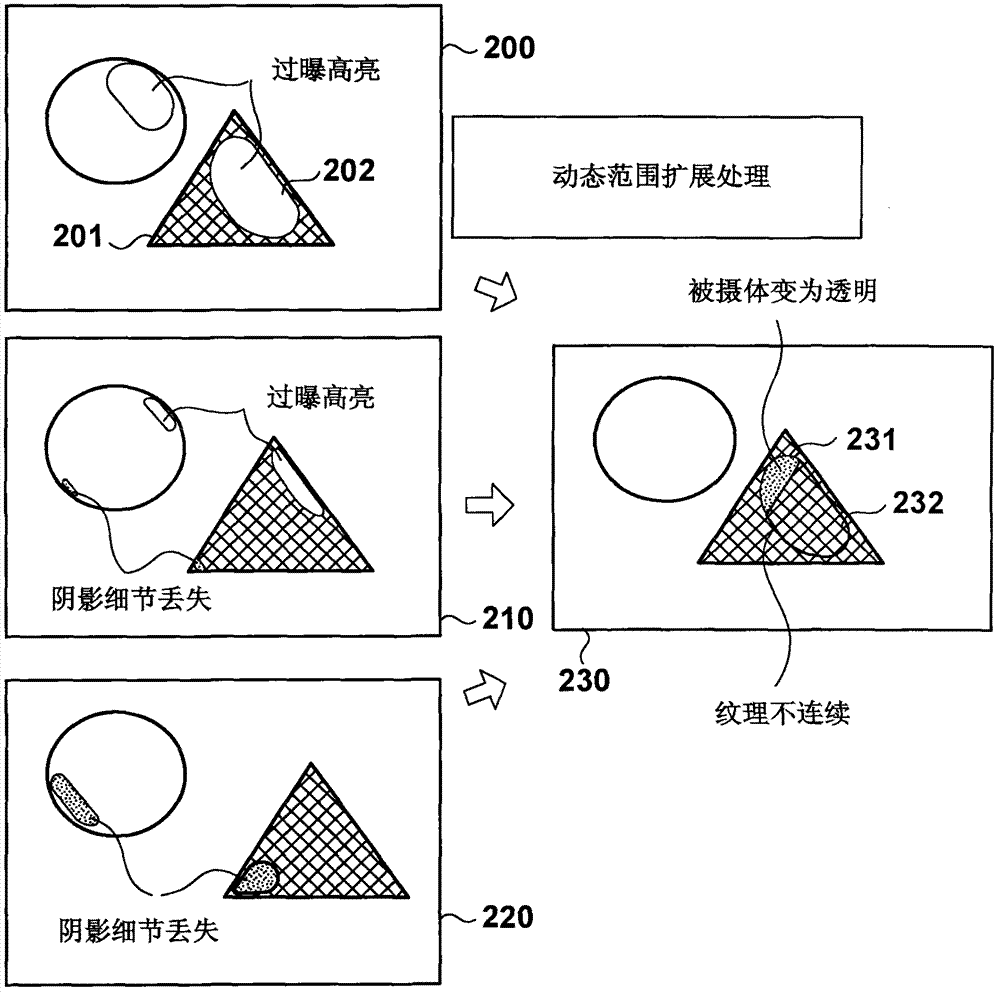

Image processing apparatus and control method thereof

InactiveCN102740082AImage enhancementTelevision system detailsImaging processingPixel value difference

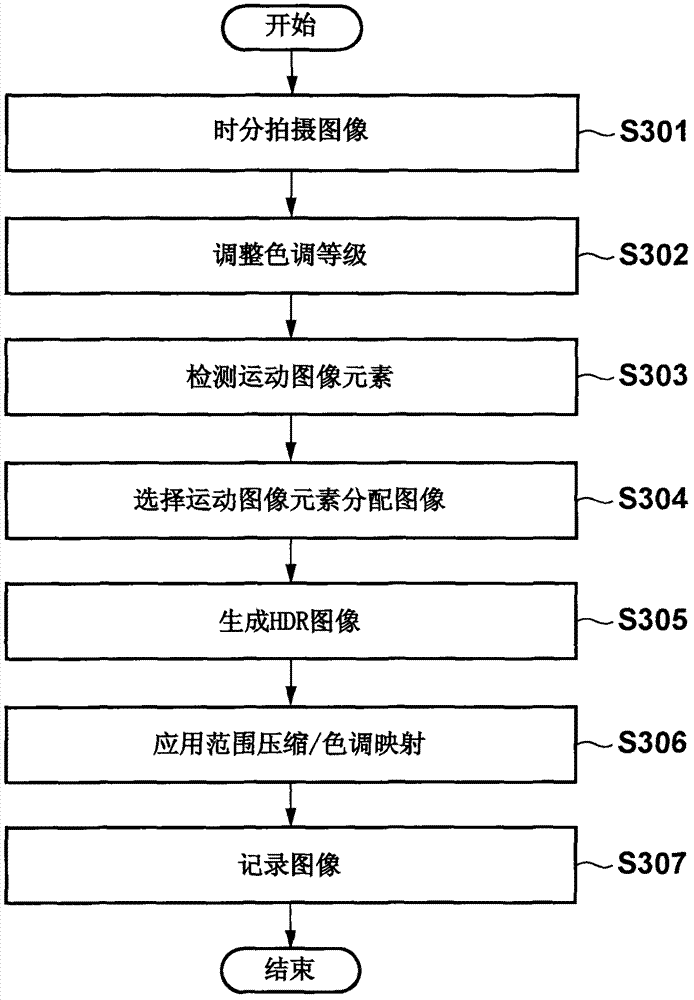

The invention provides an image processing apparatus and a control method thereof. After a plurality of differently exposed images are obtained, and tone levels of the plurality of images are adjusted, image elements corresponding to changes of objects are detected based on pixel value differences between the plurality of images. Then, the numbers of blown-out highlight and shadow-detail loss image elements in the image elements corresponding to the changes of the objects are counted for the plurality of images. Then, an image in which the total of the numbers of image elements is smallest is selected. Furthermore, image elements corresponding to the changes of the objects in the selected image are used as those corresponding to image elements corresponding to the changes of the objects in an HDR image to be generated.

Owner:CANON KK

Color correction objective assessment method consistent with subjective perception

ActiveCN105046708AImprove accuracyImprove use valueImage enhancementImage analysisPattern recognitionPixel value difference

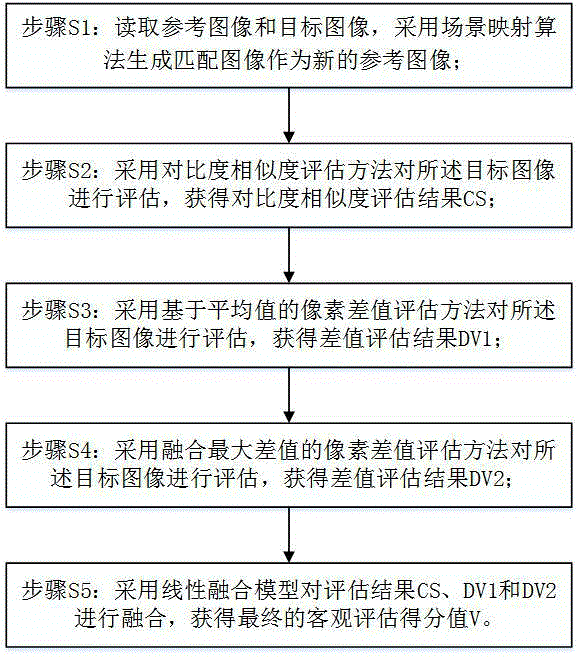

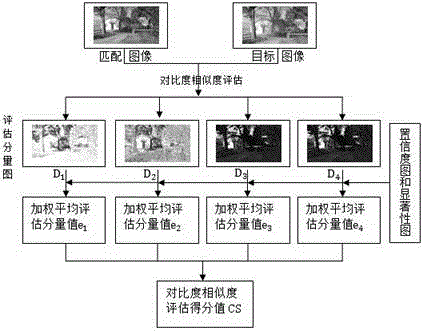

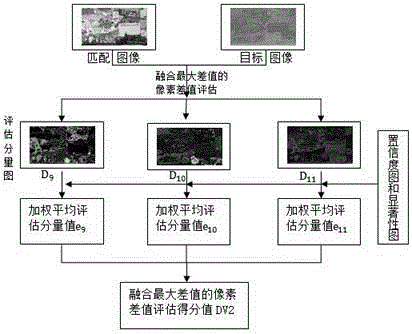

The invention relates to a color correction objective assessment method consistent with subjective perception. The method comprises the following steps: Step S1, reading a reference image and a target image and generating a matched image by a scenario mapping algorithm to be used as a new reference image; Step S2, assessing the target image by a contrast similarity assessment method to obtain a contrast similarity assessment result CS; Step S3, assessing the target image by a pixel-value difference assessment method based on average value to obtain a difference value assessment result DV1; Step S4, assessing the target image by a pixel-value difference assessment method of maximum difference fusion to obtain a difference value assessment result DV2; and Step S5, fusing the assessment results CS, DV1 and DV2 by a linear fusion model to obtain a final objective assessment score value V. The method can effectively assess color consistency between images, has good correlation with subjective scoring of users and has accuracy, and can be applied in the field of image mosaic and left and right view colors consistency assessment of three-dimensional images.

Owner:FUZHOU UNIV

Image processing apparatus and control method thereof

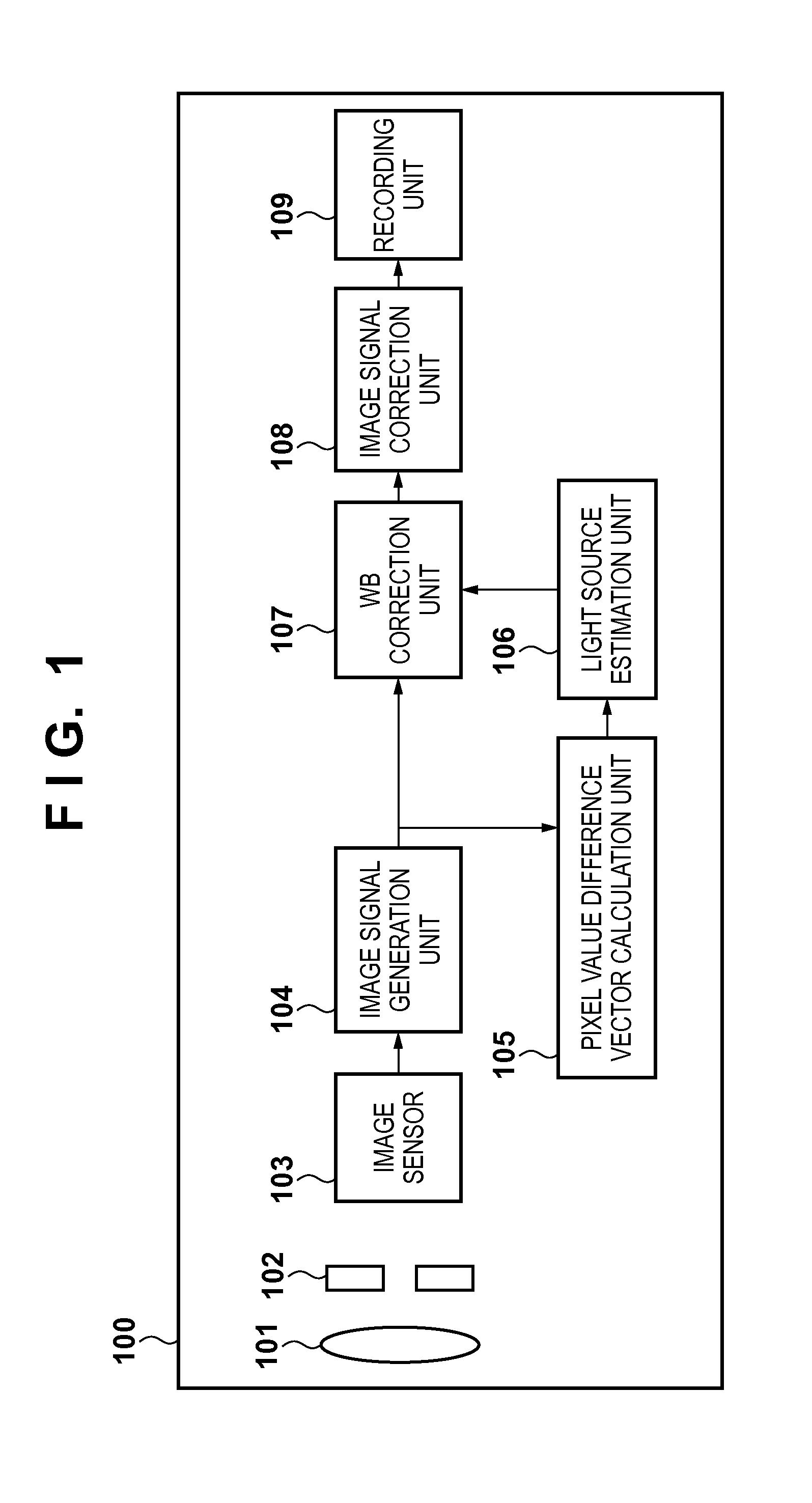

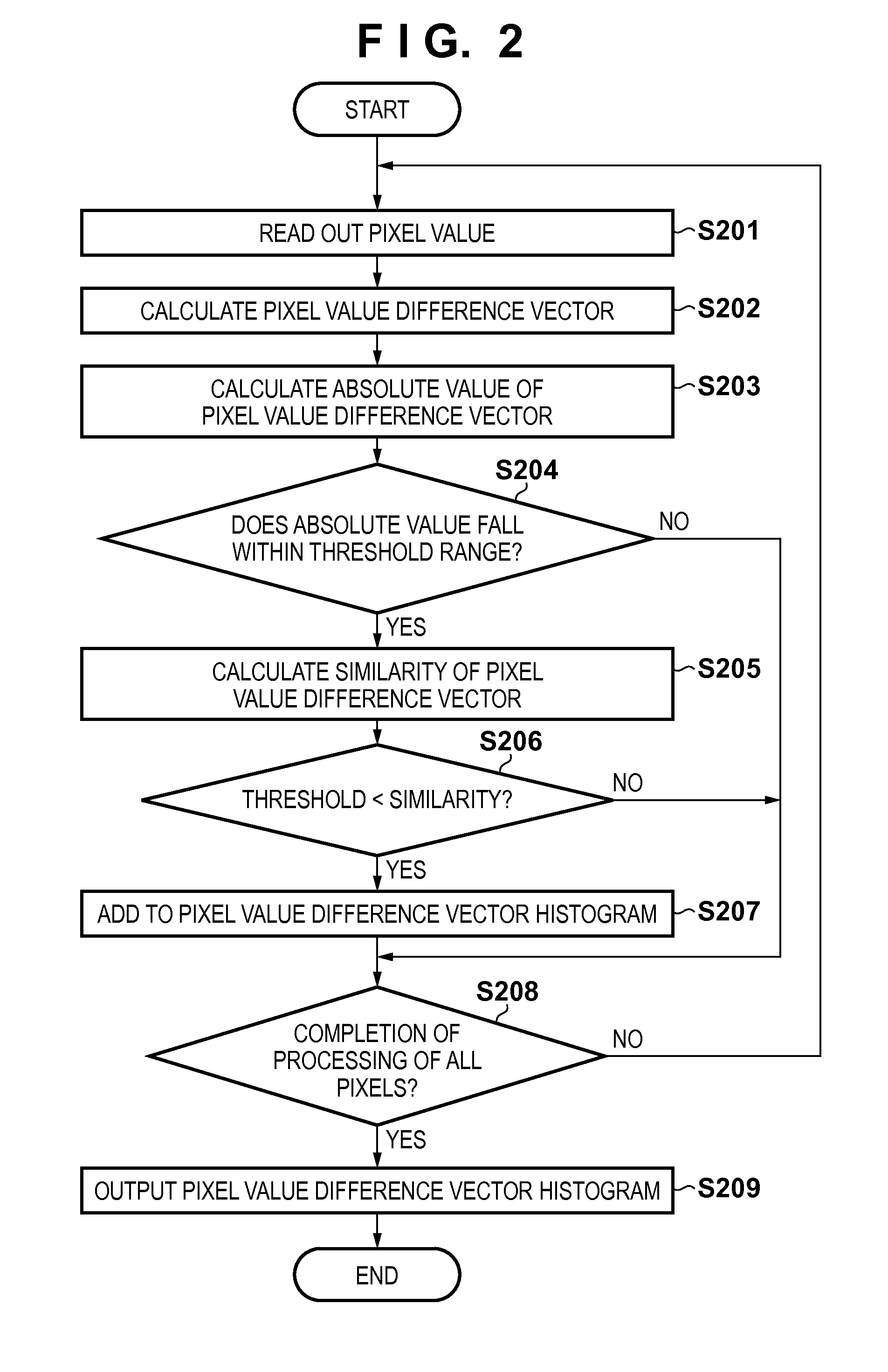

InactiveUS20130343646A1Accurate estimateImage enhancementImage analysisImaging processingPixel value difference

An image processing apparatus, which correctly extracts specular reflected light components of reflected light from an object, and accurately estimates a light source color, is provided. The image processing apparatus calculates a pixel value difference distribution by repeating, for respective pixels, to calculate pixel value differences between a pixel of interest and adjacent pixels in an input image and to calculate similarities between pixel value differences. A light source estimation unit estimates a color of a light source which illuminates an object in the input image based on the calculated distribution.

Owner:CANON KK

Adaptive histogram equalization for images with strong local contrast

InactiveUS7822272B2Increase contrastReduce artifactsTelevision system detailsImage enhancementPixel value differenceContrast enhancement

Owner:SONY DEUT GMBH

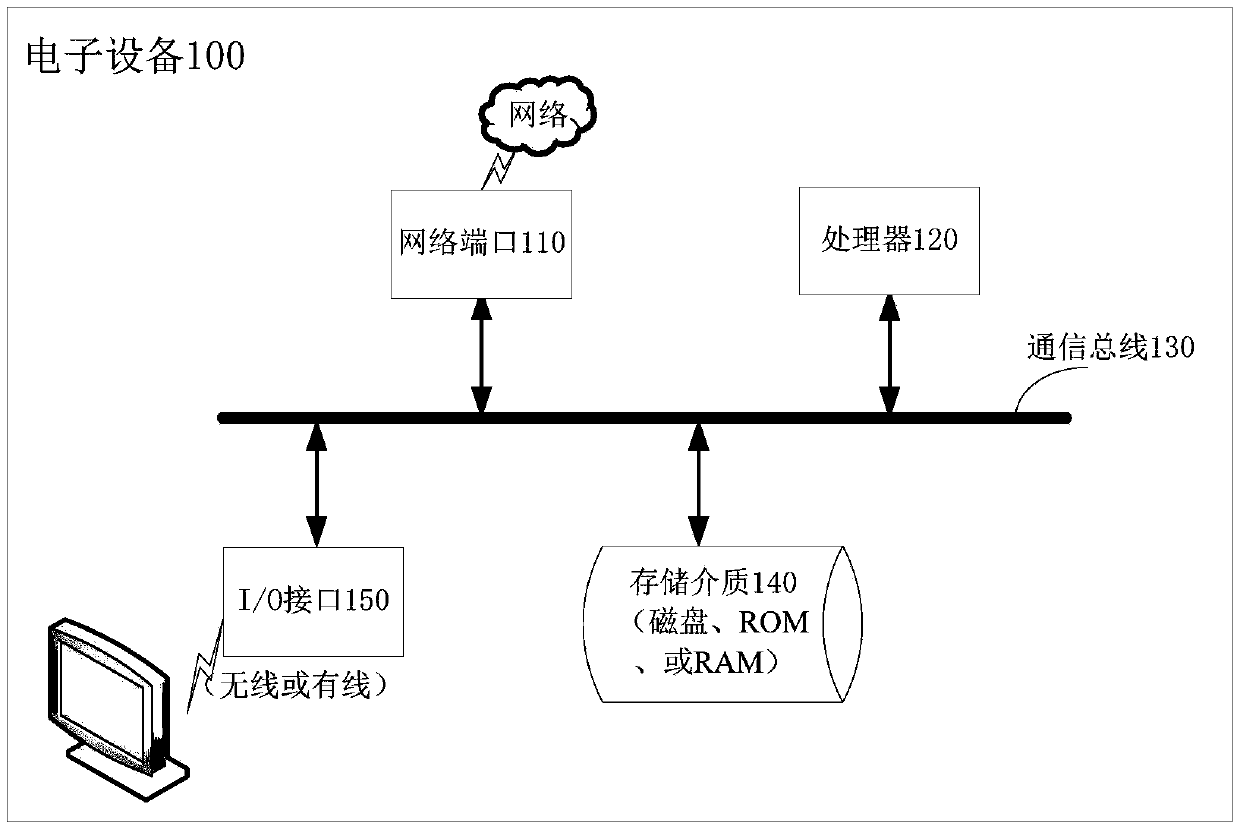

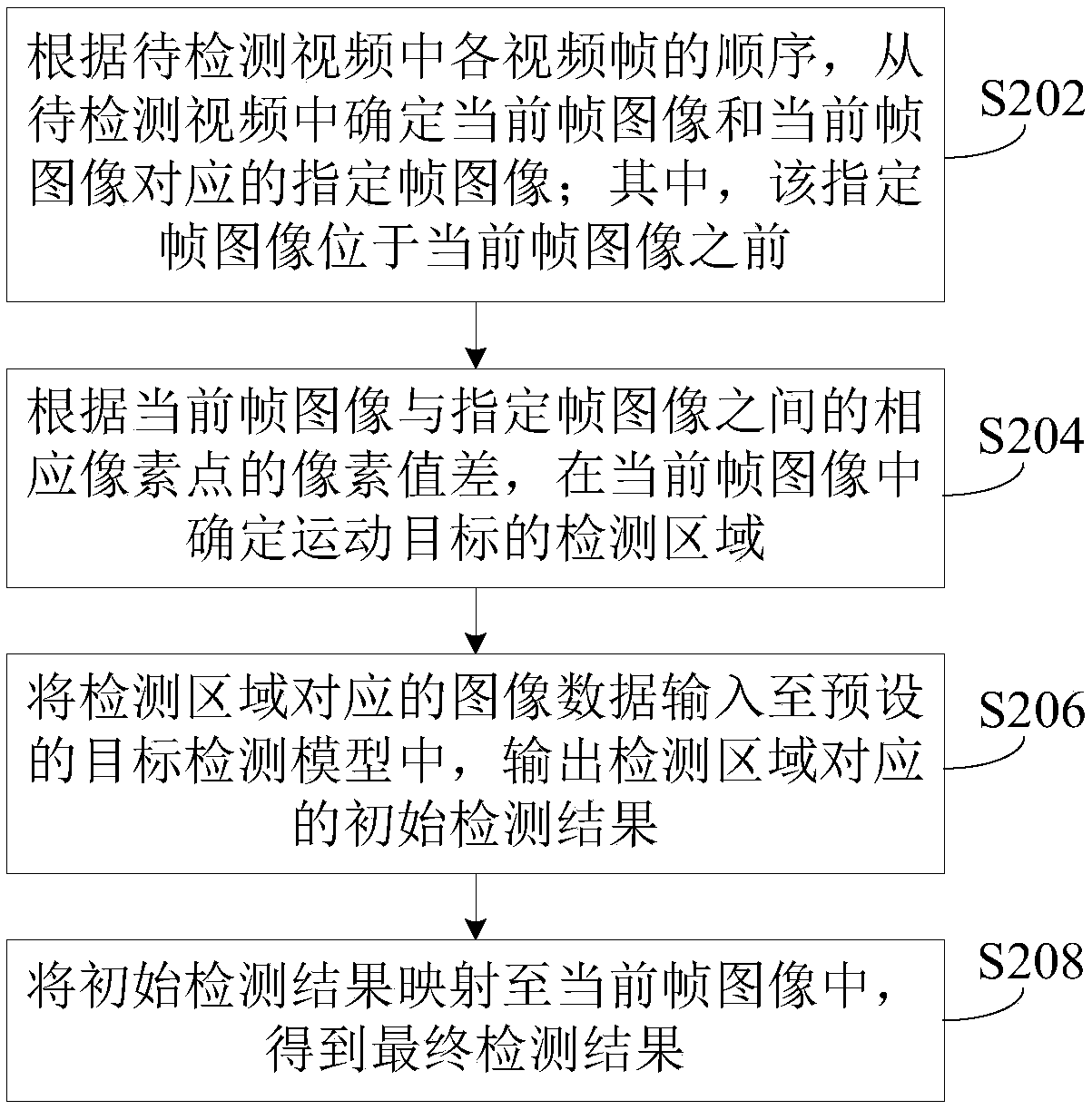

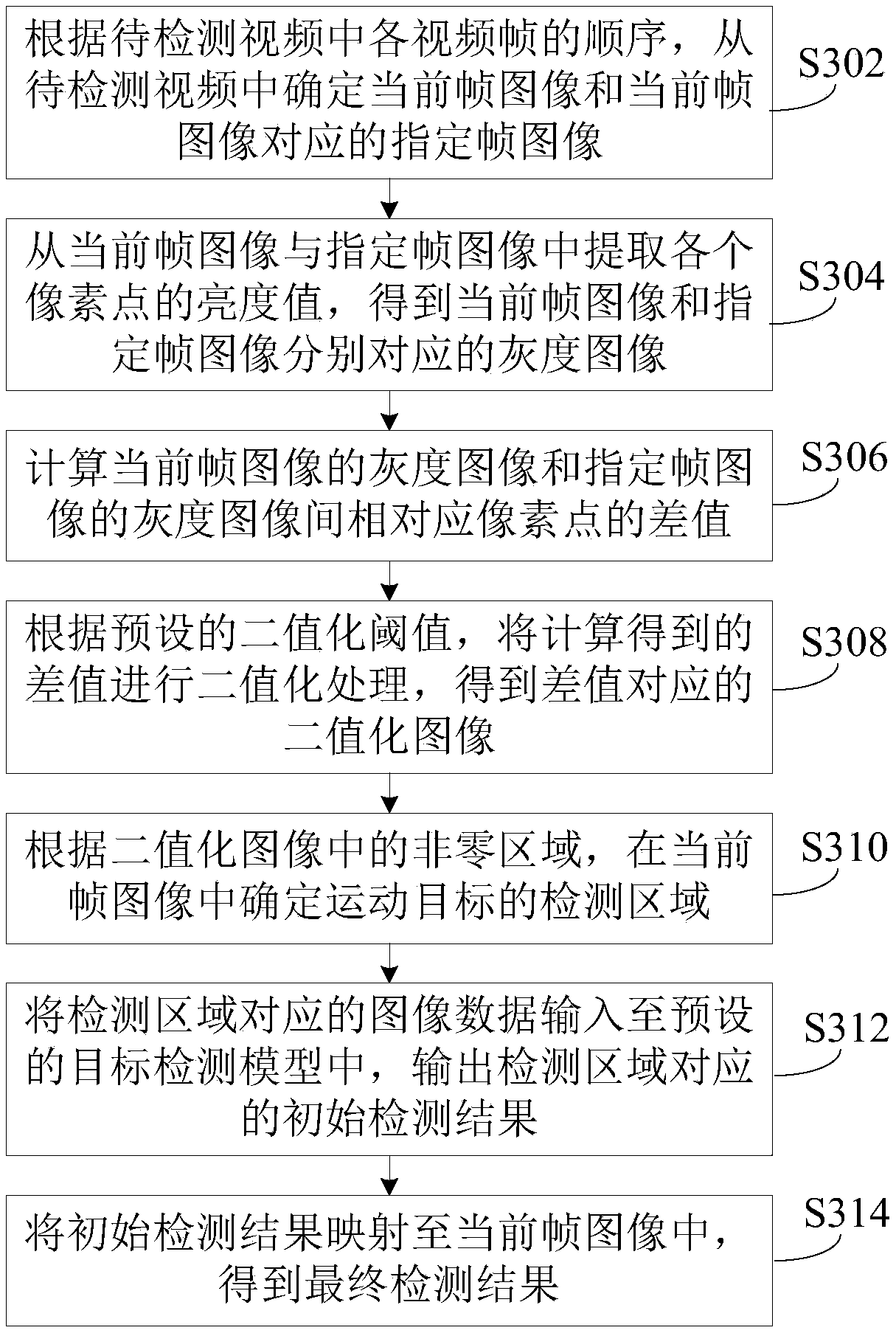

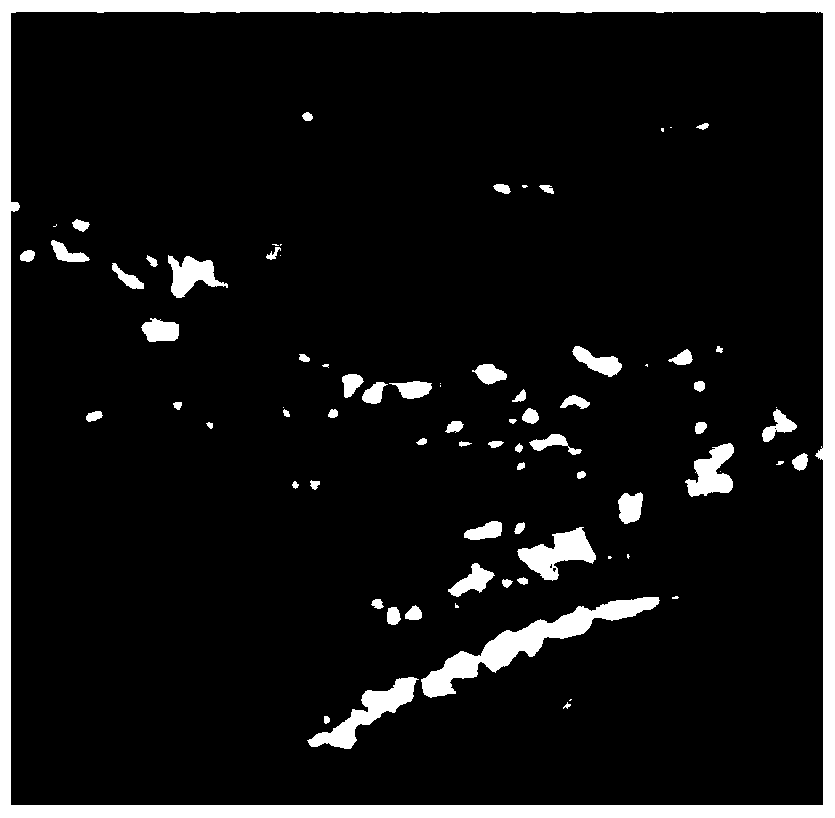

Moving target detection method and device and electronic equipment

InactiveCN110751678ANarrow detection rangeReduce distractionsImage enhancementImage analysisPixel value differenceEngineering

The invention provides a moving target detection method and device, and electronic equipment. The method comprises the following steps: determining a current frame image and a specified frame image corresponding to the current frame image from a to-be-detected video according to the sequence of each video frame in the to-be-detected video; determining a detection area of the moving target according to the pixel value difference of the corresponding pixel points between the current frame image and the specified frame image; inputting the image data corresponding to the detection area into a preset target detection model, and outputting an initial detection result corresponding to the detection area; and mapping the initial detection result to the current frame image to obtain a final detection result. According to the embodiment of the invention, the rough detection area of the moving target can be obtained through the pixel value difference between the two frames of images, thereby reducing the detection range of the target detection model, and improving the detection efficiency. Meanwhile, most static targets are eliminated from the detection area, so that the interference on dynamic target detection of the model is reduced, and the detection accuracy is improved.

Owner:BEIJING DIDI INFINITY TECH & DEV

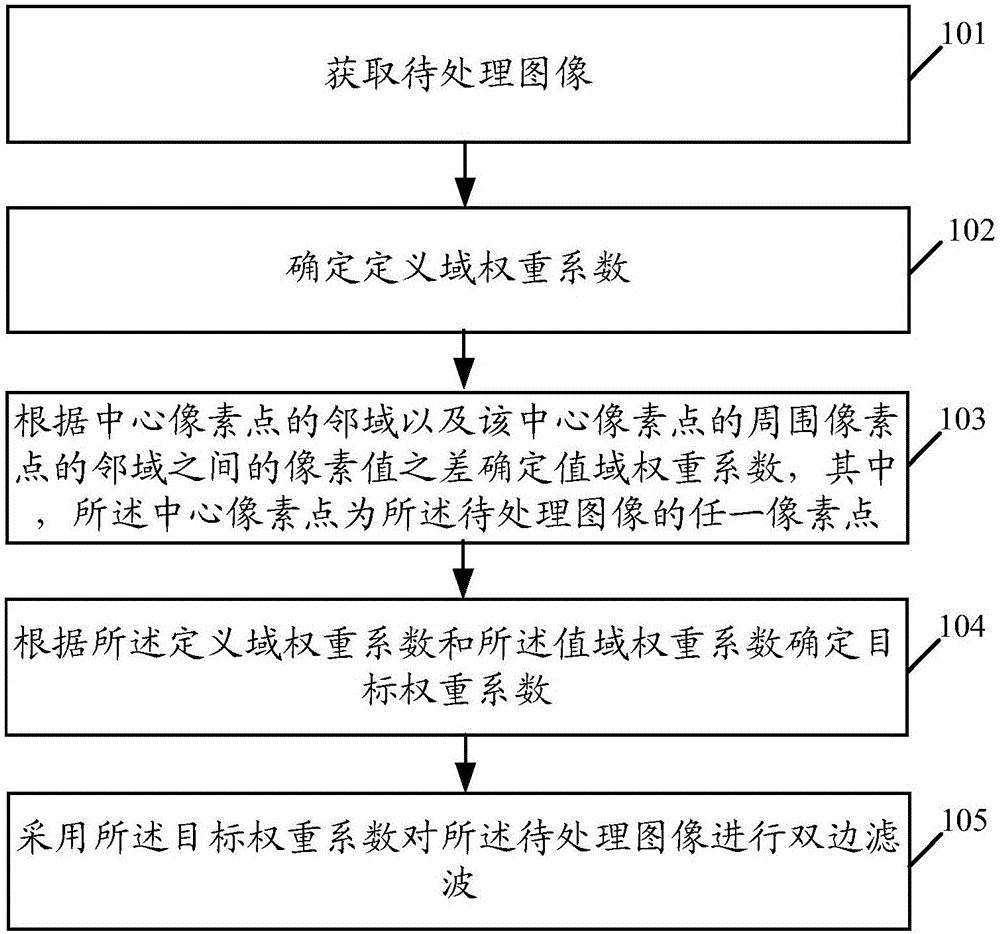

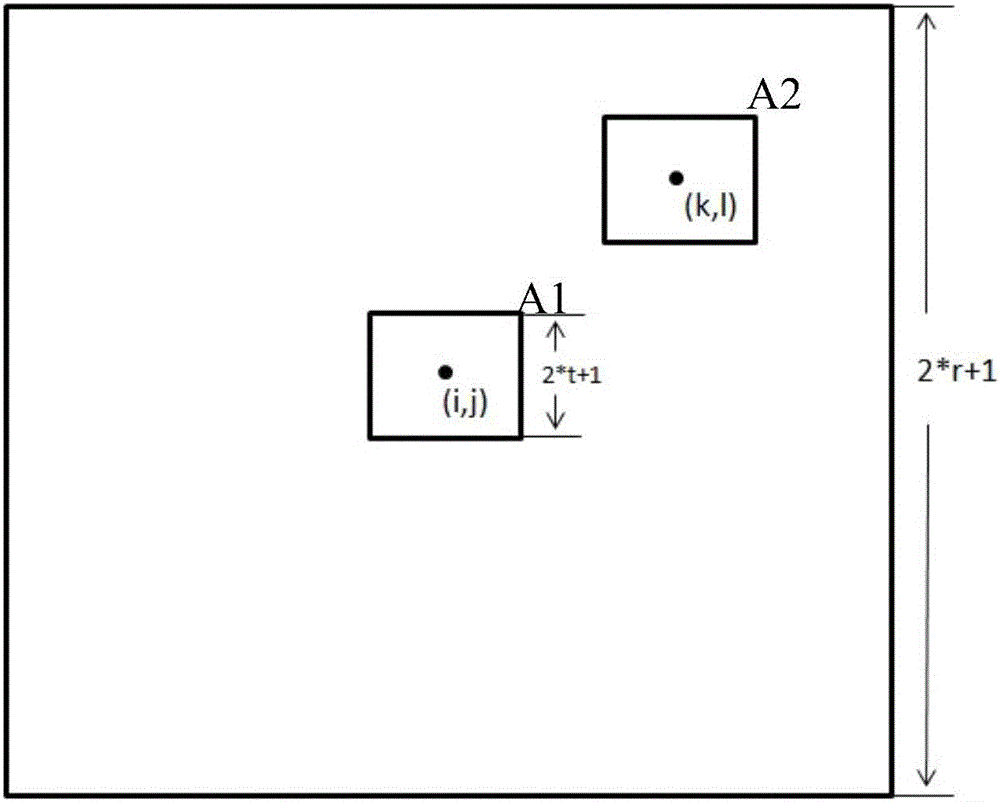

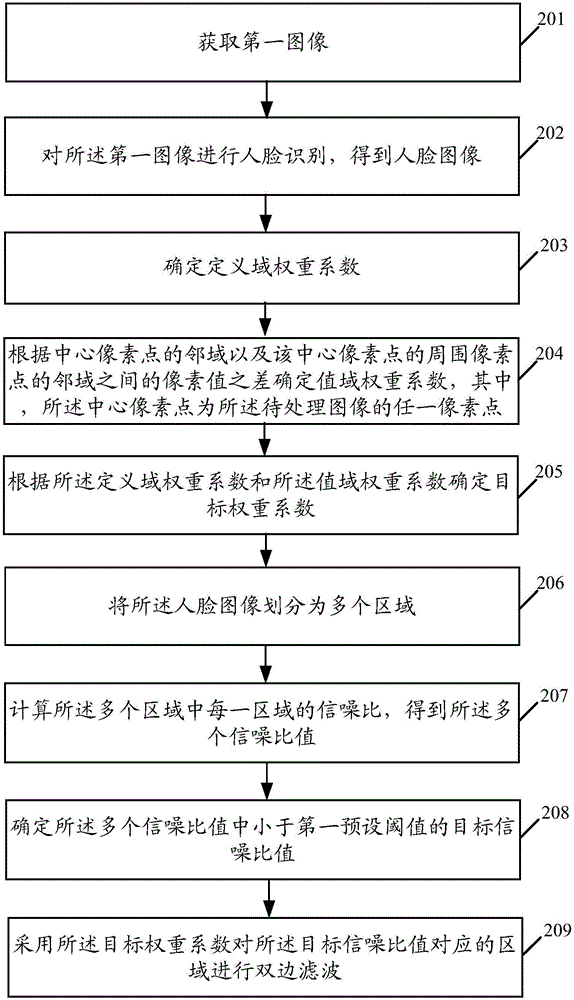

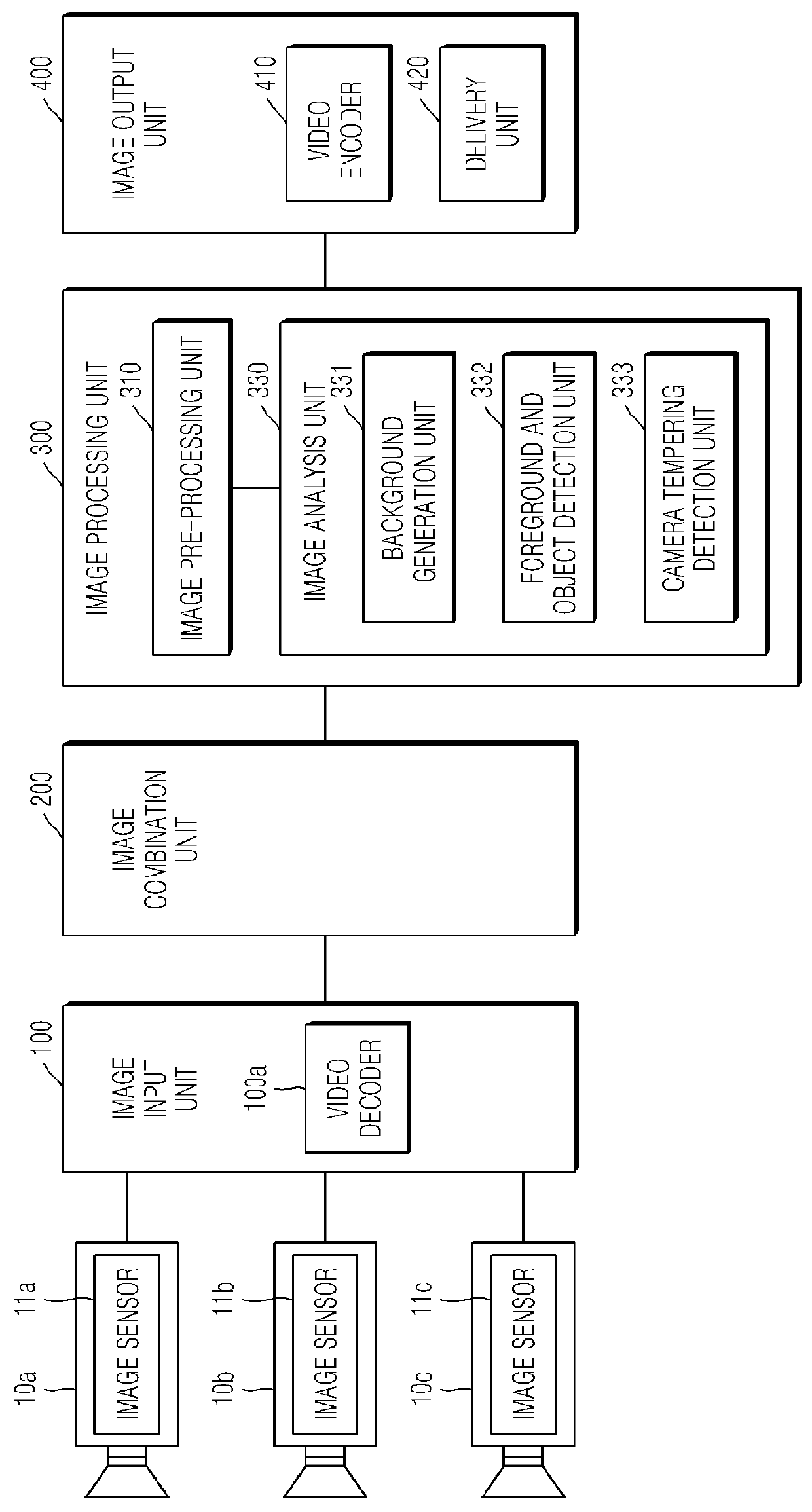

Image processing method and terminal

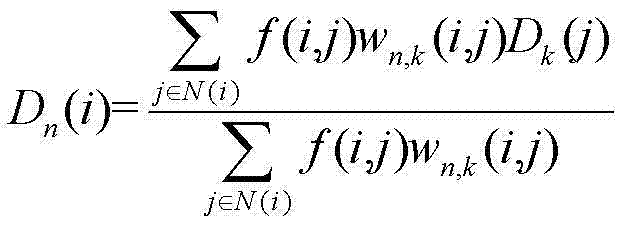

ActiveCN106373095APreserve image detailNatural beauty effectImage enhancementTelevision system detailsImaging processingPixel value difference

An embodiment of the invention provides an image processing method. The method comprises the steps of obtaining a to-be-processed image; determining a definitional domain weight coefficient; determining a value domain weight coefficient according to pixel value differences between a neighborhood of a central pixel point and neighborhoods of surrounding pixel points of the central pixel point, wherein the central pixel point is any pixel point of the to-be-processed image; determining a target weight coefficient according to the definitional domain weight coefficient and the value domain weight coefficient; and performing bilateral filtering on the to-be-processed image by adopting the target weight coefficient. An embodiment of the invention furthermore provides a terminal. Through the method and the terminal provided by the embodiments of the invention, the facial beautification effect can be more natural on the basis of keeping image details.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

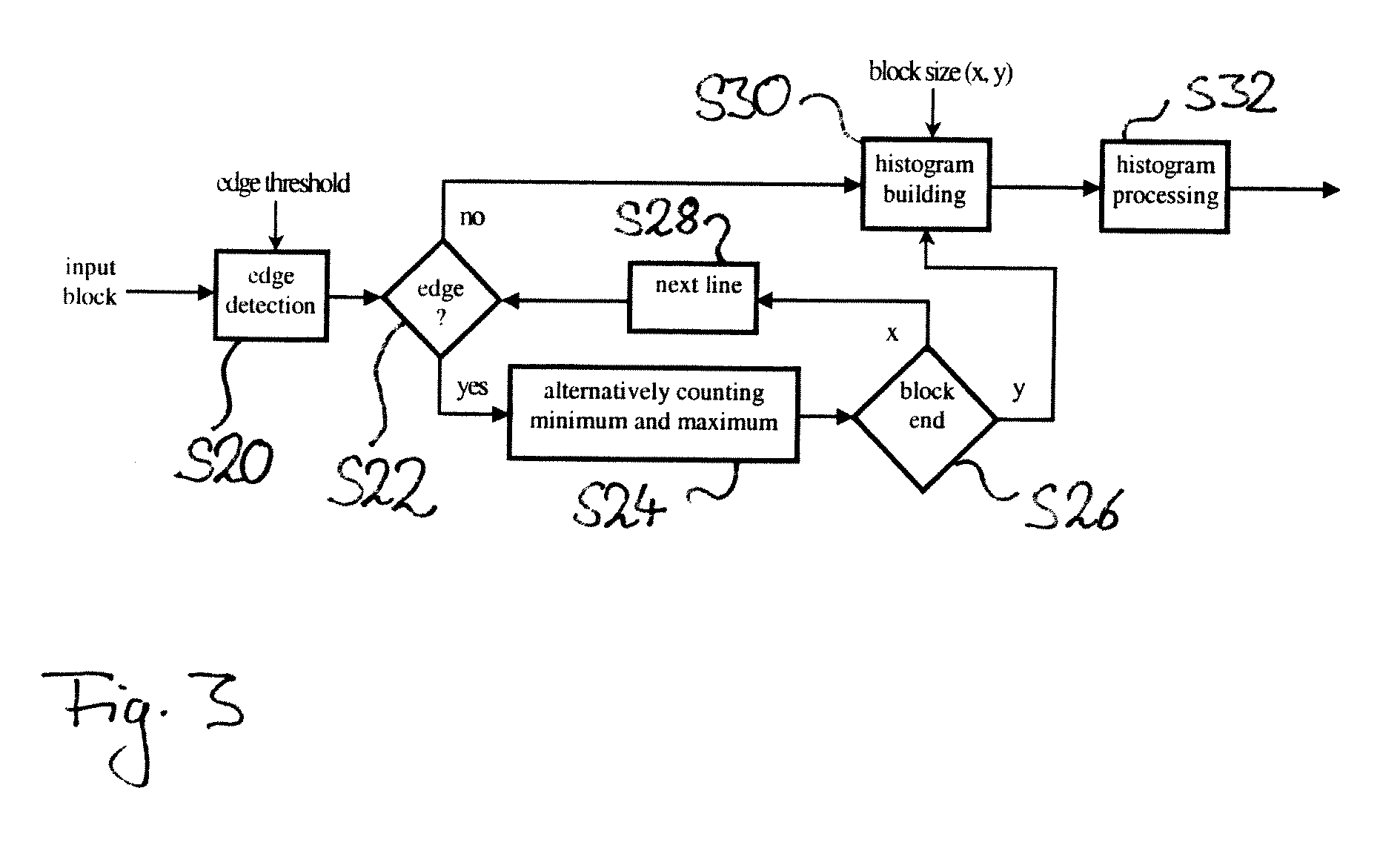

Adaptive histogram equalization for images with strong local contrast

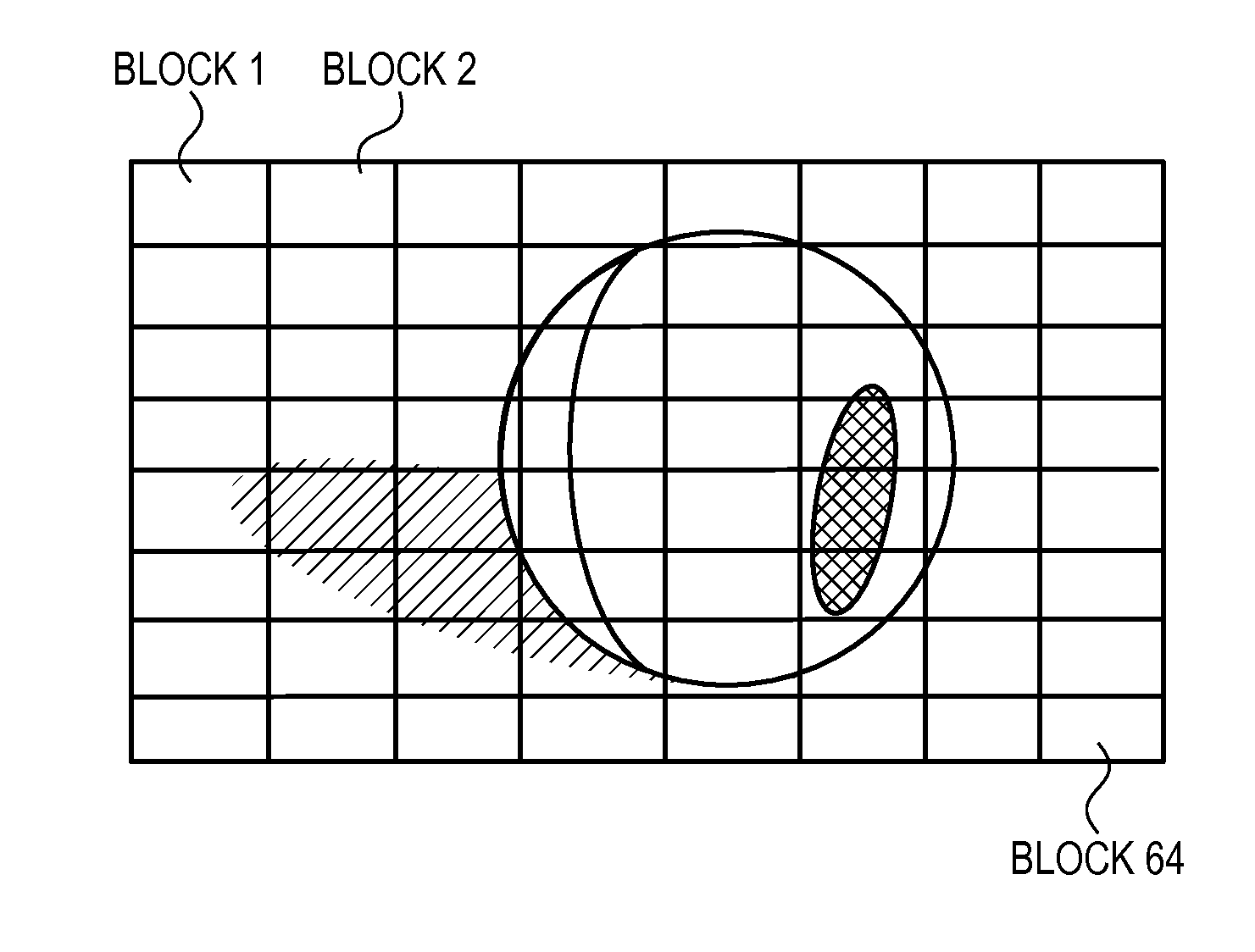

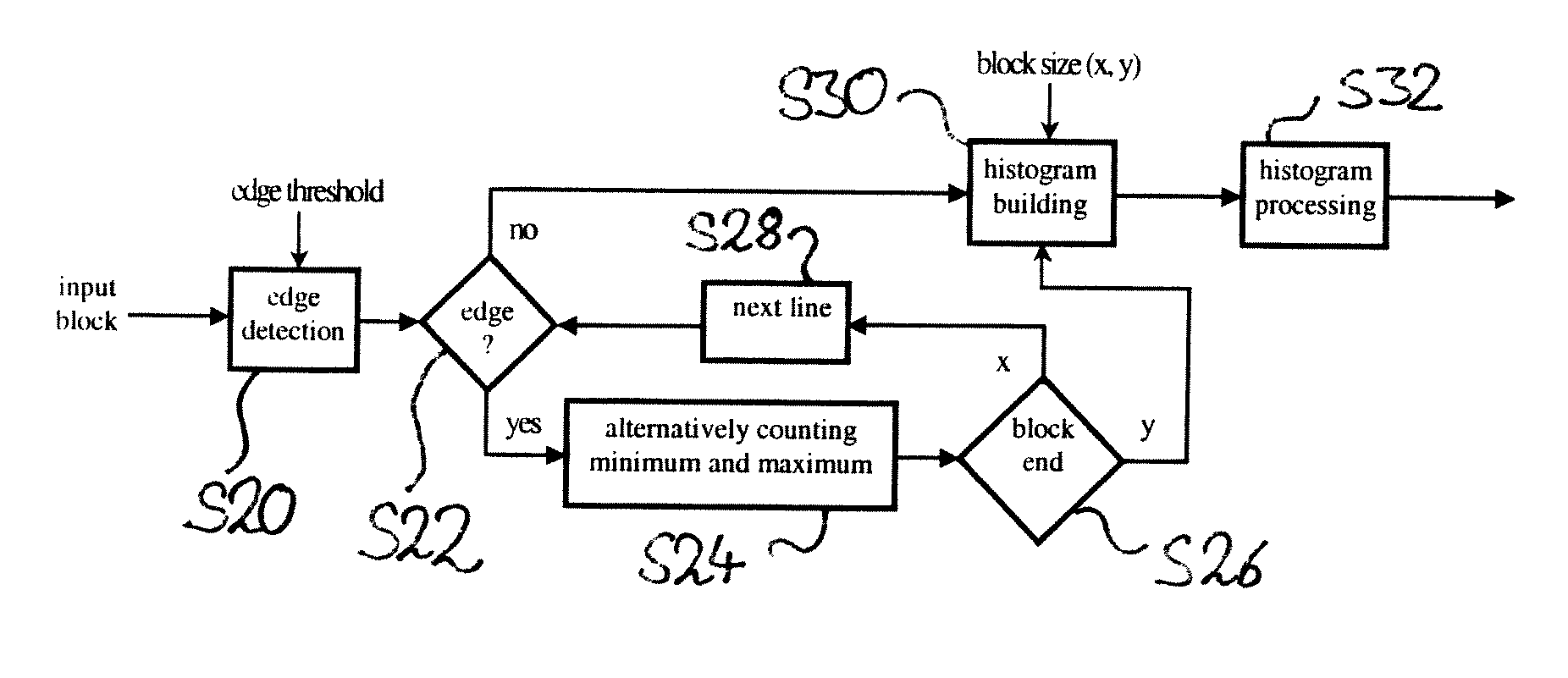

InactiveUS20070230788A1Increase contrastReduce artifactsImage enhancementTelevision system detailsPixel value differenceContrast enhancement

The present invention relates to a method, apparatus and computer program product for contrast enhancement of images based on adaptive histogram equalization. In particular it relates to preventing adaptive histogram equalization from causing fading artifacts and object extension artifacts. An adaptive histogram equalization method is provided comprising the steps of dividing an image into regions of pixels, determining structures of local pixel value differences of a predefined strength of the image, building for every region a histogram of the pixel values based on the determined structures of local pixel value differences and mapping pixel values of each region based on the histogram corresponding to the region.

Owner:SONY DEUT GMBH

Parking event detecting method based on double tracking system

InactiveCN103136514AGood removal effectEnsure safetyImage analysisDetection of traffic movementTraffic crashPixel value difference

The invention discloses a parking event detecting method based on a double tracking system. By means of segmentation of a plurality of block areas and drawing of a dynamic background, the sum of absolute values of pixel value differences of each area of the background corresponding to a current frame is calculated, the steps are repeated, a suspicious area is marked and assigned a value 255, a connected domain analysis is conducted, and whether a car is parked on the suspicious area is confirmed. The parking event detecting method based on the double tracking system is free from environmental constraints, a real-time detection can be conducted, the impacts of shadows, lights, threw objects and surface gathered water can be eliminated preferably by means of the combination of the analysis of tracing lines, the algorithm can achieve real-time and accurate detection of road unusual parking events, rescue and handling of a traffic accident can be conducted timely and effectively, a secondary accident is prevented from happening, and the safety of road operation is further guaranteed.

Owner:CHANGAN UNIV

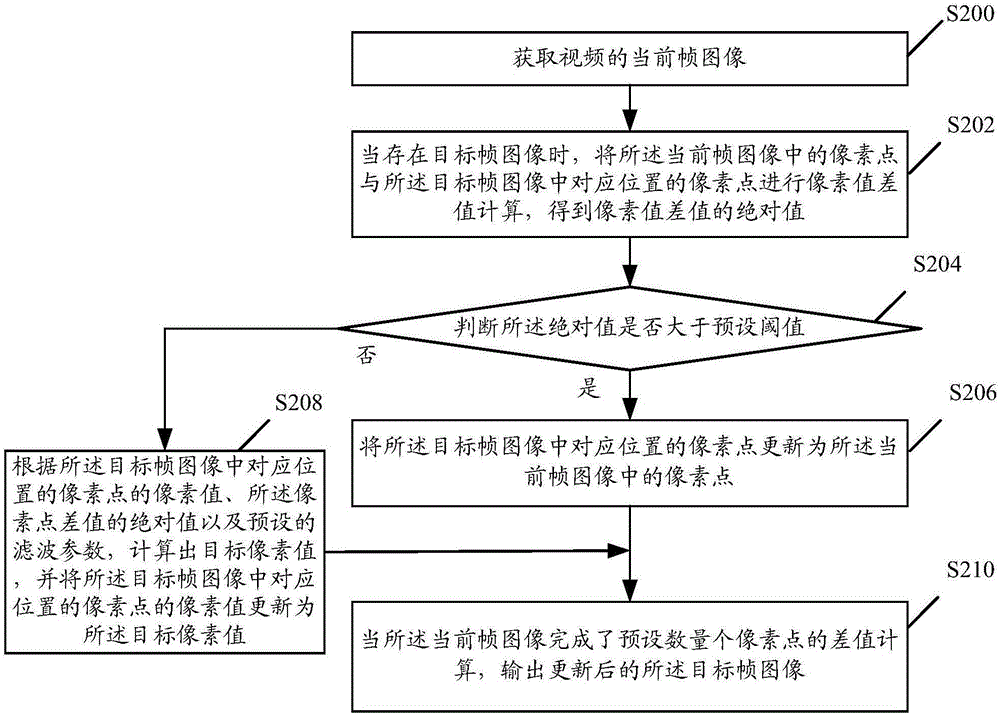

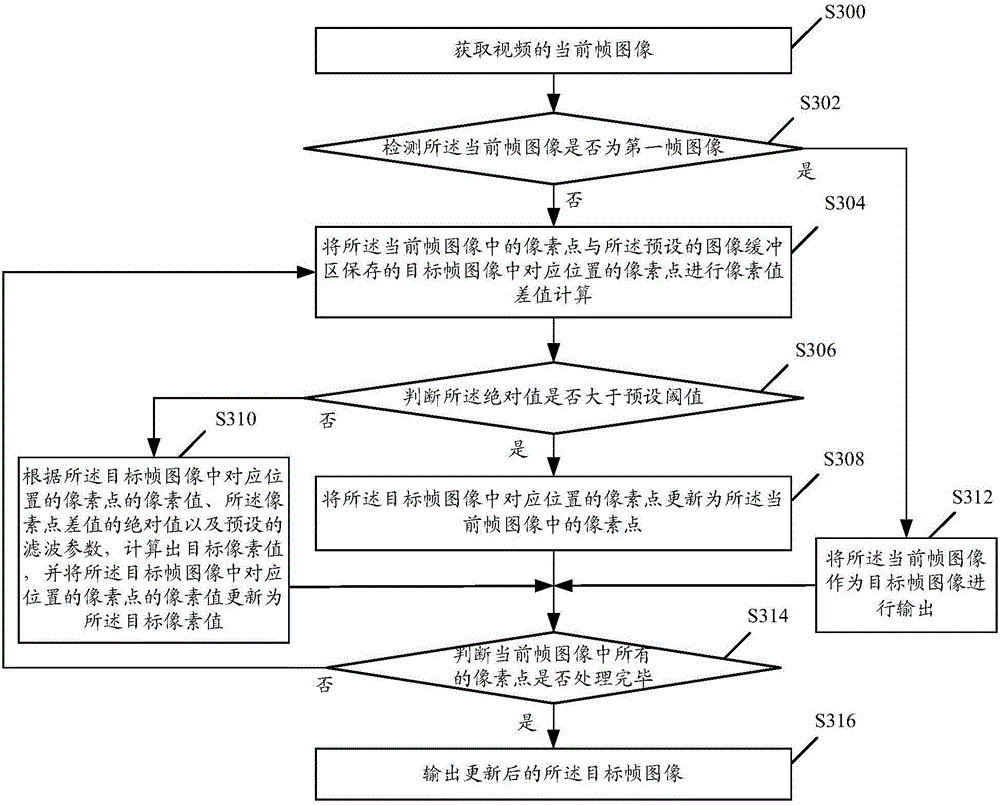

Video de-noising processing method and device

ActiveCN106303157AReduce noise levelReduce power consumptionTelevision system detailsColor television detailsImage denoisingPixel value difference

The embodiment of the present invention discloses a video de-noising processing method. The method comprises: obtaining the current frame image of the video; when there is an object frame image, performing pixel value difference calculation of the pixel point of the current frame image and the pixel point of the corresponding position in the object frame image, and obtaining the absolute value of the pixel value difference, wherein the object frame image is the frame image outputted after the last one image of the current frame image is subjected to de-noising processing; determining whether the absolute value is larger than a presetting threshold value or not; if it is determined that the absolute value is larger than a presetting threshold value, updating the pixel point of the corresponding position in the objet frame image to the pixel point in the current frame image; and when the current frame image completes the difference calculation of the presetting number of pixel points, outputting the updated object frame image. The present invention further discloses a video de-noising processing device. According to the invention, the technical problem is solved that the video de-noising algorithm cannot satisfy the requirements of low real-time video flow calculation amount and having no delay of a mobile terminal in the prior art.

Owner:GUANGZHOU BAIGUOYUAN NETWORK TECH

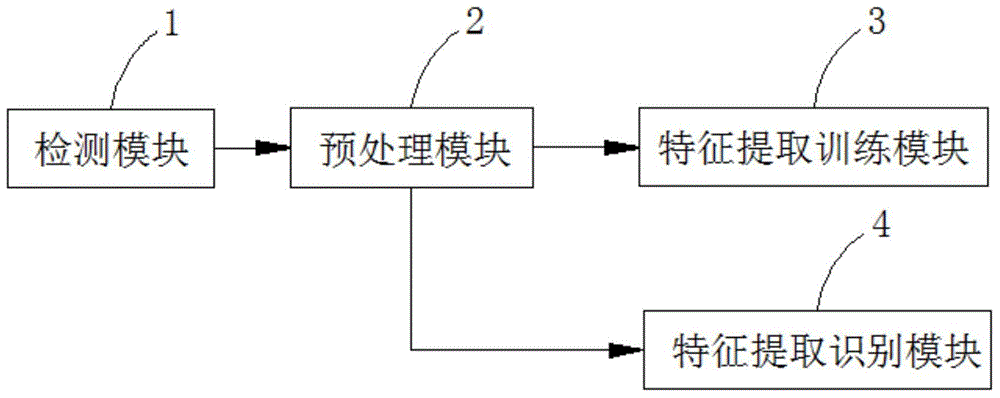

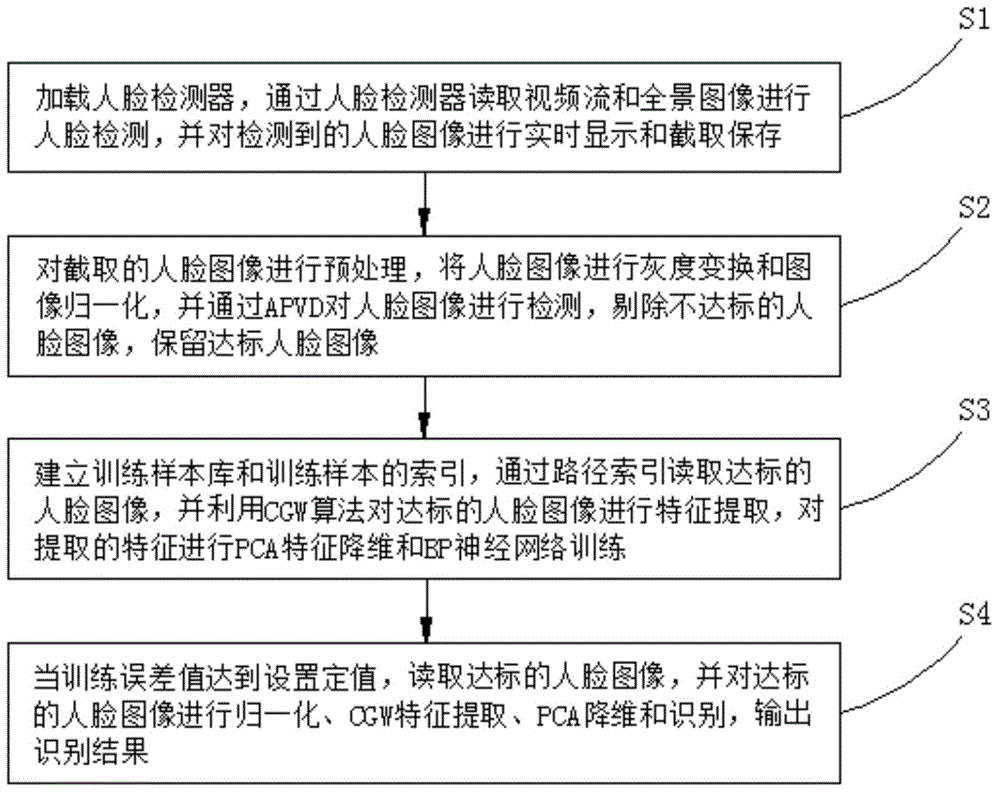

Dynamic face identification system and method

InactiveCN105139003AReduce the impactAvoid interferenceCharacter and pattern recognitionFace detectionFeature extraction

The present invention relates to a dynamic face identification system and method. The system comprises a detection module, a pre-processing module, a feature extraction training module, a feature extraction identification module. The detection module loads a face detector, reads a video stream or a panoramic image to detect the faces and displays, intercepts and saves the detected face images real-timely, the pre-processing module carries out the grey level transformation and the image normalization on the face images, detects by the accumulation of pixel values difference in wavelet domain (APVD) and retains the face images reaching a standard, the feature extraction training module establishes a training sample base and the indexes of the training samples, reads the face images reaching the standard to extract the features, and carries out the PCA feature dimensionality reduction and the BP neural network training, and the feature extraction identification module reads the face images reaching the standard, carries out the normalization, the curve Gabor wavelet (CGW) feature extraction and the PCA dimensionality reduction and identification, and outputs an identification result. According to the present invention, by calculating the fuzzy degree of the images, selecting the face images reaching the identification requirements, and using a curve Gabor wavelet to extract the effective face features, the identification rate is improved.

Owner:桂林远望智能通信科技有限公司

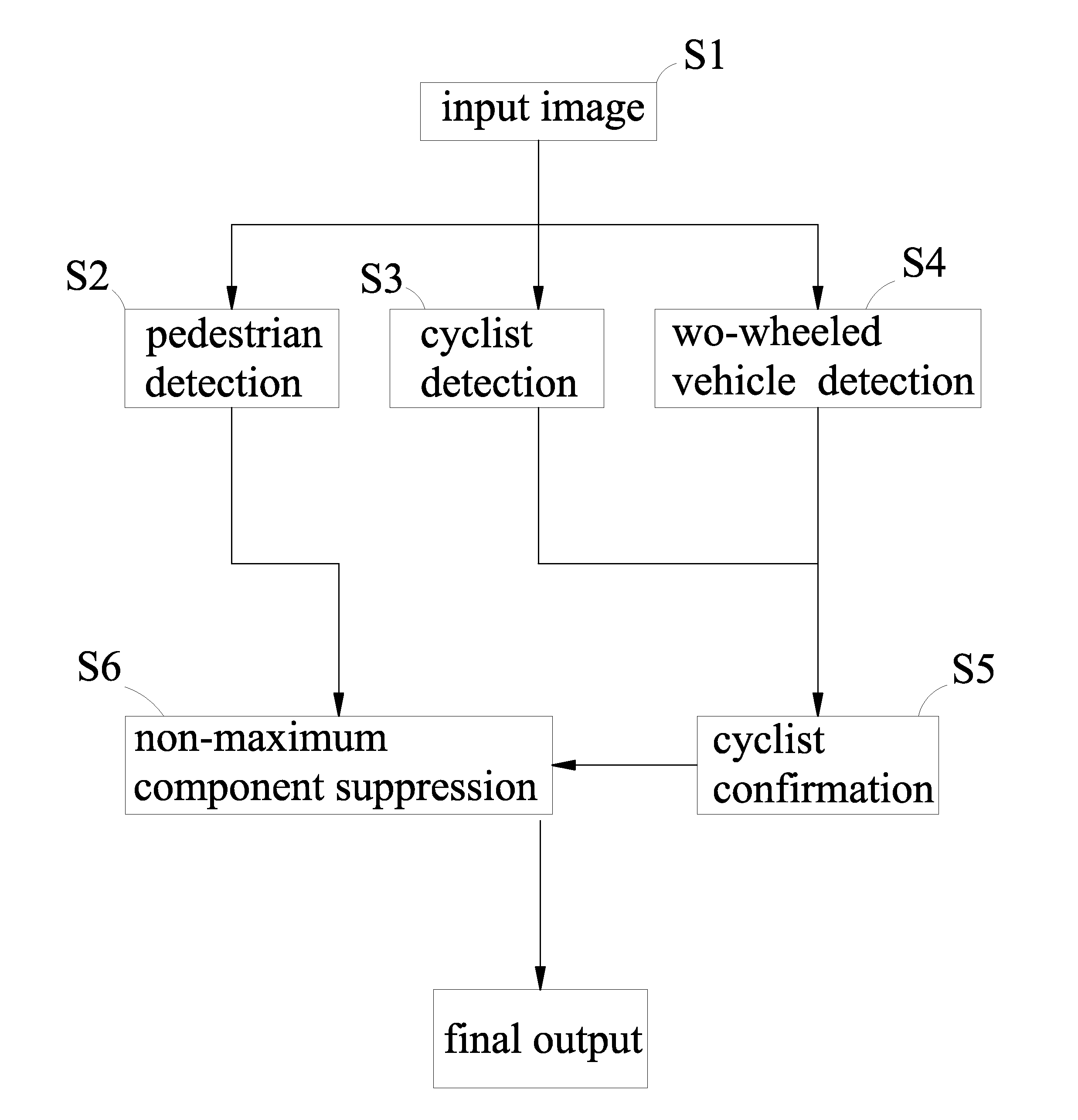

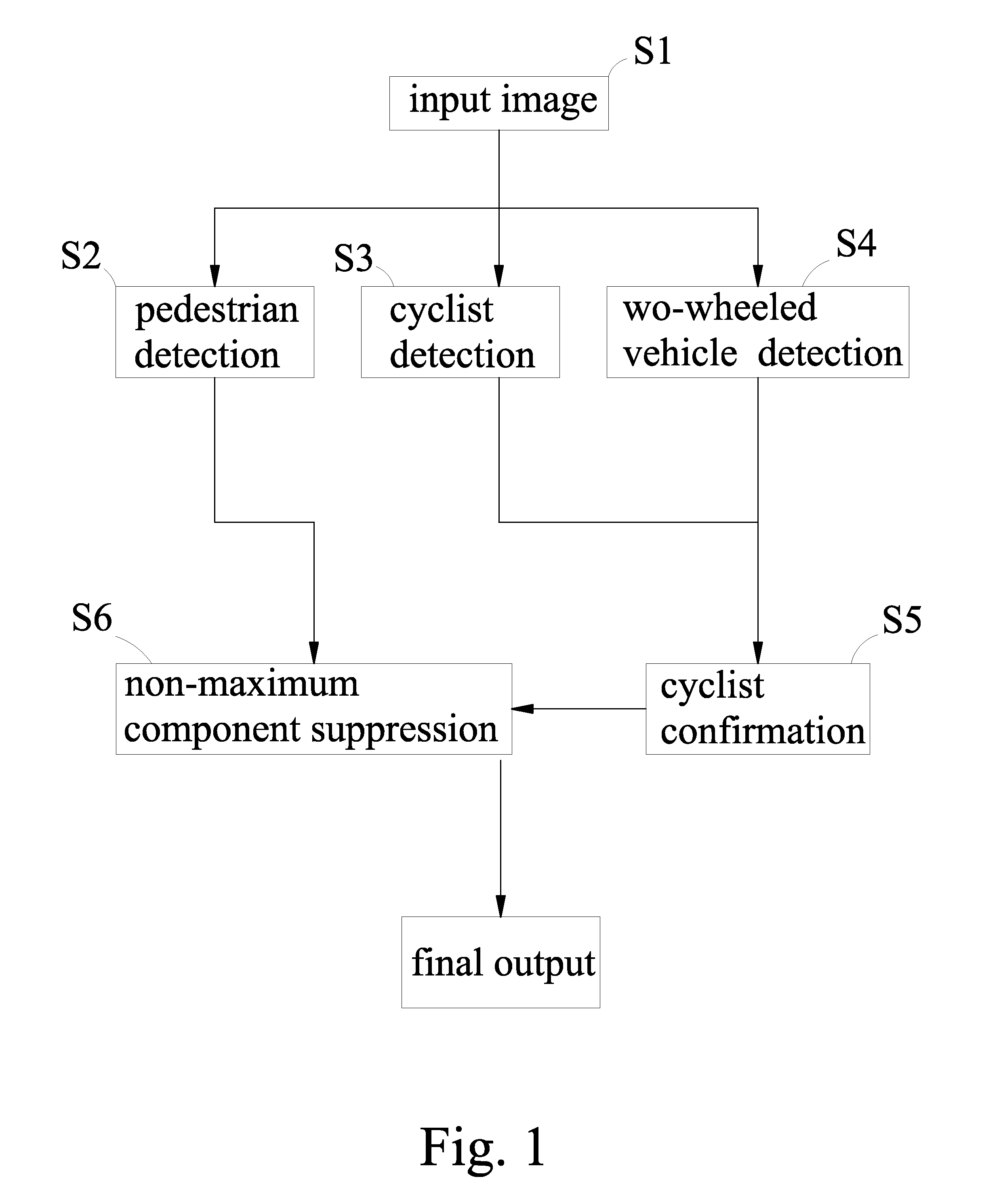

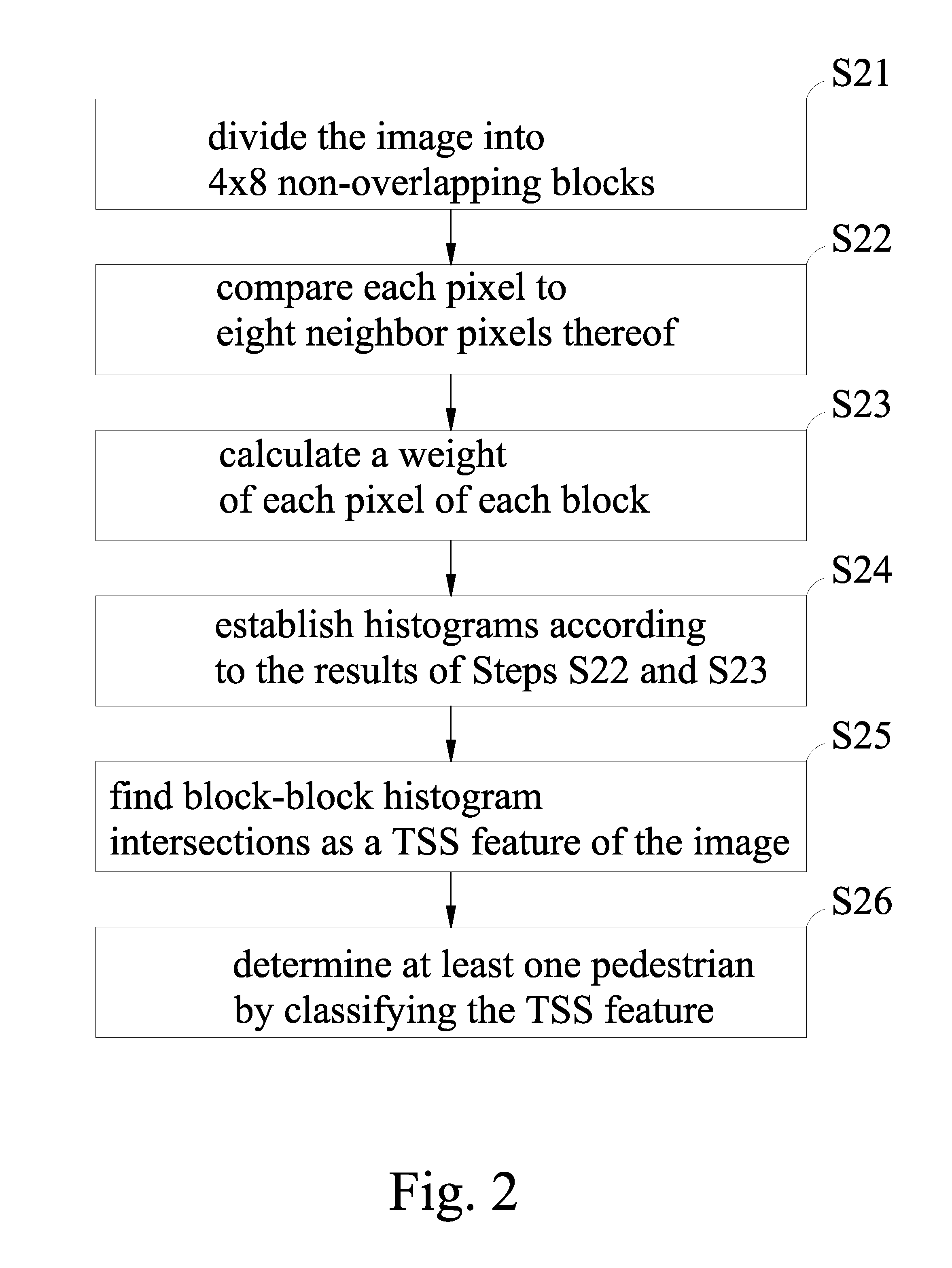

Vision based pedestrian and cyclist detection method

ActiveUS20150161447A1Improving roadway safetyImprove performanceImage enhancementImage analysisPattern recognitionPixel value difference

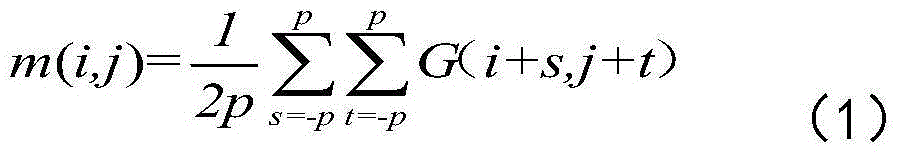

A vision based pedestrian and cyclist detection method includes receiving an input image, calculating a pixel value difference between each pixel and the neighbor pixels thereof, quantifying the pixel value difference as a weight of pixel, proceeding statistics for the pixel value differences and the weights, determining intersections of the statistics as a feature of the input image, classifying the feature into human feature and non-human feature, confirming the human feature belonging to cyclist according to the spatial relationship between the human feature and the detected two-wheeled vehicle, and retaining one detection result for each cyclist by suppressing other weaker spatial relationships between the human feature and the detected two-wheeled vehicle.

Owner:NAT CHUNG SHAN INST SCI & TECH

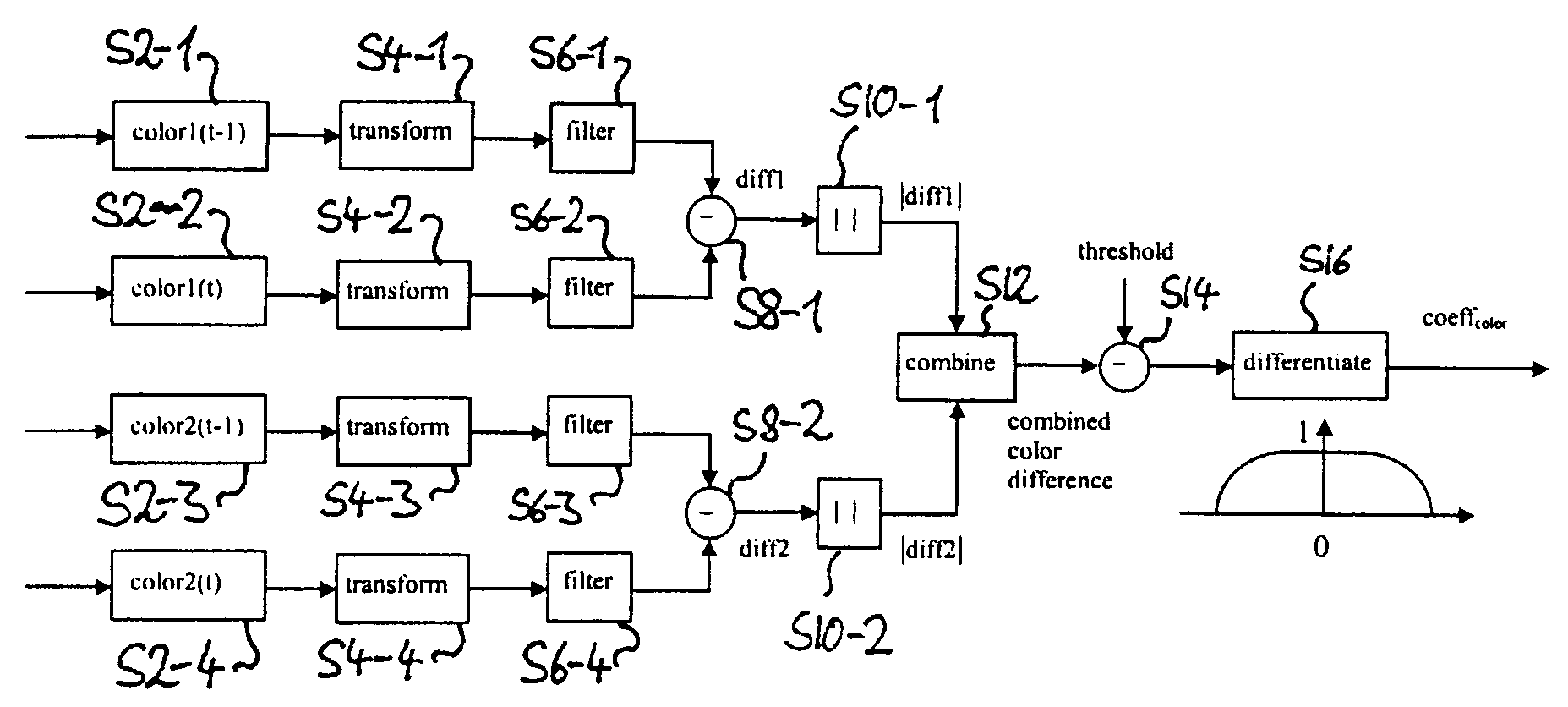

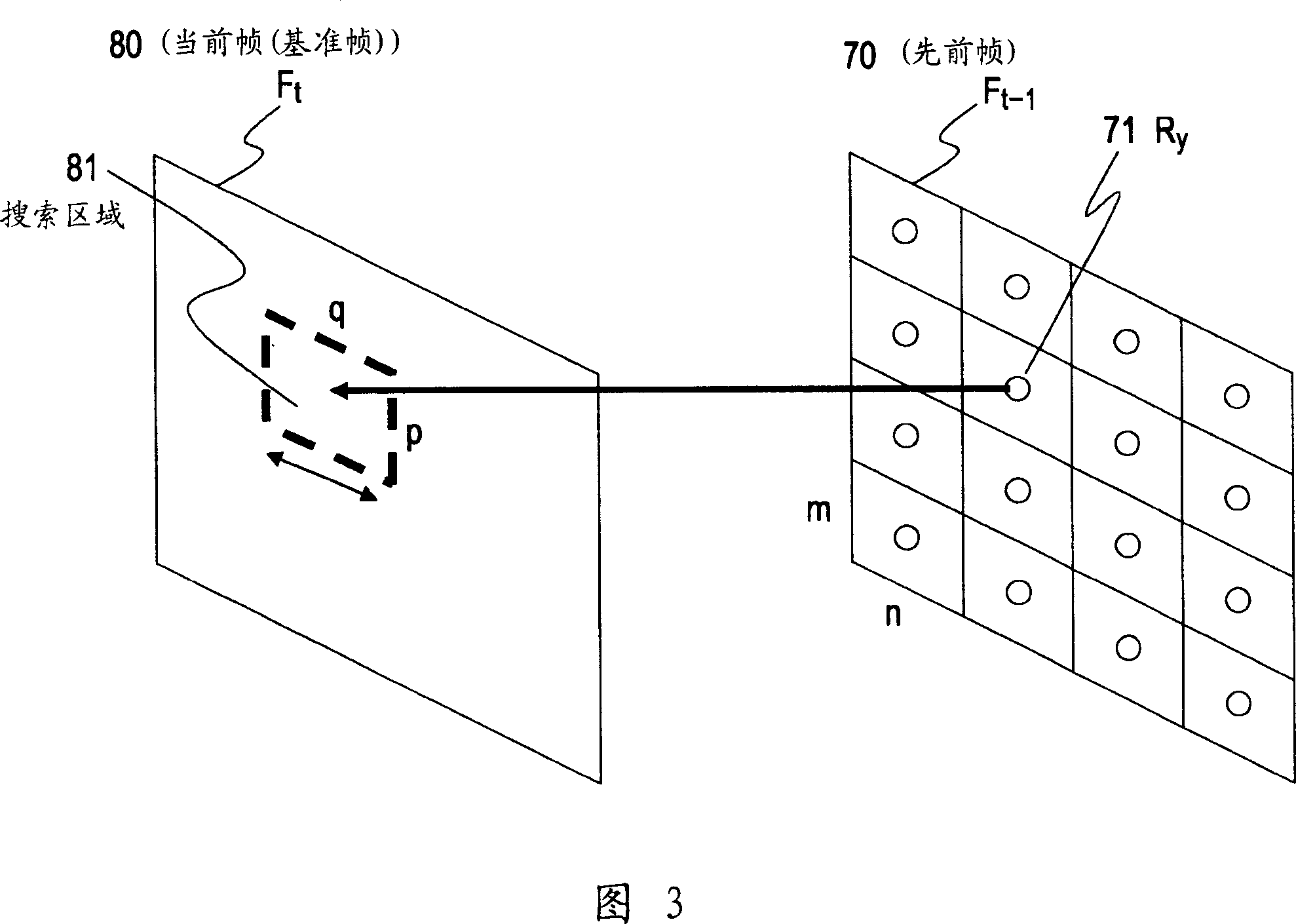

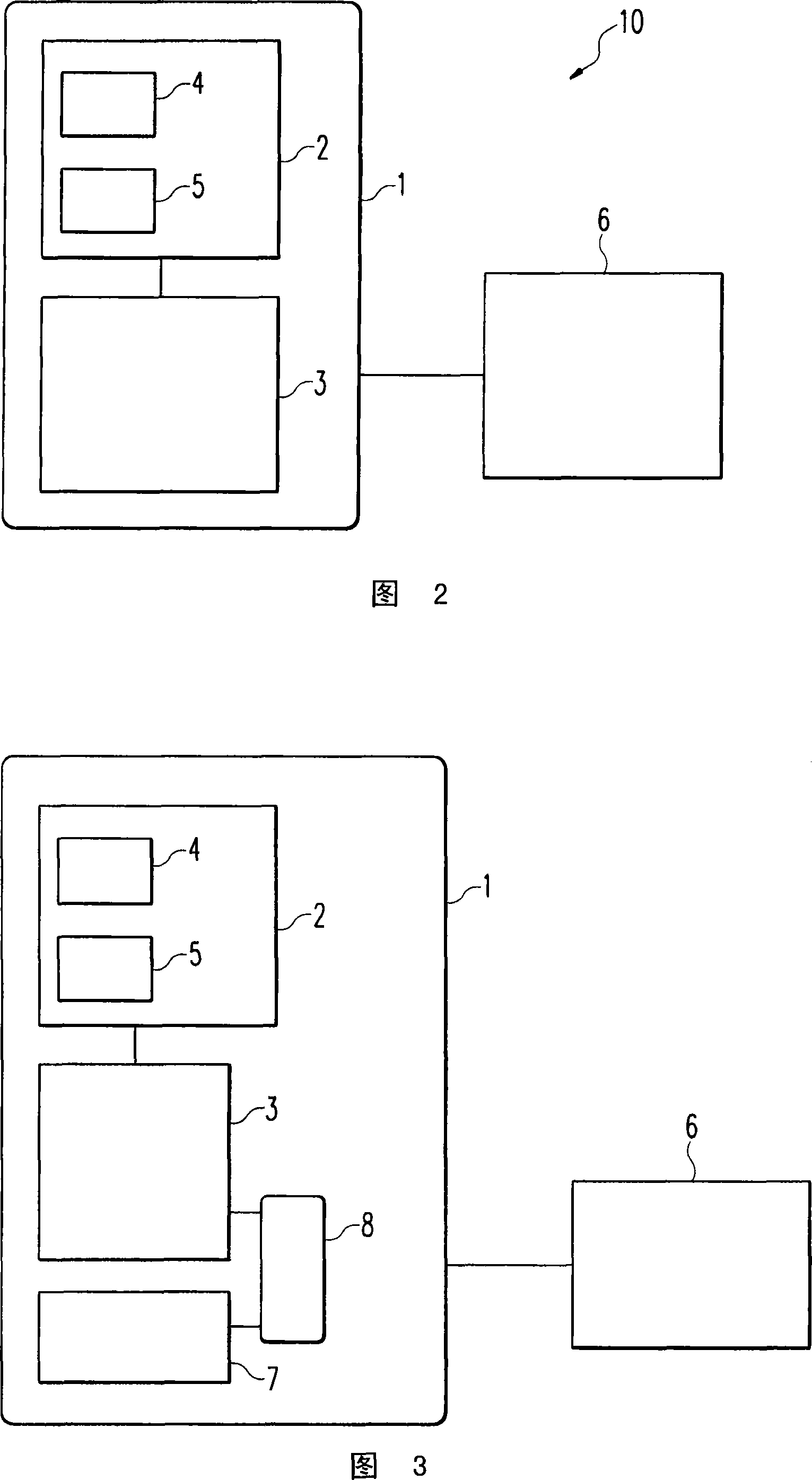

Motion and/or scene change detection using color components

InactiveUS20080129875A1Television system detailsColor signal processing circuitsPattern recognitionTime domain

The present invention relates to the fields of motion detection, scene change detection and temporal domain noise reduction. Especially, the present invention relates to a device, a method and a software for detecting motion and / or scene change in a sequence of image frames. The device for detecting motion and / or scene change in a sequence of image frames comprises a difference generator adapted to calculate one or more differences between values of pixels of separate image frames, the pixel values comprising color information, and a discriminator for providing an indication whether the calculated one or more pixel value differences correspond to noise or correspond to image content based on the one or more pixel value differences.

Owner:SONY DEUT GMBH

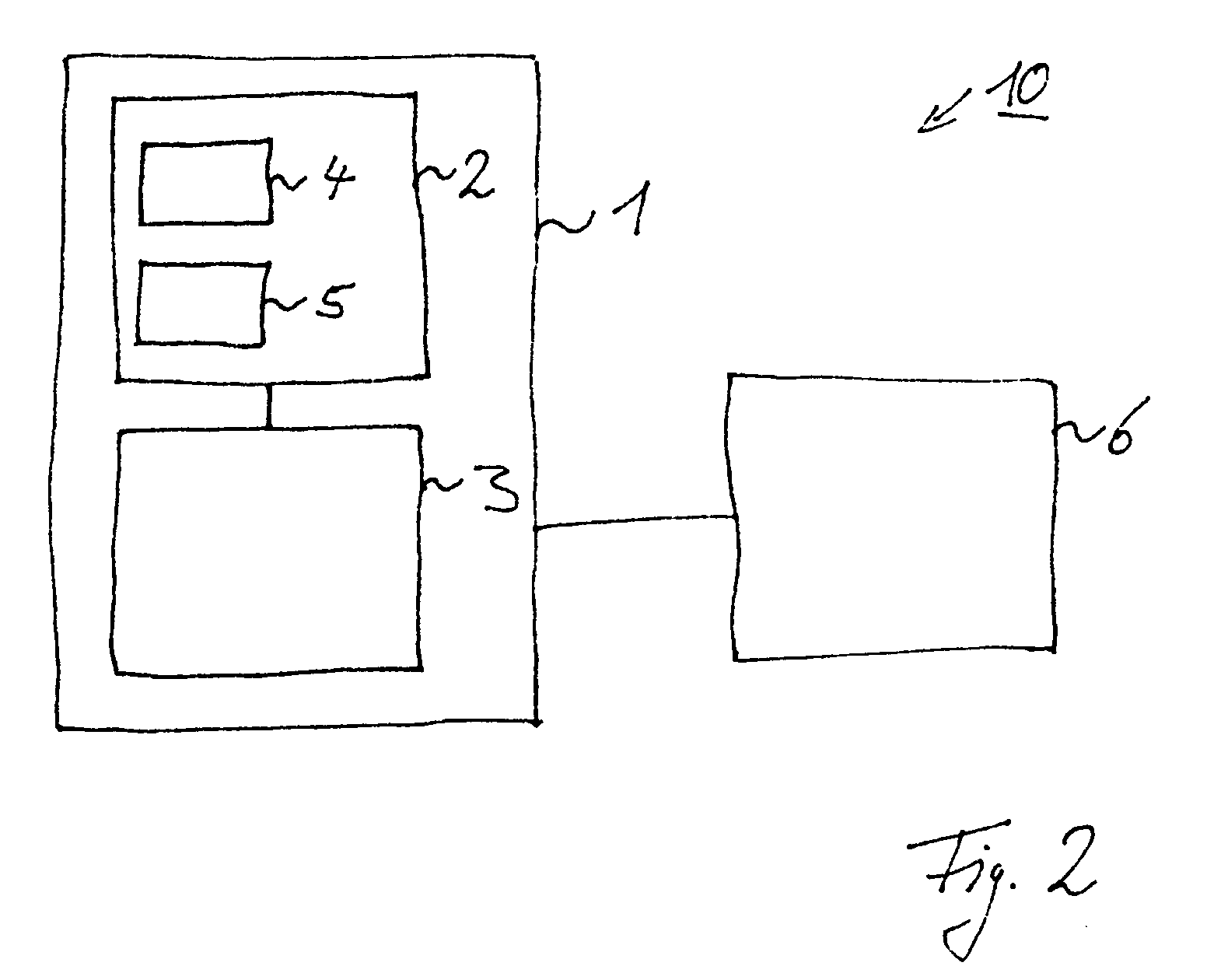

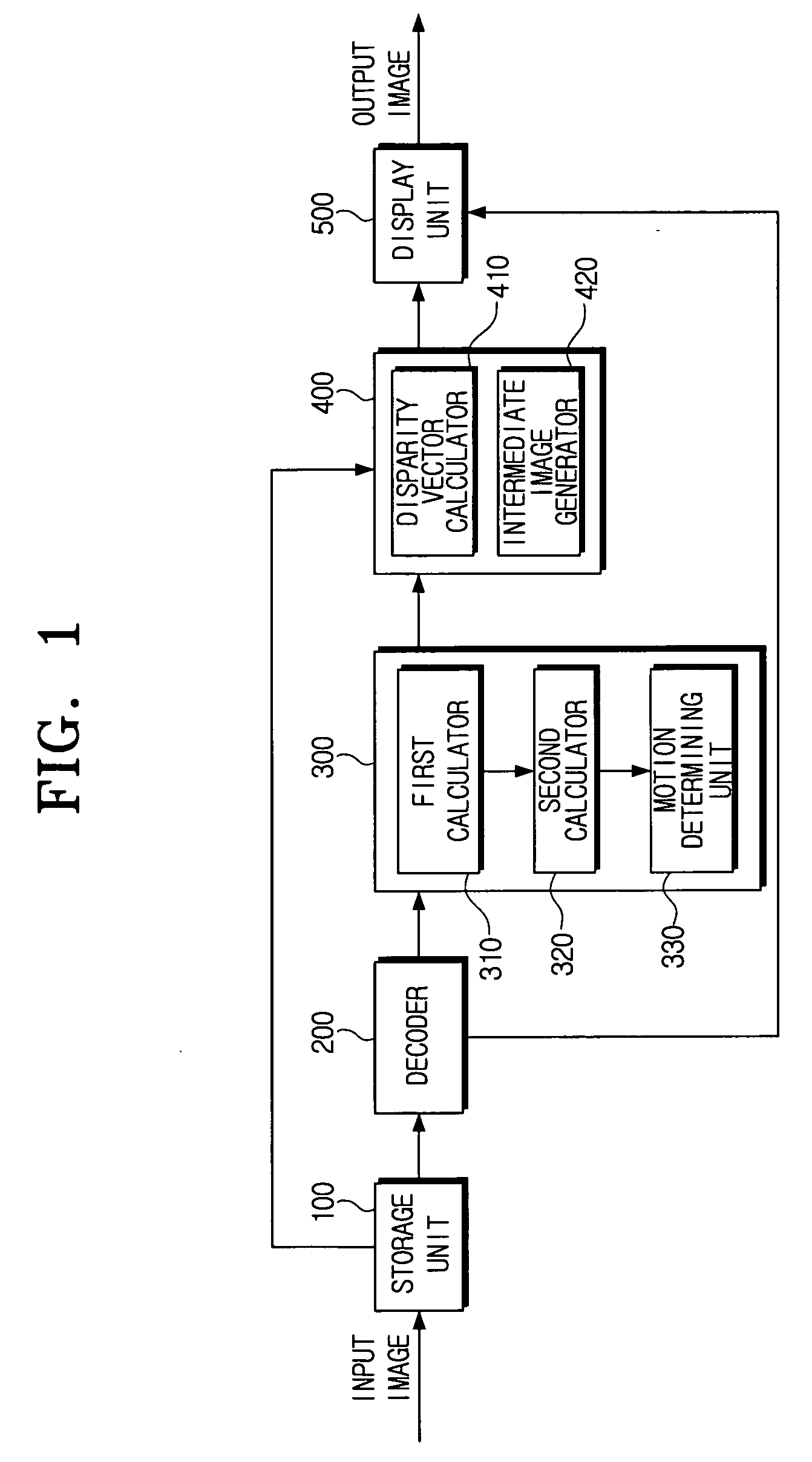

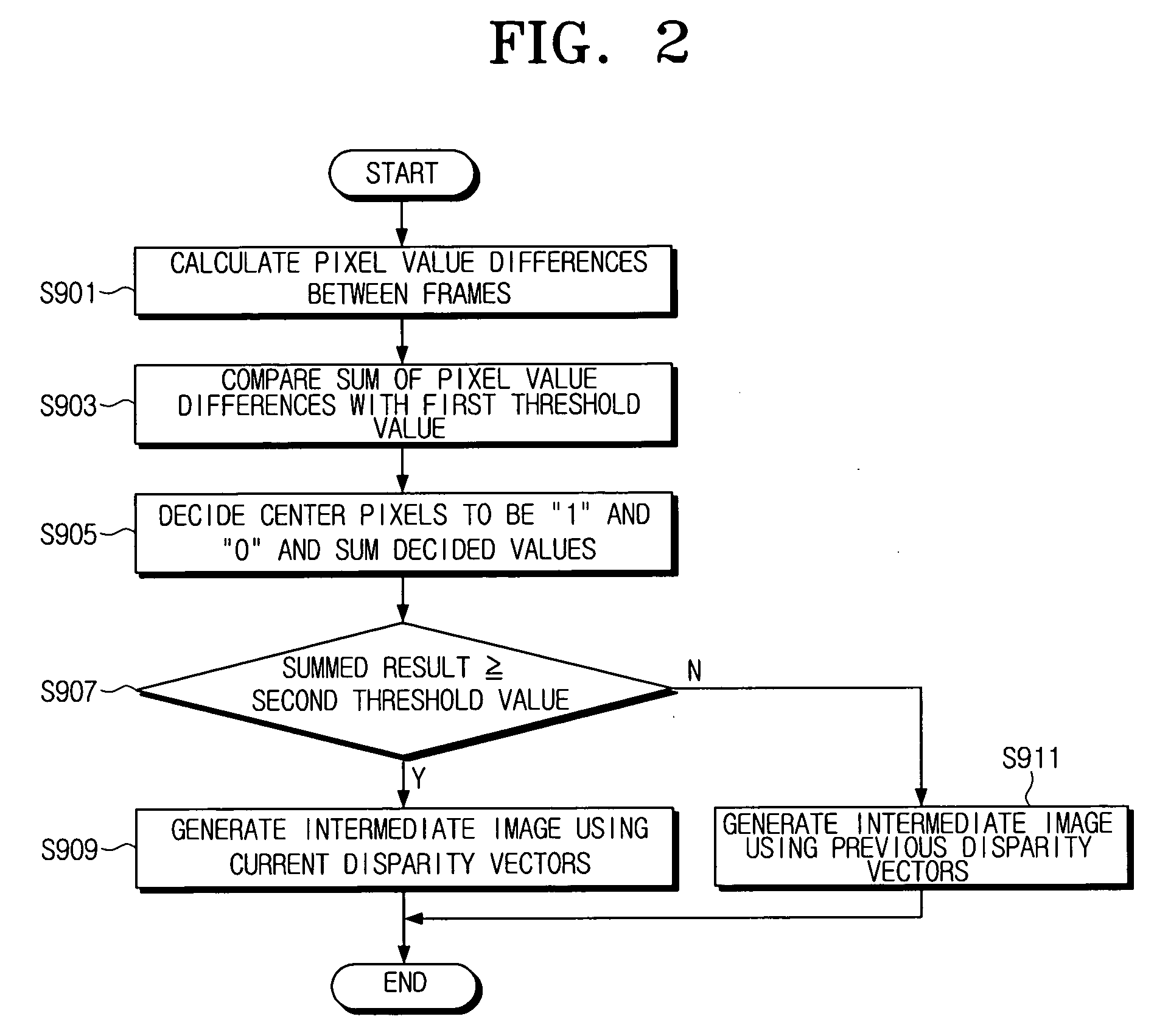

Intermediate vector interpolation method and three-dimensional (3D) display apparatus performing the method

InactiveUS20060285595A1Avoid flickeringColor television with pulse code modulationColor television with bandwidth reductionParallaxIntermediate image

Owner:SAMSUNG ELECTRONICS CO LTD

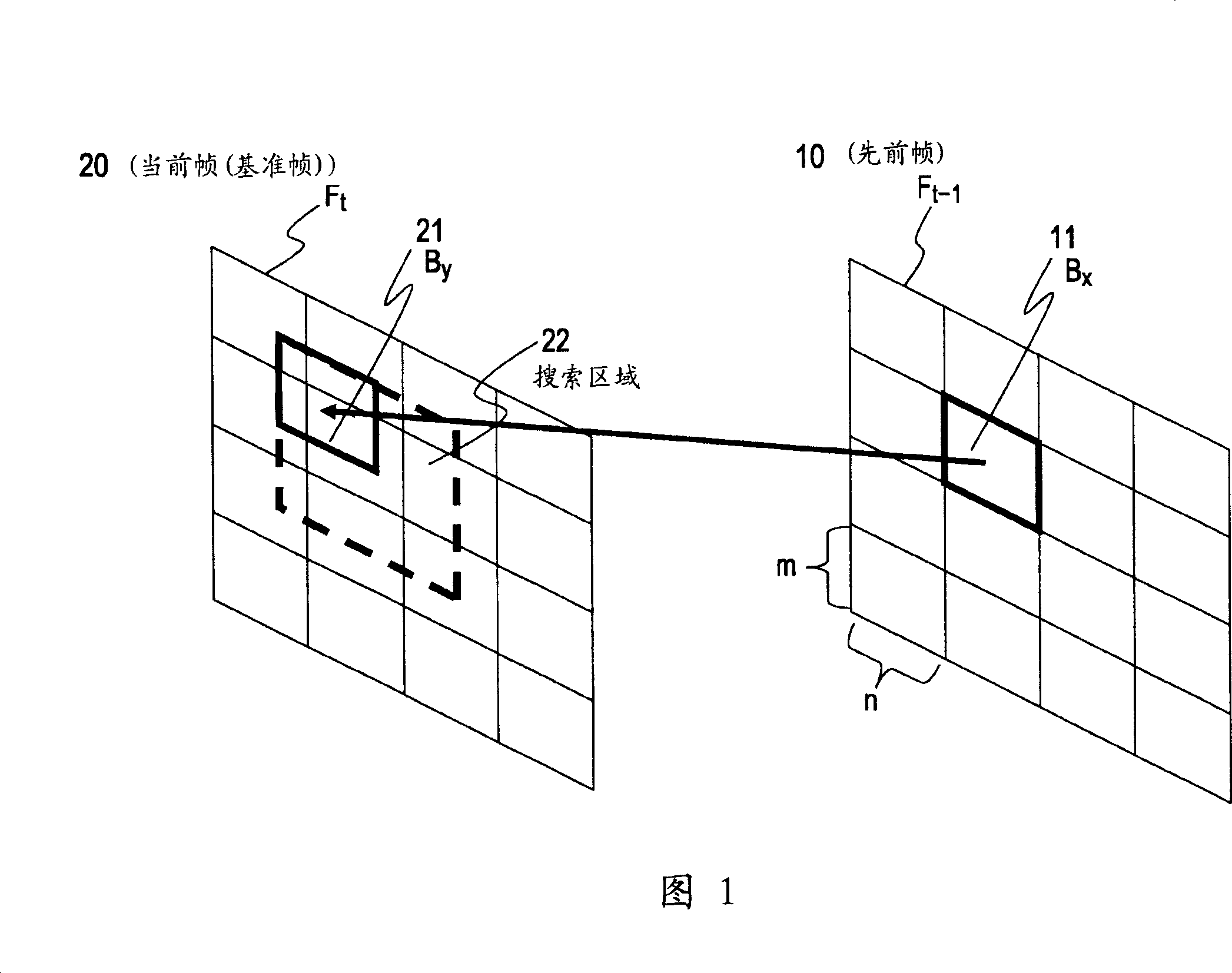

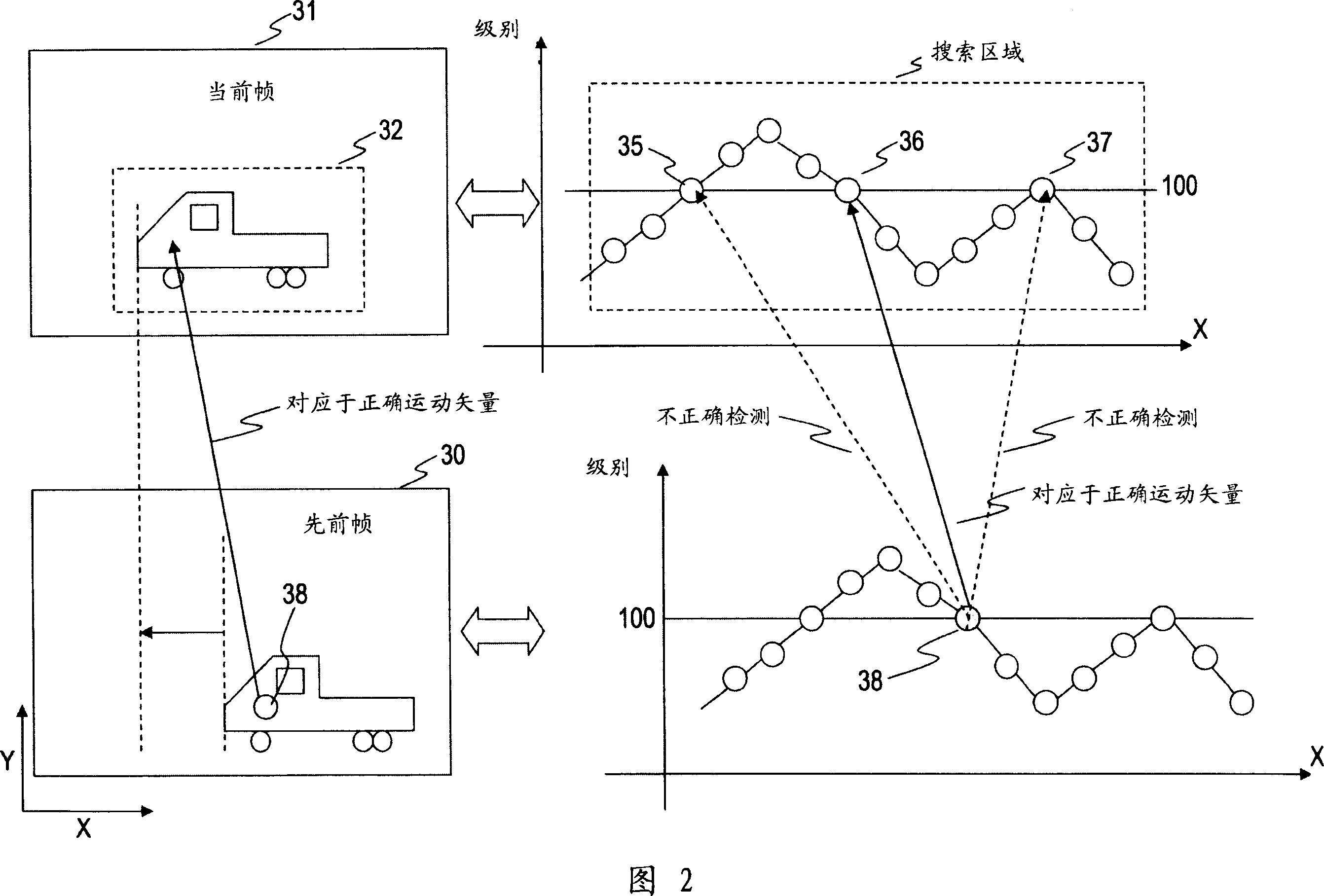

Motion vector detecting apparatus, motion vector detection method and computer program

InactiveCN1926881AAccurate detectionEstimates Table ExactImage enhancementImage analysisPattern recognitionPixel value difference

An apparatus and method are provided which produce a precise evaluation value table to realize an exact motion vector detection process. A weighting factor (W) is calculated based on information of correlation between representative point pixels and on flag correlation information based on flag data corresponding to pixel value difference data between a pixel of interest and its neighboring area pixels. A reliability index is produced based on the calculated weighting factor (W) and on an activity (A) serving as an indicator value indicative of complexity of picture data. Alternatively, a reliability index is produced based on a motion similarity between a representative point pixel and its neighboring pixels. An evaluation value table is produced in which evaluation values corresponding to the reliability indices have been integrated. In addition, a correlation determination is executed based on the pixel values or positions of featuring pixels on the periphery of the pixel of interest to determine a motion vector.

Owner:SONY CORP

Motion and/or scene change detection using color components

InactiveCN101188670ATelevision system detailsColor signal processing circuitsDiscriminatorTime domain

The present invention relates to the fields of motion detection, scene change detection and temporal domain noise reduction. Especially, the present invention relates to a device, a method and a software for detecting motion and / or scene change in a sequence of image frames. The device for detecting motion and / or scene change in a sequence of image frames comprises a difference generator adapted to calculate one or more differences between values of pixels of separate image frames, the pixel values comprising color information, and a discriminator for providing an indication whether the calculated one or more pixel value differences correspond to noise or correspond to image content based on the one or more pixel value differences.

Owner:SONY DEUT GMBH

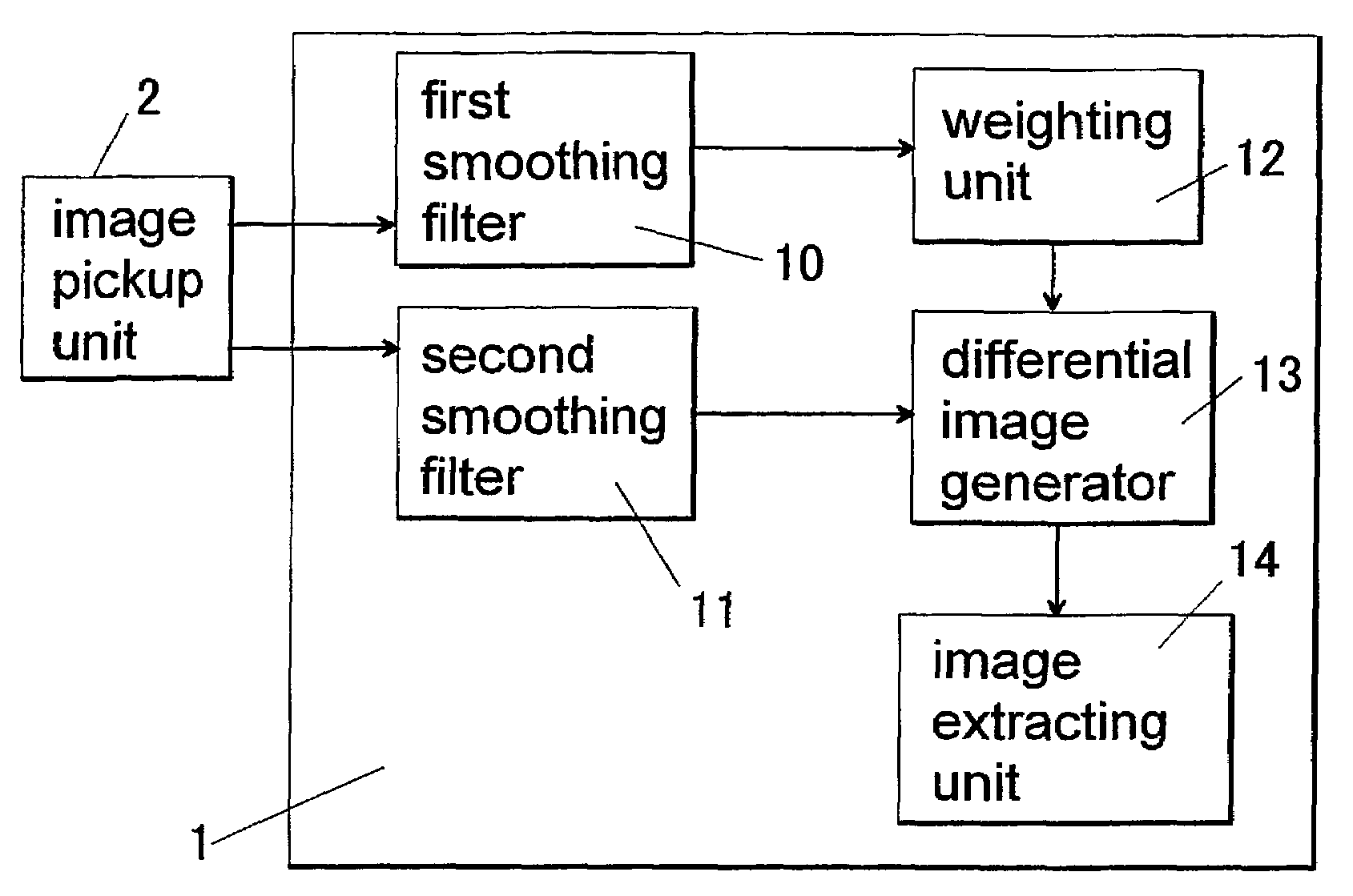

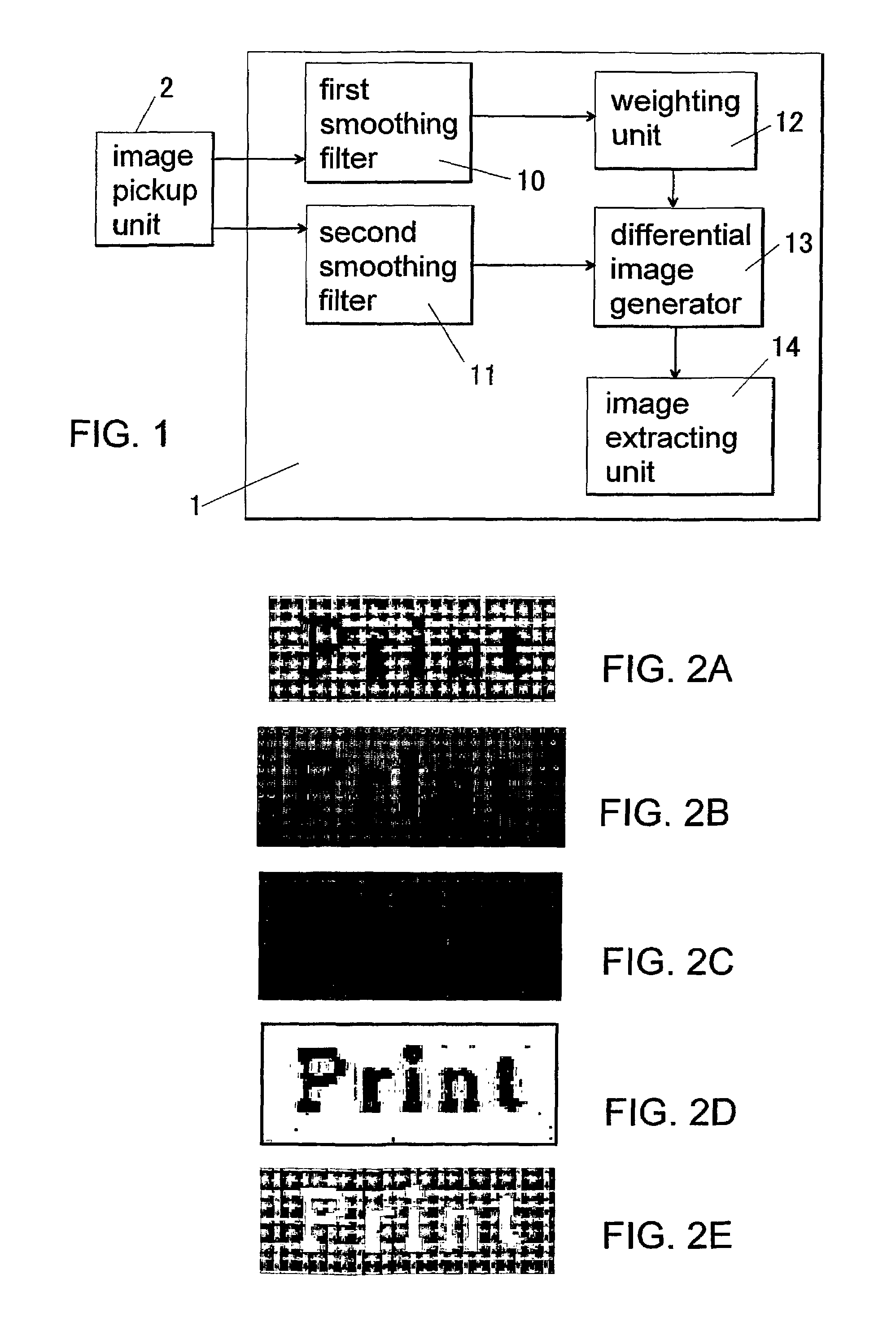

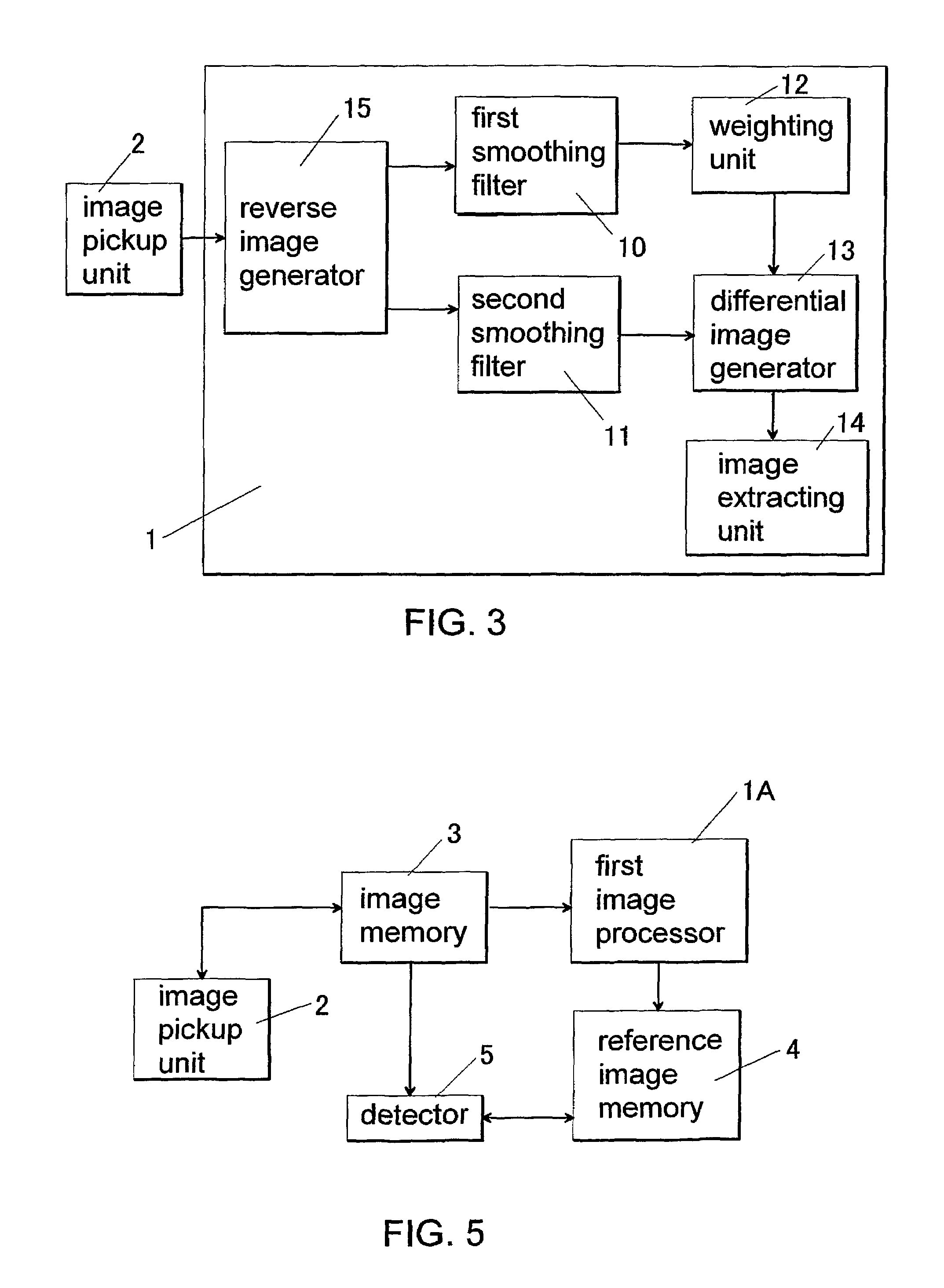

Image processor and pattern recognition apparatus using the image processor

InactiveUS7039236B2Accurate extractionEmphasize contrastImage enhancementImage analysisPattern recognitionImage extraction

An image processor for generating, from a surface image of an object having a pattern, a processed image having a distinct contrast between the pattern and a background, is provided. This image processor comprises a first smoothing filter for generating a first smoothed image; a second smoothing filter for generating a second smoothed image; a weighting unit for multiplying each of pixel values of the first smoothed image by a weight coefficient of 1 or more to determine a weighted pixel value; a differential image generator for subtracting each of weighted pixel values provided by the weighting unit from a pixel value of a corresponding pixel of the second smoothed image to generate a differential image; and an image extracting unit for extracting pixel values having the positive sign from the differential image to obtain the processed image. For example, this image processor can be utilized in a pattern recognition apparatus.

Owner:MATSUSHITA ELECTRIC WORKS LTD

Guided trilateral filtering ultrasonic image speckle noise removal method

ActiveCN109767400AAccurate descriptionPrevent deviationImage enhancementNormal densityPixel value difference

A guided trilateral filtering ultrasonic image speckle noise removal method comprises the steps of calculating the space domain distance weight of a guided image through a Gaussian function, and setting the standard deviation of the guided image to be increased along with increase of noise intensity; carrying out Histogram fitting on a local area of the guide image, and selecting a Fisher-Tippettprobability density function selected as a fitting function; Estimating a distribution parameter of the Tippett probability density function by adopting a maximum likelihood method, and calculating adistribution similarity weight according to the estimated parameter; calculating Pixel value difference weight of a guide image by using an exponential function, and setting a scale parameter of the guide image as an estimated Fisher-; Wherein the Tippett distribution parameters are in direct proportion change; And carrying out local iterative filtering on the ultrasonic image by using the three calculated weights, and carrying out iterative convergence to obtain the ultrasonic image with speckle noise removed. According to the method, the filtering weight value is calculated through three aspects of information of the spatial domain distance, the pixel value difference and the distribution similarity, speckle noise can be effectively reduced, meanwhile, detail and edge information of theimage can be better reserved, and therefore visual interpretation of the ultrasonic image is enhanced.

Owner:CHINA THREE GORGES UNIV

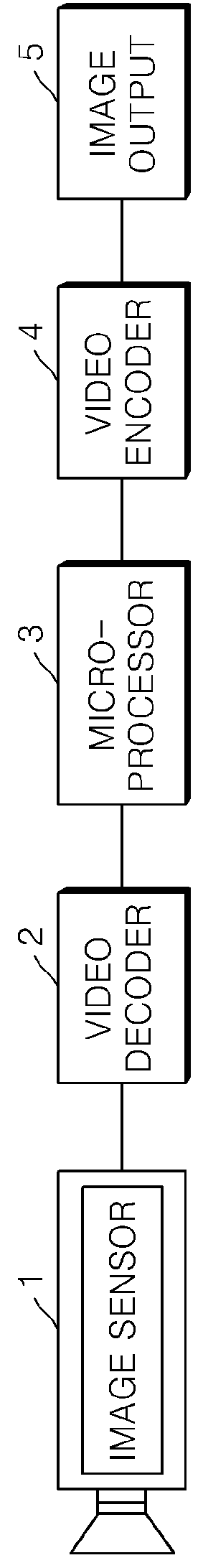

Method of detecting camera tampering and system thereof

A method of detecting camera tempering and a system therefor are provided. The method includes: performing at least one of following operations: (i) detecting a size of a foreground in an image, and determining whether a first condition, that the size exceeds a first reference value, is satisfied, (ii) detecting change of a sum of the largest pixel value differences among pixel value differences between adjacent pixels in selected horizontal lines of the image, according to time, and determining whether a second condition, that the change lasts for a predetermined time period, is satisfied, and (iii) adding up a plurality of global motion vectors with respect to a plurality of images, and determining whether a third condition, that a sum of the global motion vectors exceeds a second reference value, is satisfied; and determining occurrence of camera tempering if at least one of the corresponding conditions is satisfied.

Owner:HANWHA TECHWIN CO LTD

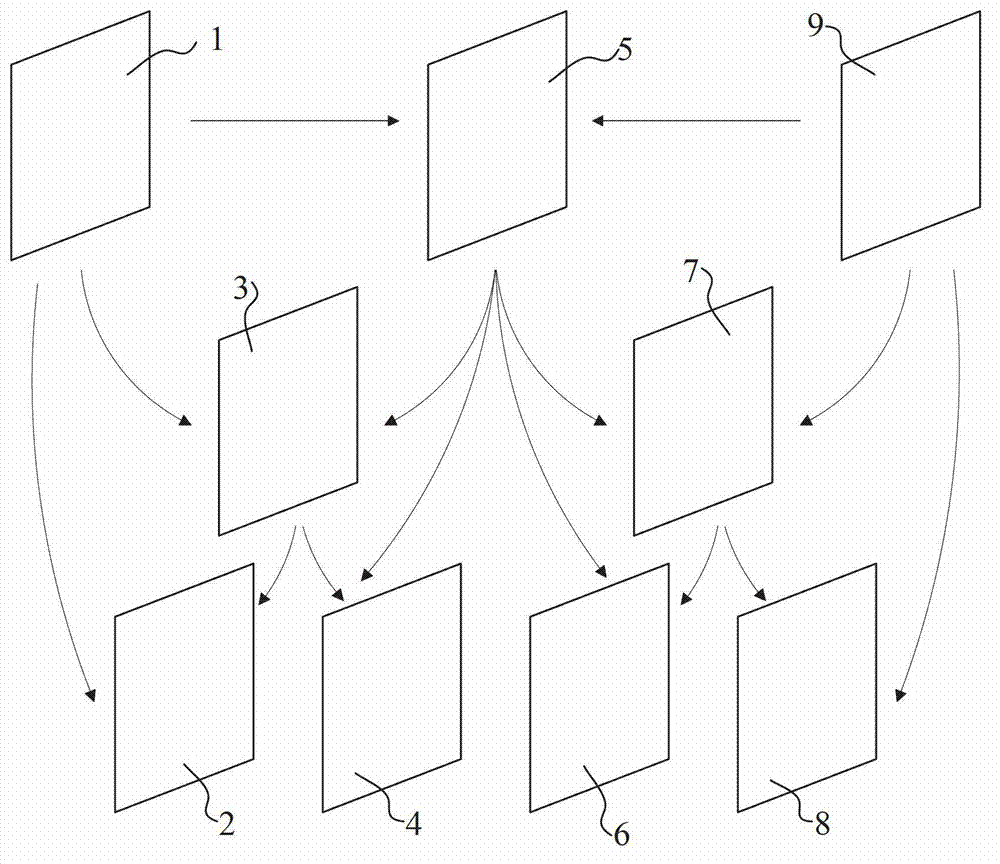

Method for generating depth maps of images

ActiveCN102881018AQuality improvementHigh precisionImage analysisSteroscopic systemsPattern recognitionPixel value difference

The invention discloses a method for generating depth maps of images. The method includes 1), selecting a plurality of reference blocks in corresponding regions of a reference-frame image for a current block of a current-frame image; 2), computing a depth block matching error and a color block matching error between the current block and each reference block; 3), weighting the corresponding depth block matching error and the corresponding color block matching error for the current block and a certain reference block to obtain a comprehensive matching error; and 4), selecting a certain reference block corresponding to the minimum comprehensive matching error and using a depth map of the reference block as a depth map of the current block. Each color block matching error is the sum of absolute values of color pixel value differences among all corresponding pixels between the current block and the corresponding reference block. The method has the advantages that the quality of the depth map obtained after the current-frame image is interpolated is improved, and accumulative errors caused by one-way depth interpolation propagation are effectively reduced.

Owner:SHENZHEN GRADUATE SCHOOL TSINGHUA UNIV

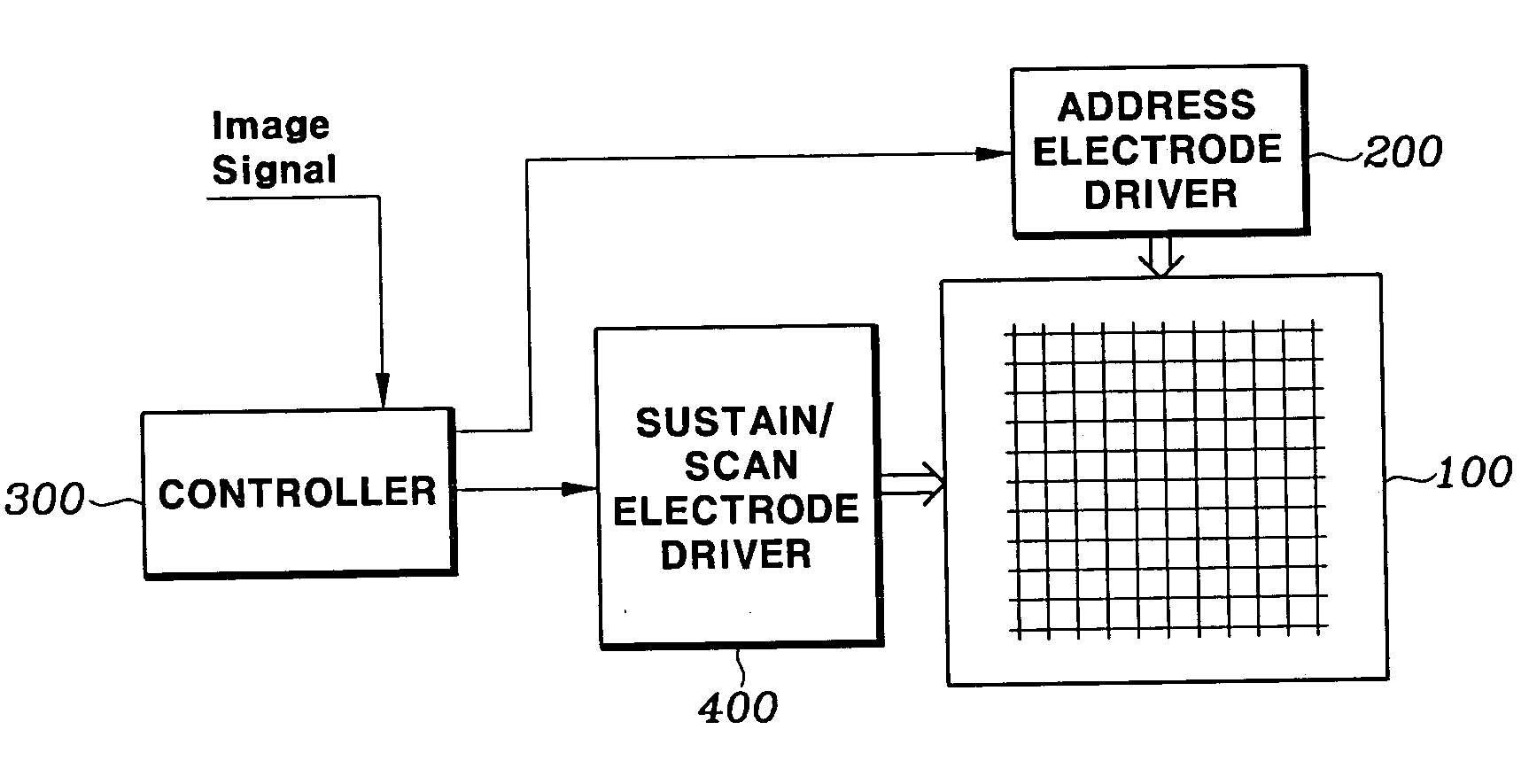

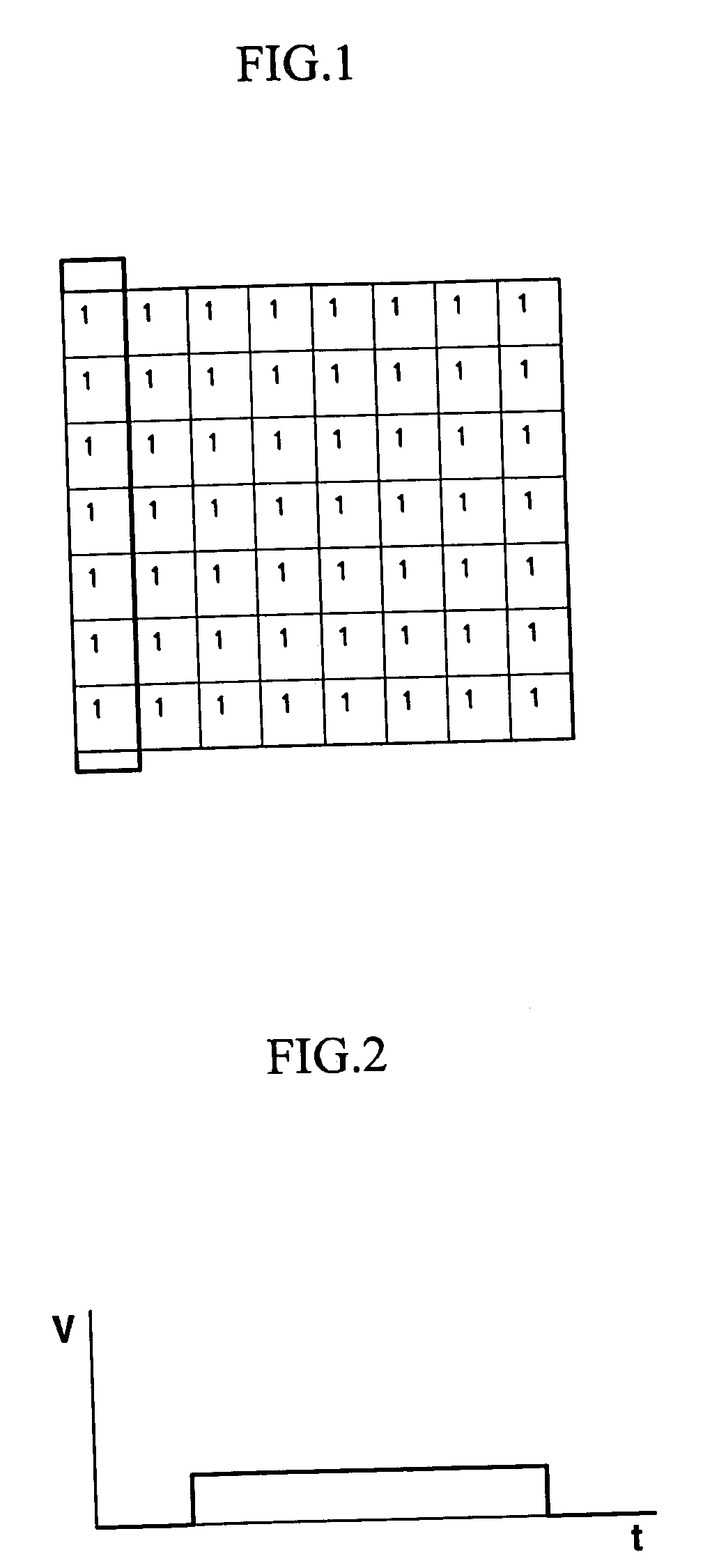

Method and apparatus to automatically control power of address data for plasma display panel, and plasma display panel including the apparatus

InactiveUS20050068265A1Reduce in quantityReduce power consumptionStatic indicating devicesCold-cathode tubesAutomatic controlPixel value difference

A method and apparatus to automatically control power of address data in a PDP includes a plurality of address electrodes, a plurality of scan electrodes, and a plurality of sustain electrodes arranged in pairs with the scan electrodes, and a PDP including the apparatus. The sum of pixel value differences between adjacent ones of successive lines in input image data is calculated, and an address power control (APC) level corresponding to the calculated line pixel value difference sum is determined. The image data is then repeatedly multiplied by a gain initially corresponding to the start gain and sequentially decremented by a predetermined value from the start gain upon every multiplication until the gain corresponds to the end gain, to output corrected address data.

Owner:SAMSUNG SDI CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com