Patents

Literature

125 results about "Polling" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Polling, or polled operation, in computer science, refers to actively sampling the status of an external device by a client program as a synchronous activity. Polling is most often used in terms of input/output (I/O), and is also referred to as polled I/O or software-driven I/O.

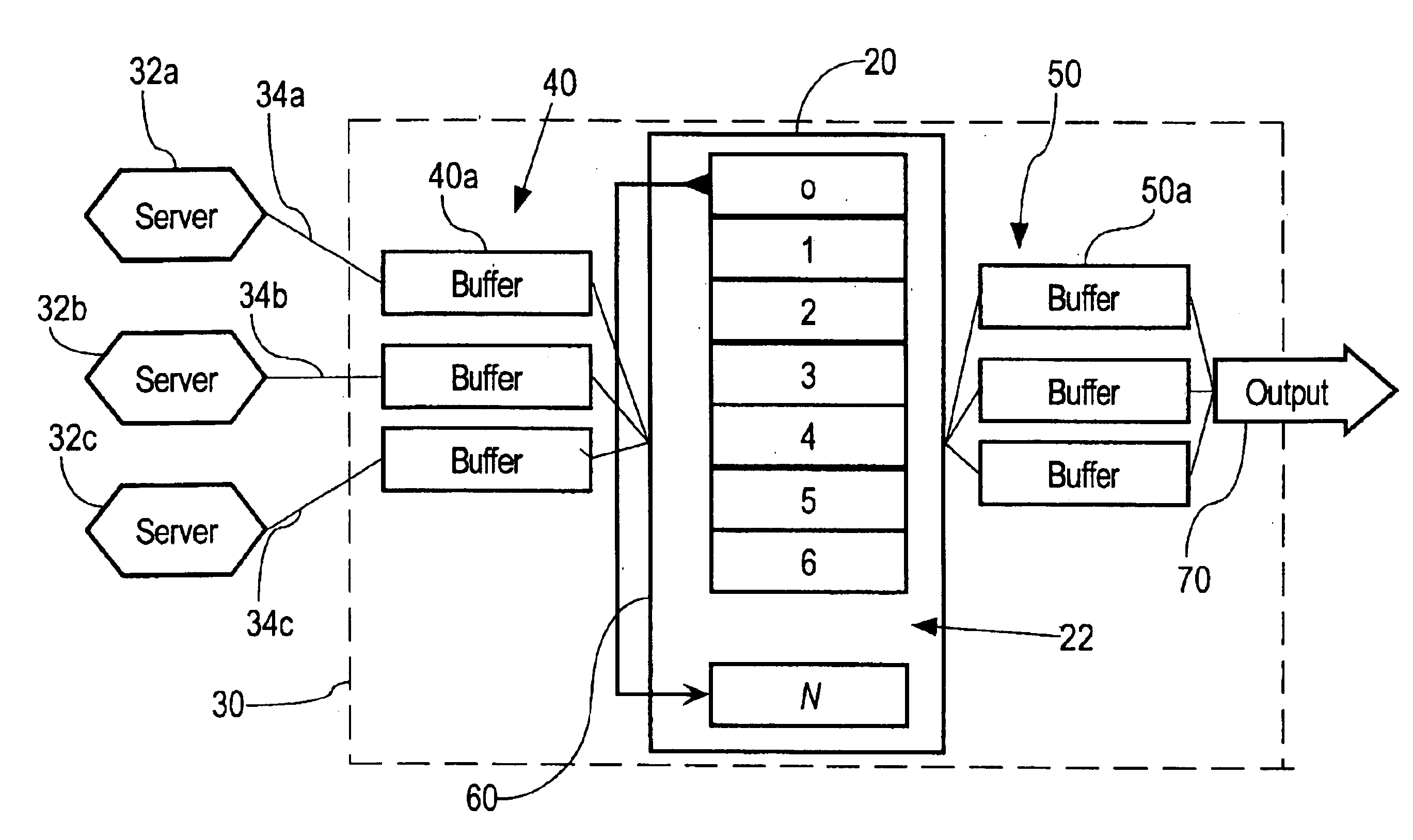

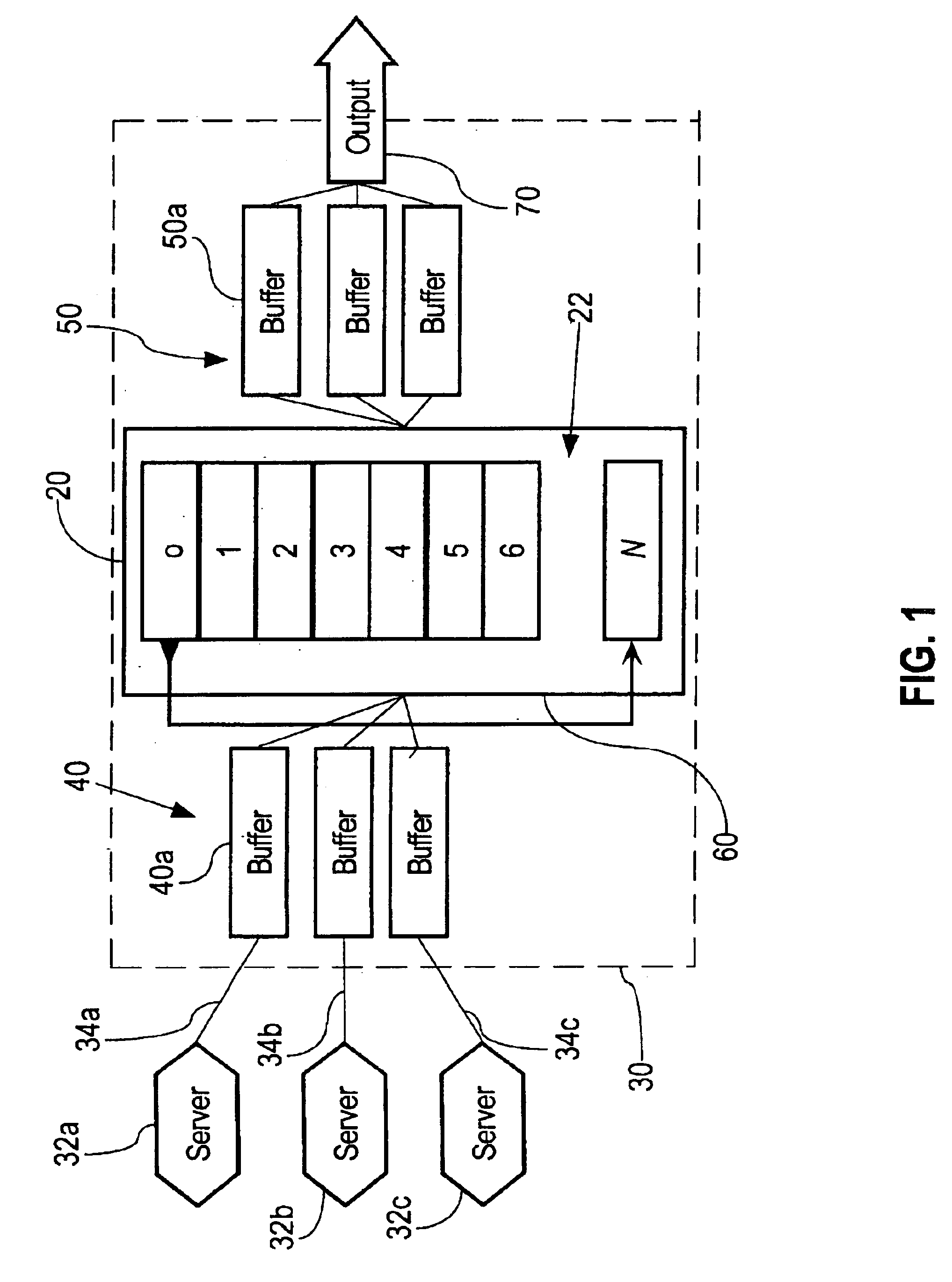

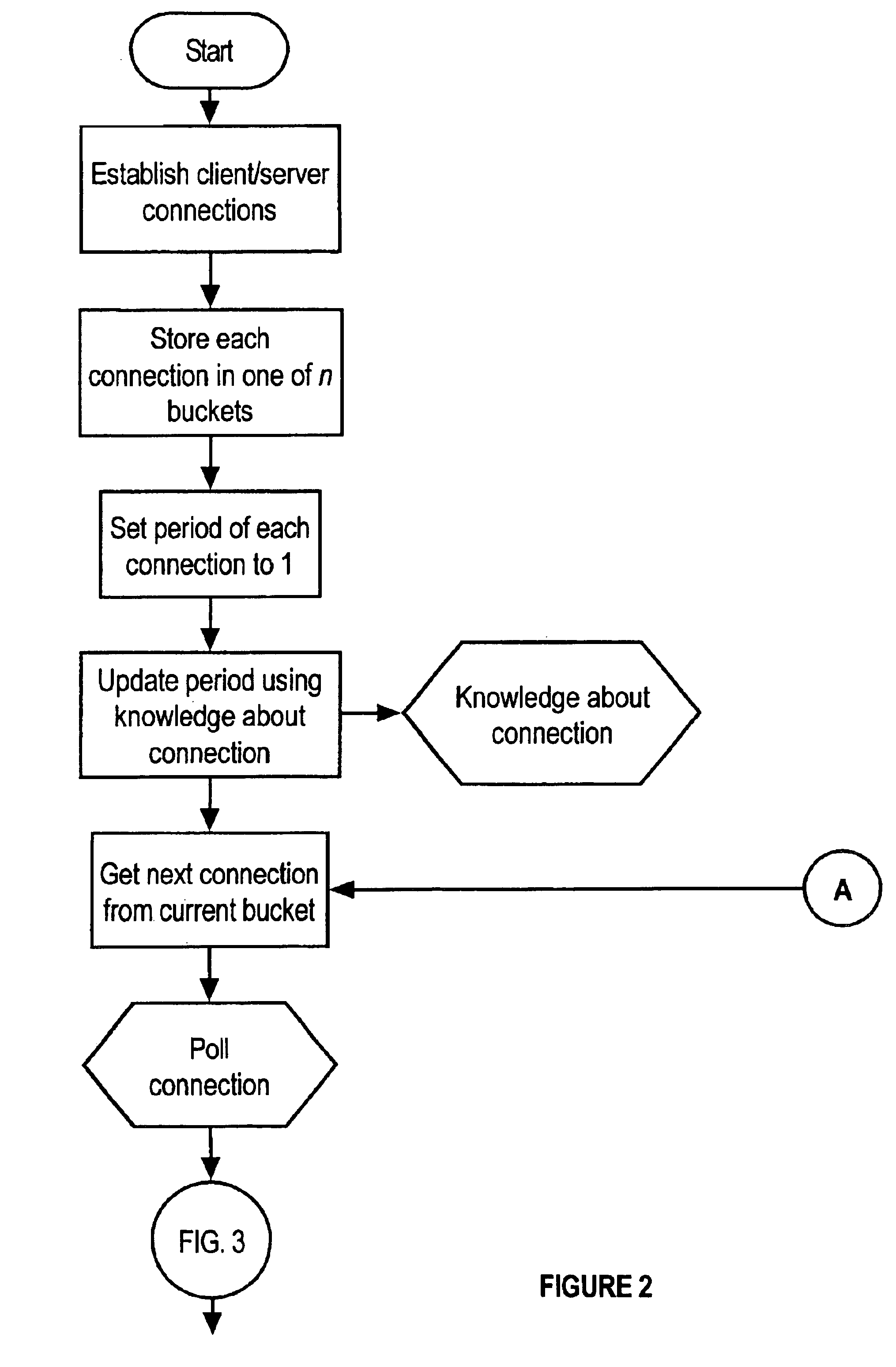

Self-tuning dataflow I/O core

InactiveUS6848005B1Multiple digital computer combinationsData switching networksData streamEngineering

A mechanism for managing data communications is provided. A circularly arranged set of buckets is disposed between input buffers and output buffers in a networked computer system. Connections among the system and clients are stored in the buckets. Each bucket in the set is successively examined, and each connection in the bucket is polled. During polling, the amount of information that has accumulated in a buffer associated with the connection since the last poll is determined. Based on the amount, a period value associated with the connection is adjusted. The connection is then stored in a different bucket that is generally identified by the sum of the current bucket number and the period value. Polling continues with the next connection and the next bucket. In this way, the elapsed time between successive polls of a connection automatically adjusts to the actual operating bandwidth or data communication speed of the connection.

Owner:R2 SOLUTIONS

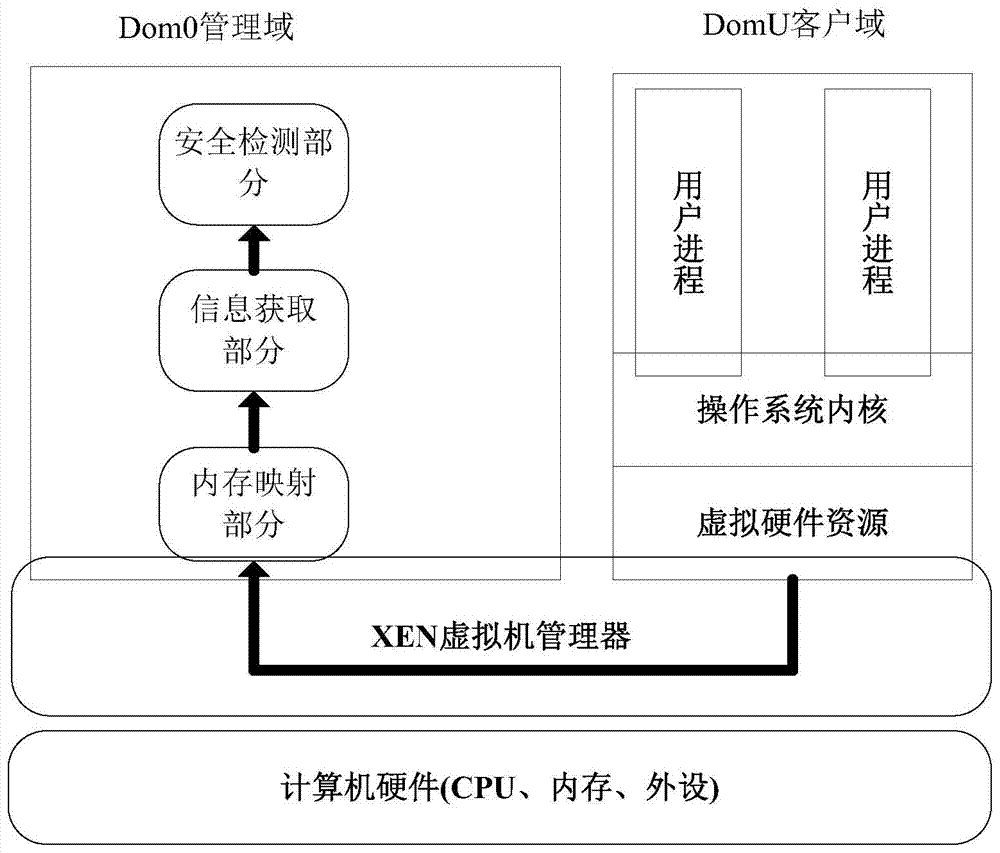

Kernel integrity detection method based on Xen virtualization

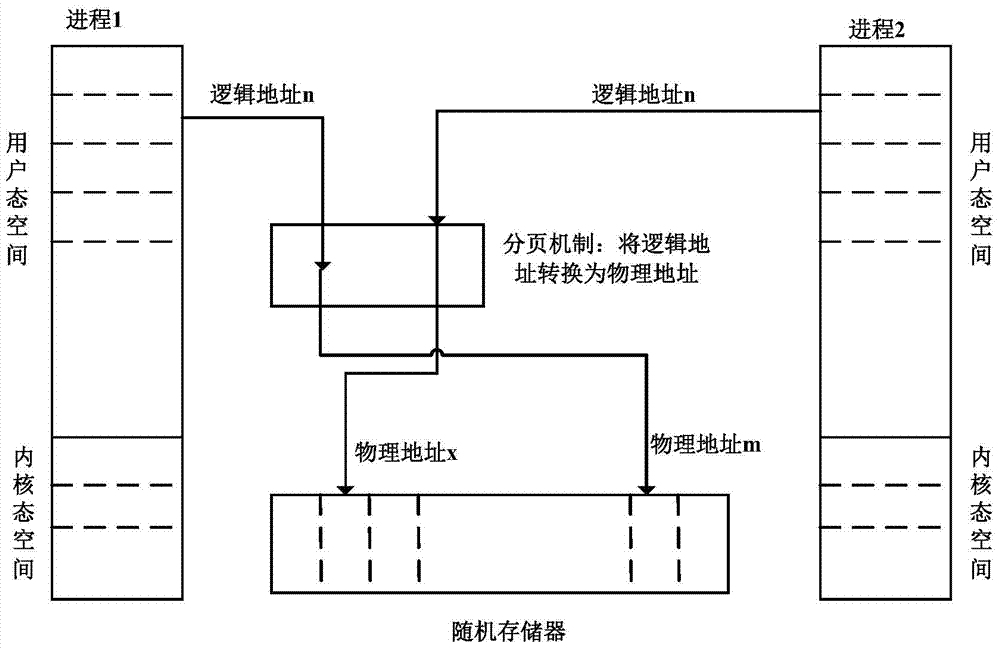

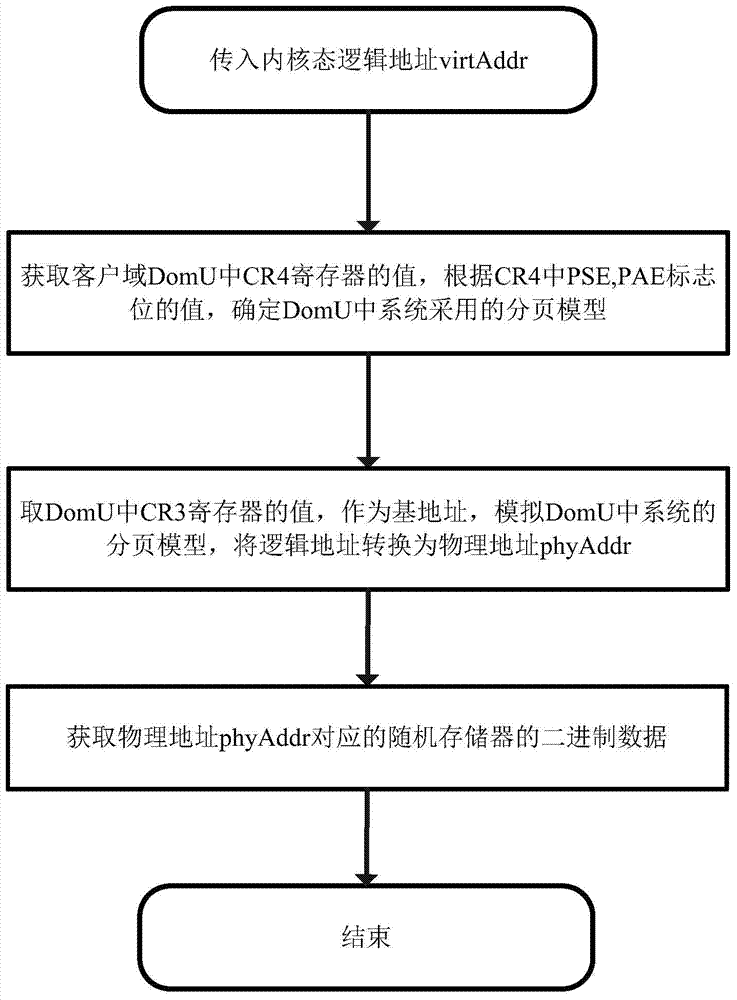

InactiveCN103793651AEnsure safetyNot to be deceivedMemory adressing/allocation/relocationPlatform integrity maintainanceData virtualizationMemory virtualization

The invention discloses a kernel integrity detection method based on Xen virtualization. According to the method, a kernel integrity detection system is utilized to detect kernel integrity of a virtual machine operation system which is operated on a Xen virtualized platform; a memory mapping part, an information acquisition part and a safety detection part are arranged on a Dom 0 of the system, wherein the memory mapping part provides an interface for acquiring machine byte data of a hardware level of a Dom U; the information acquisition part deploys the machine byte data, acquired by the interface provided by the memory mapping part, of the hardware level of the Dom U, and converts the machine byte data of the hardware level into information of the level of the operation system according to the version of the operation system in the Dom U; the safety detection part calls kernel key data, acquired by the information acquisition part, of the operation system in the Dom U in a polling mode, and judges whether kernel integrity of the operation system in the Dom U is damaged or not according to safety policies of the system. According to the method, the detection system is deployed outside a monitored system, and therefore the safety of the detection system is guaranteed, and meanwhile kernel integrity of the monitored system is detected.

Owner:XIDIAN UNIV

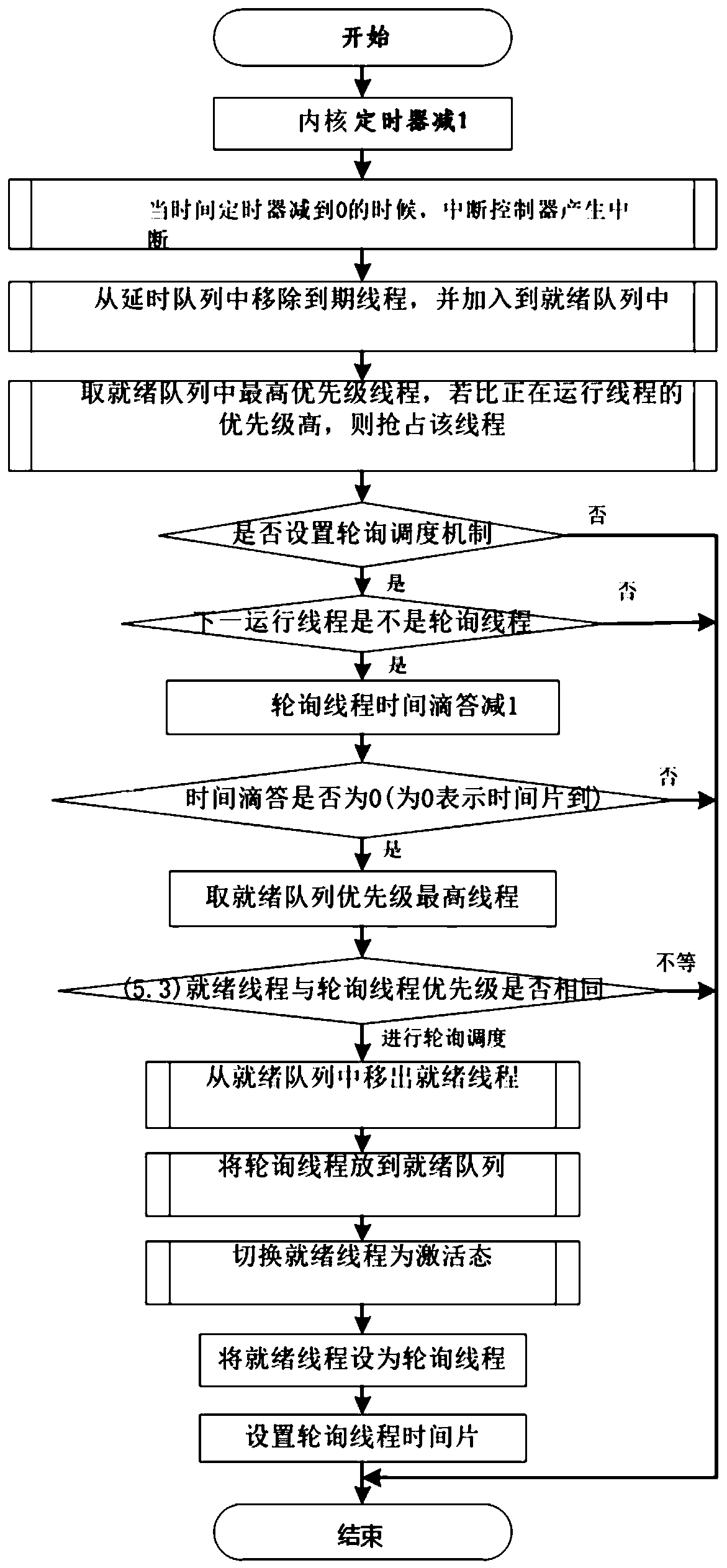

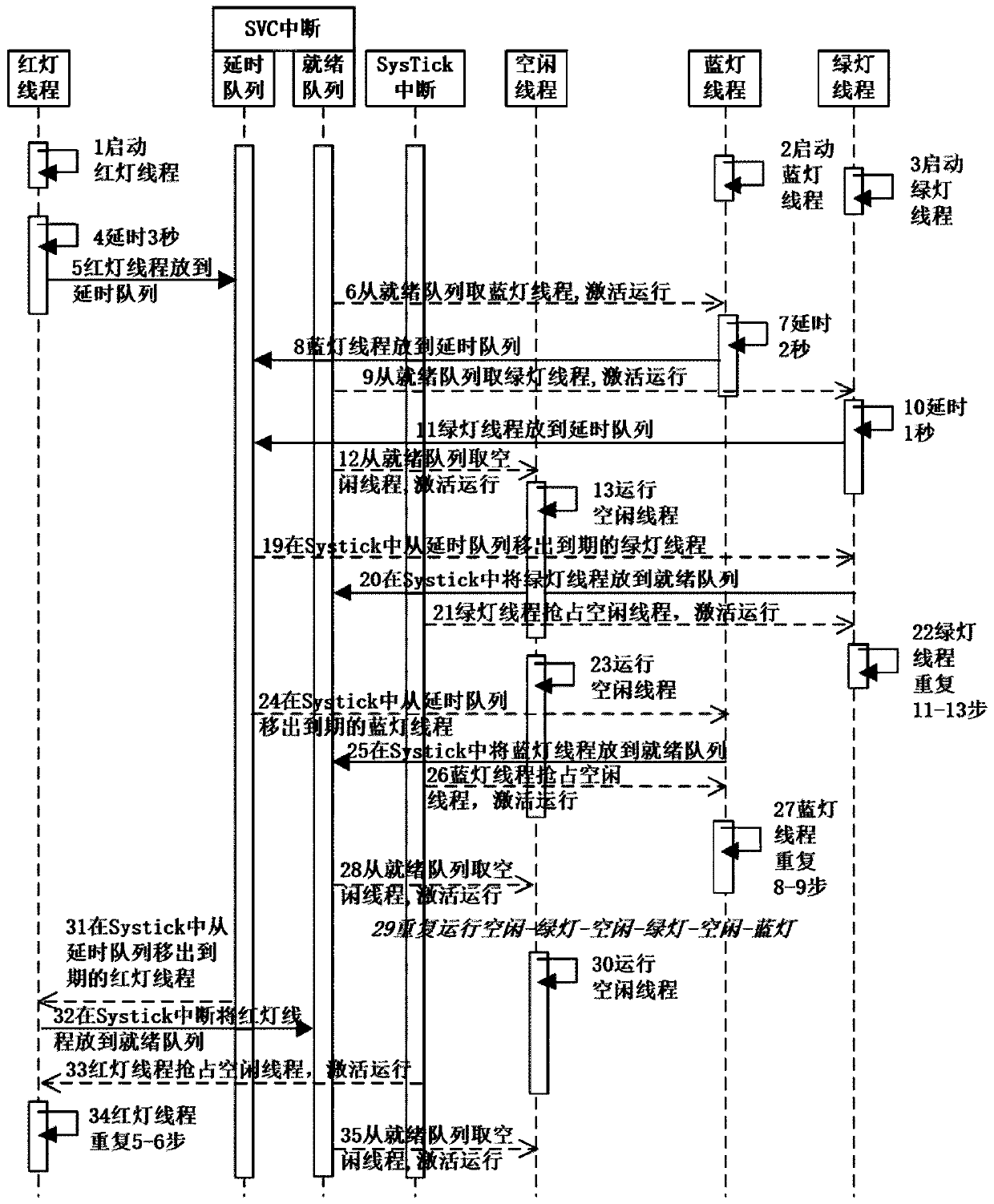

Task scheduling processing system and method of embedded real-time operating system

The invention provides a task scheduling processing system and method for an embedded real-time operating system. The system comprises a processor, a timer, an interrupt controller, a scheduler and anSVC switching module, wherein the timer, the interrupt controller, the scheduler and the SVC switching module are arranged in the processor. The scheduler comprises a priority scheduler and a time slice polling scheduler; according to the invention, a scheduling mode based on priority preemption is adopted; the right to use of the CPU is always allocated to the currently ready task with the highest priority; the tasks with the same priority are scheduled according to a first-in first-out sequence; and meanwhile, a time slice polling scheduling mode is adopted as a supplement of a priority preemption scheduling mode, so that the problem that the real-time performance of a processor system is reduced due to the fact that a plurality of ready thread tasks with the same priority share a processor can be coordinated, and the problem of task processing of a plurality of ready threads with high priorities is improved.

Owner:SUZHOU UNIV

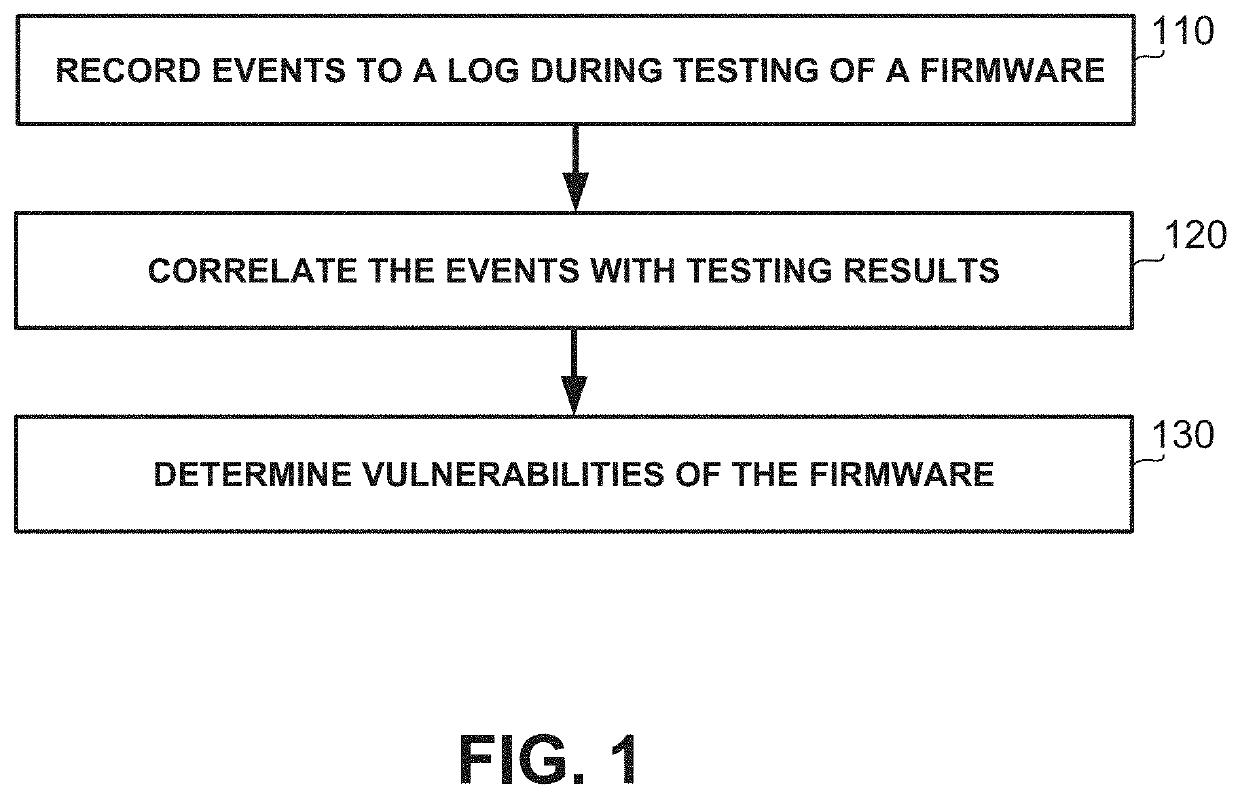

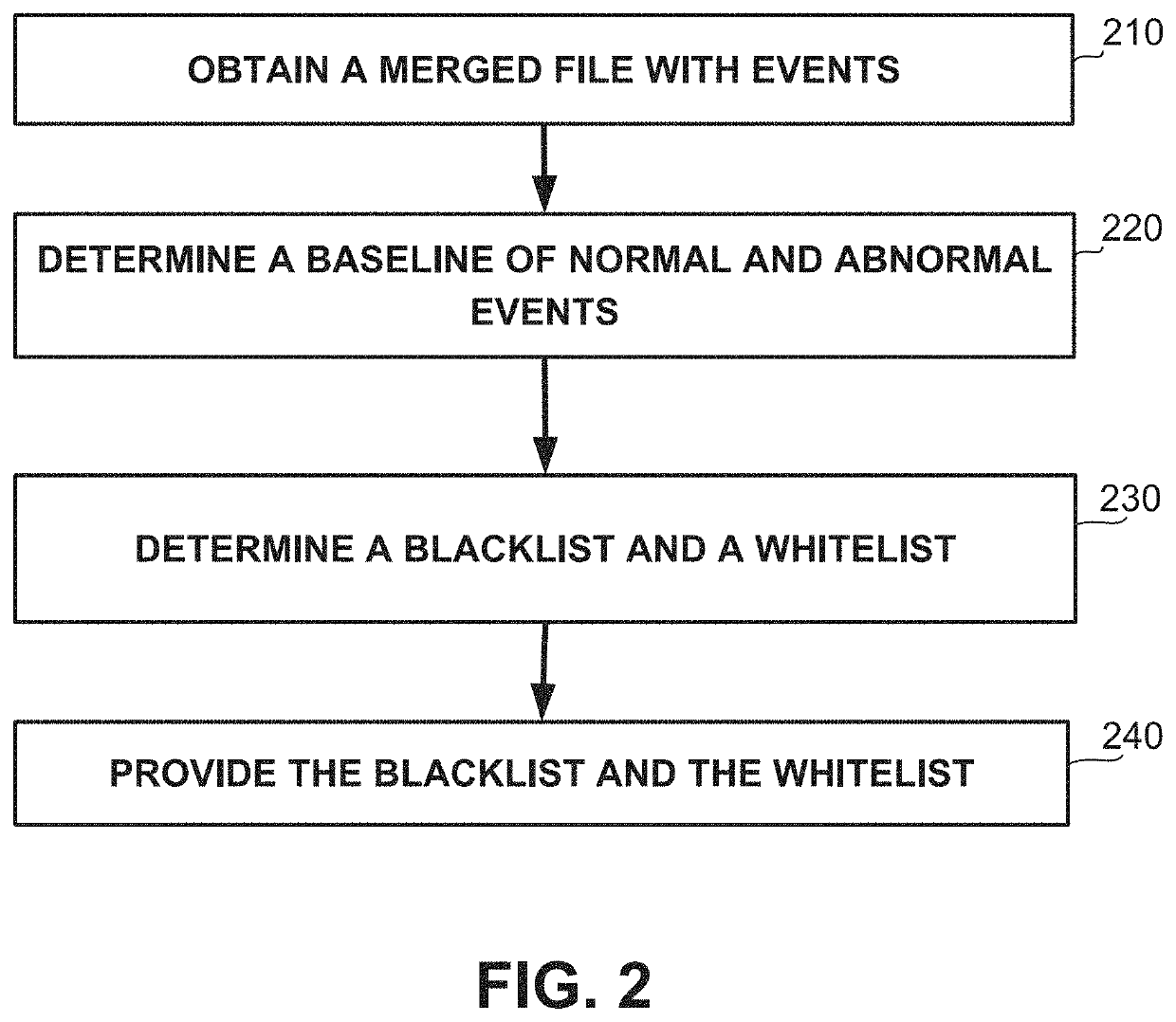

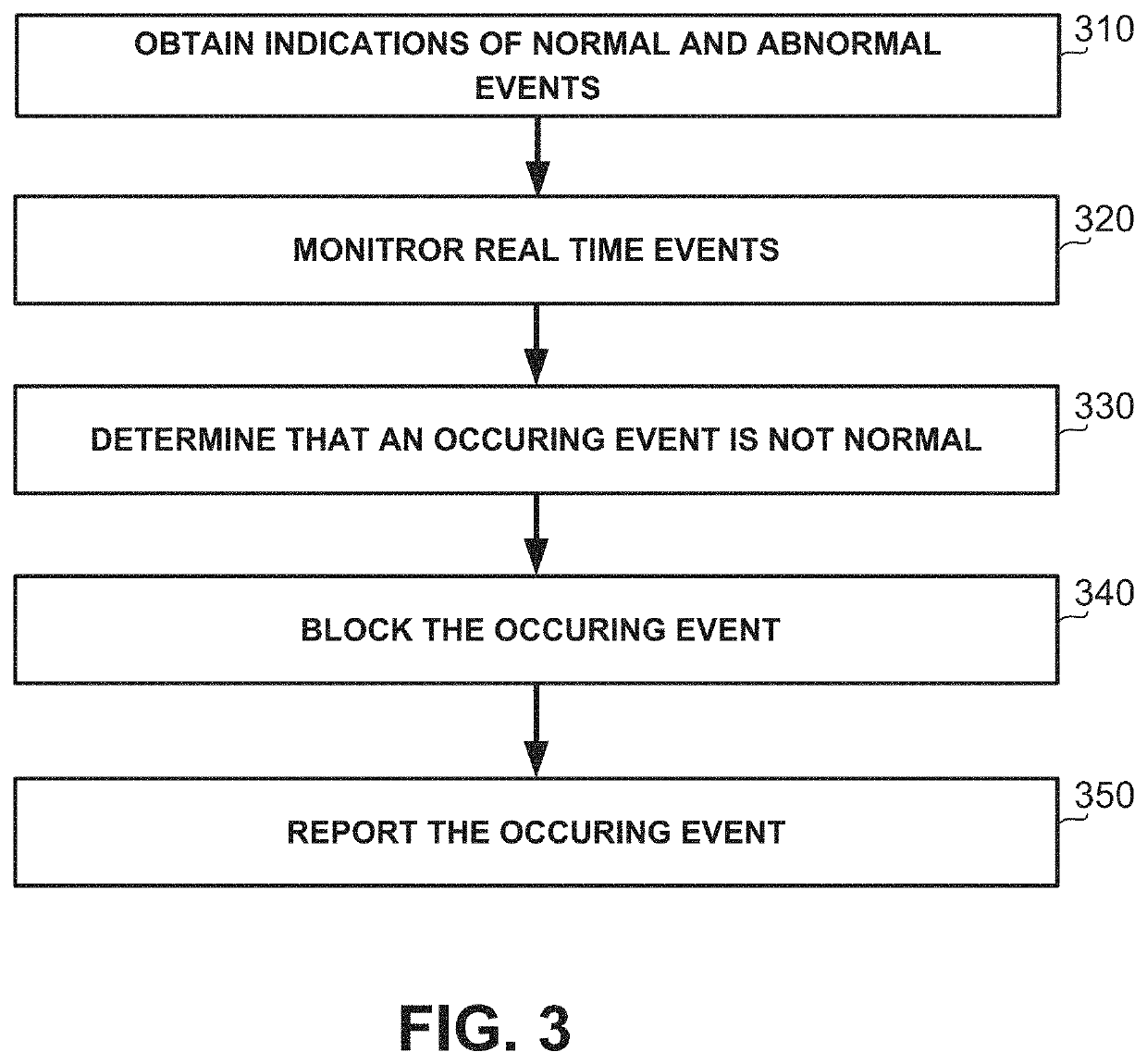

Detecting Firmware Vulnerabilities

A method, system and product for detecting firmware vulnerabilities, including, during a testing phase of a firmware of a device, continuously polling states and activities of the device, wherein said polling is at a testing agent that is functionality separate from the firmware; correlating between at least one event that is associated with the states or the activities of the device and test results of the testing phase; based on said correlating, determining for the firmware one or more normal events and one or more abnormal events; and after the testing phase, providing indications of the one or more normal events and one or more abnormal events from the testing agent to a runtime agent, whereby said providing enables the runtime agent to protect the firmware from vulnerabilities associated with the one or more abnormal events.

Owner:JFROG LTD

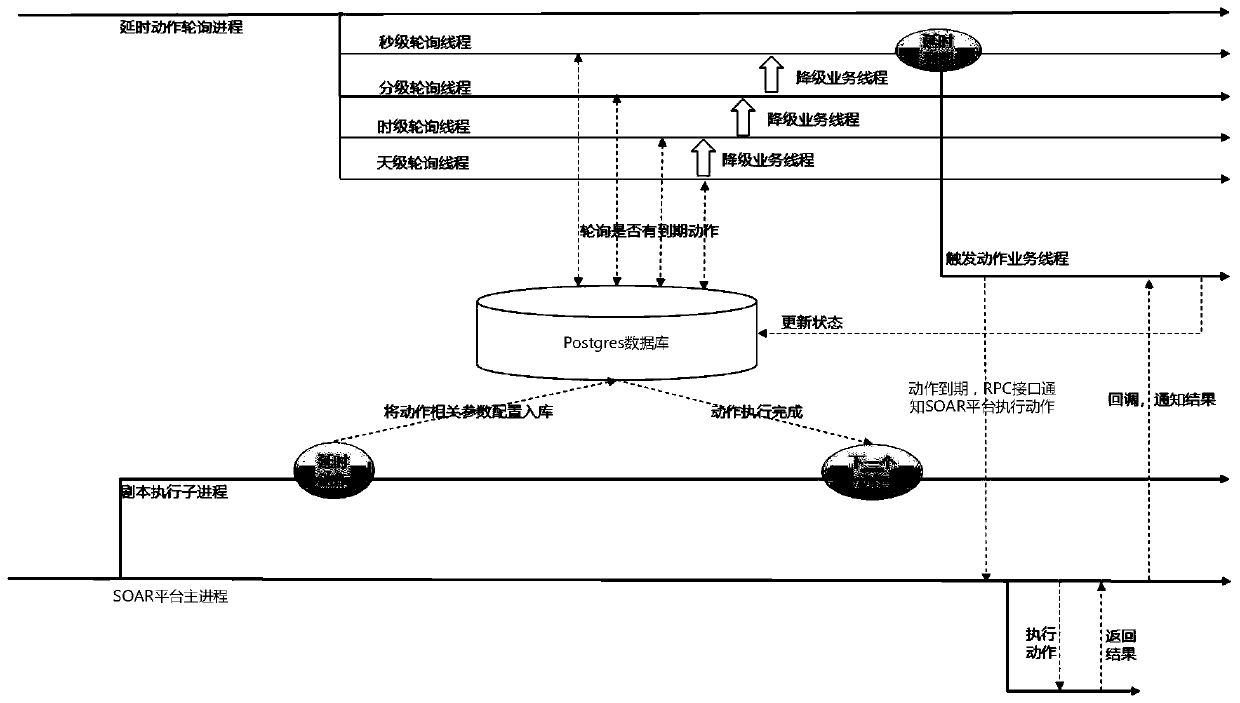

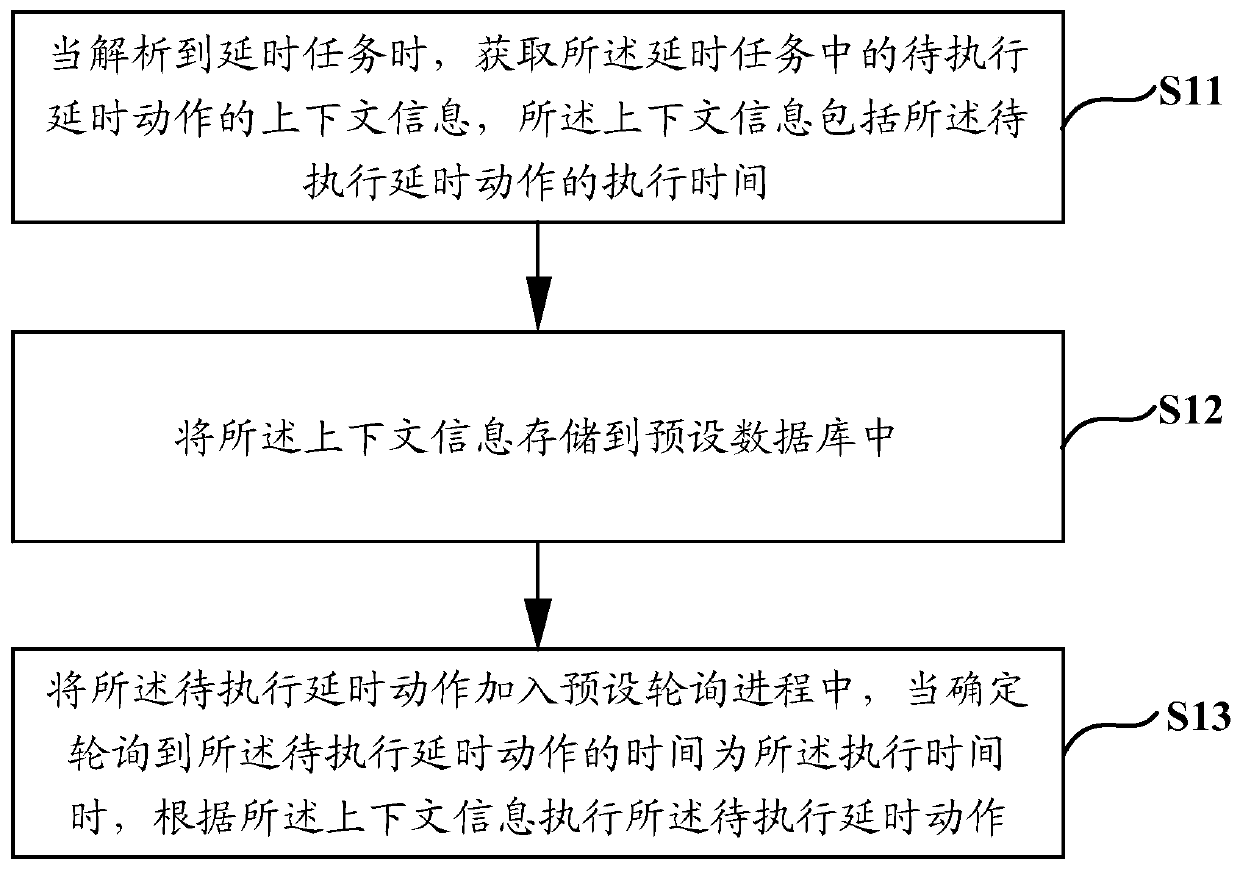

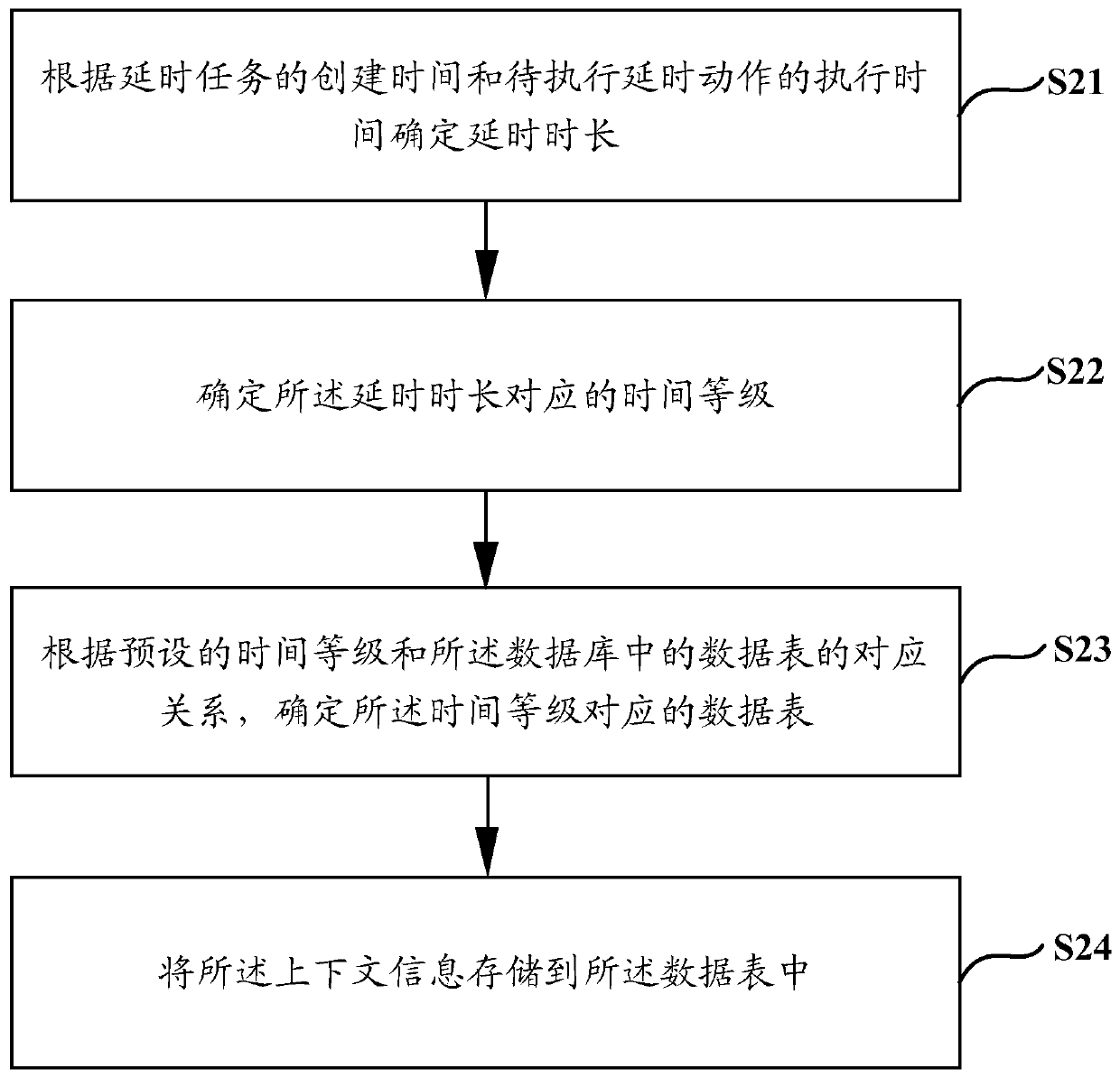

Delay task processing method and device

The invention discloses a delay task processing method and device. The method is used for solving the problems that in an existing SOAR platform script delay task processing method, execution of otheractions needing to be executed in real time behind a delayed execution action can be blocked by the delayed execution action, so that the execution efficiency of a script is low, and resource occupation cannot be released in time. The delay task processing method comprises the steps that when a delay task is analyzed, contextual information of a to-be-executed delay action in the delay task is acquired, and the contextual information comprises execution time of the to-be-executed delay action; storing the context information into a preset database; and adding the to-be-executed delay action into a preset polling process, and when it is determined that the execution time is polled, executing the to-be-executed delay action according to the context information.

Owner:NSFOCUS INFORMATION TECHNOLOGY CO LTD +1

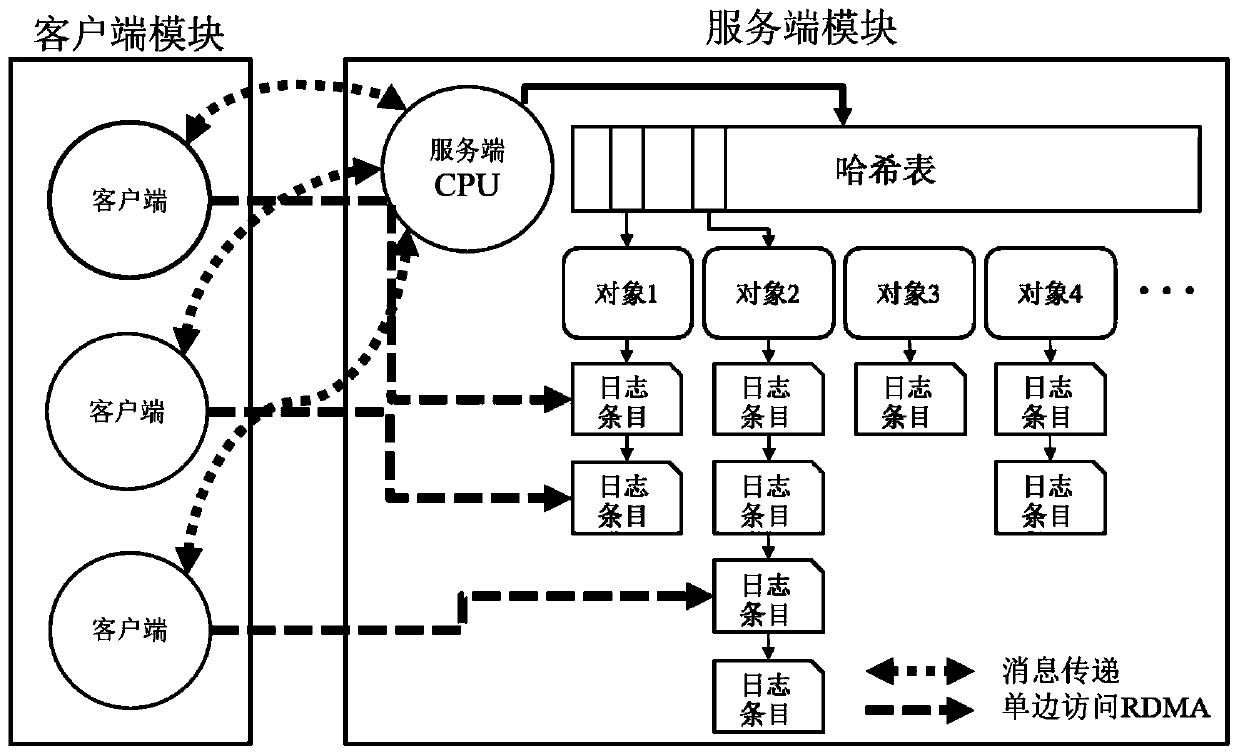

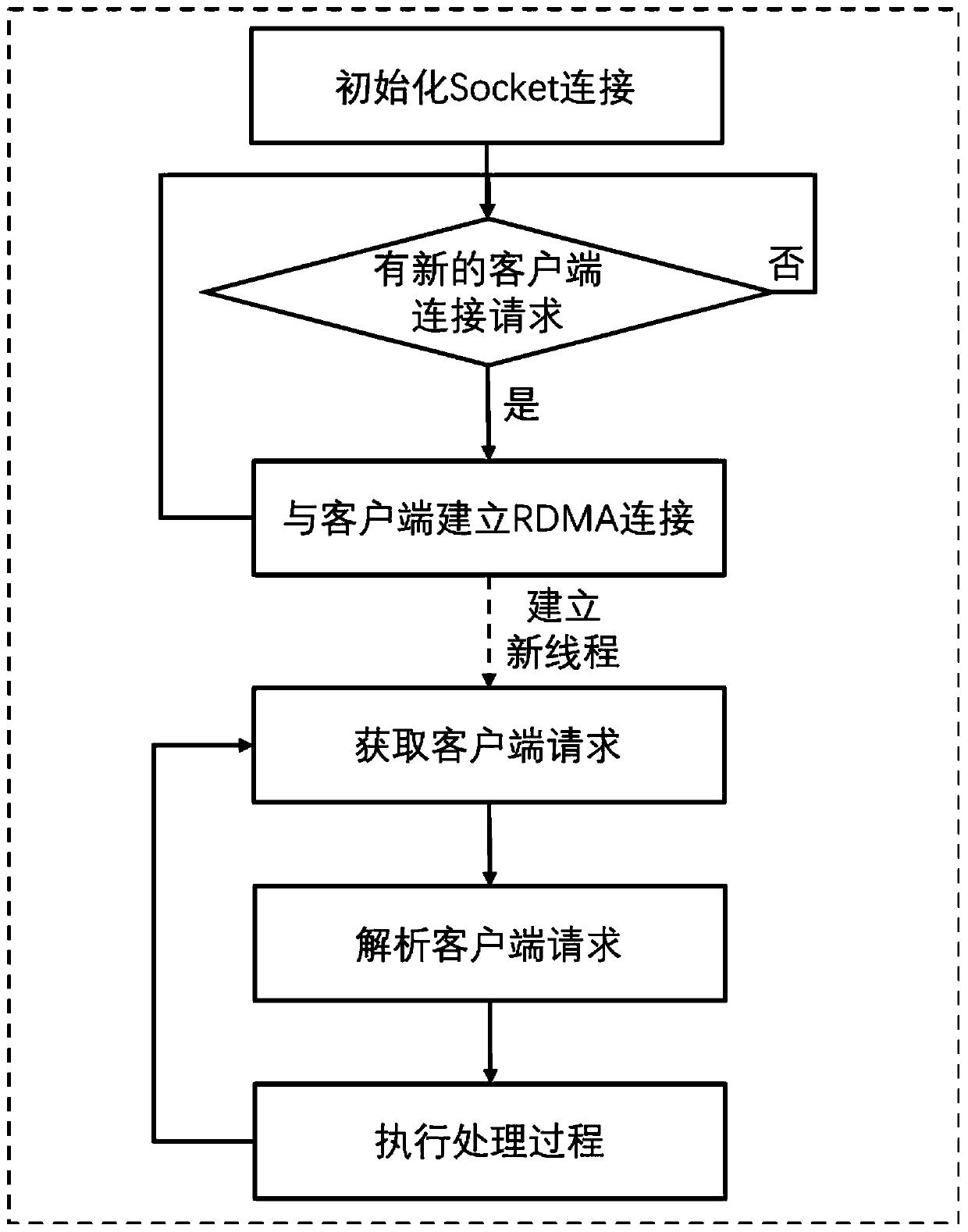

Network access programming framework deployment method and system oriented to RDMA and nonvolatile memory

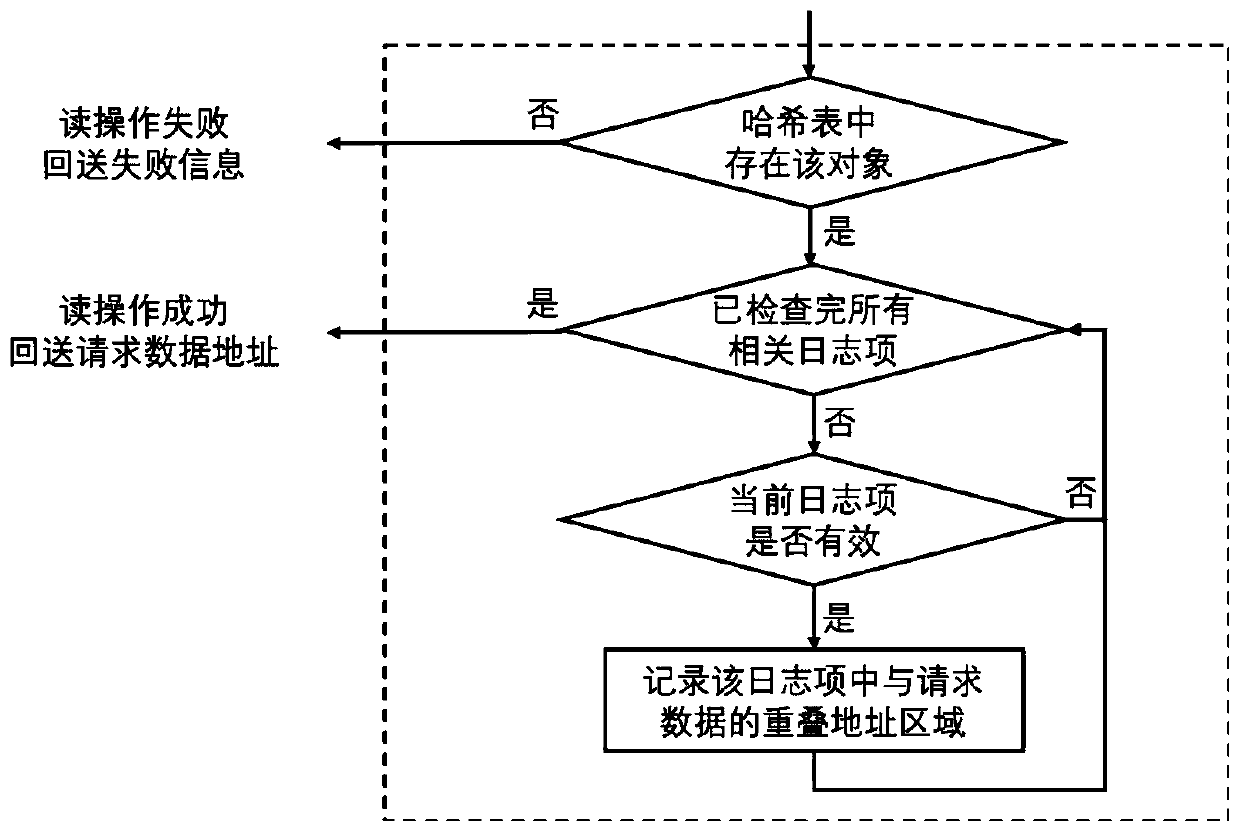

ActiveCN111078607AImprove concurrencyMemory architecture accessing/allocationMemory adressing/allocation/relocationRemote direct memory accessTerm memory

The invention provides a network access programming framework deployment method and a system oriented to RDMA (Remote Direct Memory Access) and a nonvolatile memory. The network access programming framework deployment method comprises the following steps: M1, a client request comprises RDMA buffer area data filling and CRC32-based check code calculation for one time; M2, server request processingis performed, wherein the server request processing comprises RDMA buffer area polling and request processing triggering; and M3, in a client read-write stage, the client directly accesses the nonvolatile memory of the remote machine through the RDMA unilateral read-write unit in a read-write manner, and reads and writes a specific address. According to the method, a universal functional interfacefor remotely accessing the nonvolatile memory by using the RDMA technology can be provided; according to the method, high concurrency and remote atomicity can be ensured through a data storage and access mechanism of a log structure; the method can support a user to customize the service logic, and has a wide application value.

Owner:SHANGHAI JIAO TONG UNIV +1

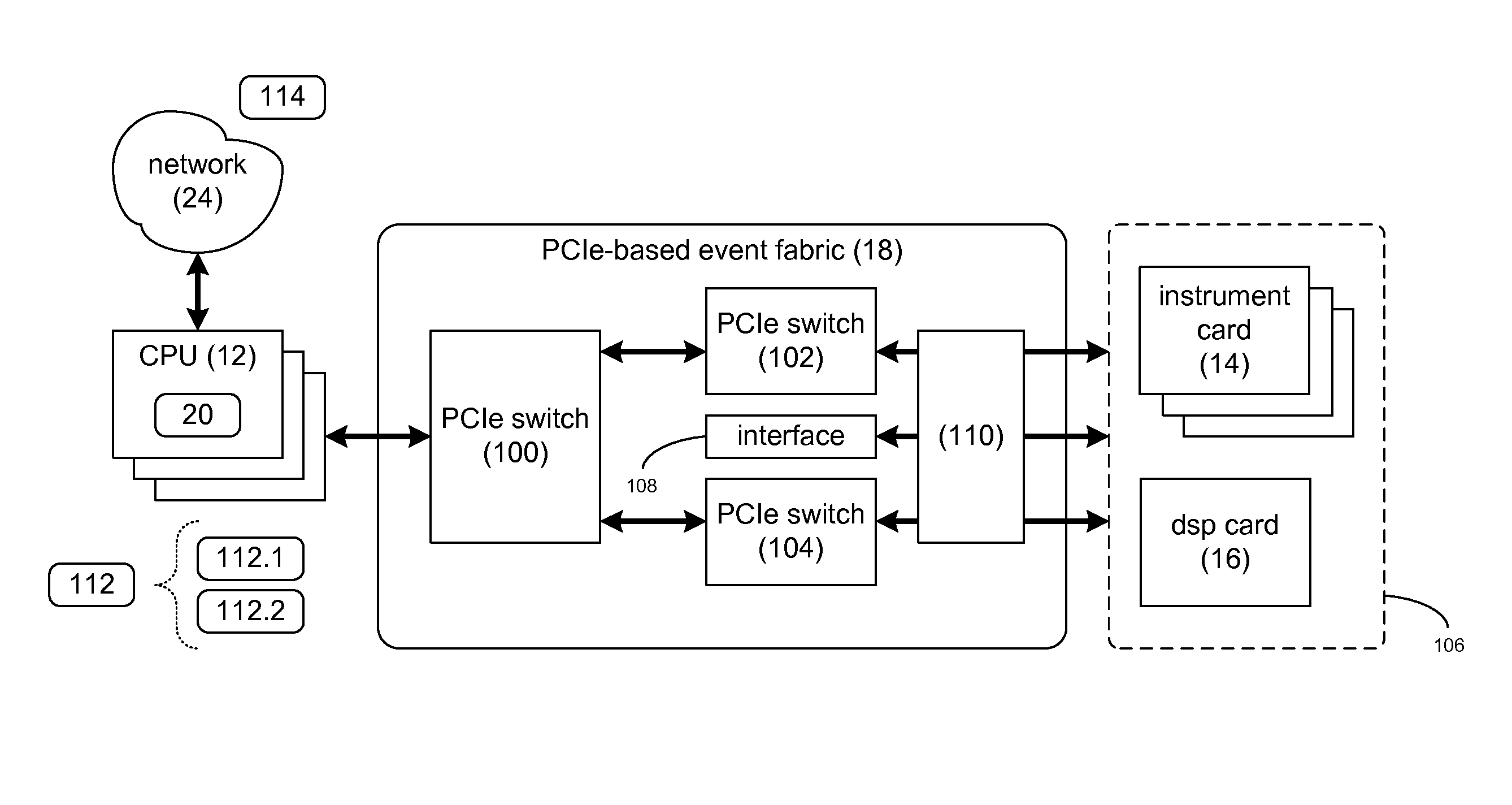

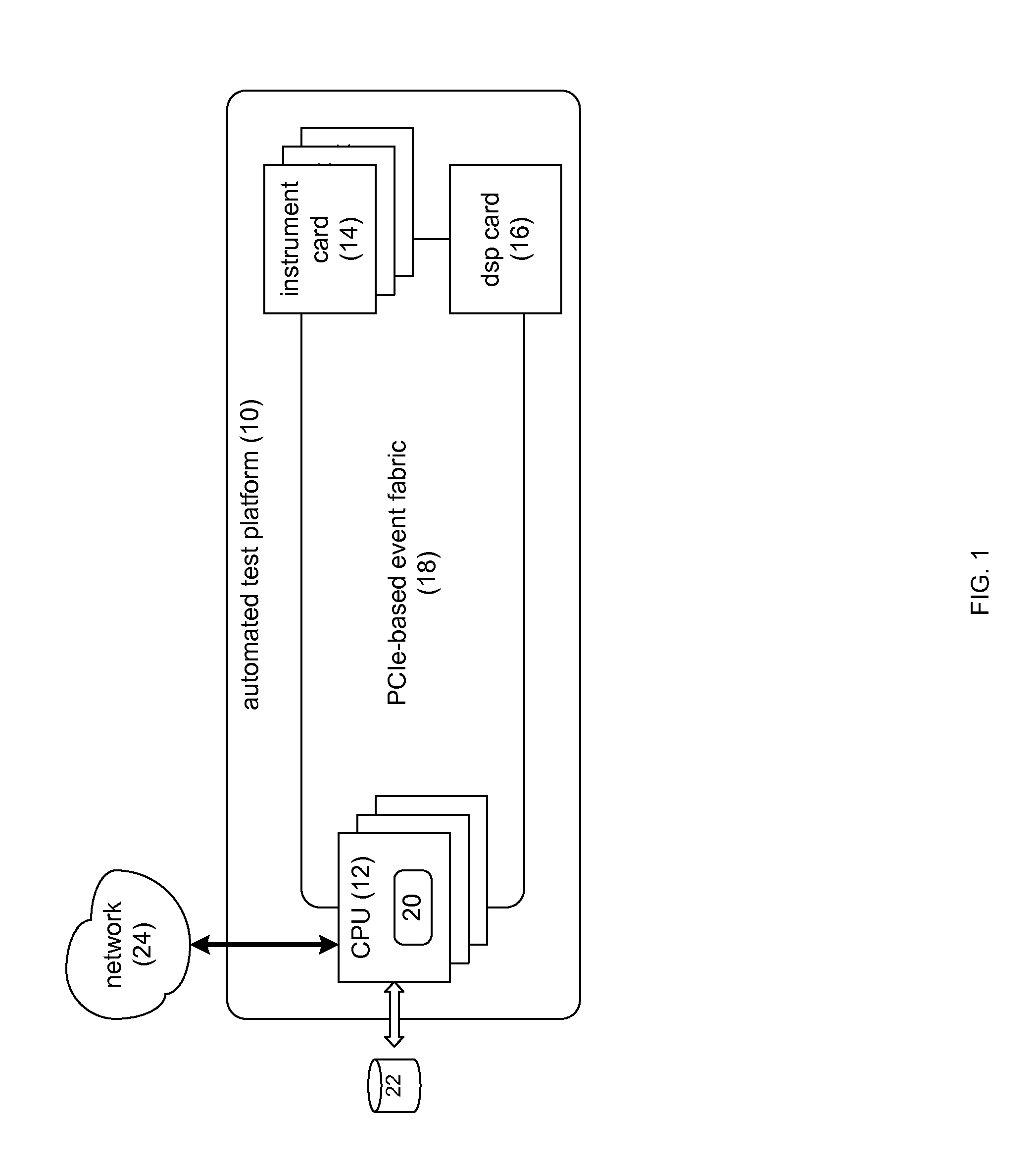

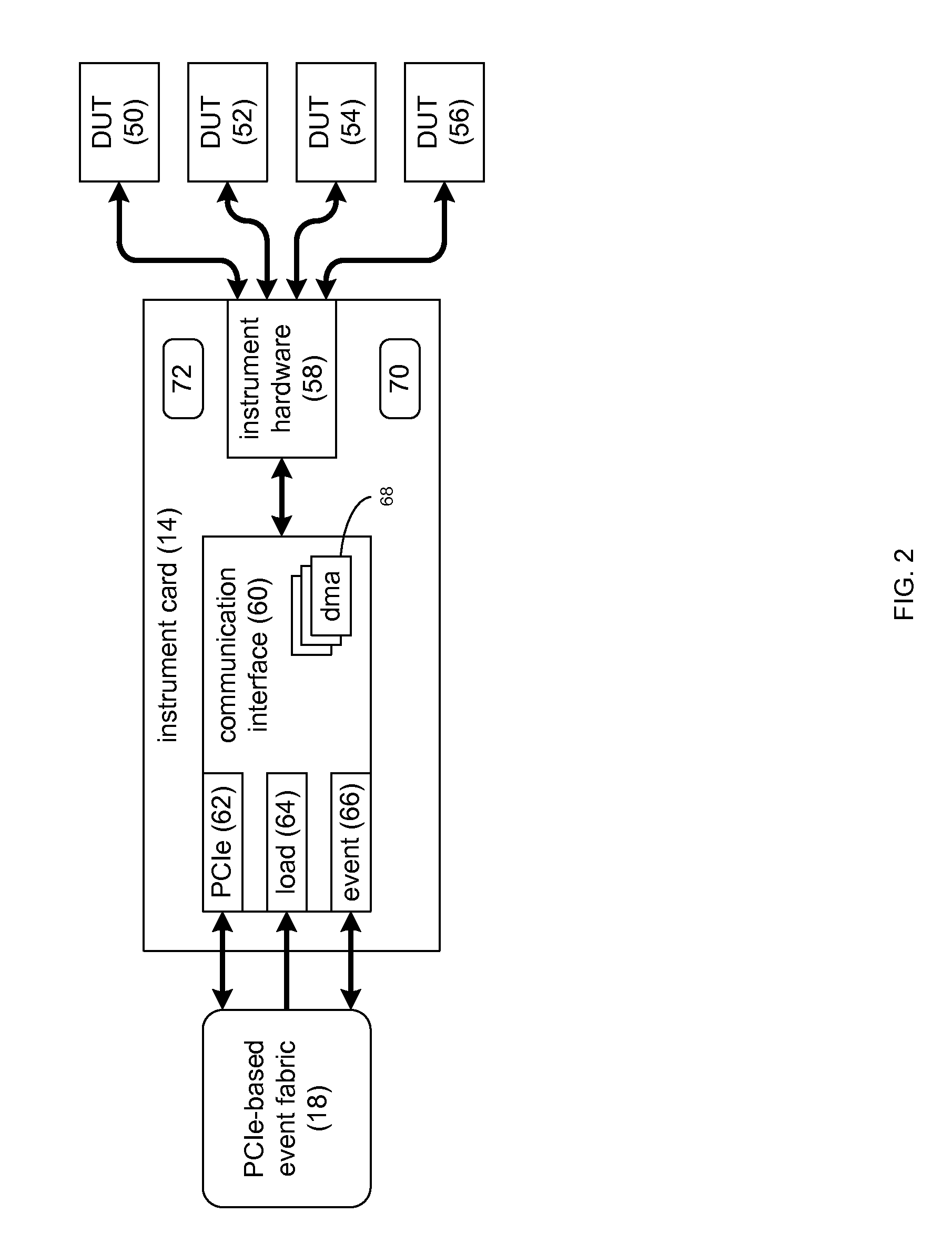

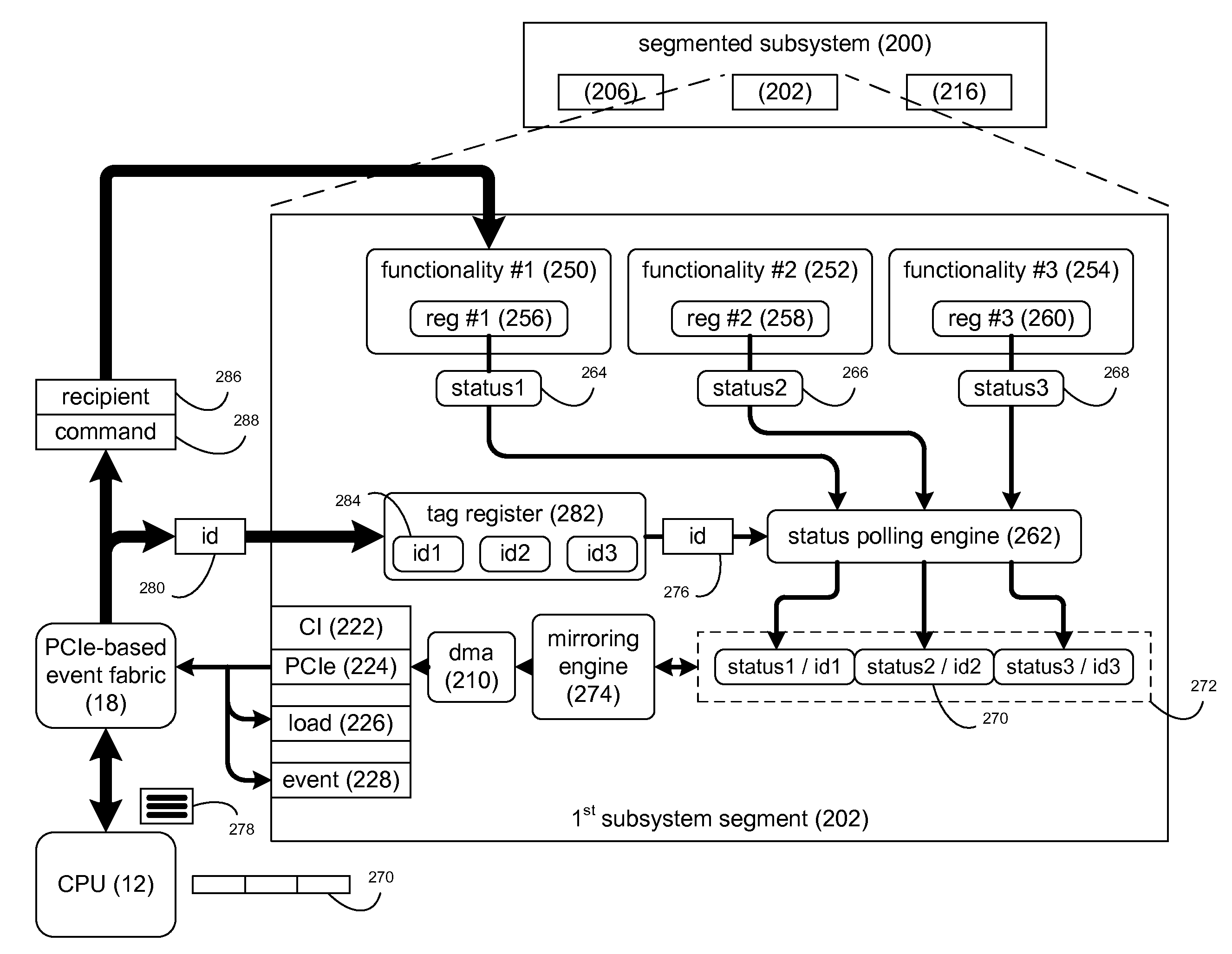

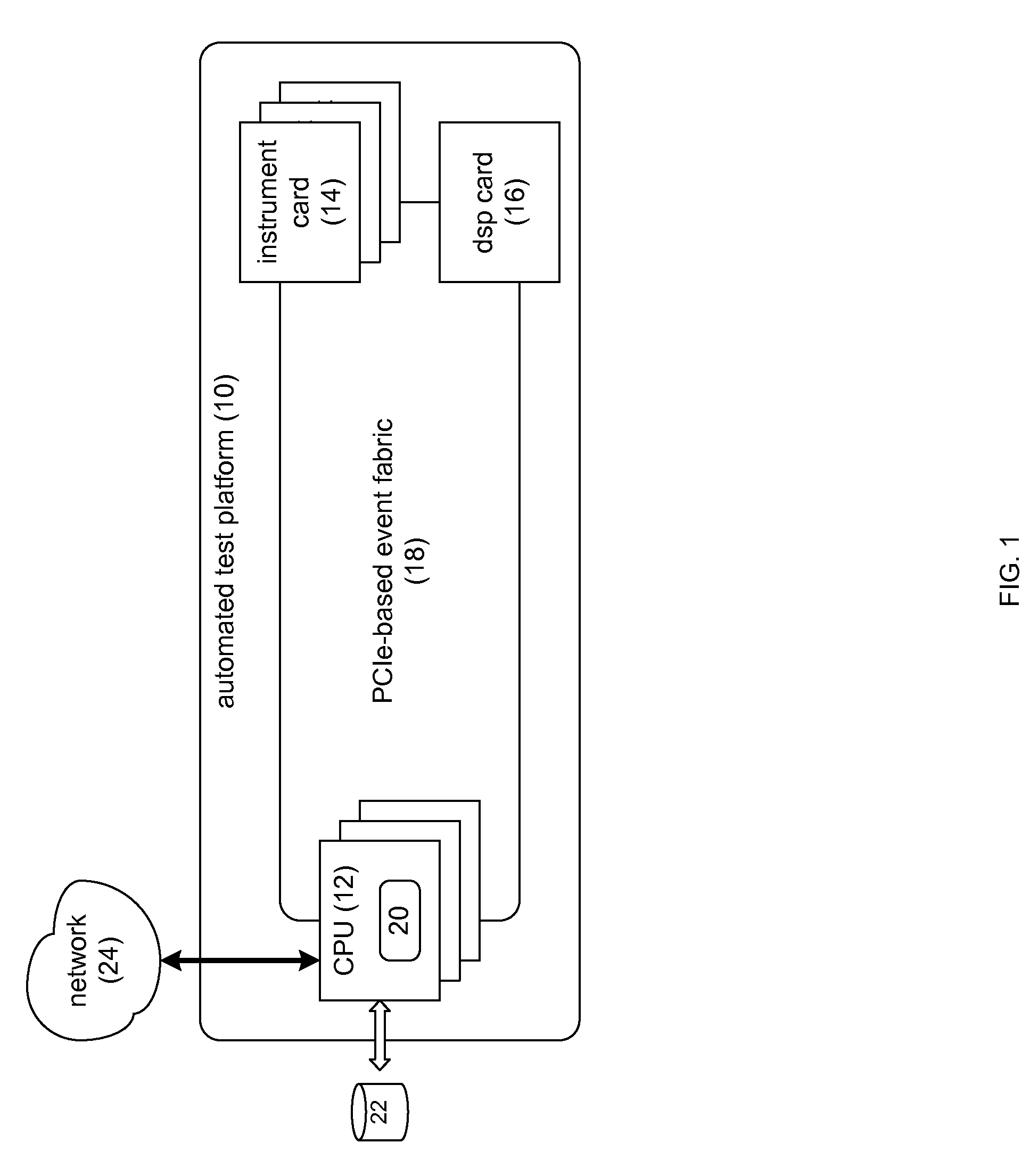

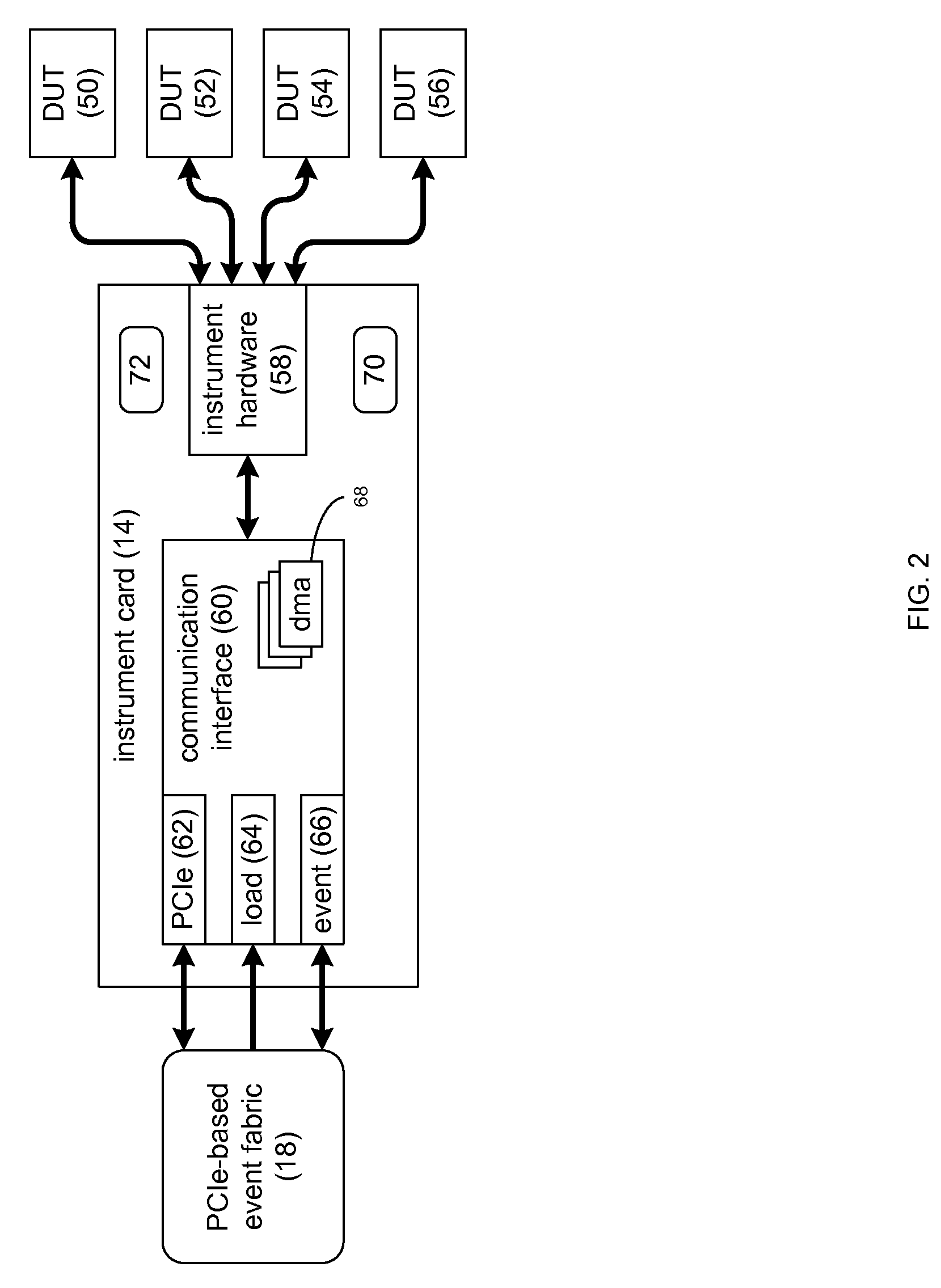

Automated test platform

ActiveUS20140208082A1Detecting faulty hardware by remote testDigital computer detailsSoftware engineeringPolling

A segmented subsystem, for use within an automated test platform, includes a first subsystem segment configured to execute one or more instructions within the first subsystem segment. A second subsystem segment is configured to execute one or more instructions within the second subsystem segment. The first subsystem segment includes: a first functionality, a second functionality, and a status polling engine. The status polling engine is configured to: determine a first status for the first functionality and a second status for the second functionality, and generate a consolidated status indicator for the first subsystem segment based, at least in part, upon the first status for the first functionality and the second status for the second functionality.

Owner:XCERRA

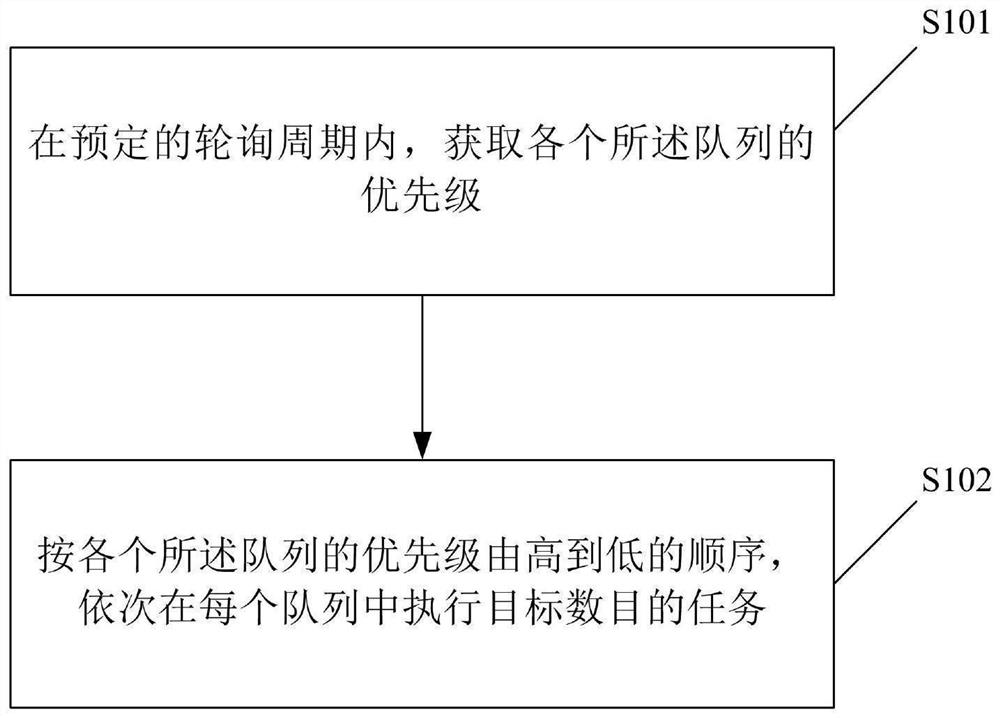

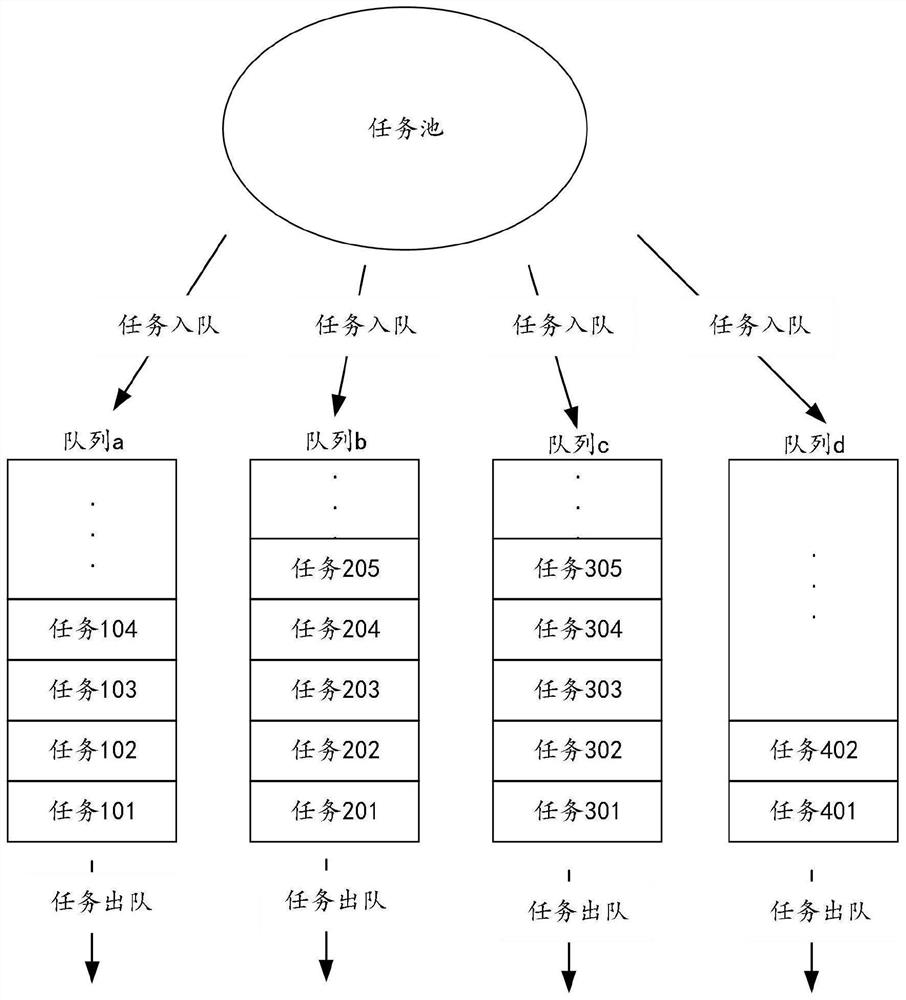

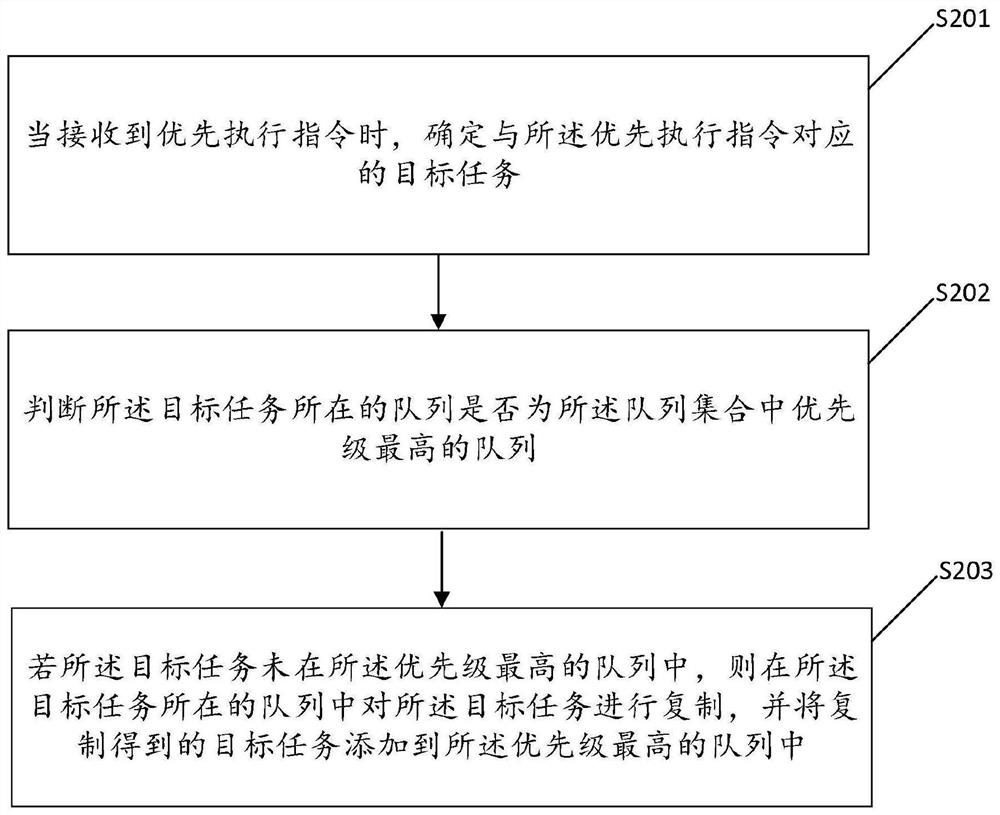

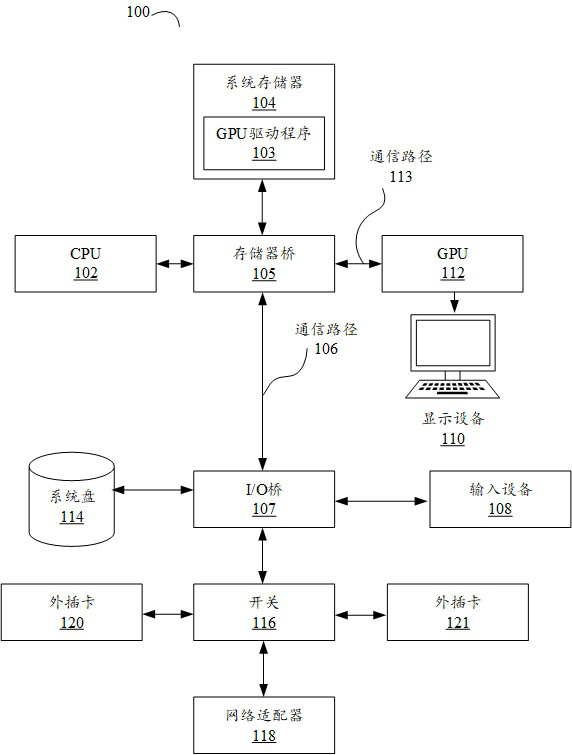

Task execution method and device, storage medium and electronic equipment

PendingCN112579263AAvoid congestionSpeed up executionProgram initiation/switchingParallel computingEngineering

The invention discloses a task execution method, which is applied to a queue set, a plurality of queues are arranged in the queue set, each queue is provided with a priority corresponding to the queue, each task to be executed is distributed to the corresponding queue according to the task attribute of the task, and the method comprises the following steps: in a preset polling period, executing the task according to the priority of the task to be executed; according to the sequence of the priorities of all the queues from high to low, executing the tasks of the target number in each queue in sequence, wherein the priorities of the queues are in positive correlation with the target number. By applying the method, a plurality of queues can be set in the queue set, the queues are started in apolling mode according to the priority sequence of the queues, the number of tasks executed each time in the polling process is set for each queue according to the priority of each queue, the numberof tasks executed by the queues with the high priorities is large, and the tasks are executed in a multi-queue polling mode. Therefore, task execution is accelerated, the problem of queue congestion caused when a large number of tasks need to be executed is avoided, and then the task processing efficiency is improved.

Owner:BEIJING GRIDSUM TECH CO LTD

Rendering task scheduling method and device based on primitives and storage medium

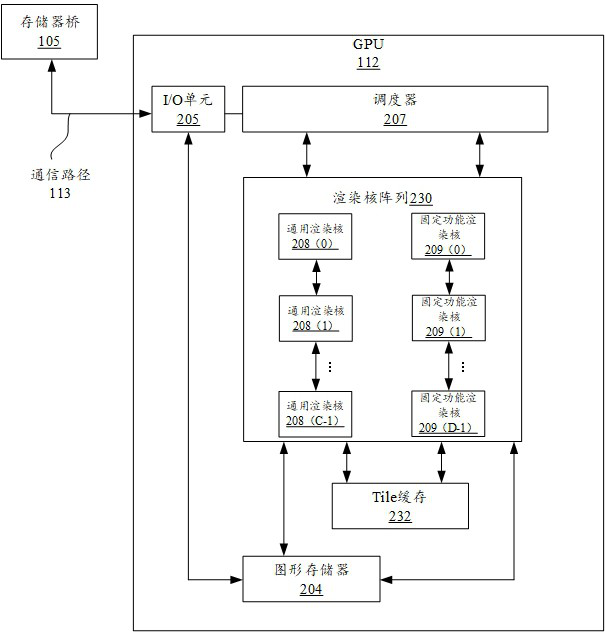

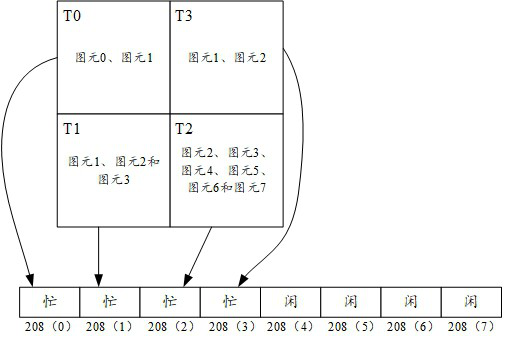

ActiveCN112801855ATask workload balanceImprove efficiencyImage memory managementProcessor architectures/configurationParallel computingPolling

The embodiment of the invention discloses a rendering task scheduling method and device based on primitives and a storage medium. The method can comprise the following steps: sequentially accessing a primitive list corresponding to a tile to be subjected to fragment coloring according to a set access sequence through a scheduler; traversing all the primitives in the primitive list corresponding to the currently accessed tile through the scheduler, and correspondingly distributing each traversed primitive to a currently idle general rendering core in a polling manner to execute a fragment coloring task; and executing a fragment coloring task through the general rendering core based on the primitives correspondingly allocated by the scheduler.

Owner:西安芯云半导体技术有限公司

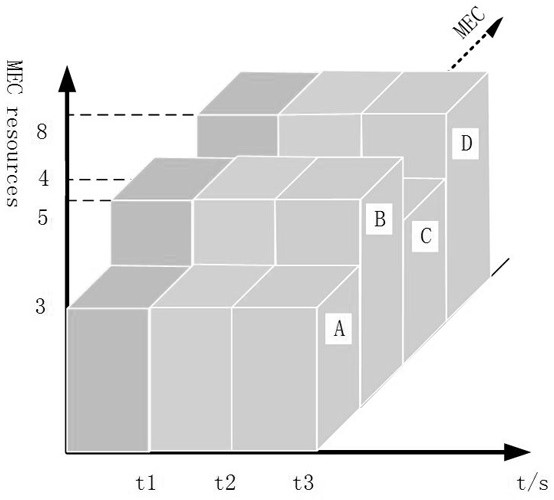

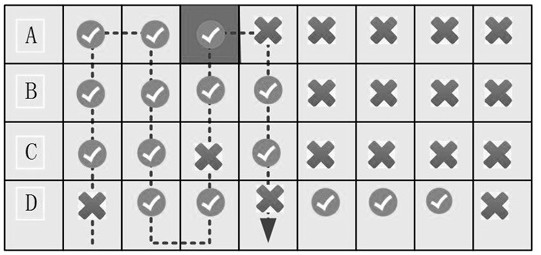

MEC-oriented distributed service scheduling method

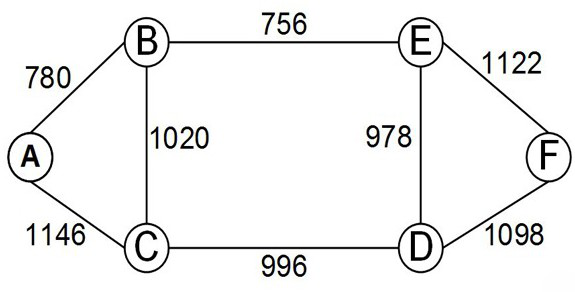

ActiveCN112888005ALoad balancingReduce waiting time in lineNetwork traffic/resource managementData switching networksCompletion timeStart time

The invention discloses an MEC-oriented distributed service scheduling method, which comprises the following steps of: for any service in a service set, determining M MEC servers closest to the service according to a shortest route algorithm, adding the M MEC servers into an available MEC server set; for any service in the available MEC server set, determining, by using a polling mode, whether the jth computing resource on each server is idle or not at an i moment; if the jth computing resource is idle, allocating the computing resource to the service; and in the same way, performing the same process on the j+1th computing resource to the Jth computing resource, obtaining the resource allocated to the service on each MEC server, namely the size of task segmentation, determining the starting time and the ending time of the service, and calculating the completion time of all the services. According to the method, the service segmentation problem and the MEC resource allocation problem are fused together for joint optimization, the computing resources of the servers are fully utilized, the load between the servers is balanced, and the queuing waiting time of the terminal is reduced.

Owner:ZHONGTIAN COMM TECH CO LTD +2

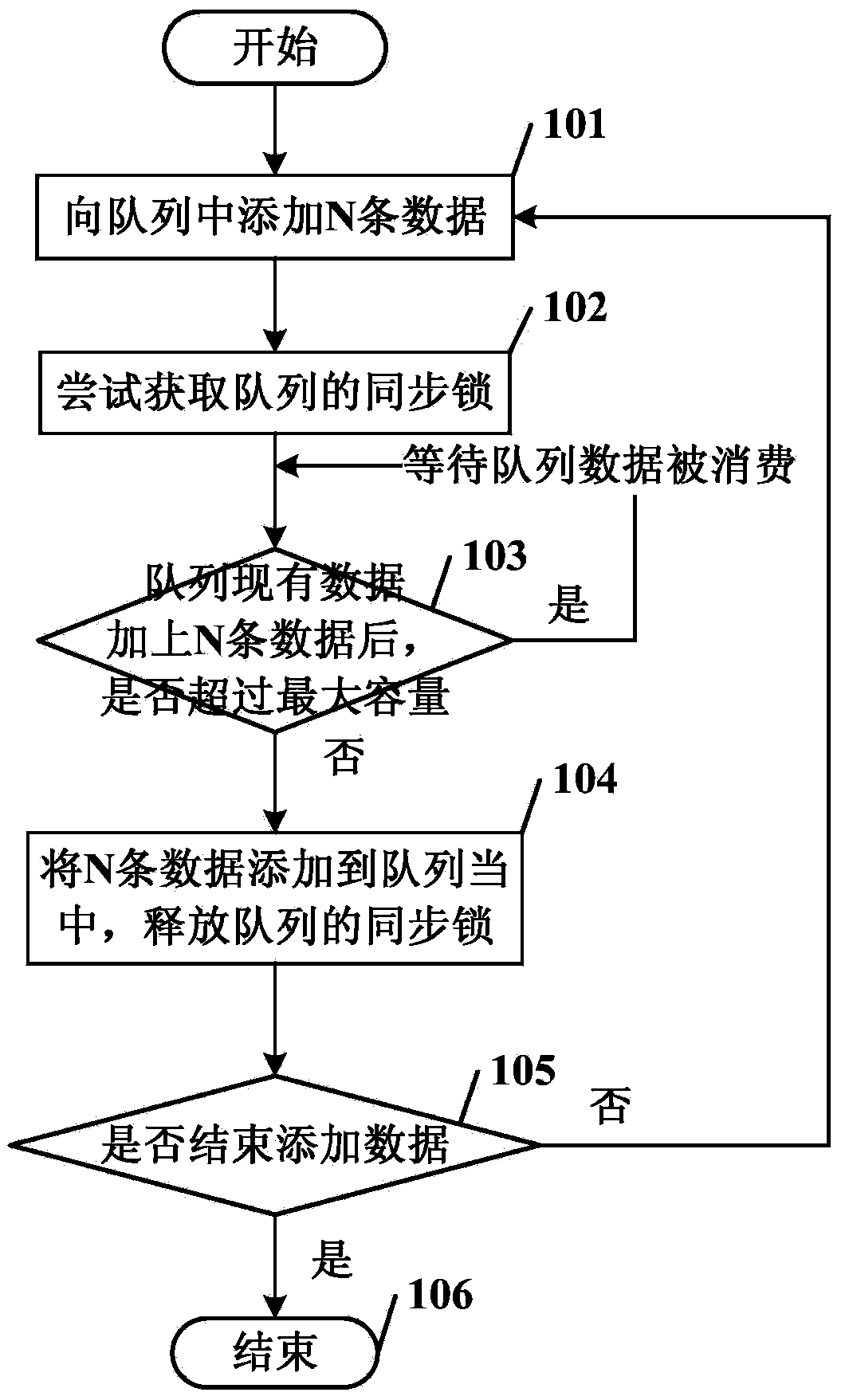

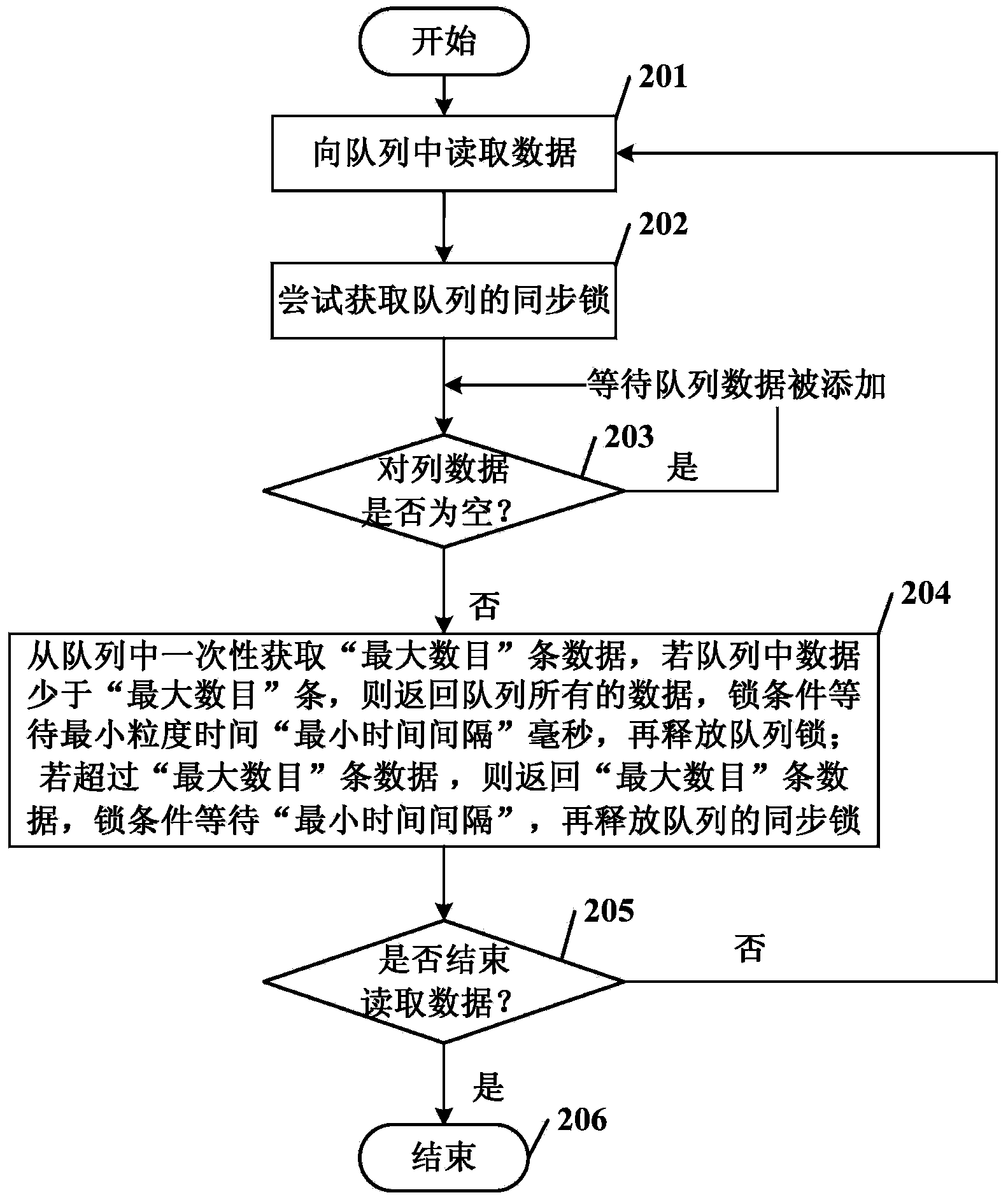

Read-write balanced blocking queue implementation method and device

ActiveCN103970597ARelieve pressureAlleviate suddennessMultiprogramming arrangementsLarge scale dataComputer software

The invention discloses a read-write balanced blocking queue implementation method and device, and relates to the field of computer software programming. The method comprises the following steps that N data are added to a queue, and a synchronous lock of the queue is tried to obtain; whether the N data can be added to a synchronous blocking queue or not is determined, and wait is continued if the queue exceeds the maximum setting range after the N data are added until the queue allows N records to be added; the queue throws exception if the N exceeds the maximal length of the queue; after the N data are added to the synchronous blocking queue, the procedure of data adding is completed, and the synchronous lock of the queue is released; addition is continued or given up to complete the procedure of the addition; continuous addition is given up, and the addition procedure is finished. According to the read-write balanced blocking queue implementation method and device, sudden, non-continuous and non-quantitative large scale data processing pressure can be released effectively, the call logic of data queue can be simplified, risks of multithreading polling data are removed, and the stability of application programs for processing sudden large data volumes is improved.

Owner:FENGHUO COMM SCI & TECH CO LTD

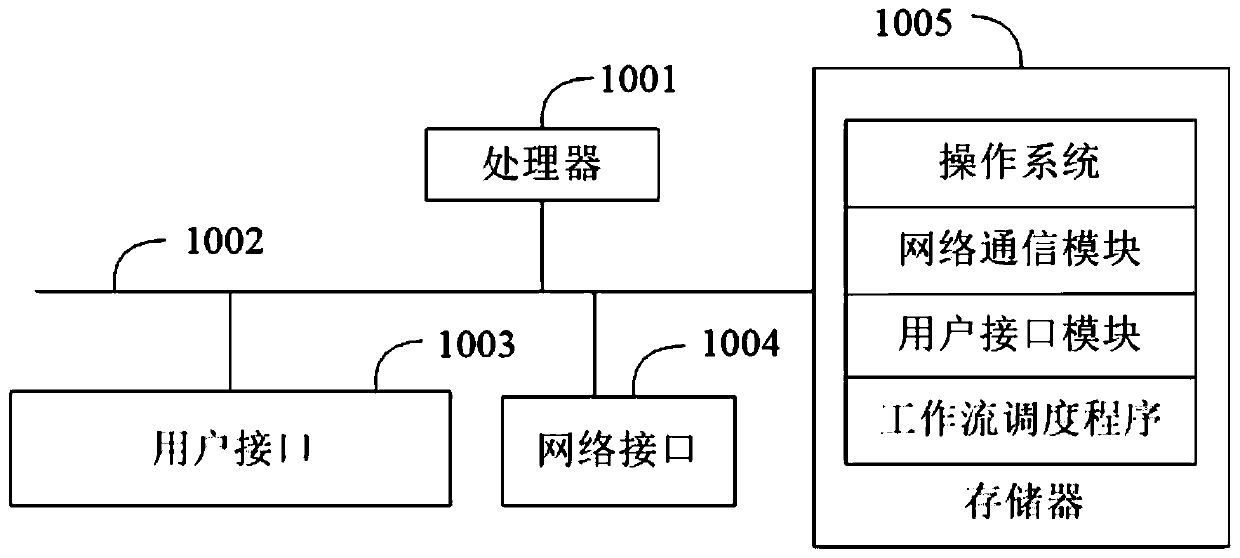

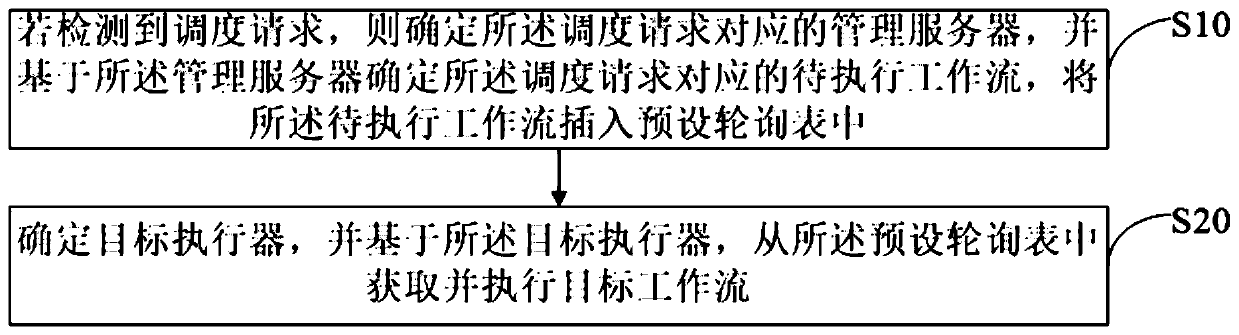

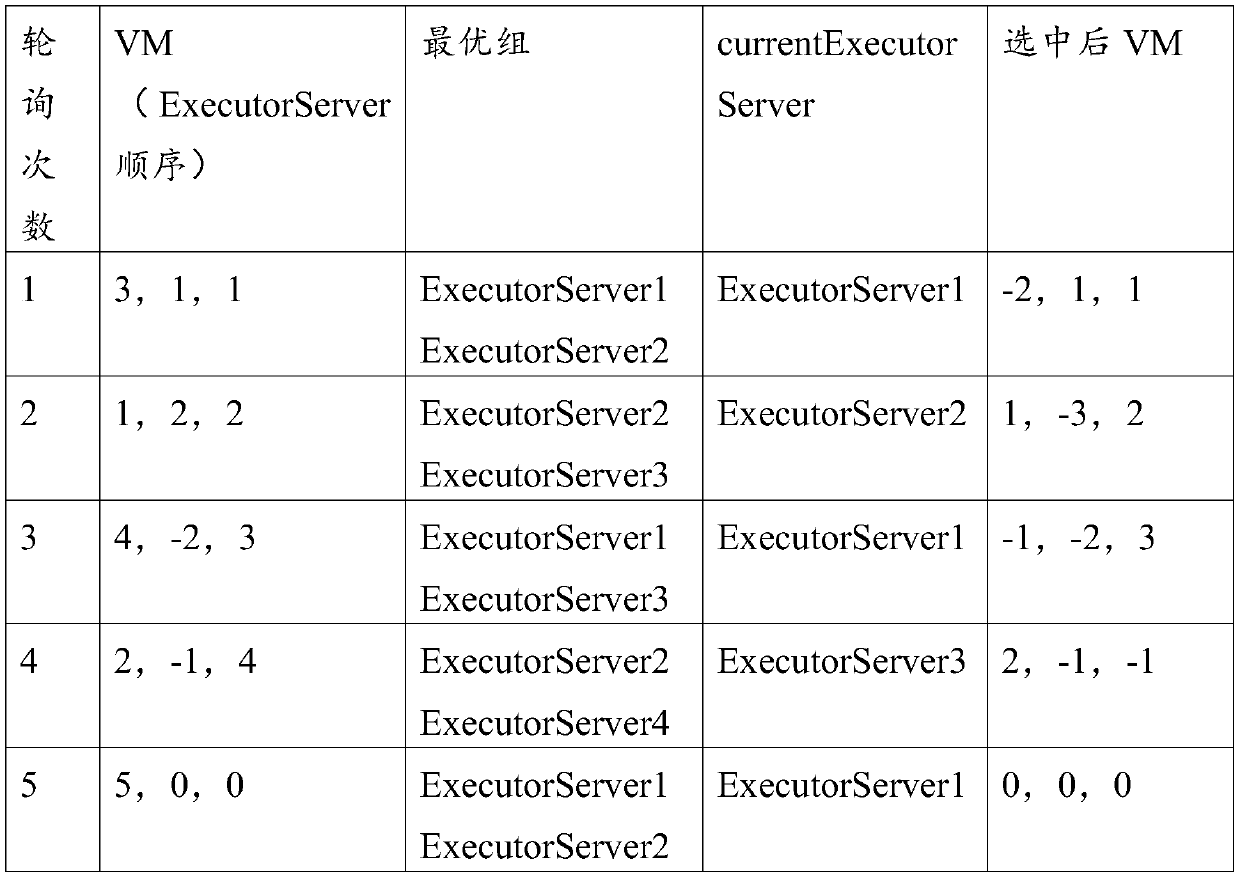

Workflow scheduling method, device and system and computer readable storage medium

PendingCN111506406AReduce complexityAchieve horizontal expansionProgram initiation/switchingInterprogram communicationScheduling functionPolling

The invention discloses a workflow scheduling method, and the method comprises the following steps: if a scheduling request is detected, determining a management server corresponding to the schedulingrequest, determining a workflow to be executed corresponding to the scheduling request based on the management server, and inserting the workflow to be executed into a preset polling table; and determining a target actuator, and obtaining and executing a target workflow from the preset polling table based on the target actuator. The invention further discloses a workflow scheduling device and system and a computer readable storage medium. According to the invention, the scheduling function of the management server is issued to the actuator; when a scheduling request is detected; the corresponding management server inserts the workflow to be executed corresponding to the scheduling request into a polling table; according to the invention, the complexity of the management server is reduced,the target executor actively obtains and executes the target workflow from the polling table, and the management server does not need to cache a series of data, thereby achieving the lateral extension of the management server, and improving the scheduling intelligence.

Owner:WEBANK (CHINA)

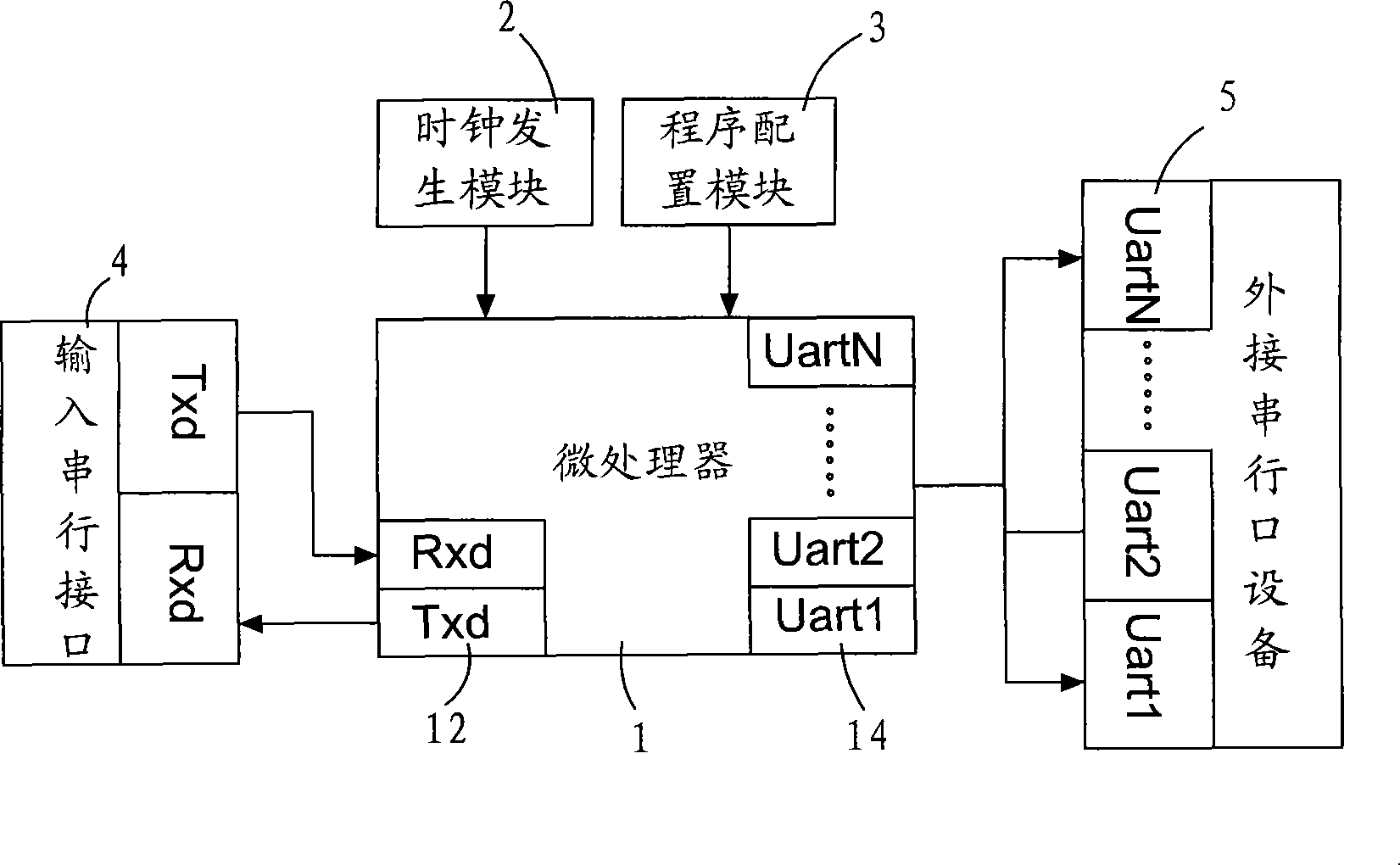

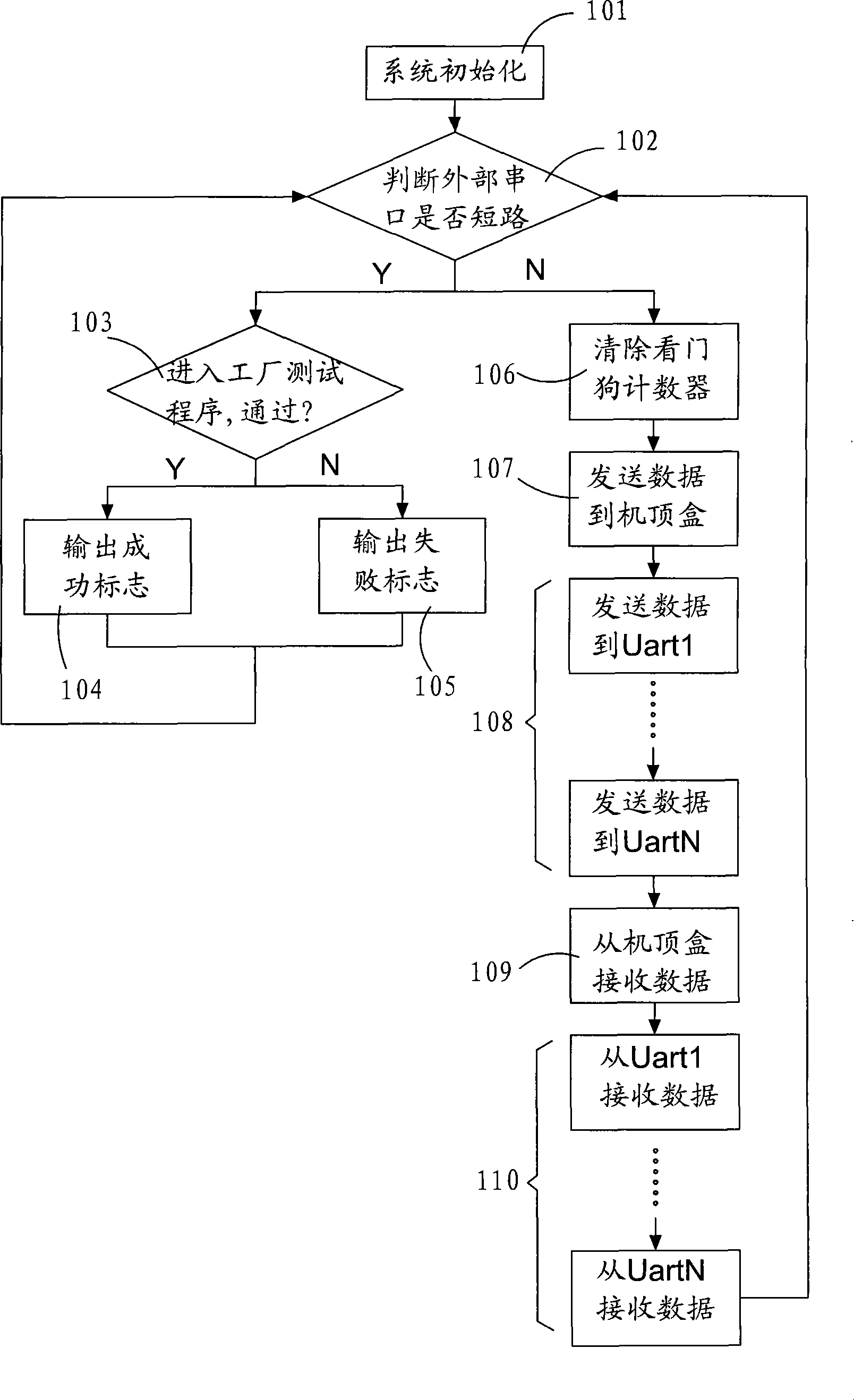

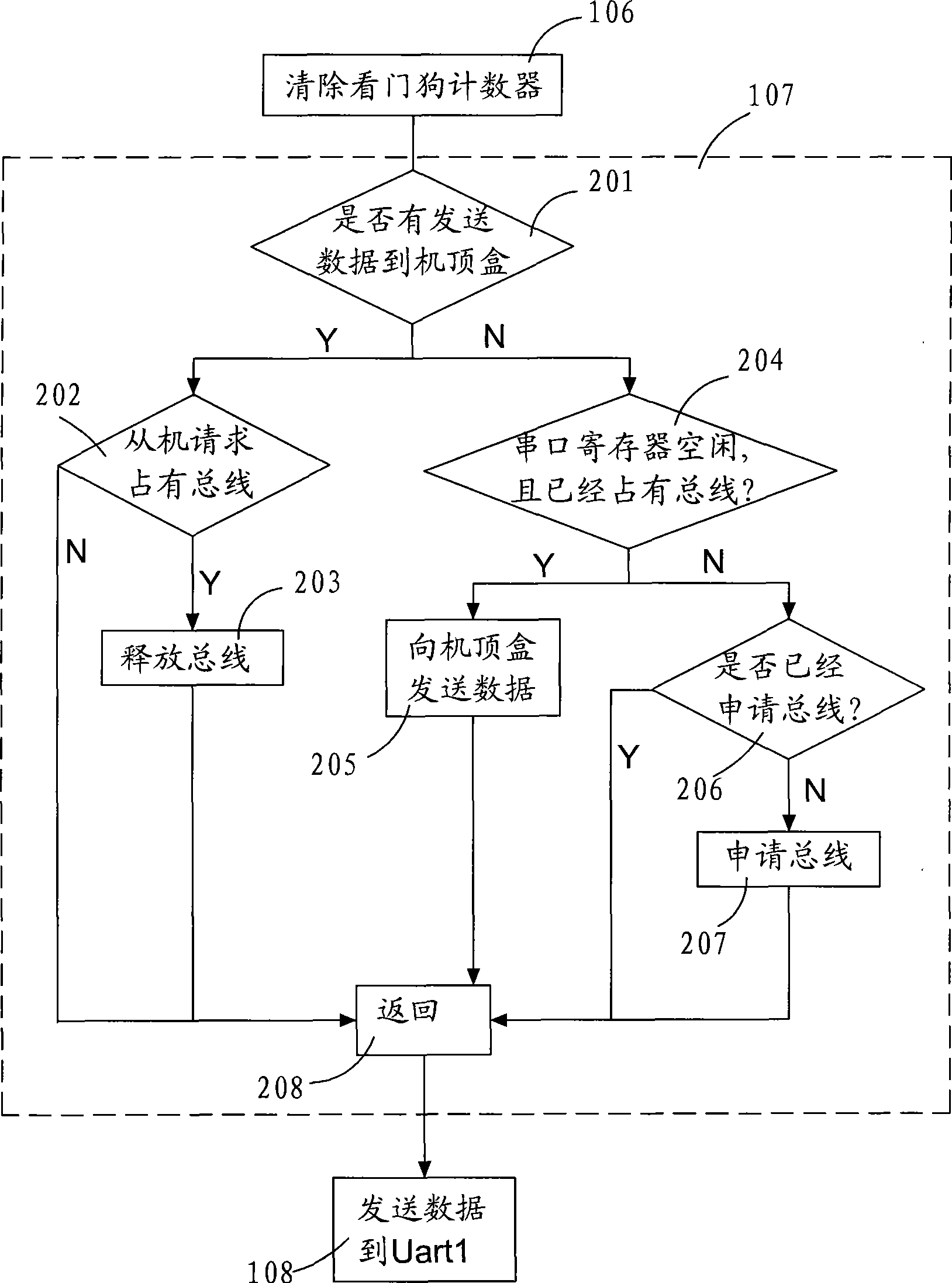

Technology for expanding single serial of set-top box

InactiveCN101415065AIncrease the number ofLow costTelevision system detailsColor television detailsTest proceduresComputer science

The invention discloses a single-serial port expanding technology of a set-up box which is based on the expanding mode of the software of a microprocessor. The single-serial port expanding technology comprises: a system is initialized; then the microprocessor starts to judge whether an external serial port is short or not; if yes, then a factory testing procedure is carried out; if the testing procedure is passed, then a successful mark is output; if not, then a failure mark is output; after the mark is output, all the procedures are returned back to re-judge whether the external serial port is short or not; if not, then a normal working mode is carried out and a polling mode is used to judge whether data is sent out and send in or not, and sending or receiving is executed according to a judging result, thus finishing one turn of port communication. The single-serial port expanding technology of the set-up box has the advantages that the cost is lower; the core part is a microprocessor chip commonly used in the market; simultaneously, the interface is simple; a common IO port can be used to simulate; the application scheme is flexible; the added number of the serial ports basically do not need the cost on the hardware and only the software is needed to change.

Owner:FUJIAN STAR NET COMM

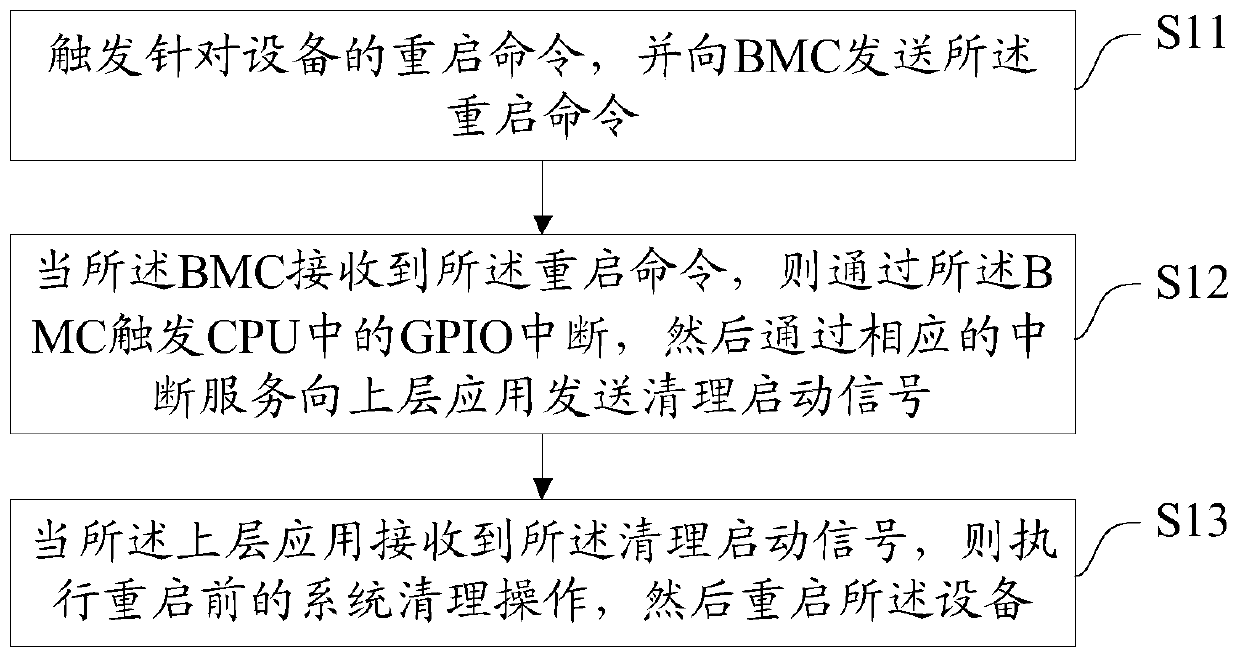

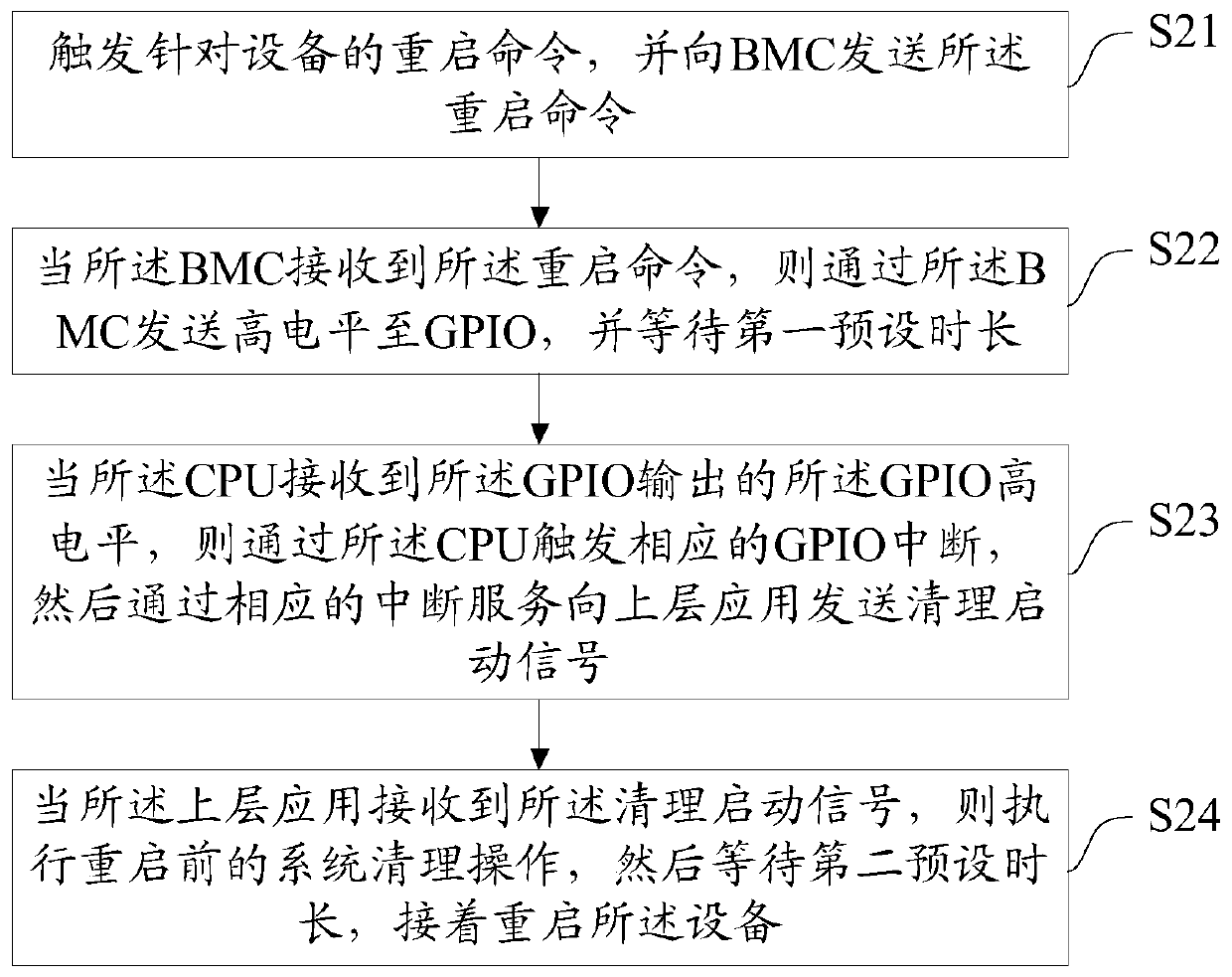

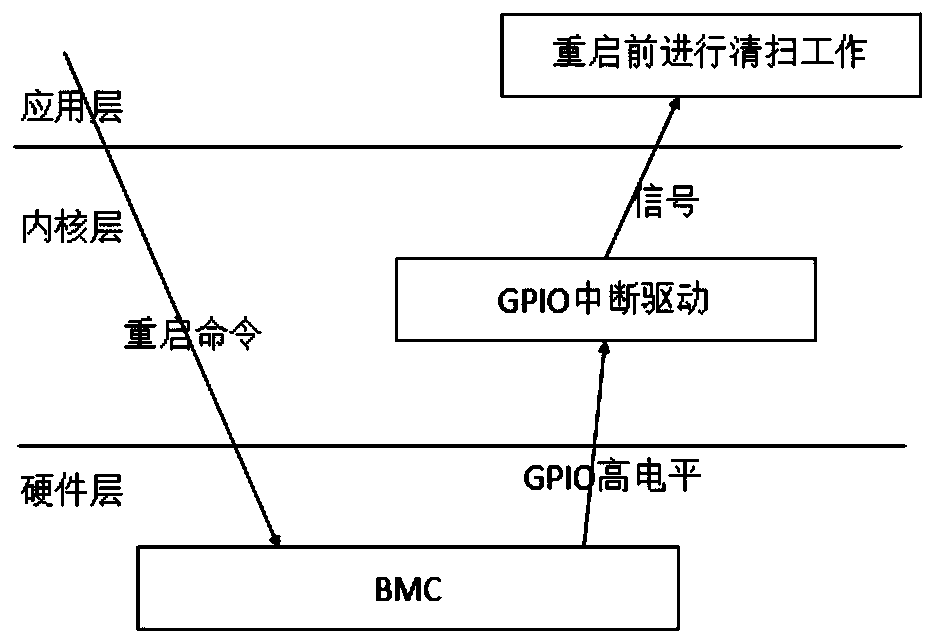

Equipment restarting method and device, equipment and medium

ActiveCN111124761AReduce occupancyAvoid repeated pollingRedundant operation error correctionPollingEmbedded system

The invention discloses an equipment restarting method and device, equipment and a medium, and the method comprises the steps: triggering a restarting command for the equipment, and transmitting the restarting command to a BMC; when the BMC receives the restart command, triggering GPIO interruption in a CPU through the BMC, and then sending a cleaning start signal to an upper application through acorresponding interruption service; and when the upper-layer application receives the cleaning starting signal, executing a system cleaning operation before restarting, and then restarting the equipment. The GPIO interrupt is triggered through the BMC, so that the cleaning starting signal can be sent to the upper-layer application through the interrupt service, the effect of quickly cleaning thesystem is achieved, the situation that in the prior art, repeated polling needs to be conducted in the restarting process is avoided, occupation of system resources is reduced, and high real-time performance is achieved.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

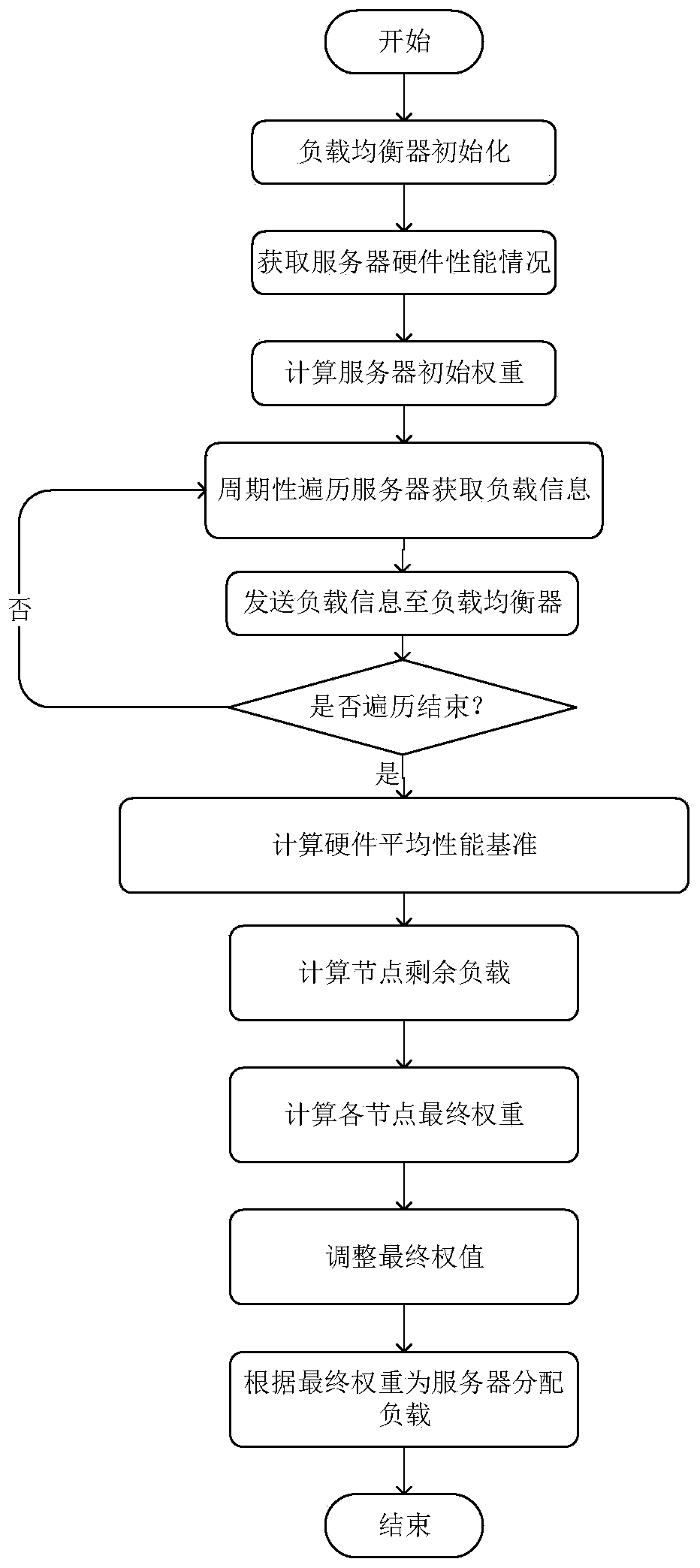

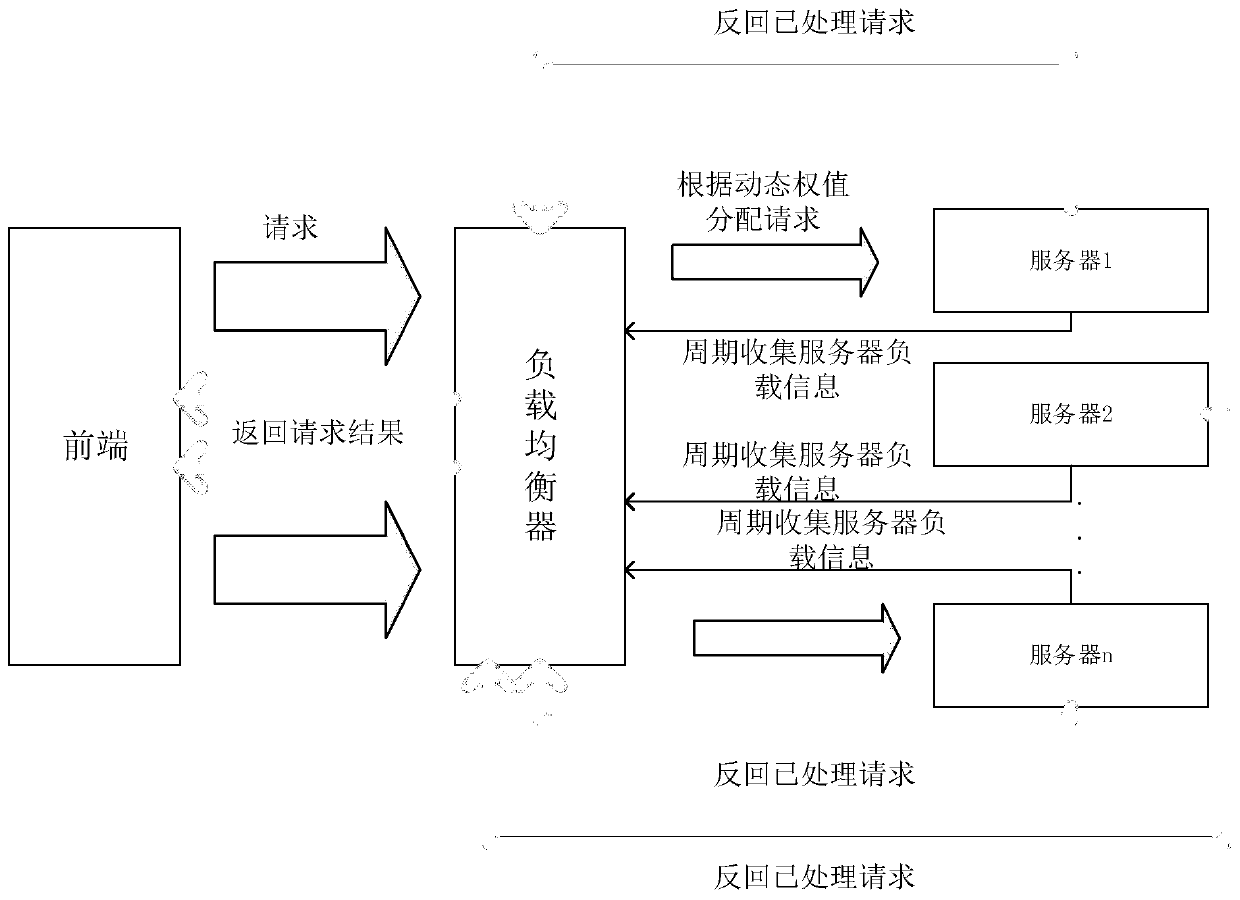

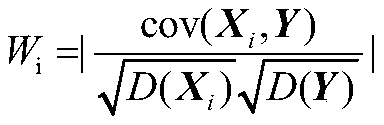

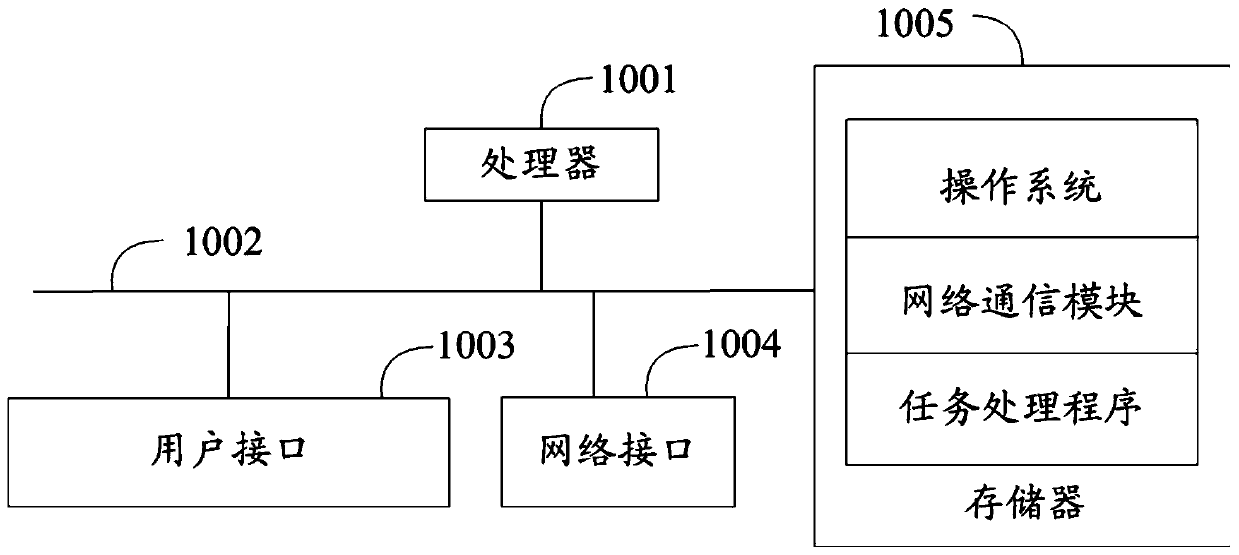

Dynamic weight load balancing method based on Nginx

PendingCN111381971AImprove efficiencyImprove service efficiencyResource allocationServer allocationPolling

The invention relates to a dynamic weight load balancing method based on Nginx. The method comprises the following steps: acquiring hardware performance of each back-end server node in a no-load state; calculating a hardware influence weight; solving the weight of each server node in a no-load state according to the hardware performance and the hardware influence weight, and taking the weight as the initial weight of a dynamic weight load balancing algorithm; periodically acquiring load information of each back-end server and calculating the use ratio of each piece of hardware; calculating a hardware average performance reference according to the hardware performance; calculating the residual load of each node according to the use ratio of each hardware and the average performance benchmark of the hardware; solving a final weight; allocating a corresponding load to the server according to the final weight; according to the method, the dynamic weight load balancing algorithm based on the weighted polling algorithm is established, so that the dynamic change of the weight is realized, and the problem that the traditional load balancing algorithm cannot change the weight according to the load of the back-end server to cause load imbalance is solved.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

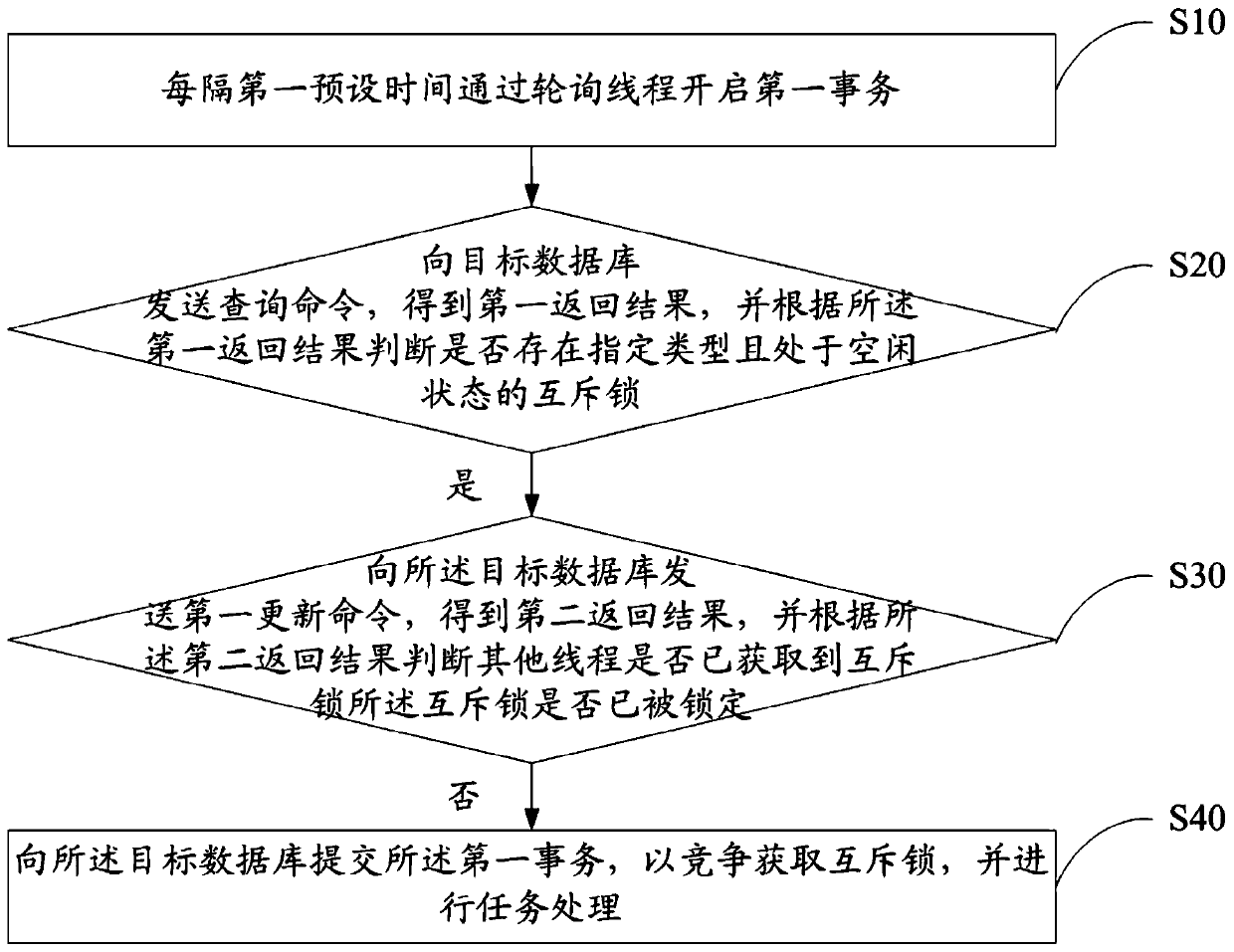

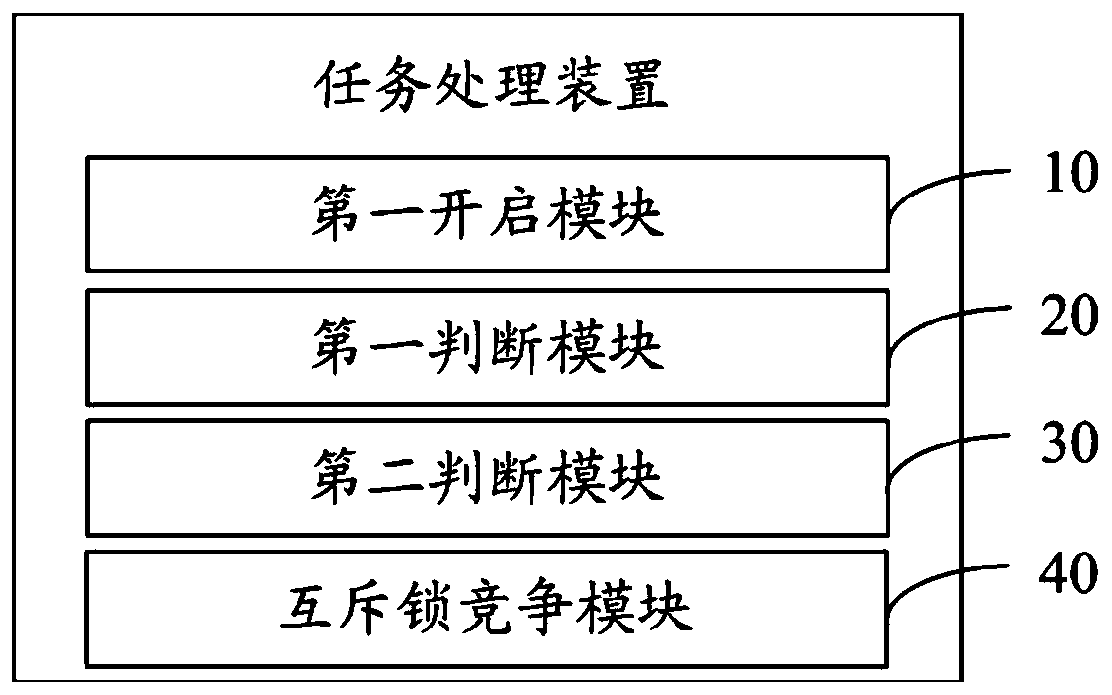

Task processing method, device and equipment and computer readable storage medium

PendingCN111400330AReduce coding costsLower deployment costsDatabase updatingProgram synchronisationPollingTarget database

The invention discloses a task processing method, device and equipment and a computer readable storage medium, and relates to the technical field of financial science and technology. The task processing method comprises the steps: starting a first transaction through a polling thread every first preset time; sending a query command to a target database to obtain a first return result, and judgingwhether a mutual exclusion lock of a specified type and in an idle state exists or not according to the first return result; if the mutual exclusion lock of the specified type and in the idle state exists, sending a first updating command to the target database to obtain a second return result, and judging whether the mutual exclusion lock is locked or not according to the second return result; ifit is judged that the mutual exclusion lock is not locked, submitting the first transaction to the target database to compete for obtaining the mutual exclusion lock, and performing task processing.According to the method, the competition and mutual exclusion of tasks can be realized based on the TiDB optimistic lock on the basis of not introducing extra infrastructure software, so the cost canbe saved.

Owner:WEBANK (CHINA)

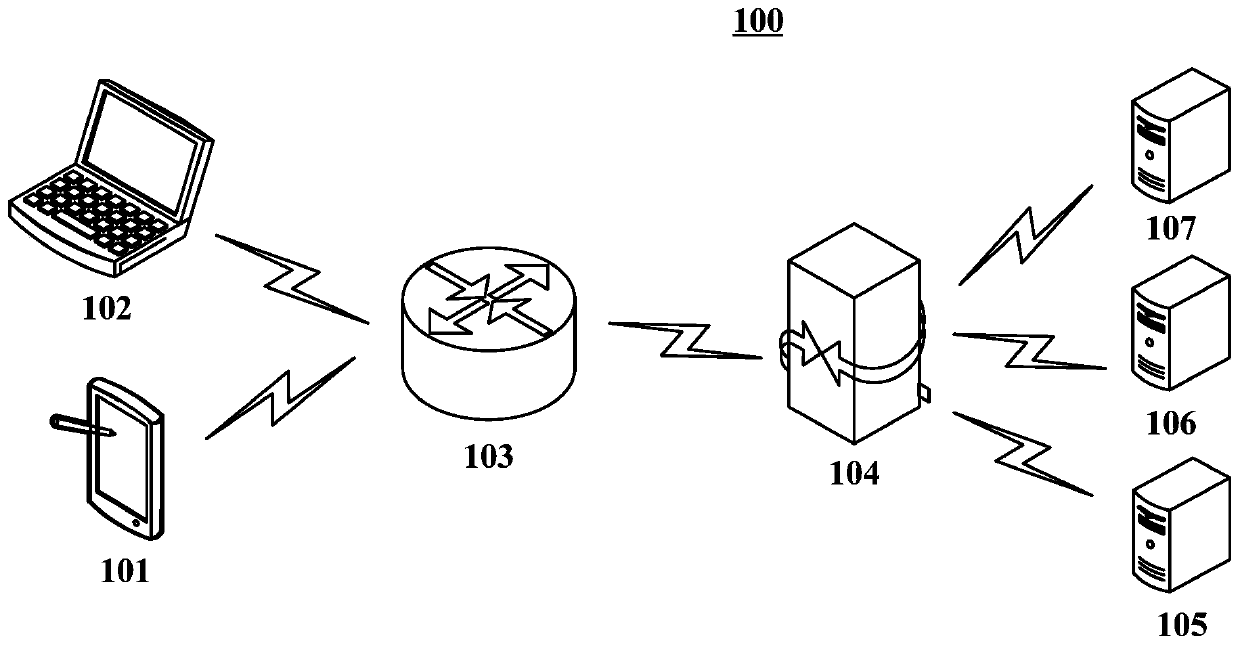

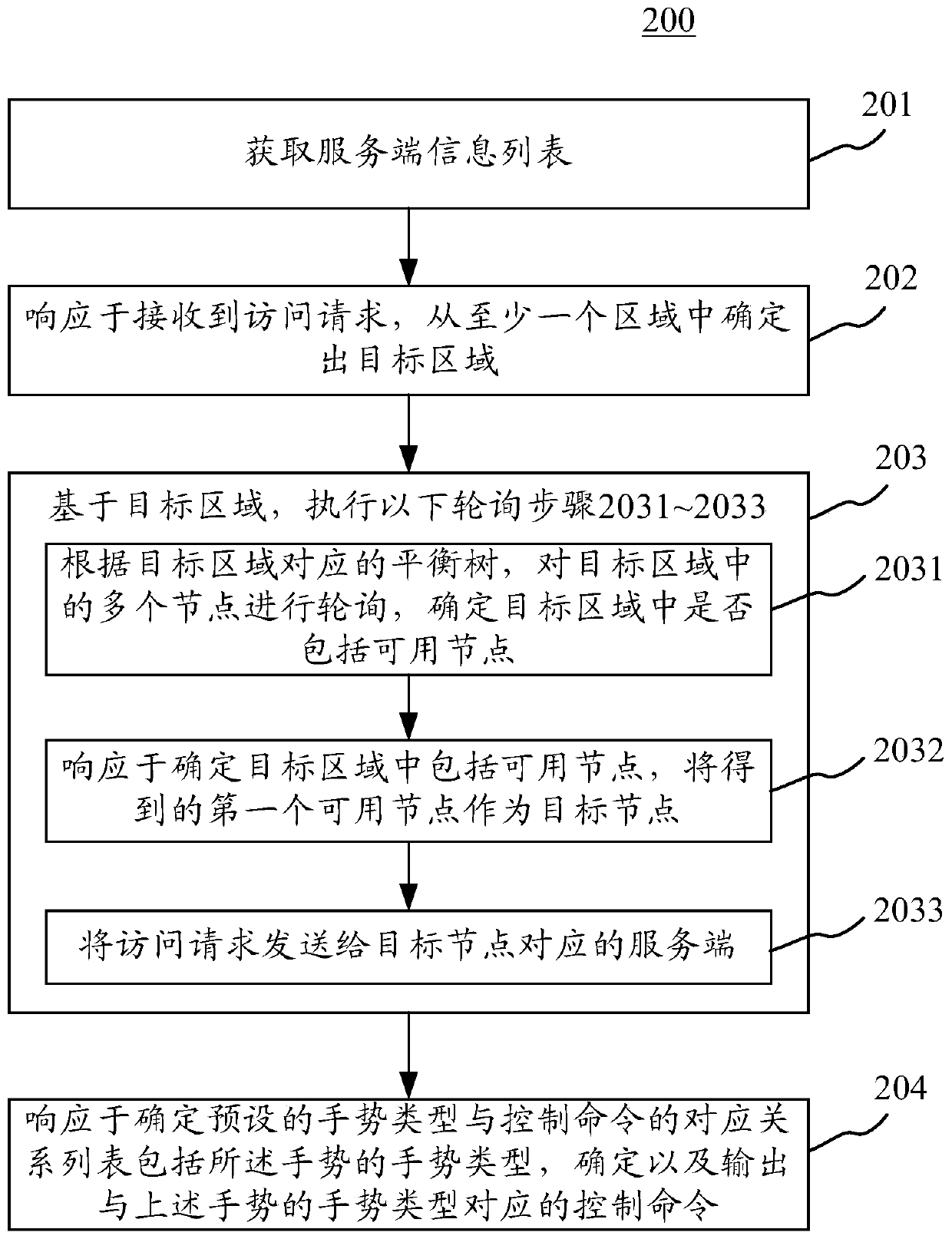

Method and device for realizing load balancing

ActiveCN110650209AReduce forwarding delayImprove forwarding efficiencyTransmissionEngineeringPolling

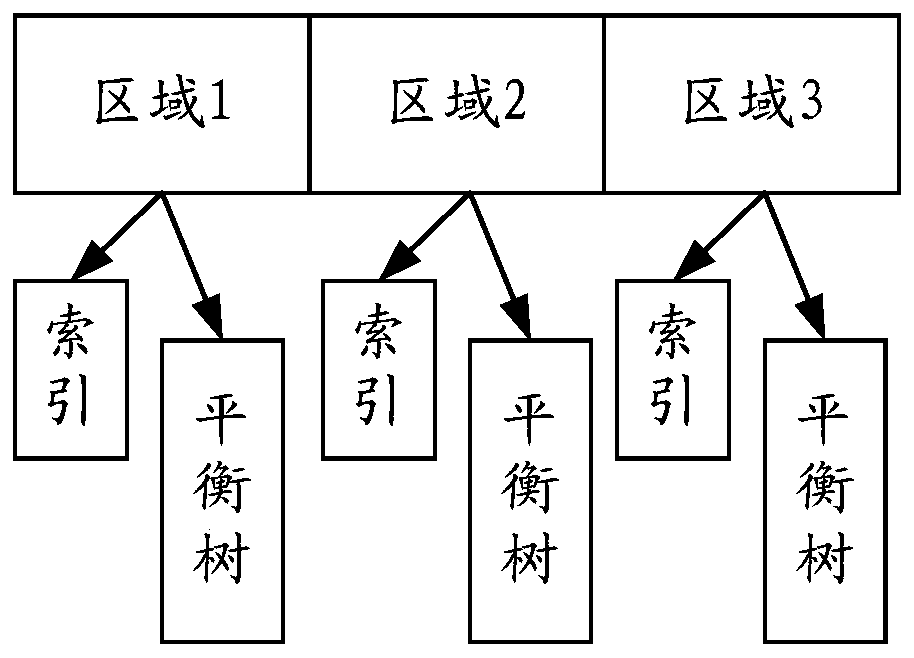

The embodiment of the invention discloses a method and a device for realizing load balancing. A specific embodiment of the method comprises the following steps: acquiring a server information list; responding to the received access request, and determining a target area from the at least one area; based on the target area, executing the following polling steps: polling a plurality of nodes in thetarget area according to a balance tree corresponding to the target area, and determining whether the target area comprises available nodes or not; in response to determining that the target area comprises available nodes, taking the obtained first available node as a target node; sending the access request to a server corresponding to the target node; and in response to determining that the target area does not include the available node, determining a new target area according to the server information list, and continuing to execute the polling step. According to the embodiment, the forwarding delay is reduced, and the forwarding efficiency is improved.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

GPU thread load balancing method and device

ActiveCN111078394ALoad balancingExtended service lifeResource allocationProcessor architectures/configurationComputer architectureParallel computing

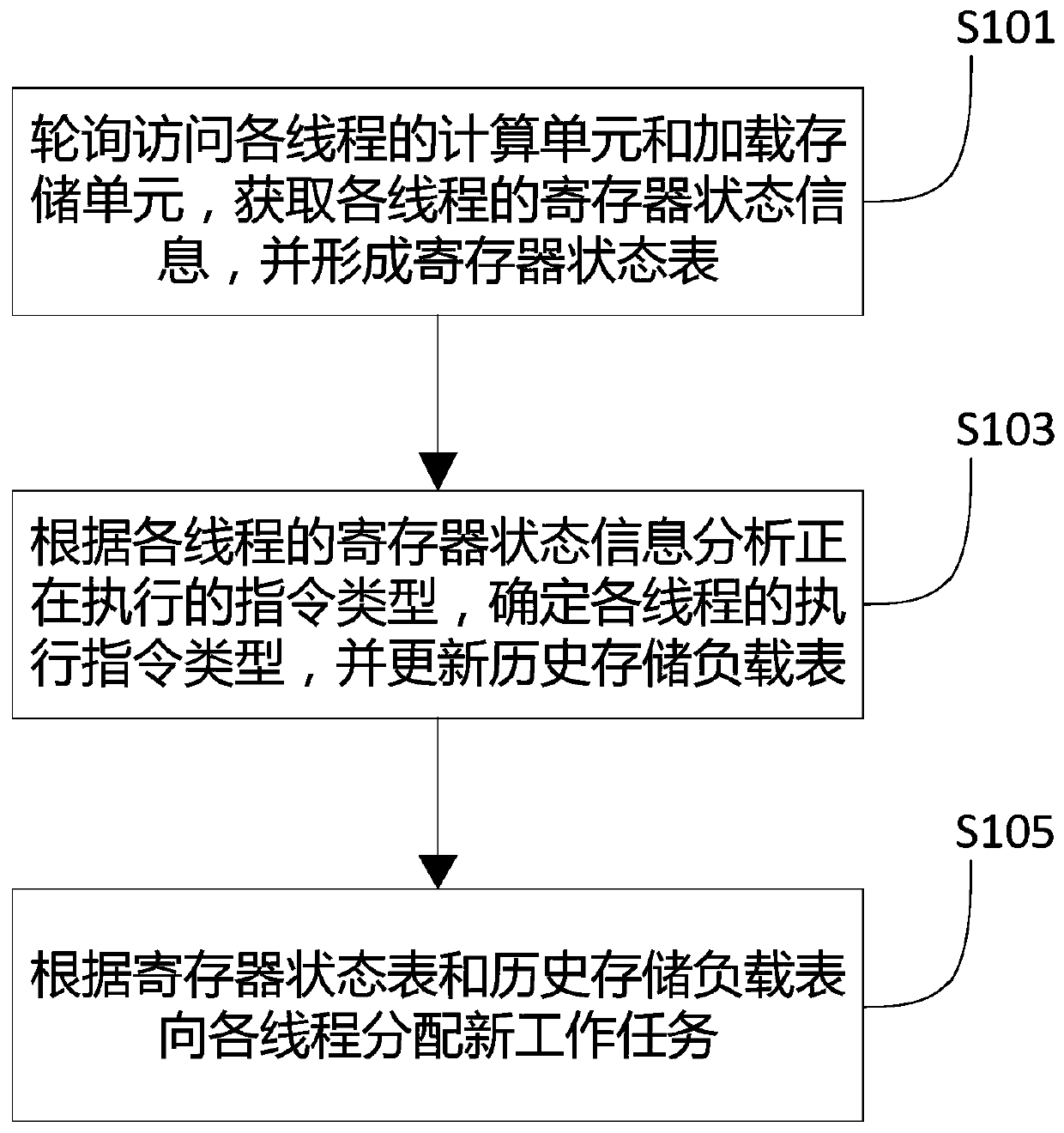

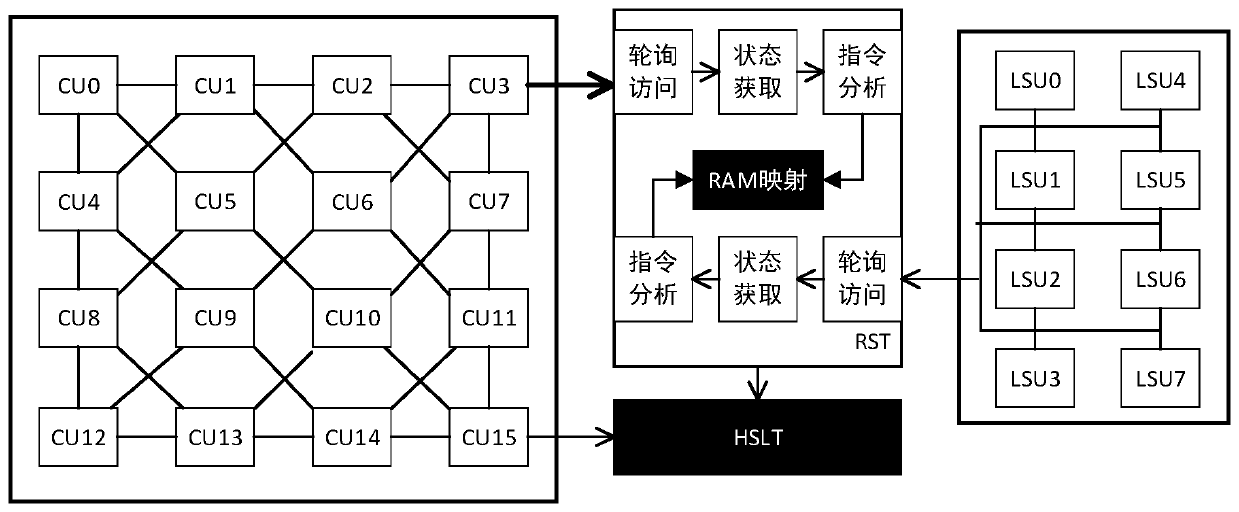

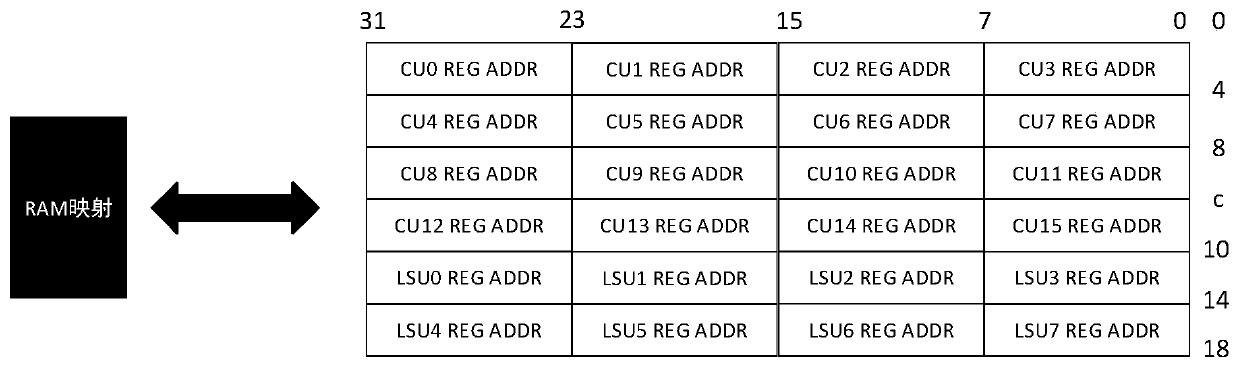

The invention discloses a GPU thread load balancing method and device. The GPU thread load balancing method comprises the following steps: polling and accessing a computing unit and a loading storageunit of each thread to obtain register state information of each thread and form a register state table; analyzing the type of an instruction being executed according to the register state informationof each thread, determining the type of an execution instruction of each thread, and updating a historical storage load table; and allocating a new work task to each thread according to the registerstate table and the historical storage load table. According to the invention, each thread of the GPU is enabled to balance the load, the working efficiency and stability are improved, and the servicelife of hardware is prolonged.

Owner:INSPUR SUZHOU INTELLIGENT TECH CO LTD

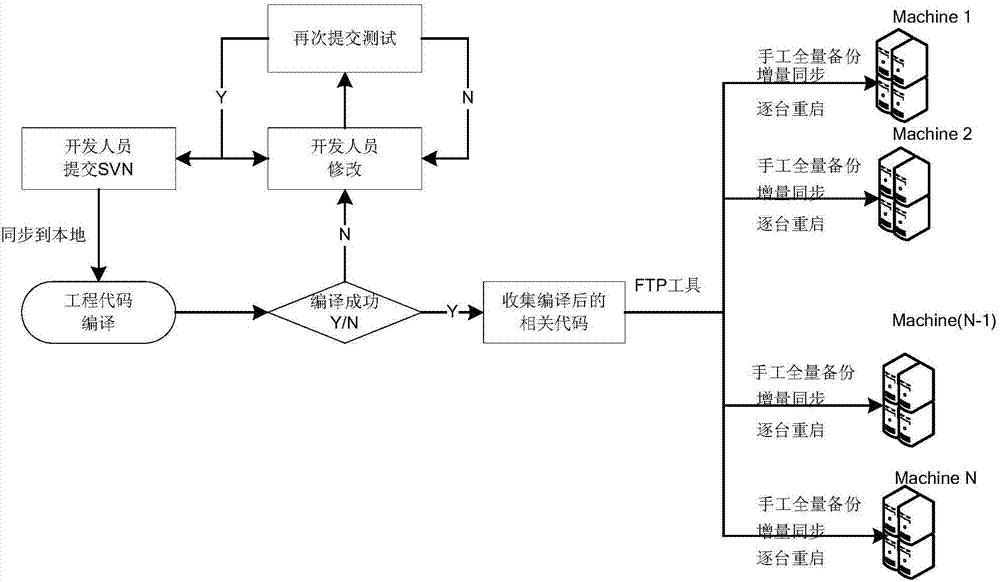

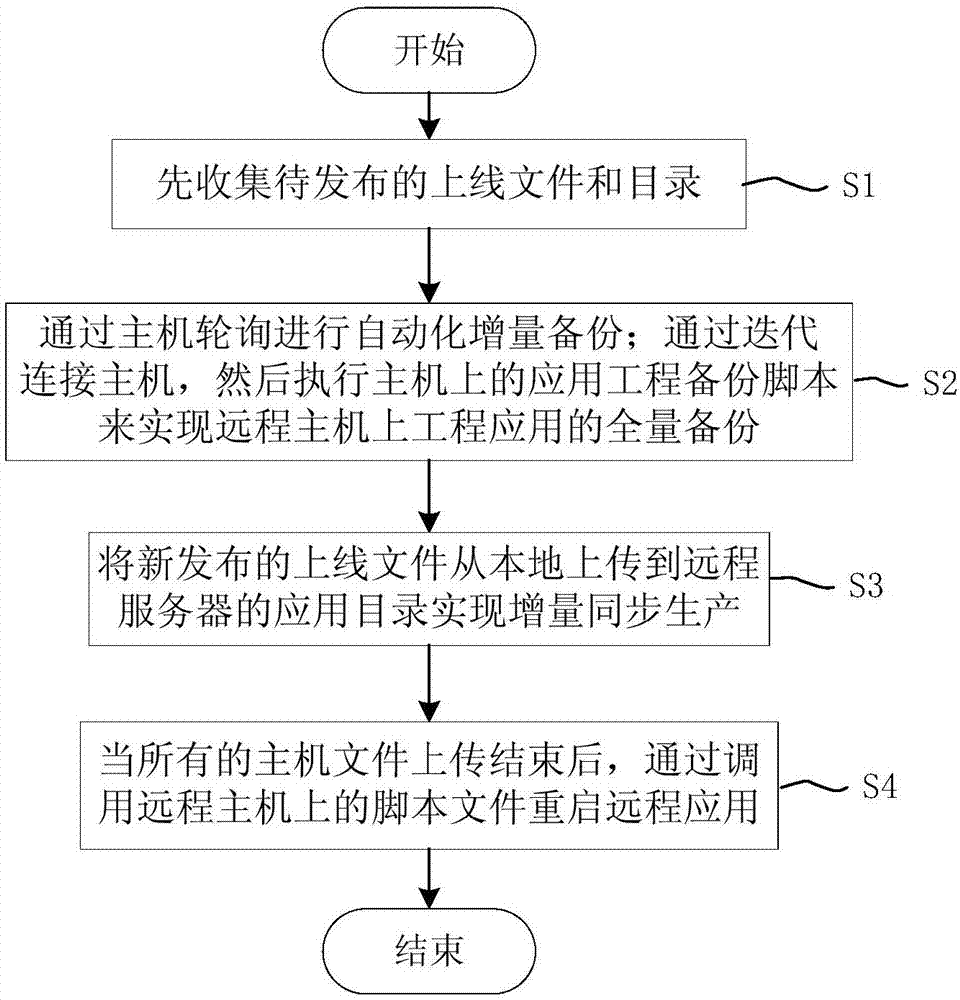

Java automatic code issuing method based on Struts2 frame

ActiveCN107992326AAvoid misinformationPrevent leakageVersion controlApplication engineeringIncremental backup

The invention discloses a Java automatic code issuing method based on a Struts2 frame. The method comprises the following steps: S1) firstly collecting online files and catalogues to be issued; S2) executing the automation incremental backup through host polling; and connecting the host through the iteration, and executing an application engineering backup script on the host to realize the total backup of the engineering application on the remote host; S3) uploading the newly issued online file from the local to an application catalogue of a remote server, and realizing the incremental synchronous production; and S4) while the uploading of all host files is over, restarting a remote application by calling a script file on the remote host. The provided Java automatic code issuing method based on the Struts2 frame is capable of realizing the automation of an issuing link through using a Java program, effectively preventing the mis-uploading and the uploading leakage of an online process,and greatly reducing the working strength.

Owner:上海新炬网络技术有限公司

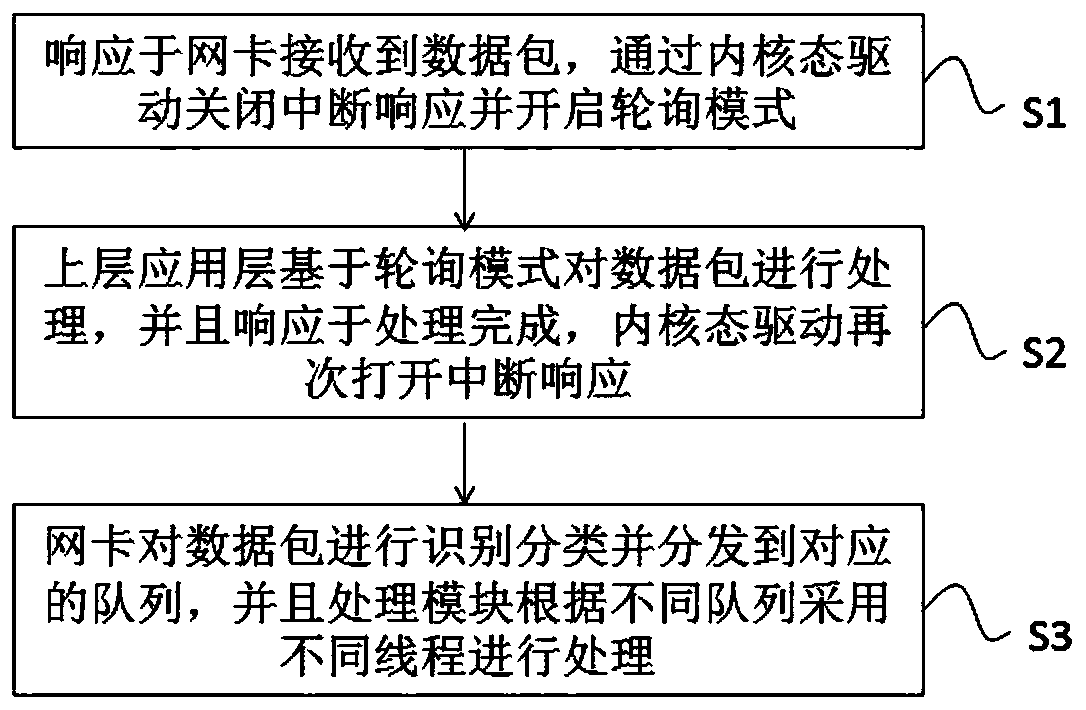

Data packet transceiving method and device, and medium

InactiveCN111211942AEasy to handleFast data sending and receivingData switching networksData packOperational system

The invention discloses a data packet transceiving method, which comprises the following steps of: in response to a data packet received by a network card, closing an interrupt response and starting apolling mode through kernel mode driving; the upper application layer processing the data packet based on the polling mode, and in response to completion of processing, the kernel mode driver openingthe interrupt response again; and the network card identifying and classifying the data packets and distributing the data packets to corresponding queues, and the processing module processing the data packets by adopting different threads according to different queues. The invention further discloses a computer device and a readable storage medium. According to the data packet transceiving methodand device and the medium, bypass processing is conducted on the kernel protocol stack of the operating system through the mixed interrupt mode, the flow classification and multi-queue technology andthe hardware acceleration technology, the network data packet does not pass through the kernel protocol stack any more, rapid data transceiving are achieved, and the processing performance of the network data packet is improved.

Owner:SHANDONG CHAOYUE DATA CONTROL ELECTRONICS CO LTD

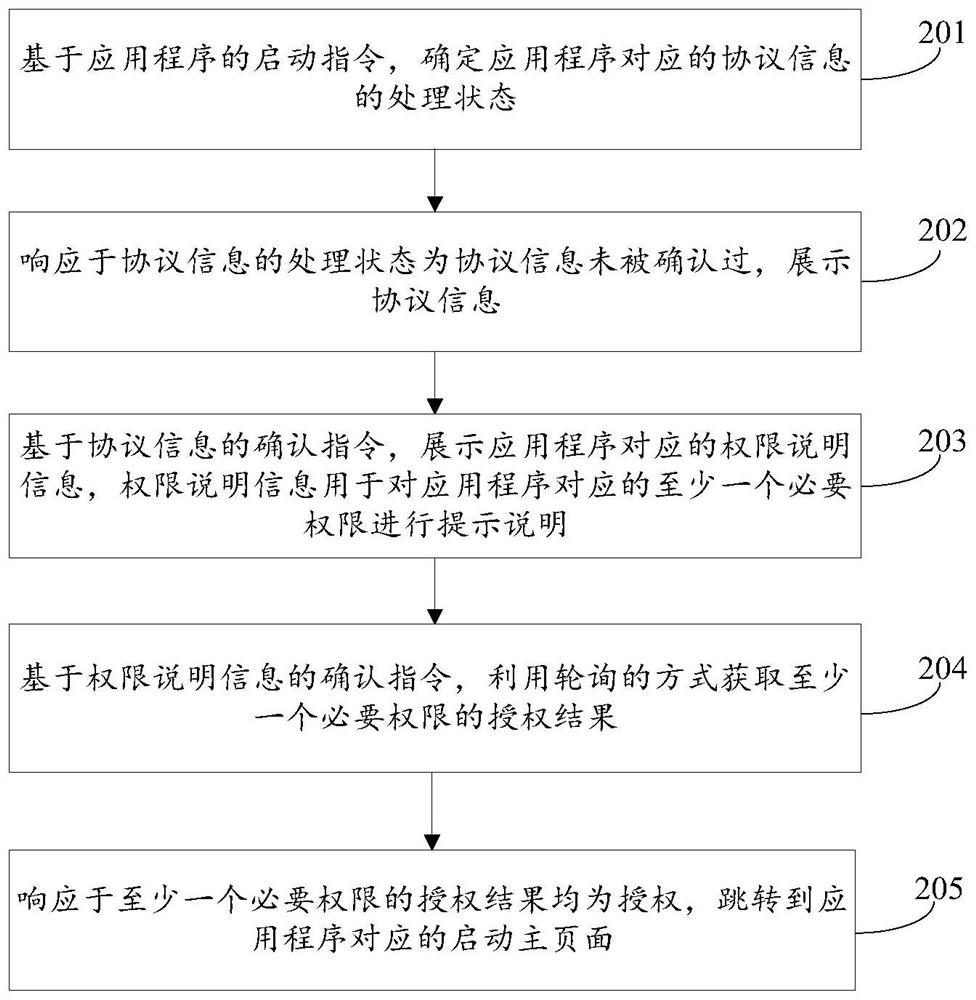

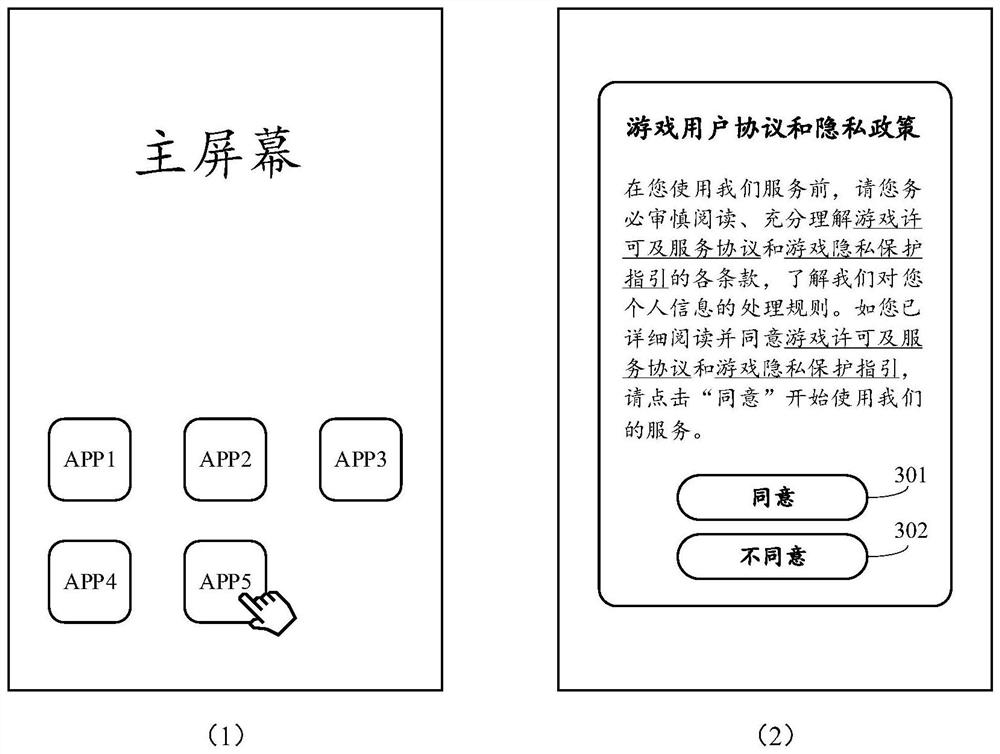

Permission control method, device and equipment and storage medium

PendingCN112131556AImprove protectionCircumvention of operations that violate user privacyDigital data authenticationSoftware engineeringUser privacy

The invention discloses a permission control method, device and equipment and a storage medium. The method comprises the steps of determining a processing state of protocol information corresponding to an application based on a starting instruction of the application; in response to the fact that the processing state of the protocol information is that the protocol information is not confirmed, displaying the protocol information; displaying permission description information corresponding to the application program based on a confirmation instruction of the protocol information; based on a confirmation instruction of the permission description information, obtaining an authorization result of at least one necessary permission in a polling mode; and in response to the fact that the authorization result of the at least one necessary permission is authorization, skipping to a starting main page corresponding to the application program. Based on the mode, permission control is carried outbefore the application program is successfully started, the phenomenon that operation invading user privacy is executed once the application program is started can be avoided, permission control is more standard and effective, the protection degree of the user privacy can be improved, and safety is high.

Owner:TENCENT TECH (SHENZHEN) CO LTD

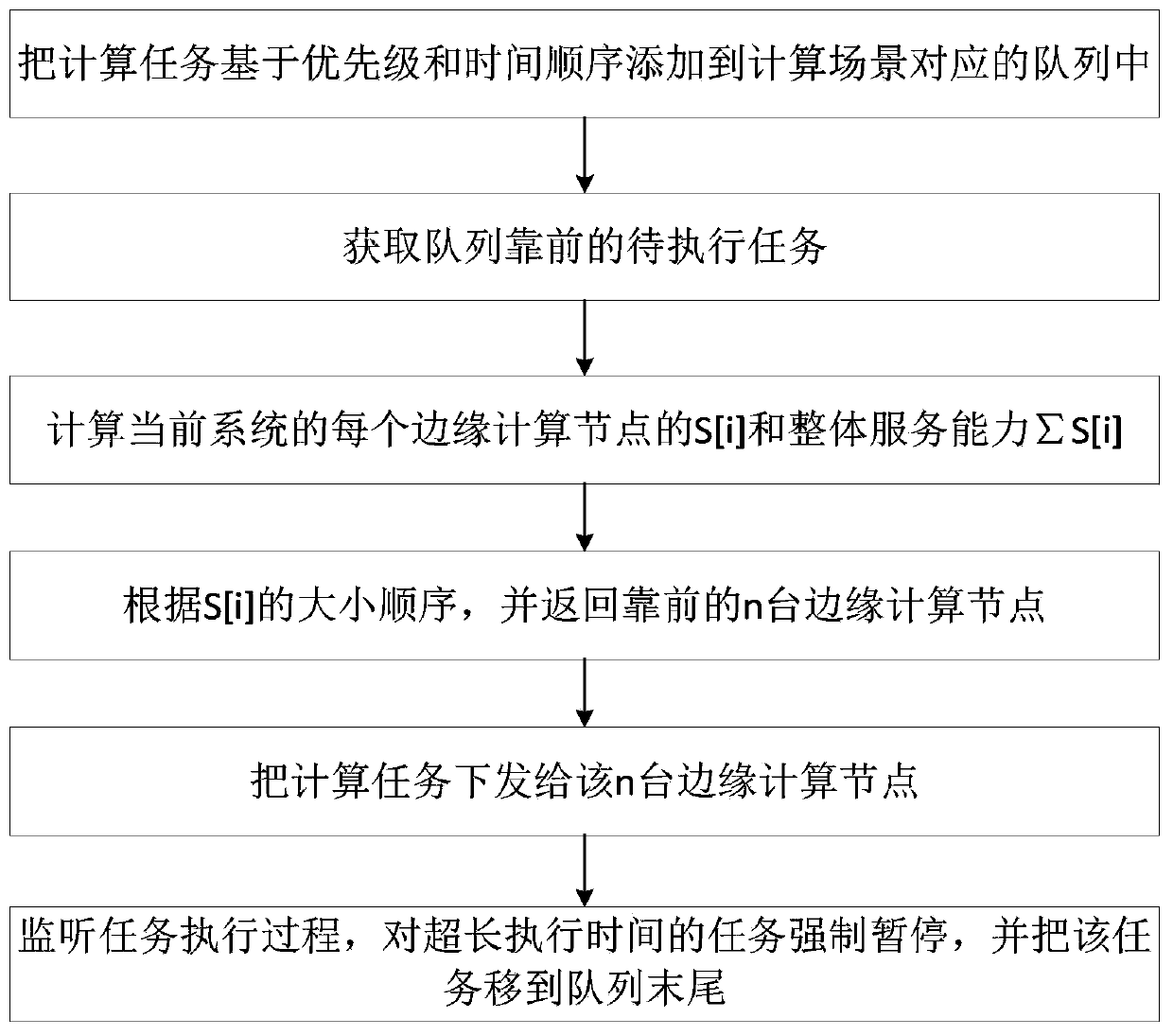

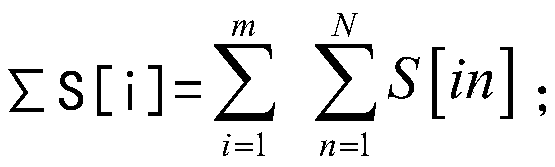

Edge computing scheduling algorithm and system

PendingCN111597025AWill not blockGuaranteed normal executionProgram initiation/switchingResource allocationEdge computingParallel computing

The invention discloses an edge computing scheduling algorithm and system. The edge computing scheduling algorithm comprises the following steps: acquiring X computing tasks and determining the priority of each computing task; distributing the X computing tasks to queues with different priority levels according to the priority levels to form Y ready queues with different priority levels; executingthe tasks in the priority ready queues for different optimal level queue execution time Ty, pausing the tasks if the tasks are not completely executed in the Ty, putting the tasks at the tails of thepriority ready queues to queue again, and selecting n optimal edge computing nodes when each task is executed. Tasks with different priorities are located in different queues and are executed in a polling mode, and the tasks cannot be blocked for a long time, thus guaranteeing that all the tasks are normally executed. According to the edge computing scheduling algorithm and system, the service capability of the edge computing nodes is quantified, and it is guaranteed that the user task selects the optimal node to be executed.

Owner:行星算力(深圳)科技有限公司

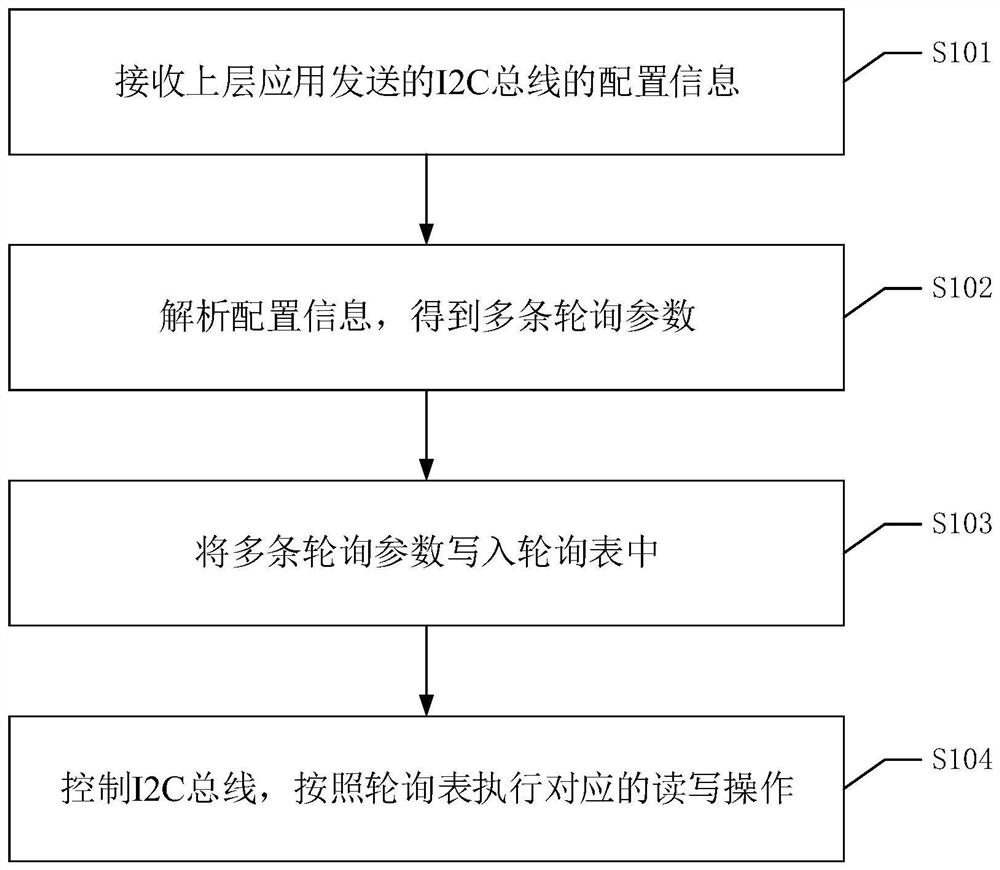

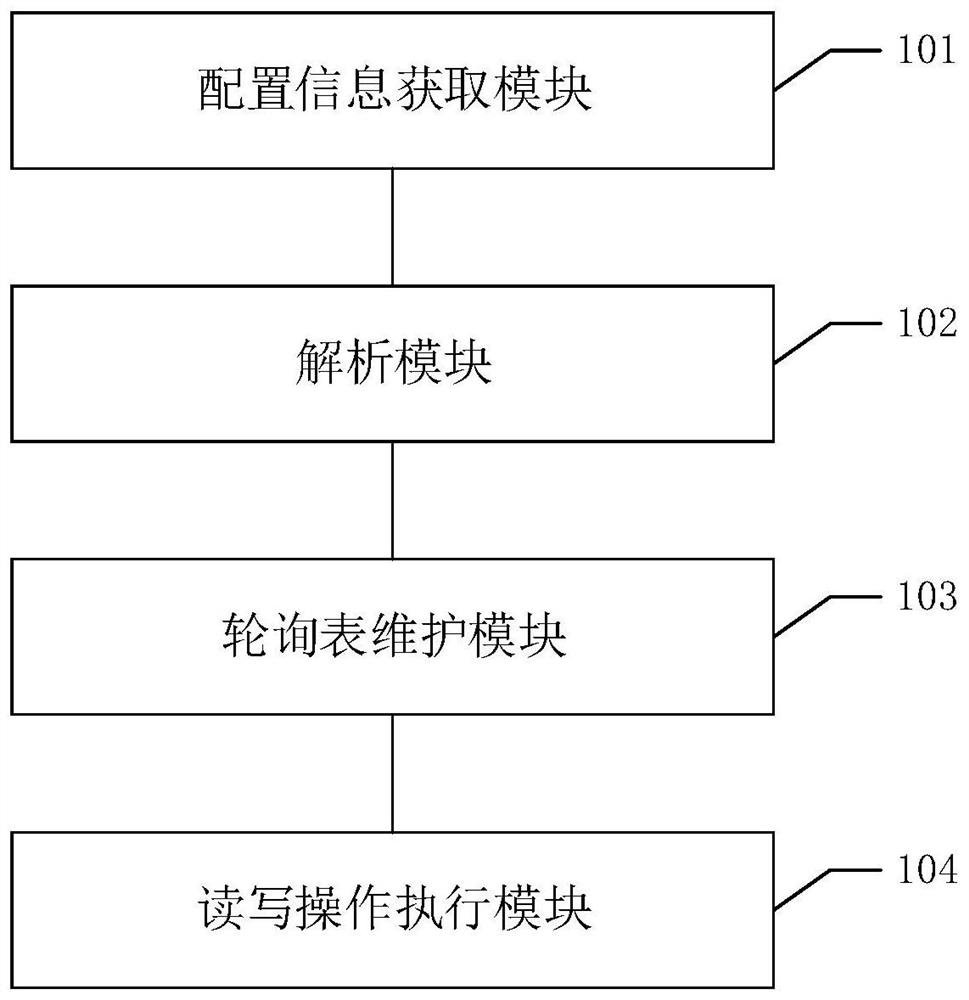

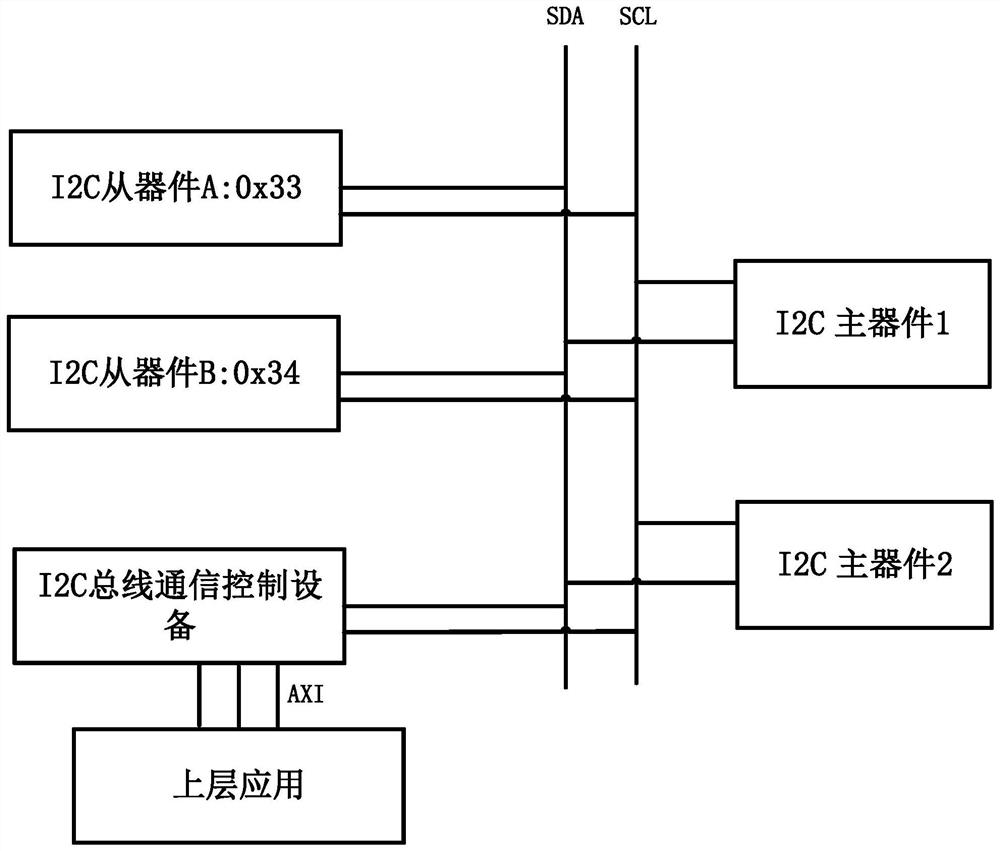

I2C bus communication control method, device and system and readable storage medium

The invention discloses an I2C bus communication control method, device and system and a readable storage medium. The method comprises the following steps: receiving configuration information of an I2C bus sent by an upper-layer application; analyzing the configuration information to obtain a plurality of polling parameters; writing the plurality of polling parameters into a polling table; and controlling the I2C bus, and executing a corresponding read-write operation according to the polling table. According to the method, the read-write operation executed on the I2C bus is carried out according to the polling table, so that the accurate communication condition of the I2C bus can be obtained directly based on the polling table without polling the access bus state; congestion risks can bereduced, and when a plurality of main devices exist, the access efficiency of a single main device can also be achieved. Management and maintenance are convenient; and if hardware or function change occurs, only the polling table needs to be updated, a program does not need to be modified, and function update or hardware replacement can be quickly adapted.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

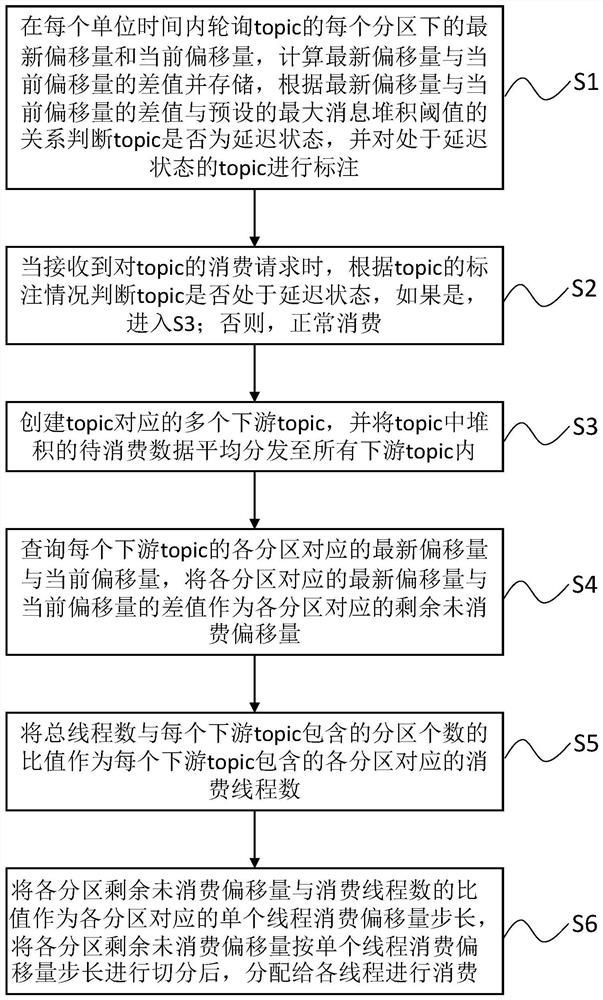

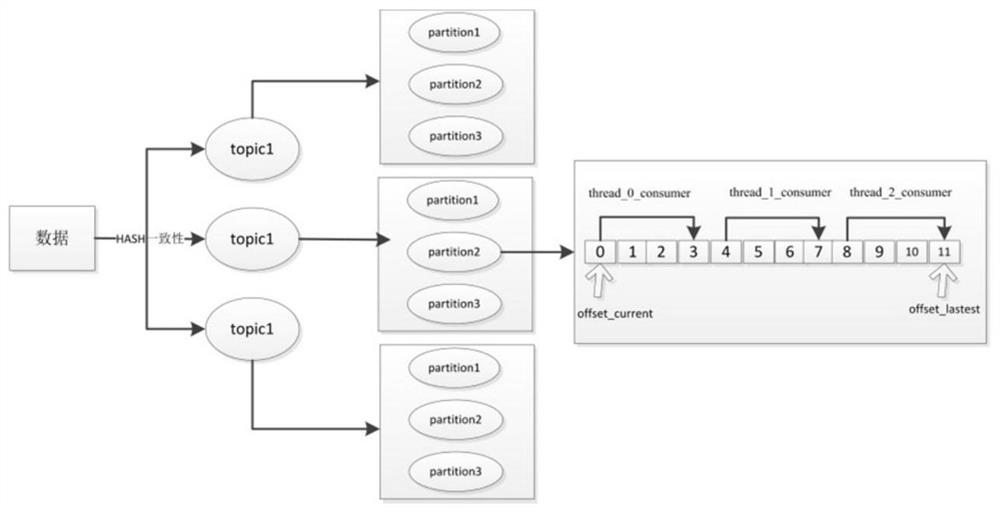

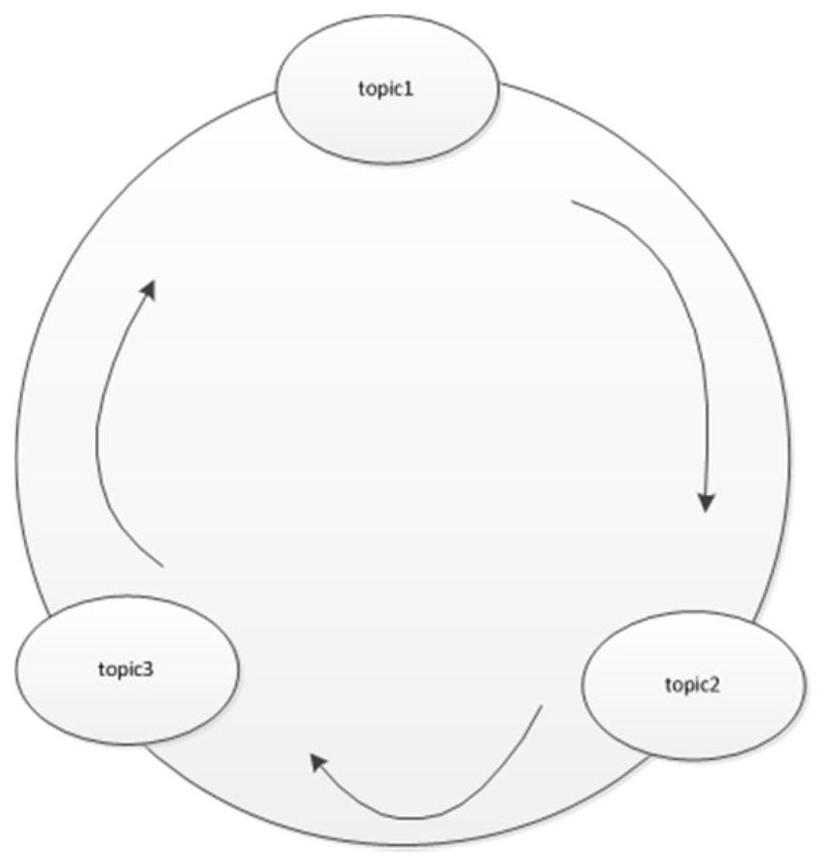

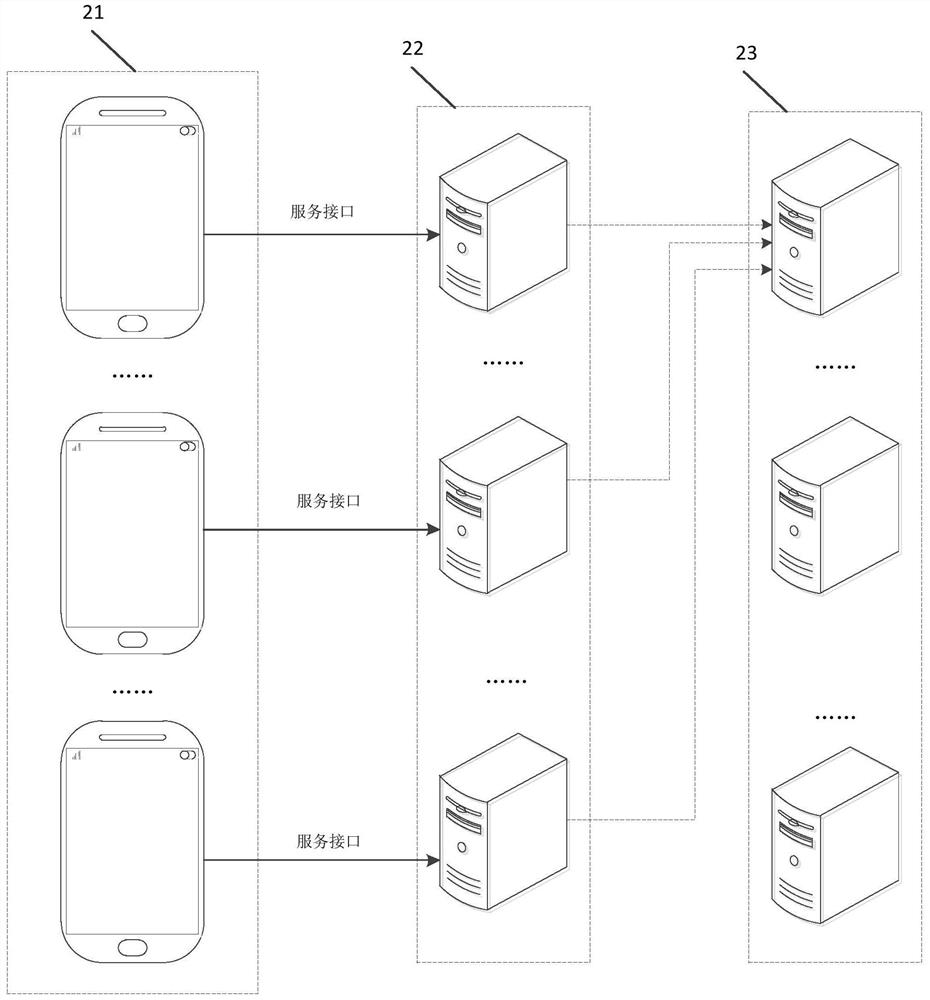

Kafka-based stacked data consumption method, terminal equipment and storage medium

ActiveCN114827049AImprove data throughputAvoid influenceTransmissionEnergy efficient computingTerminal equipmentPolling

The invention relates to a kafka-based stacked data consumption method, terminal equipment and a storage medium, and the method comprises the steps: carrying out the polling calculation of a difference value between a latest offset and a current offset of a topic under each partition in each unit time, storing the difference value, and judging whether the topic is in a delay state or not, and carrying out the marking; when a consumption request for the topic is received, judging whether the topic is in a delay state or not, if so, creating a plurality of downstream topics, and averagely distributing the accumulated data to be consumed to all the downstream topics; and according to the total thread count and the number of the partitions, after the remaining non-consumed offset of each partition is averagely segmented according to the total thread count, the remaining non-consumed offset is allocated to each thread for consumption. According to the method, the number of the top partitions can be flexibly increased, and the data throughput in the service peak period can be increased.

Owner:XIAMEN FUYUN INFORMATION TECH CO LTD

Configuration separation device based on galaxy framework and implementation method

InactiveCN113031979AAchieve separationImplement refresh cacheProgram loading/initiatingSoftware deploymentPathPingConfigfs

The invention relates to the technical field of computer information, provides a configuration separation device based on a galaxy framework and an implementation method, and aims to solve the time problem of the manual intervention stage. The main scheme comprises the following steps: defining a timed polling method G, and obtaining an environment variable E containing a path and a file name of a stored configuration file D by the method G; using the method G to obtain a comparison result by comparing the value of the historical modification time T1 of the configuration file with the modification time T2 of the stored configuration file D, synchronizing the information of the configuration file D to the local cache file F according to the comparison result, calling and starting the script method B after the local cache file F is refreshed, obtaining the information of the latest local cache file F by the script method B, updating the information of the latest local cache file F, and further loading the configuration file information in the local cache file F into the system A, and completing cache file refreshing of the configuration file.

Owner:武汉众邦银行股份有限公司

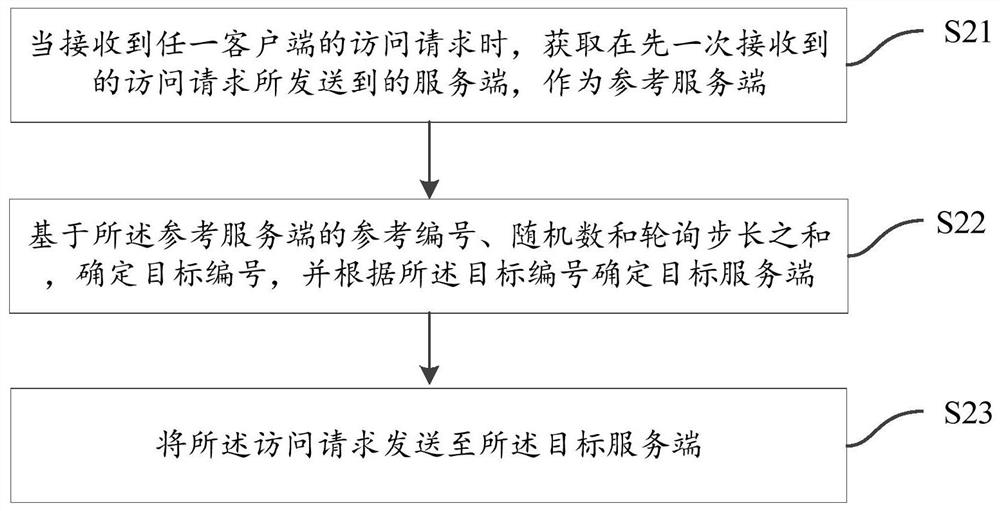

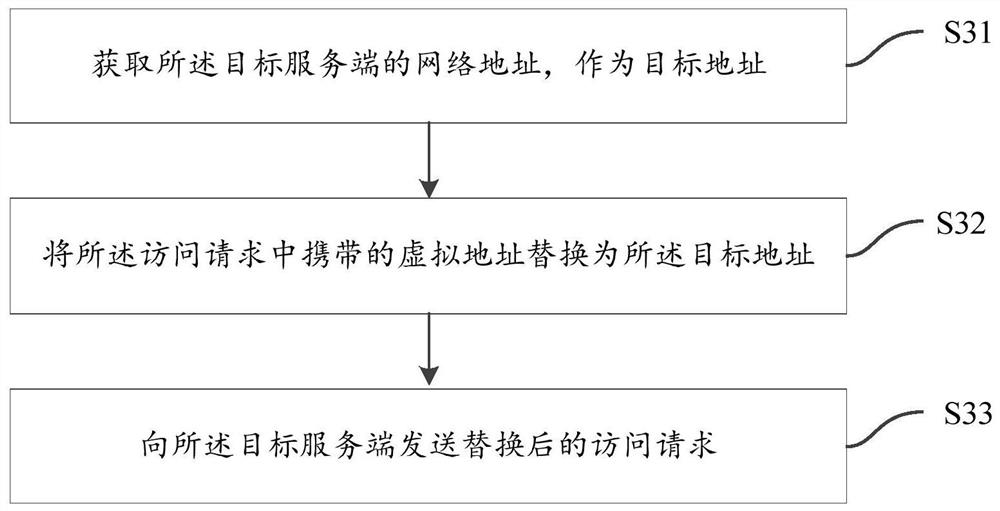

Request scheduling method and device and storage medium

ActiveCN113268329AAvoid heavy load situationsProgram initiation/switchingEnergy efficient computingStochastic algorithmsEngineering

The invention relates to a request scheduling method and device and a storage medium, which are applied to scheduling equipment. According to the scheme, a reference server side sent by a previous access request is obtained, the target number is determined based on the sum of the reference number, the random number and the polling step length of the reference server side, it is ensured that the target number is inconsistent with the reference number, and the target server side is determined according to the target number. According to the scheme, the polling algorithm and the random algorithm are combined to determine the target number, it is guaranteed that the target number is changed compared with the reference number through the polling step length, and the problem that the target number is determined through the fixed step length is solved through the random number; the problem that when the access requests are sent to the scheduling device of the same server side and forwarded again in a method only depending on a polling algorithm, all the access requests are still sent to another server side is solved, and the situation that the load of the server side is too large due to the fact that a hotspot server side appears is avoided.

Owner:BEIJING QIYI CENTURY SCI & TECH CO LTD

Automated test platform utilizing status register polling with temporal ID

ActiveUS9213616B2Detecting faulty hardware by remote testFunctional testingTest platformAutomatic testing

A segmented subsystem, for use within an automated test platform, includes a first subsystem segment configured to execute one or more instructions within the first subsystem segment. A second subsystem segment is configured to execute one or more instructions within the second subsystem segment. The first subsystem segment includes: a first functionality, a second functionality, and a status polling engine. The status polling engine is configured to: determine a first status for the first functionality and a second status for the second functionality, and generate a consolidated status indicator for the first subsystem segment based, at least in part, upon the first status for the first functionality and the second status for the second functionality.

Owner:XCERRA

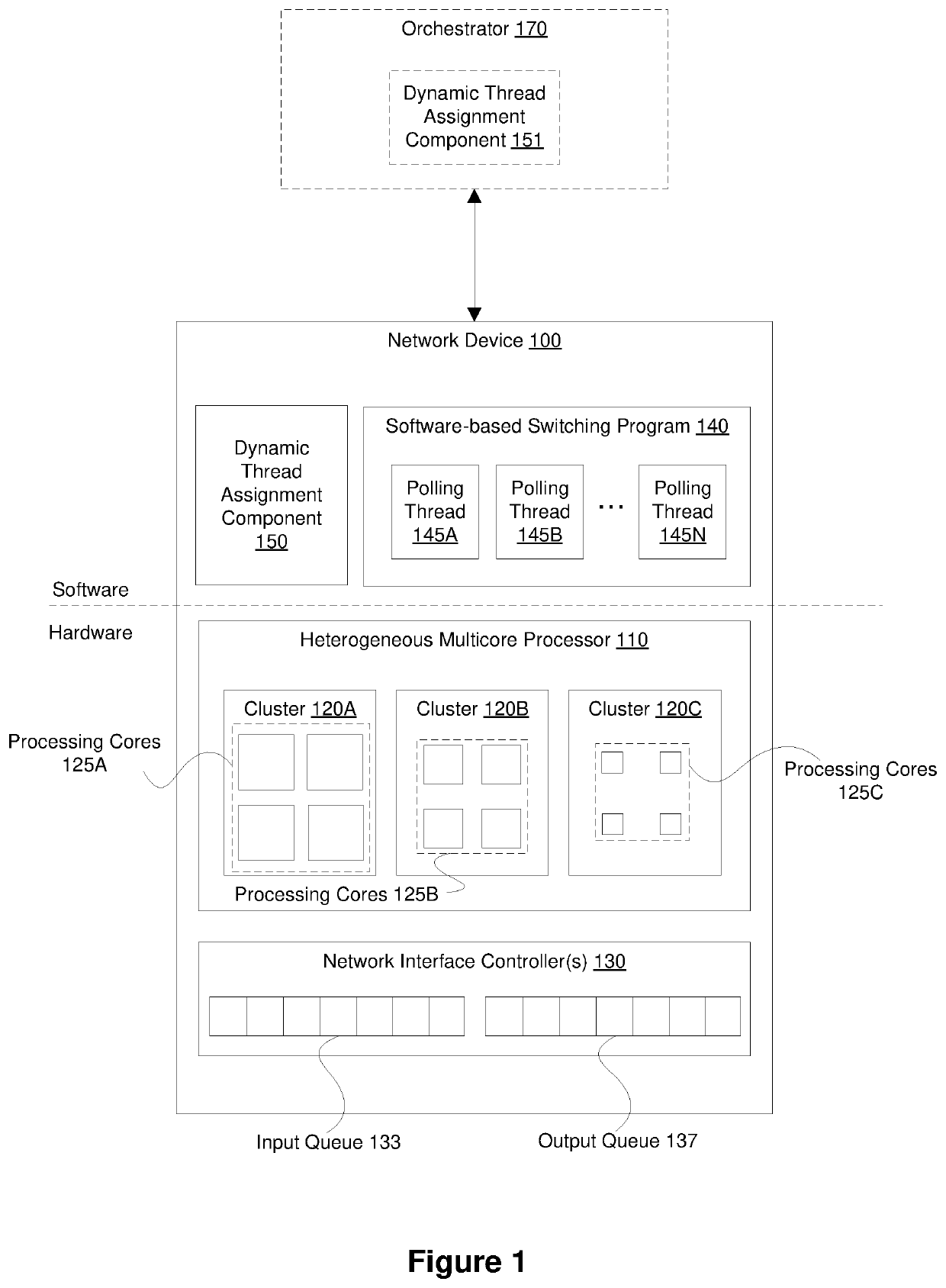

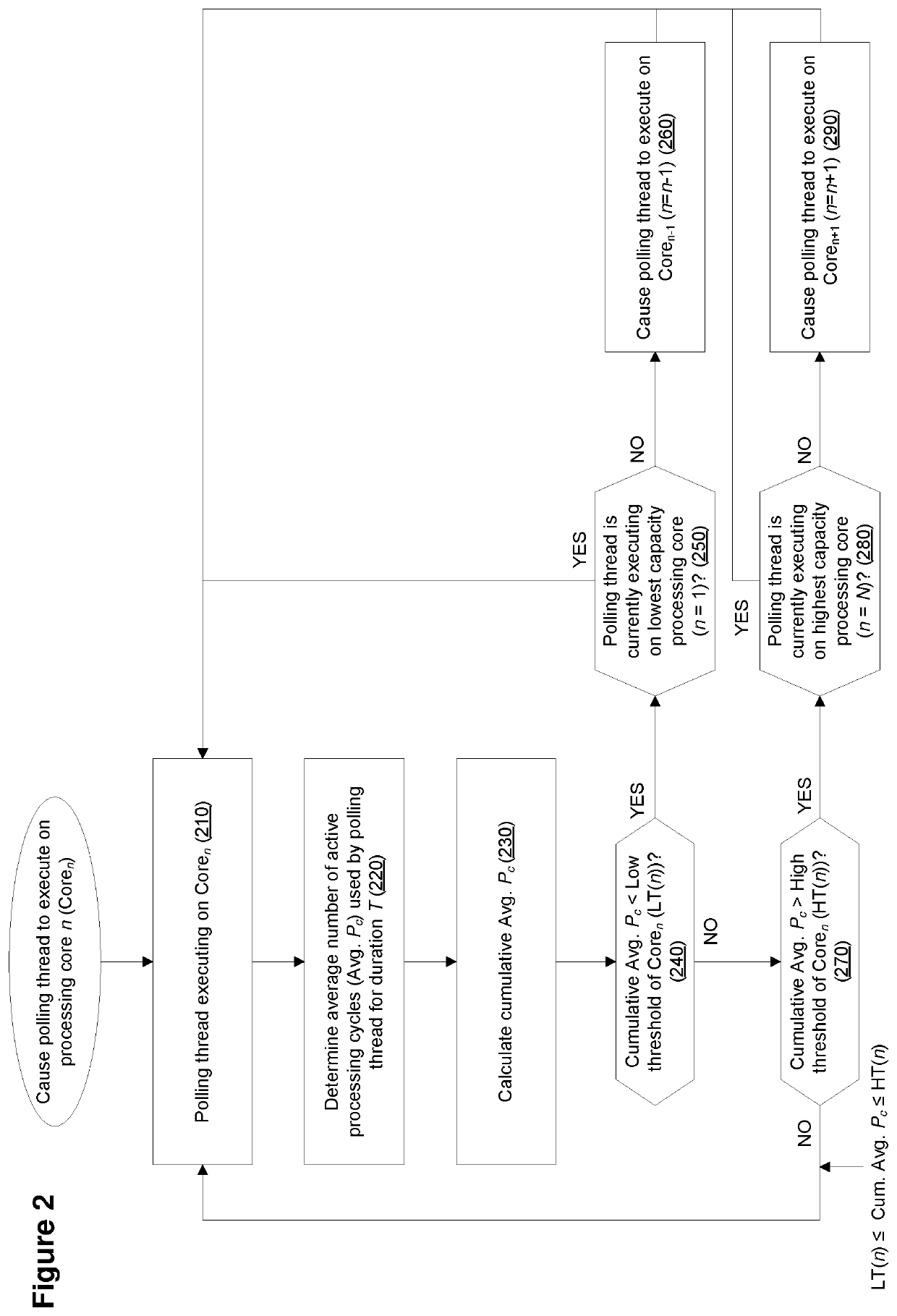

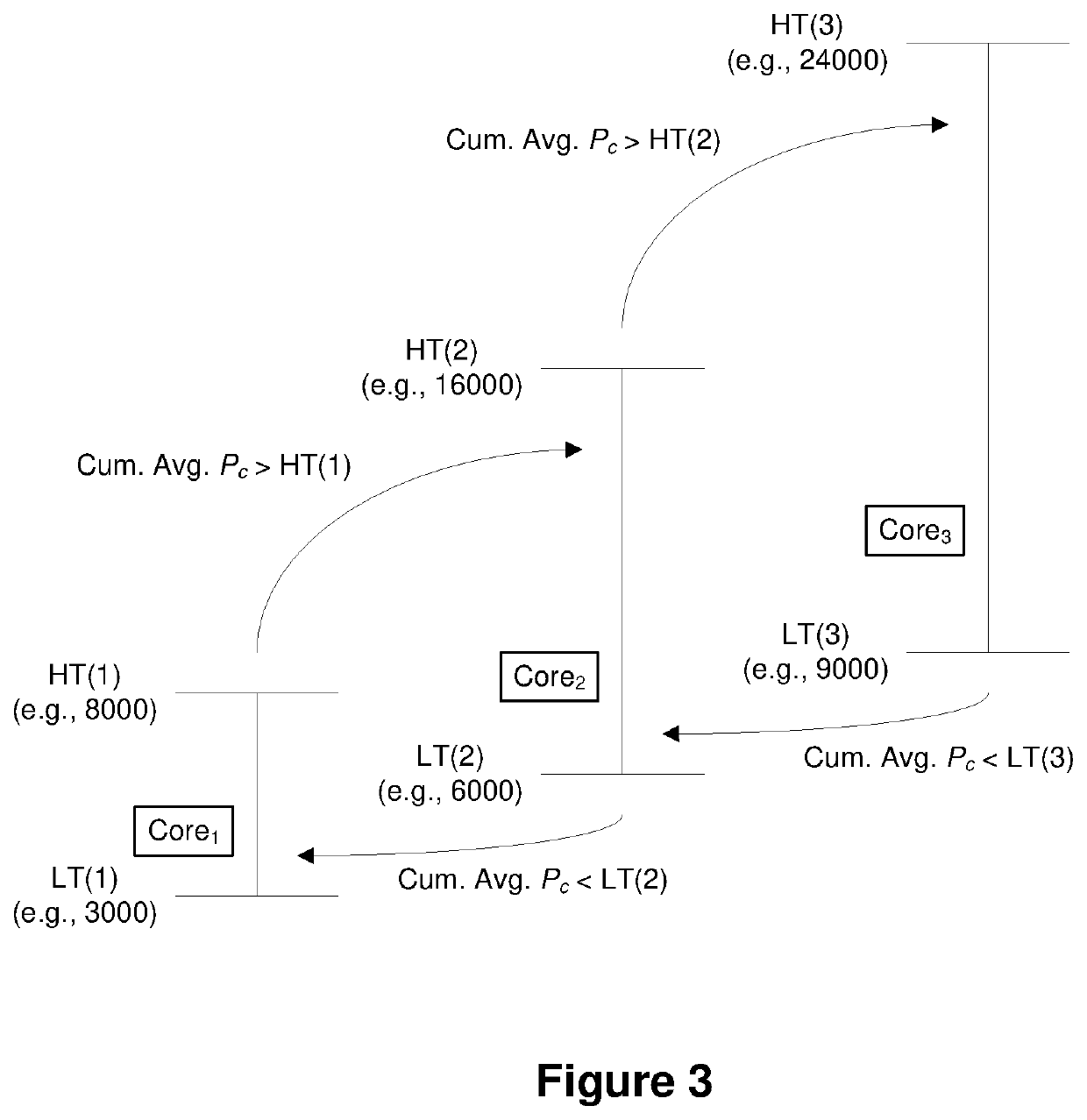

Efficient mechanism for executing software-based switching programs on heterogenous multicore processors

ActiveUS20220012108A1Program initiation/switchingInterprogram communicationProcessing coreParallel computing

A method is implemented by a network device for orchestrating execution of a polling thread of a software-based switching program on a heterogeneous multicore processor. The method includes causing the polling thread to be executed on a first processing core in a first cluster of a plurality of clusters of processing cores, determining a value indicative of a number of active processing cycles used by the polling thread, determining whether the value is higher than a high threshold associated with the first processing core or lower than a low threshold associated with the first processing core, and if so causing the polling thread to be moved to a second processing core in a second cluster of the plurality of clusters, where the second processing core has a different processing capacity than the first processing core.

Owner:TELEFON AB LM ERICSSON (PUBL)

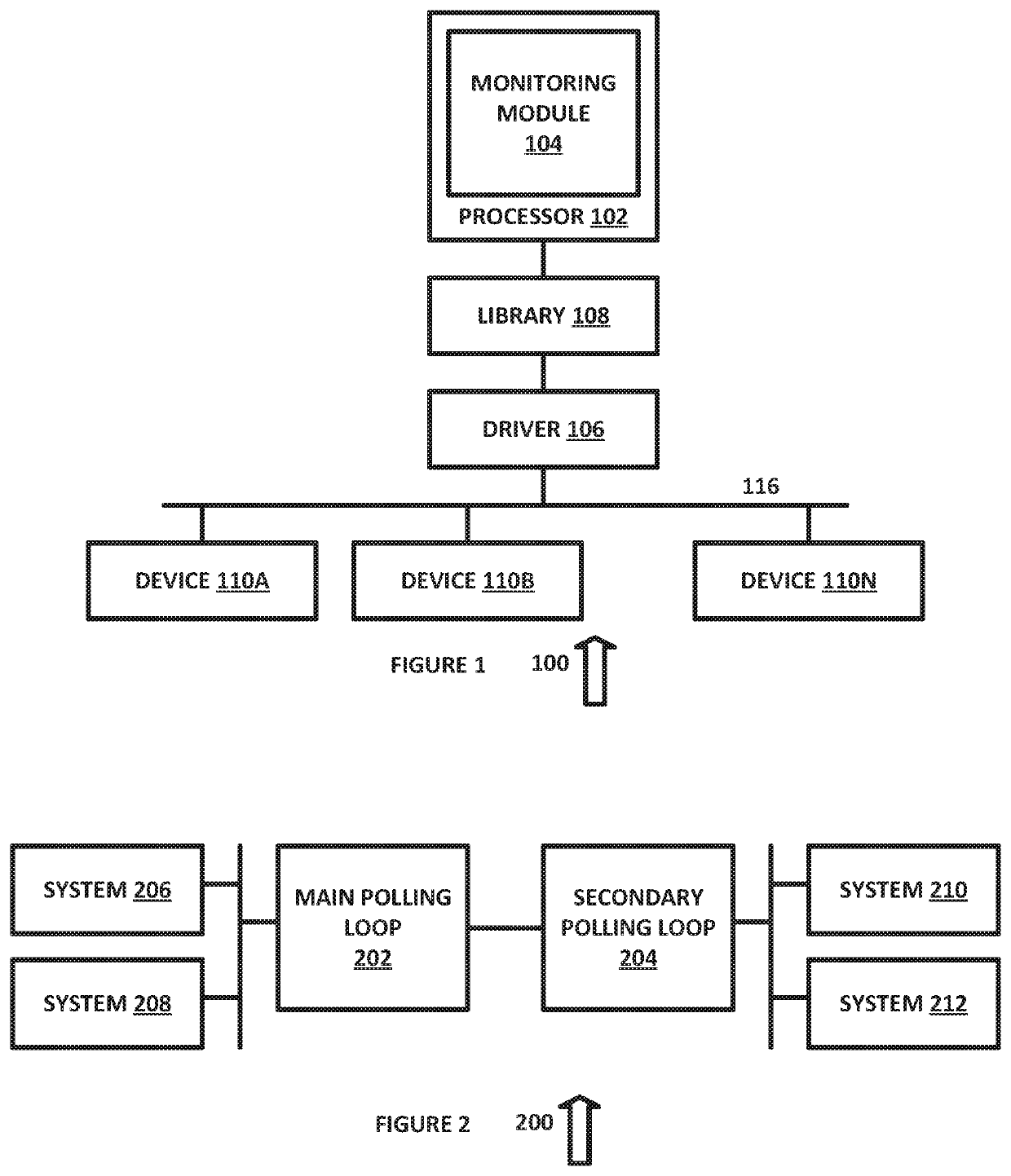

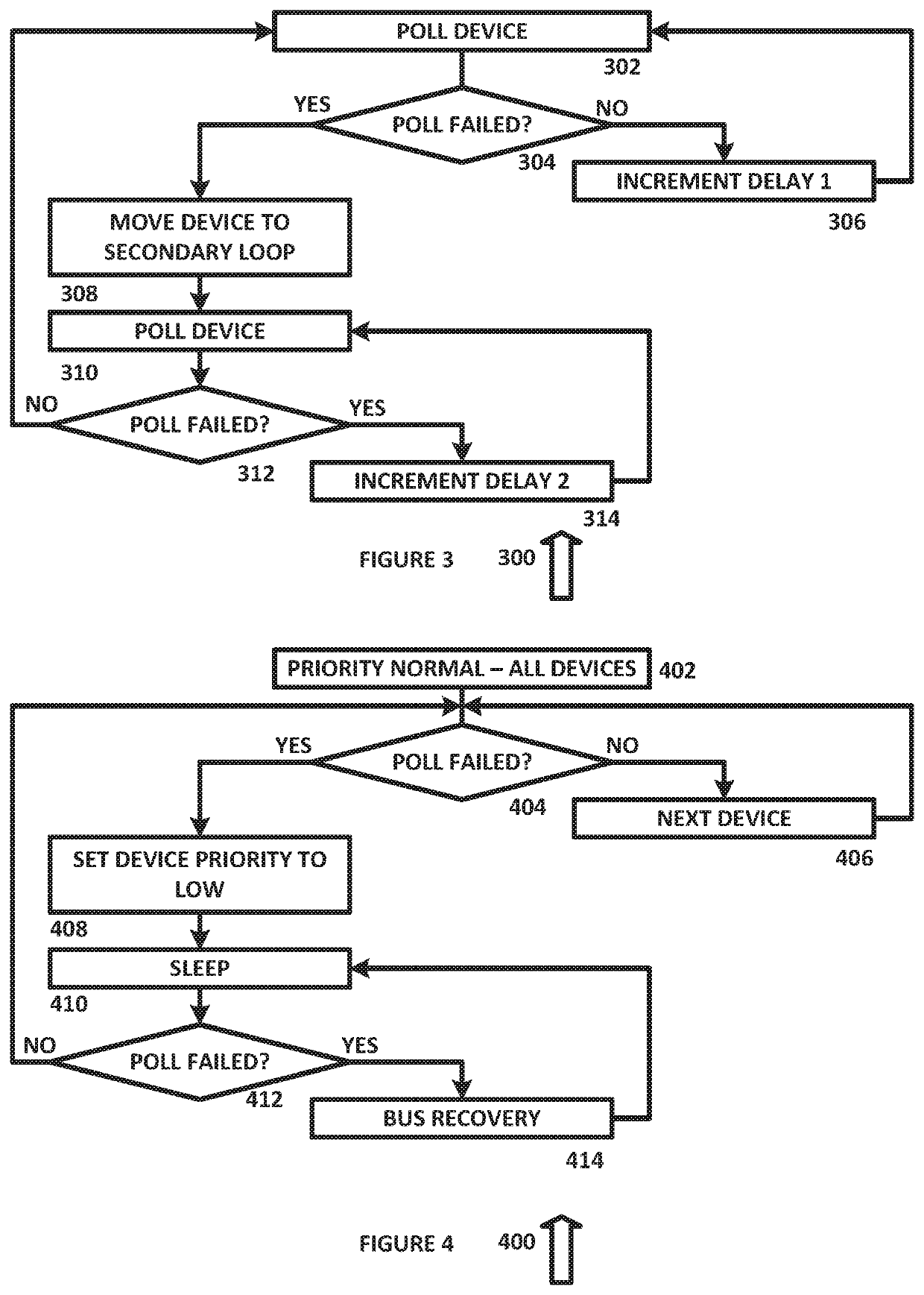

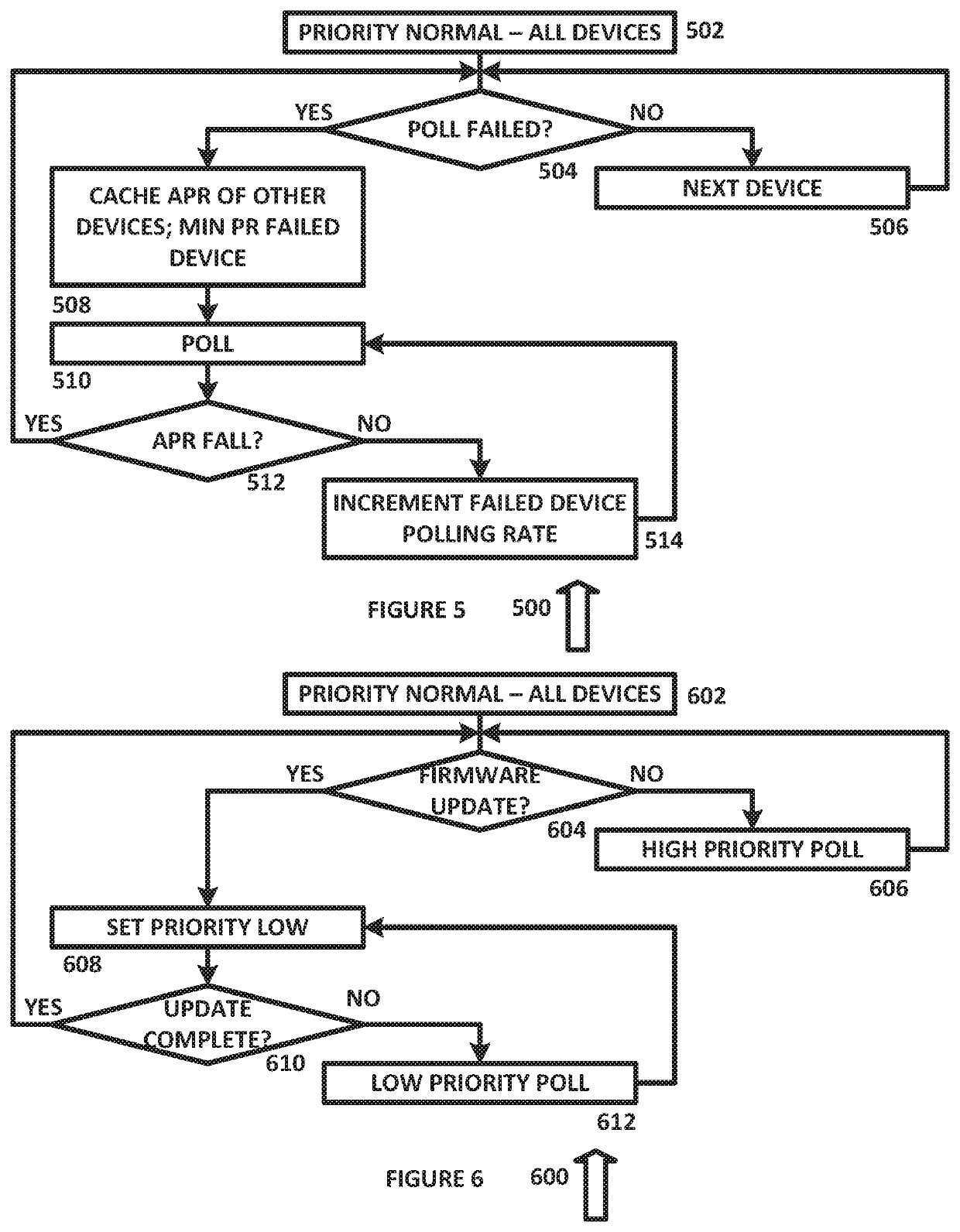

System and method to provide optimal polling of devices for real time data

A system for polling components is disclosed that includes a plurality of processors and a control system configured to interface with each of the plurality of processors, and to 1) poll each of the plurality of processors using a first polling loop and 2) transfer one of the plurality of processors to a second polling loop if the one of the plurality of processors is non-responsive to the poll.

Owner:DELL PROD LP

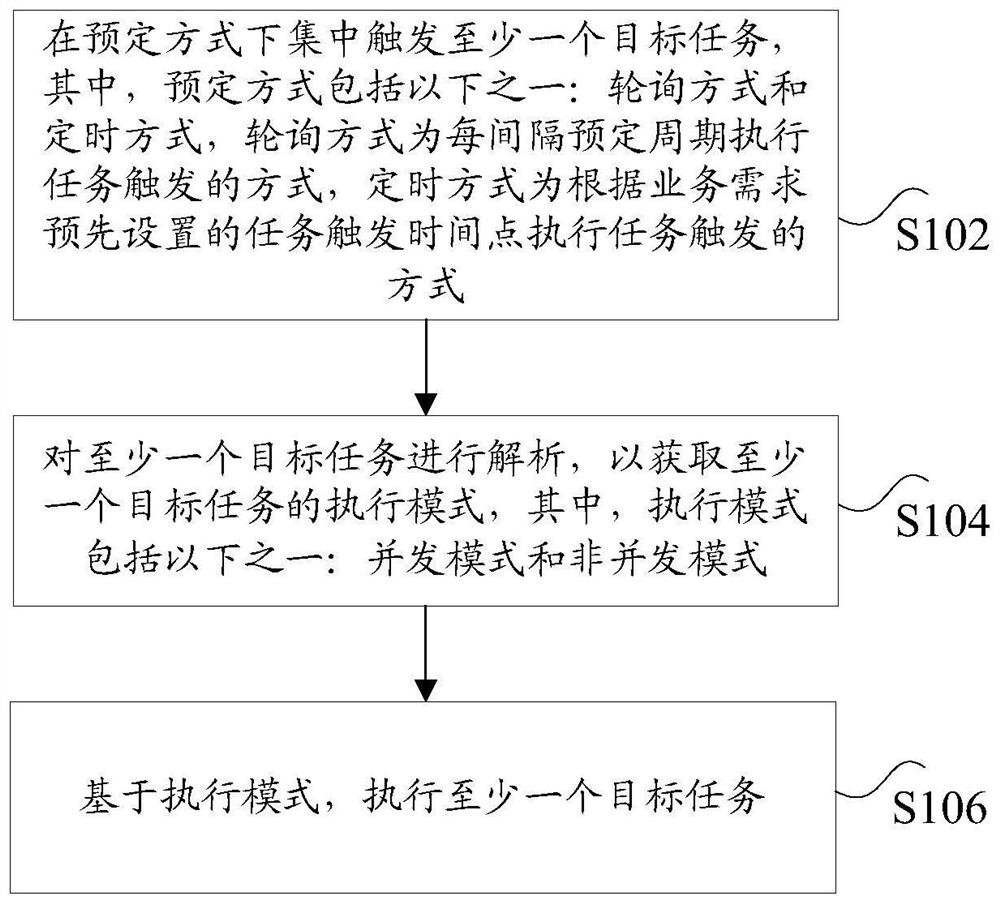

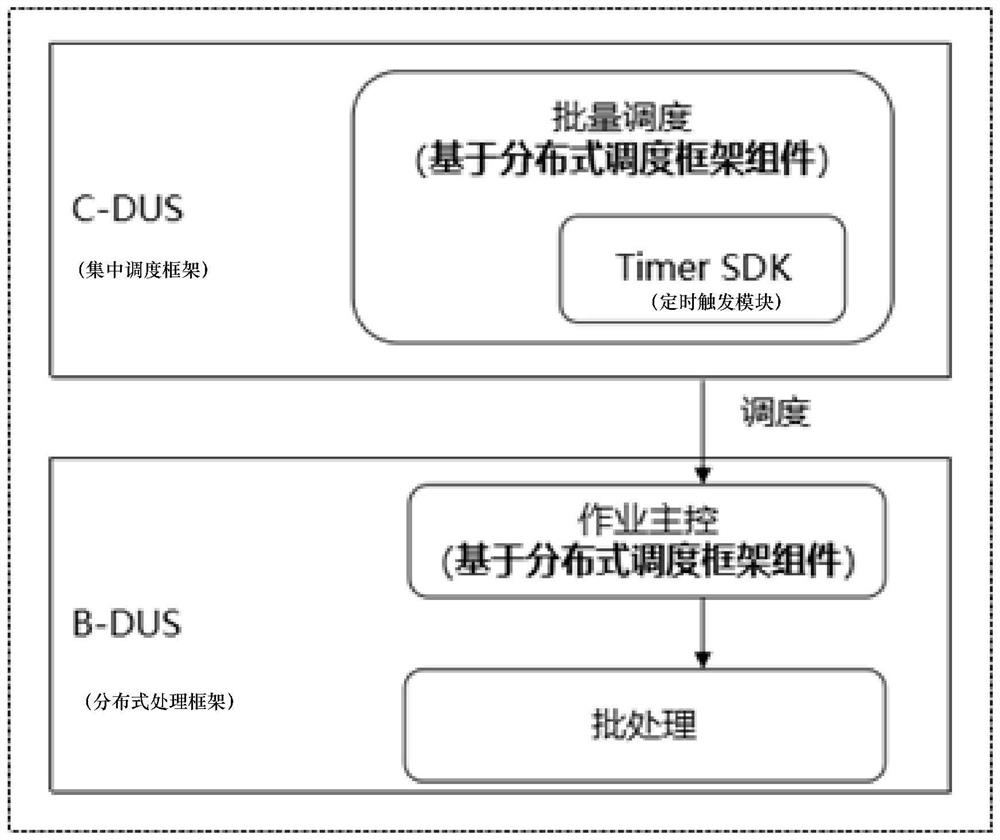

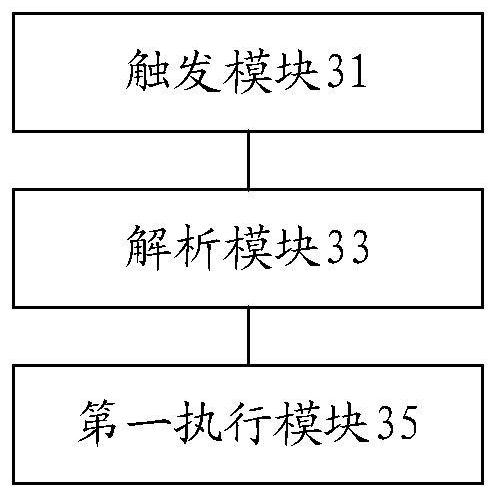

Batch task processing method and device and computer readable storage medium

PendingCN114327906AImprove the efficiency of batch processing tasksSolve technical problems that reduce the efficiency of task processingResource allocationComputer hardwareEngineering

The invention discloses a batch task processing method and device and a computer readable storage medium. The method comprises the steps that at least one target task is triggered in a centralized mode in a preset mode, the preset mode comprises one of a polling mode and a timing mode, the polling mode is a mode of executing task triggering every preset period, and the timing mode is a mode of executing task triggering according to task triggering time points preset according to service requirements; the at least one target task is analyzed to obtain an execution mode of the at least one target task, and the execution mode comprises one of a concurrent mode and a non-concurrent mode; and executing at least one target task based on the execution mode. The technical problem that the task processing efficiency is reduced due to the fact that centralized timing scheduling under multiple nodes cannot be supported at the same time in the prior art is solved.

Owner:POSBANK

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com