Patents

Literature

1373 results about "Processing core" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

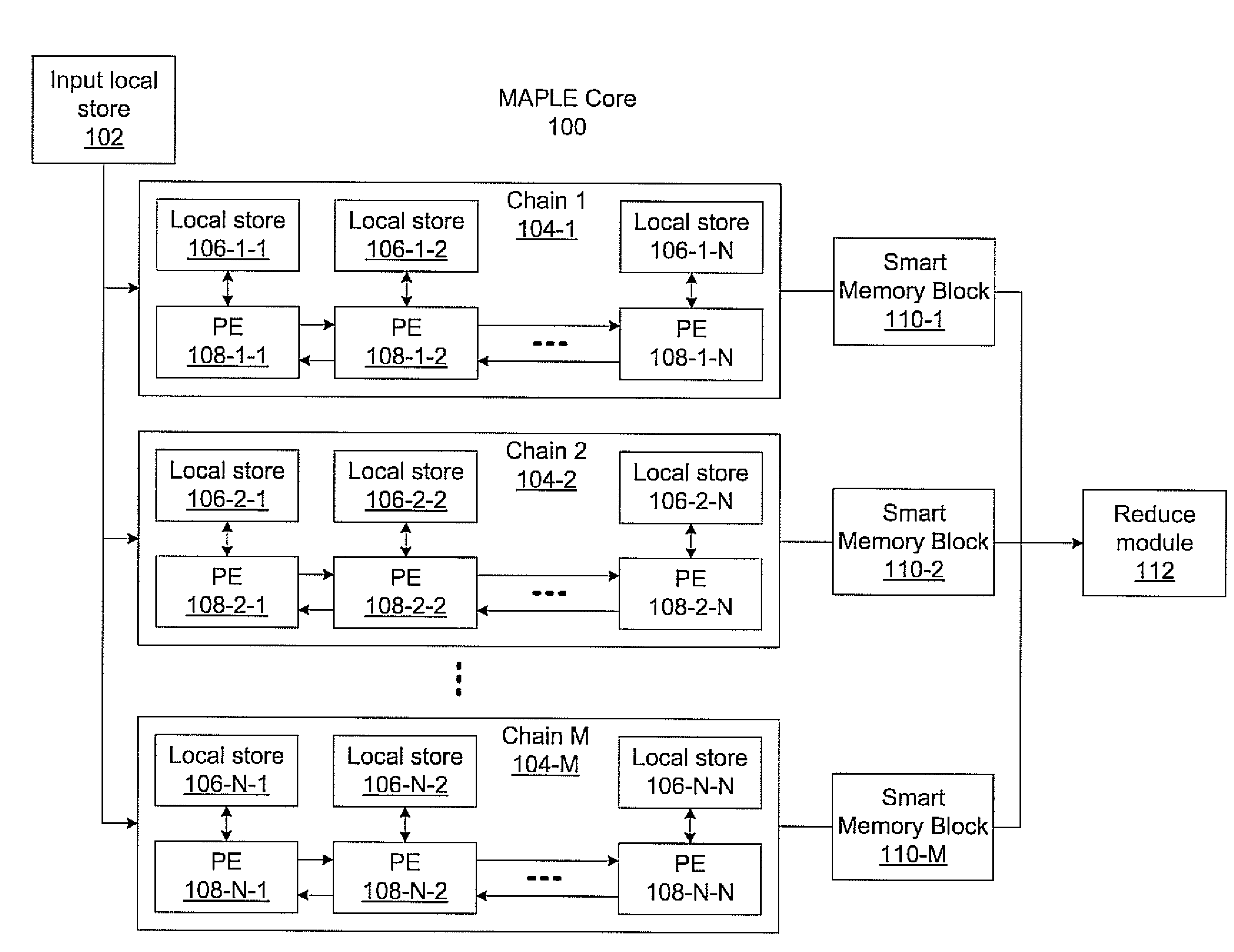

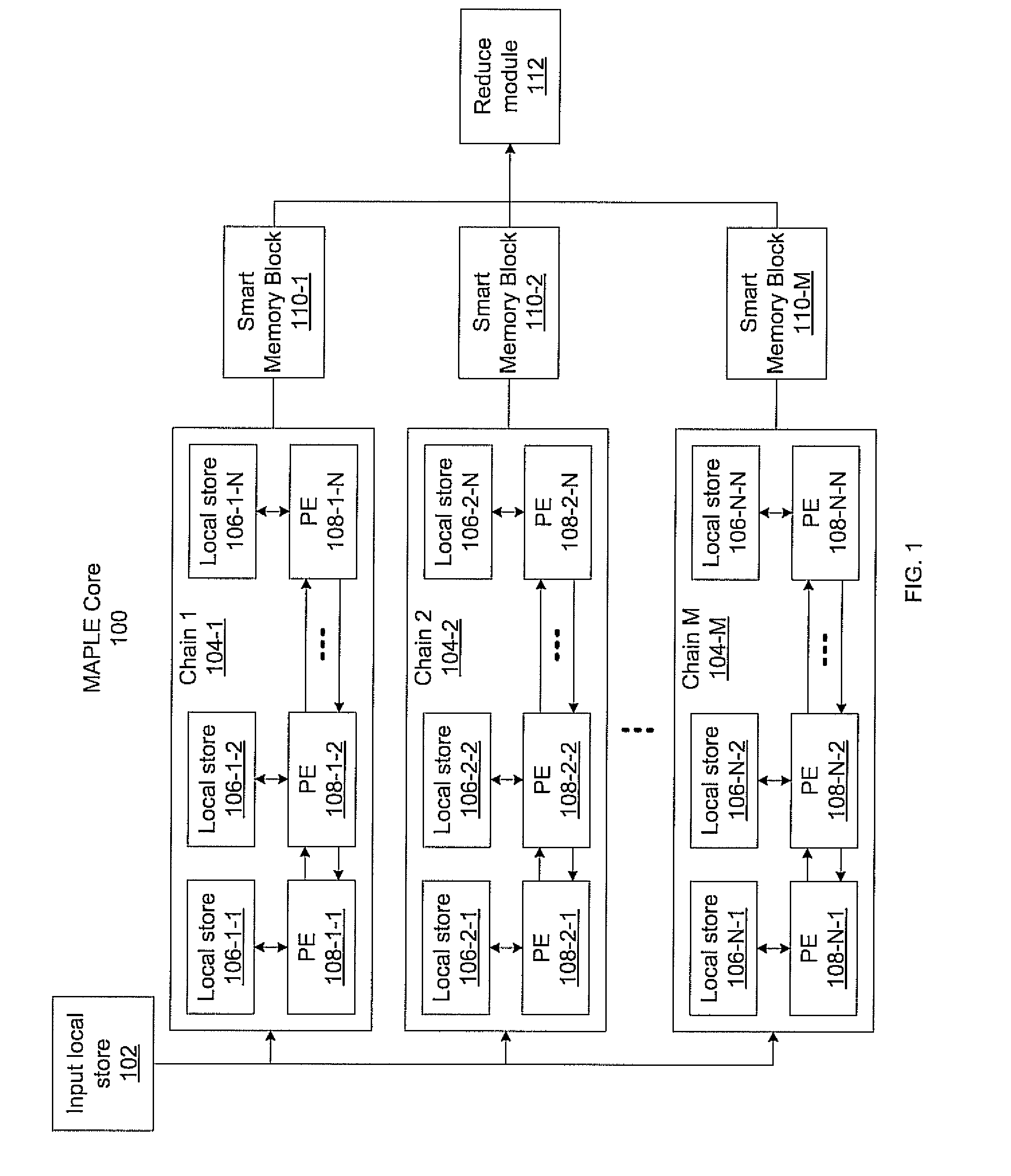

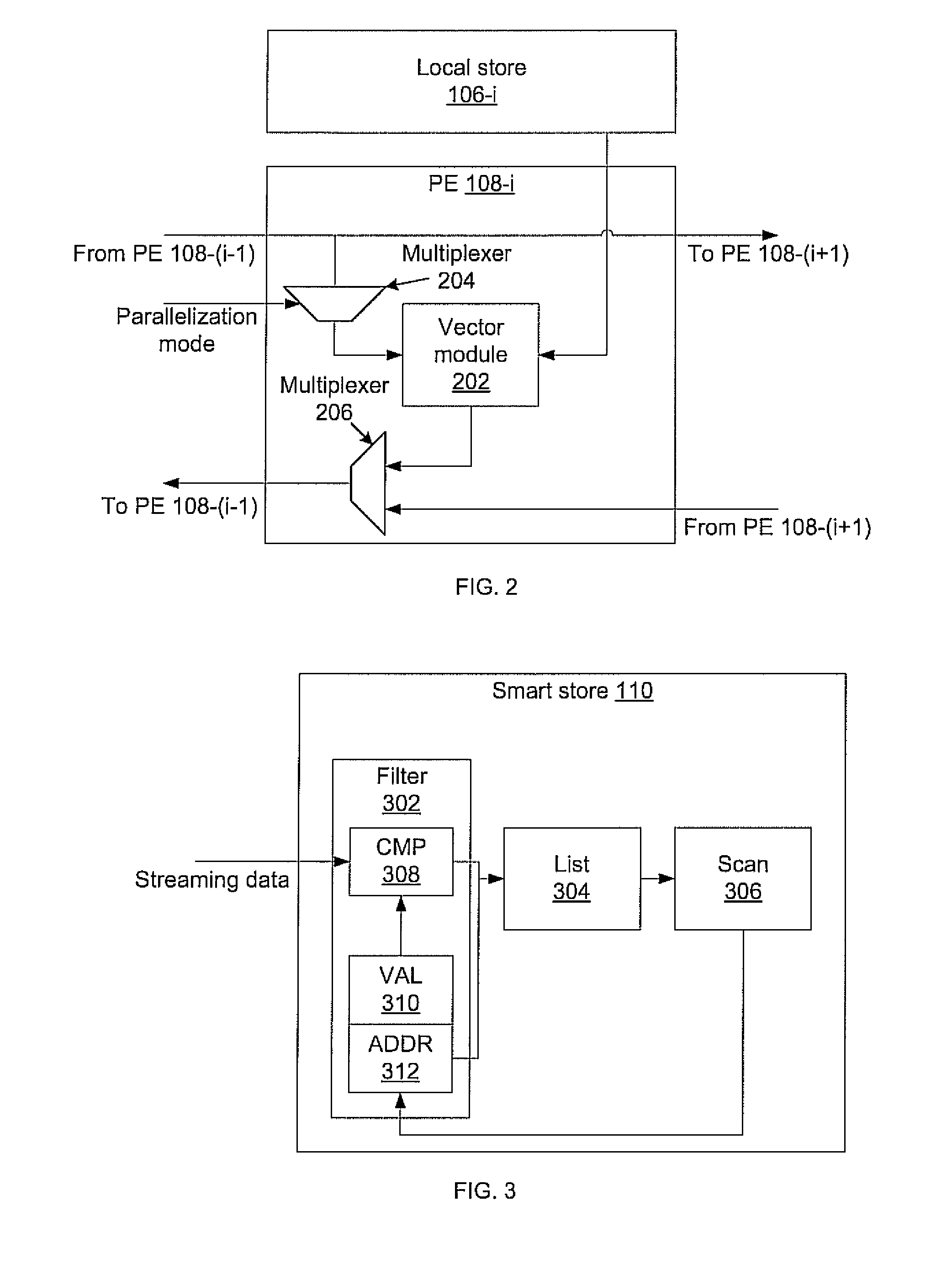

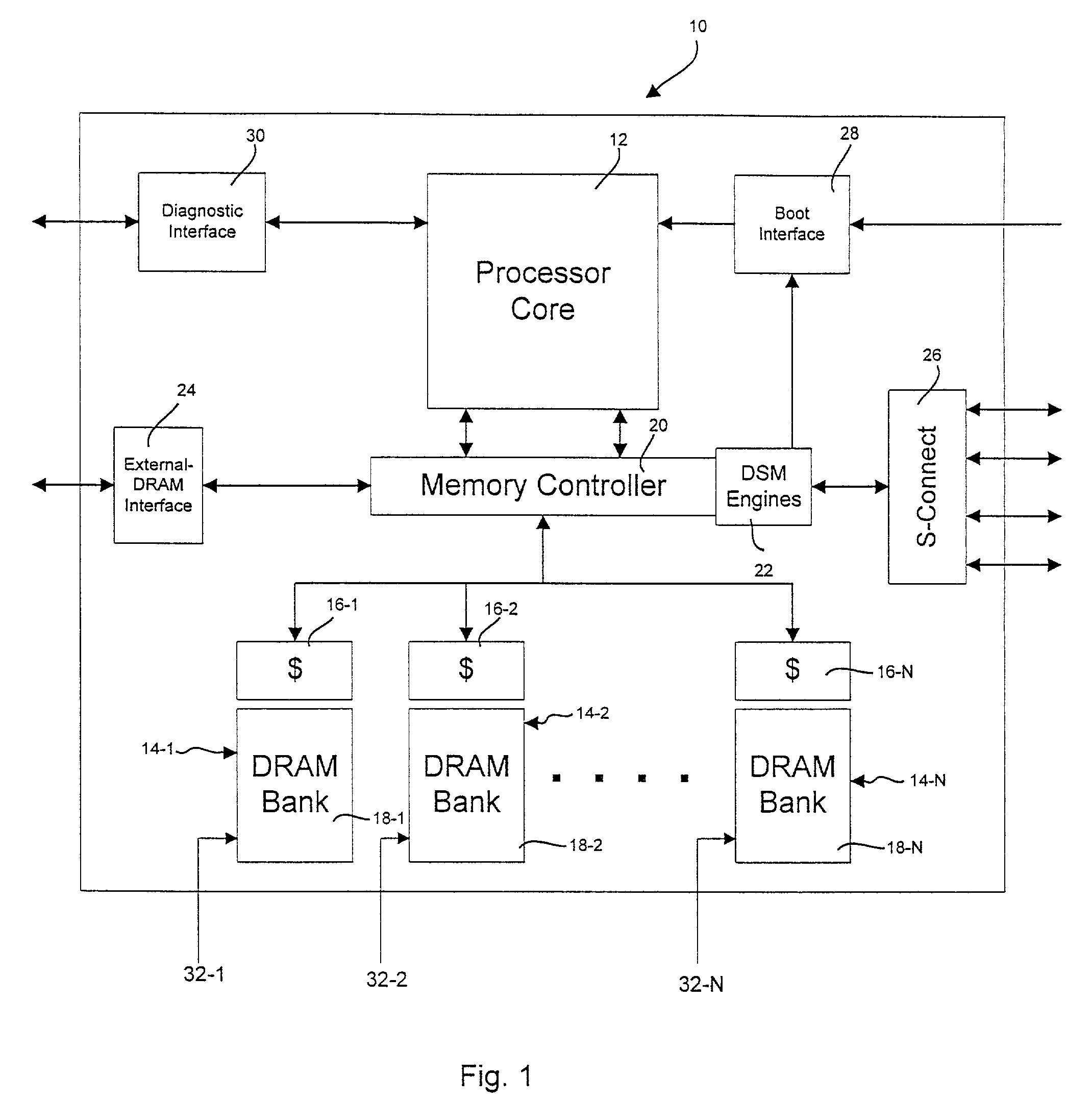

Massively parallel, smart memory based accelerator

Systems and methods for massively parallel processing on an accelerator that includes a plurality of processing cores. Each processing core includes multiple processing chains configured to perform parallel computations, each of which includes a plurality of interconnected processing elements. The cores further include multiple of smart memory blocks configured to store and process data, each memory block accepting the output of one of the plurality of processing chains. The cores communicate with at least one off-chip memory bank.

Owner:NEC CORP

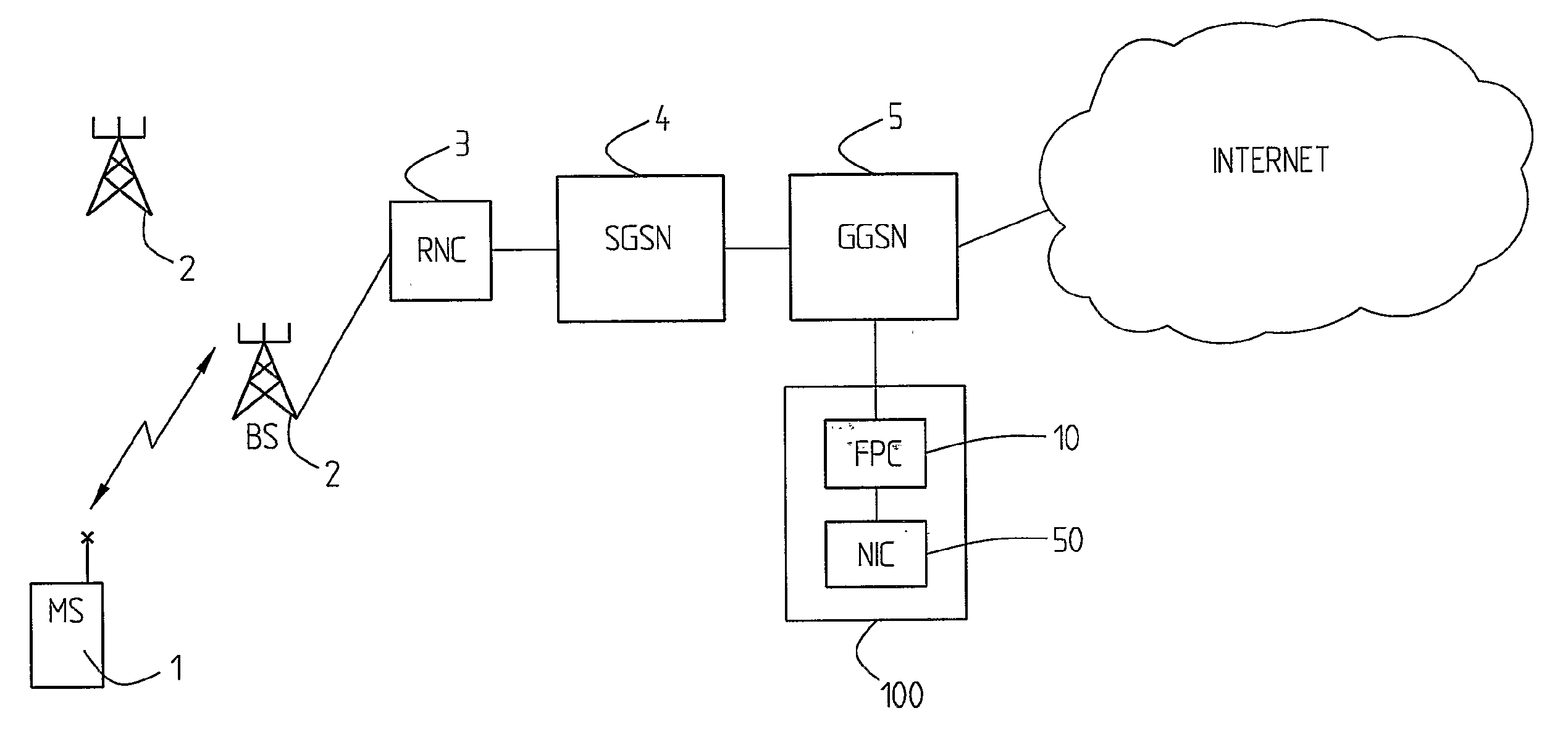

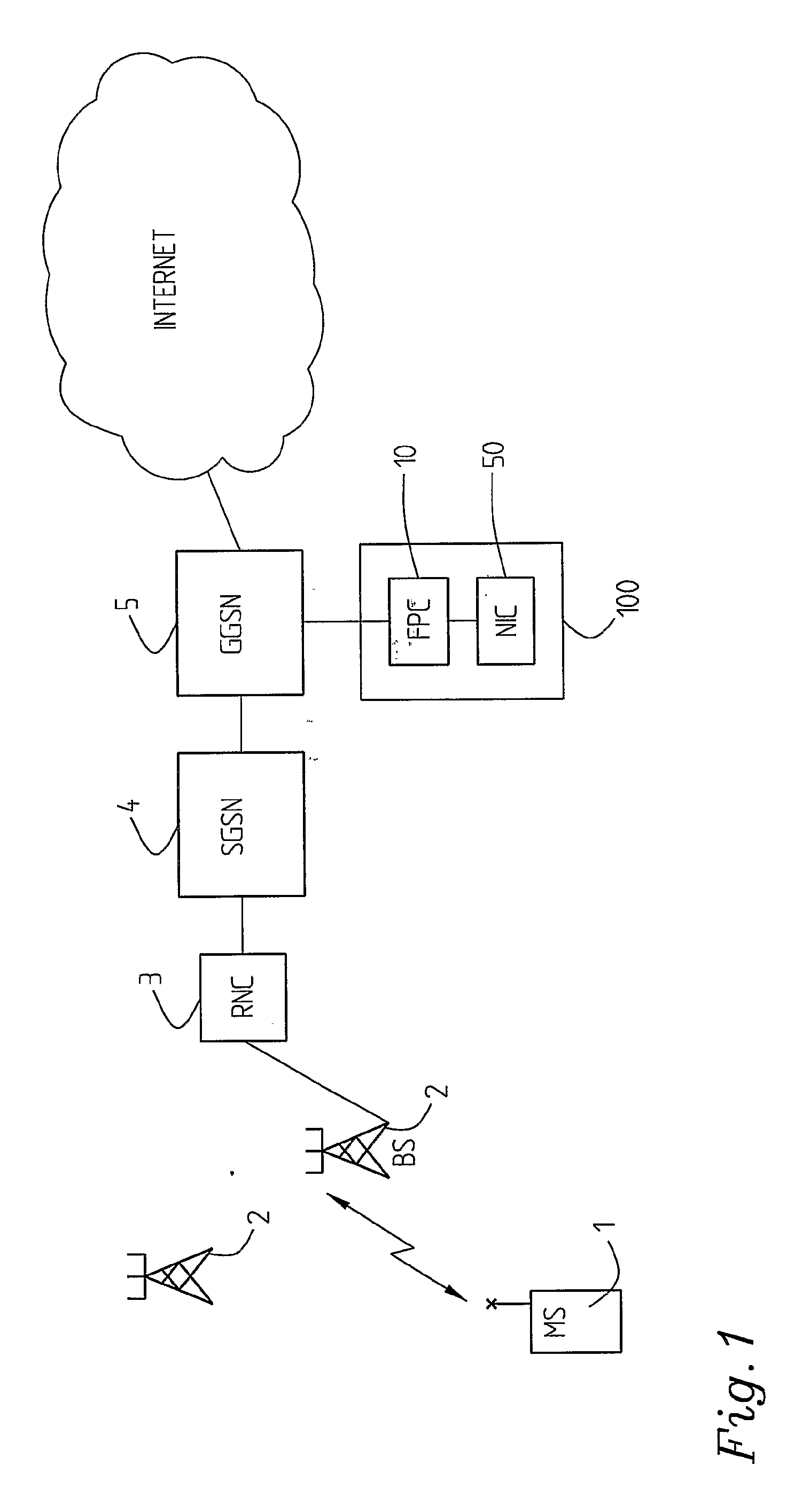

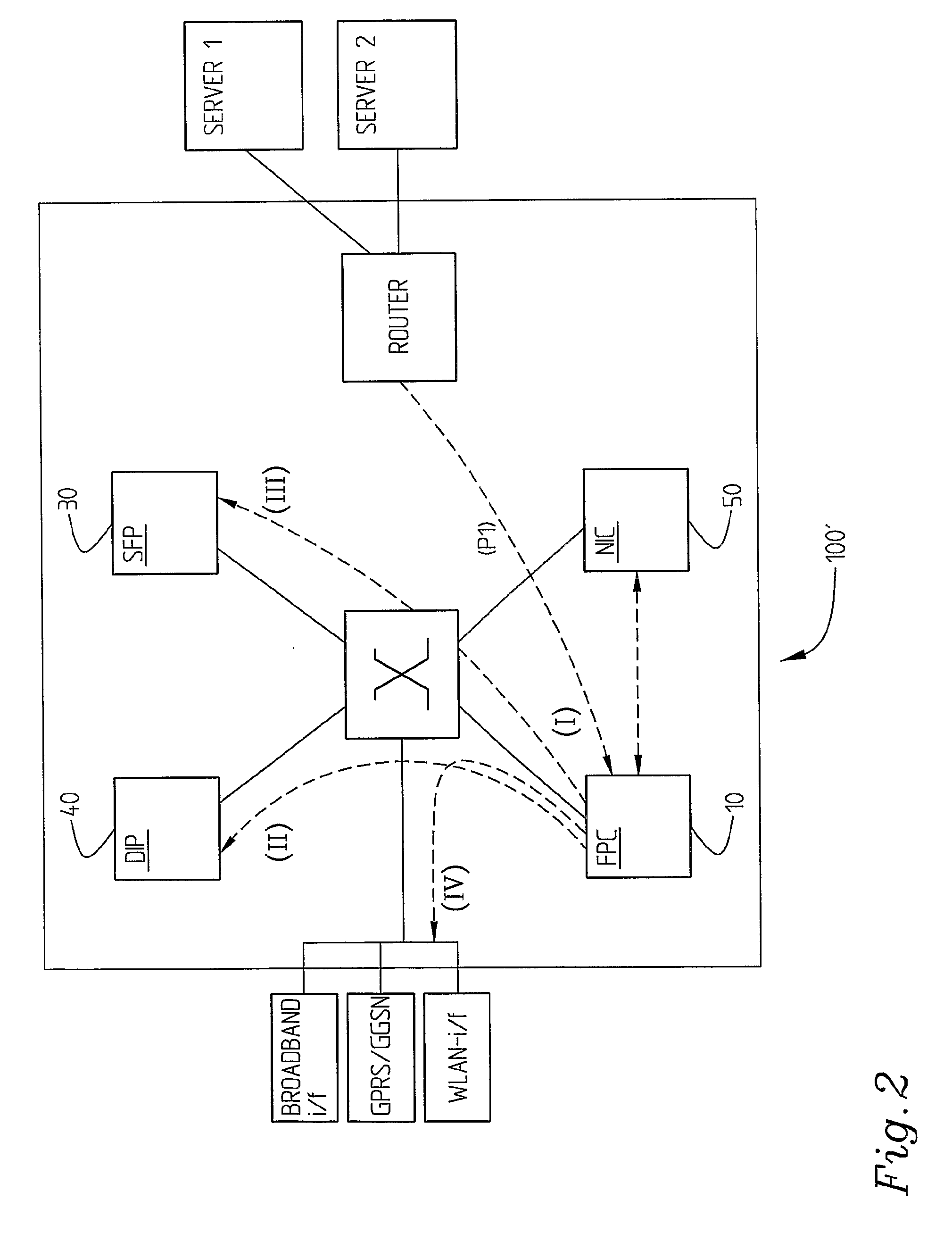

Arrangement and a Method Relating to Flow of Packets in Communication Systems

ActiveUS20080002579A1Precise maintenanceError preventionTransmission systemsProper functionProcessing core

An arrangement, system, and method for switching data packet flows in a communication system. A flow processing core classifies packet flows and defines processing flow sequences applicable to the packet flows. A distributing arrangement directs the packet flows to appropriate functional units or processors according to each packet flow's applicable processing flow sequence. The current position of each packet flow in its respective processing flow sequence is indicated. Packet flow sequence information may be determined so that reclassification of already classified packets is avoided.

Owner:TELEFON AB LM ERICSSON (PUBL)

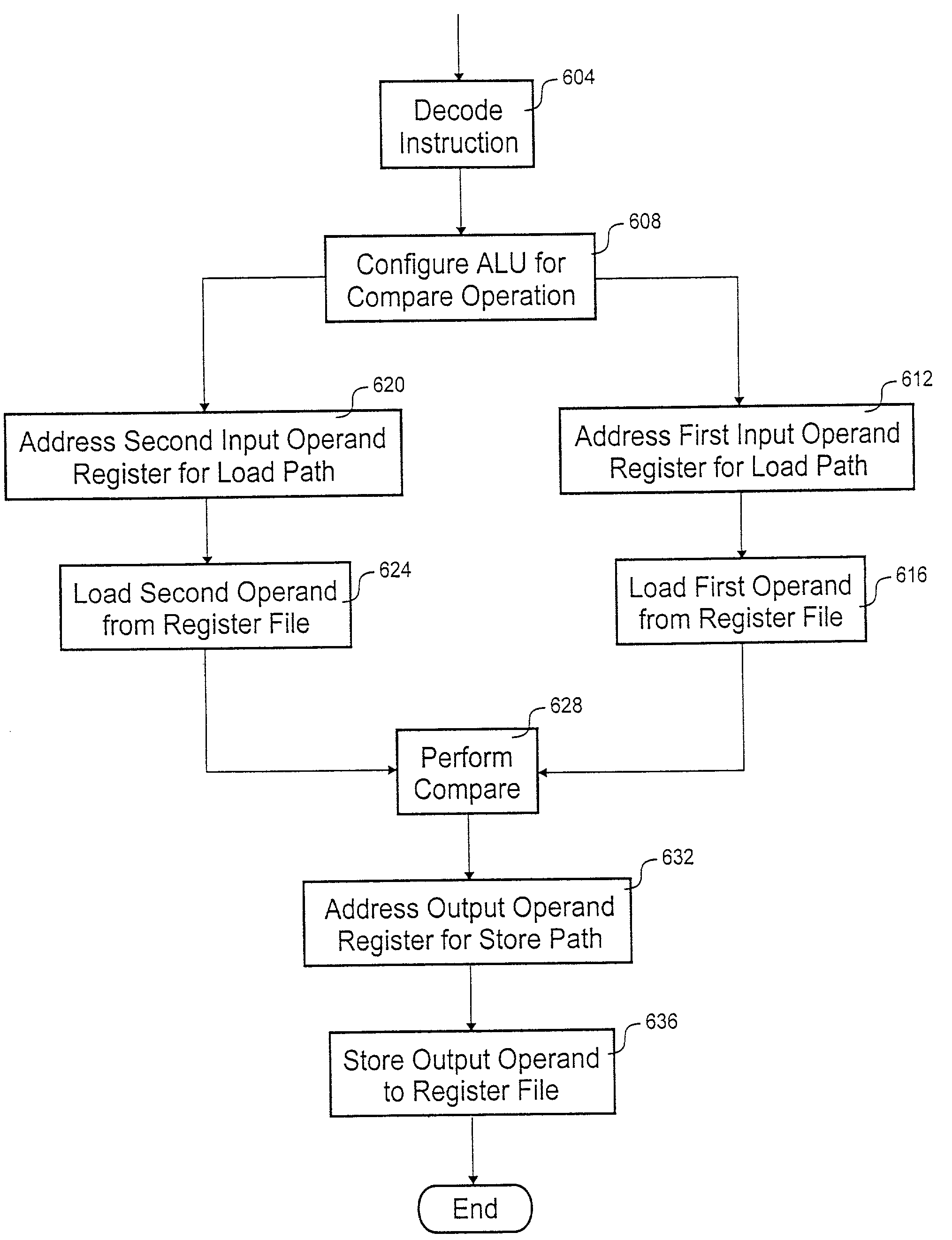

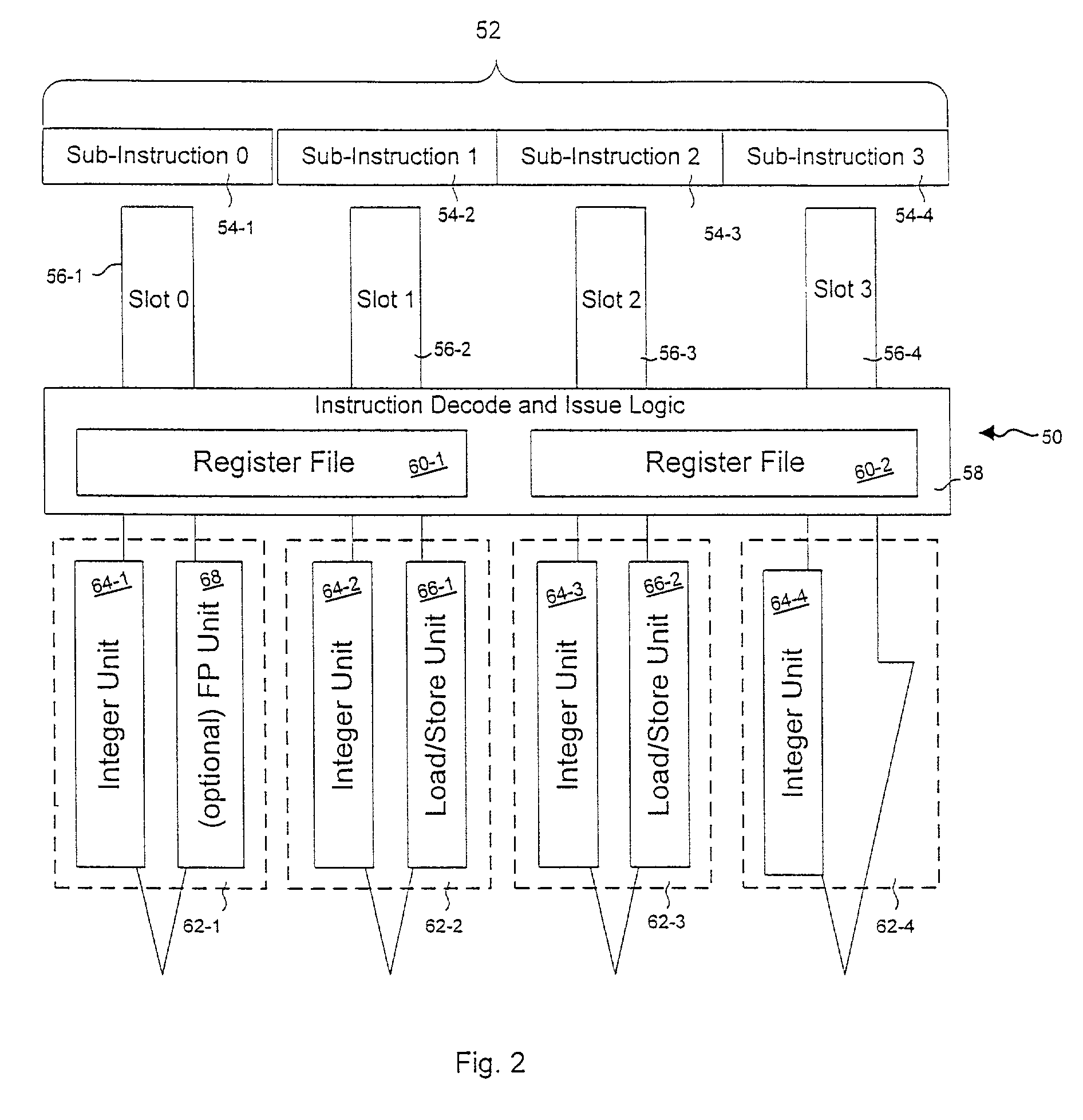

Processing architecture having a compare capability

According to the invention, a processing core that executes a compare instruction is disclosed. The processing core includes a register file, comparison logic, decode logic, and a store path. Included in the register file are a number of general-purpose registers. The general-purpose registers include a first input operand register, a second input operand register and an output operand register. Comparison logic is coupled to the register file. The comparison logic tests for at least two of the following relationships: less than, equal to, greater than and no valid relationship. The decode logic selects the output operand register from the plurality of general-purpose registers. The store path extends between the comparison logic and the selected output operand register.

Owner:ORACLE INT CORP

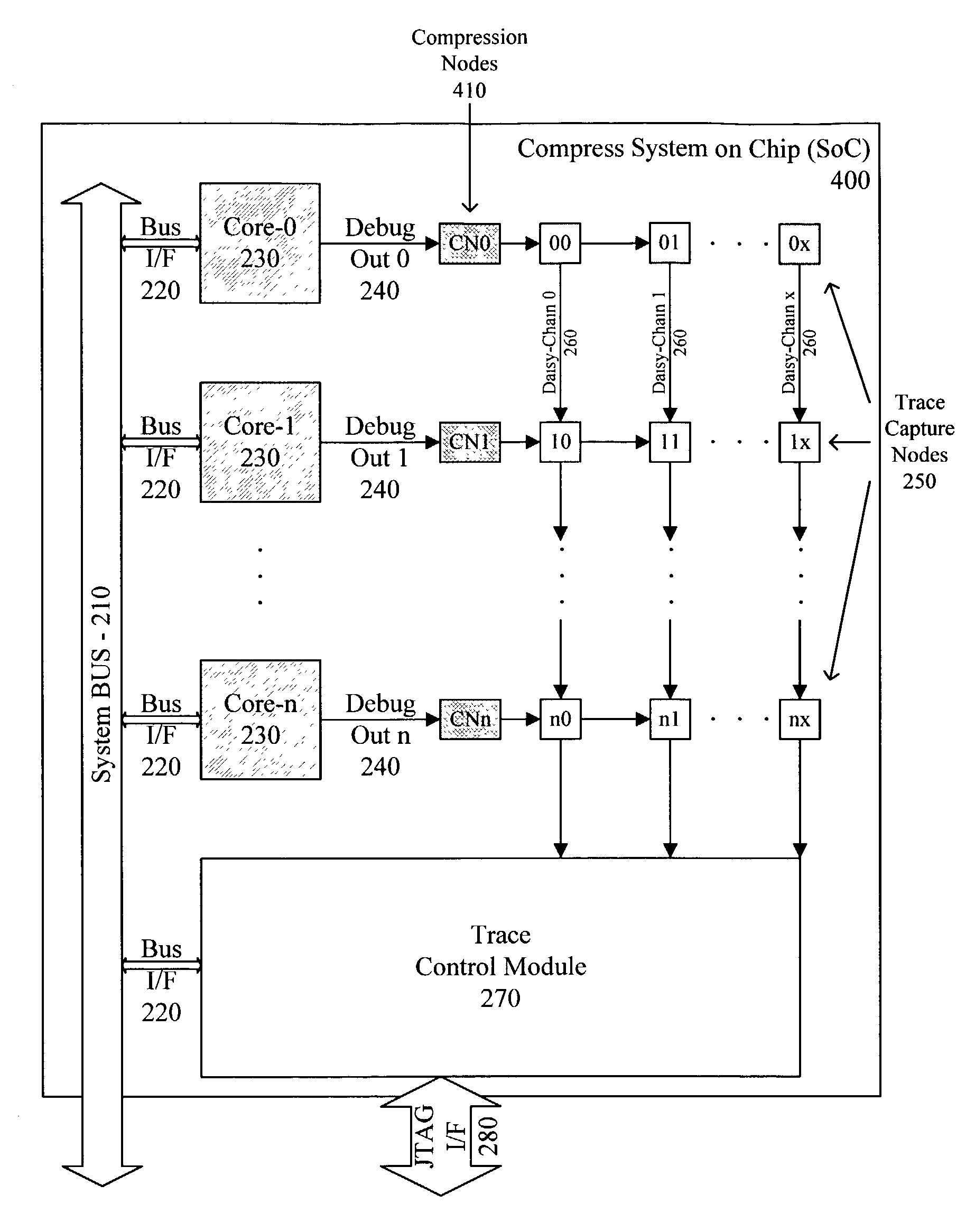

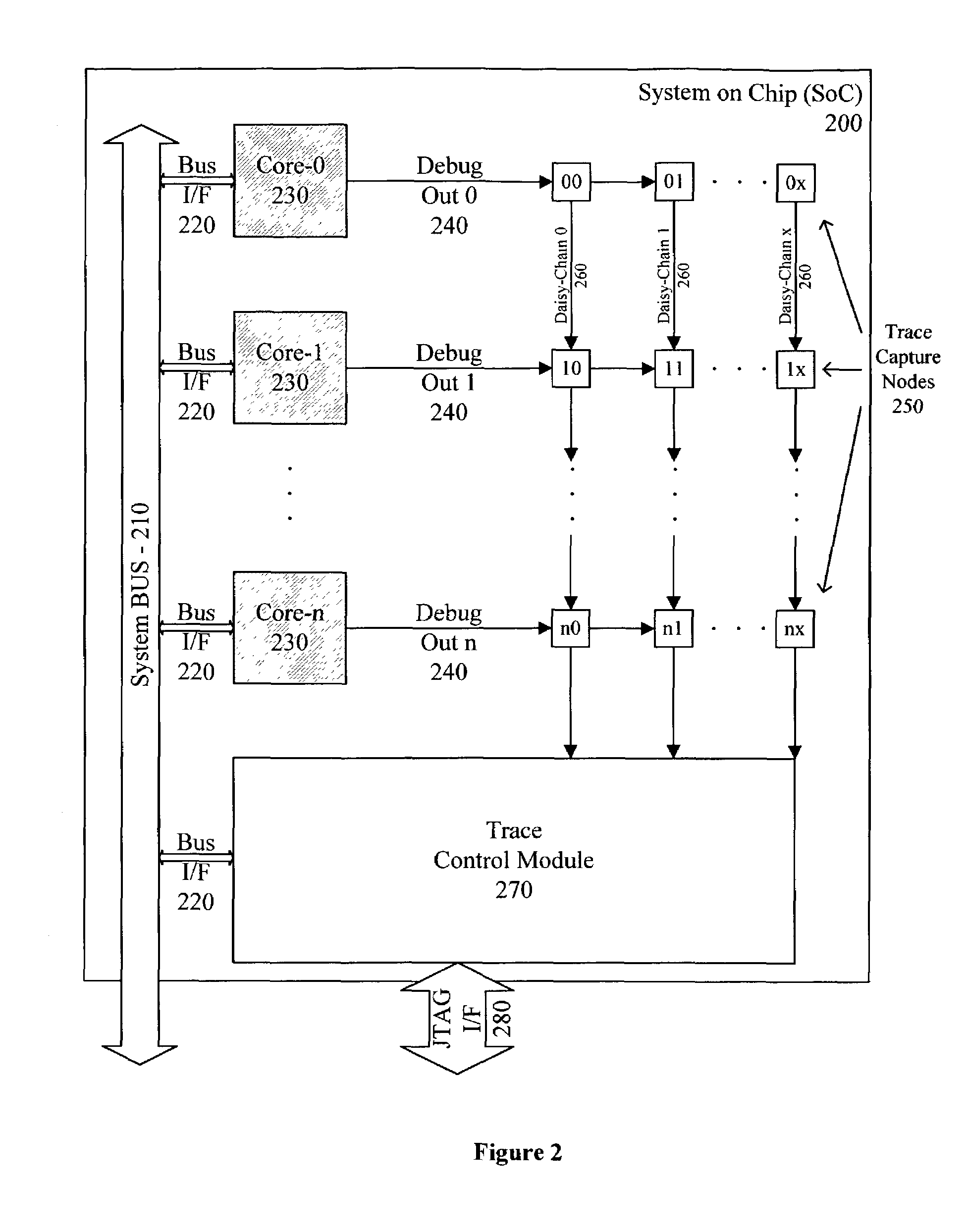

Simultaneous real-time trace and debug for multiple processing core systems on a chip

A system for providing simultaneous, real-time trace and debug of a multiple processing core system on a chip (SoC) is described. Coupled to each processing core is a debug output bus. Each debug output bus passes a processing core's operation to trace capture nodes connected together in daisy-chains. Trace capture node daisy-chains terminate at the trace control module. The trace control module receives and filters processing core trace data and decides whether to store processing core trace data into trace memory. The trace control module also contains a shadow register for capturing the internal state of a traced processing core just prior its tracing. Stored trace data, along with the corresponding shadow register contents, are transferred out of the trace control module and off the SoC into a host agent and system running debugger hardware and software via a JTAG interface.

Owner:TENSILICA

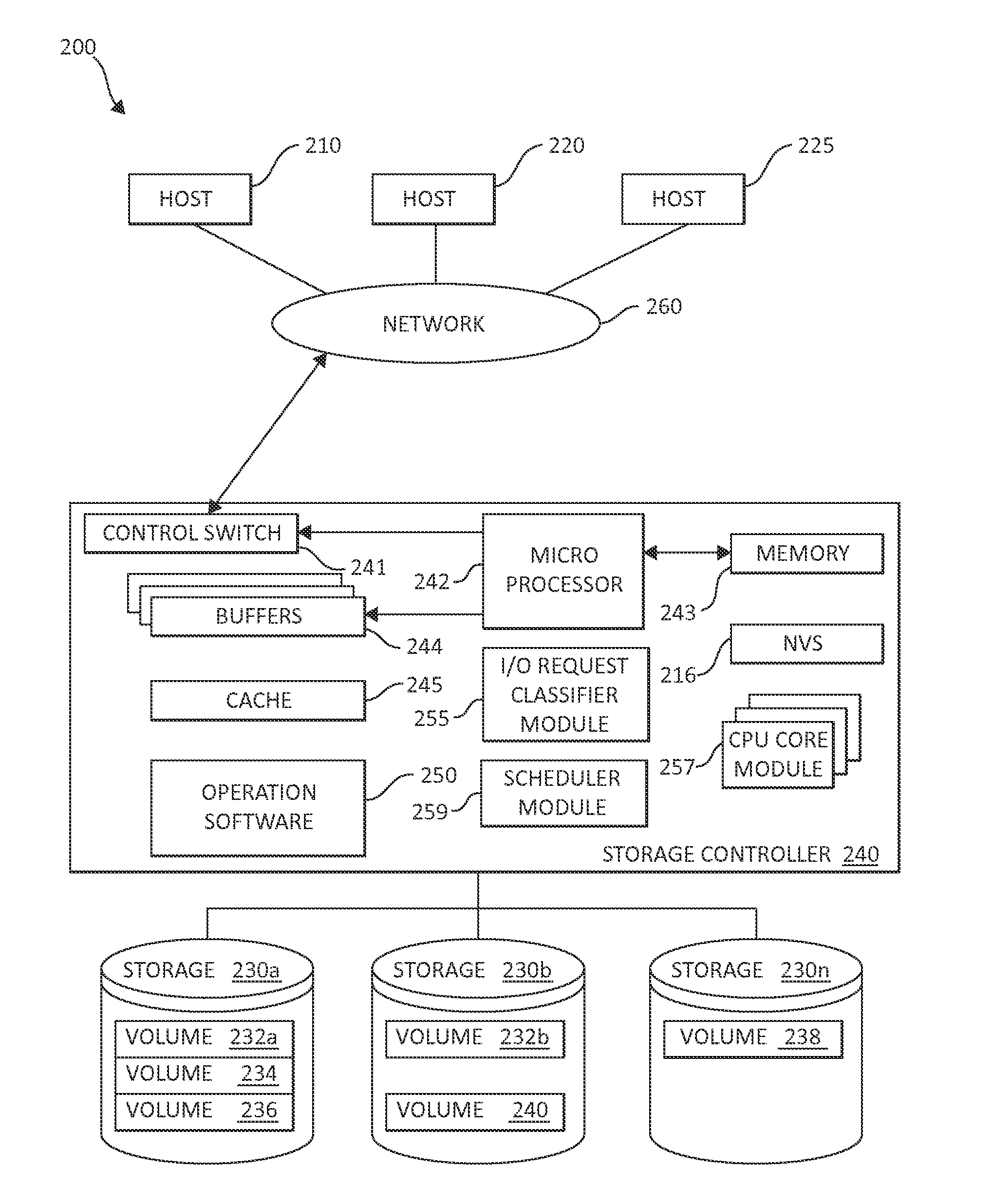

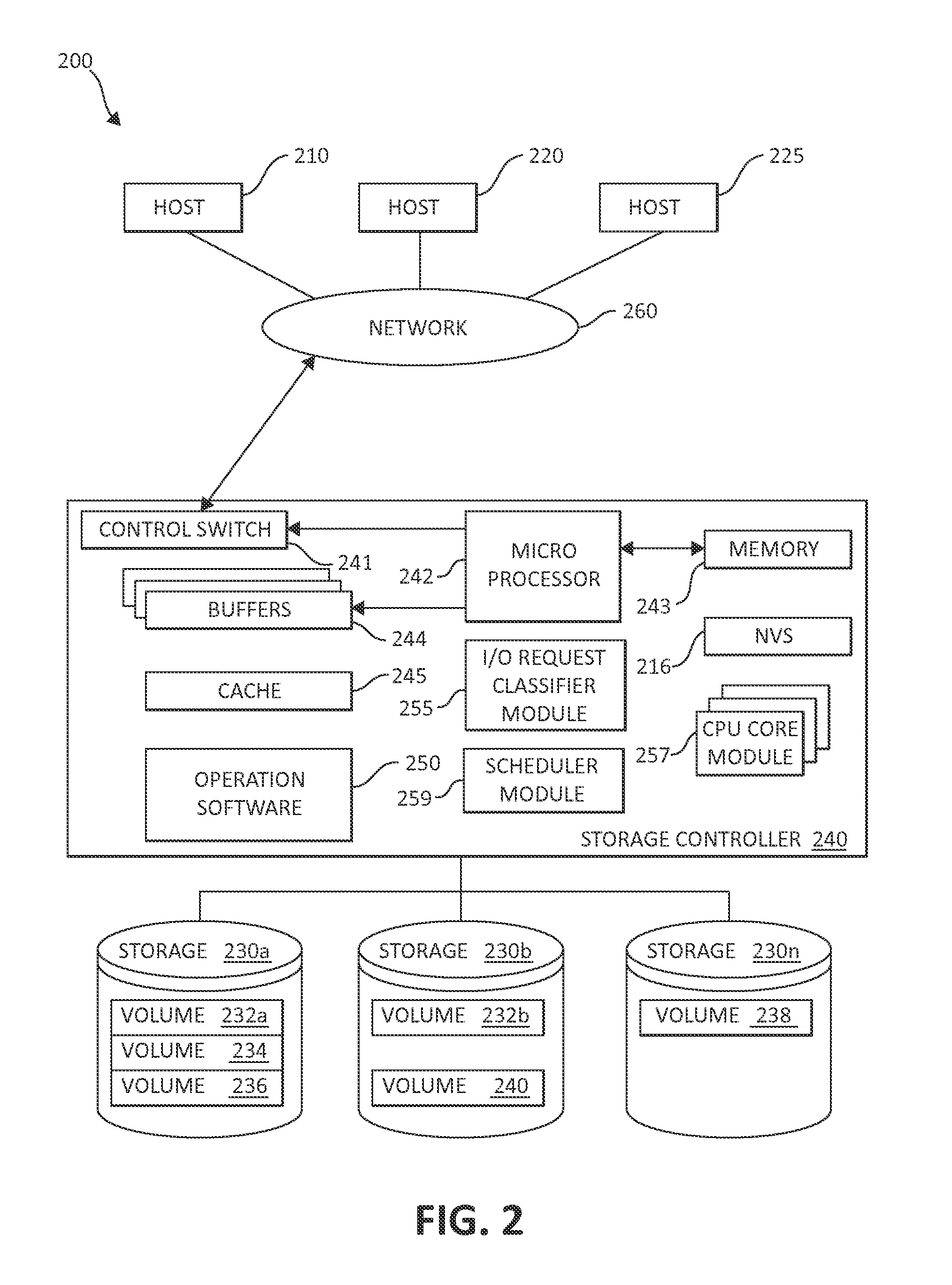

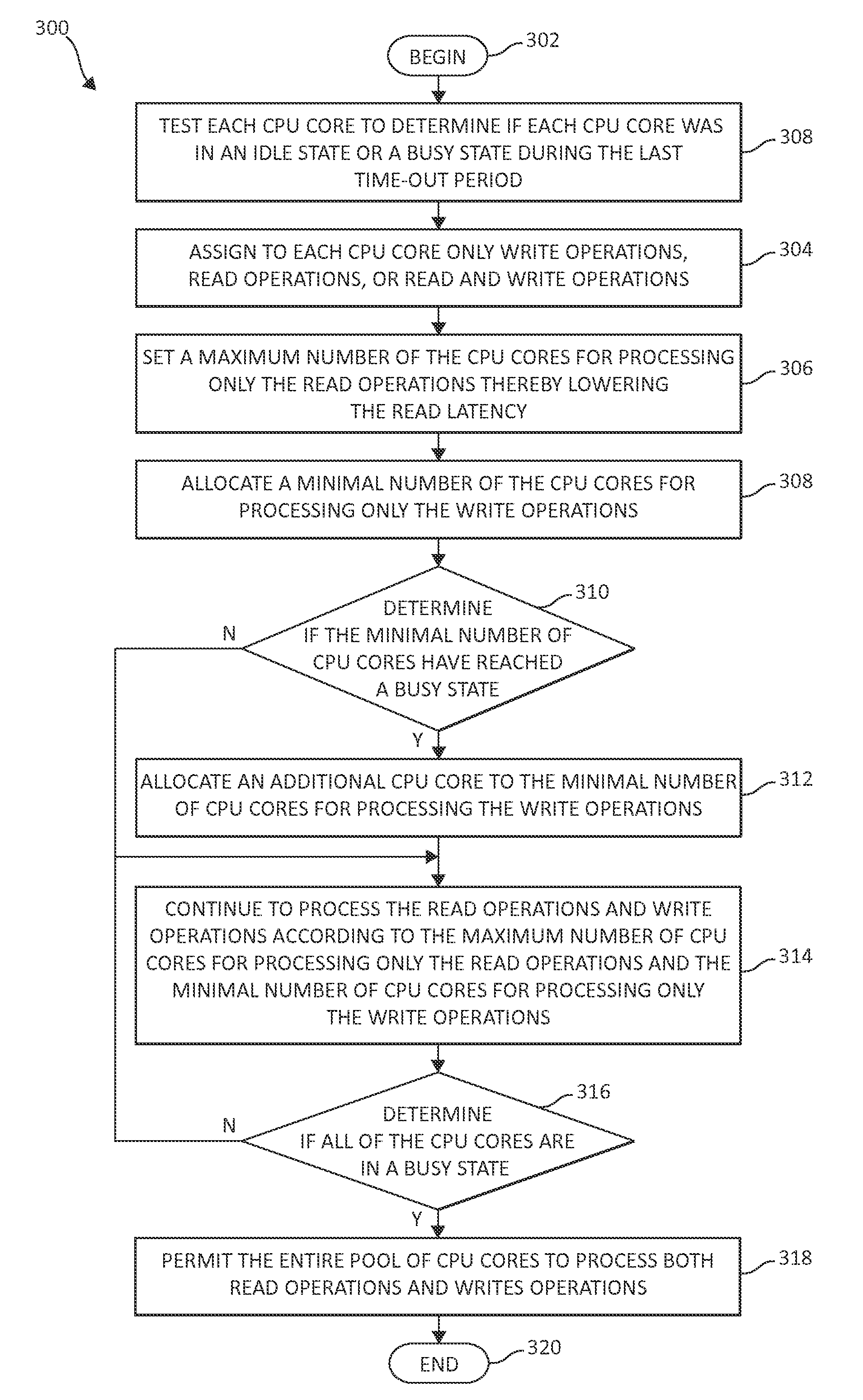

Reducing read latency using a pool of processing cores

ActiveUS20130339635A1Minimize write latencyAvoid read latencyError detection/correctionProgram controlProcessing coreThroughput

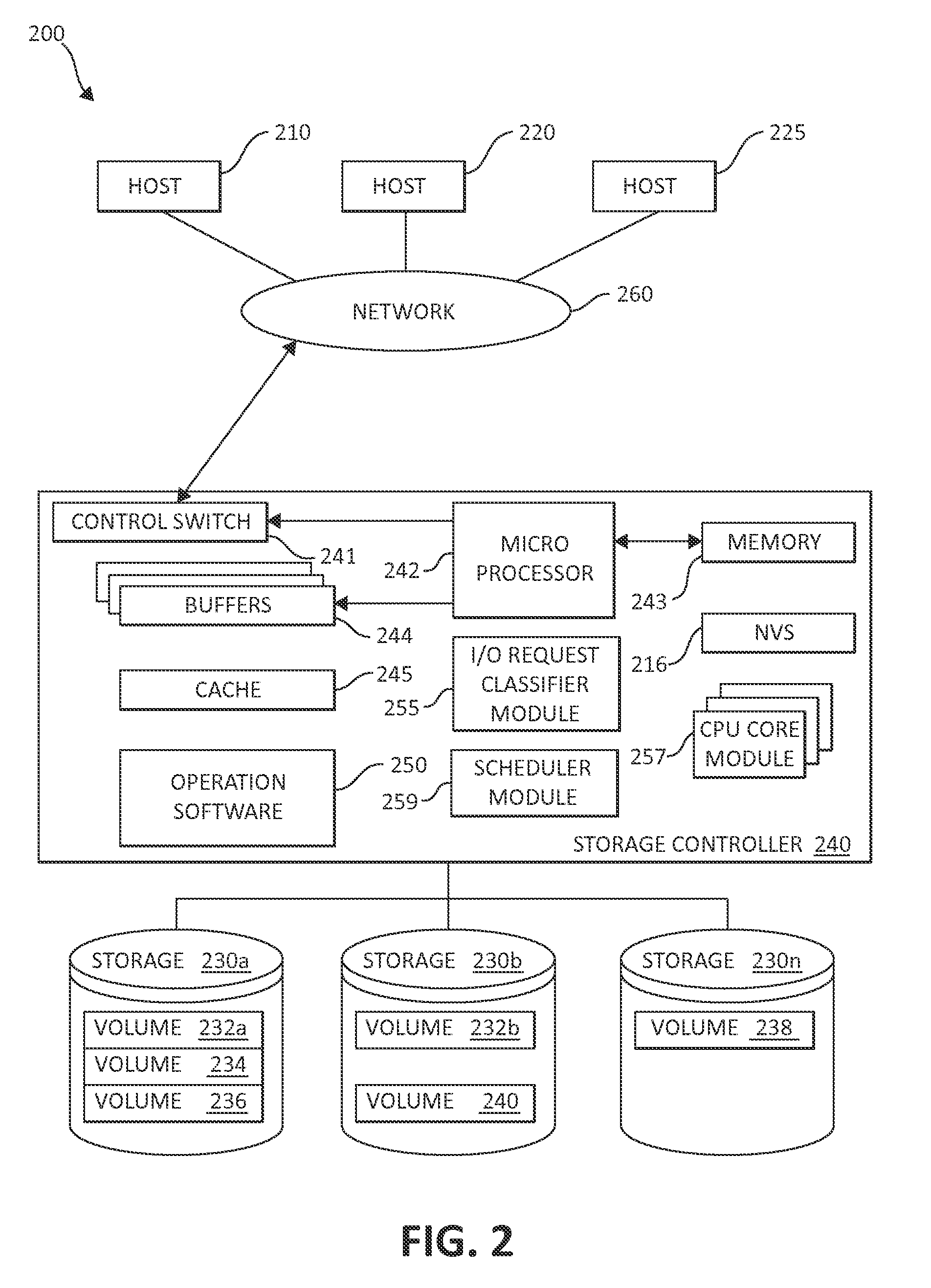

In a read processing storage system, using a pool of CPU cores, the CPU cores are assigned to process either write operations, read operations, and read and write operations, that are scheduled for processing. A maximum number of the CPU cores are set for processing only the read operations, thereby lowering a read latency. A minimal number of the CPU cores are allocated for processing the write operations, thereby increasing write latency. Upon reaching a throughput limit for the write operations that causes the minimal number of the plurality of CPU cores to reach a busy status, the minimal number of the plurality of CPU cores for processing the write operations is increased.

Owner:IBM CORP

Reducing read latency using a pool of processing cores

ActiveUS8930633B2Avoid read latencyLower read latencyInput/output to record carriersHardware monitoringProcessing coreComputer science

Owner:INT BUSINESS MASCH CORP

Parallel data processing systems and methods using cooperative thread arrays and thread identifier values to determine processing behavior

ActiveUS7861060B1Eliminate needImprove performanceGeneral purpose stored program computerMultiple digital computer combinationsData processing systemData set

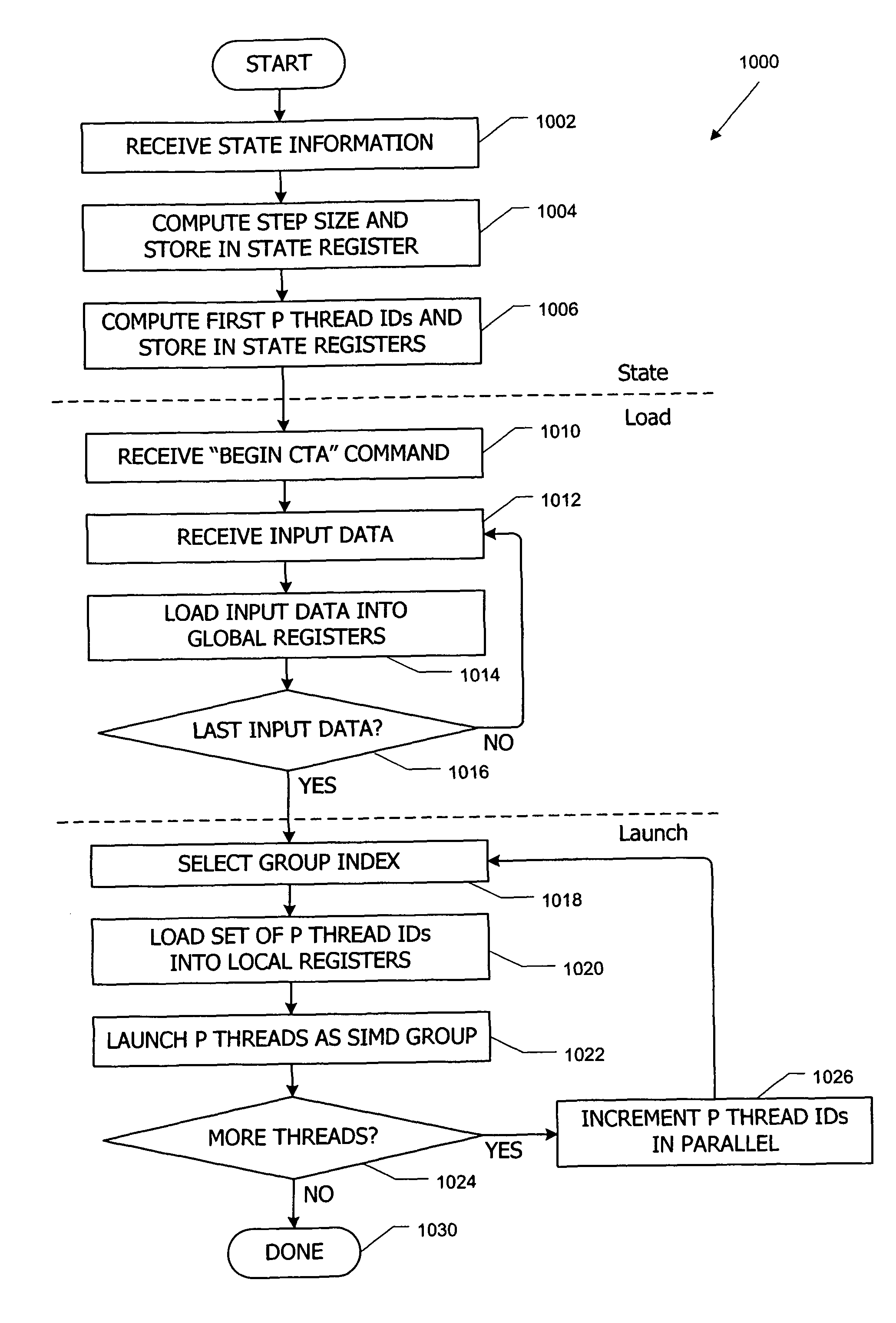

Parallel data processing systems and methods use cooperative thread arrays (CTAs), i.e., groups of multiple threads that concurrently execute the same program on an input data set to produce an output data set. Each thread in a CTA has a unique identifier (thread ID) that can be assigned at thread launch time. The thread ID controls various aspects of the thread's processing behavior such as the portion of the input data set to be processed by each thread, the portion of an output data set to be produced by each thread, and / or sharing of intermediate results among threads. Mechanisms for loading and launching CTAs in a representative processing core and for synchronizing threads within a CTA are also described.

Owner:NVIDIA CORP

Systems and methods for rapid processing and storage of data

ActiveUS9552299B2Faster bandwidthImprove latencyMemory architecture accessing/allocationMemory adressing/allocation/relocationMassively parallelProcessing core

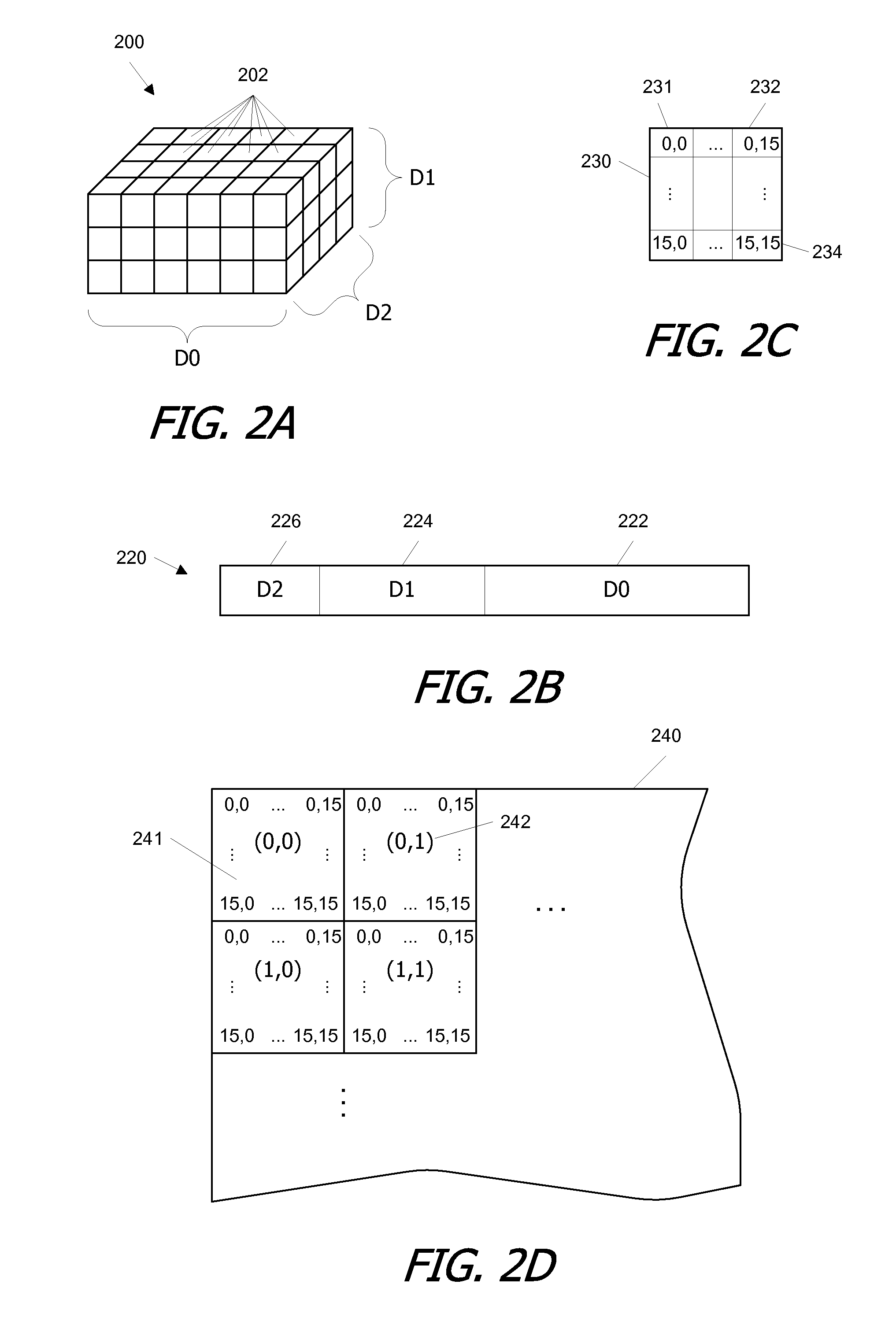

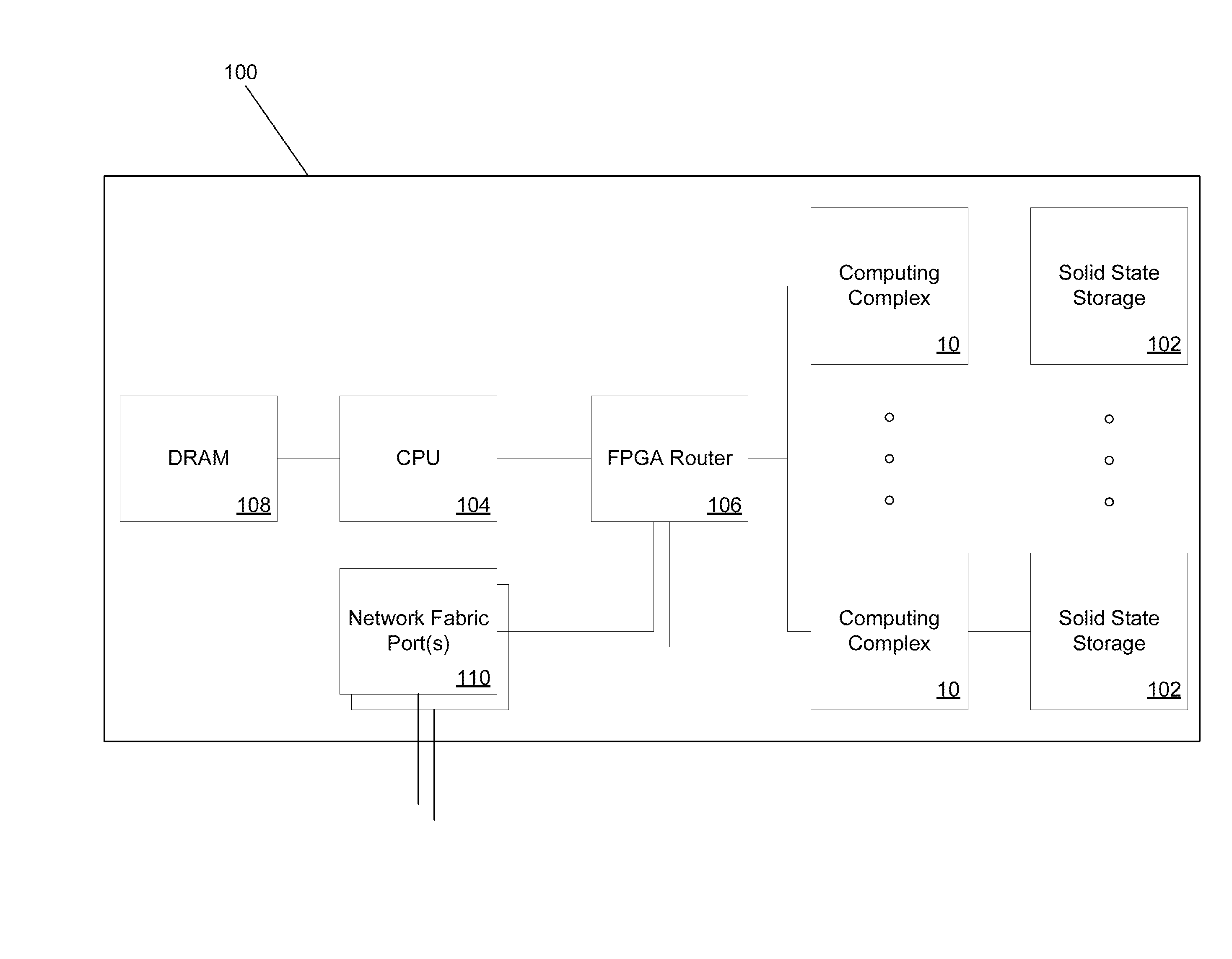

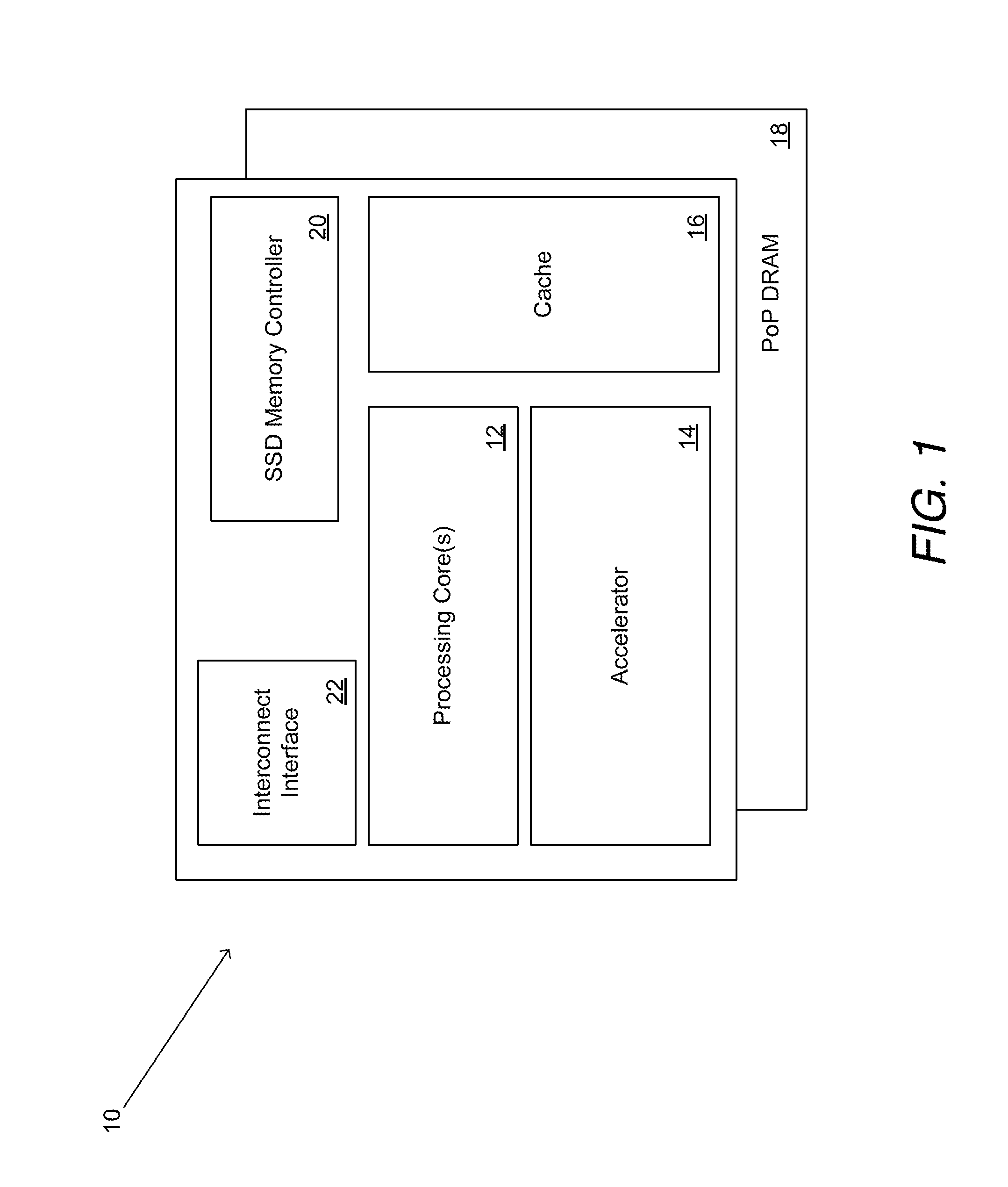

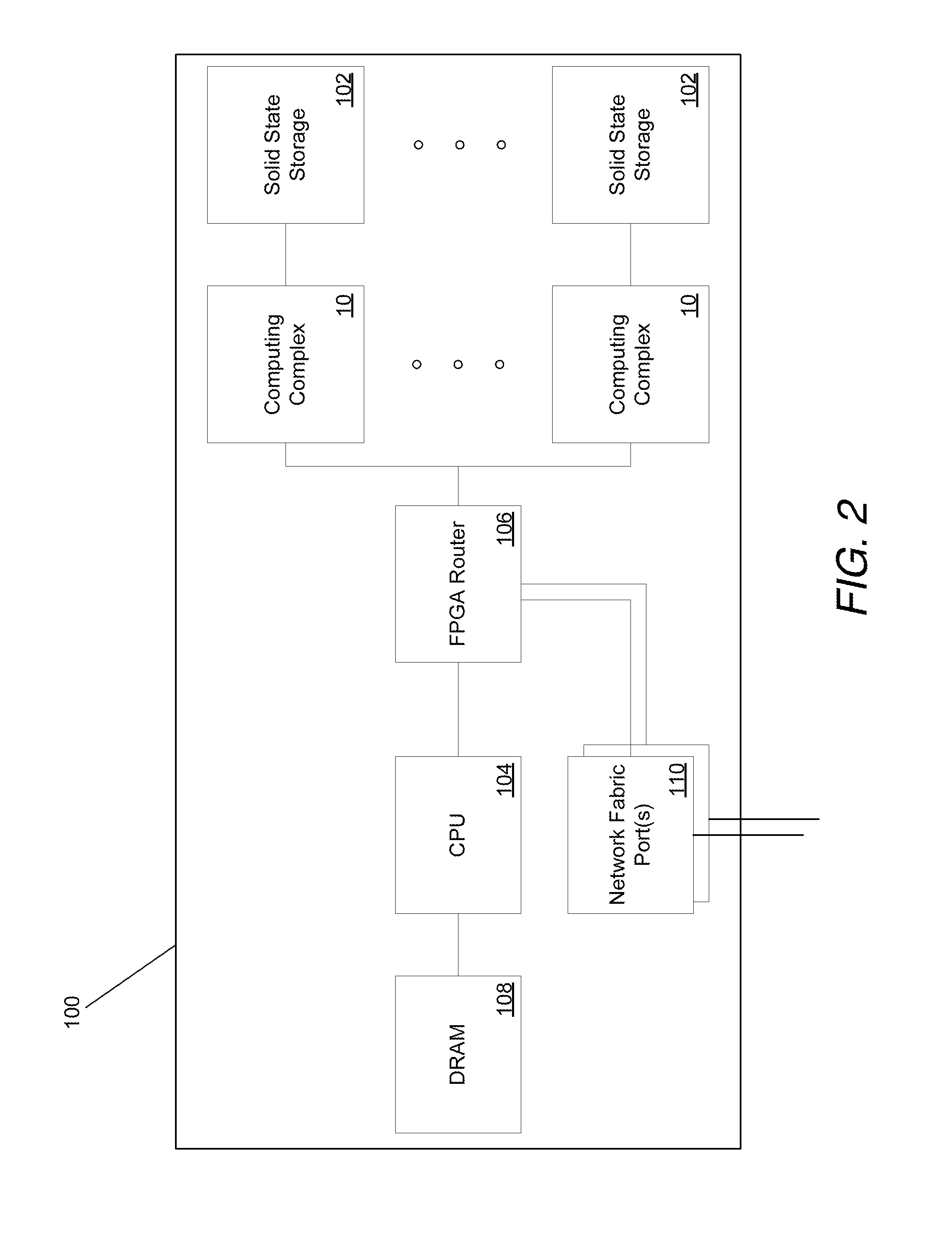

Systems and methods of building massively parallel computing systems using low power computing complexes in accordance with embodiments of the invention are disclosed. A massively parallel computing system in accordance with one embodiment of the invention includes at least one Solid State Blade configured to communicate via a high performance network fabric. In addition, each Solid State Blade includes a processor configured to communicate with a plurality of low power computing complexes interconnected by a router, and each low power computing complex includes at least one general processing core, an accelerator, an I / O interface, and cache memory and is configured to communicate with non-volatile solid state memory.

Owner:CALIFORNIA INST OF TECH

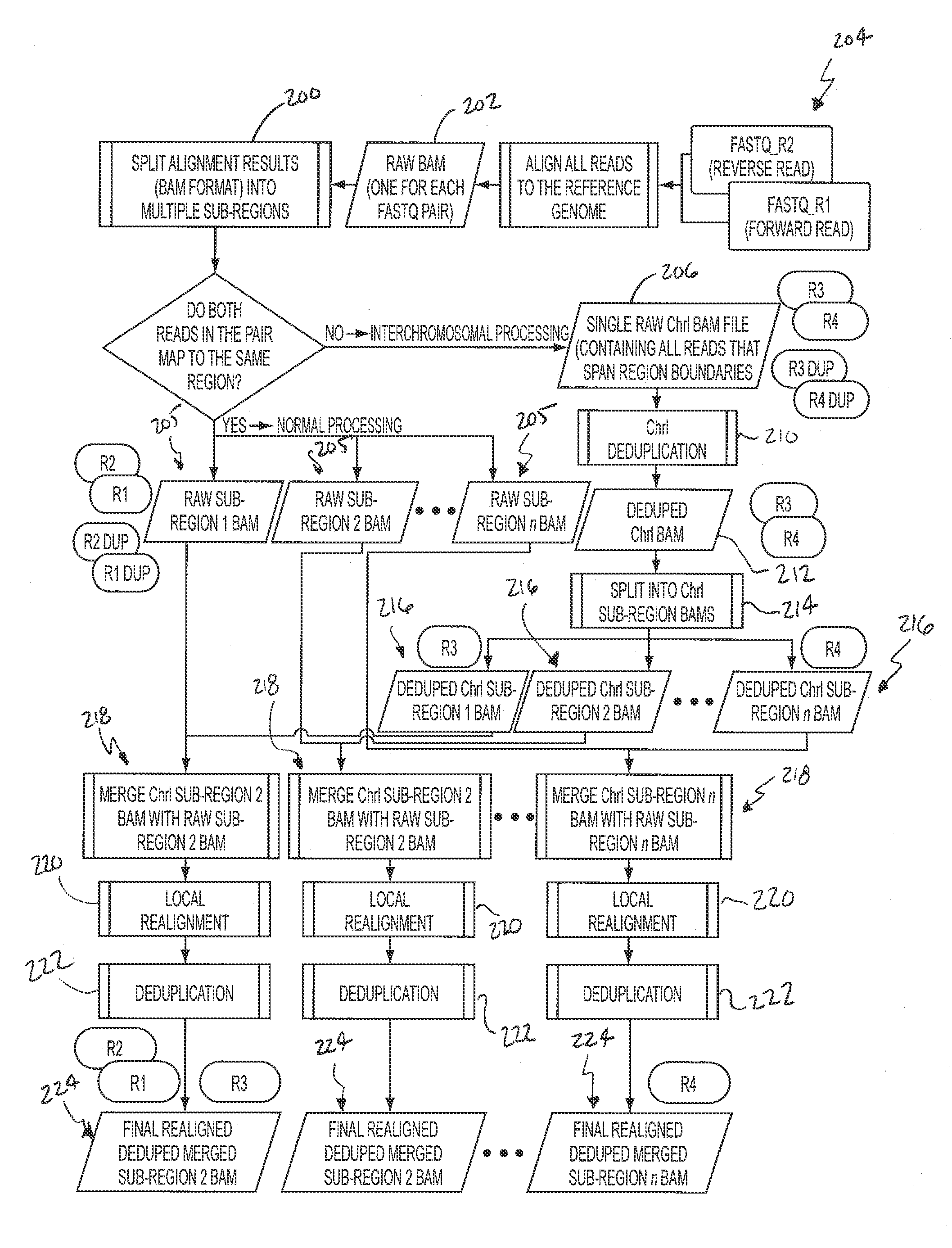

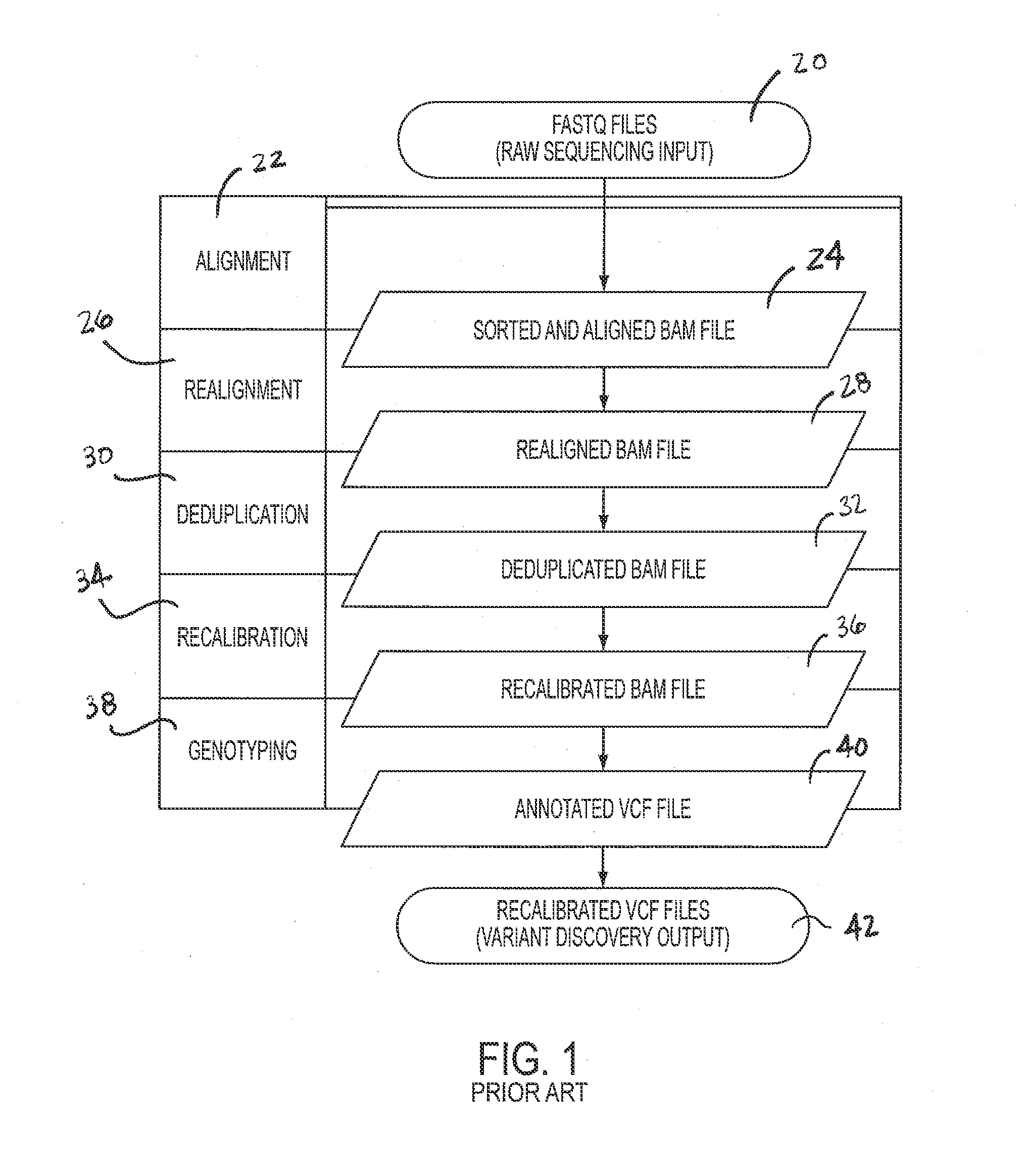

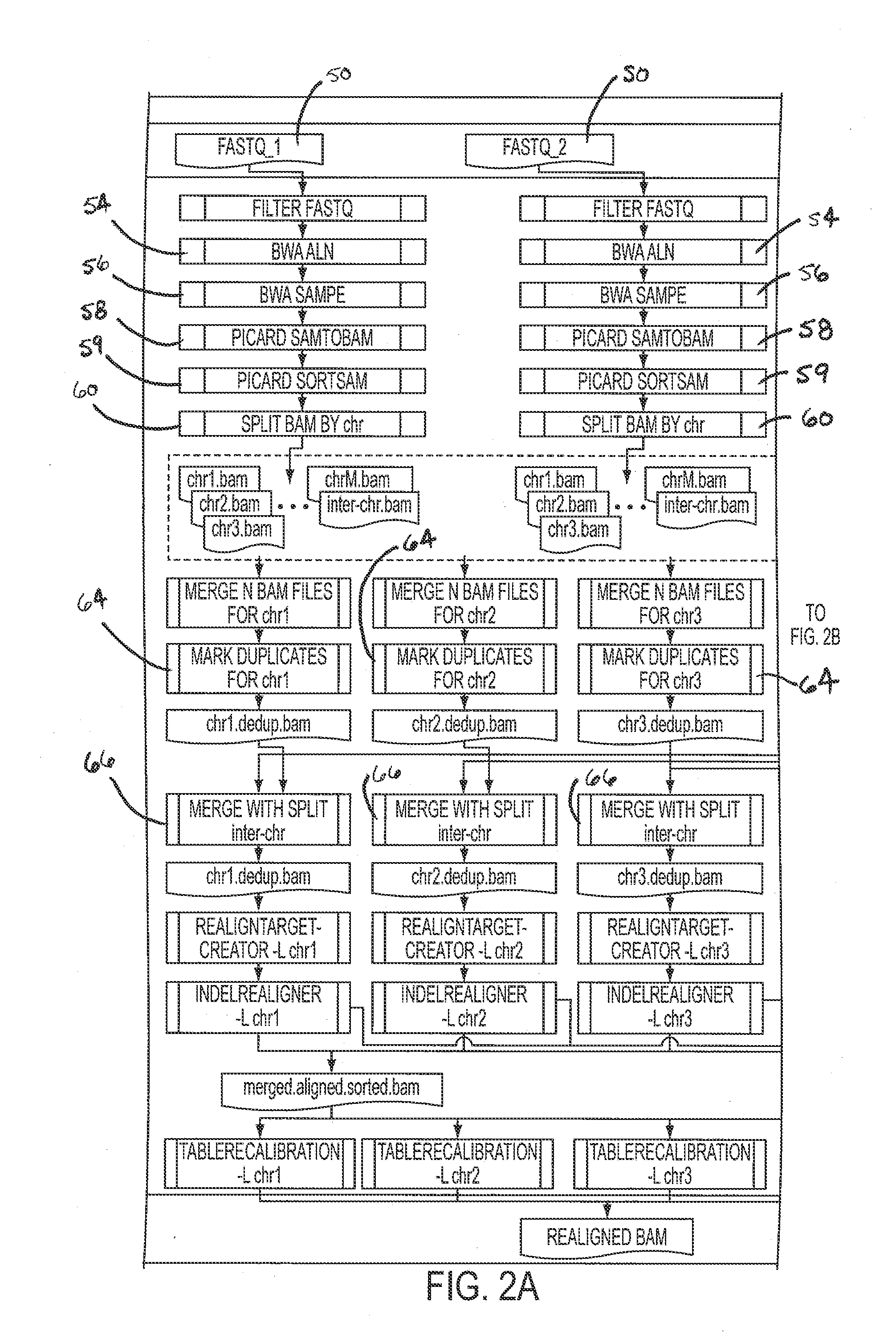

Comprehensive Analysis Pipeline for Discovery of Human Genetic Variation

ActiveUS20130311106A1Shorten analysis timeQuick identificationMicrobiological testing/measurementProteomicsData setProcessing core

Systems and methods for analyzing genetic sequence data involve: (a) obtaining, by a computer system, genetic sequencing data pertaining to a subject; (b) splitting the genetic sequencing data into a plurality of segments; (c) processing the genetic sequencing data such that intra-segment reads, read pairs with both mates mapped to the same data set, are saved to a respective plurality of individual binary alignment map (BAM) files corresponding to that respective segment; (d) processing the genetic sequencing data such that inter-segment reads, read pairs with both mates mapped to different segments, are saved into at least a second BAM file; and (e) processing at least the first plurality of BAM files along parallel processing paths. The plurality of segments may correspond to any given number of genomic subregions and may be selected based upon the number of processing cores used in the parallel processing.

Owner:RES INST AT NATIONWIDE CHILDRENS HOSPITAL

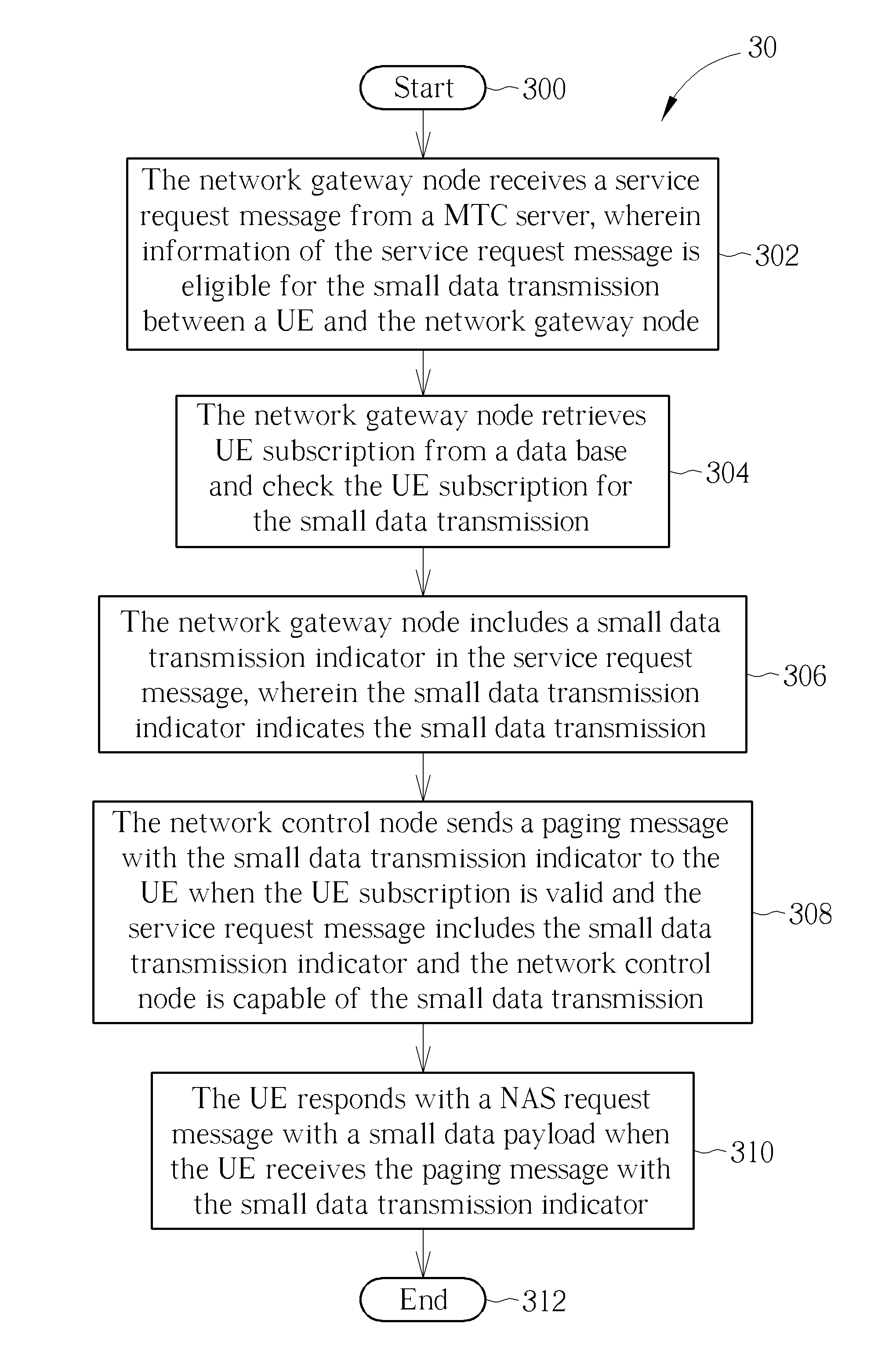

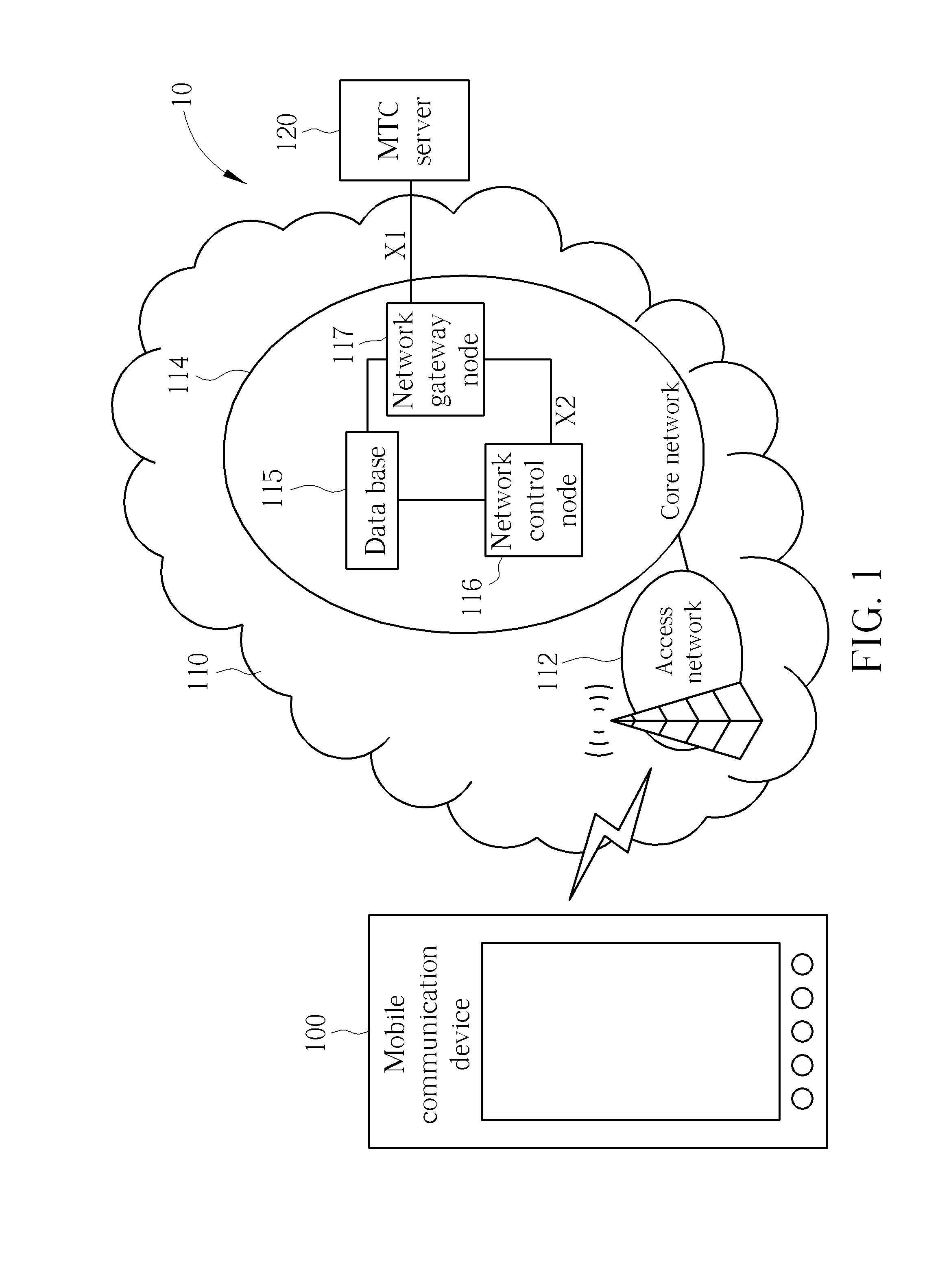

Method of Handling Small Data Transmission

ActiveUS20130080597A1Transmission path divisionMultiple digital computer combinationsProcessing coreNetwork control

A method of handling small data transmission for a core network is disclosed, wherein the core network comprises a data base, a network gateway node and a network control node. The method comprises the network gateway node receiving a service request message from a machine-type communication (MTC) server, wherein information of the service request message is eligible for the small data transmission between a mobile device and the network gateway node; and the network gateway node including a small data transmission indicator in the service request message, wherein the small data transmission indicator indicates the small data transmission.

Owner:HTC CORP

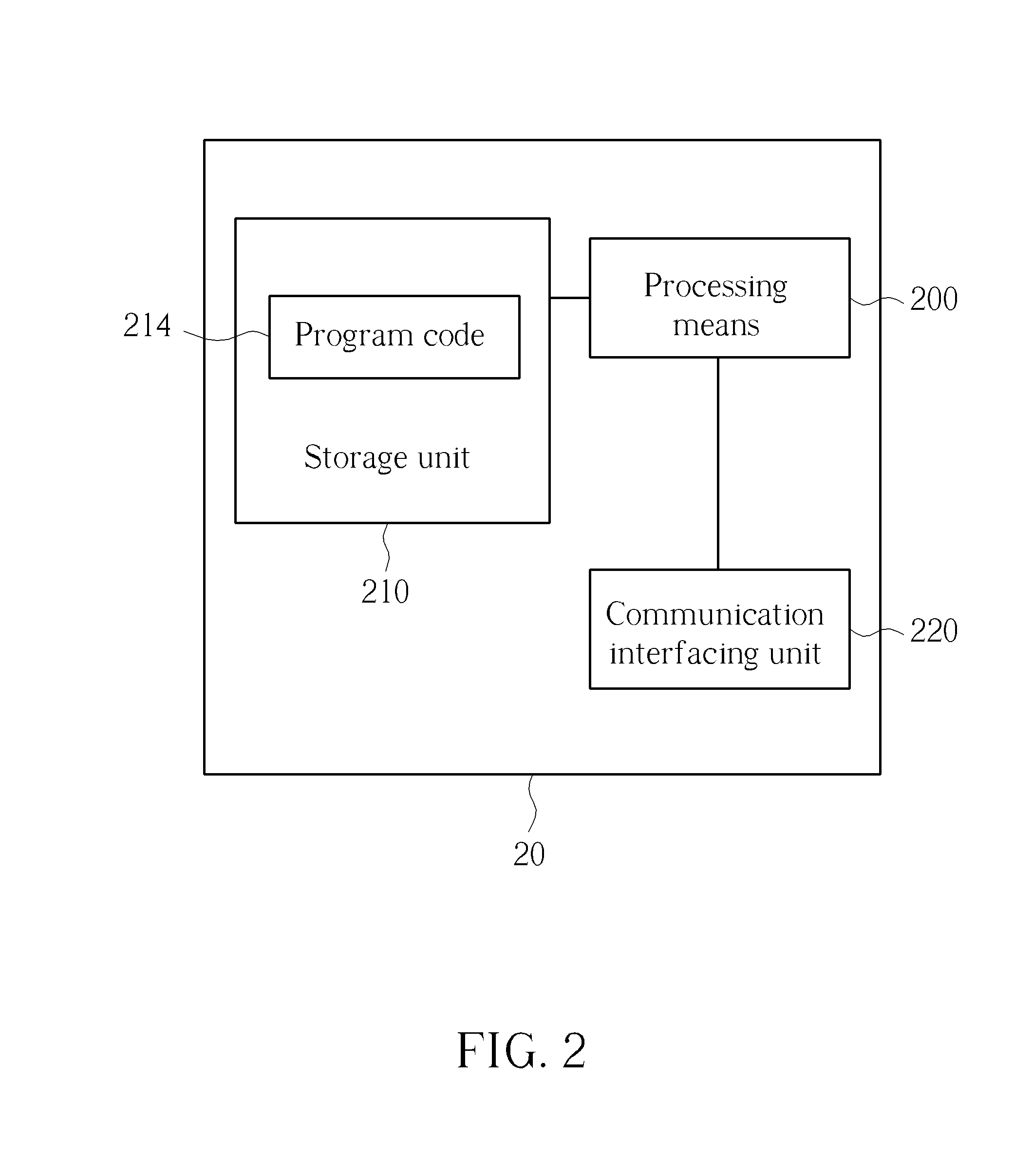

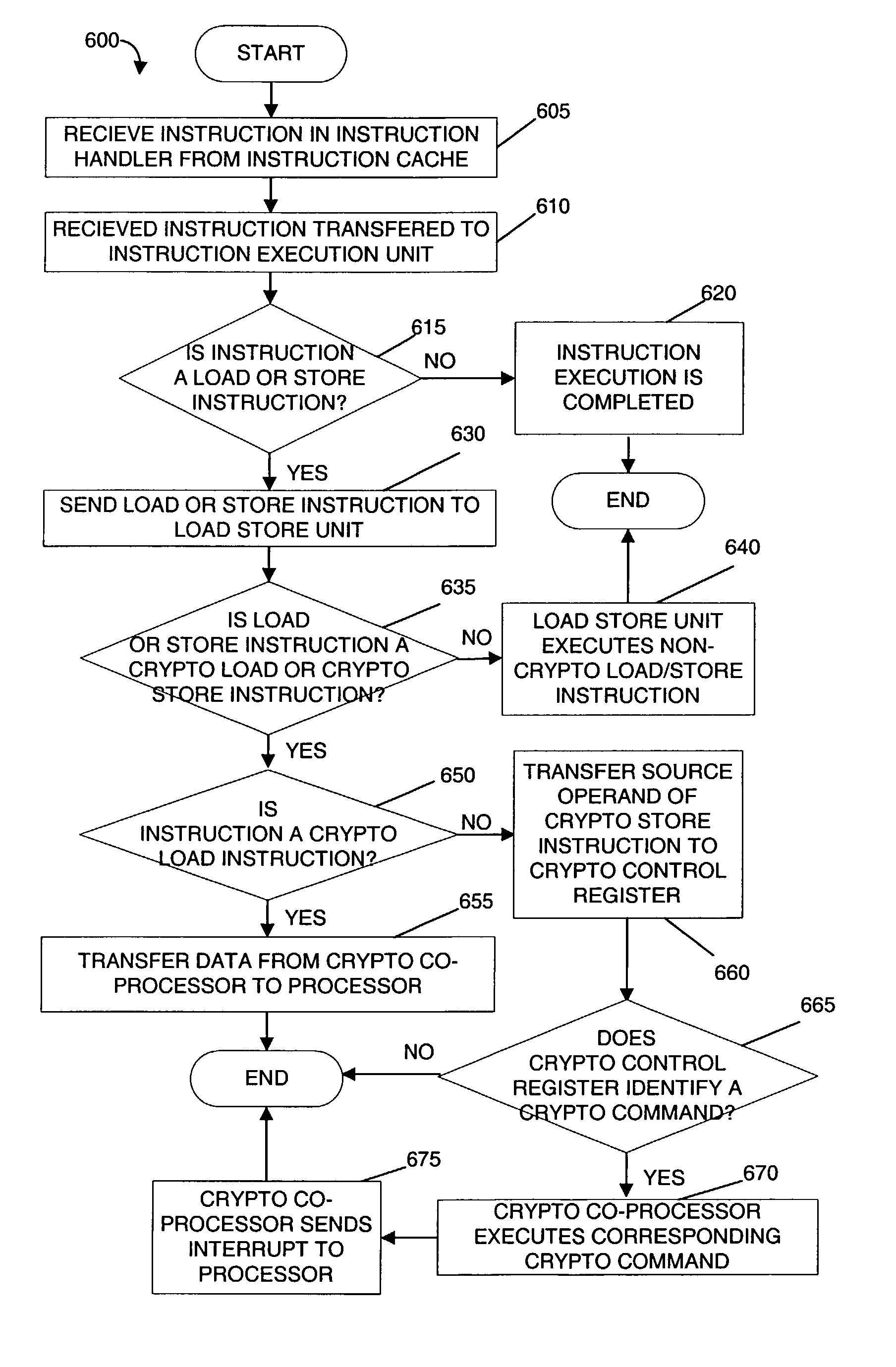

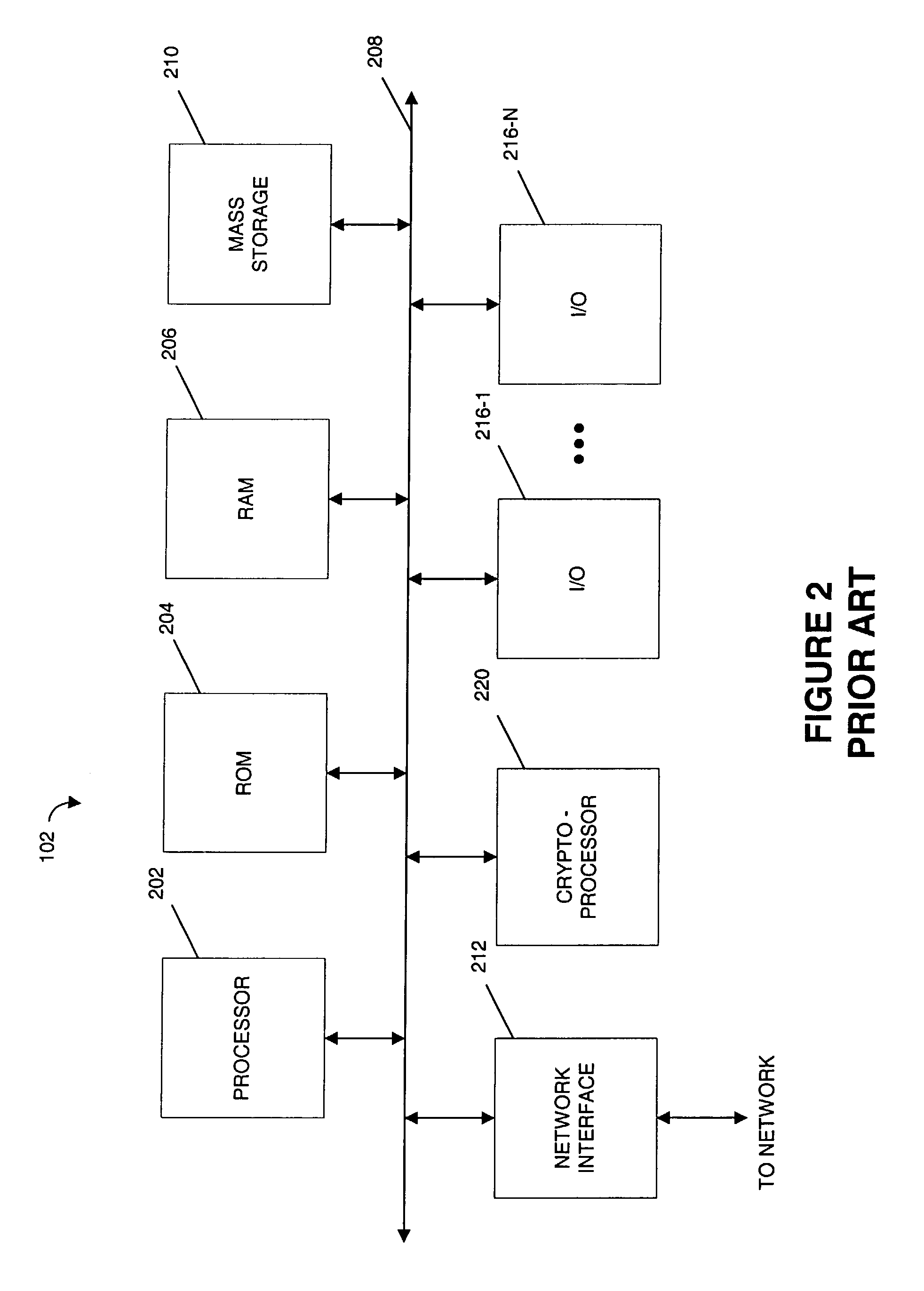

Stream processor with cryptographic co-processor

InactiveUS20030084309A1Energy efficient ICTStatic indicating devicesProcessing coreDirect memory access

A microprocessor includes a first processing core, a first cryptographic coprocessor and an integer multiplier unit that is coupled to the first processing core and the first cryptographic co-processor. The first processing core includes an instruction decode unit, an instruction execution unit, a load / store unit. The first cryptographic coprocessor is located on a first die with the first processing core. The first cryptographic co-processor includes a cryptographic control register, a direct memory access engine that is coupled to the load / store unit in the first processing core and a cryptographic memory.

Owner:SUN MICROSYSTEMS INC

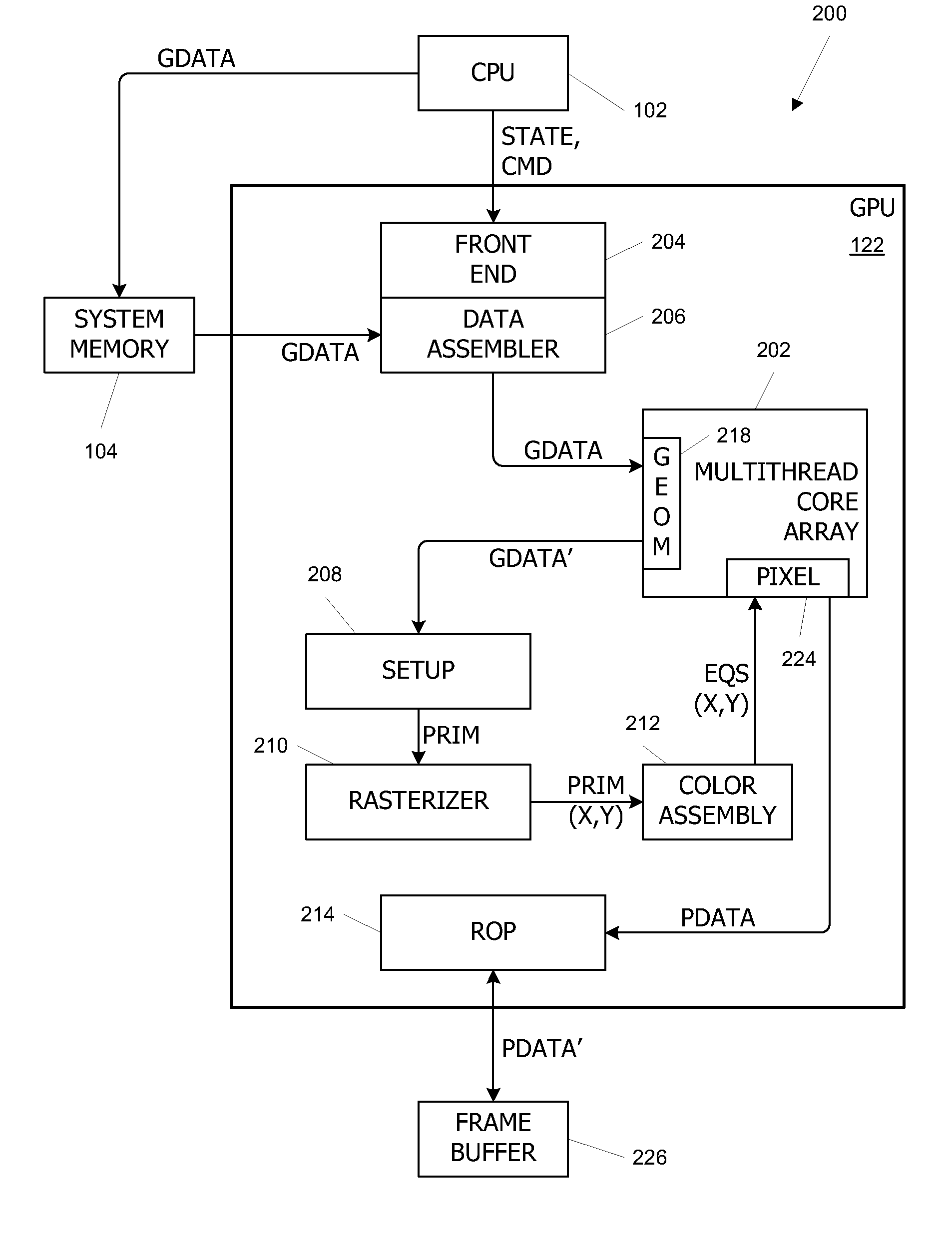

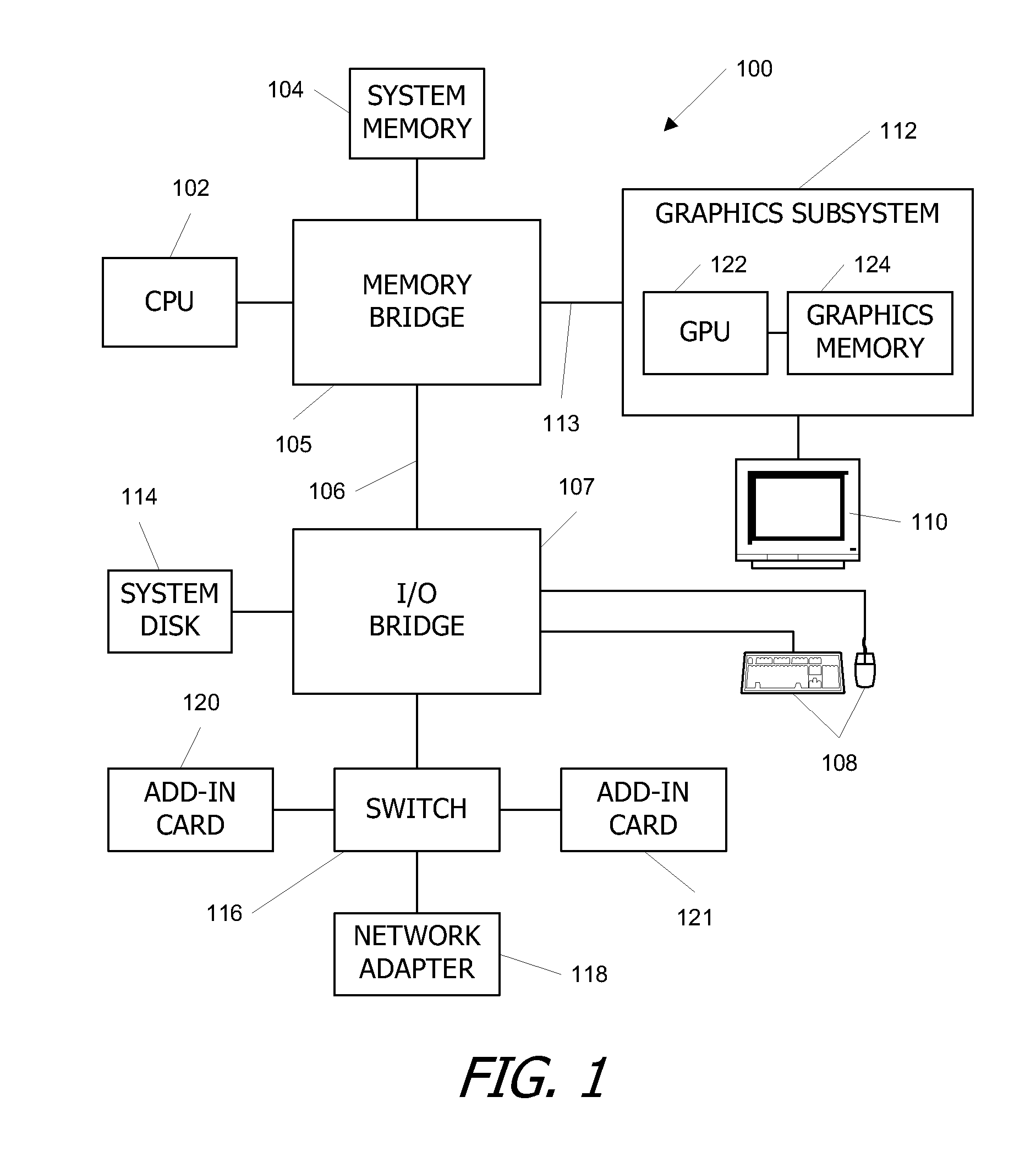

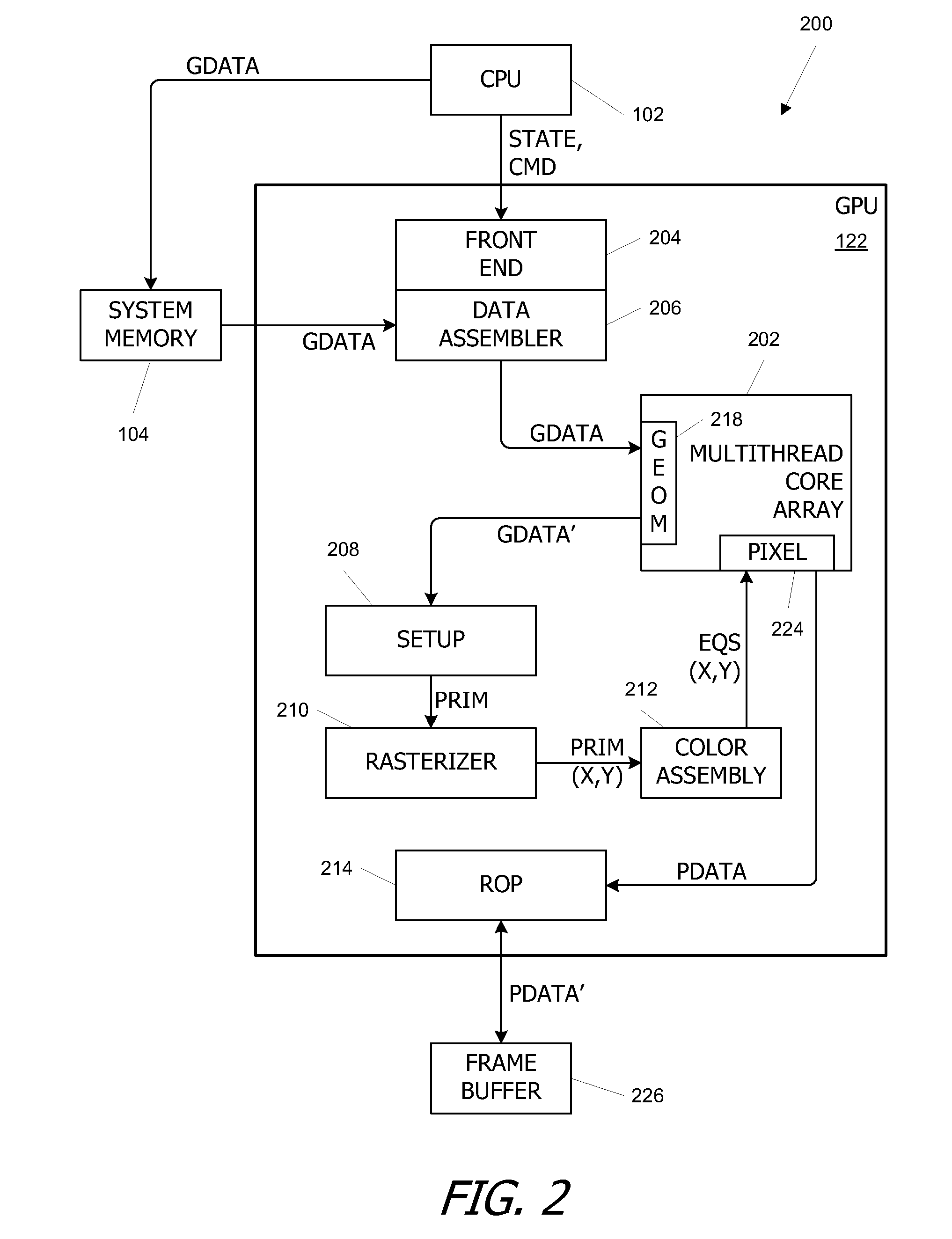

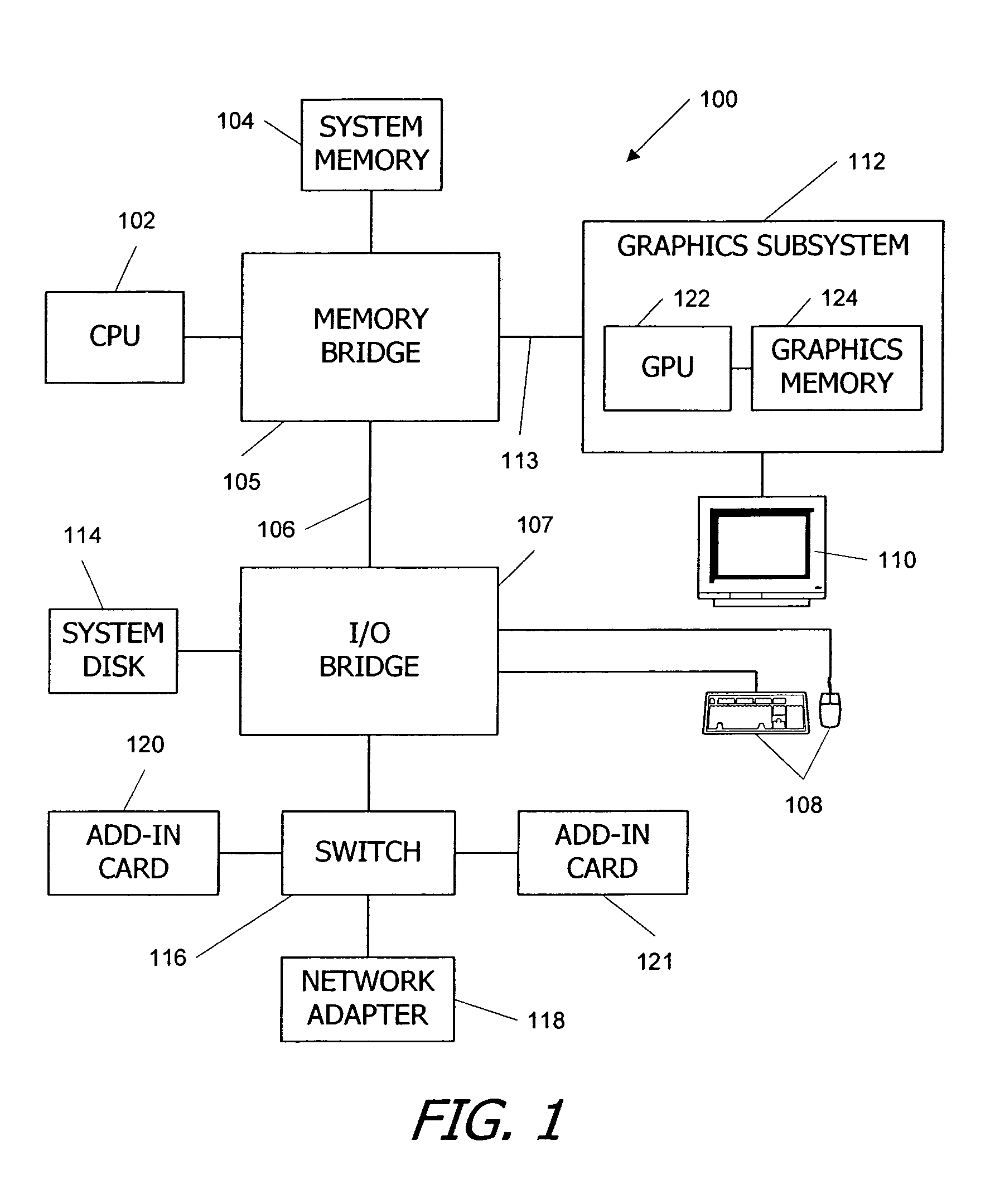

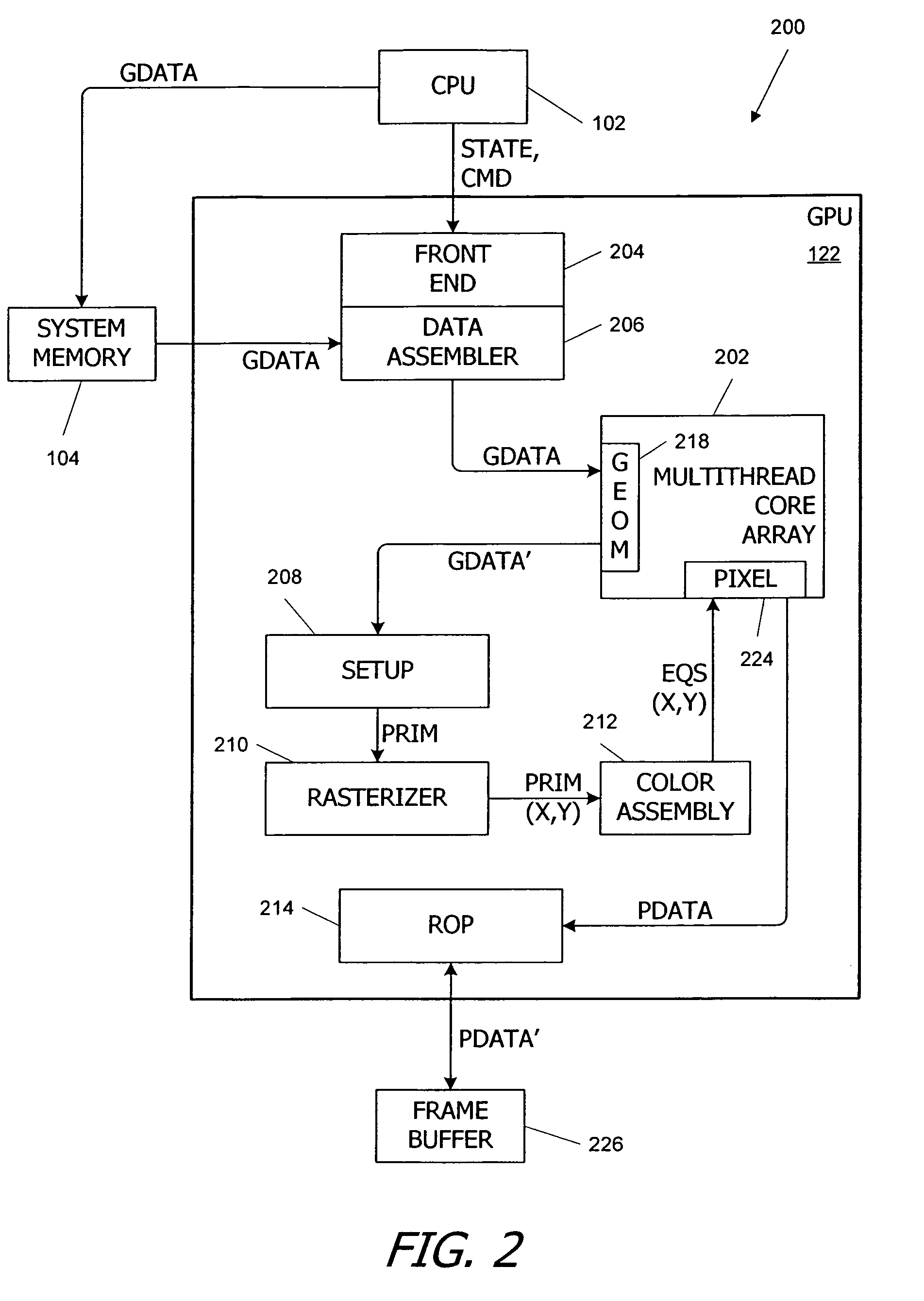

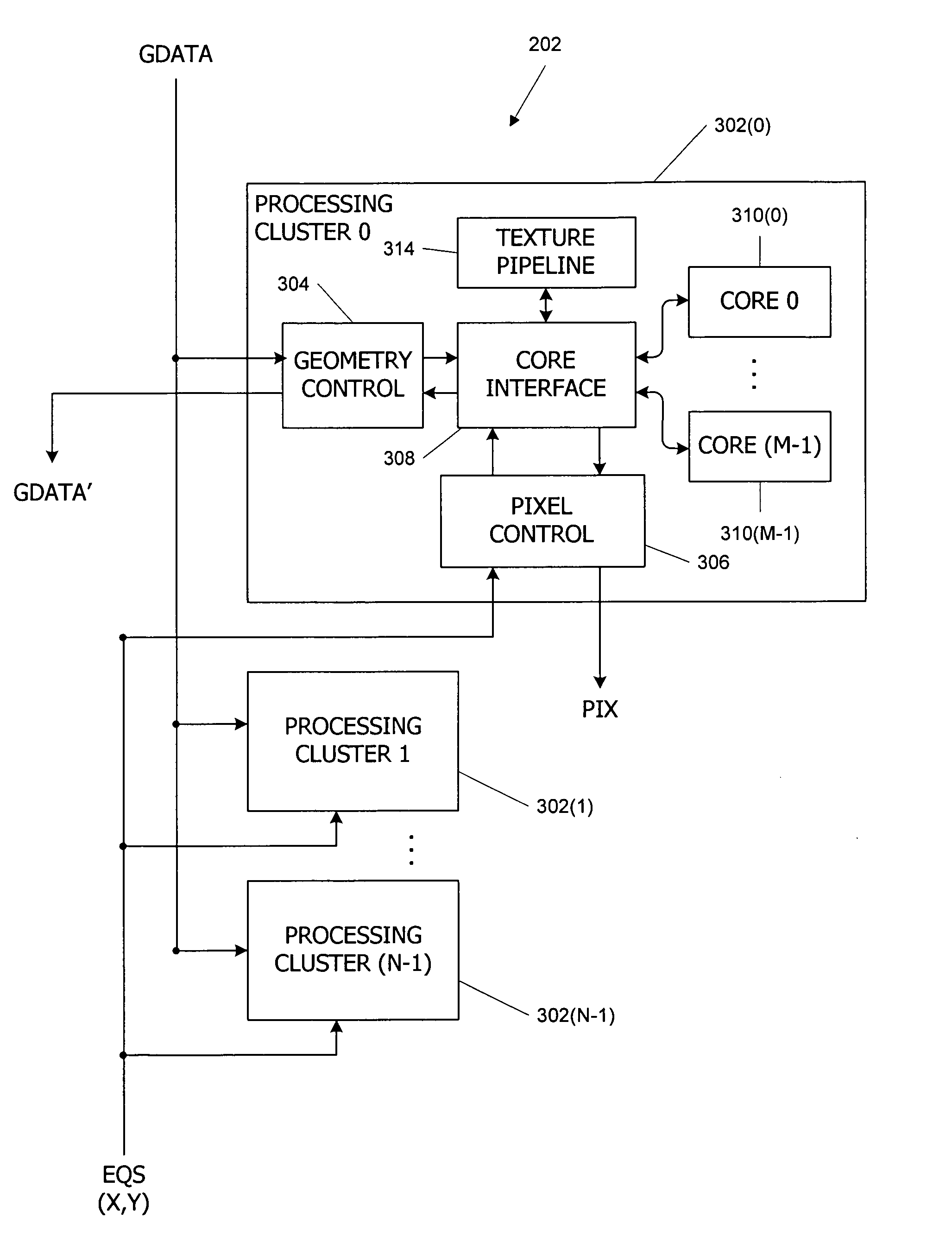

Parallel Array Architecture for a Graphics Processor

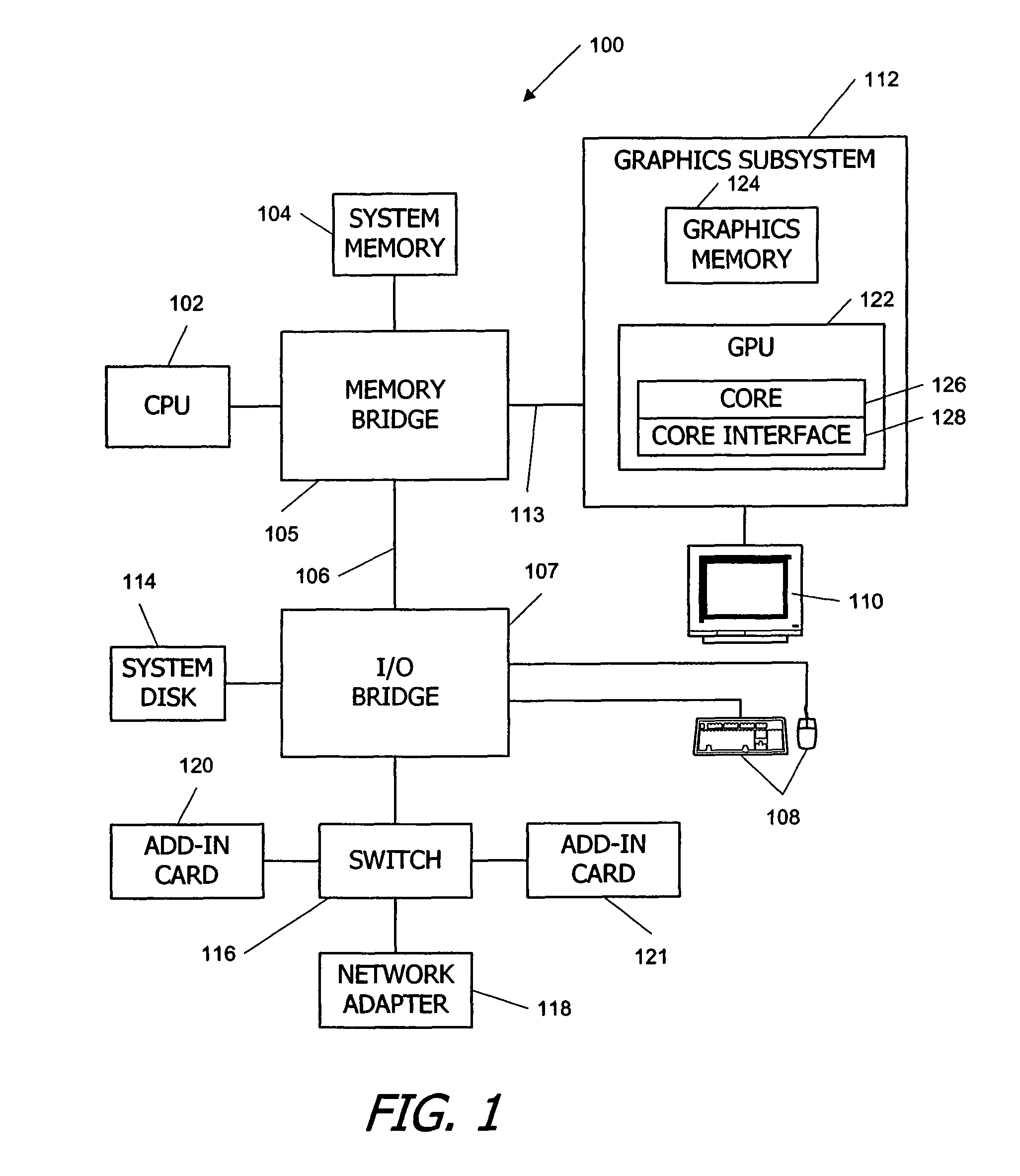

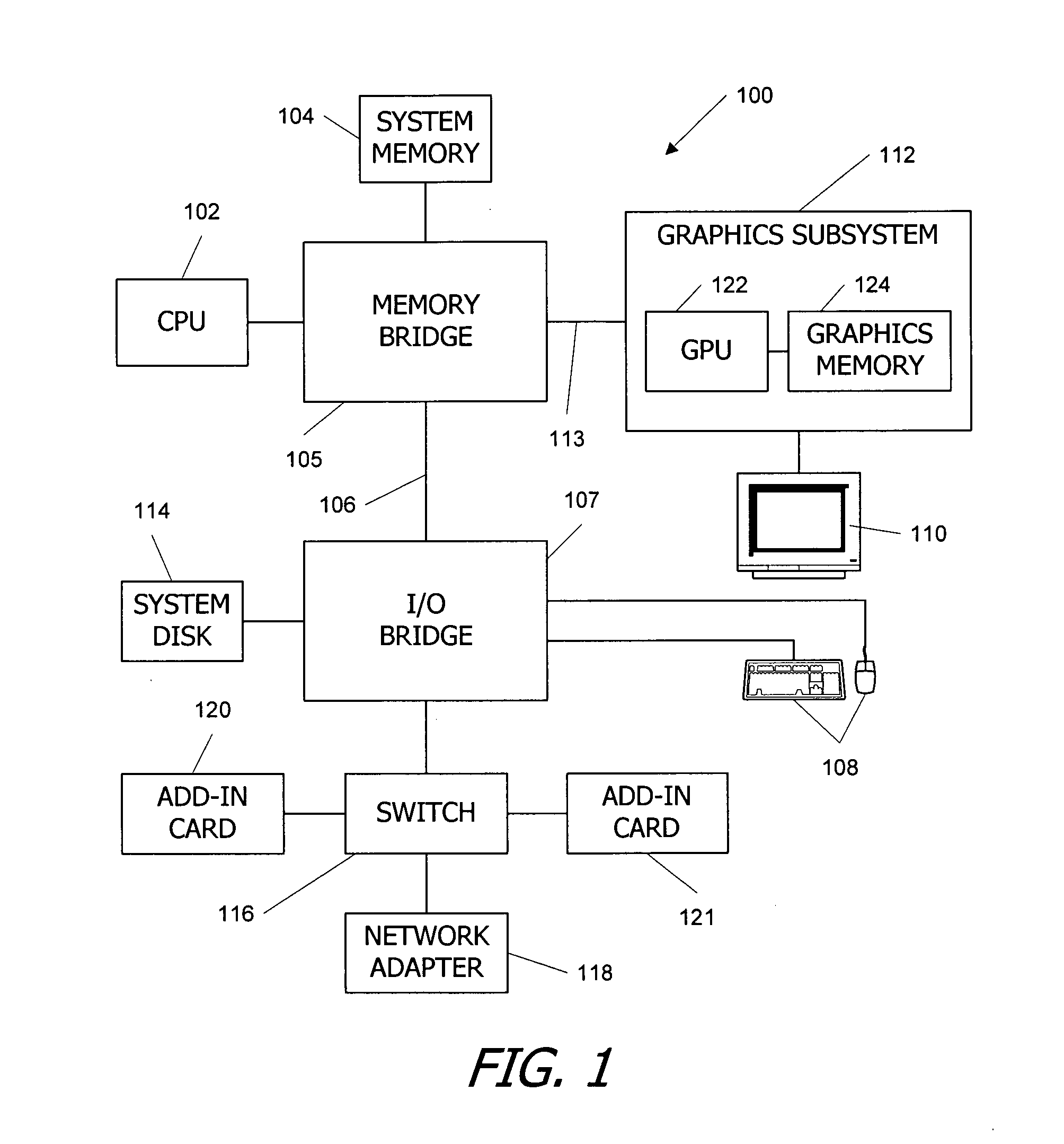

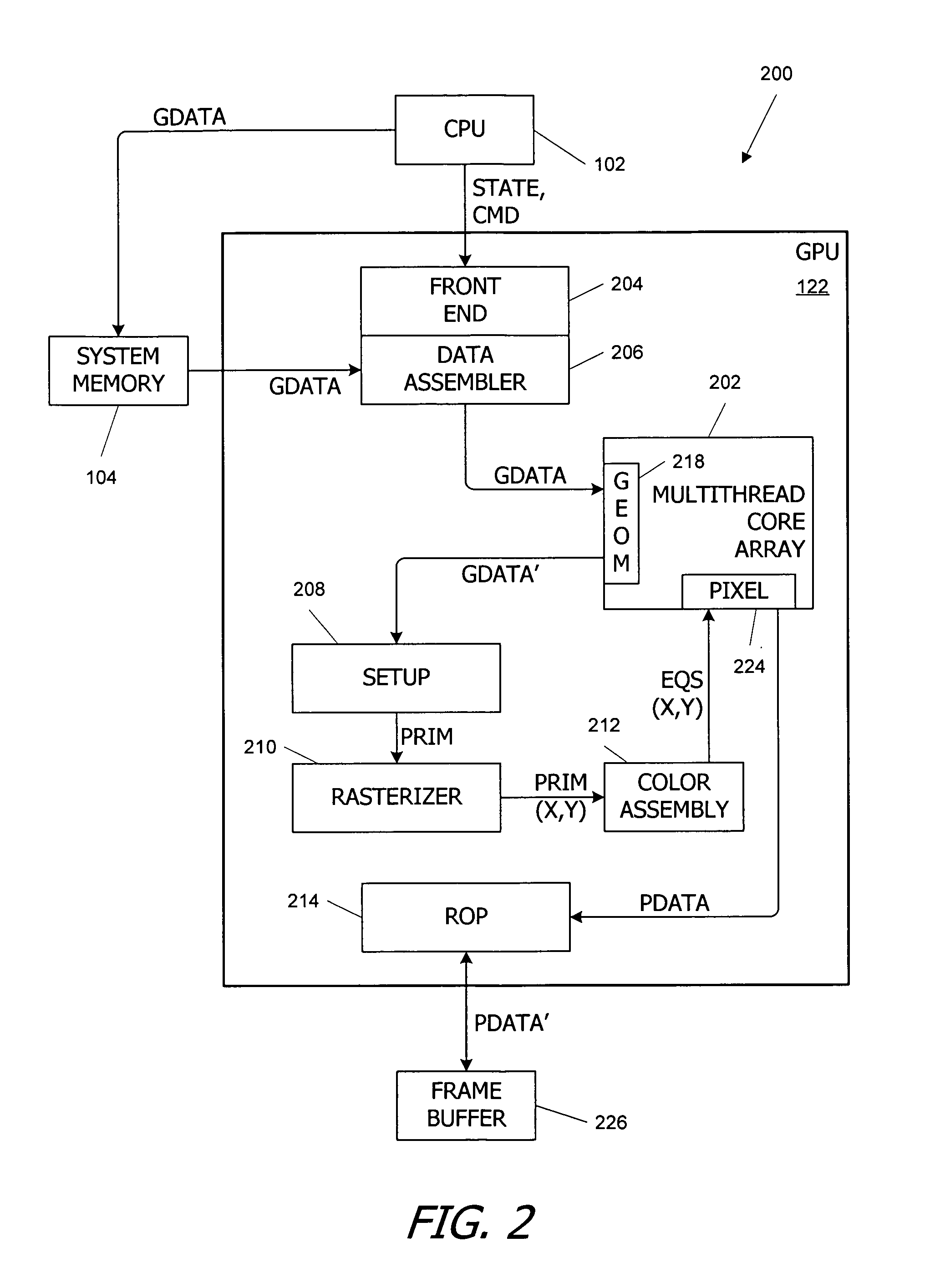

InactiveUS20070159488A1Improving memory localityImprove localitySingle instruction multiple data multiprocessorsCathode-ray tube indicatorsProcessing coreParallel computing

A parallel array architecture for a graphics processor includes a multithreaded core array including a plurality of processing clusters, each processing cluster including at least one processing core operable to execute a pixel shader program that generates pixel data from coverage data; a rasterizer configured to generate coverage data for each of a plurality of pixels; and pixel distribution logic configured to deliver the coverage data from the rasterizer to one of the processing clusters in the multithreaded core array. The pixel distribution logic selects one of the processing clusters to which the coverage data for a first pixel is delivered based at least in part on a location of the first pixel within an image area. The processing clusters can be mapped directly to the frame buffers partitions without a crossbar so that pixel data is delivered directly from the processing cluster to the appropriate frame buffer partitions. Alternatively, a crossbar coupled to each of the processing clusters is configured to deliver pixel data from the processing clusters to a frame buffer having a plurality of partitions. The crossbar is configured such that pixel data generated by any one of the processing clusters is deliverable to any one of the frame buffer partitions.

Owner:NVIDIA CORP

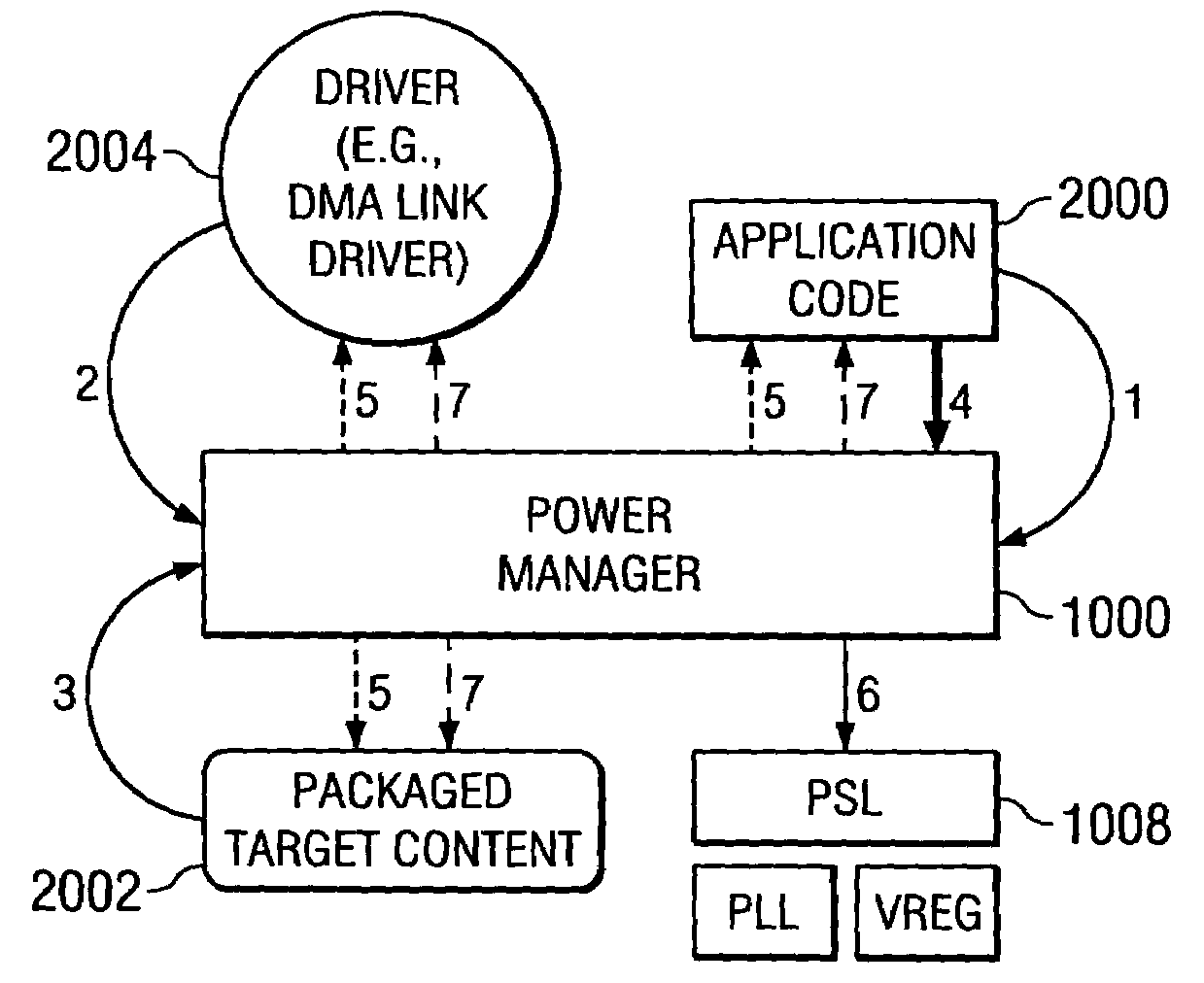

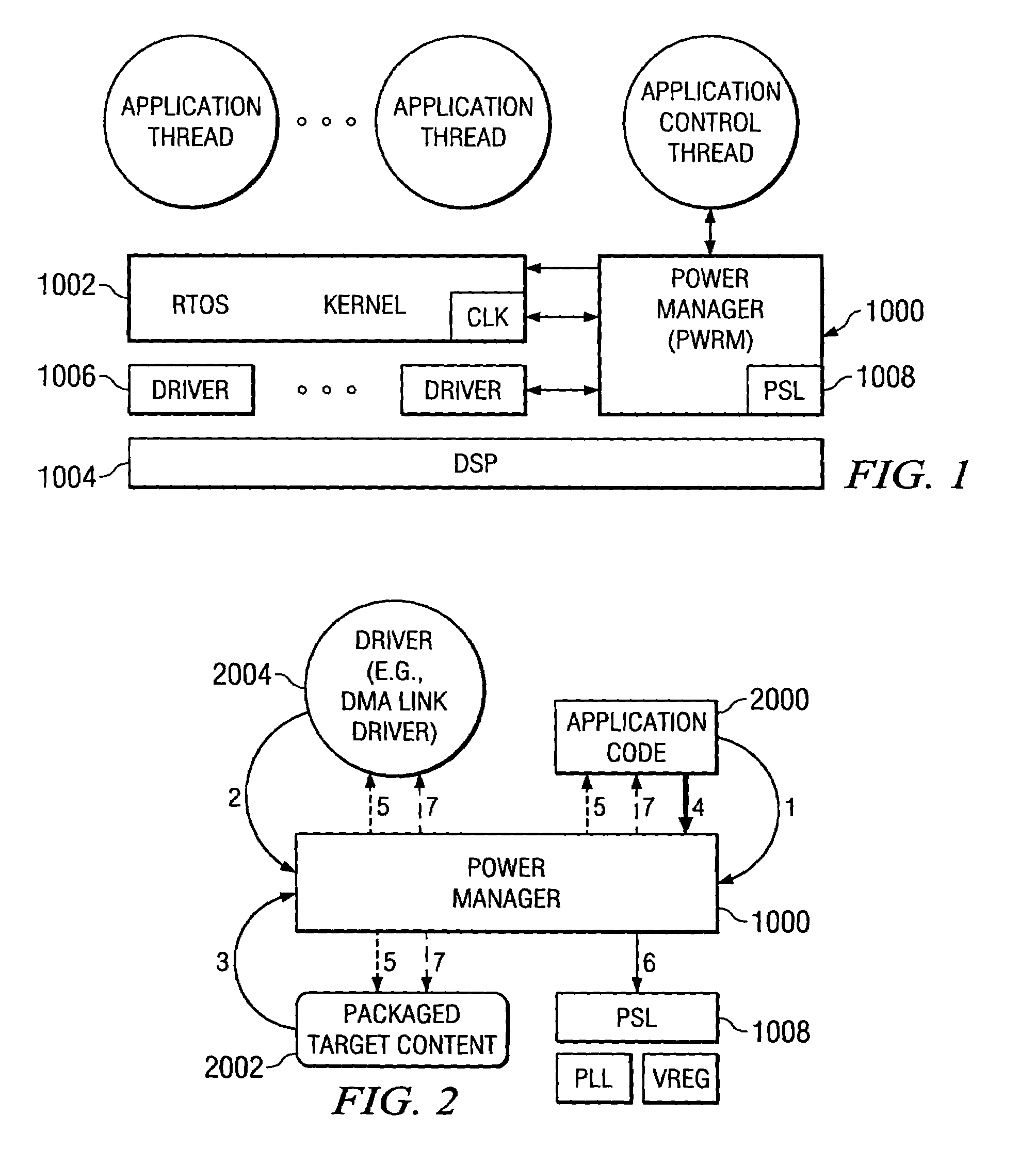

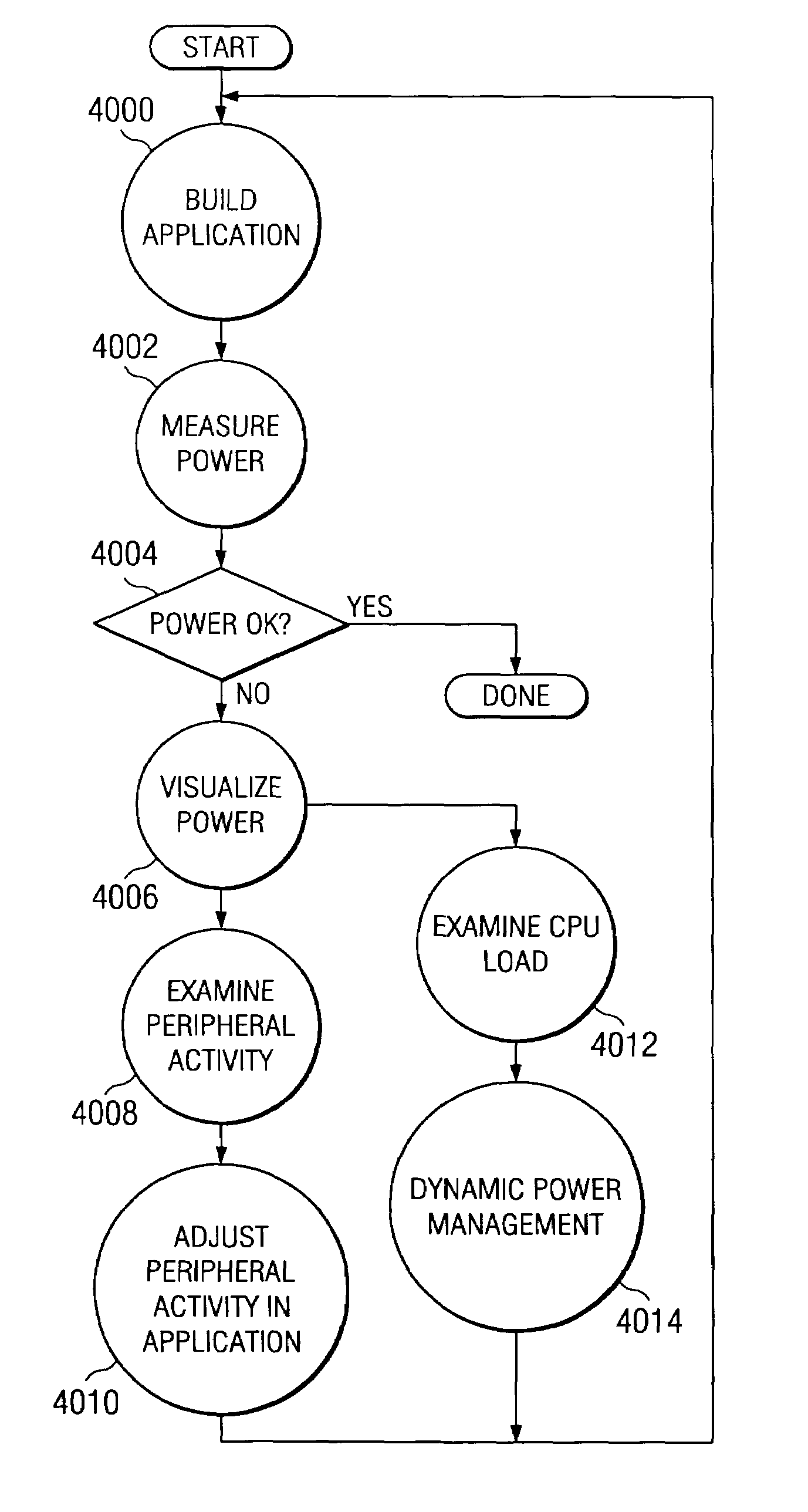

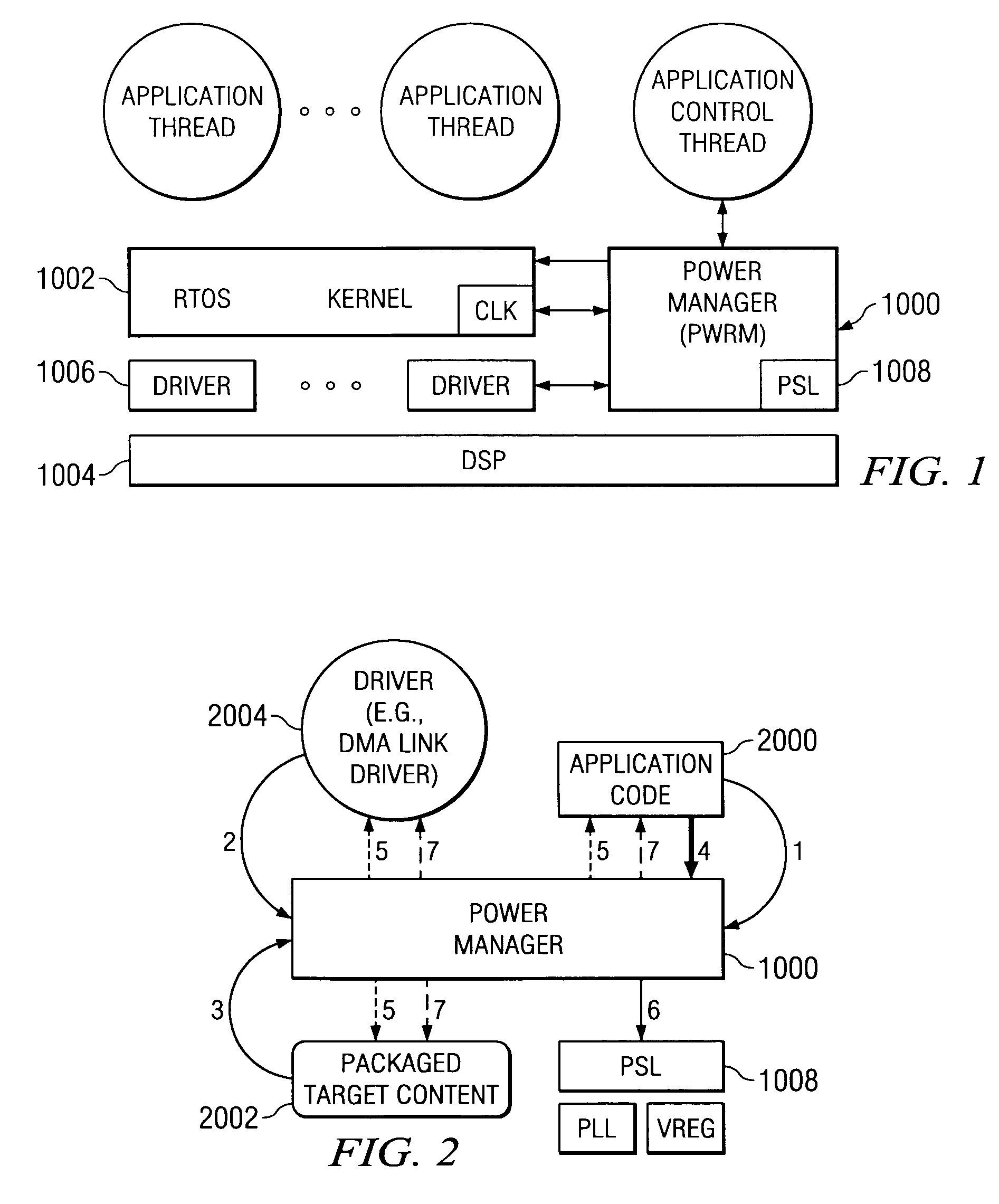

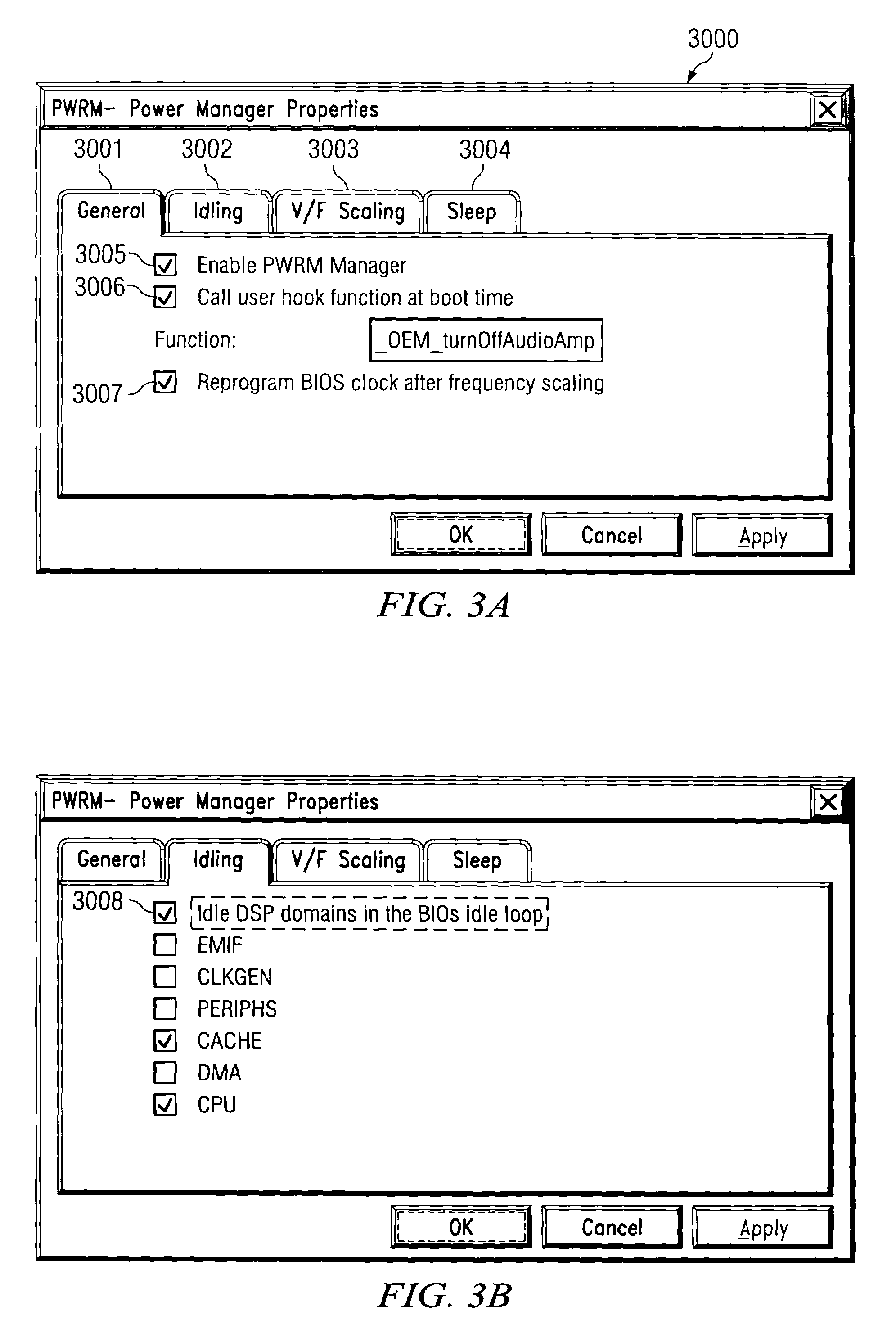

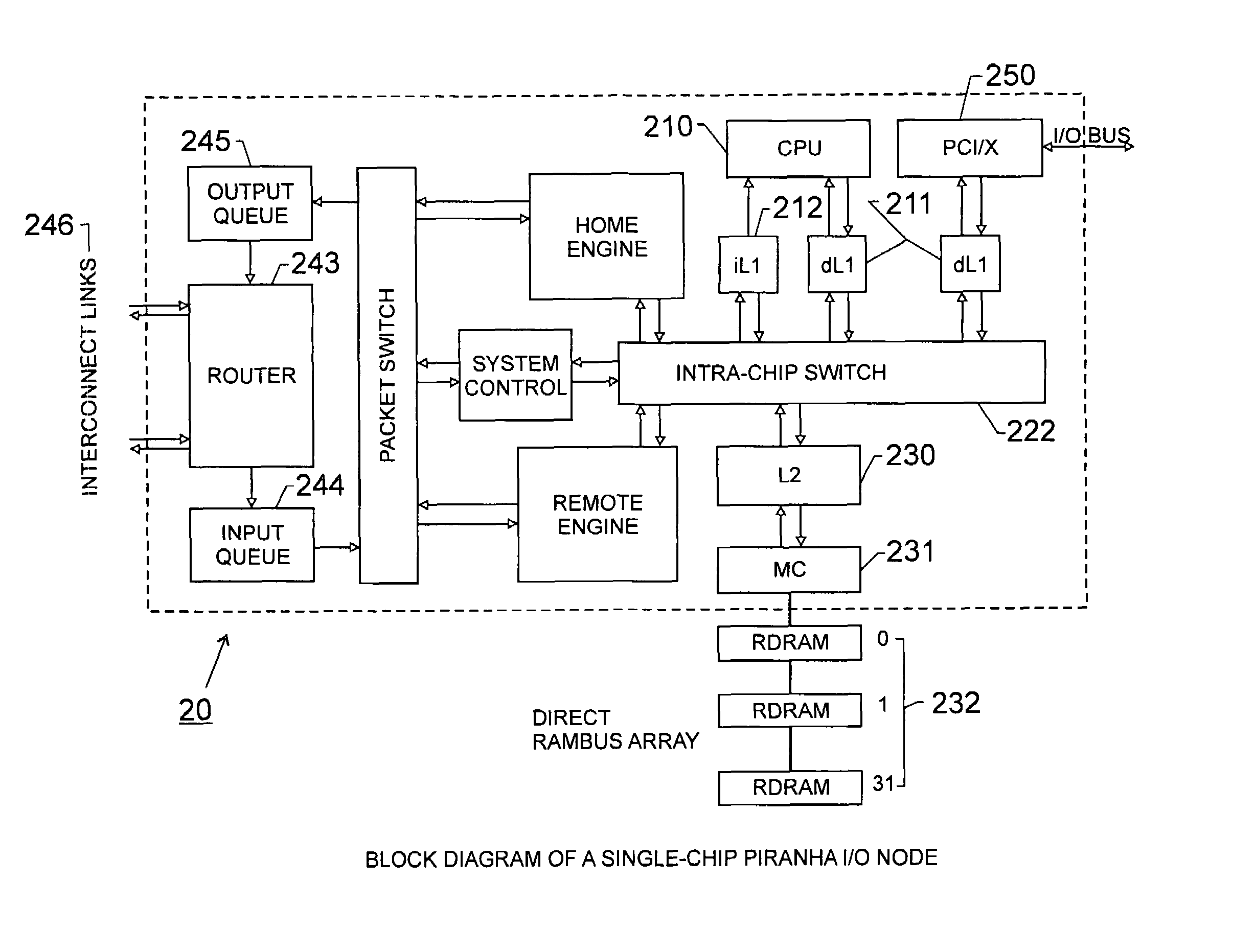

Methodology for managing power consumption in an application

Methods and systems are provided for dynamically managing power consumption in a digital system. These methods and systems broadly provide for permitting clients executing on a digital system to register for notification of power event and to request that power events occur. Registered clients are notified when a power event is requested and the requested power event is caused to occur. Power events are selected from a group comprising setpoint change, enter deep sleep mode, enter snooze mode, and change to power supply status. There may also be user-defined custom power events. If the requested power event is a setpoint change, a check is made to verify that each of the registered clients can operate at the requested setpoint. The digital system may be comprised of processor with a single processing core with a single clock or a processor with multiple processing cores and multiple clocks.

Owner:TEXAS INSTR INC

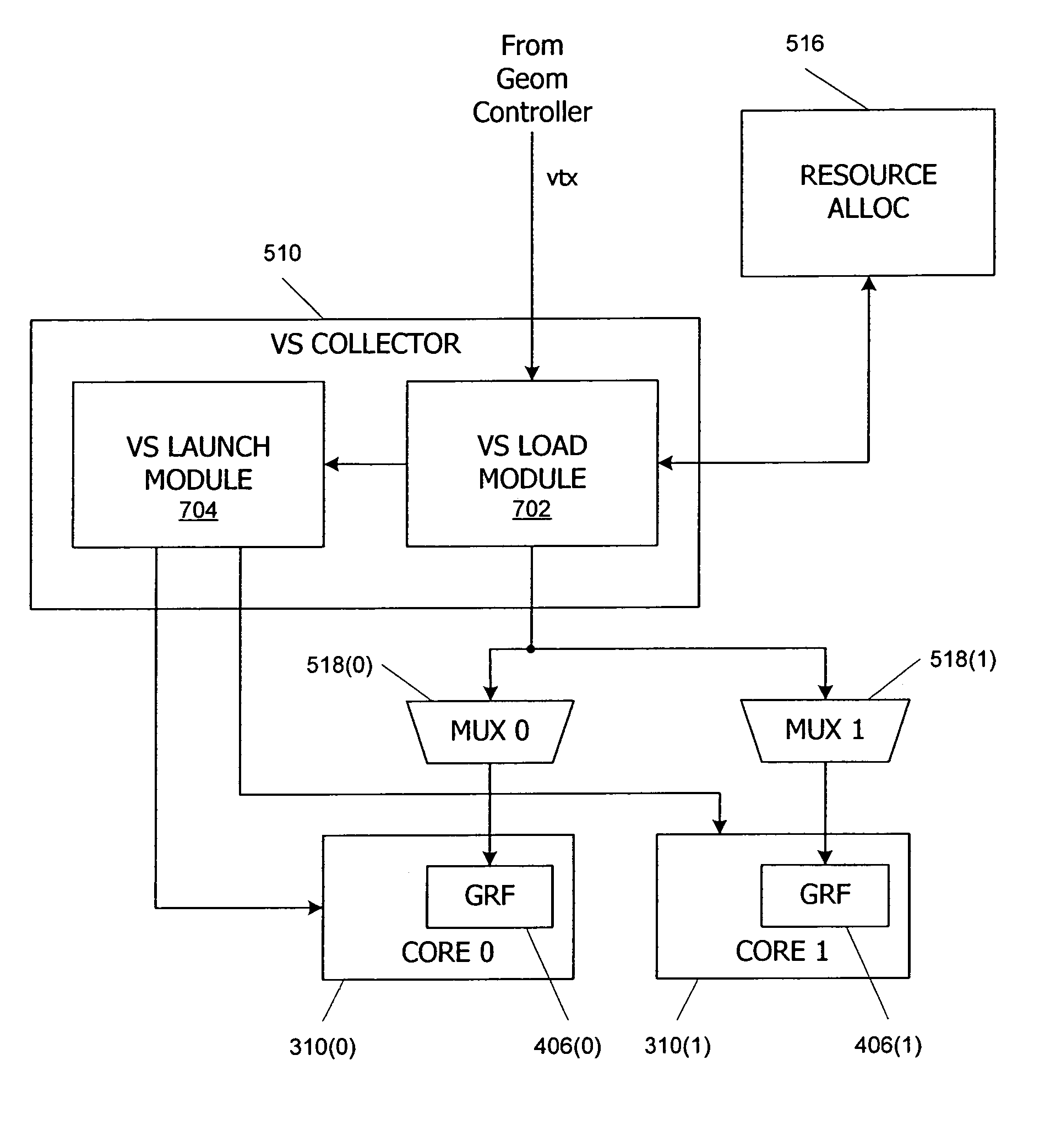

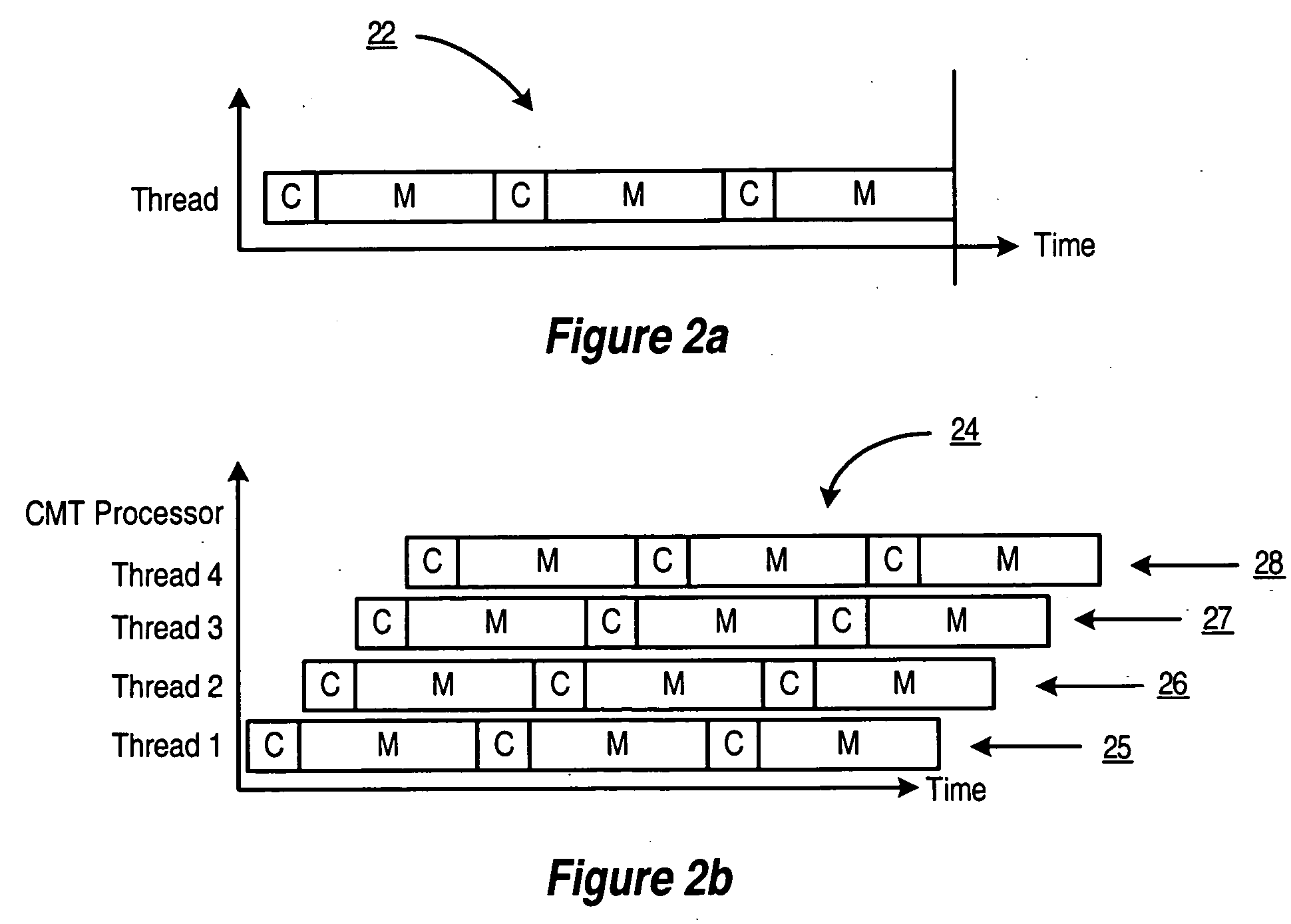

Multithreaded SIMD parallel processor with loading of groups of threads

ActiveUS7447873B1Management loadRegister arrangementsGeneral purpose stored program computerProcessing coreProcessor register

In a multithreaded processing core, groups of threads are executed using single instruction, multiple data (SIMD) parallelism by a set of parallel processing engines. Input data defining objects to be processed received as a stream of input data blocks, and the input data blocks are loaded into a local register file in the core such that all of the data for one of the input objects is accessible to one of the processing engines. The input data can be loaded directly into the local register file, or the data can be accumulated in a buffer and loaded after accumulation, for instance during a launch operation for a SIMD group. Shared input data can also be loaded into a shared memory in the processing core.

Owner:NVIDIA CORP

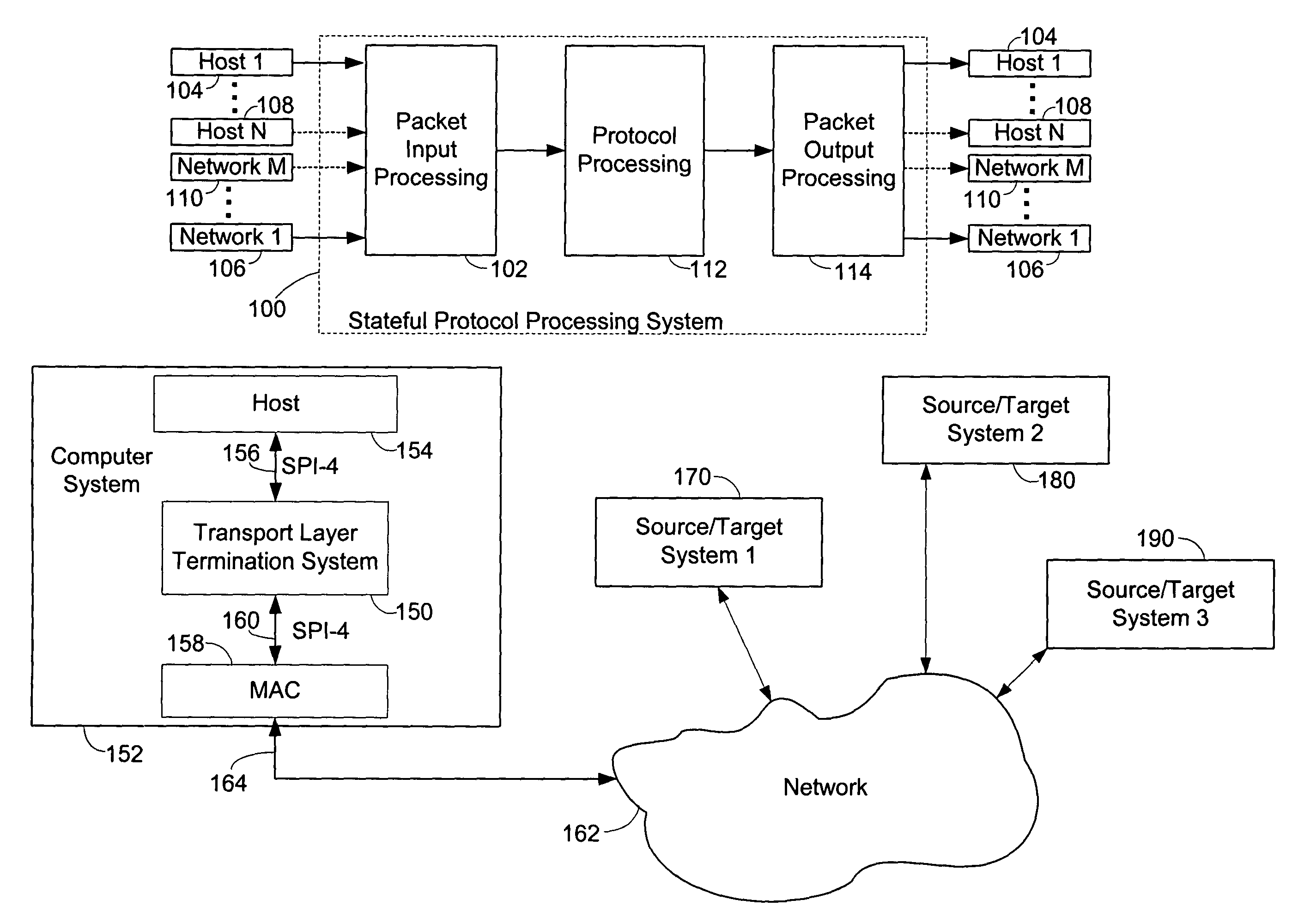

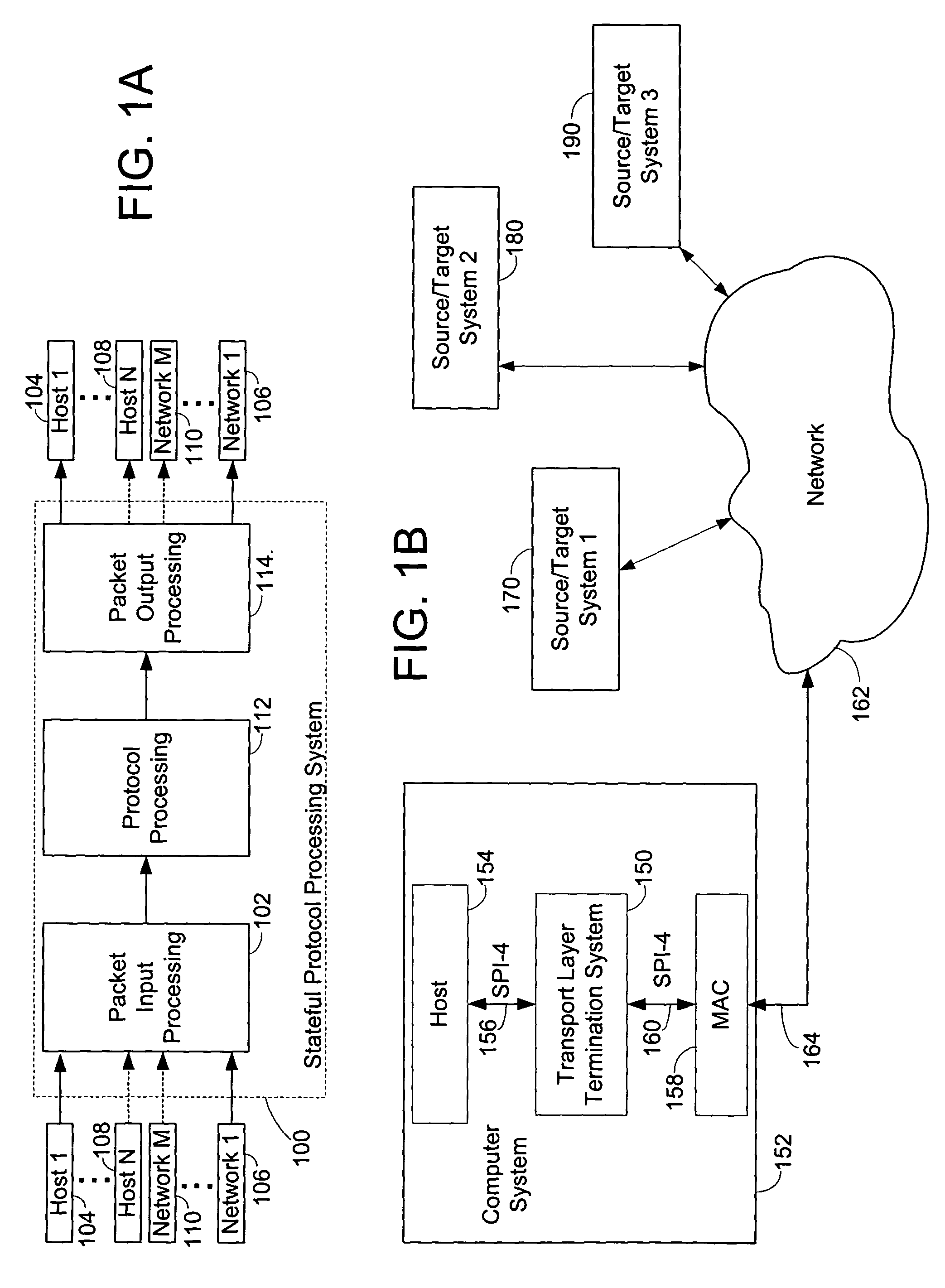

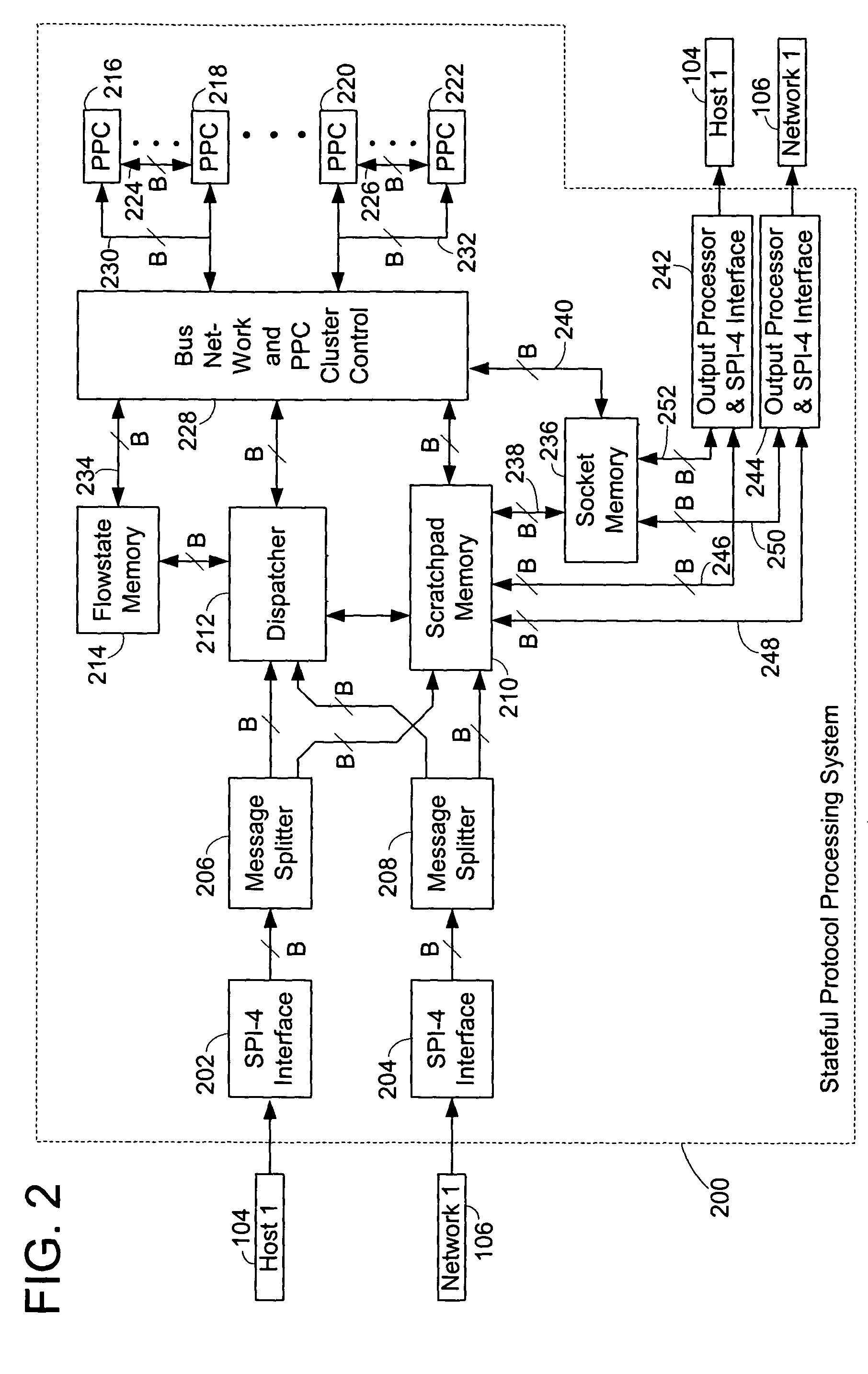

Multi-protocol and multi-format stateful processing

InactiveUS7814218B1Multiple digital computer combinationsTransmissionProtocol processingProcessing core

A system and method of processing data in a stateful protocol processing system (“SPPS”) configured to process a multiplicity of flows of messages is disclosed herein. The method includes receiving a first plurality of messages belonging to a first of the flows comporting with a first stateful protocol. In addition, a second plurality of messages belonging to a second of the flows comporting with a second stateful protocol are also received. Various events of at least first and second types associated with the first flow are then derived from the first plurality of received messages. The method further includes assigning a first protocol processing core to process the events of the first type in accordance with the first stateful protocol. A second protocol processing core is also assigned to process the events of the second type in accordance with the first stateful protocol.

Owner:IKANOS COMMUNICATIONS

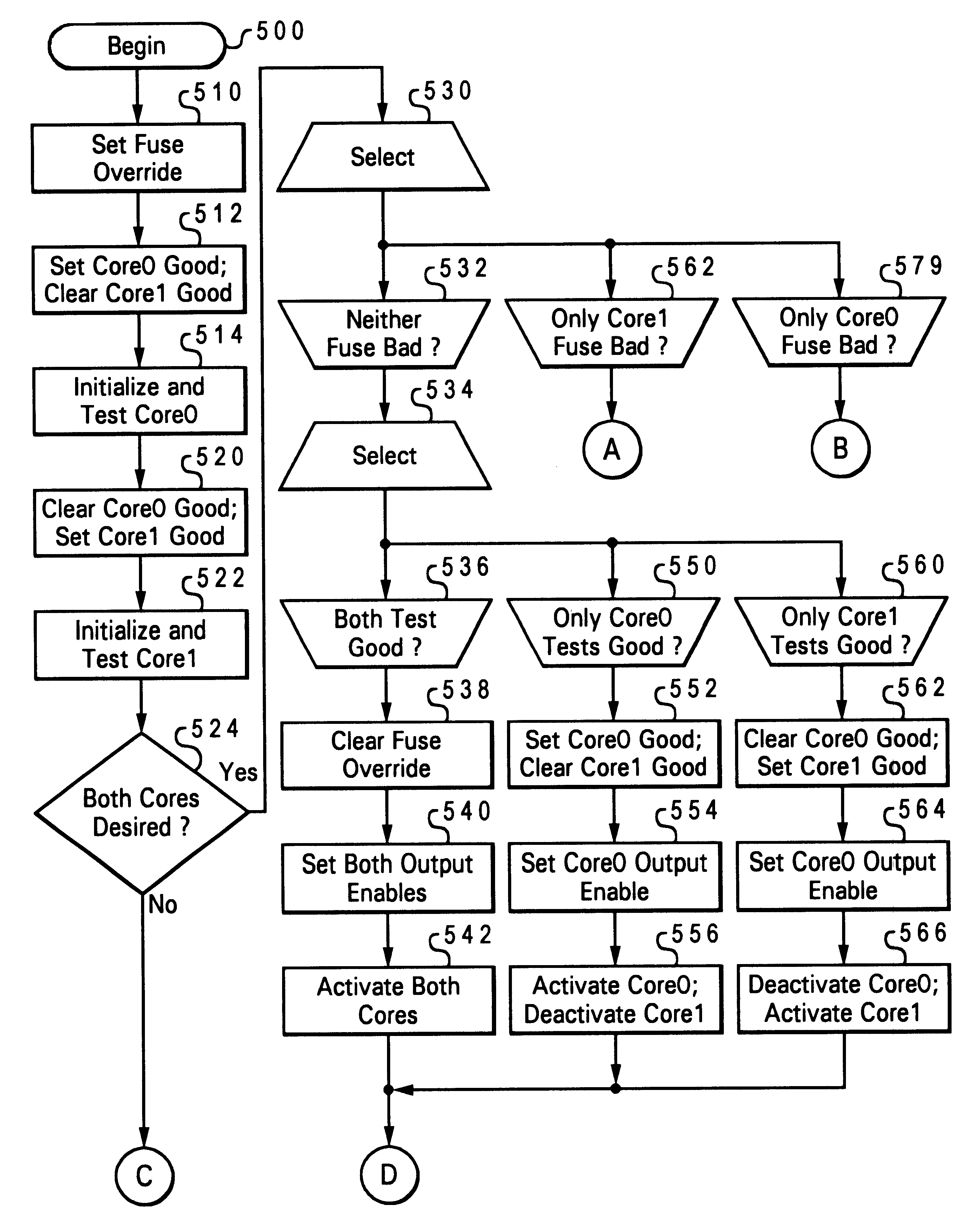

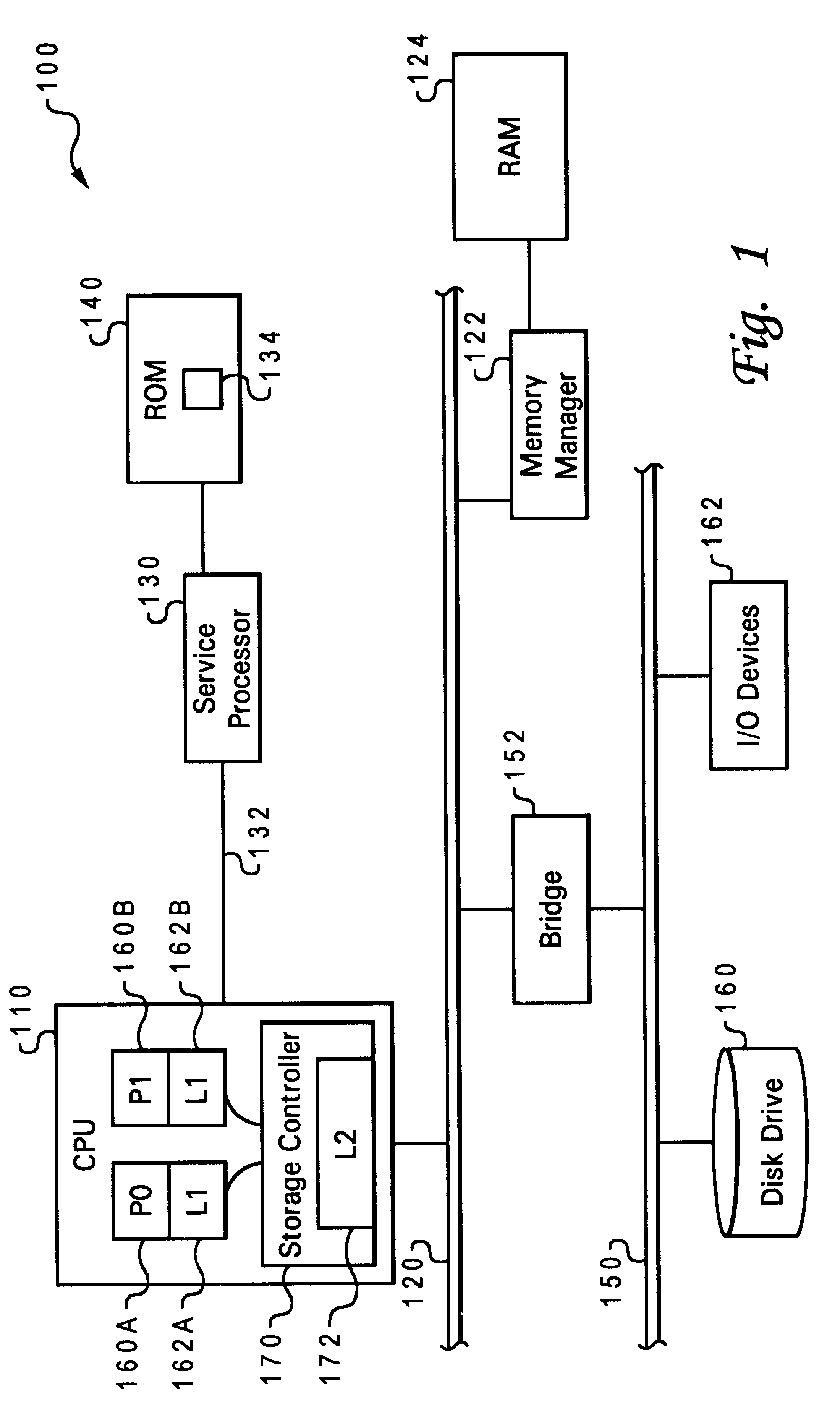

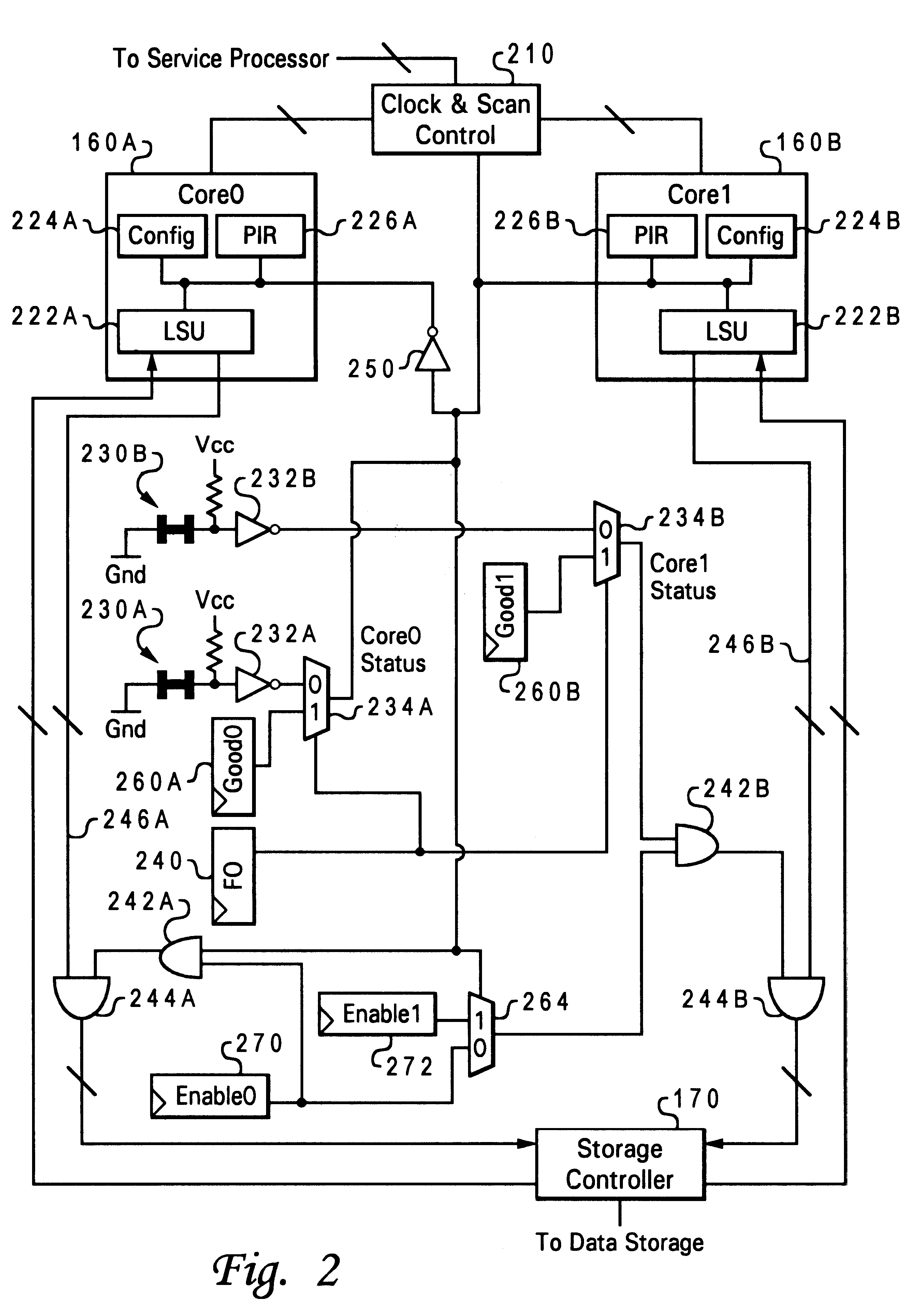

Method and system for dynamically configuring a central processing unit with multiple processing cores

InactiveUS6550020B1Detecting faulty computer hardwareArchitecture with single central processing unitData processing systemProcessing core

A data processing system has at least one integrated circuit containing a central processing unit (CPU) that includes at least first and second processing cores. The integrated circuit also includes input facilities that receive control input specifying which of the processing cores is to be utilized. In addition, the integrated circuit includes configuration logic that decodes the control input and, in response, selectively controls reception of input signals and transmission of output signals of one or more of the processing cores in accordance with the control input. In an illustrative embodiment, the configuration logic is partial-good logic that configures the integrated circuit to utilize the second processing core, in lieu of a defective or inactive first processing core, as a virtual first processing core.

Owner:IBM CORP

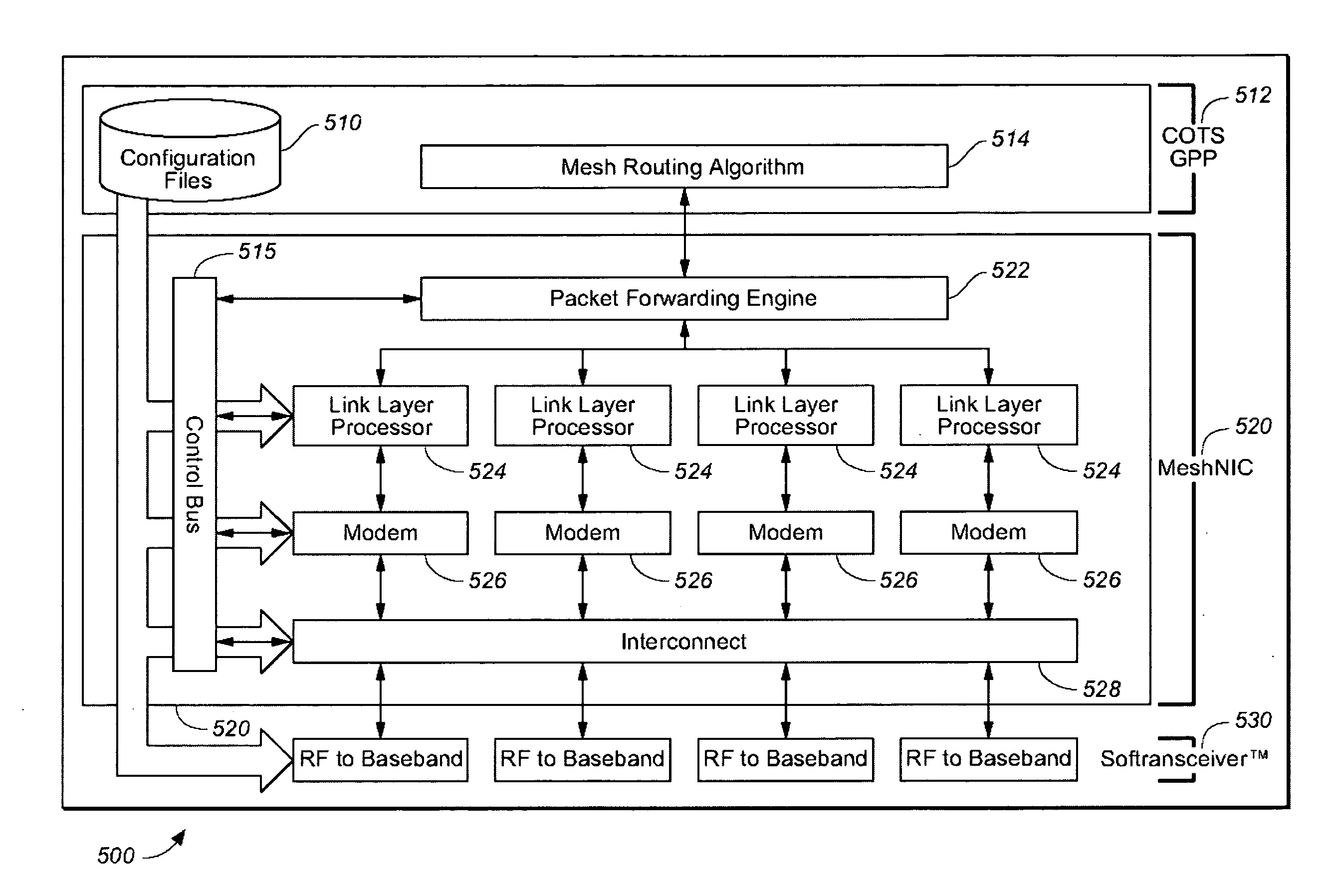

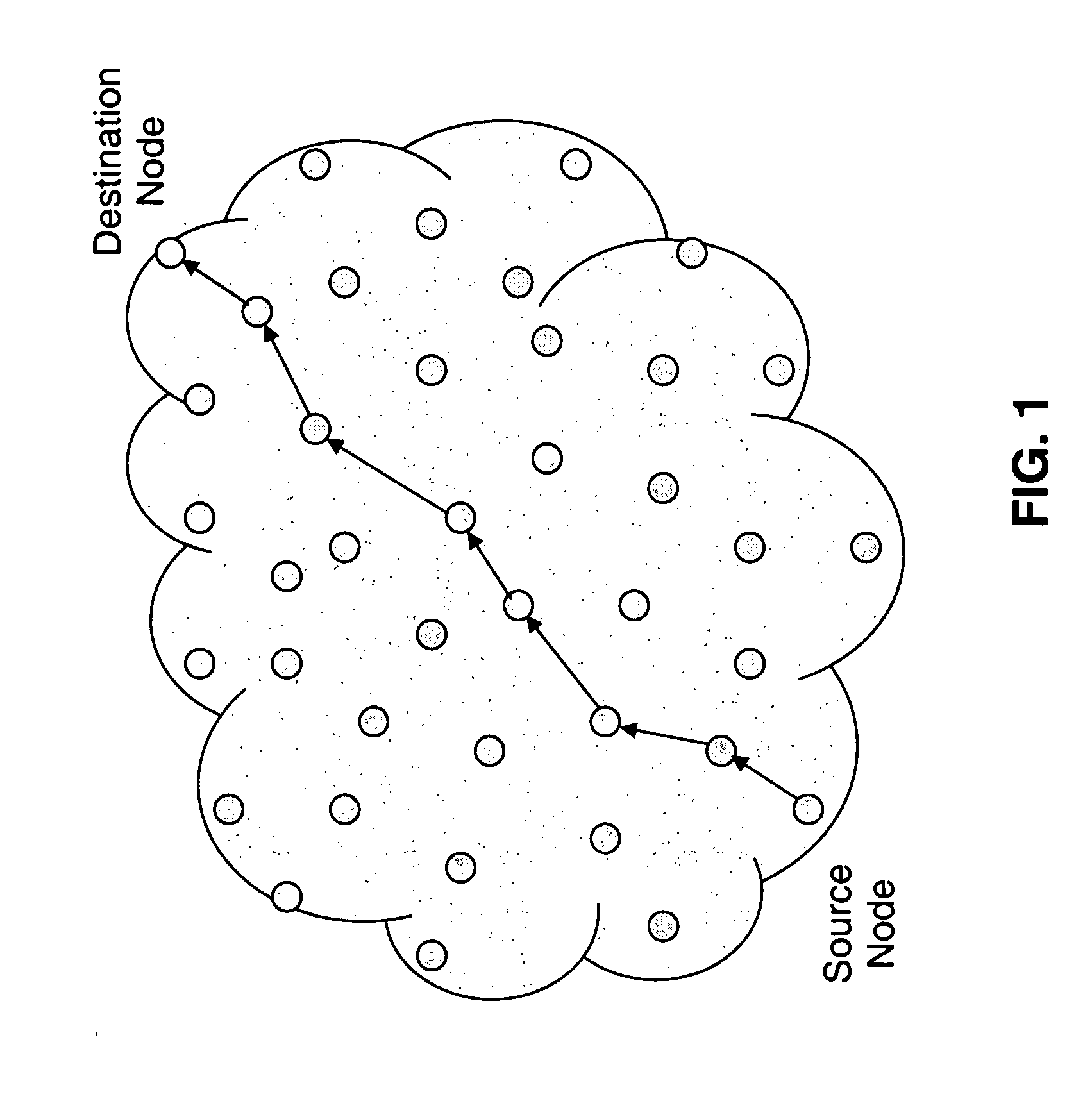

Mobile nodal based communication system, method and apparatus

ActiveUS20090225751A1Consumes powerSufficiently fast for effective routingError preventionTransmission systemsCommunications systemSoftware define radio

An improved micro architectural approach for a network microprocessor has low power consumption, and employs two specialized processing cores, a MAC processing core and a network processor core. Each of these processing cores has facilities designed for a specific set of functions, to handle ISO layer 2 and layer 3 functionality in a packet switched Software Defined Radio mobile network.

Owner:ROCKWELL COLLINS INC

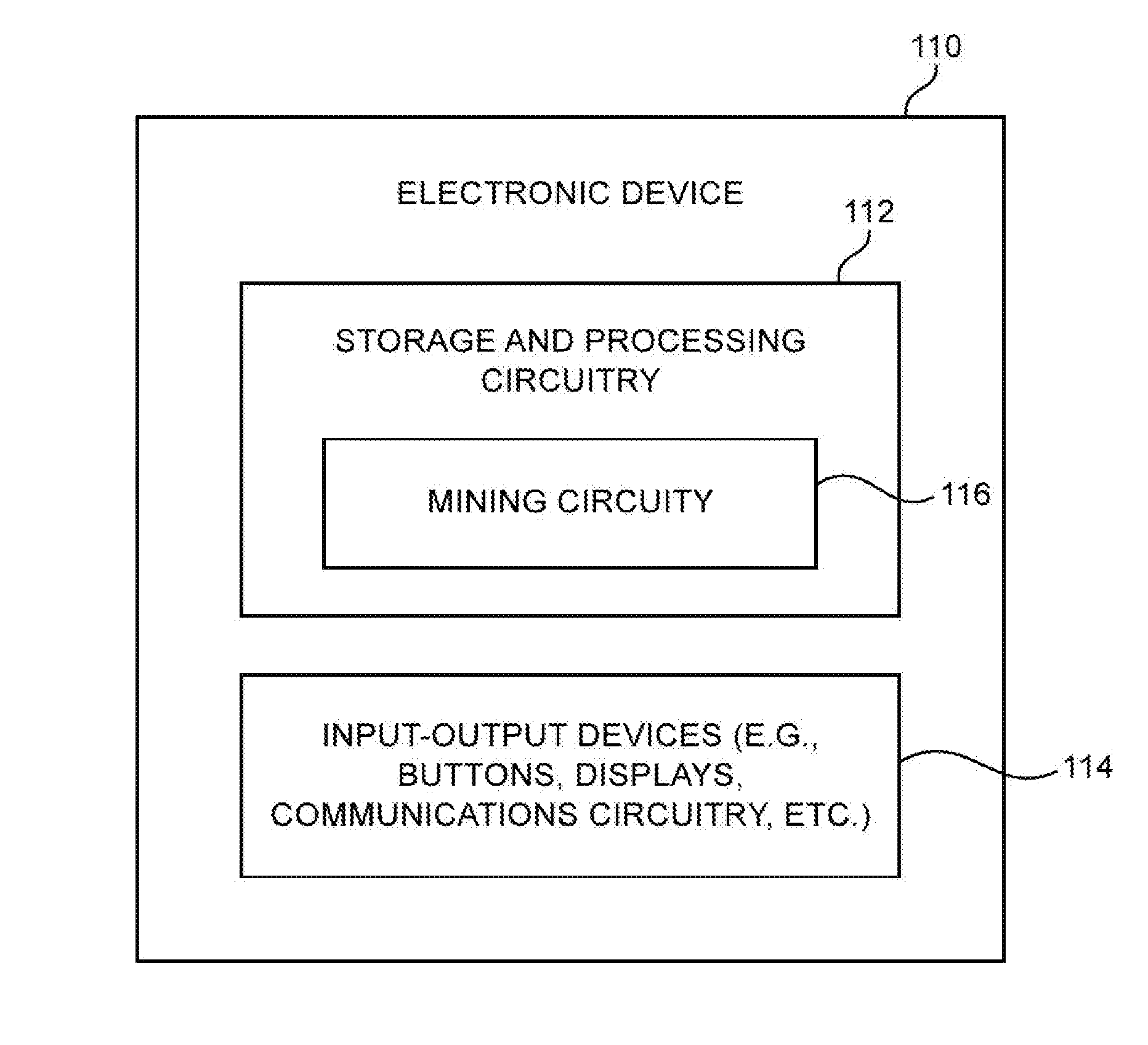

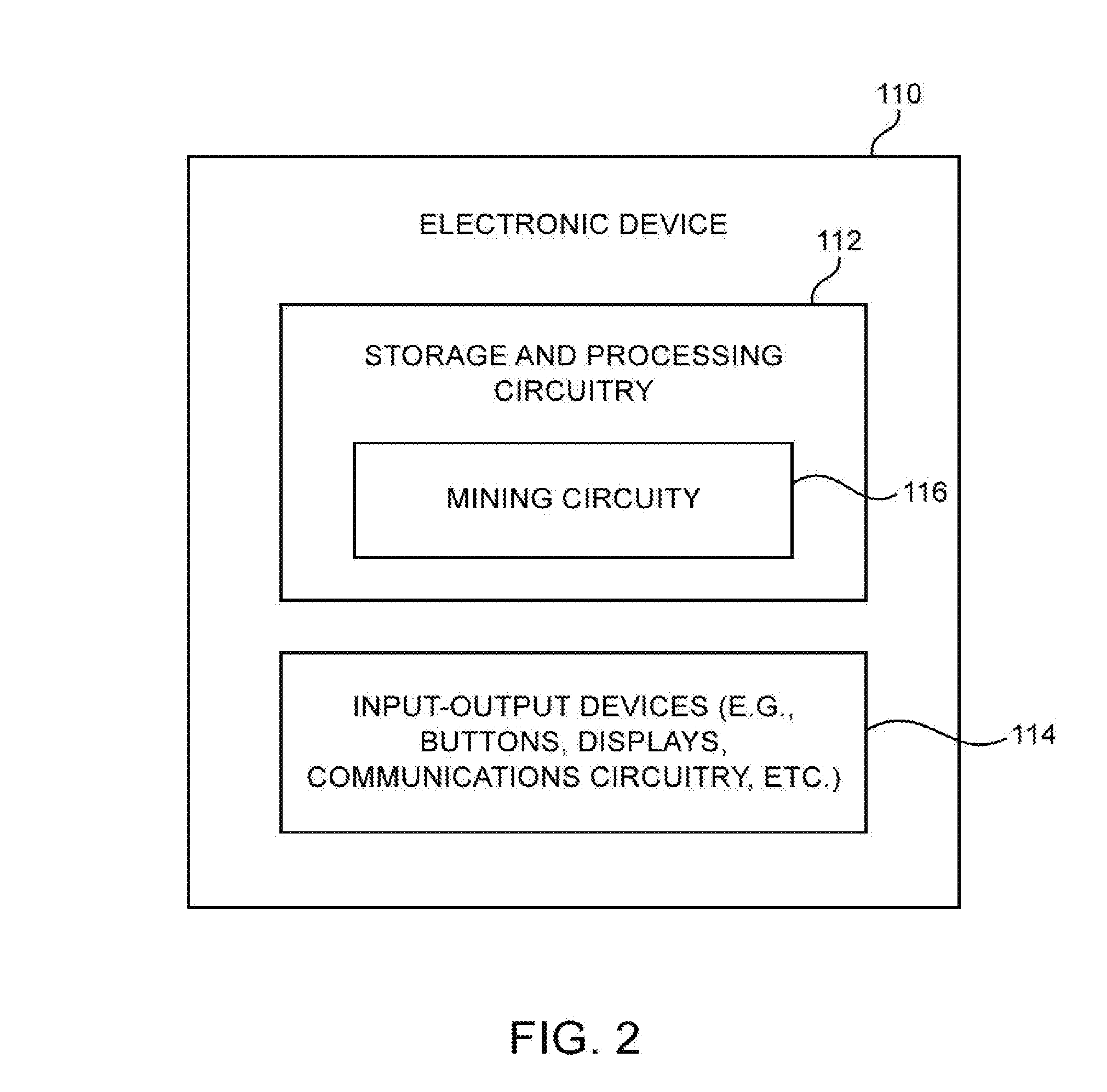

Digital currency mining circuitry having shared processing logic

ActiveUS20160125040A1Increase consumptionIncrease powerFinanceWeb data indexingDigital currencyProcessing core

An integrated circuit may be provided with cryptocurrency mining capabilities. The integrated circuit may include control circuitry and a number of processing cores that complete a Secure Hash Algorithm 256 (SHA-256) function in parallel. Logic circuitry may be shared between multiple processing cores. Each processing core may perform sequential rounds of cryptographic hashing operations based on a hash input and message word inputs. The control circuitry may control the processing cores to complete the SHA-256 function over different search spaces. The shared logic circuitry may perform a subset of the sequential rounds for multiple processing cores. If desired, the shared logic circuitry may generate message word inputs for some of the sequential rounds across multiple processing cores. By sharing logic circuitry across cores, chip area consumption and power efficiency may be improved relative to scenarios where the cores are formed using only dedicated logic.

Owner:COINBASE

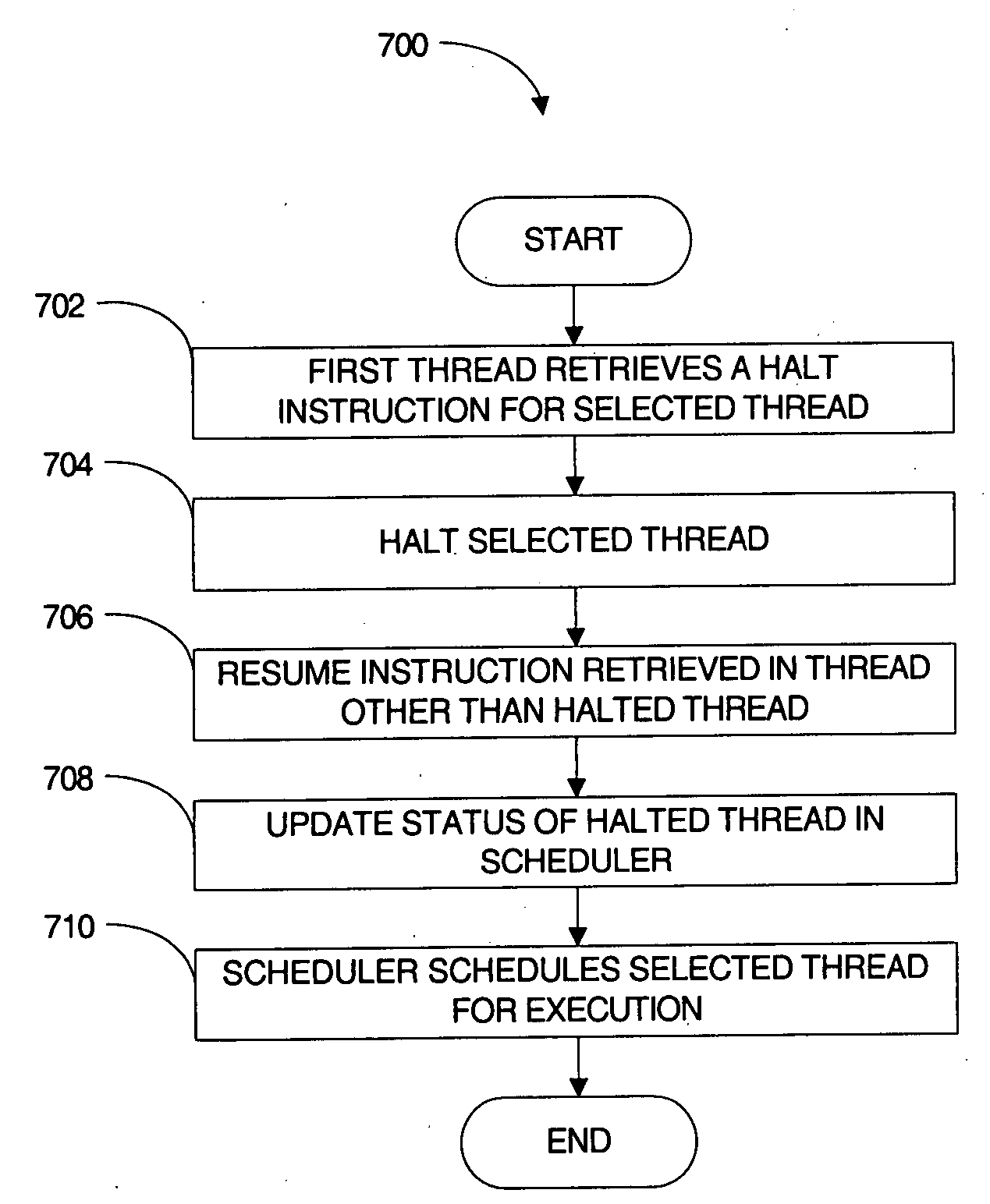

System and method for controlling thread suspension in a multithreaded processor

InactiveUS20060136919A1Energy efficient ICTMultiprogramming arrangementsProcessing coreParallel computing

A multi-thread processor including a processing core. The processing core including multiple threads and a scheduler. The scheduler includes a thread state register. The thread state register being capable of storing a selective wait state for a selected one of the threads. A method of scheduling threads in a multi-thread processor is also disclosed.

Owner:SUN MICROSYSTEMS INC

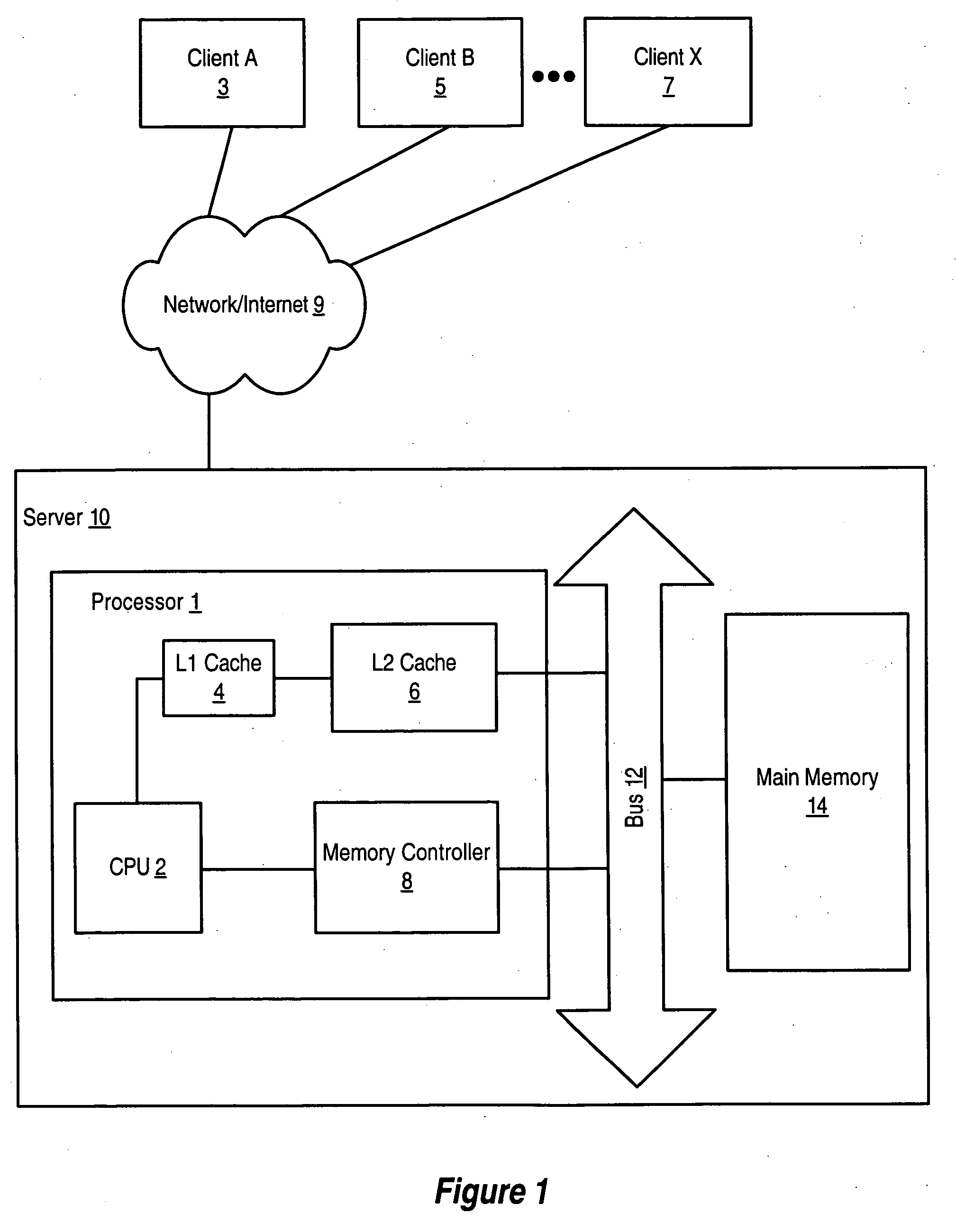

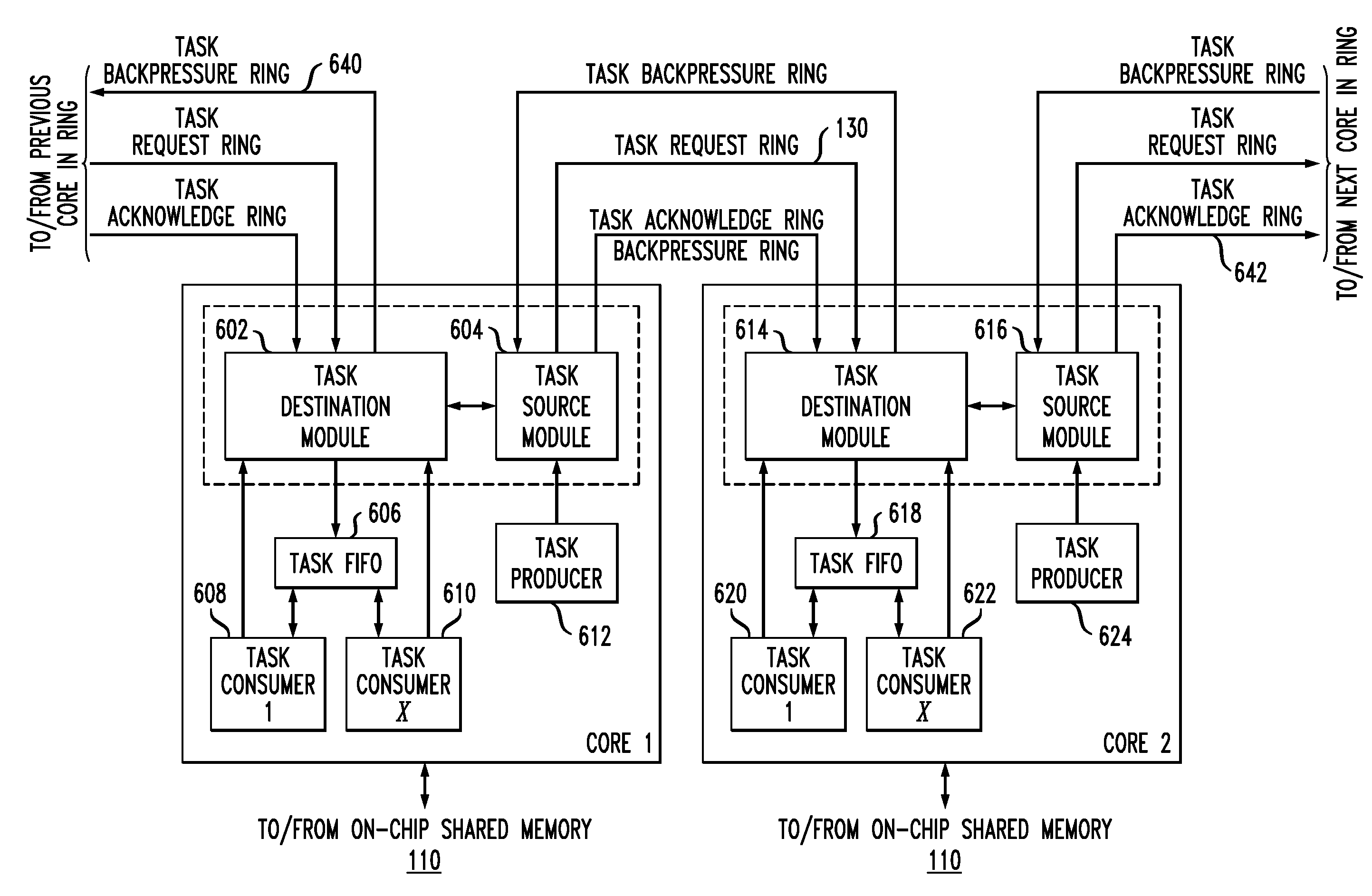

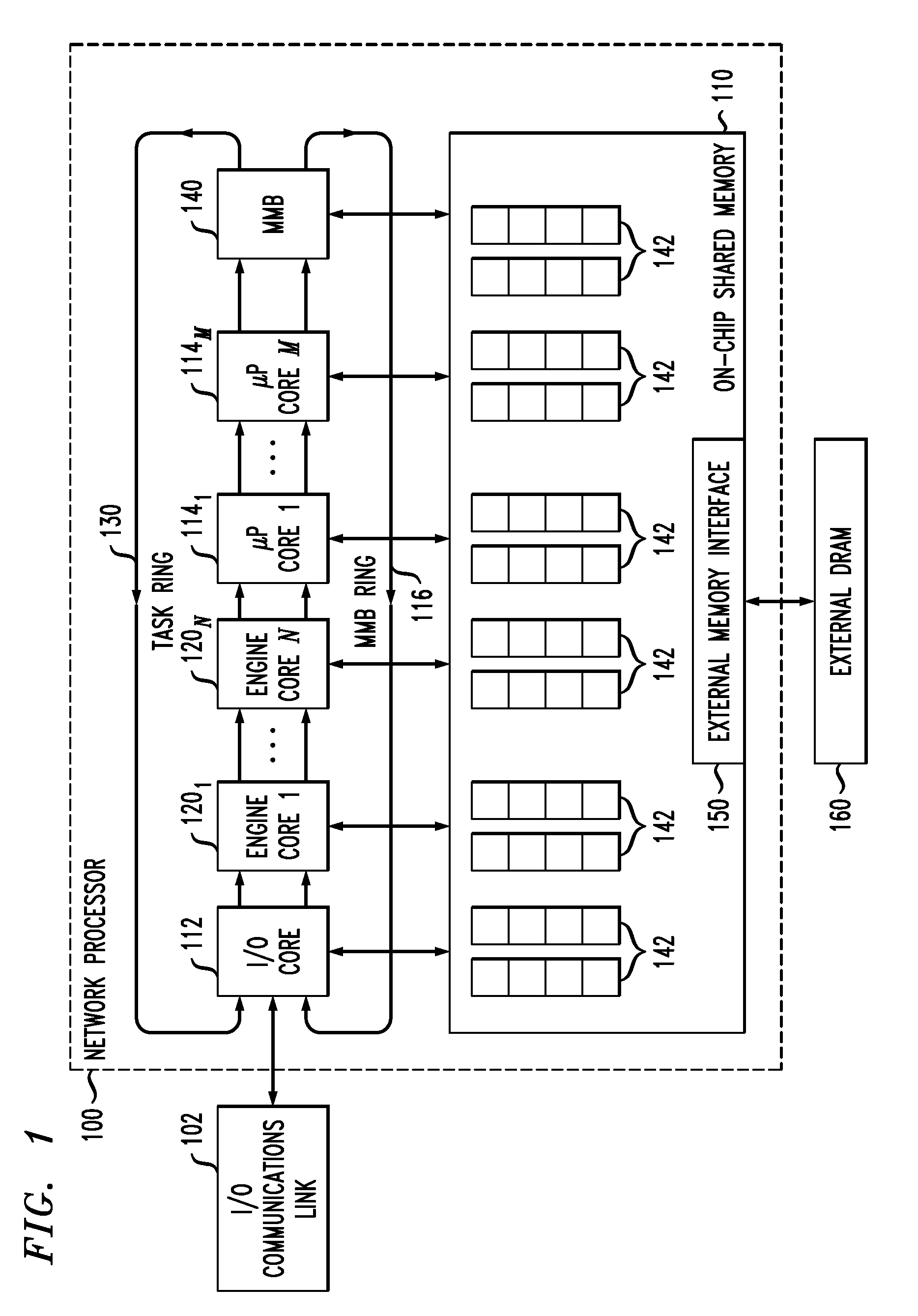

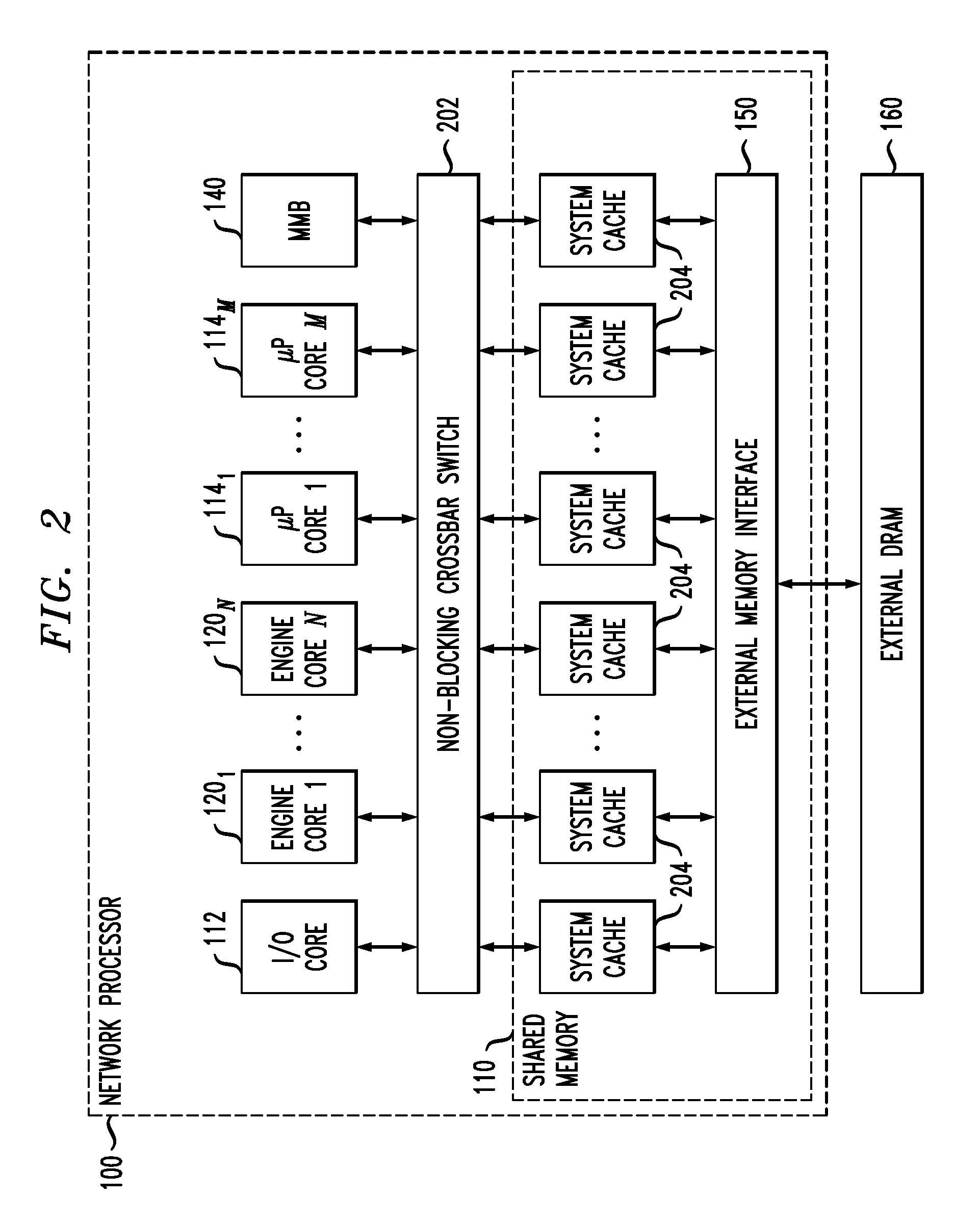

Task queuing in a network communications processor architecture

InactiveUS20100293353A1Well formedMemory adressing/allocation/relocationDigital computer detailsProcessing coreNetwork communication

Described embodiments provide a method of assigning tasks to queues of a processing core. Tasks are assigned to a queue by sending, by a source processing core, a new task having a task identifier. A destination processing core receives the new task and determines whether another task having the same identifier exists in any of the queues corresponding to the destination processing core. If another task with the same identifier as the new task exists, the destination processing core assigns the new task to the queue containing a task with the same identifier as the new task. If no task with the same identifier as the new task exists in the queues, the destination processing core assigns the new task to the queue having the fewest tasks. The source processing core writes the new task to the assigned queue. The destination processing core executes the tasks in its queues.

Owner:INTEL CORP

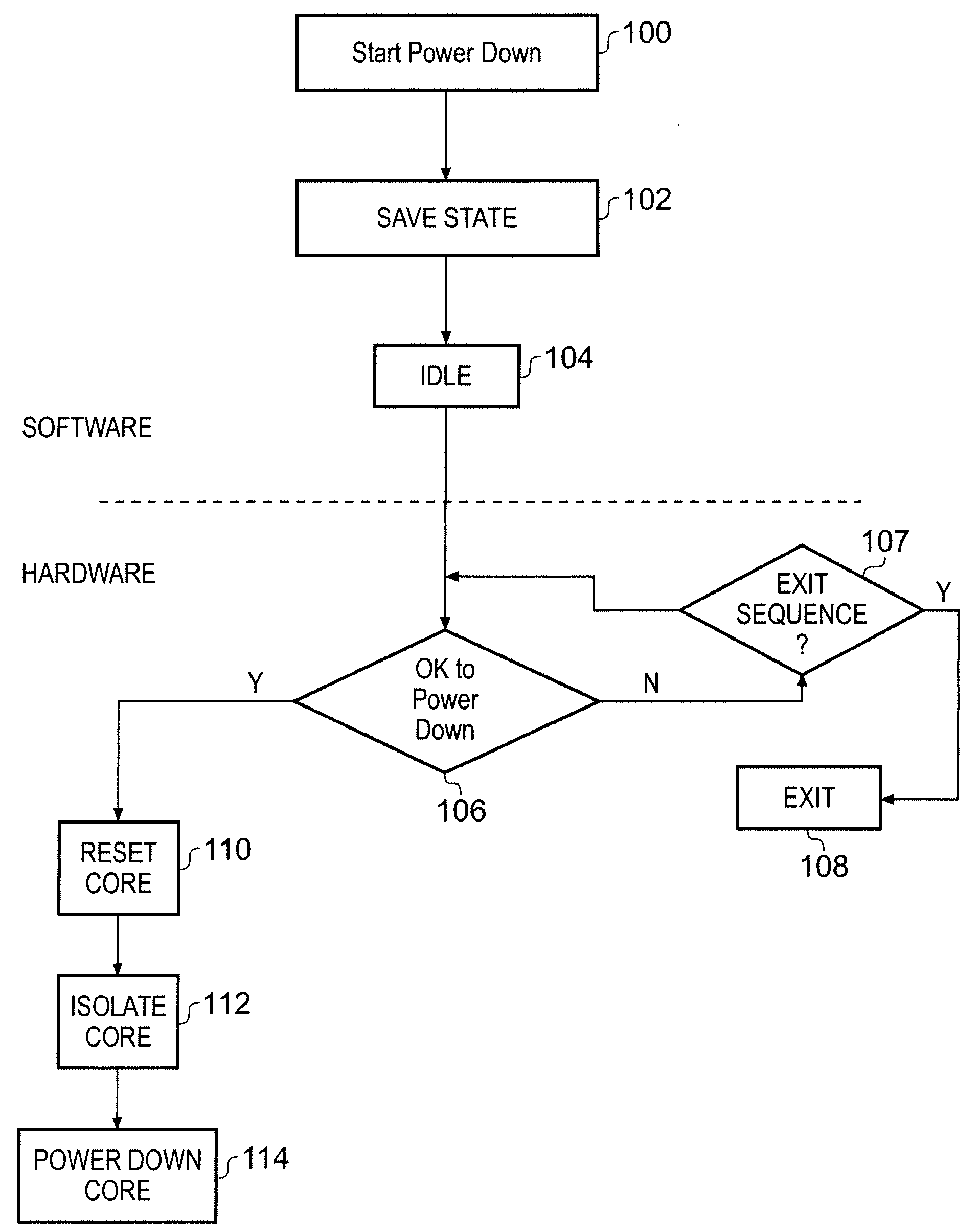

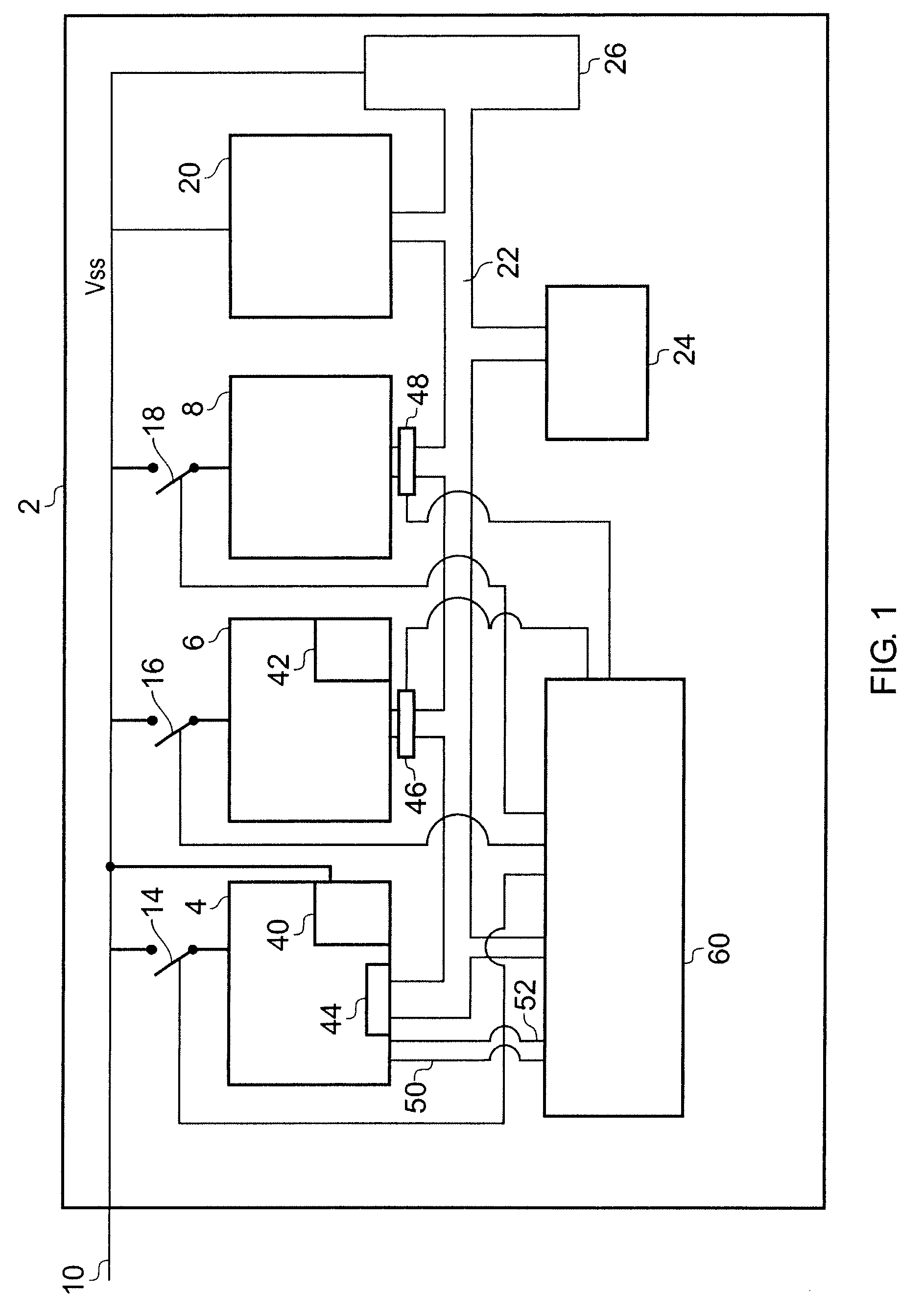

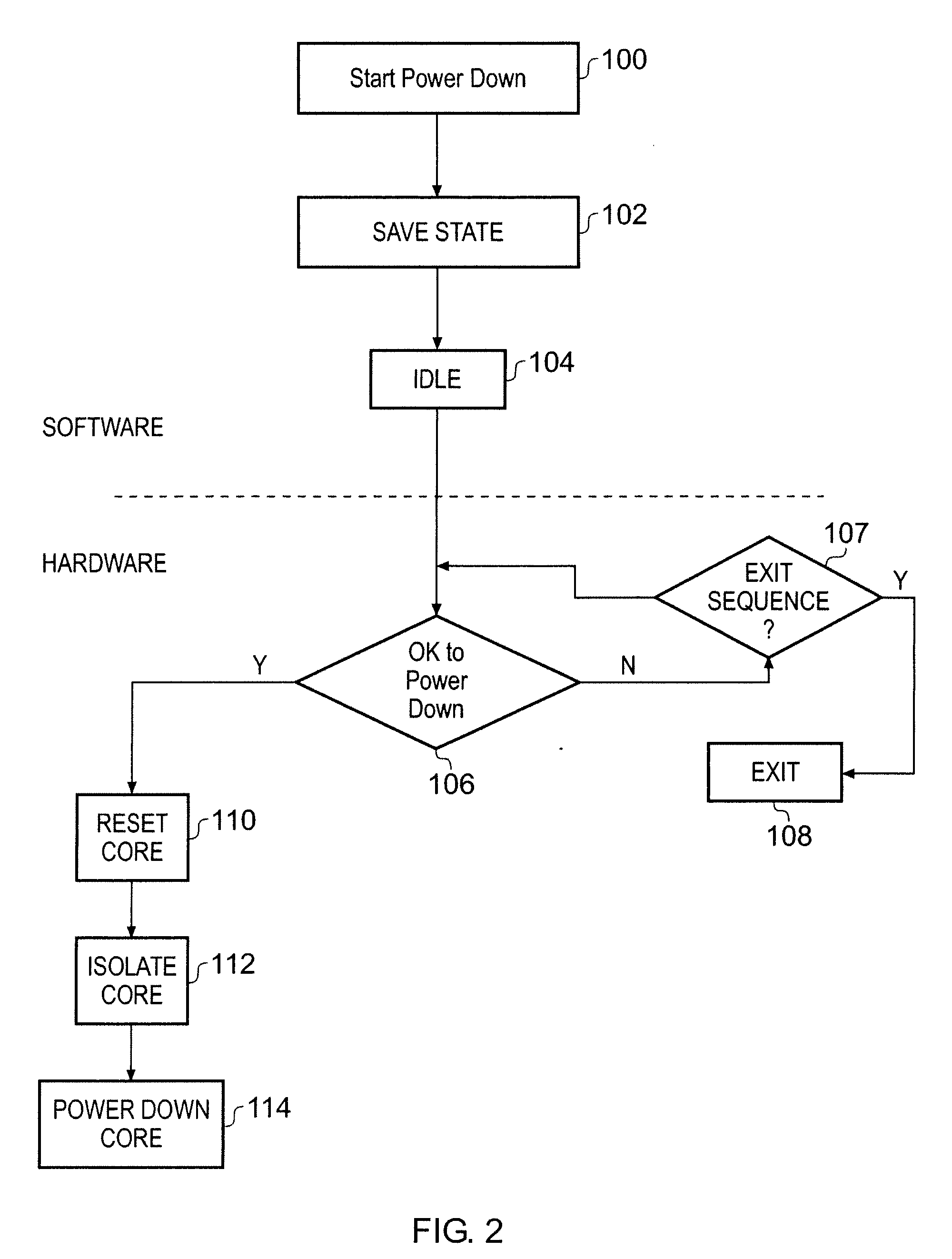

Method of and Apparatus for Reducing Power Consumption within an Integrated Circuit

ActiveUS20080307244A1Avoid perturbing operationTotal current dropEnergy efficient ICTVolume/mass flow measurementProcessing coreEngineering

An integrated circuit comprising a plurality of processing cores, characterised by comprising electrically controllable switches for controlling the supply of power to one or more of the processing cores, a memory for saving state data from at least one of the processing cores and a controller adapted to control the supply of power to one or more of the processing cores such that processing cores can be de-powered.

Owner:MEDIATEK INC

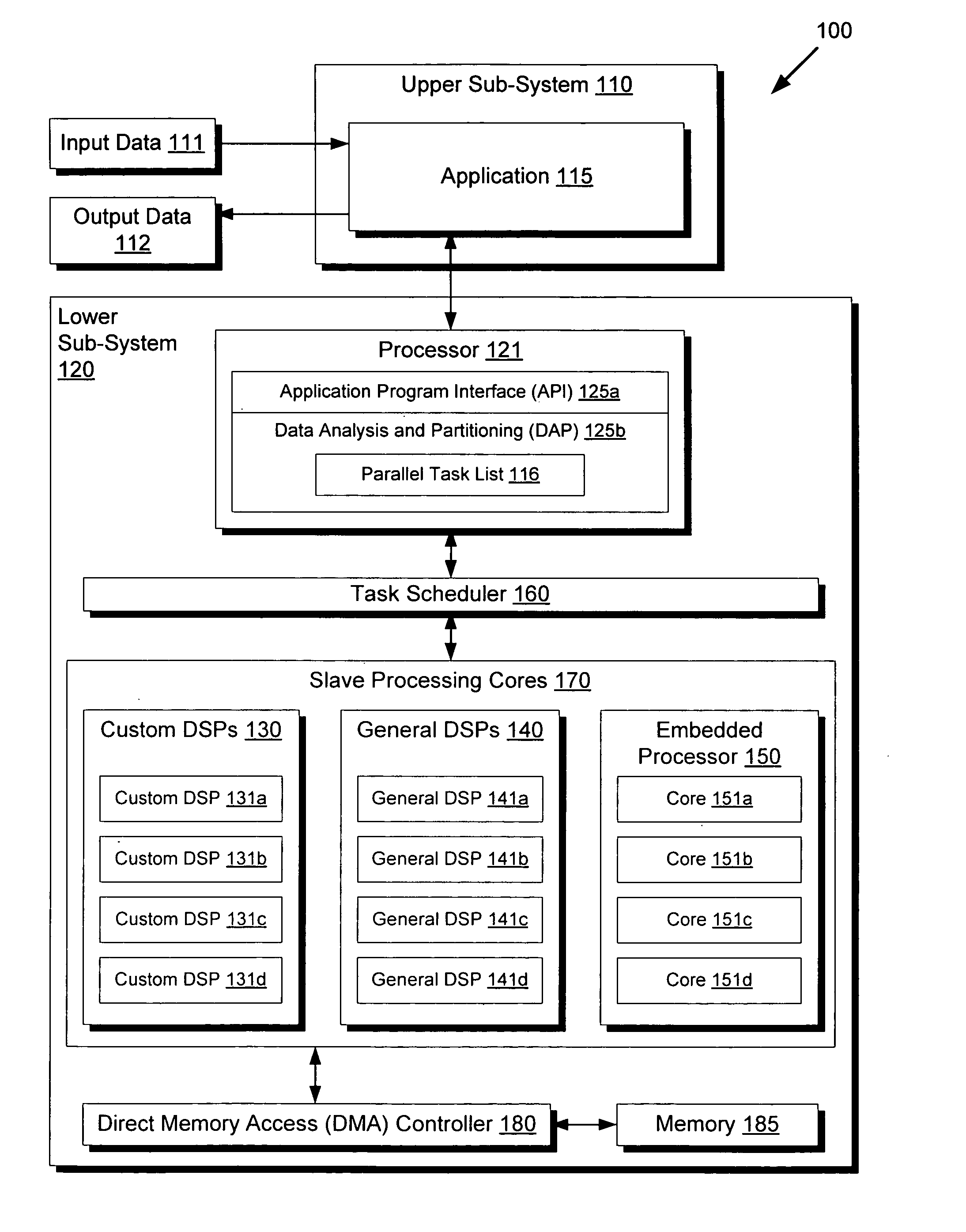

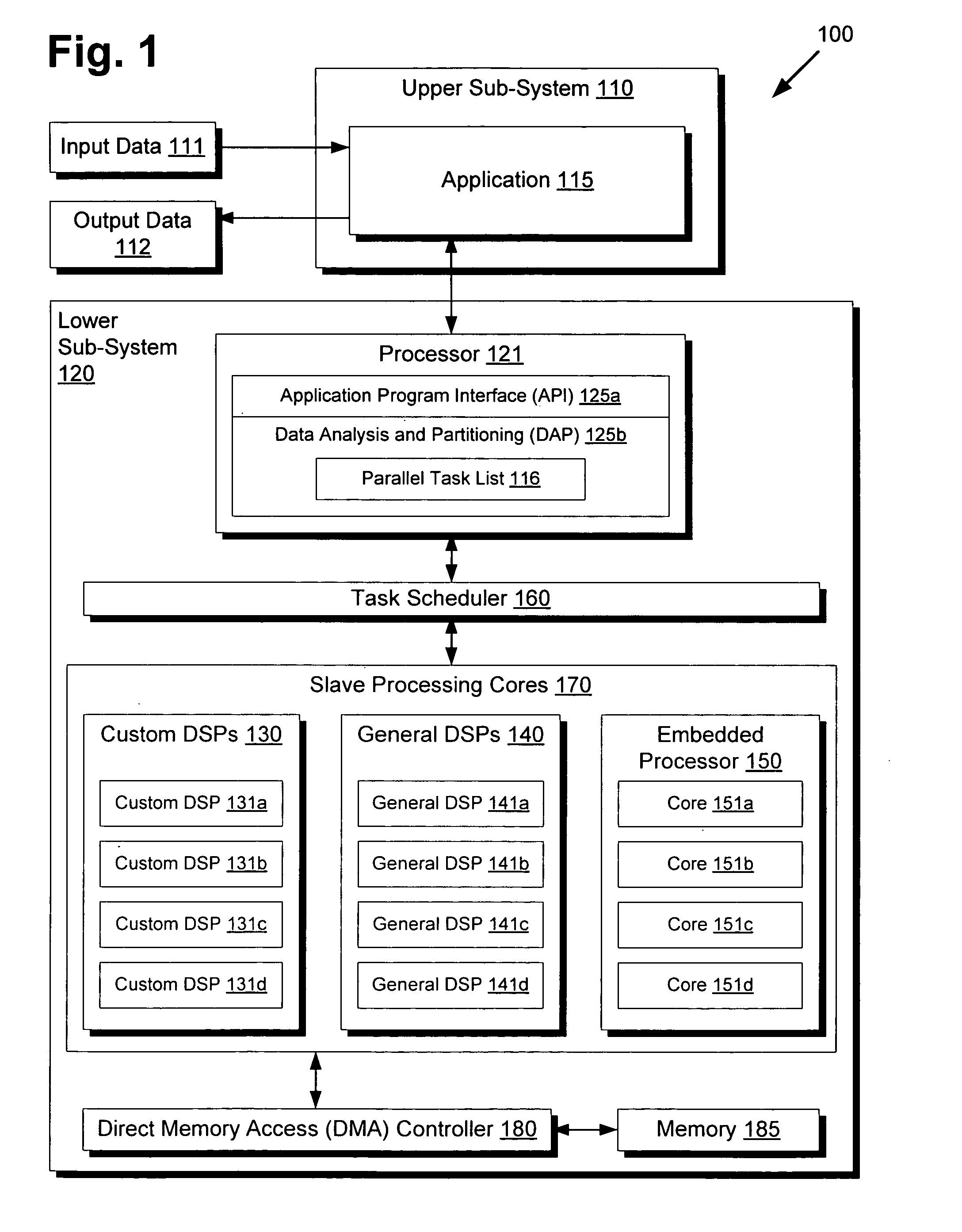

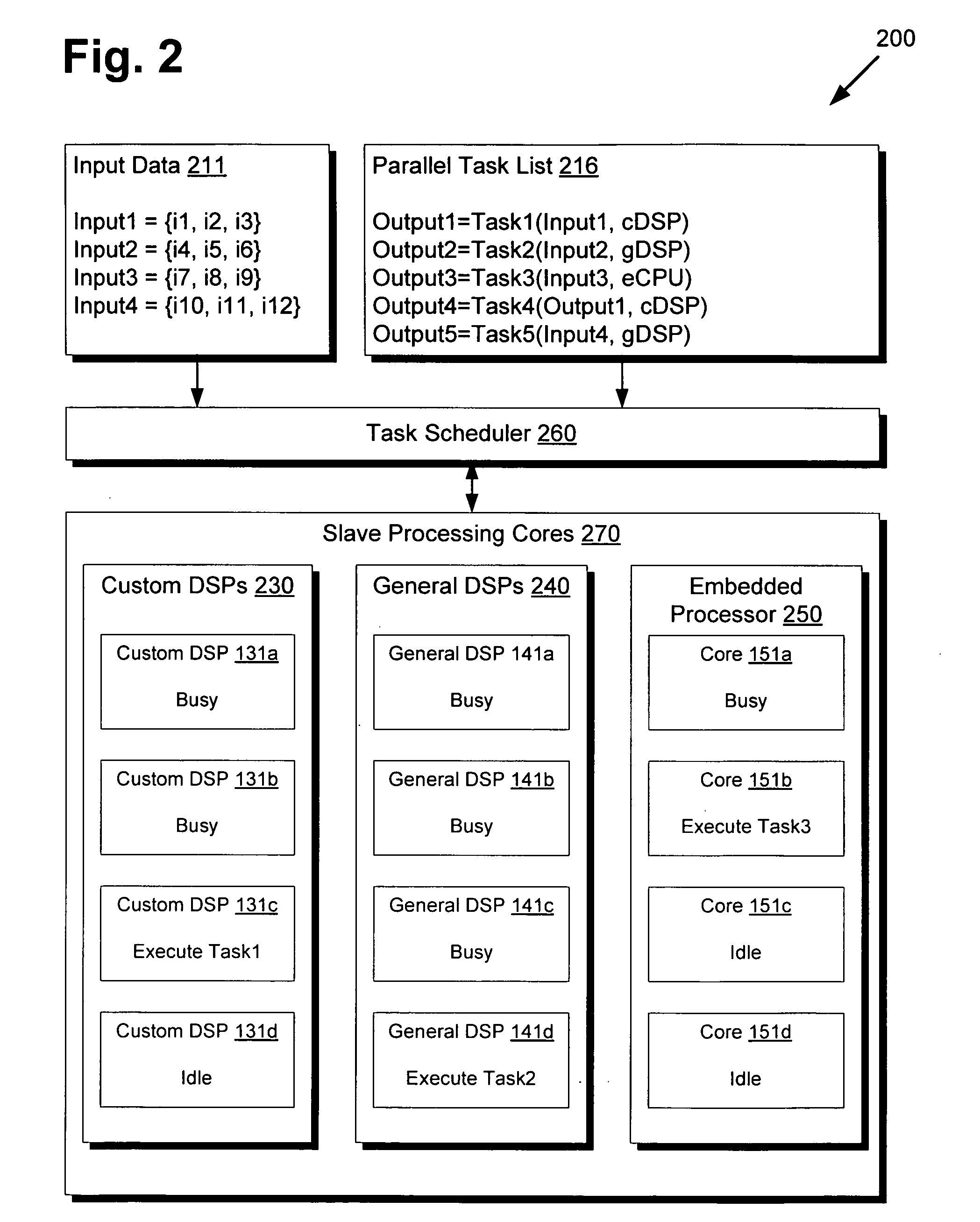

Highly distributed parallel processing on multi-core device

InactiveUS20100131955A1Multiprogramming arrangementsMemory systemsSystem requirementsSoftware development

There is provided a highly distributed multi-core system with an adaptive scheduler. By resolving data dependencies in a given list of parallel tasks and selecting a subset of tasks to execute based on provided software priorities, applications can be executed in a highly distributed manner across several types of slave processing cores. Moreover, by overriding provided priorities as necessary to adapt to hardware or other system requirements, the task scheduler may provide for low-level hardware optimizations that enable the timely completion of time-sensitive workloads, which may be of particular interest for real-time applications. Through this modularization of software development and hardware optimization, the conventional demand on application programmers to micromanage multi-core processing for optimal performance is thus avoided, thereby streamlining development and providing a higher quality end product.

Owner:MINDSPEED TECH LLC

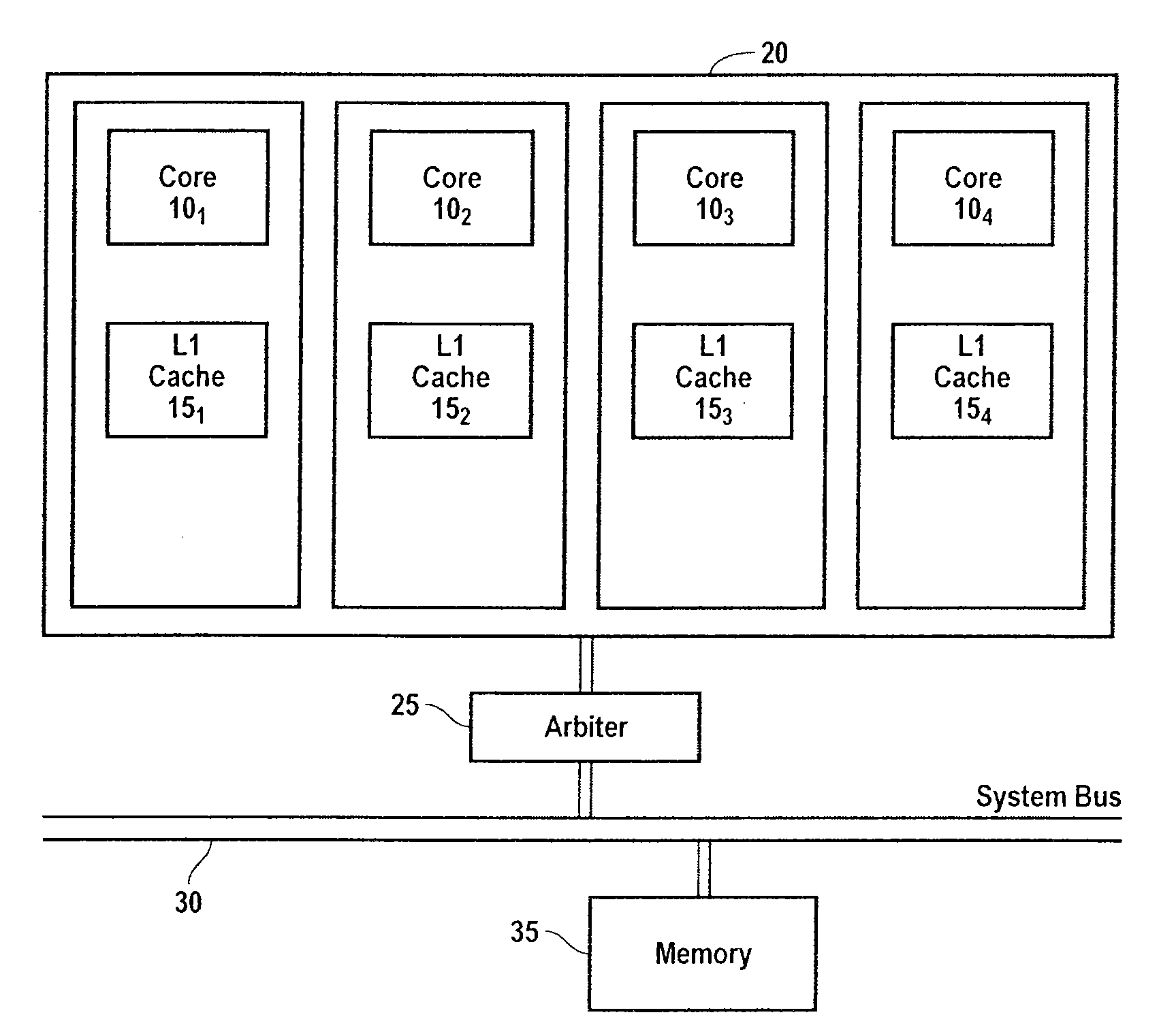

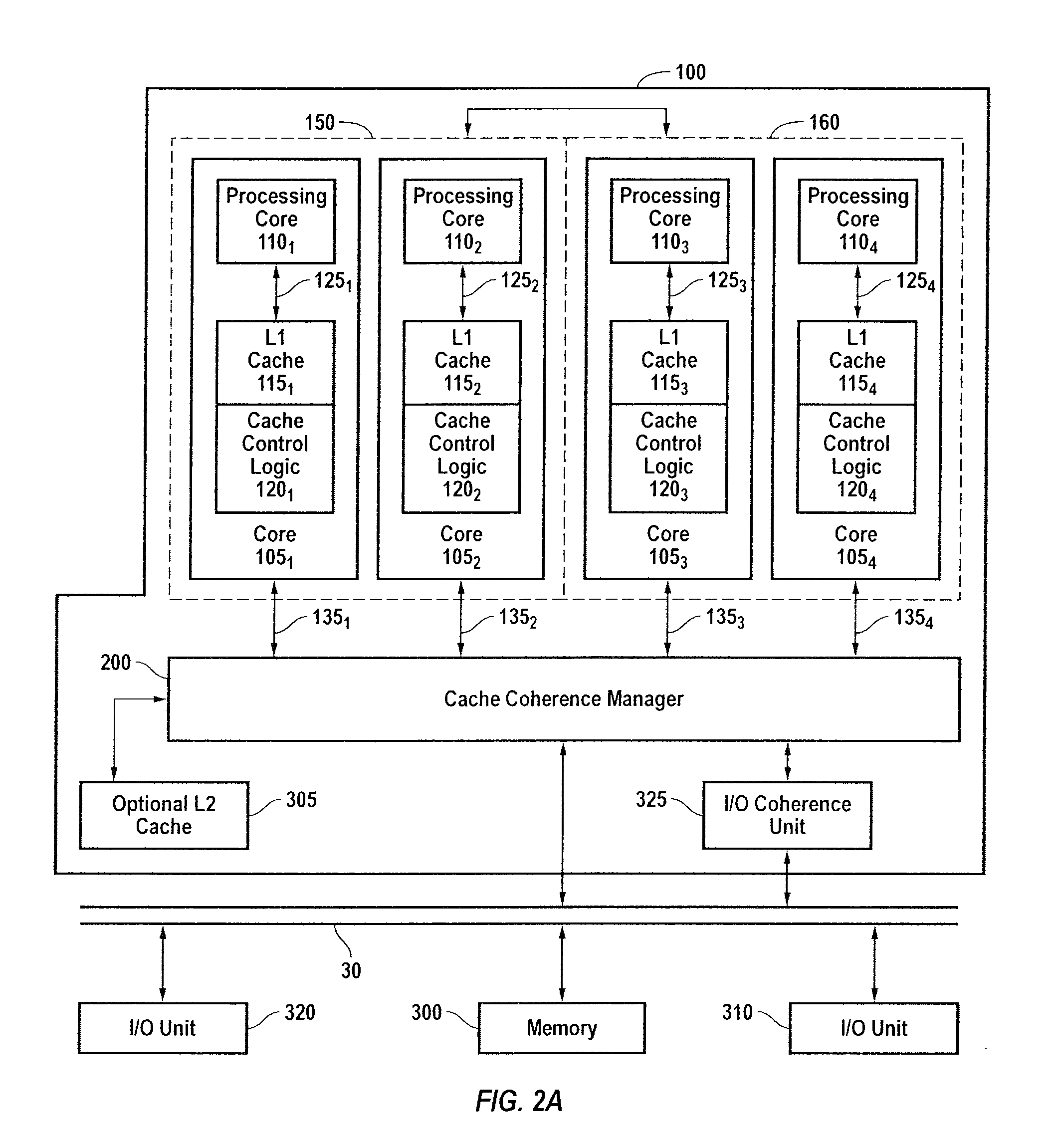

Support for multiple coherence domains

A number of coherence domains are maintained among the multitude of processing cores disposed in a microprocessor. A cache coherency manager defines the coherency relationships such that coherence traffic flows only among the processing cores that are defined as having a coherency relationship. The data defining the coherency relationships between the processing cores is optionally stored in a programmable register. For each source of a coherent request, the processing core targets of the request are identified in the programmable register. In response to a coherent request, an intervention message is forwarded only to the cores that are defined to be in the same coherence domain as the requesting core. If a cache hit occurs in response to a coherent read request and the coherence state of the cache line resulting in the hit satisfies a condition, the requested data is made available to the requesting core from that cache line.

Owner:ARM FINANCE OVERSEAS LTD

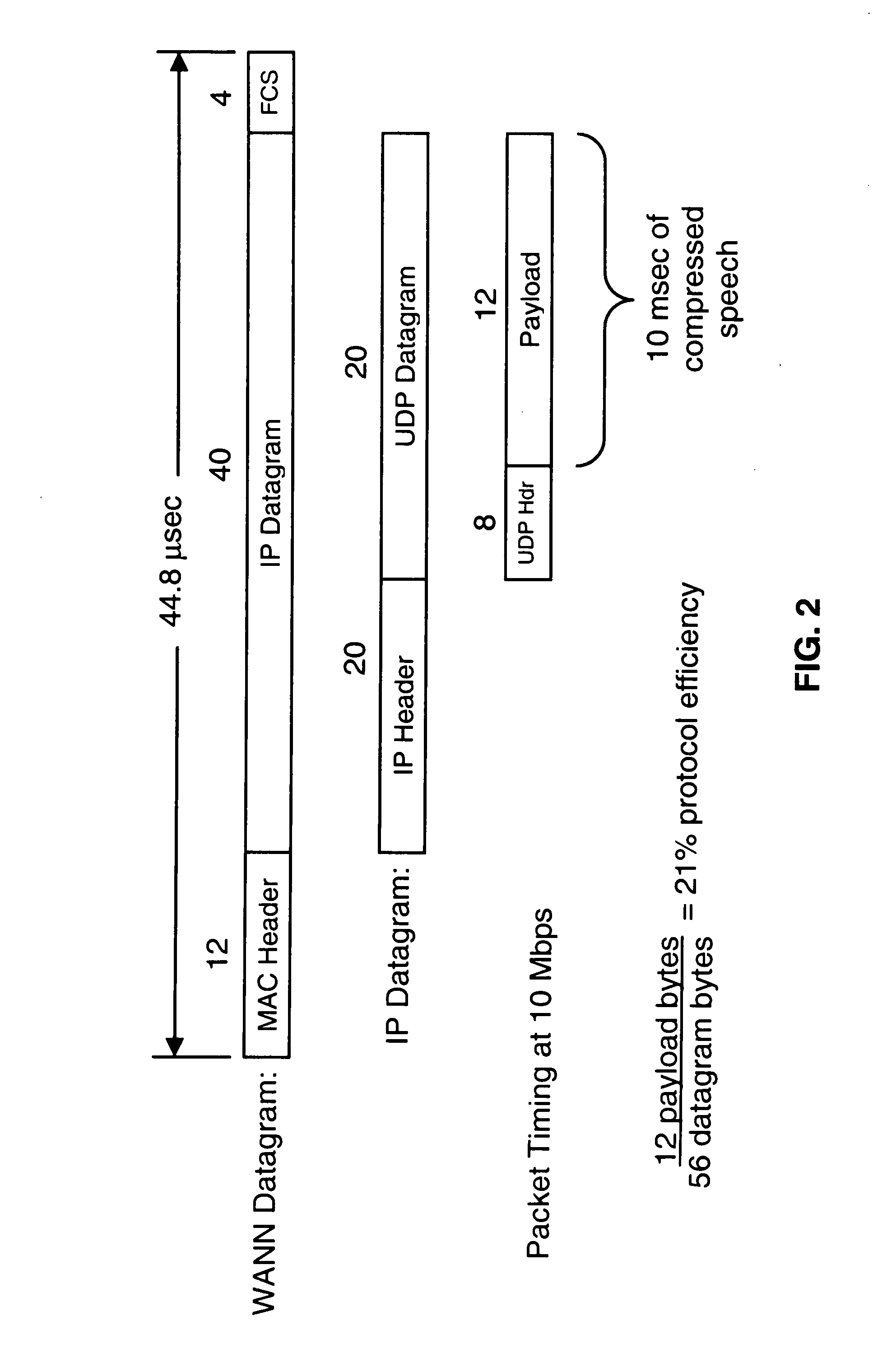

System and Method for Multicore Communication Processing

InactiveUS20080181245A1Effective latencySustaining media speedTime-division multiplexData switching by path configurationDirect memory accessProcessing core

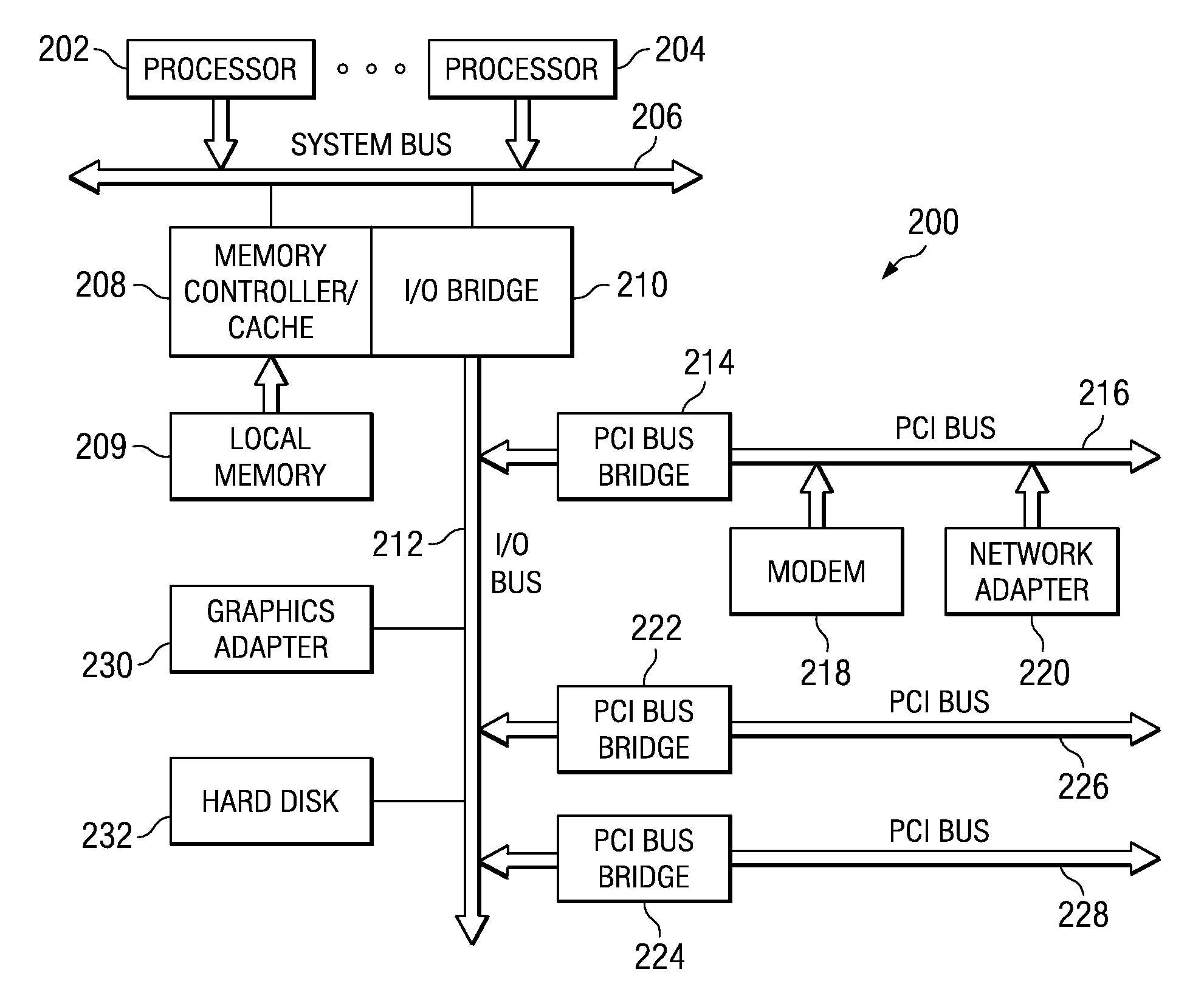

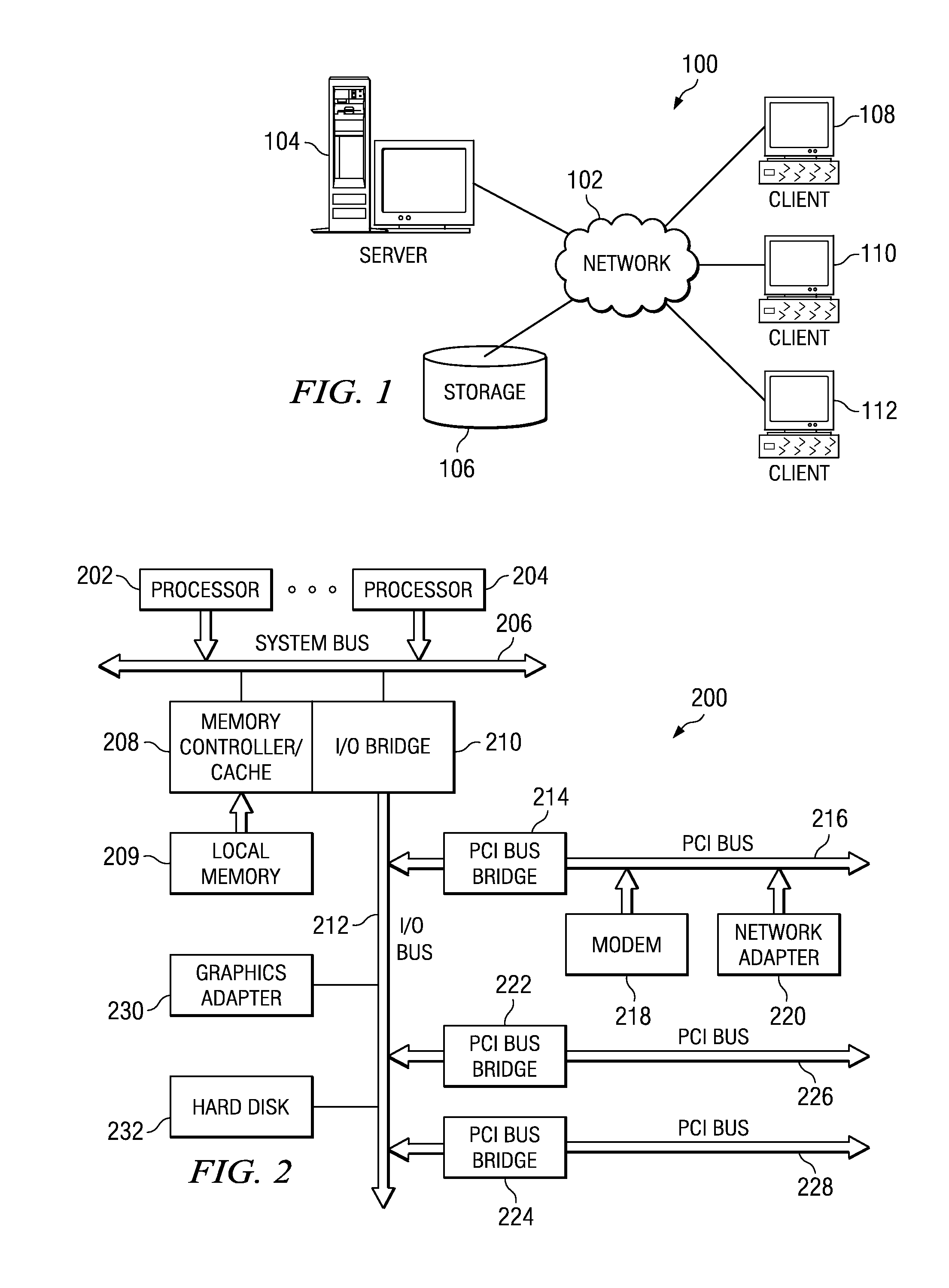

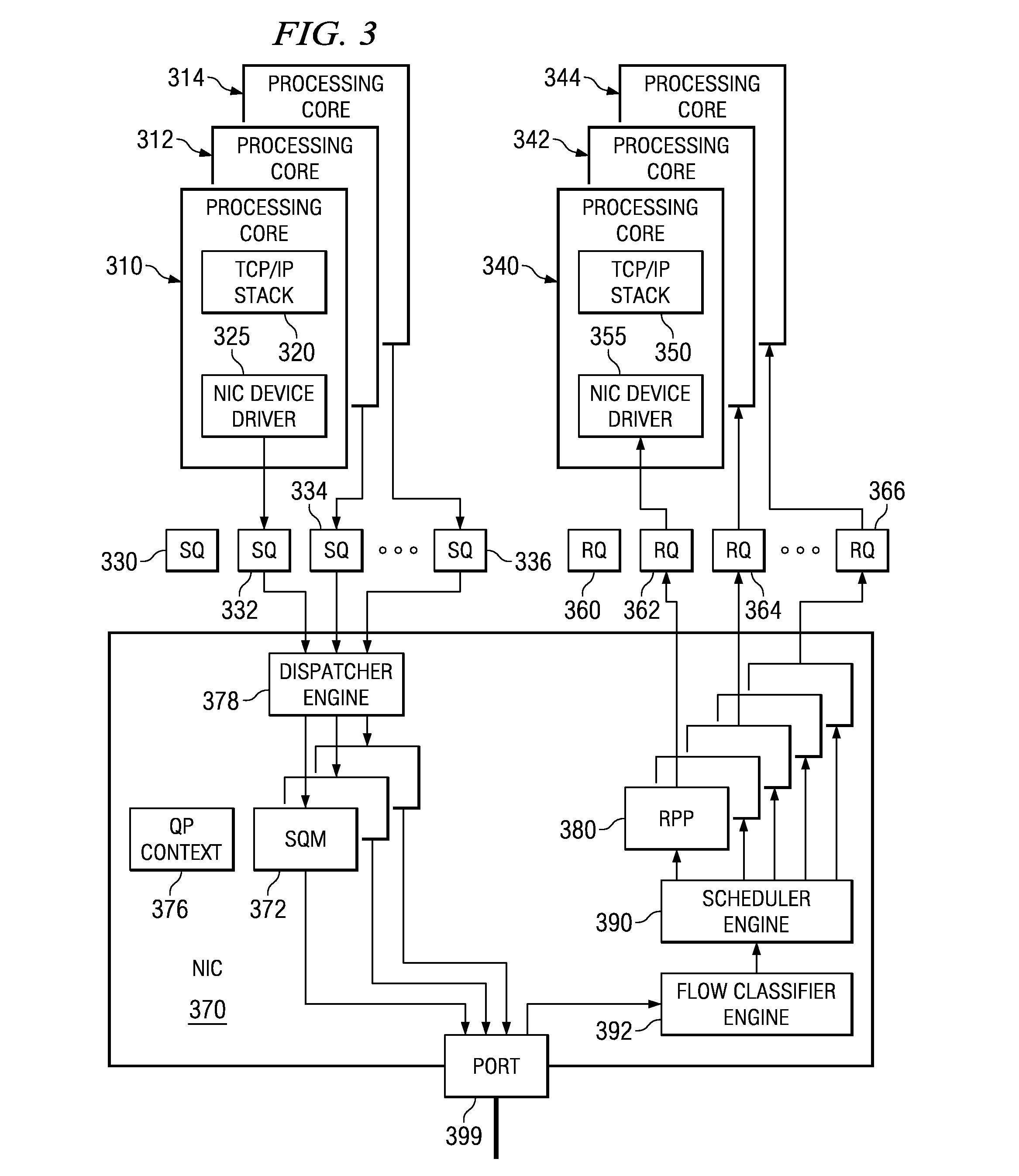

A system and method for multicore processing of communications between data processing devices are provided. With the mechanisms of the illustrative embodiments, a set of techniques that enables sustaining media speed by distributing transmit and receive-side processing over multiple processing cores is provided. In addition, these techniques also enable designing multi-threaded network interface controller (NIC) hardware that efficiently hides the latency of direct memory access (DMA) operations associated with data packet transfers over an input / output (I / O) bus. Multiple processing cores may operate concurrently using separate instances of a communication protocol stack and device drivers to process data packets for transmission with separate hardware implemented send queue managers in a network adapter processing these data packets for transmission. Multiple hardware receive packet processors in the network adapter may be used, along with a flow classification engine, to route received data packets to appropriate receive queues and processing cores for processing.

Owner:IBM CORP

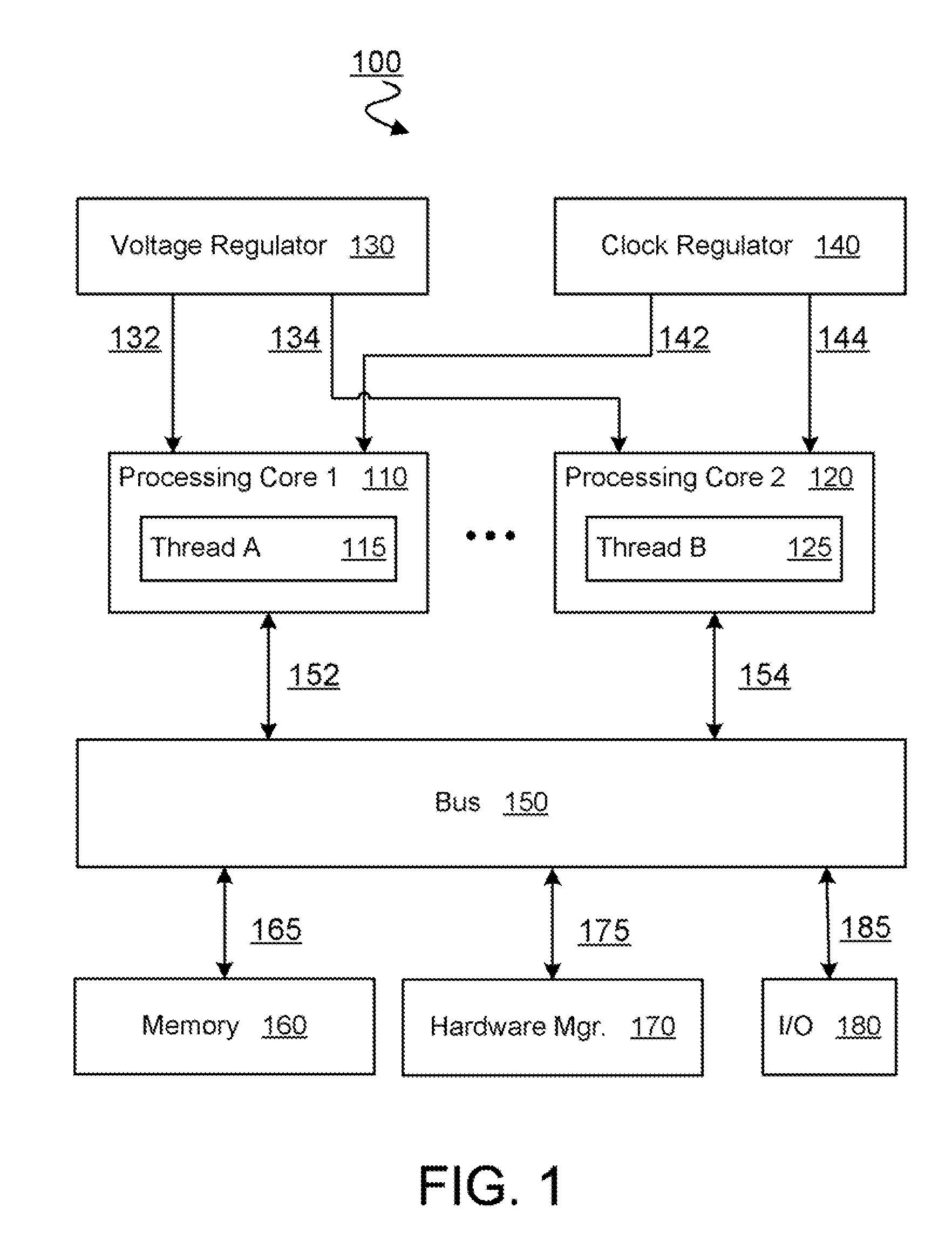

Methods and systems for performing dynamic power management via frequency and voltage scaling

ActiveUS7155617B2Significant latencyEnergy efficient ICTVolume/mass flow measurementProcessing coreDynamic power management

Methods and systems are provided for dynamically managing the power consumption of a digital system. These methods and systems broadly provide for varying the frequency and voltage of one or more clocks of a digital system upon request by an entity of the digital system. An entity may request that the frequency of a clock of the processor of the digital system be changed. After the frequency is changed, the voltage point of the voltage regulator of the digital system is automatically changed to the lowest voltage point required for the new frequency if there is a single clock on the processor. If the processor is comprised of multiple processing cores with associated clocks, the frequency is changed to the lowest voltage point required by all frequencies of all clocks.

Owner:TEXAS INSTR INC

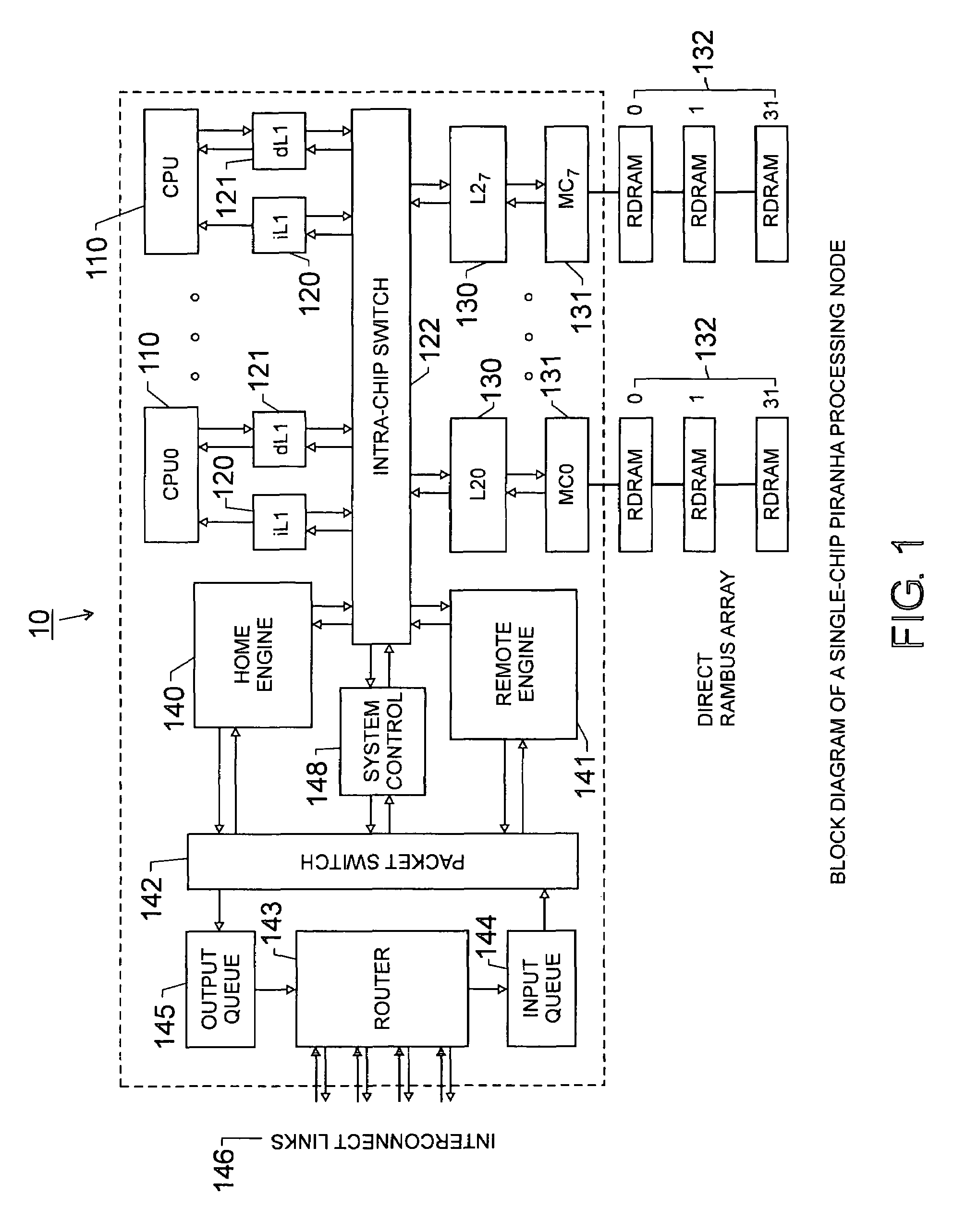

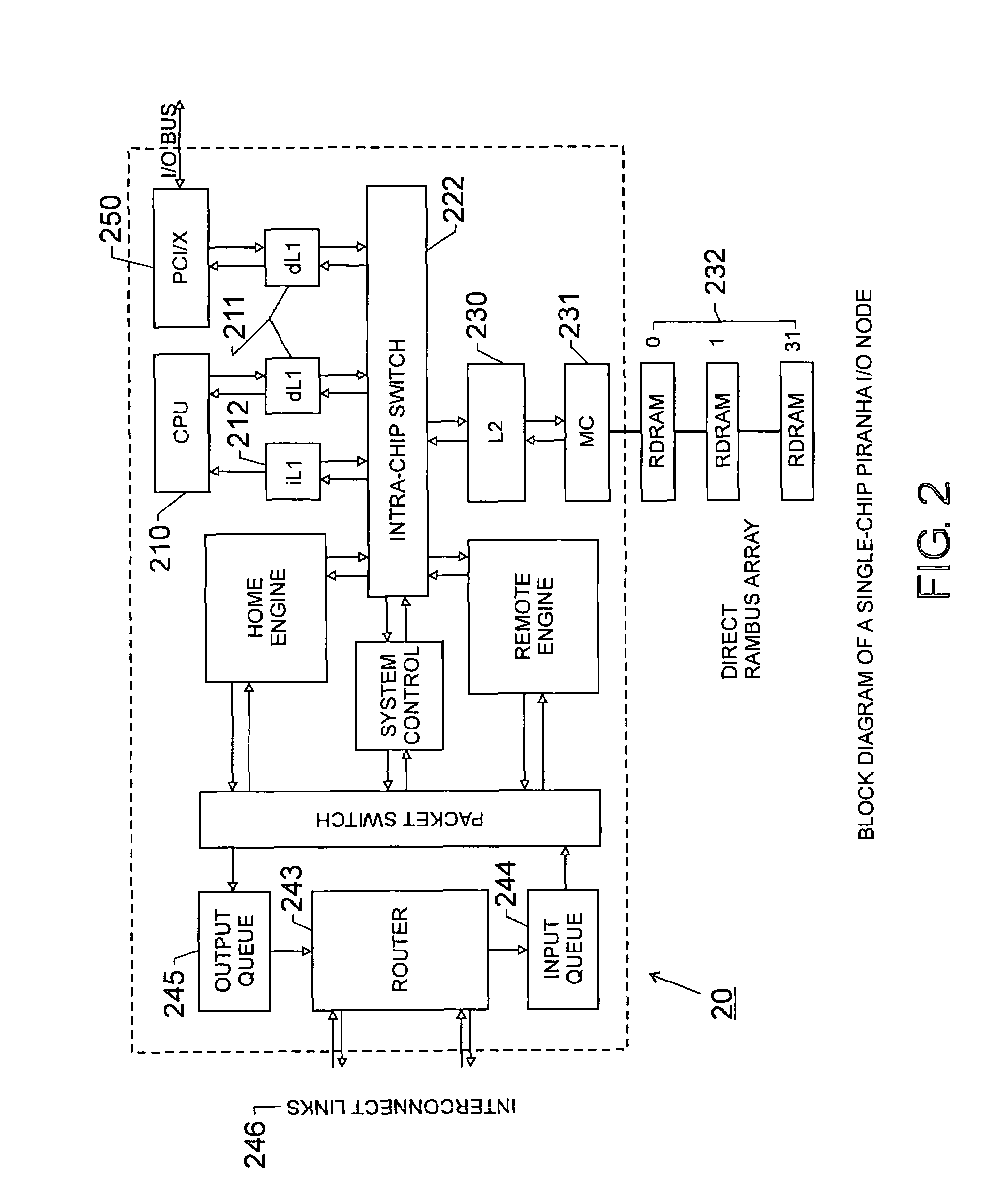

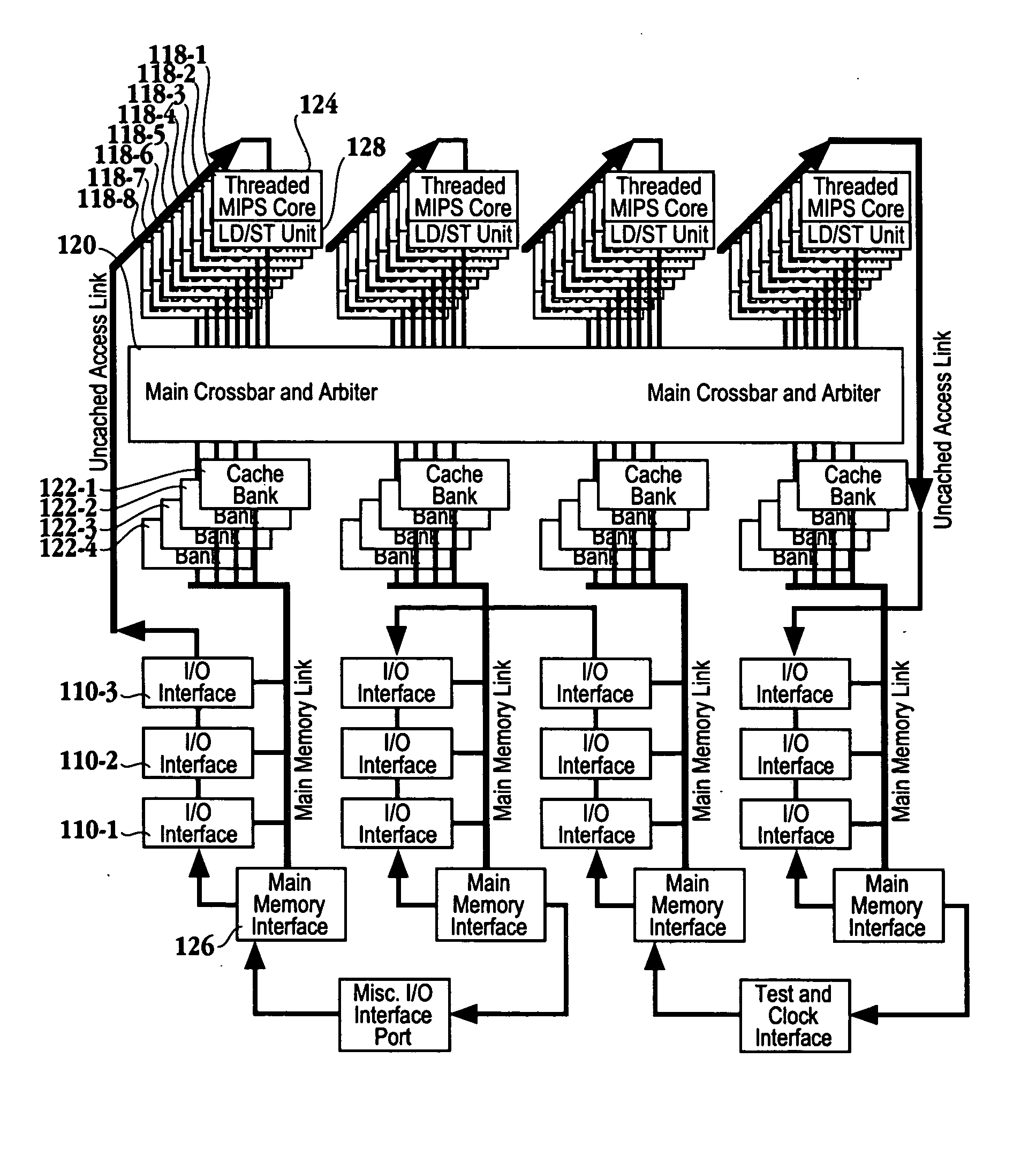

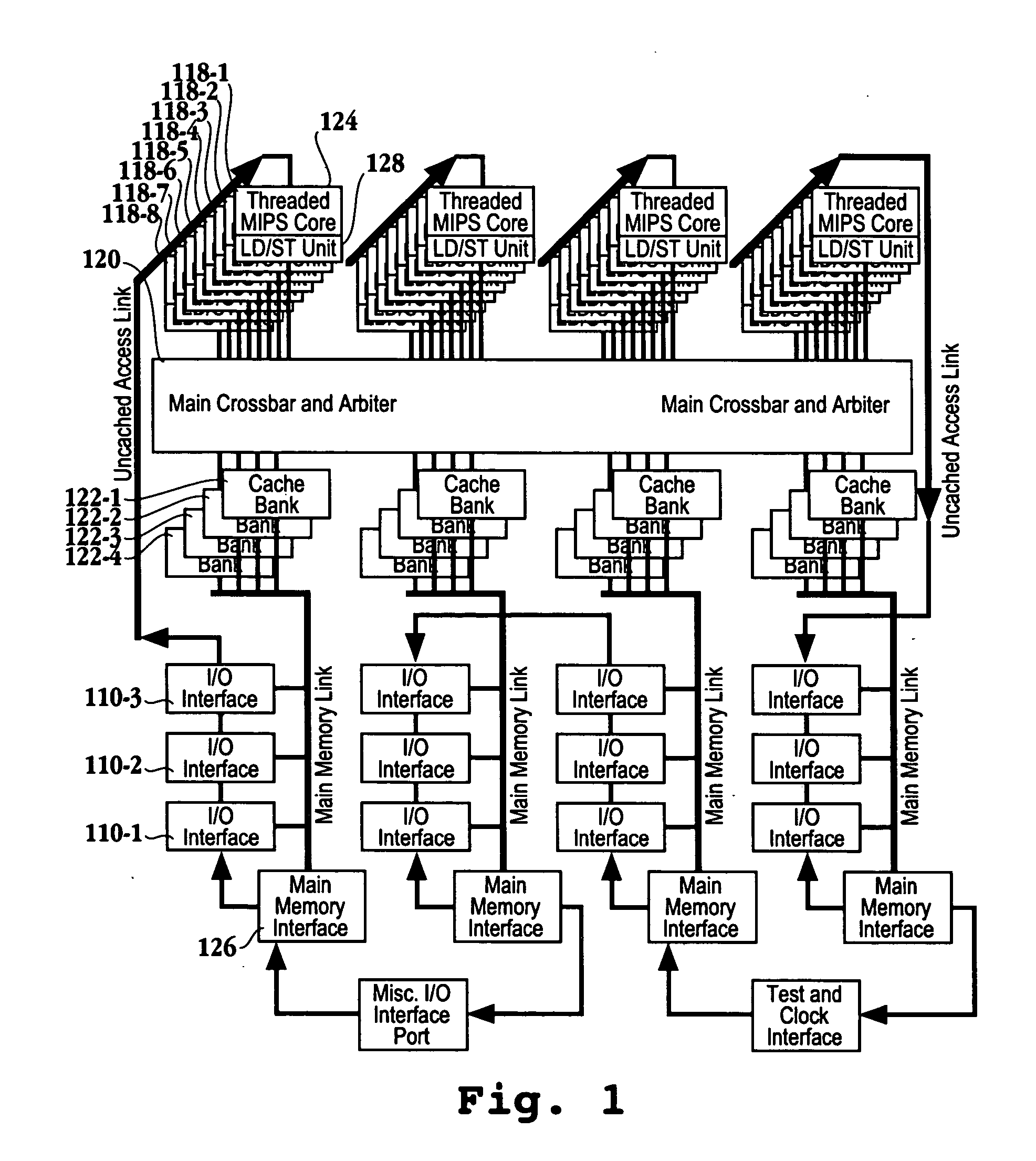

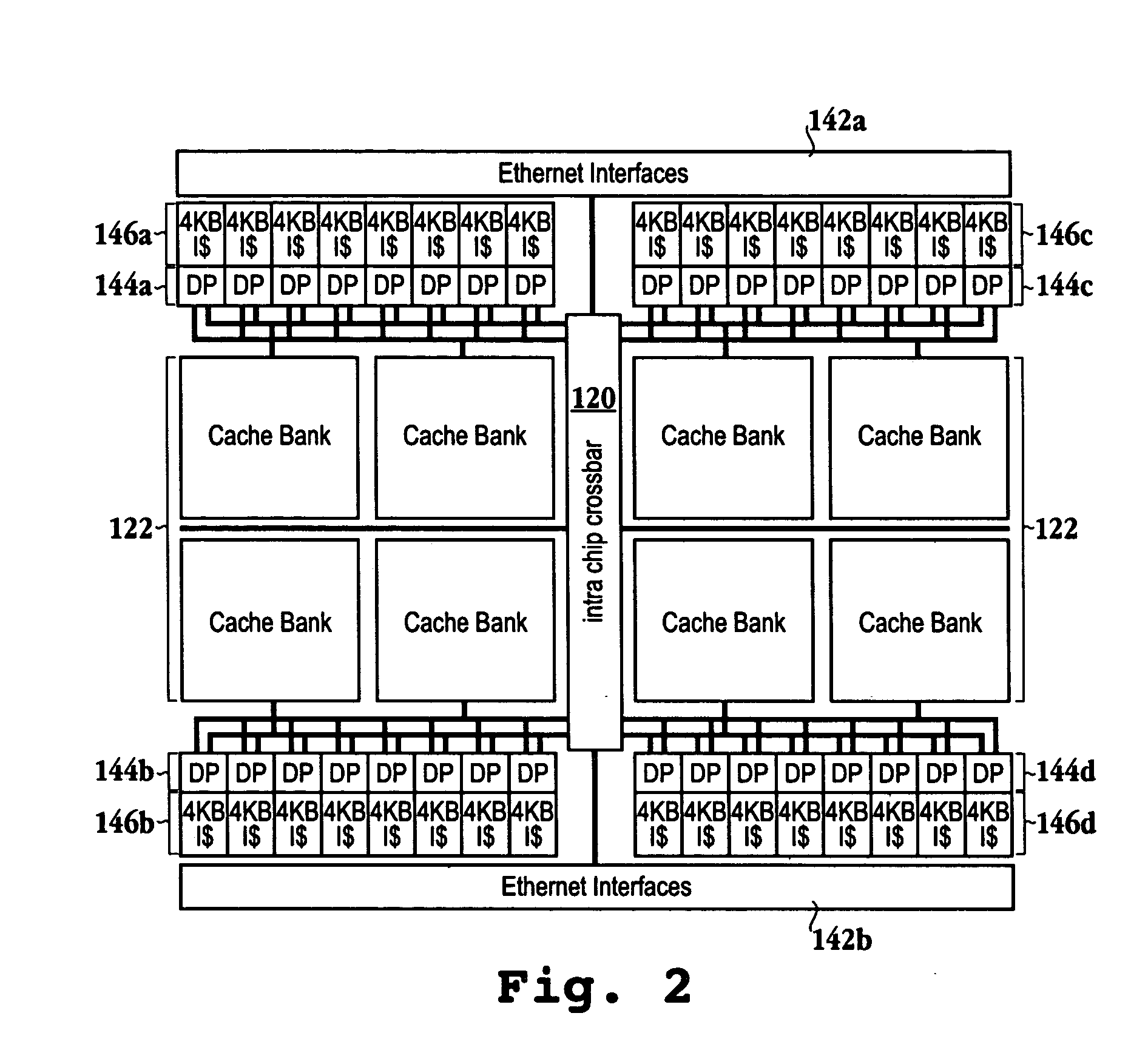

Scalable architecture based on single-chip multiprocessing

InactiveUS6988170B2Short timeSmall investmentMemory architecture accessing/allocationMemory adressing/allocation/relocationCache hierarchyProcessing core

A chip-multiprocessing system with scalable architecture, including on a single chip: a plurality of processor cores; a two-level cache hierarchy; an intra-chip switch; one or more memory controllers; a cache coherence protocol; one or more coherence protocol engines; and an interconnect subsystem. The two-level cache hierarchy includes first level and second level caches. In particular, the first level caches include a pair of instruction and data caches for, and private to, each processor core. The second level cache has a relaxed inclusion property, the second-level cache being logically shared by the plurality of processor cores. Each of the plurality of processor cores is capable of executing an instruction set of the ALPHA™ processing core. The scalable architecture of the chip-multiprocessing system is targeted at parallel commercial workloads. A showcase example of the chip-multiprocessing system, called the PIRAHNA™ system, is a highly integrated processing node with eight simpler ALPHA™ processor cores. A method for scalable chip-multiprocessing is also provided.

Owner:SK HYNIX INC

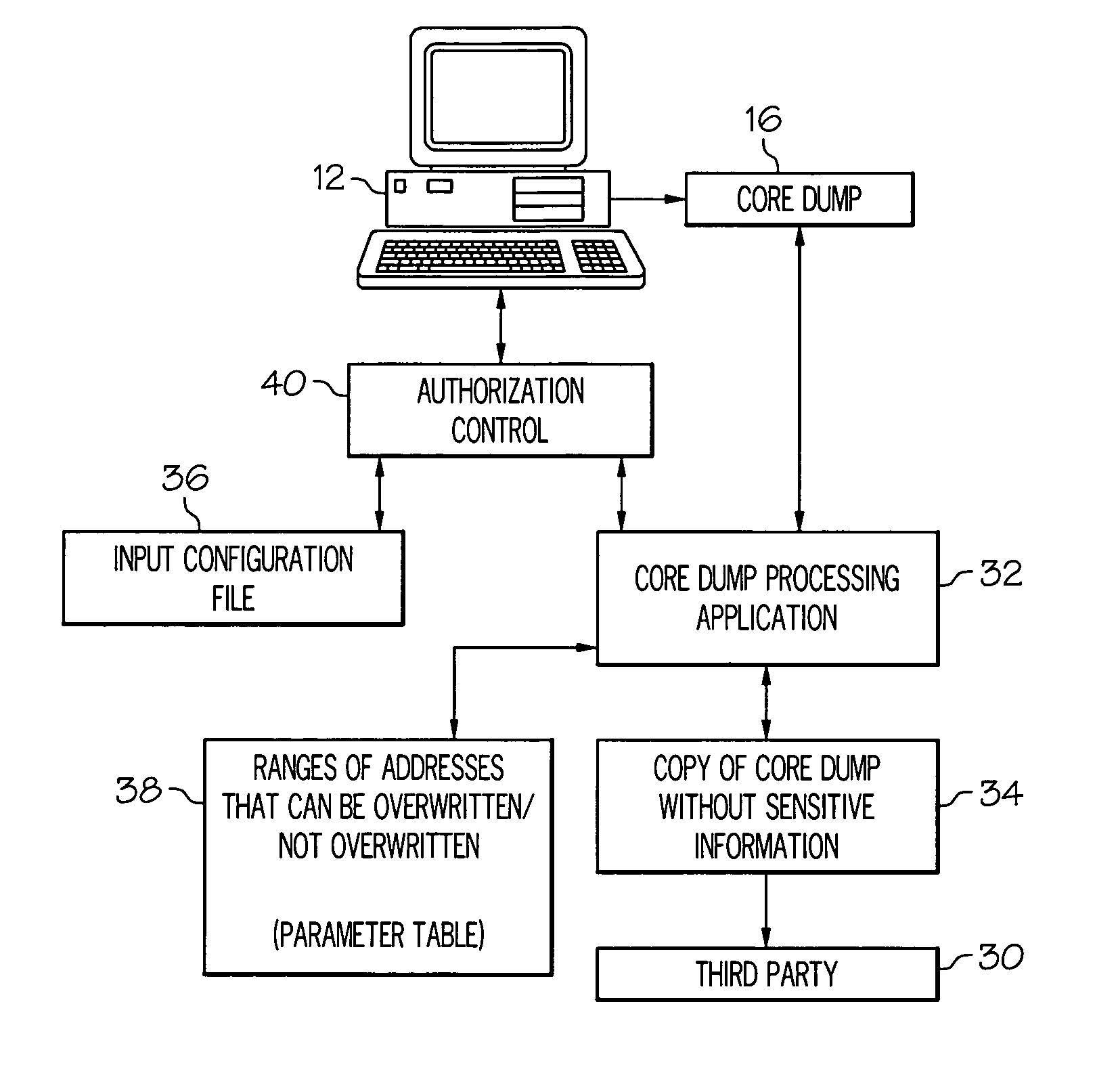

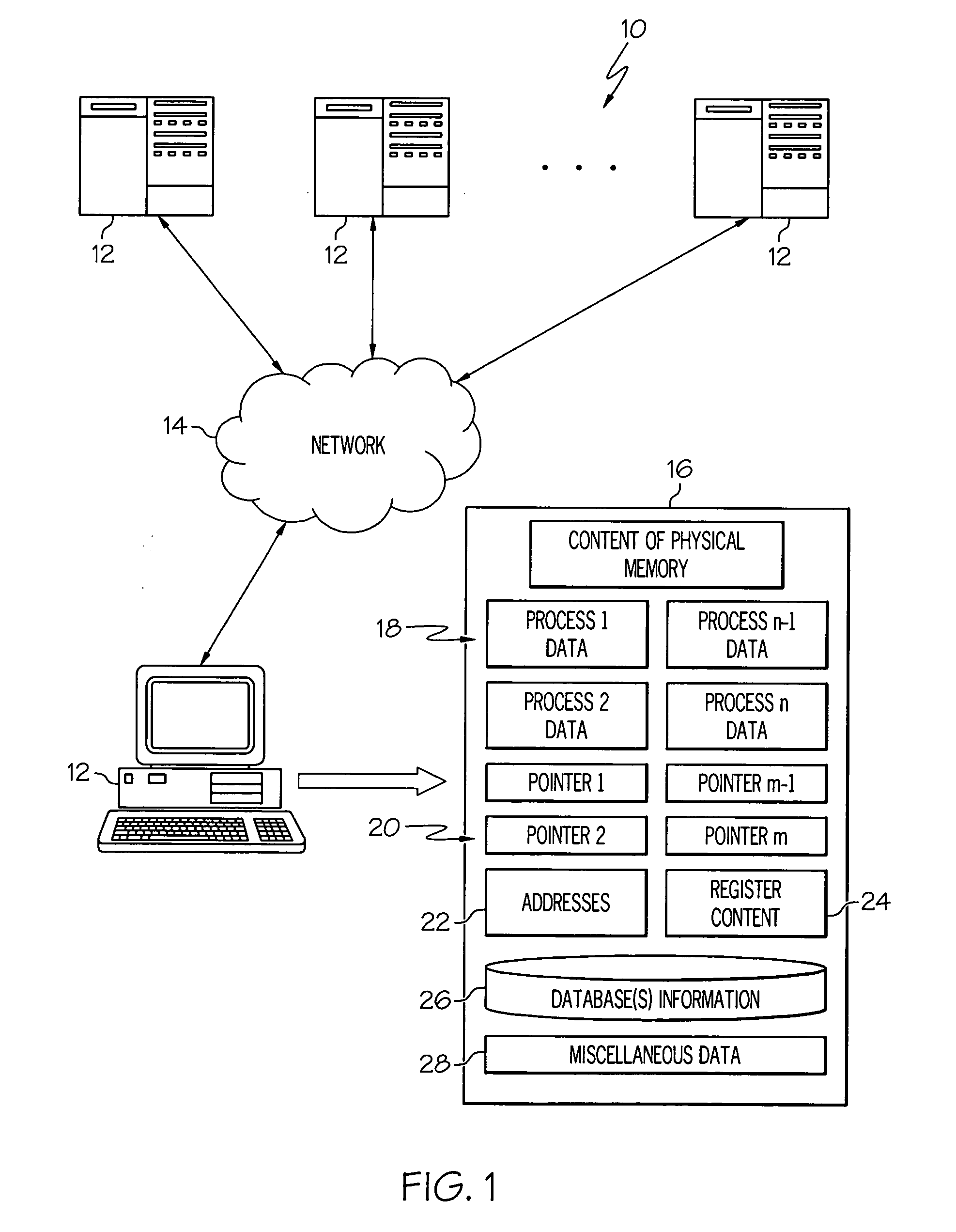

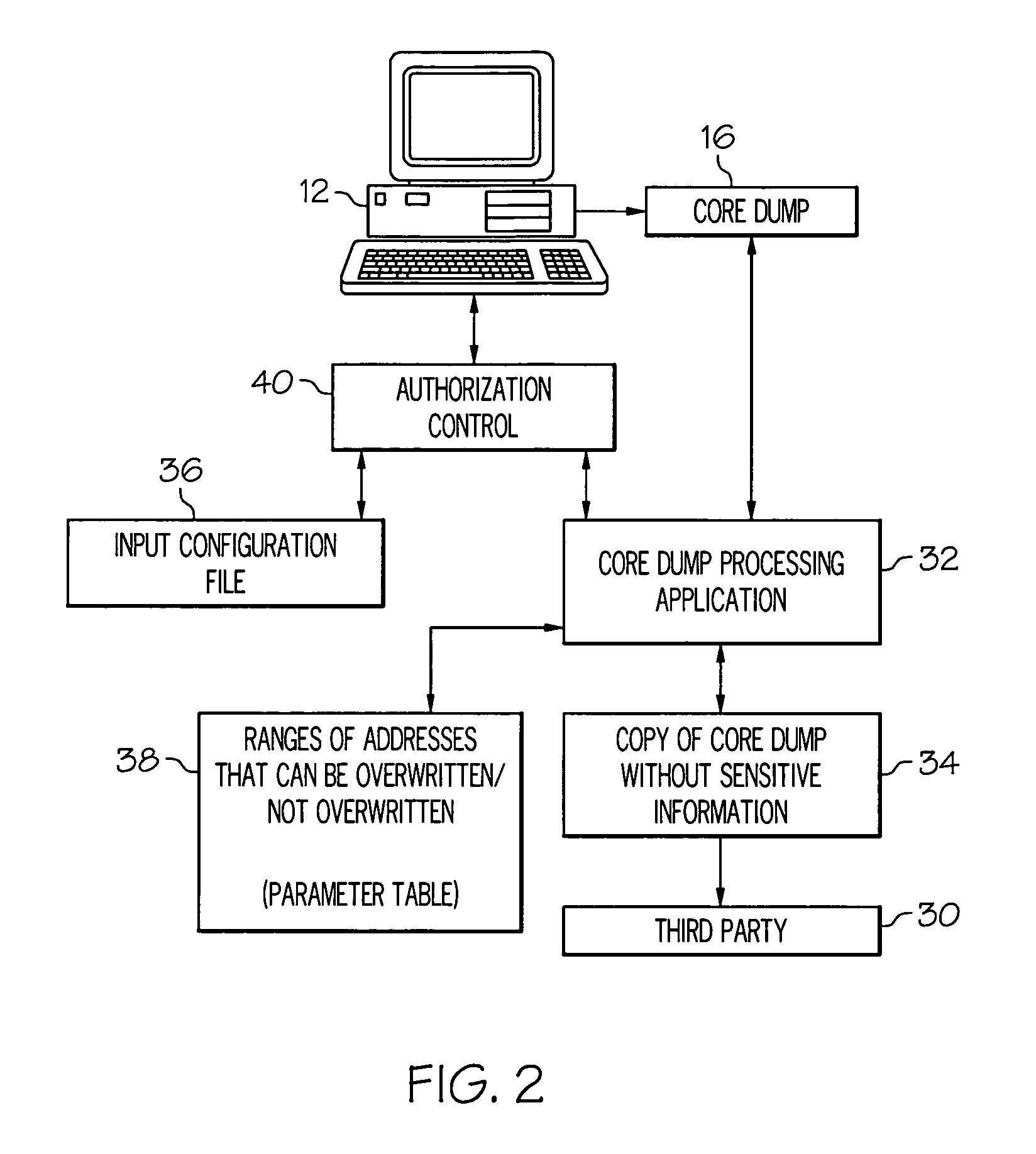

Locating and altering sensitive information in core dumps

ActiveUS20080126301A1Error detection/correctionSpecial data processing applicationsCore dumpProcessing core

A core dump is processed to locate and optionally alter sensitive information. A core dump copy is created from at least a portion of an original core dump. Also, at least one input parameter is provided that corresponds to select information to be identified in the core dump copy and address information associated with the core dump copy is defined that corresponds to at least one of addresses where the select information can be altered and addresses where the select information should not be altered. Each occurrence of the select information located within the core dump copy is identified and optionally replaced with predetermined replacement data if the occurrence of the select information is within the addresses where the select information can be altered.

Owner:IBM CORP

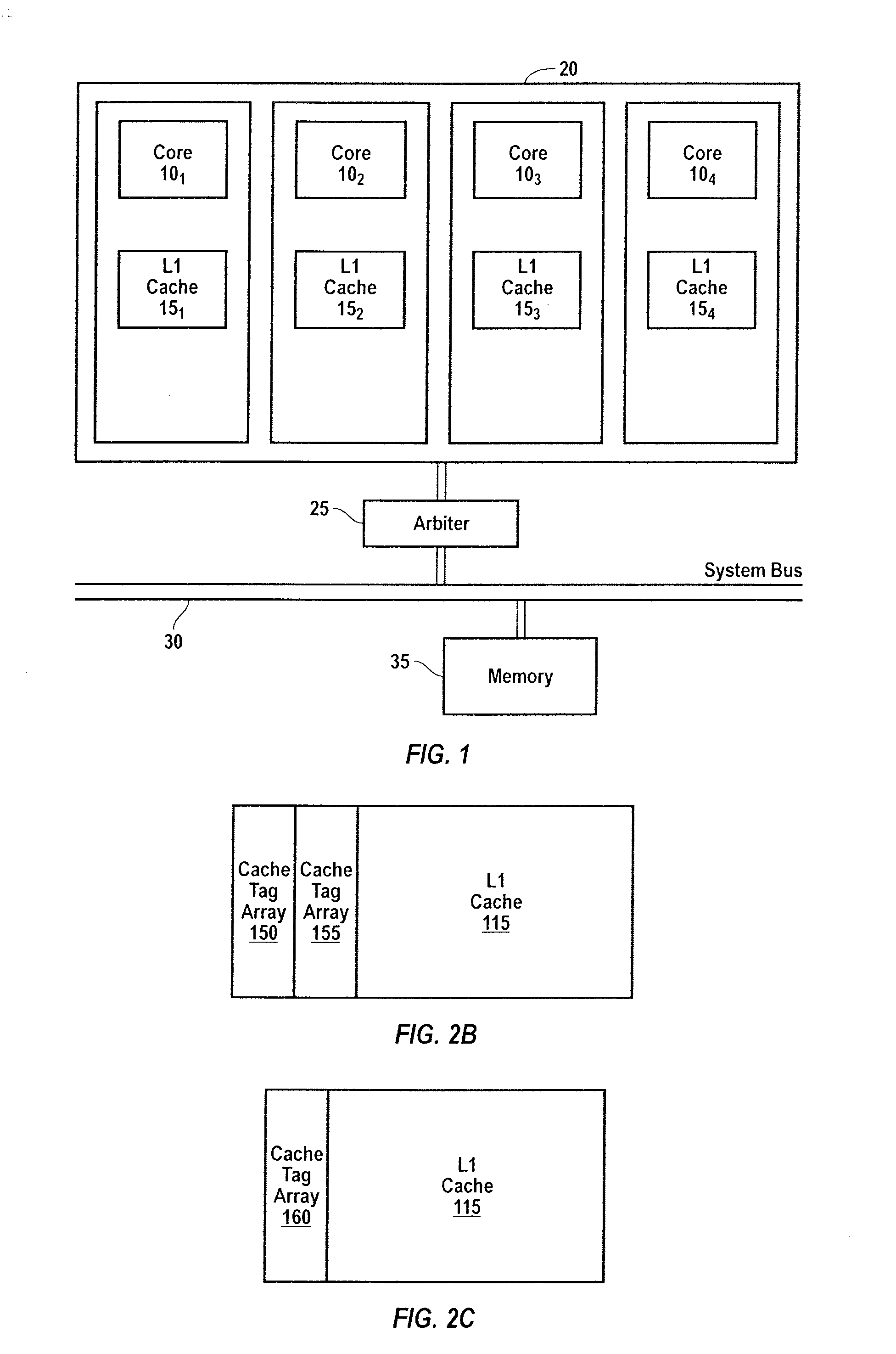

Cache crossbar arbitration

ActiveUS20050060457A1Efficient processingMemory adressing/allocation/relocationConcurrent instruction executionProcessing coreParallel computing

A processor chip is provided. The processor chip includes a plurality of processing cores, where each of the processing cores are multi-threaded. The plurality of processing cores are located in a center region of the processor chip. A plurality of cache bank memories are included. A crossbar enabling communication between the plurality of processing cores and the plurality of cache bank memories is provided. The crossbar includes an arbiter configured to arbitrate multiple requests received from the plurality of processing cores with available outputs. The arbiter includes a barrel shifter configured to rotate the multiple requests for dynamic prioritization, and priority encoders associated with each of the available outputs. Each of the priority encoders have logic gates configured to disable priority encoder outputs. A method for arbitrating requests within a multi-core multi-thread processor is included.

Owner:ORACLE INT CORP

Multithreaded SIMD parallel processor with launching of groups of threads

ActiveUS7594095B1General purpose stored program computerProgram controlProcessing coreProcessor register

In a multithreaded processing core, groups of threads are launched in parallel for single-instruction, multiple-data (SIMD) execution by a set of parallel processing engines. Thread-specific input data for threads in a new SIMD group can be loaded directly into the local register files used by the parallel processing engines, or the data can be accumulated in a buffer until a launch condition is satisfied. When the launch condition is satisfied, the entire group is launched. Various launch conditions can be defined, including but not limited to full population of the SIMD group, a change in data processing conditions, or a timeout.

Owner:NVIDIA CORP

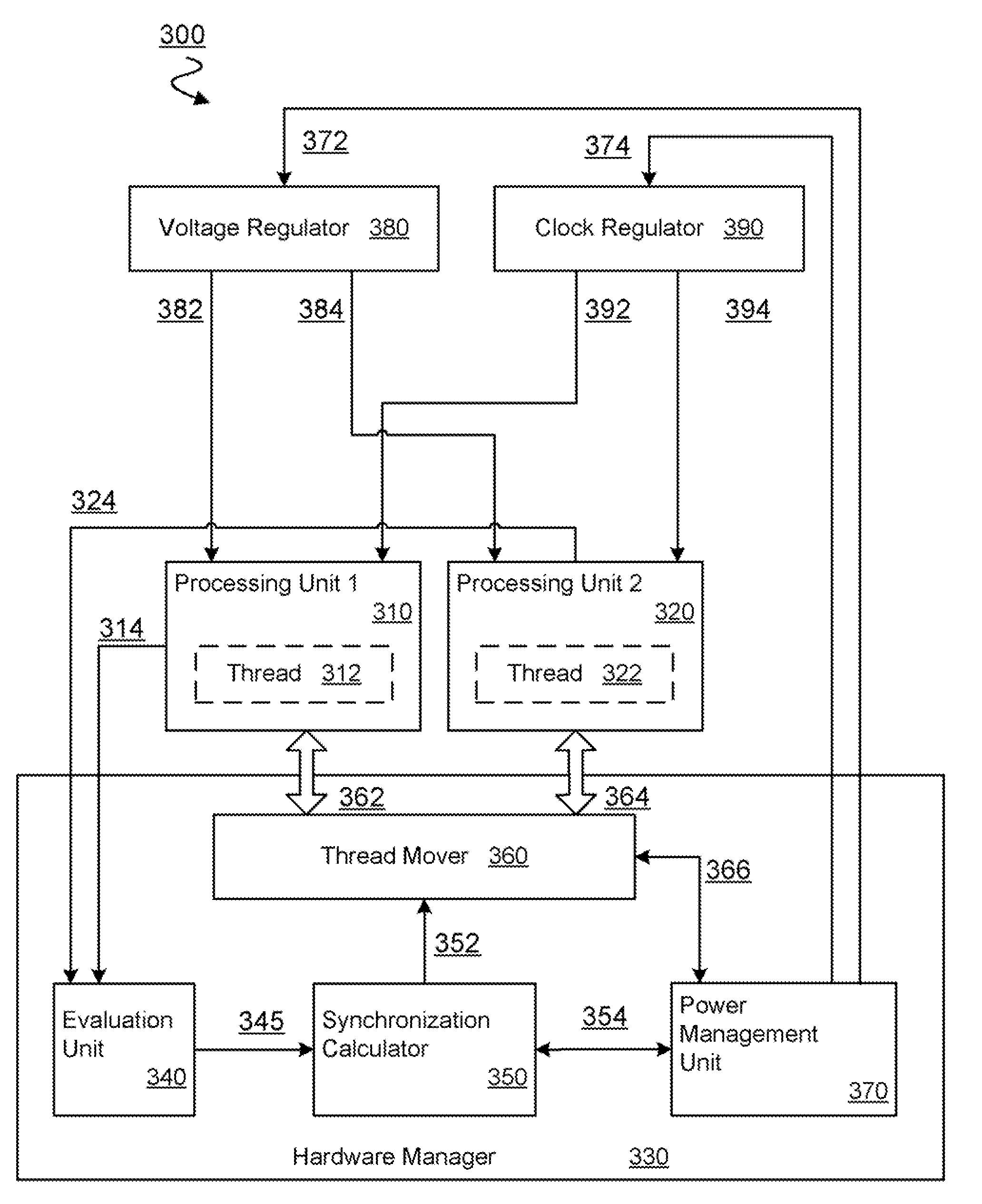

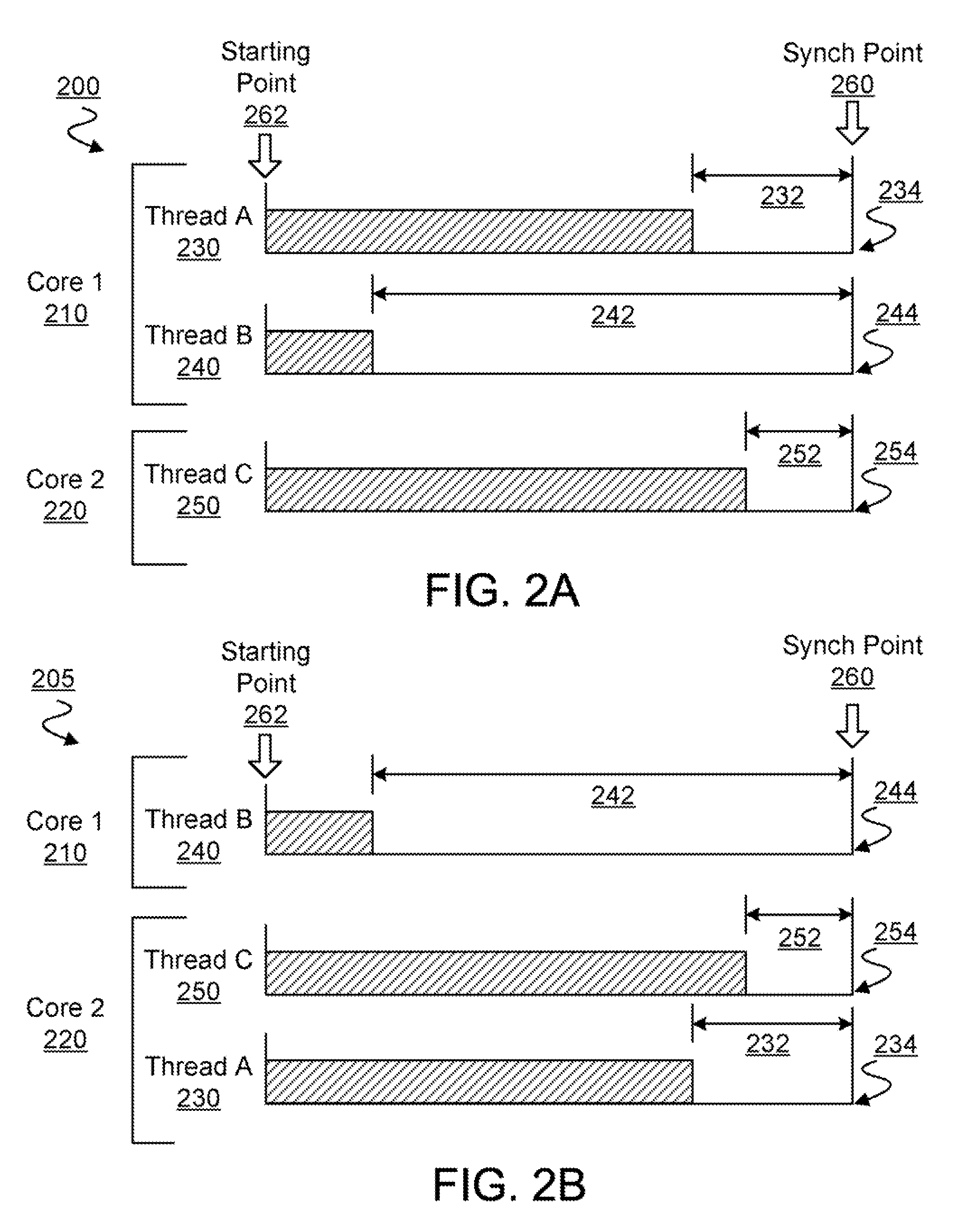

Thread migration to improve power efficiency in a parallel processing environment

InactiveUS20090172424A1Energy efficient ICTVolume/mass flow measurementProcessing coreParallel processing

A method and system to selectively move one or more of a plurality threads which are executing in parallel by a plurality of processing cores. In one embodiment, a thread may be moved from executing in one of the plurality of processing cores to executing in another of the plurality of processing cores, the moving based on a performance characteristic associated with the plurality of threads. In another embodiment of the invention, a power state of the plurality of processing cores may be changed to improve a power efficiency associated with the executing of the multiple threads.

Owner:INTEL CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com