Patents

Literature

1046results about How to "Improve latency" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

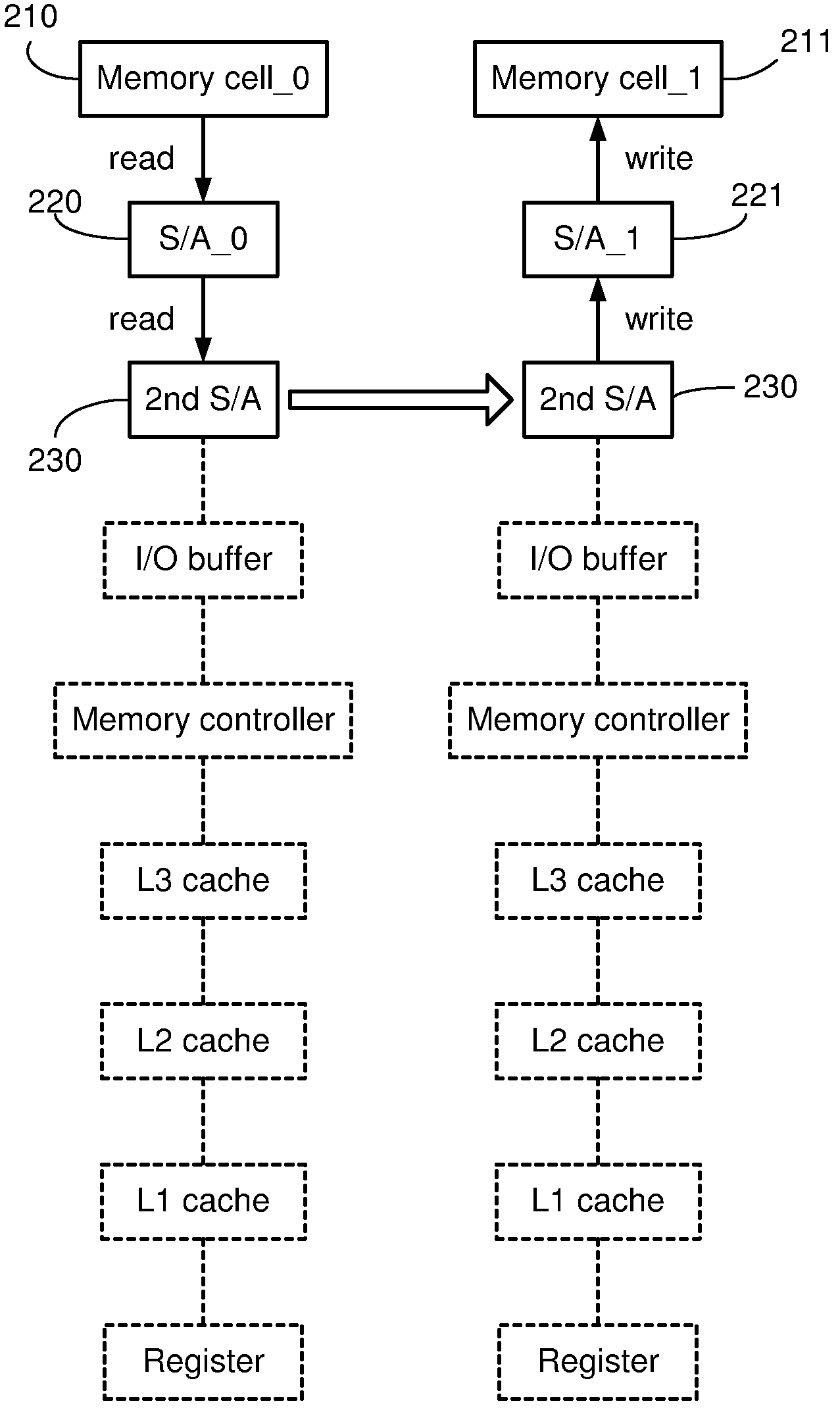

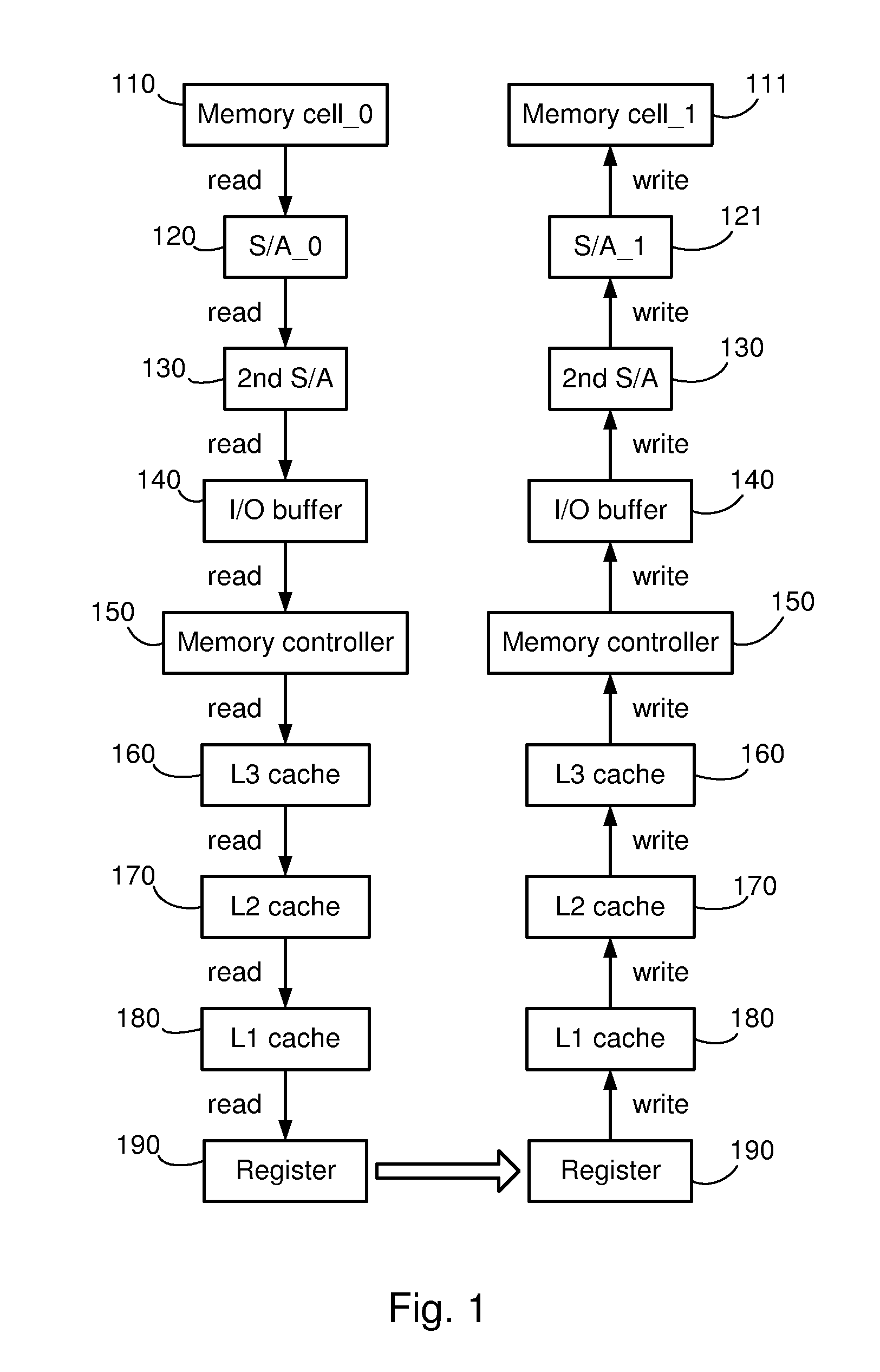

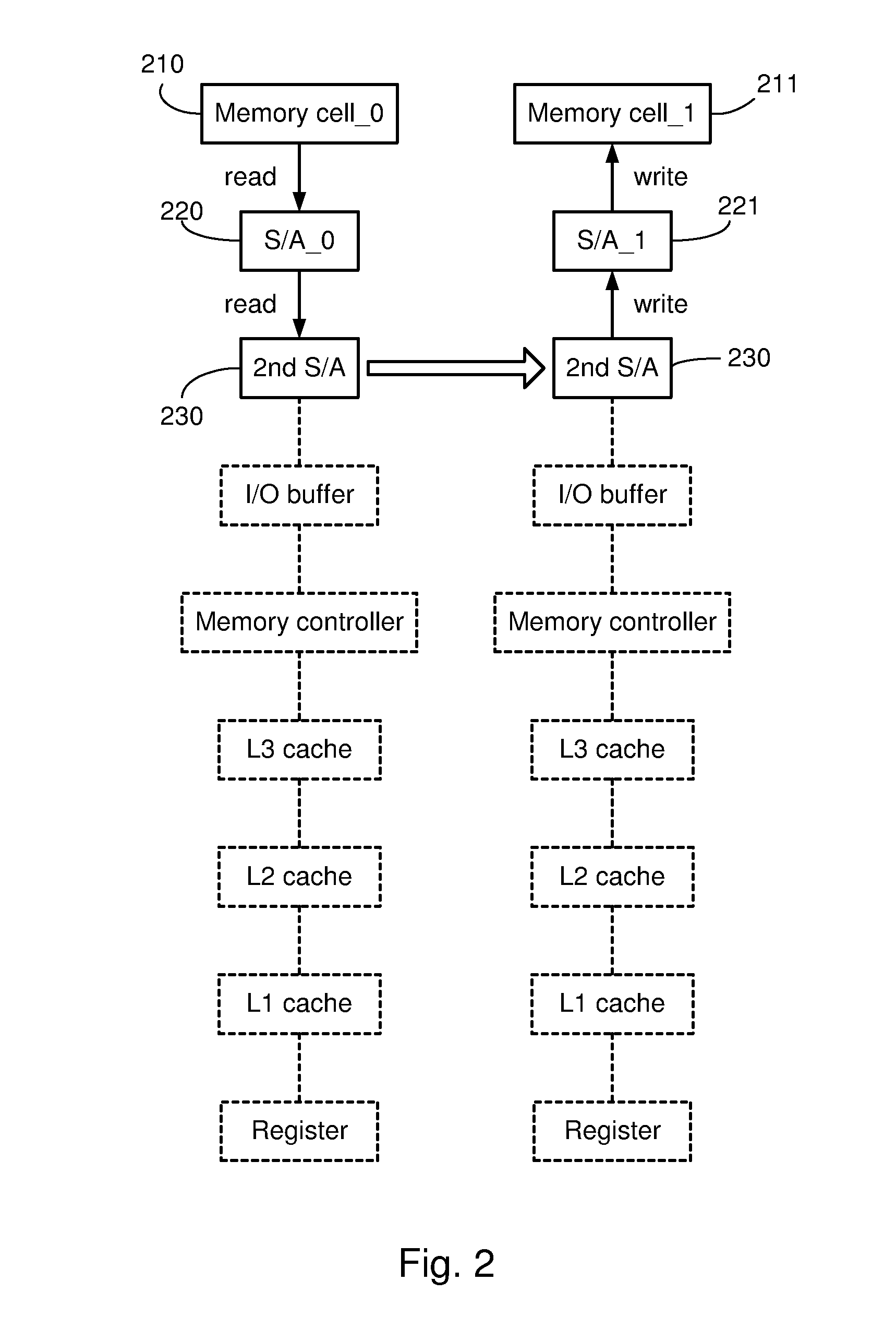

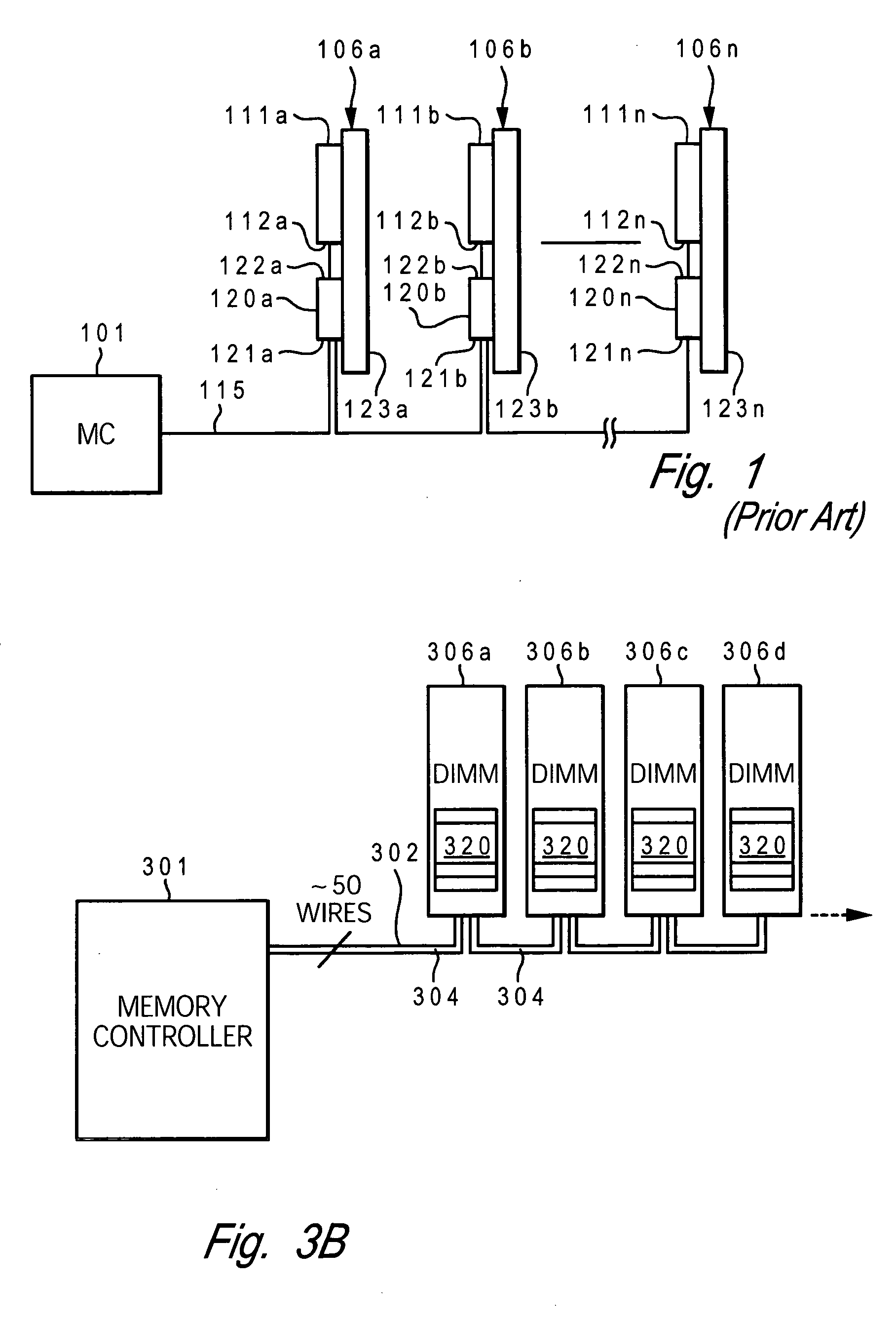

Systems and methods for data transfers between memory cells

Systems and methods for reducing the latency of data transfers between memory cells by enabling data to be transferred directly between sense amplifiers in the memory system. In one embodiment, a memory system uses a conventional DRAM memory structure having a pair of first-level sense amplifiers, a second-level sense amplifier and control logic for the sense amplifiers. Each of the sense amplifiers is configured to be selectively coupled to a data line. In a direct data transfer mode, the control logic generates control signals that cause the sense amplifiers to transfer data from a first one of the first-level sense amplifiers (a source sense amplifier) to the second-level sense amplifier, and from there to a second one of the first-level sense amplifiers (a destination sense amplifier.) The structure of these sense amplifiers is conventional, and the operation of the system is enabled by modified control logic.

Owner:TOSHIBA AMERICA ELECTRONICS COMPONENTS

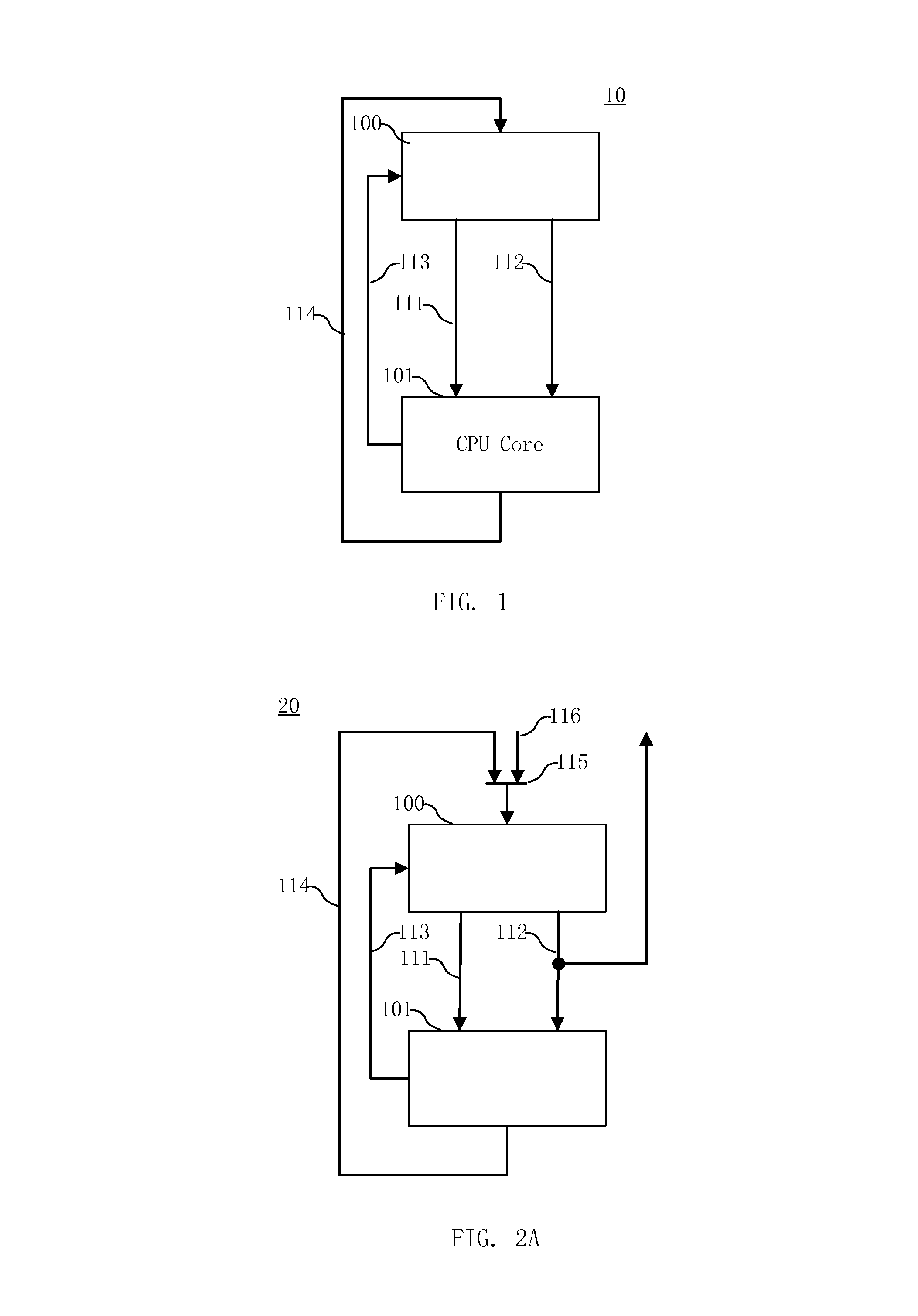

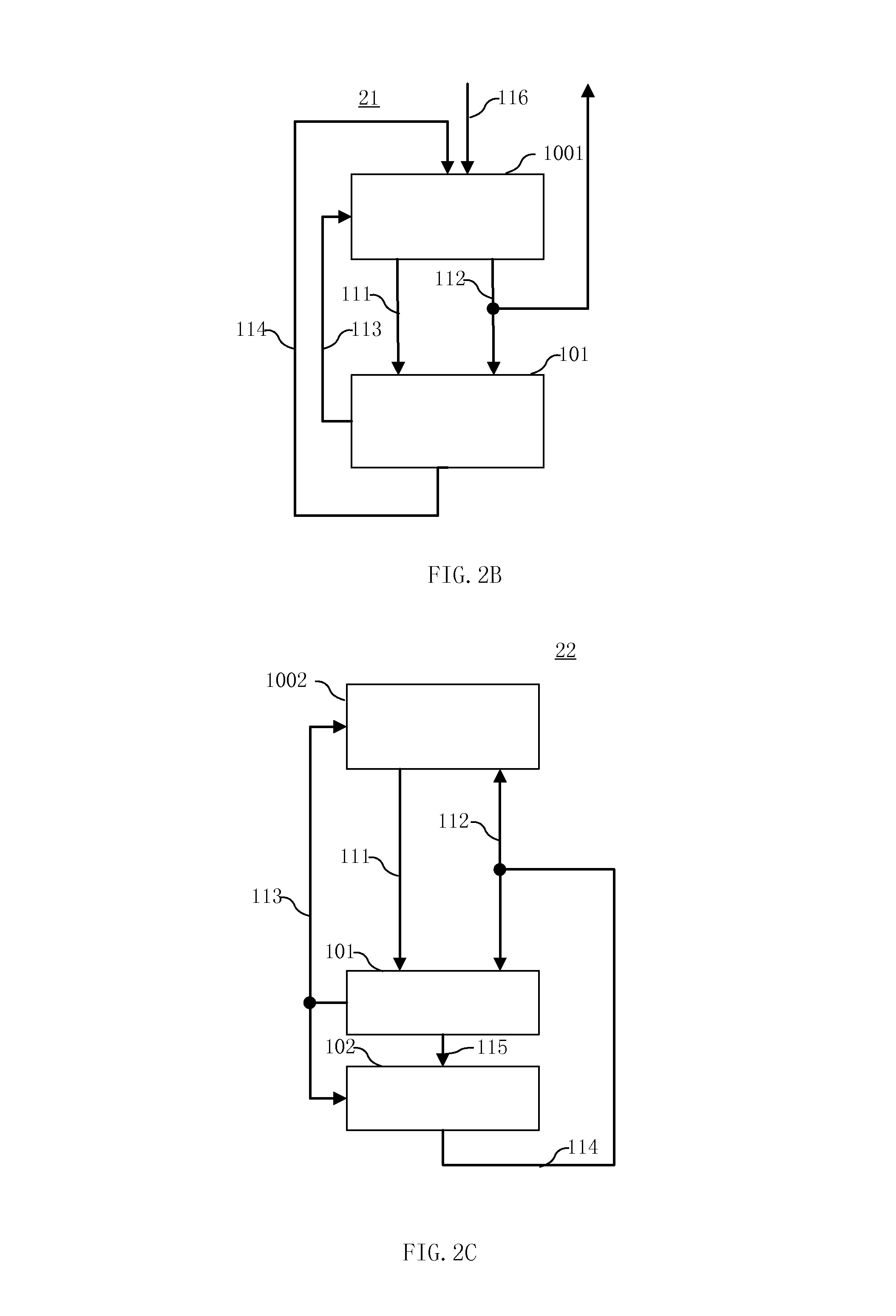

Processor-cache system and method

ActiveUS9047193B2Shorten the counting processEfficient and uniform structureEnergy efficient ICTRegister arrangementsAddress generation unitProcessor register

A digital system is provided. The digital system includes an execution unit, a level-zero (L0) memory, and an address generation unit. The execution unit is coupled to a data memory containing data to be used in operations of the execution unit. The L0 memory is coupled between the execution unit and the data memory and configured to receive a part of the data in the data memory. The address generation unit is configured to generate address information for addressing the L0 memory. Further, the L0 memory provides at least two operands of a single instruction from the part of the data to the execution unit directly, without loading the at least two operands into one or more registers, using the address information from the address generation unit.

Owner:SHANGHAI XINHAO MICROELECTRONICS

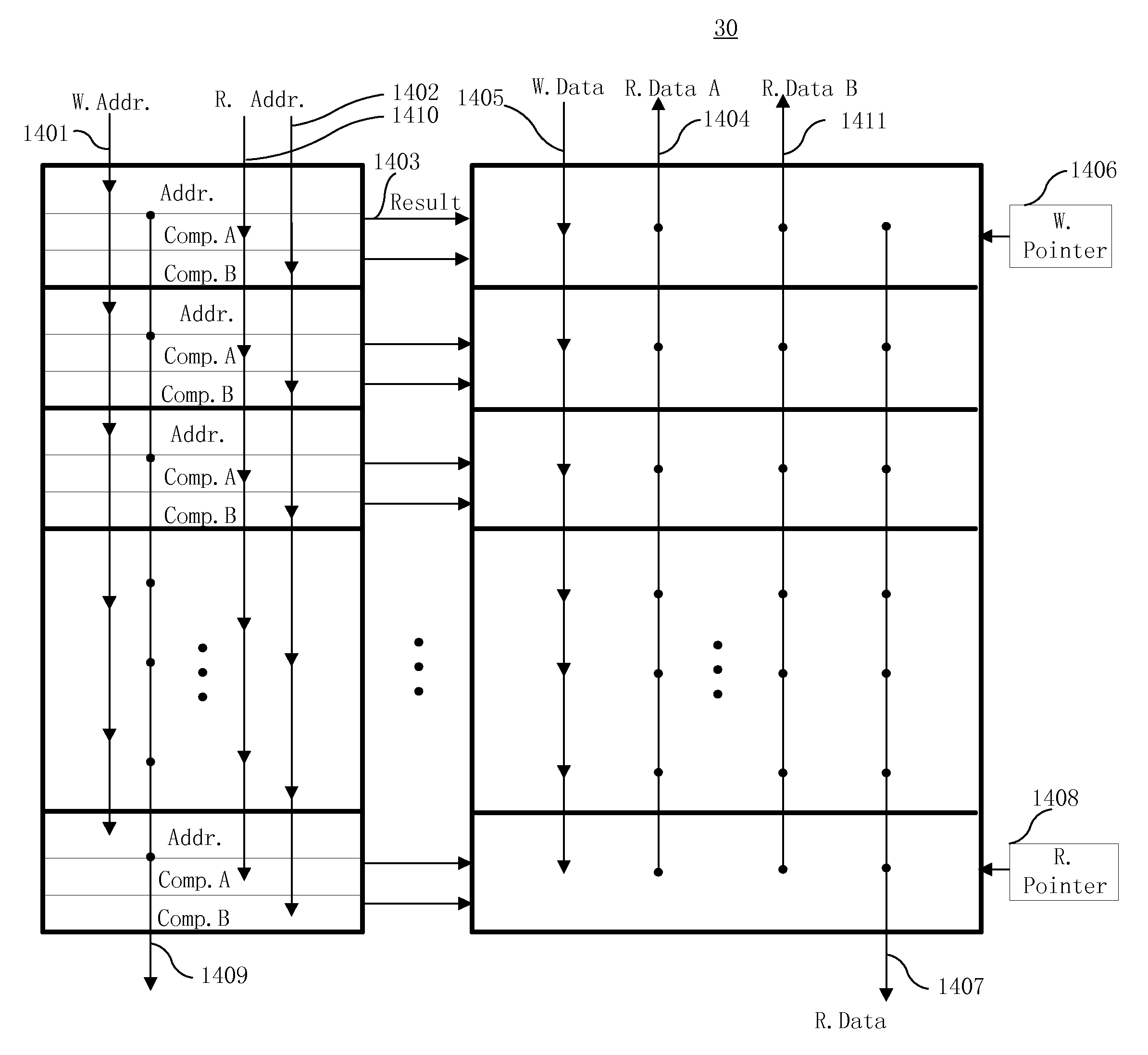

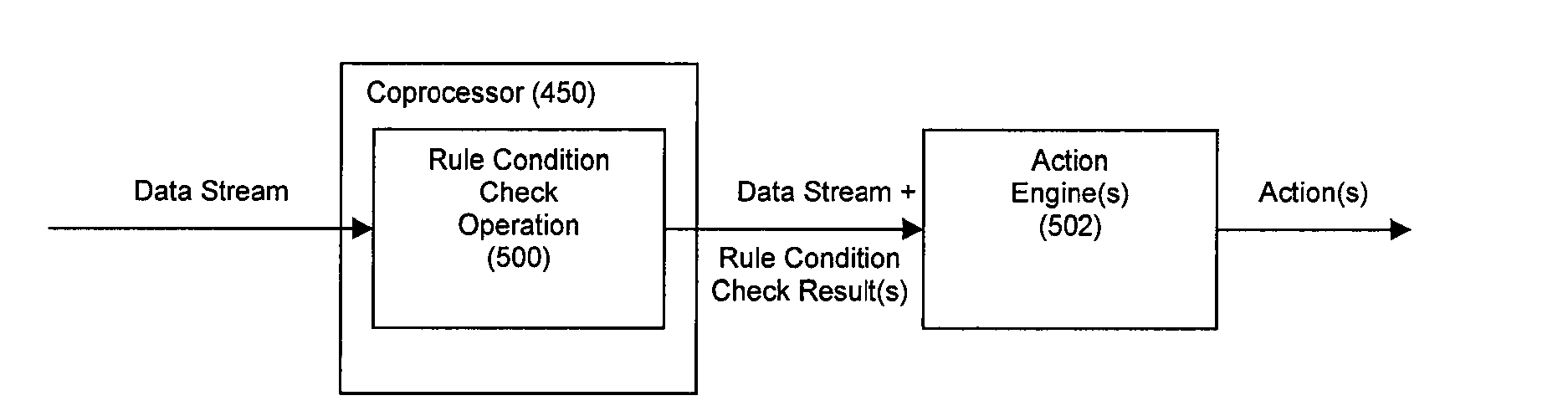

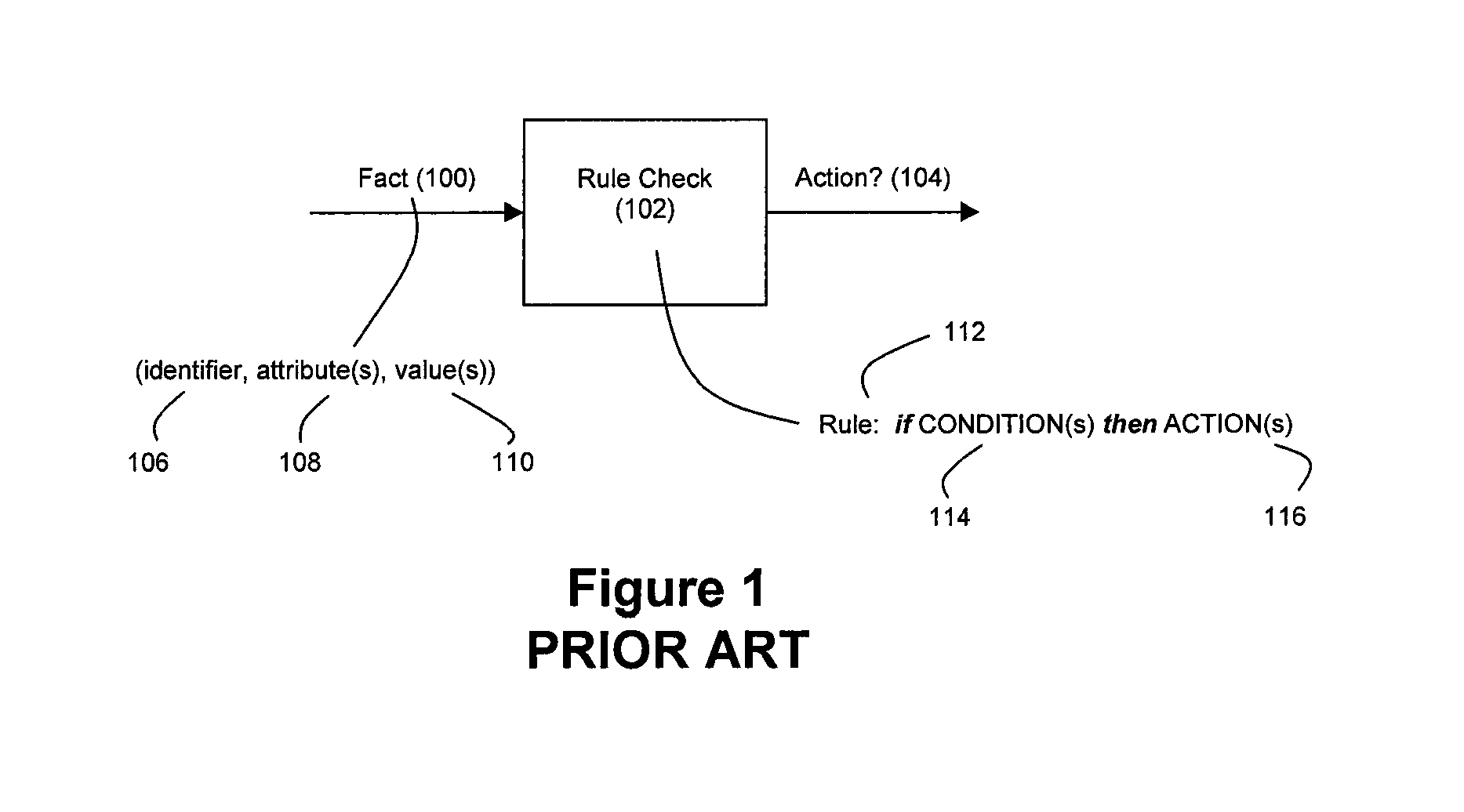

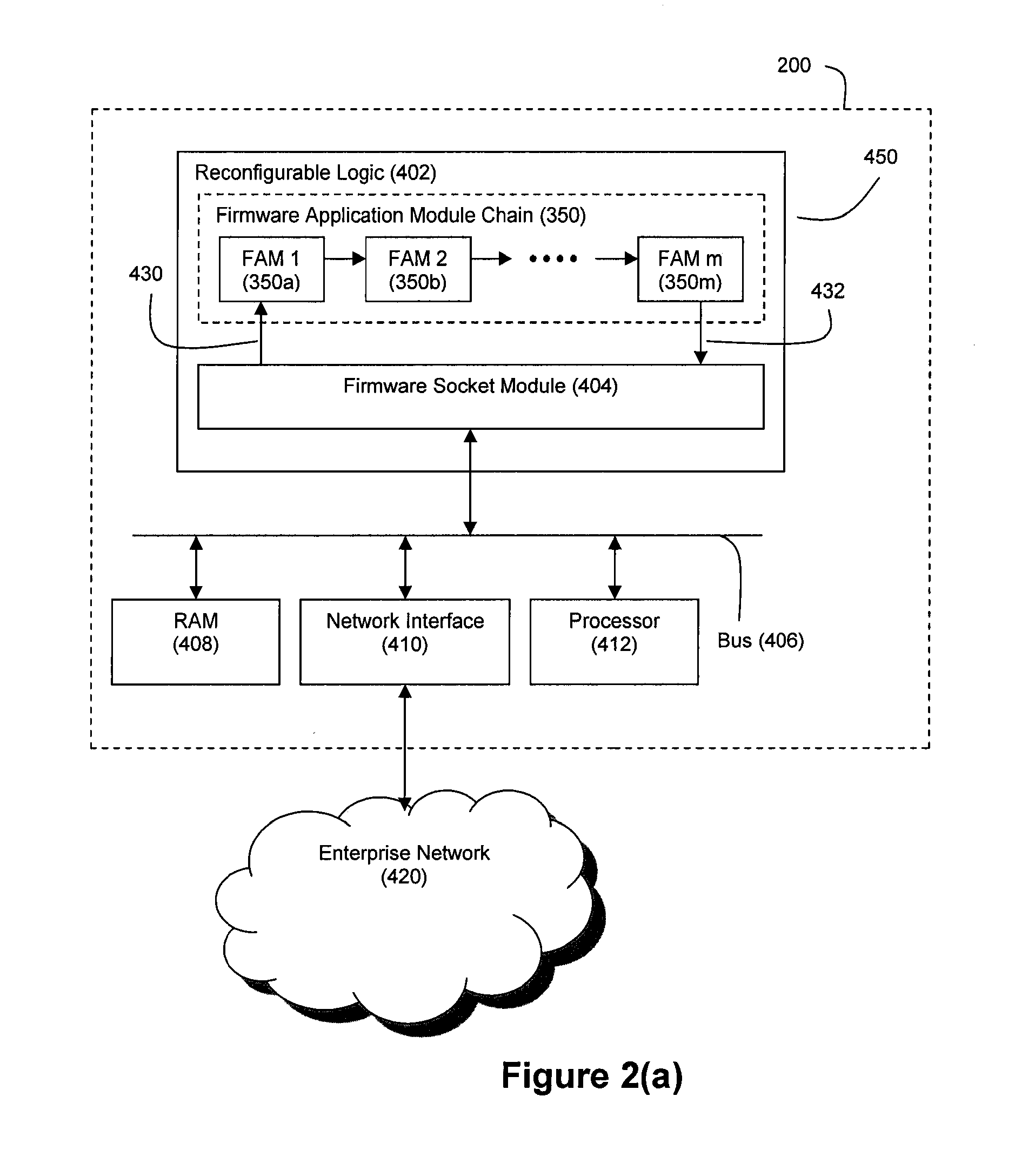

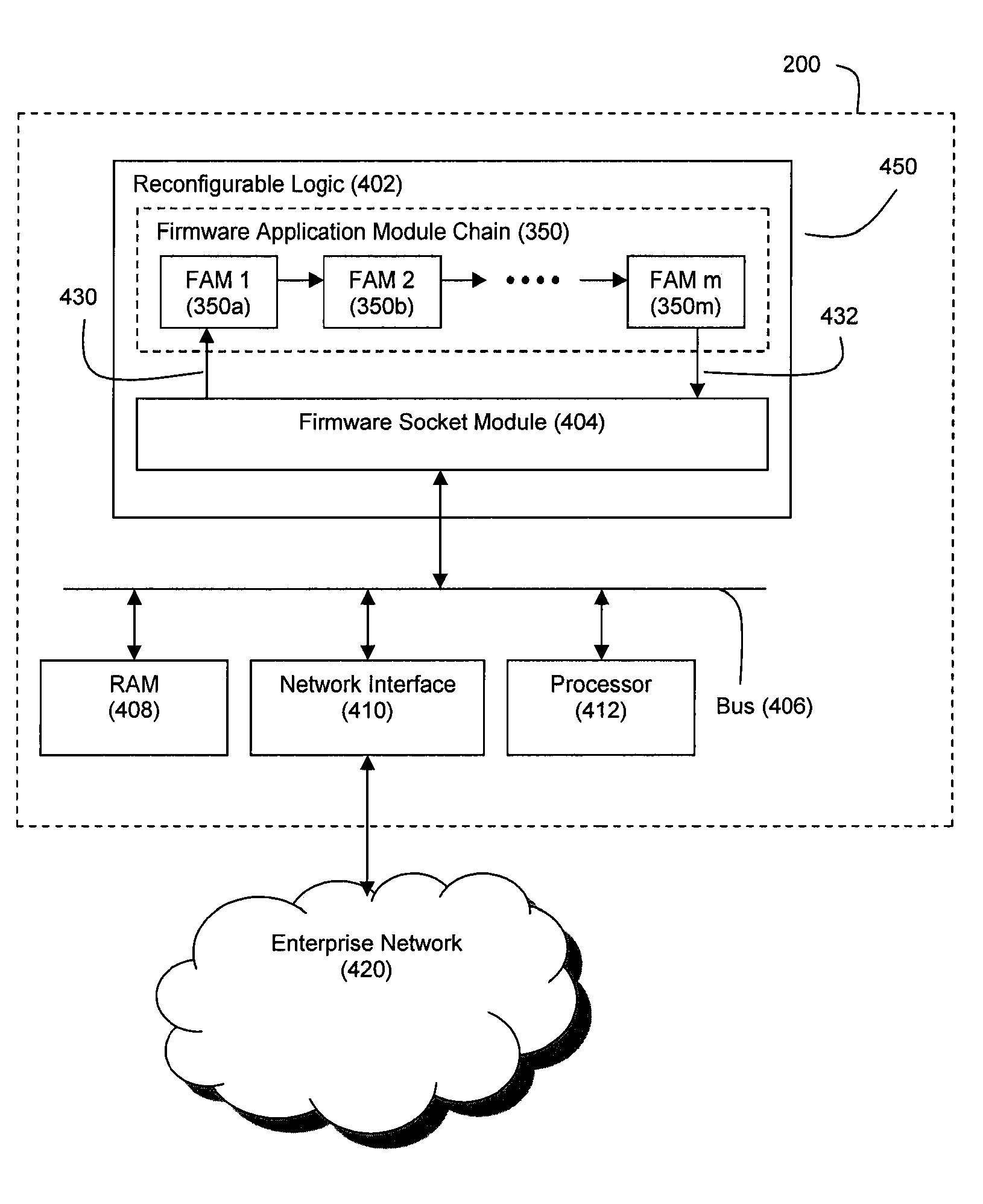

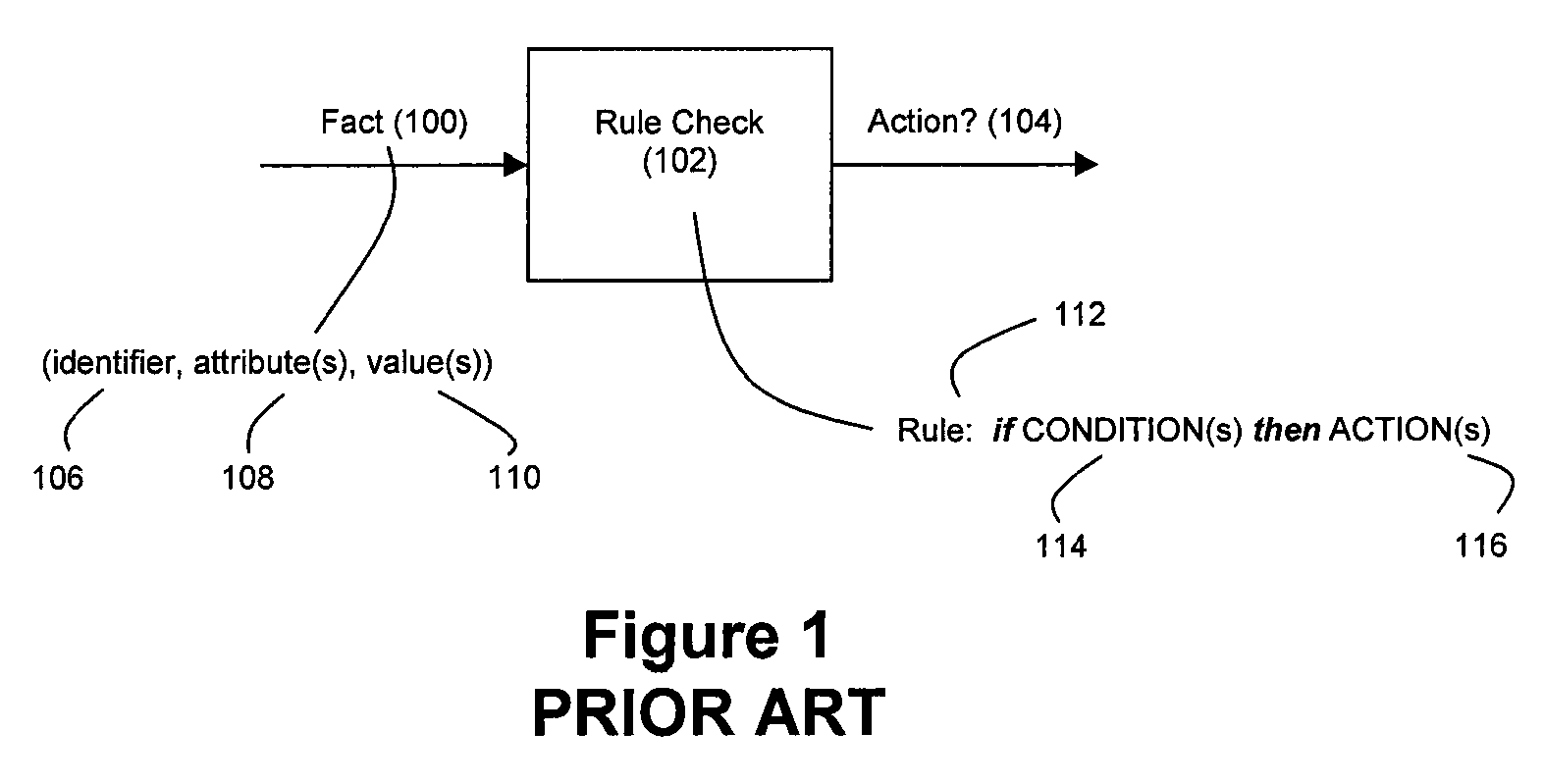

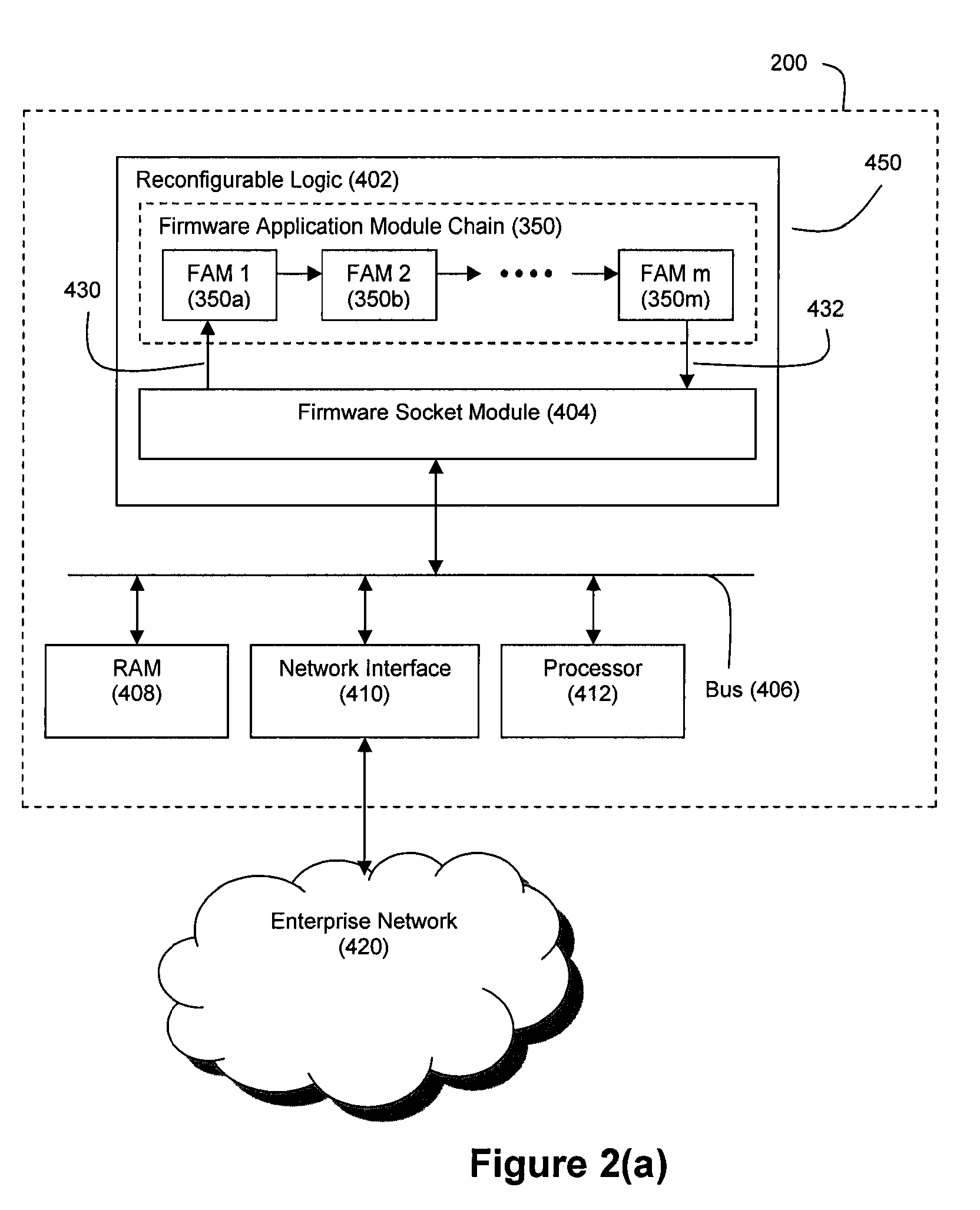

Method and System for Accelerated Stream Processing

ActiveUS20090287628A1Lower latencyImprove latencyDigital computer detailsCode conversionBusiness ruleEvent stream

Disclosed herein is a method and system for hardware-accelerating various data processing operations in a rule-based decision-making system such as a business rules engine, an event stream processor, and a complex event stream processor. Preferably, incoming data streams are checked against a plurality of rule conditions. Among the data processing operations that are hardware-accelerated include rule condition check operations, filtering operations, and path merging operations. The rule condition check operations generate rule condition check results for the processed data streams, wherein the rule condition check results are indicative of any rule conditions which have been satisfied by the data streams. The generation of such results with a low degree of latency provides enterprises with the ability to perform timely decision-making based on the data present in received data streams.

Owner:IP RESERVOIR

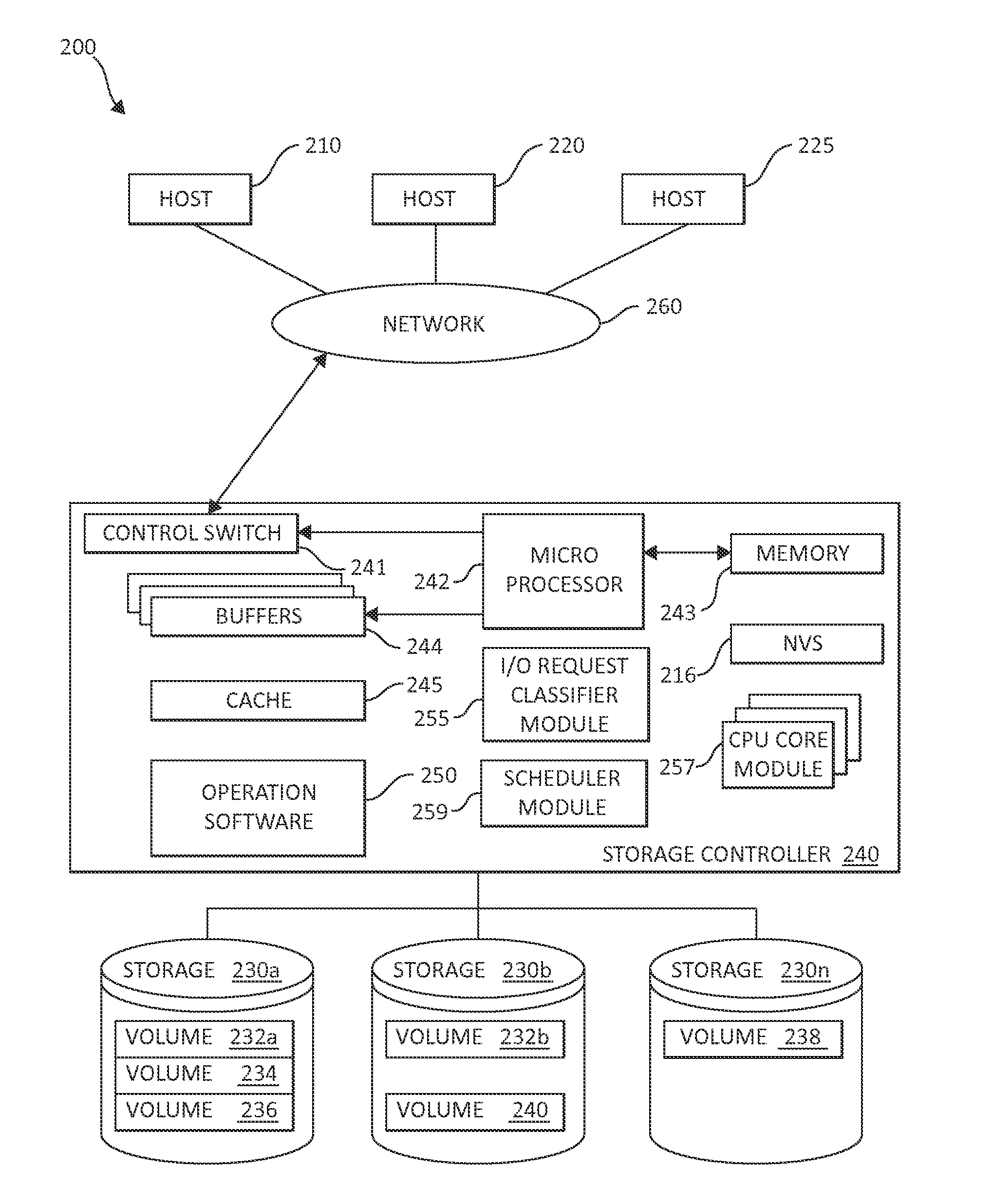

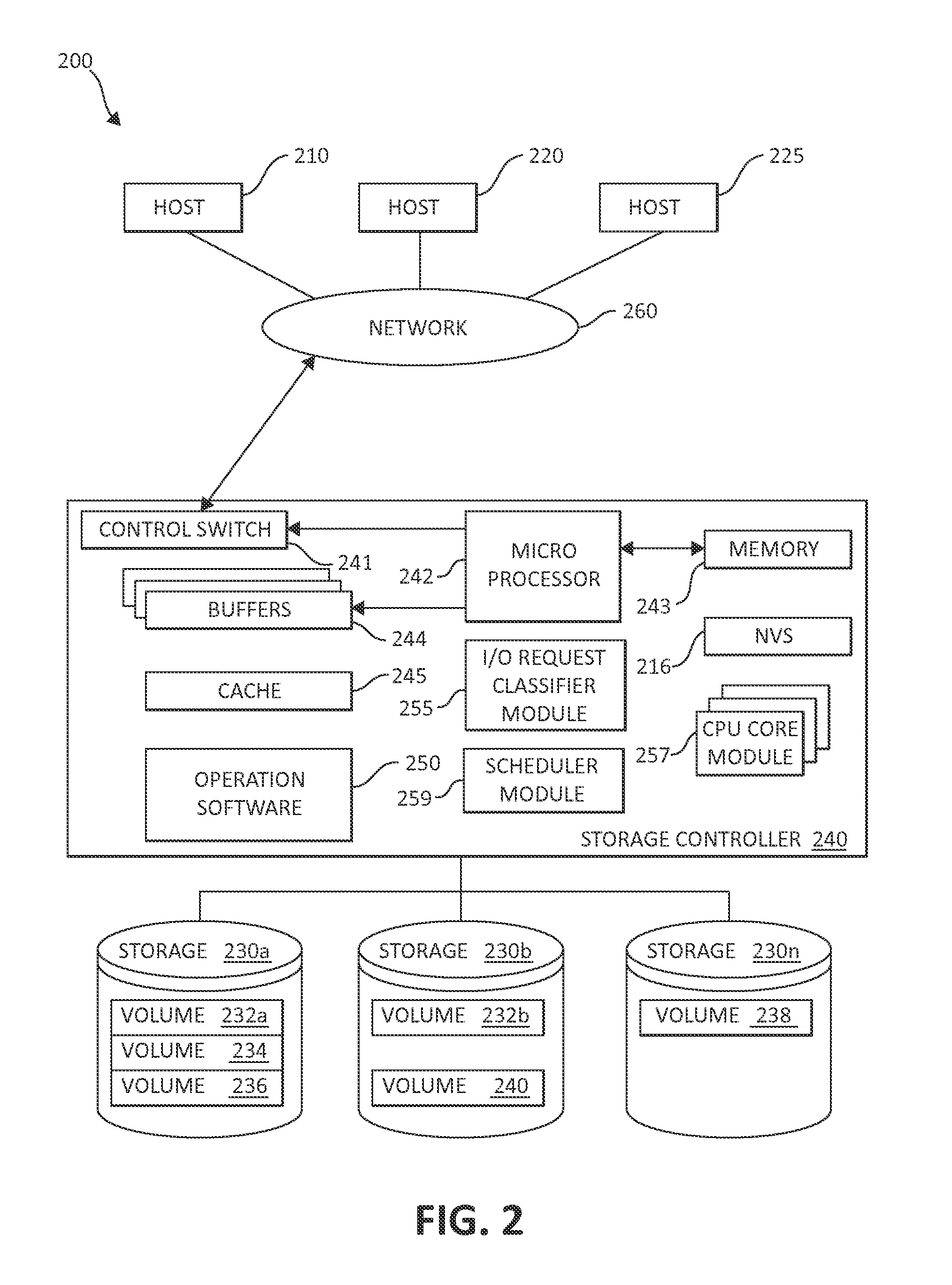

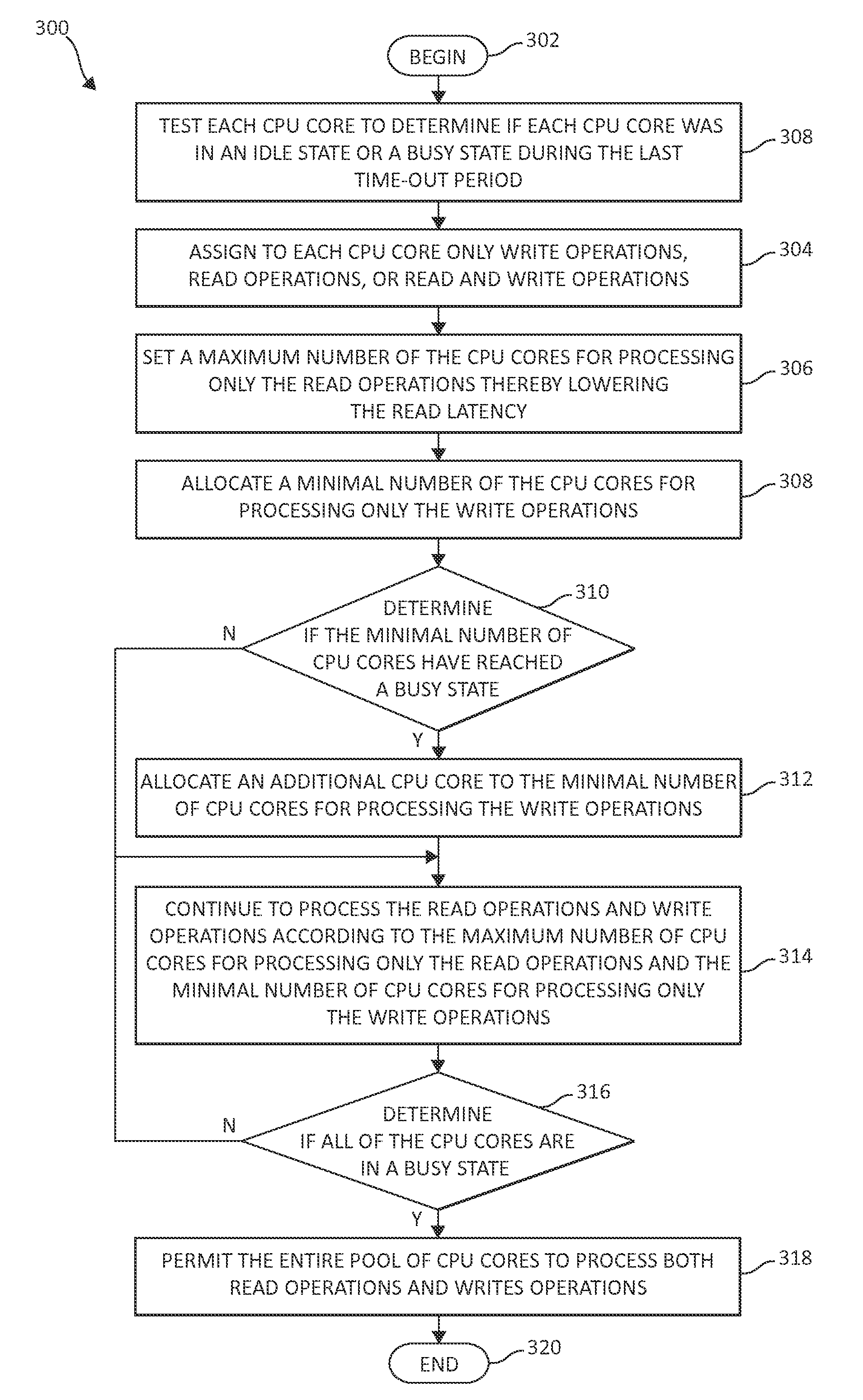

Reducing read latency using a pool of processing cores

ActiveUS20130339635A1Minimize write latencyAvoid read latencyError detection/correctionProgram controlProcessing coreThroughput

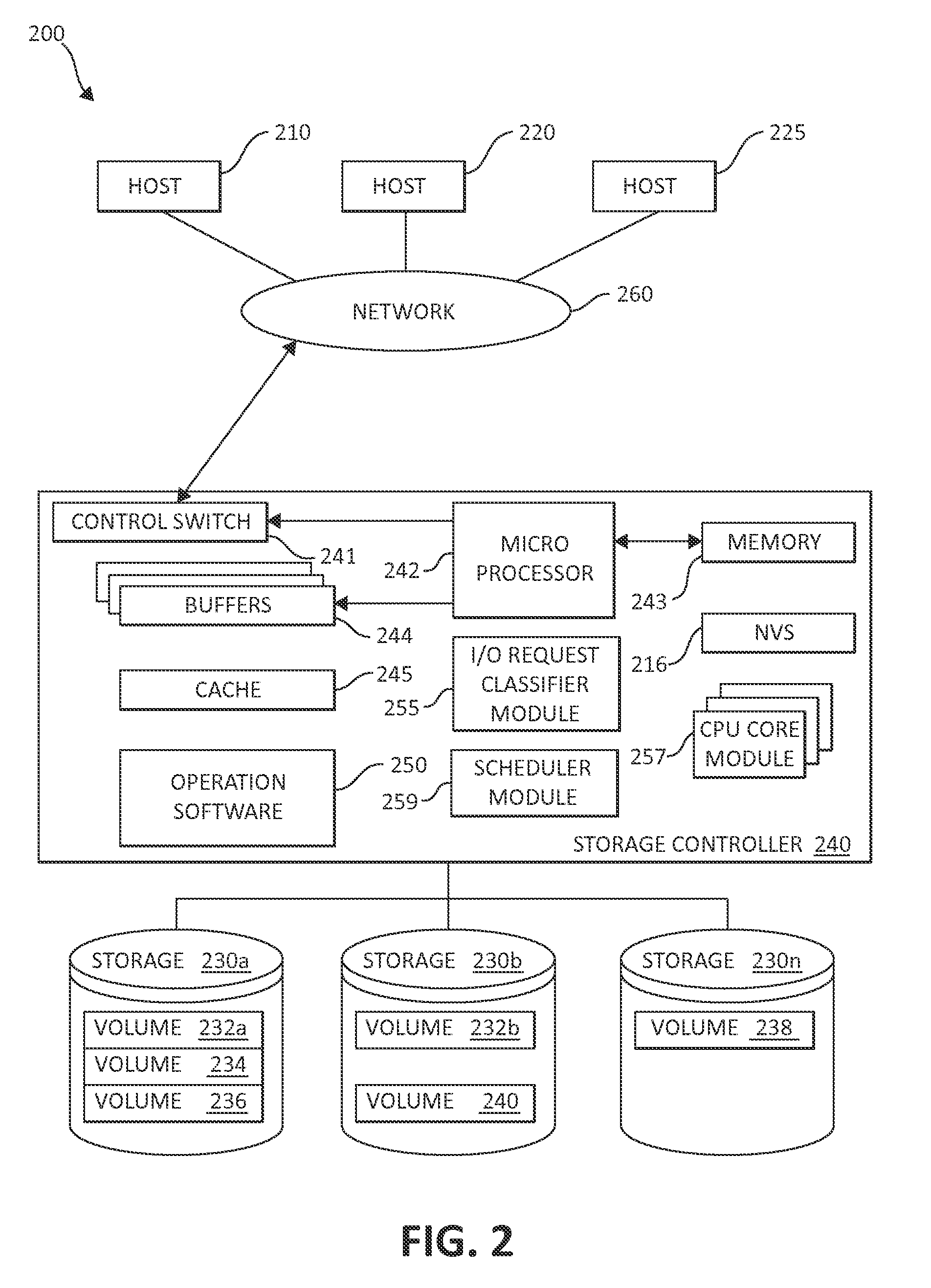

In a read processing storage system, using a pool of CPU cores, the CPU cores are assigned to process either write operations, read operations, and read and write operations, that are scheduled for processing. A maximum number of the CPU cores are set for processing only the read operations, thereby lowering a read latency. A minimal number of the CPU cores are allocated for processing the write operations, thereby increasing write latency. Upon reaching a throughput limit for the write operations that causes the minimal number of the plurality of CPU cores to reach a busy status, the minimal number of the plurality of CPU cores for processing the write operations is increased.

Owner:IBM CORP

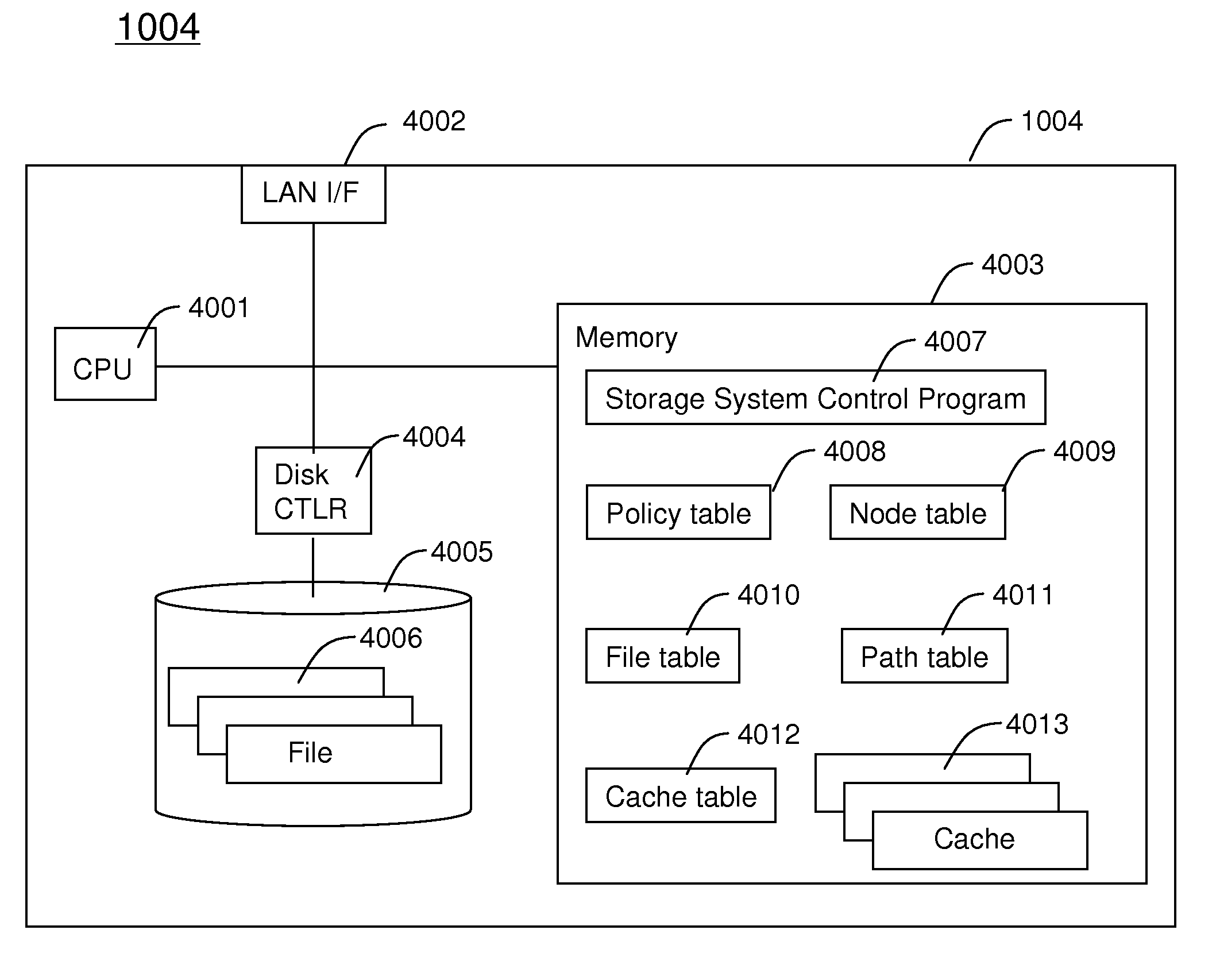

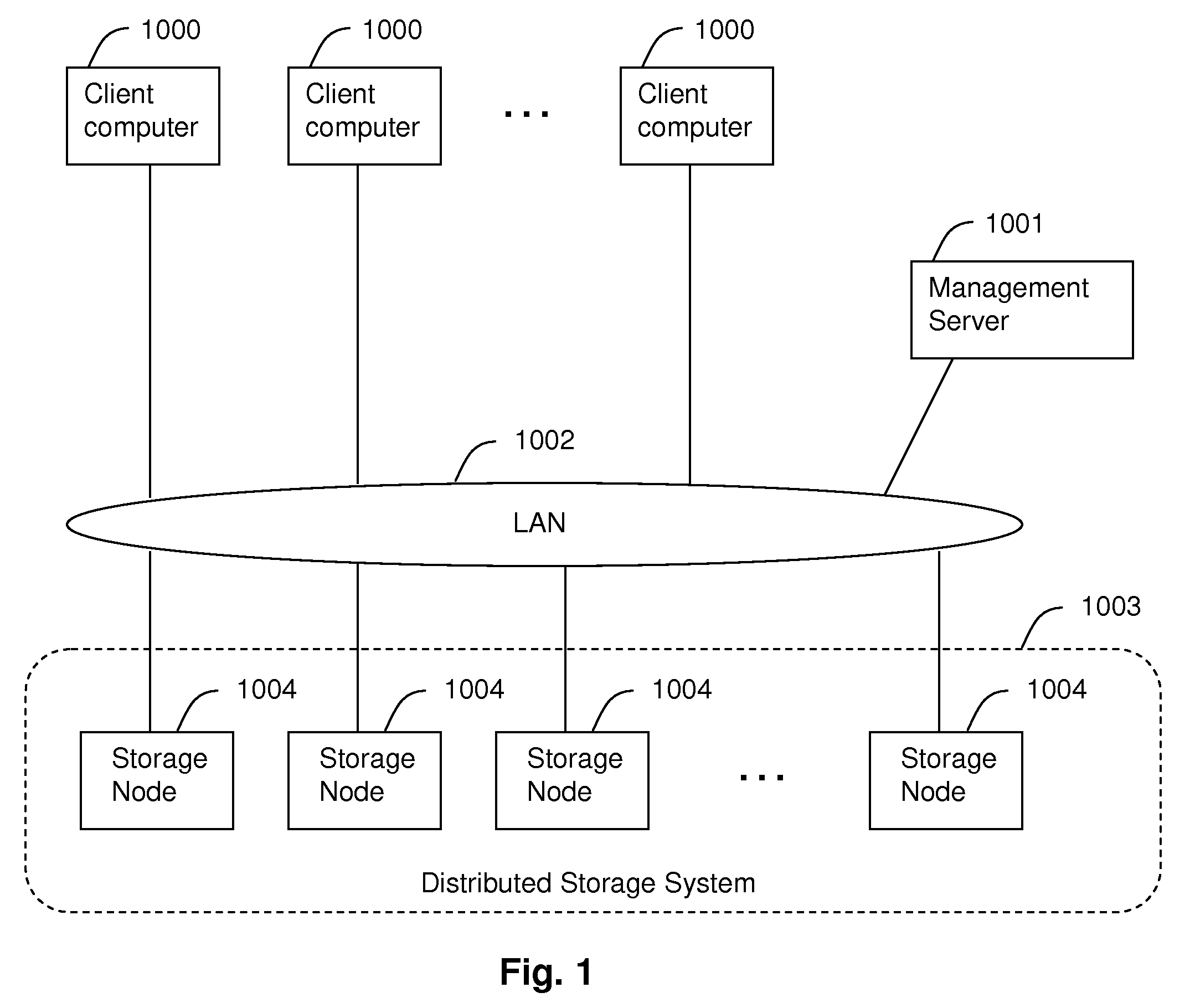

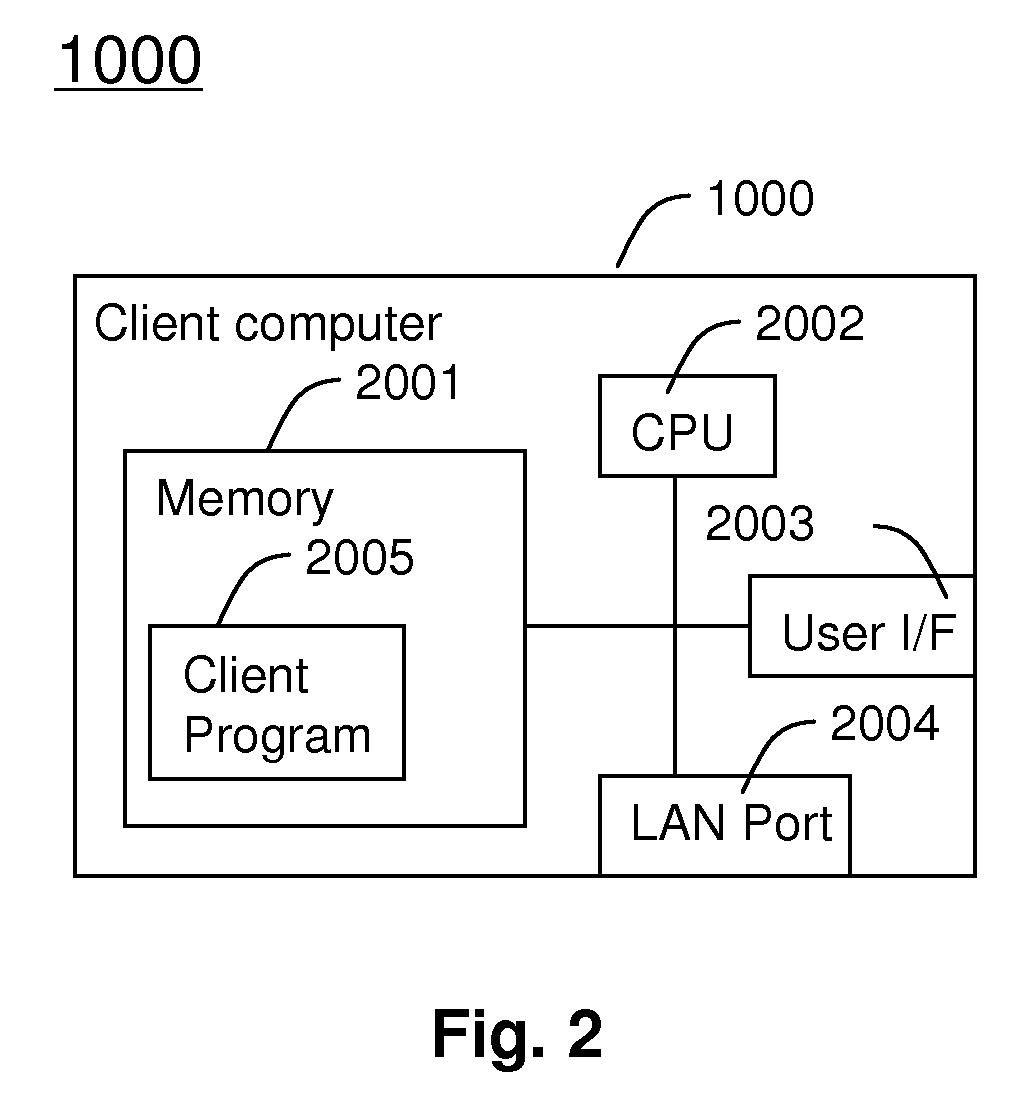

Method and apparatus for improving file access performance of distributed storage system

InactiveUS8086634B2Improve performanceReduce overheadDigital data information retrievalDigital data processing detailsNetwork connectivityDistributed memory systems

Embodiments of the invention provide methods and apparatus for improving the performance of file transfer to a client from a distributed storage system which provides single name space to clients. In one embodiment, a system for providing access to files in a distributed storage system comprises a plurality of storage nodes and at least one computer device connected via a network. Each storage node is configured, upon receiving a file access request for a file from one of the at least one computer device as a receiver storage node, to determine whether or not to inform the computer device making the file access request to redirect the file access request to an owner storage node of the file according to a preset policy. The preset policy defines conditions for whether to redirect the file access request based on at least one of file type or file size of the file.

Owner:HITACHI LTD

Reducing read latency using a pool of processing cores

ActiveUS8930633B2Avoid read latencyLower read latencyInput/output to record carriersHardware monitoringProcessing coreComputer science

Owner:INT BUSINESS MASCH CORP

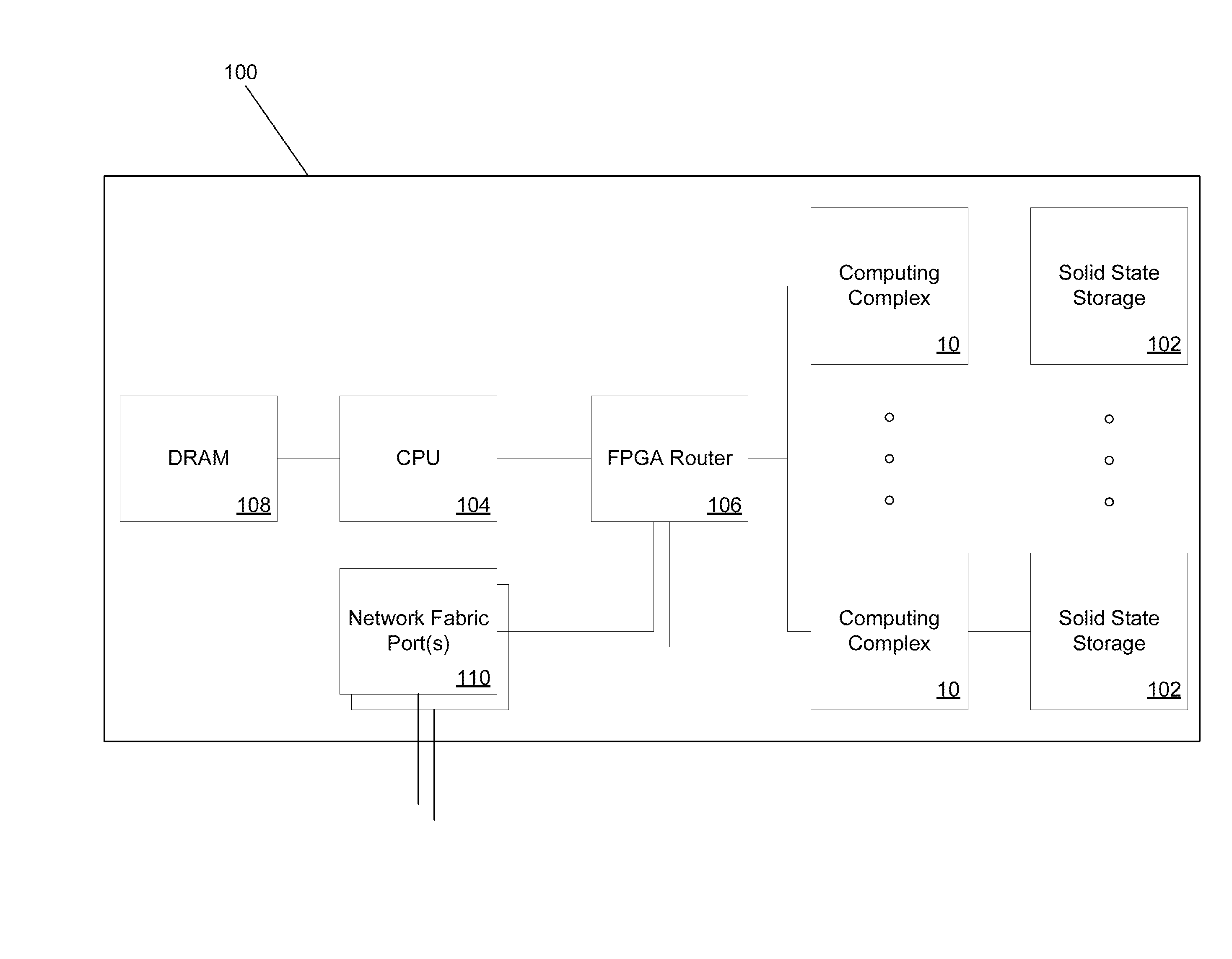

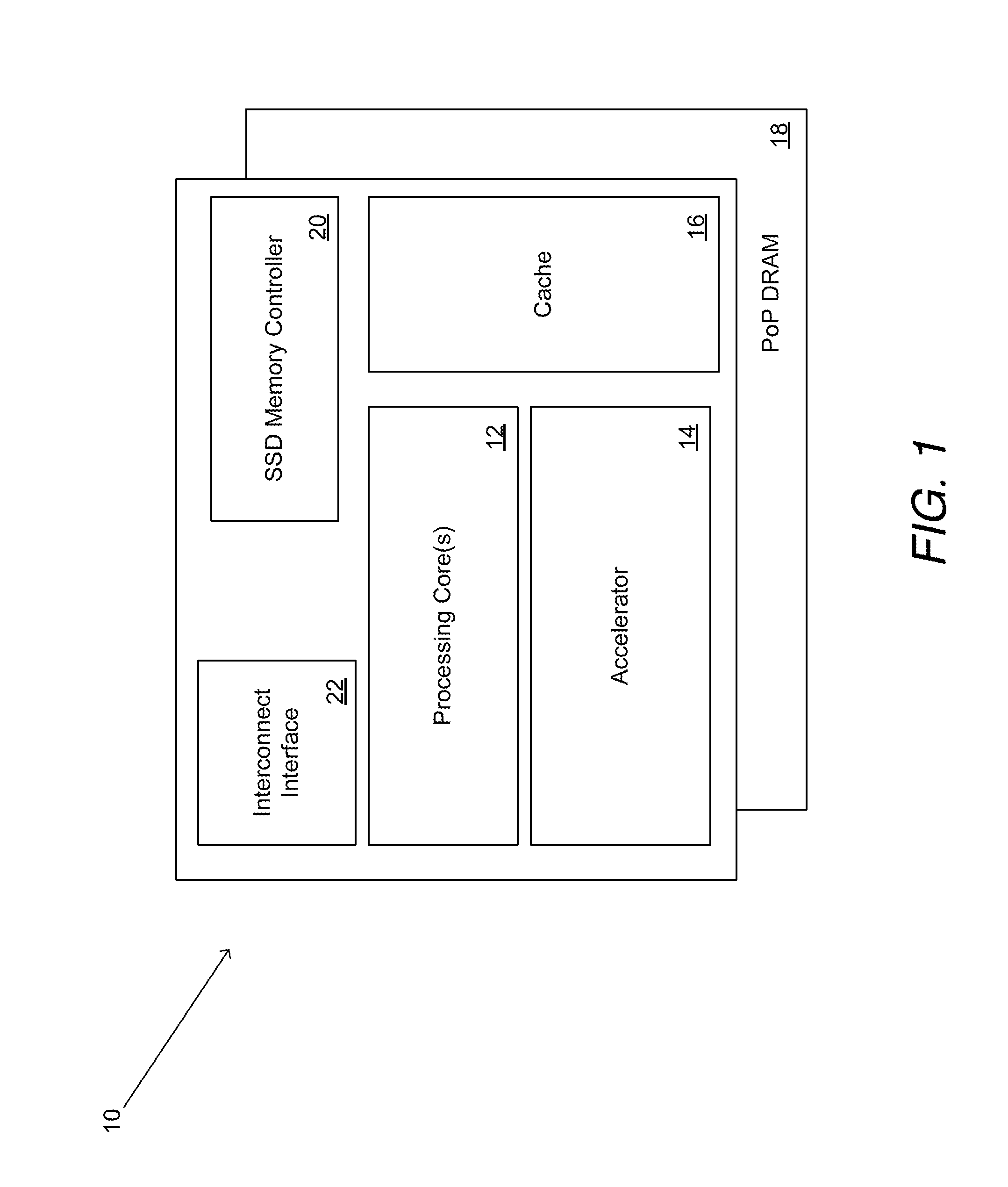

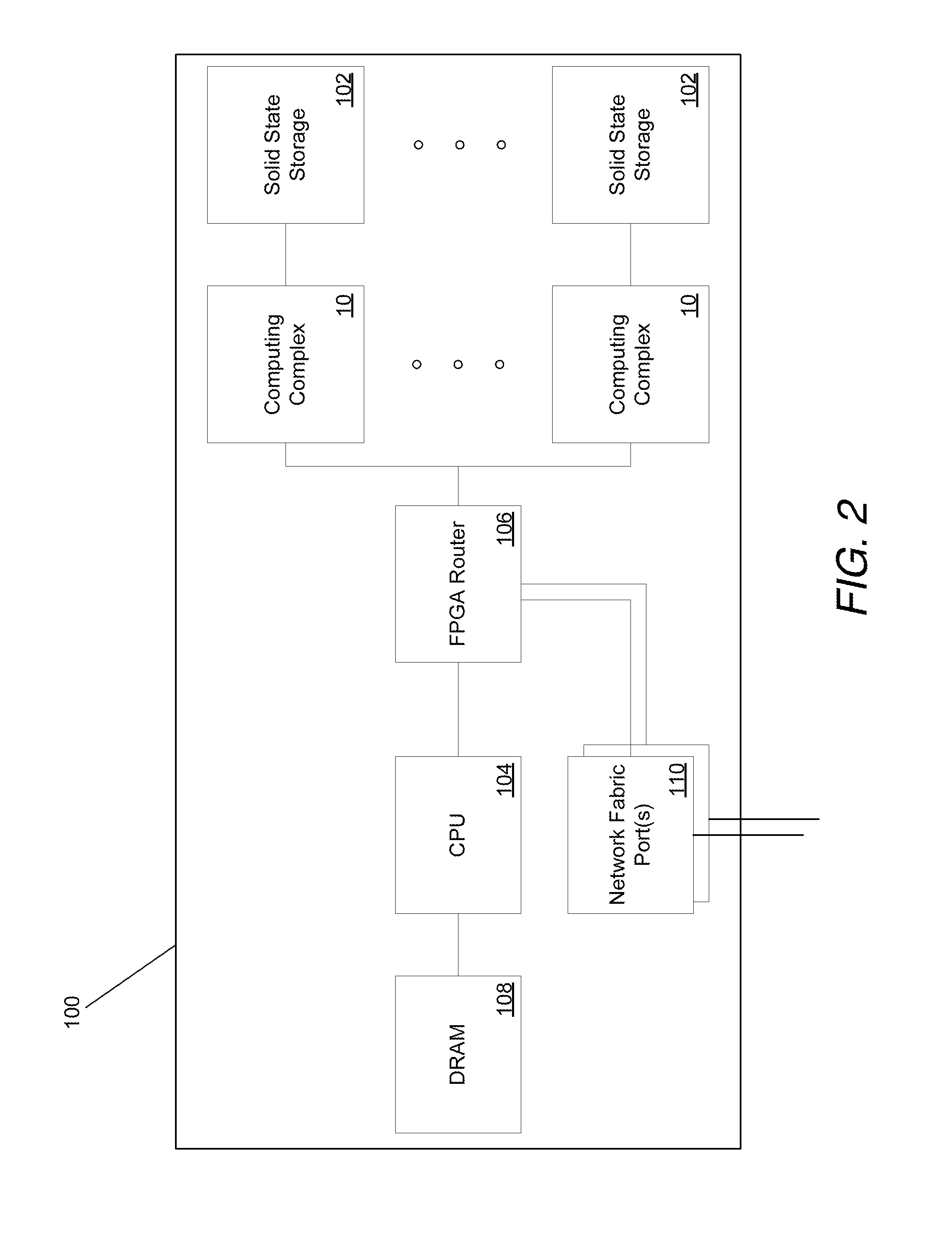

Systems and methods for rapid processing and storage of data

ActiveUS9552299B2Faster bandwidthImprove latencyMemory architecture accessing/allocationMemory adressing/allocation/relocationMassively parallelProcessing core

Systems and methods of building massively parallel computing systems using low power computing complexes in accordance with embodiments of the invention are disclosed. A massively parallel computing system in accordance with one embodiment of the invention includes at least one Solid State Blade configured to communicate via a high performance network fabric. In addition, each Solid State Blade includes a processor configured to communicate with a plurality of low power computing complexes interconnected by a router, and each low power computing complex includes at least one general processing core, an accelerator, an I / O interface, and cache memory and is configured to communicate with non-volatile solid state memory.

Owner:CALIFORNIA INST OF TECH

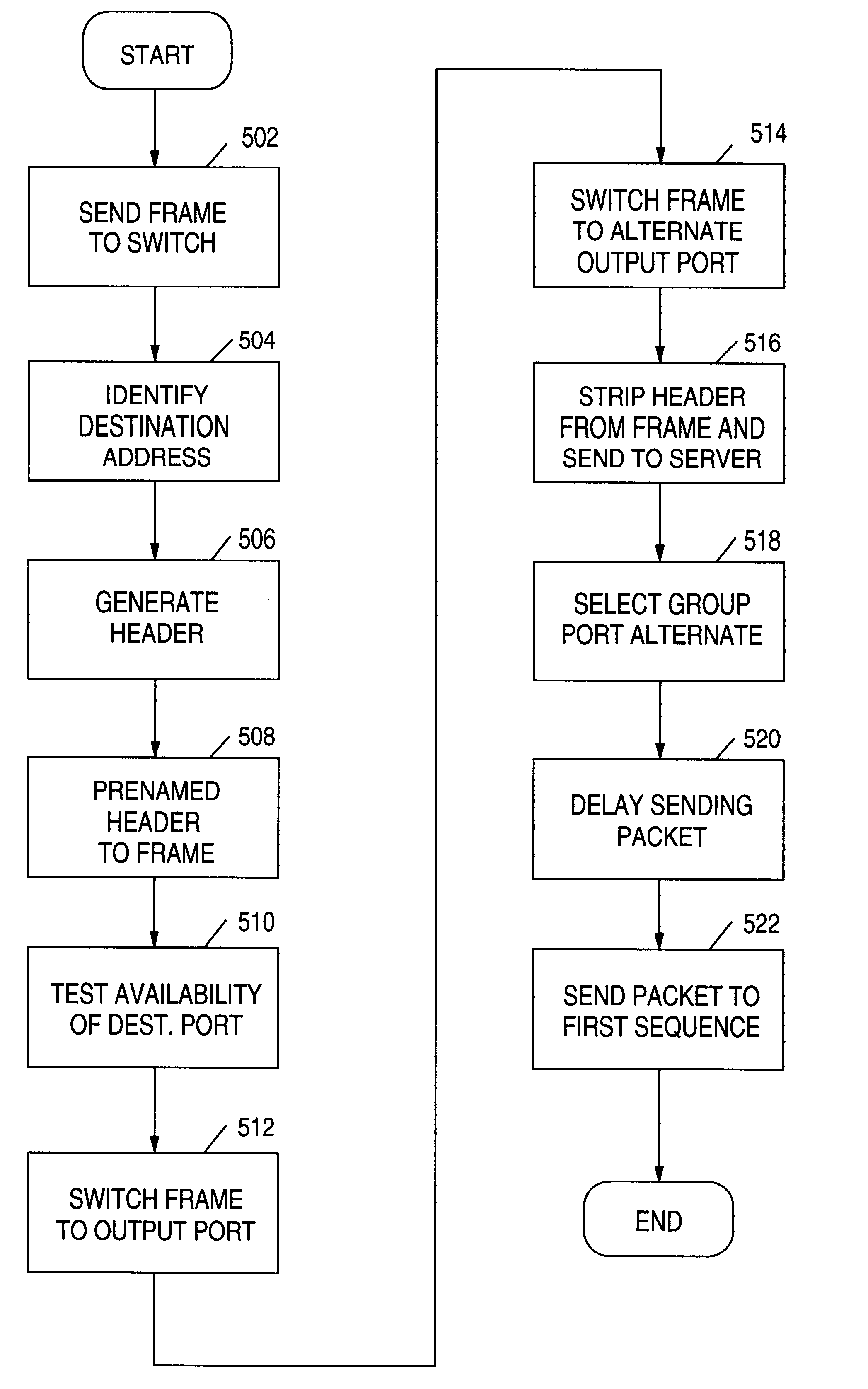

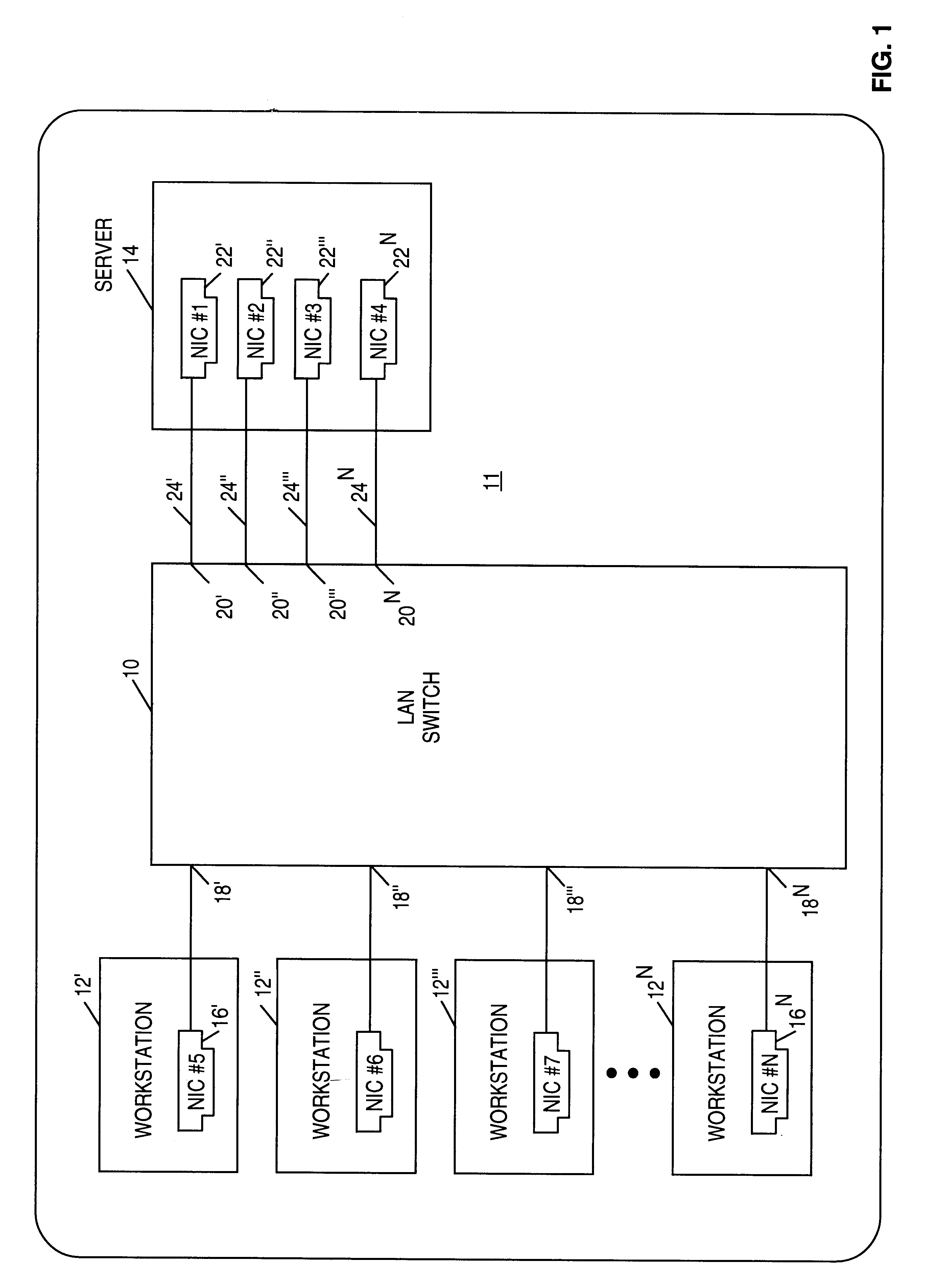

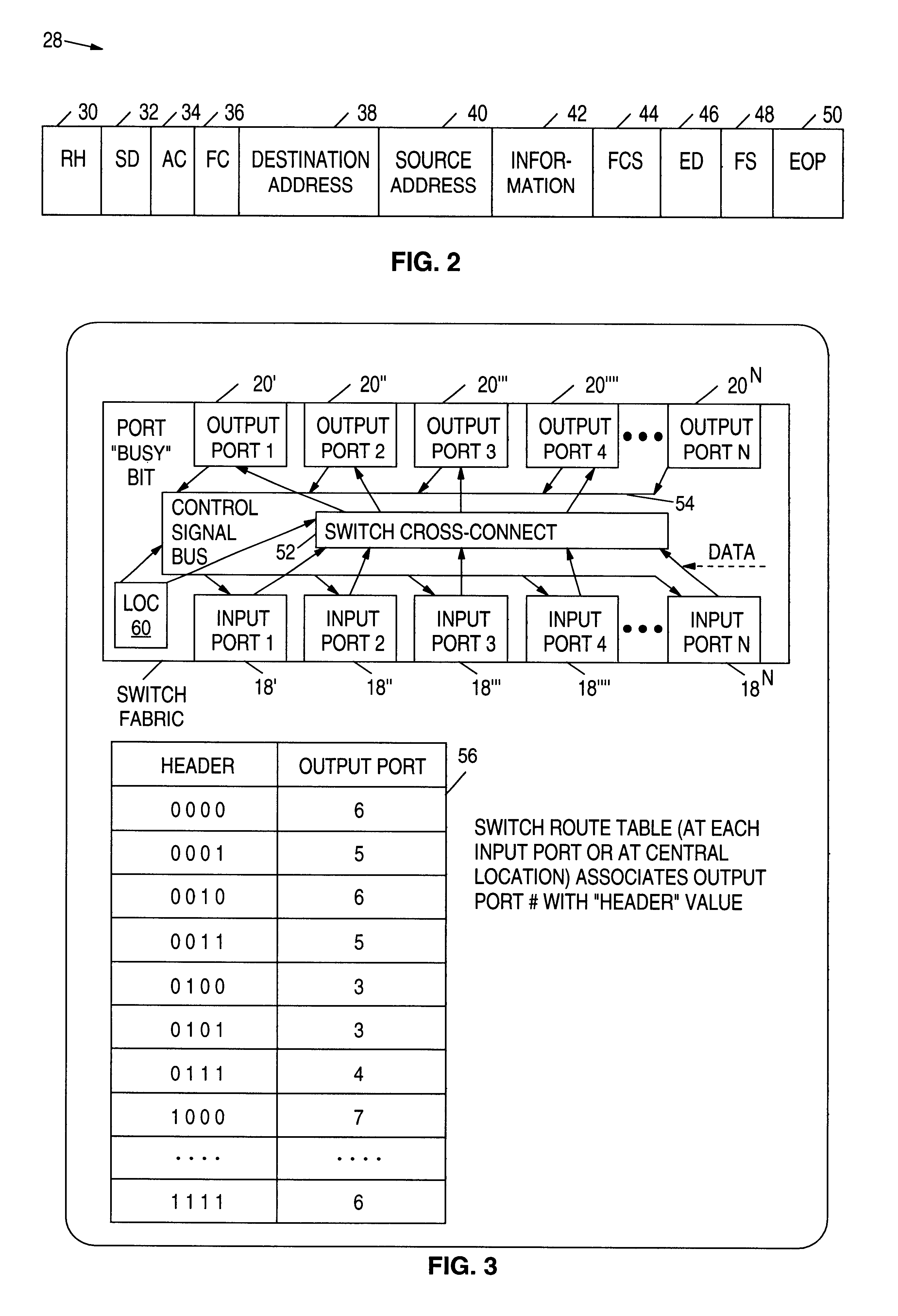

Network server having dynamic load balancing of messages in both inbound and outbound directions

InactiveUS6243360B1Improve performancePromote recoveryError preventionTransmission systemsDynamic load balancingNetwork packet

A communications network including a server having multiple entry ports and a plurality of workstations dynamically balances inbound and outbound to / from the server thereby reducing network latency and increasing network throughput. Workstations send data packets including network destination addresses to a network switch. A header is prepended to the data packet, the header identifying a switch output or destination port for transmitting the data packet to the network destination address. The network switch transfers the data packet from the switch input port to the switch destination output port whereby whenever the switch receives a data packet with the server address, the data packet is routed to any available output port of the switch that is connected to a Network Interface Card in the server. The switch includes circuitry for removing the routing header prior to the data packet exiting the switch. The server includes circuitry for returning to the workstation the address of the first entry port into the server or other network device that has more than one Network Interface Card installed therein.

Owner:IBM CORP

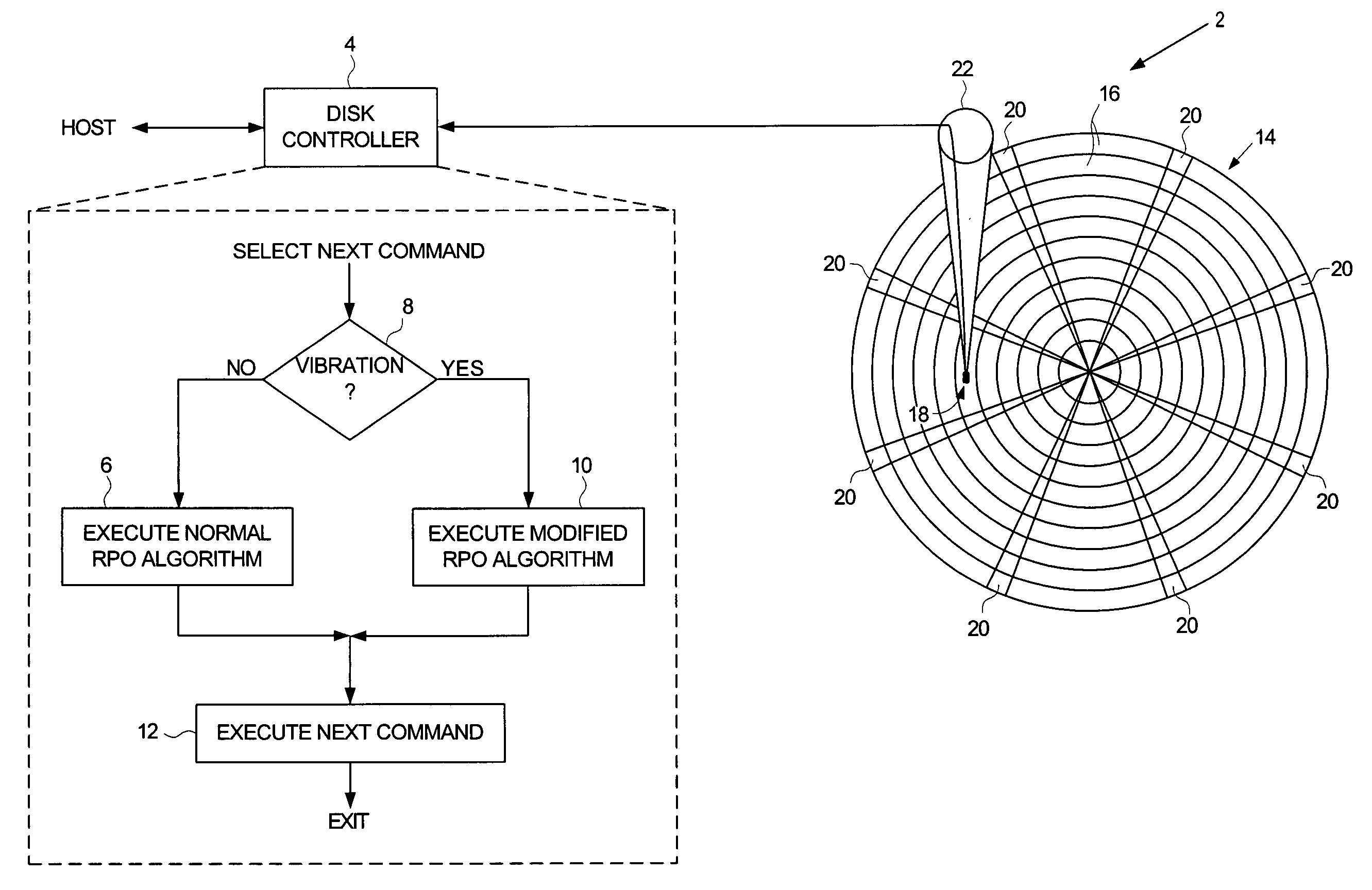

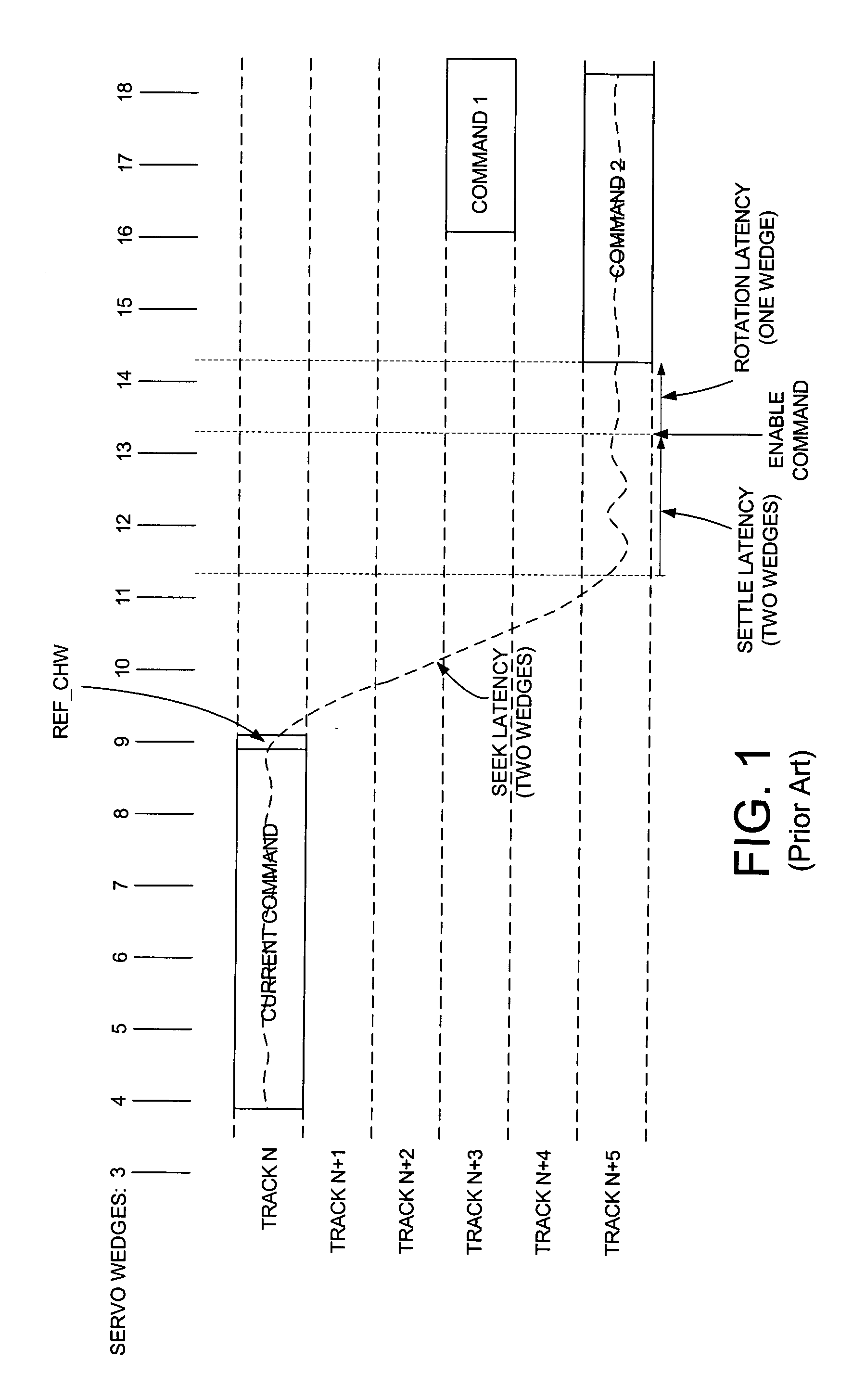

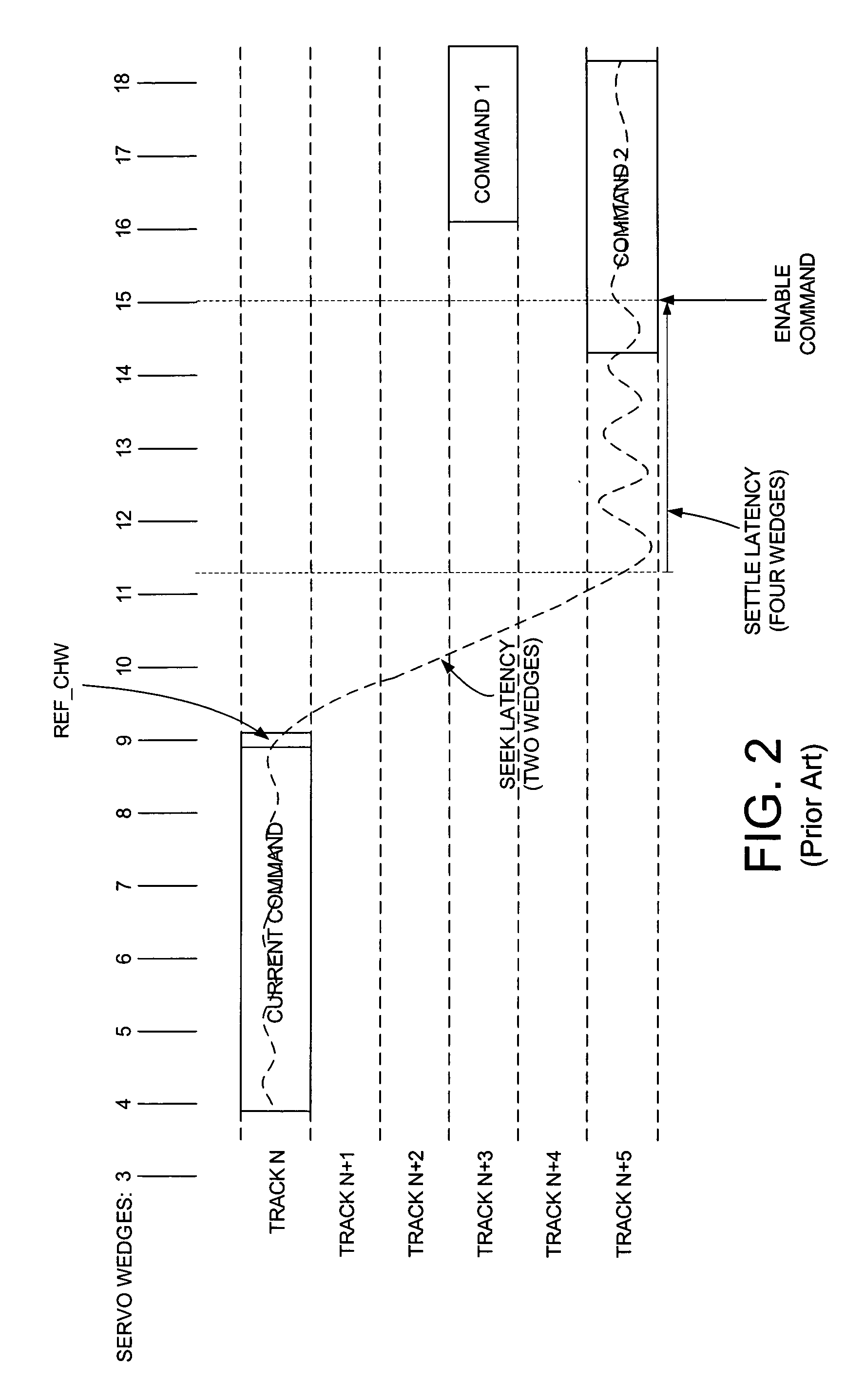

Disk drive employing a modified rotational position optimization algorithm to account for external vibrations

InactiveUS6968422B1Increases estimated rotational latencyImprove latencyDisposition/mounting of recording headsInput/output to record carriersAccess timeComputer science

A disk drive is disclosed which executes a rotational position optimization (RPO) algorithm to select a next command to execute from a command queue relative to an estimated access time. If an external vibration is detected, the RPO algorithm increases at least one of an estimated seek latency, an estimated settle latency, and an estimated rotational latency for each command in the command queue.

Owner:WESTERN DIGITAL TECH INC

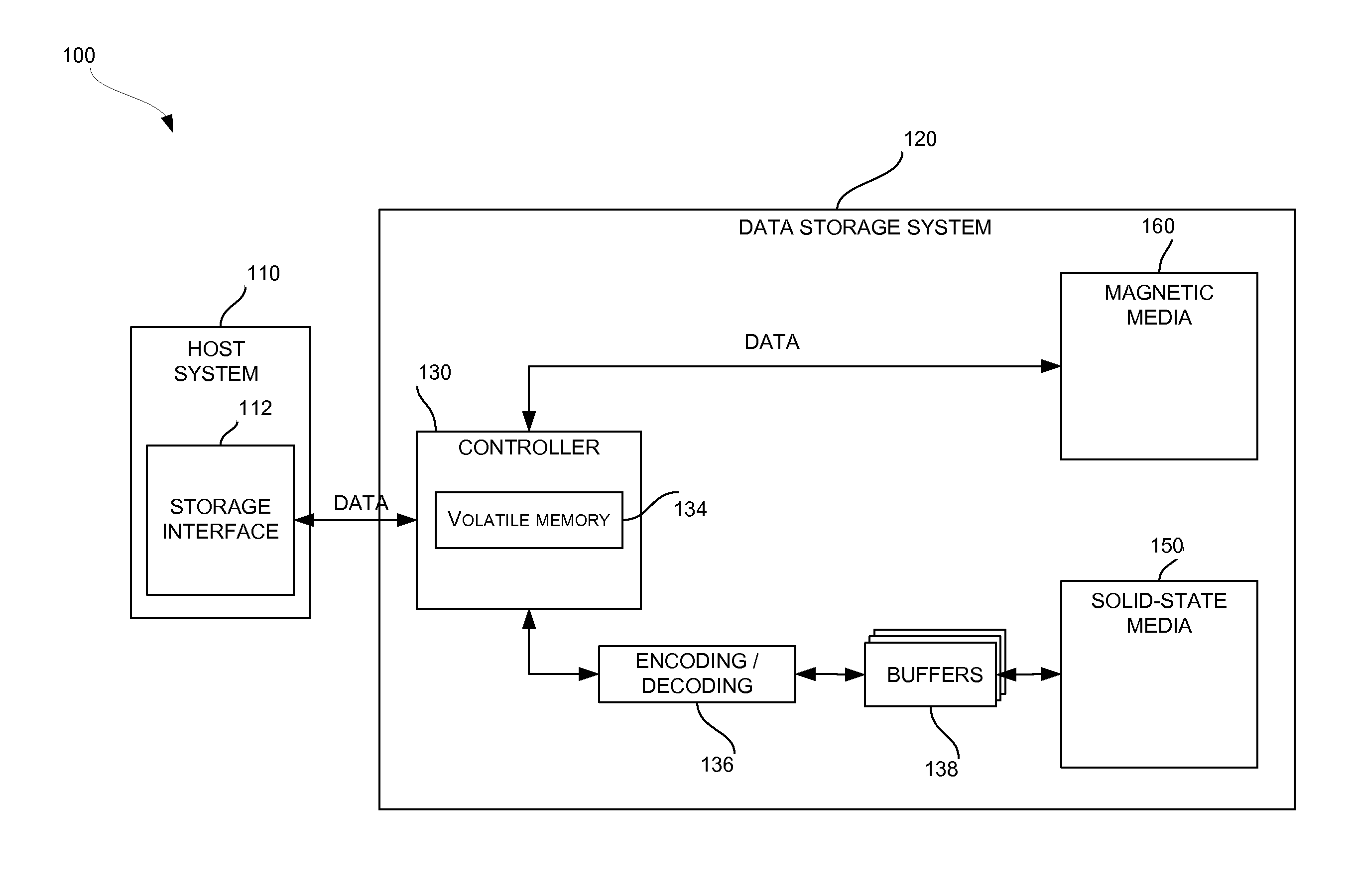

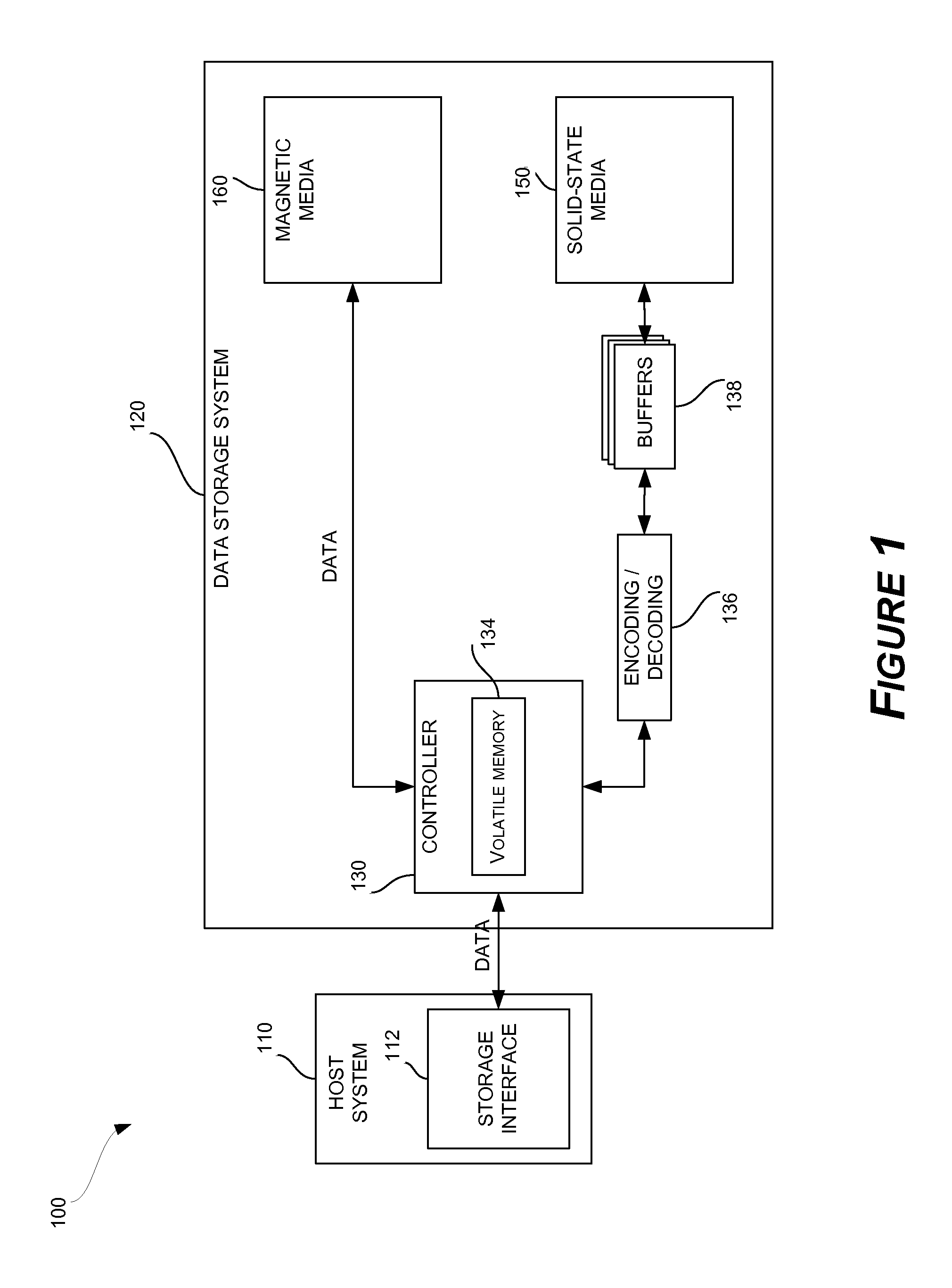

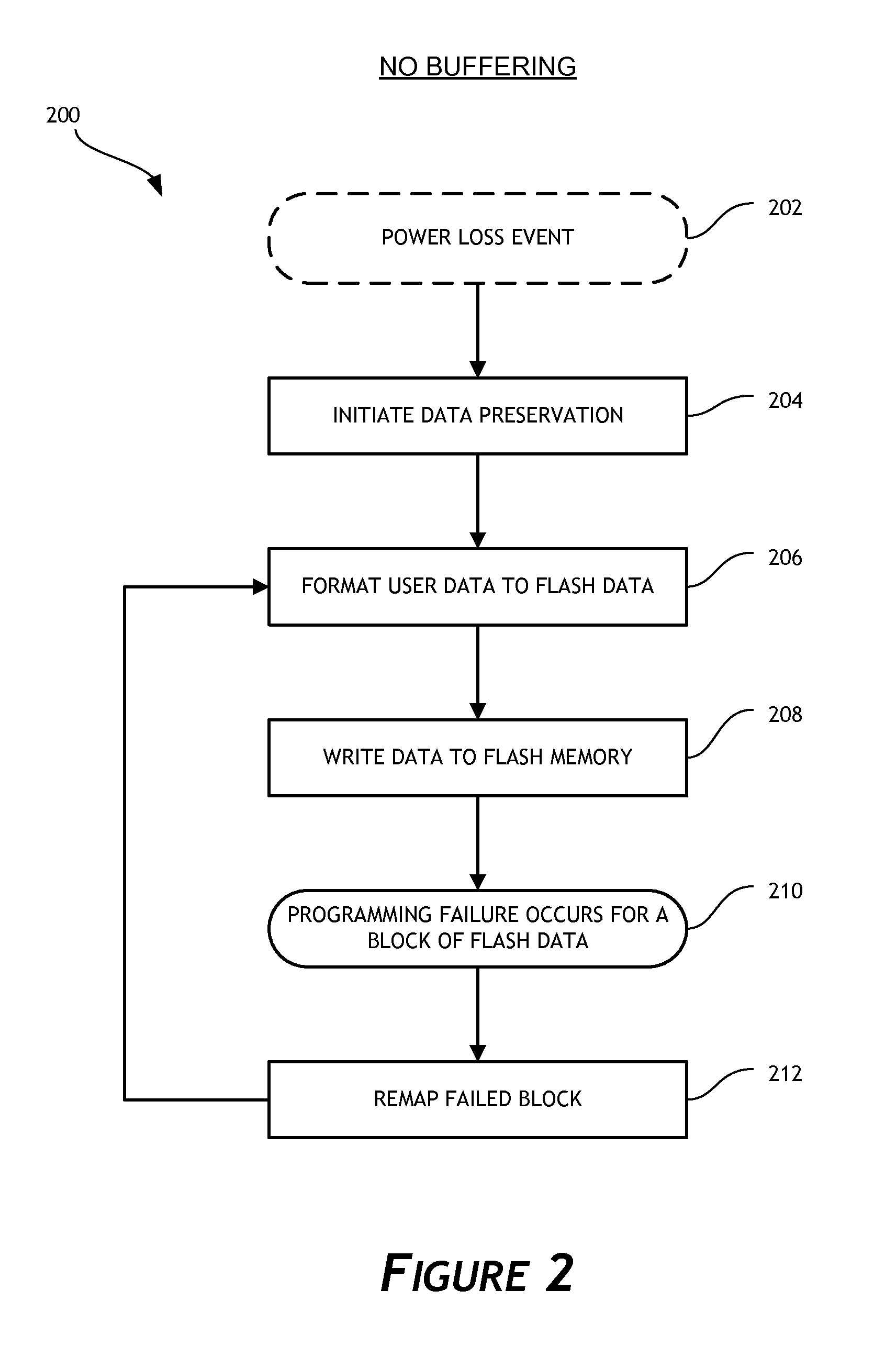

Efficient retry mechanism for solid-state memory failures

ActiveUS8788880B1Improve performanceReduce allocationError detection/correctionRead-only memoriesSolid-state storageTheoretical computer science

A data storage subsystem is disclosed that implements a solid-state data buffering scheme. Prior to completion of programming in solid-state storage media, data that is formatted for storage in solid-state media is maintained in one or more buffers. The system is able to retry failed programming operations directly from the buffers, rather than reprocessing the data. The relevant programming pipeline may therefore be preserved by skipping over a failed write operation and reprocessing the data at the end of the current pipeline processing cycle.

Owner:WESTERN DIGITAL TECH INC

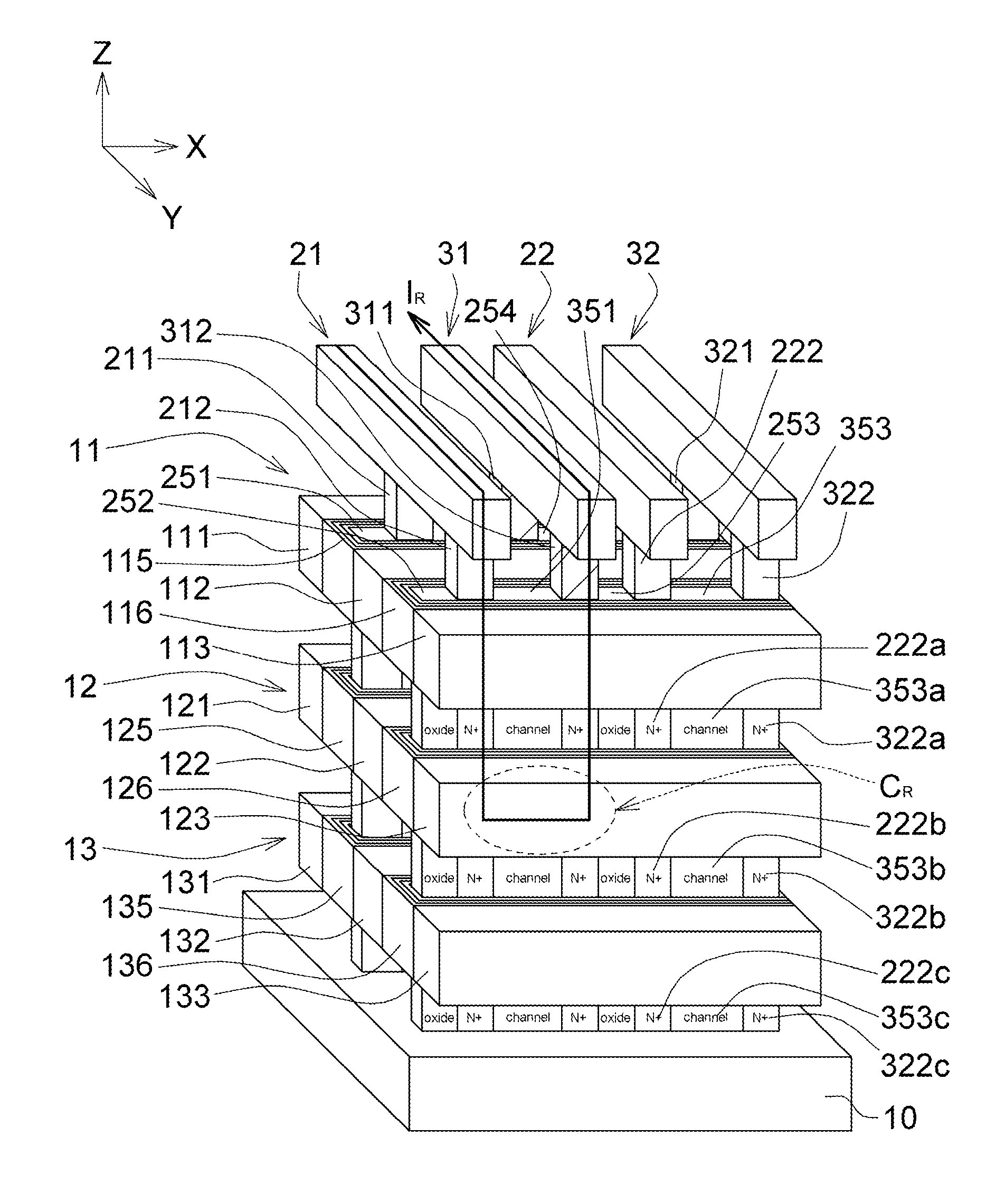

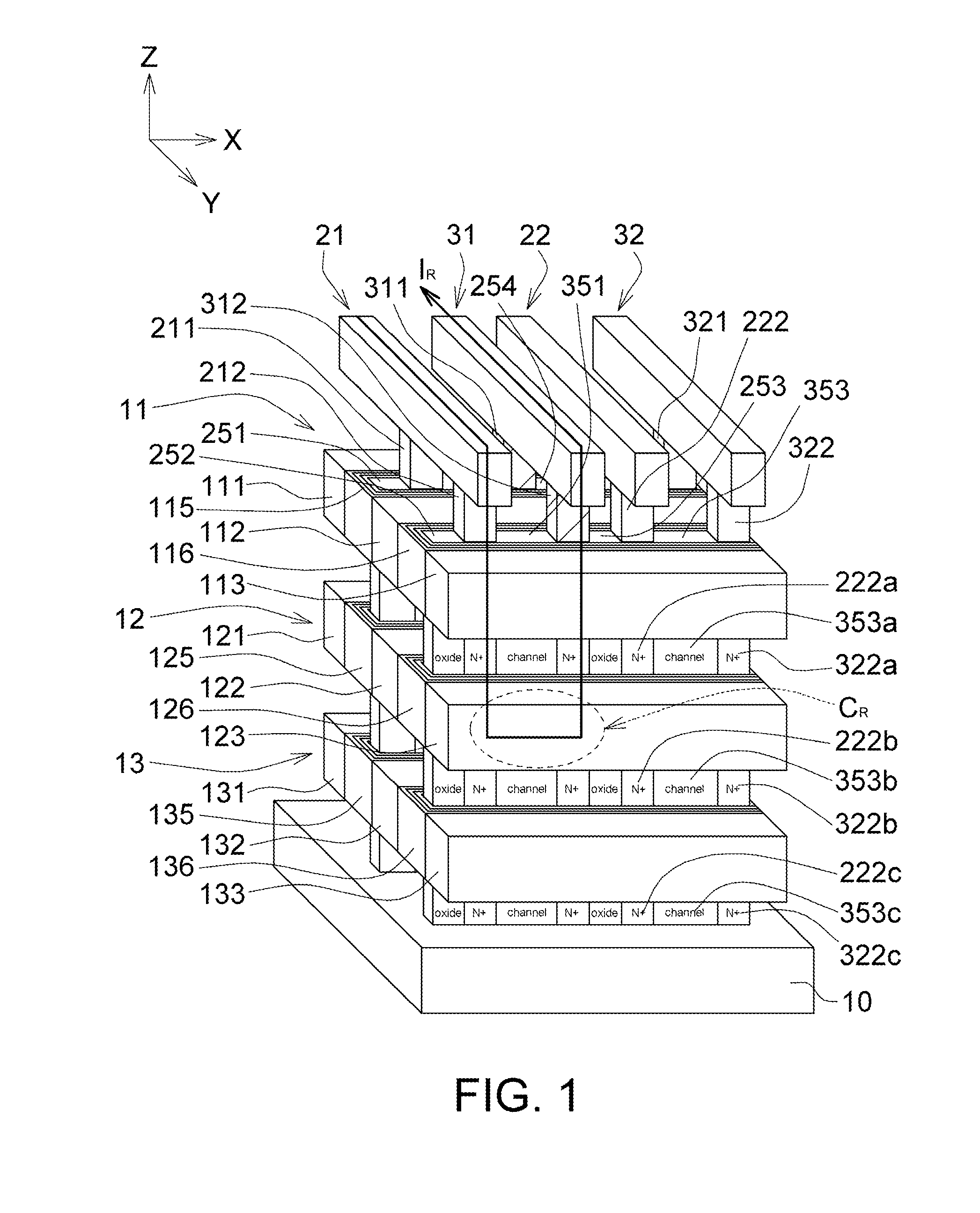

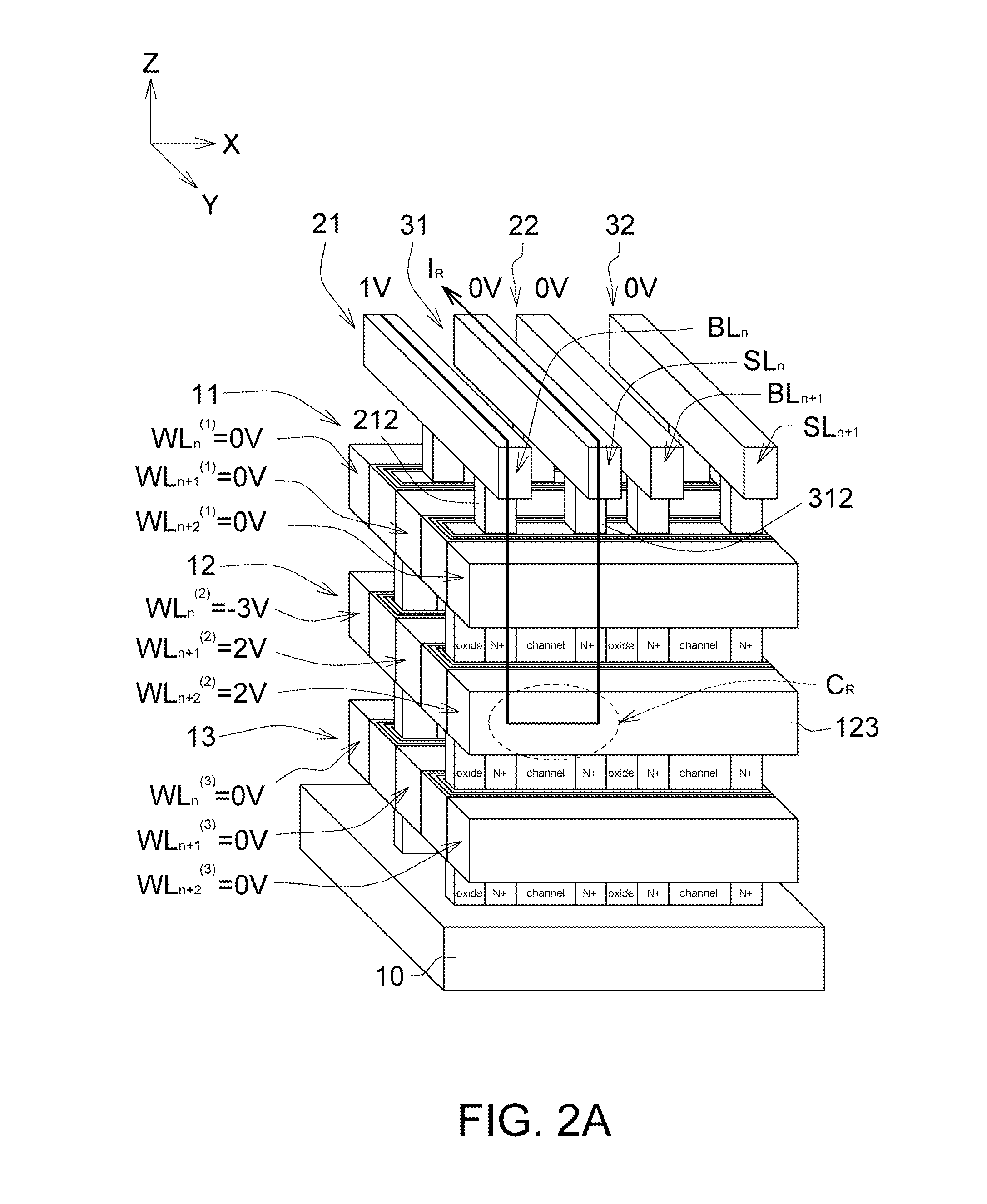

Three-dimensional stacked and-type flash memory structure and methods of manufacturing and operating the same hydride

A 3D stacked AND-type flash memory structure comprises several horizontal planes of memory cells arranged in a three-dimensional array, and each horizontal plane comprising several word lines and several of charge trapping multilayers arranged alternately, and the adjacent word lines spaced apart from each other with each charge trapping multilayer interposed between; a plurality of sets of bit lines and source lines arranged alternately and disposed vertically to the horizontal planes; and a plurality of sets of channels and sets of insulation pillars arranged alternatively, and disposed perpendicularly to the horizontal planes, wherein one set of channels is sandwiched between the adjacent sets of bit lines and source lines.

Owner:MACRONIX INT CO LTD

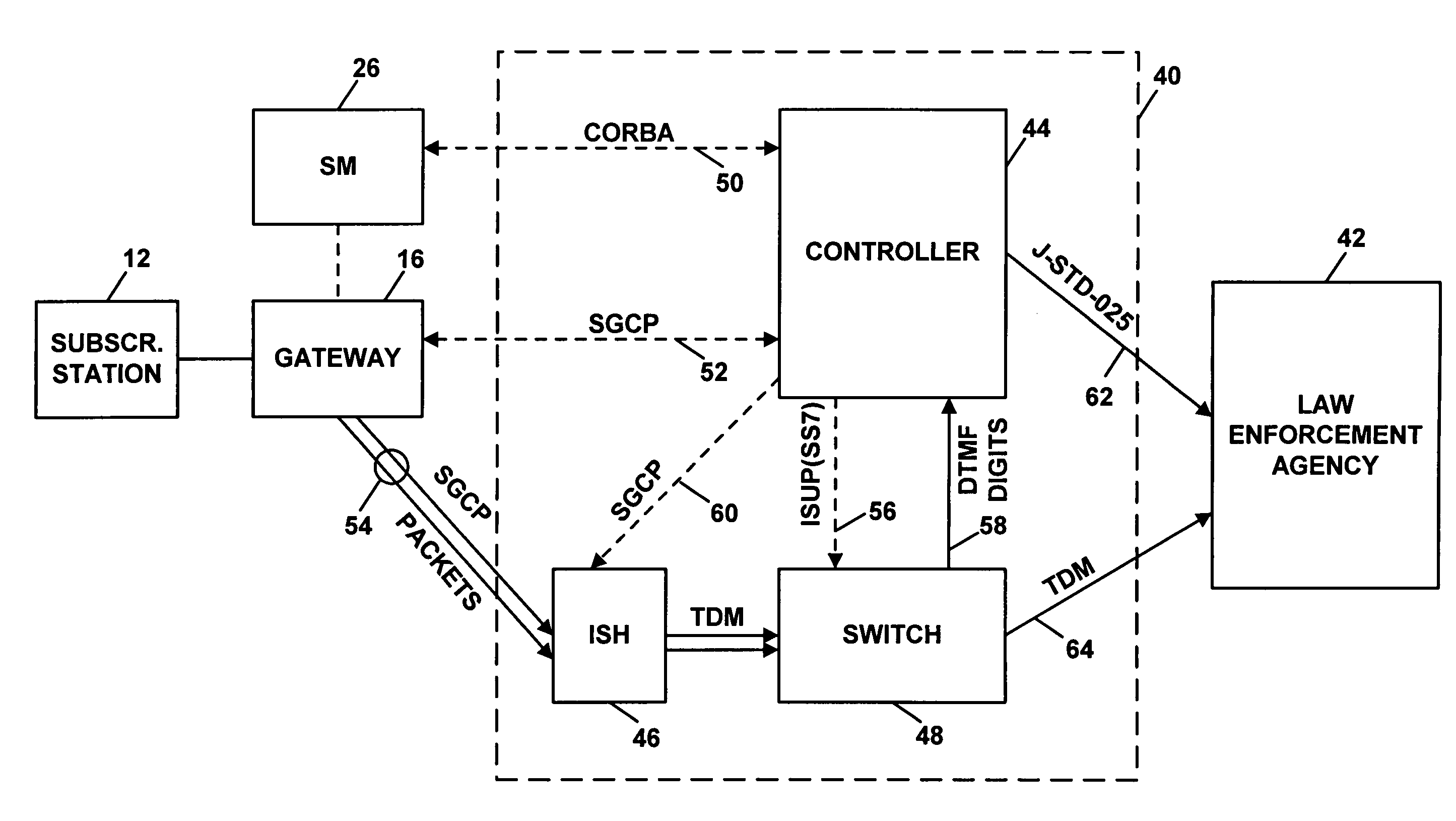

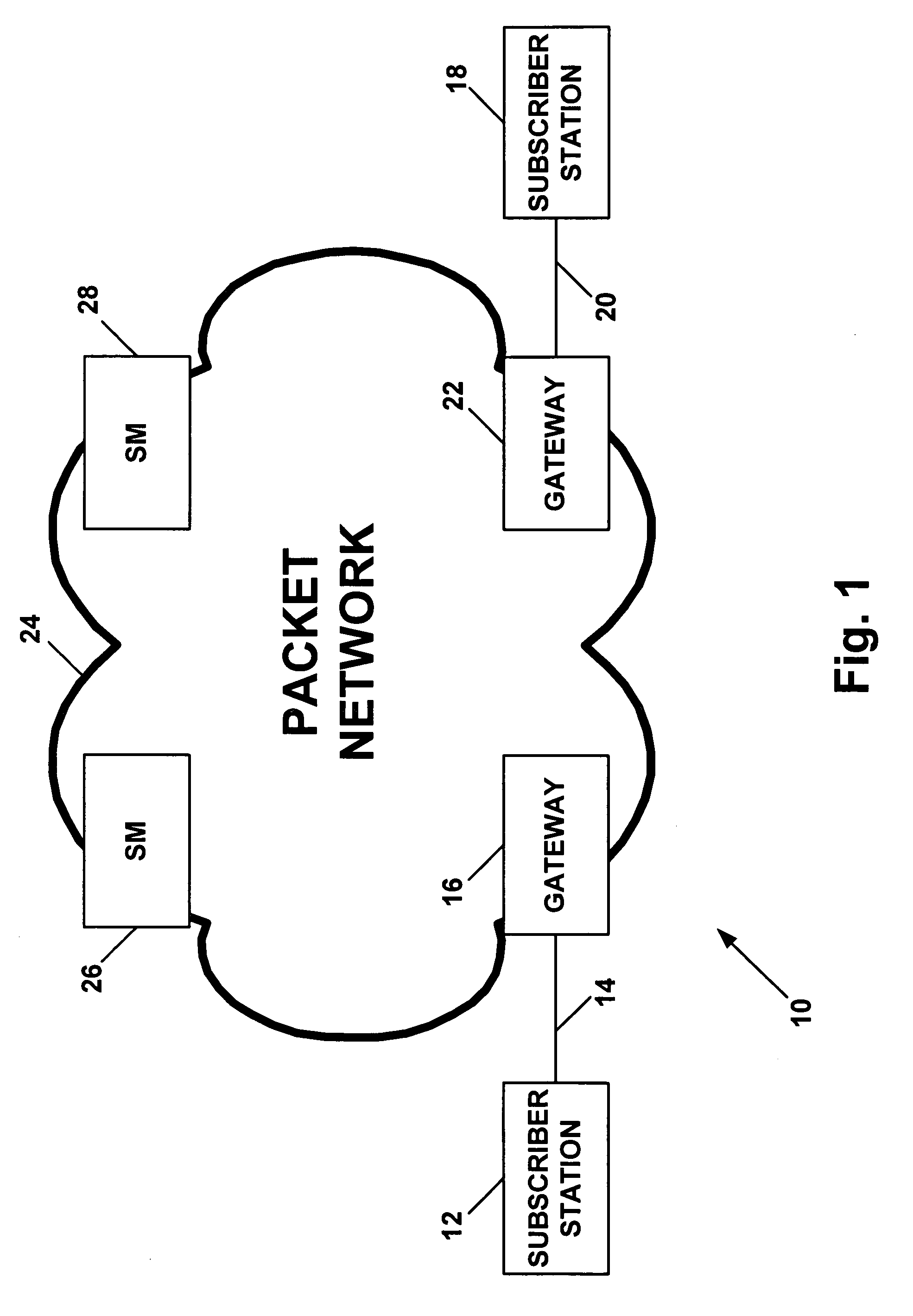

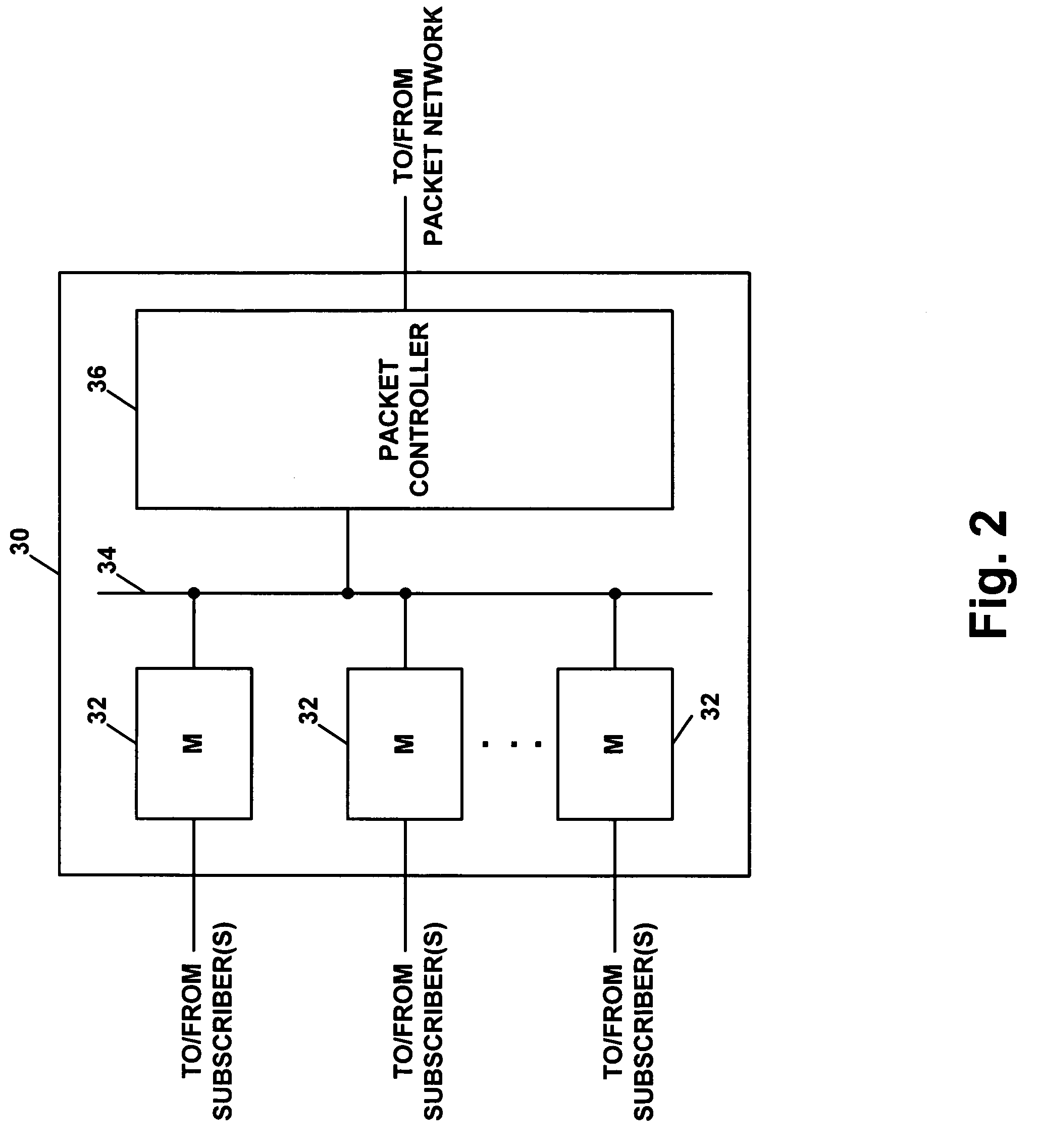

Method and system for wiretapping of packet-based communications

ActiveUS7055174B1Facilitate efficient complianceOvercome deficienciesInterconnection arrangementsMemory loss protectionEnforcementSession management

A method and system for wiretapping a packet-based communication, such as a voice communication for instance. When a node in the normal path of packet-transmission between a source and destination receives packets of the communication, the node can conventionally route the packets to the intended destination. At the same time, a session manager can notify a wiretap server that the session is being established or is underway, and the wiretap server can then direct the node to bi-cast the packets of the communication to the wiretap server node. At the wiretap server, the packetized communication can be depacketized, and a TDM signal representing the communication can be sent via a conventional circuit-switch to a law enforcement agency or other authorized entity.

Owner:SPRING SPECTRUM LP

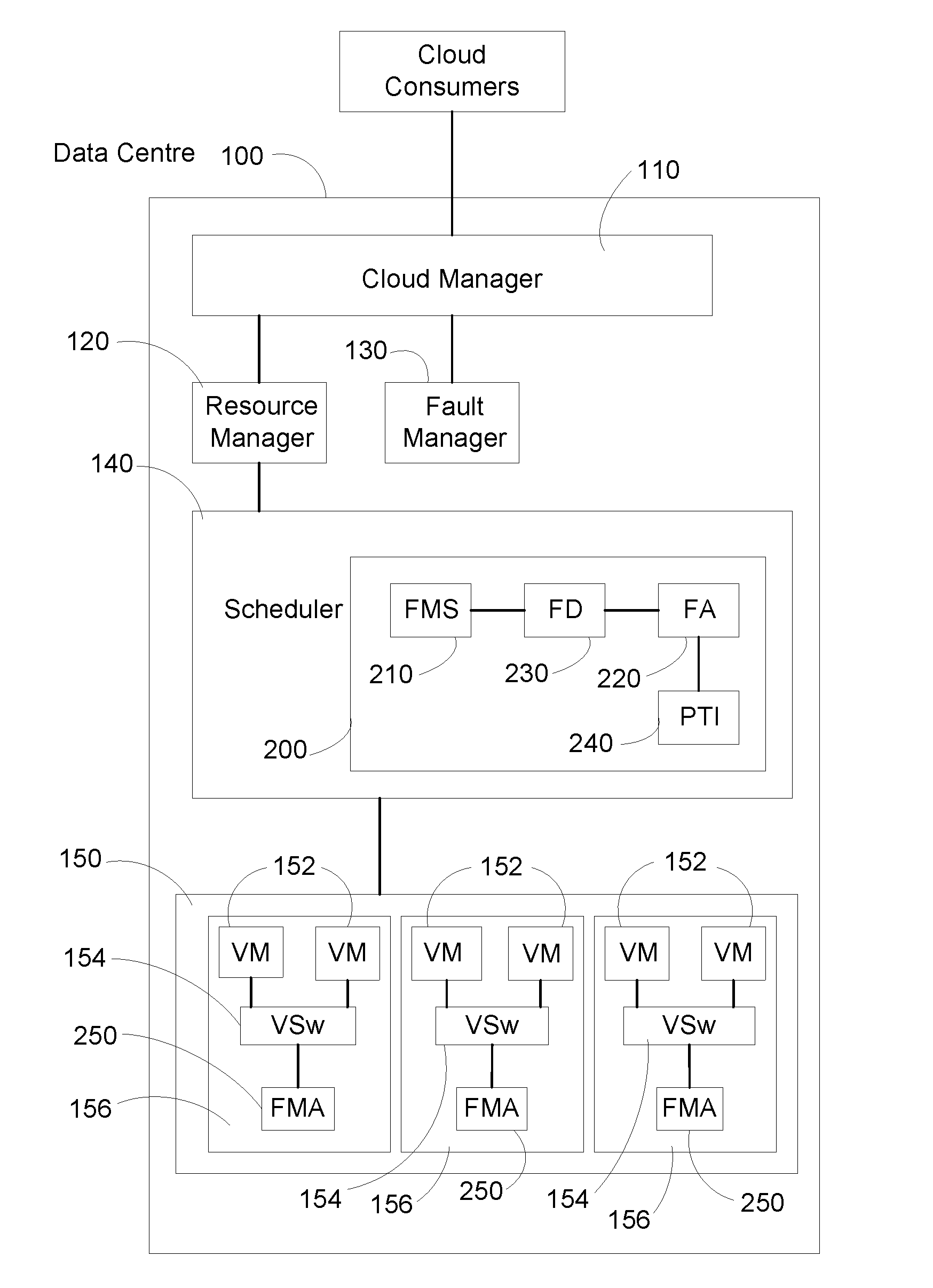

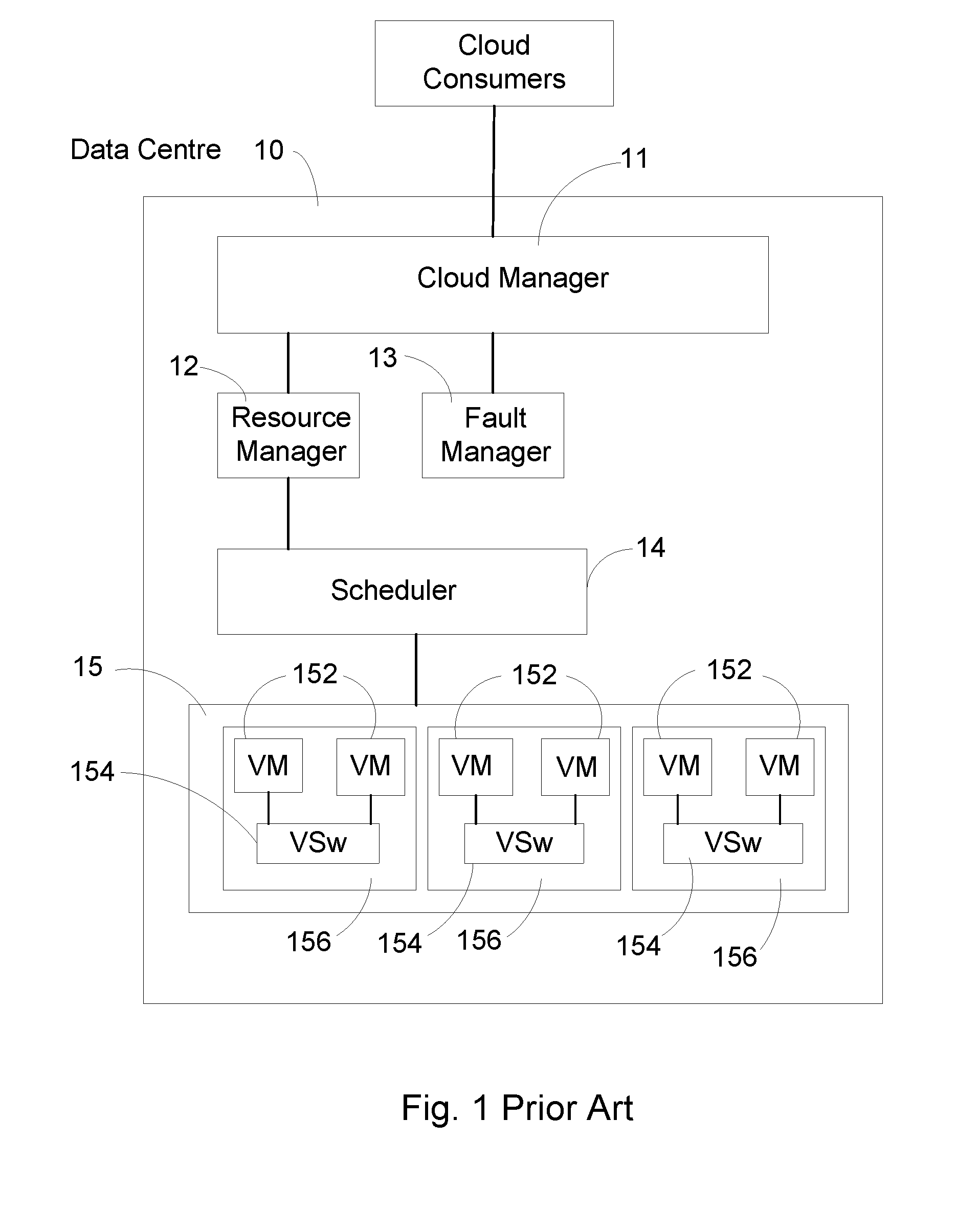

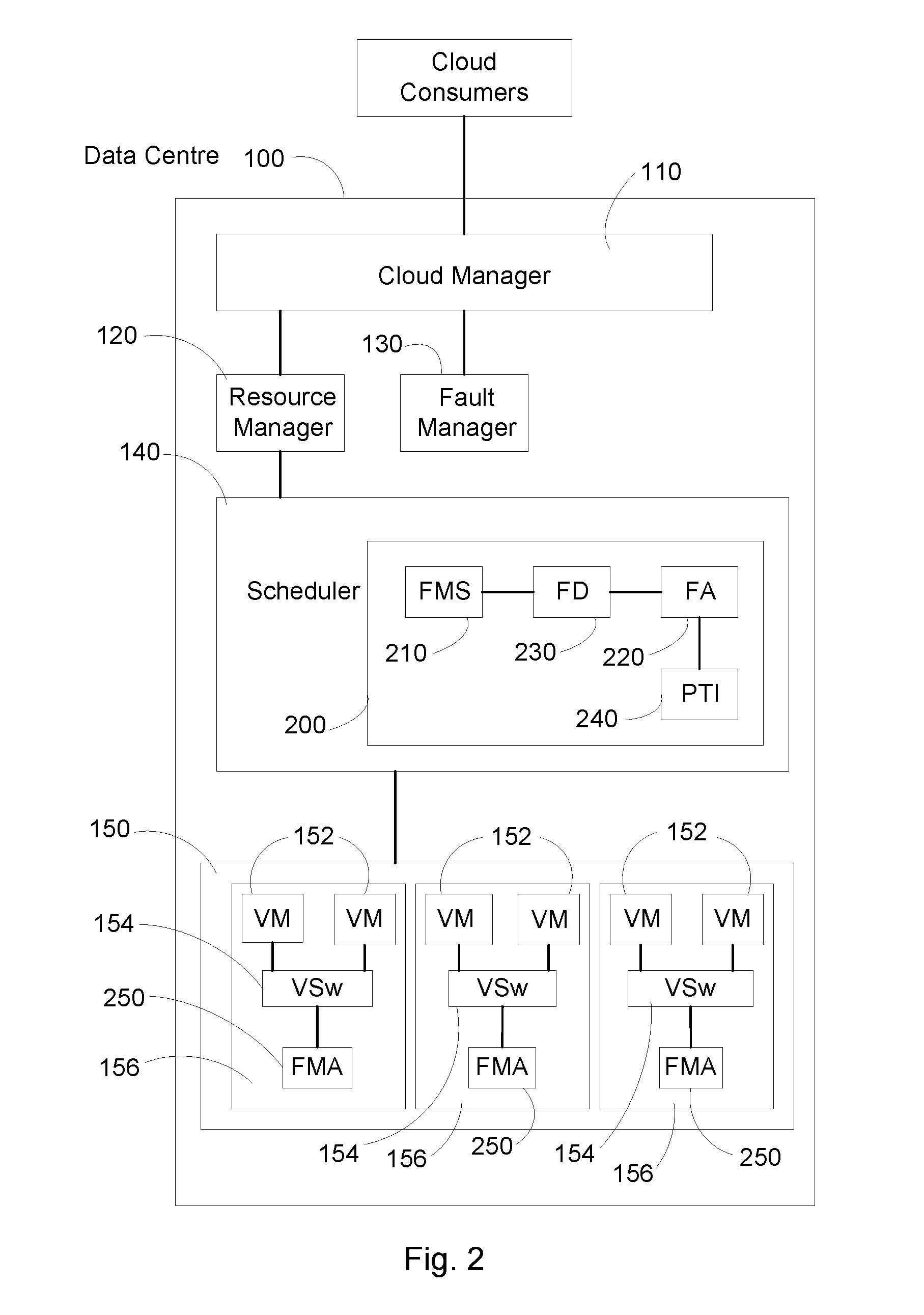

Method, system, computer program and computer program product for monitoring data packet flows between virtual machines, vms, within a data centre

InactiveUS20160216994A1Improve overall utilizationReduce network latencyError detection/correctionData switching networksData packData center

The following invention relates to methods, systems, computer programs and computer program products for supporting said method, and different embodiments thereof, for monitoring data packet flows between Virtual Machines, VMs, within a data centre. The method comprises collecting flow data for data packet flows between VMs in the data centre, and mapping the flow data of data packet flows between VMs onto data centre topology to establish flow costs for said data packet flows. The method further comprises calculating an aggregated flow cost for all the flows associated with a VM for each VM within the data centre, and determining whether to reschedule any VM, or not, based on the aggregated flow cost.

Owner:TELEFON AB LM ERICSSON (PUBL)

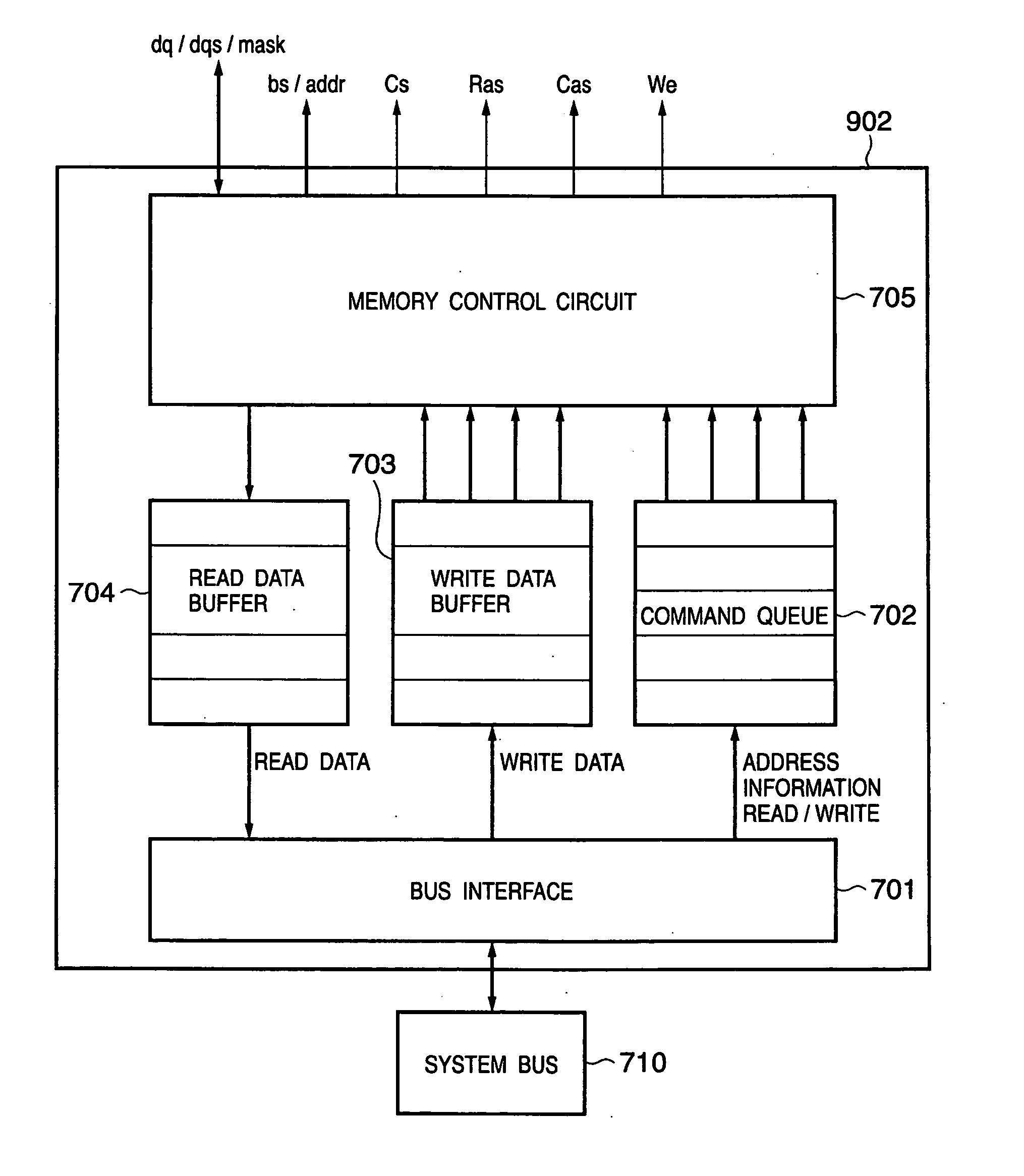

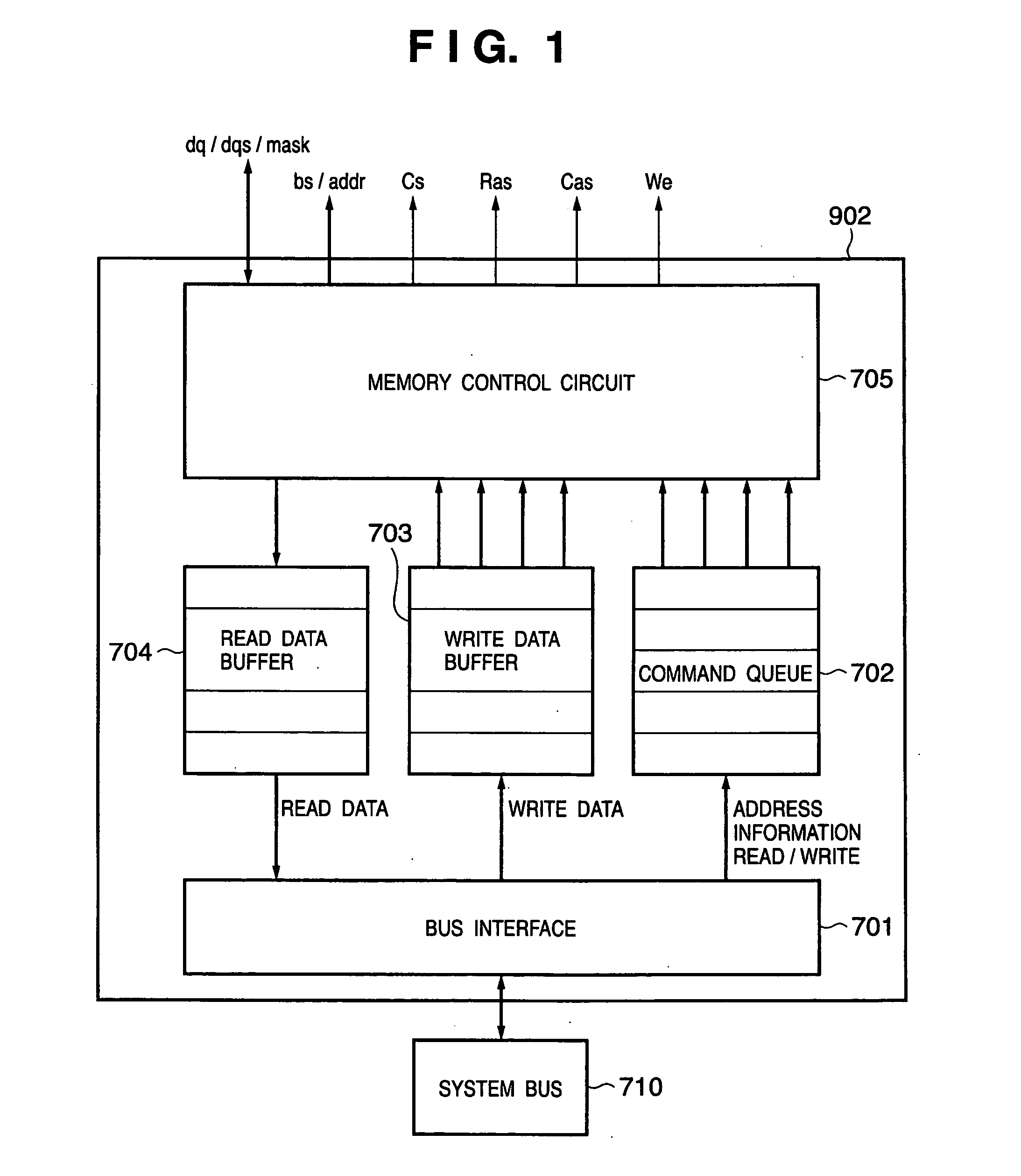

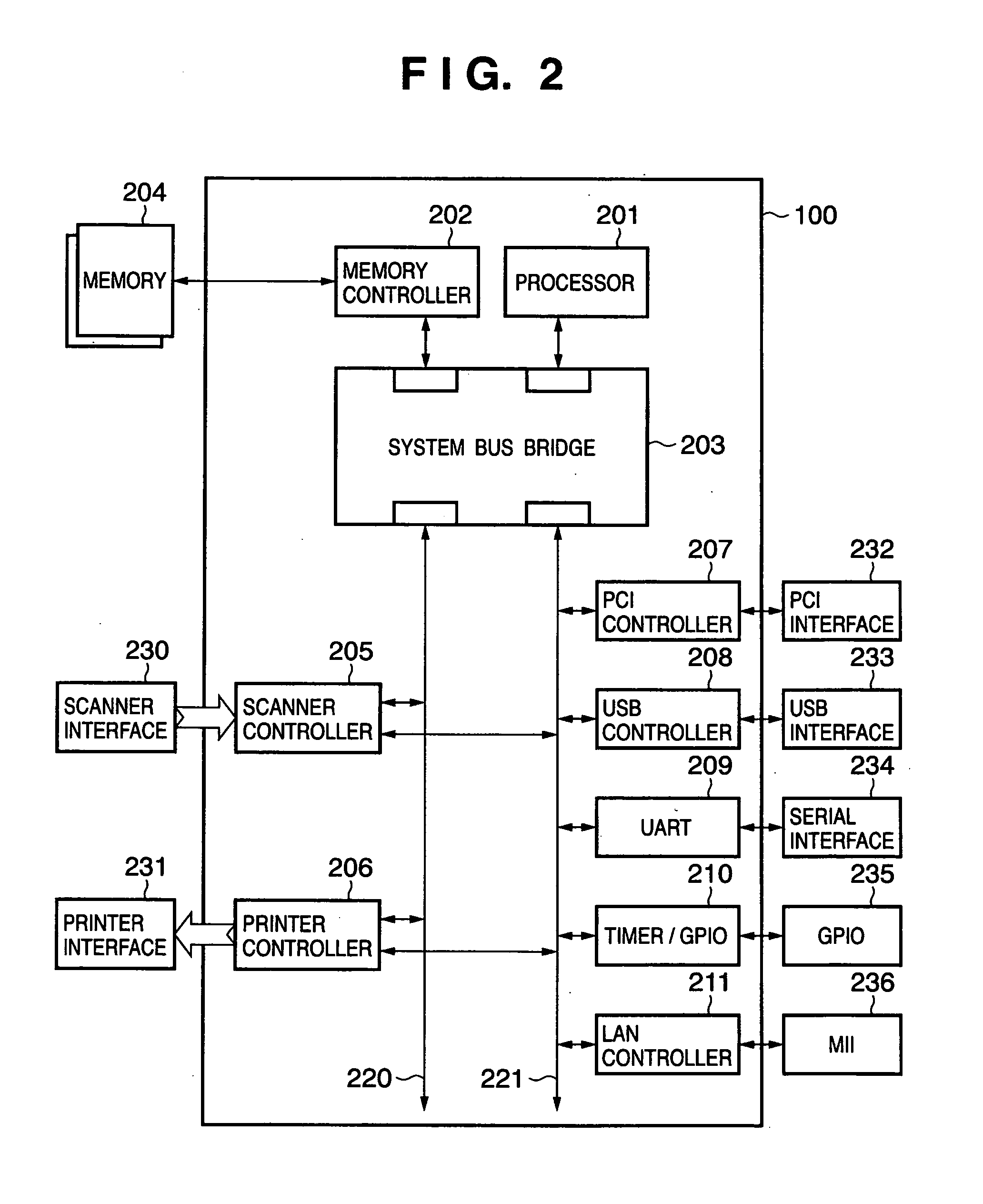

Memory control apparatus and method

ActiveUS20070016699A1Reduce capacitySuppressing increase in memory access latencyMemory systemsInput/output processes for data processingEngineeringMechanical engineering

Owner:CANON KK

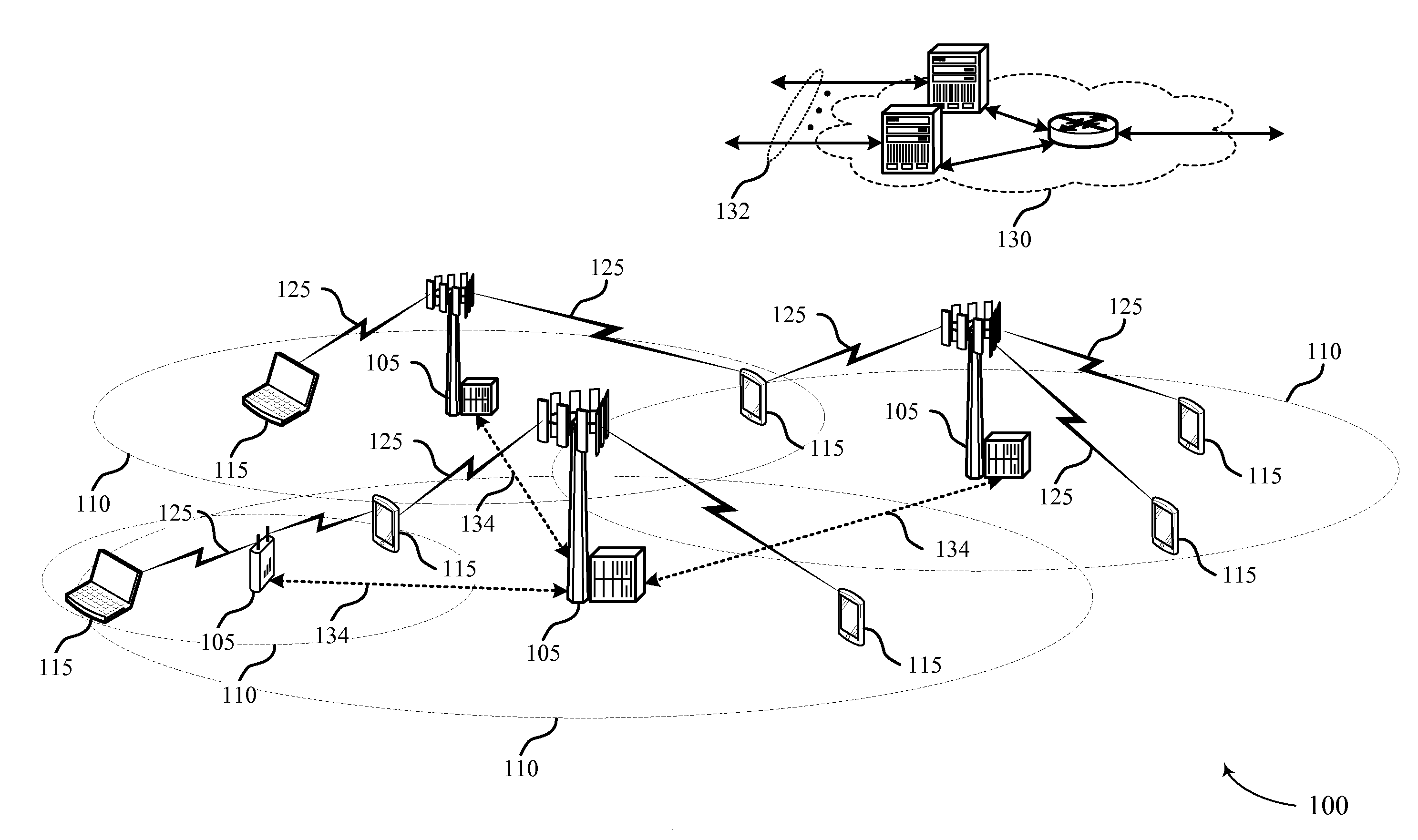

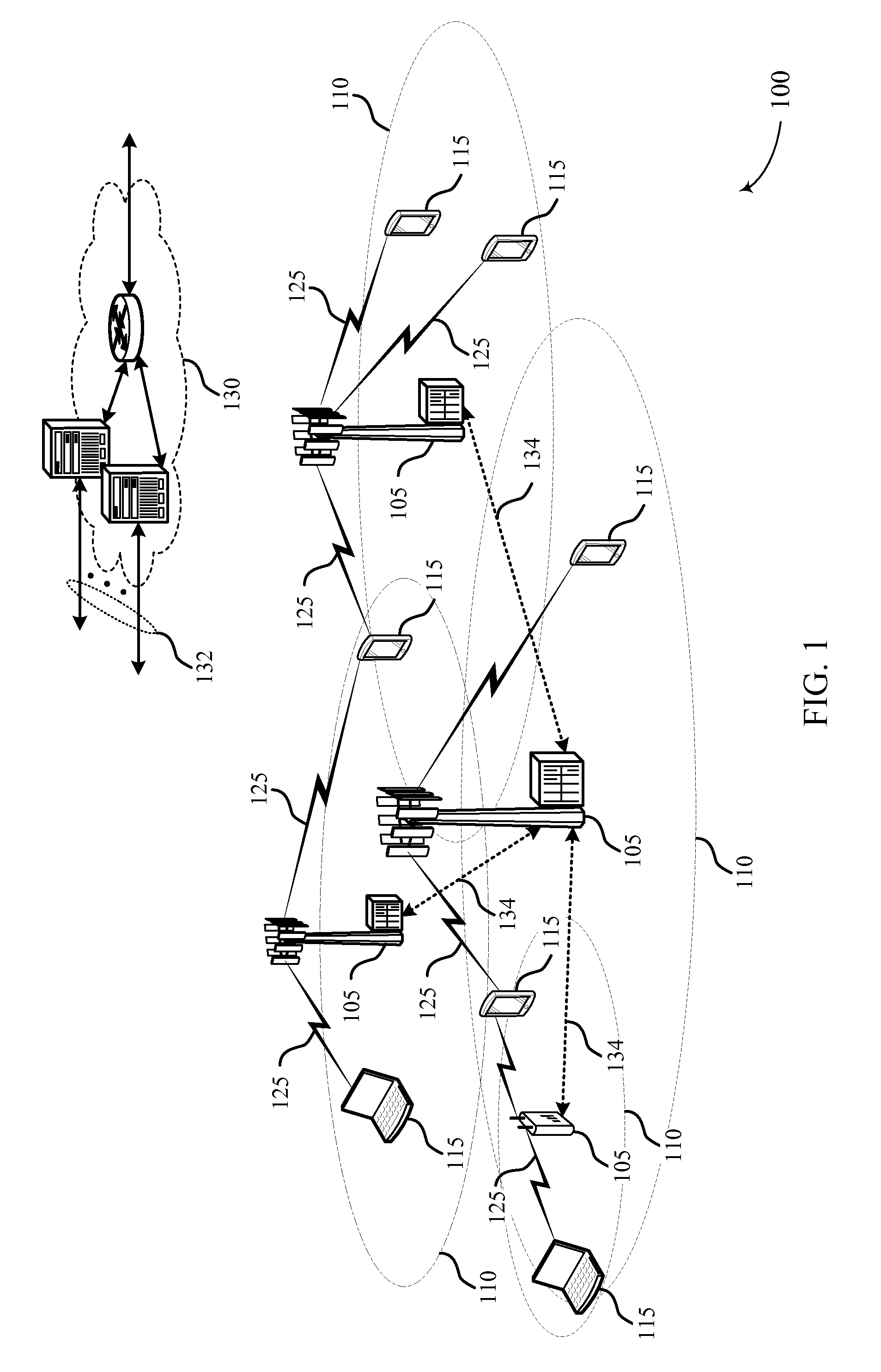

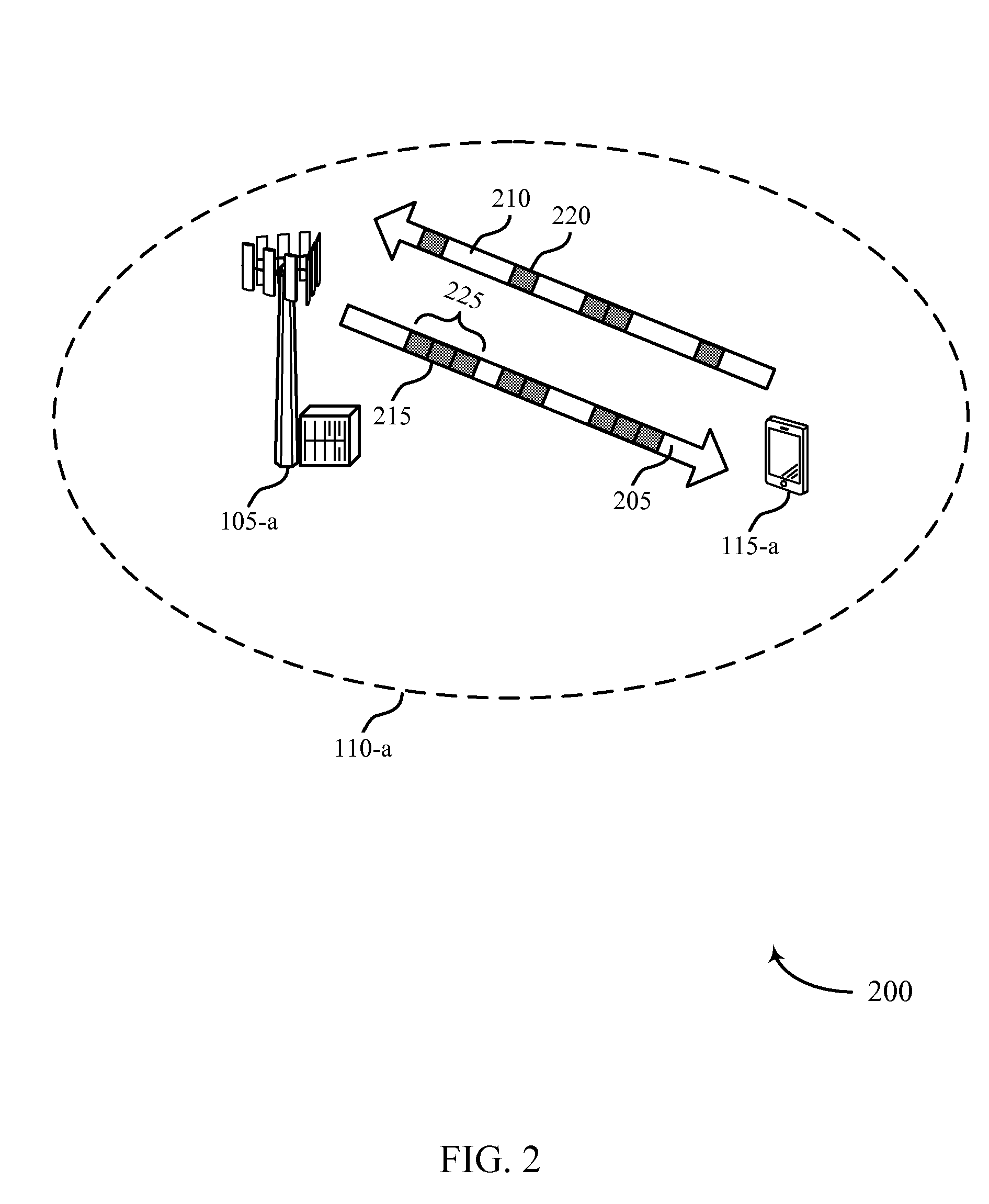

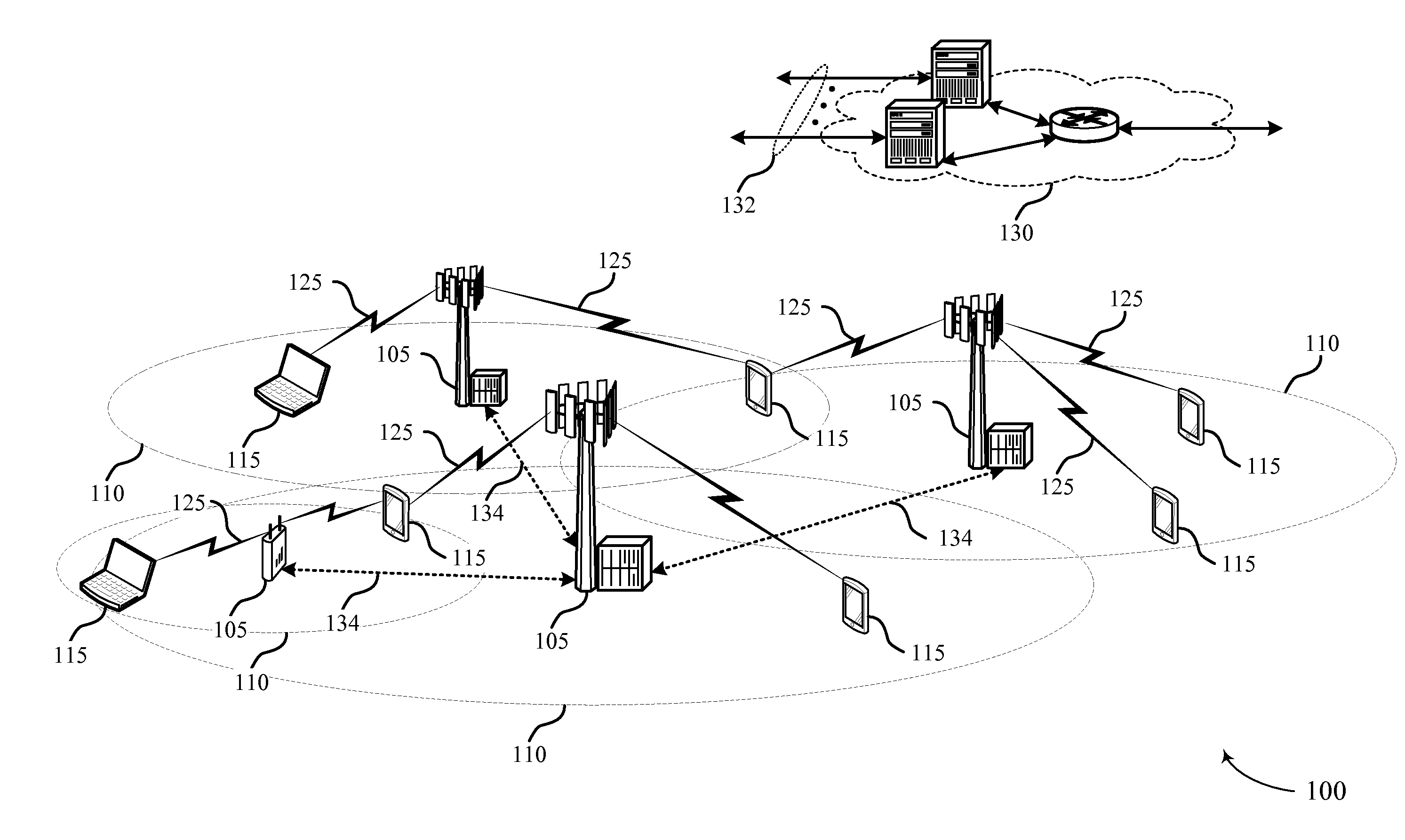

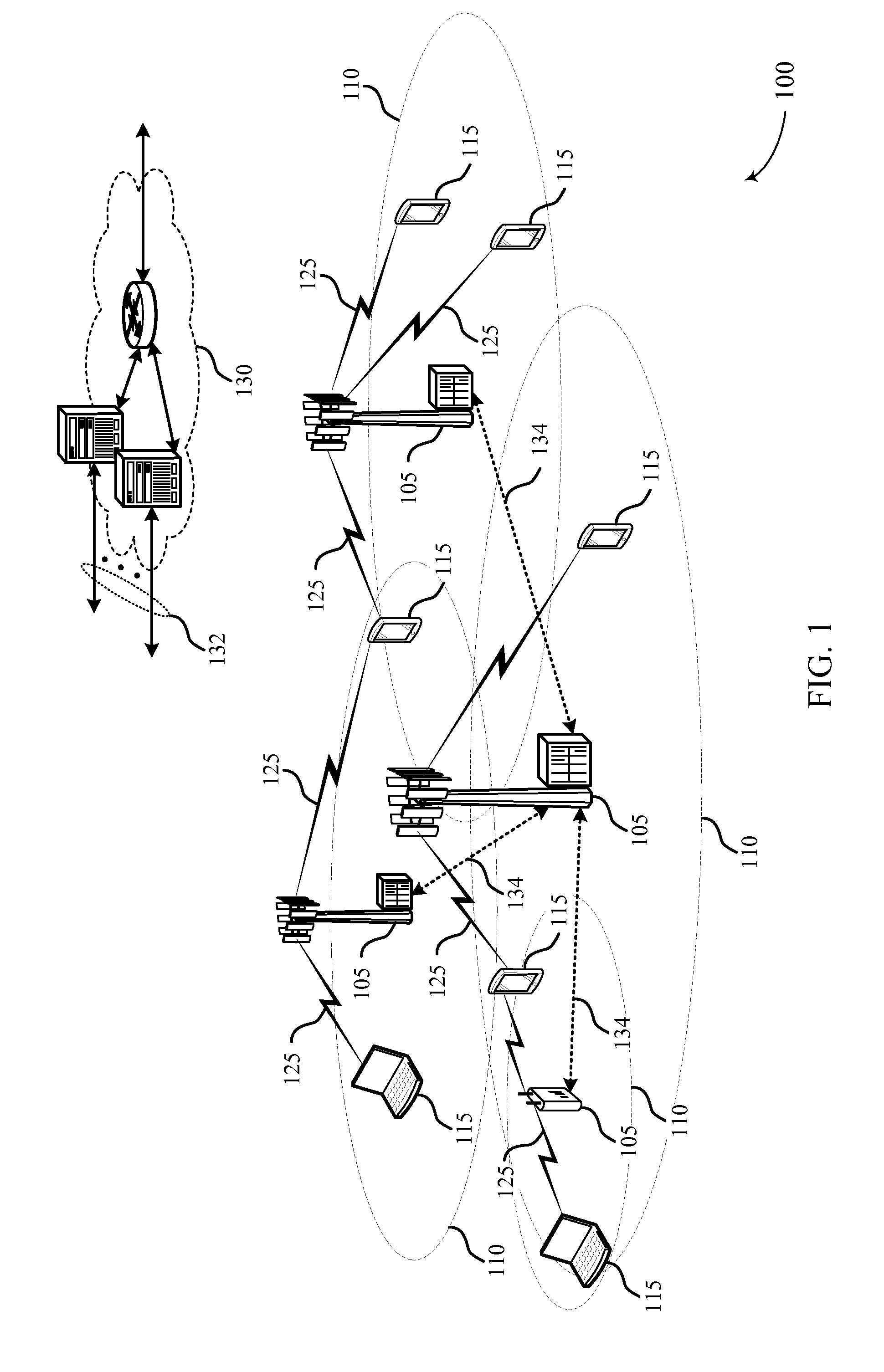

Flexible multiplexing and feedback for variable transmission time intervals

ActiveUS20160119948A1Improve latencyError prevention/detection by using return channelSignal allocationMultiplexingHybrid automatic repeat request

Methods, systems, and devices for wireless communication are described. A base station may employ a multiplexing configuration based on latency and efficiency considerations. The base station may transmit a resource grant, a signal indicating the length of a downlink (DL) transmission time interval (TTI), and a signal indicating the length of a subsequent uplink (UL) TTI to one or more user equipment (UEs). The base station may dynamically select a new multiplexing configuration by, for example, setting the length of an UL TTI to zero or assigning multiple UEs resources in the same DL TTI. Latency may also be reduced by employing block feedback, such as block hybrid automatic repeat request (HARQ) feedback. A UE may determine and transmit HARQ feedback for each transport block (TB) of a set of TBs, which may be based on a time duration of a downlink TTI.

Owner:QUALCOMM INC

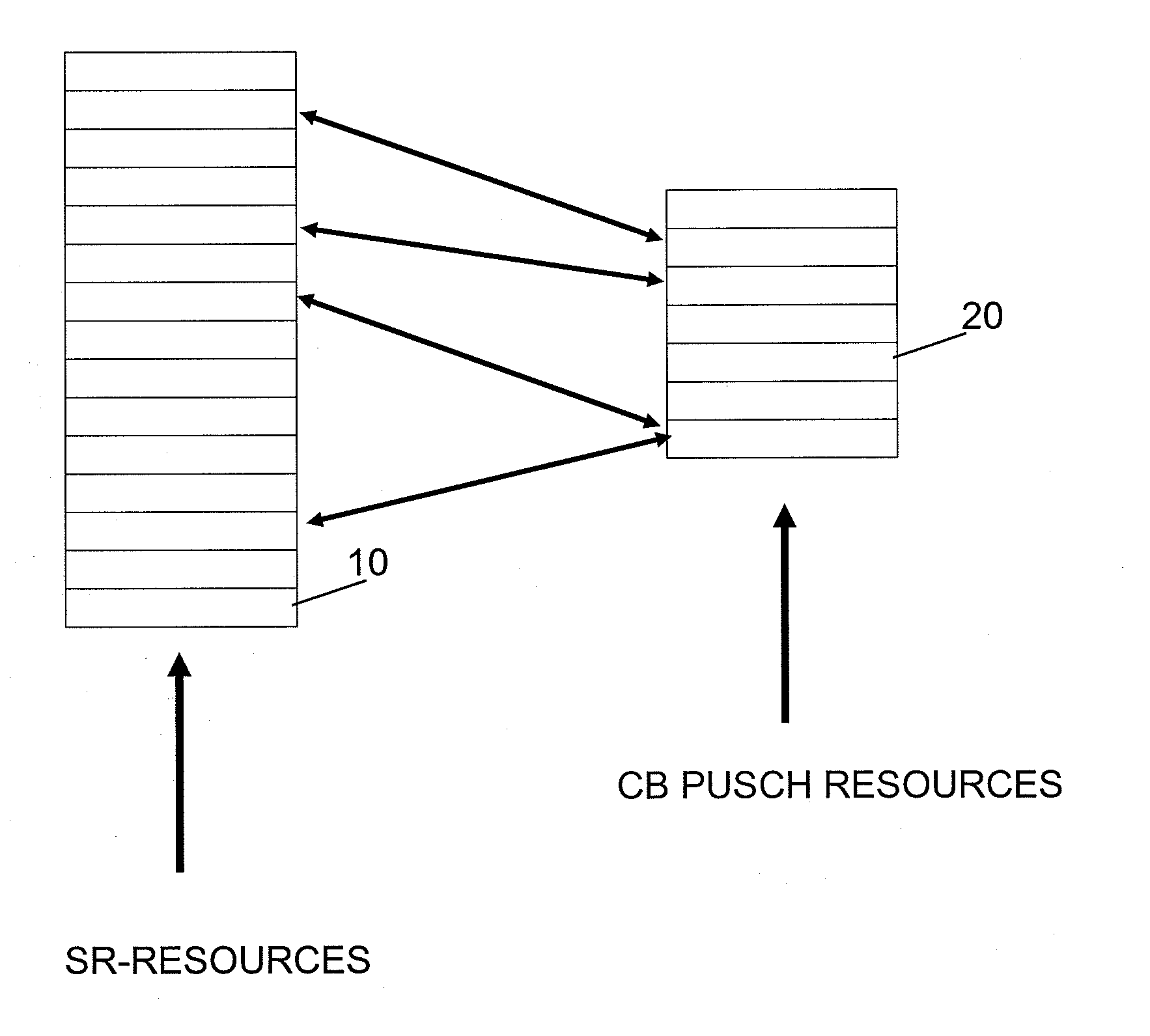

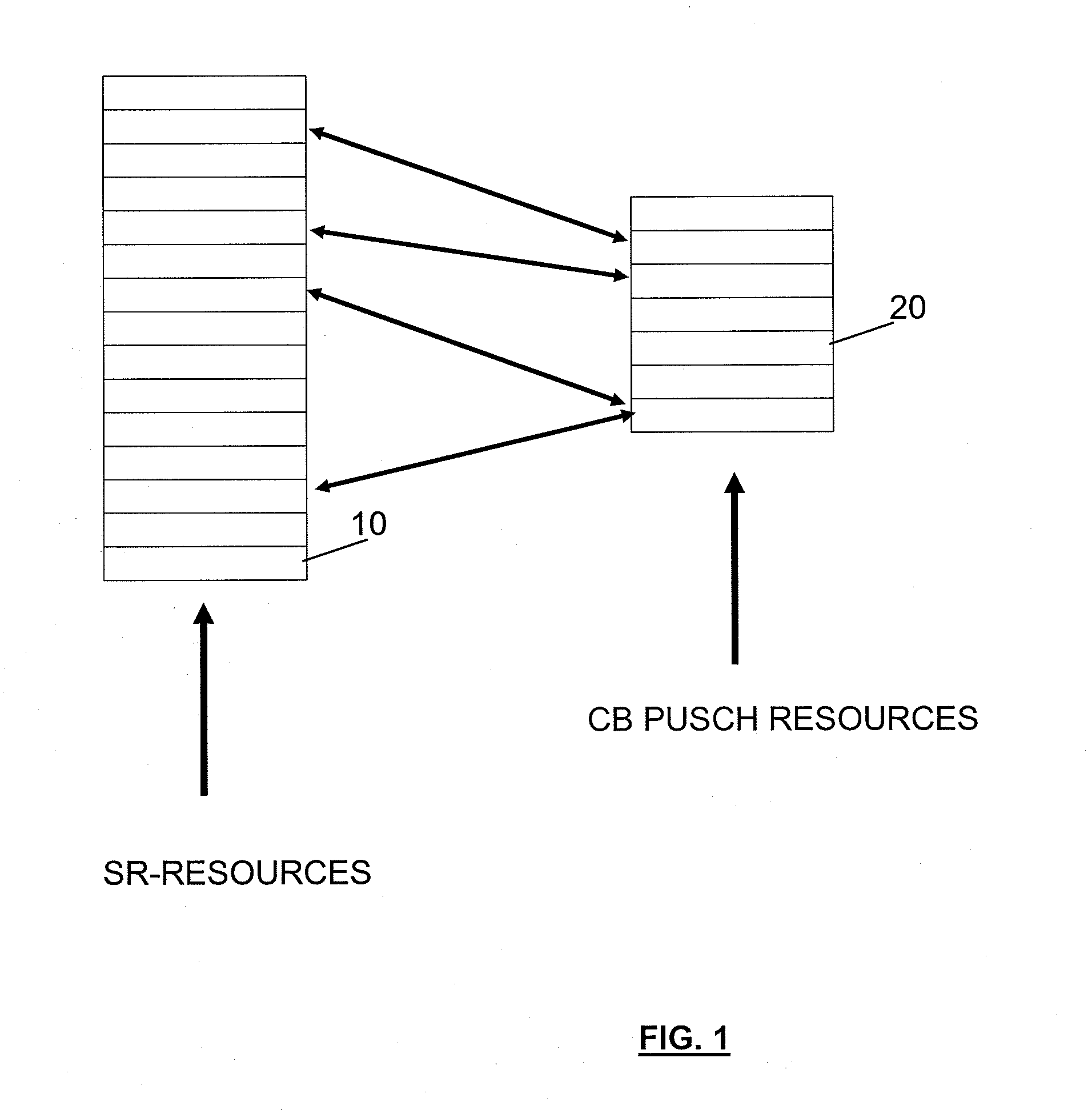

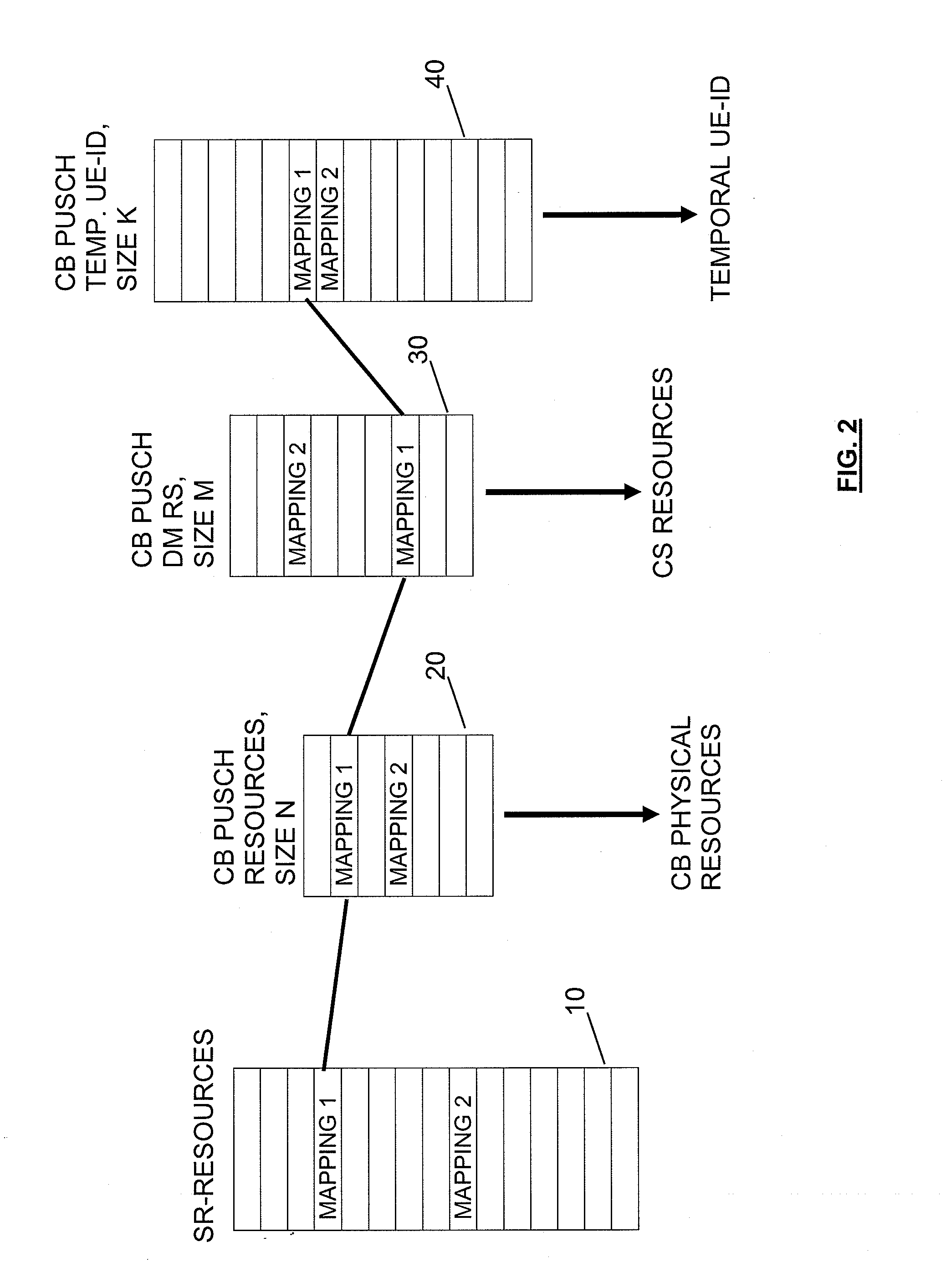

Resource Setting Control for Transmission Using Contention Based Resources

ActiveUS20120182977A1Increase delayImprove latencyNetwork traffic/resource managementTime-division multiplexResource elementData transmission

There is proposed a mechanism by means of which resources for a data transmission between a user equipment and a base transceiver station are set. For this purpose, a resource dedicated to the user equipment (like an SR resource) is combined with at least one contention based resource allocated to a contention based transmission by the user equipment (like a CB-PUSCH resource). The at least one contention based resource to be combined is selected by executing a mapping according to a predetermined rule and based on an information indicating a specific resource element dedicated to the user equipment to at least one set of available contention based resources, and by determining an information identifying at least one resource element of the at least one contention based resource.

Owner:NOKIA TECHNOLOGLES OY

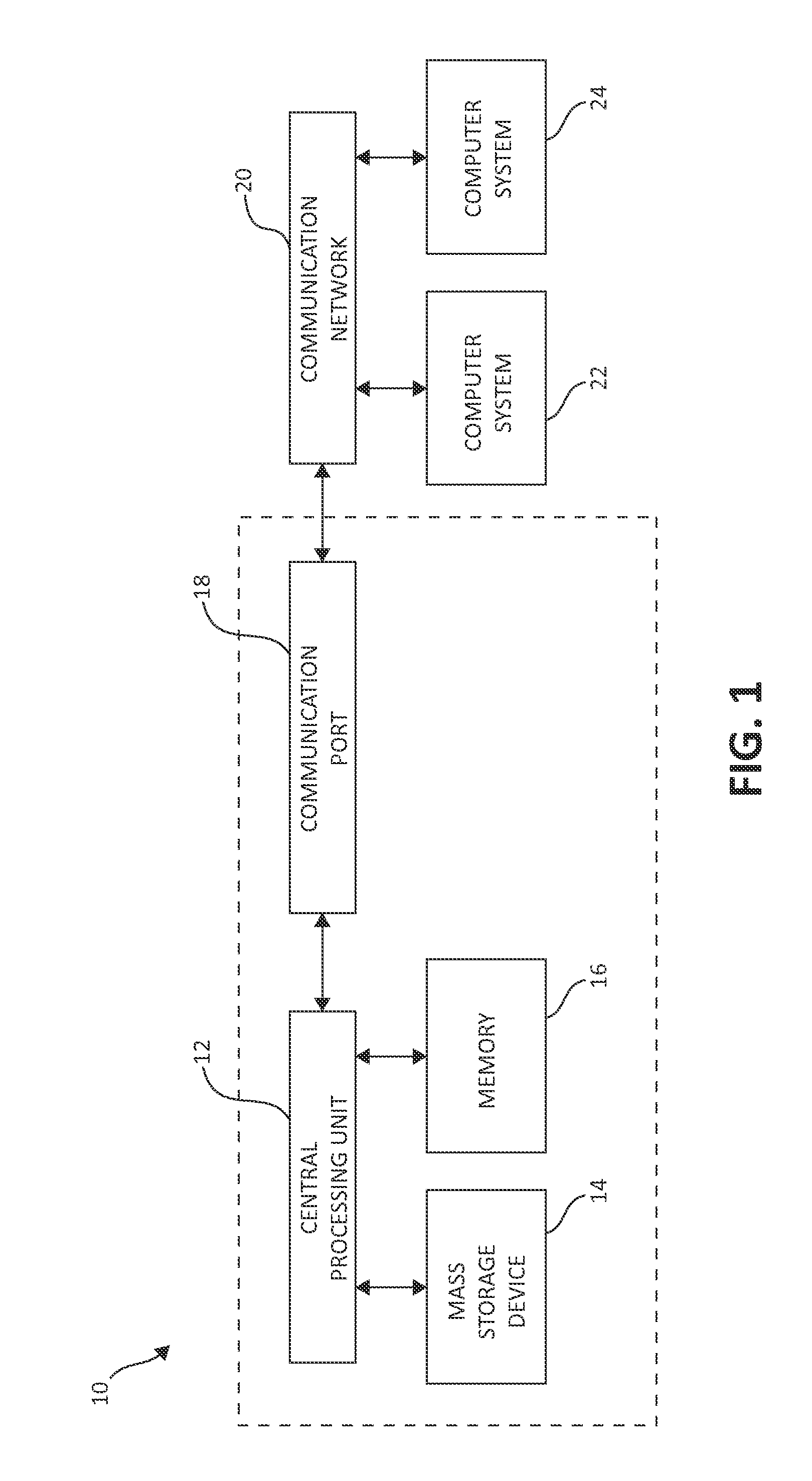

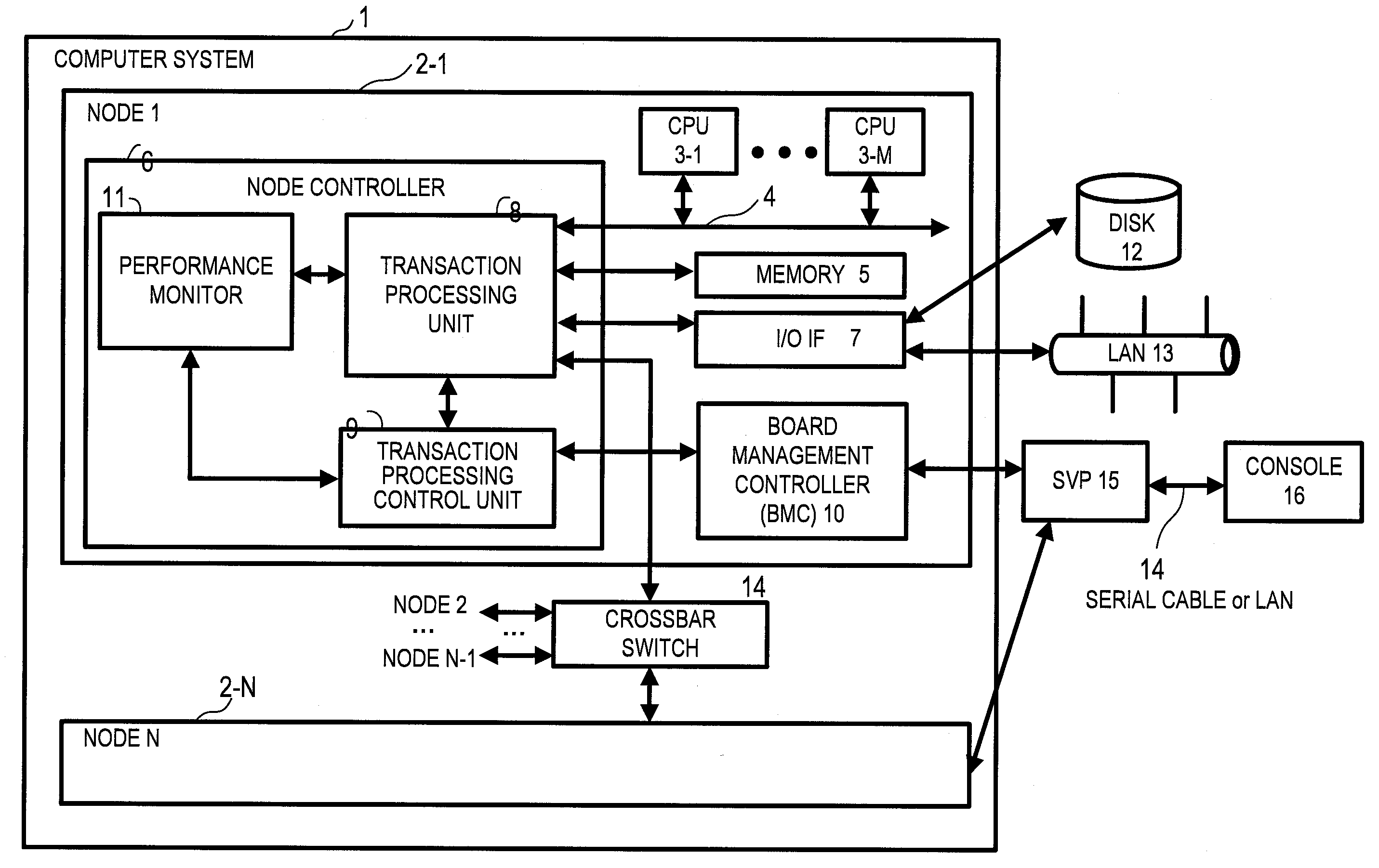

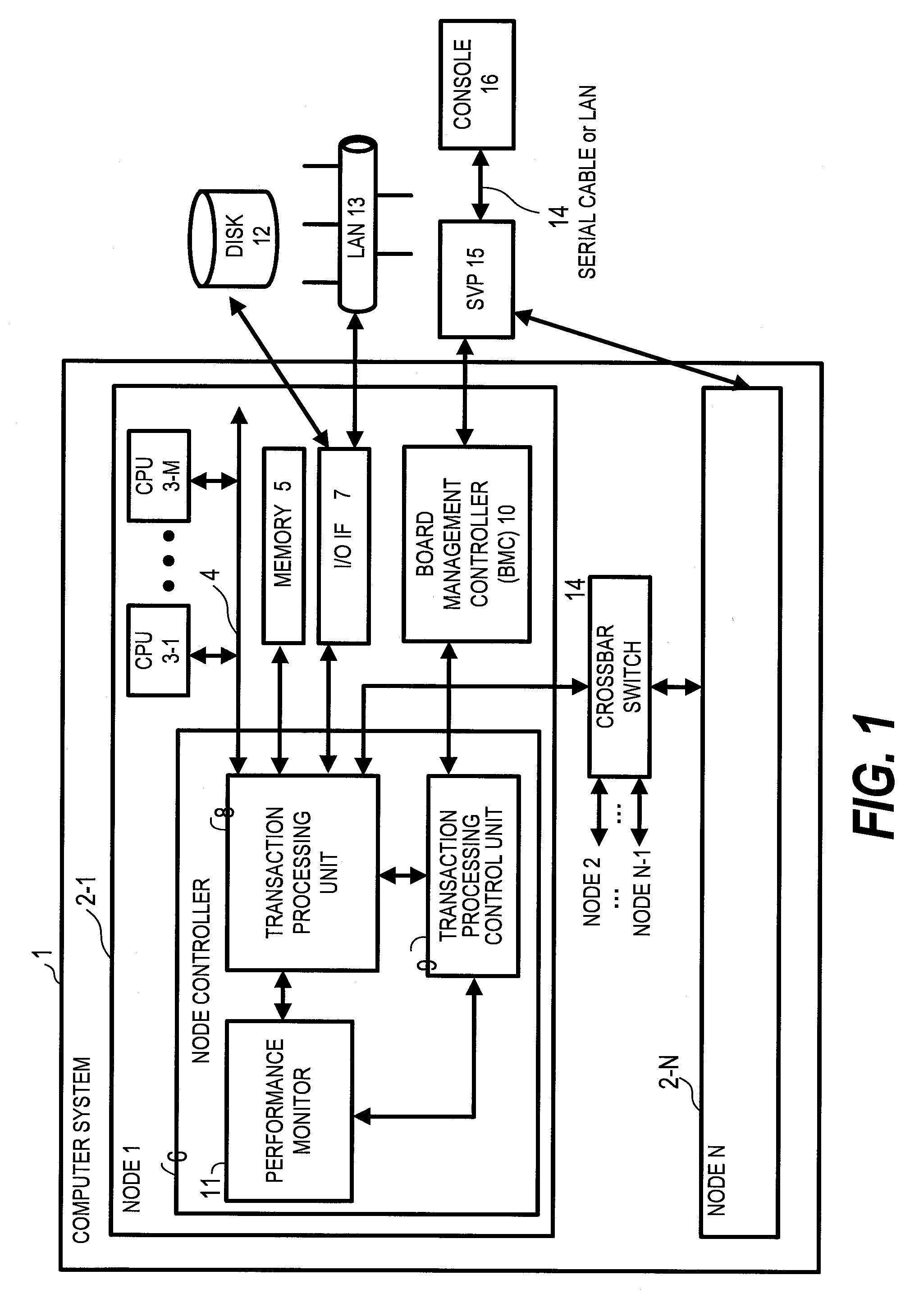

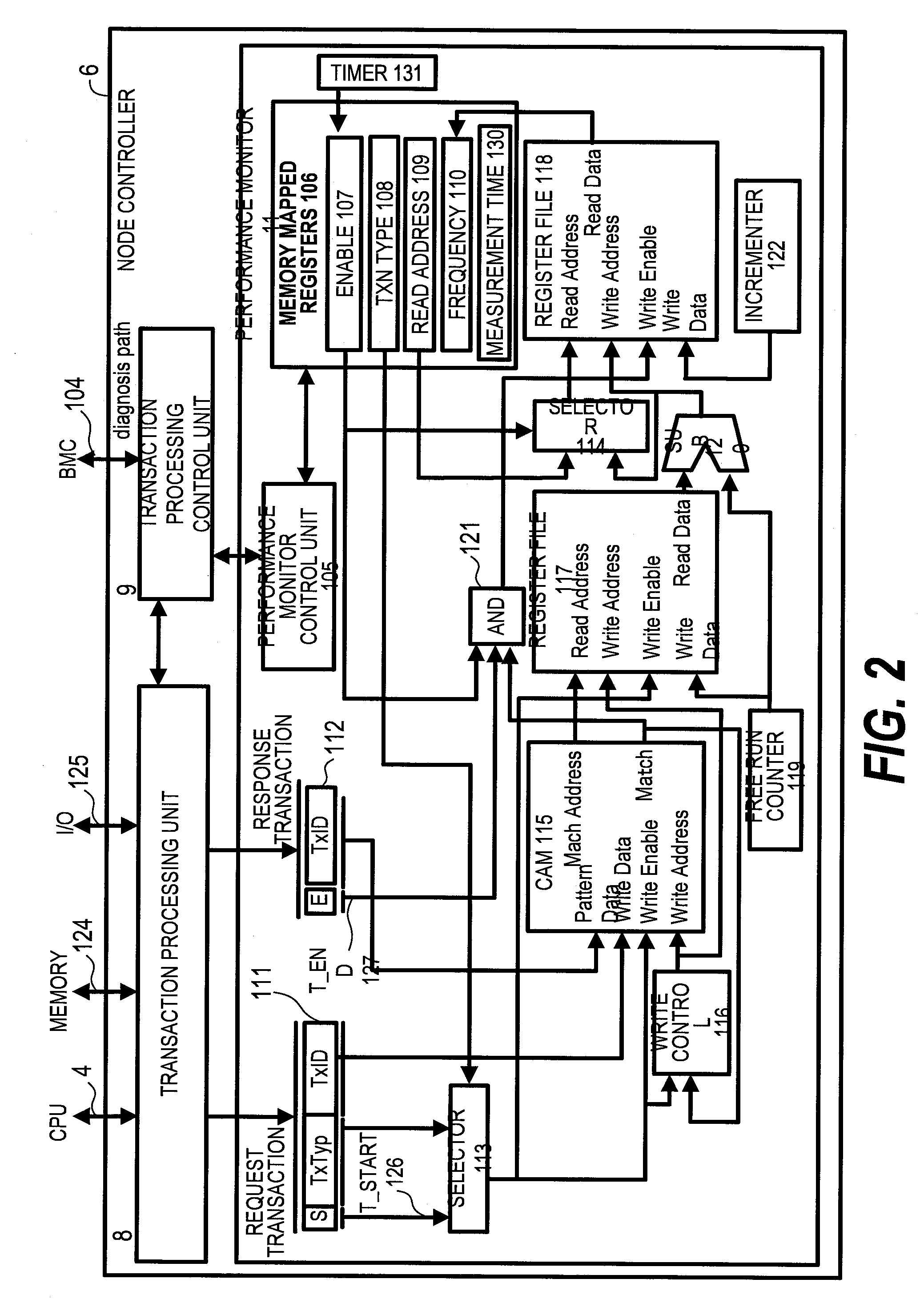

System and method for performance monitoring and reconfiguring computer system with hardware monitor

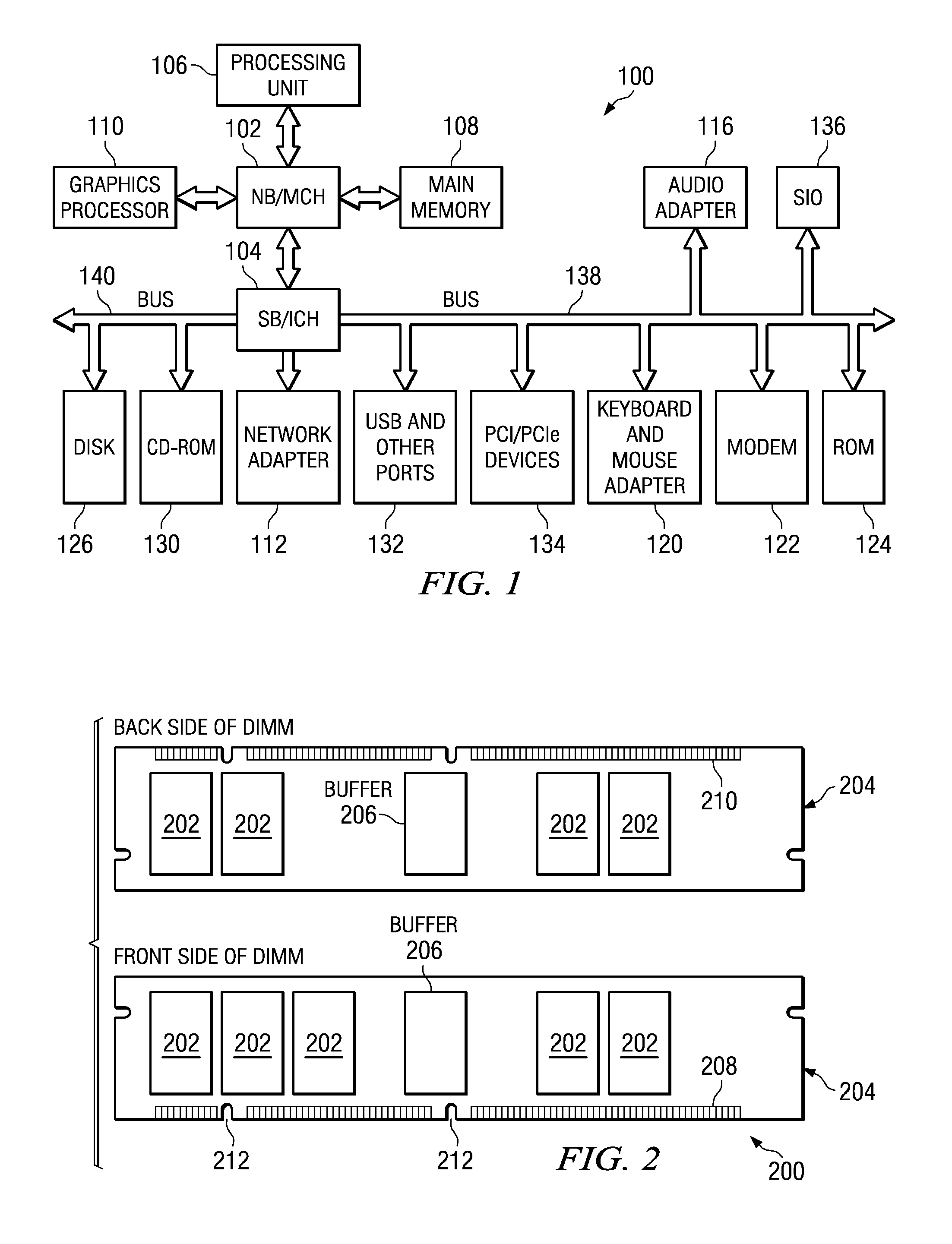

InactiveUS20080071939A1Improve performanceHigh performanceError detection/correctionMultiprogramming arrangementsFrequency countChipset

A judgment is made quickly about whether or not it is a memory or a chipset that is causing a performance bottleneck in an application program. A computer system of this invention includes at least one CPU, a controller that connects the CPU to a memory and to an I / O interface, in which the controller includes a response time measuring unit, which receives a request to access the memory and measures a response time taken to respond to the memory access request, a frequency counting unit, which measures an issue count of the memory access request, a measurement result storing unit, which stores a measurement result associating the response time with the corresponding issue count, and a measurement result control unit which outputs the measurement result stored in the measurement result storing unit when receiving a measurement result read request.

Owner:HITACHI LTD

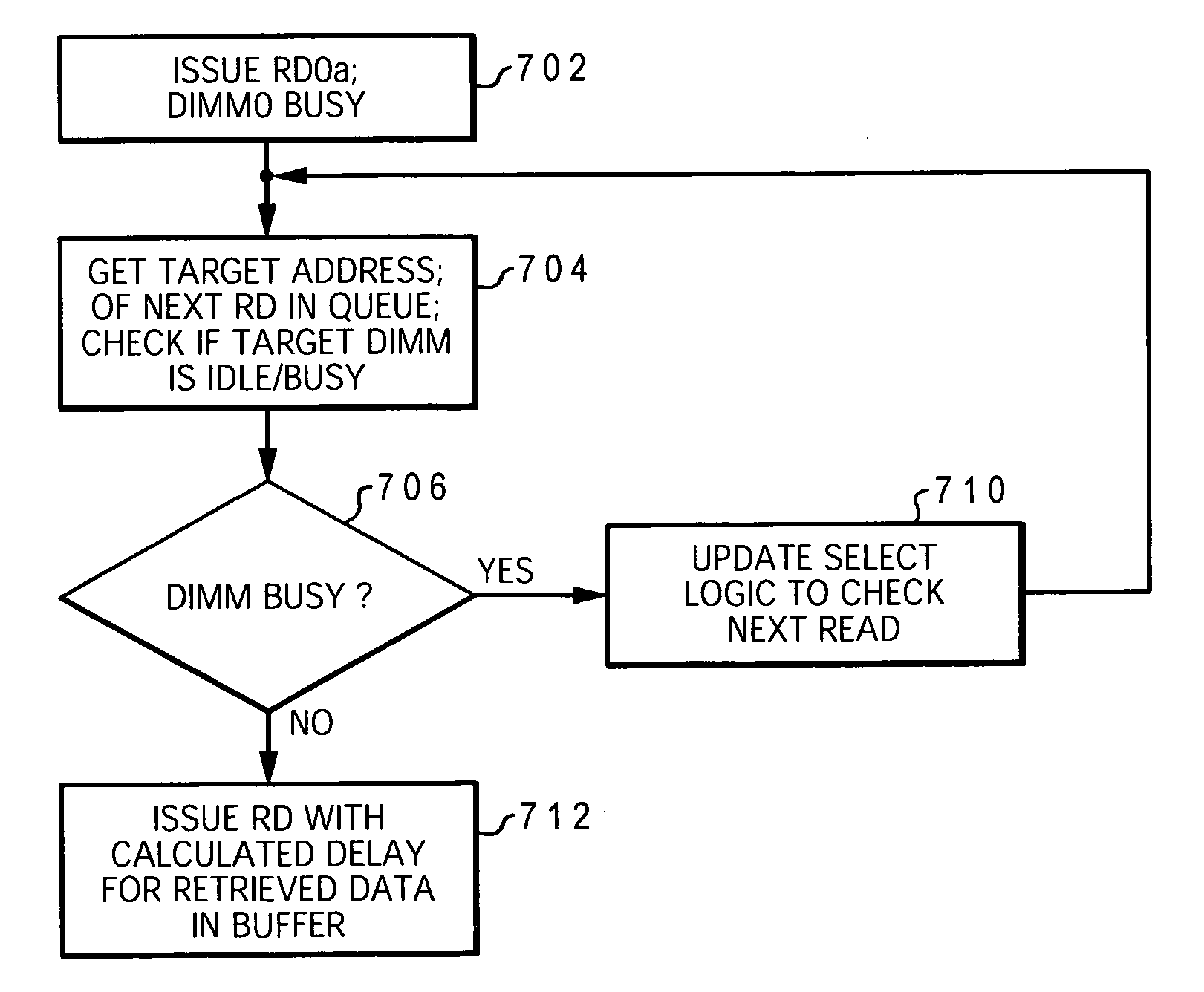

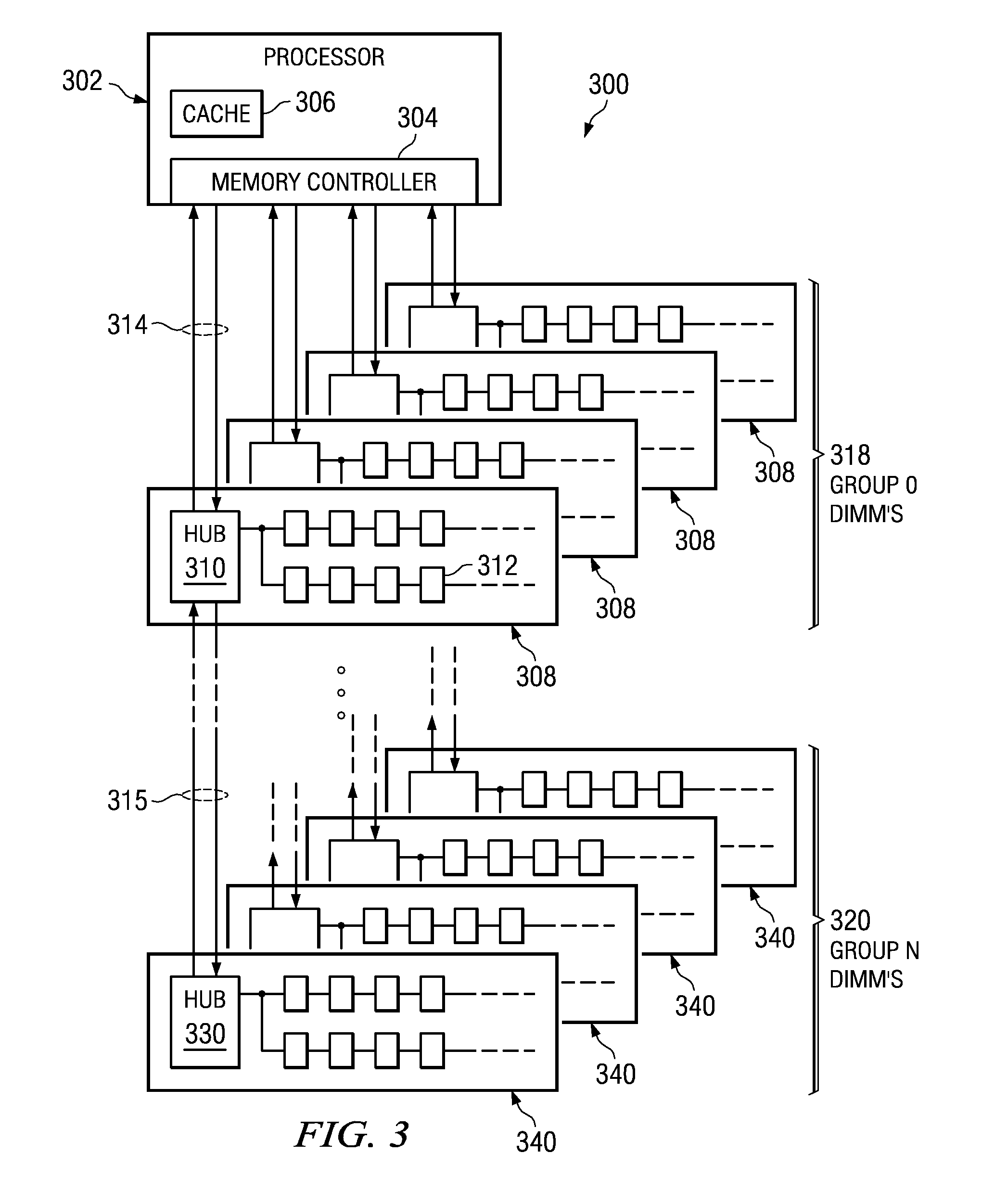

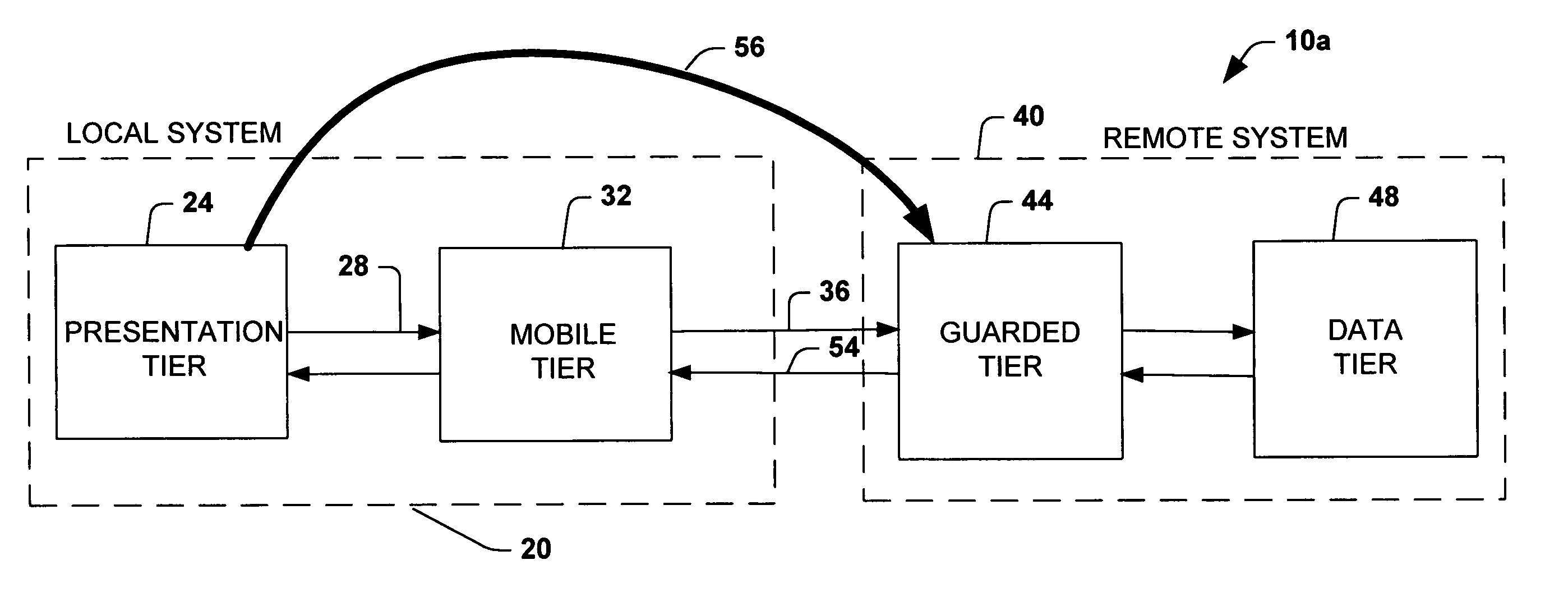

Streaming reads for early processing in a cascaded memory subsystem with buffered memory devices

InactiveUS20060179262A1Complete efficientlySignificant utilityMemory systemsControl storeTerm memory

A memory subsystem completes multiple read operations in parallel, utilizing the functionality of buffered memory modules in a daisy chain topology. A variable read latency is provided with each read command to enable memory modules to run independently in the memory subsystem. Busy periods of the memory device architecture are hidden by allowing data buses on multiple memory modules attached to the same data channel to run in parallel rather than in series and by issuing reads earlier than required to enable the memory devices to return from a busy state earlier. During scheduling of reads, the earliest received read whose target memory module is not busy is immediately issued at a next command cycle. The memory controller provides a delay parameter with each issued read. The number of cycles of delay is calculated to allow maximum utilization of the memory modules' data bus bandwidth without causing collisions on the memory channel.

Owner:IBM CORP

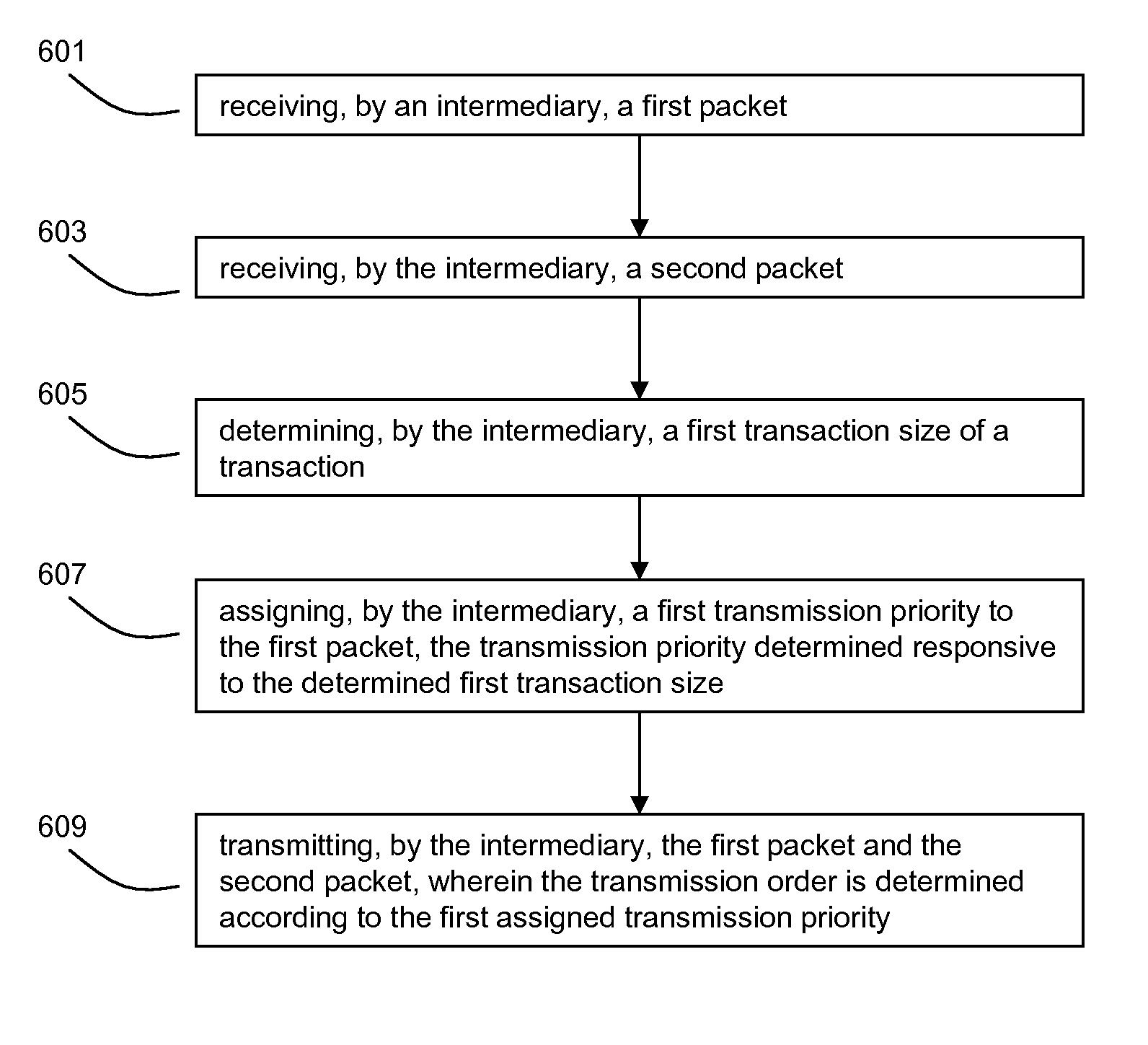

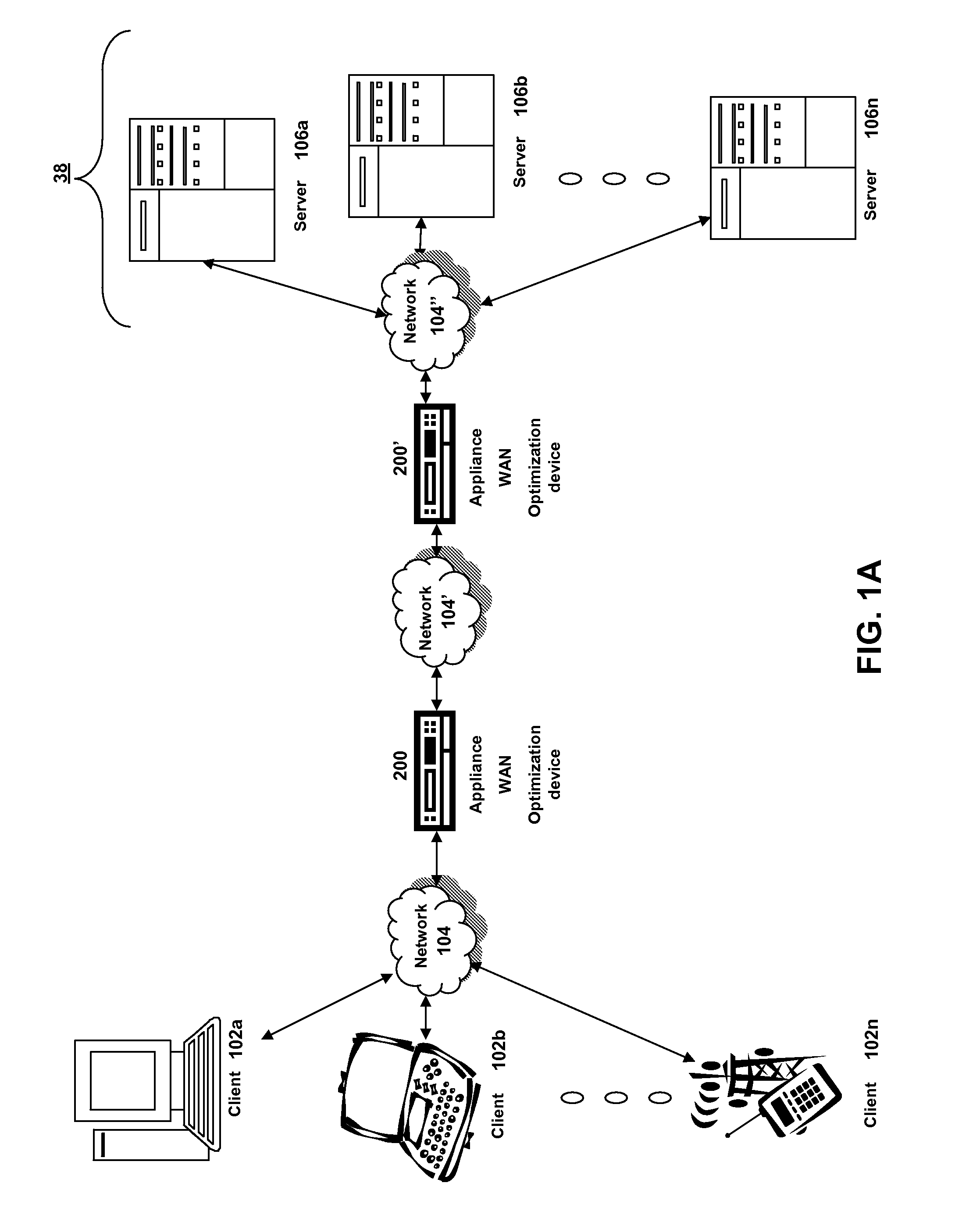

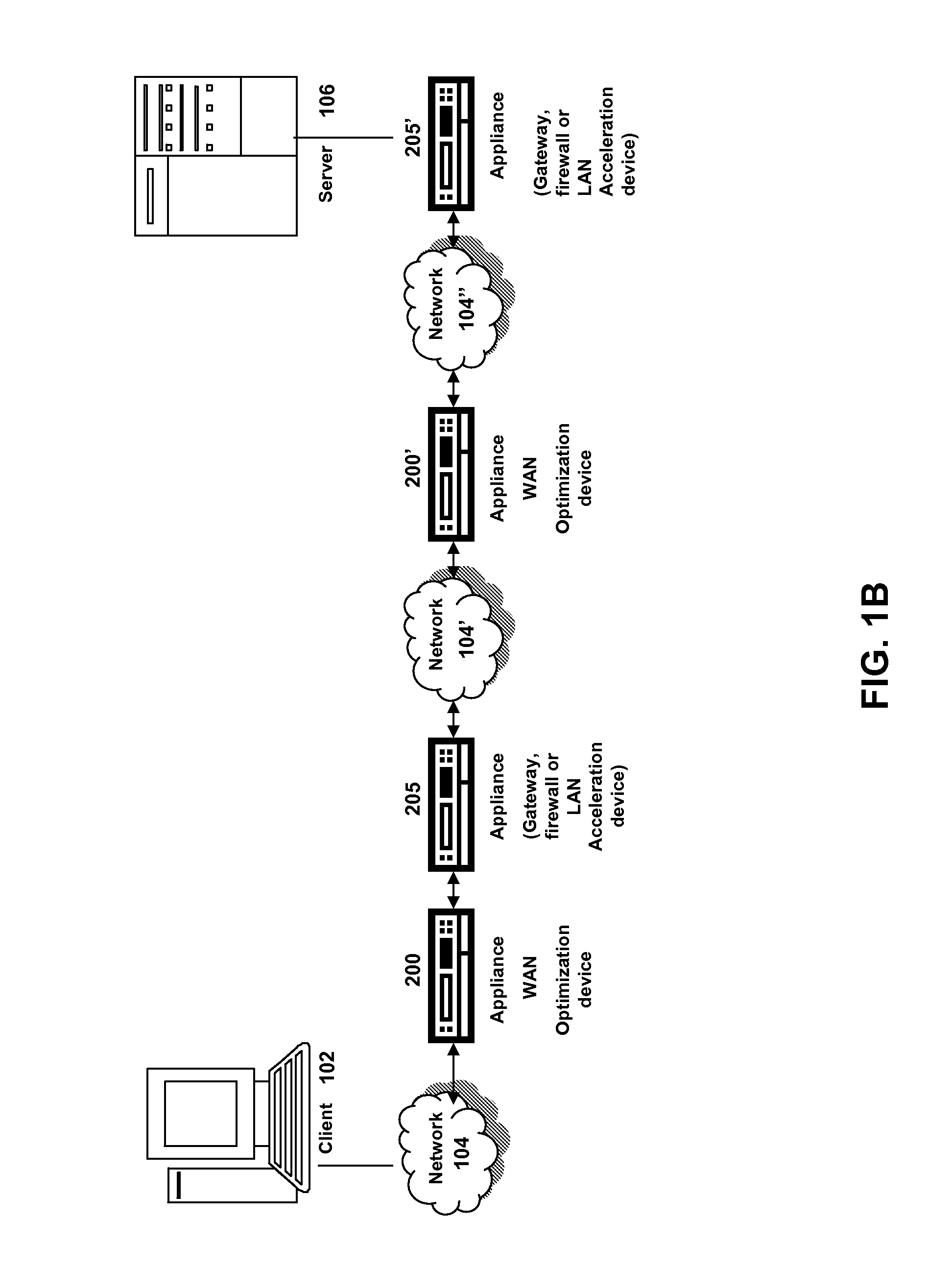

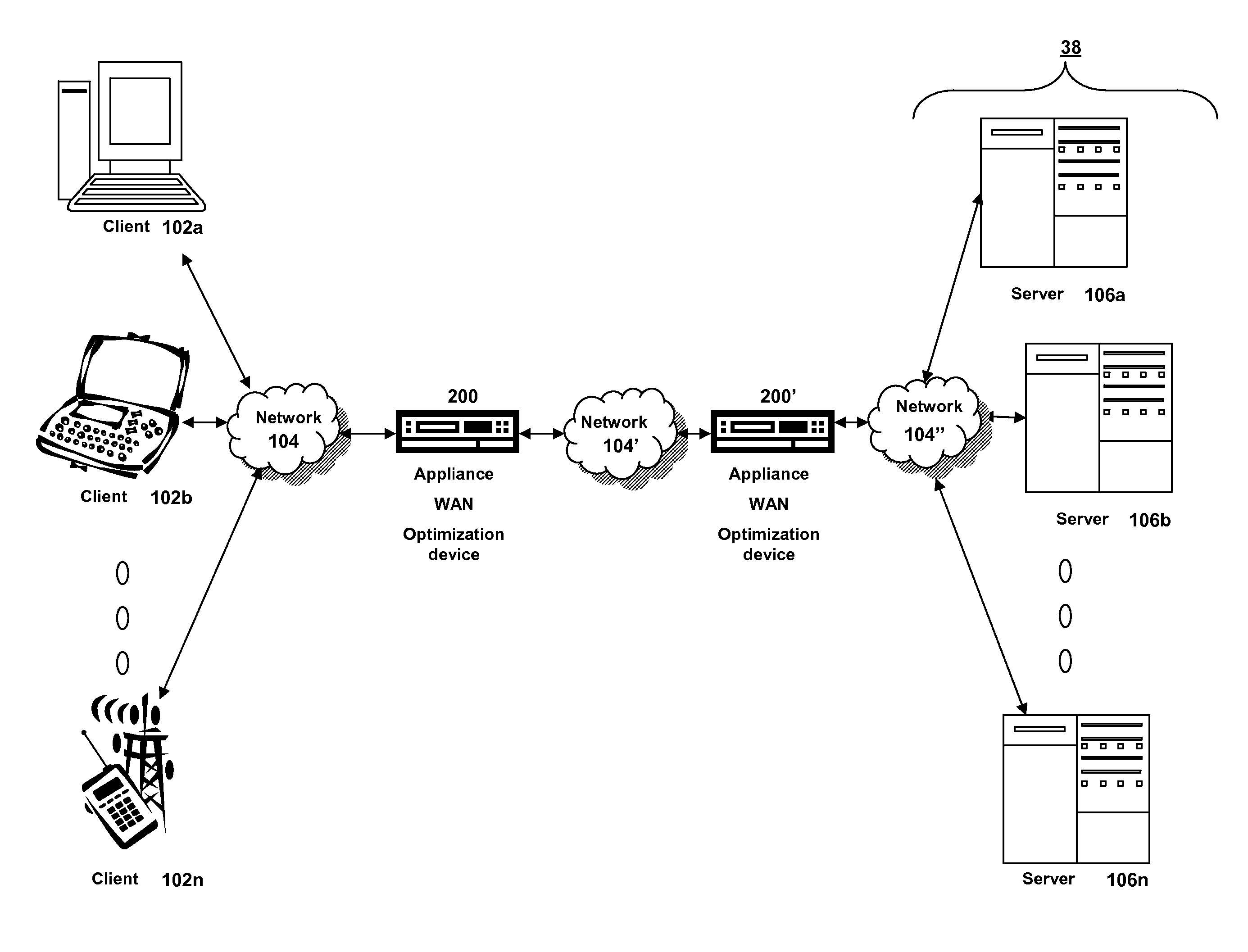

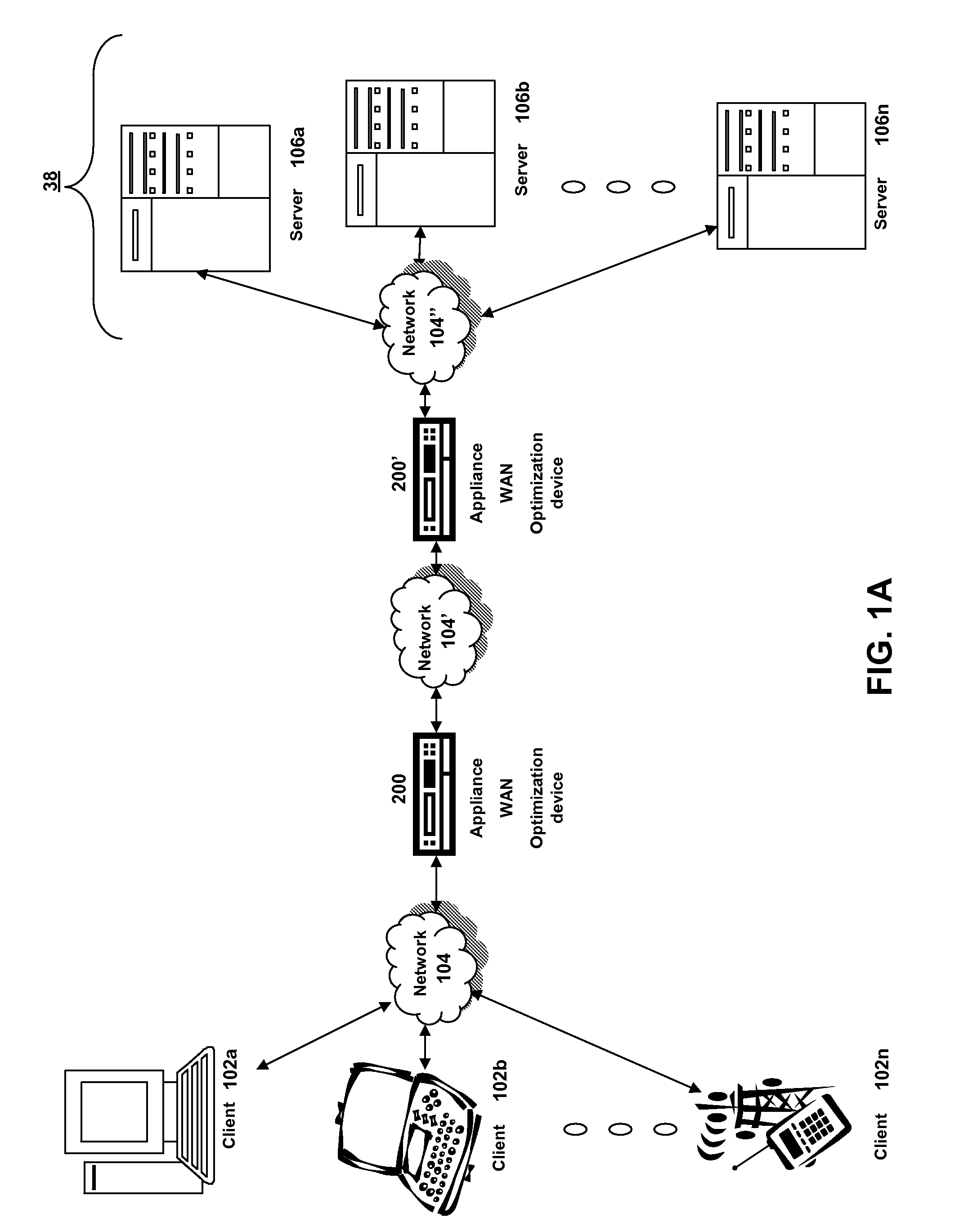

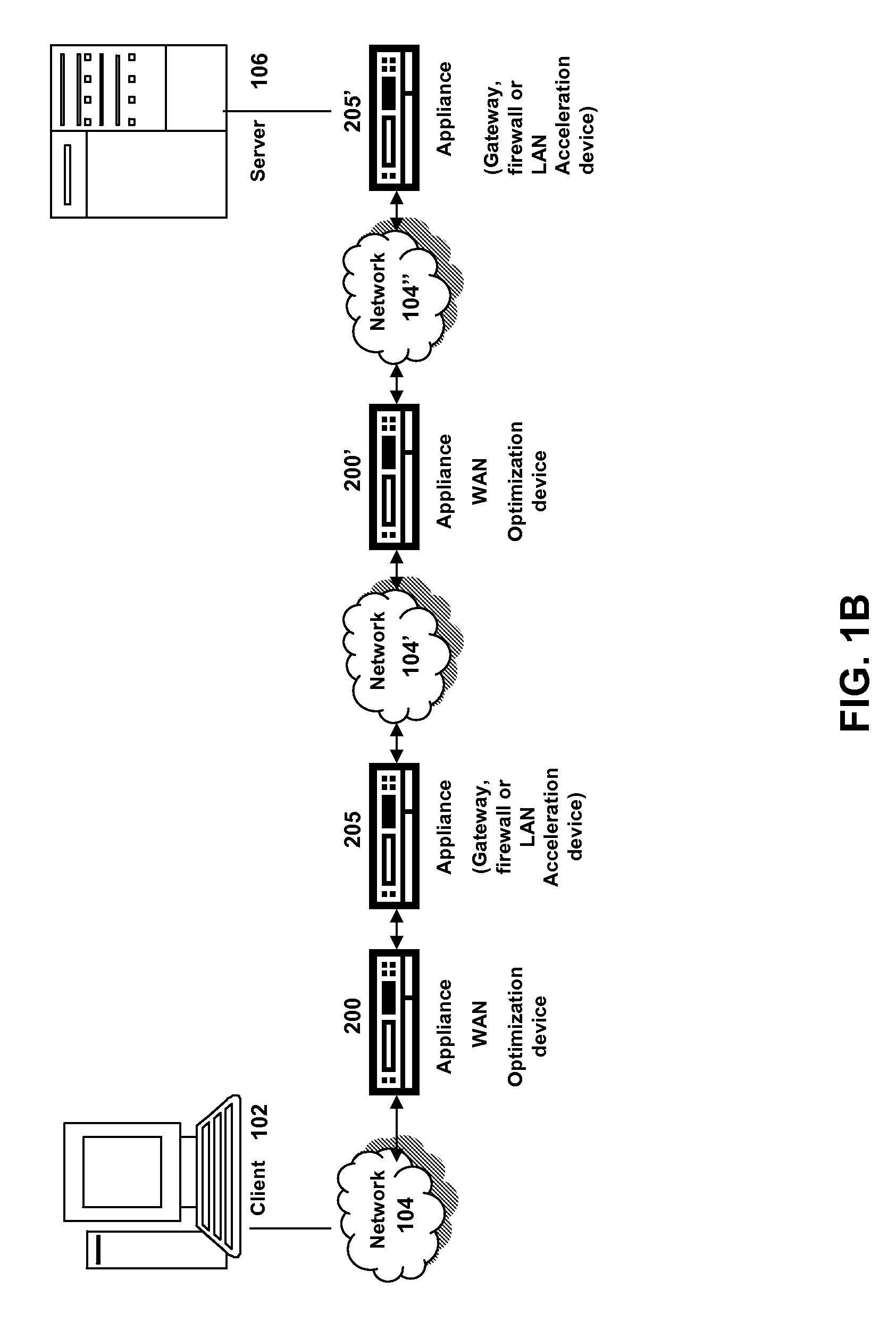

Systems and methods of using packet boundaries for reduction in timeout prevention

ActiveUS20070206621A1Increase network latencyAvoid delayData switching by path configurationStore-and-forward switching systemsInternet trafficBoundary detection

Systems and methods for utilizing transaction boundary detection methods in queuing and retransmission decisions relating to network traffic are described. By detecting transaction boundaries and sizes, a client, server, or intermediary device may prioritize based on transaction sizes in queuing decisions, giving precedence to smaller transactions which may represent interactive and / or latency-sensitive traffic. Further, after detecting a transaction boundary, a device may retransmit one or more additional packets prompting acknowledgements, in order to ensure timely notification if the last packet of the transaction has been dropped. Systems and methods for potentially improving network latency, including retransmitting a dropped packet twice or more in order to avoid incurring additional delays due to a retransmitted packet being lost are also described.

Owner:CITRIX SYST INC

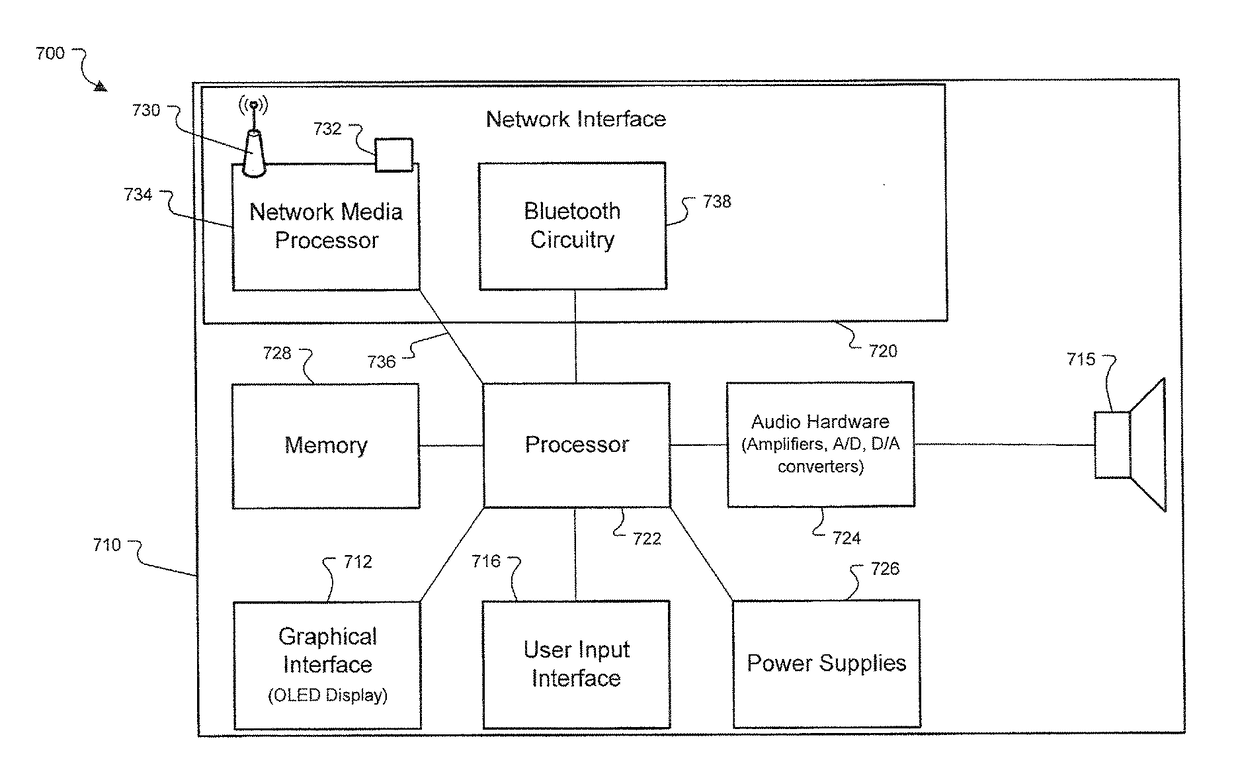

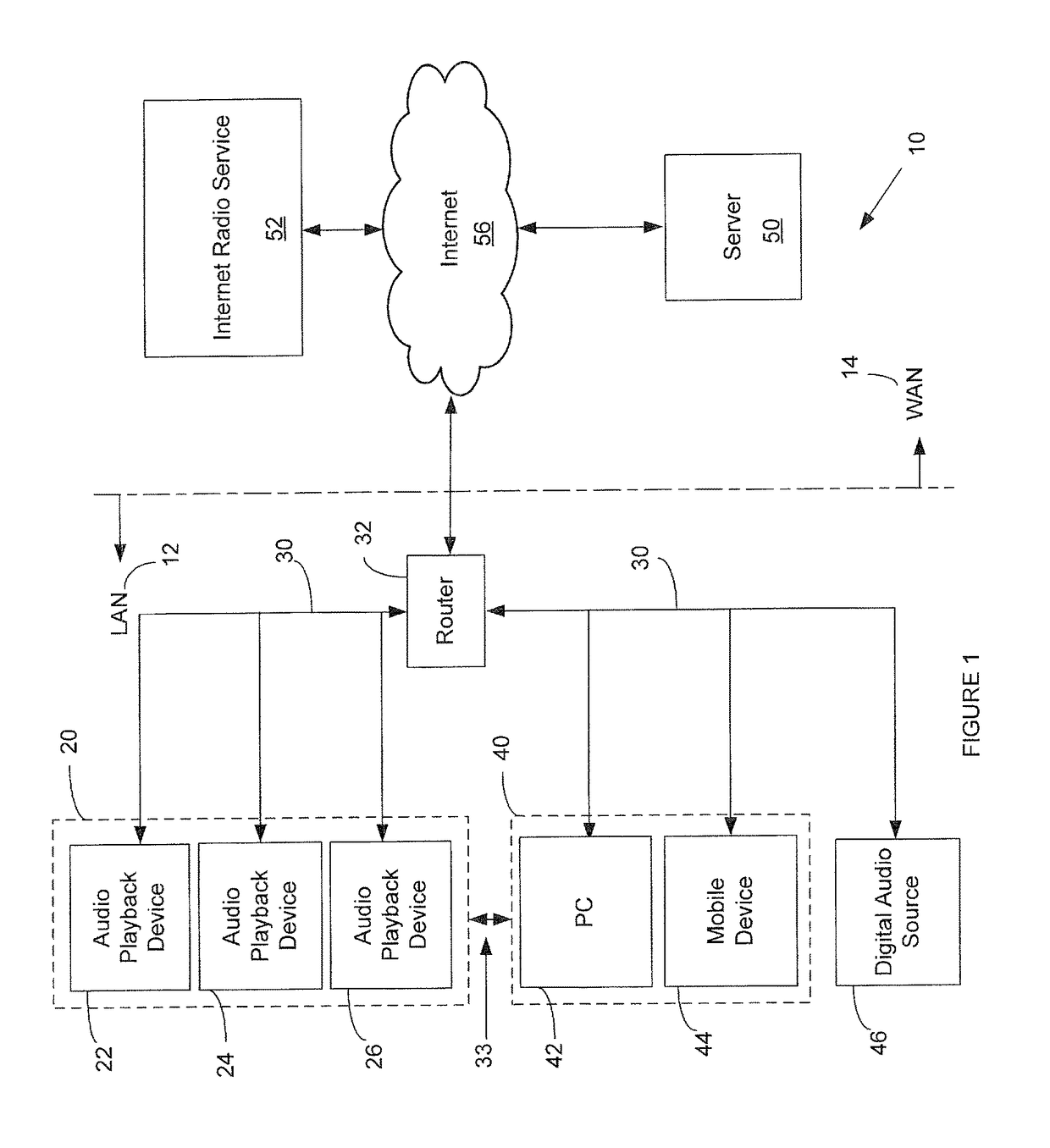

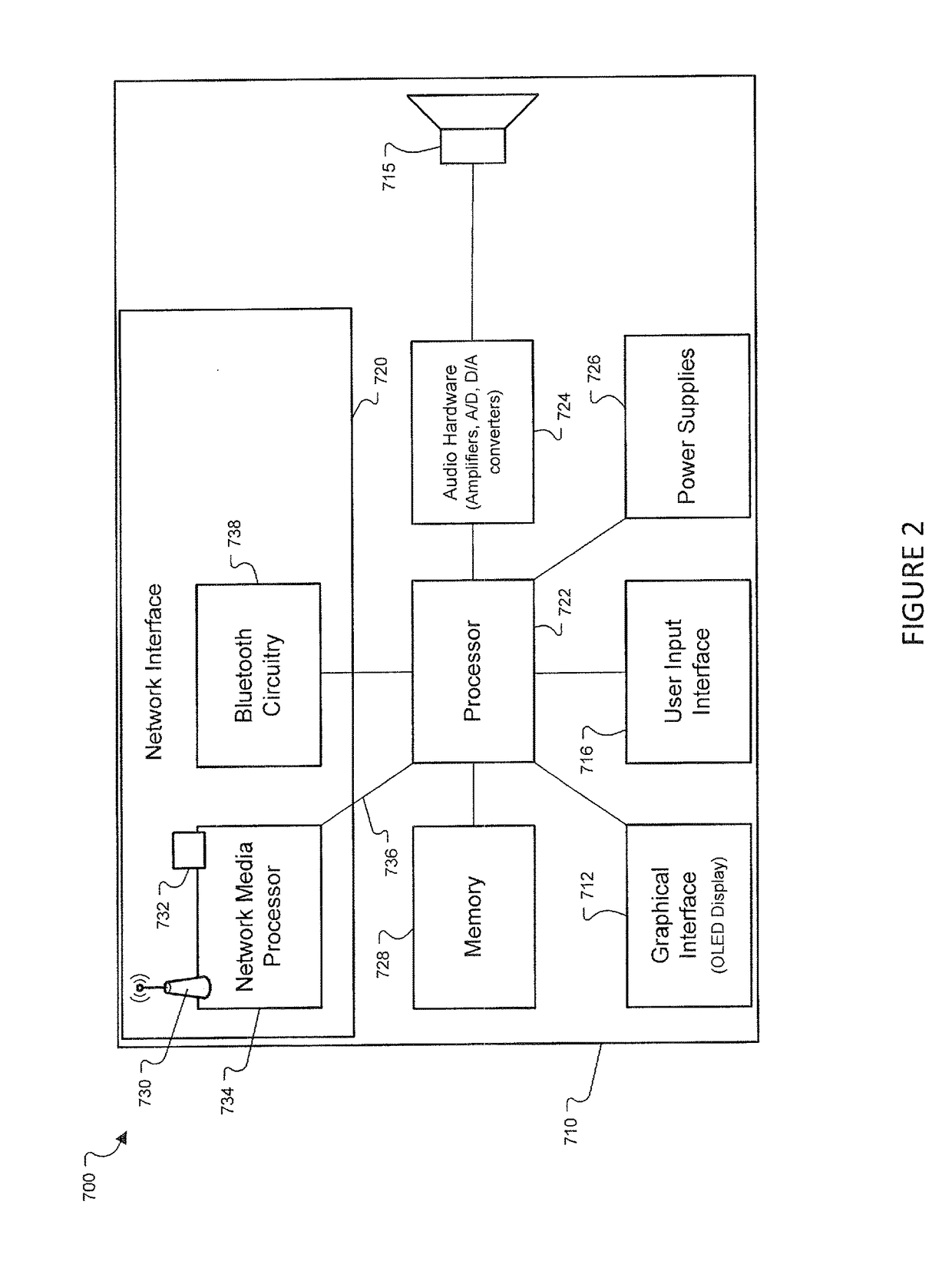

Wireless Audio Synchronization

ActiveUS20170069338A1Delay minimizationLower latencySpeech analysisSelective content distributionTime segmentLoudspeaker

A method of synchronizing playback of audio data sent over a first wireless network from an audio source to a wireless speaker package that is adapted to play the audio data. The method includes comparing a first time period over which audio data was sent over the first wireless network to a second time period over which the audio data was received by the wireless speaker package, and playing the received audio data on the wireless speaker package over a third time period that is related to the comparison of the first and second time periods.

Owner:BOSE CORP

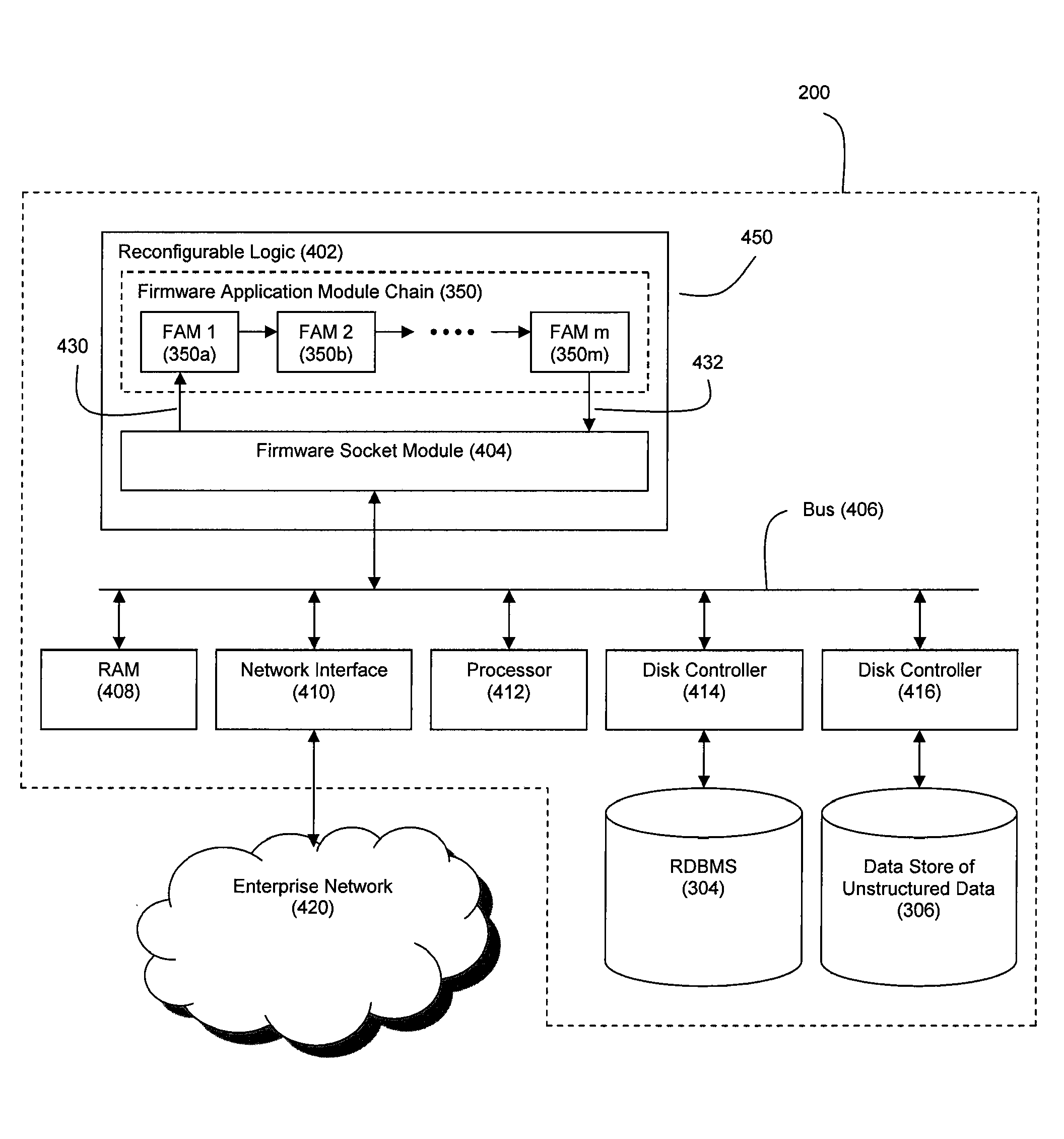

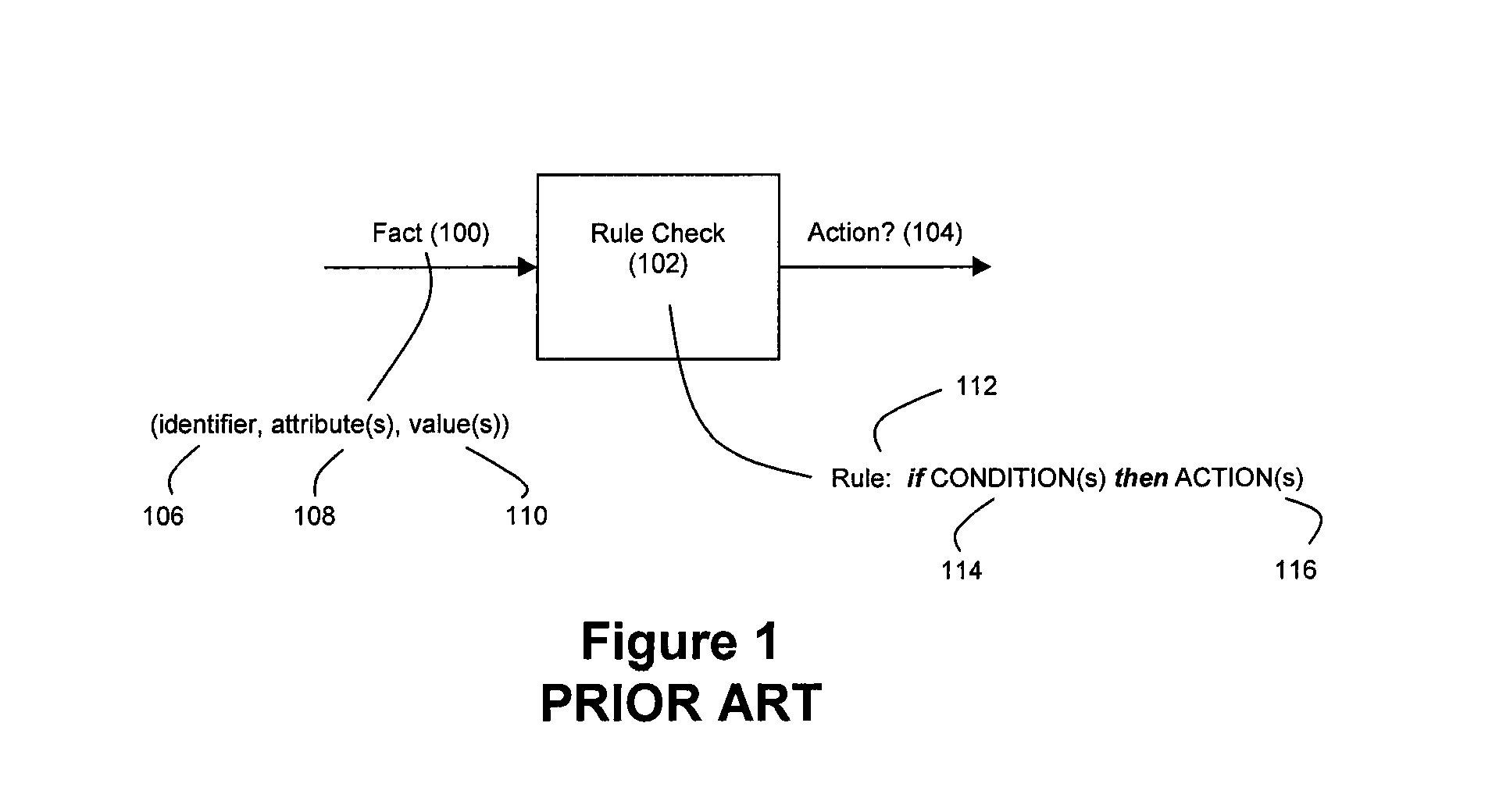

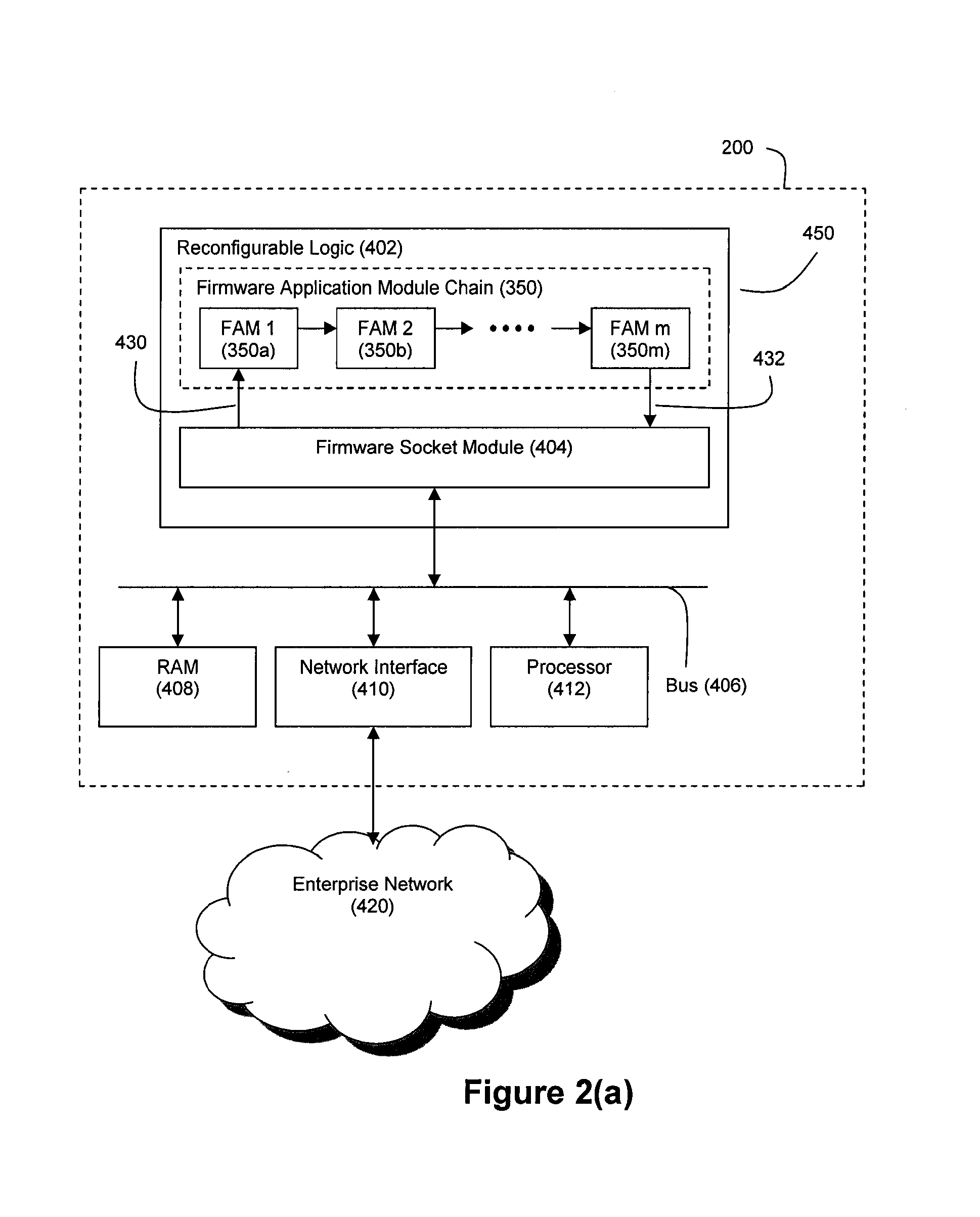

Method and system for accelerated stream processing

ActiveUS8374986B2Low degreeImprove latencyDigital computer detailsCode conversionProgramming languageData stream

Disclosed herein is a method and system for hardware-accelerating various data processing operations in a rule-based decision-making system such as a business rules engine, an event stream processor, and a complex event stream processor. Preferably, incoming data streams are checked against a plurality of rule conditions. Among the data processing operations that are hardware-accelerated include rule condition check operations, filtering operations, and path merging operations. The rule condition check operations generate rule condition check results for the processed data streams, wherein the rule condition check results are indicative of any rule conditions which have been satisfied by the data streams. The generation of such results with a low degree of latency provides enterprises with the ability to perform timely decision-making based on the data present in received data streams.

Owner:IP RESERVOIR

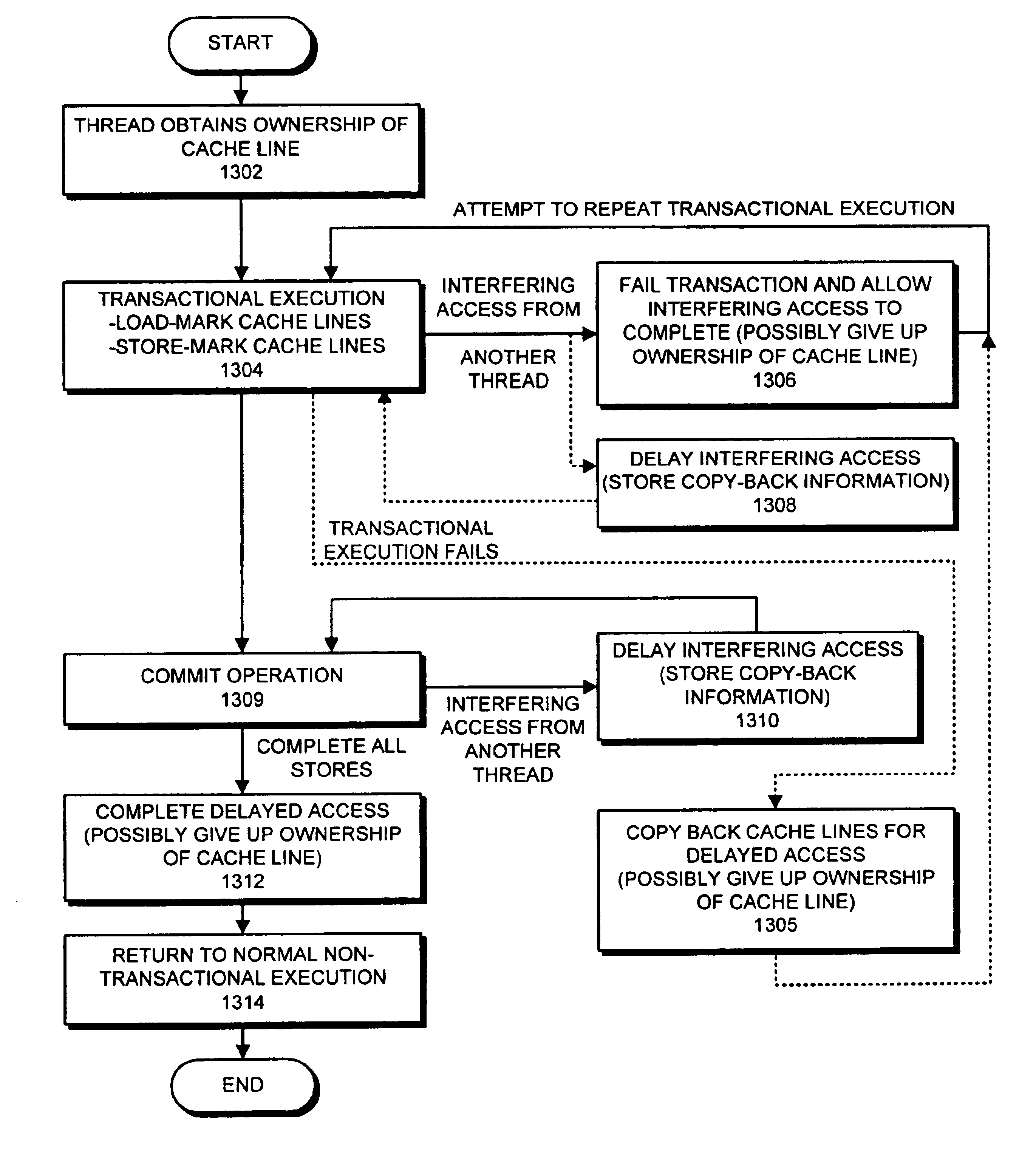

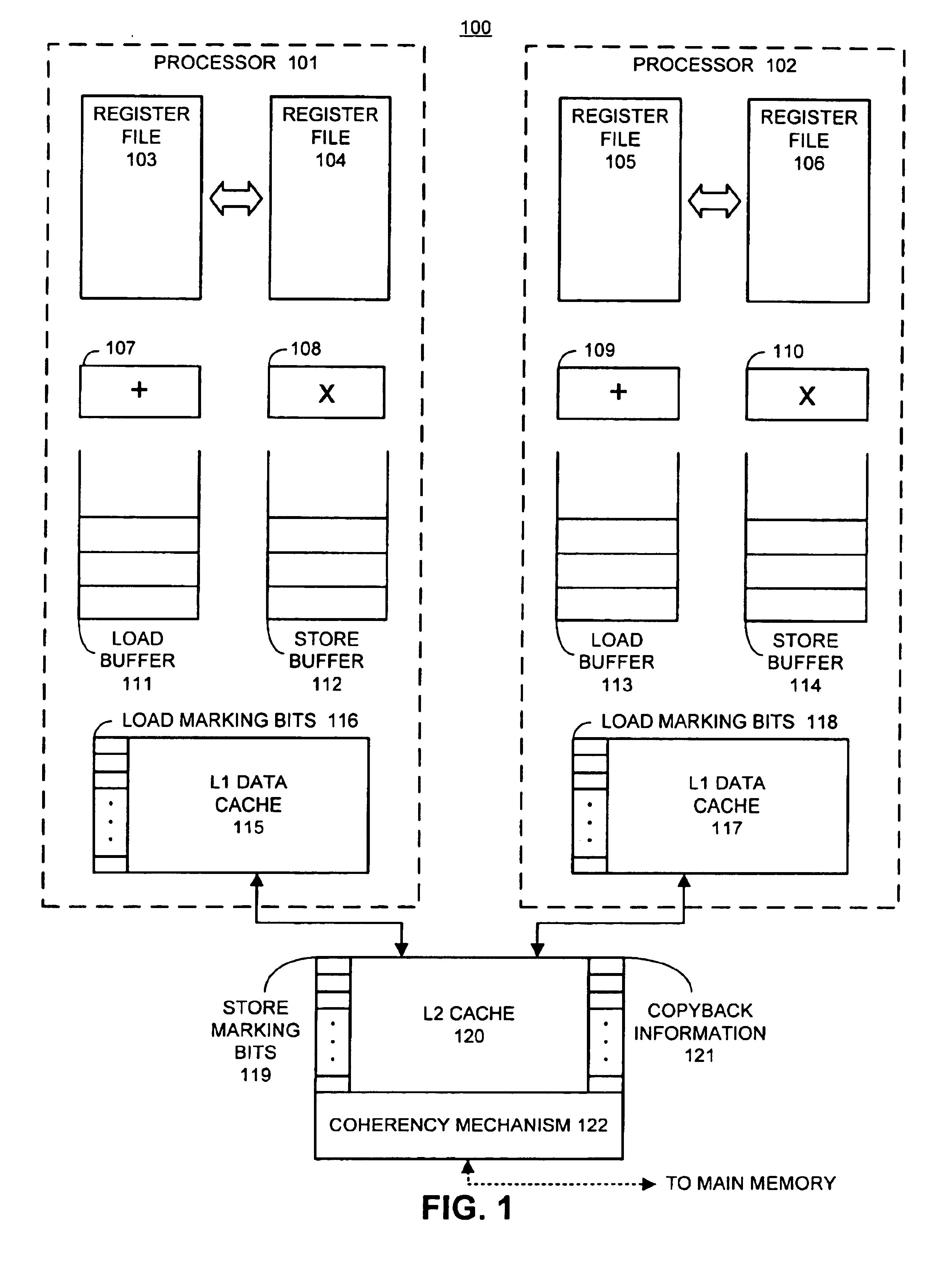

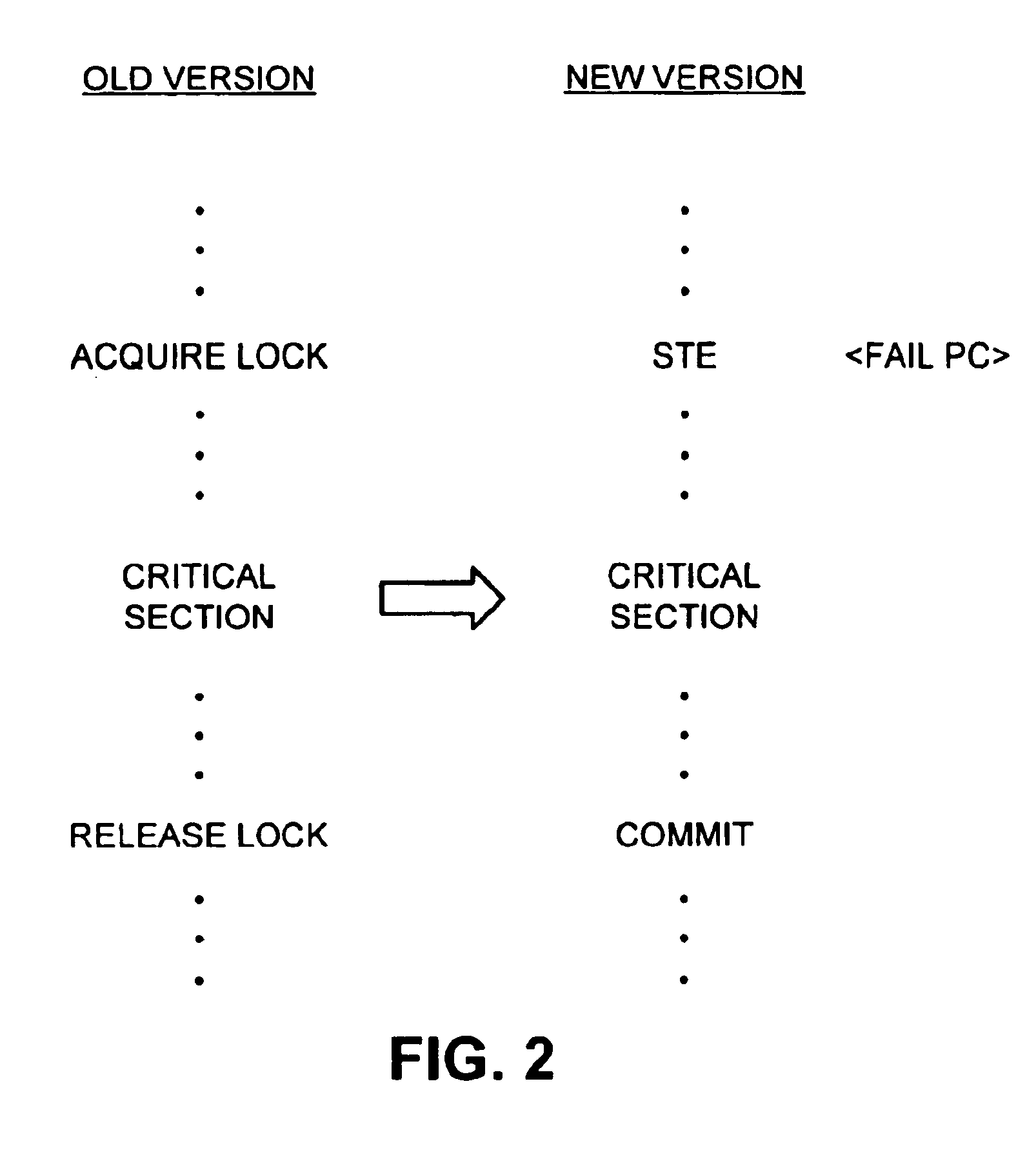

Method and apparatus for delaying interfering accesses from other threads during transactional program execution

ActiveUS6938130B2Improve latencyMemory adressing/allocation/relocationConcurrent instruction executionOperating system

One embodiment of the present invention provides a system that facilitates delaying interfering memory accesses from other threads during transactional execution. During transactional execution of a block of instructions, the system receives a request from another thread (or processor) to perform a memory access involving a cache line. If performing the memory access on the cache line will interfere with the transactional execution and if it is possible to delay the memory access, the system delays the memory access and stores copy-back information for the cache line to enable the cache line to be copied back to the requesting thread. At a later time, when the memory access will no longer interfere with the transactional execution, the system performs the memory access and copies the cache line back to the requesting thread.

Owner:ORACLE INT CORP

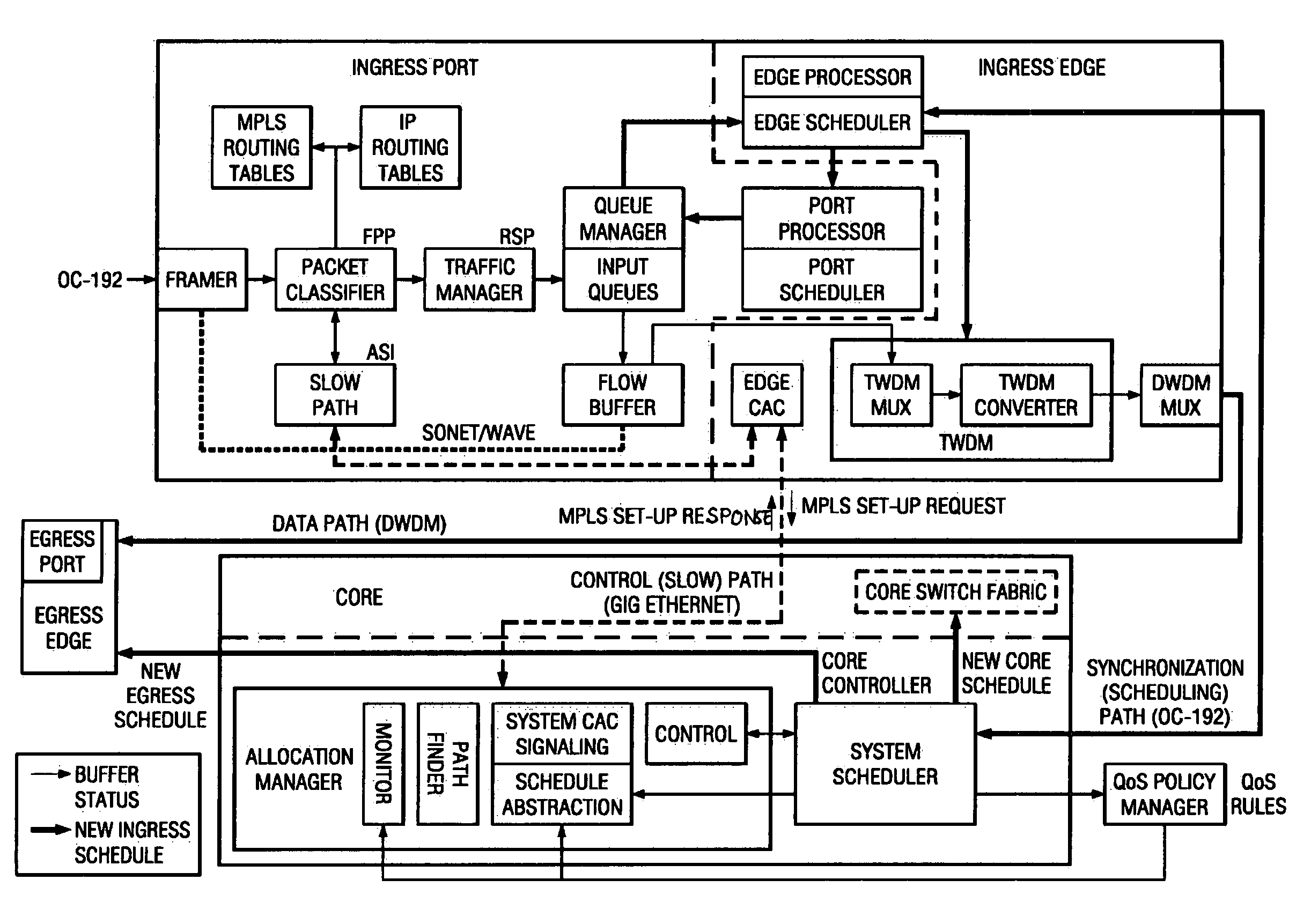

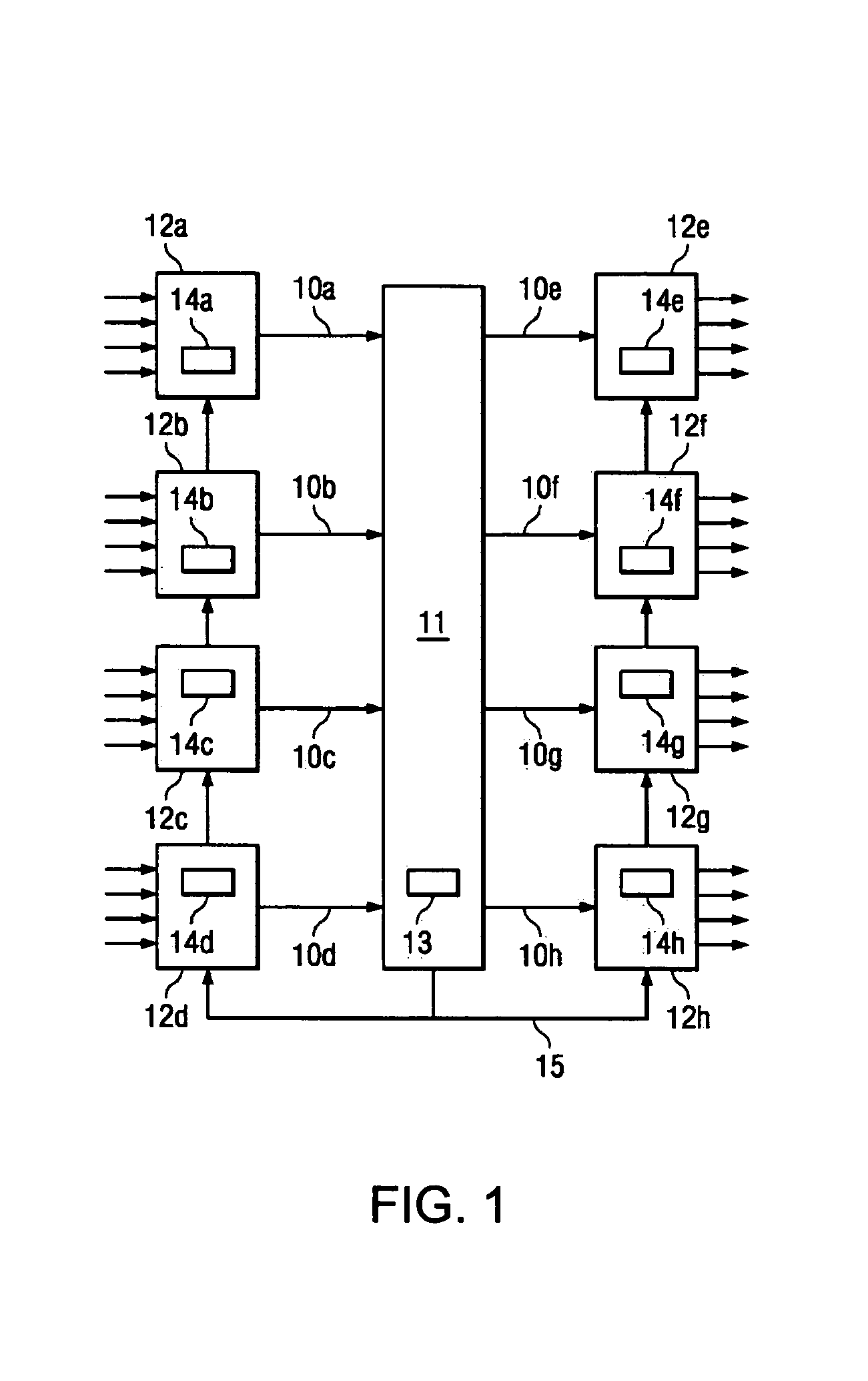

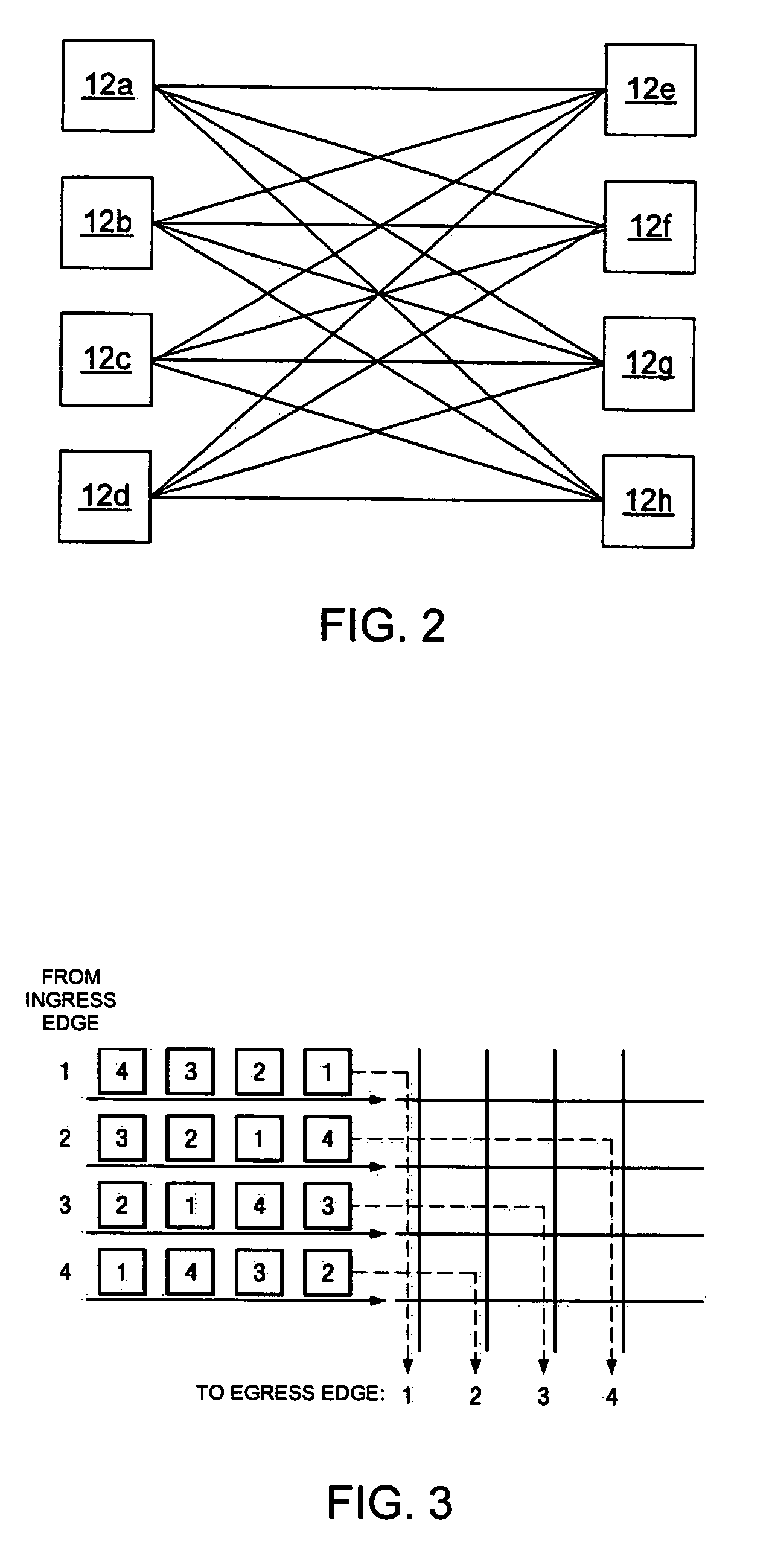

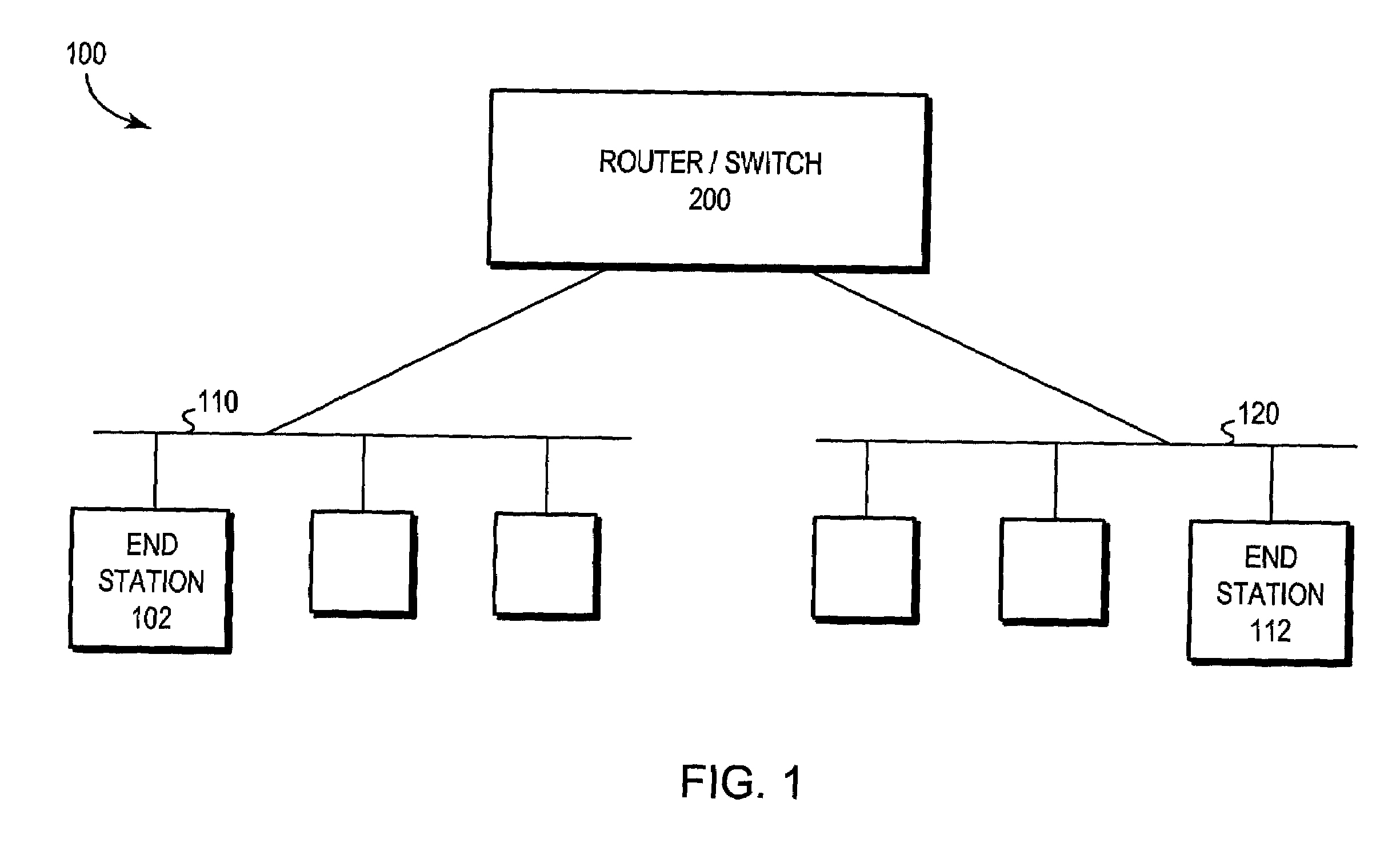

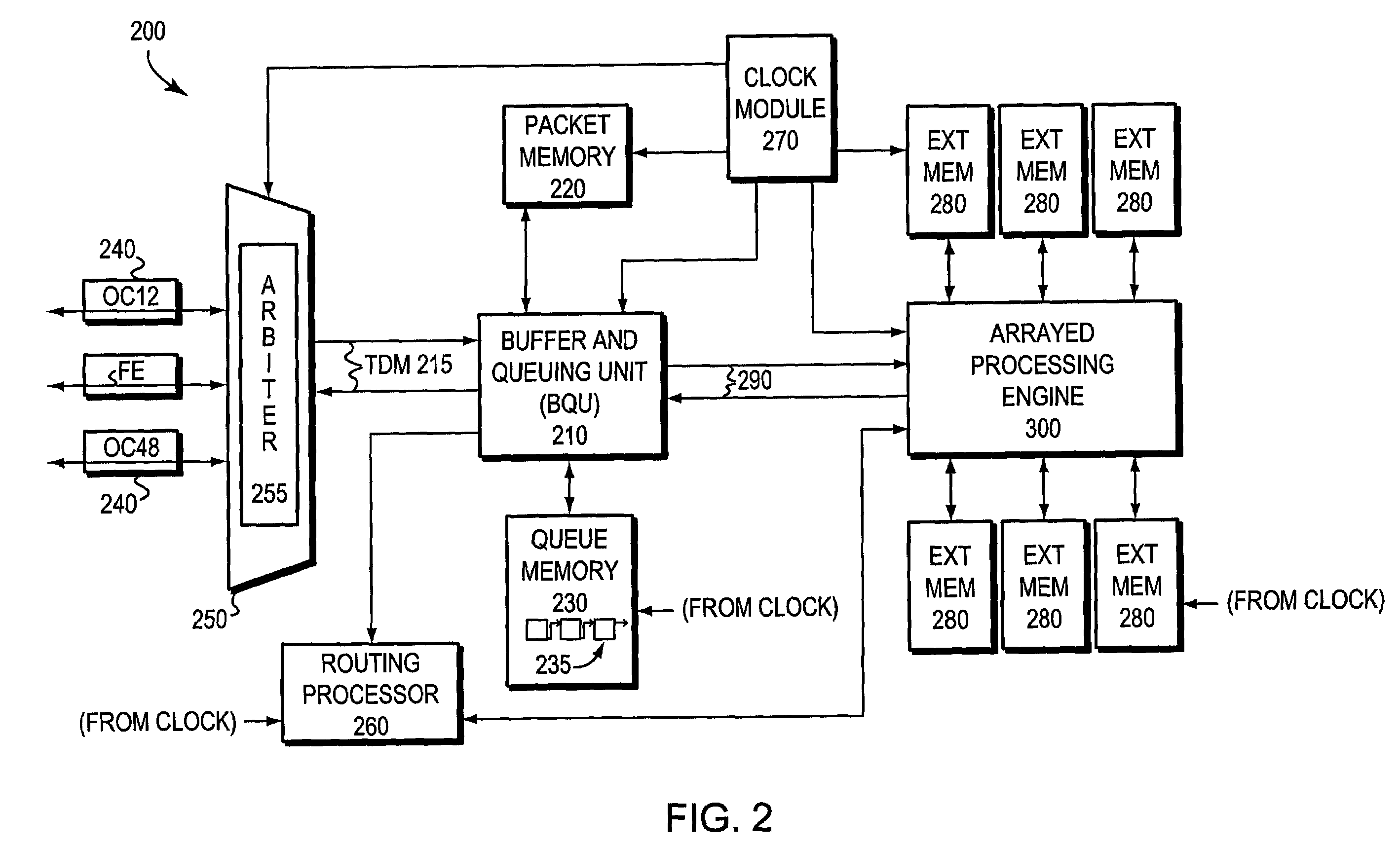

System for switching data using dynamic scheduling

ActiveUS7218637B1Lower latencyImprove performanceData switching by path configurationTime scheduleData interchange

An architecture and related systems for improving the performance of non-blocking data switching systems. In one embodiment, a switching system includes an optical switching core coupled to a plurality of edge units, each of which has a set of ingress ports and a set of egress ports. The switching system also contains a scheduler that maintains two non-blocking data transfer schedules, only one of which is active at a time. Data is transferred through the switching system according to the active schedule. The scheduler monitors the sufficiency of data transferred according to the active schedule and, if the currently active schedule is insufficient, the scheduler recomputes the alternate schedule based on demand data received from the edges / ports and activates the alternate schedule. A timing mechanism is employed to ensure that the changeover to the alternate schedule is essentially simultaneous among the components of the system.

Owner:UNWIRED BROADBAND INC

Method and Apparatus for Accelerated Data Quality Checking

ActiveUS20130151458A1Low degreeImprove latencyDigital computer detailsCode conversionData streamData quality

Disclosed herein is a method and apparatus for hardware-accelerating various data quality checking operations. Incoming data streams can be processed with respect to a plurality of data quality check operations using offload engines (e.g., reconfigurable logic such as field programmable gate arrays (FPGAs)). Accelerated data quality checking can be highly advantageous for use in connection with Extract, Transfer, and Load (ETL) systems.

Owner:IP RESERVOIR

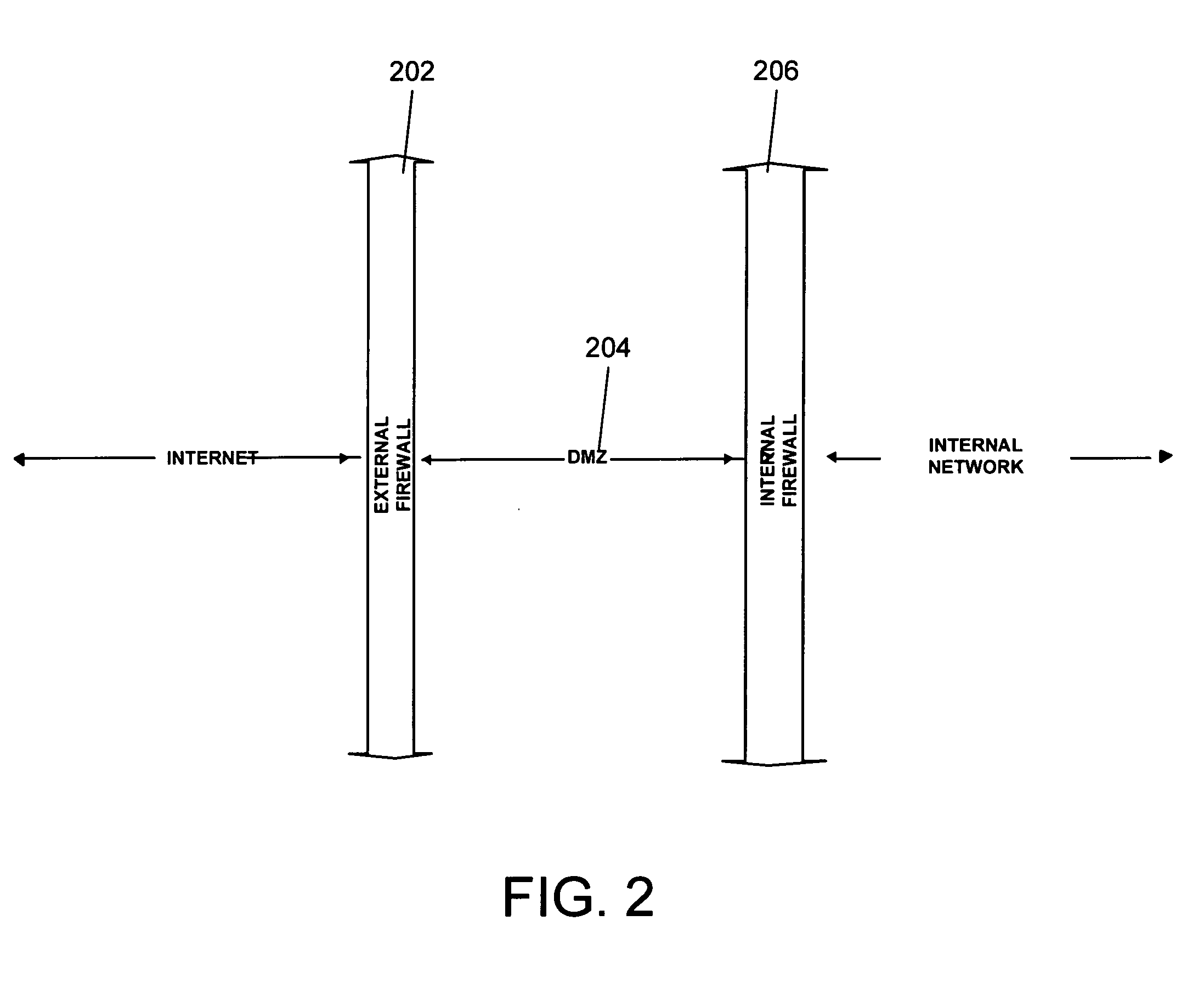

System and method for efficiently transferring media across firewalls

InactiveUS20050198499A1Secure network environmentEnvironment safetyMultiple digital computer combinationsProgram controlTime ProtocolNetsniff-ng

Enabling media (audio / video) scenarios across firewalls typically requires opening up multiple UDP ports in an external firewall. This is so because RTP (Real Time Protocol, RFC 1889), which is the protocol used to carry media packets over IP network, requires a separate UDP receive port for each media source. Opening up multiple media ports on the external firewall is something that administrators are not comfortable doing as they consider it security vulnerability. The system and method according to the invention provides an alternate mechanism which changes RTP protocol a little and achieves a goal of traversing firewalls for media packets using a fixed number, namely two, of UDP ports.

Owner:MICROSOFT TECH LICENSING LLC

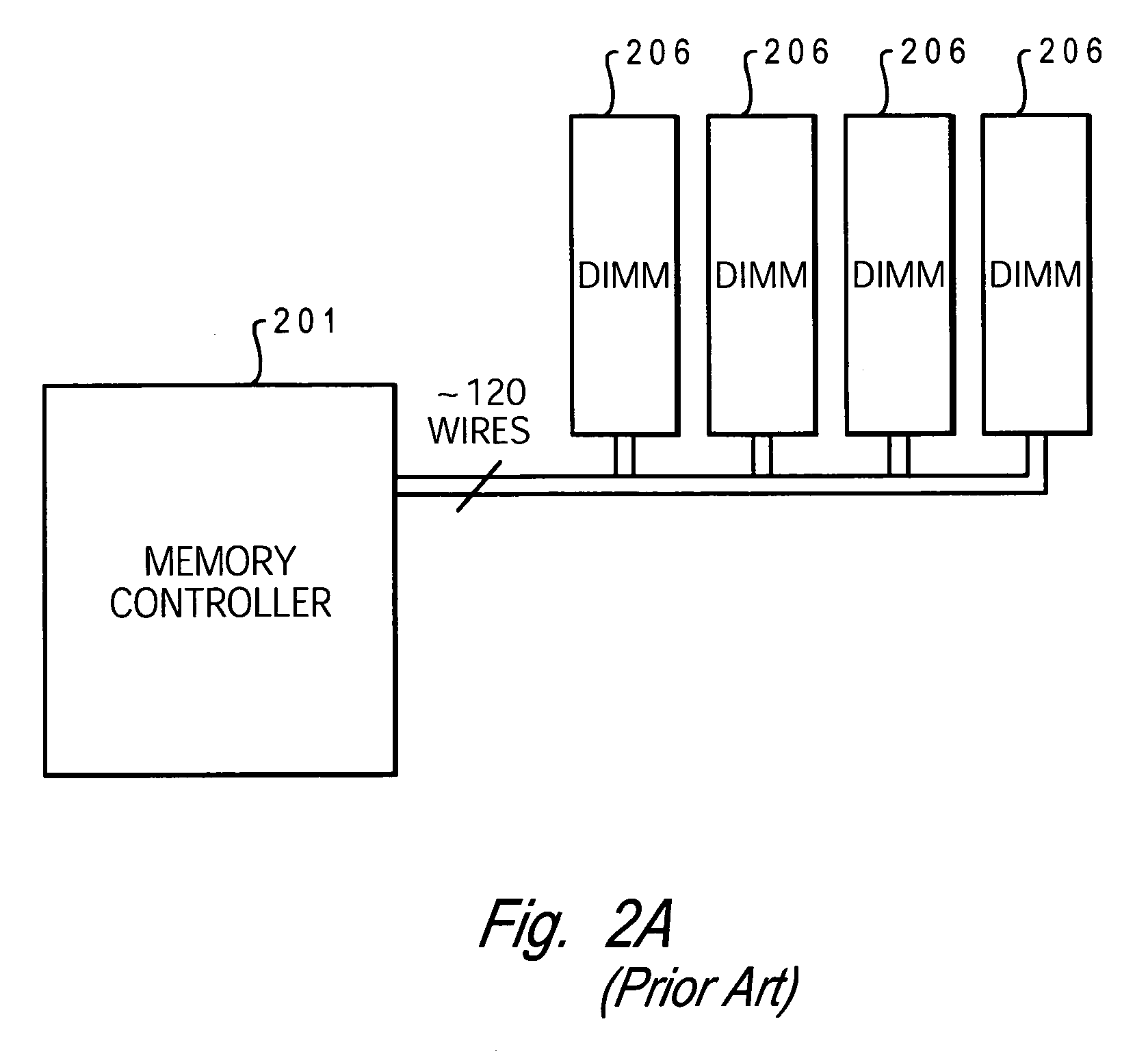

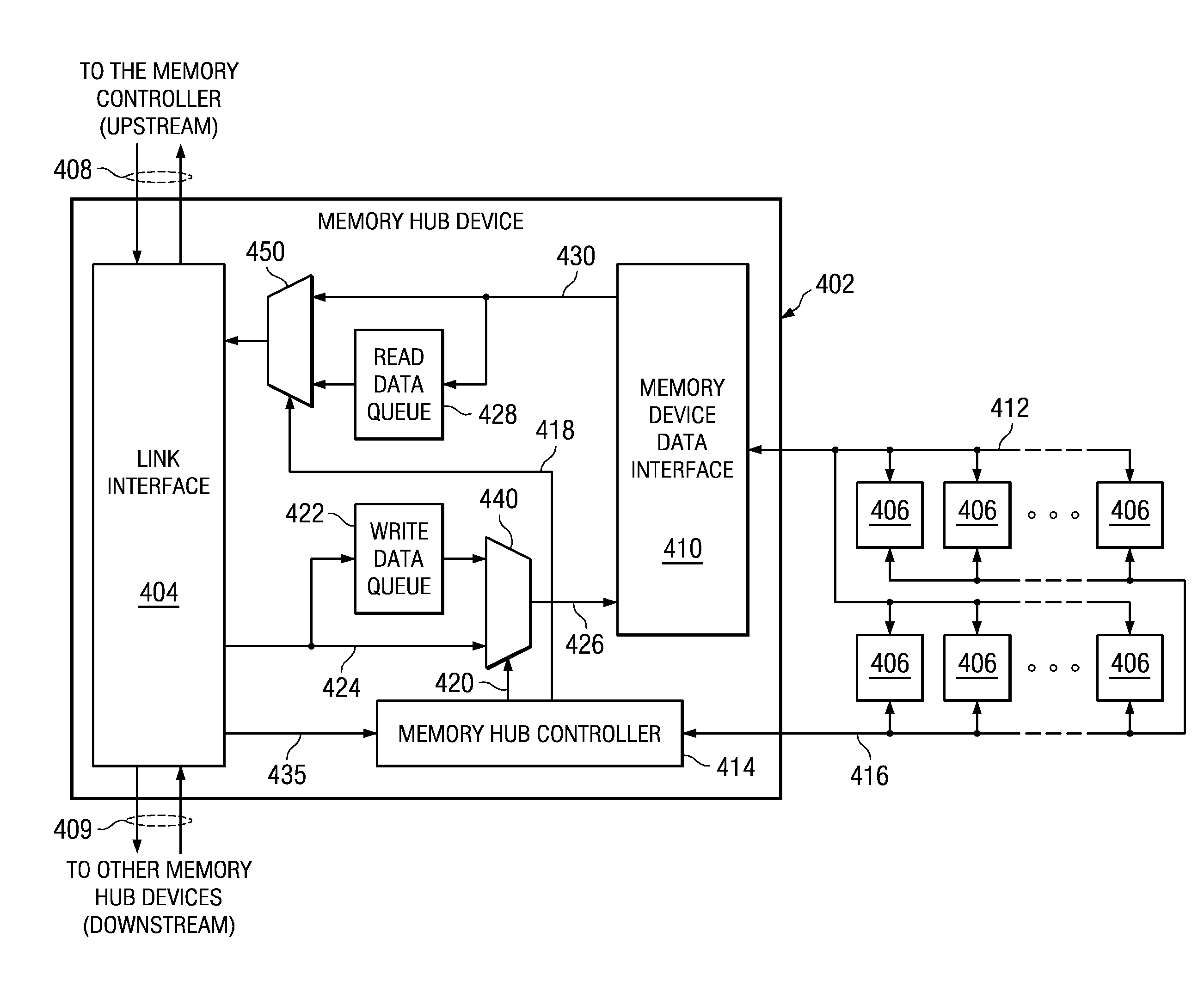

System to Increase the Overall Bandwidth of a Memory Channel By Allowing the Memory Channel to Operate at a Frequency Independent from a Memory Device Frequency

InactiveUS20090193201A1High bandwidthReduce operating frequencyDigital data processing detailsMemory systemsExternal storageMemory controller

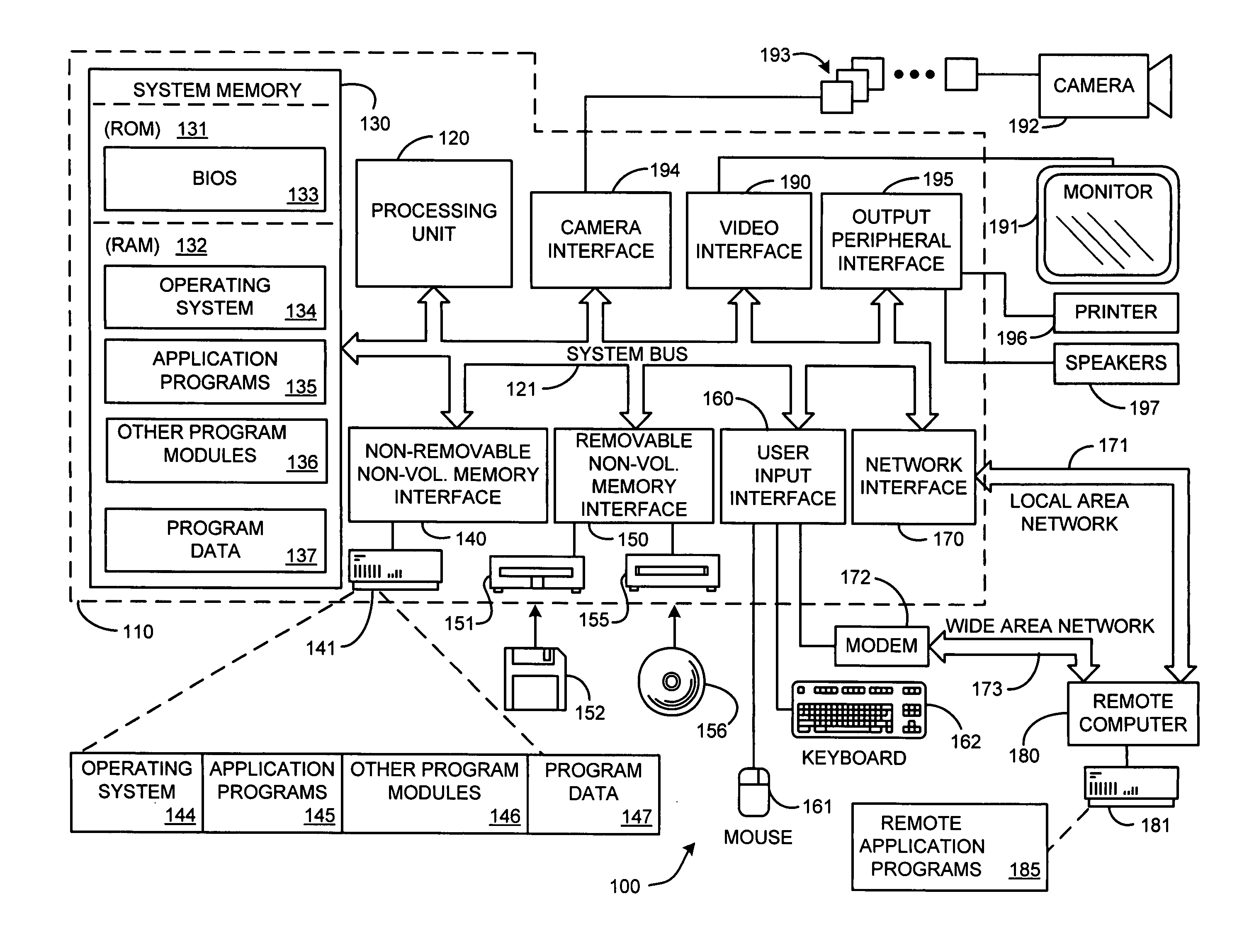

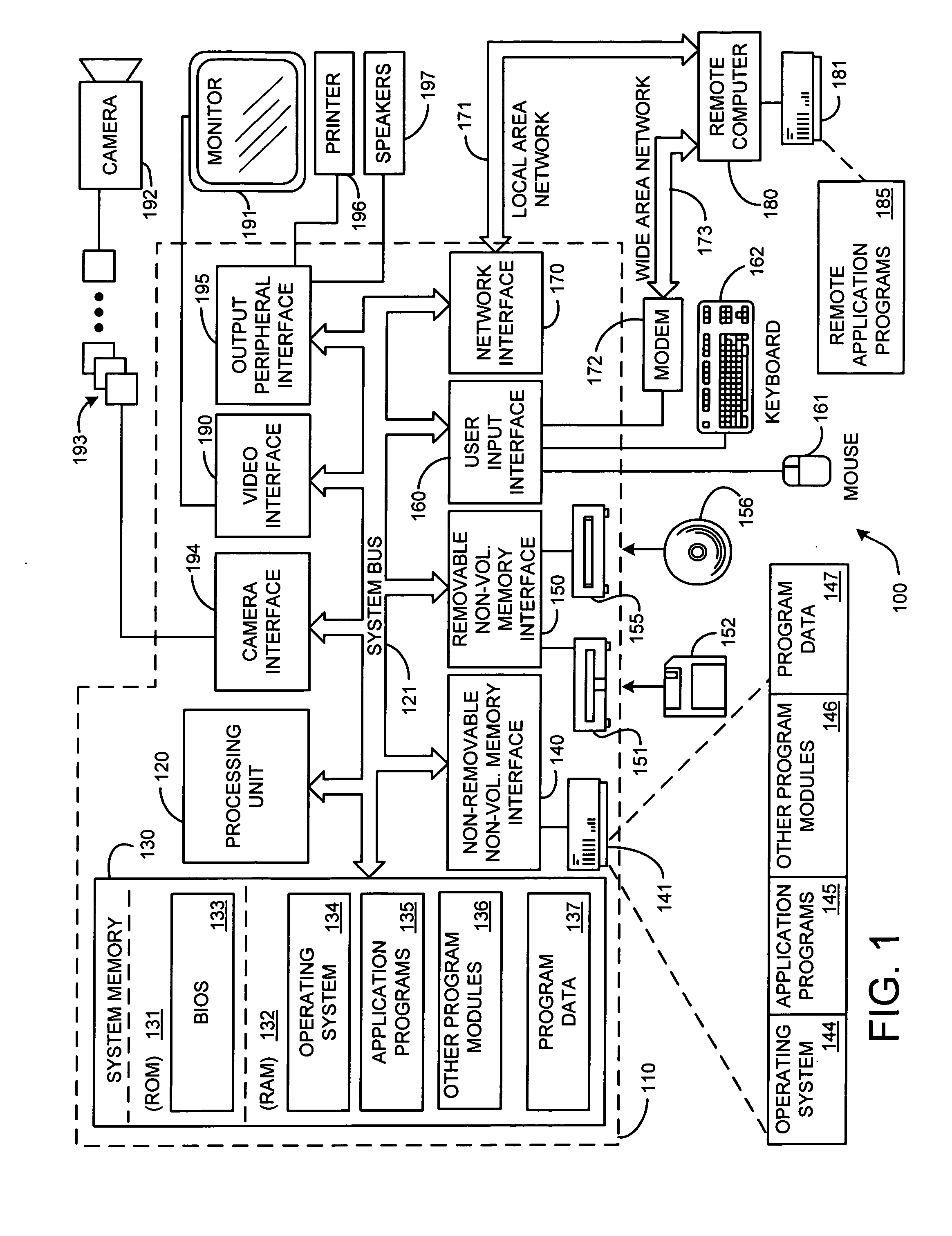

A memory system is provided that increases the overall bandwidth of a memory channel by operating the memory channel at a independent frequency. The memory system comprises a memory hub device integrated in a memory module. The memory hub device comprises a command queue that receives a memory access command from an external memory controller via a memory channel at a first operating frequency. The memory system also comprises a memory hub controller integrated in the memory hub device. The memory hub controller reads the memory access command from the command queue at a second operating frequency. By receiving the memory access command at the first operating frequency and reading the memory access command at the second operating frequency an asynchronous boundary is implemented. Using the asynchronous boundary, the memory channel operates at a maximum designed operating bandwidth, which is independent of the second operating frequency.

Owner:IBM CORP

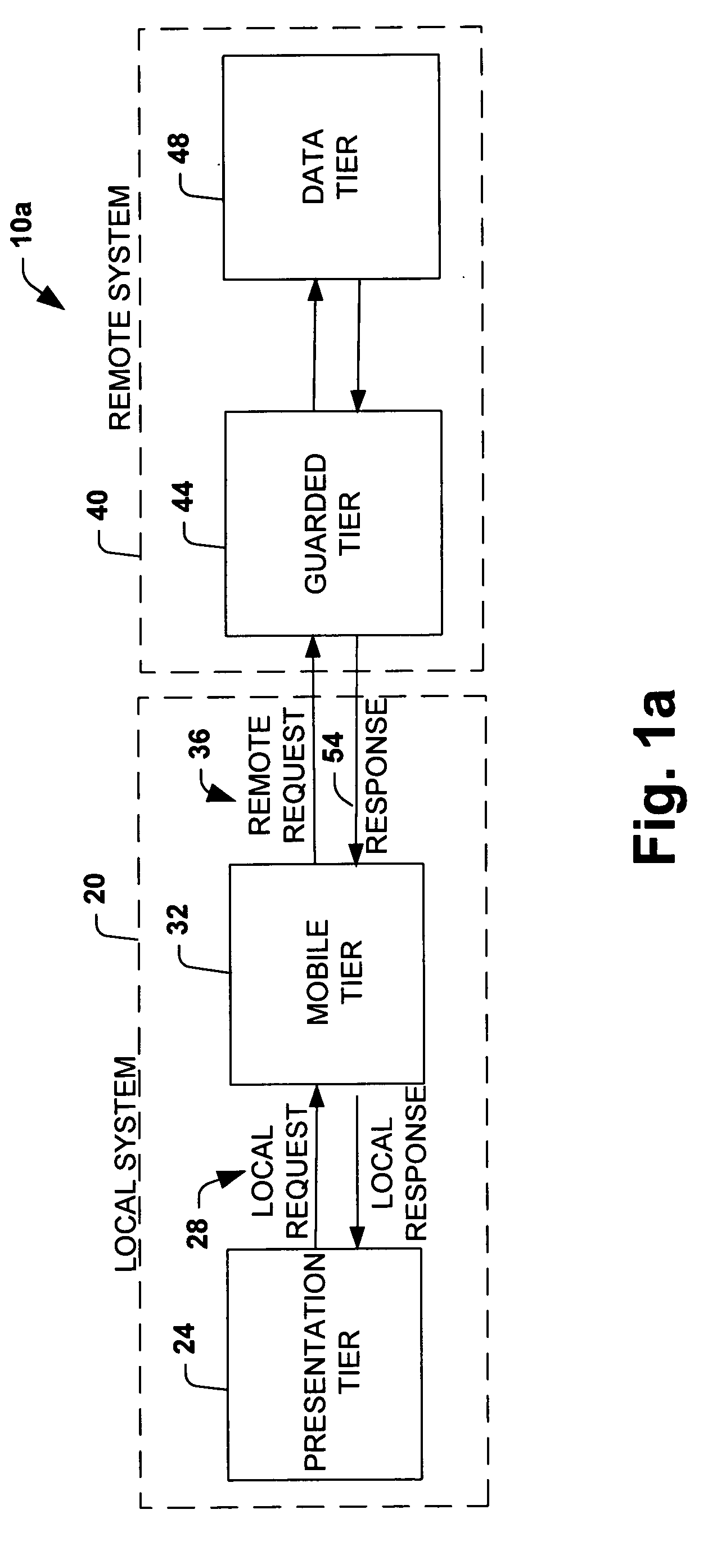

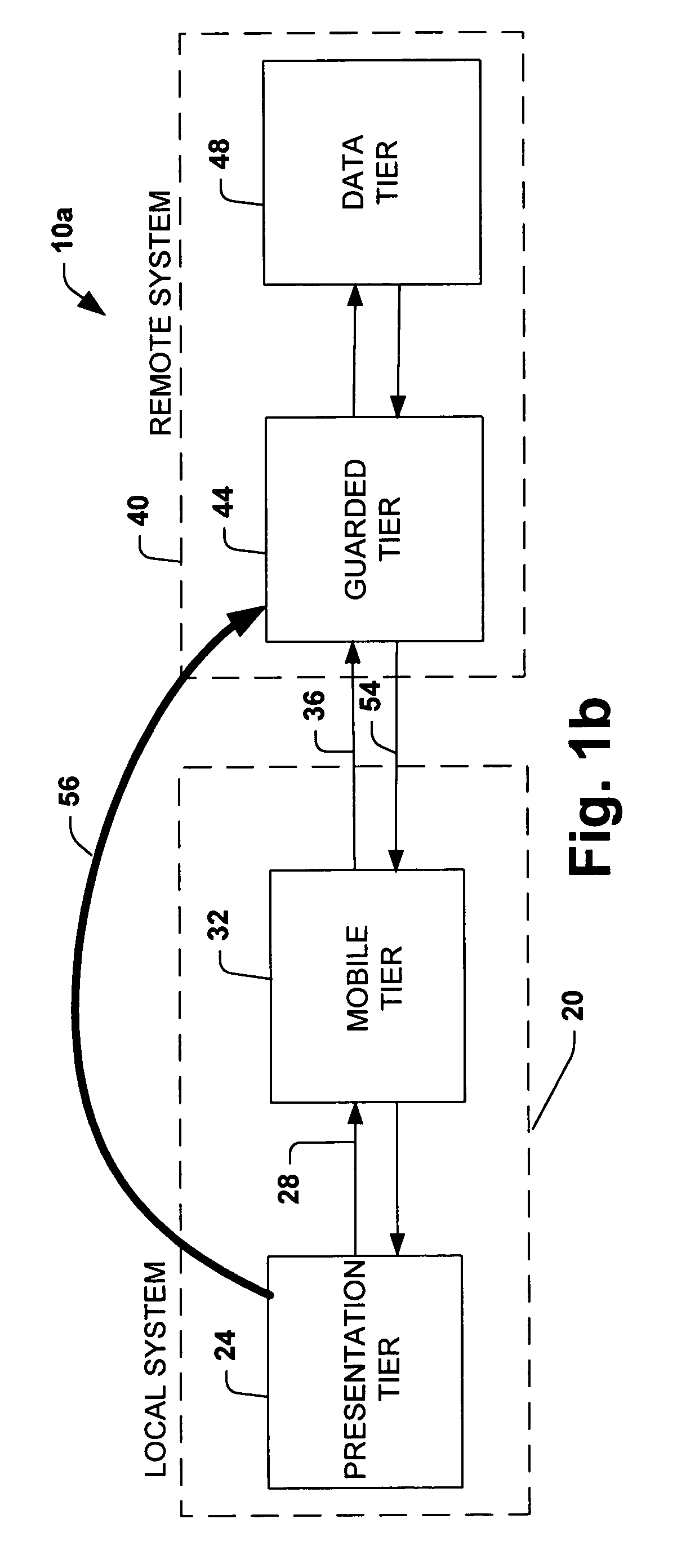

System and method providing multi-tier applications architecture

InactiveUS6996599B1Capability facilitatedImprove performanceMultiple digital computer combinationsProgram loading/initiatingApplication softwareClient-side

A network-based distributed application system is provided in accordance with the present invention for enabling services to be established locally on a client system. The system may include an application and presentation logic, at least a portion of which is interchangeably processed by a server or a client without modification to the portion. The core functionality provided by the application may be preserved between the client and the server wherein improved network performance may provided along with improved offline service capabilities.

Owner:MICROSOFT TECH LICENSING LLC

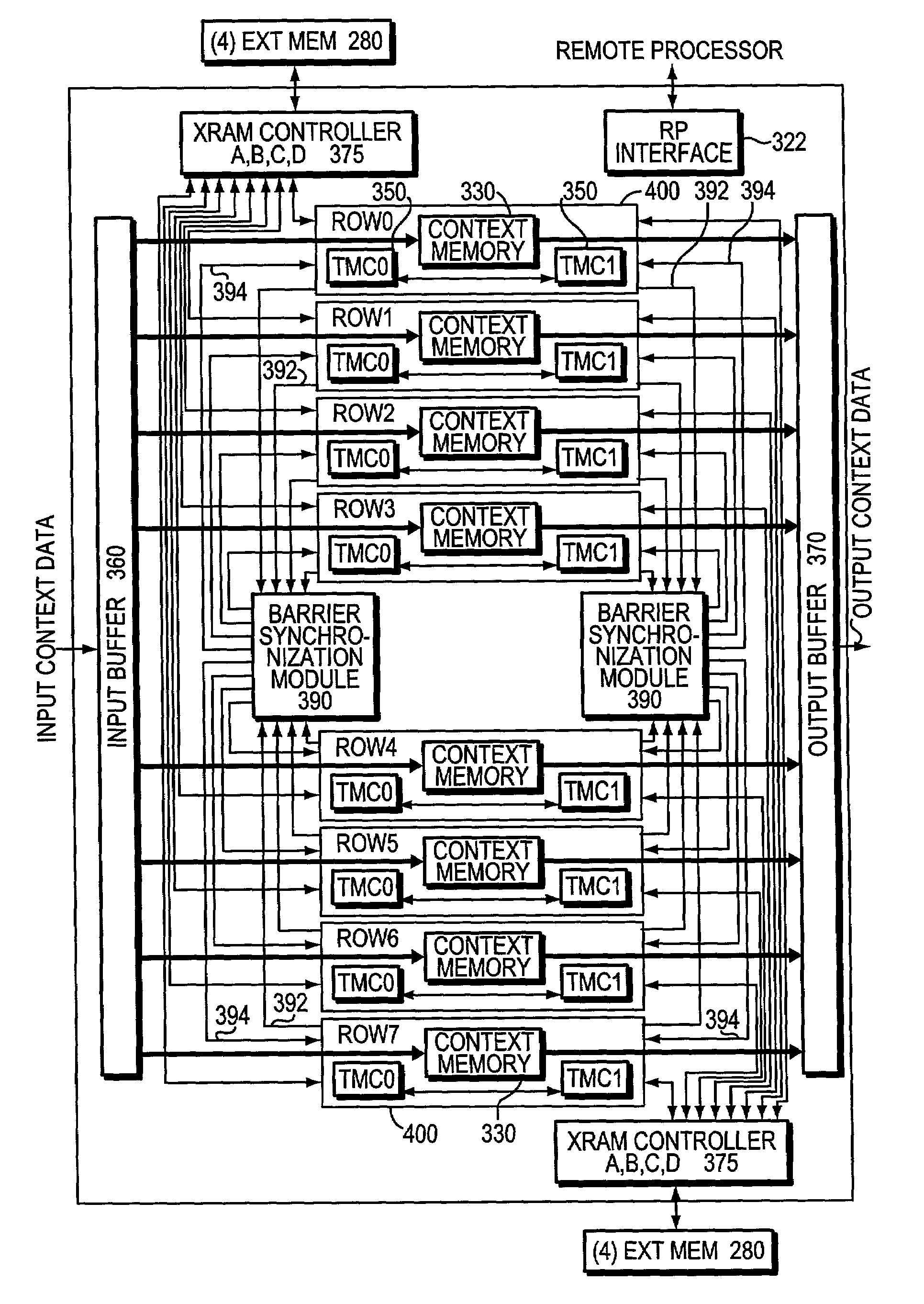

Barrier synchronization mechanism for processors of a systolic array

InactiveUS7100021B1Without consuming substantial memory resourceImprove latencyProgram synchronisationGeneral purpose stored program computerSystolic arrayCommon point

A mechanism synchronizes among processors of a processing engine in an intermediate network station. The processing engine is configured as a systolic array having a plurality of processors arrayed as rows and columns. The mechanism comprises a barrier synchronization mechanism that enables synchronization among processors of a column (i.e., different rows) of the systolic array. That is, the barrier synchronization function allows all participating processors within a column to reach a common point within their instruction code sequences before any of the processors proceed.

Owner:CISCO TECH INC

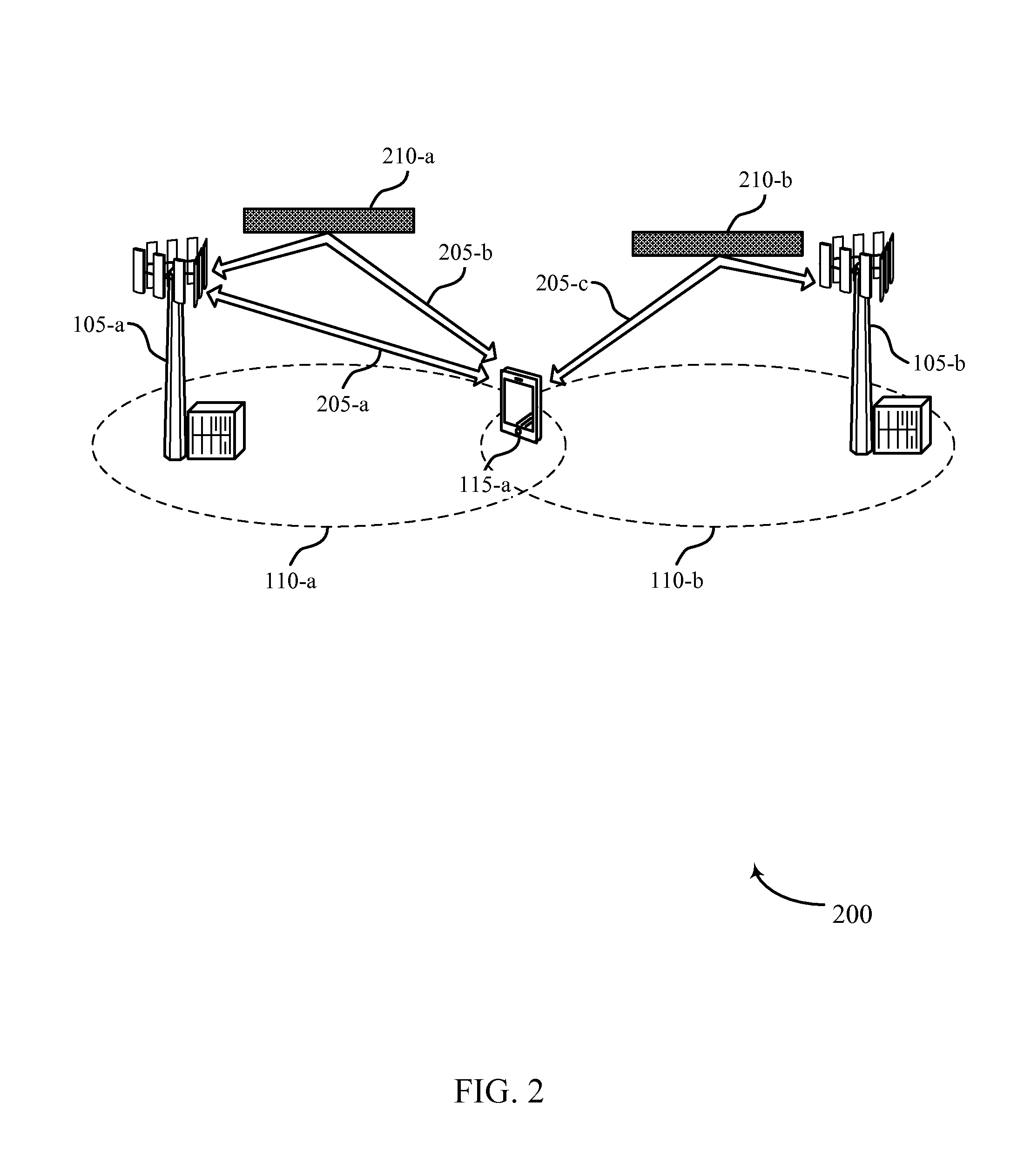

Techniques for beam shaping at a millimeter wave base station and a wireless device and fast antenna subarray selection at a wireless device

ActiveUS20160198474A1Improve latencyQuick selectionSpatial transmit diversityWireless communicationCommunications systemLink margin

Methods, systems, and devices are described for wireless communication at a user equipment (UE). A wireless communications system may improve UE discovery latency by dynamically selecting and switching beam forming codebooks at the millimeter wave base station and the wireless device. Selecting an optimal beam forming codebook may allow the wireless communication system to improve link margins between the base station without compromising resources. In some examples, a wireless device may determine whether the received signals from the millimeter wave base station satisfy established signal to noise (SNR) thresholds, and select an optimal beam codebook to establish communication. Additionally or alternately, the wireless device may further signal the selected beam codebook to the millimeter wave base station and direct the millimeter wave base station to adjust its codebook based on the selection.

Owner:QUALCOMM INC

Systems and methods for additional retransmissions of dropped packets

ActiveUS20070206497A1Increase network latencyAvoid delayTransmission systemsFrequency-division multiplex detailsTraffic capacityBoundary detection

Systems and methods for utilizing transaction boundary detection methods in queuing and retransmission decisions relating to network traffic are described. By detecting transaction boundaries and sizes, a client, server, or intermediary device may prioritize based on transaction sizes in queuing decisions, giving precedence to smaller transactions which may represent interactive and / or latency-sensitive traffic. Further, after detecting a transaction boundary, a device may retransmit one or more additional packets prompting acknowledgements, in order to ensure timely notification if the last packet of the transaction has been dropped. Systems and methods for potentially improving network latency, including retransmitting a dropped packet twice or more in order to avoid incurring additional delays due to a retransmitted packet being lost are also described.

Owner:CITRIX SYST INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com