Patents

Literature

139 results about "High performance network" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

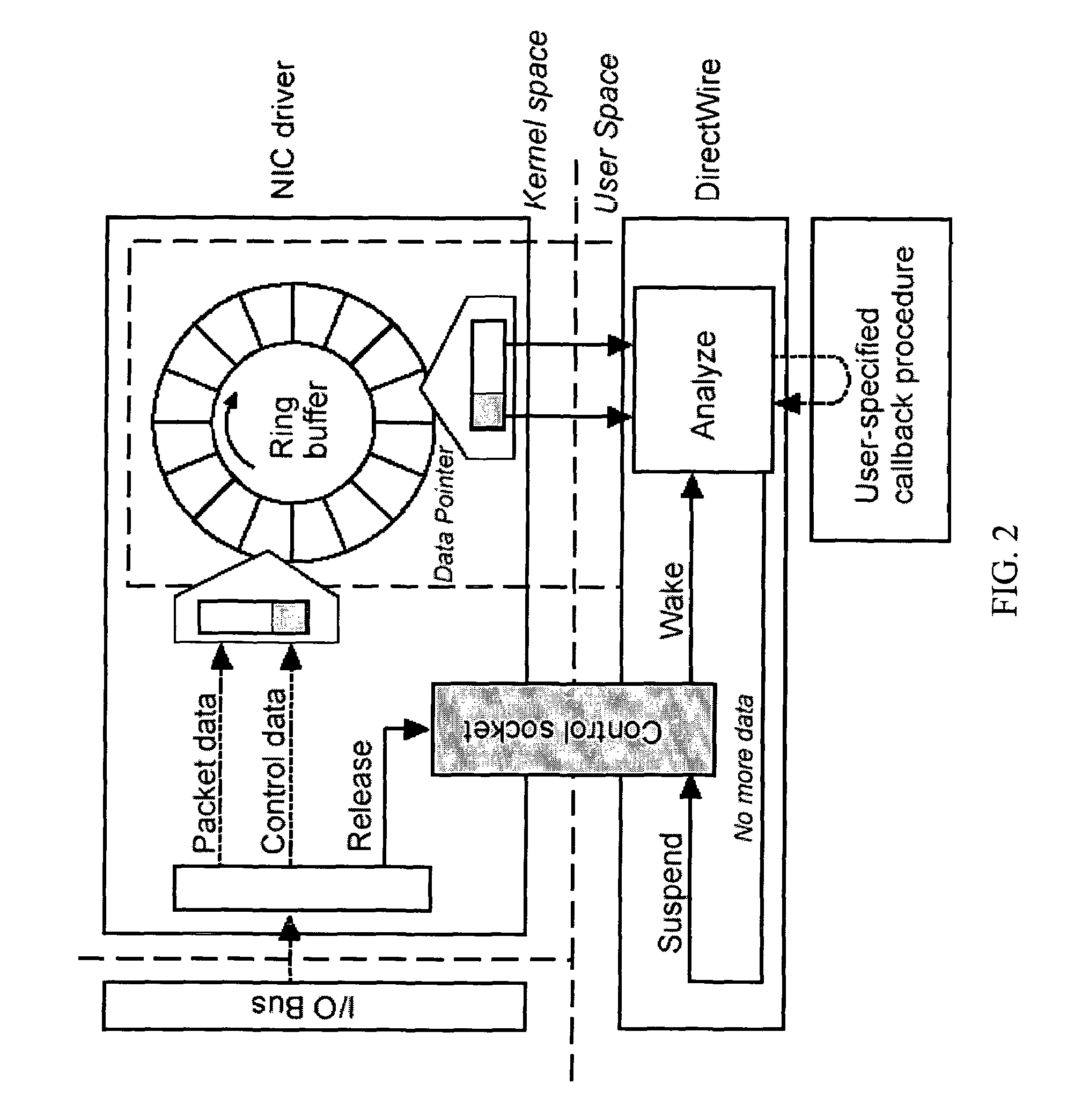

High-performance network content analysis platform

ActiveUS20050055399A1Prevent leakageDigital data processing detailsAnalogue secracy/subscription systemsData streamEmail attachment

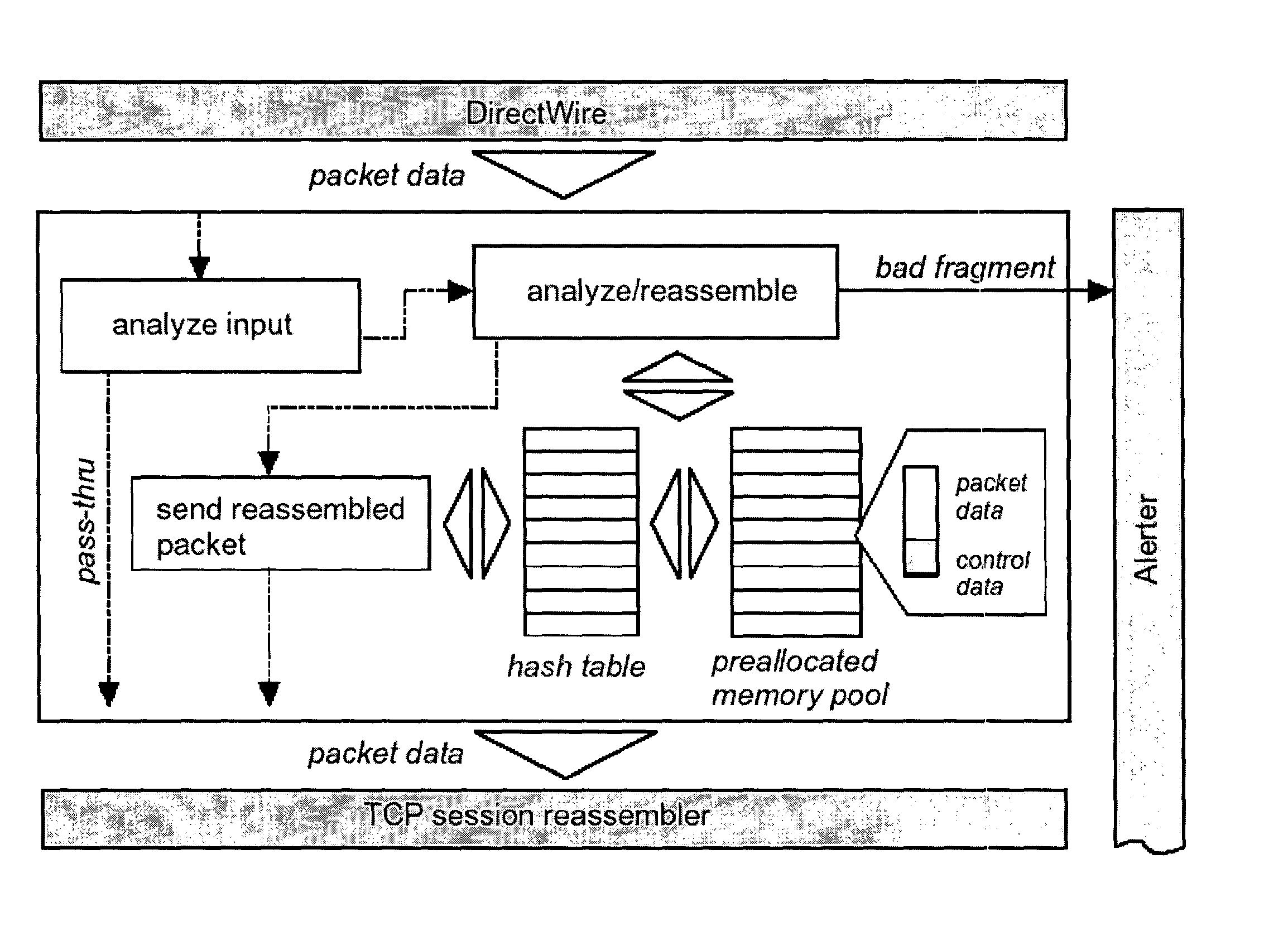

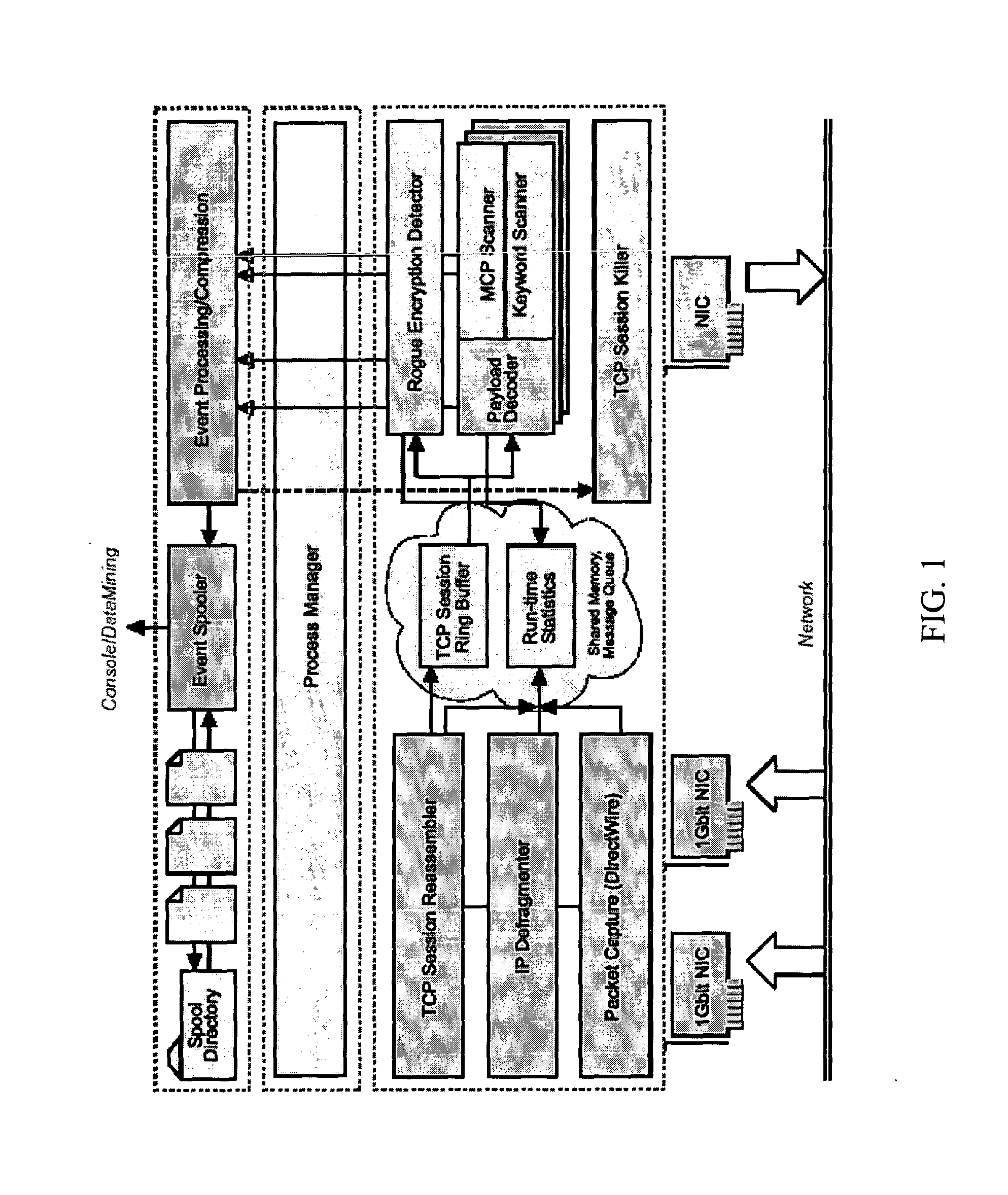

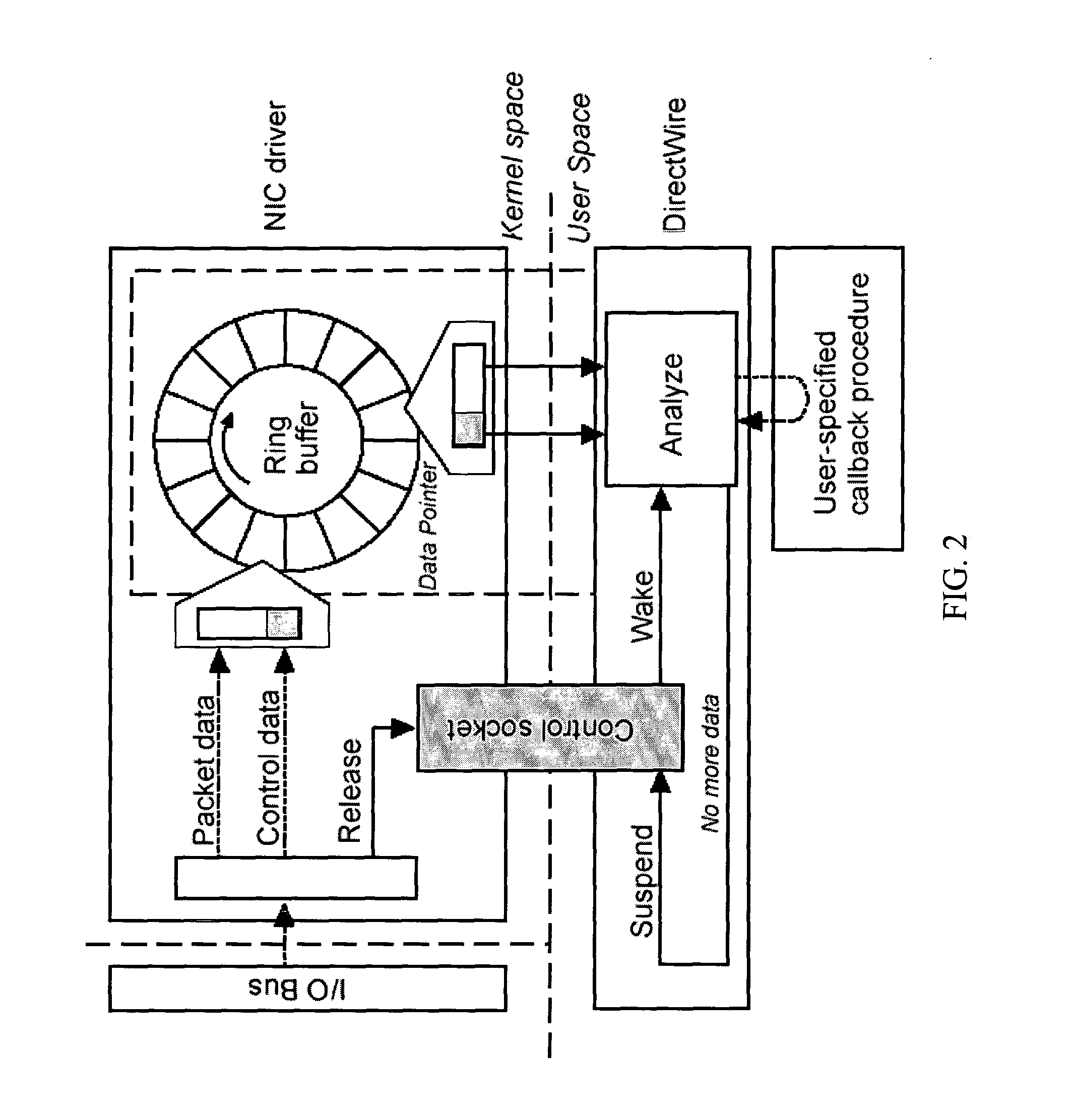

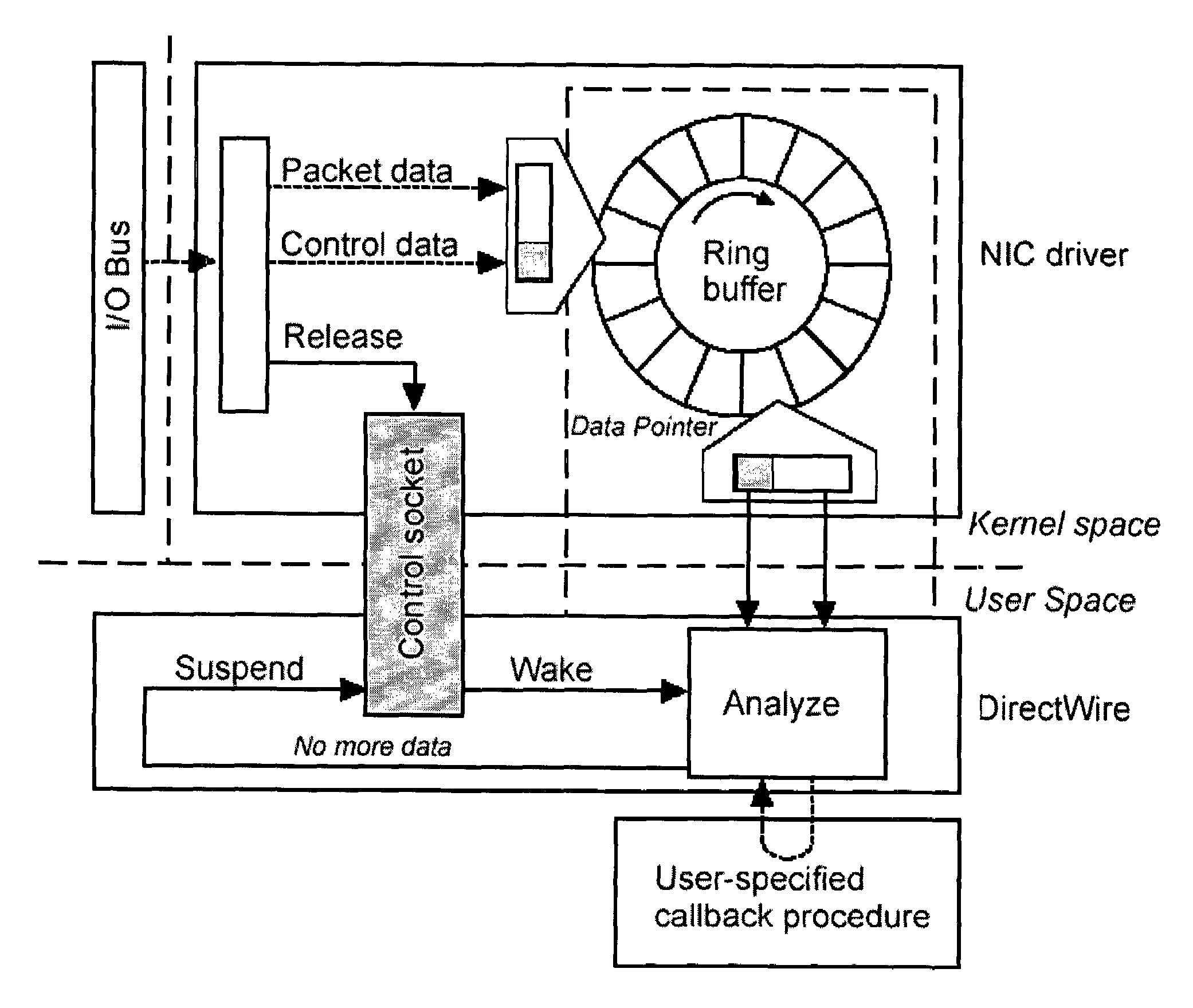

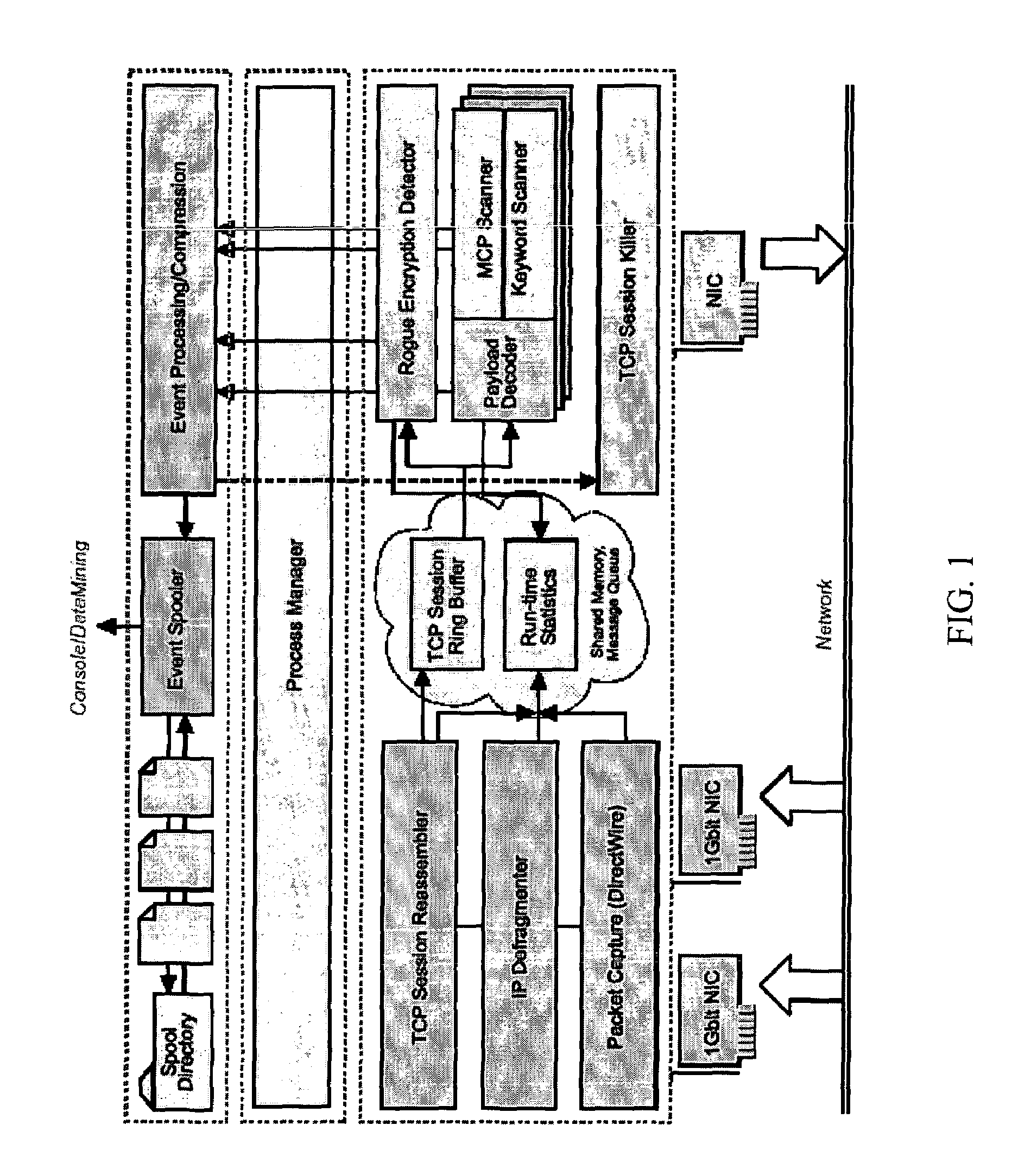

One implementation of a method reassembles complete client-server conversation streams, applies decoders and / or decompressors, and analyzes the resulting data stream using multi-dimensional content profiling and / or weighted keyword-in-context. The method may detect the extrusion of the data, for example, even if the data has been modified from its original form and / or document type. The decoders may also uncover hidden transport mechanisms such as, for example, e-mail attachments. The method may further detect unauthorized (e.g., rogue) encrypted sessions and stop data transfers deemed malicious. The method allows, for example, for building 2 Gbps (Full-Duplex)-capable extrusion prevention machines.

Owner:FIDELIS SECURITY SYSTEMS

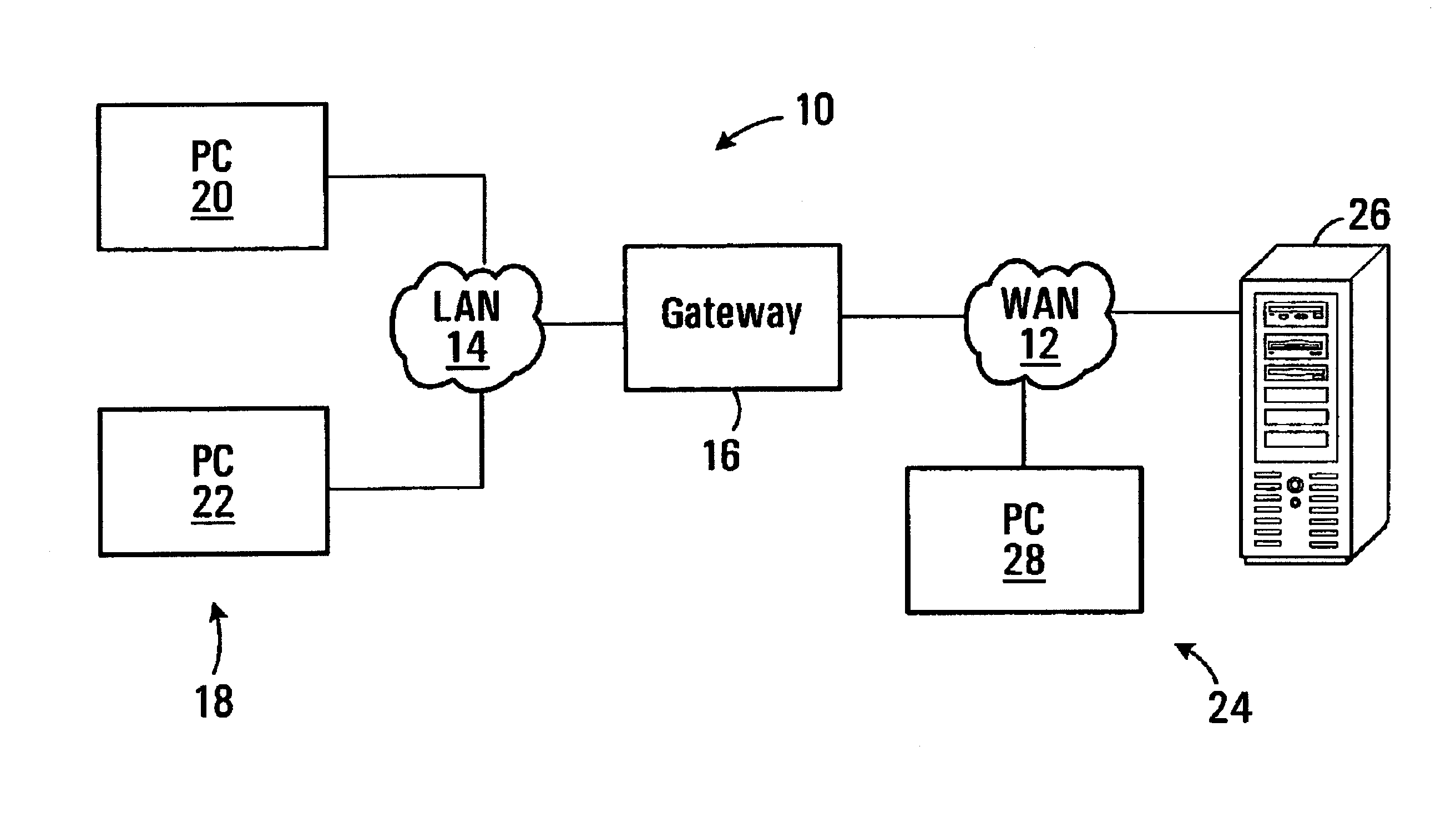

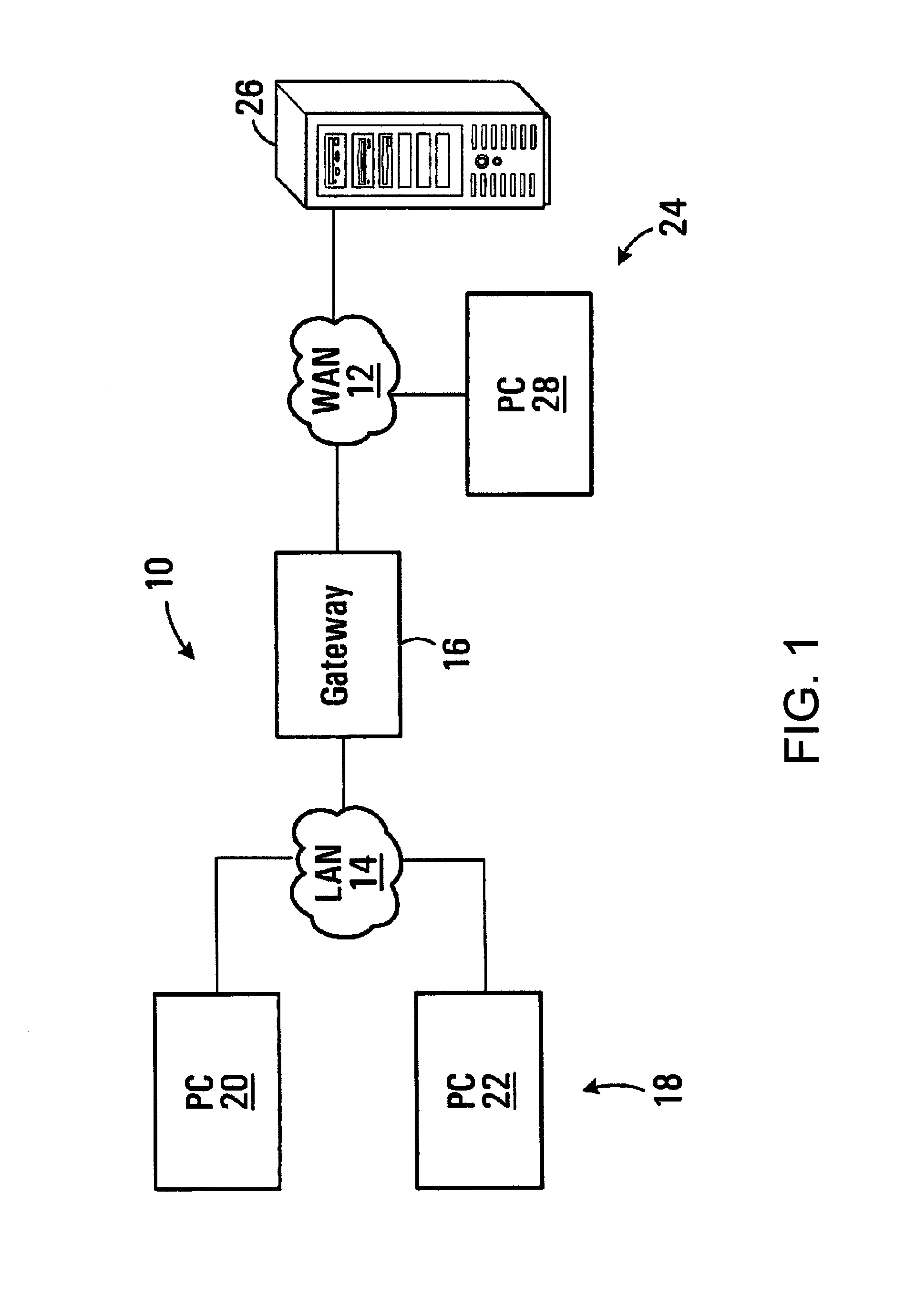

Method and system for providing high performance Web browser and server communications

InactiveUS6397253B1Avoid unnecessaryImprove performanceMultiple digital computer combinationsSpecial data processing applicationsWeb browserClient-side

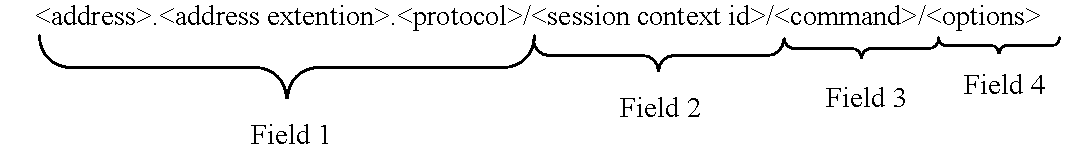

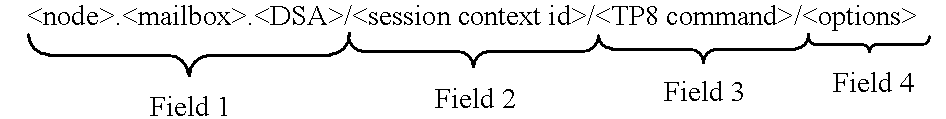

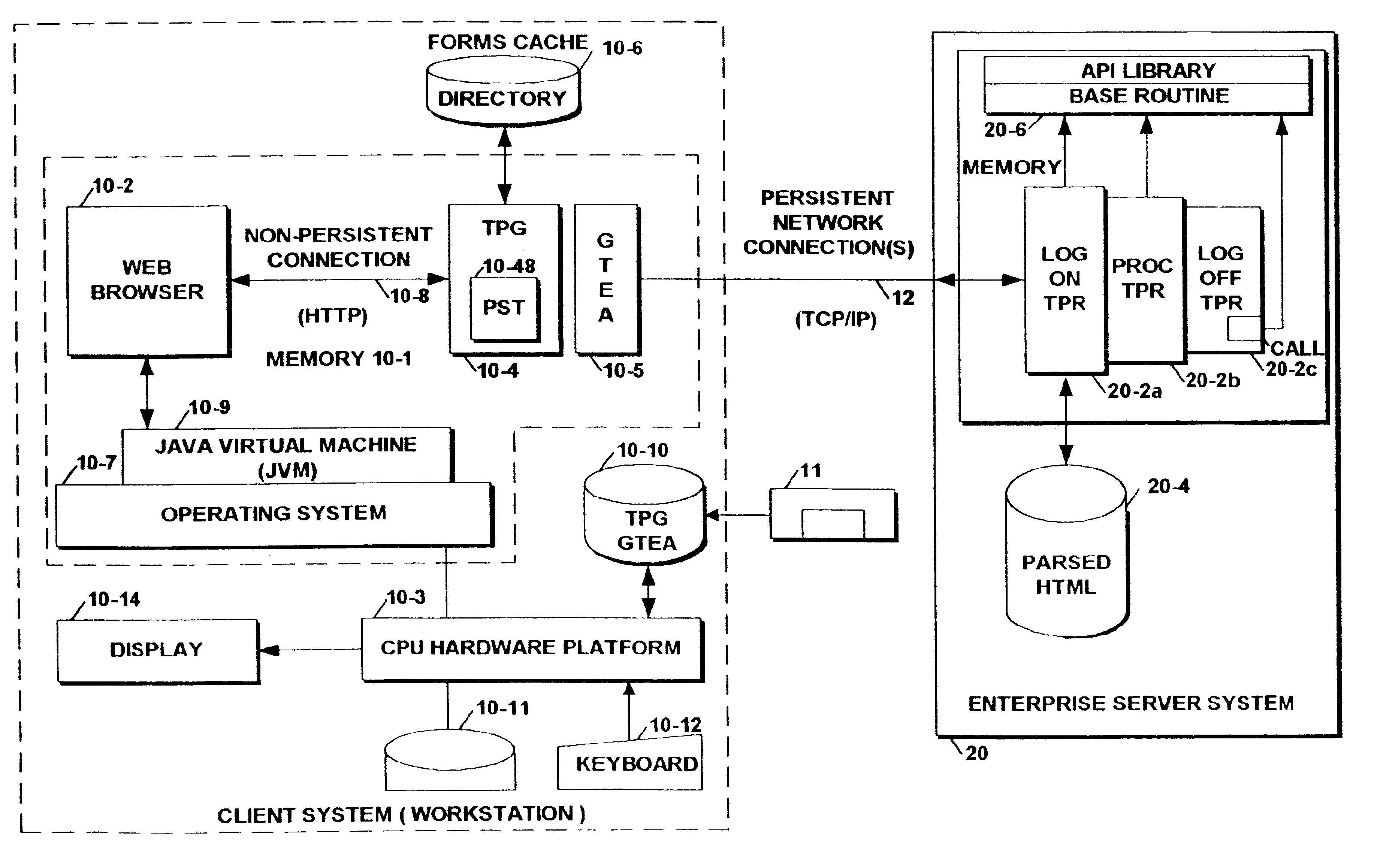

A client system utilizes a standard browser component and a transaction protocol gateway (TPG) component that operatively couples to the standard browser component. The browser component initiates the utilization of new session connections and reuse of existing session connections as a function of the coding of the universal resource locators (URLs) contained in each issued request. Each URL is passed to the TPG component that examines a context field included within the URL. If the context field has been set to a first value, the TPG component opens a new session connection to the server system and records the session connection information in a persistent session table (PST) component maintained by the TPG component. If the context field has been set to a second value, then the TPG component obtains the session connection information in the PST component for the established session connection and passes the data from the browser component to the server system over the existing persistent session connection.

Owner:BULL HN INFORMATION SYST INC

High-performance network content analysis platform

ActiveUS7467202B2Prevent leakageDigital data processing detailsAnalogue secracy/subscription systemsData streamKeyword analysis

One implementation of a method reassembles complete client-server conversation streams, applies decoders and / or decompressors, and analyzes the resulting data stream using multi-dimensional content profiling and / or weighted keyword-in-context. The method may detect the extrusion of the data, for example, even if the data has been modified from its original form and / or document type. The decoders may also uncover hidden transport mechanisms such as, for example, e-mail attachments. The method may further detect unauthorized (e.g., rogue) encrypted sessions and stop data transfers deemed malicious. The method allows, for example, for building 2 Gbps (Full-Duplex)-capable extrusion prevention machines.

Owner:FIDELIS SECURITY LLC

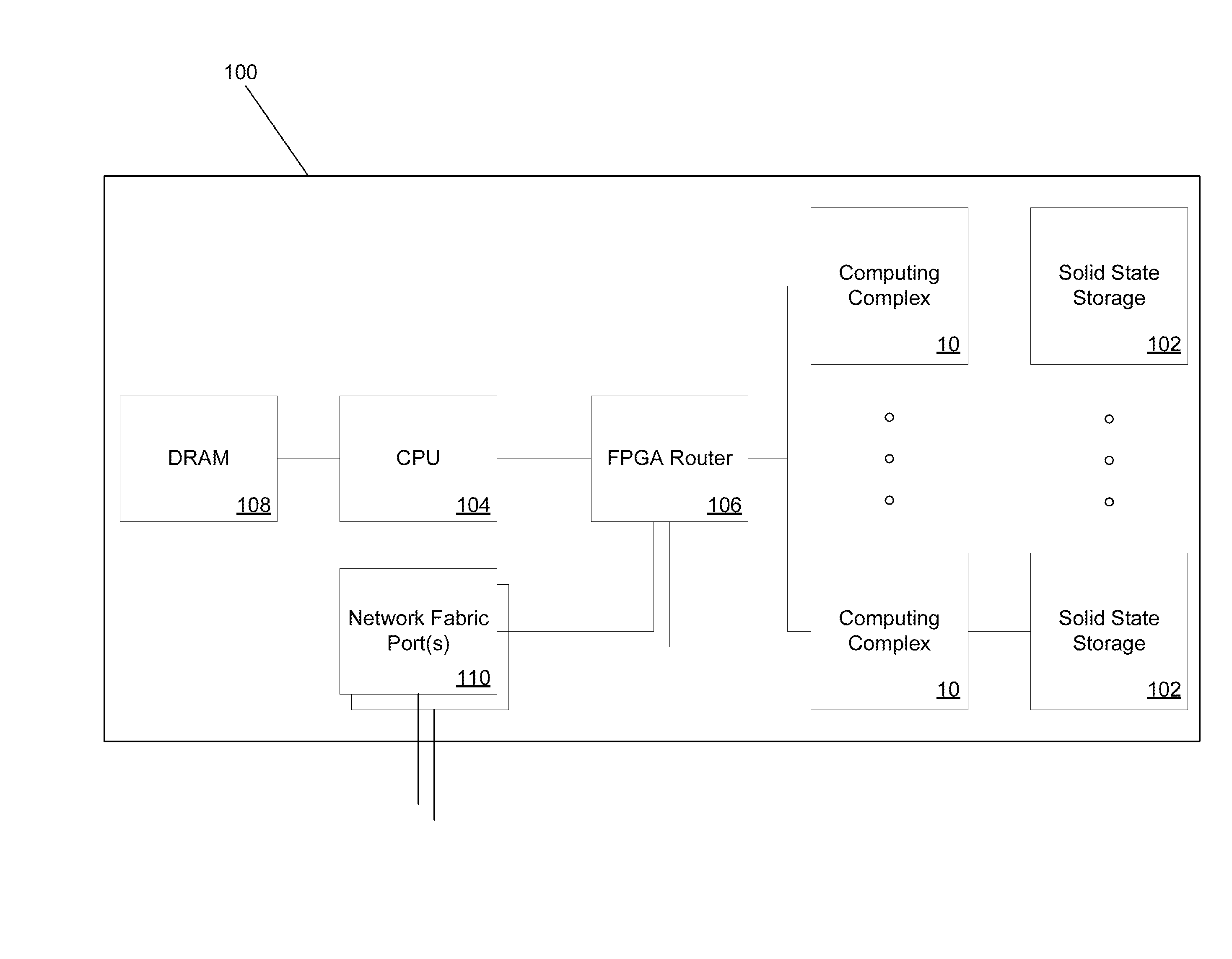

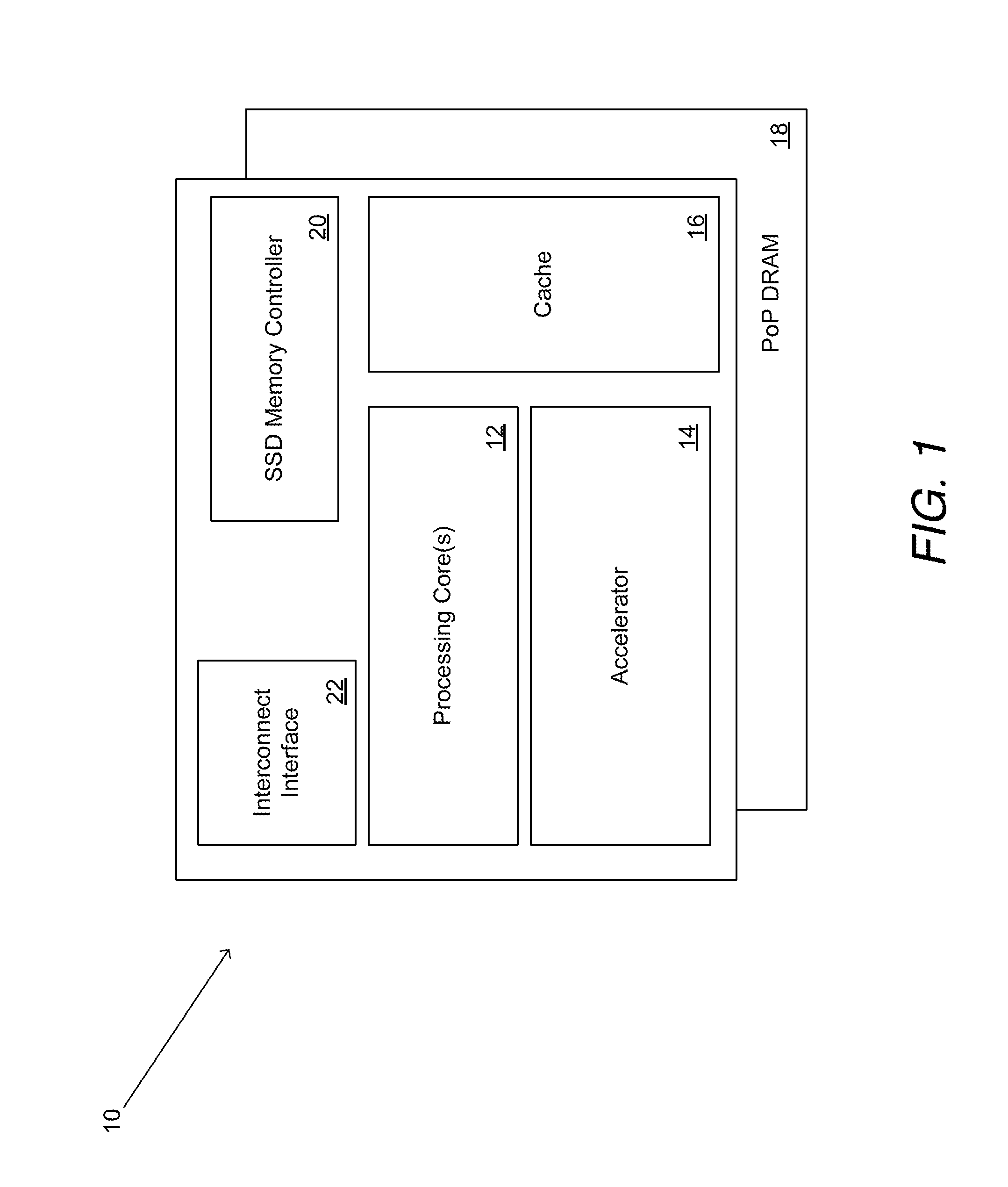

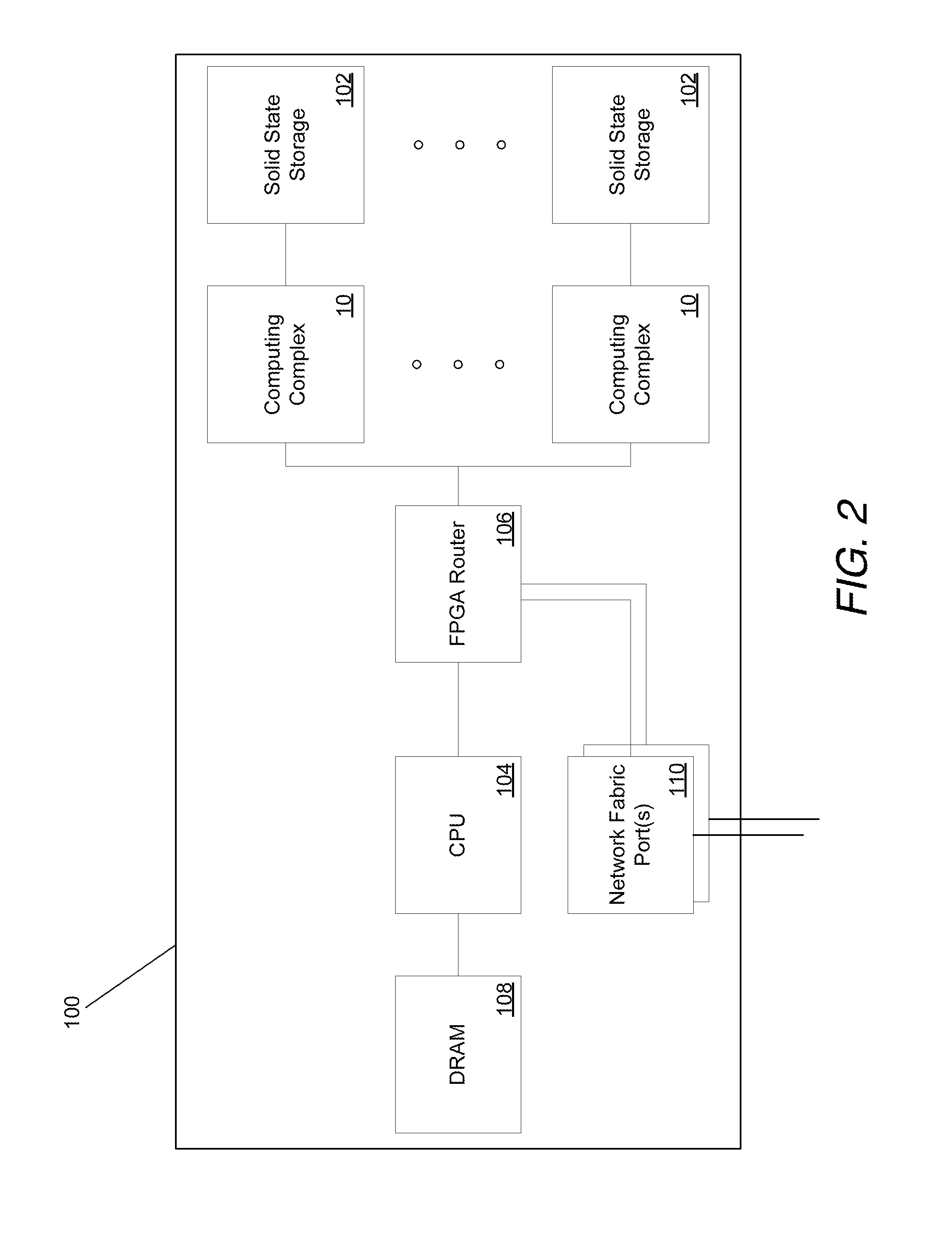

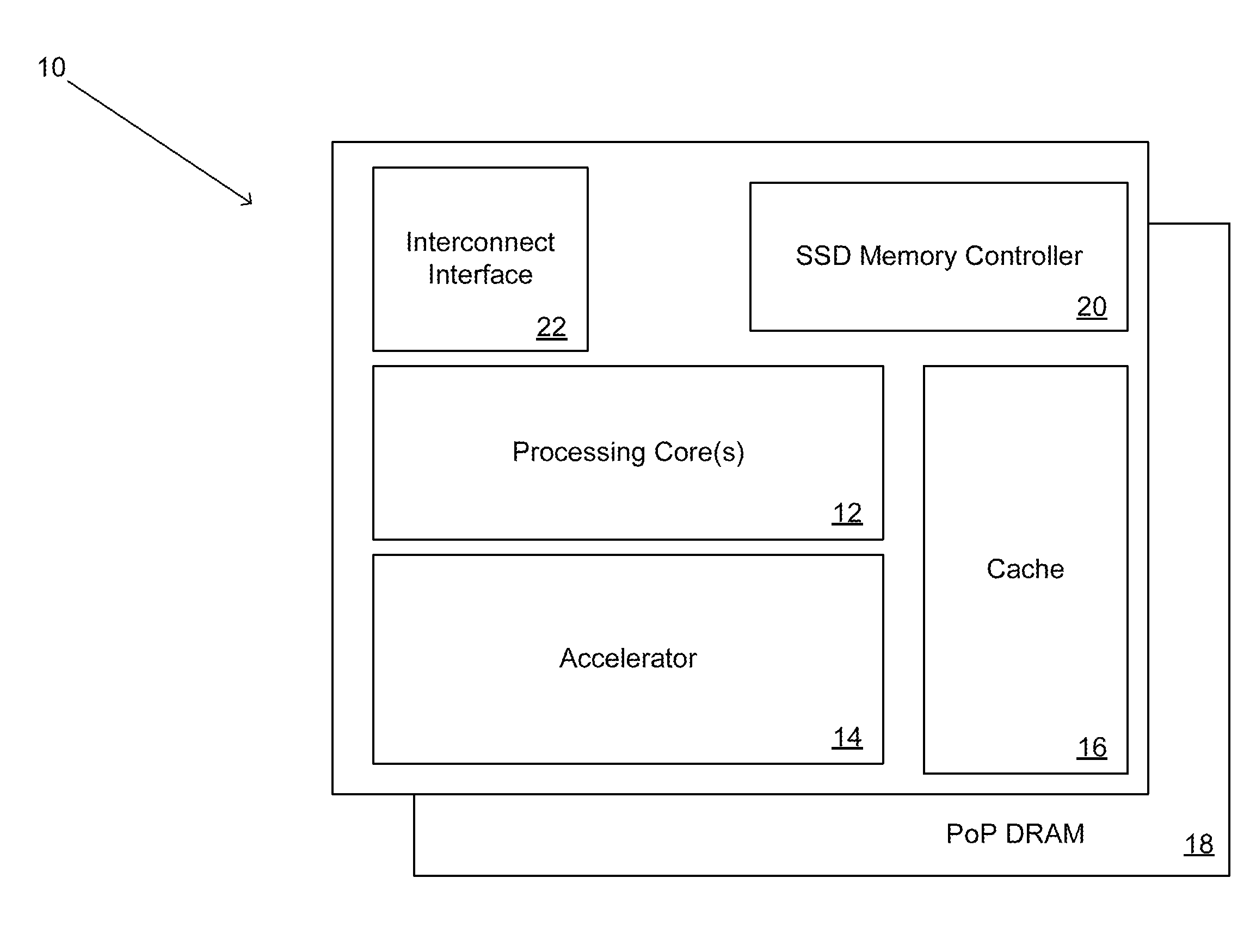

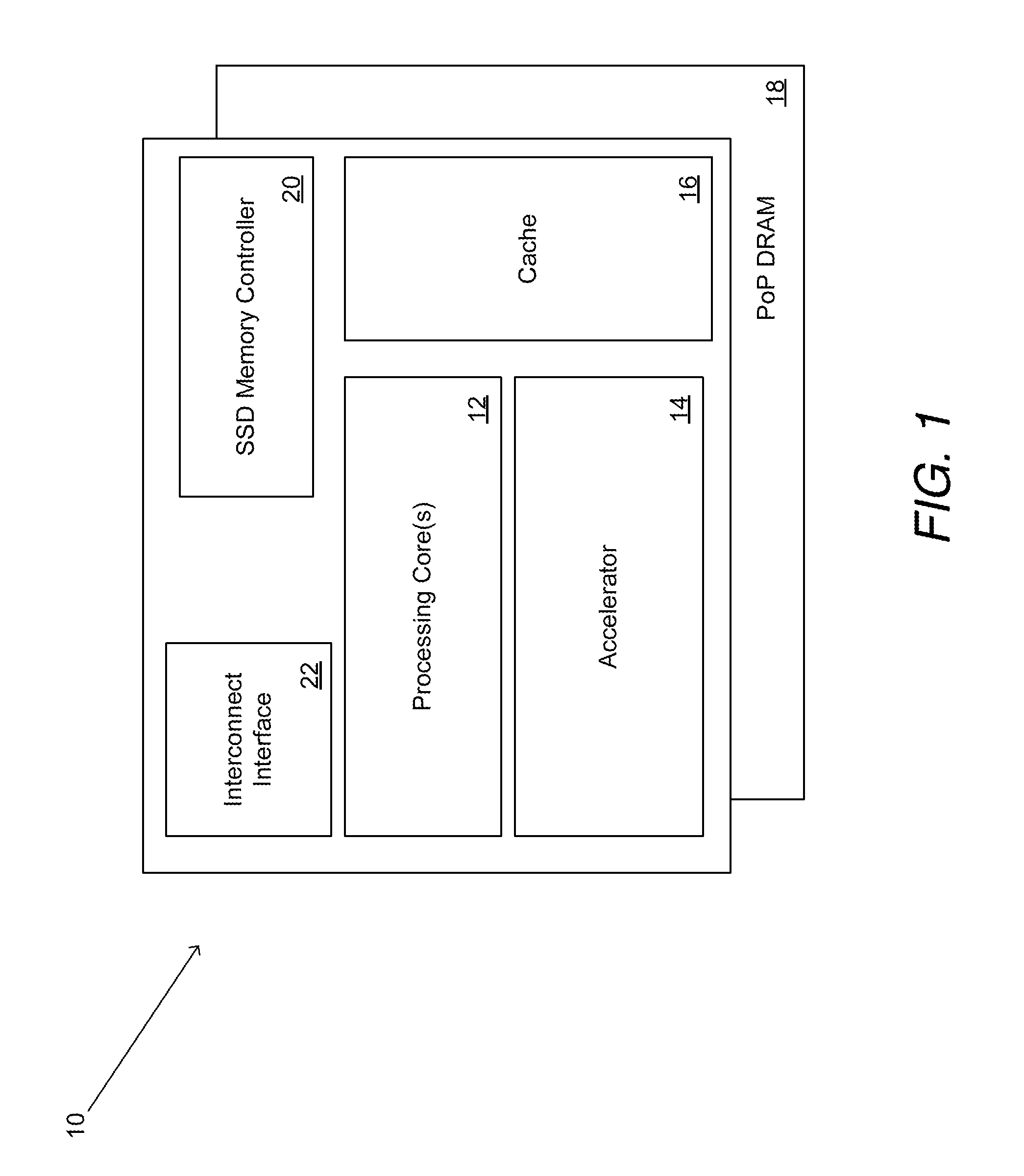

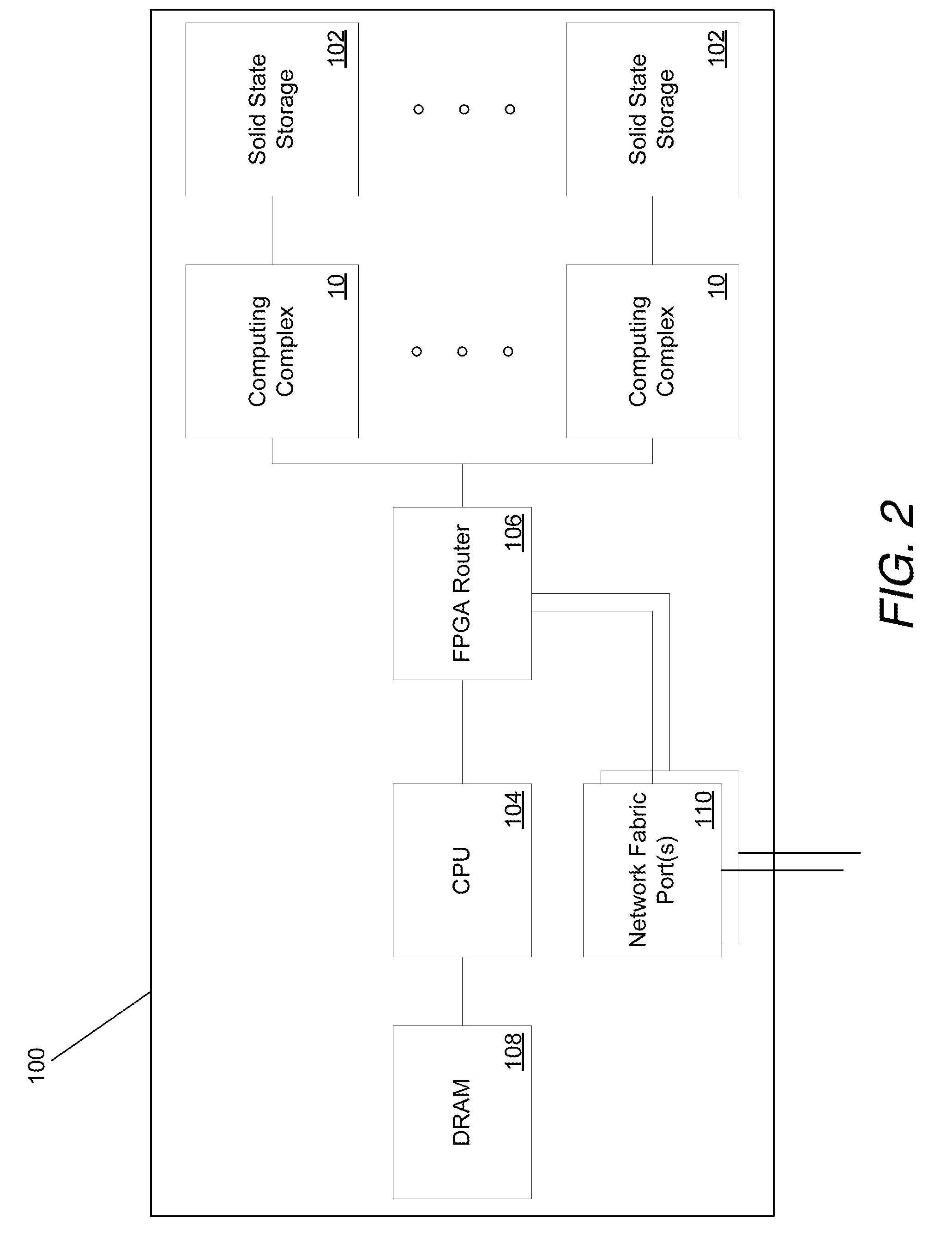

Systems and methods for rapid processing and storage of data

ActiveUS9552299B2Faster bandwidthImprove latencyMemory architecture accessing/allocationMemory adressing/allocation/relocationMassively parallelProcessing core

Systems and methods of building massively parallel computing systems using low power computing complexes in accordance with embodiments of the invention are disclosed. A massively parallel computing system in accordance with one embodiment of the invention includes at least one Solid State Blade configured to communicate via a high performance network fabric. In addition, each Solid State Blade includes a processor configured to communicate with a plurality of low power computing complexes interconnected by a router, and each low power computing complex includes at least one general processing core, an accelerator, an I / O interface, and cache memory and is configured to communicate with non-volatile solid state memory.

Owner:CALIFORNIA INST OF TECH

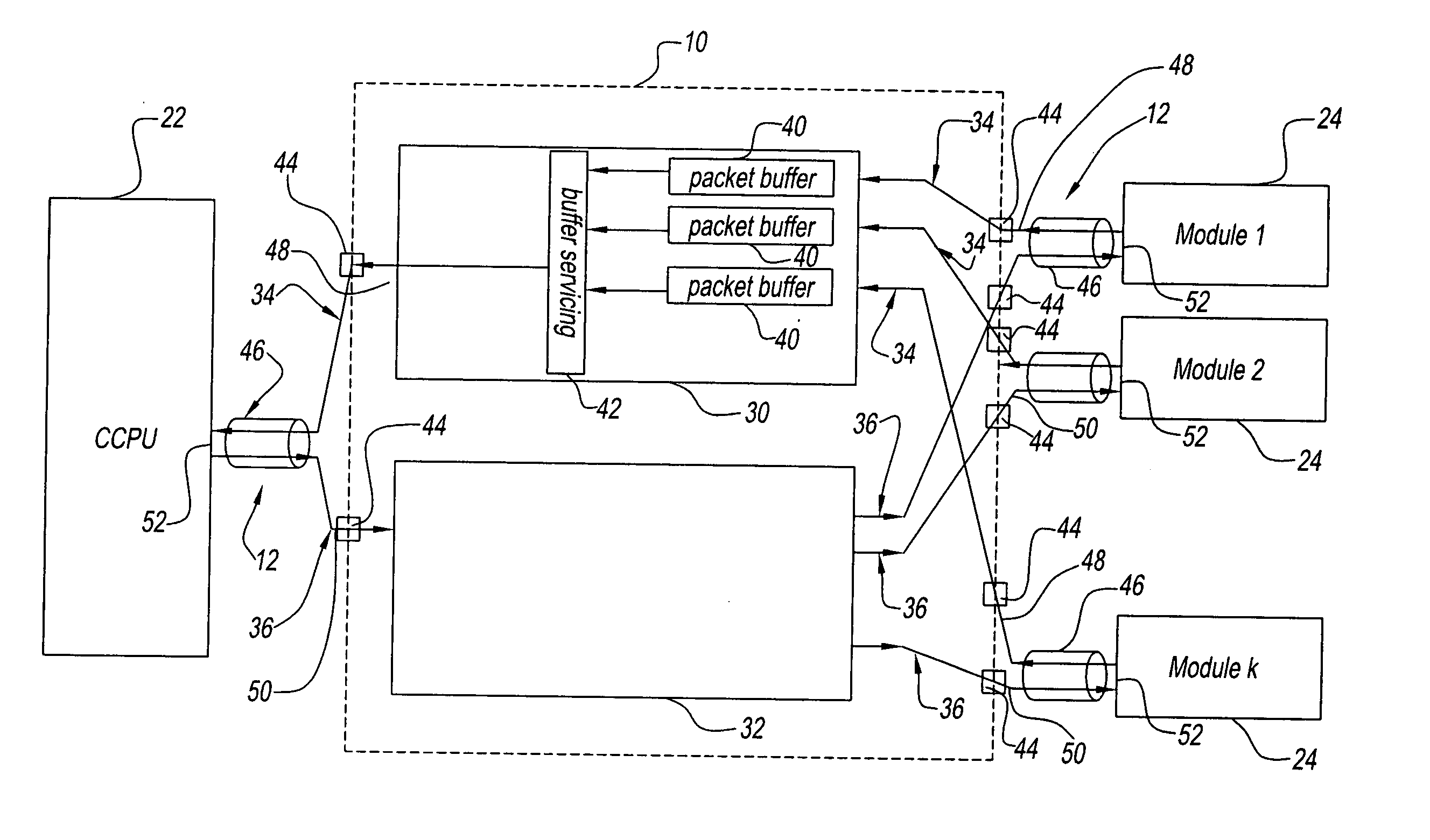

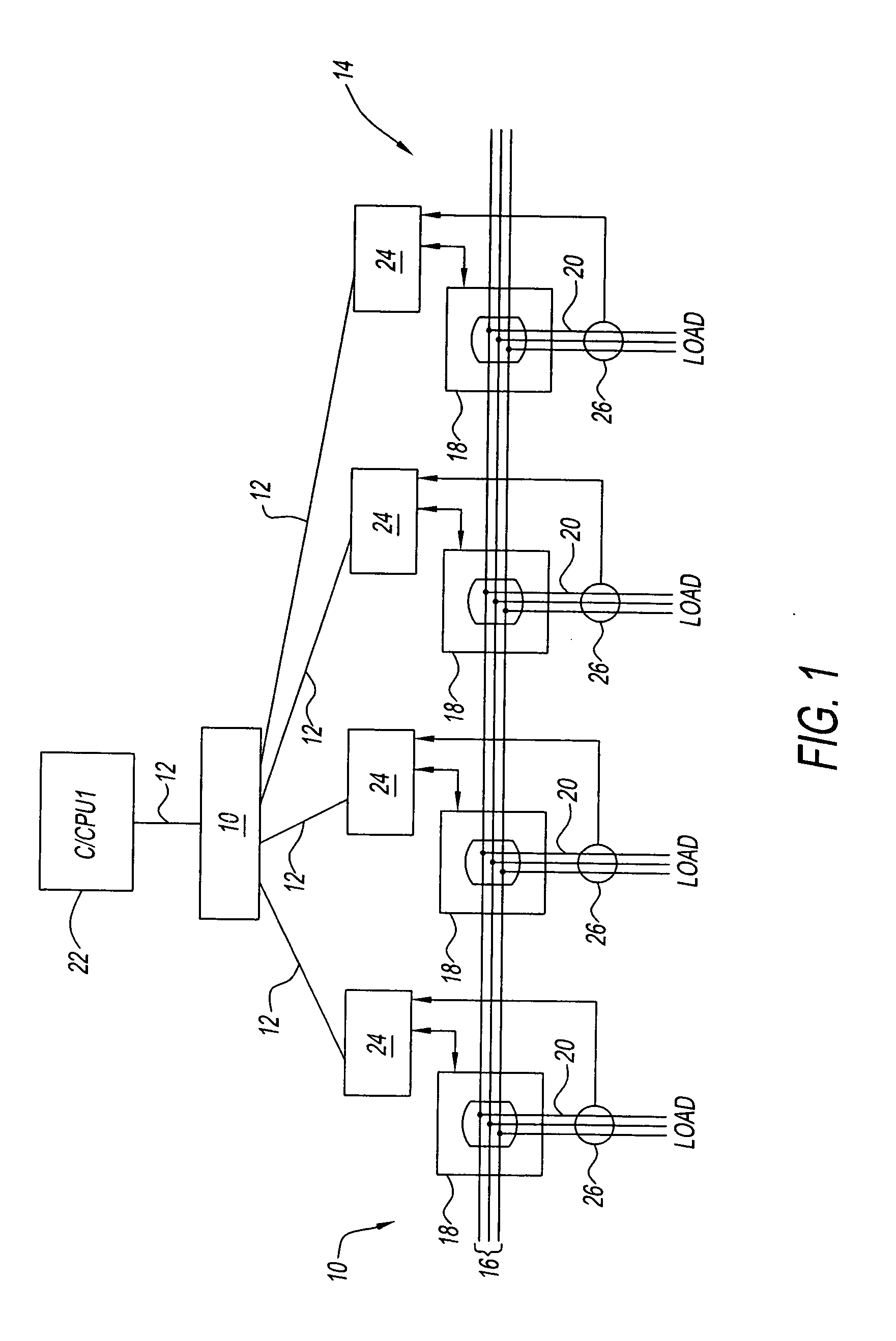

Method and apparatus for data re-assembly with a high performance network interface

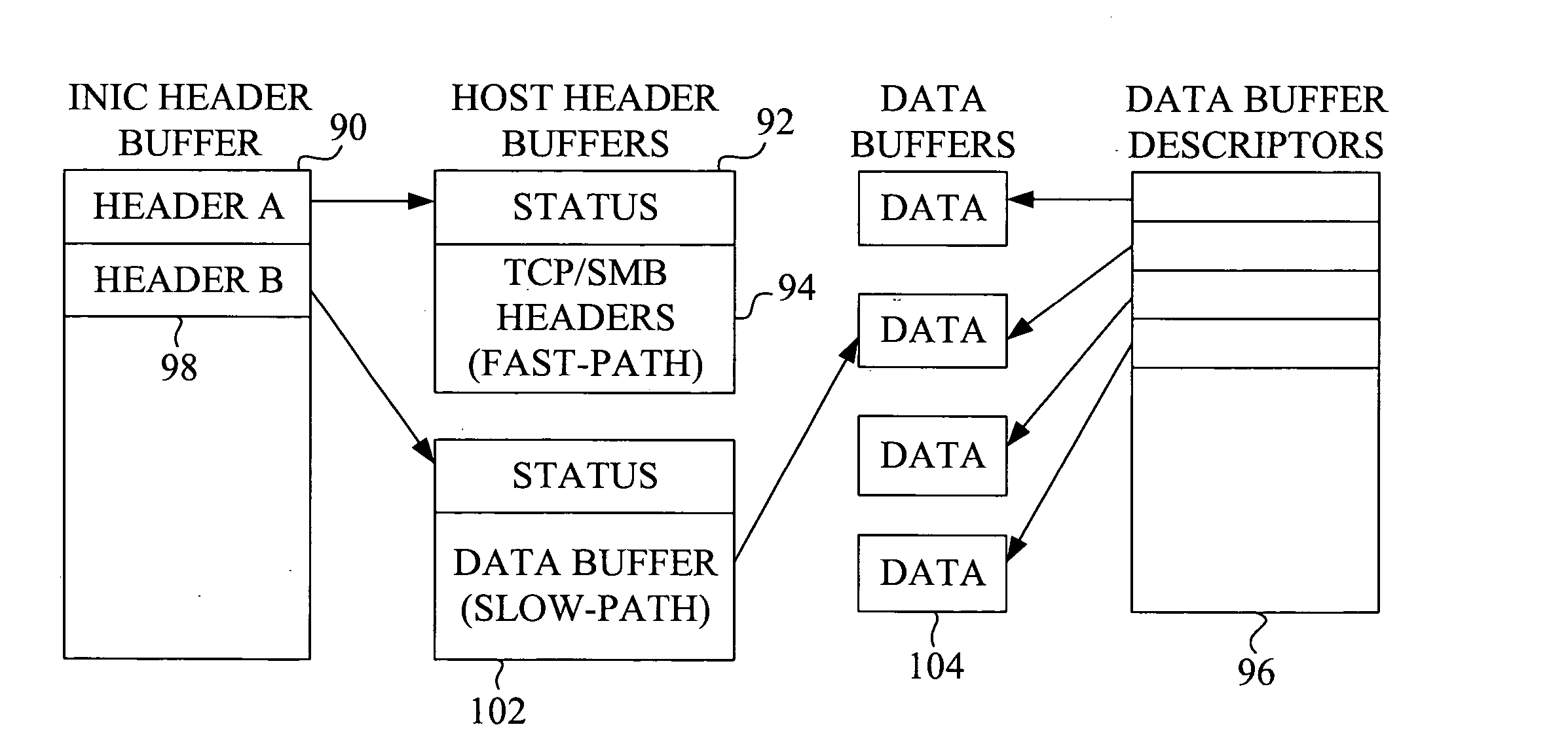

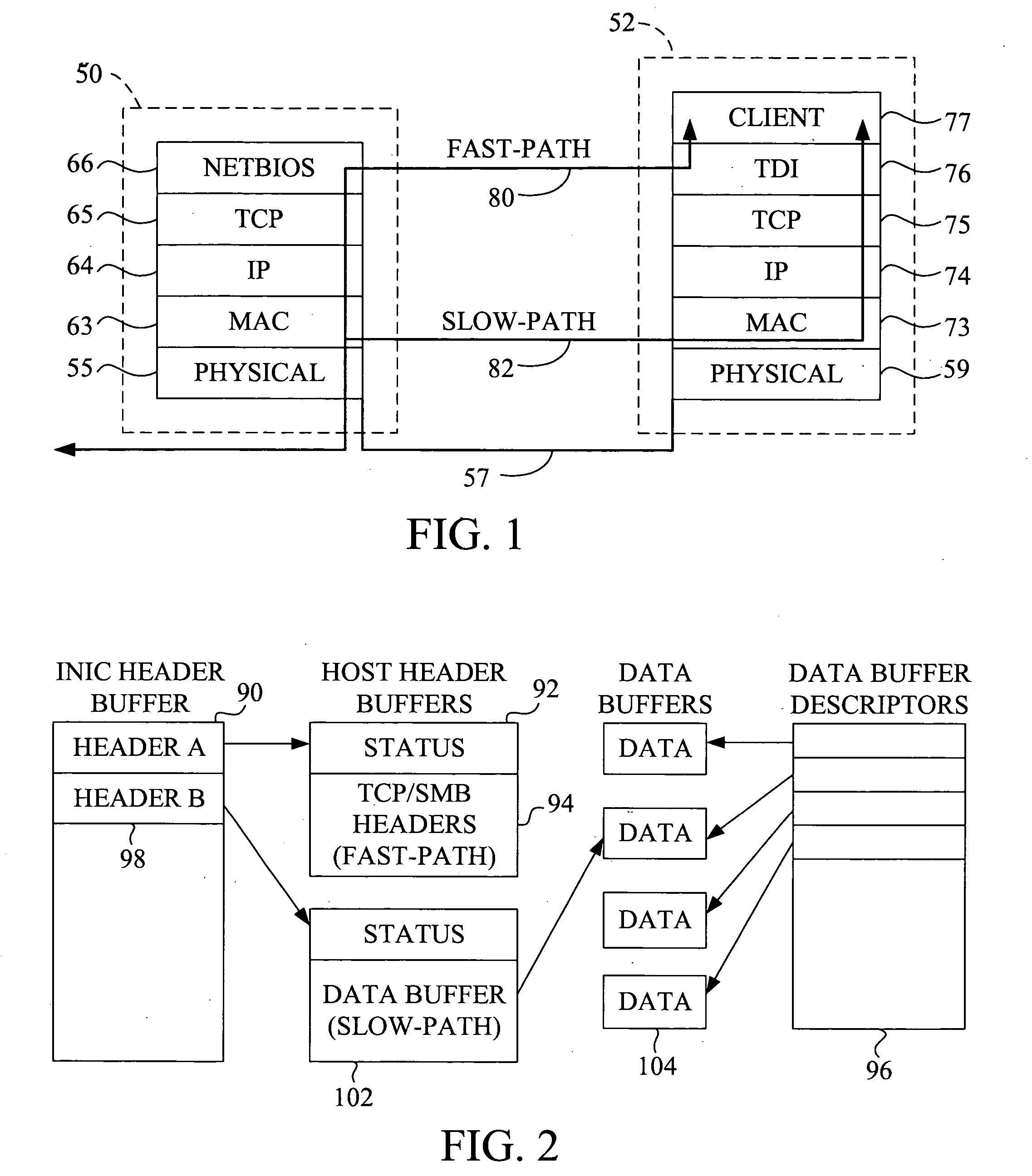

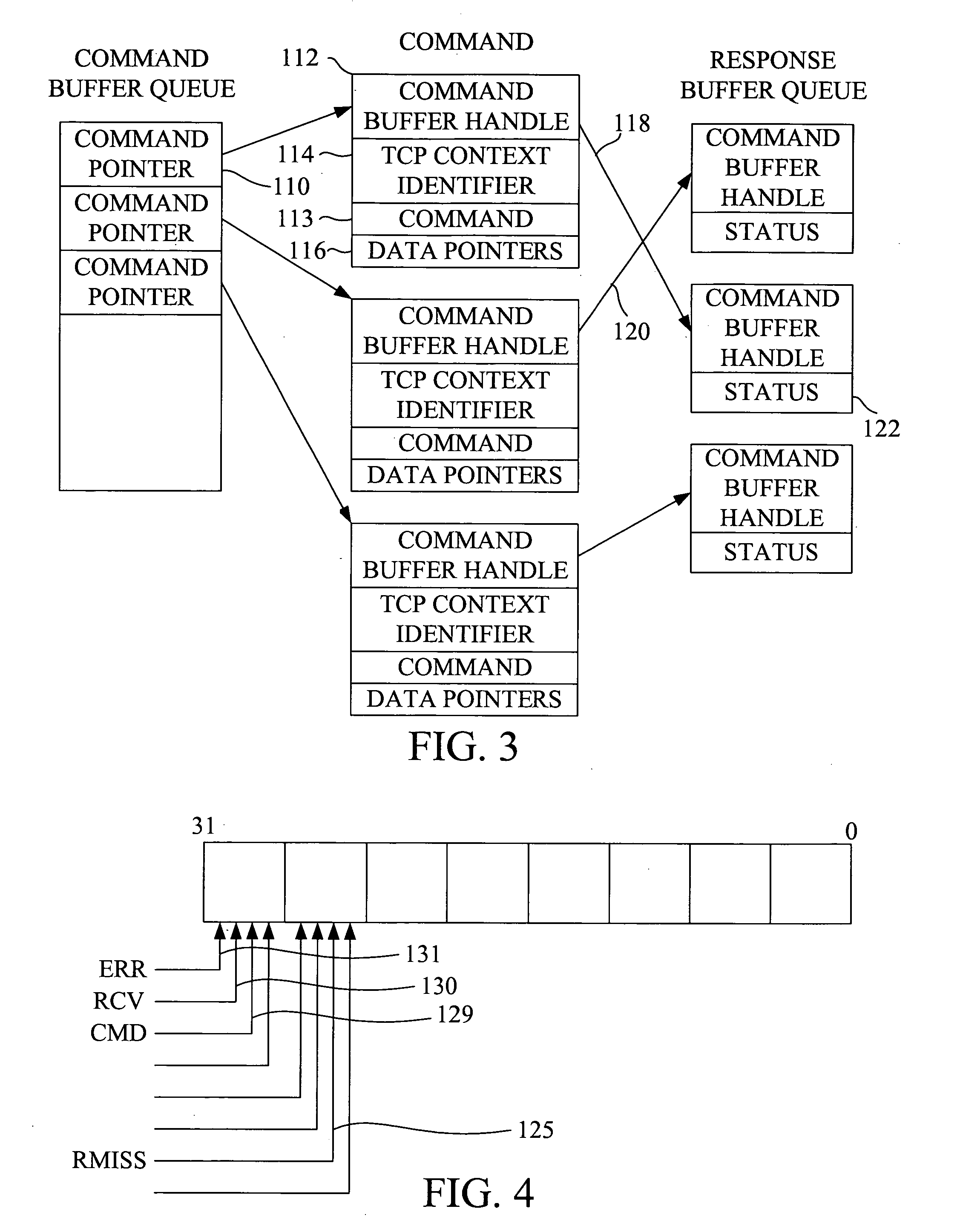

InactiveUS20050204058A1Avoid header processing and data copying and checksummingCost-effectiveMultiple digital computer combinationsData switching networksFast pathGeneral purpose

An intelligent network interface card (INIC) or communication processing device (CPD) works with a host computer for data communication. The device provides a fast-path that avoids protocol processing for most messages, greatly accelerating data transfer and offloading time-intensive processing tasks from the host CPU. The host retains a fallback processing capability for messages that do not fit fast-path criteria, with the device providing assistance such as validation even for slow-path messages, and messages being selected for either fast-path or slow-path processing. A context for a connection is defined that allows the device to move data, free of headers, directly to or from a destination or source in the host. The context can be passed back to the host for message processing by the host. The device contains specialized hardware circuits that are much faster at their specific tasks than a general purpose CPU. A preferred embodiment includes a trio of pipelined processors devoted to transmit, receive and utility processing, providing full duplex communication for four Fast Ethernet nodes.

Owner:ALACRITECH

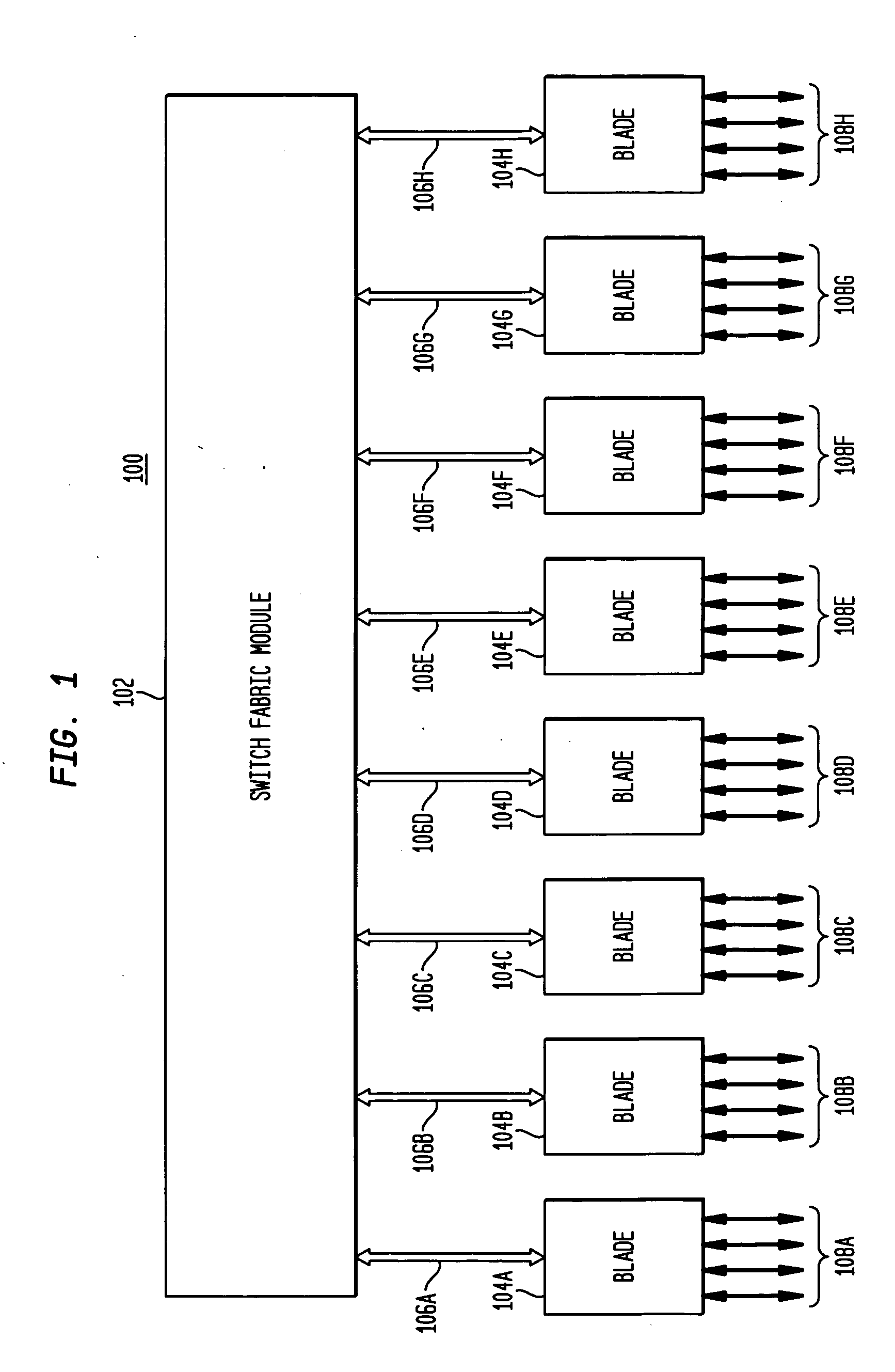

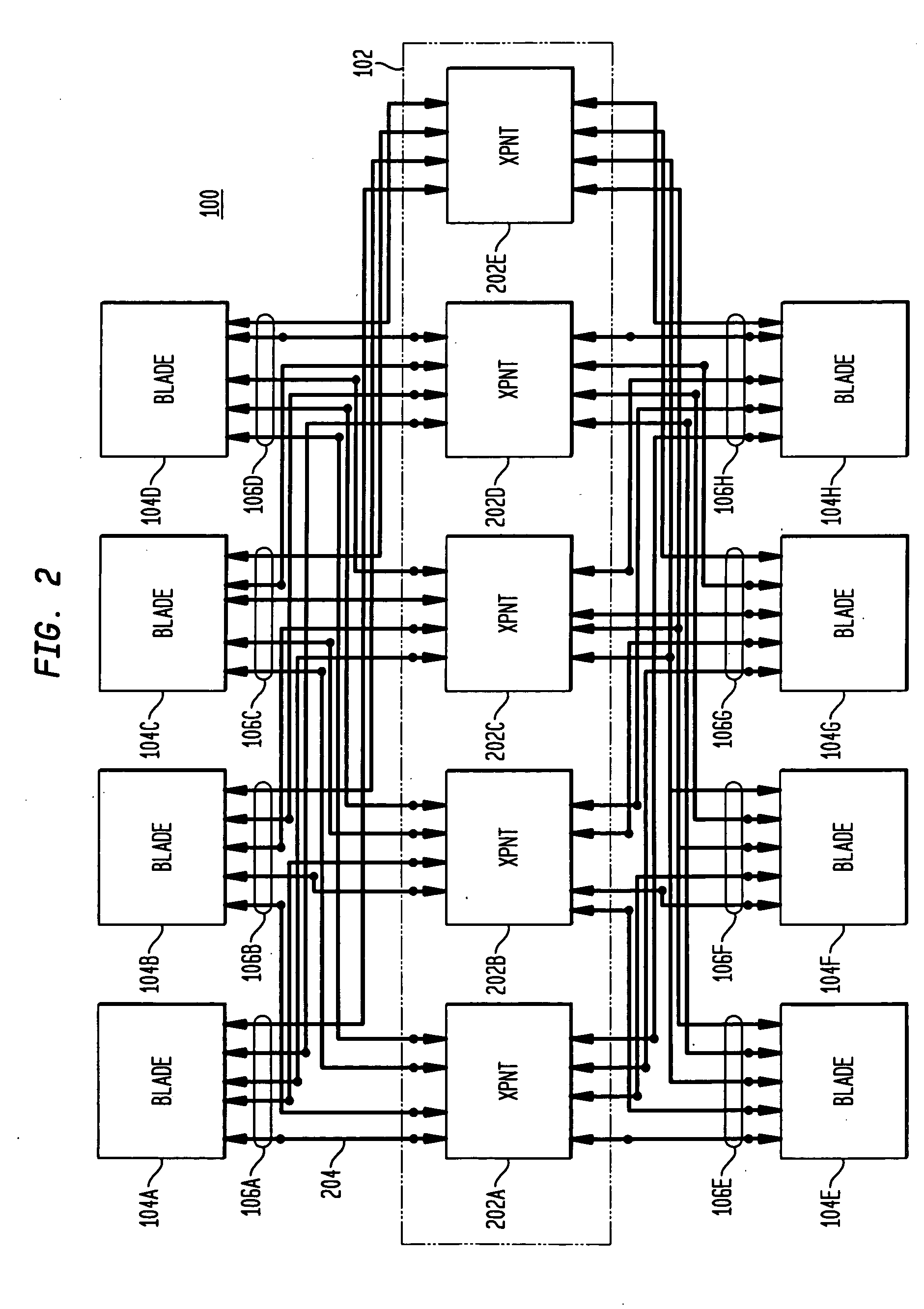

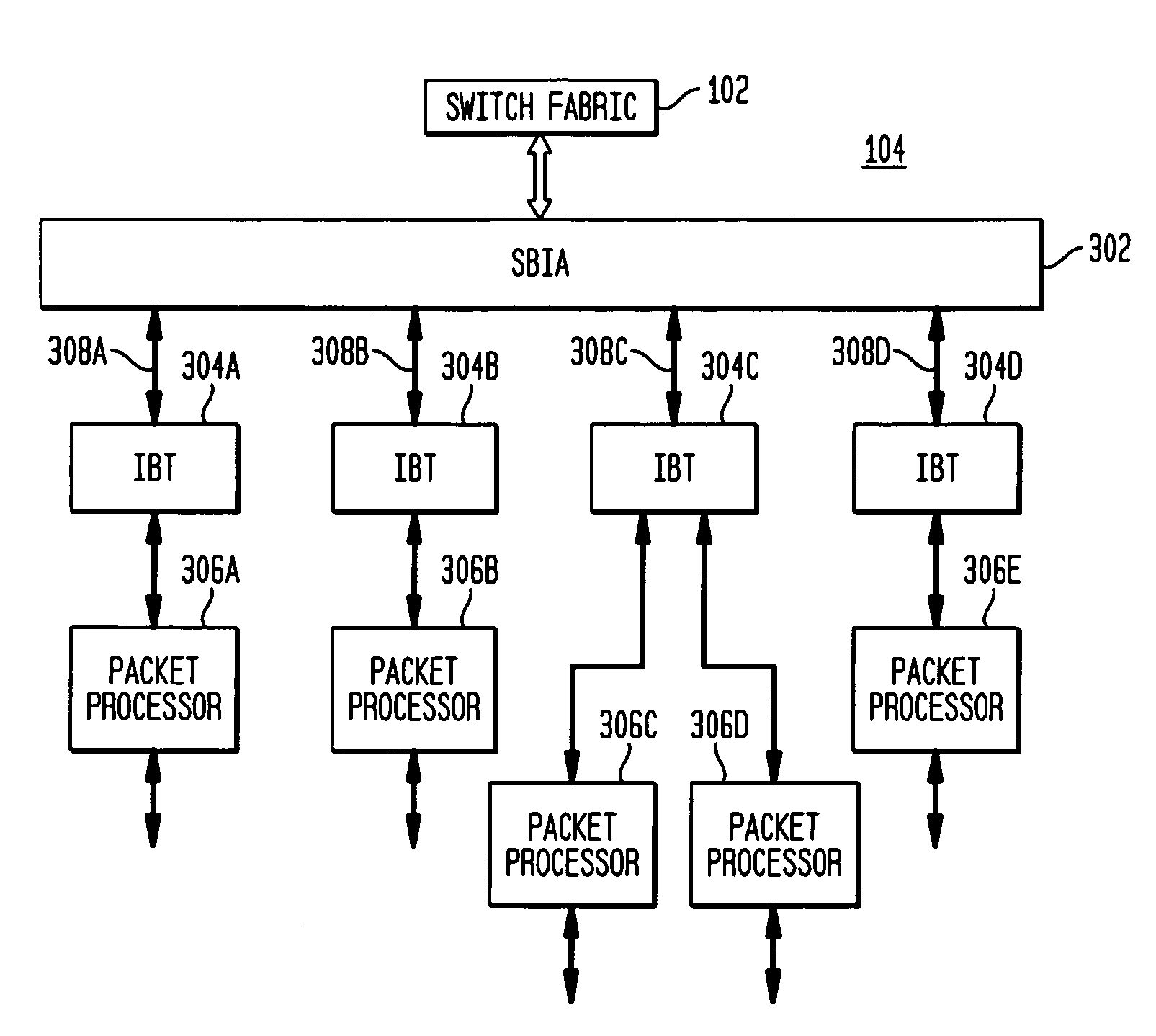

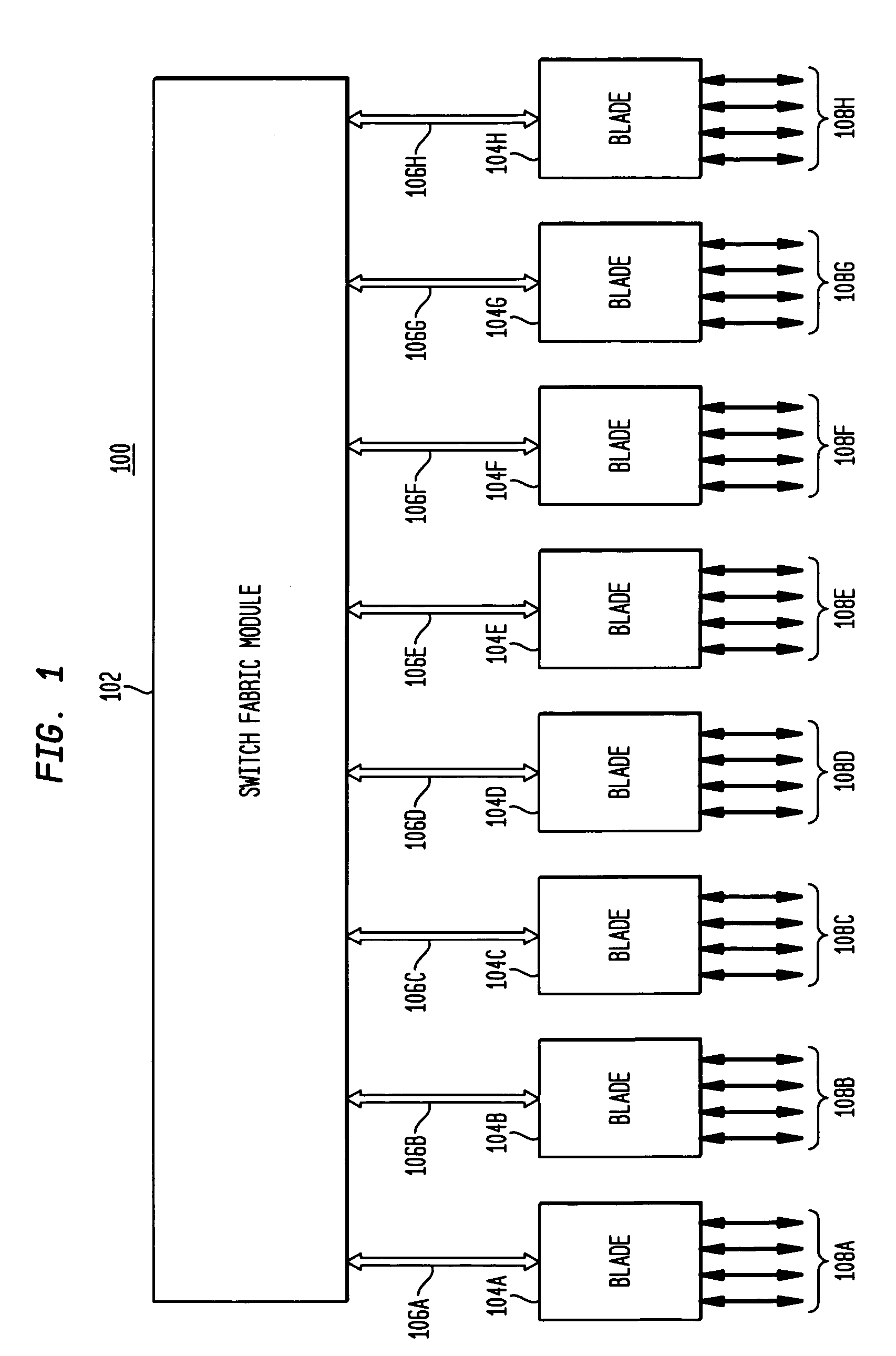

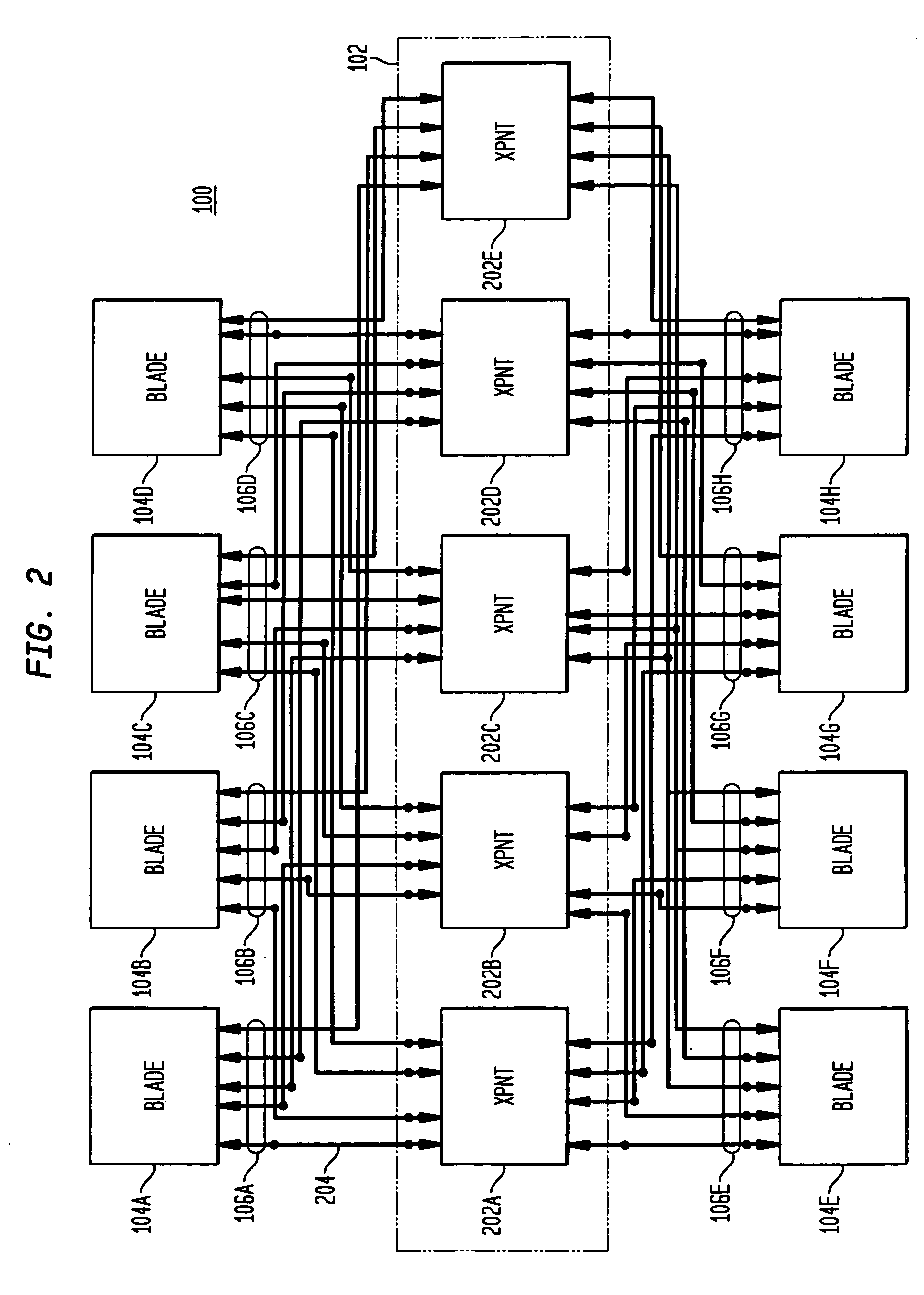

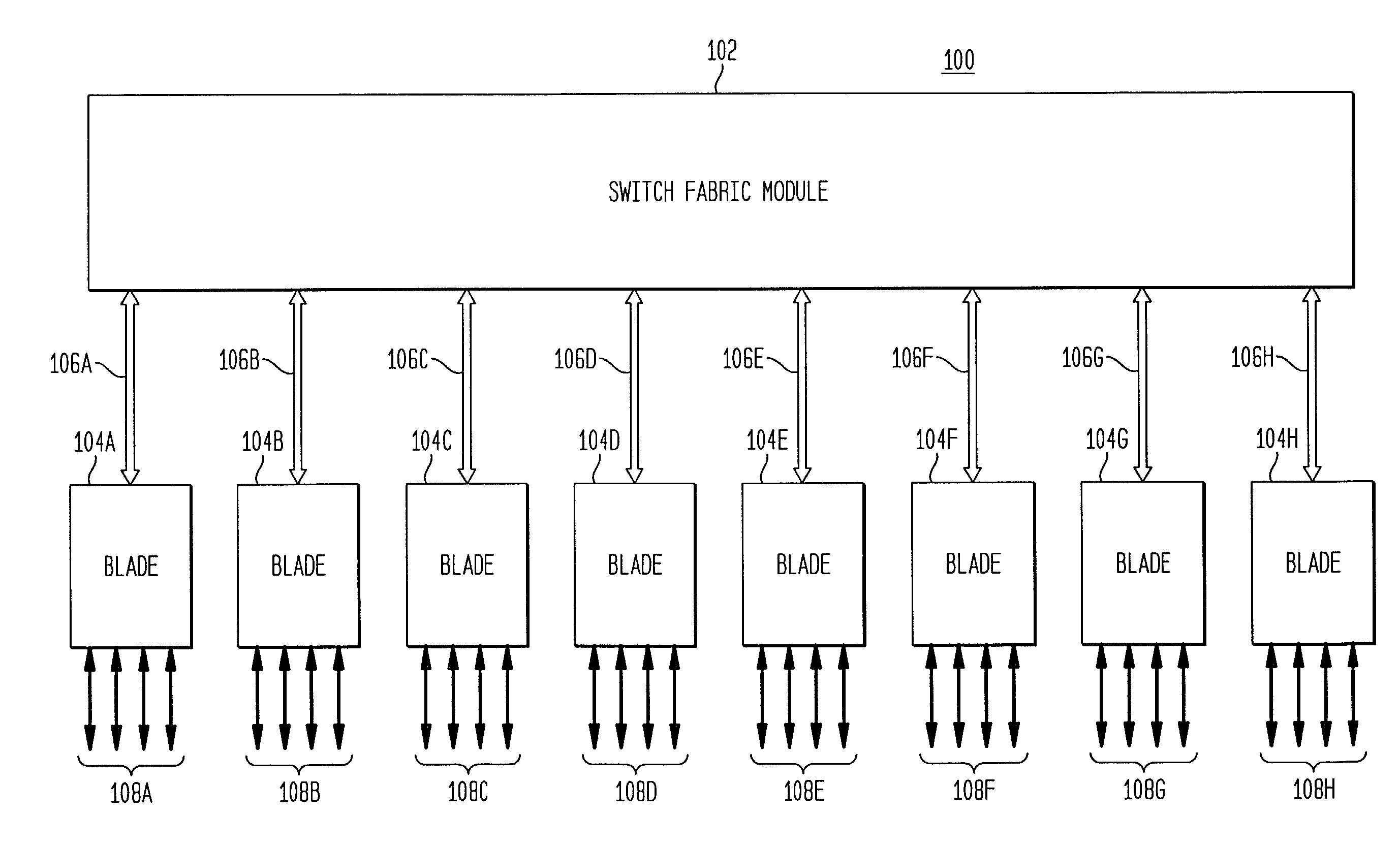

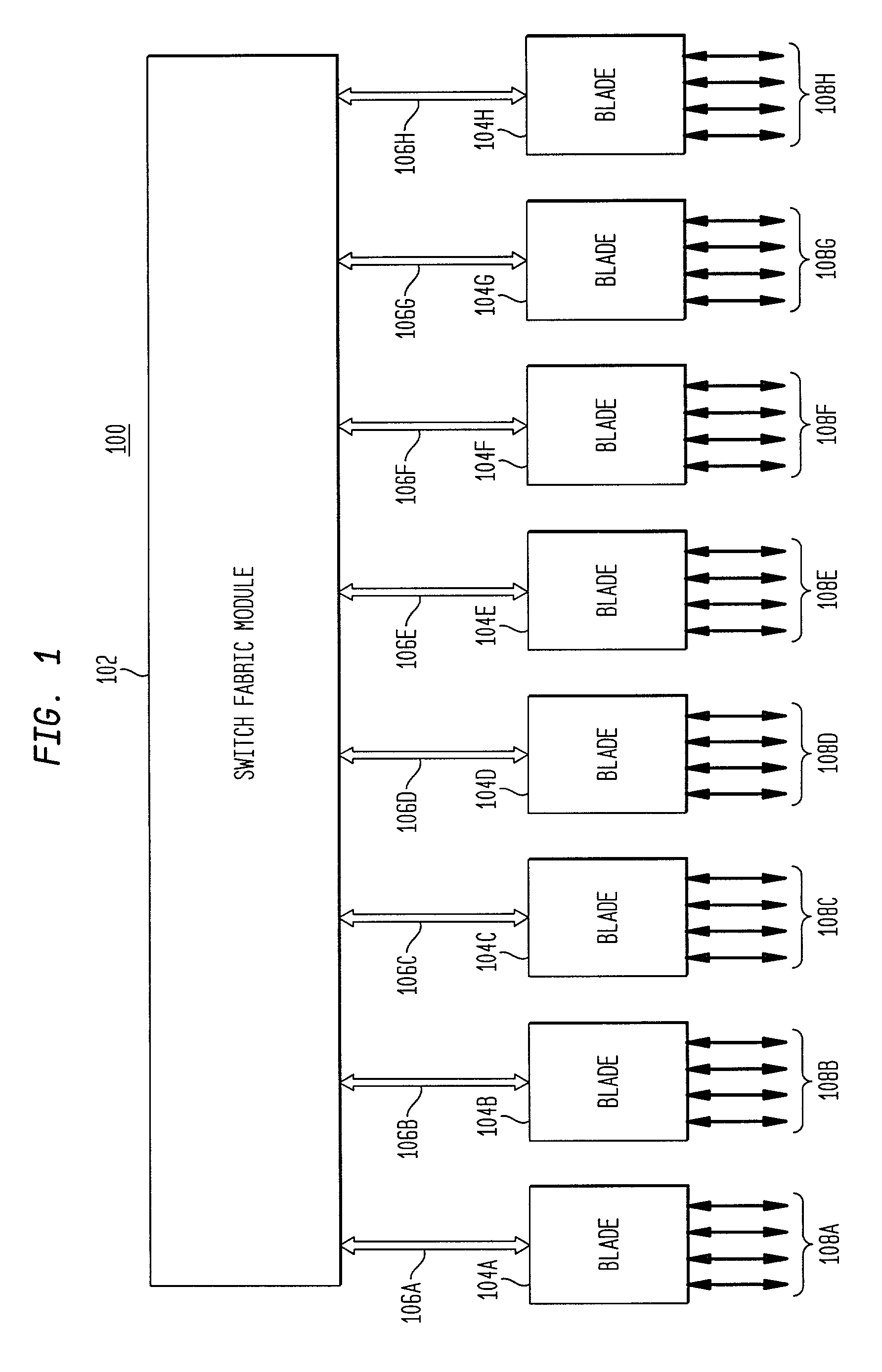

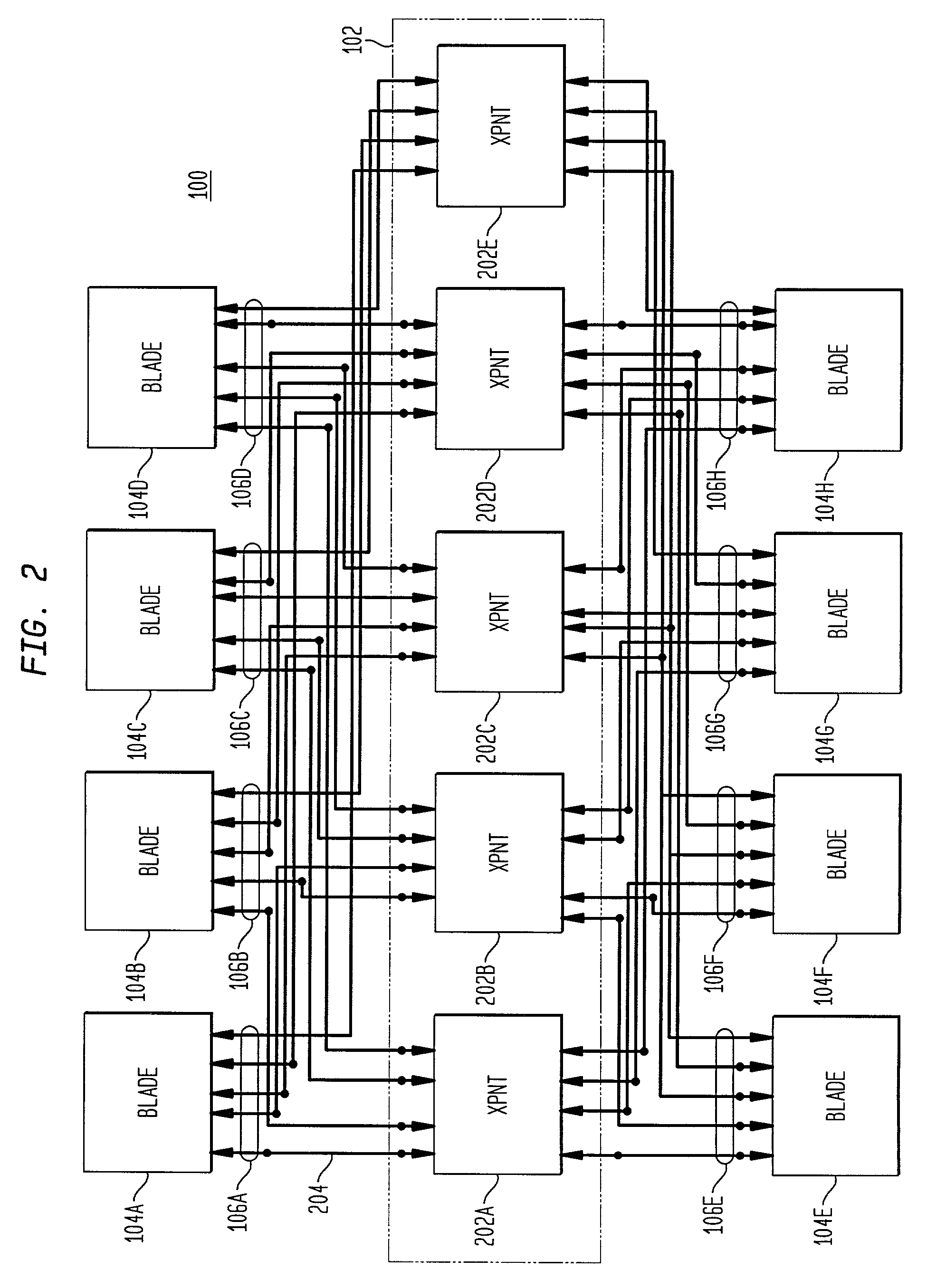

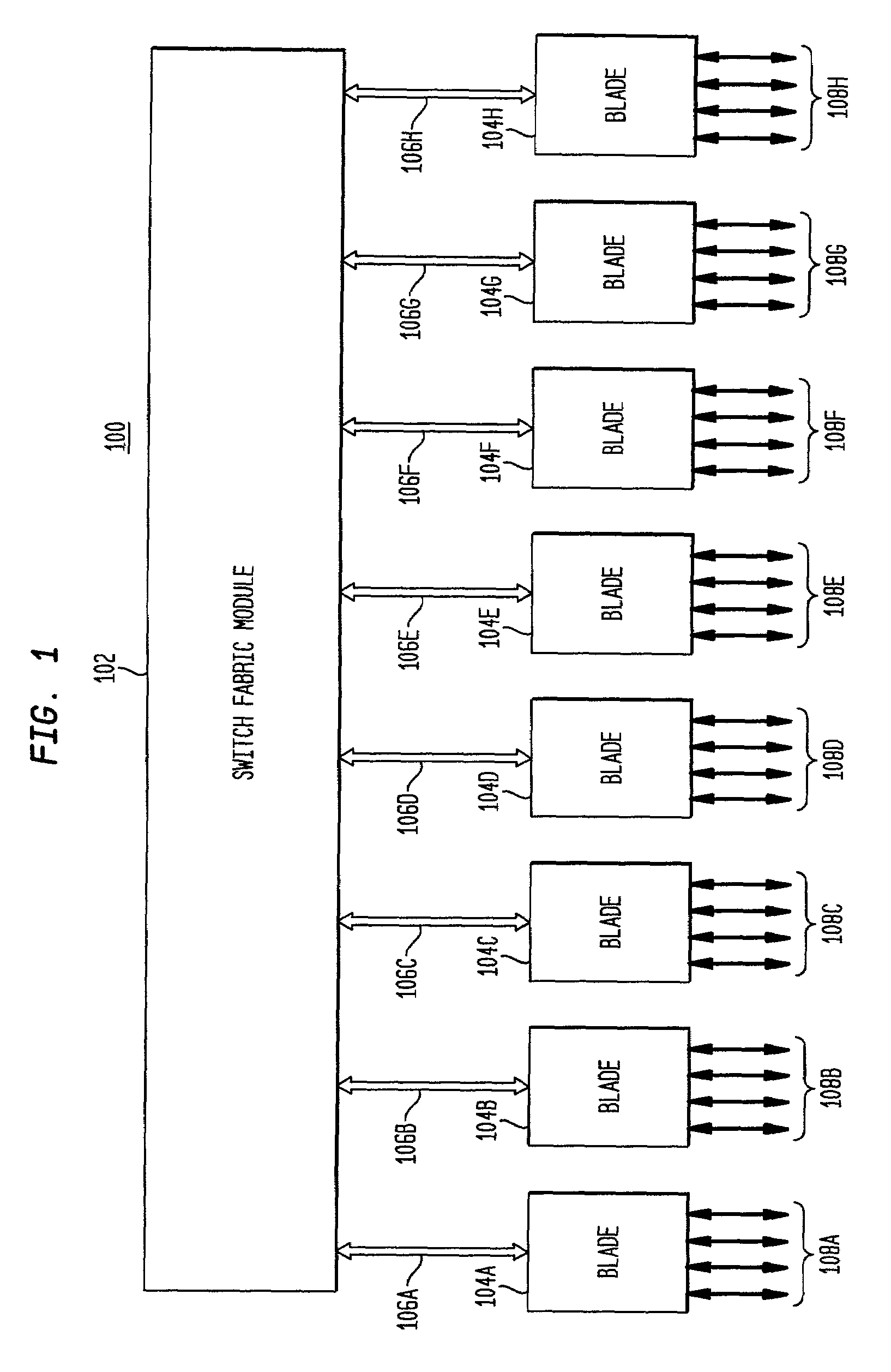

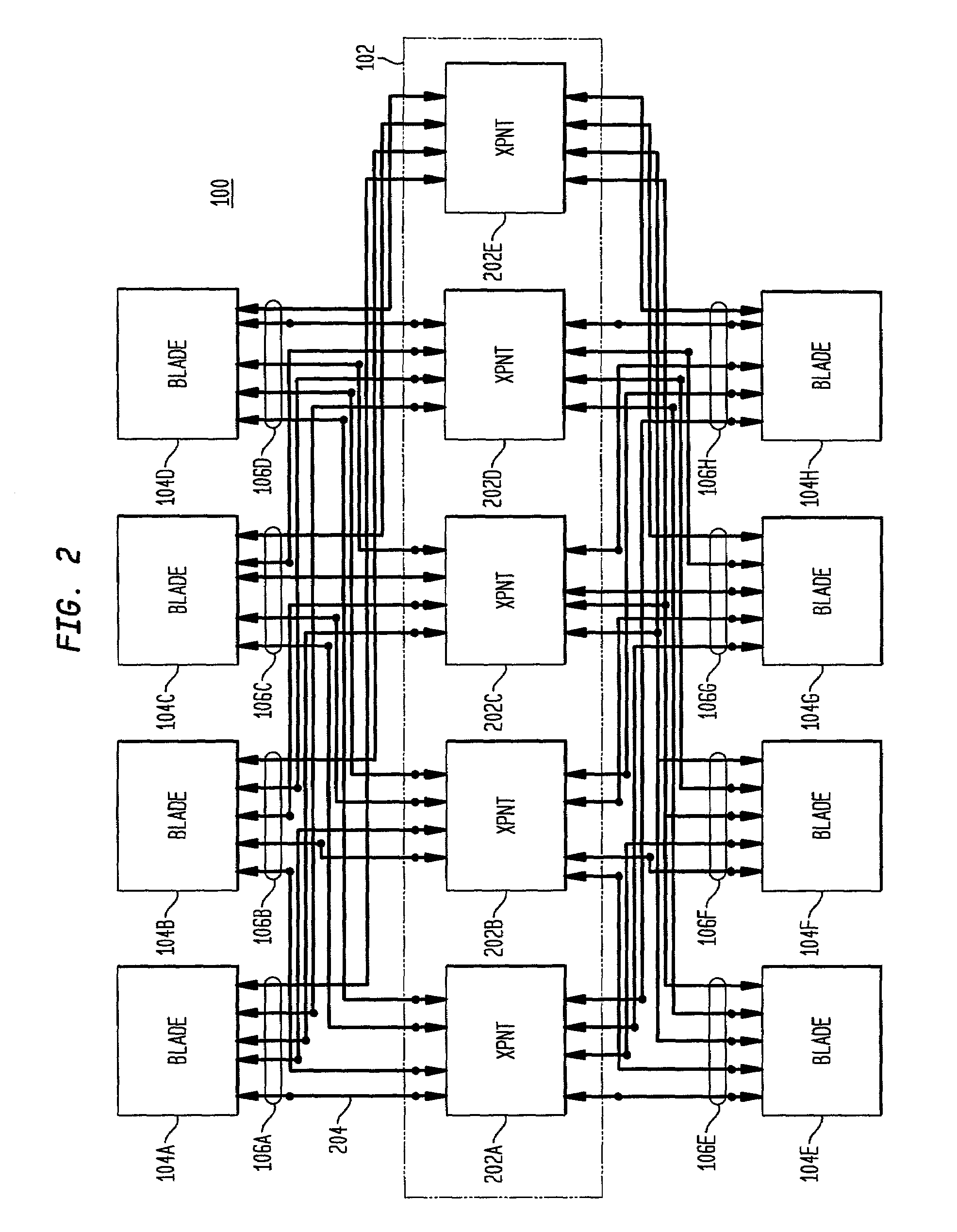

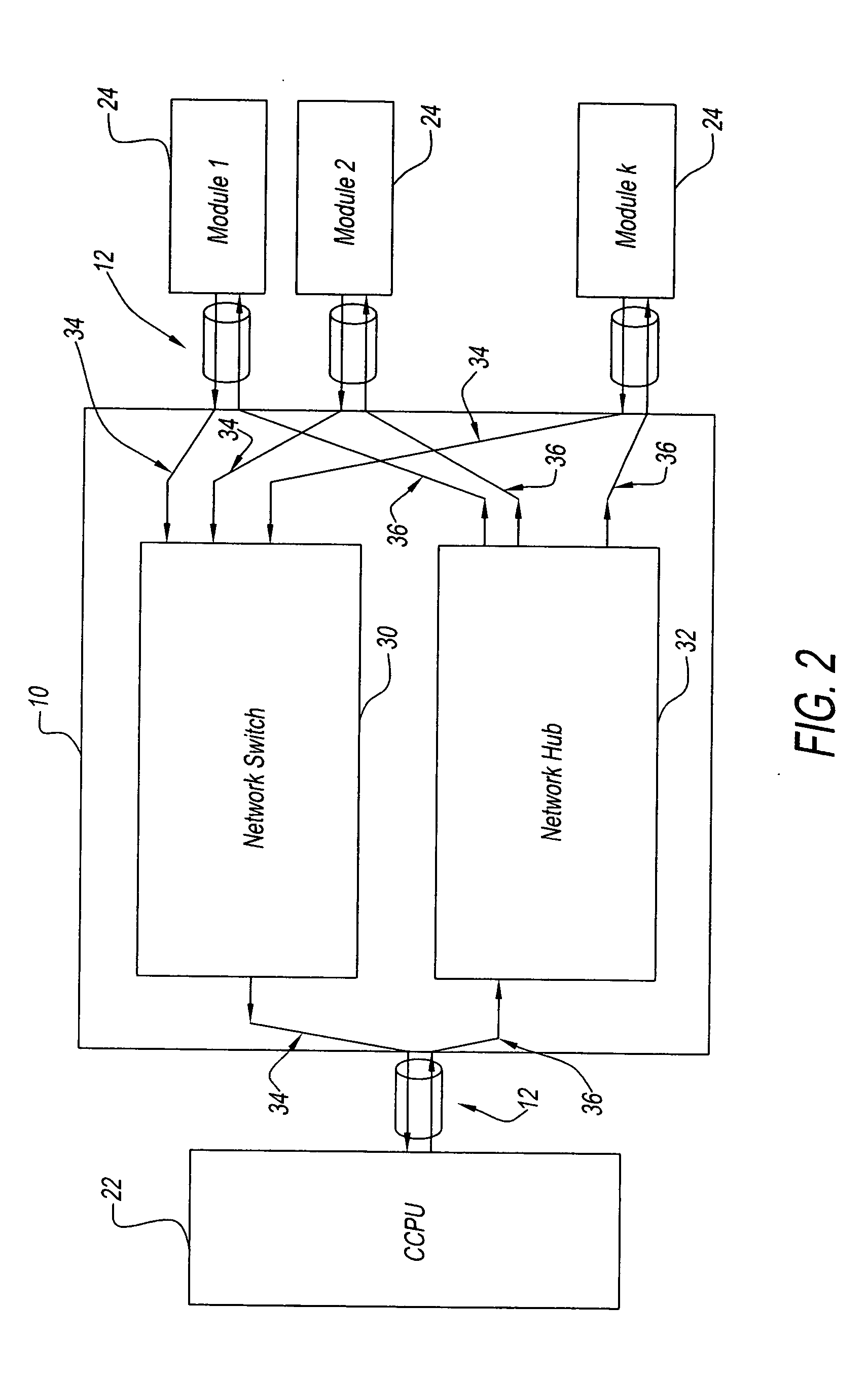

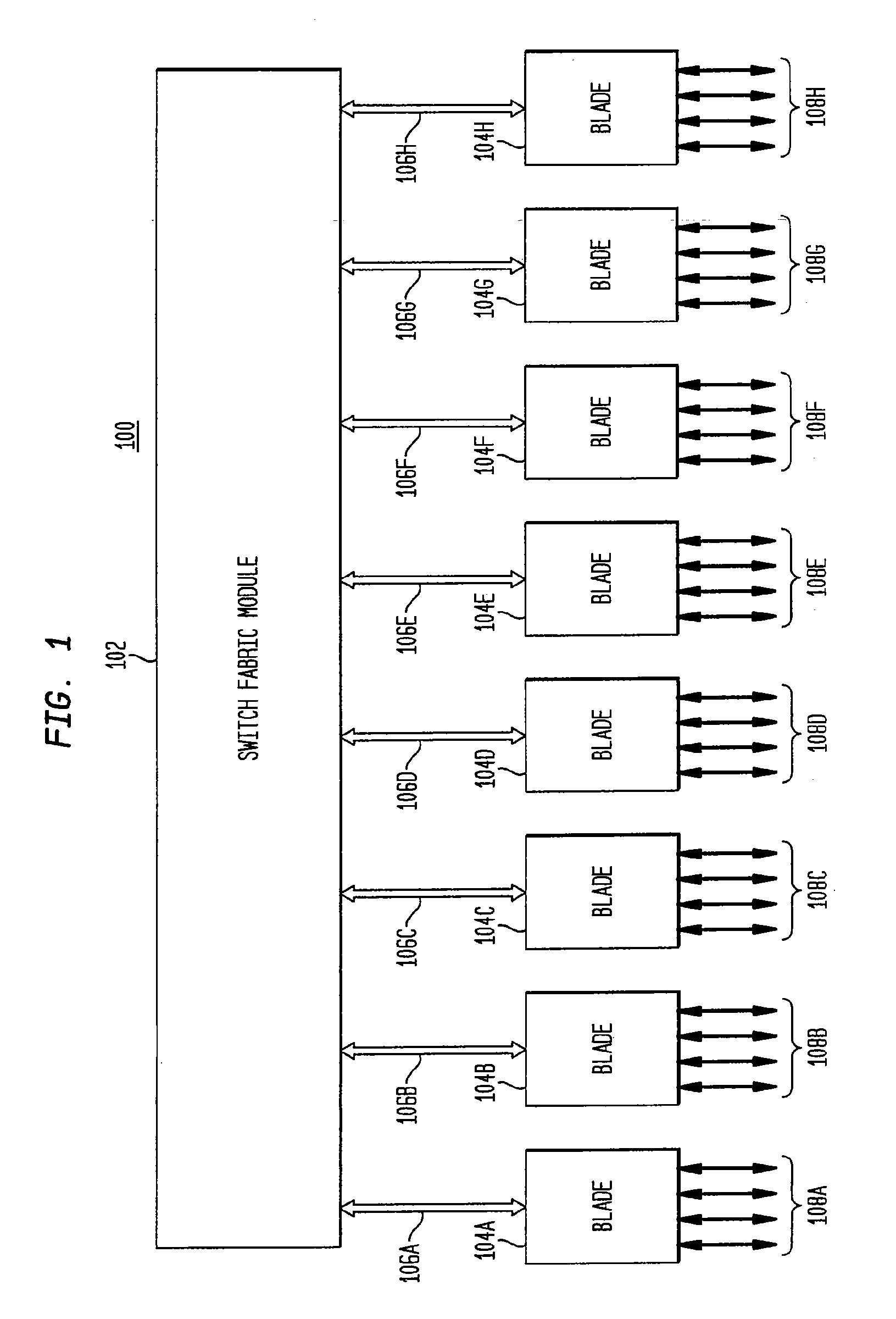

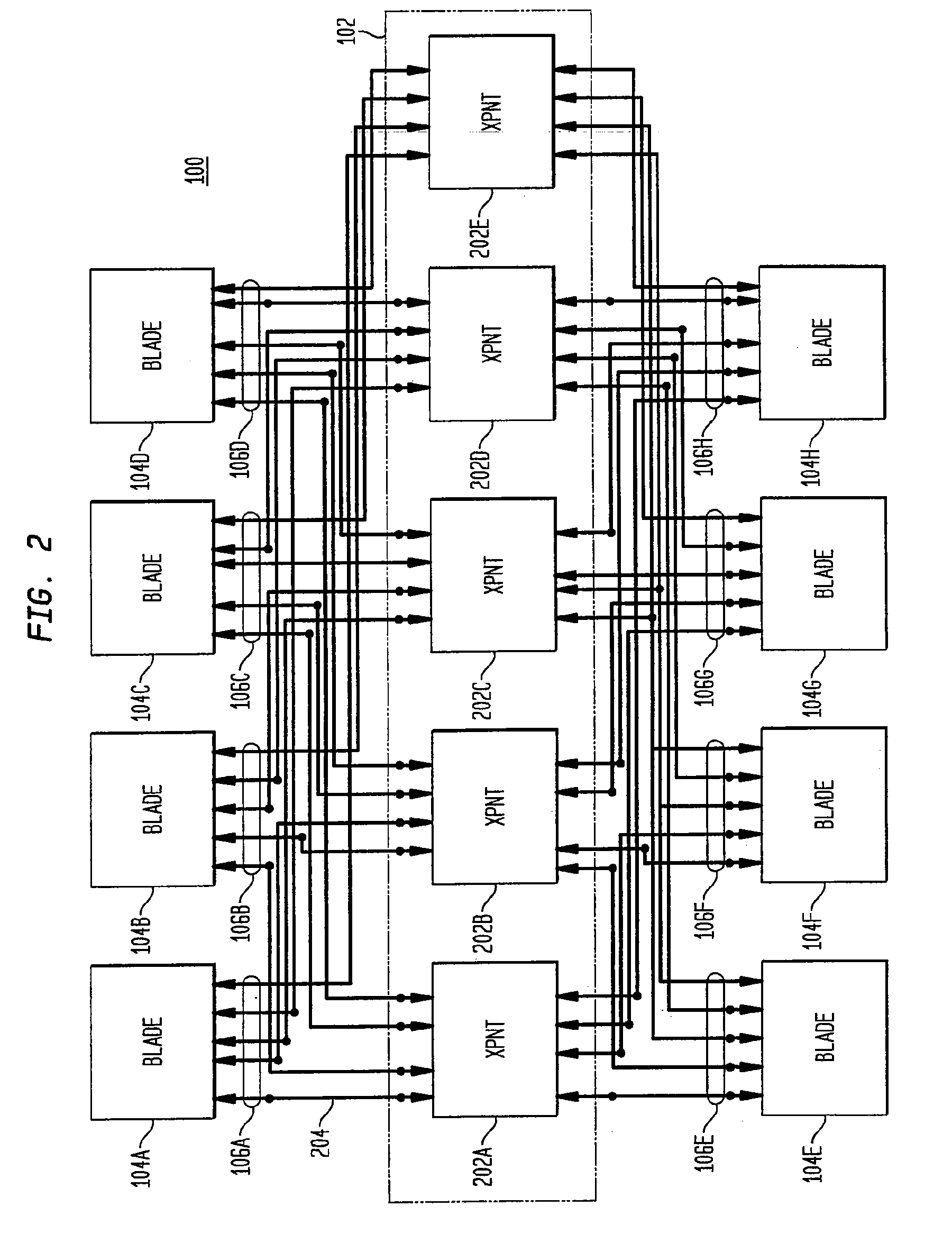

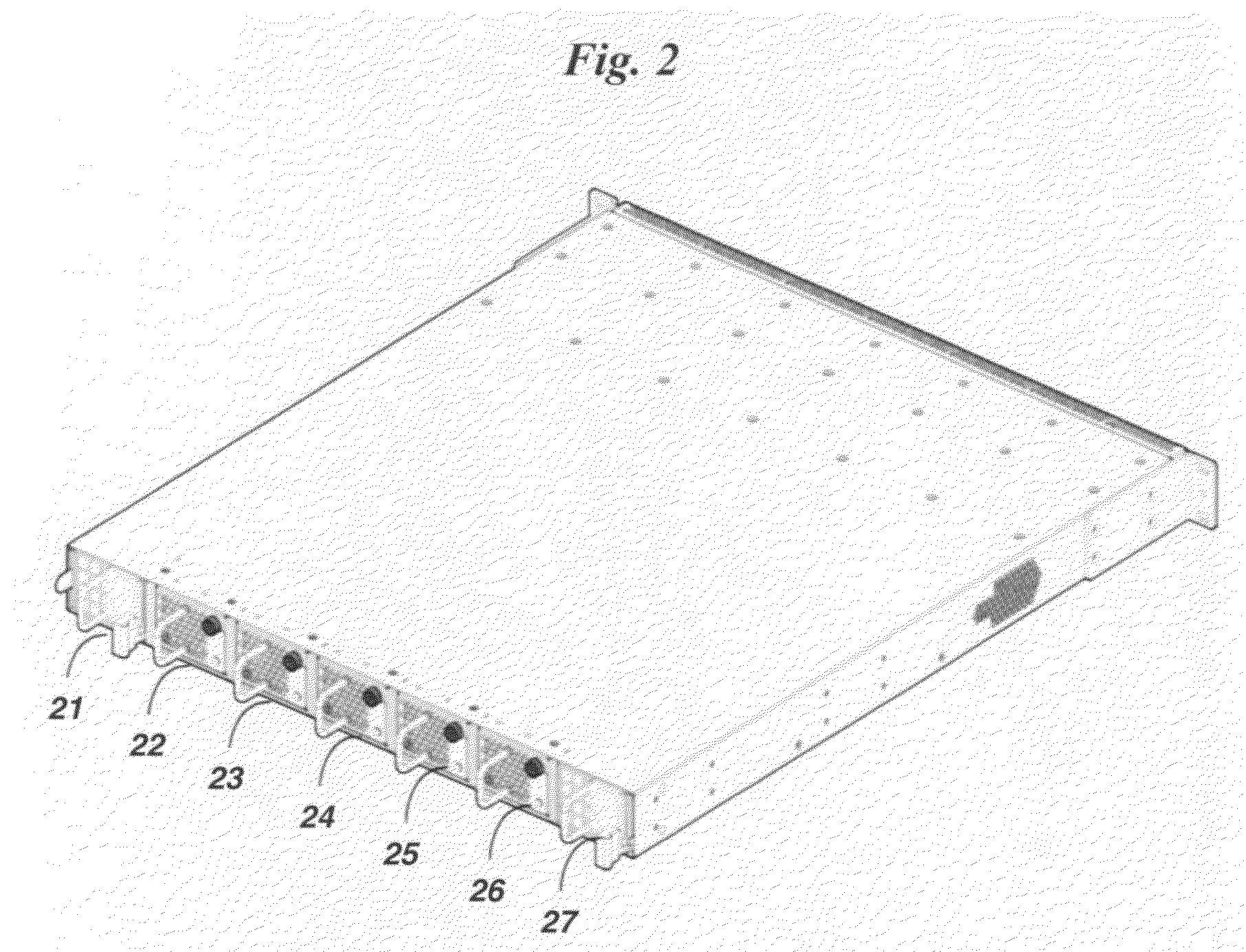

High-performance network switch

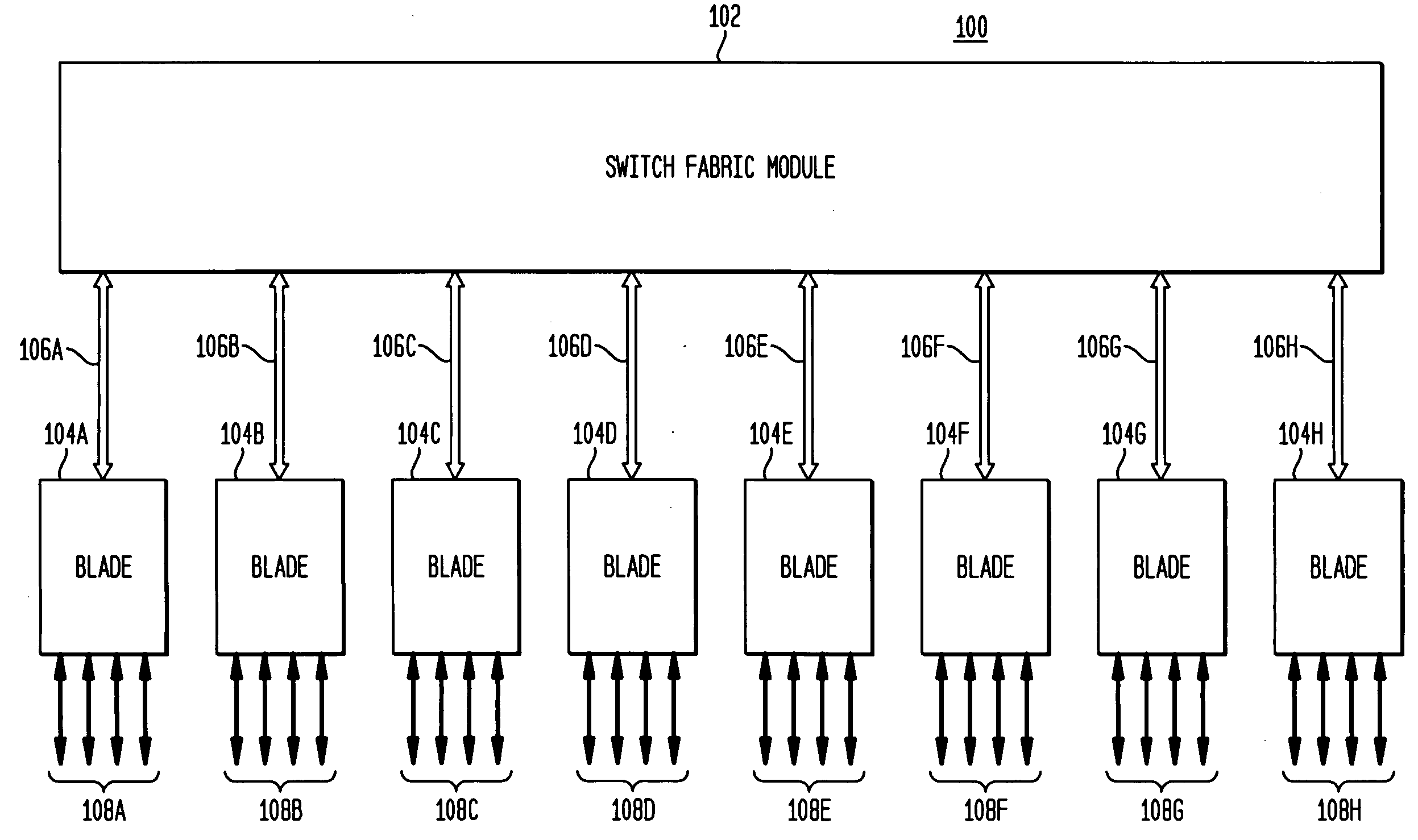

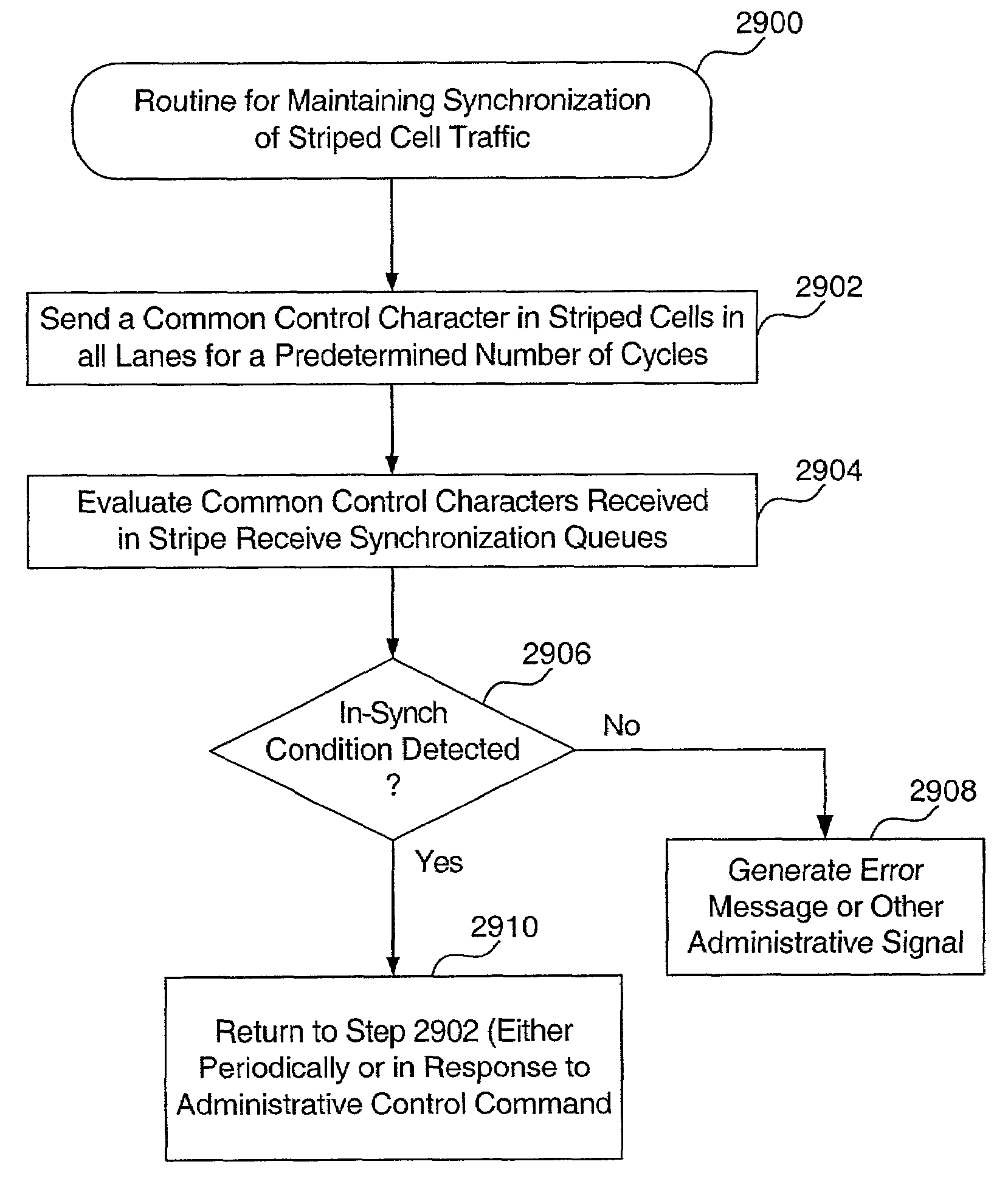

InactiveUS20050089049A1Improve performanceMultiplex system selection arrangementsError preventionData streamNetwork switch

The present invention provides a high-performance network switch. A digital switch has a plurality of blades coupled to a switching fabric via serial pipes. Serial link technology is used in the switching fabric. Each blade outputs serial data streams with in-band control information in multiple stripes to the switching fabric. The switching fabric includes a plurality of cross points corresponding to the multiple stripes. In one embodiment five stripes and five cross points are used. Each blade has a backplane interface adapter (BIA). One or more integrated bus translators (IBTs) and / or source packet processors are coupled to a BIA. An encoding scheme for packets of data carried in wide striped cells is provided.

Owner:AVAGO TECH INT SALES PTE LTD

High-performance network switch

InactiveUS7206283B2Multiplex system selection arrangementsError preventionComputer hardwareData stream

The present invention provides a high-performance network switch. A digital switch has a plurality of blades coupled to a switching fabric via serial pipes. Serial link technology is used in the switching fabric. Each blade outputs serial data streams with in-band control information in multiple stripes to the switching fabric. The switching fabric includes a plurality of cross points corresponding to the multiple stripes. In one embodiment five stripes and five cross points are used. Each blade has a backplane interface adapter (BIA). One or more integrated bus translators (IBTs) and / or source packet processors are coupled to a BIA. An encoding scheme for packets of data carried in wide striped cells is provided.

Owner:AVAGO TECH INT SALES PTE LTD

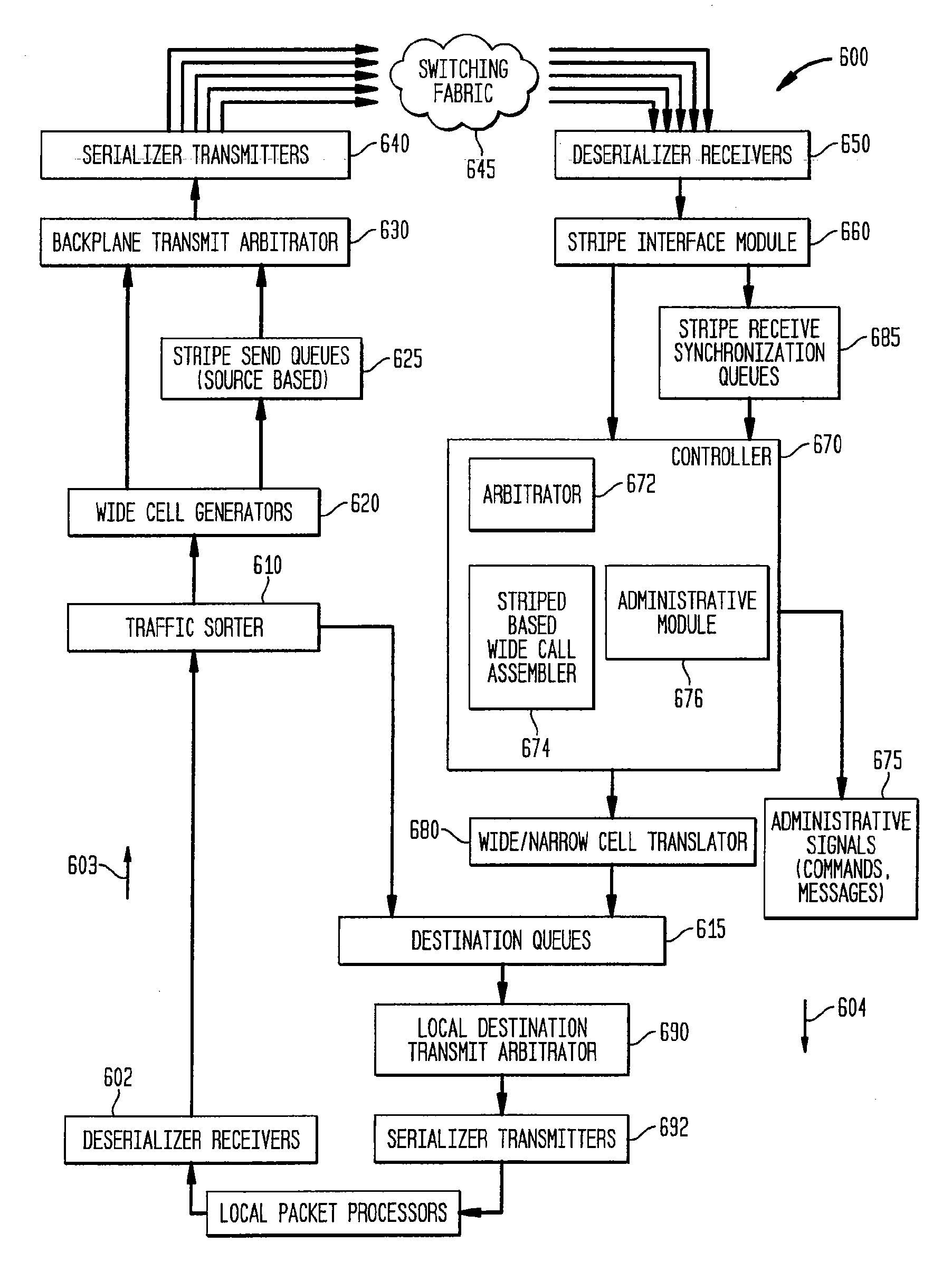

Backplane interface adapter

InactiveUS7236490B2Multiplex communicationData switching by path configurationNetwork switchHigh performance network

A backplane interface adapter for a high-performance network switch. The backplane interface adapter receives narrow input cells carrying packets of data and outputs wide striped cells to a switching fabric. One traffic processing path through the backplane interface adapter includes deserializer receivers, a traffic sorter, wide cell generators, stripe send queues, a backplane transmit arbitrator, and serializer transmitters. Another traffic processing path through the backplane interface adapter includes deserialize receivers, a stripe interface, stripe receive synchronization queues, a controller, wide / narrow cell translator, destination queues, and serializer transmitters. An encoding scheme for packets of data carried in wide striped cells is provided.

Owner:AVAGO TECH INT SALES PTE LTD

Backplane interface adapter with error control and redundant fabric

A backplane interface adapter with error control and redundant fabric for a high-performance network switch. The error control may be provided by an administrative module that includes a level monitor, a stripe synchronization error detector, a flow controller, and a control character presence tracker. The redundant fabric transceiver of the backplane interface adapter improves the adapter's ability to properly and consistently receive receive narrow input cells carrying packets of data and output wide striped cells to a switching fabric.

Owner:AVAGO TECH INT SALES PTE LTD

High performance network communication device and method

A network communication device for bi-directional communication networks is provided having a first portion and a second portion. The first portion is connectable to a first point and a second point on the bi-directional communication network. Similarly, the second portion is connectable to the first and second points. The first portion manages collisions among a first set of messages transmittable from the first point to the second point. However, the second portion transmits free of collision management a second set of messages transmittable from the second point to the first point.

Owner:GENERAL ELECTRIC CO

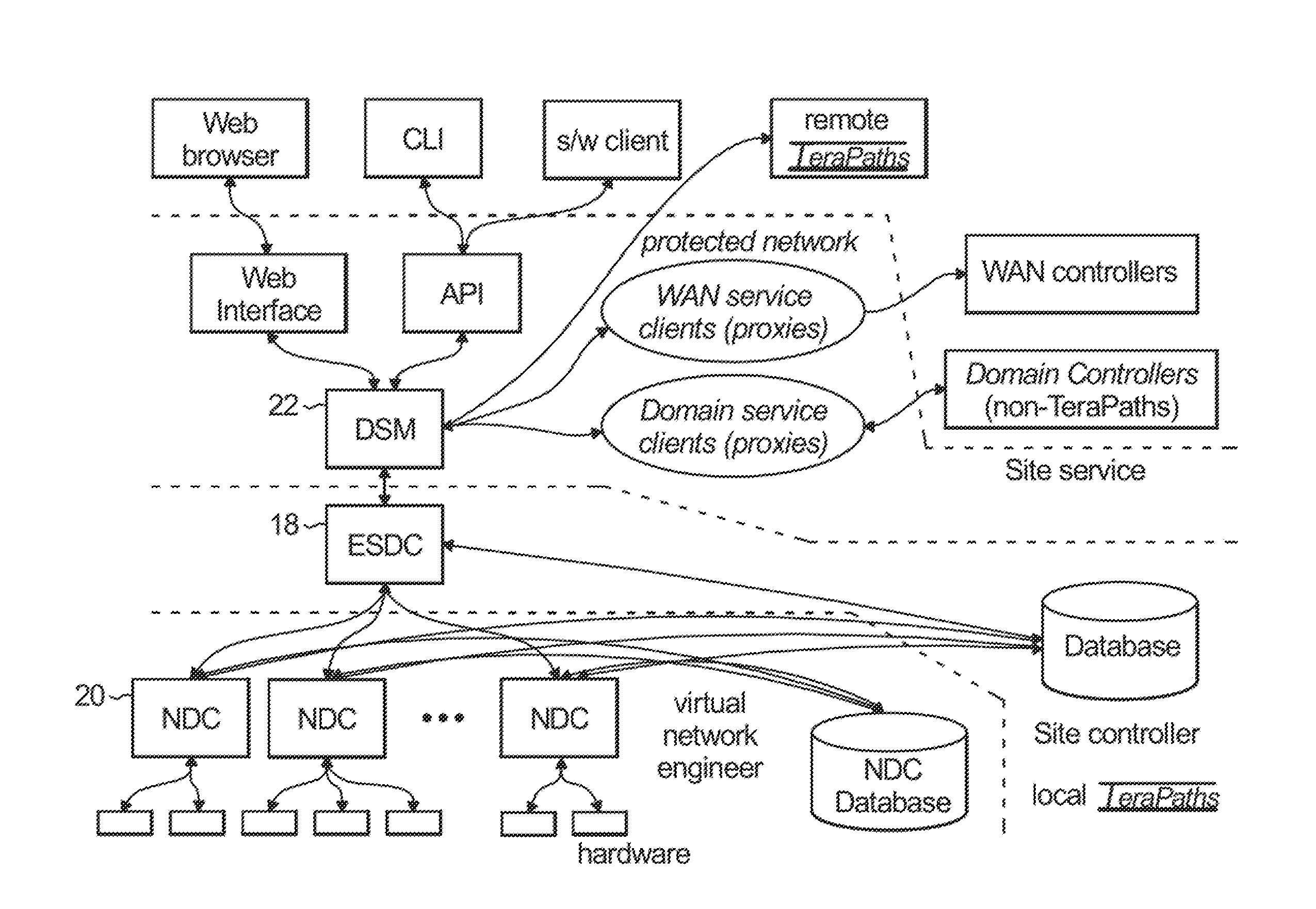

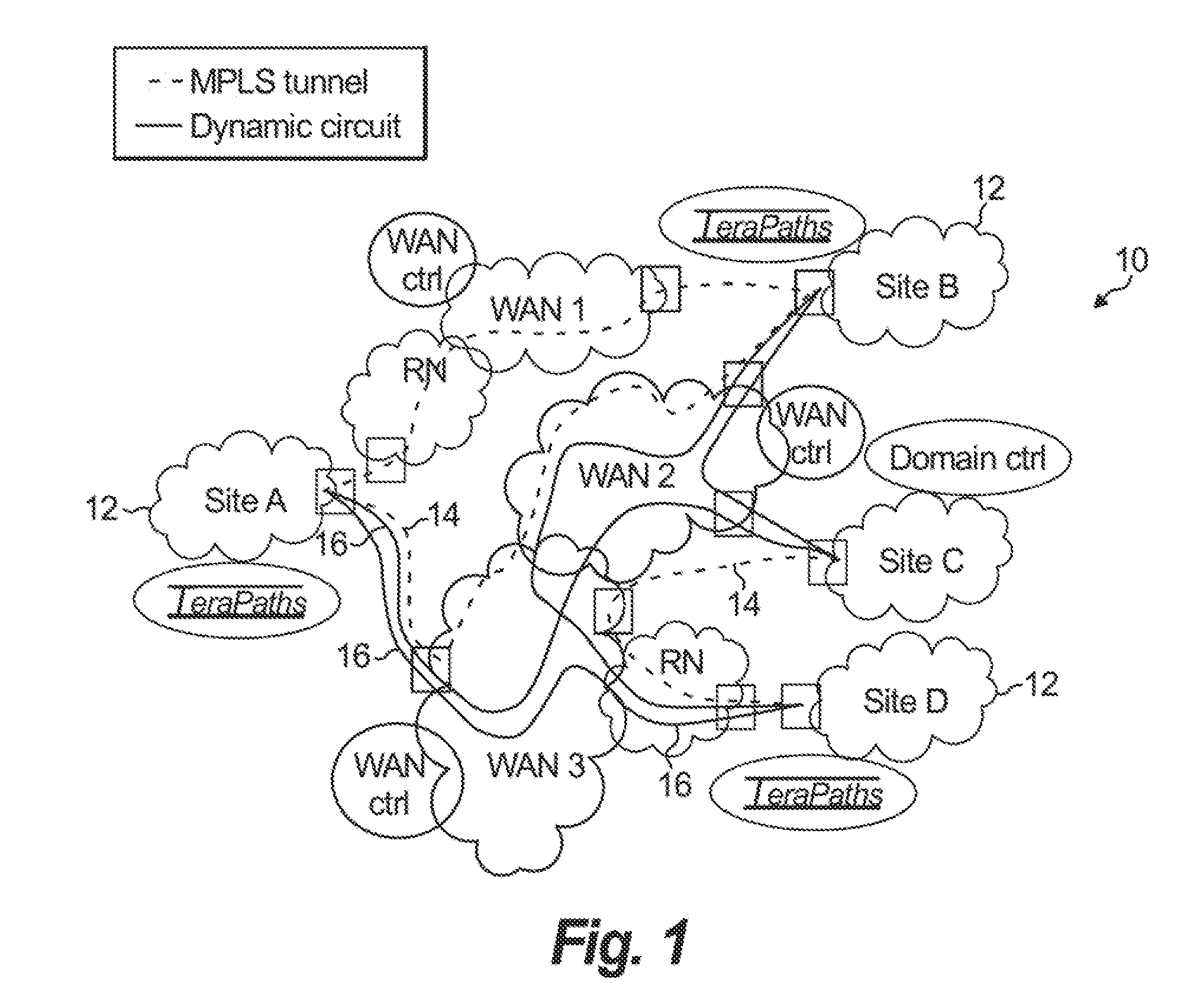

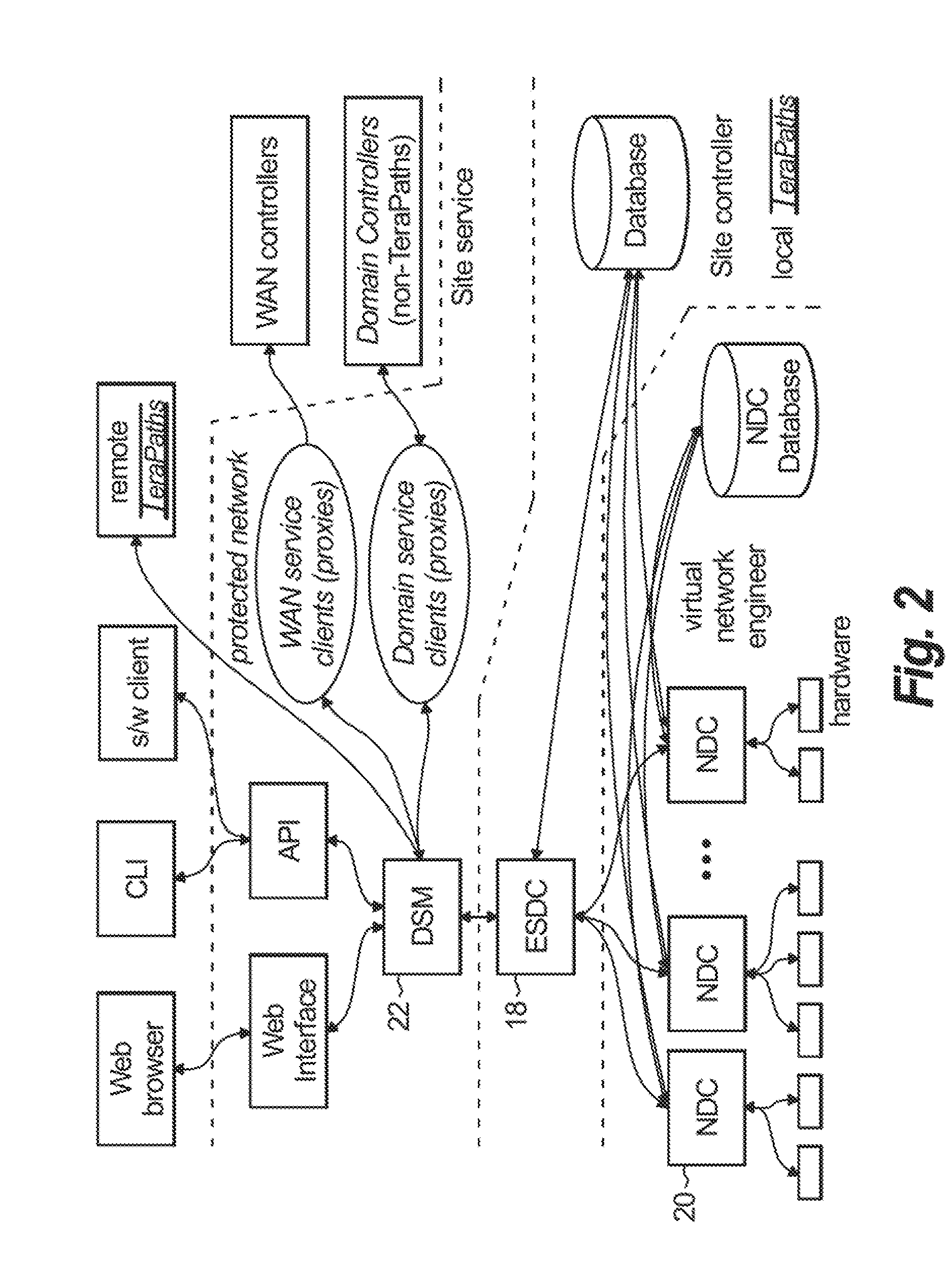

Co-Scheduling of Network Resource Provisioning and Host-to-Host Bandwidth Reservation on High-Performance Network and Storage Systems

A cross-domain network resource reservation scheduler configured to schedule a path from at least one end-site includes a management plane device configured to monitor and provide information representing at least one of functionality, performance, faults, and fault recovery associated with a network resource; a control plane device configured to at least one of schedule the network resource, provision local area network quality of service, provision local area network bandwidth, and provision wide area network bandwidth; and a service plane device configured to interface with the control plane device to reserve the network resource based on a reservation request and the information from the management plane device. Corresponding methods and computer-readable medium are also disclosed.

Owner:RGT UNIV OF CALIFORNIA THROUGH THE ERNEST ORLANDO LAWRENCE BERKELEY NAT LAB +1

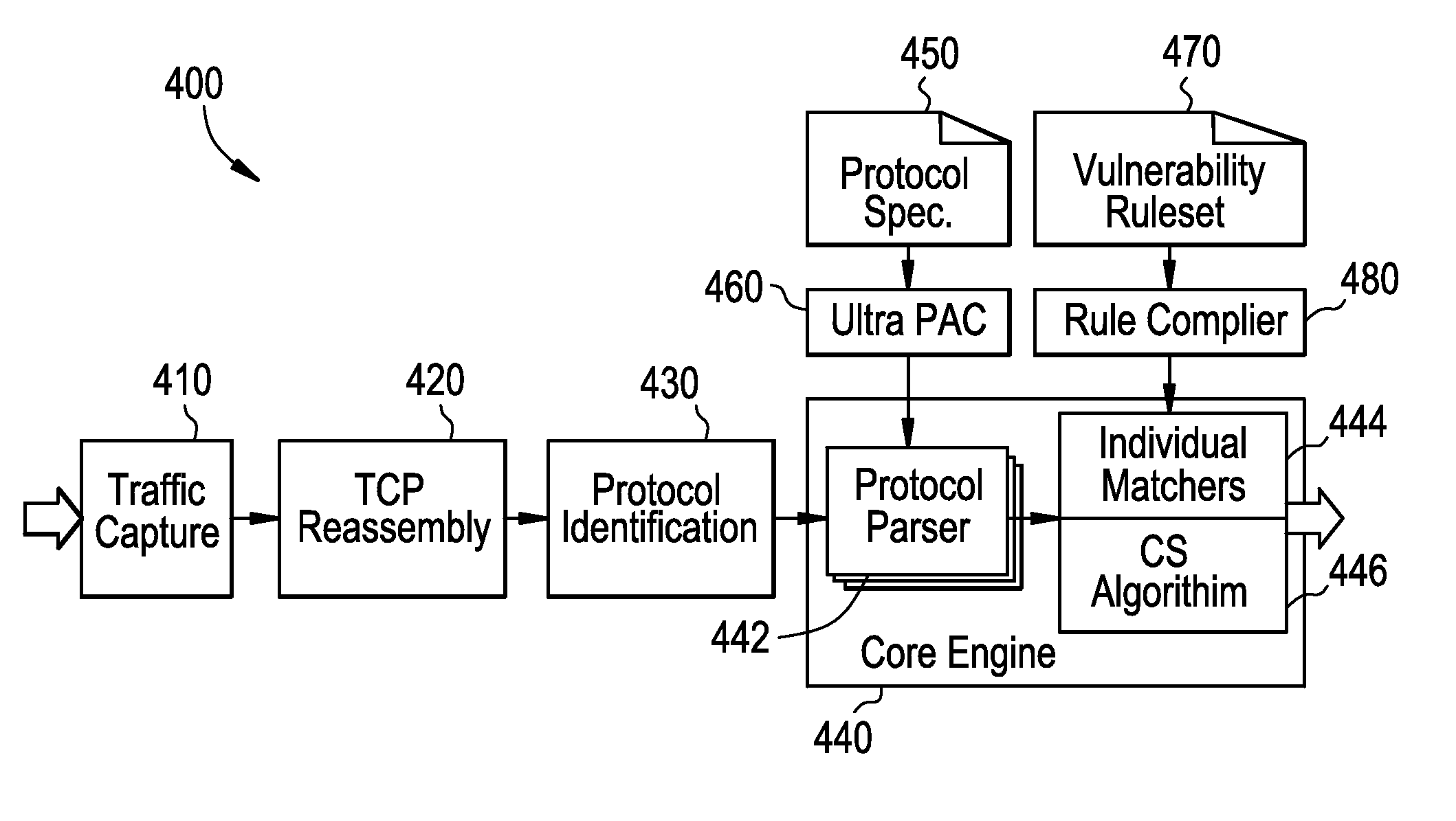

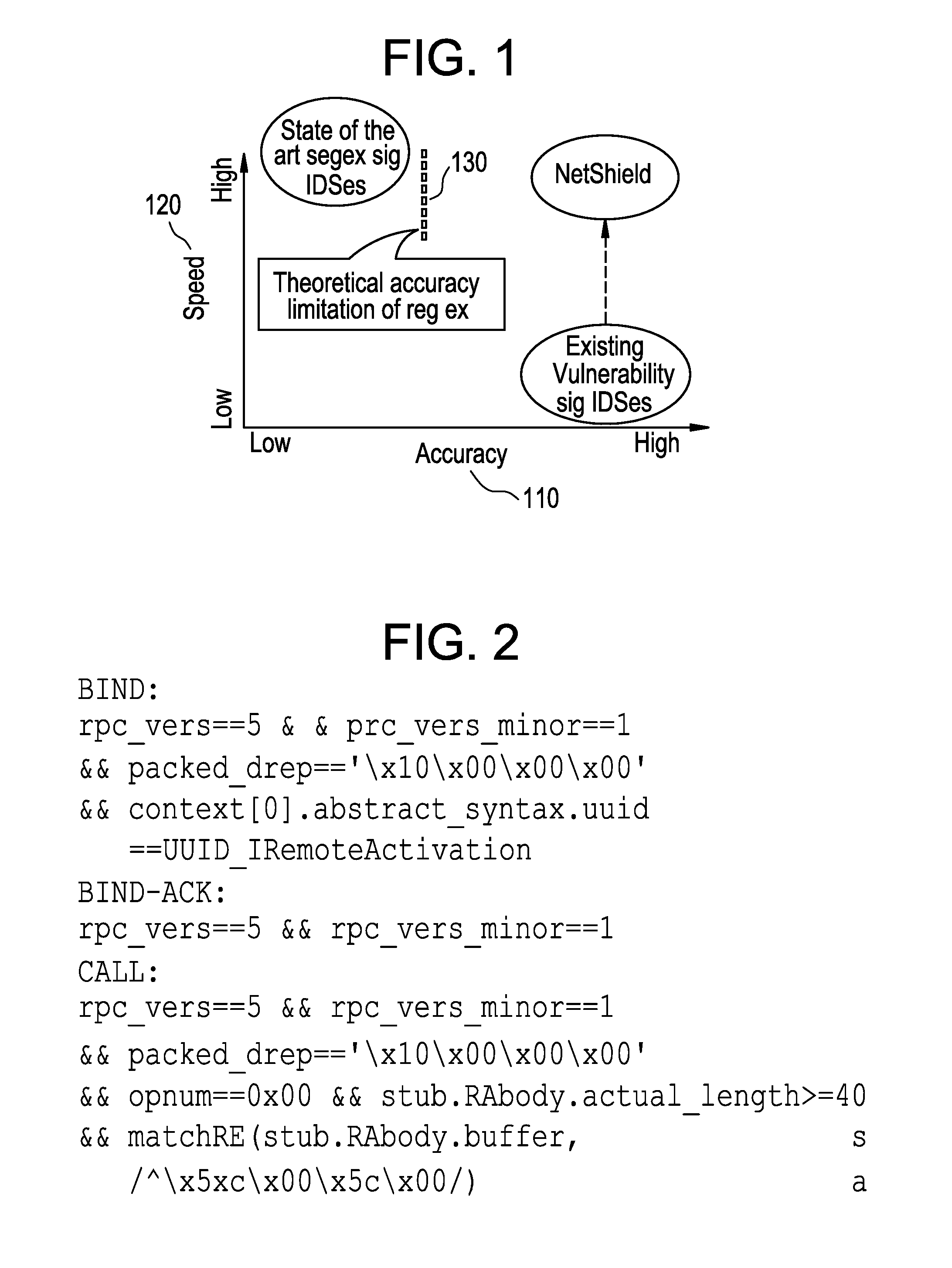

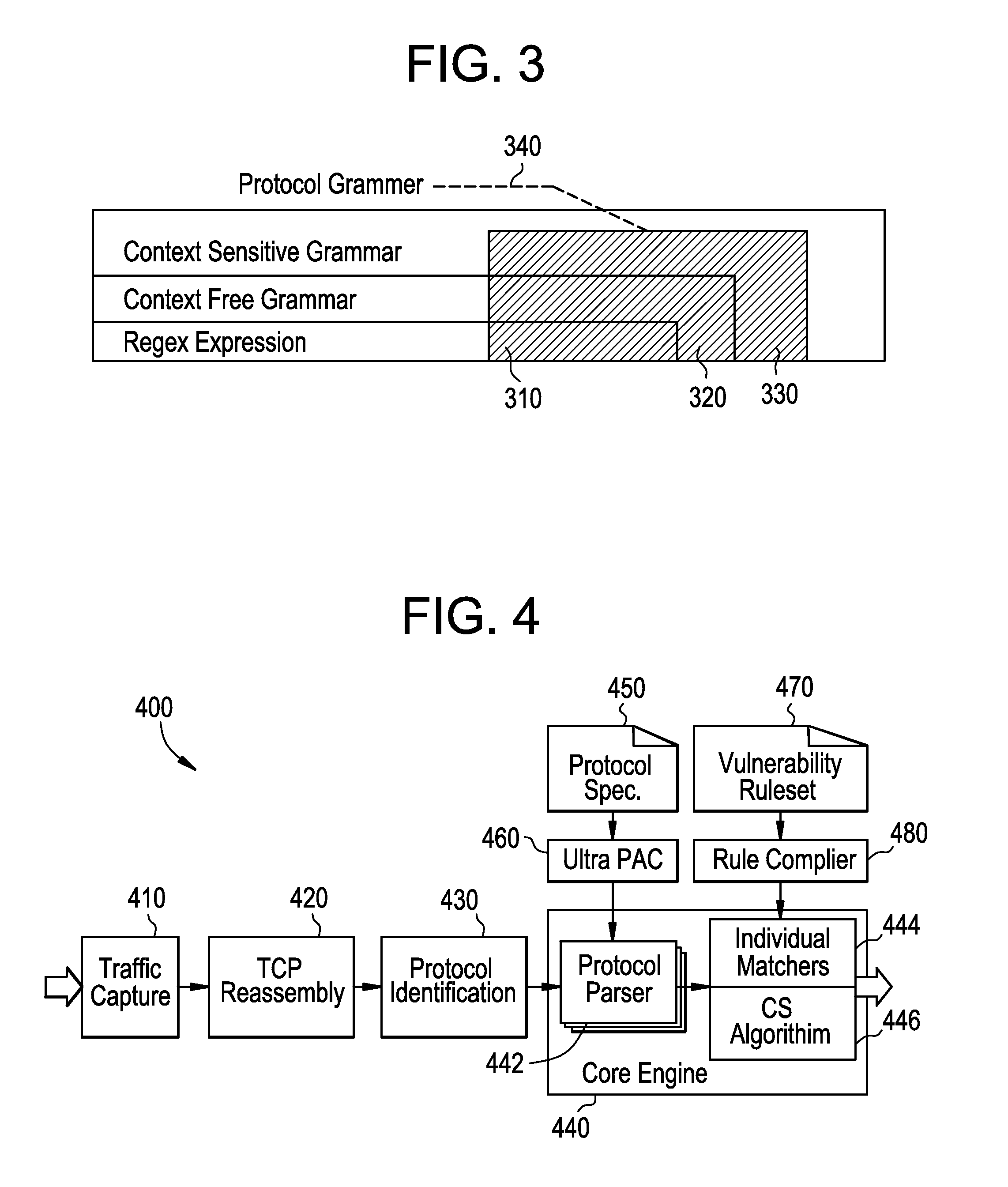

Matching with a large vulnerability signature ruleset for high performance network defense

InactiveUS20110030057A1Memory loss protectionError detection/correctionRegular expressionHigh performance network

Systems, methods, and apparatus are provided for vulnerability signature based Network Intrusion Detection and / or Prevention which achieves high throughput comparable to that of the state-of-the-art regex-based systems while offering improved accuracy. A candidate selection algorithm efficiently matches thousands of vulnerability signatures simultaneously using a small amount of memory. A parsing transition state machine achieves fast protocol parsing. Certain examples provide a computer-implemented method for network intrusion detection. The method includes capturing a data message and invoking a protocol parser to parse the data message. The method also includes matching the parsed data message against a plurality of vulnerability signatures in parallel using a candidate selection algorithm and detecting an unwanted network intrusion based on an outcome of the matching.

Owner:NORTHWESTERN UNIV

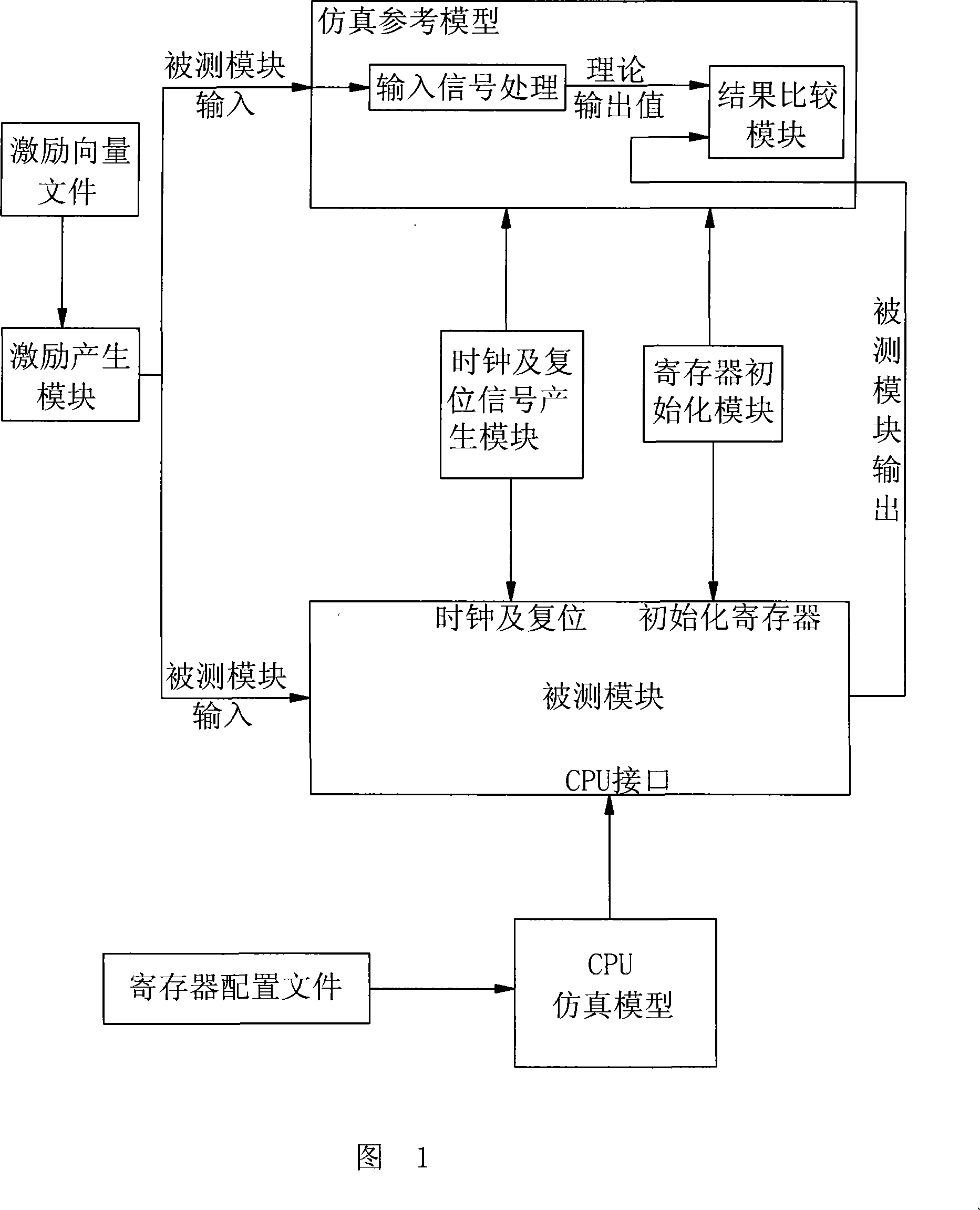

Method for establishing network chip module level function checking testing platform

ActiveCN101183406ASimple and straightforward way to buildClear structureSpecial data processing applicationsReference modelProcessor register

The invention relates to a method for constructing a verification and test platform of network chip module level functions, comprising the construction of a simulation and reference model of the tested modules, which is characterized in that: the method comprises the construction of all modules and documents; the output of an excitation generating model is connected with the inputs of the tested module and the simulation and reference model, a clock and a reset generating module are connected with the tested module and the clock and reset signal of the simulation and reference model, a register initialization module is connected with the register of the tested module and the simulation and reference model, and a CPU simulation module is connected with the CPU of the tested module; the output of the tested module is connected with the simulation and reference model, thus, the network chip module level function verification and test platform can be constructed. The invention has the advantages that the platform can be constructed easily and directly, so the time required to construct the module level function verification and test platform can be shortened greatly in the high performance network chip verification process; meanwhile, the platform constructed by the method has clear structure which is easy to be understood and has improved reliability.

Owner:苏州盛科科技有限公司

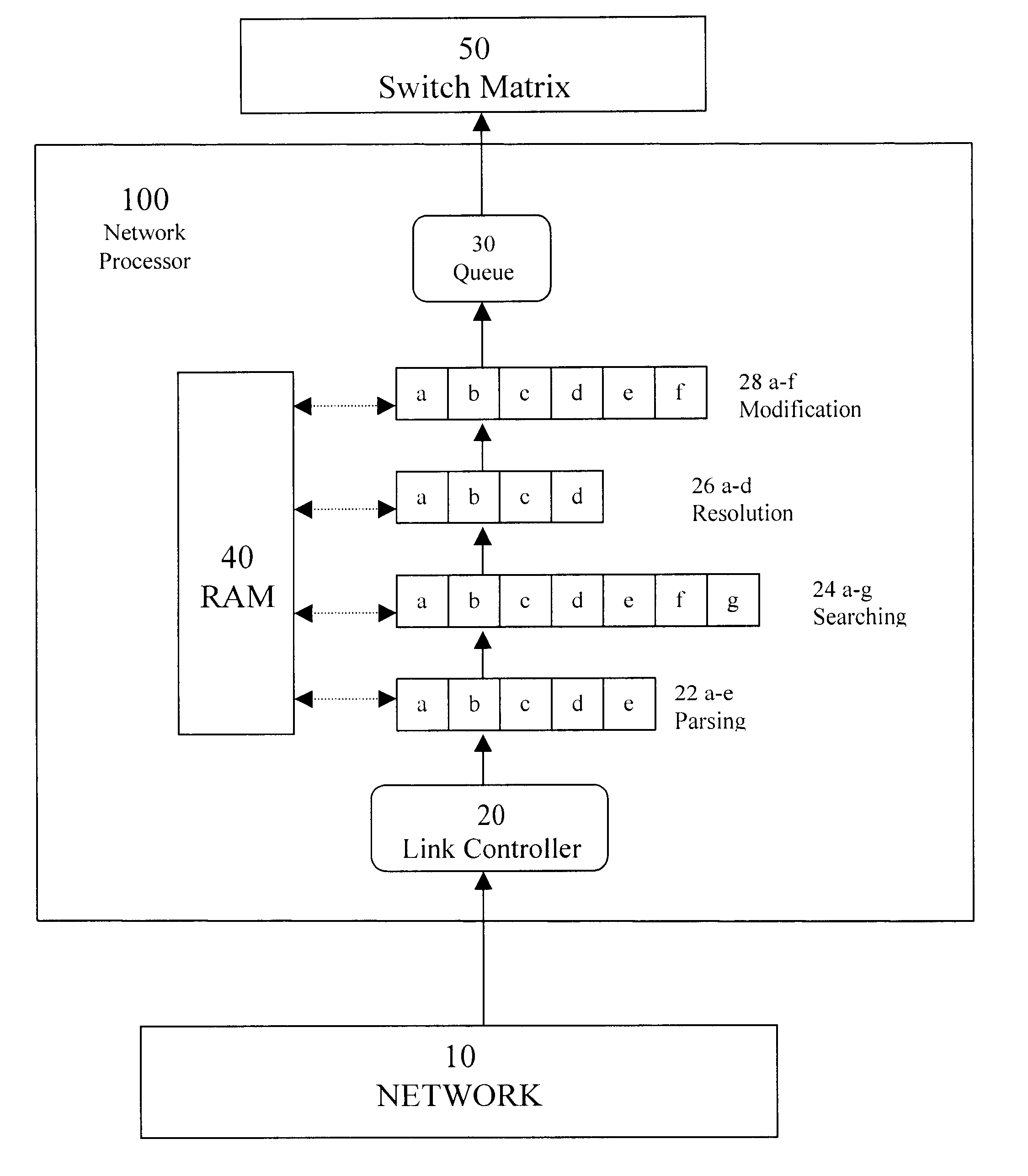

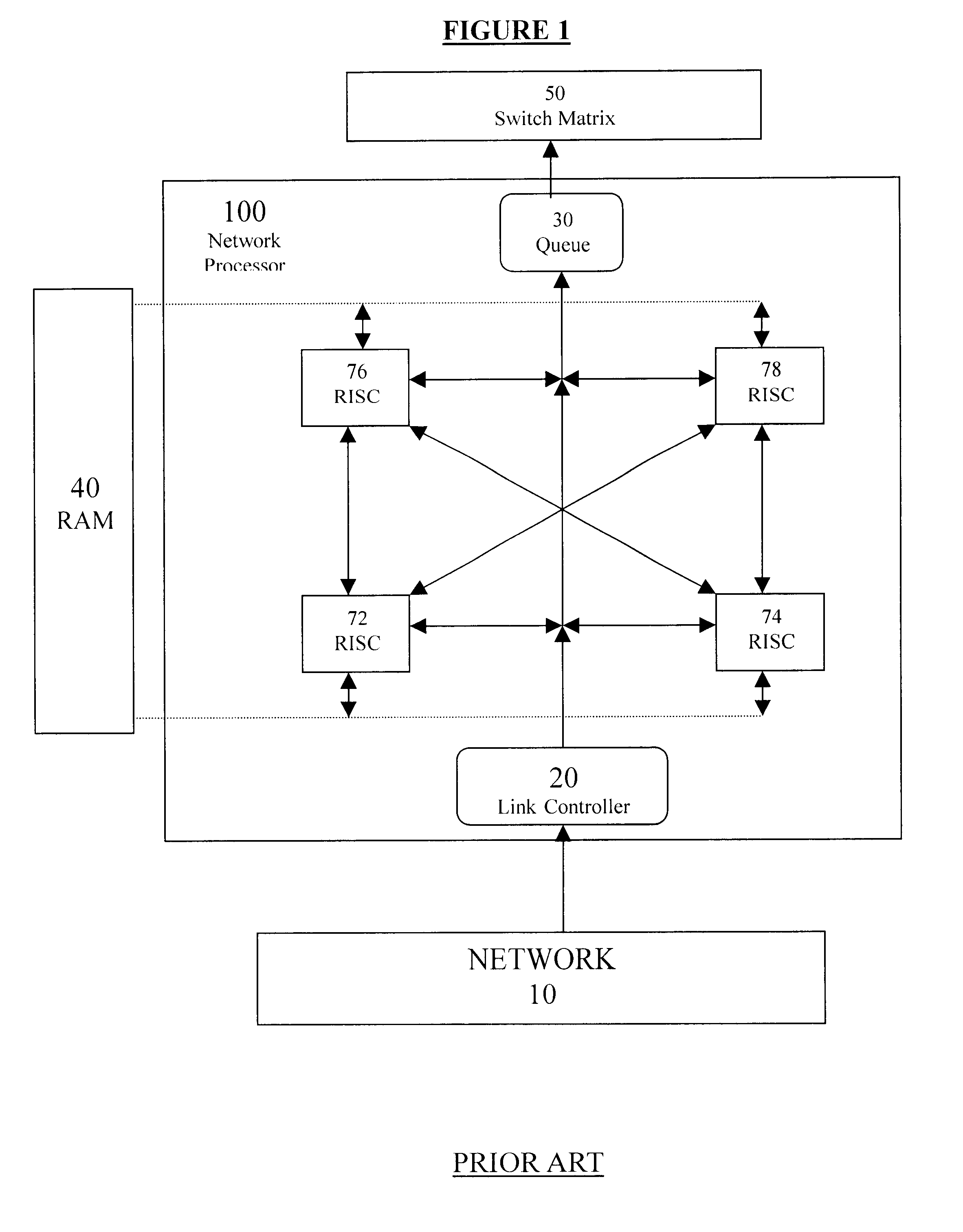

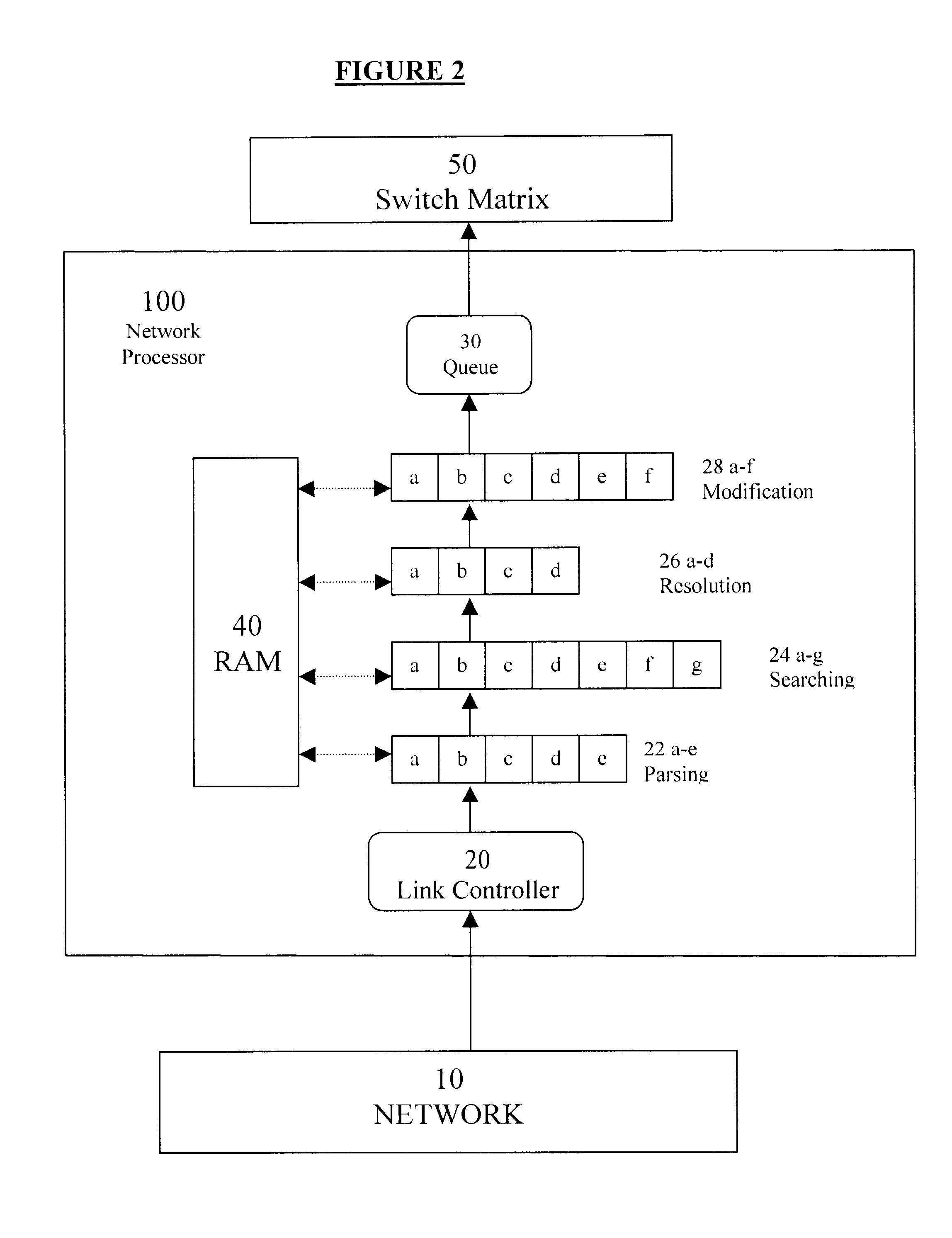

High-performance network processor

InactiveUS6778534B1Time-division multiplexData switching by path configurationImage resolutionHigh performance network

A high-speed system for processing a packet and routing the packet to a packet destination port, the system comprising: (a) a memory block for storing tabulated entries, (b) a parsing subsystem containing at least one microcode machine for parsing the packet, thereby obtaining at least one search key, (c) a searching subsystem containing at least one microcode machine for searching for a match between said at least one search key and said tabulated entries, (d) a resolution subsystem containing at least one microcode machine for resolving the packet destination port, and (e) a modification subsystem containing at least one microcode machine for making requisite modifications to the packet; wherein at least one of said microcode machines is a customized microcode machine.

Owner:MELLANOX TECHNOLOGIES LTD

Backplane Interface Adapter with Error Control and Redundant Fabric

A backplane interface adapter with error control and redundant fabric for a high-performance network switch. The error control may be provided by an administrative module that includes a level monitor, a stripe synchronization error detector, a flow controller, and a control character presence tracker. The redundant fabric transceiver of the backplane interface adapter improves the adapter's ability to properly and consistently receive narrow input cells carrying packets of data and output wide striped cells to a switching fabric.

Owner:AVAGO TECH INT SALES PTE LTD

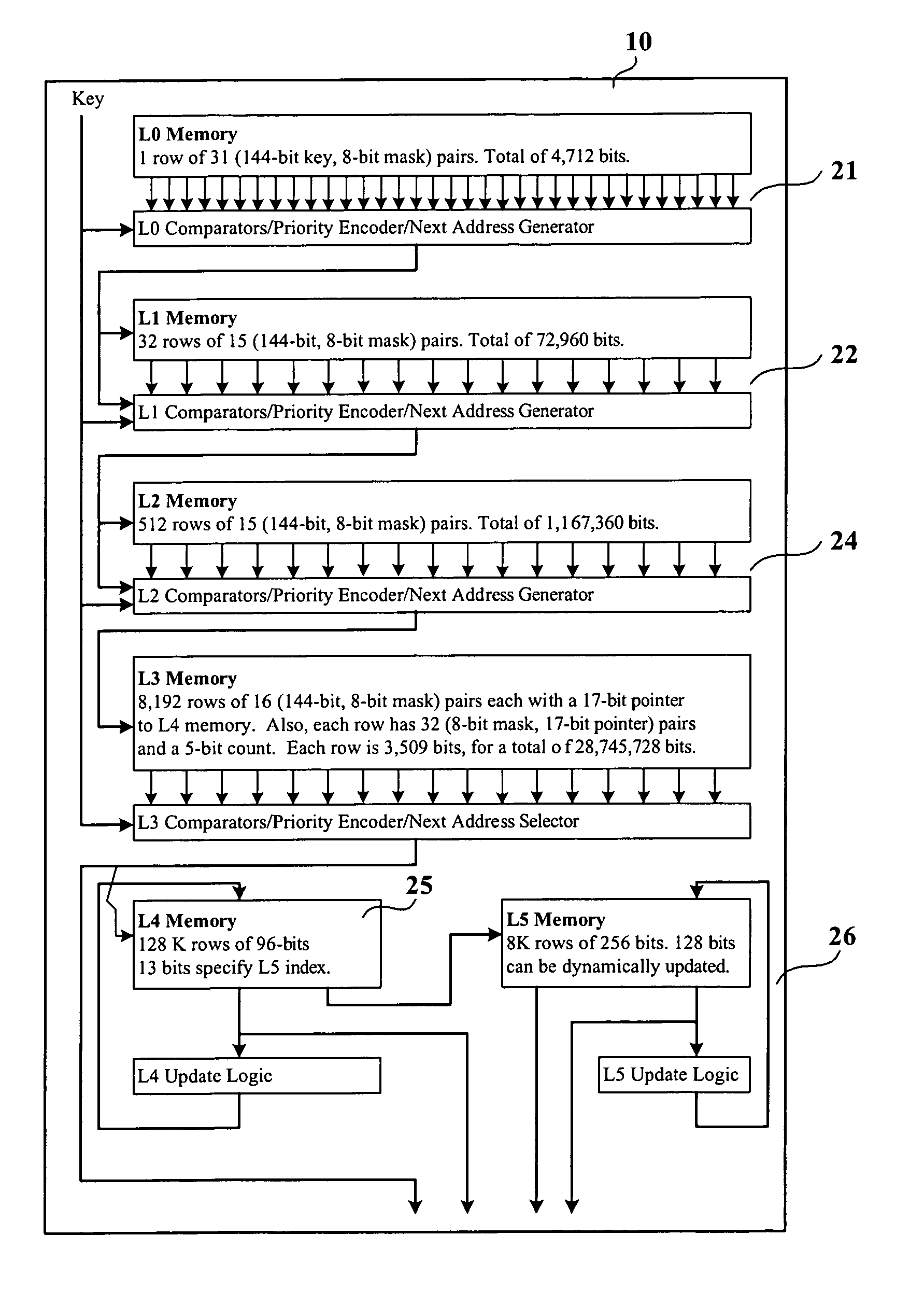

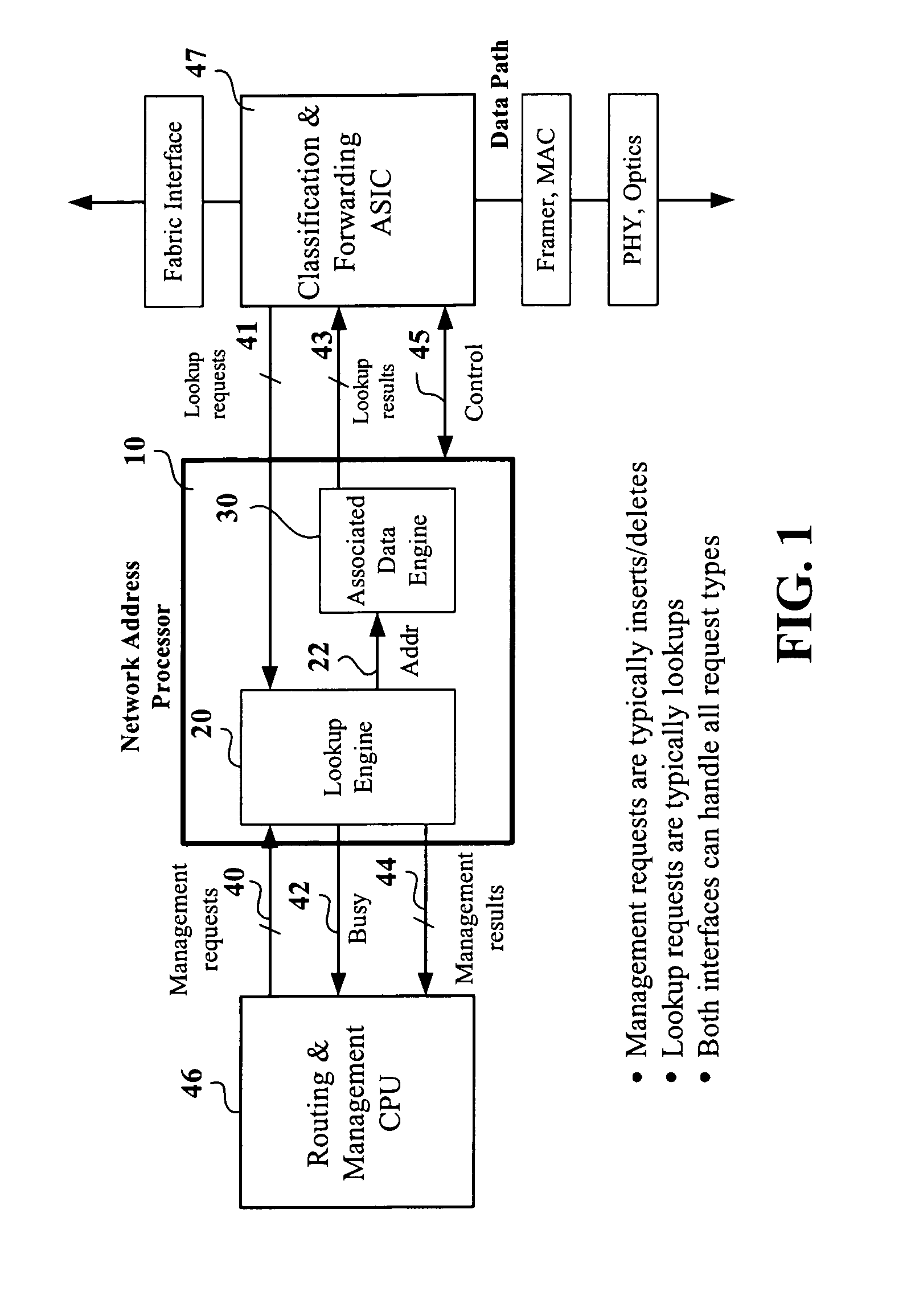

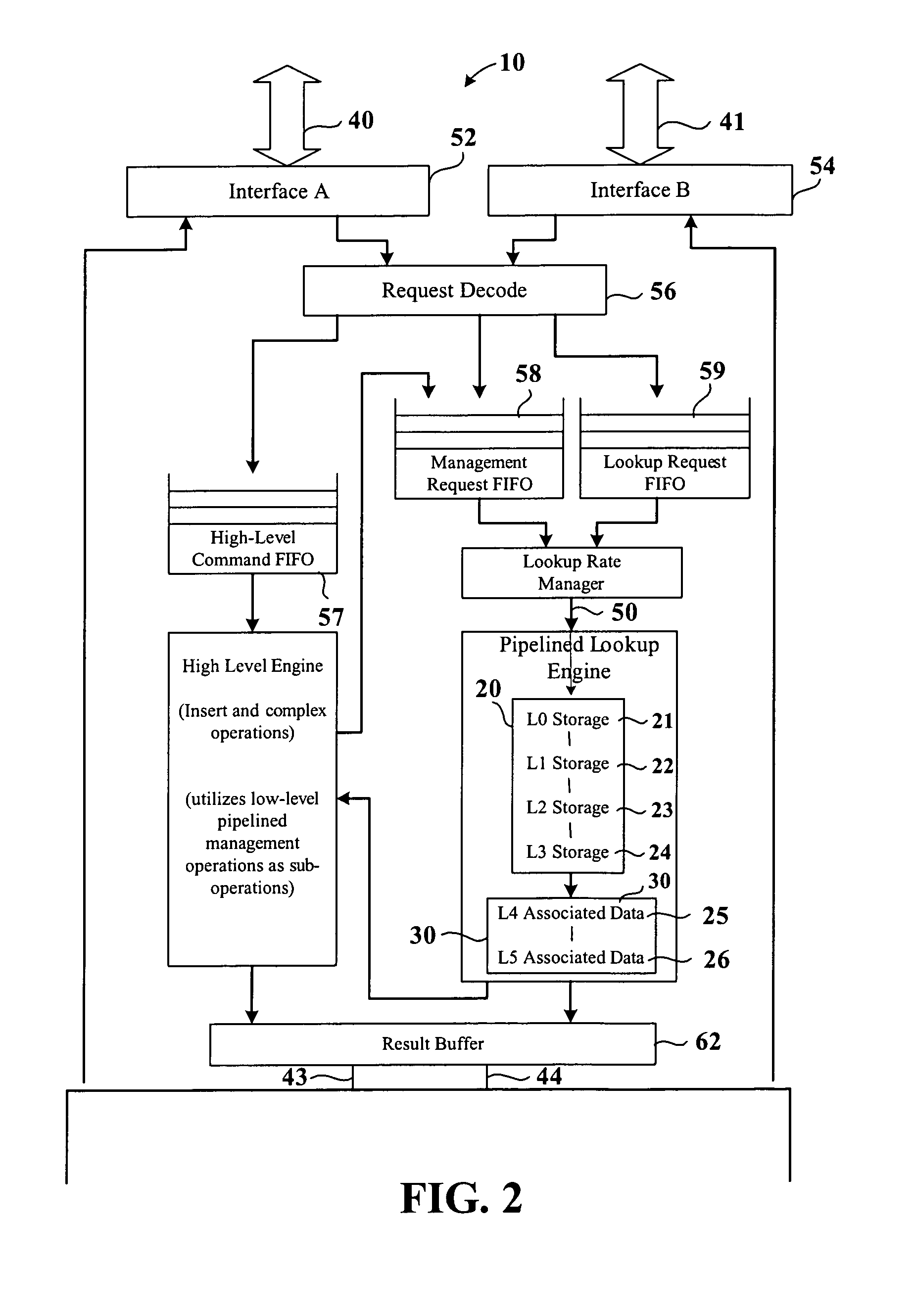

High performance network address processor system

InactiveUS7047317B1High densityReduce power consumptionMultiple digital computer combinationsTransmissionLongest prefix matchNetwork addressing

A high performance network address processor is provided comprising a longest prefix match lookup engine for receiving a request for data from a designated network destination address. An associated data engine is also provided to couple to the longest prefix match lookup engine for receiving a longest prefix match lookup engine output address from the longest prefix match lookup engine and providing a network address processor data output corresponding to the designated network destination address requested. The high performance network address processor longest prefix match lookup engine comprises a plurality of pipelined lookup tables. Each table provides an index to a given row within the next higher stage lookup table, wherein the last stage, or the last table, in the set of tables comprises an associated data pointer provided as input to the associated data engine. The associated data engine also comprising one or more tables, the associated data engine generating a designated data output associated with the designated network address provided to the high performance NAP.

Owner:ALTERA CORP

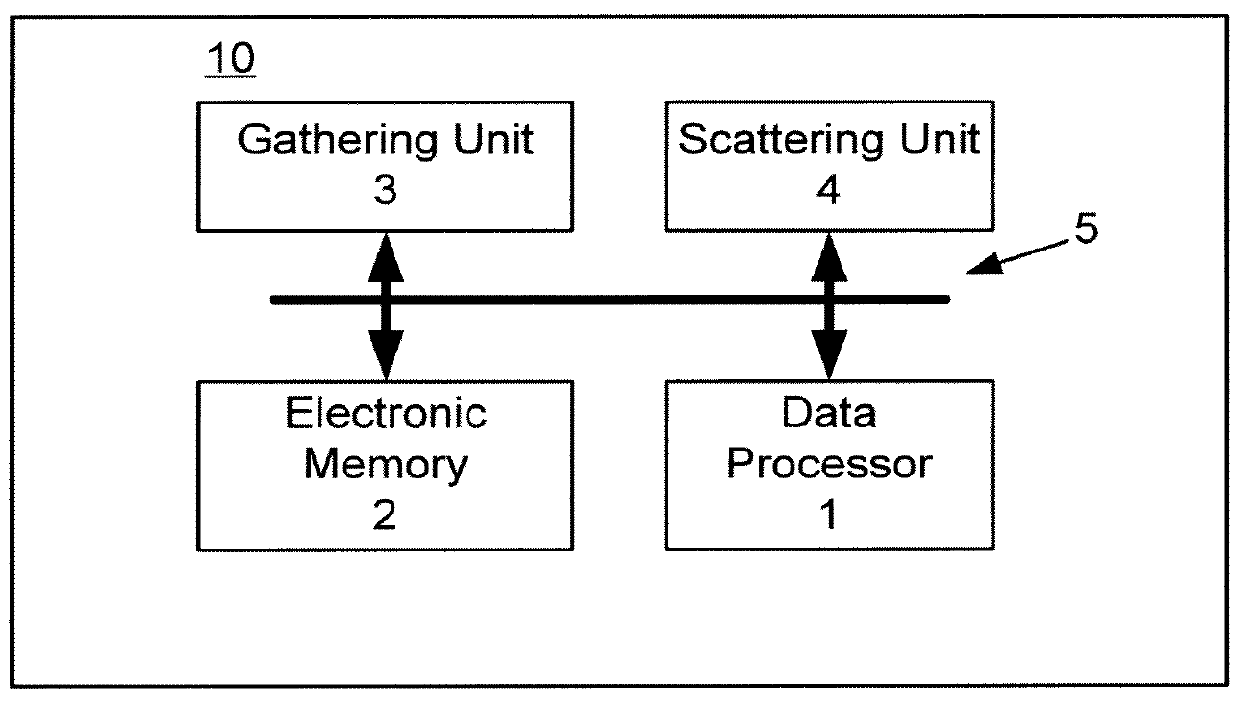

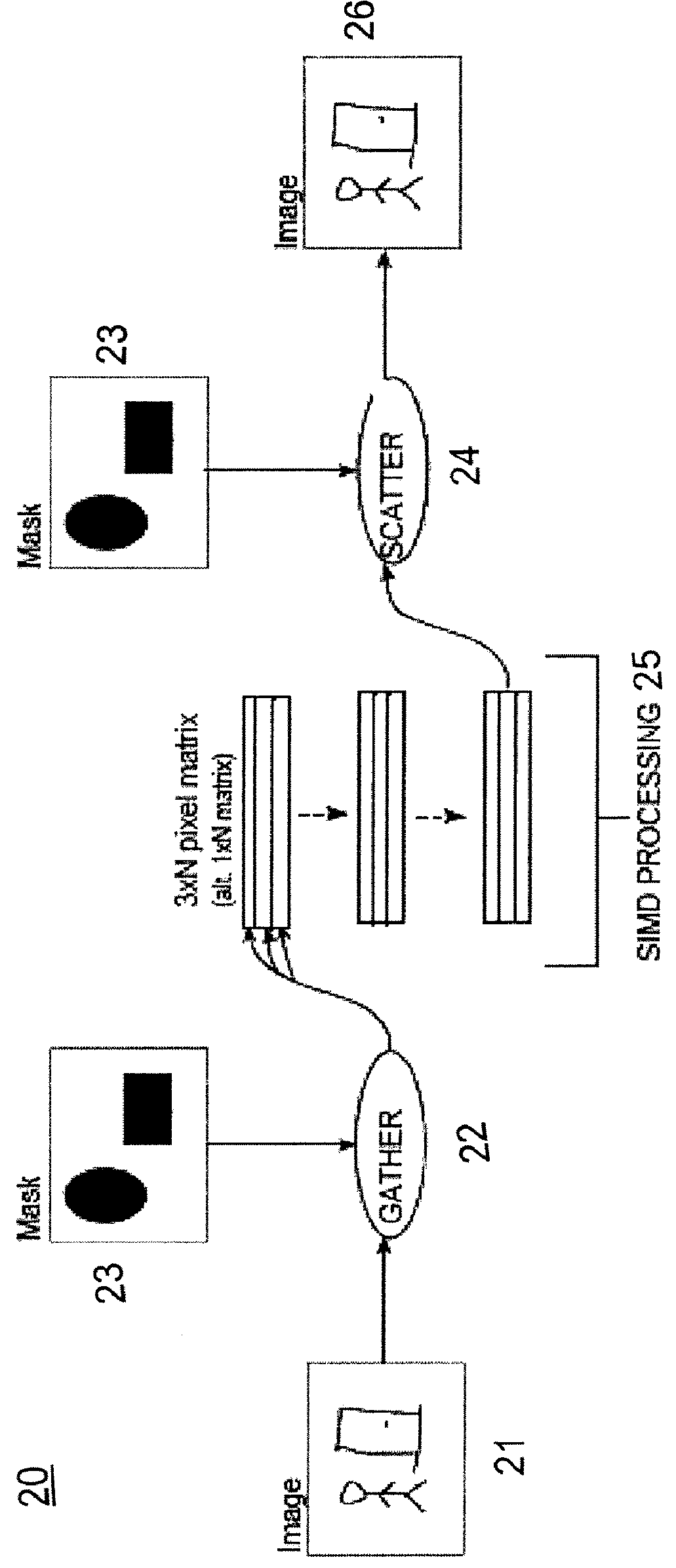

Video analytics system, computer program product, and associated methodology for efficiently using SIMD operations

ActiveUS8260002B2Image analysisCharacter and pattern recognitionTraffic capacityComputer graphics (images)

A video analytics system and associated methodology for performing low-level video analytics processing divides the processing into three phases in order to efficiently use SIMD instructions of many modern data processors. In the first phase, pixels of interest are gathered using a predetermined mask and placed into a pixel matrix. In the second phase, video analytics processing is performed on the pixel matrix, and in the third phase the pixels are scattered using the same predetermined mask. This allows many pixels to be processed simultaneously, increasing overall performance. A DMA unit may also be used to offload the processor during the gathering and scattering of pixels, further increasing performance. A network camera integrates the video analytics system to reduce network traffic.

Owner:AXIS

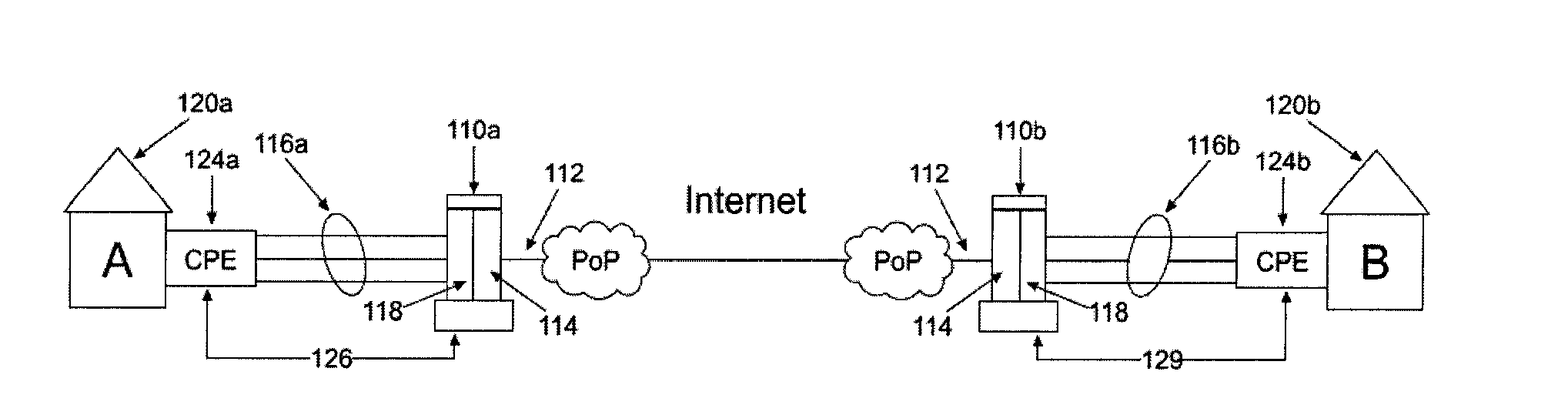

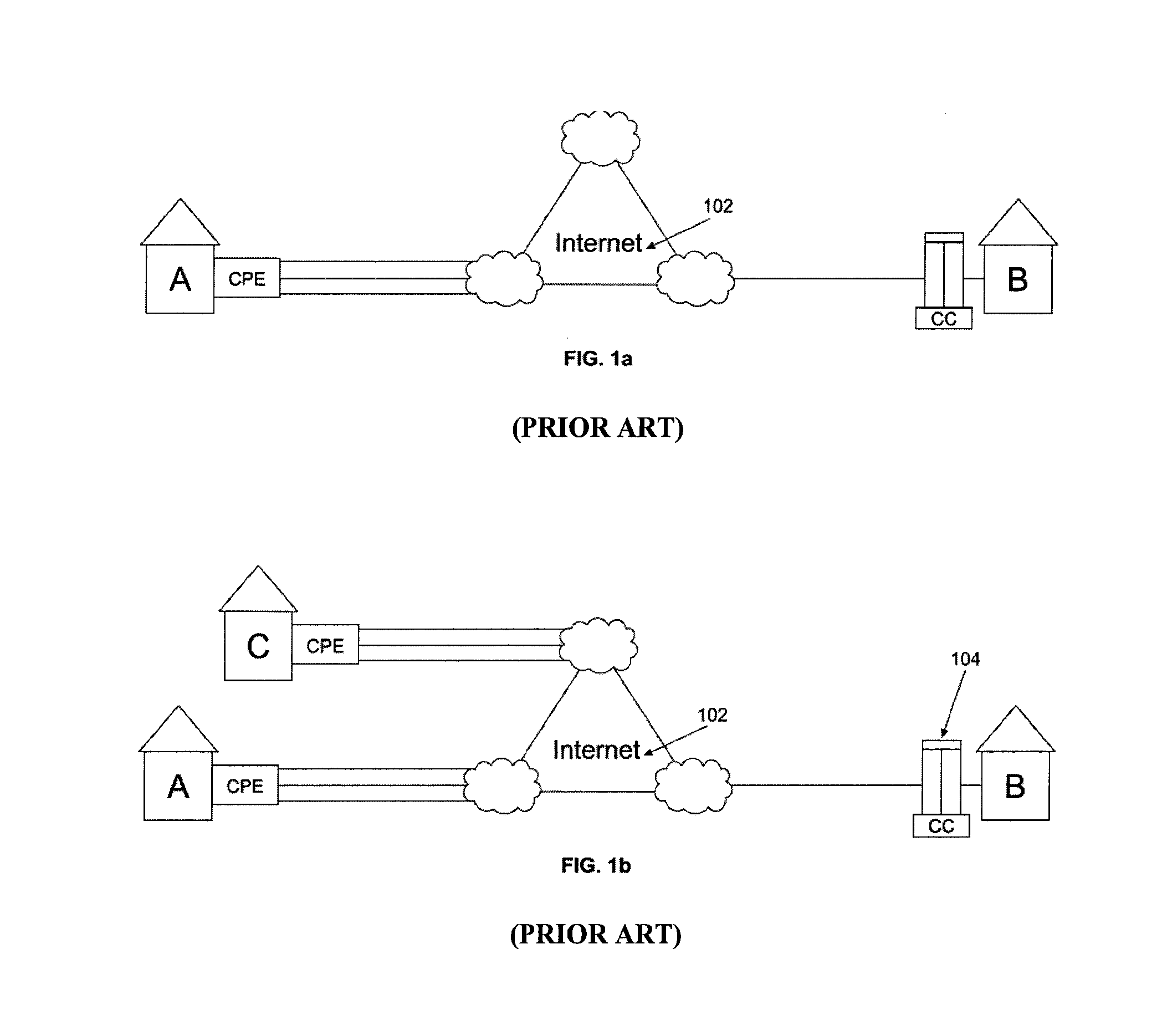

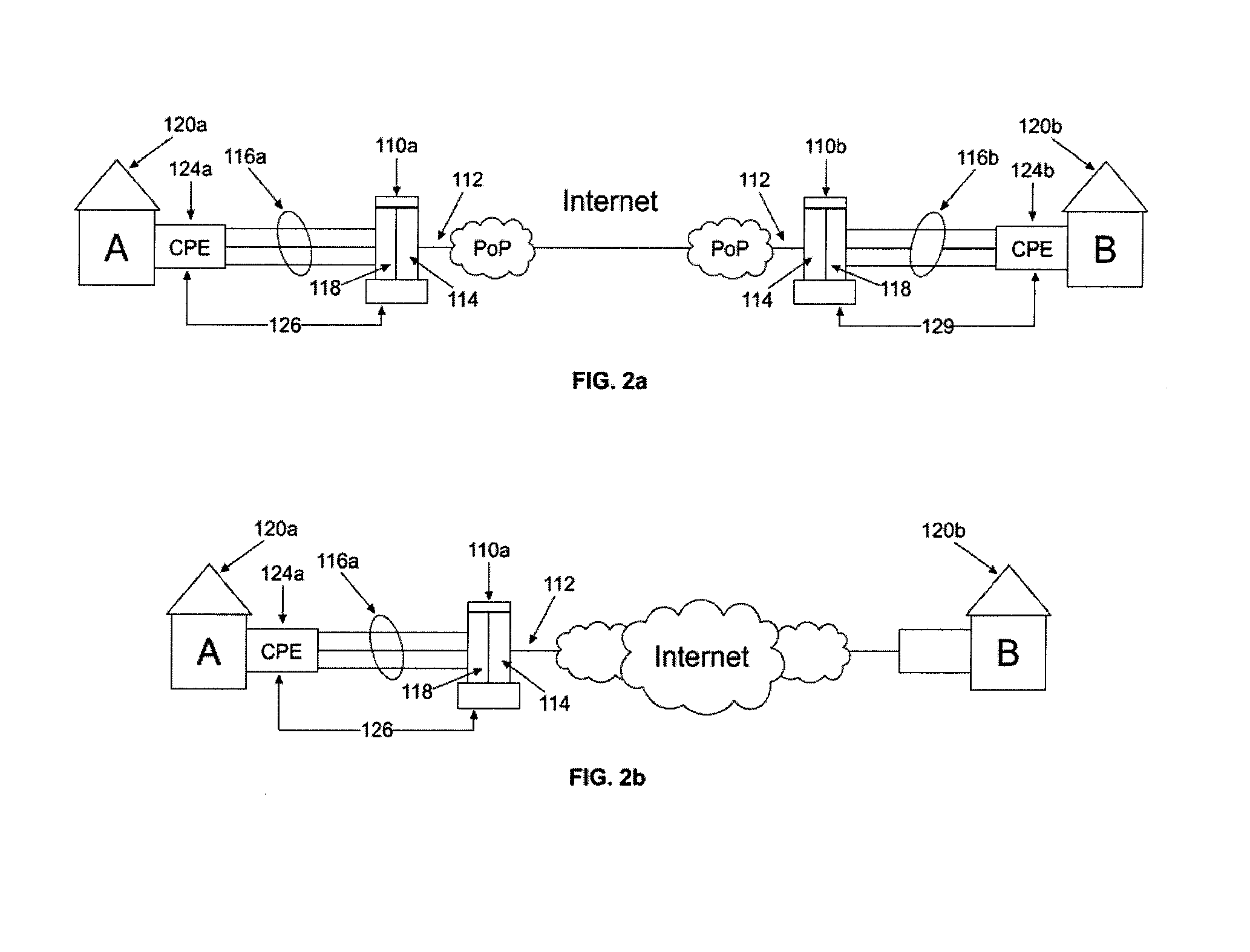

System, apparatus and method for providing improved performance of aggregated/bonded network connections between remote sites

ActiveUS20140040442A1Improve communication performanceImprove performanceDigital computer detailsData switching networksTraffic capacityNetwork connection

A networking system, method, and device is provided for improving network communication performance between client sites at a distance from one another such that would usually require long haul network communication. The networking system includes at least one network bonding / aggregation computer system for bonding or aggregating one or more diverse network connections so as to configure a bonded / aggregated connection that has increased throughput; and at least one network server component implemented at an access point to a high performing network. Data traffic is carried over the bonded / aggregated connection. The network server component automatically terminates the bonded / aggregated connection and passes the data traffic to the network backbone, while providing a managed network path that incorporates both the bonded / aggregated connection and a network path carried over the high performing network, and thereby providing improved performance in long haul network connections.

Owner:ADAPTIV NETWORKS INC

Systems and methods for rapid processing and storage of data

ActiveUS20110307647A1Faster bandwidthImprove latencyMemory architecture accessing/allocationTransmissionMassively parallelProcessing core

Systems and methods of building massively parallel computing systems using low power computing complexes in accordance with embodiments of the invention are disclosed. A massively parallel computing system in accordance with one embodiment of the invention includes at least one Solid State Blade configured to communicate via a high performance network fabric. In addition, each Solid State Blade includes a processor configured to communicate with a plurality of low power computing complexes interconnected by a router, and each low power computing complex includes at least one general processing core, an accelerator, an I / O interface, and cache memory and is configured to communicate with non-volatile solid state memory.

Owner:CALIFORNIA INST OF TECH

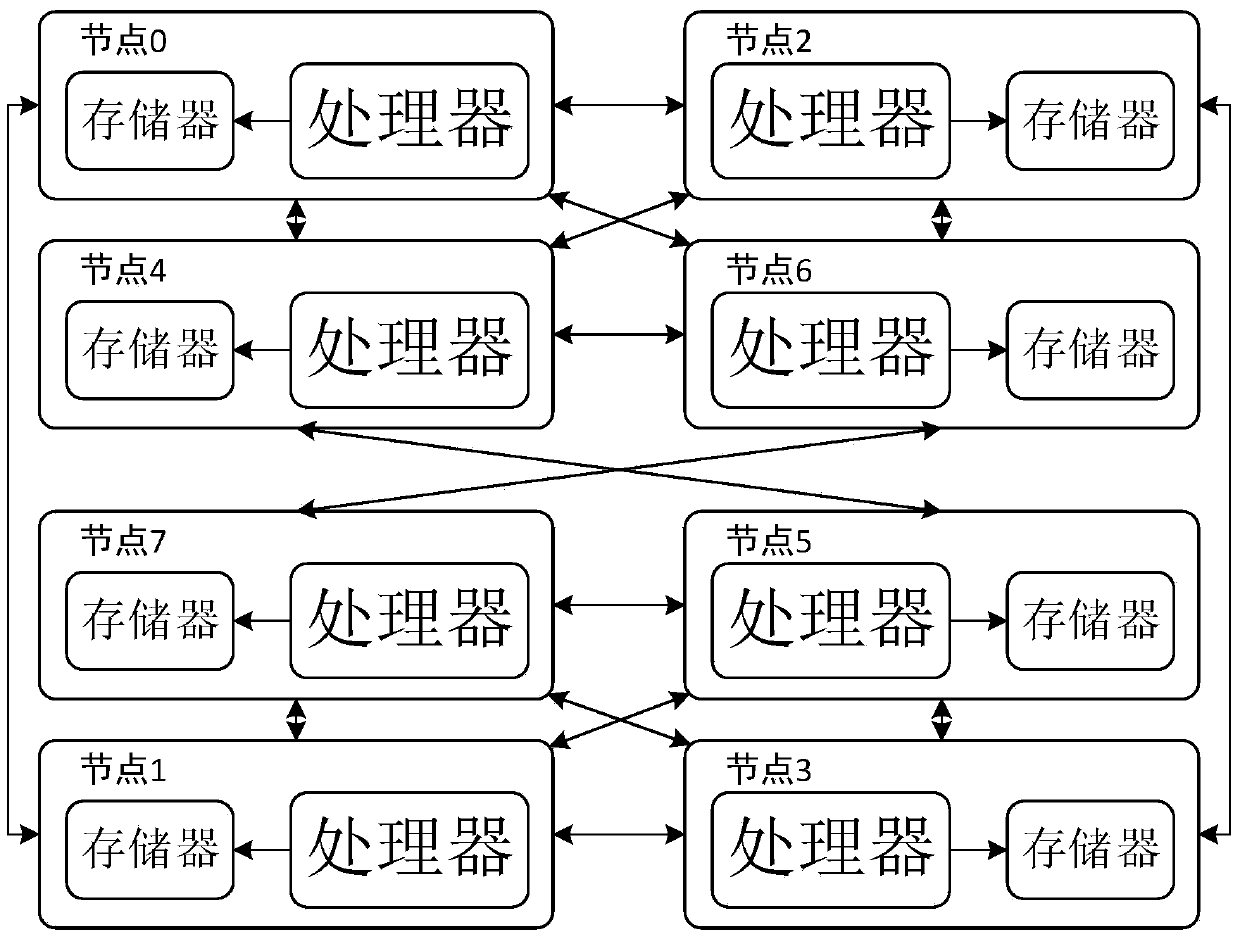

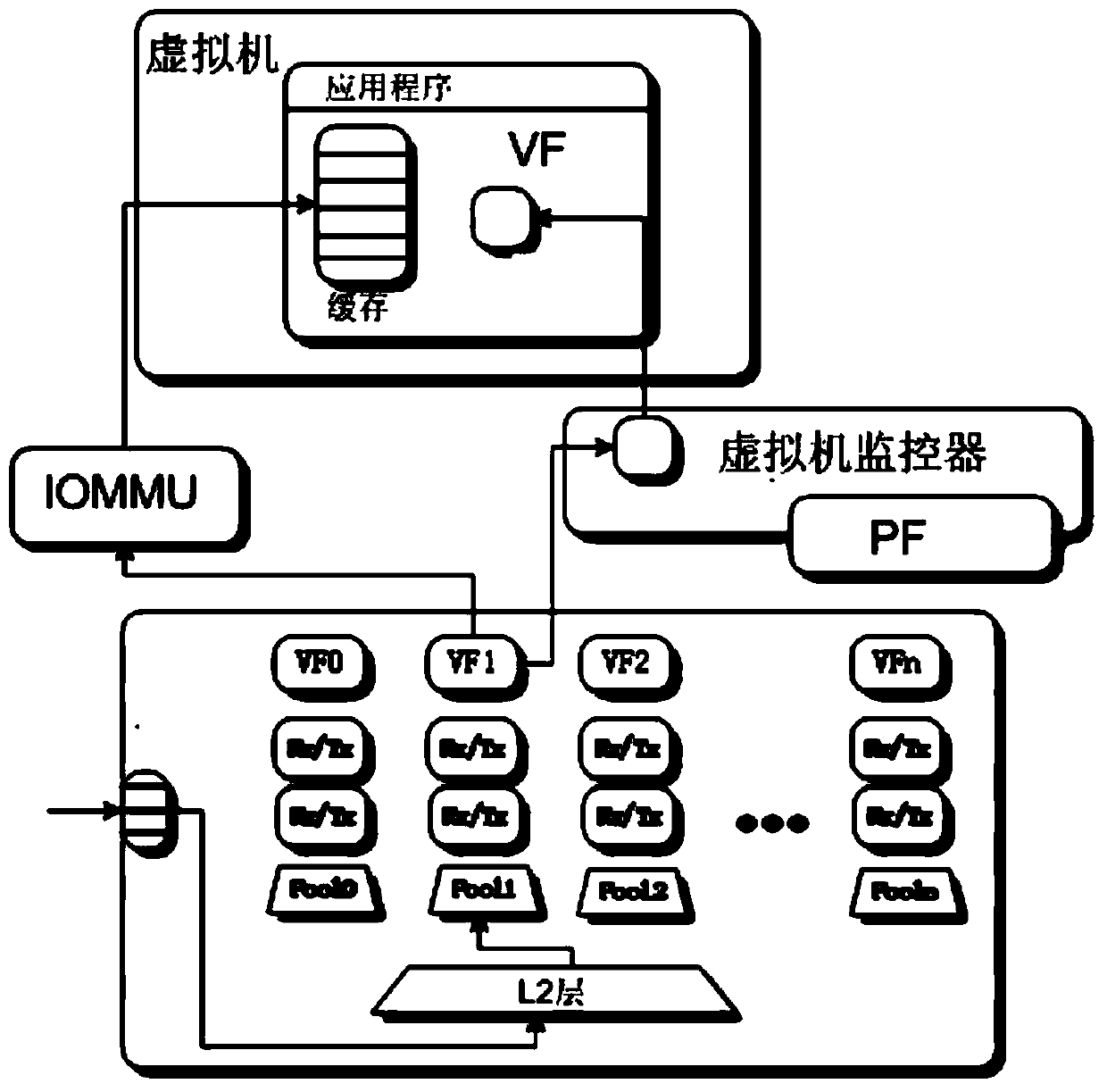

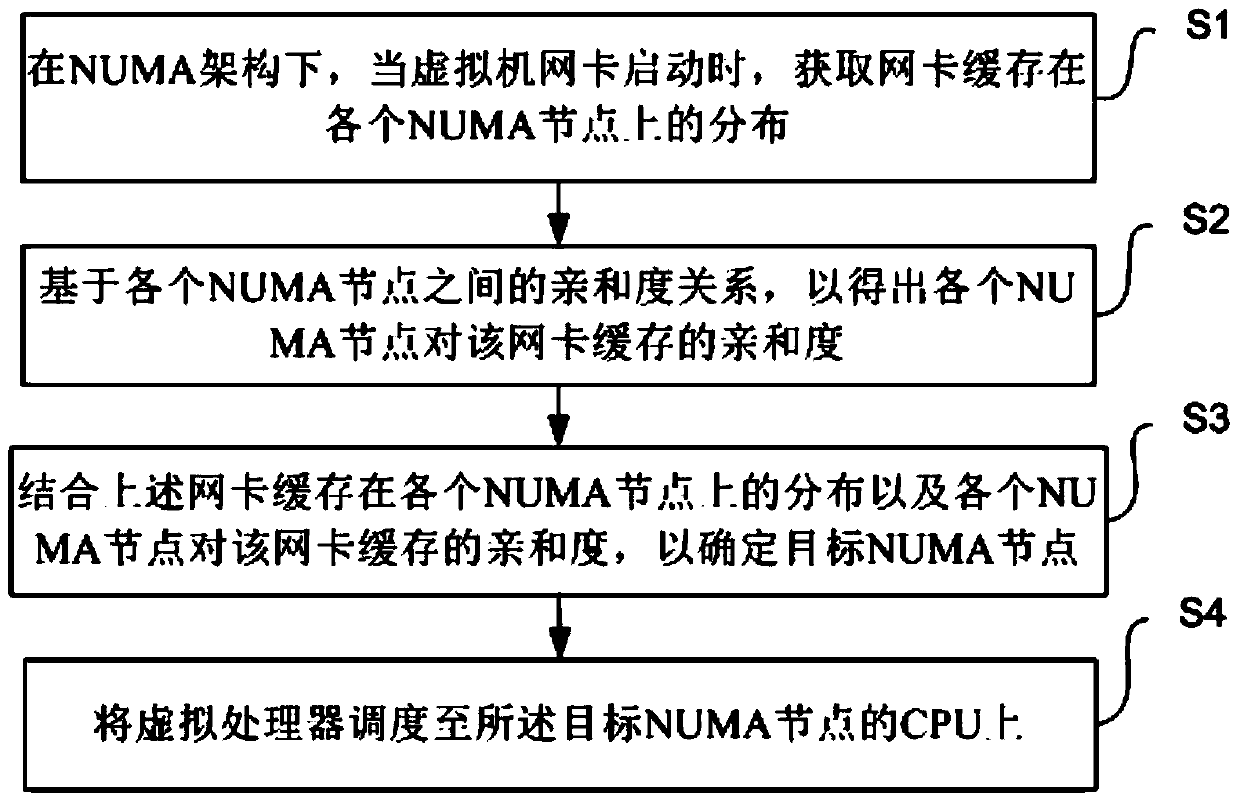

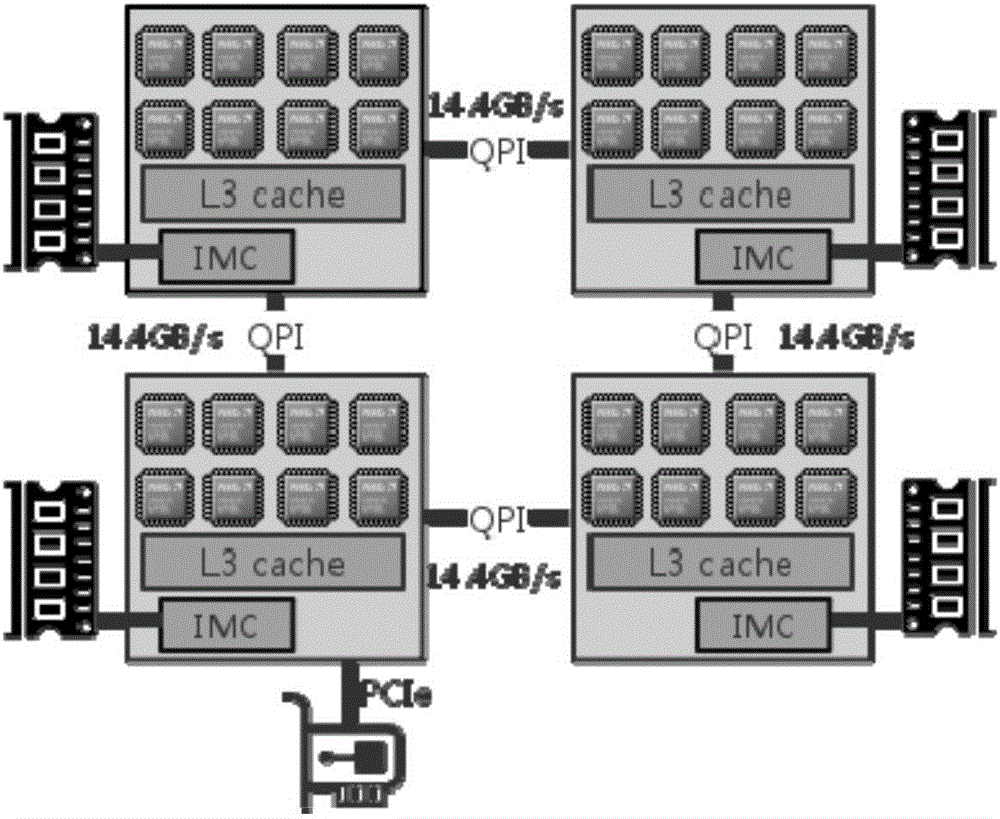

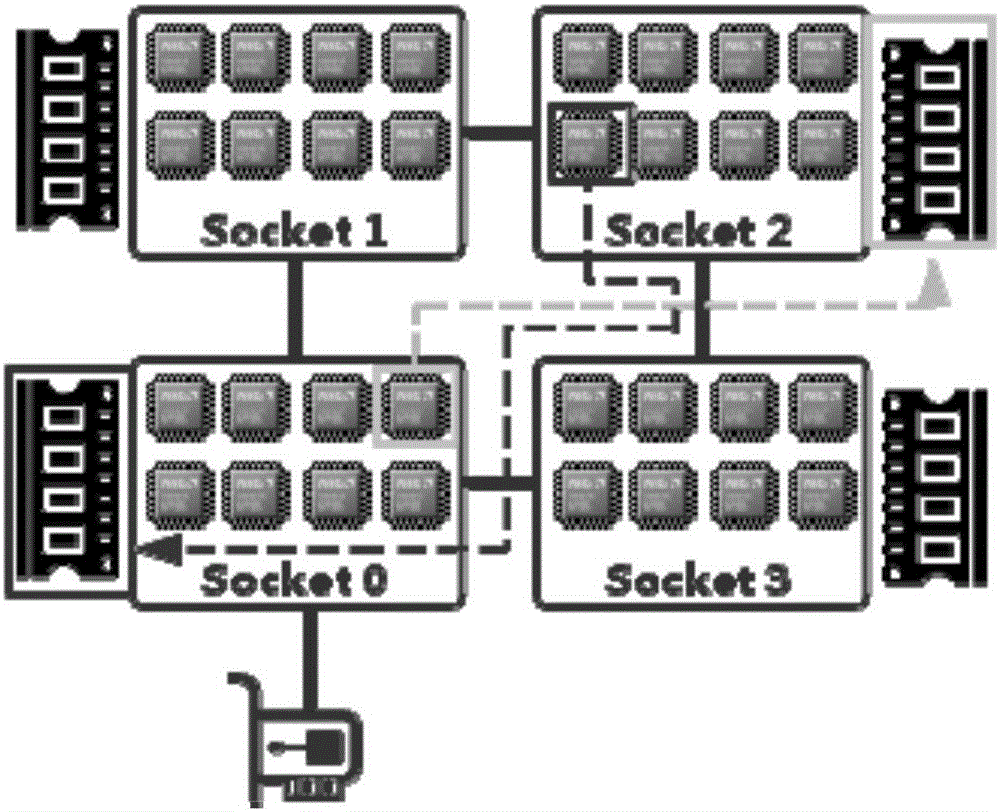

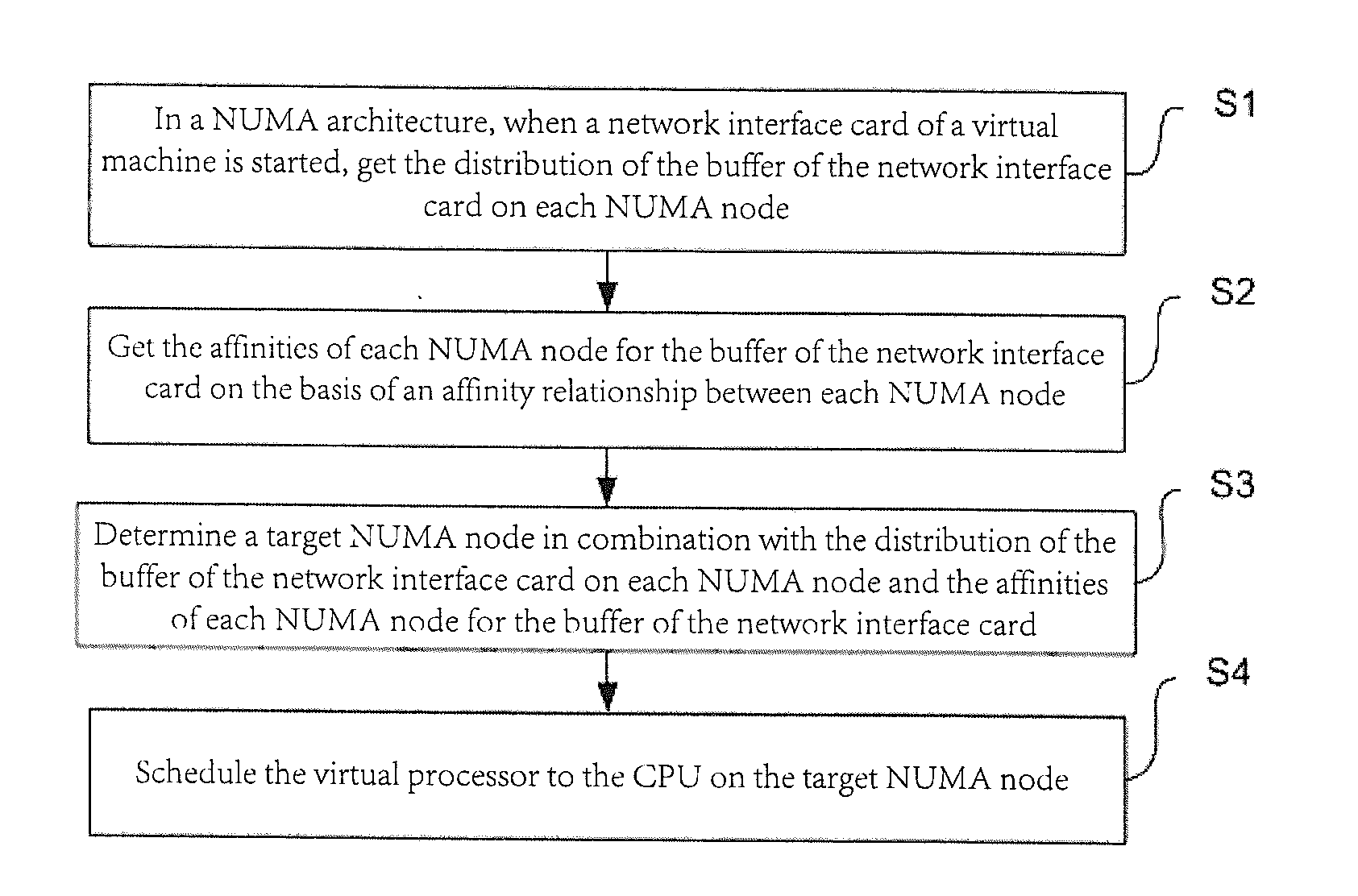

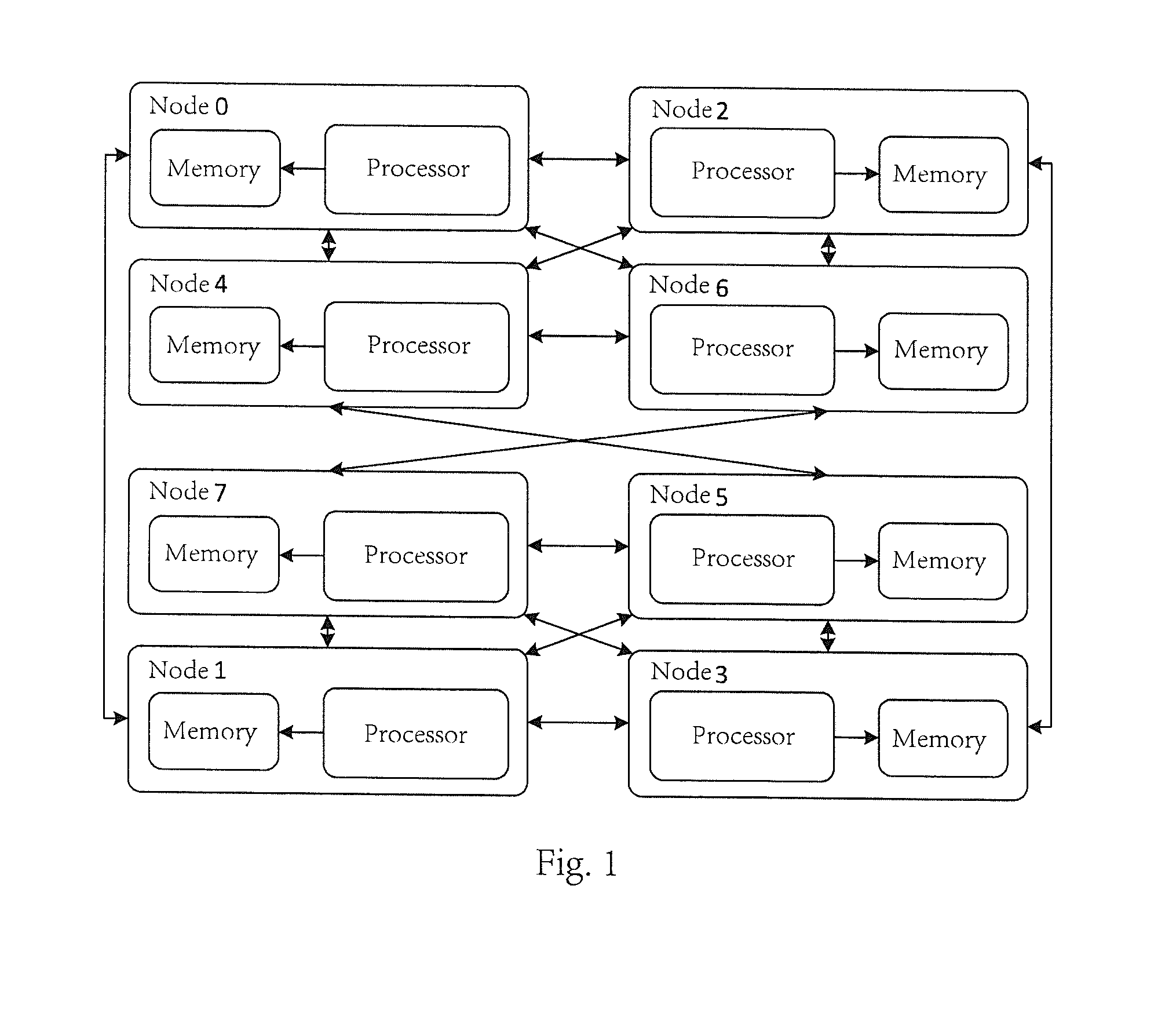

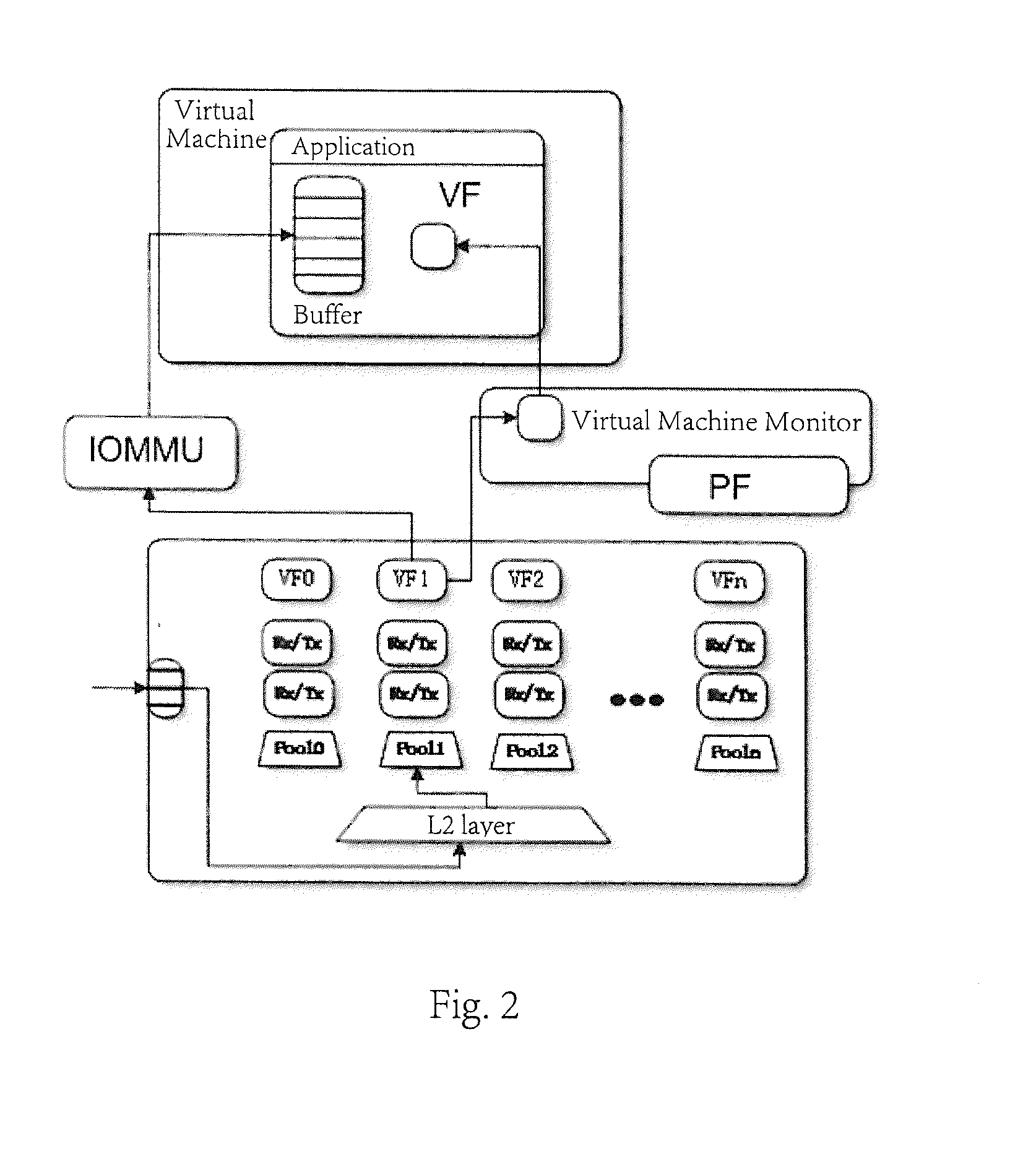

Dispatching method of virtual processor based on NUMA high-performance network cache resource affinity

ActiveCN104199718AProcessing speedTake advantage ofResource allocationSoftware simulation/interpretation/emulationNetwork packetSpeed of processing

The invention discloses a dispatching method of a virtual processor based on NUMA high-performance network cache resource affinity. The dispatching method comprises the steps that under an NUMA framework, when a virtual machine network card is started, distribution of a network card cache on each NUMA node is obtained; based on the affinity relationship among the NUMA nodes, the affinity of each NUMA node towards the network card cache is obtained; a target NUMA node is determined by combining the distribution of the network card cache on each NUMA node and the affinity of each NUMA node towards the network card cache; the virtual processor is dispatched to a CPU of the target NUMA node. The dispatching method of the virtual processor based on NUMA high-performance network cache resource affinity resolves the problem that under the NUMA framework, the affinity of a VCPU of a virtual machine and the network card cache is not optimal, so that the speed of processing network data packages of the virtual machine network card is low.

Owner:SHANGHAI JIAO TONG UNIV

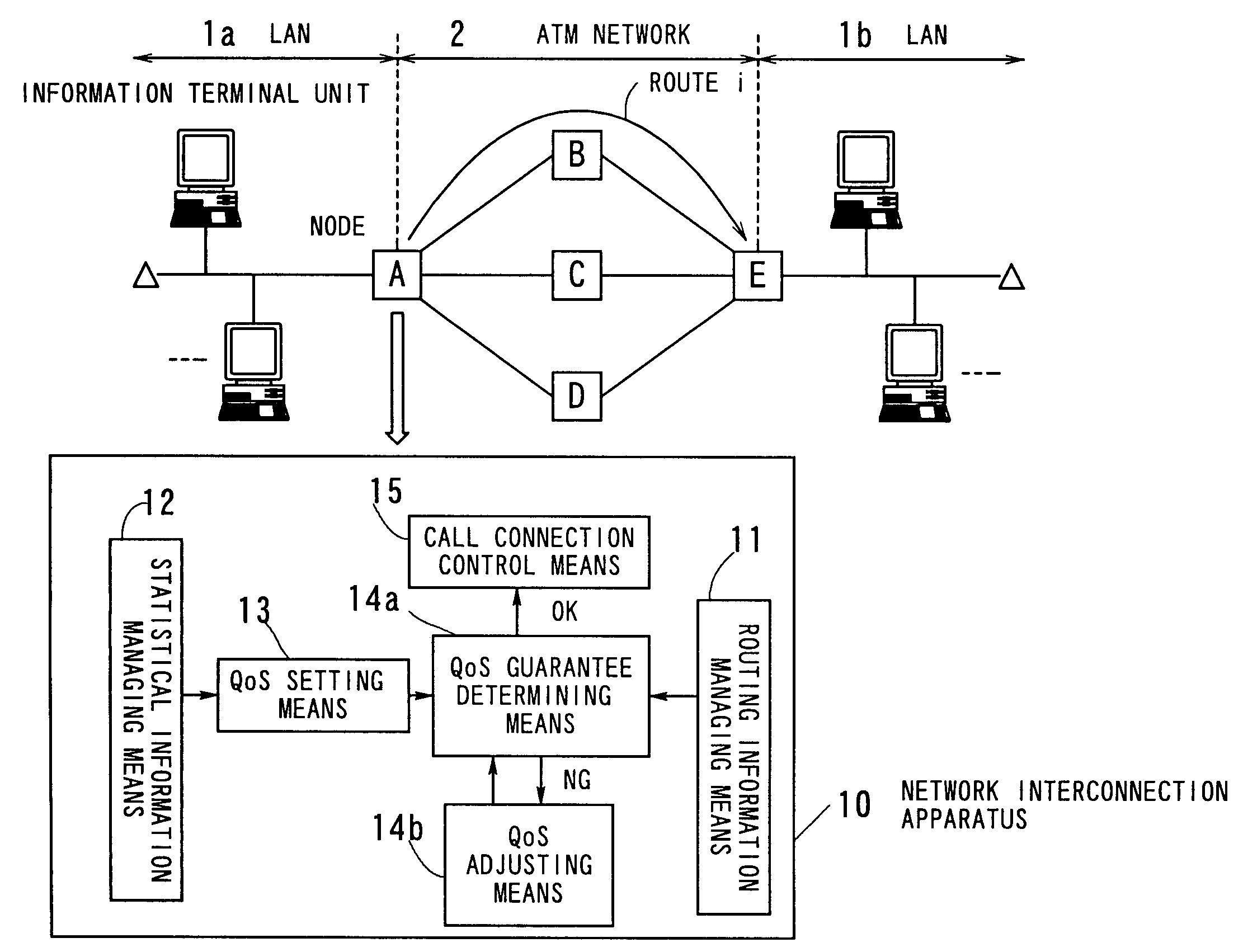

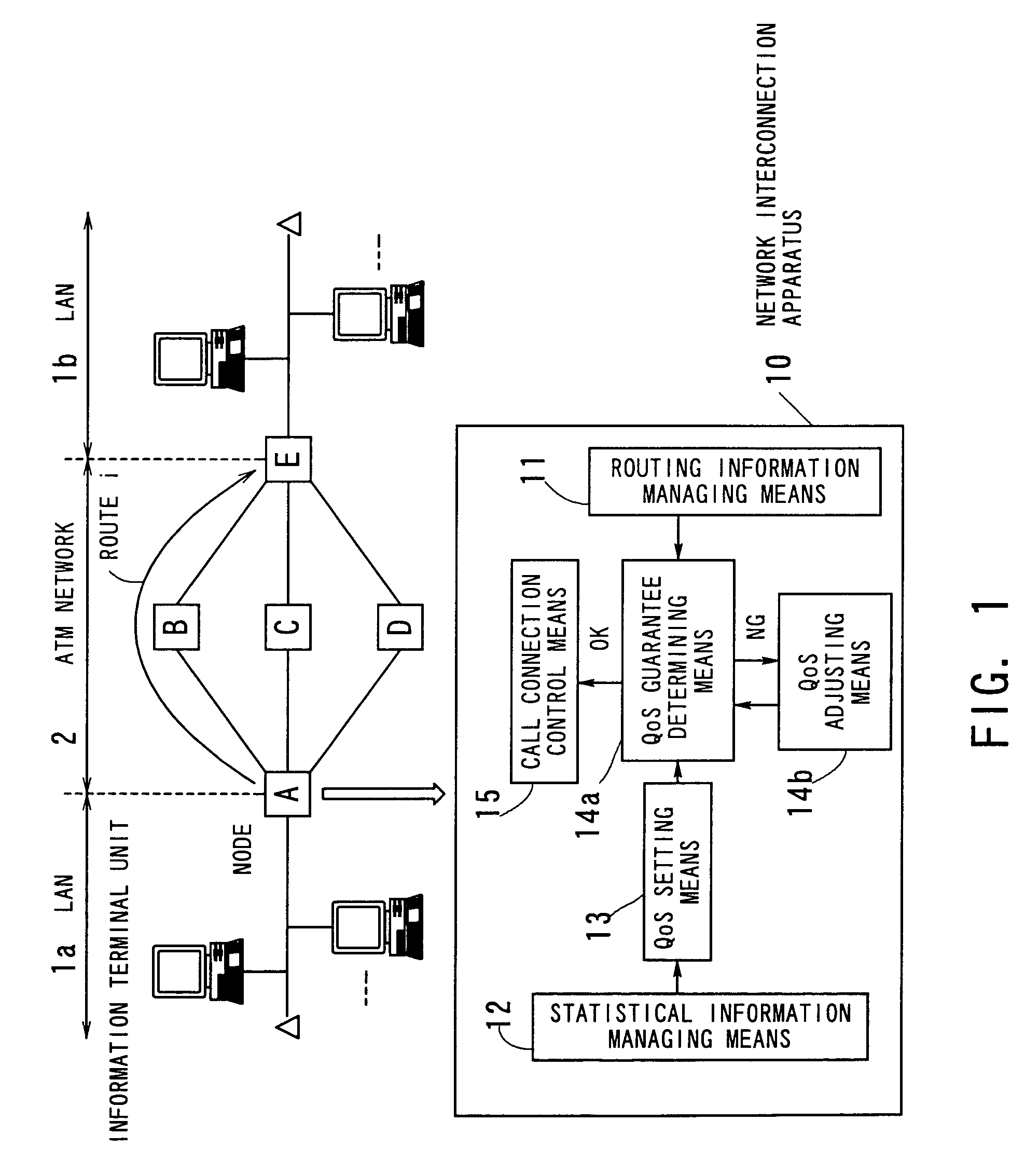

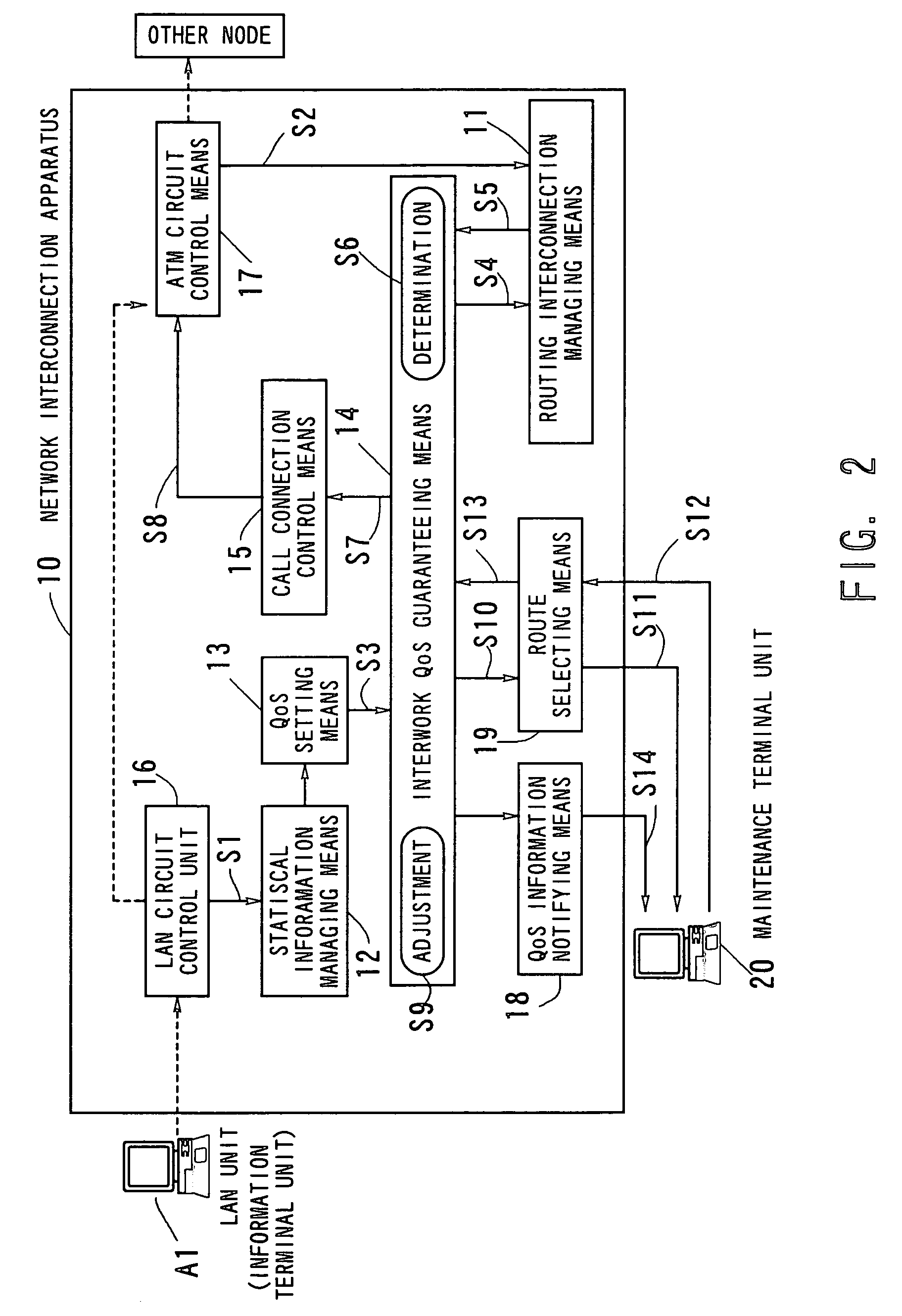

Network interconnection apparatus for interconnecting a LAN and an ATM network using QoS adjustment

InactiveUS7185112B1Guaranteed efficiencyImprove performanceError preventionTransmission systemsInterconnectionConnection control

Network interconnection apparatus and method capable of efficiently guaranteeing optimum QoS suited to the status of actual traffic and network, whereby high-performance network interconnection is achieved without entailing lost calls. Routing information managing means manages routing information of an ATM network, and statistical information managing means manages statistical information on the traffic of a LAN. QoS setting means sets QoS which the ATM network ought to guarantee, based on the statistical information, and QoS guarantee determining means determines based on the routing information whether or not the set QoS can be guaranteed. If it is judged that the QoS cannot be guaranteed, QoS adjusting means adjusts the QoS so that the QoS can be guaranteed. Call connection control means connects call according to the QoS which can be guaranteed.

Owner:FUJITSU LTD

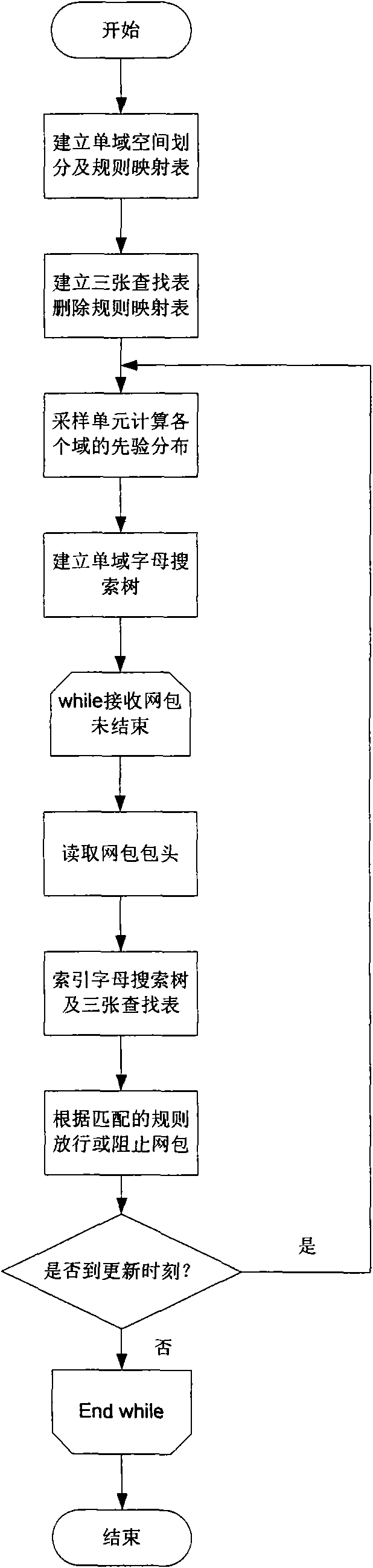

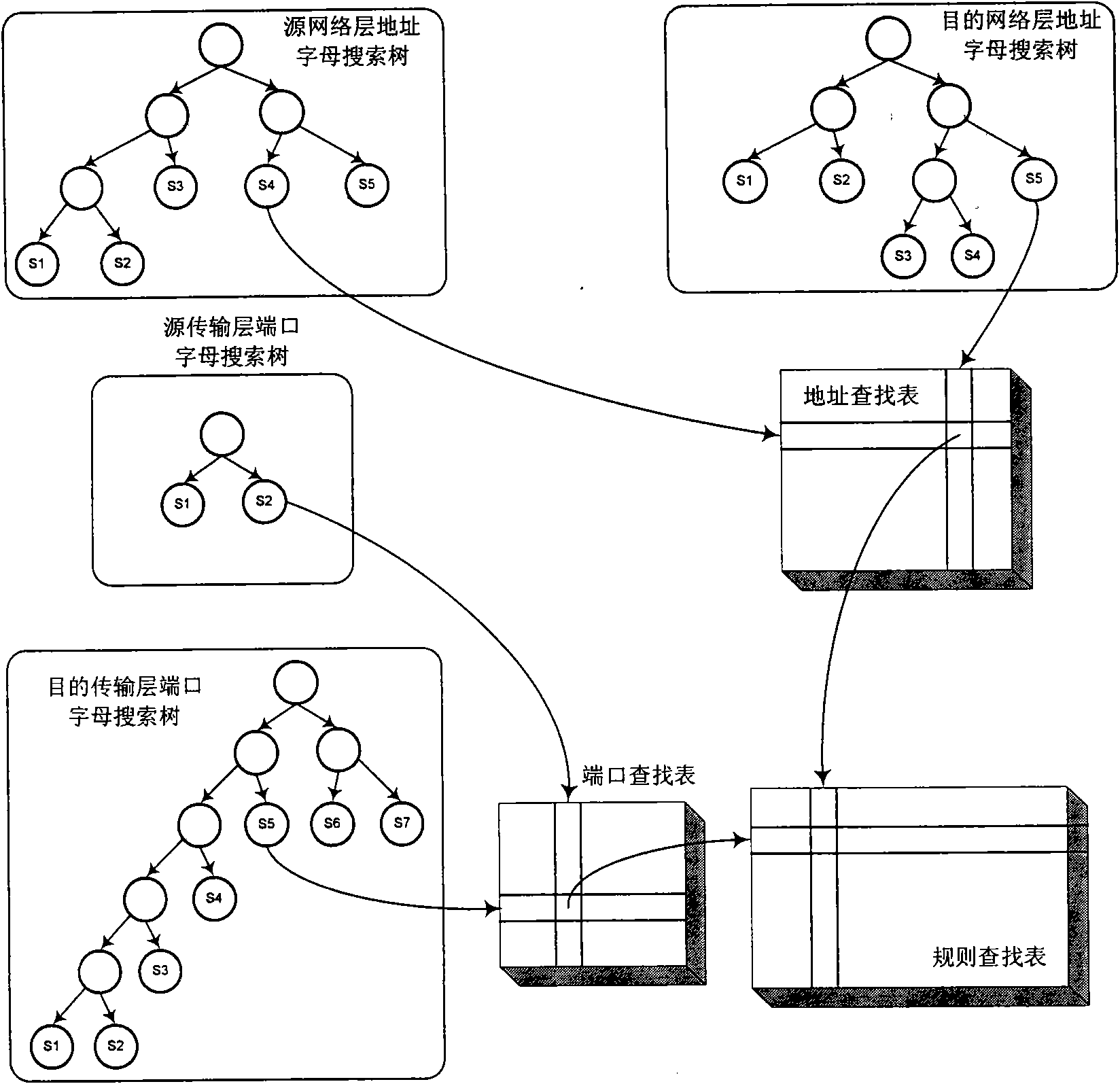

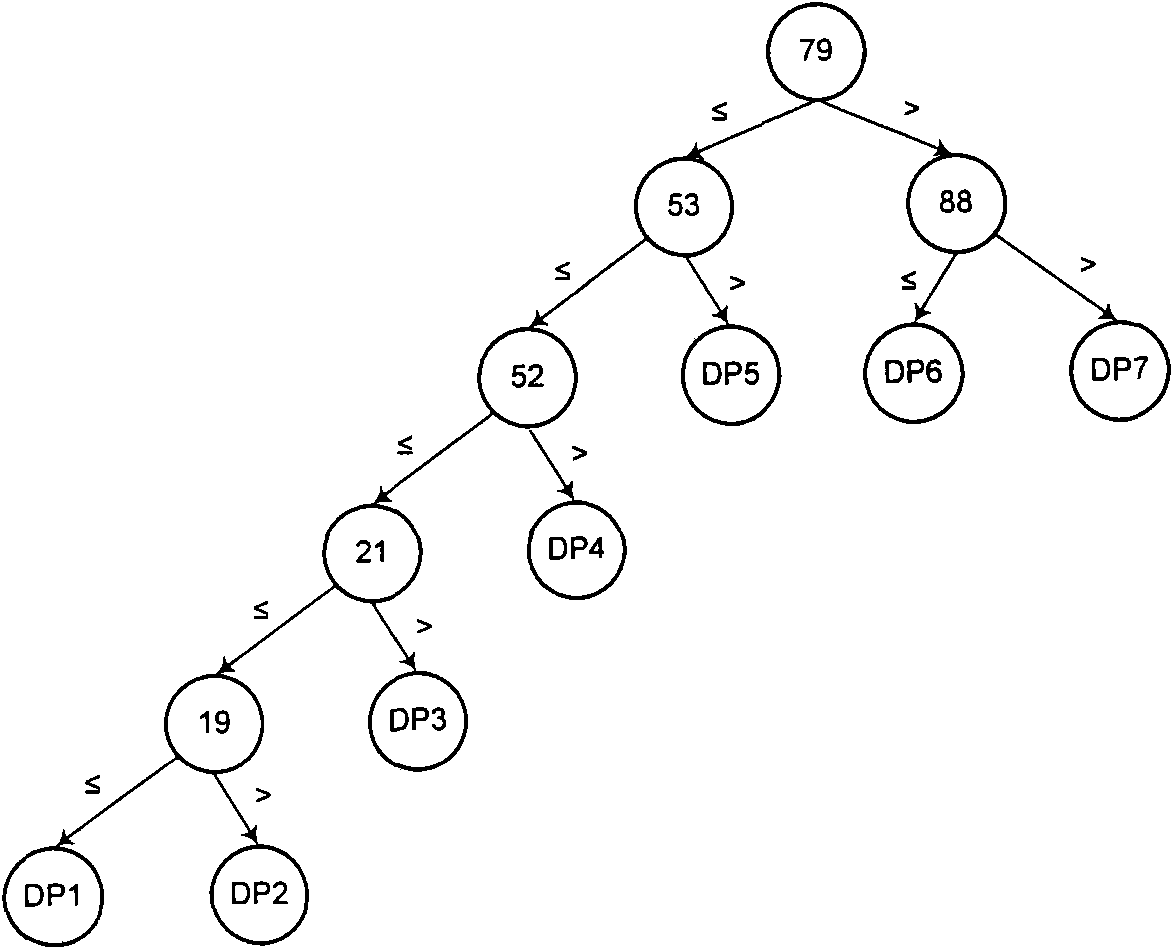

Rapid network packet classification method based on network traffic statistic information

ActiveCN101594303AOptimize data structureAvoid the pitfalls caused by performance dipsData switching networksTraffic capacityFiltration

The invention relates to a rapid network packet classification method based on network traffic statistic information, which belongs to the technical field of filtration and monitoring of network traffic. The method comprises the steps of: determining the space partition of various domains of a header and a rule mapping table thereof according to a classification rule set, and establishing an address lookup table, a port lookup table and a rule lookup table; recording the times that the header of a network packet appears in the space partition of the domains and calculating prior distribution; establishing a letter search tree of the domains according to the prior distribution; performing continuous matching on the received header of the network packet according to the letter search tree and the lookup tables; and re-calculating the prior distribution and updating the letter search tree at update time, and continuing to match the received network traffic. The method uses the classification rule set and heuristic information of the network traffic from two different levels of network packet classification, strengthens the adaptability of the classification method, and improves average classification efficiency. The method has quick lookup speed and strong adaptability, can be realized on a plurality of platforms, and is applicable to the filtration and monitoring of high-performance network traffic.

Owner:CERTUS NETWORK TECHNANJING

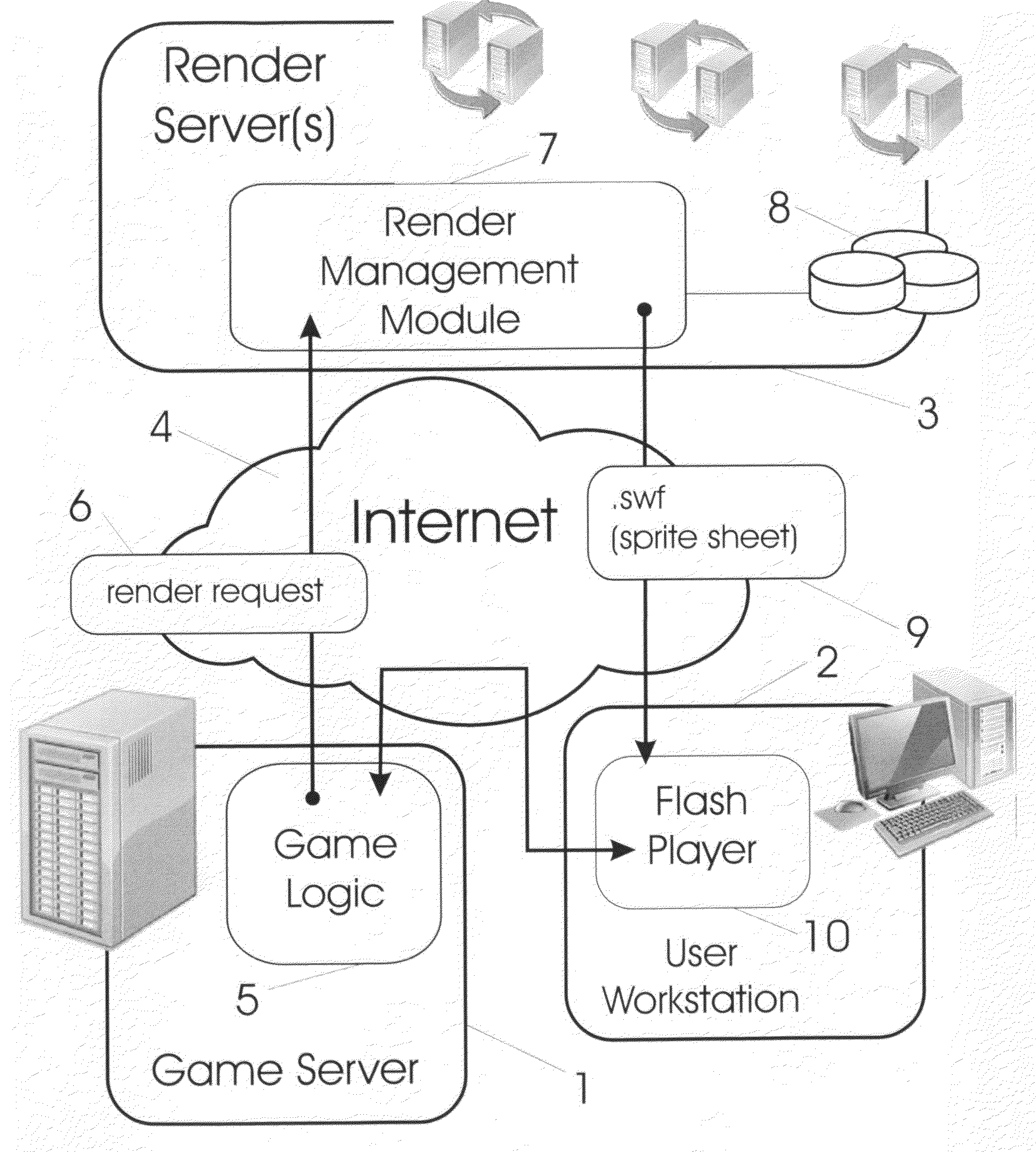

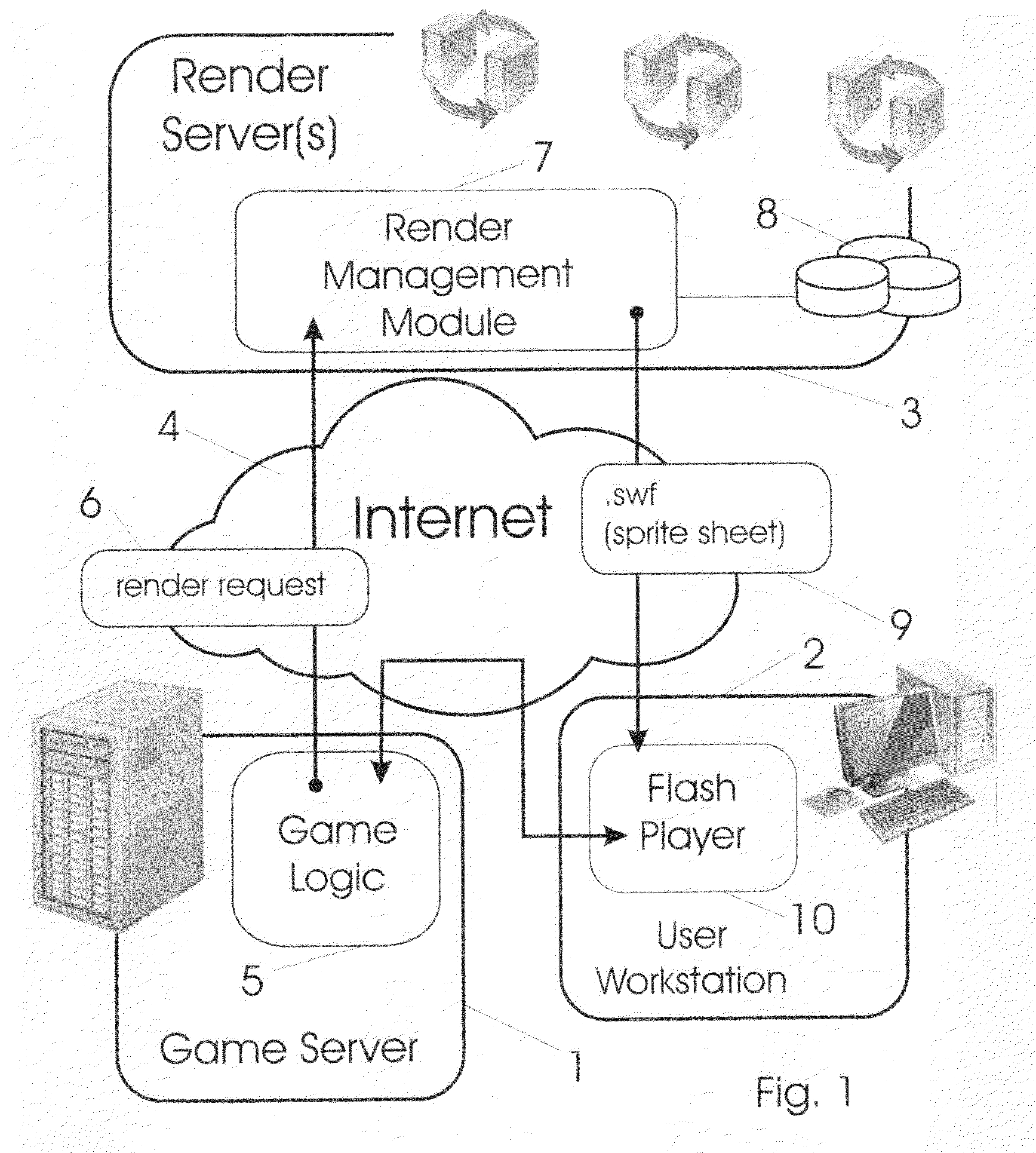

High performance network art rendering systems

InactiveUS20100285884A1Highly dynamic and powerful automated art rendering serviceVideo gamesSpecial data processing applicationsGraphicsThe Internet

Computer games deployed over communications networks such as the Internet are arranged with graphics rendering services to effect a real-time, automated fast rendering facility responsive to requests generated in view of game execution state. High-performance graphics authoring tools are used to produce graphics elements in agreement with a particular game design and scenario specification ‘on-the-fly’. These graphics are uploaded and stored in a database having a cooperating prescribed schema. A media player executing game logic is coupled to a render manager whereby render requests are passed thereto. The render manager forms scene files to be processed at a rendering application and finally to an assembler which converts all rendered graphics to a form usable at the media player. In this way, very high-quality real-time automated graphics generation is provided for bandwidth and memory limited platforms such as user workstations coupled to the Internet.

Owner:GAZILLION

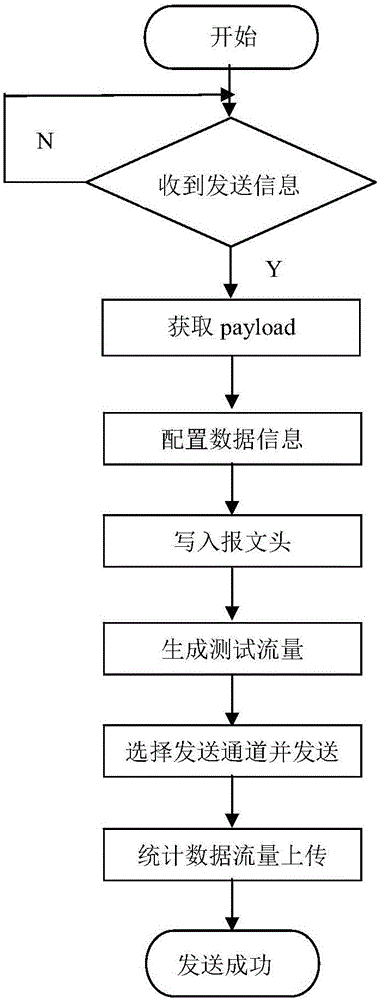

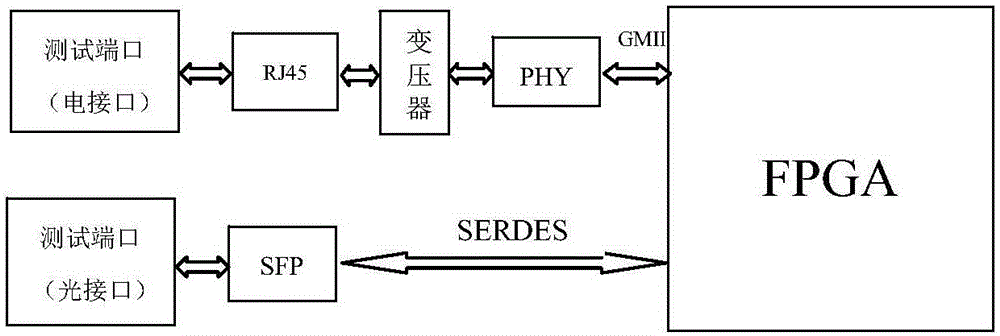

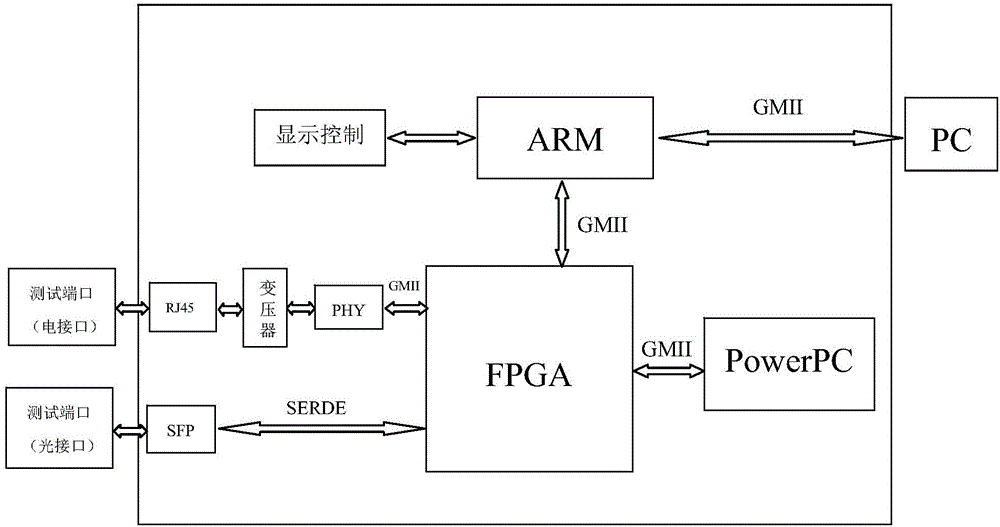

High performance network tester and the testing method thereof

ActiveCN105099828ASupport parsingEasy compatibilityData switching networksTraffic capacityNetworking protocol

The invention discloses a high performance network tester and a testing method of the high performance network tester with the adoption of a FPGA+PowerPC+ARM framework. The network tester comprises a RJ45 interface, a transformer, a physical interface (PHY), an optical interface SFP, an FPGA, a PowerPC, an ARM and a display control device. The FPGA completes the generation and reception of testing flows, conducts analysis of a first layer message and a second layer message, controls the FPGA and surveys the first layer message and the second layer message; the PowerPC conducts analysis of a network protocol on a third layer or layers above; the ARM communicates with a computer to realize the display and control of entire equipment. According to the invention, the network tester puts together the advantages of three processors. The network tester is provided with high flexibility and is cost-effective to make. The tester is also compatible with a variety of interfaces and has strong expansibility.

Owner:NANJING UNIV OF SCI & TECH +1

Network switch cooling system

ActiveUS20100110632A1Avoid the needDigital data processing detailsCooling/ventilation/heating modificationsNetwork switchEmbedded system

A high-performance network switch chassis has multiple network ports and air openings on the front end of the chassis, and multiple fan modules mounted on the back end of the chassis. The fan modules are hot-swap replaceable so that replacement of one of the fan modules does not require interruption of network switch operation. Air-blockers associated with each fan module prevent recirculation of air when fan modules are removed. Different types of fan modules may be used to provide either front-to-rear or rear-to-front airflow through the chassis. A fan speed controller determines fan speed based on temperature using one of two profiles. The two profiles correspond to the two different airflow directions.

Owner:ARISTA NETWORKS

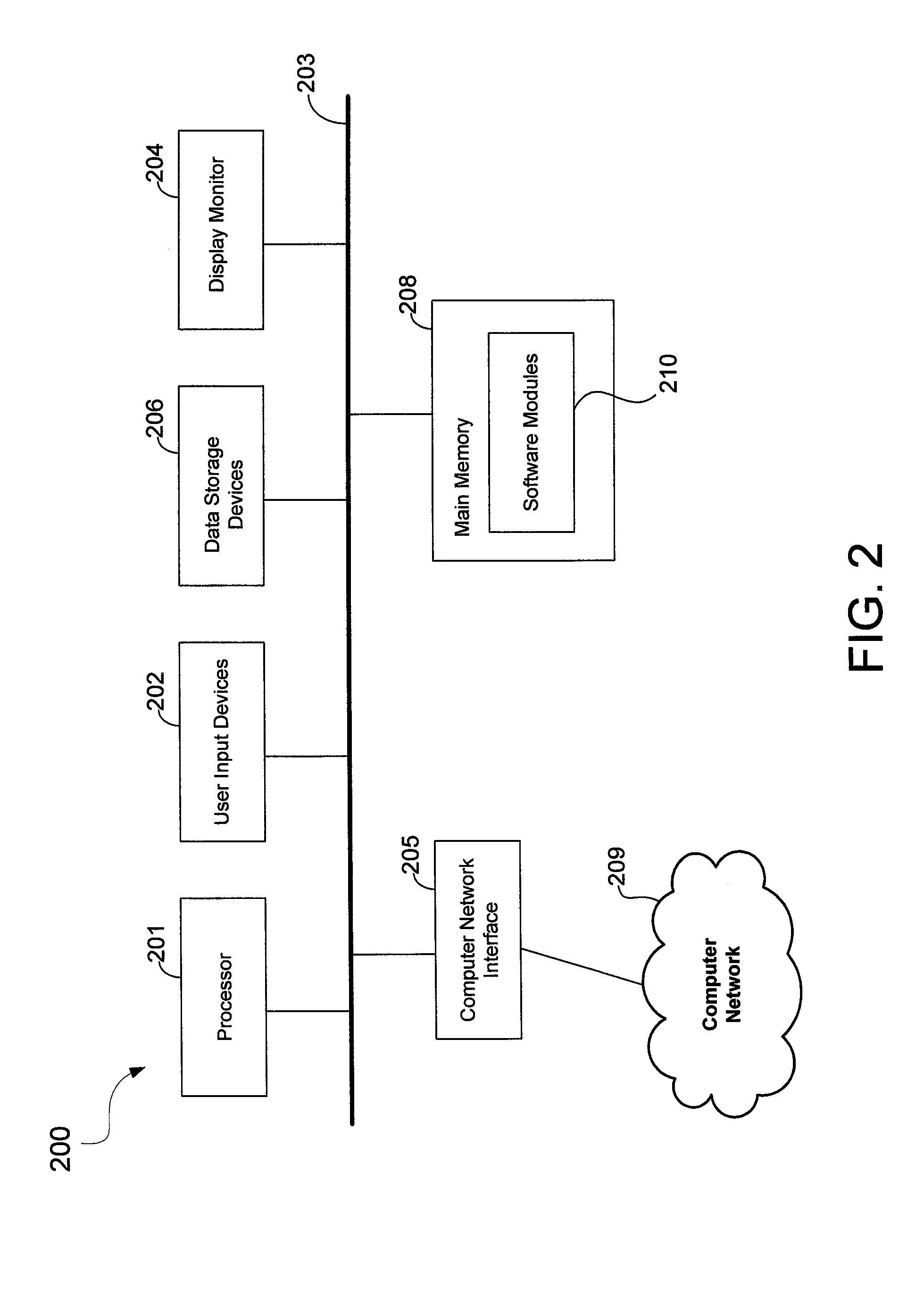

Apparatus and method for high-performance network content processing

ActiveUS8289981B1Data switching by path configurationStore-and-forward switching systemsData memoryHigh performance network

One embodiment relates to a network gateway apparatus configured for high-performance network content processing. The apparatus includes data storage configured to store computer-readable code and data, and a processor configured to execute computer-readable code and to access said data storage. Computer-readable code implements a plurality of packet processors, each packet processor being configured with different processing logic. Computer-readable code further implements a packet handler which is configured to send incoming packets in parallel to the plurality of packet processors. Another embodiment relates to a method for high-performance network content processing. Other embodiments, aspects and features are also disclosed.

Owner:TREND MICRO INC

Dynamic extensible method for increasing virtual machine resources

ActiveCN103593243AMeeting dynamic memory requirementsRealize dynamic migrationResource allocationSoftware simulation/interpretation/emulationClient-sideStorage pool

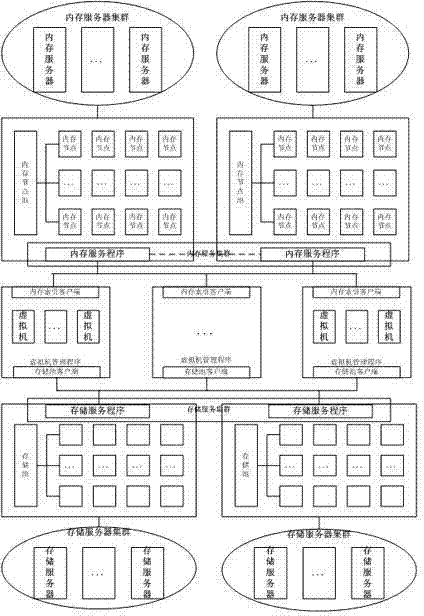

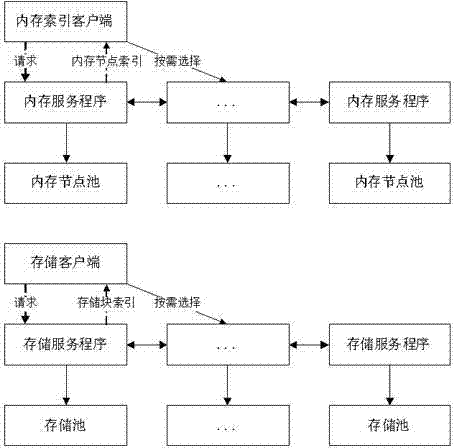

The invention provides a dynamic extensible method for increasing virtual machine resources. An internal storage index client side (1), an internal storage service program (2), an internal storage node pool (3), a storage client side (4), a storage service program (5) and a storage pool (6) are integrated with the high-performance network as a core so that the requirements for dynamically increasing internal storage, storage resources and dynamic transferring of a virtual machine can be met by a system, the internal storage service program can change the internal storage space provided by an internal storage server cluster to be the internal storage pool in a virtual mode, internal storage nodes can be dynamically divided according to the request of the internal storage index client side, and when an unoccupied adjacent internal storage node exists, the internal storage nodes can be combined to meet the dynamic internal storage demands of different client sides. The internal storage service program can operate in a cluster mode, and under a cluster mode, the internal storage pool also needs to record internal storage nodes managed by other internal service programs, and accordingly the client sides can select services from the internal storage service program with many unoccupied internal storage nodes or with a small load.

Owner:SHANDONG LANGCHAO YUNTOU INFORMATION TECH CO LTD

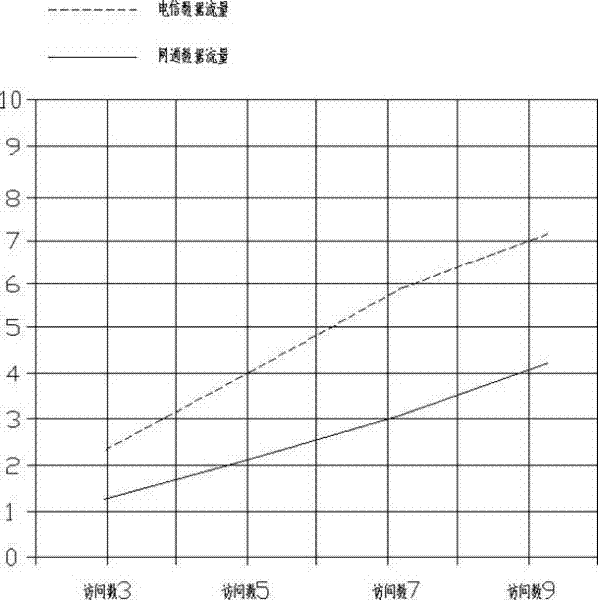

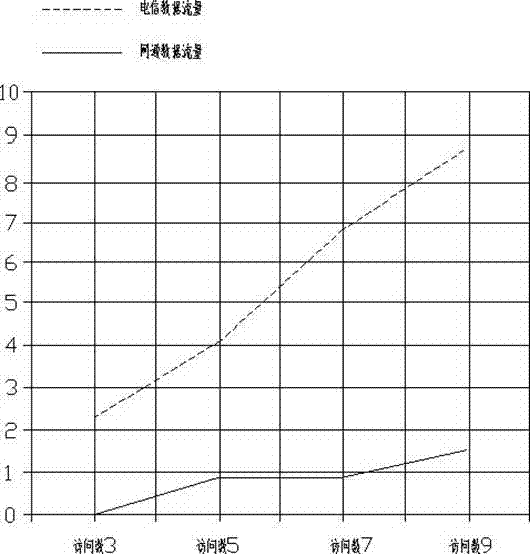

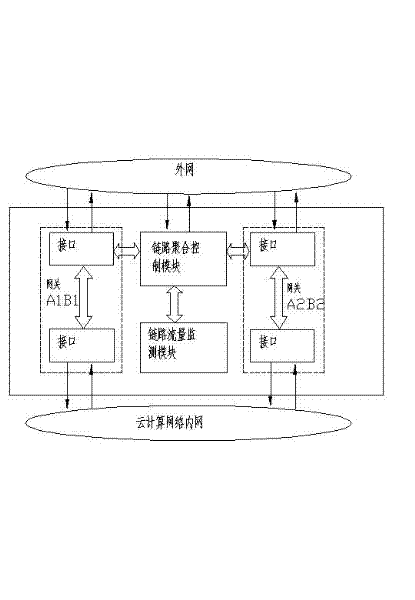

Multilink aggregation control device for cloud computing network

InactiveCN102347876ALower access costsImprove efficiencyData switching by path configurationNetwork addressingNetwork address

The invention discloses a multilink aggregation control device for a cloud computing network. The multilink aggregation control device comprises a plurality of gateways connected with an external network and an internal network of the cloud computing network. The device is characterized by also comprising a link aggregation control unit which is used for adjusting a network load flow direction list and a gateway external network address list so as to realize dynamic dispatching of the number of access loads on different access links. According to the multilink dynamic aggregation technology, the access cost of a high-performance network can be effectively reduced, the efficiency of an access link can be improved, and the multilink aggregation control device has the advantages of strong transplantation, high stability and remarkable effect.

Owner:鞠洪尧

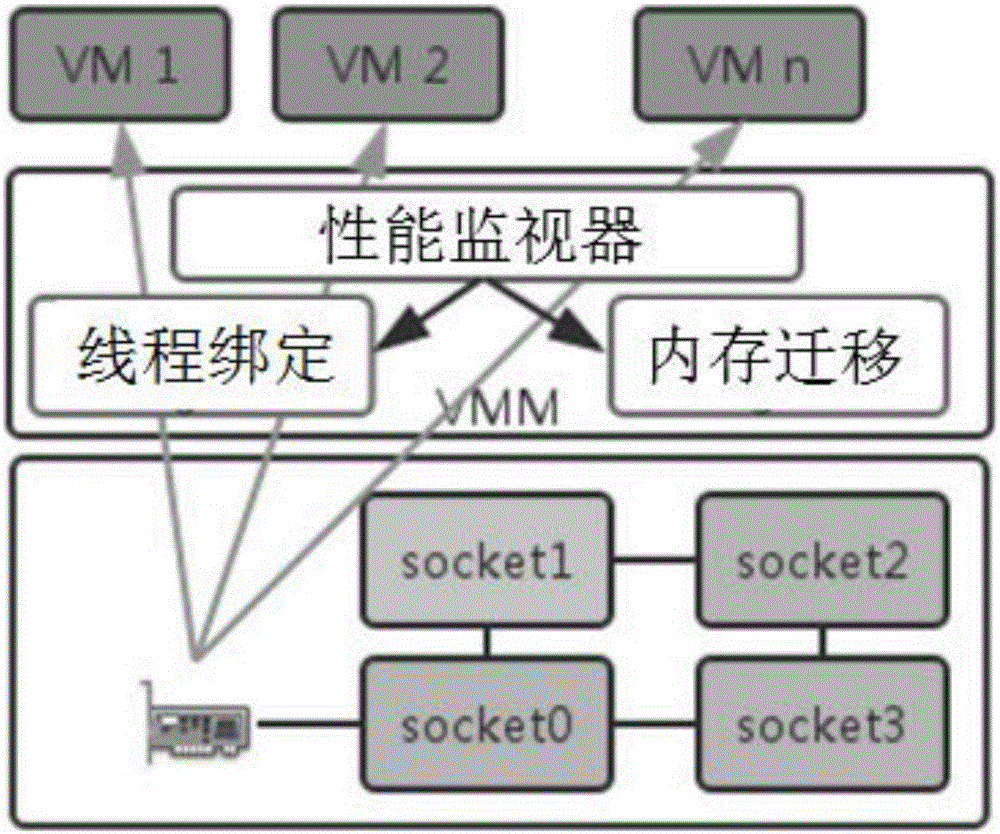

Nonuniformity-based I/O access system in virtual multicore environment and optimizing method

ActiveCN106293944AReflect the importanceReduce data communicationResource allocationVirtualizationLow load

The invention discloses a nonuniformity-based I / O access system in a virtual multicore environment and an optimizing method and relates to the field of computer virtualization. The system comprises a performance detection module, a thread binding module and a memory migration module, the performance detection module monitors hardware information of a virtual machine and a physical host in real time through a modified performance monitoring tool, the thread binding module judges whether a current system is with low load or high load according to the hardware information collected by the performance detection module and binds virtual machine threads on nodes with high load onto nodes with low load when the current system is with high load, and the memory migration module migrates related threads onto a node closest to a network adapter when the current system is with low load. An affinity optimizing model of I / O performance based on the virtual multicore environment is built, and real-time dynamic optimized placement strategies with high throughput and low delay are provided for the system so as to efficiently utilize multicore resources and performance of the high-performance network adapter, so that load of the system is lowered effectively.

Owner:SHANGHAI JIAO TONG UNIV

A scheduling method for virtual processors based on the affinity of numa high-performance network buffer resources

ActiveUS20160062802A1Reduce the impactOptimal network processing speedMemory architecture accessing/allocationProgram initiation/switchingVirtual ProcessorHigh performance network

The present invention discloses a scheduling method for virtual processors based on the affinity of NUMA high-performance network buffer resources, including: in a NUMA architecture, when a network interface card of a virtual machine is started, getting distribution of the buffer of the network interface card on each NUMA node; getting affinities of each NUMA node for the buffer of the network interface card on the basis of an affinity relationship between each NUMA node; determining a target NUMA node in combination with the distribution of the buffer of the network interface card on each NUMA node and affinities of each NUMA node for the buffer of the network interface card; scheduling the virtual processor to the CPU on the target NUMA node. The present invention solves the problem that the affinity between the VCPU of the virtual machine and the buffer of the network interface card is not optimal in the NUMA architecture, so that the speed of VCPU processing network packets is not high.

Owner:SHANGHAI JIAO TONG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com