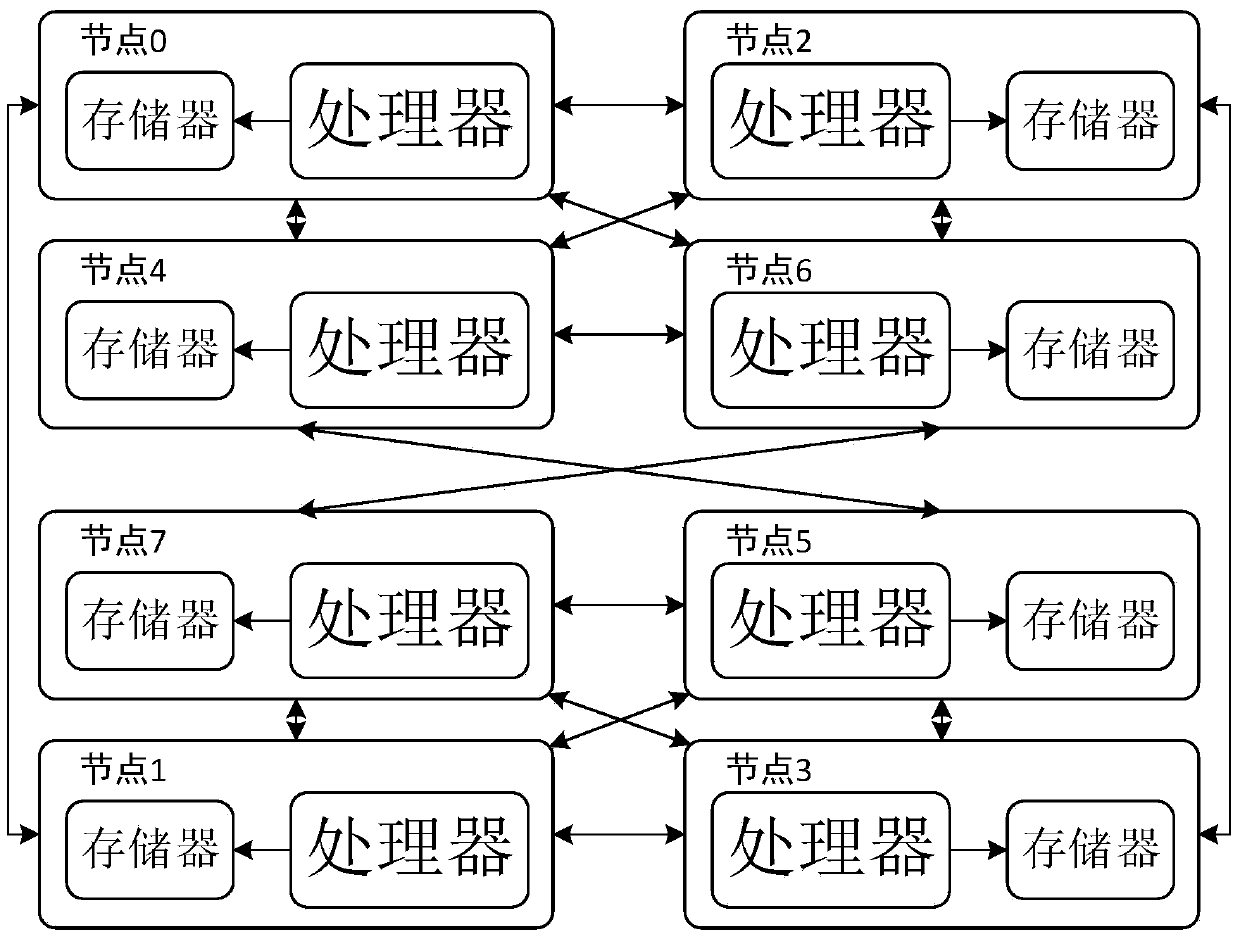

Dispatching method of virtual processor based on NUMA high-performance network cache resource affinity

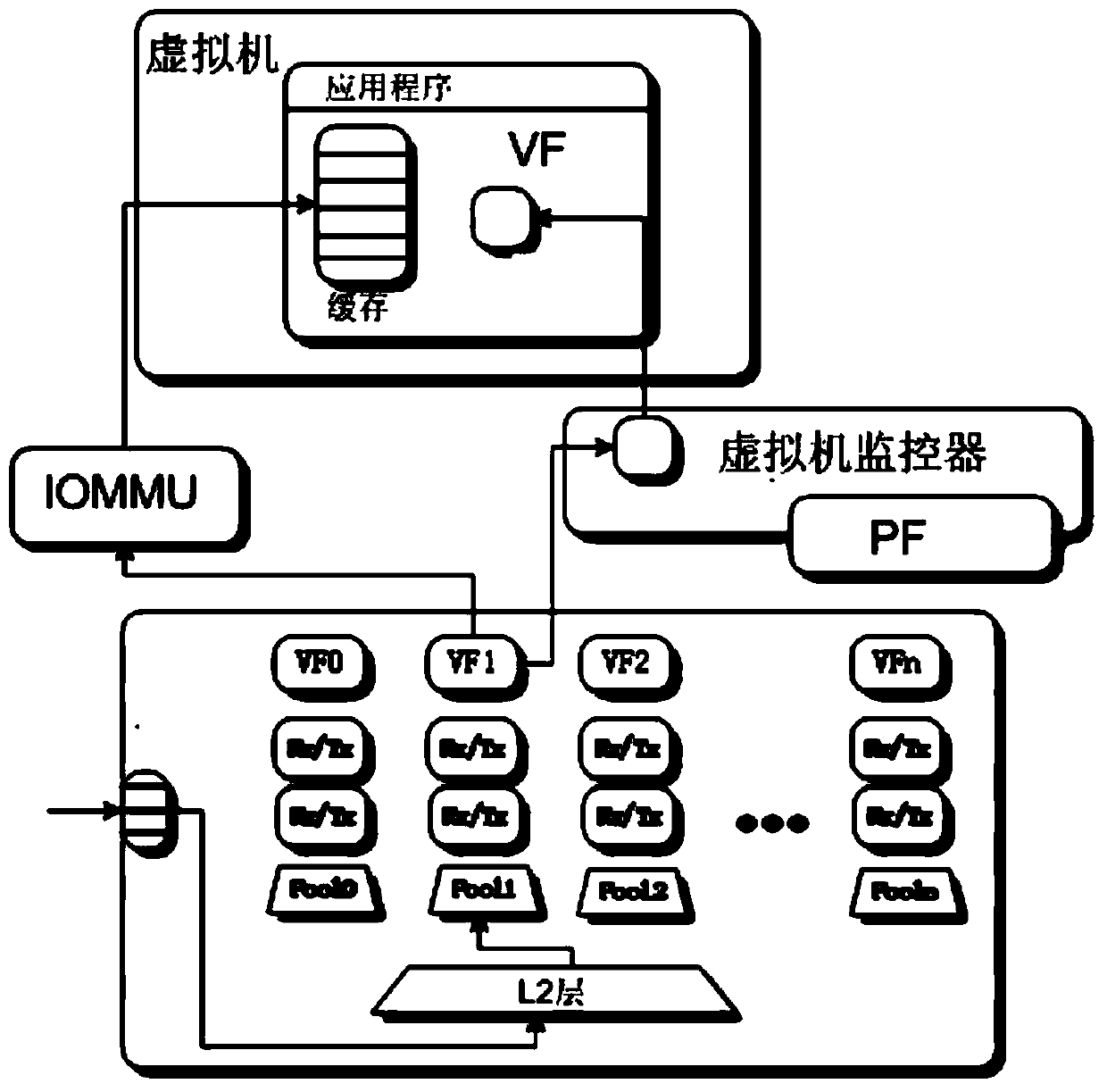

A virtual processor and network caching technology, applied in resource allocation, software simulation/interpretation/simulation, multi-programming devices, etc., can solve the problem of analyzing virtual machine memory resources, VCPU and memory affinity, VCPU data packet The speed is not optimal and other issues, to improve the processing speed and reduce the impact

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0040] The embodiments of the present invention are described in detail below in conjunction with the accompanying drawings: this embodiment is implemented on the premise of the technical solution of the present invention, and detailed implementation methods and specific operating procedures are provided, but the protection scope of the present invention is not limited to the following the described embodiment.

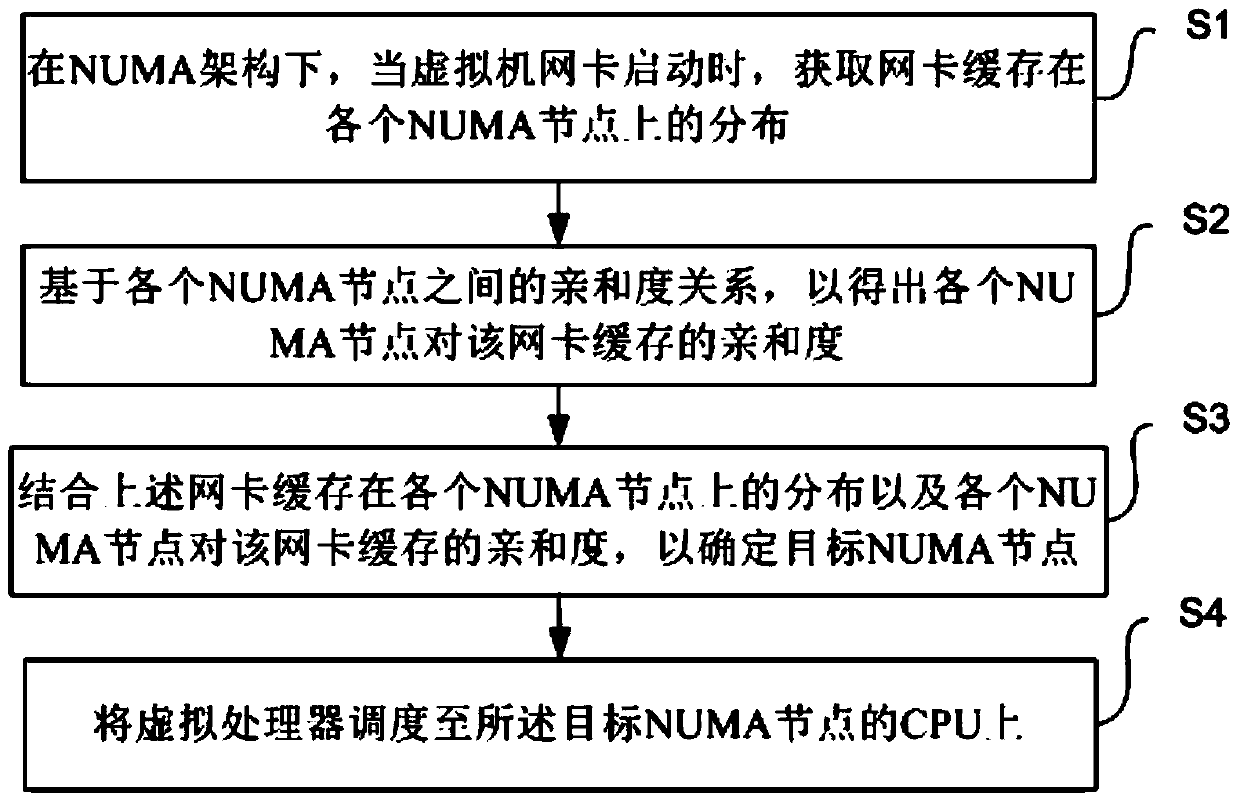

[0041] Such as image 3What is shown is a schematic flowchart of a virtual processor scheduling method based on NUMA high-performance network cache resource affinity of the present invention. refer to image 3 , the scheduling method shown includes the following steps:

[0042] Step S1: Under the NUMA architecture, when the network card of the virtual machine is started, obtain the distribution of the network card cache on each NUMA node;

[0043] Step S2: Based on the affinity relationship between each NUMA node, to obtain the affinity of each NUMA node to the net...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com