Patents

Literature

709results about "Single instruction multiple data multiprocessors" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

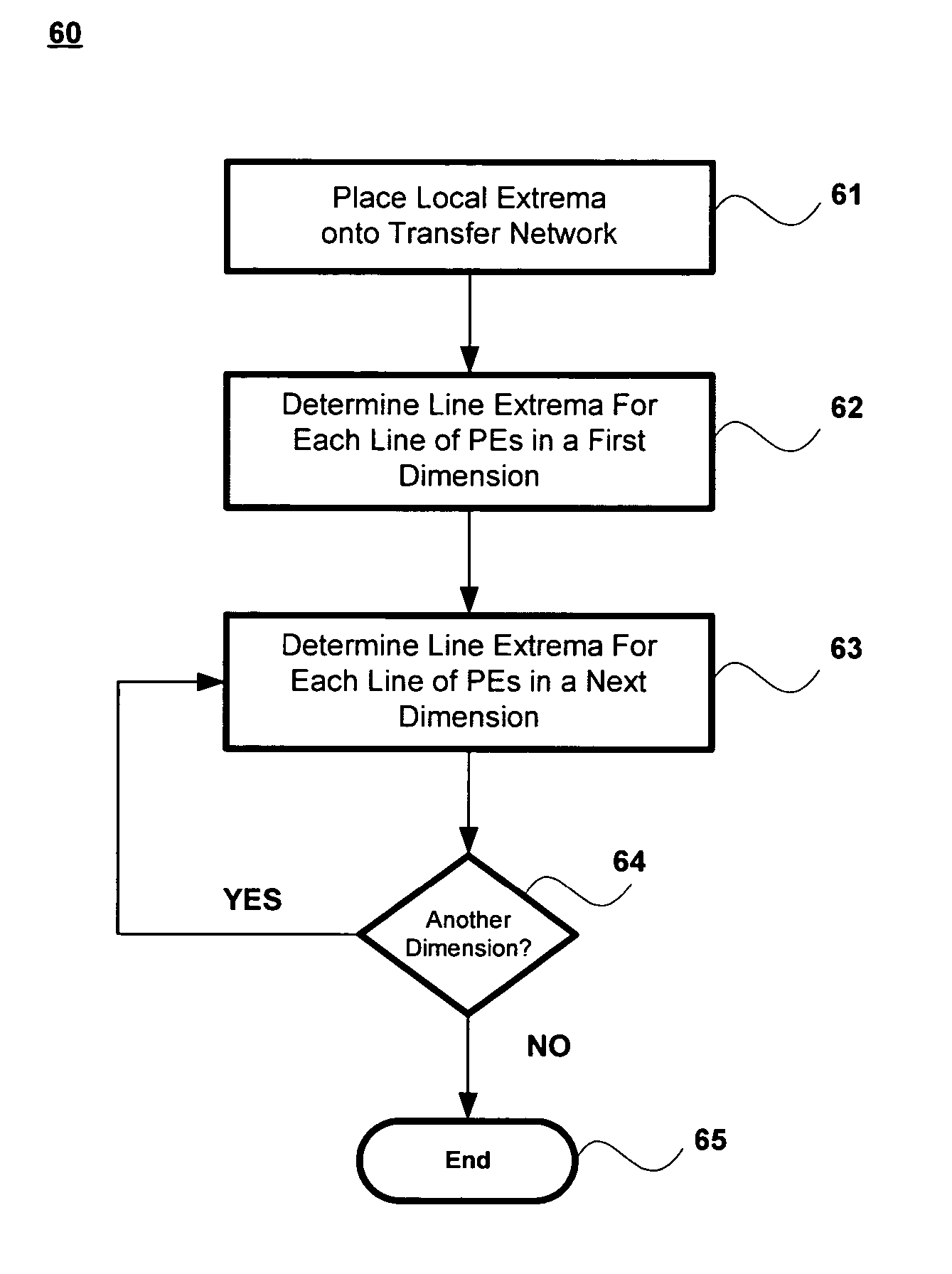

Method for finding global extrema of a set of shorts distributed across an array of parallel processing elements

ActiveUS7574466B2Single instruction multiple data multiprocessorsDigital data processing detailsProcessing elementParallel processing

A method for finding an extrema for an n-dimensional array having a plurality of processing elements, the method includes determining within each processing element a first dimensional extrema for a first dimension, wherein the first dimensional extrema is related to the local extrema of the processing elements in the first dimension and wherein the first dimensional extrema has a most significant byte and a least significant byte, determining within each processing element a next dimensional extrema for a next dimension of the n-dimensional array, wherein the next dimensional extrema is related to the first dimensional extrema and wherein the next dimensional extrema has a most significant byte and a least significant byte; and repeating the determining within each processing element a next dimensional extrema for each of the n-dimensions, wherein each of the next dimensional extrema is related to a dimensional extrema from a previously selected dimension.

Owner:MICRON TECH INC

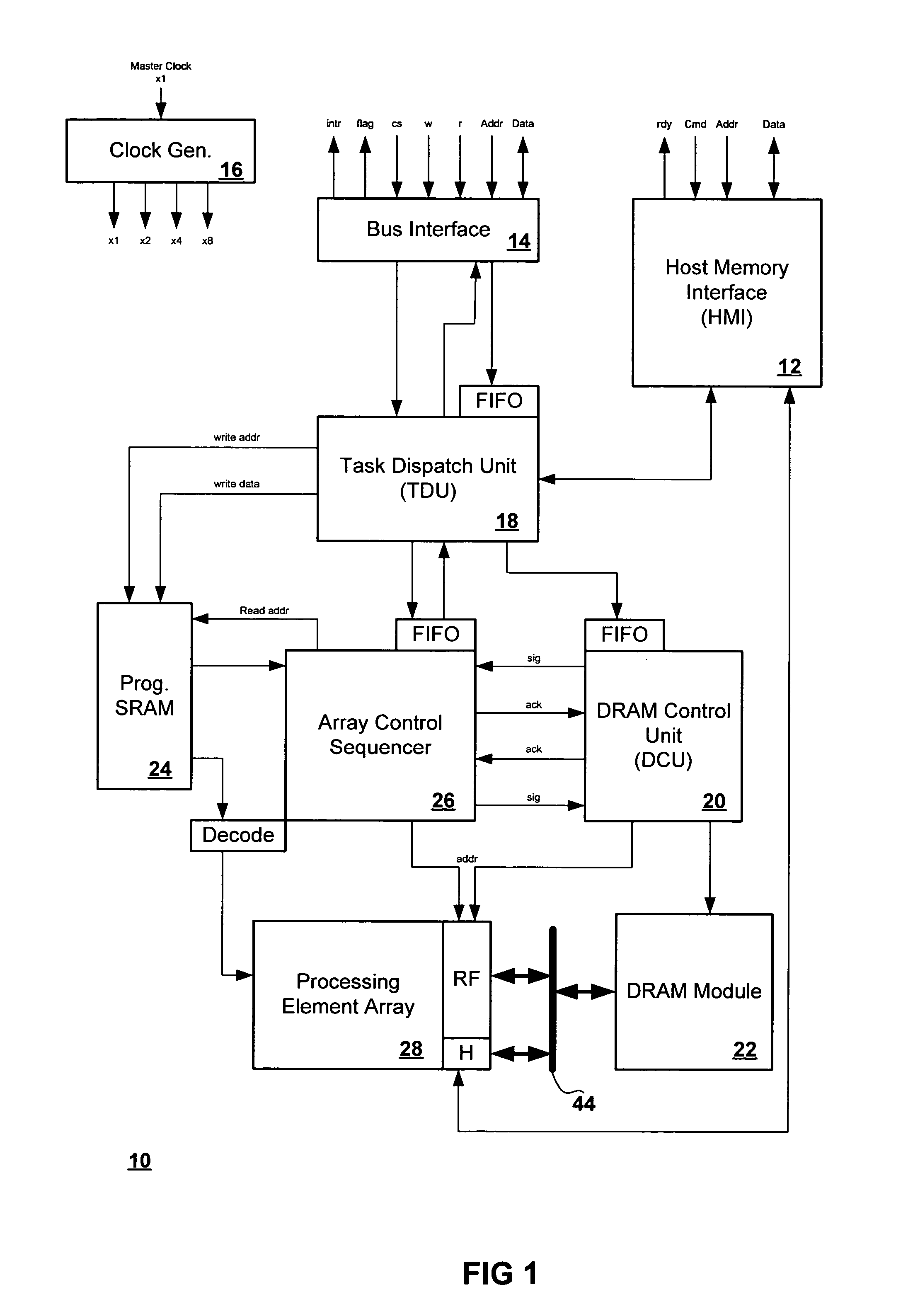

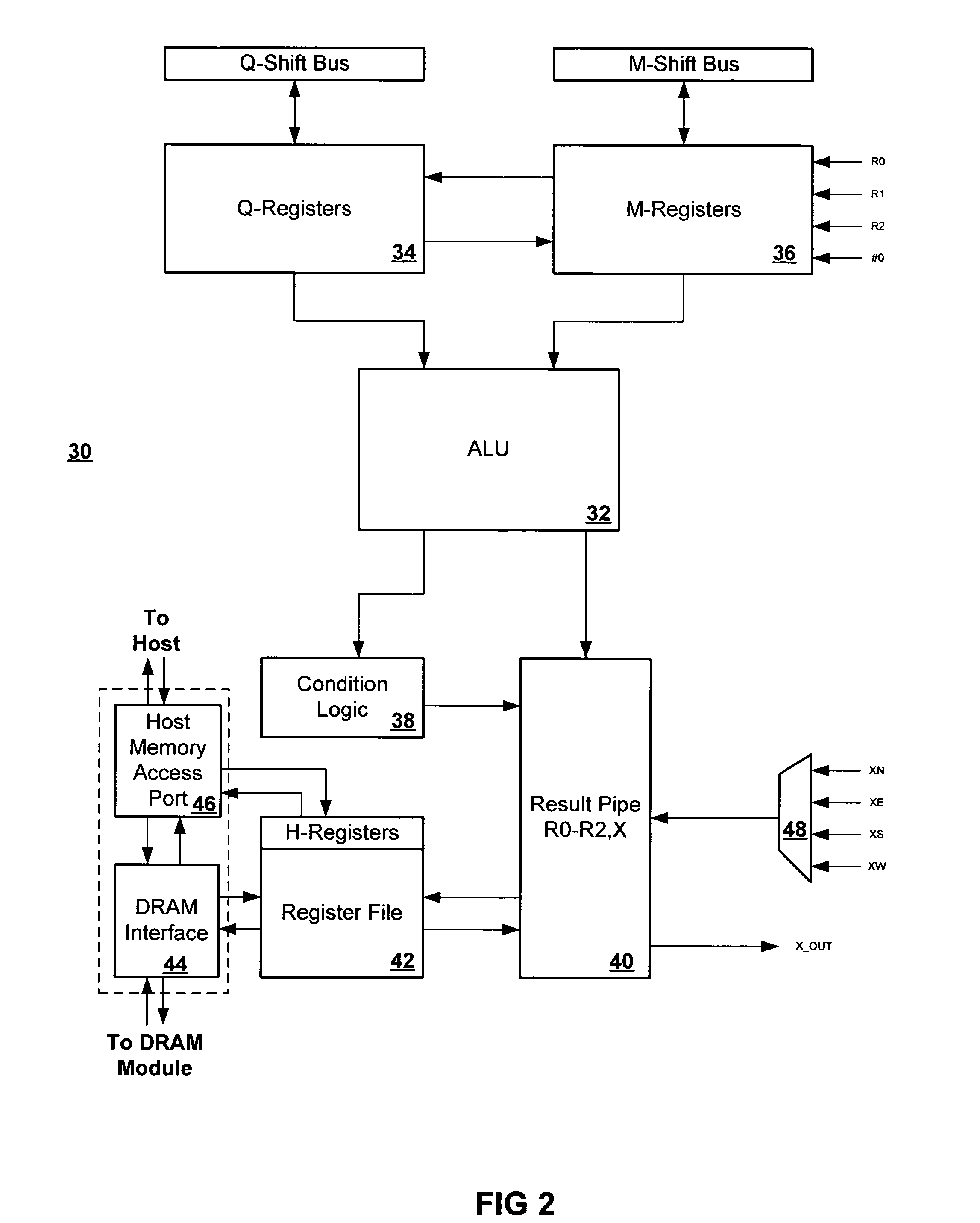

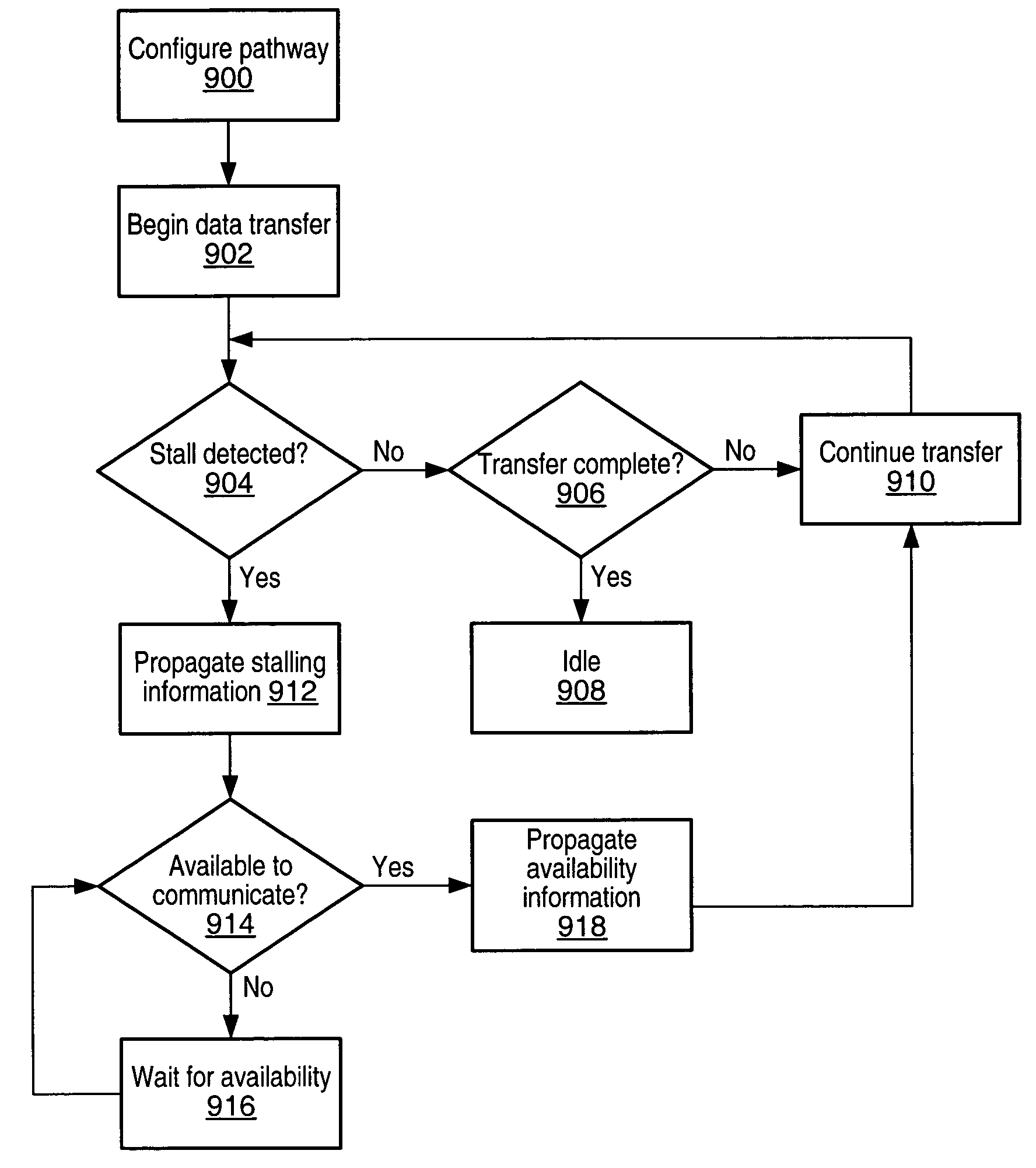

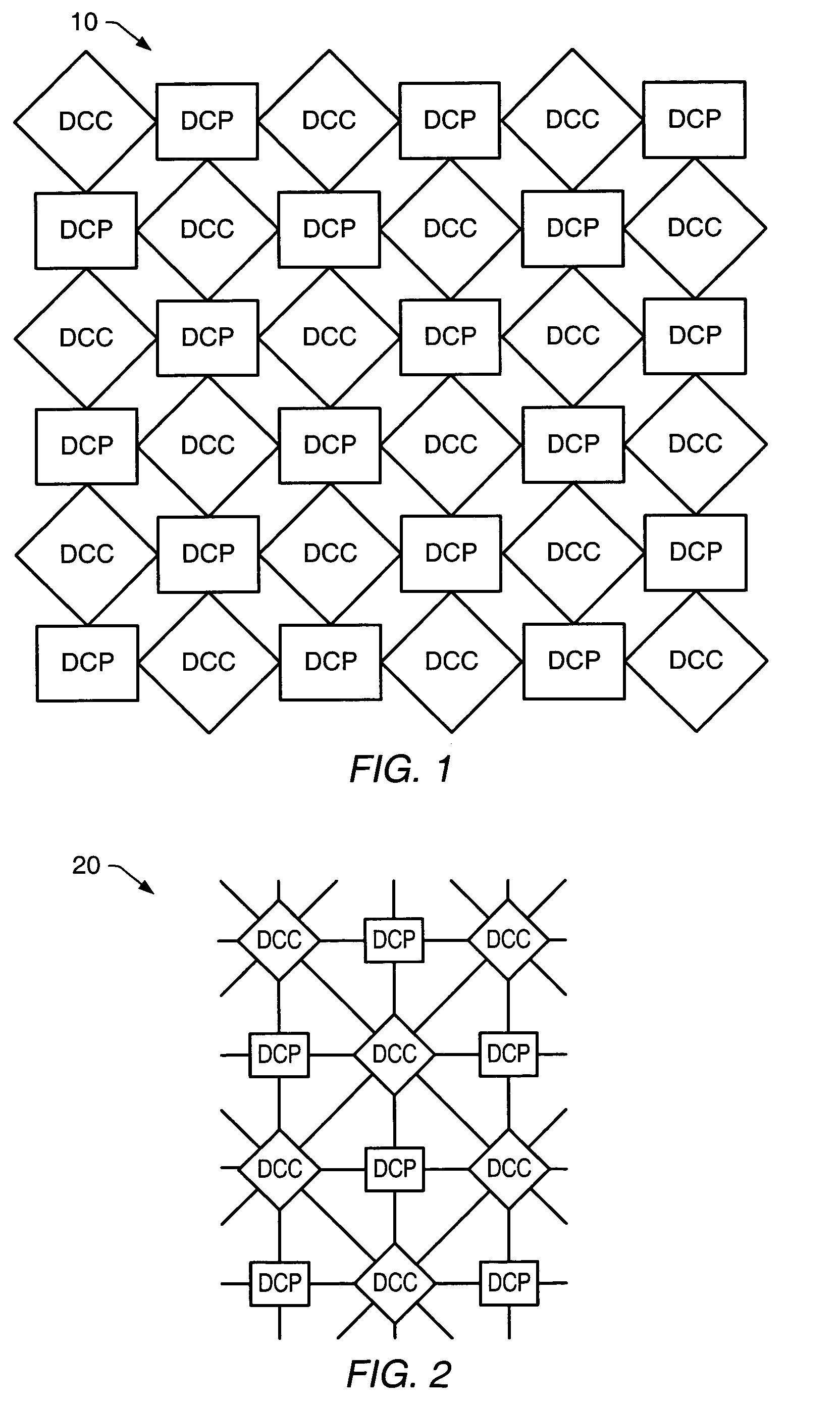

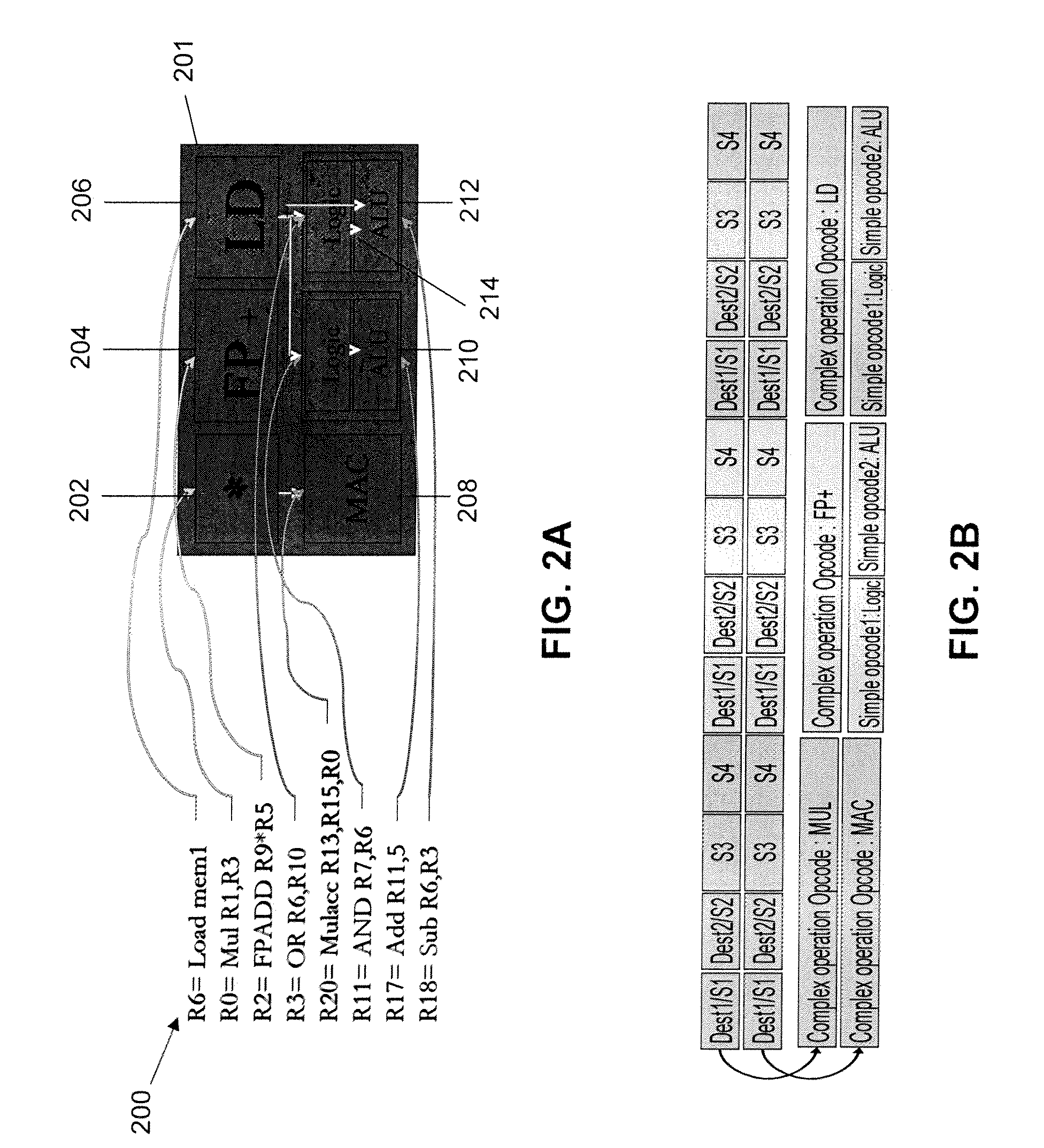

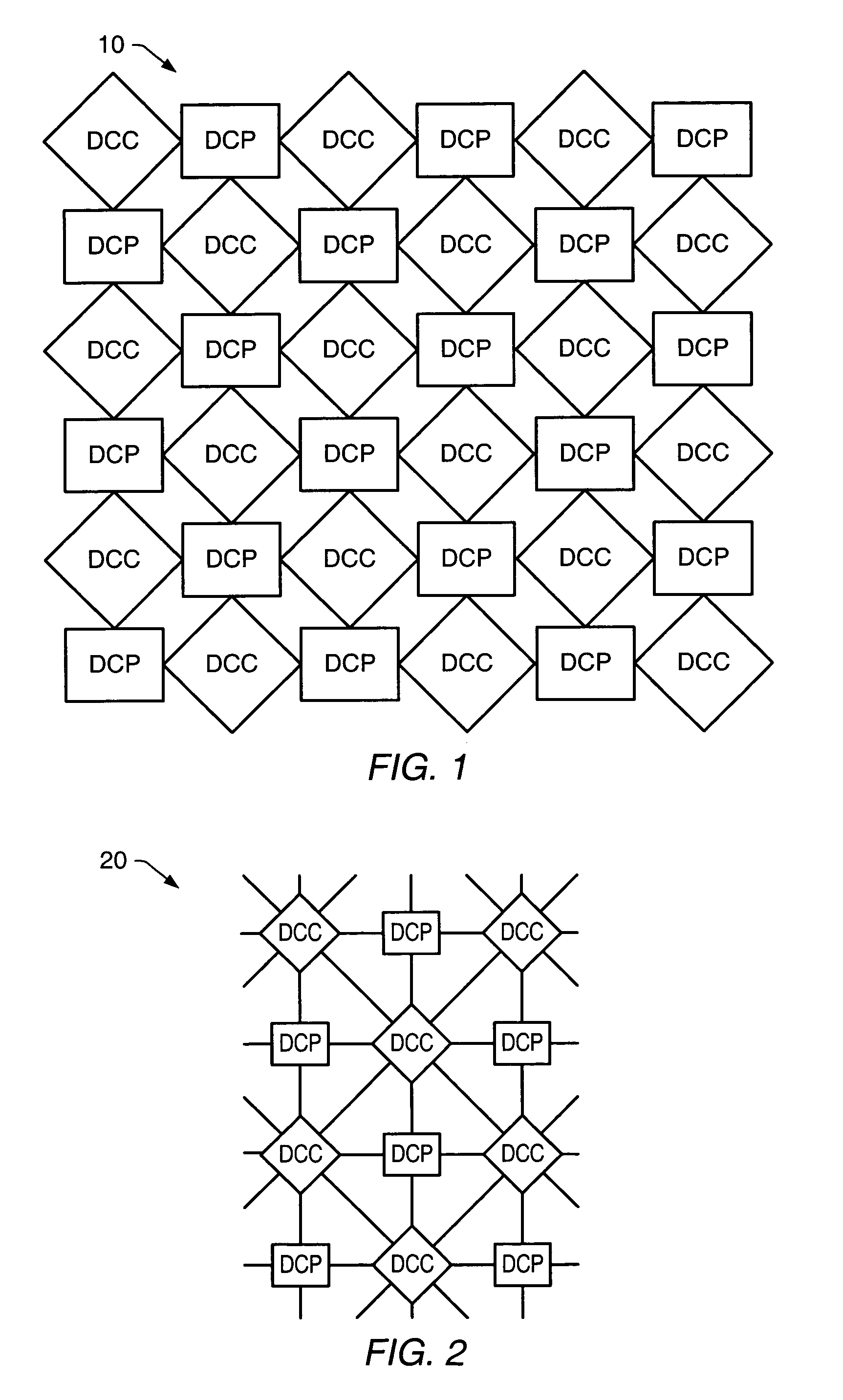

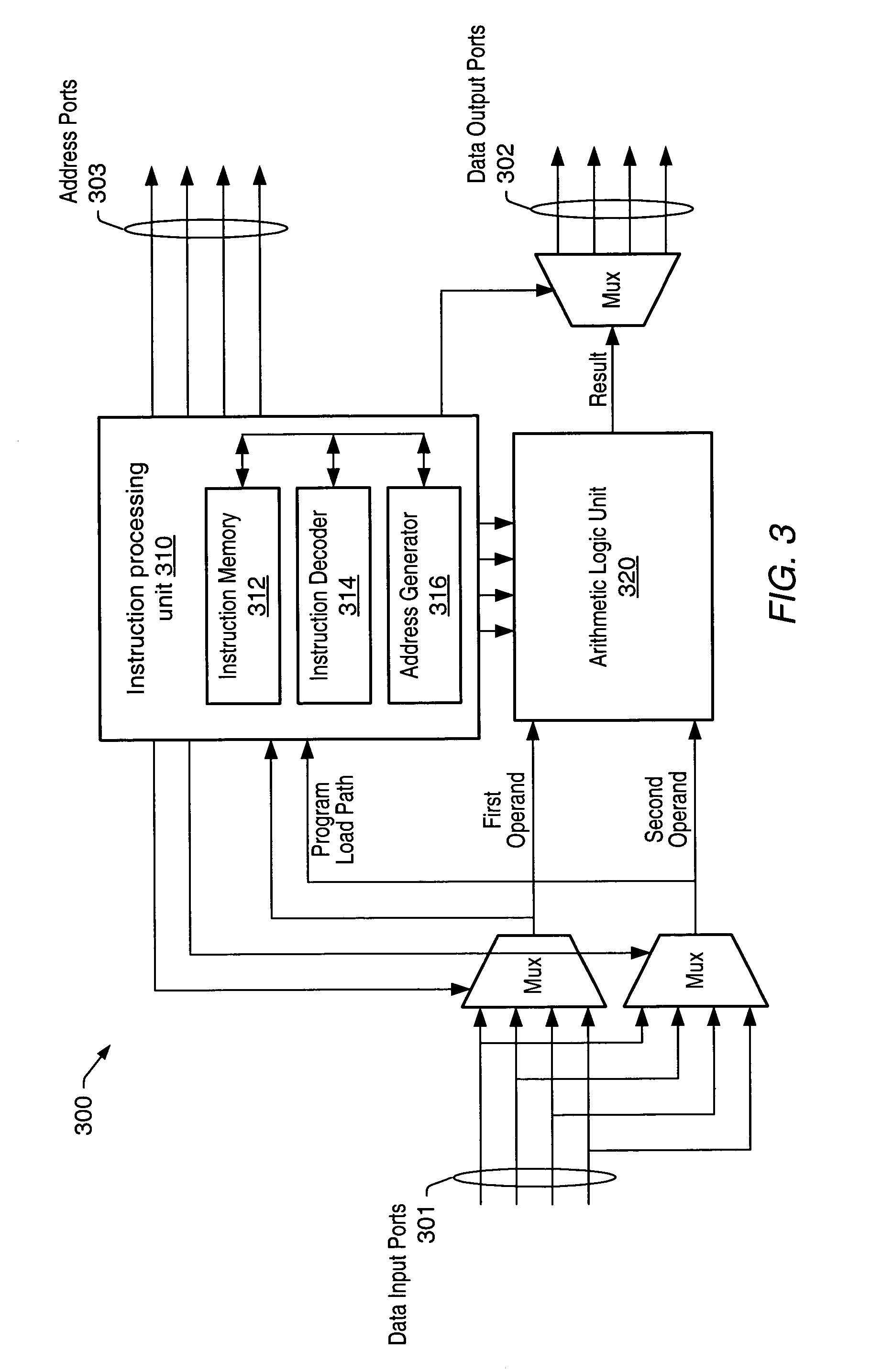

Processing system with interspersed stall propagating processors and communication elements

ActiveUS7415594B2Energy efficient ICTSingle instruction multiple data multiprocessorsArithmetic logic unitInstruction processing unit

A processing system comprising processors and the dynamically configurable communication elements coupled together in an interspersed arrangement. The processors each comprise at least one arithmetic logic unit, an instruction processing unit, and a plurality of processor ports. The dynamically configurable communication elements each comprise a plurality of communication ports, a first memory, and a routing engine. For each of the processors, the plurality of processor ports is configured for coupling to a first subset of the plurality of dynamically configurable communication elements. For each of the dynamically configurable communication elements, the plurality of communication ports comprises a first subset of communication ports configured for coupling to a subset of the plurality of processors and a second subset of communication ports configured for coupling to a second subset of the plurality of dynamically configurable communication elements.

Owner:COHERENT LOGIX

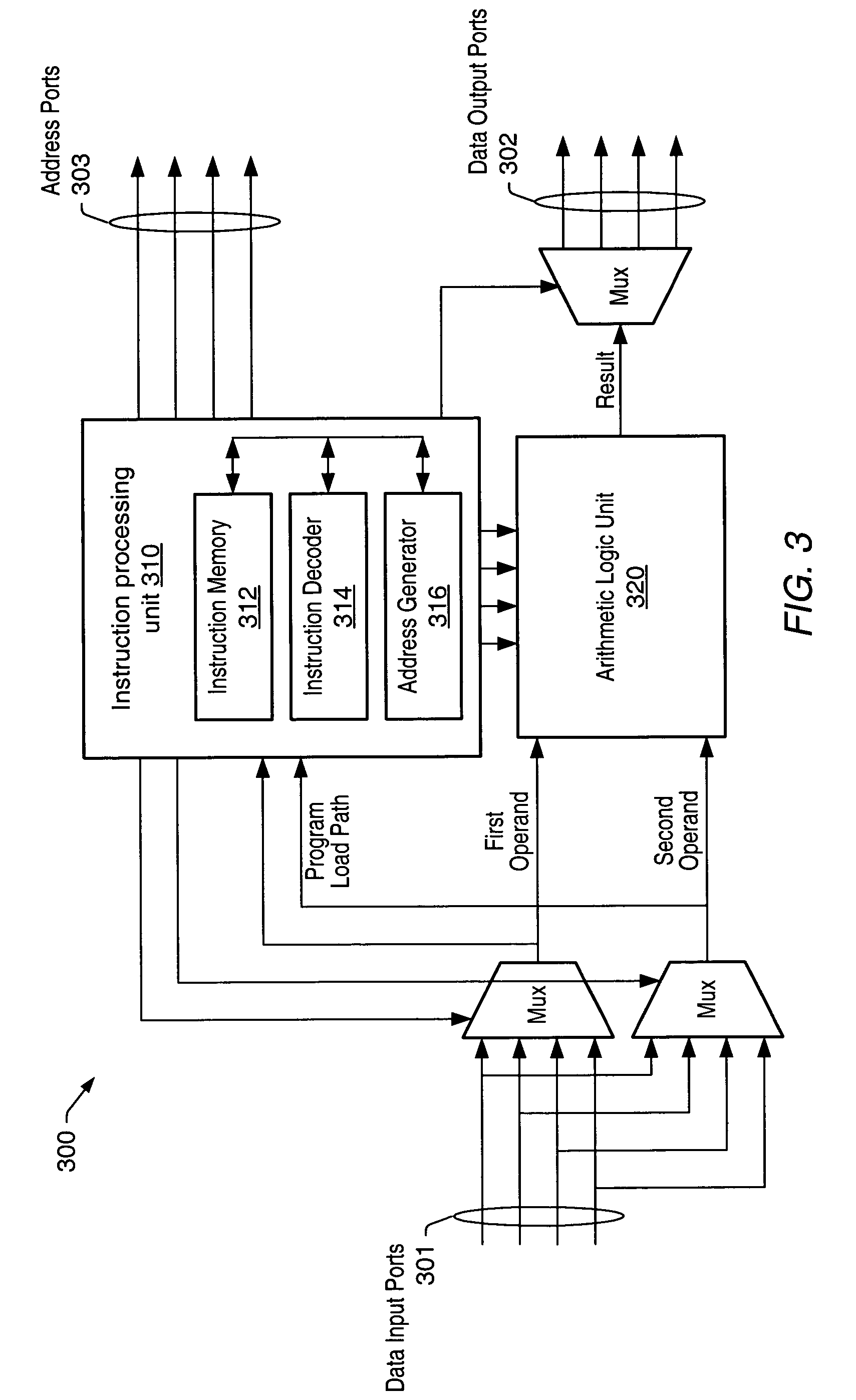

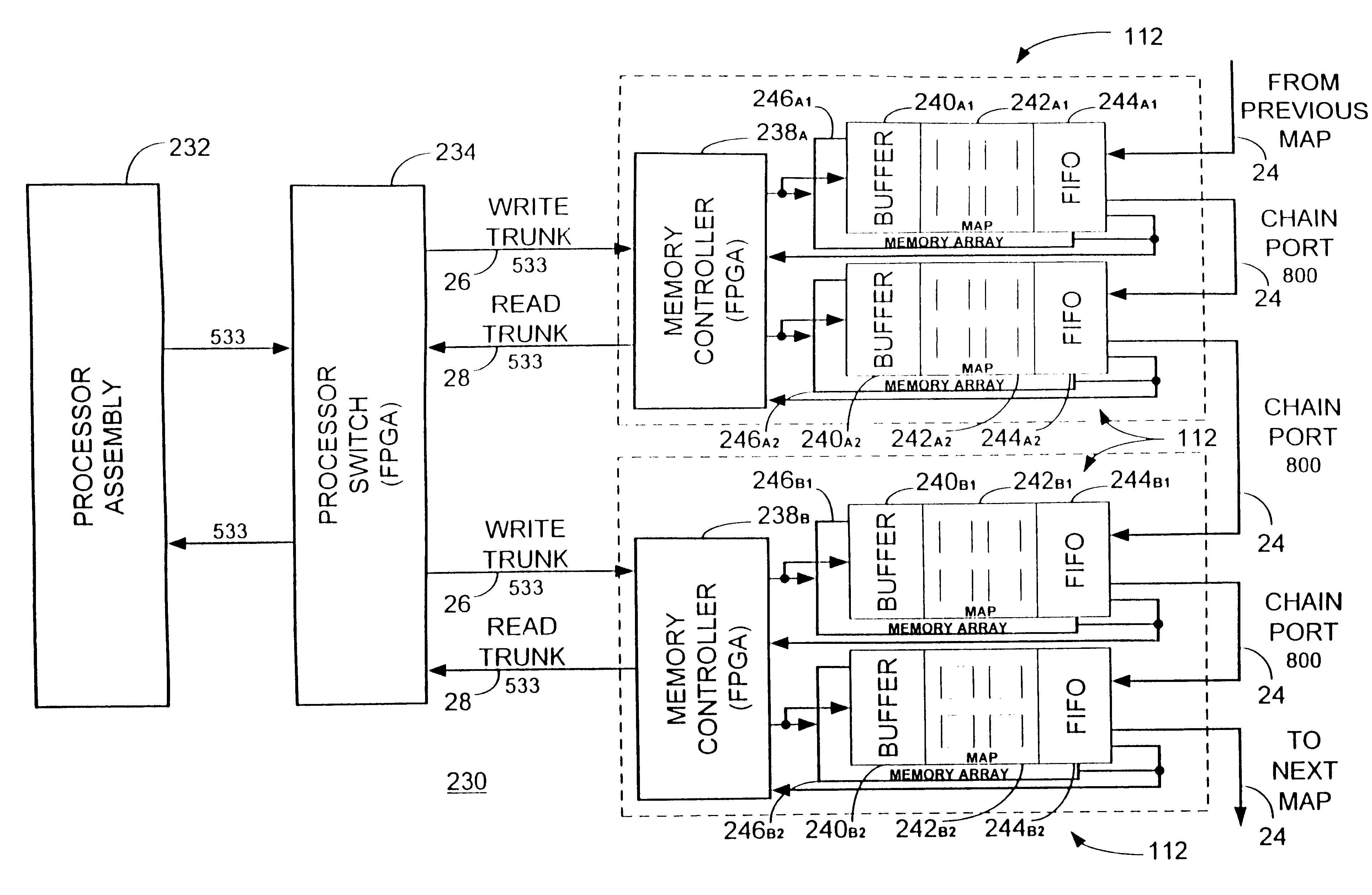

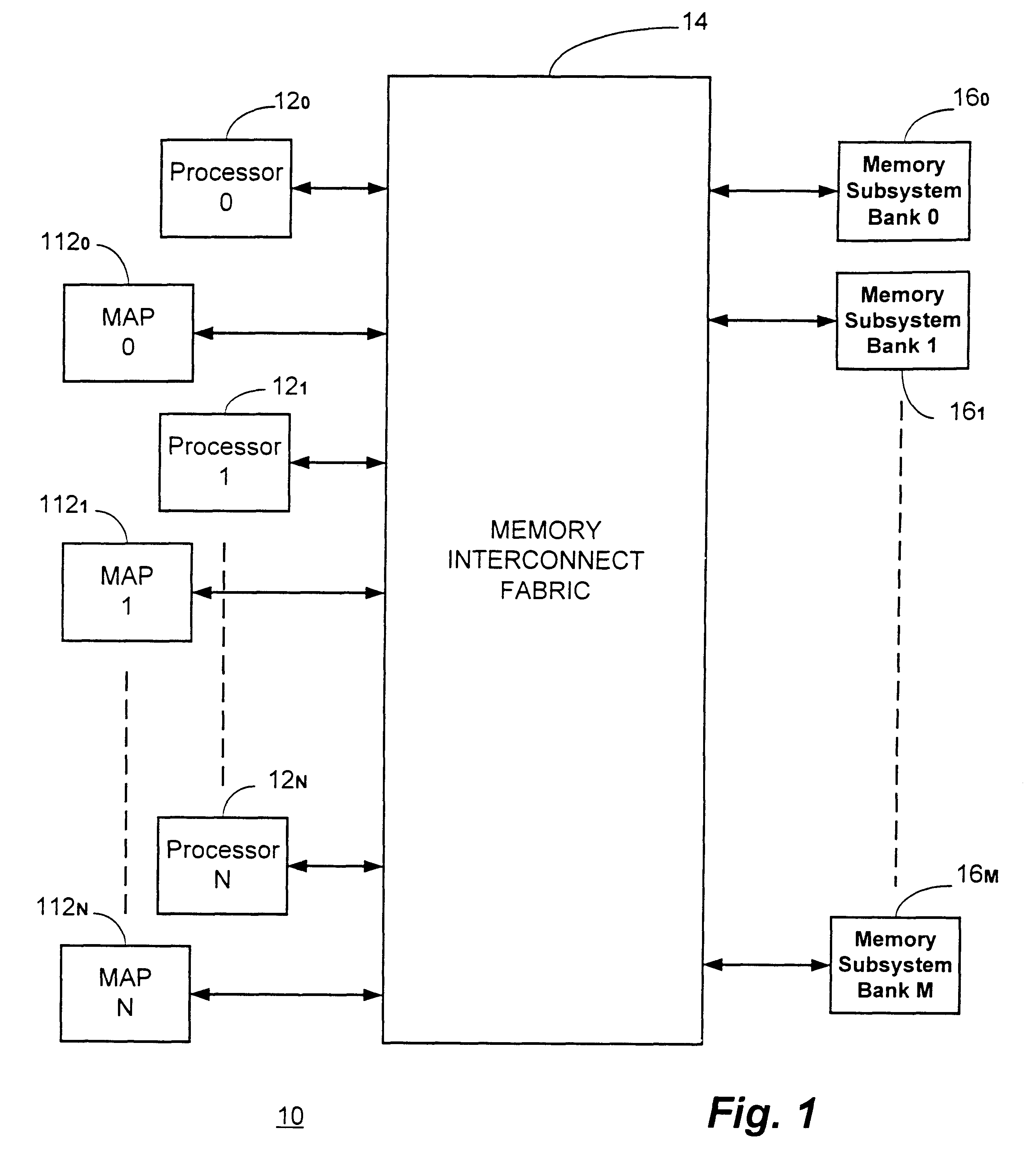

Multiprocessor with each processor element accessing operands in loaded input buffer and forwarding results to FIFO output buffer

InactiveUS6339819B1Perform operationPotential utilitySingle instruction multiple data multiprocessorsMemory adressing/allocation/relocationProcessor elementMulti processor

An enhanced memory algorithmic processor ("MAP") architecture for multiprocessor computer systems comprises an assembly that may comprise, for example, field programmable gate arrays ("FPGAs") functioning as the memory algorithmic processors. The MAP elements may further include an operand storage, intelligent address generation, on board function libraries, result storage and multiple input / output ("I / O") ports. The MAP elements are intended to augment, not necessarily replace, the high performance microprocessors in the system and, in a particular embodiment of the present invention, they may be connected through the memory subsystem of the computer system resulting in it being very tightly coupled to the system as well as being globally accessible from any processor in a multiprocessor computer system.

Owner:SRC COMP

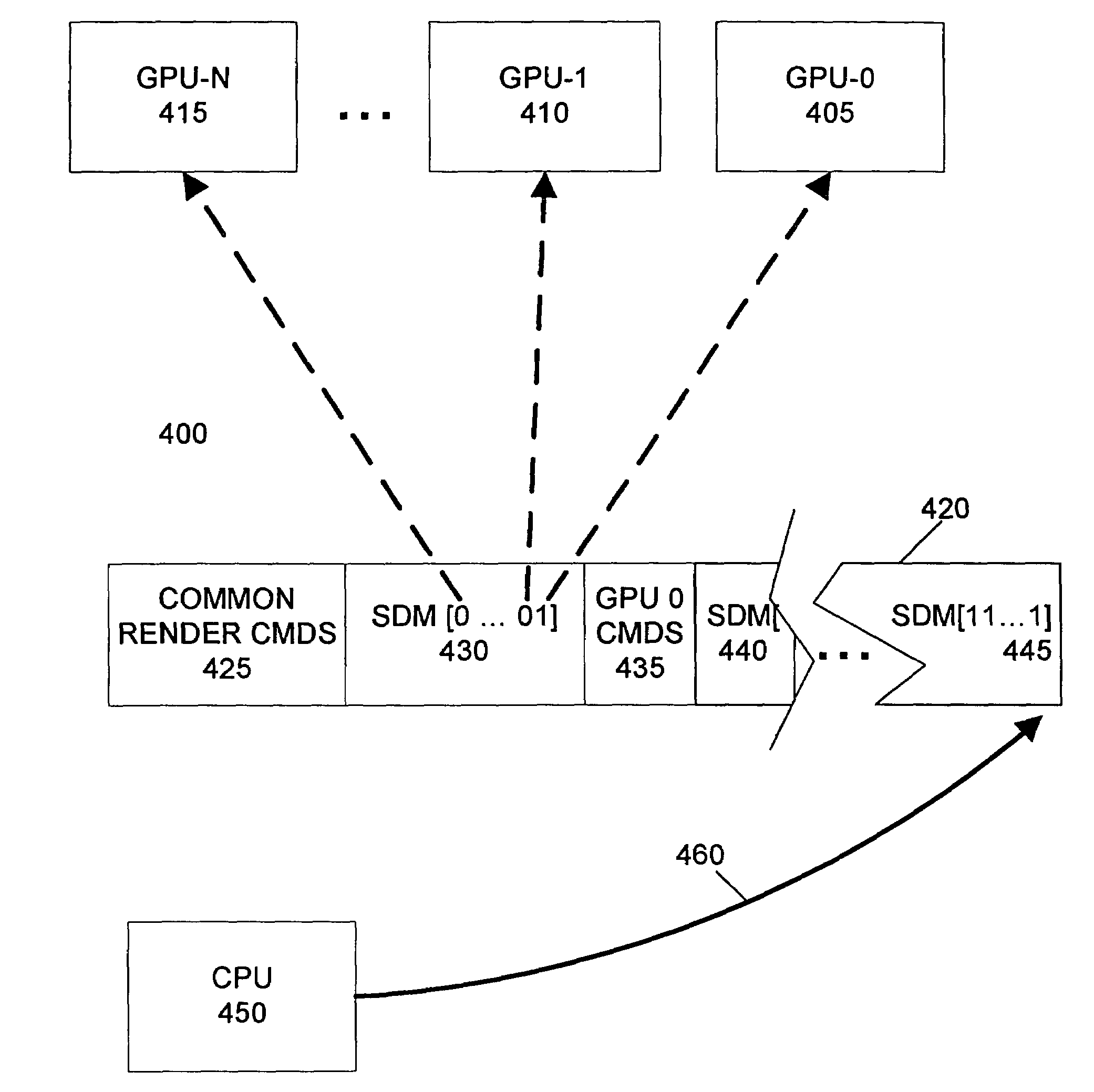

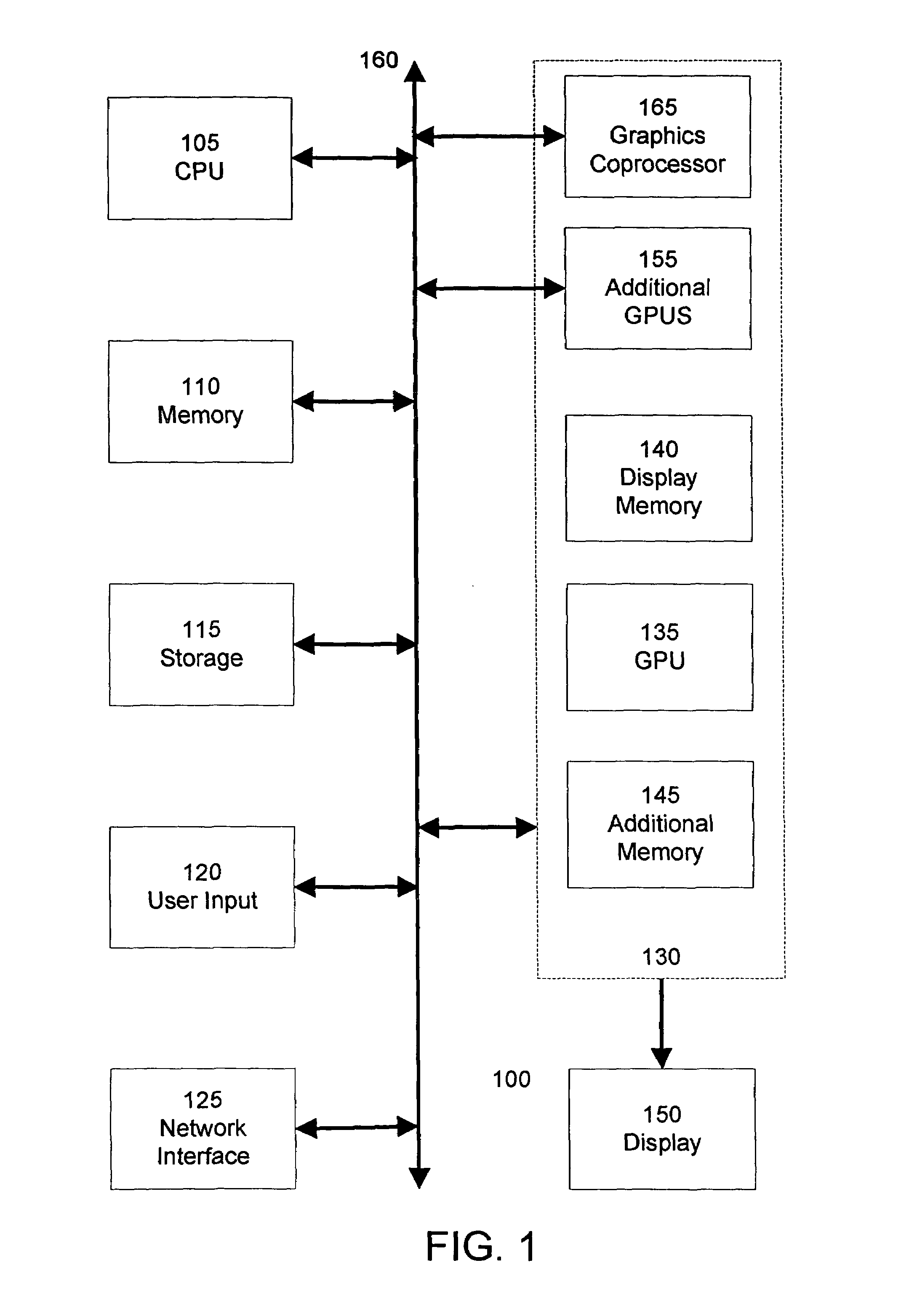

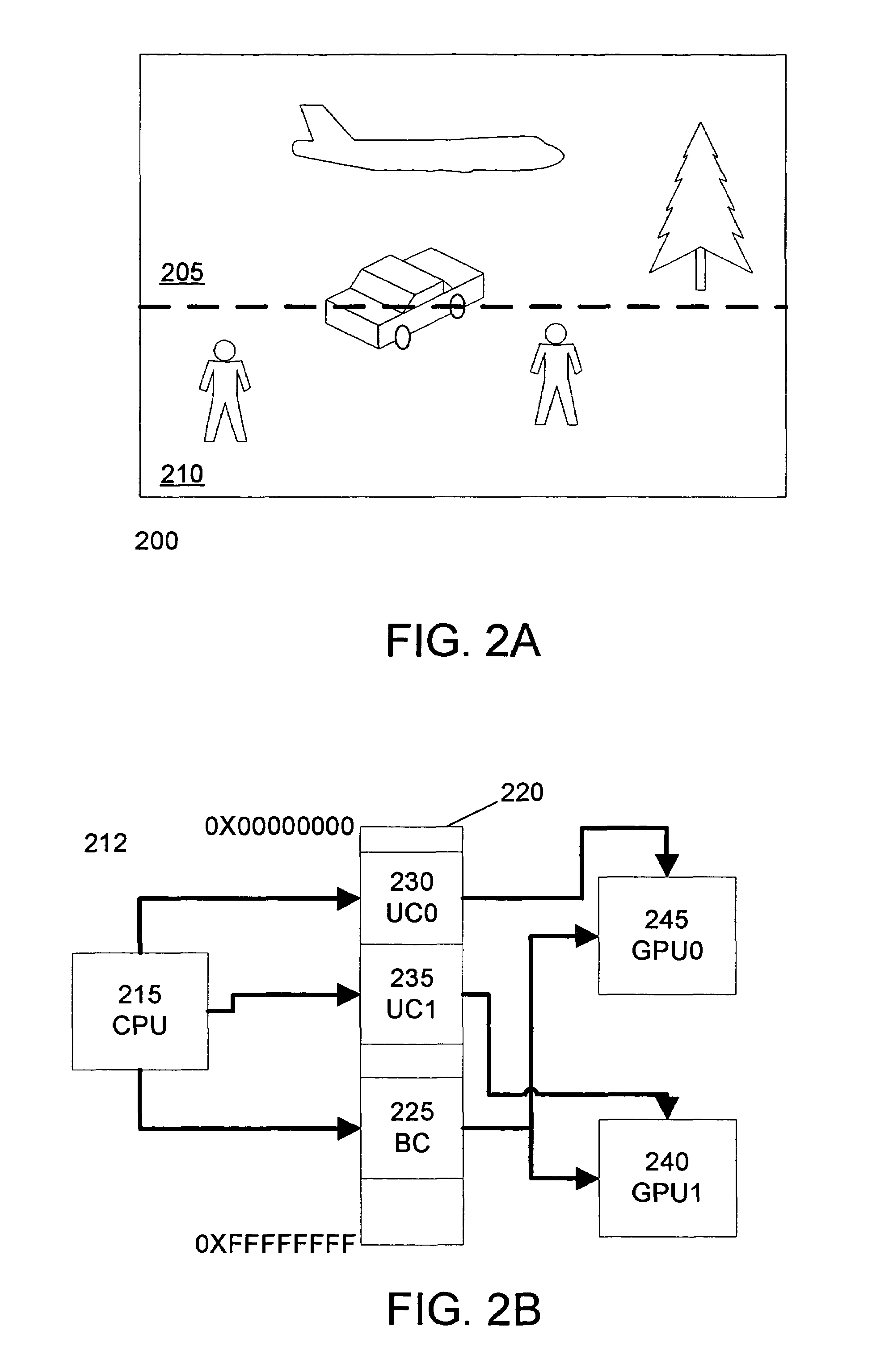

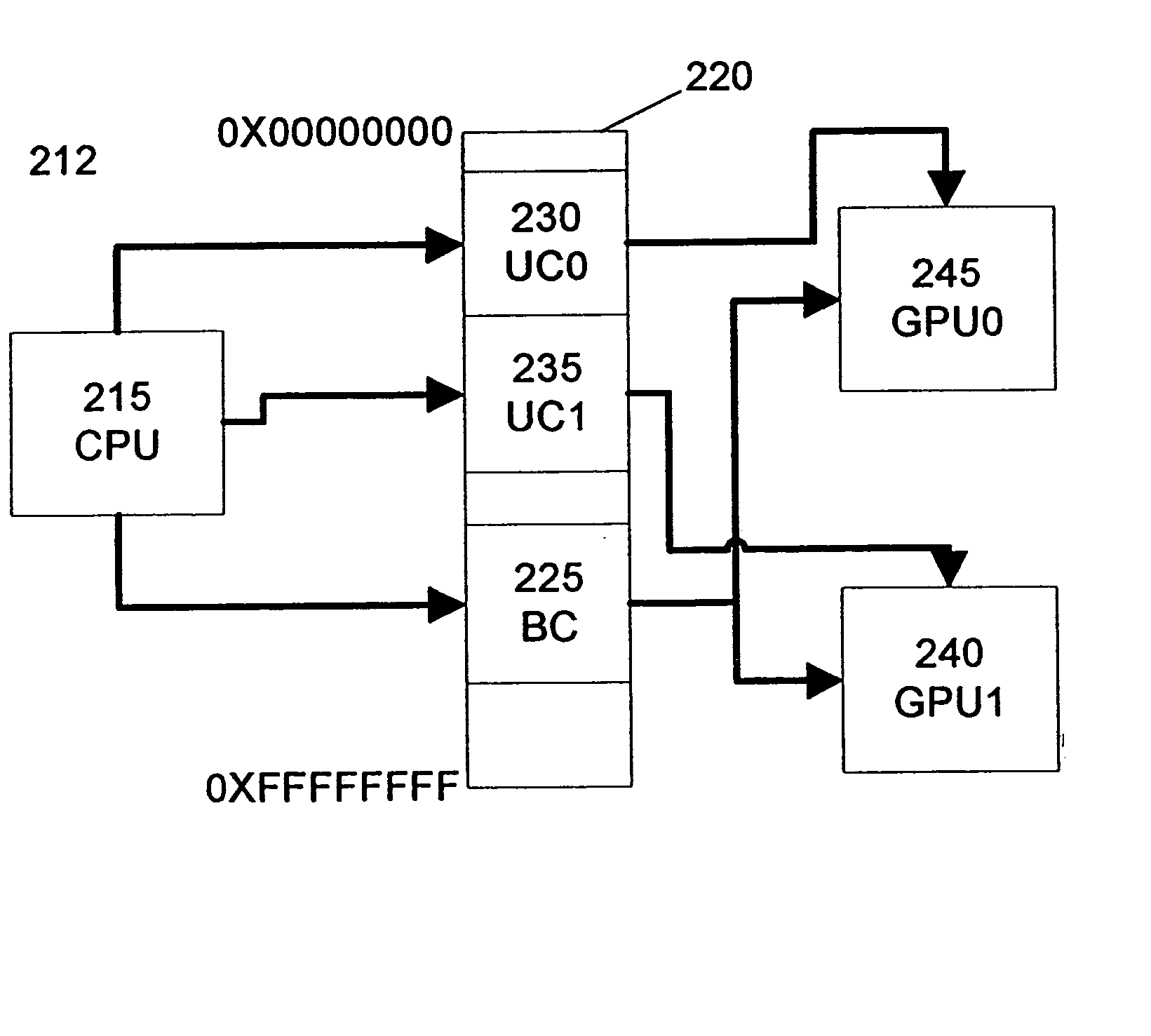

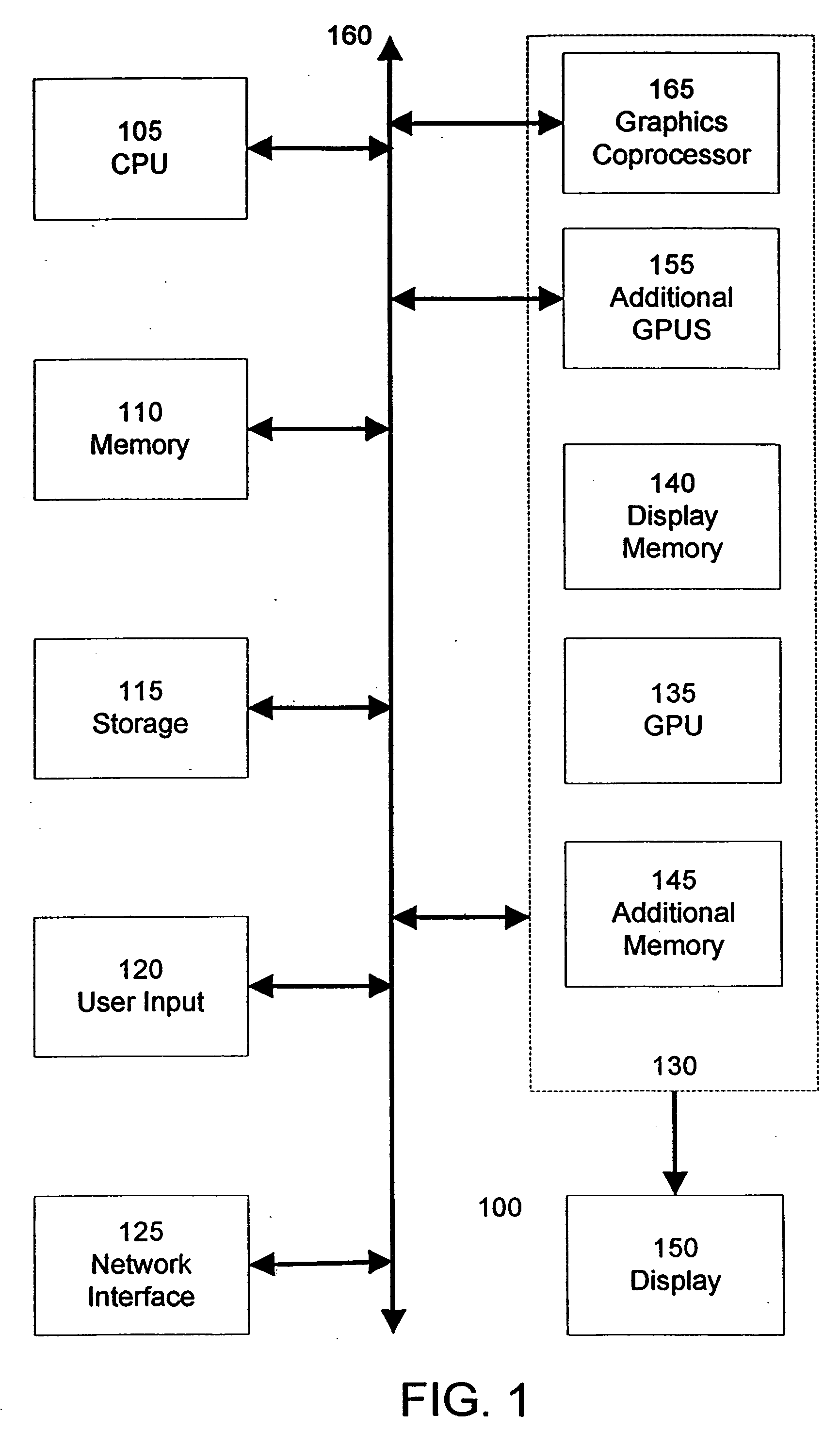

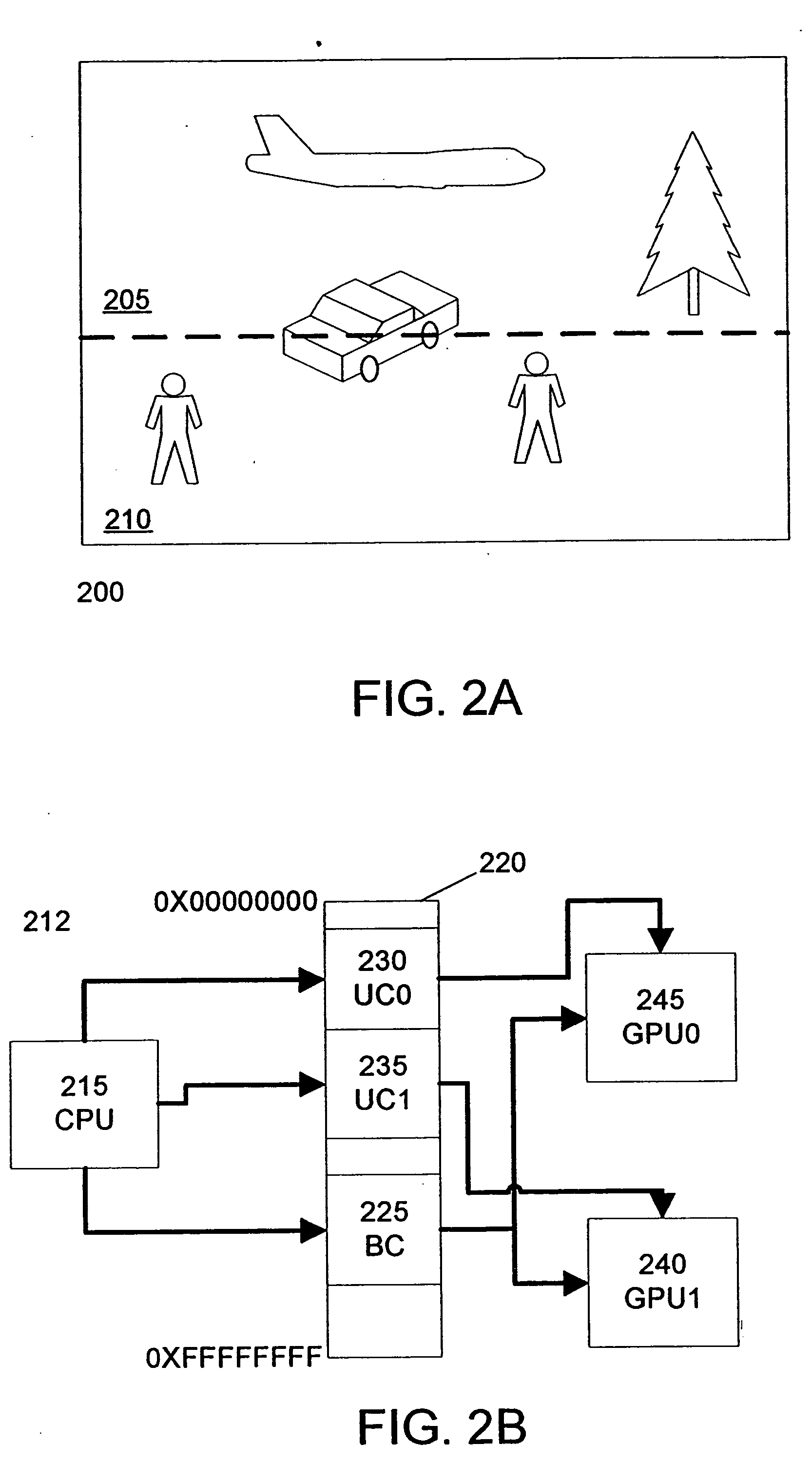

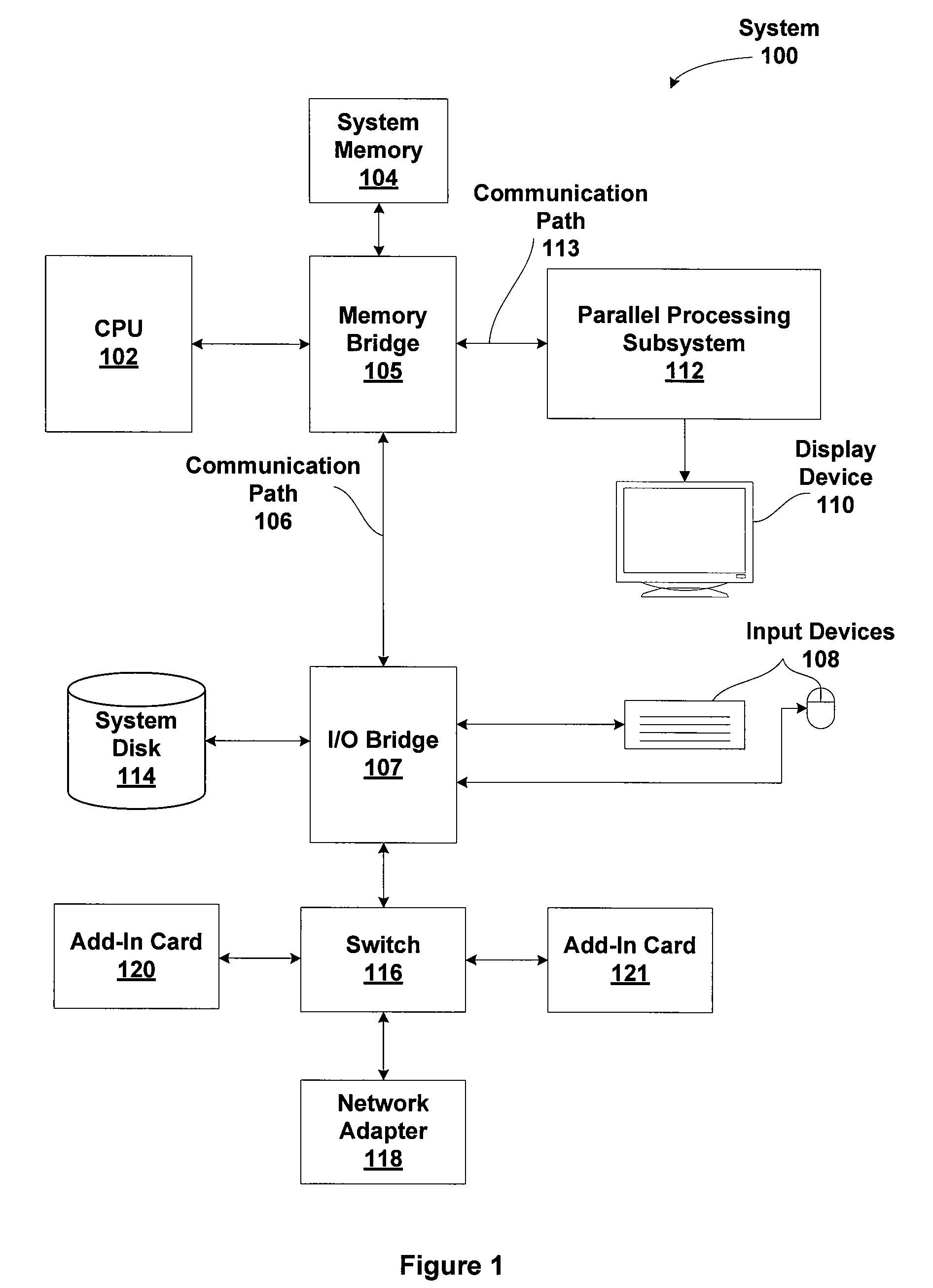

Programming multiple chips from a command buffer

ActiveUS7015915B1Keep in syncSingle instruction multiple data multiprocessorsProcessor architectures/configurationGraphicsCoprocessor

A CPU selectively programs one or more graphics devices by writing a control command to the command buffer that designates a subset of graphics devices to execute subsequent commands. Graphics devices not designated by the control command will ignore the subsequent commands until re-enabled by the CPU. The non-designated graphics devices will continue to read from the command buffer to maintain synchronization. Subsequent control commands can designate different subsets of graphics devices to execute further subsequent commands. Graphics devices include graphics processing units and graphics coprocessors. A unique identifier is associated with each of the graphics devices. The control command designates a subset of graphics devices according to their respective unique identifiers. The control command includes a number of bits. Each bit is associated with one of the unique identifiers and designates the inclusion of one of the graphics devices in the first subset of graphics devices.

Owner:NVIDIA CORP

Data processing architectures

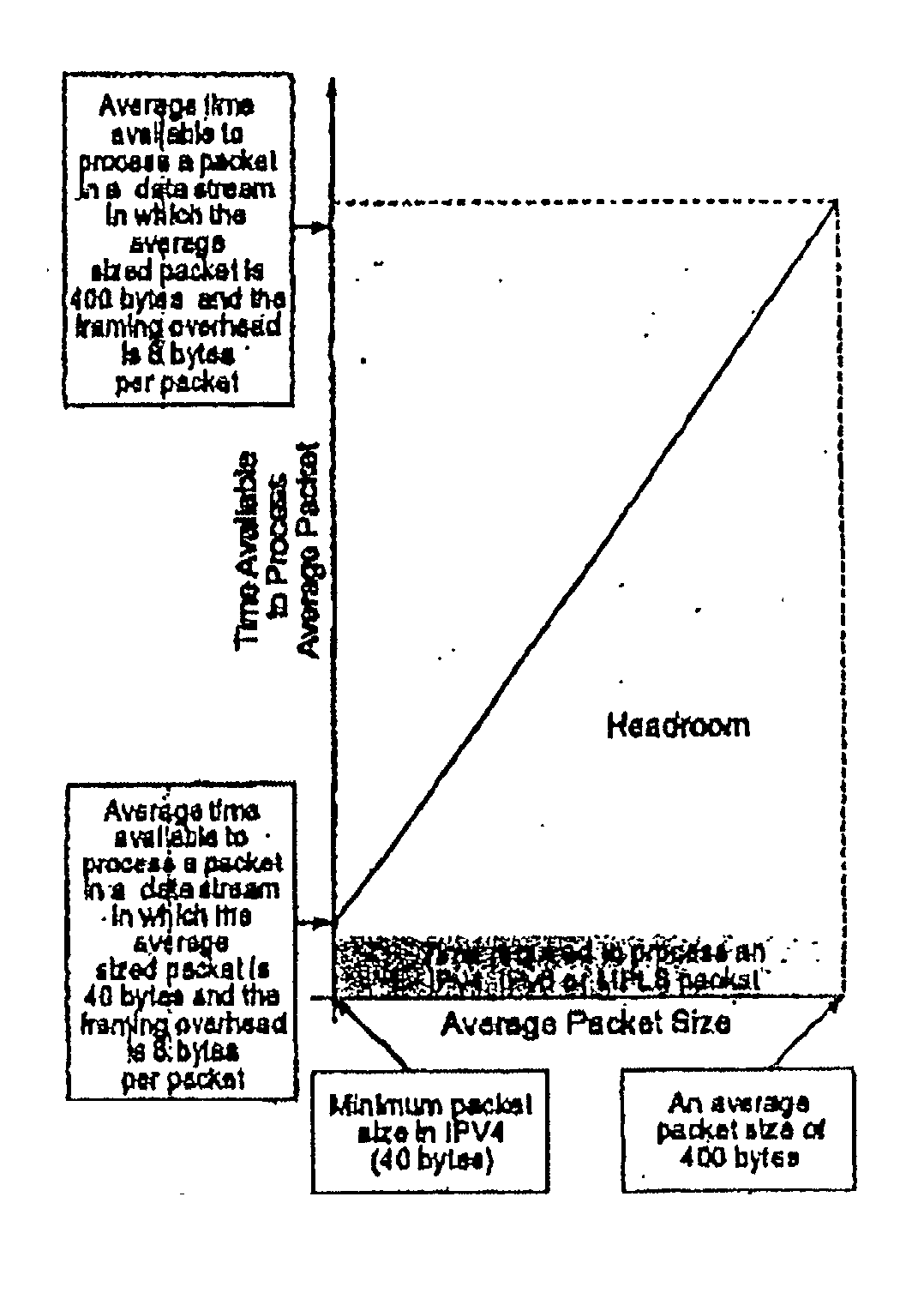

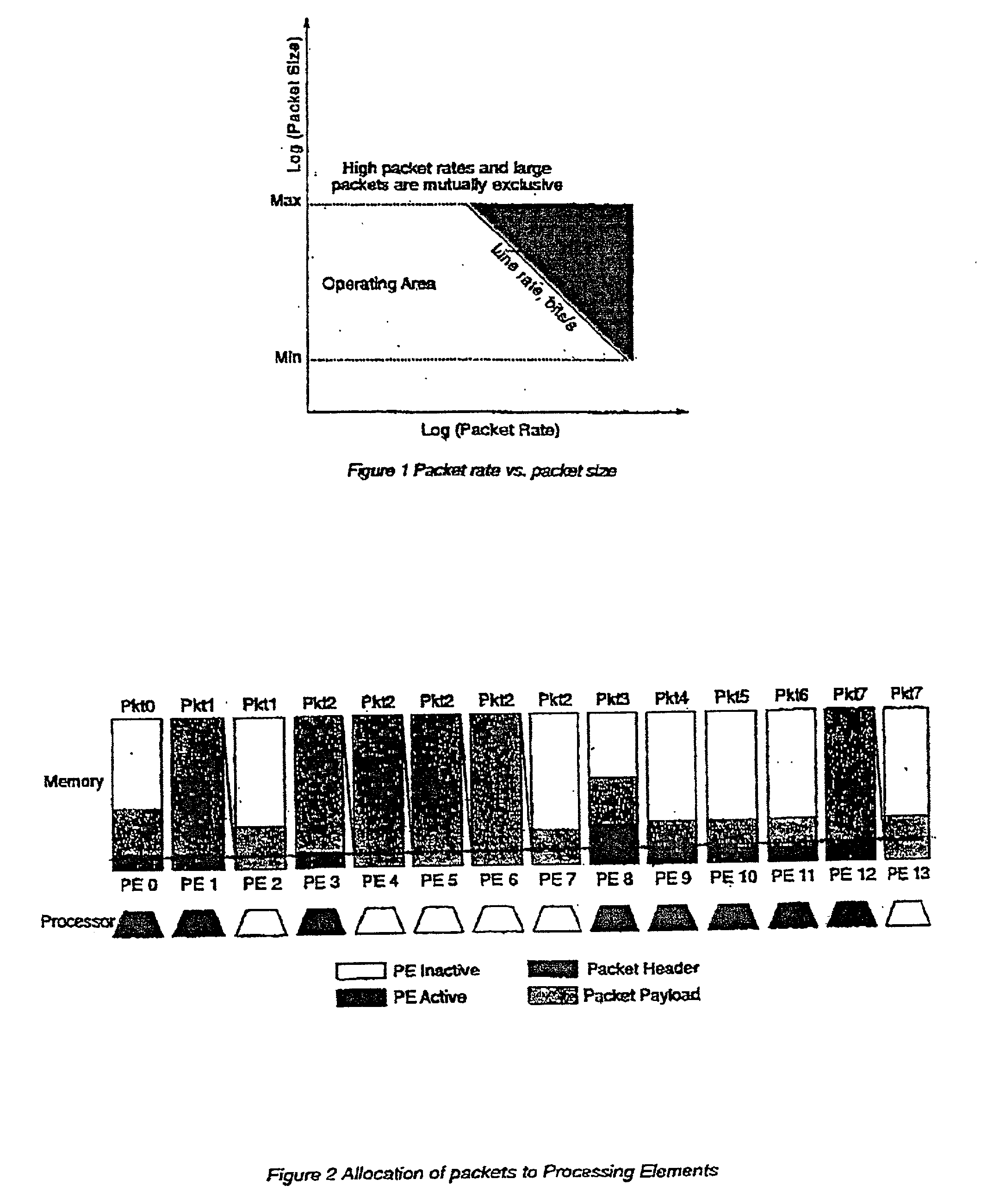

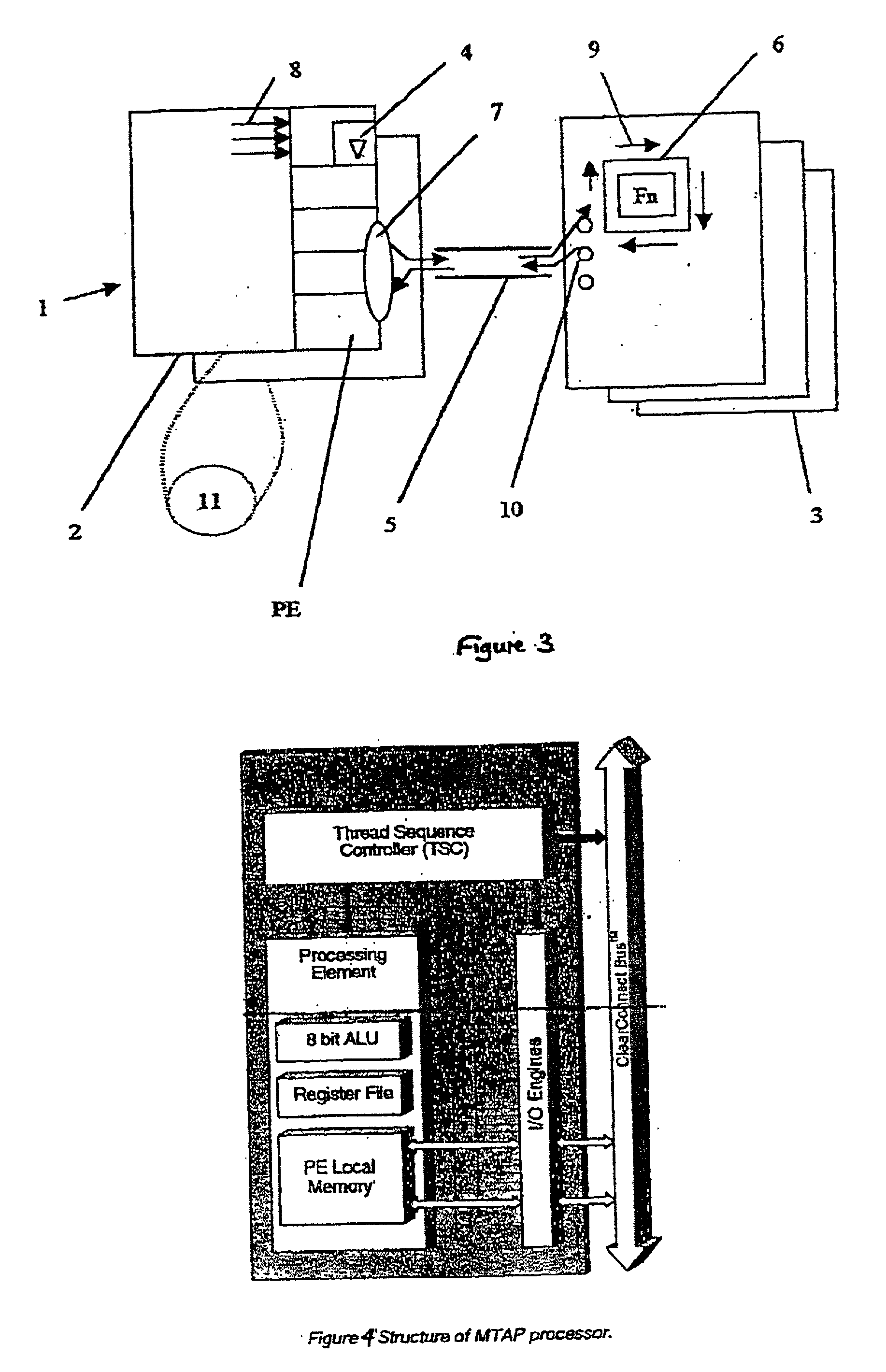

InactiveUS20030041163A1Flexibility maximisedMaximize reuseSingle instruction multiple data multiprocessorsData switching by path configurationData processingInput device

A data processing architecture comprising: an input device for receiving an incoming stream of data packets; and a plurality of processing elements which are operable to process data received thereby; wherein the input device is operable to distribute data packets in whole or in part to the processing elements in dependence upon the data processing bandwidth of the processing elements.

Owner:RAMBUS INC

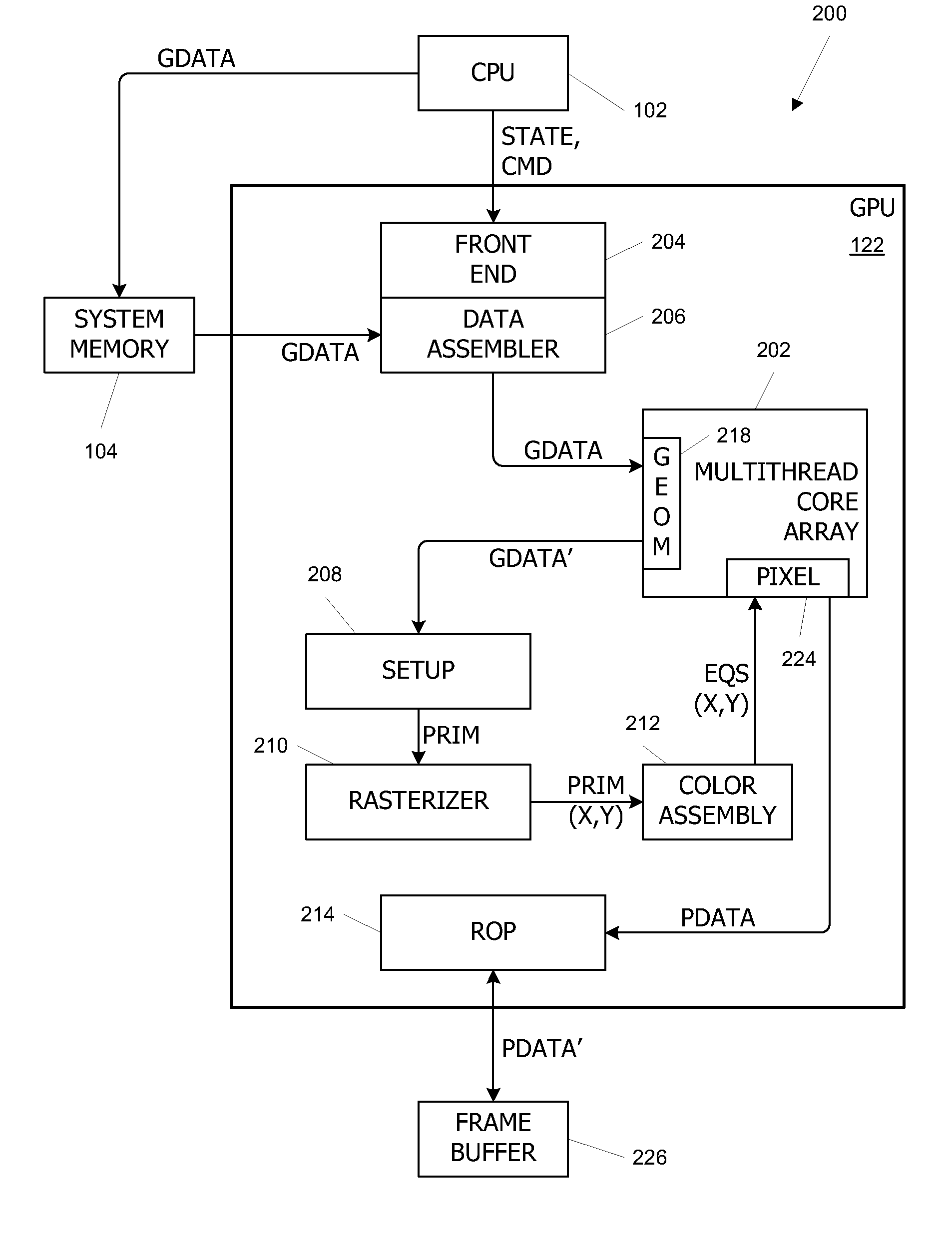

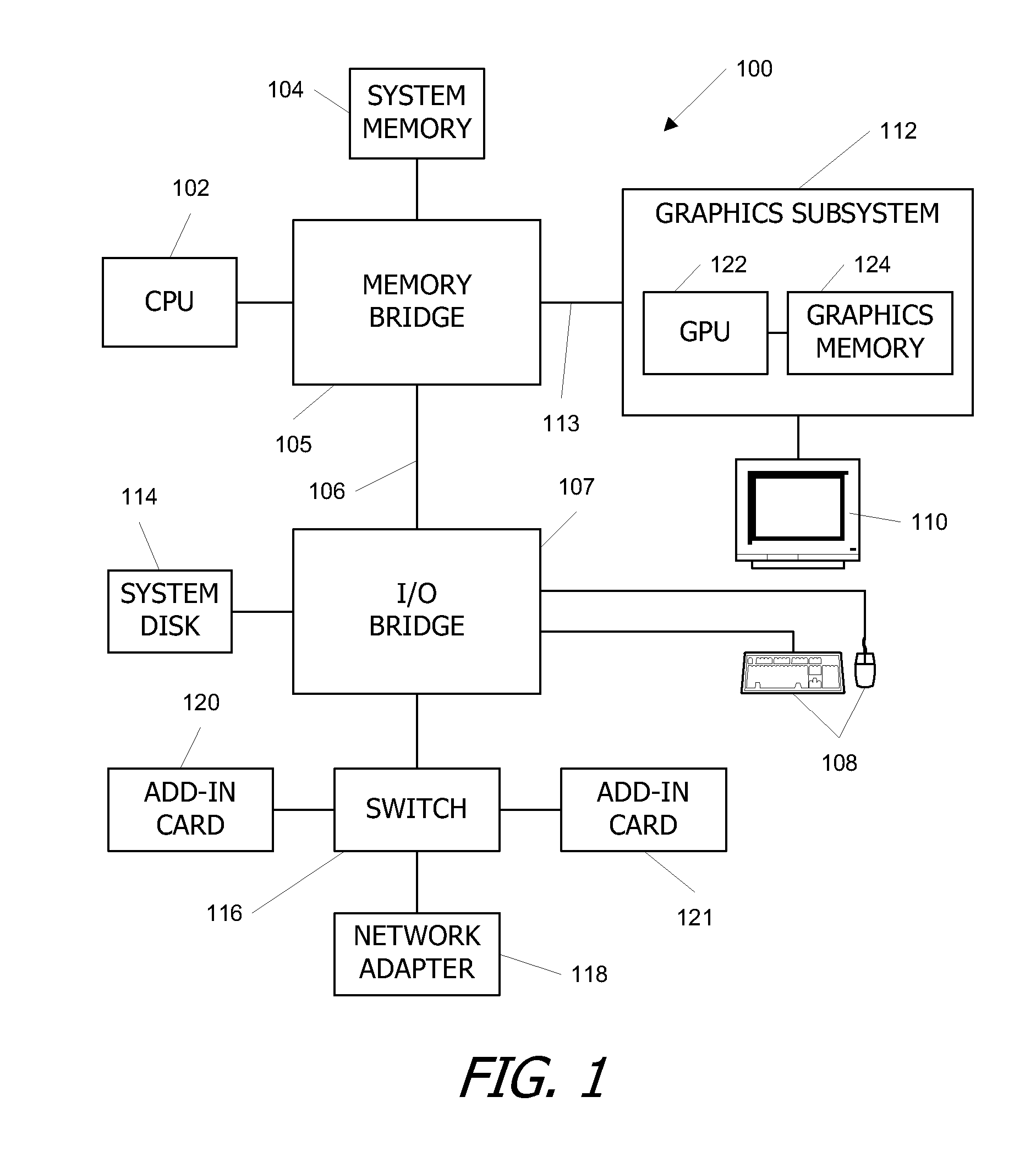

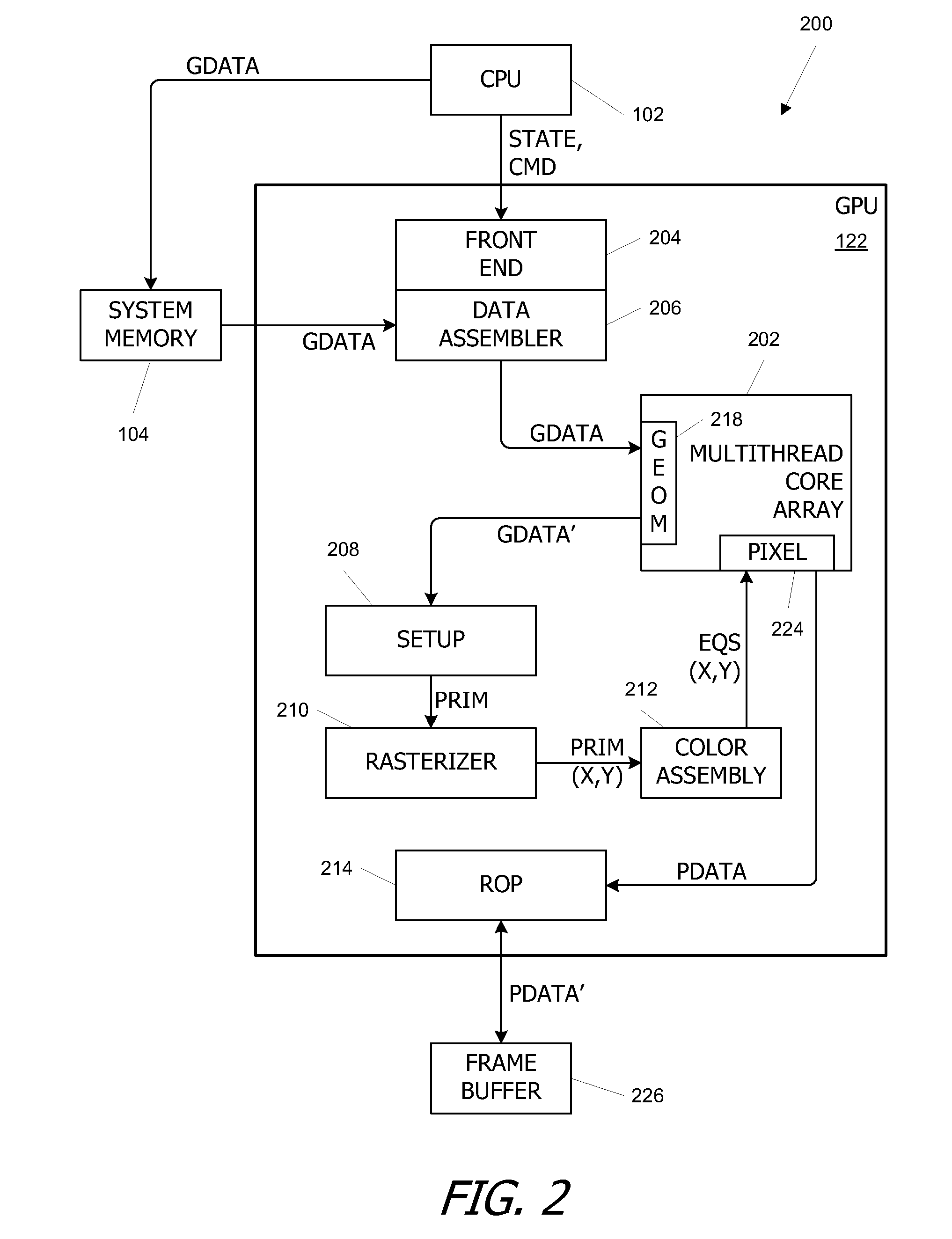

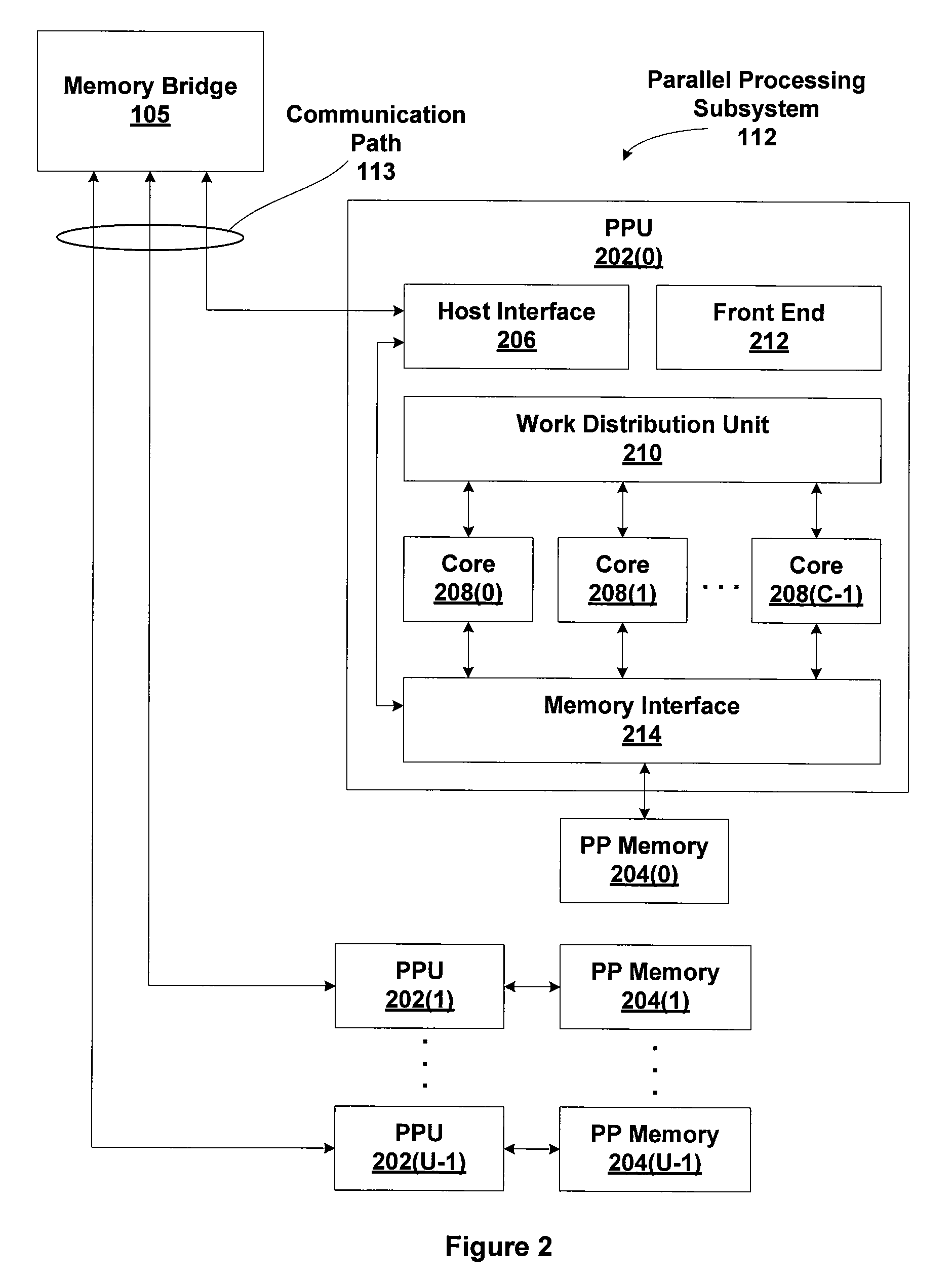

Parallel Array Architecture for a Graphics Processor

InactiveUS20070159488A1Improving memory localityImprove localitySingle instruction multiple data multiprocessorsCathode-ray tube indicatorsProcessing coreParallel computing

A parallel array architecture for a graphics processor includes a multithreaded core array including a plurality of processing clusters, each processing cluster including at least one processing core operable to execute a pixel shader program that generates pixel data from coverage data; a rasterizer configured to generate coverage data for each of a plurality of pixels; and pixel distribution logic configured to deliver the coverage data from the rasterizer to one of the processing clusters in the multithreaded core array. The pixel distribution logic selects one of the processing clusters to which the coverage data for a first pixel is delivered based at least in part on a location of the first pixel within an image area. The processing clusters can be mapped directly to the frame buffers partitions without a crossbar so that pixel data is delivered directly from the processing cluster to the appropriate frame buffer partitions. Alternatively, a crossbar coupled to each of the processing clusters is configured to deliver pixel data from the processing clusters to a frame buffer having a plurality of partitions. The crossbar is configured such that pixel data generated by any one of the processing clusters is deliverable to any one of the frame buffer partitions.

Owner:NVIDIA CORP

Programming multiple chips from a command buffer

ActiveUS20060114260A1Single instruction multiple data multiprocessorsProcessor architectures/configurationComputational scienceGraphics

Owner:NVIDIA CORP

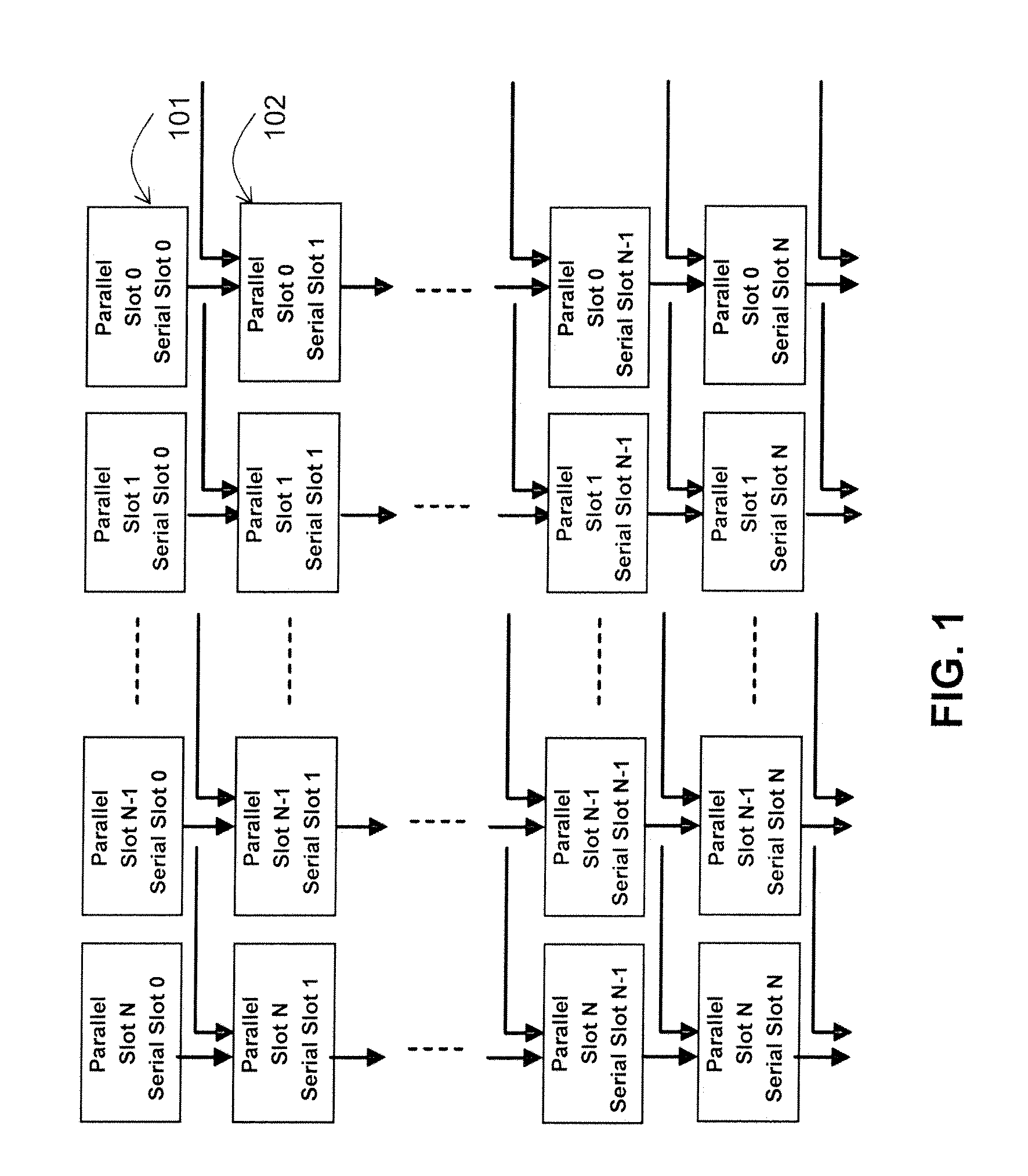

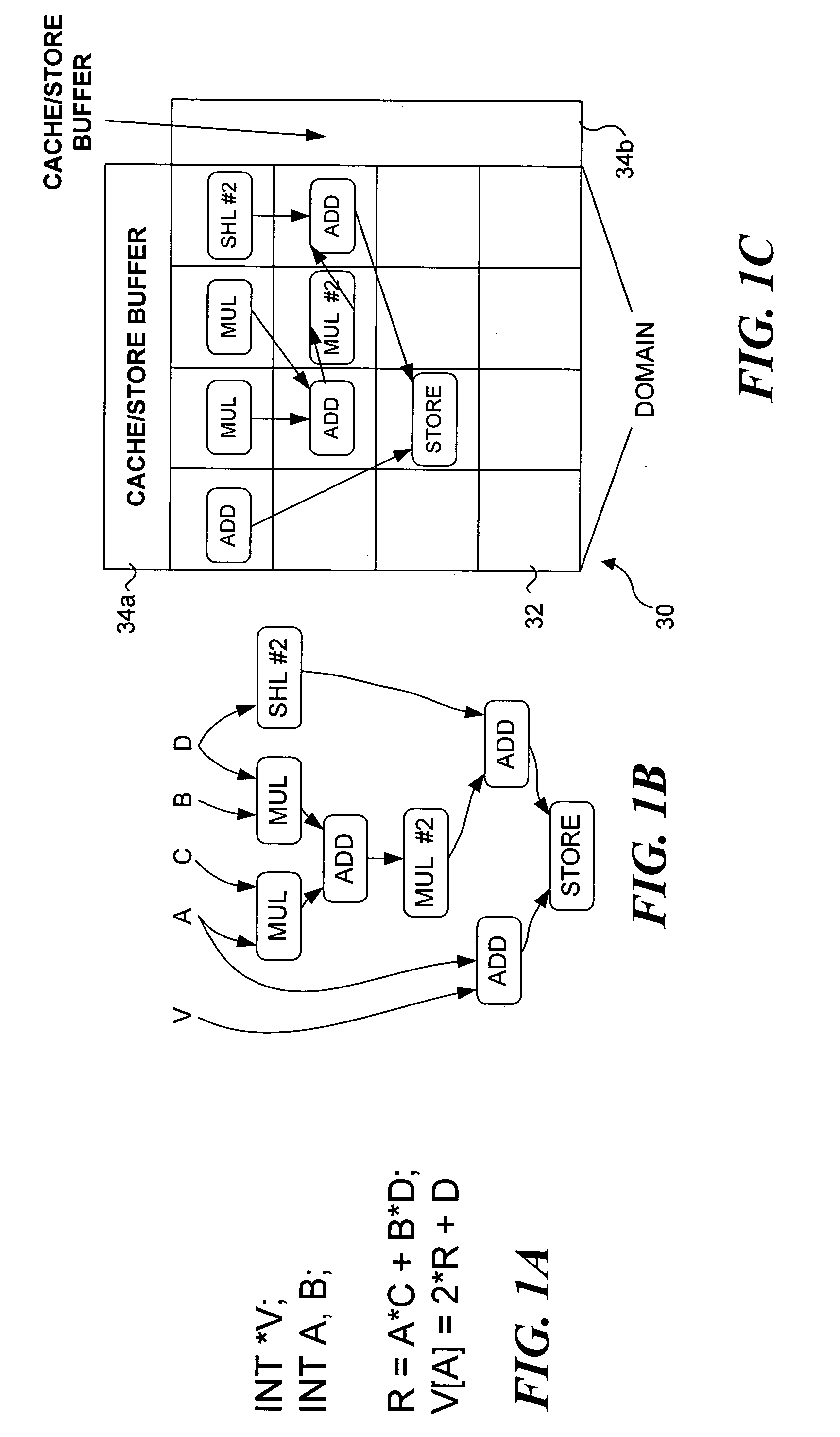

Apparatus and Method for Processing an Instruction Matrix Specifying Parallel and Dependent Operations

ActiveUS20090113170A1Single instruction multiple data multiprocessorsRegister arrangementsParallel computingMatrix manipulation

Owner:INTEL CORP

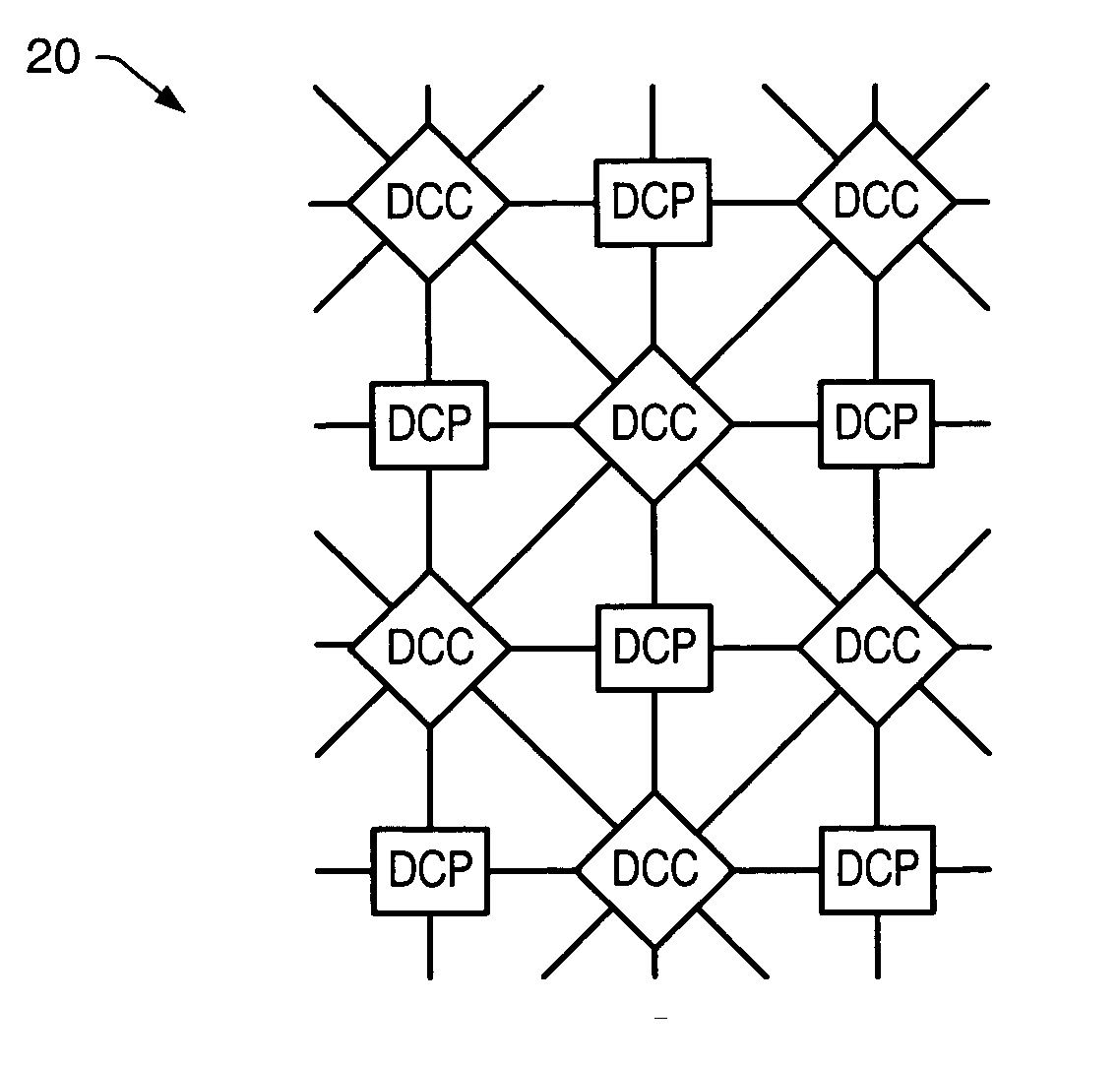

Processing system with interspersed processors and communication elements

ActiveUS20040030859A1Energy efficient ICTSingle instruction multiple data multiprocessorsArithmetic logic unitInstruction processing unit

A processing system comprising processors and the dynamically configurable communication elements coupled together in an interspersed arrangement. The processors each comprise at least one arithmetic logic unit, an instruction processing unit, and a plurality of processor ports. The dynamically configurable communication elements each comprise a plurality of communication ports, a first memory, and a routing engine. For each of the processors, the plurality of processor ports is configured for coupling to a first subset of the plurality of dynamically configurable communication elements. For each of the dynamically configurable communication elements, the plurality of communication ports comprises a first subset of communication ports configured for coupling to a subset of the plurality of processors and a second subset of communication ports configured for coupling to a second subset of the plurality of dynamically configurable communication elements.

Owner:COHERENT LOGIX

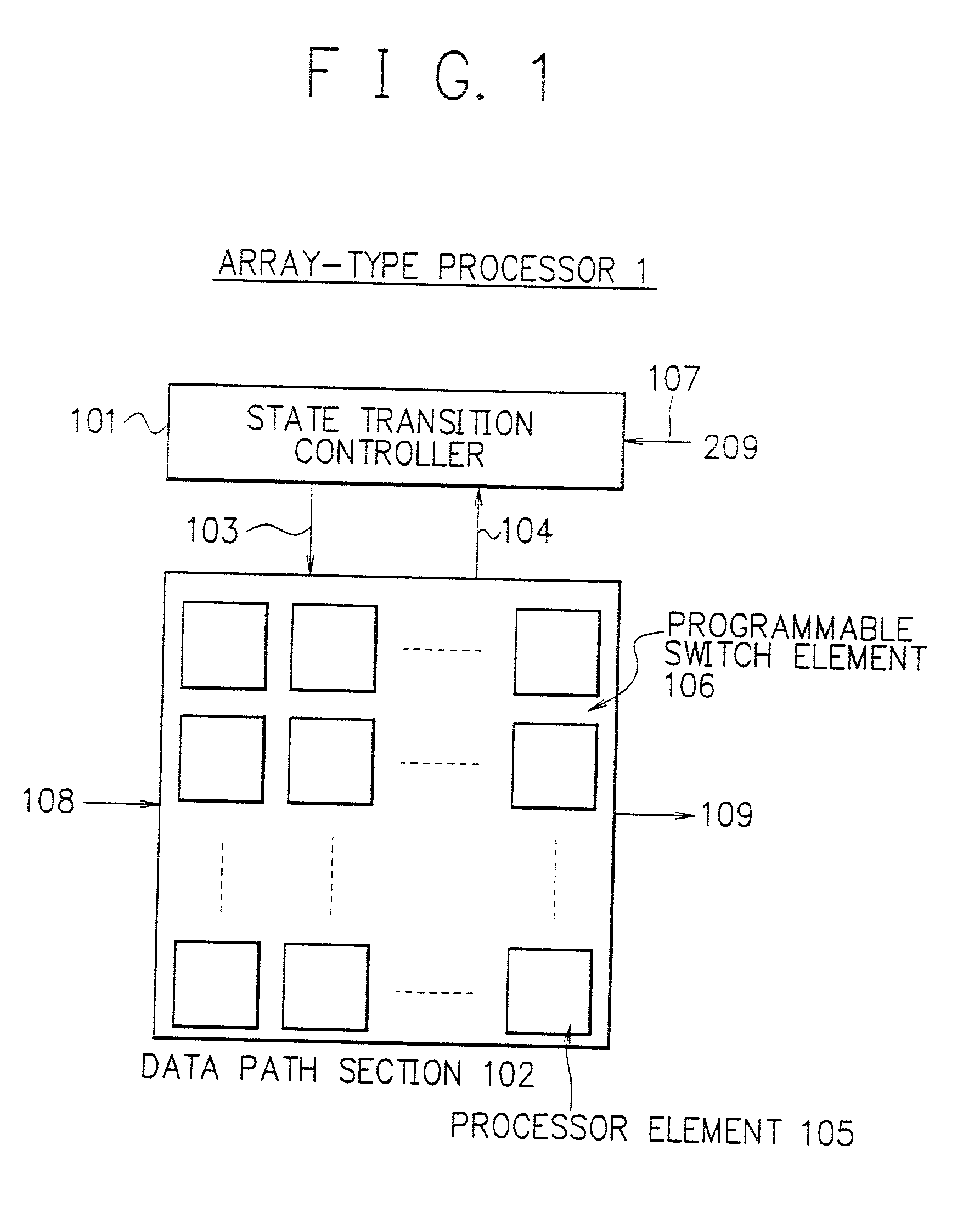

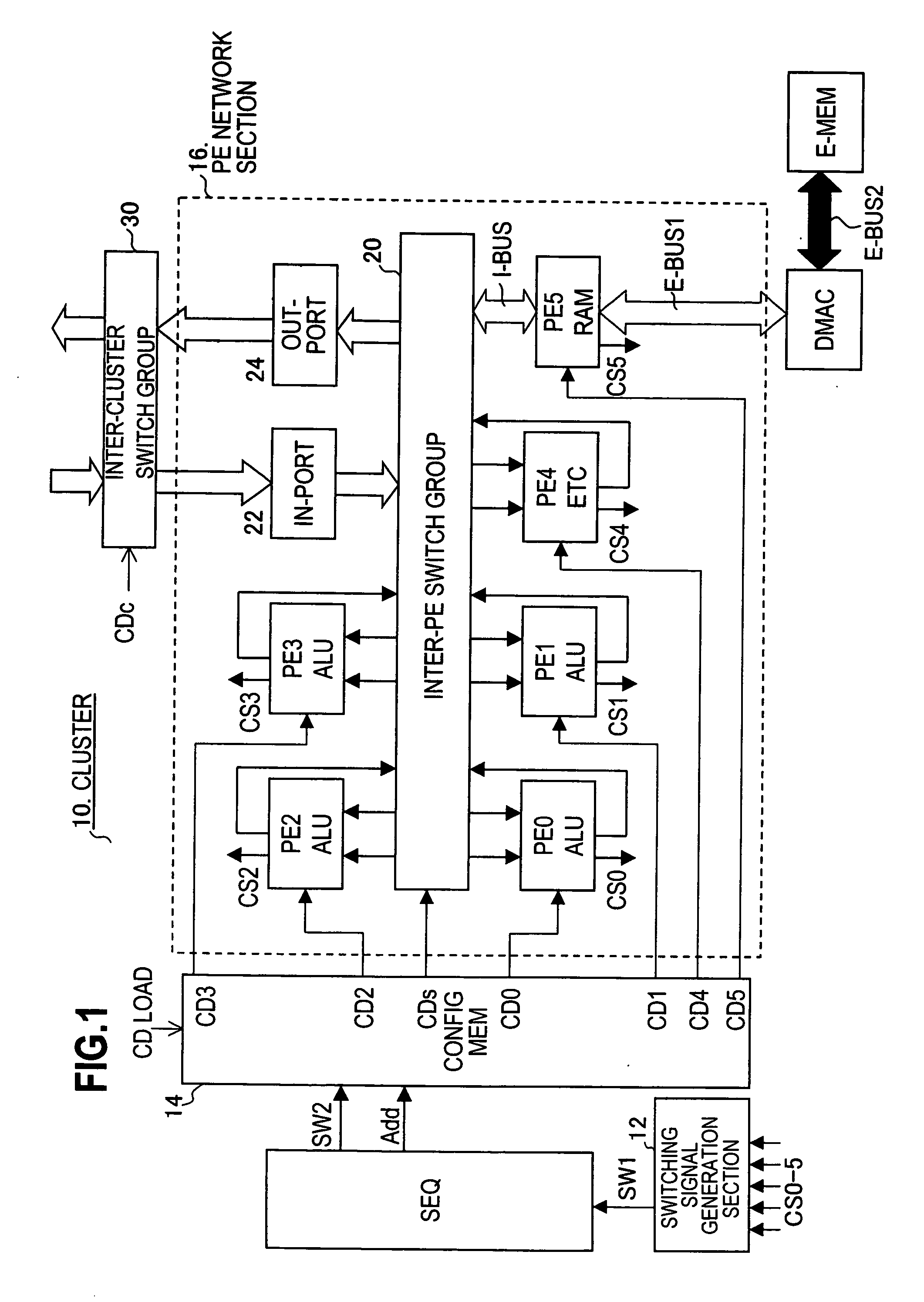

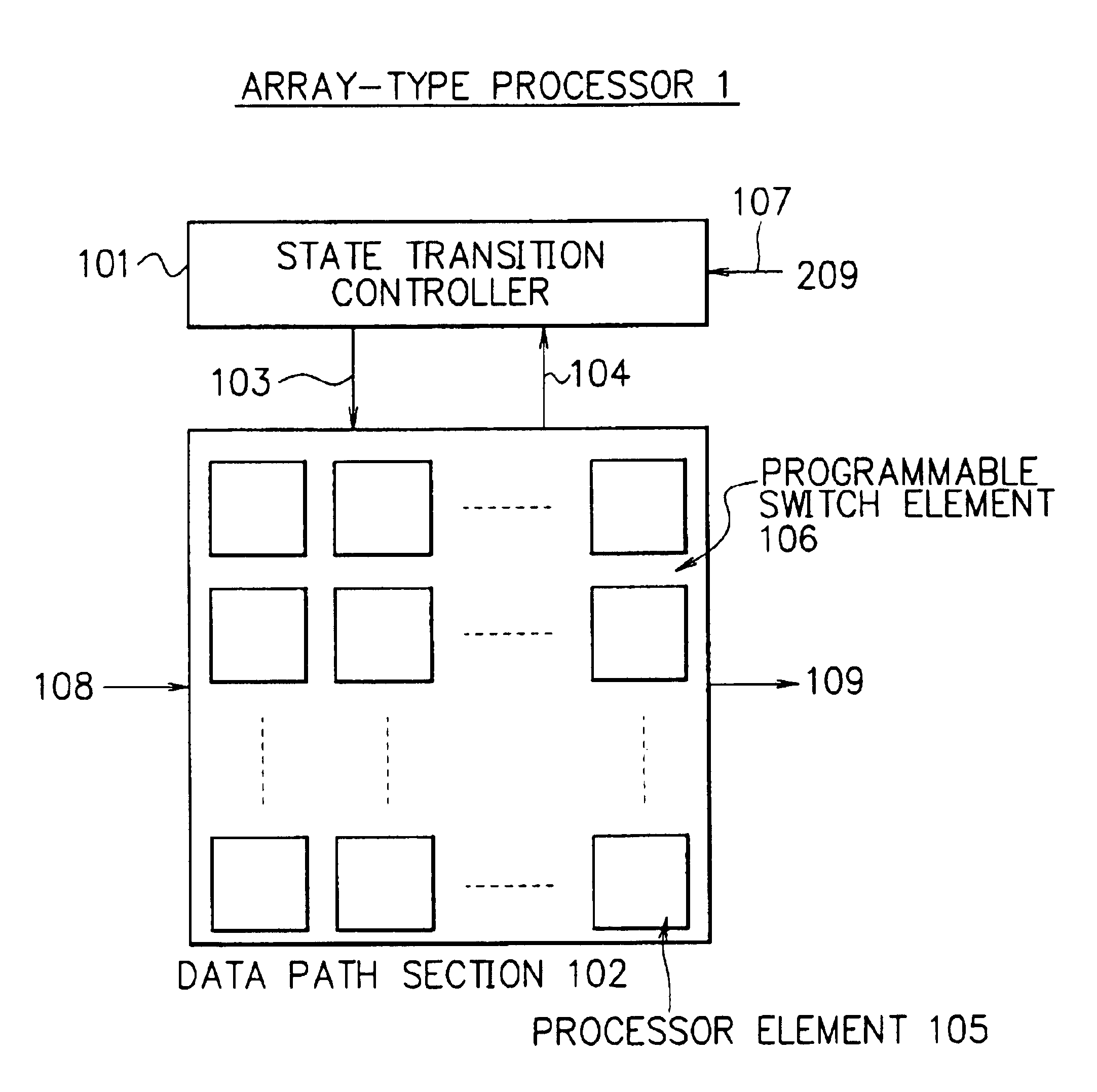

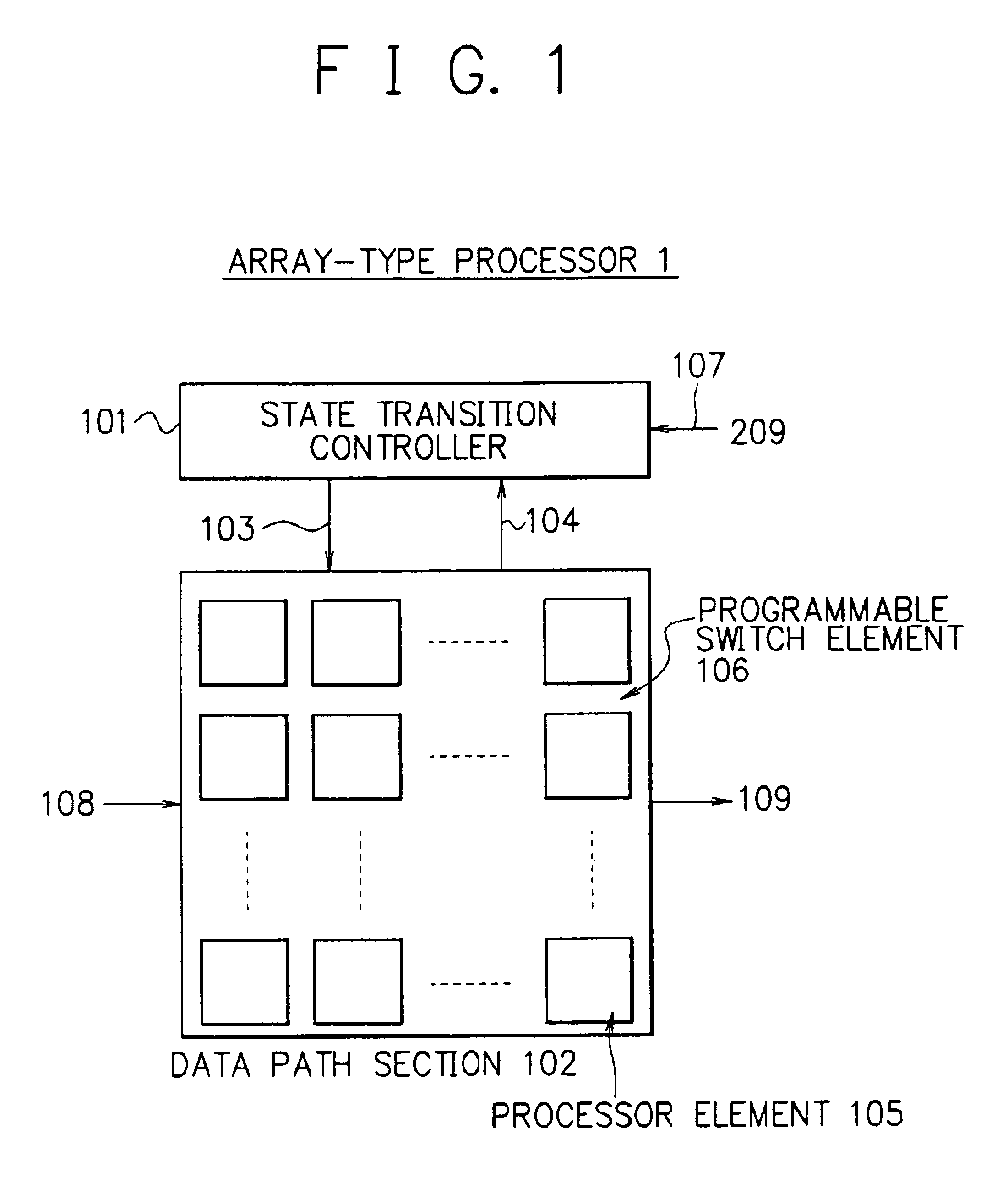

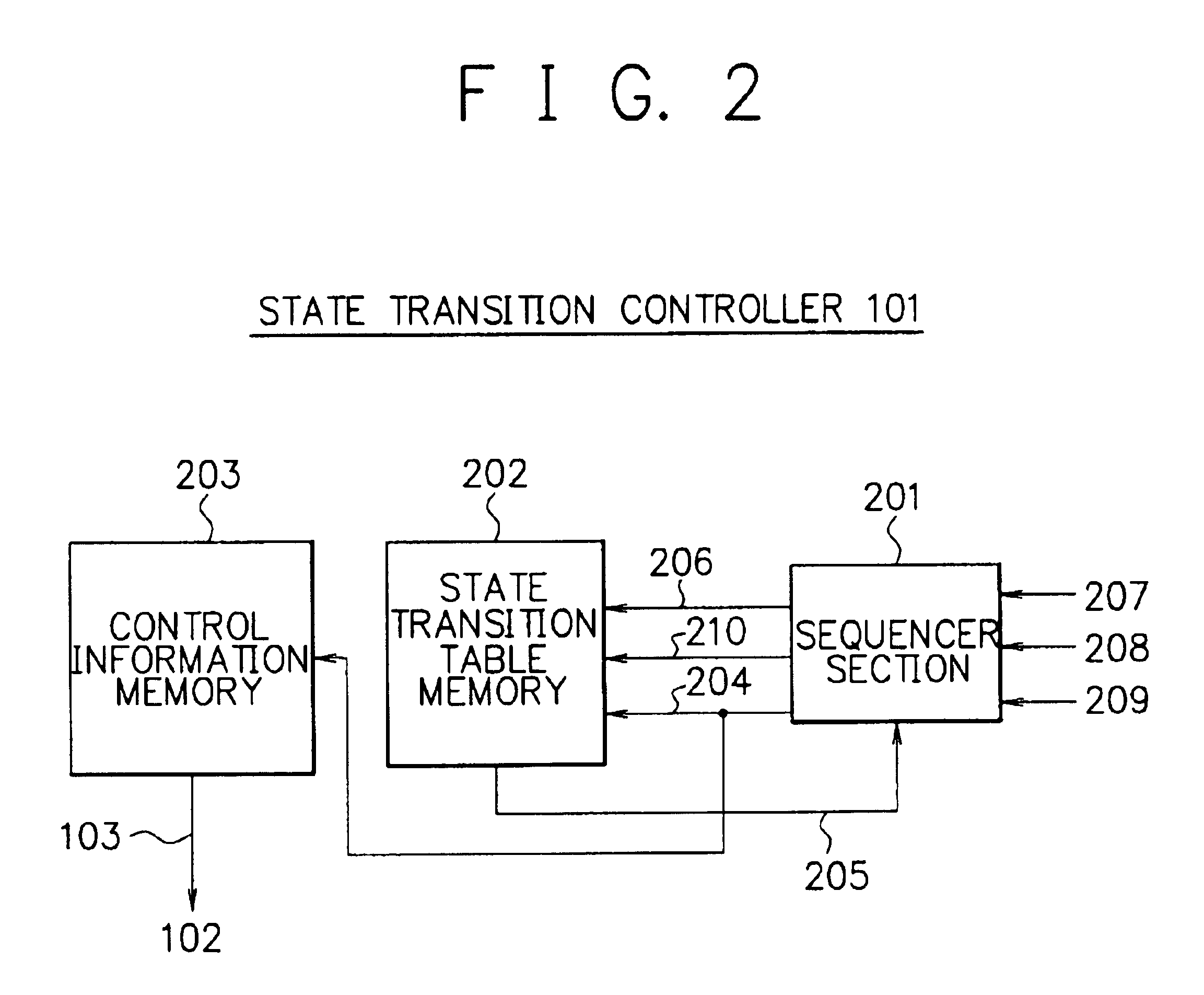

Array-type processor

InactiveUS20010018733A1Efficient implementationEfficient executionSingle instruction multiple data multiprocessorsSpecific program execution arrangementsDatapathState switching

To execute all processing in an array section of an array-type processor, each processor must execute processing of different types, i.e., processing of an operating unit and processing of a random logic circuit, which limits its size and processing performance. A data path section including processors arranged in an array are connected via programmable switches to primarily execute processing of operation and a state transition controller configured to easily implement a state transition function to control state transitions are independently disposed. These sections are configured in customized structure for respective processing purposes to efficiently implement and achieve the processing of operation and the control operation.

Owner:NEC CORP

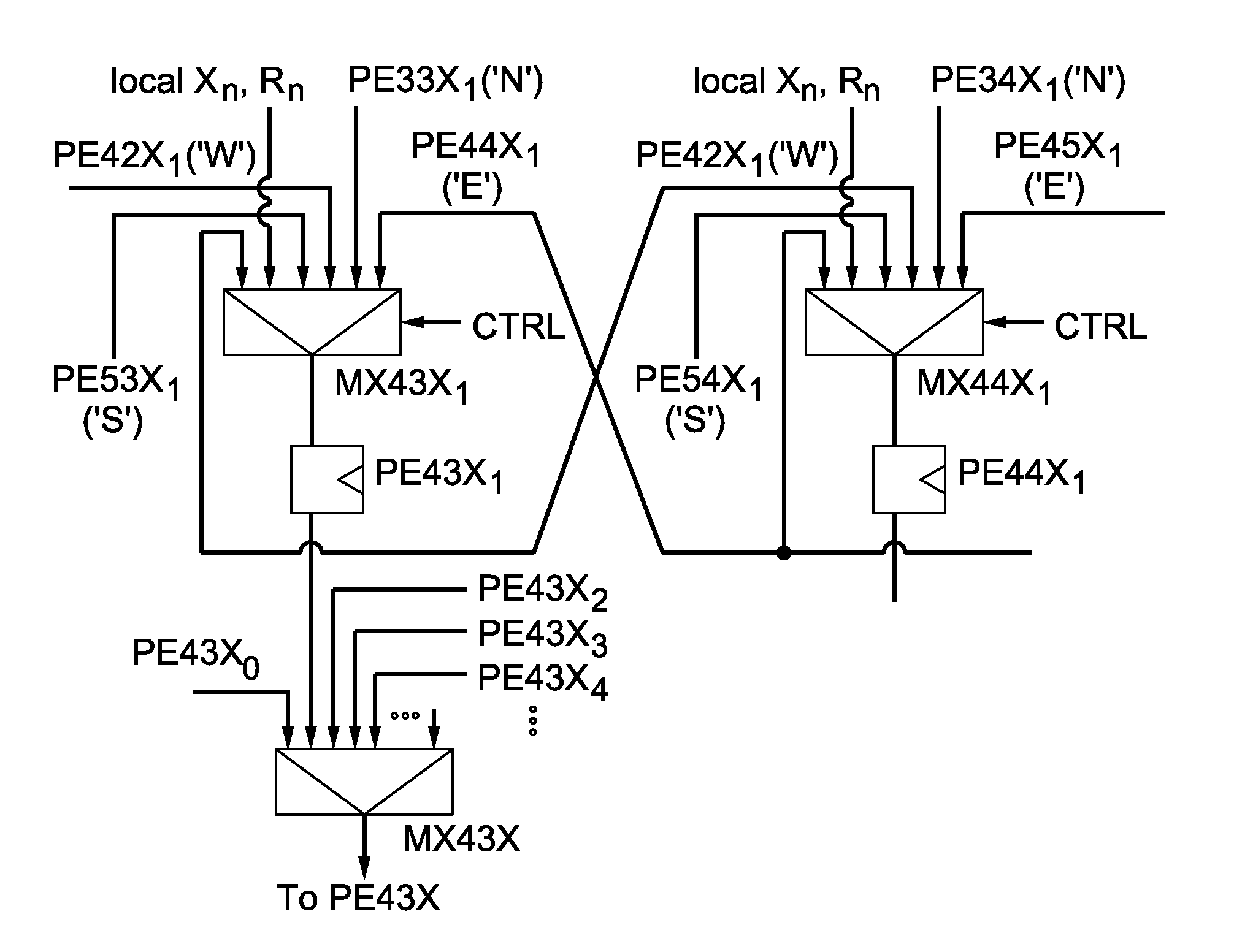

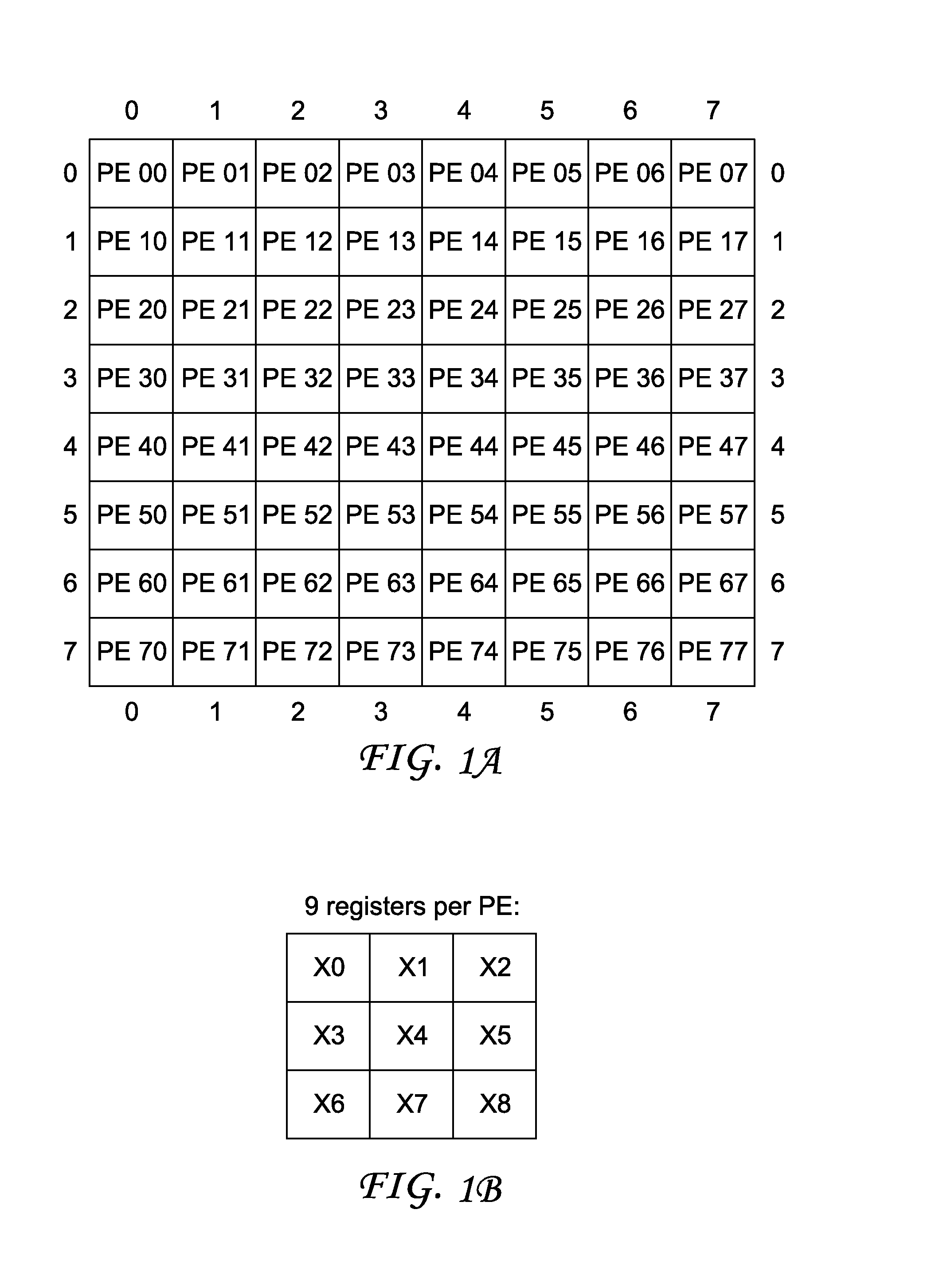

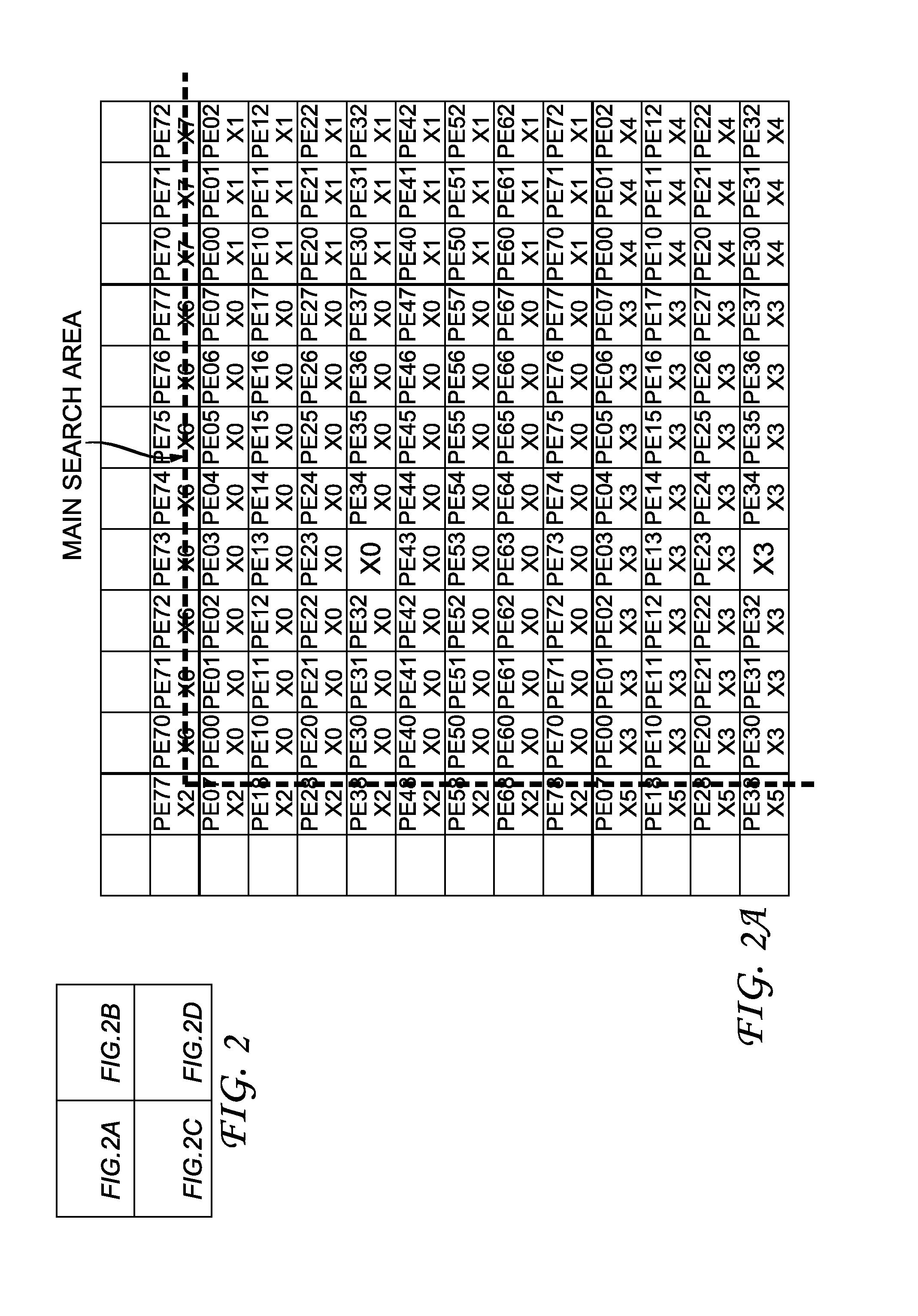

Array of processing elements with local registers

InactiveUS7941634B2Reduce loadSingle instruction multiple data multiprocessorsImage memory managementArray data structureImaging processing

Specialized image processing circuitry is usually implemented in hardware in a massively parallel way as a single instruction multiple data (SIMD) architecture. The invention prevents long and complicated connection paths between a processing element and the memory subsystem, and improves maximum operating frequency. An optimized architecture for image processing has processing elements that are arranged in a two-dimensional structure, and each processing element has a local storage containing a plurality of reference pixels that are not neighbors in the reference image. Instead, the reference pixels belong to different blocks of the reference image, which may vary for different encoding schemes. Each processing element has a plurality of local first registers for holding the reference image data: one of the first registers holds reference input data of a first search block, and some of the remaining first registers holding reference input data of further search blocks that have specified positions relative to the first search block.

Owner:INTERDIGITAL VC HLDG INC

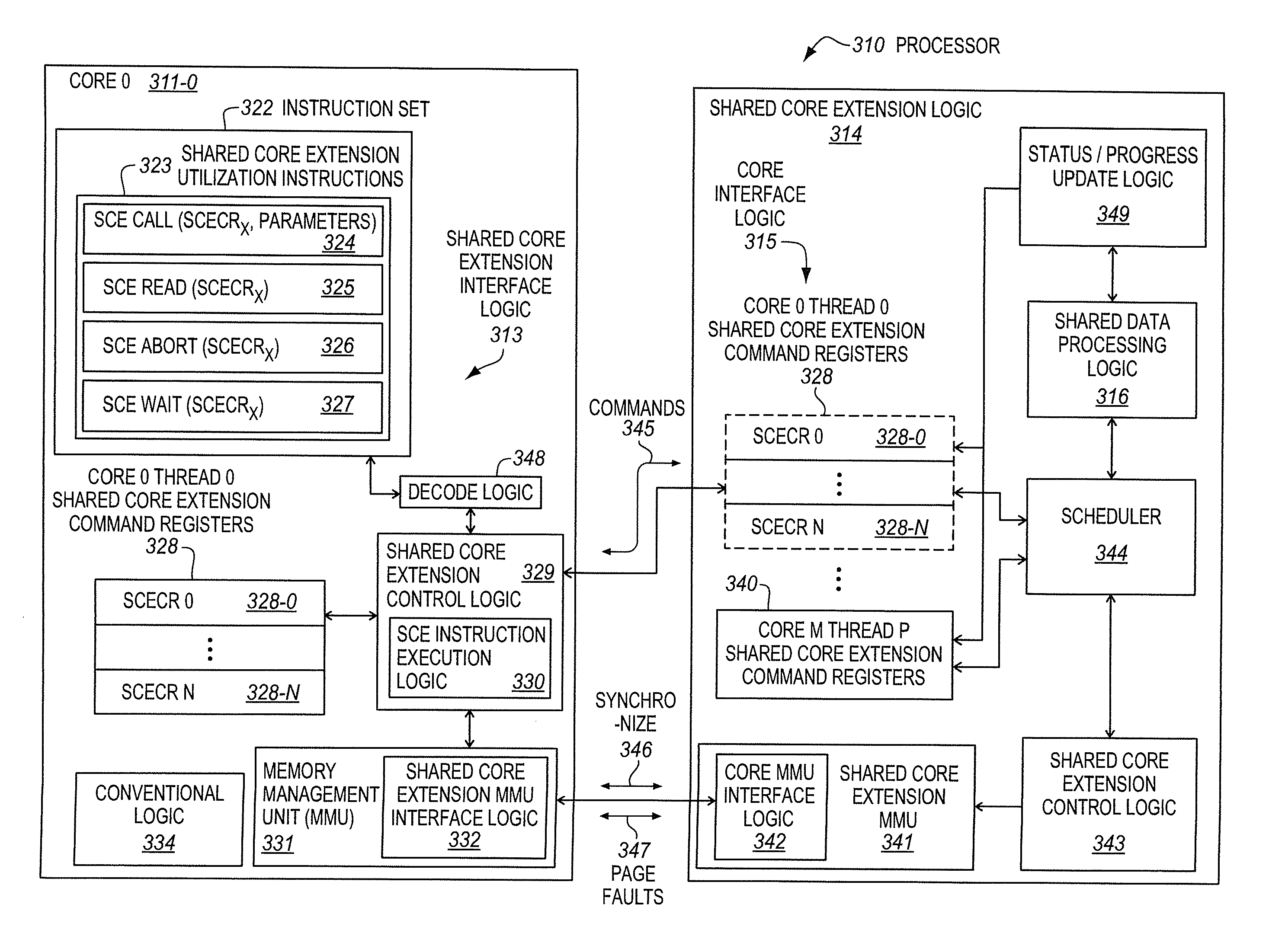

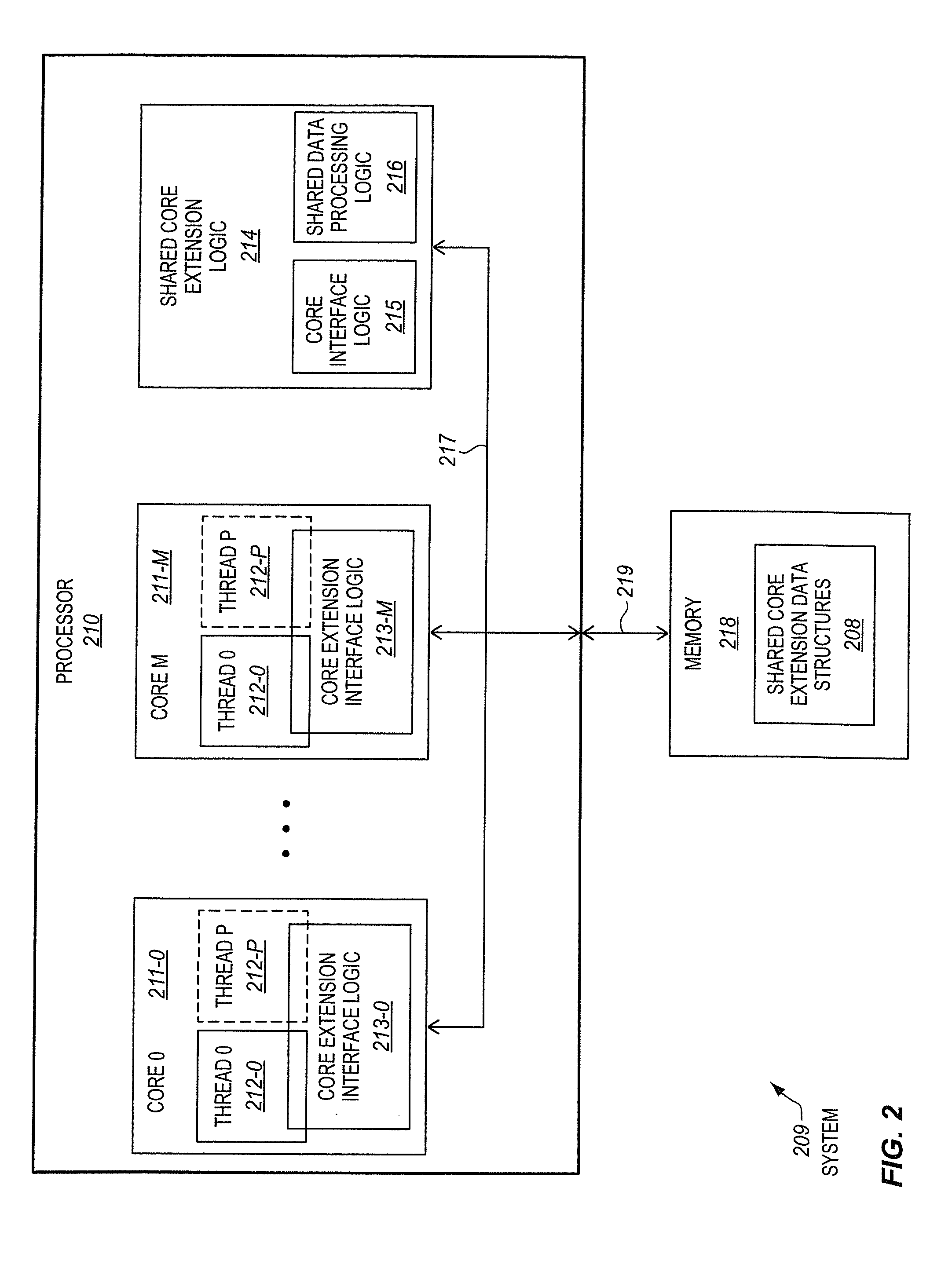

Processor having multiple cores, shared core extension logic, and shared core extension utilization instructions

ActiveUS20140089635A1Single instruction multiple data multiprocessorsProgram controlParallel computingData processing

An apparatus of an aspect includes a plurality of cores and shared core extension logic coupled with each of the plurality of cores. The shared core extension logic has shared data processing logic that is shared by each of the plurality of cores. Instruction execution logic, for each of the cores, in response to a shared core extension call instruction, is to call the shared core extension logic. The call is to have data processing performed by the shared data processing logic on behalf of a corresponding core. Other apparatus, methods, and systems are also disclosed.

Owner:INTEL CORP

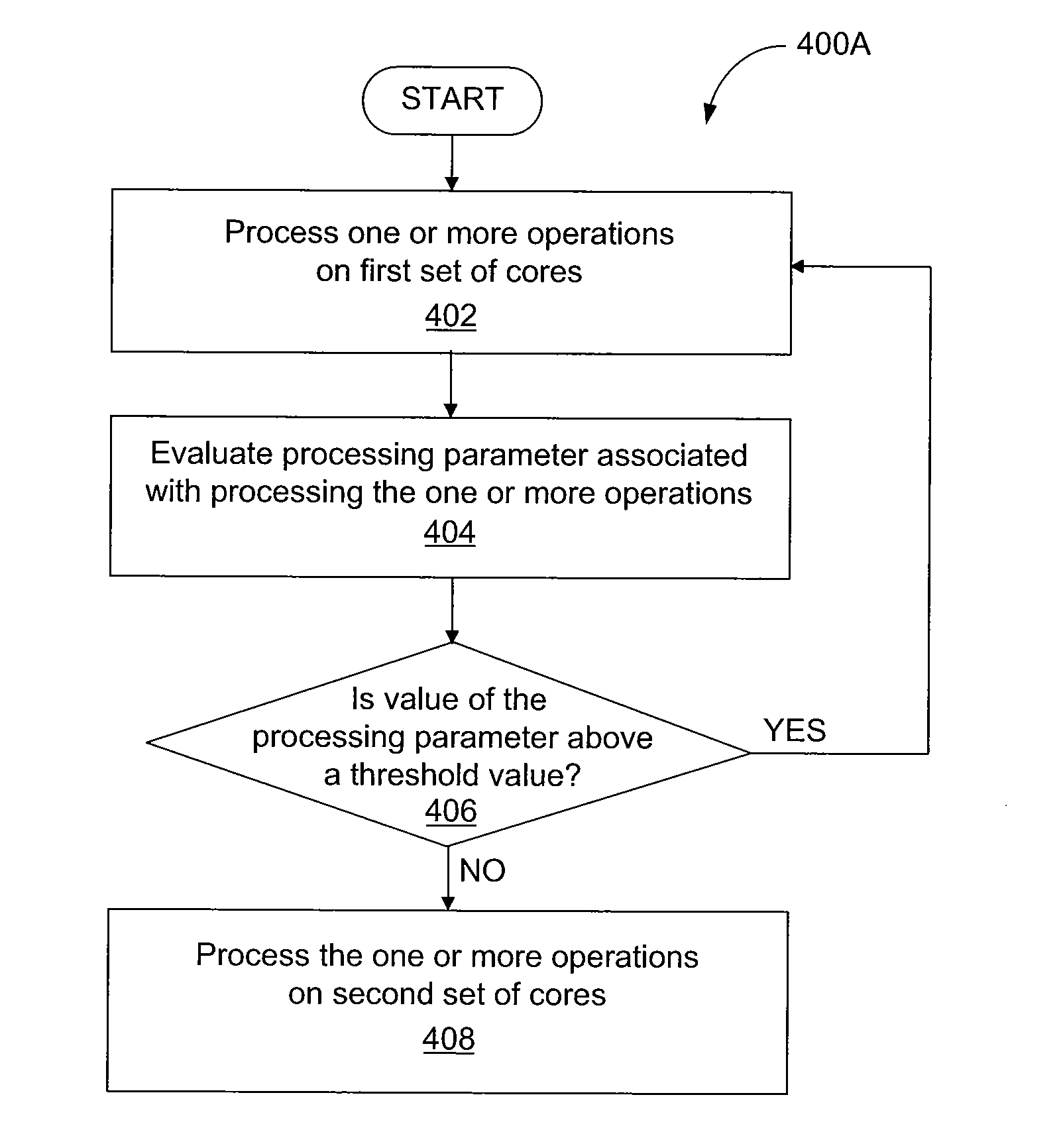

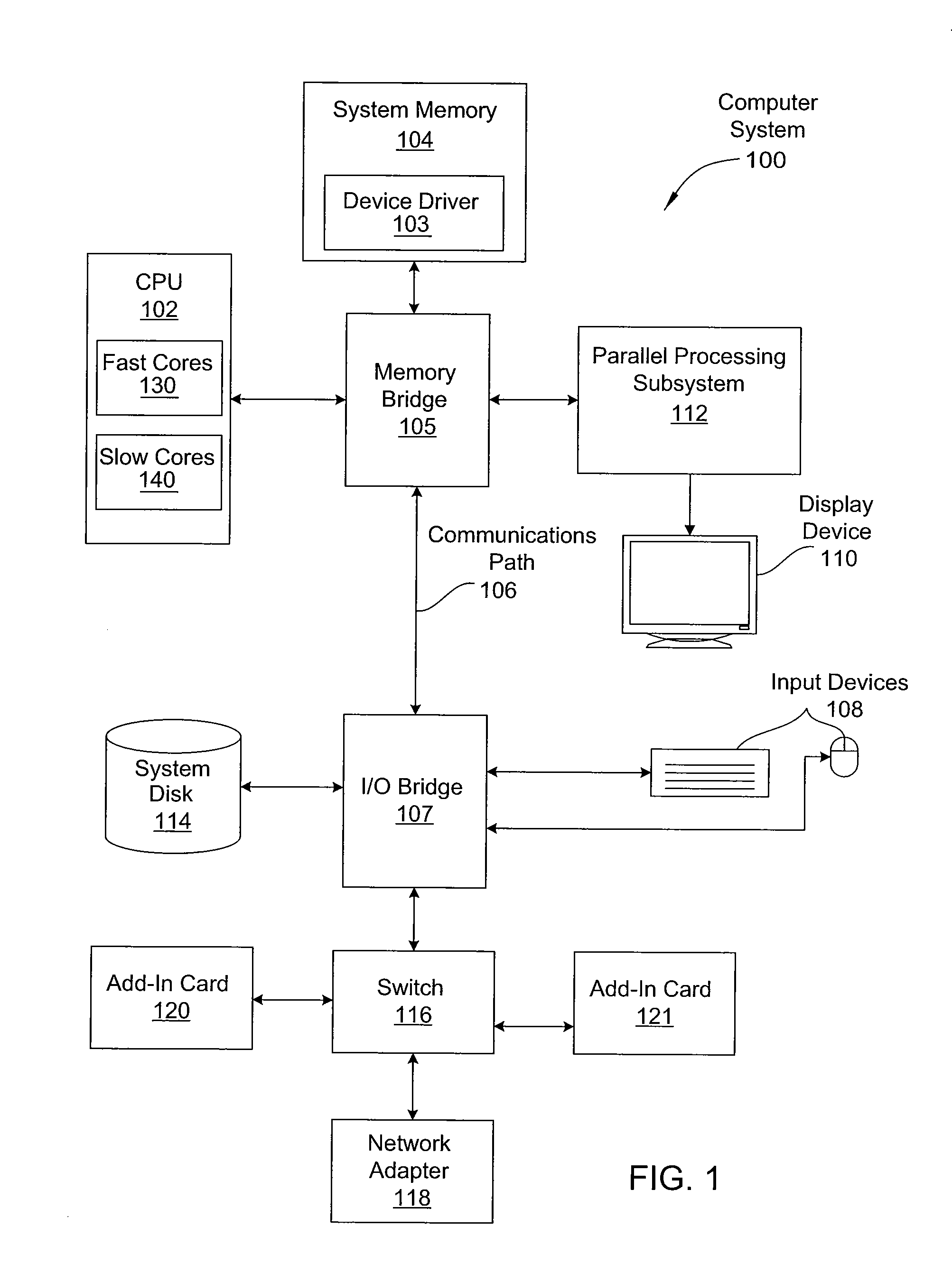

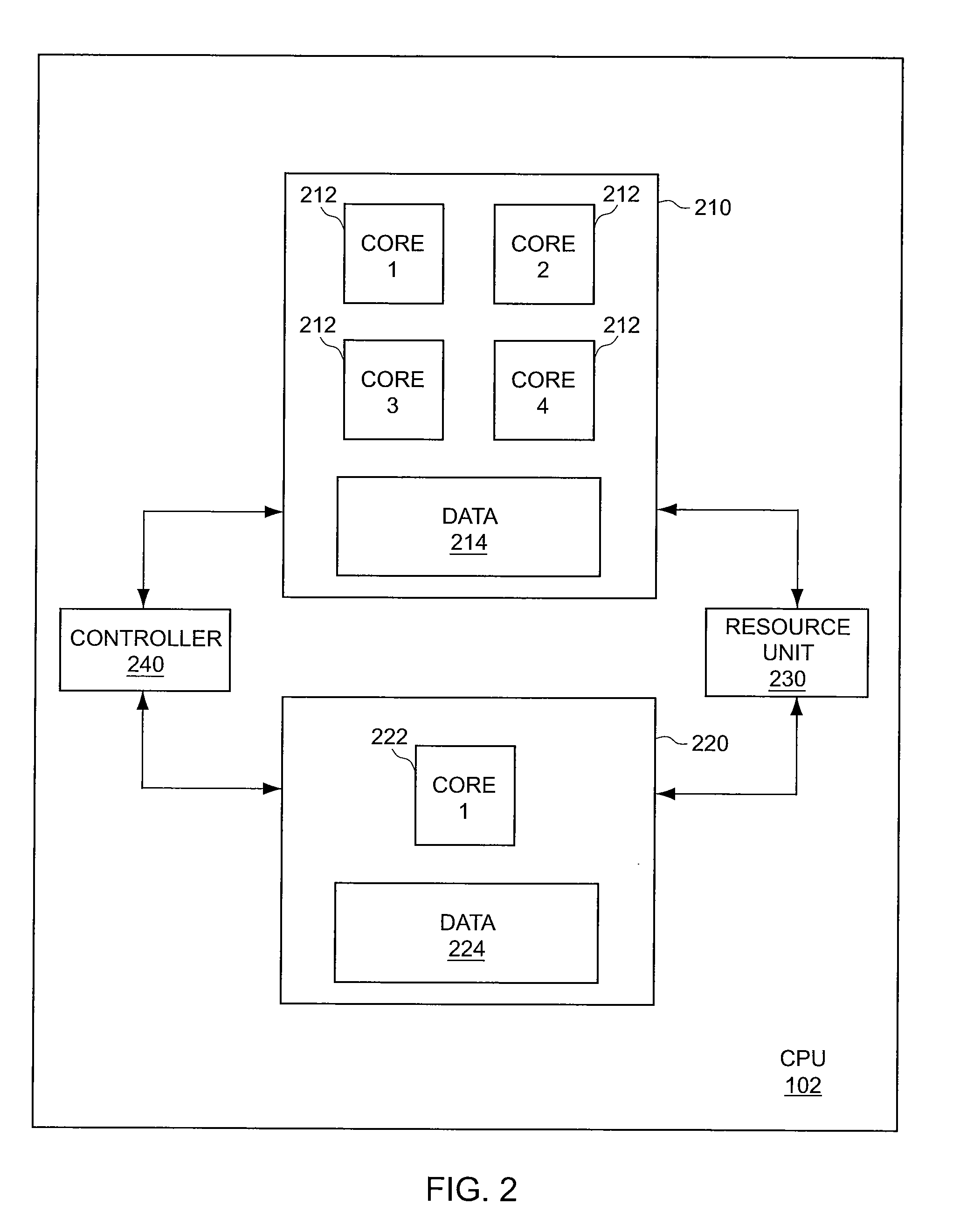

System and Method for Power Optimization

InactiveUS20110213950A1Reduce power consumptionEnergy efficient ICTSingle instruction multiple data multiprocessorsParallel computingOperation mode

A technique for reducing the power consumption required to execute processing operations. A processing complex, such as a CPU or a GPU, includes a first set of cores comprising one or more fast cores and second set of cores comprising one or more slow cores. A processing mode of the processing complex can switch between a first mode of operation and a second mode of operation based on one or more of the workload characteristics, performance characteristics of the first and second sets of cores, power characteristics of the first and second sets of cores, and operating conditions of the processing complex. A controller causes the processing operations to be executed by either the first set of cores or the second set of cores to achieve the lowest total power consumption.

Owner:NVIDIA CORP

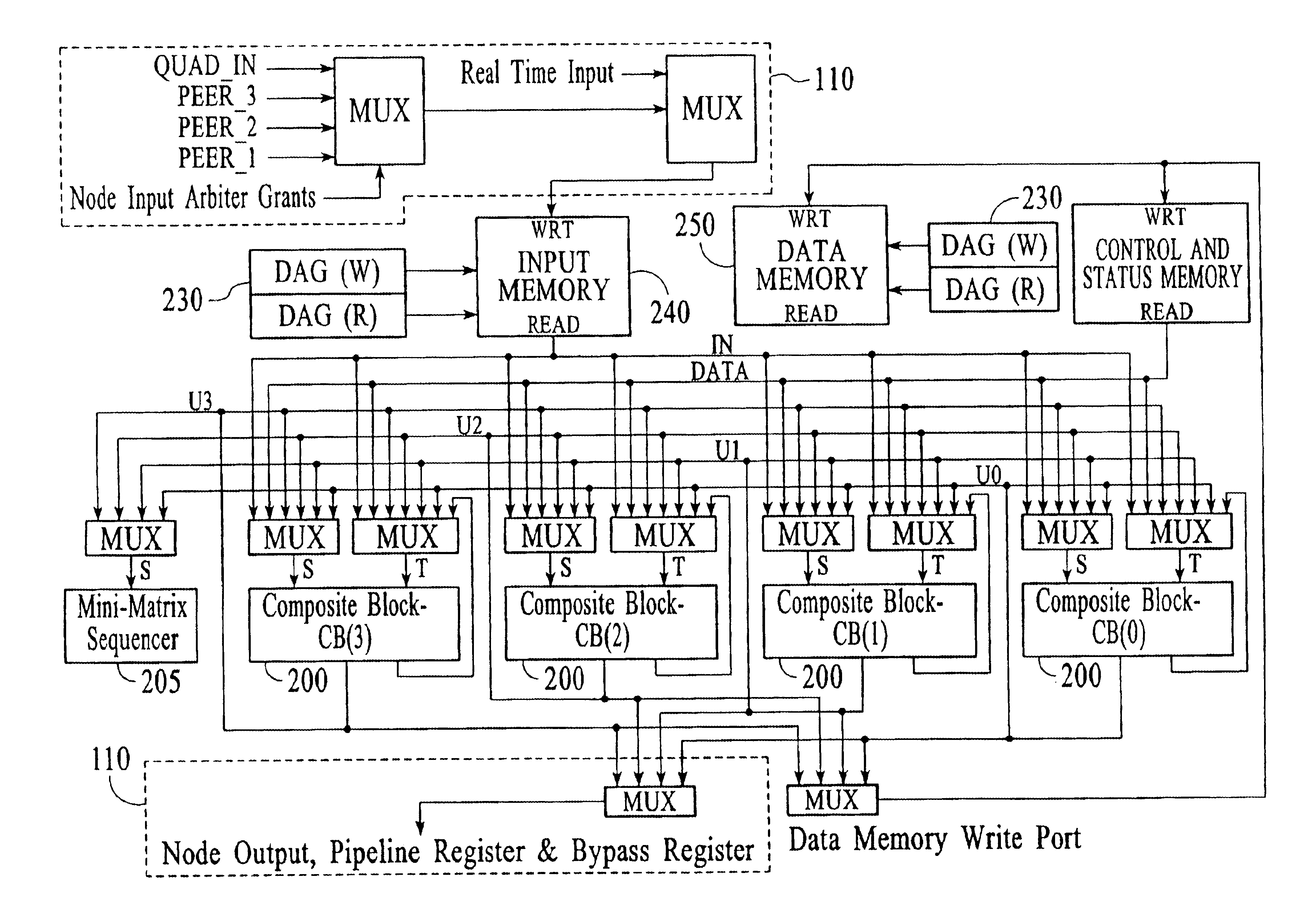

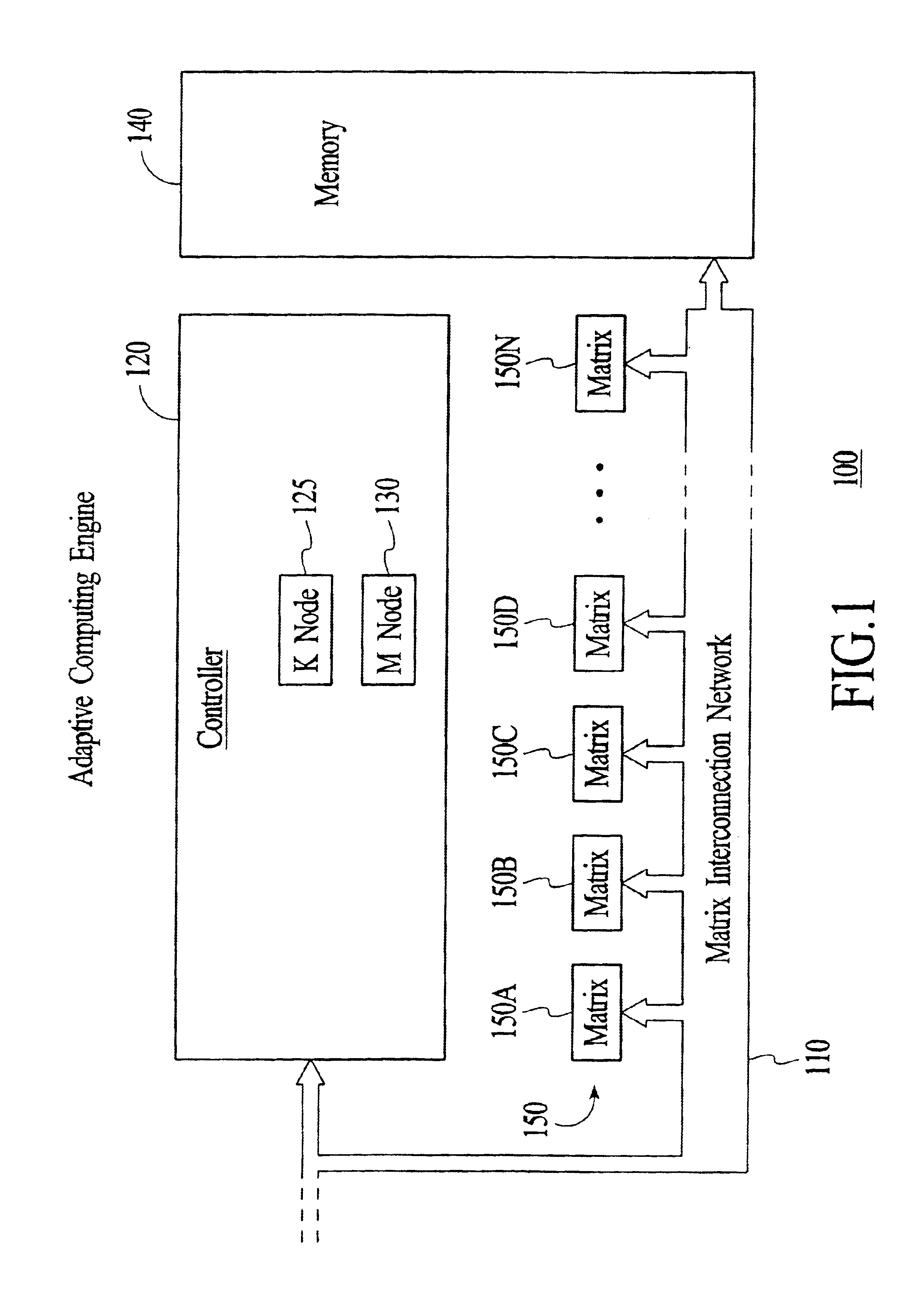

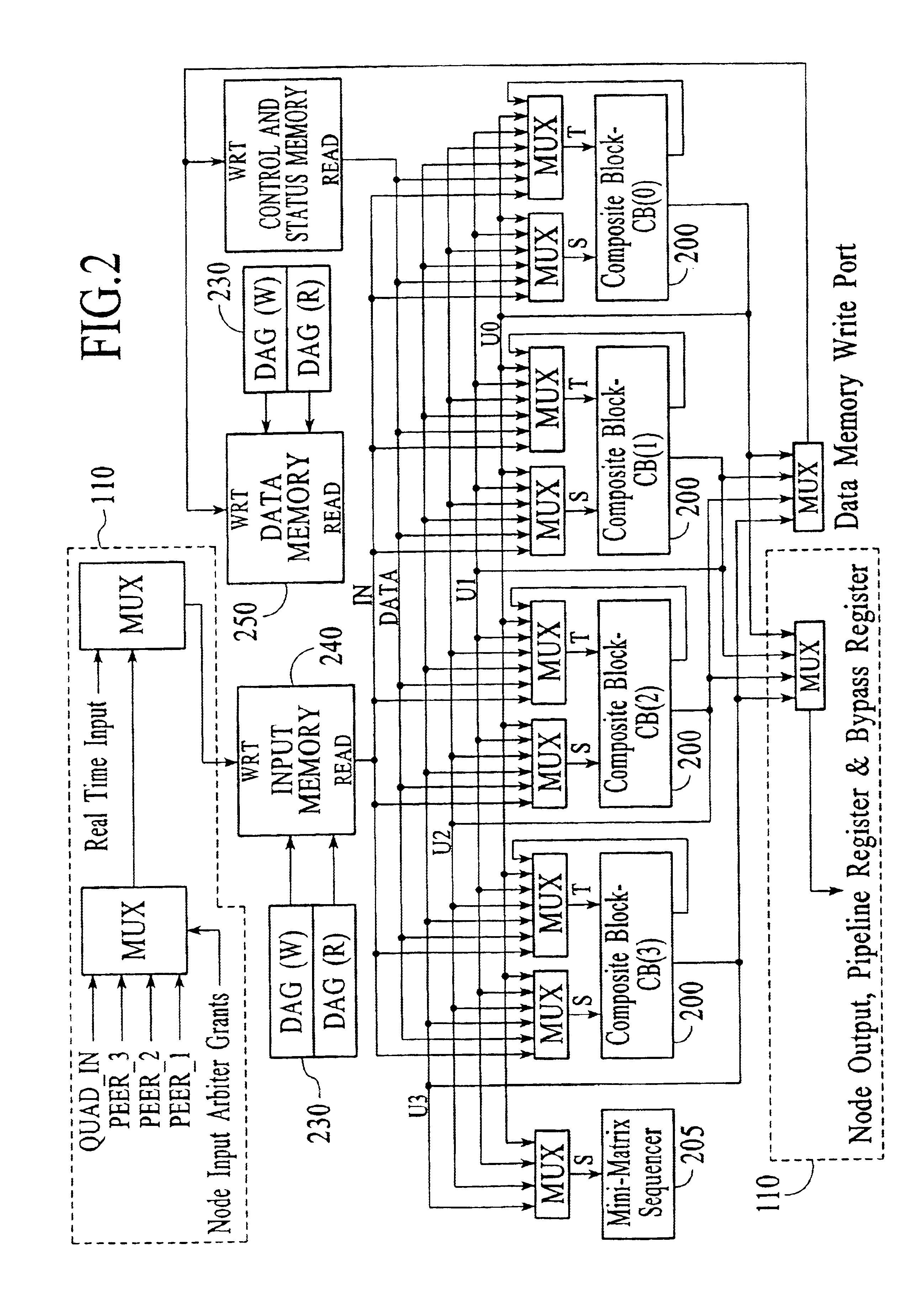

Adaptive computing engine with dataflow graph based sequencing in reconfigurable mini-matrices of composite functional blocks

InactiveUS6874079B2Single instruction multiple data multiprocessorsNext instruction address formationDigital signal processingParallel computing

Aspects of a method and system for digital signal processing within an adaptive computing engine are described. These aspects include a mini-matrix, the mini-matrix comprising a set of composite blocks, each composite block capable of executing a predetermined set of instructions. A sequencer is included for controlling the set of composite blocks and directing instructions among the set of composite blocks based on a data-flow graph. Further, a data network is included and transmits data to and from the set of composite blocks and to the sequencer, while a status network routes status word data resulting from instruction execution in the set of composite blocks. With the present invention, an effective combination of hardware resources is provided in a manner that provides multi-bit digital signal processing capabilities for an embedded system environment, particularly in an implementation of an adaptive computing engine. These and other advantages will become readily apparent from the following detailed description and accompanying drawings.

Owner:CORNAMI INC

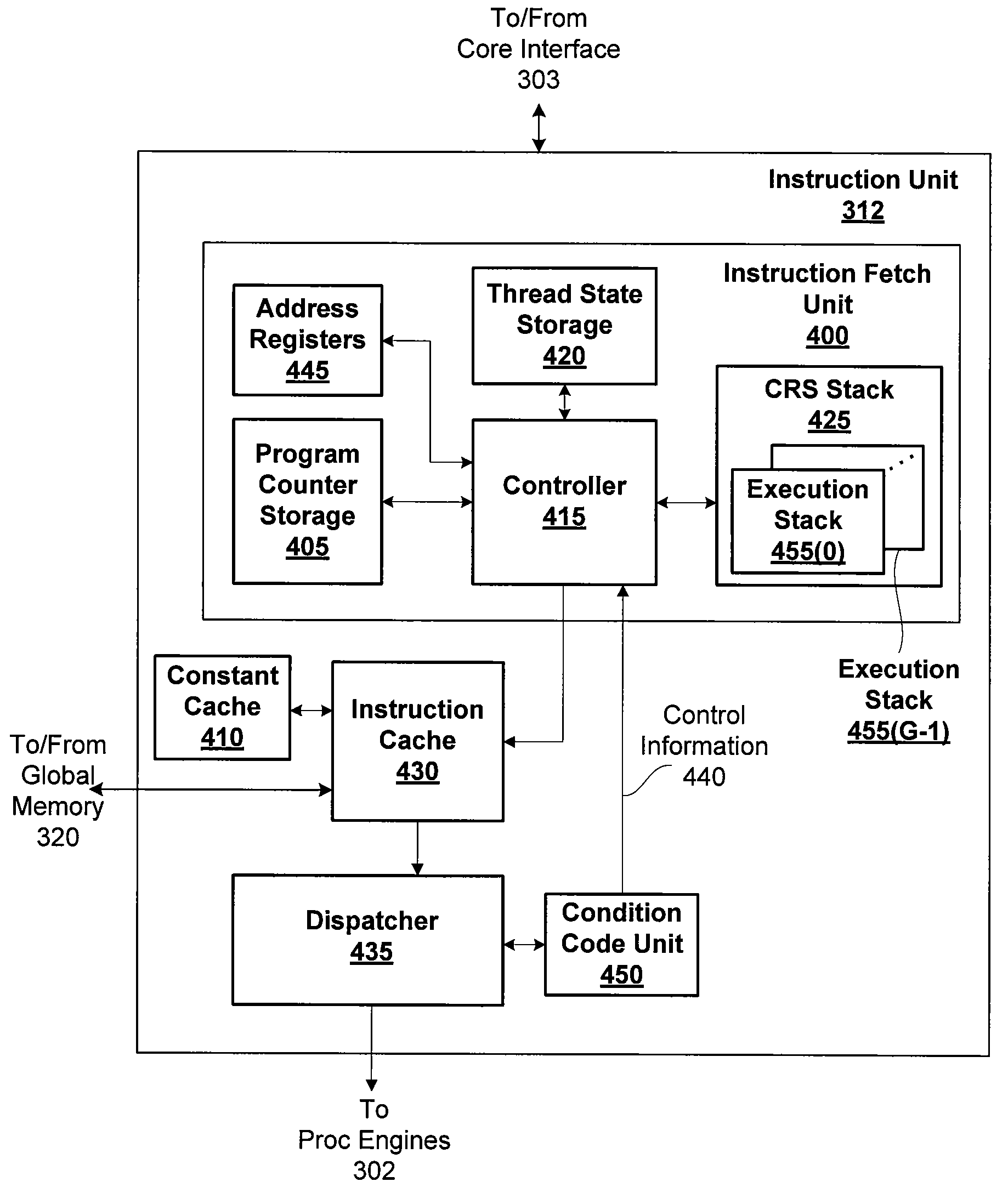

Indirect Function Call Instructions in a Synchronous Parallel Thread Processor

ActiveUS20090240931A1Easy to processProvide capabilitySingle instruction multiple data multiprocessorsConcurrent instruction executionSingle instruction, multiple threadsProcessor register

An indirect branch instruction takes an address register as an argument in order to provide indirect function call capability for single-instruction multiple-thread (SIMT) processor architectures. The indirect branch instruction is used to implement indirect function calls, virtual function calls, and switch statements to improve processing performance compared with using sequential chains of tests and branches.

Owner:NVIDIA CORP

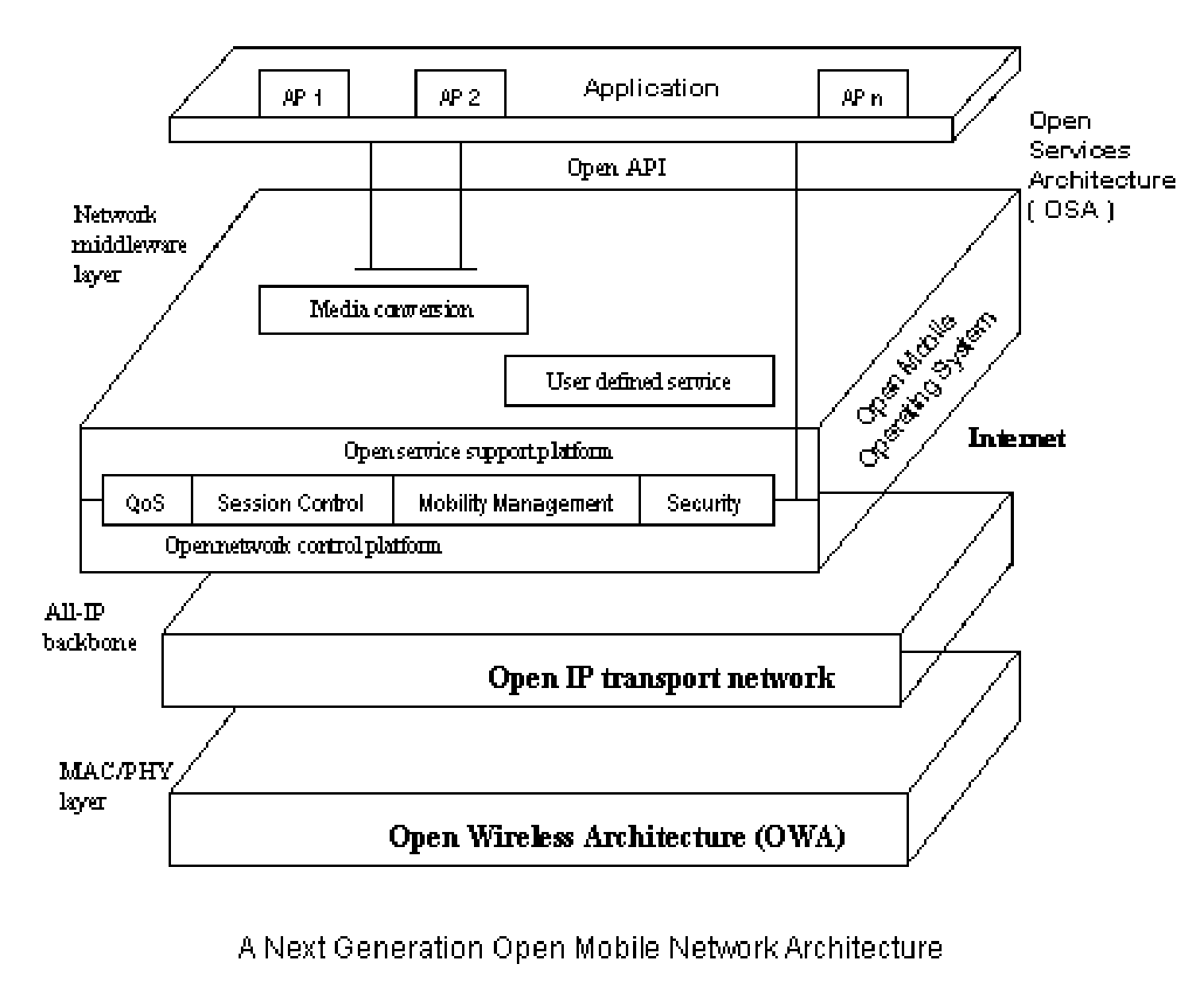

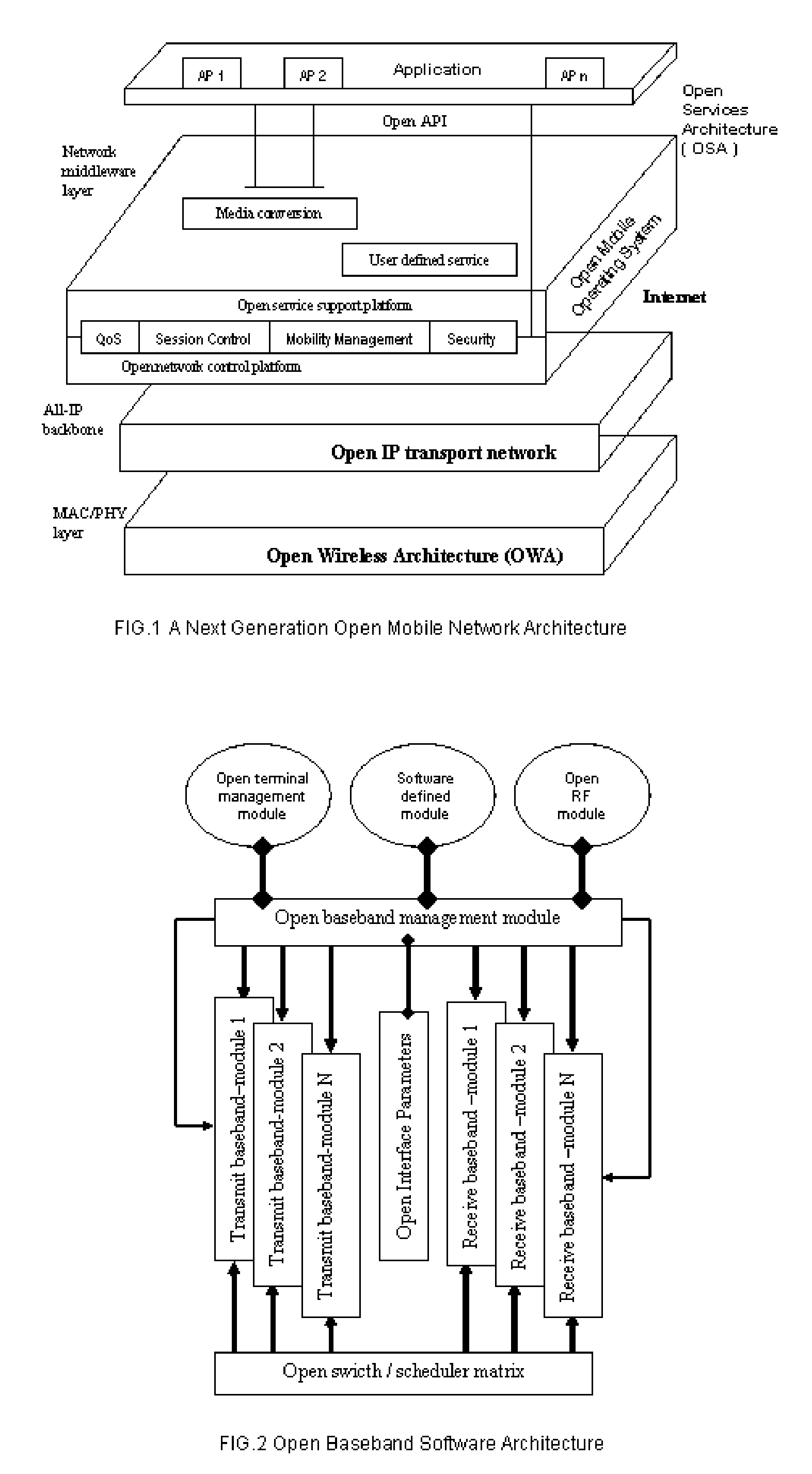

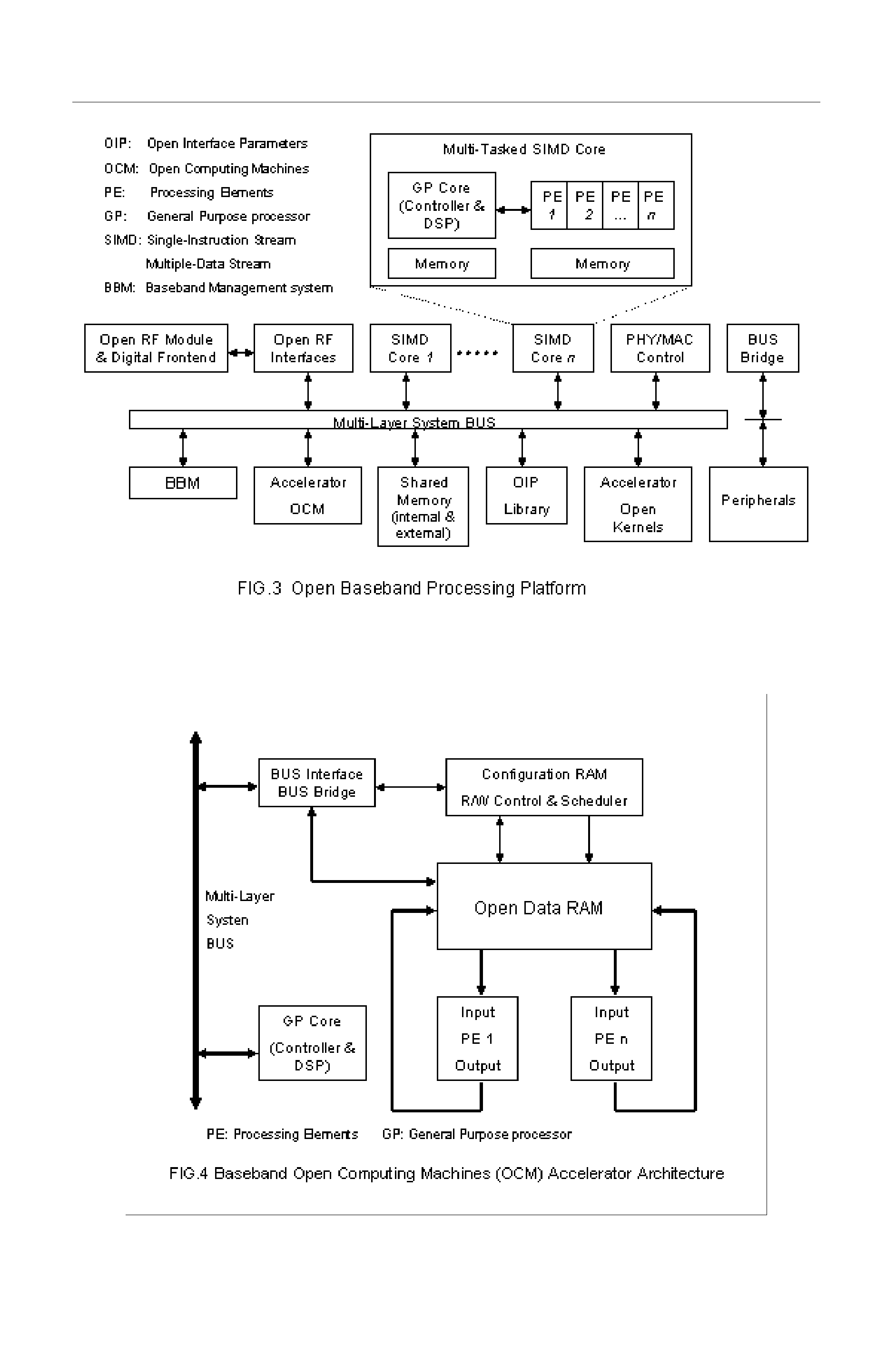

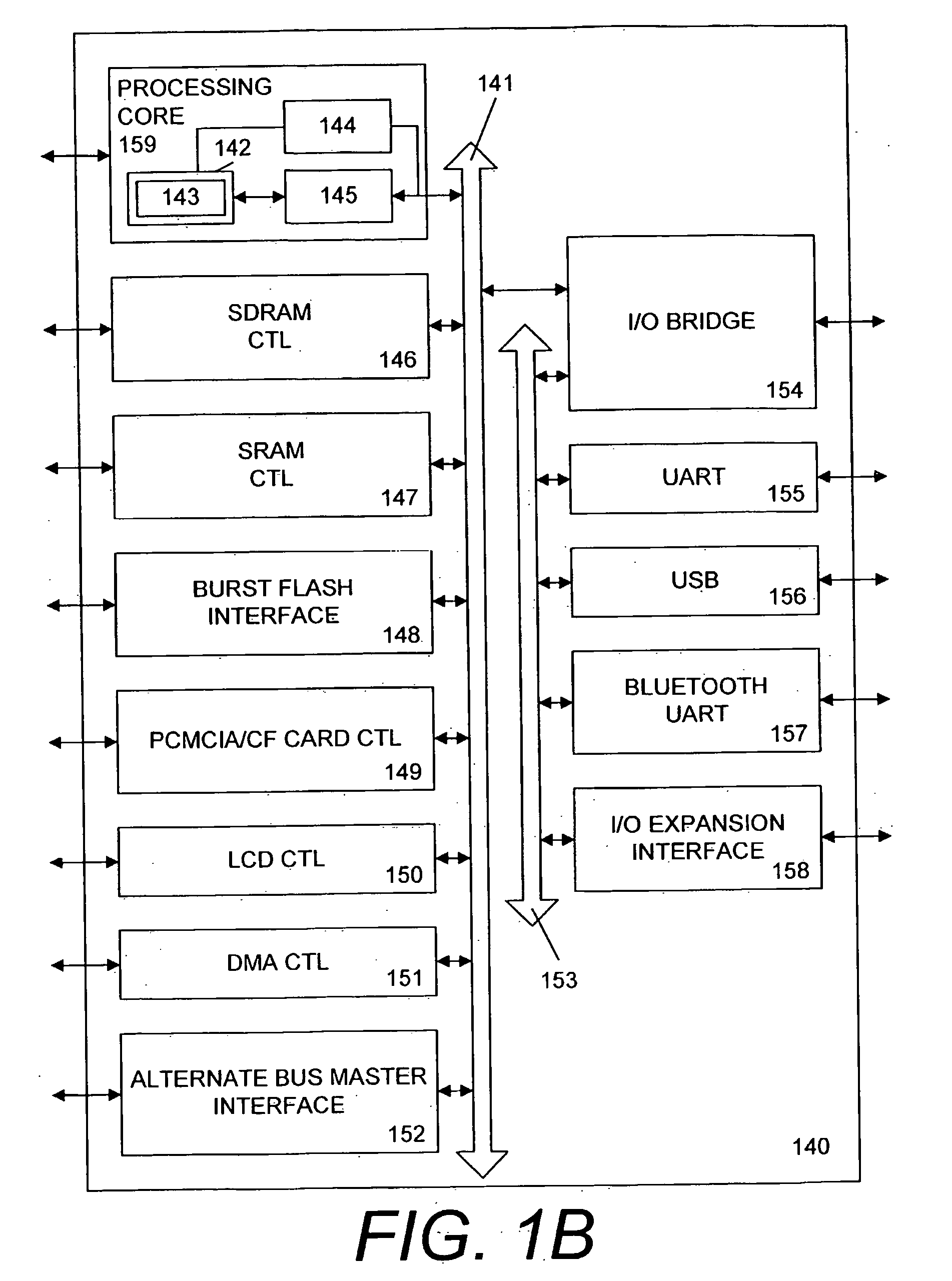

Open baseband processing architecture for next generation wireless and mobile communication terminal design

InactiveUS7283838B2Efficient and powerfulSave powerPower managementSingle instruction multiple data multiprocessorsNetwork resource managementComputer science

Owner:PALO ALTO RES

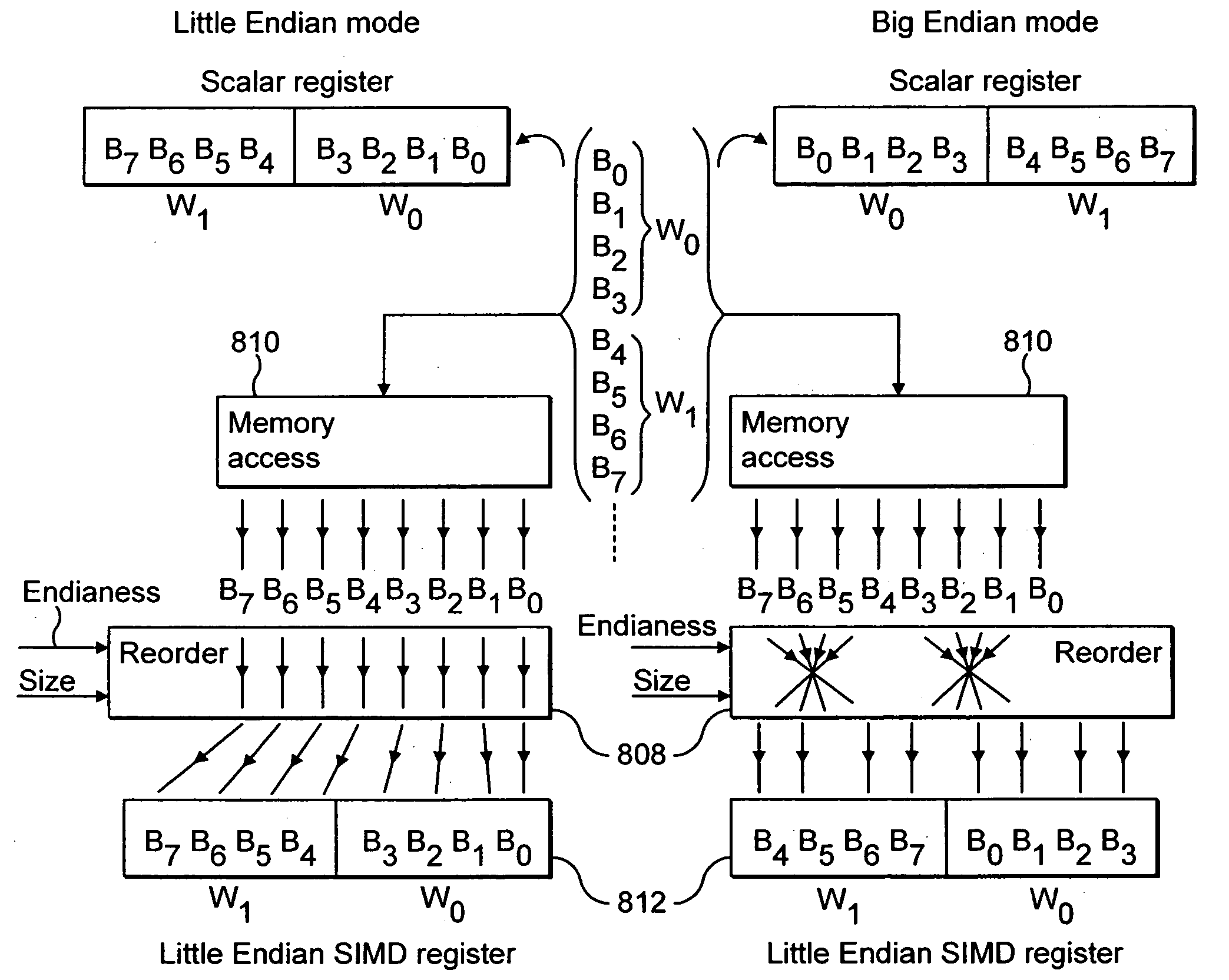

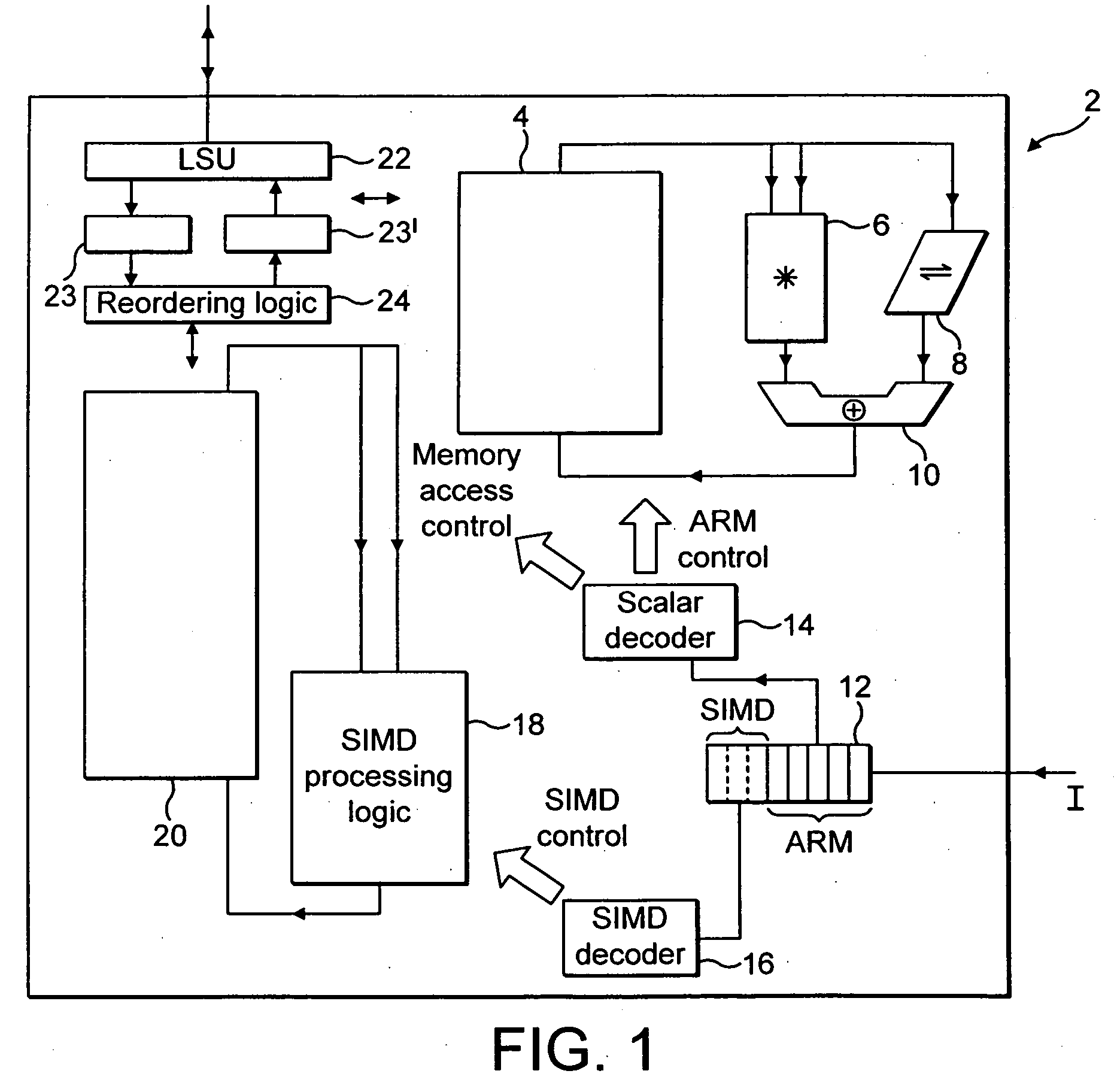

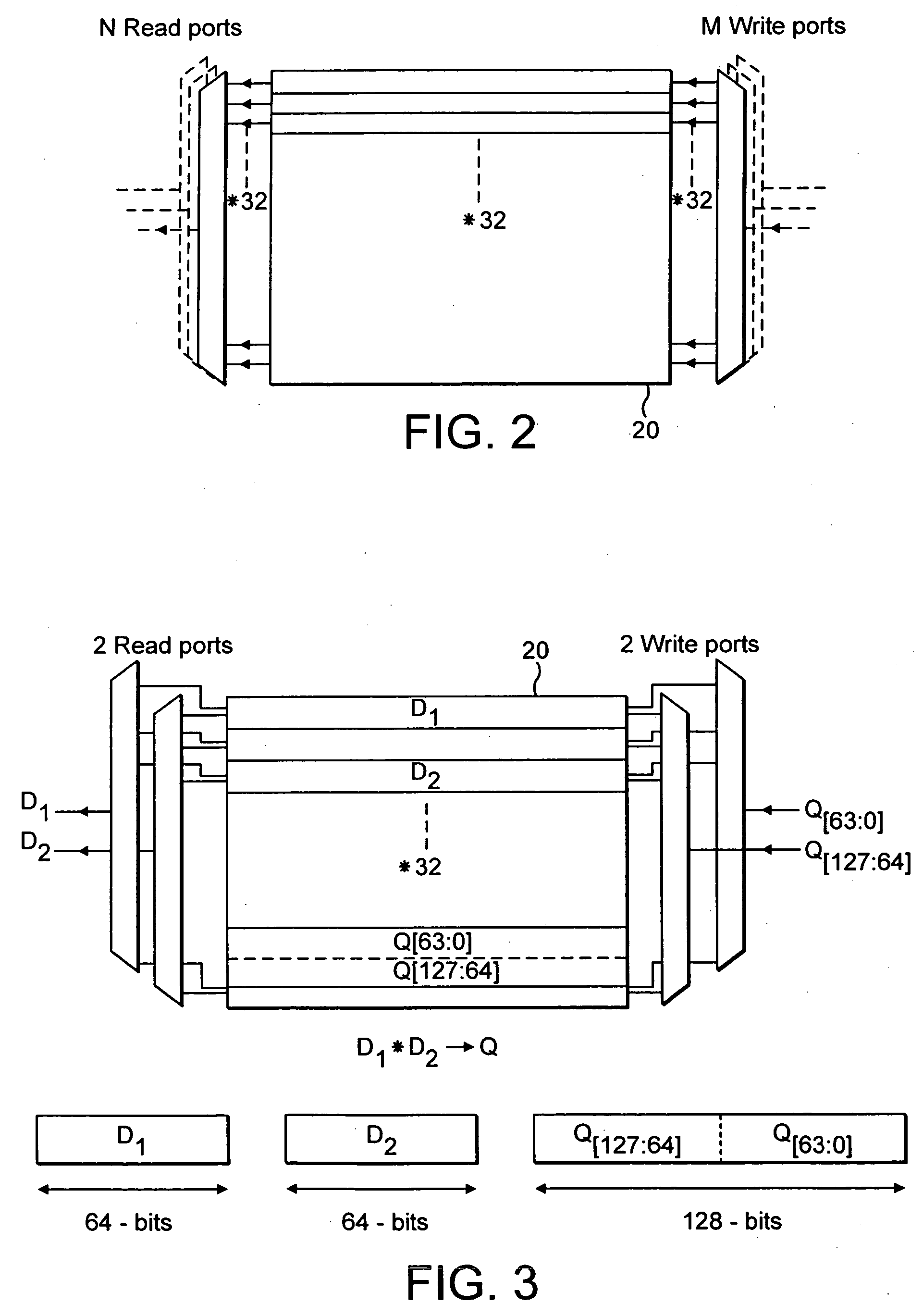

Endianess compensation within a SIMD data processing system

InactiveUS20050125647A1Single instruction multiple data multiprocessorsHandling data according to predetermined rulesData processing systemEndianness

A memory system can store data in either a big endian mode or a little endian mode. Memory accessing logic 810 utilises byte invariant addressing to retrieve multiple data elements from that memory to be stored within a SIMD register 812. Data element reordering logic 808 is responsive to an endianess mode specifying signal and a data element size specifying signal to reorder retrieved bytes such that the data elements when stored within the SIMD registers 812 are invariant irrespective of the endianess mode being used by the memory.

Owner:ARM LTD

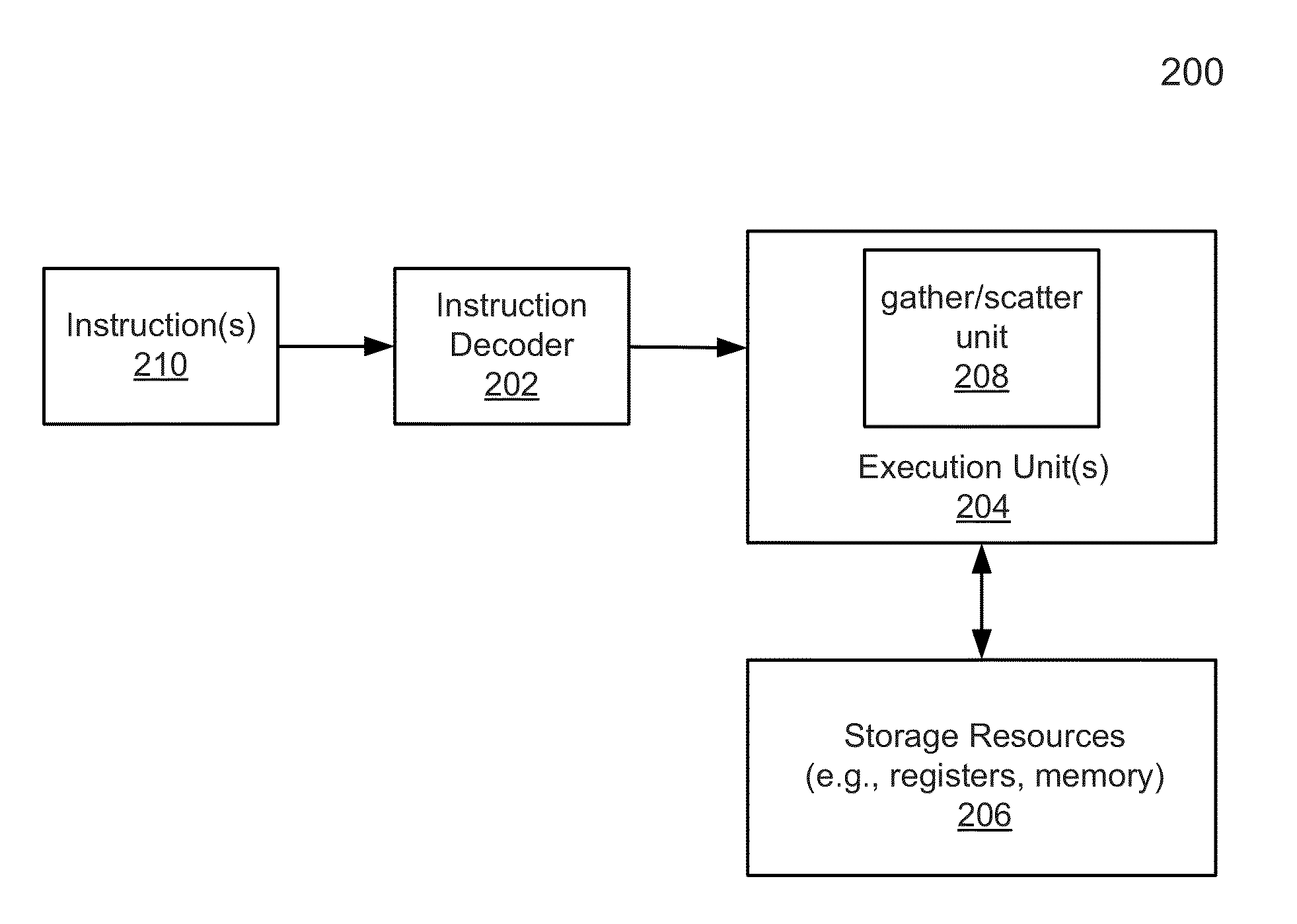

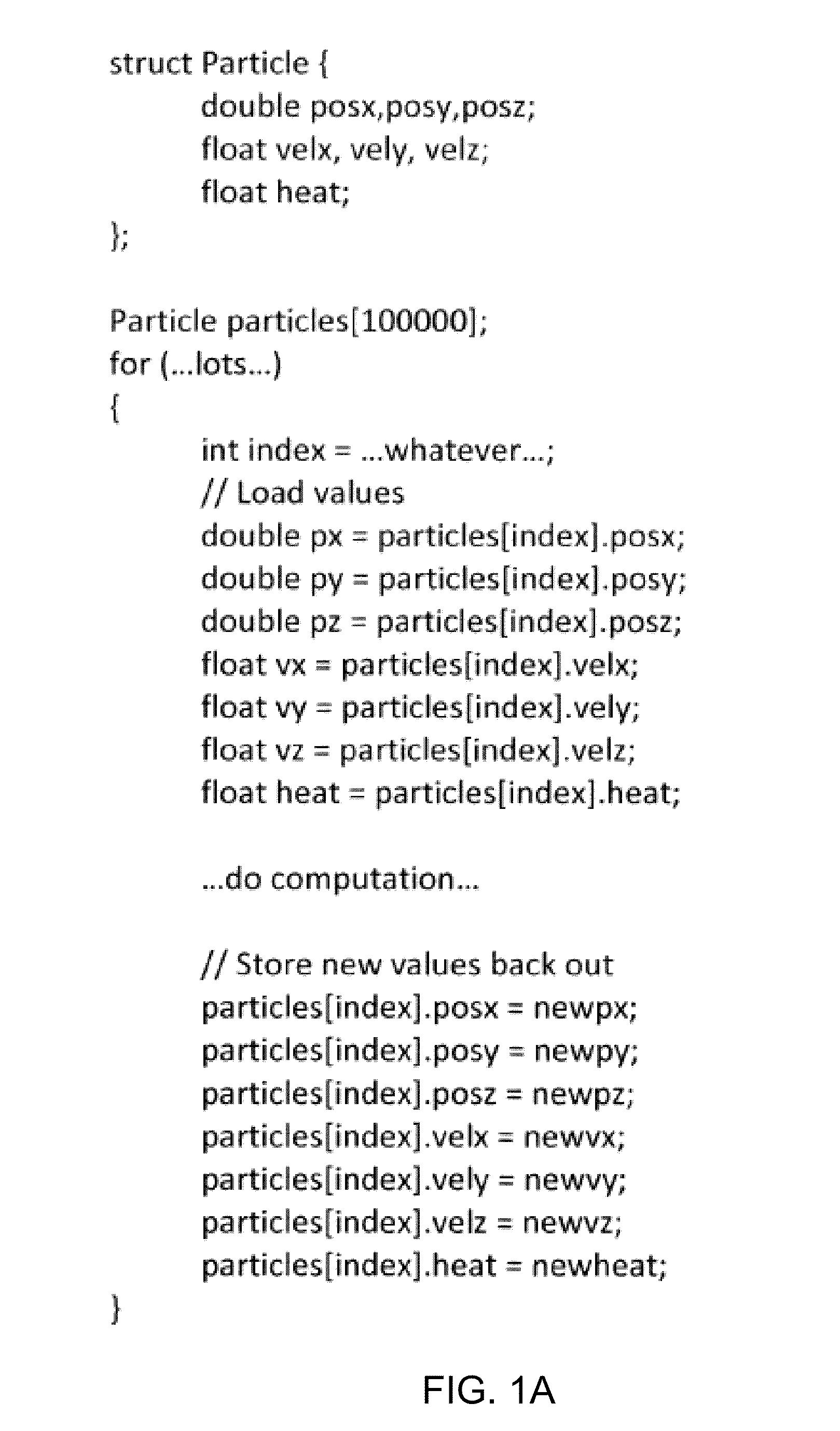

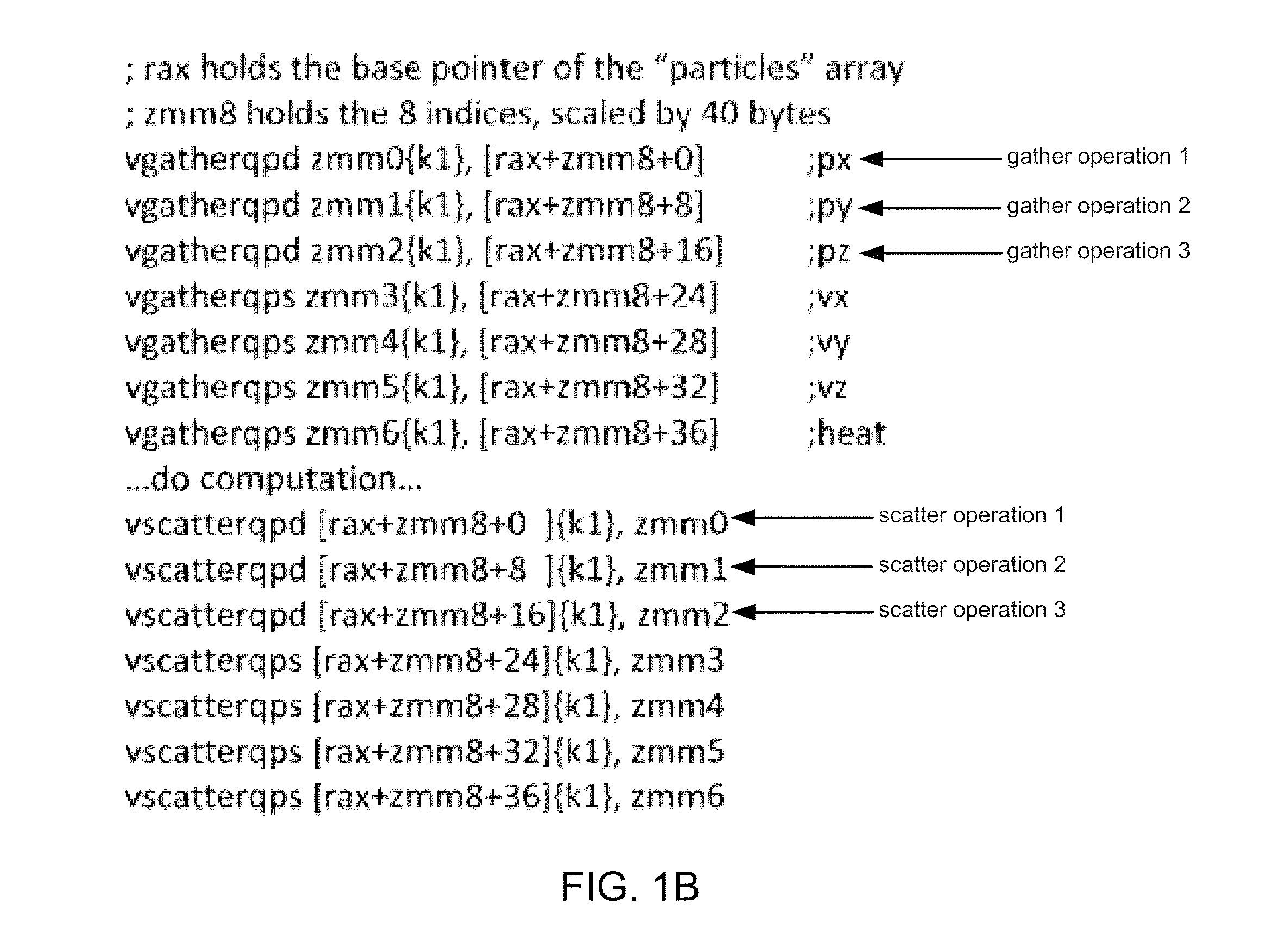

Coalescing adjacent gather/scatter operations

ActiveUS20140181464A1Memory architecture accessing/allocationSingle instruction multiple data multiprocessorsMemory addressExecution unit

According to one embodiment, a processor includes an instruction decoder to decode a first instruction to gather data elements from memory, the first instruction having a first operand specifying a first storage location and a second operand specifying a first memory address storing a plurality of data elements. The processor further includes an execution unit coupled to the instruction decoder, in response to the first instruction, to read contiguous a first and a second of the data elements from a memory location based on the first memory address indicated by the second operand, and to store the first data element in a first entry of the first storage location and a second data element in a second entry of a second storage location corresponding to the first entry of the first storage location.

Owner:INTEL CORP

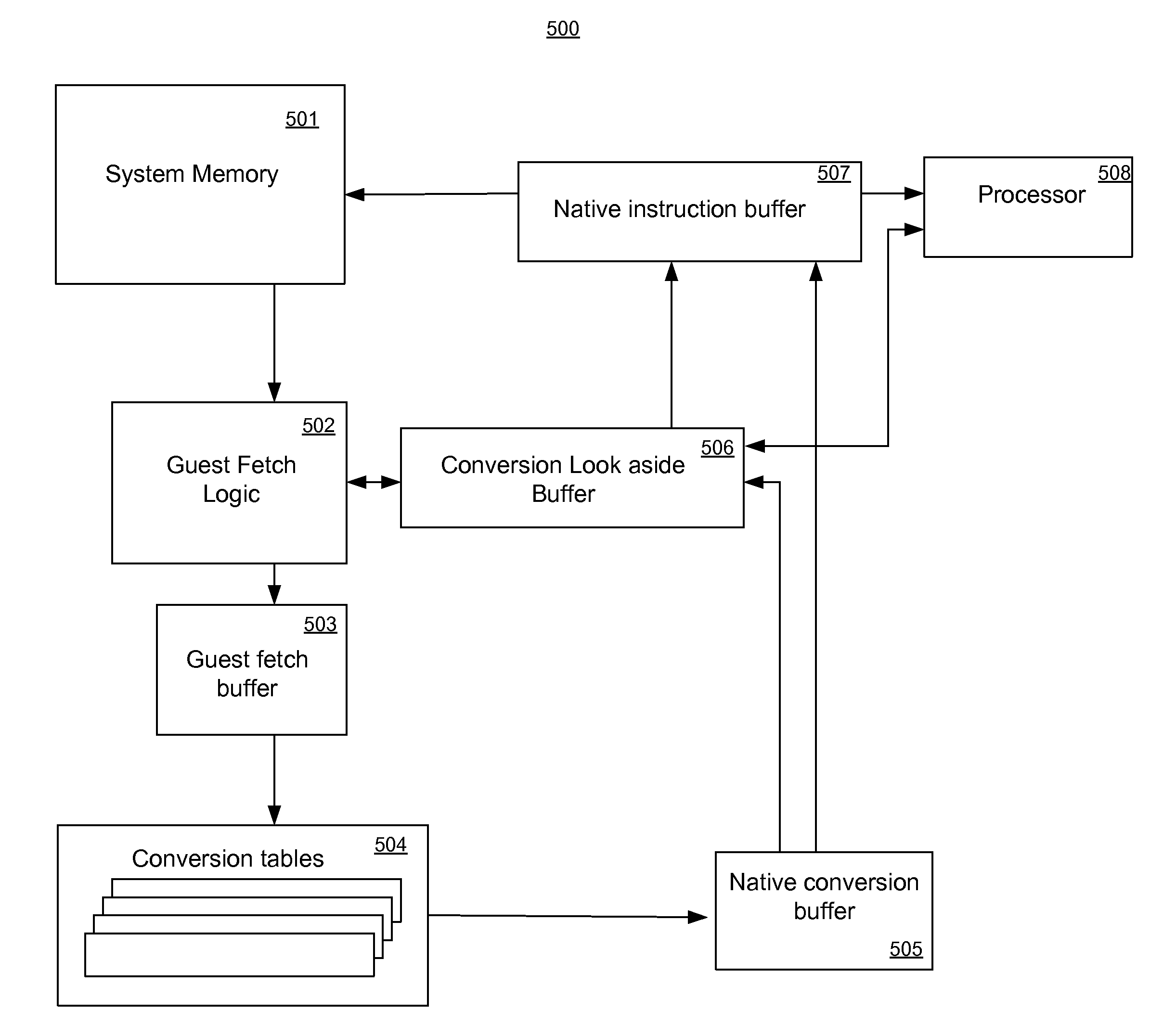

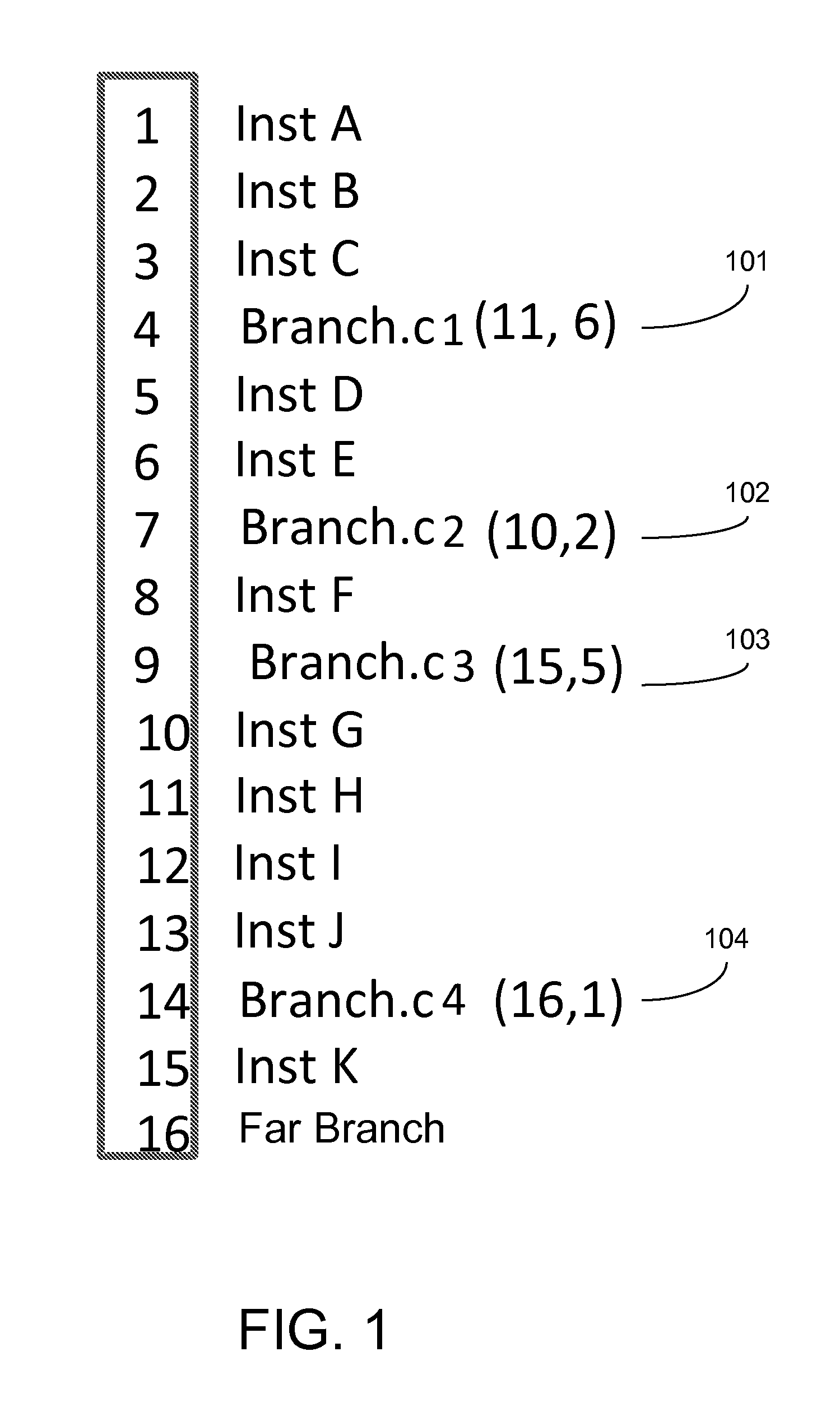

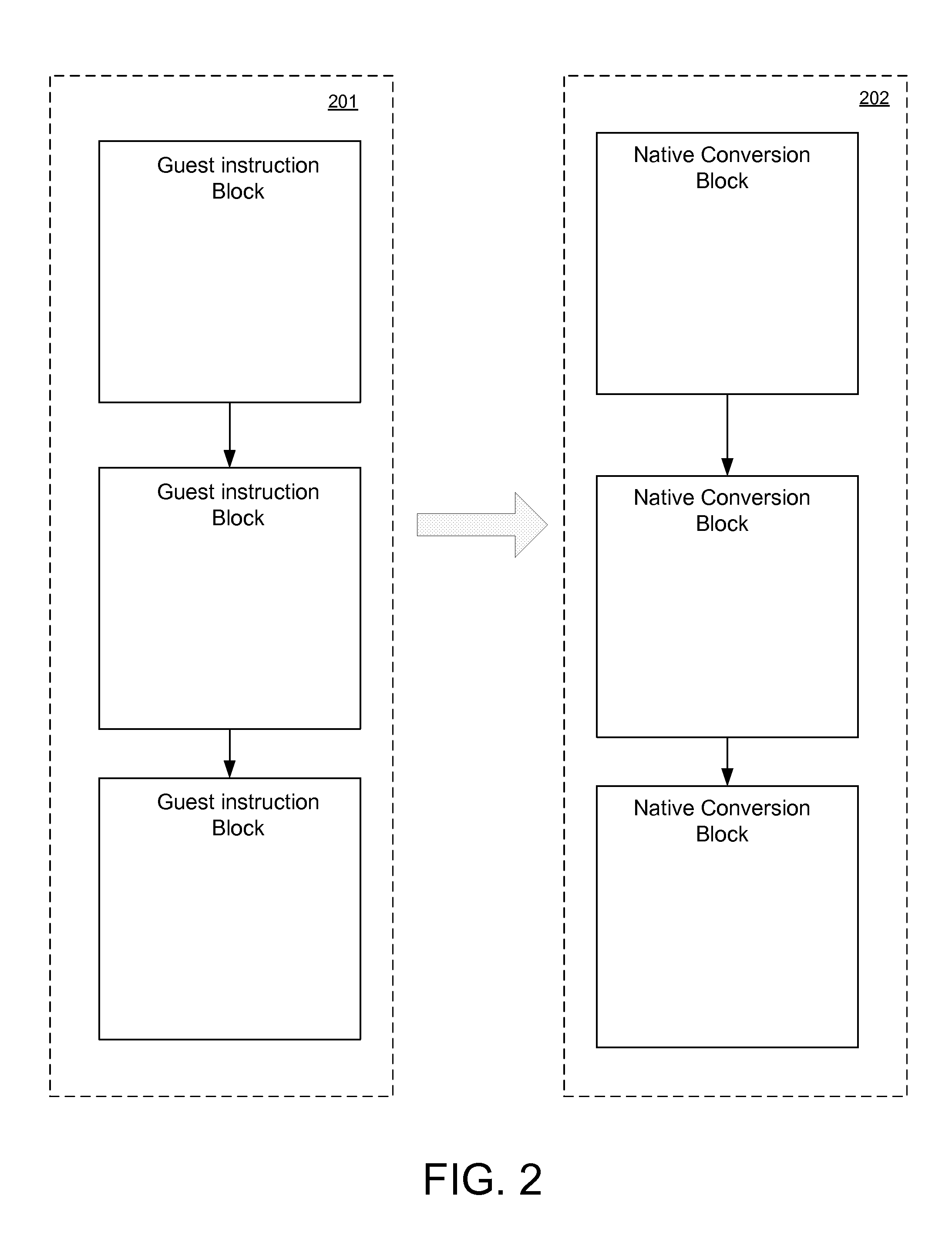

Hardware acceleration components for translating guest instructions to native instructions

ActiveUS20130024661A1Memory architecture accessing/allocationSingle instruction multiple data multiprocessorsParallel computingHardware acceleration

A hardware based translation accelerator. The hardware includes a guest fetch logic component for accessing guest instructions; a guest fetch buffer coupled to the guest fetch logic component and a branch prediction component for assembling guest instructions into a guest instruction block; and conversion tables coupled to the guest fetch buffer for translating the guest instruction block into a corresponding native conversion block. The hardware further includes a native cache coupled to the conversion tables for storing the corresponding native conversion block, and a conversion look aside buffer coupled to the native cache for storing a mapping of the guest instruction block to corresponding native conversion block, wherein upon a subsequent request for a guest instruction, the conversion look aside buffer is indexed to determine whether a hit occurred, wherein the mapping indicates the guest instruction has a corresponding converted native instruction in the native cache.

Owner:INTEL CORP

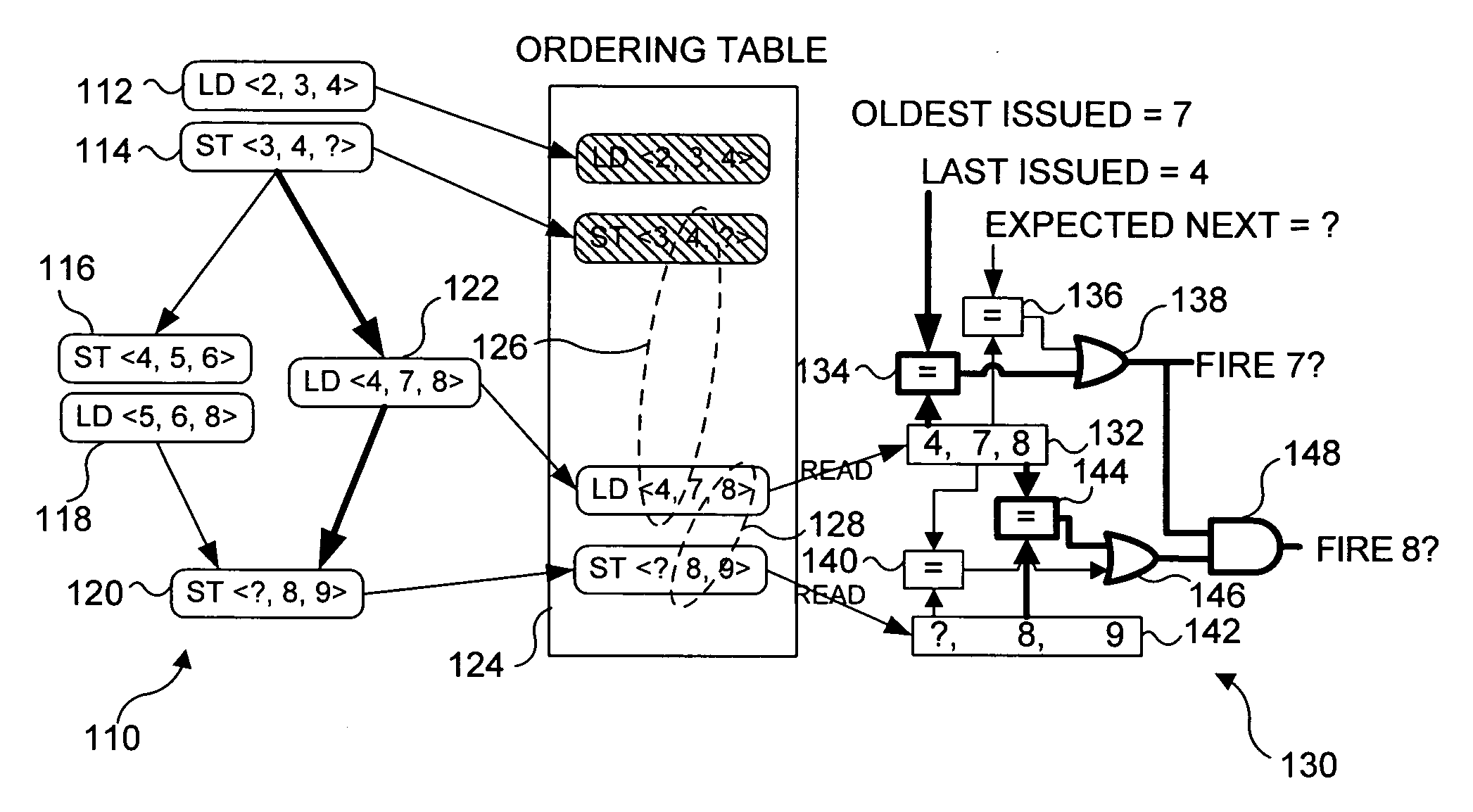

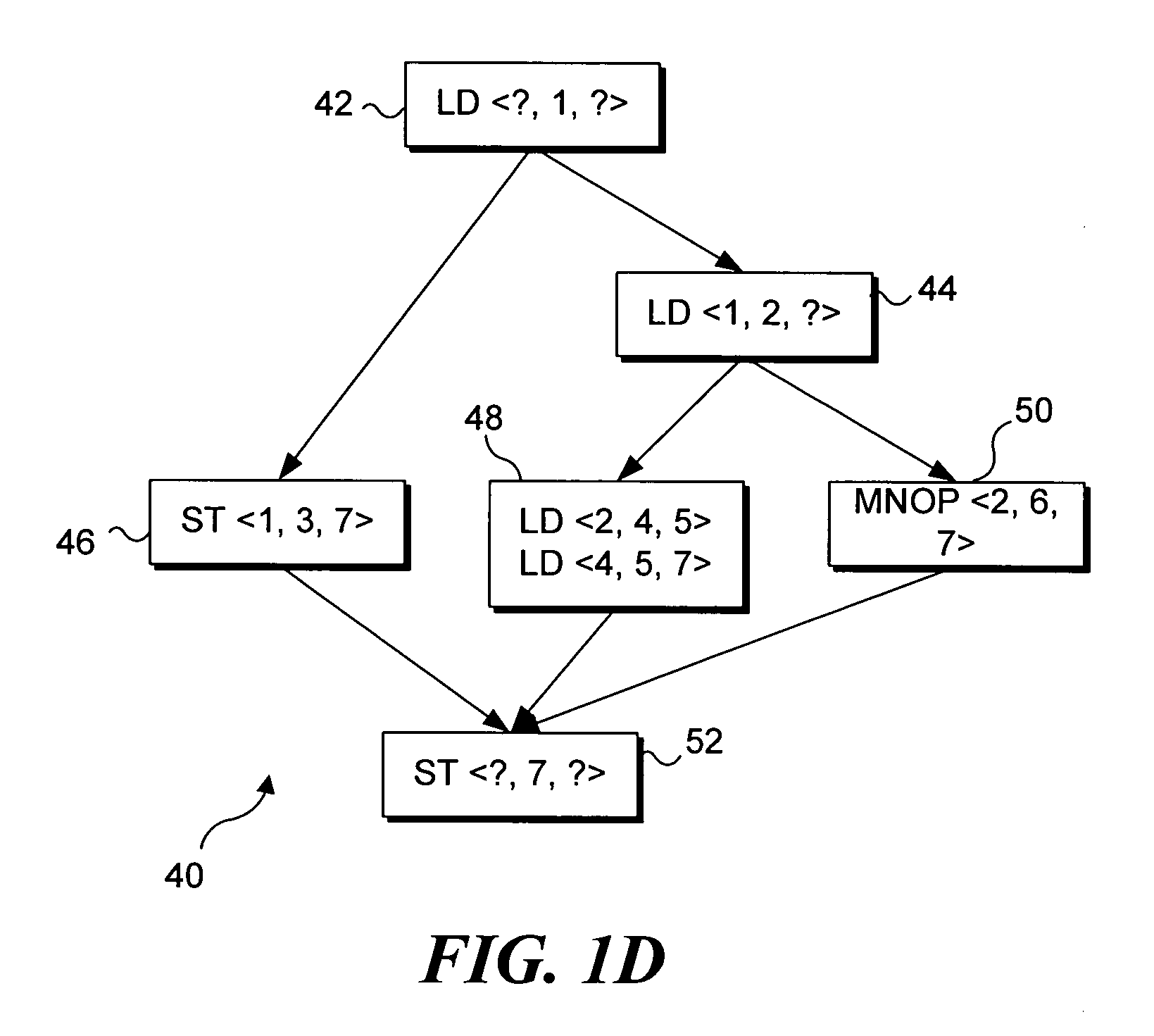

Wavescalar architecture having a wave order memory

InactiveUS20050166205A1Reduce communication costsMaximizing processor utilizationSingle instruction multiple data multiprocessorsProgram synchronisationTerm memoryLow complexity

A dataflow instruction set architecture and execution model, referred to as WaveScalar, which is designed for scalable, low-complexity / high-performance processors, while efficiently providing traditional memory semantics through a mechanism called wave-ordered memory. Wave-ordered memory enables “real-world” programs, written in any language, to be run on the WaveScalar architecture, as well as any out-of-order execution unit. Because it is software-controlled, wave-ordered memory can be disabled to obtain greater parallelism. Wavescalar also includes a software-controlled tag management system.

Owner:UNIV OF WASHINGTON

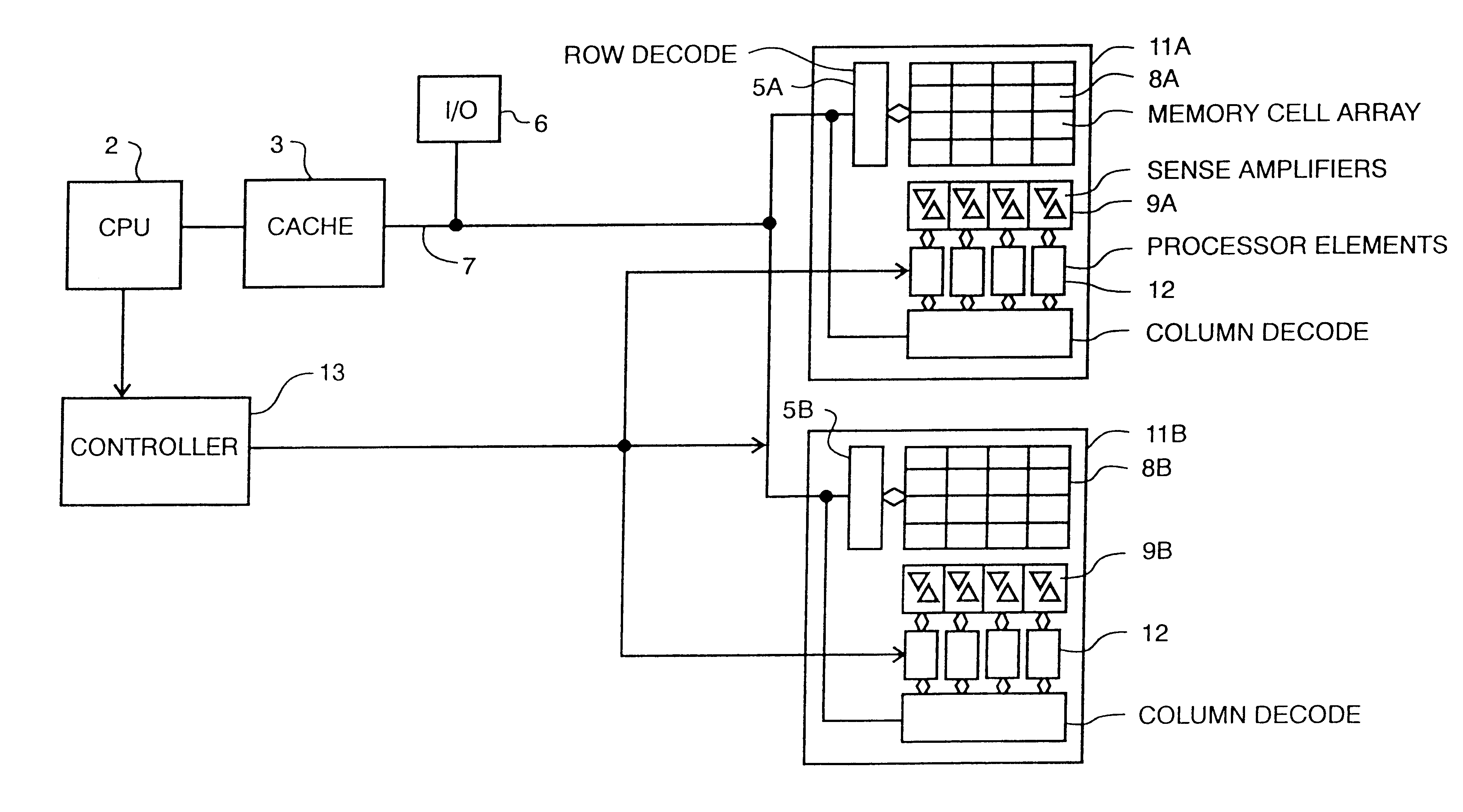

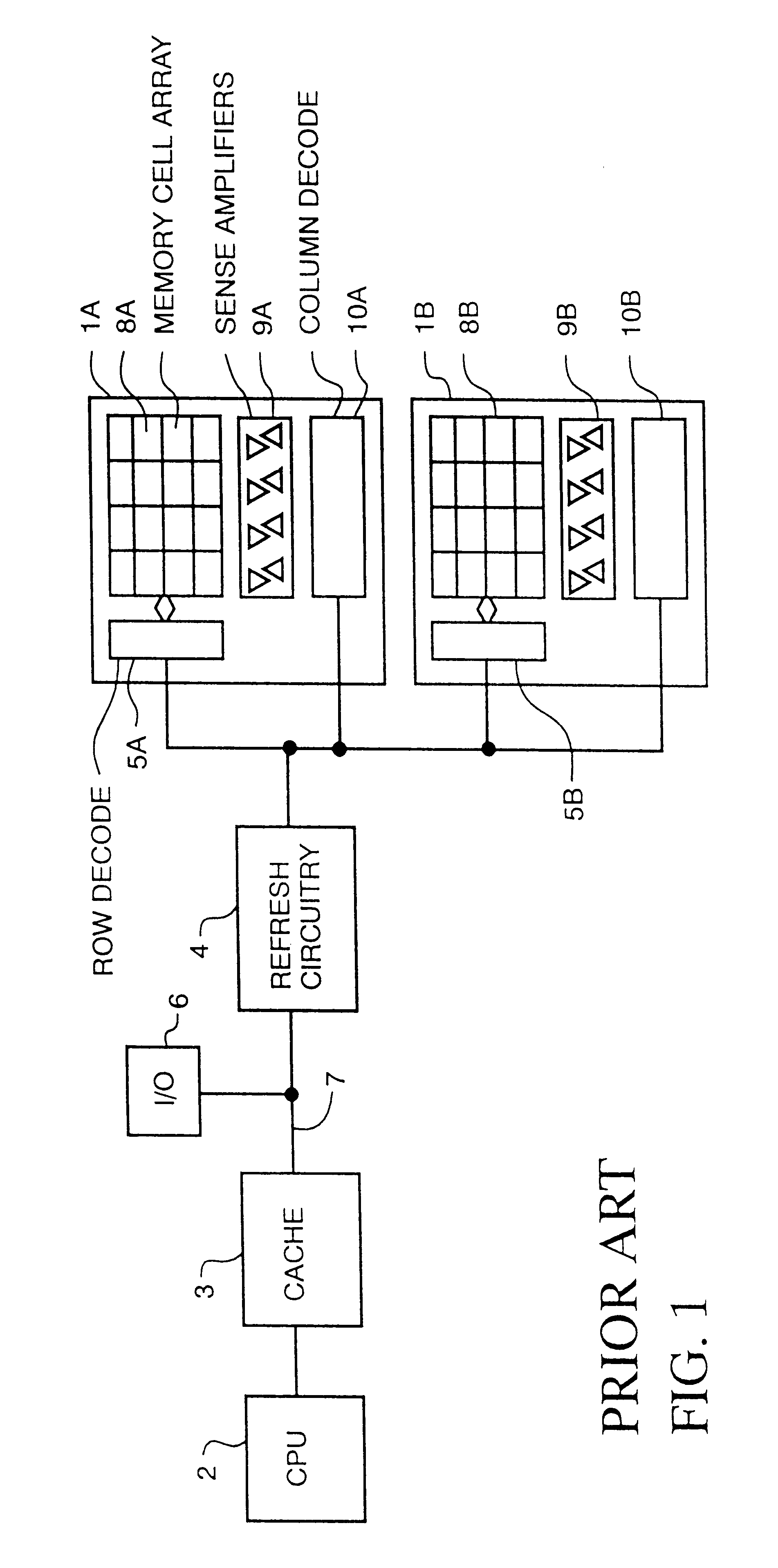

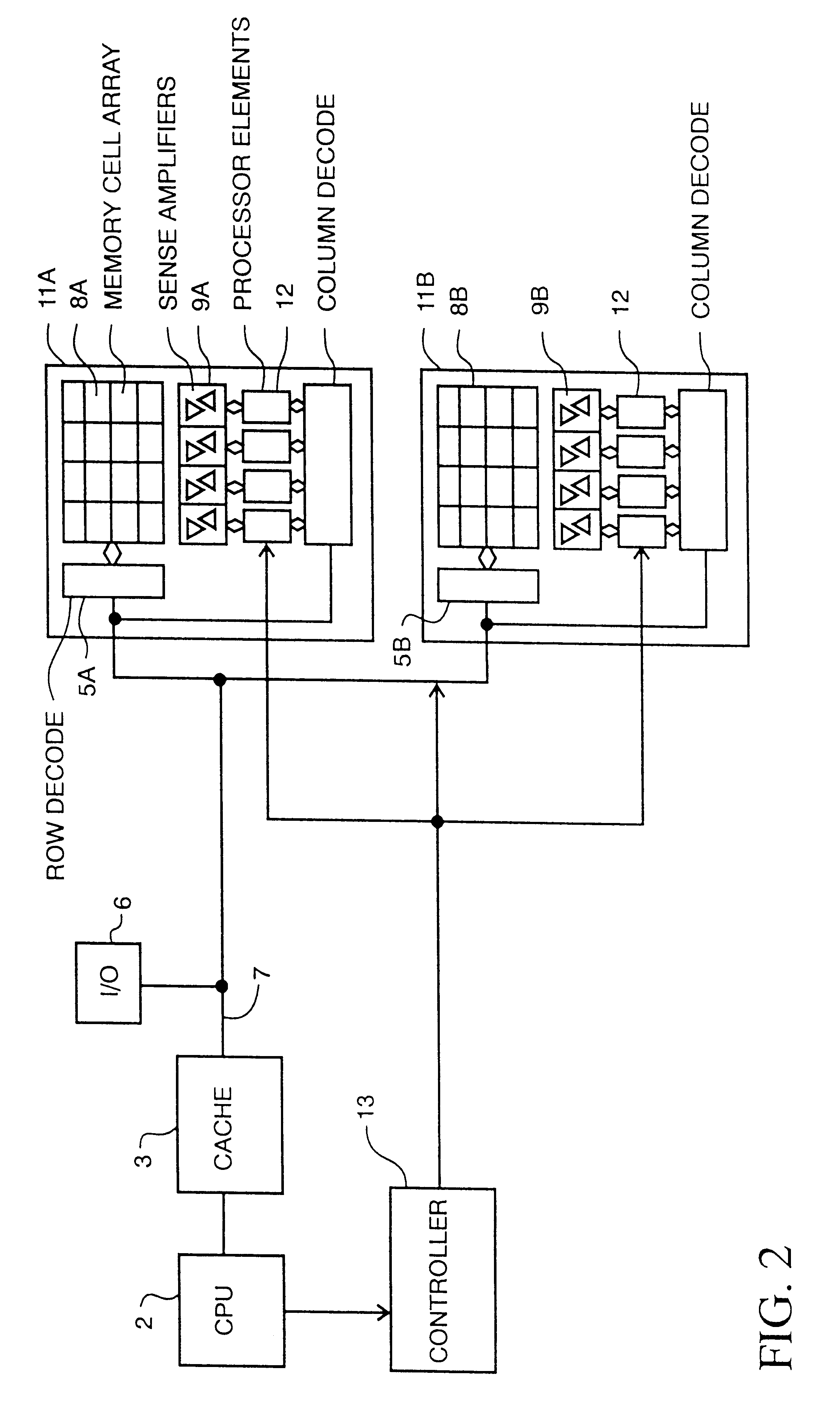

Memory device with multiple processors having parallel access to the same memory area

InactiveUS6279088B1Energy efficient ICTOperational speed enhancementArithmetic logic unitRead-modify-write

A digital computer performs read-modify-write (RMW) processing on each bit of a row of memory in parallel, in one operation cycle, comprising: (a) addressing a memory, (b) reading each bit of a row of data from the memory in parallel, (c) performing the same computational operation on each bit of the data in parallel, using an arithmetic logic unit (ALU) in a dedicated processing element, and (d) writing the result of the operation back into the original memory location for each bit in the row.

Owner:SATECH GRP A B LLC

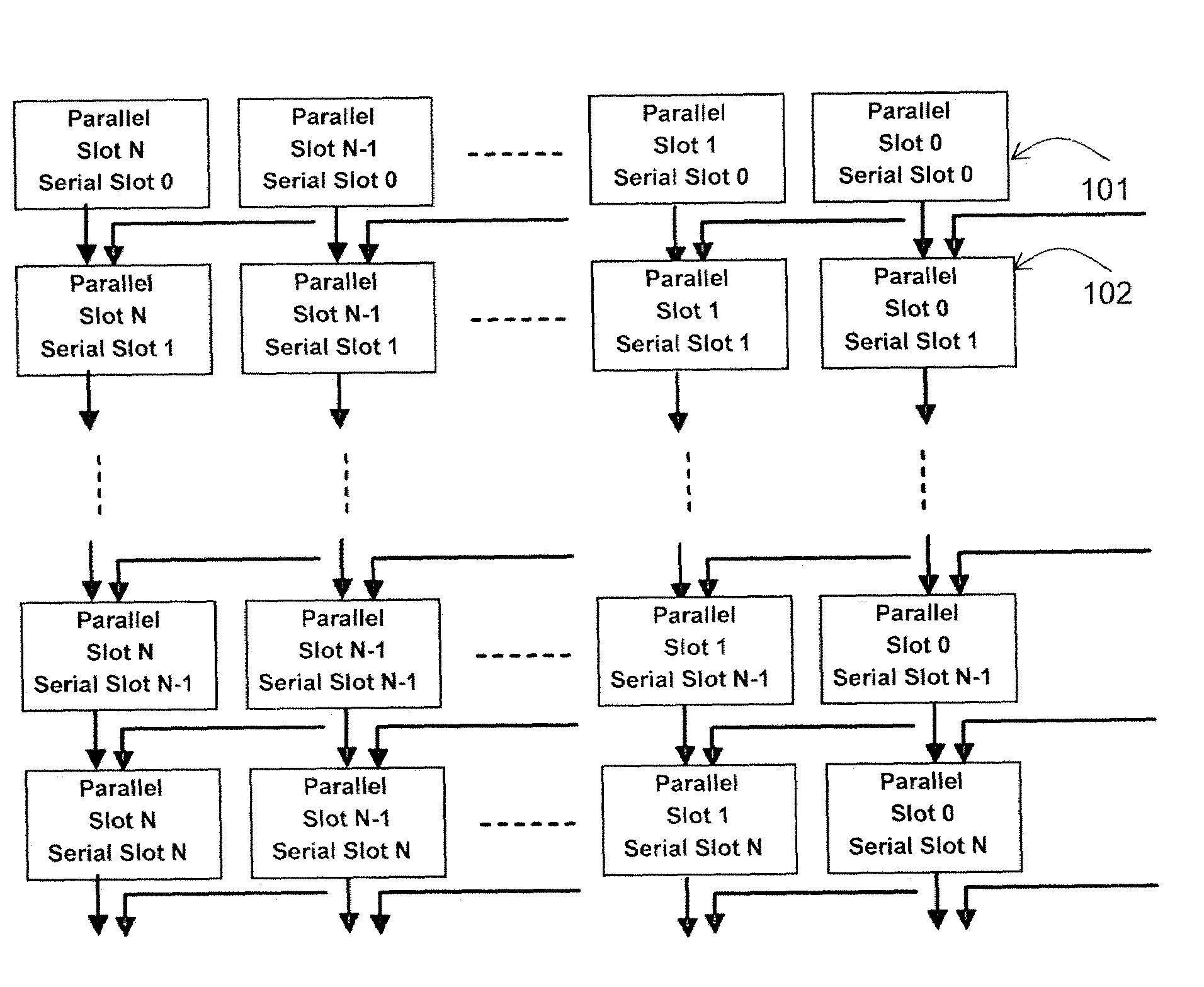

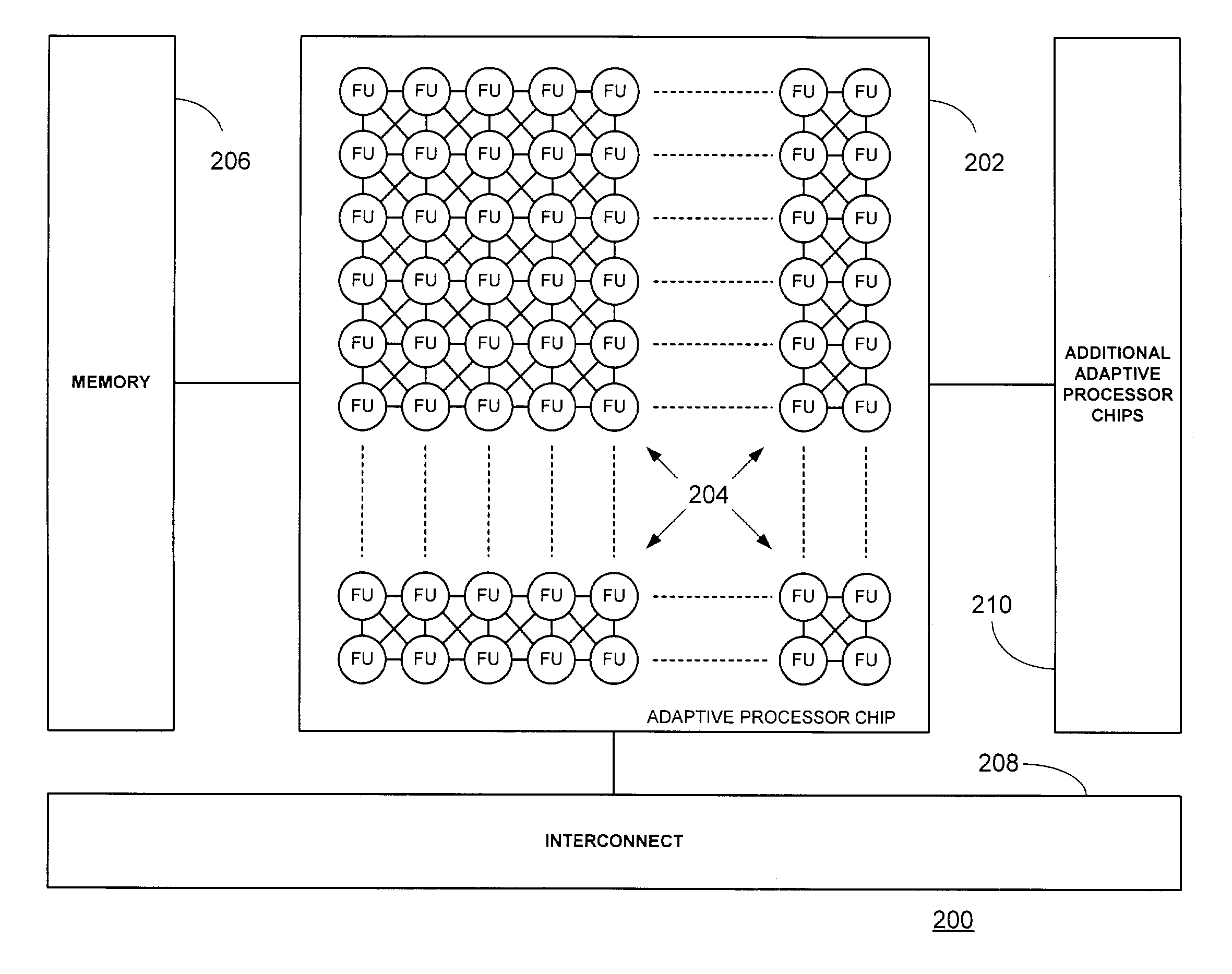

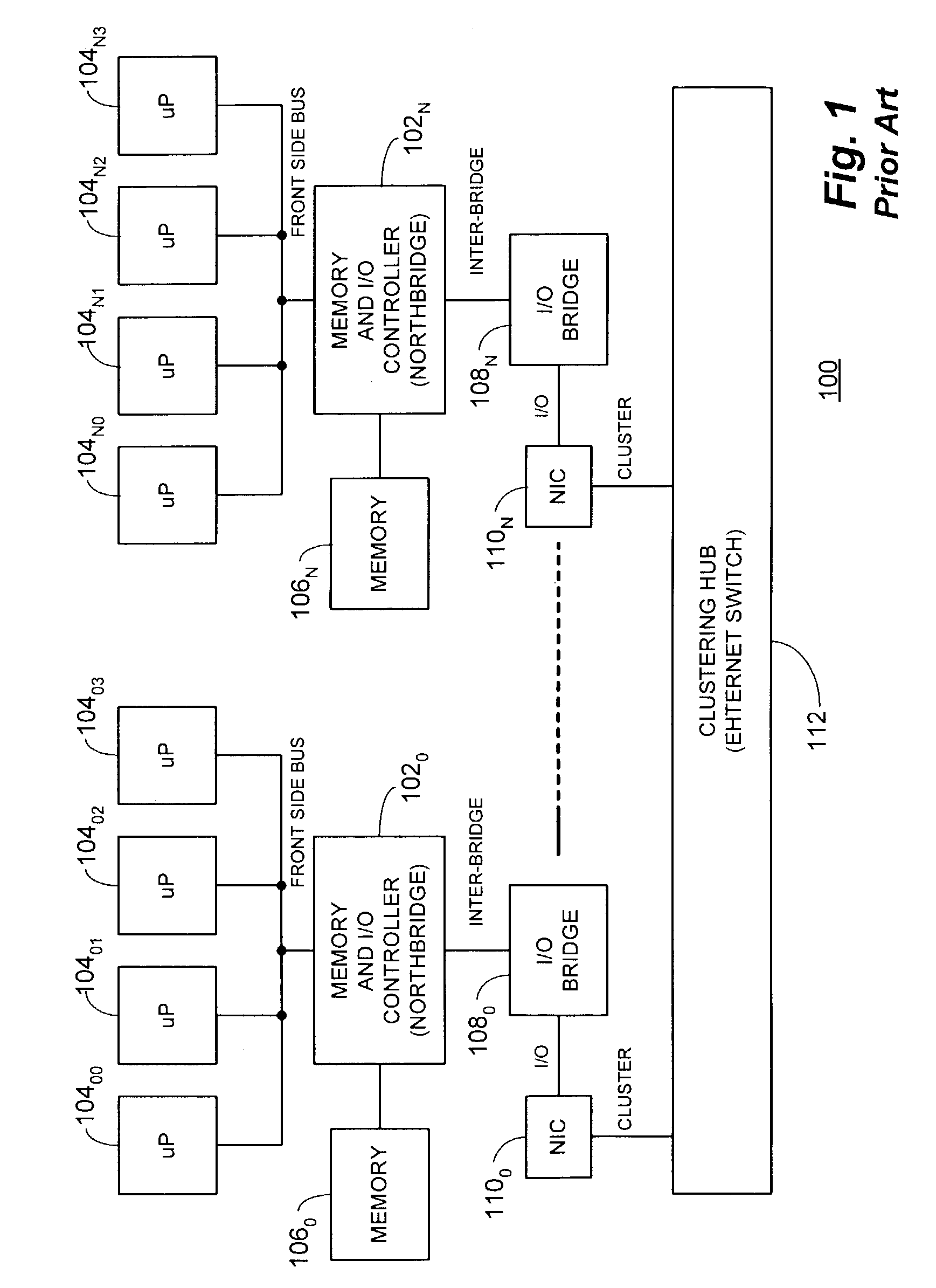

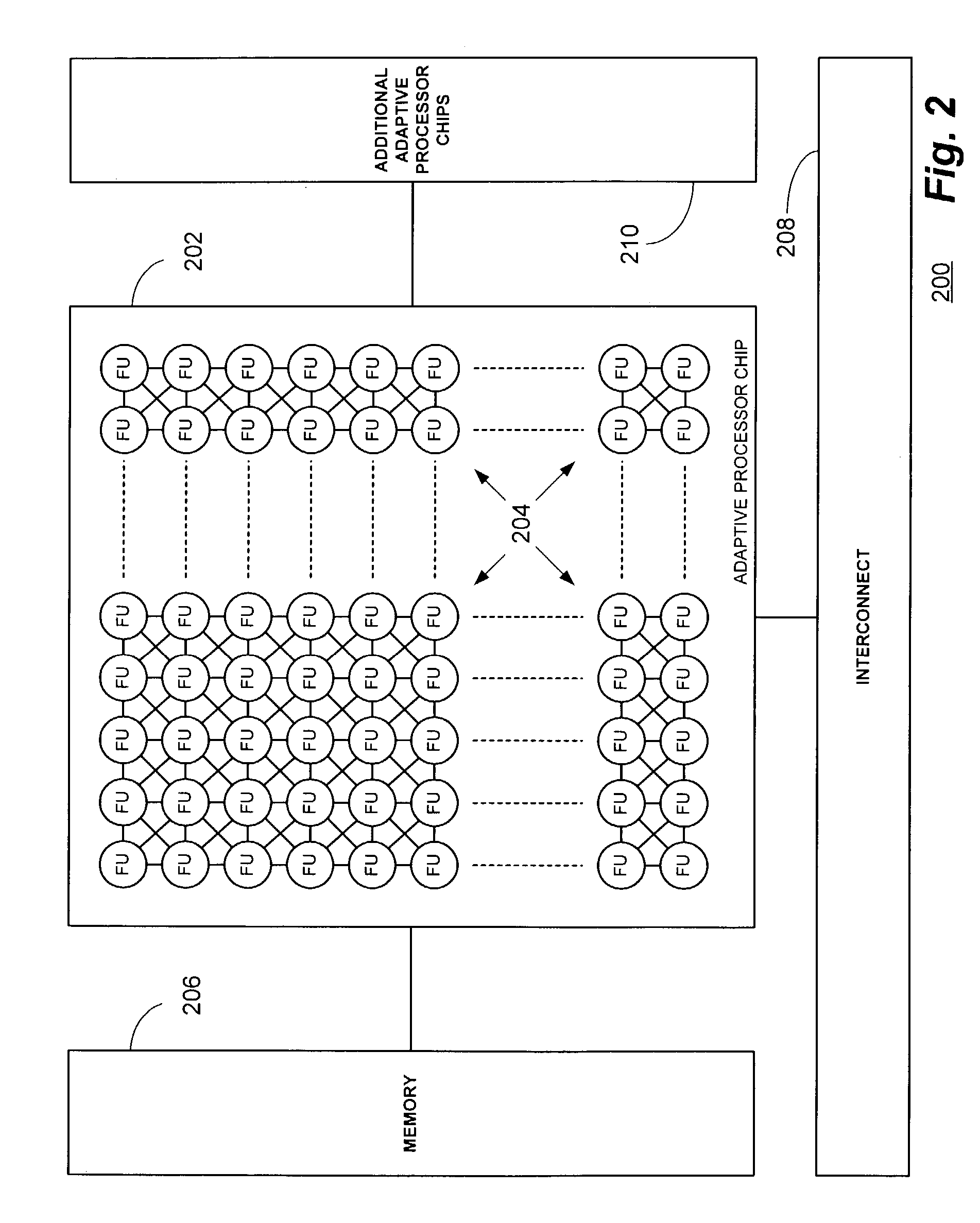

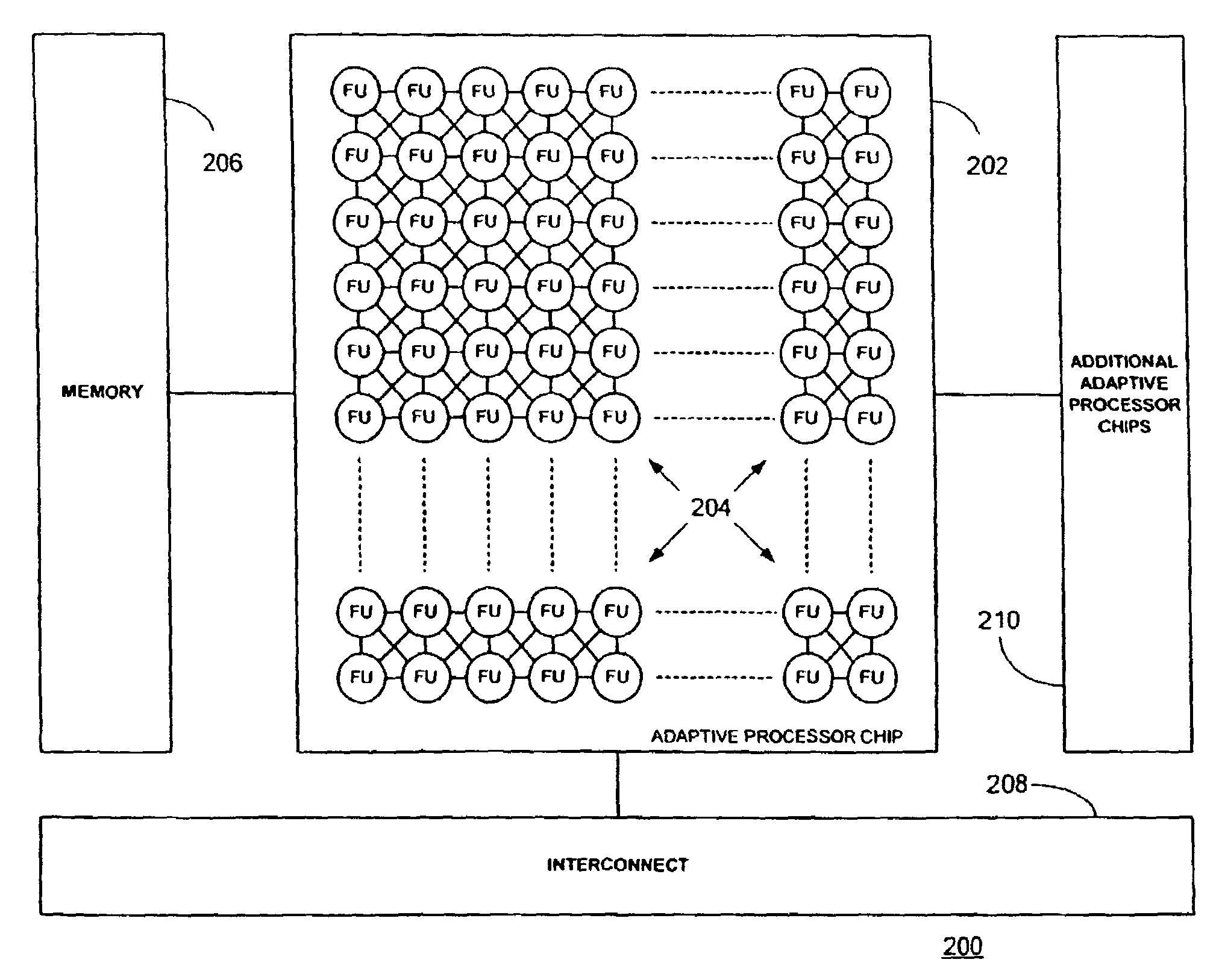

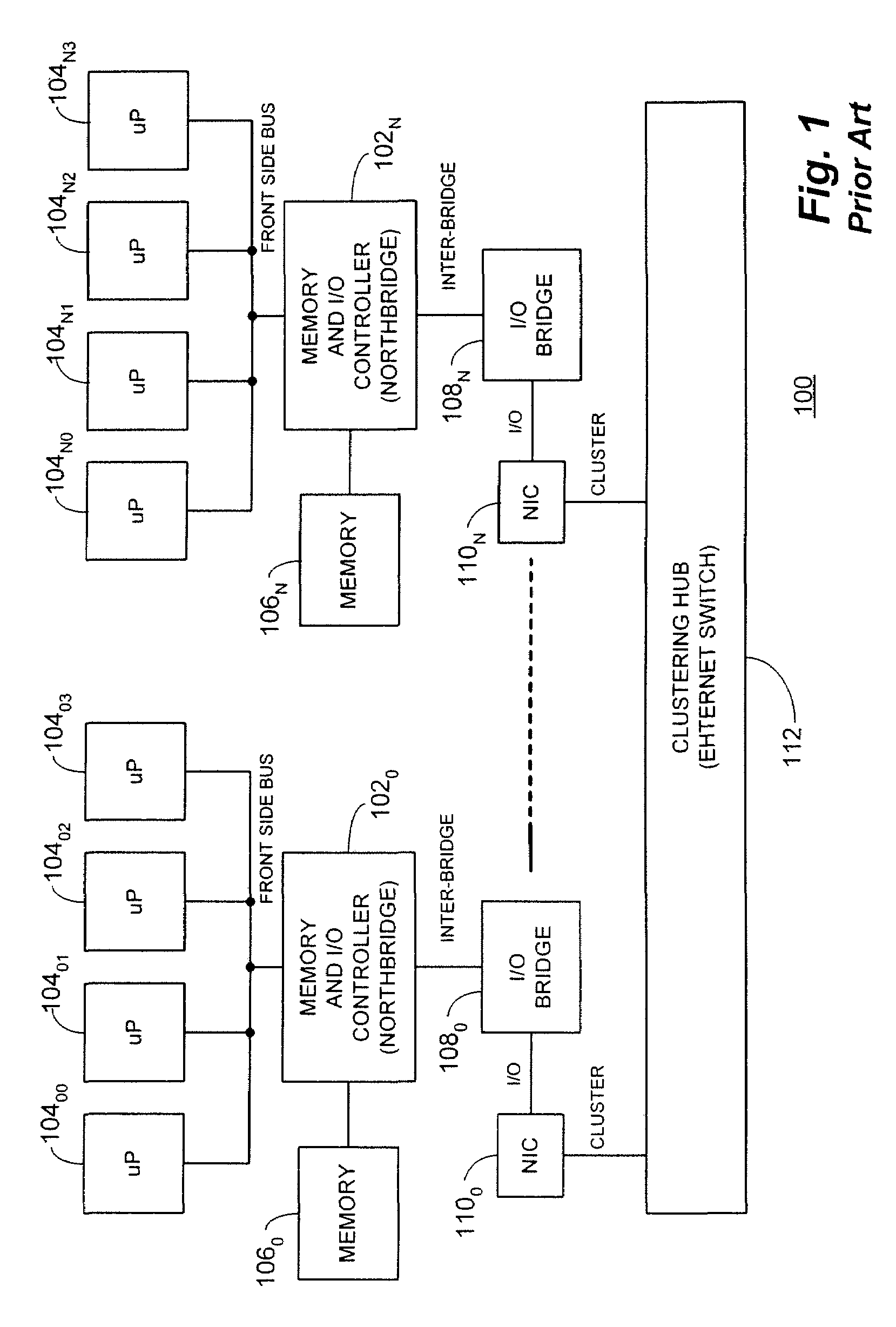

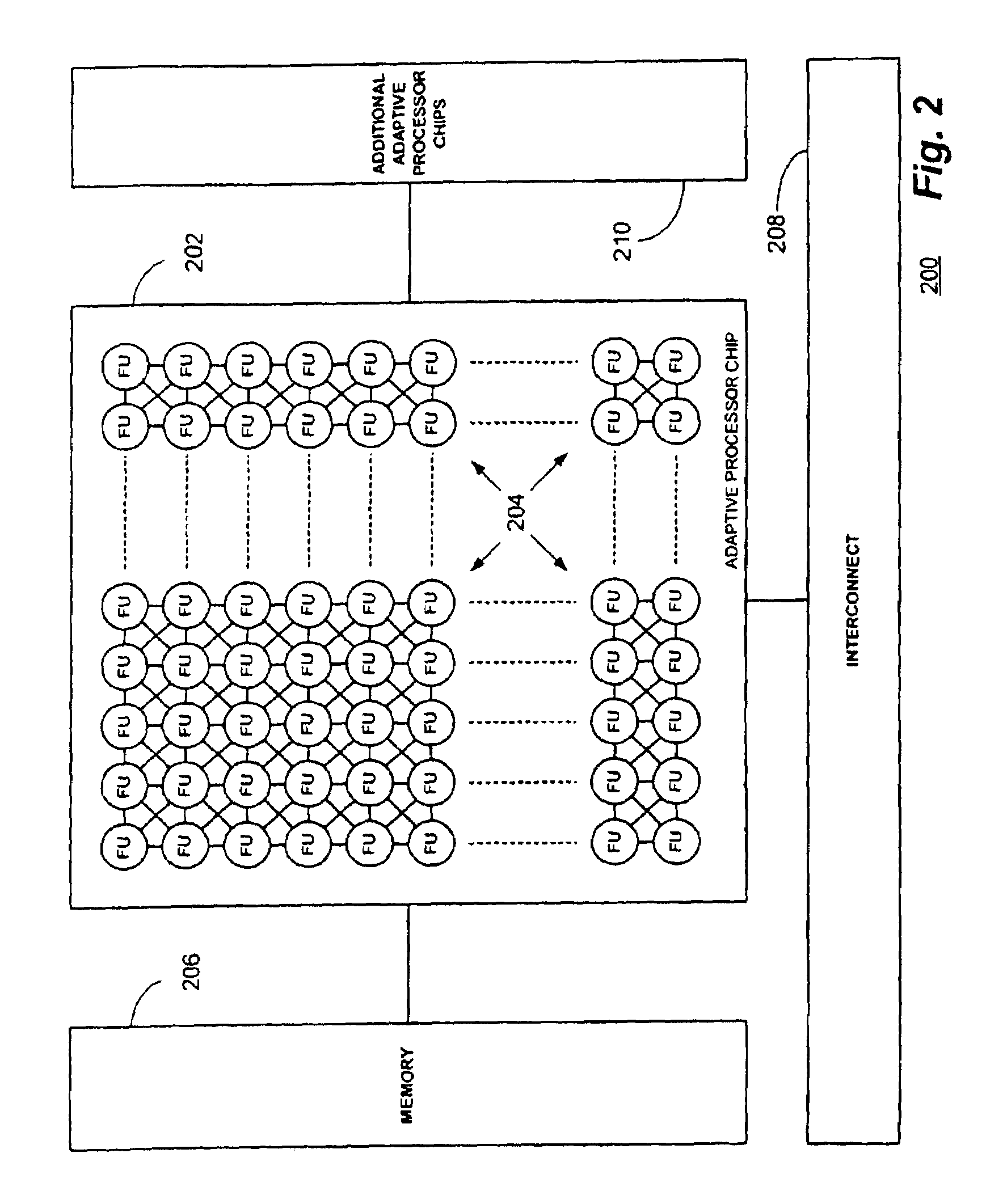

Multi-adaptive processing systems and techniques for enhancing parallelism and performance of computational functions

ActiveUS7225324B2Improve performanceSmall and less-expensiveSingle instruction multiple data multiprocessorsArchitecture with single central processing unitData processing systemWavefront

Multi-adaptive processing systems and techniques for enhancing parallelism and performance of computational functions are disclosed which can be employed in a myriad of applications including multi-dimensional pipeline computations for seismic applications, search algorithms, information security, chemical and biological applications, filtering and the like as well as for systolic wavefront computations for fluid flow and structures analysis, bioinformatics etc. Some applications may also employ both the multi-dimensional pipeline and systolic wavefront methodologies disclosed.

Owner:SRC COMP

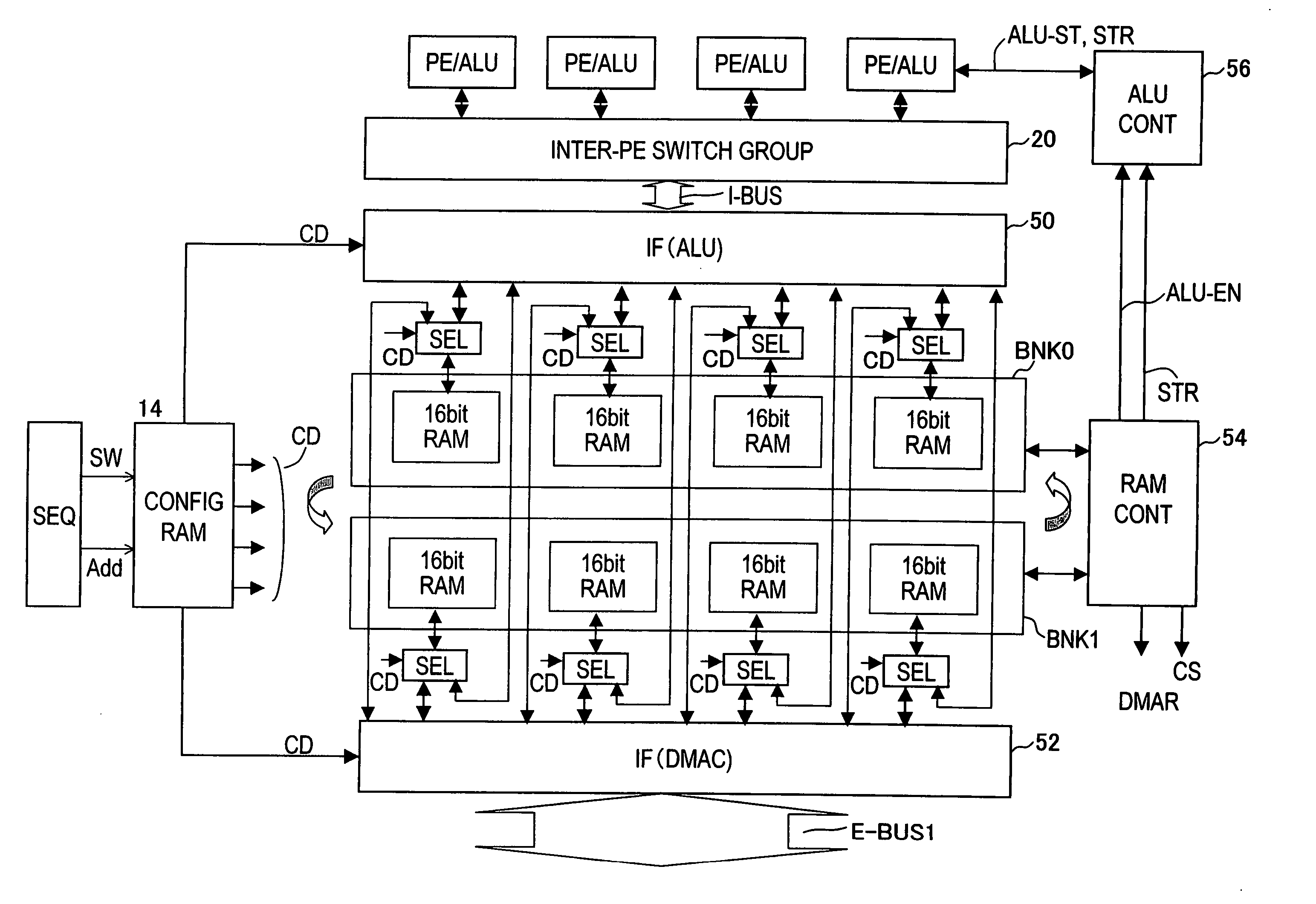

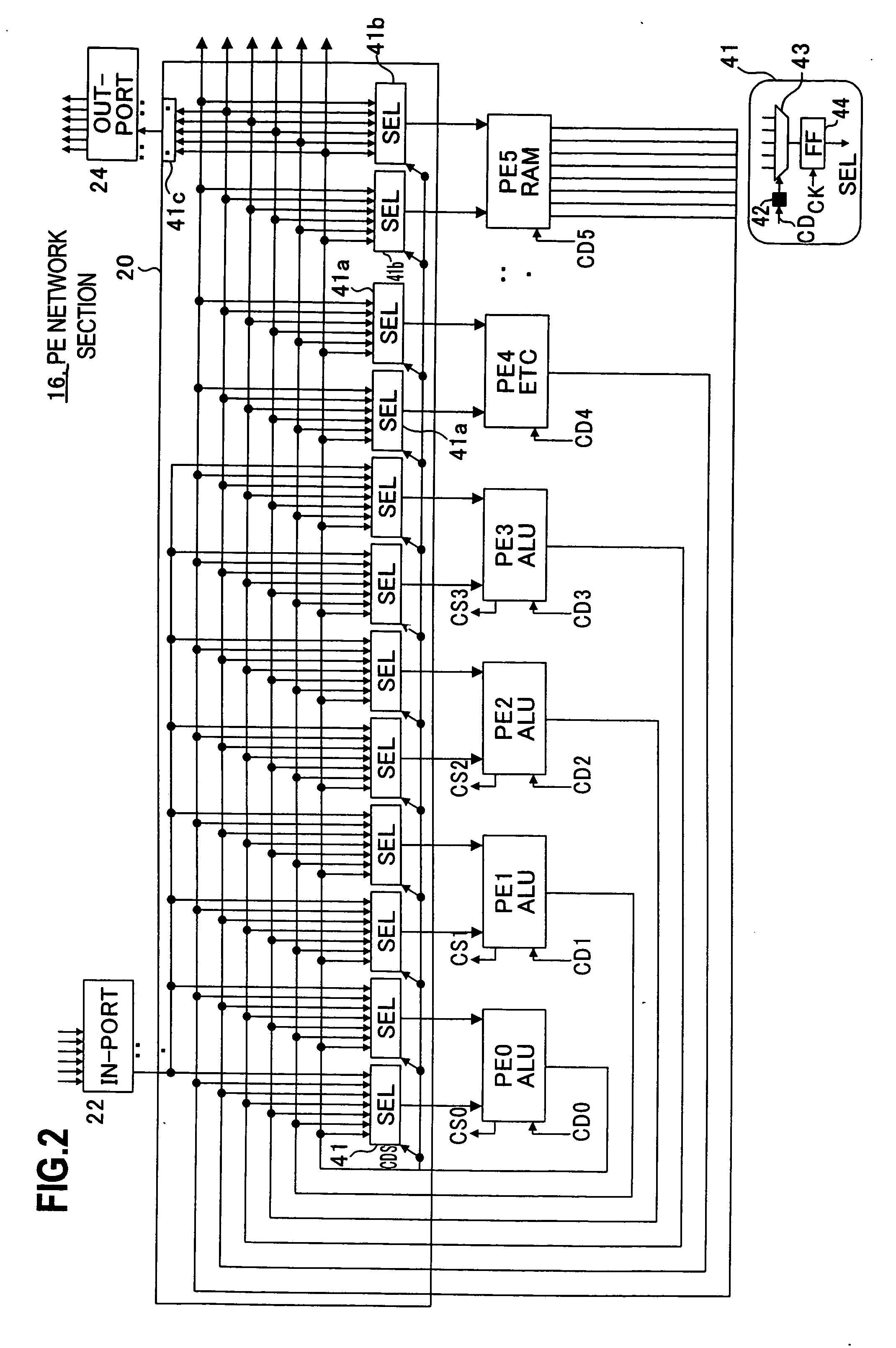

Reconfigurable integrated circuit device

InactiveUS20070033369A1Configuration highHigh operating requirementsSingle instruction multiple data multiprocessorsMemory systemsExternal storageDirect memory access

A reconfigurable integrated circuit device which is dynamically constructed to be an arbitrary operation status based on a configuration data, has a plurality of clusters including operation processor elements, a memory processor element, and an inter-processor element switch group for connecting the elements in an arbitrary status; an inter-cluster switch group for constructing data paths between the clusters in an arbitrary status; and an external memory bus. A direct memory access control section, for executing the data transfer between the memory processor element and the external memory by direct memory access responding to an access request from the memory processor elements of the plurality of clusters, is further provided.

Owner:FUJITSU SEMICON LTD

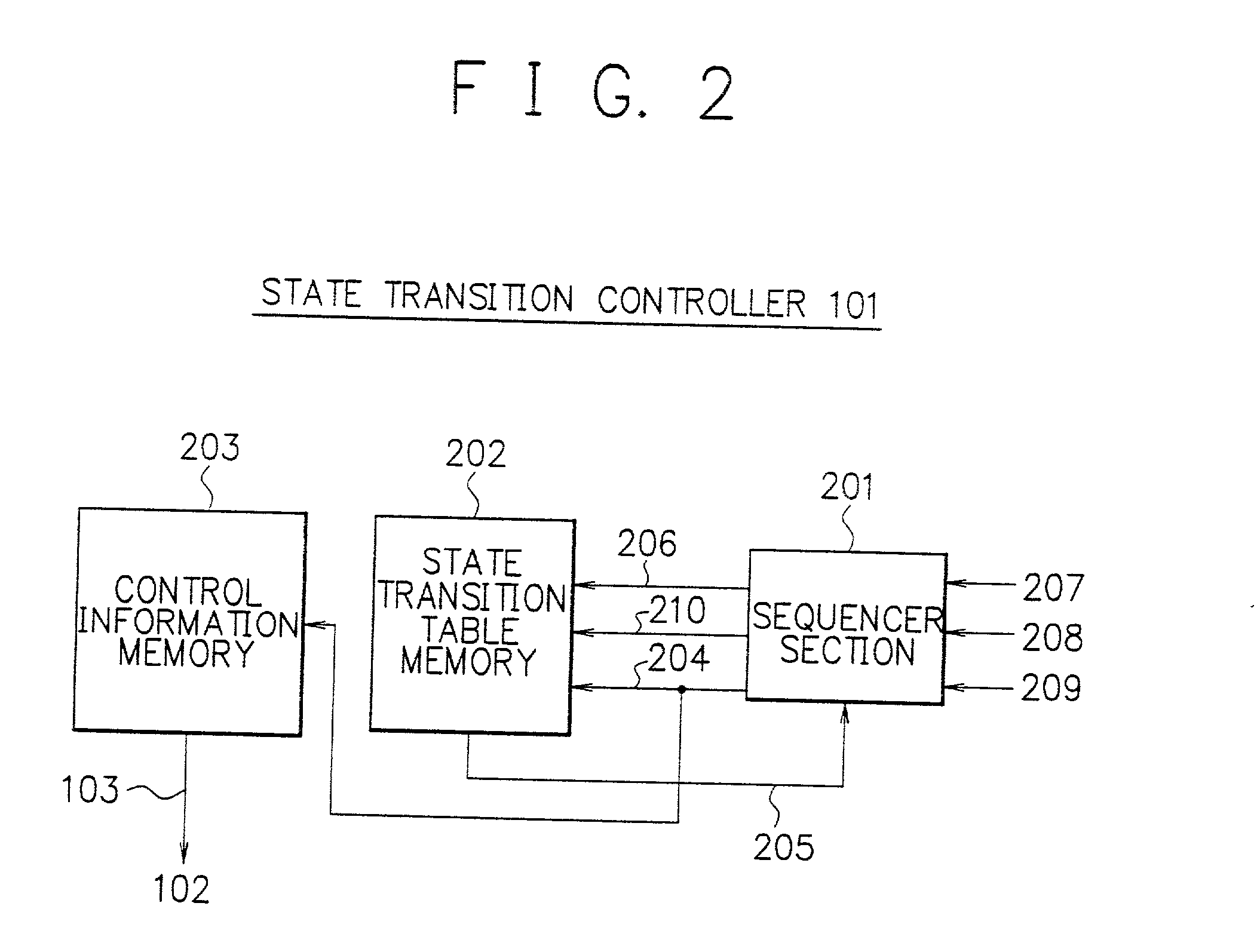

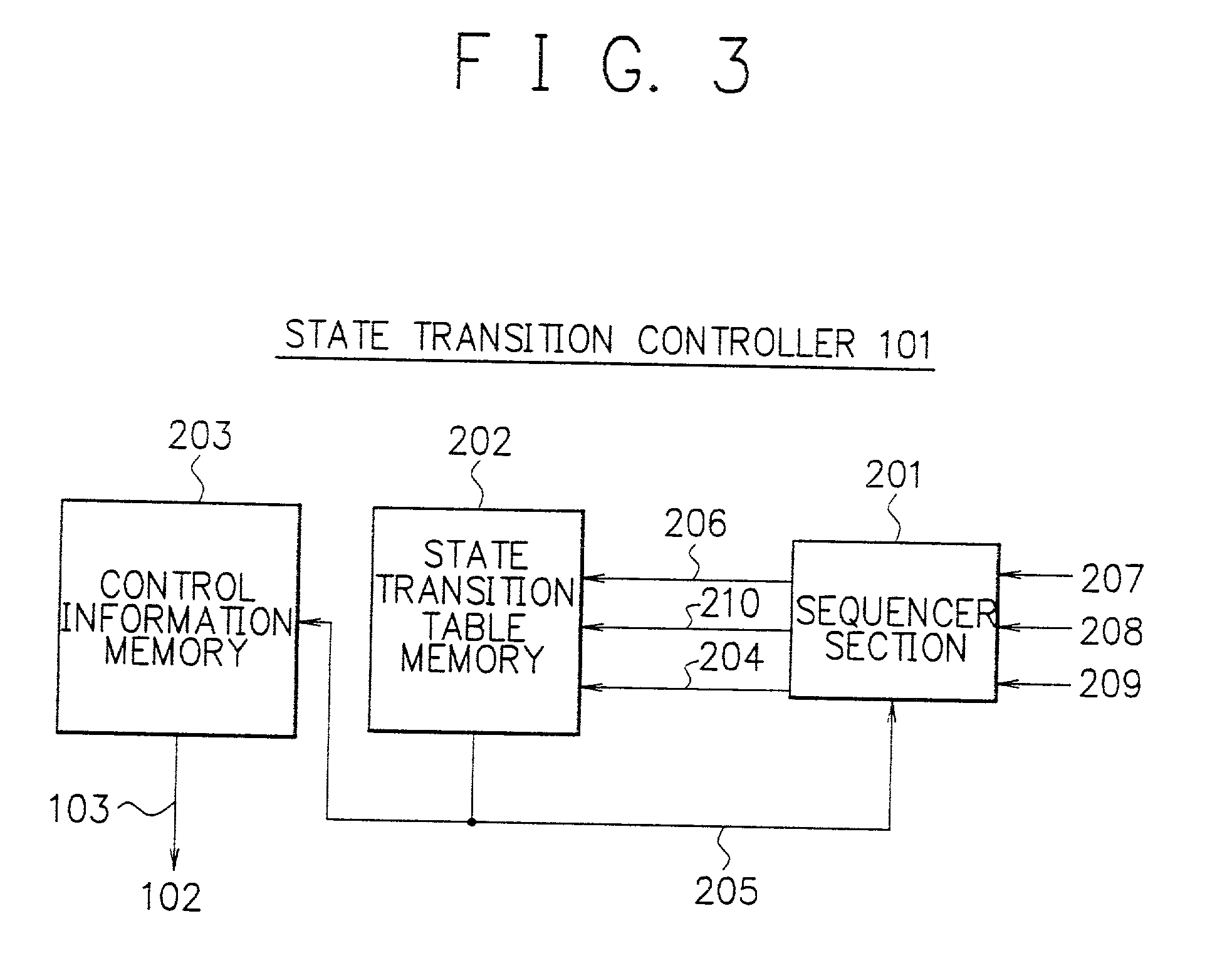

Array type processor with state transition controller identifying switch configuration and processing element instruction address

InactiveUS6738891B2Efficient implementationEfficient executionSingle instruction multiple data multiprocessorsSpecific program execution arrangementsProcessing elementSystem configuration

To execute all processing in an array section of an array-type processor, each processor must execute processing of different types, i.e., processing of an operating unit and processing of a random logic circuit, which limits its size and processing performance. A data path section including processors arranged in an array are connected via programmable switches to primarily execute processing of operation and a state transition controller configured to easily implement a state transition function to control state transitions are independently disposed. These sections are configured in customized structure for respective processing purposes to efficiently implement and achieve the processing of operation and the control operation.

Owner:NEC CORP

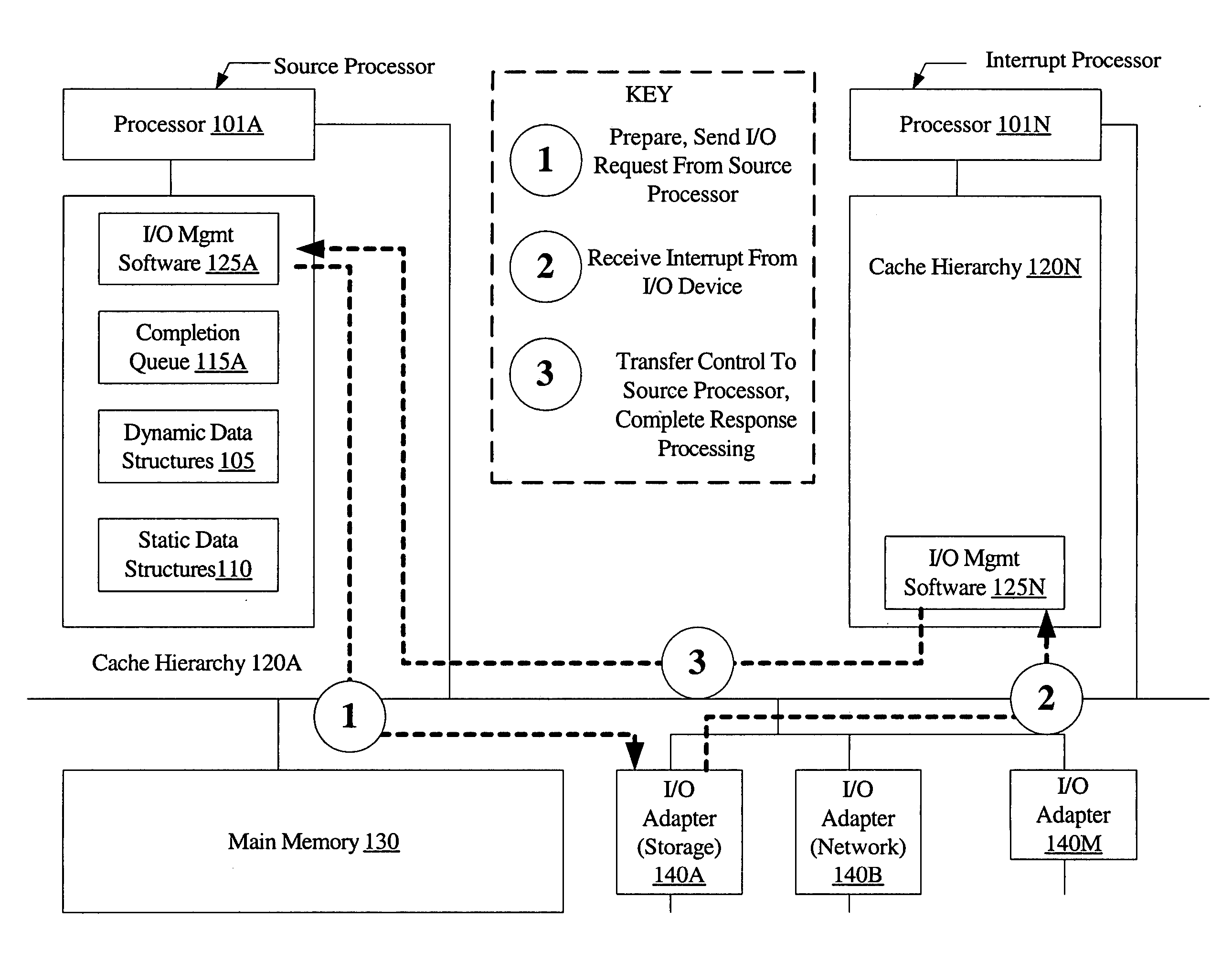

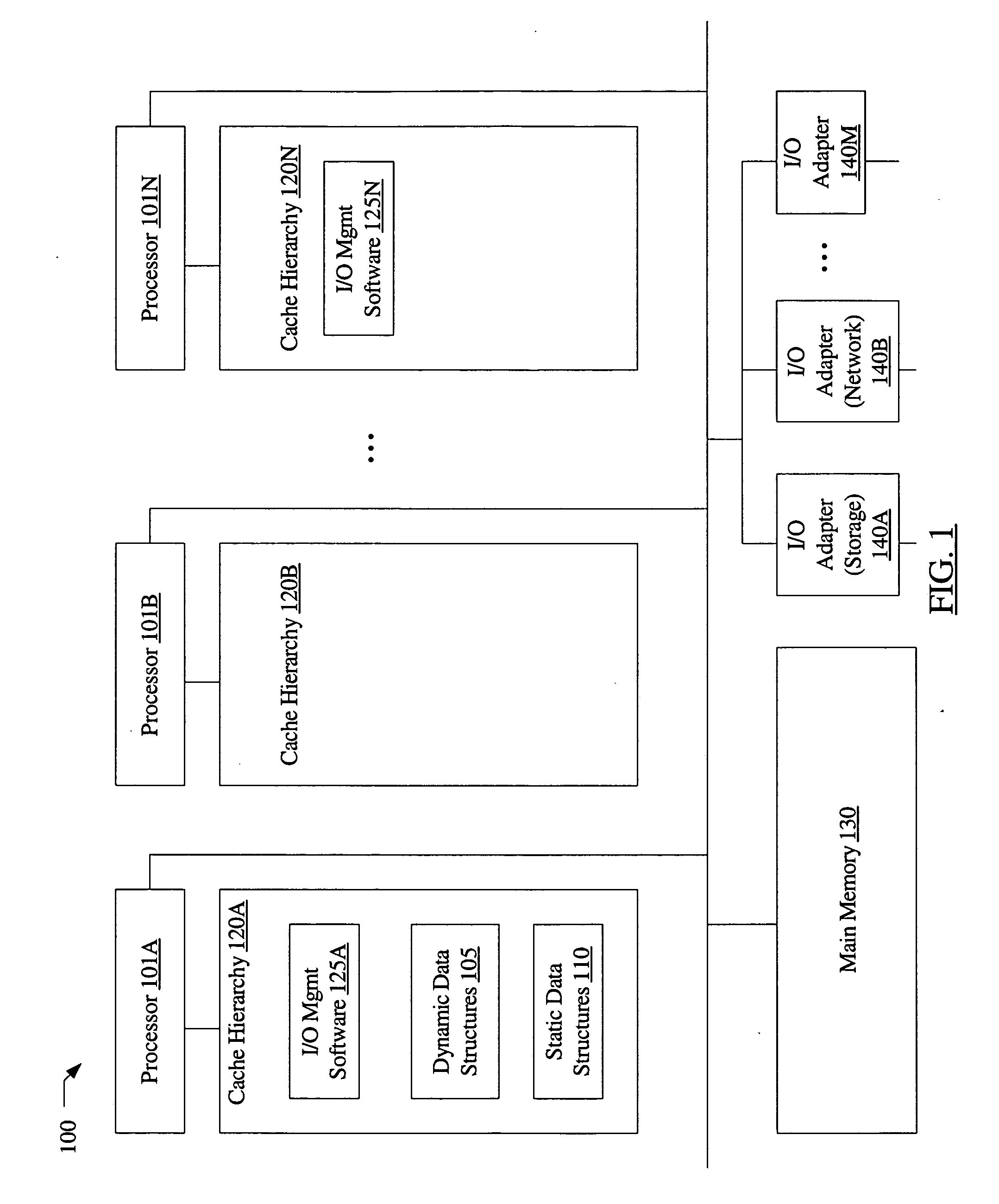

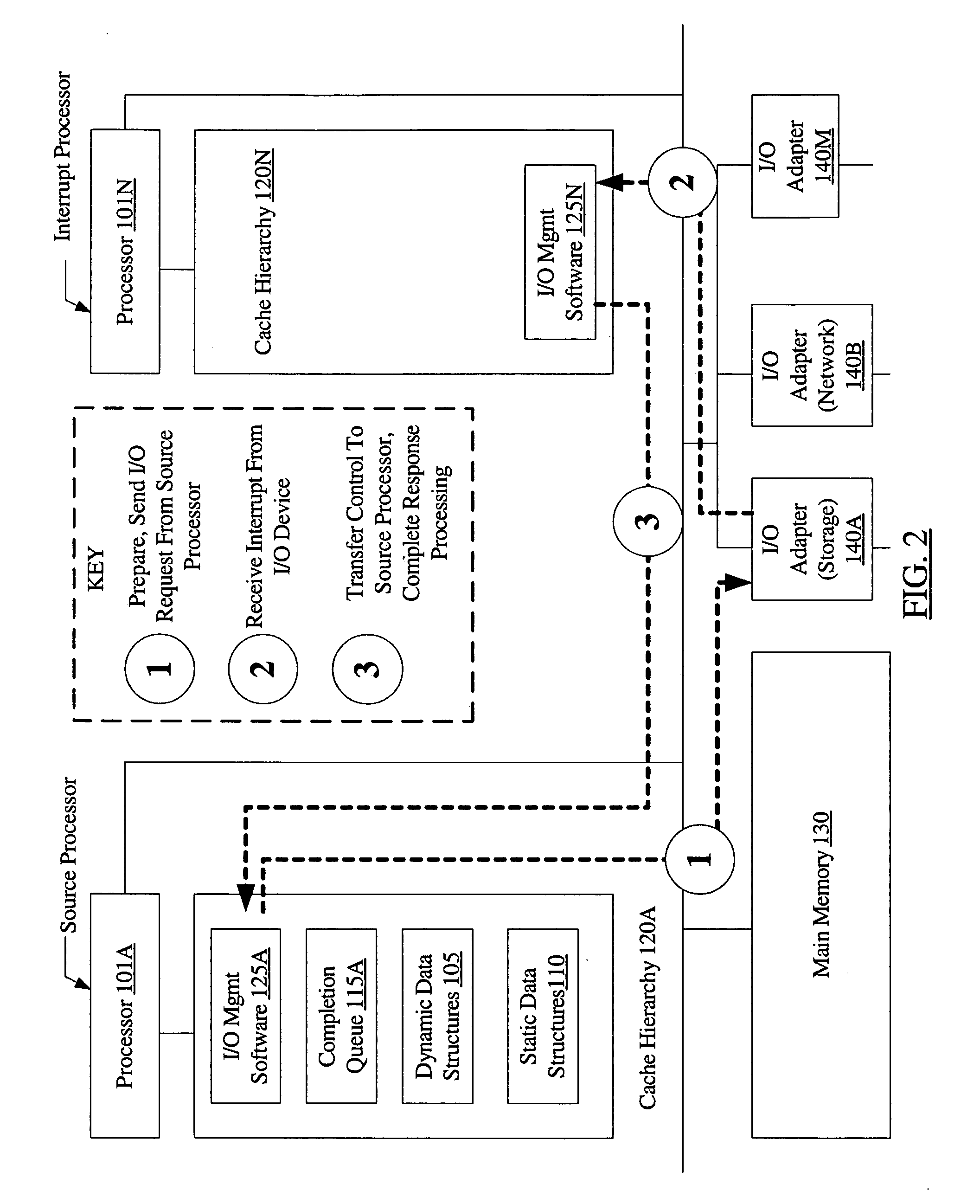

Tagged interrupt forwarding

ActiveUS20060212607A1Expensive memory accessSingle instruction multiple data multiprocessorsSpecific program execution arrangementsMulti processorSoftware

A system for tagged interrupt forwarding comprises a multiprocessor including a first and a second processor, an I / O device, and I / O management software. In response to an application I / O request, the I / O management software may be configured to prepare a request descriptor at the first processor, including an identification of the first processor. The I / O management software may then send a hardware or device-level I / O request to the I / O device. When the I / O device completes the requested hardware I / O operation, it may send a response, which may be received at the second processor. I / O management software at the second processor may be configured to transfer control for processing the response back to the first processor at which the I / O request was initiated, using the tag to identify the first processor. Processing of the response may then be completed at the first processor.

Owner:ORACLE INT CORP

Bitstream buffer manipulation with a SIMD merge instruction

ActiveUS20050108312A1Single instruction multiple data multiprocessorsRegister arrangementsVariable lengthSIMD

Method, apparatus, and program means for performing bitstream buffer manipulation with a SIMD merge instruction. The method of one embodiment comprises determining whether any unprocessed data bits for a partial variable length symbol exist in a first data block is made. A shift merge operation is performed to merge the unprocessed data bits from the first data block with a second data block. A merged data block is formed. A merged variable length symbol comprised of the unprocessed data bits and a plurality of data bits from the second data block is extracted from the merged data block.

Owner:INTEL CORP

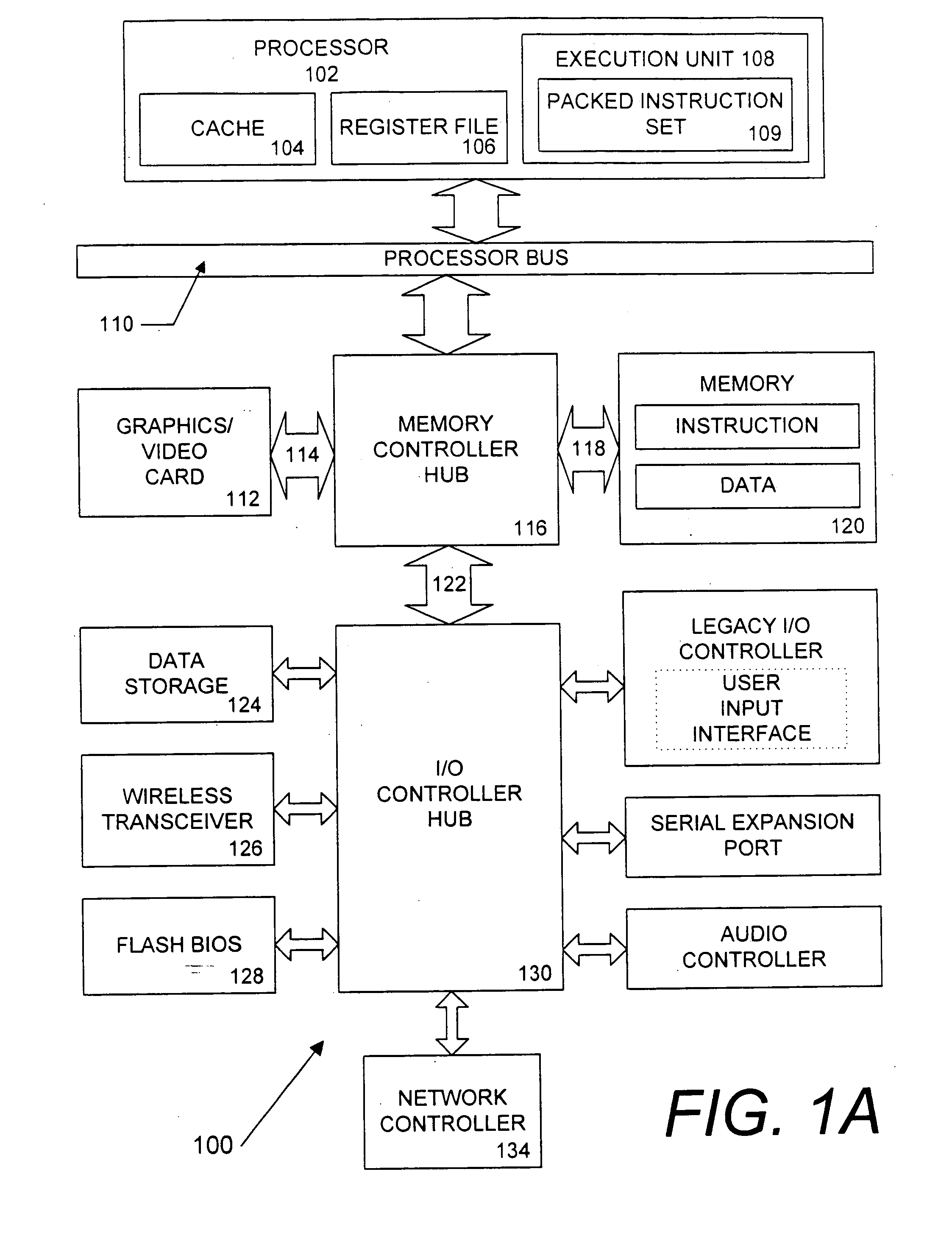

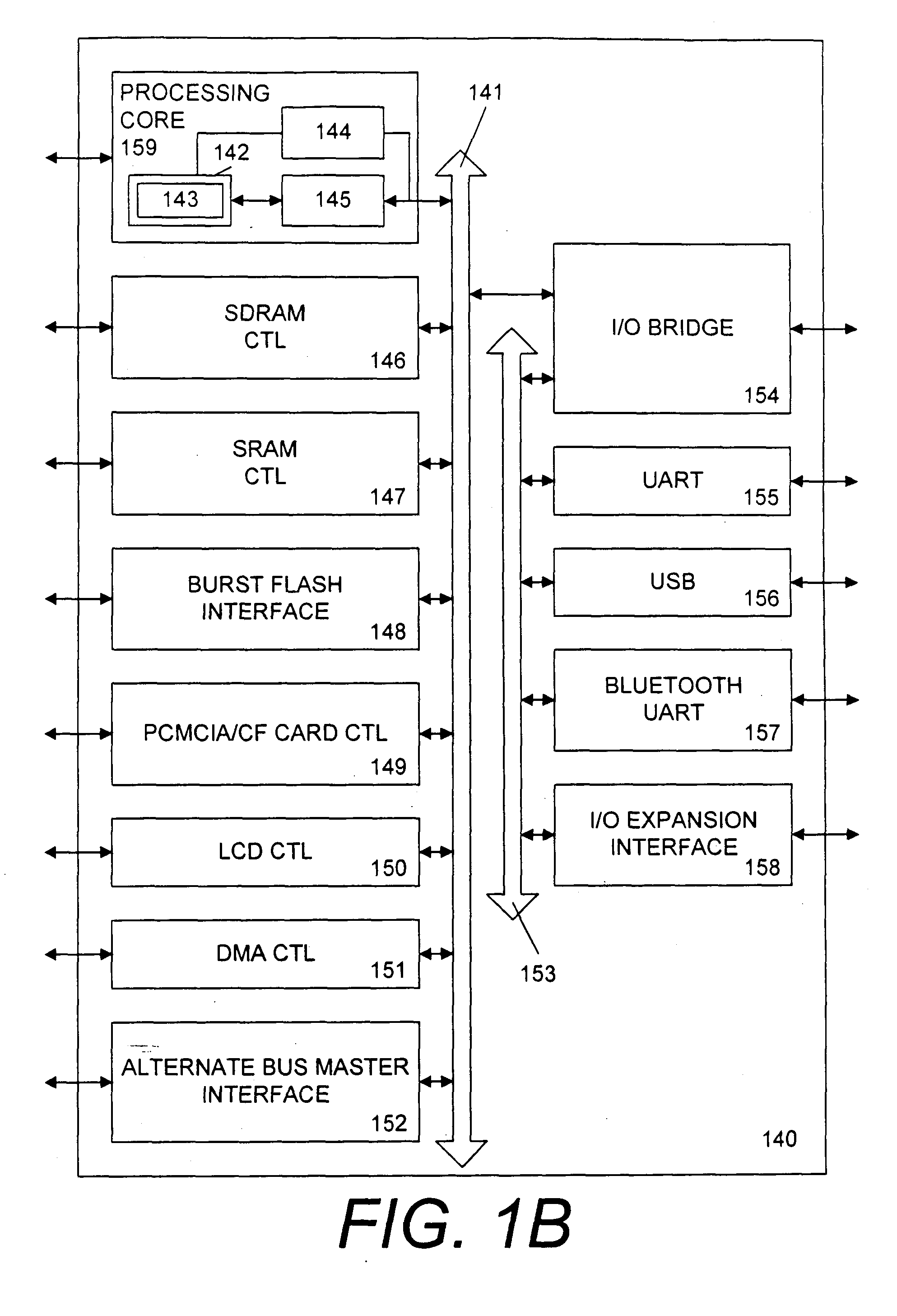

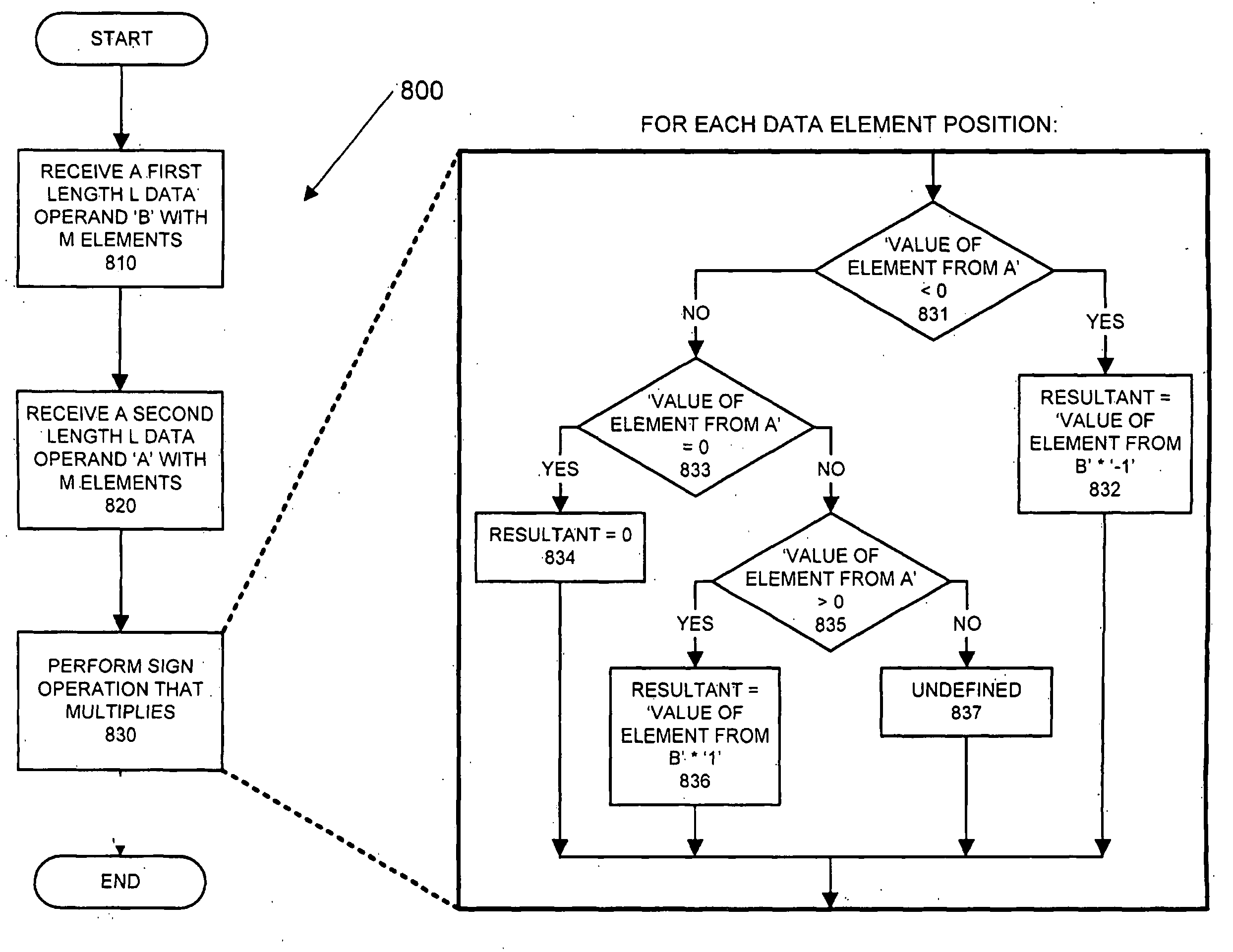

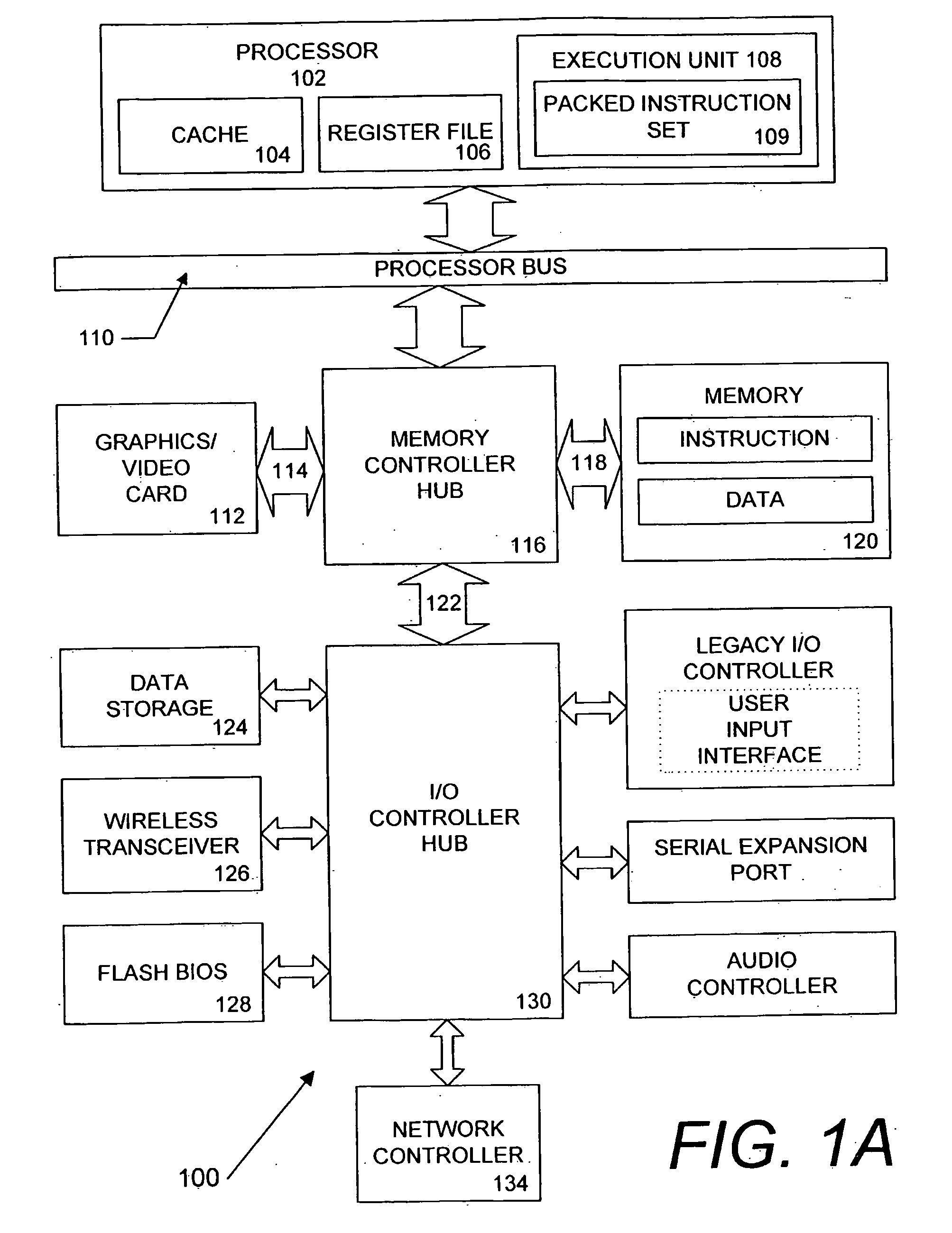

Nonlinear filtering and deblocking applications utilizing SIMD sign and absolute value operations

ActiveUS20090077143A1Single instruction multiple data multiprocessorsInstruction analysisNonlinear filterNonlinear filtering

Method, apparatus, and program means for nonlinear filtering and deblocking applications utilizing SIMD sign and absolute value operations. The method of one embodiment comprises receiving first data for a first block and second data for a second block. The first data and said second data are comprised of a plurality of rows and columns of pixel data. A block boundary between the first block and the second block is characterized. A correction factor for a deblocking algorithm is calculated with a first instruction for a sign operation that multiplies and with a second instruction for an absolute value operation. Data for pixels located along said block boundary between the first and second block are corrected.

Owner:INTEL CORP

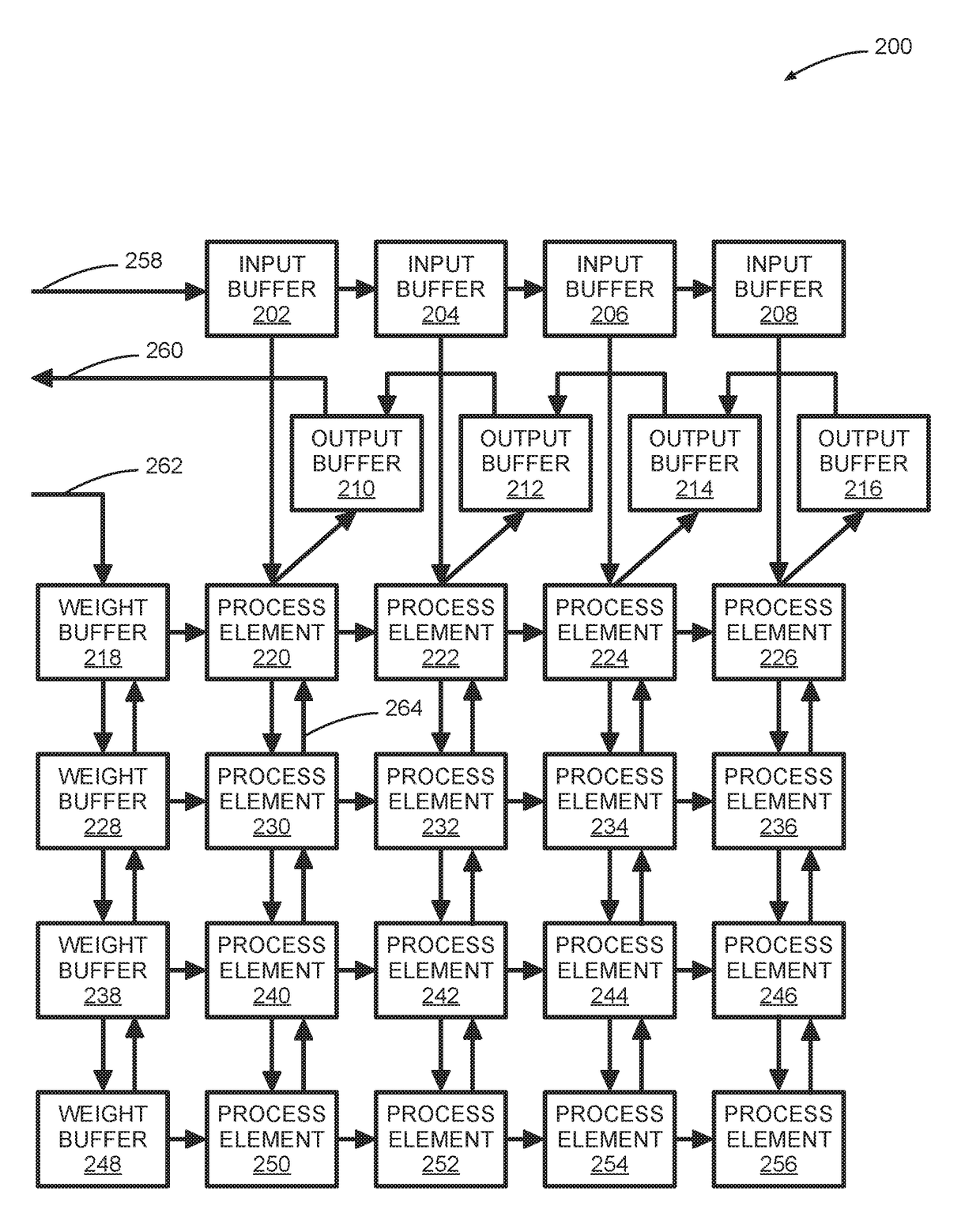

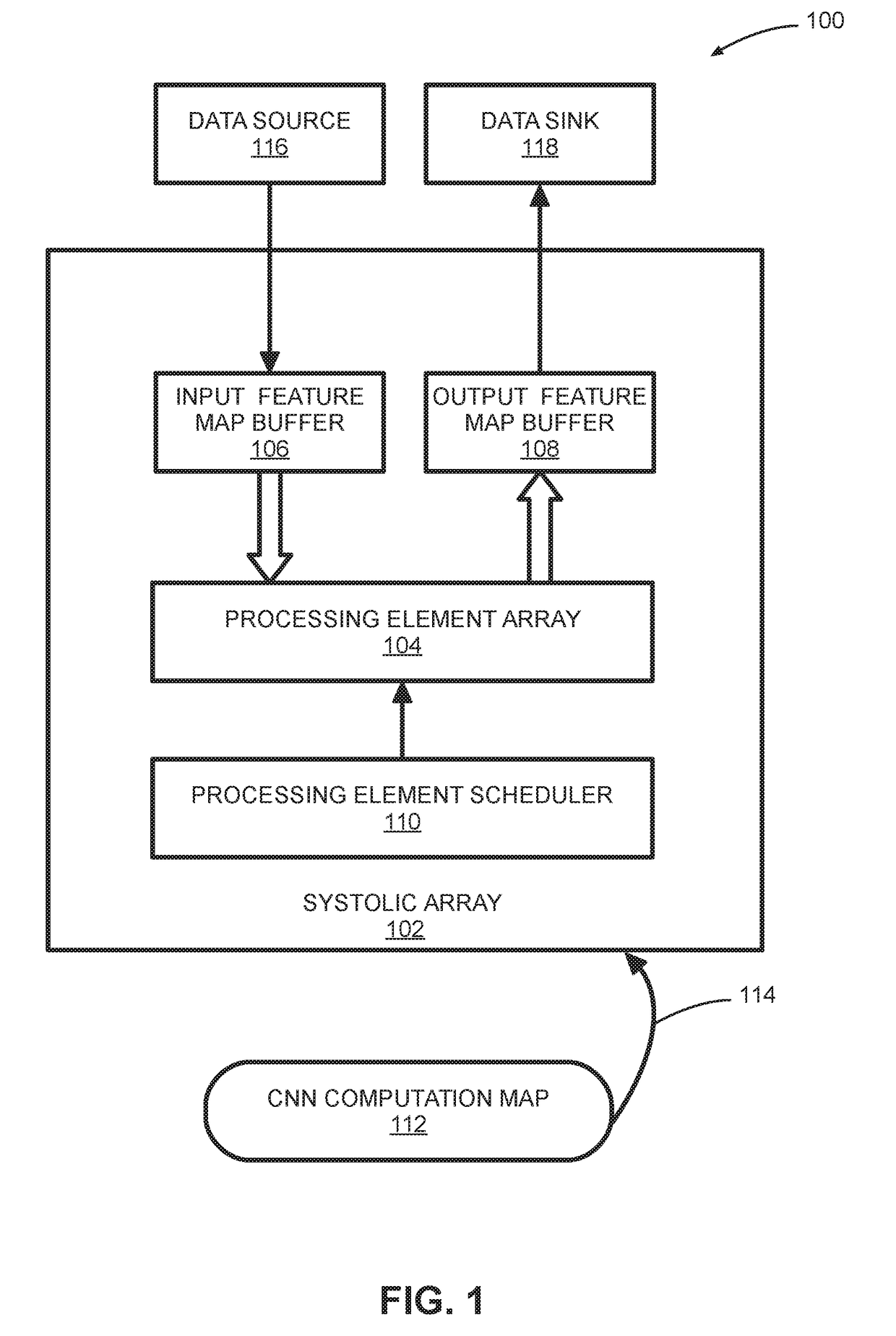

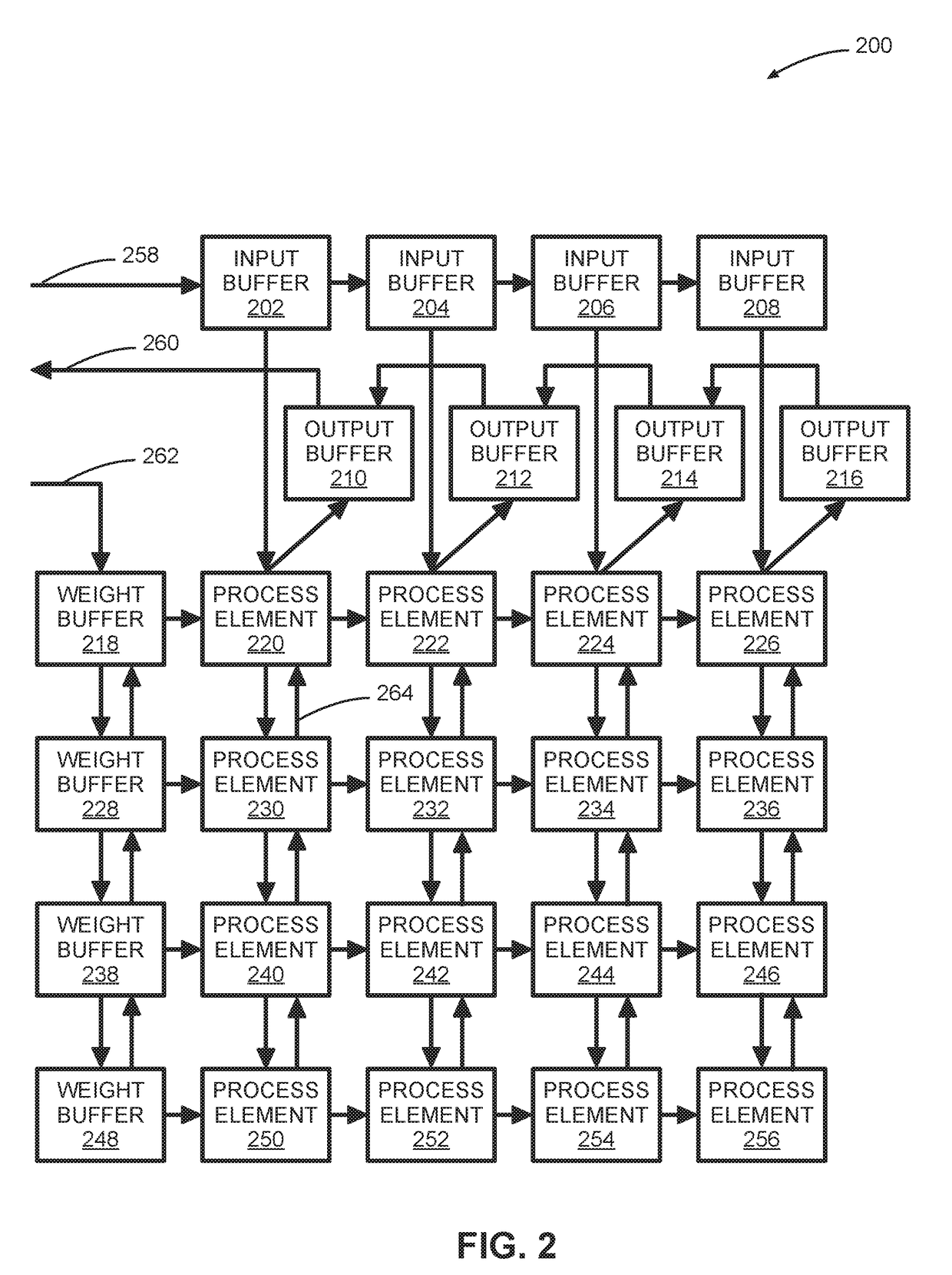

Systems And Methods For Systolic Array Design From A High-Level Program

ActiveUS20180314671A1Improve design throughputSingle instruction multiple data multiprocessorsSystolic arraysParallel computingProcessing element

Systems and methods for automated systolic array design from a high-level program are disclosed. One implementation of a systolic array design supporting a convolutional neural network includes a two-dimensional array of reconfigurable processing elements arranged in rows and columns. Each processing element has an associated SIMD vector and is connected through a local connection to at least one other processing element. An input feature map buffer having a double buffer is configured to store input feature maps, and an interconnect system is configured to pass data to neighboring processing elements in accordance with a processing element scheduler. A CNN computation is mapped onto the two-dimensional array of reconfigurable processing elements using an automated system configured to determine suitable reconfigurable processing element parameters.

Owner:XILINX INC

Multi-adaptive processing systems and techniques for enhancing parallelism and performance of computational functions

InactiveUS7620800B2Improve performanceSmaller and less expensive computer systemsSingle instruction multiple data multiprocessorsArchitecture with single central processing unitData processing systemStructure analysis

Multi-adaptive processing systems and techniques for enhancing parallelism and performance of computational functions are disclosed which can be employed in a myriad of applications including multi-dimensional pipeline computations for seismic applications, search algorithms, information security, chemical and biological applications, filtering and the like as well as for systolic wavefront computations for fluid flow and structures analysis, bioinformatics etc. Some applications may also employ both the multi-dimensional pipeline and systolic wavefront methodologies disclosed.

Owner:SRC COMP

Gather using index array and finite state machine

ActiveUS20130326160A1Single instruction multiple data multiprocessorsInstruction analysisMicro-operationTheoretical computer science

Methods and apparatus are disclosed for using an index array and finite state machine for scatter / gather operations. Embodiment of apparatus may comprise: decode logic to decode a scatter / gather instruction and generate a set of micro-operations, and an index array to hold a set of indices and a corresponding set of mask elements. A finite state machine facilitates the gather operation. Address generation logic generates an address from an index of the set of indices for at least each of the corresponding mask elements having a first value. An address is accessed to load a corresponding data element if the mask element had the first value. The data element is written at an in-register position in a destination vector register according to a respective in-register position the index. Values of corresponding mask elements are changed from the first value to a second value responsive to completion of their respective loads.

Owner:INTEL CORP

Popular searches

Complex mathematical operations Energy efficient computing Computation using denominational number representation Solid-state devices Multiple digital computer combinations Logic circuits using elementary logic circuit components Semiconductor devices Electric digital data processing 3D-image rendering Store-and-forward switching systems

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com