Patents

Literature

37 results about "Single instruction, multiple threads" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Single instruction, multiple thread (SIMT) is an execution model used in parallel computing where single instruction, multiple data (SIMD) is combined with multithreading.

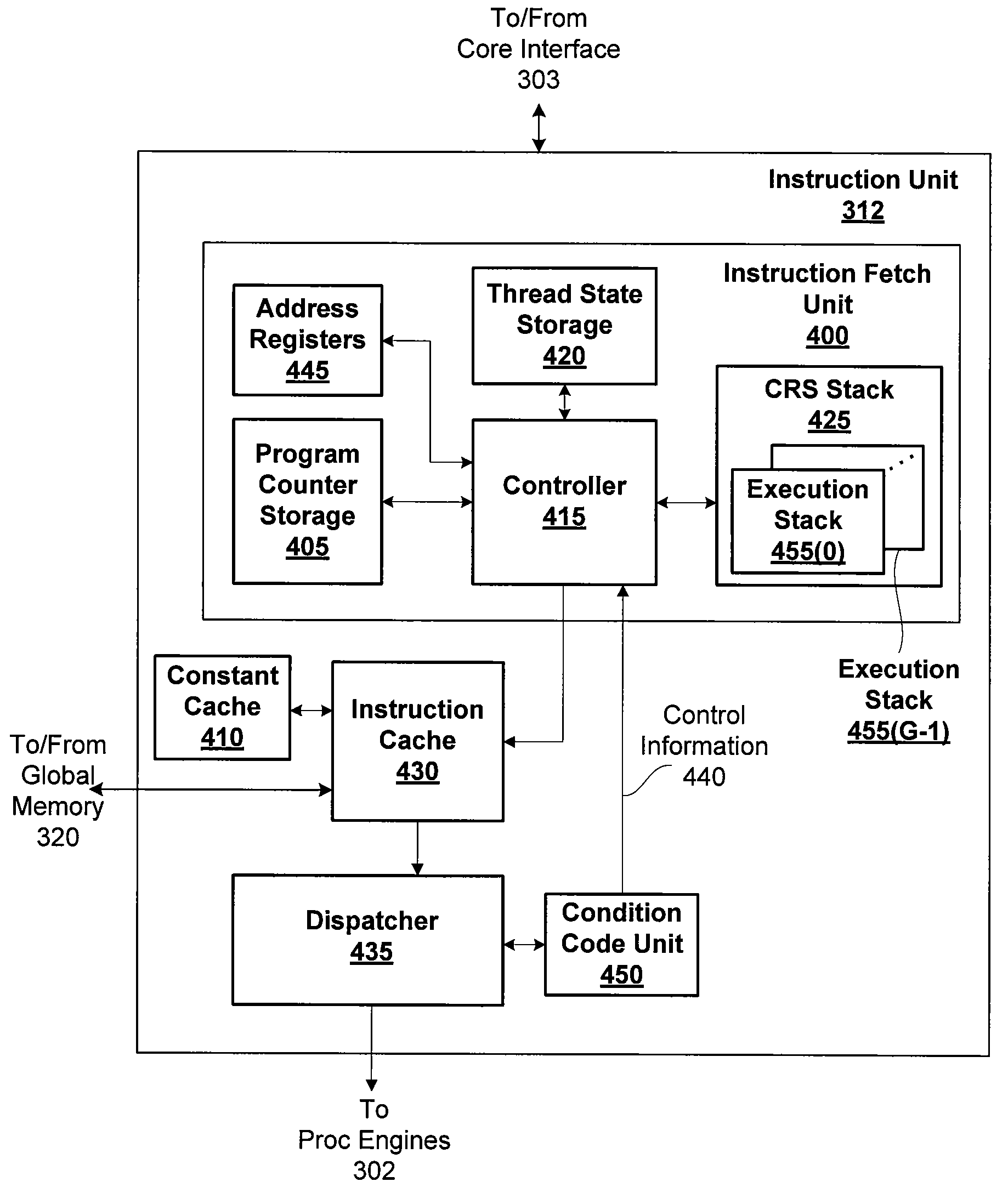

Indirect Function Call Instructions in a Synchronous Parallel Thread Processor

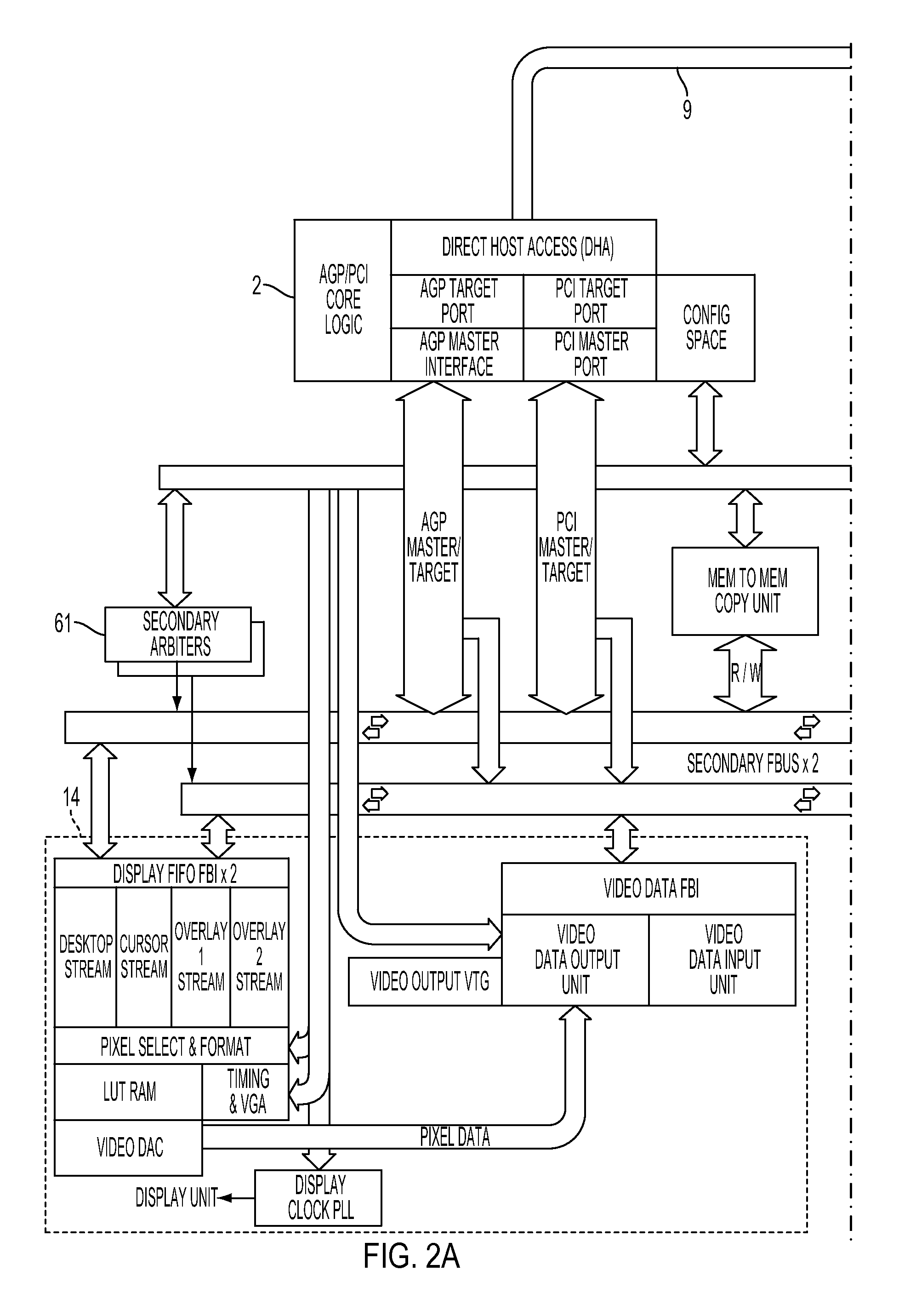

ActiveUS20090240931A1Easy to processProvide capabilitySingle instruction multiple data multiprocessorsConcurrent instruction executionSingle instruction, multiple threadsProcessor register

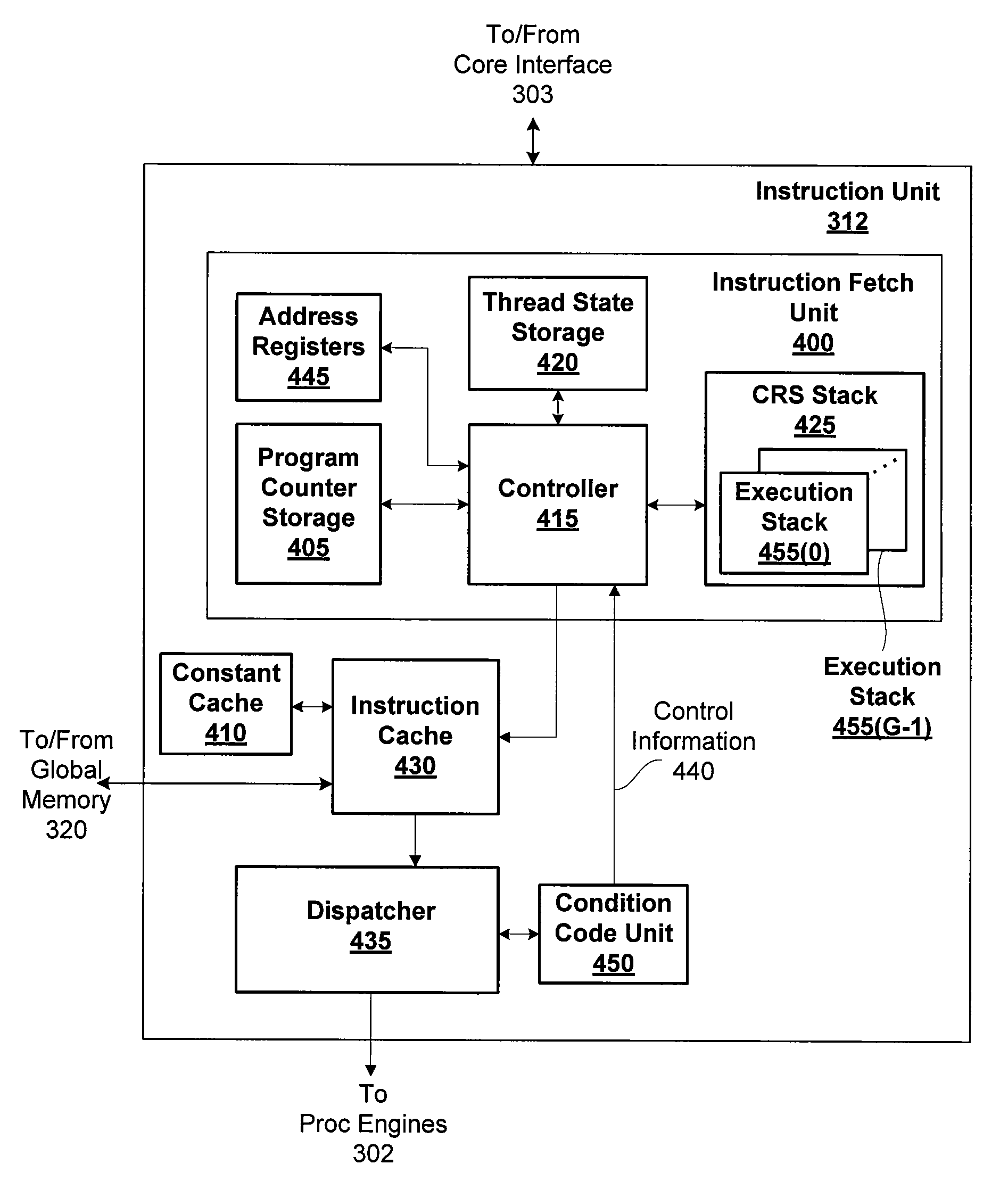

An indirect branch instruction takes an address register as an argument in order to provide indirect function call capability for single-instruction multiple-thread (SIMT) processor architectures. The indirect branch instruction is used to implement indirect function calls, virtual function calls, and switch statements to improve processing performance compared with using sequential chains of tests and branches.

Owner:NVIDIA CORP

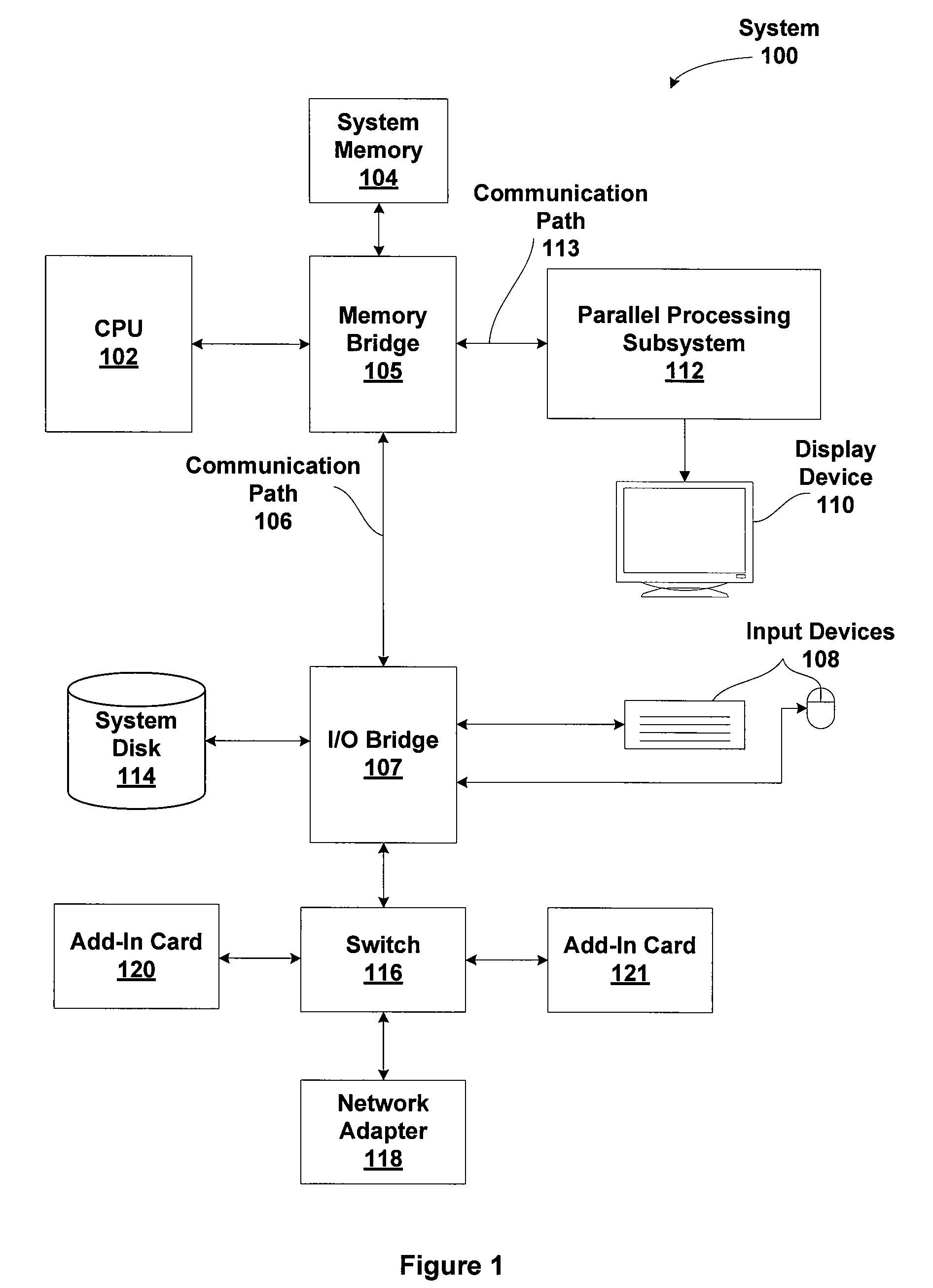

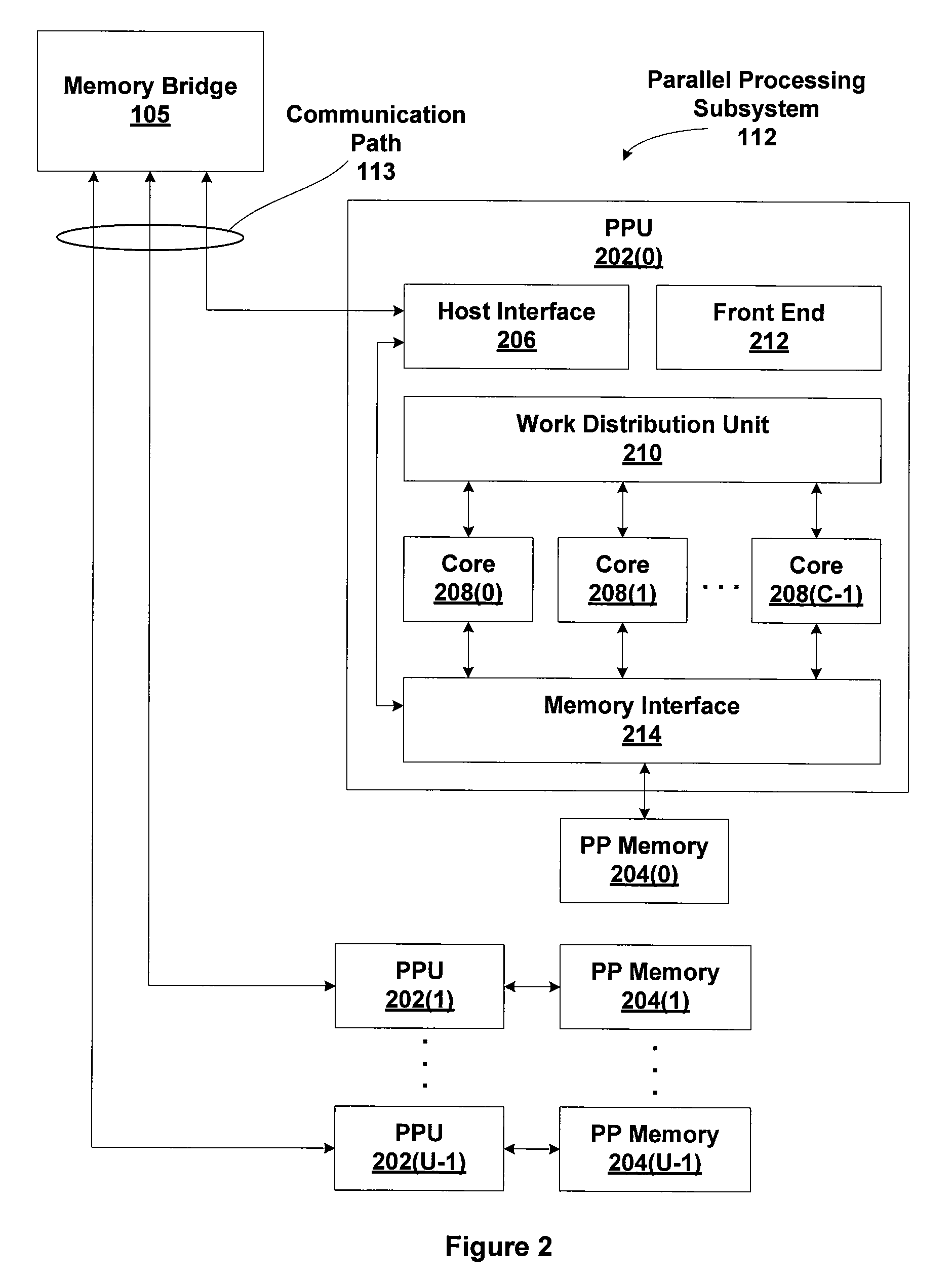

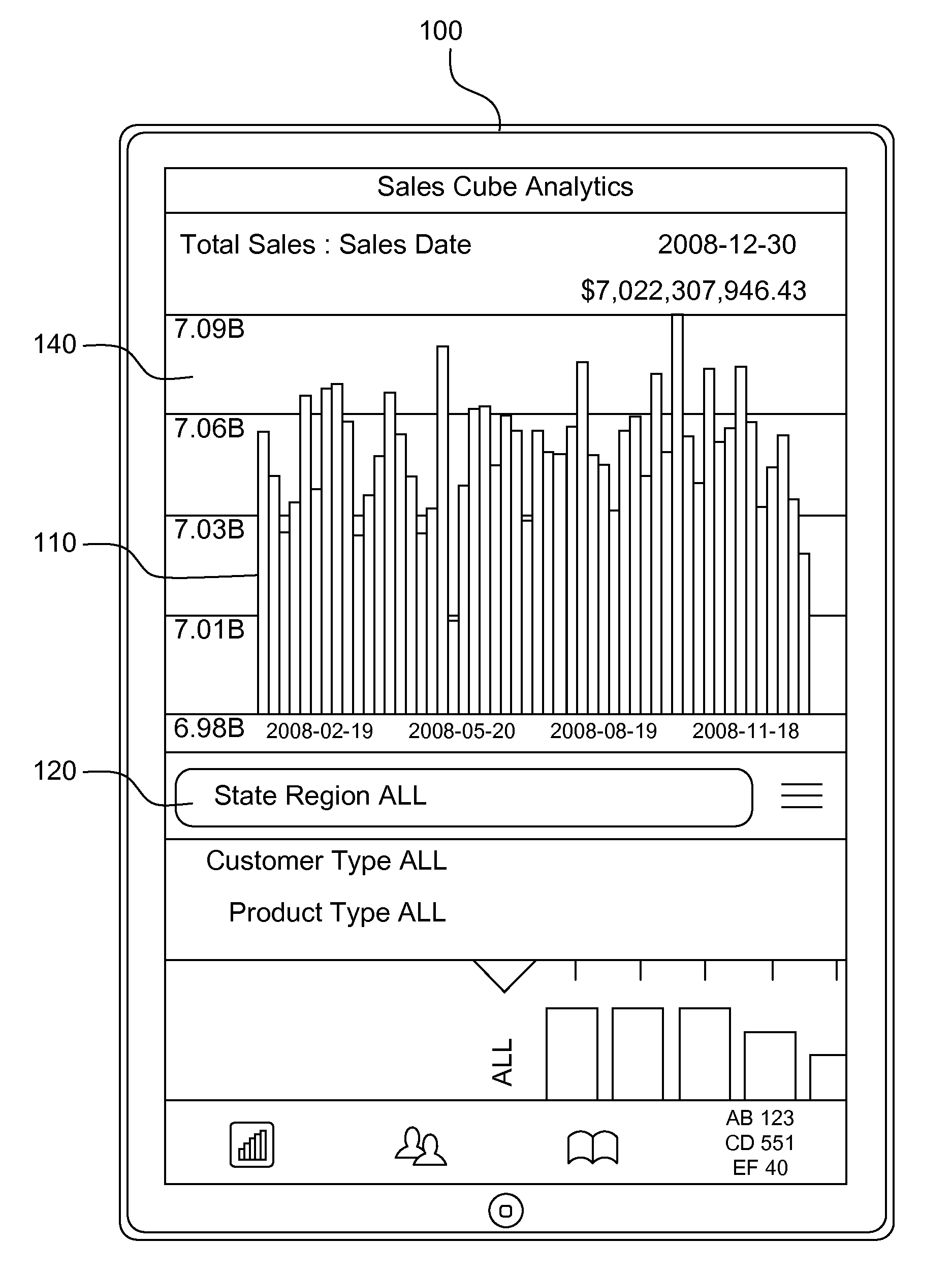

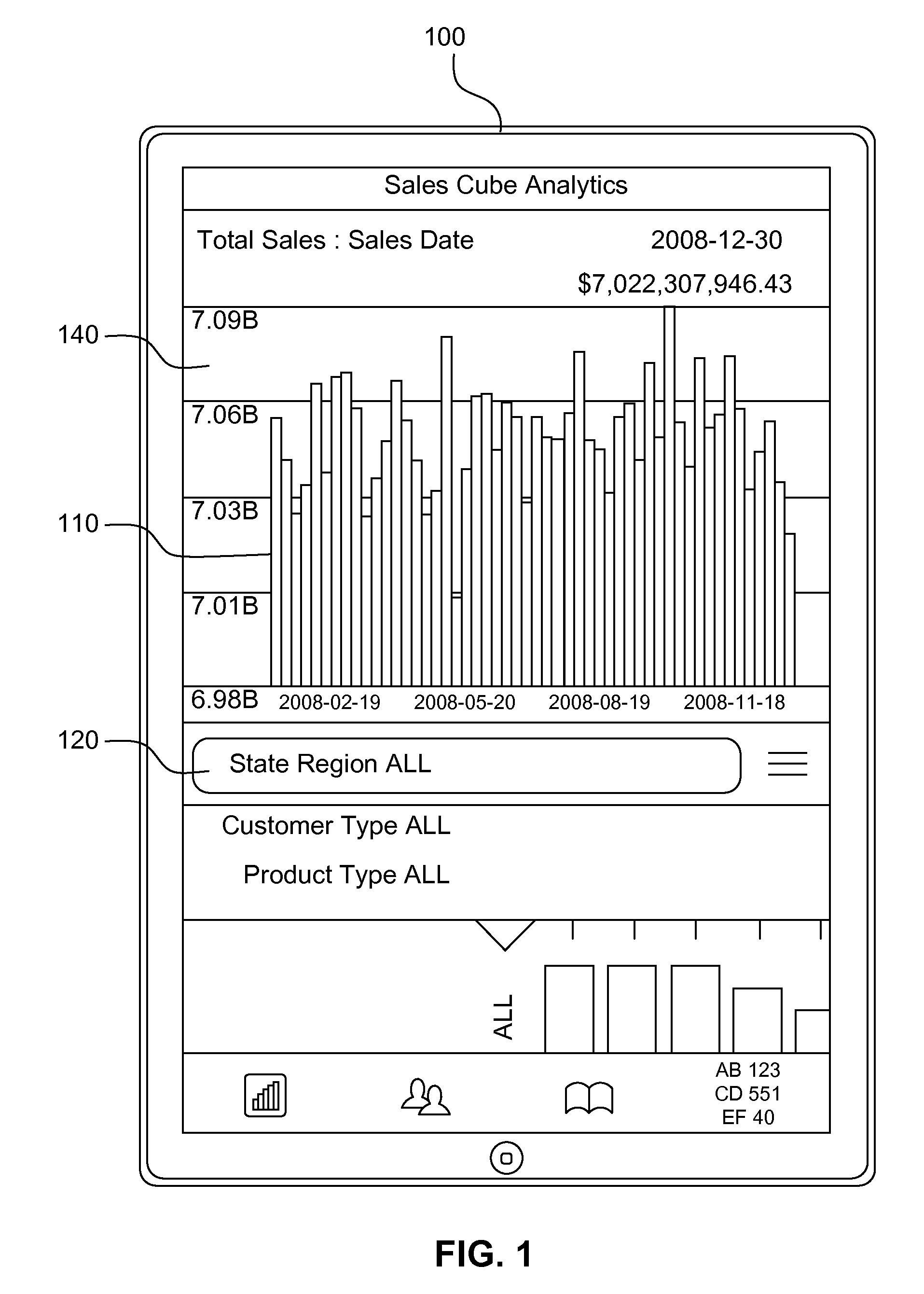

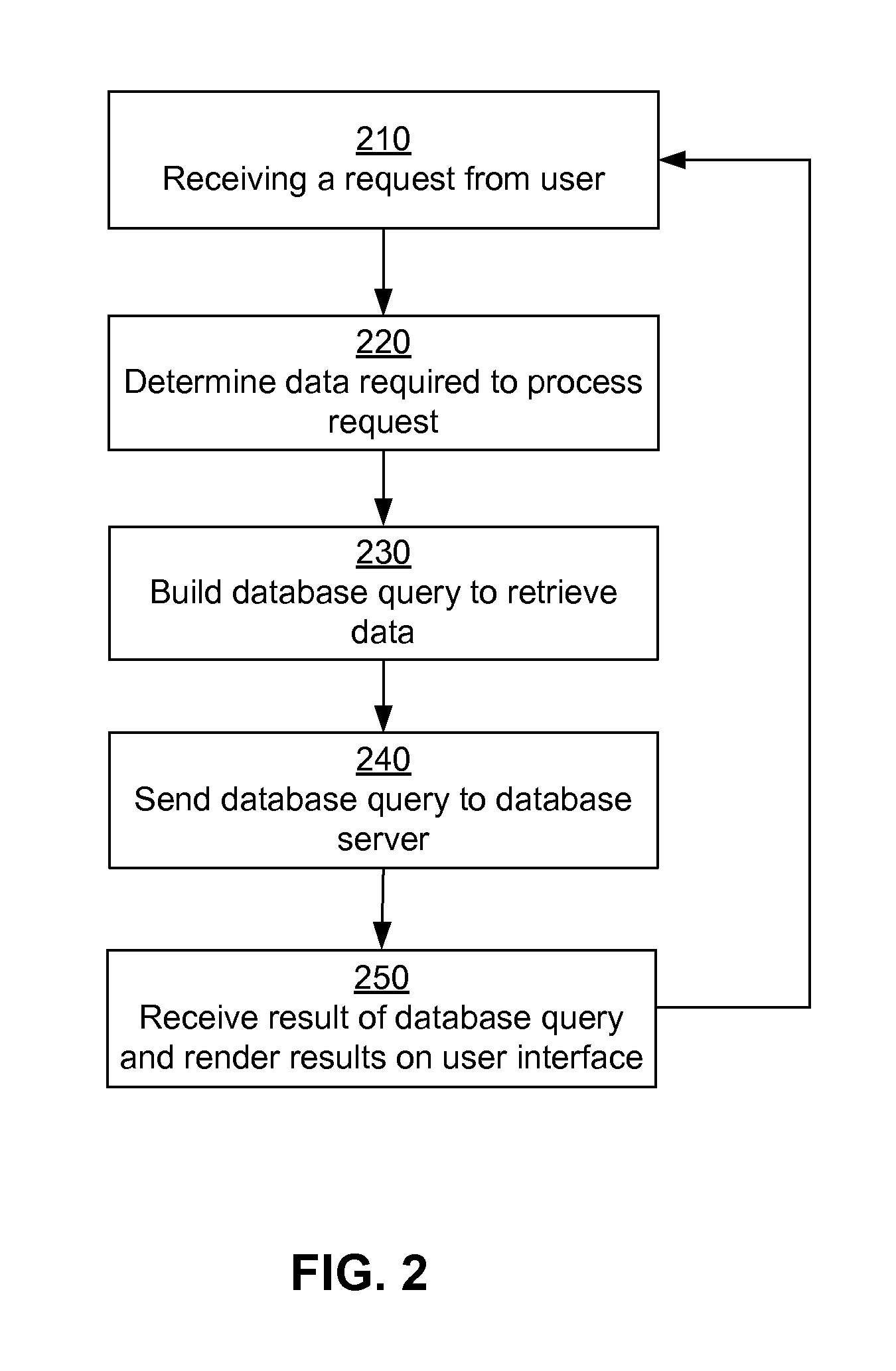

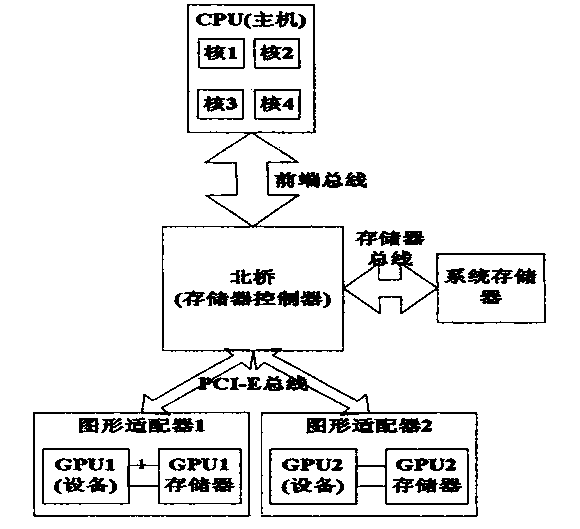

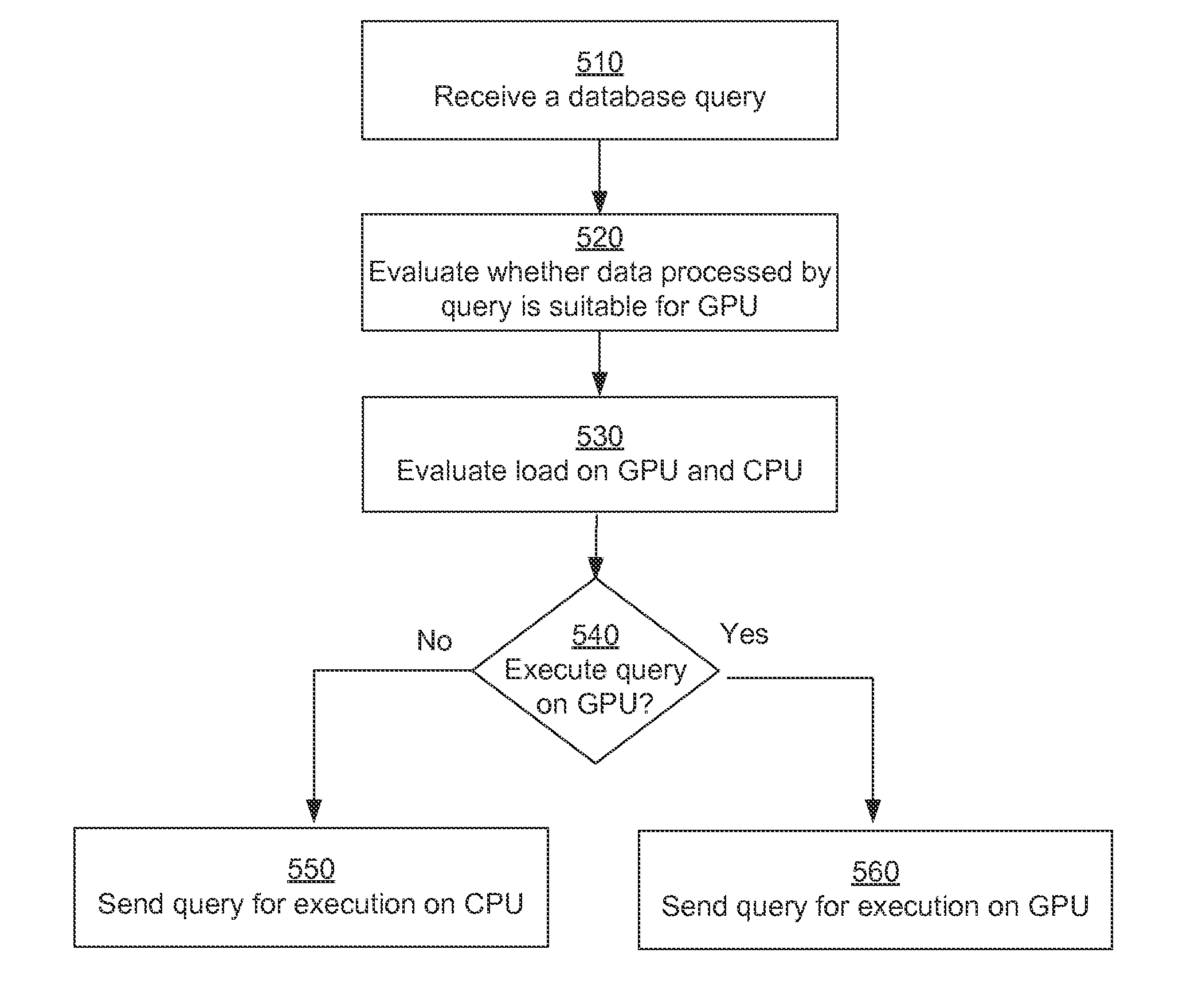

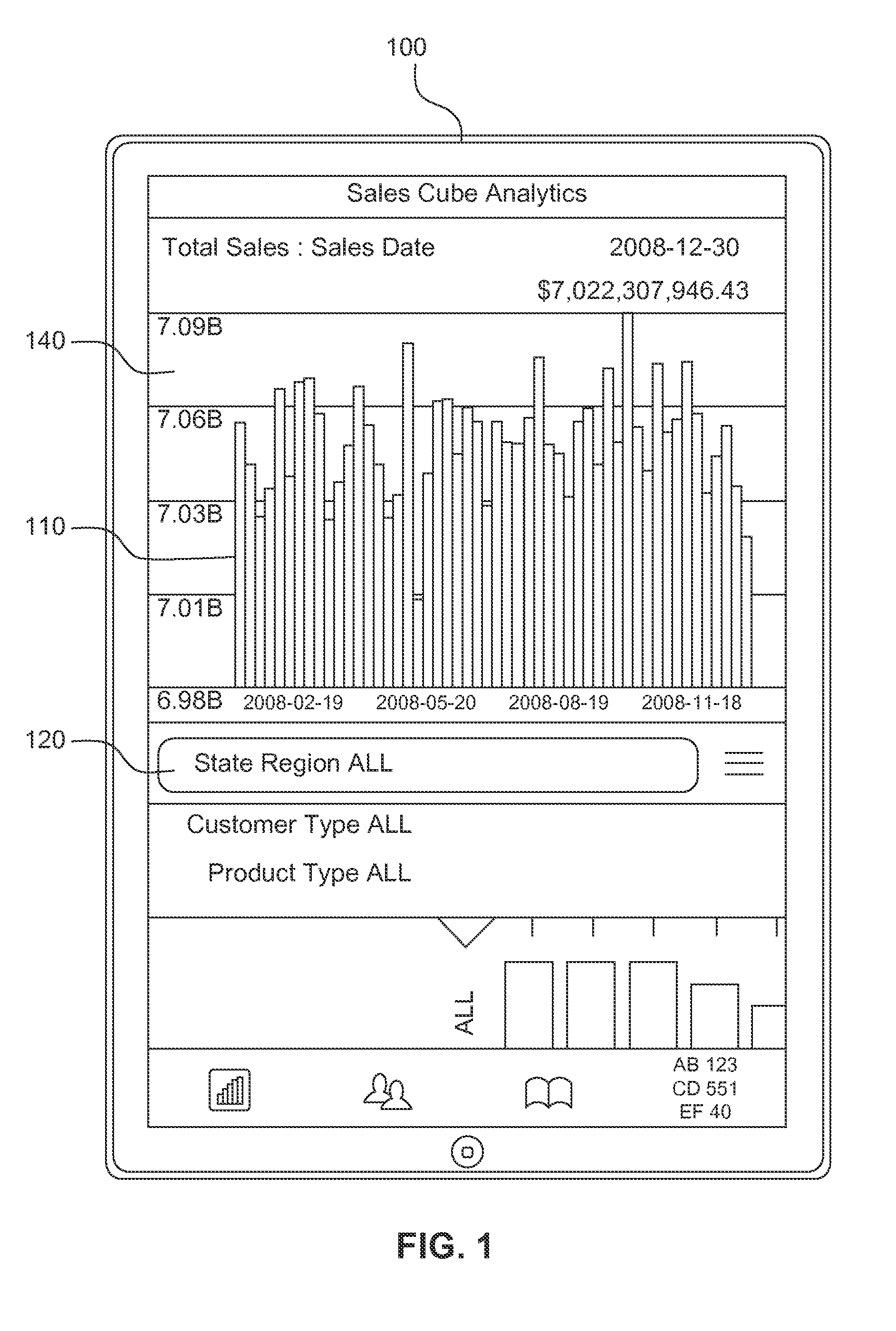

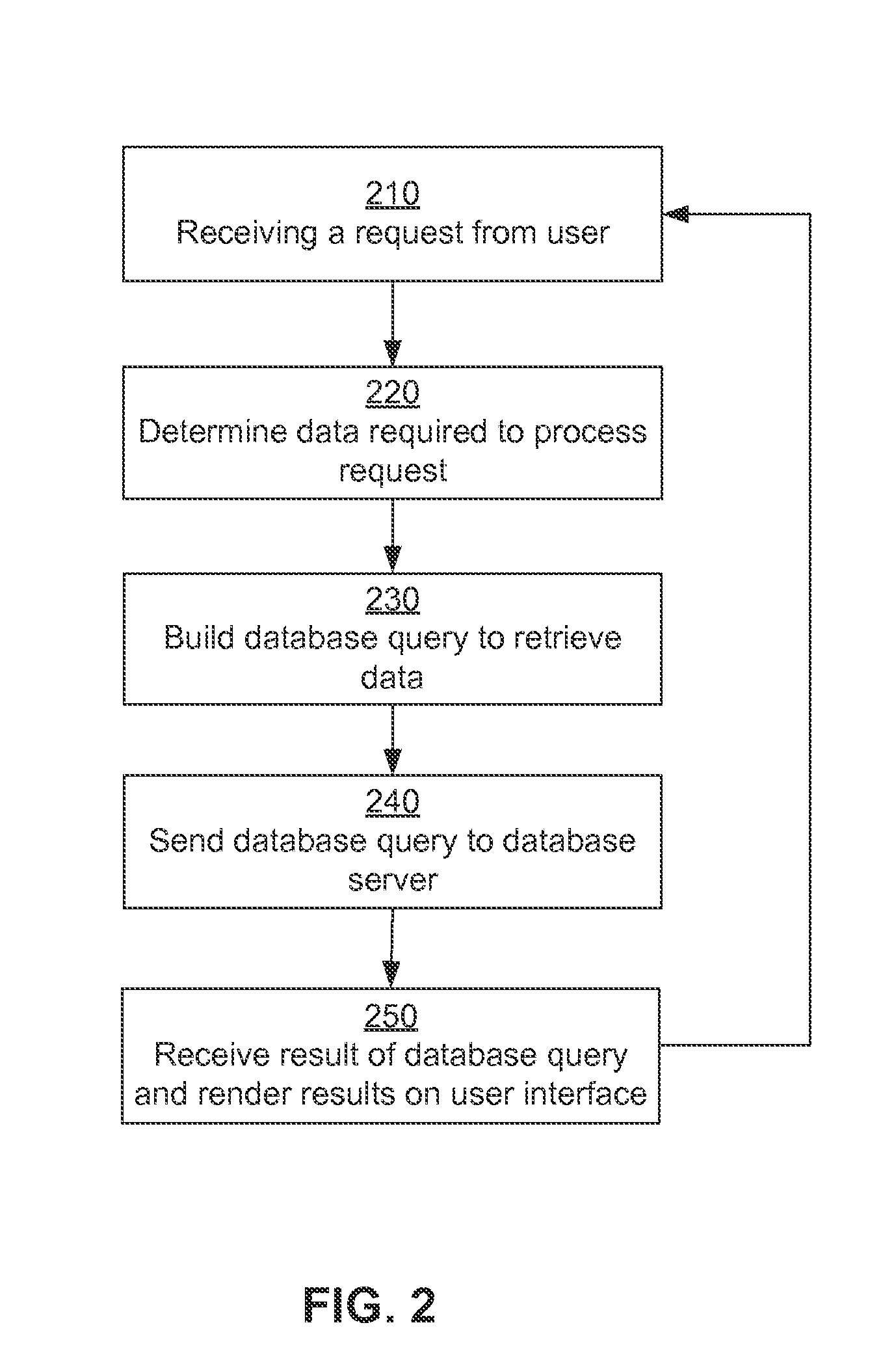

Executing database queries using multiple processors

ActiveUS8762366B1Efficient executionDigital data information retrievalDigital data processing detailsCurrent loadDatabase query

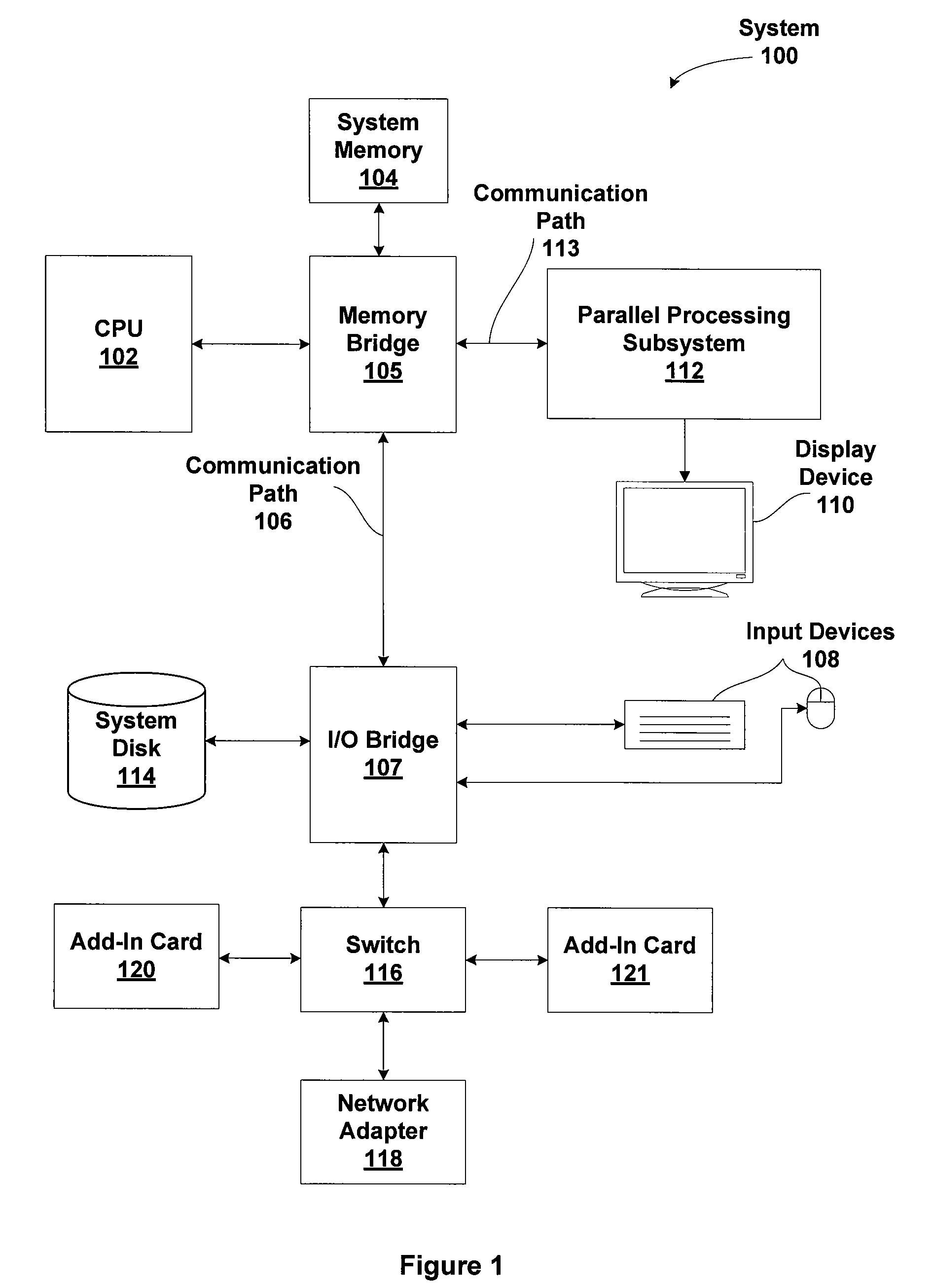

A system and a method are disclosed for efficiently executing database queries using a computing device that includes a central processing unit (CPU) and a processing unit based on single instruction multiple thread (SIMT) architecture, for example, a GPU. A query engine determines a target processing unit to execute a database query based on factors including the type and amount of data processed by the query, the complexity of the query, and the current load on the processing units. An intermediate executable representation generator generates an intermediate executable representation for executing a query on a database virtual machine. If the query engine determines that the database query should be executed on an SIMT based processing unit, a native code generator generates native code from the intermediate executable representation. The native code is optimized for execution using a particular processing unit.

Owner:SAP AG

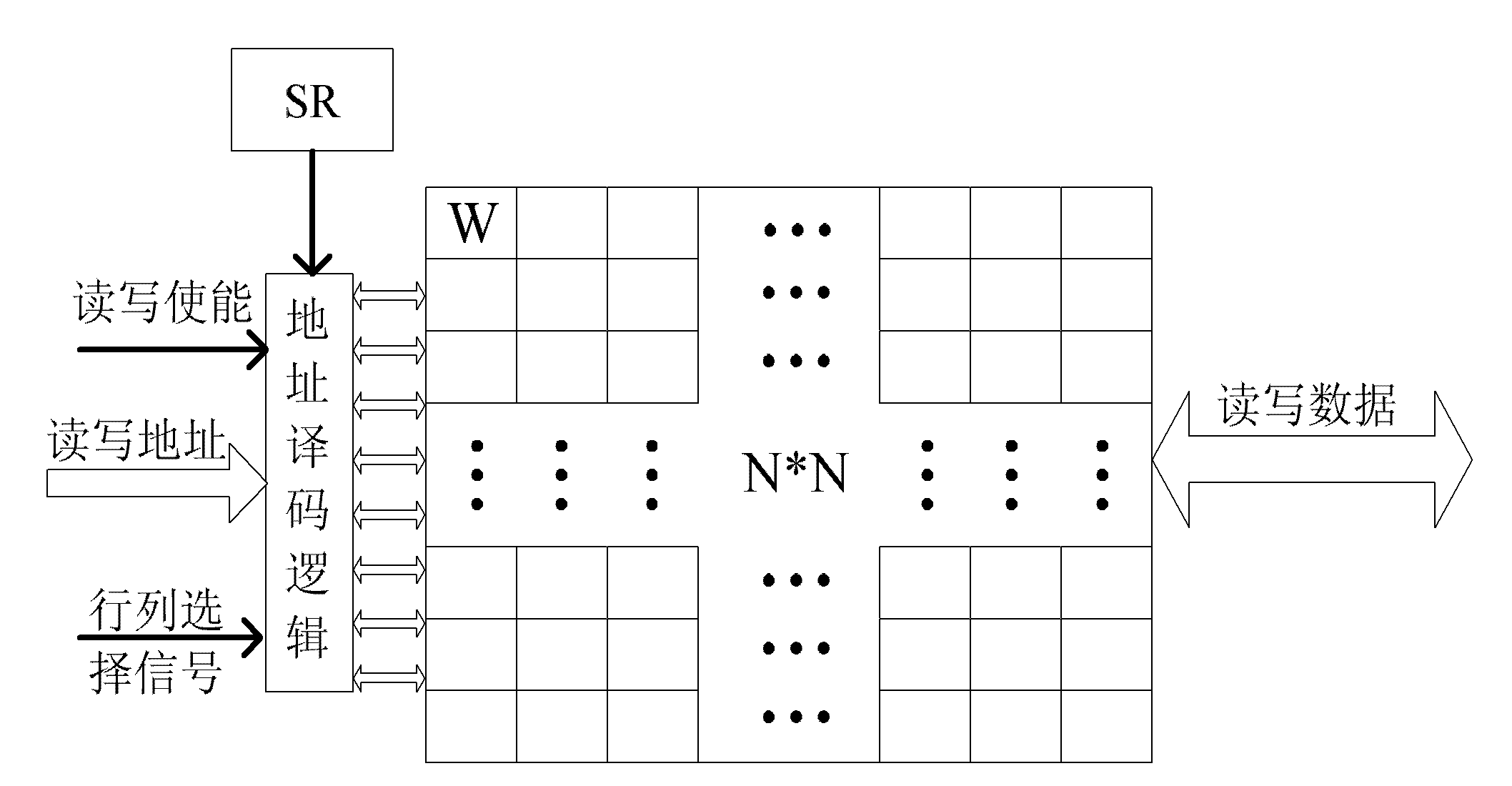

Configurable matrix register unit for supporting multi-width SIMD and multi-granularity SIMT

ActiveCN102012803AFlexible configurationImprove Parallel Processing EfficiencyConcurrent instruction executionSingle instruction, multiple threadsProcessor register

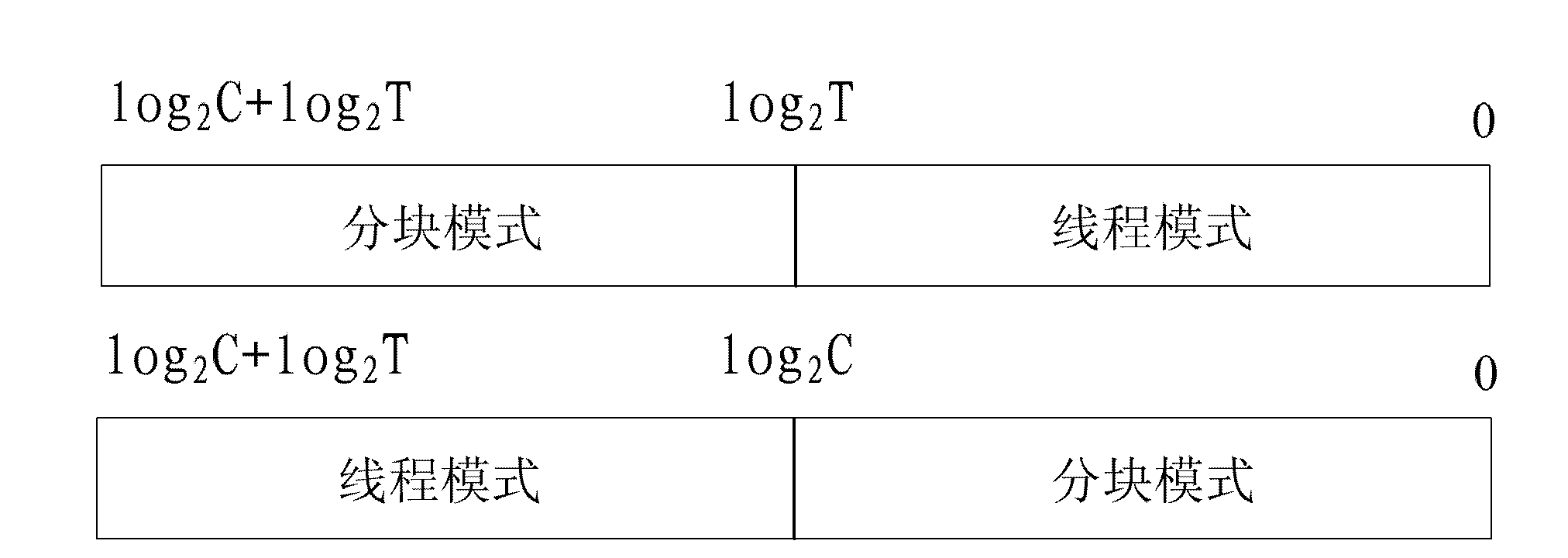

The invention relates to a configurable matrix register unit for supporting multi-width single instruction multiple data stream (SIMD) and multi-granularity single instruction multiple threads (SIMT). The configurable matrix register unit comprises a matrix register and a control register SR; the matrix register of which the size is N*N is divided into M*M blocks, wherein N is a positive integer and is the power of 2, and M is an integer which is more than or equal to 0 and is the power of 2; the block modes of the matrix register and the multi-thread numbers simultaneously processed by a vector processing unit are recorded in the control register; and the width of the control register is log2C+log2T, wherein C is the number of the number of the block modes of the matrix register, and T is the number of multi-thread modes which can be processed by a vector processor. The configurable matrix register unit has the advantages that: the principle is simple; the configurable matrix register unit is simple and convenient to operate; the block size and the thread number can be configured flexibly; the access to vector data in the mode of multi-width SIMD and multi-granularity SIMT is supported at the same time and the like.

Owner:NAT UNIV OF DEFENSE TECH

Method for data correlation in parallel solving process based on cloud elimination equation of GPU (Graph Processing Unit)

InactiveCN102508820AEliminate data correlationGuaranteed correctnessComplex mathematical operationsSingle instruction, multiple threadsReusability

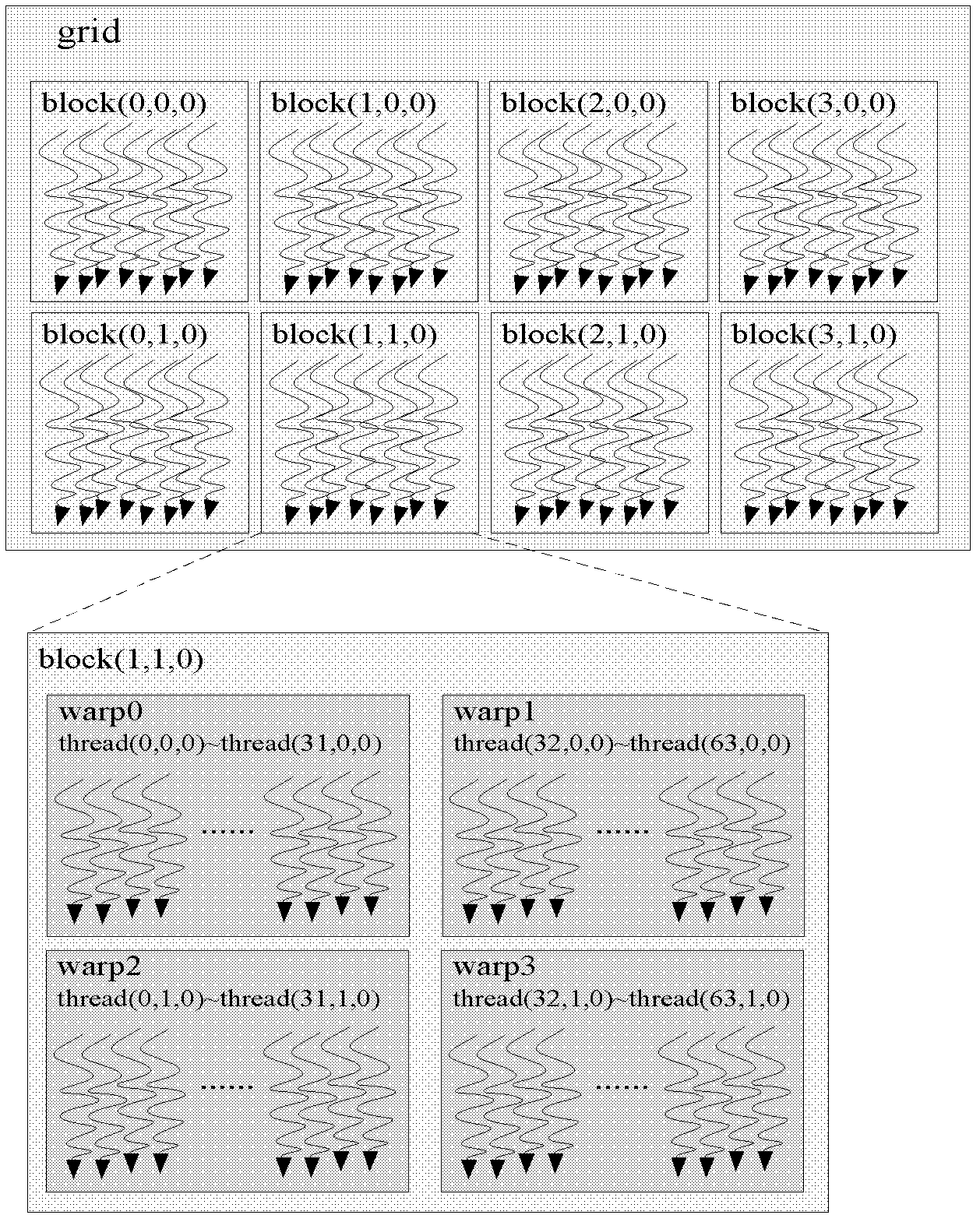

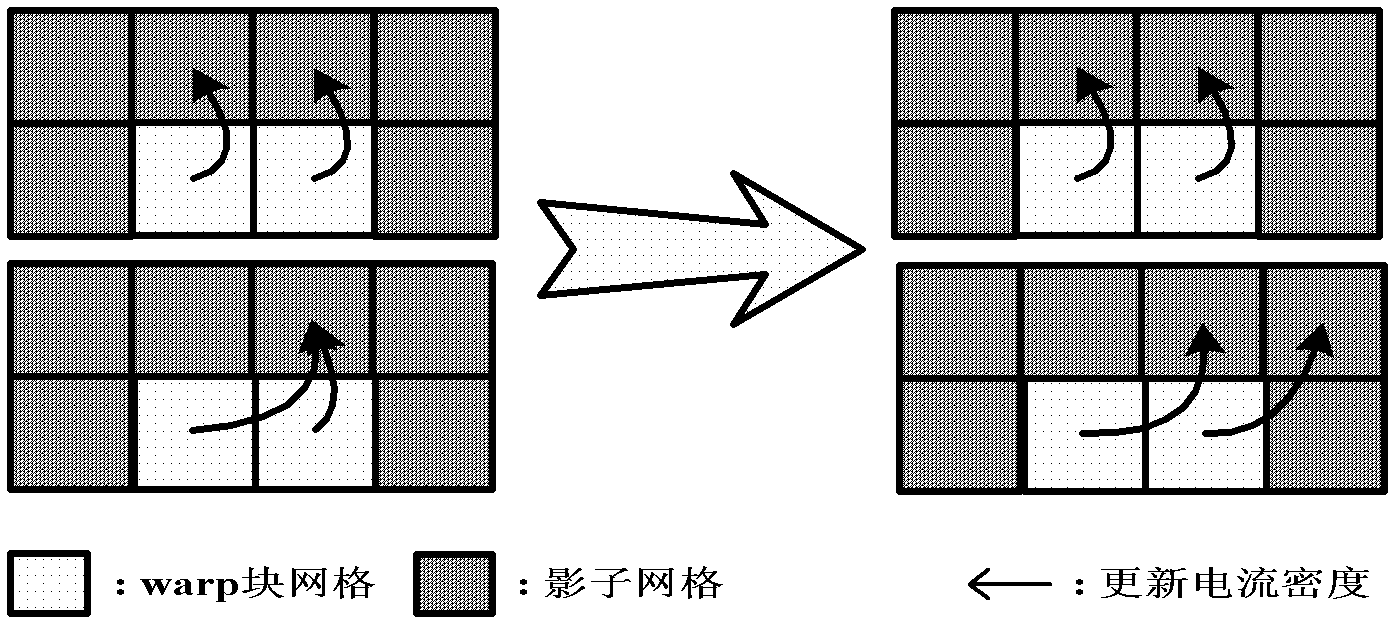

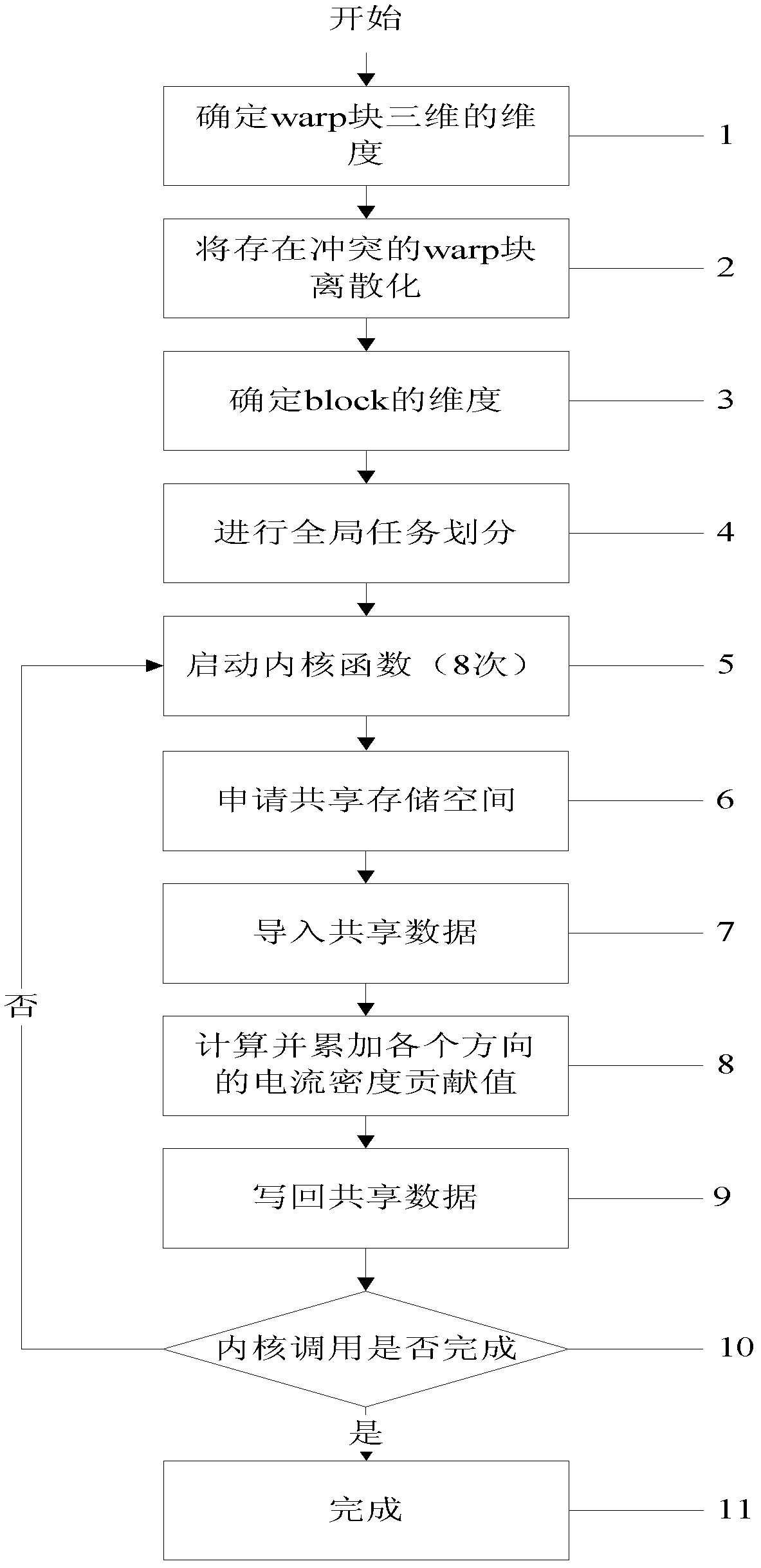

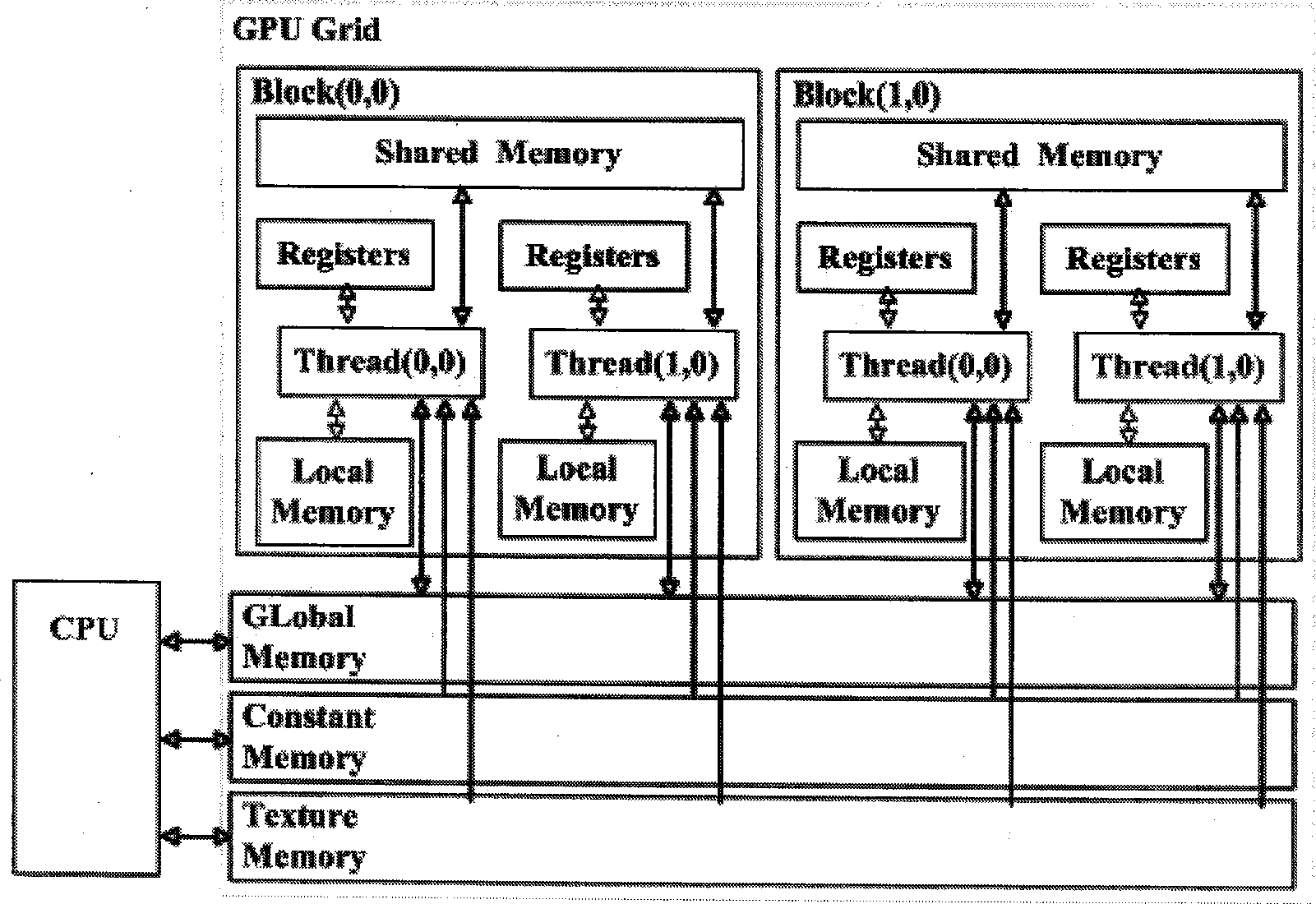

The invention discloses a method for data correlation in the parallel solving process based on a cloud elimination equation of a GPU (Graph Processing Unit) and aims at increasing the reusability and access efficiency of the data. The invention has the technical scheme that the data correlation between every two warp interior threads is eliminated by using a parallel mechanism of an SIMT (Single-Instruction Multiple-Thread); constructing a warp block by grids processed by 32 threads in a warp; determining an organization mode of the warp block; restricting the three dimensionality of the block and the grids according to the capacity of a shared memory; carrying out discretization on a whole simulation area with the warp block as a basic unit; dividing a global task into 8 groups; avoiding the data correlation between every two warp blocks in each group; starting kernel calling for 8 times; and finishing update of the current density of 1 / 8 grid in the whole simulation area. According to the method disclosed by the invention, the condition that no multiple threads are used for updating the current density of the same grid at the same time can be ensured; the data correlation between adjacent grids is eliminated; the reusability and high-efficiency access of the data are realized; and the operation speed of a CUDA (Compute Unified Device Architecture) program is increased.

Owner:NAT UNIV OF DEFENSE TECH

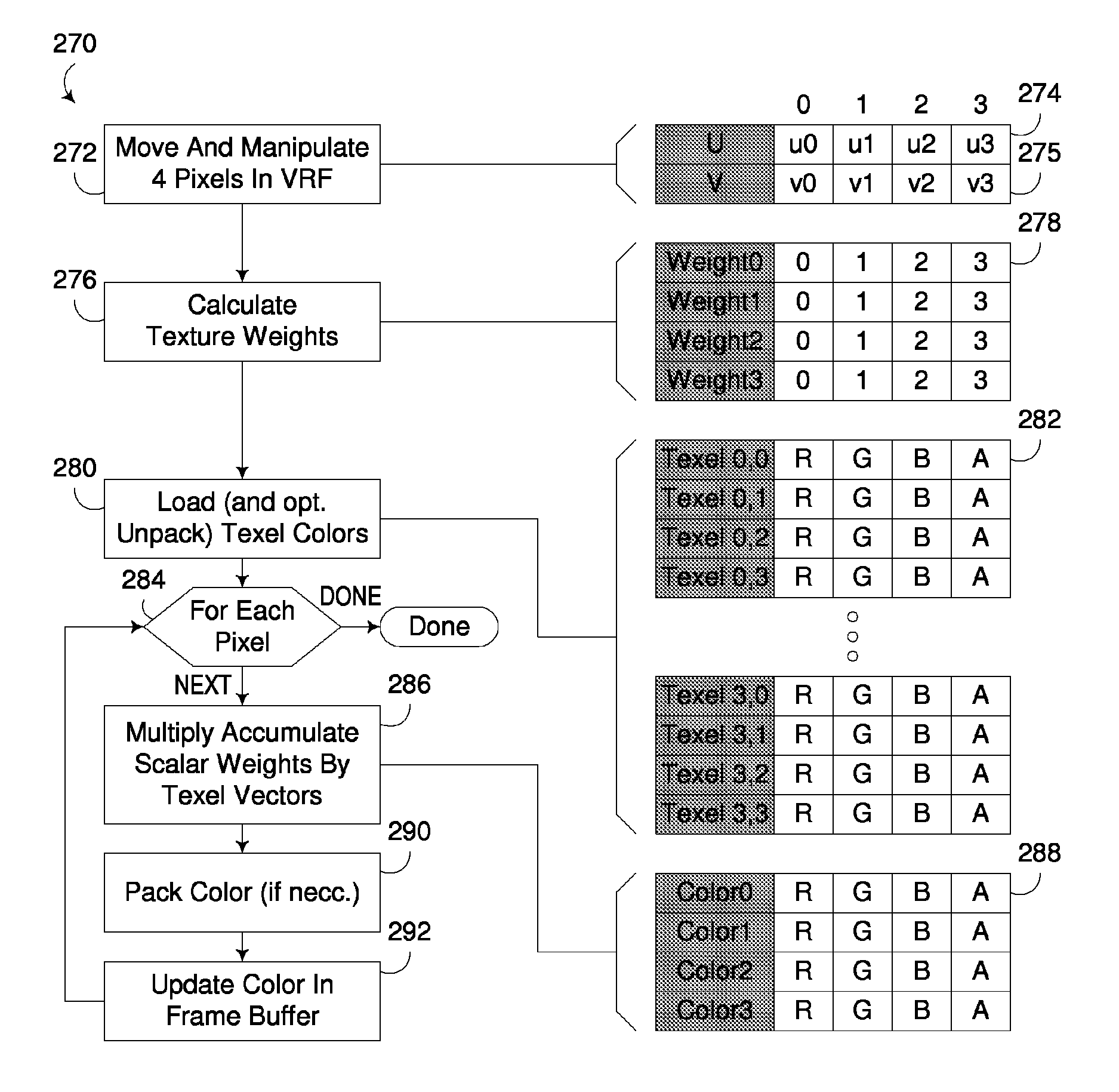

Efficient texture processing of pixel groups with SIMD execution unit

ActiveUS8248422B2Increase profitMaximize utilizationCathode-ray tube indicatorsProcessor architectures/configurationSingle instruction, multiple threadsExecution unit

A circuit arrangement and method perform concurrent texture processing of groups of pixels with a single instruction multiple data (SIMD) execution unit to improve the utilization of the SIMD execution unit when performing scalar operations associated with a texture processing algorithm. In addition, when utilized in connection with a multi-threaded SIMD execution unit, groups of pixels may be concurrently processed in different threads executed by the SIMD execution unit to further maximize the utilization of the SIMD execution unit by reducing the adverse effects of dependencies in scalar and / or vector operations incorporated into a texture processing algorithm.

Owner:RAKUTEN GRP INC

Harris corner detecting software system based on GPU

The invention discloses a Harris corner detecting parallel software system based on the design idea of a Graphics Processing Unit (GPU). The time-consuming image Gaussian convolution smoothing filtering part in calculation is improved to a Single Instruction Multiple Thread (SIMT) mode through a plurality of threads, and the whole process of image corner detecting is finished on a Compute Unified Device Architecture (CUDA) through a shared memory, a constant memory and a lock page internal storage mechanism in the GPU. Corners detected by the software system are distributed evenly, and good effects in the aspects of corner extracting and precise positioning are achieved. Compared with a serial algorithm based on a CPU, a Harris corner detecting parallel algorithm based on the GPU can obtain a speed-up ratio reaching up to 60 times. The executing efficiency of the Harris corner detecting parallel algorithm based on the GPU is improved obviously, and the good real-time processing capacity is shown in the aspect of large-scale data processing.

Owner:ZHENGZHOU UNIV

Indirect function call instructions in a synchronous parallel thread processor

ActiveUS8312254B2Easy to processProvide capabilitySingle instruction multiple data multiprocessorsConcurrent instruction executionSingle instruction, multiple threadsParallel computing

An indirect branch instruction takes an address register as an argument in order to provide indirect function call capability for single-instruction multiple-thread (SIMT) processor architectures. The indirect branch instruction is used to implement indirect function calls, virtual function calls, and switch statements to improve processing performance compared with using sequential chains of tests and branches.

Owner:NVIDIA CORP

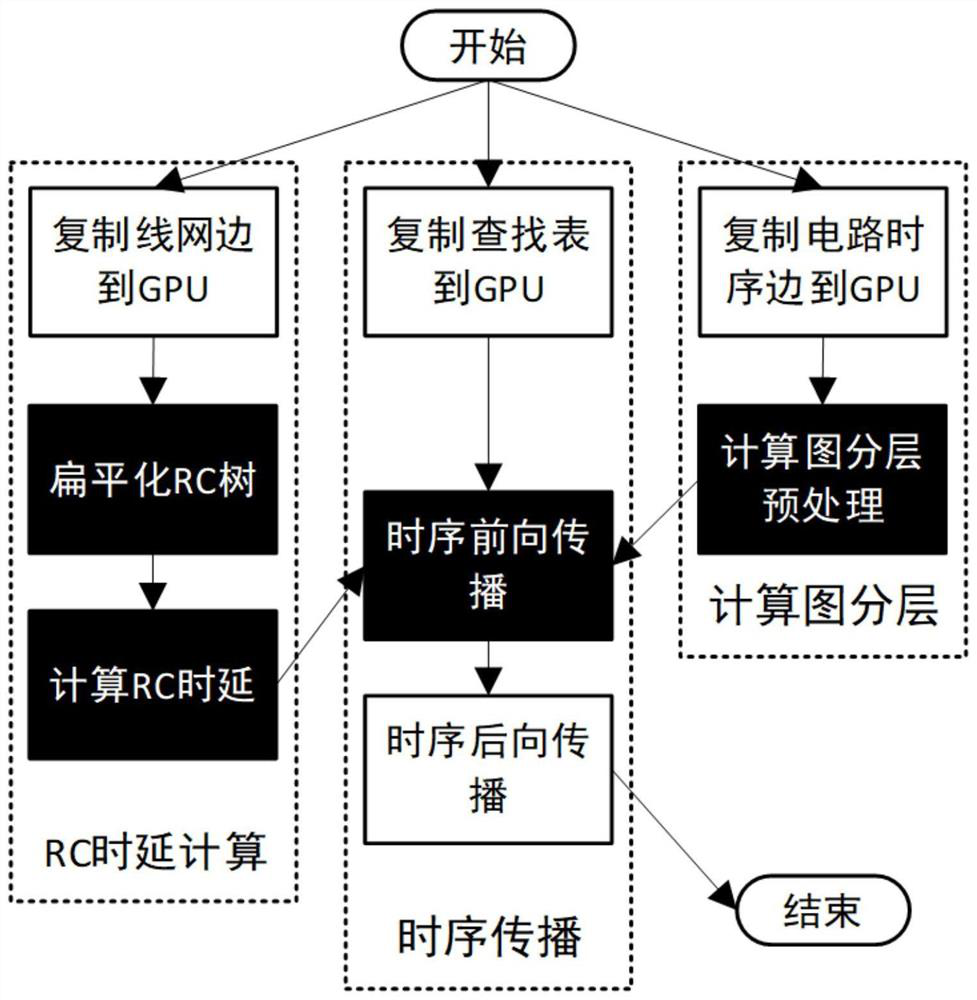

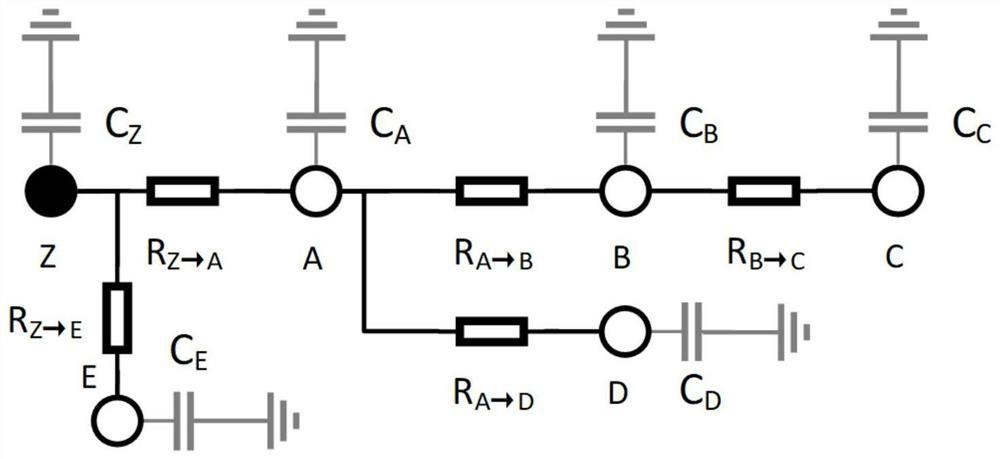

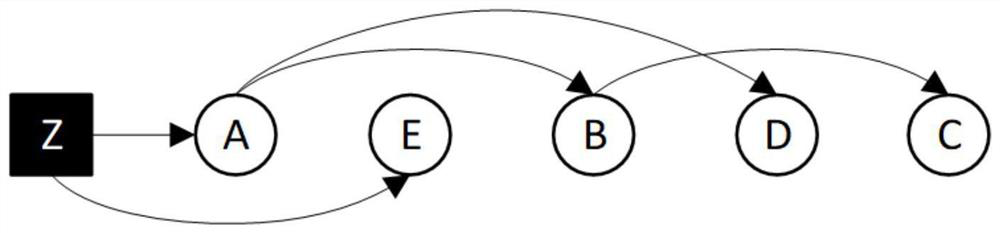

Integrated circuit static time sequence analysis method for GPU accelerated calculation

ActiveCN112257364AImprove performanceMinimize the adverse impact of total time consumptionCAD circuit designSpecial data processing applicationsStatic timing analysisSorting algorithm

The invention discloses an integrated circuit static time sequence analysis method for GPU accelerated calculation. The method comprises the steps that: RC time delay is calculated and delayed updating is performed; input circuit information is expressed as a circuit structure diagram, flattening is conducted on the circuit structure diagram, the edge relation in the circuit structure diagram is expressed as a father node pointer or a compression adjacency list, a dynamic planning and topological sorting algorithm on the circuit structure diagram is designed, and a GPU algorithm for static timing sequence analysis of an integrated circuit is designed; and the GPU algorithm conforms to a single-instruction multi-thread architecture, so that the time of CPU-GPU computing tasks is merged. Byadopting the technical scheme provided by the invention, the static time sequence analysis cost of the integrated circuit can be reduced, and the performance of a time sequence driven chip design automation algorithm is further improved.

Owner:PEKING UNIV

Executing database queries using multiple processors

ActiveUS20140337313A1Efficient executionDigital data information retrievalDigital data processing detailsCurrent loadMain processing unit

A system and a method are disclosed for efficiently executing database queries using a computing device that includes a central processing unit (CPU) and a processing unit based on single instruction multiple thread (SIMT) architecture, for example, a GPU. A query engine determines a target processing unit to execute a database query based on factors including the type and amount of data processed by the query, the complexity of the query, and the current load on the processing units. An intermediate executable representation generator generates an intermediate executable representation for executing a query on a database virtual machine. If the query engine determines that the database query should be executed on an SIMT based processing unit, a native code generator generates native code from the intermediate executable representation. The native code is optimized for execution using a particular processing unit.

Owner:SAP AG

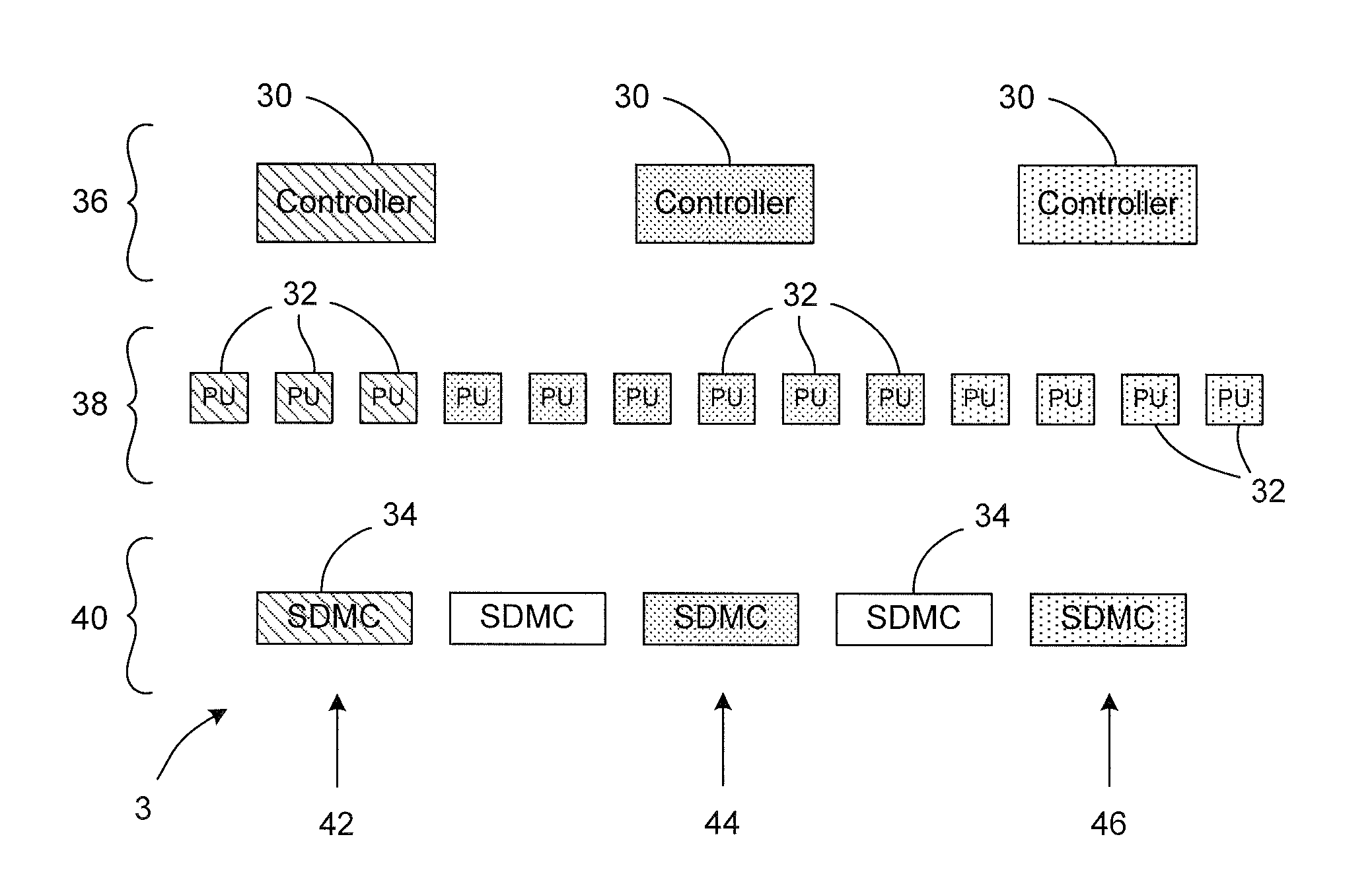

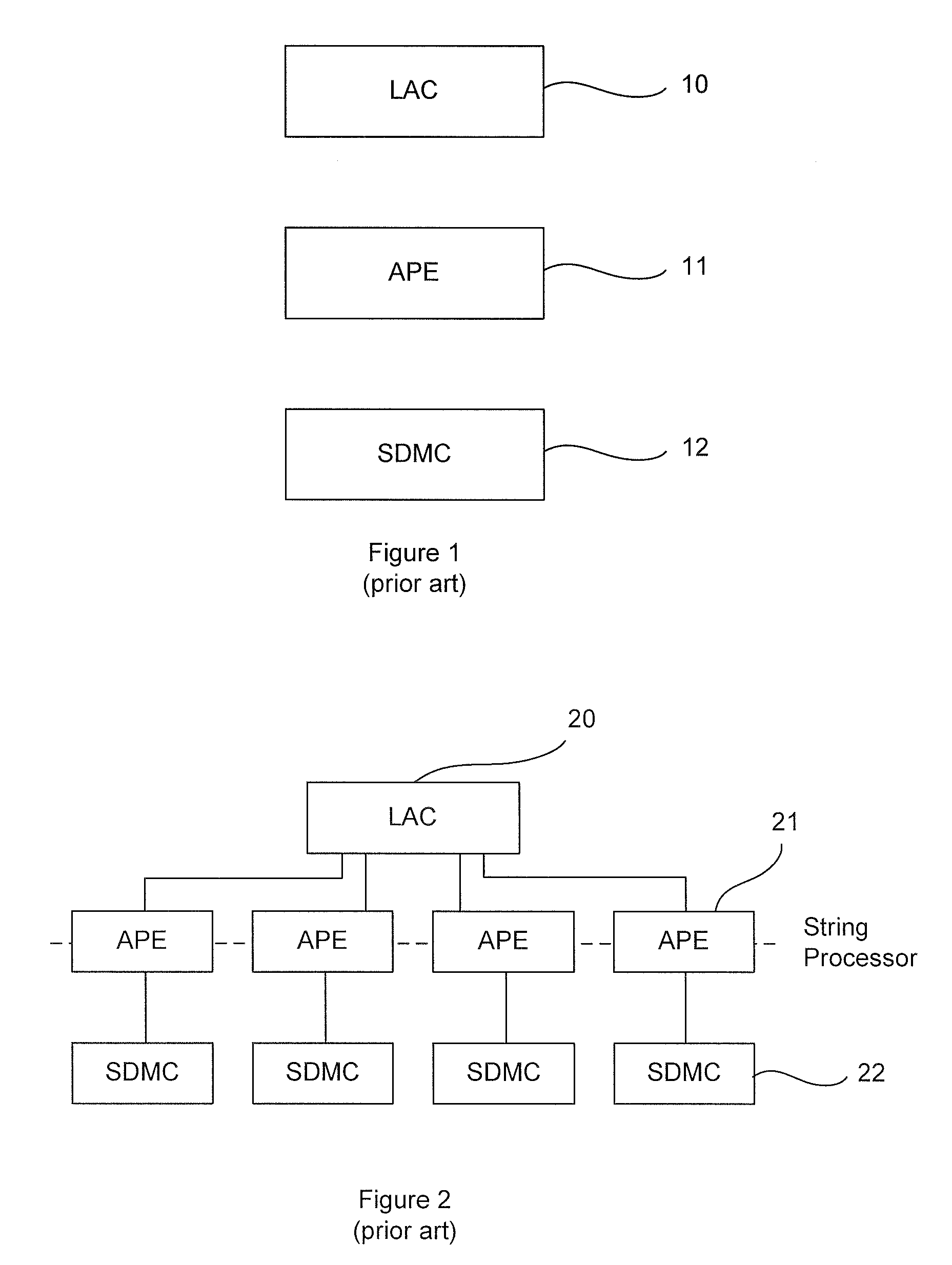

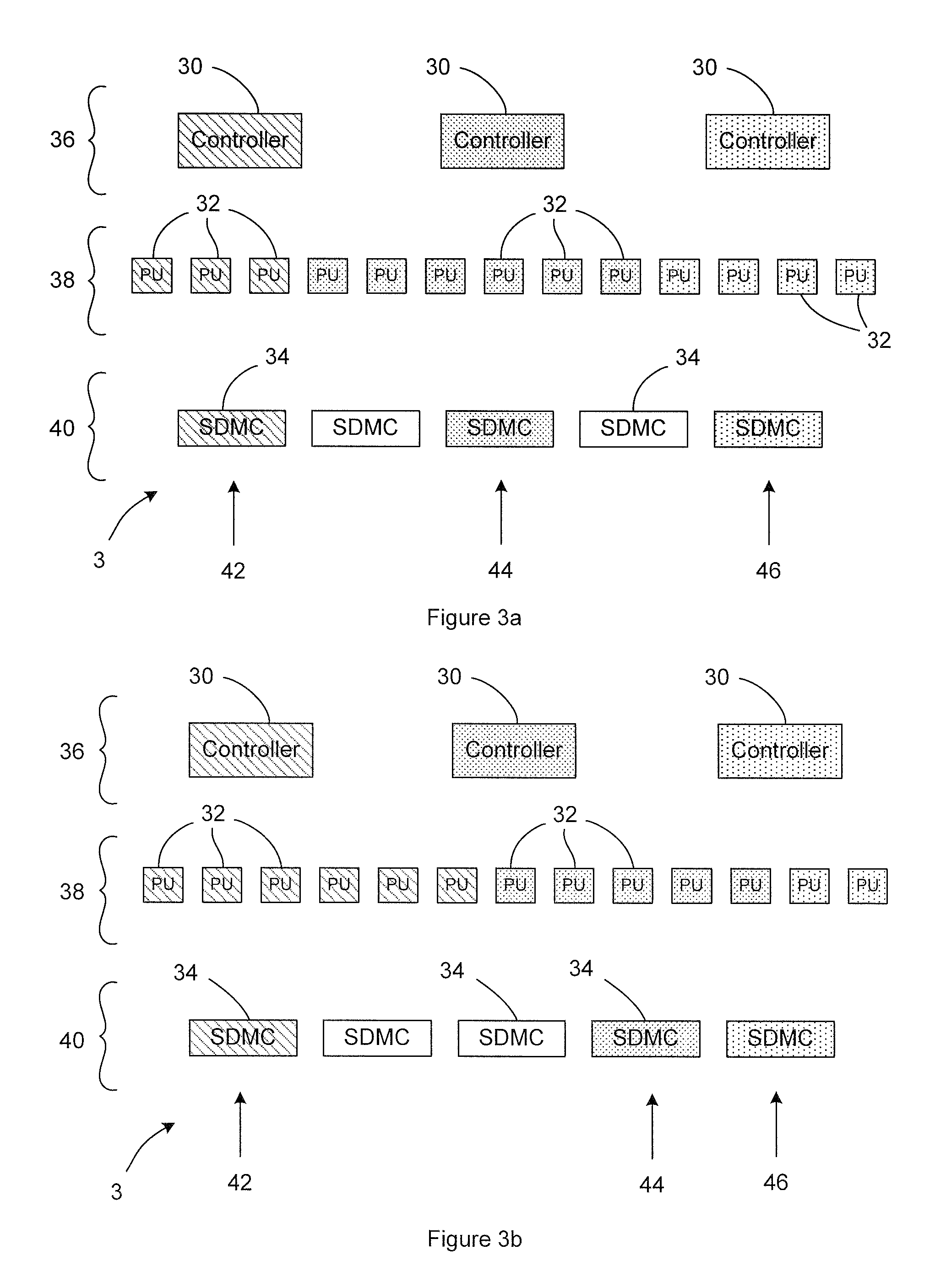

Relating to Single Instruction Multiple Data (SIMD) Architectures

ActiveUS20110191567A1Minimize power consumptionEfficient configurationSingle instruction multiple data multiprocessorsInstruction analysisProcessing InstructionSingle instruction, multiple threads

Improvements Relating to Single Instruction Multiple Data (SIMD) Architectures A parallel processor for processing a plurality of different processing instruction streams in parallel is described. The processor comprises a plurality of data processing units; and a plurality of SIMD (Single Instruction Multiple Data) controllers, each connectable to a group of data processing units of the plurality of data processing units, and each SIMD controller arranged to handle an individual processing task with a subgroup of actively connected data processing units selected from the group of data processing units. The parallel processor is arranged to vary dynamically the size of the subgroup of data processing units to which each SIMD controller is actively connected under control of received processing instruction streams, thereby permitting each SIMD controller to be actively connected to a different number of processing units for different processing tasks.

Owner:TELEFON AB LM ERICSSON (PUBL)

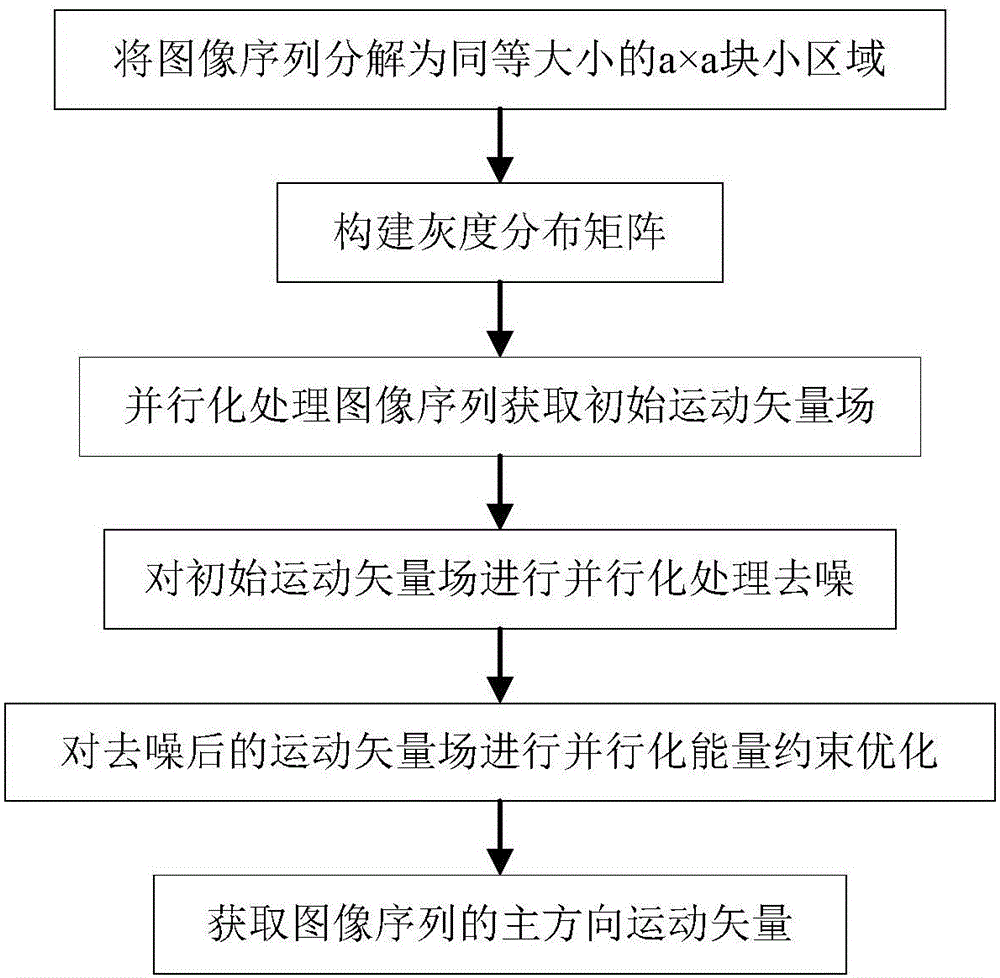

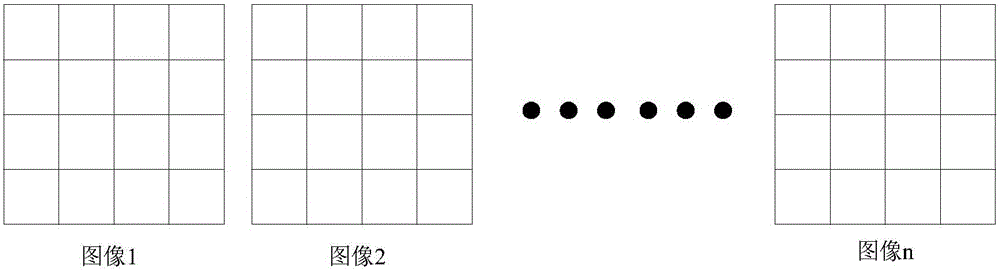

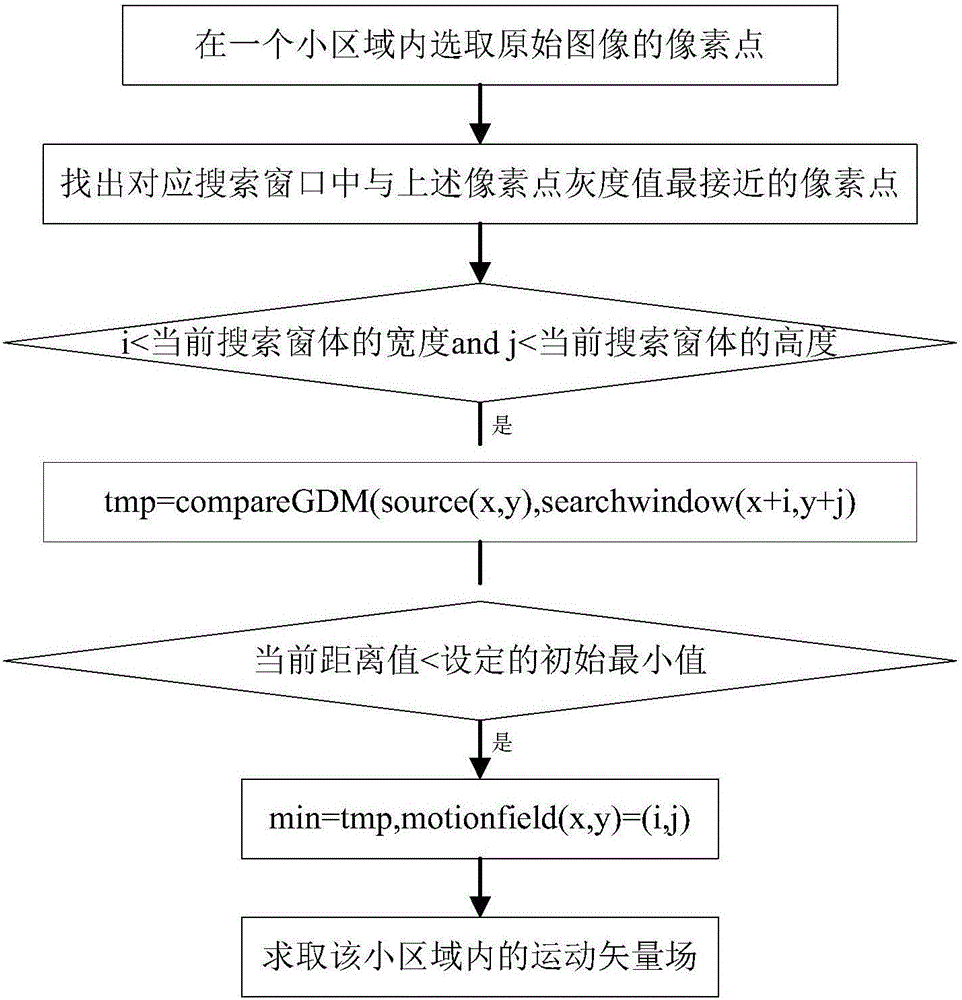

Parallel analysis method based on fluid motion vector field

InactiveCN106097396AImprove performance and efficiencyHigh precisionImage enhancementImage analysisMotion vectorAnalysis method

The invention provides a parallel analysis method based on a fluid motion vector field. The method comprises the following steps of decomposing each image of an image sequence into several small areas with a same size; constructing a gray scale distribution matrix; acquiring an initial motion vector field of the image sequence in a parallelization mode; through a new smoothness constraint term, carrying out parallelization processing denoising on the initial motion vector field; through a new energy constraint function, carrying out parallelization processing optimization on the denoised motion vector field; and acquiring a main direction motion vector containing a fluid motion image sequence. By using the fluid motion vector analysis method, an energy optimization technology is combined and a single-instruction multi-thread SIMT characteristic of a graphic processor GPU is used so that a fluid analysis is converted into a parallel state from a serial state; parallelization processing to the image is realized; performance and efficiency of image processing are increased; image processing precision is greatly increased and time for a system to process the image sequence is reduced.

Owner:EAST CHINA NORMAL UNIVERSITY

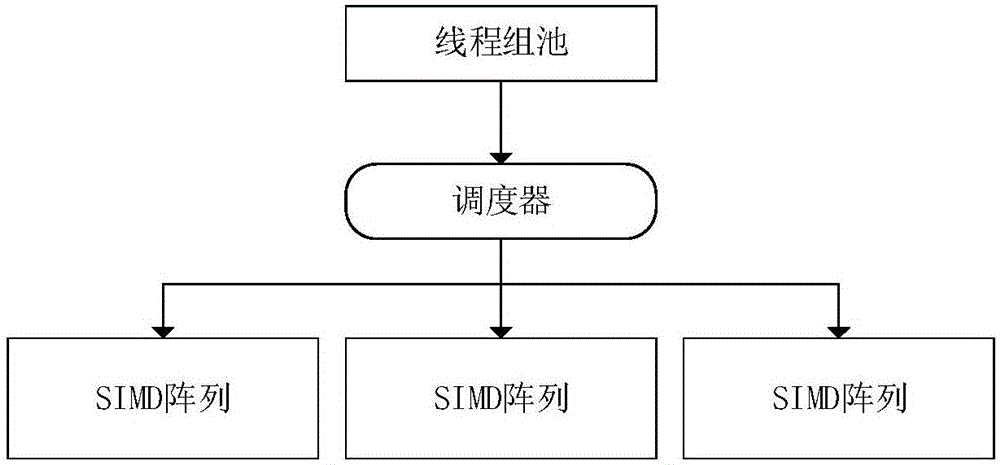

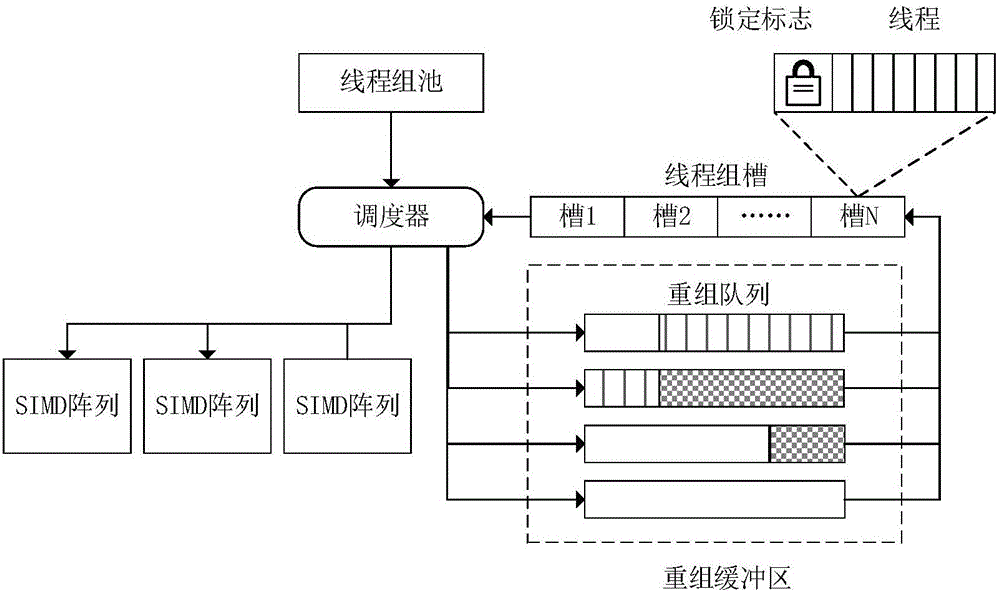

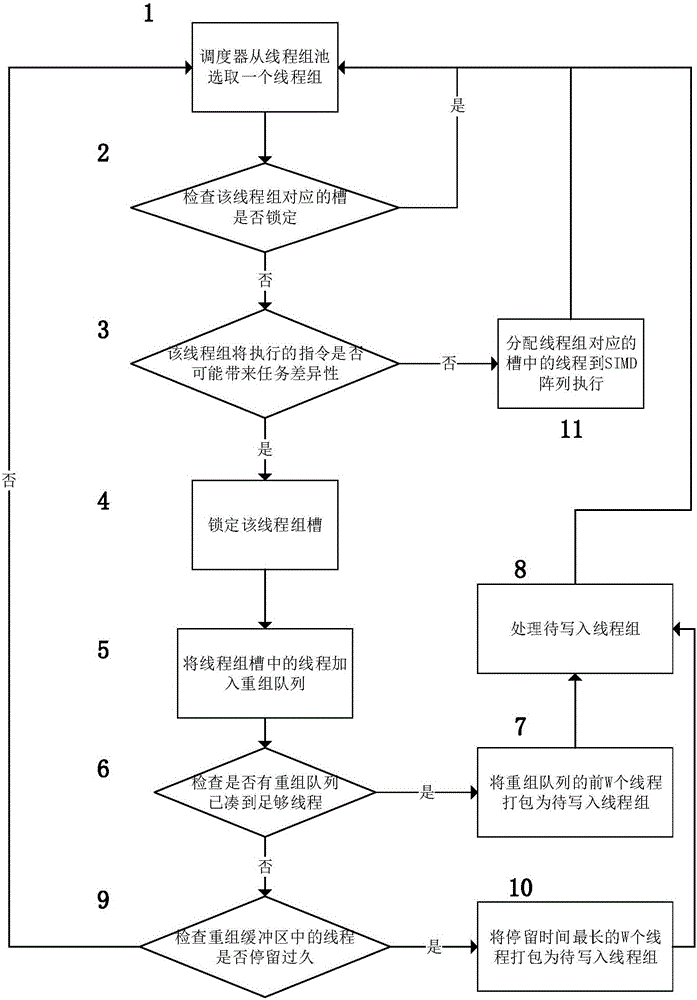

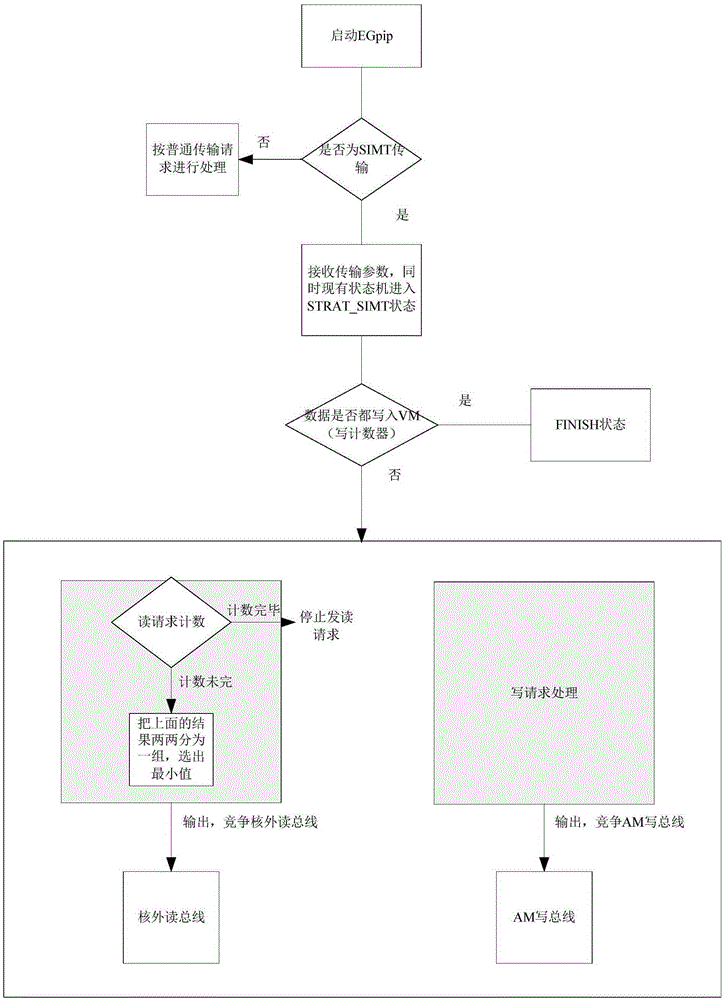

Asynchronous threading recombination method and SIMT (single instruction multiple thread) processor based on method

ActiveCN106484519AImprove performanceEliminate Task VarianceProgram initiation/switchingInterprogram communicationSingle instruction, multiple threadsAssembly line

The invention discloses an asynchronous threading recombination method and a SIMT (single instruction multiple thread) processor based on the method; through asynchronously exchanging a thread between different threading groups, a task difference in the threading group is eliminated, and thereby avoiding the idle use of a processing unit in a SIMD array and improving performance of GPU (graphics processing unit). The SIMT processor is added with a recombination buffer zone and a threading group slot. The recombination scheme is asynchronously generated by means of a recombination buffer zone, and the recombination scheme is stored by using the threading group. Compared with the existed threading combination method, the asynchronous method cannot cause the pause of the SIMD assembly line generated from the synchronous operation of the threading group, thus the SIMT processor achieves higher performance.

Owner:RES INST OF SOUTHEAST UNIV IN SUZHOU

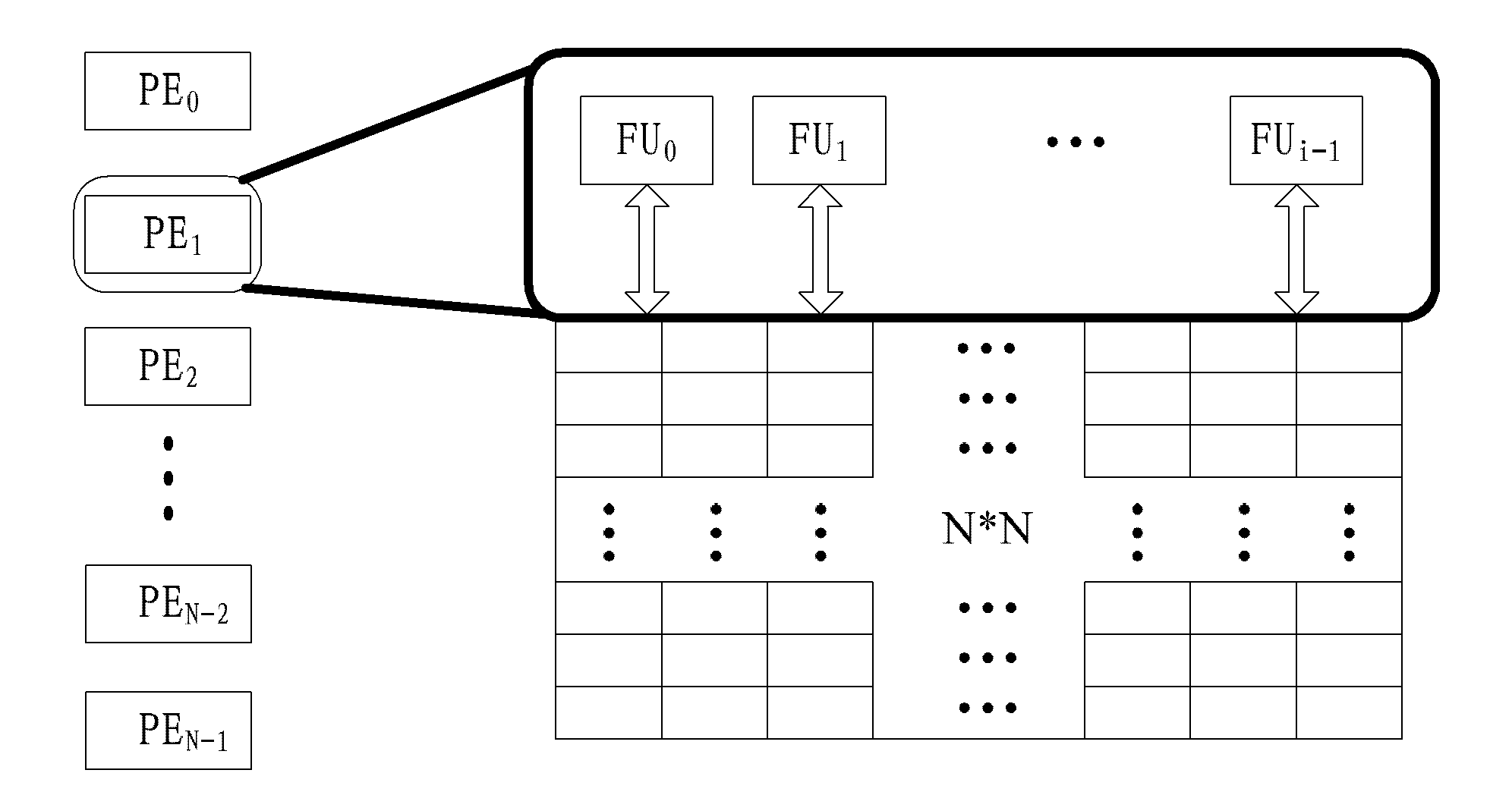

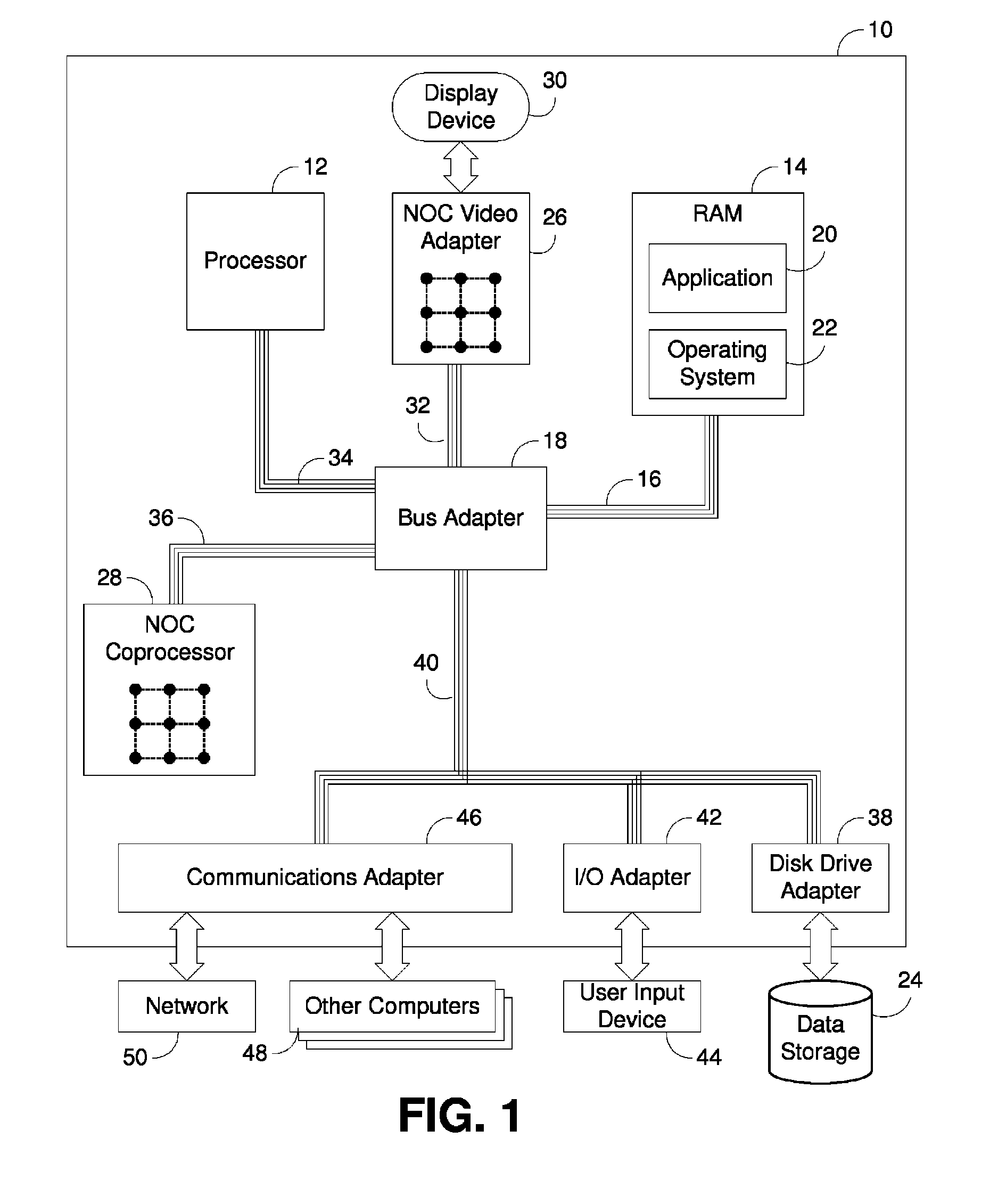

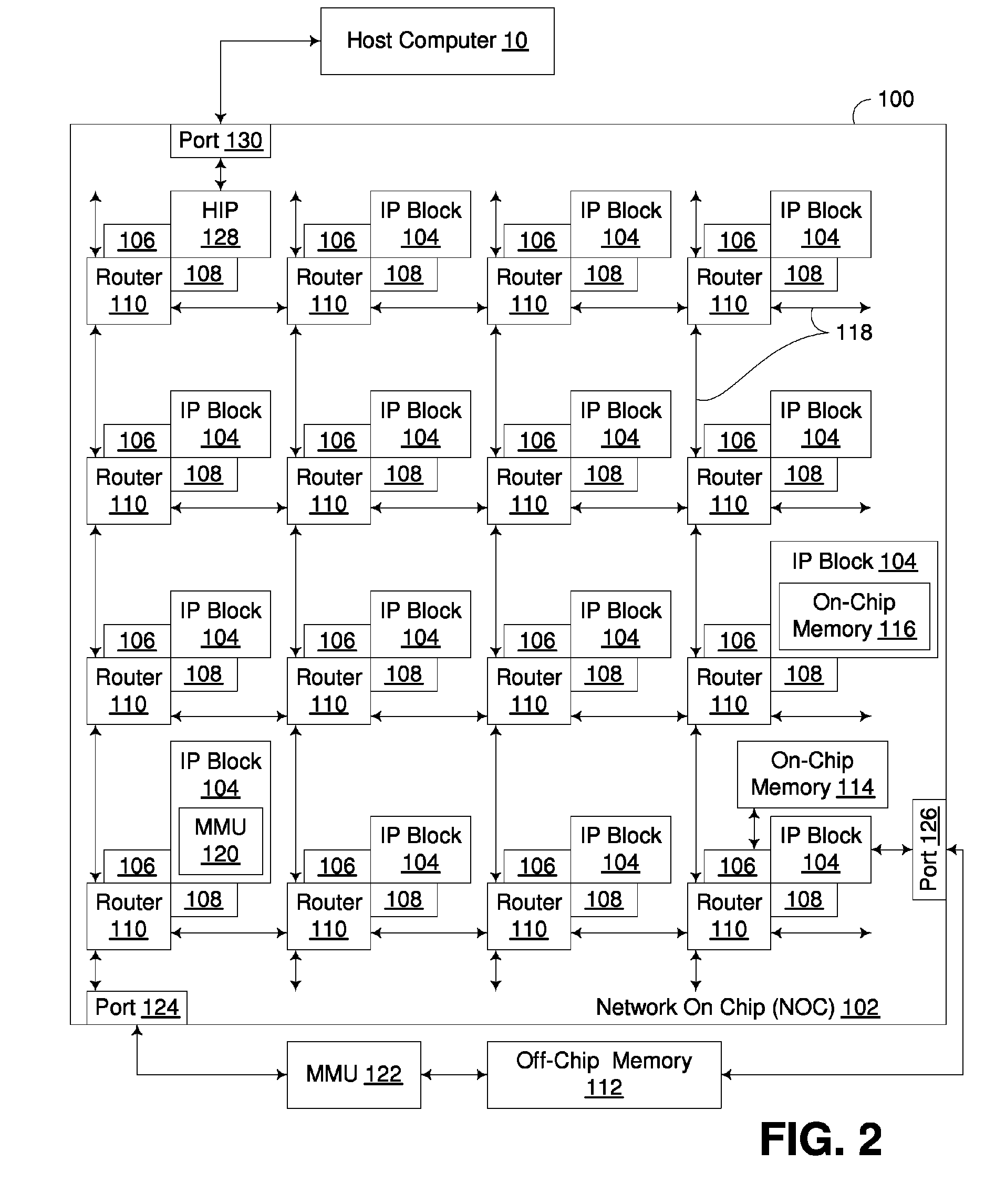

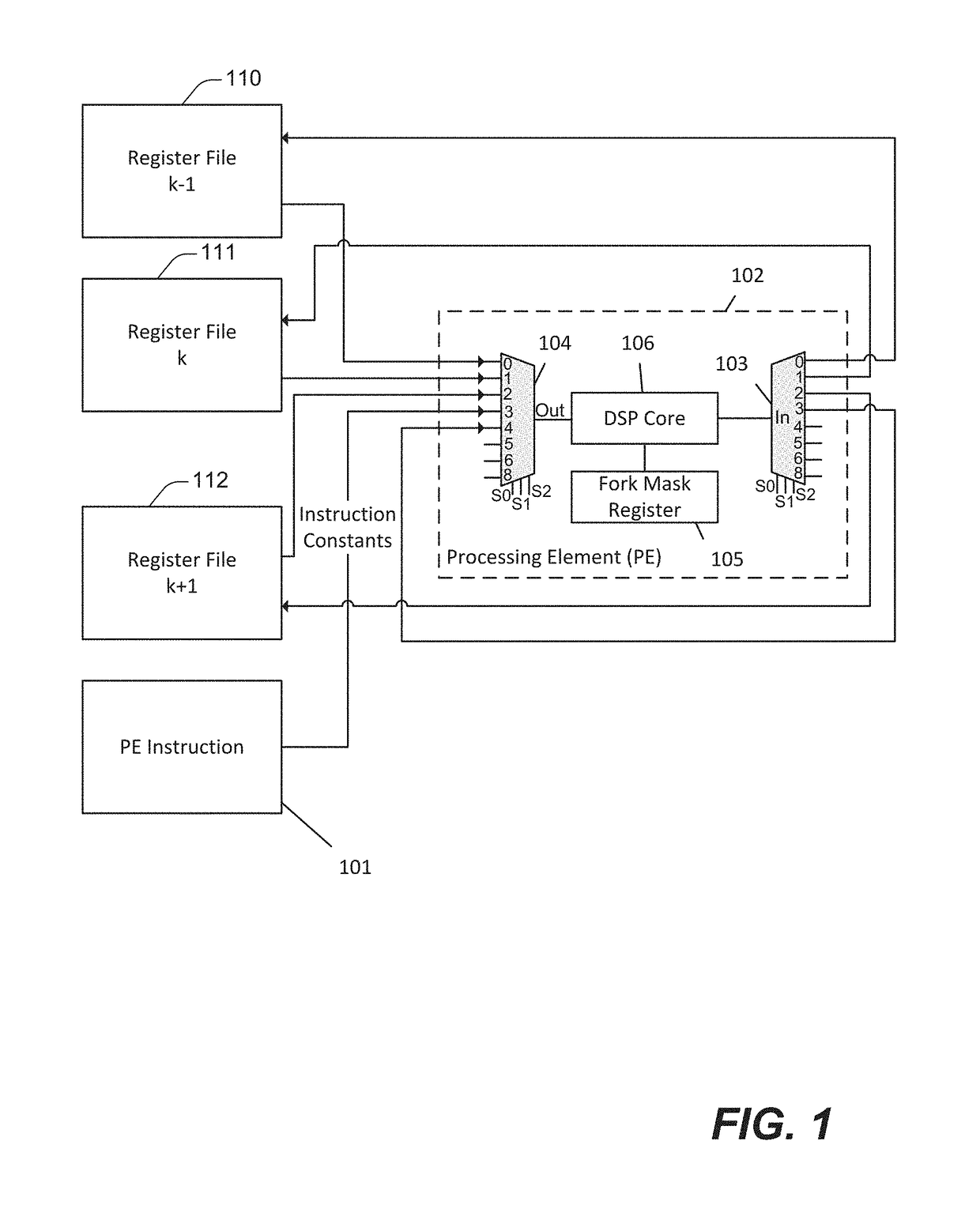

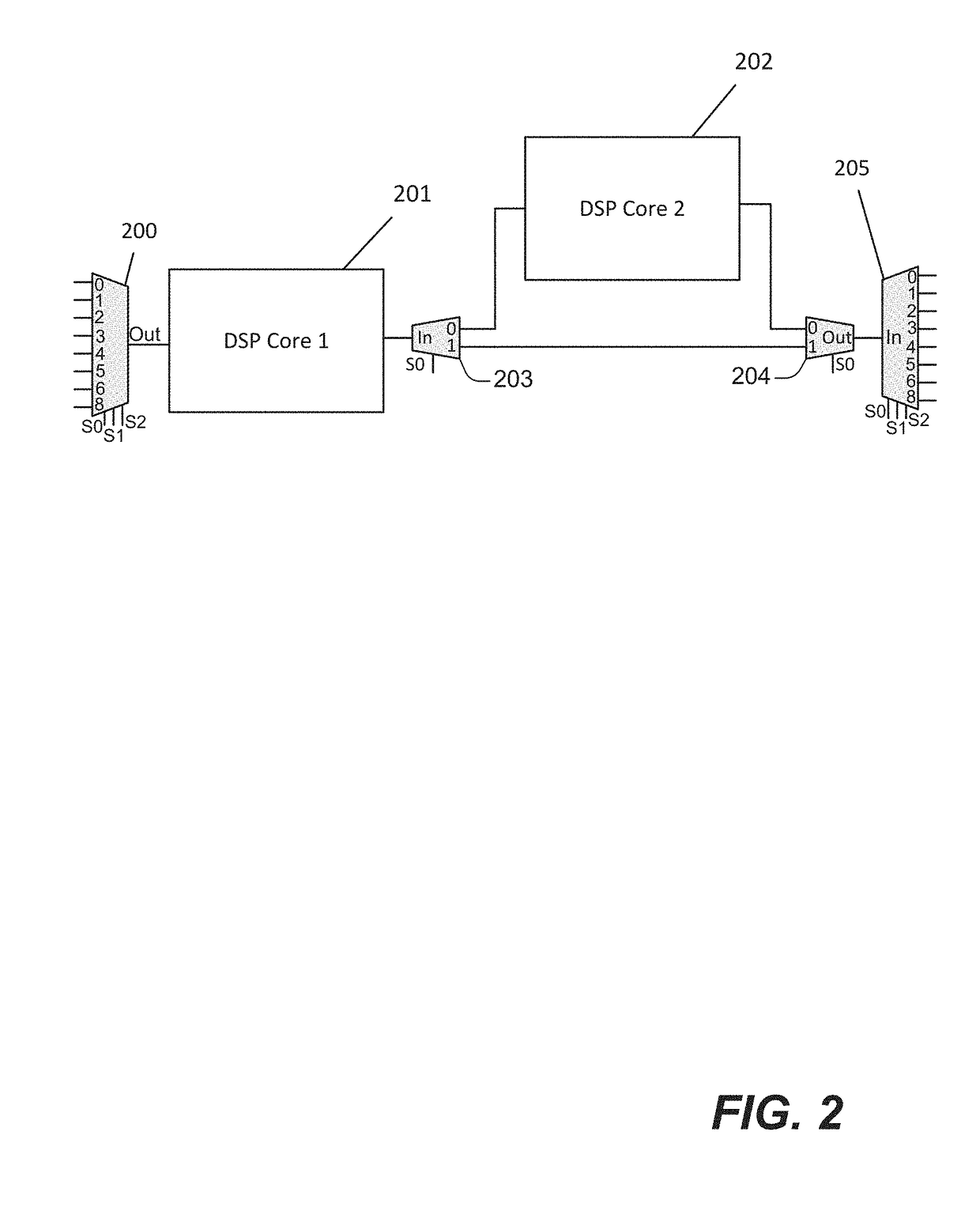

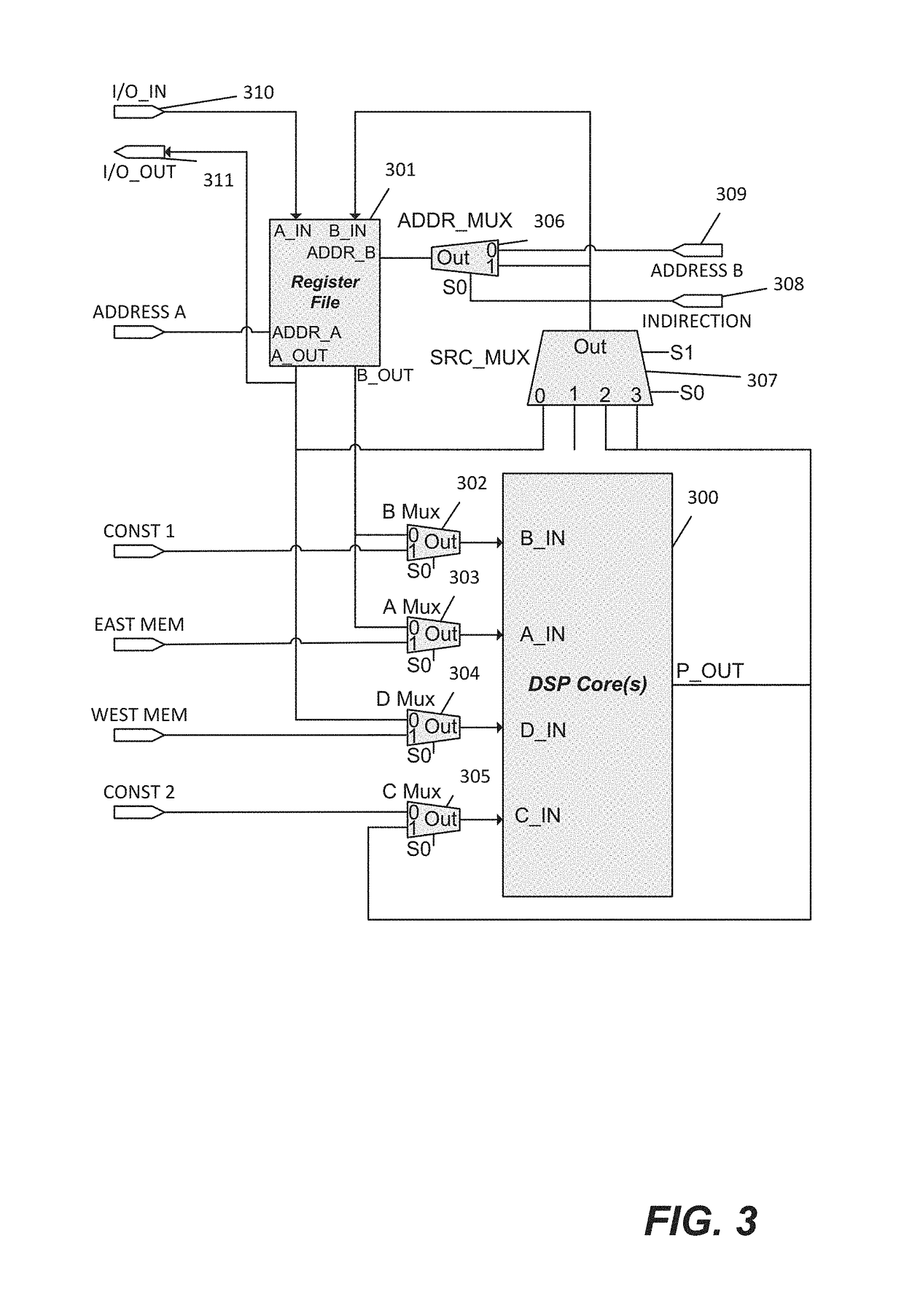

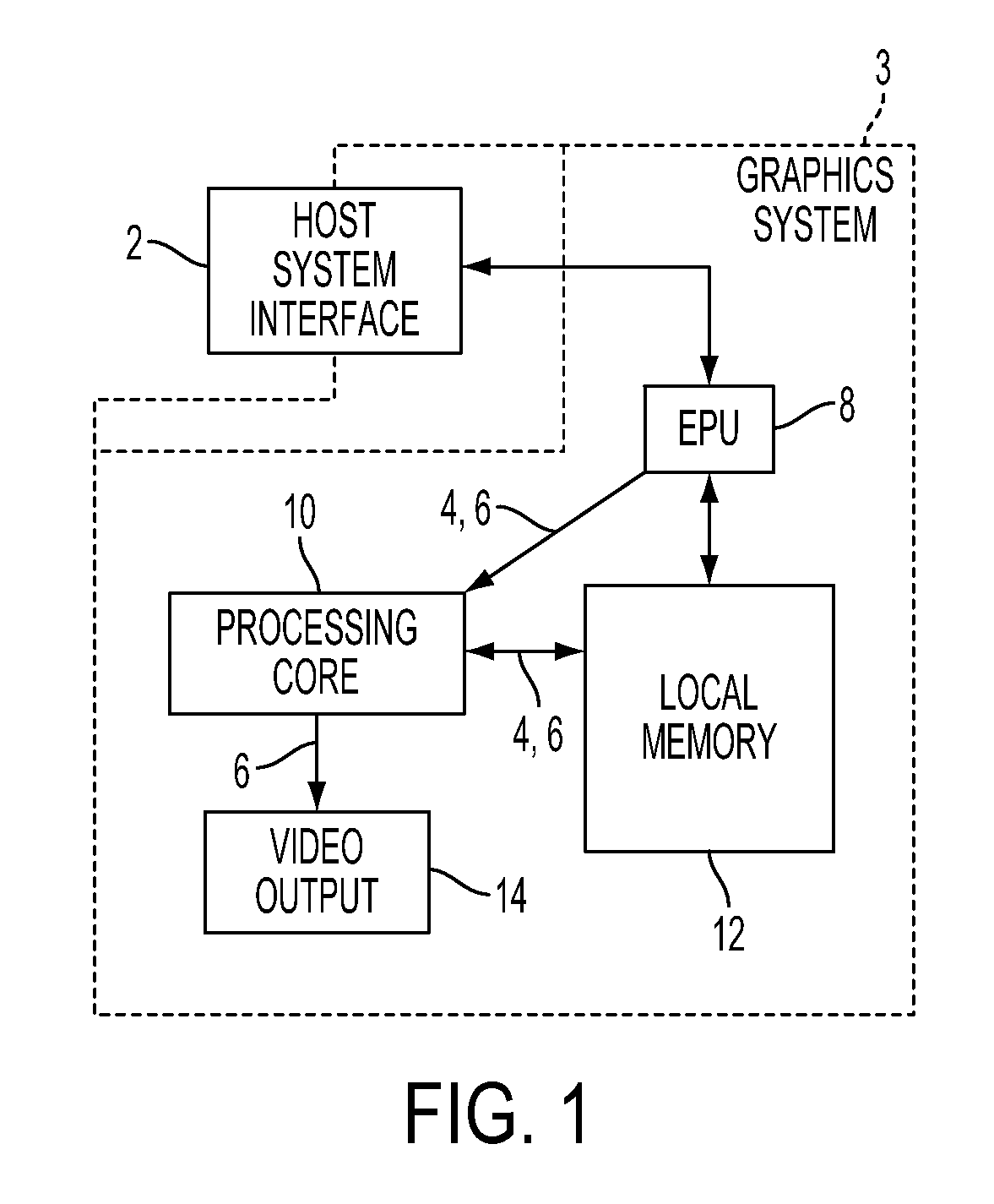

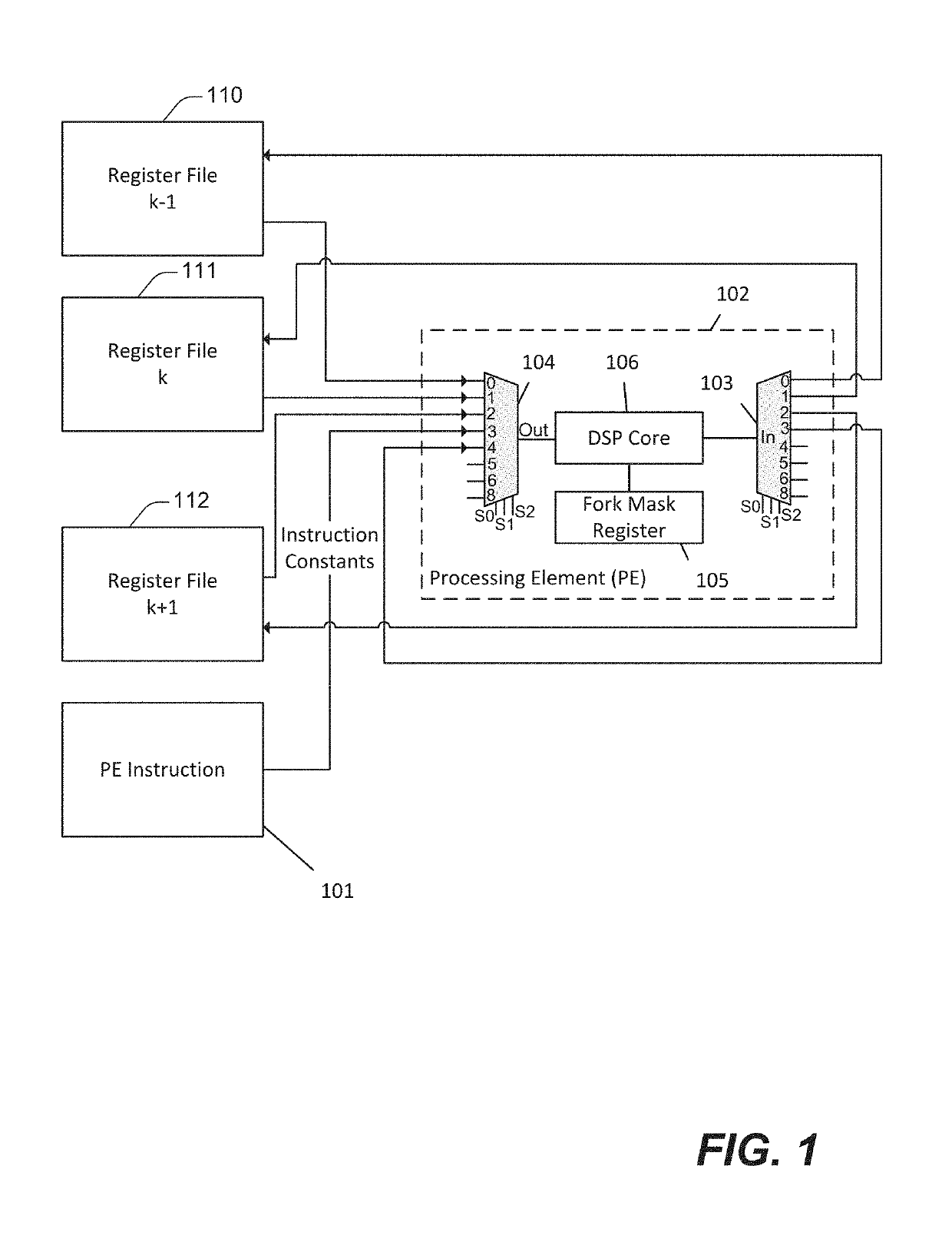

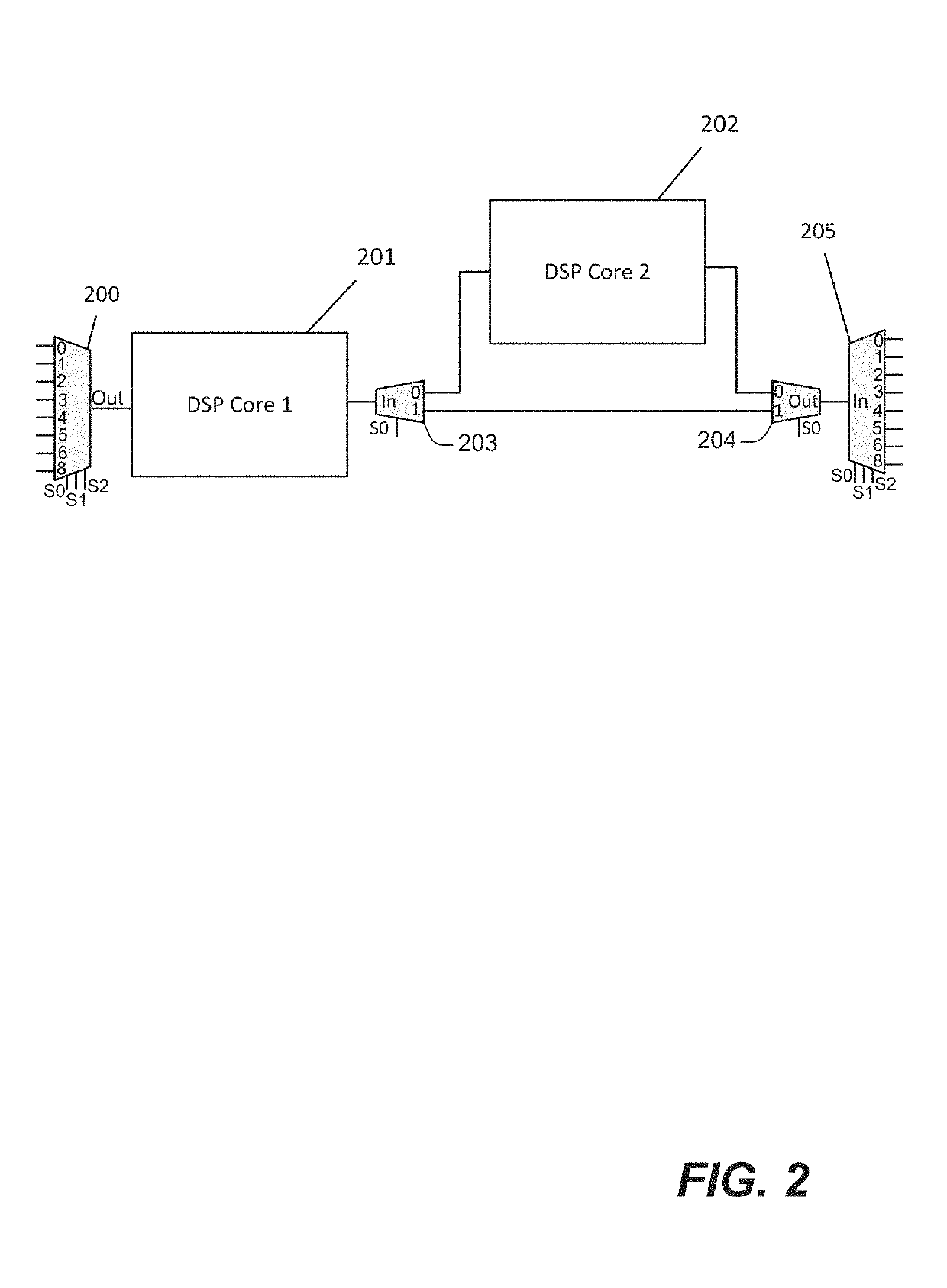

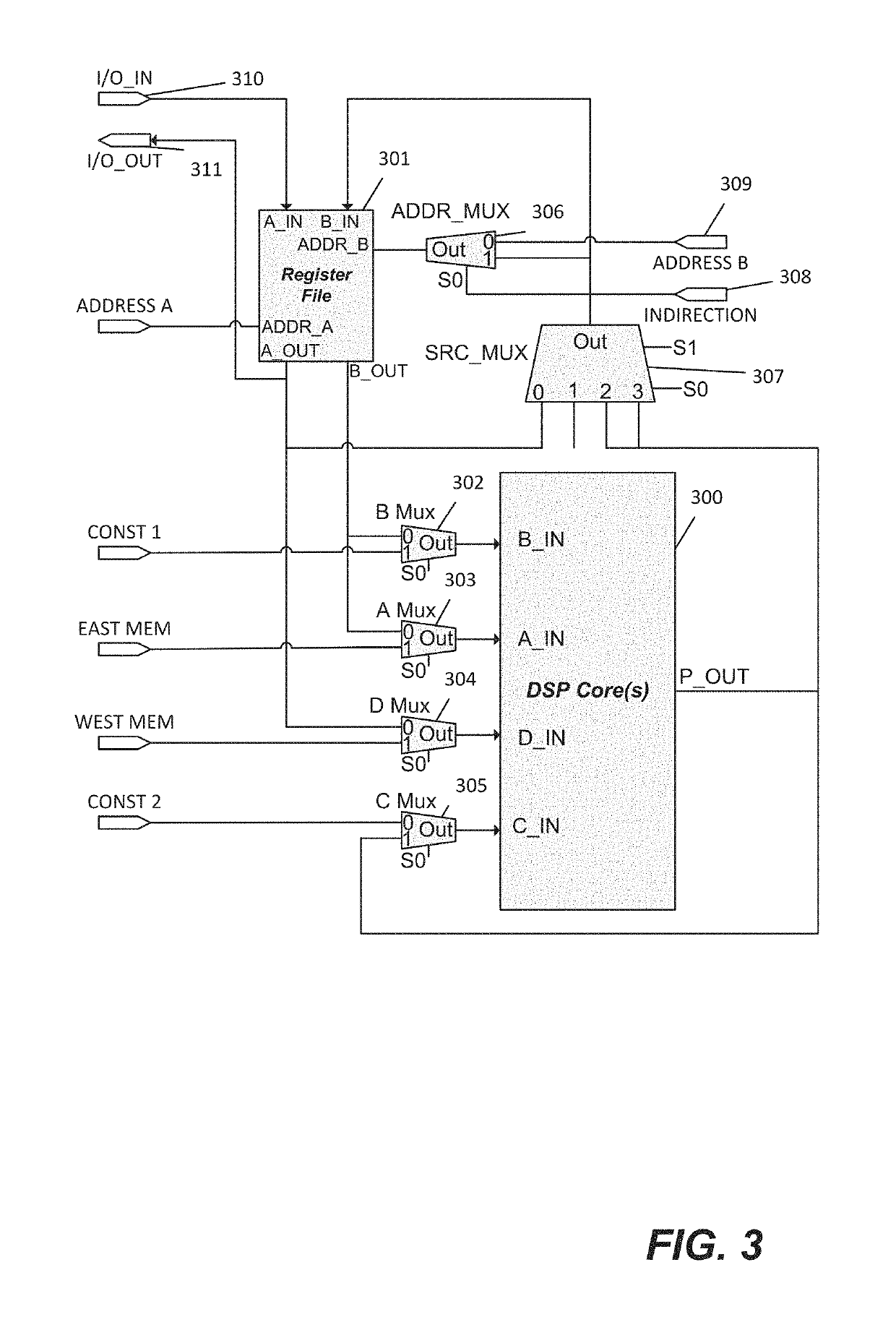

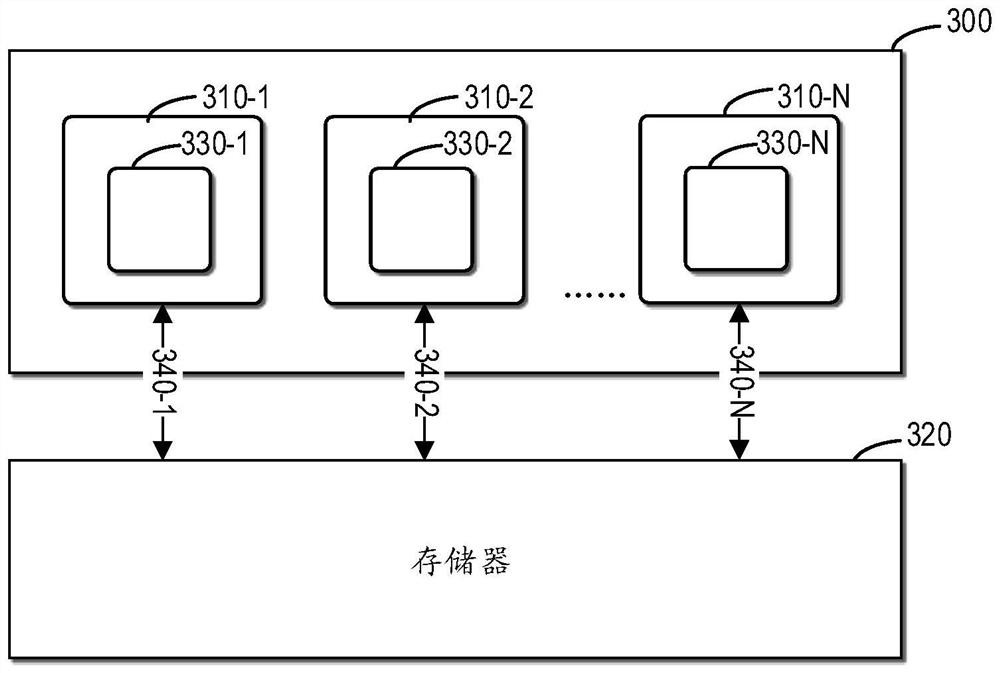

Digital signal processing array using integrated processing elements

ActiveUS20190079761A1Minimize and eliminate redundant processingEliminate in processing dataArchitecture with multiple processing unitsLogic circuitsDigital signal processingSingle instruction, multiple threads

Techniques and mechanisms described herein include a signal processor implemented as an overlay on a field-programmable gate array (FPGA) device that utilizes special purpose, hardened intellectual property (IP) modules such as memory blocks and digital signal processing (DSP) cores. A Processing Element (PE) is built from one or more DSP cores connected to additional logic. Interconnected as an array, the PEs may operate in a computational model such as Single Instruction-Multiple Thread (SIMT). A software hierarchy is described that transforms the SIMT array into an effective signal processor.

Owner:ACORN INNOVATIONS INC

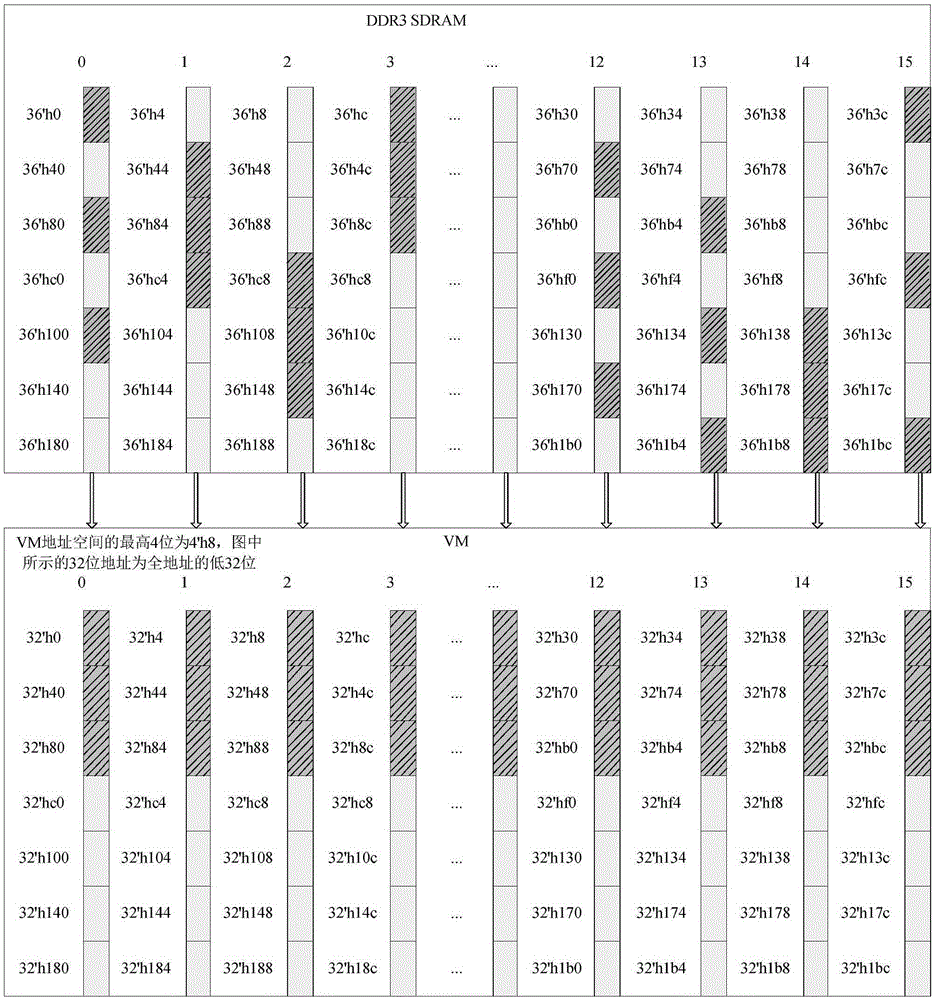

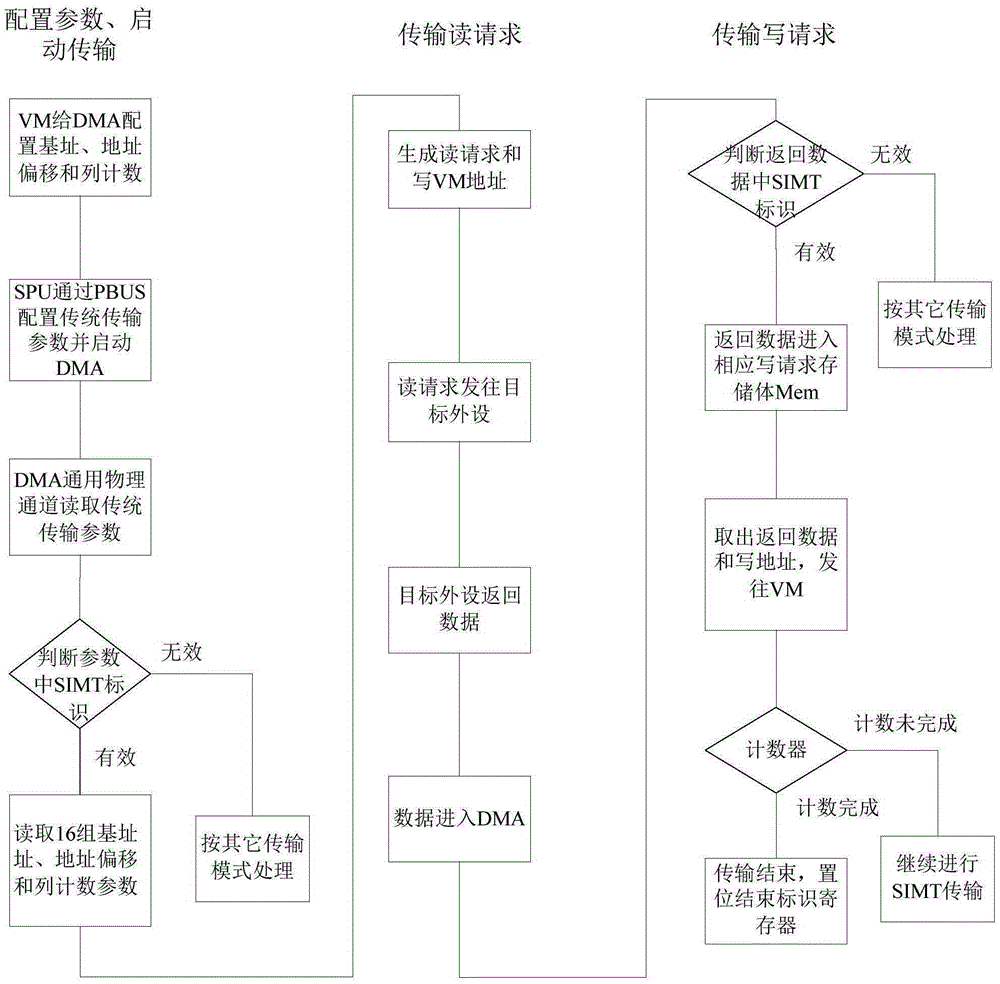

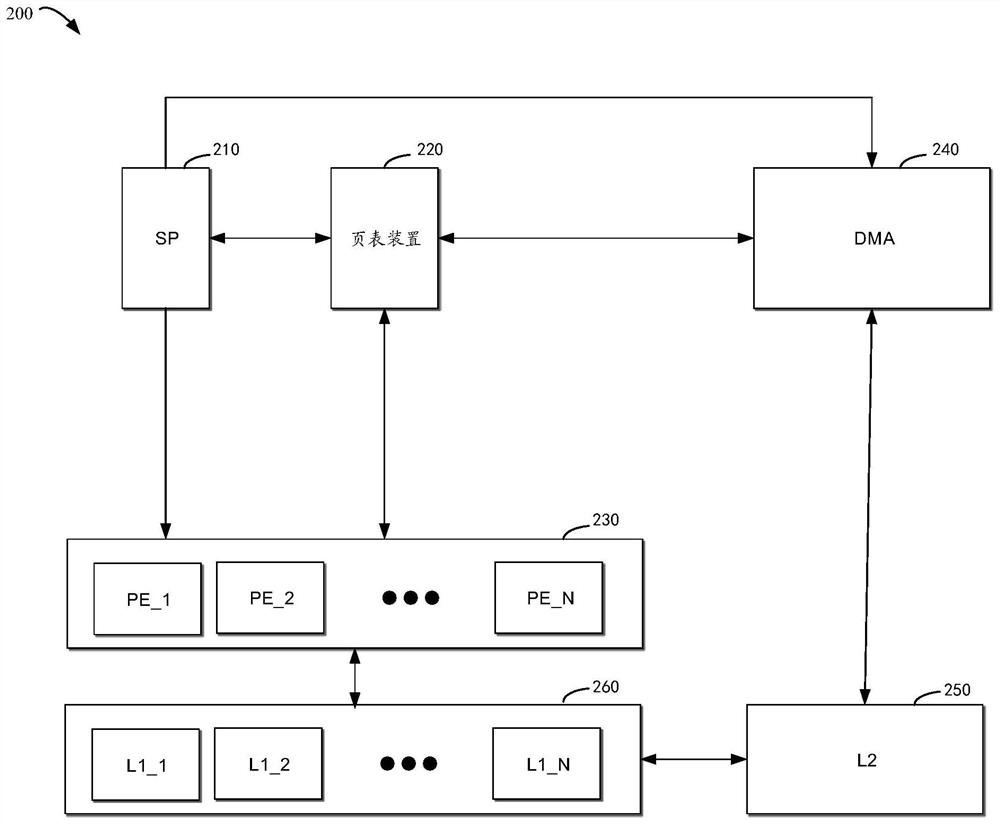

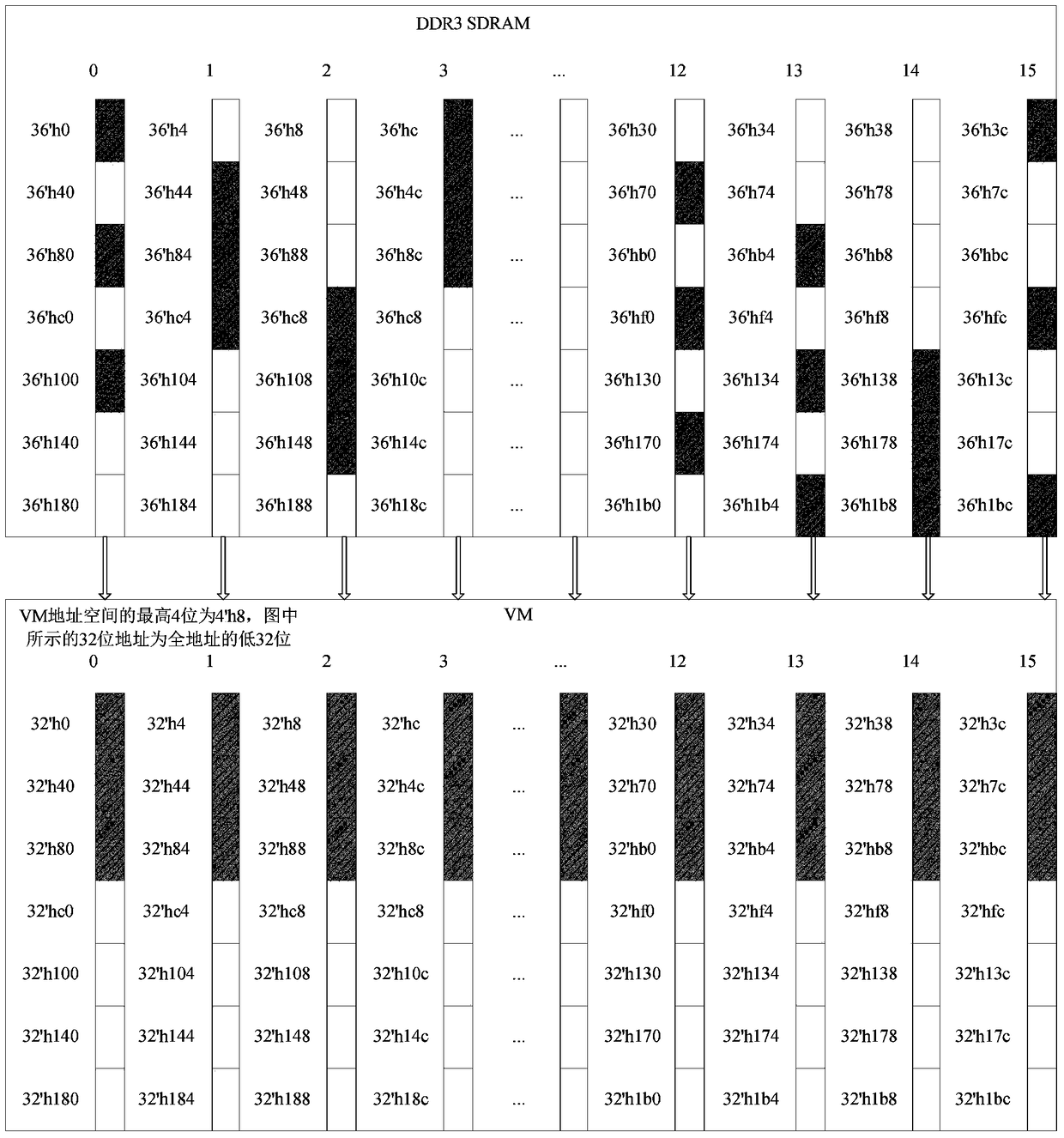

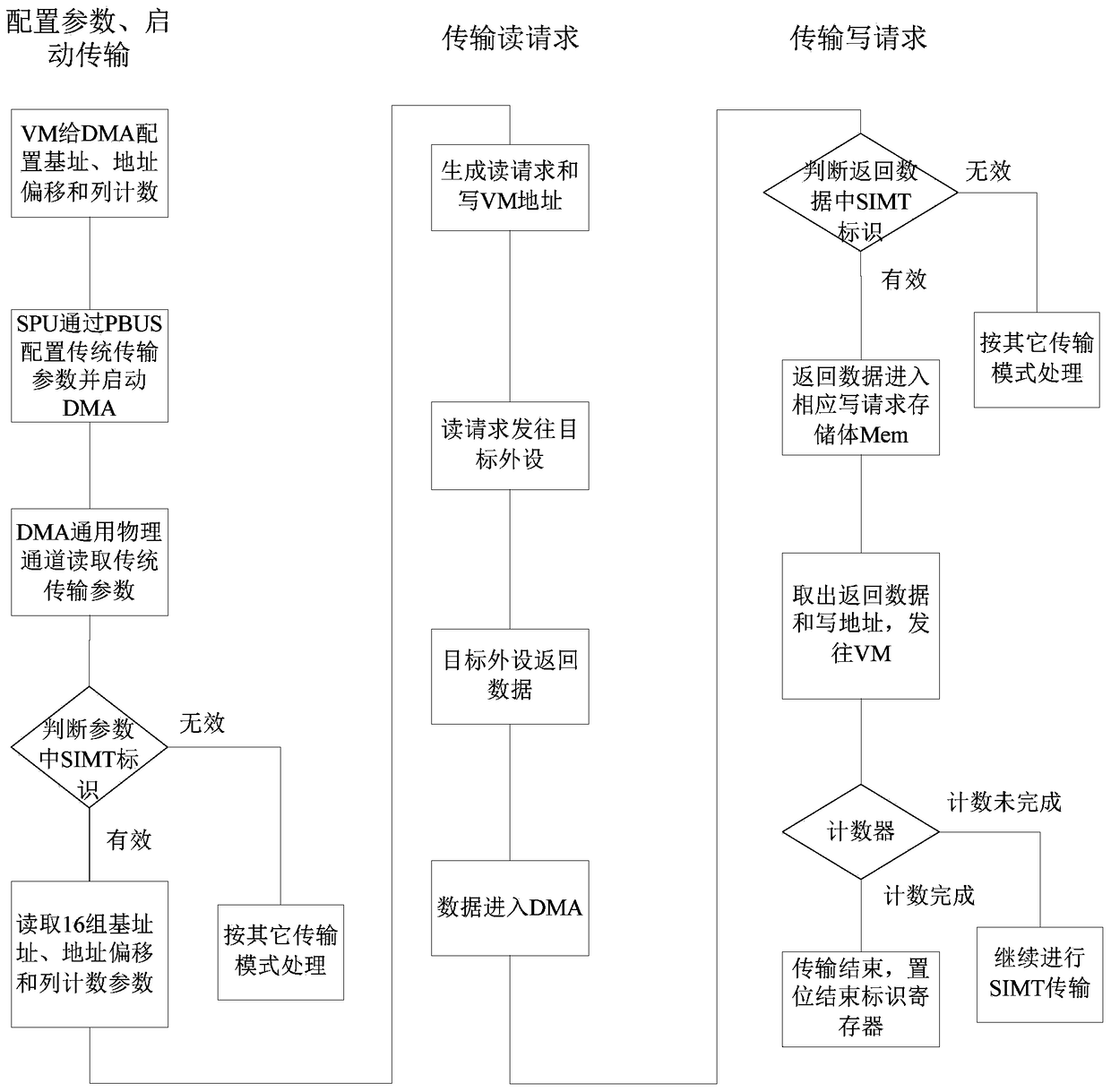

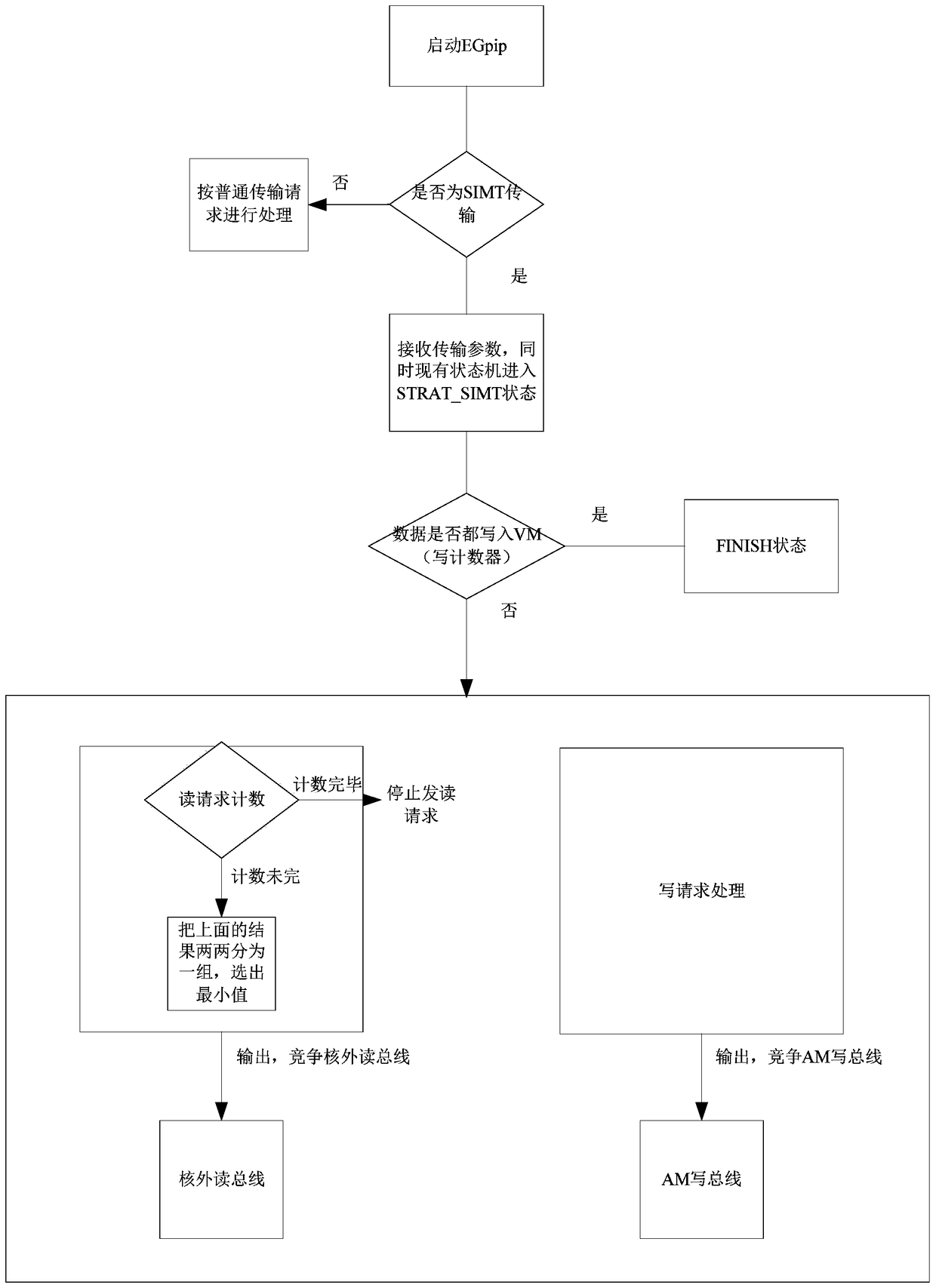

Single-instruction multi-thread mode oriented method for DMA transmission in GPDSP

ActiveCN105302749ASupport miningFast executionElectric digital data processingGeneral purposeSingle instruction, multiple threads

The invention discloses a single-instruction multi-thread mode oriented method for DMA (Director Memory Access) transmission in a GPDSP (General Purpose Digital Signal Processor). The method comprises: transferring data of SIMT (Single Instruction Multiple Thread) programs irregularly stored in an out-of-core storage space to a VM (Vector Memory) of a core by configuring a DMA transmission transaction once; and after the transfer, regularly storing the data in the VM, and enabling vector calculation parts to concurrently access to the data. The method is simple in principle, convenient to operate and configurable in data transmission amount, can efficiently supply data to the SIMT programs in a background operation manner, and not only better supports the execution of the SIMT programs but also greatly improves the operational performance of the GPDSP.

Owner:NAT UNIV OF DEFENSE TECH

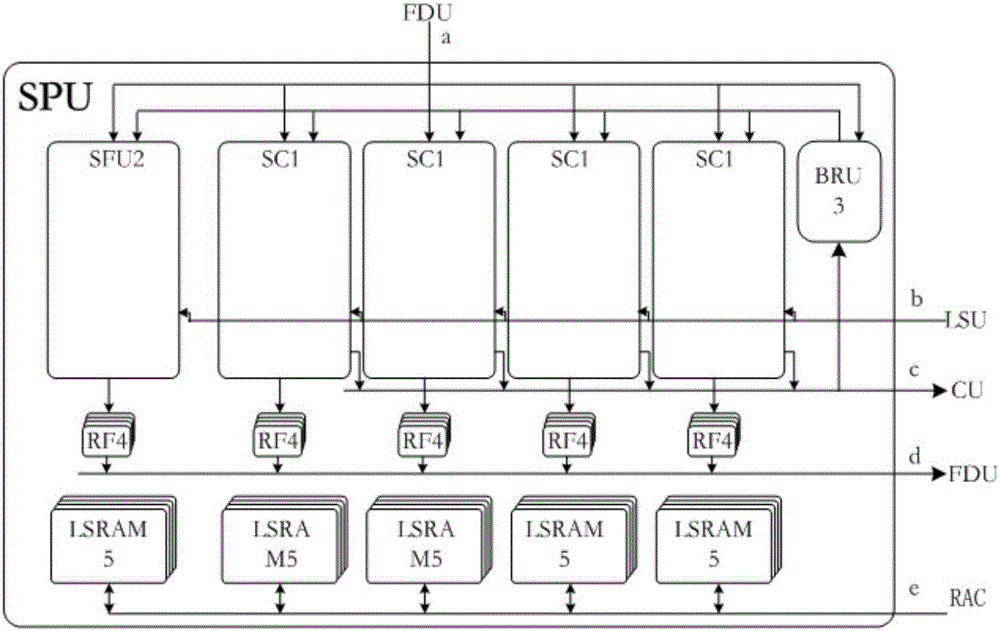

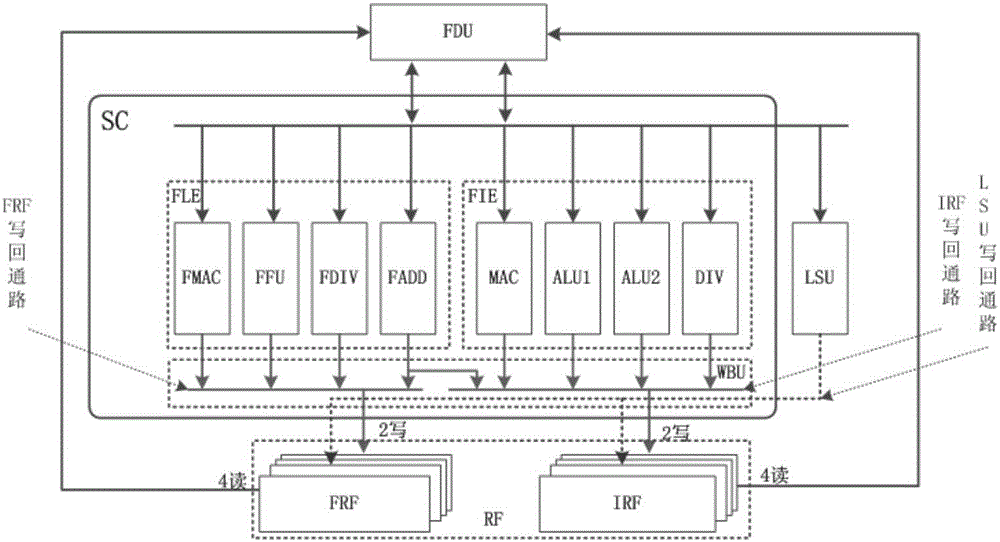

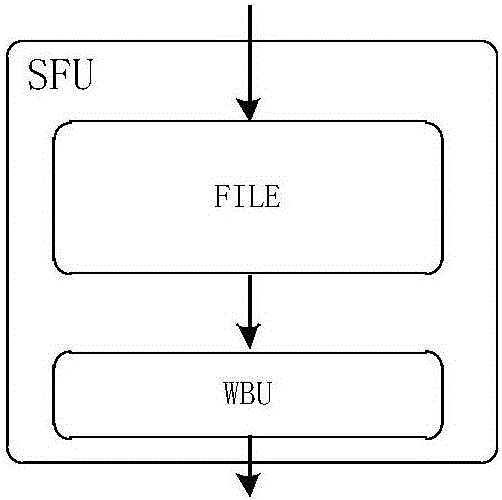

Single-instruction multi-thread staining processing unit structure for uniform staining graphic processing unit

InactiveCN106709858AAccelerated routineImprove featuresProcessor architectures/configurationGraphicsPerformance function

The invention belongs to the field of graphic processing unit design, and relates to a single-instruction multi-thread staining processing unit for a uniform staining graphic processing unit. The processing unit comprises four SC (Staining Core) units (1) used for executing a conventional operation, an SFU (Special Function Unit) (2) used for executing a special performance function, a BRU (Branching Unit) (3) used for carrying out program branching, five sets of RF (Register File) units (4) used for data storage and five sets of LSRAM (Local Storage Random-Access Memory) units (5) used for data storage, wherein one set of RF unit (4) corresponds to one SFU (2), and the rest four sets of RF units (4) correspond to the four SC units (1) one by one; and one set of LSRAM unit (5) corresponds to one SFU (2), and the rest four sets of LSRAM units(5) correspond to the four SC units (1) one by one.

Owner:XIAN AVIATION COMPUTING TECH RES INST OF AVIATION IND CORP OF CHINA

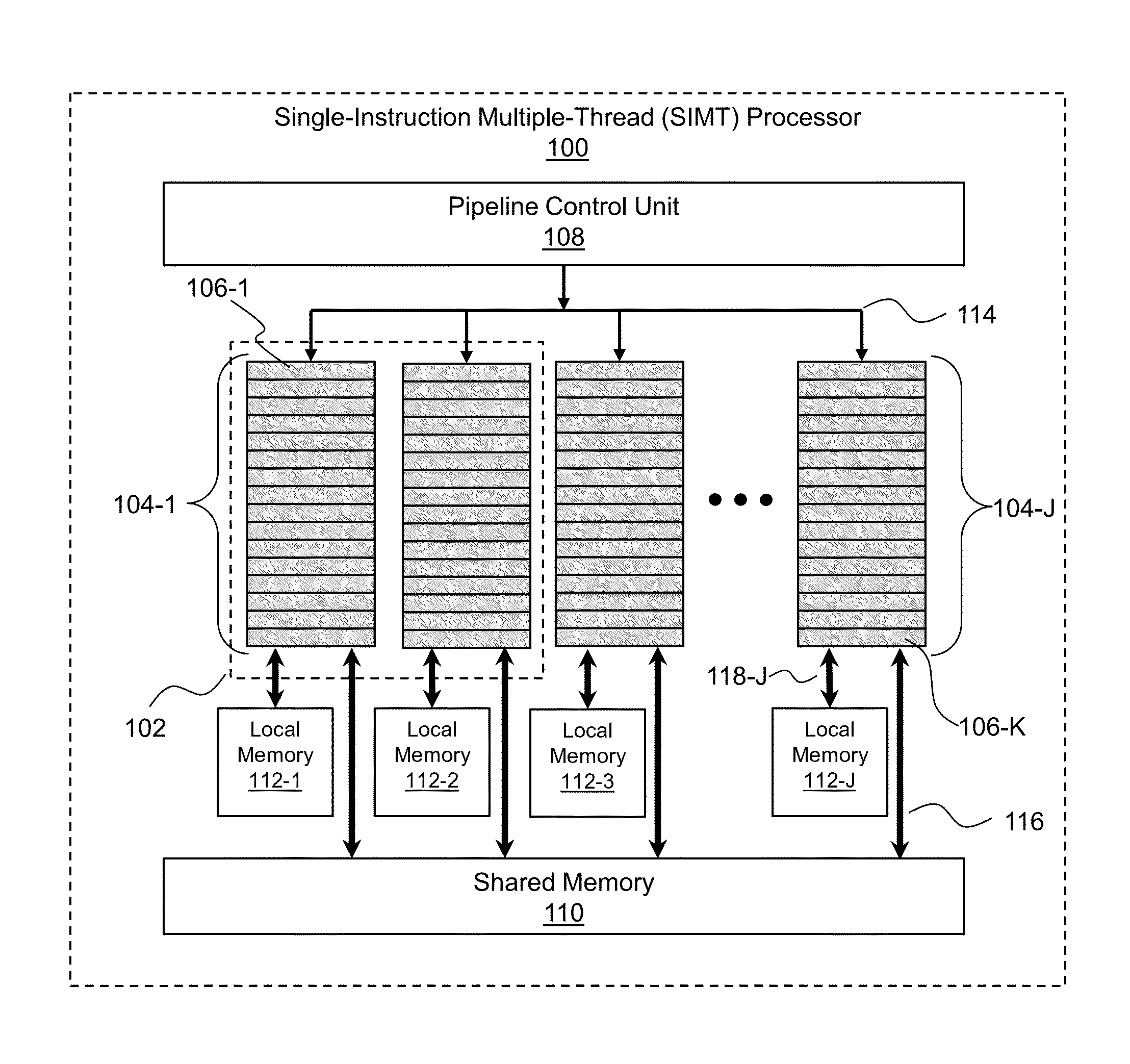

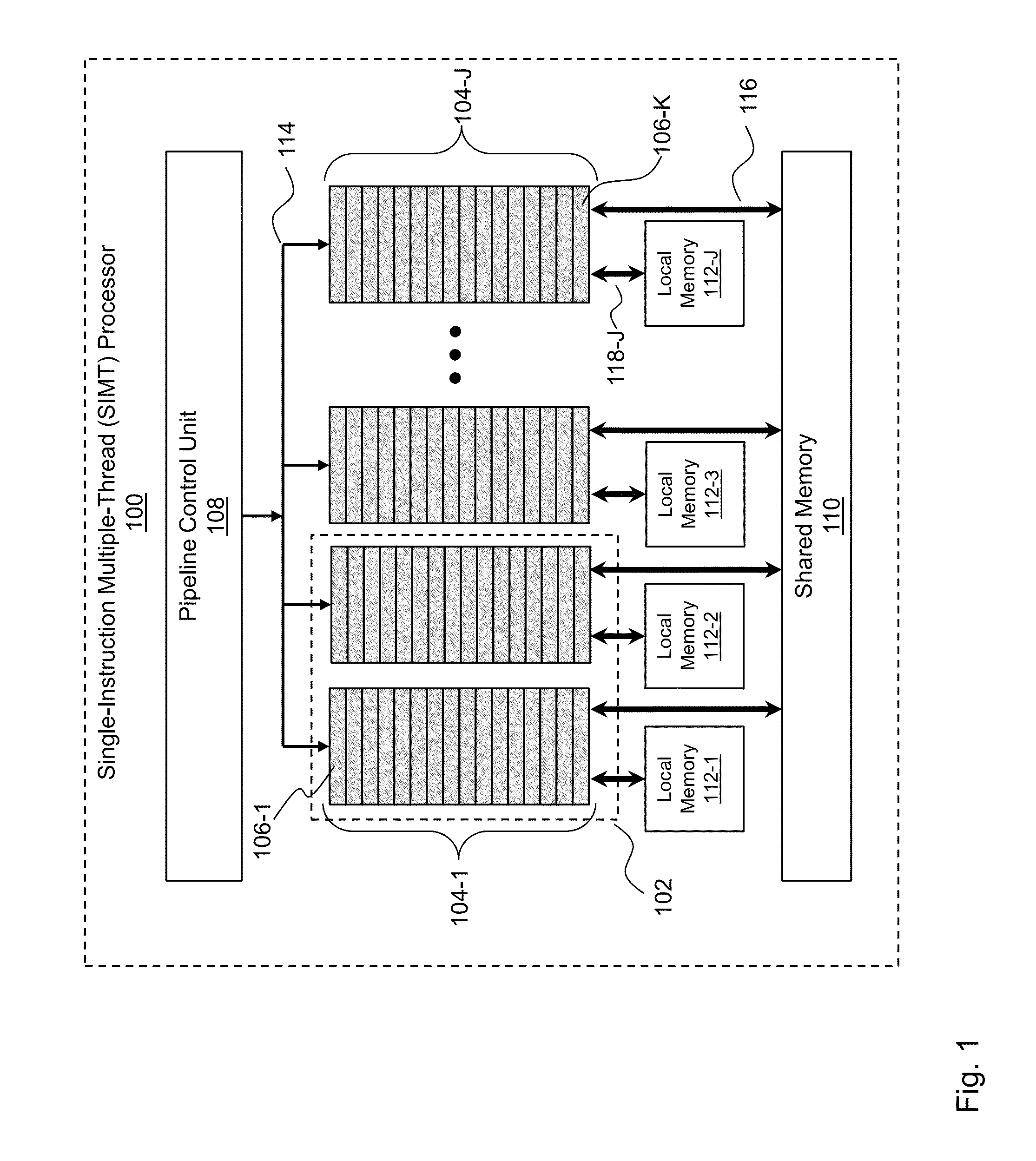

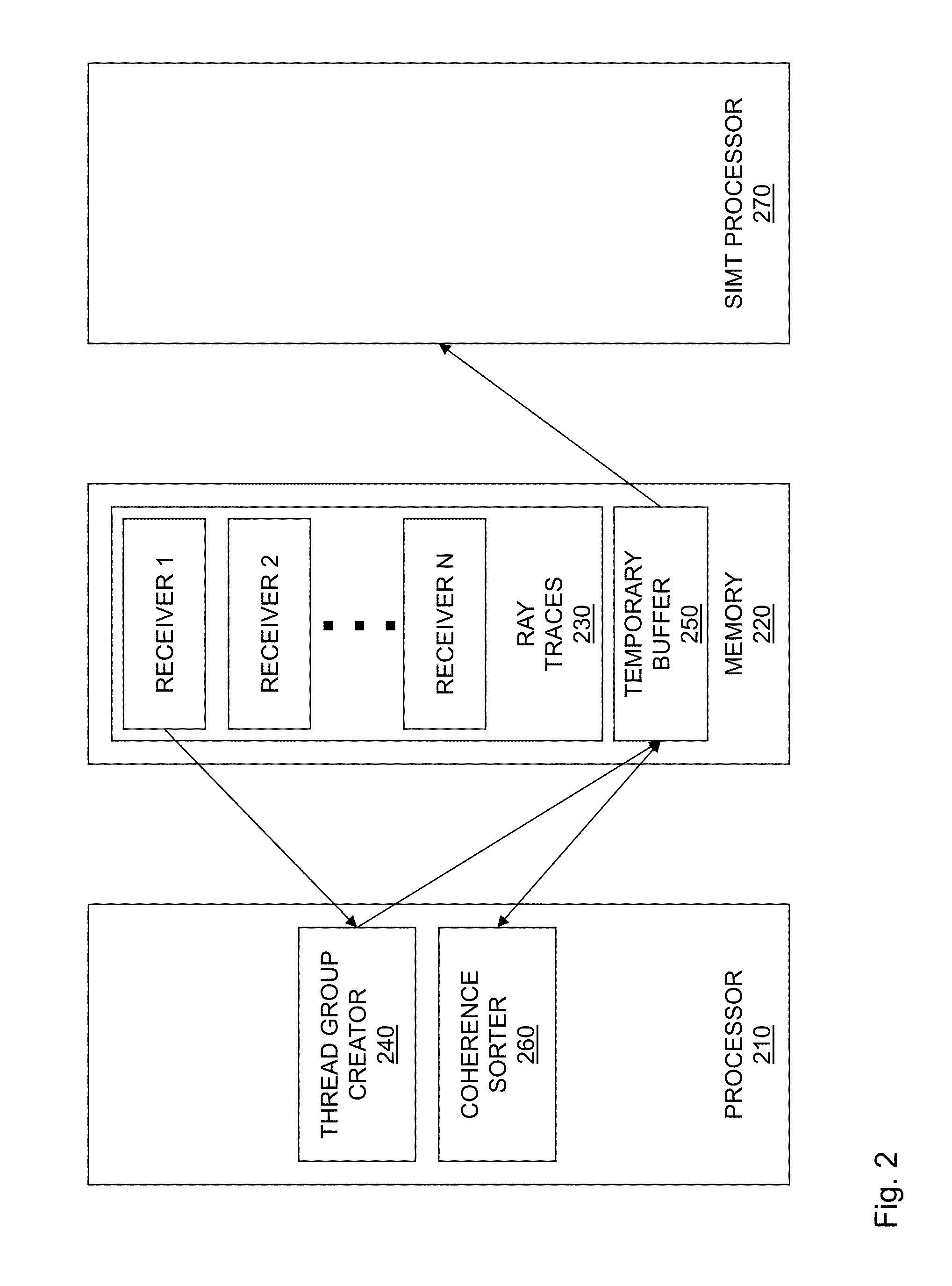

System and method for computing gathers using a single-instruction multiple-thread processor

InactiveUS20150221123A1Reduce dispersionDetails involving 3D image dataConcurrent instruction executionSingle instruction, multiple threadsParallel computing

Systems for, and methods of, computing gathers for processing on a SIMT processor. In one embodiment, the system includes: (1) a thread group creator executing on a processor and operable to assign ray traces pertaining to a single receiver to threads for execution by a SIMT processor and (2) a memory configured to contain at least some of the threads for execution by the SIMT processor.

Owner:NVIDIA CORP

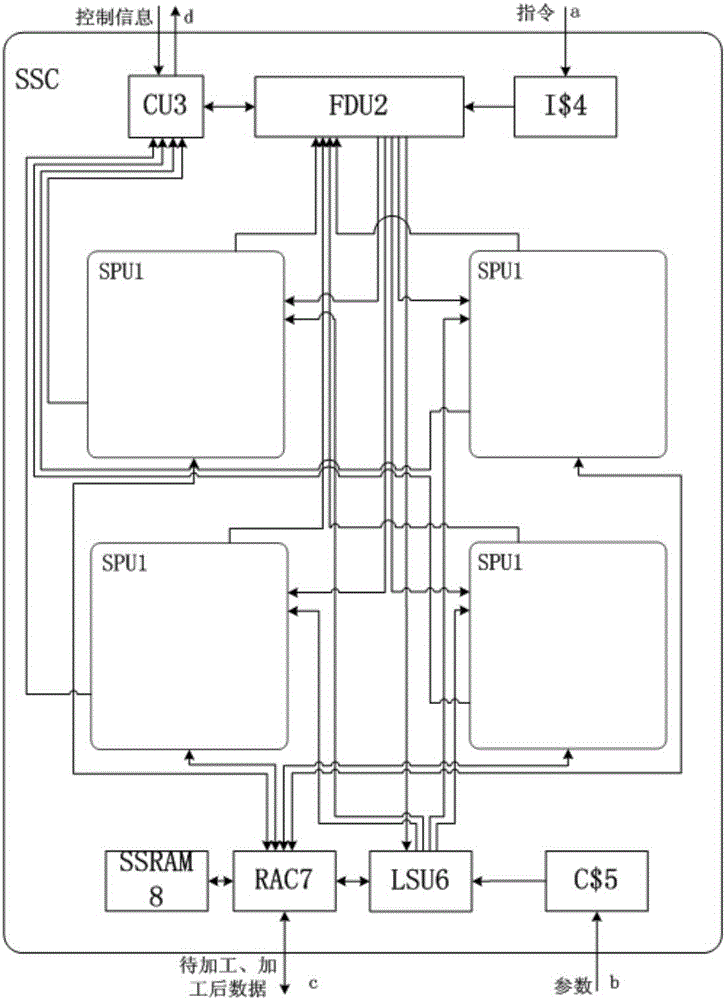

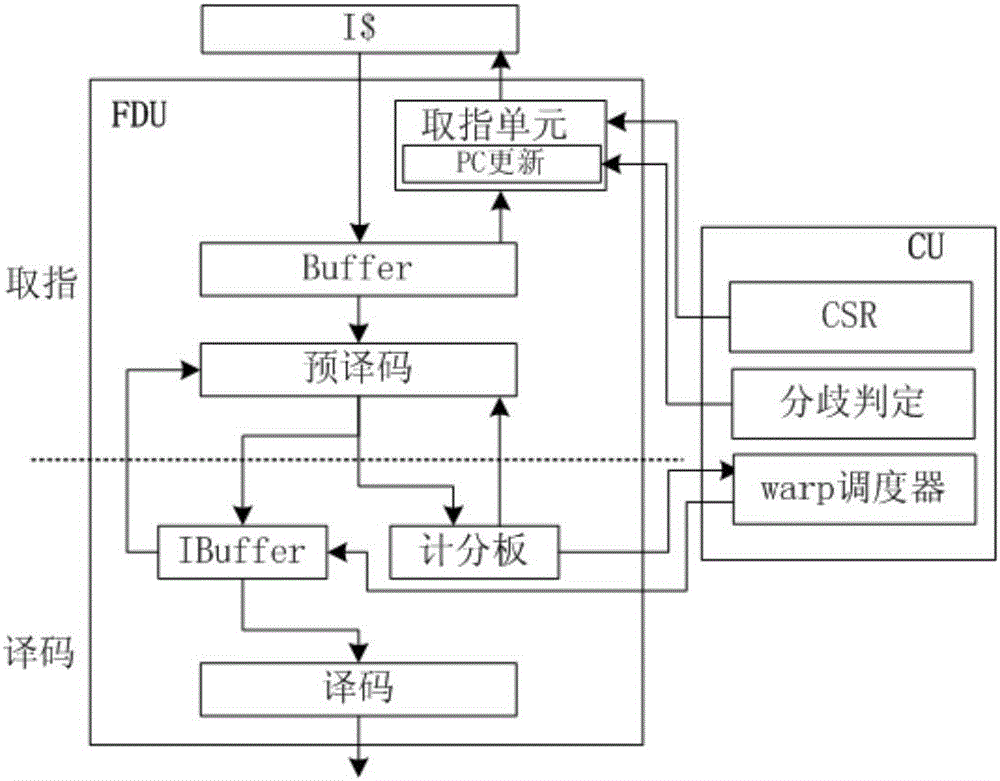

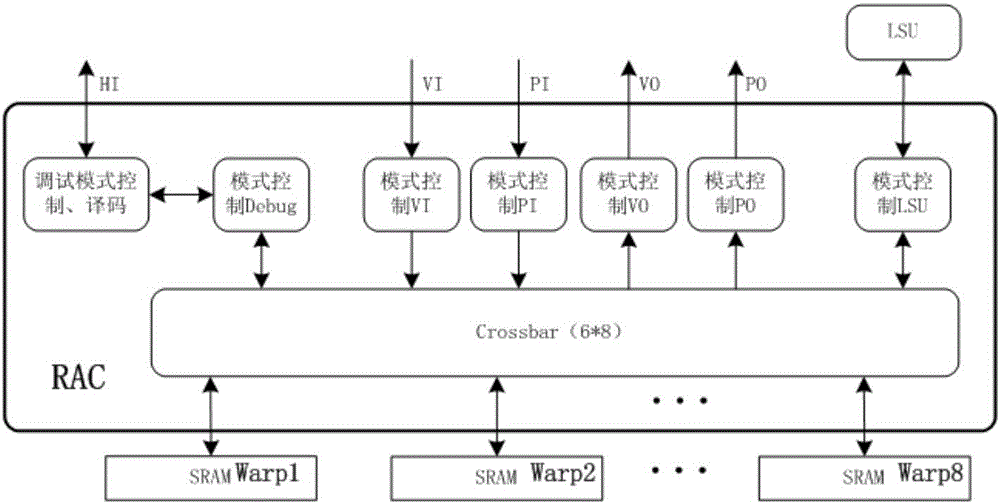

Single-instruction multi-thread staining cluster structure of uniform staining architecture graphics processor

ActiveCN106651742ARealize the processing methodStrong computing powerConcurrent instruction executionProcessor architectures/configurationInternal memoryGraphics

The invention belongs to the field of graphics processor design, and relates to a single-instruction multi-thread staining cluster structure of a uniform staining architecture graphics processor. The structure comprises a CU (Control Unit) (3), a FDU (Fetch Decode Unit) (2), an I$ (Instruction Cache) unit (4), a plurality of SPUs (Staining Processing Unit) (1), a SSRAM (Synchronous Static Random Access Memory) unit (8), a RAC (RAM Access Control) unit (7), a LSU (Load Storage Unit) (6) and a C$ (Constant Cache) unit (5), wherein the CU (3) is used for controlling and scheduling SSC; the FDU (2) is used for carrying out FD on an instruction; the I$ unit (4) is used for quickening an instruction access speed; the SPUs are used for executing a staining program; the SSRAM unit (8) is used for sharing data among the SPUs; the RAC unit (7) is used for carrying out decoding and arbitration control on internal memory access; the LSU (6) is used for carrying out data exchange among the SSRAM unit (8), the internal memories of the SPUs and a RF (Radio Frequency) unit; and the C$ unit (5) is used for quickening constant access. By use of the structure, a single-instruction multi-thread processing way is realized.

Owner:XIAN AVIATION COMPUTING TECH RES INST OF AVIATION IND CORP OF CHINA

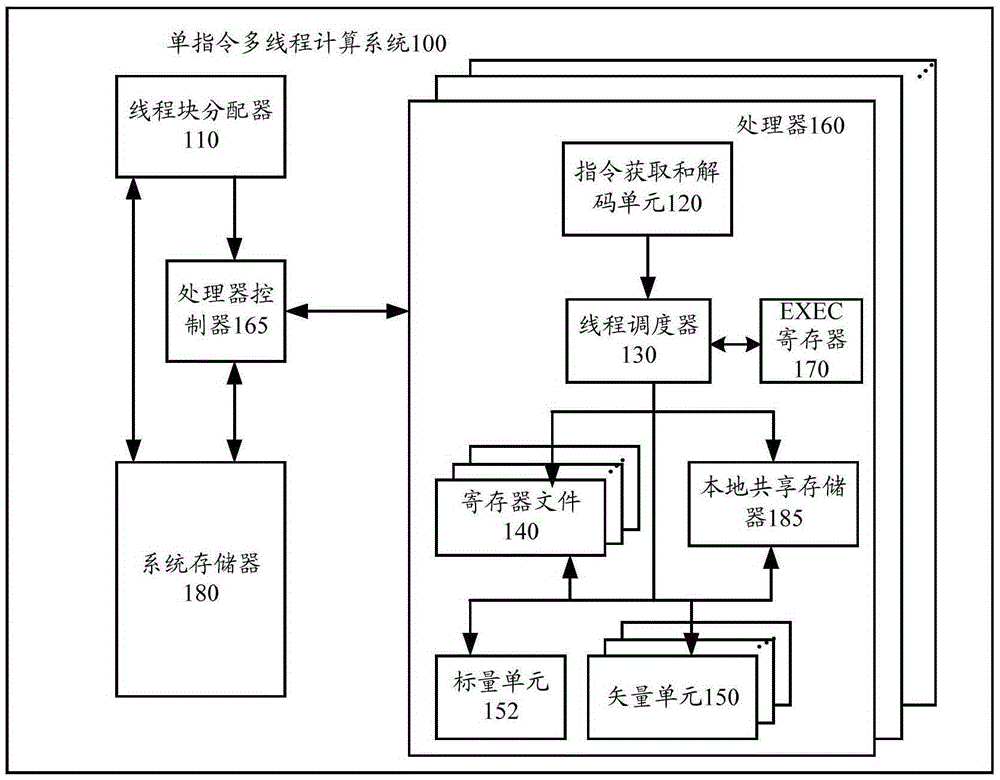

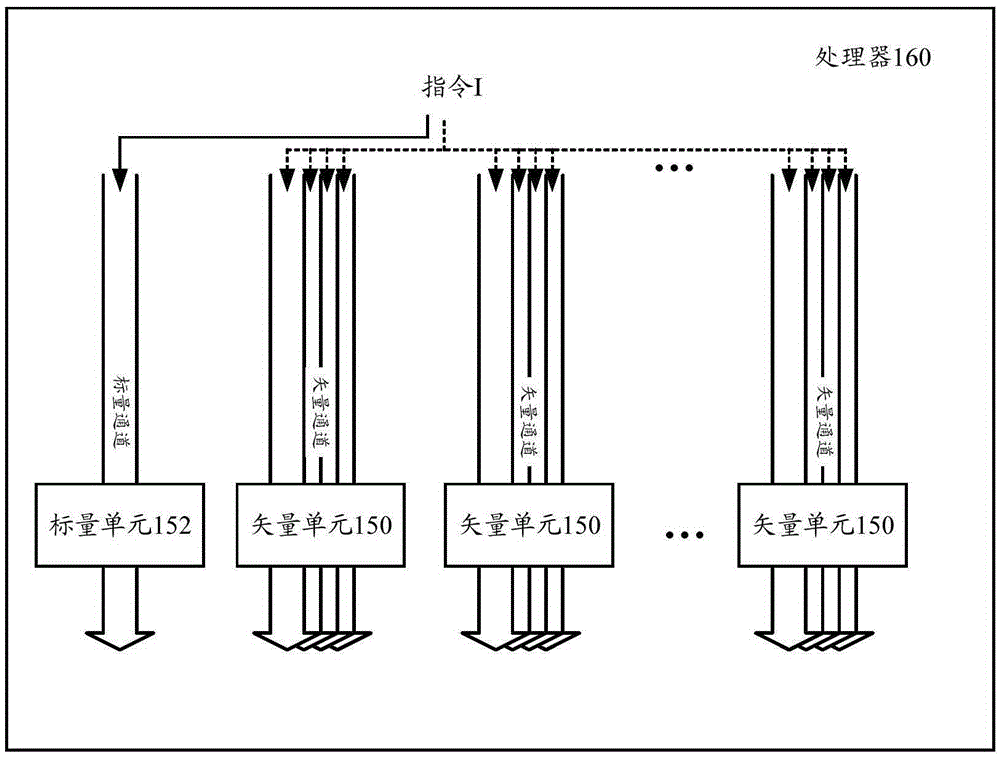

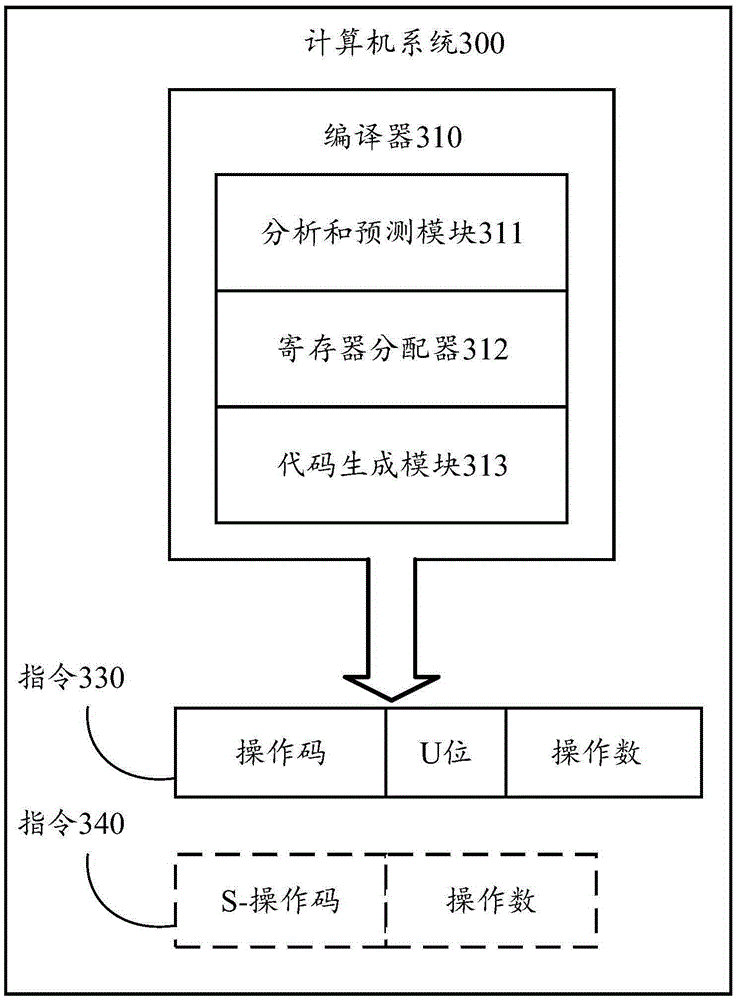

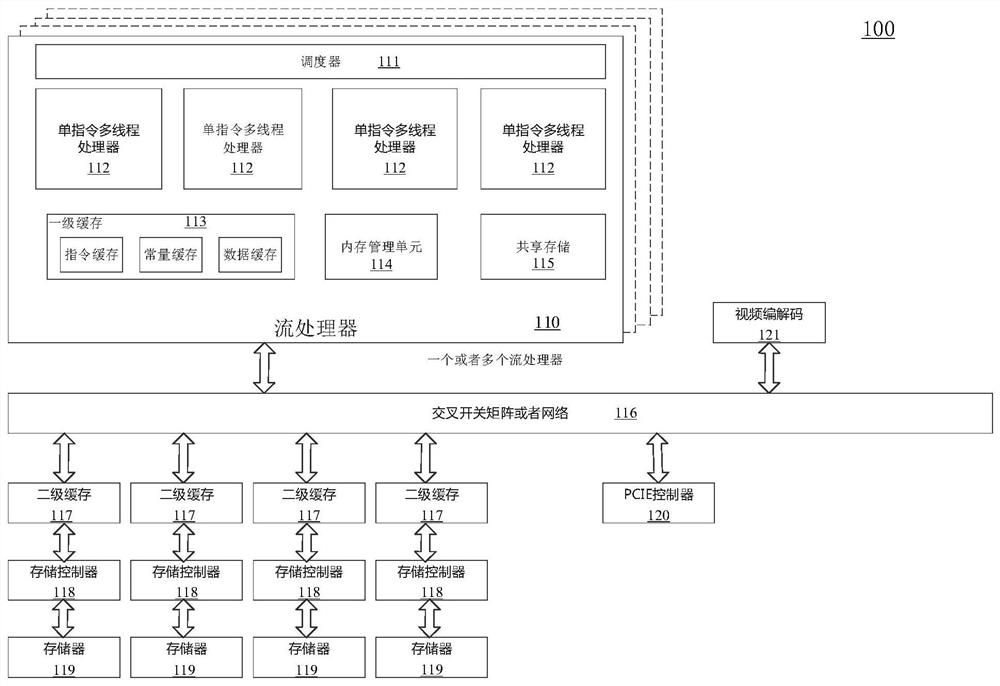

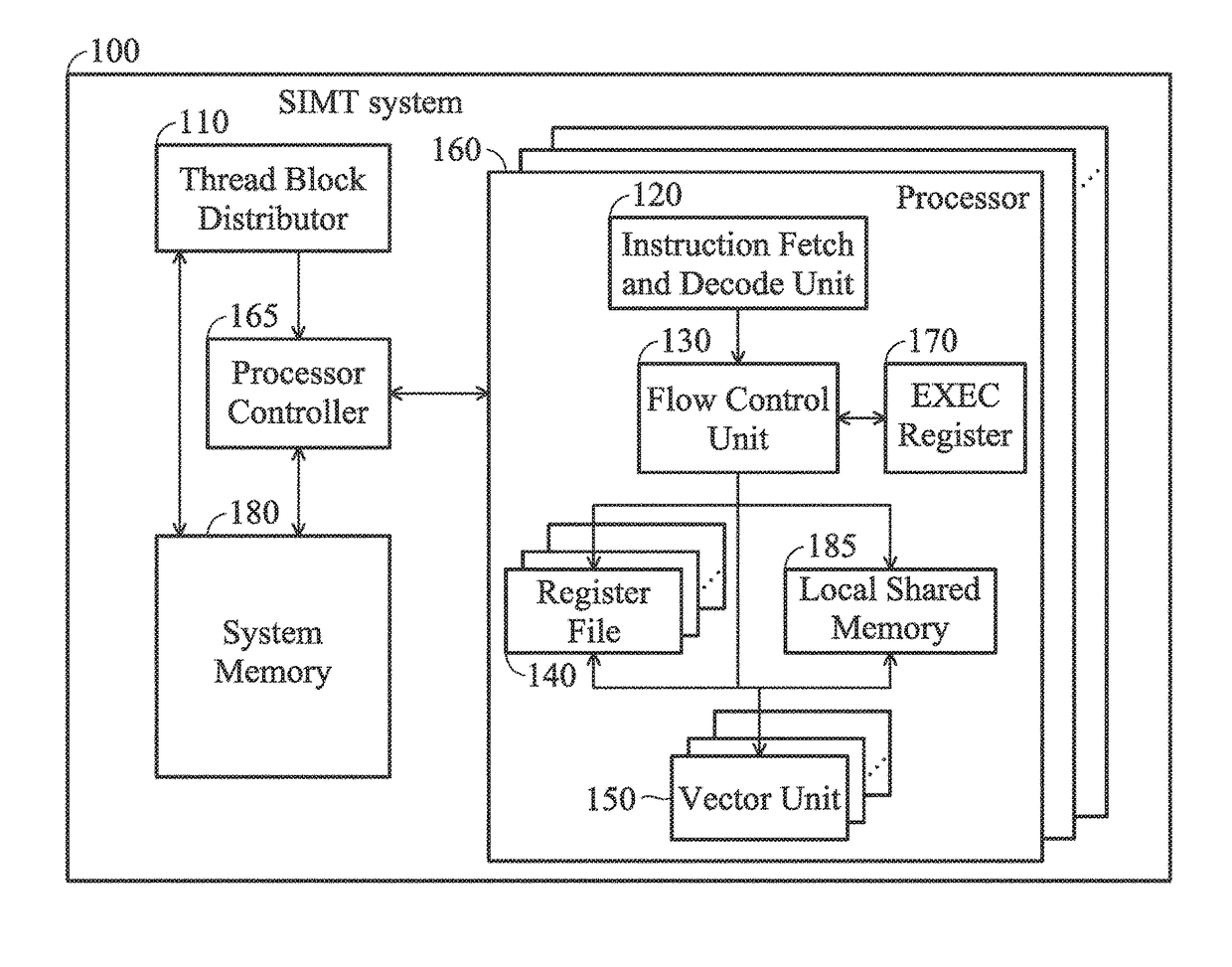

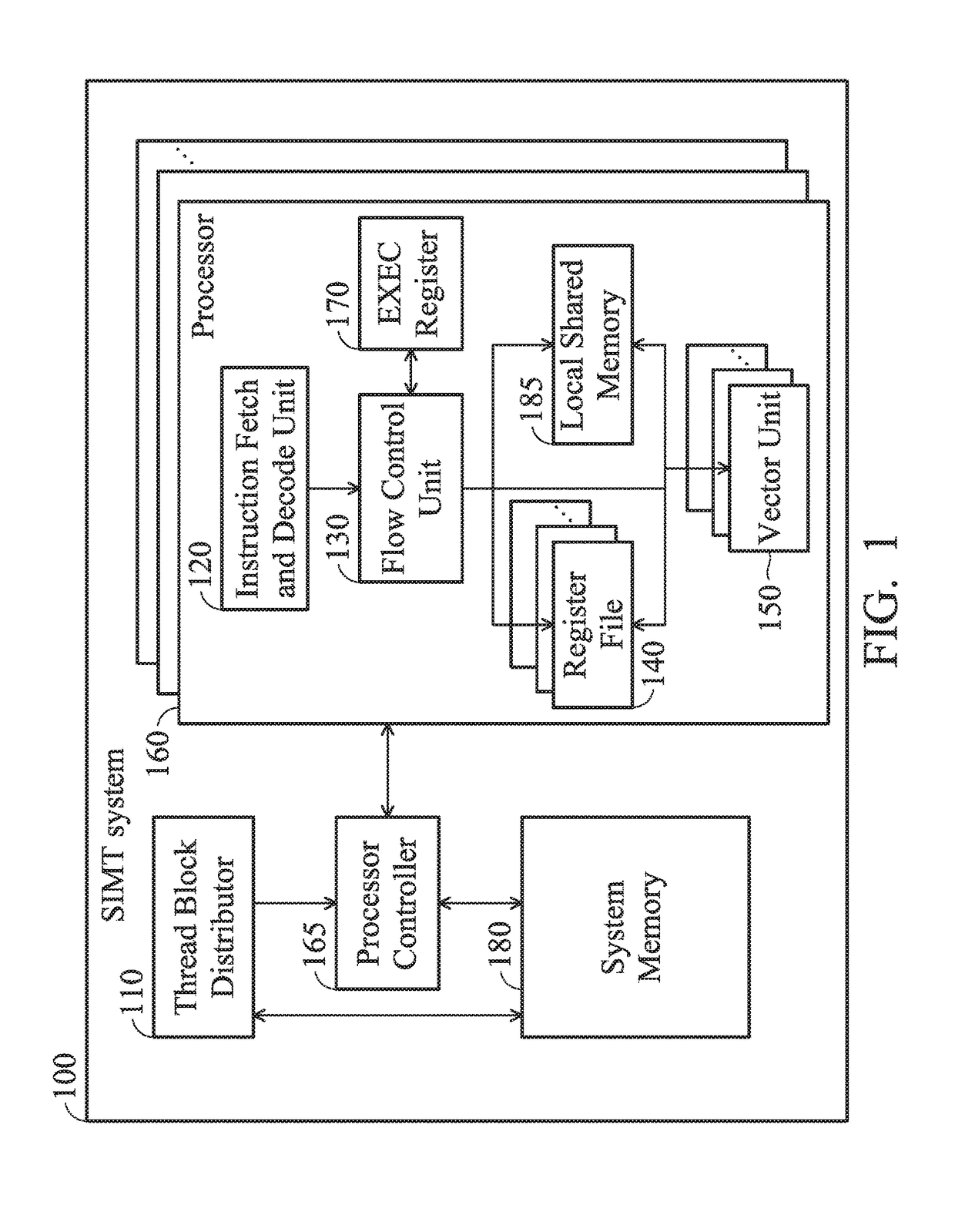

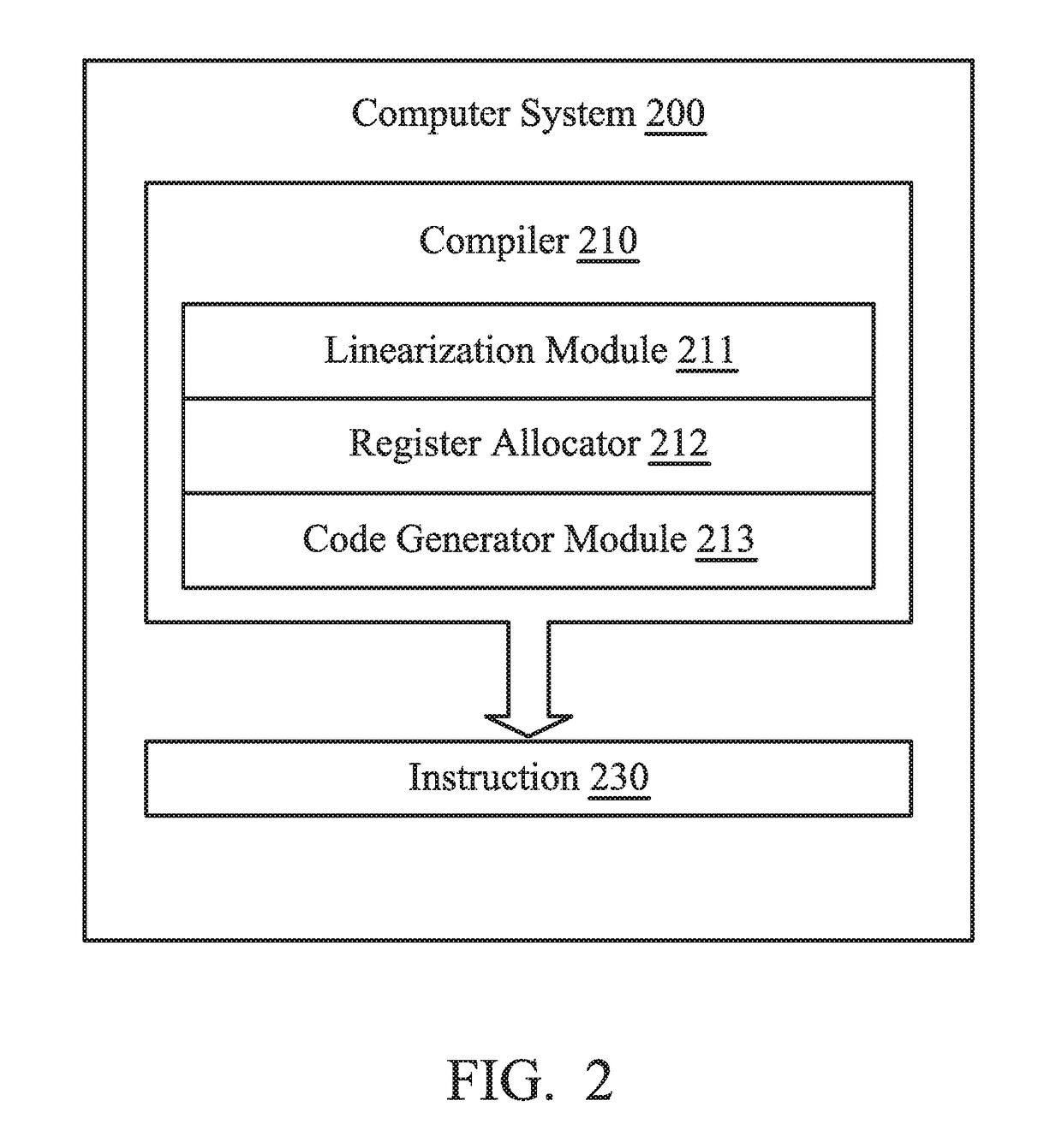

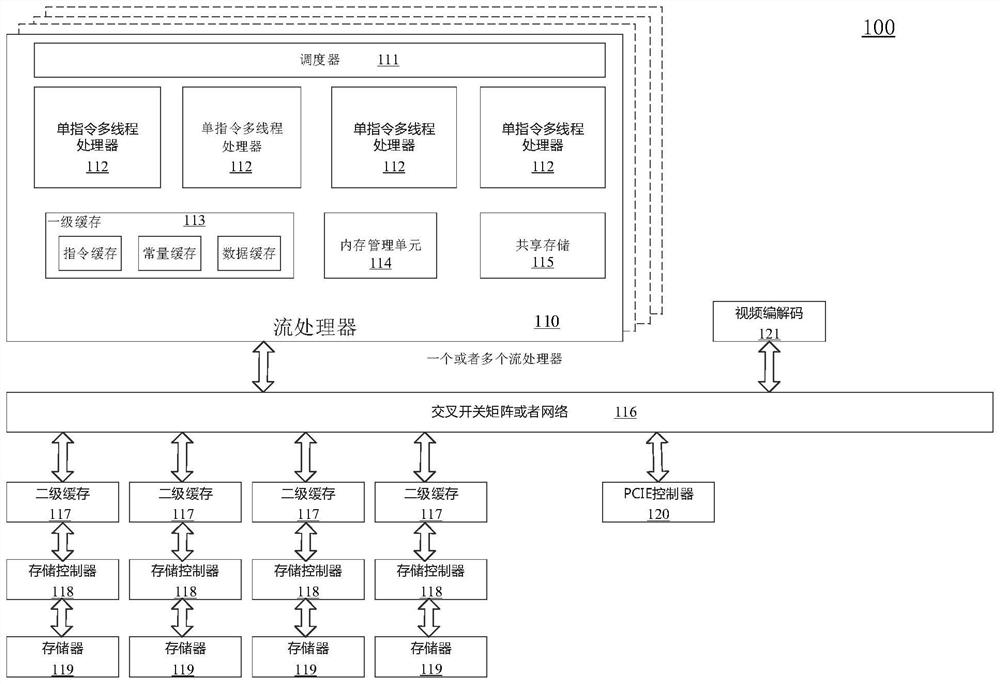

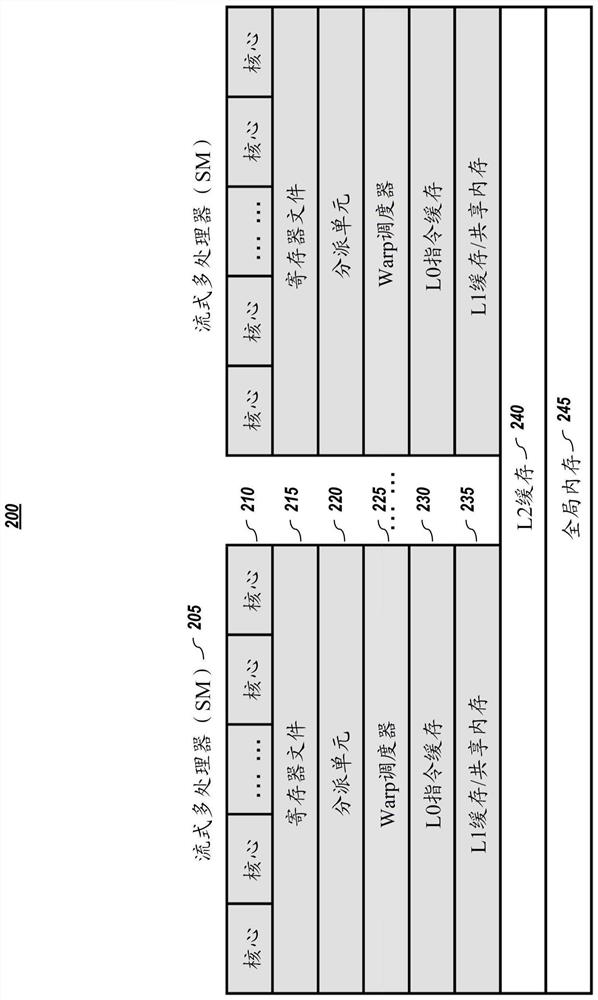

Single-Instruction-Multiple-Treads (SIMT) computing system and method thereof

ActiveCN106257411AImprove performanceConcurrent instruction executionSingle instruction, multiple threadsParallel computing

The invention provides a Single-Instruction-Multiple-Treads (SIMT) computing system including multiple processors and a scheduler to schedule multiple threads to each of the processors. Each processor includes a scalar unit to provide a scalar lane for scalar execution and vector units to provide N parallel lanes for vector execution. During execution time, a processor detects that an instruction of N threads has been predicted by a compiler to have (N M) inactive threads and the same source operands for M active threads, where N>M>=1. Upon the detection, the instruction is sent to the scalar unit for scalar execution. The single-instruction-multiple-treads computing system can improve the performance of the system.

Owner:MEDIATEK INC

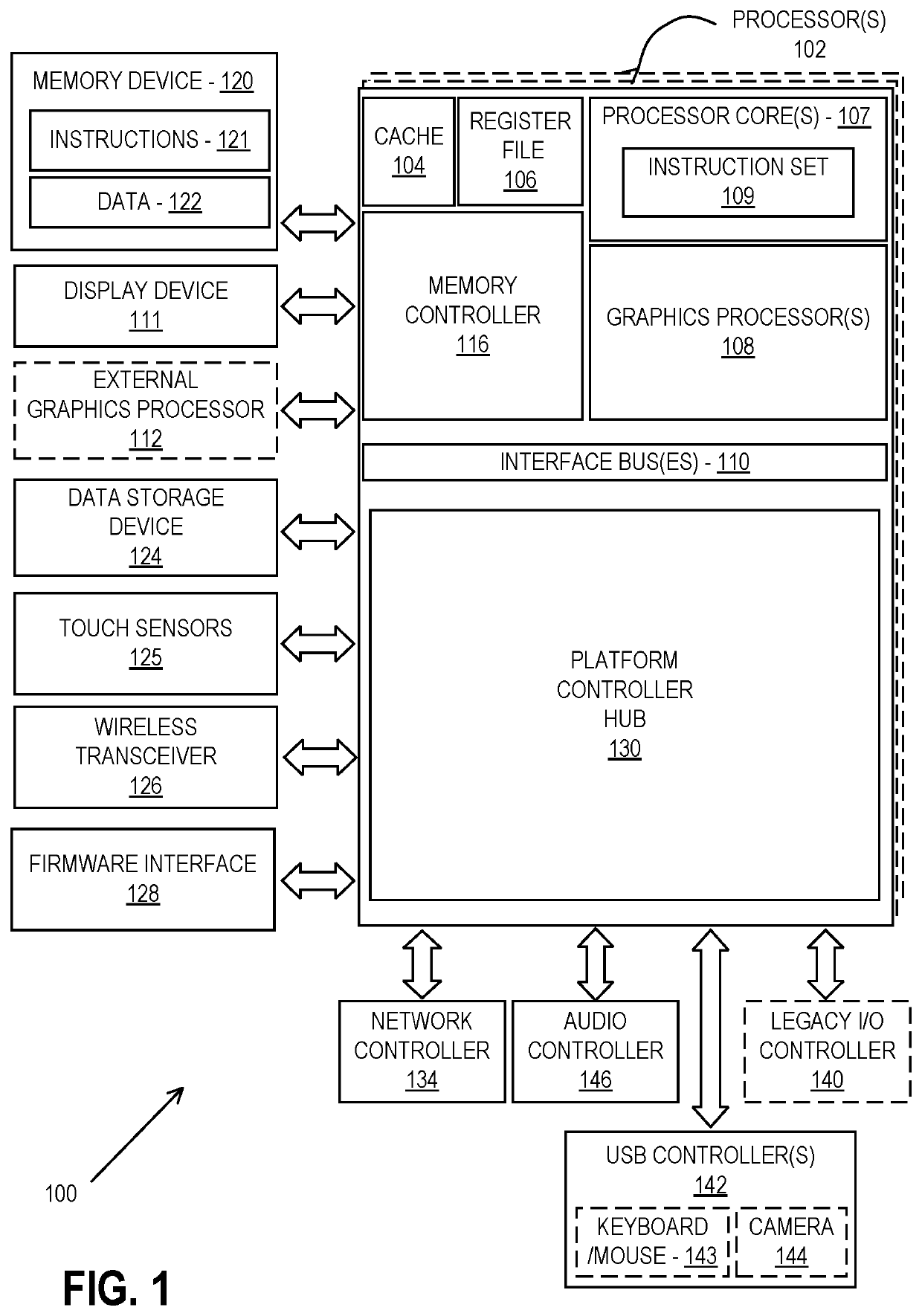

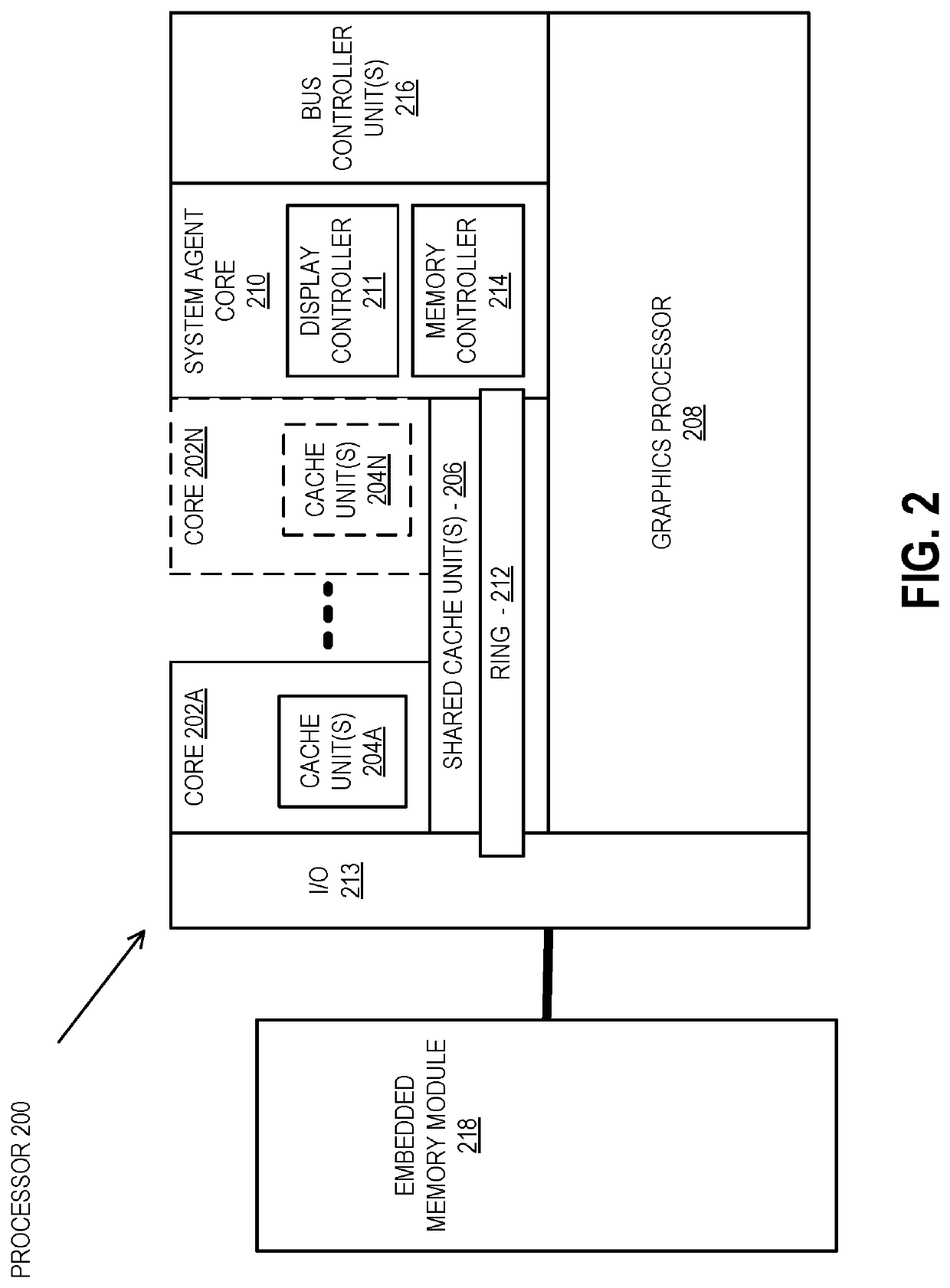

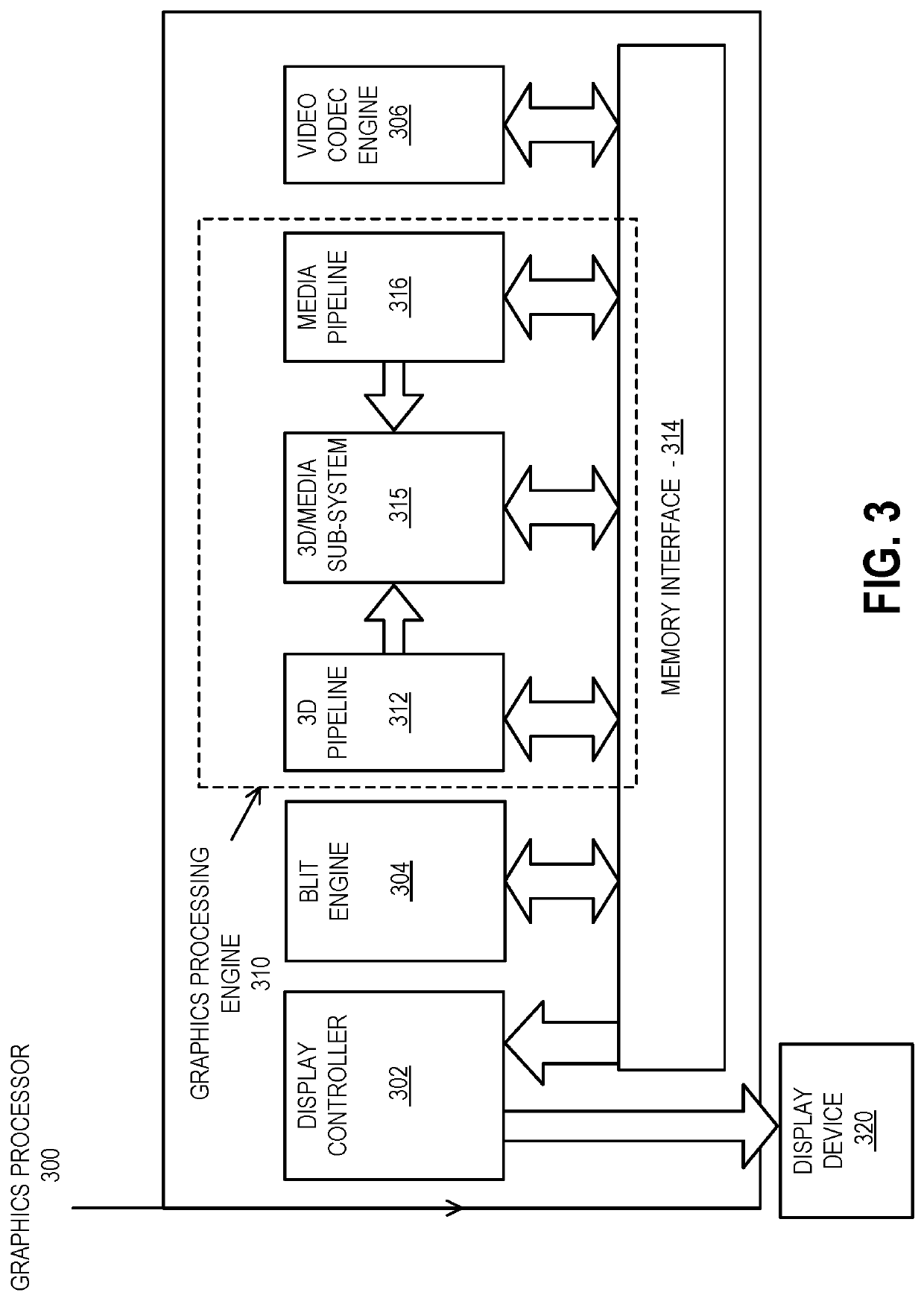

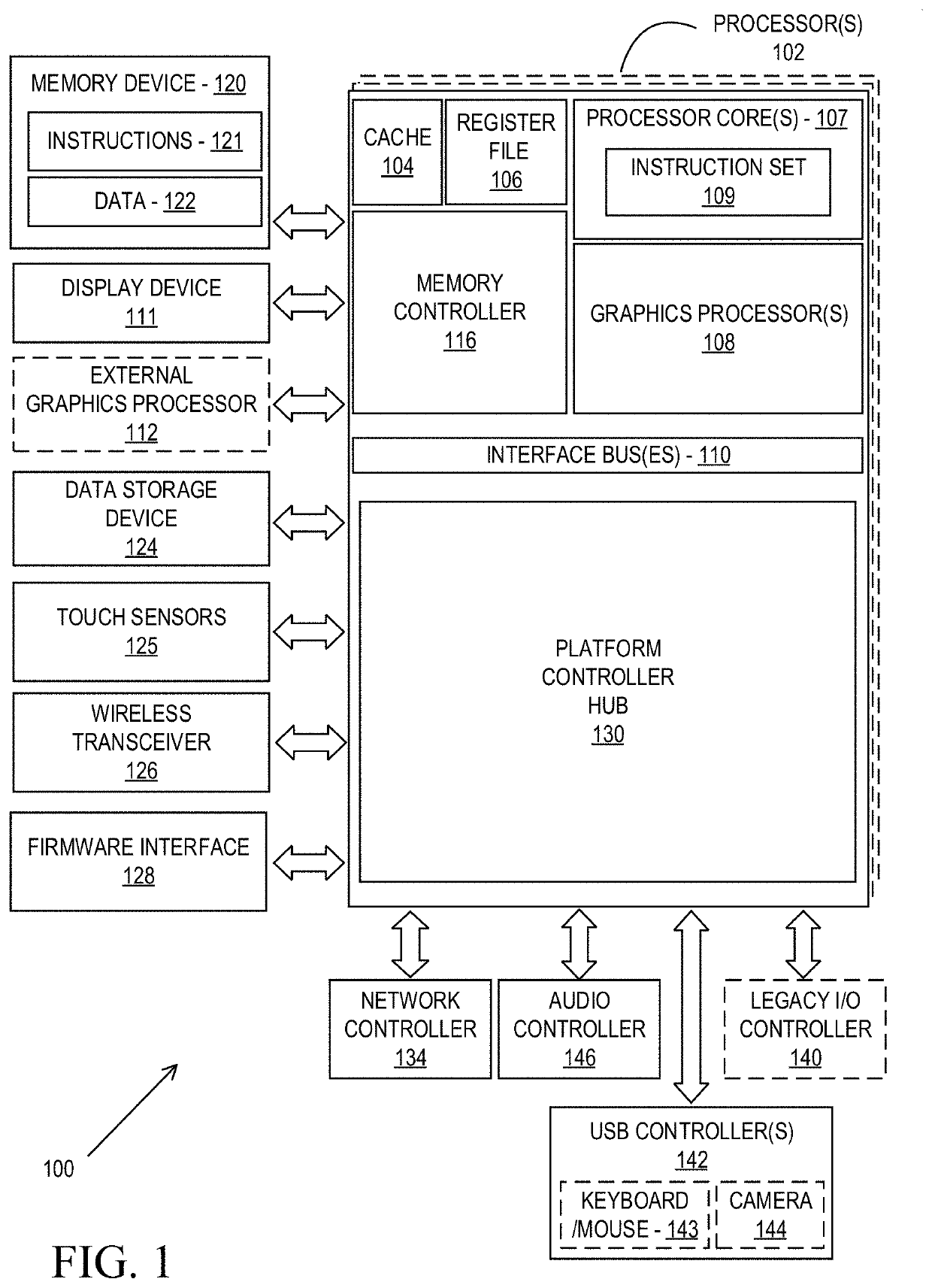

Speculative execution of hit and intersection shaders on programmable ray tracing architectures

Apparatus and method for speculative execution of hit and intersection shaders on programmable ray tracing architectures. For example, one embodiment of an apparatus comprises: single-instruction multiple-data (SIMD) or single-instruction multiple-thread (SIMT) execution units (EUs) to execute shaders; and ray tracing circuitry to execute a ray traversal thread, the ray tracing engine comprising: traversal / intersection circuitry, responsive to the traversal thread, to traverse a ray through an acceleration data structure comprising a plurality of hierarchically arranged nodes and to intersect the ray with a primitive contained within at least one of the nodes; and shader deferral circuitry to defer and aggregate multiple shader invocations resulting from the traversal thread until a particular triggering event is detected, wherein the multiple shaders are to be dispatched on the EUs in a single shader batch upon detection of the triggering event.

Owner:INTEL CORP

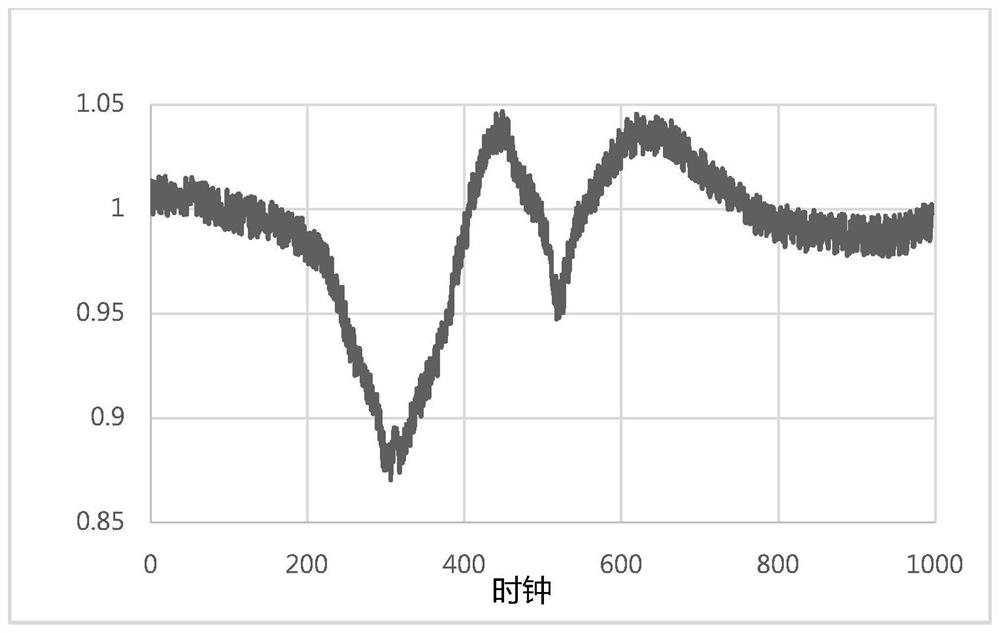

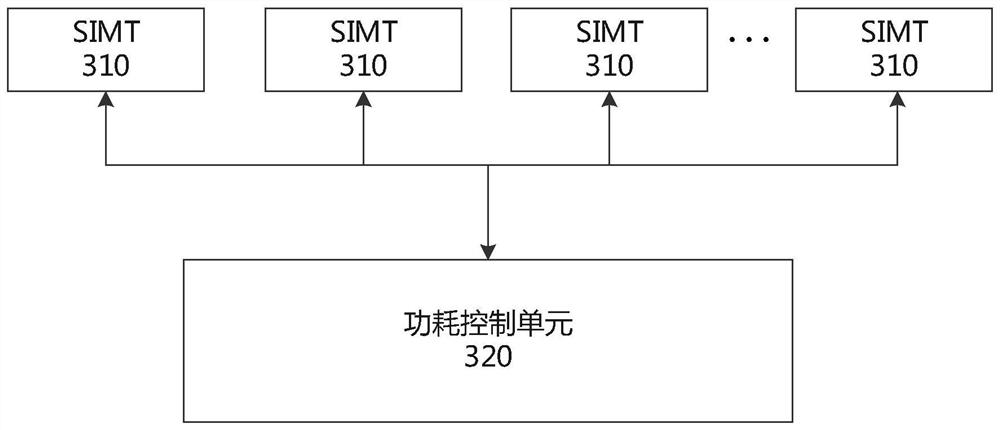

Multi-core processing device and power consumption control method thereof

ActiveCN114442795AStable currentStable voltageDigital data processing detailsEnergy efficient computingSingle instruction, multiple threadsProcessing element

The embodiment of the invention discloses a multi-core processing device and a power consumption control method thereof. The device obtains the workload of each of one or more single-instruction multi-thread processing units in a power consumption adjustment period through a power consumption control unit, and judges whether the workload of each of the one or more single-instruction multi-thread processing units in the power consumption adjustment period is smaller than a power consumption adjustment threshold value or not; when the working load of any one single-instruction multi-thread processing unit in one or more single-instruction multi-thread processing units in one power consumption adjusting period is smaller than a power consumption adjusting threshold value, at least part of thread bundles in the any one single-instruction multi-thread processing unit are indicated to execute a power consumption adjusting instruction; therefore, the workload of any single-instruction multi-thread processing unit is increased. According to the embodiment of the invention, the multi-core processing device can be ensured to maintain relatively stable current and voltage during an effective working period, and the problem of voltage prompt drop in the multi-core processing device is reduced, so that the overall power consumption of a chip is reduced, and the processing frequency and performance are improved.

Owner:METAX INTEGRATED CIRCUITS (SHANGHAI) CO LTD

Methods and systems for managing an instruction sequence with a divergent control flow in a SIMT architecture

A computer-implemented method of executing an instruction sequence with a recursive function call of a plurality of threads within a thread group in a Single-Instruction-Multiple-Threads (SIMT) system is provided. Each thread is provided with a function call counter (FCC), an active mask, an execution mask and a per-thread program counter (PTPC). The instruction sequence with the recursive function call is executed by the threads in the thread group according to a program counter (PC) indicating a target. Upon executing the recursive function call, for each thread, the active mask is set according to the PTPC and the target indicated by the PC, the FCC is determined when entering or returning from the recursive function call, the execution mask is determined according to the FCC and the active mask. It is determined whether an execution result of the recursive function call takes effects according to the execution mask.

Owner:XUESHAN TECH INC

Speculative execution of hit and intersection shaders on programmable ray tracing architectures

Apparatus and method for speculative execution of hit and intersection shaders on programmable ray tracing architectures. For example, one embodiment of an apparatus comprises: single-instruction multiple-data (SIMD) or single-instruction multiple-thread (SIMT) execution units (EUs) to execute shaders; and ray tracing circuitry to execute a ray traversal thread, the ray tracing engine comprising: traversal / intersection circuitry, responsive to the traversal thread, to traverse a ray through an acceleration data structure comprising a plurality of hierarchically arranged nodes and to intersect the ray with a primitive contained within at least one of the nodes; and shader deferral circuitry to defer and aggregate multiple shader invocations resulting from the traversal thread until a particular triggering event is detected, wherein the multiple shaders are to be dispatched on the EUs in a single shader batch upon detection of the triggering event.

Owner:INTEL CORP

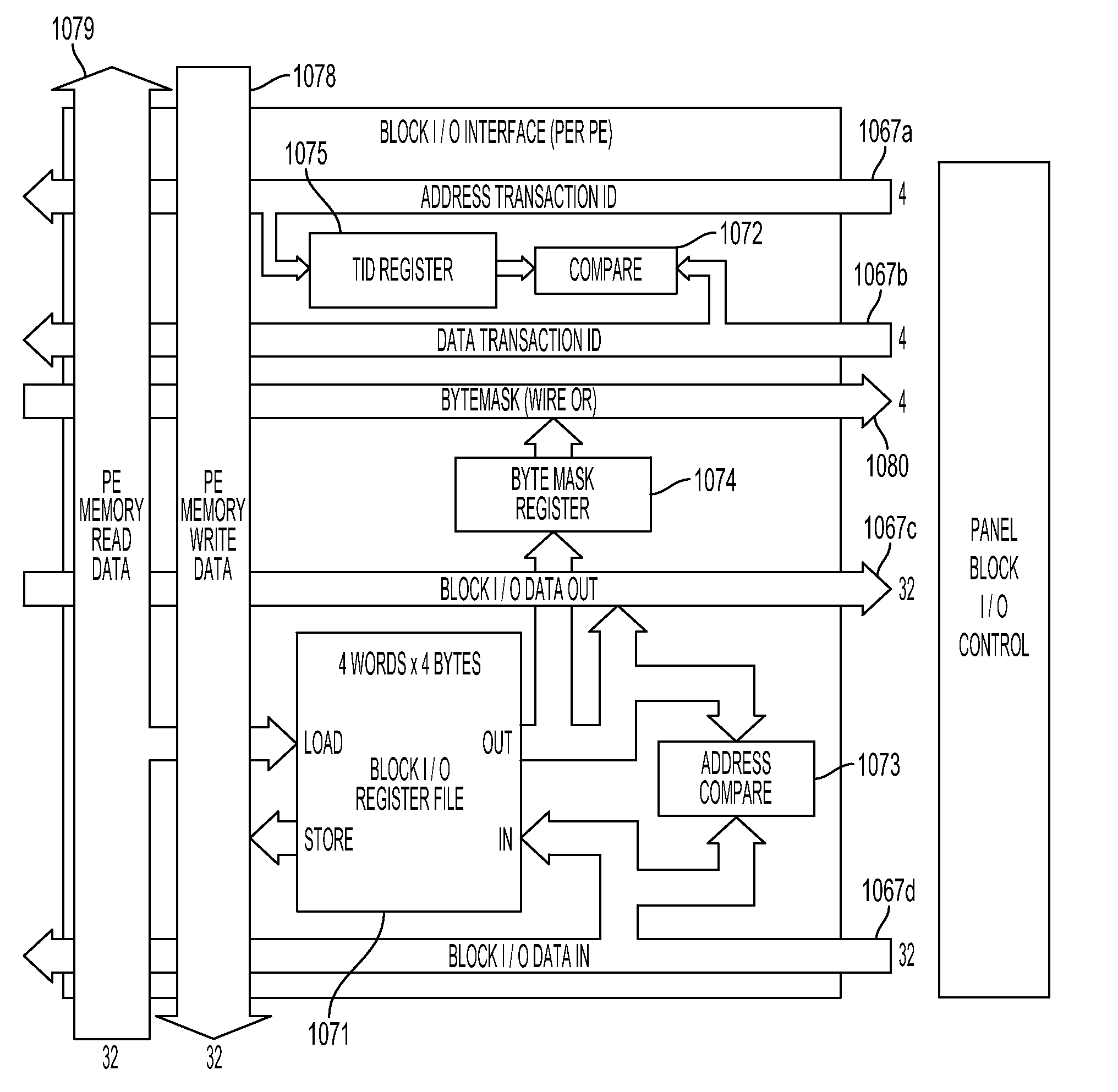

Memory access consolidation for SIMD processing elements using transaction identifiers

InactiveUS8762691B2Single instruction multiple data multiprocessorsProcessor architectures/configurationInternal memorySingle instruction, multiple threads

A data processing apparatus includes a plurality of processing elements arranged in a single instruction multiple data array. The apparatus includes an instruction controller operable to receive instructions from a plurality of instructions streams, and to transfer instructions from those instructions streams to the processing elements in the array, such that the data processing apparatus is operable to process a plurality of processing threads substantially in parallel with one another. A data transfer controller is provided which is operable to control transfer of data between the internal memory units associated with the processing elements, and memory external to the array.

Owner:RAMBUS INC

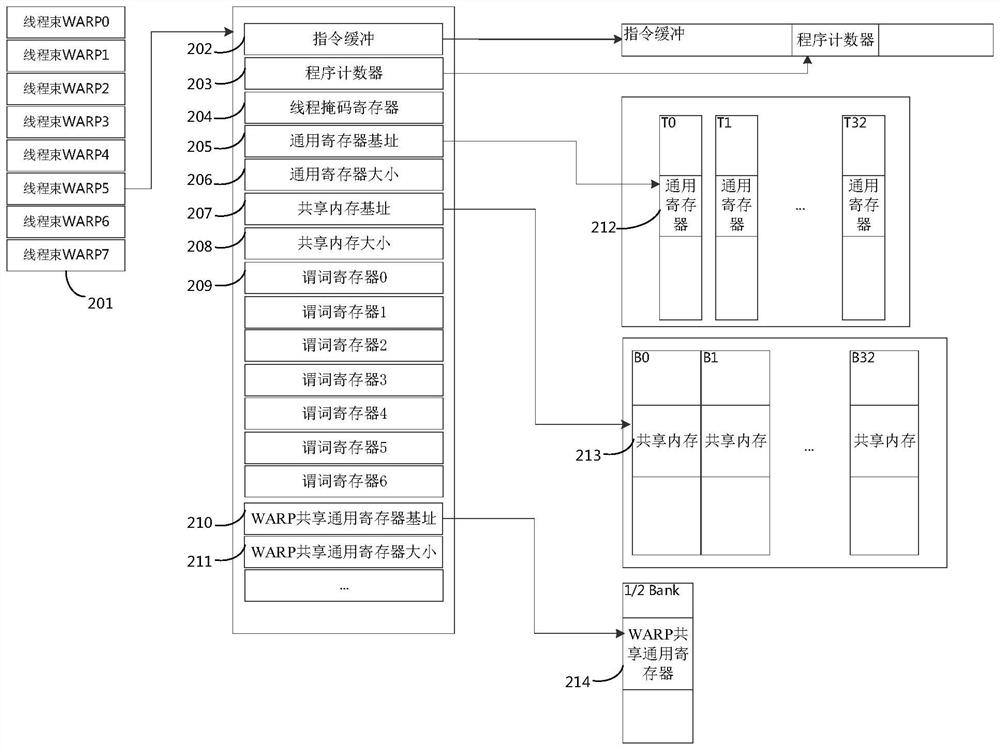

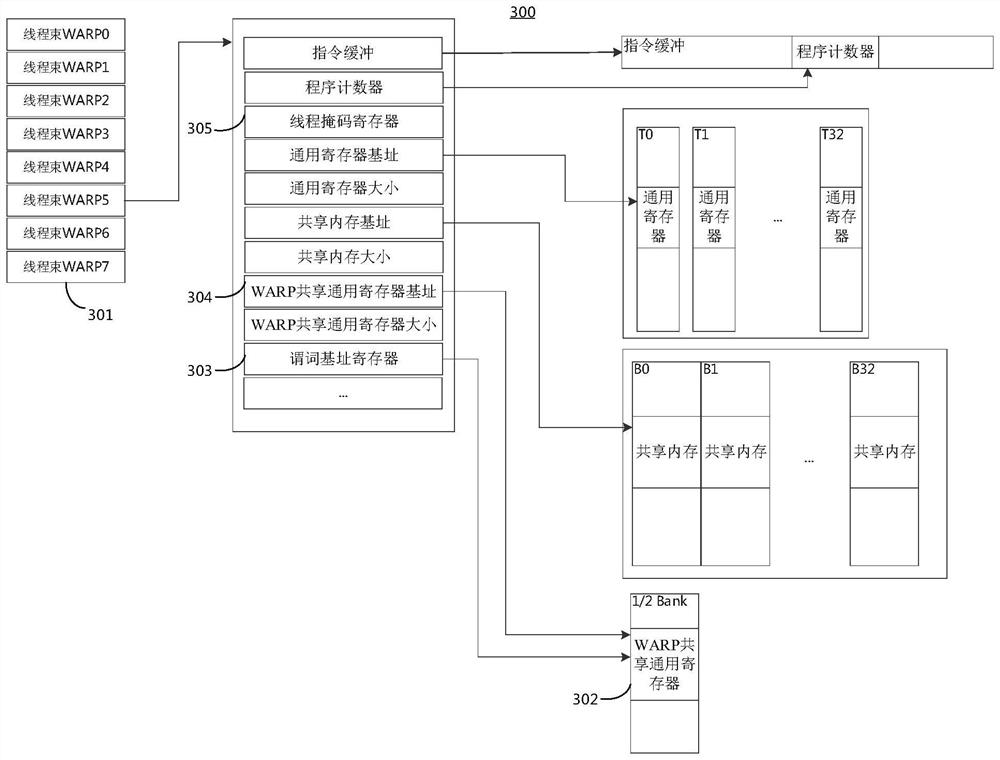

Processor device, instruction execution method thereof and computing equipment

ActiveCN114489791ARealize dynamic expansionTake advantage ofRegister arrangementsConcurrent instruction executionGeneral purposeComputer architecture

The embodiment of the invention discloses a processor device, an instruction execution method thereof and computing equipment. The apparatus comprises one or more single-instruction multi-thread processing units, and the single-instruction multi-thread processing units comprise one or more thread bundles used for executing instructions; a shared register group including a plurality of general purpose registers shared among the thread bundles; a predicate base address register which is arranged corresponding to each thread bundle and is used for indicating a base address of a group of general purpose registers which are used as predicate registers of each thread bundle in the shared register group; wherein each thread bundle performs asserted execution on instructions based on predicate values in the set of general purpose registers used as predicate registers for each thread bundle. According to the embodiment of the invention, the inherent special predicate register of each thread bundle in the original processor architecture can be canceled, the dynamic expansion of the predicate register resource of each thread bundle is realized, the full utilization of processor resources is realized, the overhead of switching instructions is reduced, and the instruction processing performance is improved.

Owner:METAX INTEGRATED CIRCUITS (SHANGHAI) CO LTD

Digital signal processing array using integrated processing elements

ActiveUS10353709B2Eliminate redundancyMinimize and eliminate redundant processingInterprogram communicationArchitecture with multiple processing unitsDigital signal processingIntellectual property

Techniques and mechanisms described herein include a signal processor implemented as an overlay on a field-programmable gate array (FPGA) device that utilizes special purpose, hardened intellectual property (IP) modules such as memory blocks and digital signal processing (DSP) cores. A Processing Element (PE) is built from one or more DSP cores connected to additional logic. Interconnected as an array, the PEs may operate in a computational model such as Single Instruction-Multiple Thread (SIMT). A software hierarchy is described that transforms the SIMT array into an effective signal processor.

Owner:ACORN INNOVATIONS INC

Method and apparatus for loading data in single instruction multi-thread computing system

ActiveCN114510271AImprove data exchange efficiencyProgram initiation/switchingRegister arrangementsLoad instructionSingle instruction, multiple threads

The embodiment of the invention relates to a method and an electronic device for loading data in a single-instruction multi-thread computing system. In the method, a plurality of predicates for a plurality of threads are determined based on a received single load instruction, each predicate indicating whether an address specified in a respective thread is valid, the address being used to access data in a memory; determining at least one execution thread in the plurality of threads based on the determined plurality of predicates; for each execution thread in the at least one execution thread, determining target data; and writing a set of target data for each of the at least one execution thread into a register file of each of the plurality of threads. In this manner, corresponding target data may be determined for each execution thread based on a single load instruction and a set of target data is written to each target thread. In this way, the efficiency of data exchange between the register and the memory can be improved.

Owner:HEXAFLAKE (NANJING) INFORMATION TECH CO LTD

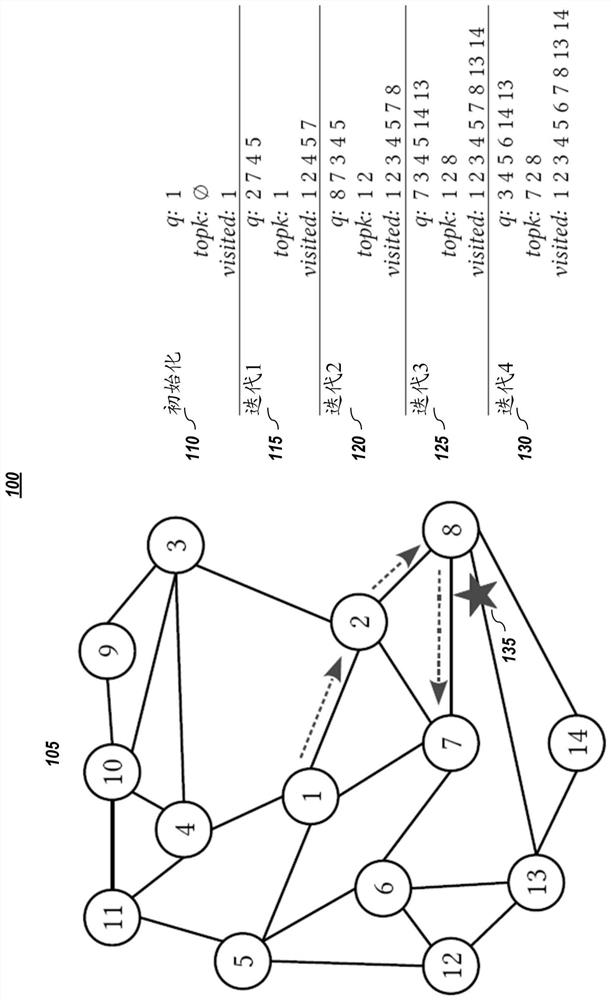

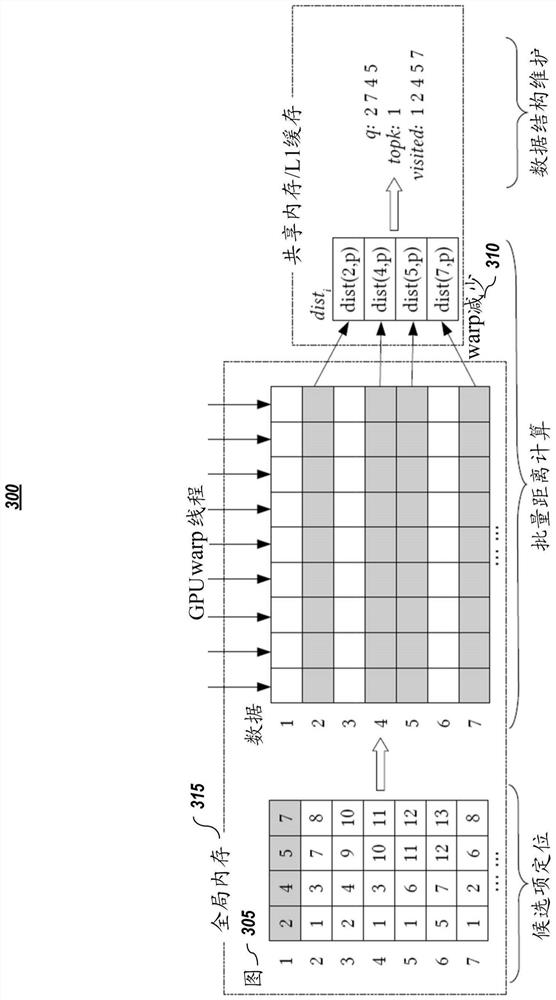

Approximate nearest neighbor search for single instruction, multiple thread (SIMT) or single instruction, multiple data (SIMD) type processors

ActiveCN112835627ASingle instruction multiple data multiprocessorsConcurrent instruction executionGraphicsSingle instruction, multiple threads

Approximate nearest neighbor (ANN) searching is a fundamental problem in computer science with numerous applications in area such as machine learning and data mining. For typical graph-based ANN methods, the searching method is executed iteratively, and the execution dependency prohibits graphics processor unit (GPU) / GPU-type processor adaptations. Presented herein are embodiments of a novel framework that decouples the searching on graph methodology into stages, in order to parallel the performance-crucial distance computation. Furthermore, in one or more embodiments, to obtain better parallelism on GPU-type components, also disclosed are novel ANN-specific optimization methods that eliminate dynamic memory allocations and trade computations for less memory consumption. Embodiments were empirically compared against other methods, and the results confirm the effectiveness.

Owner:BAIDU USA LLC

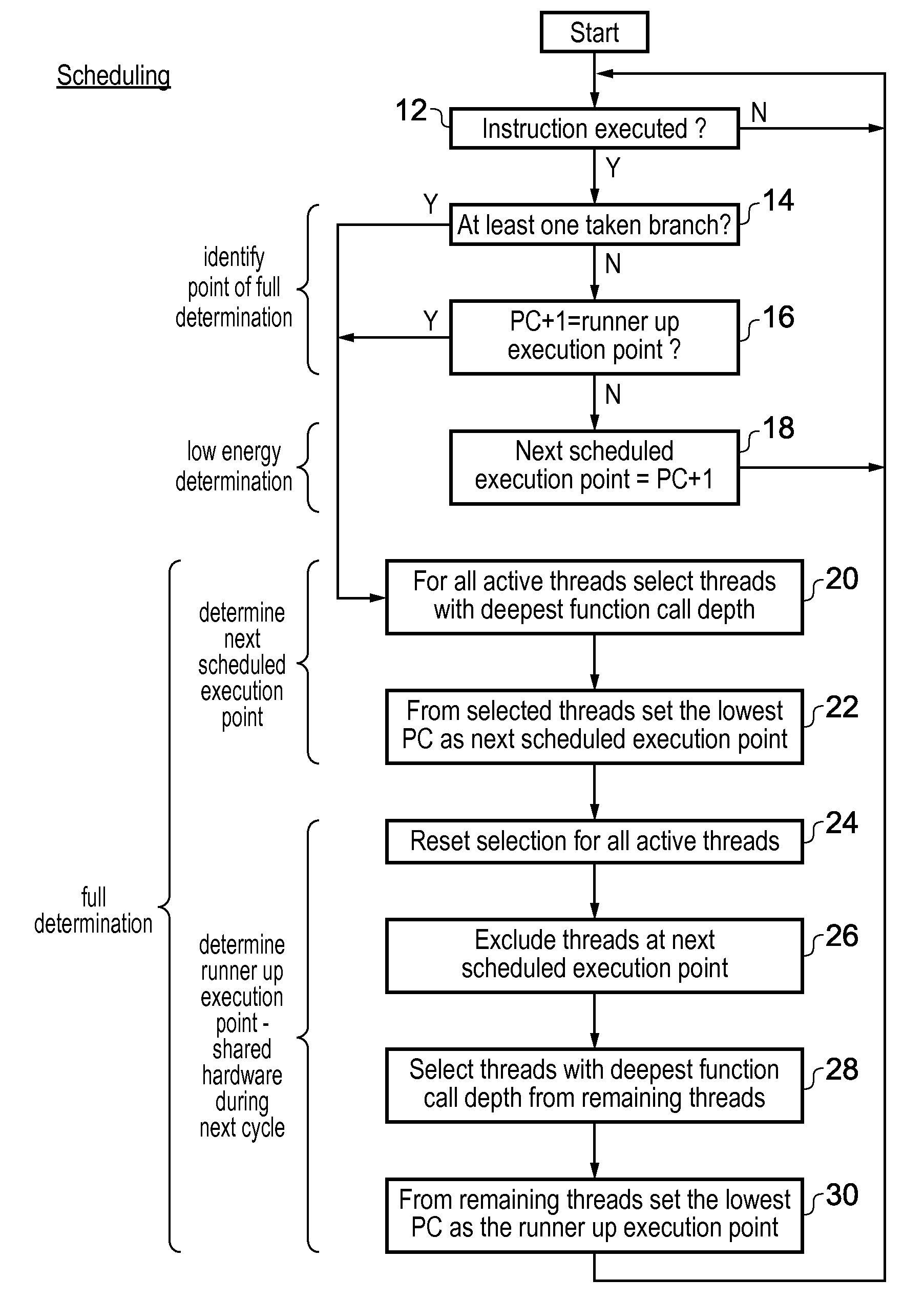

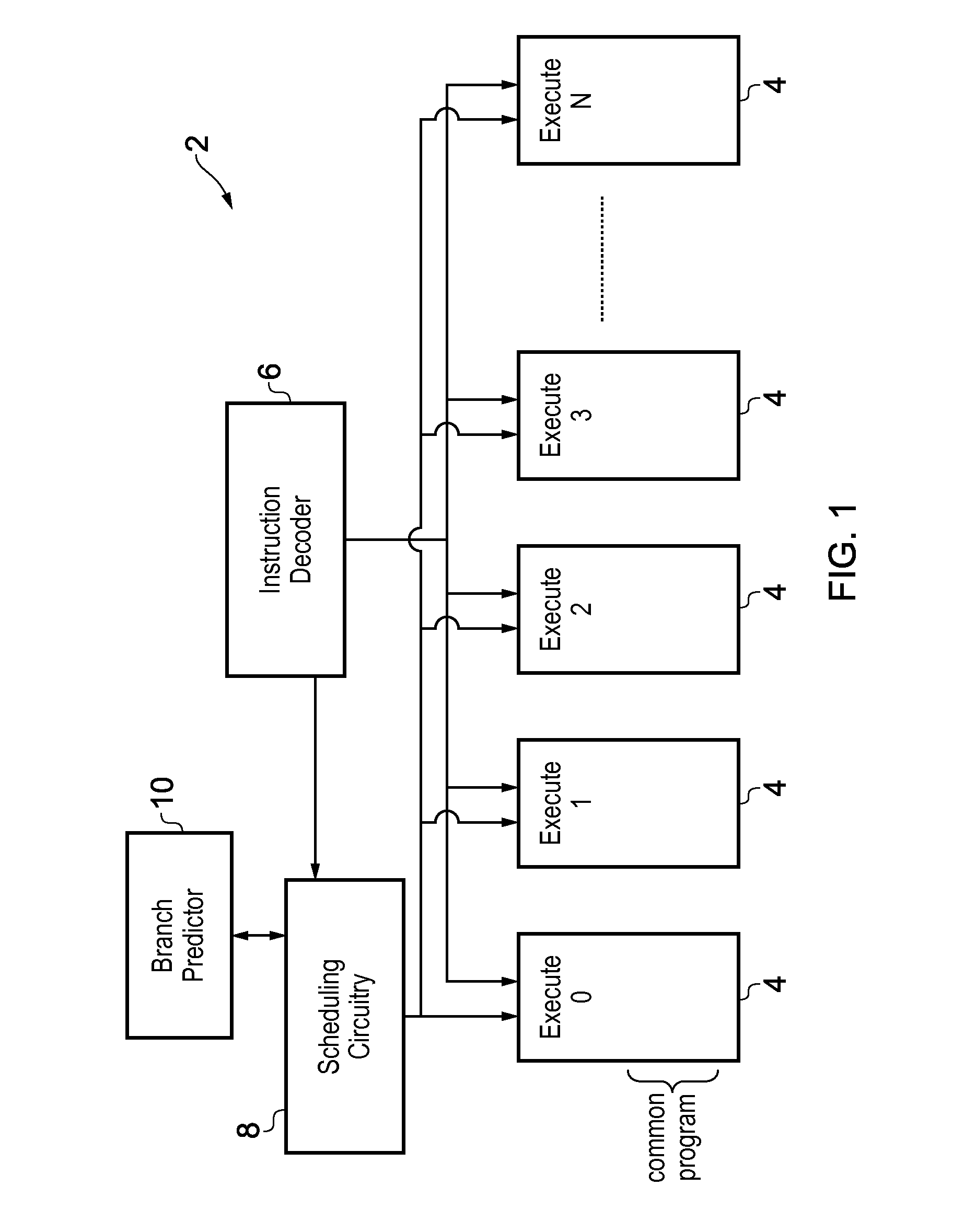

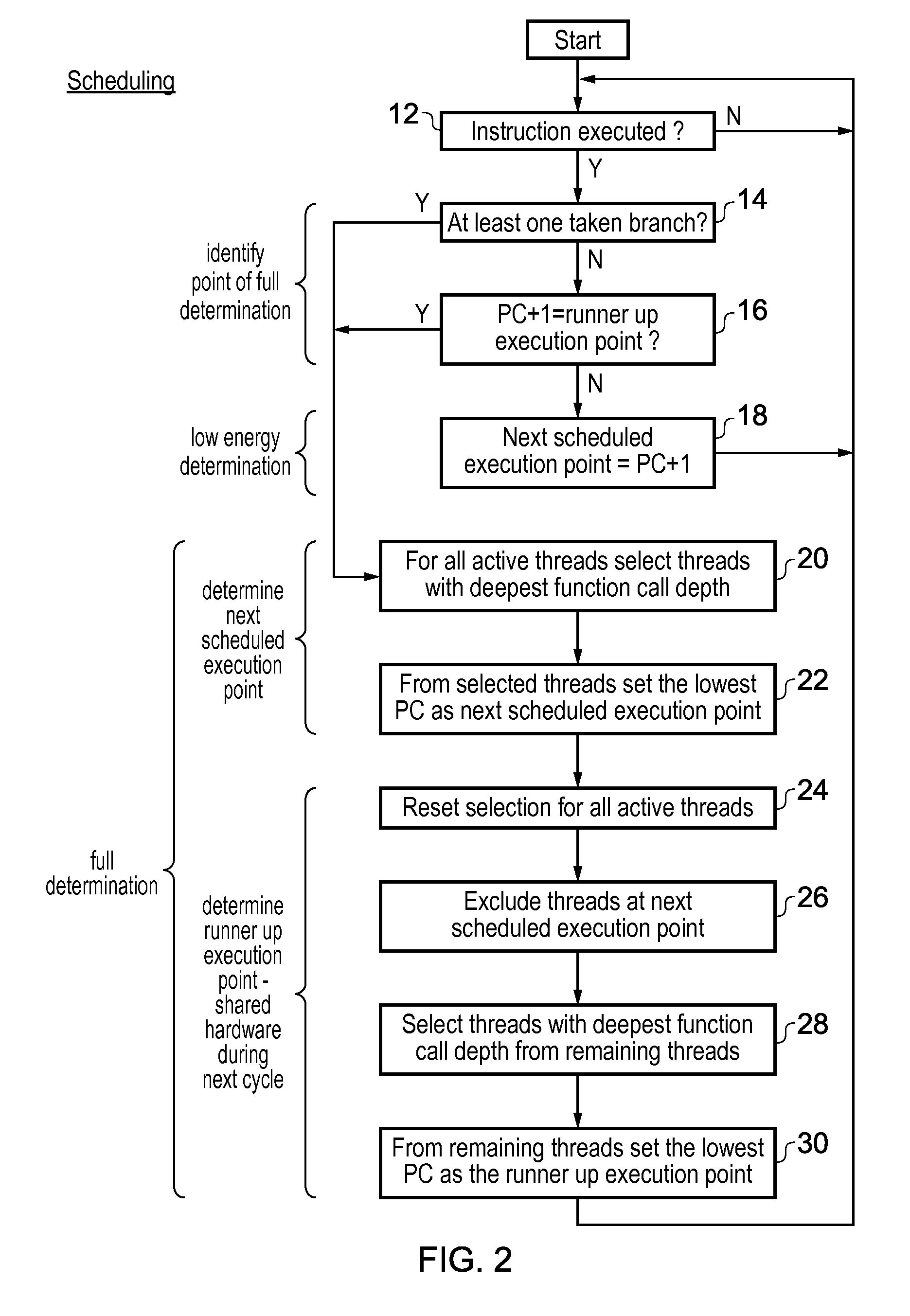

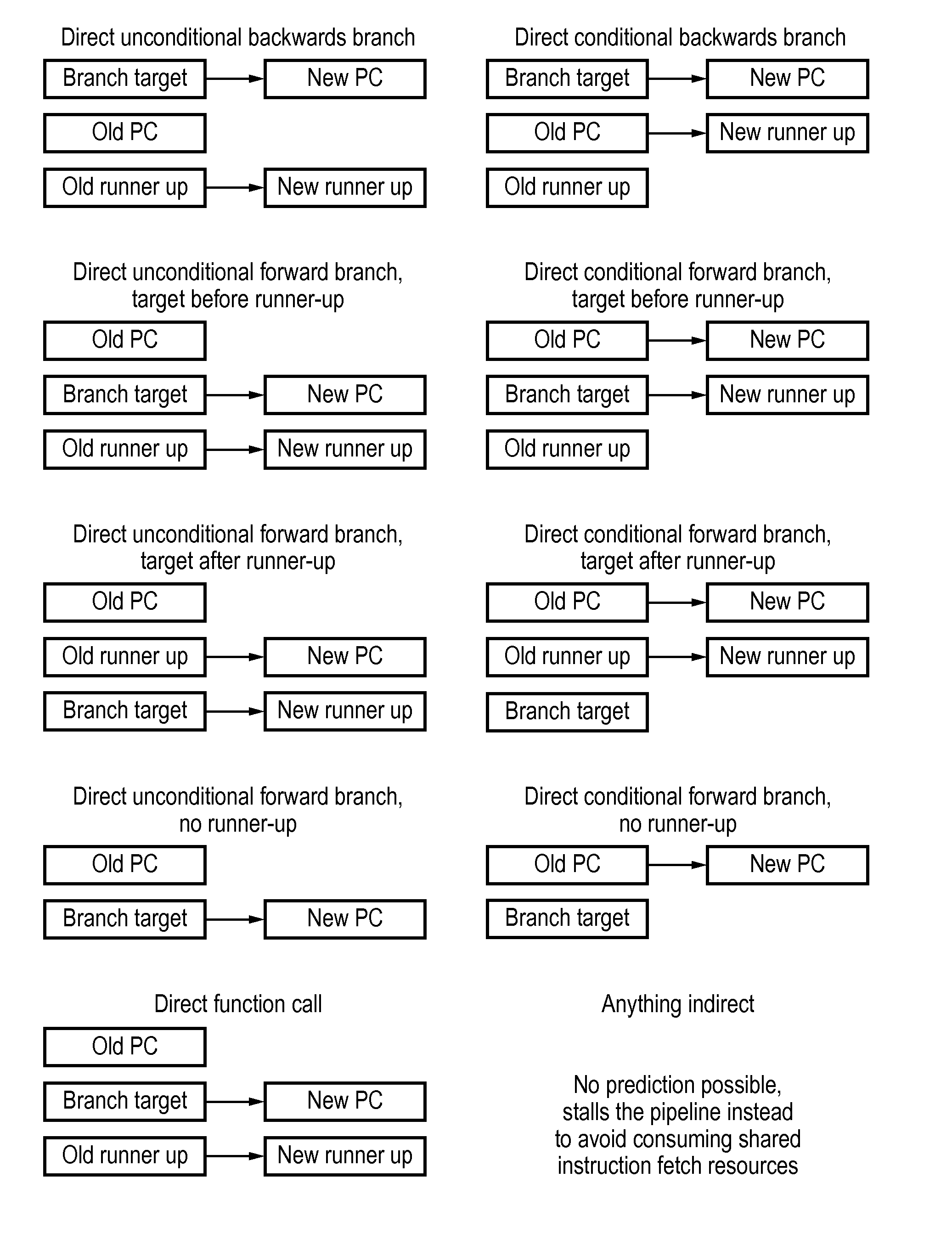

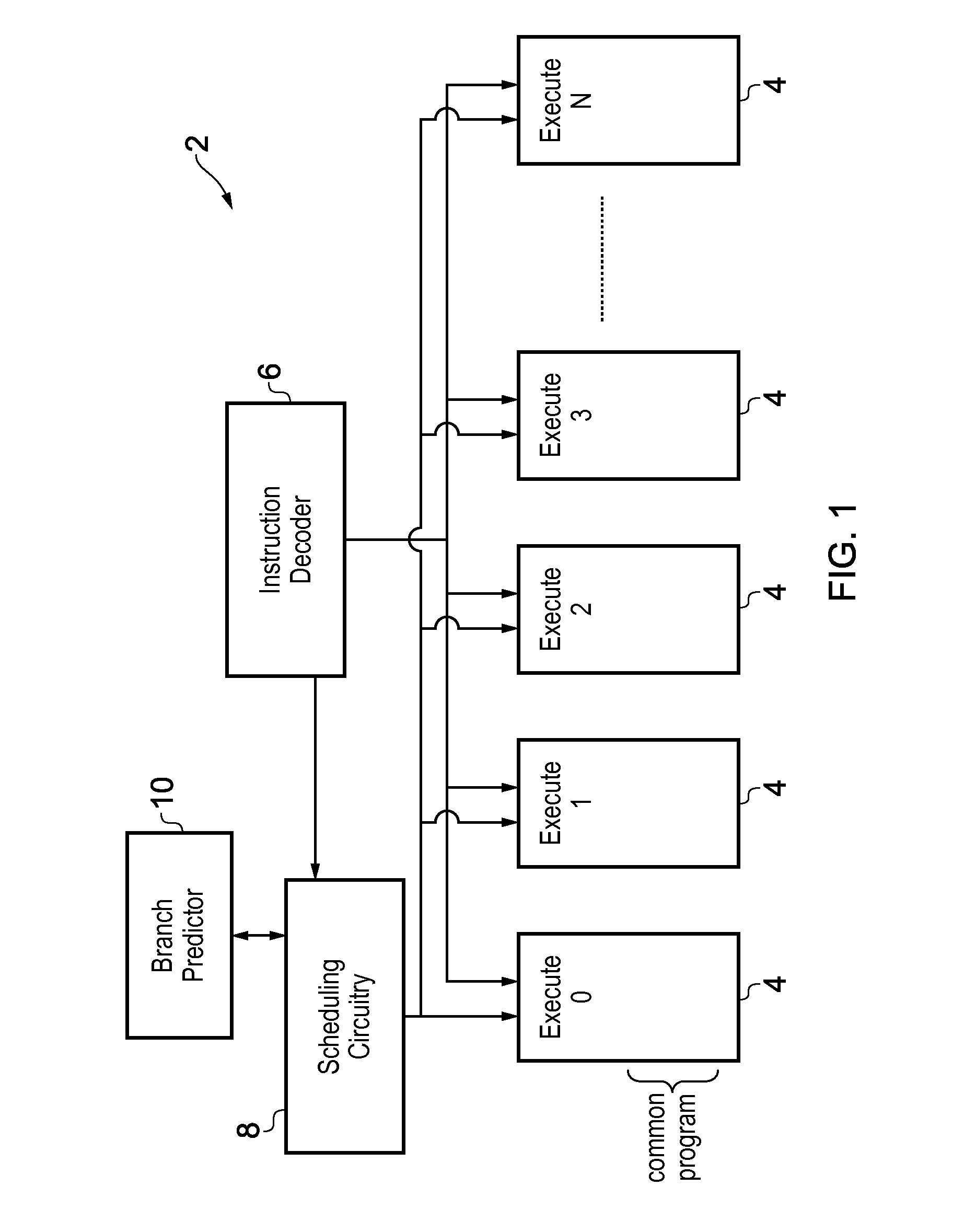

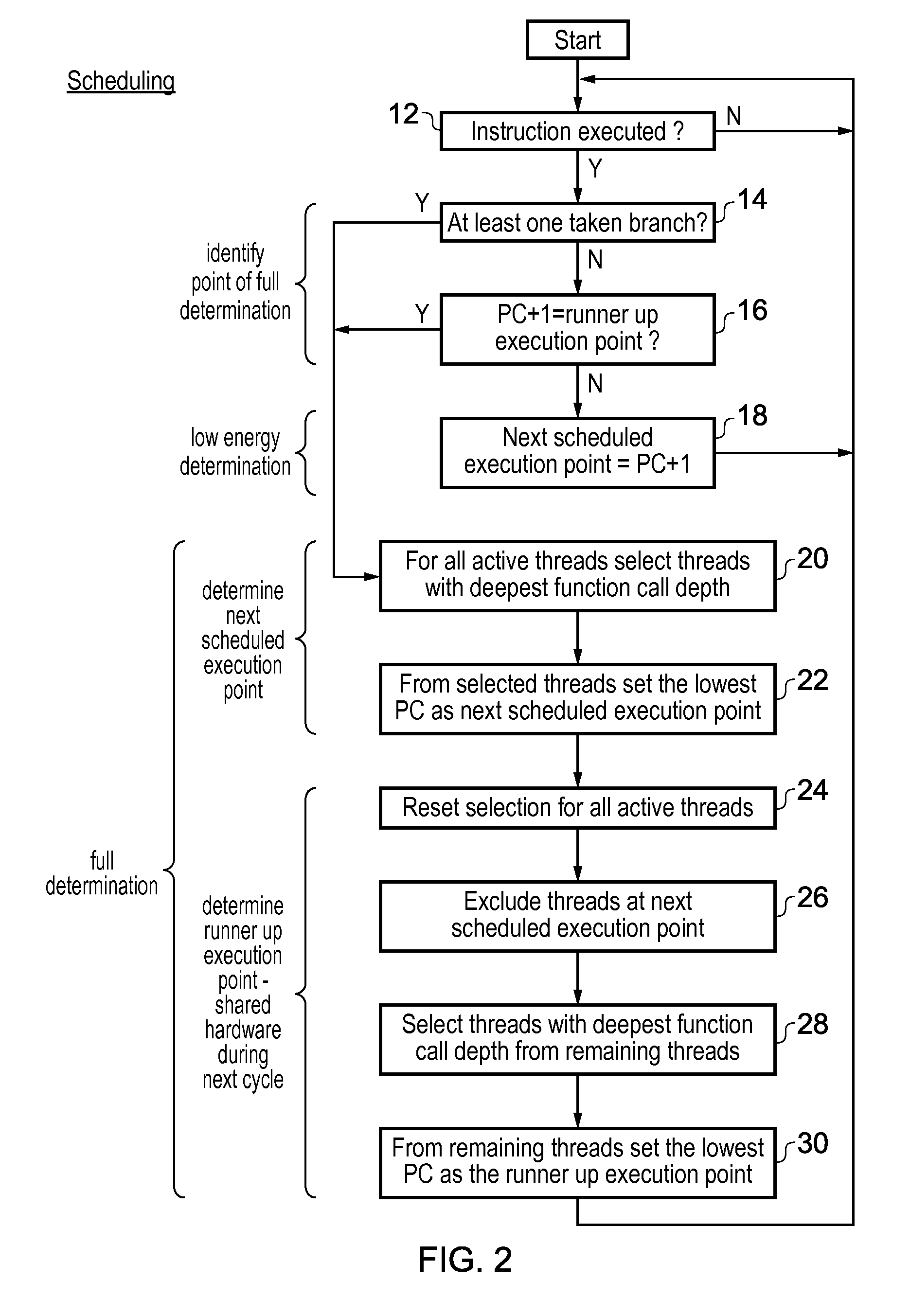

Scheduling program instructions with a runner-up execution position

ActiveUS20150100768A1Reduce workloadImprove system efficiencyInstruction analysisDigital computer detailsProgram instructionSingle instruction, multiple threads

A single instruction multiple thread (SIMT) processor 2 includes scheduling circuitry 8 for calculating a next scheduled execution point for execution circuits 4 which execute respective threads corresponding to a common program. In addition to calculating the next scheduled execution point, the scheduling circuitry determines a runner up execution point which would have been determined as the next scheduled execution point if the threads which actually correspond to the next scheduled execution point had been removed from consideration. This runner up execution point is used to identify points of re-convergence within the program flow and as part of the operation of a static branch predictor 10.

Owner:ARM LTD

Scheduling program instructions with a runner-up execution position

ActiveUS9436473B2Improve system efficiencyReduce workloadInstruction analysisNext instruction address formationProgram instructionSingle instruction, multiple threads

A single instruction multiple thread (SIMT) processor includes scheduling circuitry for calculating a next scheduled execution point for execution circuits which execute respective threads corresponding to a common program. In addition to calculating the next scheduled execution point, the scheduling circuitry determines a runner up execution point which would have been determined as the next scheduled execution point if the threads which actually correspond to the next scheduled execution point had been removed from consideration. This runner up execution point is used to identify points of re-convergence within the program flow and as part of the operation of a static branch predictor.

Owner:ARM LTD

DMA transfer method for single instruction multithreading mode in gpdsp

ActiveCN105302749BSupport miningFast executionElectric digital data processingGeneral purposeComputer architecture

The invention discloses a single-instruction multi-thread mode oriented method for DMA (Director Memory Access) transmission in a GPDSP (General Purpose Digital Signal Processor). The method comprises: transferring data of SIMT (Single Instruction Multiple Thread) programs irregularly stored in an out-of-core storage space to a VM (Vector Memory) of a core by configuring a DMA transmission transaction once; and after the transfer, regularly storing the data in the VM, and enabling vector calculation parts to concurrently access to the data. The method is simple in principle, convenient to operate and configurable in data transmission amount, can efficiently supply data to the SIMT programs in a background operation manner, and not only better supports the execution of the SIMT programs but also greatly improves the operational performance of the GPDSP.

Owner:NAT UNIV OF DEFENSE TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com