Patents

Literature

69 results about "Thread (computing)" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

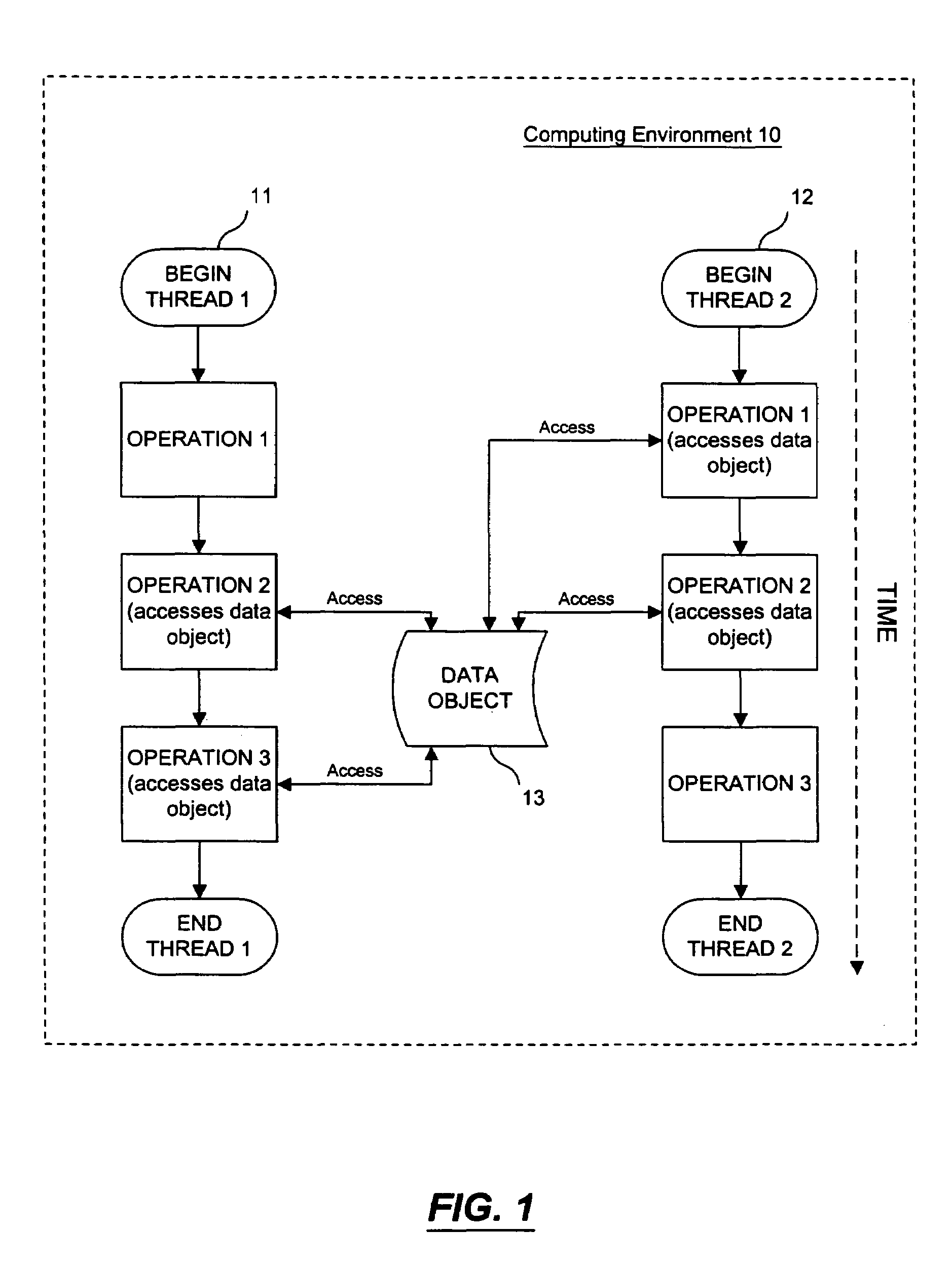

In computer science, a thread of execution is the smallest sequence of programmed instructions that can be managed independently by a scheduler, which is typically a part of the operating system. The implementation of threads and processes differs between operating systems, but in most cases a thread is a component of a process. Multiple threads can exist within one process, executing concurrently and sharing resources such as memory, while different processes do not share these resources. In particular, the threads of a process share its executable code and the values of its dynamically allocated variables and non-thread-local global variables at any given time.

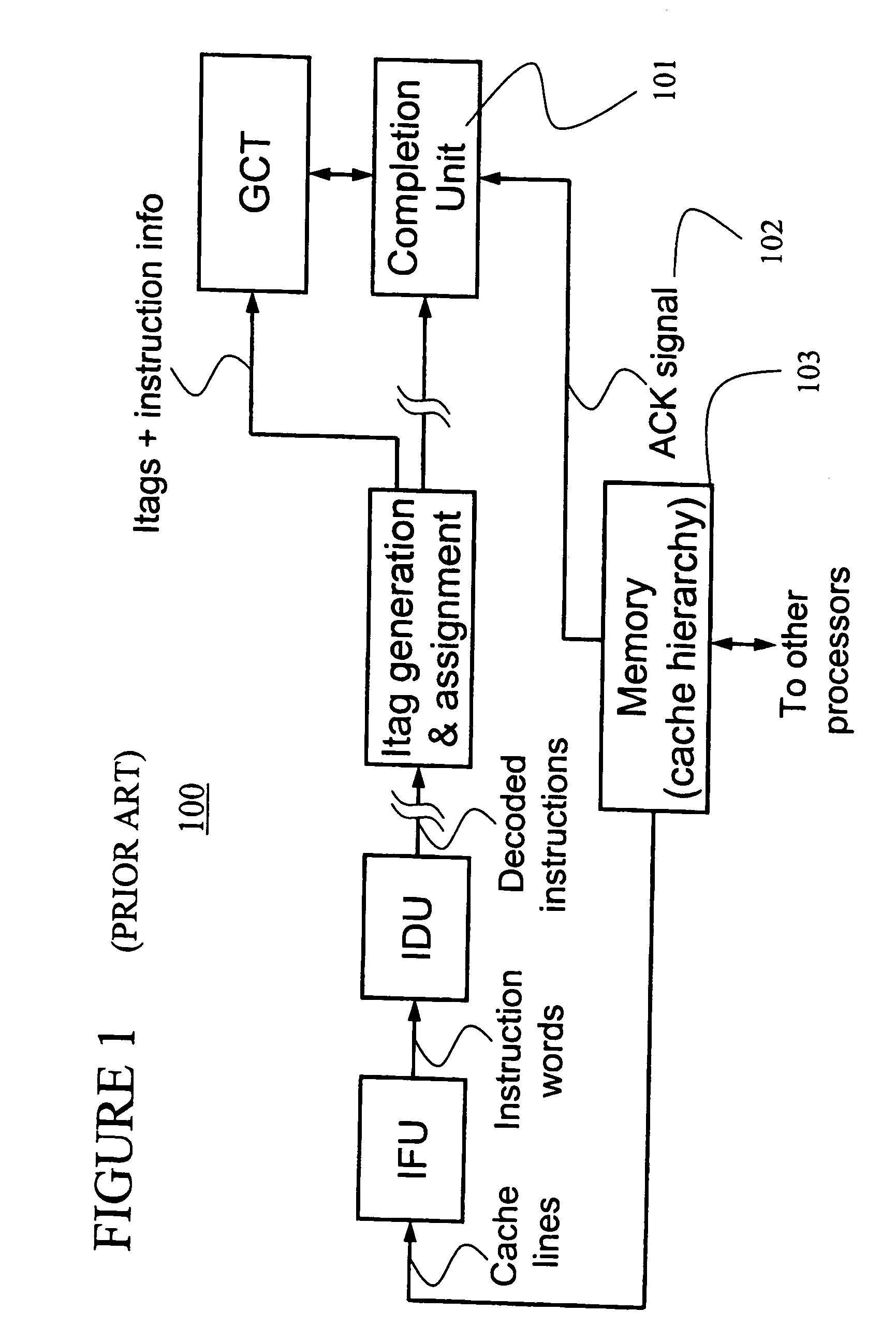

Method, apparatus and computer program product for efficient, large counts of per thread performance events

InactiveUS6931354B2Maximum countSolve excessive overheadDigital computer detailsNuclear monitoringComputer scienceComputer program

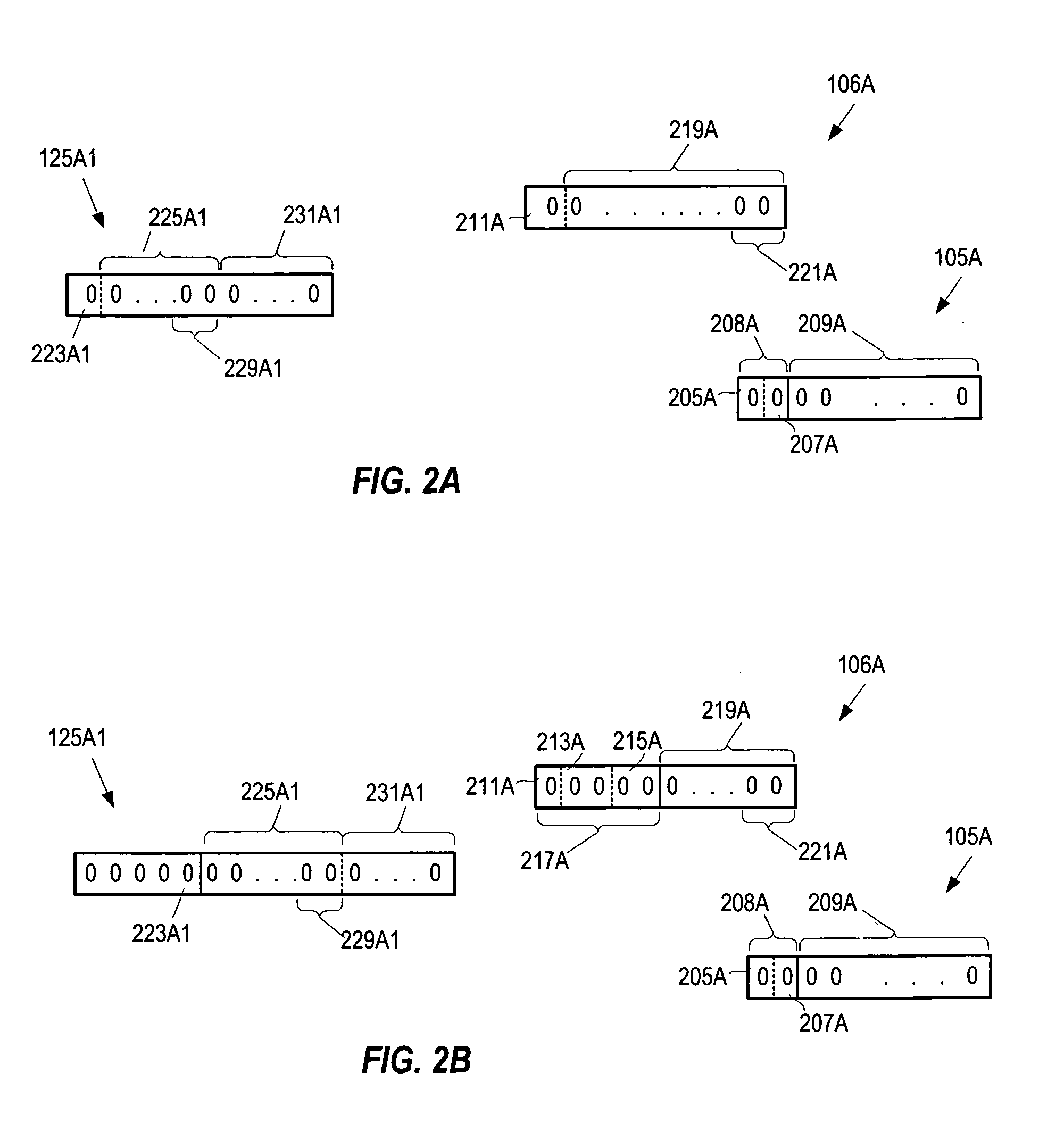

Performance events are counted for a computing system. This includes designating a first processor counter as a low-order counter for counting a certain performance event encountered by the processor and associating with the first counter a second counter as a high-order counter. The first counter is incremented responsive to detecting the performance event for a first processing thread. Responsive to a second thread, an accumulator in system memory for the first thread and first and second counters is updated. Responsive to the first thread becoming active, values of the first and second counters are loaded from the accumulator. Responsive to a user call to read and return a combined value, a first instance of the second counter is read, then the first counter is read and a second instance of the second counter is read before returning the combined value.

Owner:INT BUSINESS MASCH CORP

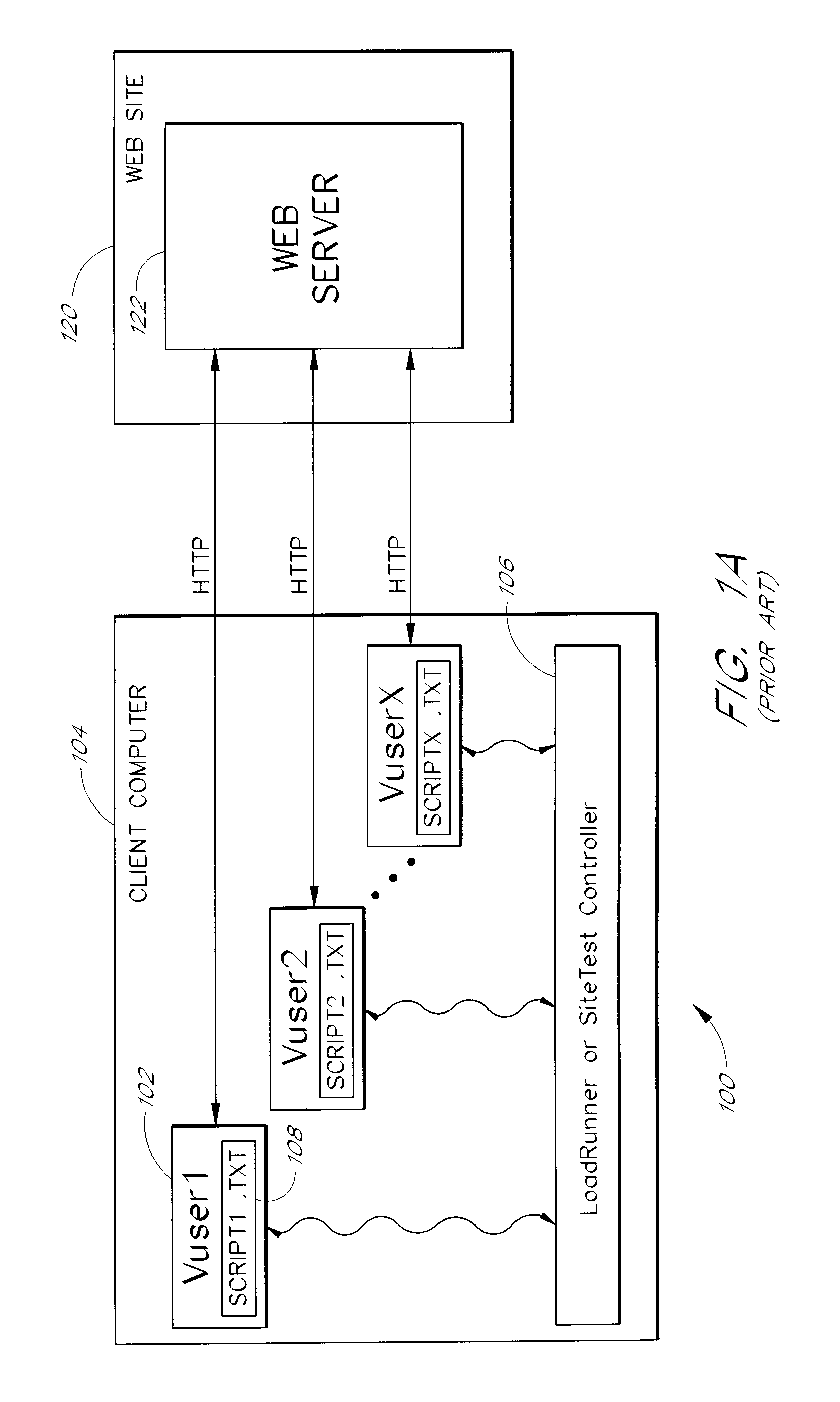

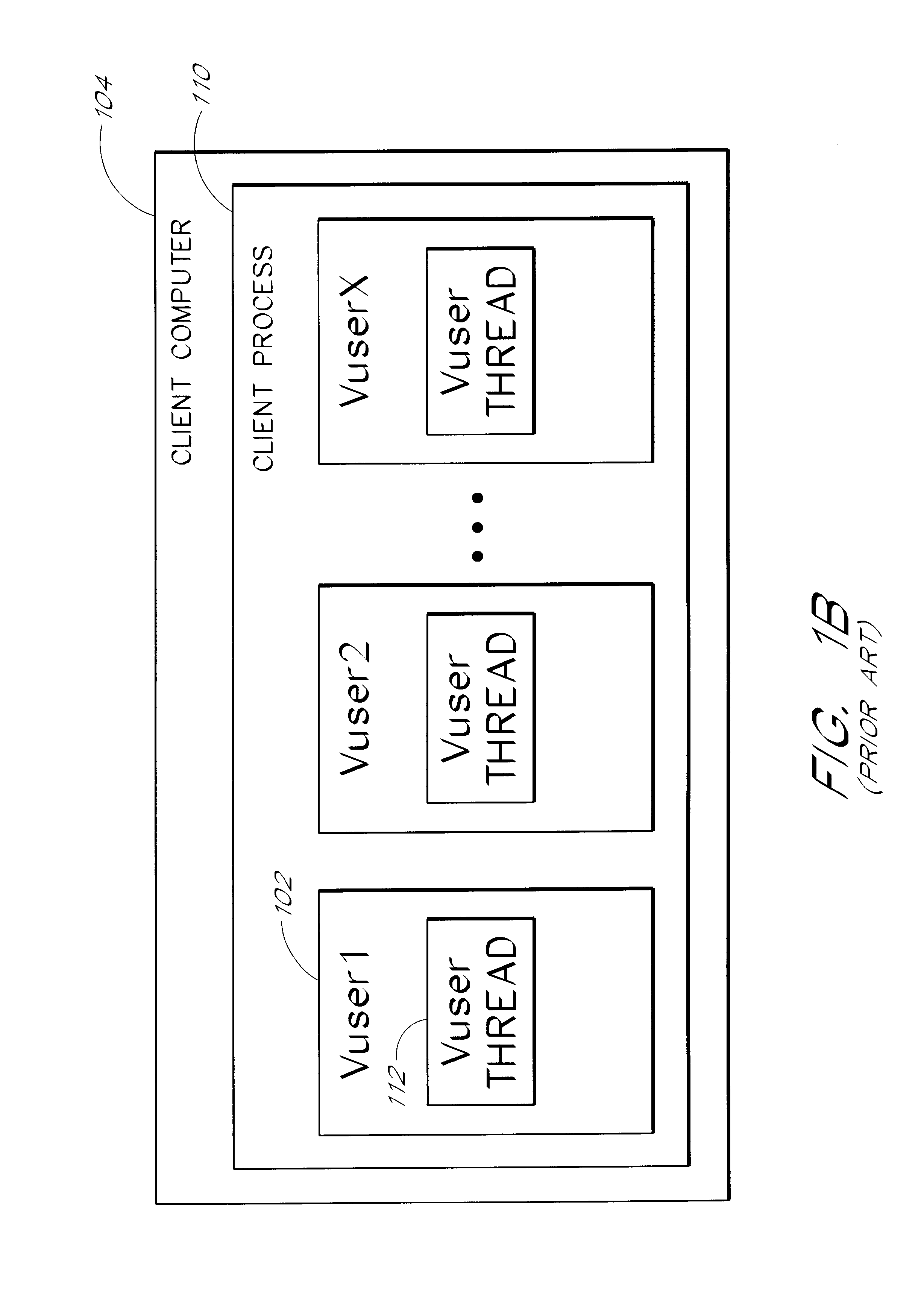

Use of a single thread to support multiple network connections for server load testing

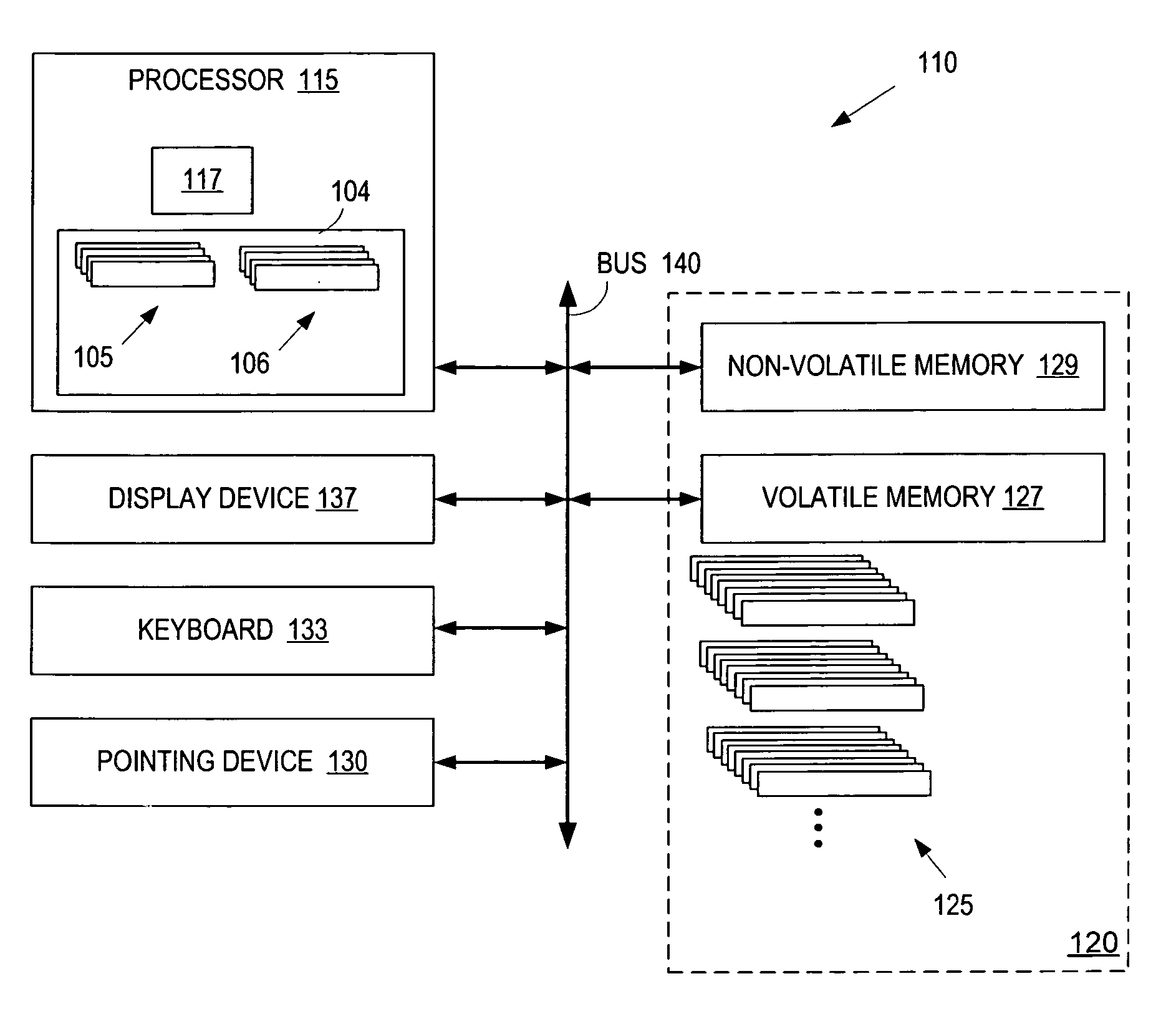

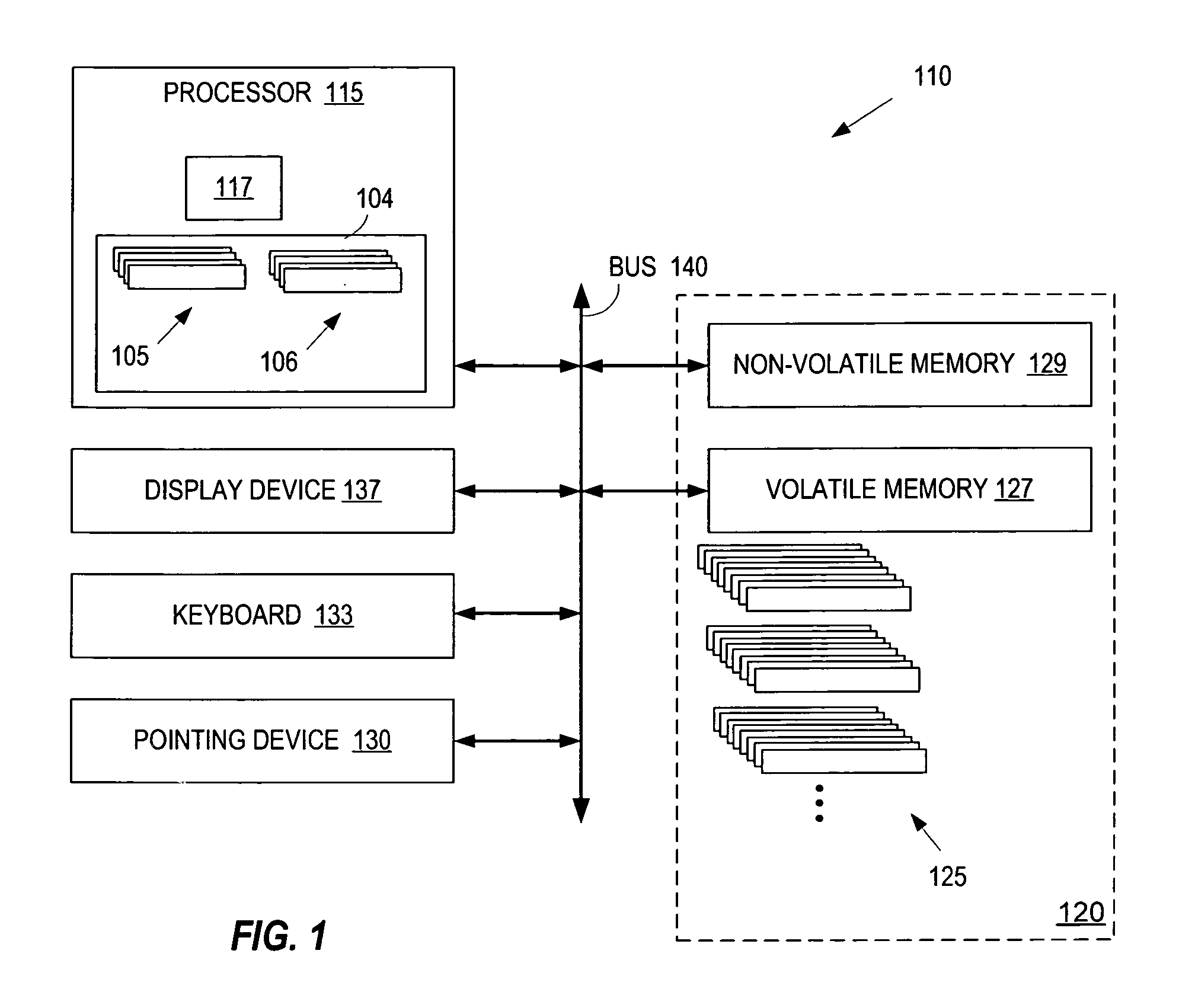

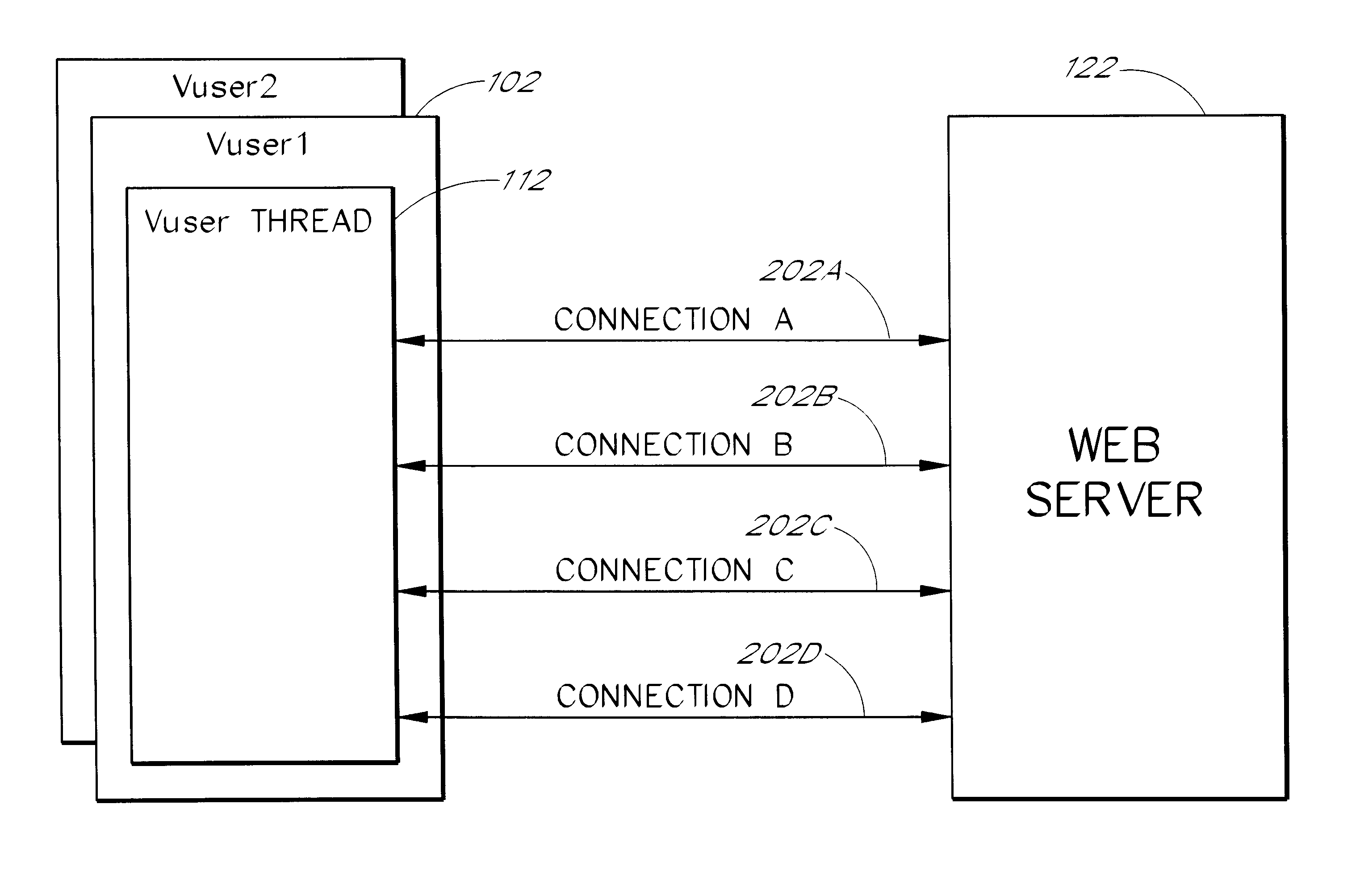

A load testing system for testing a web site or other type of server system uses a thread architecture that reduces the computing resources needed to generate a desired load. The load testing system runs several virtual users on one or more clients to simulate user interactions with the web site. Each virtual user is executed as a virtual user thread under a process on a client computer. Each virtual user thread itself establishes and supports multiple connections to the web site; therefore, an additional thread need not be created for each connection. For each connection, the virtual user thread performs a sequence of functions in an asynchronous mode to establish and support the connection. If a function cannot complete without blocking, it immediately returns a RESOURCE UNAVAILABLE error code. If a function returns a RESOURCE UNAVAILABLE code, the calling thread switches execution to another task. After the condition causing the RESOURCE UNAVAILABLE error code has been resolved, the thread can switch back to executing the interrupted task. In this manner, the single thread is able to support multiple simultaneous connections.

Owner:MICRO FOCUS LLC

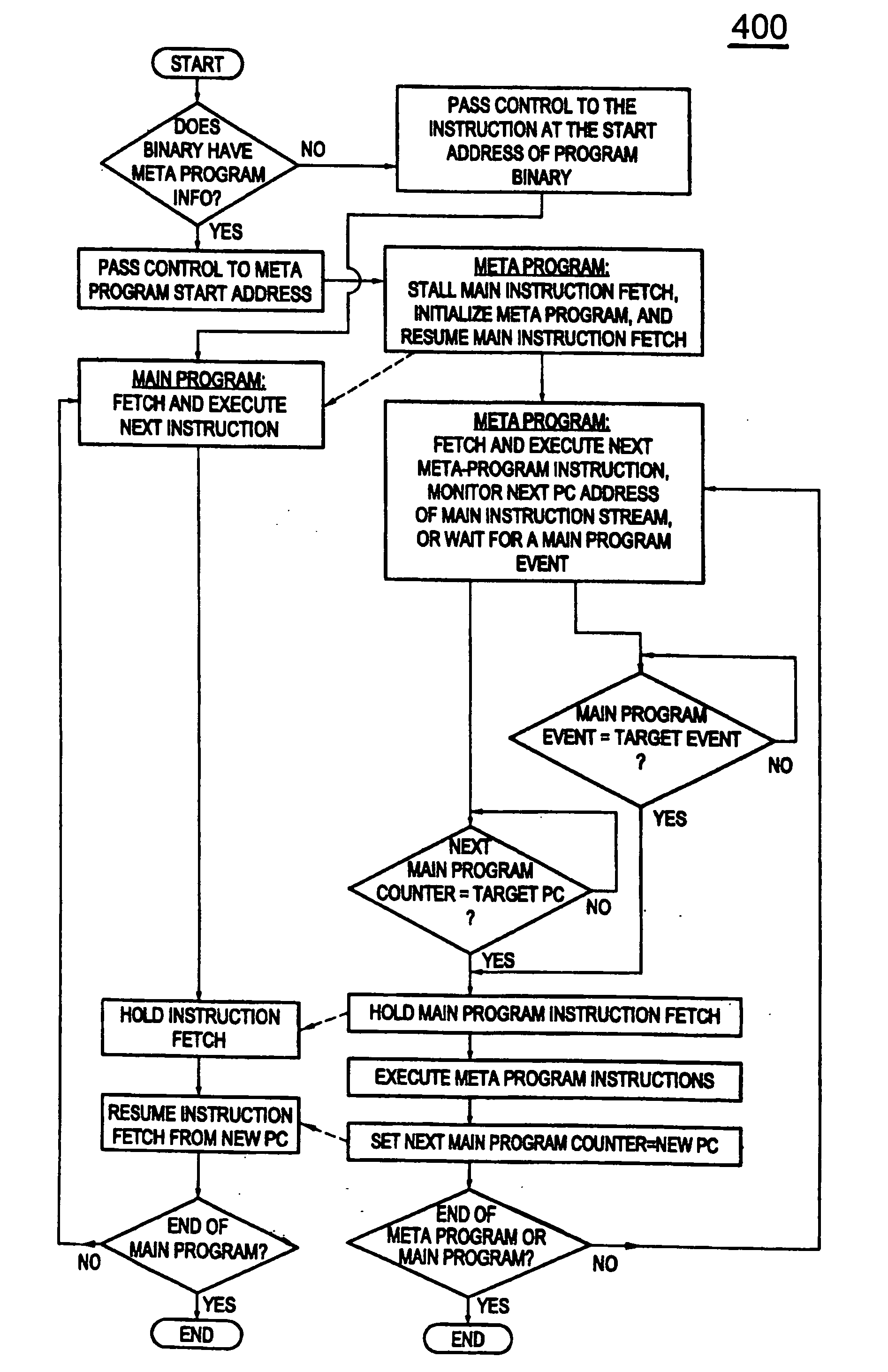

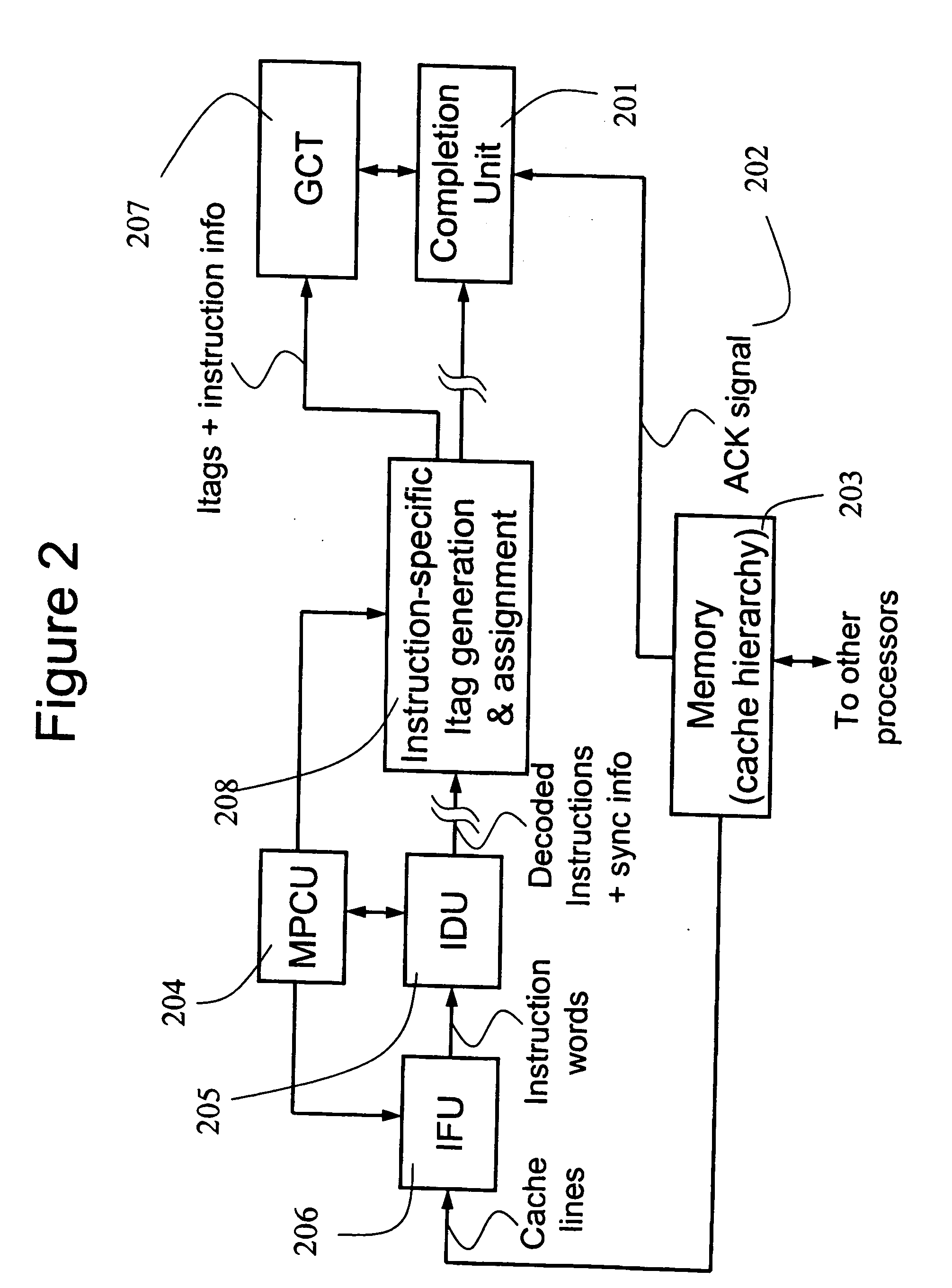

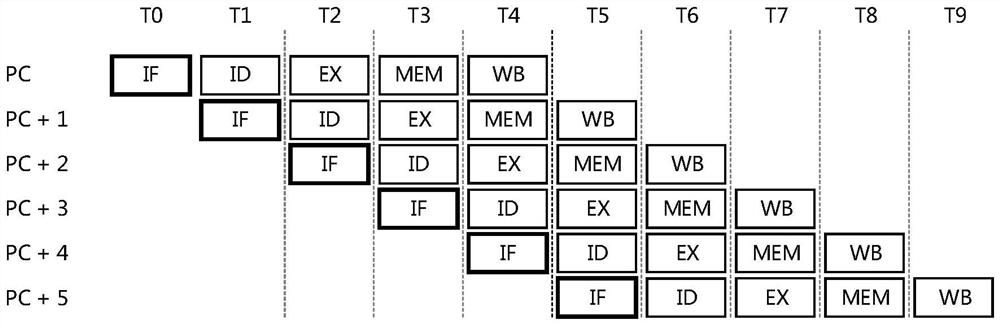

Method and apparatus for fast synchronization and out-of-order execution of instructions in a meta-program based computing system

InactiveUS20080028196A1Simple and efficient and fast methodDigital computer detailsProgram loading/initiatingOut-of-order executionComputer architecture

Owner:IBM CORP

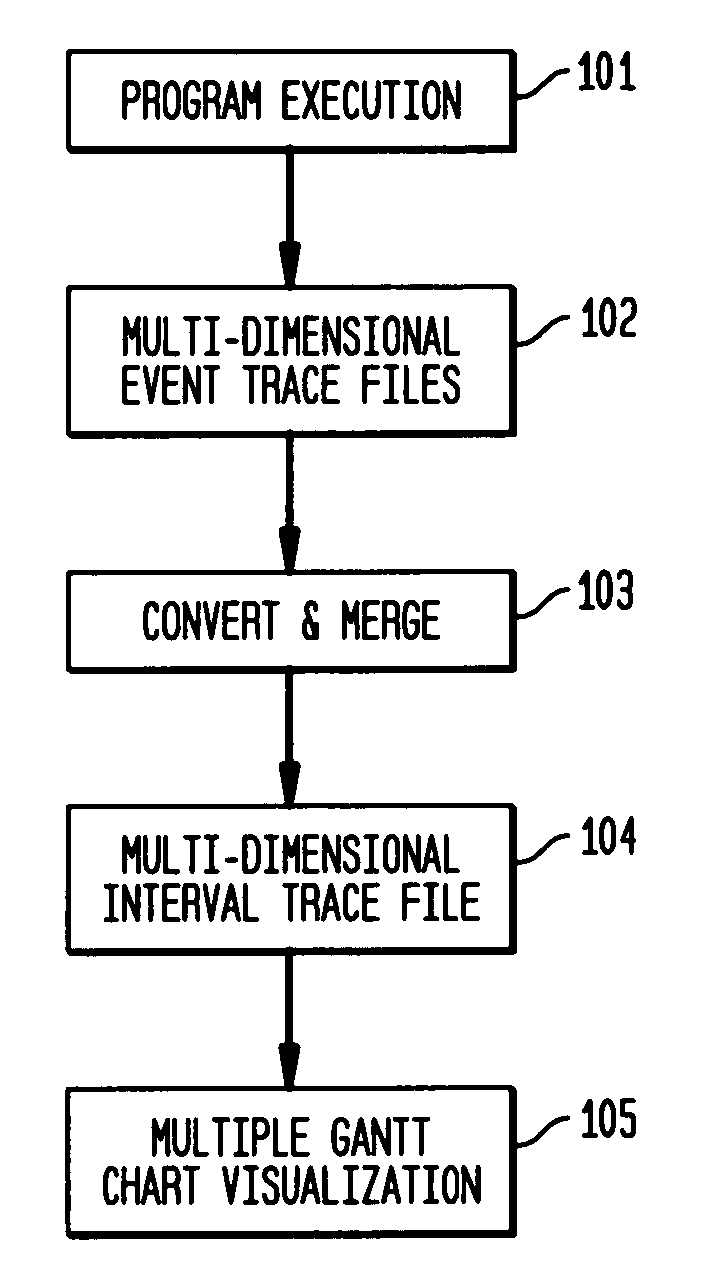

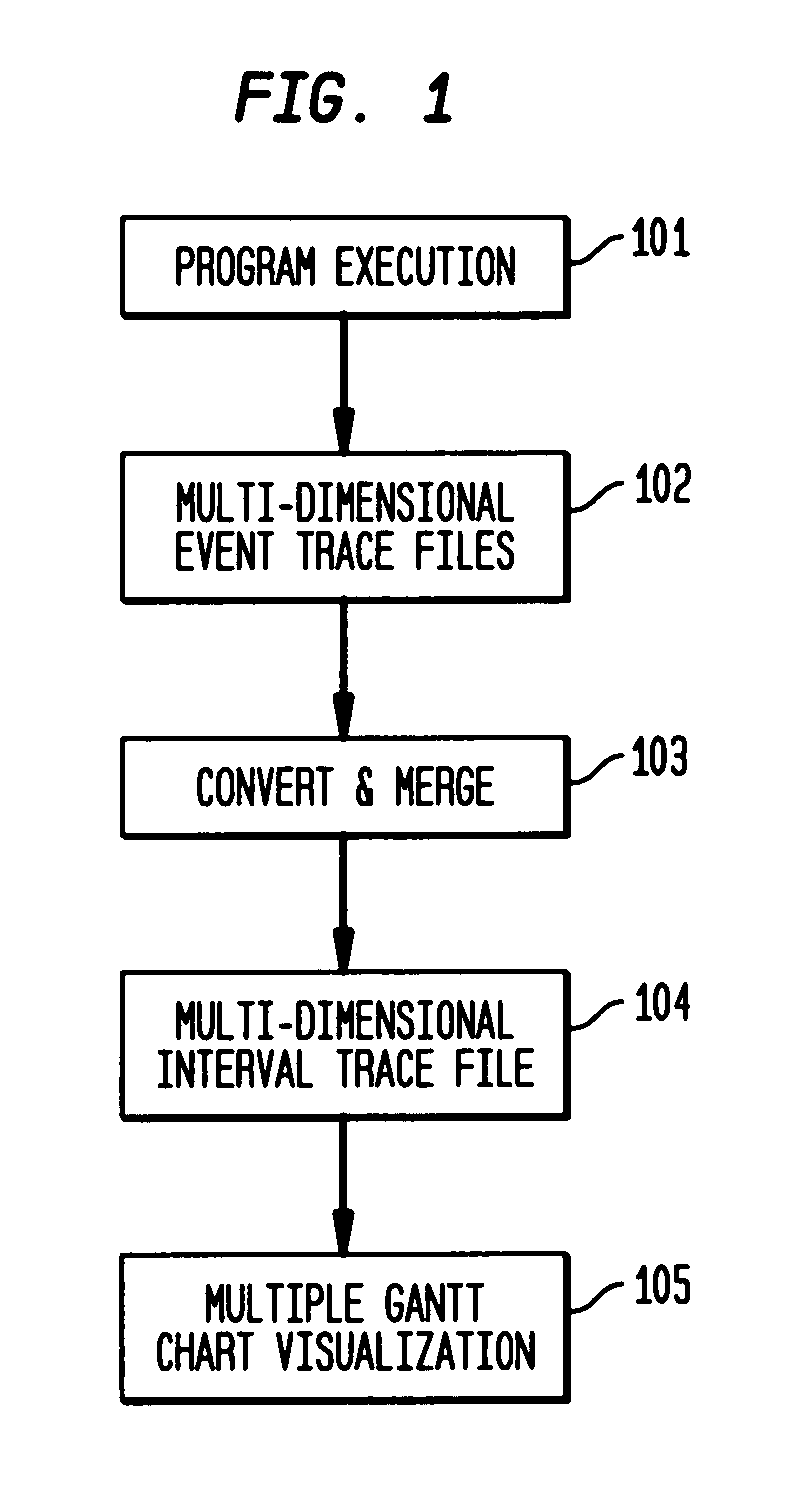

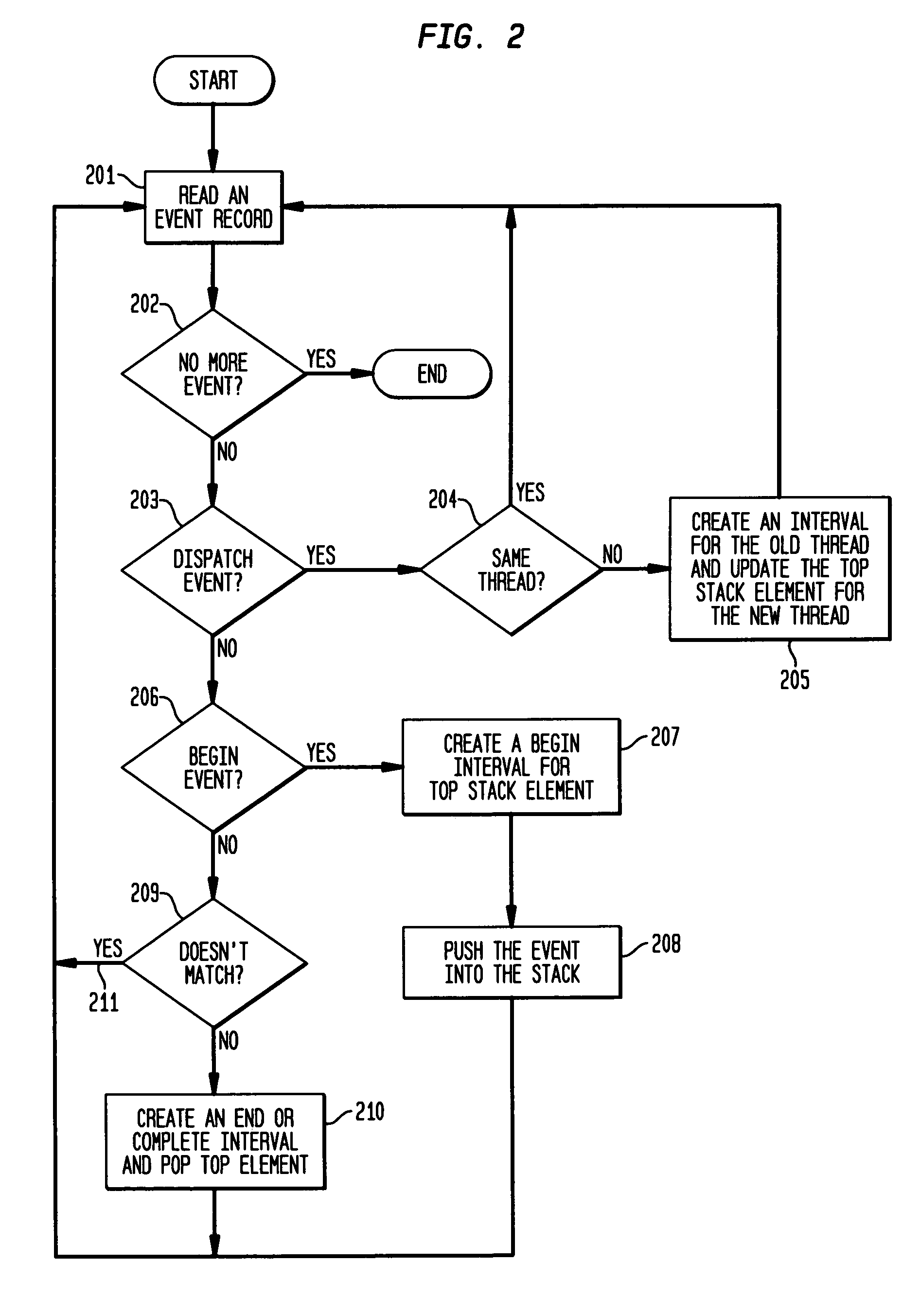

System and method on generating multi-dimensional trace files and visualizing them using multiple Gantt charts

InactiveUS7131113B2Elimination of trace file explosionEasy to understandError preventionError detection/correctionGantt chartMultiple dimension

A system and method for visualizing data in two-dimensional time-space diagrams including generating multi-dimensional program trace files, converting and merging them into interval trace files, and generating multiple time-space diagrams (multiple Gantt charts) to visualize the resulting multi-dimensional interval trace files. The needed events and trace information required to form multi-dimensional event traces are described in event trace files typically gathered from running programs, such as parallel programs for technical computing and Web server processes. The method includes converting event traces into interval traces. In one selectable design, the two-dimensional time-space diagrams includes three discriminator types (thread, processor, and activity) and six discriminator-legend combinations, capable of producing seven possible views. The six discriminator-legend combinations are thread-activity, thread-processor, processor-thread, processor-activity, activity-thread, and activity-processor. Each discriminator-legend combination corresponds to one time-space diagram, except that the thread-activity combination has two possible views: Thread-Activity View and Connected Thread-Activity View.

Owner:IBM CORP

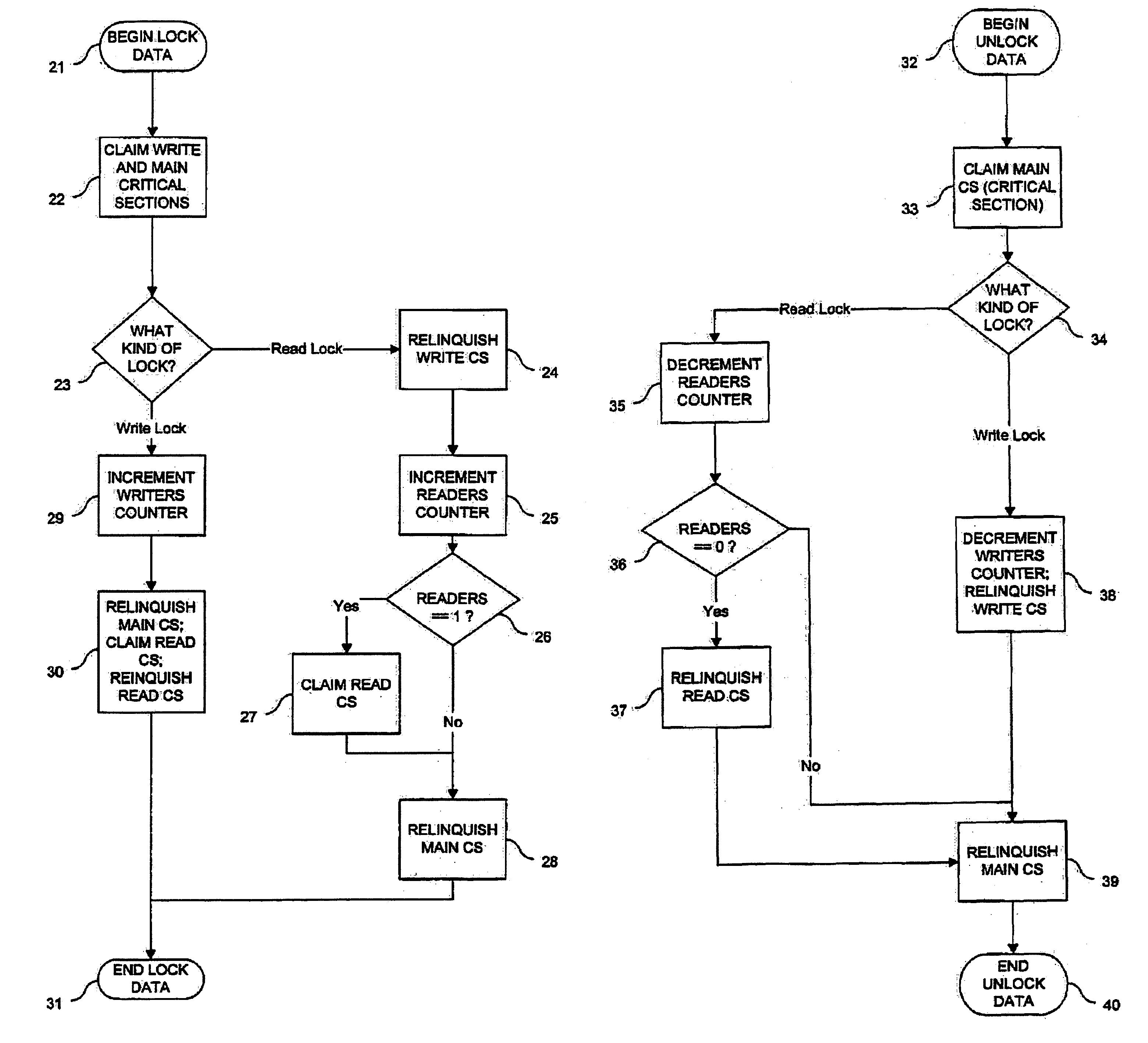

Architecture for a read/write thread lock

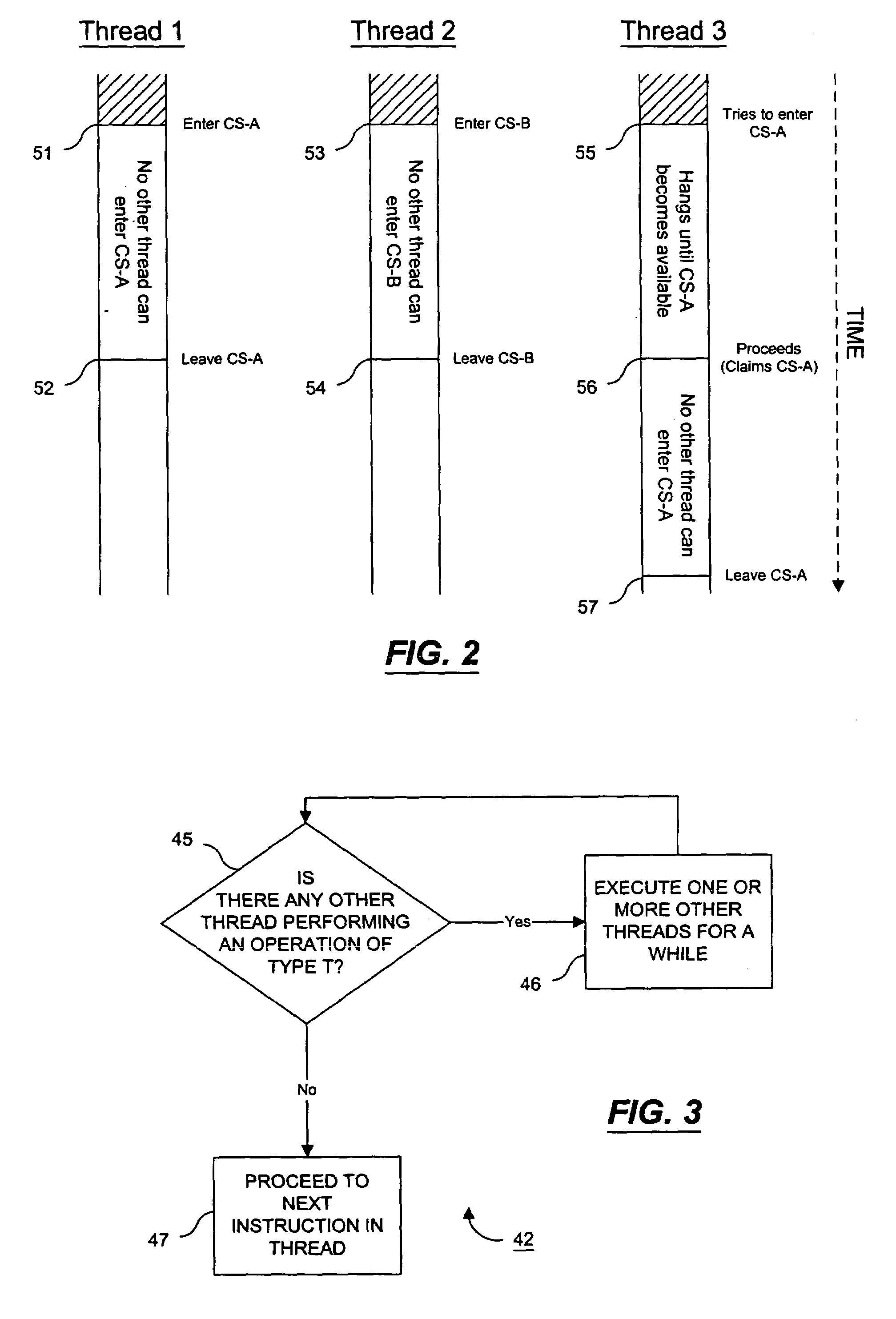

InactiveUS7188344B1Minimizing chanceMultiprogramming arrangementsMemory systemsComputer architectureCritical section

An architecture for a read / write thread lock is provided for use in a computing environment where several sets of computer instructions, or “threads,” can execute concurrently. The disclosed thread lock allows concurrently-executing threads to share access to a resource, such as a data object. The thread lock allows a plurality of threads to read from a resource at the same time, while providing a thread exclusive access to the resource when that thread is writing to the resource. The thread lock uses critical sections to suspend execution of other threads when one thread needs exclusive access to the resource. Additionally, a technique is provided whereby the invention can be deployed as constructors and destructors in a programming language, such as C++, where constructors and destructors are available. When the invention is deployed in such a manner, it is possible for a programmer to issue an instruction to lock a resource, without having to issue a corresponding unlock instruction.

Owner:UNISYS CORP

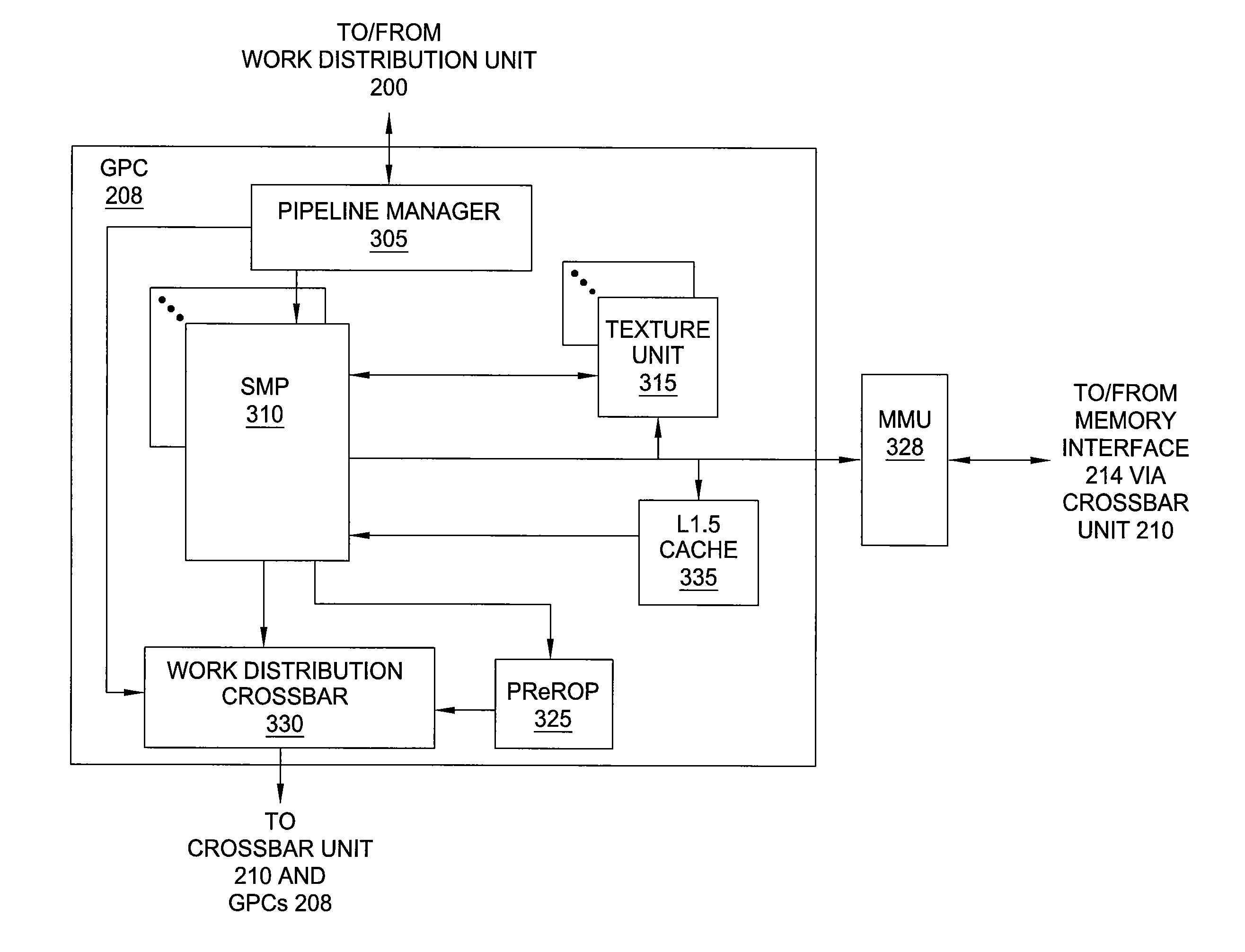

Thread group scheduler for computing on a parallel thread processor

ActiveUS20120110586A1Reduce processing stepsProcess is inefficientMultiprogramming arrangementsMemory systemsScheduling instructionsParallel computing

A parallel thread processor executes thread groups belonging to multiple cooperative thread arrays (CTAs). At each cycle of the parallel thread processor, an instruction scheduler selects a thread group to be issued for execution during a subsequent cycle. The instruction scheduler selects a thread group to issue for execution by (i) identifying a pool of available thread groups, (ii) identifying a CTA that has the greatest seniority value, and (iii) selecting the thread group that has the greatest credit value from within the CTA with the greatest seniority value.

Owner:NVIDIA CORP

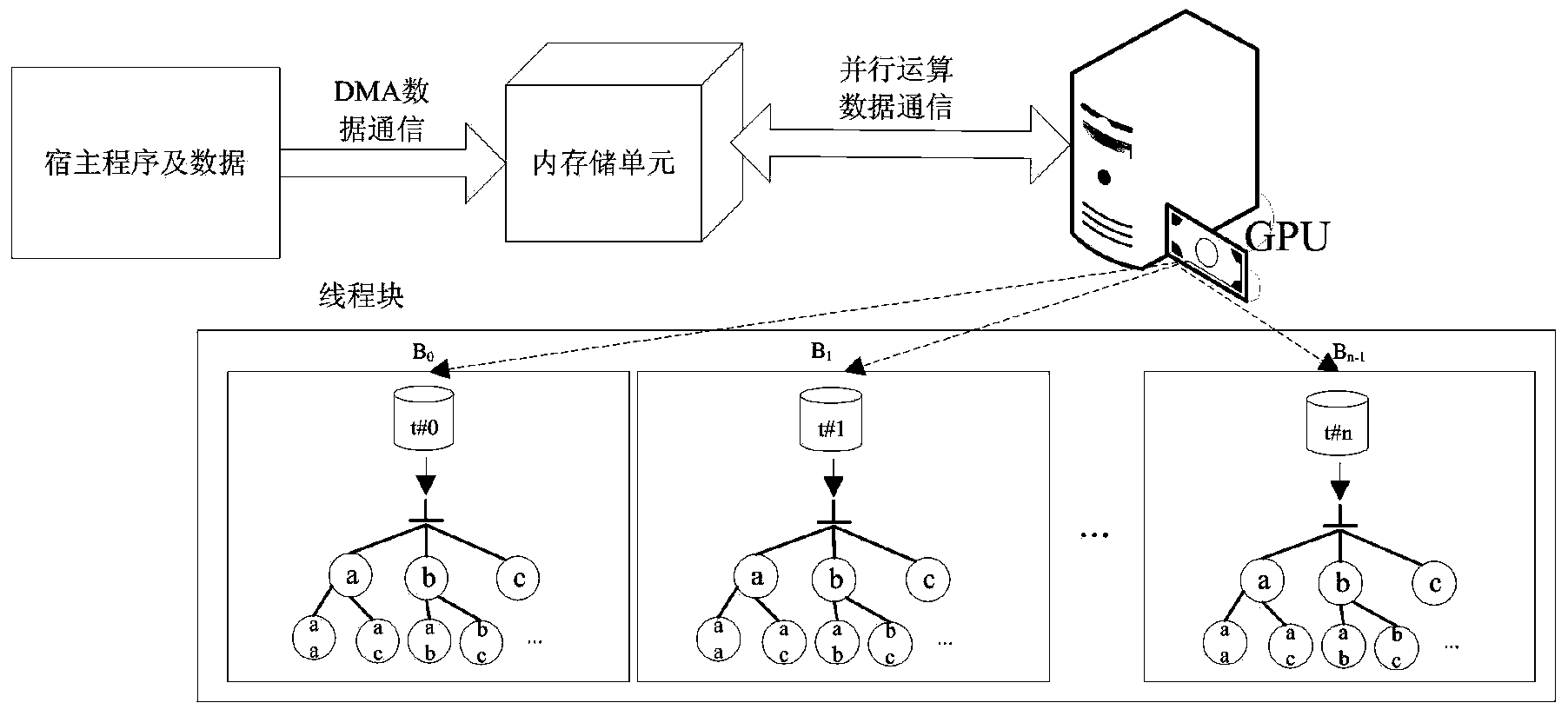

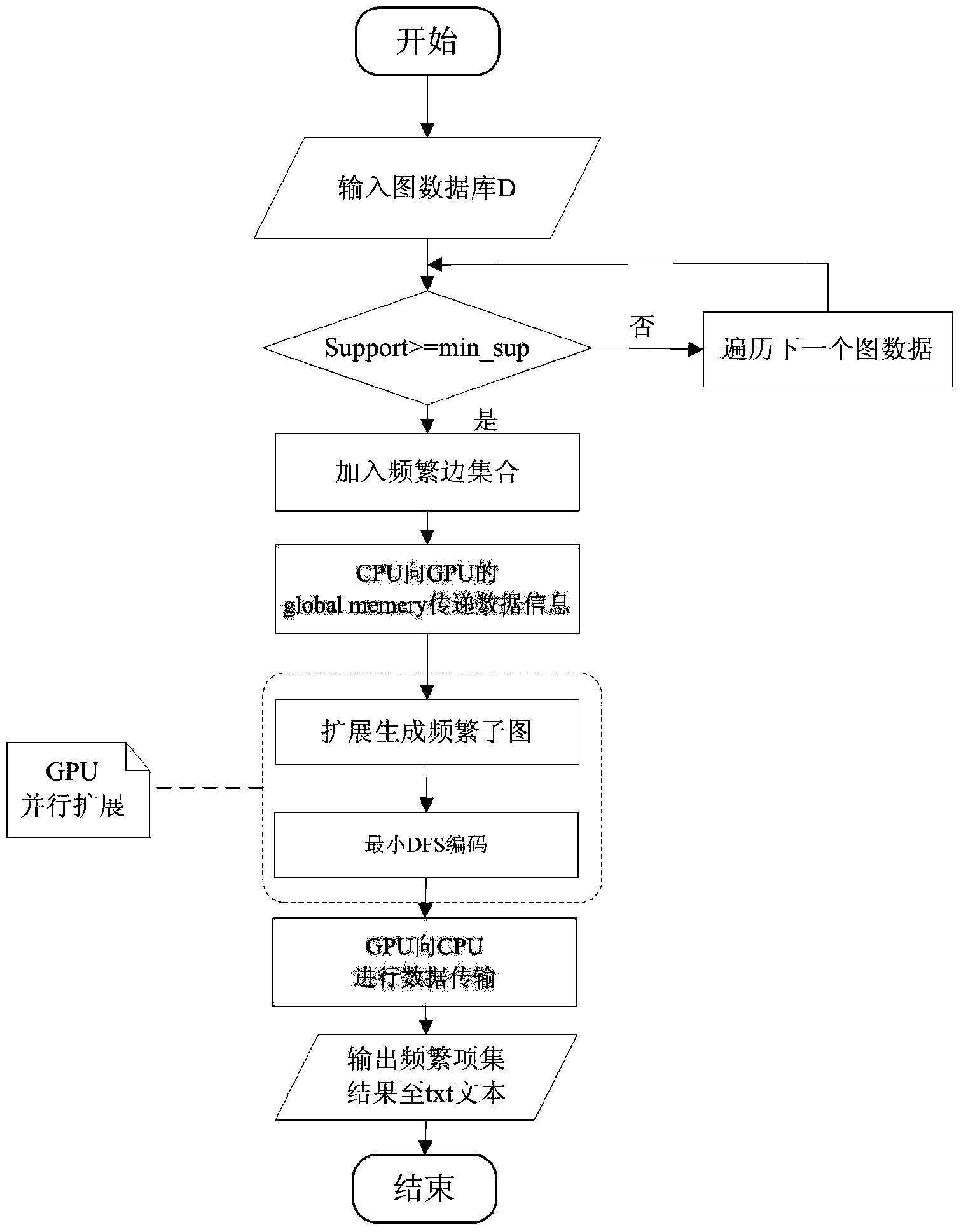

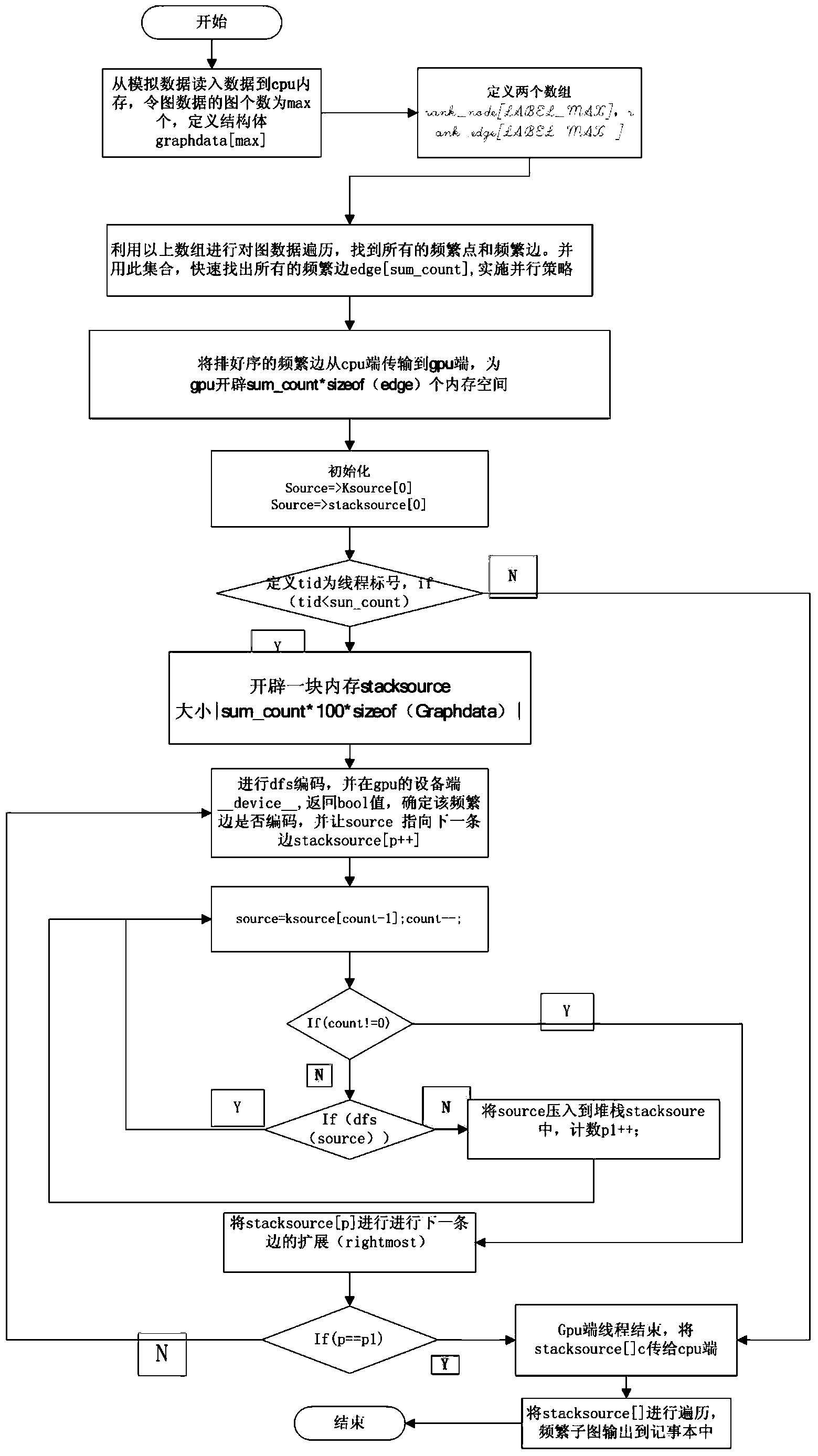

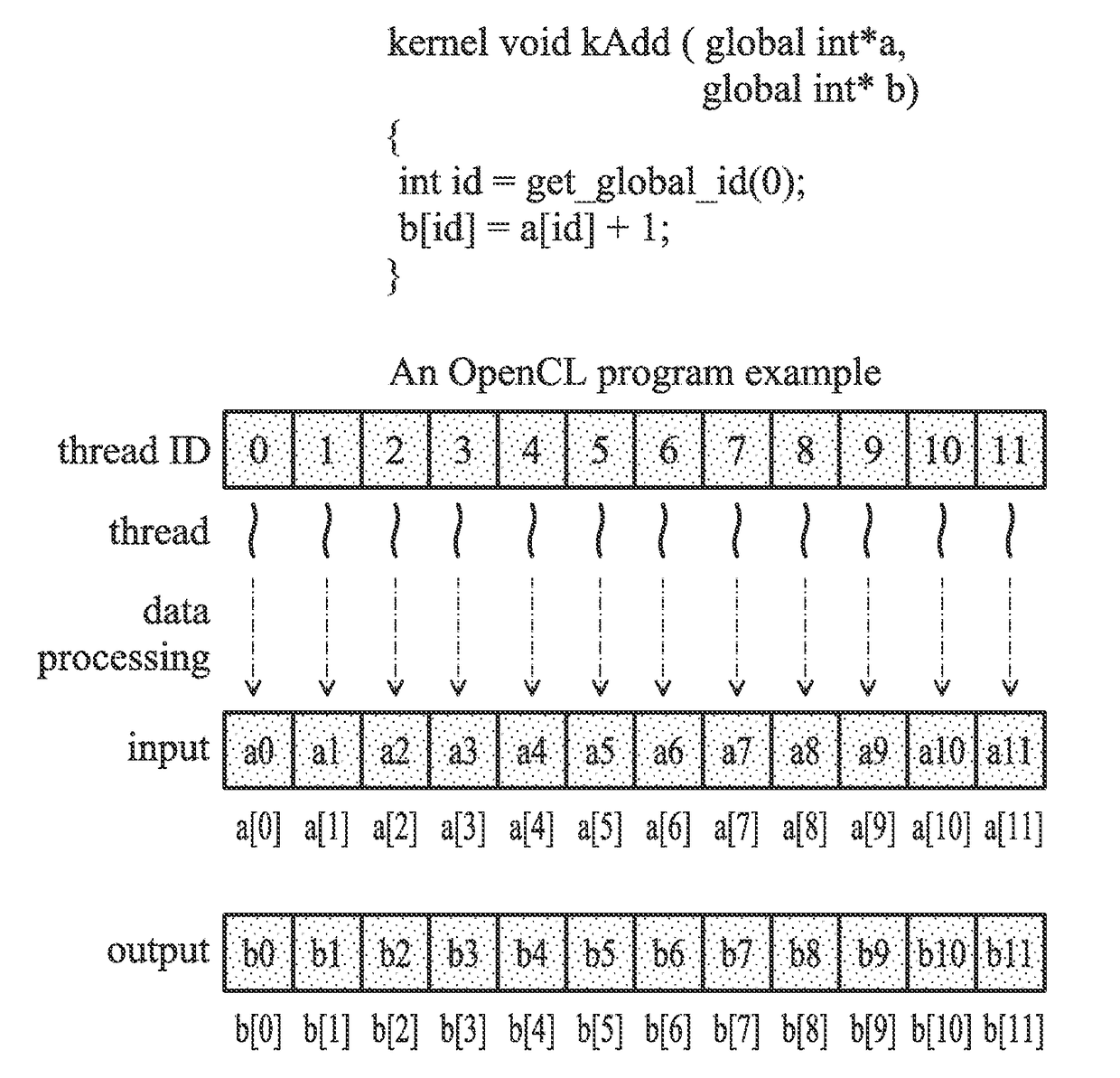

Frequent subgraph excavating method based on graphic processor parallel computing

ActiveCN103559016ACollaborative Computing RealizationImprove data processing capabilitiesResource allocationConcurrent instruction executionGraphicsData set

The invention discloses a frequent subgraph excavating method based on graphic processor parallel computing. The method includes marking out a plurality of thread blocks through a graphic processing unit (GPU), evenly distributing frequent sides to different threads to conduct parallel processing, obtaining different extension subgraphs through right most, returning the graph excavating data set obtained by each thread to each thread block, finally utilizing the GPU to conduct data communication with a memory and returning a result to a central processing unit (CPU) to process the result. The graph excavating method is feasible and effective, graph excavating performance is optimized under intensive large data environment, graph excavating efficiency is improved, data information is provided for scientific research analysis, market research and the like fast and reliably, and a parallel excavating method on a compute unified device architecture (CUDA) is achieved.

Owner:JIANGXI UNIV OF SCI & TECH

Techniques for dynamically assigning jobs to processors in a cluster based on broadcast information

ActiveUS8122132B2Digital computer detailsMultiprogramming arrangementsPerformance computingParallel computing

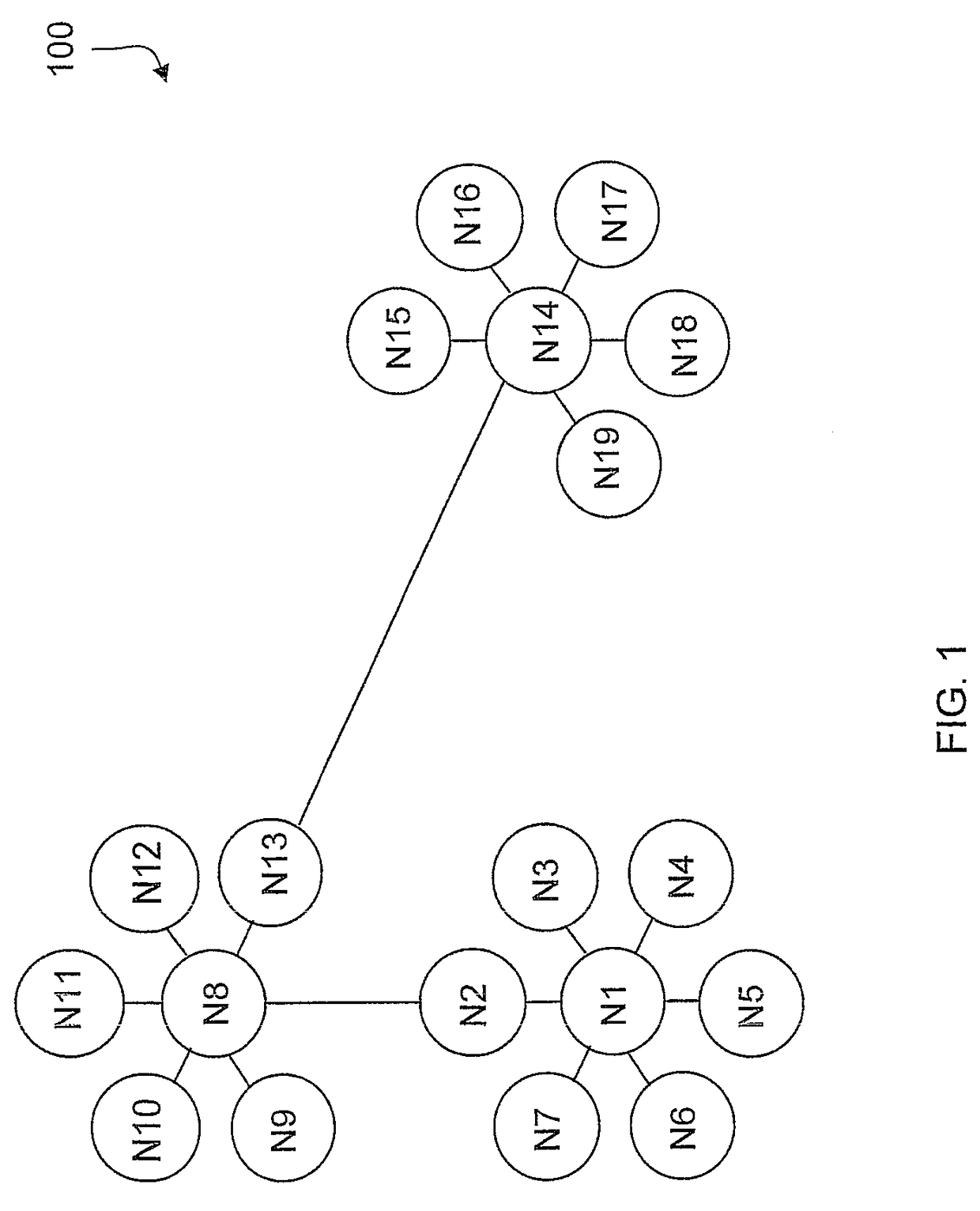

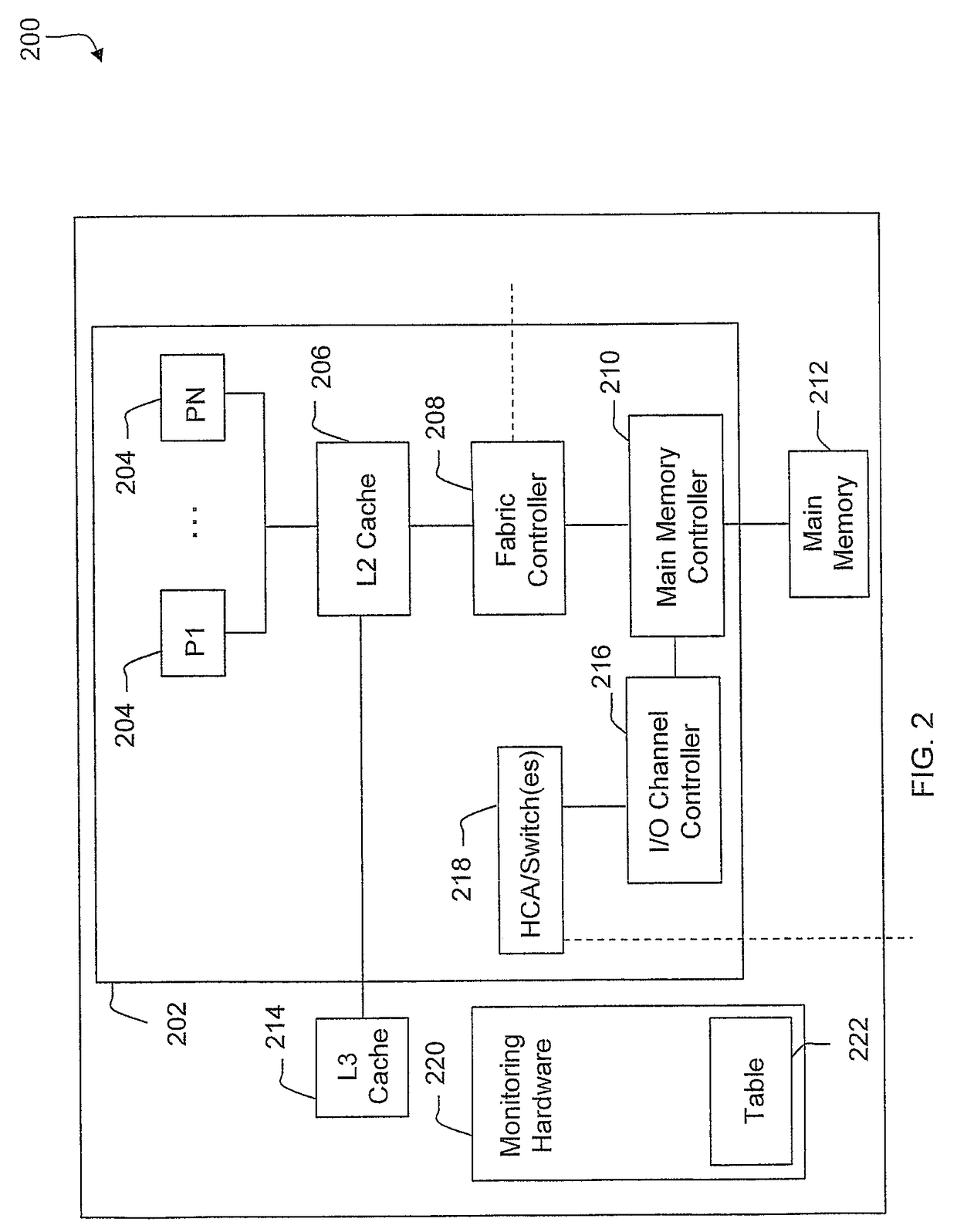

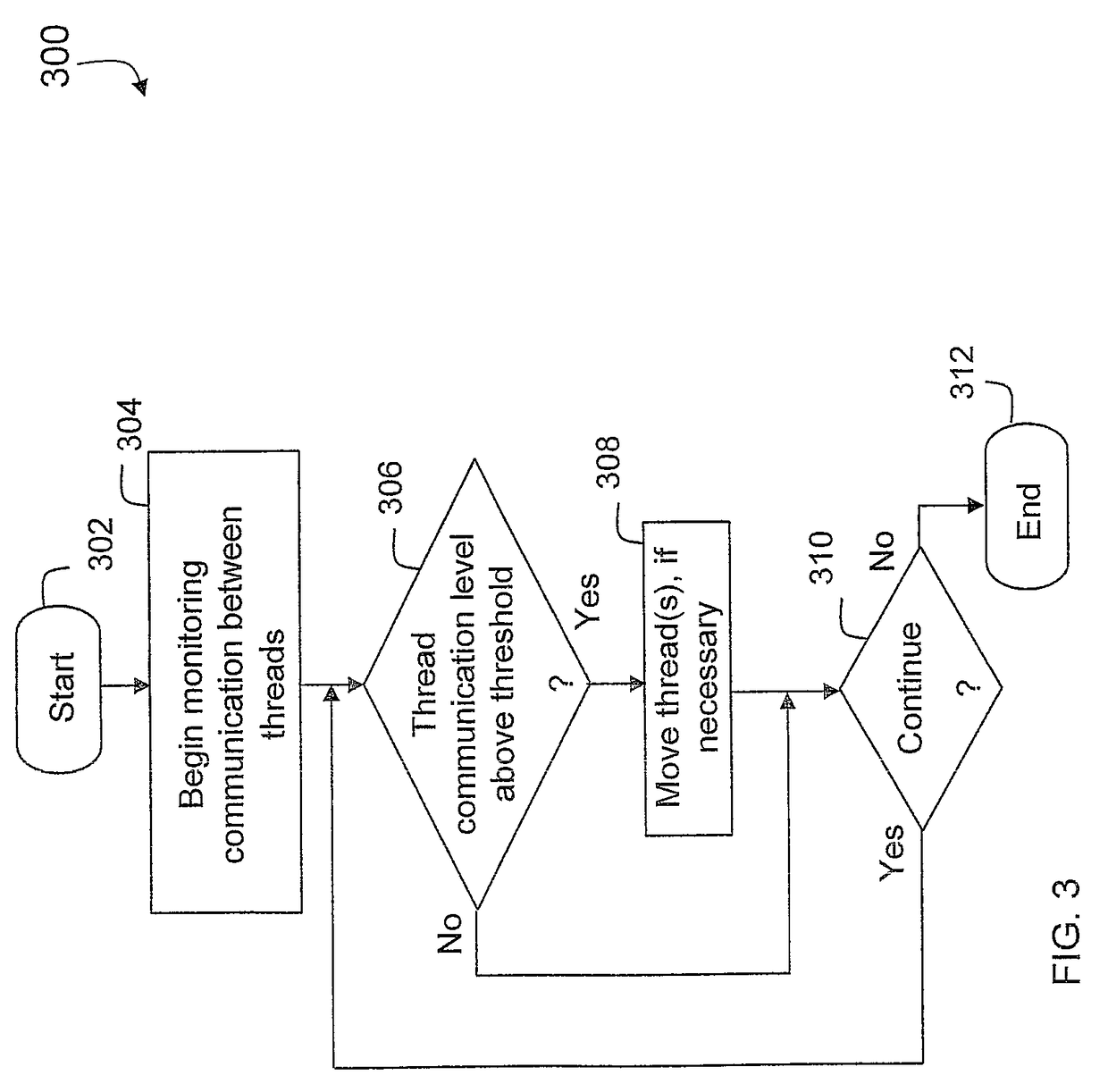

A technique for operating a high performance computing cluster (HPC) having multiple nodes (each of which include multiple processors) includes periodically broadcasting information, related to processor utilization and network utilization at each of the multiple nodes, from each of the multiple nodes to remaining ones of the multiple nodes. Respective local job tables maintained in each of the multiple nodes are updated based on the broadcast information. One or more threads are then moved from one or more of the multiple processors to a different one of the multiple processors (based on the broadcast information in the respective local job tables).

Owner:INT BUSINESS MASCH CORP

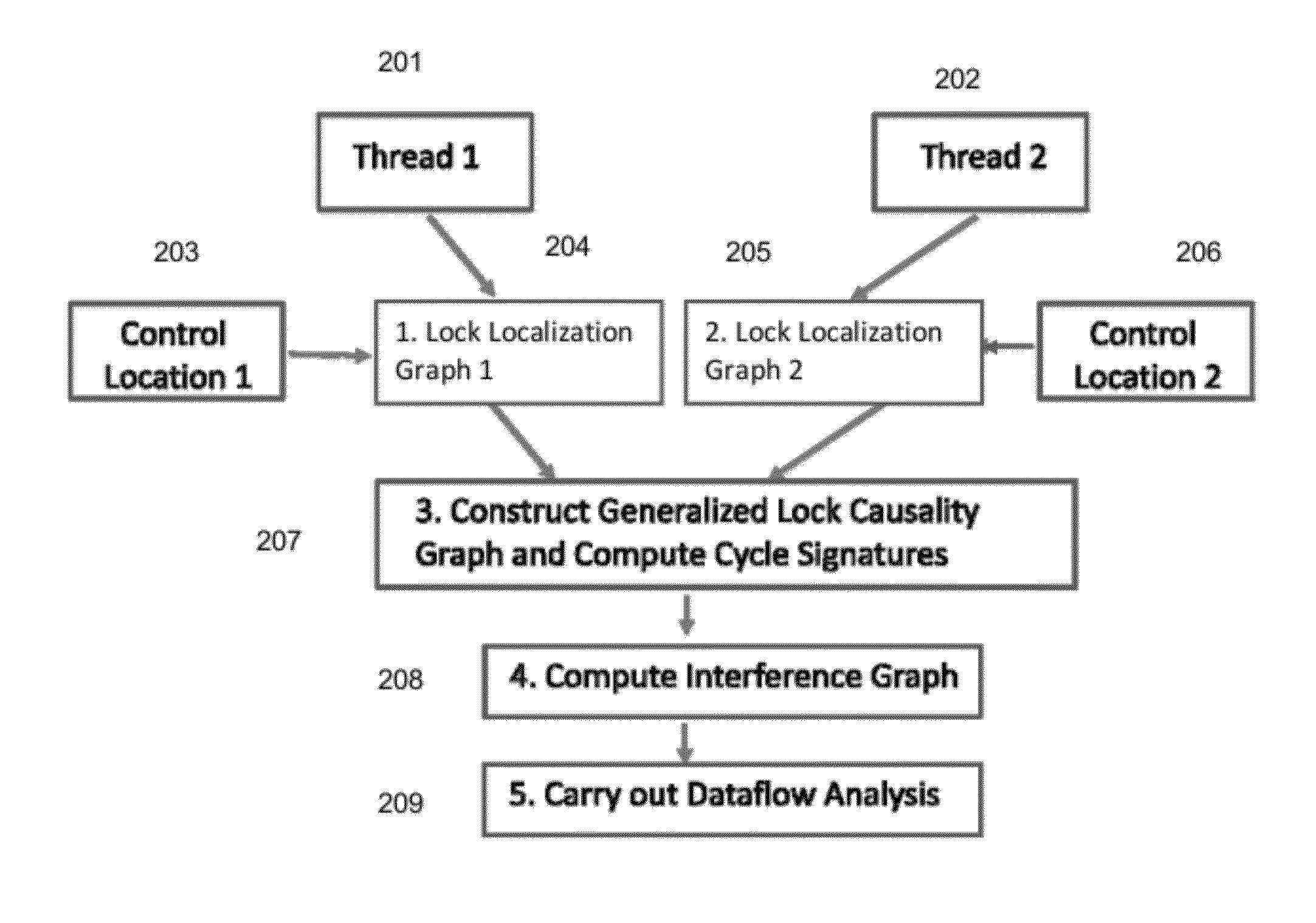

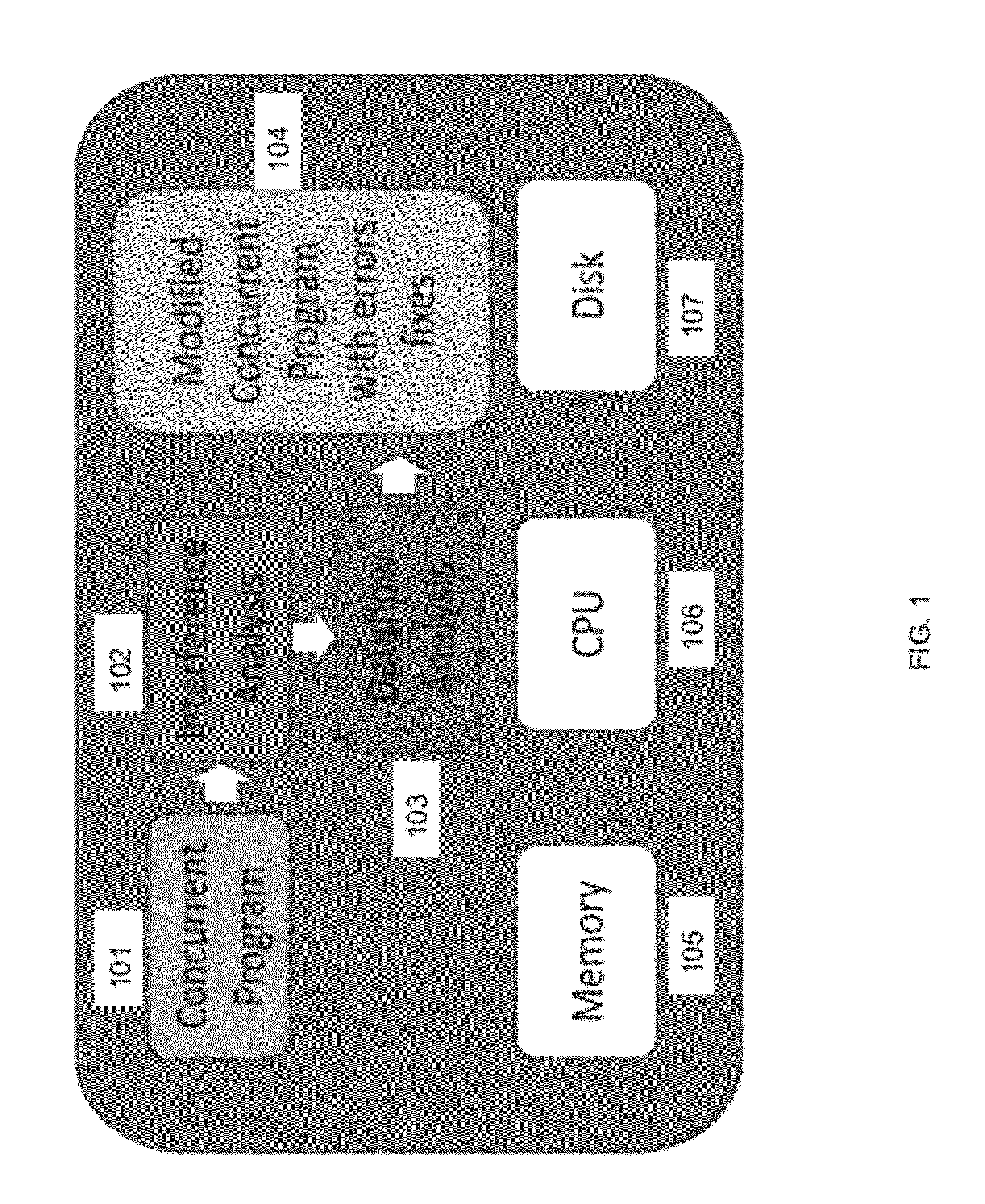

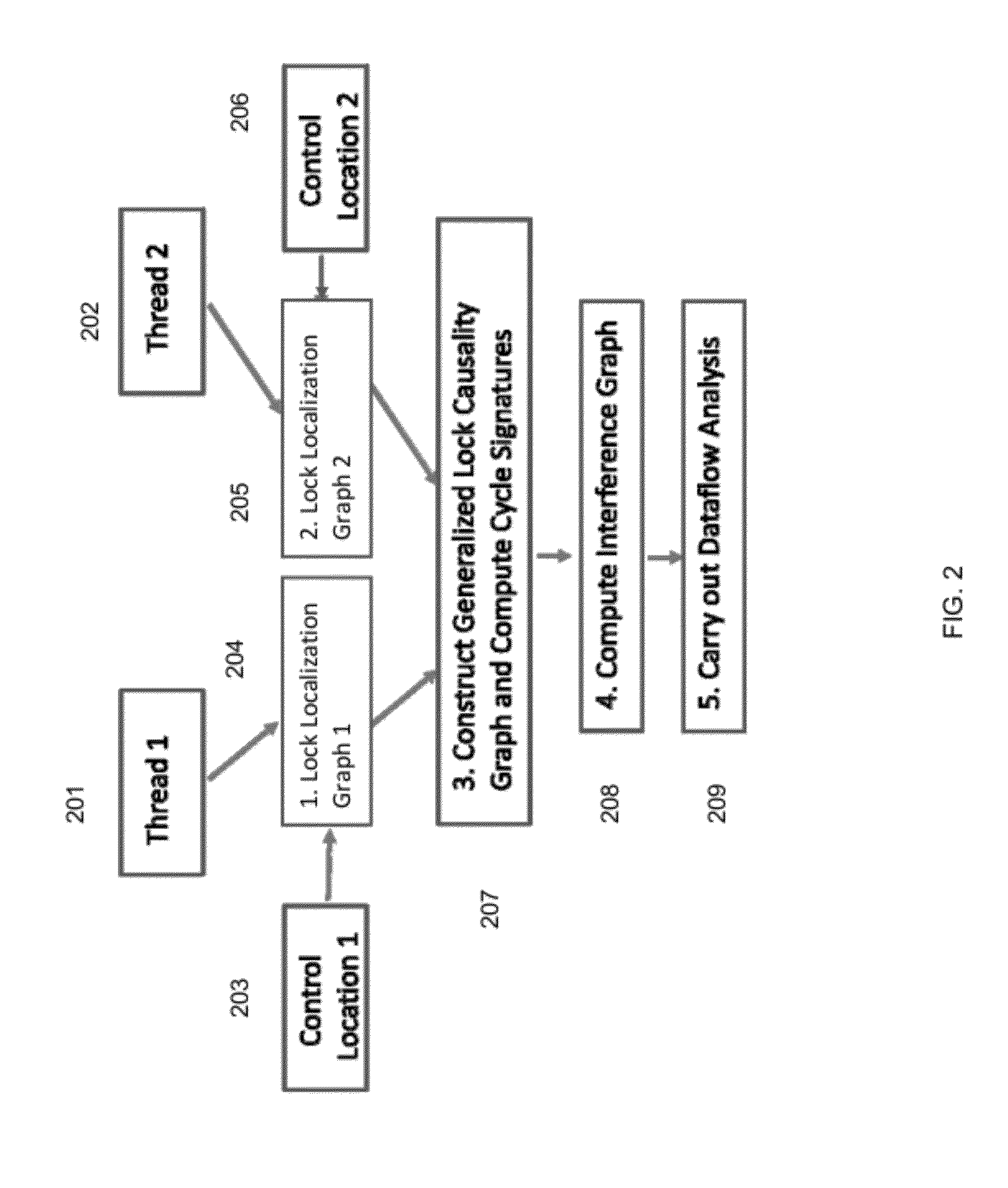

Computer Implemented Method for Precise May-Happen-in-Parallel Analysis with Applications to Dataflow Analysis of Concurrent Programs

A computer implemented method for automatically for determining errors in concurrent program using lock localization graphs for capturing few relevant lock / unlock statements and function calls required for reasoning about interference at a thread location at hand, responsive to first and second threads of a concurrent program, constructing generalized lock causality graphs and computing cycle signatures, and determining errors in the concurrent program responsive to computing an interference graph and data flow analysis.

Owner:NEC LAB AMERICA

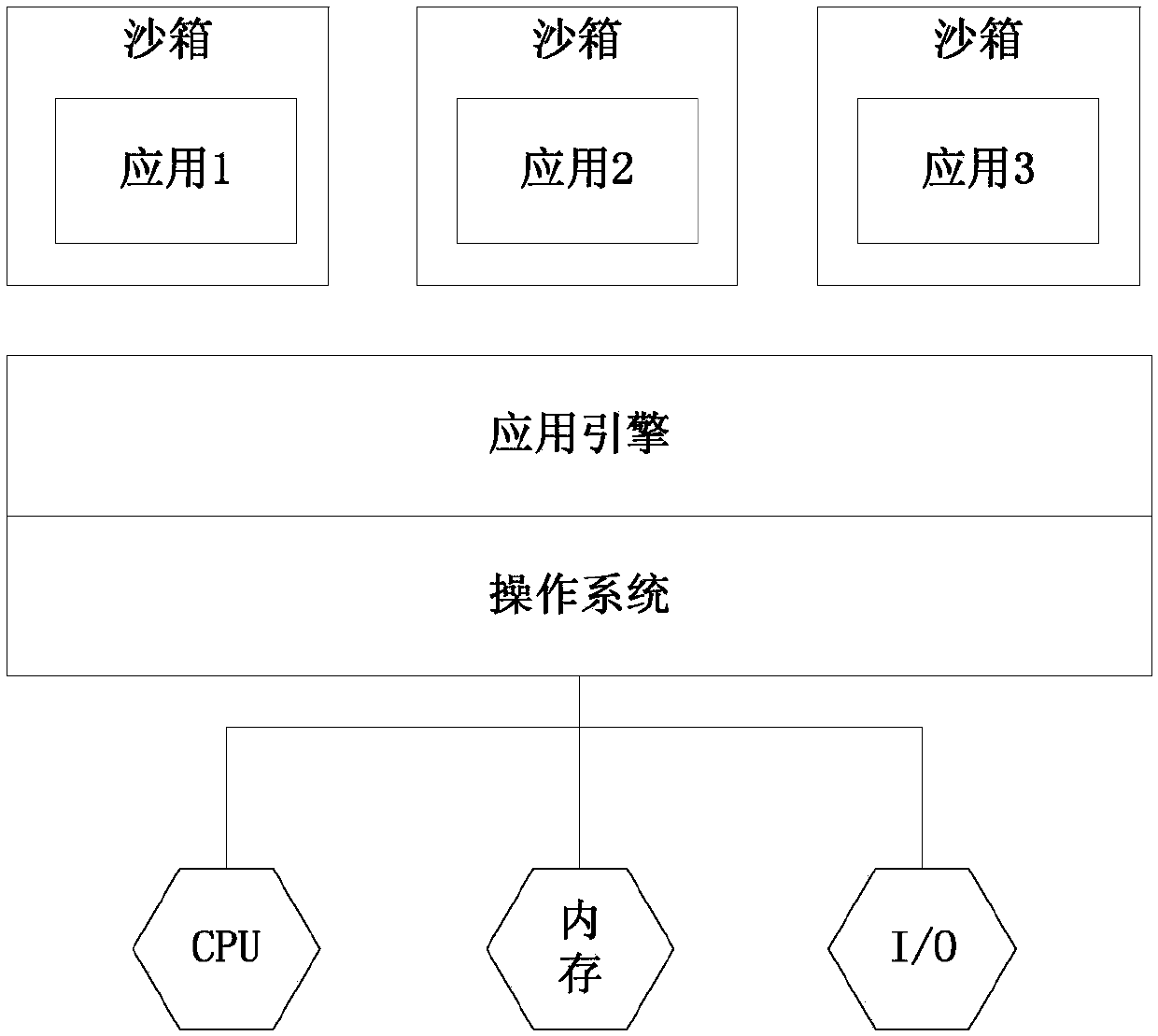

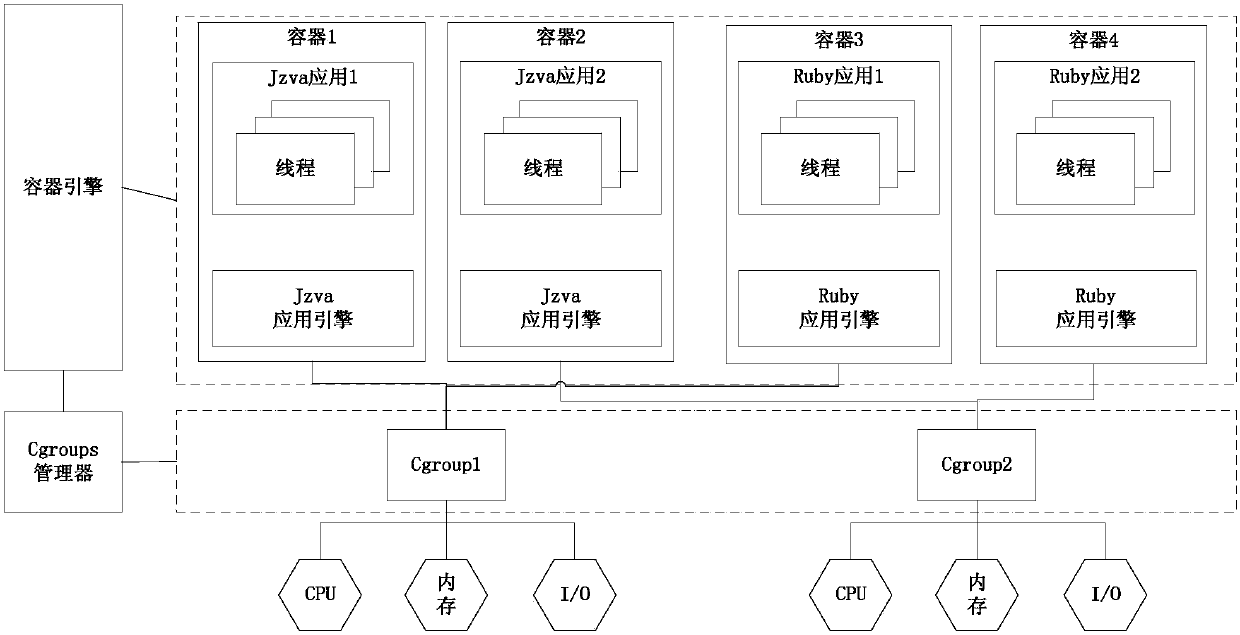

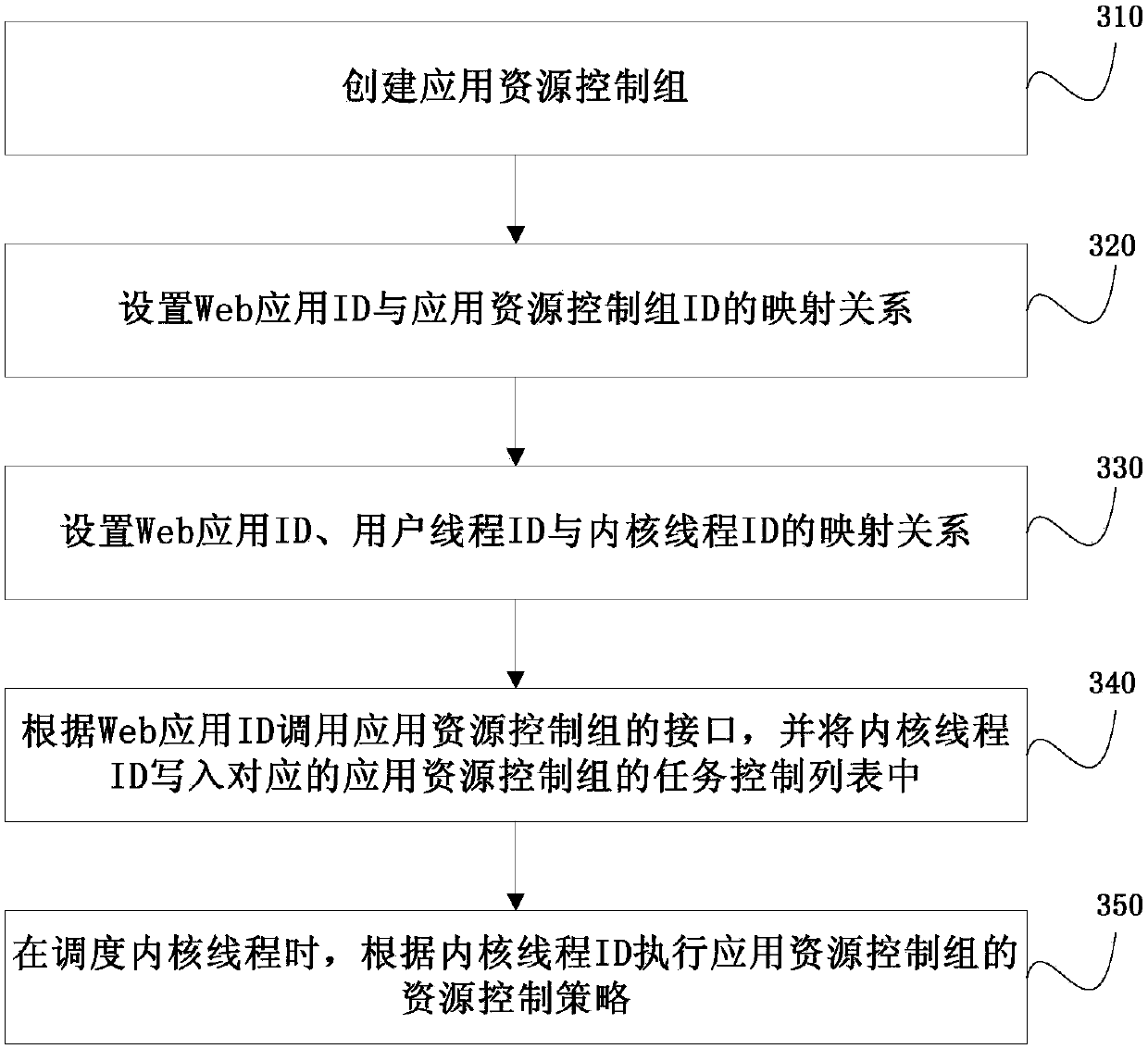

Method and device for controlling Web application resources based on Linux container

The invention discloses a method and device for controlling Web application resources based on a Linux container and relates to the field of cloud computing. The method includes the steps that an application resource control group is created; the mapping relation between a Web application ID and an application resource control group ID is set; the mapping relation of the Web application ID, a userthread ID and a kernel thread ID is set; according to the Web application ID, an interface of the application resource control group is called to write the kernel thread ID into a task control list of the corresponding application resource control group. When the kernel thread is dispatched, a resource control strategy of the application resource control group is executed according to the kernelthread ID so as to achieve fine control of Web application resource quotas using the threads as particle sizes.

Owner:CHINA TELECOM CORP LTD

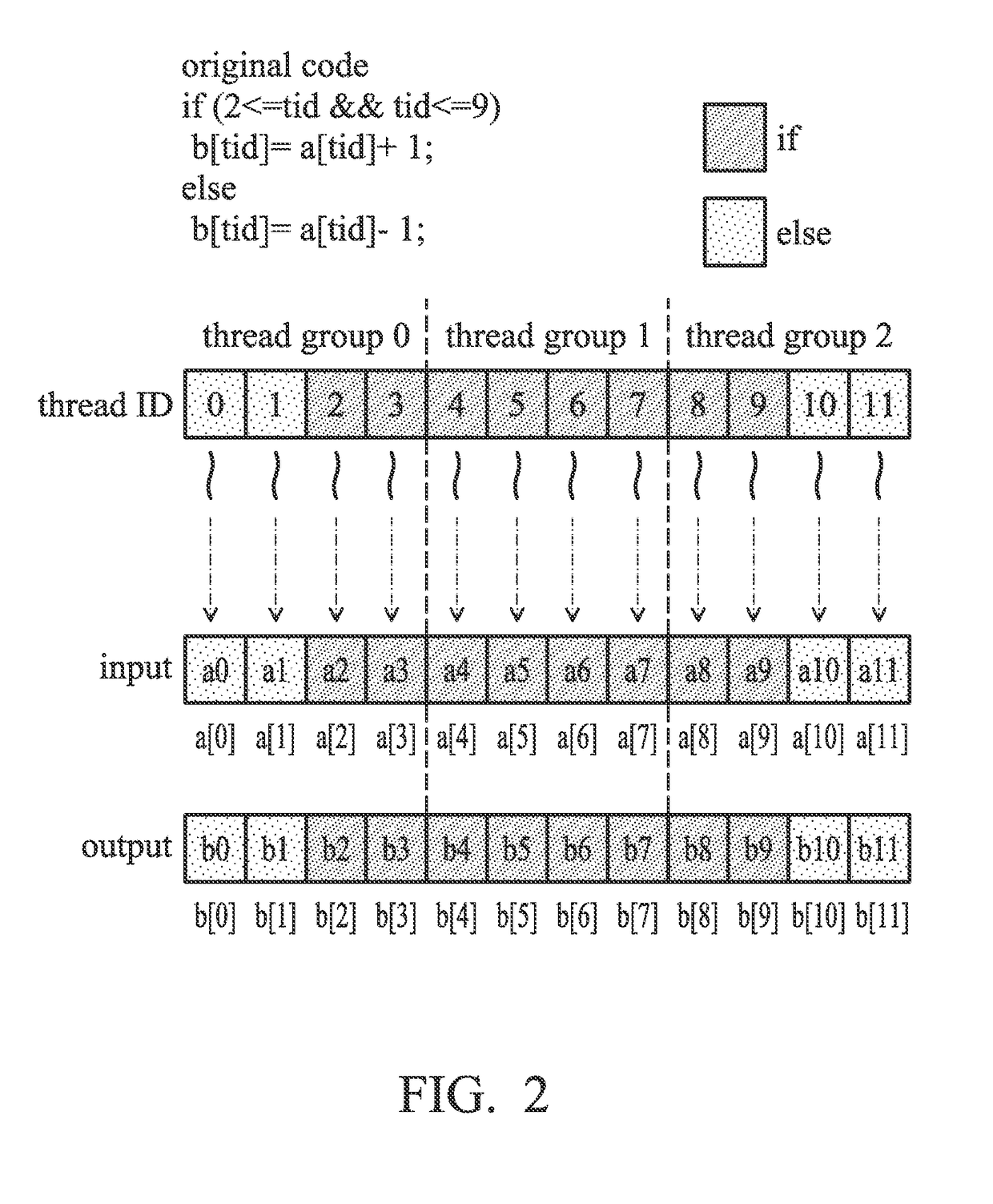

System and method for managing static divergence in a simd computing architecture

ActiveUS20170097825A1Software engineeringConcurrent instruction executionComputer architectureEngineering

A method is presented for processing one or more instructions to be executed on multiple threads in a Single-Instruction-Multiple-Data (SIMD) computing system. The method includes the steps of analyzing the instructions to collect divergent threads among a plurality of thread groups of the multiple threads; obtaining a redirection array for thread-operand association adjustment among the divergent threads according to the analysis, where the redirection array is used for exchanging a first operand associated with a first divergent thread in a first thread group with a second operand associated with a second divergent thread in a second thread group; and generating compiled code corresponding to the instructions according to the redirection array.

Owner:MEDIATEK INC

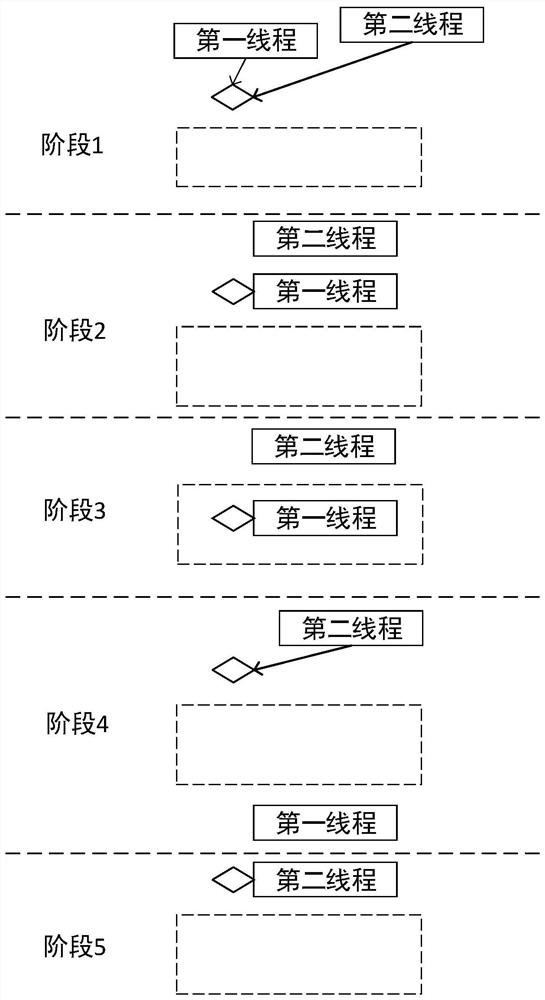

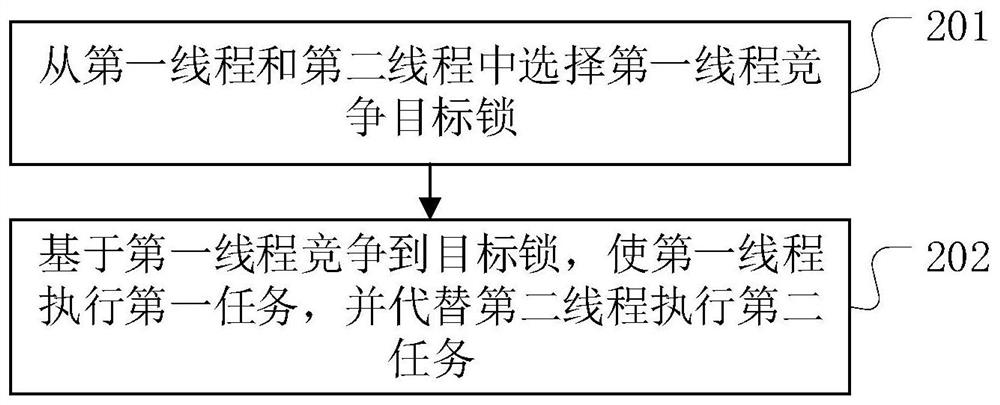

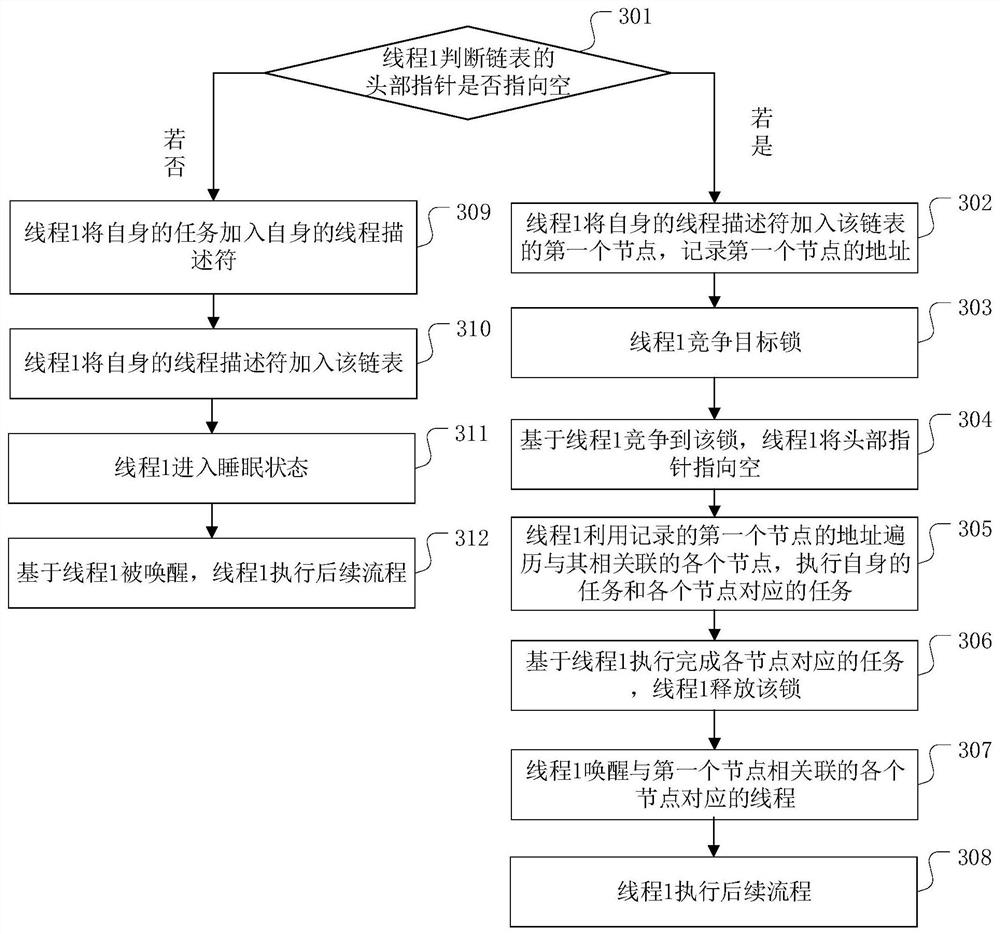

Critical resource access method and device, computer equipment and readable storage medium

PendingCN112306699AReduce the number of operationsPerformance impactResource allocationProgram synchronisationAccess methodEngineering

The embodiment of the invention discloses a critical resource access method and device, computer equipment and a readable storage medium, which are beneficial to reducing the operation quantity of a thread competition lock, saving computing resources and improving the performance of an ARM architecture processor. The critical resource access method comprises the steps of: selecting a first threadfrom a first thread and a second thread to compete for a target lock, and a first task to be executed by the first thread and a second task to be executed by the second thread correspond to the targetlock; and competing for the target lock based on the first thread to enable the first thread to execute the first task and replace the second thread to execute the second task.

Owner:HUAWEI TECH CO LTD

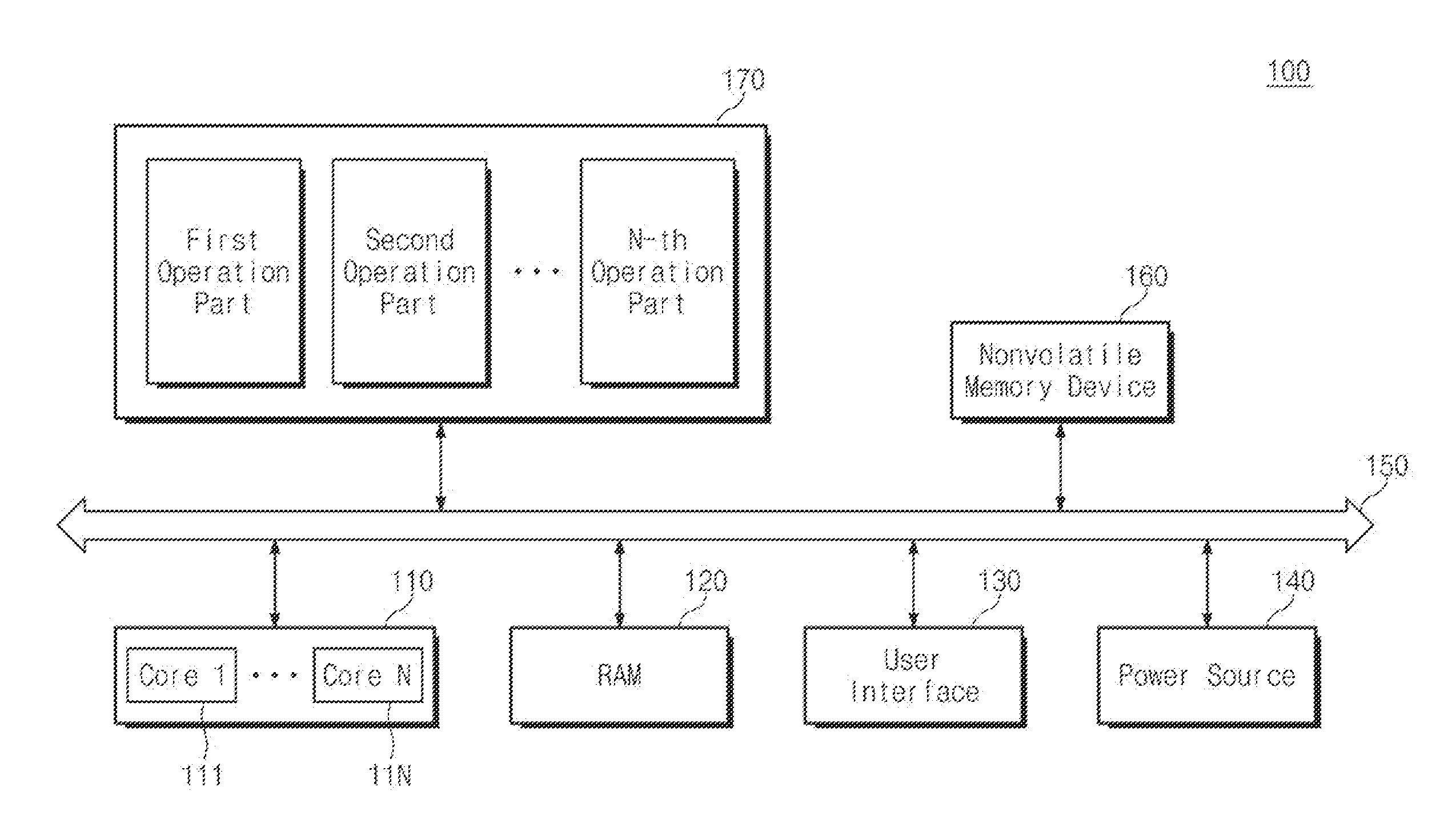

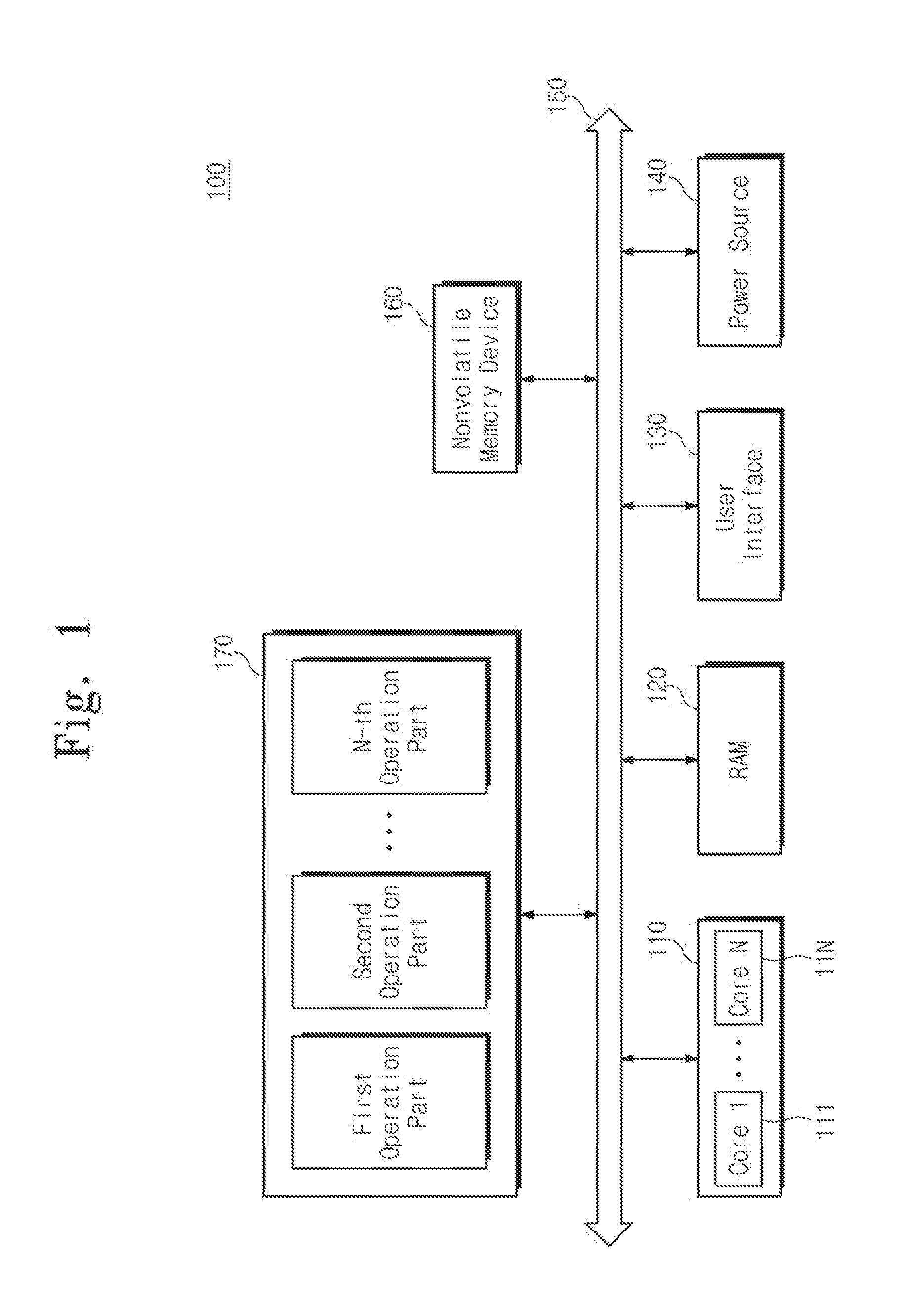

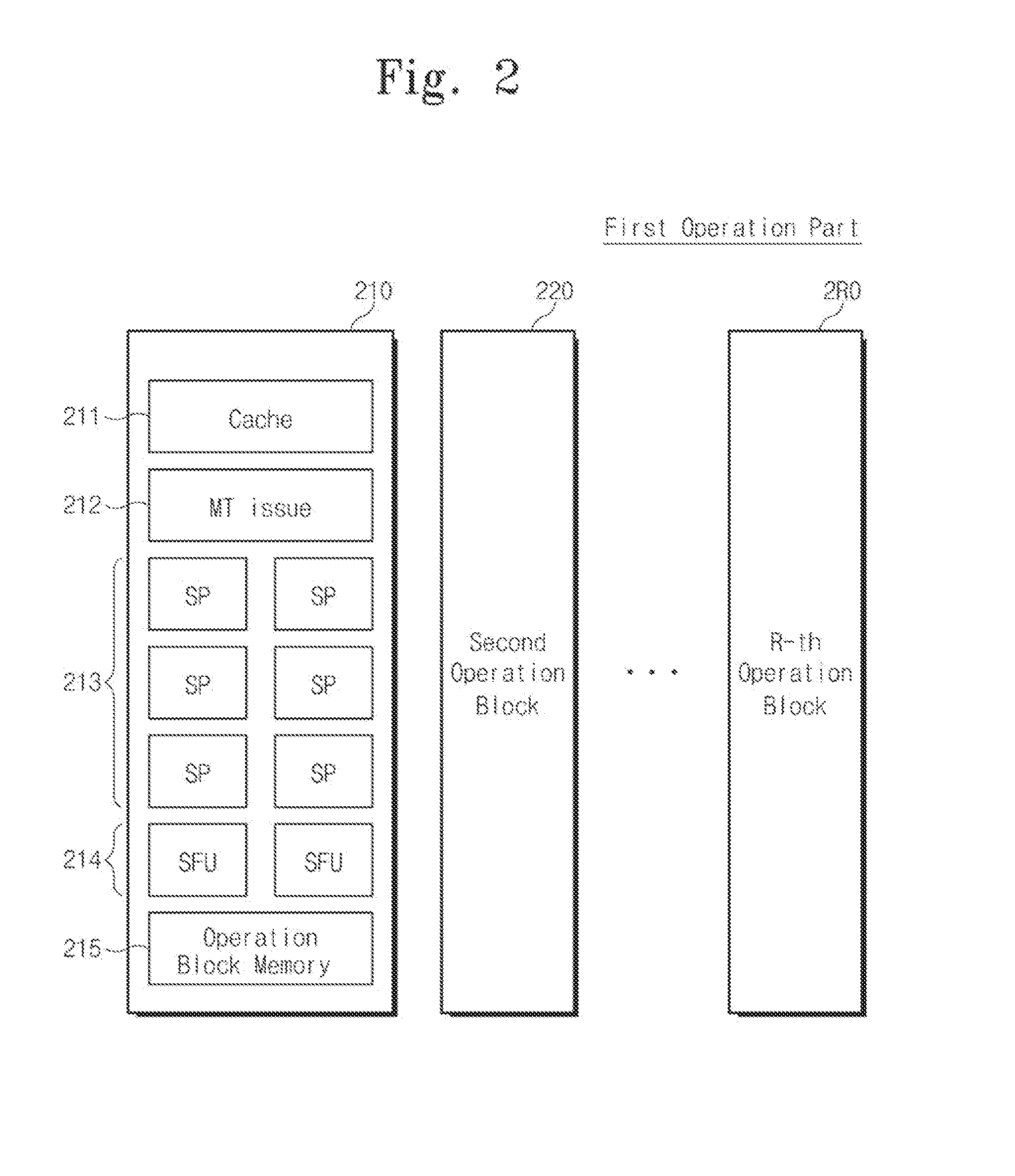

Central processing unit, GPU simulation method thereof, and computing system including the same

ActiveUS20130207983A1Improved GPU simulation speedIncrease speedProcessor architectures/configurationProgram controlGraphicsComputer architecture

A central processing unit (CPU) according to embodiments of the inventive concept may include an upper core allocated with a main thread and a plurality of lower cores, each of the plurality of the lower cores being allocated with at least one worker thread. The worker thread may perform simulation operations on operation units of a graphic processing unit (GPU) to generate simulation data, and the main thread may generate synchronization data based on the generated simulation data.

Owner:IND ACADEMIC CORP FOUND YONSEI UNIV

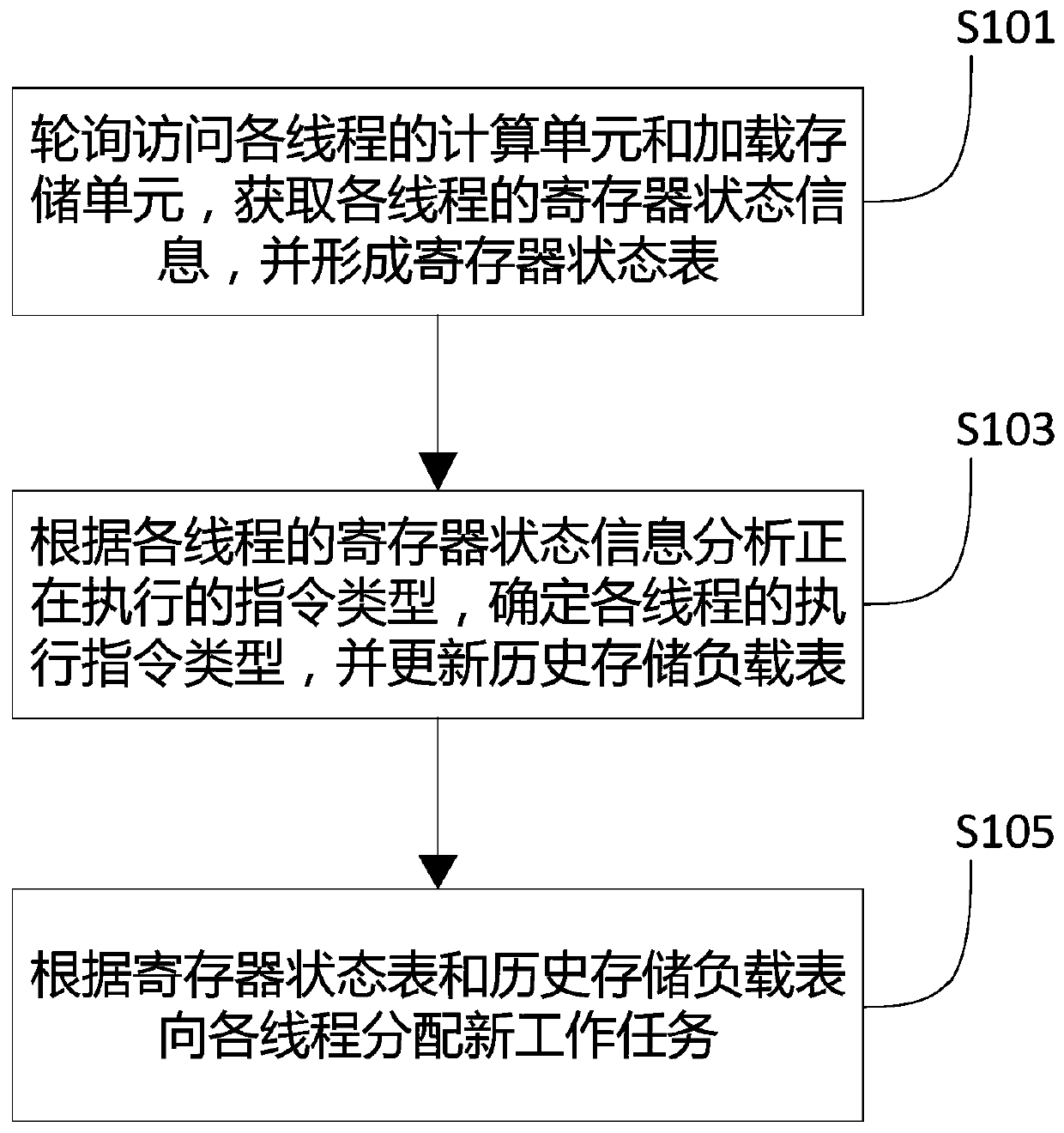

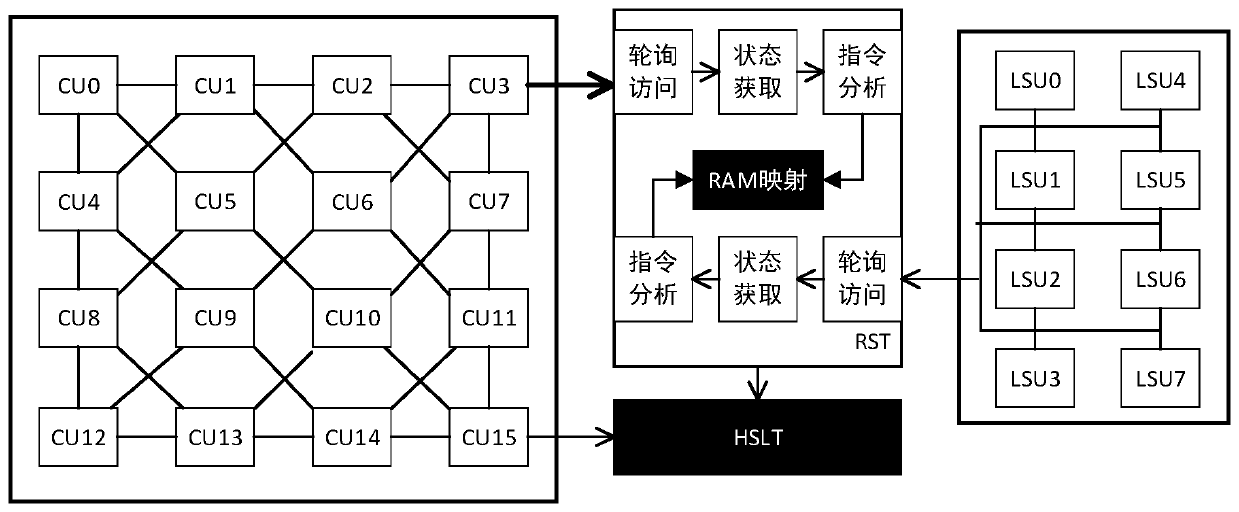

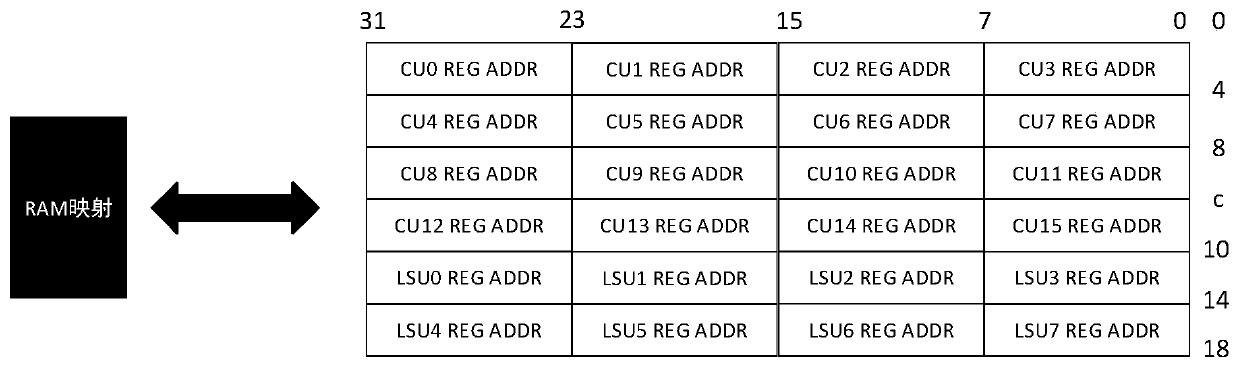

GPU thread load balancing method and device

ActiveCN111078394ALoad balancingExtended service lifeResource allocationProcessor architectures/configurationComputer architectureParallel computing

The invention discloses a GPU thread load balancing method and device. The GPU thread load balancing method comprises the following steps: polling and accessing a computing unit and a loading storageunit of each thread to obtain register state information of each thread and form a register state table; analyzing the type of an instruction being executed according to the register state informationof each thread, determining the type of an execution instruction of each thread, and updating a historical storage load table; and allocating a new work task to each thread according to the registerstate table and the historical storage load table. According to the invention, each thread of the GPU is enabled to balance the load, the working efficiency and stability are improved, and the servicelife of hardware is prolonged.

Owner:INSPUR SUZHOU INTELLIGENT TECH CO LTD

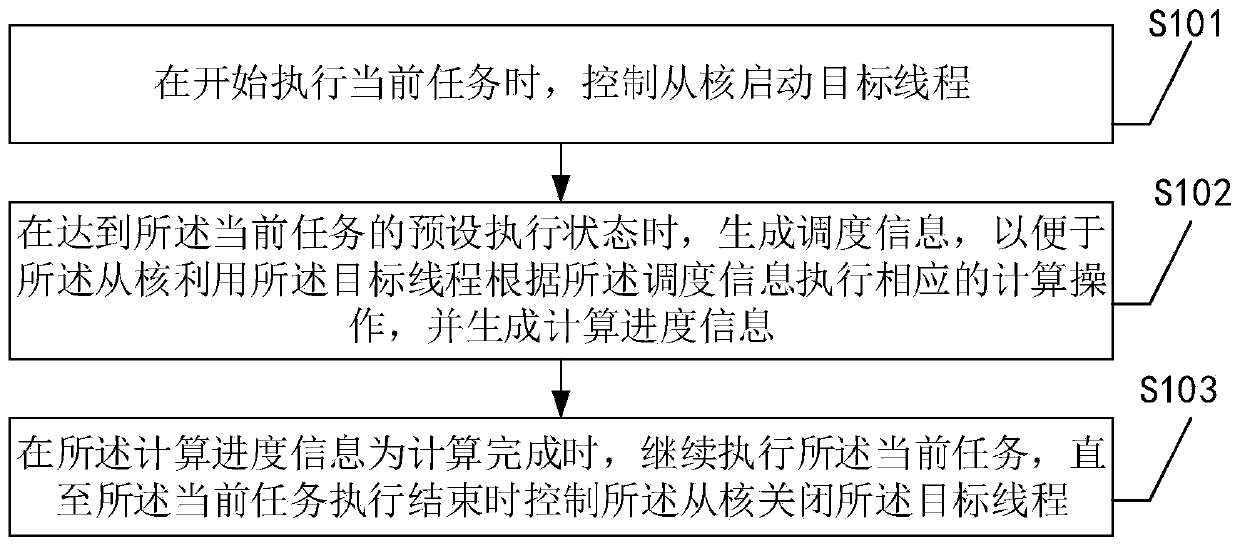

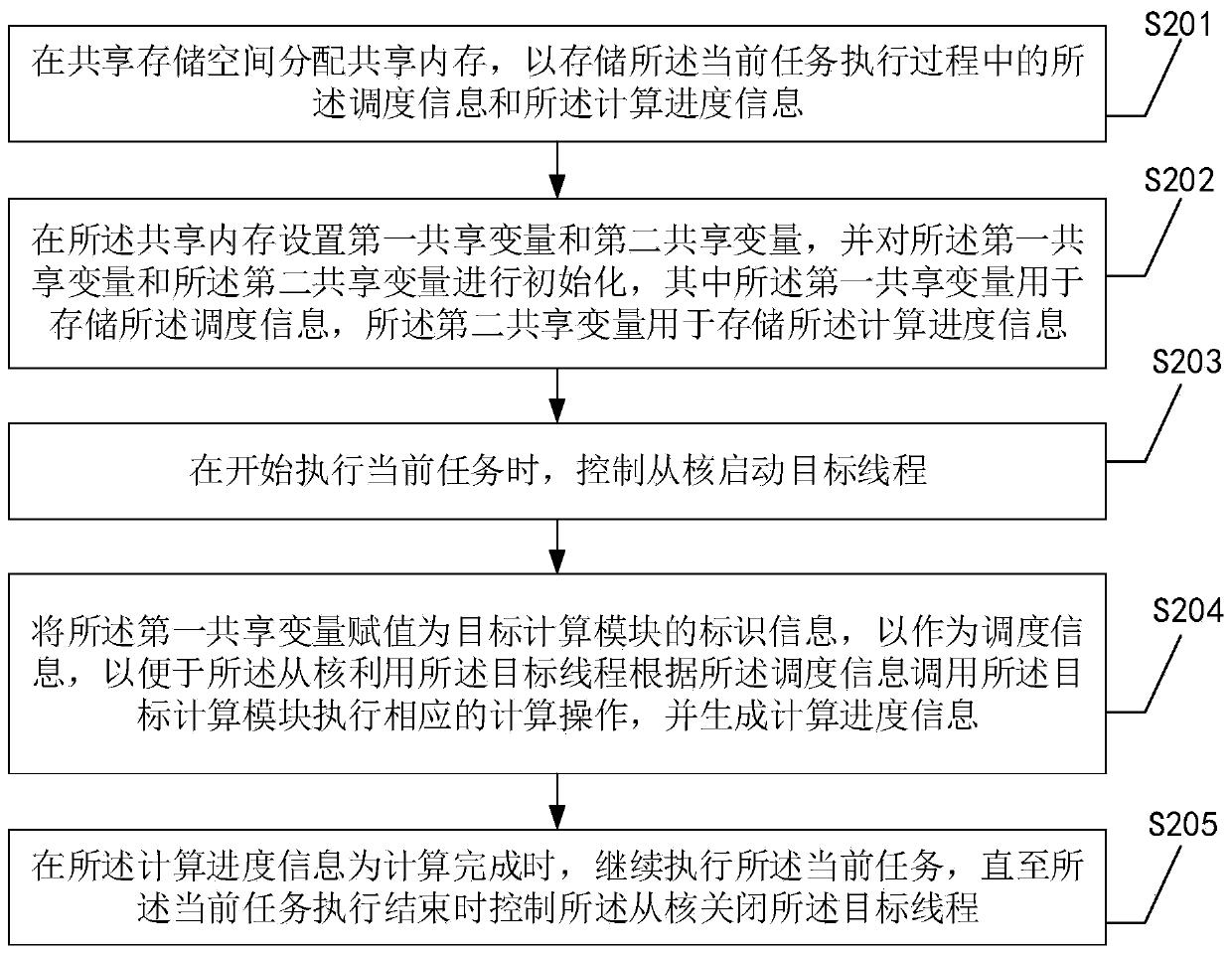

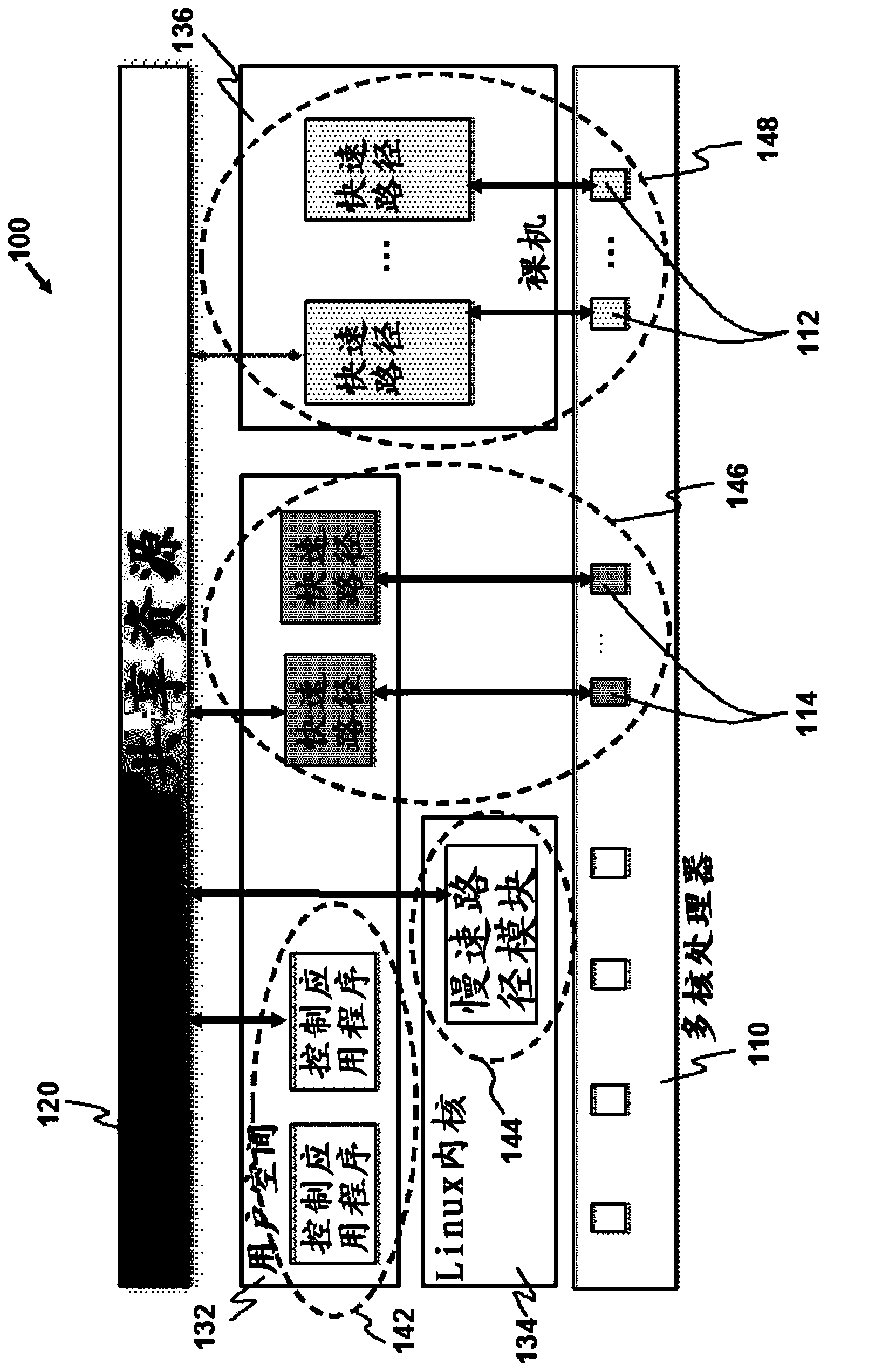

Service processing method based on heterogeneous computing platform

PendingCN110990151AEasy to calculate and operateImprove computing efficiencyResource allocationInterprogram communicationThread (computing)Processing

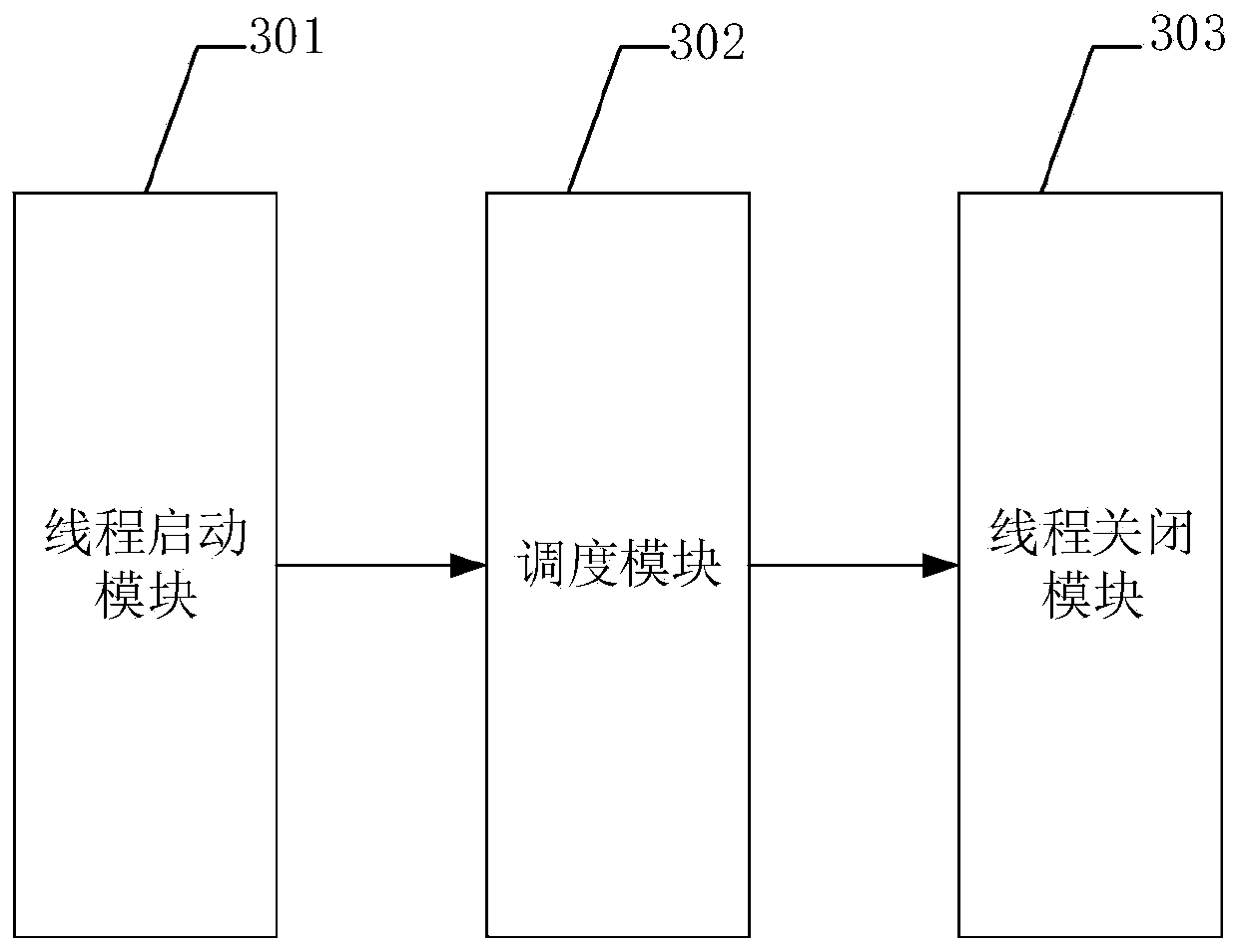

The invention discloses a heterogeneous computing platform, a service processing method and device thereof and a main core, and the scheme can start and end a thread once in a service processing process under a unified computing framework, thereby avoiding the expenditure caused by the frequent starting and closing of the thread, and improving the efficiency of heterogeneous computing. Moreover, the communication of the calculation progress between a master core and a slave core is realized by utilizing a master-slave core communication framework and a synchronization mechanism, so the mastercore is ensured to perform the next operation after the slave core finishes calculation, the slave core is ensured to start the corresponding slave core calculation module to execute the correspondingcalculation operation at different moments, and the correctness of calculation is ensured.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

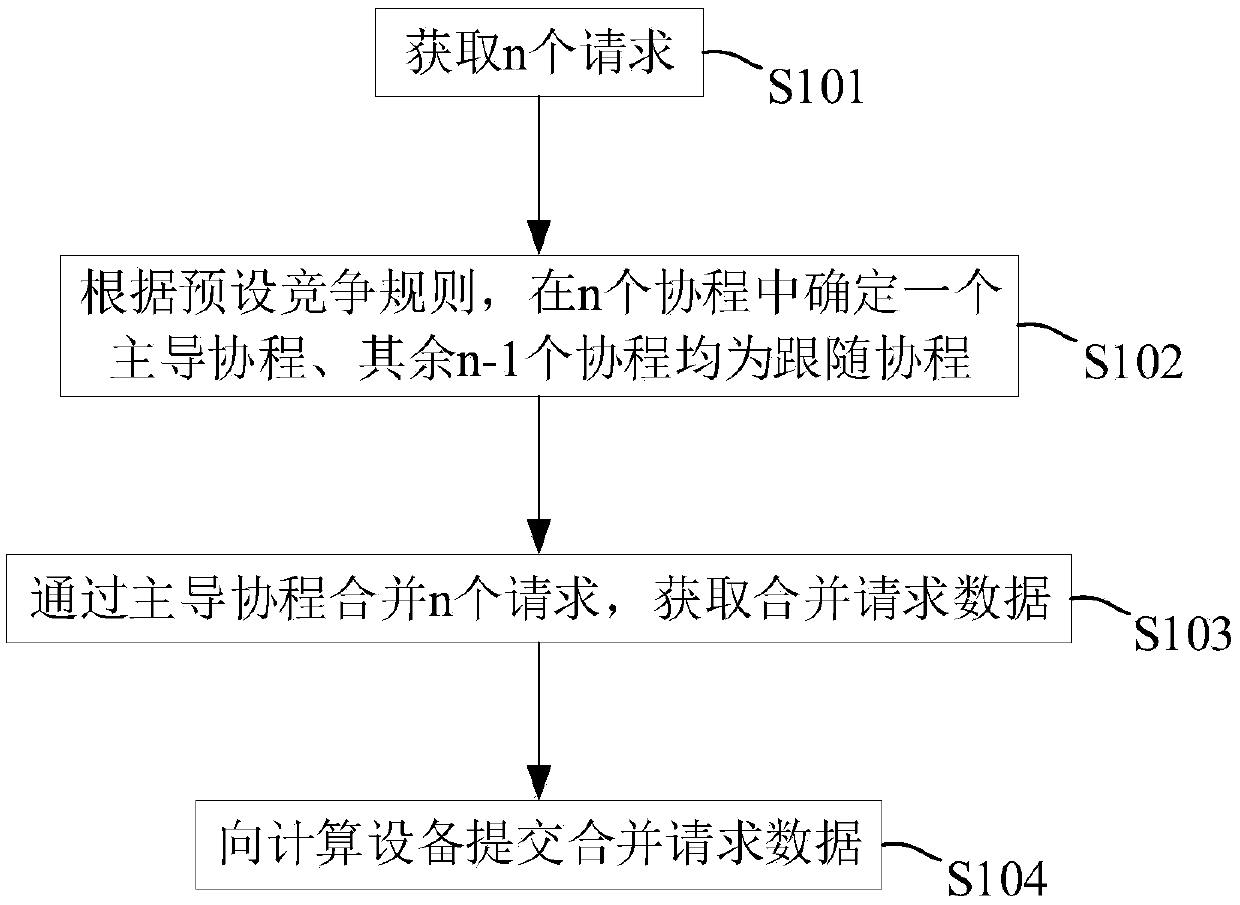

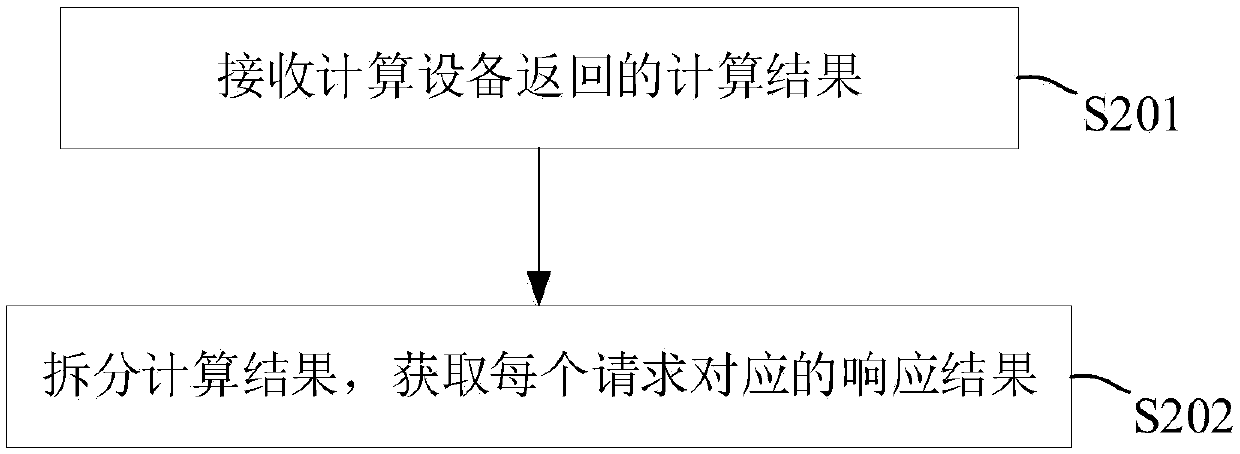

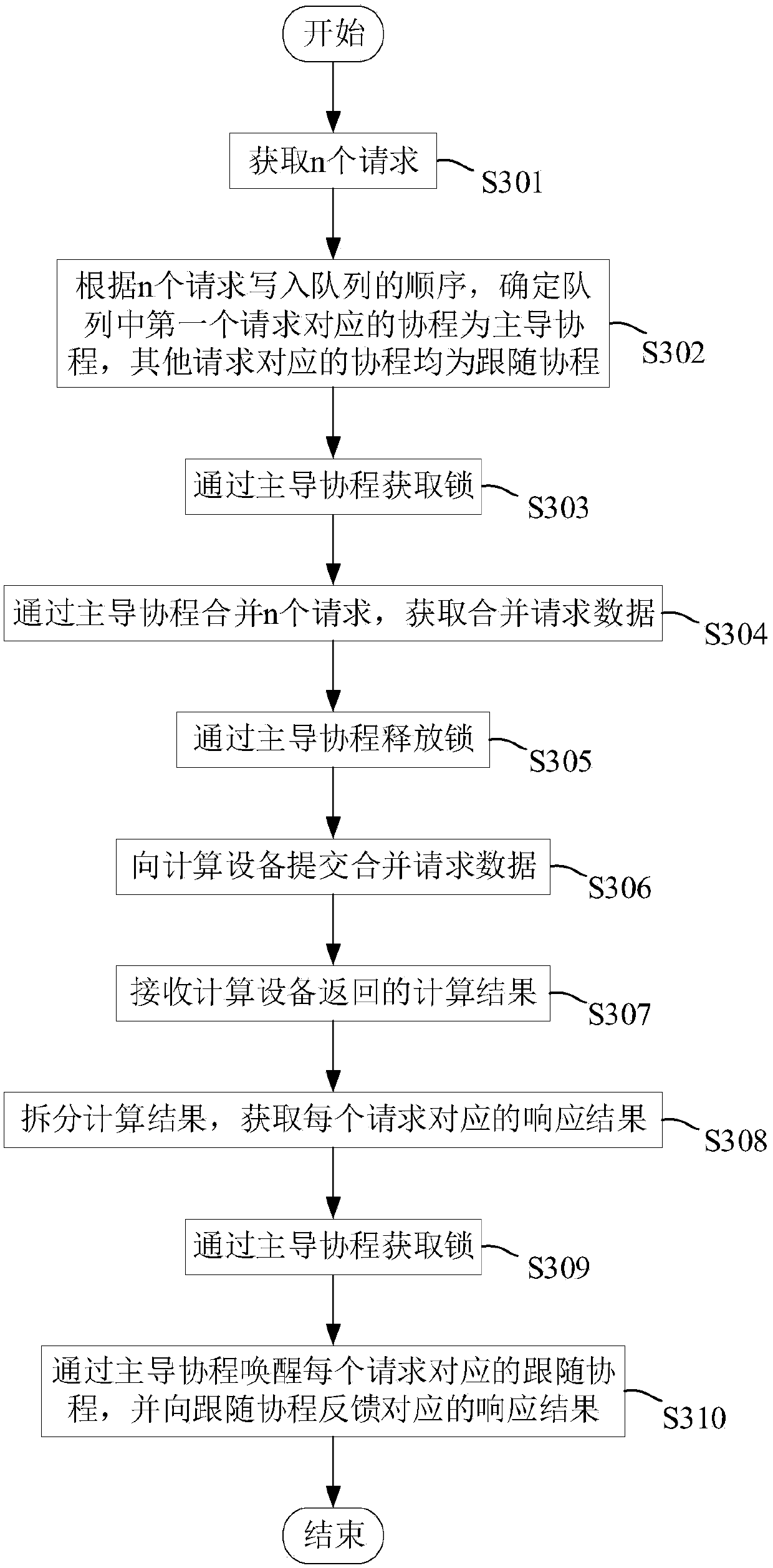

Request processing method and device, electronic equipment and computer readable storage medium

PendingCN111209094AAvoid competitionReduce resource consumptionProgram initiation/switchingResource allocationSoftware engineeringExecution unit

The invention provides a request processing method and device, electronic equipment and a computer readable storage medium, and relates to the technical field of data processing. The method comprisesthe steps of obtaining n requests, wherein each request corresponds to a cothread on the thread, and n is an integer greater than 0 and is less than or equal to a preset threshold; determining one dominant coroutine in the n coroutines according to a preset competition rule, and taking the other n-1 coroutines as following coroutines; merging the n requests through the dominant coroutine to obtainmerged request data; and submitting the merge request data to the computing device. According to the embodiment of the invention, when the request processing method is used for processing a pluralityof requests input by a user, the coroutines are introduced to serve as the execution units, the obtained n requests correspond to the coroutines respectively, the dominant coroutines are determined according to the preset competition rule to merge the n requests, server resource consumption caused by cross-thread competition is reduced, and therefore the computing performance of the server can bebrought into full play.

Owner:北京小桔科技有限公司

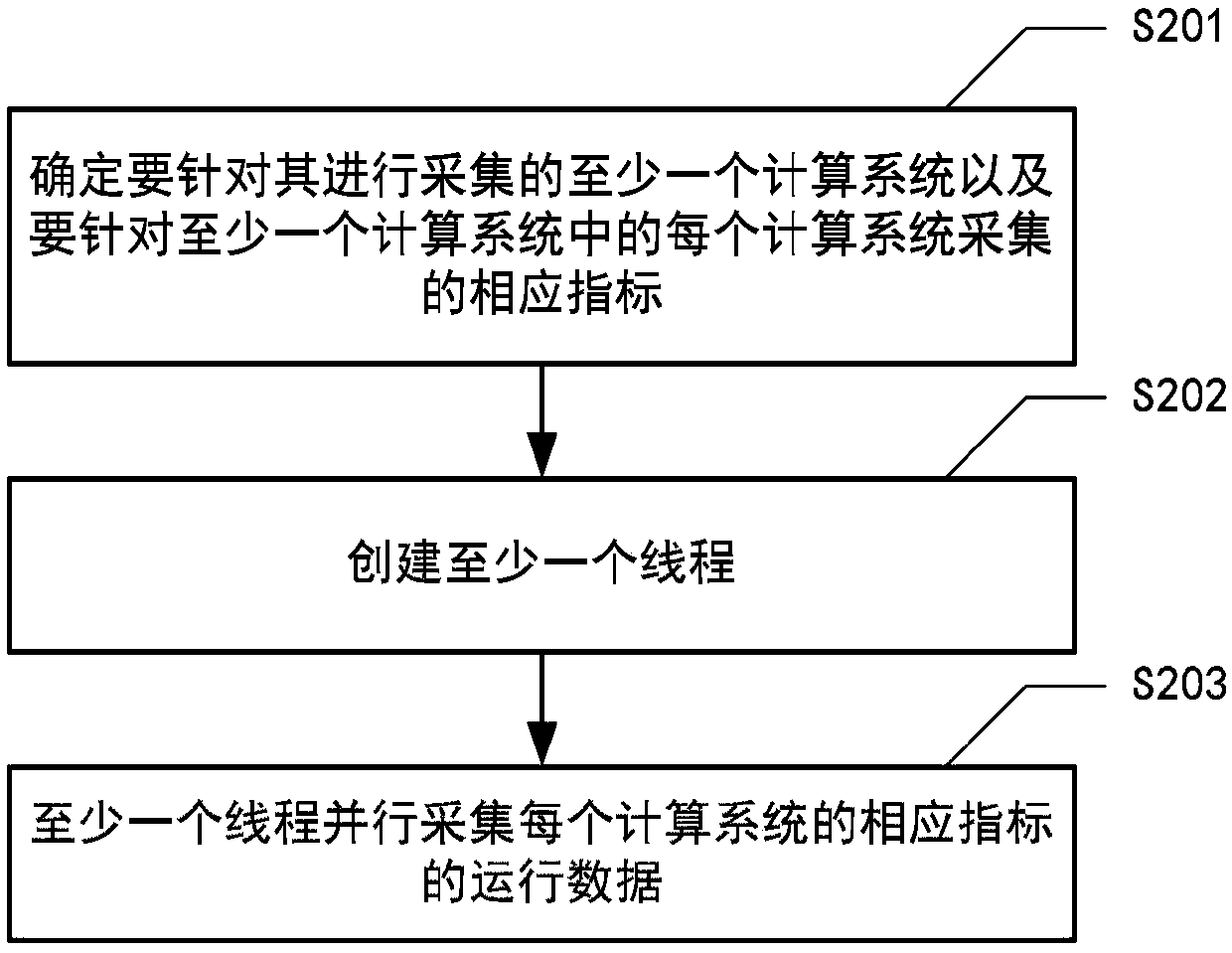

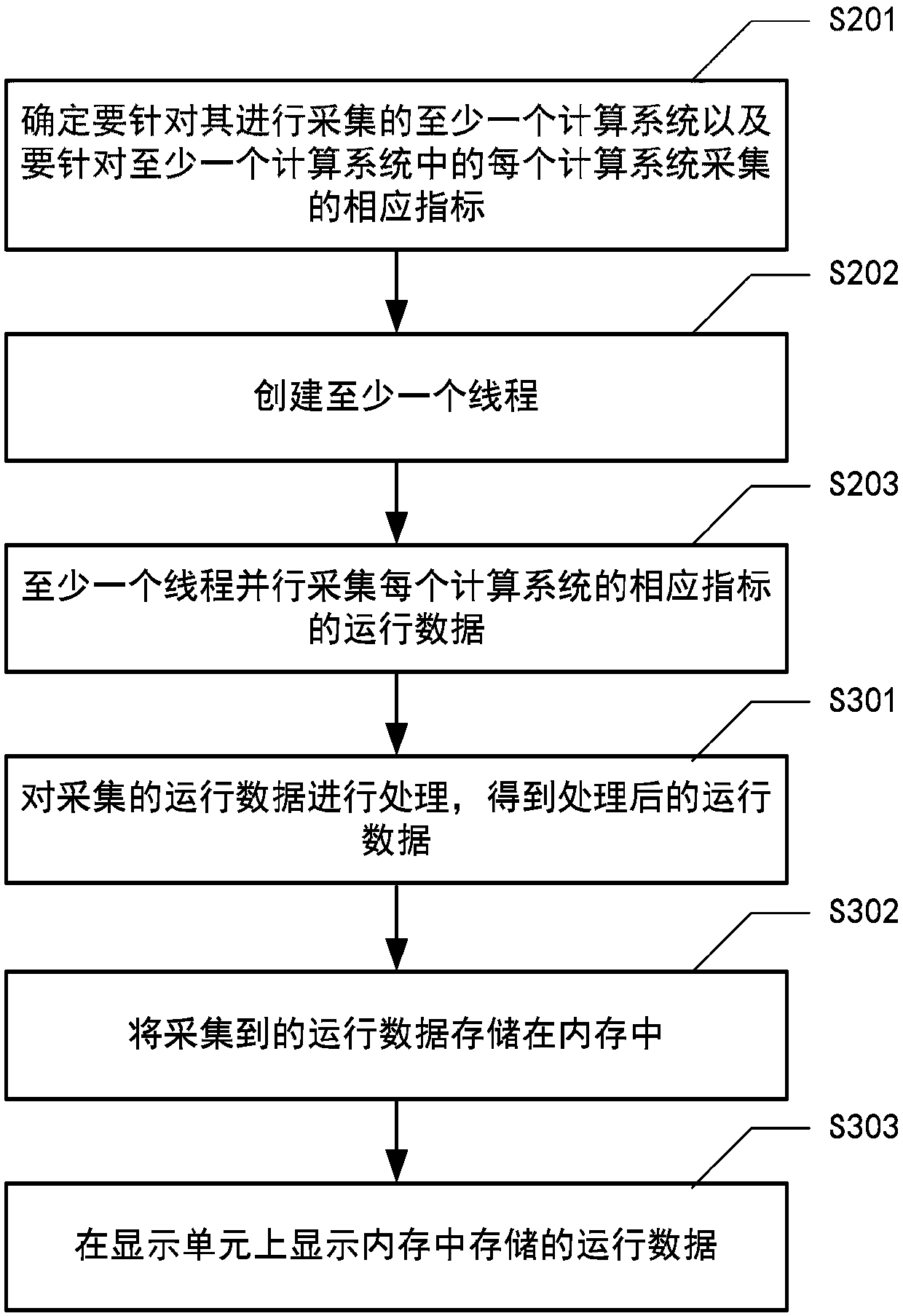

Data acquisition method and system

PendingCN109710497AAcquisition speed is fastAcquisition speed reducedHardware monitoringComputing systemsCollections data

The invention provides a data collection method used for collecting operation data of a computing system, and the method comprises the steps: determining at least one computing system to be collectedand a corresponding index to be collected for each computing system in the at least one computing system; creating at least one thread; And the at least one thread collects operation data of the corresponding index of each computing system in parallel.

Owner:BEIJING JINGDONG SHANGKE INFORMATION TECH CO LTD +1

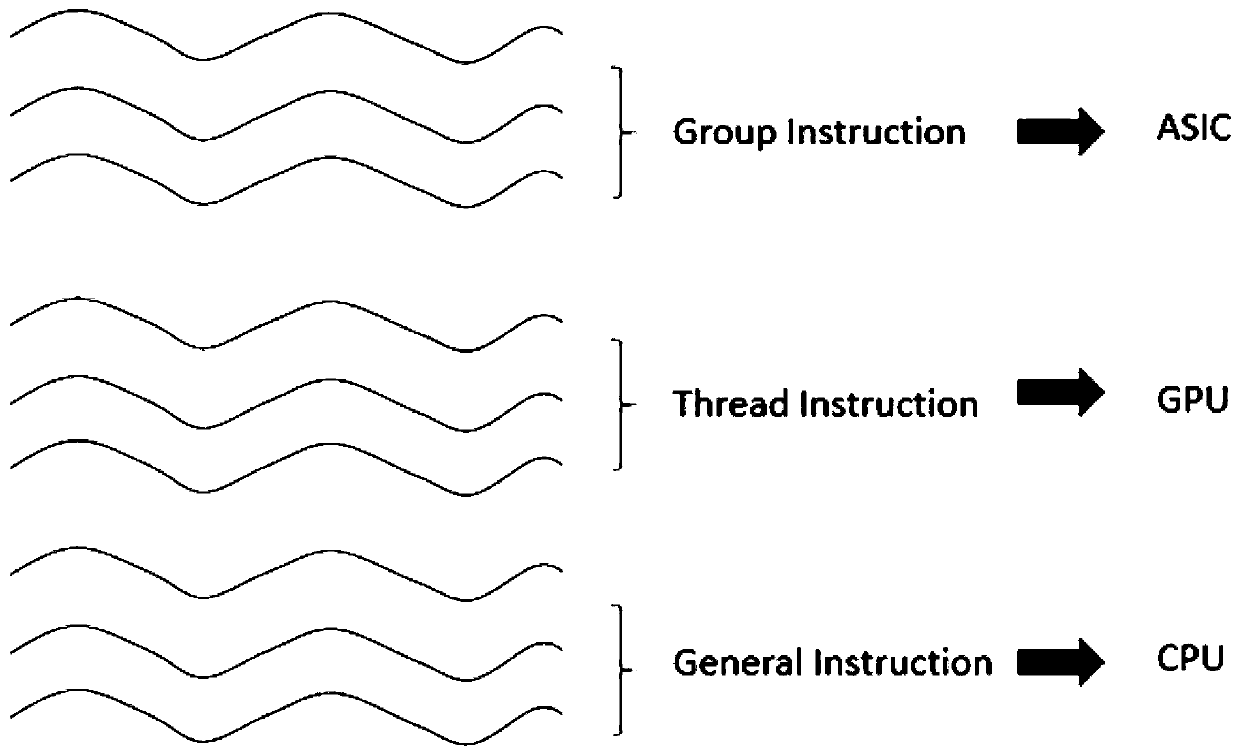

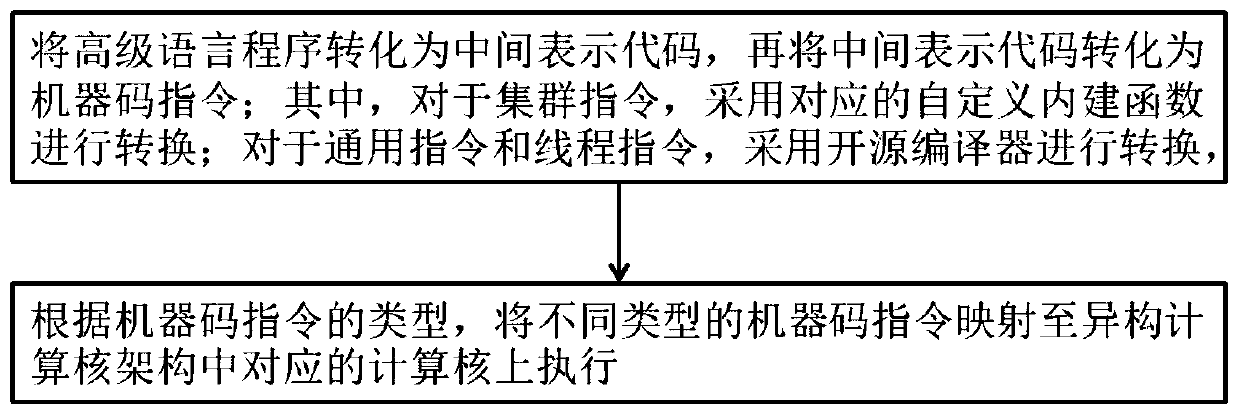

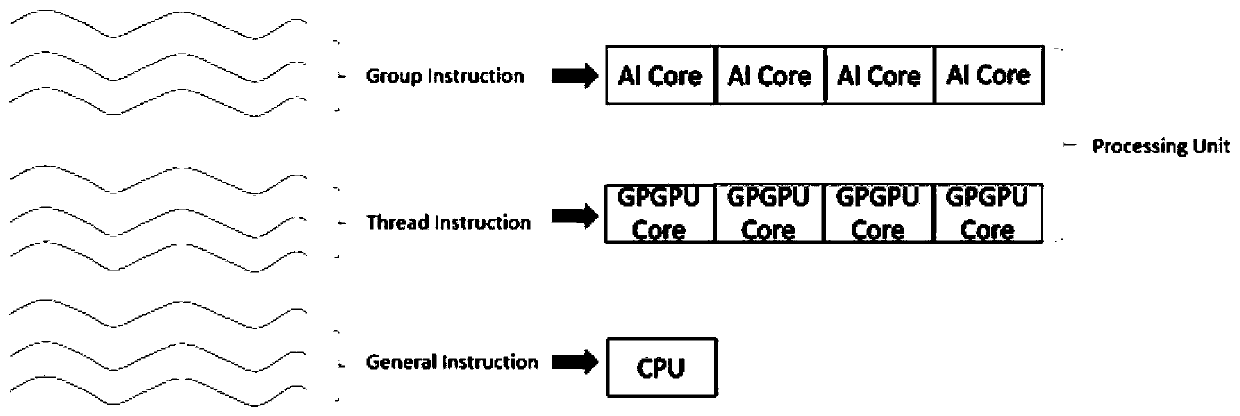

Compiler implementation method and system supporting heterogeneous computing core architecture

ActiveCN110865814AImprove execution performanceAvoid transmissionCode compilationComputer architectureEngineering

The invention discloses a compiler implementation method supporting heterogeneous computing core architecture. The compiler implementation method comprises the steps of converting a high-level language program into an intermediate representation code; converting the intermediate representation code into a machine code instruction; according to the types of the machine code instructions, mapping the machine code instructions of different types to corresponding computing cores in the heterogeneous computing core architecture to be executed, wherein the machine code instructions comprise a universal instruction, a cluster instruction and a thread instruction, and for the cluster instruction, a corresponding self-defined built-in function is adopted for conversion; and for general instructionsand thread instructions, adopting an existing built-in function or instruction of an open source compiler for conversion. According to the compiler implementation method, multiple types of high-levellanguage programs can be automatically processed, and the high-level language programs are sequentially converted into the intermediate representation codes and the machine code instructions which can be finally executed, and the machine code instructions are distributed to different computing cores to be executed according to the attribute types of the machine code instructions, and data transmission through a system bus is avoided, and the instruction execution performance is improved.

Owner:上海芷锐电子科技有限公司

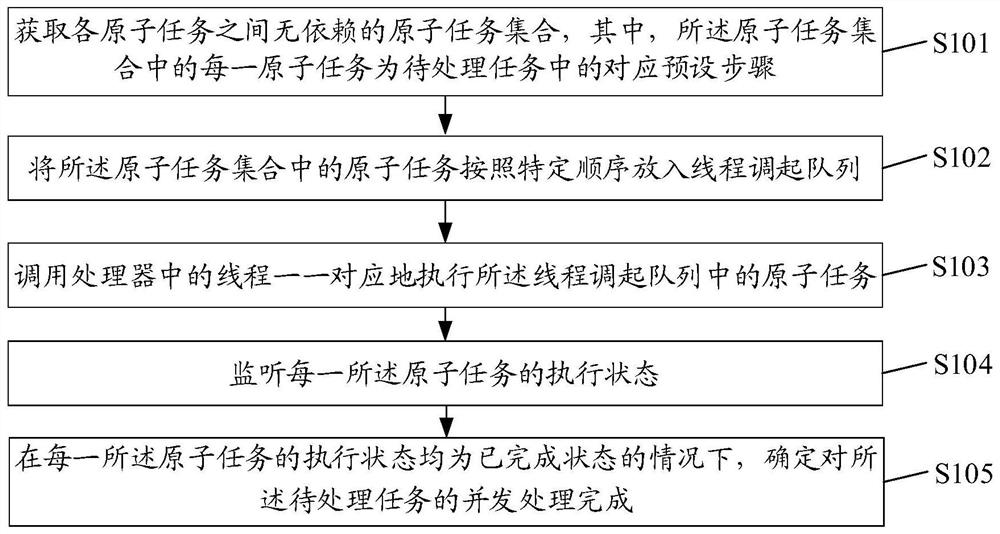

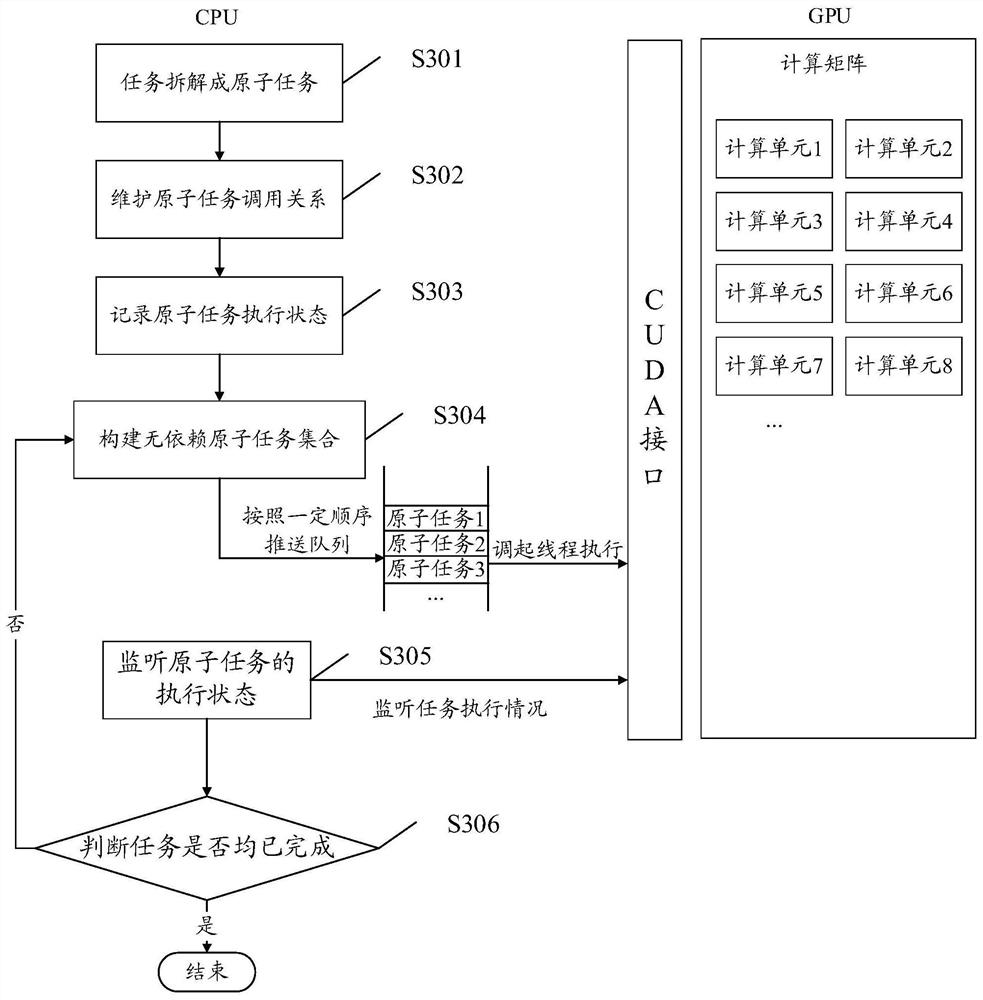

Concurrent computing method and device for tasks, equipment and storage medium

PendingCN112306713AImprove execution efficiencyTake advantage of parallel computing powerResource allocationInterprogram communicationConcurrent computingThread (computing)

The embodiment of the invention discloses a concurrent calculation method and device for tasks, equipment and a storage medium, and the method comprises the steps: obtaining an independent atomic taskset among all atomic tasks, and enabling each atomic task in the atomic task set to be a corresponding preset step in a to-be-processed task; putting the atomic tasks in the atomic task set into a thread calling queue according to a specific sequence; calling threads in a processor to execute the atomic tasks in the thread calling queue in a one-to-one correspondence manner; monitoring an execution state of each atomic task; and under the condition that the execution state of each atomic task is a completed state, determining that concurrent processing of the to-be-processed tasks is completed.

Owner:WEBANK (CHINA)

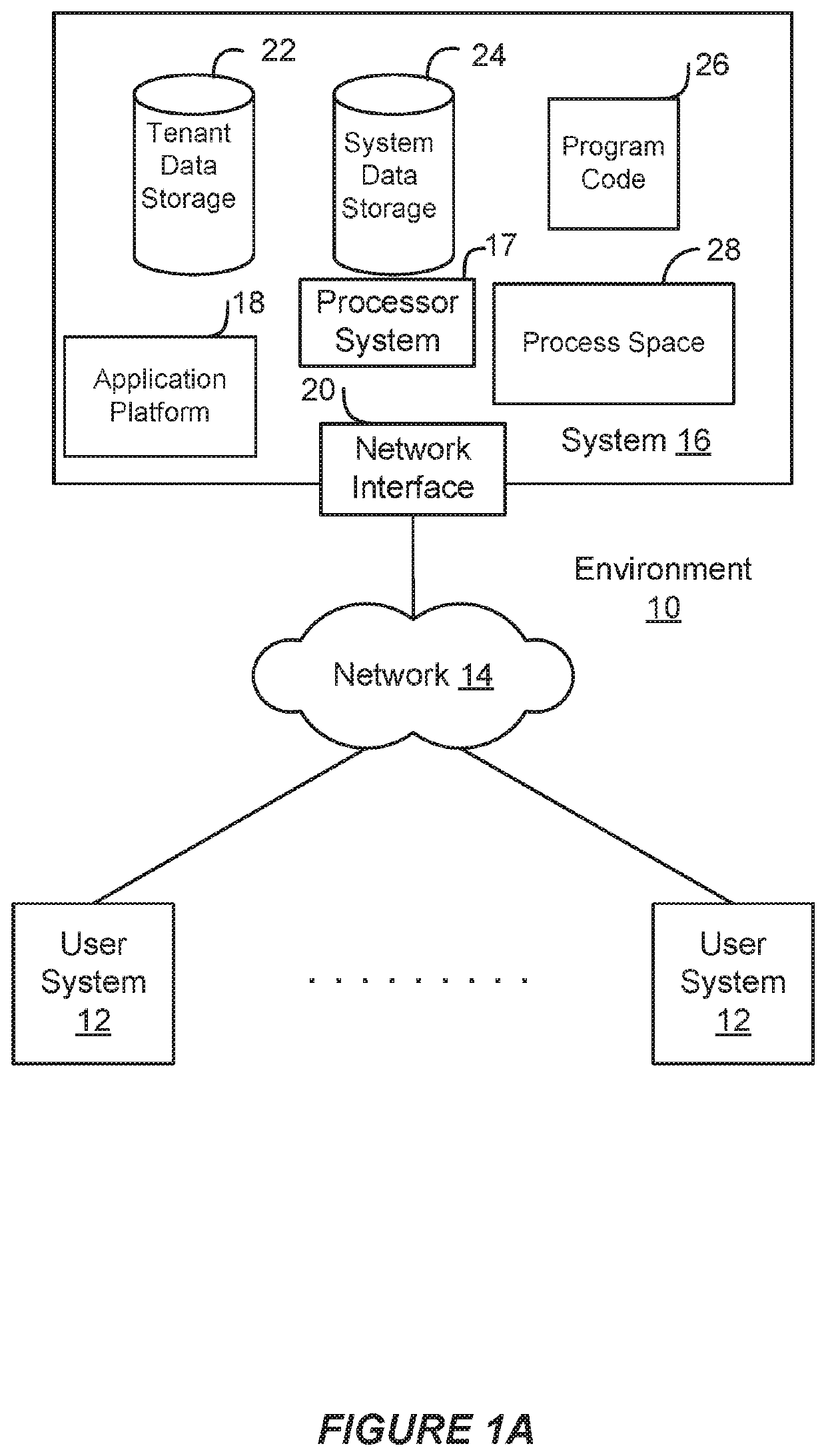

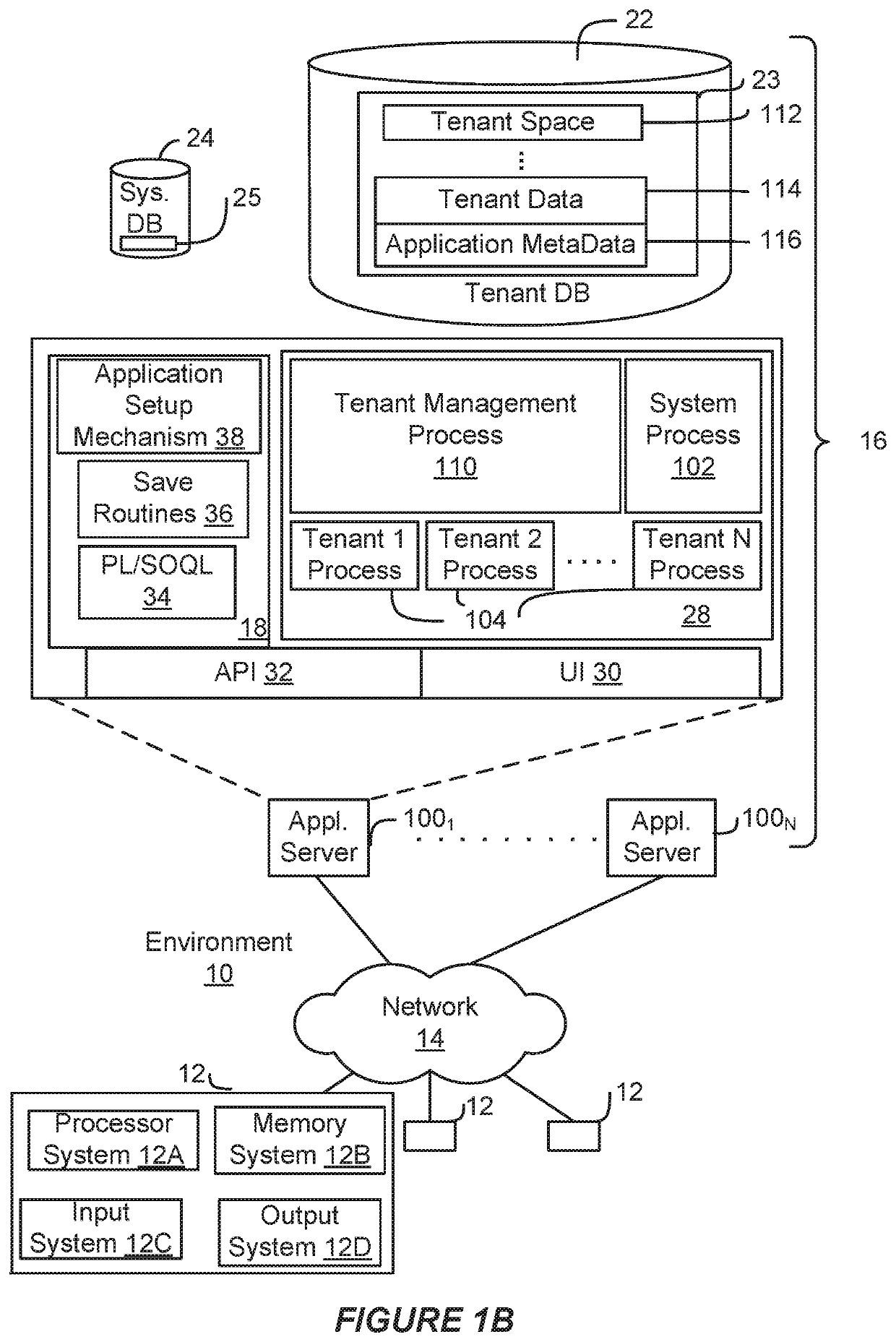

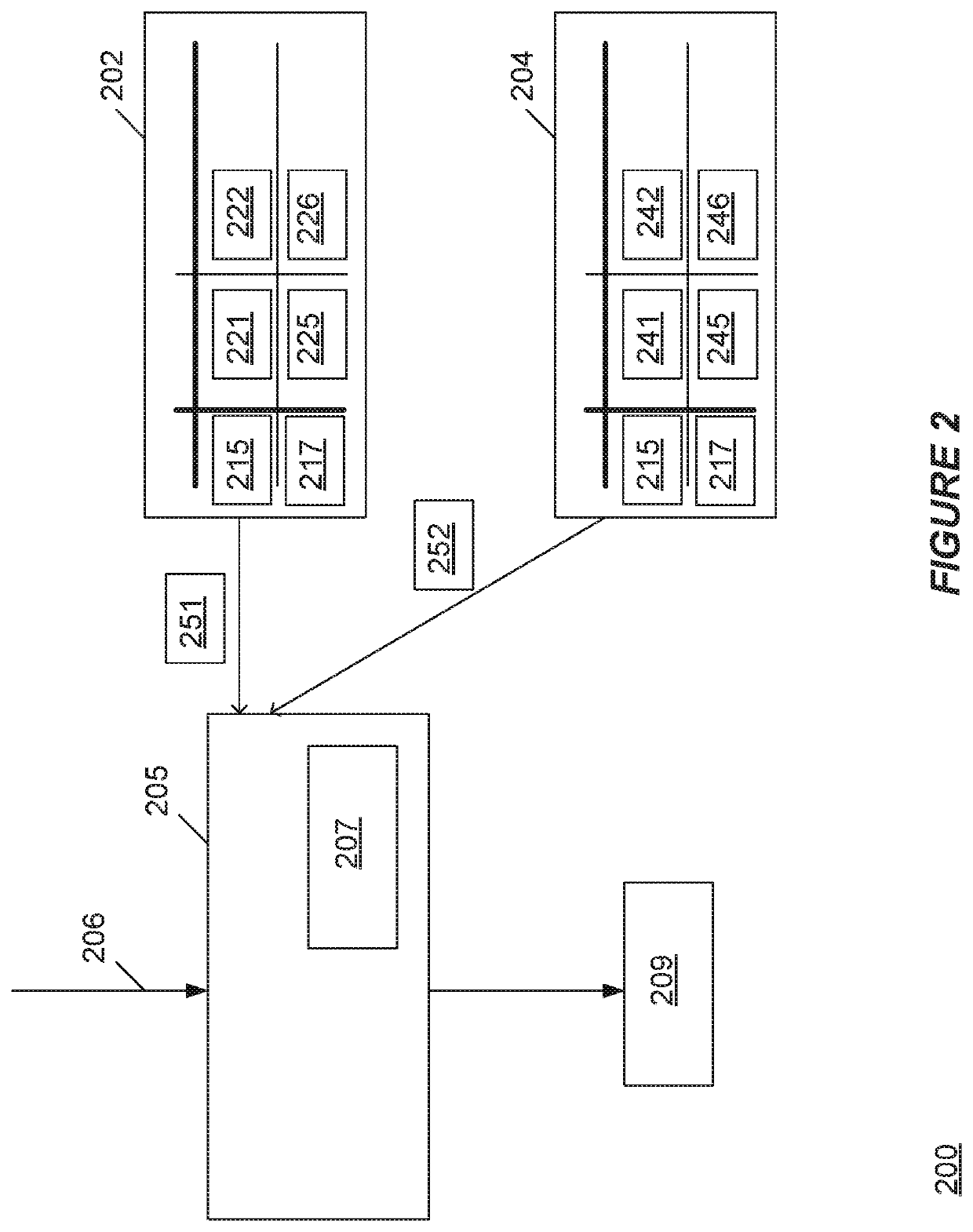

Thread record provider

Owner:SALESFORCE COM INC

Thread migration method and device, storage medium and electronic equipment

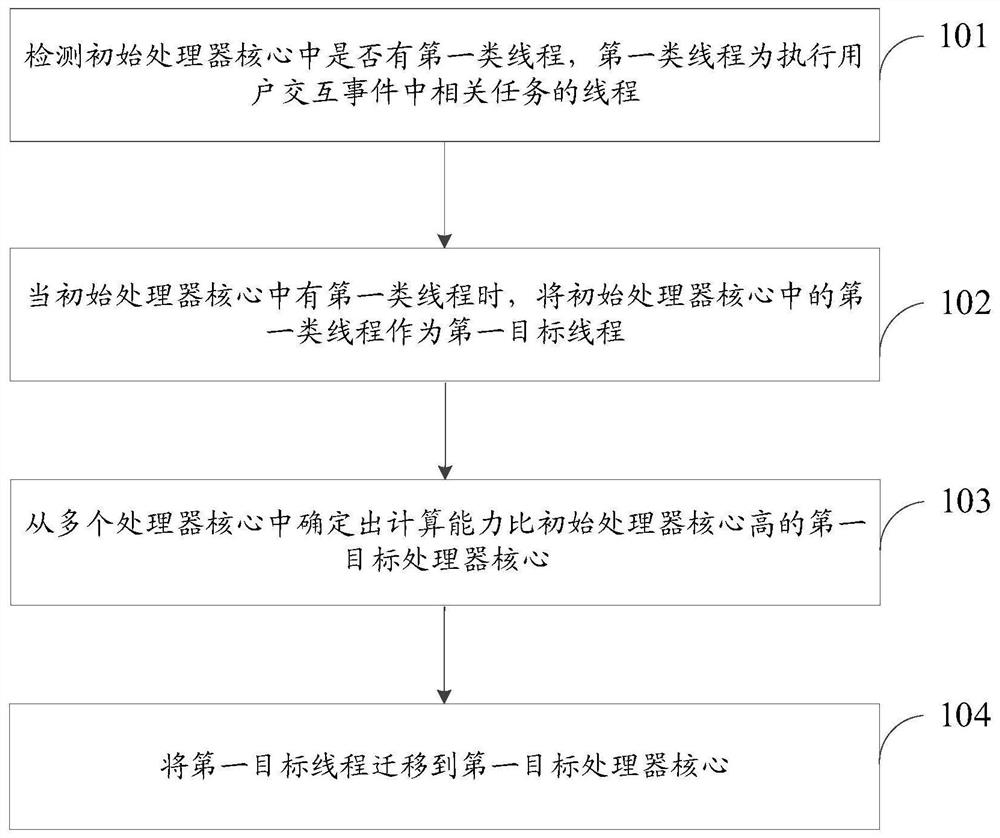

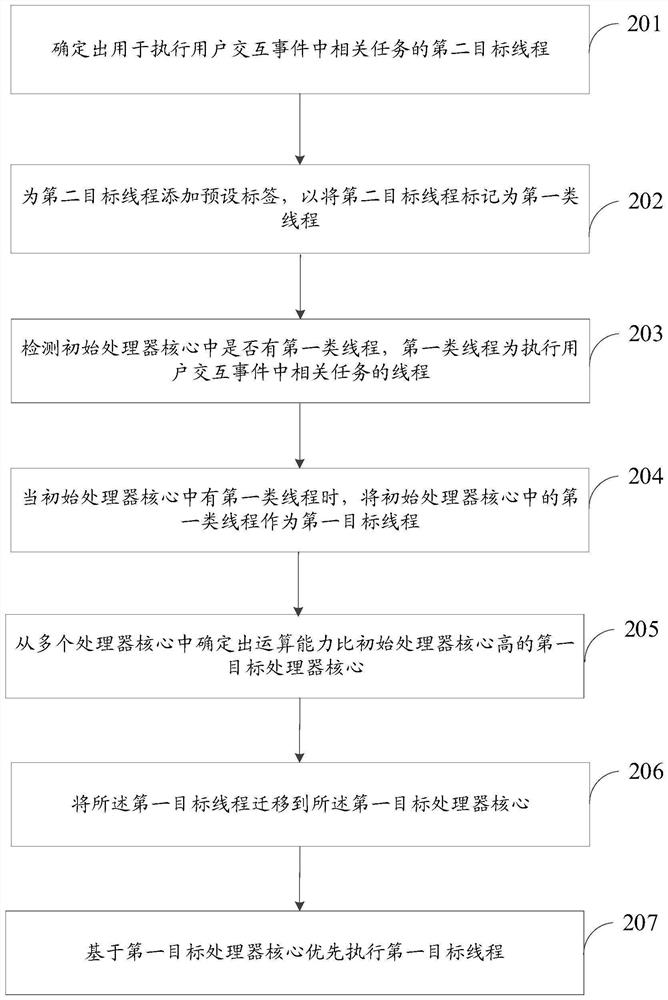

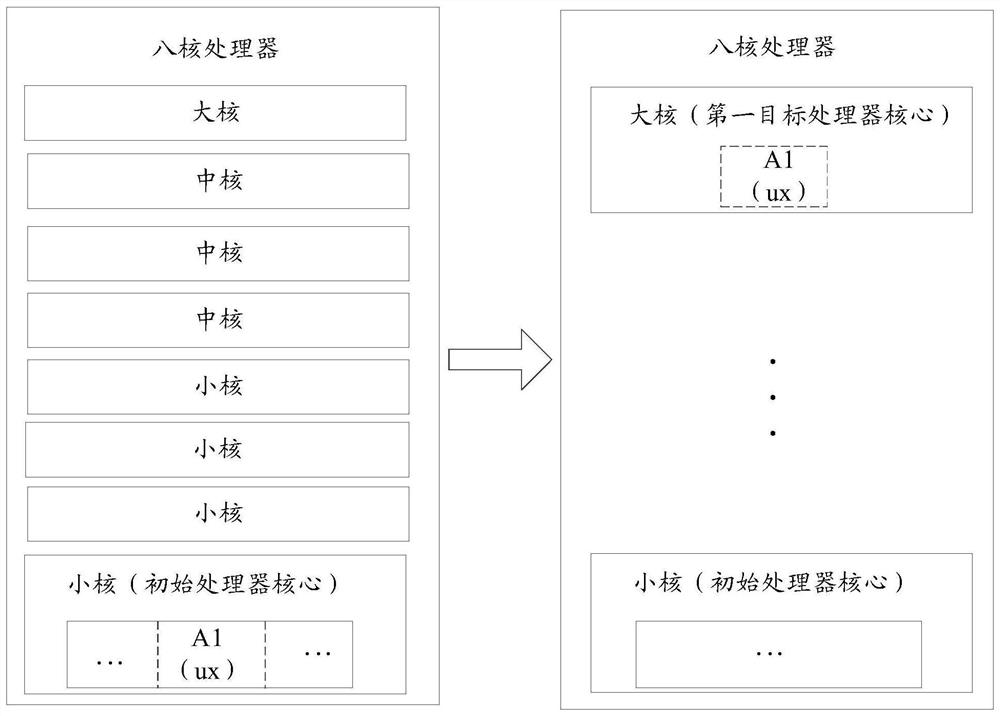

PendingCN111831414AReduce stutteringImprove experienceProgram initiation/switchingResource allocationComputer architectureParallel computing

The invention discloses a thread migration method and device, a storage medium and electronic equipment. The method comprises the following steps: detecting whether a first type of thread exists in aninitial processor core or not, wherein the first type of thread is a thread for executing related tasks in a user interaction event; when the first kind of threads exist in the initial processor core, using the first kind of threads in the initial processor core as first target threads; determining a first target processor core of which the computing power is higher than that of the initial processor core from the plurality of processor cores; and migrating the first target thread to the first target processor core. According to the method, when it is detected that the first kind of threads are located in the processor core with low computing power, the first kind of threads are migrated into the processor core with high computing power, so that the first kind of threads executing the user interaction event related tasks are processed more efficiently, and therefore the jamming phenomenon in a user interaction scene is reduced.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

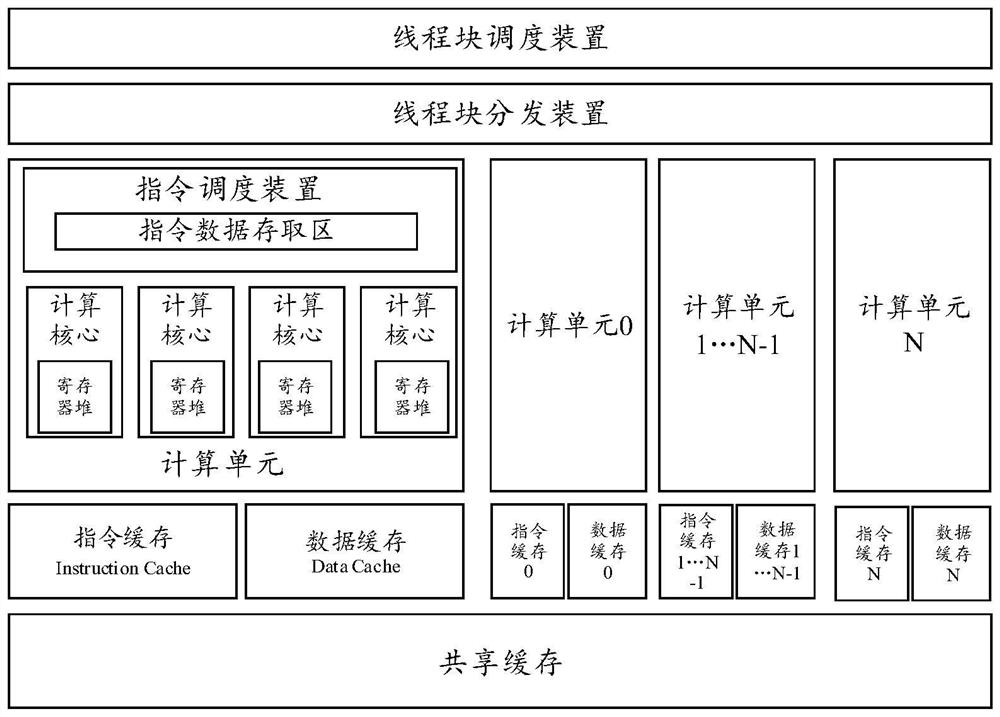

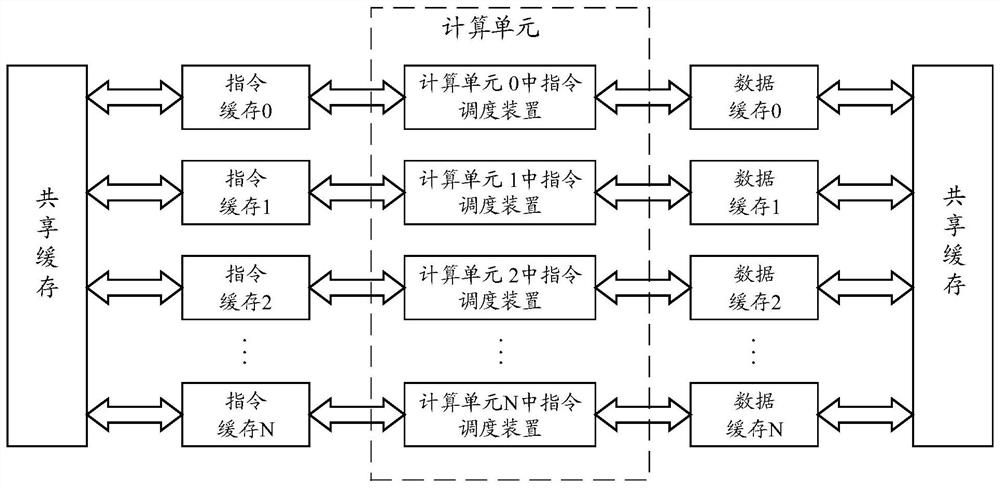

Instruction scheduling method, instruction scheduling device, processor and storage medium

PendingCN114153500AConcurrent instruction executionProcessor architectures/configurationComputer architectureScheduling instructions

An instruction scheduling method, an instruction scheduling device, a processor and a storage medium, the instruction scheduling method comprising: selecting a first instruction fetch request for a first instruction address initiated by a first thread bundle, and performing instruction fetch operation for the first instruction address; receiving first instruction data which is returned from the first instruction address and corresponds to the first instruction fetching request; and in response to a second fetch request initiated by a second thread bundle for fetch of the first instruction address, broadcasting and sending the first instruction data to the write address of the instruction data access area of the first thread bundle and the write address of the instruction data access area of the second thread bundle in the first clock period. According to the instruction scheduling method, the access of the computing unit to the instruction cache or other cache systems such as the multi-level cache due to the instruction fetching operation can be reduced, and the access bandwidth of the instruction cache or other cache systems such as the multi-level cache can be reduced; and therefore, the access bandwidth of the cache system such as the data cache of the data required for executing the instruction or other multi-level caches can be reduced.

Owner:HYGON INFORMATION TECH CO LTD

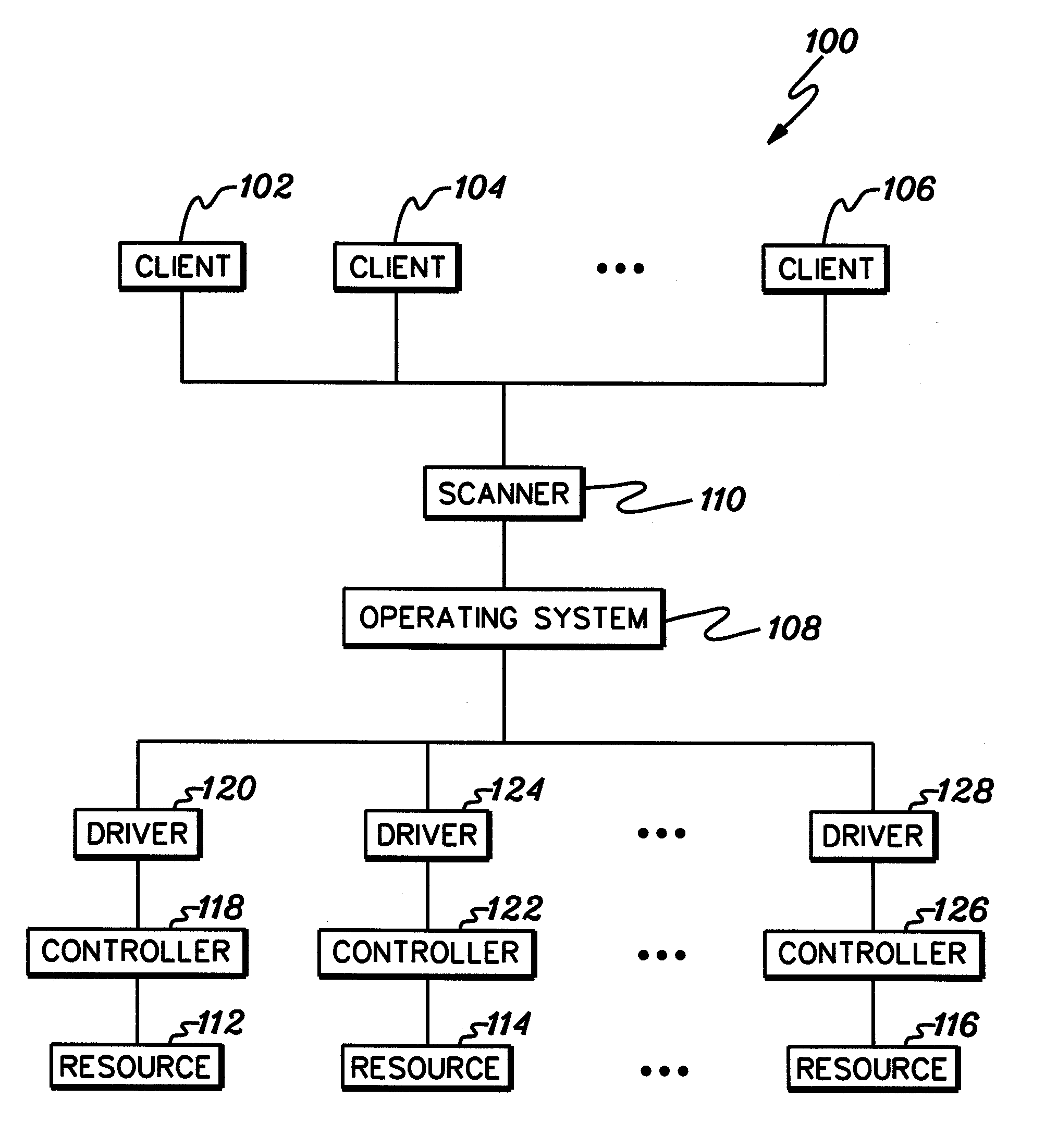

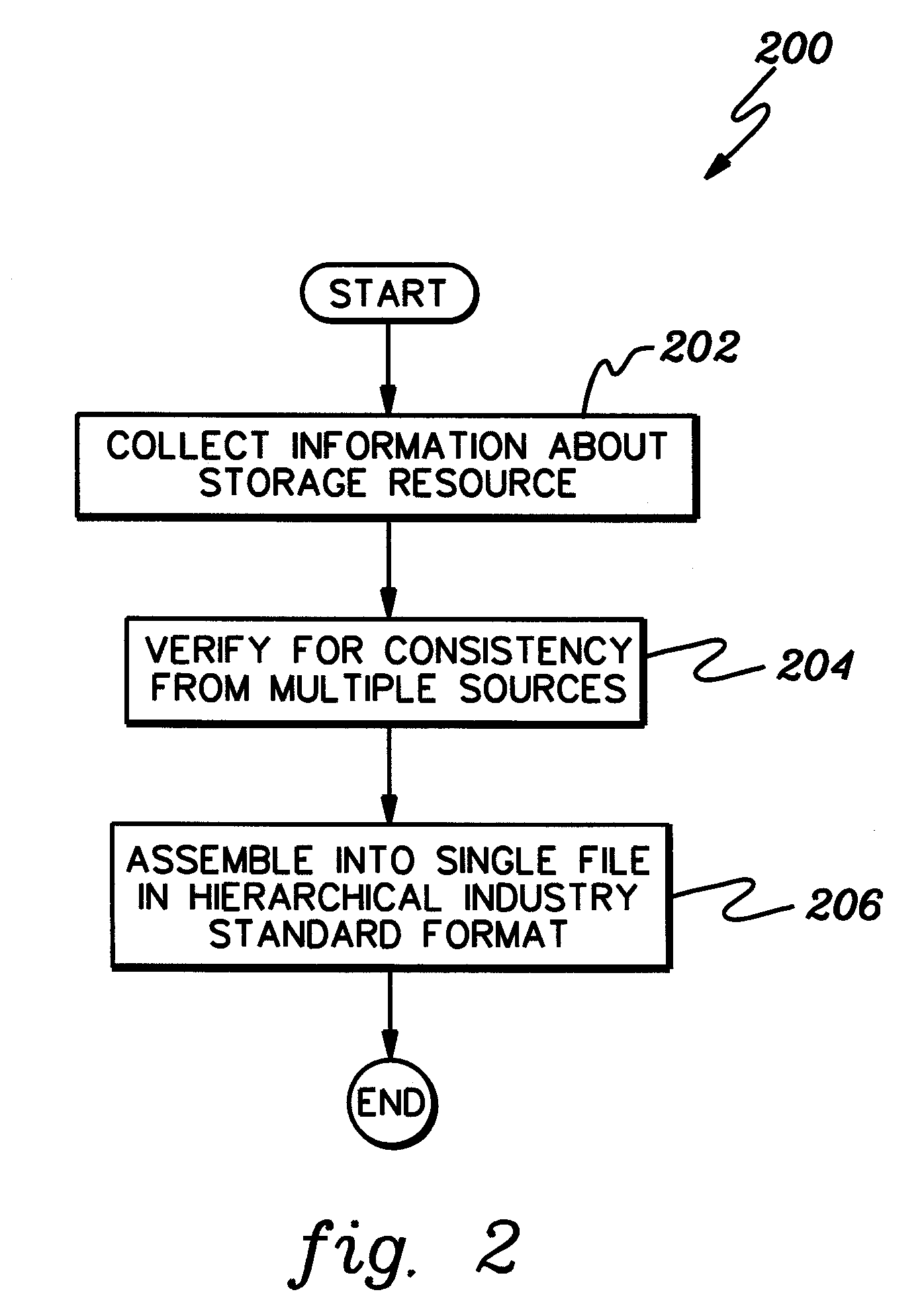

Storage resource scan

ActiveUS20070055645A1Reliable completionDigital computer detailsSpecial data processing applicationsOperational systemOperating system

Various information about storage resources in a UNIX or UNIX derivative operating system computing environment is gathered from various sources in response to scan requests. Where a given type of information for a given storage resource is gathered from multiple sources, the information is verified for consistency, and placed in a single file in an industry standard hierarchical format. Scan threads are timed to provide reliable performance.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

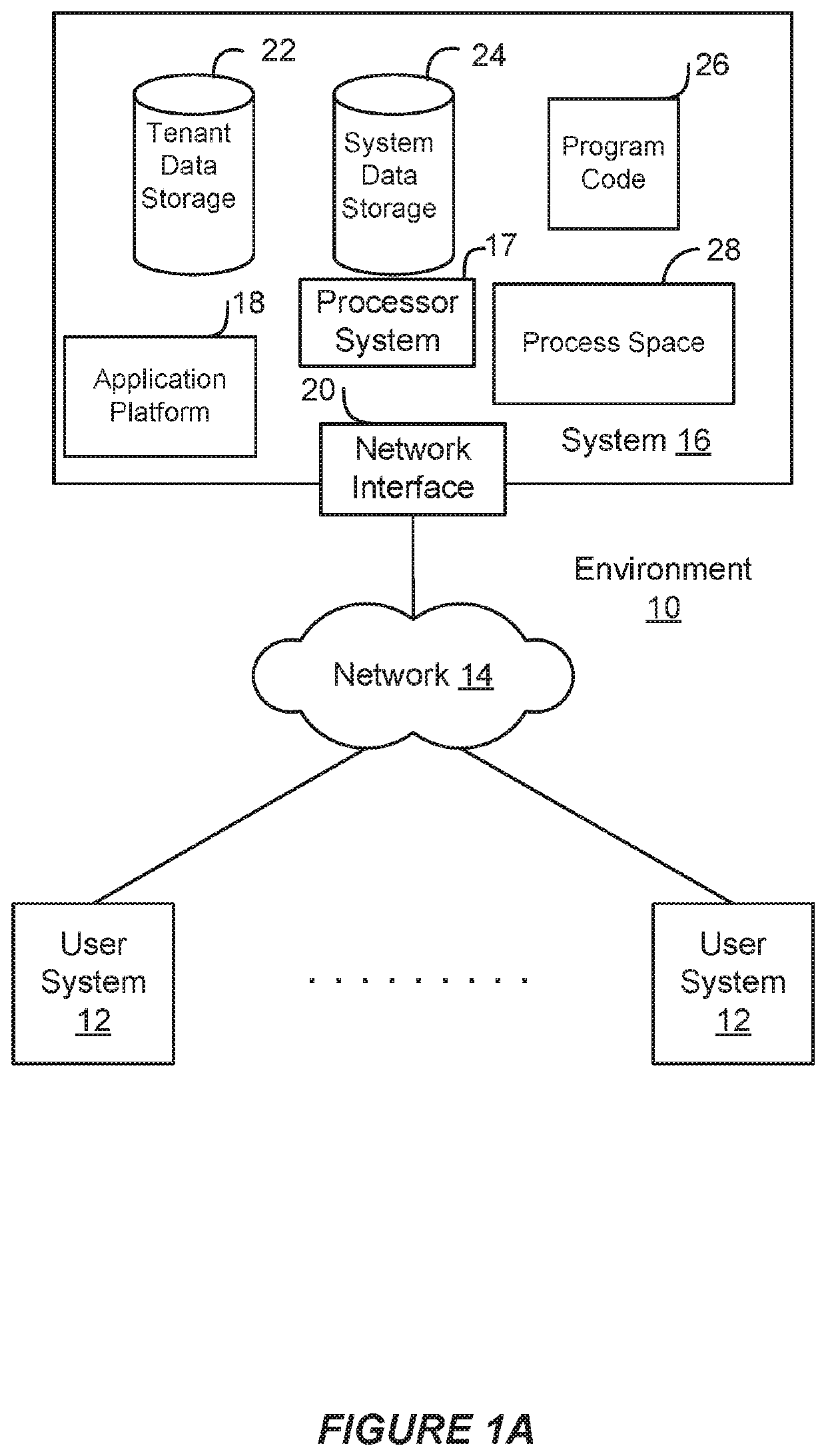

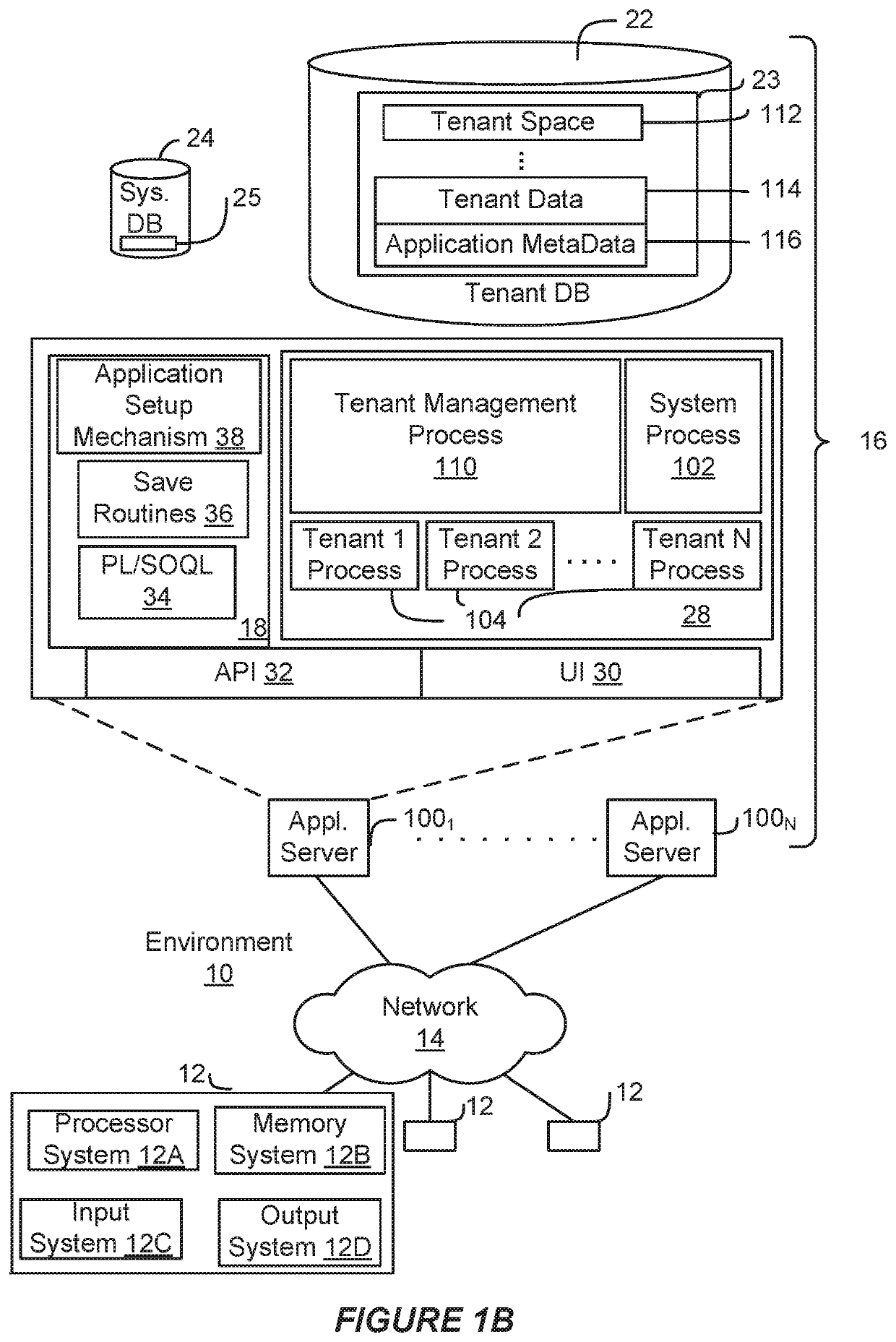

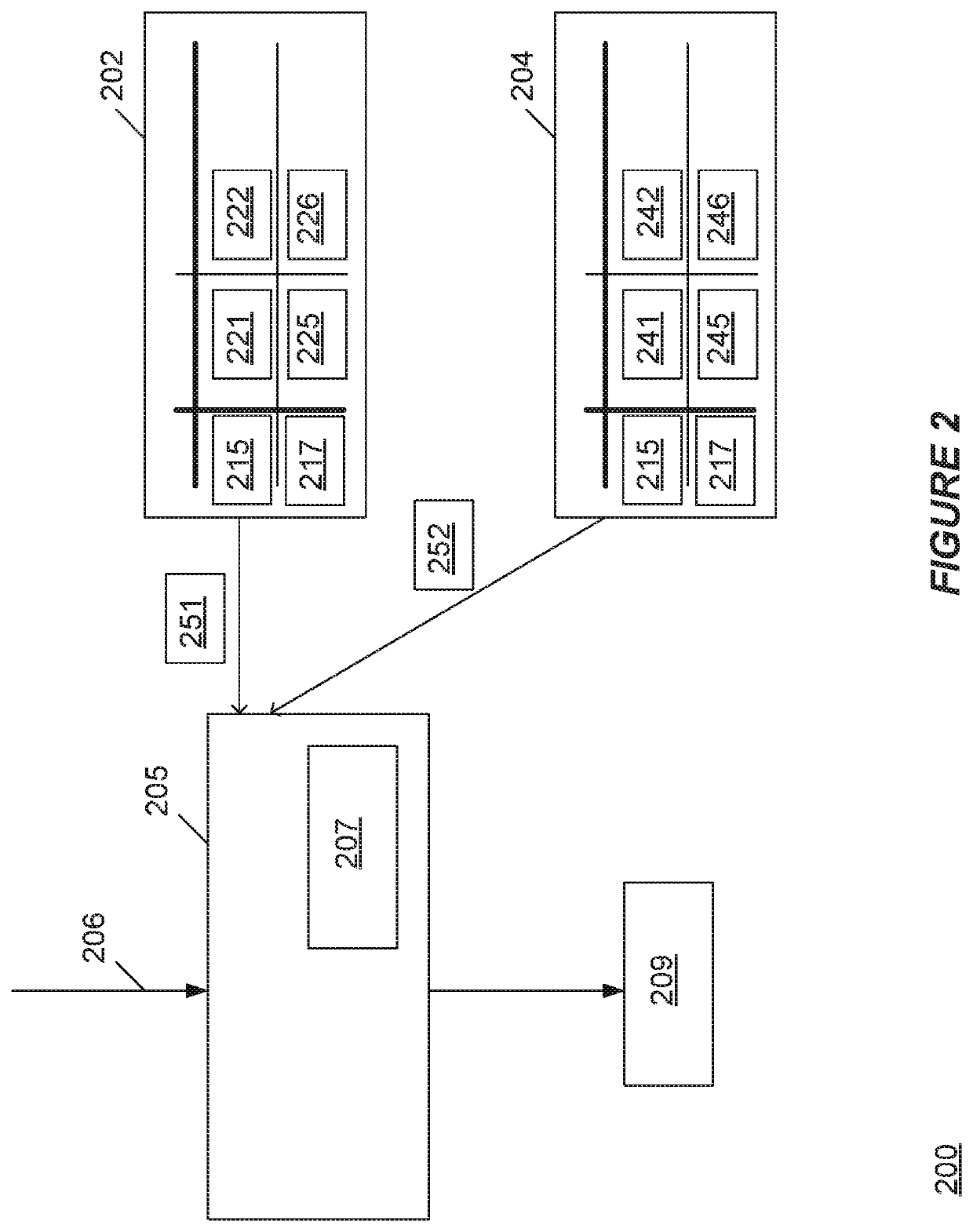

Thread record provider

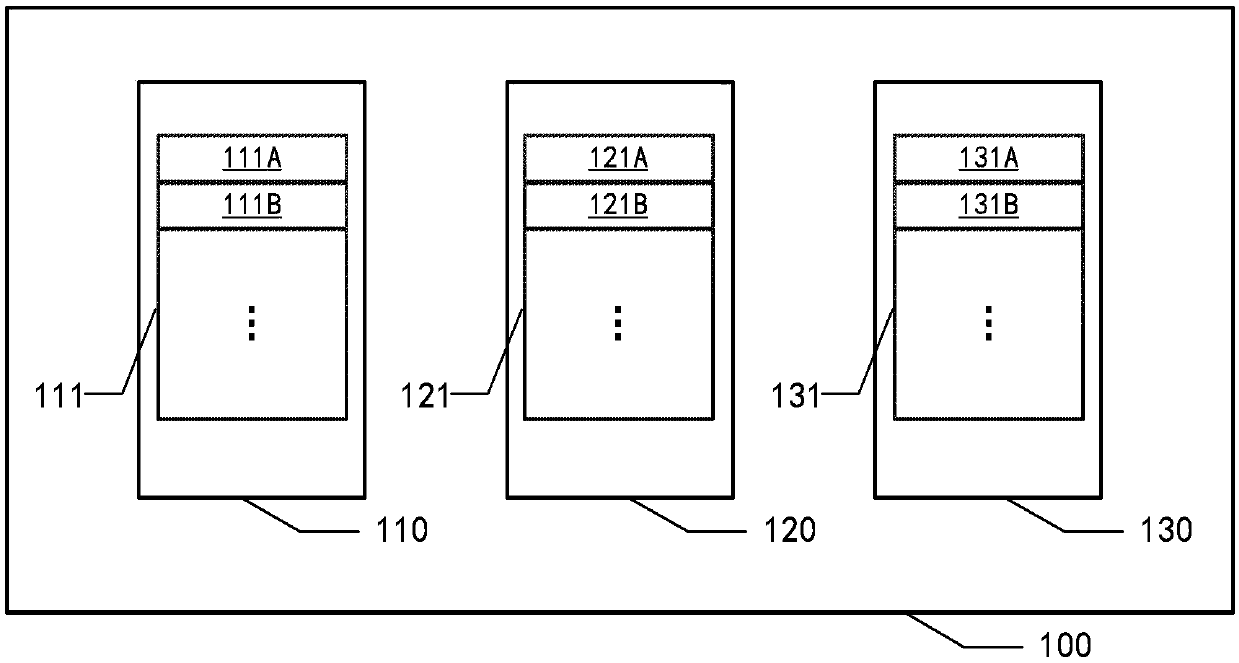

ActiveUS10810230B2Database management systemsError detection/correctionThread (computing)Data mining

In an example, a computing system may include a thread record provider. In some examples, the computing system may incrementally change a first data structure as threads are established and completed, wherein the first data structure comprises first information of currently active threads; incrementally change a second different data structure responsive to a portion of changes of the first data structure, wherein the second data structure correlates second information that is different than the first information to the currently active threads; identifying a plurality of times; and at each selected time, synchronously extracting content from the first and second data structures for a selected thread and concatenating the extracted content to form a record for the selected thread.

Owner:SALESFORCE COM INC

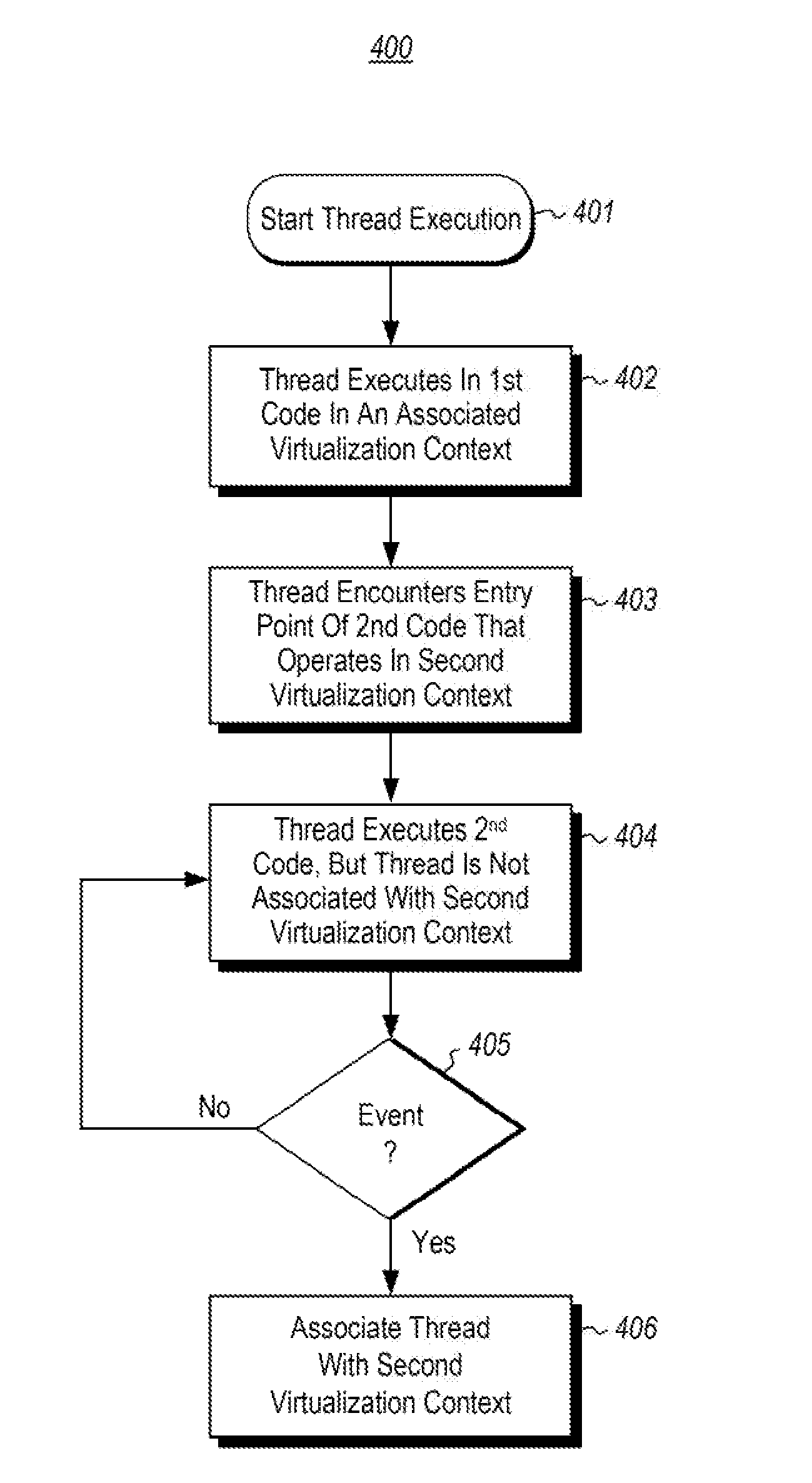

Thread operation across virtualization contexts

ActiveUS20140373008A1Software simulation/interpretation/emulationMemory systemsVirtualizationEntry point

Application virtualization at the thread level, rather than at the process level, the operation of a thread across virtualization contexts. For instance, one virtualization context might be a native environment, whereas another virtualization context might be a virtualization environment in which code running inside a virtualization package has access to virtualized computing resources. A thread operating in a first virtualization context then enters an entry point to code associated with a second virtualization context. For instance, a native thread might enter a plug-in operating as part of a virtualized package in a virtualization environment. While the thread is operating on the code, the thread might request access to the second computing resources associated with the second virtualization environment. In response, the thread is associated with the second virtualization context such that the thread has access to the second computing resources associated with the second virtualization context.

Owner:MICROSOFT TECH LICENSING LLC

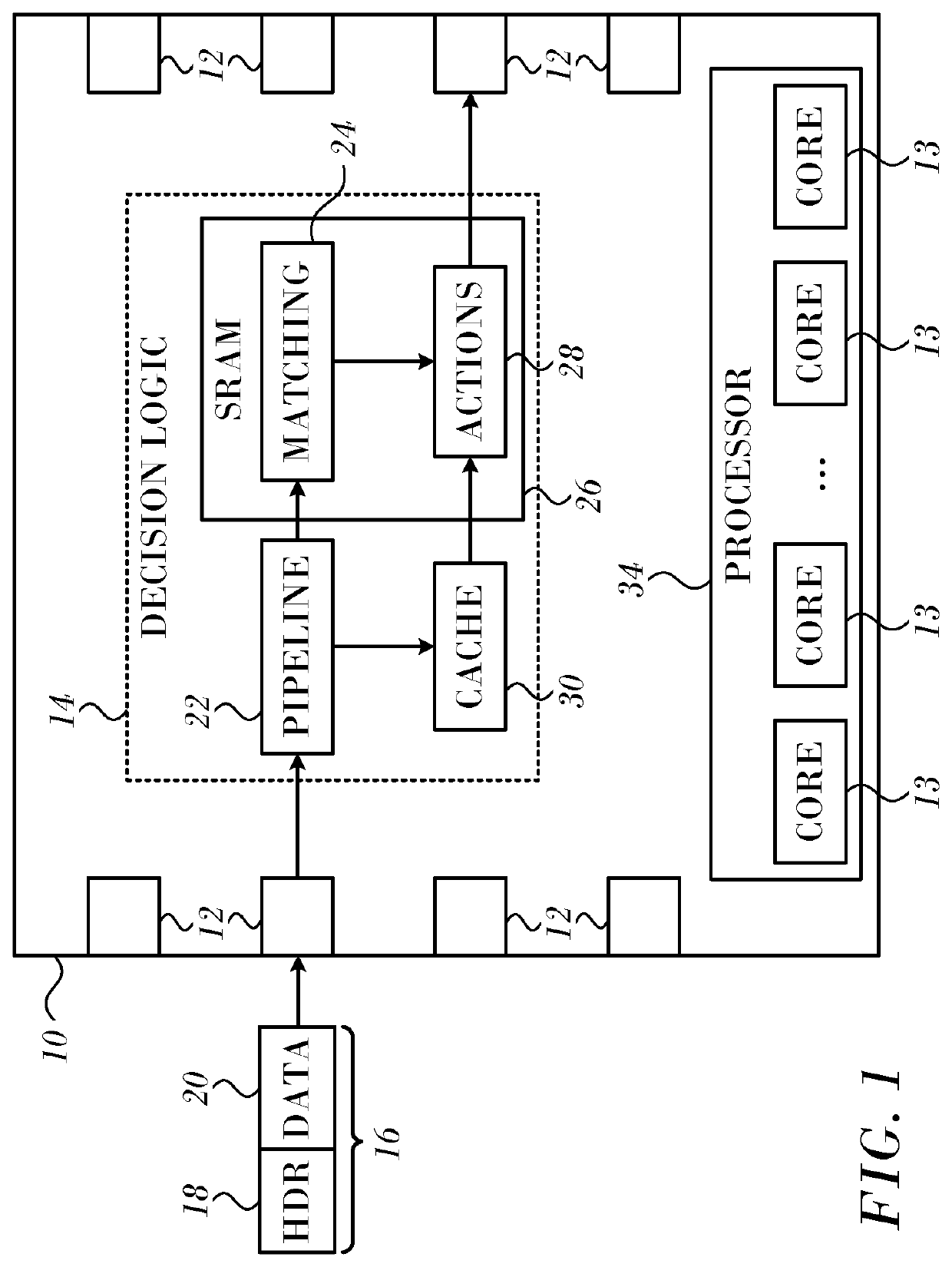

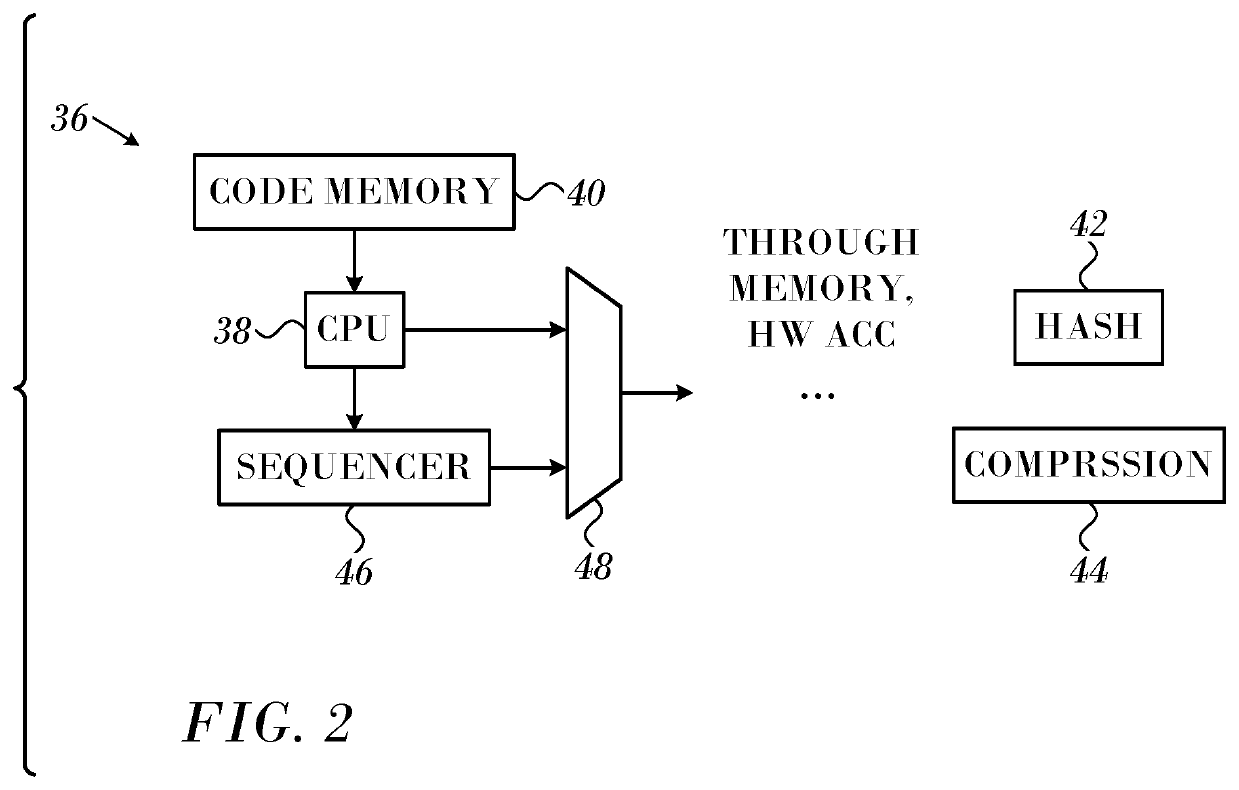

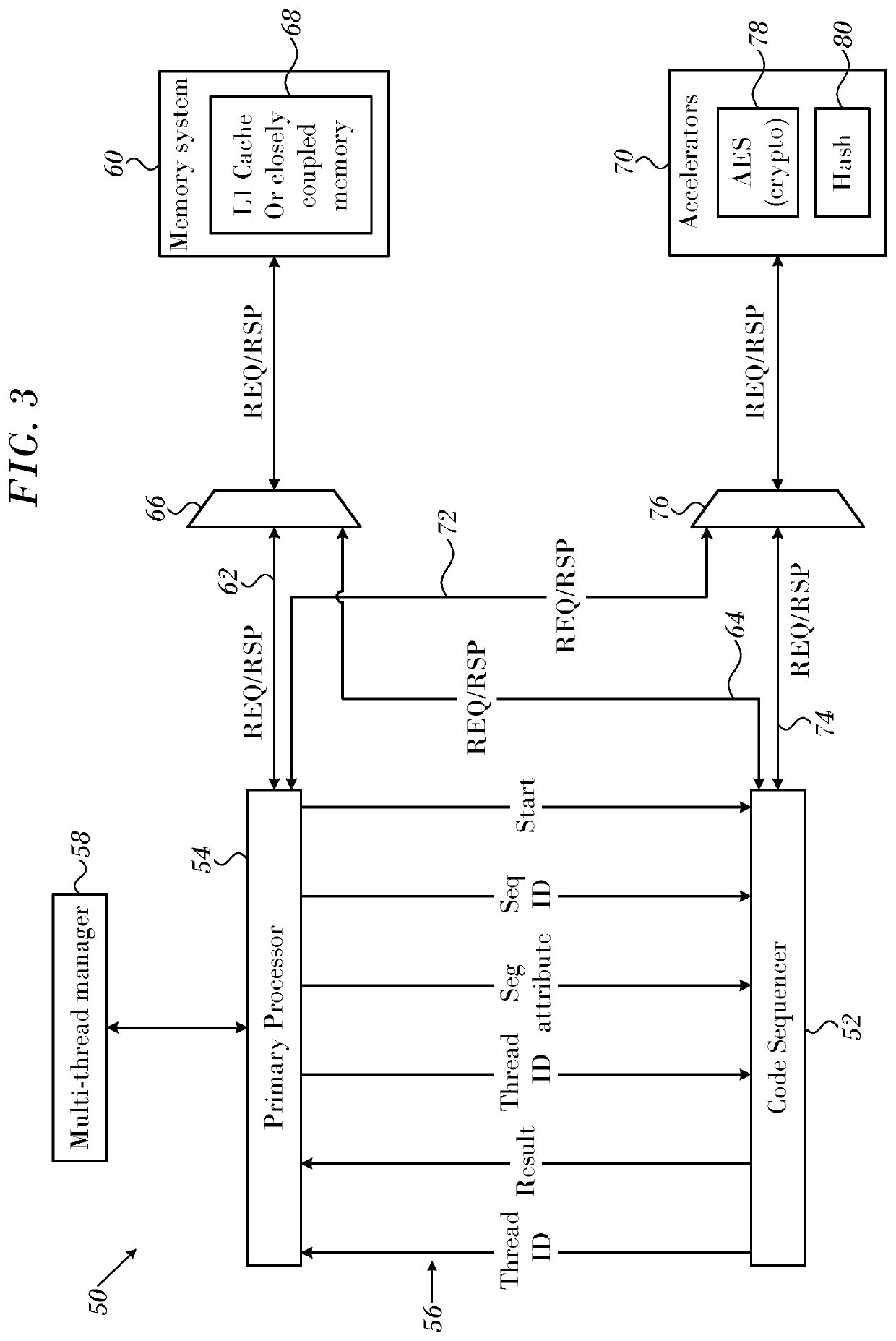

Code sequencer that, in response to a primary processing unit encountering a trigger instruction, receives a thread identifier, executes predefined instruction sequences, and offloads computations to at least one accelerator

ActiveUS10599441B2Reduce loadReduce in quantityMemory architecture accessing/allocationEncryption apparatus with shift registers/memoriesMain processing unitComputer architecture

Instruction code is executed in a central processing unit of a network computing device. Besides the central processing unit the device is provided with a code sequencer operative to execute predefined instruction sequences. The code sequencer is invoked by a trigger instruction in the instruction code, which is encountered by the central processing unit. Responsively to its invocations the code sequencer executes the predefined instruction sequences.

Owner:MELLANOX TECHNOLOGIES LTD

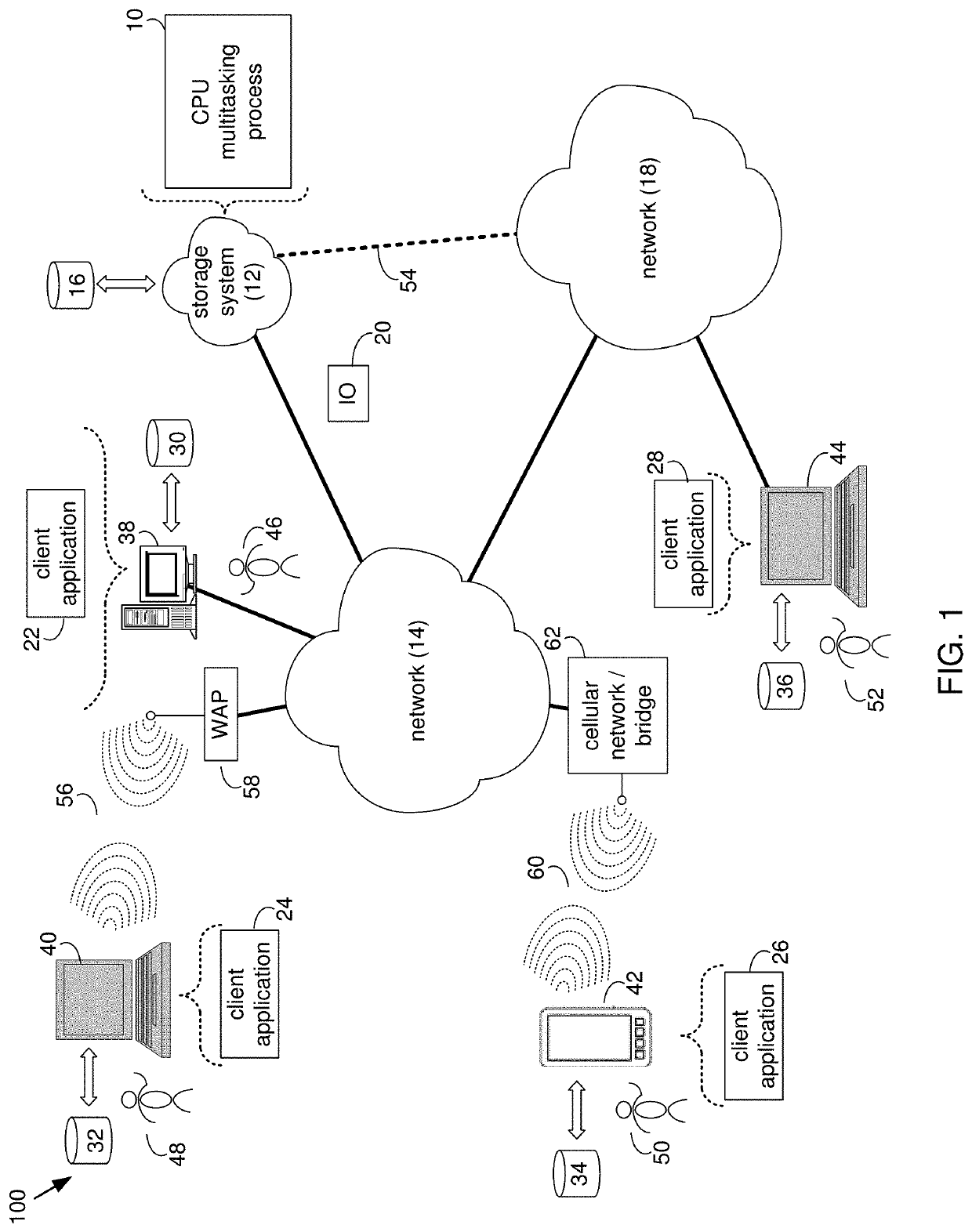

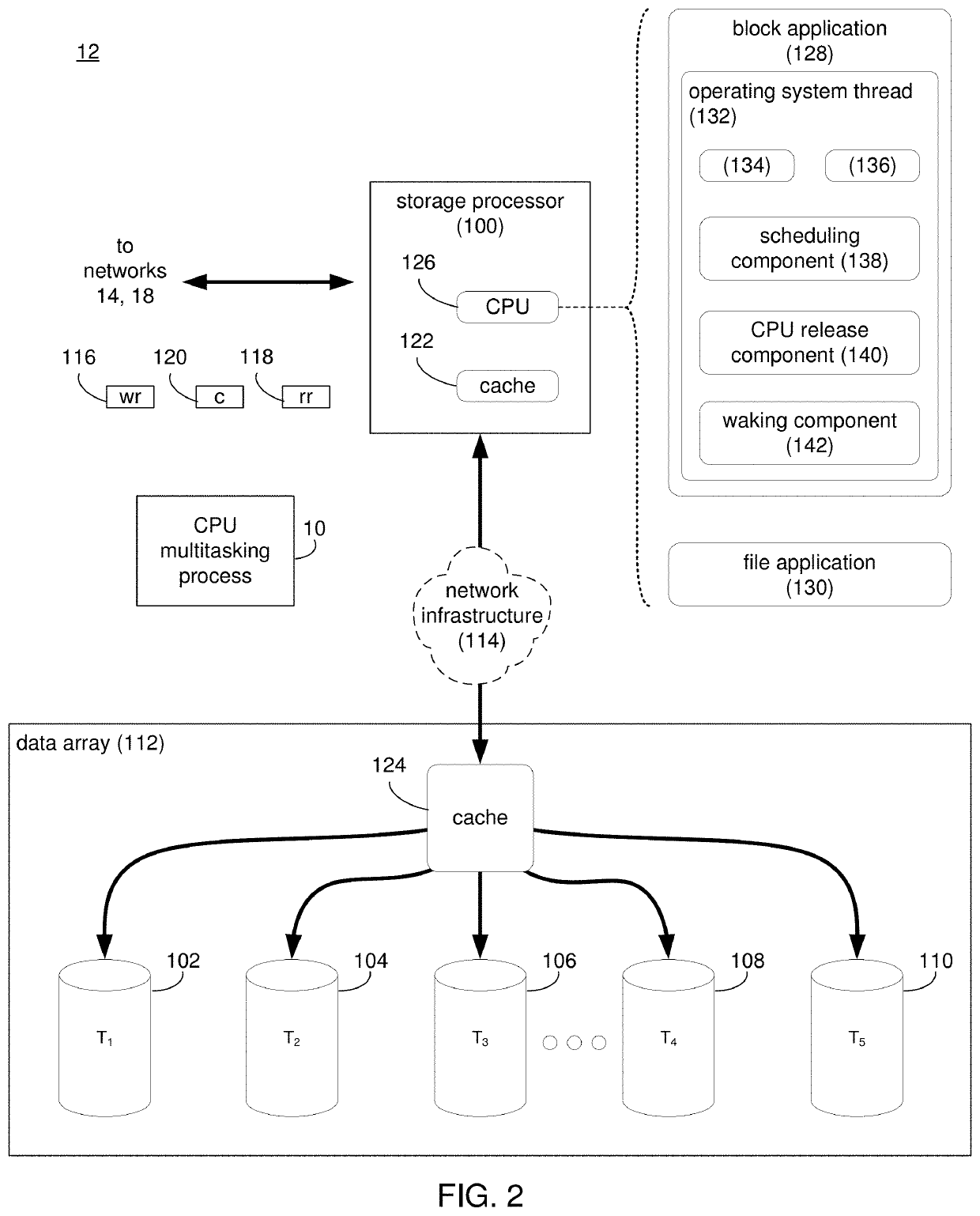

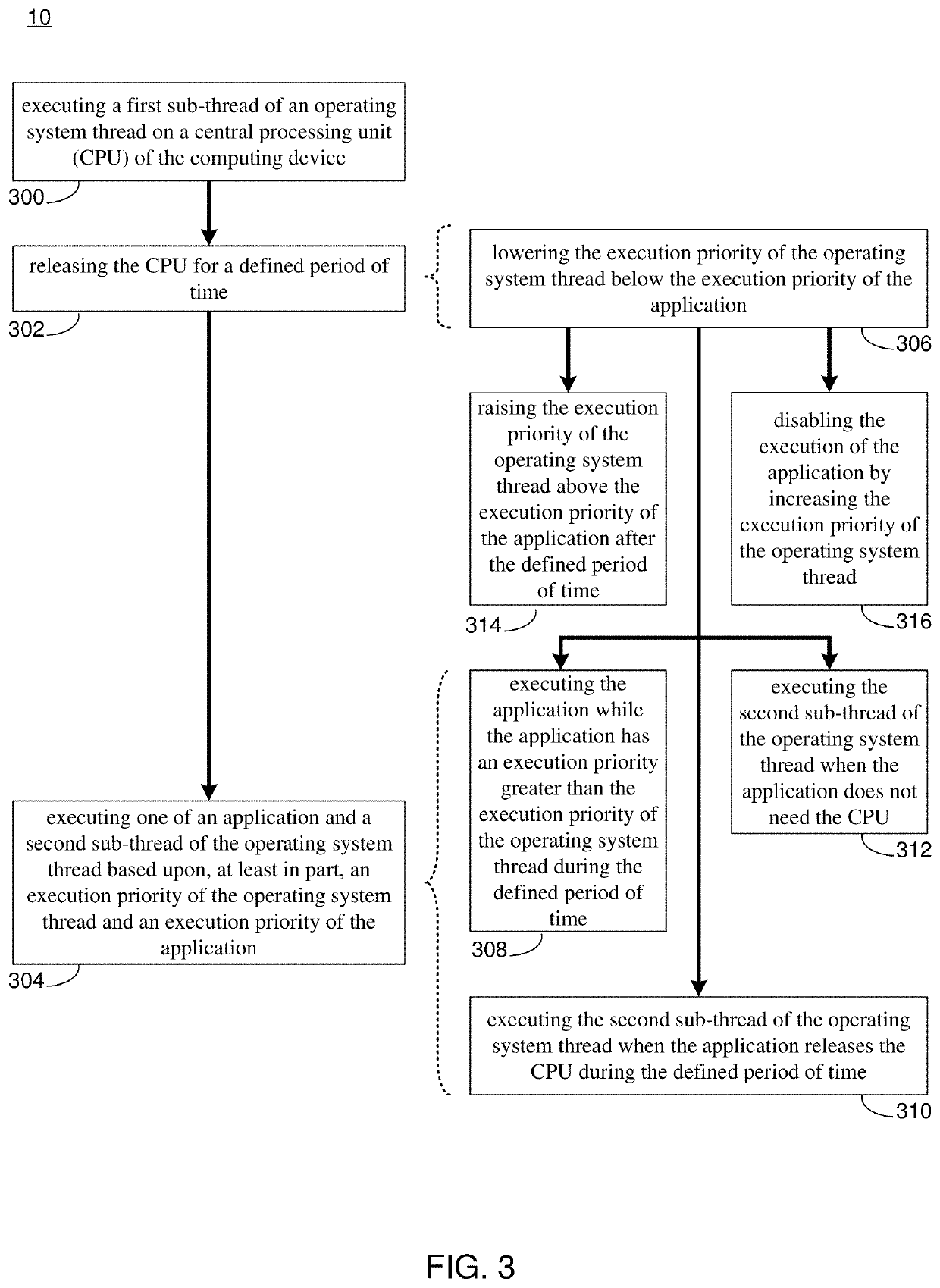

Priority-Based CPU Multitasking System and Method

ActiveUS20210124619A1Program initiation/switchingResource allocationThread (computing)Computing systems

A method, computer program product, and computing system for executing a first sub-thread of an operating system thread on a central processing unit (CPU) of the computing device. The CPU may be released for a defined period of time. One of an application and a second sub-thread of the operating system thread may be executed based upon, at least in part, an execution priority of the operating system thread and an execution priority of the application.

Owner:EMC IP HLDG CO LLC

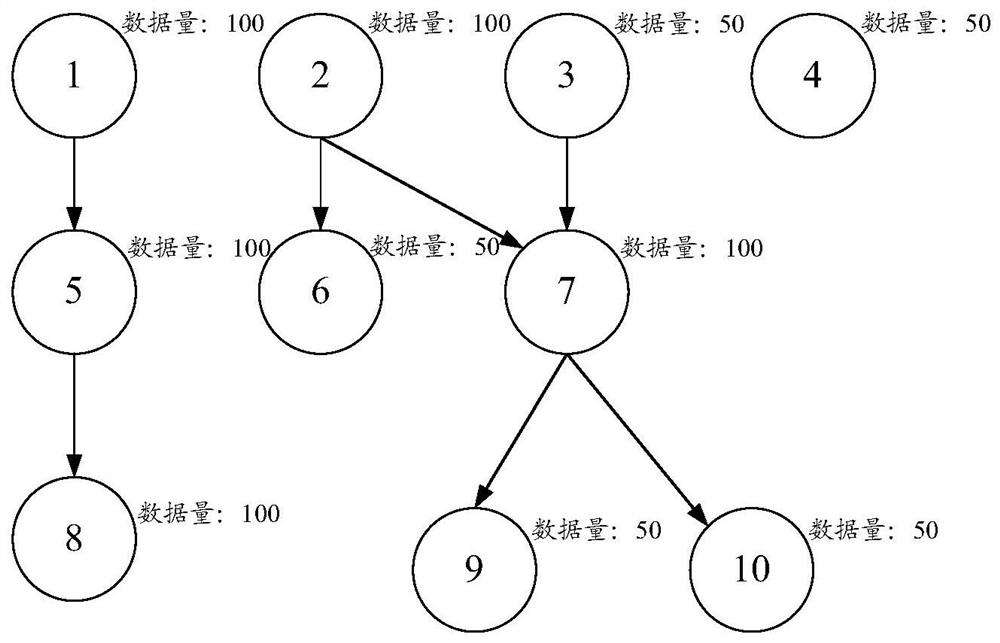

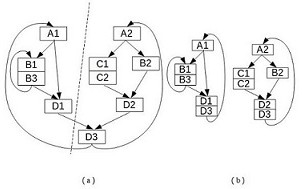

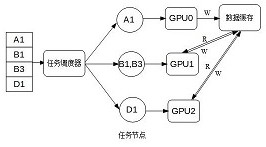

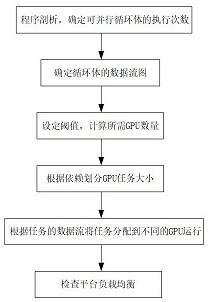

Dynamic resource scheduling method based on analysis technology and data flow information on heterogeneous many-cores

PendingCN113886057AImprove computing resource utilizationImprove parallelismResource allocationData streamDynamic resource

The invention discloses a dynamic resource scheduling method based on an analysis technology and data flow information on heterogeneous many-cores, and relates to the field of heterogeneous many-core systems. The method comprises the following steps: analyzing a program, and determining the execution times of a parallelizable loop body; determining a data flow diagram of the loop body; setting a threshold value, and calculating the number of required GPUs; dividing the GPU task size according to dependence; distributing the tasks to different GPUs for operation according to the data streams of the tasks; and checking whether the platform is load-balanced. The invention mainly aims at providing a resource scheduling method based on information during program analysis and program data flow information aiming at the current situation that programmers need to set the number of GPUs and manually divide tasks on a heterogeneous platform. The execution times and the data dependence of the loop statements obtained through analysis are utilized, a threshold value is set to determine the number of the set GPUs, the thread granularity with data dependent task division is increased, tasks are distributed to each GPU for running by utilizing data flow information at the same time, and a work-stepping algorithm is actively detected and combined to solve the problem of platform load balancing, therefore, the dynamic resource scheduling method capable of automatically setting the number of GPUs, improving the utilization rate of GPU computing resources, actively detecting and realizing load balancing is realized.

Owner:SOUTHWEAT UNIV OF SCI & TECH

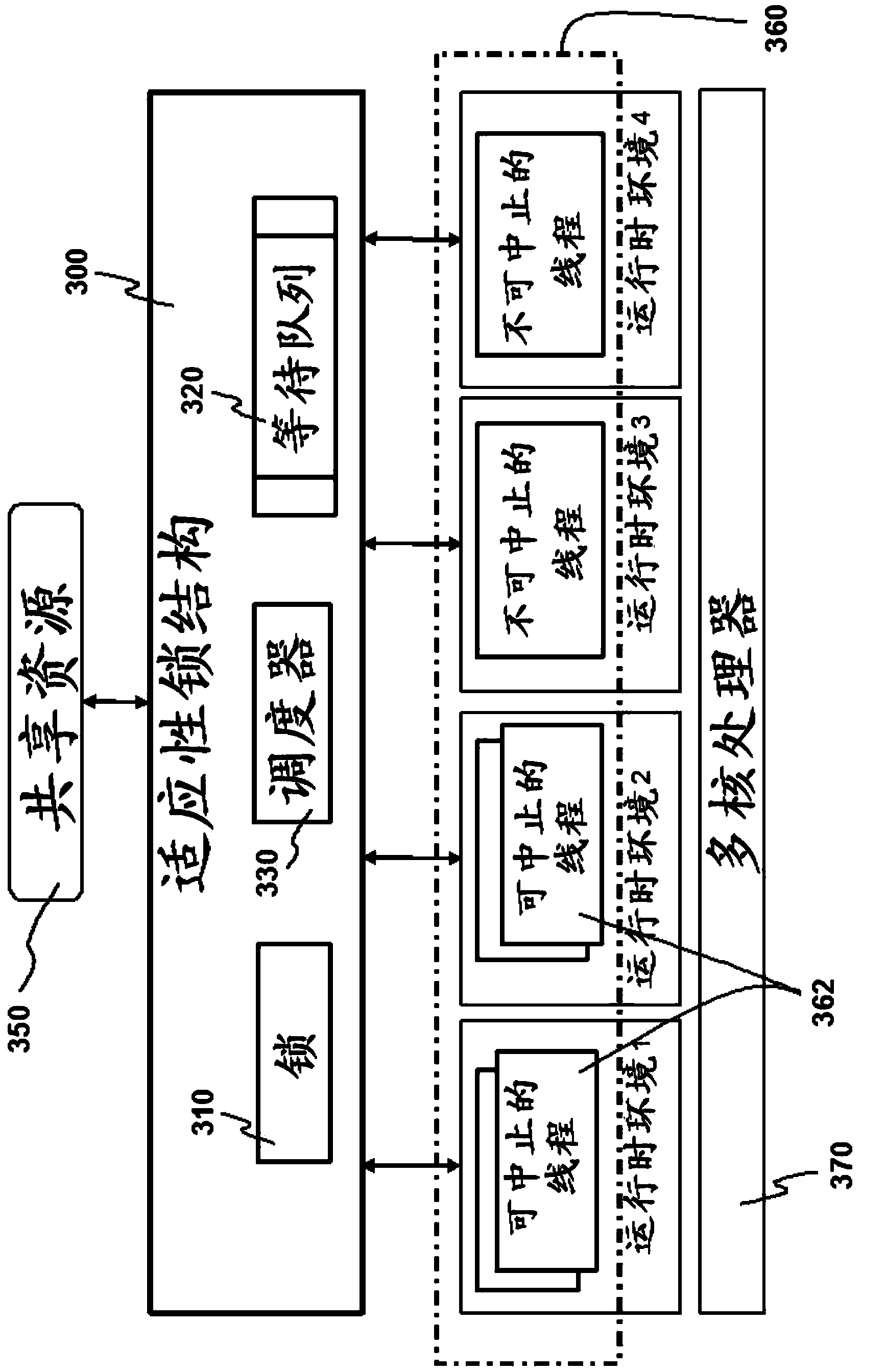

Computing system and method of operating lock in same

The invention provides a method of operating a lock in a computing system with a plurality of processing units in a plurality of runtime environments. The method includes: determining whether an owner thread is suspendable or not when the lock is owned by the owner thread and a requester thread tries to obtain the lock; making the requester thread to be in a spinning state if the owner thread is not suspendable no matter whether the requester thread is suspendable or not; determining whether the the requester thread is suspendable or not if the owner thread is suspendable unless the requester thread gives up obtaining the lock; arranging the requester thread to try again to obtain the lock if the requester thread is not suspendable, and adding the requester thread to a waiting queue as an added suspension thread if the requester thread is suspendable; allowing to restore suspended threads stored in the waiting queue soon afterwards to obtain the lock. The method is applicable to the computing system with a multi-core processor.

Owner:HONG KONG APPLIED SCI & TECH RES INST

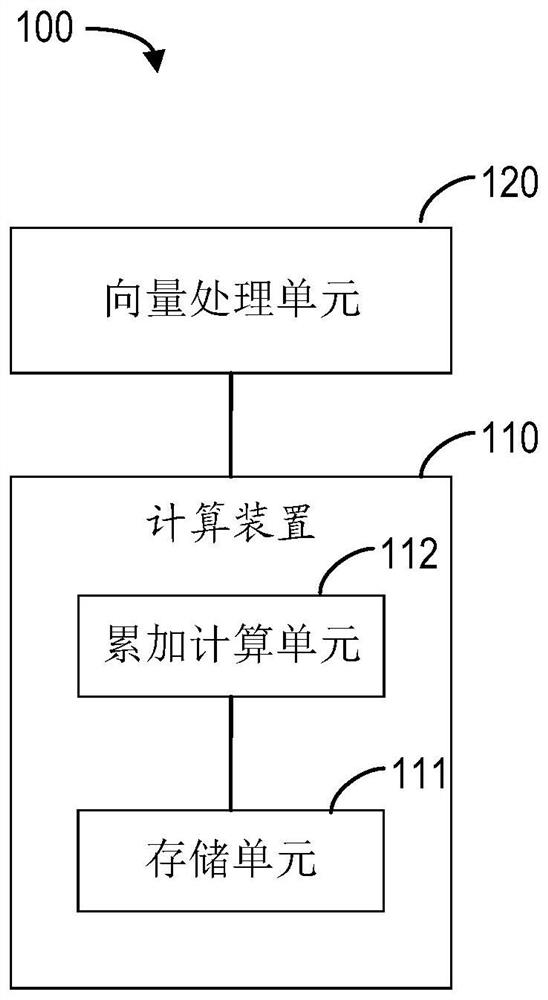

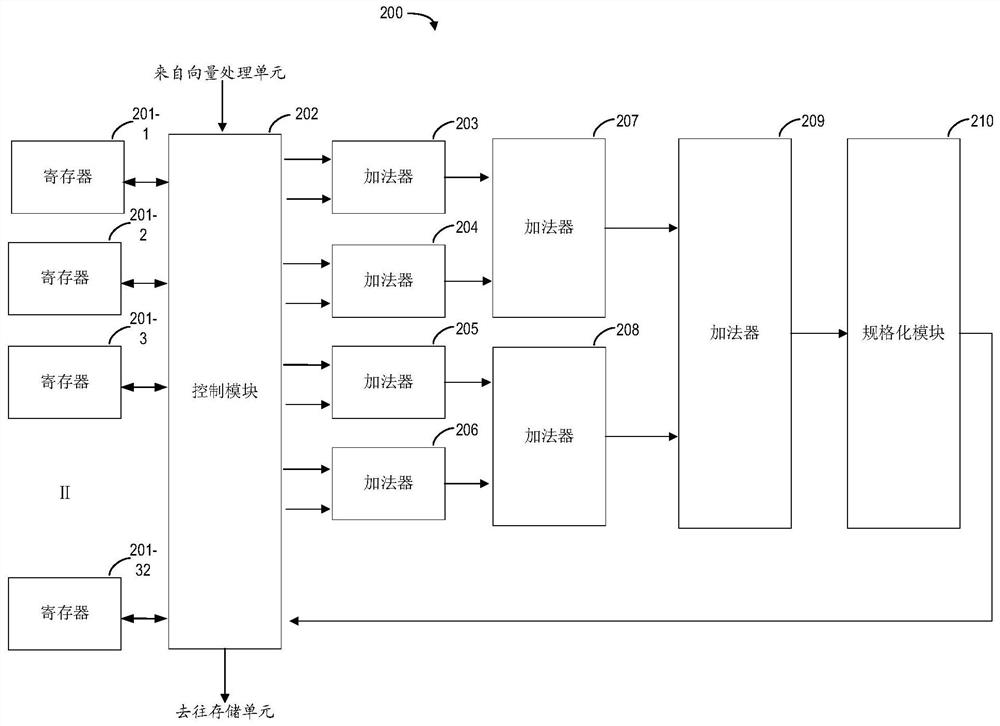

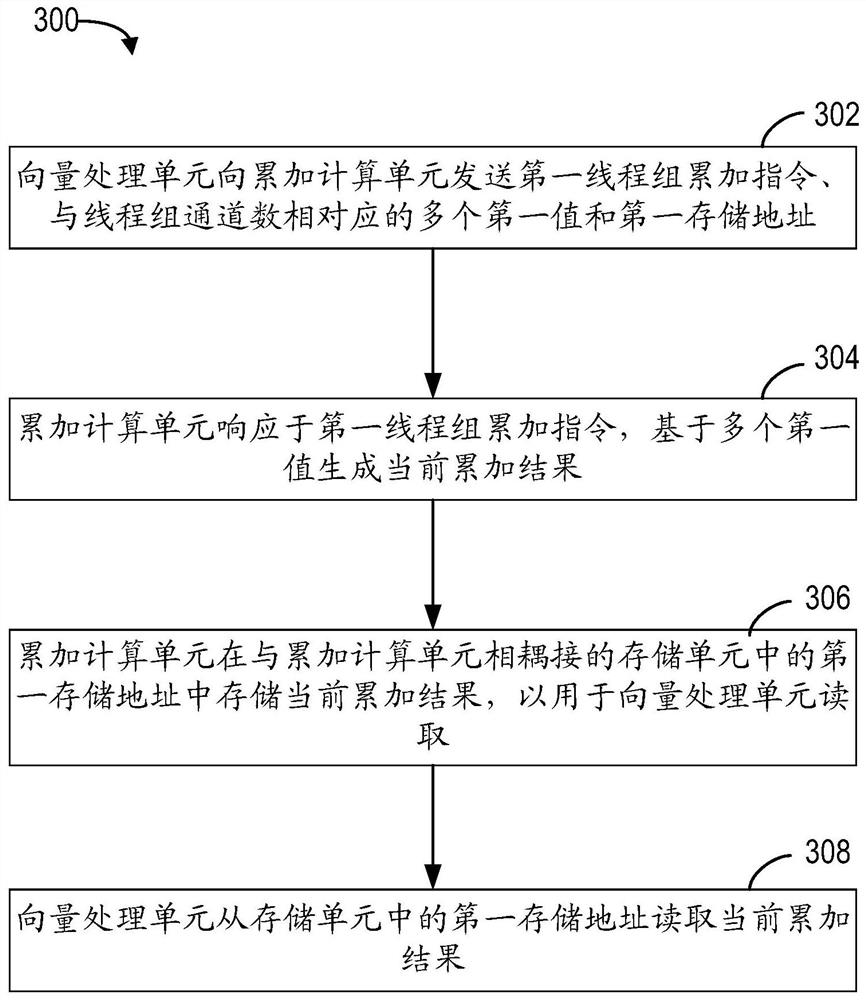

Computing device, computing equipment and method for thread group accumulation

PendingCN112817735AProgram initiation/switchingComputation using non-contact making devicesComputer architectureEngineering

The embodiment of the invention relates to a computing device, computing equipment and a method for thread group accumulation, and relates to the field of computers. The computing device includes: a storage unit; an accumulation calculation unit which is coupled with the storage unit and is configured to receive a first thread group accumulation instruction, a plurality of first values corresponding to the number of thread group channels and a first storage address from a vector processing unit coupled with the computing device, in response to the first thread group accumulation instruction, generate a current accumulation result based on the plurality of first values, and store the current accumulation result in a first storage address in the storage unit for reading by the vector processing unit. Therefore, accumulation in the thread group can be decoupled to the special hardware for processing, so that the overall accumulation performance is remarkably improved.

Owner:SHANGHAI BIREN TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com