Patents

Literature

57results about How to "Improve computing resource utilization" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

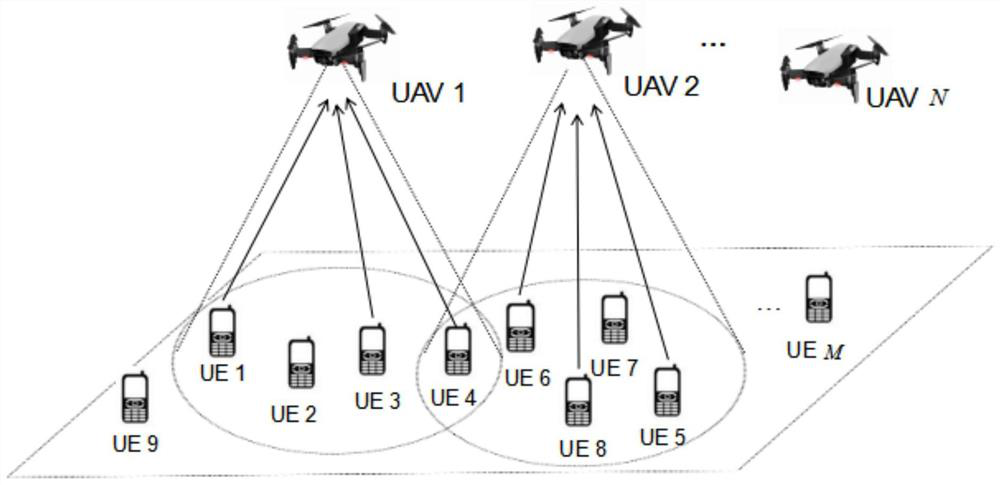

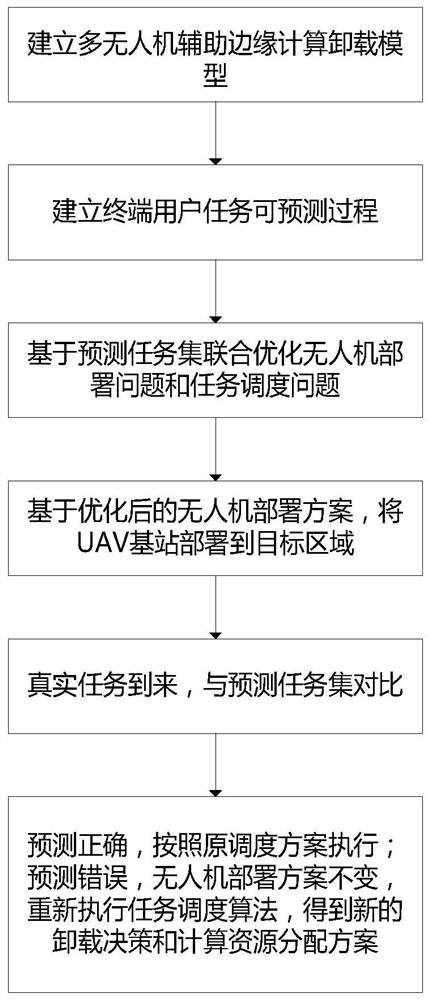

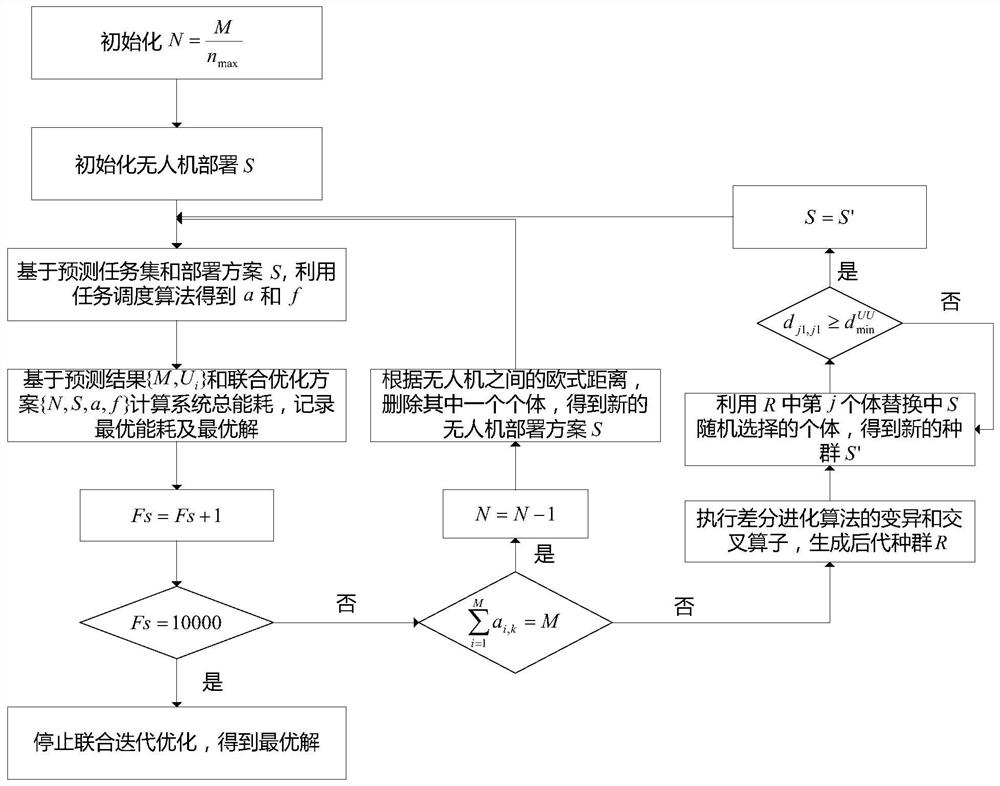

Multi-unmanned aerial vehicle auxiliary edge computing resource allocation method based on task prediction

ActiveCN112351503AReduce response delayReduce energy consumption while hoveringHigh level techniquesWireless communicationEnergy consumption minimizationResource assignment

The invention discloses a multi-unmanned aerial vehicle auxiliary edge computing resource allocation method based on task prediction. The method comprises the following steps of: firstly, modeling a communication model, a computing model and an energy loss model in an unmanned aerial vehicle auxiliary edge computing unloading scene; modeling a system total energy consumption minimization problem of the unmanned aerial vehicle auxiliary edge computing unloading network into task predictable process of terminal devices; obtaining prediction model parameters of different terminal devices by adopting centralized training through accessing historical data of the terminal devices; obtaining a prediction task set of the next time slot by utilizing the prediction model based on the task information of the current access terminal devices; and based on the prediction task set, decomposing an original problem into an unmanned aerial vehicle deployment problem and a task scheduling problem for joint optimization. The response time delay and completion time delay of the task can be effectively reduced through the deep learning algorithm, so that the calculation energy consumption is reduced; anevolutionary algorithm is introduced to solve the problem of joint unmanned aerial vehicle deployment and task scheduling optimization, the hovering energy consumption of the unmanned aerial vehicleis greatly reduced, and the utilization rate of computing resources is increased.

Owner:DALIAN UNIV OF TECH

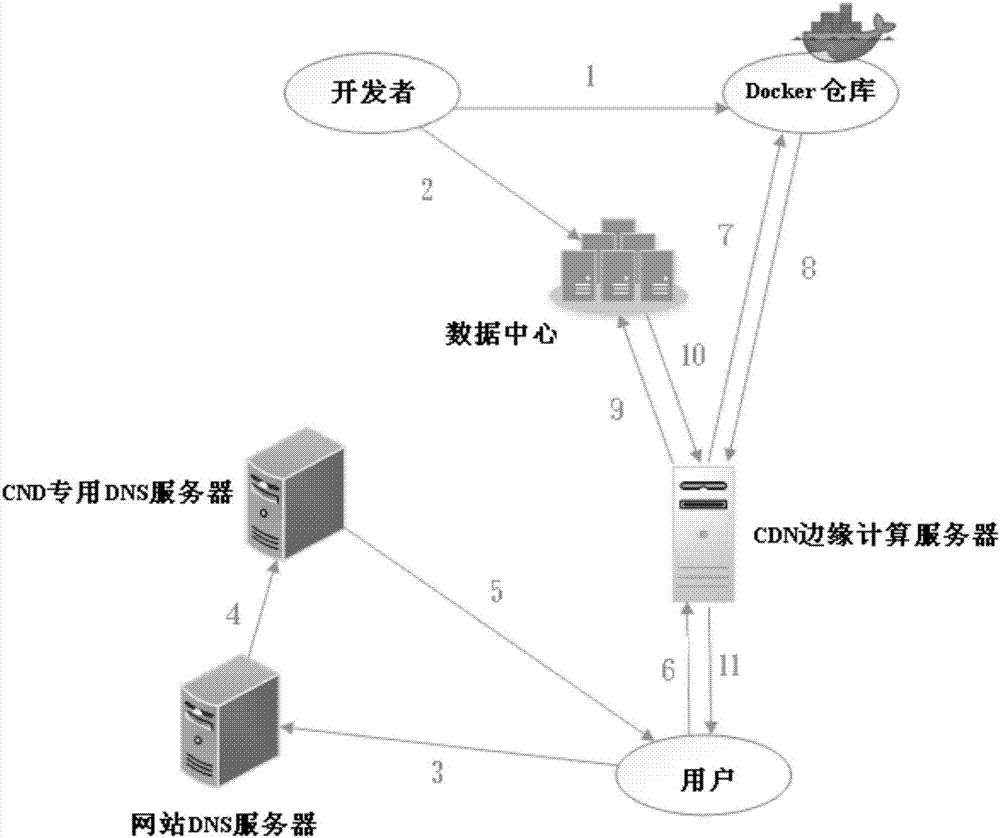

Docker technology based CDN dynamic content acceleration method and system

ActiveCN107105029AImprove compatibilityCompatibility does not affectProgram loading/initiatingTransmissionEdge computingResource utilization

The invention discloses a Docker technology based CDN dynamic content acceleration method and system, and relates to the technical field of computer application. The method comprises that a website DNS server delivers an analytical right, to analyze a host name of a URL corresponding to user request content, to a special DNS server of the CDN, and the special DNS server of the CDN returns the IP address of a CDN edge computing server to a user; the user requests the CDN edge computing server for a resource to which a target URL is directed; if the resource includes dynamic content, a Docker warehouse is asked for a Docker mirror image including a resource program and database, the Docker mirror image is downloaded, a Docker container is started, the program in the mirror image is executed, and the dynamic content corresponding to the program is generated and back fed to the user; and if the resource does not include dynamic content, static content query is implemented, and a query result is back fed to the user. Thus, response time of accessing Internet dynamic content is reduced, the total network flow is reduced, and the computing resource utilization rate of CDN nodes is improved.

Owner:北京友普信息技术有限公司

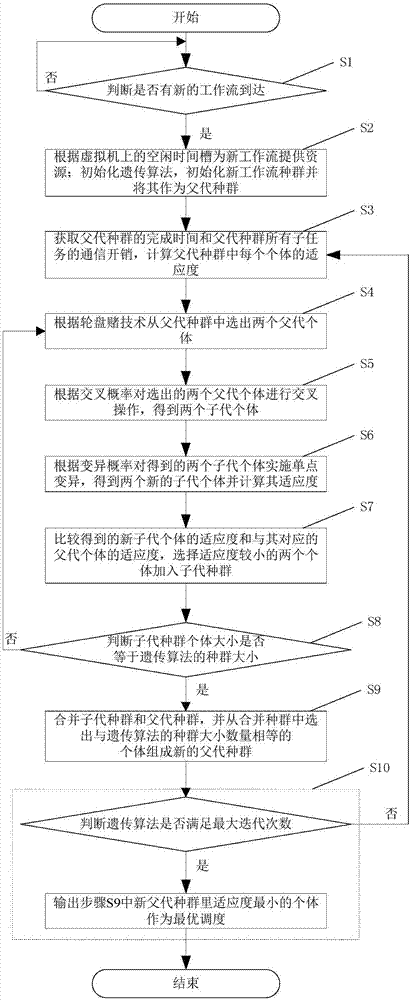

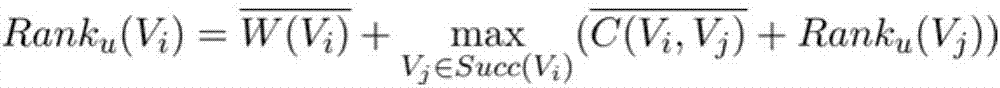

Multi-workflow scheduling method based on genetic algorithm under cloud environment

ActiveCN107967171AReduce complexityImprove computing resource utilizationProgram initiation/switchingArtificial lifeOptimal schedulingNew population

The invention discloses a multi-workflow scheduling method based on a genetic algorithm under a cloud environment. The method comprises the following steps that a previous workflow scheduling state isreserved, the genetic algorithm and a new workflow are initialized, the adaptability degree of each individual of the new workflow is calculated, and two parent individuals are selected; according tothe genetic algorithm, the parent individuals are subjected to cross operation and single-point variation, progeny individuals are obtained, the adaptability degrees of the progeny individuals are calculated, the adaptability degrees of the progeny individuals and the corresponding parent individuals are compared, and two smaller progeny individuals are selected and added to the progeny population; if the size of the progeny population is equal to that of the parent population, the progeny population and the parent population are merged, the individuals which accord with the genetic algorithmare selected from the merged population to form the new population, and otherwise, the step of selecting the parent individuals again is skipped to; finally, according to the iteration frequency, optimal scheduling is output. According to the multi-workflow scheduling method based on the genetic algorithm under the cloud environment, the situation is avoided that previous workflow scheduling is damaged so that additional communication cost can be generated, and the utilization rate of computing resources of a virtual machine is further increased.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

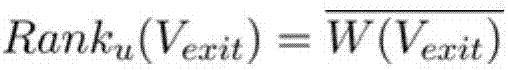

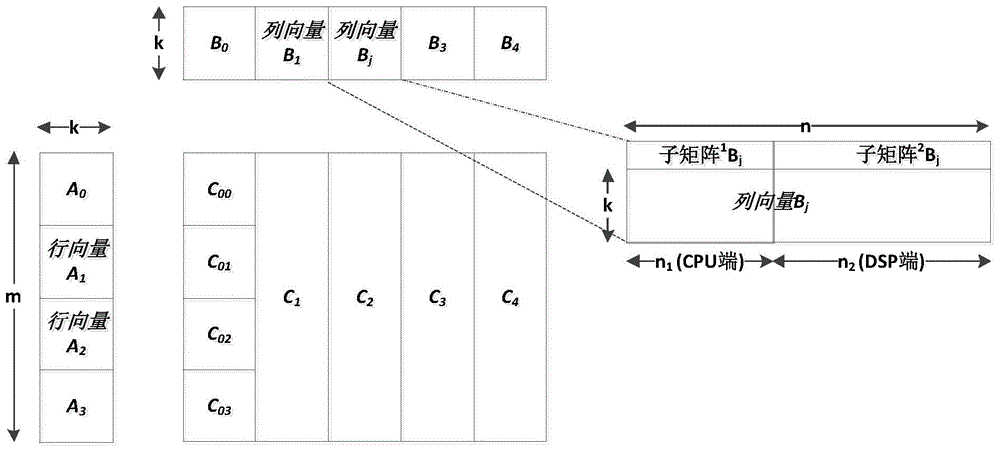

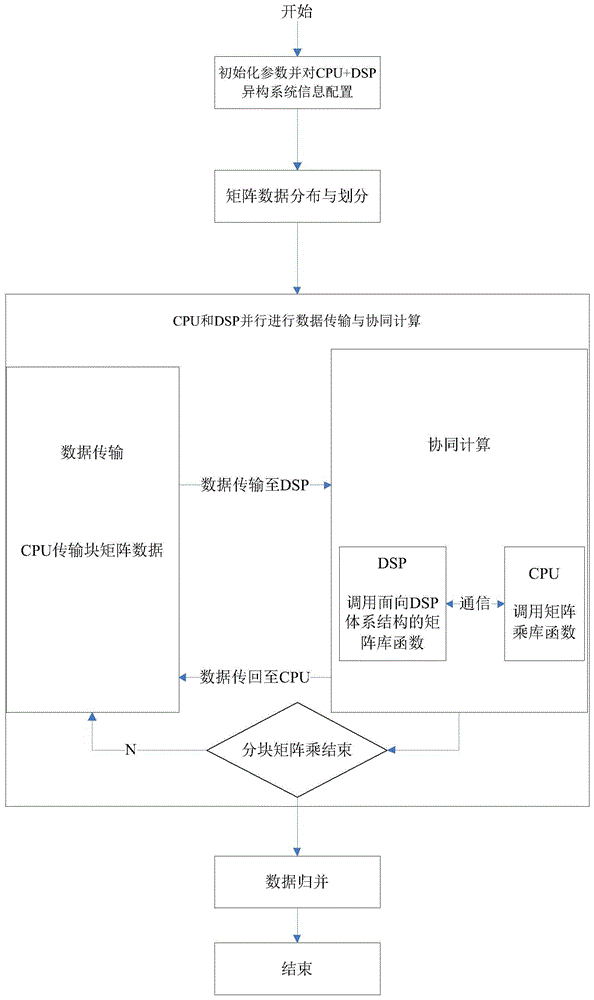

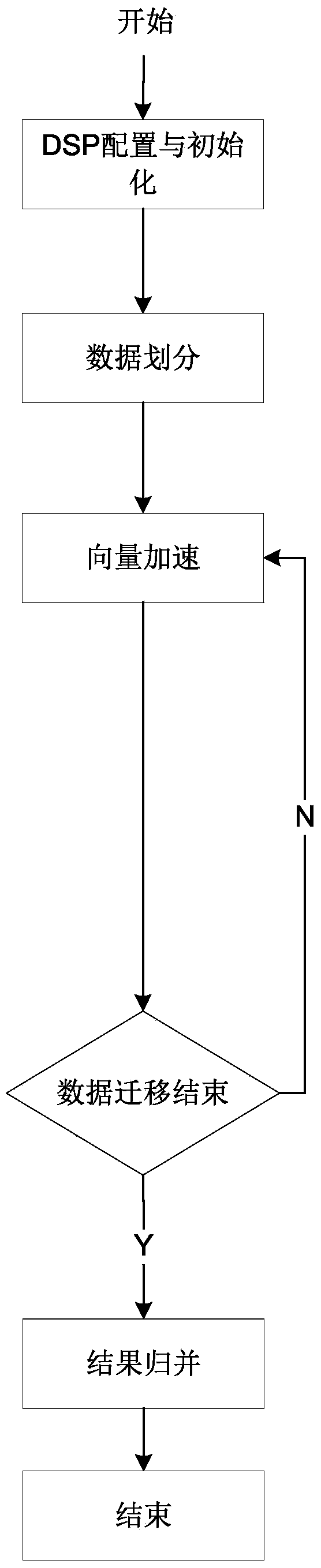

Matrix multiplication accelerating method for CPU+DSP (Central Processing Unit + Digital Signal Processor) heterogeneous system

ActiveCN104317768AImprove the speed of matrix multiplicationImprove computing resource utilizationMultiple digital computer combinationsComplex mathematical operationsParallel computingData transmission

The invention discloses a matrix multiplication accelerating method for a CPU+DSP (Central Processing Unit + Digital Signal Processor) heterogeneous system and aims at providing an efficient cooperative matrix multiplication accelerating method for the CPU+DSP heterogeneous system to increase the operation speed of matrix multiplication and maximize the computing efficiency of the CPU+DSP heterogeneous system. According to the technical scheme, the method comprises the following steps of firstly, initializing parameters, and performing information configuration on the CPU+DSP heterogeneous system; secondly, partitioning to-be-processed data which are allocated to computing nodes to a CPU and a DSP for cooperative processing according to the difference between the design target and the computing performance of the CPU of a main processor and the DSP of an accelerator; thirdly, concurrently performing data transmission and cooperative computation by the CPU and the DSP to obtain (ceiling of M / m*ceiling of N / n) block matrixes C(i-1)(j-1); finally, merging the block matrixes C(i-1)(j-1) to form an M*N result matrix C. With the adoption of the method, when being in charge of data transmission and program control, the CPU can actively cooperate with the DSP to complete matrix multiplication computation; moreover, the data transmission and the cooperative computation are overlapped, so that the matrix multiplication computation speed of the CPU+DSP heterogeneous system is increased, and the utilization rate of computation resources is improved.

Owner:NAT UNIV OF DEFENSE TECH

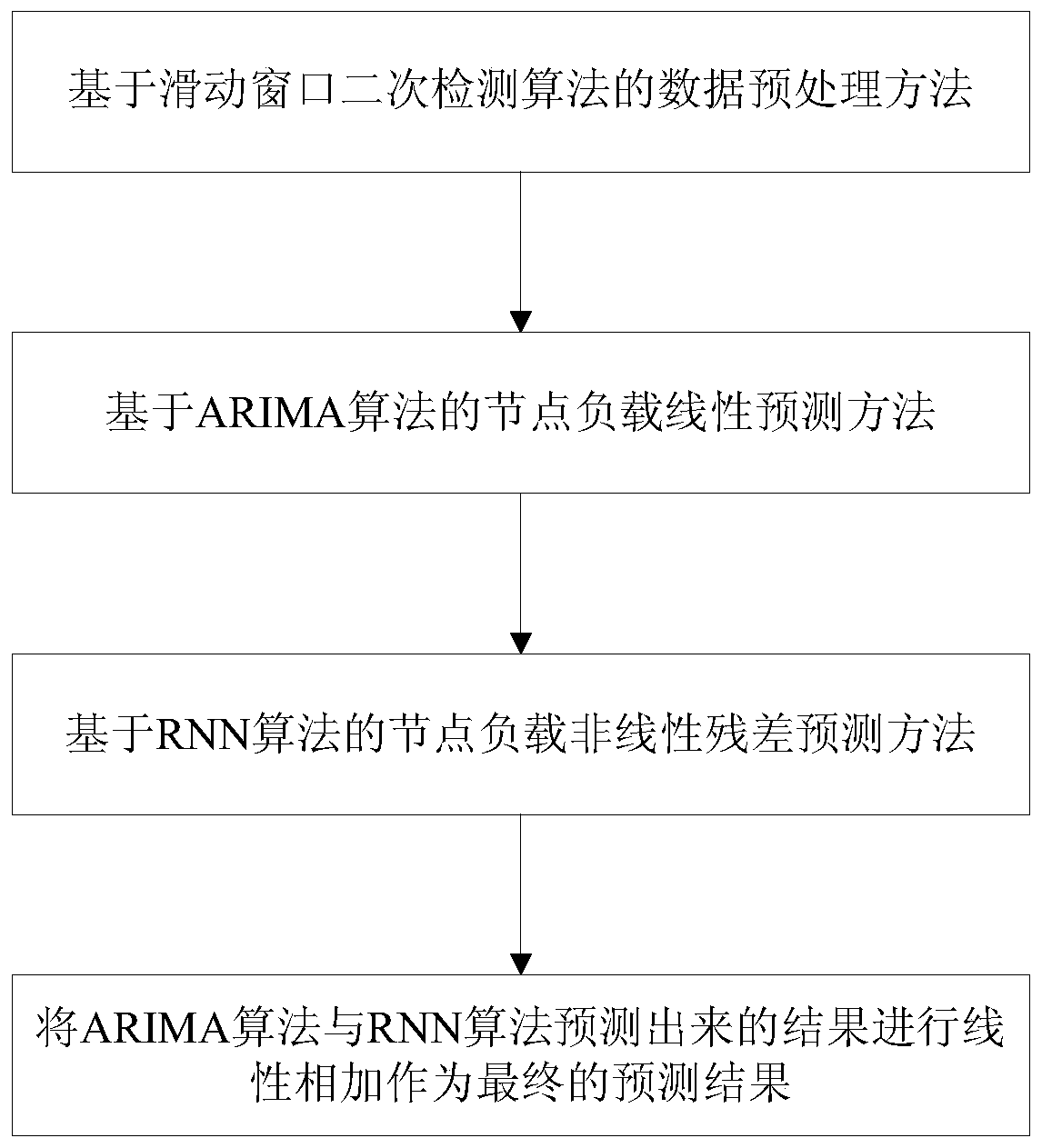

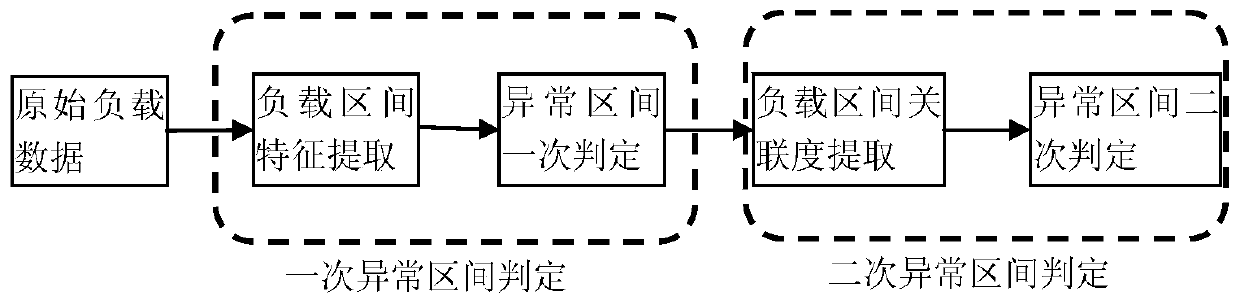

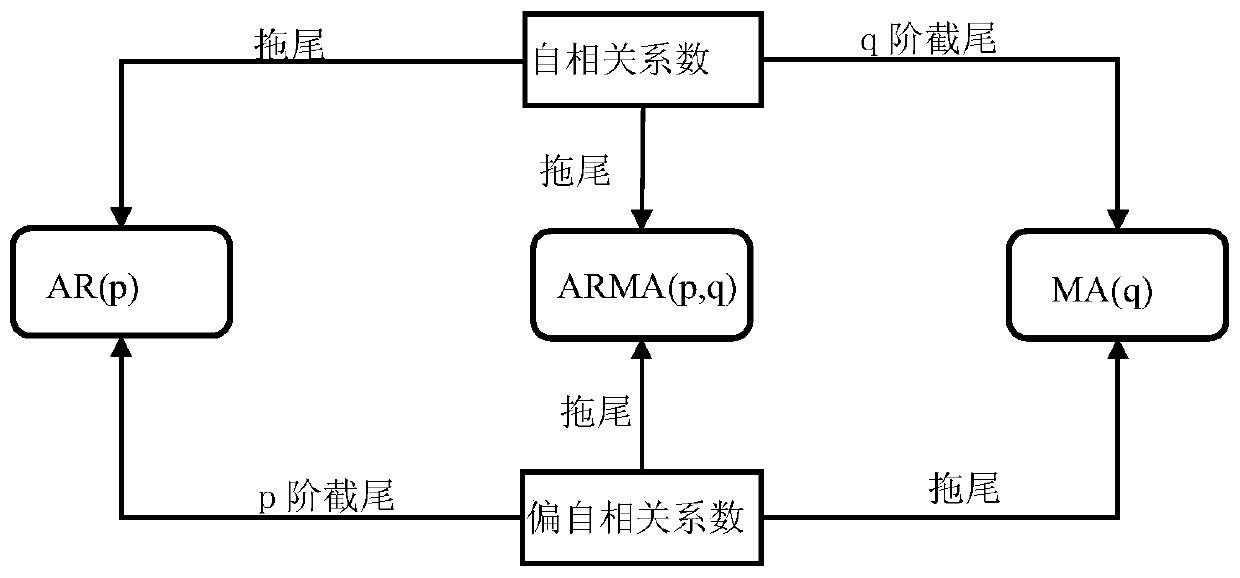

Hadoop platform computing node load prediction method

ActiveCN110149237AAccurate predictionForecast Accurate ForecastData switching networksAlgorithmData pre-processing

The invention provides a Hadoop platform computing node load prediction method. The Hadoop platform computing node load prediction method comprises: a data preprocessing method based on a sliding window secondary detection algorithm; a node load linear prediction method based on an ARIMA algorithm; a node load nonlinear residual prediction method based on an RNN algorithm; performing linear addition on results predicted by the ARIMA algorithm and the RNN algorithm to obtain a final prediction result. According to the method, through analyzing historical data of each settlement node, valuable information can be extracted, so that the load of the computing node in the next time period is reasonably predicted, and the accurate prediction of the load of the computing node can provide a basis for the resource manager to reasonably allocate resources to the AppMaster, so that the pressure of the high-load node is relieved, the computing resource utilization rate of the low-load node is improved, and the reliability and the performance of the Hadoop cluster are improved. According to the method, the ARIMA model and the RNN model are combined, so that the load can be predicted more accurately.

Owner:沈阳麟龙科技股份有限公司

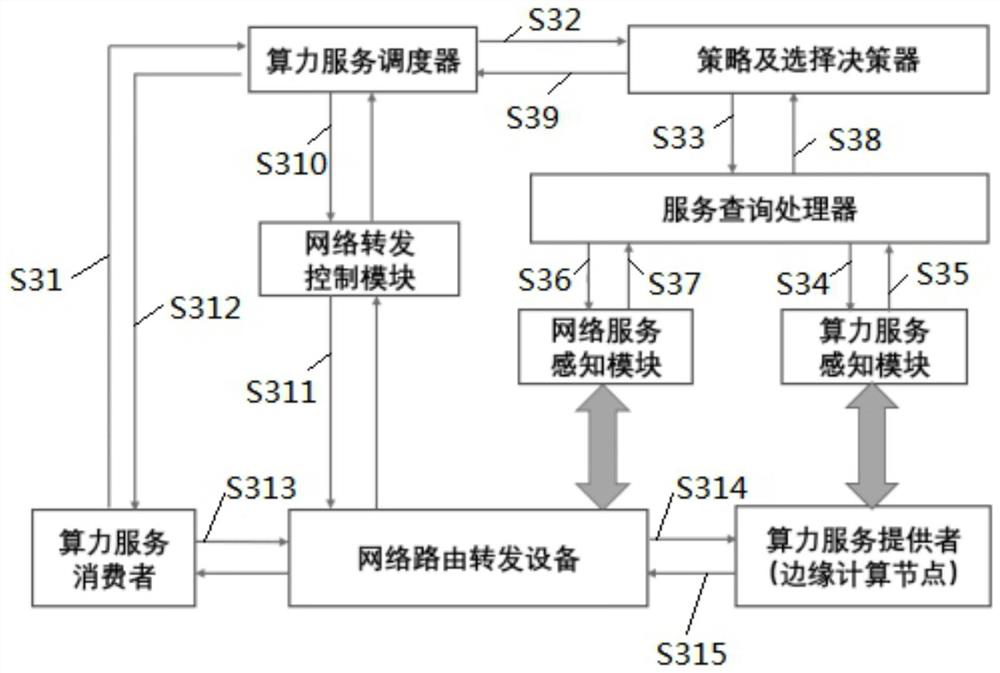

Service-oriented computing power network system, working method and storage medium

PendingCN113079218AImprove computing resource utilizationPerformance optimizationData switching networksService informationEngineering

The invention discloses a service-oriented computing power network system, a working method and a storage medium. The computing power network system comprises a computing power service sensing module, a network service sensing module, a service query processor, a strategy and selection decision maker, a computing power service scheduler, a network forwarding control module and network routing forwarding equipment. The working method comprises the following steps: S1, a computing power service information sensing mechanism; s2, a network service information perception mechanism; and S3, computing power service distribution scheduling. According to the computing power network system, the scattered edge computing nodes are connected into a network, computing resources of the distributed edge nodes can be fully utilized, and the computing resource utilization rate of the edge computing nodes is increased; and by sensing the network state and the computing power of the edge computing node, the computing task can be distributed to the optimal edge computing node through the optimal network path, so that the performance optimization of the edge computing network is realized, and the distribution efficiency of the computing task is improved.

Owner:PURPLE MOUNTAIN LAB

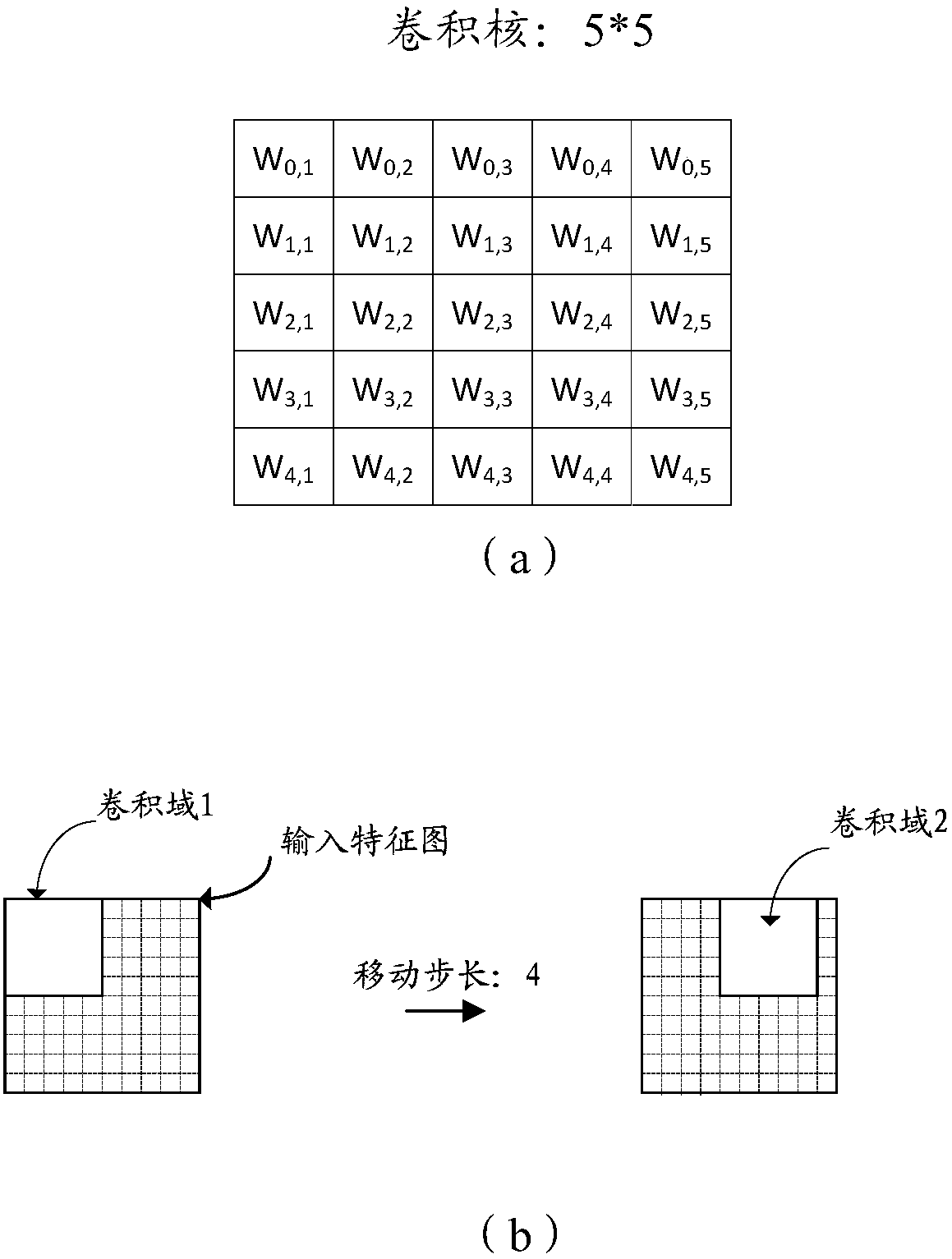

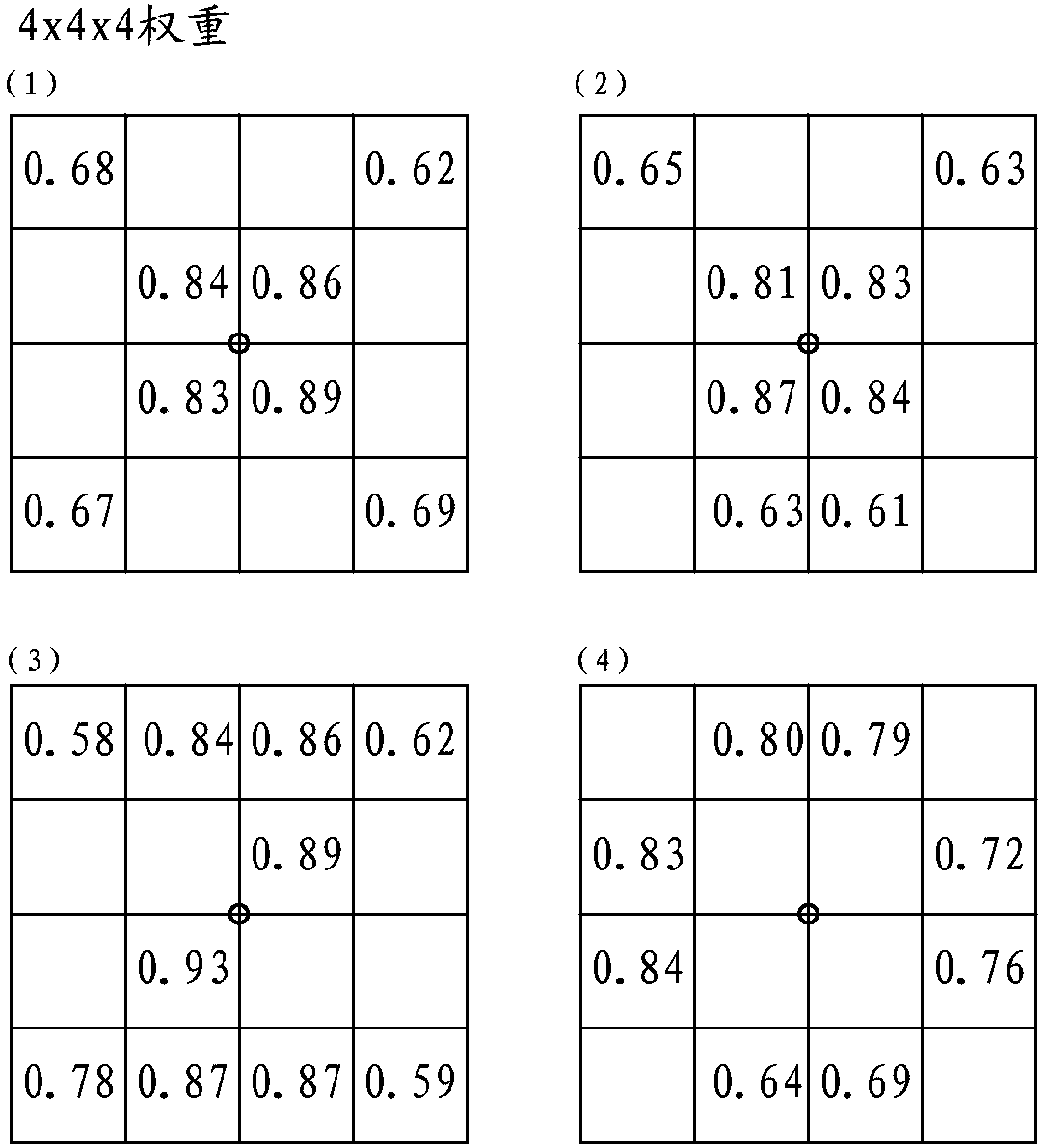

Weight storage method in nerve network and processor based on same

ActiveCN108510058AReduced operating power consumptionSave storage spaceNeural architecturesPhysical realisationNerve networkThree-dimensional space

The invention provides a weight storage method in a nerve network and a nerve network memory based on same; the weight storage method comprises the following steps: building an original two dimensionweight convolution kernel into a three dimensional space matrix; searching valid weights in the three dimensional space matrix and building valid weight indexes, wherein the valid weights are non-zeroweights, and the valid weight indexes are used for marking positions of the valid weights in the three dimensional space matrix; storing the valid weights and the valid weight indexes. The weight data storage method and a convolution calculating method can save the storage space and can improve the computing efficiency.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

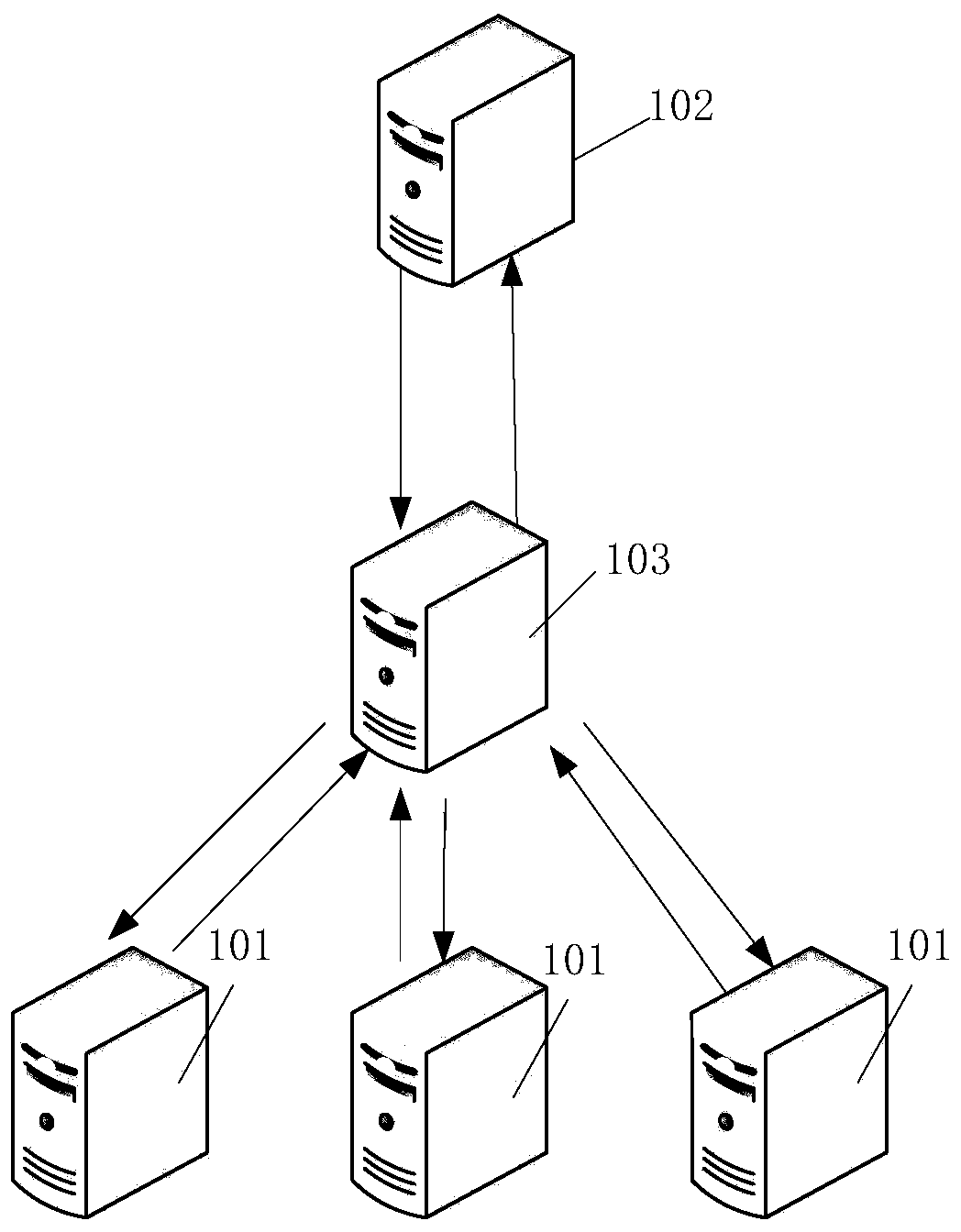

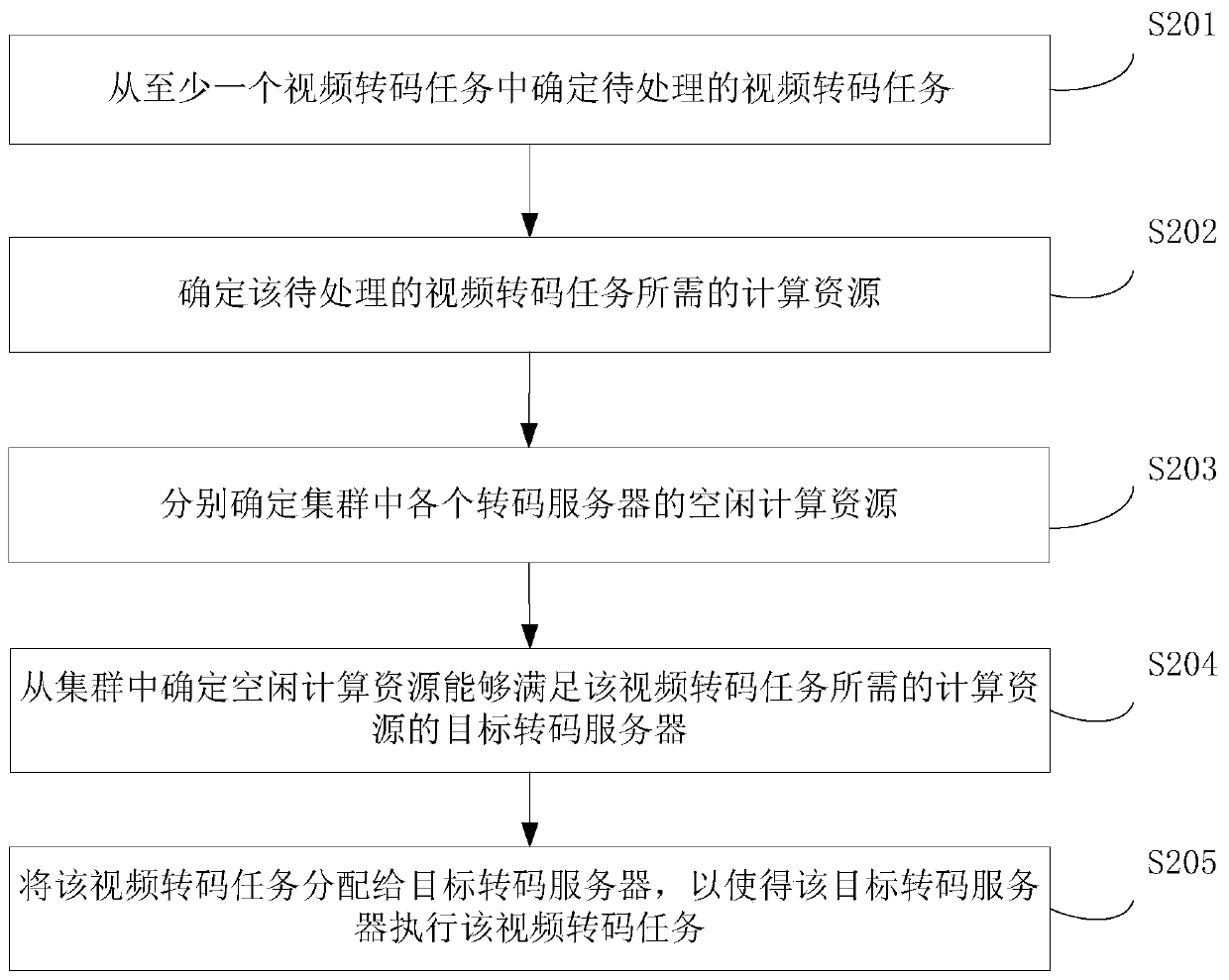

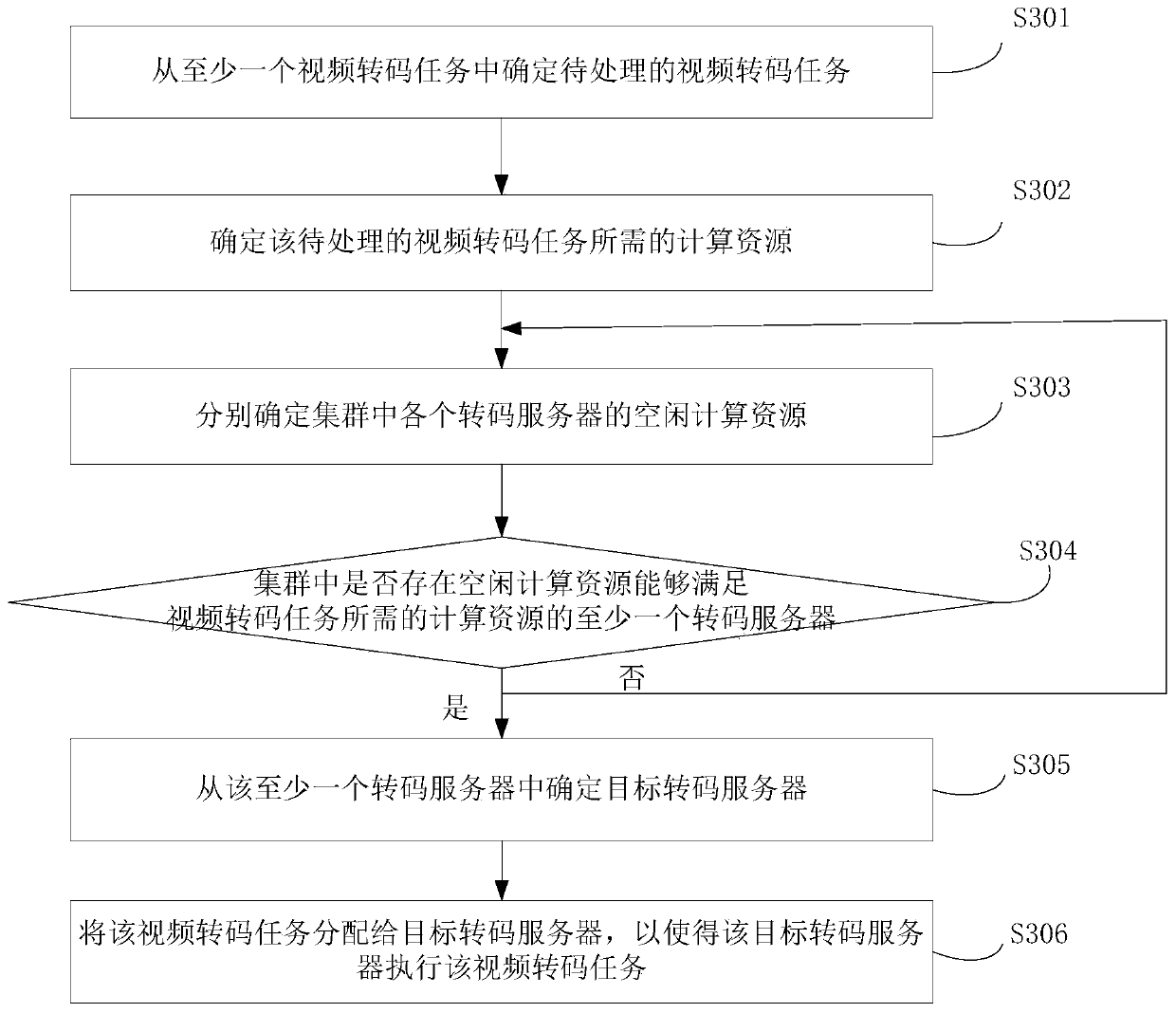

Video transcoding method, device and system

InactiveCN109788315AThe reduction cannot be exploited evenlyImprove computing resource utilizationDigital video signal modificationTransmissionComputational resourceReal-time computing

The invention provides a video transcoding method, device and system. The method comprises steps of calculating resources required by the to-be-processed video transcoding task when video transcodingtask scheduling is performed; determining a transcoding server of which idle computing resources can meet the computing resources required by the video transcoding task in the cluster; and distributing video transcoding task to the transcoding server according to needs, so that it is achieved that resources are distributed according to needs, the video transcoding task is distributed to the cluster transcoding server more reasonably, the situation that computing resources in the cluster cannot be utilized in a balanced manner is reduced, the computing resource utilization rate of the cluster is improved, and the transcoding efficiency is improved.

Owner:HUNAN HAPPLY SUNSHINE INTERACTIVE ENTERTAINMENT MEDIA CO LTD

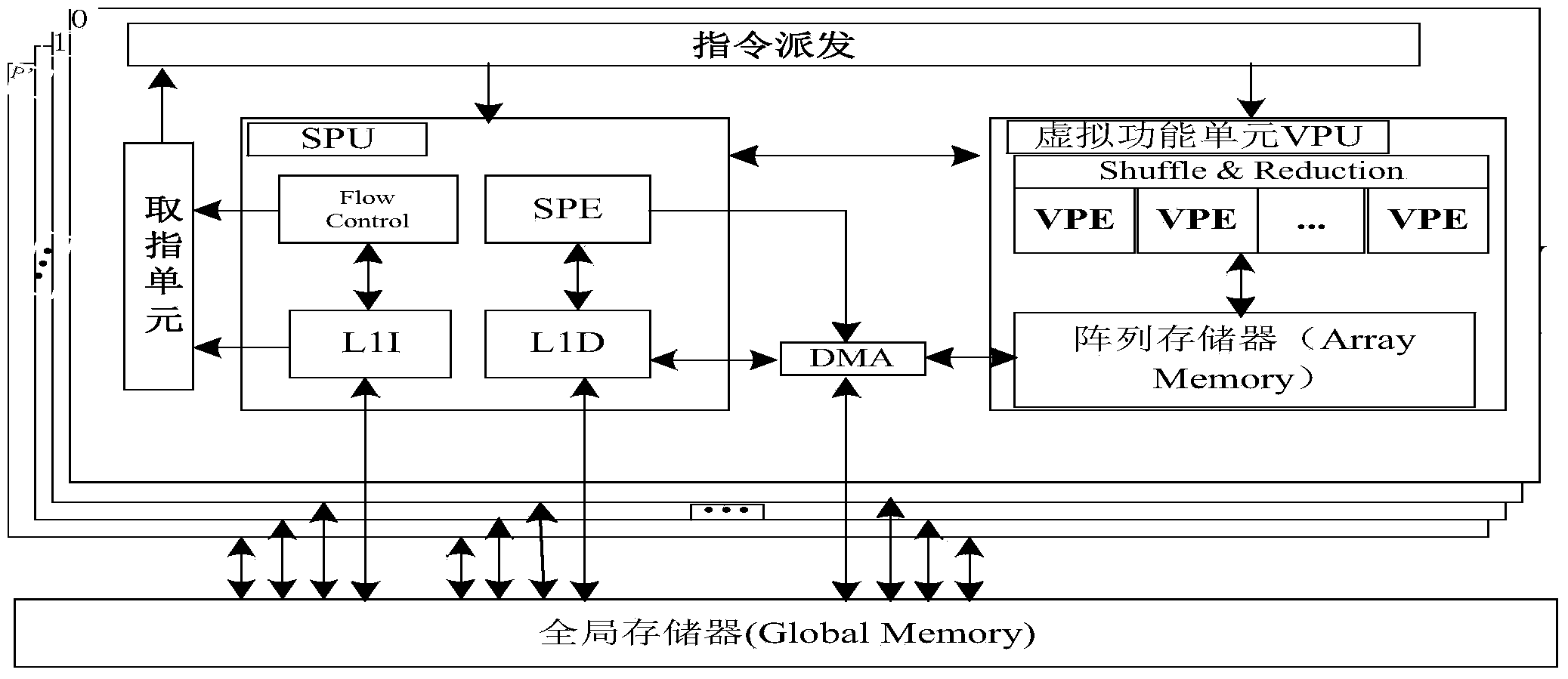

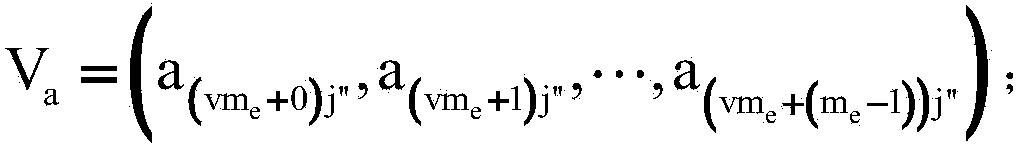

Matrix multiplication accelerating method oriented to general multi-core DSP

ActiveCN104346318AIncrease the speed of multiplicationImprove computing resource utilizationComplex mathematical operationsDigital signal processingResource utilization

The invention discloses a matrix multiplication accelerating method oriented to general multi-core DSP (Digital Signal Processing), and aims to increase the calculating speed of matrix multiplication and maximize the calculating efficiency of the general multi-core DSP. The technical scheme is as follows: firstly, configuring and initializing the DSP; then dividing a matrix A and a matrix B and converting the original matrix multiplication into block matrix multiplication according to the topological structure mg x ng of a VPU (Virtual Processing Unit); next, enabling each VPU to execute data migration operation synchronously in parallel; finally, incorporating into the result of an AM (Array Memory) in each VPU to form a calculation result of a result matrix C=A x B according to a data distribution principle. Through adoption of the matrix multiplication accelerating method oriented to general multi-core DSP, disclosed by the invention, the matrix multiplication speed of a general multi-core DSP structure and a calculation resource utilization rate of a general multi-core DSP system can be increased.

Owner:NAT UNIV OF DEFENSE TECH

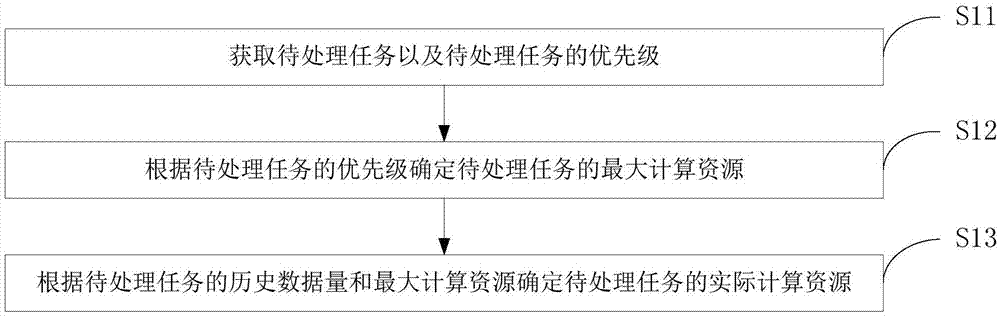

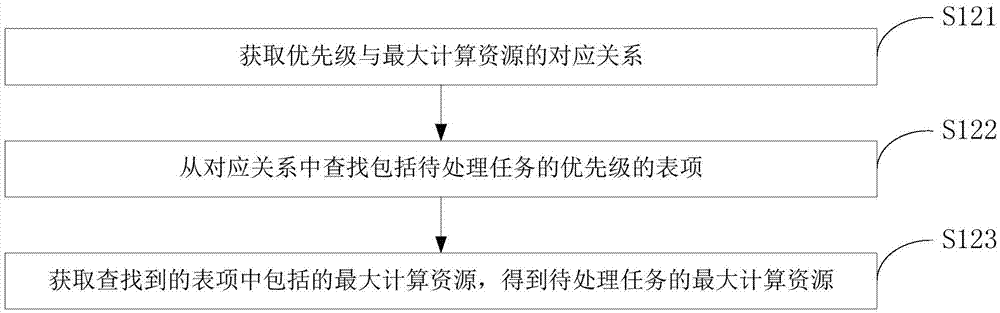

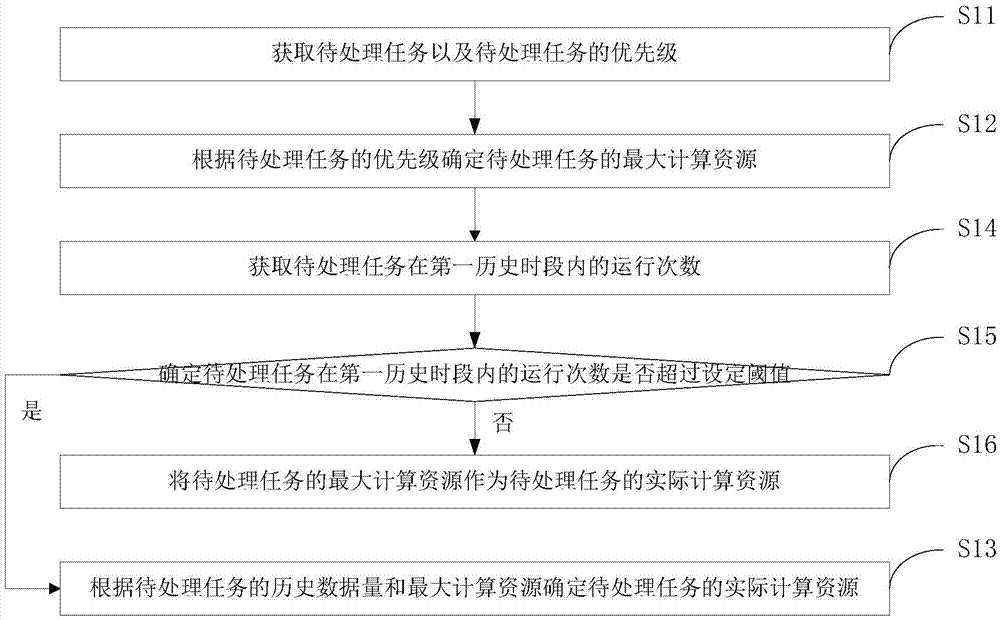

Computing resource distributing method and apparatus

ActiveCN106874100AImprove computing resource utilizationImprove task throughputResource allocationComputational resourceResource utilization

The invention discloses a computing resource distributing method and apparatus. The method relates to the technical field of computers and comprises steps of acquiring a to-be-processed task and a priority of the same, determining a maximum computing resource of the to-be-processed task according to the priority of the to-be-processed task, and determining practical computing resource to be processed according to historical data amount of the to-be-processed task and the maximum computing resource. During determination of the practical computing resource of the to-be-processed task, the historical maximum resource and the historical data amount corresponding to the priority of the to-be-processed task, rather than established parameters are referred to; for different to-be-processed tasks, computing resources are different when the priority and the historic data amount vary, so computing resource can be rationally distributed to each to-be-processed task; and further a cluster computing resource utilization rate and cluster task handling capacity can be improved.

Owner:阿里巴巴湖北有限公司

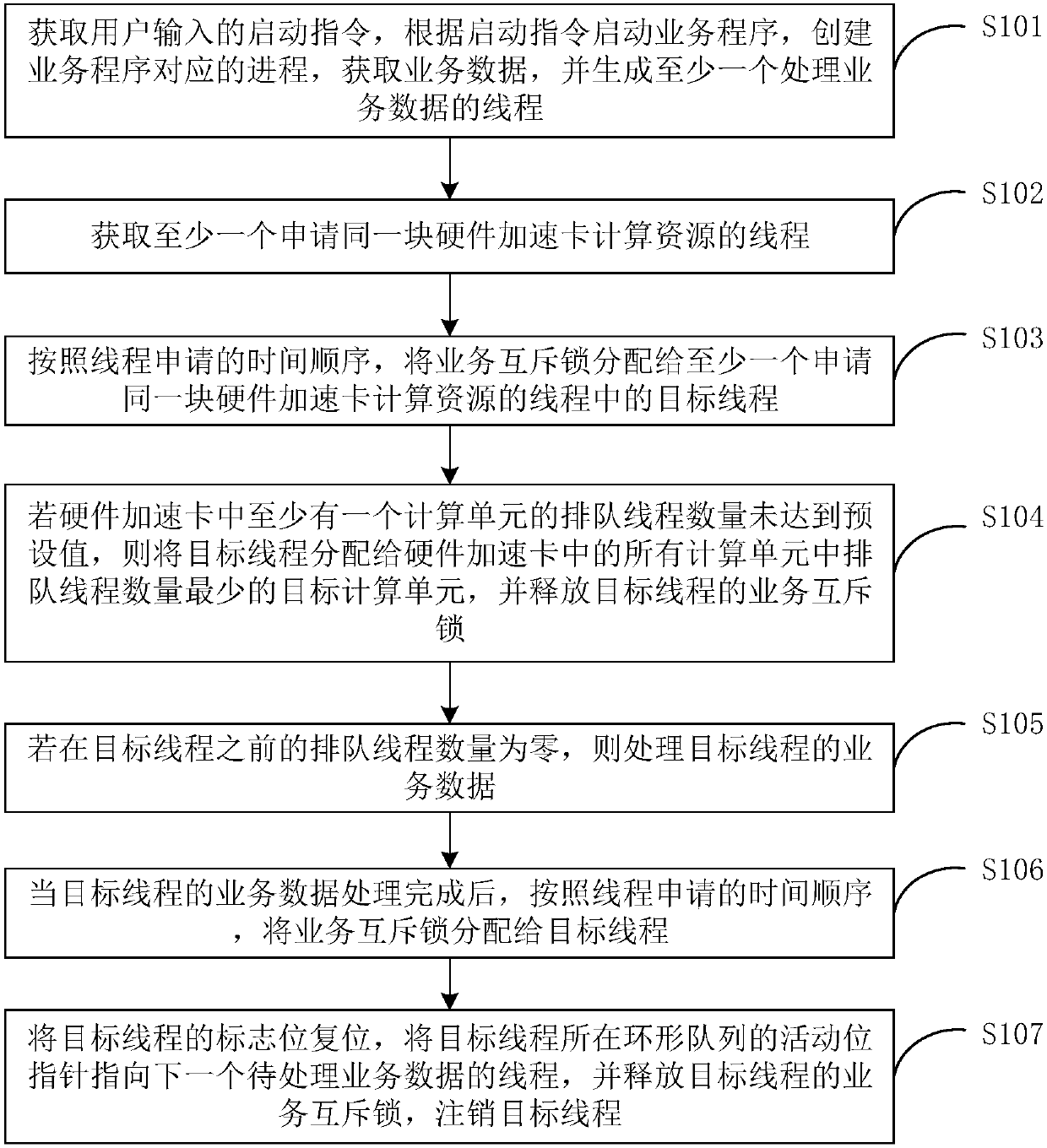

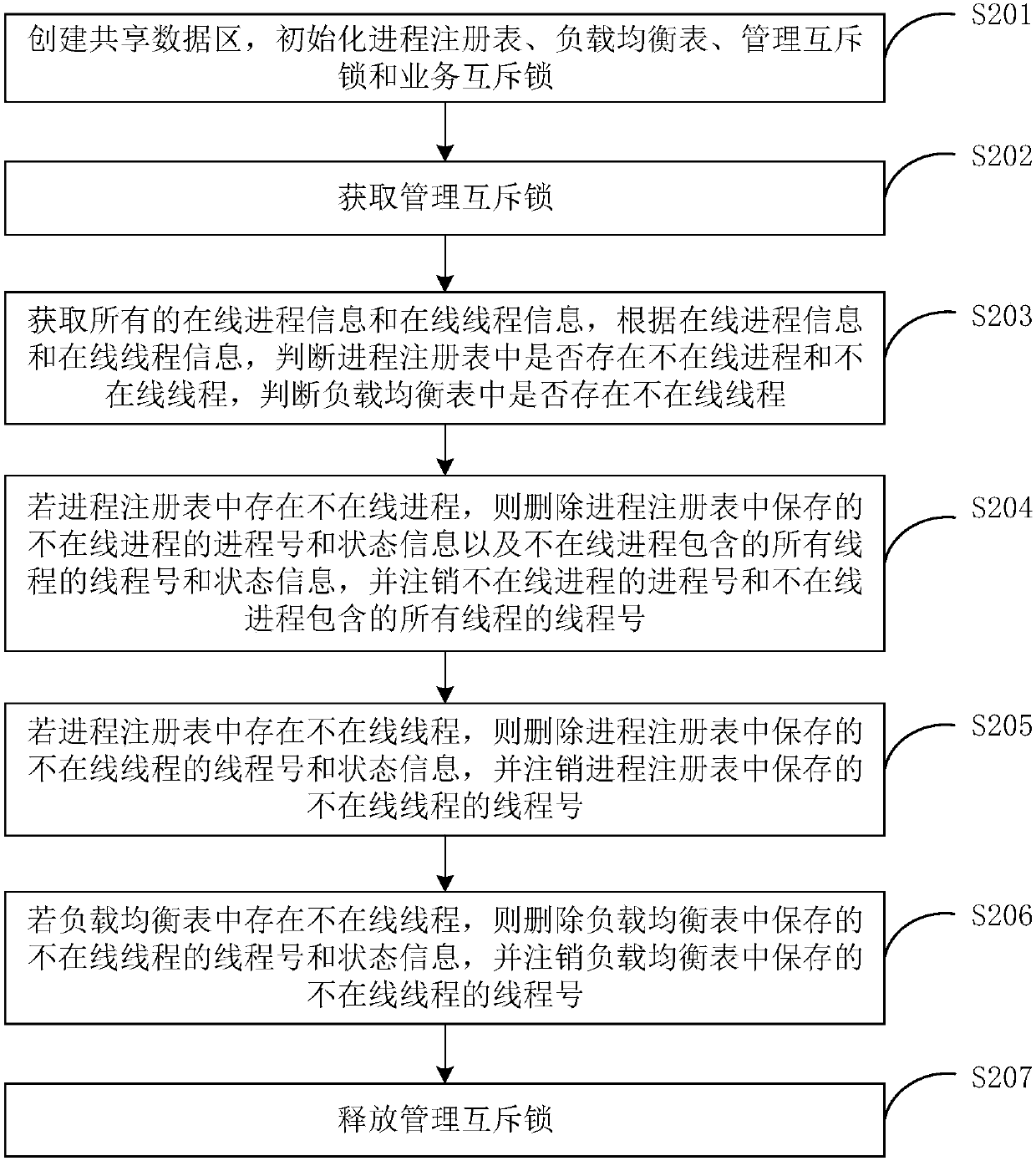

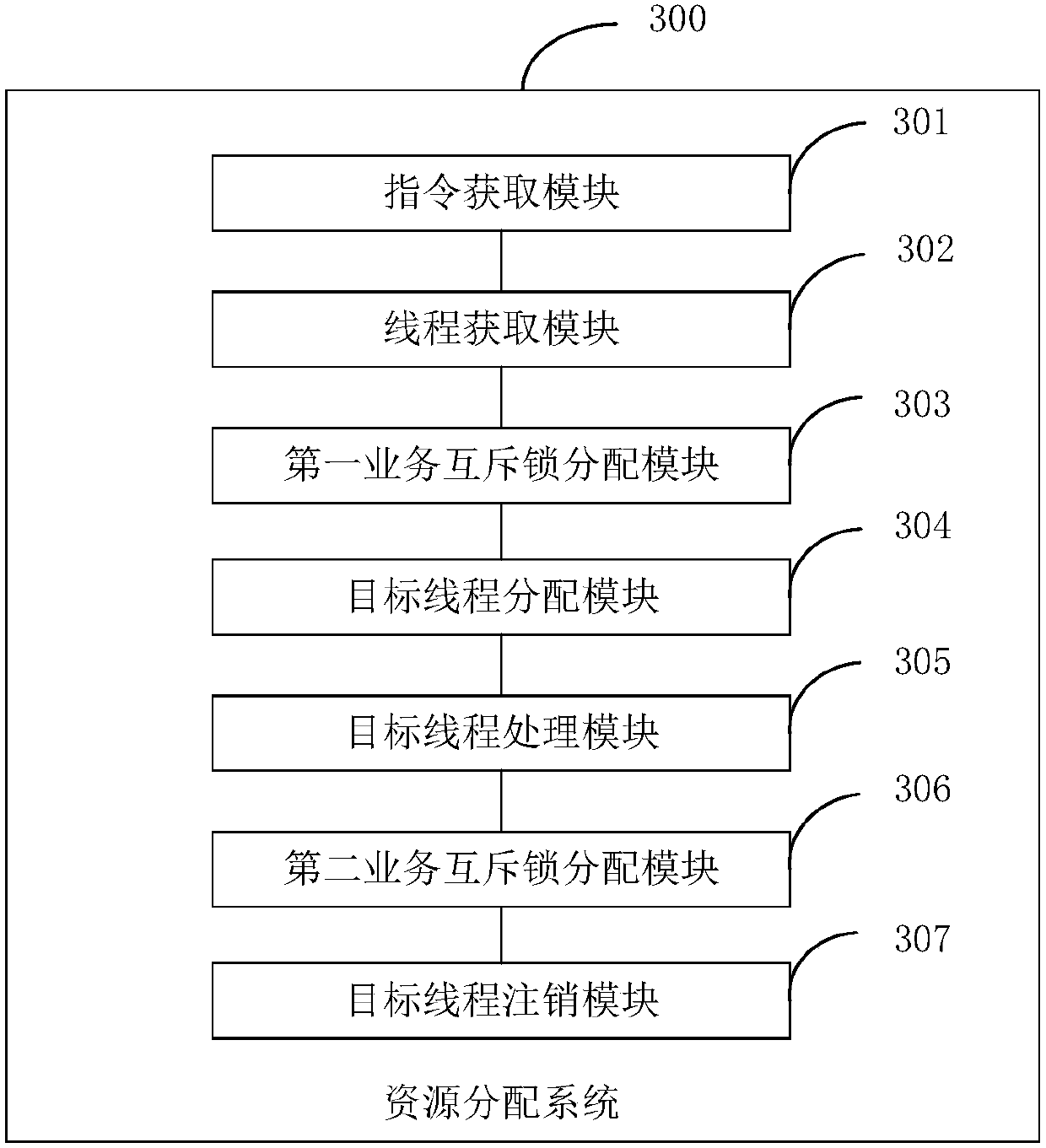

Resource allocation method and system

ActiveCN108052396ASolve the problem of unbalanced distribution and low utilization of computing resourcesIncrease profitResource allocationProgram synchronisationUser inputResource utilization

The invention discloses a resource allocation method and system, which are suitable for use in the field of resource scheduling technology. The method includes: acquiring a starting instruction inputby a user, starting a service program according to the starting instruction, and generating at least one thread; acquiring at least one thread requesting computation resources of the same hardware acceleration card ; allocating a service mutex lock to a target thread according to a time order of thread application; allocating the target thread to a target computation unit of a smallest number of queuing-up threads, and releasing the service mutex lock of the target thread; processing service data of the target thread if the number of queuing-up threads before the target thread is zero; allocating a service mutex lock to the target thread; and resetting a mark bit of the target thread, enabling a moving bit pointer of a queue, where the target thread is located, to point to a next thread ofwhich service data are to be processed, releasing the service mutex lock of the target thread, and canceling the target thread. According to the method, a computation resource utilization rate can besignificantly increased, and an application value of the hardware acceleration card can be increased.

Owner:深圳市恒扬数据股份有限公司

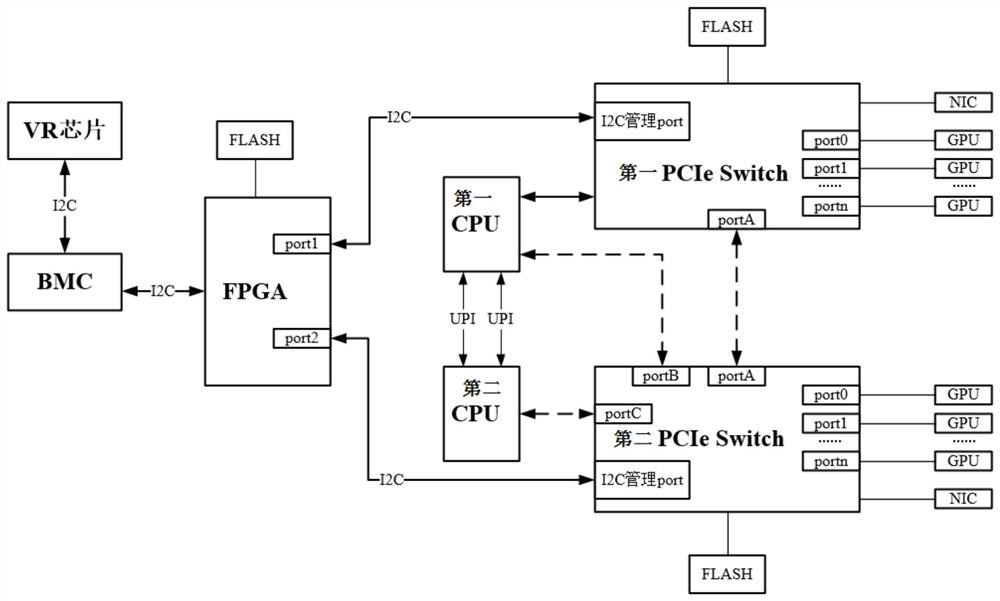

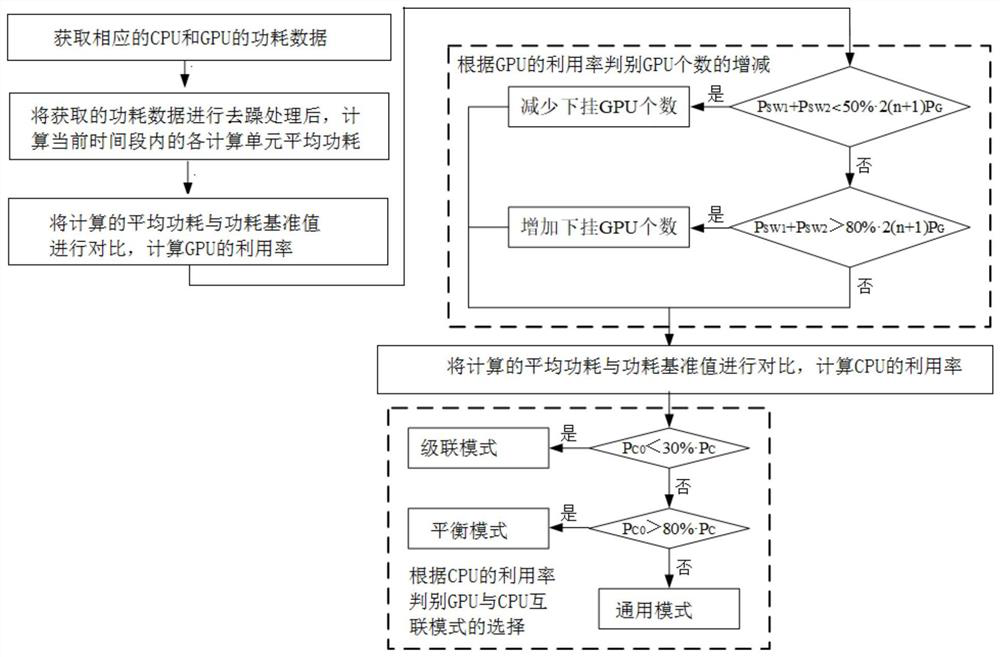

AI server computing unit architecture and implementation method

ActiveCN111737184AImprove computing resource utilizationIncrease power consumptionMultiple digital computer combinationsEnergy efficient computingComputer hardwareComputer architecture

The invention provides an AI server computing unit architecture and an implementation method. The architecture comprises a power consumption acquisition module, a control module, a first PCIe Switch chip and a second PCIe Switch chip, the control module is in communication connection with the first PCIe Switch chip and the second PCIe Switch chip respectively; the power consumption acquisition module is used for acquiring power consumption data of the CPU and the GPU; the control module is used for acquiring power consumption data of the CPU and the GPU from the power consumption acquisition module, analyzing and processing the power consumption data and sending a control instruction packet to the PCIe Switch chip according to a processing result of the power consumption data; and on-off setting and uplink and downlink attribute setting of a PCIe Switch chip port are controlled to realize adjustment of the number of GPUs participating in calculation and adjustment of the interconnection relationship between the GPUs and the CPU through the PCIe Switch chip.

Owner:INSPUR SUZHOU INTELLIGENT TECH CO LTD

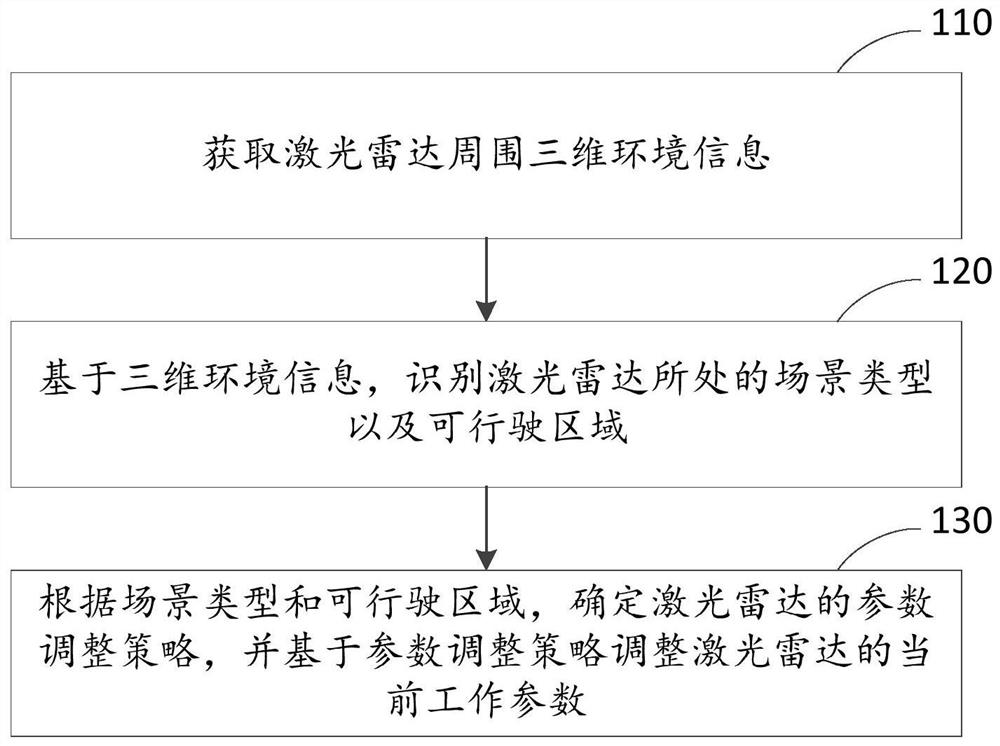

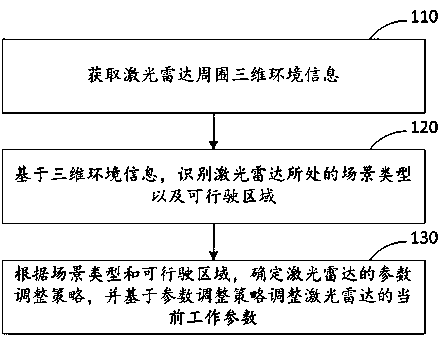

Laser radar parameter adjustment method, laser radar system and computer storage medium

ActiveCN111999720AImprove work efficiencyImprove detection rateElectromagnetic wave reradiationRadar systemsEngineering

The embodiment of the invention relates to the technical field of radars, and discloses a laser radar parameter adjustment method, a laser radar system and a computer storage medium. The laser radar parameter adjustment method comprises the following steps of: acquiring three-dimensional environment information around a laser radar; based on the three-dimensional environment information, identifying the type of a scene where the laser radar is located and a drivable area; and determining a parameter adjustment strategy of the laser radar according to the scene type and the drivable area, and adjusting the current working parameters of the laser radar based on the parameter adjustment strategy. According to the embodiment of the invention, the working parameters of the laser radar can be automatically adjusted according to different scenes.

Owner:SUTENG INNOVATION TECH CO LTD

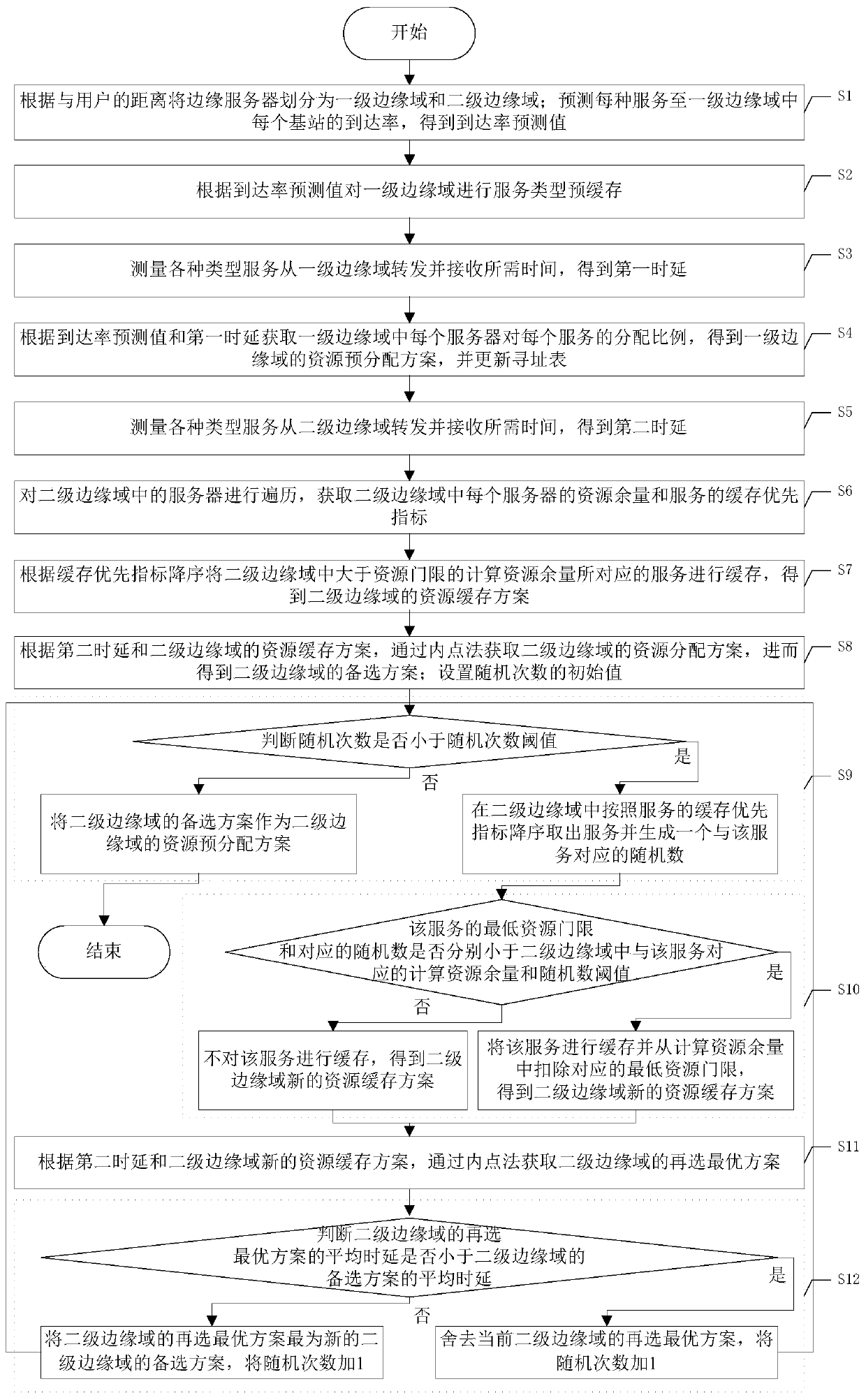

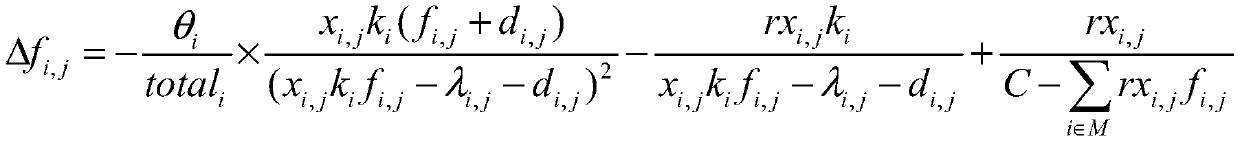

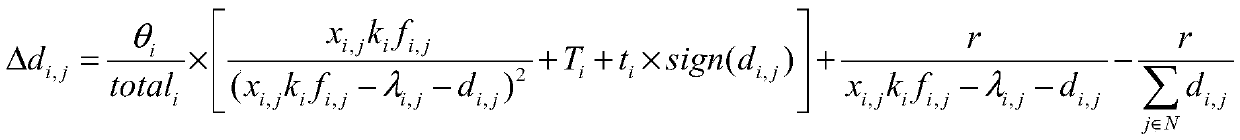

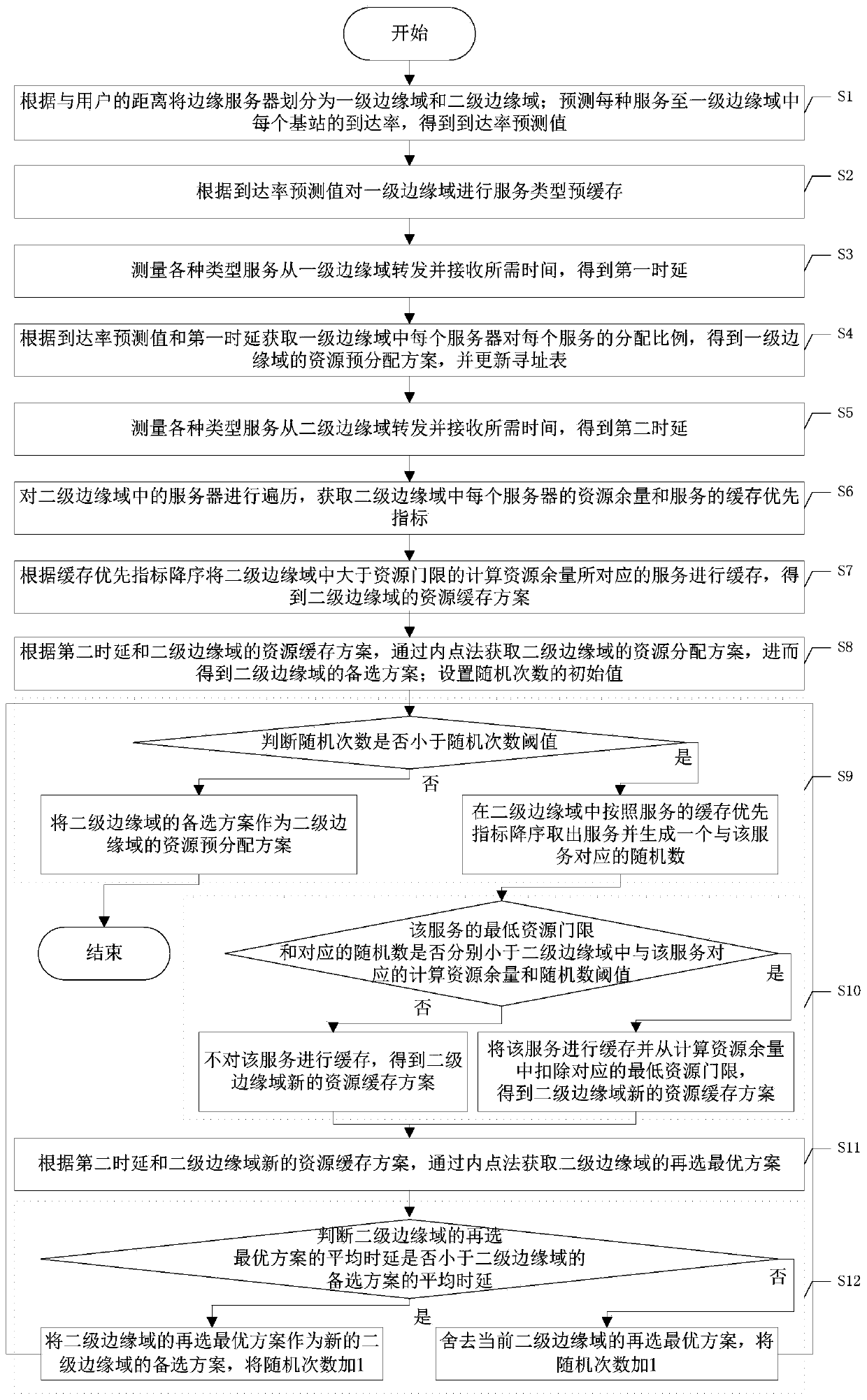

Pre-distribution method for edge domain resources in edge computing scene

ActiveCN110177055AEfficient configurationIncrease profitData switching networksEdge serverInterior point method

The invention discloses a pre-distributing method for edge domain resources in an edge computing scene, the method comprises the following steps of: predicting an arrival rate and measuring a forwarding delay, determining the type of a service pre-cache according to the arrival rate and the weight of a service in a first-level edge domain, and determining the distribution proportion of each service according to a first delay; determining the type of the service pre-cache according to the second time delay in the second-level edge domain, and obtaining an initial cache scheme through an interior point method; obtaining a new resource caching scheme by randomly selecting a service caching type, selecting a scheme with relatively small average time delay from an initial caching scheme and thenew resource caching scheme to serve as a final pre-distribution scheme, and thus completing pre-distribution. According to the method, the demand of the user in the next period is estimated by utilizing the statistical data, and the service type and quantity of the edge servers are pre-allocated according to the estimated data, so that more efficient resource allocation can be carried out, the resource utilization rate is improved, and the application delay is shortened.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

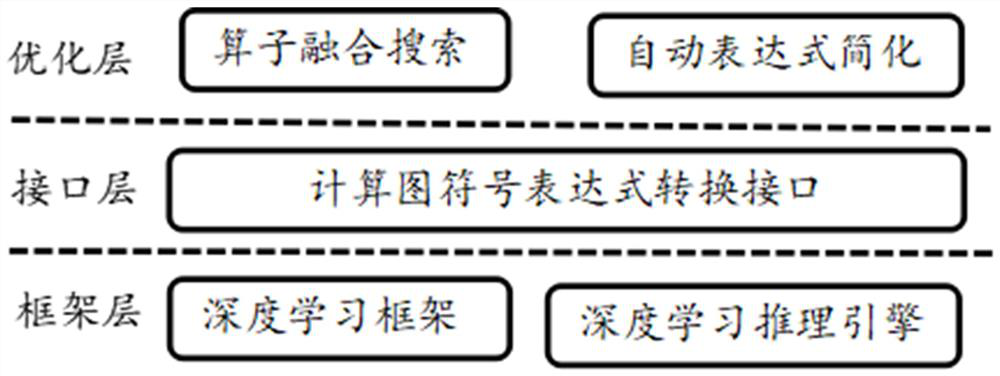

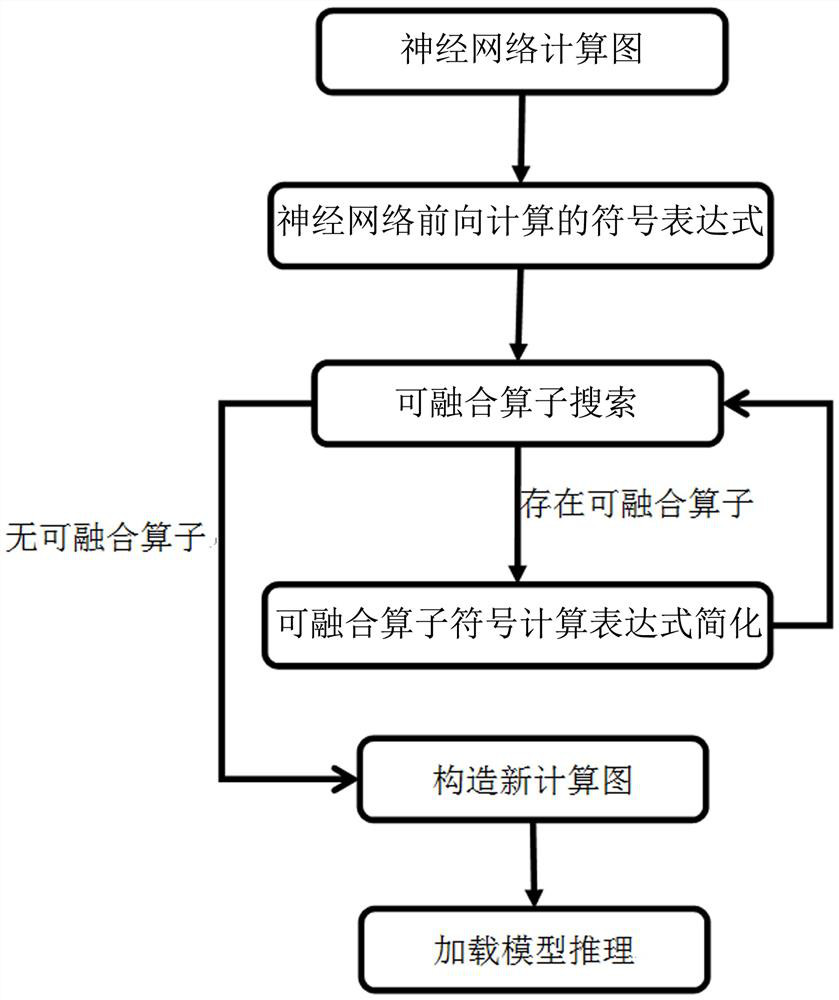

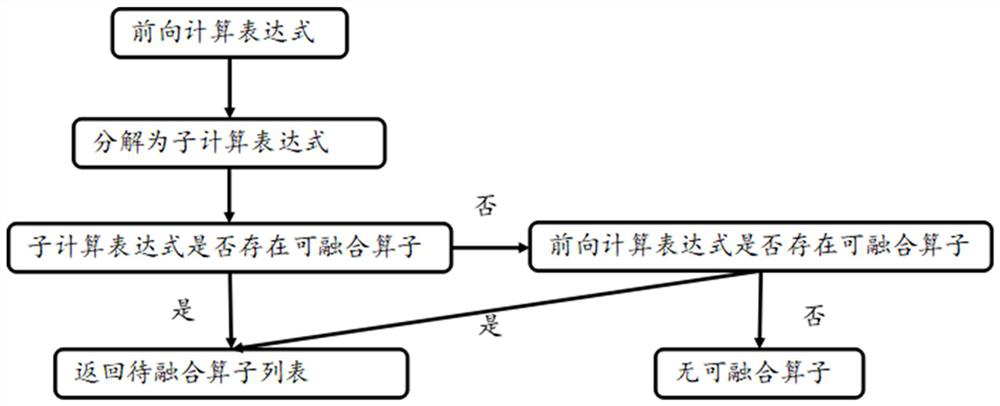

Deep neural network reasoning acceleration method and system based on multi-operator fusion

PendingCN113420865AOptimize inference speedReduce overheadNeural architecturesInference methodsAlgorithmTheoretical computer science

The invention relates to a deep neural network reasoning acceleration method and system based on multi-operator fusion, and the method specifically comprises the steps: inputting a neural network calculation diagram, obtaining a neural network calculation logic diagram, and obtaining a complete neural network forward calculation symbol expression according to the calculation relation between neural network operators; employing a fusible operator search method, and automatically simplifying the system by means of operator symbol expressions, simplifying a symbol expression of forward calculation of the neural network, obtaining the simplest symbol expression, and achieving multi-operator fusion; according to a multi-operator fusion result and the obtained simplest symbol expression, a new neural network calculation reasoning logic diagram is constructed, the simplest symbol expression is decoupled, offline calculation is performed, new model parameters are stored, and a corresponding neural network model structure is constructed; and finally, loading new model parameters to realize reasoning acceleration. According to the invention, the overhead of operator execution gaps can be reduced, the utilization rate of computing resources of equipment is improved, and the overall network reasoning speed is optimized.

Owner:ZHEJIANG LAB

Laser radar parameter adjusting method and device and laser radar

ActiveCN111537980AImprove work efficiencyImprove detection rateElectromagnetic wave reradiationRadarEngineering

The embodiment of the invention relates to the technical field of radars, and discloses a laser radar parameter adjusting method and device and a laser radar. The laser radar parameter adjusting method comprises the steps of acquiring three-dimensional environment information around the laser radar; based on the three-dimensional environment information, identifying the type of a scene where the laser radar is located and a drivable area; and determining a parameter adjustment strategy of the laser radar according to the scene type and the drivable area, and adjusting the current working parameters of the laser radar based on the parameter adjustment strategy. According to the embodiment of the invention, automatic adjusting of the working parameters of the laser radar according to different scenes can be realized.

Owner:SUTENG INNOVATION TECH CO LTD

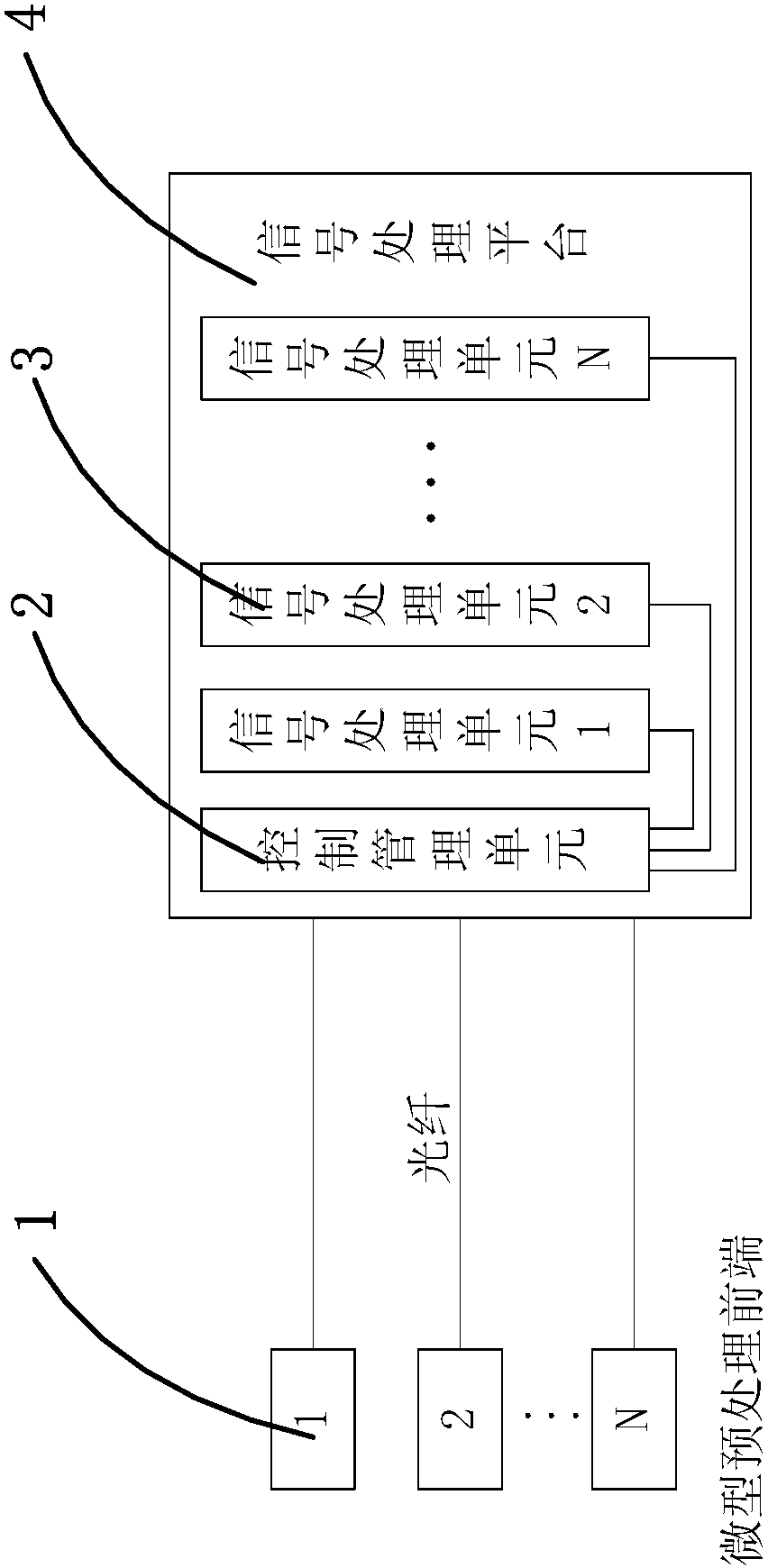

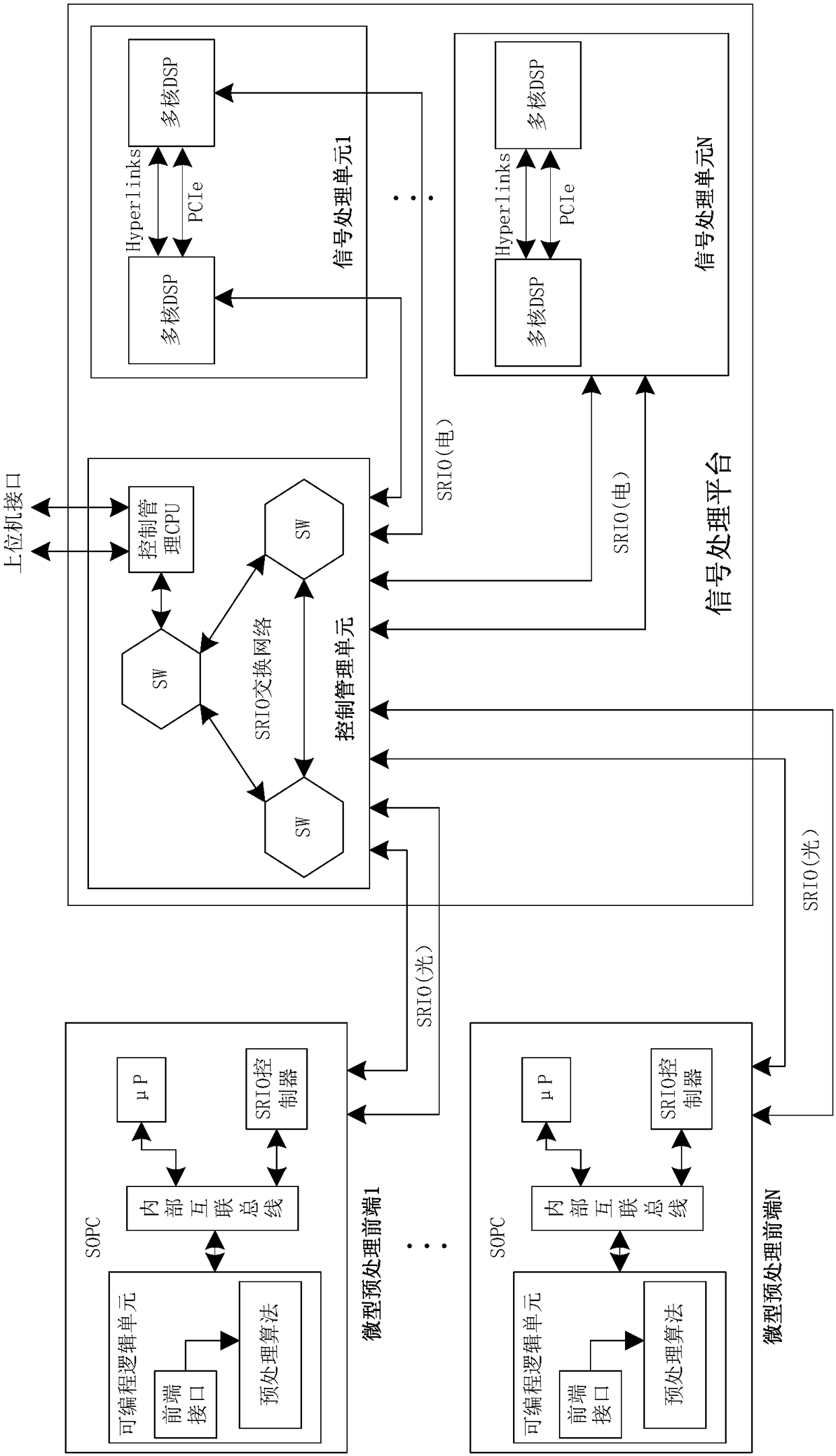

Integrated embedded signal processing system

ActiveCN108614788AIncrease flexibilityImprove data fusion capabilitiesElectric digital data processingFiberElectricity

The invention belongs to the field of embedded computer system design, and relates to an integrated embedded signal processing system. The signal processing system consists of a plurality of minitypepreprocessing front sides and a signal processing platform, wherein the signal processing platform comprises a plurality of signal processing units and a control management unit; and the minitype preprocessing front sides and the signal processing unit are mutually connected with a high-speed switched network in the control management unit independently through an optical signal and an electric signal. The minitype preprocessing front end is deeply embedded into the data collection front side of an embedded application system to finish data preprocessing, and then, the signal is transmitted tothe signal processing platform through a high-speed fiber to finish data fusion and signal processing.

Owner:XIAN AVIATION COMPUTING TECH RES INST OF AVIATION IND CORP OF CHINA

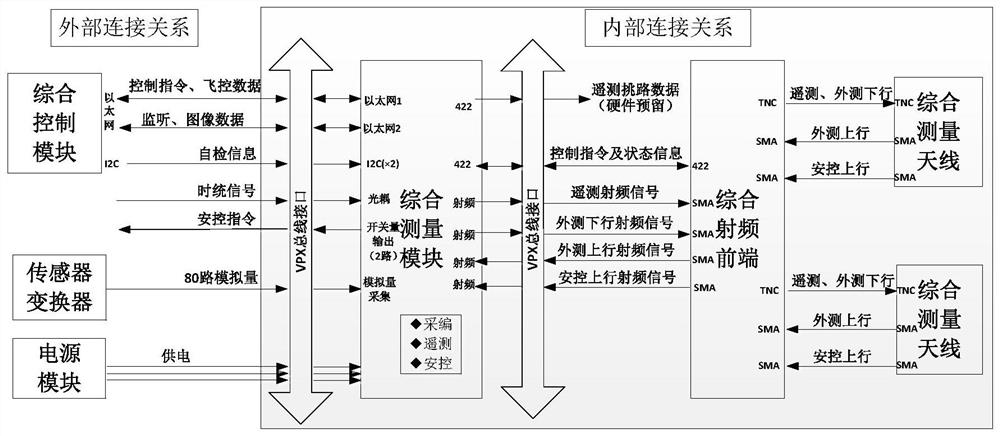

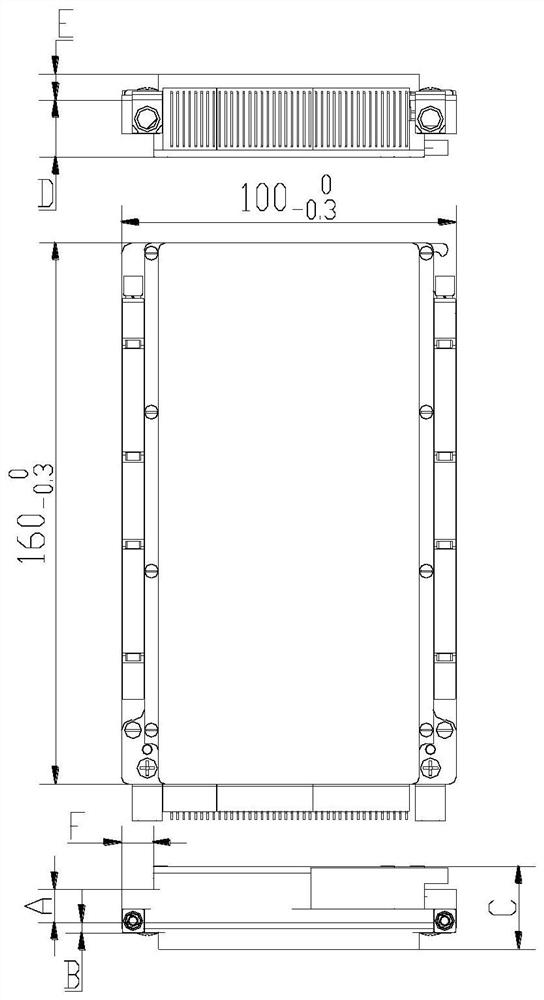

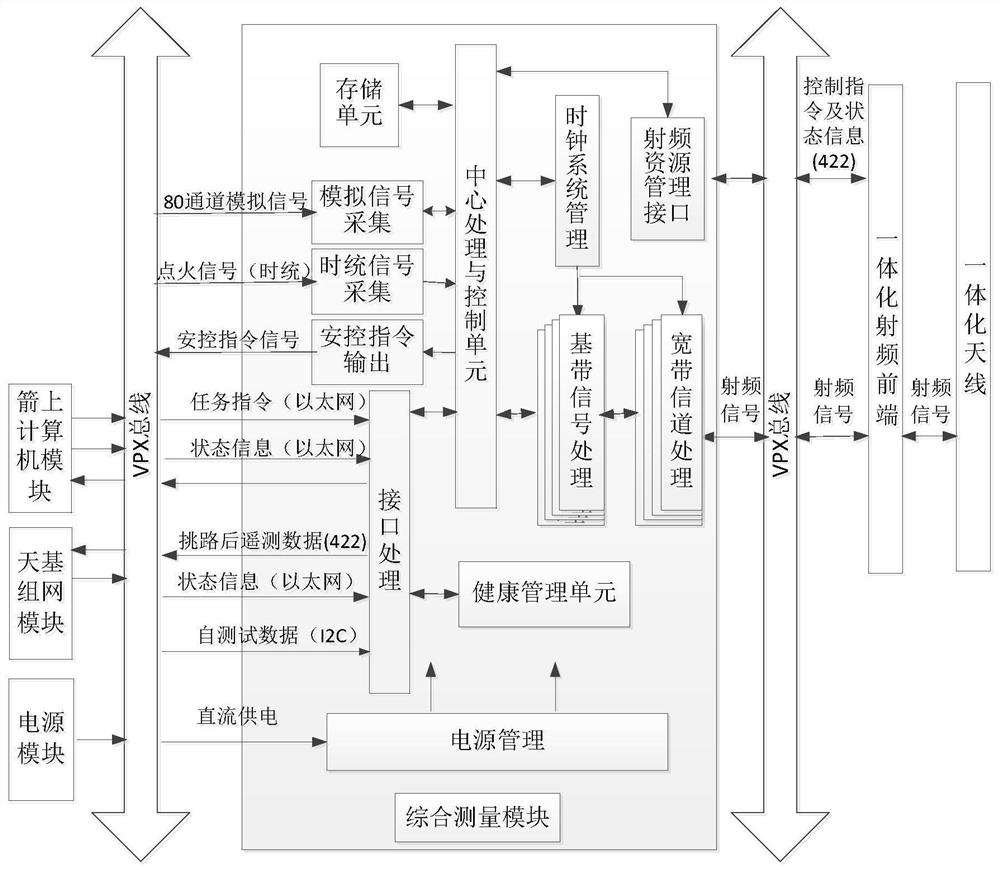

On-rocket integrated comprehensive radio frequency system

InactiveCN112118022AReduce volumeLow costCosmonautic vehiclesCosmonautic partsThird partyBus interface

An on-rocket integrated comprehensive radio frequency system comprises a comprehensive measurement module, a comprehensive radio frequency front end and a comprehensive measurement antenna, wherein the comprehensive measurement module is connected with the comprehensive radio frequency front end through a VPX bus interface; the comprehensive radio frequency front end is connected with the comprehensive measurement antenna; the comprehensive measurement module is used for collecting multiple analog quantities, processing telemetry data, externally measurement uplink and downlink communication,safety control uplink and self-destruction control and third-party monitoring; the comprehensive radio frequency front end is used for telemetering downlink amplification and filtering, external measurement uplink amplification and filtering, external measurement downlink method and filtering, and safety control uplink amplification and filtering; and the comprehensive measurement antenna is integrated with a remote / external measurement downlink antenna, an external measurement uplink antenna and a safety control uplink antenna. By the scheme, the functions of collecting, remote measuring, external measuring and safety control are integrated, scattered independent single-machine products are integrally designed into a 3U VPX module, a power amplifier and an antenna, the size and the systemcomplexity are reduced, and meanwhile the utilization rate of computing resources is increased.

Owner:CHINA ACAD OF LAUNCH VEHICLE TECH

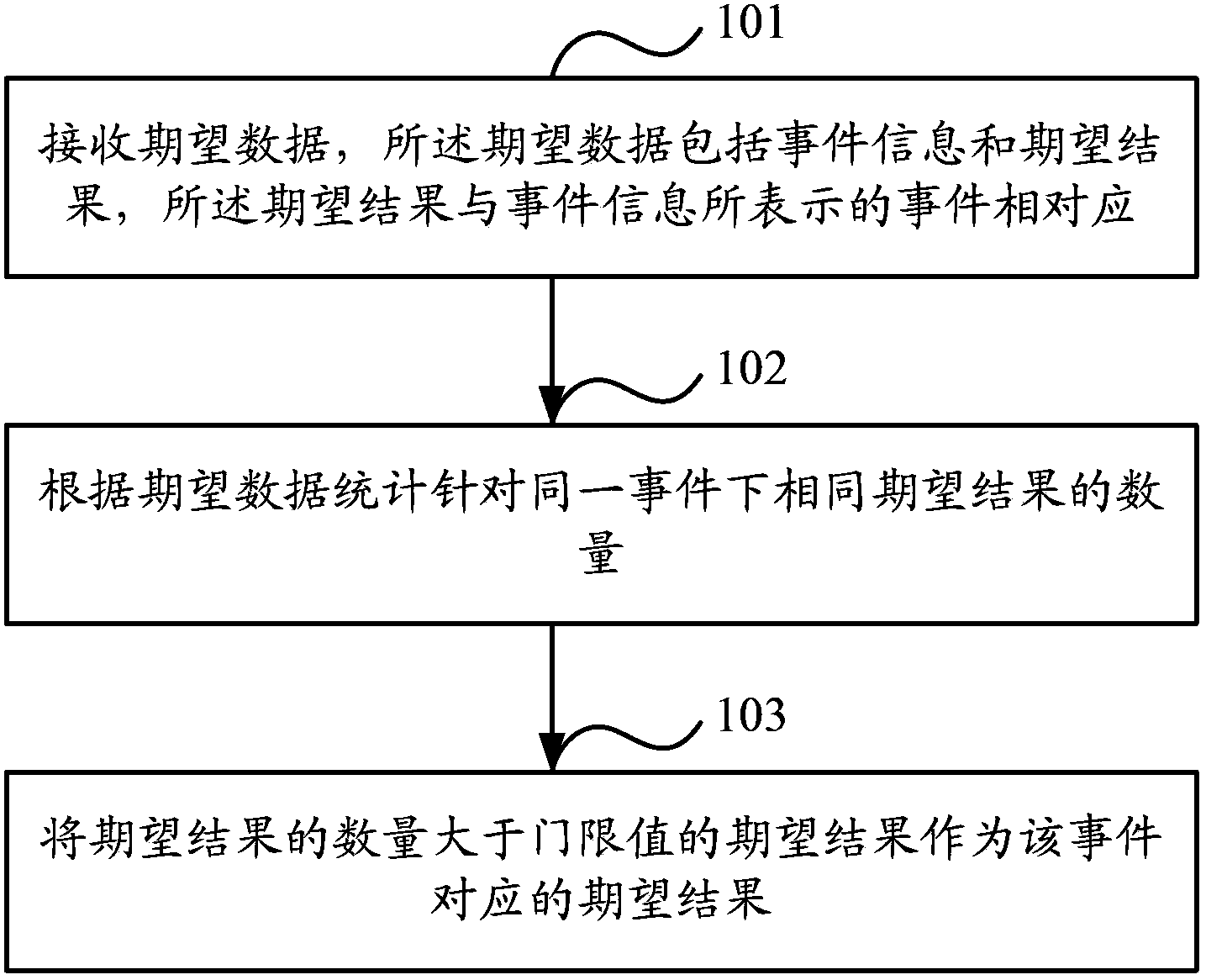

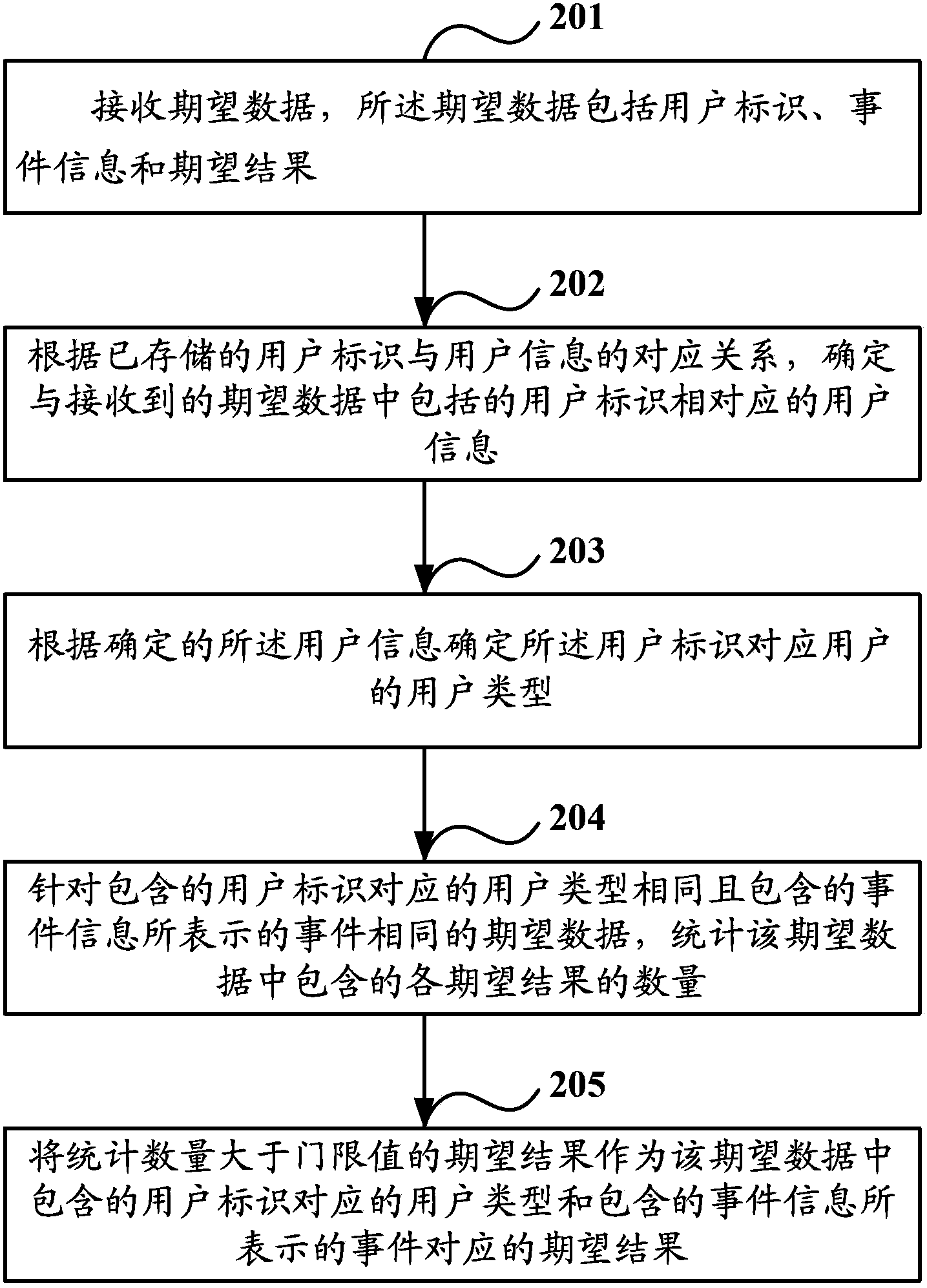

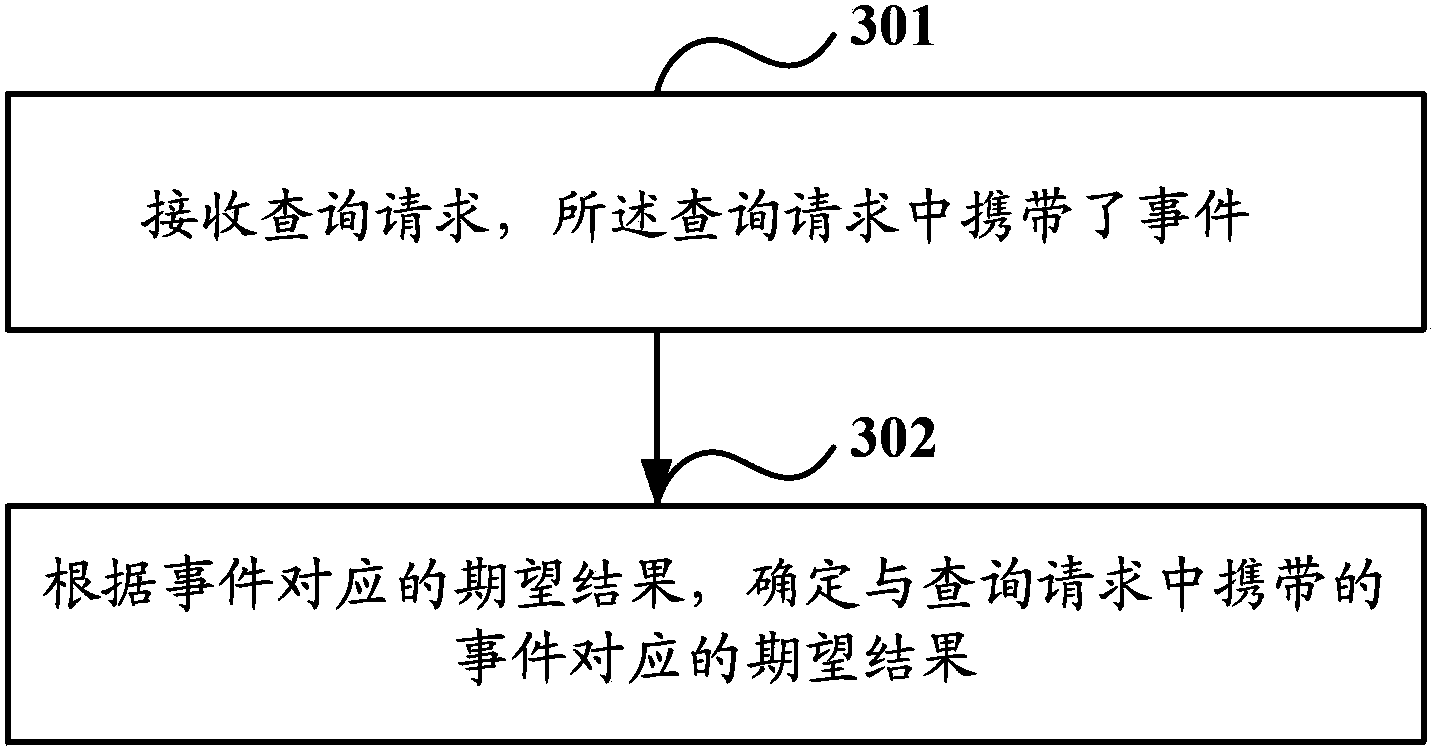

Method and device for data analysis and data query

InactiveCN103577429APersonal preference determinationImprove computing resource utilizationSpecial data processing applicationsData queryStatistical analysis

The invention discloses a method and device for data analysis and data query. The method mainly comprises the steps of receiving expected data, enabling the expected data to comprise event information and expected results, and enabling the expected results to correspond to events expressed by the event information; calculating the number of identical expected results corresponding to the same event according to the expected data; and enabling the expected results with the number larger than a threshold value to serve as the expected results corresponding to the event. Due to the fact that plenty of expected data reflecting individual preferences of users are collected, calculated and analyzed, common preferences of the users for the same event are obtained, and the obtained common preferences reflecting the individual preferences can be utilized to determine the individual preferences of some user under the conditions that self-learning of behaviors of the user cannot be performed.

Owner:ALIBABA GRP HLDG LTD

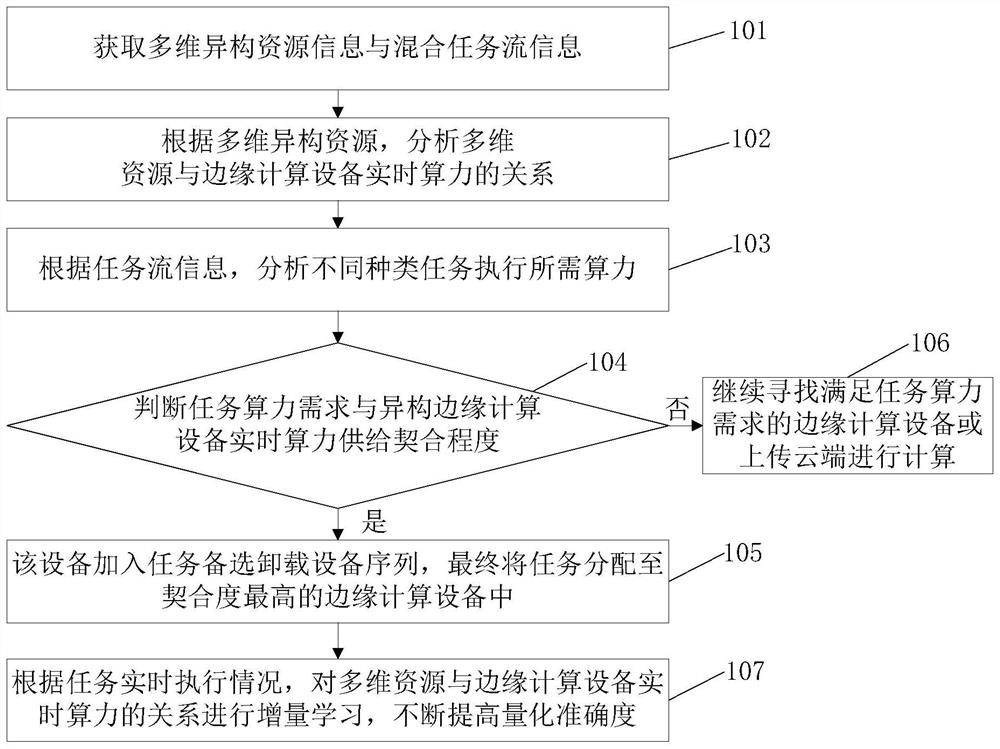

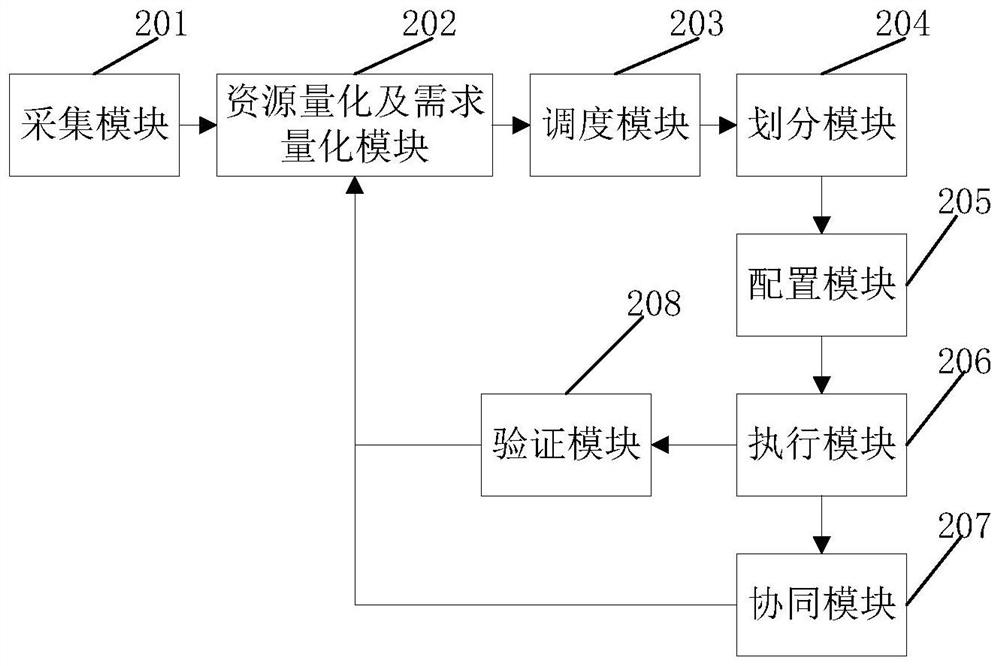

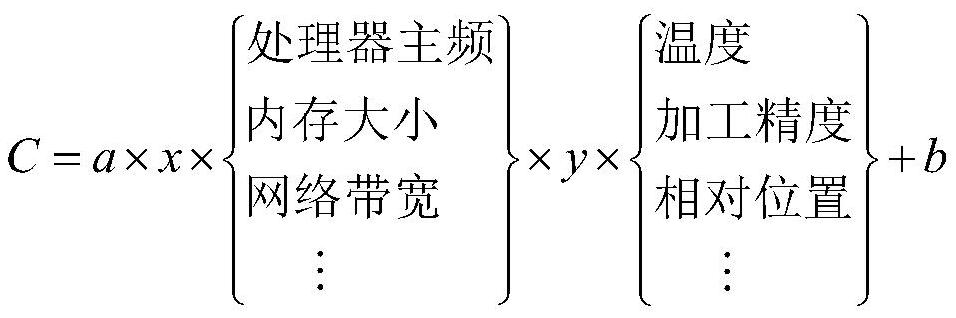

Multi-dimensional heterogeneous resource quantification method and device based on industrial edge computing system

PendingCN114064261AImprove computing resource utilizationReliable executionResource allocationNeural architecturesPersonalizationThe Internet

The invention belongs to the field of industrial internet, and particularly relates to a multi-dimensional heterogeneous resource quantification method and device based on an industrial edge computing system. The method comprises the following steps: acquiring multi-dimensional heterogeneous resource information and mixed task flow information; according to the multi-dimensional heterogeneous resources, analyzing the relationship between the multi-dimensional resources and the real-time computing power of edge computing equipment; according to the task flow information, analyzing computing power required by execution of different types of tasks; determining the matching degree of the task computing power demand and the real-time computing power supply of the heterogeneous edge computing device, determining whether the real-time computing power supply meets the task computing power demand or not, carrying out incremental learning on the relation between the multi-dimensional resources and the real-time computing power of the edge computing device. The quantization accuracy is continuously improved. According to the method, the overall utilization rate of scattered heterogeneous resources in the industrial edge computing system is improved, the network communication load and private data interaction are reduced, and the method plays a supporting role in industrial internet personalized flexible production.

Owner:SHENYANG INST OF AUTOMATION - CHINESE ACAD OF SCI

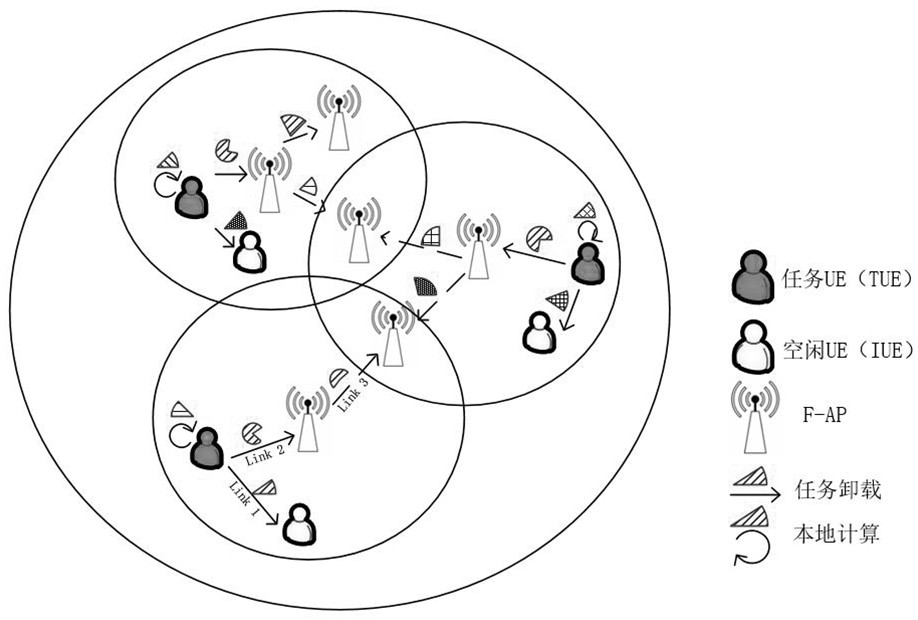

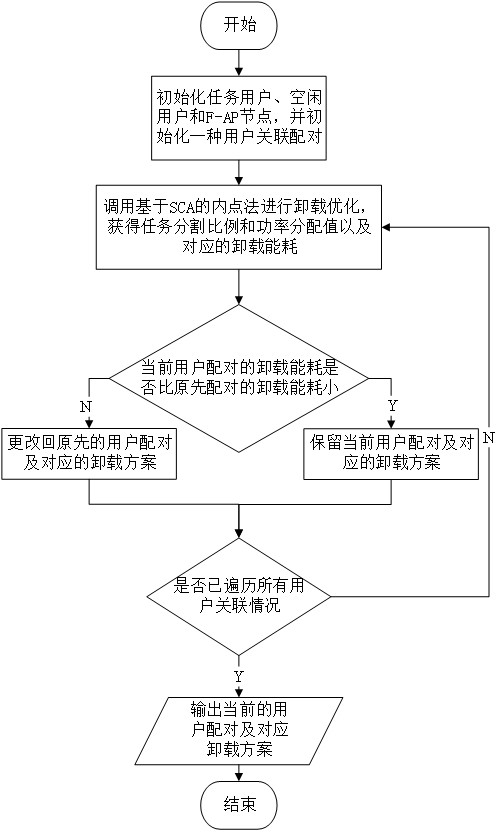

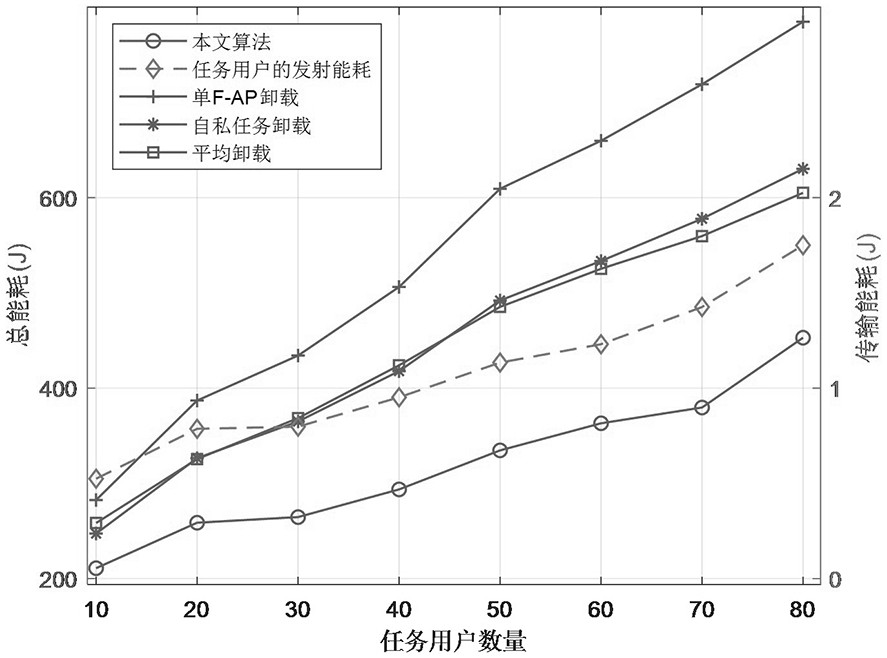

Method for cooperative computing offloading in F-RAN architecture

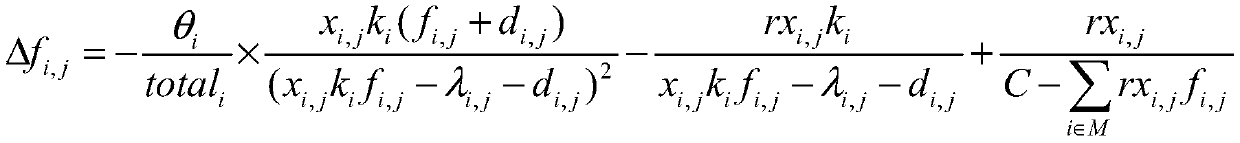

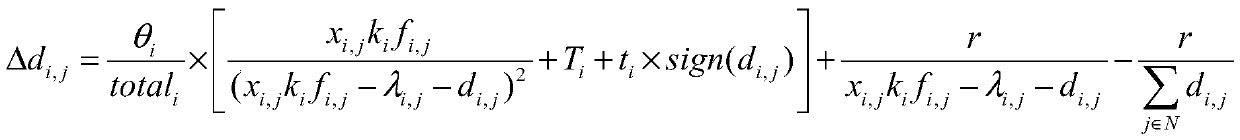

PendingCN114189521AReduce offload latencyImprove computing resource utilizationTransmissionComplex mathematical operationsTime delaysInterior point method

The invention provides a method for cooperative computing unloading in an F-RAN architecture, and provides an F-RAN unloading scheme based on NOMA and an unloading method based on SCA, an interior point method and a coalition game so as to efficiently utilize computing resources of edge nodes in a network. According to the unloading scheme, a task user can unload a computing task to a main F-AP associated with the task user and an idle user with idle computing resources based on NOMA, and the main F-AP further unloads the computing task to other auxiliary F-APs based on a cooperative communication function between the F-APs. Meanwhile, under the condition of considering user tolerance time delay, a layered iterative algorithm is provided, the inner layer of the algorithm is formed by combining SCA and an interior point method to obtain an unloading scheme after user association is determined, the outer layer of the algorithm is user association optimization based on the coalition game theory, the total energy consumption of the system is minimized, and the system reliability is improved. Compared with a common unloading scheme and algorithm in the prior art, the method has the advantage that the total energy consumption of the system is remarkably improved.

Owner:FUZHOU UNIV

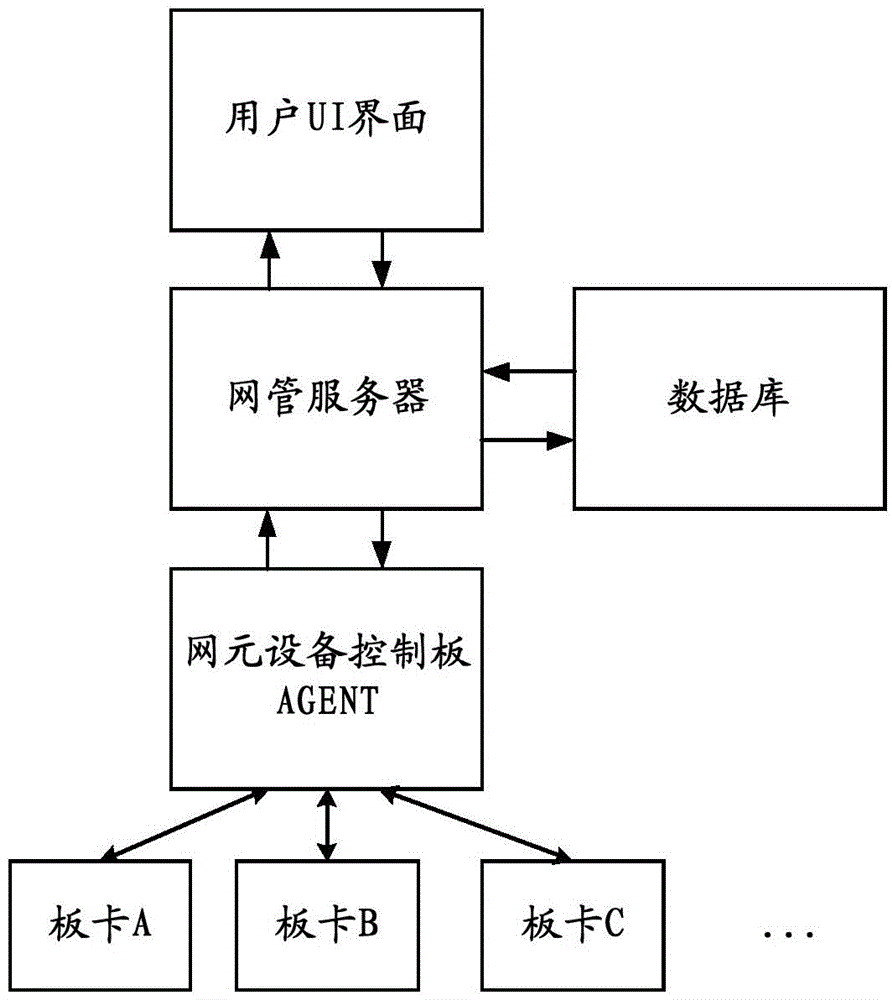

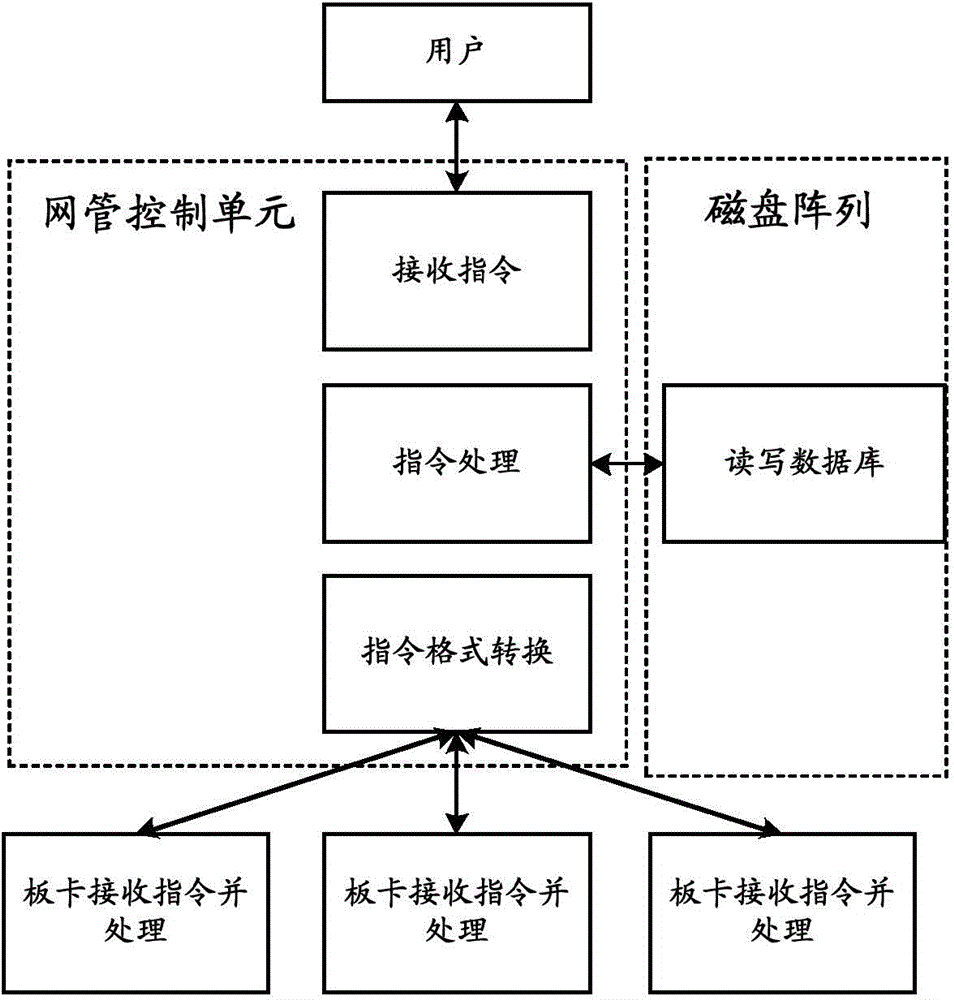

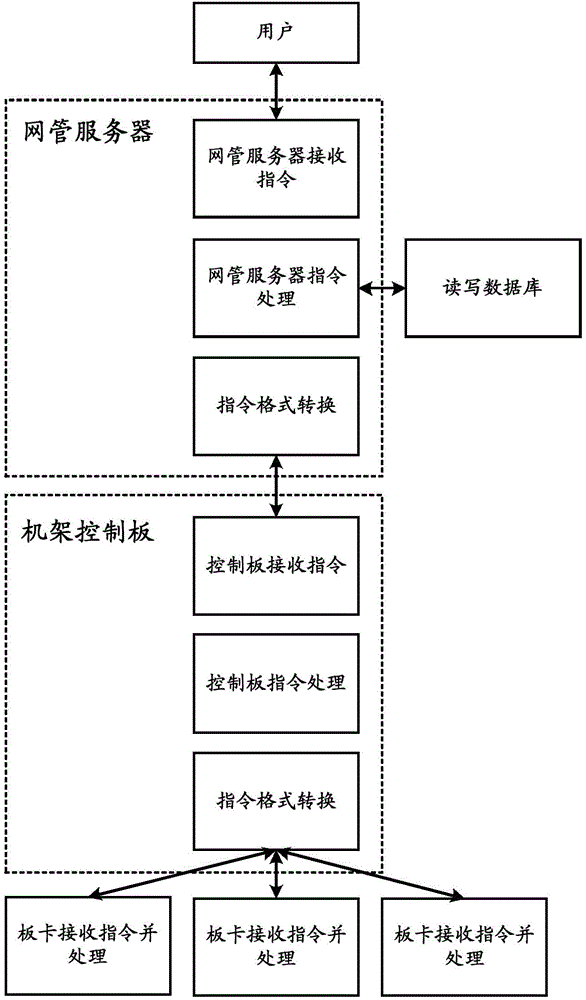

Frame and communication method

InactiveCN105721956AImprove computing resource utilizationAchieve direct controlFibre transmissionData switching networksMaintainabilityNetwork management

The invention provides a frame and a communication method, and relates to the technical field of optical communication. The frame includes a frame housing, and also includes a network management control unit, a disk array and a data processing unit, wherein the network management control unit, the disk array and the data processing unit are arranged in a first frame housing, and are connected with external equipment through a backboard interface on the first frame housing; the network management control unit is used for processing a user command and performing format conversion on the user command, and then sending the user command to business board cards, the data processing unit and the disk array, and sending the business state fed back by the business board cards to the user; the data processing unit is used for processing the data in the business board cards, the network management control unit and the disk array, and reporting the processing result of the data processing unit in real time; and the disk array is used for storing the data in the network management control unit, the state information for each business board card, and the log information of the business board cards. For the frame, not only the reliability and maintainability of the frame is increased, but also the reaction capability and service life of the frame is improved.

Owner:ZTE CORP

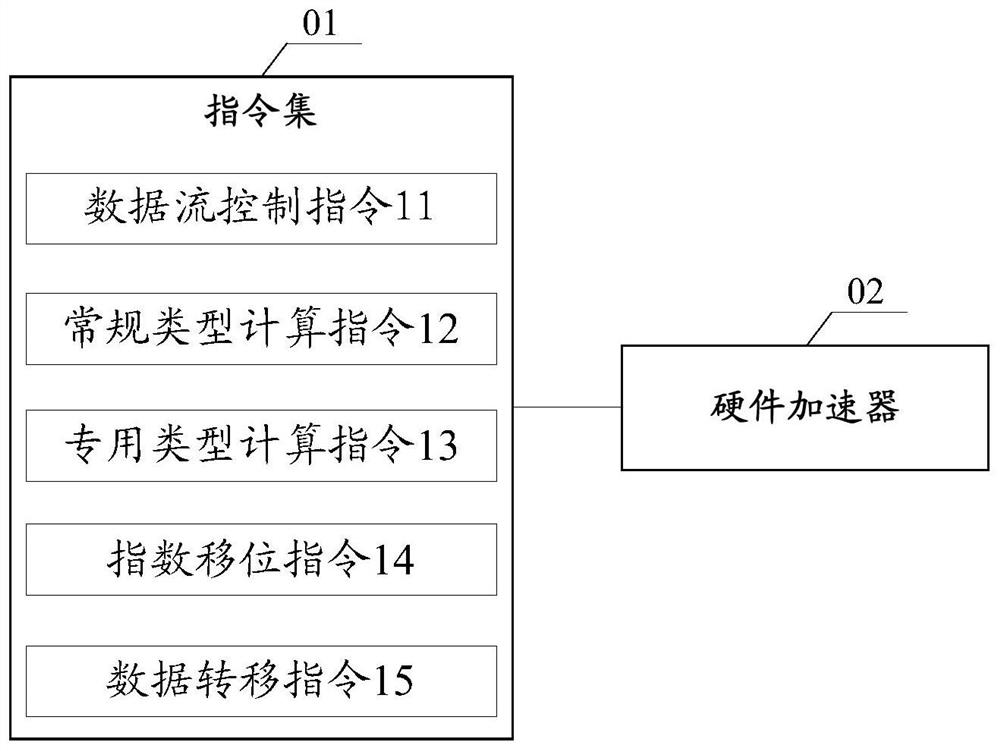

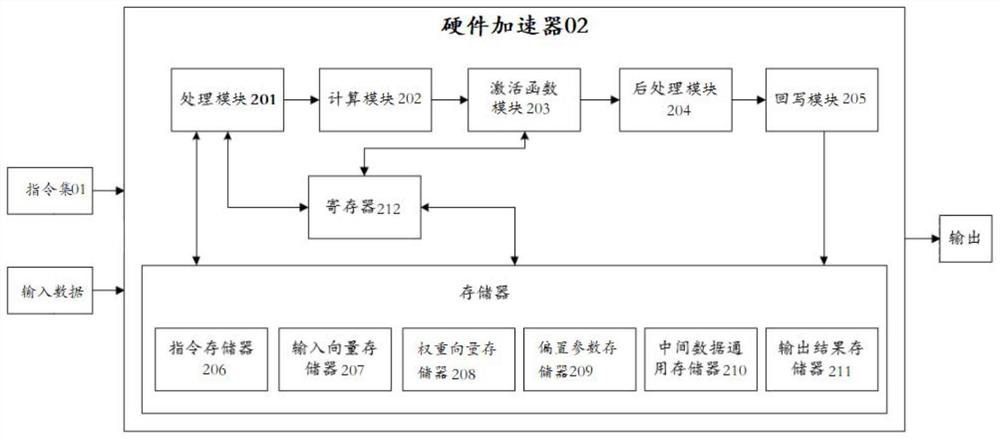

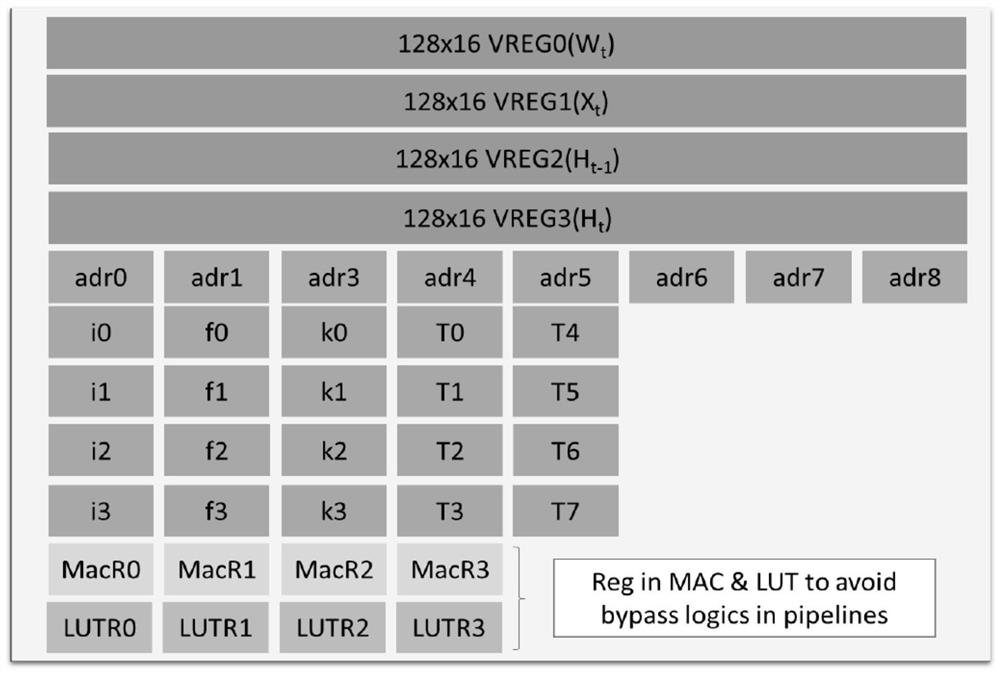

Hardware accelerator, data processing method, system-on-chip and medium

ActiveCN112784970AImprove computing resource utilizationAvoid dataOperational speed enhancementRegister arrangementsComputer hardwareComplete data

The invention discloses a hardware accelerator, a data processing method, a system-on-chip and a medium. An instruction set processed by the hardware accelerator comprises a data flow control instruction used for executing data flow control; a conventional type calculation instruction used for executing conventional type calculation so as to complete conventional type calculation in a recurrent neural network; a special type calculation instruction used for executing special type calculation so as to complete the special type calculation in the recurrent neural network; an exponential shift instruction used for executing exponential shift so as to complete data normalization in the recurrent neural network calculation; a data transfer instruction used for executing data transfer so as to complete data transfer operation between different registers and data transfer operation between the registers and a memory during calculation of the recurrent neural network. According to the technical scheme, the calculation resource utilization rate of the hardware accelerator used for operating the recurrent neural network can be effectively improved, and conflicts in the aspects of data and resources can be effectively avoided.

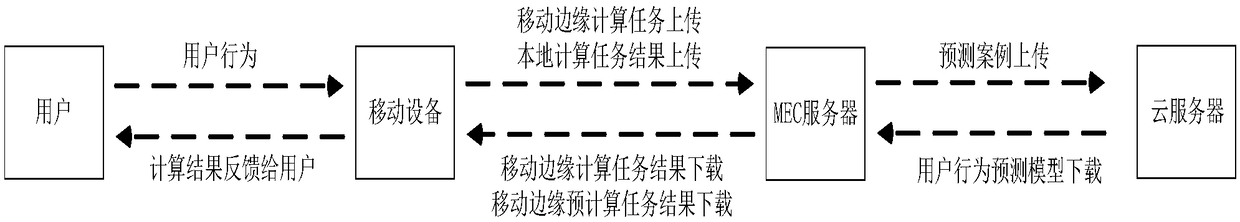

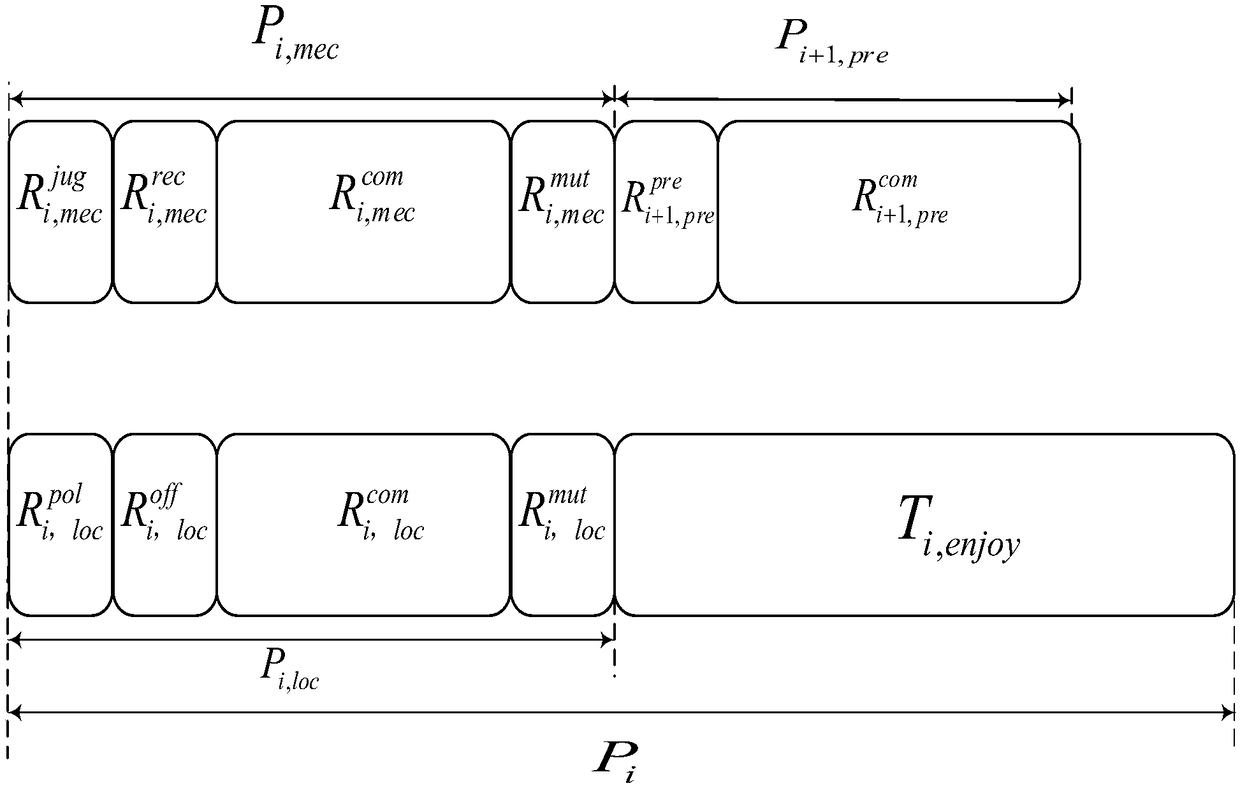

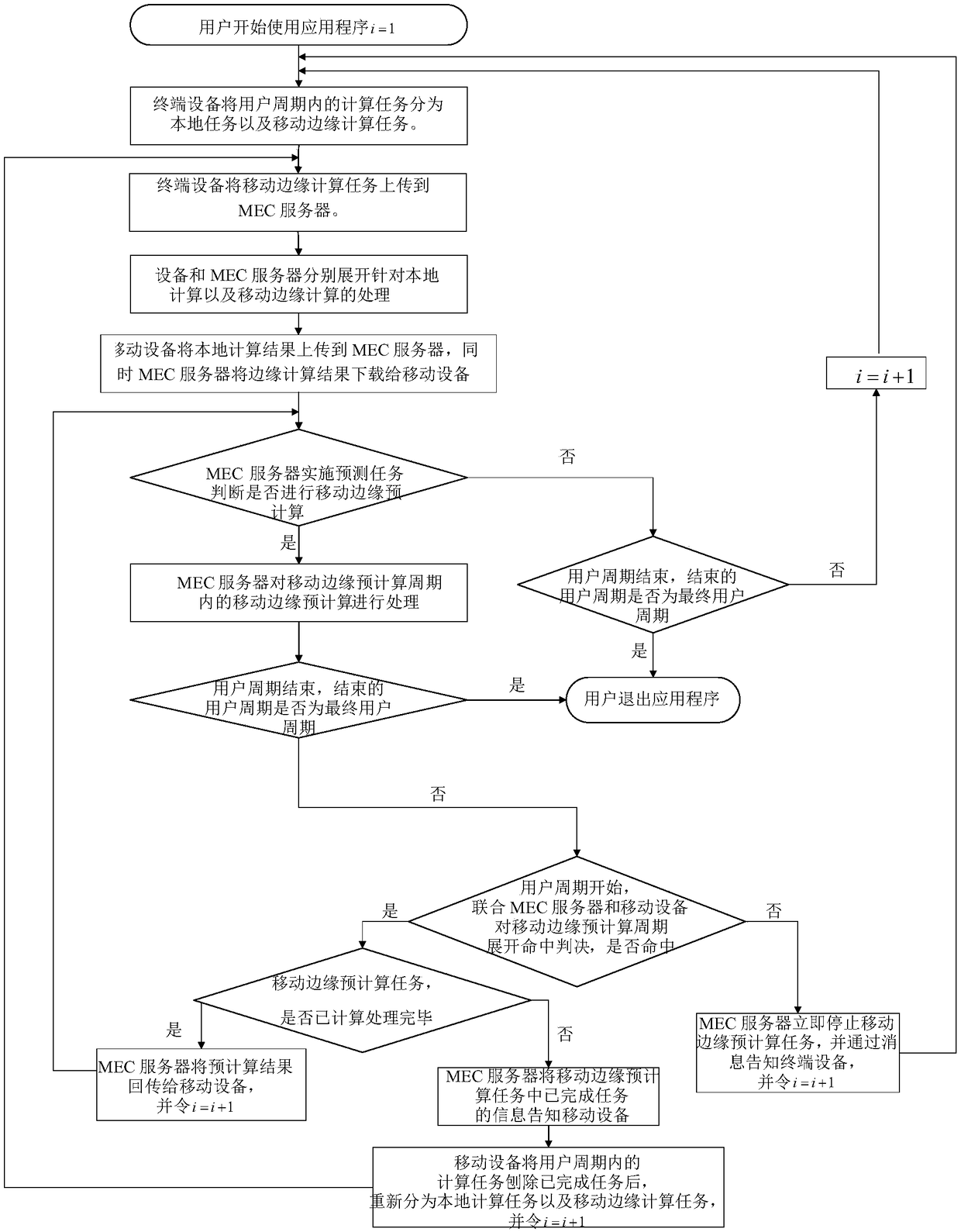

Moving edge pre-calculation method based on MEC

ActiveCN109189570ARespond quickly to computing requestsReduce computation wait timeResource allocationResource utilizationMobile device

The invention relates to a mobile edge pre-calculation method based on MEC, which utilizes a MEC server to carry out best effort to pre-calculate the calculation task Ri+1 in the next user cycle Pi+1in which the occurrence probability is the largest in the user cycle Pi. Compared with the traditional computing resource utilization strategy, this method can improve the utilization rate of networkcomputing resource, quickly respond to the user's computing request, reduce the user's computing waiting time, and solve the problem that the mobile devices with limited computing power can not meet the computing intensive and delay-sensitive application computing requirements.

Owner:JILIN UNIV

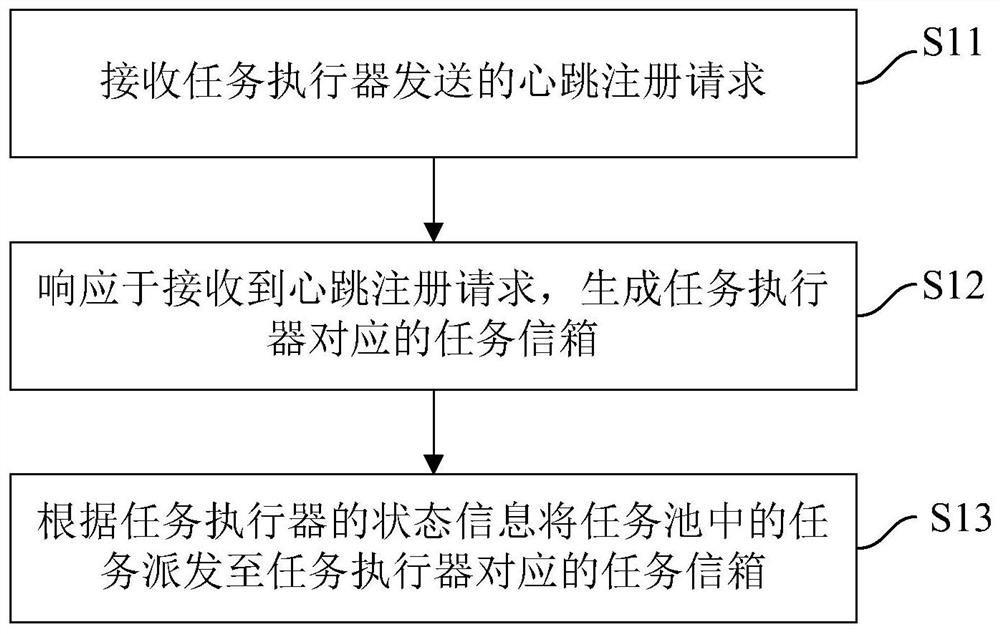

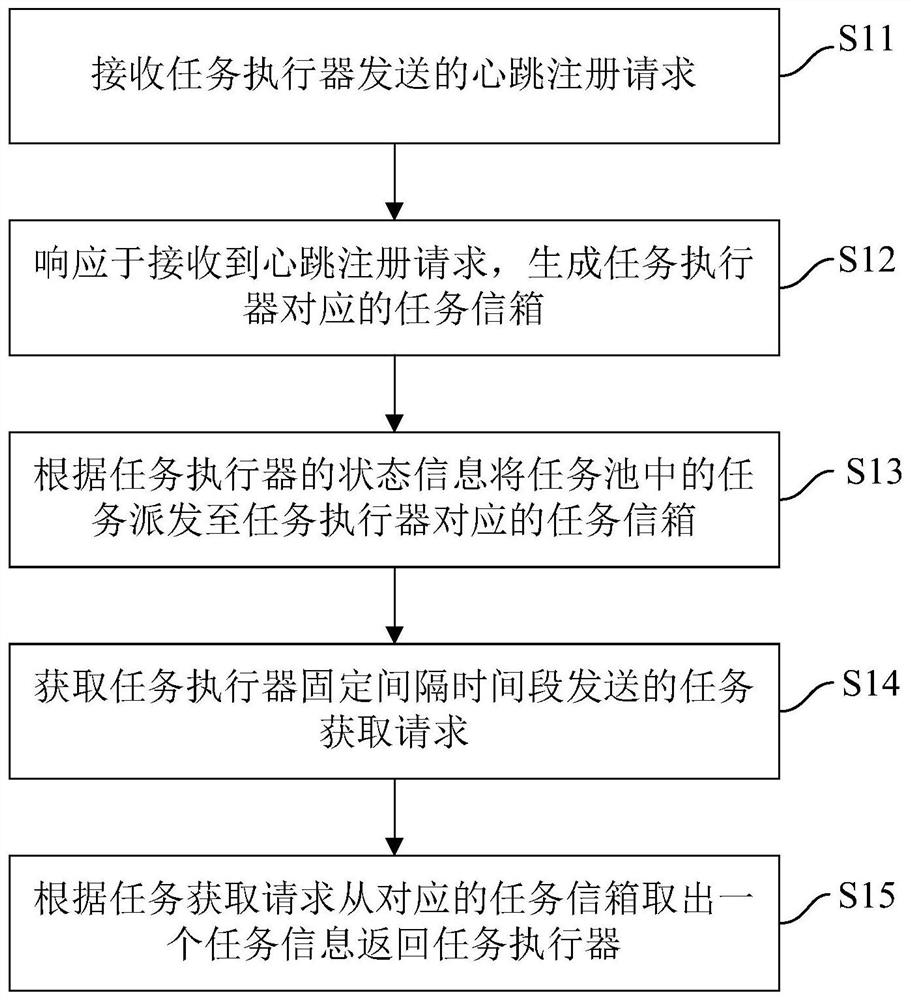

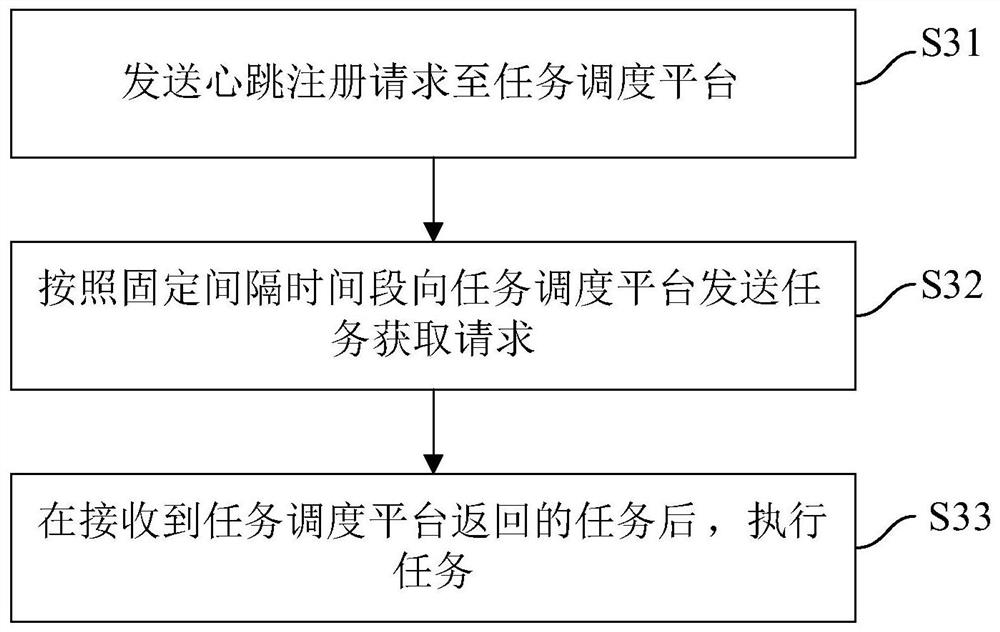

Distributed task scheduling method, task scheduling platform and task executor

PendingCN112685184AImprove computing resource utilizationResource allocationComputer architectureEngineering

The embodiment of the invention discloses a distributed task scheduling method, a task scheduling platform and a task actuator. The task scheduling platform communicates with the task executor. The task executor is arranged in a cloud server and / or edge equipment. The method comprises the following steps: receiving a heartbeat registration request sent by the task executor, and the heartbeat registration request comprises state information of the task executor; generating a task mailbox corresponding to the task executor in response to the received heartbeat registration request; and distributing the tasks in the task pool to a task mailbox corresponding to the task executor according to the state information of the task executor. Thus, the task executor can work on the server and the edge device at the same time, one machine can deploy a plurality of task executors, and the computing resource utilization rate of distributed task scheduling can be effectively increased.

Owner:GUANGZHOU XAIRCRAFT TECH CO LTD

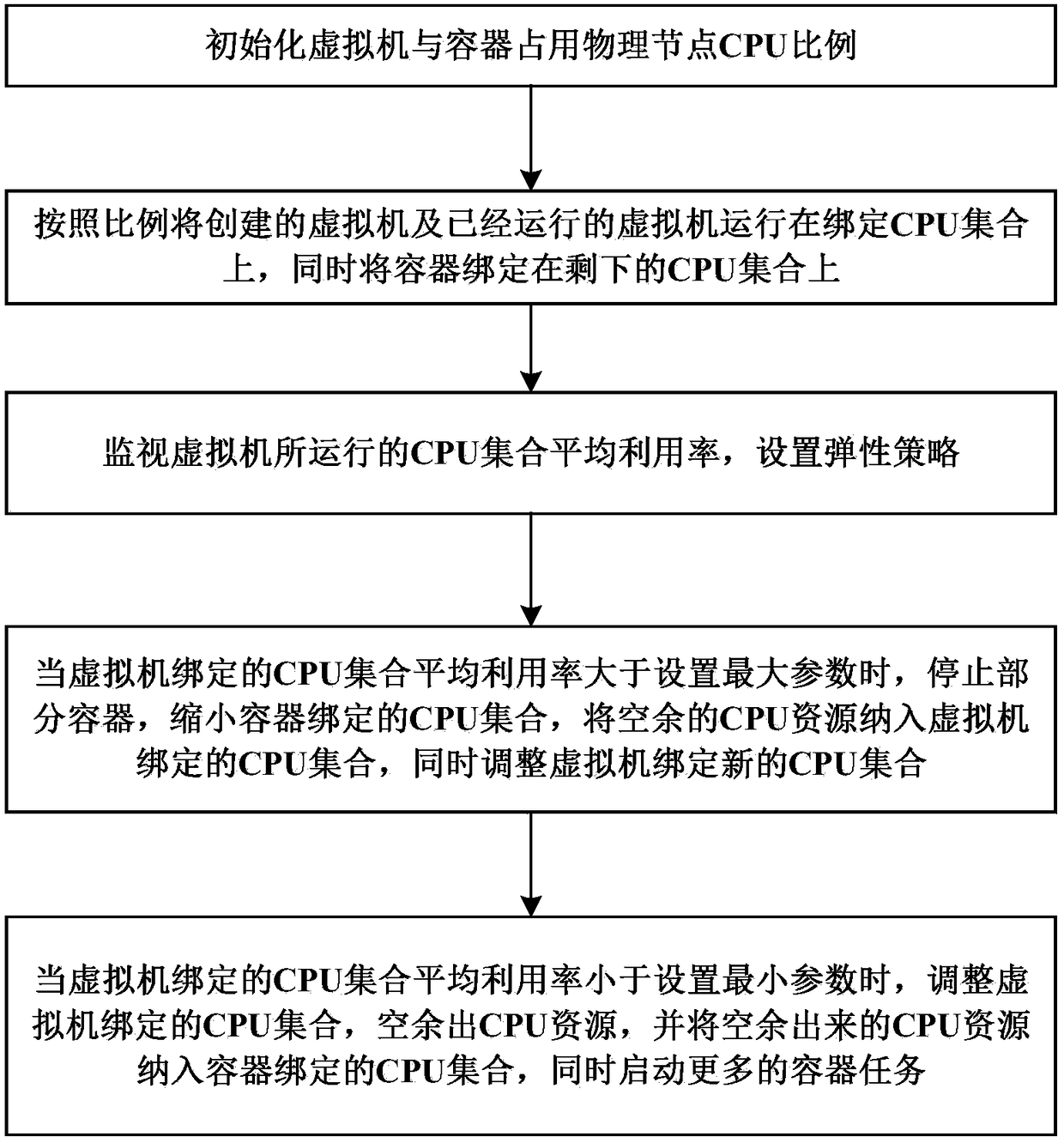

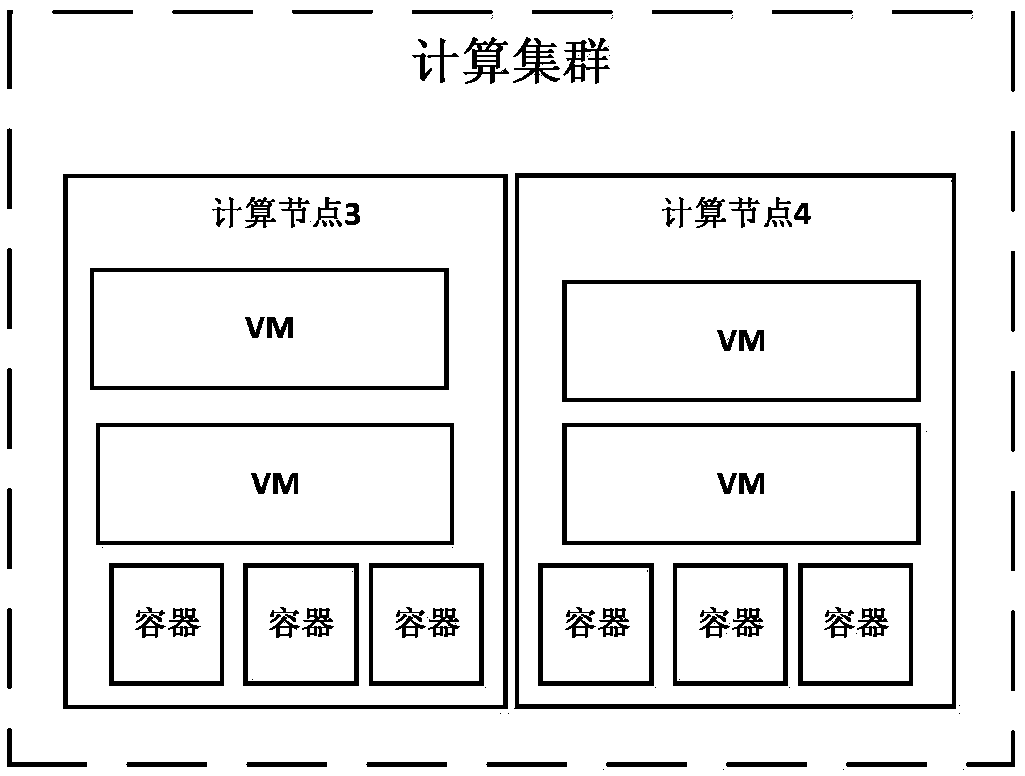

Virtual machine and container parallel scheduling method

ActiveCN108287748AImprove computing resource utilizationReduce resource consumptionResource allocationSoftware simulation/interpretation/emulationParallel schedulingVirtual machine

The invention relates to the technical field of cloud computation, in particular to a virtual machine and container parallel scheduling method. The method includes the steps of setting the CPU occupancy ratio and a flexible strategy of a virtual machine and a container, monitoring the CPU utilizing conditions of the virtual machine and the container, and adjusting the CPU ratio of the virtual machine or the container when the set strategy is triggered. By means of the method, the utilization rate of computation resources, the security and the computation performance of the container are improved; the method can be used for computation resource management of the virtual machine and the container.

Owner:G CLOUD TECH

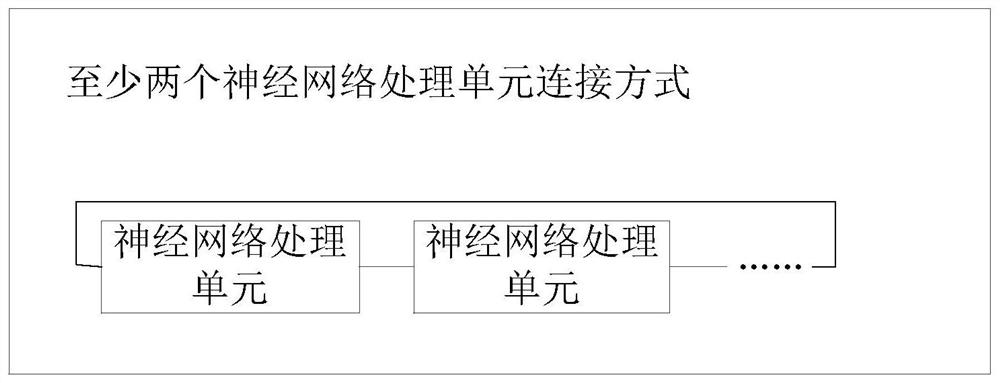

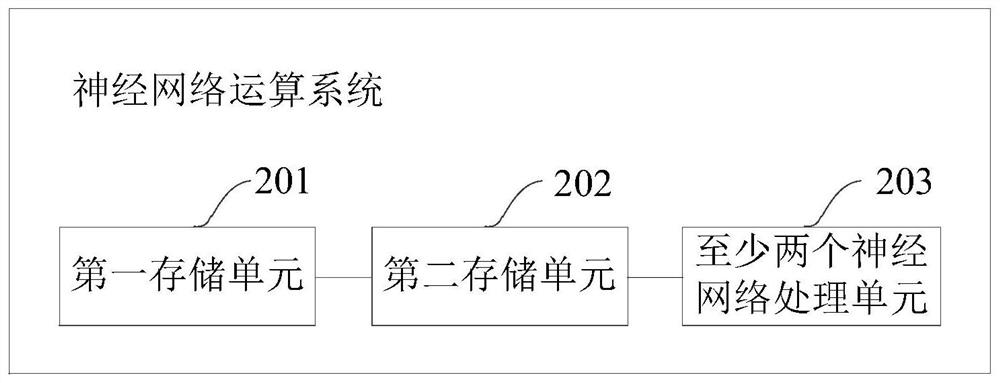

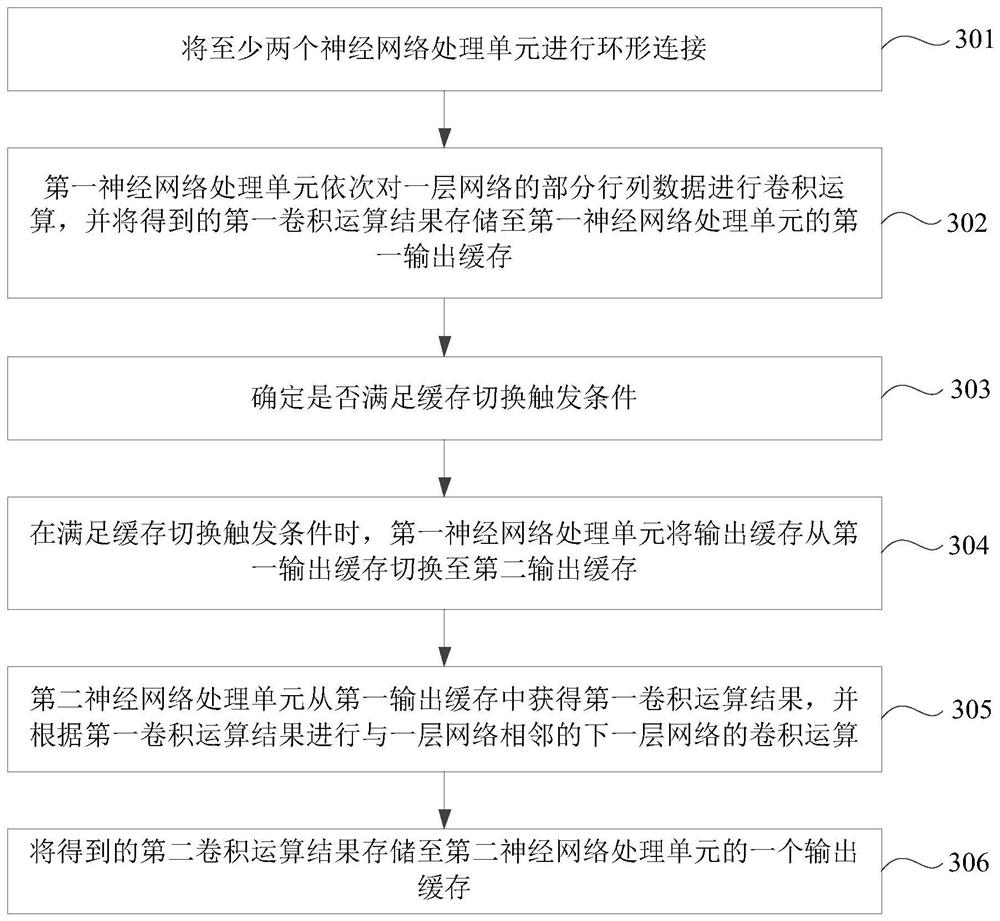

Neural network operation system, method, device and storage medium

ActiveCN112860597AReduce handlingImprove computing resource utilizationMemory architecture accessing/allocationPhysical realisationNetwork processing unitEngineering

The invention provides a neural network operation system, a method, a device and a storage medium, which are used for reducing data migration in a neural network calculation process and improving the operation efficiency of a neural network processor. The neural network operation system comprises at least two neural network processing units, a first storage unit and a second storage unit, and the first storage unit is used for storing input data and output data of a neural network and operation parameters required by operation of each layer of neural network; the second storage unit is used for providing input caches and output caches for each neural network unit in the at least two neural network processing units, and each neural network processing unit comprises two input caches and two output caches, two output caches of one neural network processing unit in two adjacent neural network processing units are two input caches of the other neural network processing unit; the at least two neural network processing units are annularly connected.

Owner:GREE ELECTRIC APPLIANCES INC

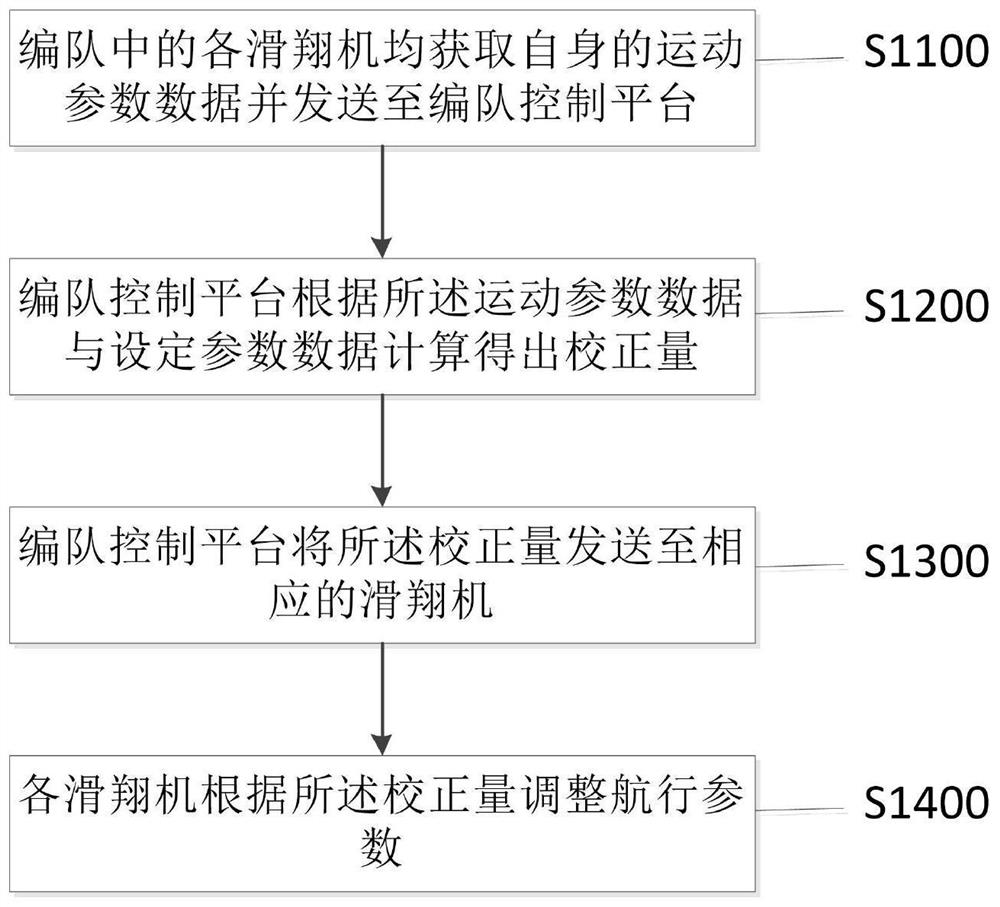

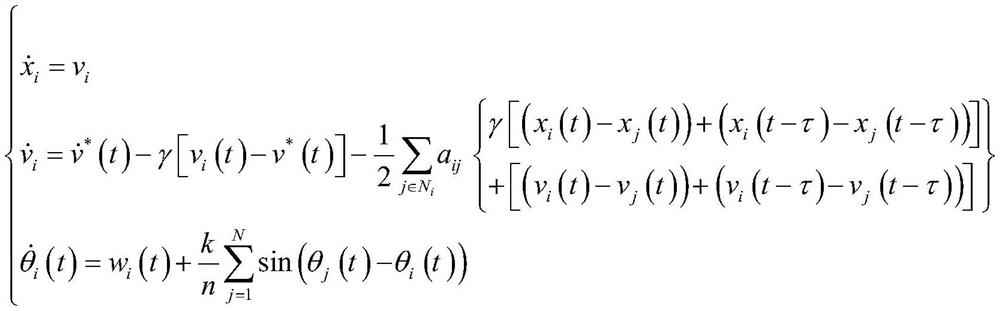

Cooperative control method for underwater glider formation

ActiveCN112947503ASave computing resourcesImprove computing resource utilizationTotal factory controlAltitude or depth controlClassical mechanicsMotion parameter

The invention discloses a cooperative control method for an underwater glider formation. The cooperative control method comprises the following steps that step 1, each underwater glider in the formation obtains motion parameter data of the underwater glider and sends the motion parameter data to a formation control platform; 2, after the formation control platform receives the motion parameter data, a correction value of each underwater glider is calculated according to the motion parameter data and set parameter data; 3, a formation control platform sends the correction value to the corresponding underwater glider; and 4, each underwater glider receives the correction value and then adjusts navigation parameters according to the correction value. The formation control platform calculates the correction value of each underwater glider, so the operation resources of the underwater gliders are saved, and improvement of the utilization rate of the operation resources of the underwater gliders is facilitated; The formation control platform can master the motion parameter data of each member of the formation, and can know the position of each underwater glider in the formation in a more detailed manner, and thereby the formation pattern can be more accurately controlled.

Owner:THE PLA NAVY SUBMARINE INST

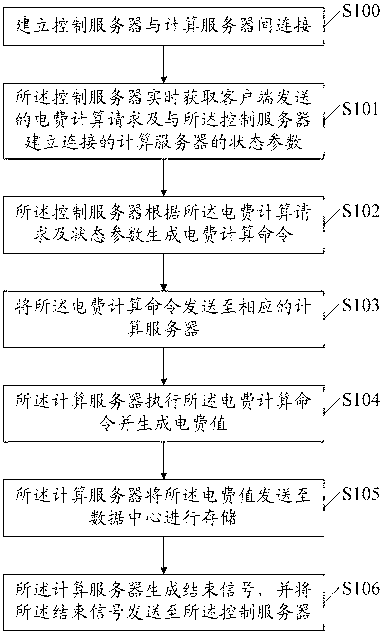

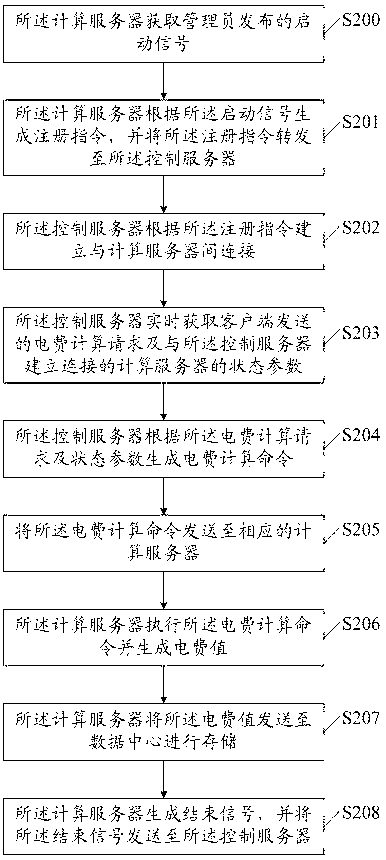

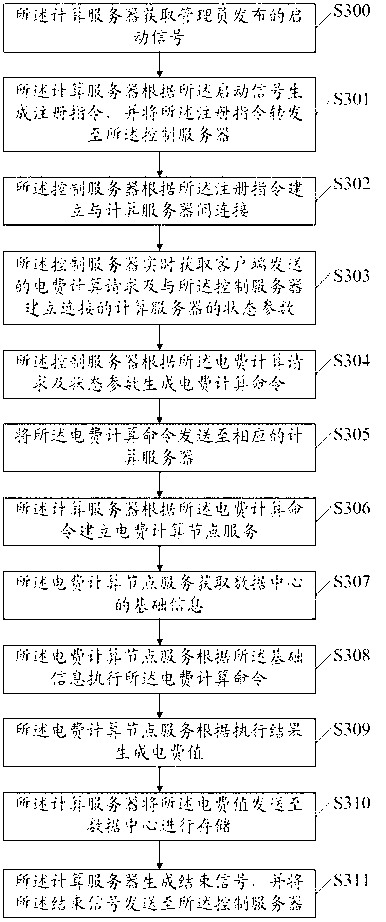

Electric charge calculating method, control server, calculating server and system

ActiveCN102843354AImprove computing resource utilizationRealize elastic expansionTransmissionReal-time computingUtilization rate

The invention discloses an electric charge calculating method, which comprises the following steps: the connection between a control server and a calculating server is set up; the control server obtains an electric charge calculating request sent by a client and a state parameter of the calculating server connected with the control server in real time; the control server generates an electric charge calculating order according to the electric charge calculating request and a state parameter, sends the electric charge calculating order to a corresponding calculating server; the calculating server performs the electric charge calculating order and generates an electric charge value; the calculating server sends the electric charge value to a data center to store; the calculating server generates an ending signal and sends to the control server. The invention further discloses a control server, a calculating server and an electric charge calculating system. By adopting the electric charge calculating method, the number of the calculating server can be dynamically adjusted, the pressure is spread on a plurality of calculating servers worked at the same time; and therefore, the utilization rate of the calculating resource is improved, and the elastic expansion of the resource is realized.

Owner:GUANGDONG POWER GRID CO LTD INFORMATION CENT +1

A pre-allocation method of edge domain resources in edge computing scenarios

ActiveCN110177055BEfficient configurationIncrease profitData switching networksEdge computingDistributed computing

The invention discloses a method for pre-allocating edge domain resources in an edge computing scenario, which includes the following steps: predicting the arrival rate and measuring the forwarding delay, and determining the service pre-cache value in the first-level edge domain according to the arrival rate and the weight of the service type, and determine the allocation ratio of each service according to the first delay; in the second-level edge domain, determine the type of service pre-caching according to the second delay, and obtain the initial cache solution through the interior point method; by randomly selecting the service cache type A new resource caching scheme is obtained by means of a new resource caching scheme, and the scheme with a smaller average delay is selected from the initial caching scheme and the new resource caching scheme as the final pre-allocation scheme, and then the pre-allocation is completed. The present invention uses statistical data to estimate the needs of users in the next period, and pre-allocates service types and quantities to edge servers according to the estimated data, so that the present invention can perform more efficient resource allocation and improve resource utilization rate and reduce application latency.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com