Matrix multiplication accelerating method for CPU+DSP (Central Processing Unit + Digital Signal Processor) heterogeneous system

A heterogeneous system and matrix multiplication technology, applied in the field of matrix multiplication acceleration, can solve the problem that the heterogeneous system matrix multiplication method cannot meet the design goals of the CPU+DSP heterogeneous computing system

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

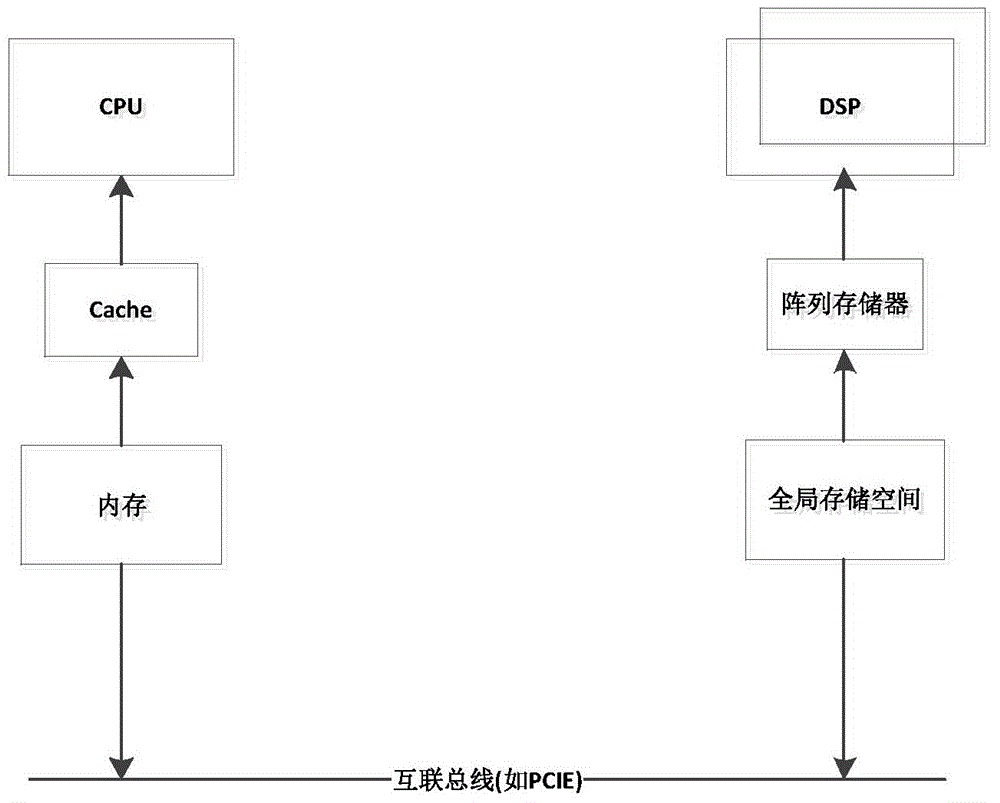

[0077] figure 1 It is a schematic diagram of the architecture of a heterogeneous computing system composed of the main processor CPU and the accelerator DSP based on the PCIE communication mode, in which the main processor end has memory and Cache, and the accelerator end has global storage space and array memory; the main processor and accelerator Communication and data transmission can only be carried out through the PCIE bus.

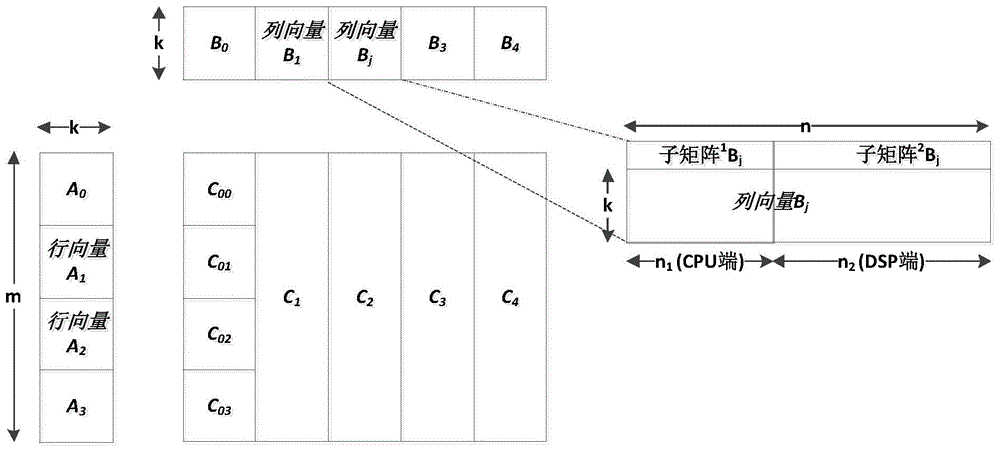

[0078] figure 2 It is a schematic diagram of the division and merging of matrix data. In the figure, the matrix A of M*K is divided into the block matrix (row vector) A of m*K i , ( ); K*N matrix B is divided into K*n block matrix (column vector) B j , ( ), and B j is divided into 1 B j and 2 B j Two submatrices, where, 1 B j The calculation is completed on the CPU side, and at the same time, 2 B j The calculation is done on the DSP side.

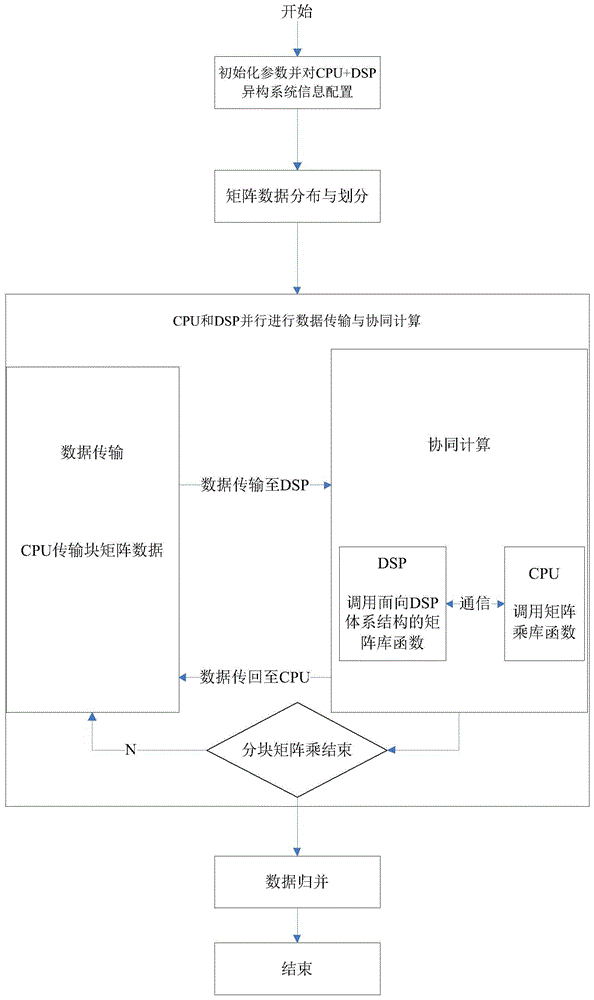

[0079] Concrete implementation steps of the present invention are as follows:

[0080] The fi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com