Patents

Literature

848 results about "Scheduling instructions" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computer science, instruction scheduling is a compiler optimization used to improve instruction-level parallelism, which improves performance on machines with instruction pipelines. Put more simply, without changing the meaning of the code, it tries to. Avoid pipeline stalls by rearranging the order of instructions.

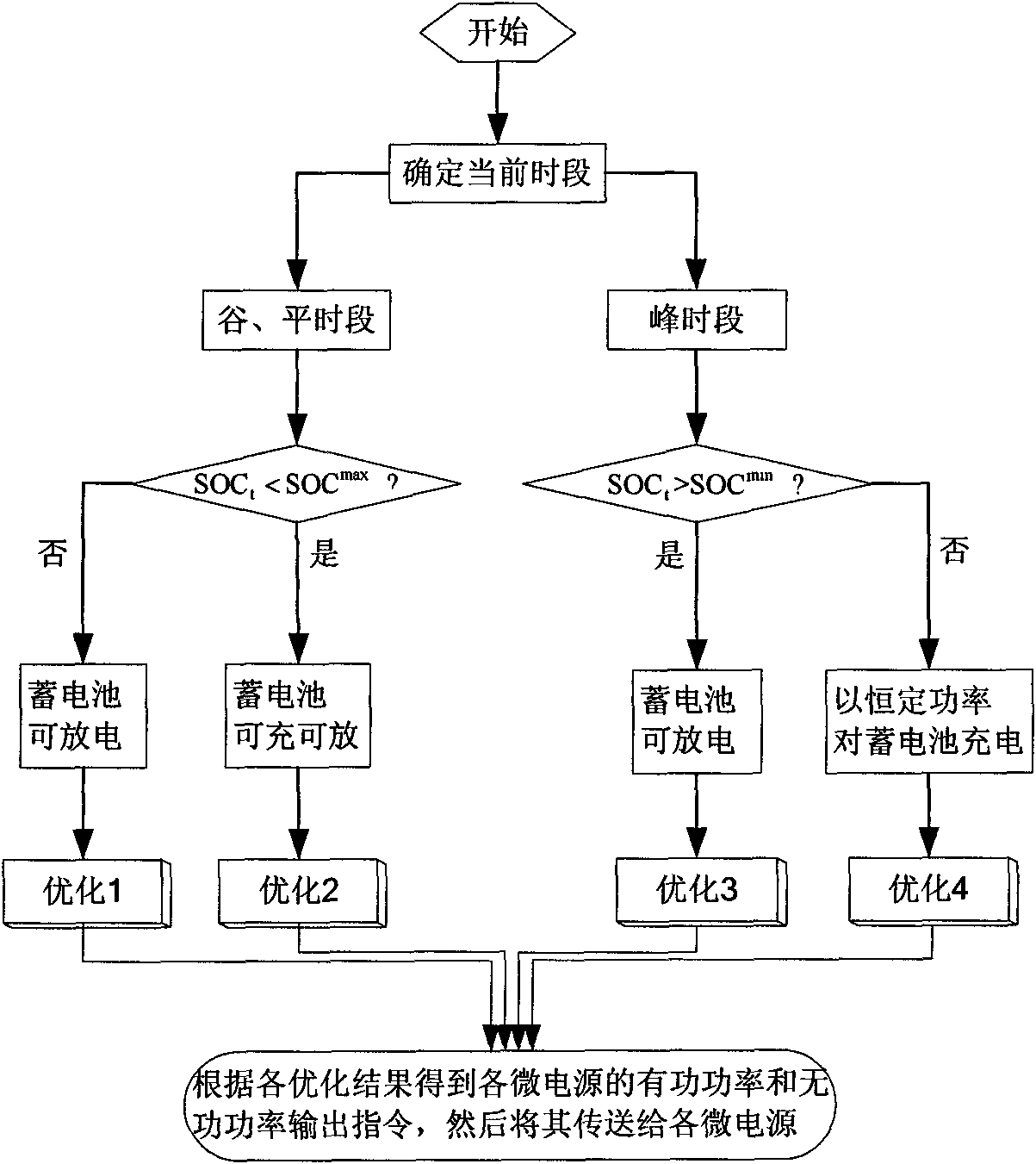

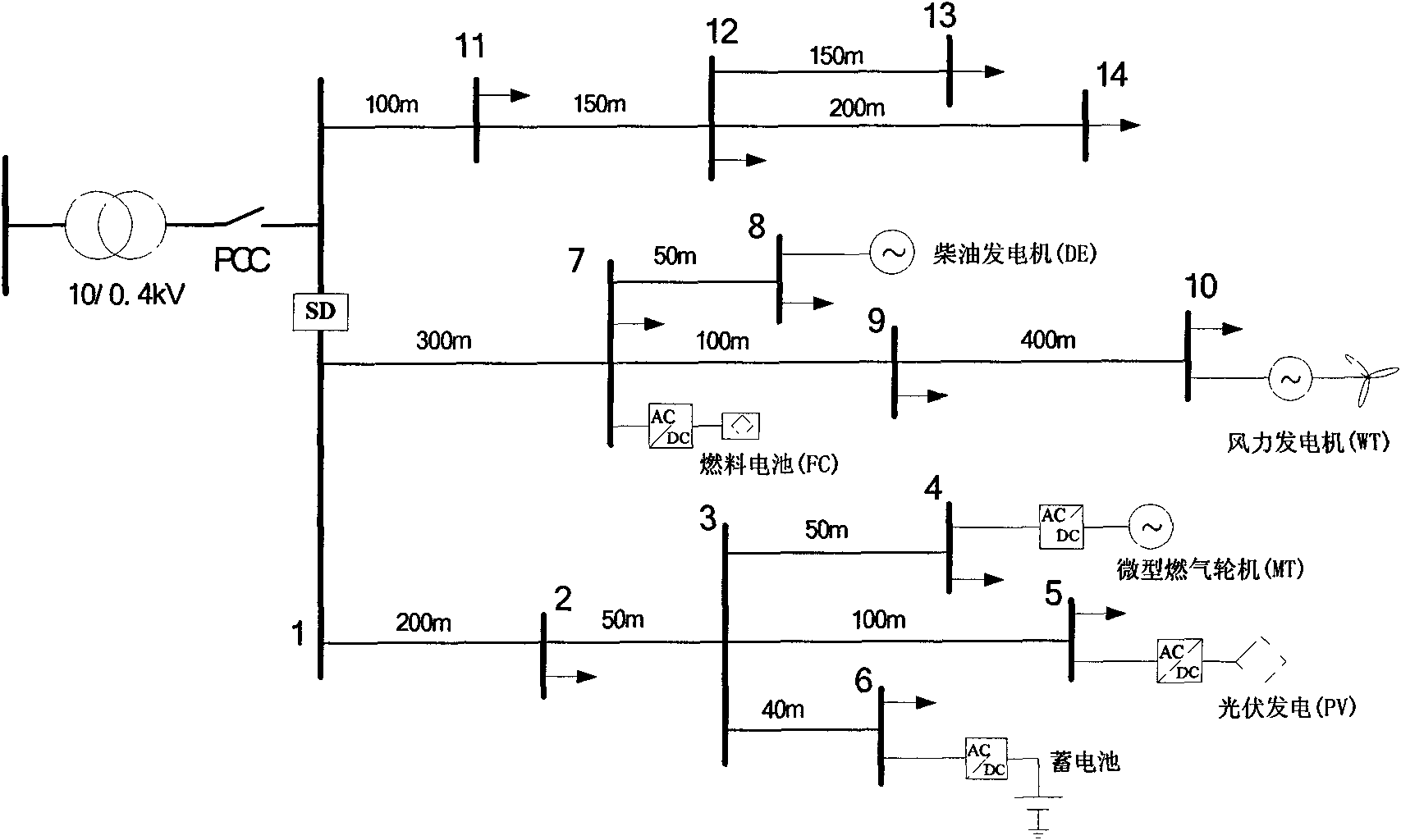

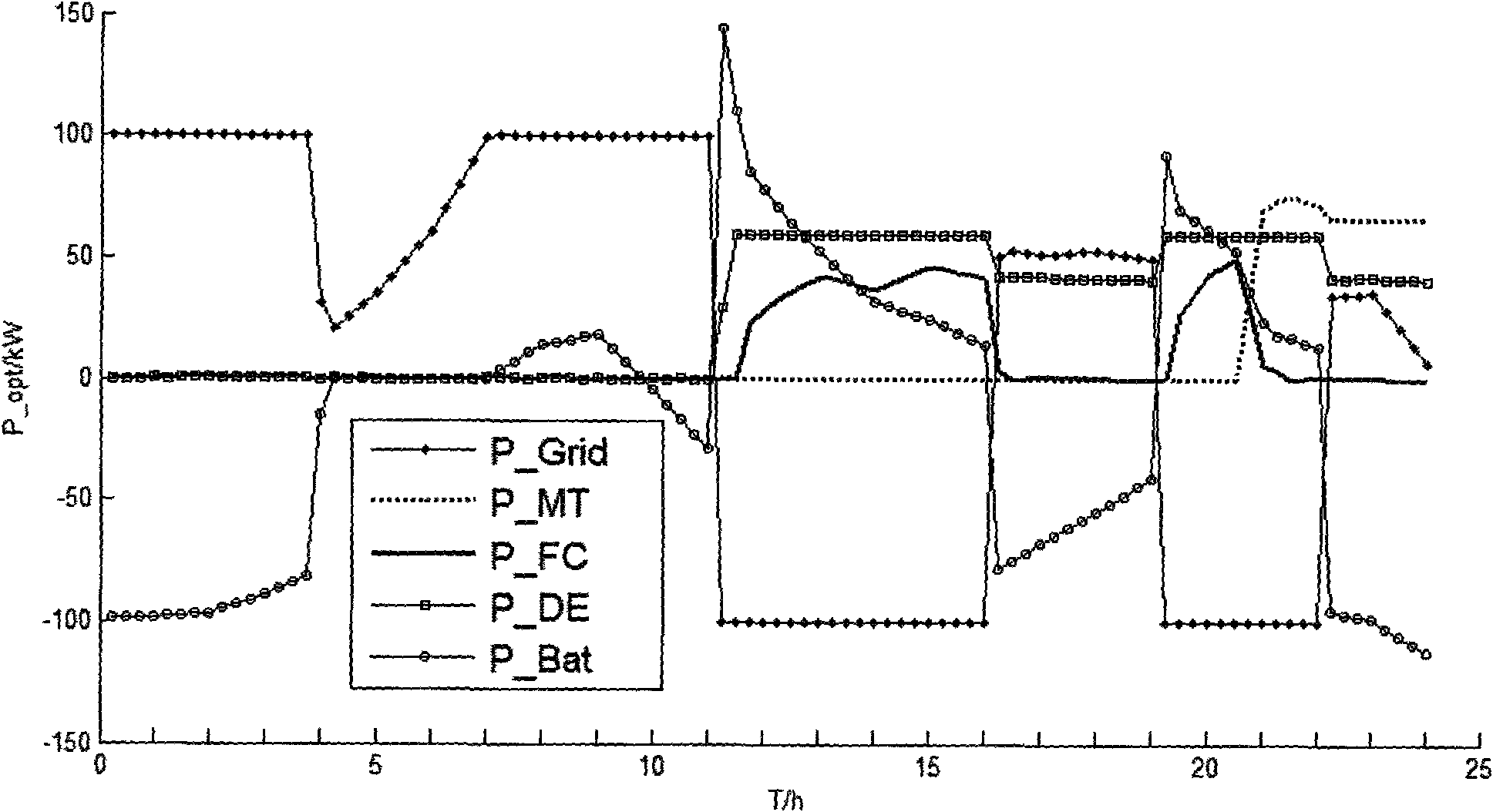

Microgrid real-time energy optimizing and scheduling method in parallel running mode

ActiveCN102104251AImprove operational efficiencyContribute to "shaving peaks and filling valleys"Energy industryAc network load balancingMicrogridScheduling instructions

The invention discloses a microgrid real-time energy optimizing and scheduling method in a parallel running mode. The method comprises the following steps of: firstly, dividing 24 hours in a day into a peak period of time, a general period of time and a trough period of time according to the load condition of a large power grid; and then, monitoring the working state of an accumulator in a microgrid in real time in the real-time process of the microgrid and adopting different energy optimizing strategies according to different periods of time and different working states of the accumulator soas to confirm the active power output of various schedulable micro power supplies, the charging and discharging power of the accumulator and scheduling instructions of the active power interactive with the power grid and the passive power of a passive adjustable power supply in the microgrid. The microgrid suitable for the invention comprises a reproducible energy generator, the schedulable micropower supplies and the accumulator. The invention not only can improve the economy and the reliability of the microgrid, but also can help the great power grid to cut peaks and fill troughs and is favorable to prolonging the service life of the accumulator.

Owner:ZHEJIANG UNIV

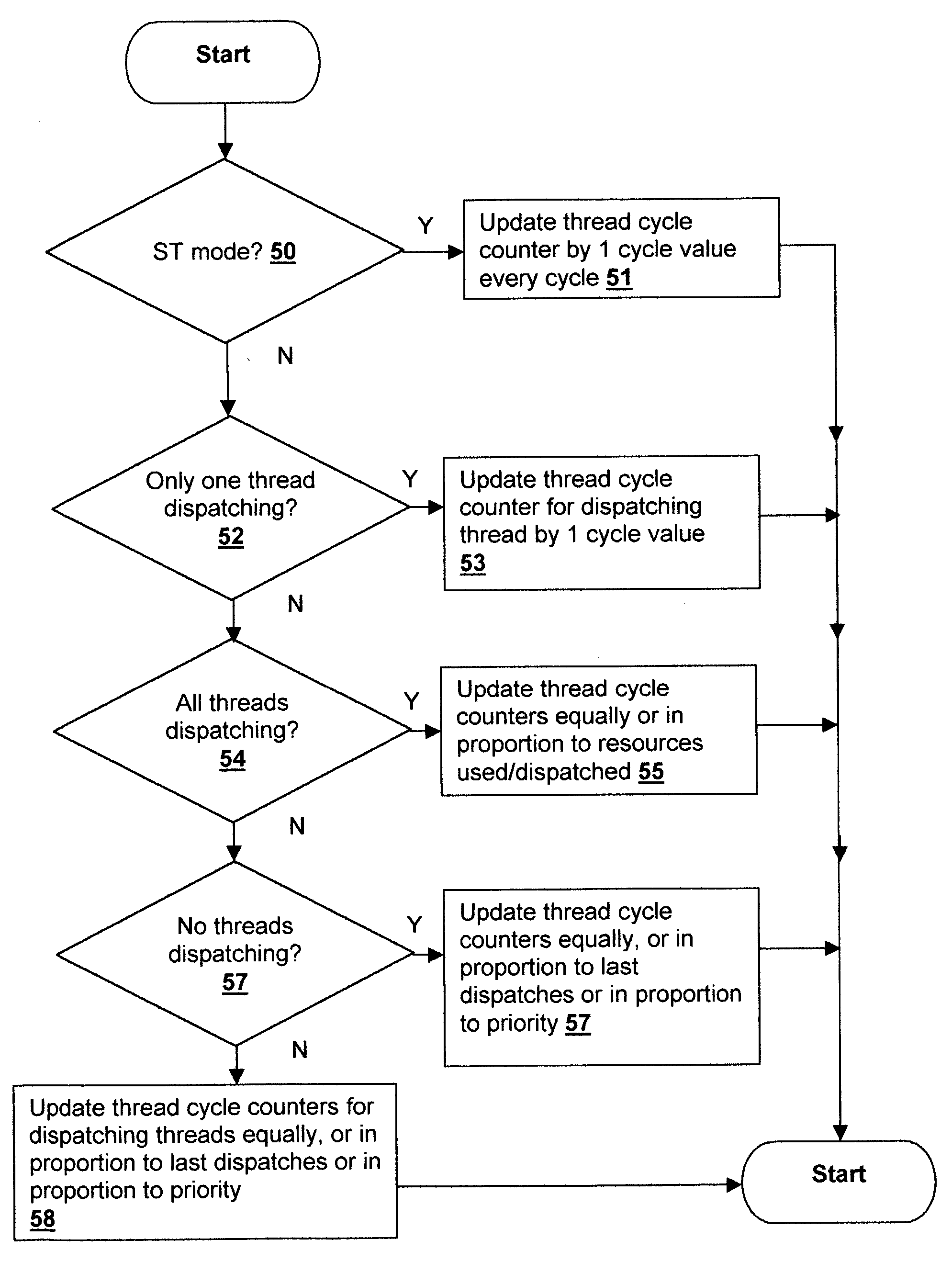

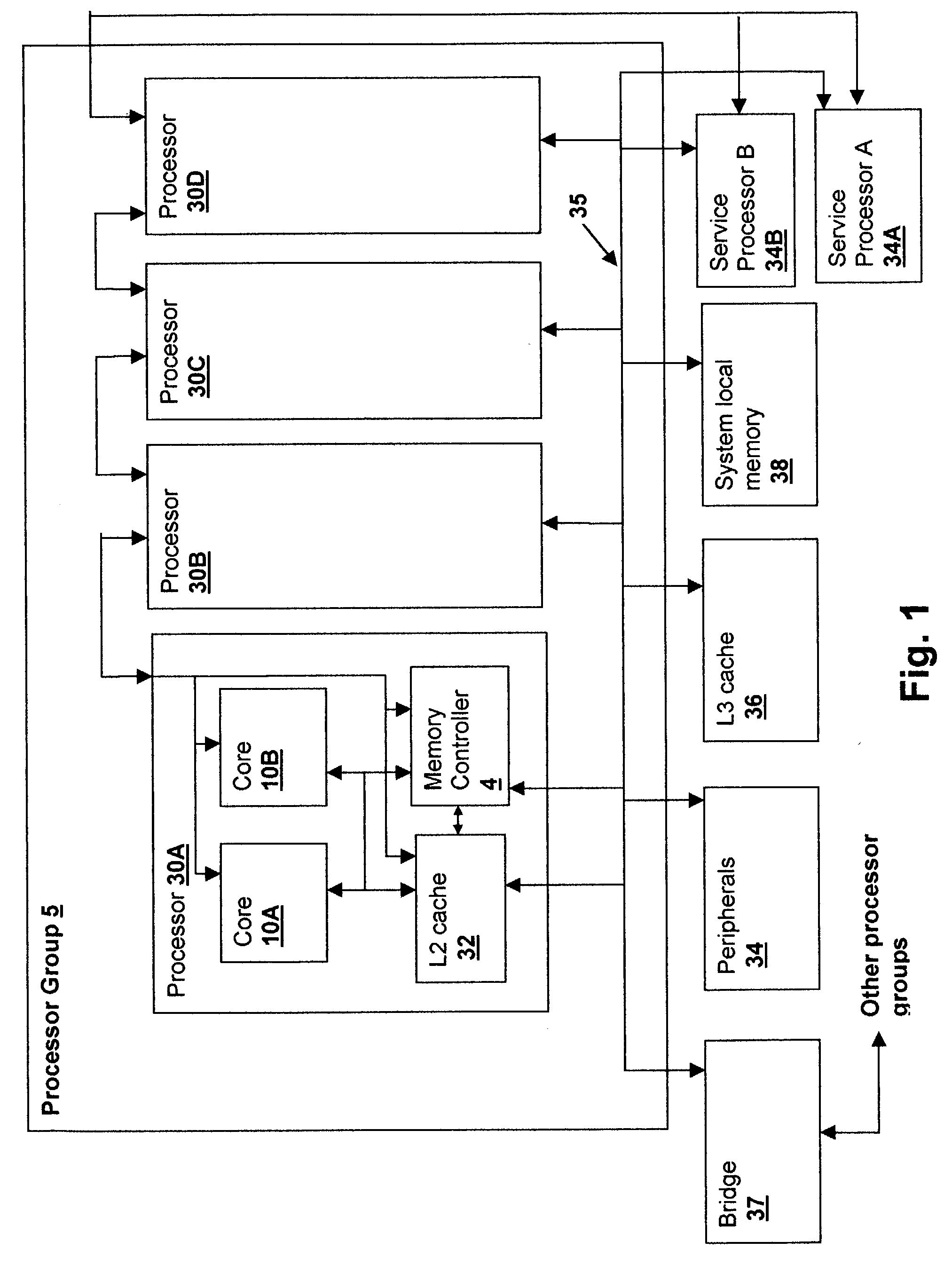

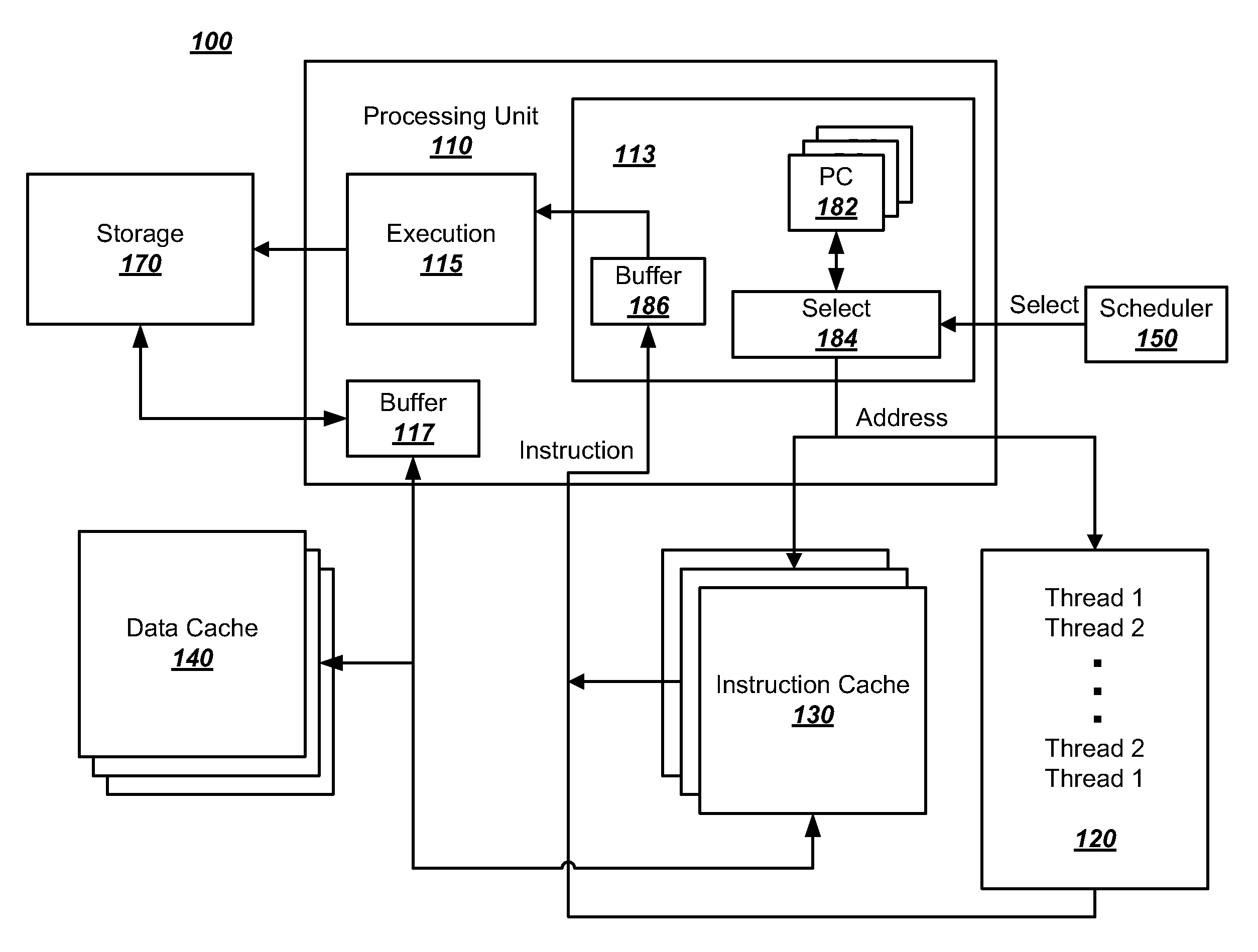

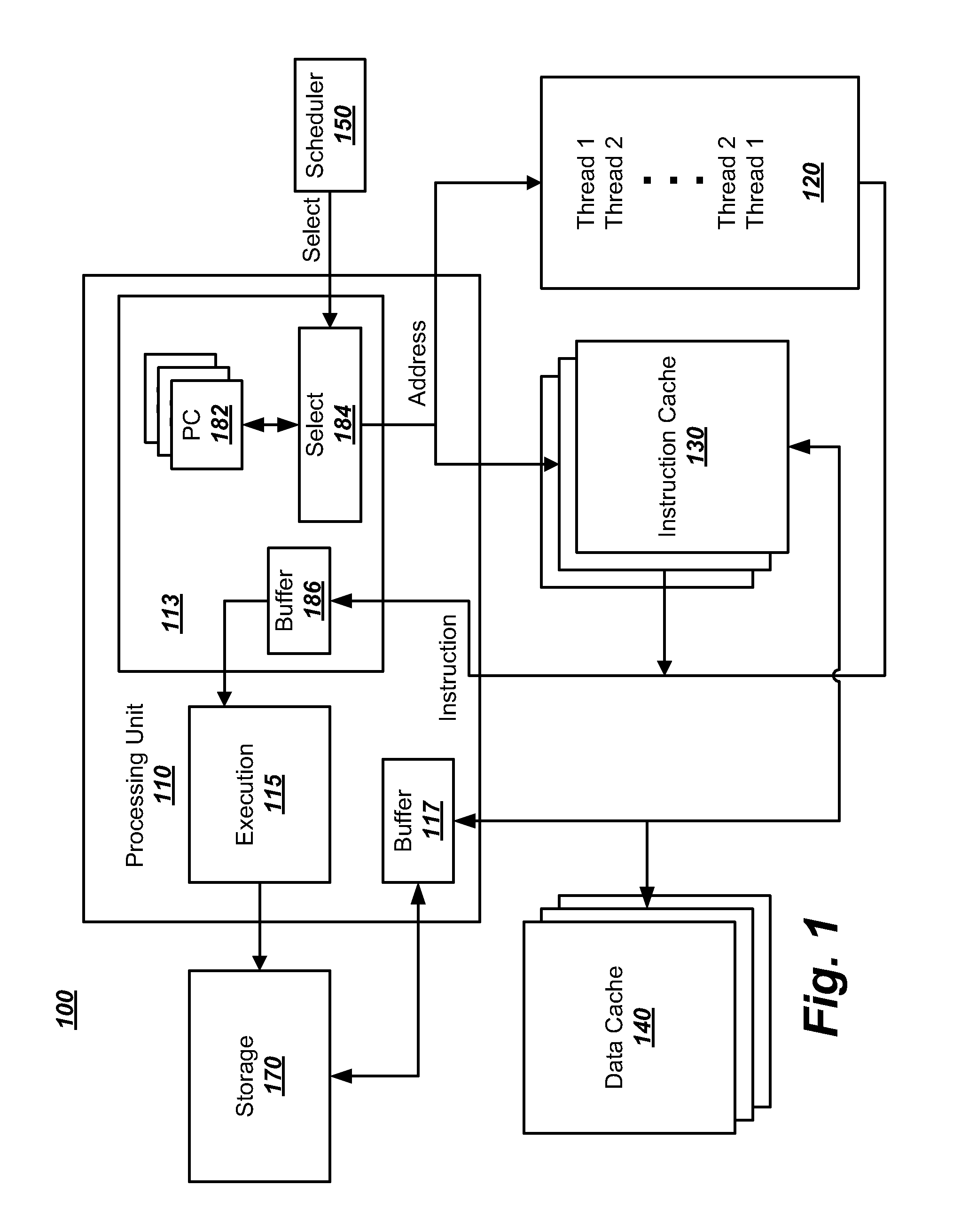

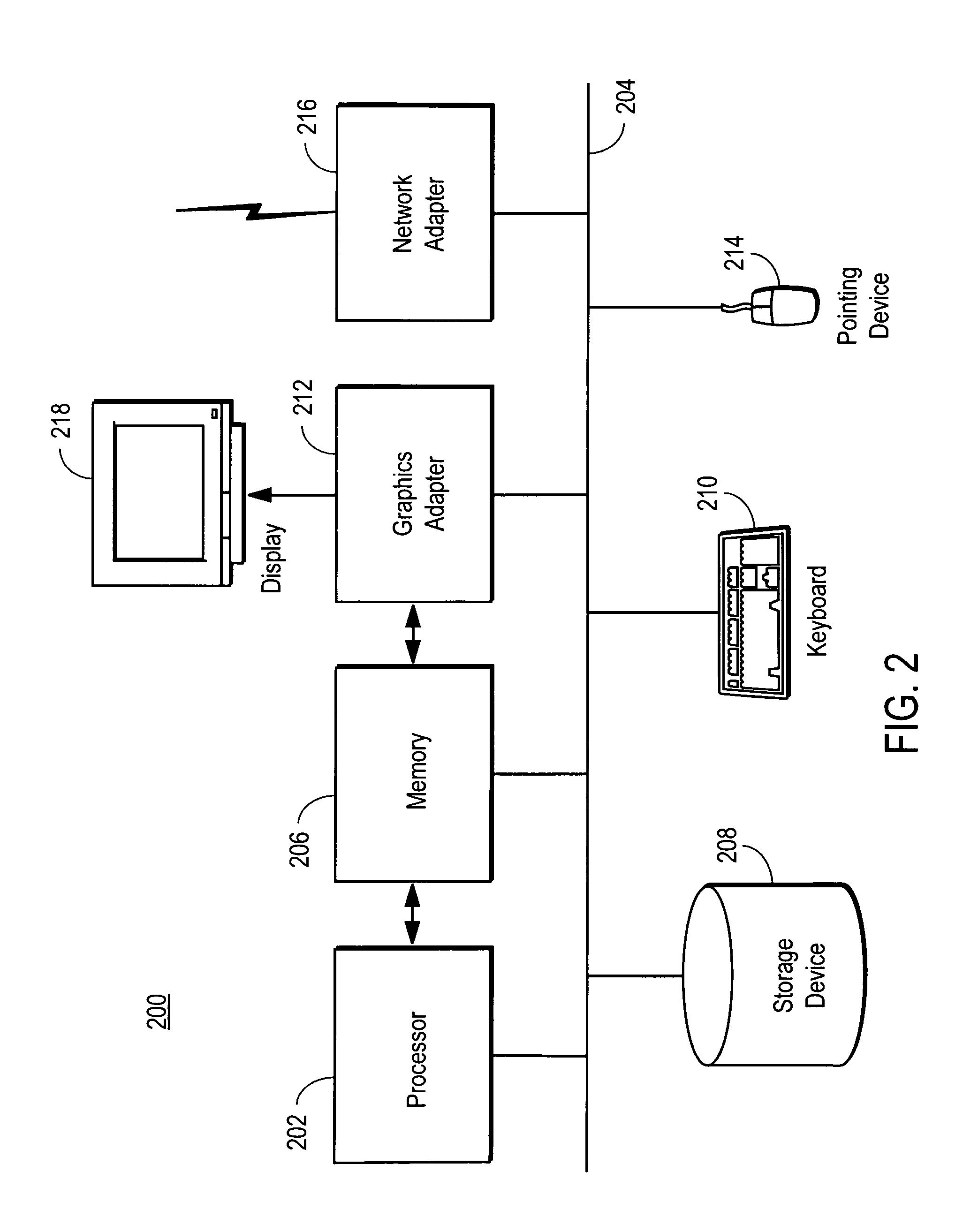

Accounting method and logic for determining per-thread processor resource utilization in a simultaneous multi-threaded (SMT) processor

InactiveUS20040216113A1Resource allocationProgram control using stored programsResource utilizationScheduling instructions

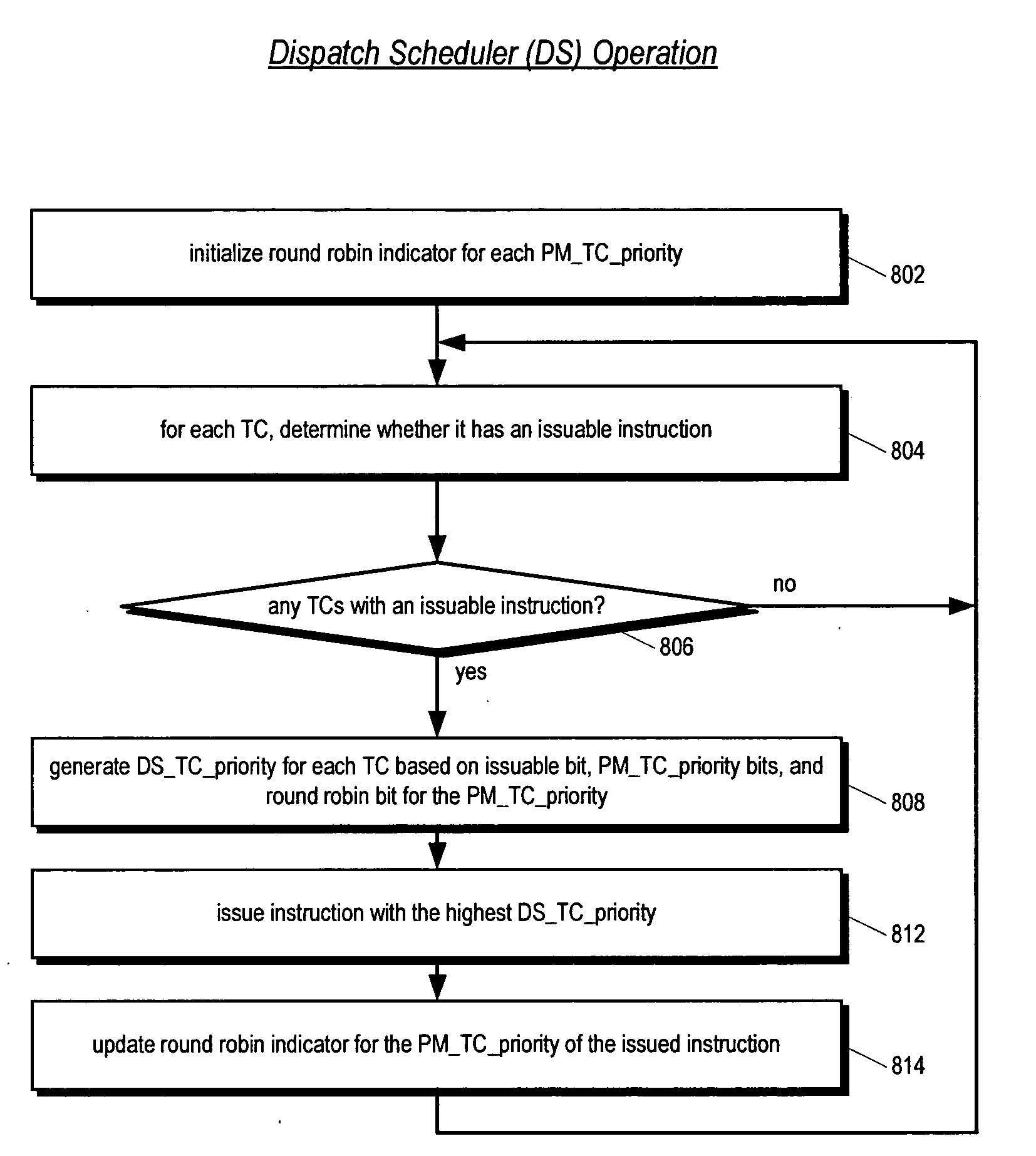

An accounting method and logic for determining per-thread processor resource utilization in a simultaneous multi-threaded (SMT) processor provides a mechanism for accounting for processor resource usage by programs and threads within programs. Relative resource use is determined by detecting instruction dispatches for multiple threads active within the processor, which may include idle threads that are still occupying processor resources. If instructions are dispatched for all threads or no threads, the processor cycle is accounted equally to all threads. Alternatively if no threads are in a dispatch state, the accounting may be made using a prior state, or in conformity with ratios of the threads' priority levels. If only one thread is dispatching, that thread is accounted the entire processor cycle. If multiple threads are dispatching, but less than all threads are dispatching (in processors supporting more than two threads), the processor cycle is billed evenly across the dispatching threads. Multiple dispatches may be detected for the threads and a fractional resource usage determined for each thread and the counters may be updated in accordance with their fractional usage.

Owner:IBM CORP

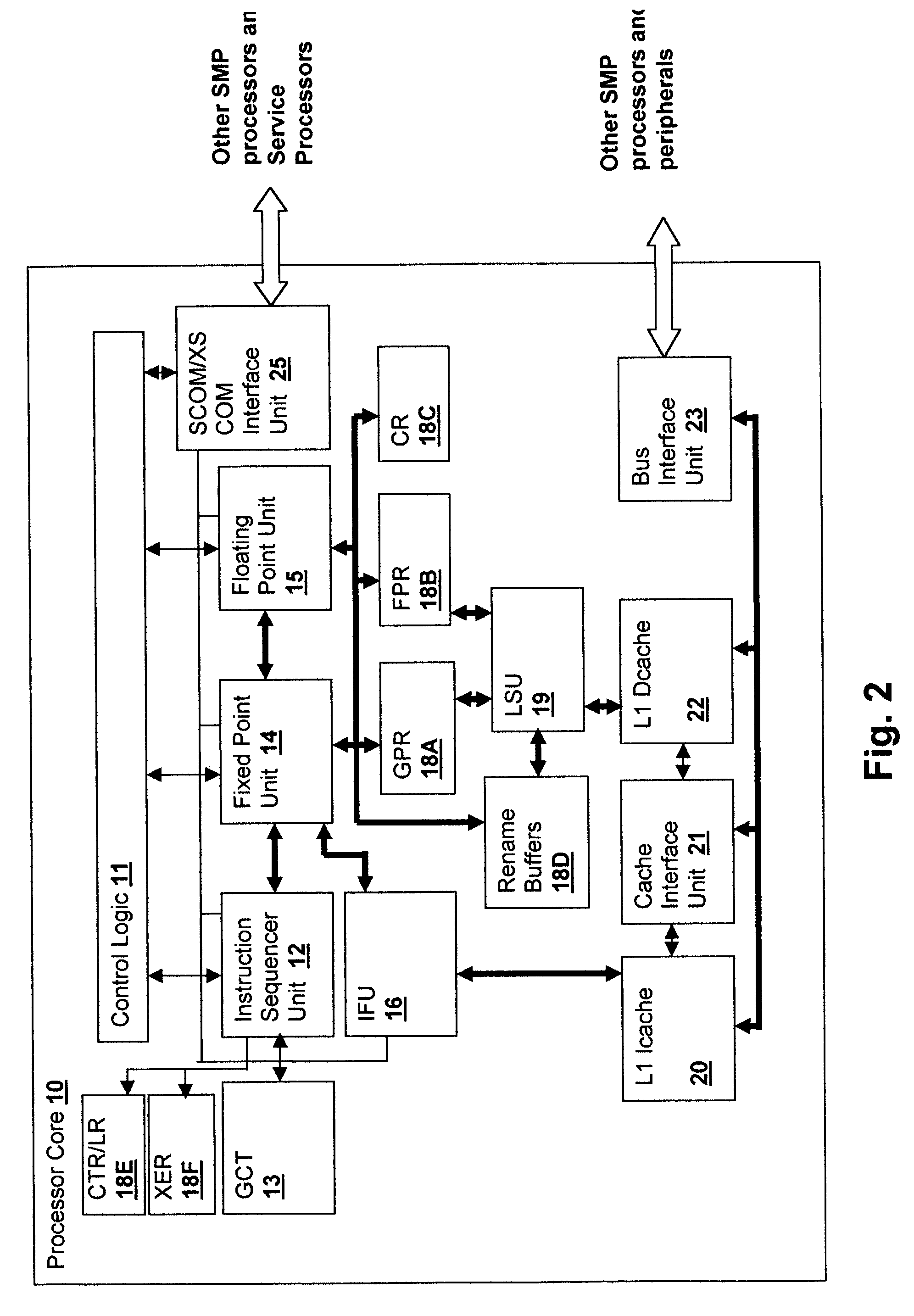

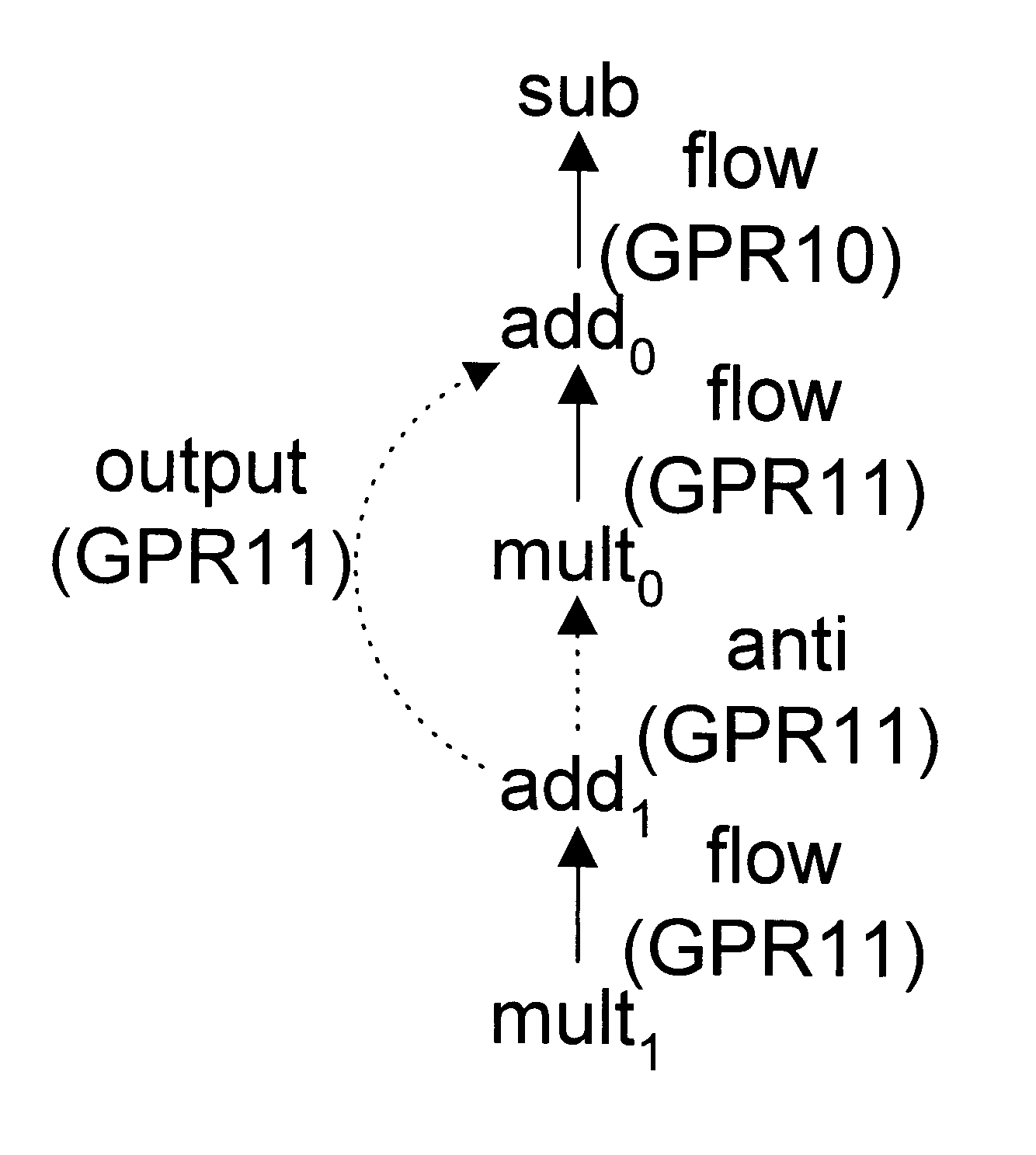

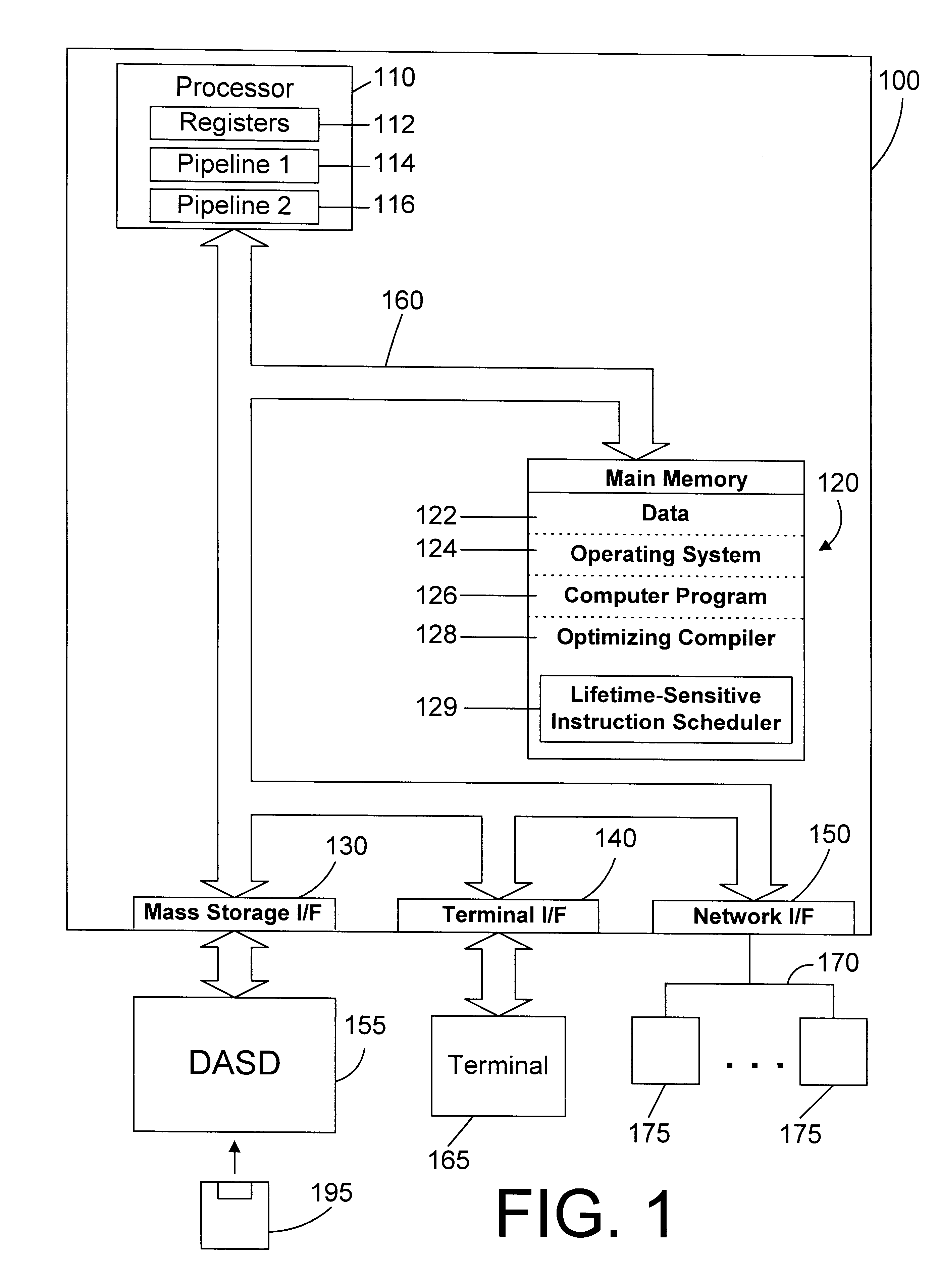

Lifetime-sensitive instruction scheduling mechanism and method

InactiveUS6305014B1Effective instructionHigh degree of parallelismSoftware engineeringSpecific program execution arrangementsScheduling instructionsDegree of parallelism

An instruction scheduler in an optimizing compiler schedules instructions in a computer program by determining the lifetimes of fixed registers in the computer program. By determining the lifetimes of fixed registers, the instruction scheduler can achieve a schedule that has a higher degree of parallelism by relaxing dependences between instructions in independent lifetimes of a fixed register so that instructions can be scheduled earlier than would otherwise be possible if those dependences were precisely honored.

Owner:IBM CORP

Processor and method for synchronous load multiple fetching sequence and pipeline stage result tracking to facilitate early address generation interlock bypass

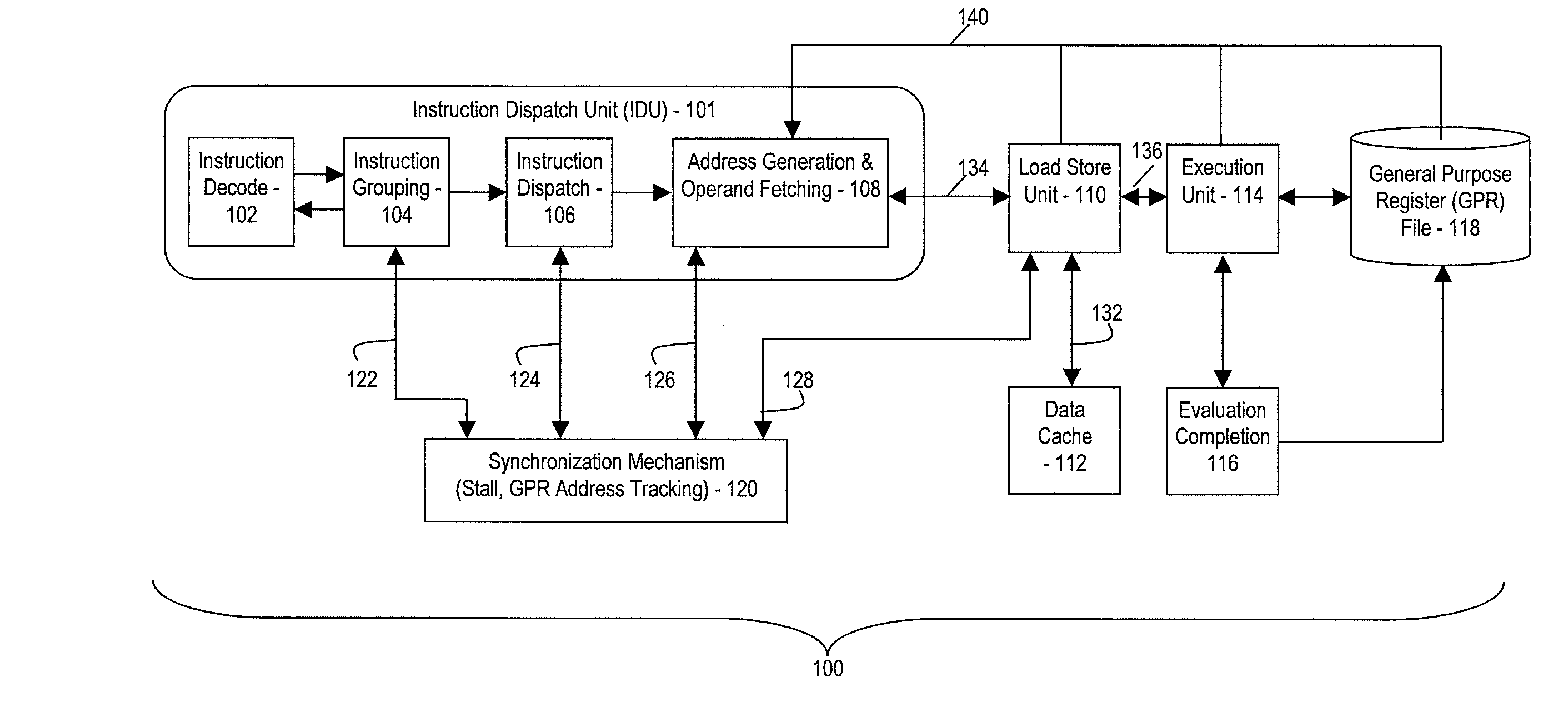

A pipelined processor including an architecture for address generation interlocking, the processor including: an instruction grouping unit to detect a read-after-write dependency and to resolve instruction interdependency; an instruction dispatch unit (IDU) including address generation interlock (AGI) and operand fetching logic for dispatching an instruction to at least one of a load store unit and an execution unit; wherein the load store unit is configured with access to a data cache and to return fetched data to the execution unit; wherein the execution unit is configured to write data into a general purpose register bank; and wherein the architecture provides support for bypassing of results of a load multiple instruction for address generation while such instruction is executing in the execution unit before the general purpose register bank is written. A method and a computer system are also provided.

Owner:IBM CORP

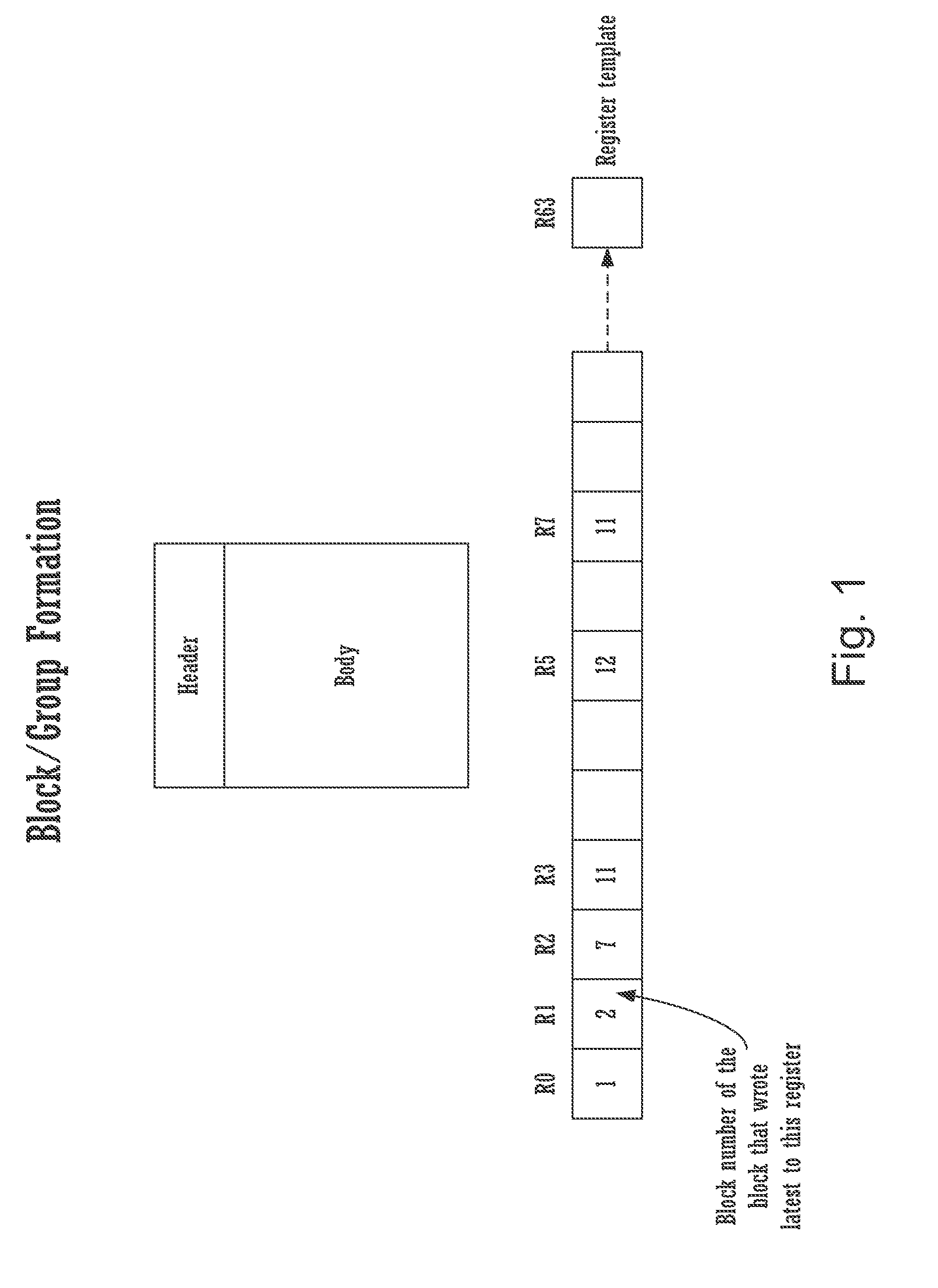

Scheduling instructions with different latencies

InactiveUS6035389ADigital computer detailsConcurrent instruction executionComputer hardwareProcessor register

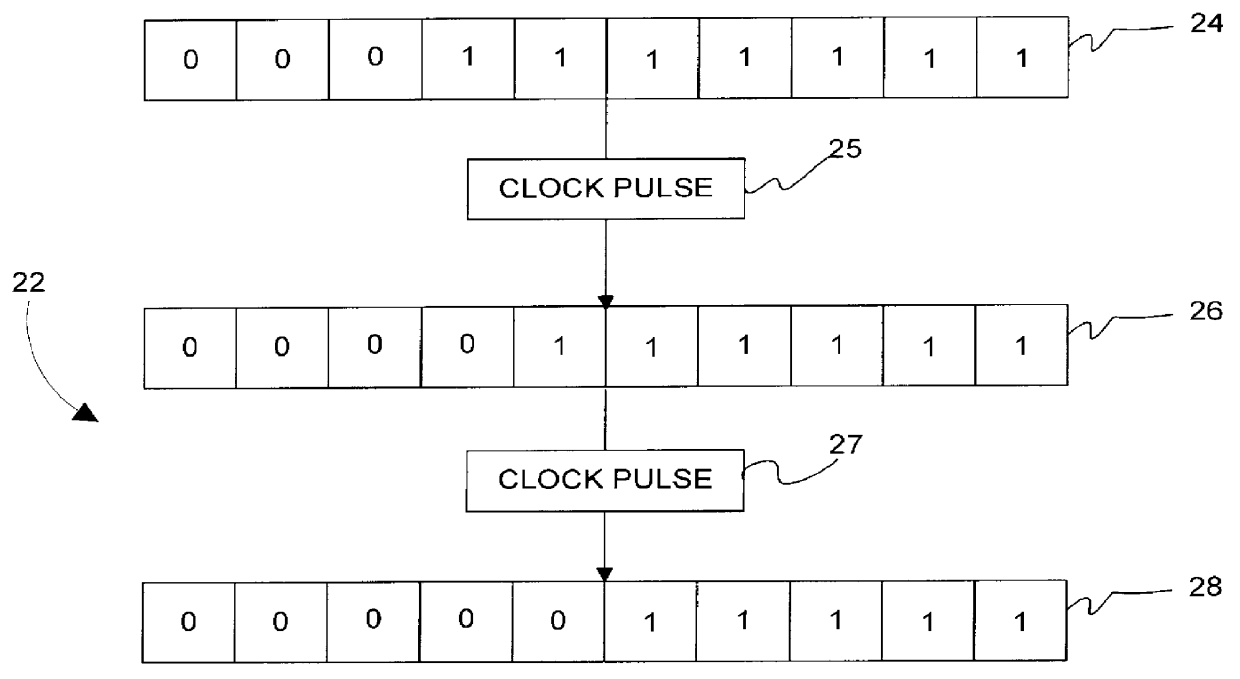

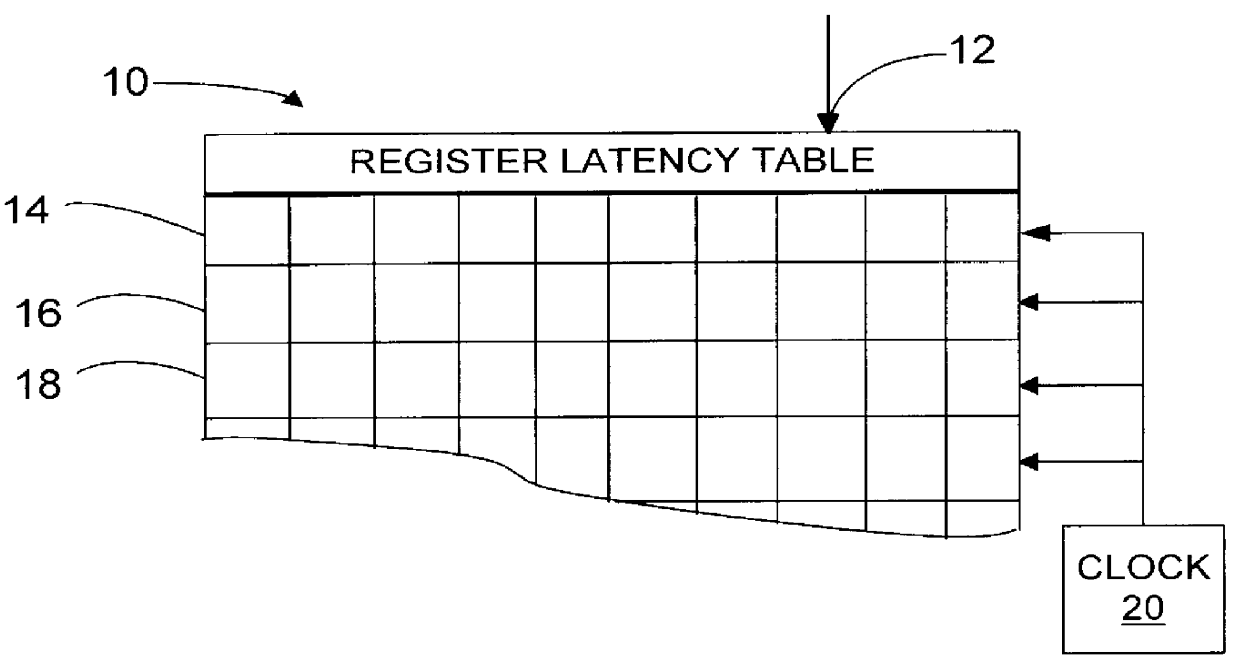

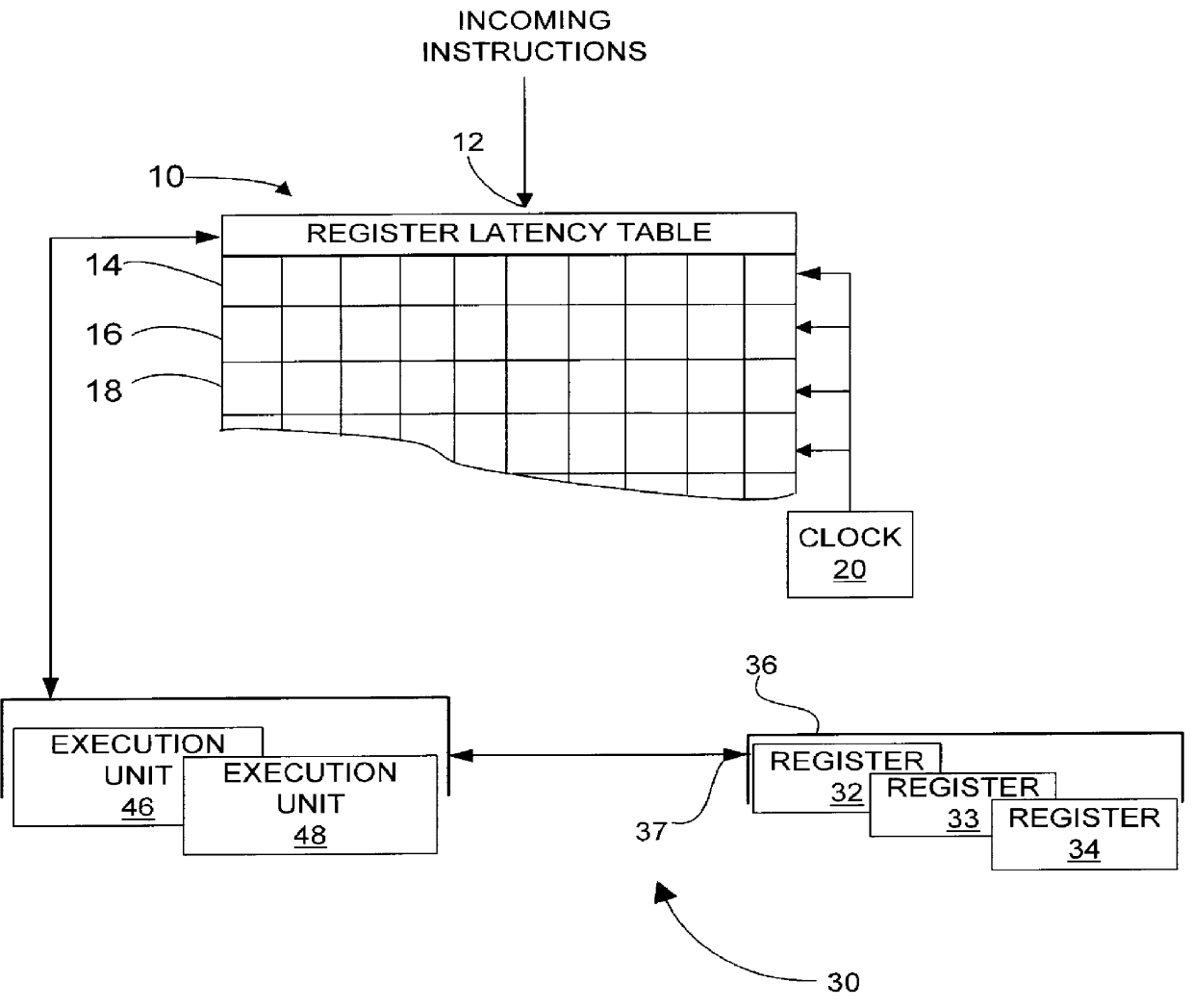

An apparatus includes a clock to produce pulses and an electronic hardware structure having a plurality of rows and one or more ports. Each row is adapted to record a separate latency vector written through one of the ports. The latency vector recorded therein is responsive to the clock. A method of dispatching instructions in a processor includes updating a plurality of expected latencies to a portion of rows of a register latency table, and decreasing the expected latencies remaining in other of the rows in response to a clock pulse. The rows of the portion correspond to particular registers.

Owner:INTEL CORP

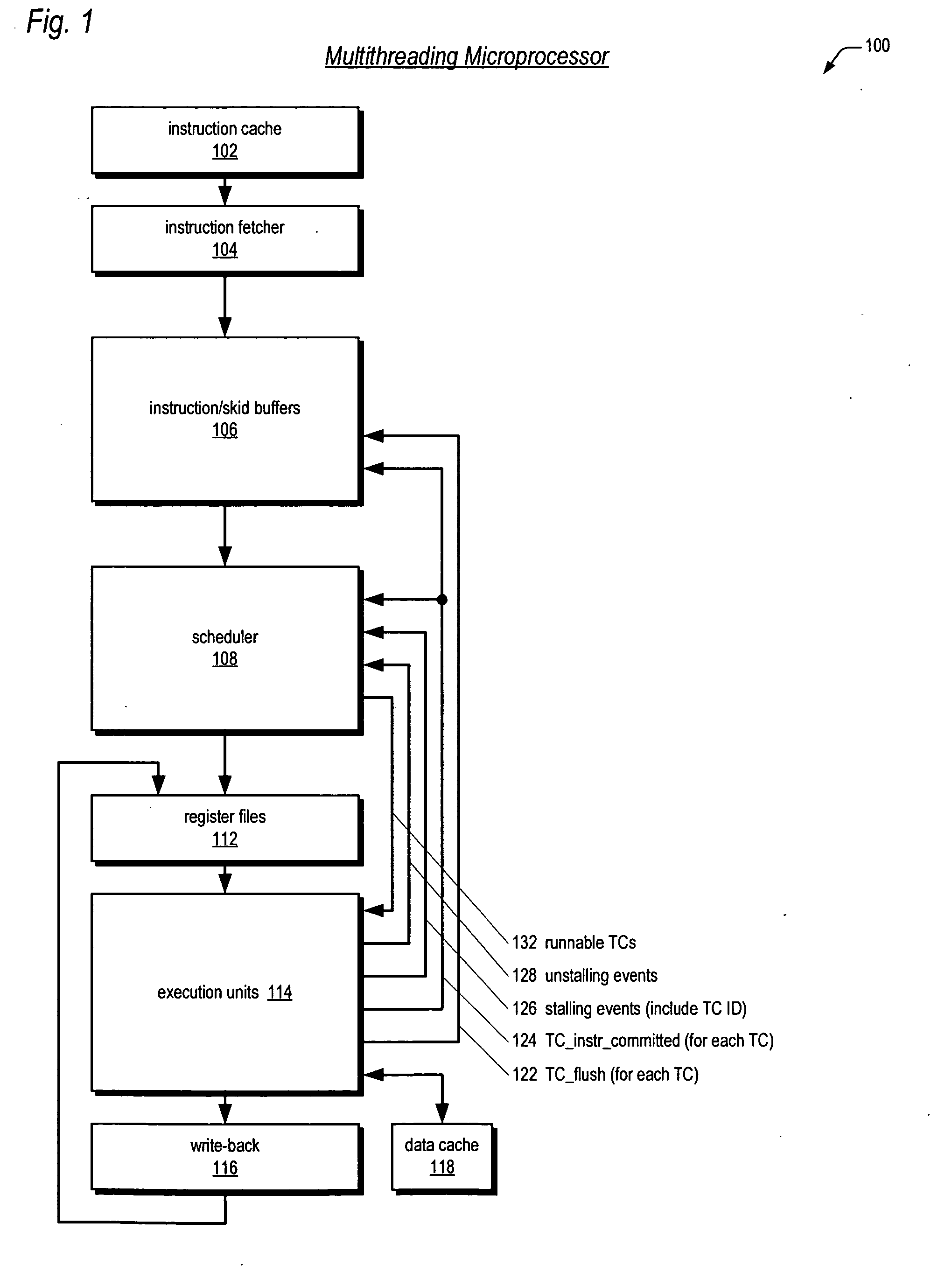

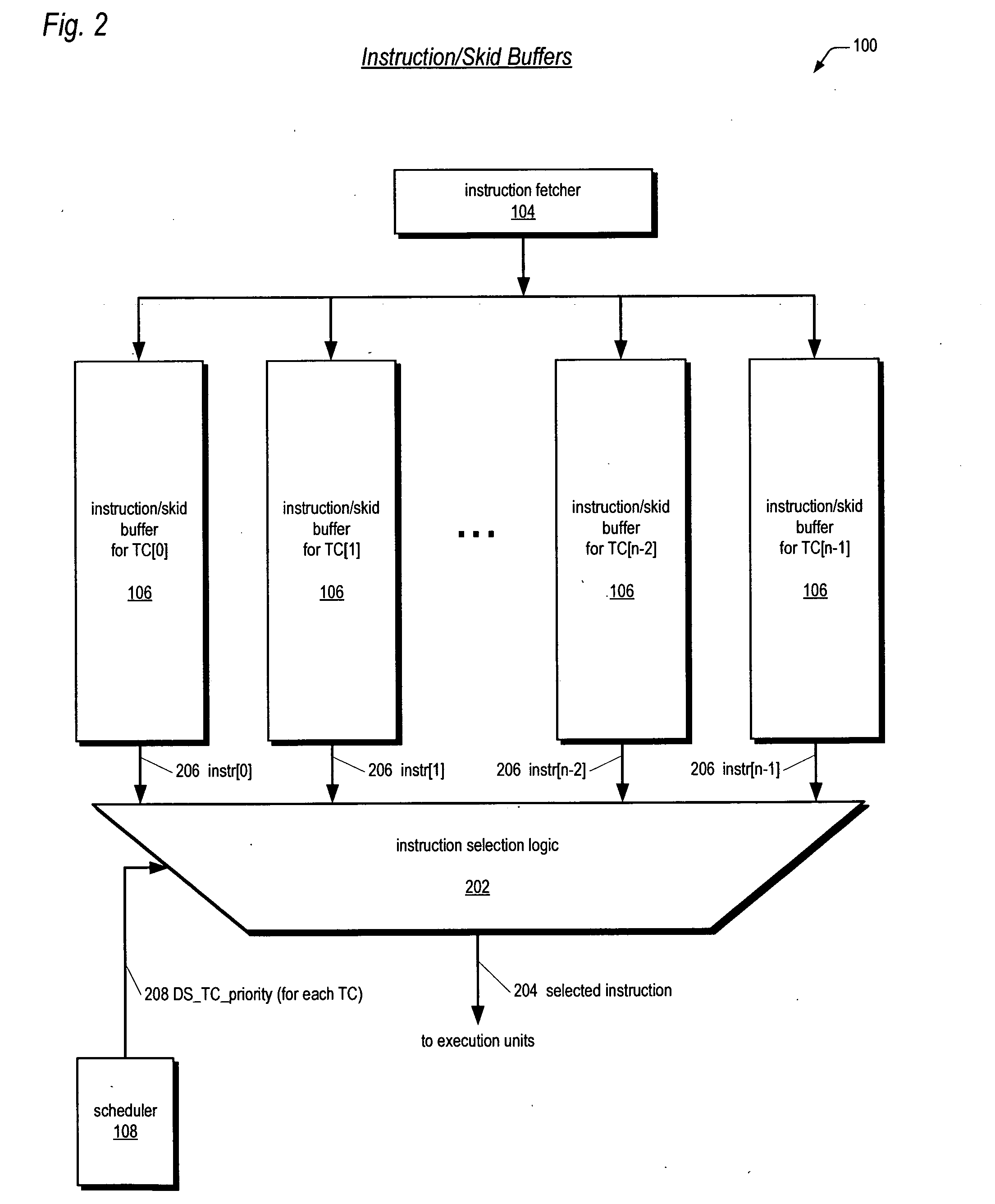

Multithreading processor including thread scheduler based on instruction stall likelihood prediction

ActiveUS20060179280A1Increase processor efficiencyReduce in quantityDigital computer detailsMultiprogramming arrangementsLoad instructionScheduling instructions

An apparatus for scheduling dispatch of instructions among a plurality of threads being concurrently executed in a multithreading processor is provided. The apparatus includes an instruction decoder that generate register usage information for an instruction from each of the threads, a priority generator that generates a priority for each instruction based on the register usage information and state information of instructions currently executing in an execution pipeline, and selection logic that dispatches at least one instruction from at least one thread based on the priority of the instructions. The priority indicates the likelihood the instruction will execute in the execution pipeline without stalling. For example, an instruction may have a high priority if it has little or no register dependencies or its data is known to be available; or may have a low priority if it has strong register dependencies or is an uncacheable or synchronized storage space load instruction.

Owner:ARM FINANCE OVERSEAS LTD

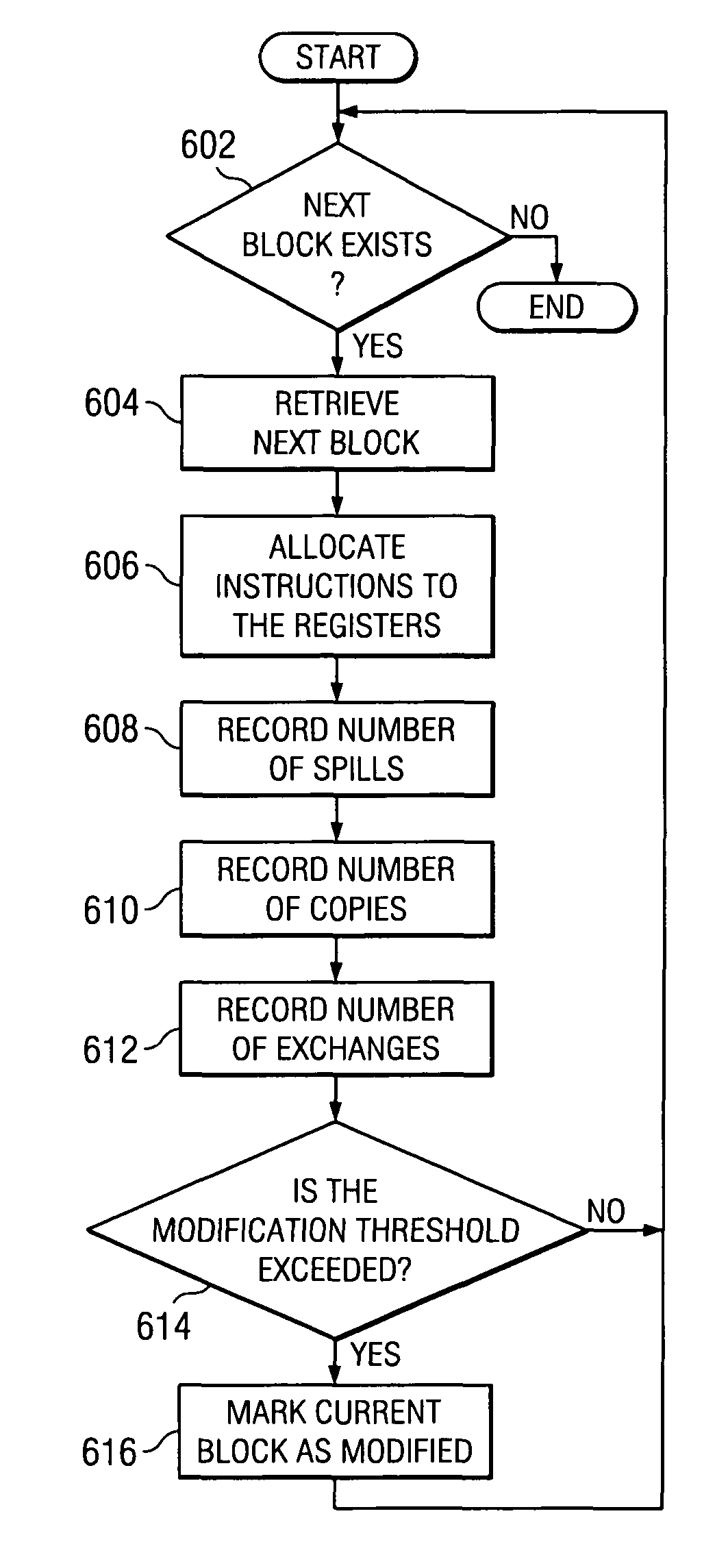

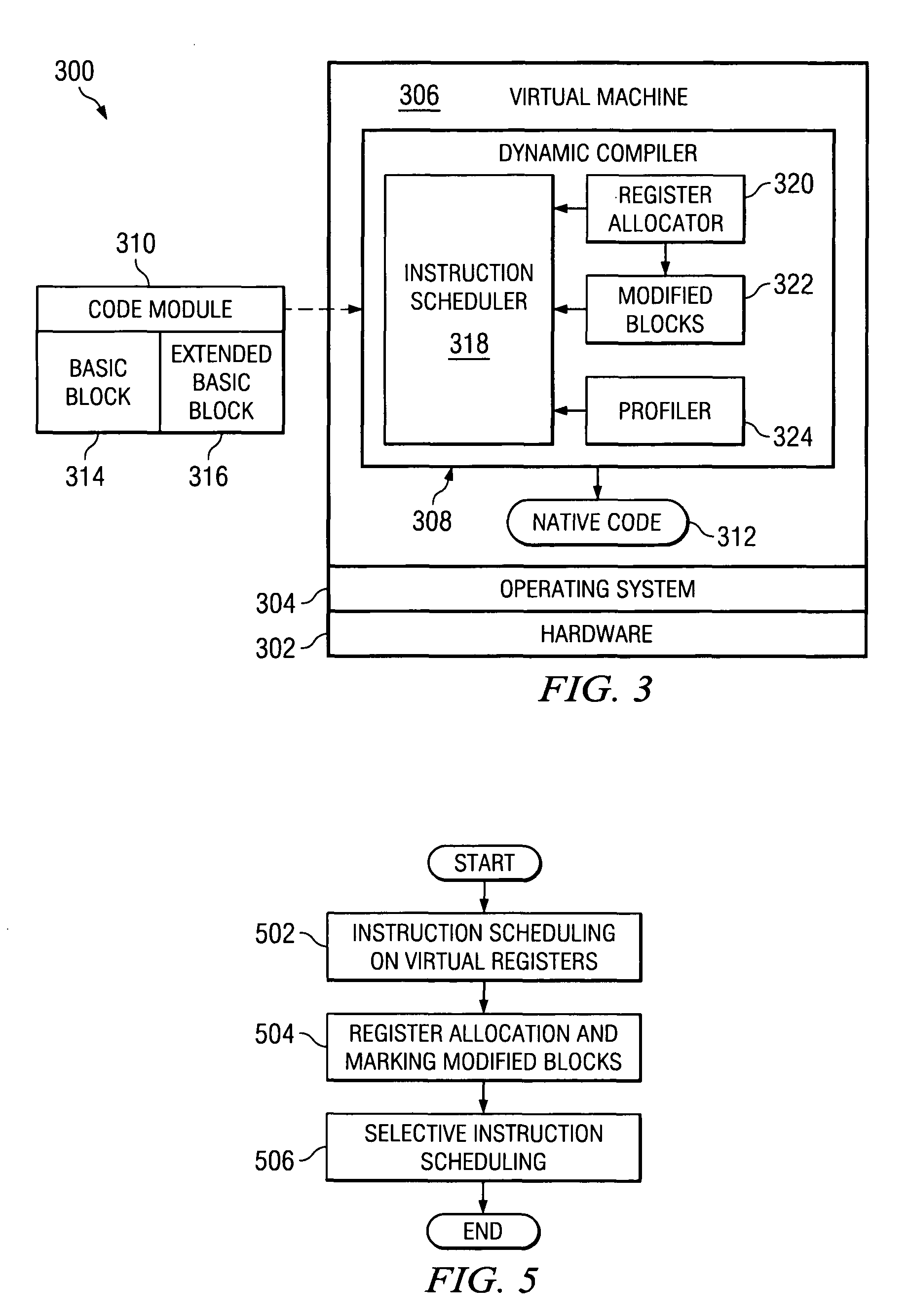

Post-register allocation profile directed instruction scheduling

ActiveUS7770161B2Software engineeringSpecial data processing applicationsRegister allocationProcessor register

A computer implemented method, system, and computer usable program code for selective instruction scheduling. A determination is made whether a region of code exceeds a modification threshold after performing register allocation on the region of code. The region of code is marked as a modified region of code in response to the determination that the region of code exceeds the modification threshold. A determination is made whether the region of code exceeds an execution threshold in response to the determination that the region of code is marked as a modified region of code. Post-register allocation instruction scheduling is performed on the region of code in response to the determination that the region of code is marked as a modified region of code and the determination that the region of code exceeds the execution threshold.

Owner:TWITTER INC

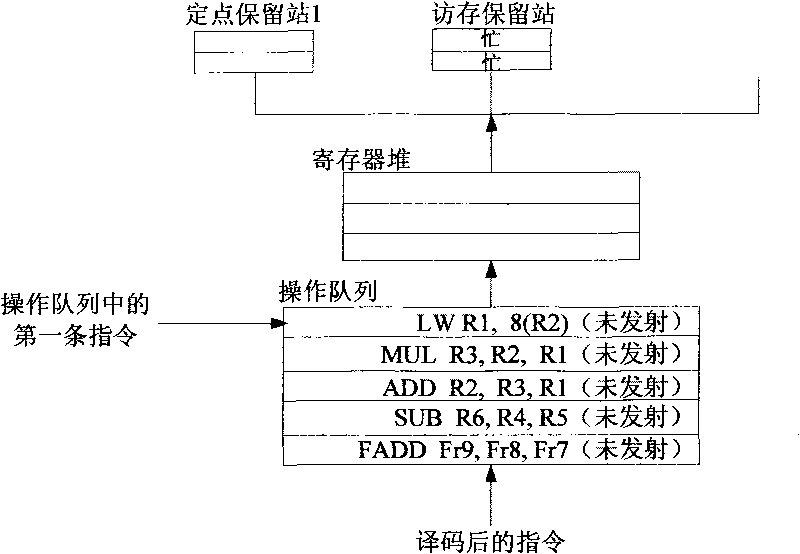

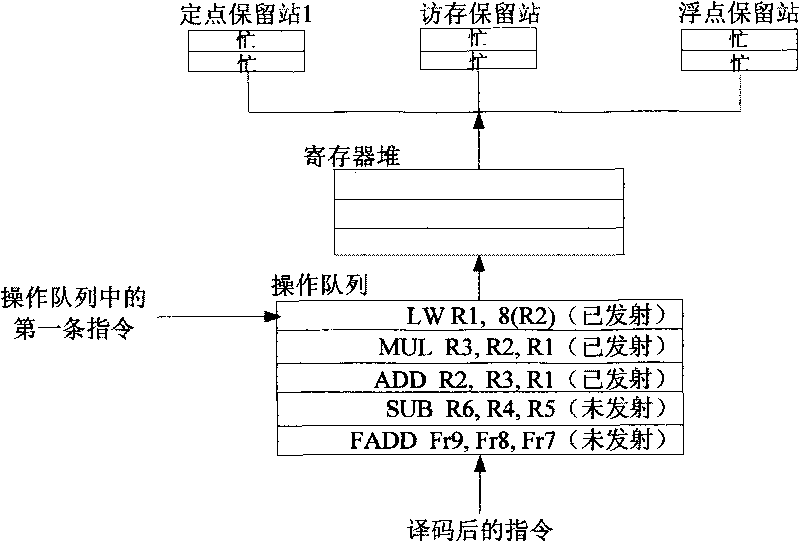

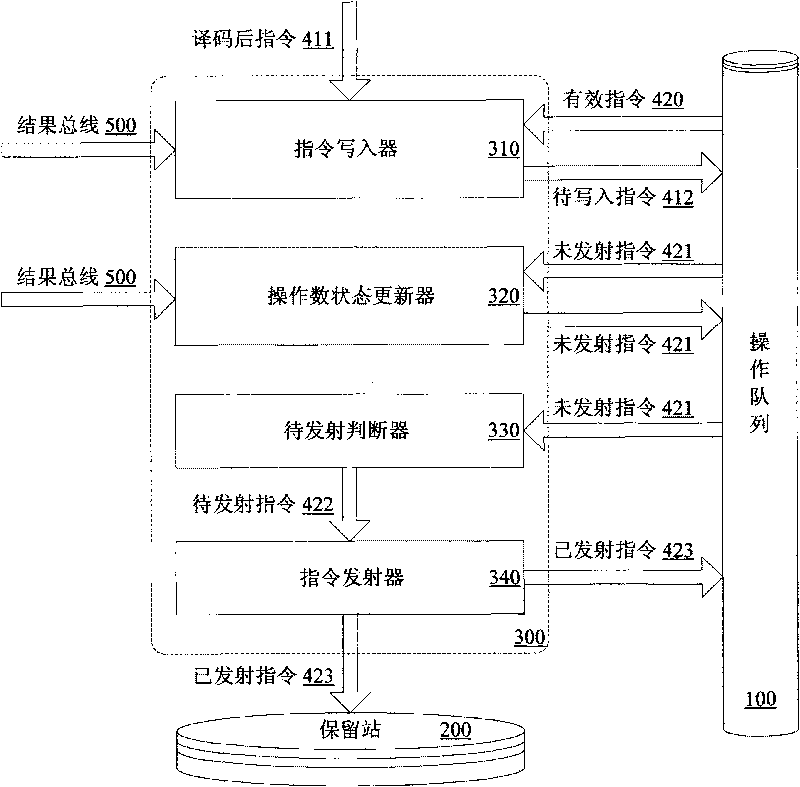

Device and method for instruction scheduling

ActiveCN101710272AImprove efficiencyImprove performanceConcurrent instruction executionReservation stationScheduling instructions

The invention provides a device and a method for dynamically scheduling instructions transmitted from an operation queue to a reservation station in a microprocessor. The method comprises the following: a step of writing instructions, which is to set and then write the operand states of the decoded instructions on the basis of data correlation between the decoded instructions to be written into the operation queue and effective instructions in the operation queue, as well as instruction execution results which have been written back and are being written; a step of updating the operand states, which is to update the operand state of each instruction not transmitted on the basis of the data correlation between each instruction not transmitted and the instructions being written back of instruction execution results; a step of judging to-be-transmitted instructions, which is to judge whether the to-be-transmitted instructions with all operands ready exist on the basis of the operand state of each instruction not transmitted; and a step of transmitting instructions, which is to transmit the judged to-be-transmitted instructions to the reservation station when the reservation station has vacancies. Pipeline efficiency can be effectively improved by transmitting the instructions with the operands ready to the reservation station on the basis of the data correlation between the instructions.

Owner:LOONGSON TECH CORP

Construction method of GPU and CPU combined processor

InactiveCN101526934AWork is not tiredDigital computer detailsConcurrent instruction executionVideo memoryOperational system

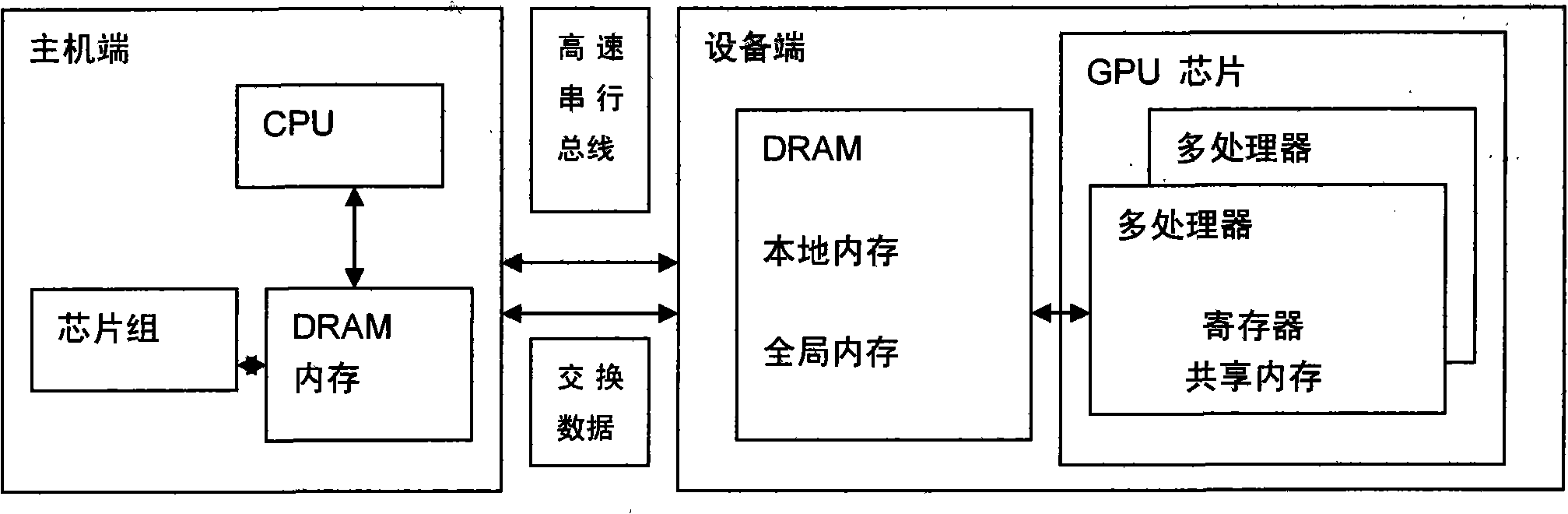

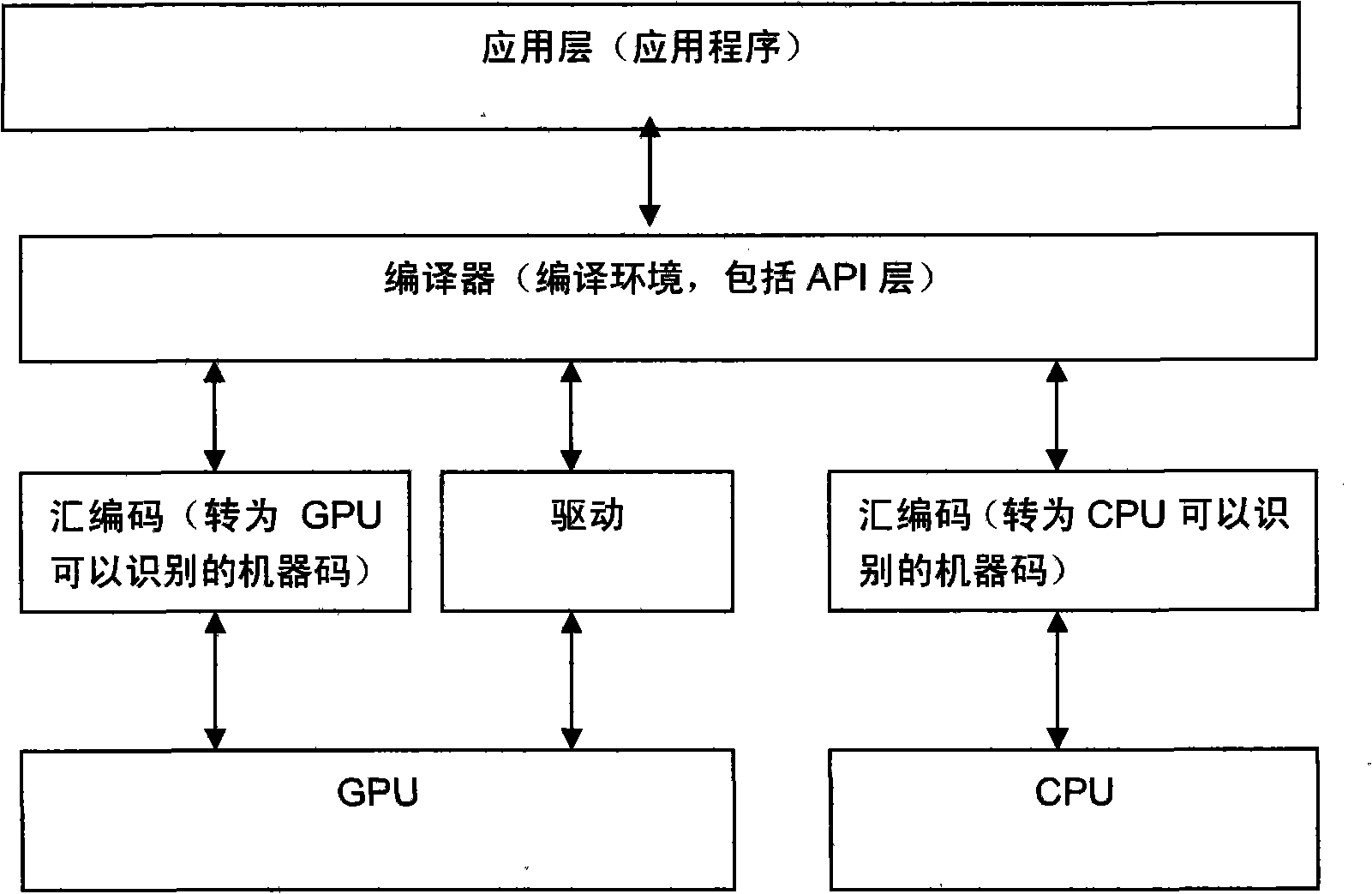

The invention provides a construction method of a GPU and CPU combined processor, which comprises the following steps: a CPU and a GPU are coupled to construct a combined processor, wherein the CPU is responsible for the general-purpose processing tasks of an operation system, system software, general-purpose application program, and the like which have complex instruction scheduling, circulation, branches and logical judgment; and the GPU is responsible for the highly-parallel calculating processing of large-scale data without logical relation; and the CPU and the GPU jointly finish the same large-scale parallel calculating application. In the GPU and CPU combined processor, a plurality of cores of CPU are communicated with each other by a memory bus and carry out calculation, and the cores of the GPU exchange data and are calculated by a uniform shared memory (a video memory on the GPU); the GPU and the CPU are connected by a high-speed serial bus and exchange calculating data by the memory of the CPU and the shared memory of the GPU; and the construction method can fully utilize the parallel processing capacity of the cores of the GPU and realize the rapid parallel calculation of enormous data volume.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

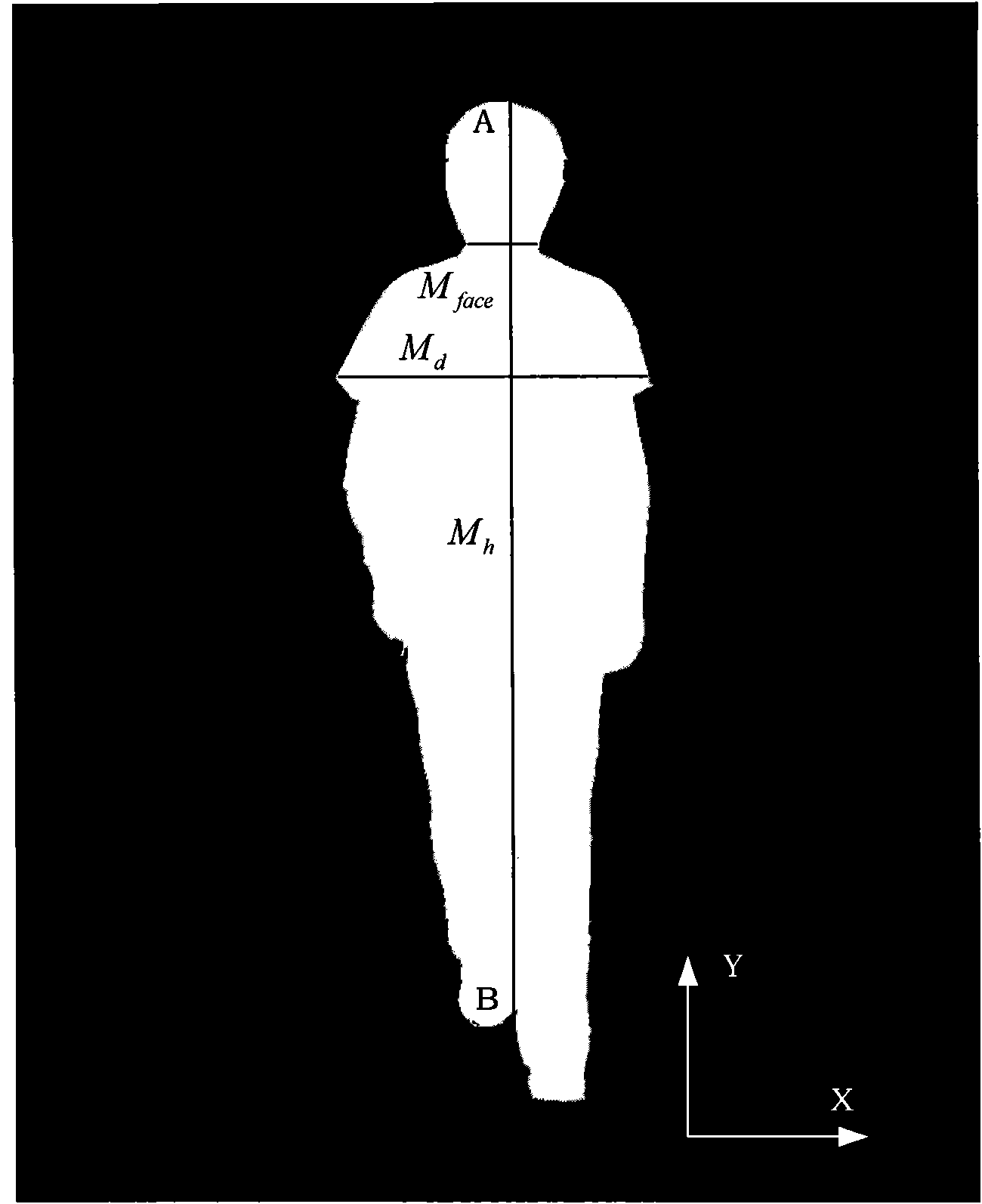

Epidemic preventing and controlling system for detecting and searching people with abnormal temperatures

InactiveCN101999888APay attention to the epidemic situation in real timeEffective prevention and control informationDiagnostic recording/measuringSensorsScheduling instructionsComputer module

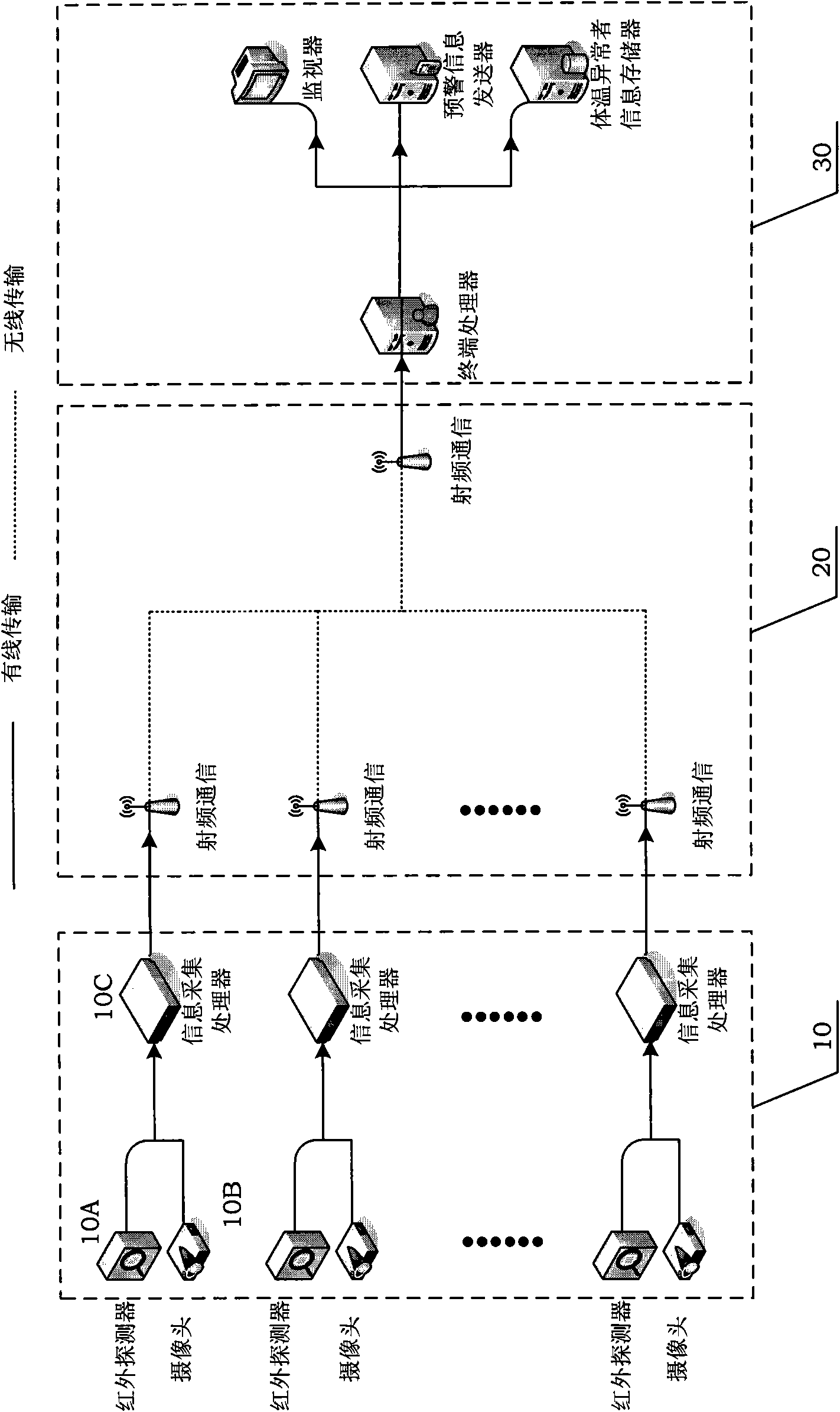

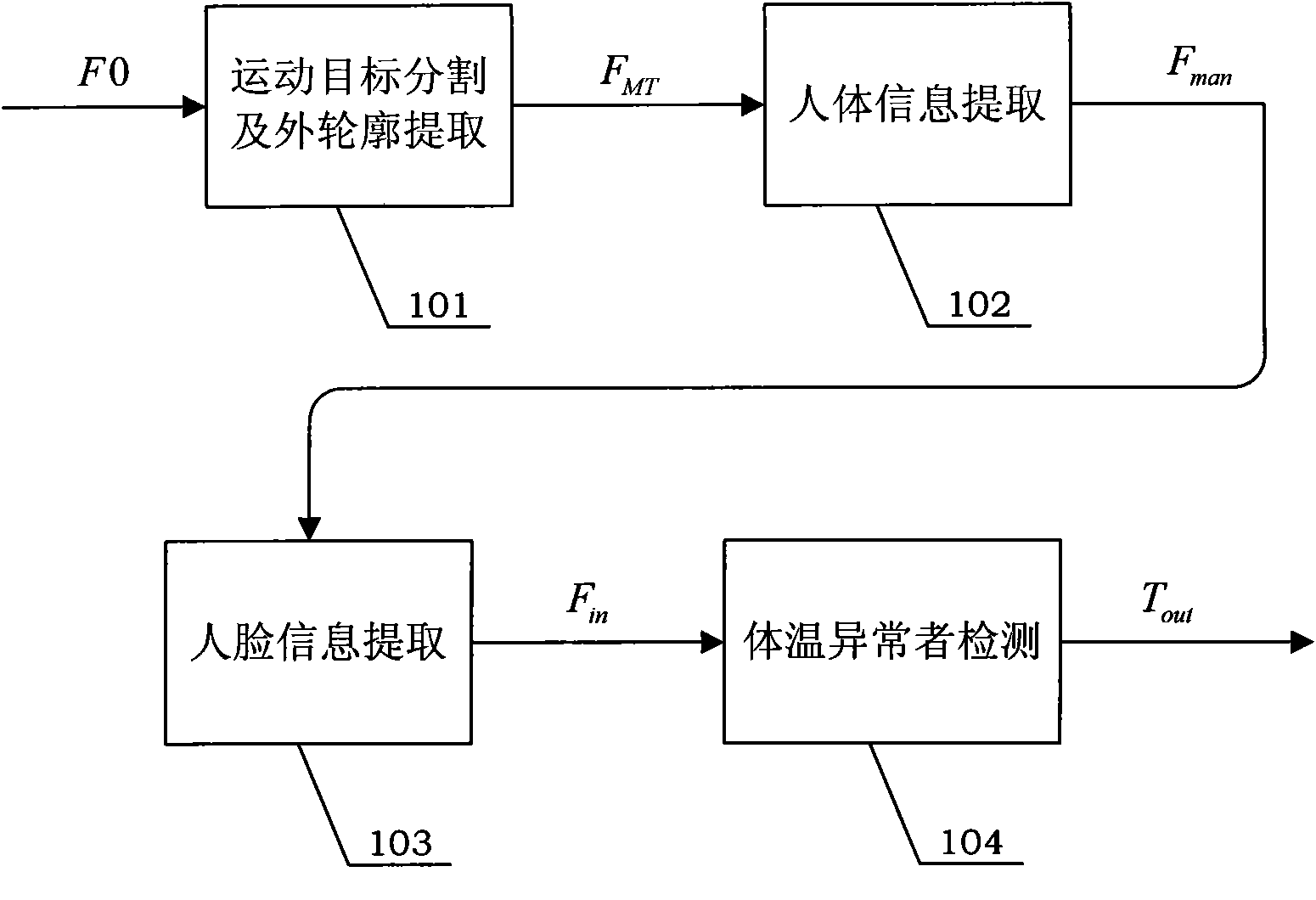

The invention discloses an epidemic preventing and controlling system for detecting and searching people with abnormal temperatures. The system comprises an information acquisition processing module, a communication processing module and a terminal processing module. The information acquisition processing module consists of an infrared detector, a camera and an information acquisition processor, wherein the infrared detector is used for acquiring face temperature information; the camera is used for acquiring face video information; and the information acquisition processor is used to perform motion target division and profile extraction on the face temperature information and the face video information so as to obtain information of people with abnormal temperatures. The communication processing module is used for transmitting the information of the people with the abnormal temperatures to the terminal processing module. The terminal processing module performs real-time dynamic searching of the people with abnormal temperatures in a monitored area and can send scheduling instruction information for giving an epidemic prompt and tracking people and the like. The system of the invention can monitor and recognize the people with abnormal temperatures in the monitored area and accordingly make a correct response and give good warning for coming epidemics, so that even epidemics outbreak, the people with abnormal temperatures can be found at first time and the epidemic preventing efficiency is improved as high as possible.

Owner:BEIHANG UNIV

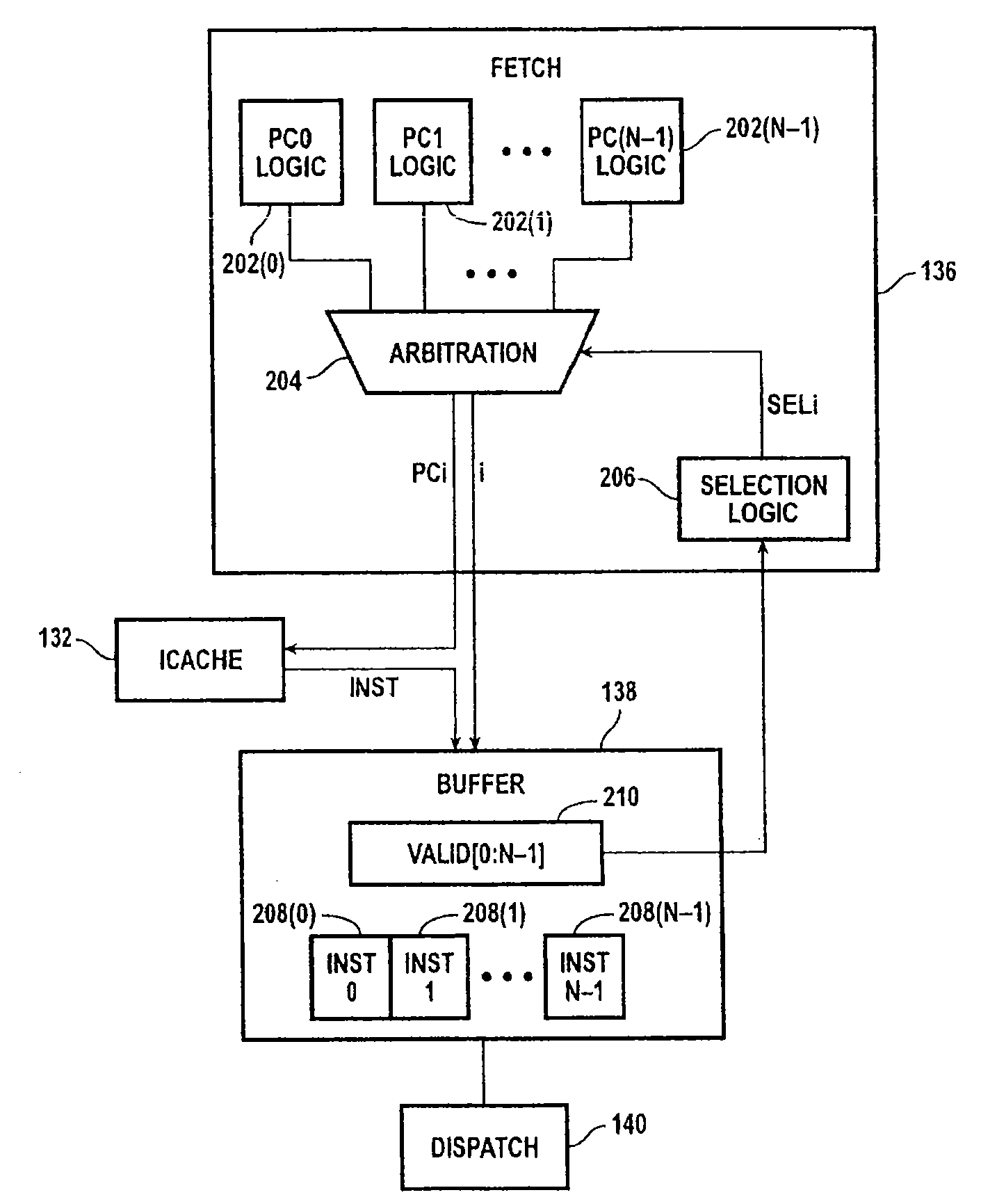

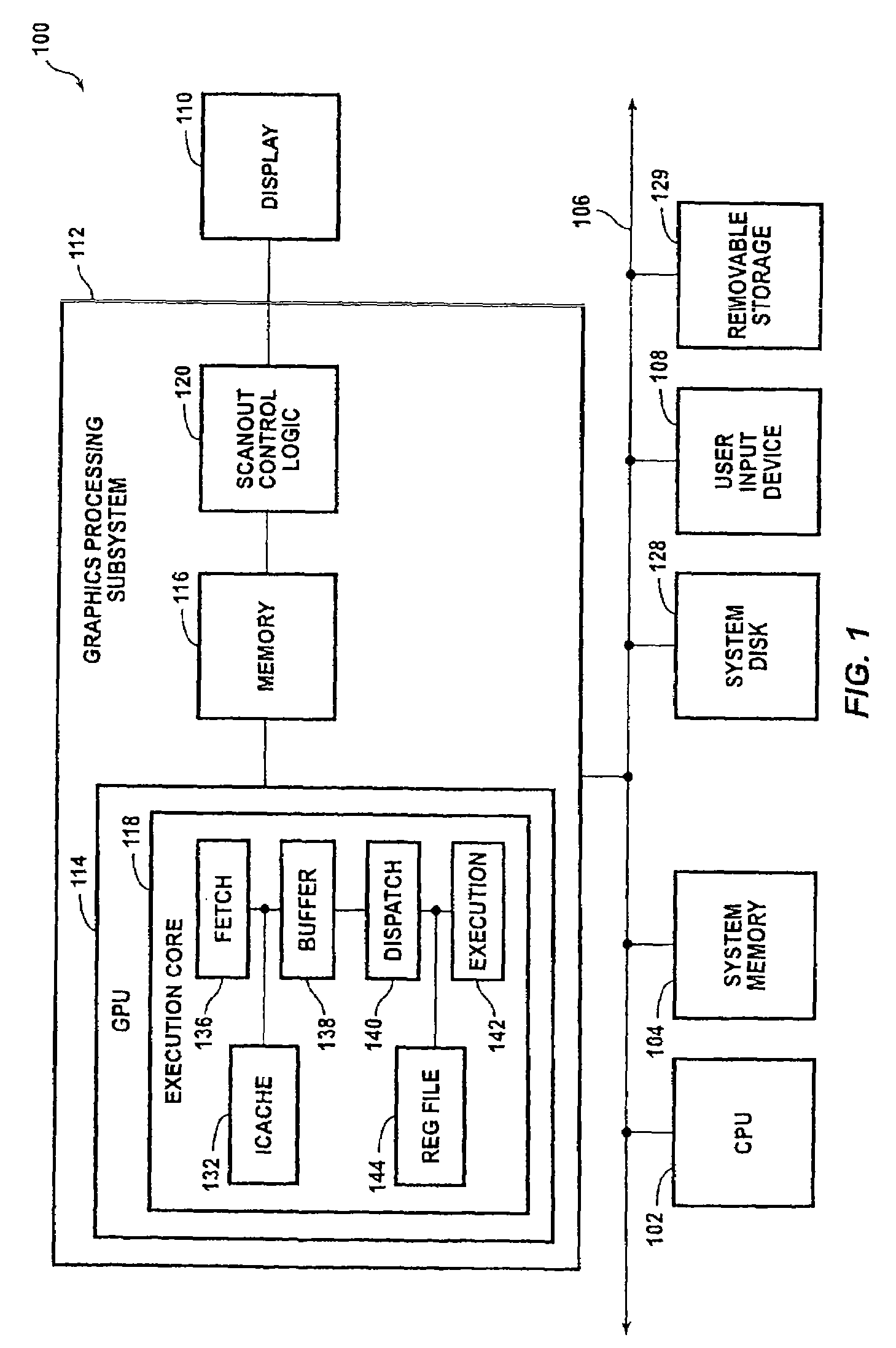

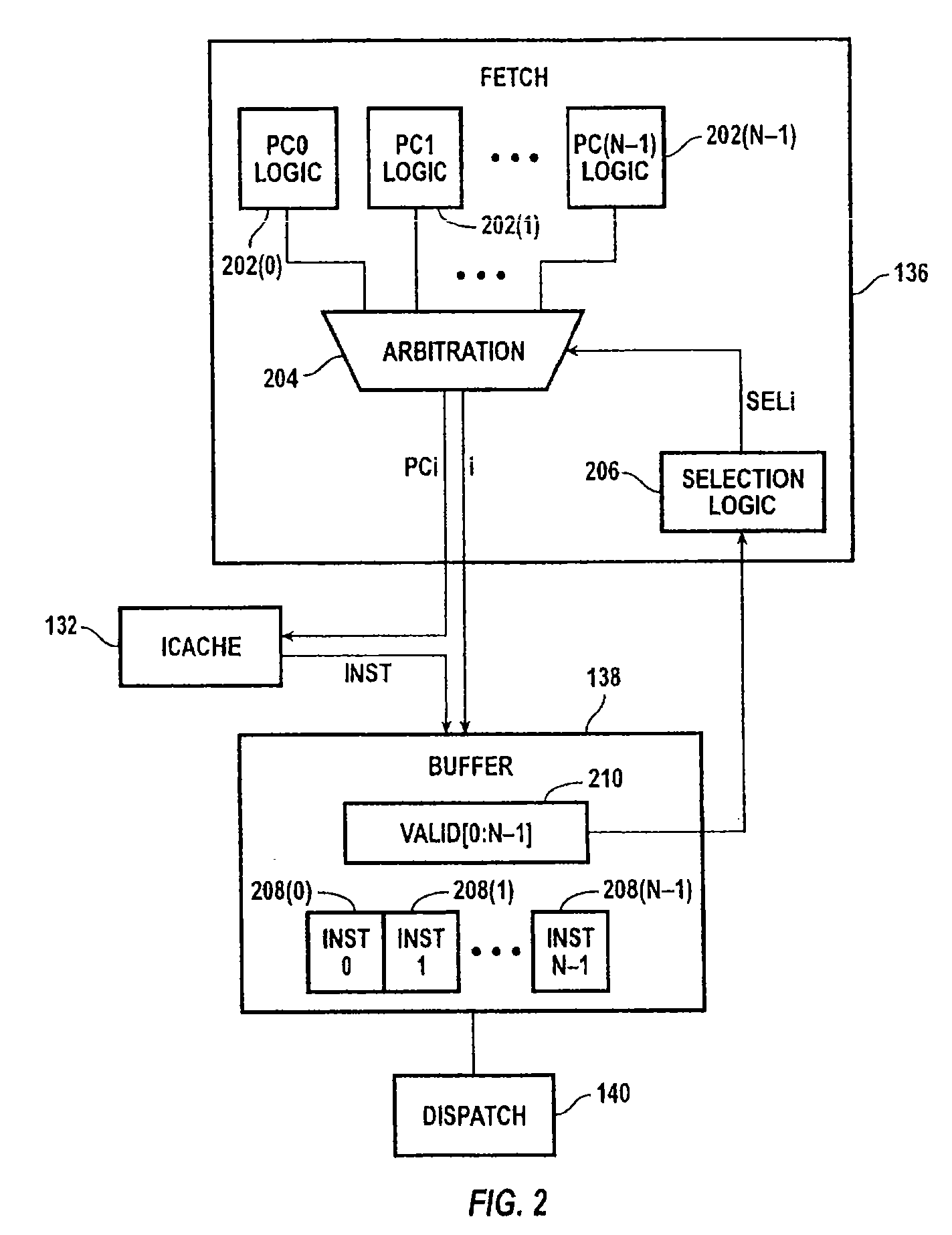

Across-thread out of order instruction dispatch in a multithreaded graphics processor

ActiveUS7310722B2Digital computer detailsMultiprogramming arrangementsGraphicsScheduling instructions

Instruction dispatch in a multithreaded microprocessor such as a graphics processor is not constrained by an order among the threads. Instructions are fetched into an instruction buffer that is configured to store an instruction from each of the threads. A dispatch circuit determines which instructions in the buffer are ready to execute and may issue any ready instruction for execution. An instruction from one thread may be issued prior to an instruction from another thread regardless of which instruction was fetched into the buffer first. Once an instruction from a particular thread has issued, the fetch circuit fills the available buffer location with the following instruction from that thread.

Owner:NVIDIA CORP

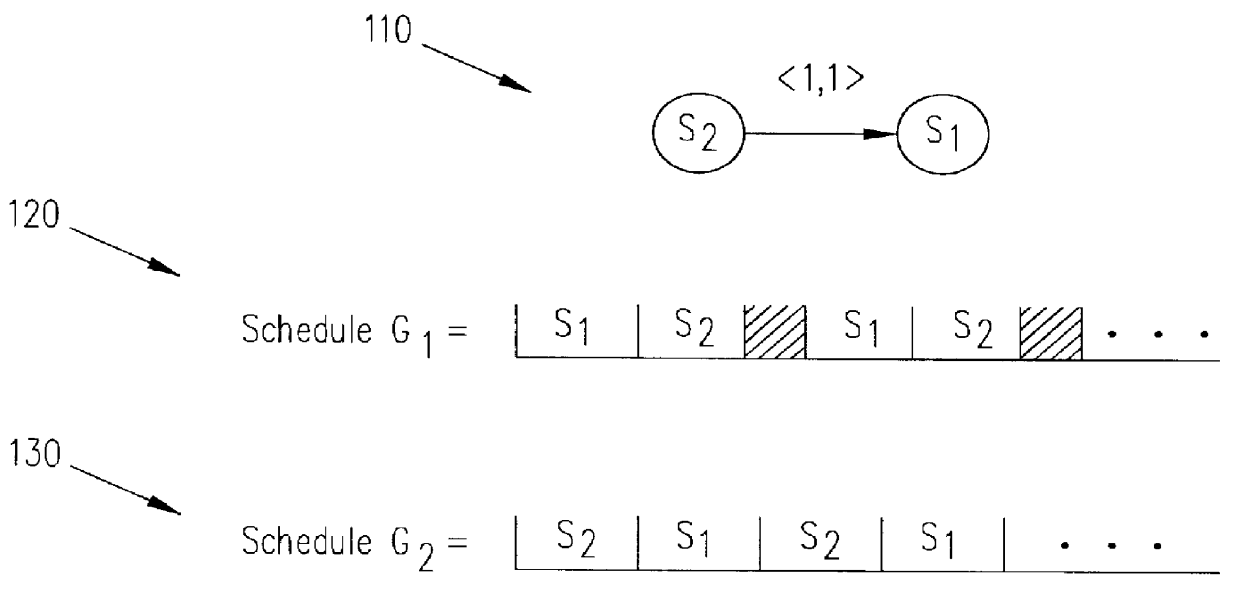

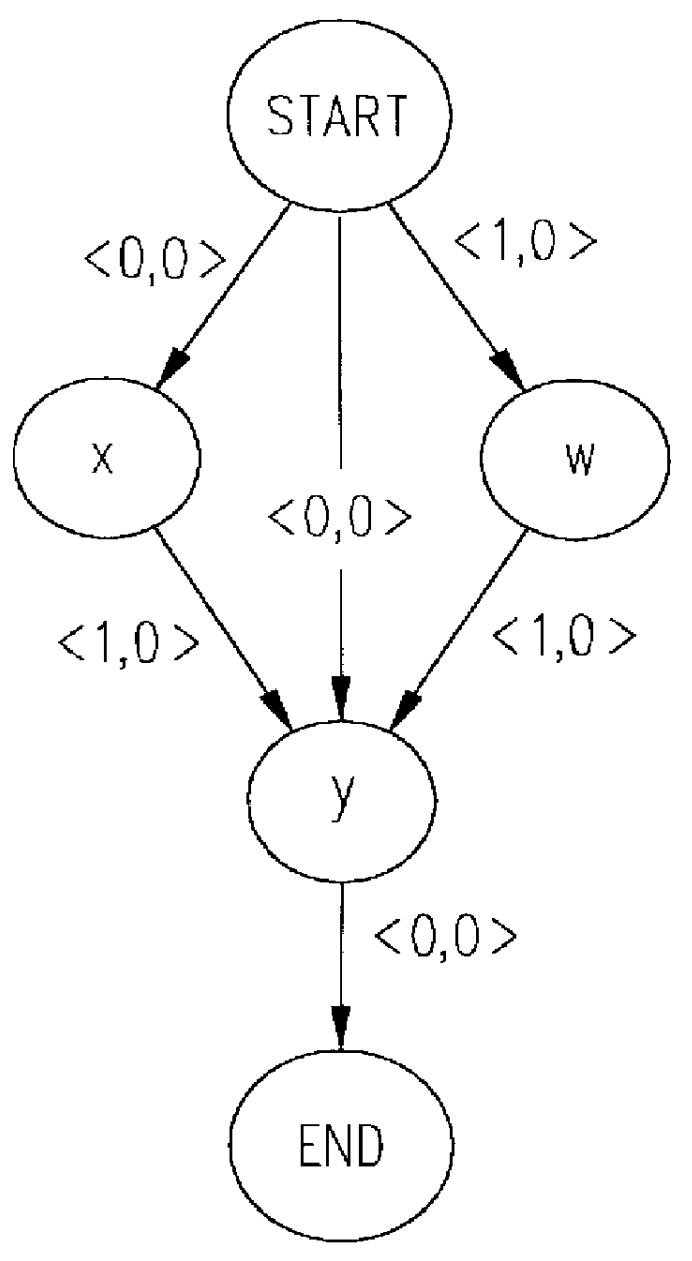

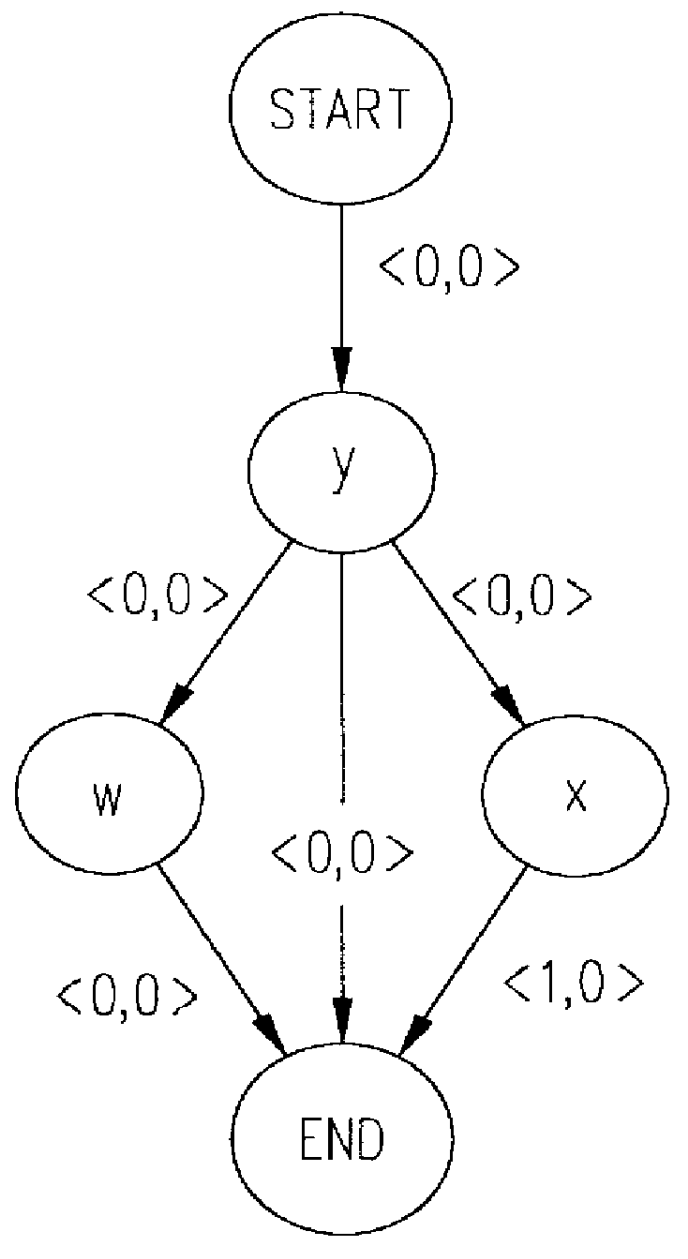

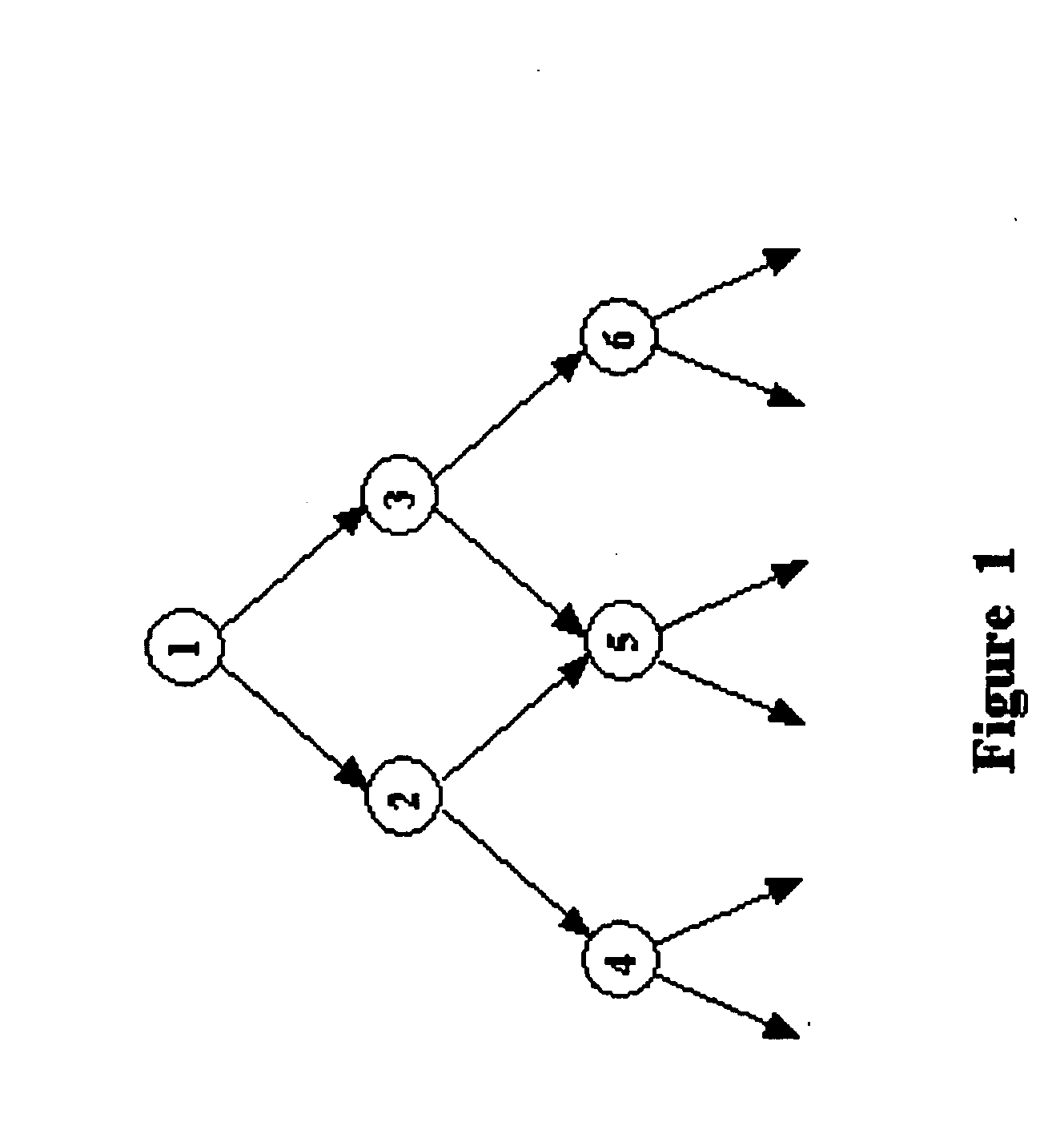

System, method, and program product for loop instruction scheduling hardware lookahead

InactiveUS6044222ASoftware engineeringDigital computer detailsScheduling instructionsComputerized system

Improved scheduling of instructions within a loop for execution by a computer system having hardware lookahead is provided. A dependence graph is constructed which contains all the nodes of a dependence graph corresponding to the loop, but which only contains loop-independent dependence edges. A start node simulating a previous iteration of the loop may be added to the dependence graph, and an end node simulating a next iteration of the loop may also added to the dependence graph. A loop-independent edge between a source node and the start node is added to the dependence graph, and a loop-independent edge between a sink node and the end node is added to the dependence graph. Loop-carried edges which satisfy a computed lower bound on the time required for a single loop iteration are eliminated from a dependence graph, and loop-carried edges which do not satisfy the computed lower bound are replaced by a pair of loop-independent edges. Instructions may be scheduled for execution based on the dependence graph.

Owner:IBM CORP

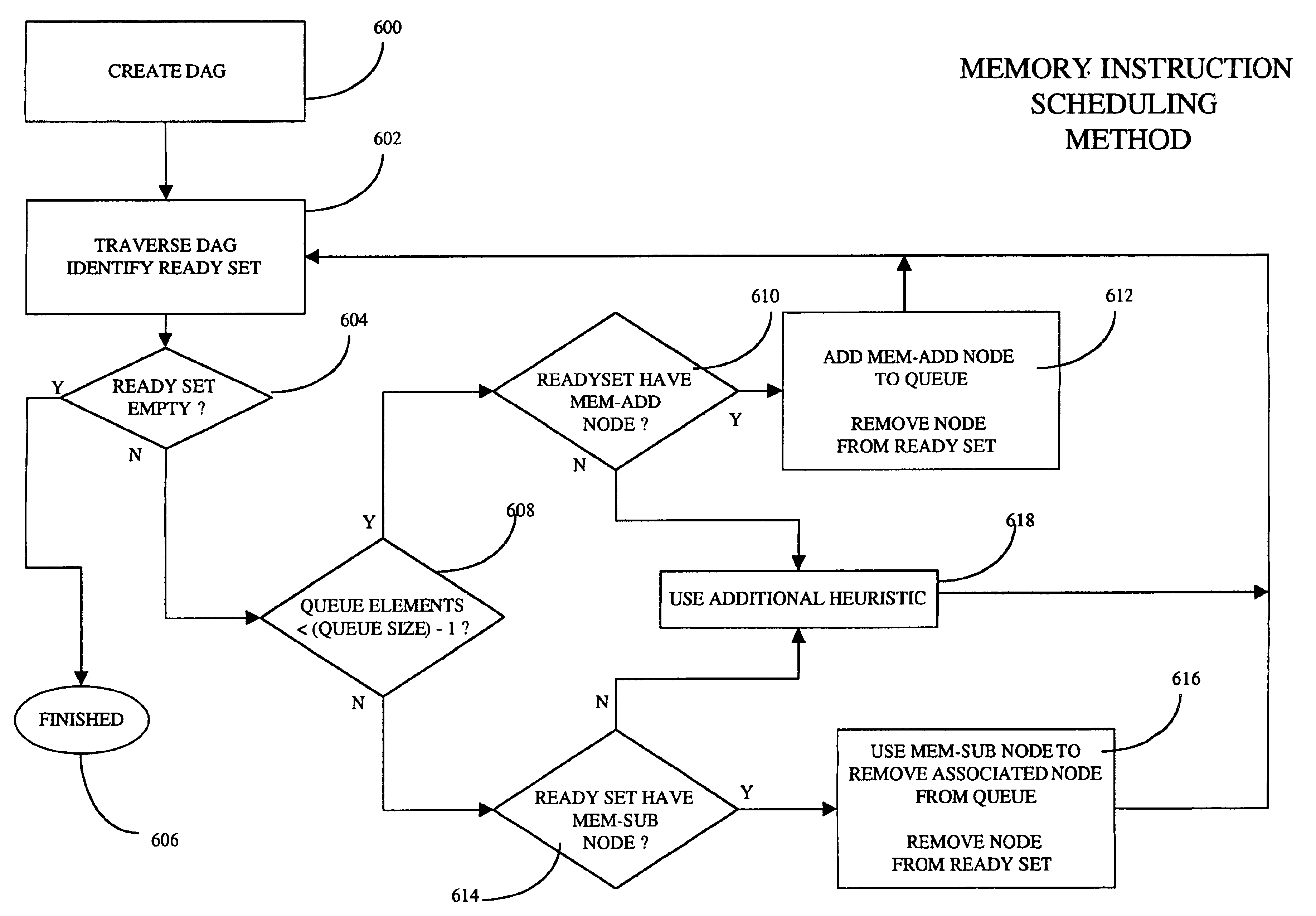

System and method for scheduling instructions to maximize outstanding prefetches and loads

InactiveUS6918111B1Minimizes processor stallMinimizes processor stallsSoftware engineeringDigital computer detailsScheduling instructionsParallel computing

The present invention discloses a method and device for ordering memory operation instructions in an optimizing compiler. for a processor that can potentially enter a stall state if a memory queue is full. The method uses a dependency graph coupled with one or more memory queues. The dependency graph is used to show the dependency relationships between instructions in a program being compiled. After creating the dependency graph, the ready nodes are identified. Dependency graph nodes that correspond to memory operations may have the effect of adding an element to the memory queue or removing one or more elements from the memory queue. The ideal situation is to keep the memory queue as full as possible without exceeding the maximum desirable number of elements, by scheduling memory operations to maximize the parallelism of memory operations while avoiding stalls on the target processor.

Owner:ORACLE INT CORP

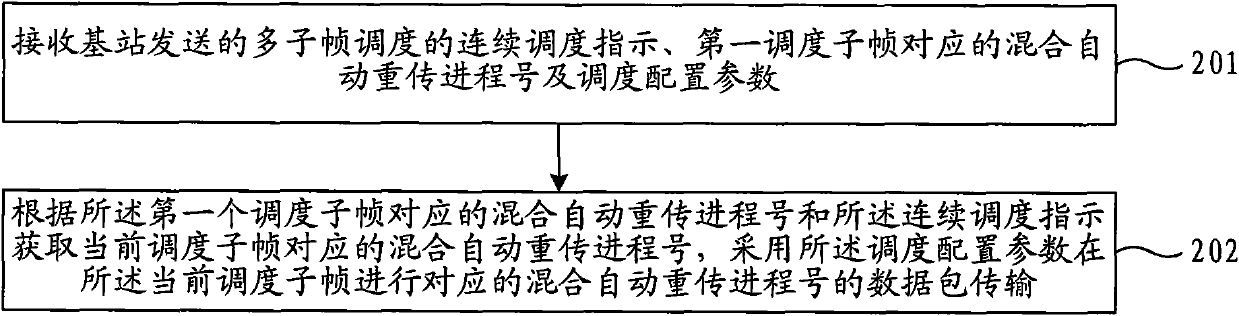

Method, system and device for scheduling multiple subframes

ActiveCN102202408AReduce overheadMulti-subframe scheduling implementationError prevention/detection by using return channelNetwork traffic/resource managementFrequency spectrumScheduling instructions

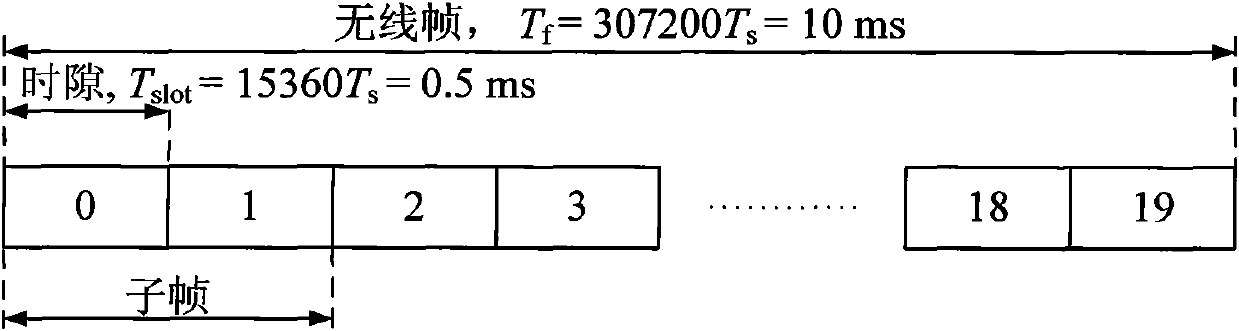

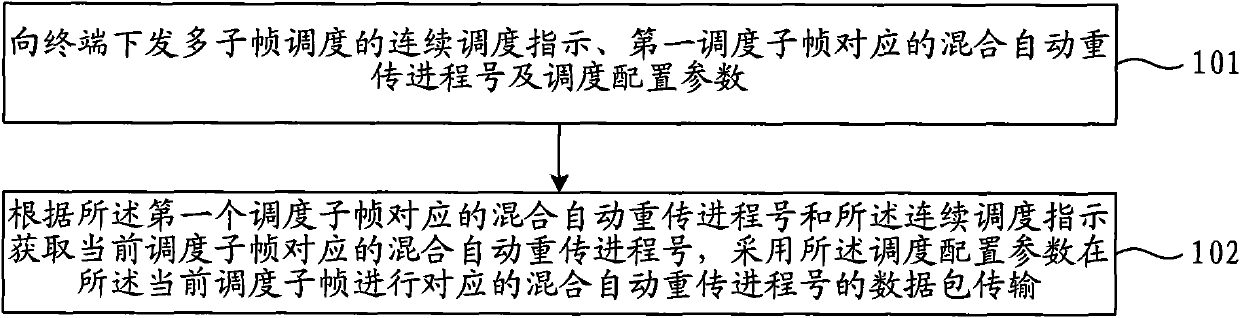

The invention discloses a method, a system and a device for scheduling multiple subframes. The method for scheduling multiple subframes comprises the following steps of: issuing a continuous scheduling instruction for scheduling multiple subframes, a hybrid automatic retransmission process number and a scheduling configuration parameter corresponding to a first scheduled subframe to a terminal; and obtaining the hybrid automatic retransmission process number corresponding to a currently scheduled subframe according to the continuous scheduling instruction and the hybrid automatic retransmission process number corresponding to the first scheduled subframe, and transmitting data packets of corresponding hybrid automatic retransmission process number at the currently scheduled subframe by using the scheduling configuration parameter. Each scheduled subframe in the multiple subframe scheduling process carries one data packet and uses the scheduling configuration parameter. In the embodiment of the invention, by issuing the hybrid automatic retransmission process number and the continuous scheduling instruction of the currently scheduled subframe each time, the multiple subframe scheduling can be implemented, control signaling cost of the system is saved and spectral efficiency of the system is improved.

Owner:HUAWEI TECH CO LTD

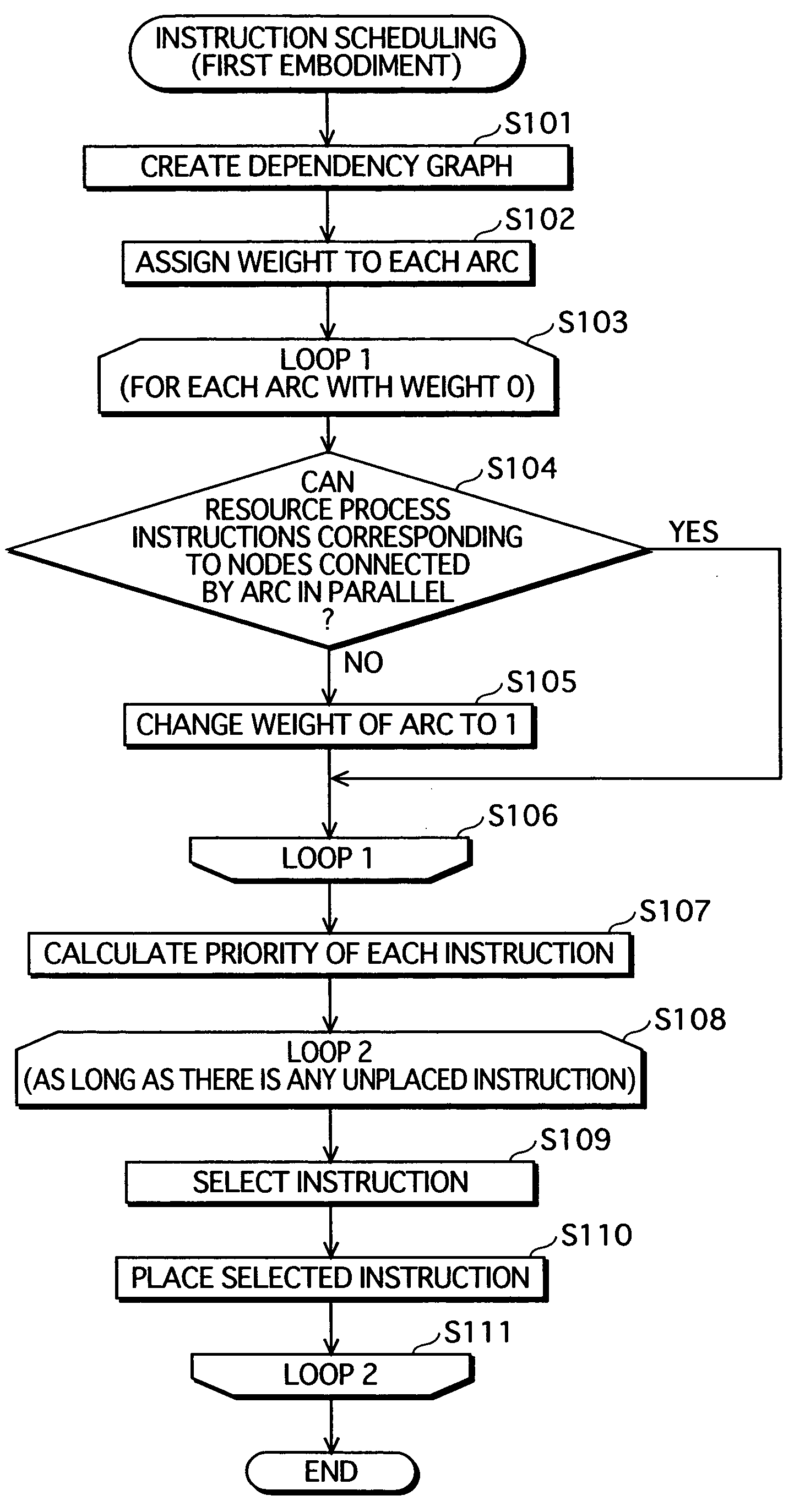

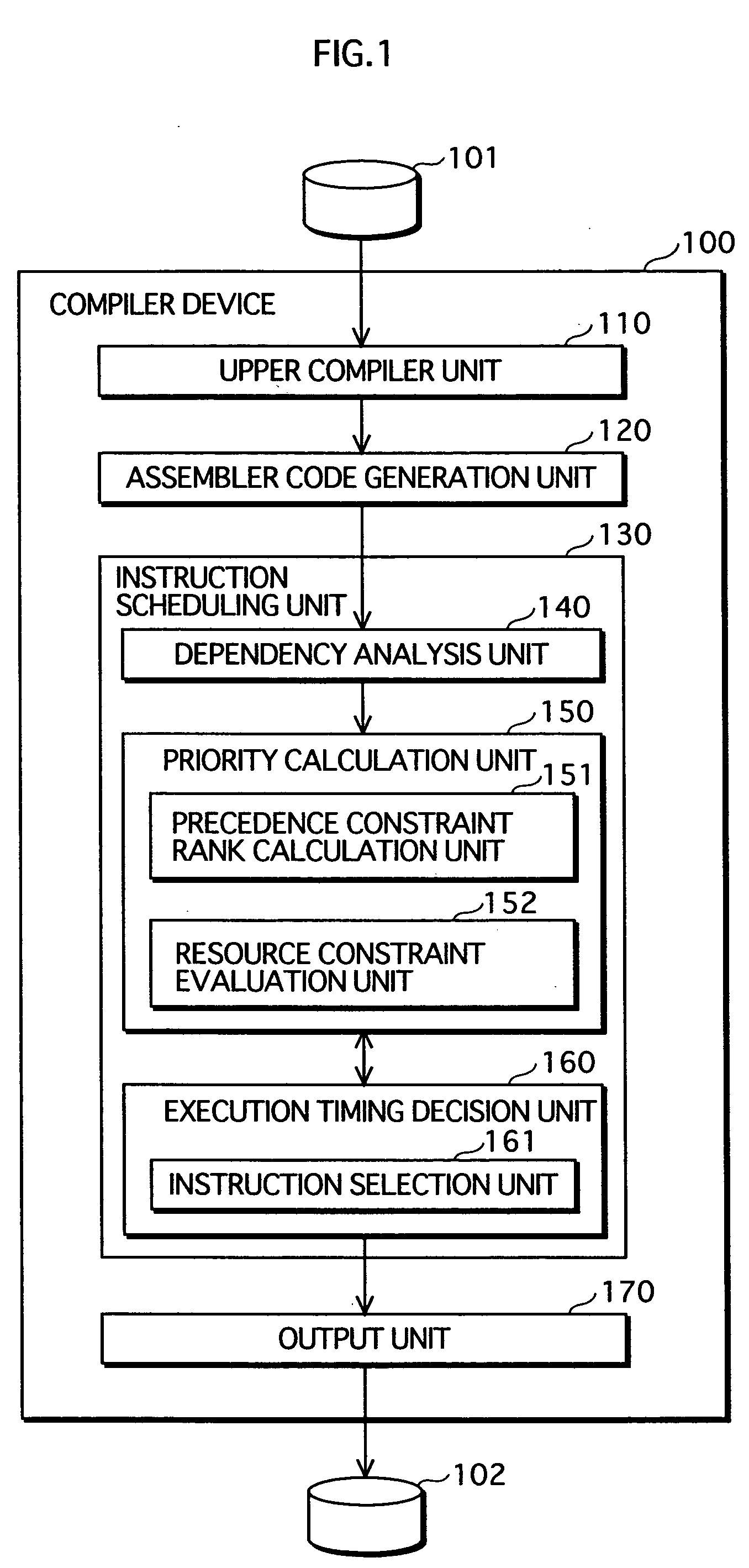

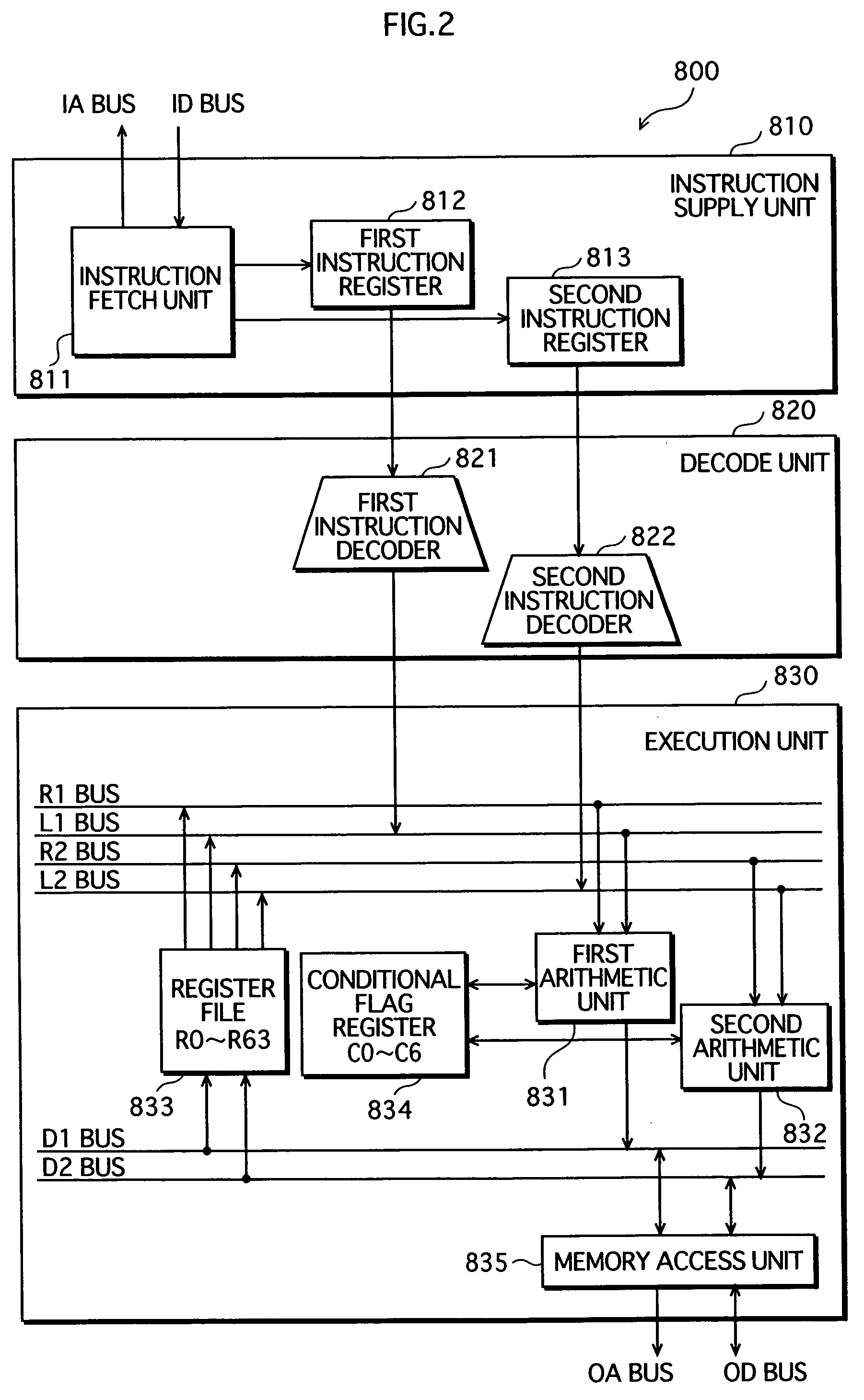

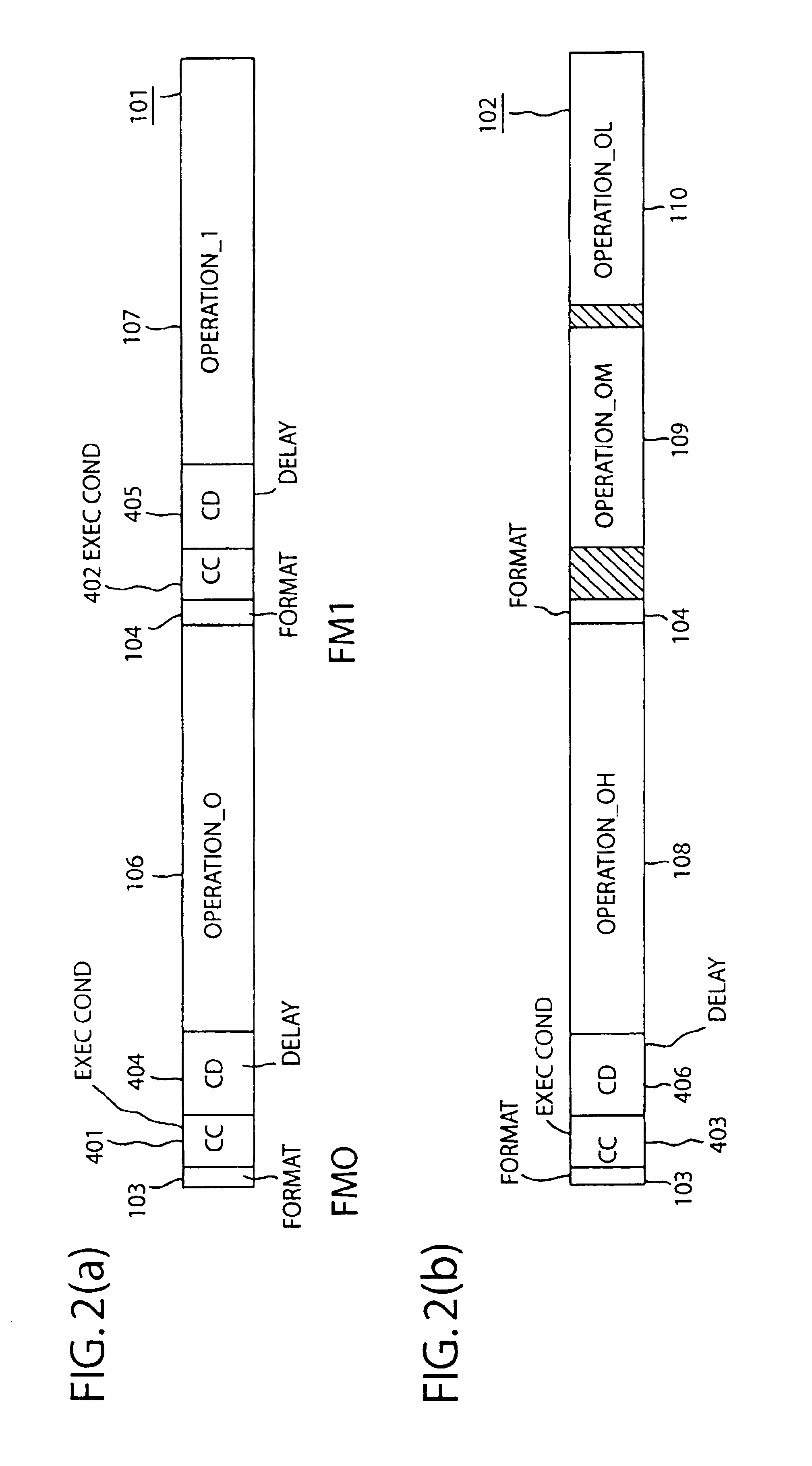

Instruction scheduling method, instruction scheduling device, and instruction scheduling program

InactiveUS20040083468A1Software engineeringProgram controlScheduling instructionsParallel processing

A dependency analysis unit creates a dependency graph showing dependencies between instructions acquired from an assembler code generation unit. A precedence constraint rank calculation unit assigns predetermined weights to arcs in the graph, and adds up weights to calculate a precedence constraint rank of each instruction. When a predecessor and a successor having a dependency and an equal precedence constraint rank cannot be processed in parallel due to a resource constraint, a resource constraint evaluation unit raises the precedence constraint rank of the predecessor. A priority calculation unit sets the raised precedence constraint rank as a priority of the predecessor. An instruction selection unit selects an instruction having a highest priority. An execution timing decision unit places the selected instruction in a clock cycle. The selection by the instruction selection unit and the placement by the execution timing decision unit are repeated until all instructions are placed in clock cycles.

Owner:PANASONIC CORP

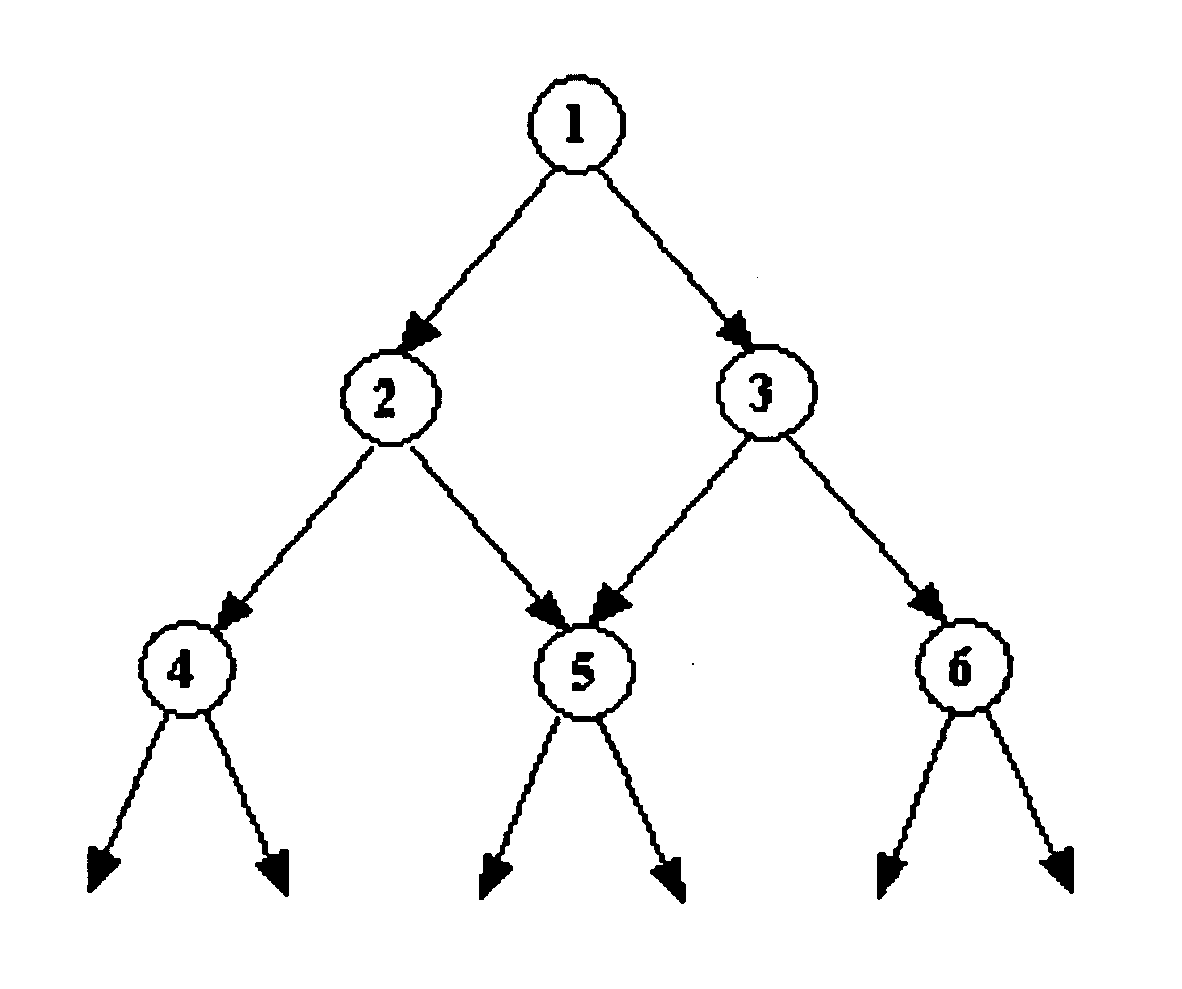

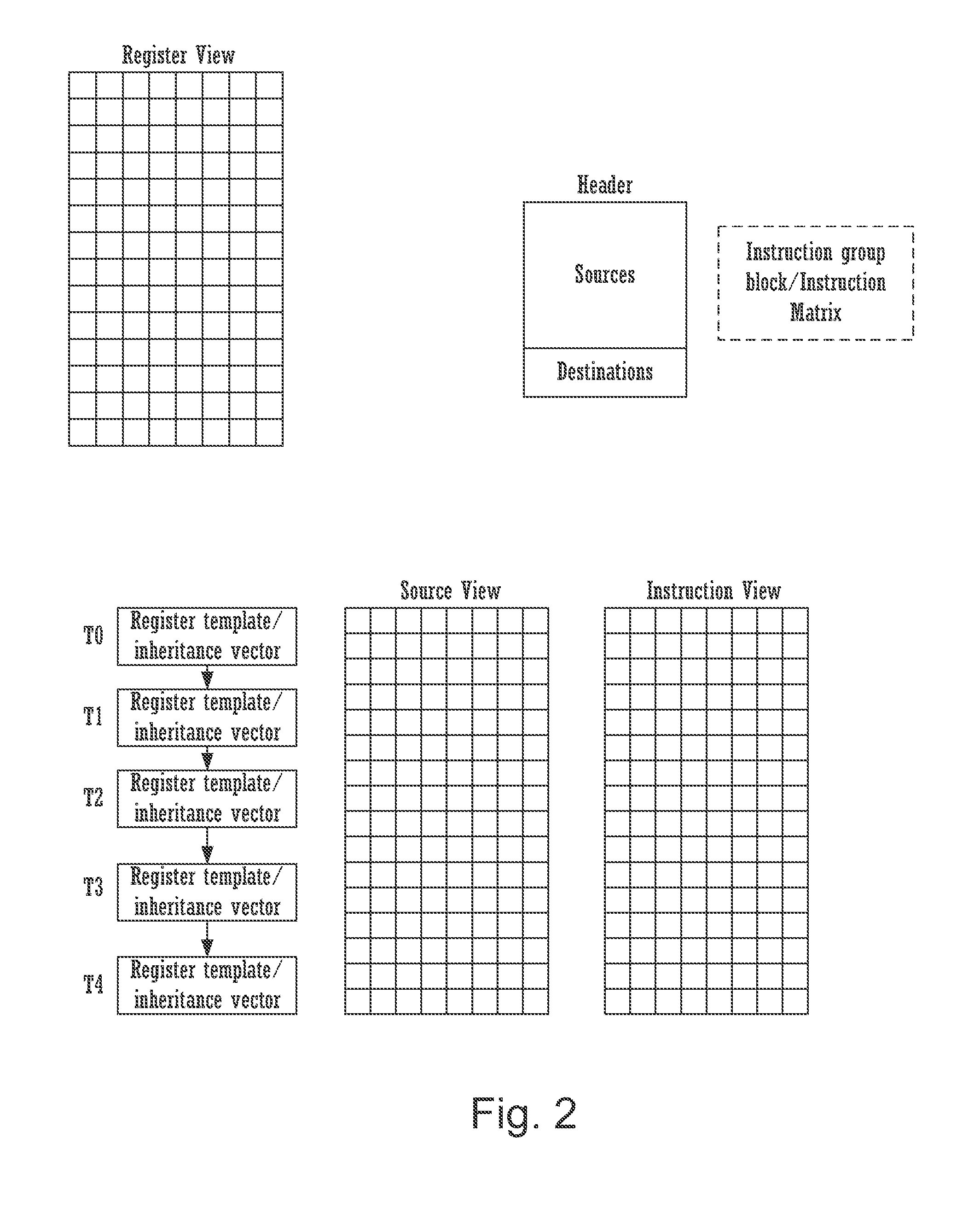

Method for executing structured symbolic machine code on a microprocessor

InactiveUS20060090063A1Software engineeringInstruction analysisScheduling instructionsHigh probability

The invention describes a method for executing structured symbolic machine code on a microprocessor. Said structured symbolic machine code contains a set of one or more regions, where each of said regions contains symbolic machine code containing, in addition to the proper instructions, information about the symbolic variables, the symbolic constants, the branch tree, pointers and functions arguments used within each of said regions. This information is fetched by the microprocessor from the instruction cache and stored into dedicated memories before the proper instructions of each region are fetched and executed. Said information is used by the microprocessor in order to improve the degree of parallelism achieved during instruction scheduling and execution. Among other purposes, said information allows the microprocessor to perform so-called speculative branch prediction. Speculative branch prediction does branch prediction along a branch path containing several dependent branches in the shortest time possible (in only a few clock cycles) without having to wait for branches to resolve. This is a key issue which allows to apply region scheduling in practice, e.g. treegion scheduling, where machine code must be fetched and speculatively executed from the trace having highest probability or confidence among several traces. This allows to use the computation resources (e.g. the FUs) of the microprocessor in the most efficient way. Finally, said information allows to re-execute instructions in the right order and to overwrite wrong data with the correct ones when miss-predictions occur.

Owner:THEIS JEAN PAUL

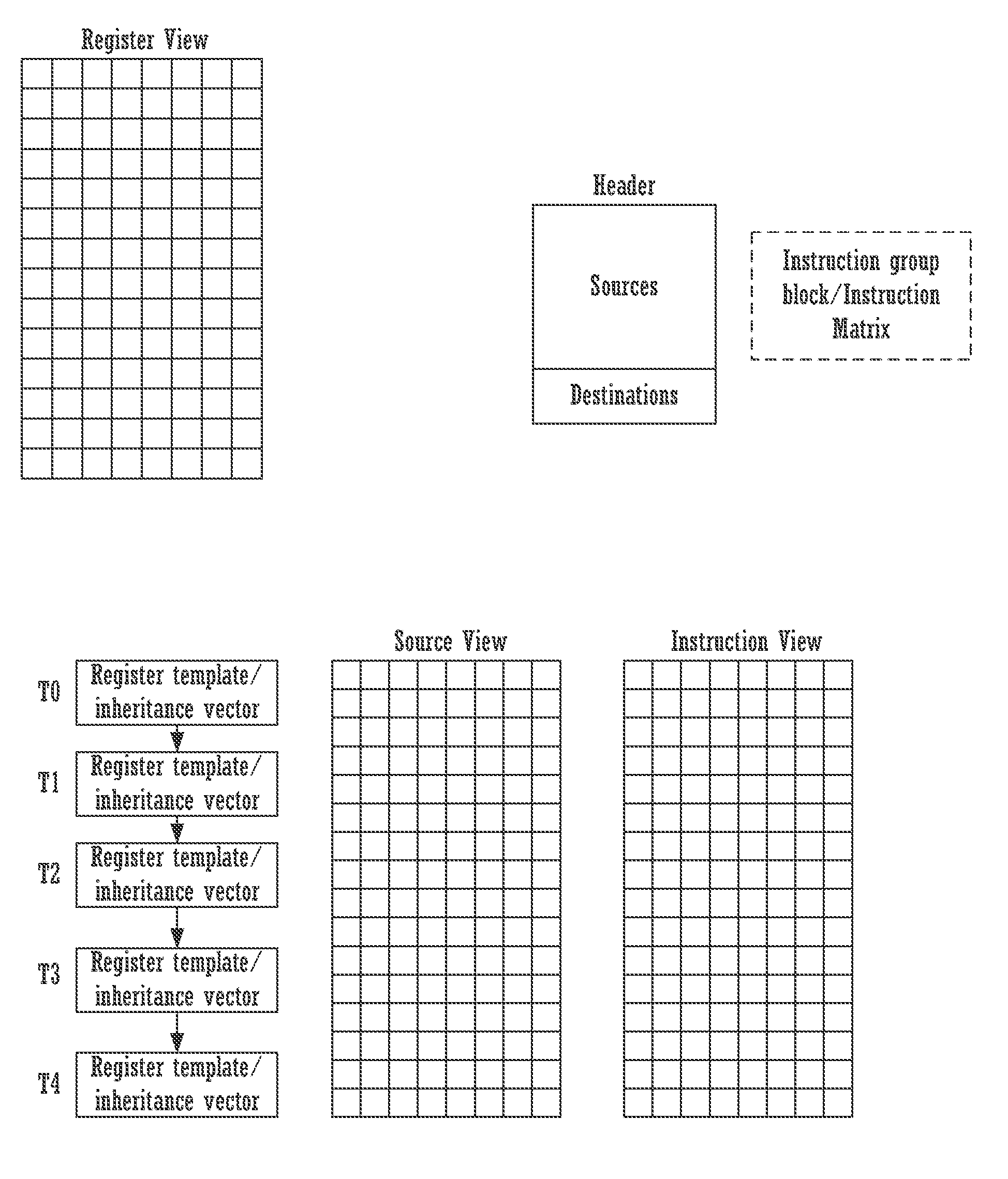

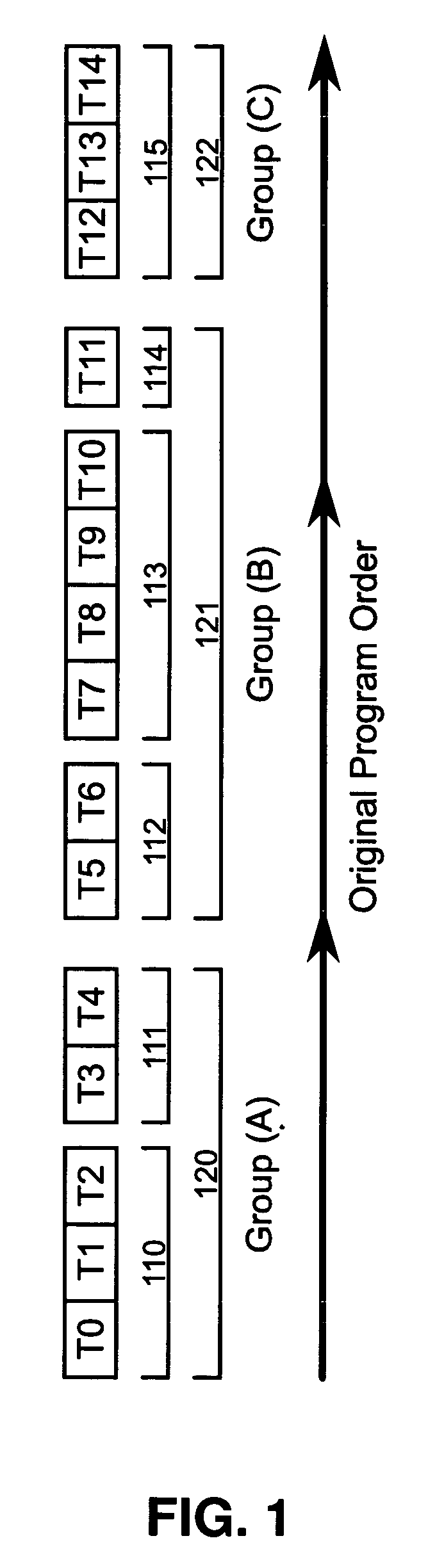

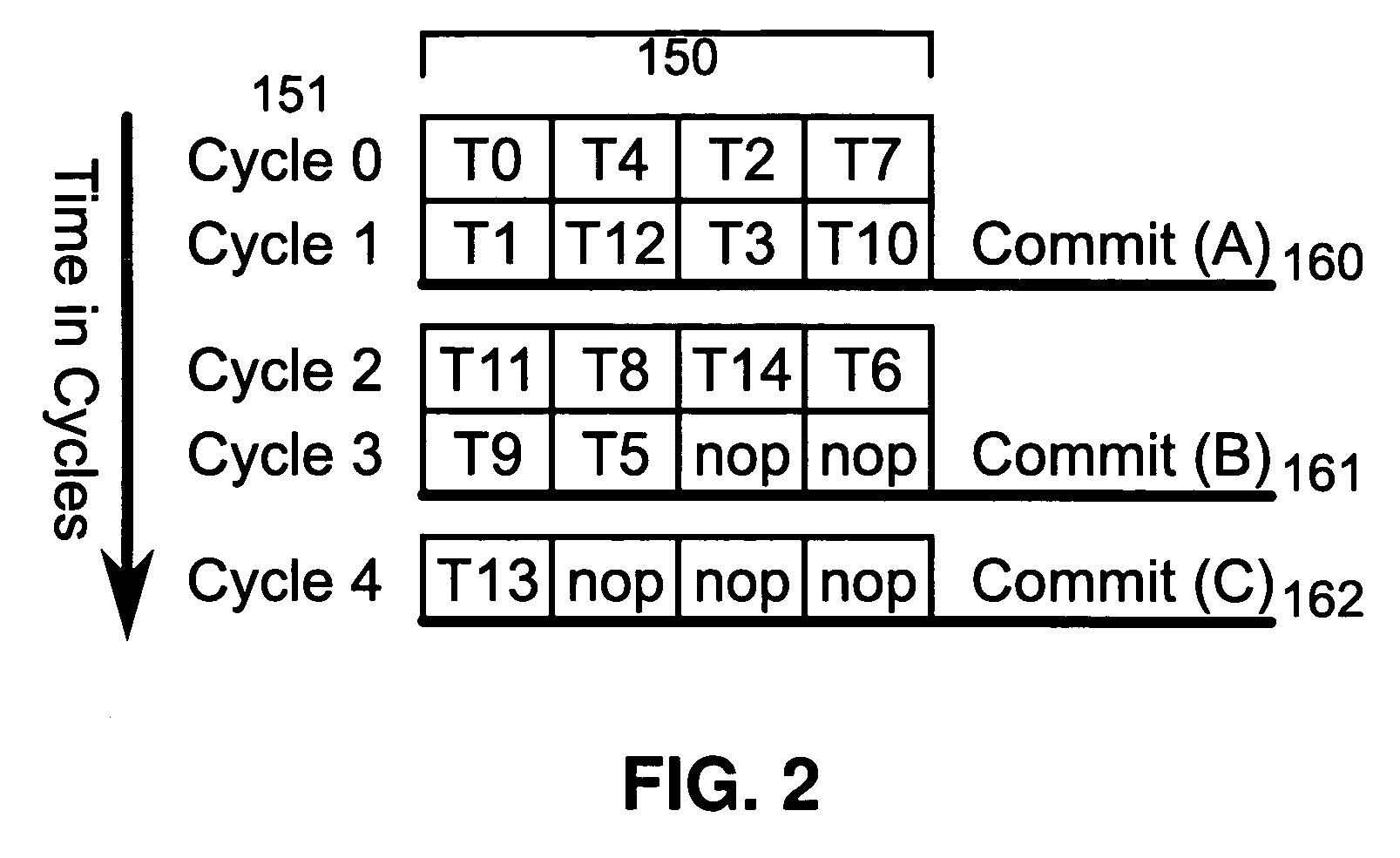

Method for performing dual dispatch of blocks and half blocks

ActiveUS20140317387A1Program initiation/switchingDigital computer detailsScheduling instructionsParallel computing

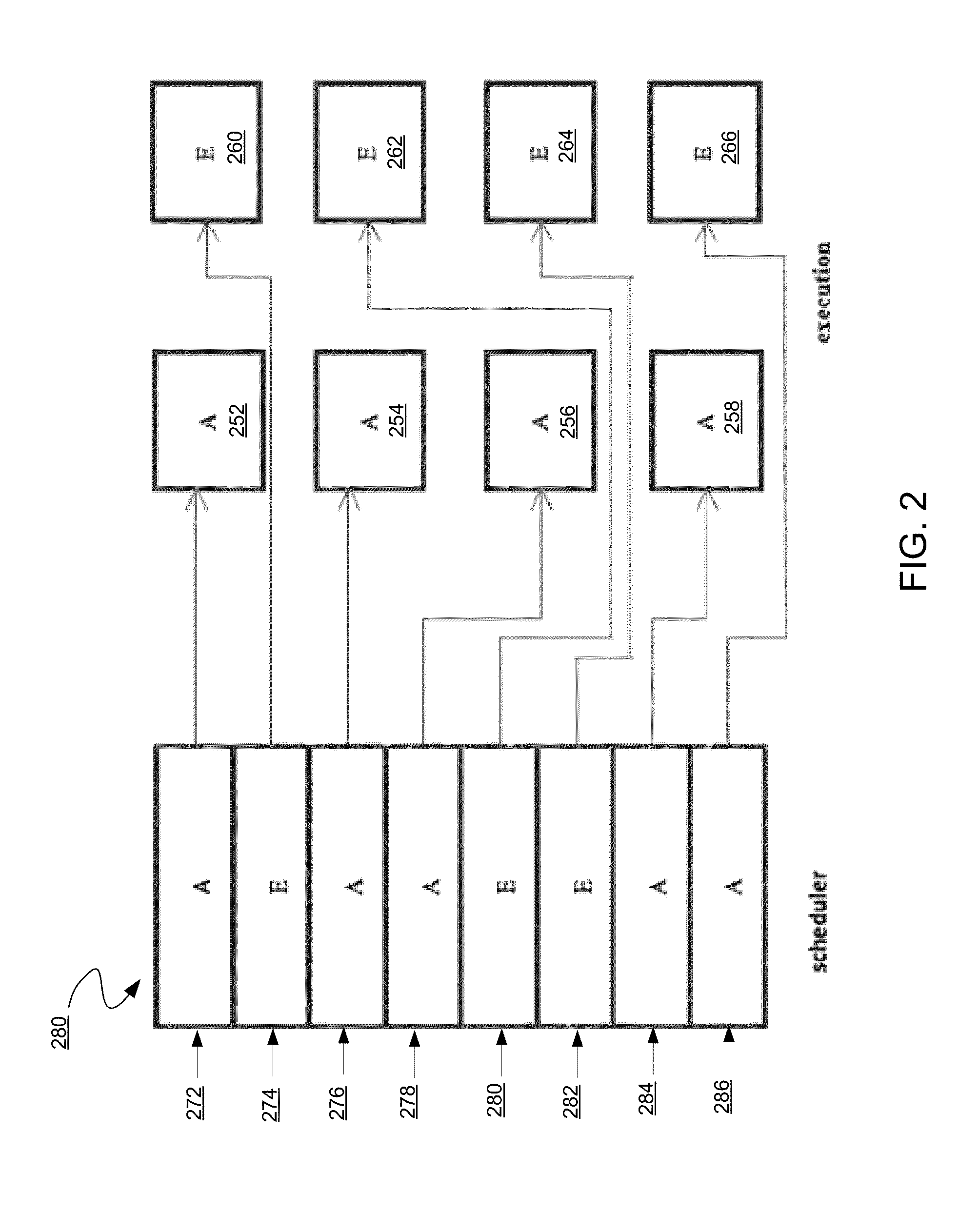

A method for executing dual dispatch of blocks and half blocks. The method includes receiving an incoming instruction sequence using a global front end; grouping the instructions to form instruction blocks, wherein each of the instruction blocks comprise two half blocks; scheduling the instructions of the instruction block to execute in accordance with a scheduler; and performing a dual dispatch of the two half blocks for execution on an execution unit.

Owner:INTEL CORP

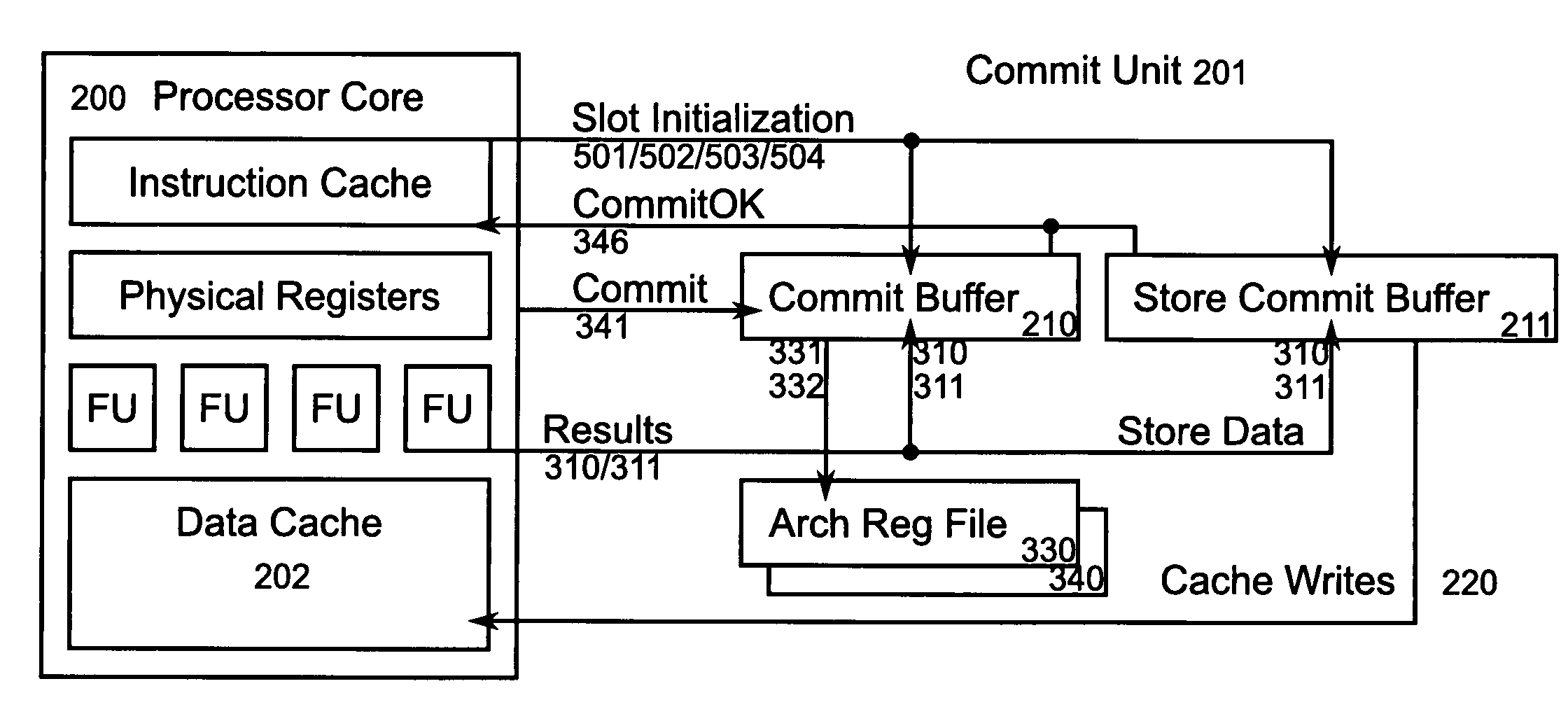

Method and apparatus for incremental commitment to architectural state in a microprocessor

InactiveUS20060112261A1Minimize overheadImprove performanceDigital computer detailsMemory systemsScheduling instructionsOperating system

Method and hardware apparatus are disclosed for reducing the rollback penalty on exceptions in a microprocessor executing traces of scheduled instructions. Speculative state is committed to the architectural state of the microprocessor at a series of commit points within a trace, rather than committing the state as a single atomic operation at the end of the trace.

Owner:VAN DIKE KORBIN S +2

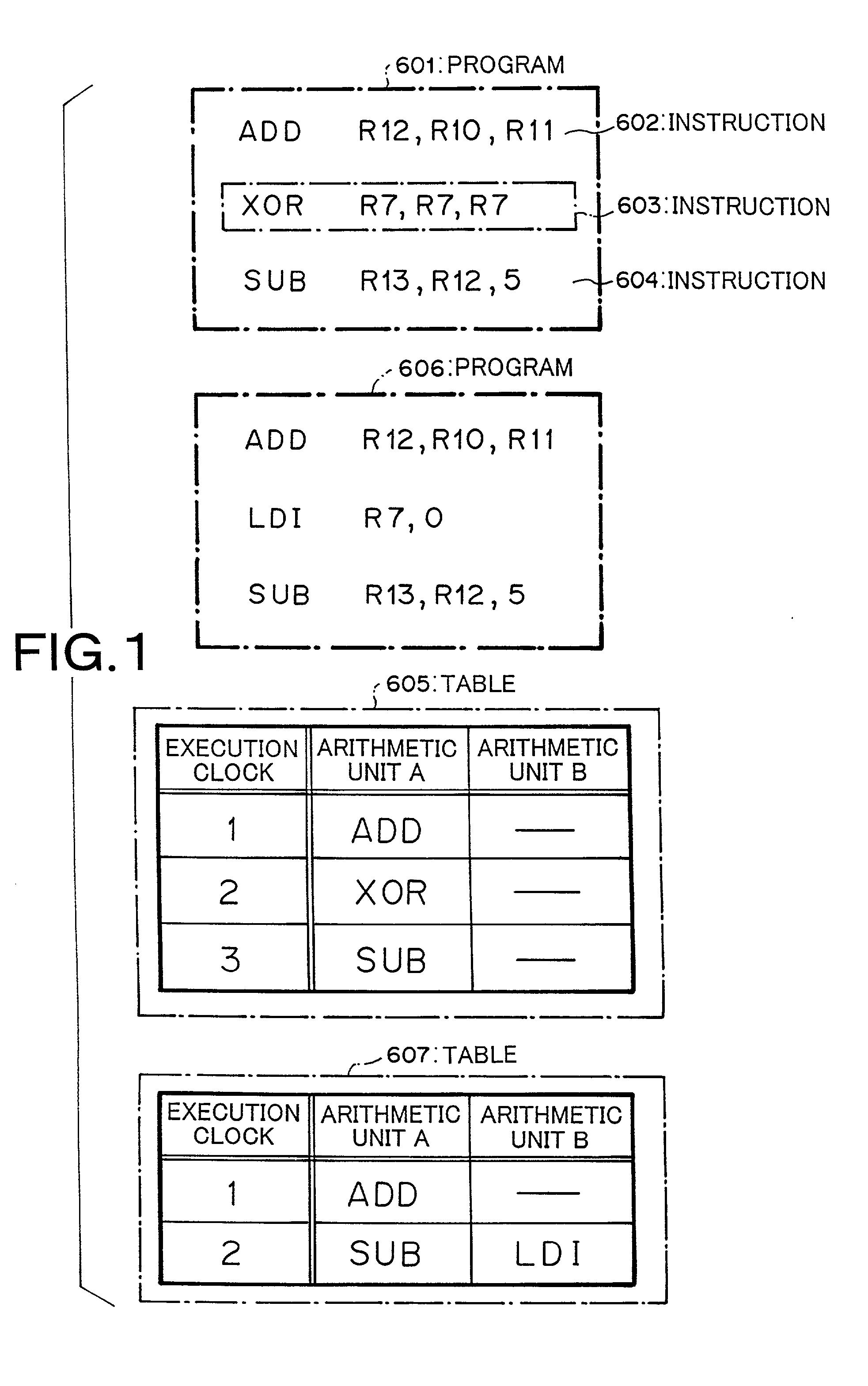

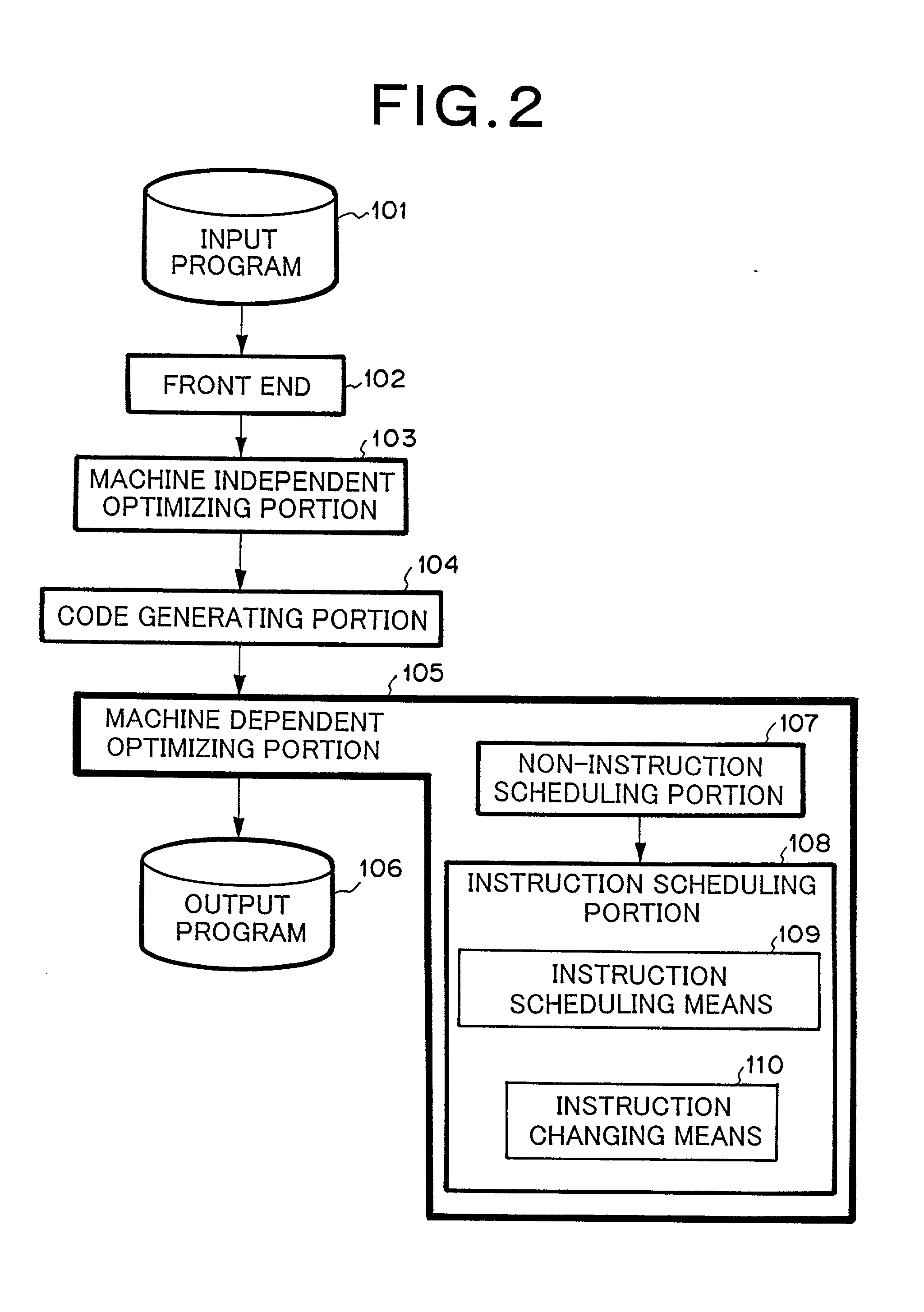

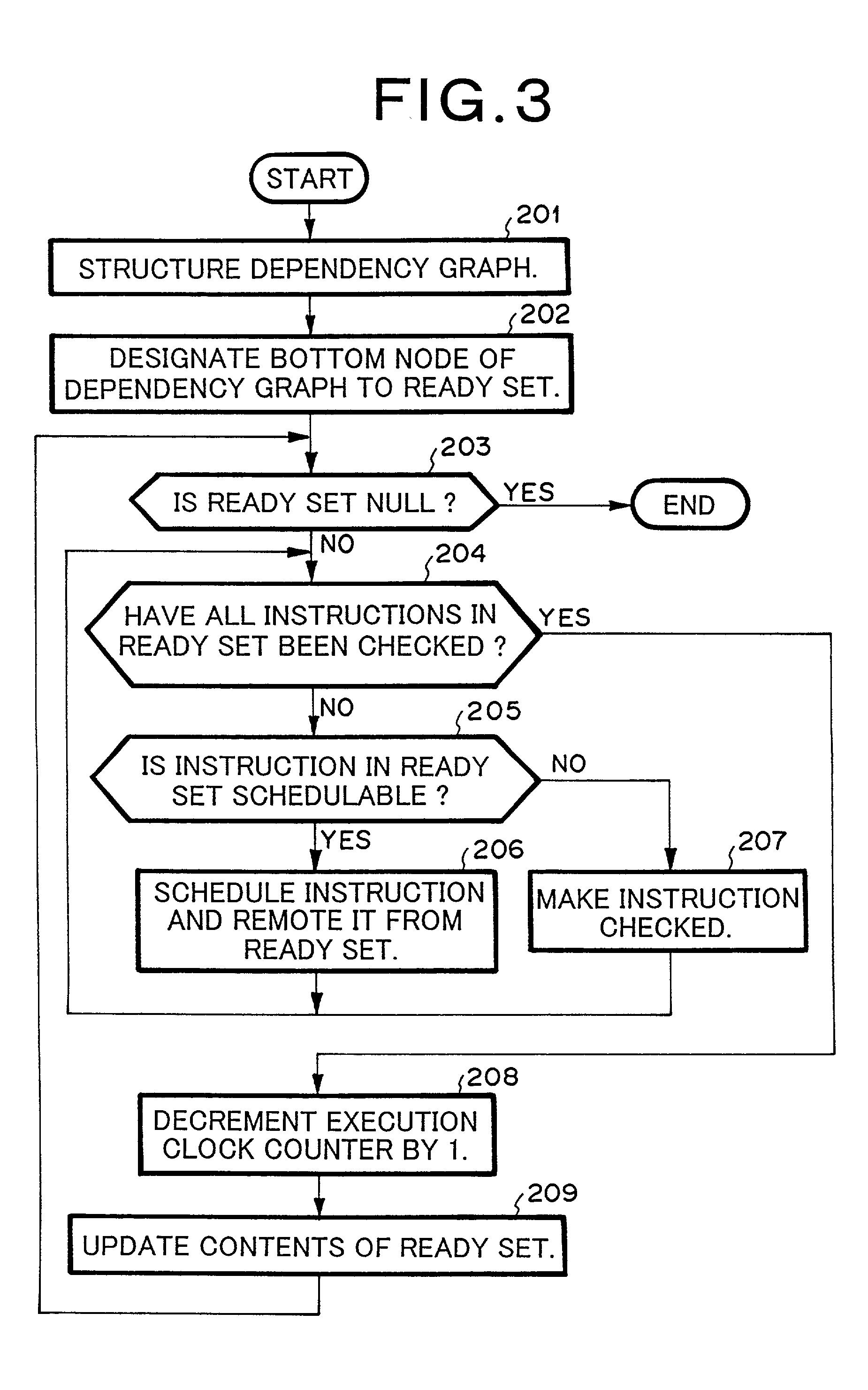

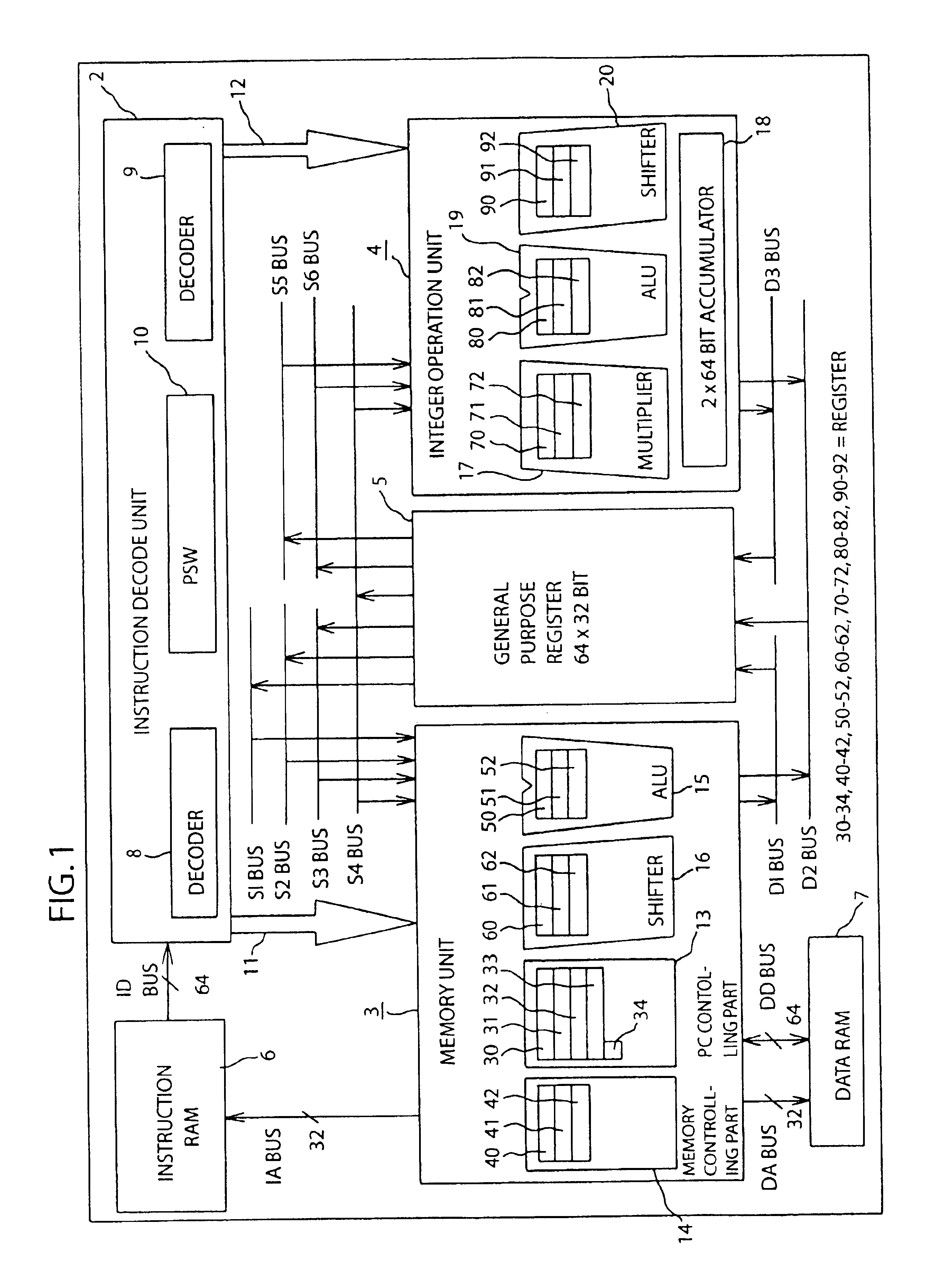

Compiler processing system for generating assembly program codes for a computer comprising a plurality of arithmetic units

InactiveUS20010039654A1Software engineeringConcurrent instruction executionScheduling instructionsProgram code

Provided is a compiler processing system for generating assembly program codes from a source program for a computer comprising a plurality of arithmetic units, the system comprising: a front end; a machine-independent optimization portion; a code generating portion; and a machine-dependent optimization portion; wherein the machine-dependent optimization portion comprises: a non-instruction scheduling portion; and an instruction scheduling portion comprising: means for determining if an arithmetic unit is available for an inspected instruction at an execution clock concerned; means for determining if there is a substitutional instruction which performs the equivalent function as the inspected instruction if an arithmetic unit is not available for the inspected instruction; means for determining if an arithmetic unit is available at the execution clock for the substitutional instruction, if any; and means for changing the inspected instruction to the substitutional instruction if an arithmetic unit is available for the substitutional instruction.

Owner:NEC ELECTRONICS CORP

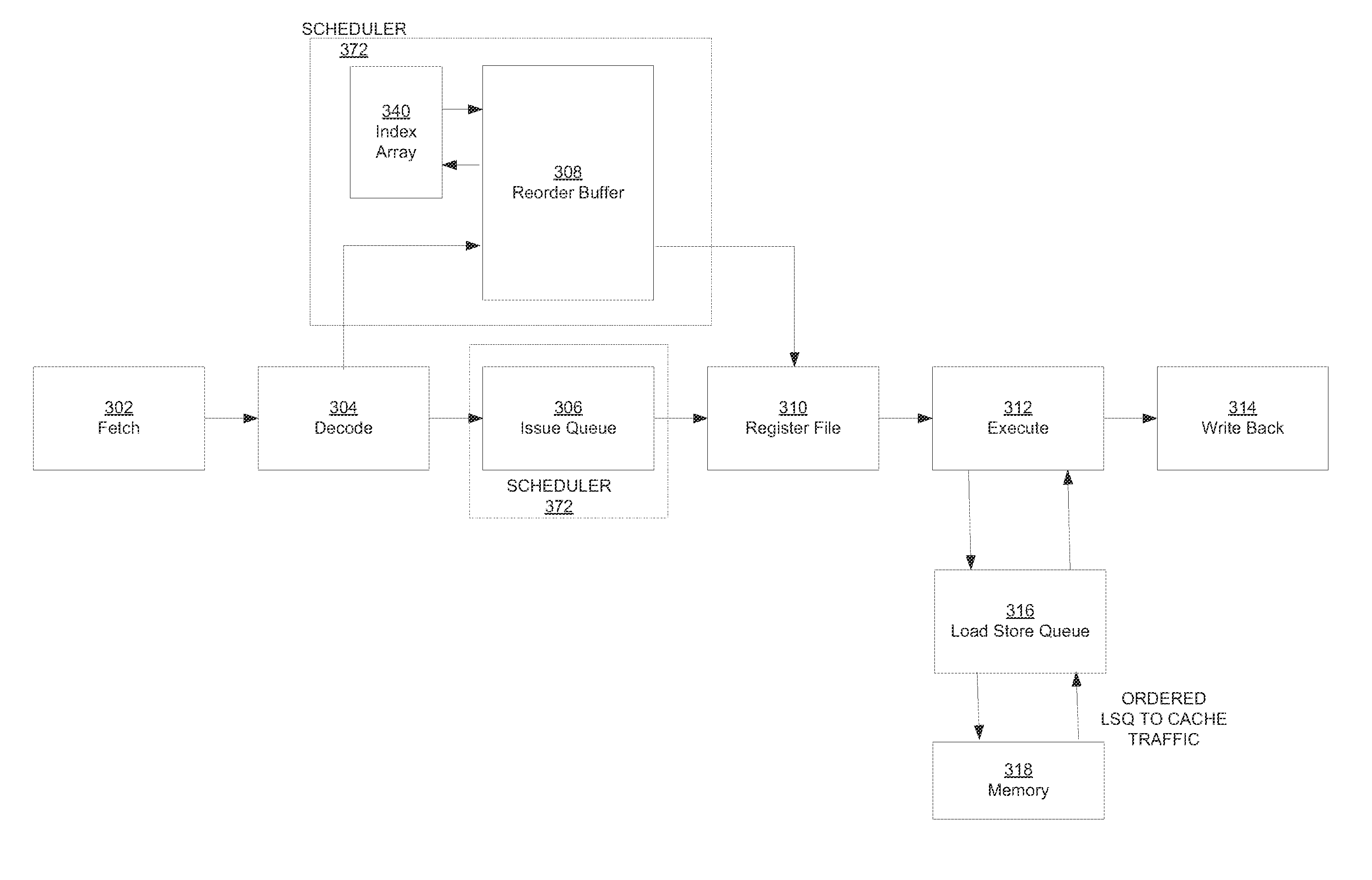

Method and apparatus for efficient scheduling for asymmetrical execution units

ActiveUS20140373022A1Efficient schedulingOptimizes dispatch throughputProgram initiation/switchingConcurrent instruction executionScheduling instructionsParallel computing

A method for performing instruction scheduling in an out-of-order microprocessor pipeline is disclosed. The method comprises selecting a first set of instructions to dispatch from a scheduler to an execution module, wherein the execution module comprises two types of execution units. The first type of execution unit executes both a first and a second type of instruction and the second type of execution unit executes only the second type. Next, the method comprises selecting a second set of instructions to dispatch, which is a subset of the first set and comprises only instructions of the second type. Next, the method comprises determining a third set of instructions, which comprises instructions not selected as part of the second set. Finally, the method comprises dispatching the second set for execution using the second type of execution unit and dispatching the third set for execution using the first type of execution unit.

Owner:INTEL CORP

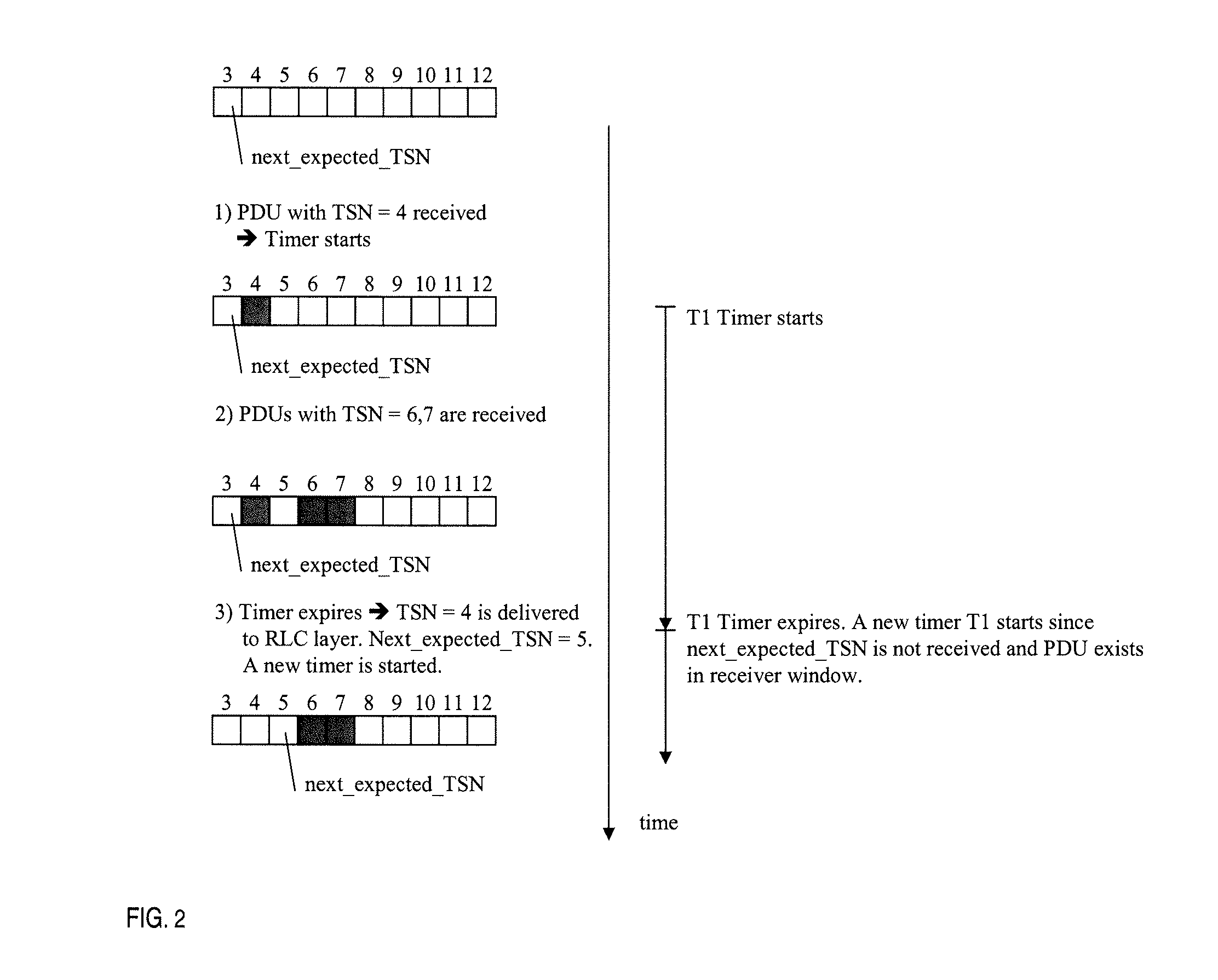

Next data indicator handling

ActiveUS20100325502A1Error prevention/detection by using return channelTransmission systemsScheduling instructionsComputer science

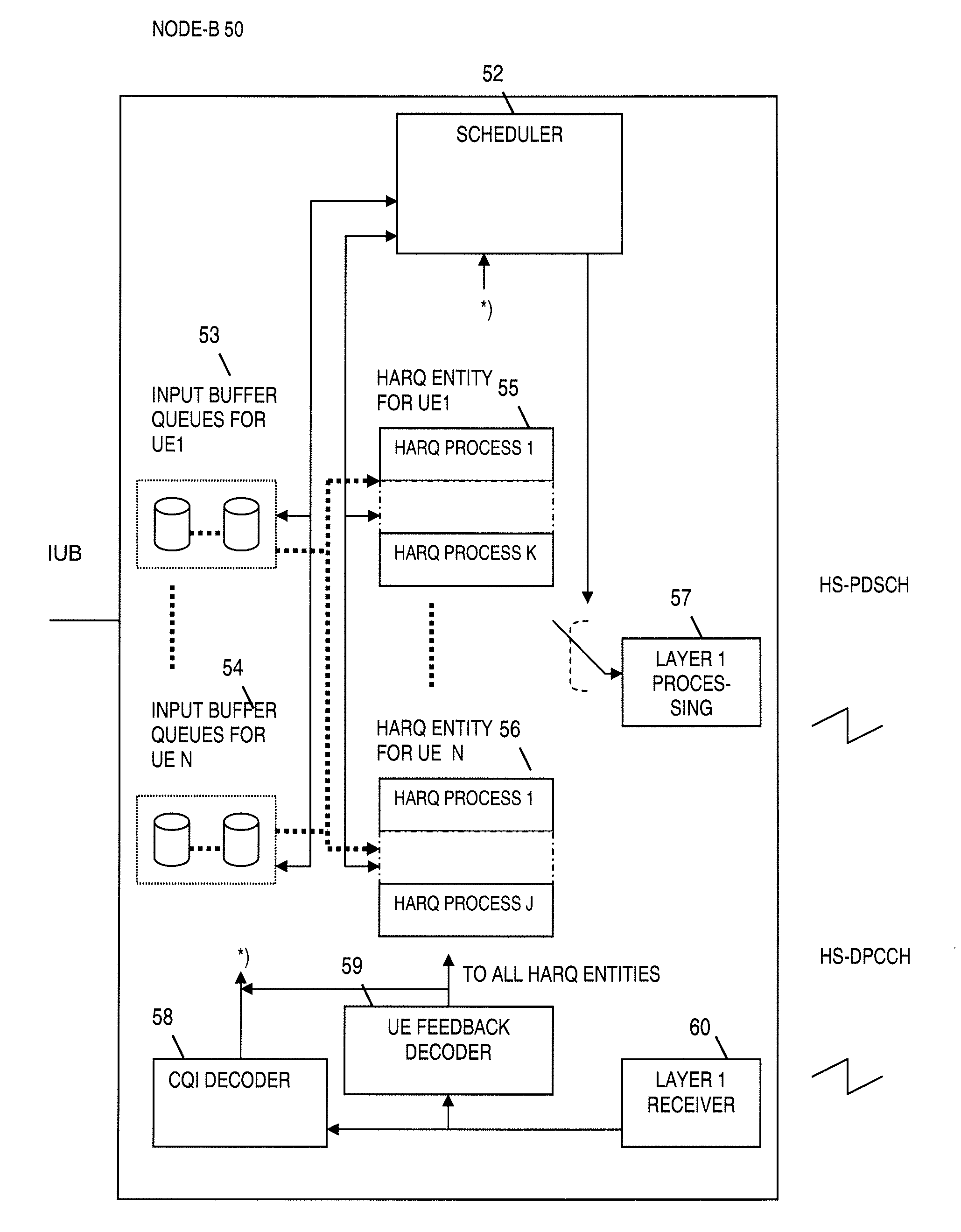

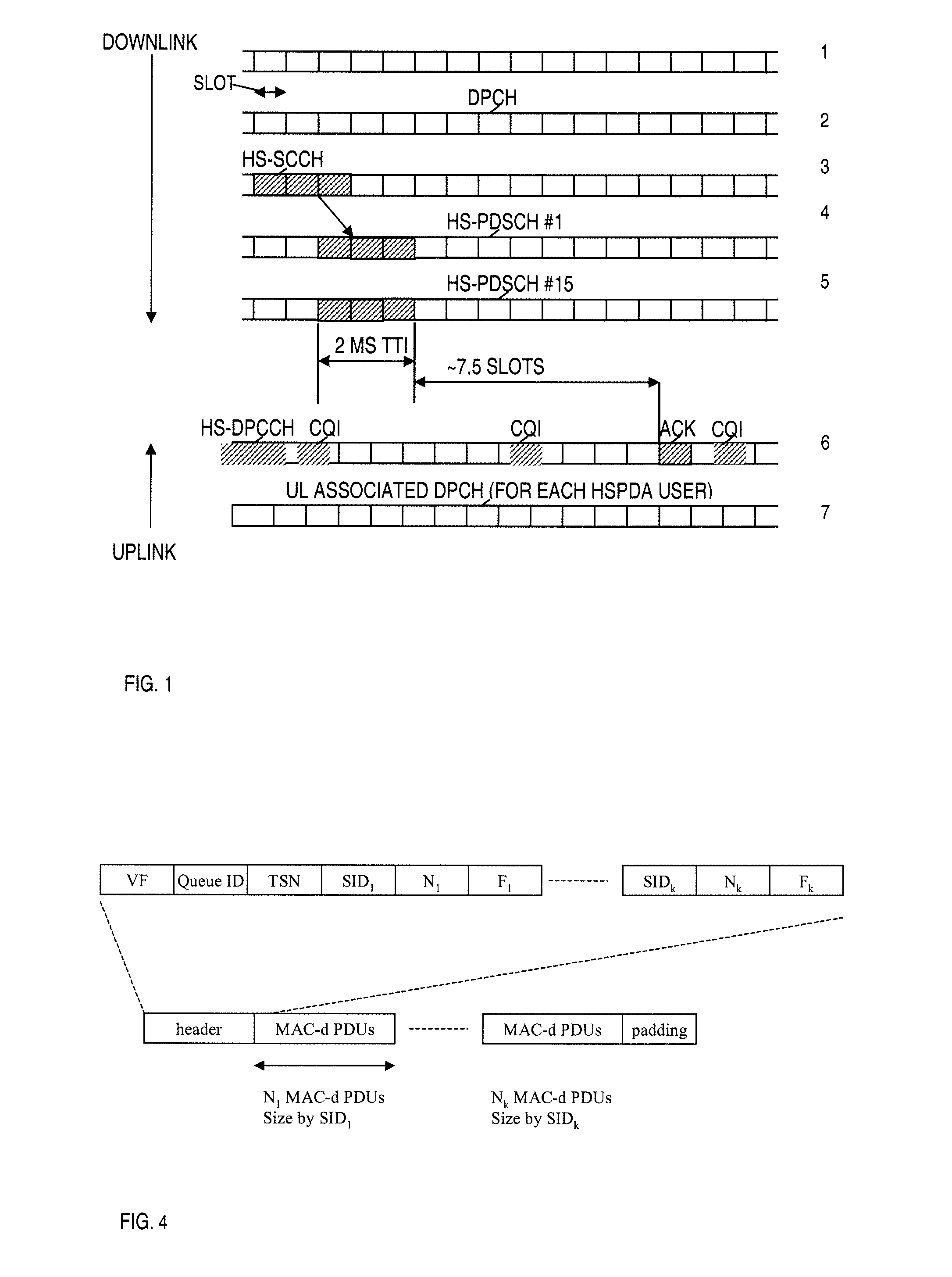

Method and apparatus for a base station engaging in transmissions via at least a media access layer with a user entity is disclosed. The base station transmits data to the user entity over a high speed scheduling channel and a high speed packet data scheduling channel. The base station has a plurality of hybrid automatic repeat request (HARQ) entities cooperating with a scheduler for transmitting frames from at least the base station to the user entity for a given HARQ process. Each HARQ entity receives either a not acknowledge signal or an acknowledge signal or detects a discontinuous transmission for a given HARQ process. The base station transmits a next data indicator to the user entity. The user entity has at least one buffer associated with a given HARQ process. The buffer stores and performs incremental combining of received data relating to data from the base station. The buffer is capable of being flushed.A method for a base station is disclosed. The method begins by awaiting a scheduling instruction and scheduling of download data. If the outcome from previous media access transmission or transmissions is detected as a discontinuous transmission the next data indicator is set to the value previously used. Otherwise the next data indicator value is toggled. Data for the given HARQ process is transmitted.

Owner:TELEFON AB LM ERICSSON (PUBL)

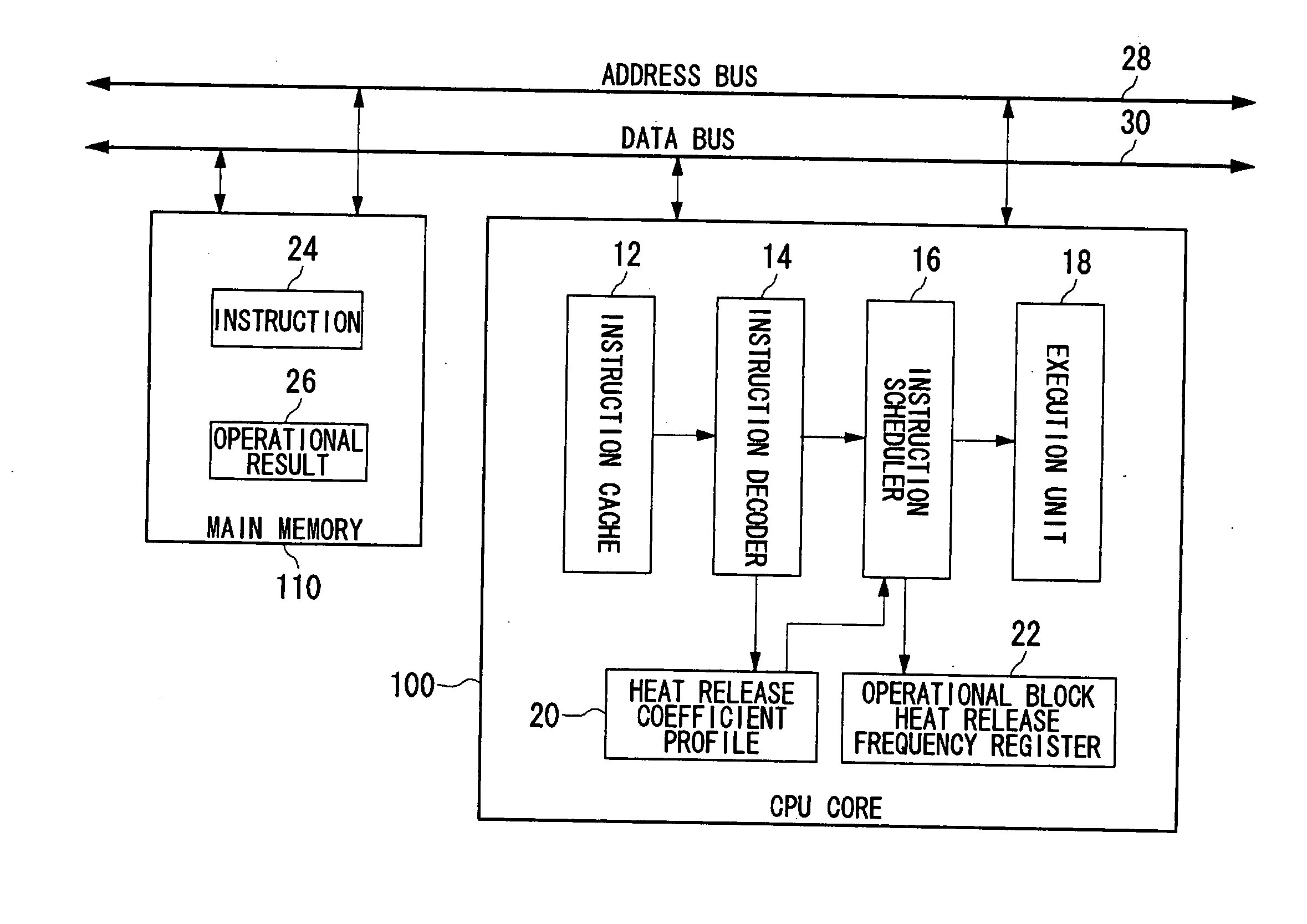

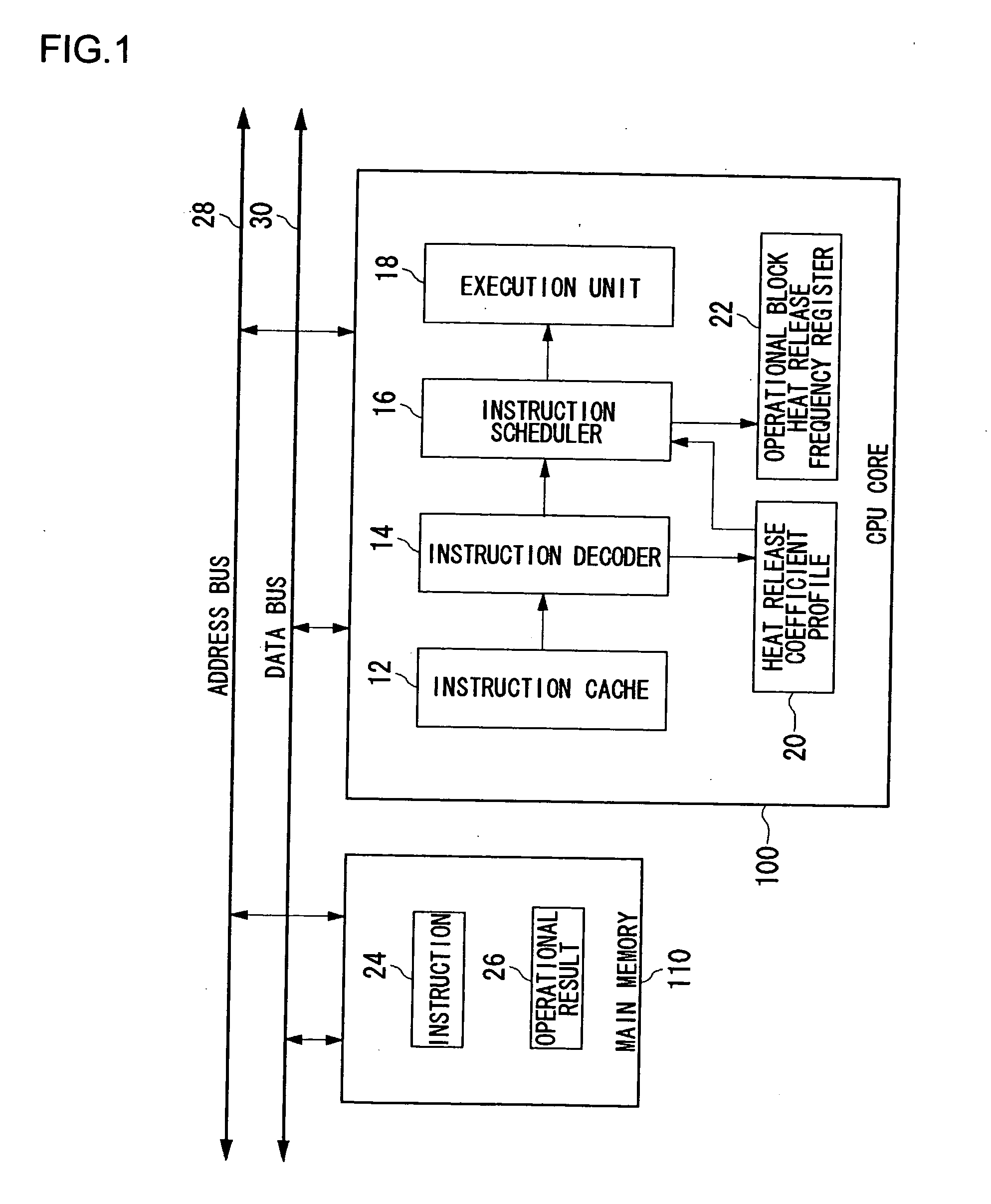

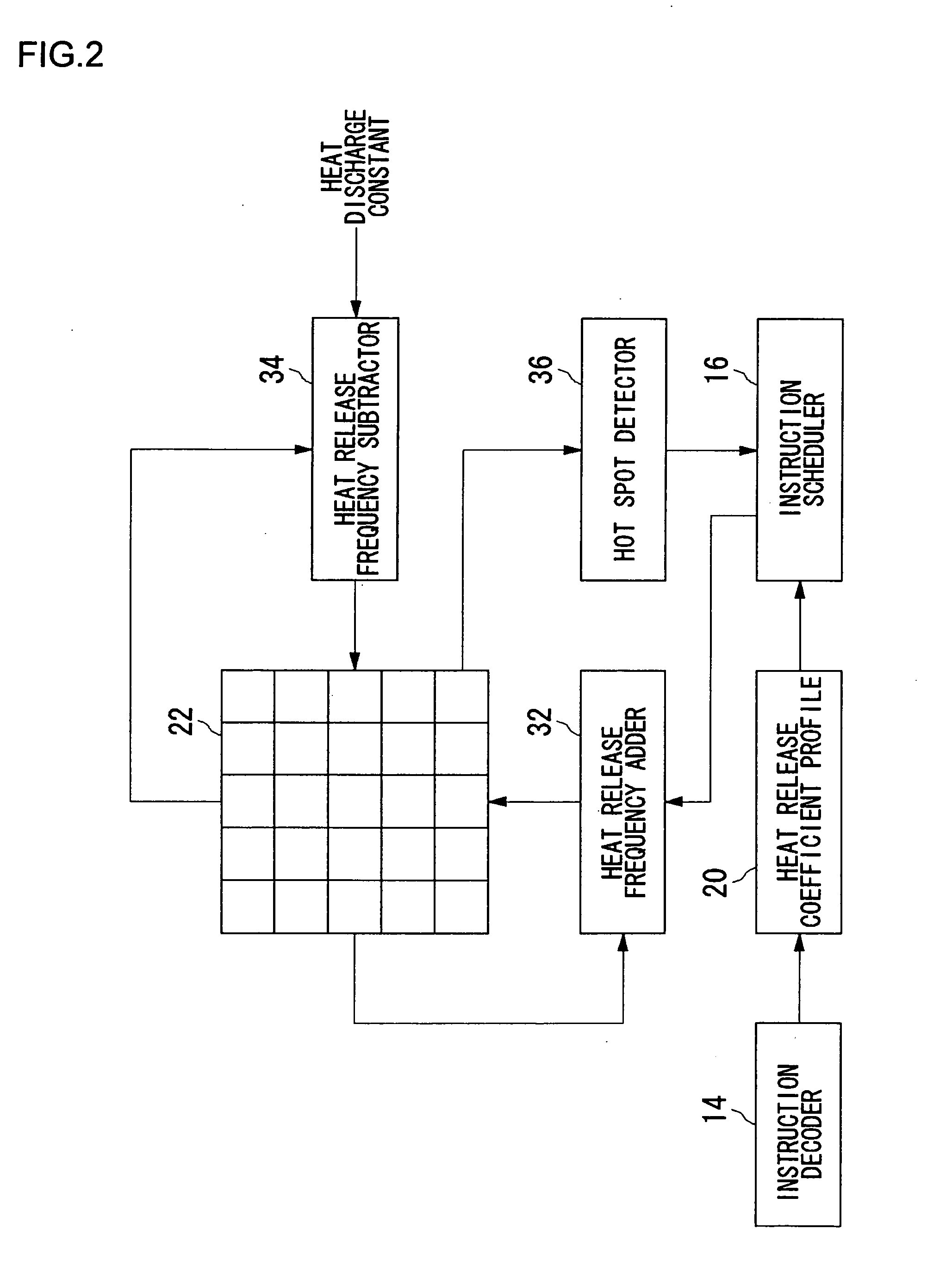

Processor, multiprocessor system, processor system, information processing apparatus, and temperature control method

InactiveUS20070198134A1Avoid misuseWithout incurring degradation in processor performanceEnergy efficient ICTResource allocationTemperature controlInformation processing

An instruction decoder identifies, for each instruction, an operational block involved in the execution of the instruction and an associated heat release coefficient. The instruction decoder stores identified information in a heat release coefficient profile. An instruction scheduler schedules the instructions in accordance with the dependence of the instructions on data. A heat release frequency adder cumulatively adds the heat release coefficient to the heat release frequency of the operational block held in the operational block heat release frequency register as the execution of the scheduled instructions proceeds. A heat release frequency subtractor subtracts from the heat release frequency of the operational blocks in the operational block heat release frequency register in accordance with heat discharge that occurs with time. A hot spot detector detects an operational block with its heat release frequency, held in the operational block heat release frequency register, exceeding a predetermined threshold value as a hot spot. The instruction scheduler delays the execution of the instruction involving for its execution the operational block identified as a hot spot.

Owner:SONY COMPUTER ENTERTAINMENT INC

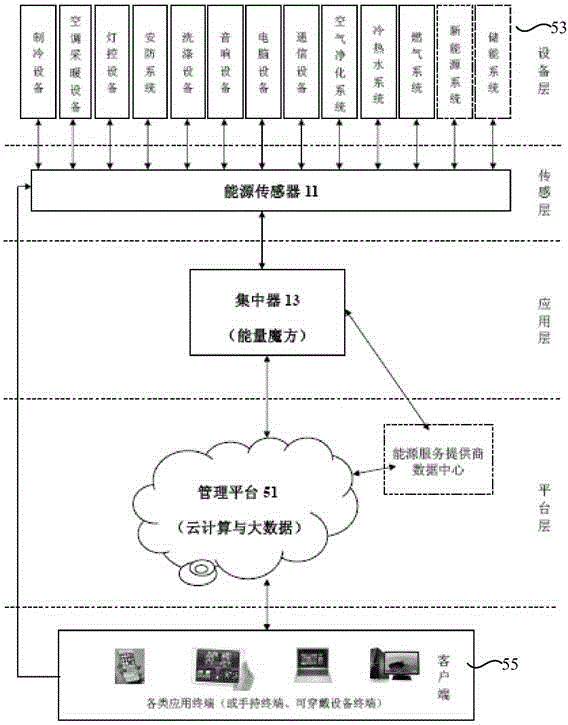

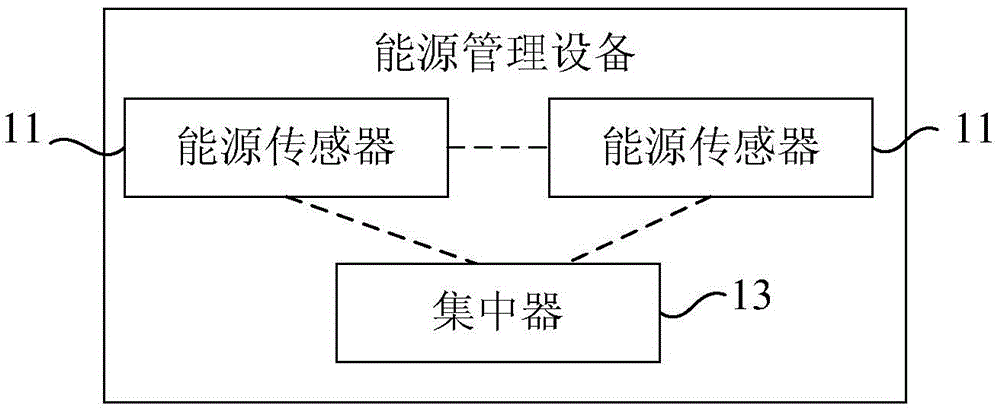

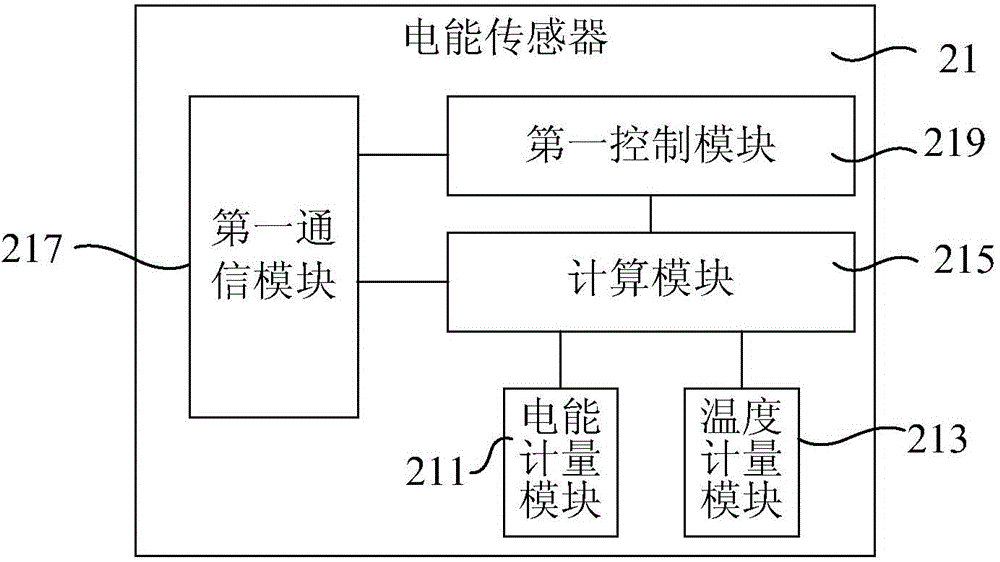

Energy management device and system

InactiveCN103984316AMeet energy scheduling needsAccurate analysisData processing applicationsTransmission systemsRate parameterScheduling instructions

The invention relates to an energy management device and system. The energy management device comprises at least two energy sensors and a concentrator, wherein the energy sensors are connected with at least two energy terminals on a user side respectively and used for collecting energy data of the energy terminals according to a time series; the concentrator is connected with the energy sensors and used for conducting analysis through combination with rated parameters and service data of the energy terminals on the basis of the energy data so as to determine the working conditions of the energy terminals and transmitting scheduling instructions to the energy sensors according to the determined working conditions so as to enable the energy sensors to be capable of responding to the scheduling instructions and controlling the working conditions of the energy terminals. The energy data, collected by various types of energy sensors, of various types of energy terminals can be gathered and analyzed comprehensively through the concentrator, so that the energy use condition of the user side is analyzed more accurately, and the requirements of users for energy scheduling on the condition that various kinds of energy are used can be met.

Owner:刘玮

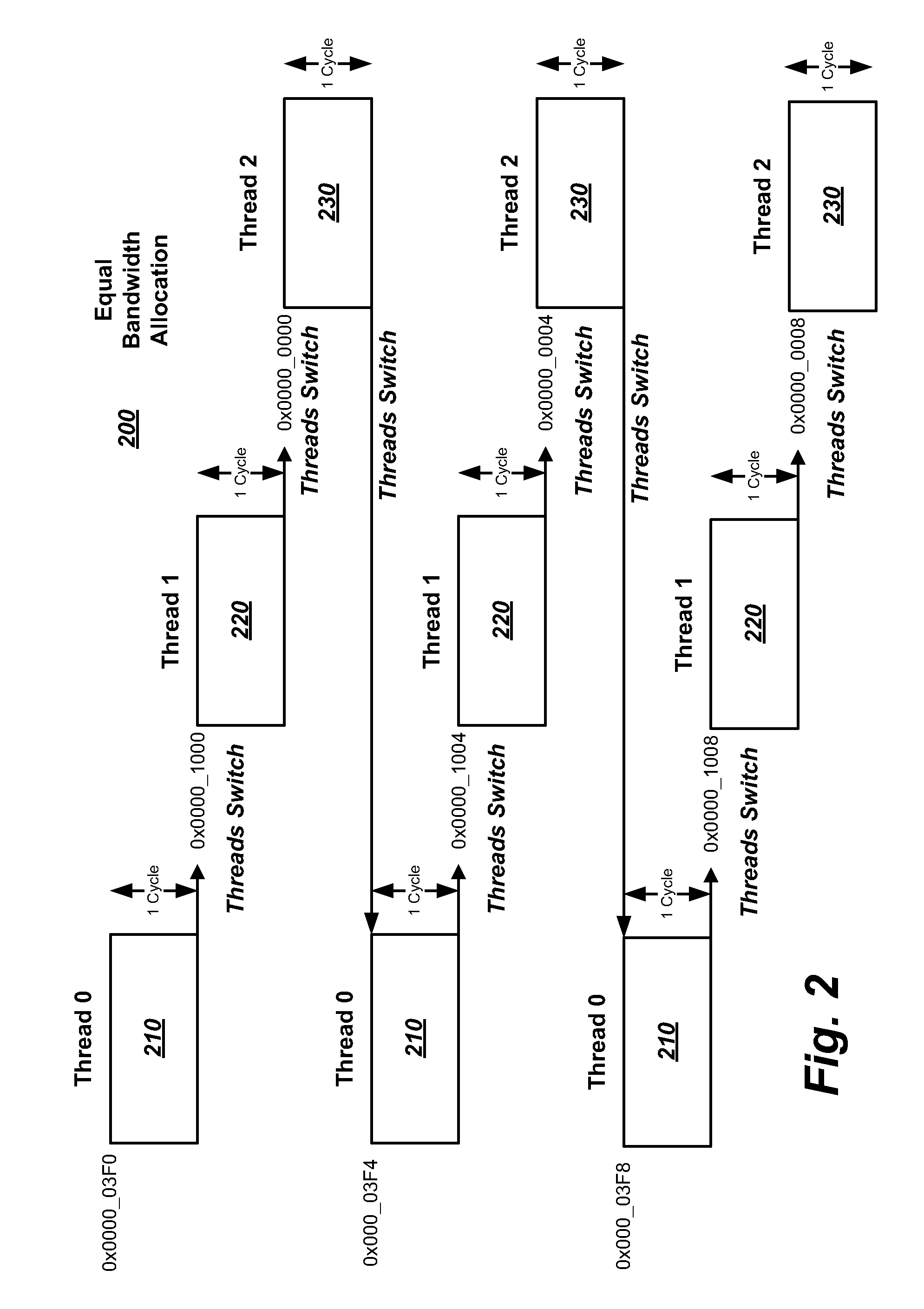

Instruction dispatching method and apparatus

InactiveUS20080040724A1Avoid delayGeneral purpose stored program computerMultiprogramming arrangementsScheduling instructionsParallel computing

A system, apparatus and method for instruction dispatch on a multi-thread processing device are described herein. The instruction dispatching method includes, in an instruction execution period having a plurality of execution cycles, successively fetching and issuing an instruction for each of a plurality of instruction execution threads according to an allocation of execution cycles of the instruction execution period among the plurality of instruction execution threads. Remaining execution cycles are subsequently used to successively fetch and issue another instruction for each of the plurality of instruction execution threads having at least one remaining allocated execution cycle of the instruction execution period. Other embodiments may be described and claimed.

Owner:MARVELL WORLD TRADE LTD

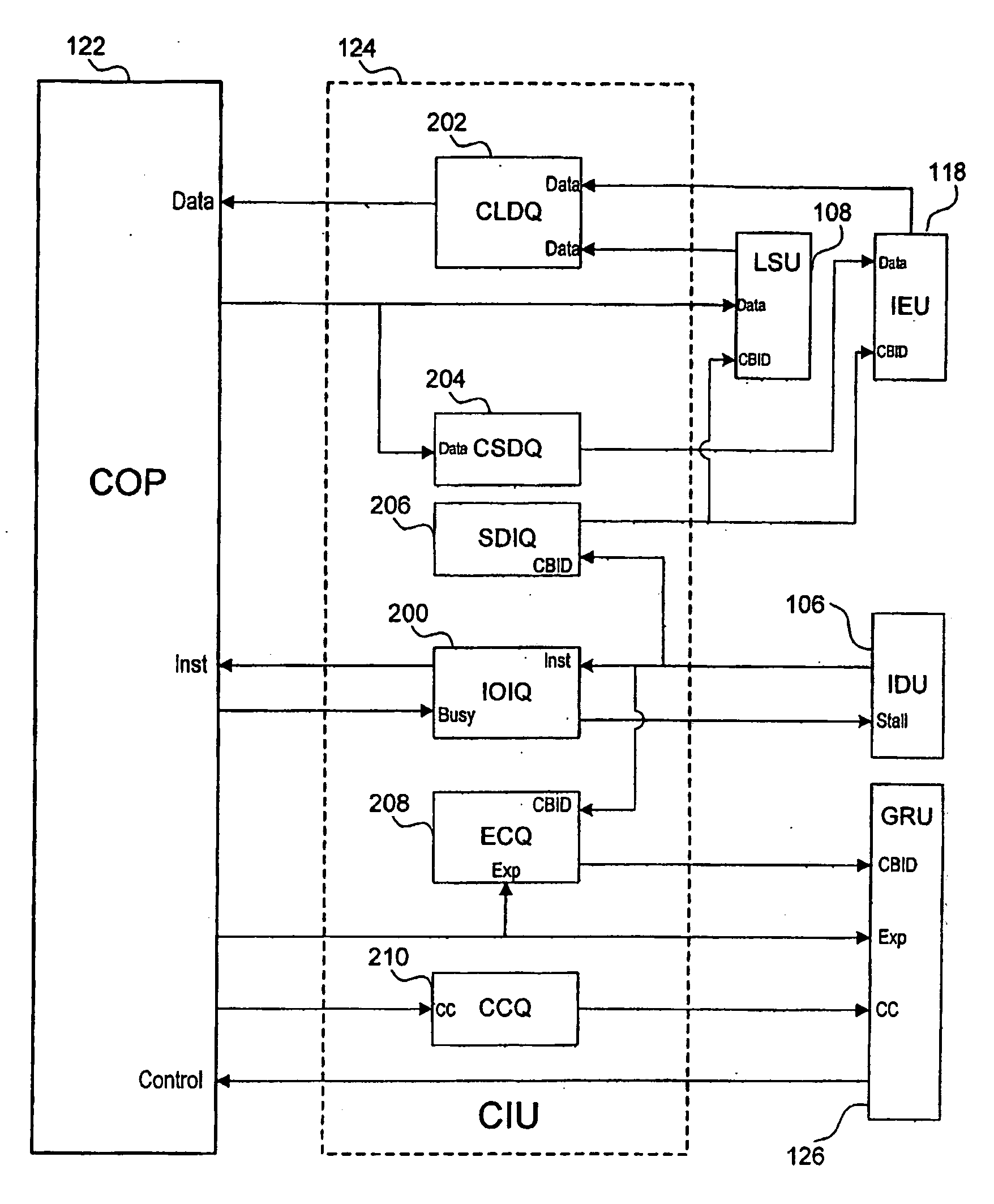

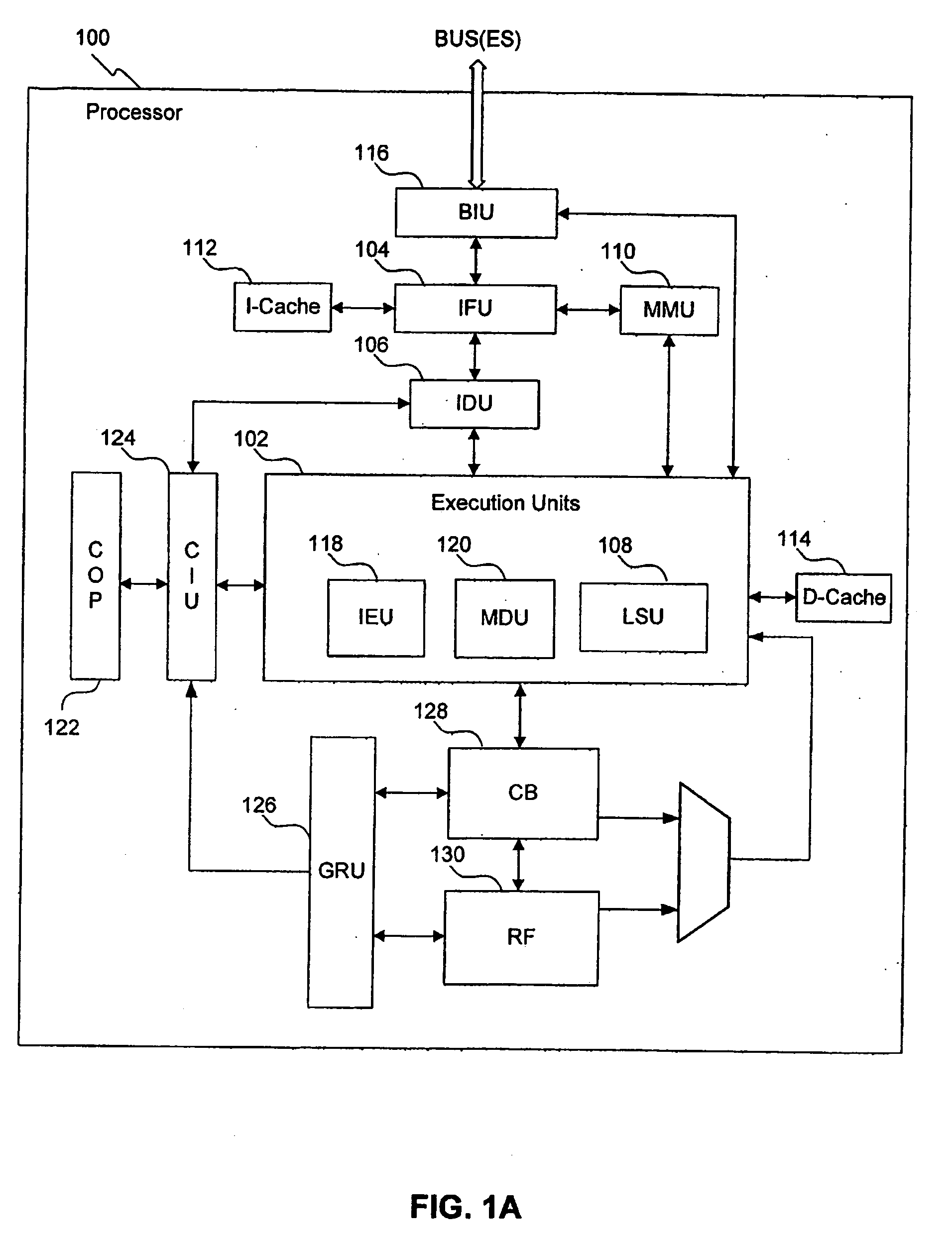

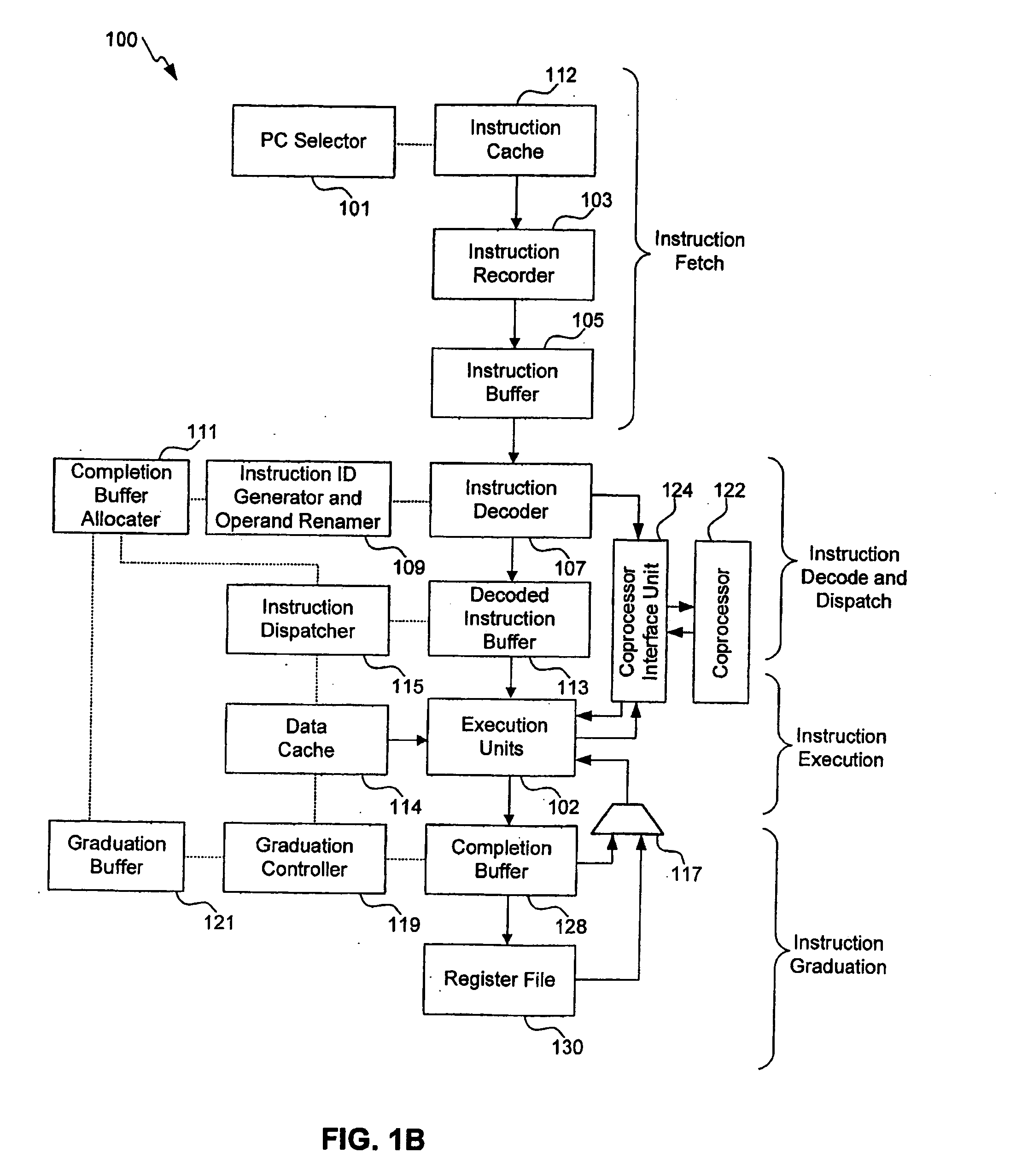

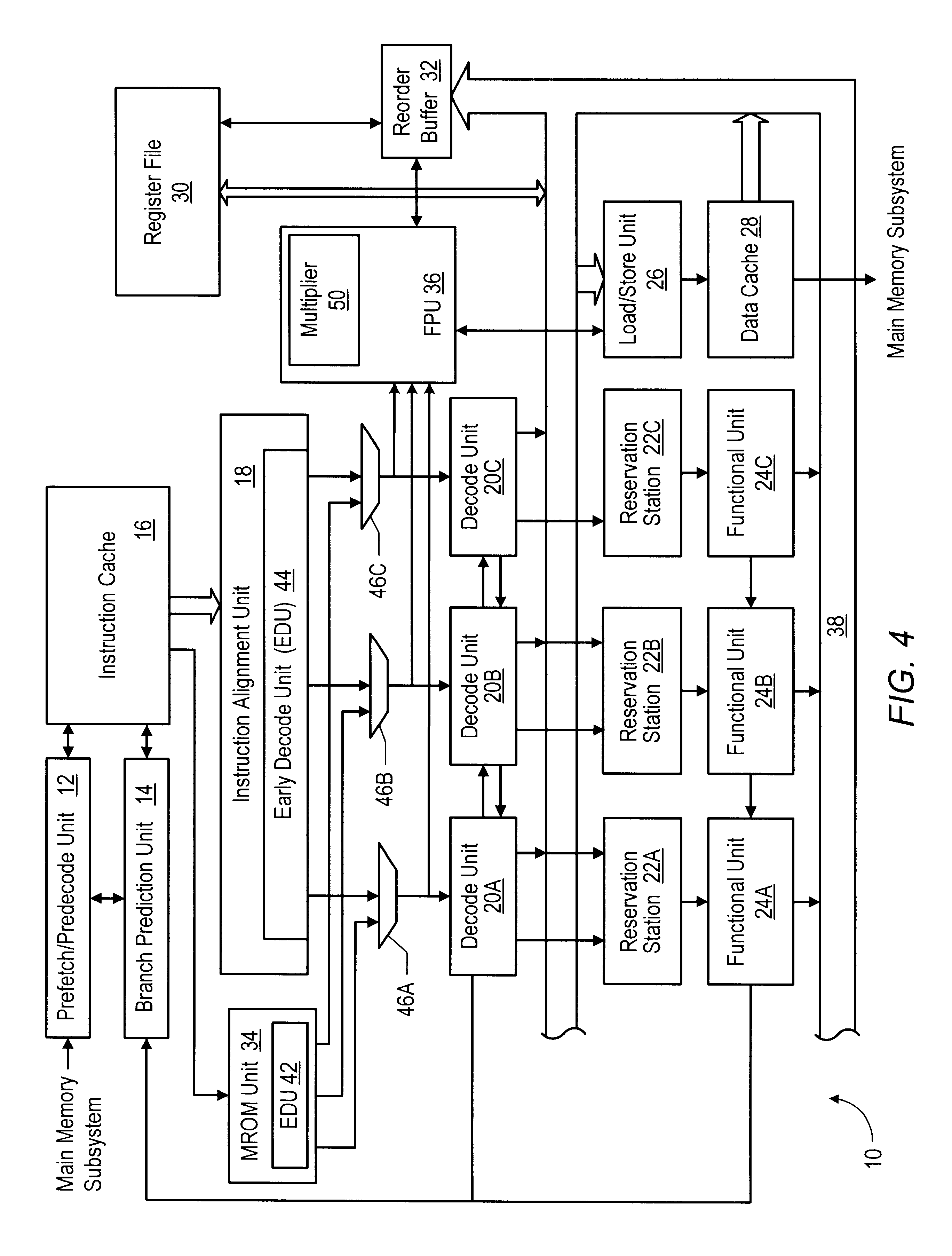

Out-of-order processor having an in-order coprocessor, and applications thereof

ActiveUS20080059771A1Runtime instruction translationGeneral purpose stored program computerCoprocessorScheduling instructions

An in-order coprocessor is interfaced to an out-of-order execution pipeline. In an embodiment, the interfacing is achieved using a coprocessor interface unit that includes an in-order instruction queue, a coprocessor load data queue, and a coprocessor store data queue. Instructions are written into the in-order instruction queue by an instruction dispatch unit. Instructions exit the in-order instruction queue and enter the coprocessor. In the coprocessor, the instructions operate on data read from the coprocessor load data queue. Data is written back, for example, to memory or a register file by inserting the data into the out-of-order execution pipeline, either directly or via the coprocessor store data queue, which writes back the data.

Owner:ARM FINANCE OVERSEAS LTD

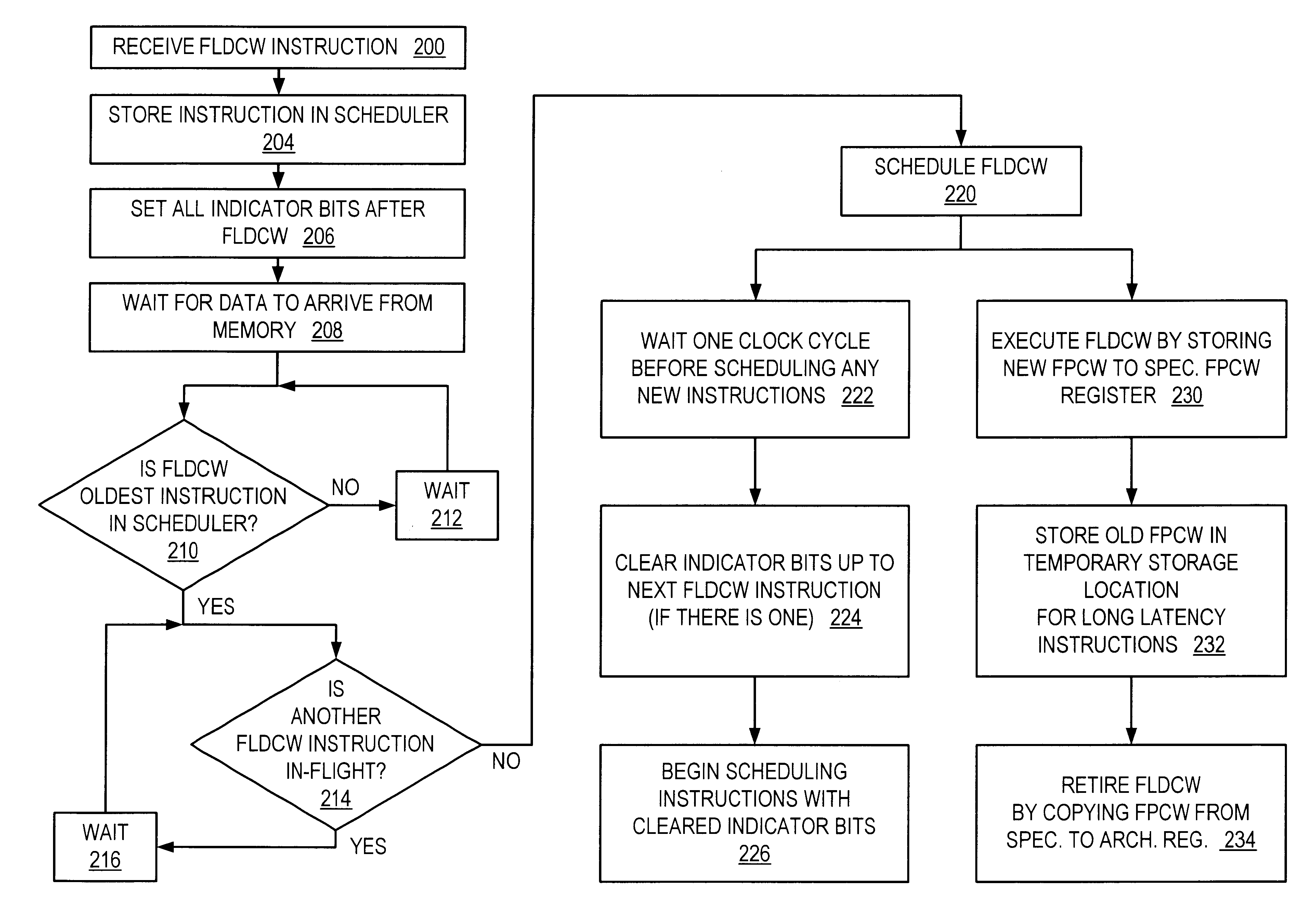

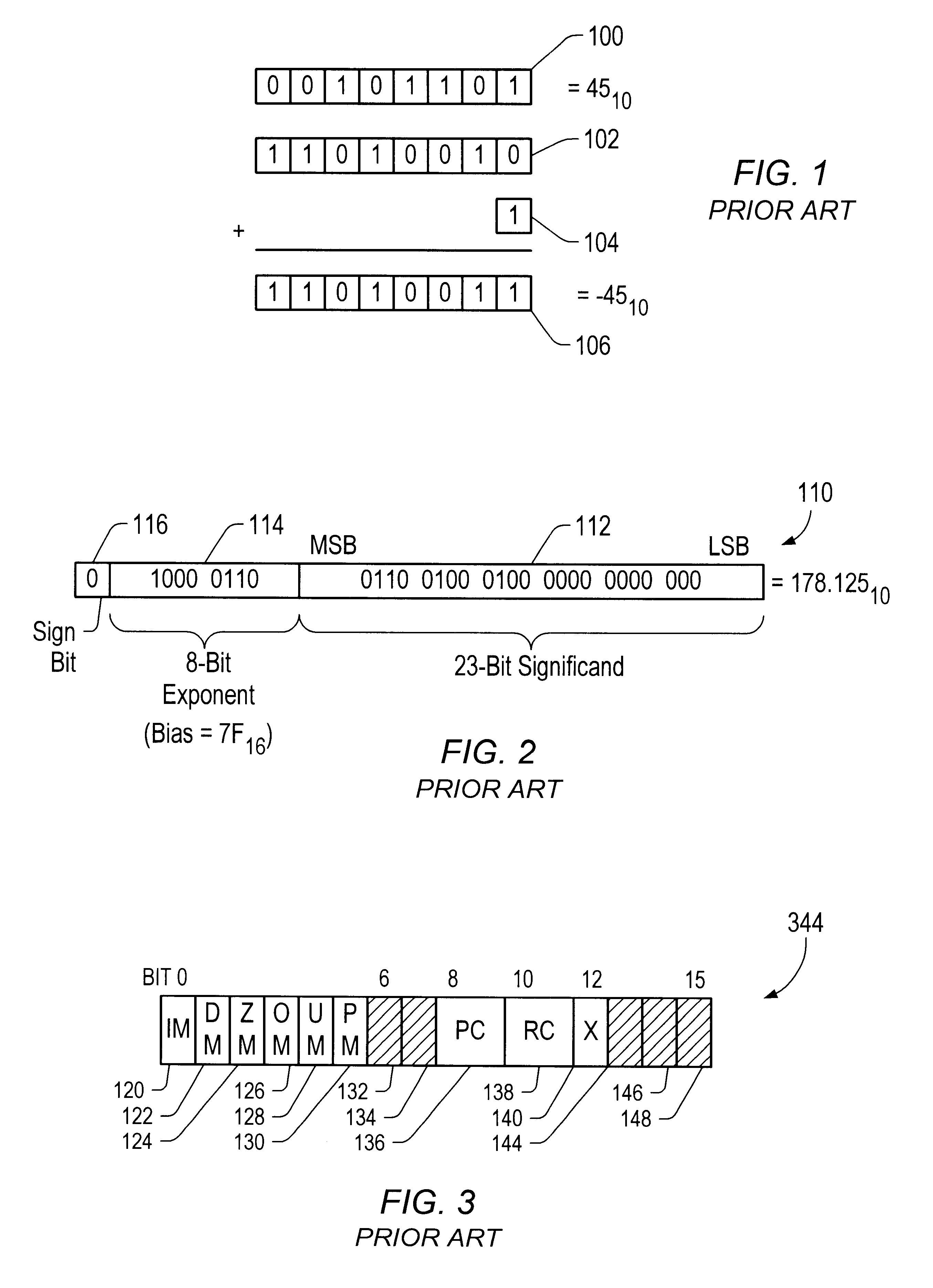

Rapid execution of floating point load control word instructions

InactiveUS6405305B1Conditional code generationDigital computer detailsScheduling instructionsParallel computing

A microprocessor with a floating point unit configured to rapidly execute floating point load control word (FLDCW) type instructions in an out of program order context is disclosed. The floating point unit is configured to schedule instructions older than the FLDCW-type instruction before the FLDCW-type instruction is scheduled. The FLDCW-type instruction acts as a barrier to prevent instructions occurring after the FLDCW-type instruction in program order from executing before the FLDCW-type instruction. Indicator bits may be used to simplify instruction scheduling, and copies of the floating point control word may be stored for instruction that have long execution cycles. A method and computer configured to rapidly execute FLDCW-type instructions in an out of program order context are also disclosed.

Owner:ADVANCED MICRO DEVICES INC

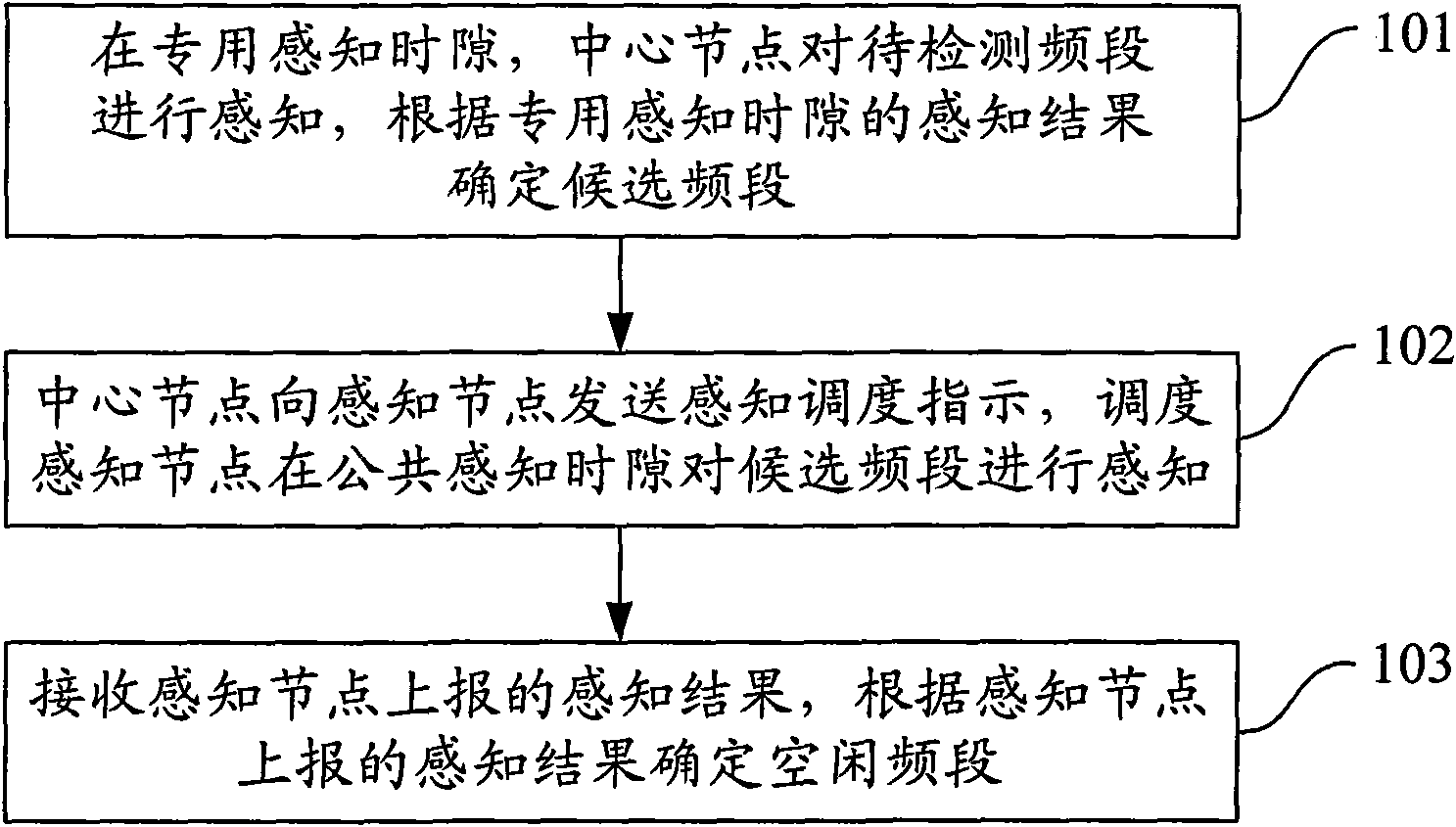

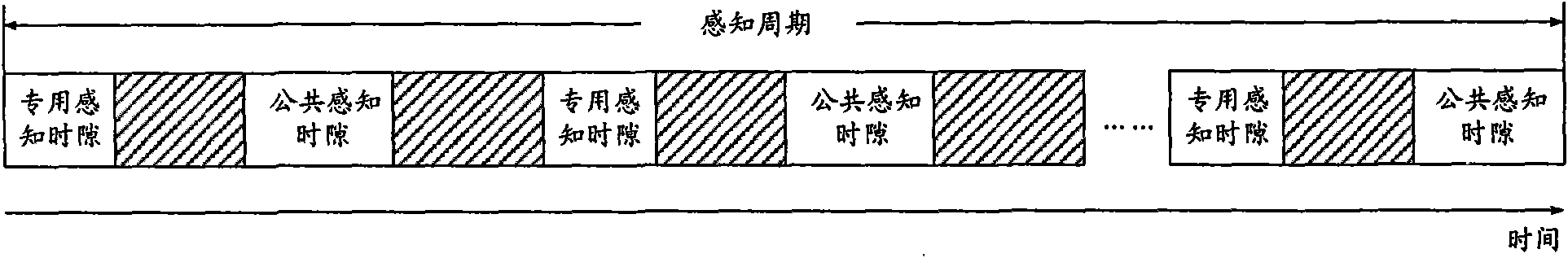

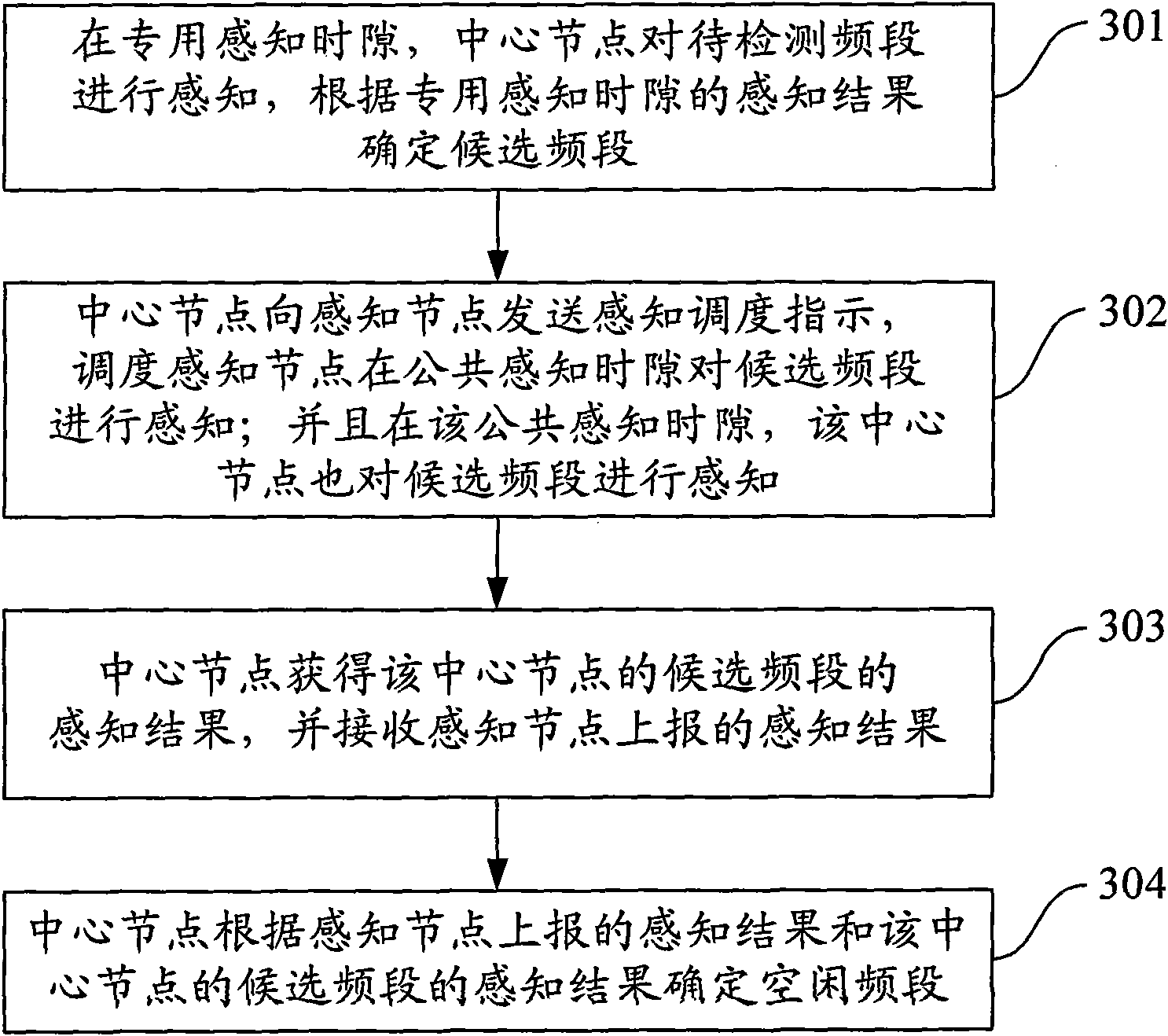

Method, system, central node and sensing node for determining idle frequency band

ActiveCN101990231AReduce power consumptionReduce overheadEnergy efficient ICTAssess restrictionFrequency spectrumScheduling instructions

The embodiment of the invention discloses a method, a system, a central node and a sensing node for determining an idle frequency band. The method for determining the idle frequency band comprises the following steps of: sensing a to-be-detected frequency band at a special sensing time slot and determining a candidate frequency band according to a sensing result of the special sensing time slot; transmitting a sensing and scheduling instruction to the sensing node and scheduling the sensing node to sense the candidate frequency band at a common sensing time slot; and receiving the sensing result reported by the sensing node and determining the idle frequency band according to the sensing result reported by the sensing node. Through the embodiment of the invention, signaling expenditure of the central node and the sensing node in a multi-point cooperative frequency spectrum sensing mode is reduced, and the total power consumption of the sensing node is reduced.

Owner:HONOR DEVICE CO LTD

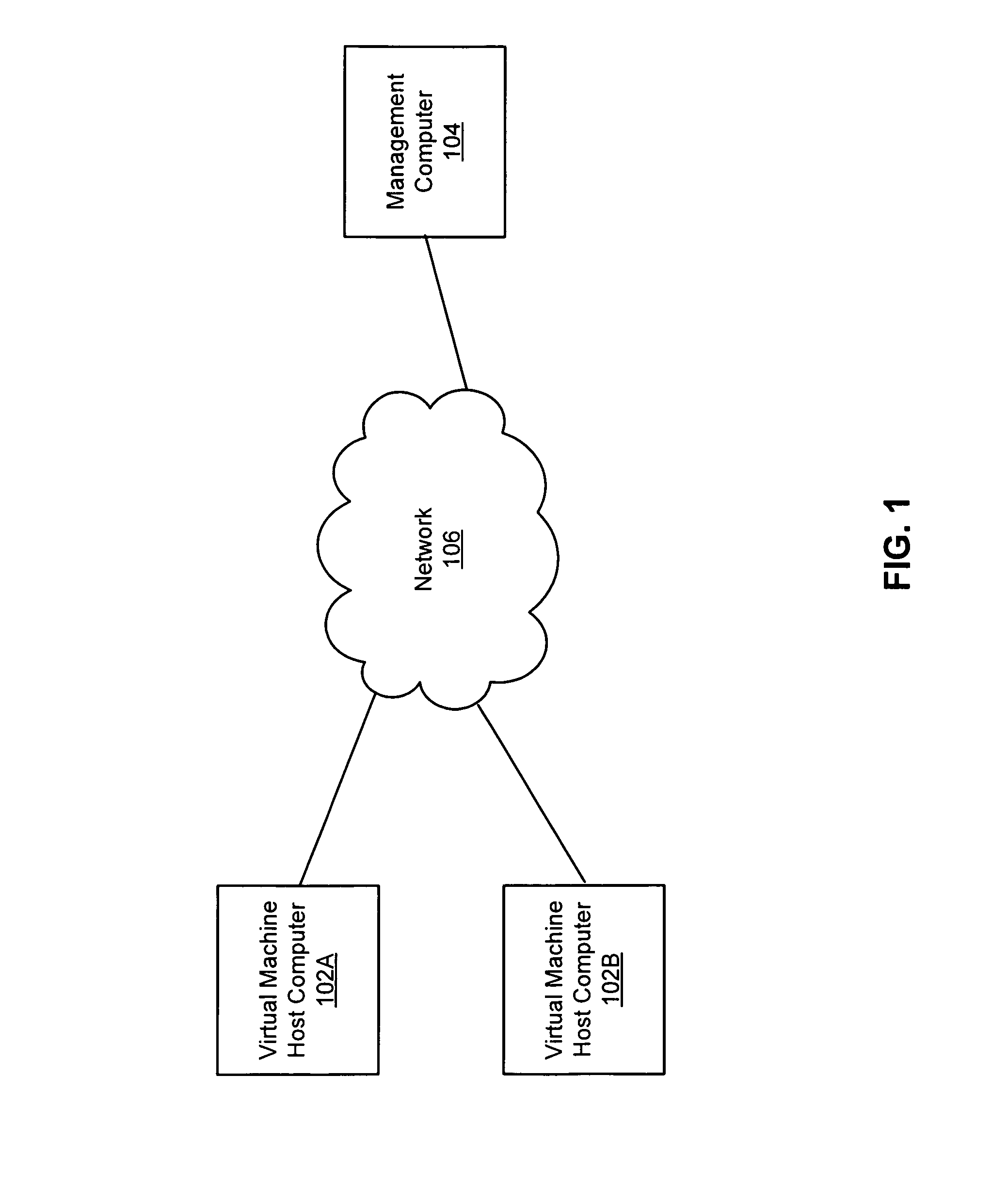

Coordinated virtualization activities

ActiveUS8443363B1Minimize hardware resource conflictMultiprogramming arrangementsSoftware simulation/interpretation/emulationVirtualizationScheduling instructions

A computer includes a plurality of activity modules in multiple virtual machines. An activity module performs an activity, such as malware detection, in a virtual machine. A monitoring module receives virtualized infrastructure information of the computer comprising a hardware configuration of the computer and a virtual machine configuration of the computer. A scheduling module determines, based on the virtualized infrastructure information, scheduling instructions for an activity module. A supervisor communication module causes the activity module to execute based on the scheduling instructions. The scheduling instructions minimize hardware resource conflicts between the activity module and the other activity modules of the computer.

Owner:CA TECH INC

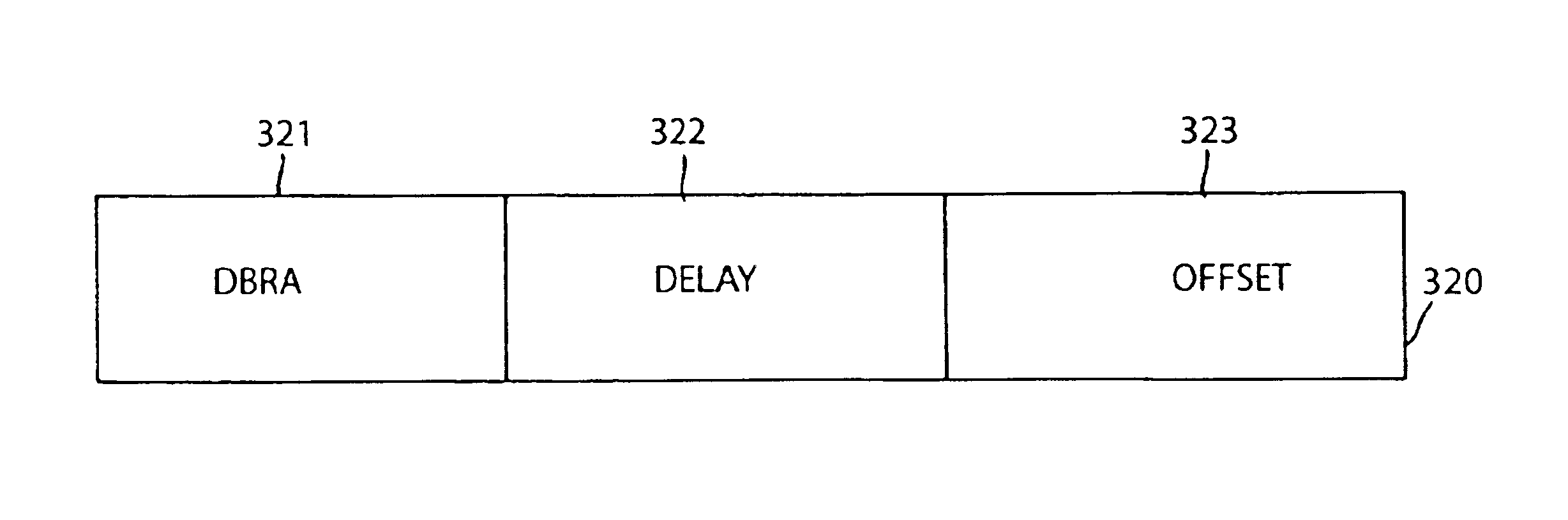

Data processing device

InactiveUS6865666B2General purpose stored program computerNext instruction address formationProcessor registerScheduling instructions

A data processing device having a PC controlling part for executing an operation of branch which has a first register for holding a result of decoding in an instruction decode unit, a register for holding a description indicating an execution condition of the operation (a value of field for designating condition), and a register for holding the description indicating a time for executing the operation (an address value of PC), wherein the execution condition is started when a value held in the register is in agreement with a PC value in accordance with the description of the register; and if the condition is satisfied, the PC controlling part executes the operation based on a content held in the register, whereby it is possible to delay the time for judging the execution condition during this delay, to thereby increase a degree of freedom in scheduling instructions such that the branch instruction is positioned prior to the operation instruction for determining the execution condition in the program.

Owner:RENESAS ELECTRONICS CORP

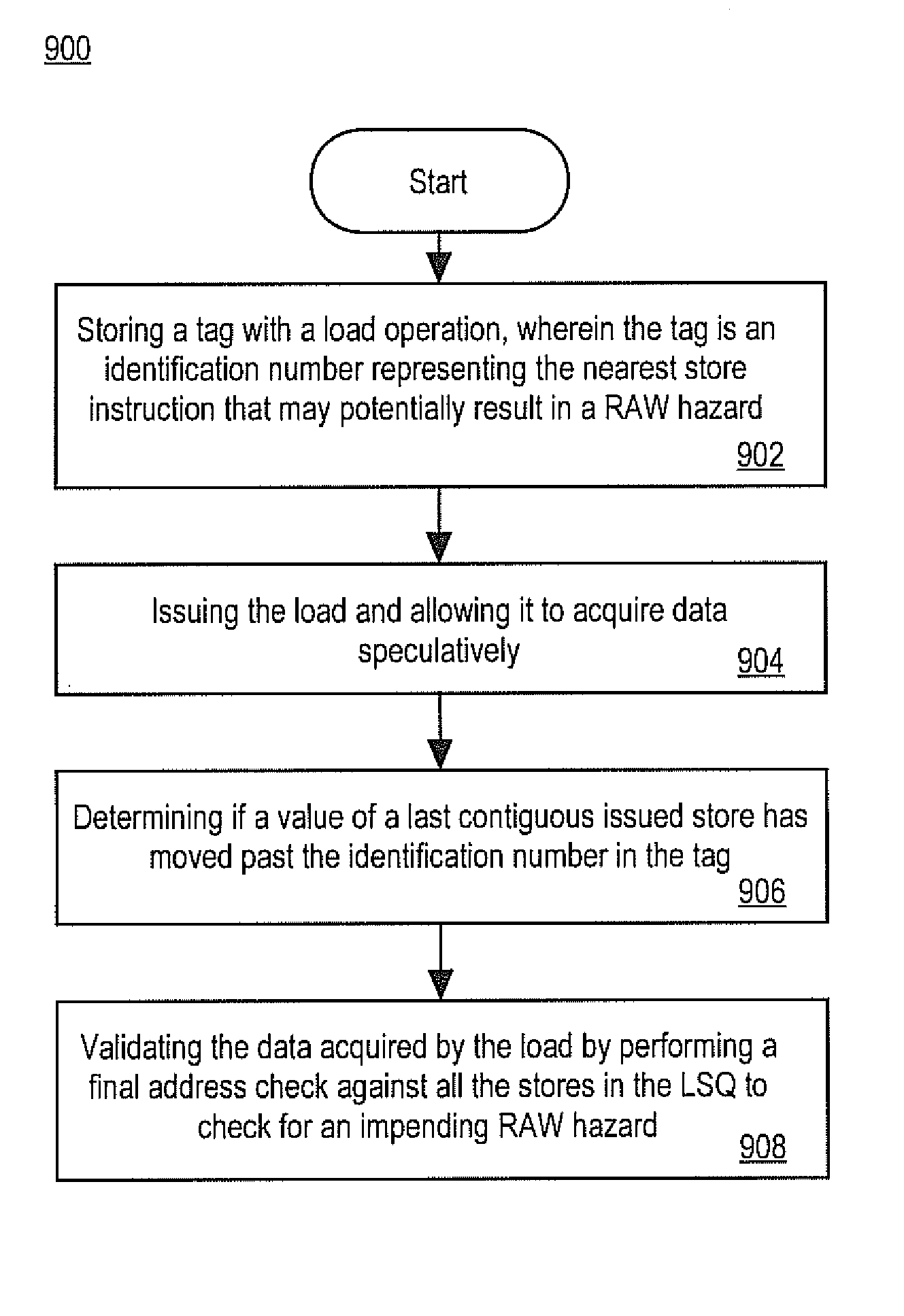

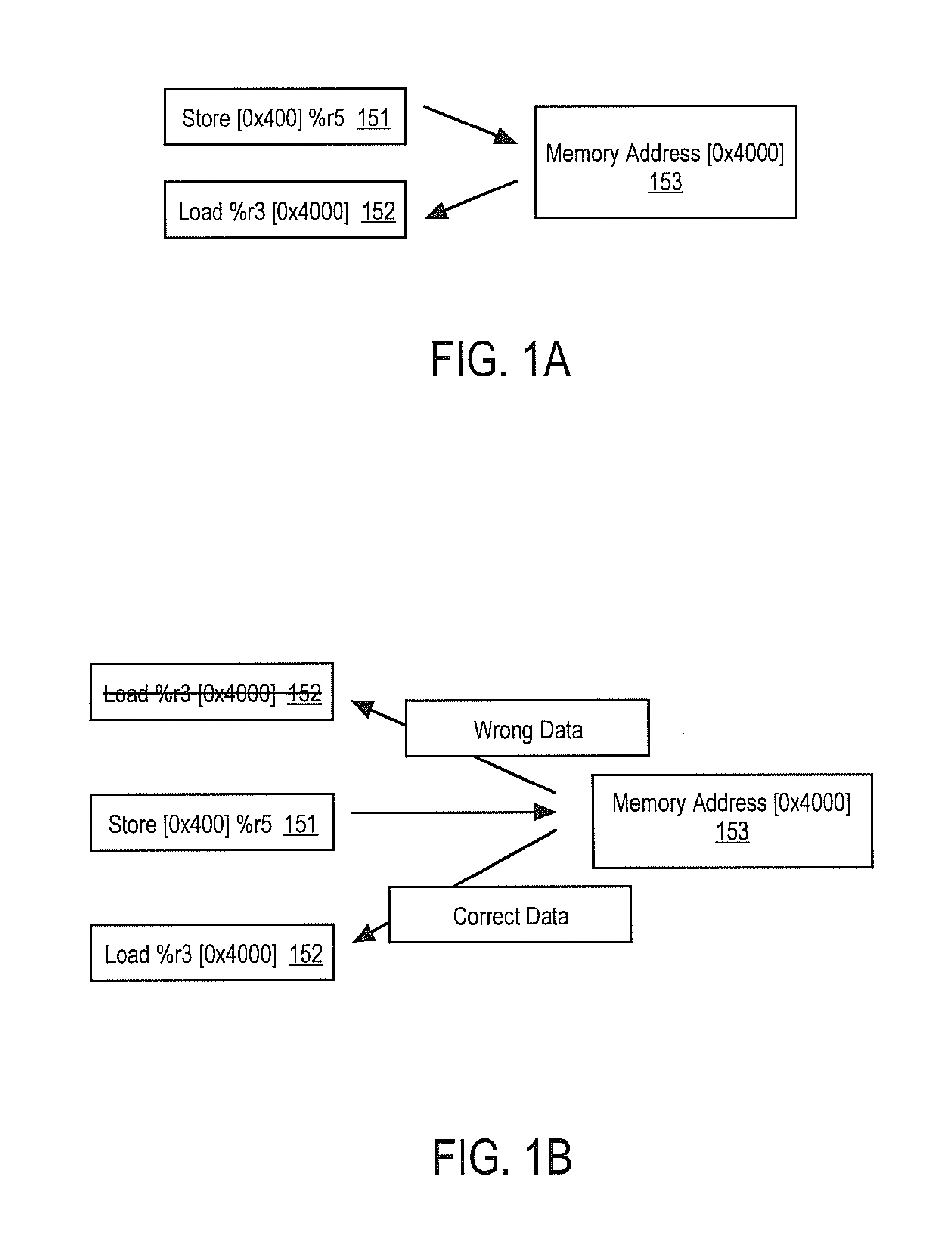

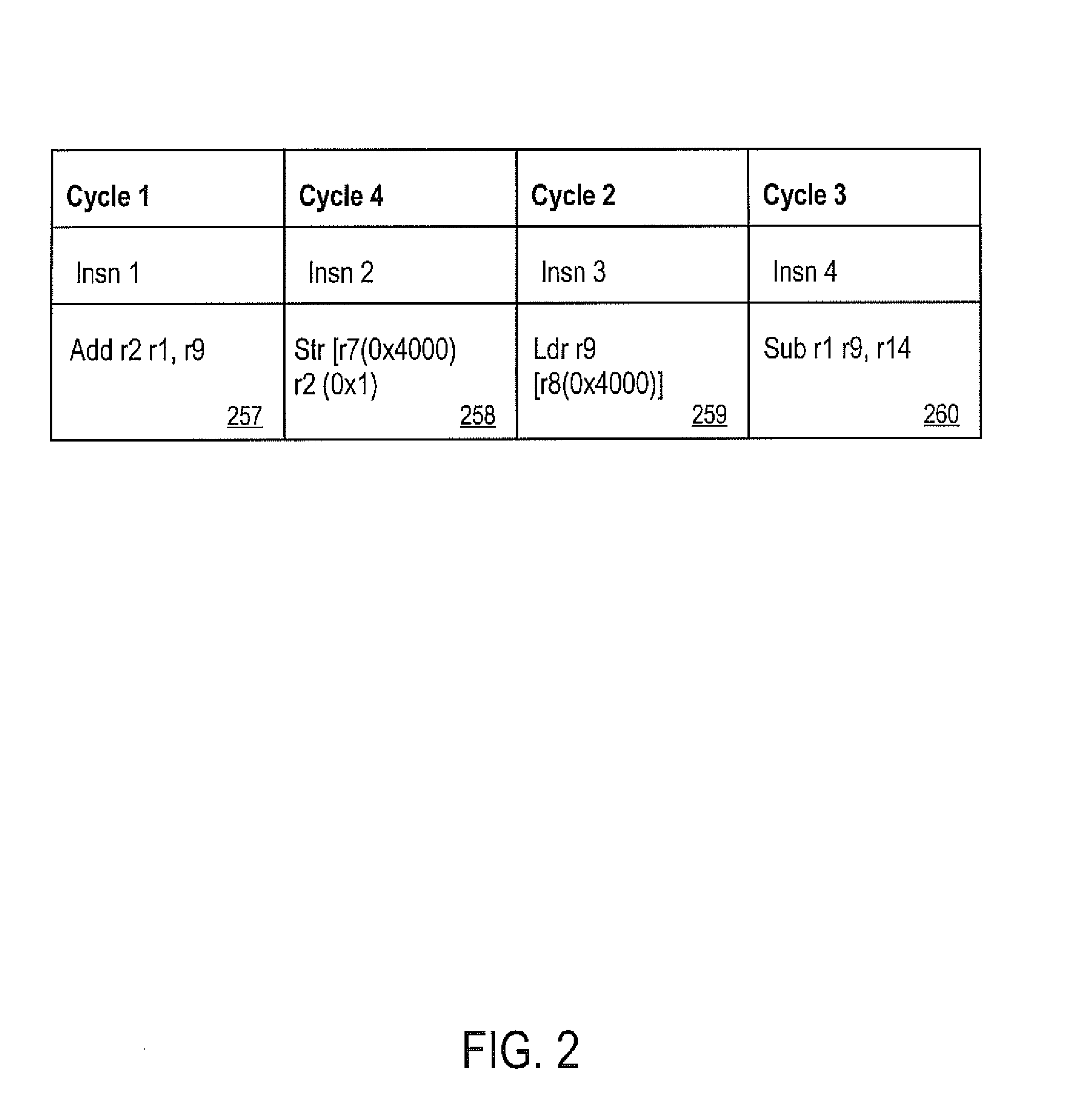

Method and apparatus for nearest potential store tagging

ActiveUS20140281409A1Easy to understandDigital computer detailsConcurrent instruction executionScheduling instructionsParallel computing

A method for performing memory disambiguation in an out-of-order microprocessor pipeline is disclosed. The method comprises storing a tag with a load operation, wherein the tag is an identification number representing a store instruction nearest to the load operation, wherein the store instruction is older with respect to the load operation and wherein the store has potential to result in a RAW violation in conjunction with the load operation. The method also comprises issuing the load operation from an instruction scheduling module. Further, the method comprises acquiring data for the load operation speculatively after the load operation has arrived at a load store queue module. Finally, the method comprises determining if an identification number associated with a last contiguous issued store with respect to the load operation is equal to or greater than the tag and gating a validation process for the load operation in response to the determination.

Owner:INTEL CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com