Patents

Literature

86results about How to "High degree of parallelism" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

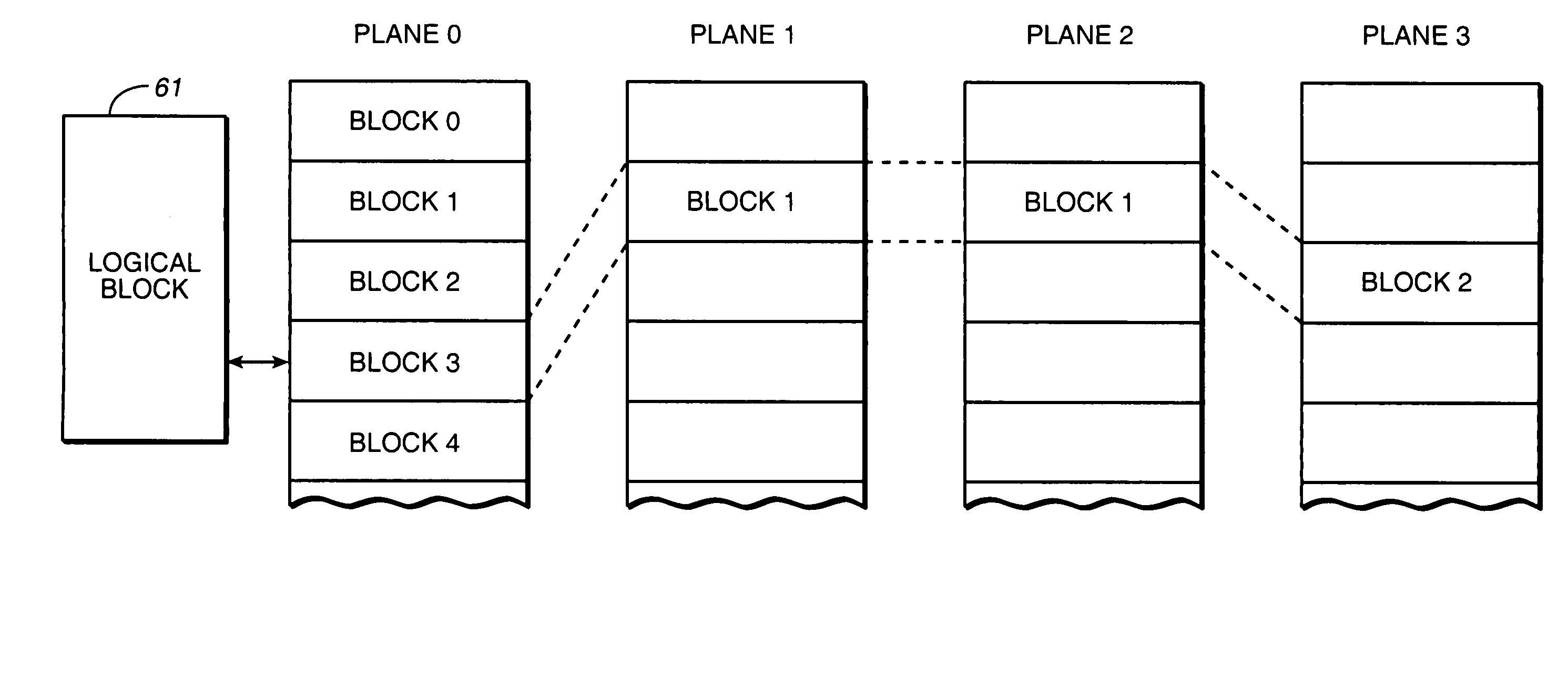

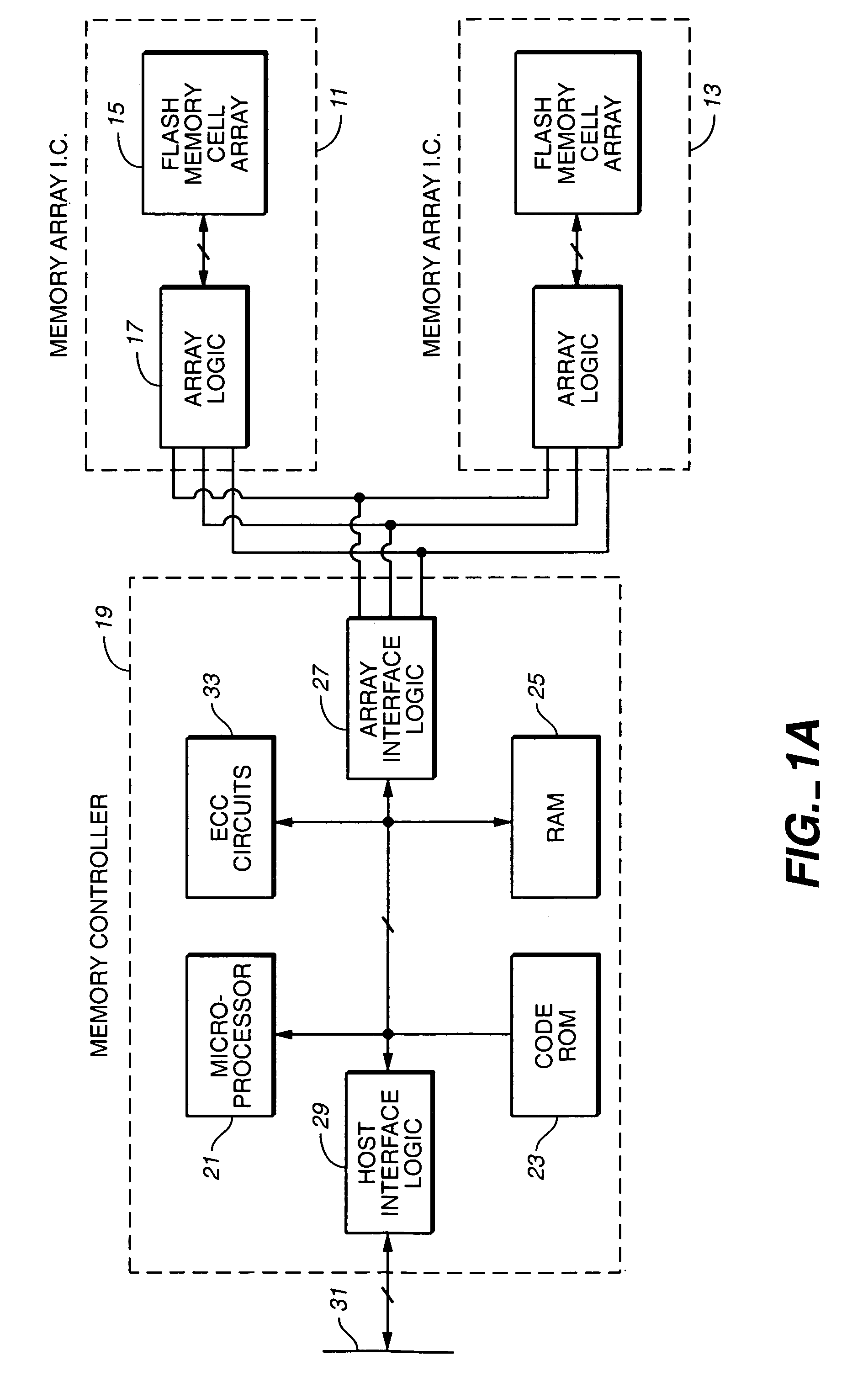

Adaptive metablocks

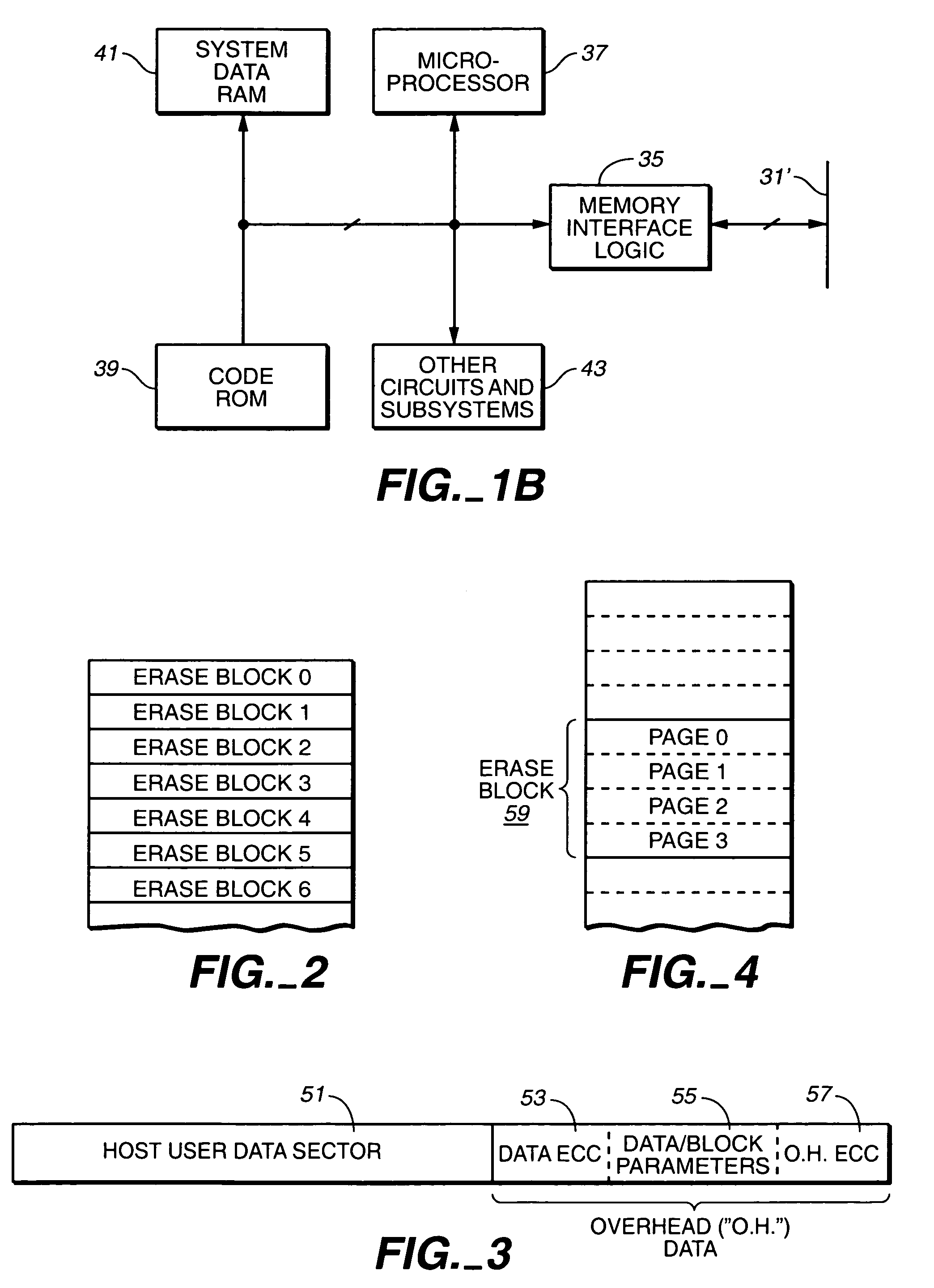

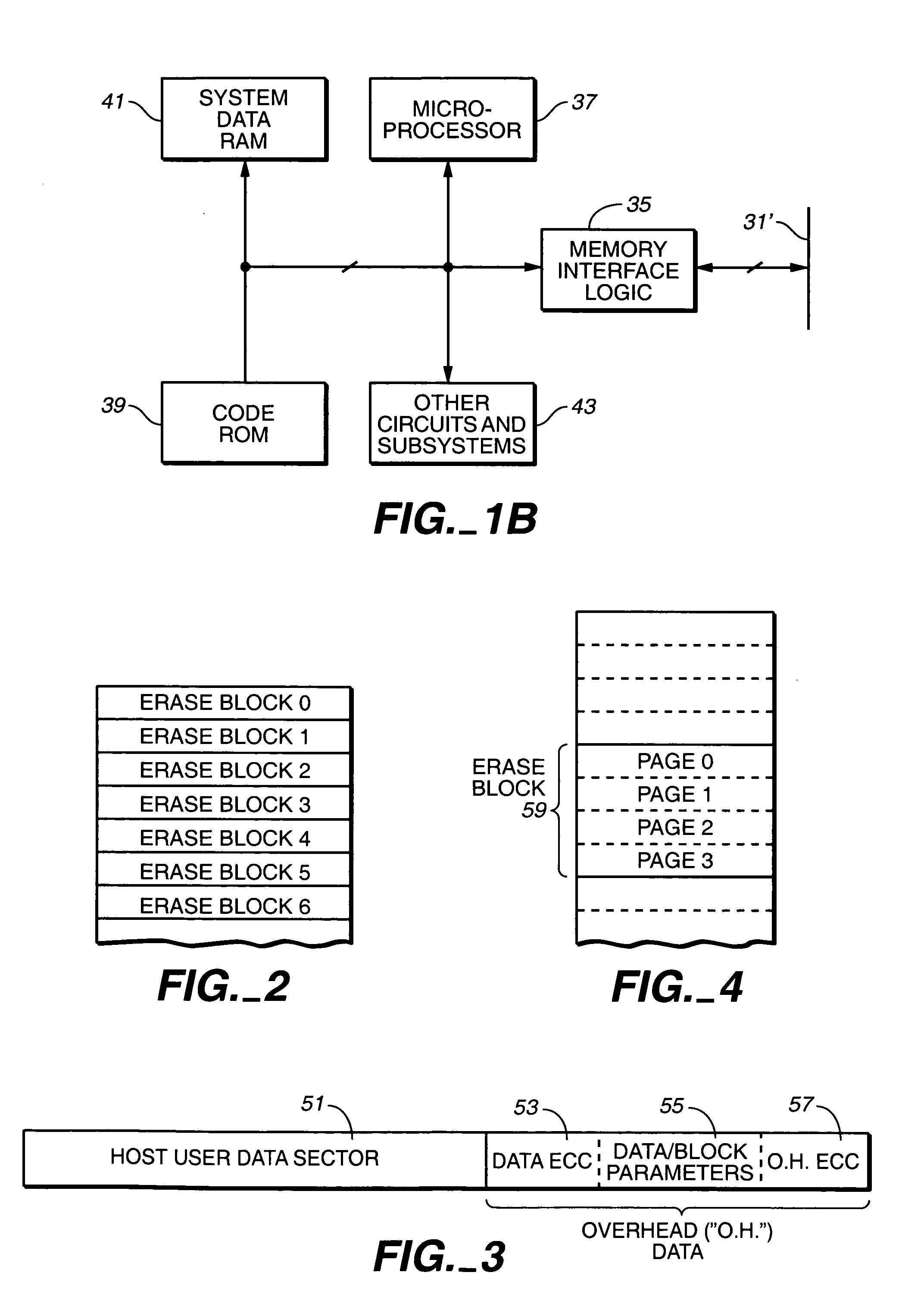

ActiveUS20050144357A1Reduce the impactEfficient updateMemory architecture accessing/allocationMemory adressing/allocation/relocationData selectionTheoretical computer science

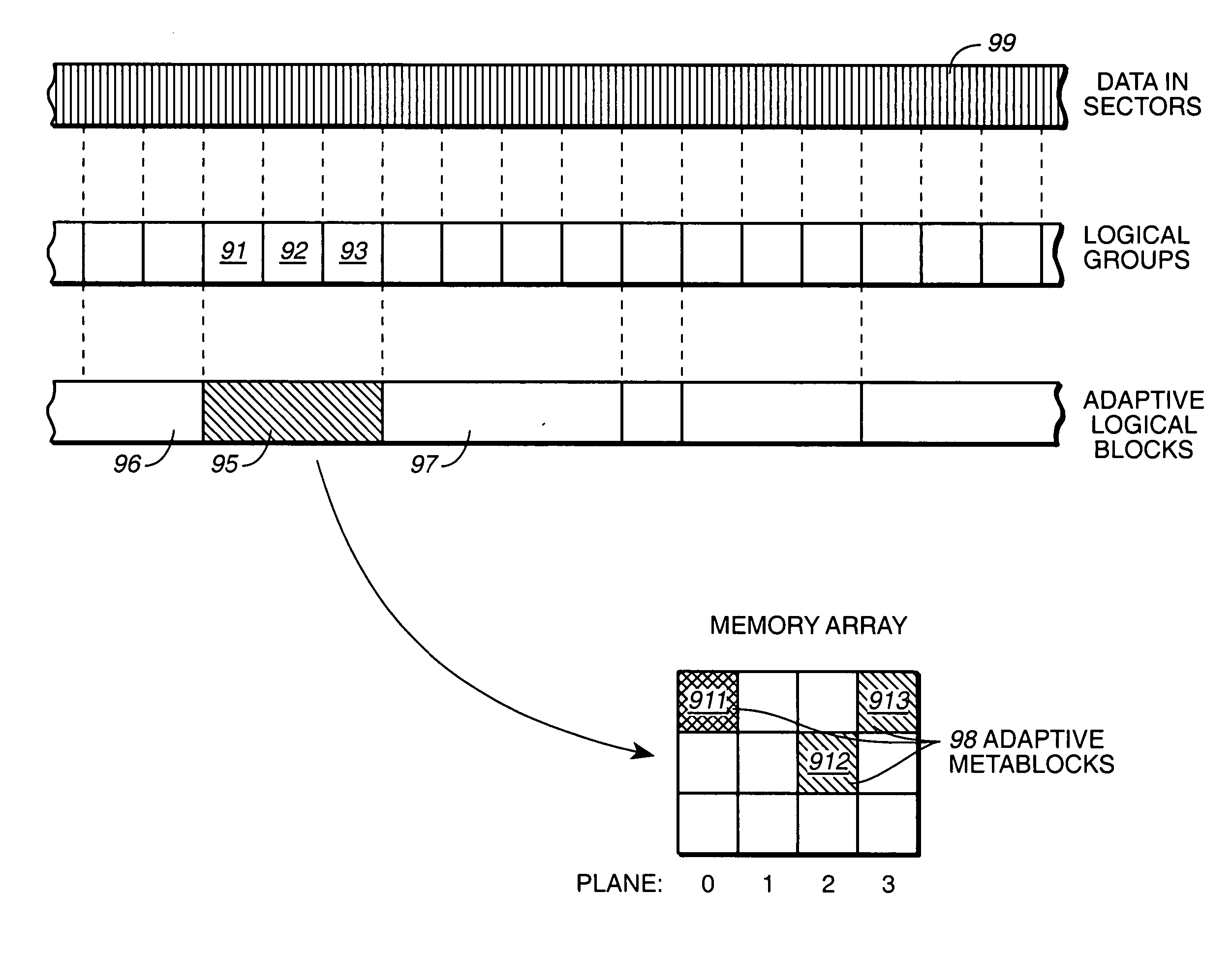

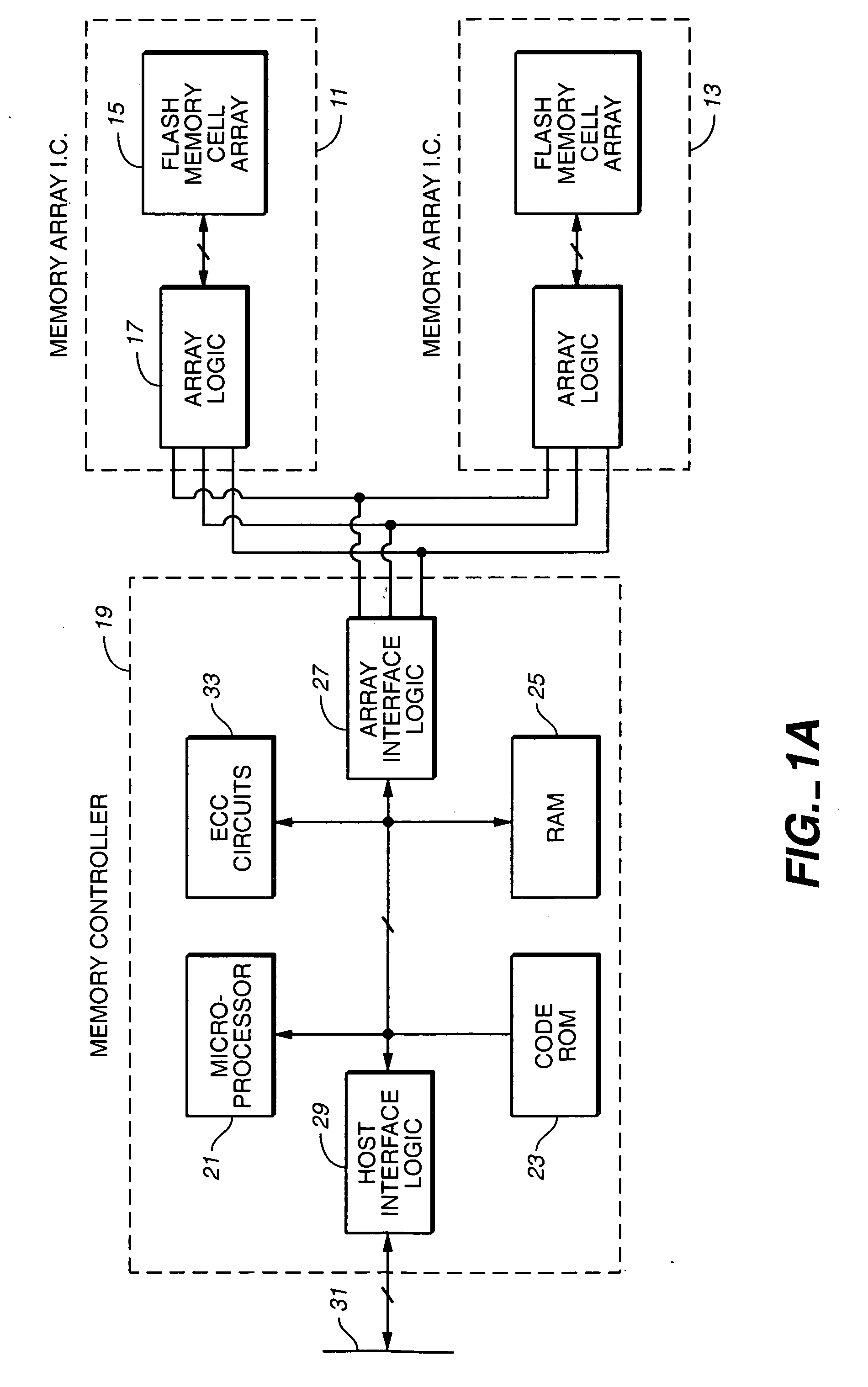

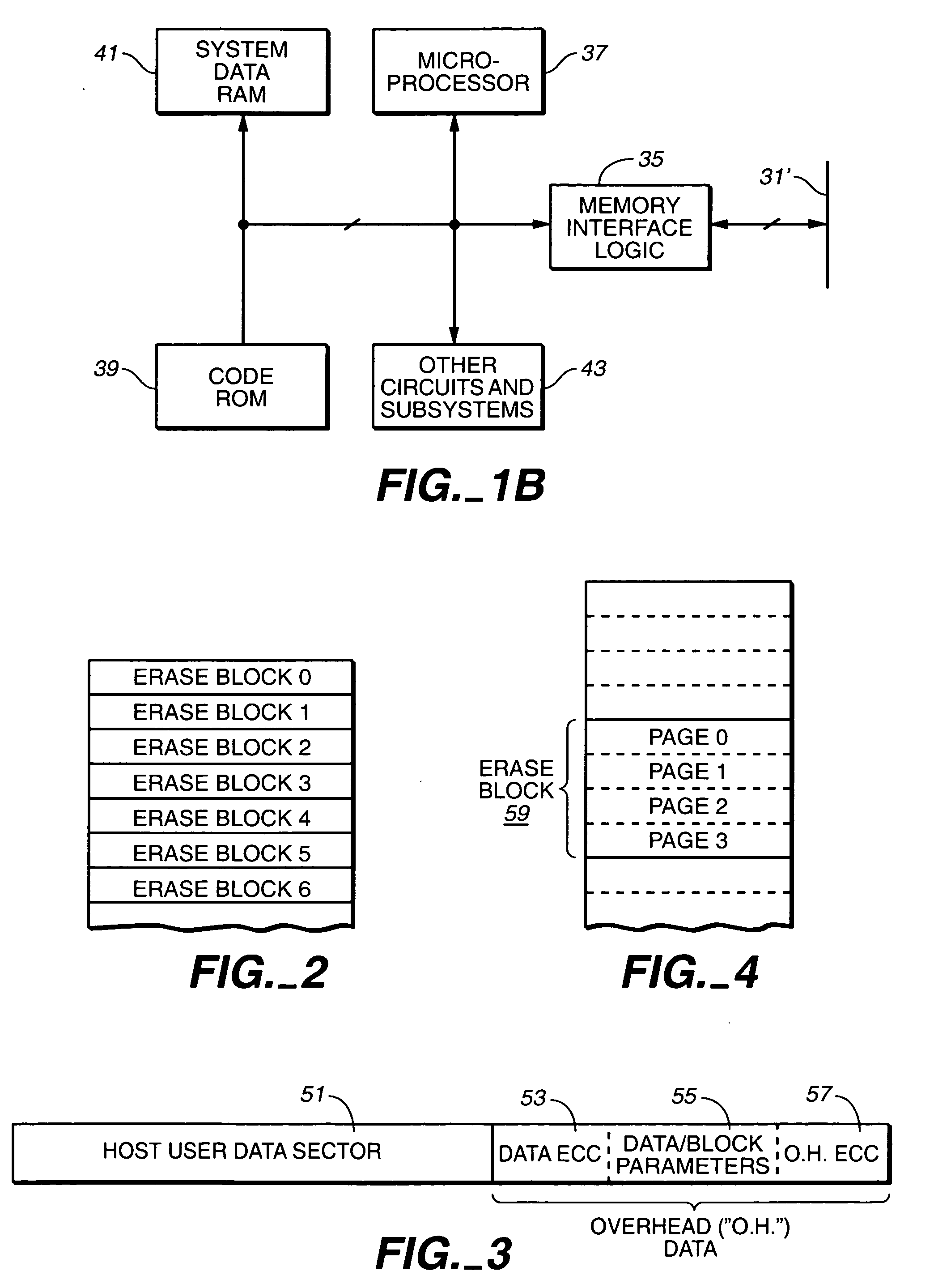

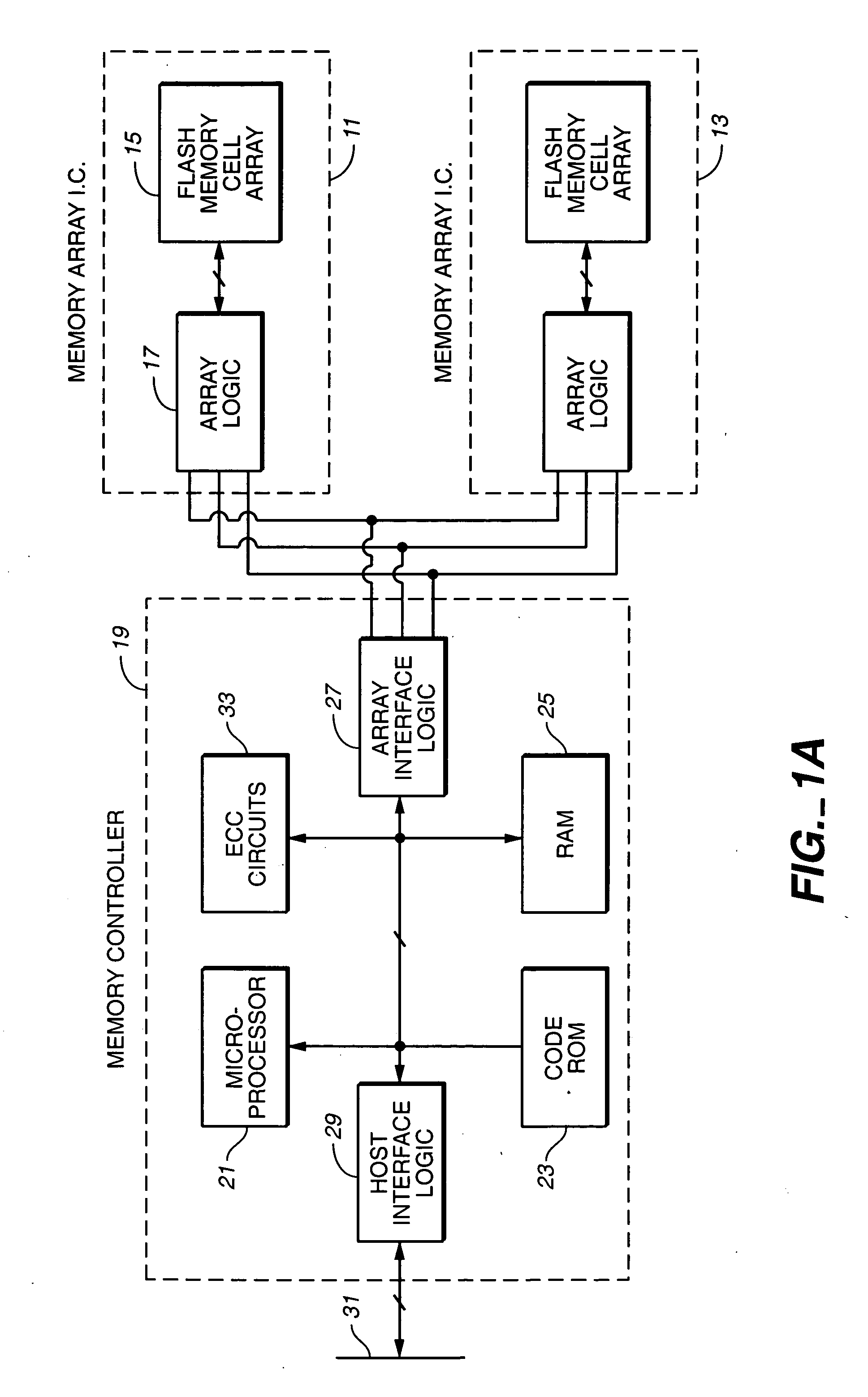

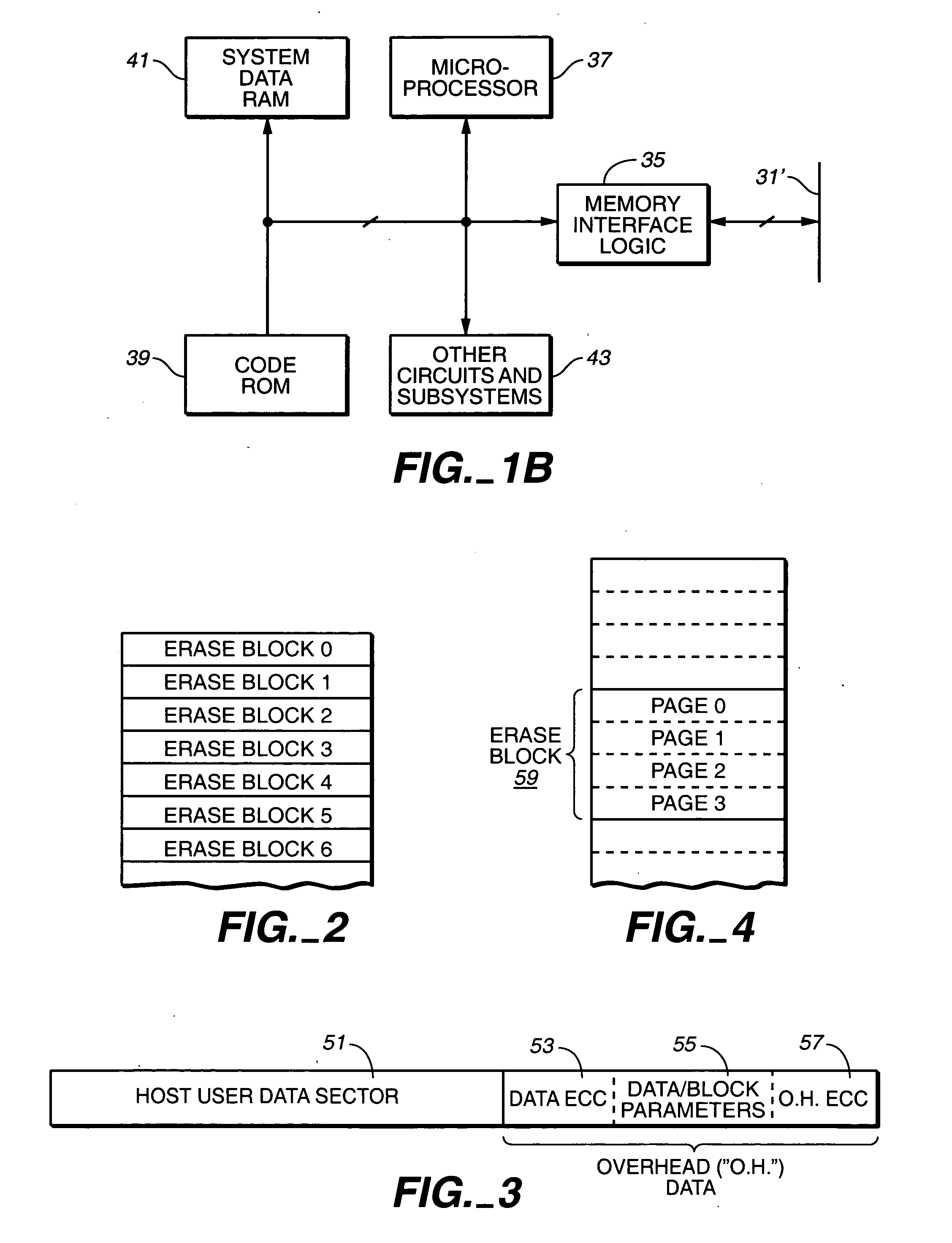

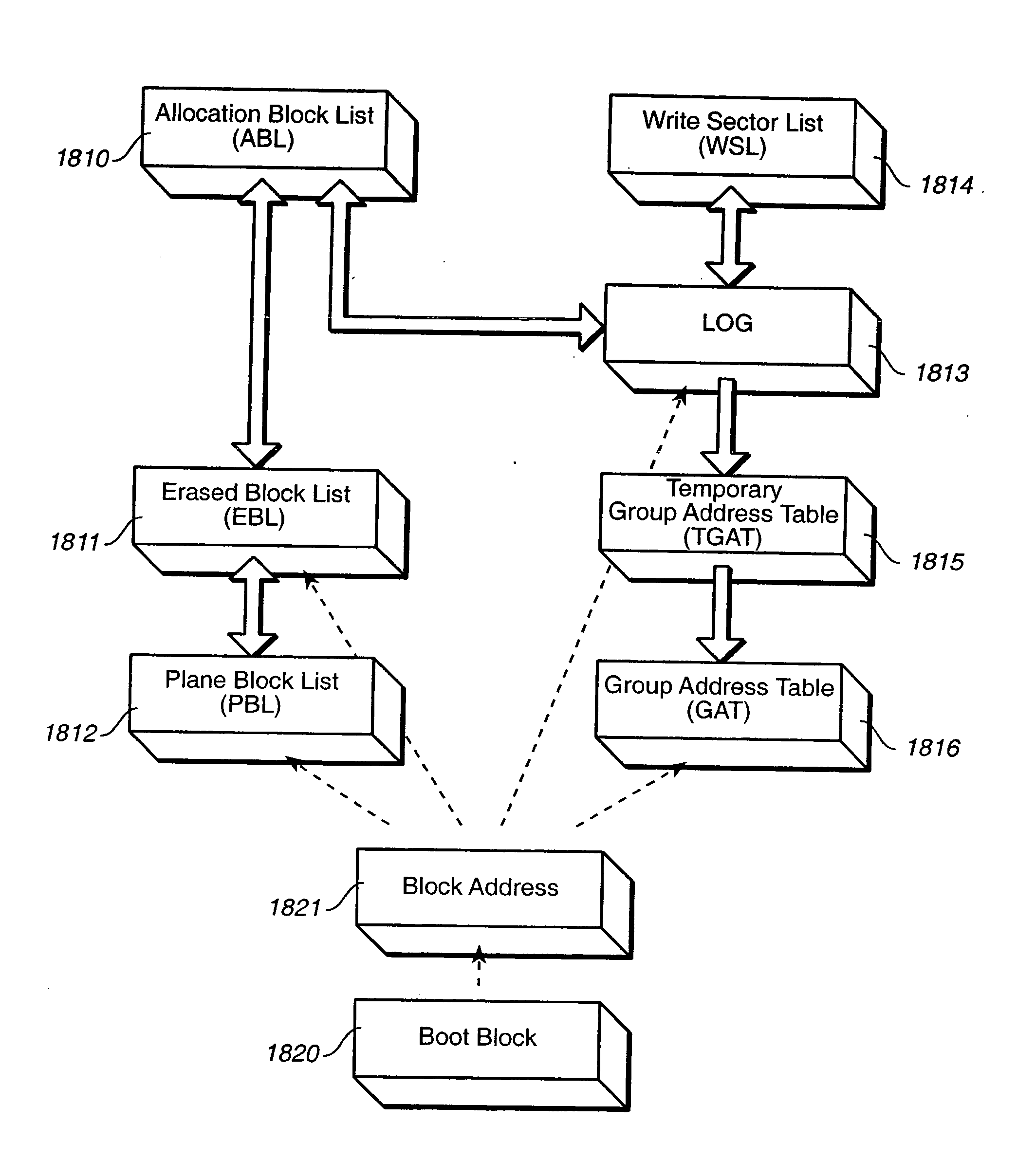

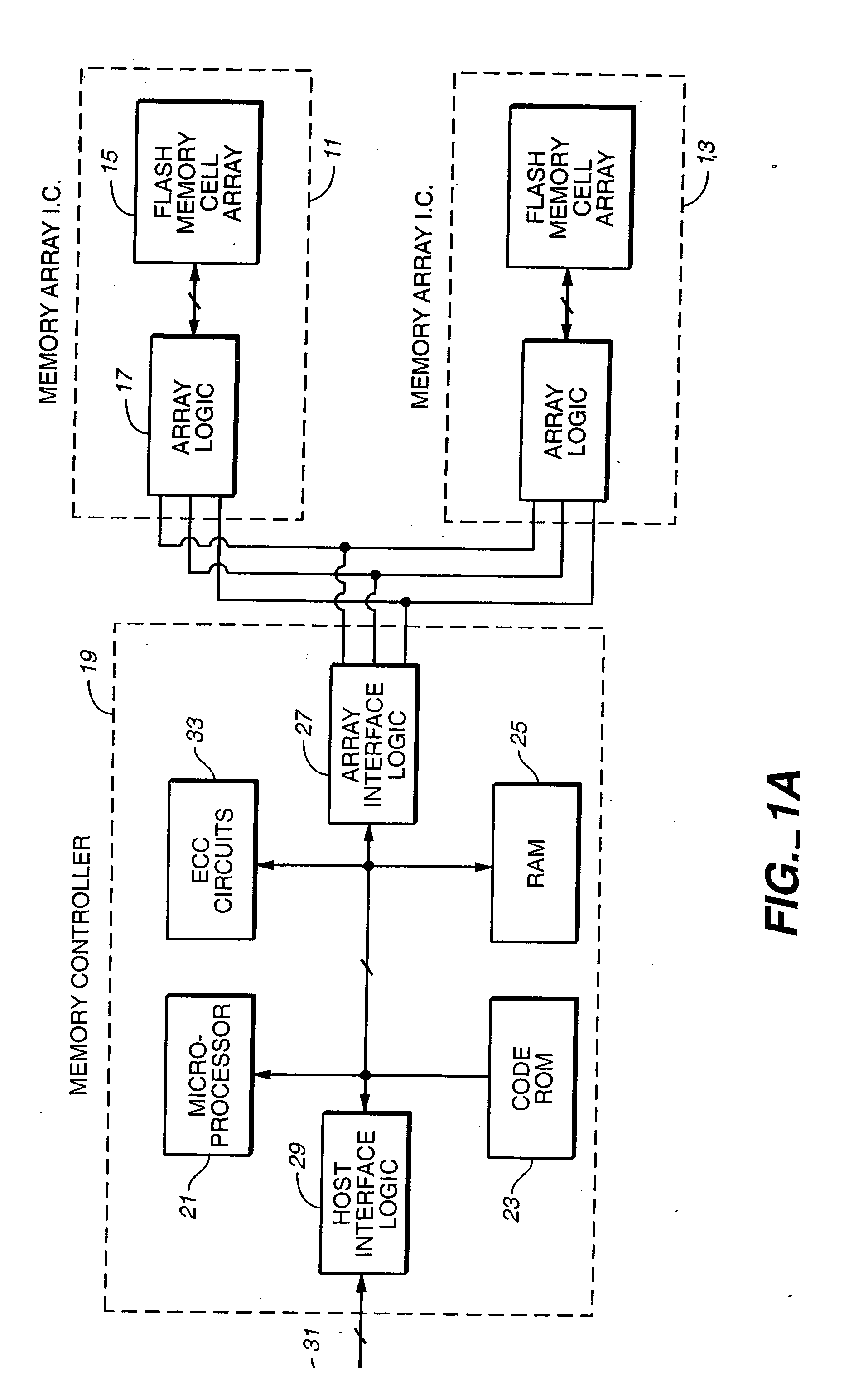

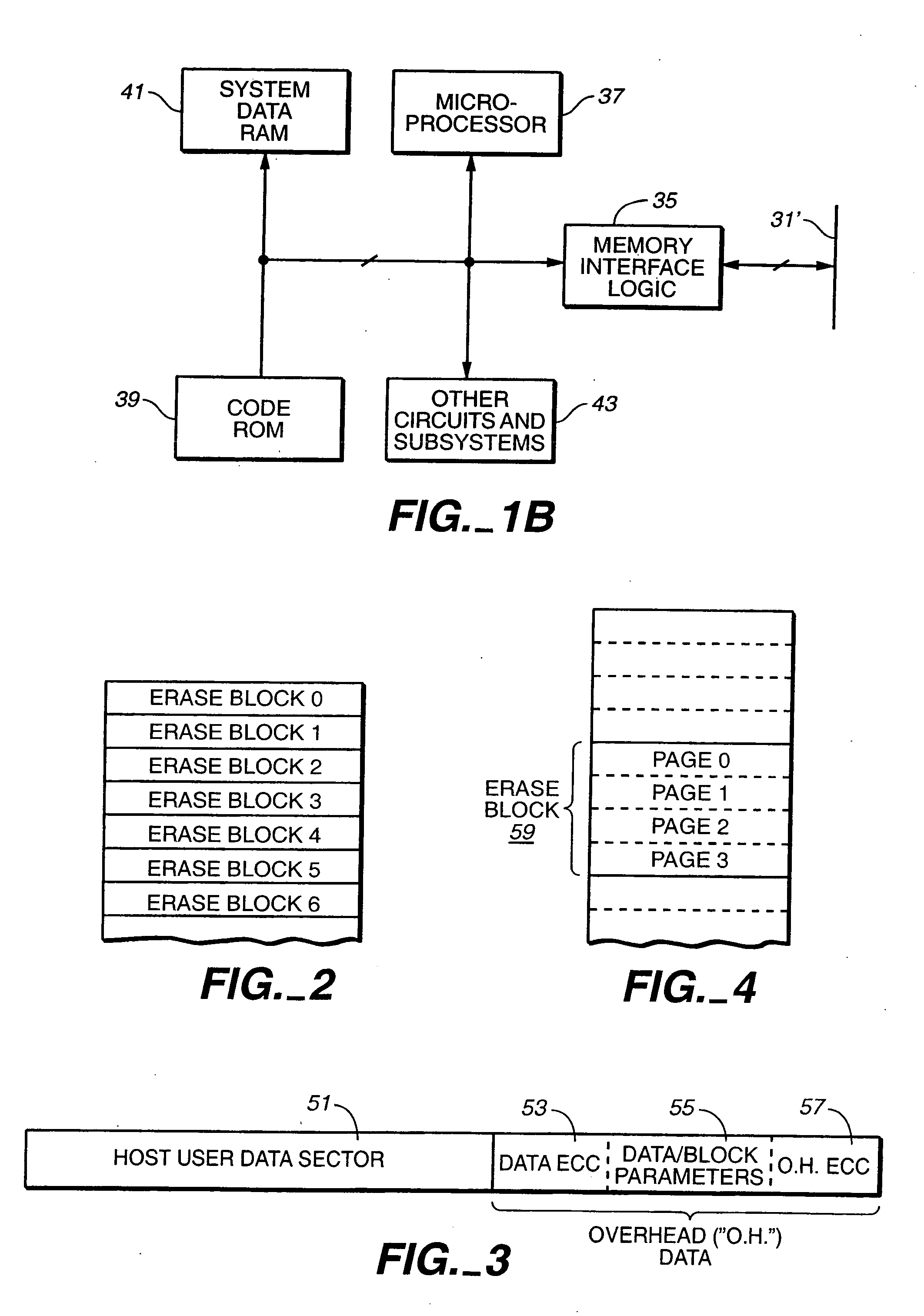

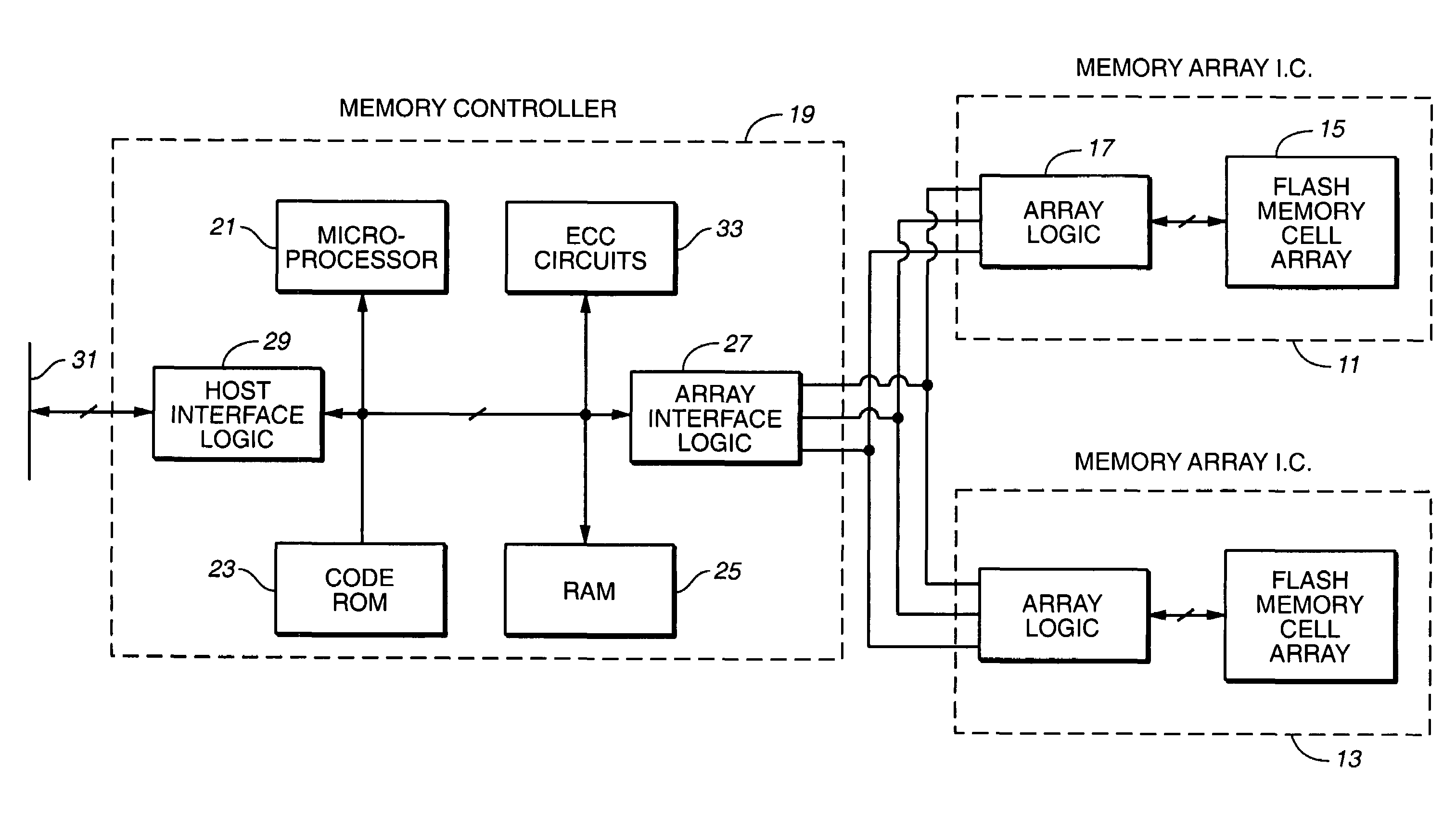

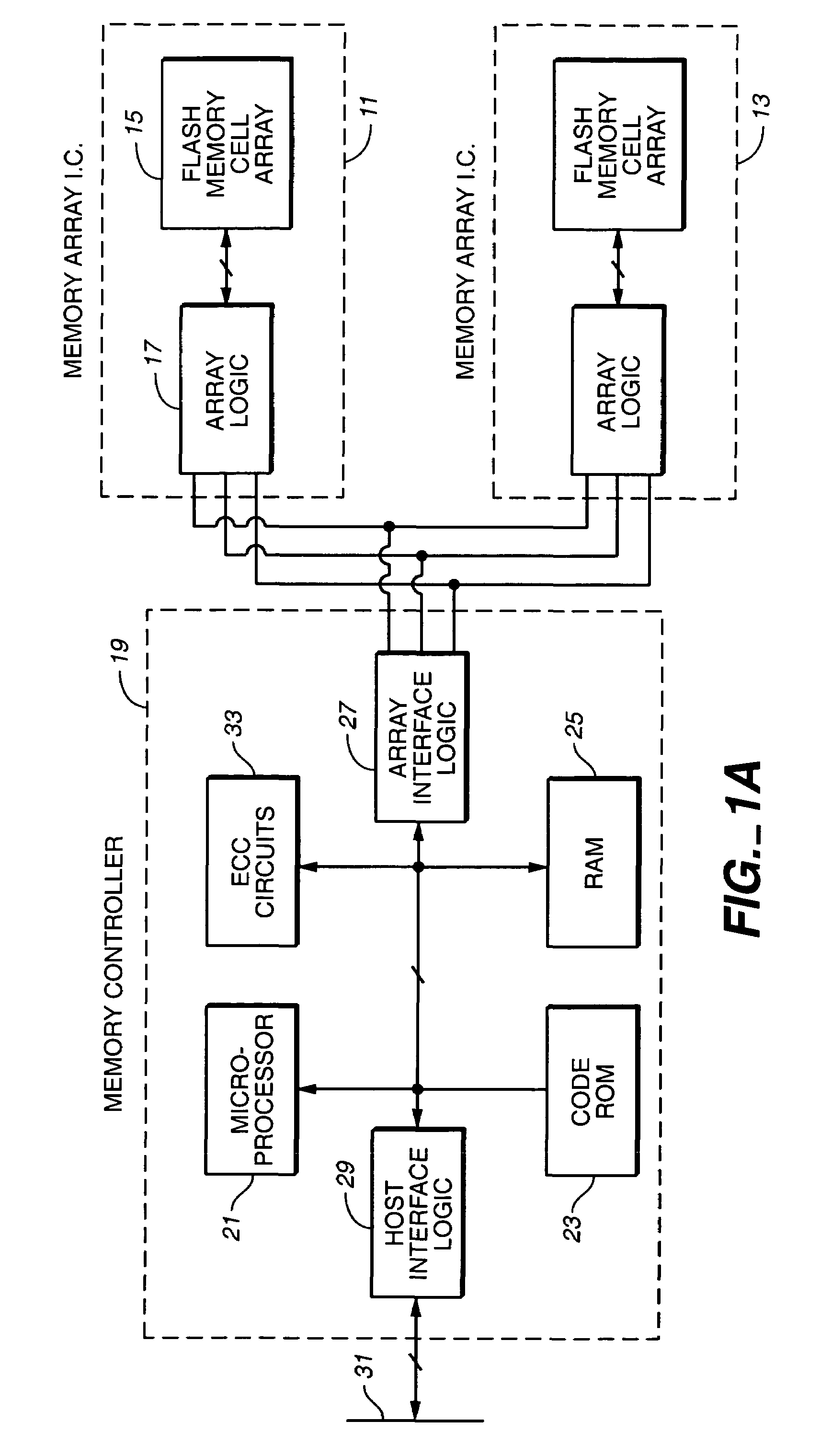

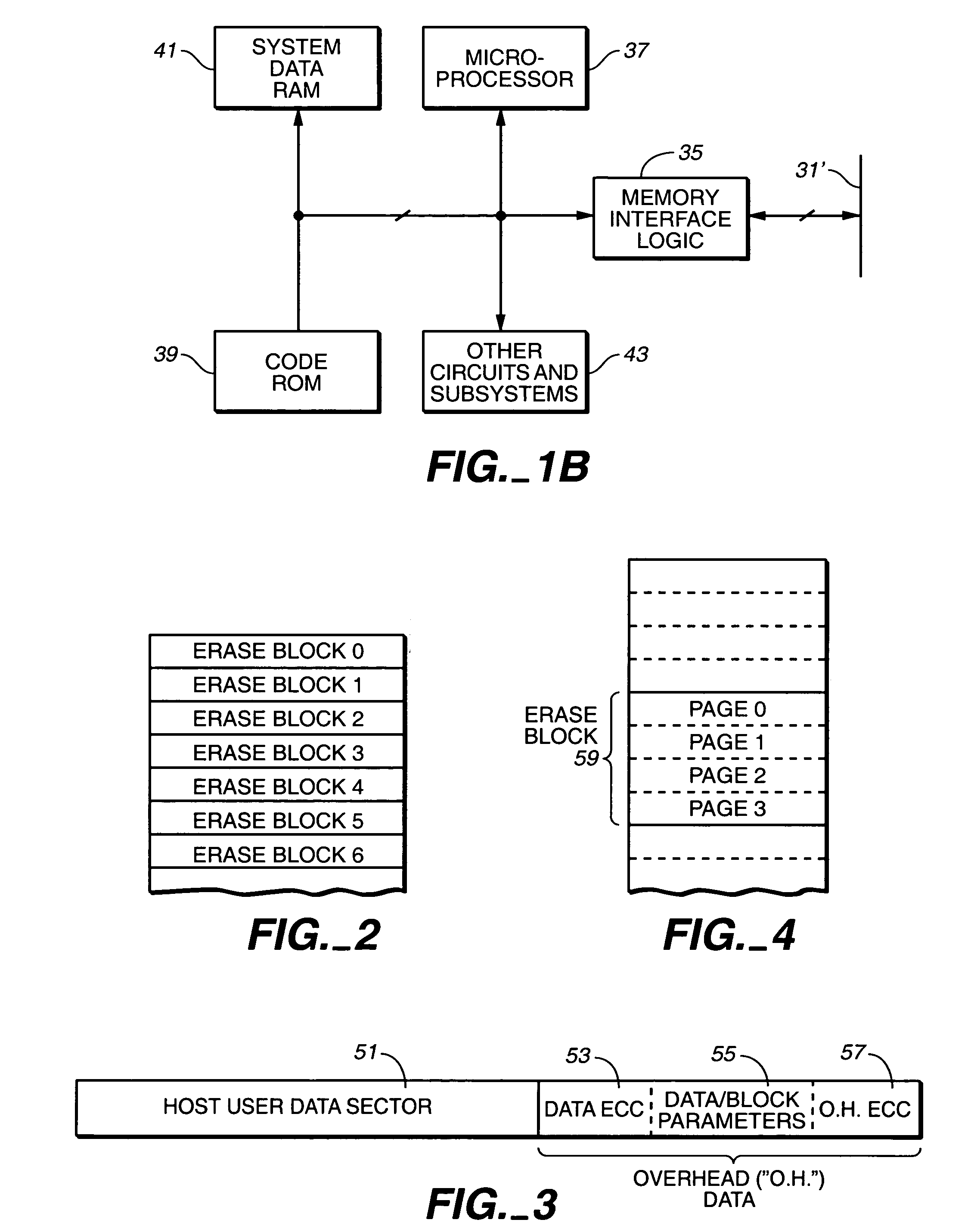

In a memory system having multiple erase blocks in multiple planes, a selected number of erase blocks are programmed together as an adaptive metablock. The number of erase blocks in an adaptive metablock is chosen according to the data to be programmed. Logical address space is divided into logical groups, a logical group having the same size as one erase block. Adaptive logical blocks are formed from logical groups. One adaptive logical block is stored in one adaptive metablock.

Owner:SANDISK TECH LLC

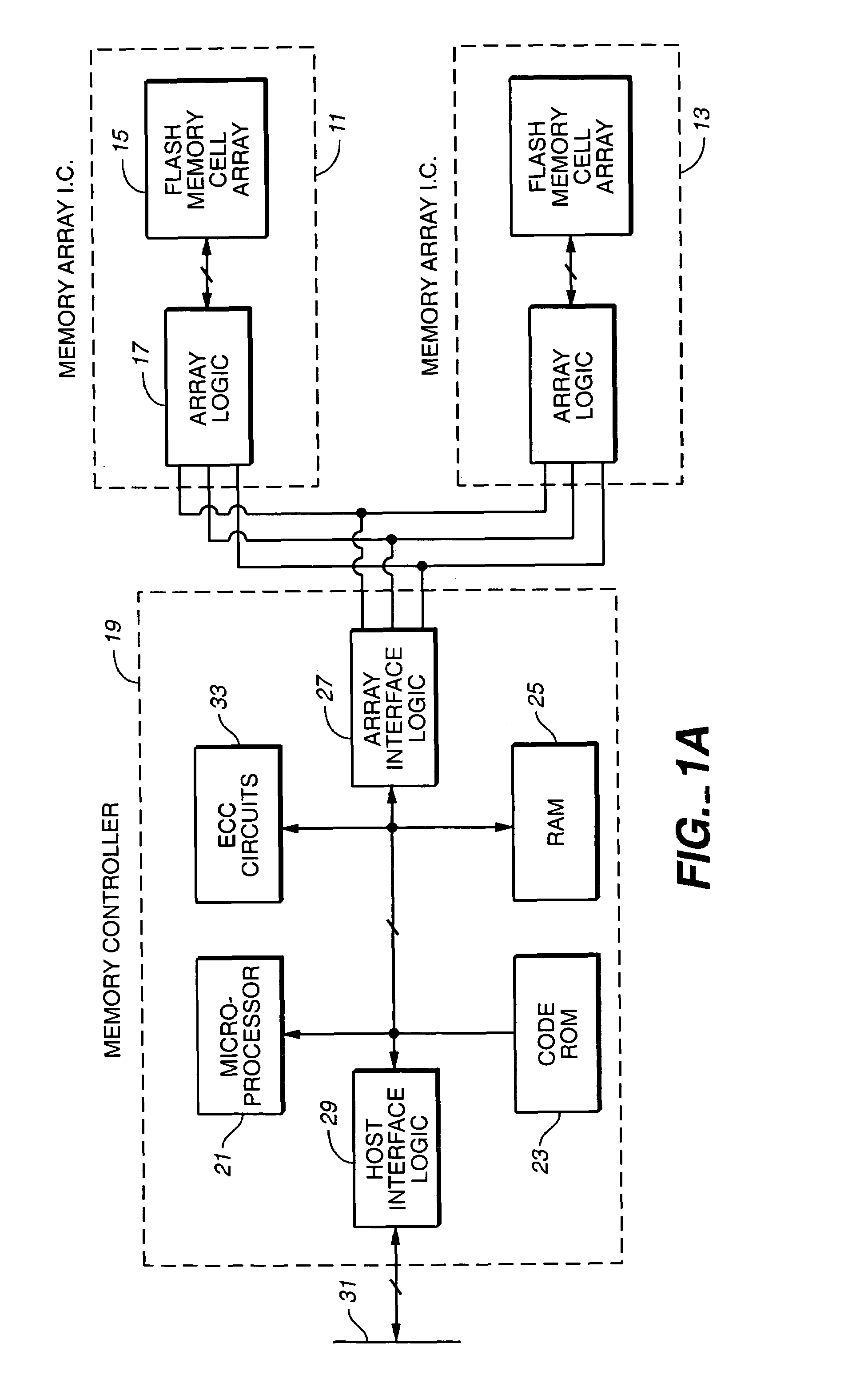

Data boundary management

InactiveUS20050144363A1Reduce the impactReduce impactMemory architecture accessing/allocationMemory adressing/allocation/relocationStatic random-access memoryRandom access memory

Data may be stored in a non-volatile memory array in adaptive metablocks that are configured according to the locations of data boundaries in the data. Data may be stored in an intermediate format and later copied to adaptive metablocks configured for the data. Data in intermediate format may be stored in non-volatile random access memory or in a portion of the non-volatile memory array.

Owner:SANDISK TECH LLC

Data run programming

ActiveUS20050144367A1Reduce the impactReduce impactMemory architecture accessing/allocationProgram control using stored programsData storeNon-volatile memory

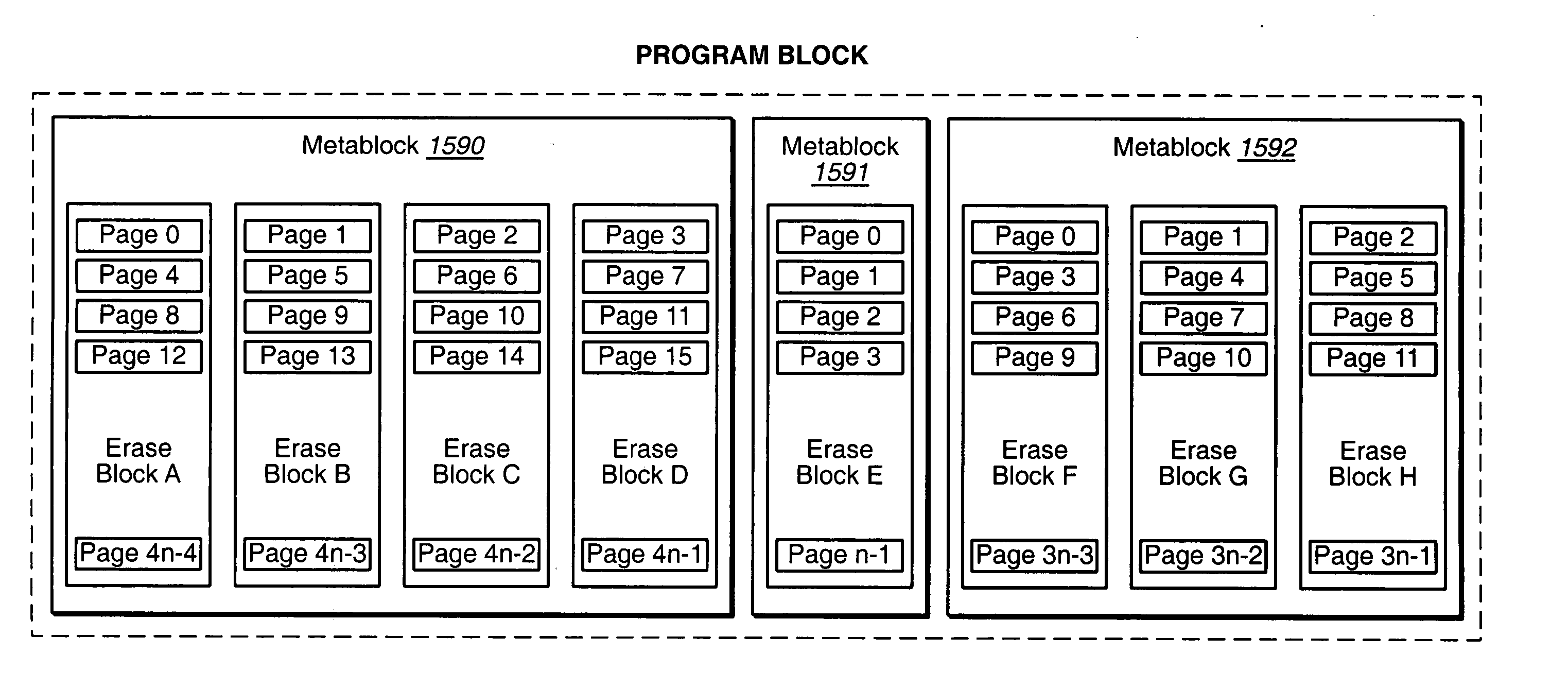

Data in data runs are stored in a non-volatile memory array in adaptive metablocks that are configured according to the locations of data boundaries. A serial flash buffer is used to store some data, while other data are directly stored in non-volatile memory. Data may be stored with alignment to data boundaries during updating of the data to improve efficiency of subsequent updates.

Owner:SANDISK TECH LLC

Scratch pad block

ActiveUS7315916B2Improve performanceWrite efficientlyMemory architecture accessing/allocationRead-only memoriesHigh densityHigh velocity

In a memory array having a minimum unit of erase of a block, a scratch pad block is used to store data that is later written to another block. The data may be written to the scratch pad block with a low degree of parallelism and later written to another location with a high degree of parallelism so that it is stored with high density. Data may be temporarily stored in the scratch pad block until it can be more efficiently stored elsewhere. This may be when some other data is received. Unrelated data may be stored in the same page of a scratch pad block.

Owner:SANDISK TECH LLC

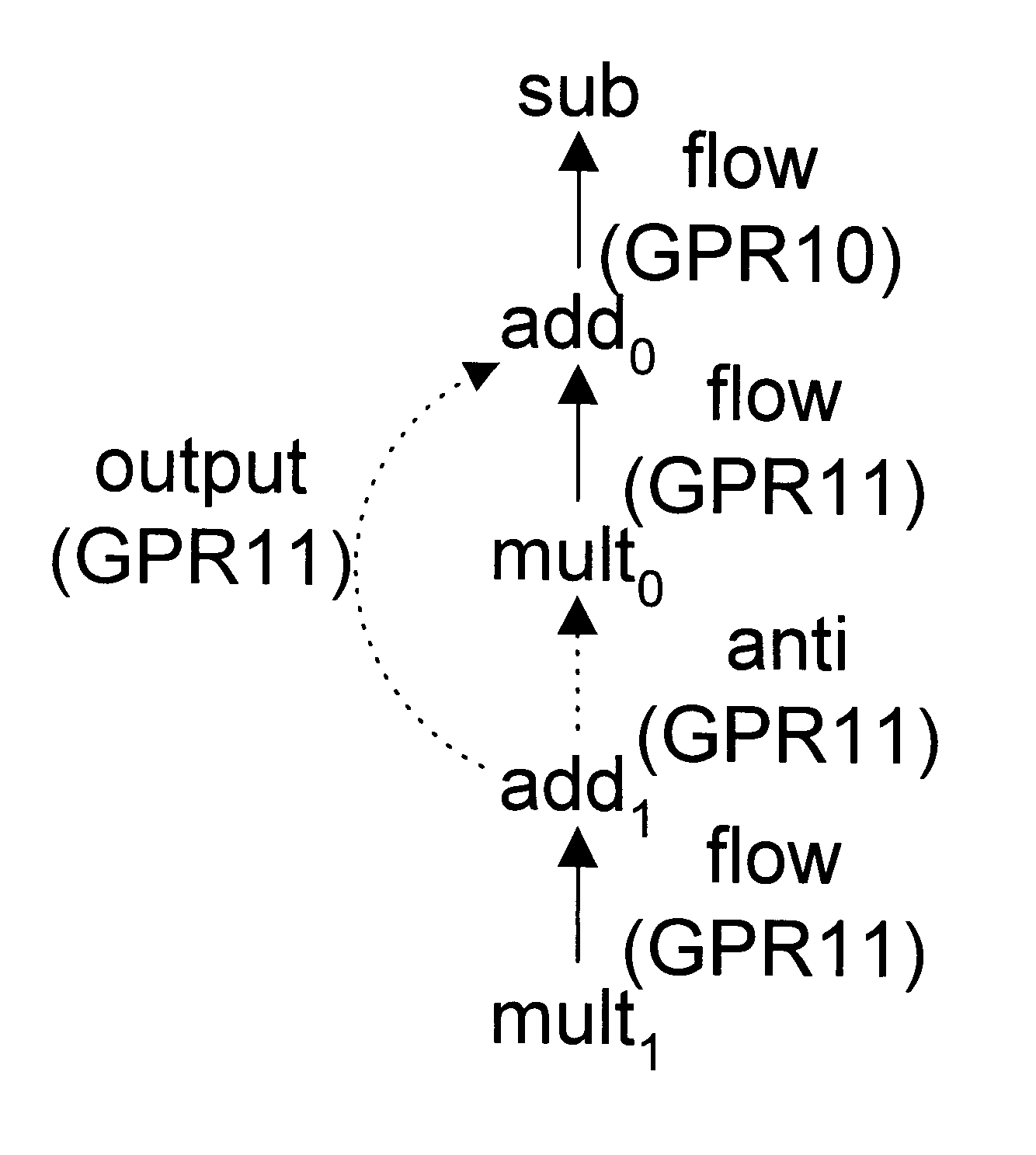

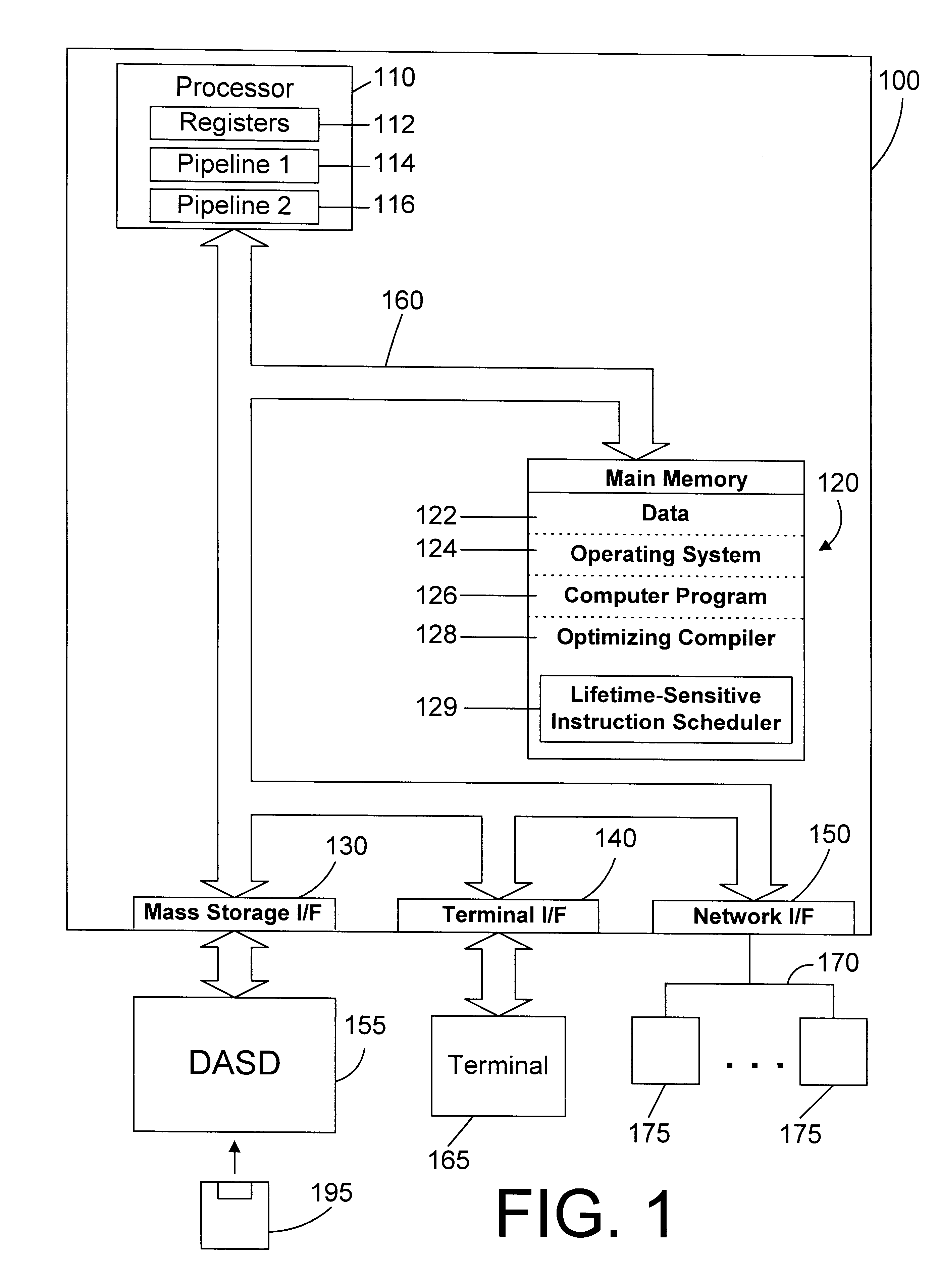

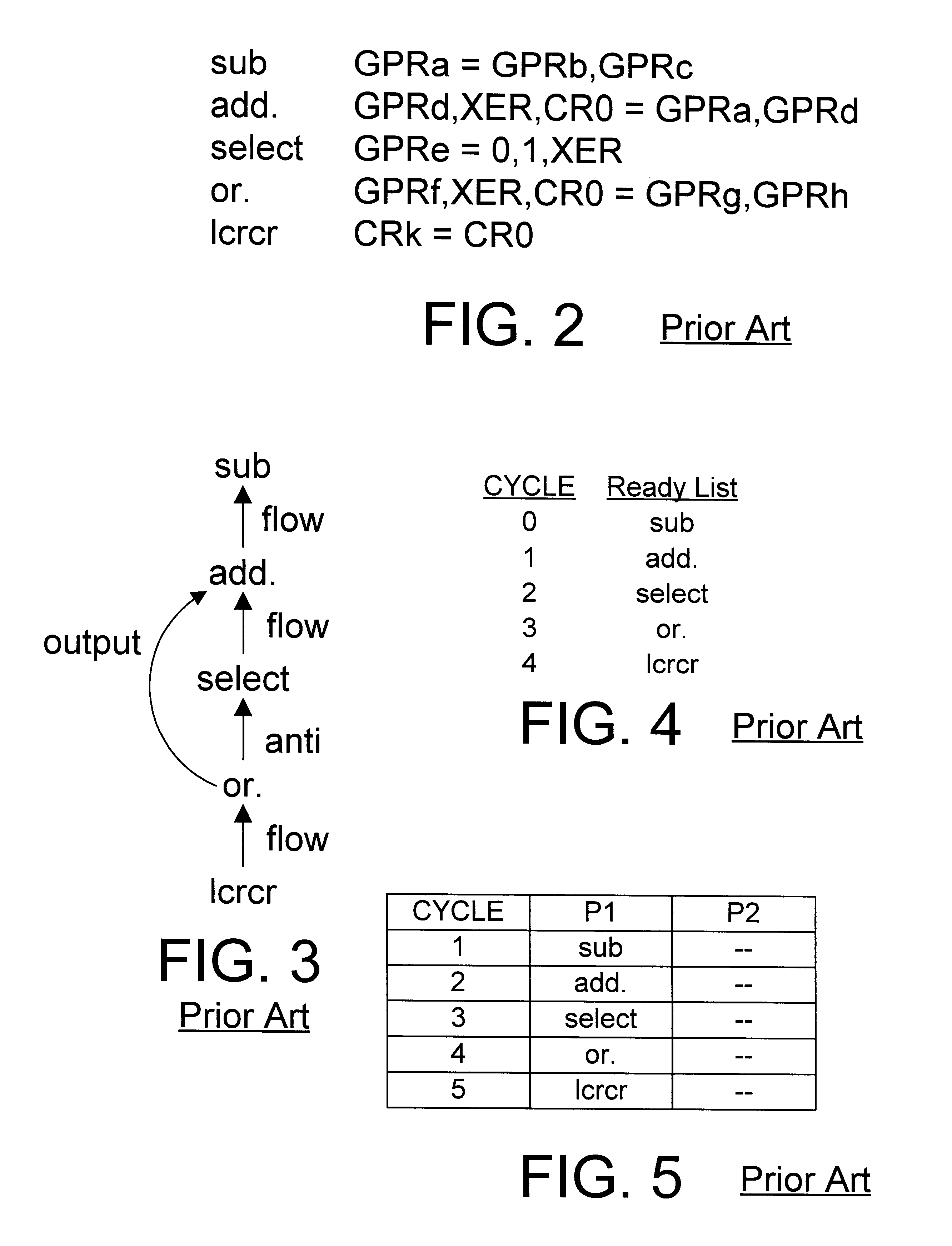

Lifetime-sensitive instruction scheduling mechanism and method

InactiveUS6305014B1Effective instructionHigh degree of parallelismSoftware engineeringSpecific program execution arrangementsScheduling instructionsDegree of parallelism

An instruction scheduler in an optimizing compiler schedules instructions in a computer program by determining the lifetimes of fixed registers in the computer program. By determining the lifetimes of fixed registers, the instruction scheduler can achieve a schedule that has a higher degree of parallelism by relaxing dependences between instructions in independent lifetimes of a fixed register so that instructions can be scheduled earlier than would otherwise be possible if those dependences were precisely honored.

Owner:IBM CORP

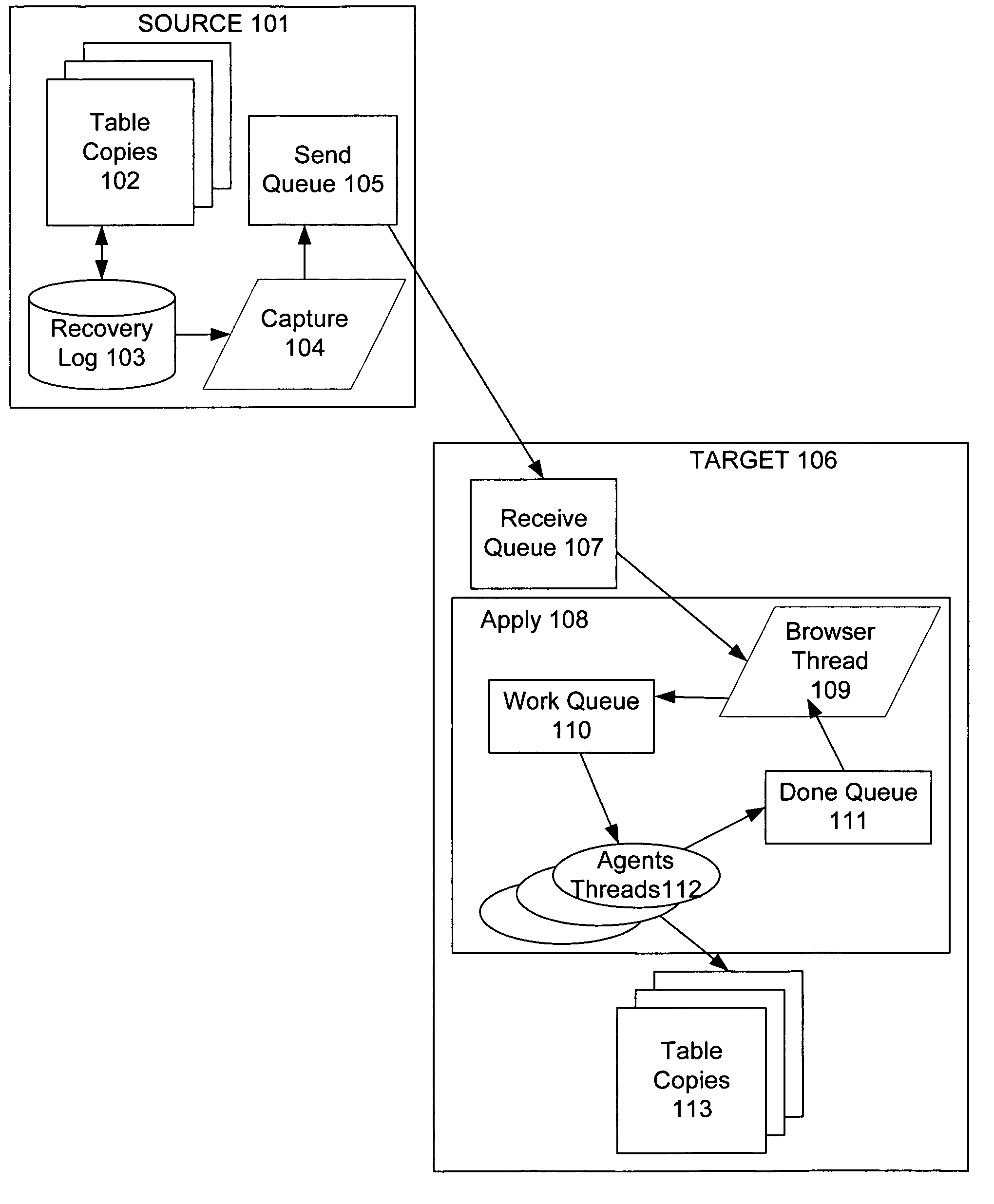

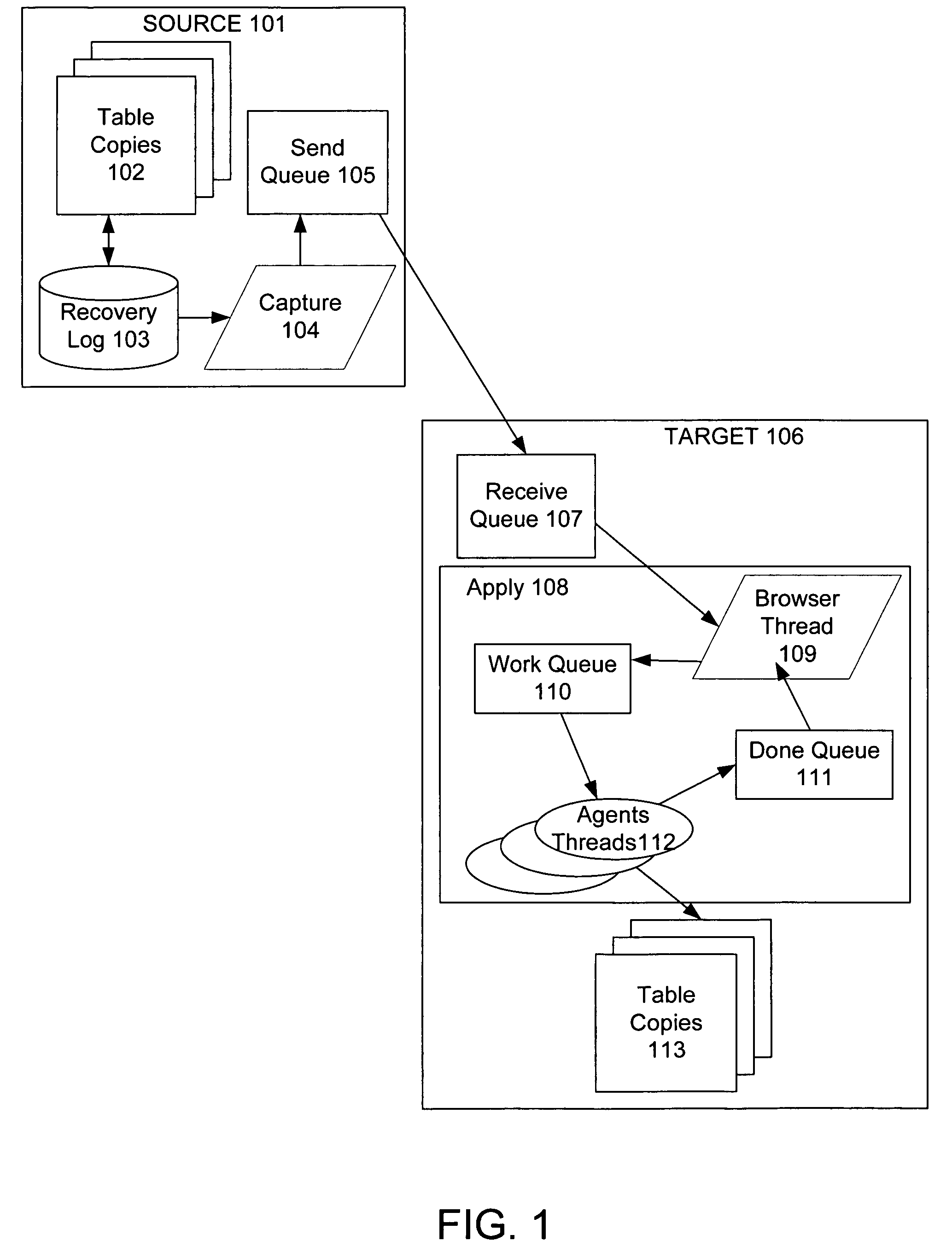

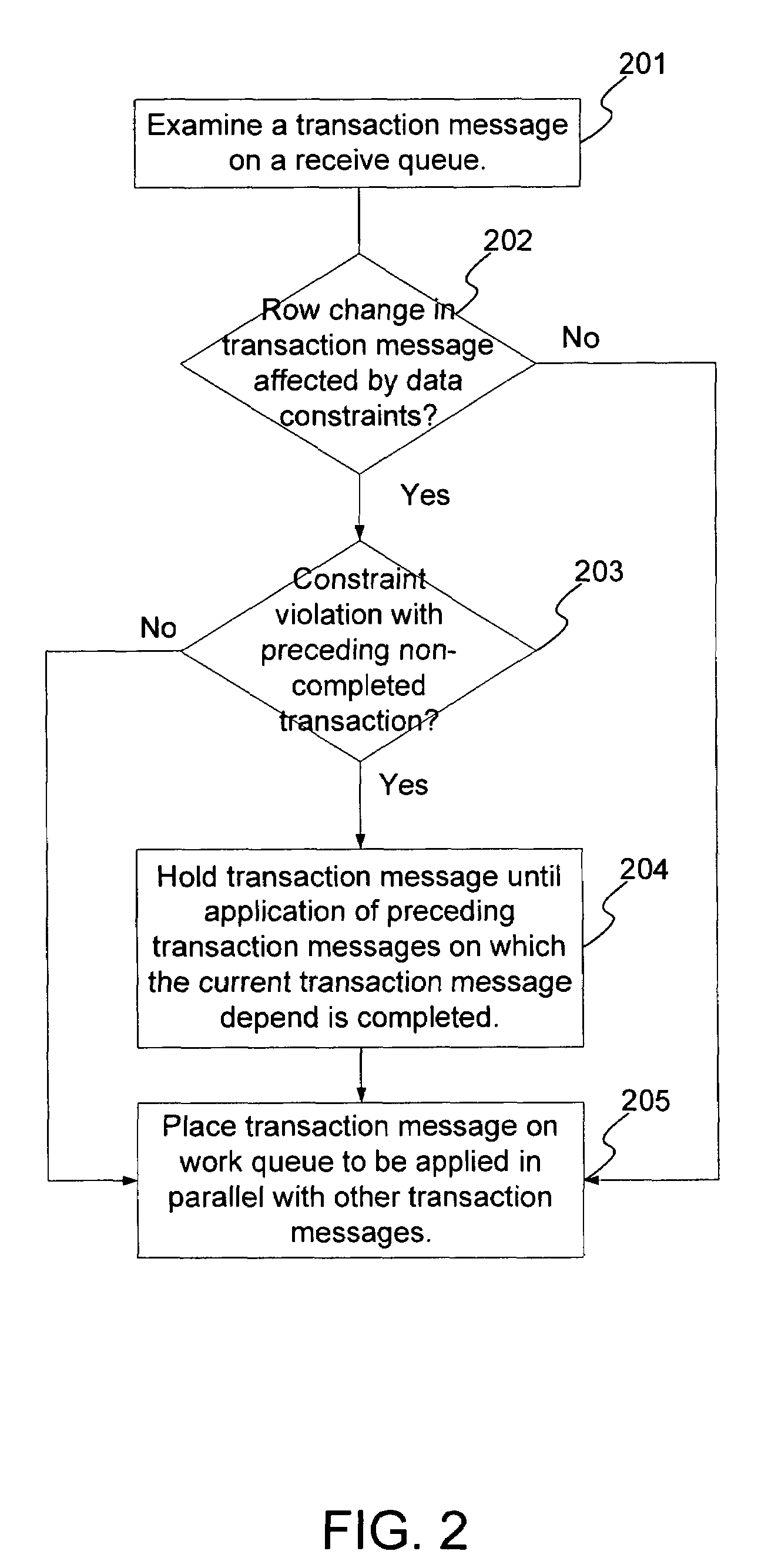

Techniques to preserve data constraints and referential integrity in asynchronous transactional replication of relational tables

InactiveUS7240054B2High degree of parallelismData processing applicationsDigital data information retrievalComputer hardwareImproved method

An improved method and system for preserving data constraints during parallel apply in asynchronous transaction replication in a database system have been disclosed. The method and system preserves secondary unique constraints and referential integrity constraints, while also allowing a high degree of parallelism in the application of asynchronous replication transactions. The method and system also detects and resolves ordering problems introduced by referential integrity cascade deletes, and allows the parallel initial loading of parent and child tables of a referential integrity constraint.

Owner:IBM CORP

Adaptive metablocks

ActiveUS7433993B2Efficient updateHigh degree of parallelismMemory architecture accessing/allocationMemory adressing/allocation/relocationData selectionTheoretical computer science

In a memory system having multiple erase blocks in multiple planes, a selected number of erase blocks are programmed together as an adaptive metablock. The number of erase blocks in an adaptive metablock is chosen according to the data to be programmed. Logical address space is divided into logical groups, a logical group having the same size as one erase block. Adaptive logical blocks are formed from logical groups. One adaptive logical block is stored in one adaptive metablock.

Owner:SANDISK TECH LLC

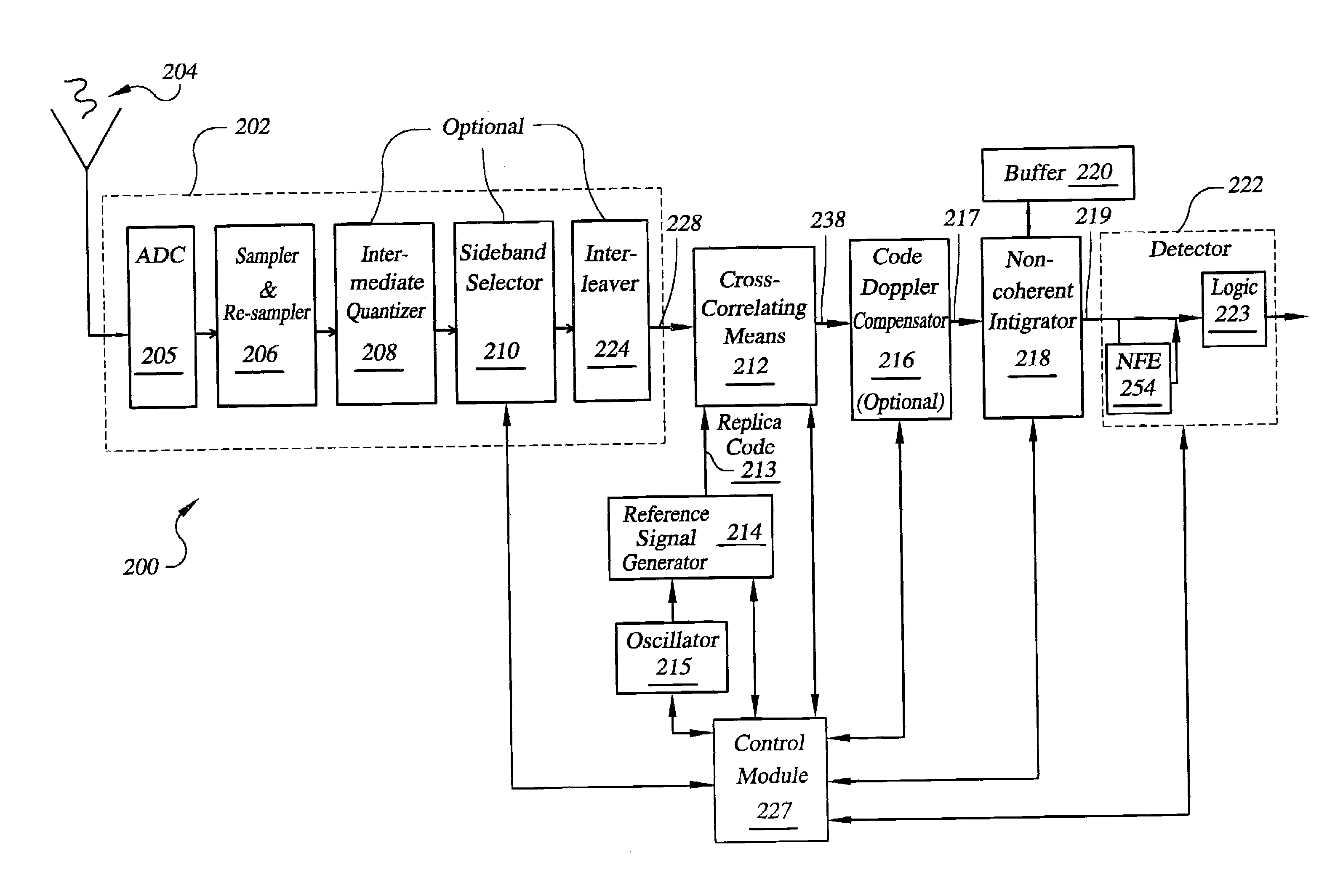

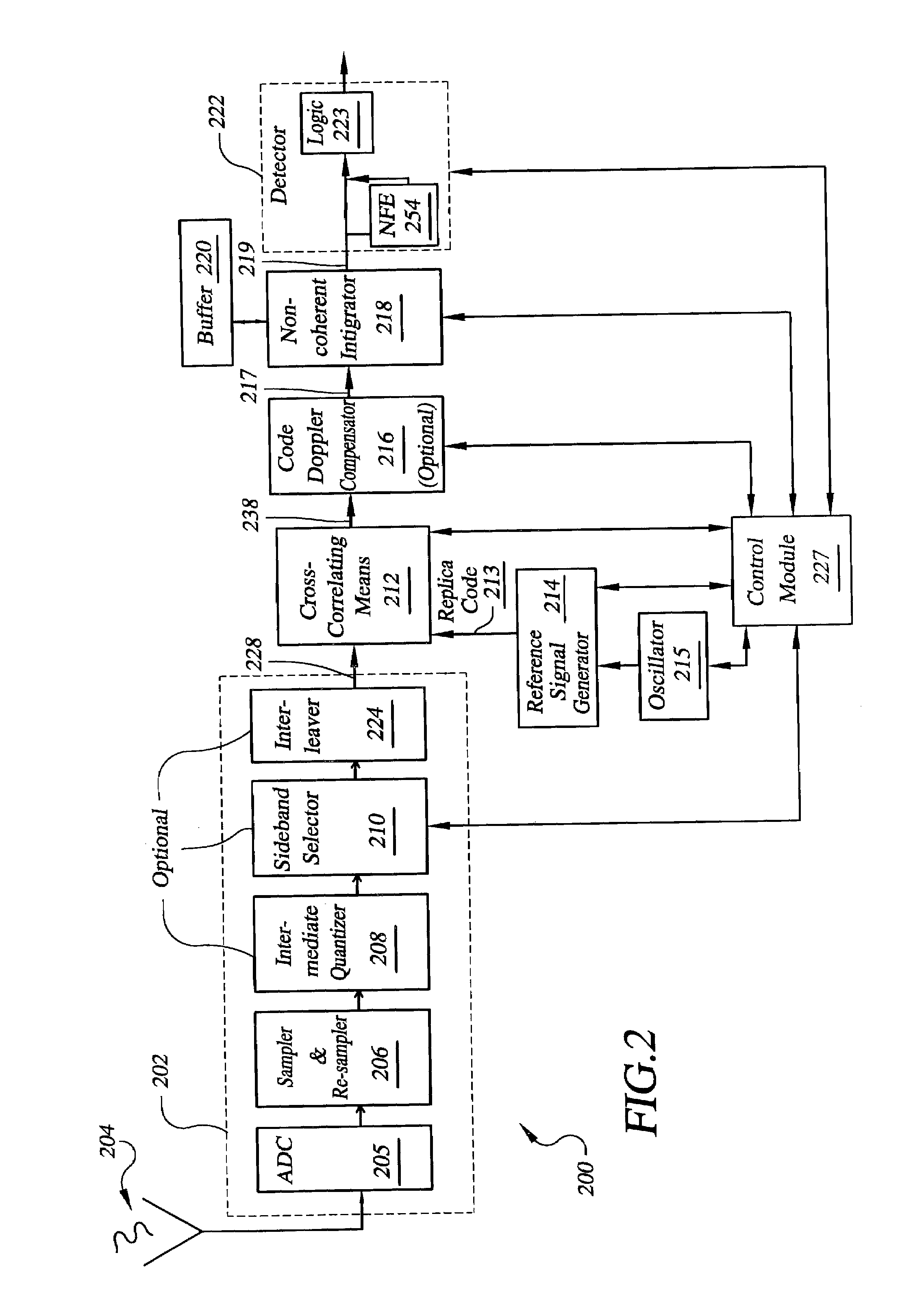

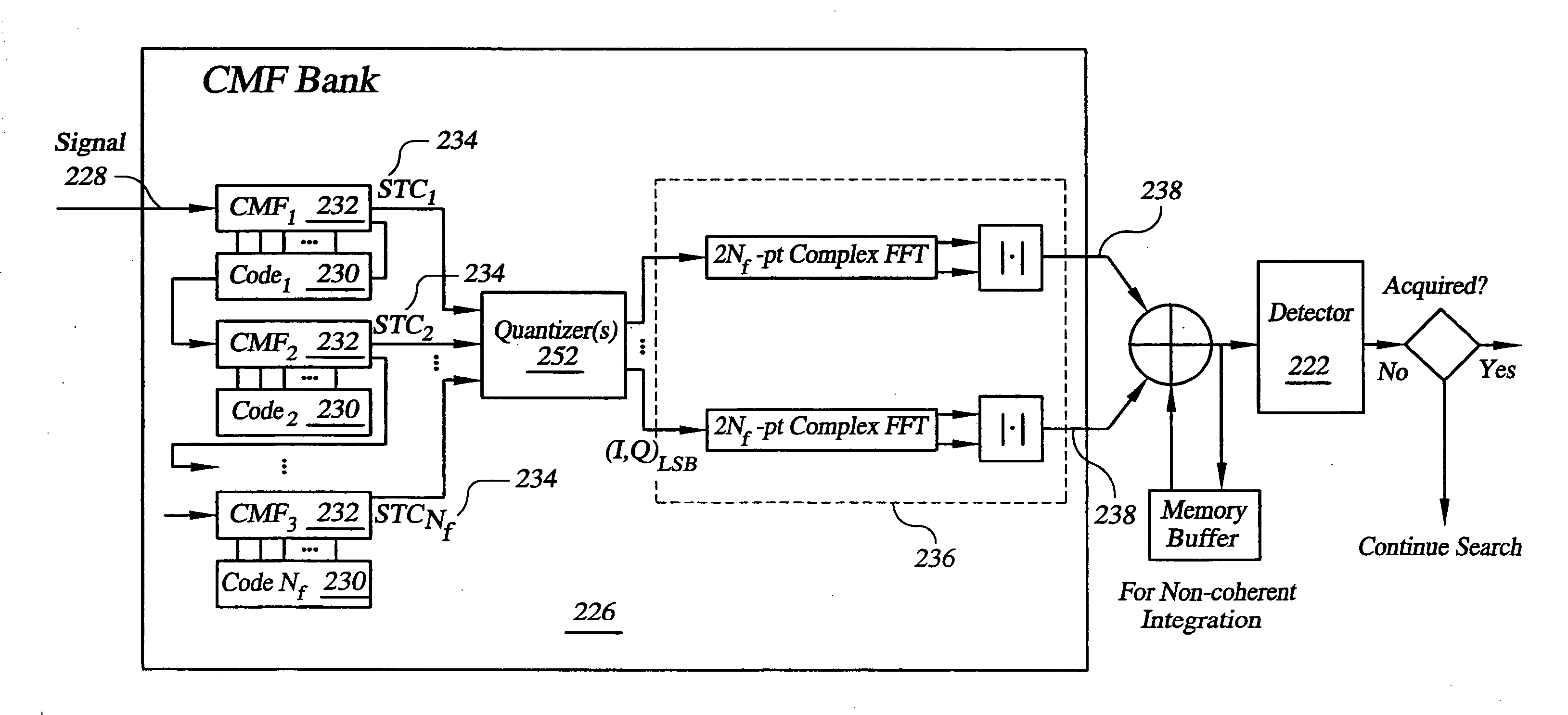

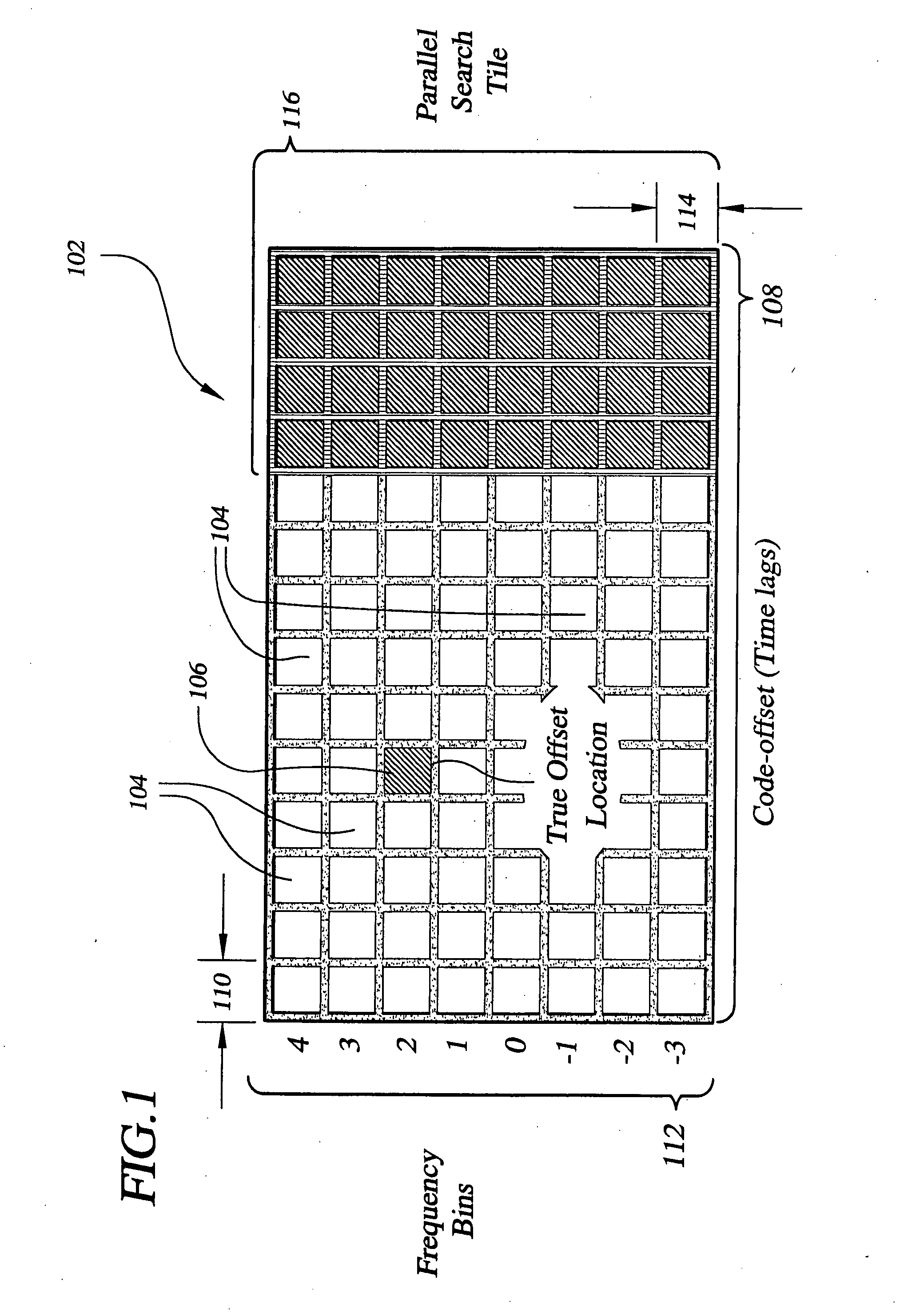

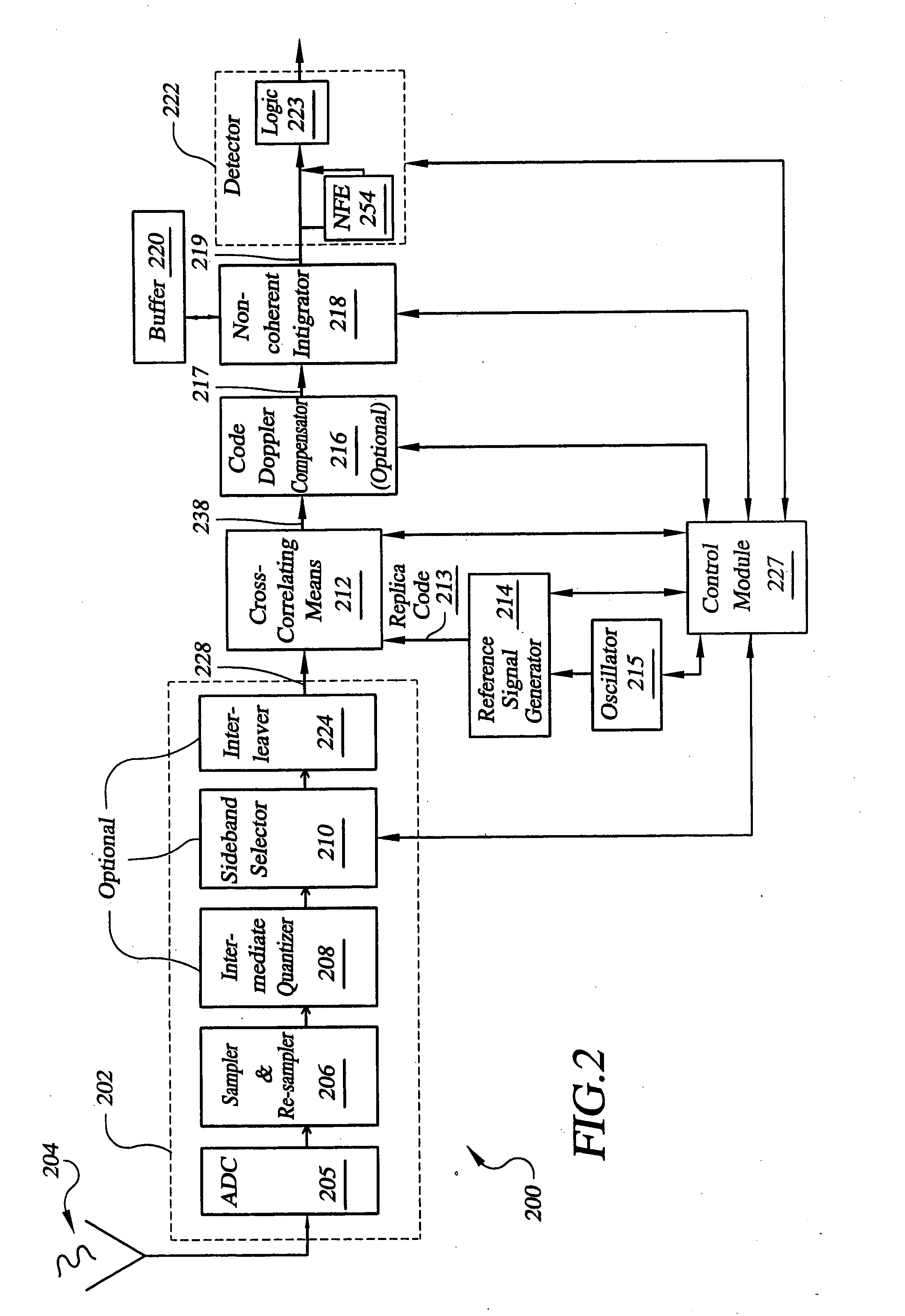

System for direct acquisition of received signals

ActiveUS7224721B2High degree of parallelismQuick alignmentAmplitude-modulated carrier systemsSatellite radio beaconingTime correlationData acquisition

Signal processing architectures for direct acquisition of spread spectrum signals using long codes. Techniques are described for achieving a high of parallelism, employing code matched filter banks and other hardware sharing. In one embodiment, upper and lower sidebands are treated as two independent signals with identical spreading codes. Cross-correlators, in preferred embodiments, are comprised of a one or more banks of CMFs for computing parallel short-time correlations (STCs) of received signal samples and replica code sequence samples, and a means for calculating the cross-correlation values utilizing discrete-time Fourier analysis of the computed STCs. One or more intermediate quantizers may optionally be disposed between the bank of code matched filters and the cross-correlation calculation means for reducing word-sizes of the STCs prior to Fourier analysis. The techniques described may be used with BOC modulated signals or with any signal having at least two distinct sidebands.

Owner:MITRE SPORTS INT LTD

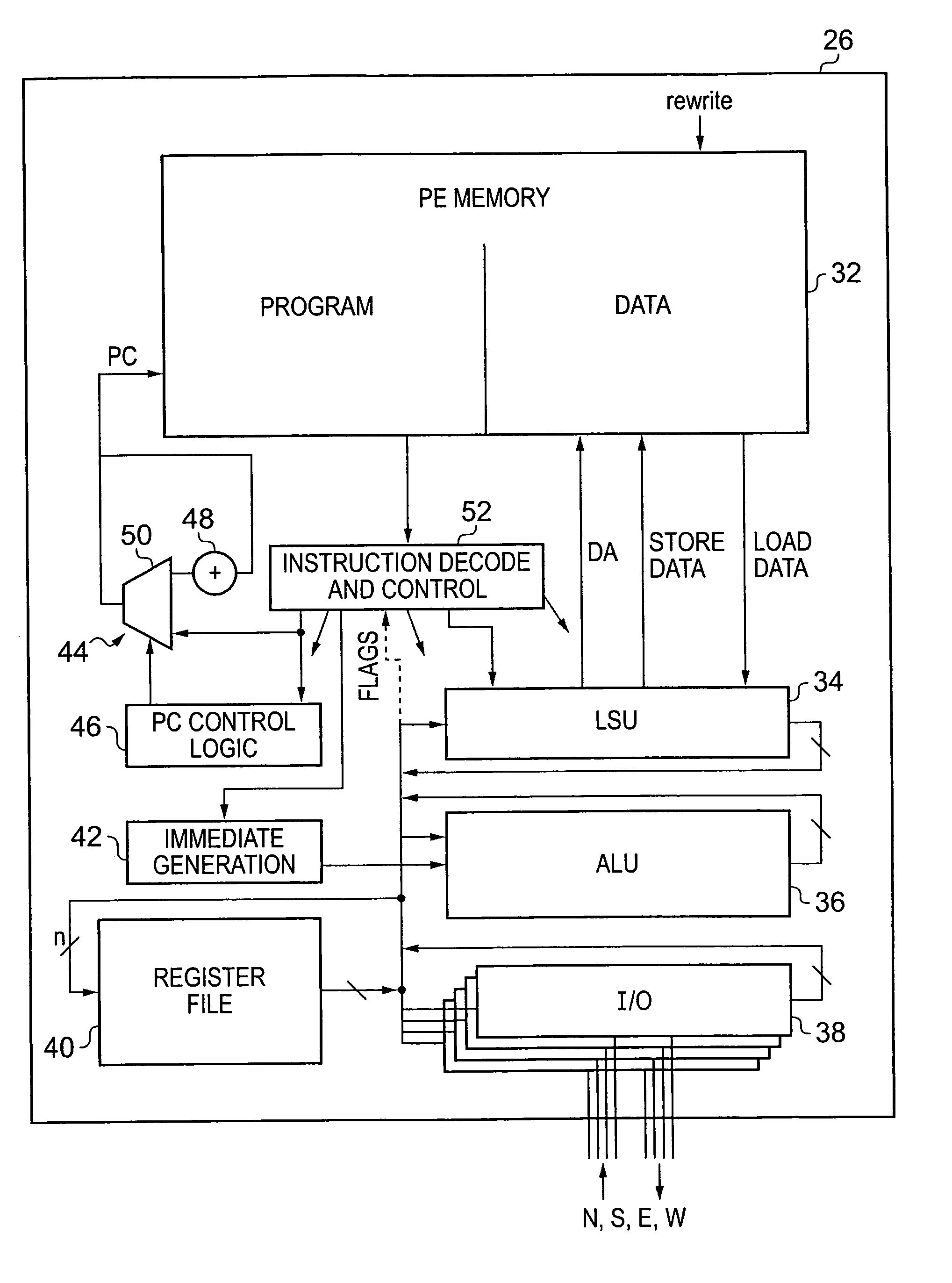

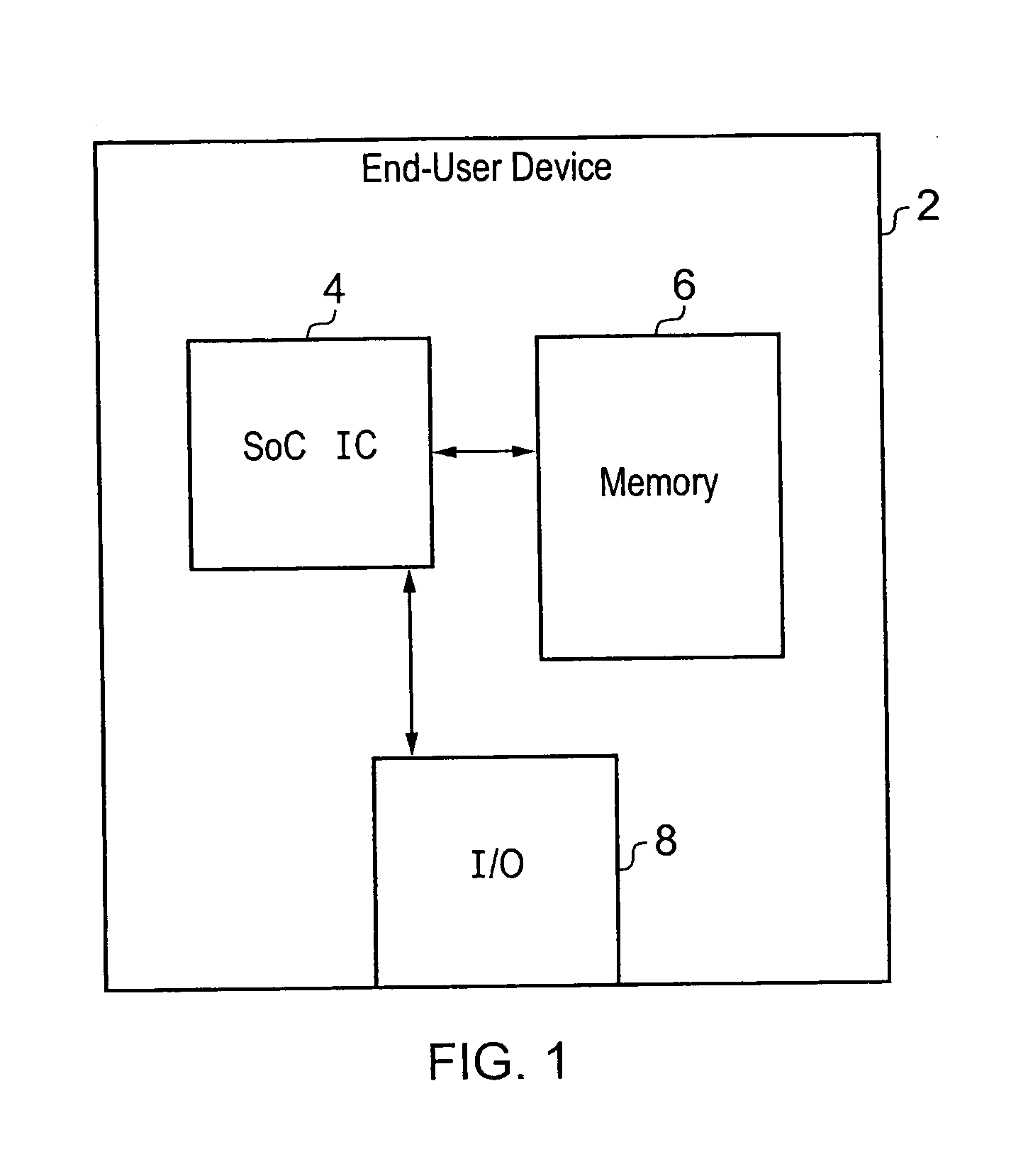

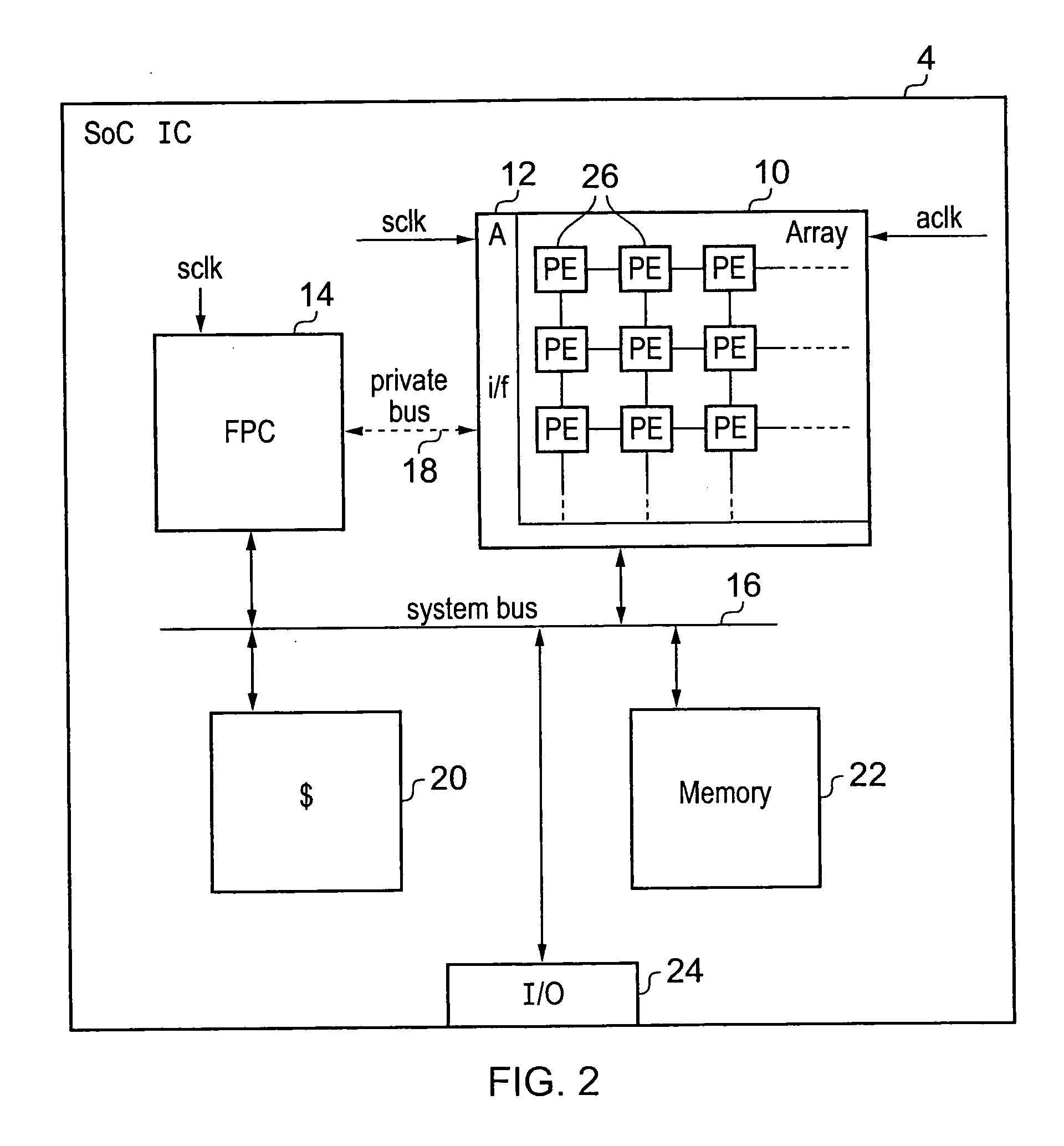

Integrated circuit incorporating an array of interconnected processors executing a cycle-based program

InactiveUS20100100704A1Facilitate communicationImprove performanceSingle instruction multiple data multiprocessorsProgram control using wired connectionsArray data structureState variable

An integrated circuit 4 is provided including an array 10 of processors 26 with interface circuitry 12 providing communication with further processing circuitry 14. The processors 26 within the array 10 execute individual programs which together provide the functionality of a cycle-based program. During each program-cycle of the cycle based program, each of the processors executes its respective program starting from a predetermined execution start point to evaluate a next state of at least some of the state variables of the cycle-based program. A boundary between program-cycles provides a synchronisation time (point) for processing operations performed by the array.

Owner:ARM LTD

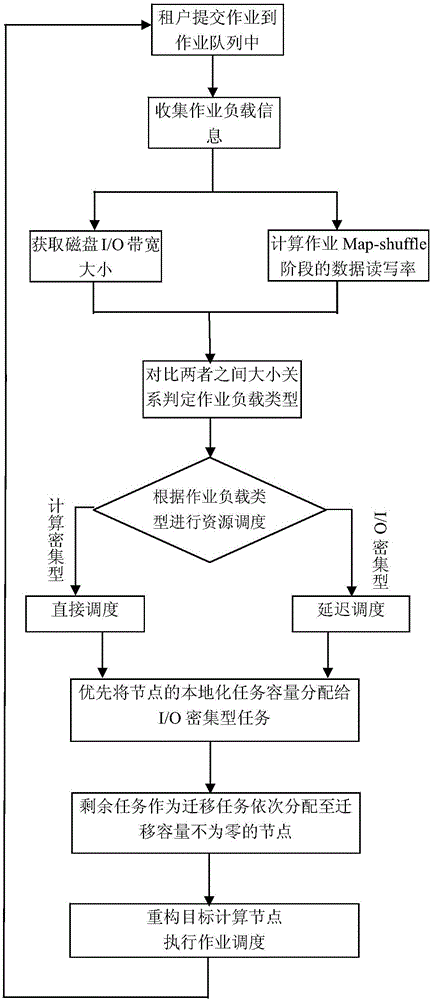

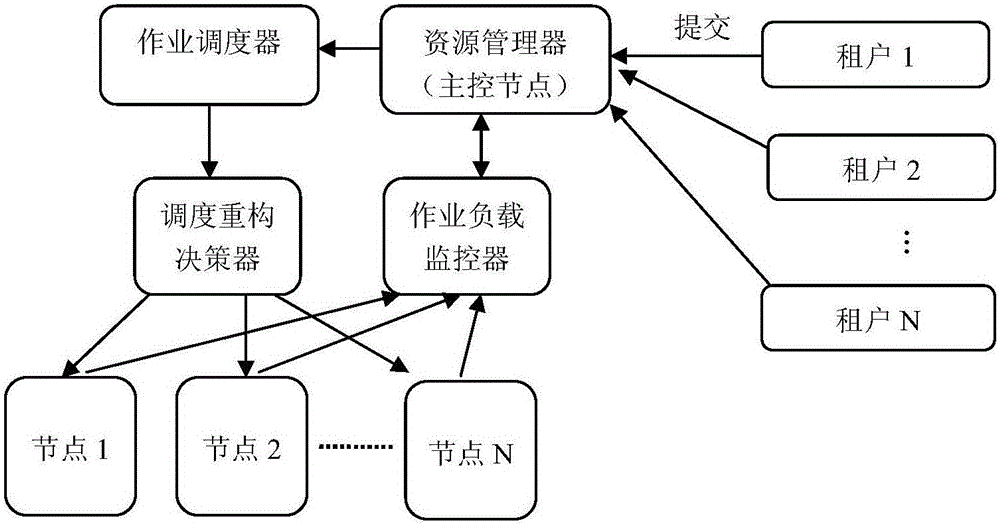

Multi-tenant resource optimization scheduling method facing different types of loads

ActiveCN106502792AAchieve localizationImprove throughputResource allocationResource managementDistributed computing

The invention relates to a multi-tenant resource optimization scheduling method facing different types of loads. The multi-tenant resource optimization scheduling method comprises the following steps: 1, submitting jobs by system tenants and adding the jobs into a job queue; 2, collecting job load information and sending the job load information to a resource manager; 3, judging different load types of the jobs according to the job load information by the resource manager, and sending type information to a job scheduler; 4, carrying out job scheduling according to the different load types by the job scheduler; if the job is a calculation intensive type job, scheduling at the node; if the job is an I / O intensive type job, delaying and waiting; and 5, collecting job scheduling decision-making information to a scheduling reconstruction decision-making device, reconstructing a target calculation node, and carrying out the job scheduling according to a final decision-making result. According to the method provided by the invention, a multi-tenant shared cluster is realized, so that the cost of establishing an independent cluster is reduced, and meanwhile, a plurality of tenants can share more big data set resources. The better data locality is realized facing optimization of the different types of loads, and the balance between the equity and efficiency in a job scheduling process can be realized very well; and calculation performances of the whole cluster, such as throughput rate and job responding time, are improved.

Owner:SOUTH CHINA UNIV OF TECH

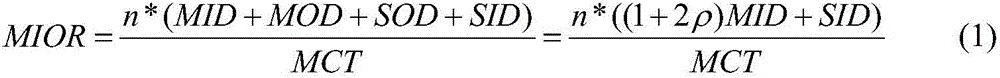

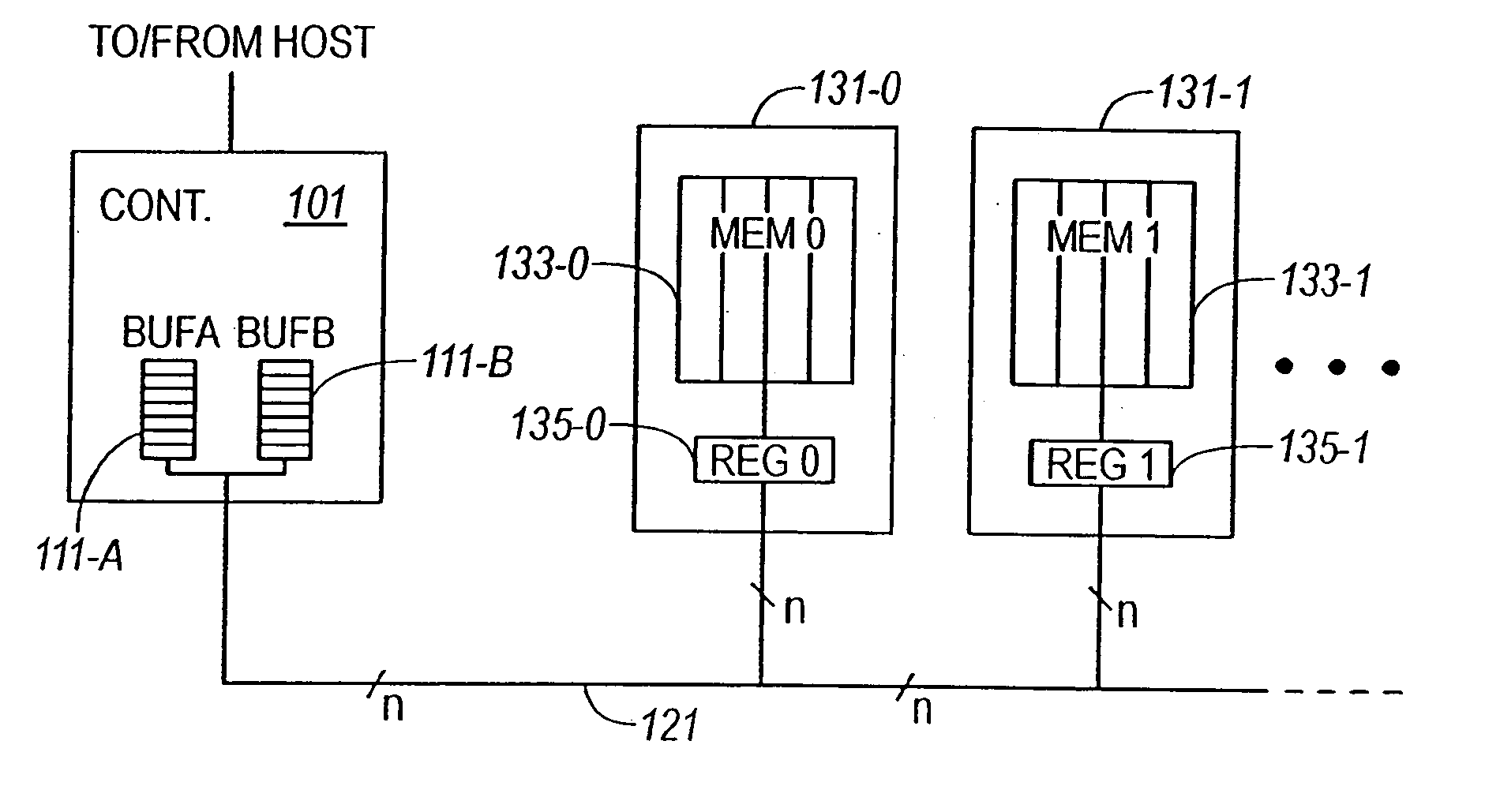

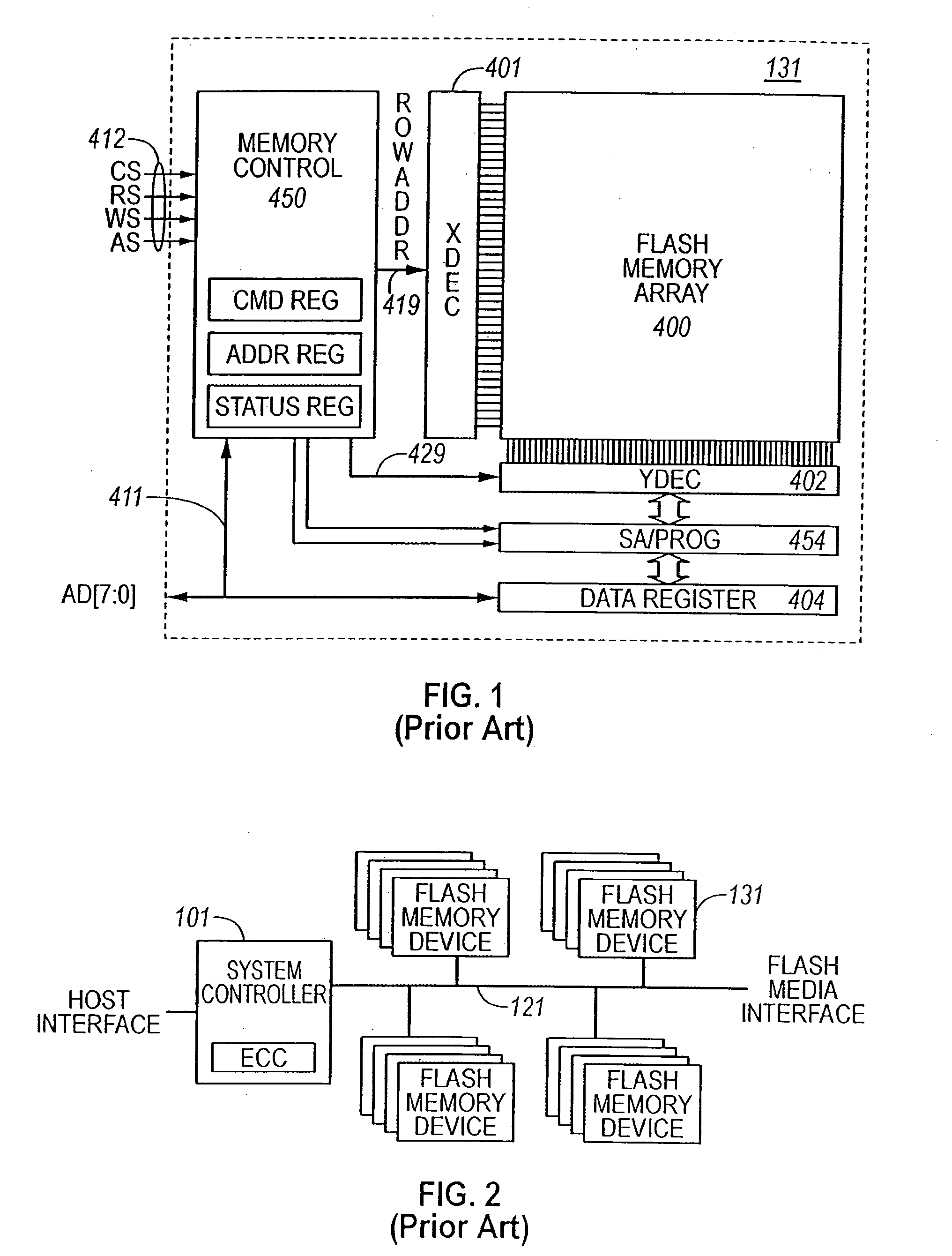

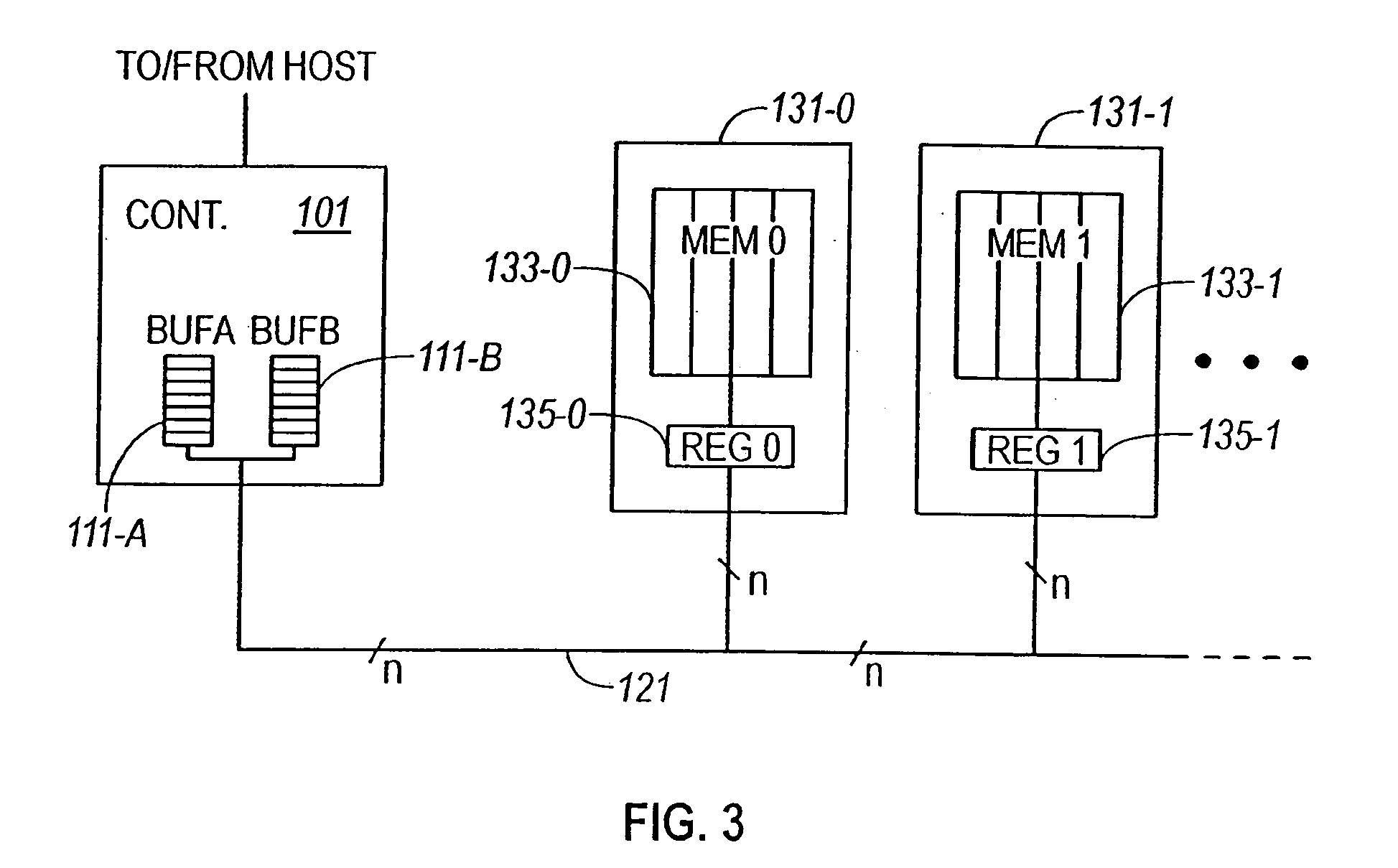

Pipelined parallel programming operation in a non-volatile memory system

InactiveUS20050146939A1Programming parallelism in the system is increasedHigh degree of parallelismRead-only memoriesDigital storageMemory chipHigh rate

The present invention allows for an increase in programming parallelism in a non-volatile memory system without incurring additional data transfer latency. Data is transferred from a controller to a first memory chip and a programming operation is caused to begin. While that first memory chip is busy performing that program operation, data is transferred from the controller to a second memory chip and a programming operation is caused to begin in that chip. Data transfer can begin to the first memory chip again once it has completed its programming operation even though the second chip is still busy performing its program operation. In this manner high parallelism of programming operation is achieved without incurring the latency cost of performing the additional data transfers. Two sets of embodiments are presented, one that preserves the host data in a buffer until successful programming of that data is confirmed and one that does not require that success be achieved and that does not preserve the data thus achieving a higher rate of data programming throughput.

Owner:SANDISK TECH LLC

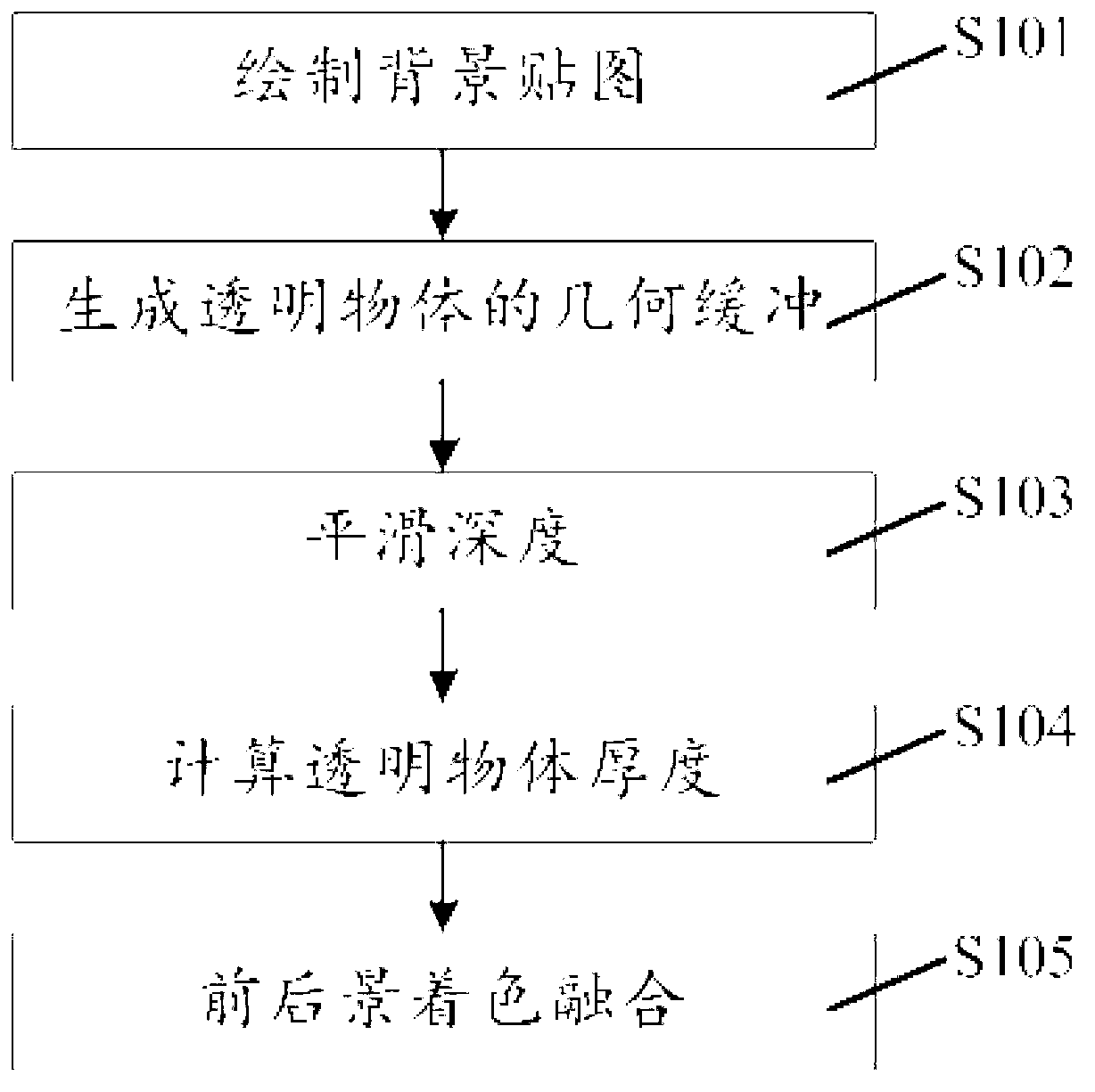

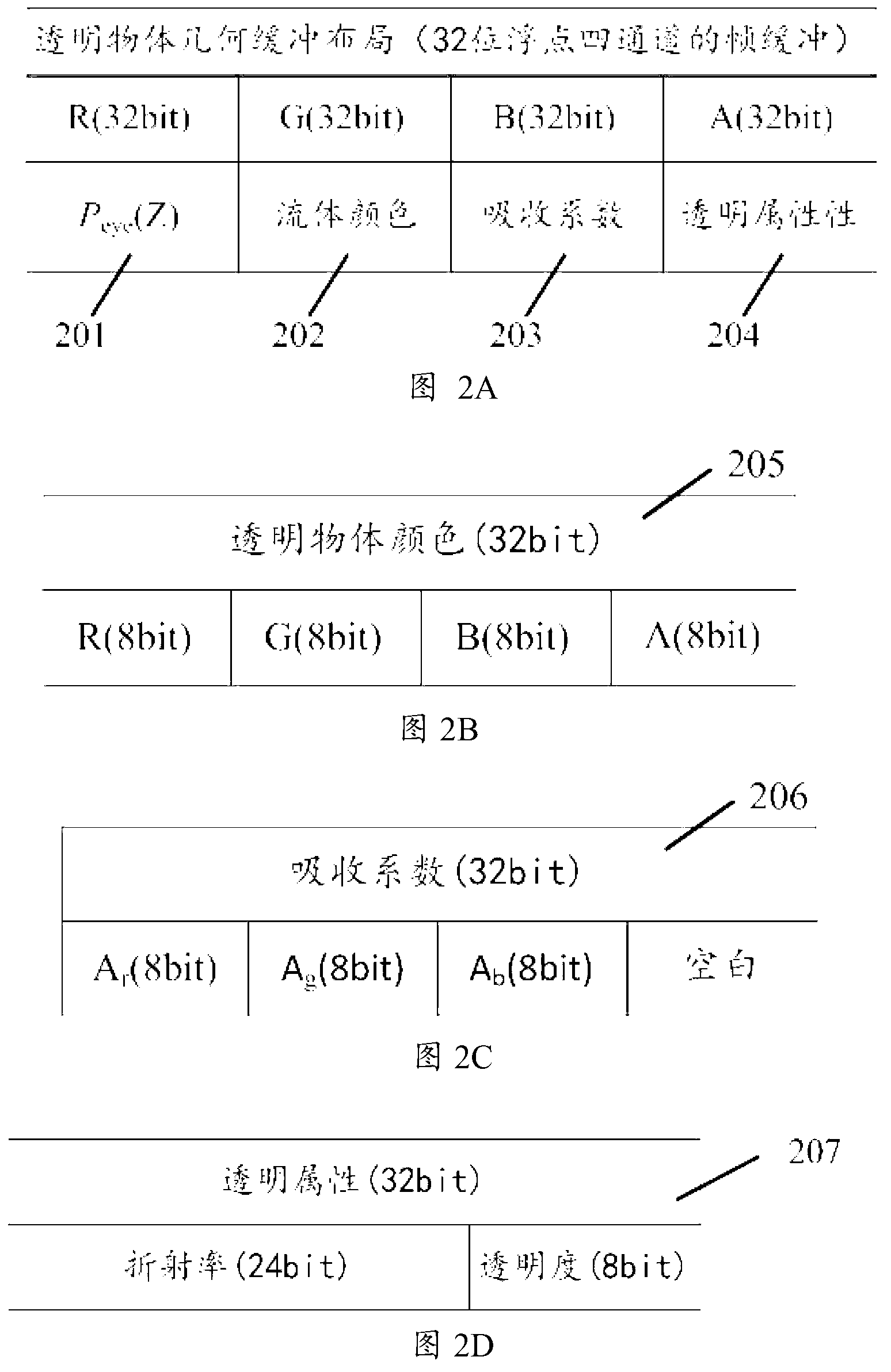

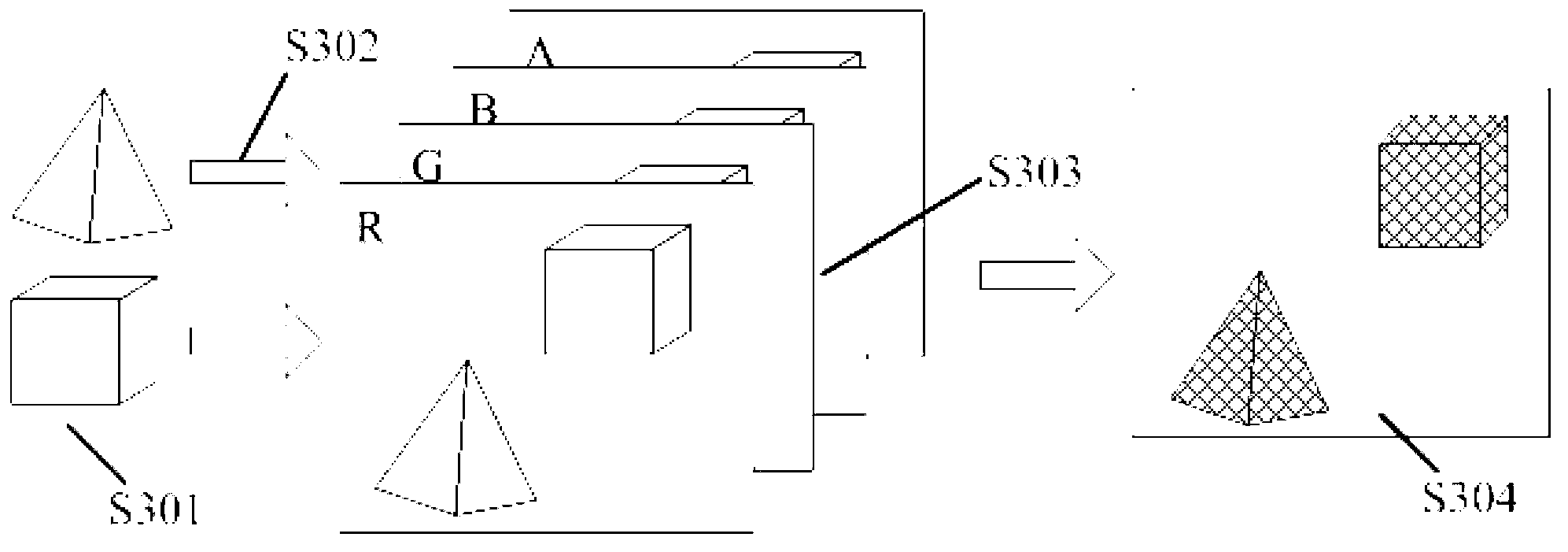

Real-time transparent object GPU (graphic processing unit) parallel generating method based on three-dimensional point cloud

The invention discloses a real-time transparent object GPU (graphic processing unit) parallel generating method based on three-dimensional point cloud, which comprises steps of: (1) generating a background map of a nontransparent object; (2) generating geometric buffer of a transparent object, generating all three-dimensional points by a sphere generating way, utilizing hardware depth detection to acquire depth of an approximate plane, and simultaneously saving material information of the transparent object; (3) smoothing the depth, using the depth information in the geometric buffer to perform smooth filtering to the depth to acquire a smooth surface; (4) calculating thickness of the transparent object, generating all three-dimensional points, and utilizing hardware Alpha to mix and calculate the thickness of the transparent object; and (5) coloring the transparent object, using the depth and the material information to perform illumination computation to the transparent object, utilizing the thickness to calculate refraction and reflection properties of the transparent object, and utilizing the background map to finish coloring. The method avoids the surface rebuilding step in the traditional method, and can meet the requirement of real-time generation of a million-level transparent object based on point cloud.

Owner:BEIHANG UNIV

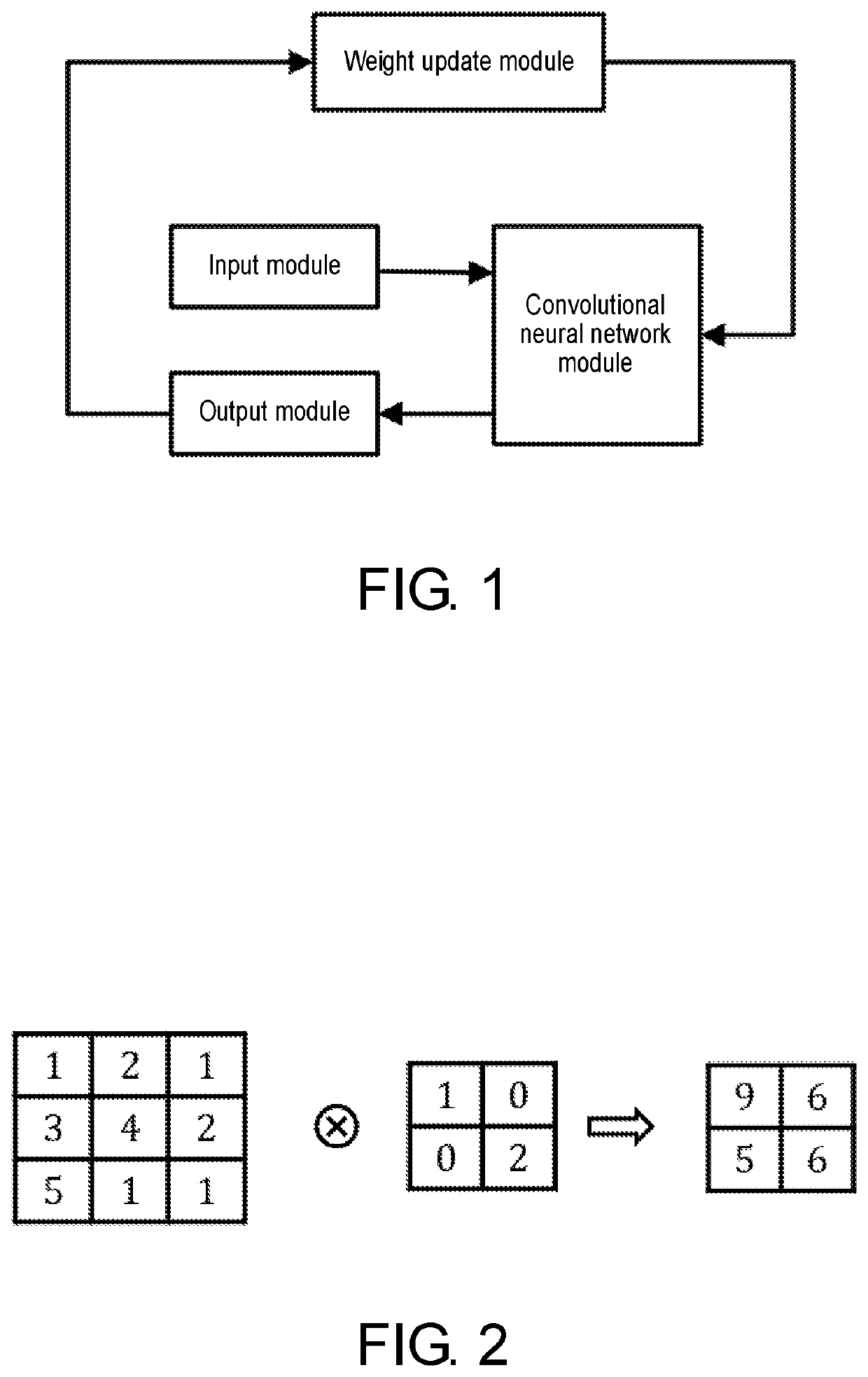

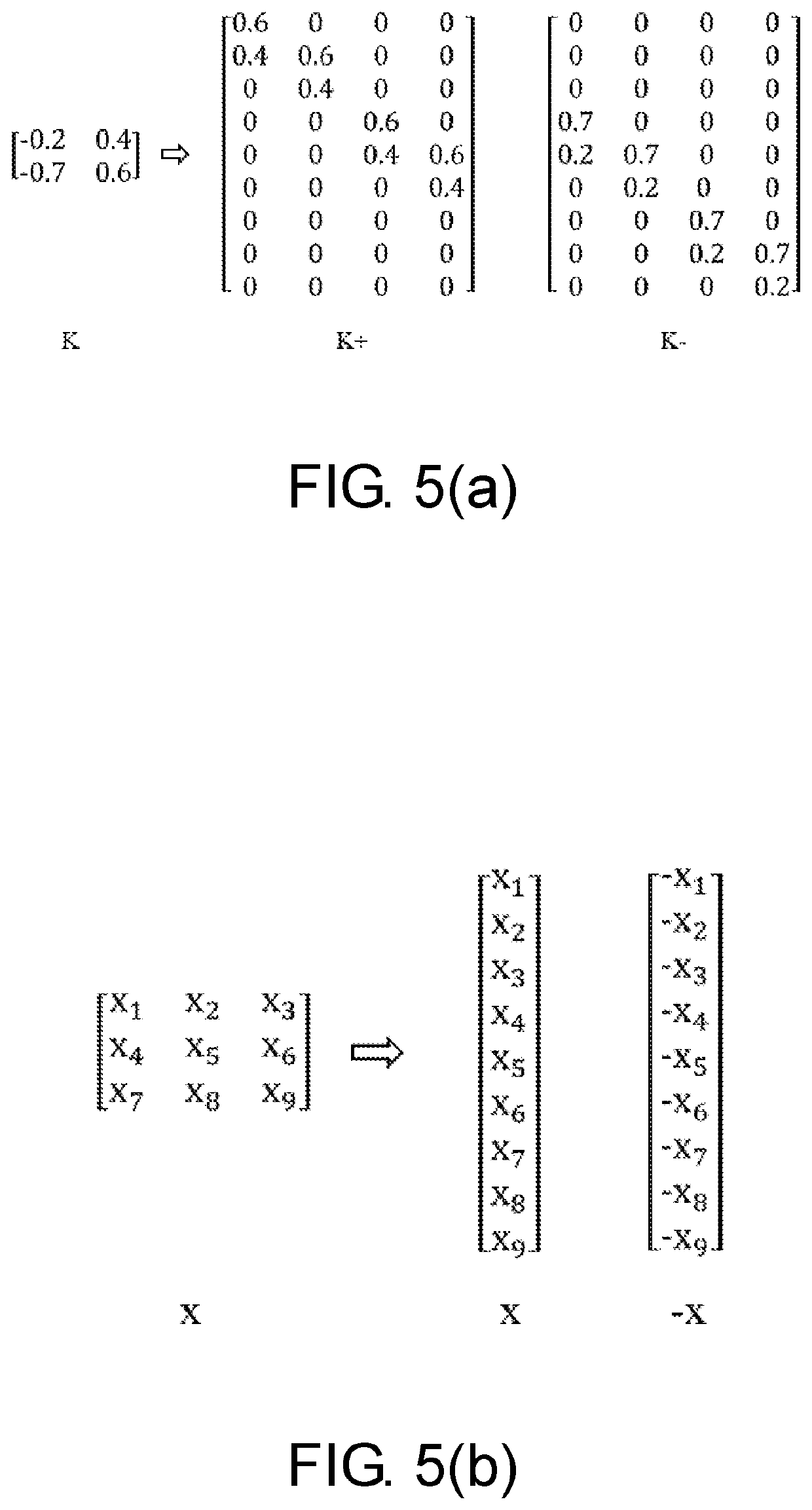

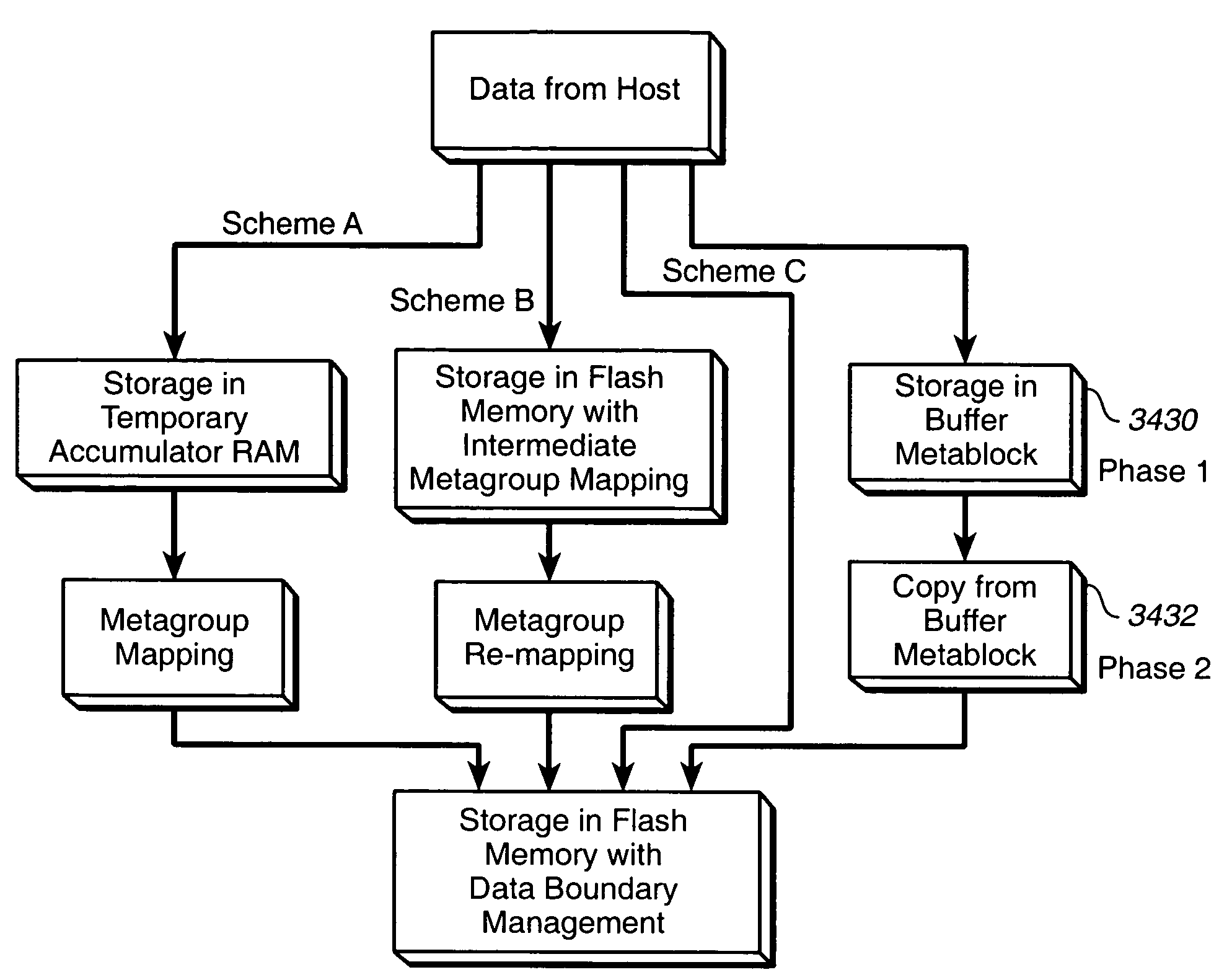

Convolutional neural network on-chip learning system based on non-volatile memory

PendingUS20200342301A1Improve operation speed and densityHigh degree of parallelismNeural architecturesPhysical realisationSynaptic weightEngineering

Disclosed by the disclosure is a convolutional neural network on-chip learning system based on non-volatile memory, comprising: an input module, a convolutional neural network module, an output module and a weight update module. The on-chip learning of the convolutional neural network module implements a synaptic function by using a characteristic which the conductance of a memristor changes according to an applied pulse, and the convolutional kernel value or synaptic weight value is stored in a memristor unit; the input module converts an input signal into a voltage signal required by the convolutional neural network module; the convolutional neural network module converts the input voltage signal level by level, and transmits the result to the output module to obtain an output of the network; and the weight update module adjusts the conductance value of the memristor in the convolutional neural network module according to the result of the output module to update a network convolutional kernel value or synaptic weight value.

Owner:HUAZHONG UNIV OF SCI & TECH

Data run programming

ActiveUS7383375B2Reduce impactEfficient updateMemory architecture accessing/allocationProgram control using stored programsData storeNon-volatile memory

Data in data runs are stored in a non-volatile memory array in adaptive metablocks that are configured according to the locations of data boundaries. A serial flash buffer is used to store some data, while other data are directly stored in non-volatile memory. Data may be stored with alignment to data boundaries during updating of the data to improve efficiency of subsequent updates.

Owner:SANDISK TECH LLC

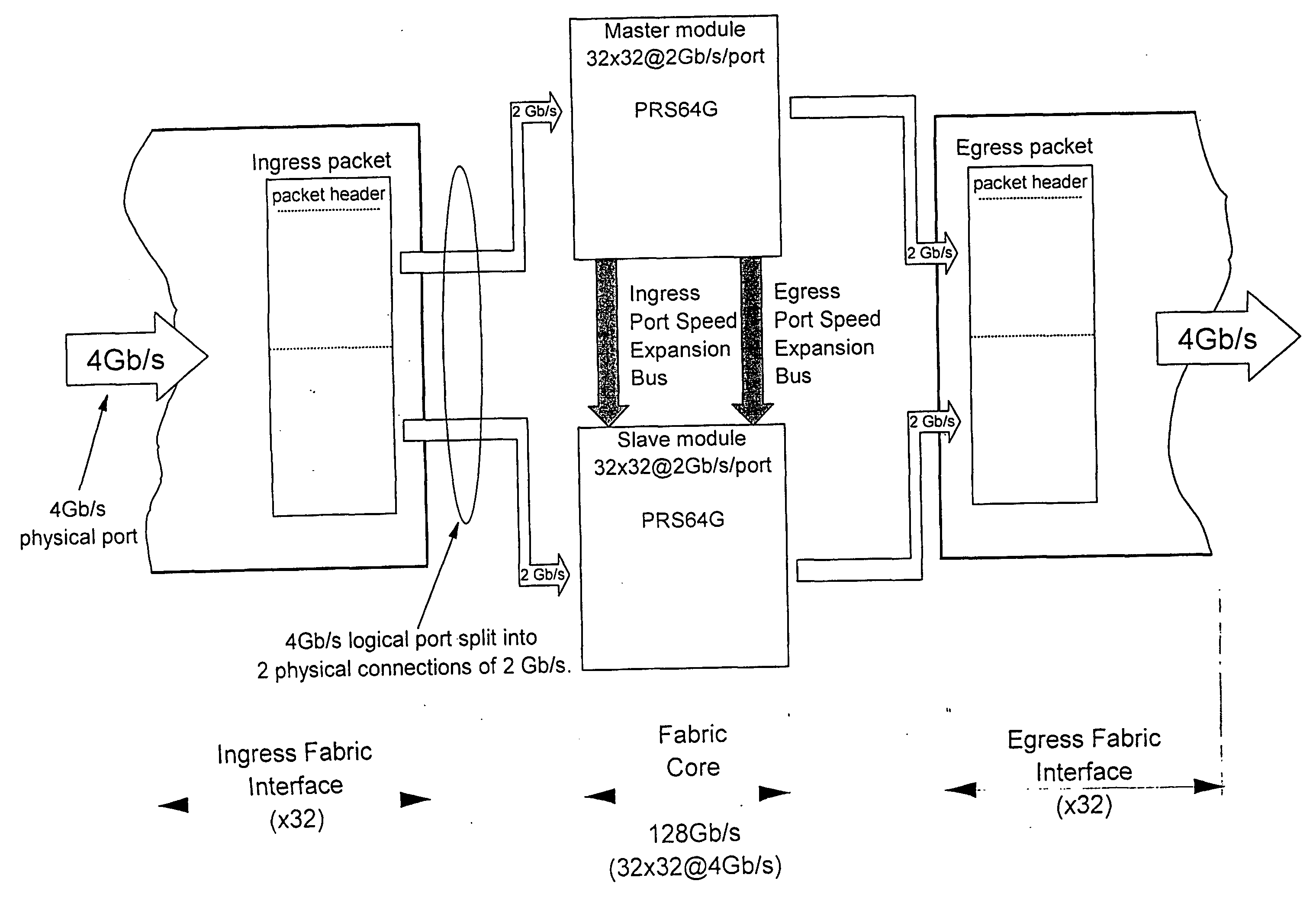

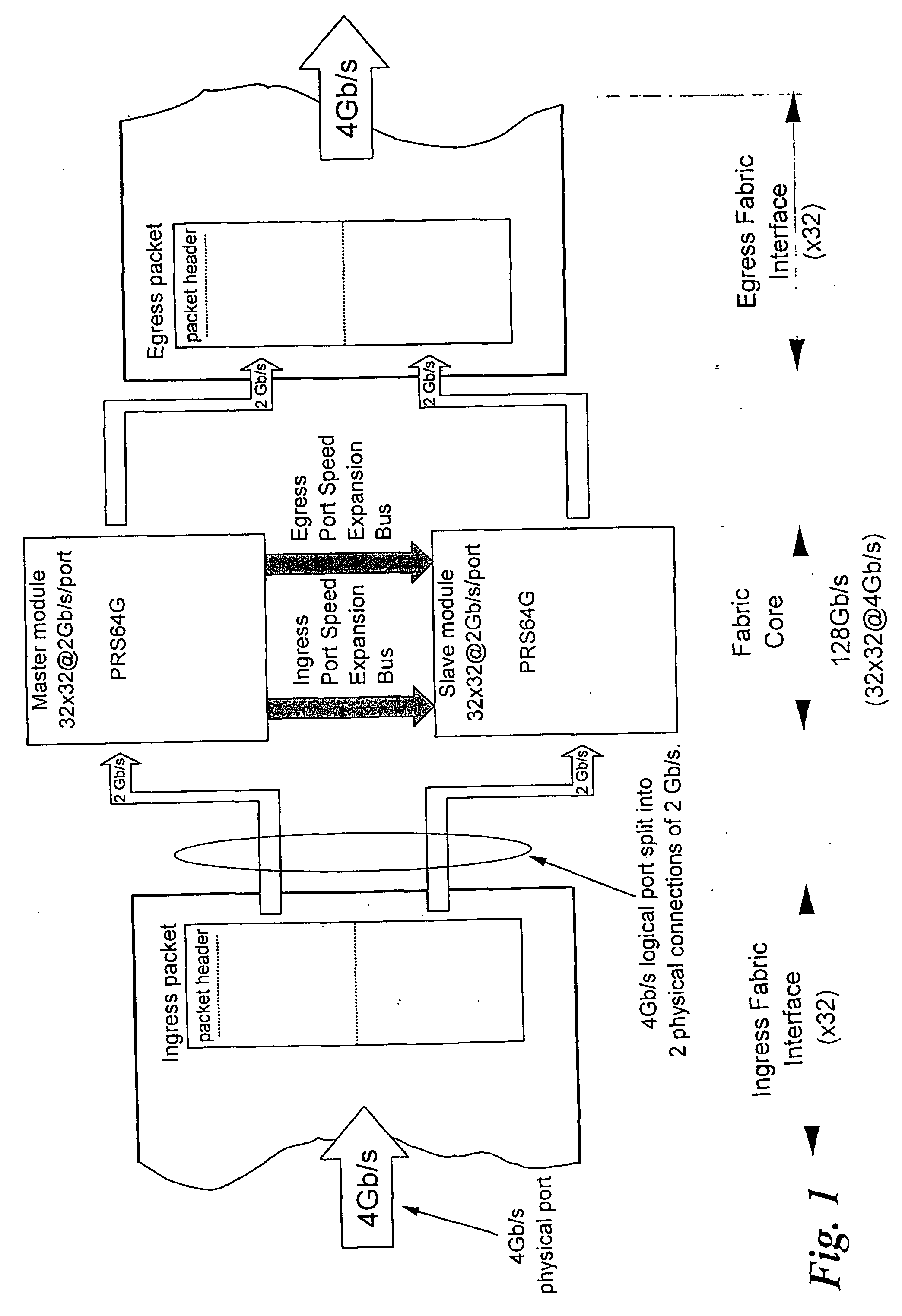

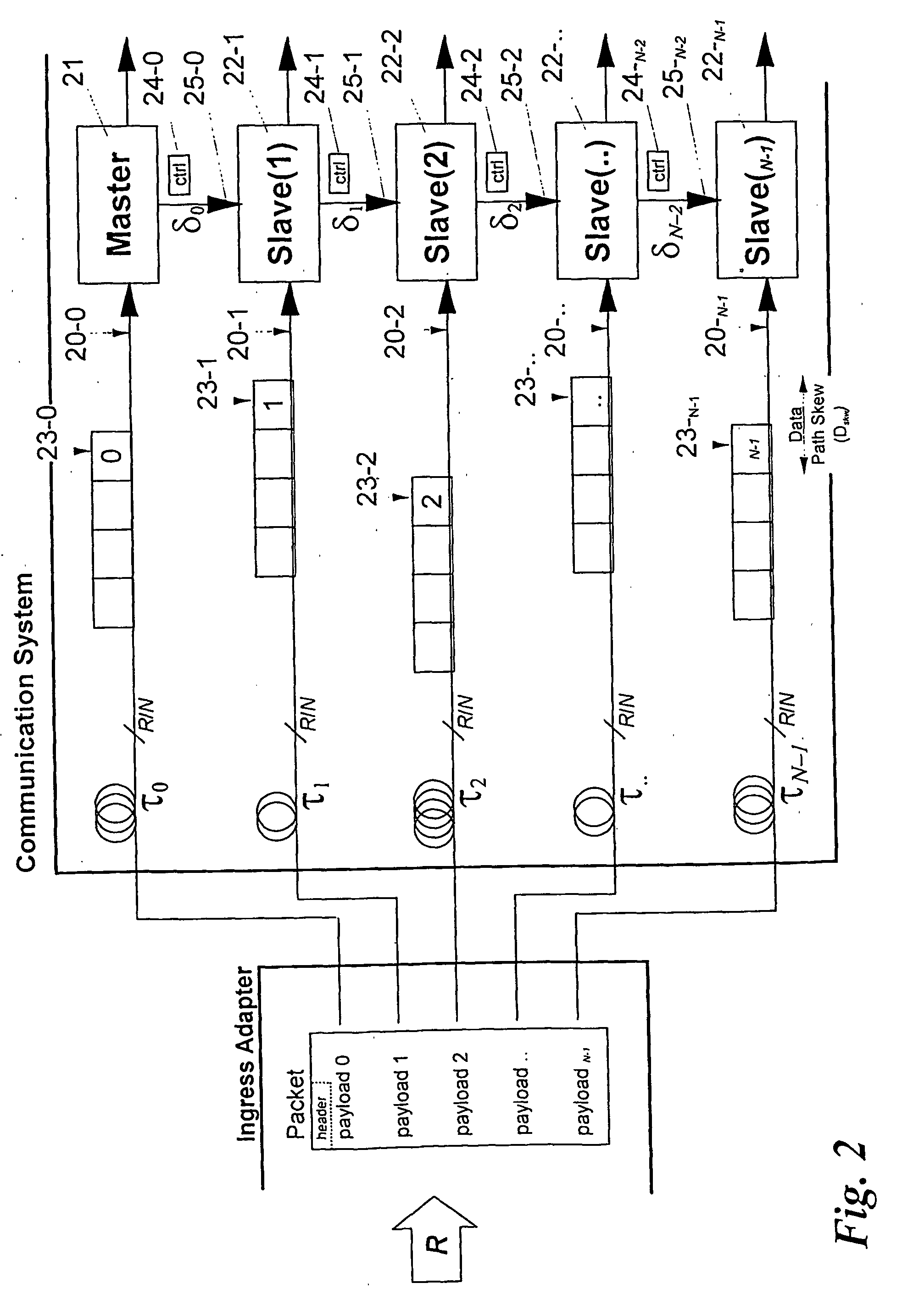

Method and arrangement for local sychronization in master-slave distributed communication systems

InactiveUS20060251124A1High bandwidthEnough timeTime-division multiplexData switching by path configurationPropagation delayCommunications system

For switching or transmitting data packets, one can provide communication systems which consist of several modules—operating in parallel on segments of a packet—to increase speed and handling capacity. One module acts as master (21), the others are slave modules (22) controlled by control signals (25) derived by the master module. It is important that in each module the data segment and the respective control signal of each packet are correctly synchronized, because in large systems the data paths carrying packet segments and the control signal paths may have substantially different delays. The invention provides for measurement of the propagation delay differences and for introducing a controlled delay in each slave module, so that data segments and control signals can be correctly correlated by delaying either the one or the other. Synchronization packets are transmitted besides normal data packets, for obtaining time stamps which are used to determine the delay difference.

Owner:IBM CORP

System for direct acquisition of received signals

InactiveUS20070195867A1High degree of parallelismQuick alignmentAmplitude-modulated carrier systemsSatellite radio beaconingTime correlationSideband

Signal processing architectures for direct acquisition of spread spectrum signals using long codes. Techniques are described for achieving a high of parallelism, employing code matched filter banks and other hardware sharing. In one embodiment, upper and lower sidebands are treated as two independent signals with identical spreading codes. Cross-correlators, in preferred embodiments, are comprised of a one or more banks of CMFs for computing parallel short-time correlations (STCs) of received signal samples and replica code sequence samples, and a means for calculating the cross-correlation values utilizing discrete-time Fourier analysis of the computed STCs. One or more intermediate quantizers may optionally be disposed between the bank of code matched filters and the cross-correlation calculation means for reducing word-sizes of the STCs prior to Fourier analysis. The techniques described may be used with BOC modulated signals or with any signal having at least two distinct sidebands.

Owner:MITRE SPORTS INT LTD

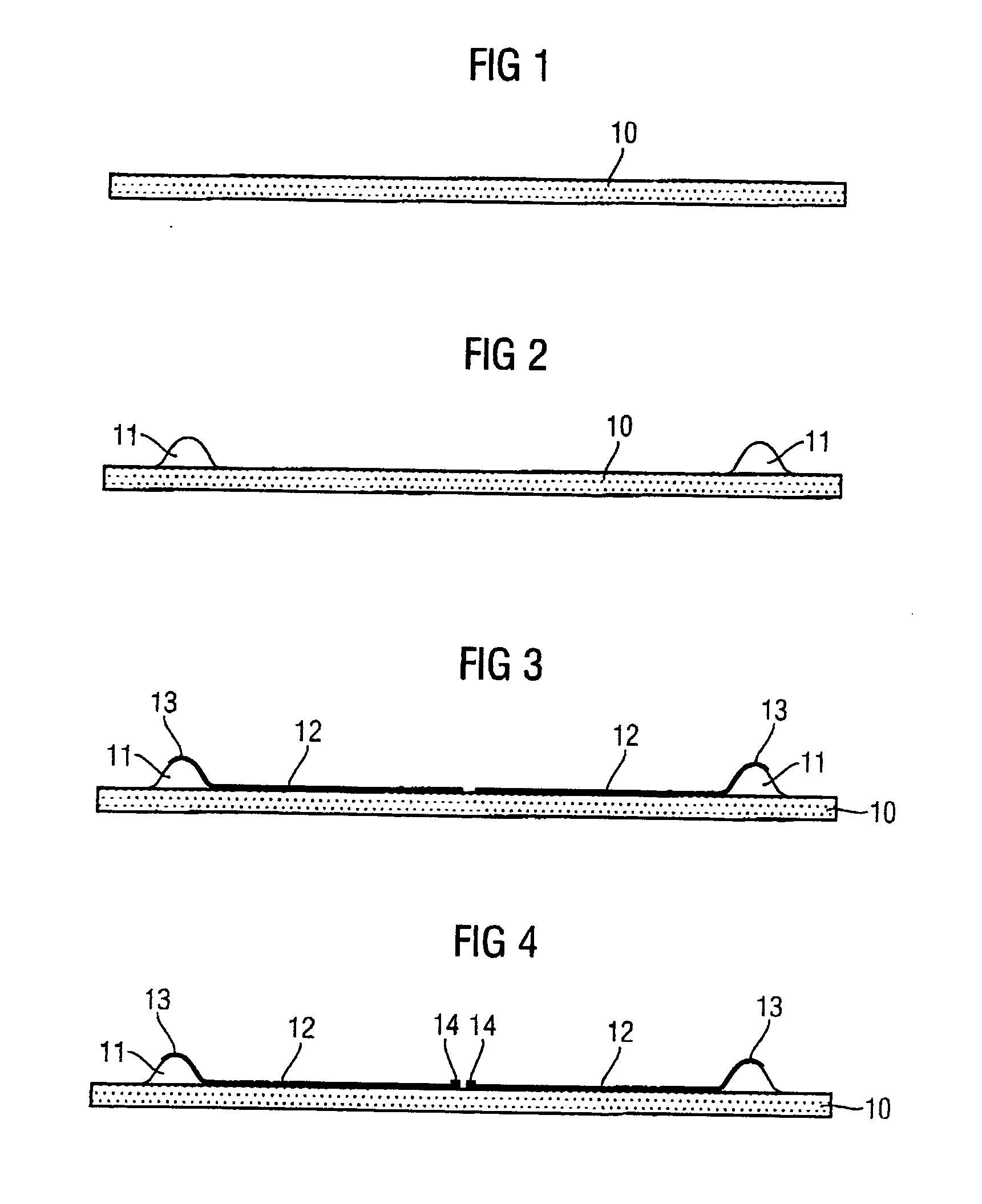

Method for producing a multichip module and multichip module

InactiveUS20050077632A1High degree of parallelismSimple and cost-effectiveSemiconductor/solid-state device detailsSolid-state devicesElectrically conductiveEngineering

A method for producing a multi-chip module having application of at least one contact elevation onto a substrate, application and patterning of a rewiring device onto the substrate and the at least one contact elevation with provision of a contact device on the at least one contact elevation, application of a semiconductor chip onto the substrate with electrical contact-connection of the rewiring device; application of an encapsulating device that is not electrically conductive onto the semiconductor chip, the substrate, the rewiring device and the at least one contact elevation, the contact device on the at least one contact elevation at least touching a first surface of the encapsulating device; and repetition at least once of at least the first two steps, the first surface of the encapsulating device serving as a substrate and the correspondingly produced rewiring device making electrical contact with the contact device of the at least one contact elevation of the underlying plane.

Owner:POLARIS INNOVATIONS

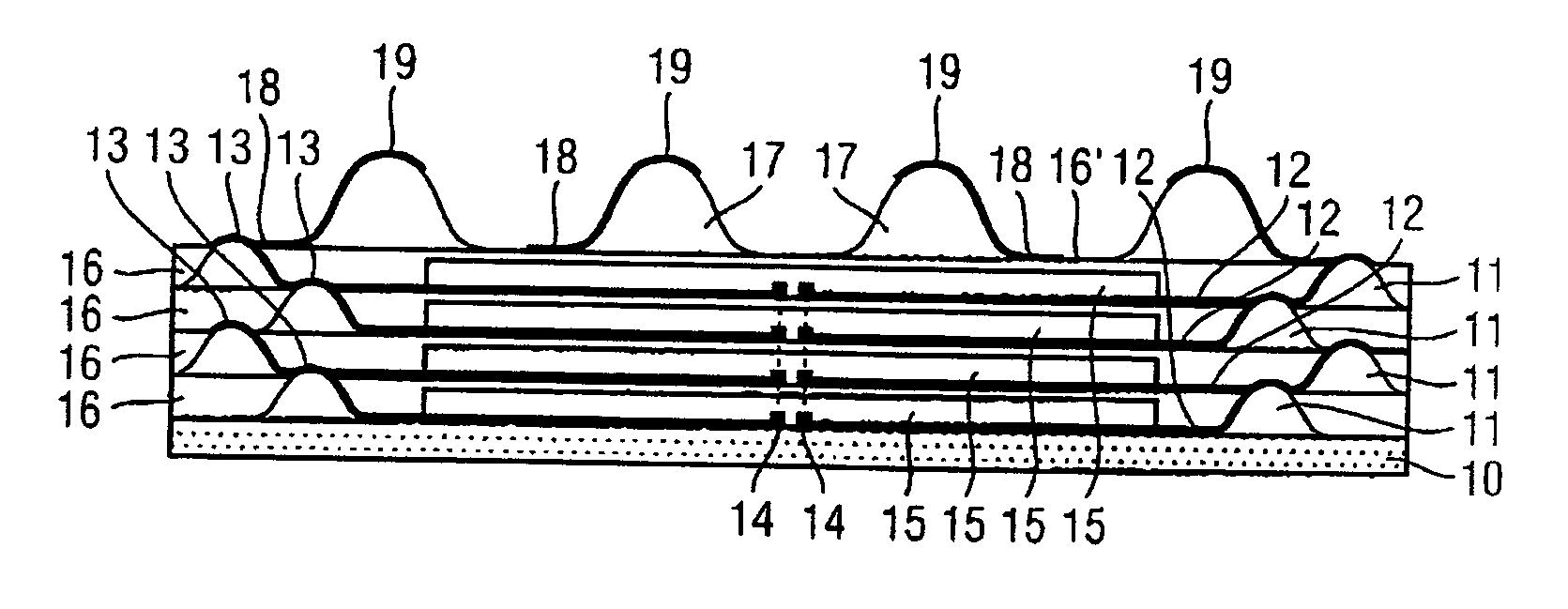

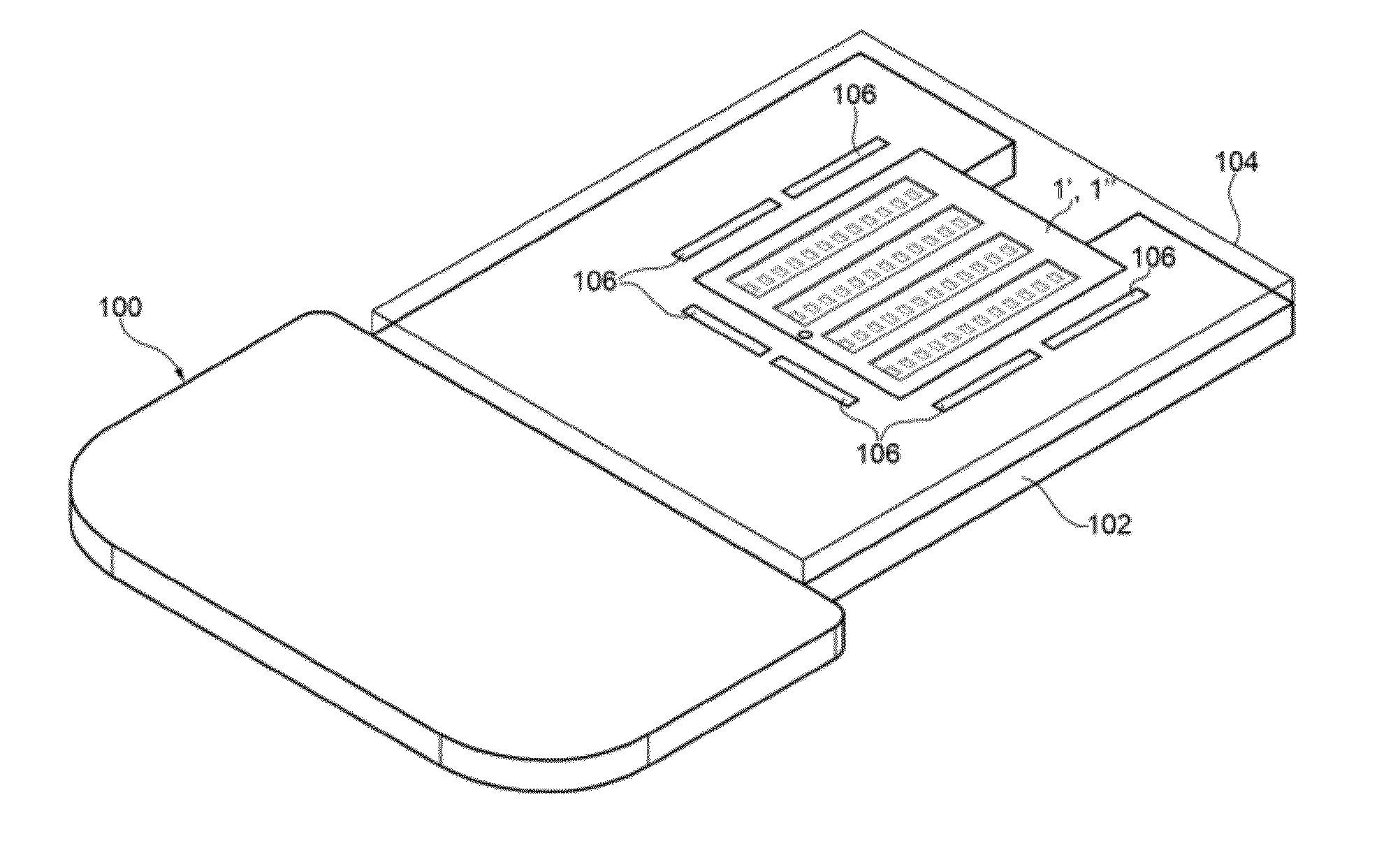

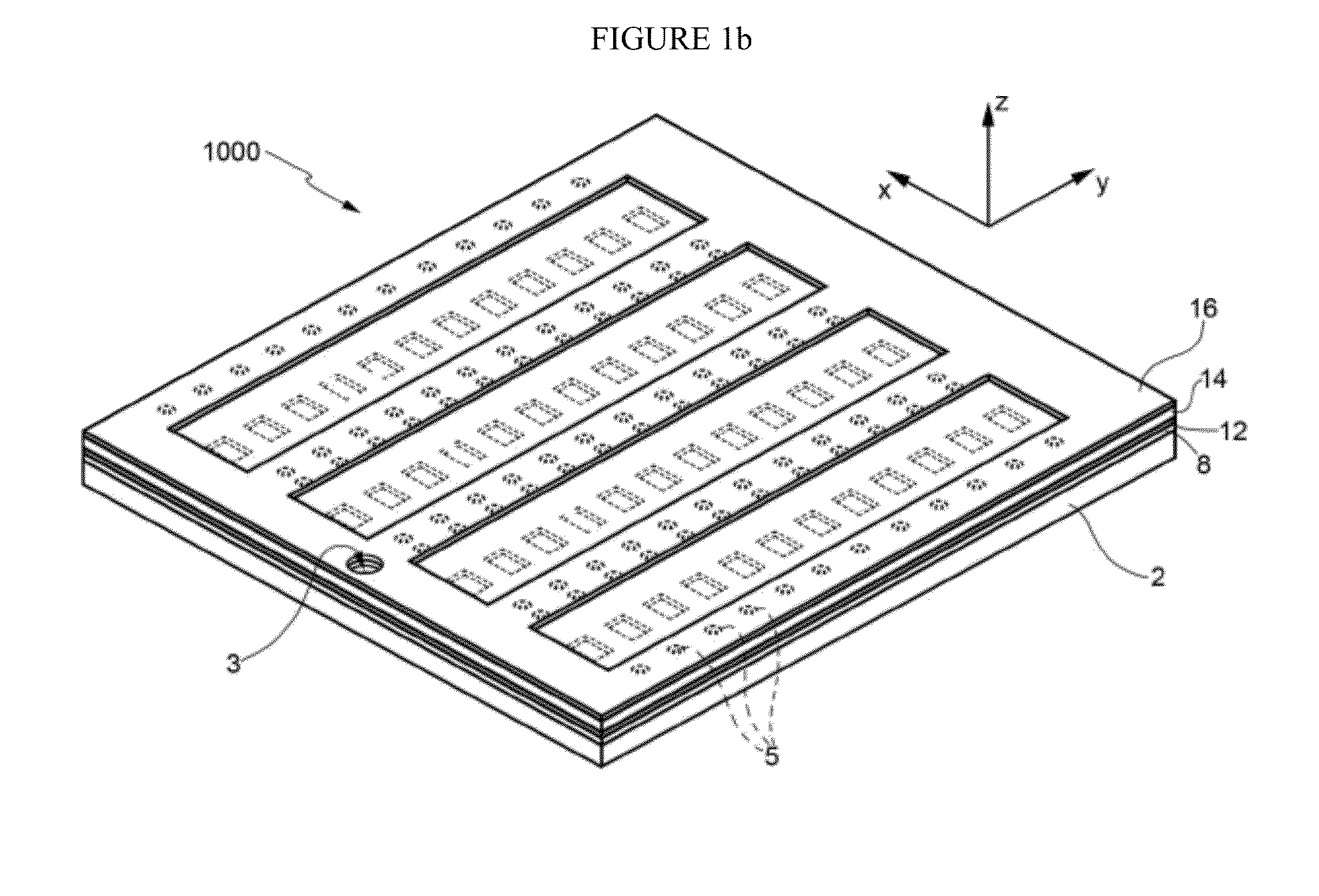

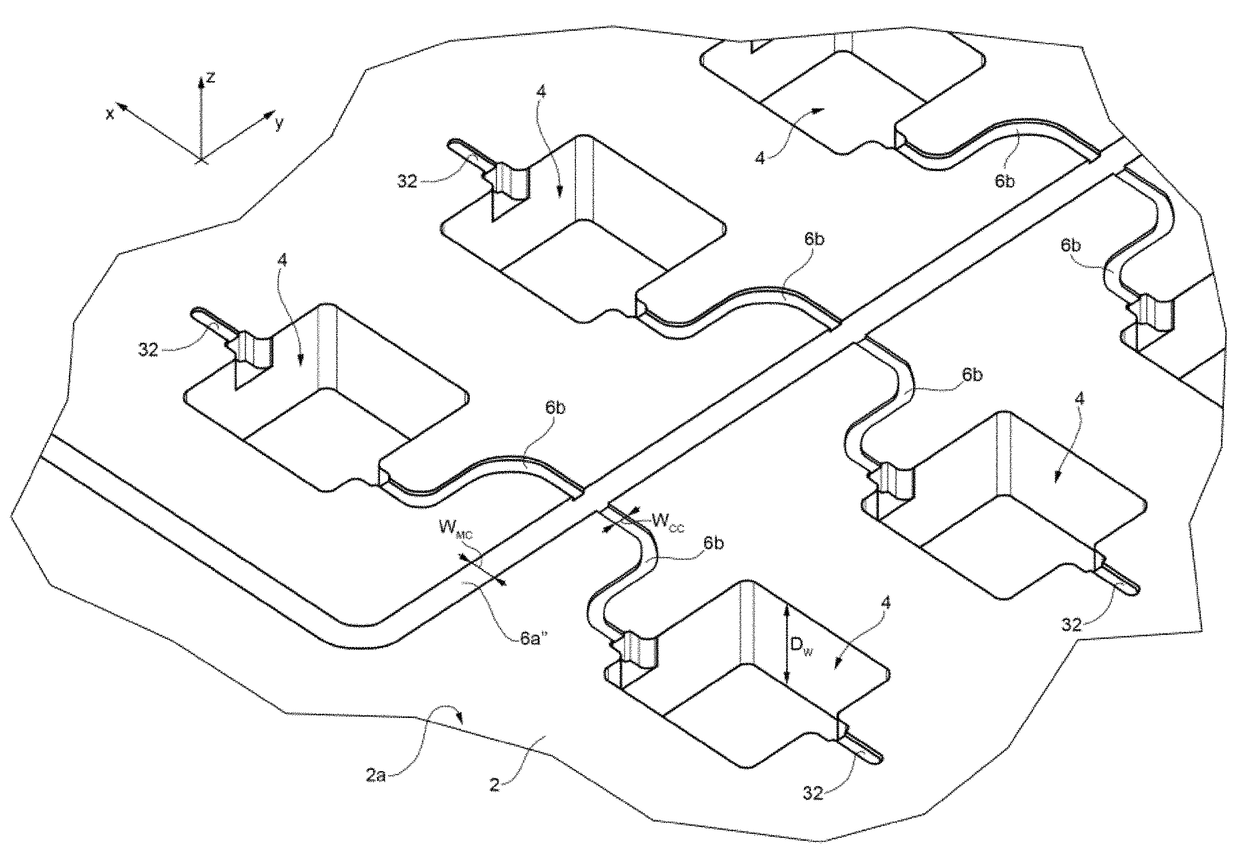

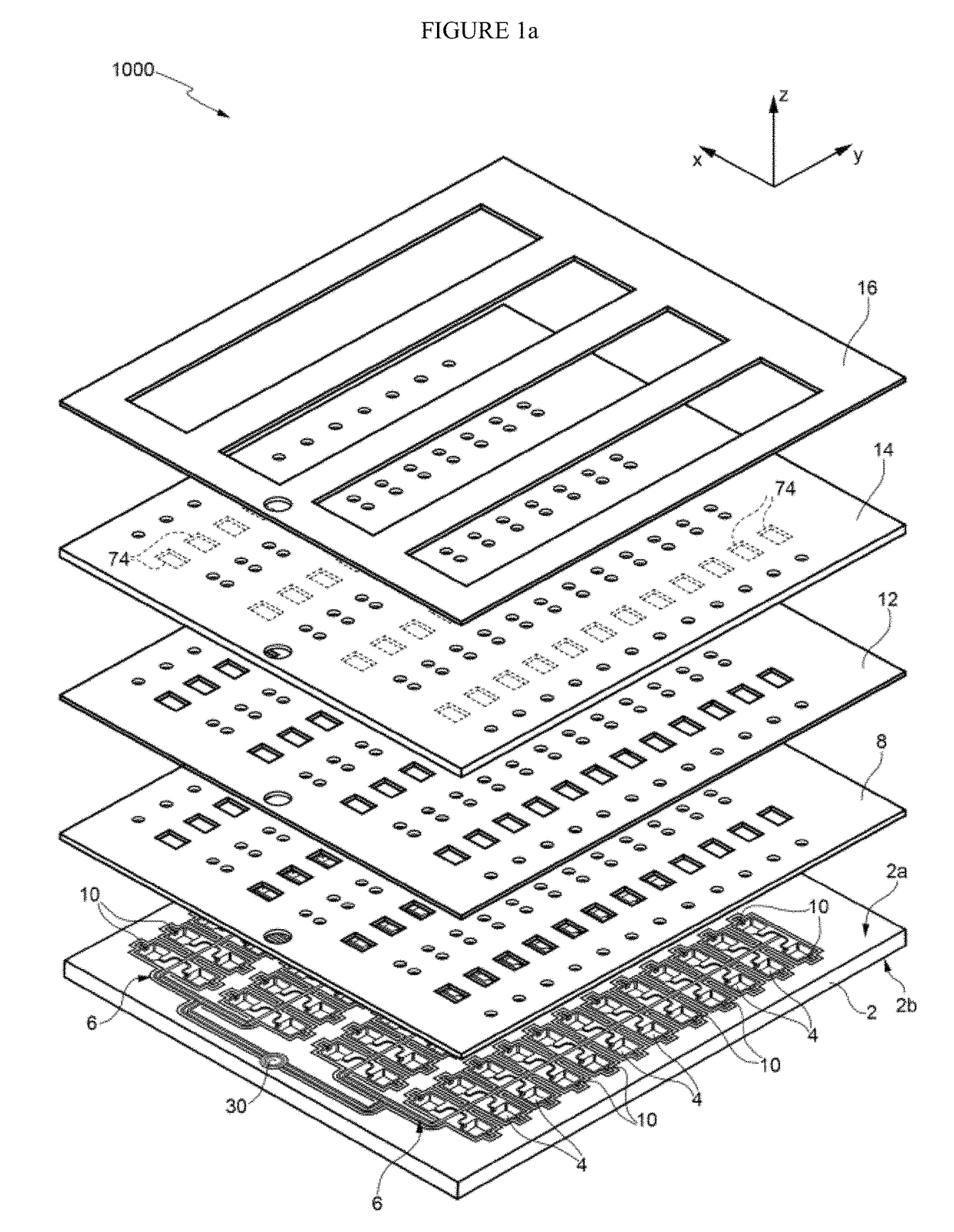

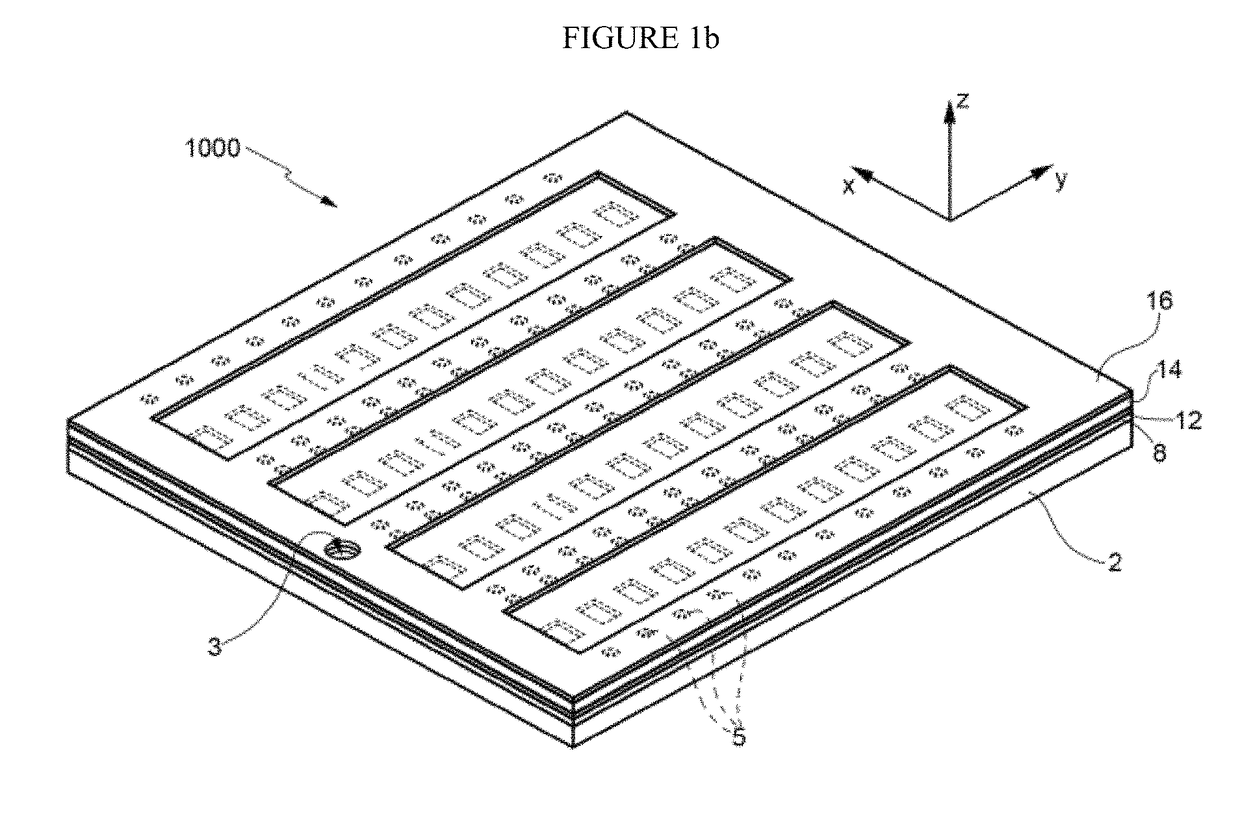

Microfluidic PCR device

ActiveUS20140038193A1High degree of parallelismReduce and eliminate cross contaminationBioreactor/fermenter combinationsBiological substance pretreatmentsMain channelEngineering

A microfluidic device (1000-1005), comprising: a semiconductor body (2) having a first side (2a) and a second side (2b) opposite to one another, and housing, at the first side, a plurality of wells (4), having a first depth; an inlet region (30) forming an entrance point for a fluid to be supplied to the wells; a main channel (6a) fluidically connected to the inlet region, and having a second depth; and a plurality of secondary channels (6b) fluidically connecting the main channel to a respective well, and having a third depth. The first depth is higher than the second depth, which in turn is higher than the third depth. According to an aspect, the microfluidic device further comprises a cover layer (8), arranged above the first side of the semiconductor body, configured for sealing the wells and provided with at least a first valve hole (54) which extends through the cover layer and overlaps, at least partially, the secondary channels; and a flexible layer (14), arranged above the cover layer and provided with at least a protrusion (74) extending through the first valve hole towards the semiconductor body and overlapping, at least partially, the secondary channels, the flexible layer being configured such that, when a pressure is applied on it, the protrusion contacts the semiconductor body and enters the secondary channels thus fluidically isolating the wells from one another.

Owner:STMICROELECTRONICS SRL +1

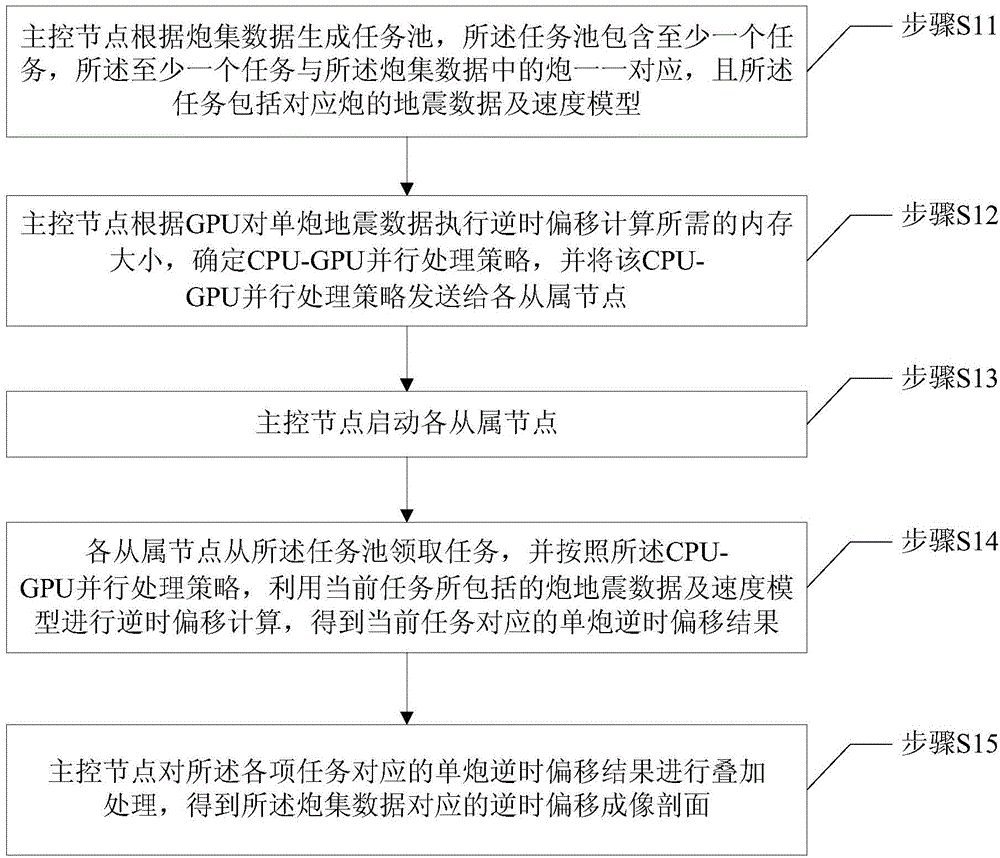

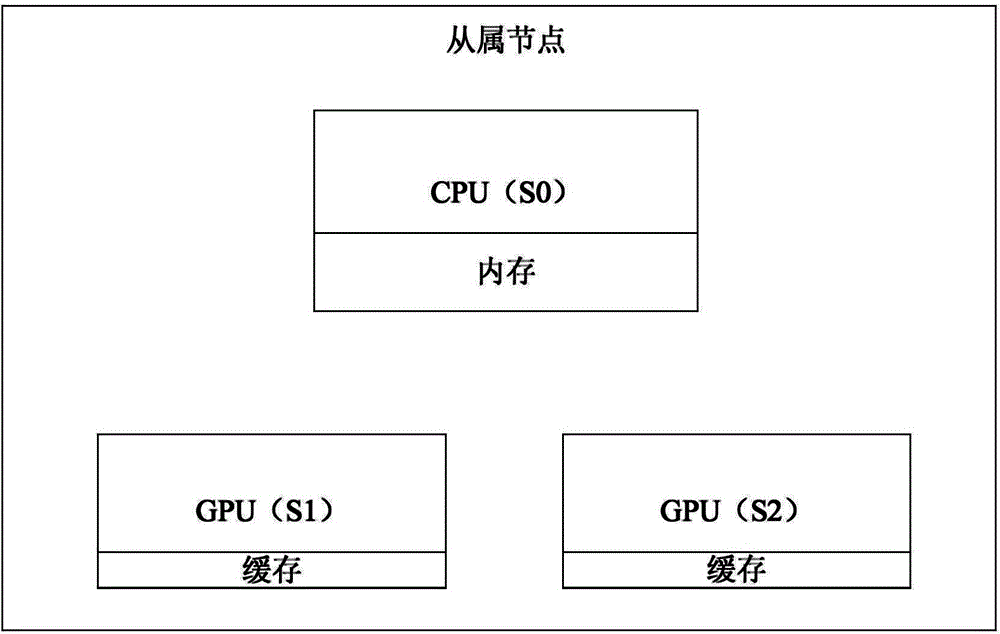

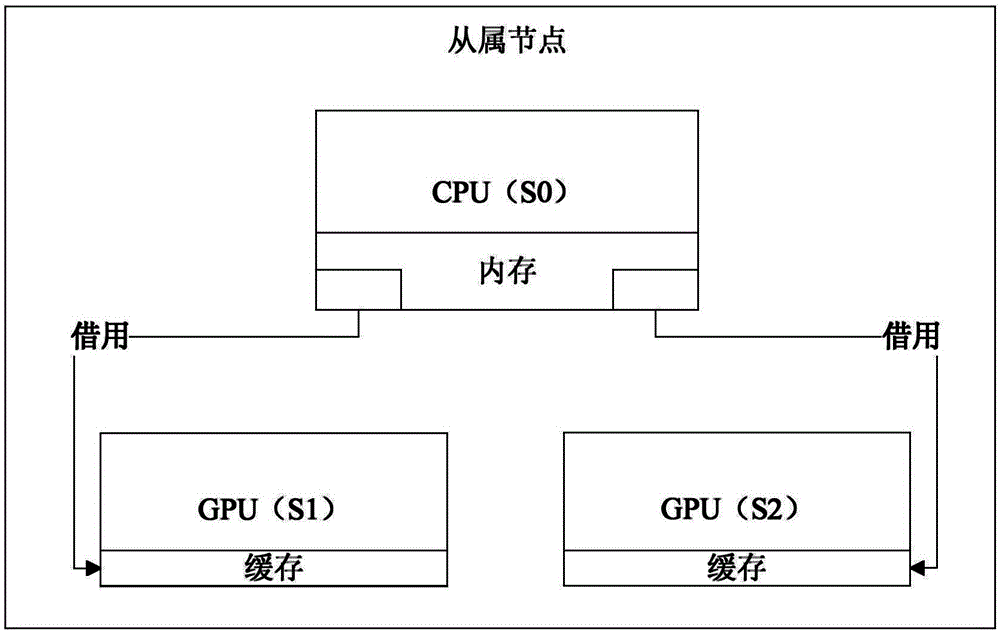

Method of using CPU-GPU platform for seismic wave reverse-time migration imaging

ActiveCN104635258AImprove resource utilizationTake full advantage of the ability of parallel computingSeismic signal processingReverse timeReflected waves

The invention provides a method of using a CPU-GPU platform for seismic wave reverse-time migration imaging, which can be applied to the technical field of reflected wave seismic data processing. The method comprises steps: a main control node generates a task pool according to shot gather data; the main control node carries out reverse-time migration on single shot seismic data according to the GPU for calculating the needed memory size, a CPU-GPU parallel processing strategy is determined, and the CPU-GPU parallel processing strategy is sent to each slave node; the main control node starts each slave node; each slave node receives a tack from the task pool, and according to the CPU-GPU parallel processing strategy, shot seismic data and a speed model included in the current task are used for reverse-time migration calculation, and a single shot reverse-time migration result according to the current task is obtained; the main control node carries out stacked processing on single shot reverse-time migration results according to various tasks so as to obtain a reverse-time migration imaging profile corresponding to the shot gather data. The method makes full use of the CPU-GPU platform parallel calculation ability, and has the advantages of high parallel degree and easy realization, and a shot point wave field simulation process is saved.

Owner:BC P INC CHINA NAT PETROLEUM CORP +1

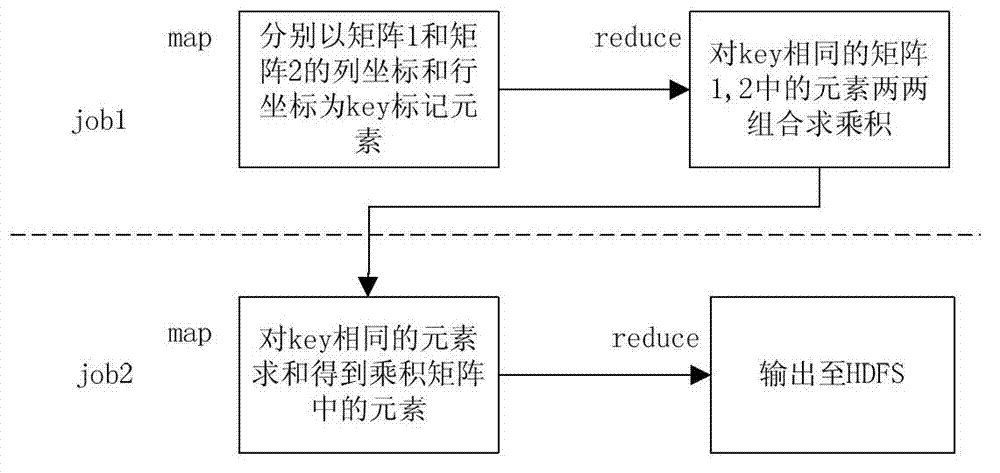

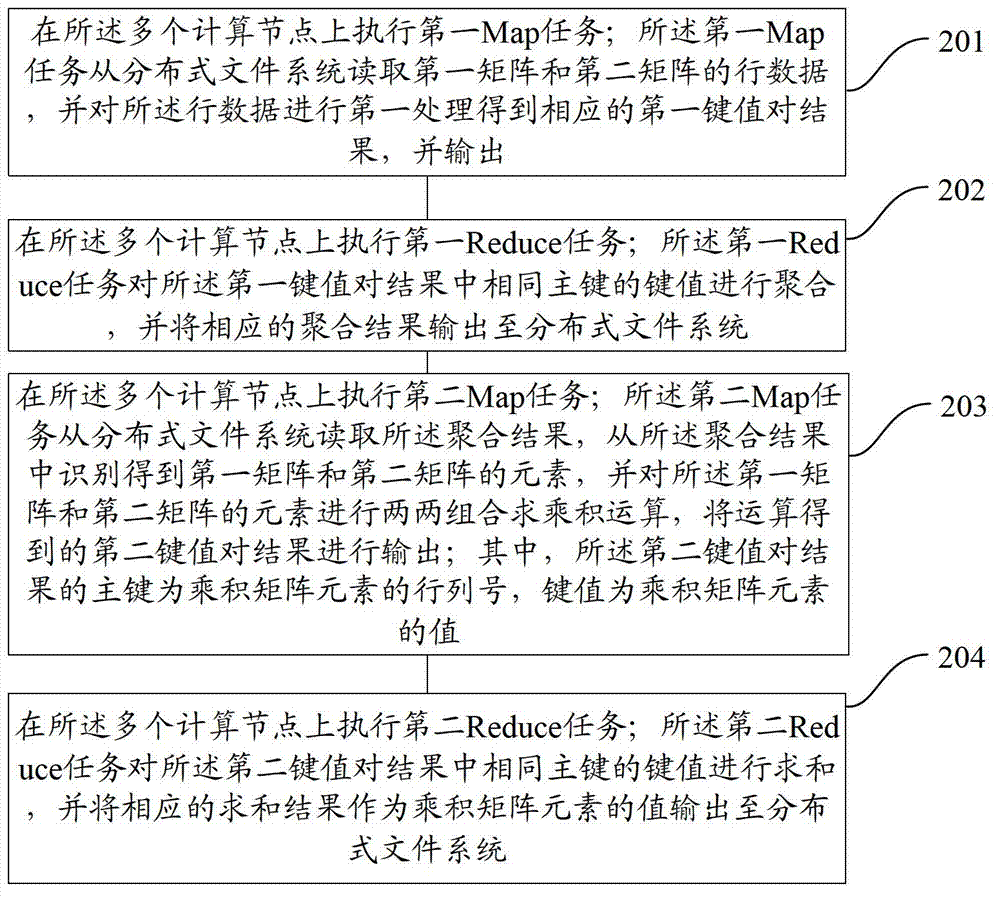

Method and system for carrying out matrix product operation on computer cluster

InactiveCN102831102AFast operationHigh degree of parallelismMultiprogramming arrangementsComplex mathematical operationsComputer clusterDistributed File System

The invention provides a method and a system for carrying out matrix product operation on a computer cluster. A distributed file system and a plurality of calculation nodes for executing Map tasks and Reduce tasks are arranged on the computer cluster. The method specifically comprises the following step of: executing a first Map task, a first Reduce task, a second Map task and a second Reduce task on the calculation nodes, wherein the first Map task is used for carrying out first treatment to obtain a corresponding first key value pair result; the first Reduce task is used for gathering the key value of the same main key in the first key value pair result; the second Map task is used for identifying to obtain elements of a first matrix and a second matrix, and carrying out two-two combining and multiplying operation to obtain a second key value pair result; and the second Reduce task is used for summing the key values of the same main key in the second key value pair result. According to the method and the system provided by the invention, the calculation speed of the matrix product can be improved.

Owner:BEIJING IZP NETWORK TECH CO LTD

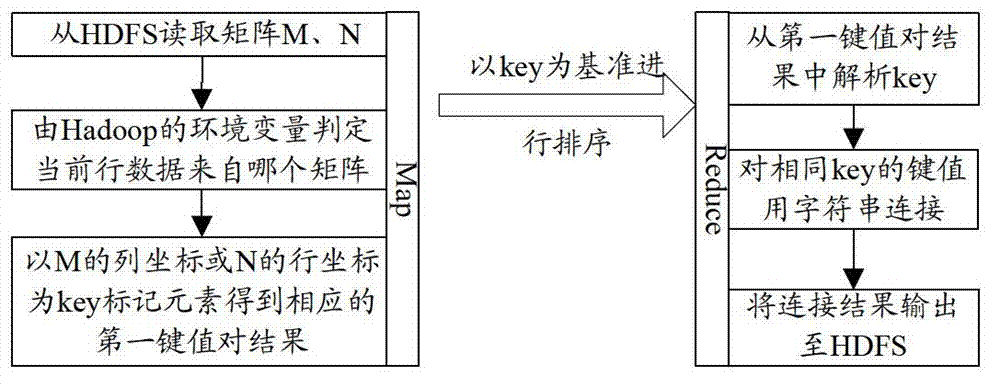

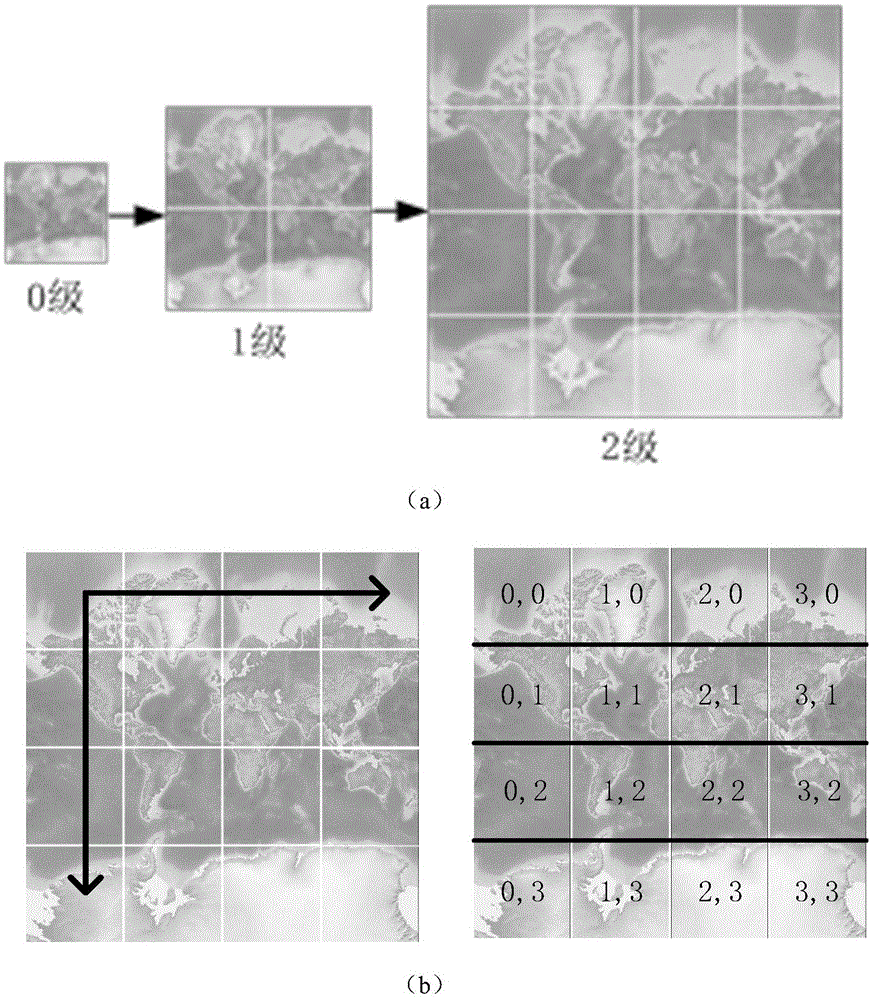

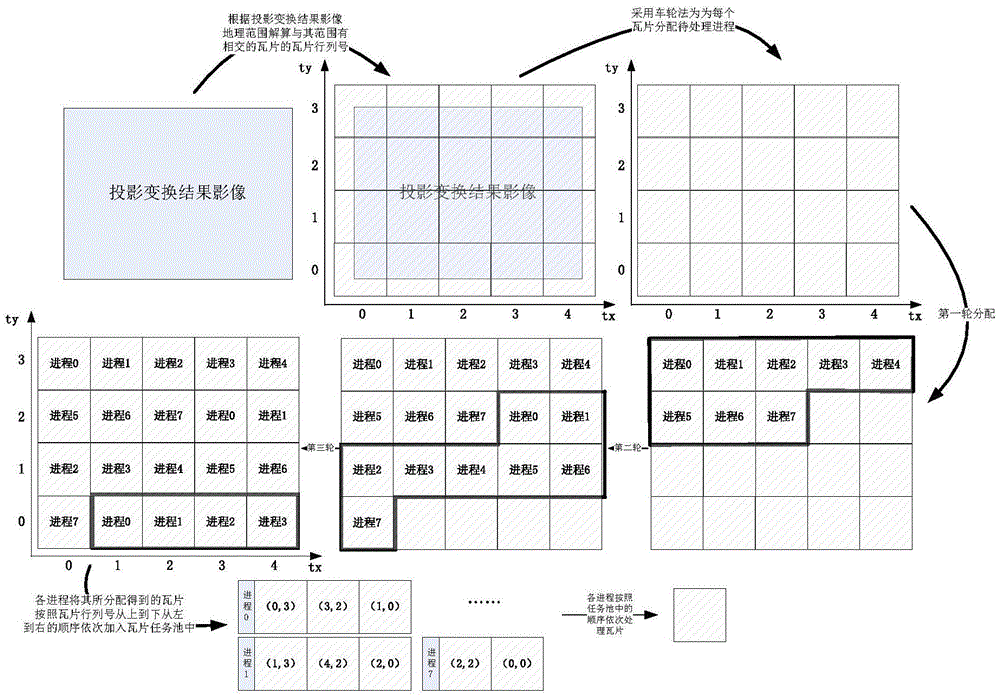

Parallel mode grid image slicing method

ActiveCN105550977ASimple configurationFast integrationImage enhancementImage analysisInformation processingAlgorithm

The present invention belongs to the field of the geographic space information processing technology, and relates to a parallel mode grid image slicing method. The method provided by the invention comprises the following steps: the step 1: obtaining an original grid image, and setting a target tile grade and the total number of processes; the step 2: reading meta-data information of the original grid image; the step 3: converting the original grid image to WebMercator projection; the step 4: calculating the max grade and the mix grade of the tiles of the projection conversion result image; the step 5: calculating the range of the tile rank numbers of the projection conversion result image, and establishing an empty tile file; and the step 6: dividing tasks for each process through adoption of a wheel method; the step 7: reading tiles in each task pool one by one through each process, inversely calculating the geographical range with respect to the tiles, calculating the relative position and the size of an intersecting region in the projection conversion result image, and reading the original grid data of the intersecting region into a memory space; and the step 8: writing resampling data in built empty tile file after resampling the original grid data of the intersecting region.

Owner:NAT UNIV OF DEFENSE TECH

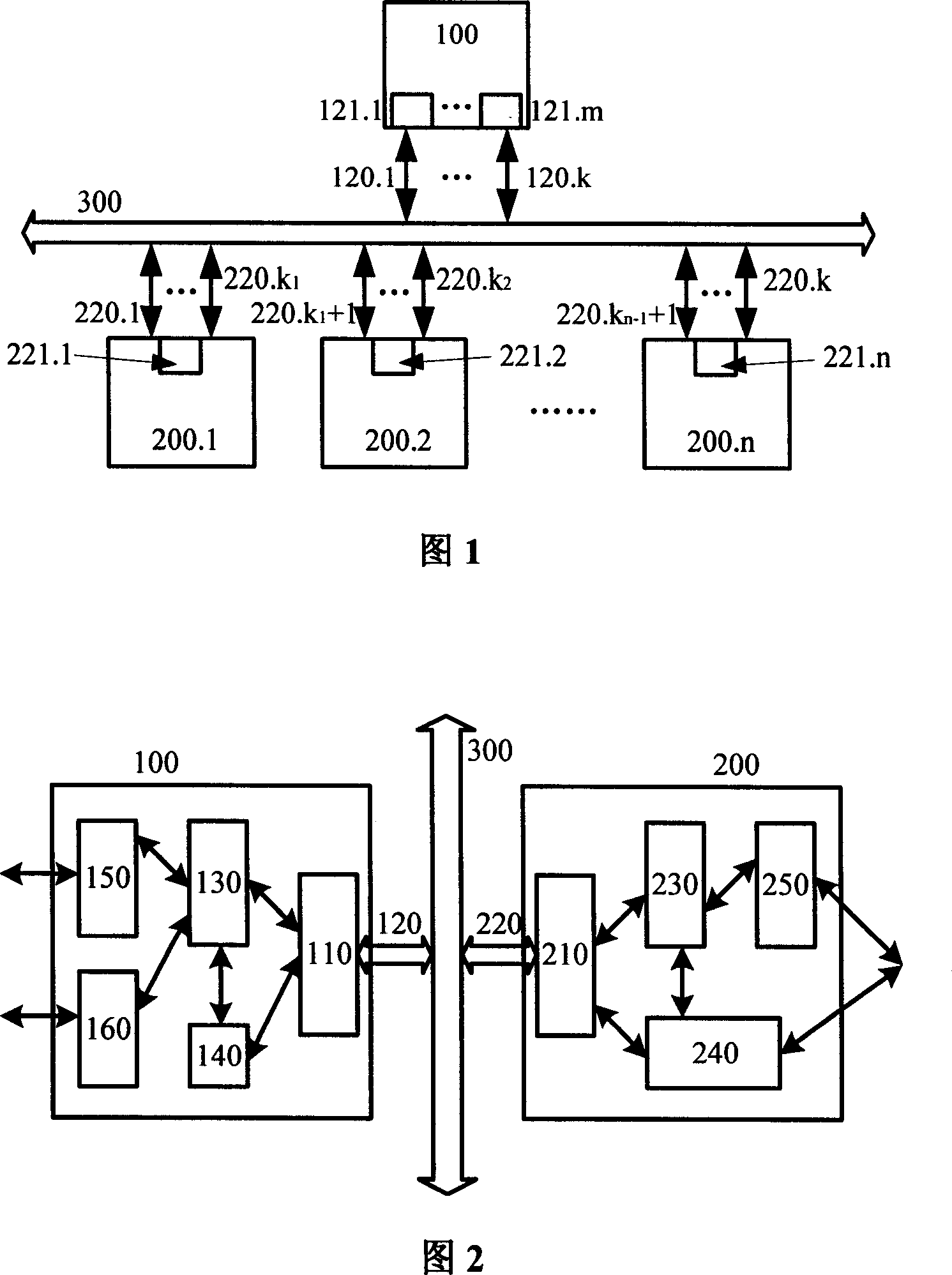

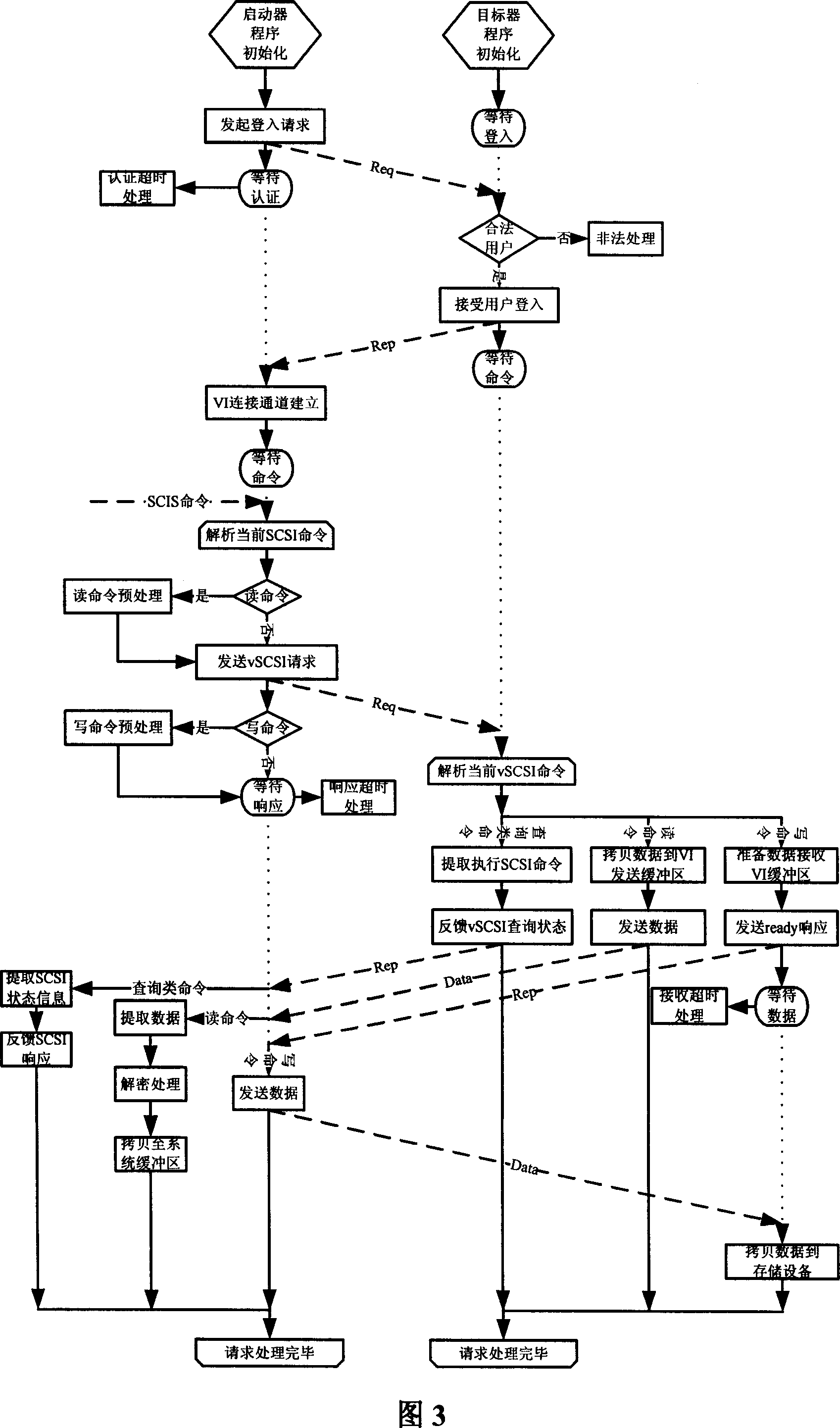

A protocol for network storage and its system

InactiveCN1997033AImprove transmission performanceLow Latency FeaturesTransmissionInteroperabilityThe Internet

This invention relates to one agreement and system for network memory in computer information memory technique field by use of VI composed of start process and aim process. The invention system comprises the following parts: one start device and N aim parts connected to the internet through VI network interface, VI connection channel; the start and aim parts are connected to the channel transmission data through more than one VI.

Owner:HUAZHONG UNIV OF SCI & TECH

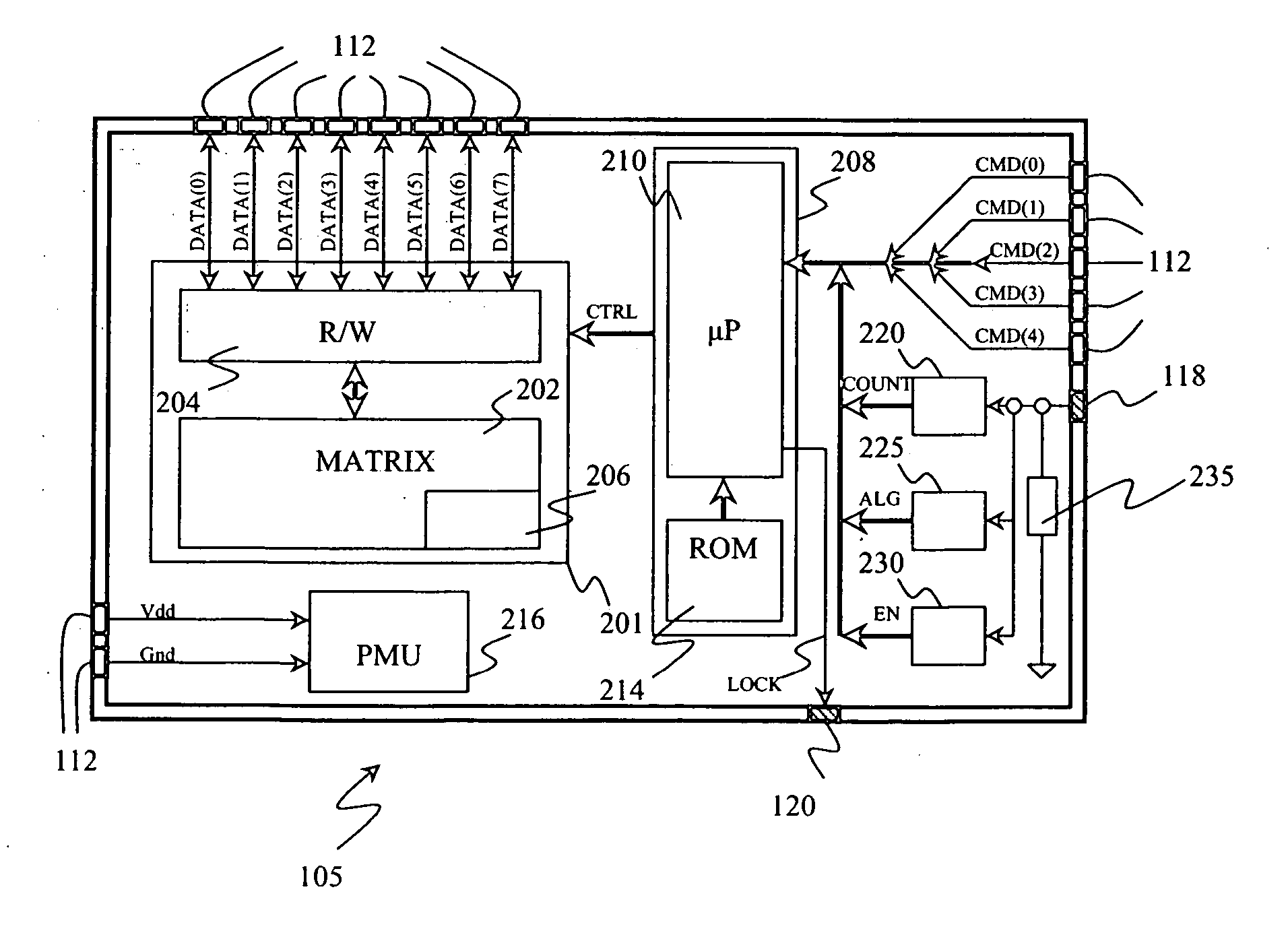

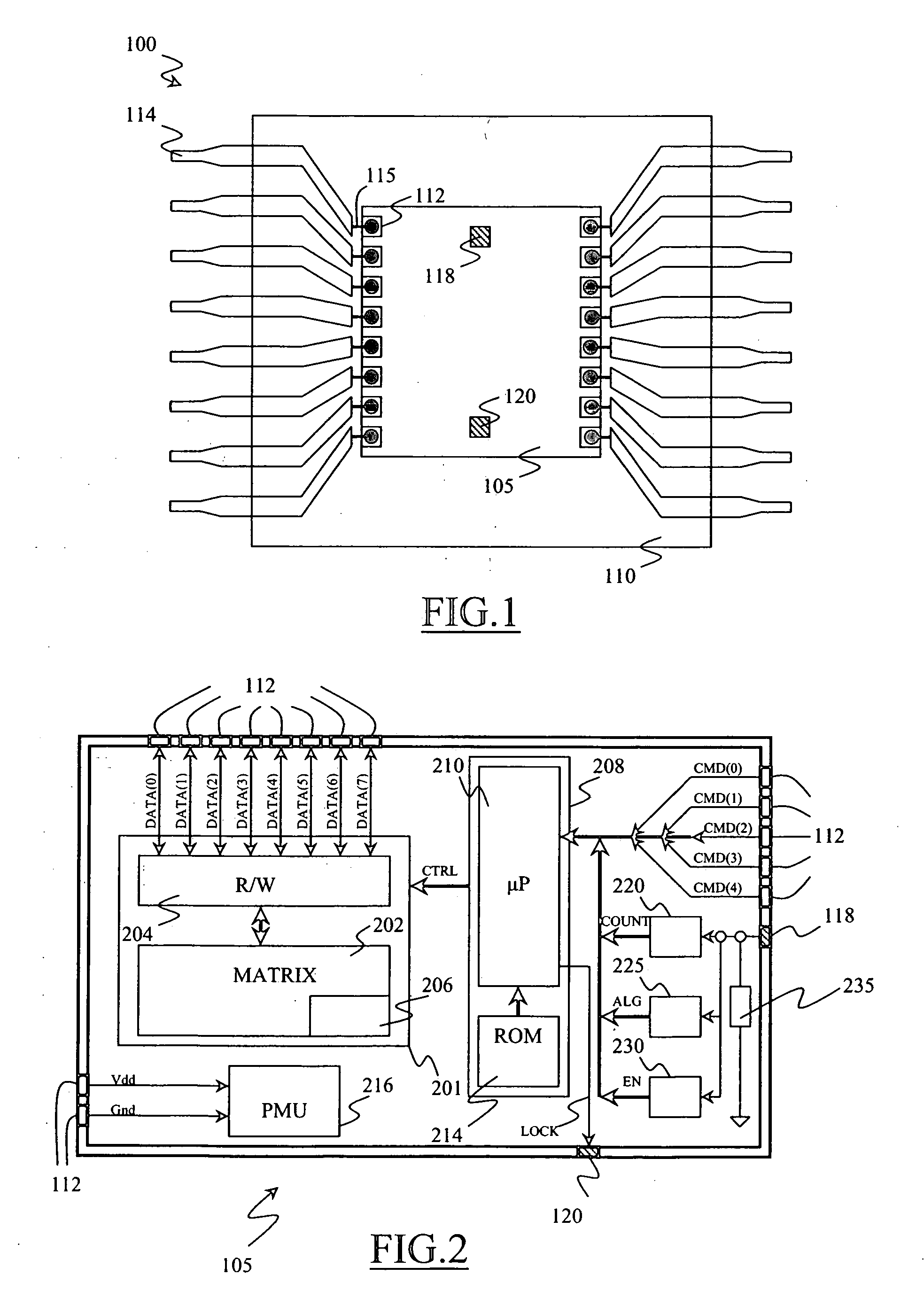

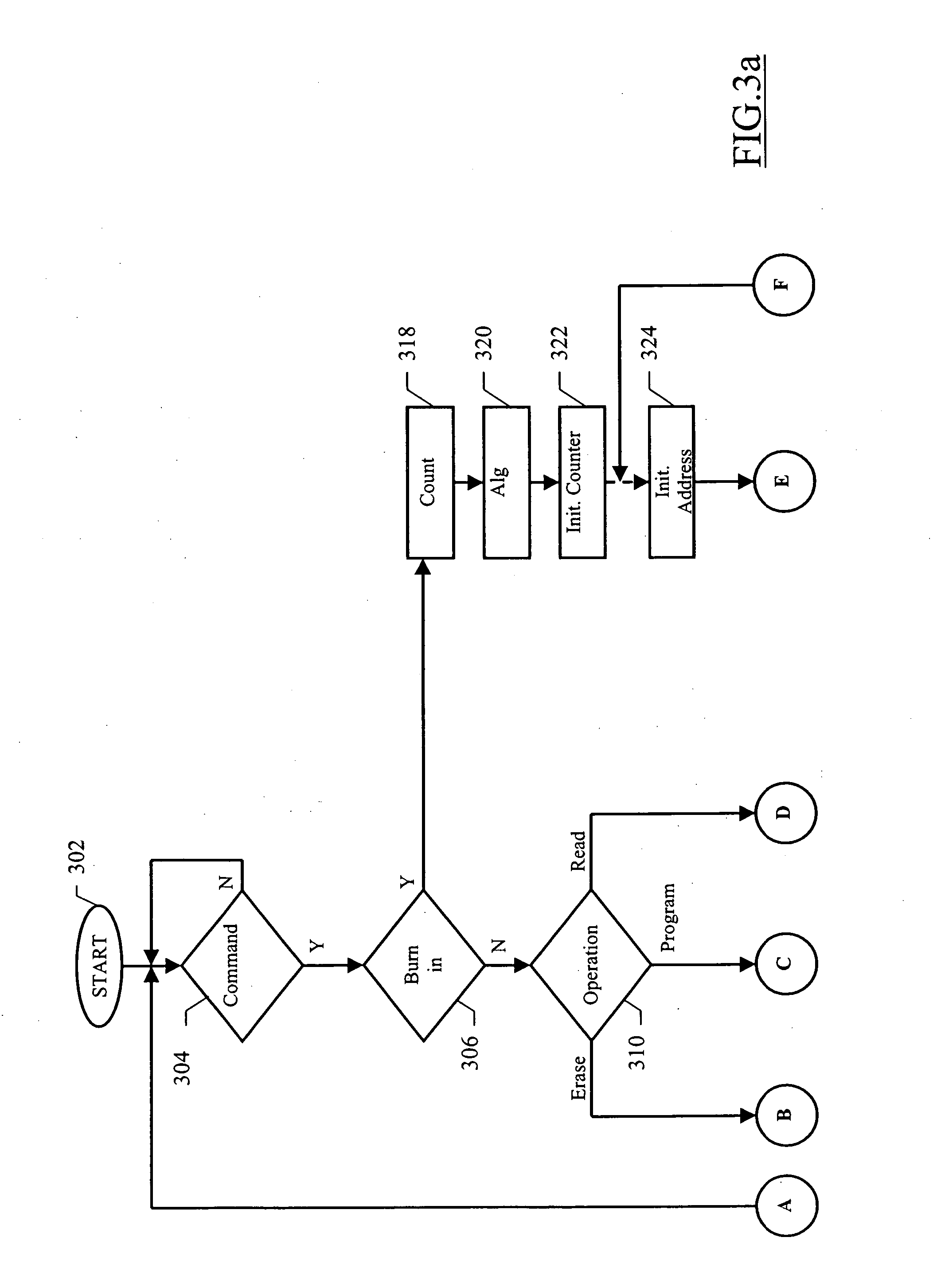

Non-volatile memory device supporting high-parallelism test at wafer level

InactiveUS20060161825A1Easy to implementWithout wasting silicon areaRead-only memoriesSemiconductor materialsTest set

A non-volatile memory device includes a chip of semiconductor material. The chip includes a memory and control means for performing a programming operation, an erasing operation and a reading operation on the memory in response to corresponding external commands. The chip further includes testing means for performing at least one test process including the repetition of at least one of said operations by the control means, and a single access element for enabling the testing means.

Owner:STMICROELECTRONICS SRL +1

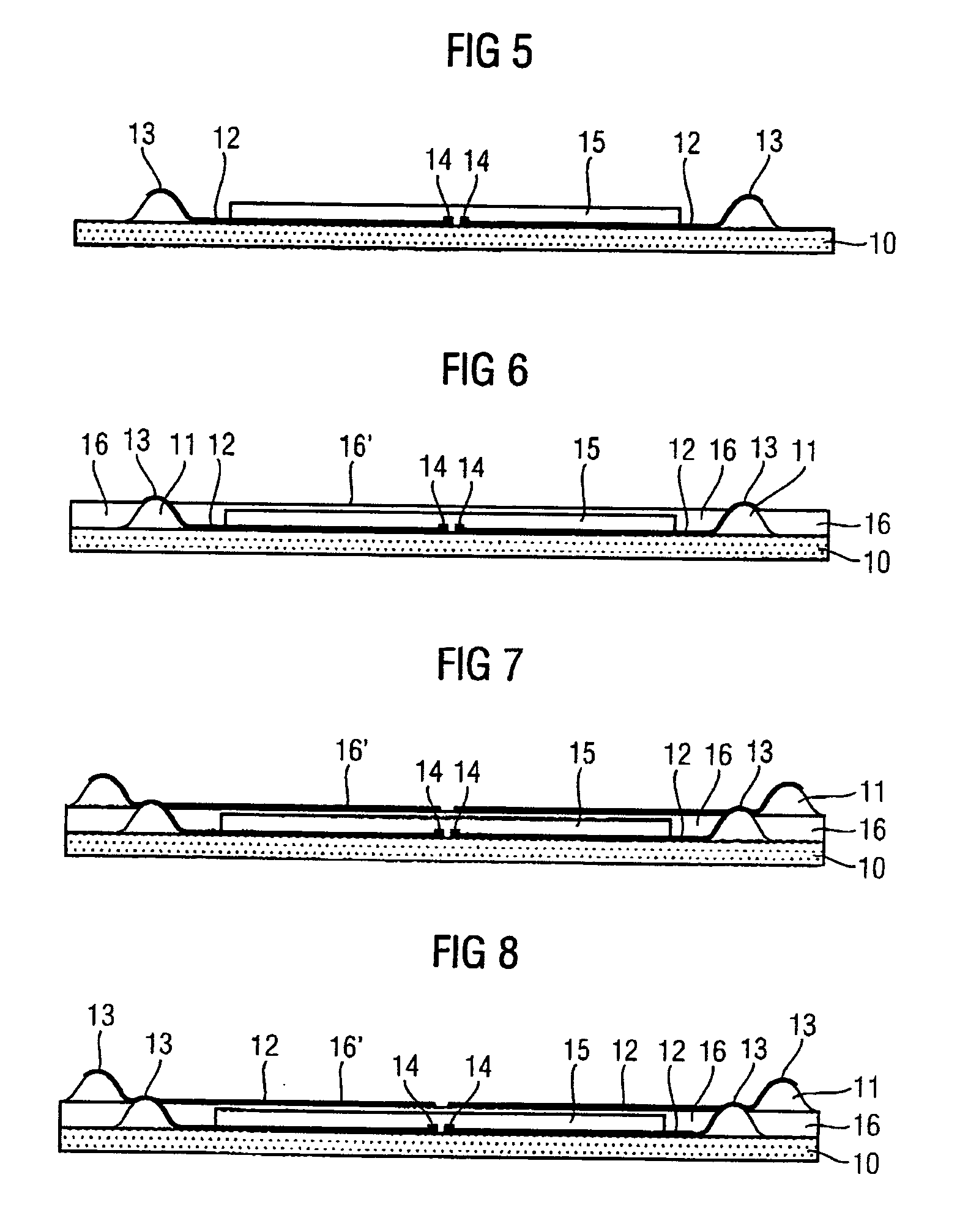

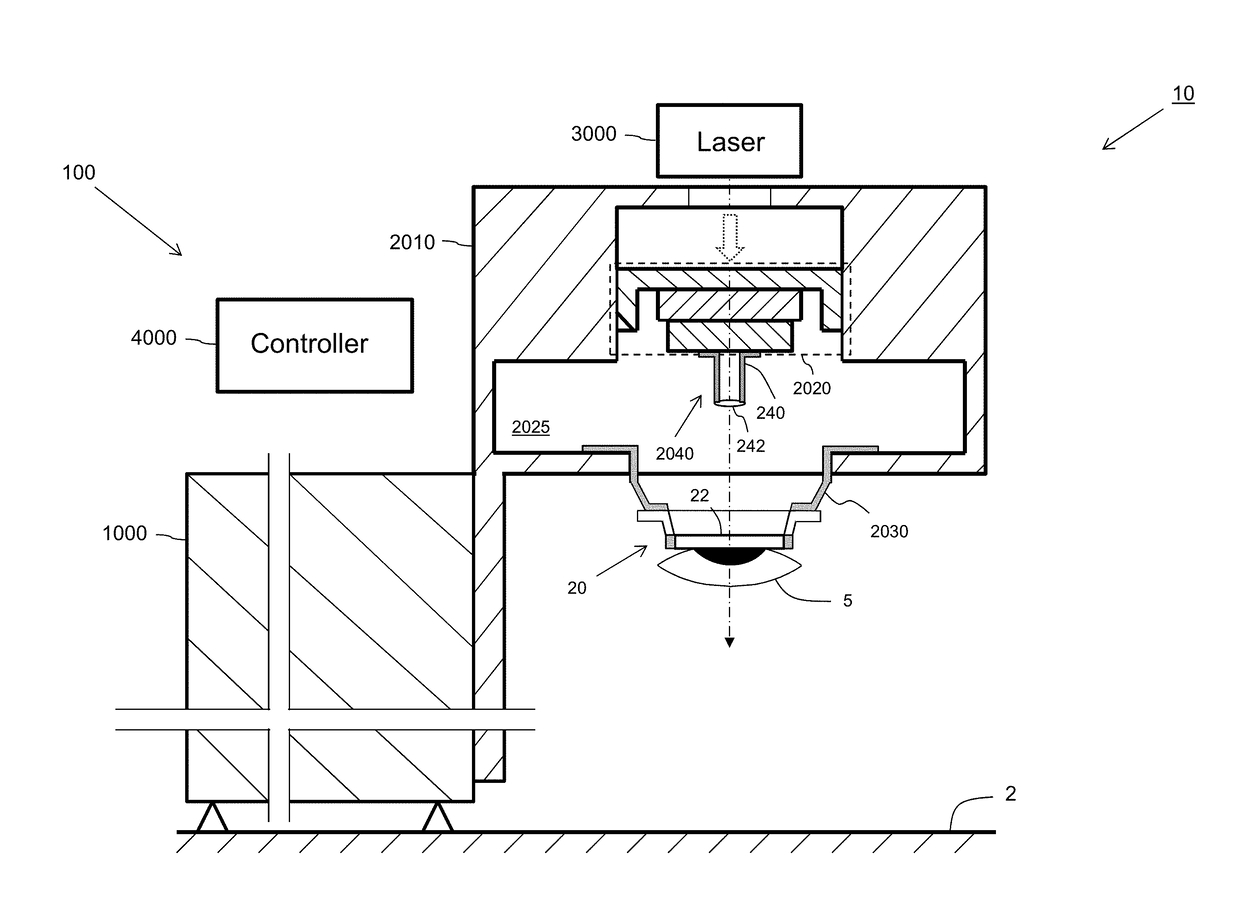

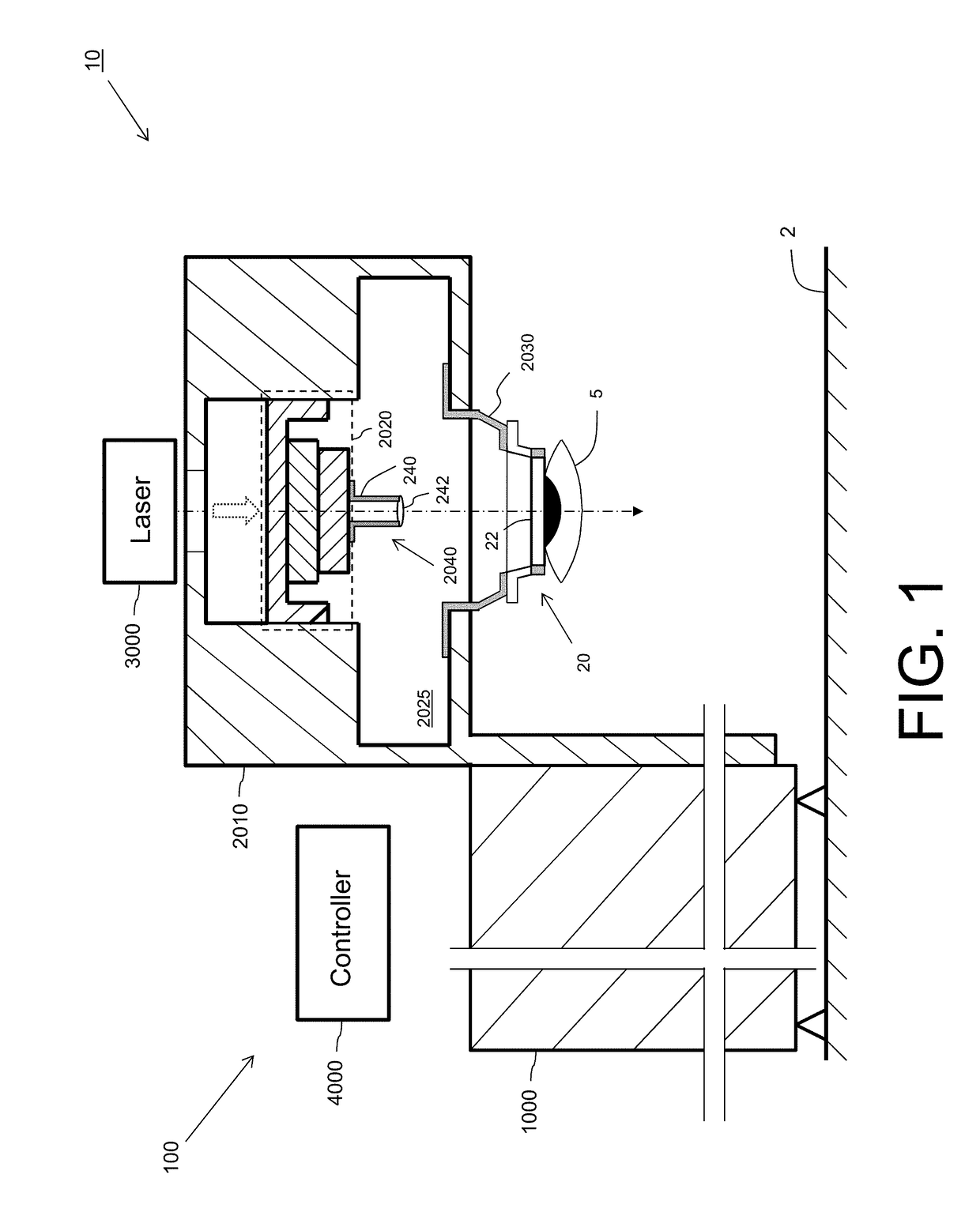

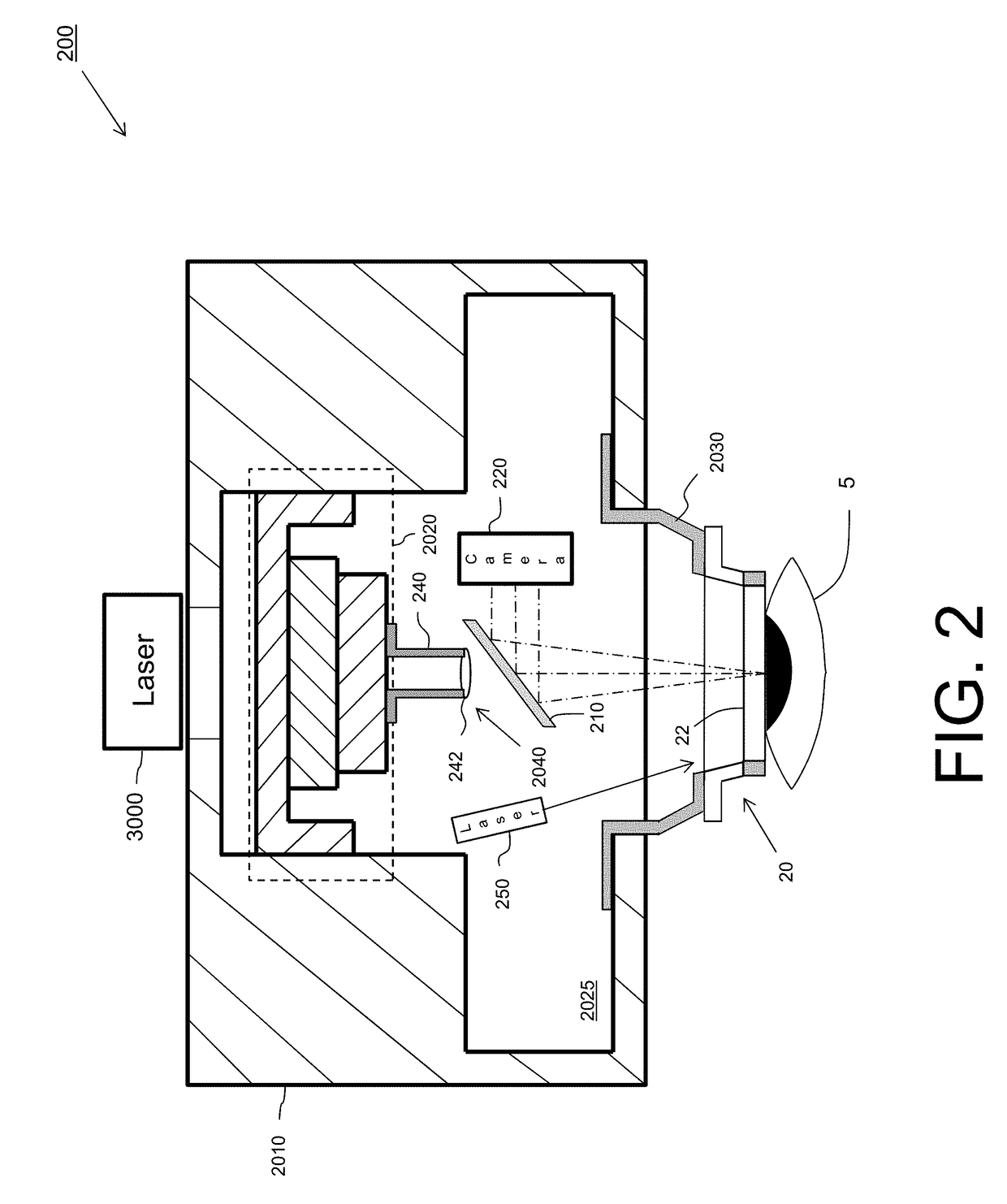

Patient interface device for laser eye surgery having light guiding structure for illuminating eye

ActiveUS20170281407A1High degree of parallelismImprove Manufacturing TolerancesLaser surgerySurgical instrument detailsLight guideLaser surgery

A patient interface device includes: a first interface port configured to be interfaced with a laser surgery apparatus; a second interface port configured to be interfaced with a patient's eye, the second interface port including an applanating lens for application to a patient's eye during a laser surgery procedure; a chamber extending between the first interface port and the second interface port and defining a chamber therein, wherein air may be evacuated from the chamber by the laser surgery apparatus via the first interface port; and a tubular light guiding structure having at a first end thereof a light receiving surface, configured to receive light, and having at a second end thereof a light-emitting surface, wherein the second surface is disposed adjacent the applanating lens and configured to provide the light in a vicinity of the patient's eye when the applanating lens is applied to the patient's eye.

Owner:AMO DEVMENT

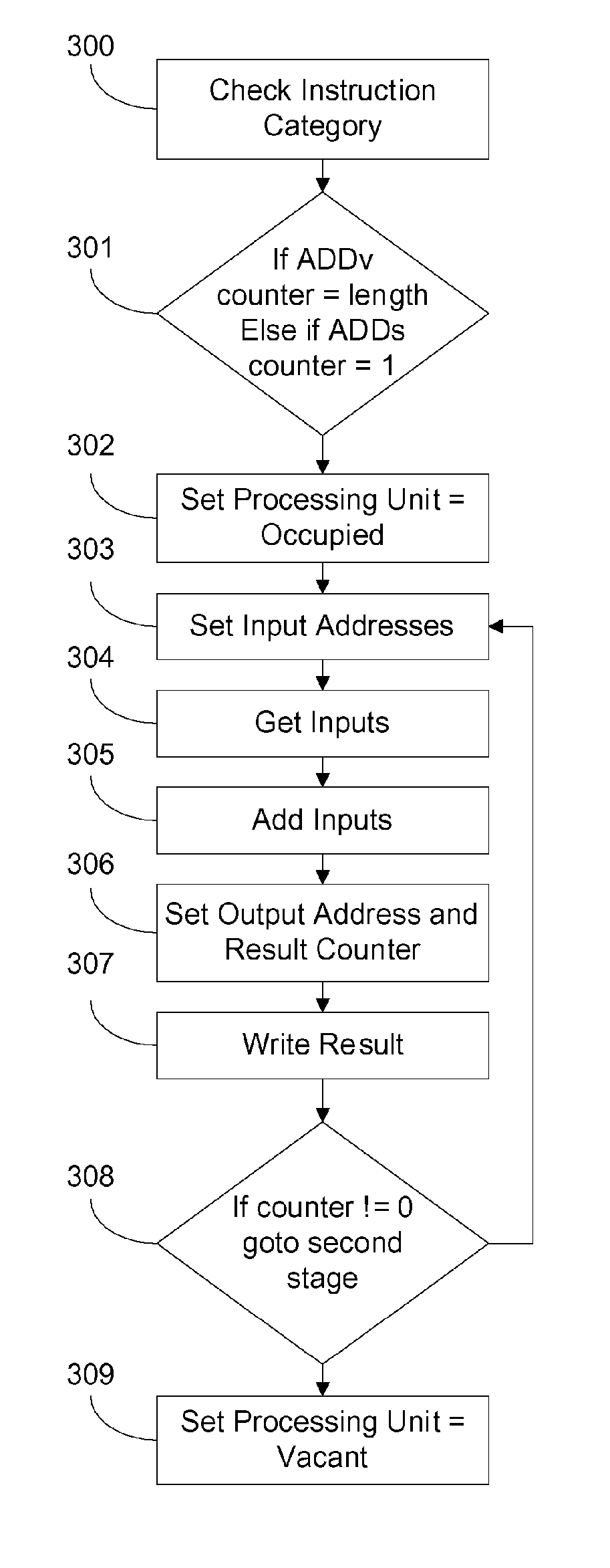

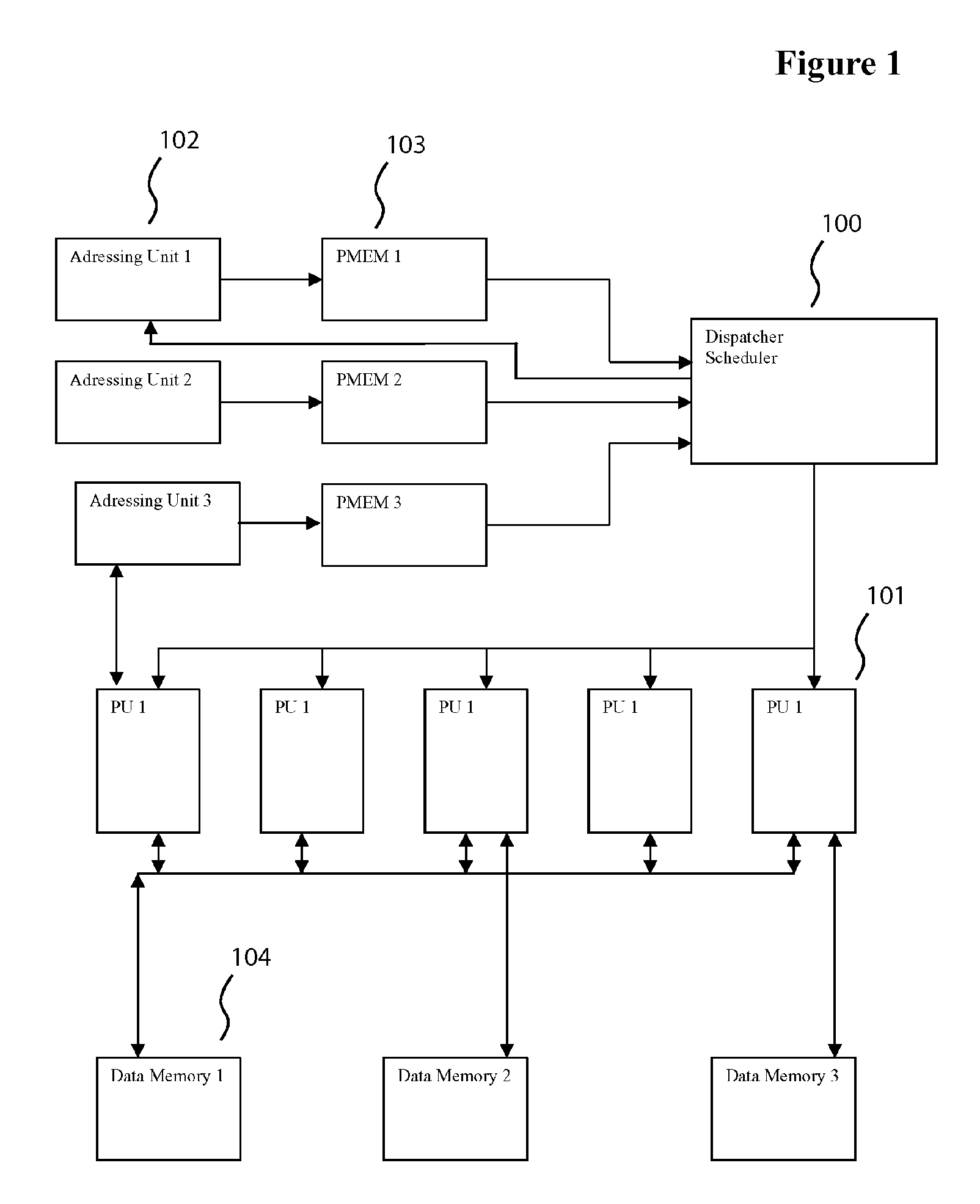

High-level language processor apparatus and method

InactiveUS20060200648A1Improve executionSave powerDigital computer detailsMemory systemsLanguage processorParallel computing

A digital computing component and method for computing configured to execute the constructs of a high-level software programming language via optimizing hardware targeted at the particular high-level software programming language. The architecture employed allows for parallel execution of processing components utilizing instructions that execute in an unknown number of cycles and allowing for power control by manipulating the power supply to unused elements. The architecture employed by one or more embodiments of the invention comprise at least one dispatcher, at least one processing unit, at least one program memory, at least one program address generator, at least one data memory. Instruction decoding is performed in two stages. First the dispatcher decodes a category from each instruction and dispatches instructions to processing units that decode the remaining processing unit specific portion of the instruction to complete the execution.

Owner:FALKENBERG ANDREAS

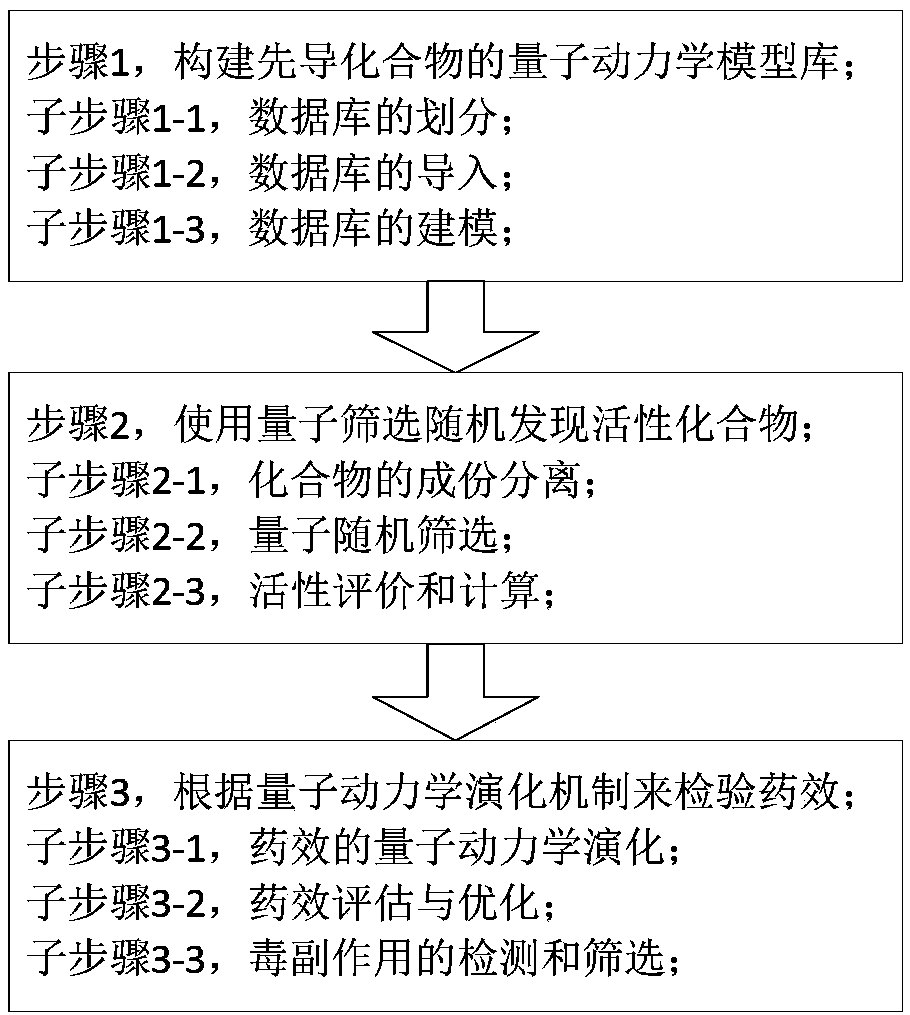

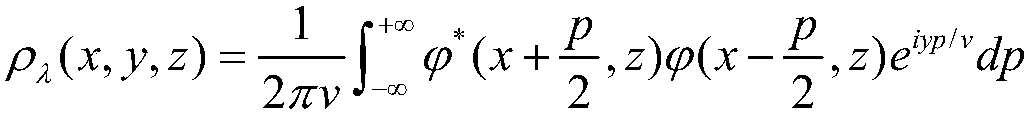

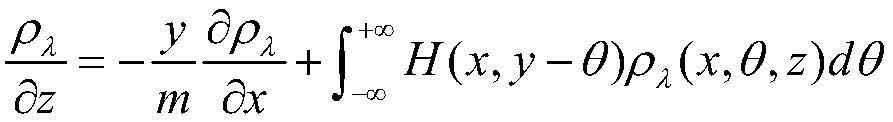

Quantum dynamic computation method for lead compounds

PendingCN109584969AImprove accuracyIncrease success rateComputational theoretical chemistryInstrumentsDynamic methodLead compound

The invention discloses a quantum dynamic computation method for lead compounds. The quantum dynamic computation method for the lead compounds includes the steps that a quantum dynamic model base of the lead compounds is established; related information of the lead compounds is quantized, and a database of the lead compounds is established; active compounds are discovered at random through quantumscreening; the lead compounds and active ingredients meeting required properties are searched in a database system, true random numbers generated through a quantum dynamic model are combined, and various compound models are established through a quantum screening mechanism; through the quantum dynamic model, mutual effects between active compound molecules and mutual effects between biological cells are established. The chemical effect is checked according to a quantum dynamic evolution mechanism; a synthetic active compound is subjected to a later quantum test simulation experiment, and a quantum dynamic model when compound molecules are combined with target spot protein is established. According to the quantum dynamic computation method for the lead compounds, the mutual effects betweenmolecular structures are subjected to evolution analysis with the quantum dynamic method, and the lead compounds corresponding to some pathologic structure can be obtained at higher accuracy and lower cost.

Owner:CHINA THREE GORGES UNIV

Microfluidic PCR device

ActiveUS9777317B2High degree of parallelismReduce and eliminate cross contaminationMicrobiological testing/measurementLaboratory glasswaresMain channelSemiconductor

A microfluidic device (1000-1005), comprising: a semiconductor body (2) having a first side (2a) and a second side (2b) opposite to one another, and housing, at the first side, a plurality of wells (4), having a first depth; an inlet region (30) forming an entrance point for a fluid to be supplied to the wells; a main channel (6a) fluidically connected to the inlet region, and having a second depth; and a plurality of secondary channels (6b) fluidically connecting the main channel to a respective well, and having a third depth. The first depth is higher than the second depth, which in turn is higher than the third depth. According to an aspect, the microfluidic device further comprises a cover layer (8), arranged above the first side of the semiconductor body, configured for sealing the wells and provided with at least a first valve hole (54) which extends through the cover layer and overlaps, at least partially, the secondary channels; and a flexible layer (14), arranged above the cover layer and provided with at least a protrusion (74) extending through the first valve hole towards the semiconductor body and overlapping, at least partially, the secondary channels, the flexible layer being configured such that, when a pressure is applied on it, the protrusion contacts the semiconductor body and enters the secondary channels thus fluidically isolating the wells from one another.

Owner:STMICROELECTRONICS SRL +1

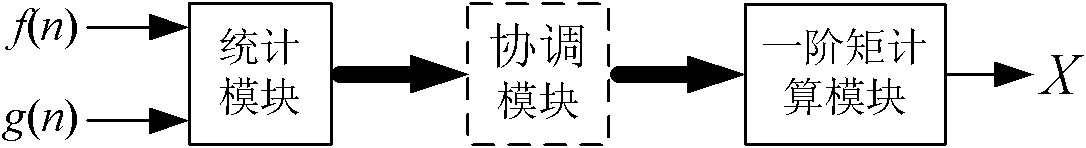

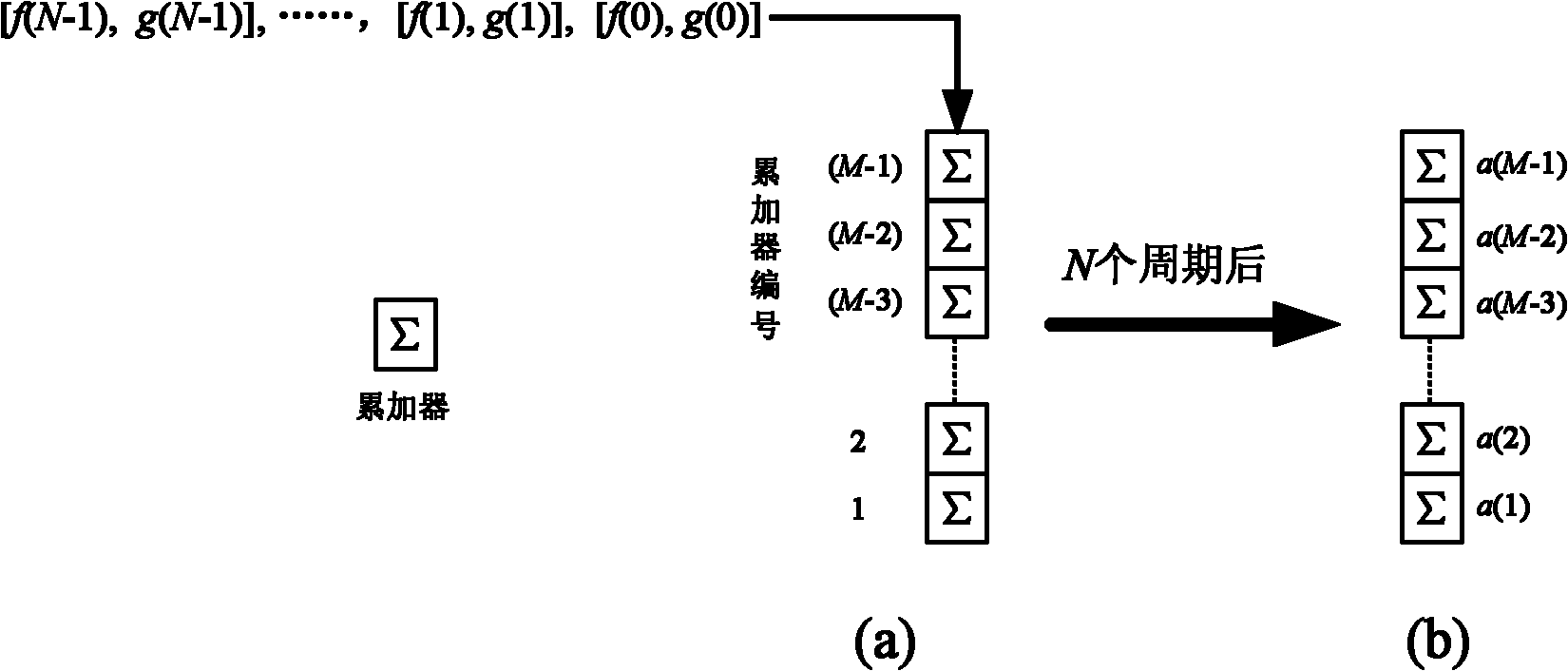

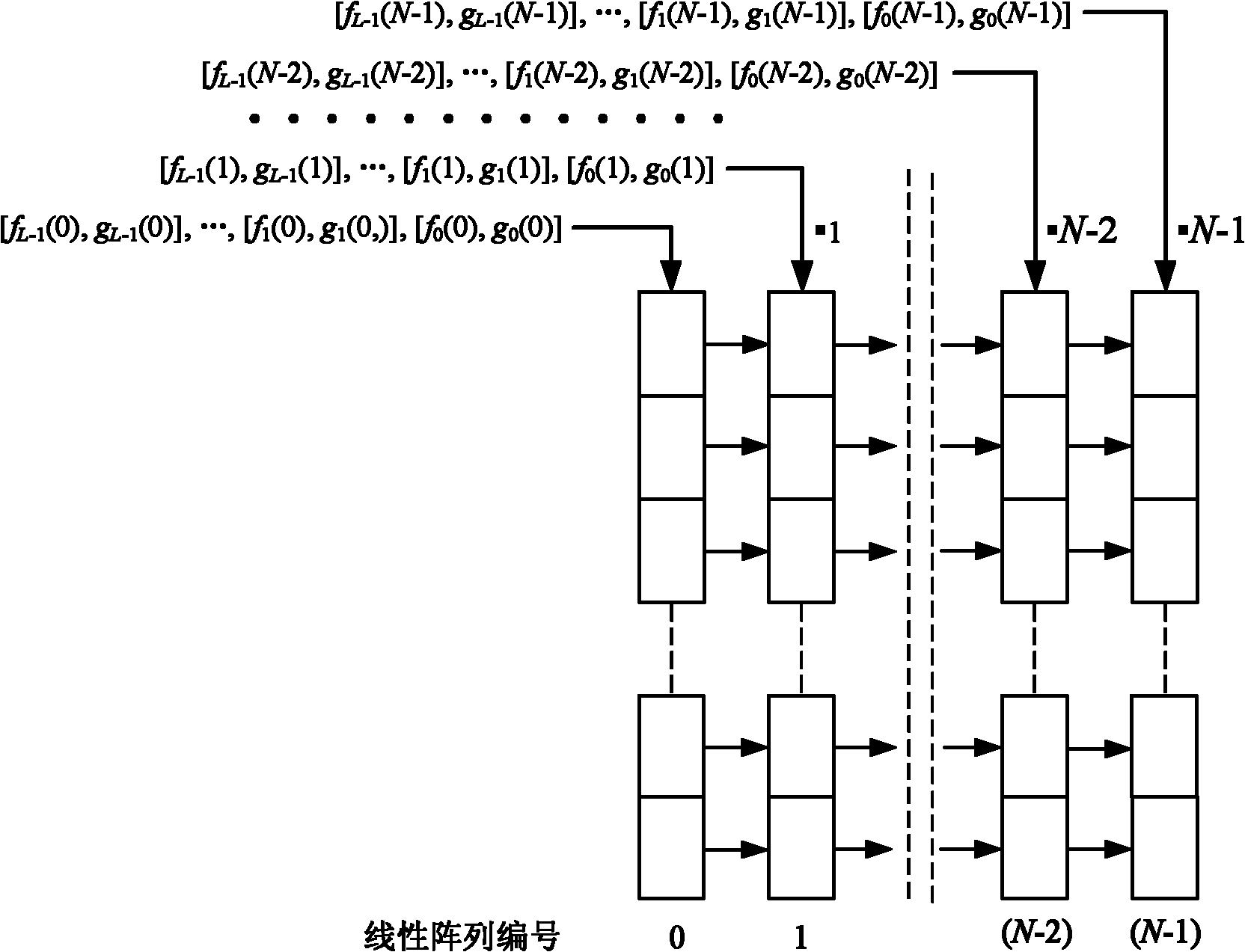

Digital inner product calculator based on first moment

InactiveCN101957738AHigh degree of parallelismEasy to implementDigital data processing detailsCalculatorData input

The invention provides a digital inner product calculator which is used for realizing various digital sequence calculations by taking digital inner products as a basic calculation form. The calculator comprises a statistical module and a first moment calculation module, wherein the statistical module is used for converting an inner product form into a first moment form through value statistics; the first moment calculation module is used for rapidly calculating the first moment acquired by the statistical module to obtain an inner product calculation result; and the digital inner product calculator comprises a coordination module which is used for connecting the statistical module and the first moment calculation module and realizing the coordination operation of the two modules. In the calculator, only a summator and a register are adopted; and when the inner product form changes, the calculation of inner products in different forms can be realized by changing the data input mode, therefore, the structure of the calculator per se is completely unnecessary to be changed.

Owner:HUAZHONG UNIV OF SCI & TECH

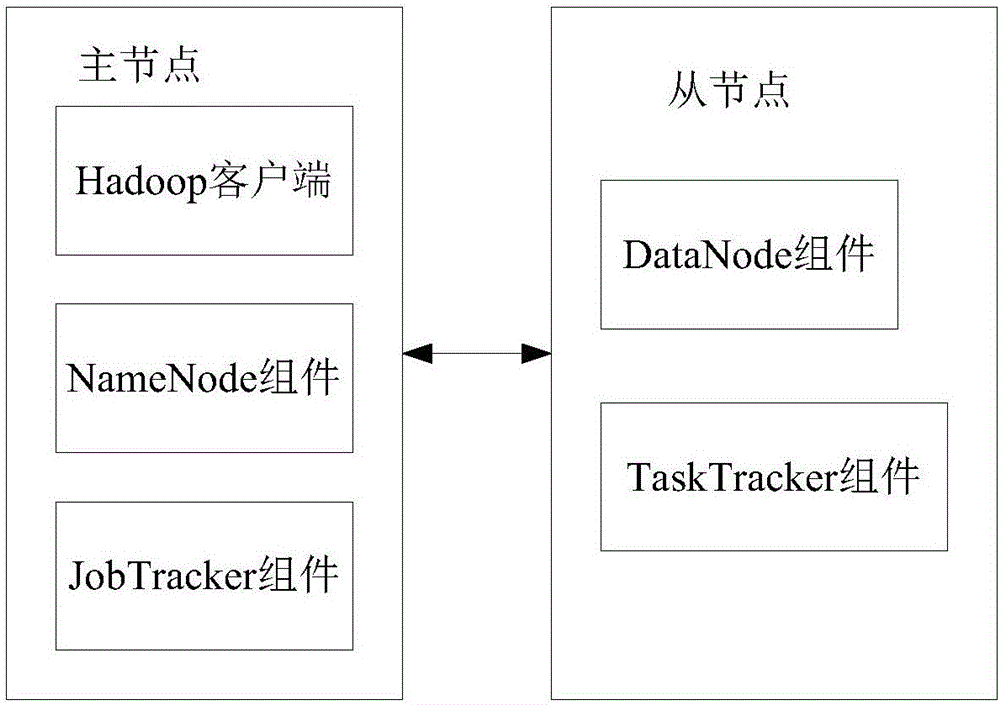

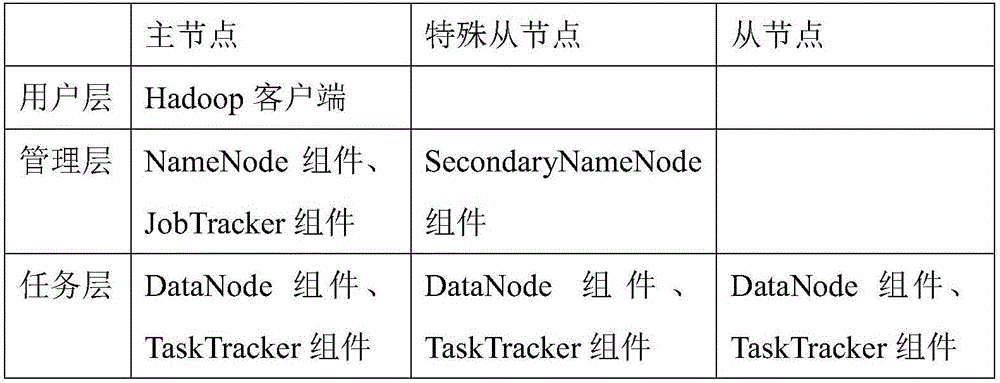

Cloud-computing-based large scale curved surface reconstruction system

InactiveCN106447775AImproved Surface Reconstruction CapabilitiesStrong computing power3D modellingCouplingSurface reconstruction

The invention discloses a cloud-computing-based large scale curved surface reconstruction system. The system comprises a cloud computing platform deployed with a Hadoop framework. The cloud computing platform includes a main node and a sub-node. The main node is provided with a Hadoop user terminal, a NameNode element, and a JobTracker element. The sub-node is provided with a DataNode element and a TaskTracker element. According to the invention, through the introduction of cloud computing into a curved surface reconstruction system and through the use of the advantages of cloud computing such as high calculation capability and high system parallelism, the curved surface reconstruction capability of a curved surface reconstruction system is increased greatly. The reconstruction system has high expandability, utilizes resources properly and achieves low coupling. With the system, the problems with the insufficiency in single machine's memory and computing ability and the topological merging of the curved surfaces are solved.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

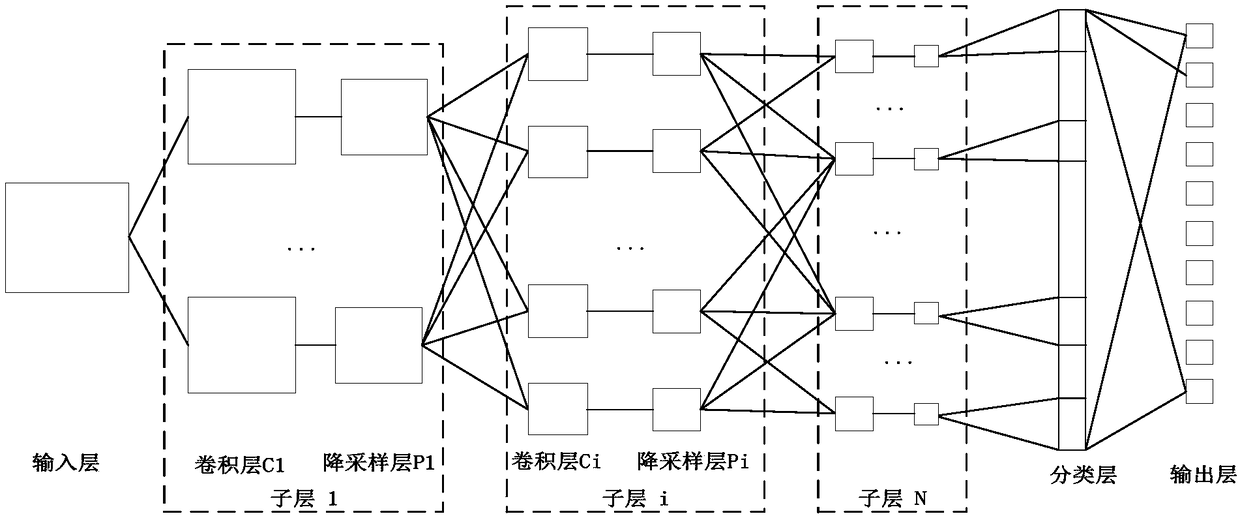

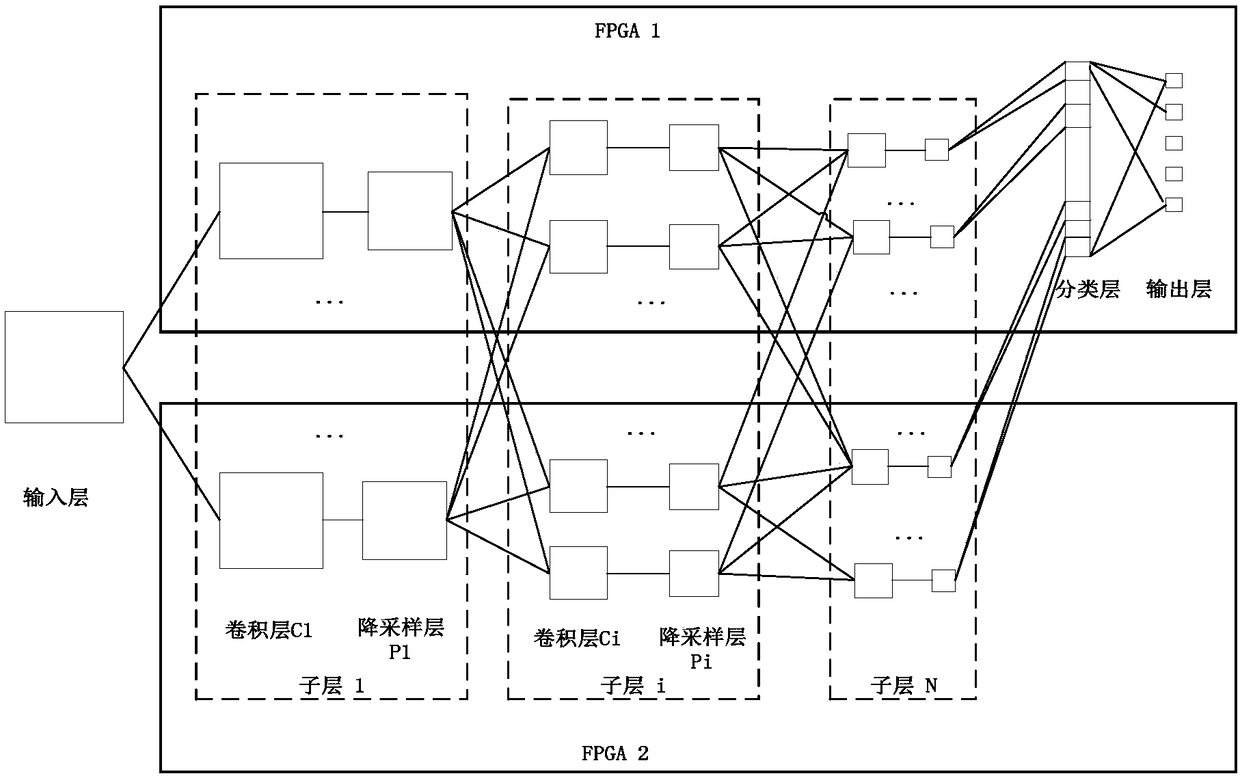

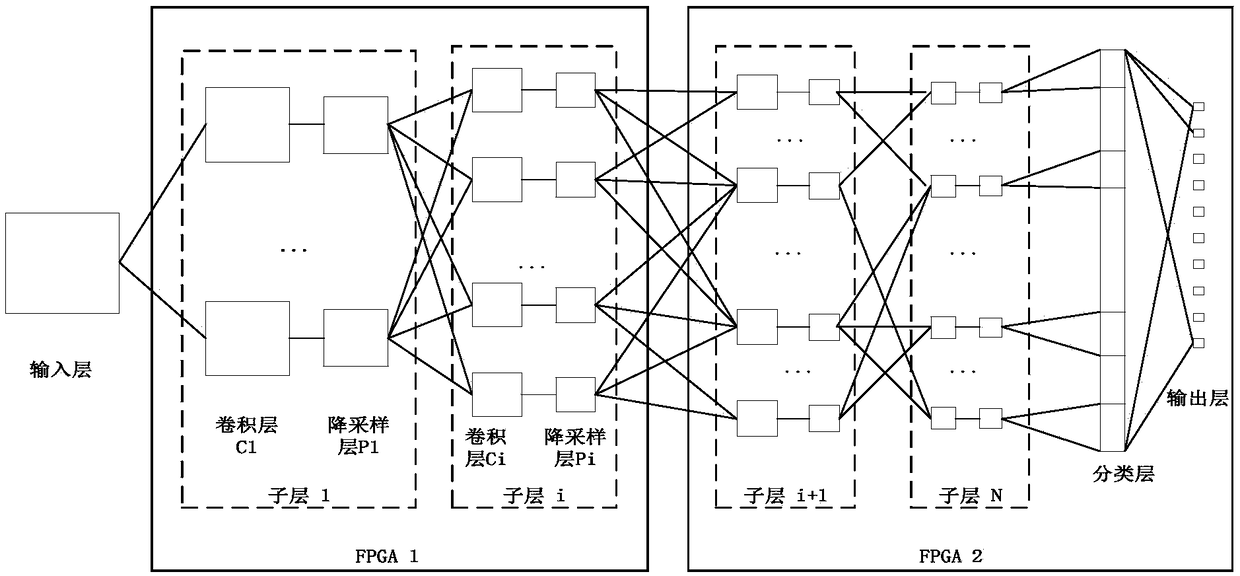

Double-FPGA cooperative work method for deep neural network

InactiveCN108228969AHigh degree of parallelismCalculation speedSpecial data processing applicationsNetwork modelFpga chip

The invention belongs to the field of onboard intelligent computation and provides a double-FPGA cooperative work method for a deep neural network. The method comprises the steps that a deep neural network model is analyzed, according to the characteristic that the deep neural network model is composed of multiple sub layers, a calculation task is divided, and double-FPGA-chip cooperative work isachieved; the method of dividing the calculation task comprises serial division and parallel division. The limit of hardware resources can be effectively broken, time is replaced by space, the parallel degree of the neural network in an embedded calculation environment is greatly increased, and then the calculation speed of the network is increased. Similarly, the method can be further expanded toa multi-FPGA cooperative work method, and the neural network with a larger scale is achieved.

Owner:XIAN AVIATION COMPUTING TECH RES INST OF AVIATION IND CORP OF CHINA

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com