Patents

Literature

491results about How to "Keep details" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Method of performing fast bilateral filtering and using the same for the display of high-dynamic-range images

ActiveUS7146059B1Reduce image contrastKeep detailsImage enhancementImage analysisNonlinear filterDecomposition

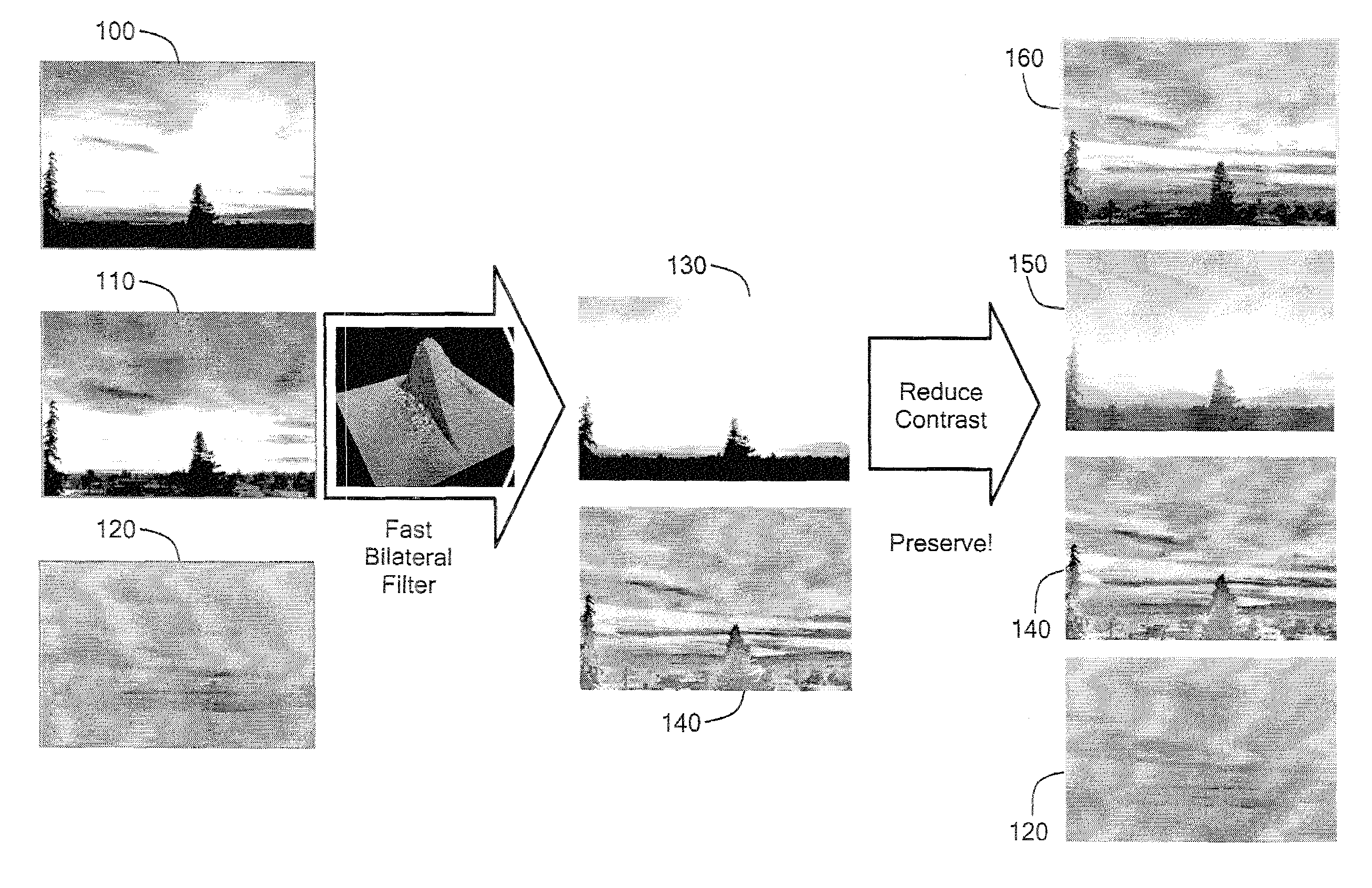

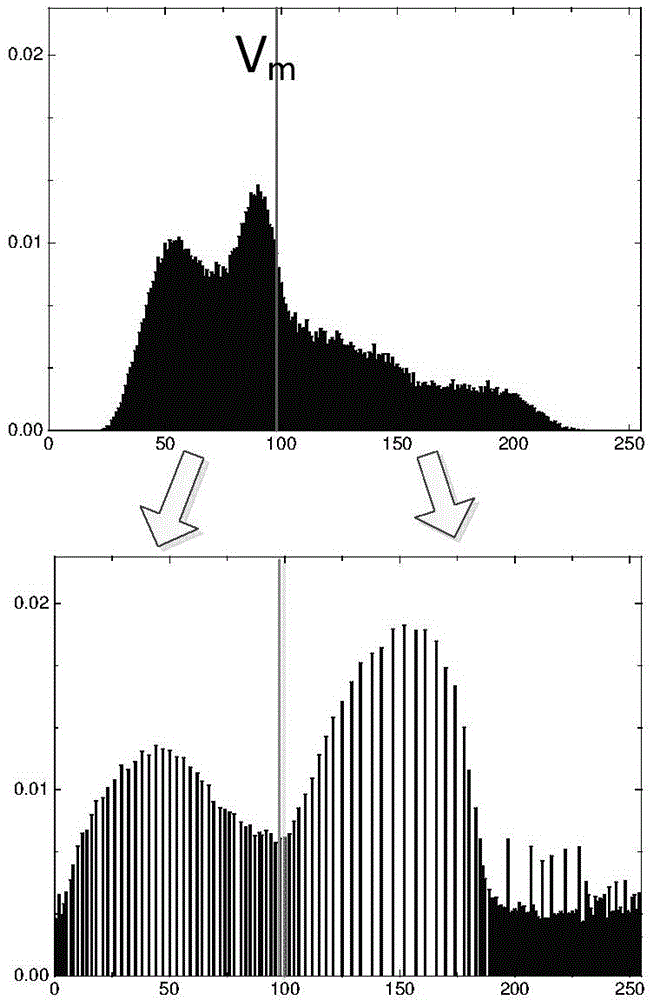

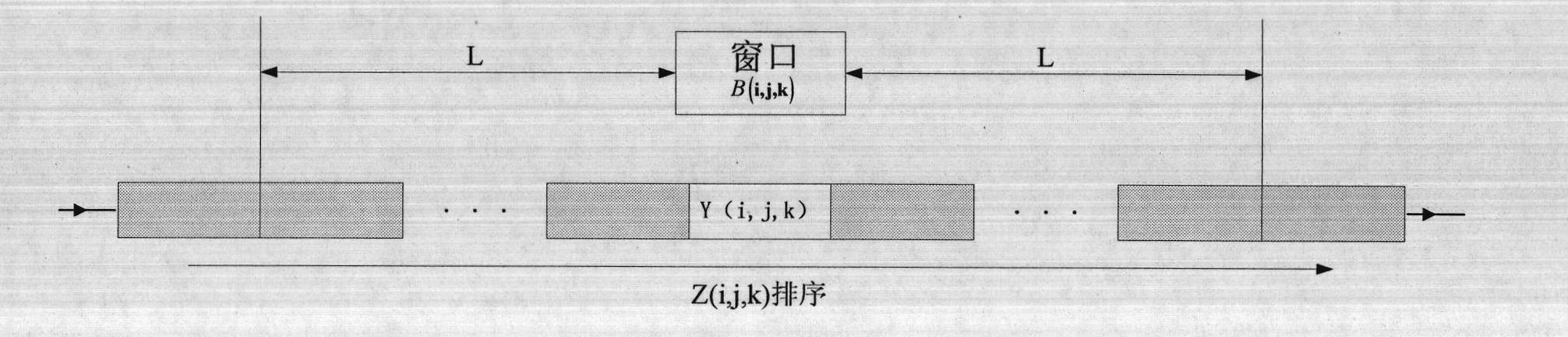

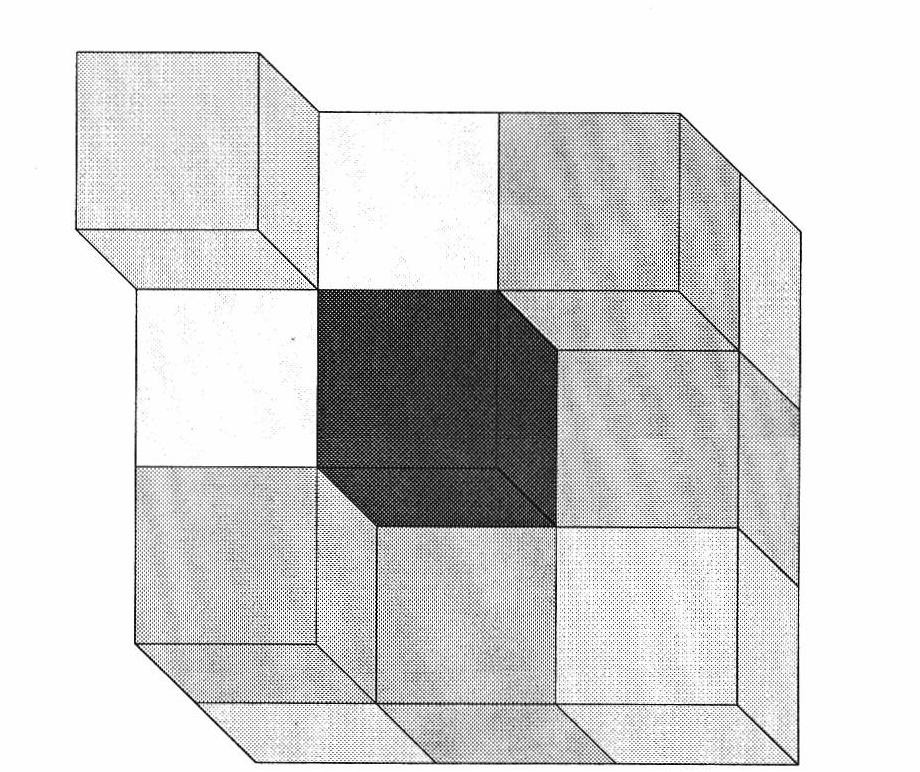

A method of performing bilateral filtering and using the method for displaying high-dynamic-range images is presented. The method reduces the contrast of the image while preserving detail of the image. The presently disclosed method incorporates a two-scale decomposition of the image into a base layer encoding large-scale variations, and a detail layer. The base layer has its contrast reduced, thereby preserving detail. The base layer is obtained using an edge-preserving bilateral filter. The bilateral filter is a non-linear filter, where the weight of each pixel is computed using a Gaussian in the spatial domain multiplied by an influence function in the intensity domain that decreases the weight of pixels with large intensity differences. The bilateral filtering is accelerated by using a piecewise-linear approximation in the intensity domain and appropriate subsampling.

Owner:MASSACHUSETTS INST OF TECH

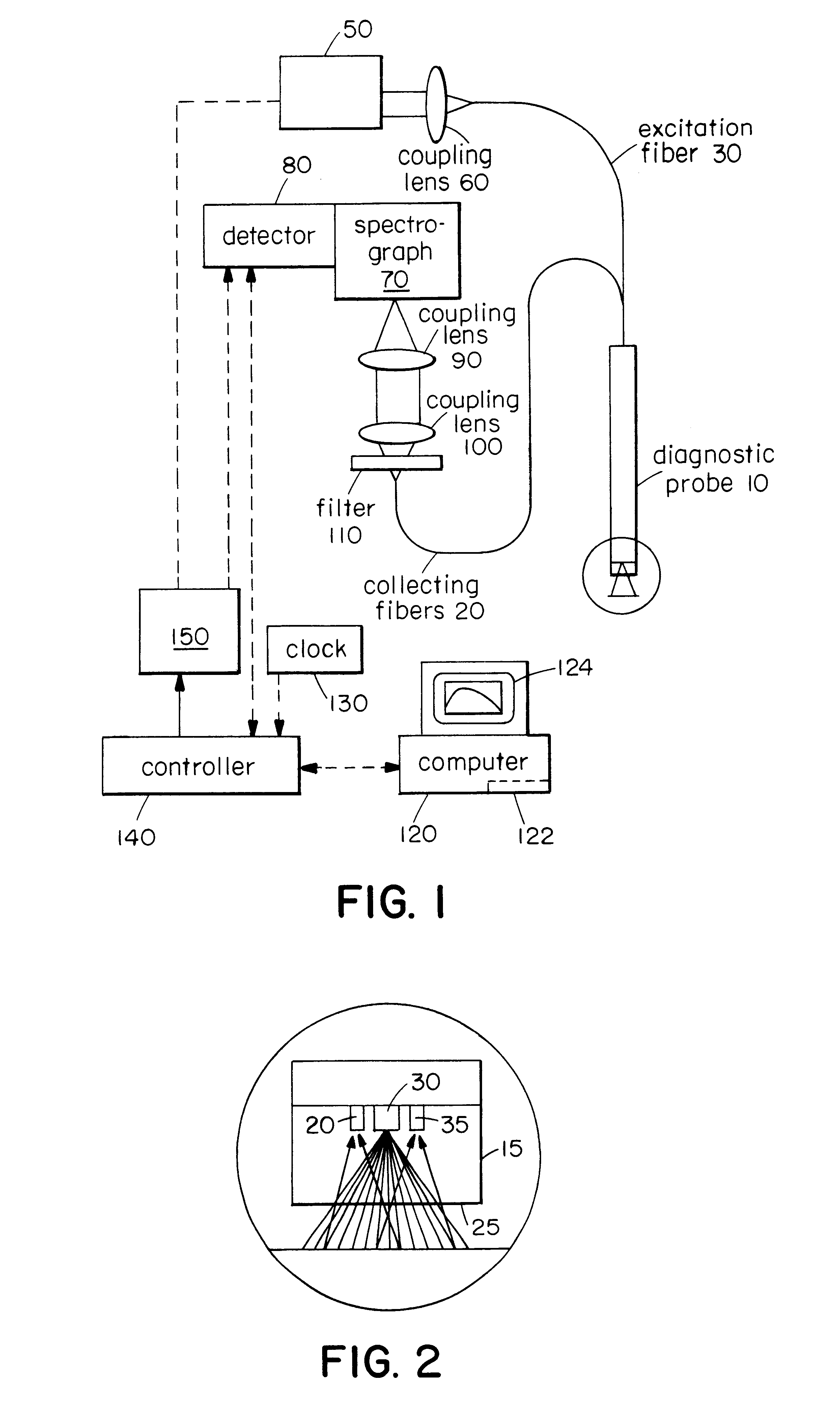

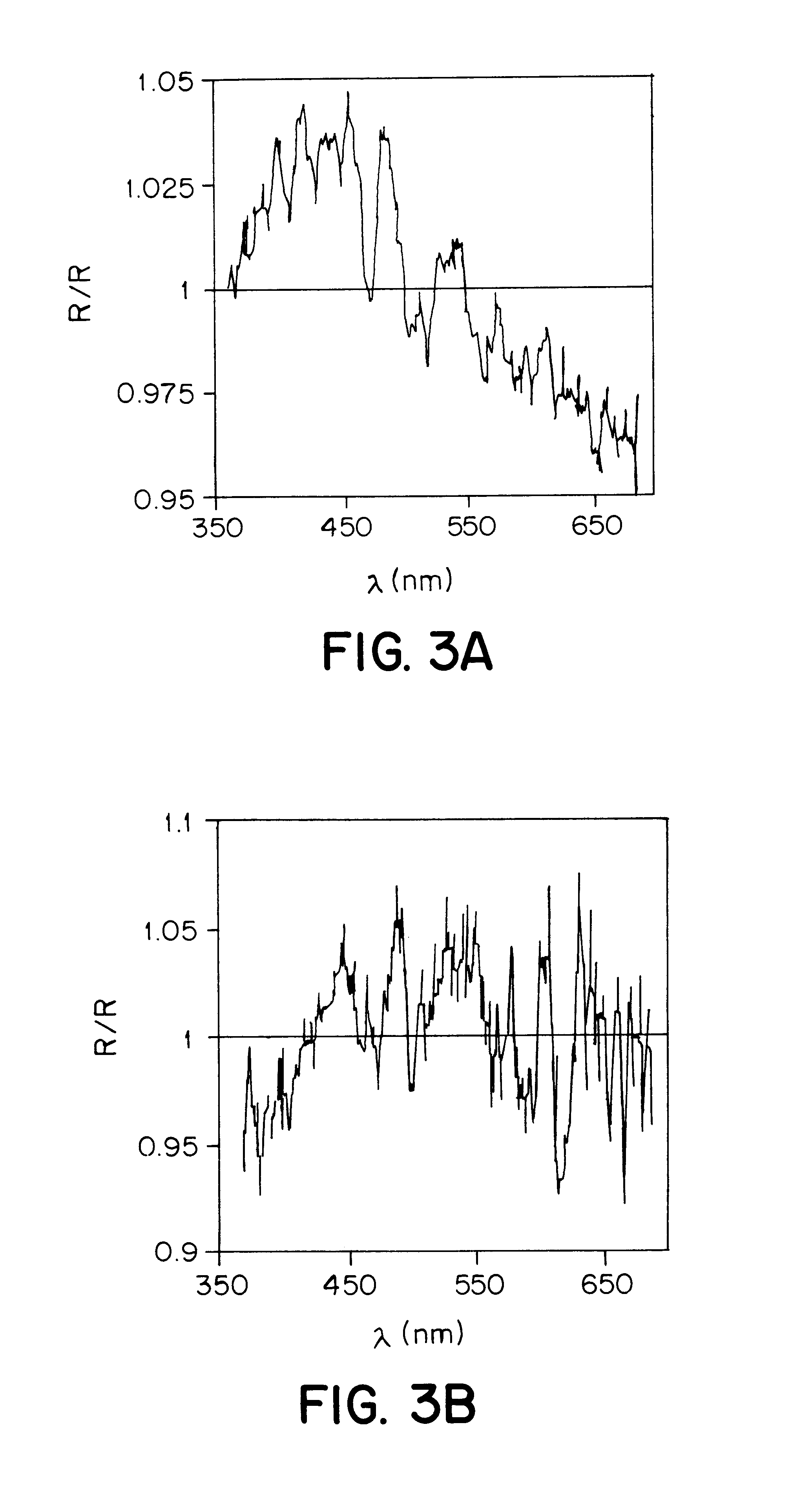

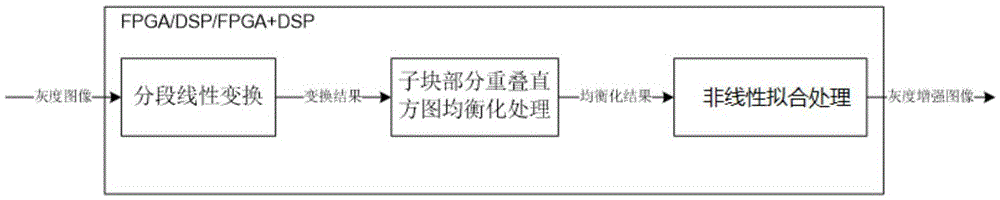

Method for measuring tissue morphology

InactiveUS6922583B1High densityHigh refractive indexDiagnostics using spectroscopySurgeryOptical radiationFiber

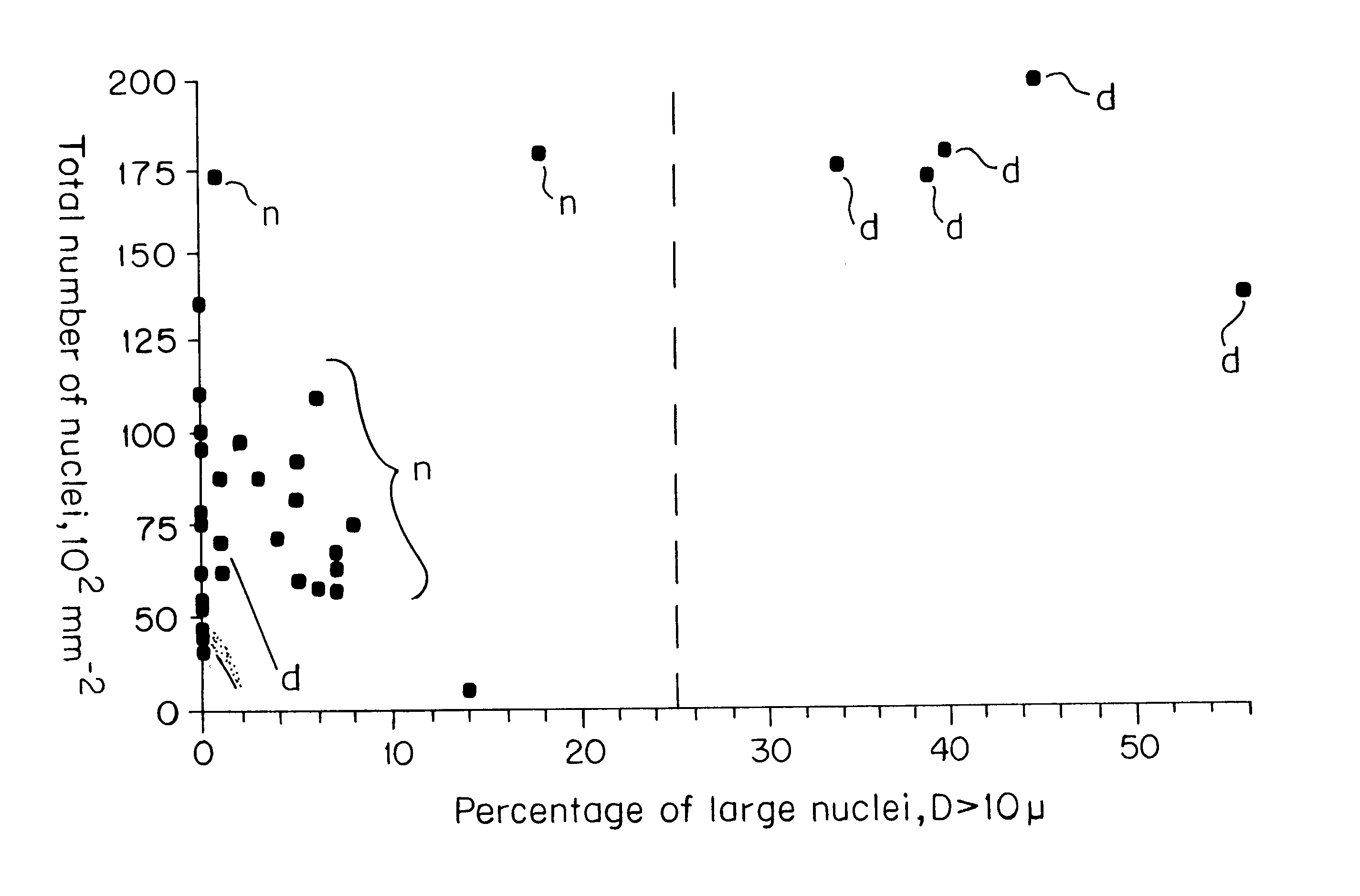

The present invention relates to systems and methods for measuring one or more physical characteristics of material such as tissue using optical radiation. The system can use light that is scattered by a tissue layer to determine, for example, the size of nuclei in the tissue layer to aid in the characterization of the tissue. These methods can include the use of fiber optic devices to deliver and collect light from a tissue region of interest to diagnose, for example, whether the tissue is normal or precancerous.

Owner:MASSACHUSETTS INST OF TECH

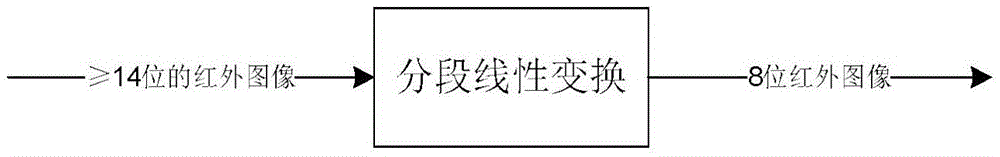

Gray scale image fitting enhancement method based on local histogram equalization

InactiveCN105654438ASuppresses "cold reflection" imagesEvenly distributedImage enhancementImage analysisImage contrastBlock effect

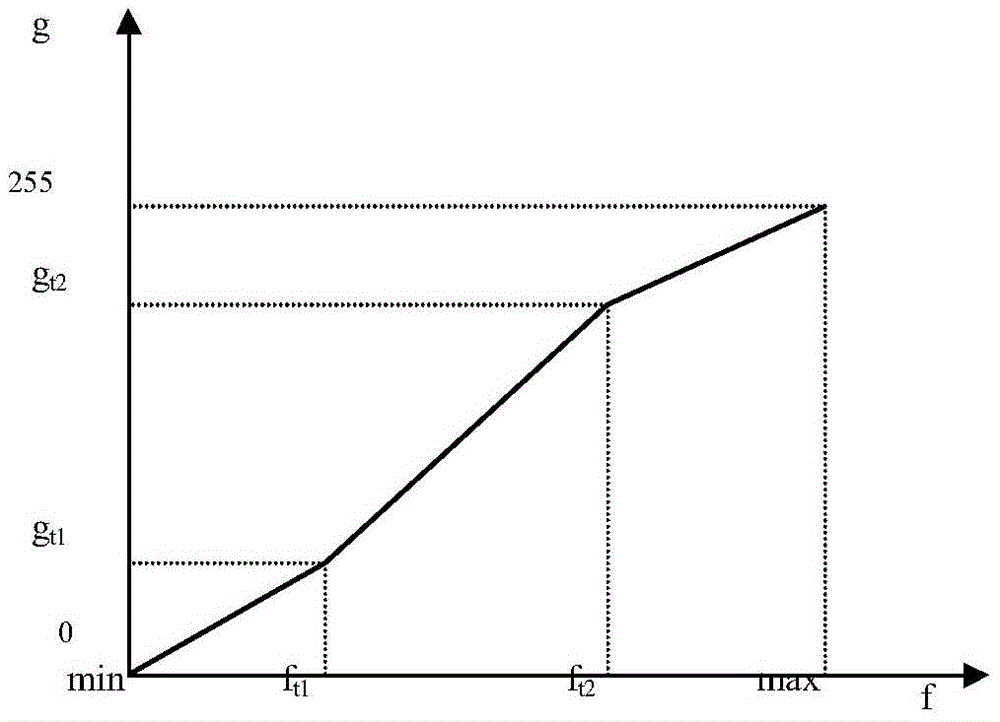

The invention provides a gray scale image fitting enhancement method based on local histogram equalization. The gray scale image fitting enhancement method has advantages of improving gray scale image contrast and detail information and eliminating block effect and over-enhancement. The gray scale image fitting enhancement method comprises the steps of performing segmental linear transformation on a gray scale image in an overwide dynamic range, obtaining the gray scale image in an appropriate dynamic range, dividing an image gray scale distribution interval to two segments to multiple segments, adjusting the gradient of a segmenting point and a transformation line of each image gray scale distribution interval, performing expansion or compression on a random gray scale interval; performing subblock part overlapping histogram equalization on a transformation result, obtaining the transformation function of the current subblock through performing weighted summation on a subblock transform function in the neighborhood, performing histogram equalization processing on the current subblock by means of the transformation function; and performing nonlinear fitting on the gray scale map after histogram equalization, and performing histogram distribution correction on the gray scale image after subblock part overlapping histogram equalization.

Owner:SOUTH WEST INST OF TECHN PHYSICS

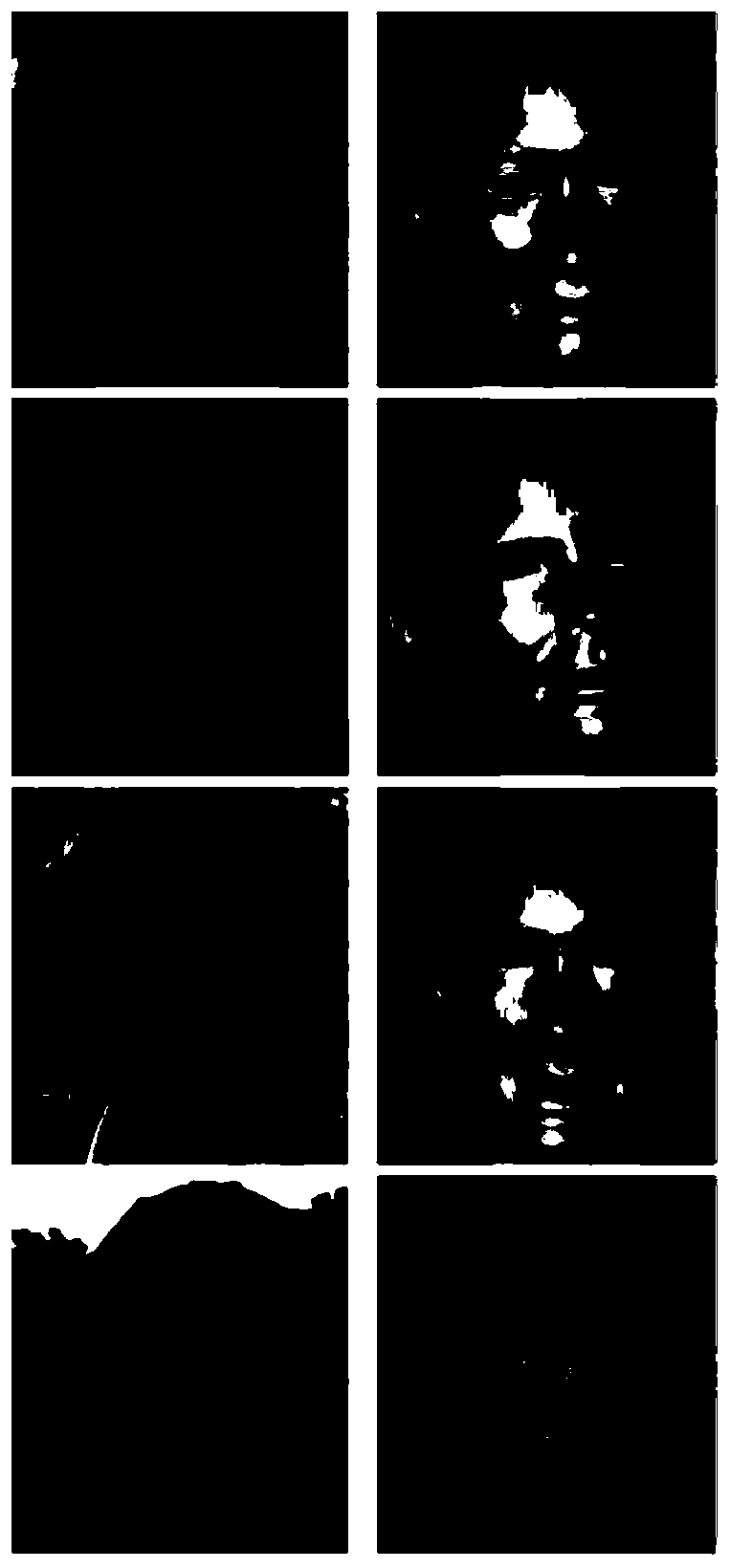

Double exposure implementation method for inhomogeneous illumination image

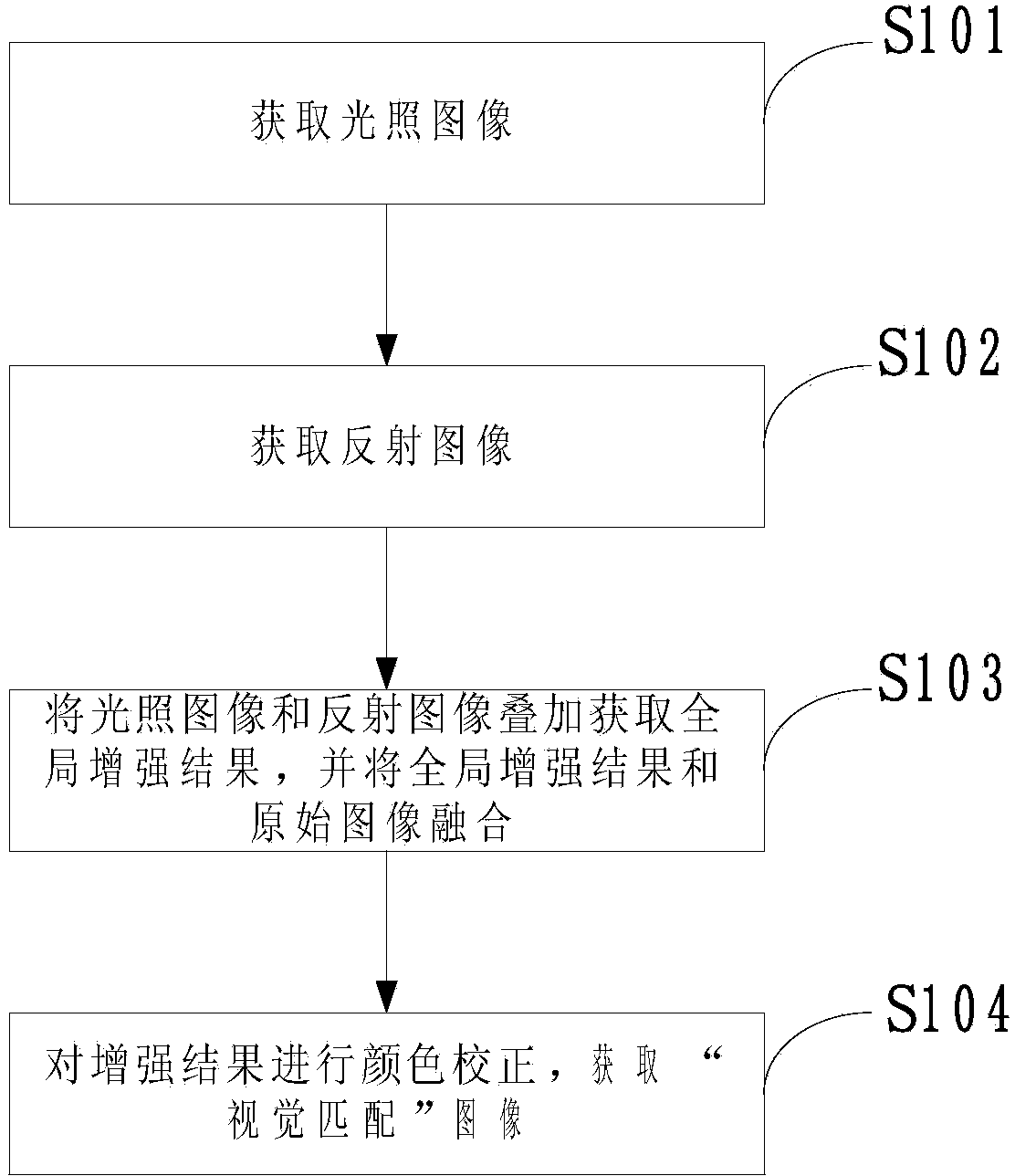

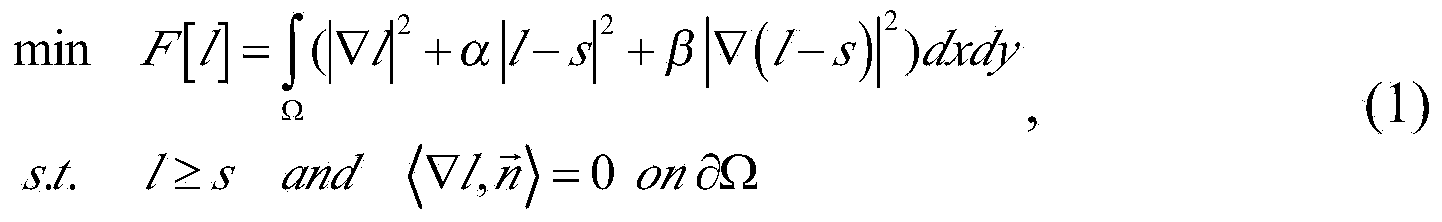

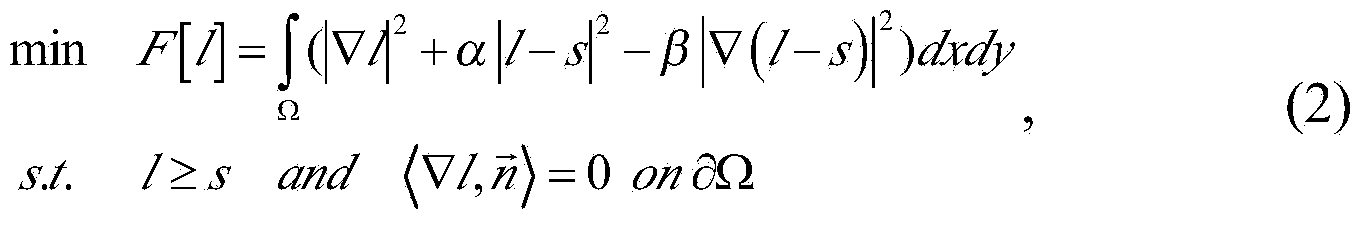

The invention discloses a double exposure implementation method for an inhomogeneous illumination image. The double exposure implementation method for the inhomogeneous illumination image comprises the following steps: obtaining an illumination image; obtaining a reflection image; overlaying the illumination image with the reflection image to obtain the global enhancement effect; fusing the global enhancement effect with the original image; and carrying out color correction on an enhancement result to obtain a visual matching image. According to the double exposure implementation method for the inhomogeneous illumination image, which is disclosed by the invention, the smooth property of the illumination image is constrained, and the reflection image is sharpened by the visual threshold value characteristic to guarantee the detail information of the image; with an image fusion method, the luminance, the contrast ratio and the color information of an original image luminance range can be effectively kept; because the characteristic of the human eye visual perception of average background brightness is introduced in, the fused image can be used for effectively eliminating image color distortion near a shadow boundary; the color of a low-illuminance zone is restored by the color correction technology; the colors of the low-illuminance zone and the luminance range are free from obvious distortion; the continuity is good; and the visual effect is more nature.

Owner:AIR FORCE UNIV PLA

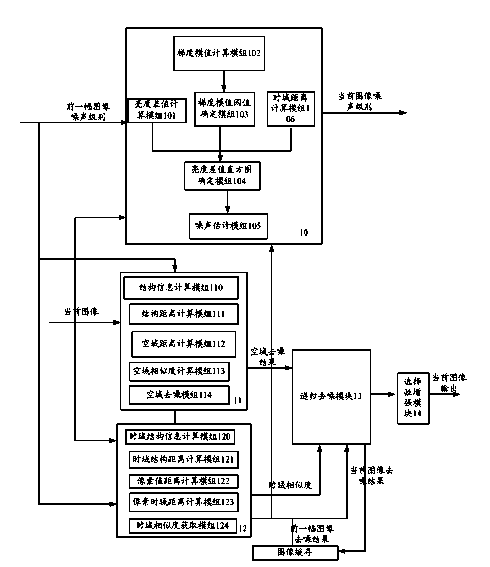

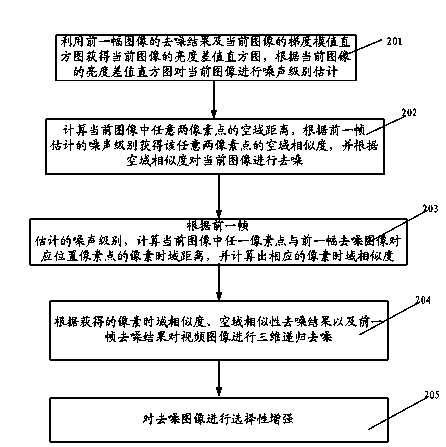

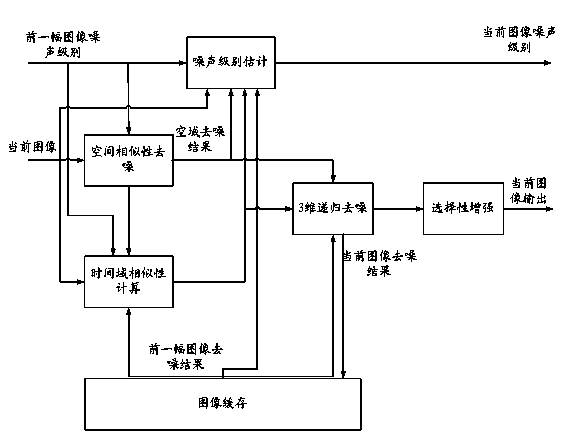

Video noise reduction device and video noise reduction method

ActiveCN103369209AGuaranteed stabilityPreserve image detailImage enhancementTelevision system detailsTime domainNoise level

The invention discloses video noise reduction device and a video noise reduction method. The method comprises the following steps of: obtaining a brightness difference histogram of a current image by using a denoising result of a previous frame of image and a gradient magnitude histogram of the current image; carrying out noise level evaluation on the current image according to the brightness difference histogram; calculating the spatial distance of any two pixel points in the current image, so as to obtain the spatial similarity of the any two pixel points; carrying out denoising on the current image according to the spatial similarity; calculating a pixel time domain distance between any pixel point in the current image and the pixel point at the position corresponding to the previous frame of denoised image, and calculating the corresponding time domain similarity; carrying out three-dimensional recursive denoising on the video image according to the obtained time domain similarity, the spatial similarity denoising result and the previous frame of denoising result. By adopting the device and method disclosed by the invention, three-dimensional recursive denoising is carried out by using the pertinence of the pixel in space and time, so that strong complicated noise can be removed; an image detail can be kept; the stability of the denoising effect also can be ensured.

Owner:SHANGHAI TONGTU SEMICON TECH

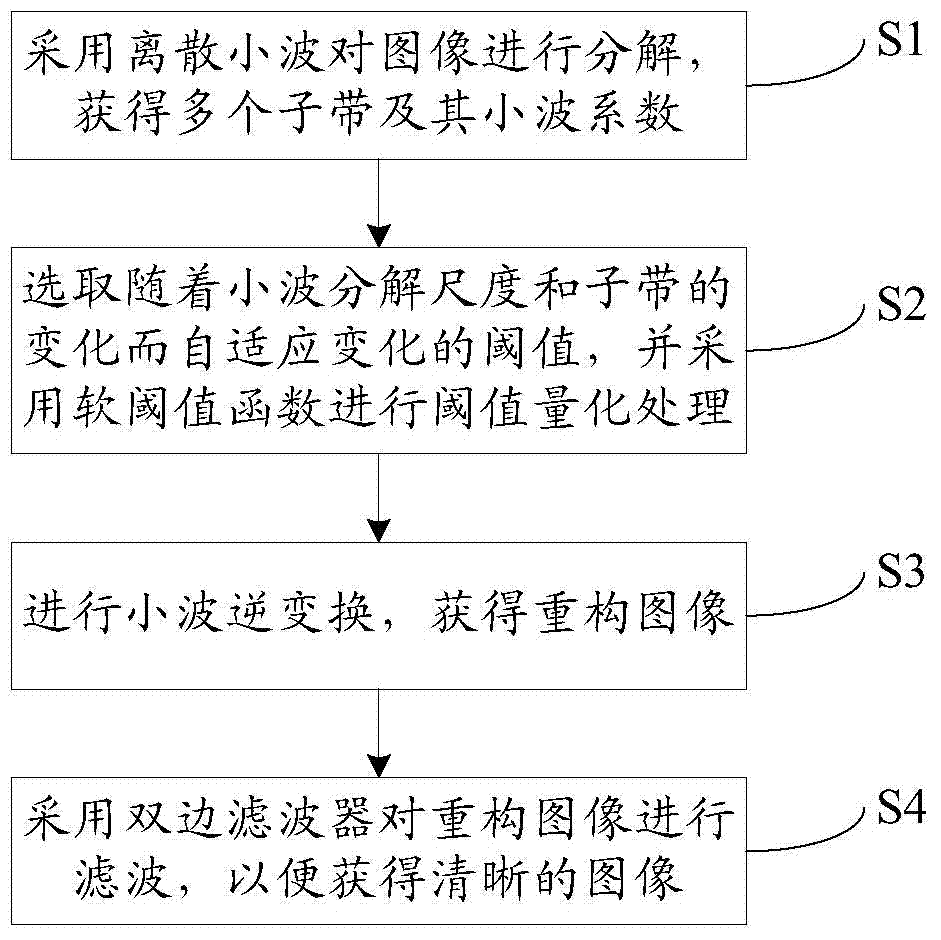

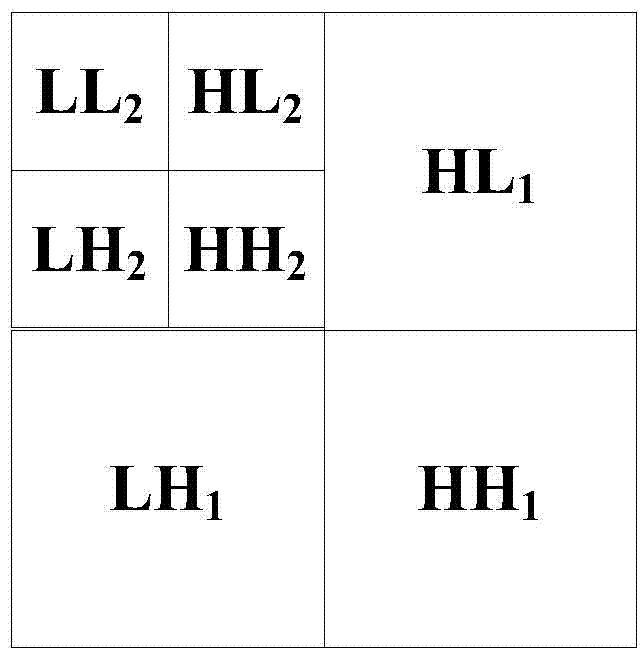

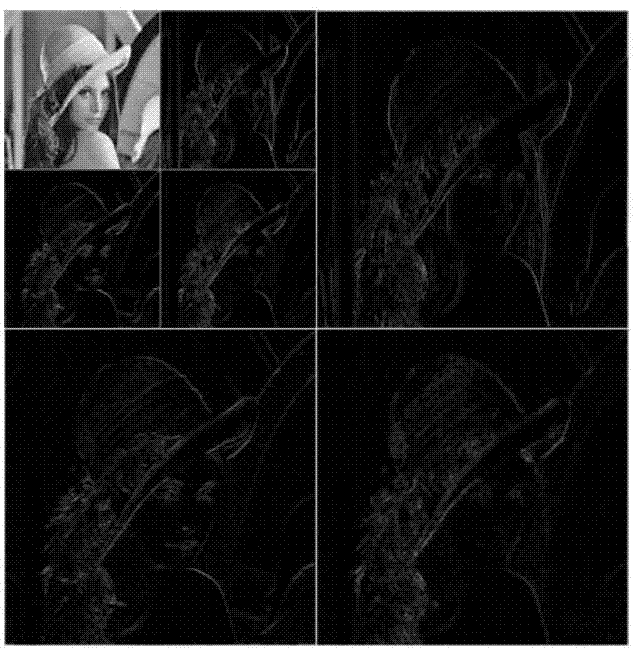

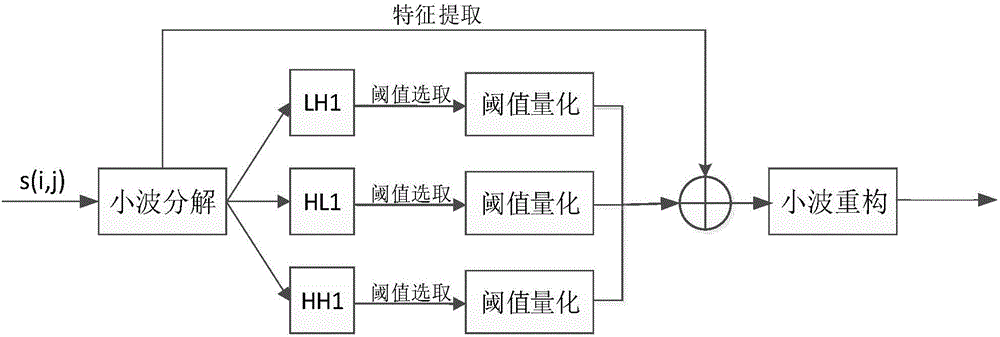

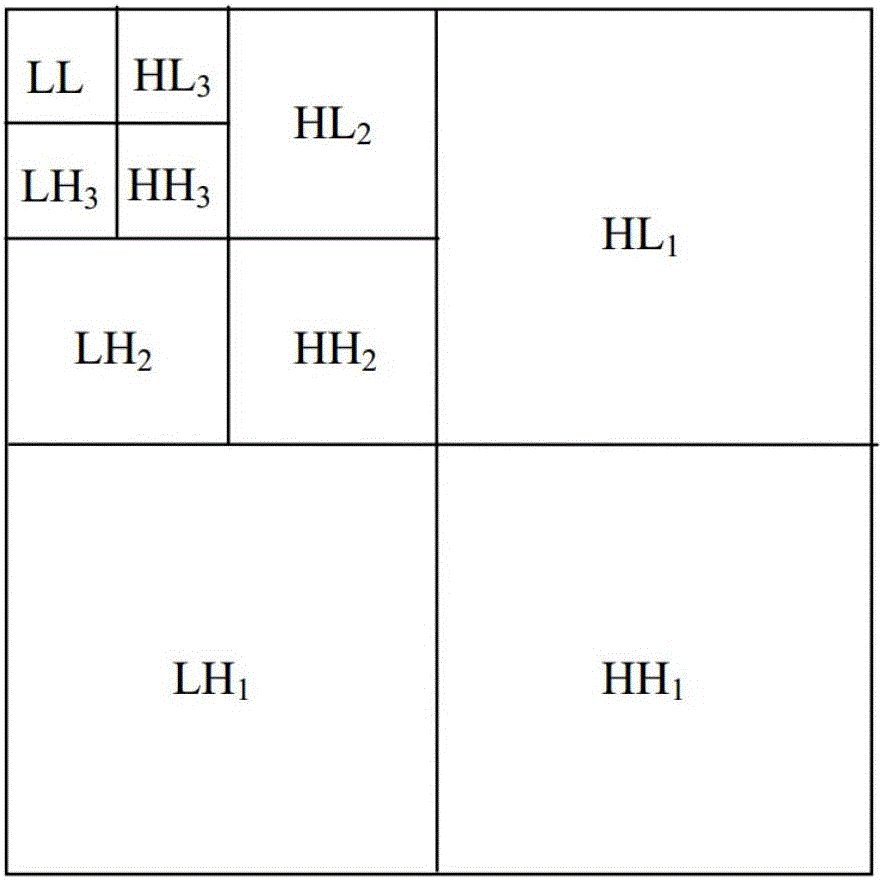

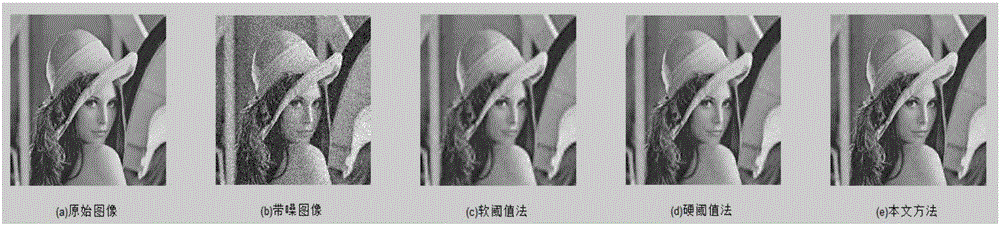

Image denoising method based on self-adaptive wavelet threshold and two-sided filter

InactiveCN103700072AEfficient removalKeep detailsImage enhancementDenoising algorithmAdaptive wavelet

The invention provides an image denoising method based on a self-adaptive wavelet threshold and a two-sided filter and aims to improve the effect of a wavelet threshold denoising algorithm and better protect the edge and the detailed information of an image. The algorithm comprises the following steps of decomposing the image by adopting a discrete wavelet to obtain a plurality of sub-bands and the wavelet coefficients of the sub-bands; selecting a threshold which is self-adaptively changed along with the changes of wavelet-decomposing scales and the sub-bands, and carrying out quantitative threshold processing by adopting a soft threshold function; carrying out inverse wavelet transformation to obtain a reconstructed image; filtering the reconstructed image by adopting the two-sided filter so as to obtain the clear image. According to the image denoising method, wavelet threshold denoising is carried out by utilizing the threshold which is self-adapted to the wavelet-decomposing scales and the sub-bands, and filtering is carried out by combining the two-sided filter, so that through the designed denoising algorithm, not only can white gaussian noise be effectively removed, but also the edge and the detailed information of the image can be well reserved.

Owner:BEIJING UNIV OF TECH

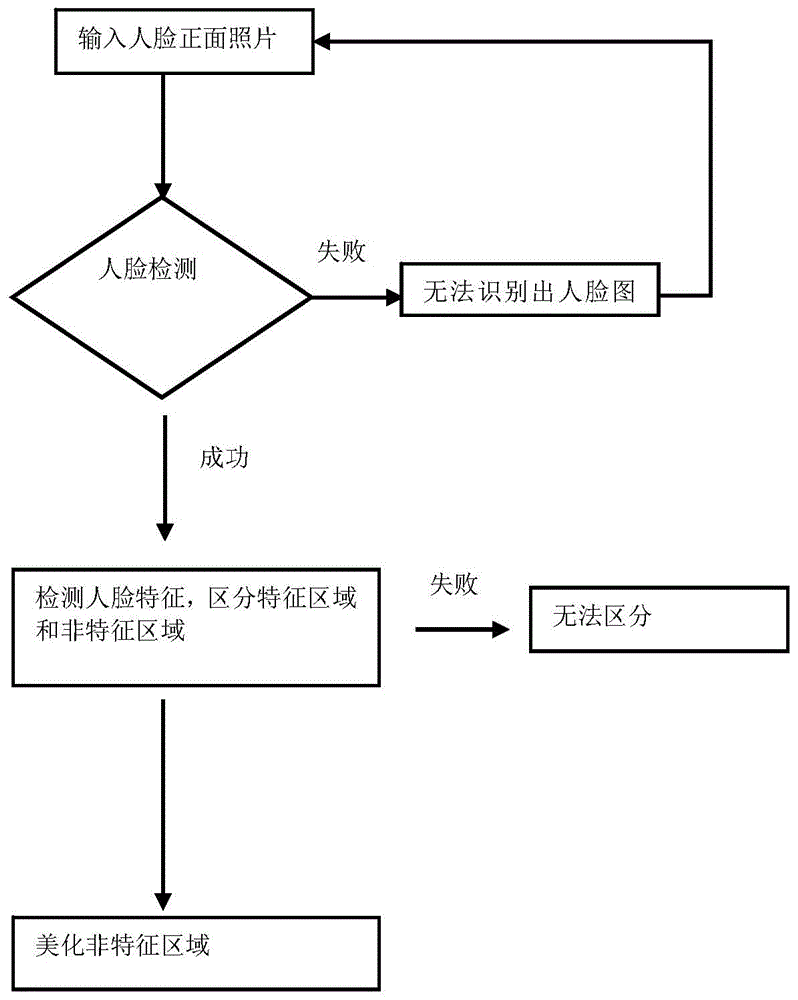

Method and system for replacing skin through human face photos

InactiveCN104318262AFast conversionQuick checkImage enhancementImage analysisFace detectionDigital image

The invention provides a method and a system for replacing skin through human face photos. The method comprises the following steps of: 1, carrying out human face reorganization in a picture to be processed, detecting whether human faces exist or not, and executing a step 2 if the human faces exist; 2, determining a skin region to be processed in a human face region according to the human face detection result in the step 1, and determining skin flaw positions in the skin region to be processed; and 3, removing the skin flaws in the human face region. The method and the system have the advantages that a plurality of human face positions existing in a digital image or a video can be fast detected and located, and can be converted into three-dimensional human face forms; the automatic skin retouching processing is carried out in the face region for removing the flaws; meanwhile, detail parts of the human face image can be completely remained; and an effect of automatically beautifying the human face is achieved.

Owner:上海明穆电子科技有限公司

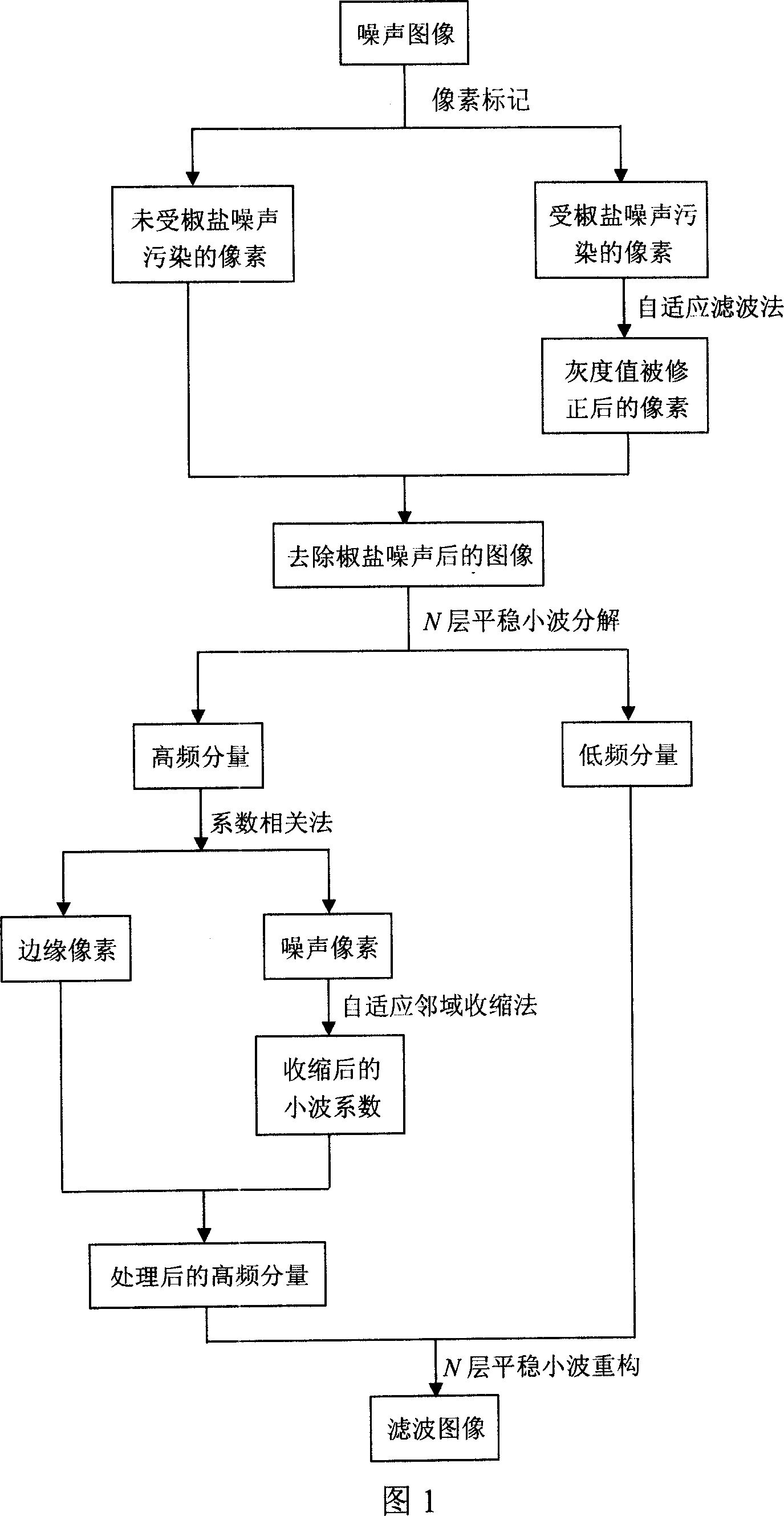

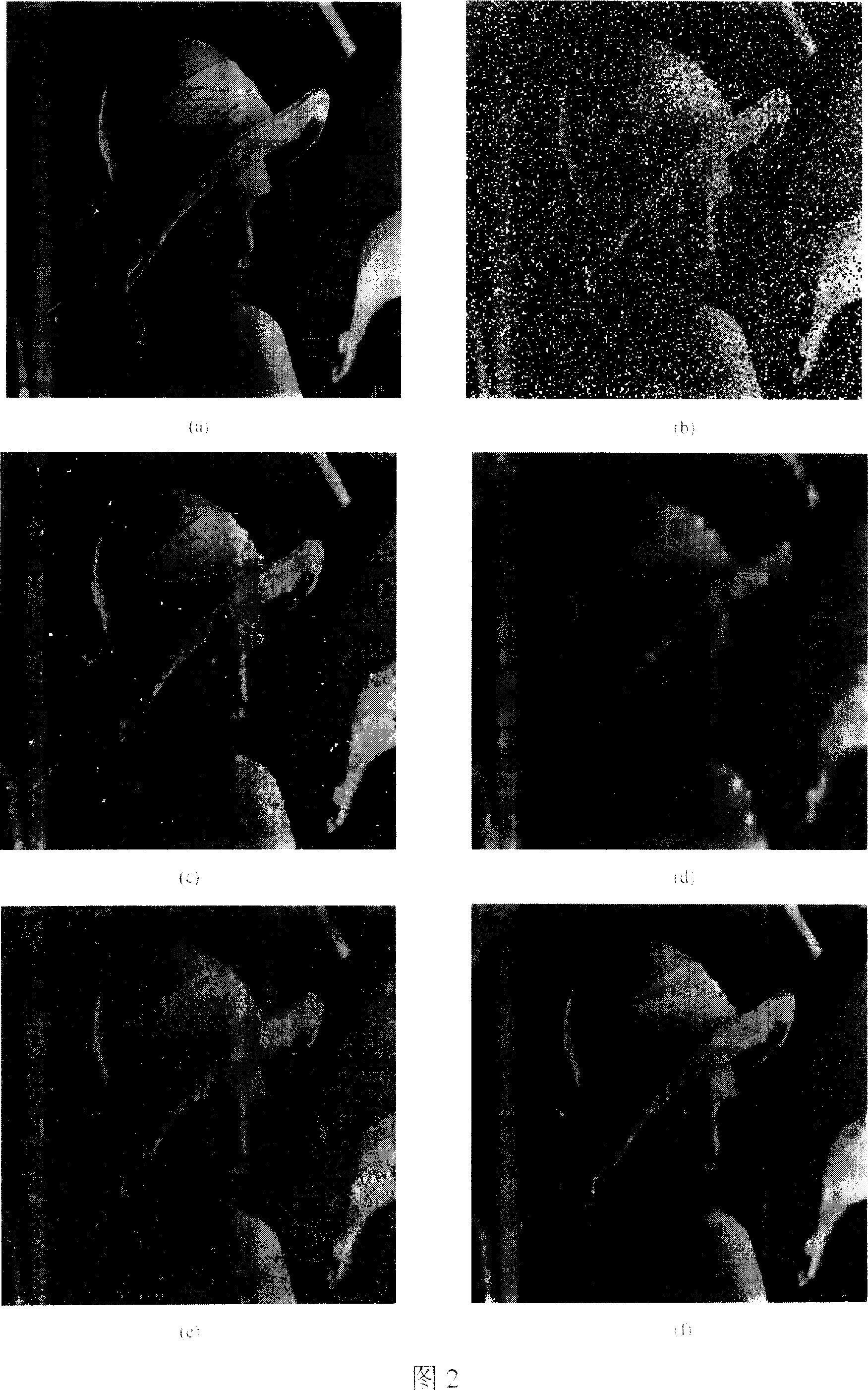

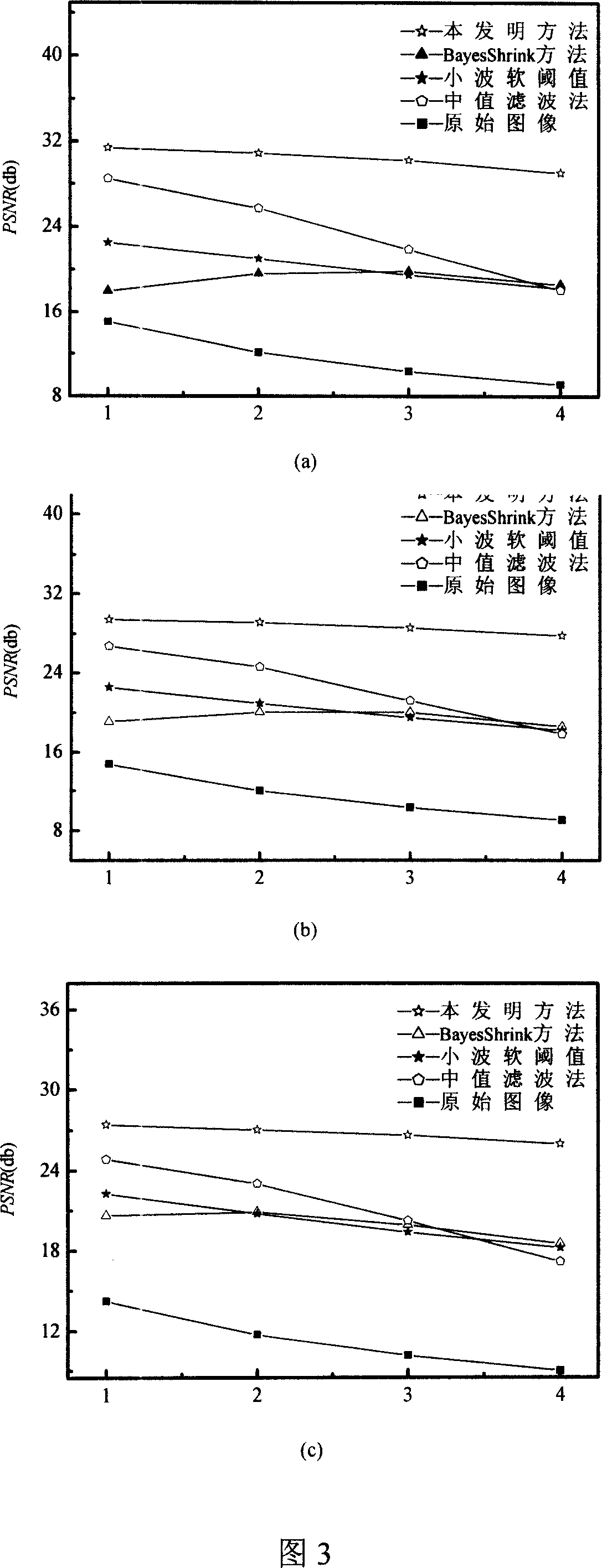

Self-adapting method for filtering image with edge being retained

InactiveCN101094312AGood removal effectEfficient removalTelevision system detailsColor television detailsSelf adaptiveSalt-and-pepper noise

The method comprises: using an extreme-value method to detect the image pixels polluted by the salt-and-pepper noise; using a self-adaptive filter method to correct the grey value of noise pixels to get an image removing the salt-and-pepper noise; making stationary wavelet de-composition to get relevant low-frequency component and high-frequency component; keeping an unchanged state for the low-frequency component, and using the coefficient correlation method for the high-frequency component to mark its pixels as noise or edge; if a pixels is marked as edge, then its value is unchanged; otherwise, using self-adaptive adjacent domain method to contract the wavelet coefficient; making stationary wavelet inverse transformation for the processed wavelet coefficient to get a noise-removed image.

Owner:HAOYUN TECH CO LTD

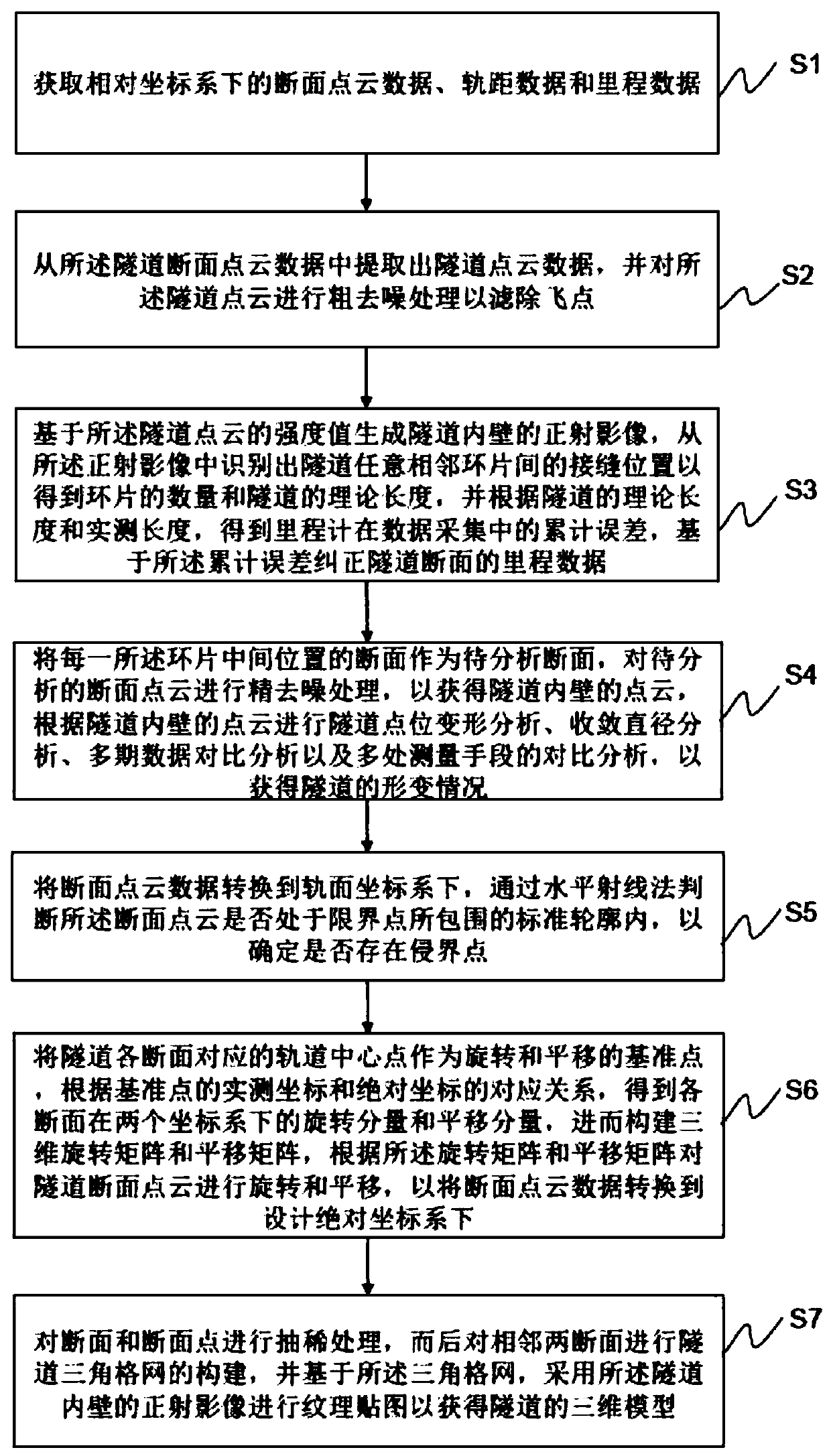

Mobile tunnel laser detection data processing method

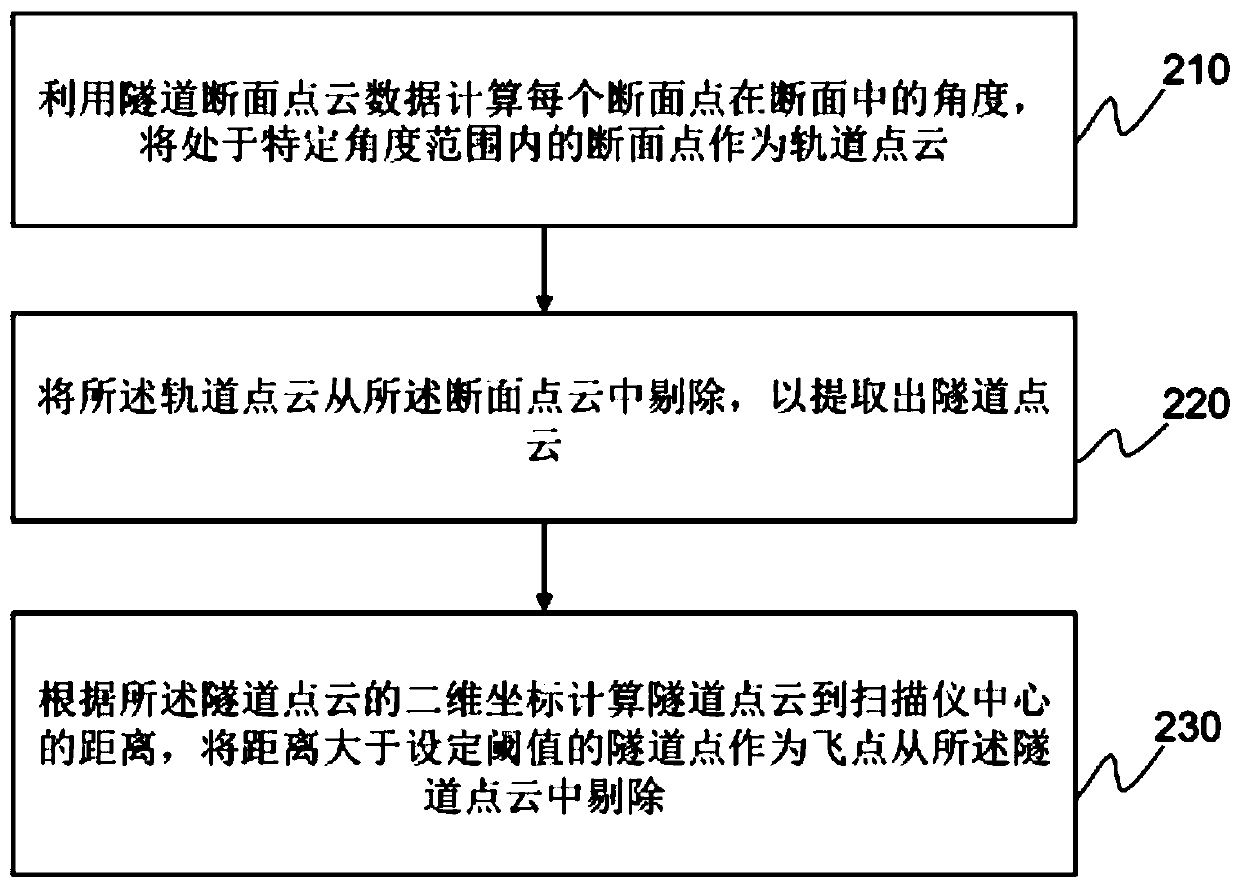

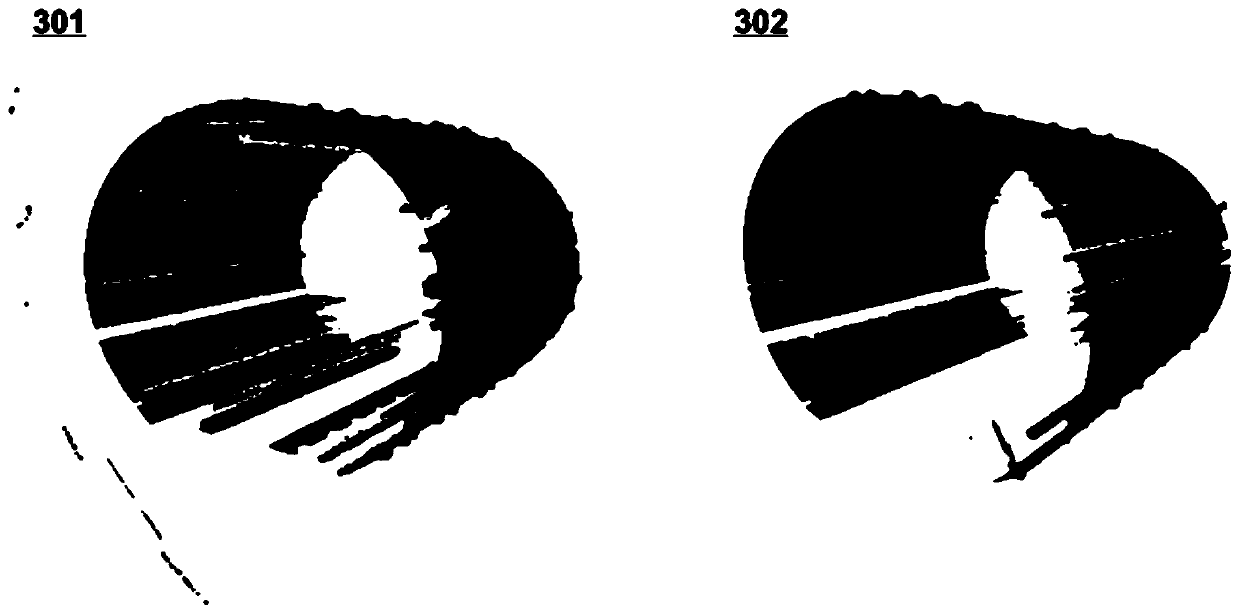

ActiveCN110411361AImprove processing efficiencyAccurate removalUsing optical meansPoint cloudProjection image

The invention relates to a mobile tunnel laser detection data processing method. The method comprises steps of acquiring original, actual tunnel section point cloud data; correcting tunnel section mileage data based on accumulated errors; performing tunnel point deformation analysis, convergent diameter analysis, multi-phase data comparison and analysis and comparison and analysis of multiple measurement manners according to point cloud on the inner wall of the tunnel, so as to acquire tunnel deformation situation; determining whether the tunnel section point cloud is within a standard profileenclosed by limiting points by using a horizontal ray method, so as to determine whether invasion points exist; converting section point cloud data into a designed absolute coordinate system, so as to restore a design line type of the tunnel; and performing texture mapping by using an orthogonal projection image of the inner wall of the tunnel so as to acquire a 3D model of the tunnel. In the method, tunnel deformation analysis, mileage correction, boundary detection, recovery of the design line type of the tunnel and 3D modelling of the tunnel can be performed according to the original, actual section, and data is processed rapidly.

Owner:CAPITAL NORMAL UNIVERSITY

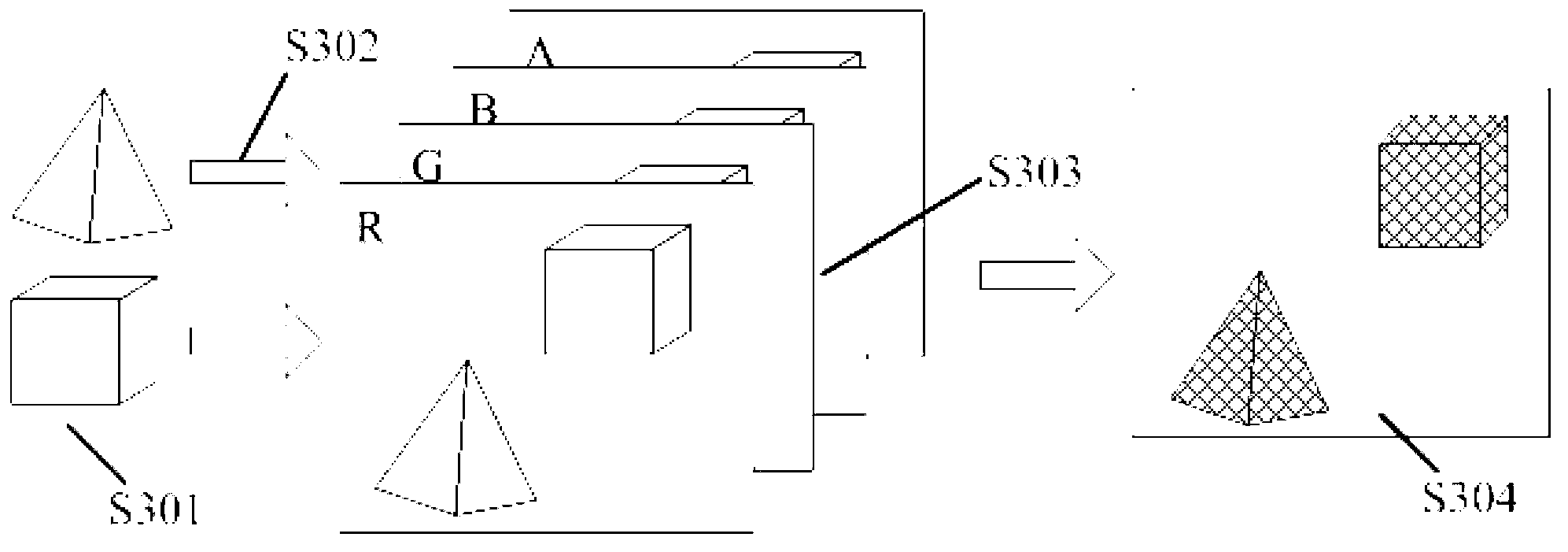

Multi-scale gradient domain image fusion algorithm

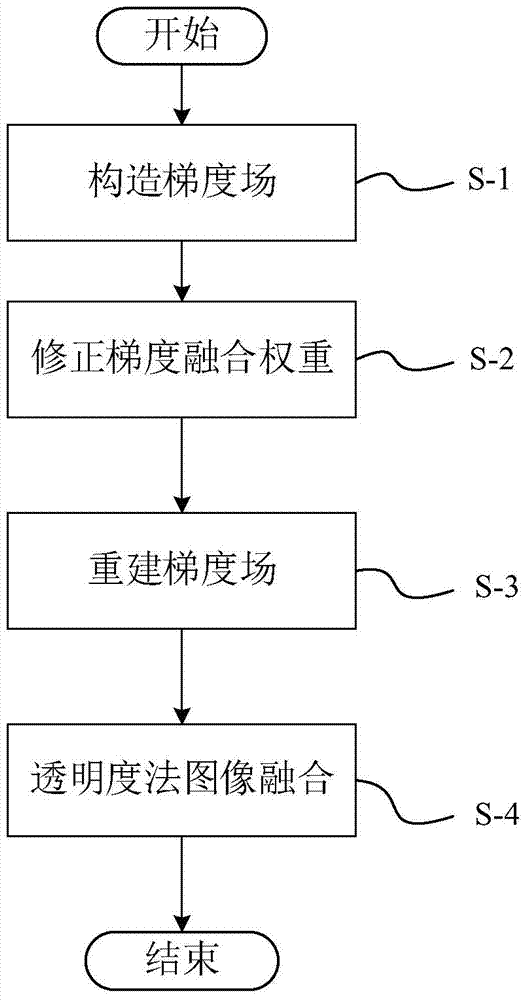

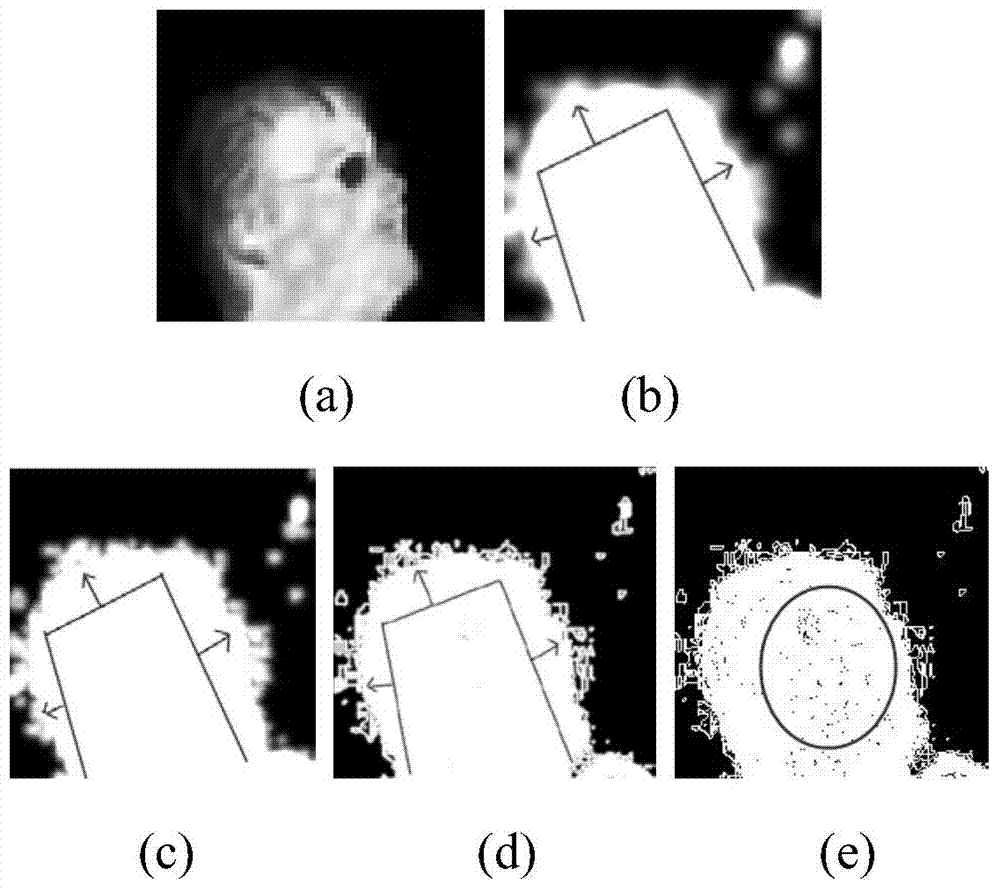

InactiveCN104504670AKeep detailsSolve the problem of not being able to identify the thermal hazard of the deviceImage enhancementGeometric image transformationImage fusion algorithmComputer vision

The invention provides a multi-scale gradient domain image fusion algorithm which is used for fusing infrared thermal images with visible light images. The multi-scale gradient domain image fusion algorithm is characterized by comprising the following steps: a step of constructing gradient fields; a step of correcting gradient fusion weight; a step of reconstructing gradient fields; and a step of fusing images through a transparency method. The multi-scale gradient domain image fusion algorithm provided by the invention can be used for simultaneously reserving temperature rise areas and detail information of infrared thermal images and visible light images, removing a halation background-effect phenomenon and redundant information due to interaction among images and avoiding color inconsistency of an infrared thermal image temperature rise area.

Owner:UNIV OF SHANGHAI FOR SCI & TECH

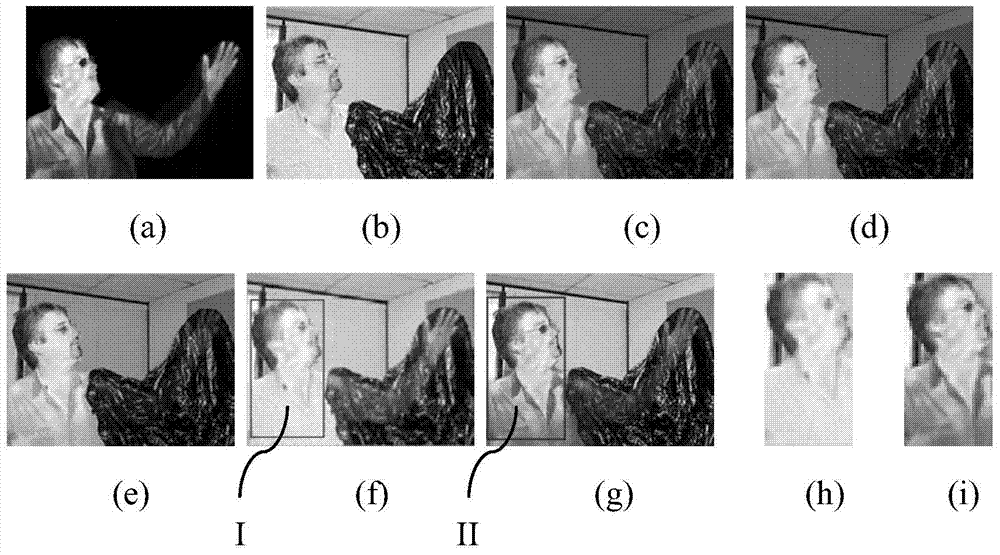

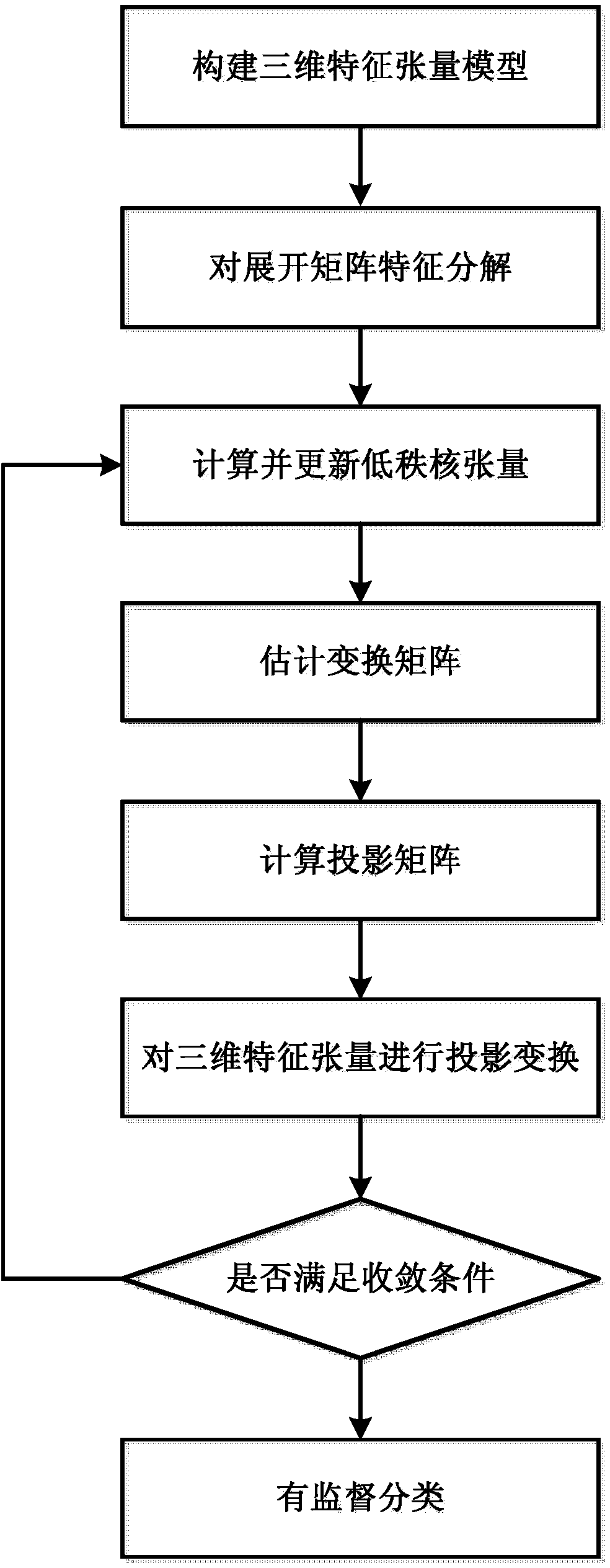

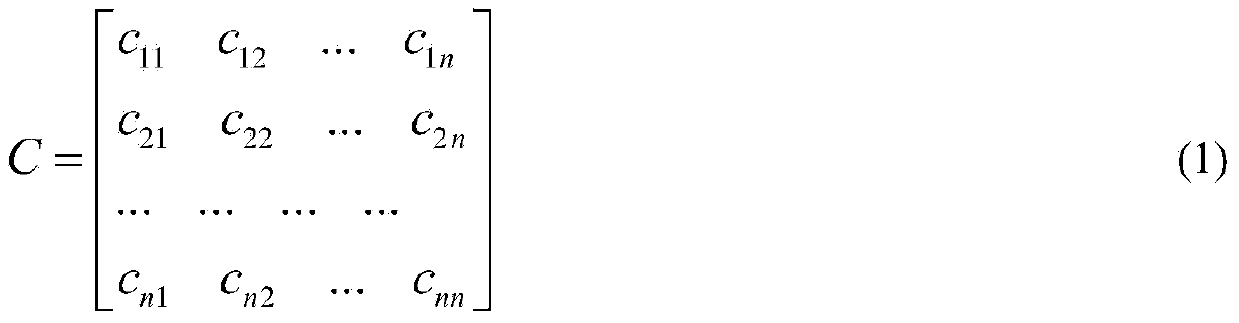

Polarization image sorting method based on tensor decomposition and dimension reduction

ActiveCN103886329AImprove efficiencyFull of informationCharacter and pattern recognitionTerrainImaging processing

The invention belongs to the technical field of image processing, relates to the POLSAR image processing technology, and discloses a polarization image sorting method based on tensor decomposition and dimension reduction. According to the method, polarization data and a polarization characteristic quantity matrix are utilized for setting three-dimensional polarization characteristic tensor, low-dimension characteristic tensor is obtained according to the dimension reduction method based on tensor decomposition, training samples are selected from the low-dimension characteristic tensor for classification of an SVM, and on the basis of not destroying the space relation between the structure of the three-dimensional polarization characteristic tensor and adjacent pixels, redundancy between the characteristic quantities is removed, dimension disasters are avoided, the classification effect is well improved, and the efficiency and robustness of the algorithm are improved. The polarization image sorting method based on tensor decomposition and dimension reduction can be applied to classification of various complex terrains.

Owner:XIDIAN UNIV

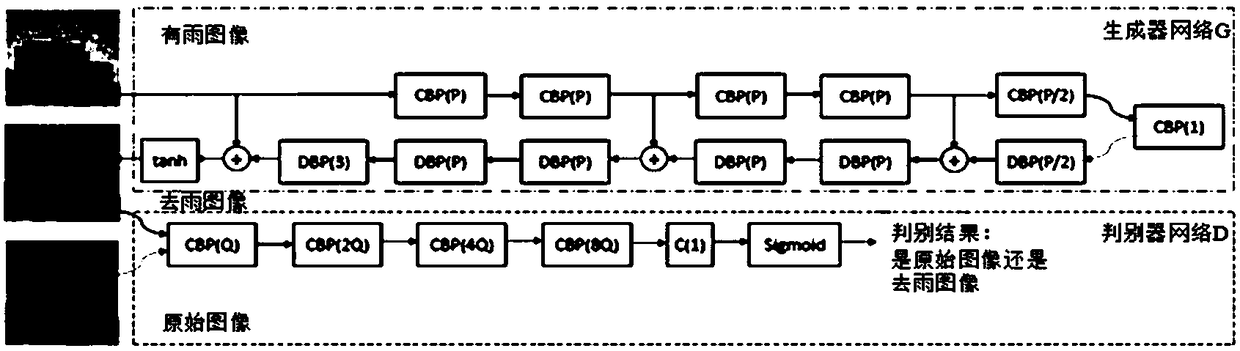

Single image rainfall removing method based on image partitioning of generating antagonistic network

The invention provides a single image rainfall removing method based on image partitioning of generating antagonistic network. By dividing images into image blocks with the same size and non-overlapping each other, each image block is used as a condition to generate input of antagonistic network, and the input dimension is reduced. A generate antagonism network is trained to realize the nonlinearmapping from rainless image block to rainless image block, which overcomes the problem that many details are neglected and can remove the rainline at every scale as much as possible. In order to maintain the consistency of the structure and color between the rainless image blocks, a new error function is constructed by using bilateral filter and non-mean local denoising algorithm, which is added to the total error function of the conditional generation countermeasure network. The invention does not need any prior knowledge, nor does it need to preprocess and post-process the image, thus ensuring the integrity of the whole structure. The results on the test set show that the invention is improved by 4-7dB compared with the classical algorithm.

Owner:SHANGHAI JIAO TONG UNIV

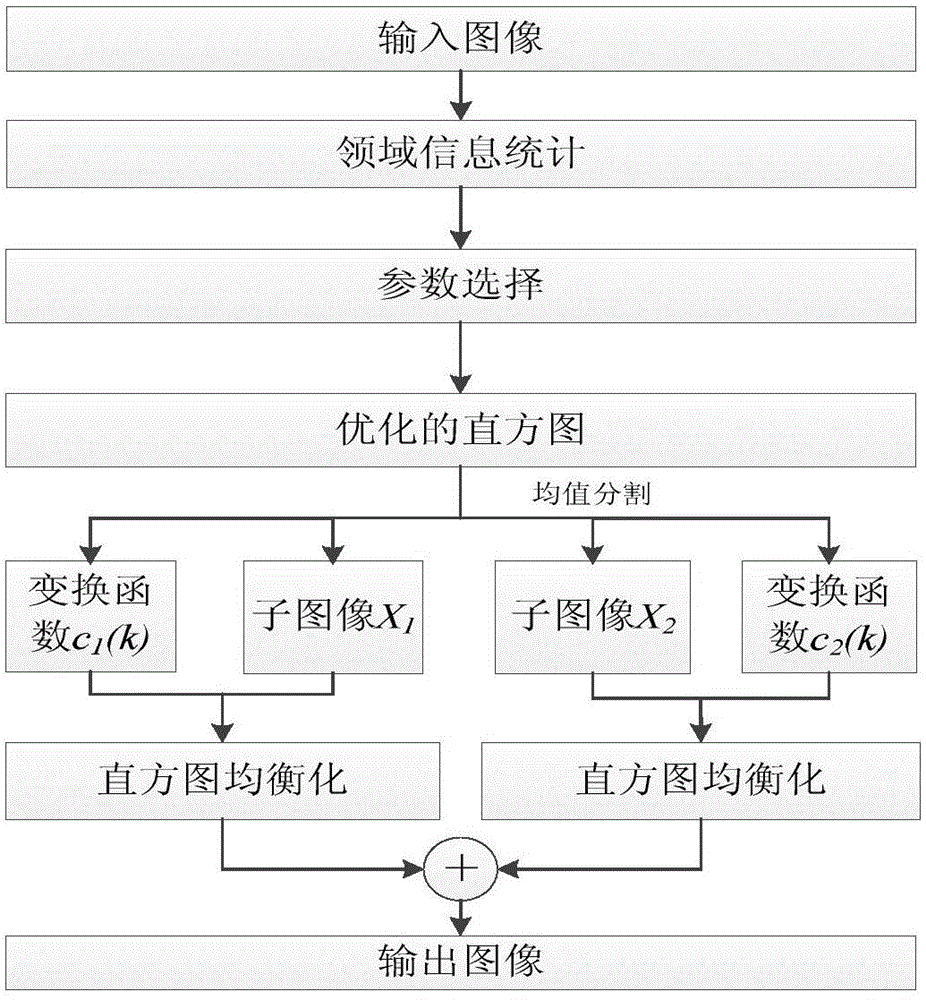

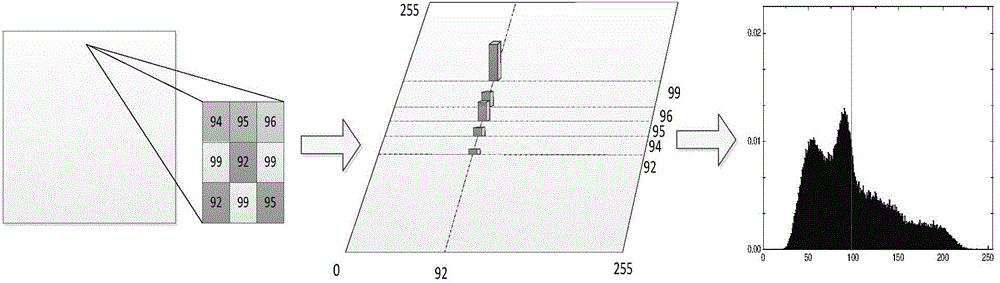

Histogram equalization method for maintaining background and detail information

InactiveCN103606137AKeep detailsIncrease contrastImage enhancementImaging qualityContrast enhancement

The invention relates to the field of digital image processing, and aims at enhancing digital image contrast ratio and weakening unfavorable influence on image quality caused by an over-enhancement effect, a halo effect and average brightness drifting. Therefore, the technical scheme adopted by the invention is a histogram equalization method for maintaining background and detail information. A histogram statistics calculation method for performing statistics on local neighborhood information is adopted so that an optimized histogram is generated. Meanwhile, degree of enhancement of the parameter adjustment contrast ratio is set. Before equalization of the histogram, a certain value of the optimized histogram is taken to perform segmentation, and then histogram equalization operation is performed respectively. The histogram equalization method is mainly applied to digital image processing.

Owner:TIANJIN UNIV

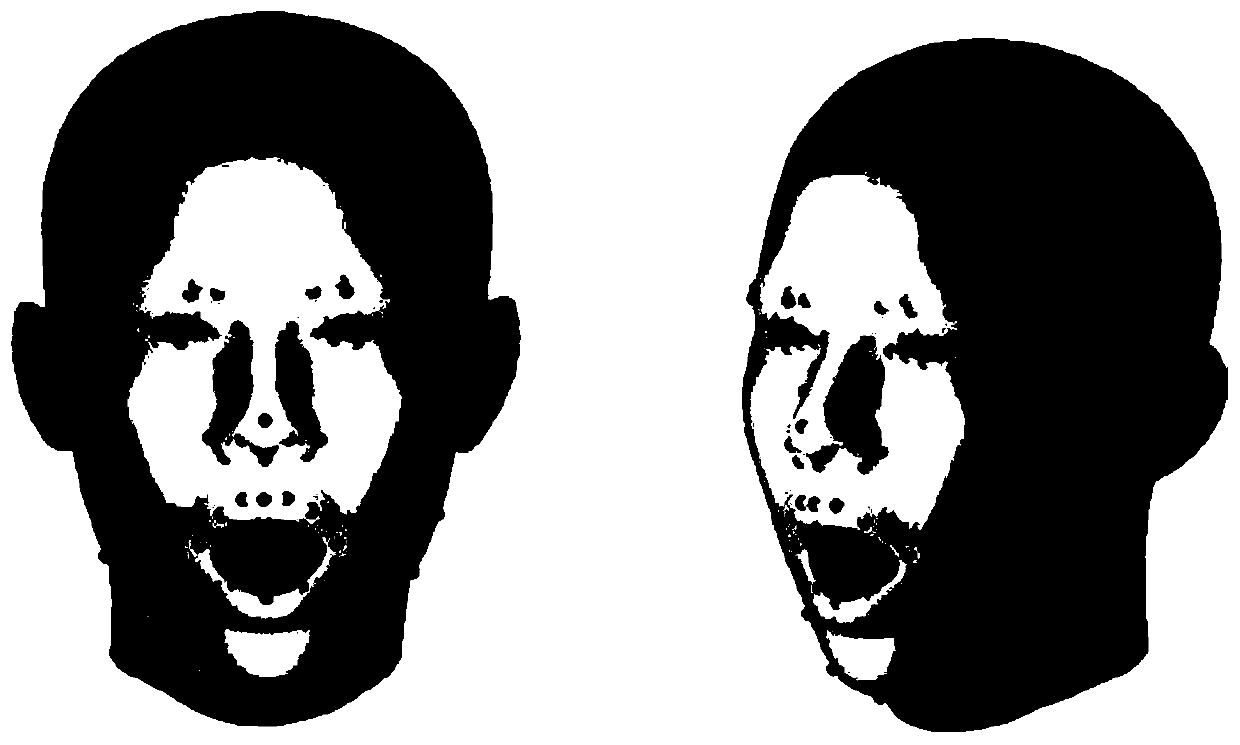

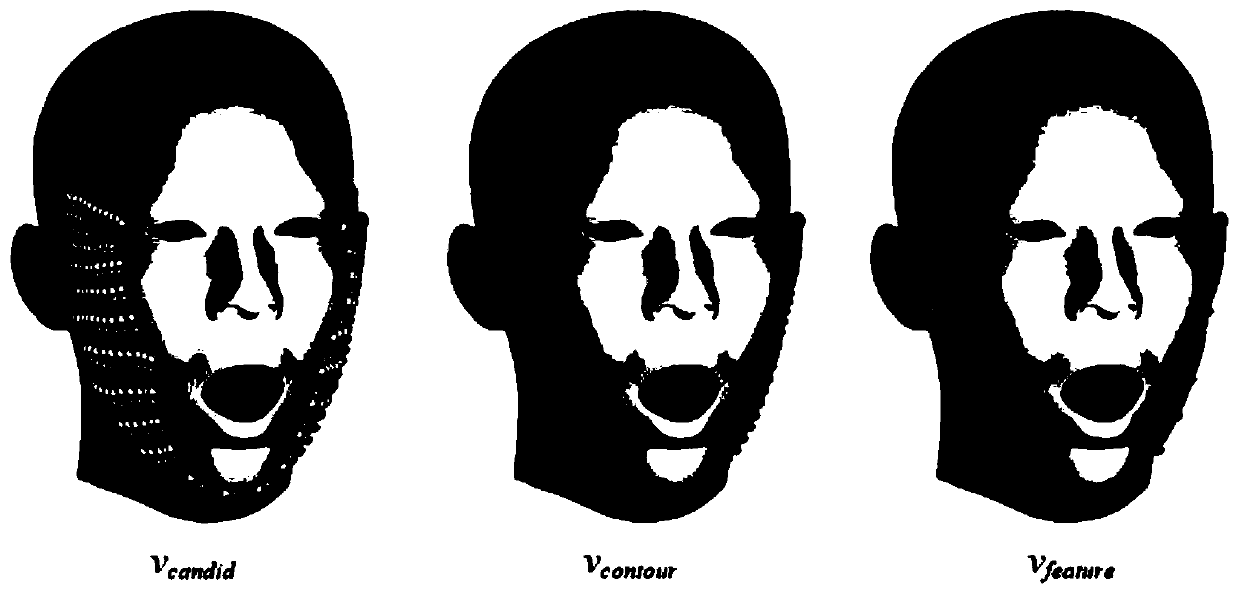

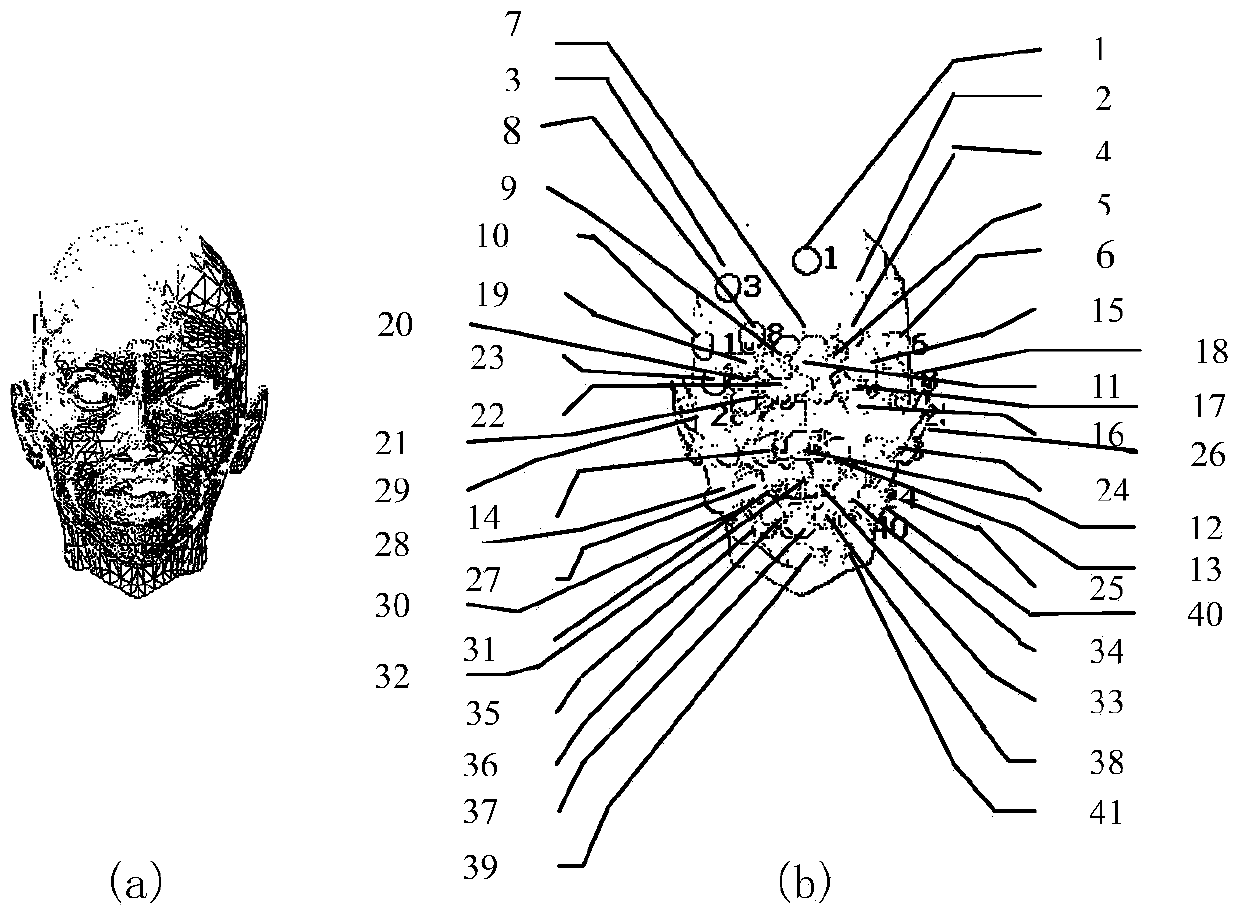

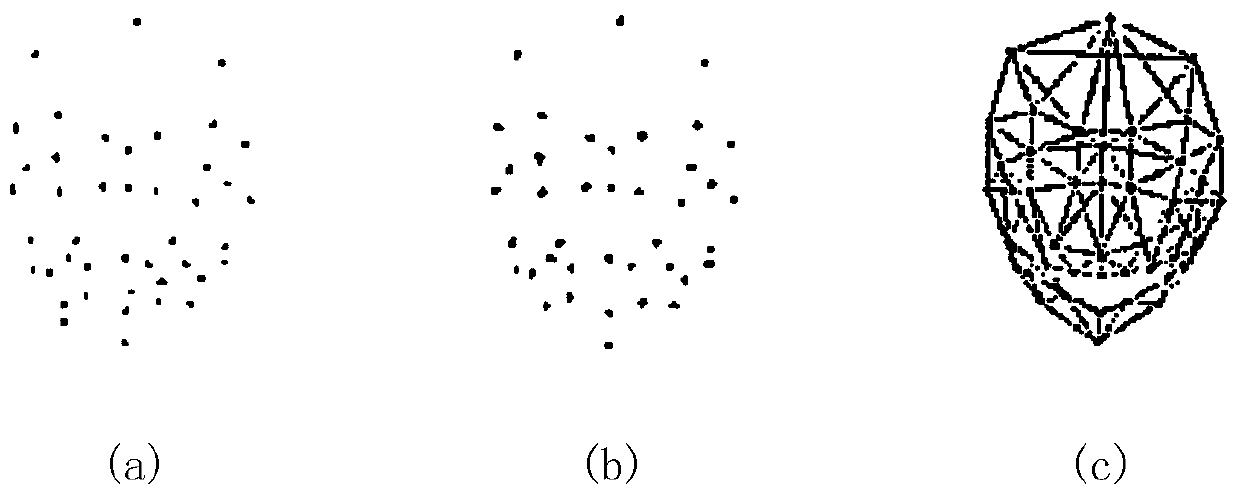

Three-dimensional human head and face model reconstruction method based on random face image

ActiveCN110443885AKeep detailsSuppress Distortion DetailsCharacter and pattern recognitionArtificial lifePattern recognitionPoint cloud

The invention provides a three-dimensional human head and face model reconstruction method based on a random face image. The method includes; establishing a human face bilinear model and an optimization algorithm by using a three-dimensional human face database; gradually separating the spatial attitude of the human face, camera parameters and identity features and expression features for determining the geometrical shape of the human face through the two-dimensional feature points, and adjusting the generated three-dimensional human face model through Laplace deformation correction to obtaina low-resolution three-dimensional human face model; finally, calculating the face depth, and achieving high-precision three-dimensional model reconstruction of the target face through registration ofthe high-resolution template model and the point cloud model, so as to enable the reconstructed face model to conform to the shape of the target face. According to the method, while face distortion details are eliminated, original main details of the face are kept, the reconstruction effect is more accurate, especially in face detail reconstruction, face detail distortion and expression influences are effectively reduced, and the display effect of the generated face model is more real.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

Self-adaptive wavelet threshold image de-noising algorithm and device

The invention brings forward a self-adaptive wavelet threshold image de-noising algorithm and device. The image de-noising algorithm comprises the following steps: a noised image is subjected to wavelet transformation operation, and wavelet coefficients of all layers can be obtained; with signal correlation considered, coefficients in an area adjacent to each coefficient are averaged in wavelet coefficients of each layer; threshold is determined based on a wavelet coefficient which is obtained via an absolute mean value estimation method, and a self-adaptive threshold method is adopted for determining thresholds suitable for all different scales; as for the wavelet coefficients and thresholds, self-adaptive threshold functions for all directions at all layers are constructed, wavelet inverse transformation and reconstruction are performed, and a de-noised image can be obtained. According to the image de-noising algorithm, the self-adaptive threshold method is adopted for determining the thresholds, an overall uniform threshold is replaced with thresholds for different scales, wavelet threshold de-noising operation is performed via use of the self-adaptive thresholds and the self-adaptive threshold functions, and detailed information of the image can be protected; the self-adaptive wavelet threshold image de-noising algorithm is better than a conventional wavelet threshold de-noising algorithm in terms of peak signal to noise ratio and visual perception.

Owner:JINAN UNIVERSITY

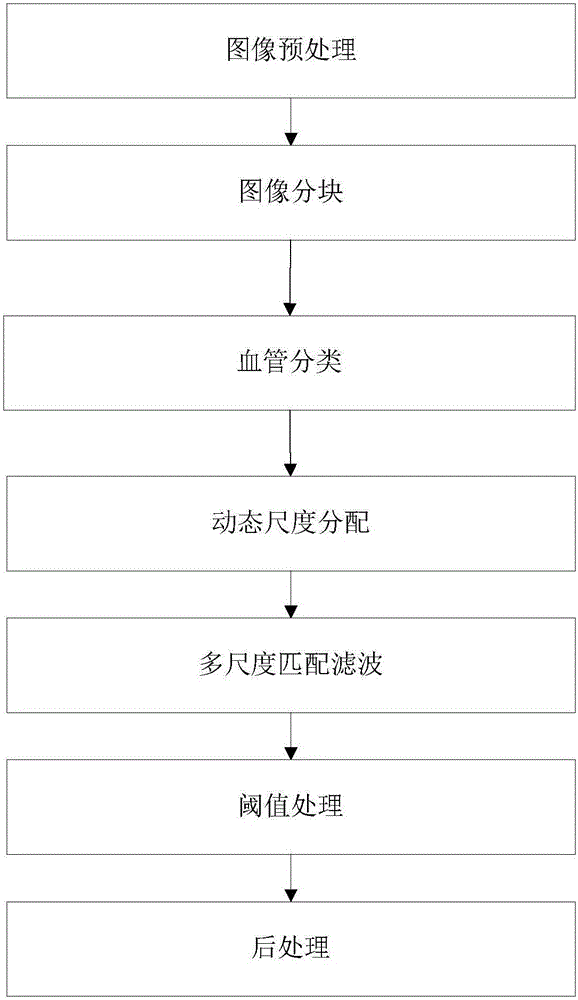

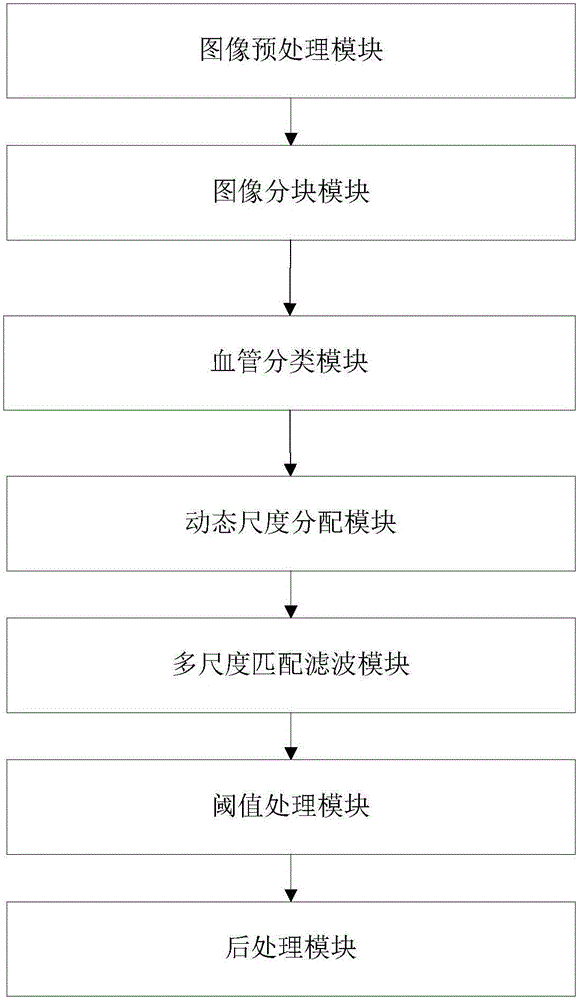

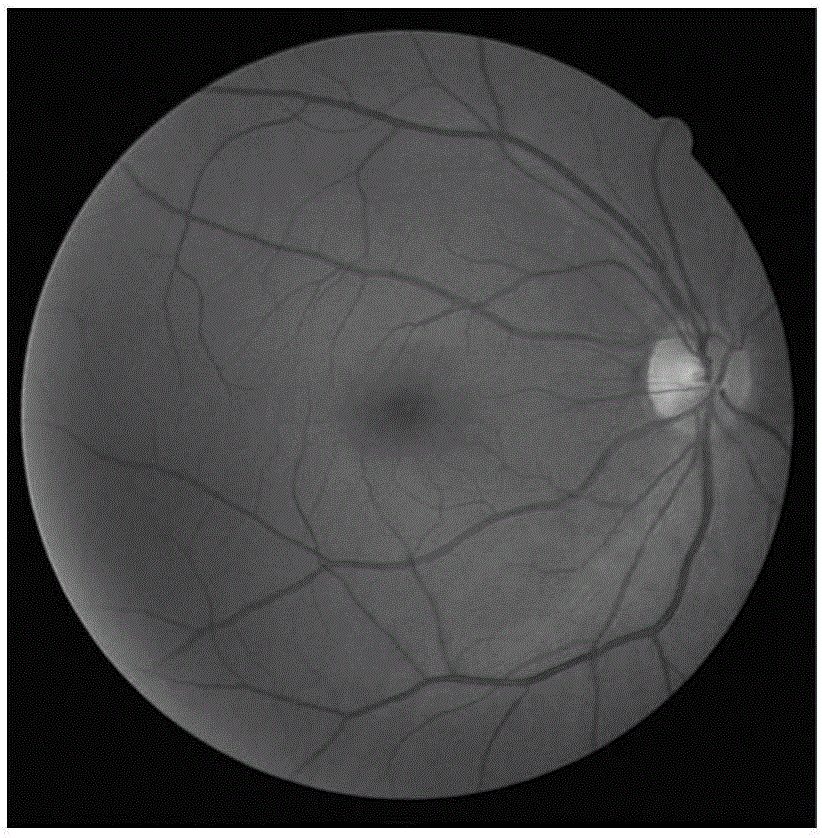

Dynamic scale distribution-based retinal vessel extraction method and system

ActiveCN106407917AReduce the chance of missegmentationImprove performanceImage enhancementImage analysisContrast enhancementComputer vision

The present invention discloses a dynamic scale distribution-based retinal vessel extraction method and system. The method includes the following steps of: retinal image preprocessing: contrast enhancement is performed on the green channel component of a color retinal image; image segmentation: the preprocessed retinal image is segmented into a set number of sub-images; vessel classification: the vessels of each sub-image are divided into three categories, namely, a large category, a medium category and a small category; dynamic scale allocation: filters of different scales are dynamically selected to enhance vessels of different widths; multi-scale matched filtering; threshold processing: vascular structures are extracted, nonvascular structure was removed, the extraction results of all the sub-images are re-spliced, so that a retinal vessel network binary image can be obtained; and post-processing: post-processing is carried out, so that a high-segmentation accuracy retinal vessel network image can be obtained. With the method and system of the invention adopted, the vessel extraction of the retinal image can be realized; excessive estimation of the widths of the vessels can be avoided when complex nonvascular structures are removed; and simpler and more accurate retinal vessel extraction can be realized.

Owner:SHANDONG UNIV

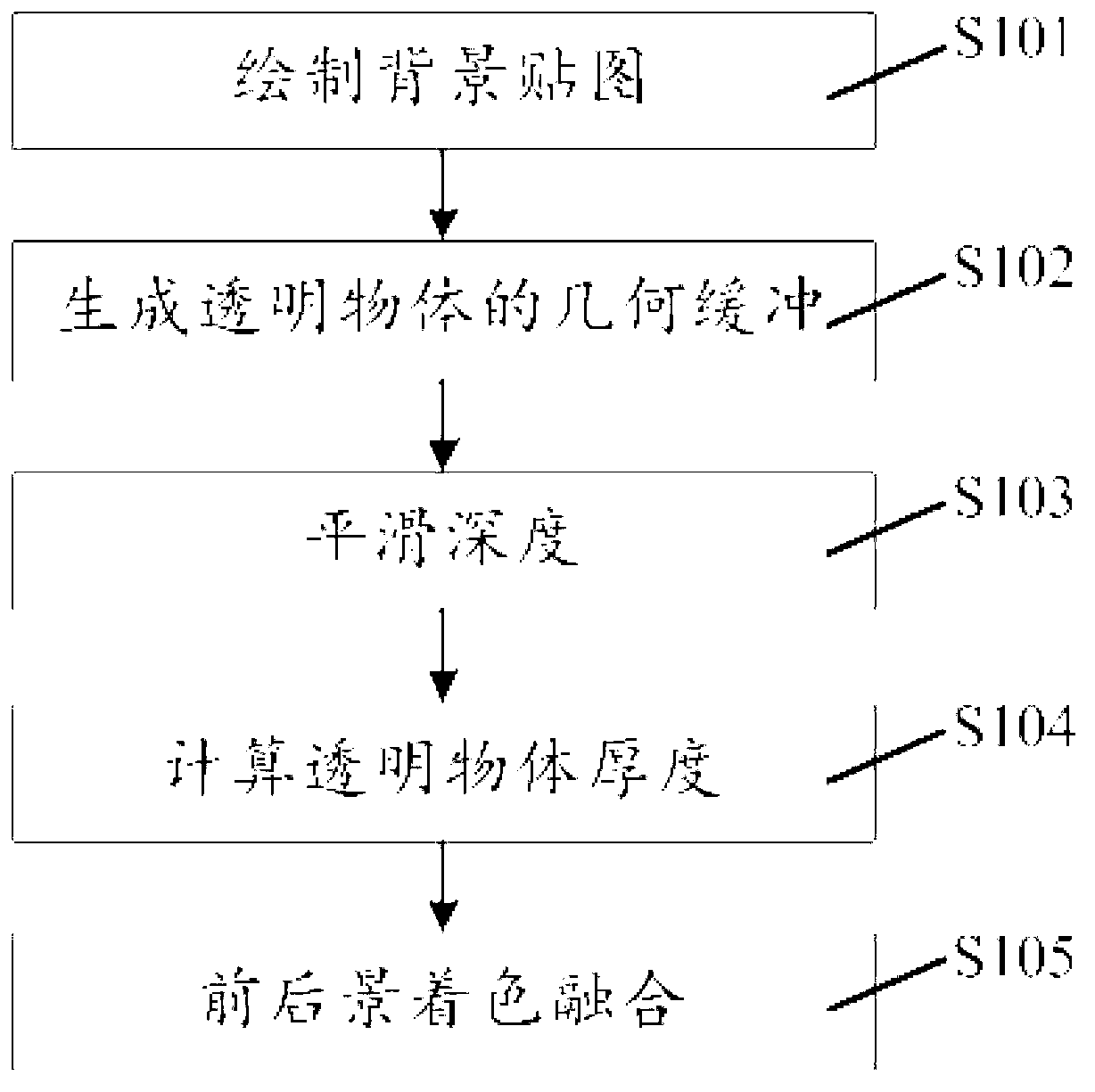

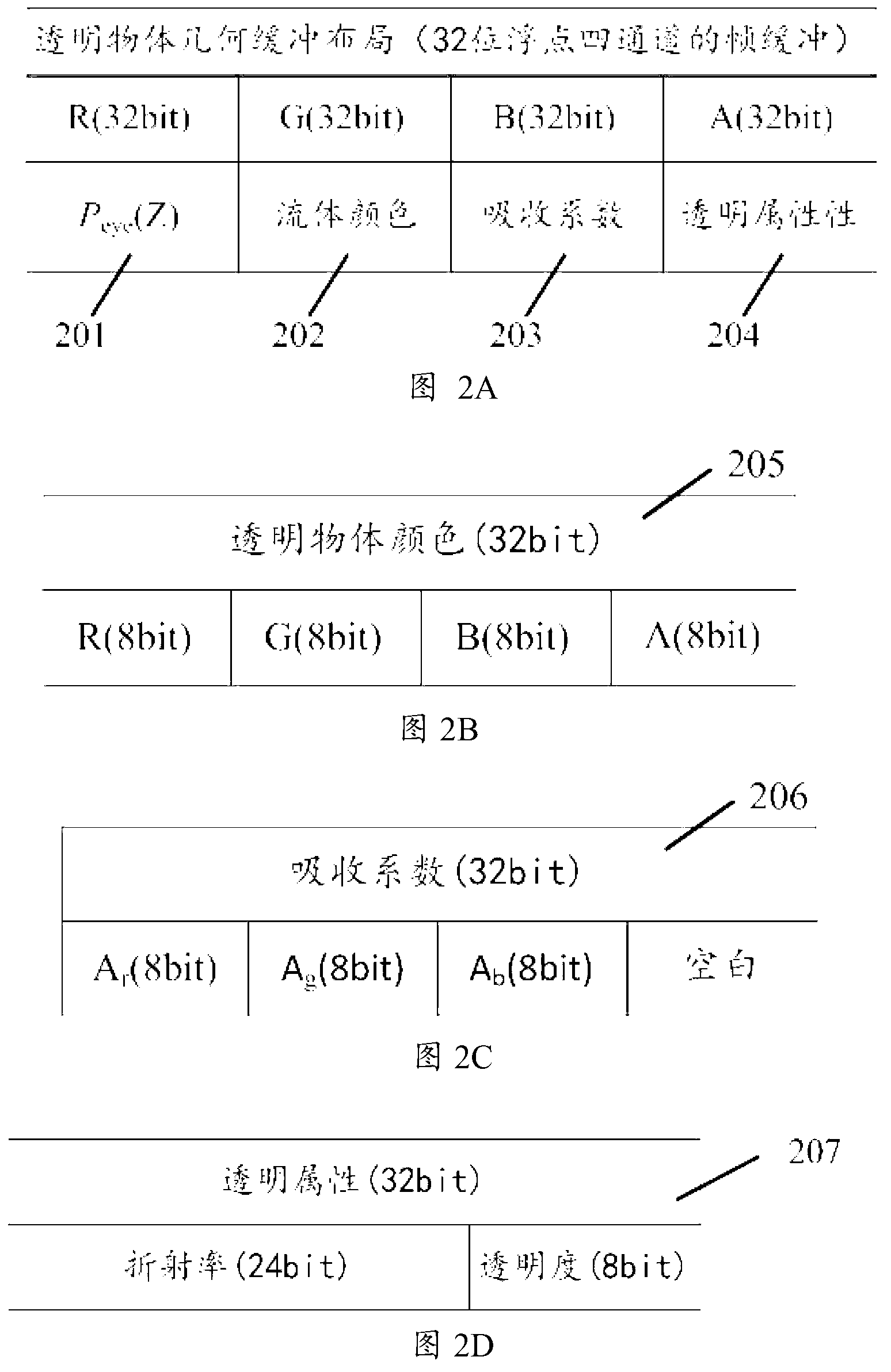

Real-time transparent object GPU (graphic processing unit) parallel generating method based on three-dimensional point cloud

The invention discloses a real-time transparent object GPU (graphic processing unit) parallel generating method based on three-dimensional point cloud, which comprises steps of: (1) generating a background map of a nontransparent object; (2) generating geometric buffer of a transparent object, generating all three-dimensional points by a sphere generating way, utilizing hardware depth detection to acquire depth of an approximate plane, and simultaneously saving material information of the transparent object; (3) smoothing the depth, using the depth information in the geometric buffer to perform smooth filtering to the depth to acquire a smooth surface; (4) calculating thickness of the transparent object, generating all three-dimensional points, and utilizing hardware Alpha to mix and calculate the thickness of the transparent object; and (5) coloring the transparent object, using the depth and the material information to perform illumination computation to the transparent object, utilizing the thickness to calculate refraction and reflection properties of the transparent object, and utilizing the background map to finish coloring. The method avoids the surface rebuilding step in the traditional method, and can meet the requirement of real-time generation of a million-level transparent object based on point cloud.

Owner:BEIHANG UNIV

Visible light and infrared image fusion algorithm based on NSCT domain bottom layer visual features

The invention provides a visible light and infrared image fusion algorithm based on non-subsample contourlet transform (NSCT) domain bottom layer visual features.Firstly, visible light and infrared images are subjected to NSCT, high and low frequency subband coefficients of the visible light and the infrared images are obtained, then phase equalization, neighborhood space frequency, neighborhood energy and other information are combined, the pixel active levels of the low frequency subband coefficients are comprehensively measured, fusion weights of the low frequency subband coefficients of the visible light and infrared images are obtained respectively, and therefore low frequency subband coefficients of fusion images are obtained; the pixel active levels of the high frequency subband coefficients are measured through the combination of phase equalization, definition, brightness and other information, fusion weights of the high frequency subband coefficients of the visible light and infrared images are obtained respectively, then high frequency subband coefficients of the fusion images are obtained, finally, NSCT reverse transformation is utilized, and final fusion images are obtained.Detail information of source images can be effectively reserved, and meanwhile useful information of the visible light images and the infrared images is synthesized.

Owner:云南联合视觉科技有限公司

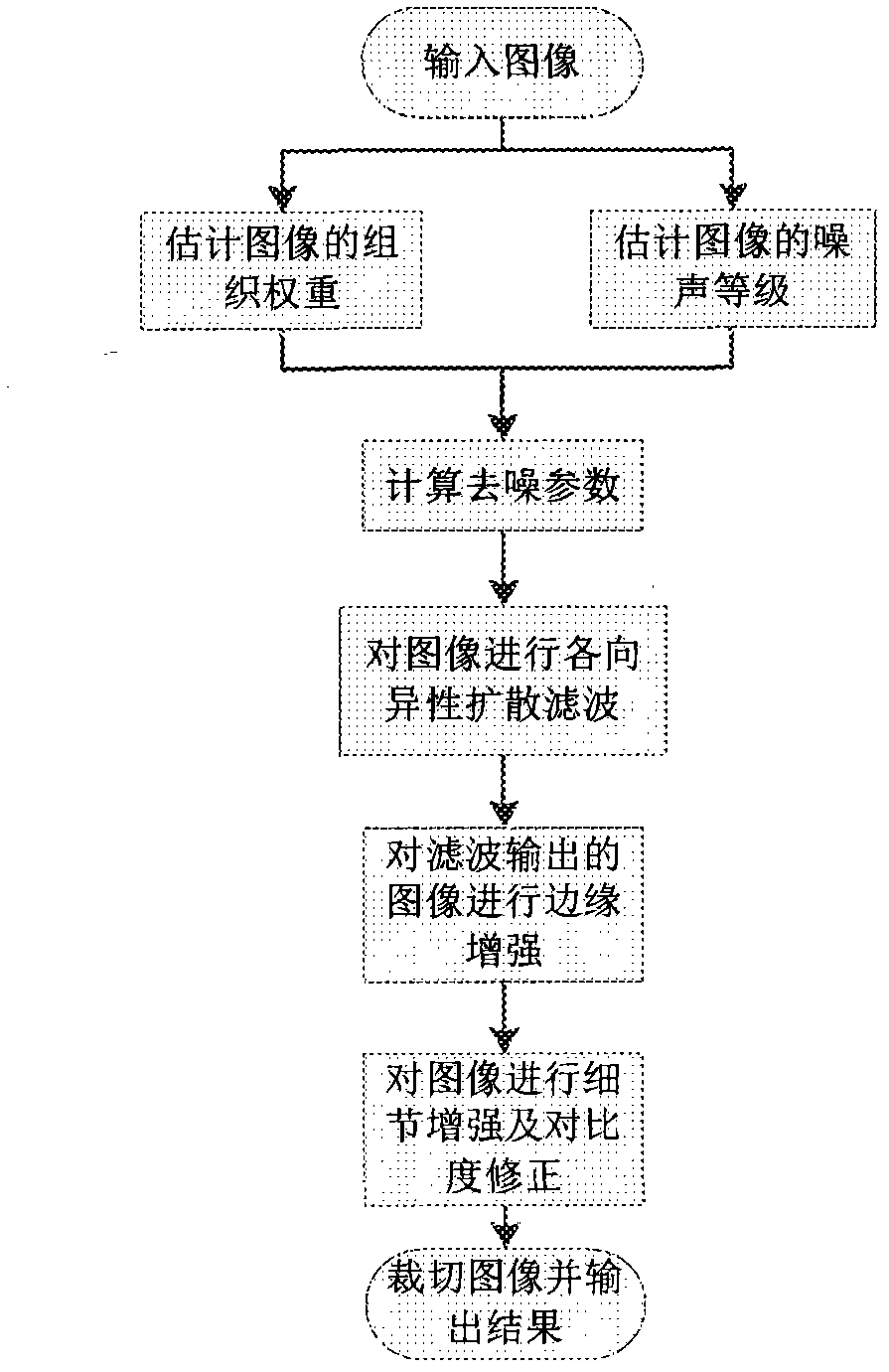

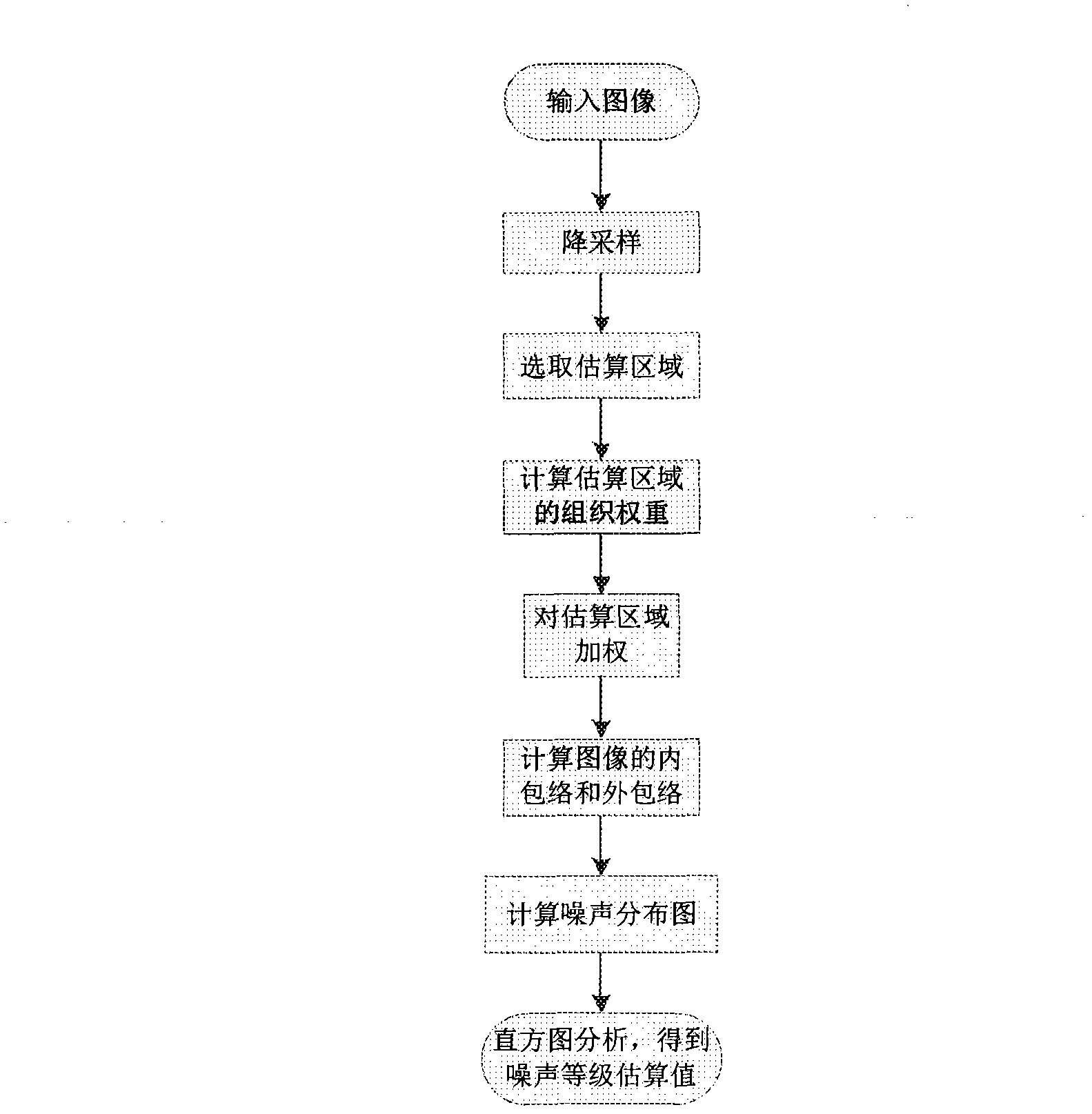

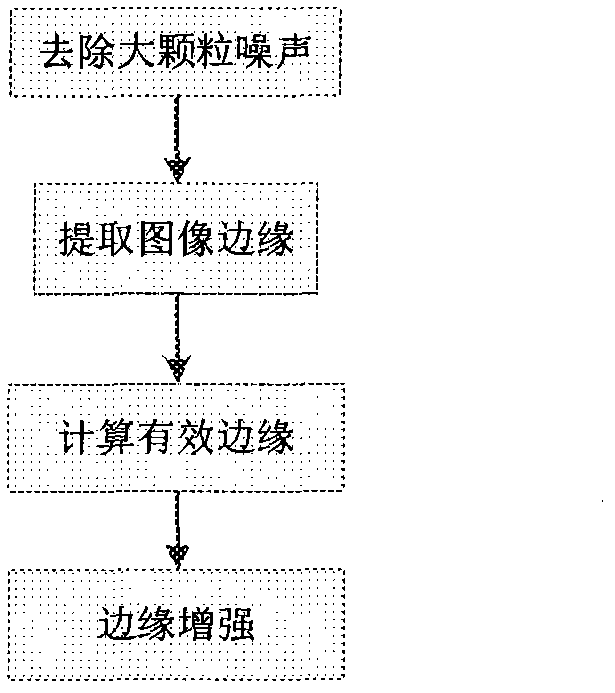

Method and device for removing CT (computed tomography) image noises

ActiveCN103186888AKeep detailsDoes not weaken contrastImage enhancementComputed tomographyNoise level

The invention discloses a method and a device for removing CT (computed tomography) image noises, and relates to the technical field of image noise removal. The method comprises the following steps of: estimating the tissue weight of an image, estimating the noise level of the image, calculating a noise removing parameter, carrying out anisotropic diffusion filtering on the image, carrying out edge enhancement on the image subjected to filtering output, enhancing details of the image and correcting the contrast ratio, cutting the image, and outputting the result. The device comprises a module for estimating the tissue weight of the image, a module for estimating the noise level of the image, a module for calculating the noise removing parameter, a module for carrying out anisotropic diffusion filtering on the image, a module for carrying out edge enhancement on the image subjected to filtering output, a module for performing detail enhancement and contrast ratio rectification on the image, and a module for cutting images and outputting the result. The method and the device can maintain the image edge and the original contrast ratio of the image while effectively removing the high-frequency noises of the CT images.

Owner:GE MEDICAL SYST GLOBAL TECH CO LLC

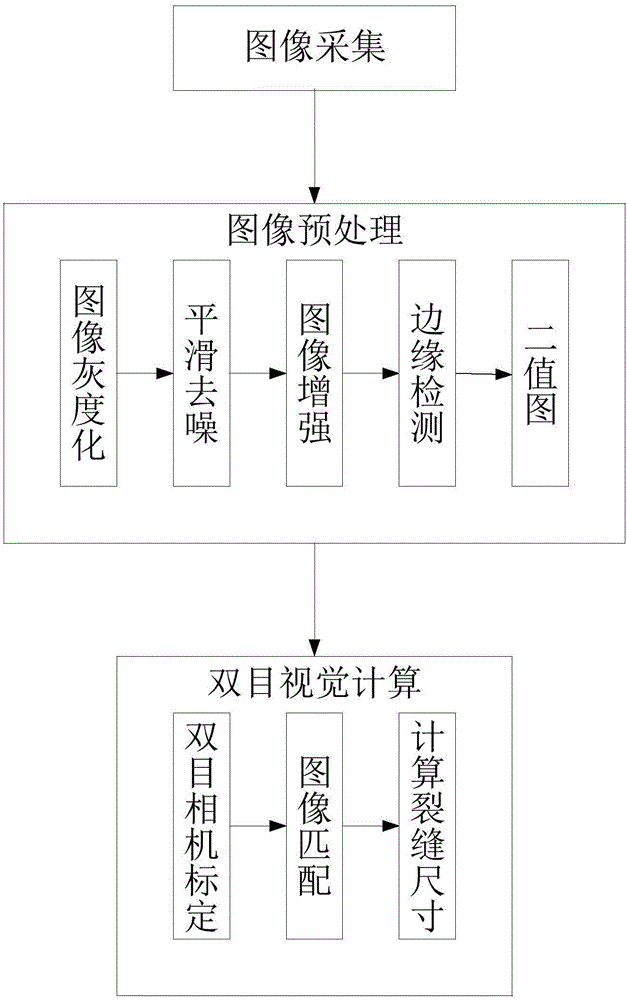

Bridge bottom surface crack detecting method based on binocular vision

InactiveCN107179322AKeep detailsAvoid excessive smoothingOptically investigating flaws/contaminationImaging processingCalculation error

The invention discloses a bridge bottom surface crack detecting method. The real size of cracks is reduced by binocular vision; the errors are greatly avoided; the condition that in the monocular vision, the shooting plane of a camera in the monocular vision is not parallel to the bridge bottom surface, so that a shot crack image in only the projection of the crack on the shooting plane of a monocular camera is avoided; in the monocular vision, the crack picture is subjected to simple image processing, so that the calculation error is great; only the projection size of the crack on the shooting plane of the monocular camera is obtained; the size is not the real size of the crack; in the image processing aspect, improved median filter is used. Compared with the traditional median filter, the method has the advantages that the condition that all pixel points in the image need to perform median replacement is avoided; only detected noise points need to perform median replacement; by the method, the detail information of the crack in the image is greatly preserved; the excessive smoothing of the crack image after wave filtering is avoided.

Owner:CHANGAN UNIV

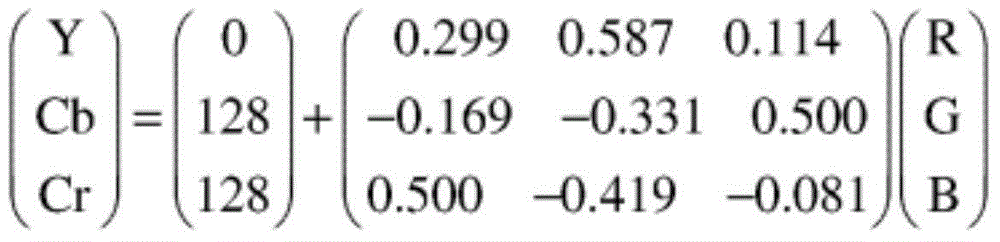

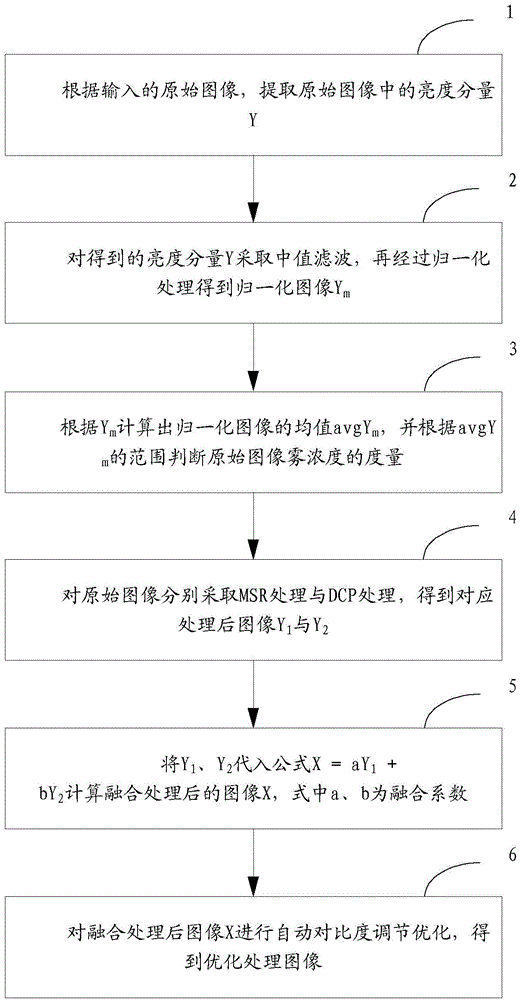

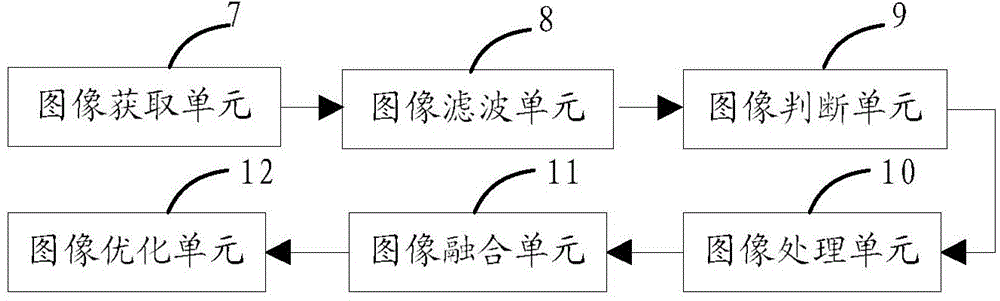

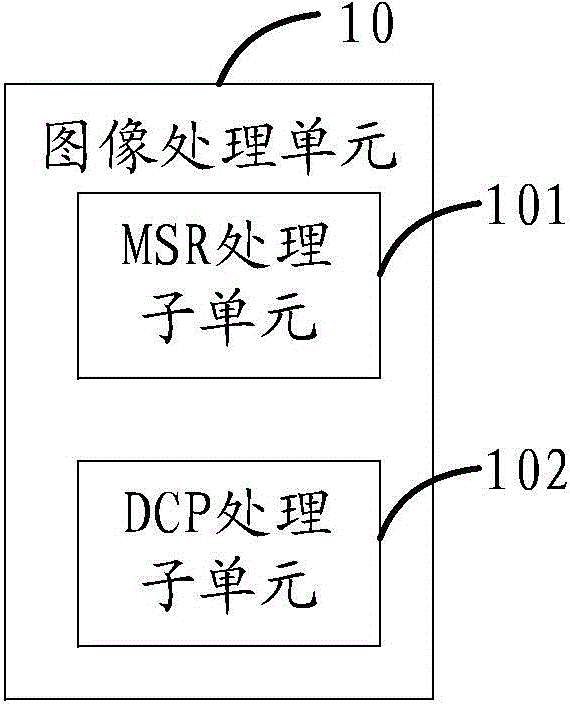

Method, device and system for image enhancement based on YCbCr color space

InactiveCN104318524ACoordinated and natural look and feelReal-time processingImage enhancementYcbcr color spaceLightness

The invention belongs to the technical field of image enhancement and in particular relates to a method, a device and a system for image enhancement based on a YCbCr color space. The method comprises the steps of extracting the luminance component Y in an original image according to the input original image, performing median filtering on the obtained luminance component Y and then performing normalization processing to obtain a normalized image Ym, calculating the average value avgYm of the normalized image according to Ym and judging the measure of the fog concentration of the original image according to the range of avgYm, performing MSR processing and DCP processing on the original image to obtain corresponding processed images Y1 and Y2, putting Y1 and Y2 into a formula X=aY1+bY2 to calculate an image X after fusion processing, and performing automatic contrast control optimization on the image X after the fusion processing to obtain an optimized image, wherein a and b in the formula are fusion coefficients. The method for the image enhancement based on the YCbCr color space is capable of effectively eliminating the halo and over-enhancement phenomena, and therefore, the stability and quality of the output image can be improved.

Owner:IRAY TECH CO LTD

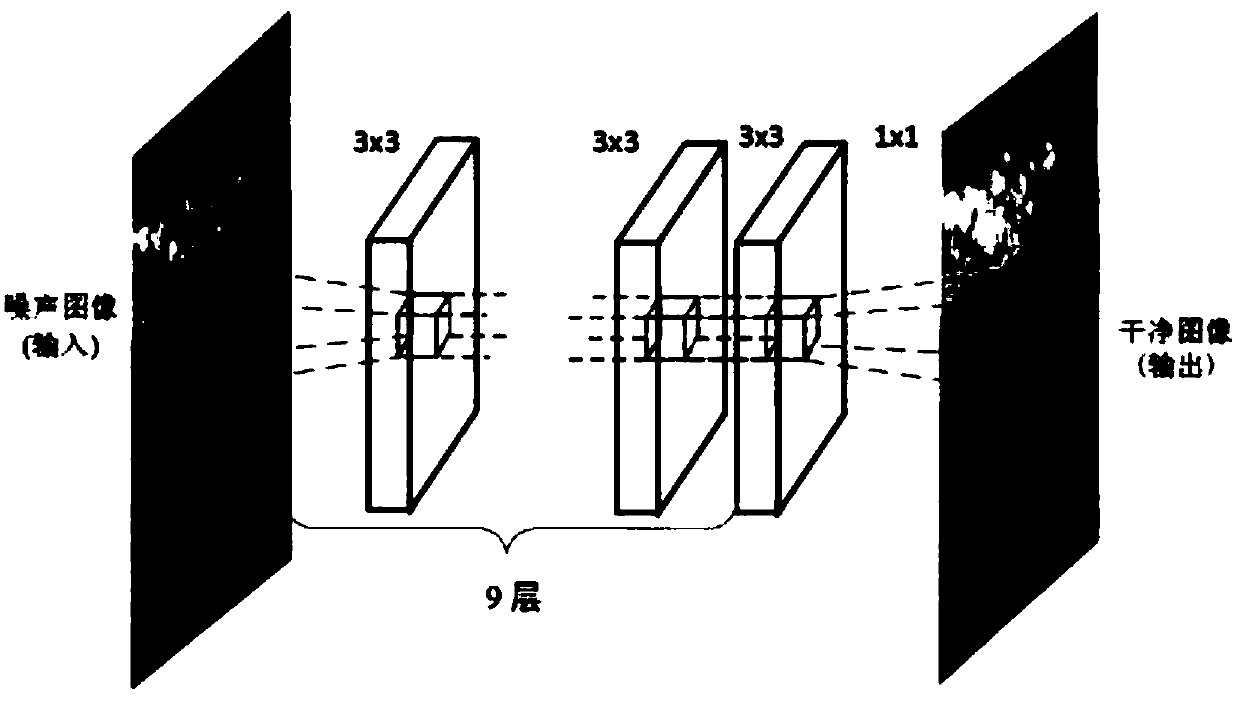

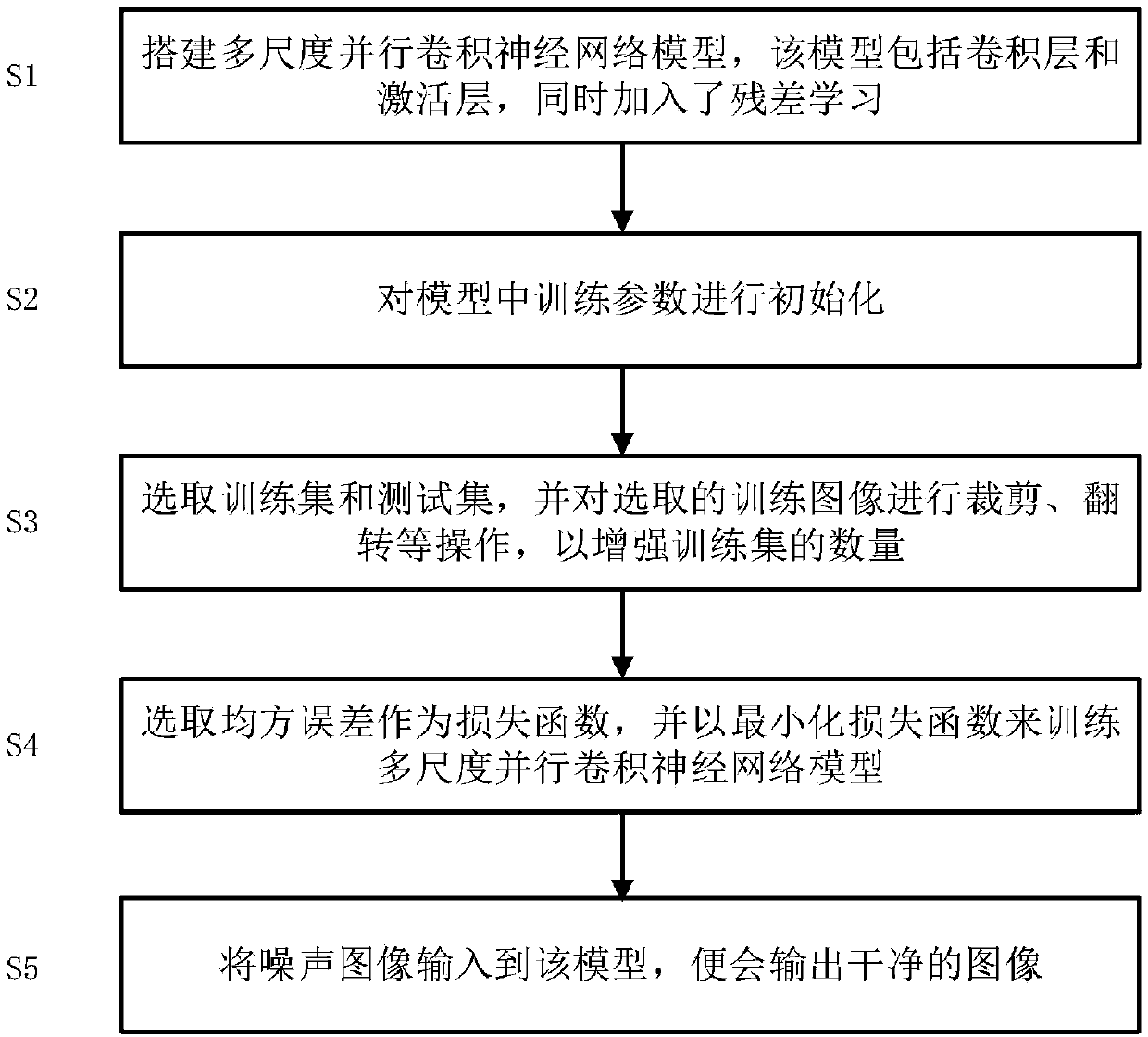

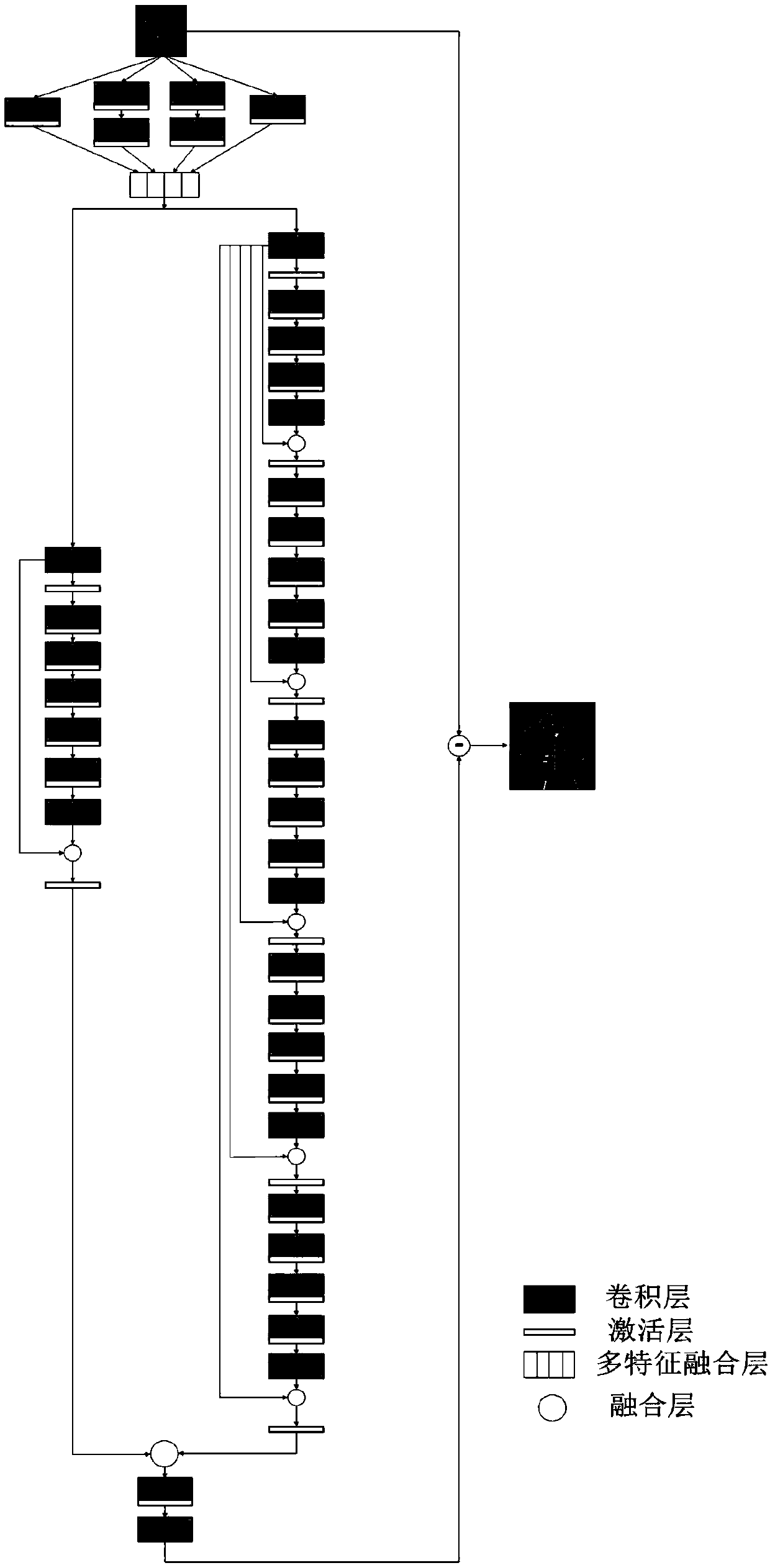

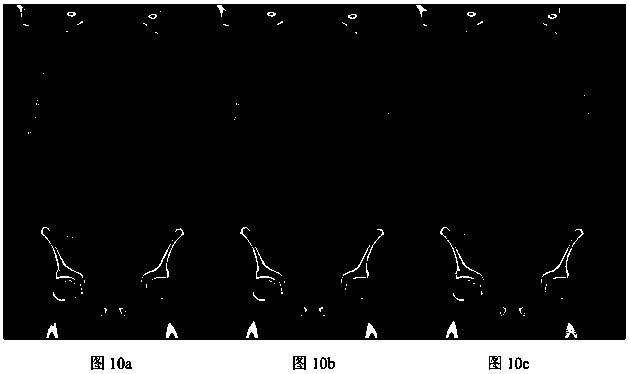

An image denoising method based on multi-scale parallel CNNs

ActiveCN109003240AExtract comprehensiveFavorable image reconstructionImage enhancementImage analysisImage denoisingMean square

The invention discloses an image denoising method based on multi-scale parallel CNNs, comprising five steps: 1, building a multi-scale parallel convolution neural network model, wherein only that convolution layer and the activation lay are included, and residual learning is added at the same time; 2, setting training parameter of a multi-scale parallel convolution neural network model; 3, selecting a training set and cutting and flipping the selected training image to enhance the number of the training sets; 4, selecting the mean square error as a loss function and train a multi-scale parallel convolution neural network model with a minimization loss function to obtain an image denoising model; 5, inputting the noise image of arbitrary size to the image denoising model, and outputting thedenoised clean image. The invention can preserve the edge information and the detail information of the image as much as possible while denoising, can improve the structural similarity of the image,and can obtain a high-quality denoised image.

Owner:ANHUI UNIV OF SCI & TECH

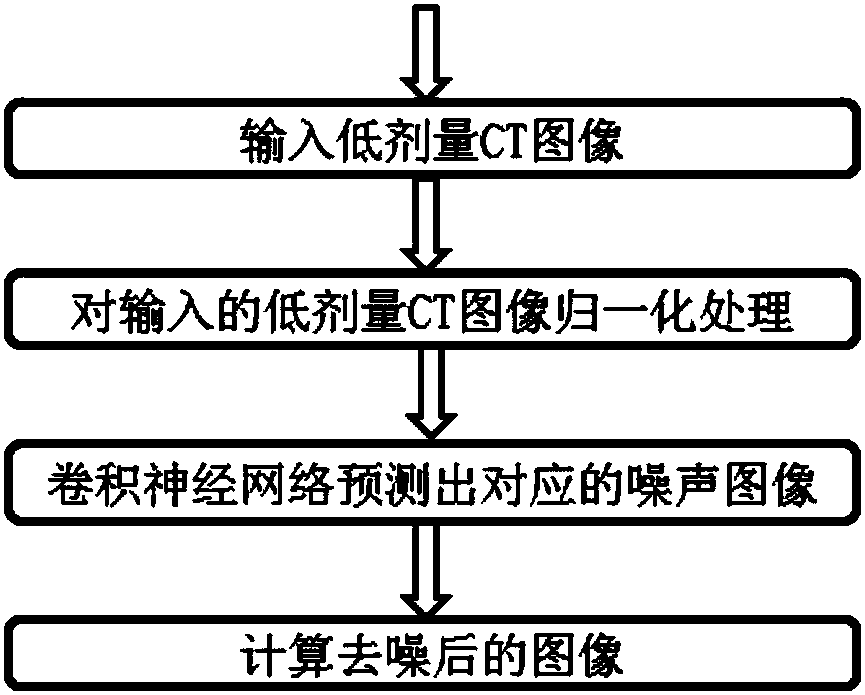

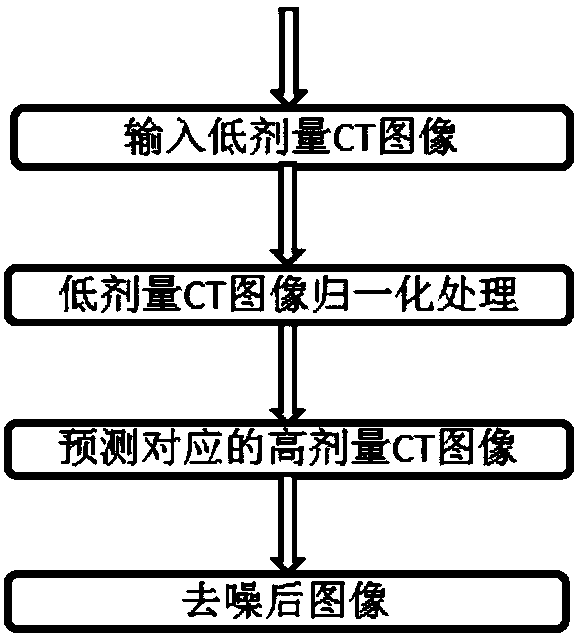

Convolutional neural network-based low-dosage CT image noise inhibition method

PendingCN108564553AAvoid cumbersomePreserve image detailsImage enhancementImage analysisDiseaseGray level

The invention relates to a convolutional neural network-based low-dosage CT image noise inhibition method. The convolutional neural network-based low-dosage CT image noise inhibition method comprisesthe following steps: (1) performing normalization processing on the input original low-dosage CT image L by utilizing the low-dosage CT image obtained through low tube current tube voltage scanning, evaluating the mean value and the standard deviation of the gray level of all the pixels of the low-dosage CT image, and subtracting the mean value from the L and dividing the standard deviation to obtain a CT image L0; (2) taking the acquired preprocessed low-dosage CT image L0 as input of the convolutional neural network and predicting a noise CT image D0 corresponding to a low-dosage CT image I;and (3) subtracting the predicted noise image D0 from the L0, multiplying the standard deviation of the low-dosage CT image and adding the mean value of the low-dosage CT image to acquire the denoised image H0. The low-dosage CT image is subjected to denoising processing by the convolutional neural network, so that the image is guaranteed to meet the diagnosis quality, the irradiation dosage of asubject is reduced, the detection rate of the focus is increased and the disease is diagnosed early.

Owner:SOUTHERN MEDICAL UNIVERSITY

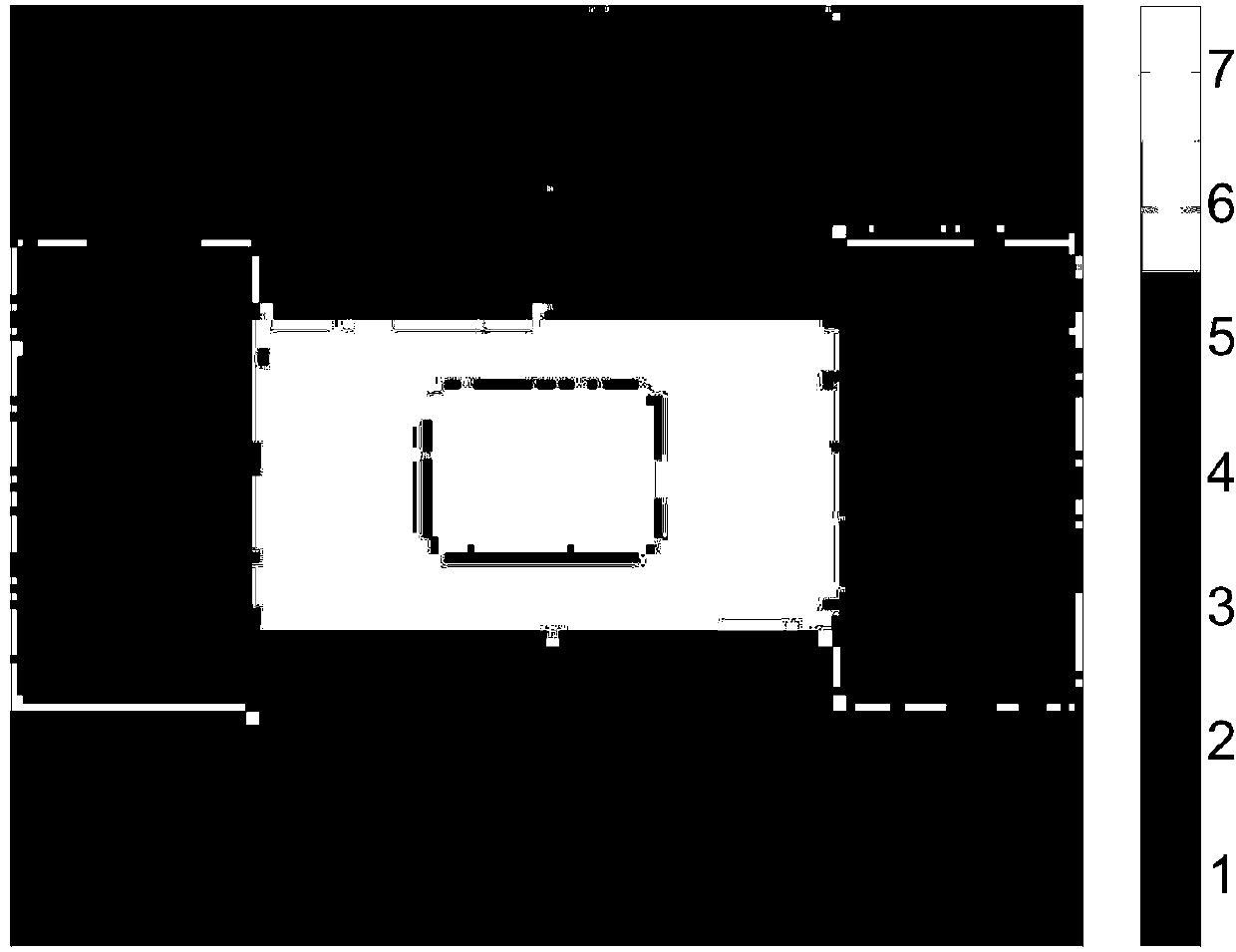

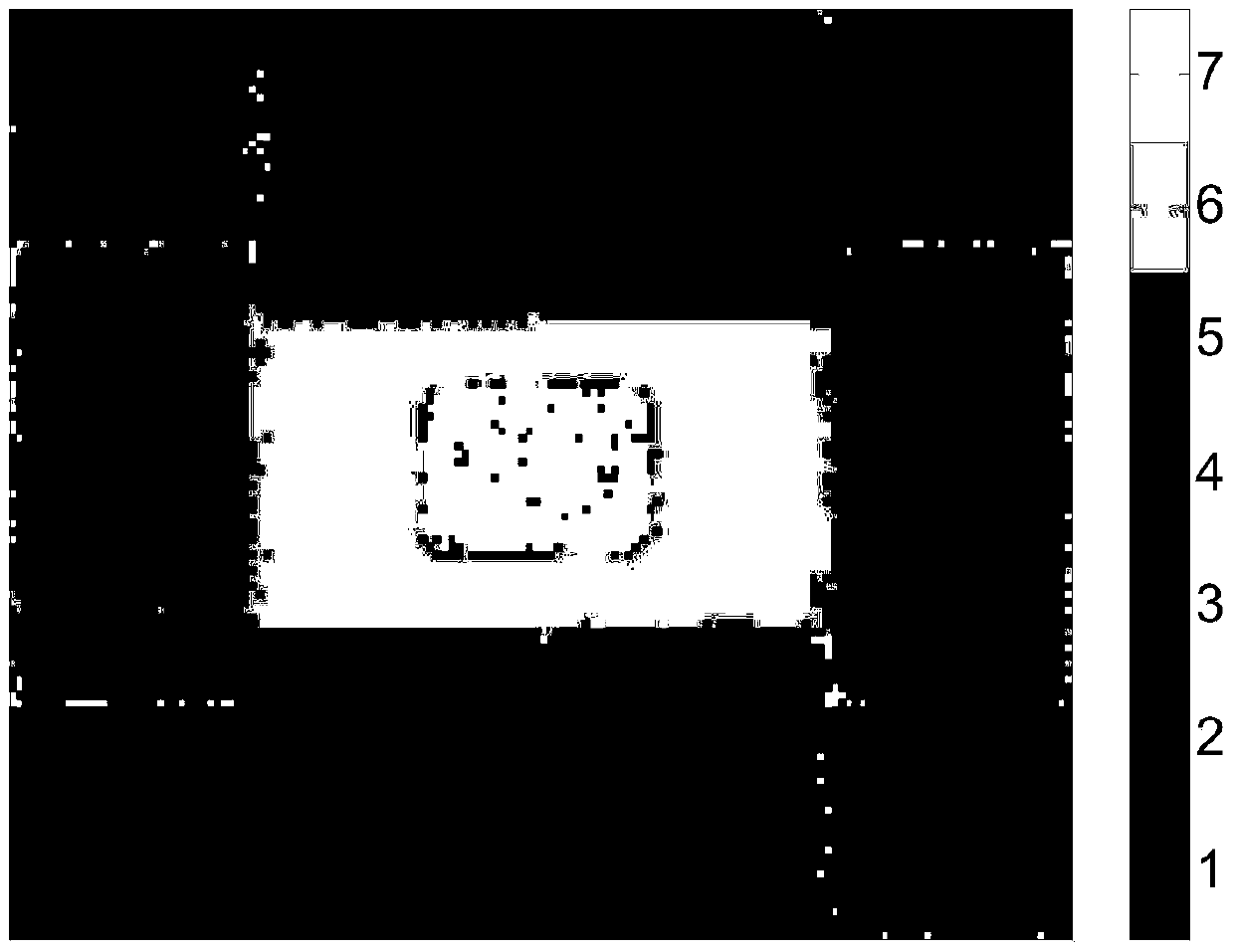

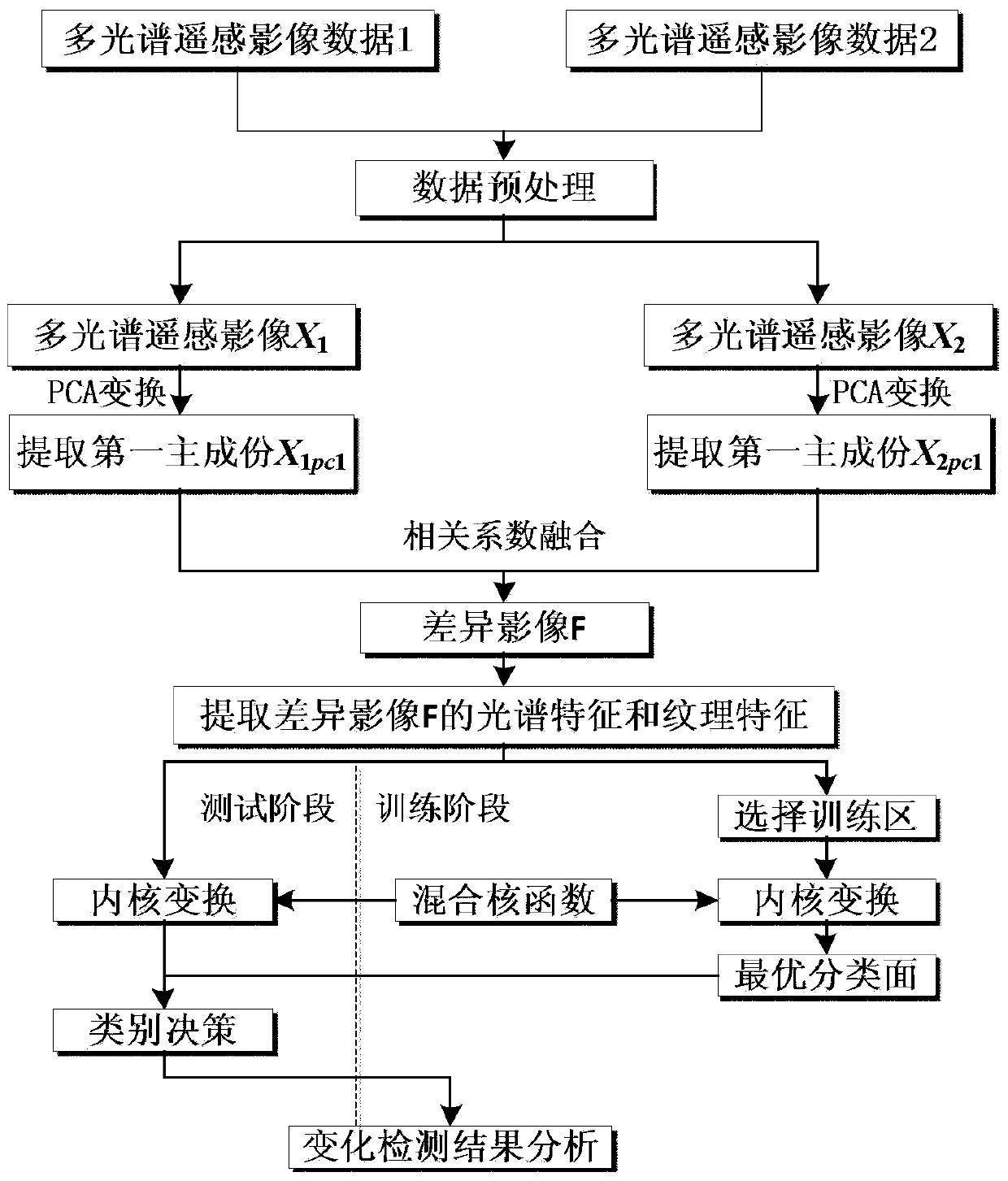

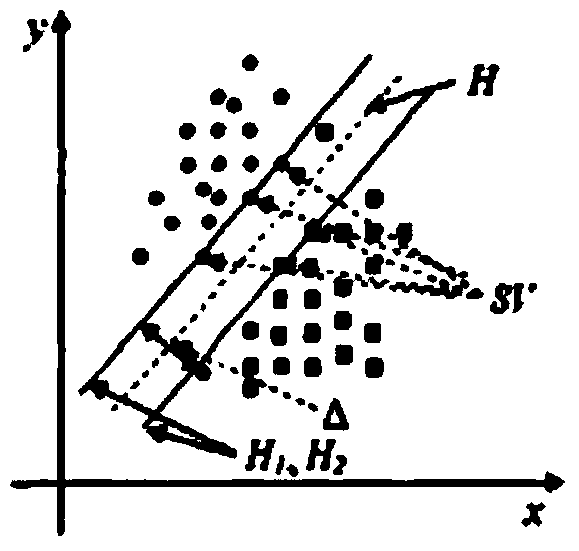

Multi-spectrum remote sensing image change detection method

InactiveCN103500450AEliminate dependenciesReduce distractionsImage analysisSupport vector machineCorrelation coefficient

The invention discloses a multi-spectrum remote sensing image change detection method. The traditional remote sensing image change detection method comprises the following steps of forming a difference image on the two remote sensing images different in time phases through an algebraic method, then modeling the difference image, determining the change threshold value, and detecting the change of the image. However, the process has the problems that the difference image may not meet the designated model and the change threshold value is difficultly determined, so the remote sensing image change detection method based on an SVM (support vector machine) mixed kernel function is put forward for solving the problems. The implementation process comprises the following steps of firstly, constructing the difference image in a way of combining PCA (primary component analysis) conversion and a correlation coefficient integrating method; then, extracting the gray feature and texture feature of the difference image, and carrying out normalization and formatting; selecting a training area, and constructing the SVM mixed kernel function to train; finally, enabling an SVM mixed kernel classifier to classify the difference image, so the change detection result of the target image is obtained.

Owner:HOHAI UNIV +1

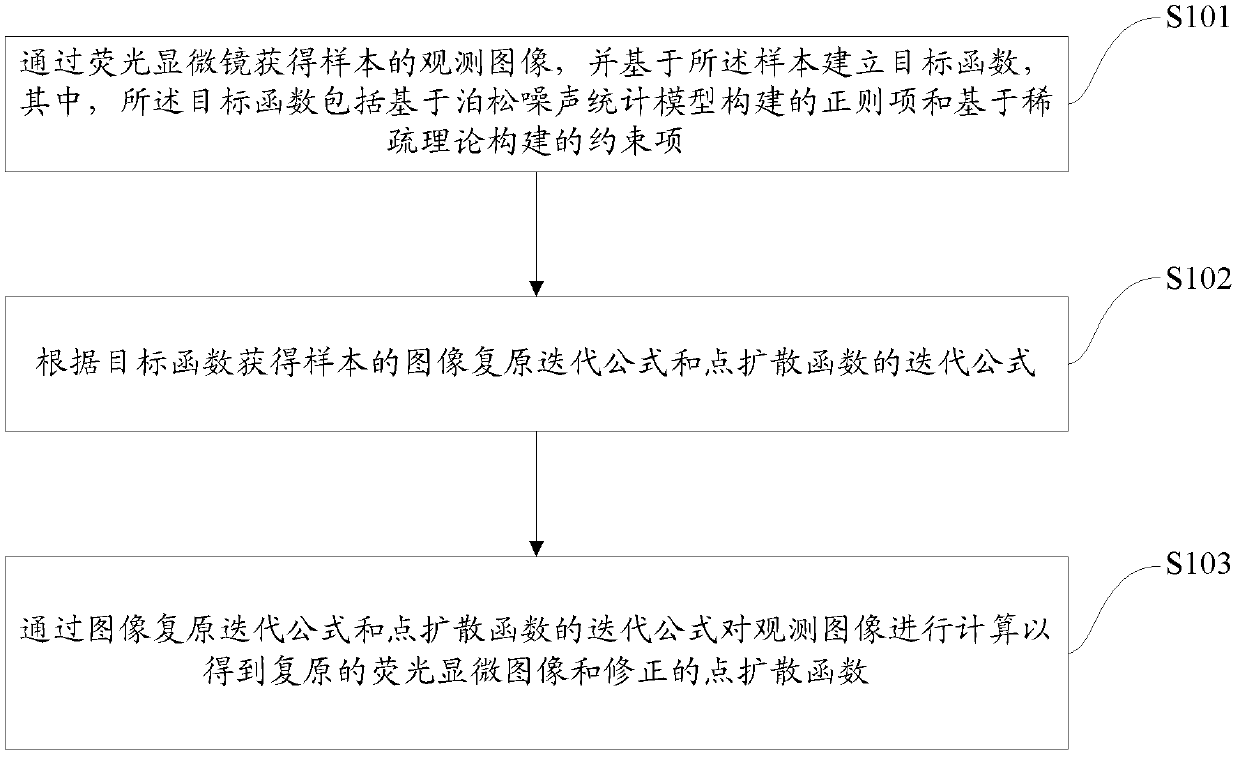

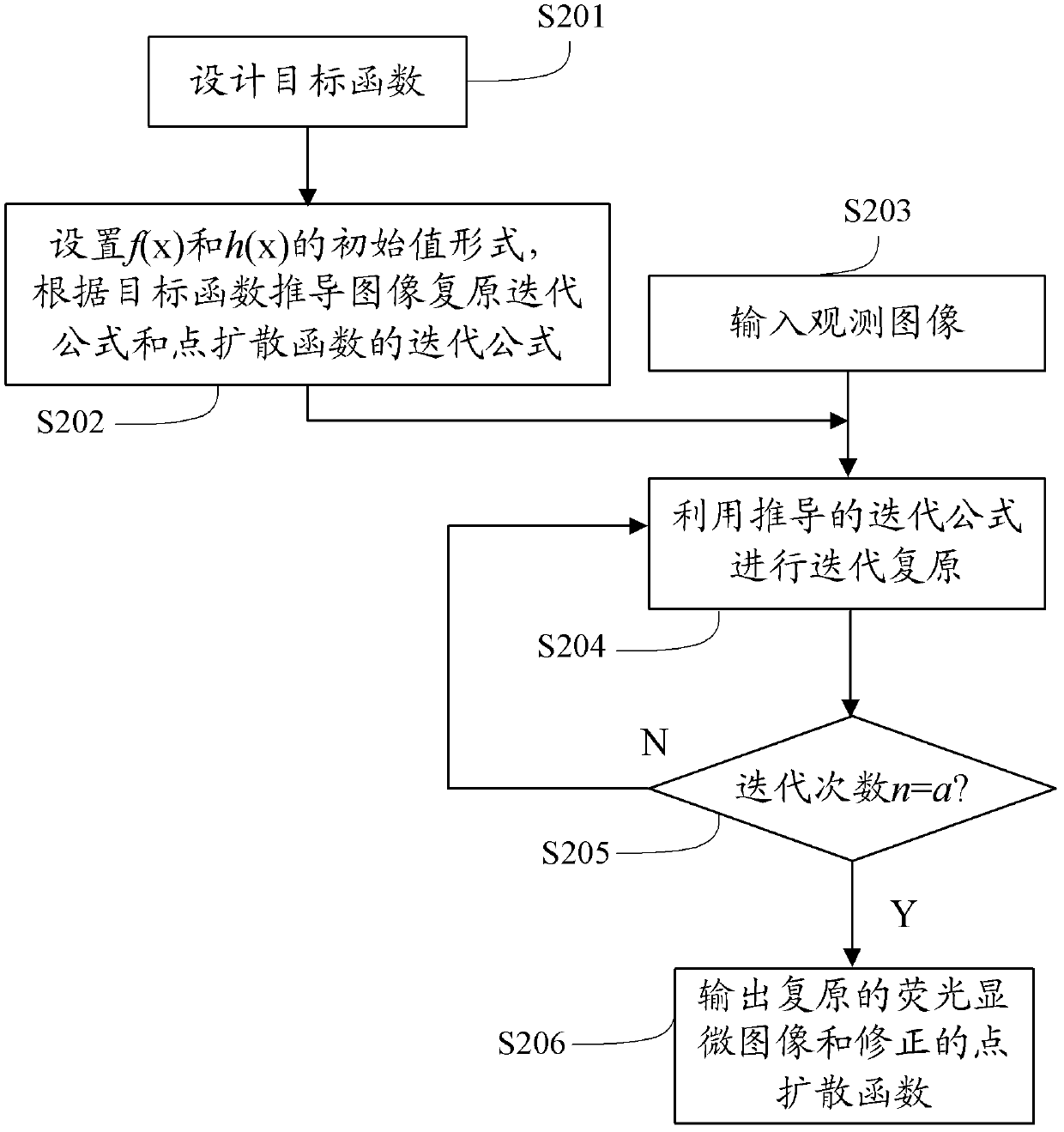

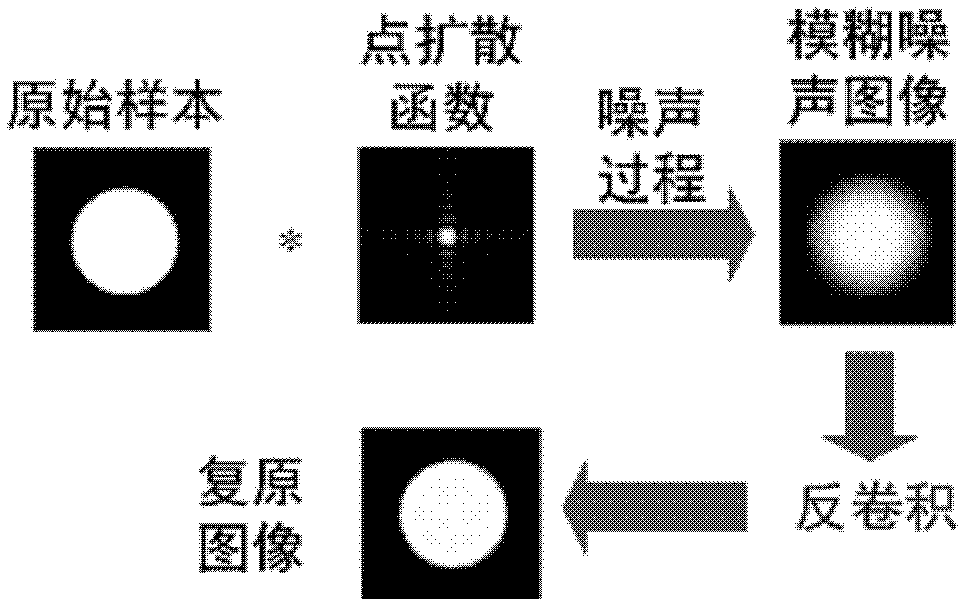

Fluorescent microscopic image restoring method based on blind deconvolution and sparse representation and device thereof

InactiveCN102708543AImprove accuracyKeep detailsImage enhancementPattern recognitionMicroscopic image

The invention provides a fluorescent microscopic image restoring method based on the blind deconvolution and the sparse representation and a device thereof. The method comprises the following steps of: obtaining an observing image of a sample through a fluorescence microscope, and building an objective function based on the sample, wherein the objective function comprises a regular item and a constraint item; obtaining an image restoring iteration formula and a point spreading function iteration formula of the sample according to the objective function; and calculating the observed image through the image restoring iteration formula and the point spreading function iteration formula in order to obtain the restored fluorescent microscopic image and the corrected point spreading function. By using the embodiment of the invention, the contrast ratio and the definition of the restored image are high; the texture details are effectively maintained; the image visual effect is more clear; and naturally, the point spreading function obtained after restoring also has very high degree of accuracy.

Owner:BEIJING TECHNOLOGY AND BUSINESS UNIVERSITY

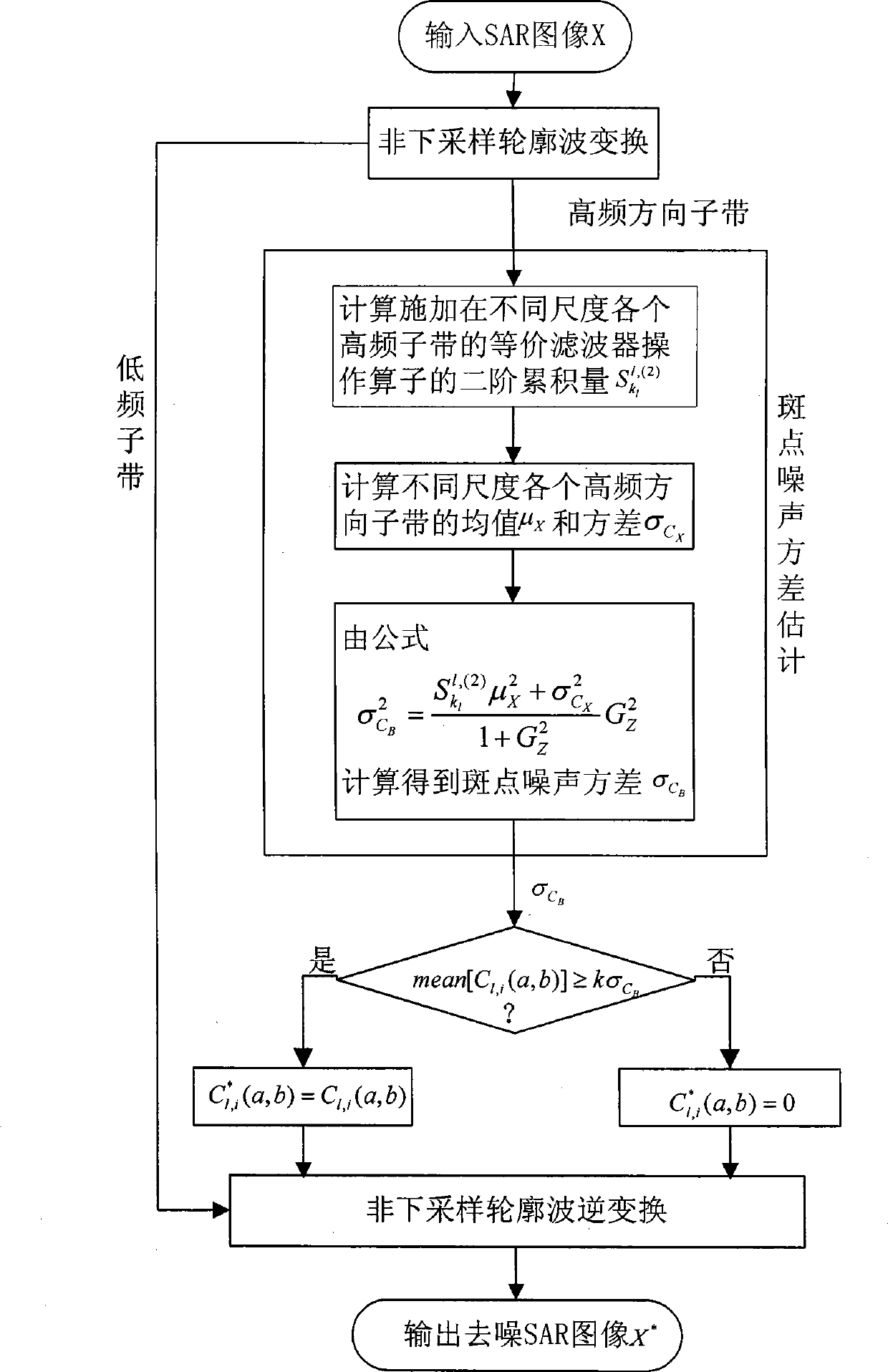

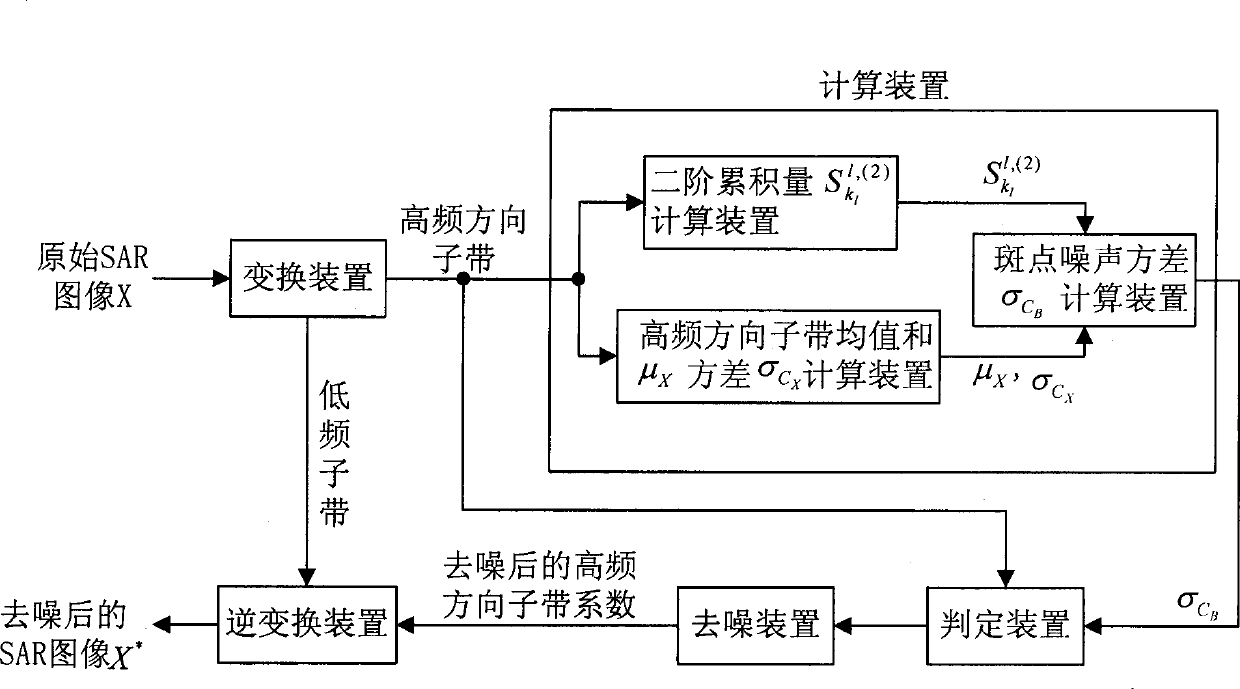

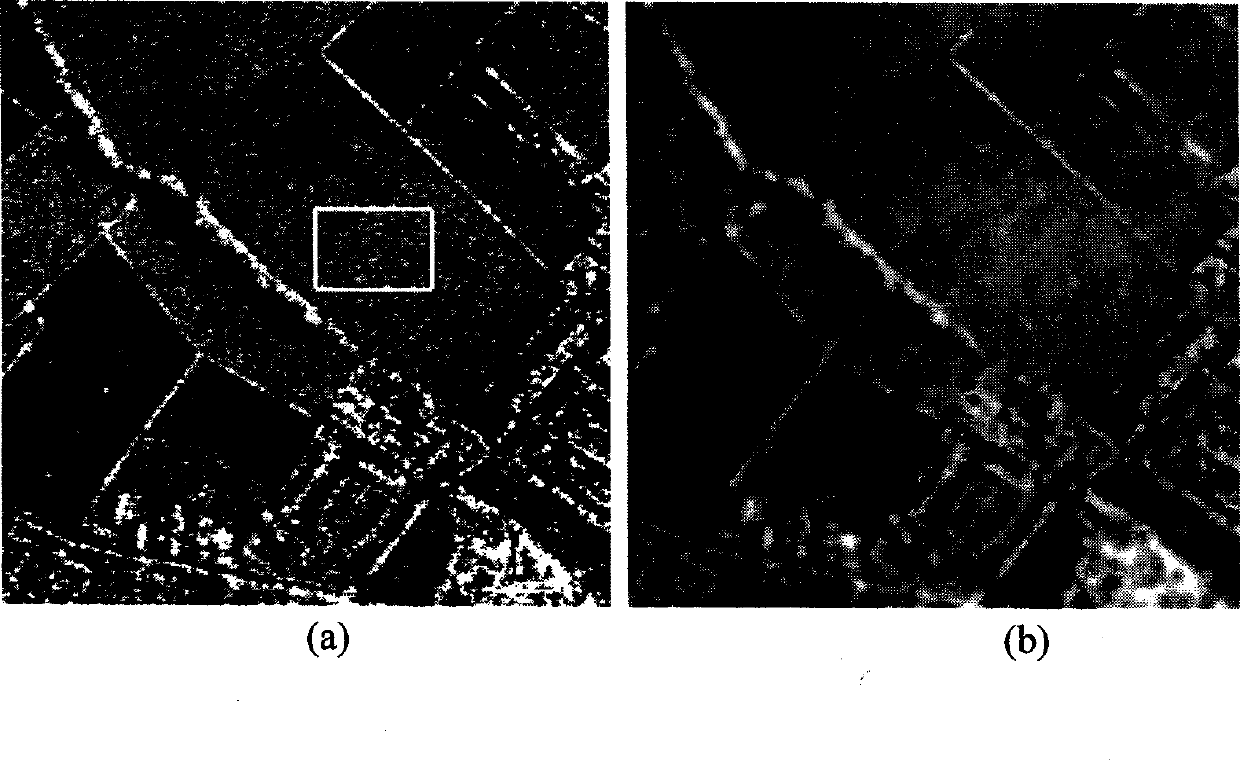

Synthetic aperture radar image denoising method based on non-down sampling profile wave

ActiveCN101482617AAvoid jitter distortionAdaptive denoisingImage enhancementRadio wave reradiation/reflectionSynthetic aperture radarRadar

The invention discloses a denoising method of synthetic aperture radar image based on a non-lower sampling configuration wave, which is mainly to solve the problem that the image detail is difficult to keep effectively by the existing method, the new method comprises: (1) inputting a SAR image X and performing the L layer non-lower sampling configuration wave transformation; (2) calculating speckle noise variance delta C#-[B] of subband in each high-frequency direction of different dimensions; (3) distinguishing the high-frequency direction subband coefficients into the signal or the noise transformation coefficients by the local average value mean[C1, i(a, b)] high-frequency direction suband coefficient C1 and the i (a, b); (4) reserving the signal part in the judged high-frequency direction subband coefficient C1 and i (a, b) to obtain the denoised high-frequency direction subband coefficient C1 and i (a, b); (5) performing the non-lower sampling configuration wave inverse transformation for the low-frequency subband amd the denoised high-frequency direction subband coefficient C1 and I (a, b) to obtain the denoised SAR image X . The invention can effectively eliminate the coherent speckle noise, meanwhile can effectively keep the image detail, the denoised image has no shake and distortion and can be used for the preprocessing stage of the synthetic aperture radar image.

Owner:XIDIAN UNIV

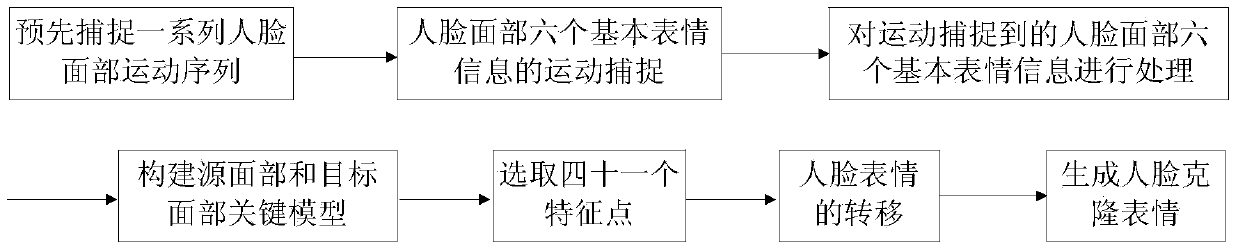

Human face expression cloning method

ActiveCN104008564AIncrease authenticityOvercoming low fidelityAnimationFacial characteristic3d image processing

The invention provides a human face expression cloning method and relates to 3D image processing, in particular to a human face expression cloning method based on motion capture data. Firstly, a human face motion sequence is captured in advance; secondly, motion capture of the six types of basic expression information of anger, disgust, fear, joy, sadness and surprise of the human face is carried out; thirdly, the six types of basic expression information obtained through motion capture, of the human face is processed; fourthly, a source face and target face key model is established; fifthly, forty one human face characteristic points are selected; sixth, human face expressions are transferred; seventh, human face cloned expressions are generated. The human face expression cloning method solves the problems that in the prior art, uniform weight fidelity is low and cotangent weight calculation is instable, and overcomes the defects that the requirement for motion capture equipment is high and the processing speed is low.

Owner:HEBEI UNIV OF TECH

Semi-inverse method-based rapid single image dehazing algorithm

ActiveCN104252698AImprove automatic performanceImprove robustnessImage enhancementScattered lightGlobal illumination

The invention discloses a semi-inverse method-based rapid single image dehazing algorithm, which comprises the following steps: In light of an atmospheric scattering model, working out an atmospheric global illumination value by utilizing an improved semi-inverse algorithm, wherein the robustness of the obtained atmospheric global illumination value is stronger than that of the maximum gray value in a dark channel; secondly, fusing the edge information and the scene depth information of an image upon the characteristic of atmospheric scattered light by taking the edge information of the image as a synthesis condition, and accurately estimating an atmospheric streamer; then, obtaining an initial restored haze-free image according to the atmospheric scattering model; finally, performing color adjustment and detail enhancement processing on the image after being initially dehazed to obtain a haze-free image with strong sense of reality. The semi-inverse method-based rapid single image dehazing algorithm has a very good processing effect on depth mutation or prospect pixels, so that a vignetting effect is eliminated; shown by a large number of experiments, the semi-inverse method-based rapid single image dehazing algorithm disclosed by the invention is capable of well keeping color and detail information, is better in automaticity and robustness, and can be further used for a video dehazing system.

Owner:SOUTHWEAT UNIV OF SCI & TECH

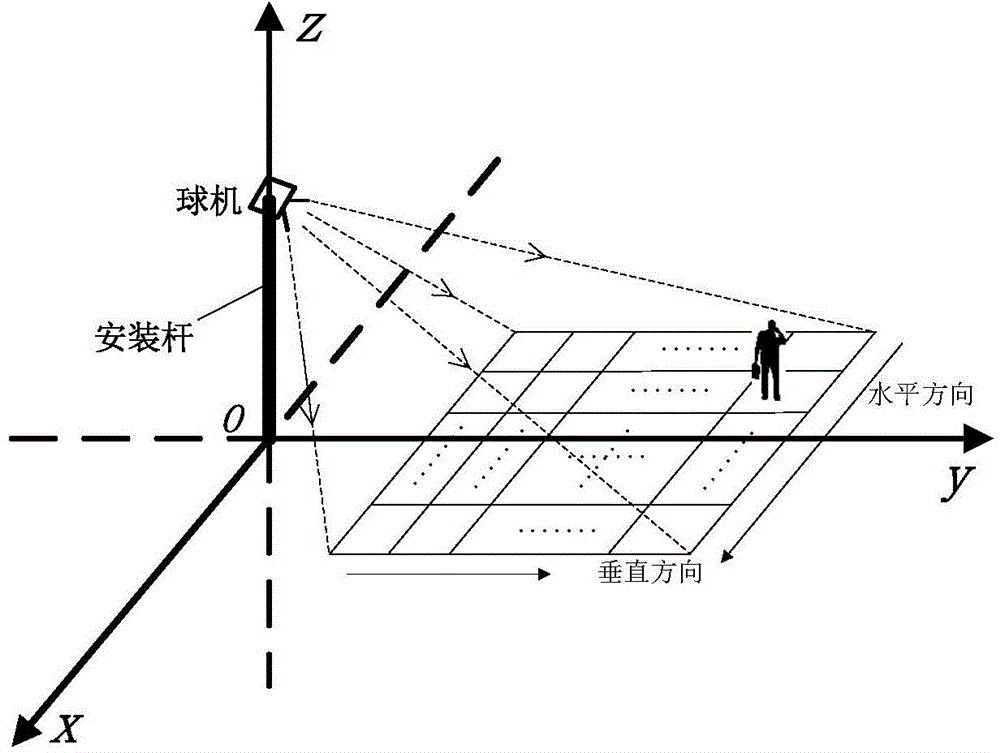

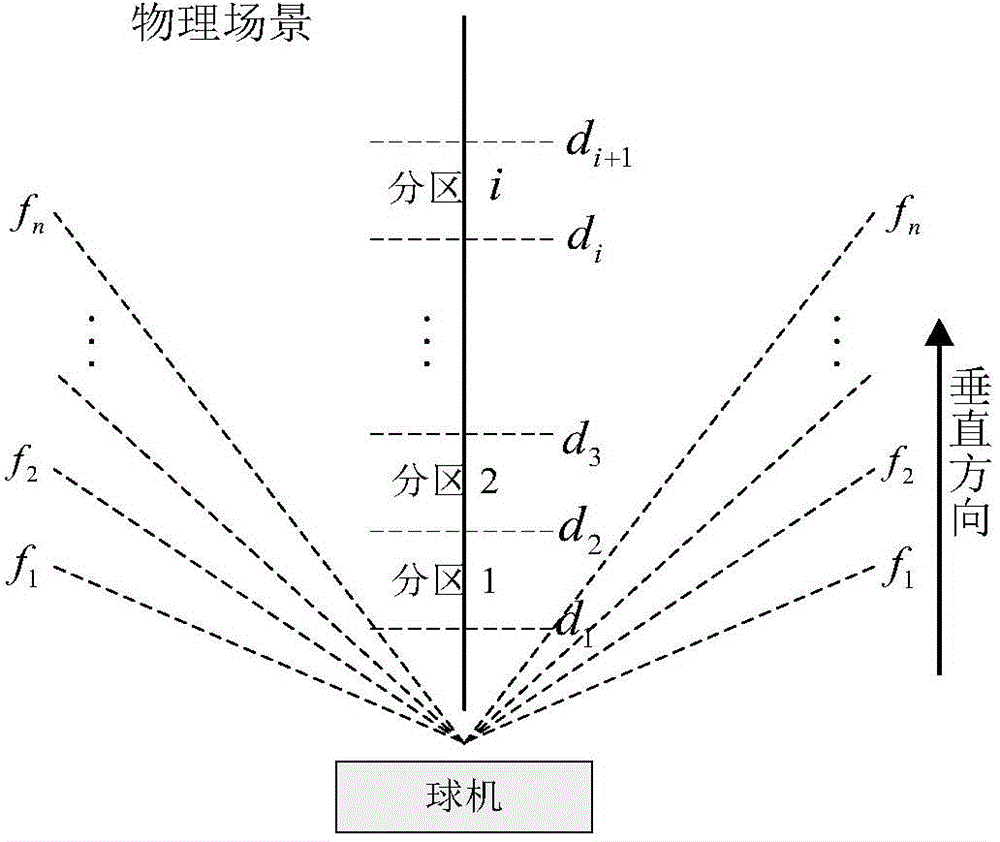

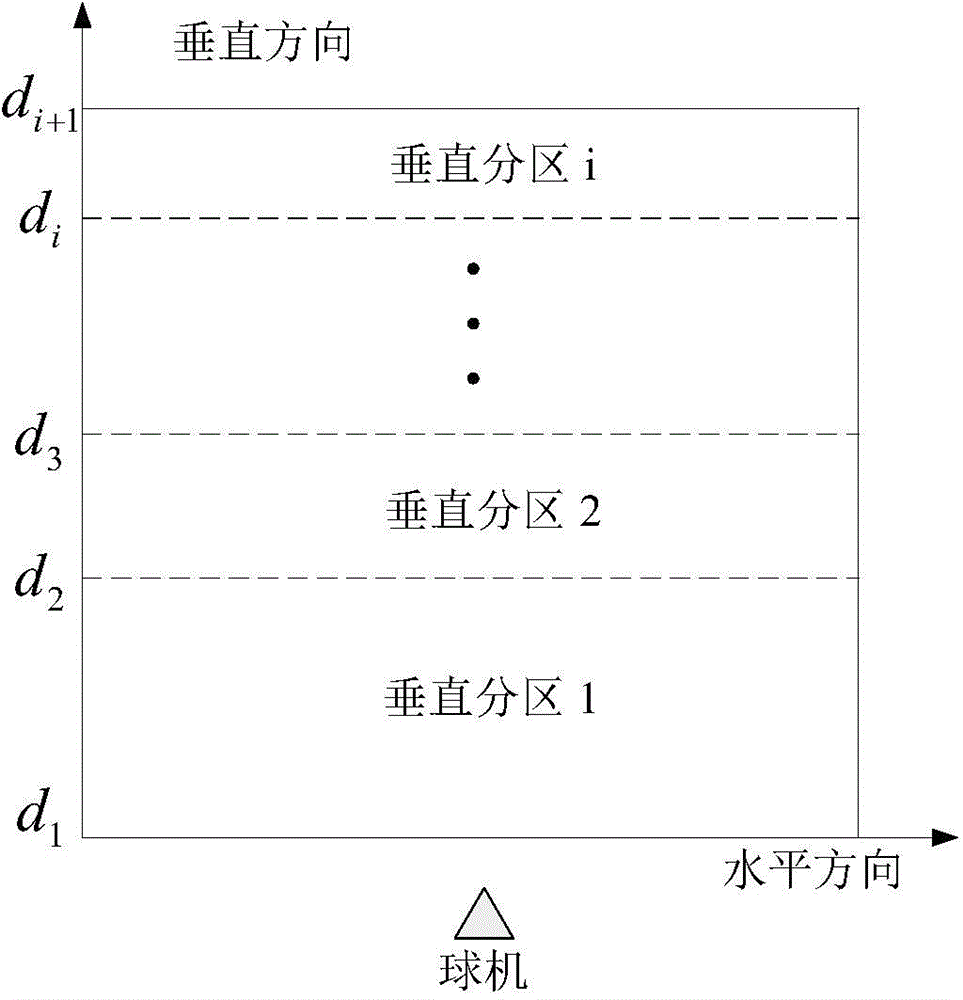

Control method of monitoring ball machine

InactiveCN104639908AFast captureGuaranteed shooting effectImage analysisClosed circuit television systemsVideo monitoringControl engineering

The invention discloses a control method of a monitoring ball machine. The method comprises the following steps of (1) vertically and horizontally dividing a to-be-monitored space into a plurality of small partitions, and setting a shooting focal distance for each small partition; (2) setting corresponding preset positions for a central point position and an edge point position of each small partition, and storing horizontal position information, vertical position information and shooting focal distance information of the ball machine; (3) reading a video frame, and performing target detection on the video frame; (4) according to direction information of a detected target in a monitoring scene, mapping the target to the corresponding preset position; (5) calling the preset position by the ball machine to acquire a monitored image. The defects that the automation degree of control is not high, the real-time property and the flexibility are not enough, and human manual interference is required in a ball machine of the traditional video monitoring system are overcome; the control method is convenient in operation, high in automation degree of control and good in instantaneity, and is particularly good in capture effect on the monitored image of a quickly moving target.

Owner:HUAZHONG UNIV OF SCI & TECH

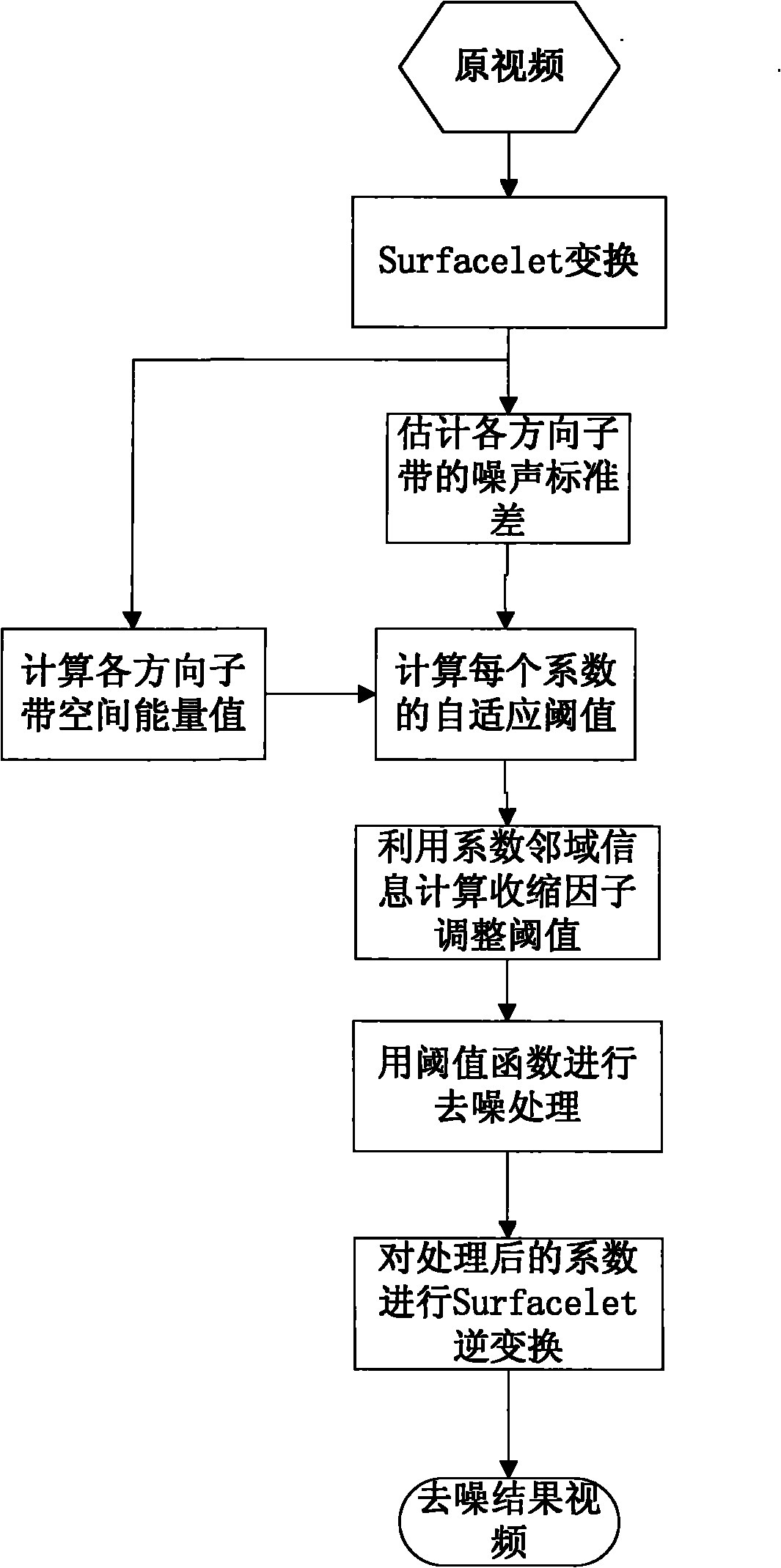

Method for denoising space self-adaption threshold video based on Surfacelet transform domain

InactiveCN102158637AImprove efficiencyAccurate captureTelevision system detailsColor television detailsSelf adaptiveDecomposition

The invention discloses a method for denoising a space self-adaption threshold video based on a Surfacelet transform domain, and the method is mainly used for solving the phenomena of unsatisfactory video denoising effects, excessive denoising course complexity, artifact and pseudo Gibbs' effect in a solution course and the like. The method disclosed by the invention is implemented through the following courses: outputting a video to be denoised and carrying out Surfacelet transform; respectively evaluating the noise of factors in the direction subband of each Surfacelet decomposition; computing self-adaption thresholds by utilizing the space energy values of the factors; adjusting the thresholds by utilizing the adjacent domain information of the factors; denoising by utilizing a threshold function; and reconfiguring the denoised factors, thus obtaining the denoised video. Compared with the prior art, the method disclosed by the invention has the advantages of obviously reducing the computing complexity, enhancing the PSNR (Peak Signal to Noise Ratio) value of the denoised video and effectively maintaining the detail information of the video, and can be used for natural video denoising and three-dimensional image denoising.

Owner:XIDIAN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com