Patents

Literature

3096 results about "Source image" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

How to find the source of an image: The towel: Go to images.google.com and click the photo icon. Click “upload an image”, then “choose file”. Locate the file on your computer and click “upload”. Scroll through the search results to find the original image. Mine happened to be the first result and those below it led to my first result.

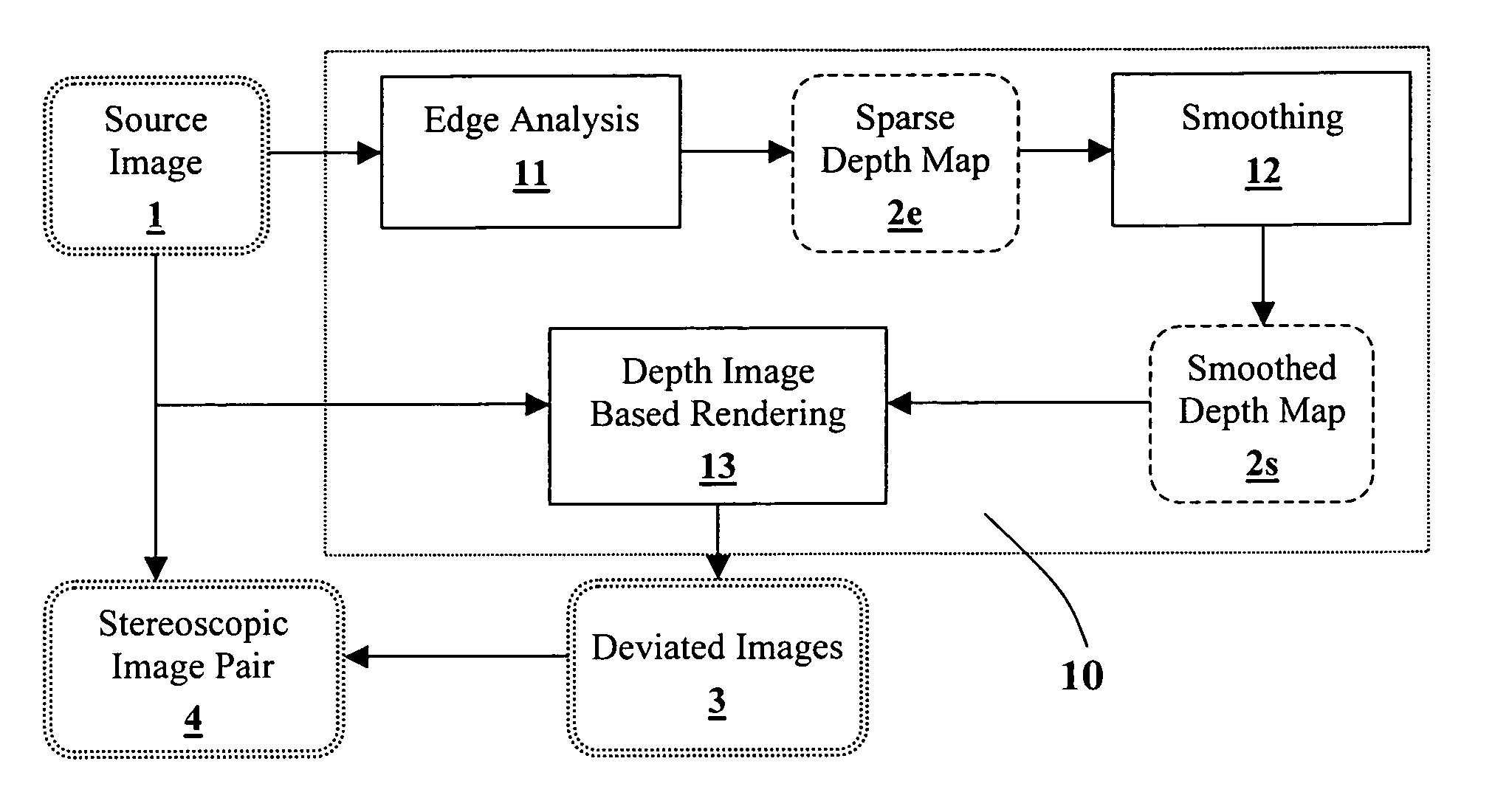

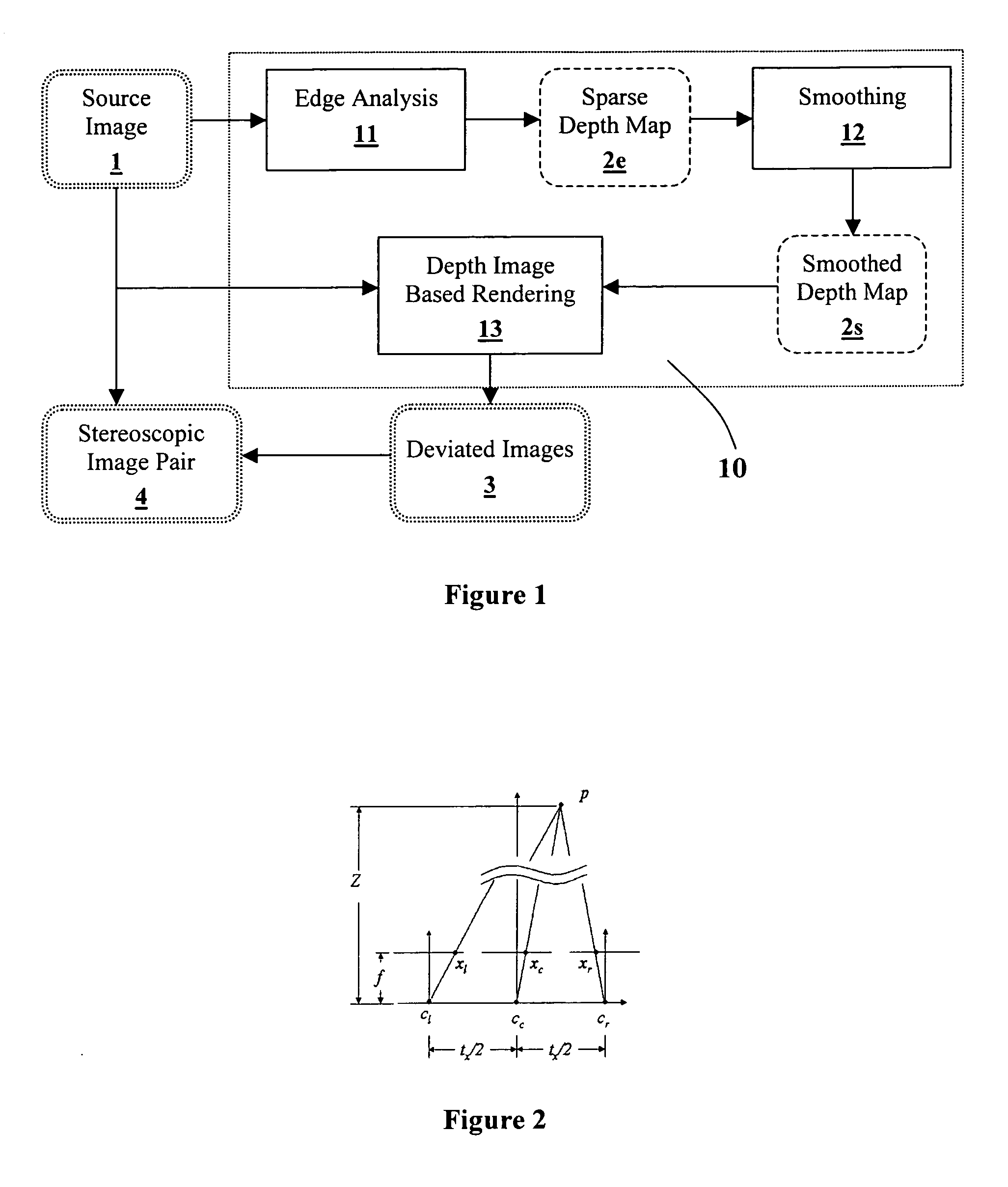

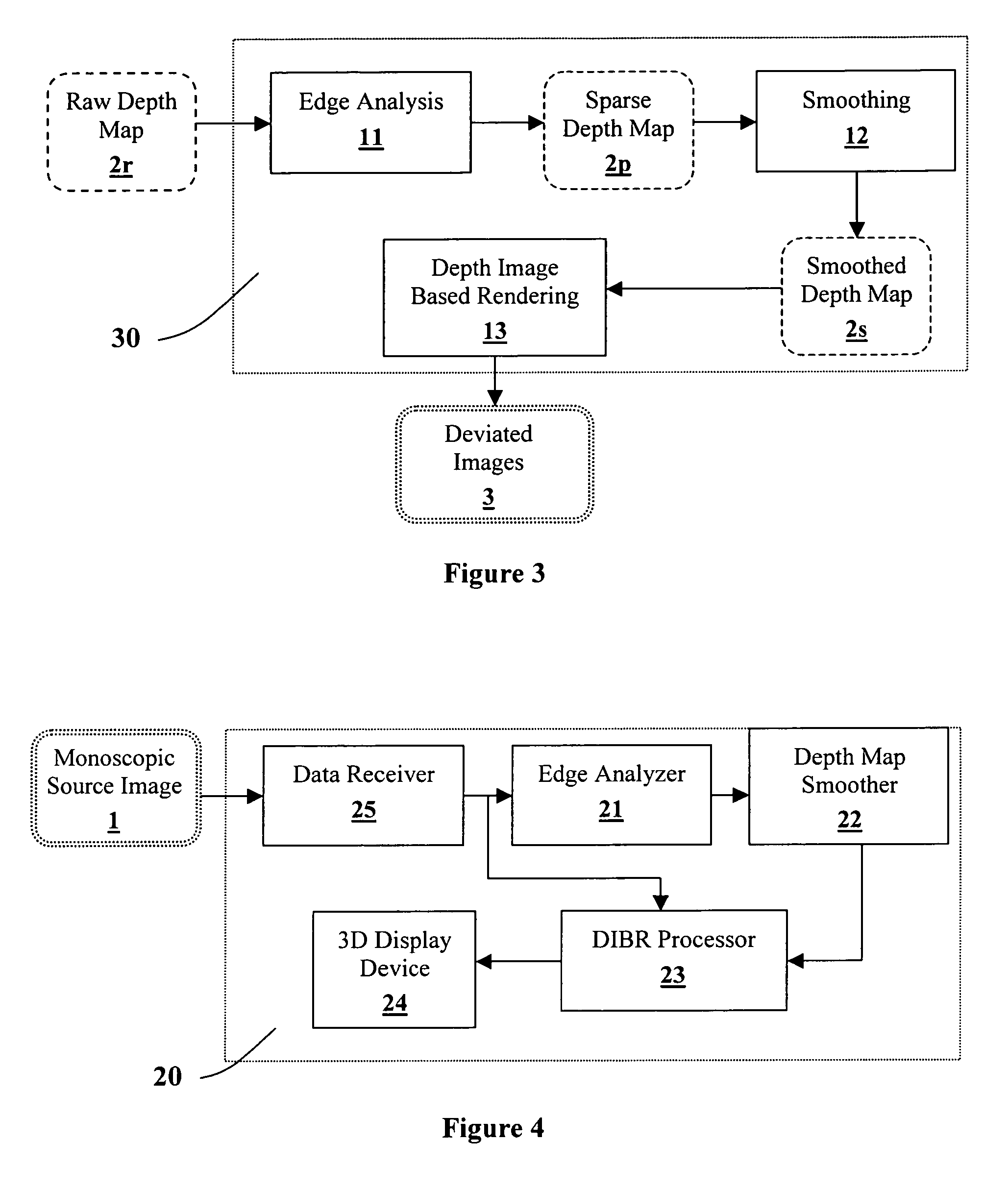

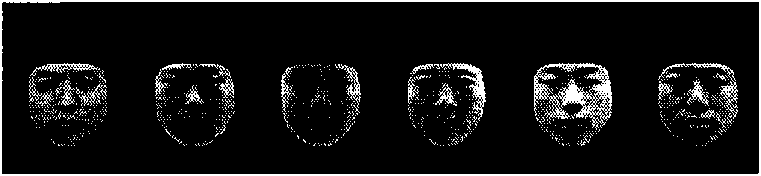

Generating a depth map from a two-dimensional source image for stereoscopic and multiview imaging

InactiveUS20070024614A1Saving in bandwidth requirementIncrease widthImage enhancementImage analysisViewpointsImage pair

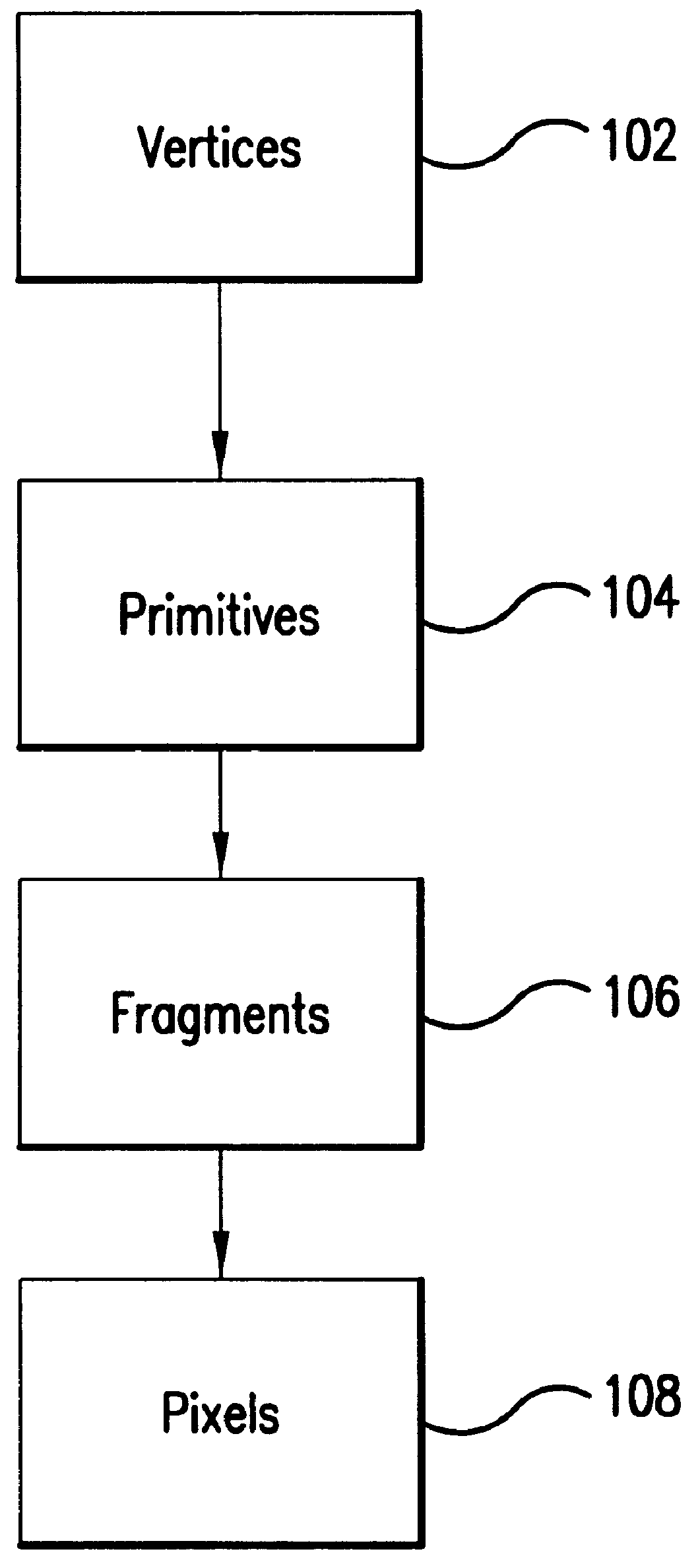

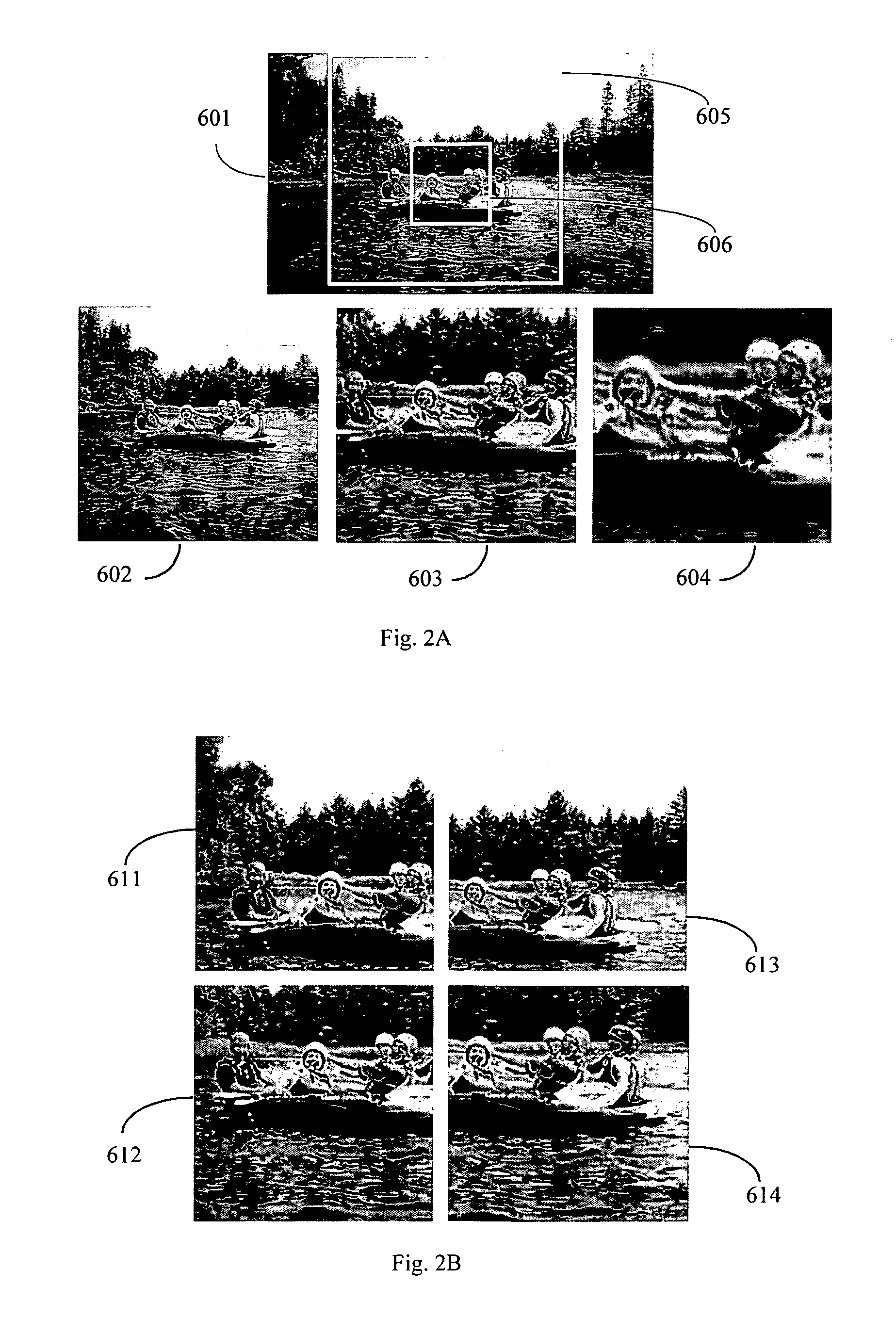

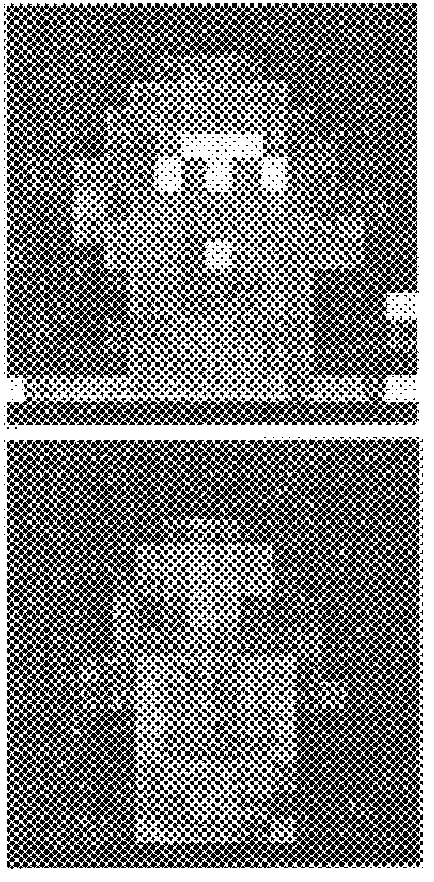

Depth maps are generated from a monoscopic source images and asymmetrically smoothed to a near-saturation level. Each depth map contains depth values focused on edges of local regions in the source image. Each edge is defined by a predetermined image parameter having an estimated value exceeding a predefined threshold. The depth values are based on the corresponding estimated values of the image parameter. The depth map is used to process the source image by a depth image based rendering algorithm to create at least one deviated image, which forms with the source image a set of monoscopic images. At least one stereoscopic image pair is selected from such a set for use in generating different viewpoints for multiview and stereoscopic purposes, including still and moving images.

Owner:HER MAJESTY THE QUEEN & RIGHT OF CANADA REPRESENTED BY THE MIN OF IND THROUGH THE COMM RES CENT

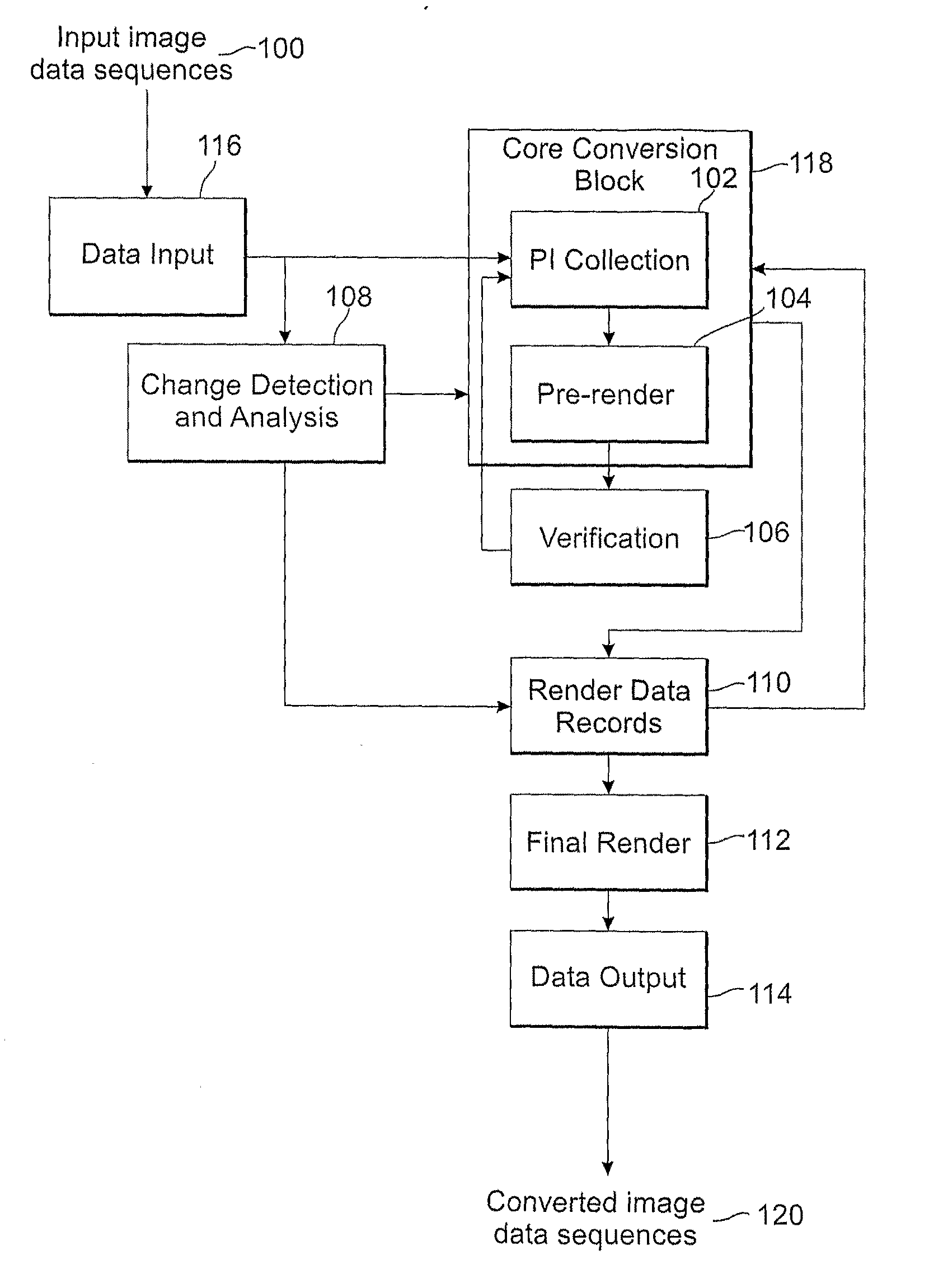

Methods and systems for converting 2d motion pictures for stereoscopic 3D exhibition

ActiveUS20090116732A1Improve image qualityImprove visual qualityPicture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningImaging quality3d image

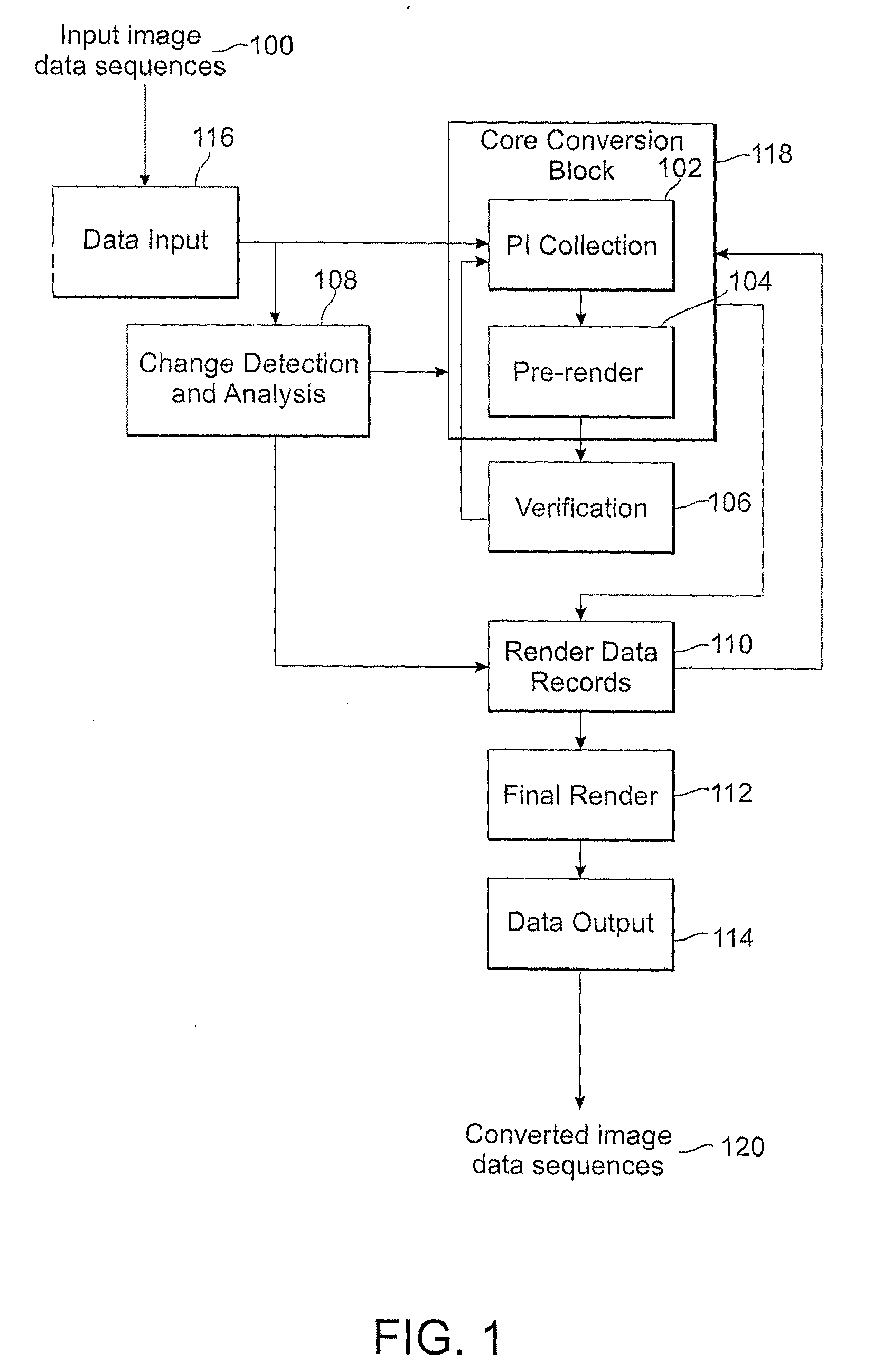

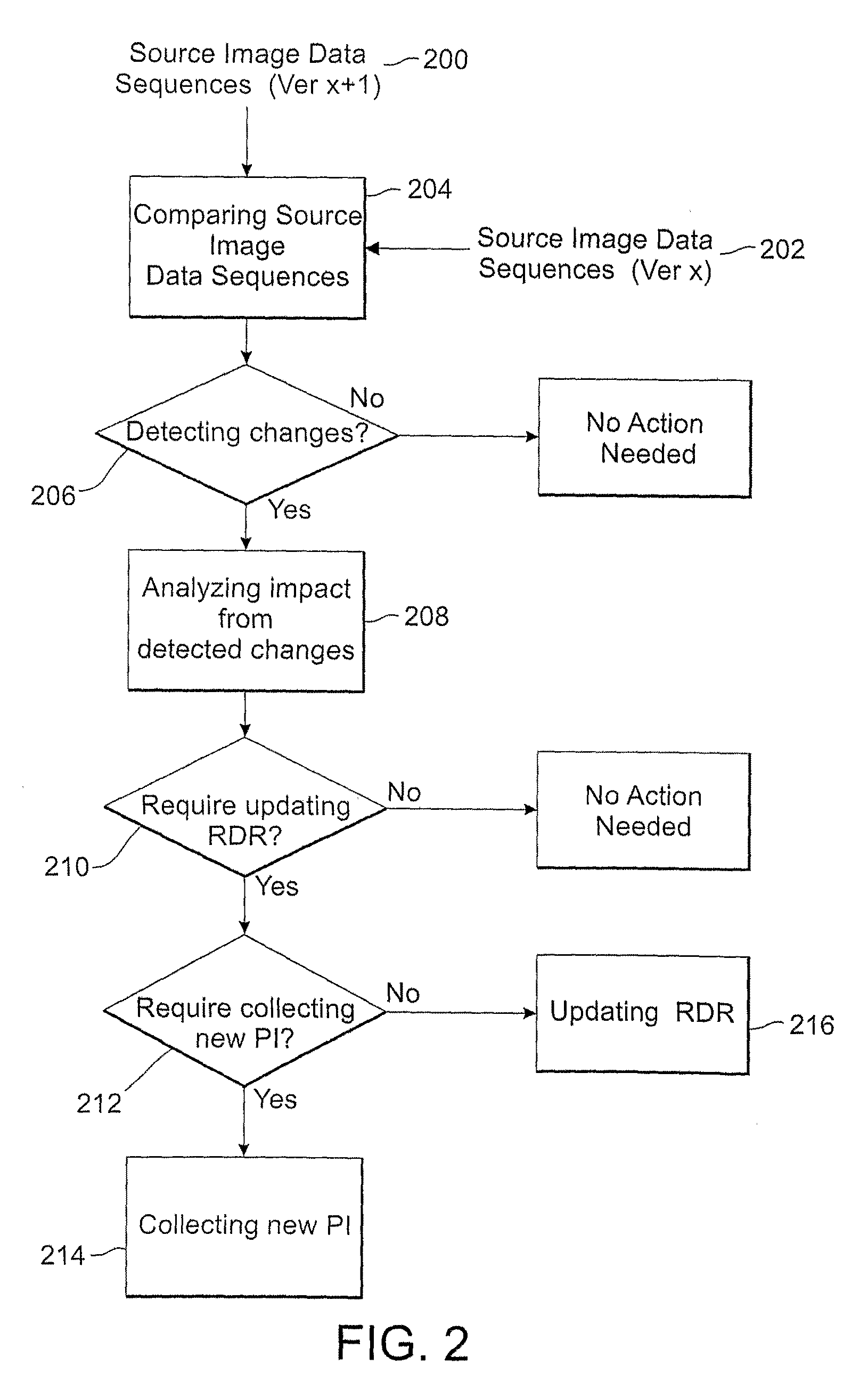

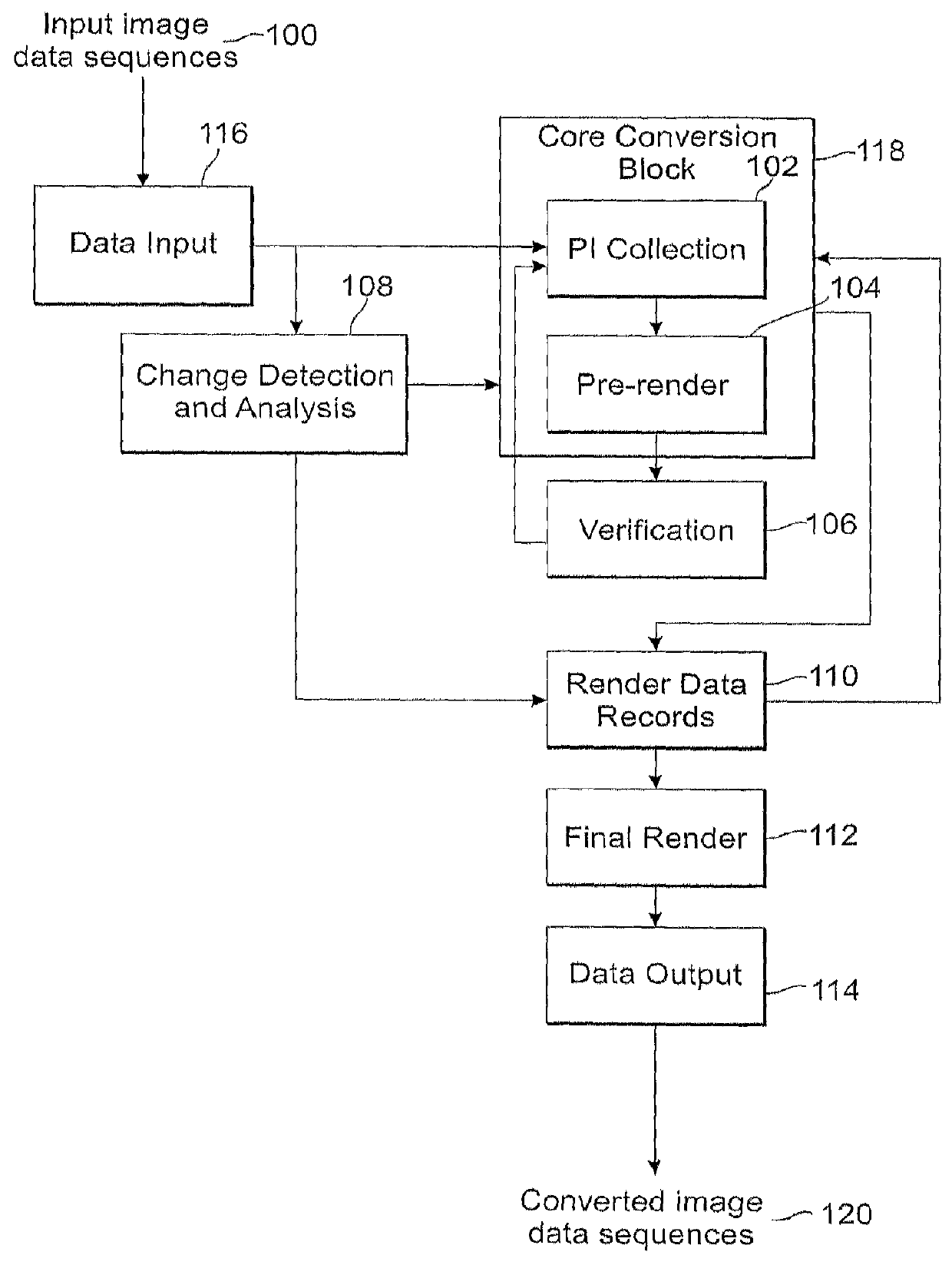

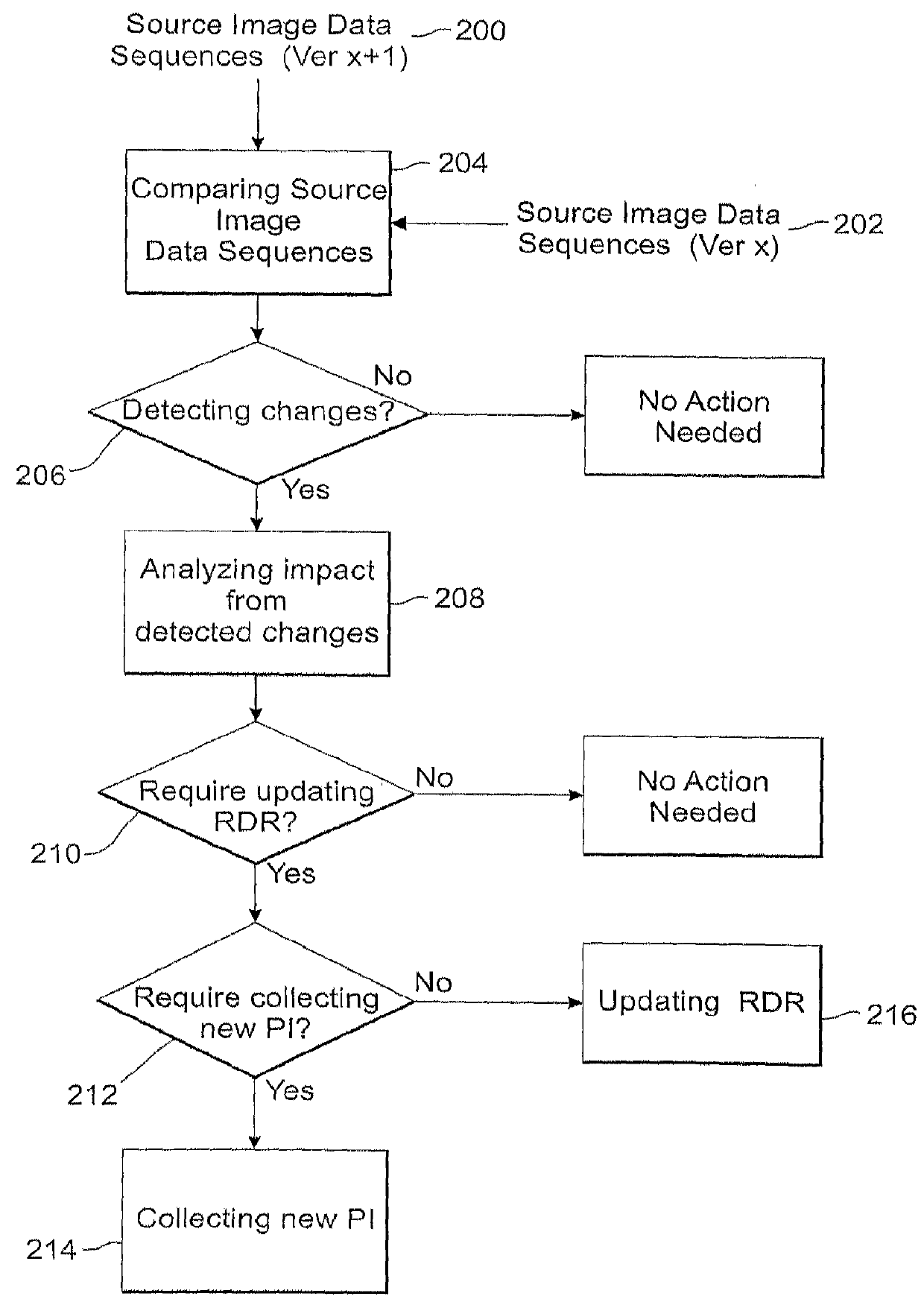

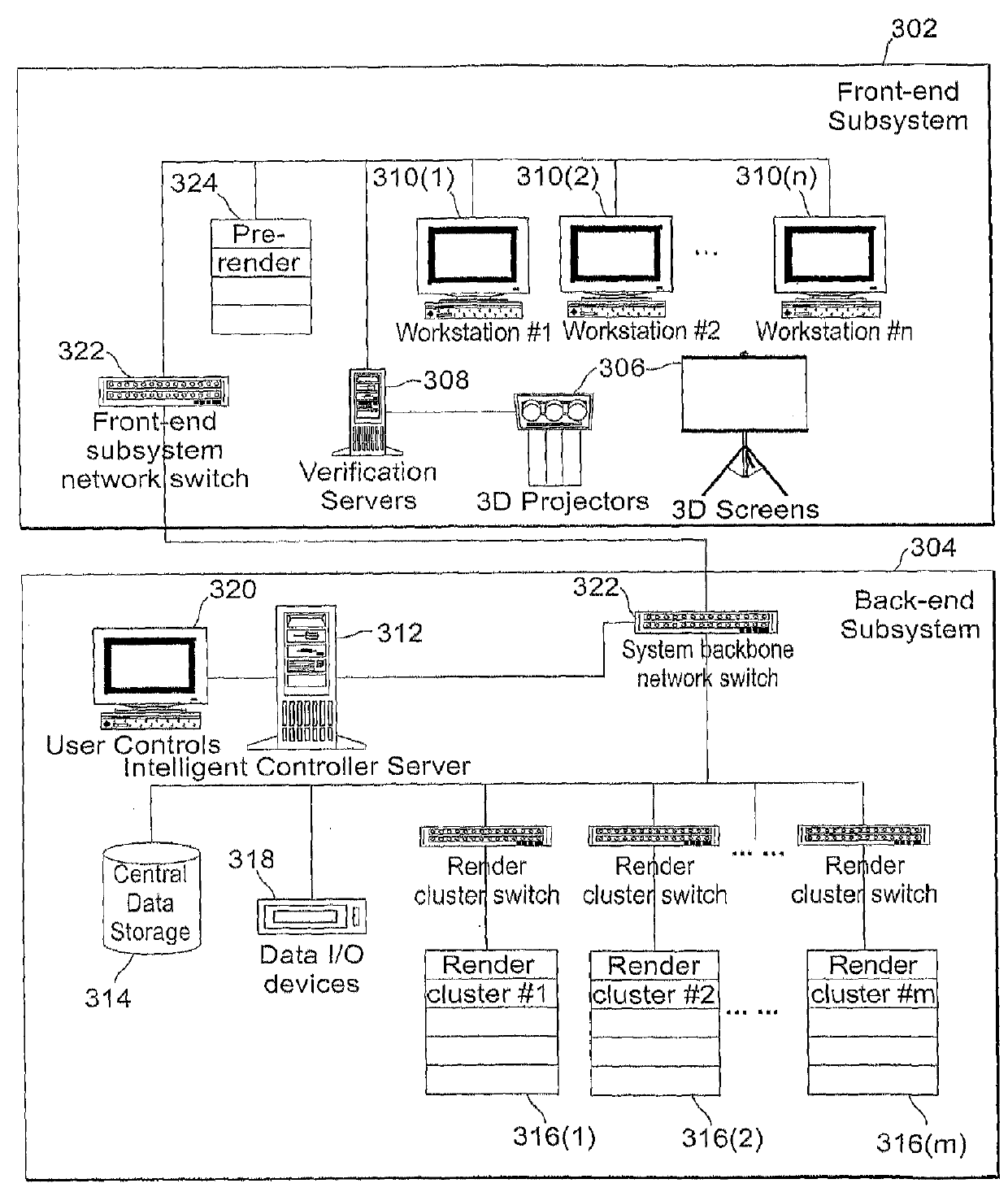

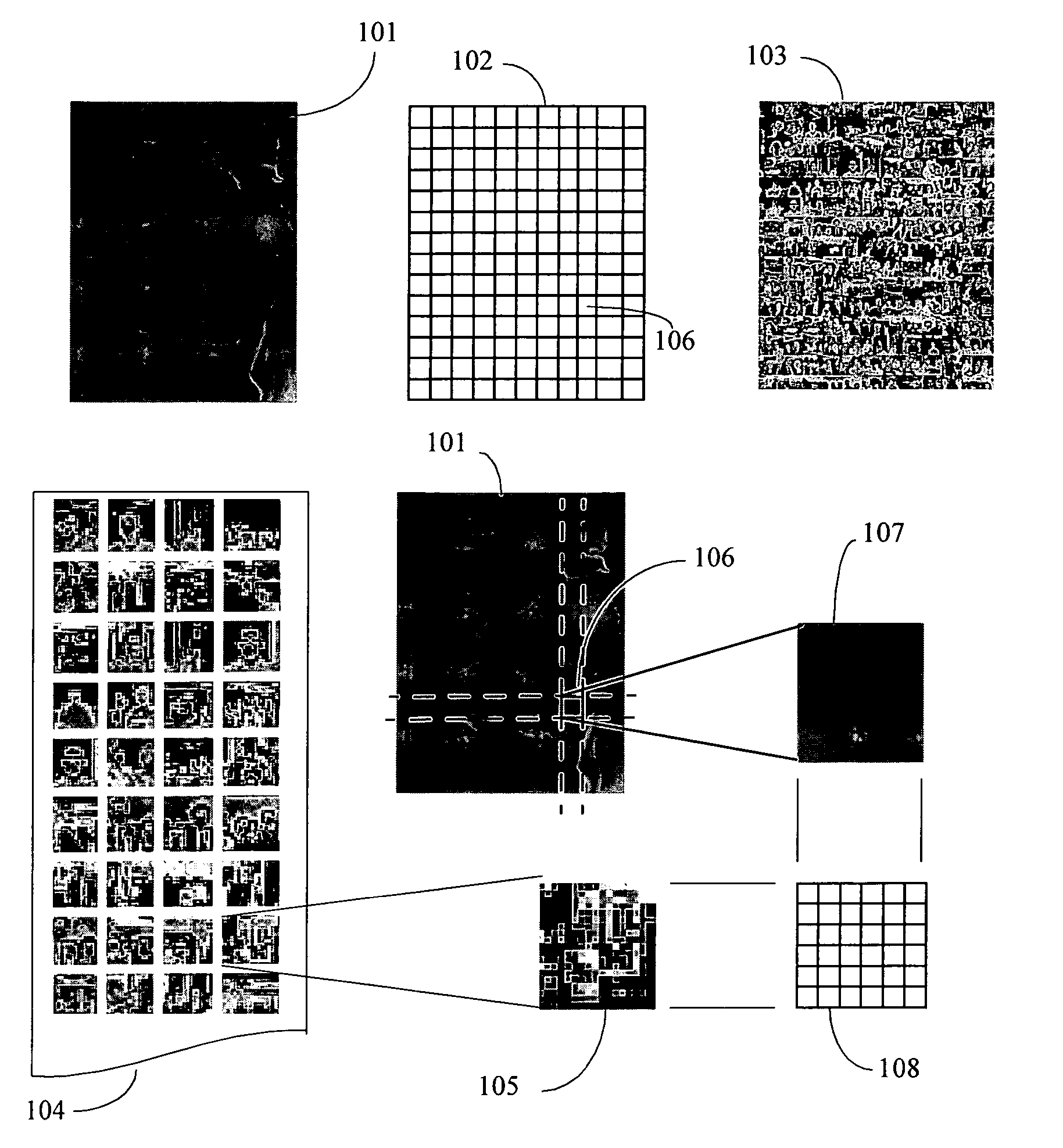

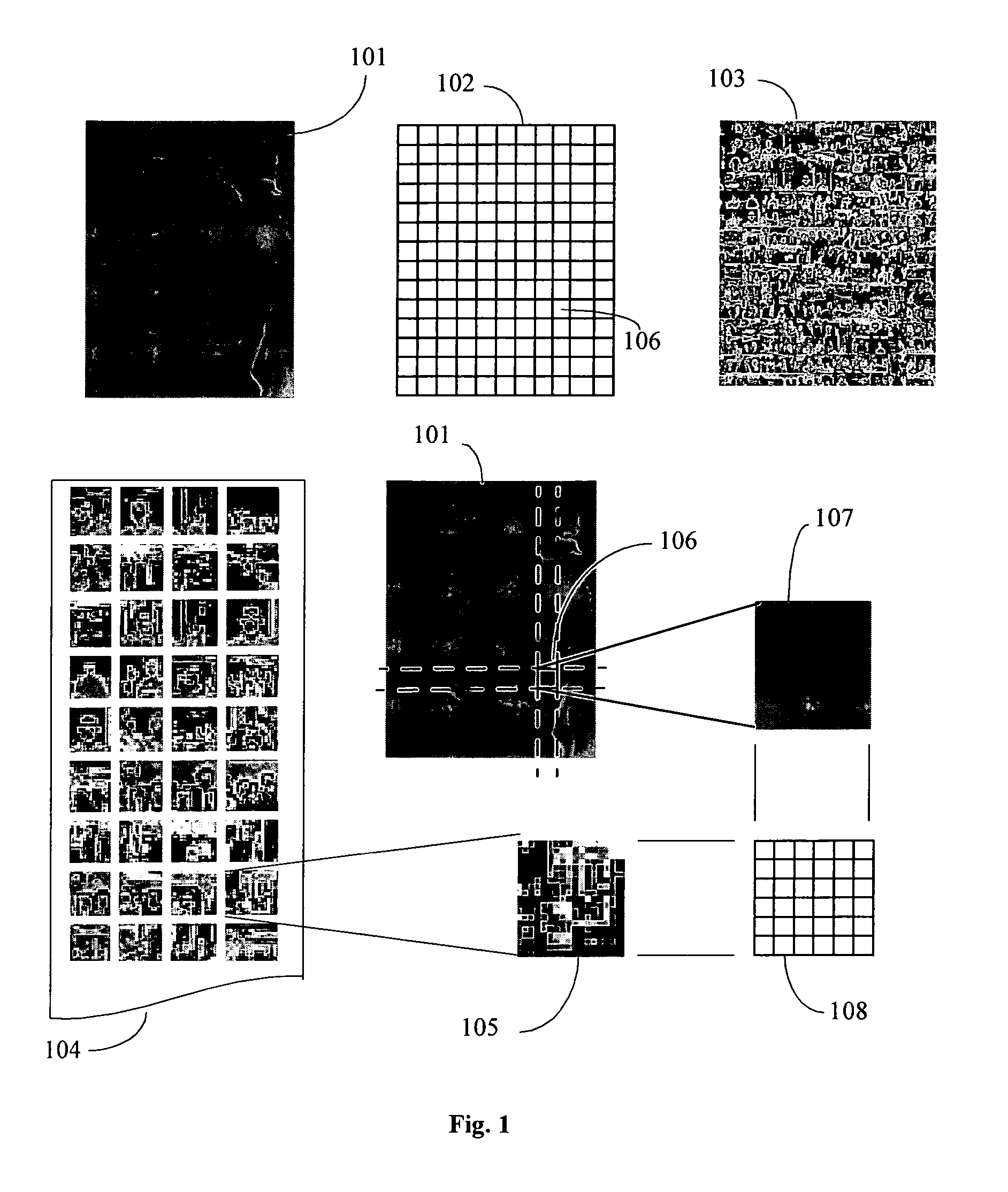

The present invention discloses methods of digitally converting 2D motion pictures or any other 2D image sequences to stereoscopic 3D image data for 3D exhibition. In one embodiment, various types of image data cues can be collected from 2D source images by various methods and then used for producing two distinct stereoscopic 3D views. Embodiments of the disclosed methods can be implemented within a highly efficient system comprising both software and computing hardware. The architectural model of some embodiments of the system is equally applicable to a wide range of conversion, re-mastering and visual enhancement applications for motion pictures and other image sequences, including converting a 2D motion picture or a 2D image sequence to 3D, re-mastering a motion picture or a video sequence to a different frame rate, enhancing the quality of a motion picture or other image sequences, or other conversions that facilitate further improvement in visual image quality within a projector to produce the enhanced images.

Owner:IMAX CORP

Image Blending System, Method and Video Generation System

InactiveUS20080043041A2Advanced conceptQuick mixColor signal processing circuitsCharacter and pattern recognitionComputer graphics (images)Radiology

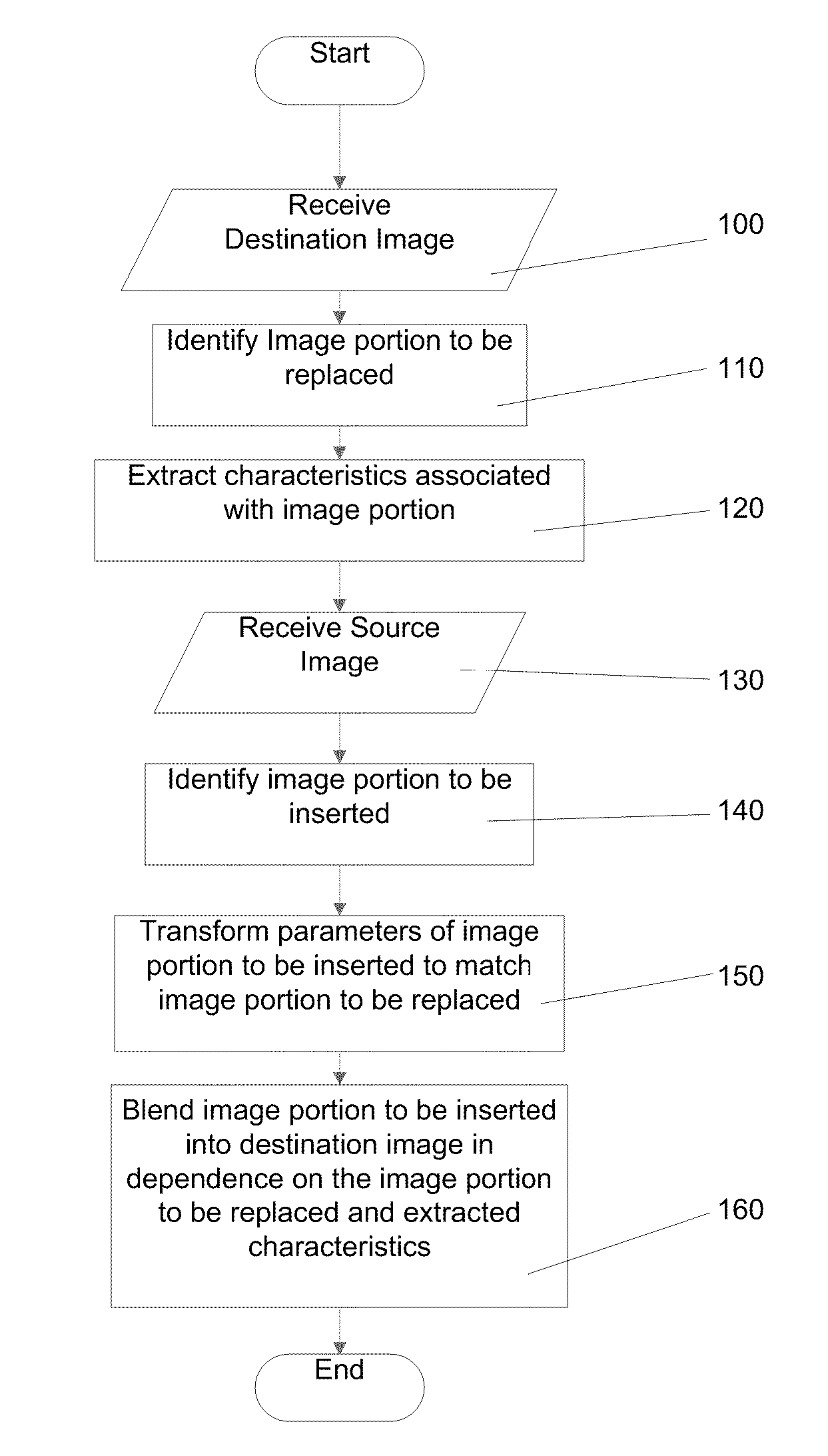

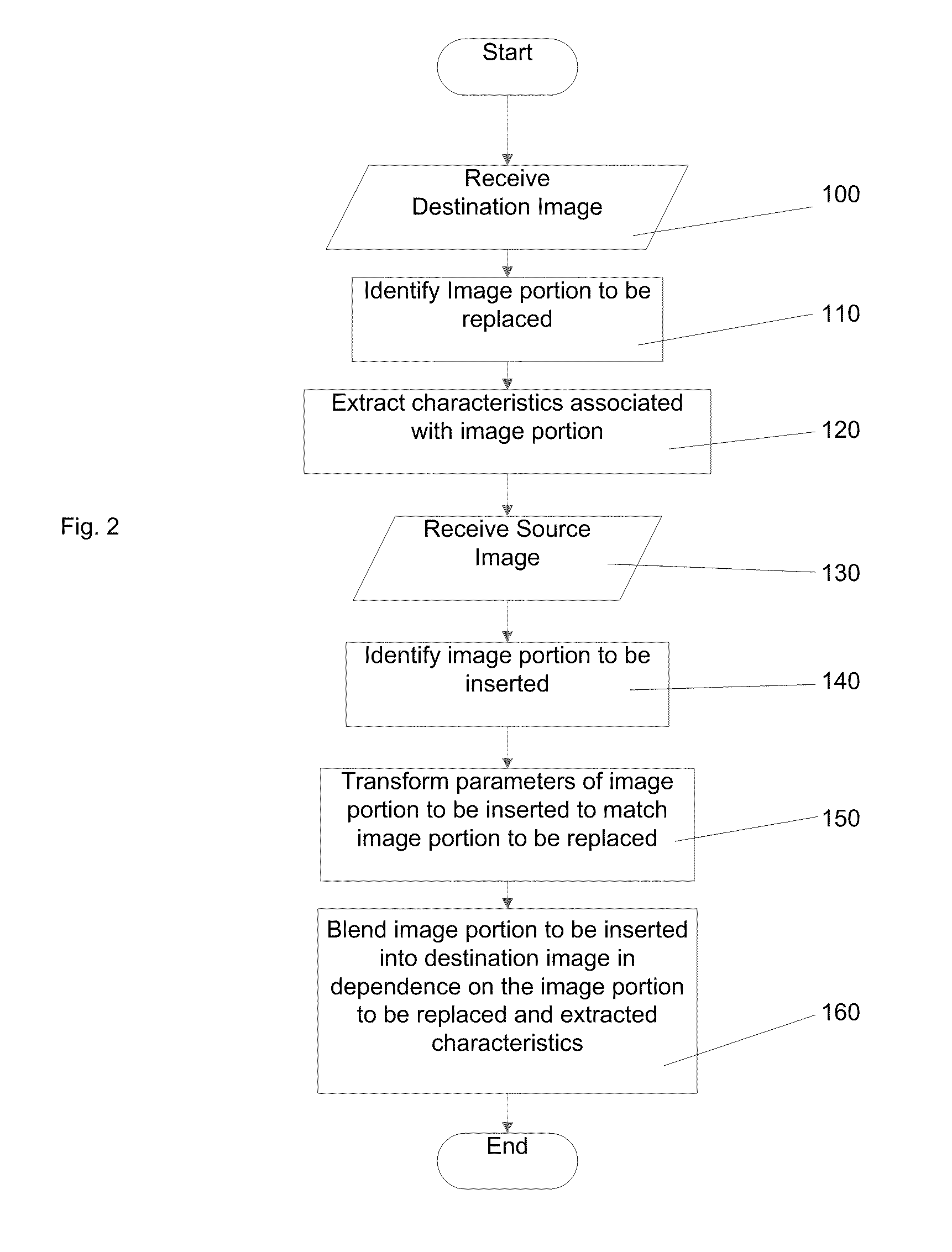

A method and system for image blending is disclosed. A destination image is received (100), the destination image including an image portion to be replaced and having characteristics associated with the identified image portion. A source image is also received (130). An image portion of the source image to be inserted into the destination image is identified (140). Where necessary, parameters of the image portion to be inserted are transformed to match those of the image portion to be replaced (150). The image portion to be inserted is then blended into the destination image in dependence on the image portion to be replaced and its associated characteristics (160). A video generation system using these features is also disclosed.

Owner:FREMANTLEMEDIA

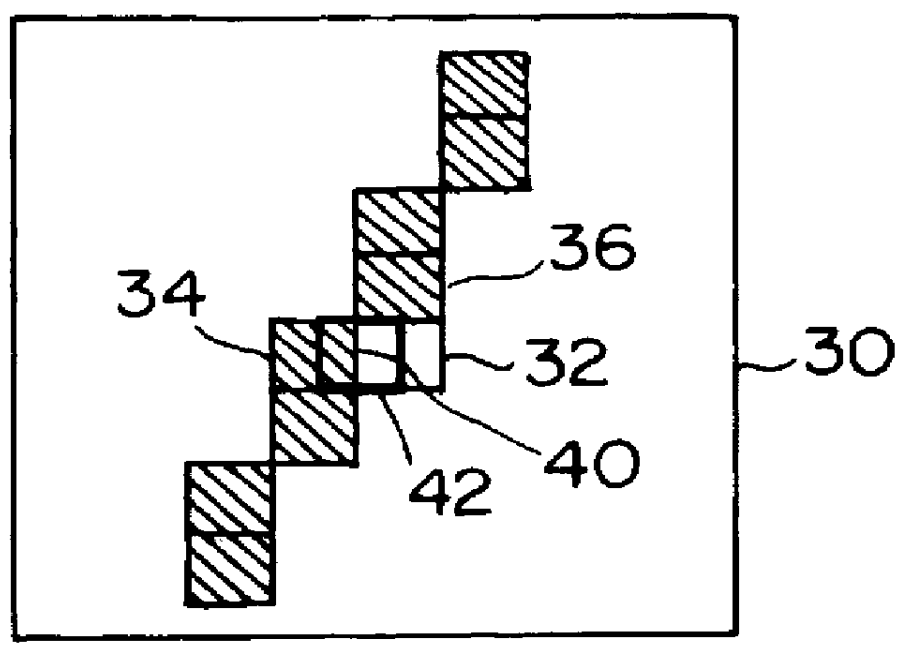

Arbitrary fractional pixel movement

ActiveUS20100060646A1Continuous appearancePicture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningComputer graphics (images)Source image

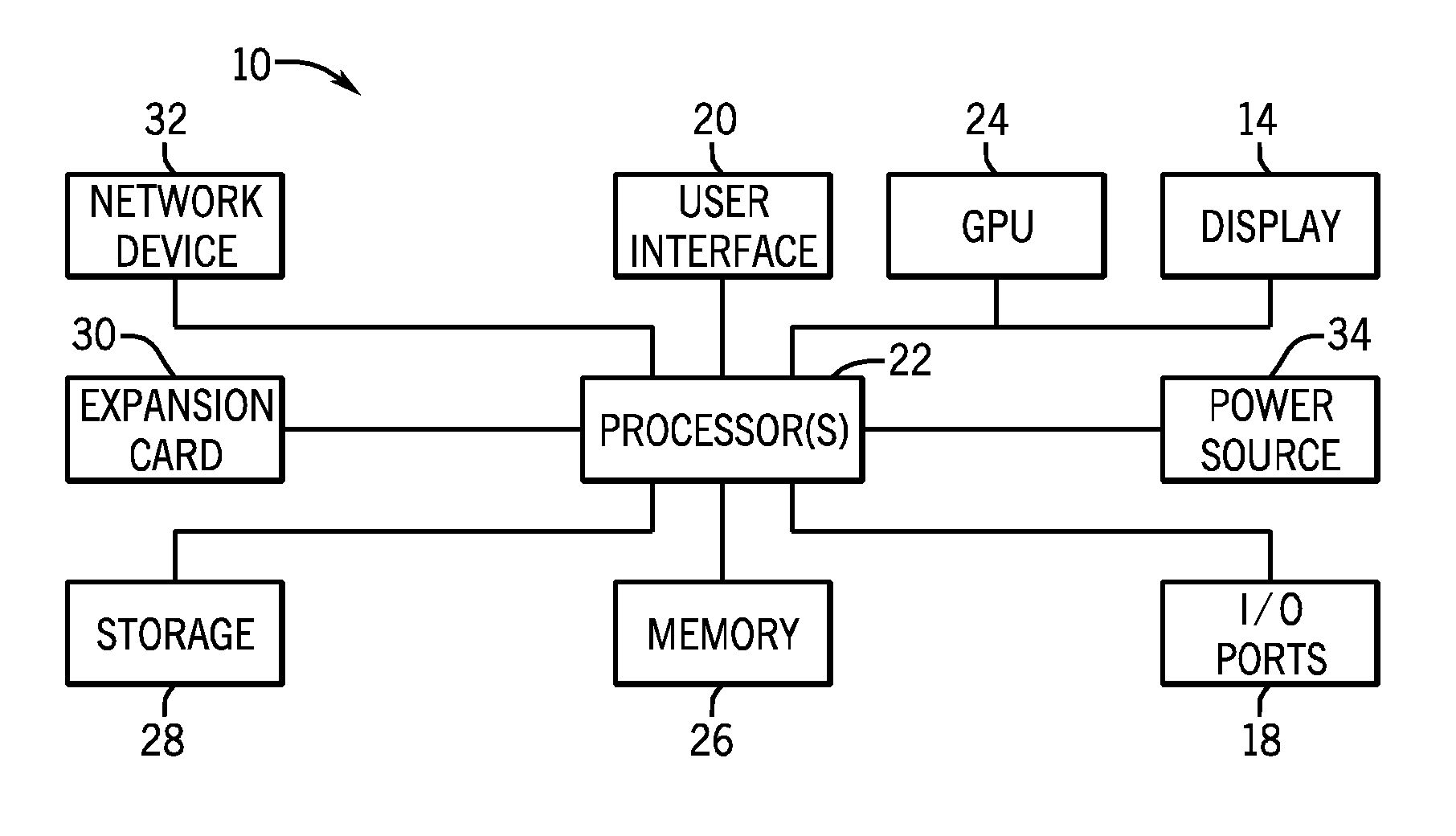

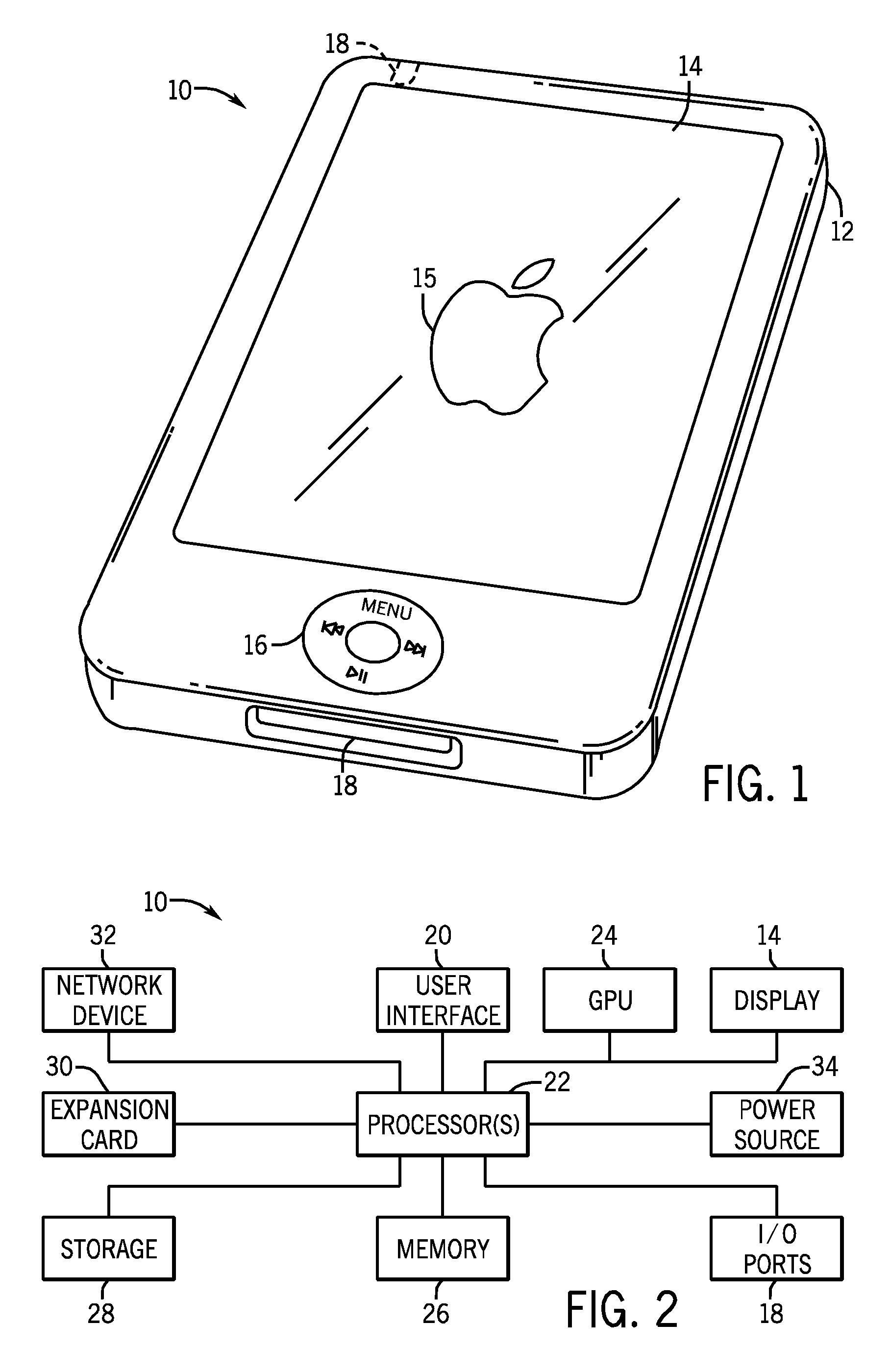

A technique is provided for displaying pixels of an image at arbitrary subpixel positions. In accordance with aspects of this technique, interpolated intensity values for the pixels of the image are derived based on the arbitrary subpixel location and an intensity distribution or profile. Reference to the intensity distribution provides appropriate multipliers for the source image. Based on these multipliers, the image may be rendered at respective physical pixel locations such that the pixel intensities are summed with each rendering, resulting in a destination image having suitable interpolated pixel intensities for the arbitrary subpixel position.

Owner:APPLE INC

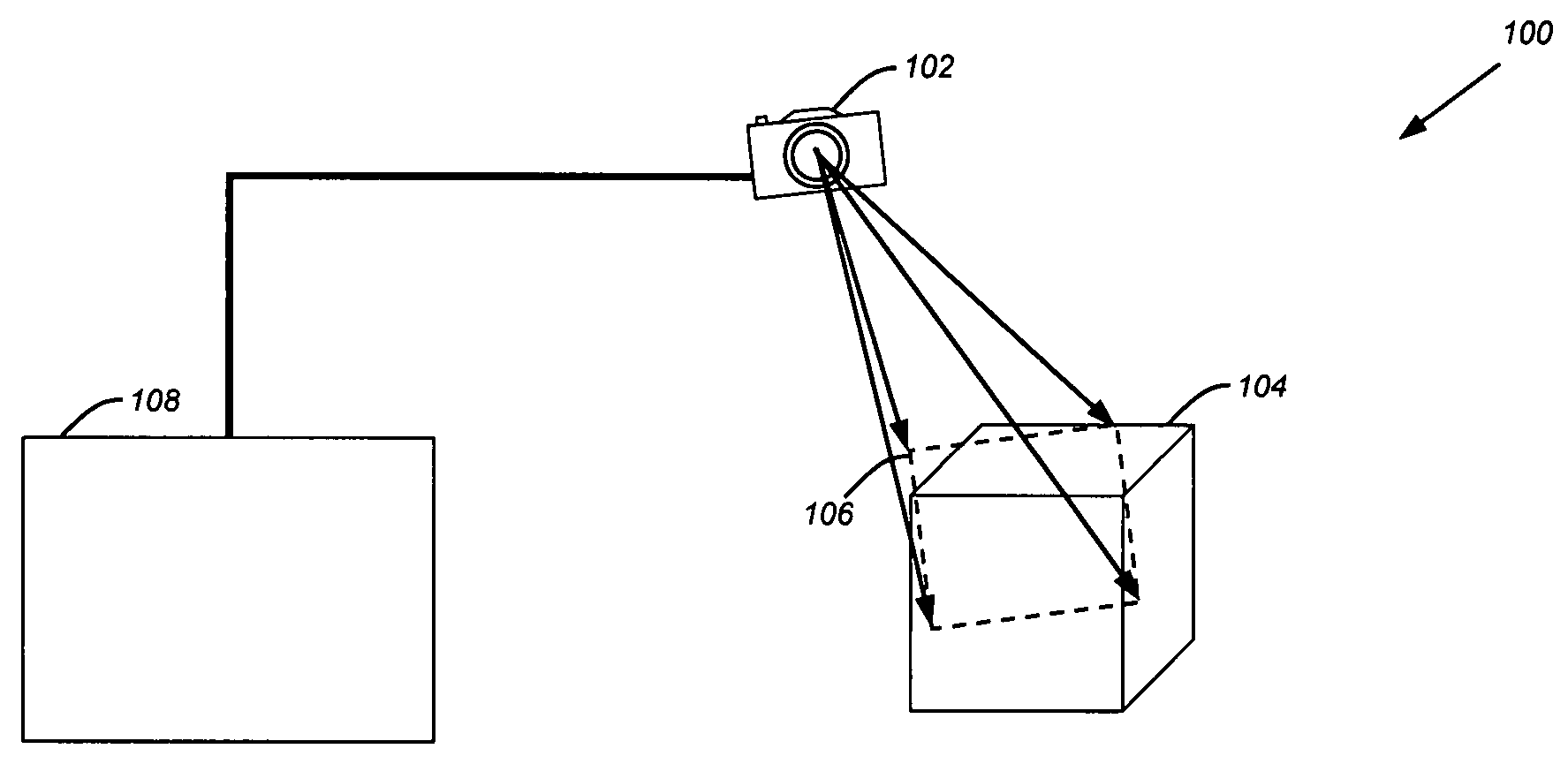

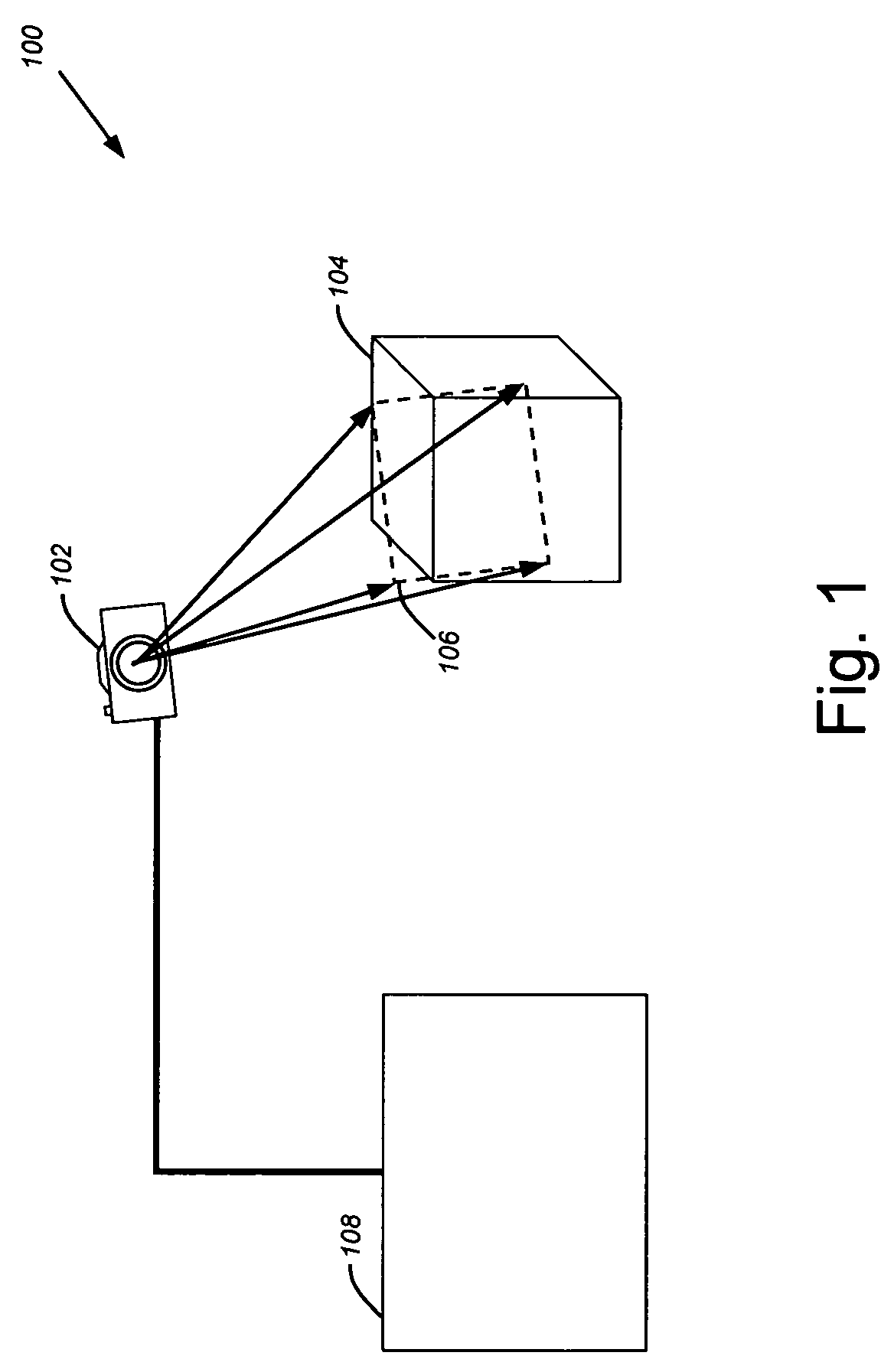

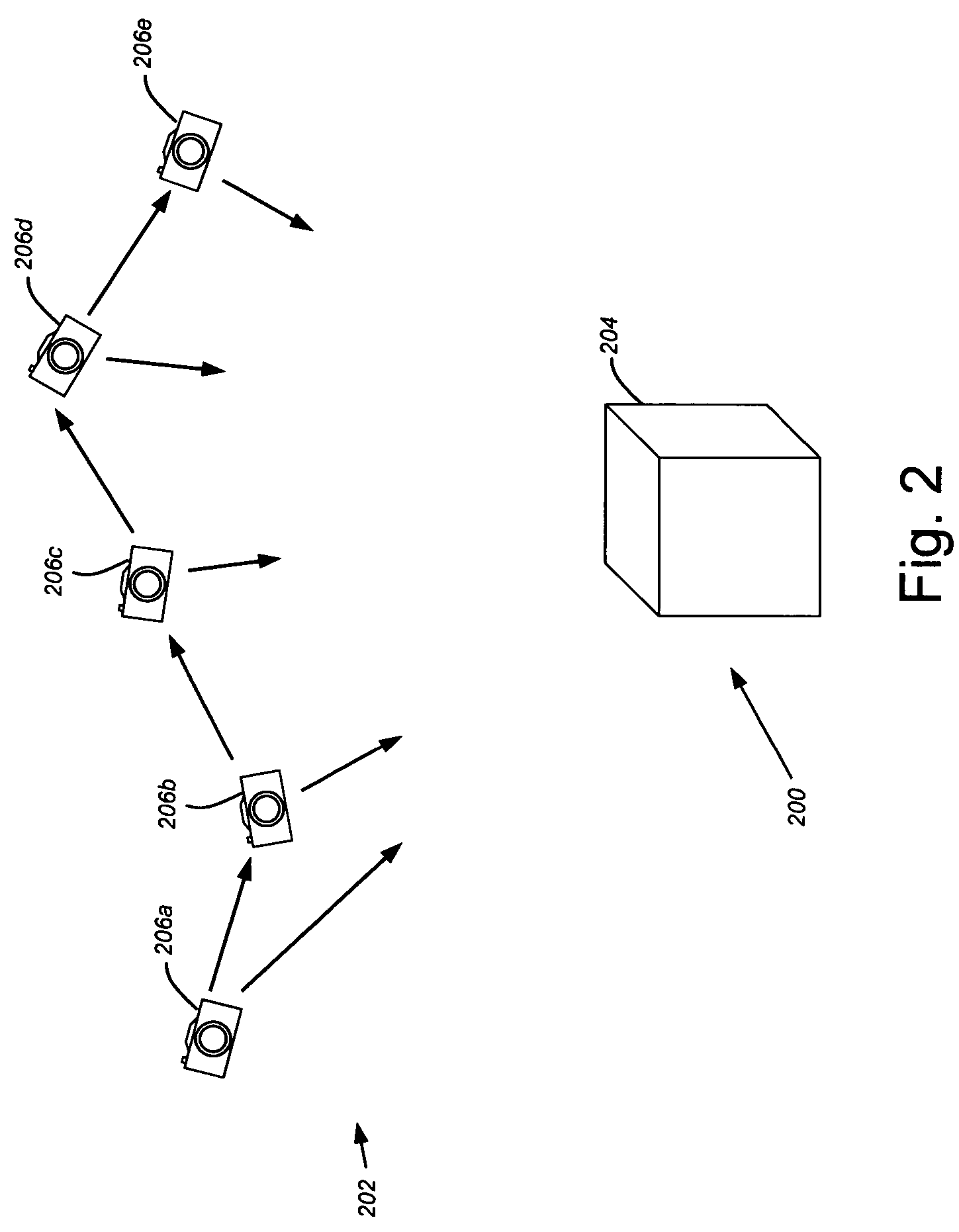

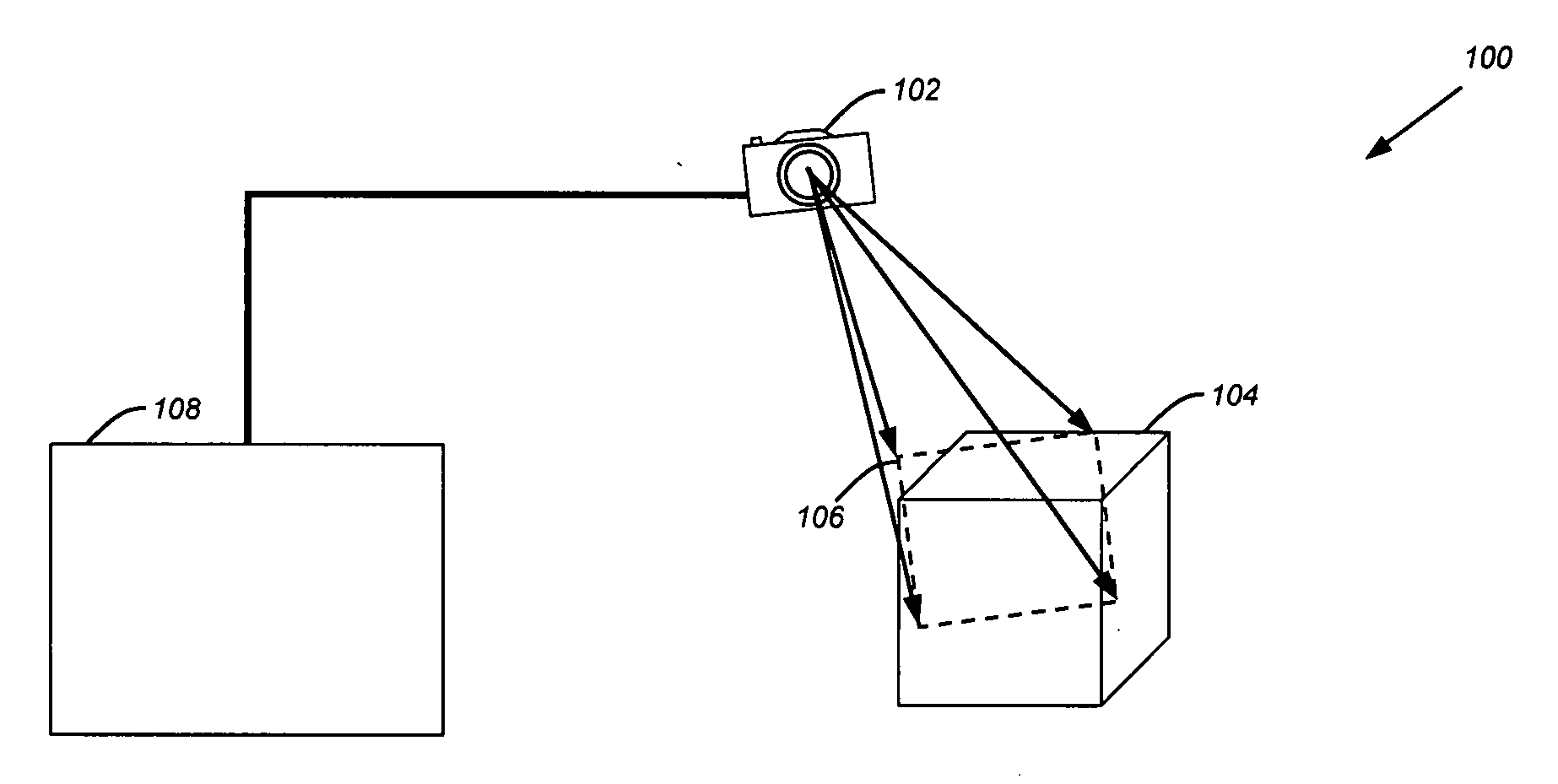

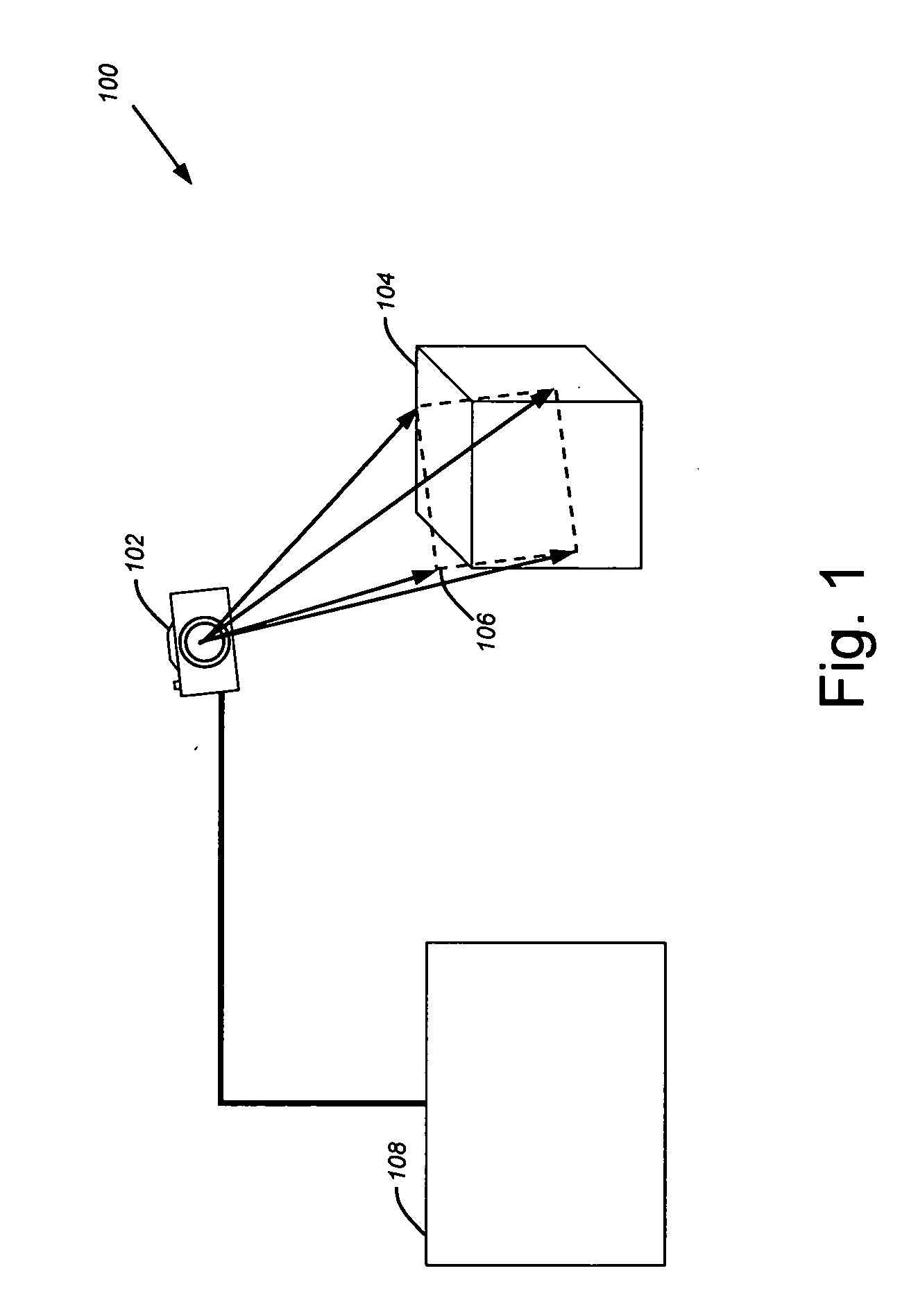

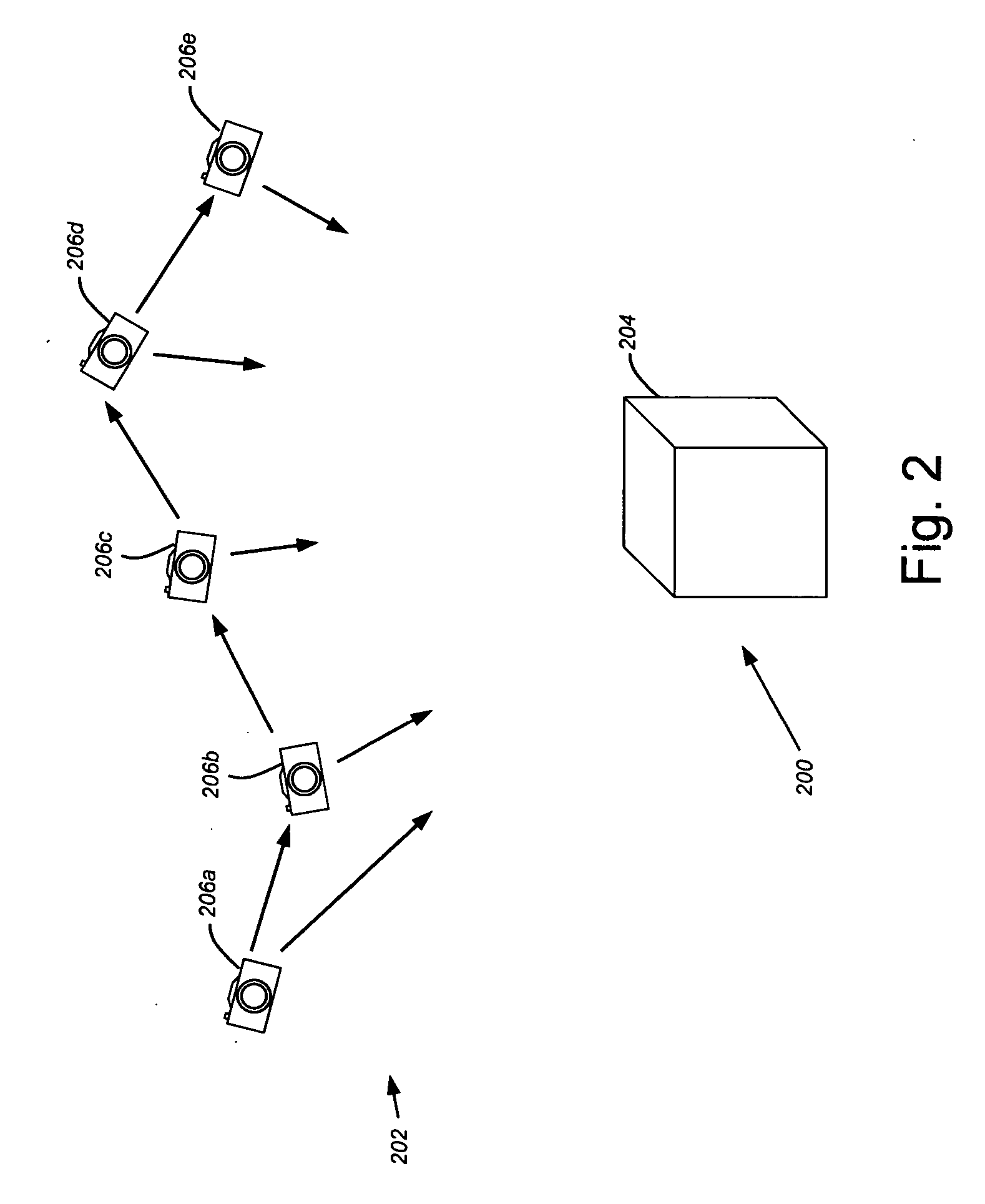

Determining camera motion

ActiveUS7605817B2Efficiently parameterizedTelevision system detailsImage analysisPoint cloudSource image

Camera motion is determined in a three-dimensional image capture system using a combination of two-dimensional image data and three-dimensional point cloud data available from a stereoscopic, multi-aperture, or similar camera system. More specifically, a rigid transformation of point cloud data between two three-dimensional point clouds may be more efficiently parameterized using point correspondence established between two-dimensional pixels in source images for the three-dimensional point clouds.

Owner:MEDIT CORP

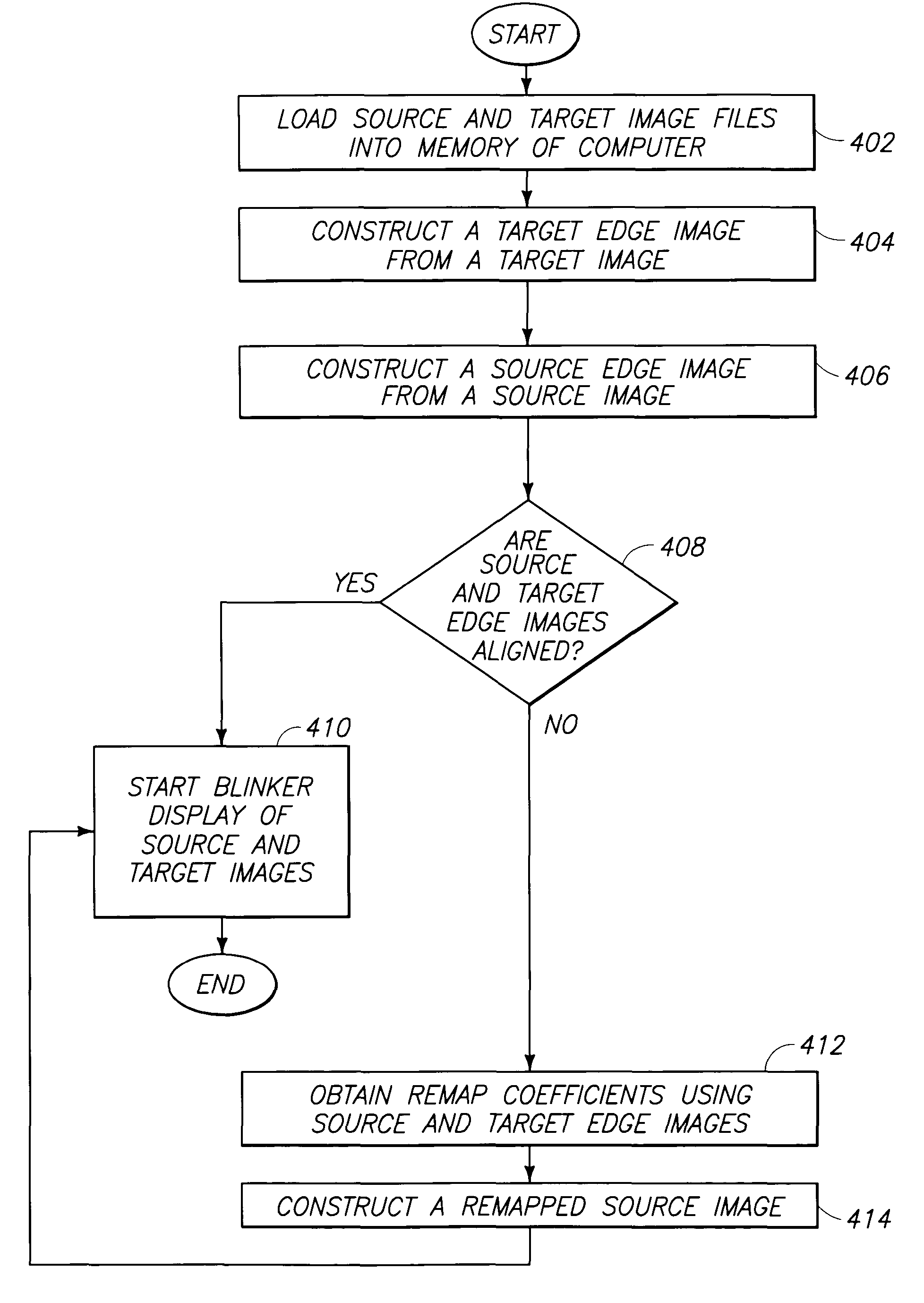

Image change detection systems, methods, and articles of manufacture

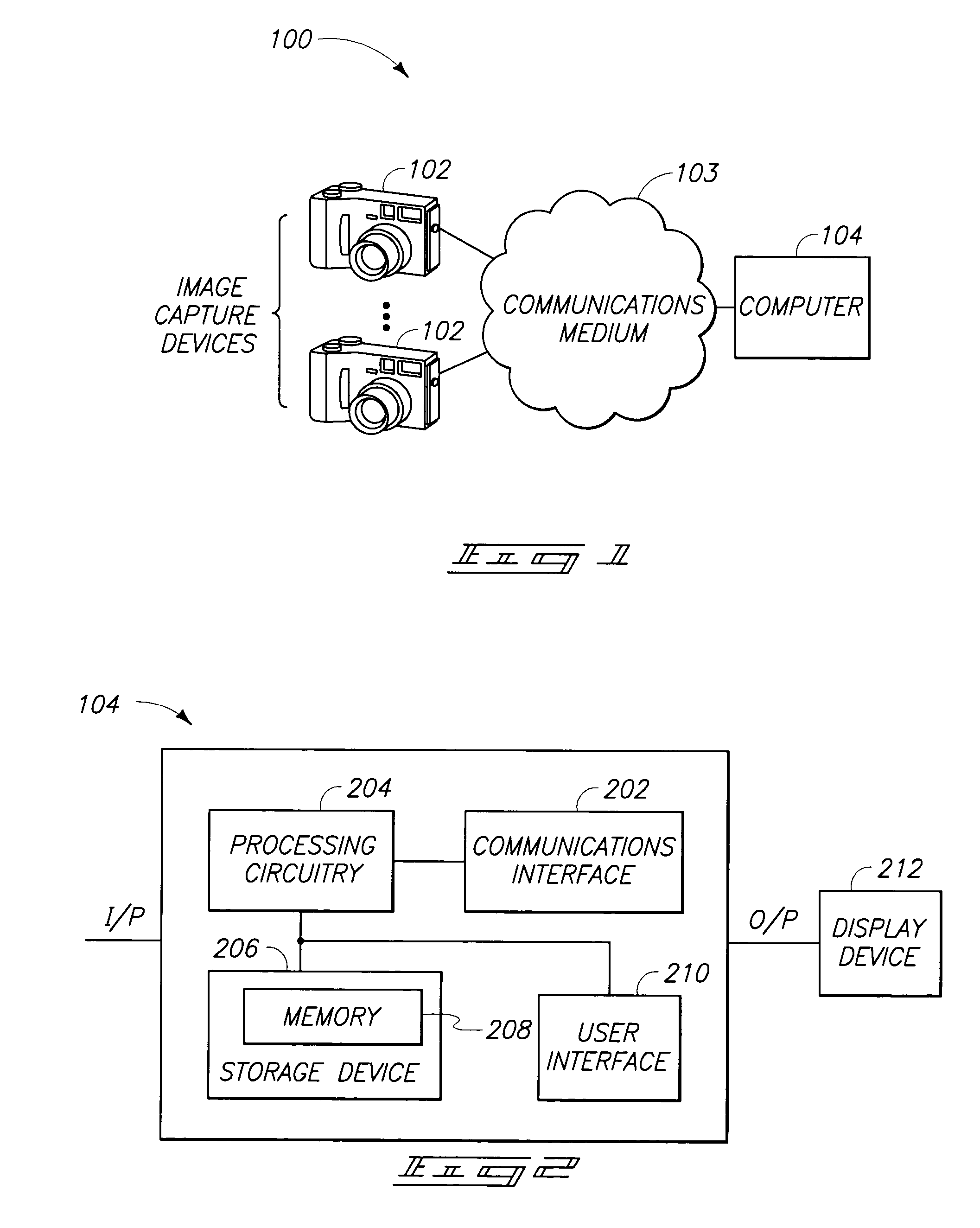

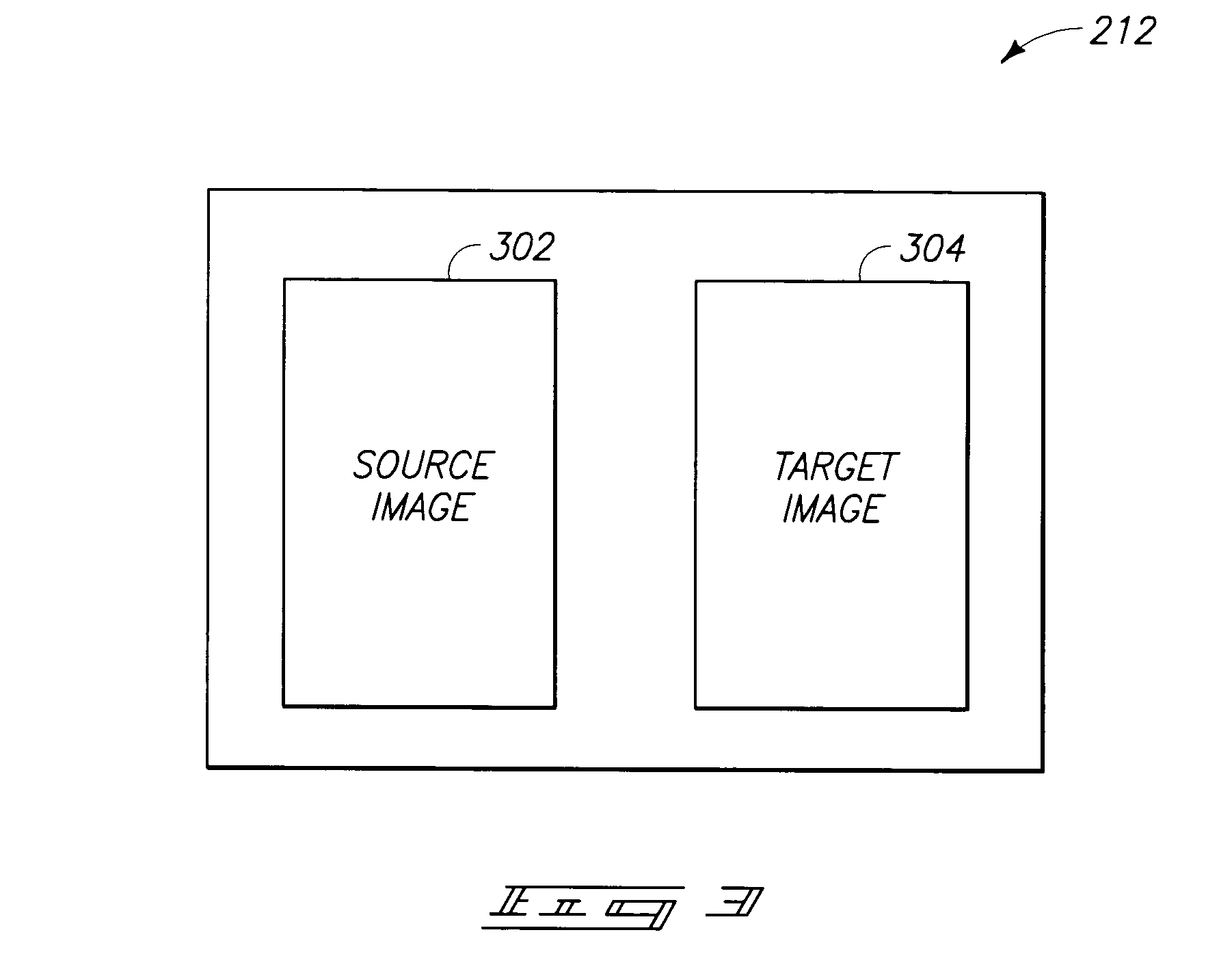

Aspects of the invention relate to image change detection systems, methods, and articles of manufacture. According to one aspect, a method of identifying differences between a plurality of images is described. The method includes loading a source image and a target image into memory of a computer, constructing source and target edge images from the source and target images to enable processing of multiband images, displaying the source and target images on a display device of the computer, aligning the source and target edge images, switching displaying of the source image and the target image on the display device, to enable identification of differences between the source image and the target image.

Owner:BATTELLE ENERGY ALLIANCE LLC

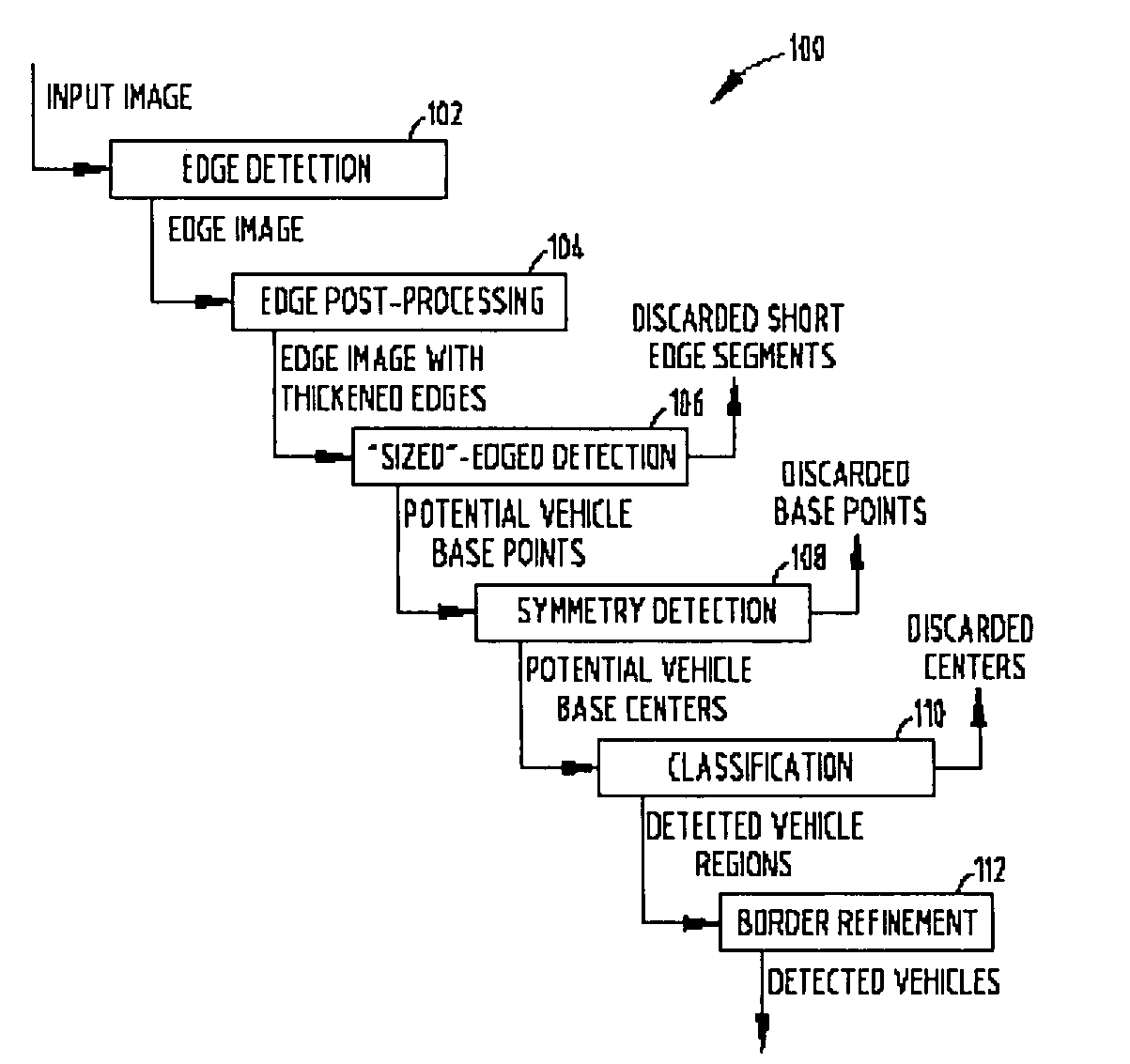

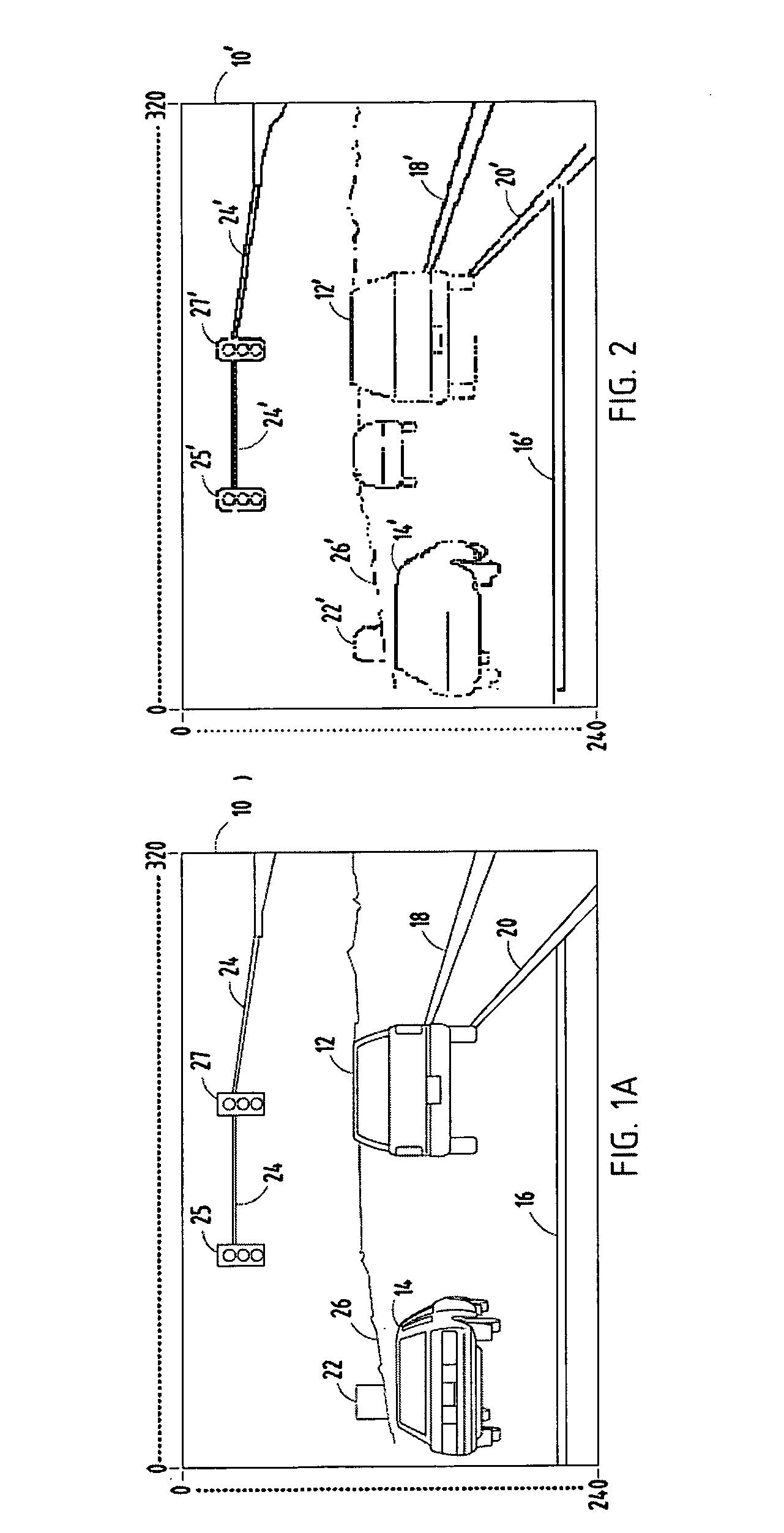

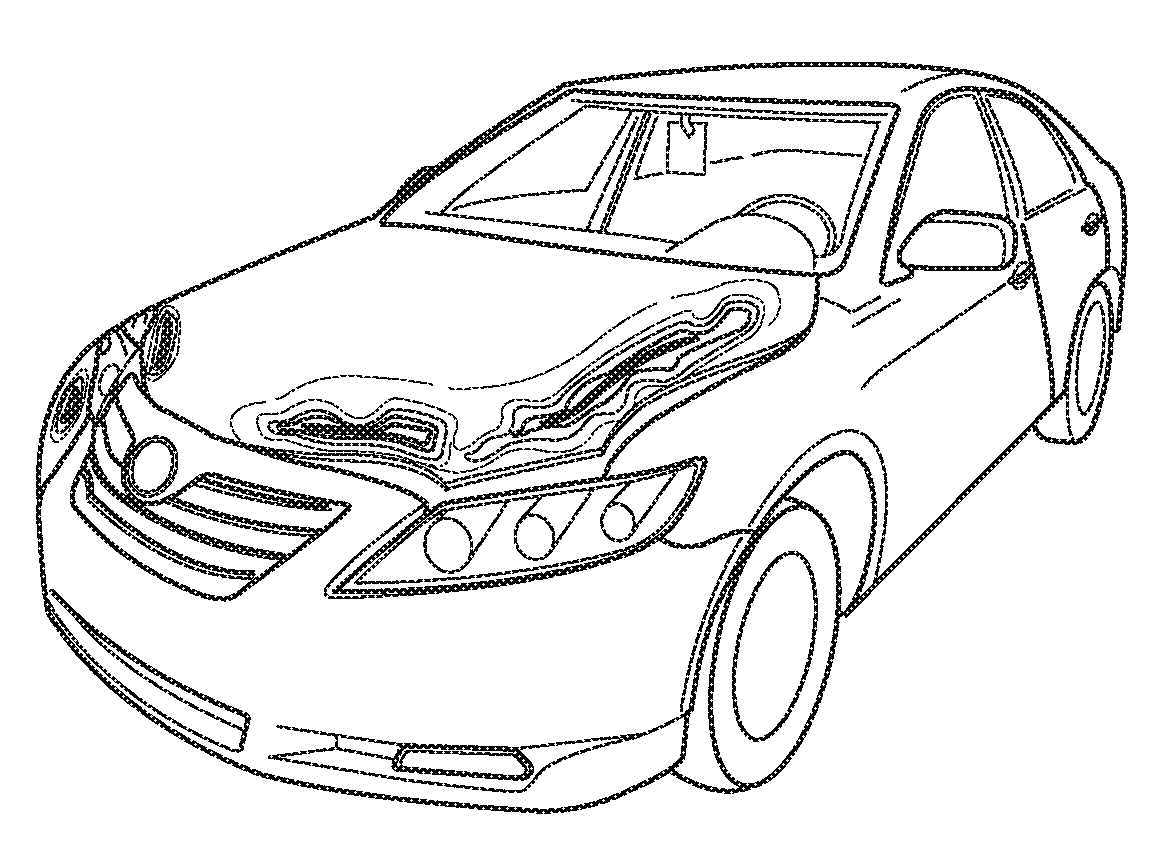

Method for identifying vehicles in electronic images

A method for identifying objects in an electronic image is provided. The method includes the steps of providing an electronic source image and processing the electronic source image to identify edge pixels. The method further includes the steps of providing an electronic representation of the edge pixels and processing the electronic representation of the edge pixels to identify valid edge center pixels. The method still further includes the step of proving an electronic representation of the valid edge center pixels. Each valid edge center pixel represents the approximate center of a horizontal edge segment of a target width. The horizontal edge segment is made up of essentially contiguous edge pixels. The method also includes the steps of determining symmetry values of test regions associated with valid edge center pixels, and classifying the test regions based on factors including symmetry.

Owner:APTIV TECH LTD

Determining camera motion

ActiveUS20070103460A1Efficiently parameterizedTelevision system detailsImage analysisPoint cloud3d image

Camera motion is determined in a three-dimensional image capture system using a combination of two-dimensional image data and three-dimensional point cloud data available from a stereoscopic, multi-aperture, or similar camera system. More specifically, a rigid transformation of point cloud data between two three-dimensional point clouds may be more efficiently parameterized using point correspondence established between two-dimensional pixels in source images for the three-dimensional point clouds.

Owner:MEDIT CORP

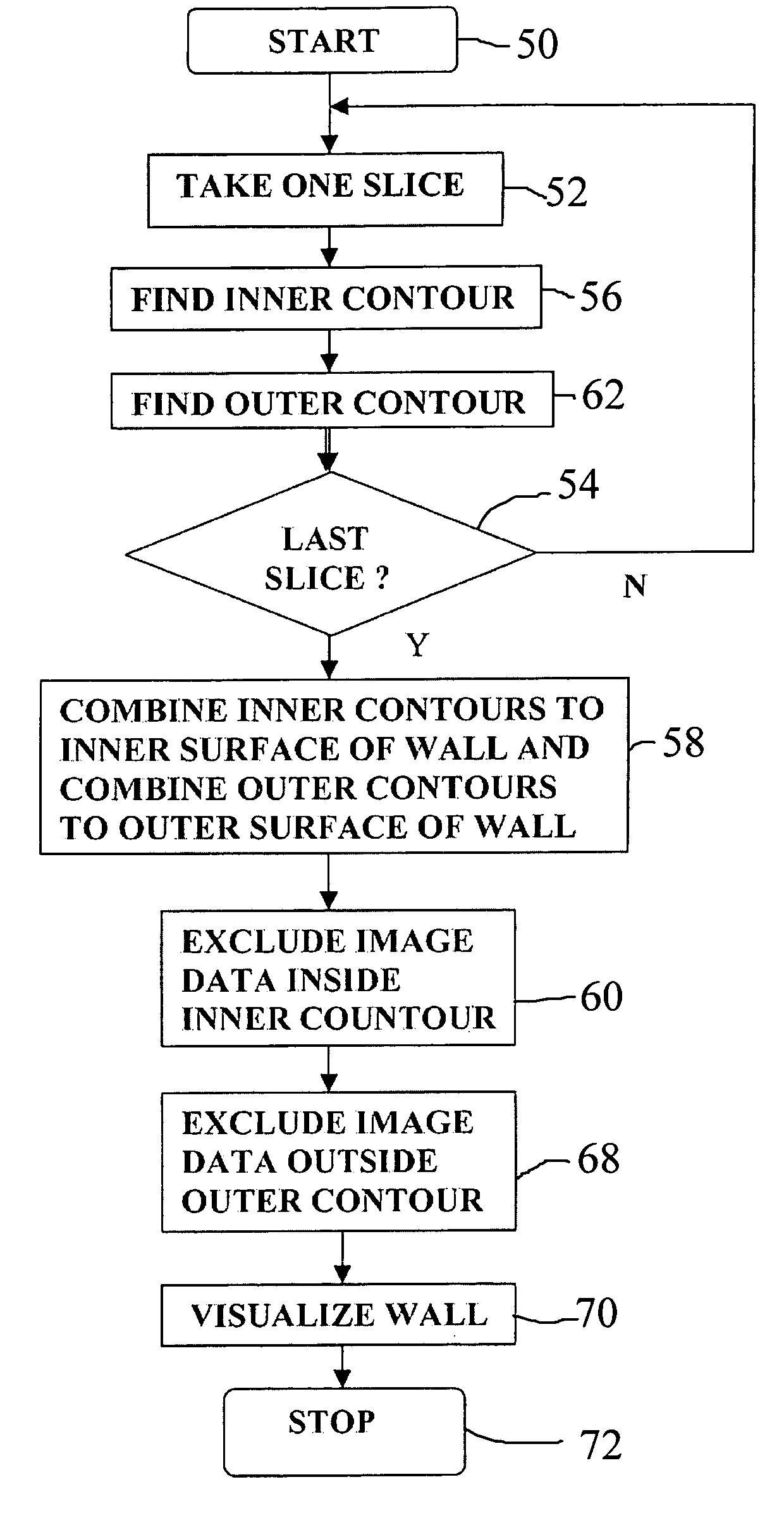

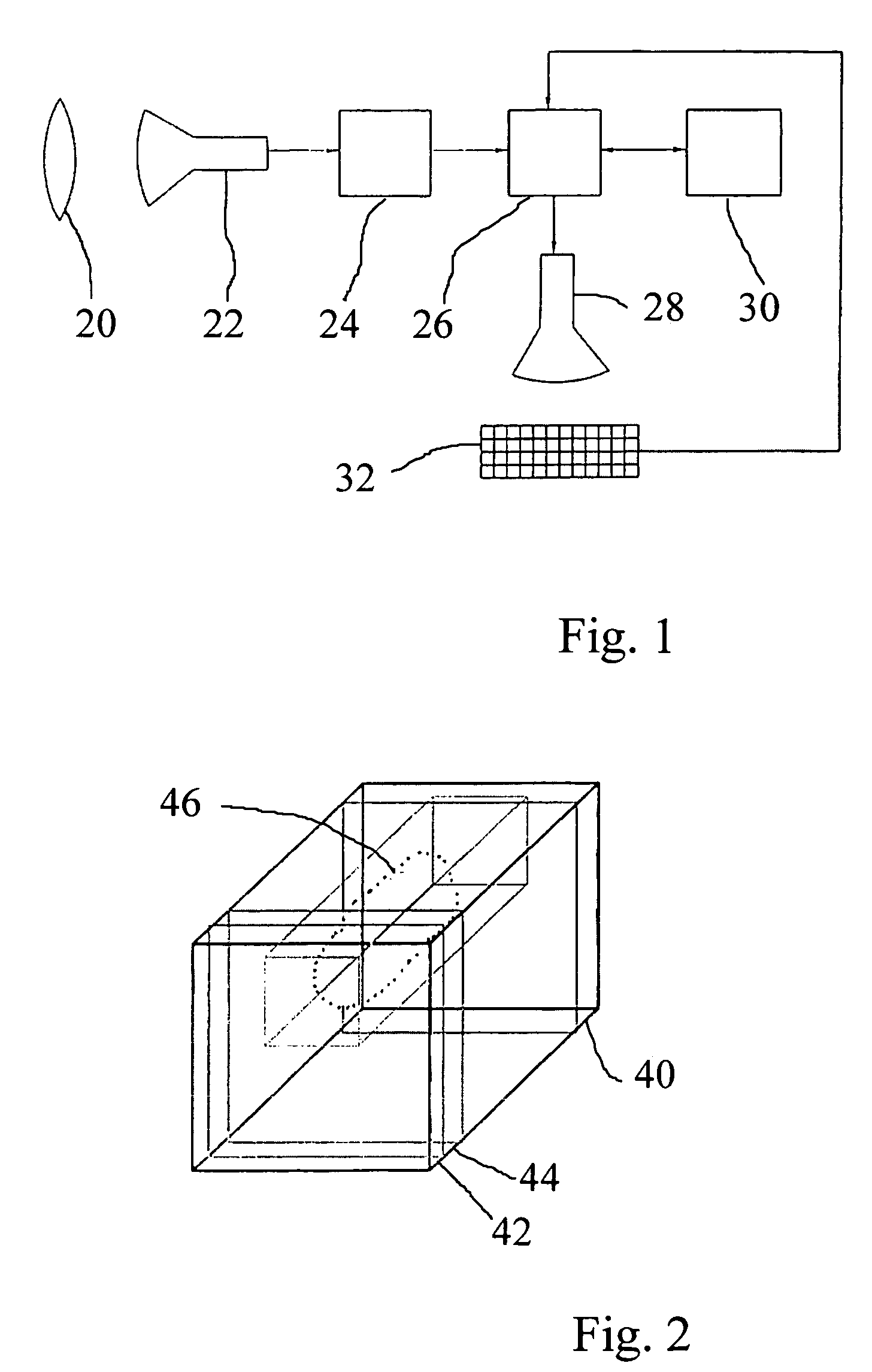

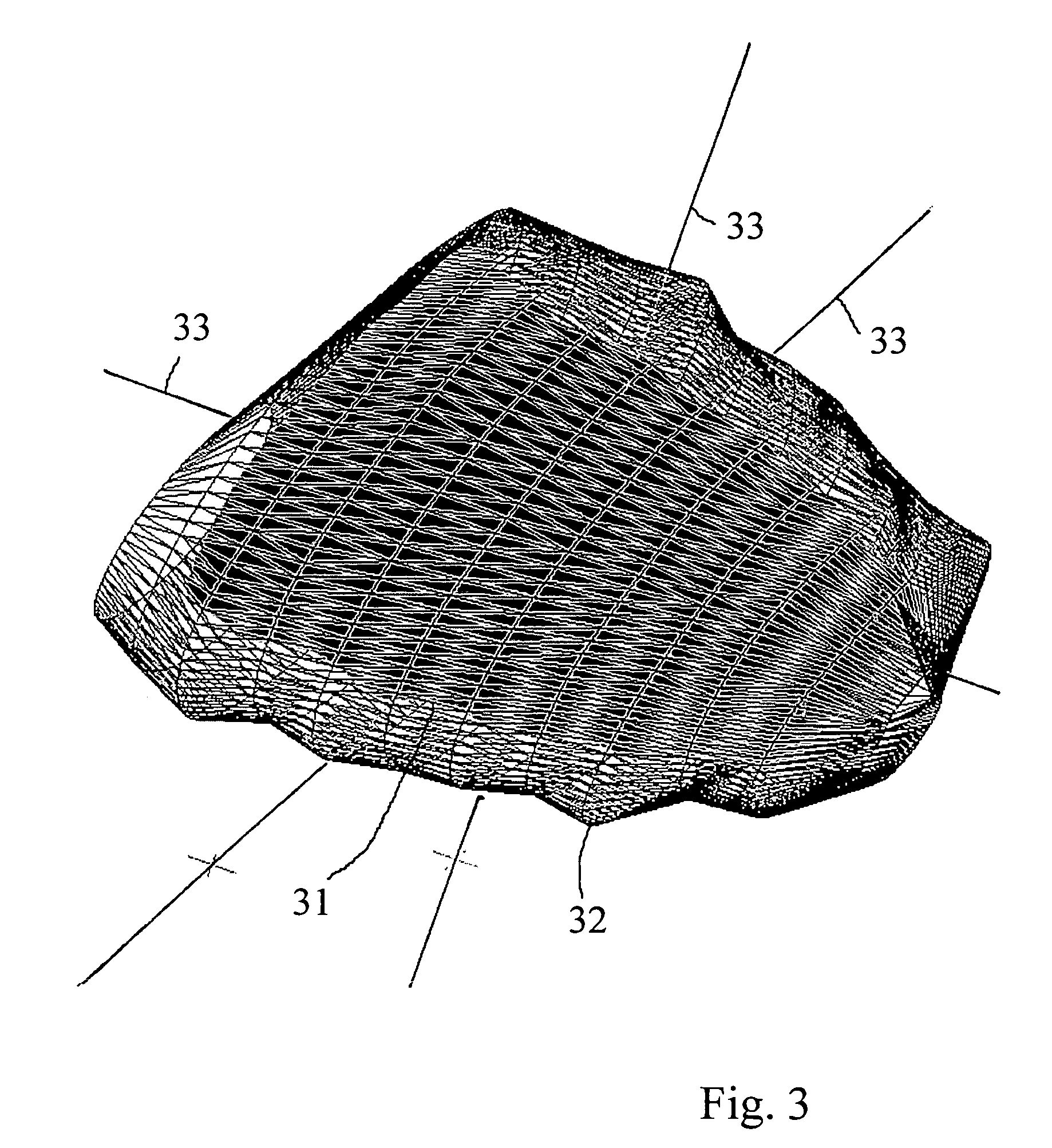

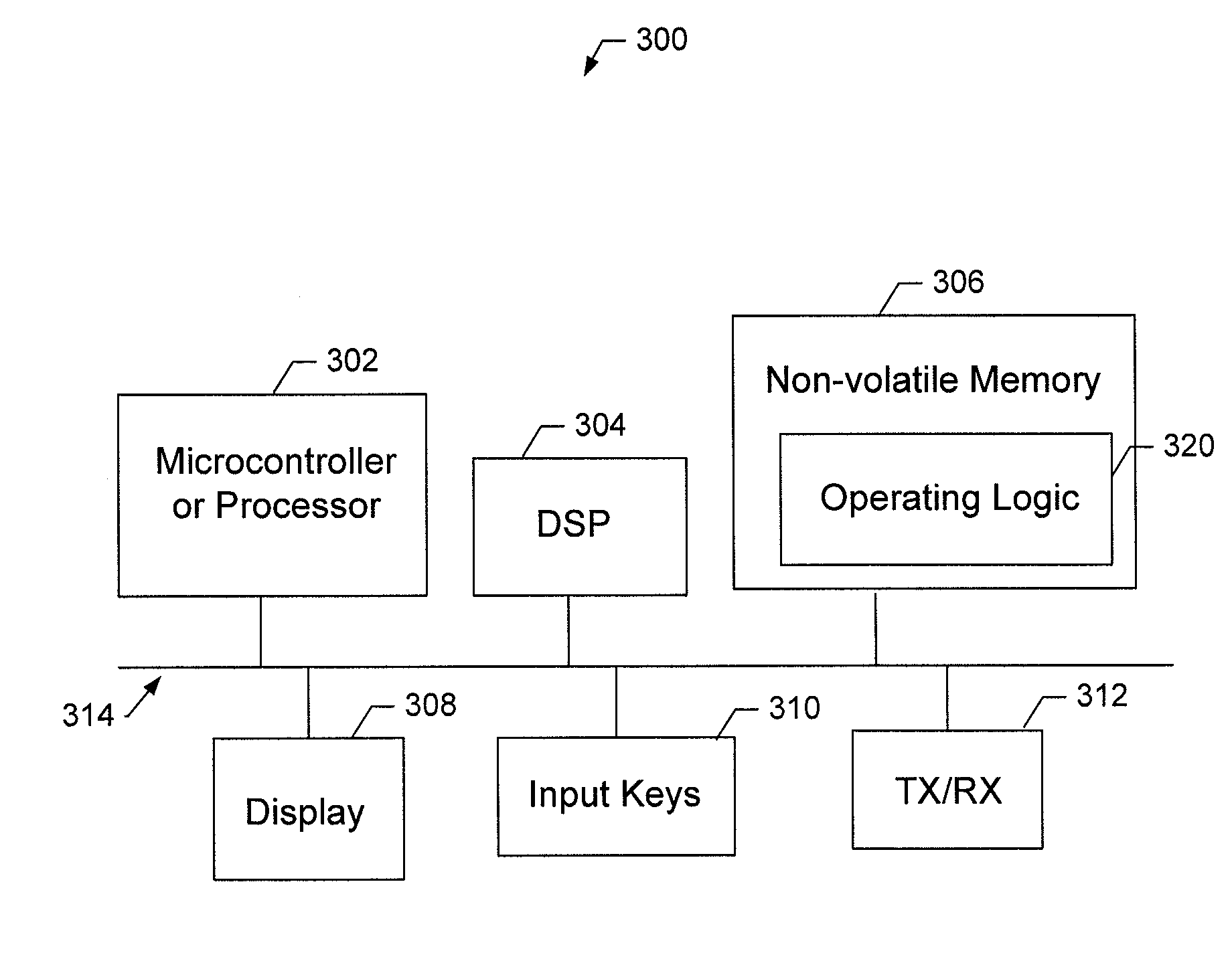

Method and apparatus for visualization of biological structures with use of 3D position information from segmentation results

A data processing methodology and corresponding apparatus for visualizing the characteristics of a particular object volume in an overall medical / biological environment receives a source image data set pertaining to the overall environment. First and second contour surfaces within the environment are established which collectively define a target object volume. By way of segmenting, all information pertaining to structures outside the target object volume are excluded from the image data. A visual representation of the target object based on nonexcluded information is displayed. In particular, the method establishes i) the second contour surface through combining both voxel intensities and relative positions among voxel subsets, and ii) a target volume by excluding all data outside the outer surface and inside the inner surface (which allows non-uniform spacing between the first and second contour surfaces). The second contour surface is used as a discriminative for the segmenting.

Owner:PIE MEDICAL IMAGING

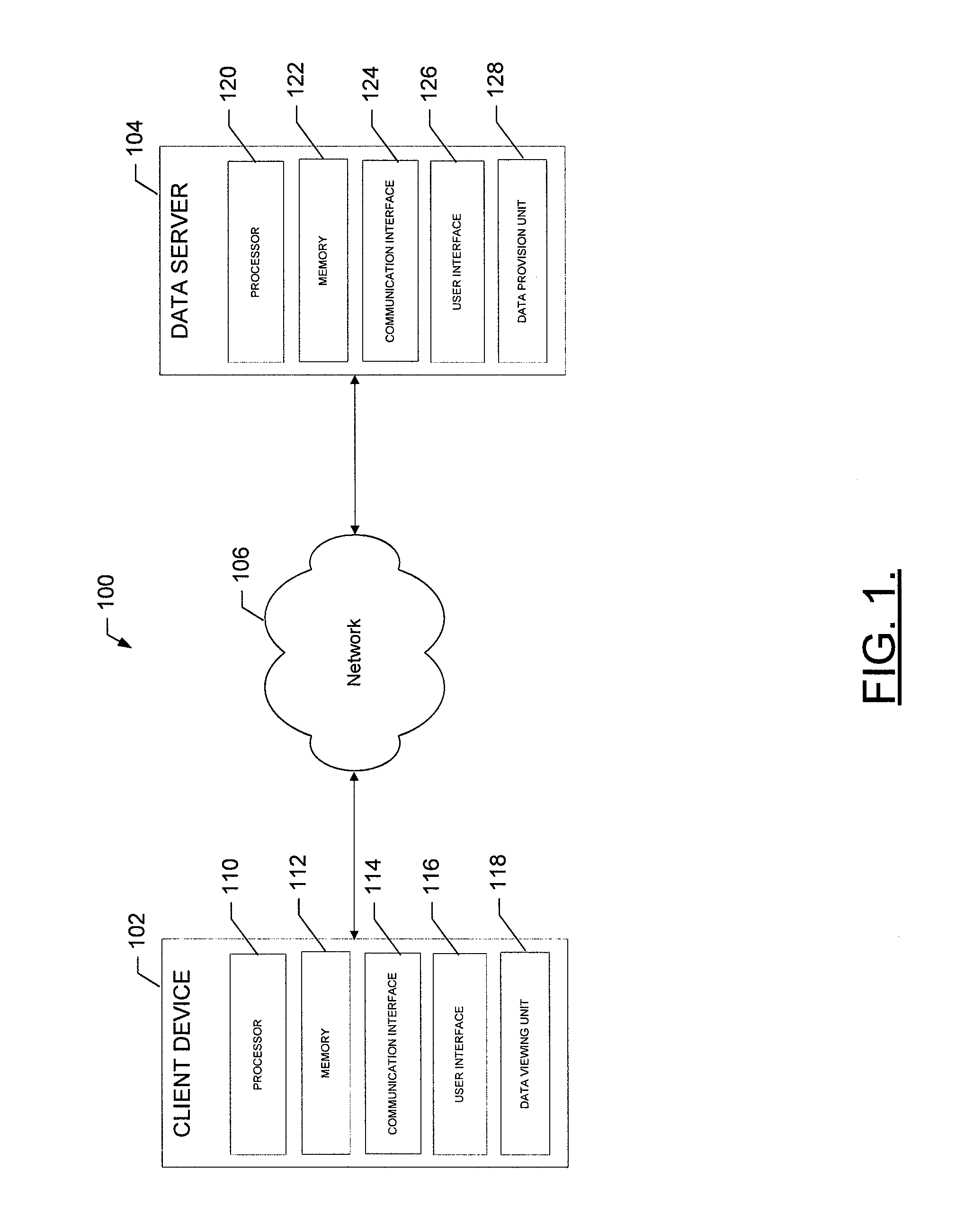

Methods, computer program products, apparatuses, and systems to accommodate decision support and reference case management for diagnostic imaging

ActiveUS20090274384A1High resolutionLimiting problem pixelizationStill image data indexingCharacter and pattern recognitionImage resolutionReference case

A method, apparatus, and computer program product are provided to accommodate decision support and reference case management for diagnostic imaging. An apparatus may include a processor configured to receive a request for an image from a client device. The processor may be further configured to retrieve a source image corresponding to the requested image from a memory. The processor may additionally be configured to process the source image to generate a second image having a greater resolution than the source image. The processor may also be configured to provide the second image to the client device to facilitate viewing and manipulation of the second image at the client device. Corresponding methods and computer program products are also provided.

Owner:CHANGE HEALTHCARE HLDG LLC

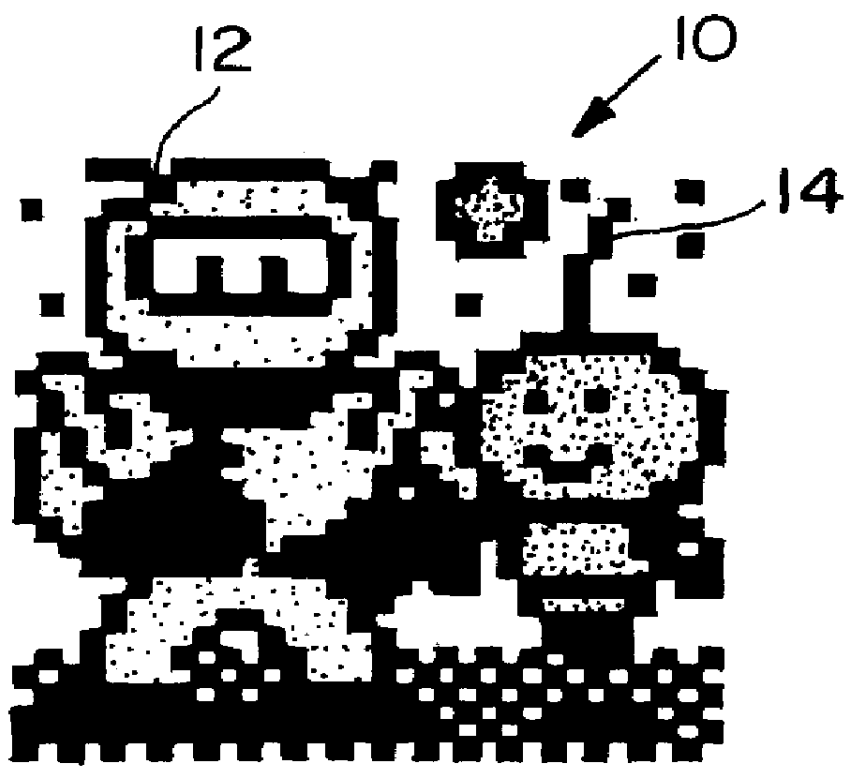

Pixel image enhancement system and method

InactiveUS6038348ALow costReduce memoryCharacter and pattern recognitionPictoral communicationDisplay deviceOutput device

A pixel image enhancement system which operates on color or monochrome source images to produce output cells the same size as the source pixels but not spatially coincident or one-to-one correspondent with them. By operating upon a set of input pixels surrounding each output cell with a set of logic operations implementing unique Boolean equations, the system generates "case numbers" characterizing inferred-edge pieces within each output cell. A rendering subsystem, responsive to the case numbers and source-pixel colors, then produces signals for driving an output device (printer or display) to display the output cells, including the inferred-edge pieces, to the best of the output device's ability and at its resolution.

Owner:CSR TECH INC

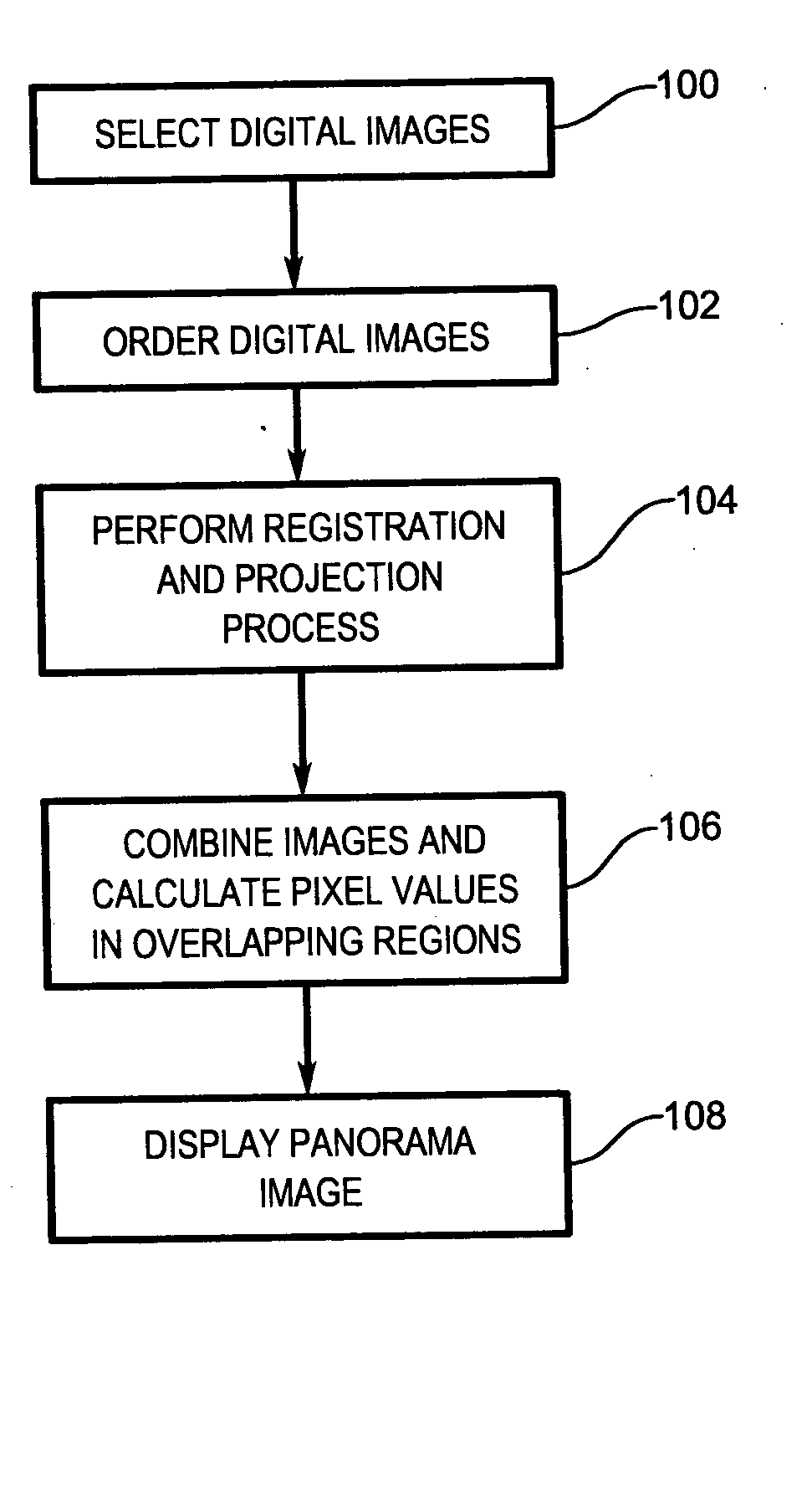

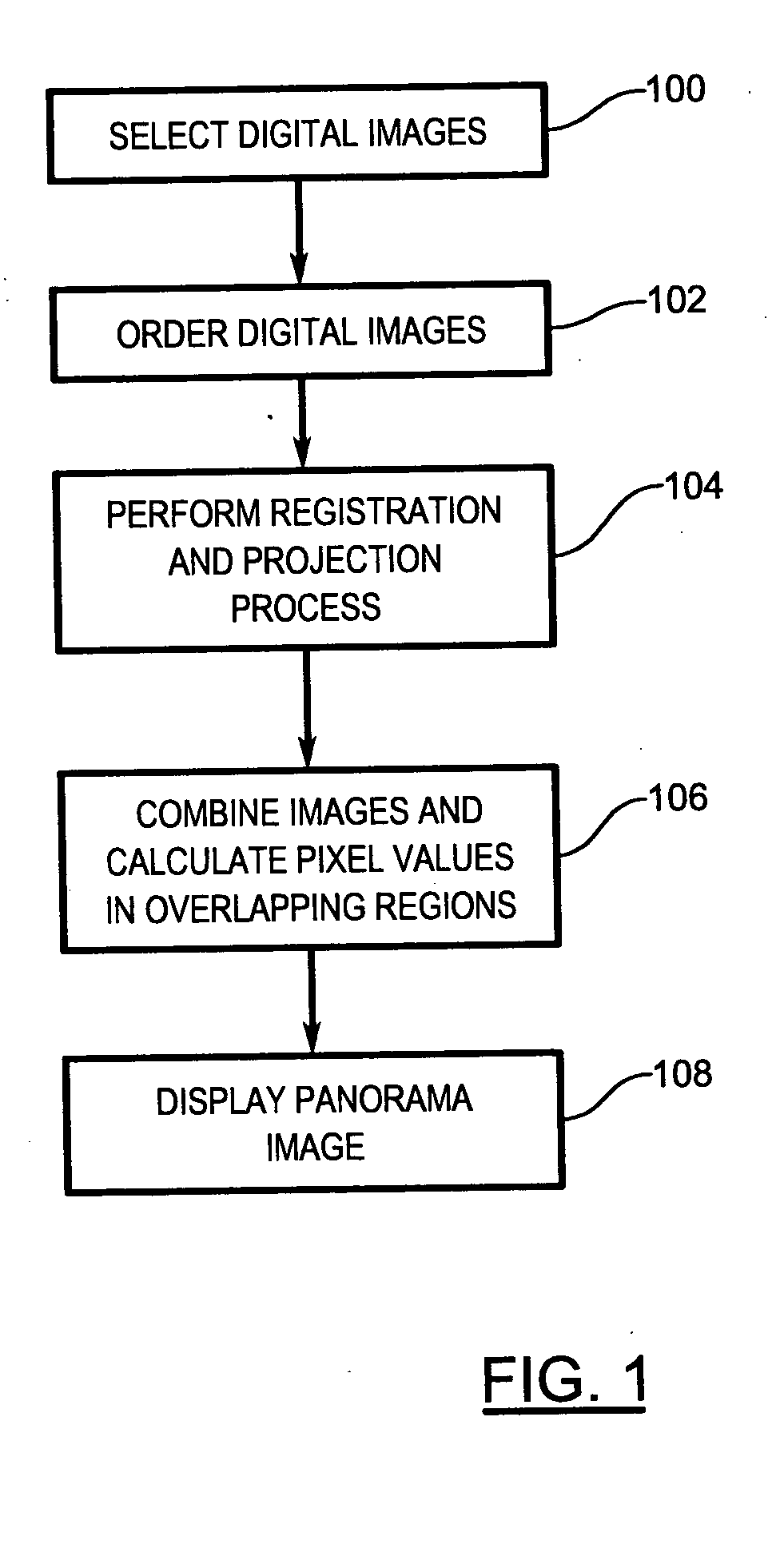

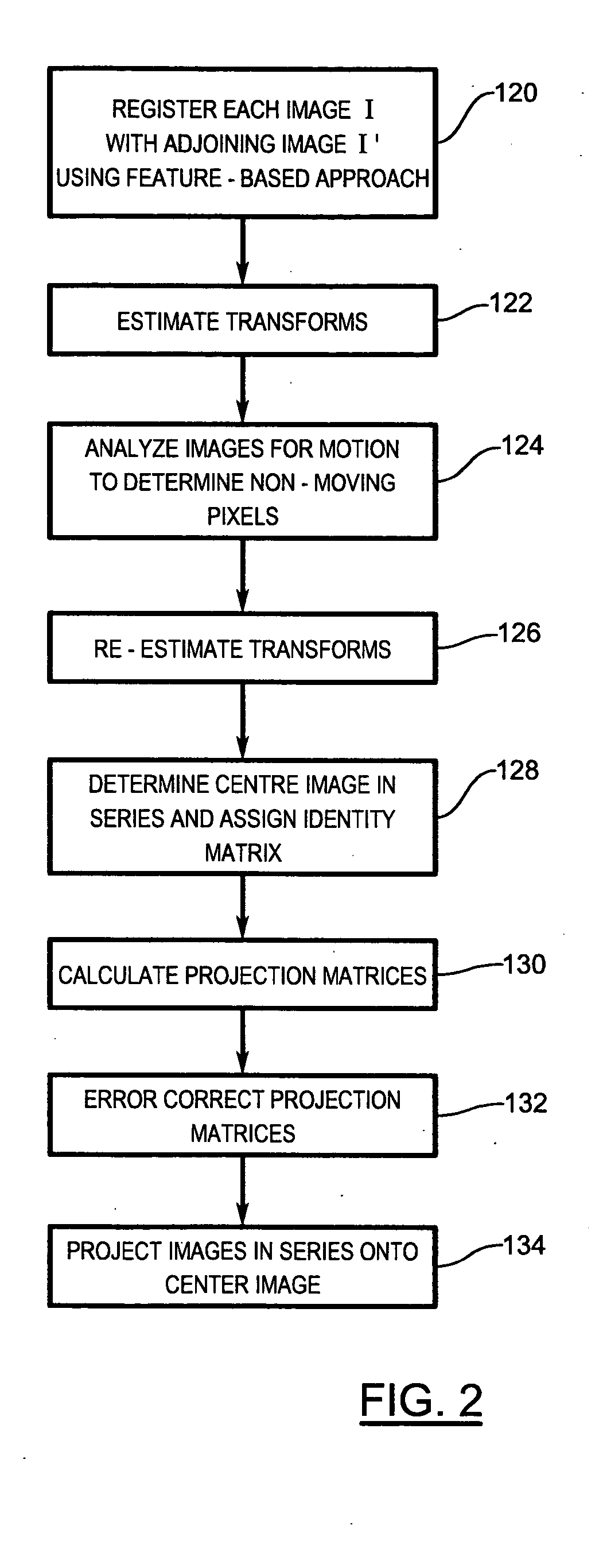

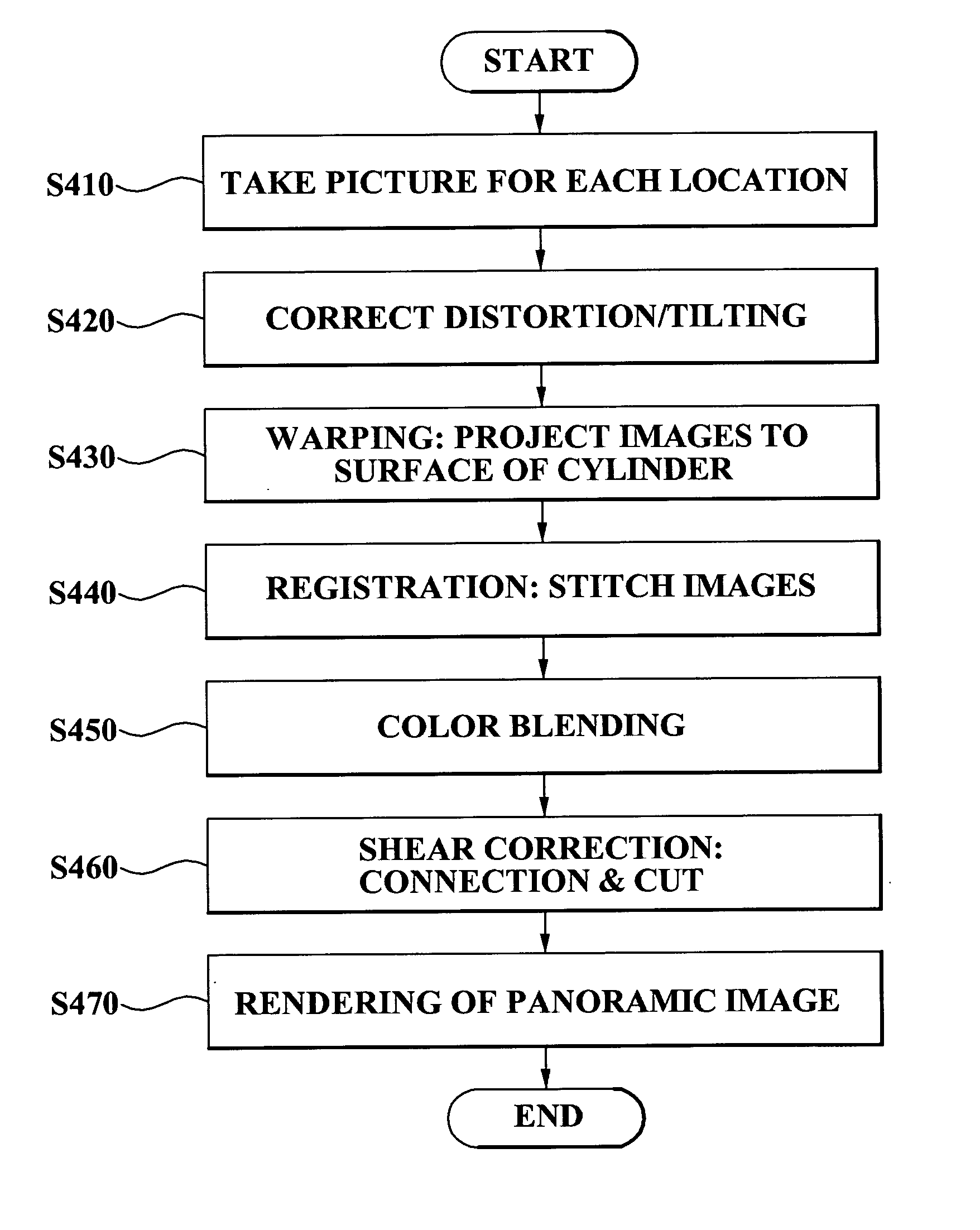

System and method for creating a panorama image from a plurality of source images

InactiveUS20050063608A1Fast and efficientTelevision system detailsImage enhancementProjection imagePanorama

A system and method of creating a panorama image from a series of source images includes registering adjoining pairs of images in the series based on common features within the adjoining pairs of images. A transform between each adjoining pair of images is estimated using the common features. Each image is projected onto a designated image in the series using the estimated transforms associated with the image and with images between the image in question and the designated image. Overlapping portions of the projected images are blended to form the panorama image.

Owner:SEIKO EPSON CORP

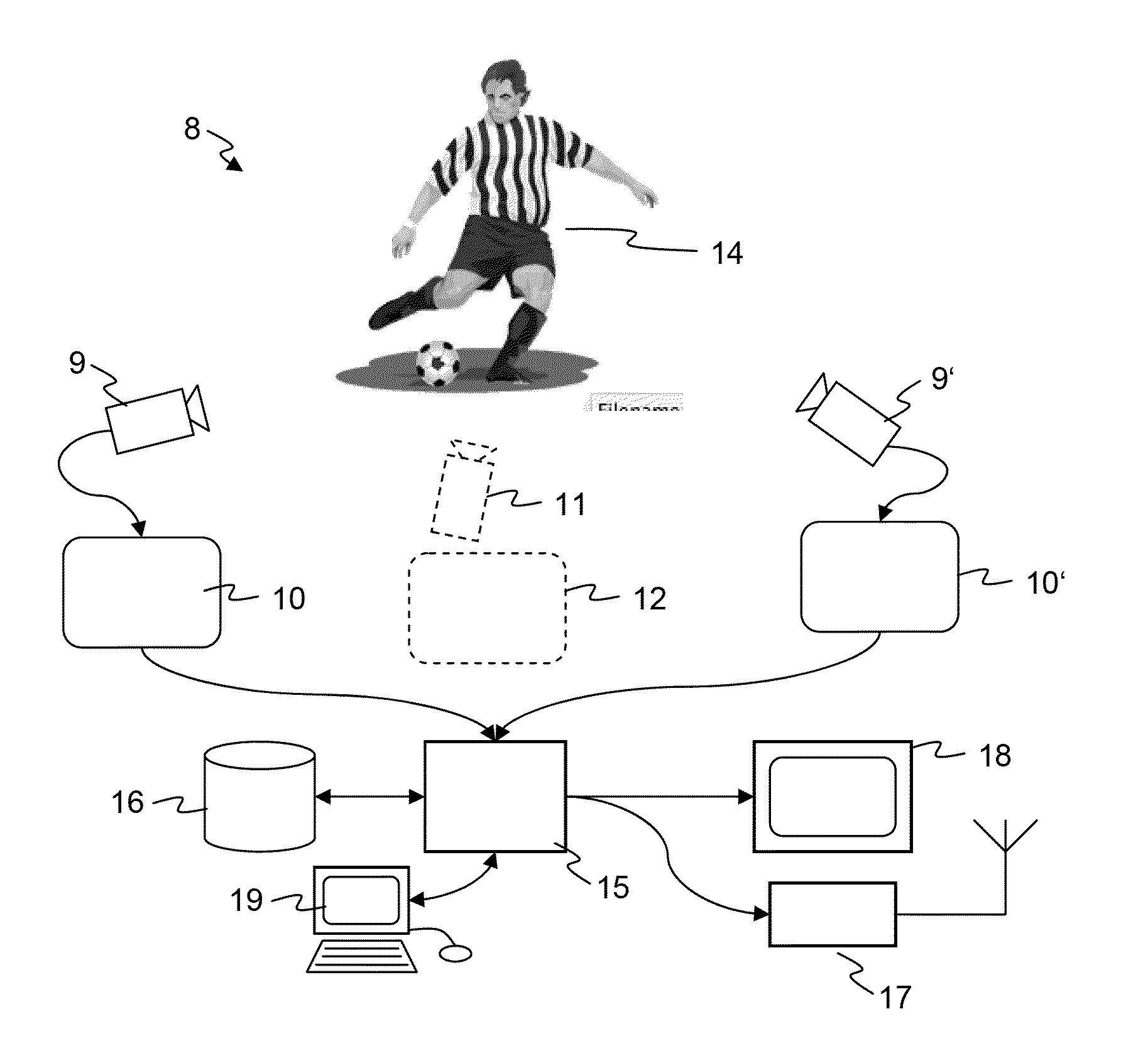

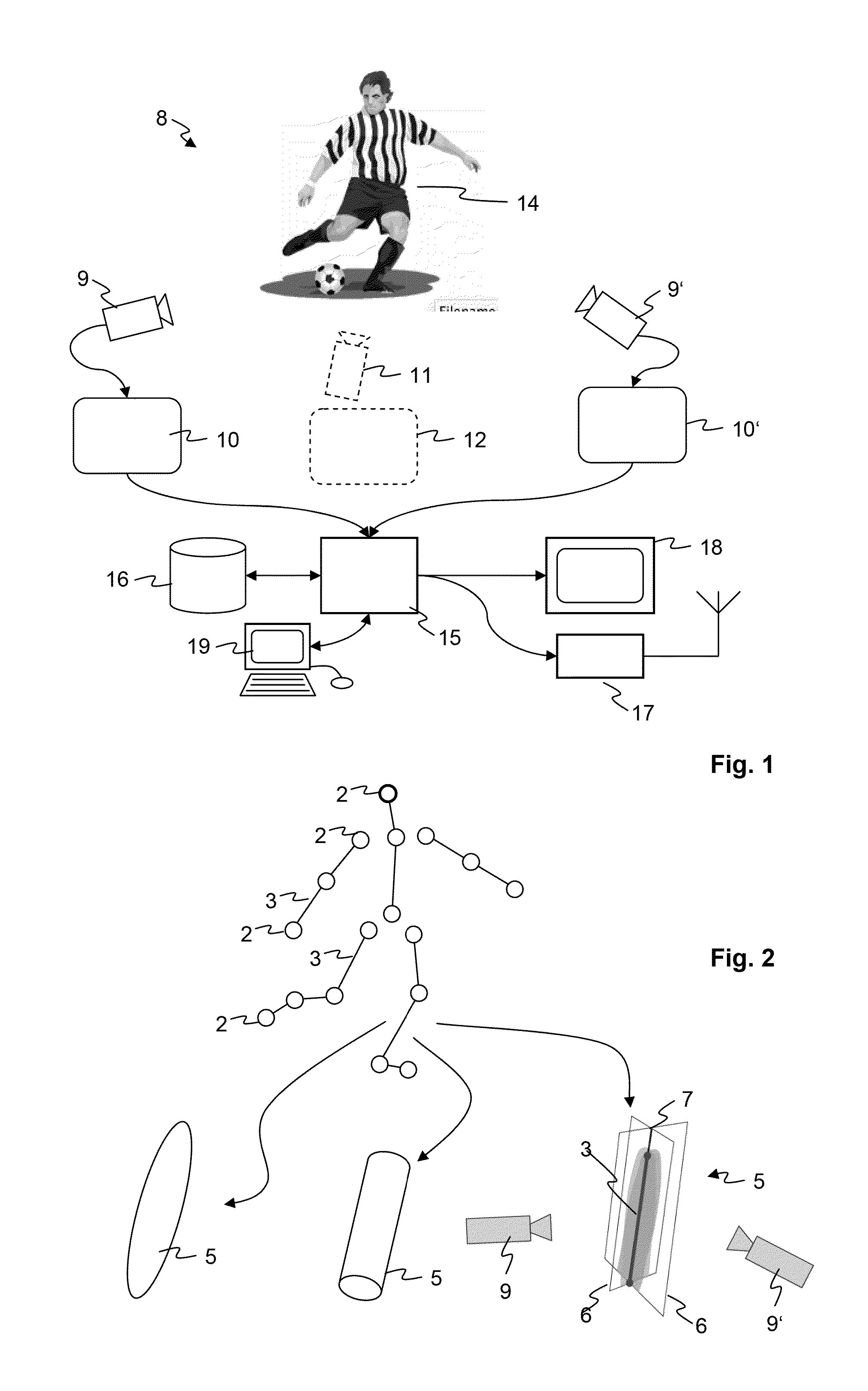

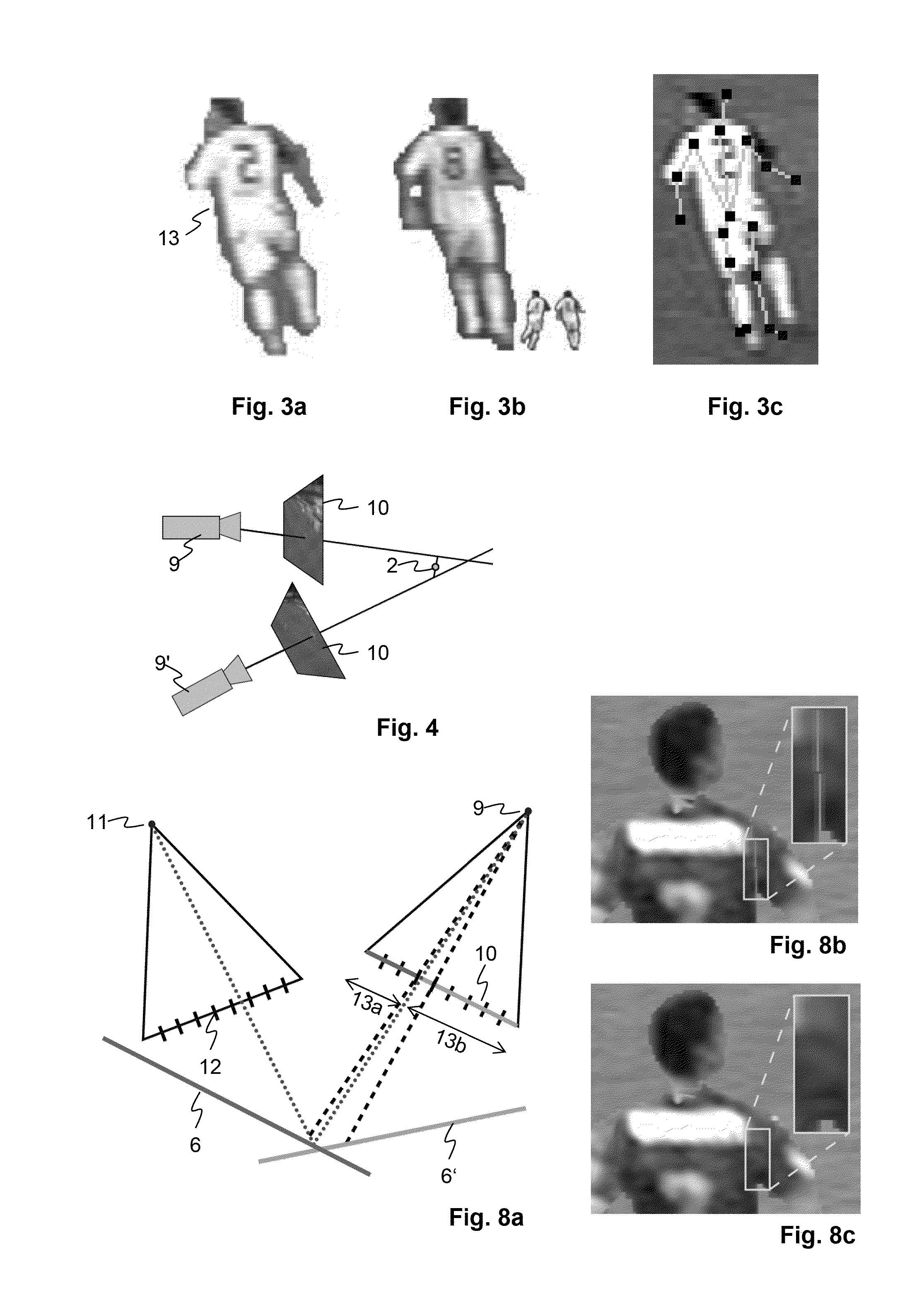

Method for estimating a pose of an articulated object model

ActiveUS20110267344A1Eliminate visible discontinuityMinimize ghosting artifactImage enhancementImage analysisSource imageComputer based

A computer-implemented method for estimating a pose of an articulated object model (4), wherein the articulated object model (4) is a computer based 3D model (1) of a real world object (14) observed by one or more source cameras (9), and wherein the pose of the articulated object model (4) is defined by the spatial location of joints (2) of the articulated object model (4), comprises the steps ofobtaining a source image (10) from a video stream;processing the source image (10) to extract a source image segment (13);maintaining, in a database, a set of reference silhouettes, each being associated with an articulated object model (4) and a corresponding reference pose;comparing the source image segment (13) to the reference silhouettes and selecting reference silhouettes by taking into account, for each reference silhouette,a matching error that indicates how closely the reference silhouette matches the source image segment (13) and / ora coherence error that indicates how much the reference pose is consistent with the pose of the same real world object (14) as estimated from a preceding source image (10);retrieving the corresponding reference poses of the articulated object models (4); andcomputing an estimate of the pose of the articulated object model (4) from the reference poses of the selected reference silhouettes.

Owner:VIZRT AG

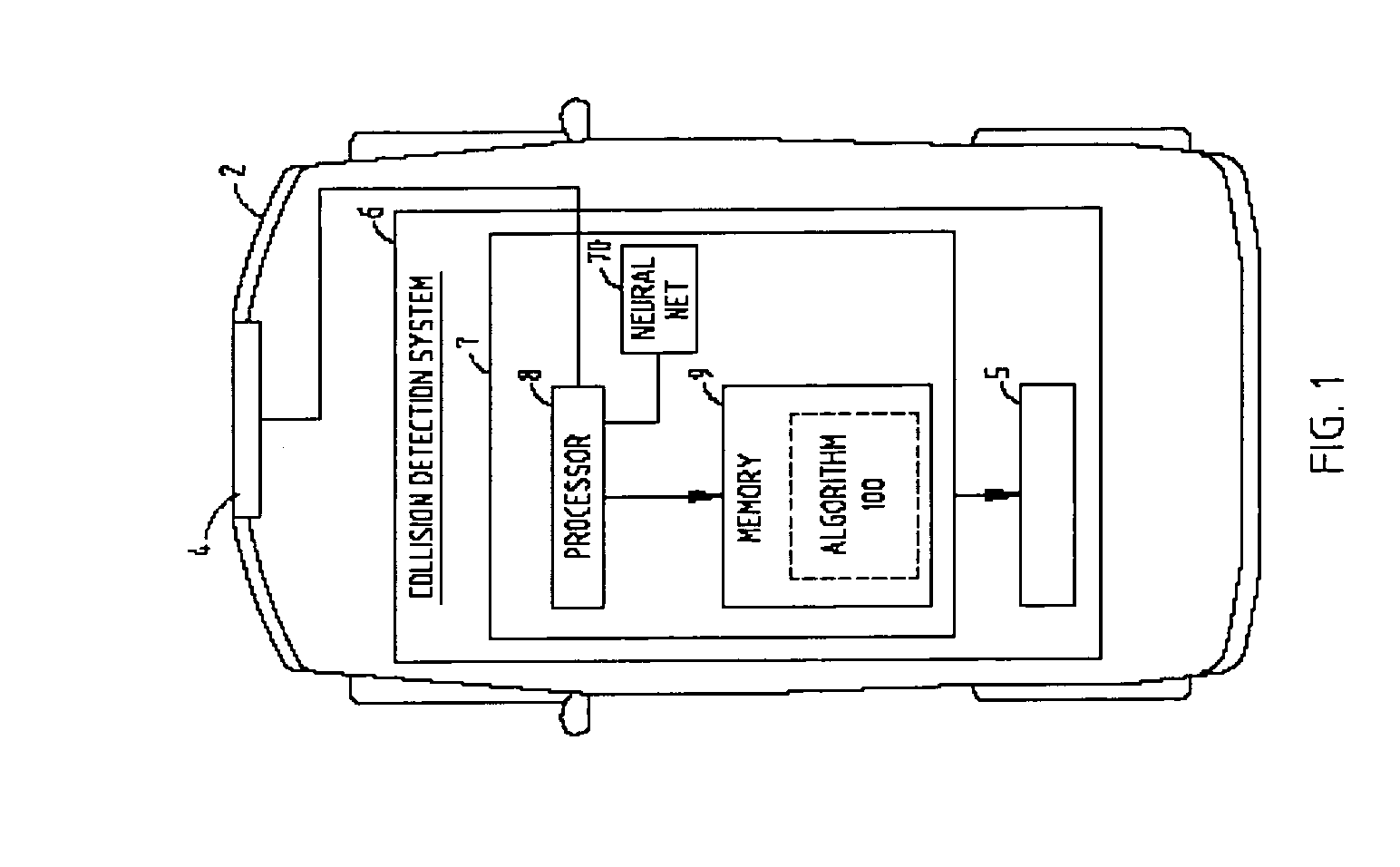

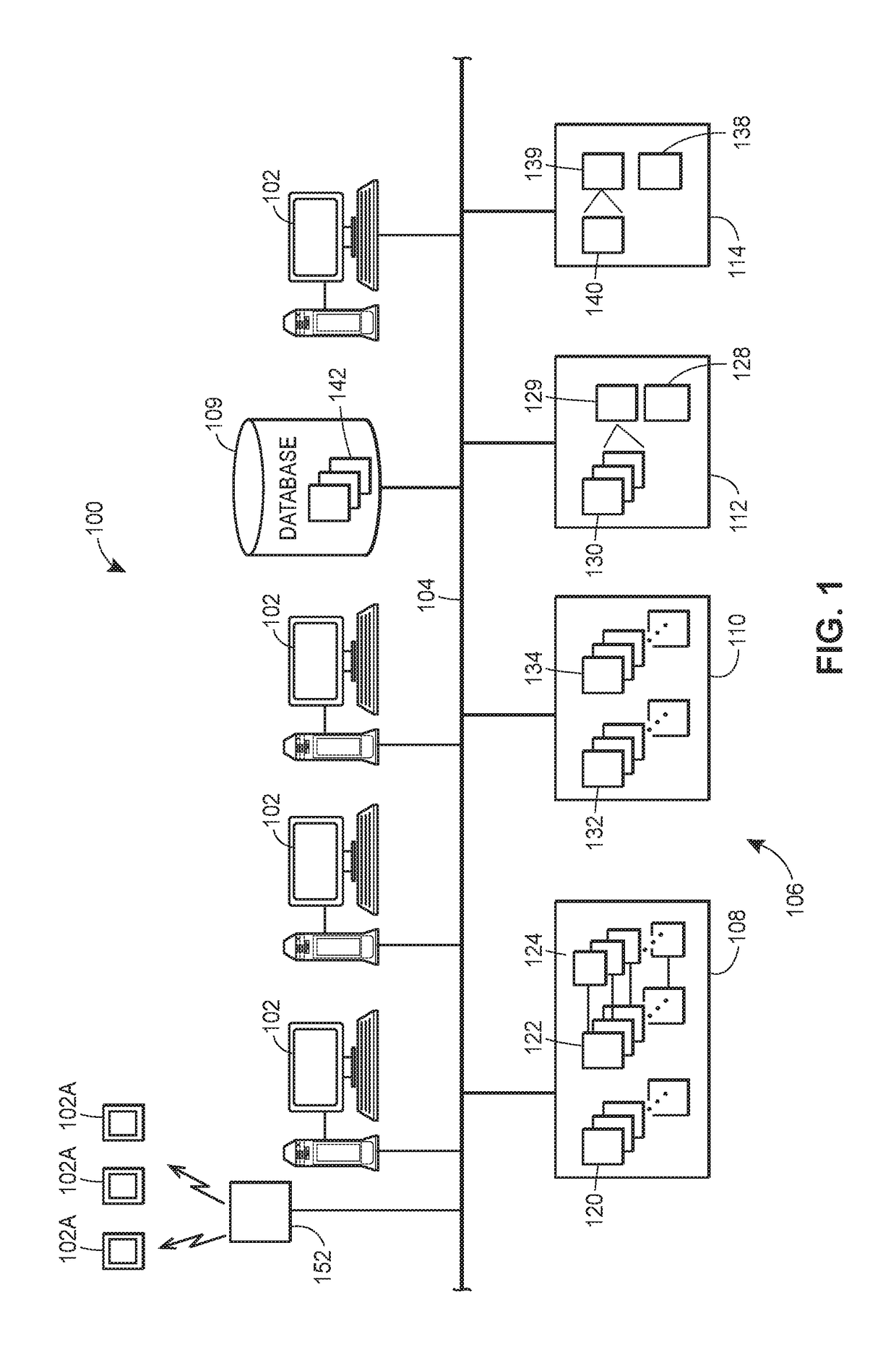

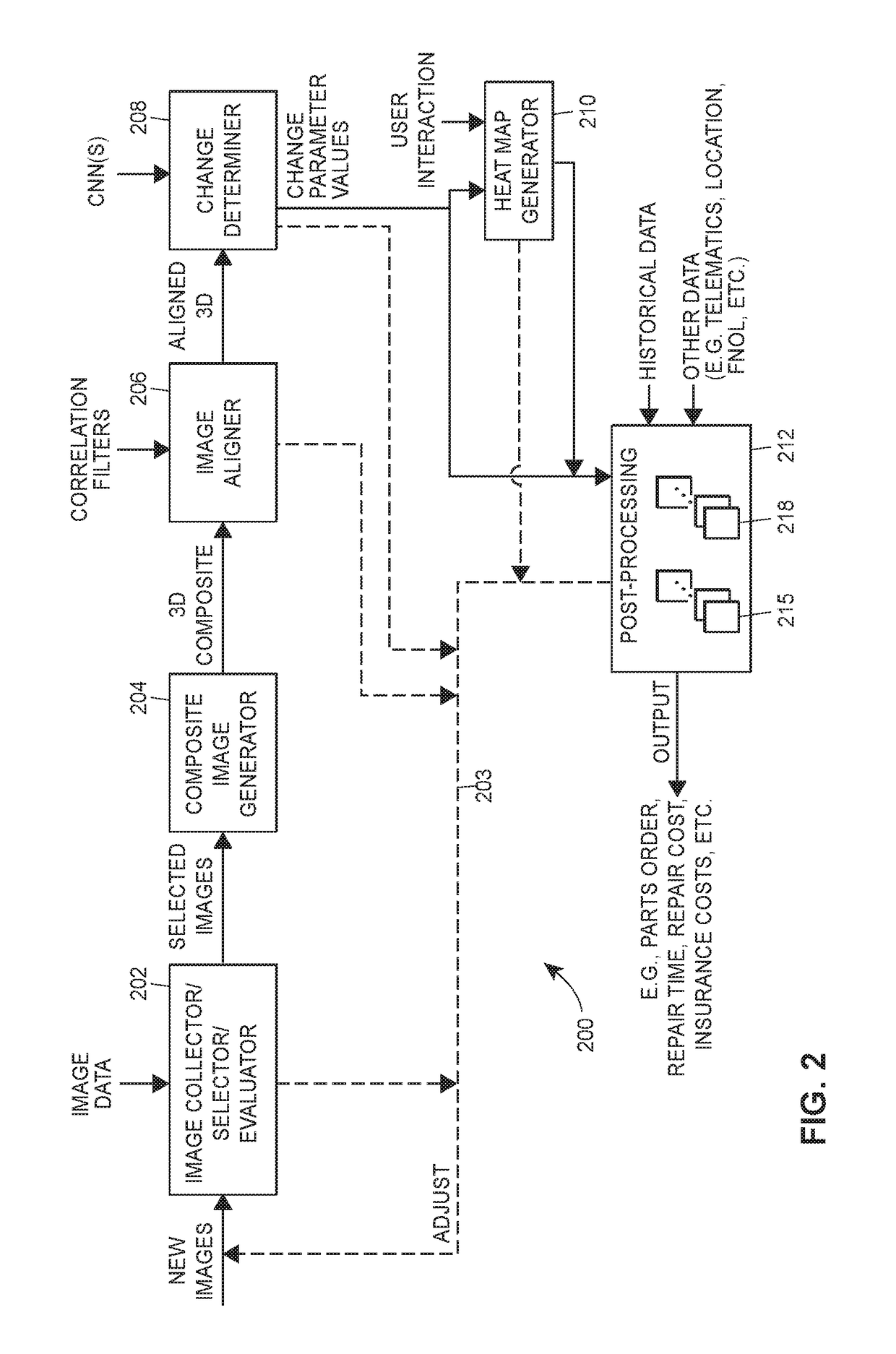

Heat map of vehicle damage

An image processing system and / or method obtains source images in which a damaged vehicle is represented, and performs image processing techniques to determine, predict, estimate, and / or detect damage that has occurred at various locations on the vehicle. The image processing techniques may include generating a composite image of the damaged vehicle, aligning and / or isolating the image, applying convolutional neural network techniques to the image to generate damage parameter values, where each value corresponds to damage in a particular location of vehicle, and / or other techniques. Based on the damage values, the image processing system / method generates and displays a heat map for the vehicle, where each color and / or color gradation corresponds to respective damage at a respective location on the vehicle. The heat map may be manipulatable by the user, and may include user controls for displaying additional information corresponding to the damage at a particular location on the vehicle.

Owner:CCC INFORMATION SERVICES

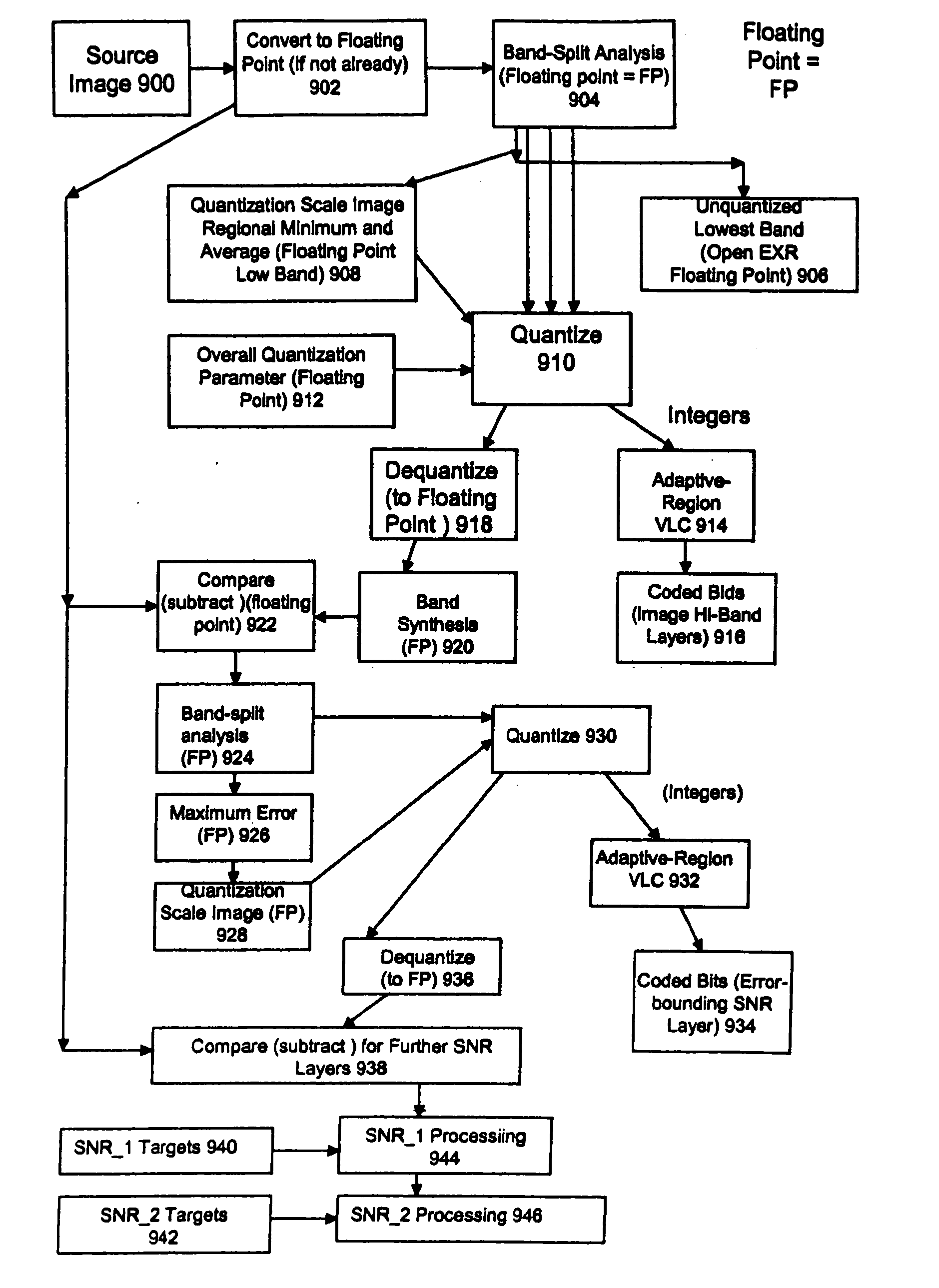

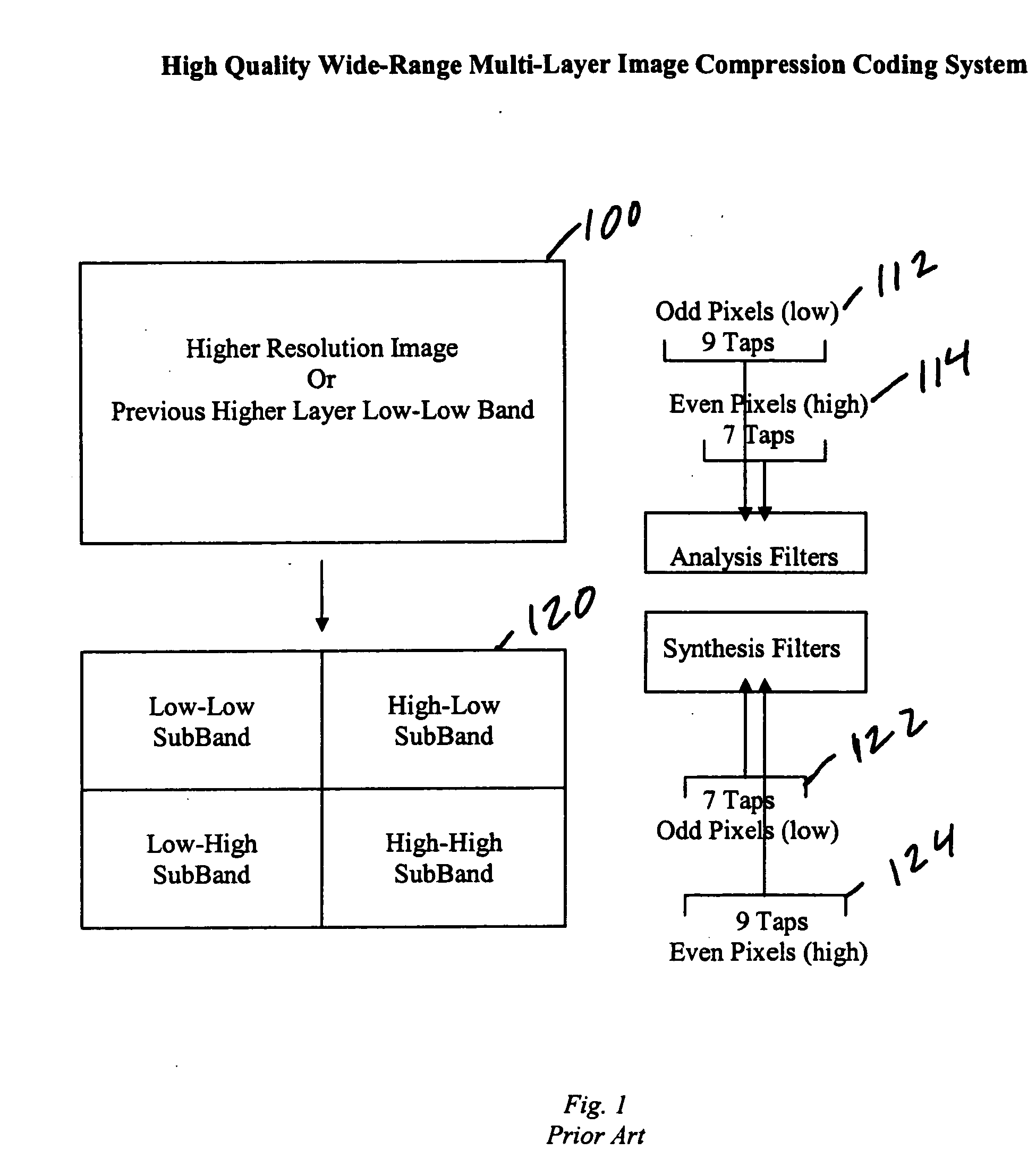

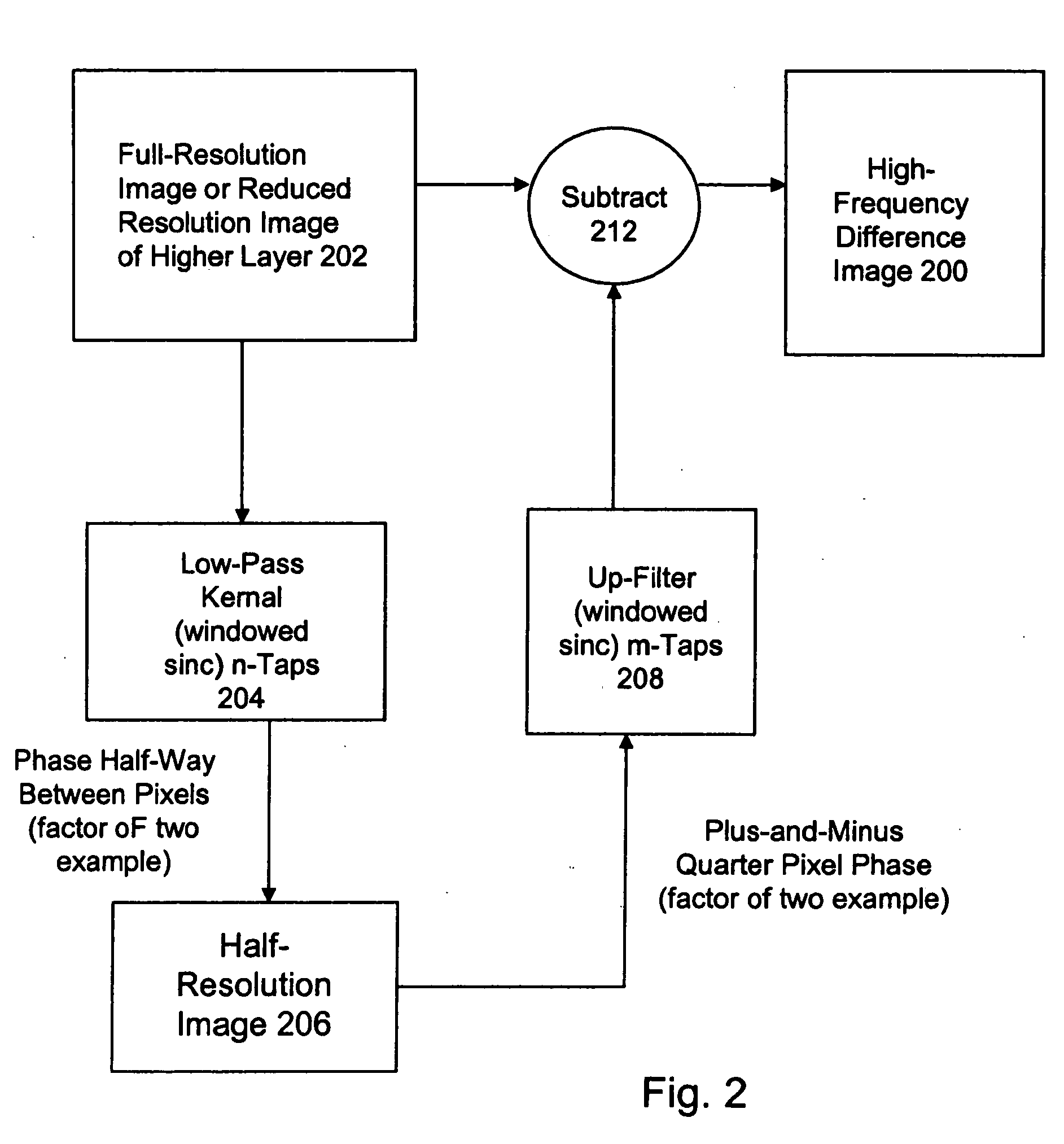

High quality wide-range multi-layer image compression coding system

ActiveUS20060071825A1Reduce sharpnessReduce detailsCode conversionCharacter and pattern recognitionFloating pointImage compression

Systems, methods, and computer programs for high quality wide-range multi-layer image compression coding, including consistent ubiquitous use of floating point values in essentially all computations; an adjustable floating-point deadband; use of an optimal band-split filter; use of entire SNR layers at lower resolution levels; targeting of specific SNR layers to specific quality improvements; concentration of coding bits in regions of interest in targeted band-split and SNR layers; use of statically-assigned targets for high-pass and / or for SNR layers; improved SNR by using a lower quantization value for regions of an image showing a higher compression coding error; application of non-linear functions of color when computing difference values when creating an SNR layer; use of finer overall quantization at lower resolution levels with regional quantization scaling; removal of source image noise before motion-compensated compression or film steadying; use of one or more full-range low bands; use of alternate quantization control images for SNR bands and other high resolution enhancing bands; application of lossless variable-length coding using adaptive regions; use of a folder and file structure for layers of bits; and a method of inserting new intra frames by counting the number of bits needed for a motion compensated frame.

Owner:DEMOS GARY

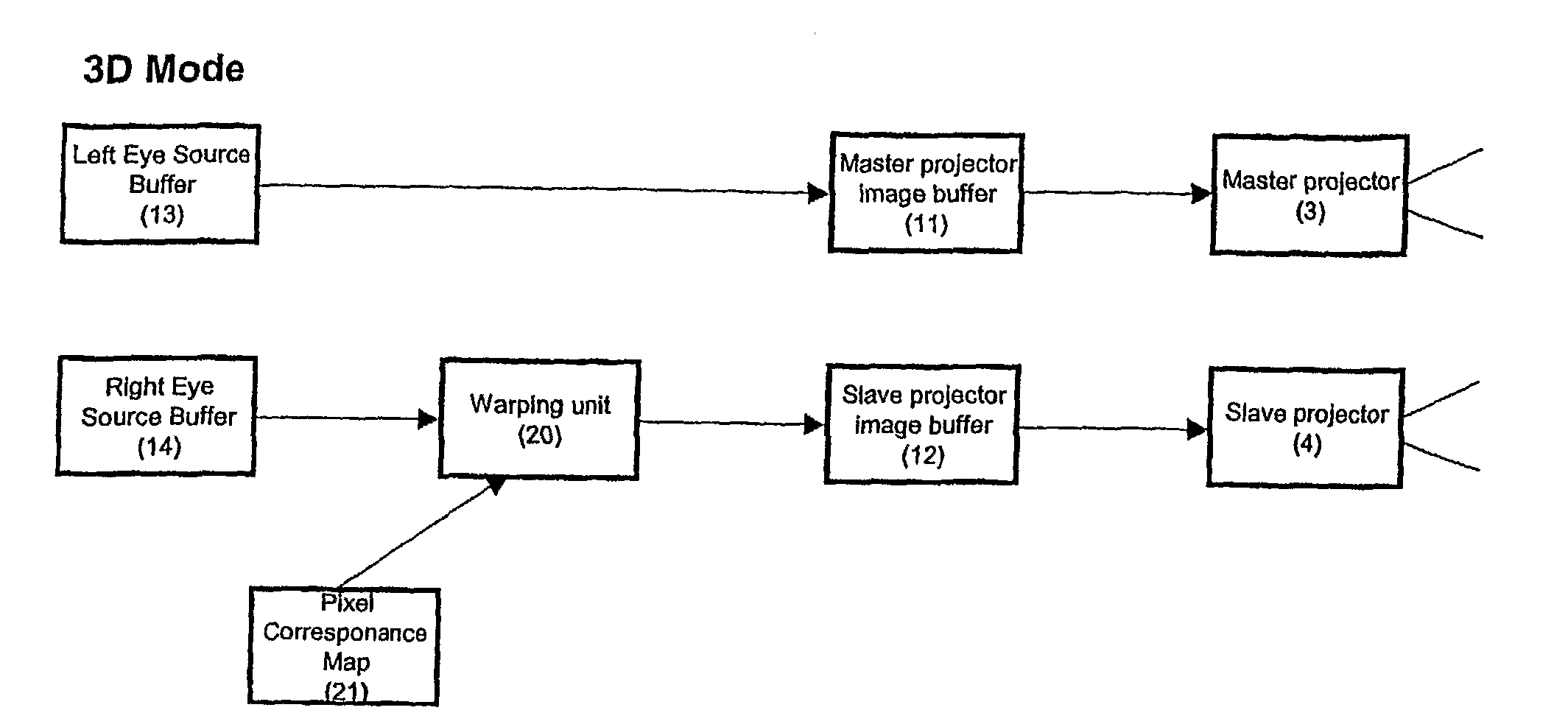

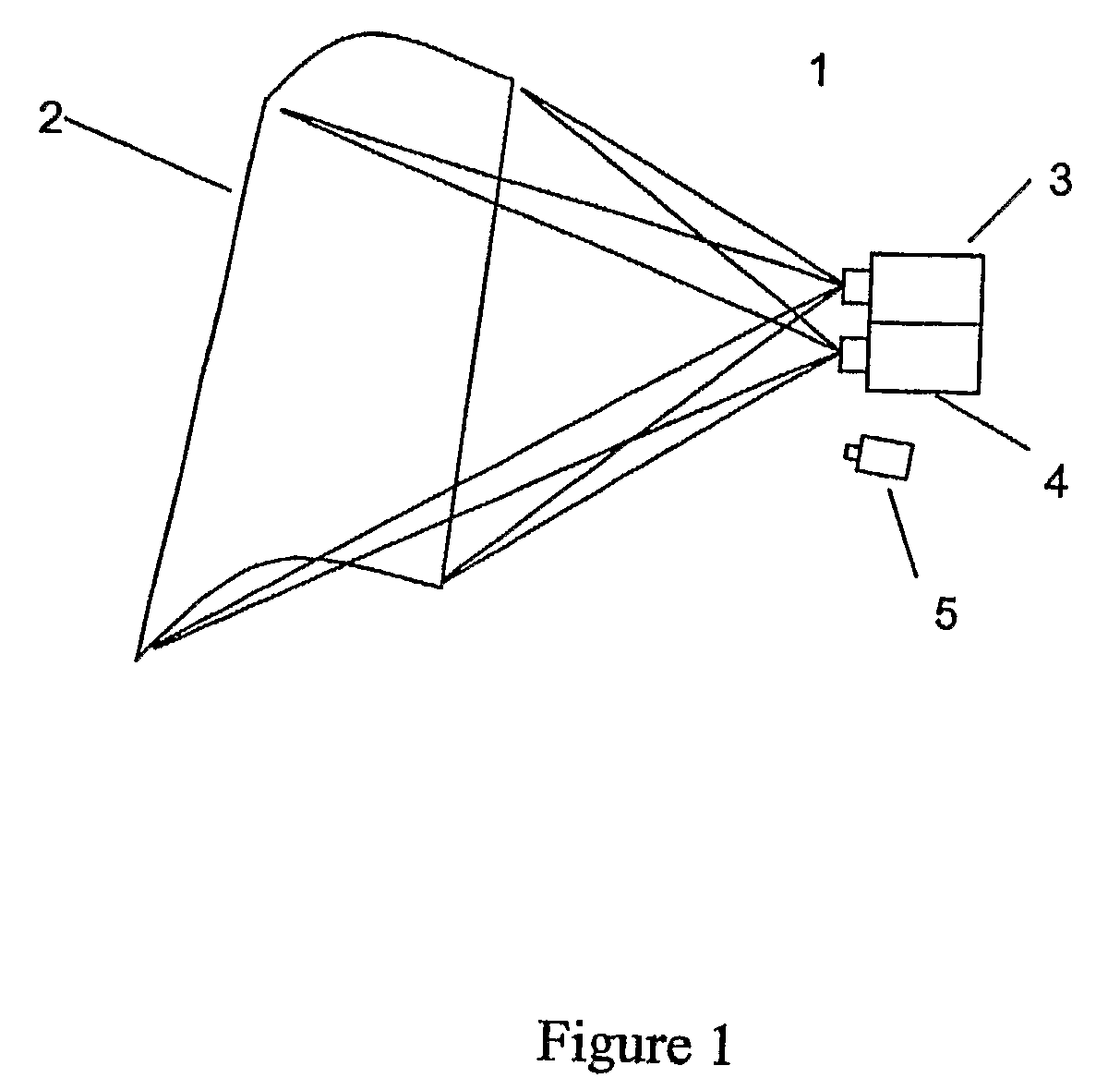

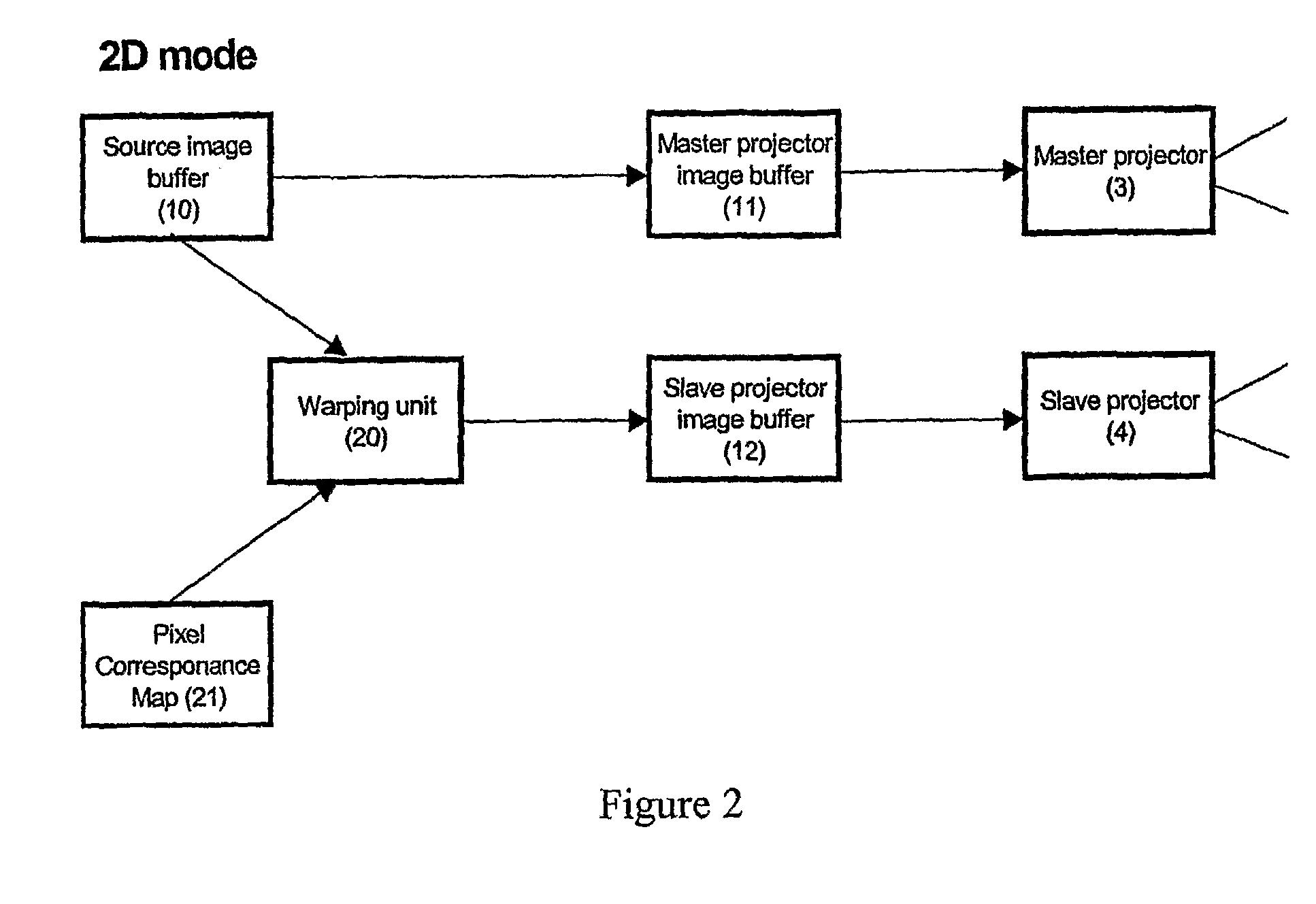

Electronic Projection Systems and Methods

ActiveUS20080309884A1Improve accuracyEliminate needTelevision system detailsProjectorsSource imageProjection system

Embodiments of the present invention comprise electronic projection systems and methods. One embodiment of the present invention comprises a method of creating composite images with a projection system comprising a first projector and at least a second projector, comprising generating a correspondence map of pixels for images by determining offsets between pixels from at least a second image from the second projector and corresponding pixels from a first image from the first projector, receiving a source image, warping the source image based at least in part on the correspondence map to produce a warped image, and displaying the source image by the first projector and displaying the warped image by the second projector to create a composite image.

Owner:IMAX CORP

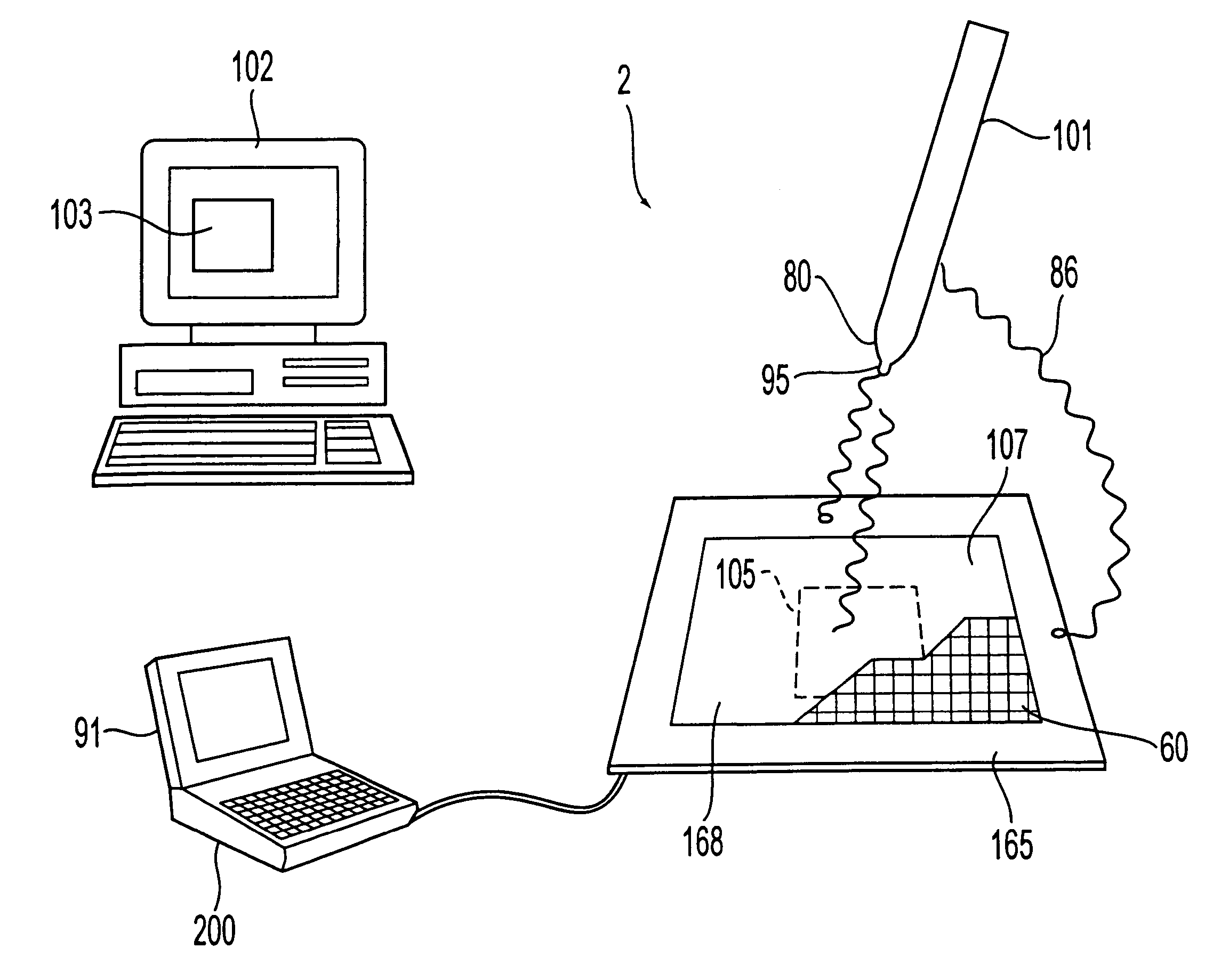

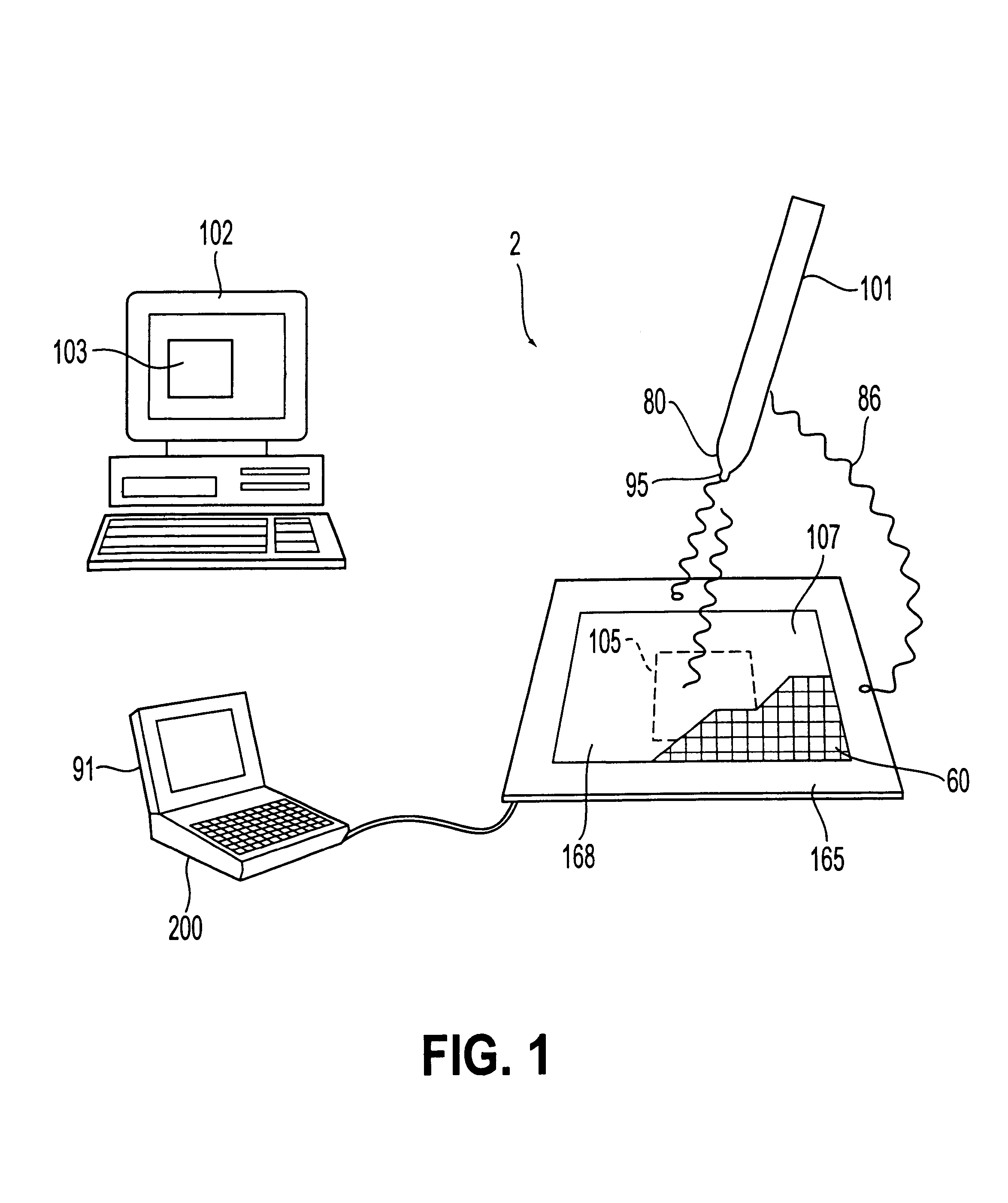

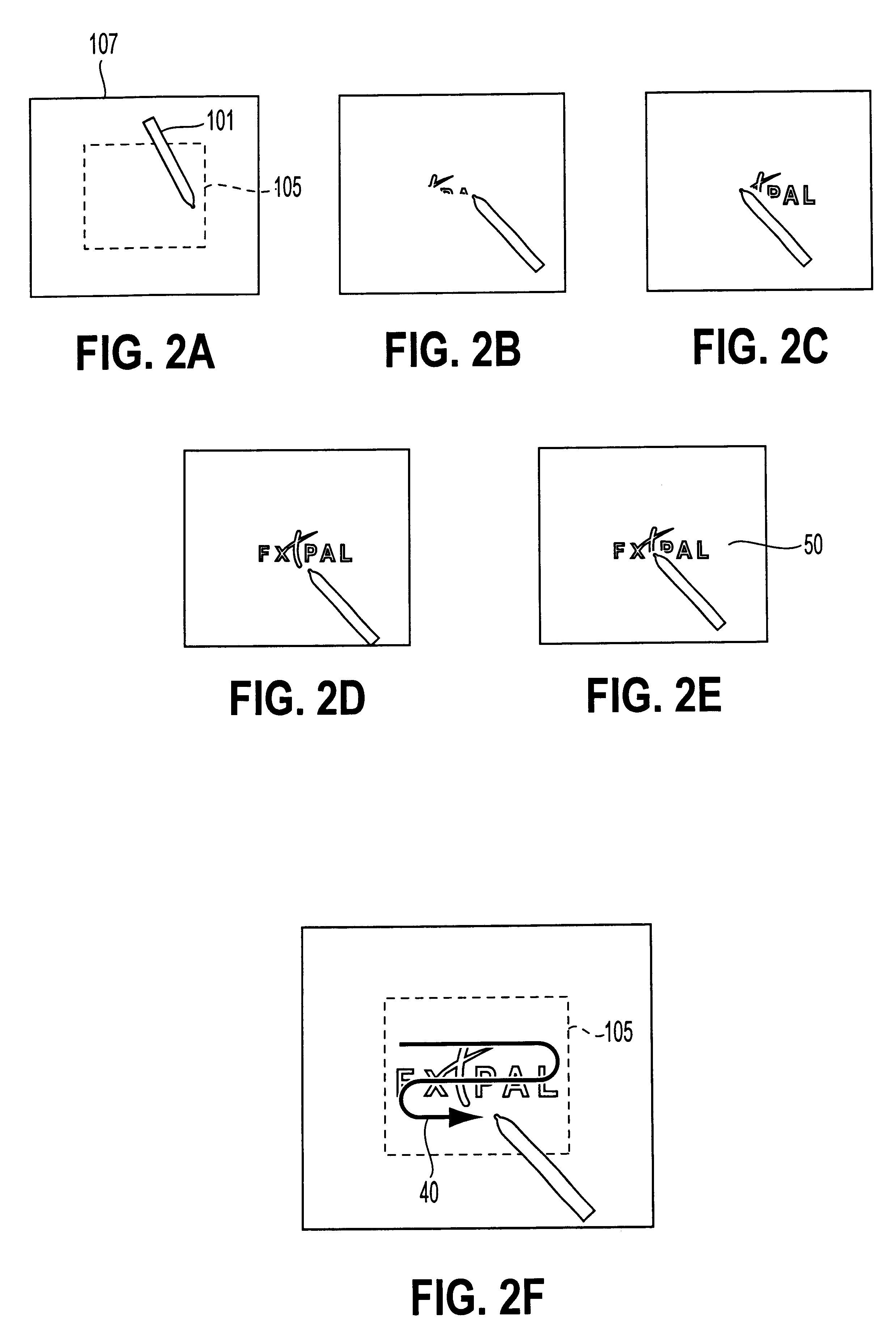

Method and system for position-aware freeform printing within a position-sensed area

Owner:FUJIFILM BUSINESS INNOVATION CORP

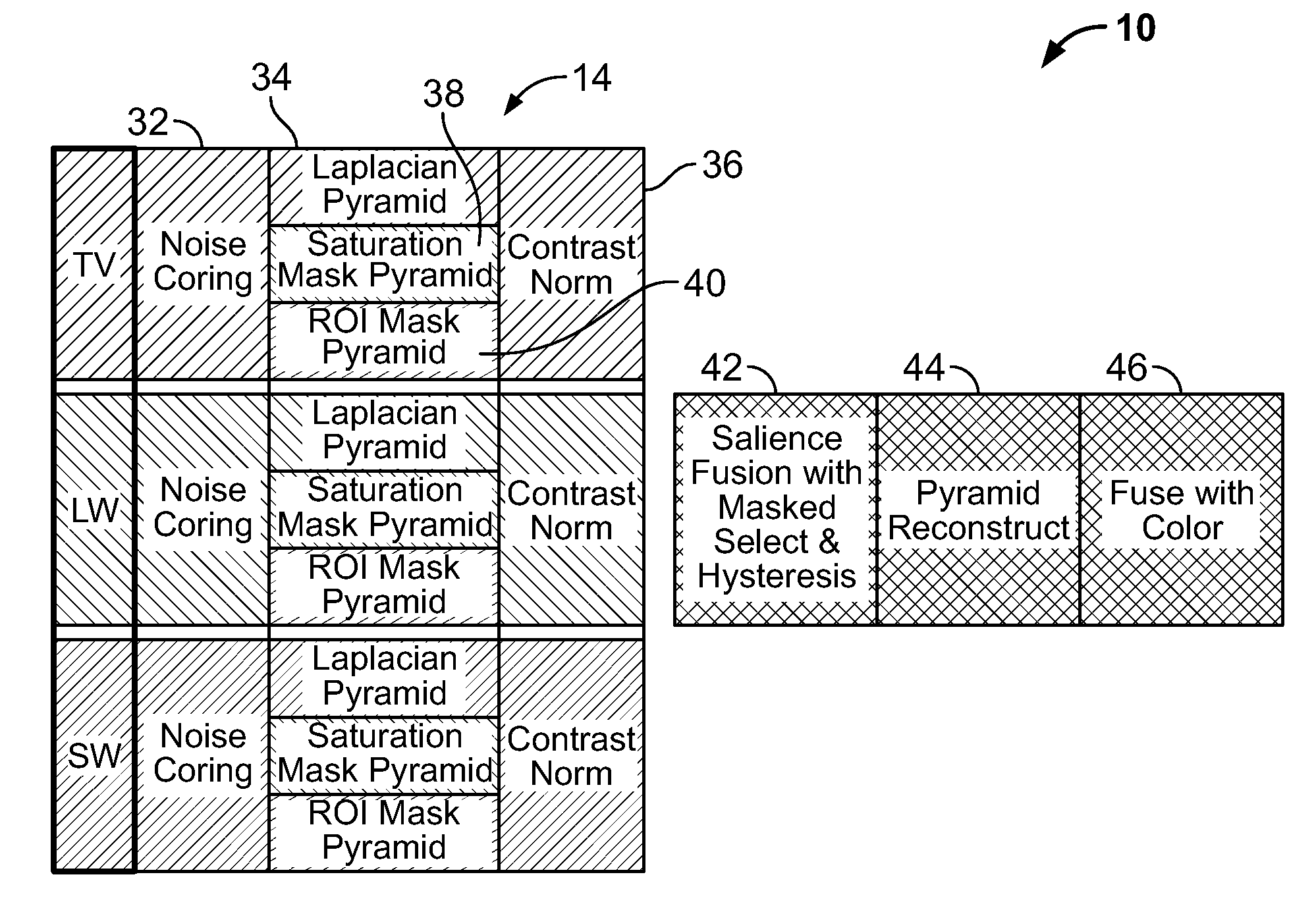

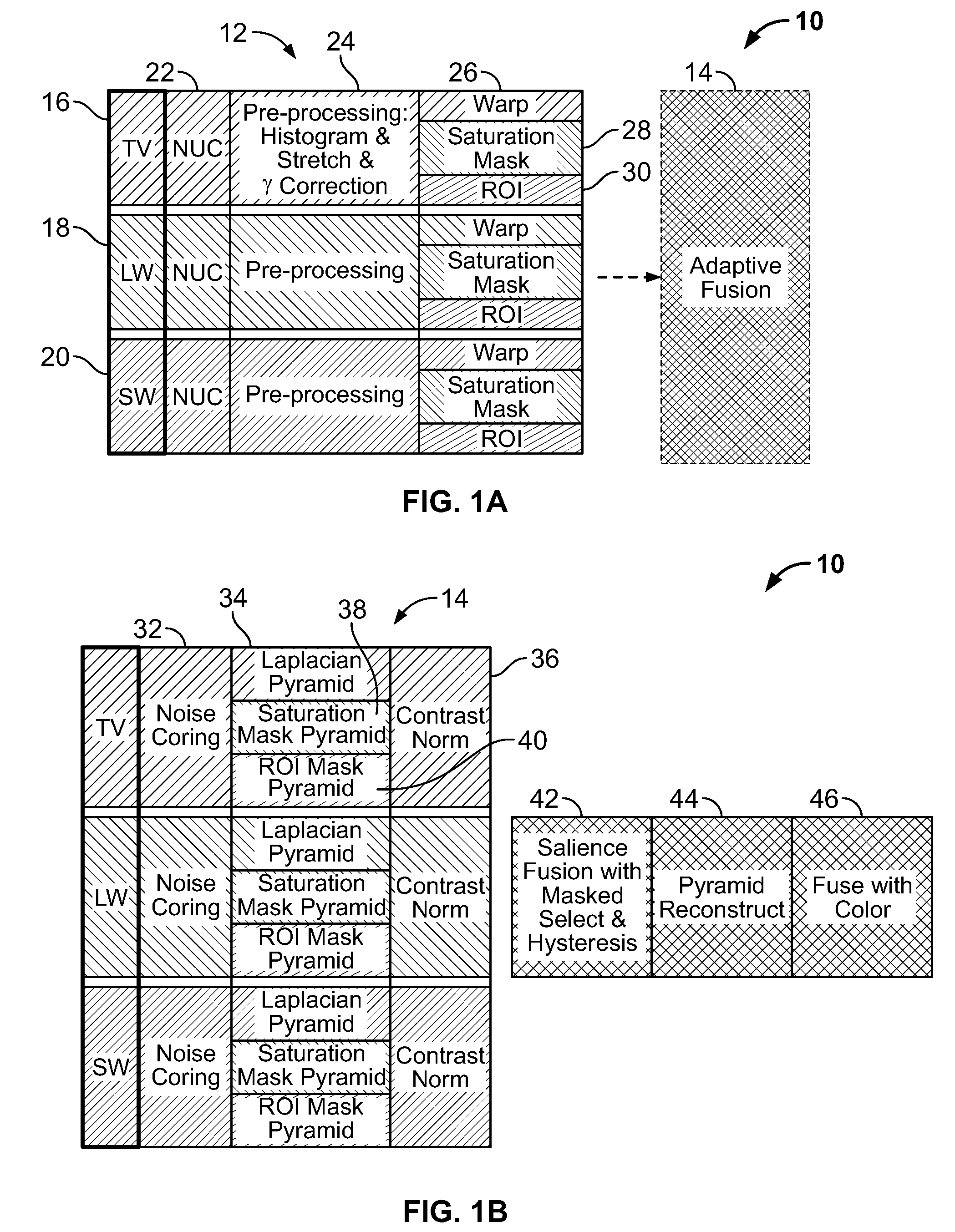

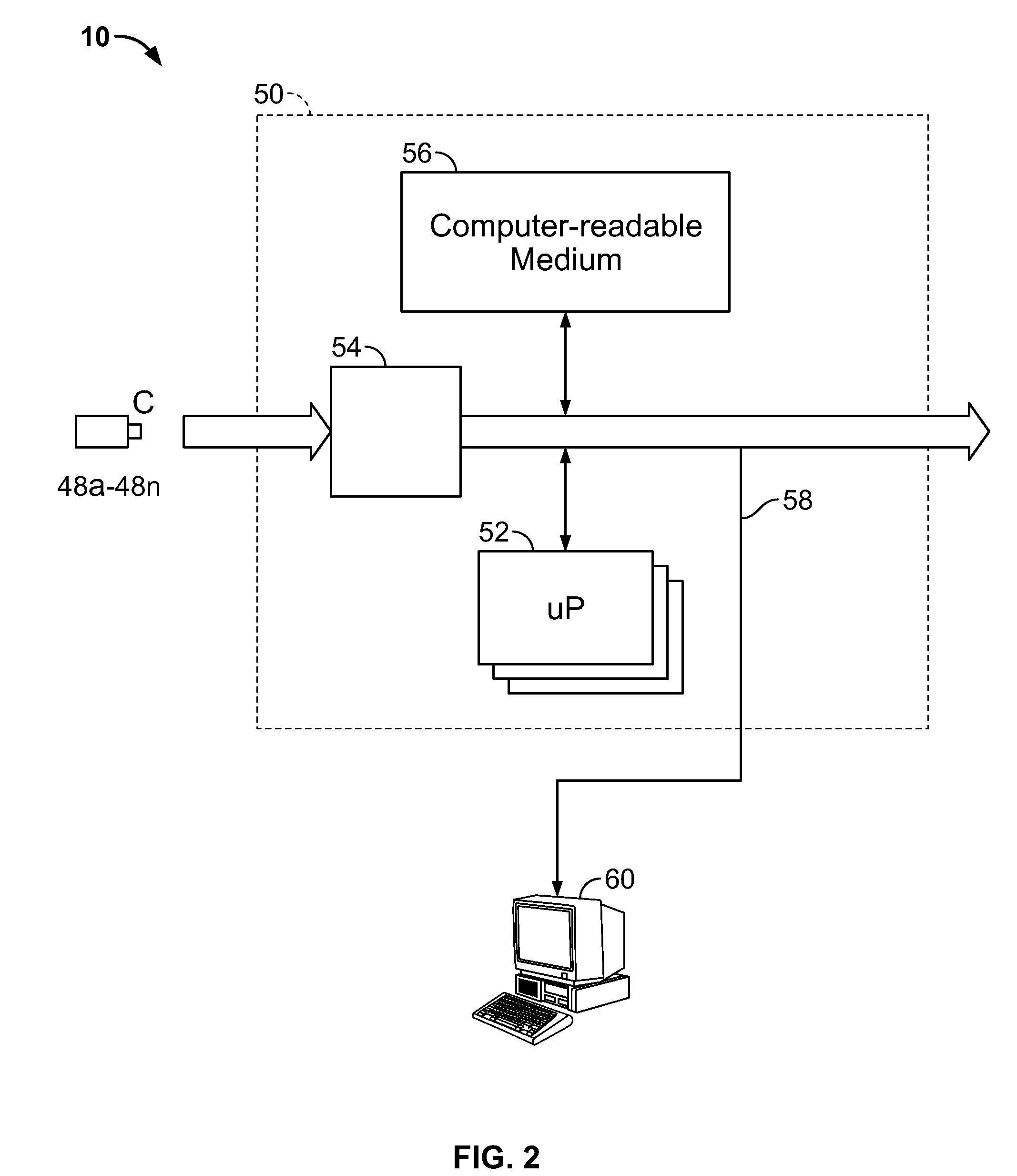

Multi-scale multi-camera adaptive fusion with contrast normalization

ActiveUS20090169102A1Reduce flickering artifactReduce artifactsTelevision system detailsImage enhancementMulti cameraEnergy based

A computer implemented method for fusing images taken by a plurality of cameras is disclosed, comprising the steps of: receiving a plurality of images of the same scene taken by the plurality of cameras; generating Laplacian pyramid images for each source image of the plurality of images; applying contrast normalization to the Laplacian pyramids images; performing pixel-level fusion on the Laplacian pyramid images based on a local salience measure that reduces aliasing artifacts to produce one salience-selected Laplacian pyramid image for each pyramid level; and combining the salience-selected Laplacian pyramid images into a fused image. Applying contrast normalization further comprises, for each Laplacian image at a given level: obtaining an energy image from the Laplacian image; determining a gain factor that is based on at least the energy image and a target contrast; and multiplying the Laplacian image by a gain factor to produce a normalized Laplacian image.

Owner:SRI INTERNATIONAL

Method for digital transmission and display of weather imagery

InactiveUS7039505B1Reduce bandwidth requirementsHigh-resolution imageDigital data processing detailsRoad vehicles traffic controlComputer visionSource image

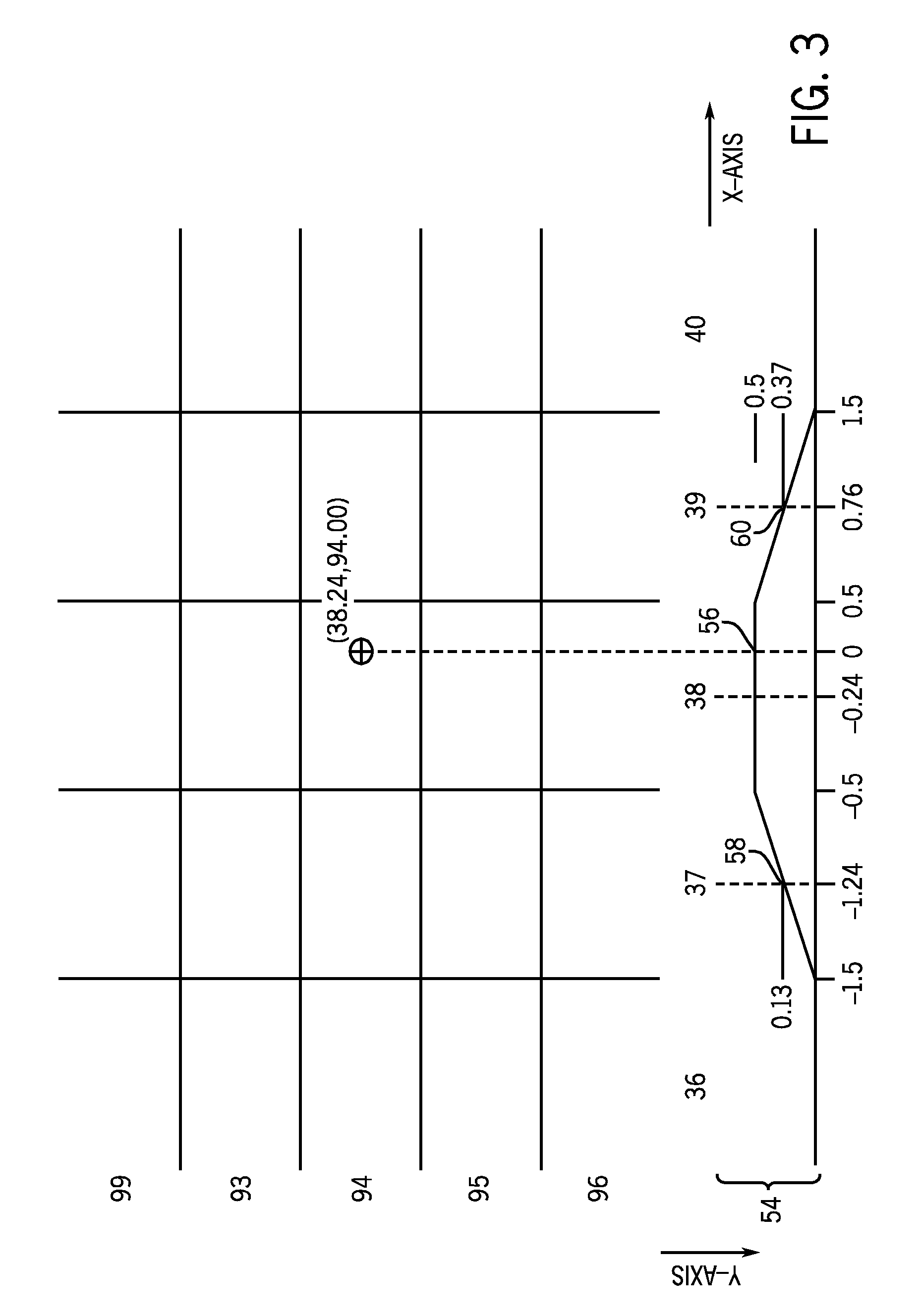

A method for creating minimal data representing a source image is presented. The source image is divided into a grid of cells. A color is selected for each cell corner based on sampling an area defined by the cell corner. An indication of the selected color is stored in an array dependent on the co-ordinates of the cell corner in the source image.

Owner:AVIDYNE CORPORATION

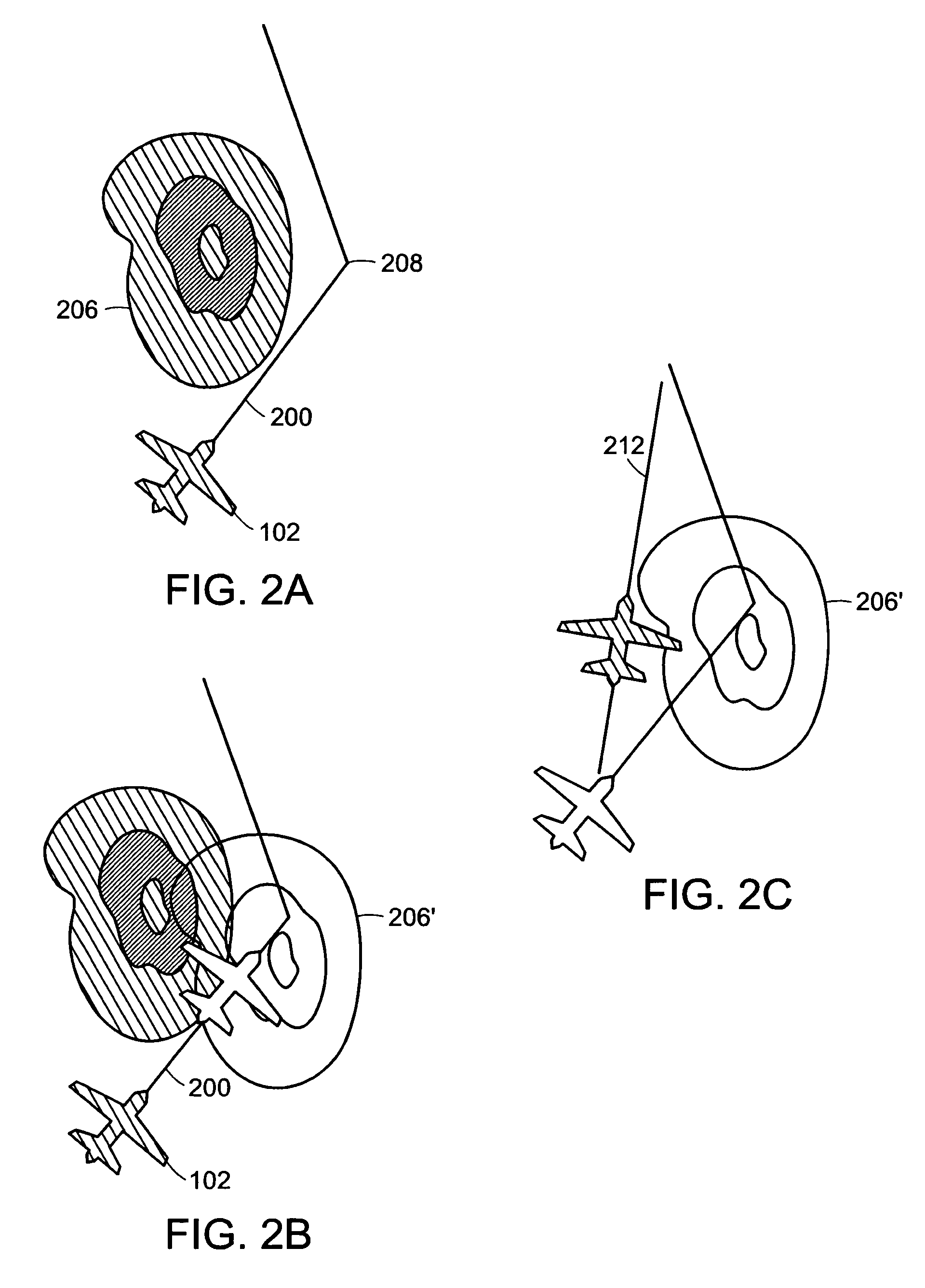

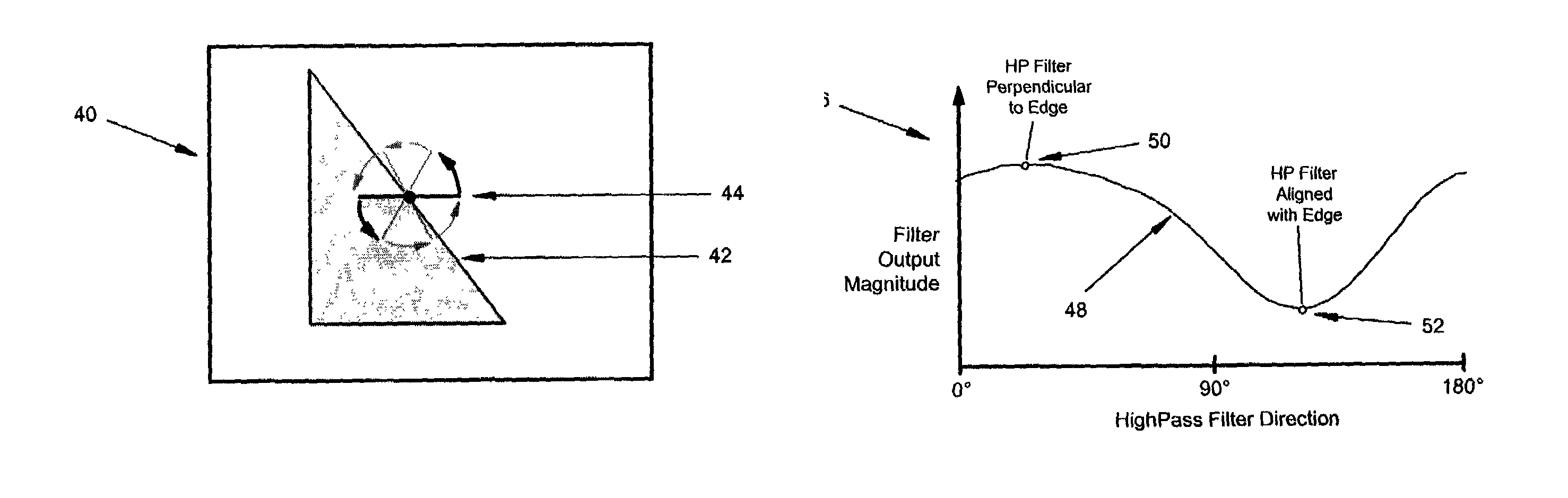

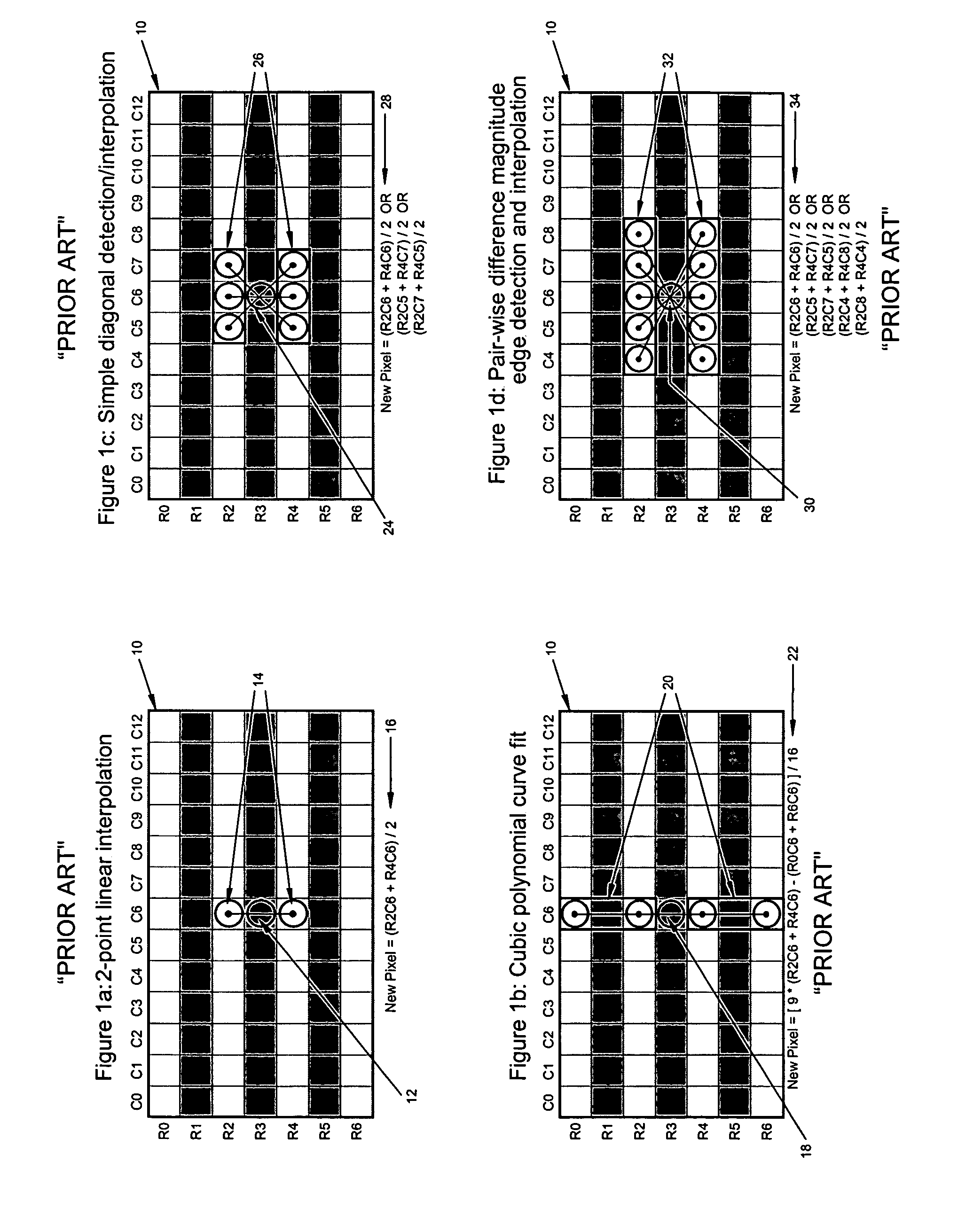

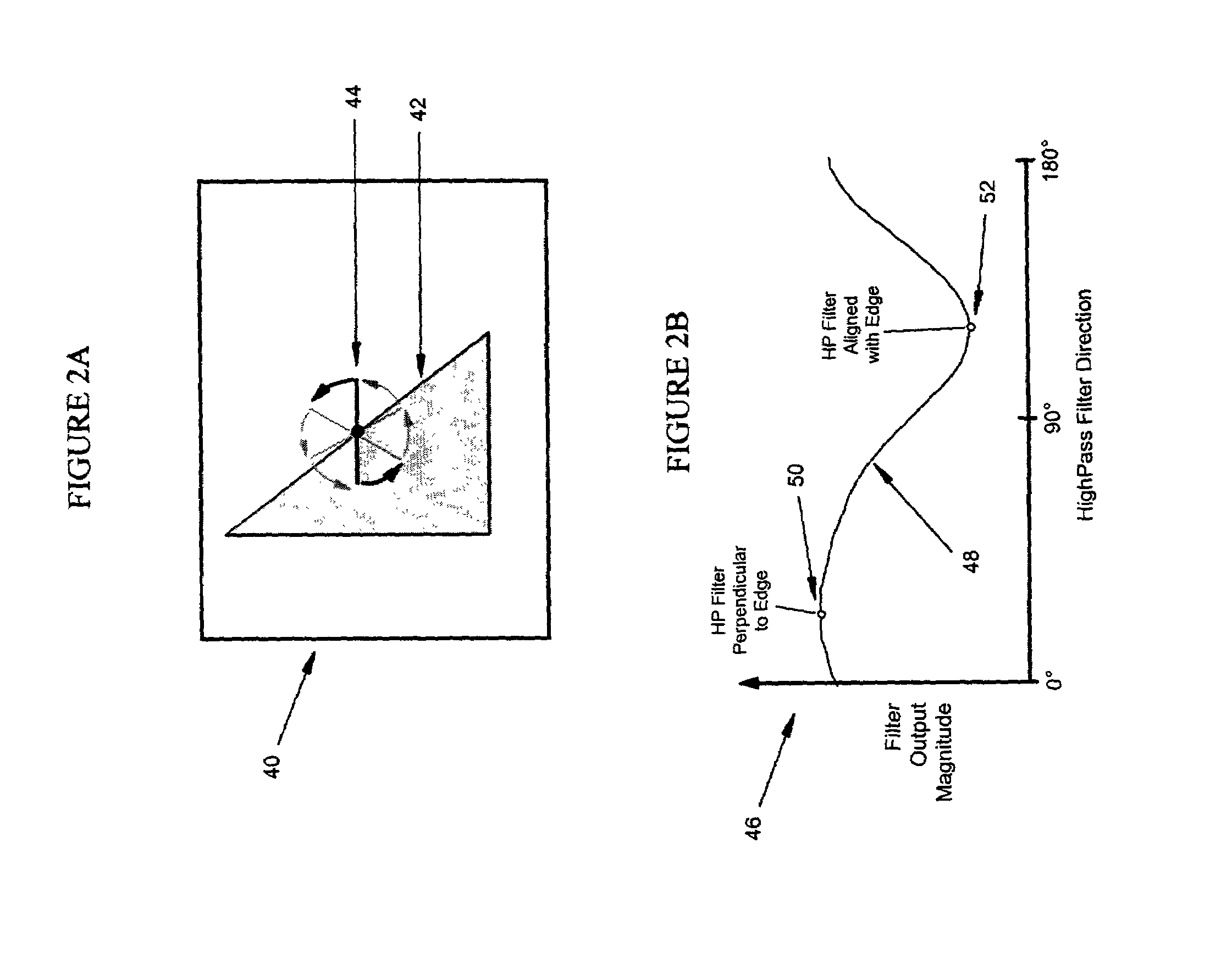

Deinterlacing of video sources via image feature edge detection

ActiveUS7023487B1Reduce artifactsPreserves maximum amount of vertical detailImage enhancementTelevision system detailsInterlaced videoProgressive scan

An interlaced to progressive scan video converter which identifies object edges and directions, and calculates new pixel values based on the edge information. Source image data from a single video field is analyzed to detect object edges and the orientation of those edges. A 2-dimensional array of image elements surrounding each pixel location in the field is high-pass filtered along a number of different rotational vectors, and a null or minimum in the set of filtered data indicates a candidate object edge as well as the direction of that edge. A 2-dimensional array of edge candidates surrounding each pixel location is characterized to invalidate false edges by determining the number of similar and dissimilar edge orientations in the array, and then disqualifying locations which have too many dissimilar or too few similar surrounding edge candidates. The surviving edge candidates are then passed through multiple low-pass and smoothing filters to remove edge detection irregularities and spurious detections, yielding a final edge detection value for each source image pixel location. For pixel locations with a valid edge detection, new pixel data for the progressive output image is calculated by interpolating from source image pixels which are located along the detected edge orientation.

Owner:LATTICE SEMICON CORP

Methods and systems for converting 2D motion pictures for stereoscopic 3D exhibition

ActiveUS8411931B2Improve image qualityColor television with pulse code modulationColor television with bandwidth reductionImaging qualityVideo sequence

The present invention discloses methods of digitally converting 2D motion pictures or any other 2D image sequences to stereoscopic 3D image data for 3D exhibition. In one embodiment, various types of image data cues can be collected from 2D source images by various methods and then used for producing two distinct stereoscopic 3D views. Embodiments of the disclosed methods can be implemented within a highly efficient system comprising both software and computing hardware. The architectural model of some embodiments of the system is equally applicable to a wide range of conversion, re-mastering and visual enhancement applications for motion pictures and other image sequences, including converting a 2D motion picture or a 2D image sequence to 3D, re-mastering a motion picture or a video sequence to a different frame rate, enhancing the quality of a motion picture or other image sequences, or other conversions that facilitate further improvement in visual image quality within a projector to produce the enhanced images.

Owner:IMAX CORP

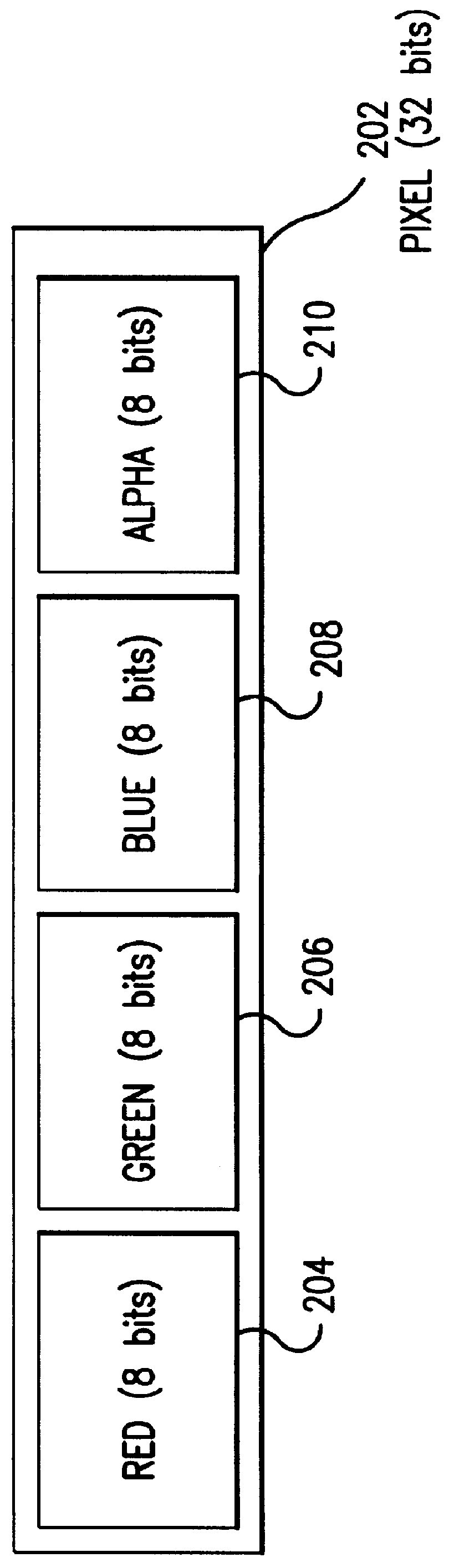

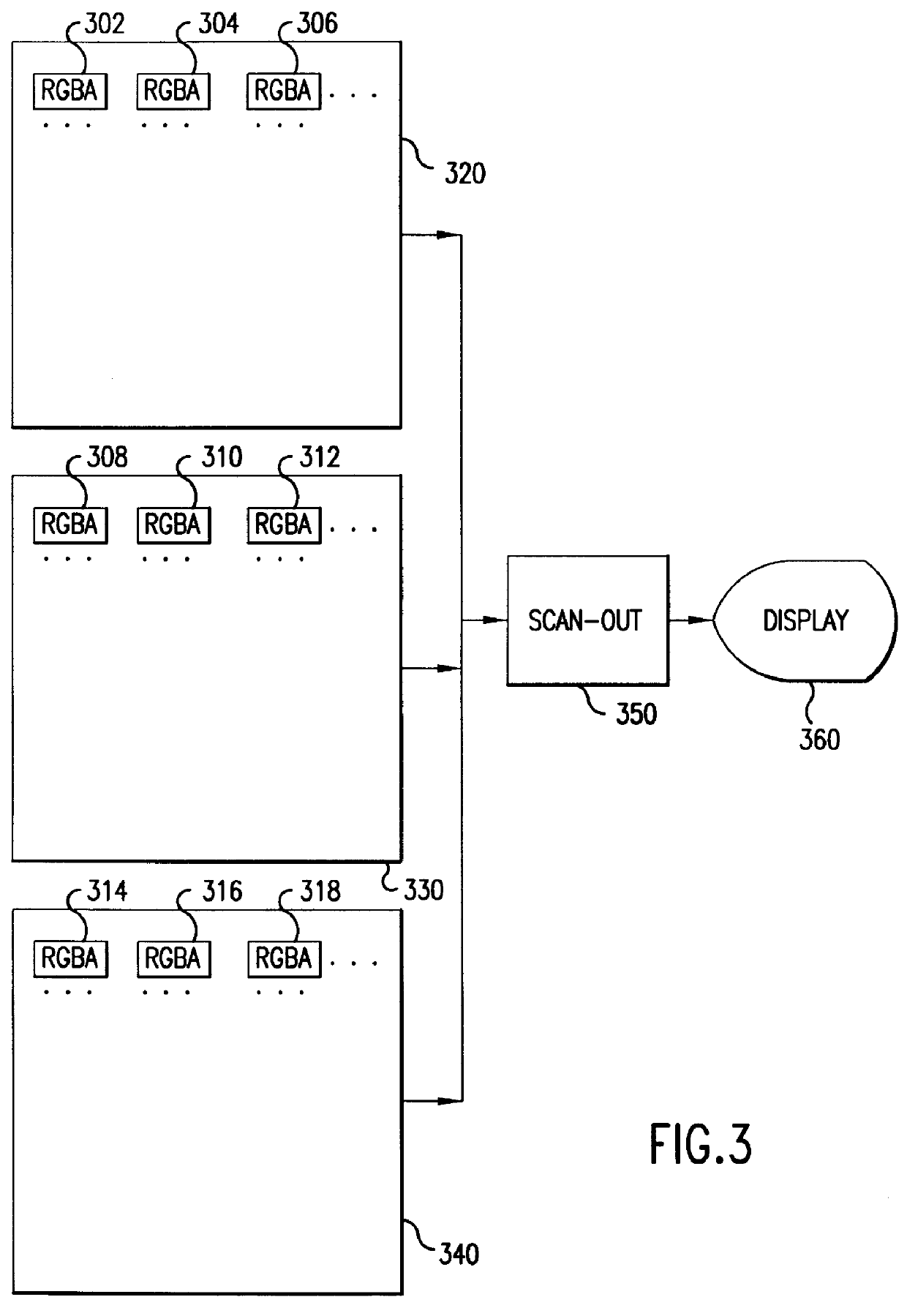

System and method for performing high-precision, multi-channel blending using multiple blending passes

A high-precision multi-channel blending operation replaces a single pass blending operation to overcome distortions resulting from an insufficient number of bits available per pixel in a hardware frame buffer. A desired frame buffer configuration, with a fewer number of channels, and a larger number of bits available per channel than available for a single pass blending operation, is specified and allocated in memory. The same, fewer number of channels from a destination image are written into the frame buffer. The frame buffer is configured for blending, and the same, fewer number of channels from the source image are blended into the frame buffer. The contents of the frame buffer is written into a memory location. The above steps are repeated, until all of the channels have been blended and written into different parts of memory. The channel information from the memory locations are combined to form an image having a user-desired bit resolution.

Owner:AUTODESK INC

Digital composition of a mosaic image

ActiveUS20050147322A1High similarityEasy to controlImage enhancementImage analysisSubject matterImage description

A mosaic image resembling a target image is composed of images from a data base. The target image is divided into regions of a specified size and shape, and the individual images from the data base are compared to each region to find the best matching tile. The comparison is performed by calculating a figure of visual difference between each tile and each region. The data base of tile images is created from raw source images using digital image processing, whereby multiple instances of each individual raw source image are produced. The tile images are organized by subject matter, and tile matching is performed such that all required subject matters are represented in the final mosaic. The digital image processing involves the adjustment of colour, brightness and contrast of tile images, as well as cropping. An image description index locates each image in the final mosaic.

Owner:TAMIRAS PER PTE LTD LLC

Multiresolutional critical point filter and image matching using the same

A multiresolutional filter called a critical point filter is introduced. This filter extracts a maximum, a minimum, and two types of saddle points of pixel intensity for every 2x2 (horizontal x vertical) pixels so that an image of a lower level of resolution is newly generated for every type of a critical point. Using this multiresolutional filter, a source image and a destination image are hierarchized, and source hierarchical images and destination hierarchical images are matched using image characteristics recognized through a filtering operation.

Owner:JBF PARTNERS

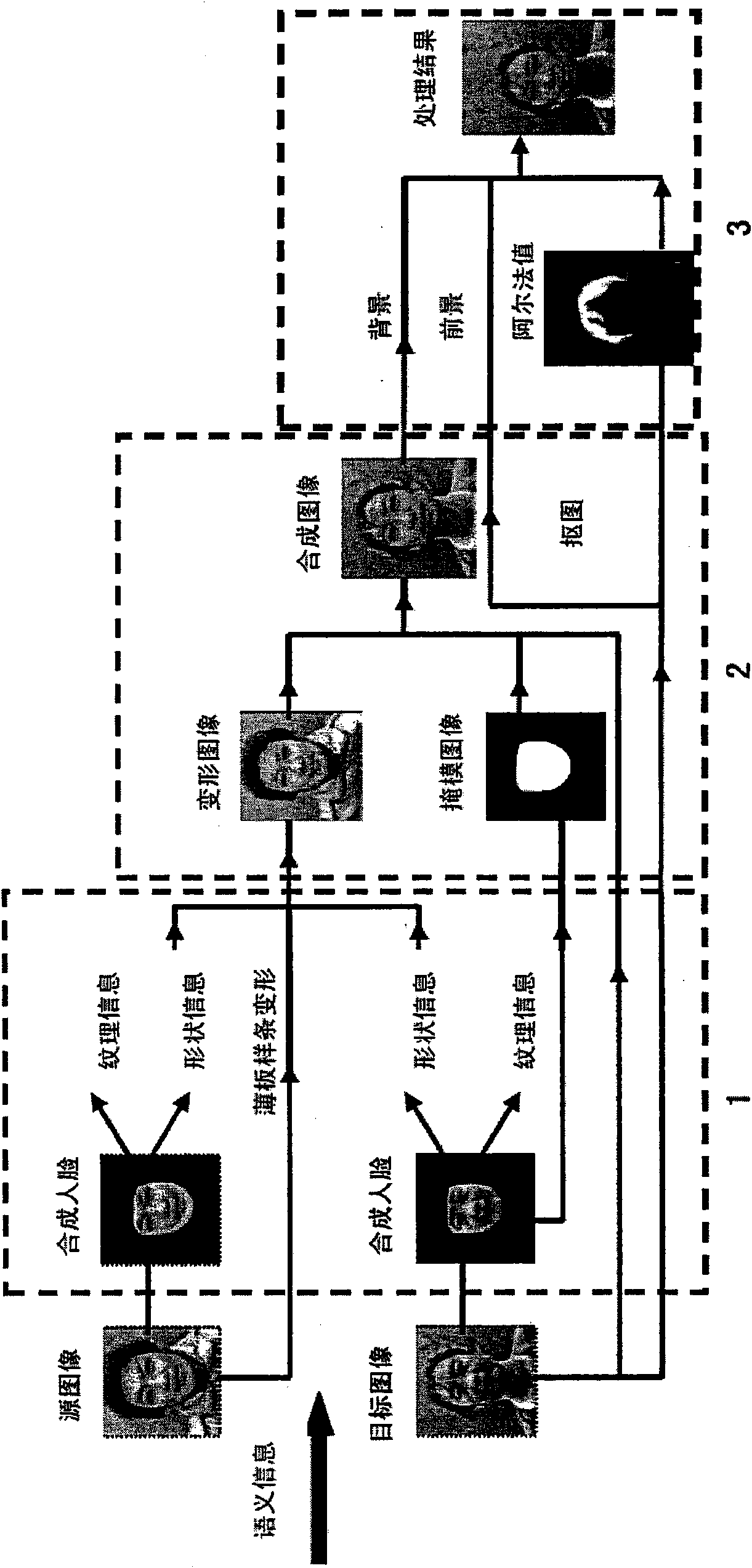

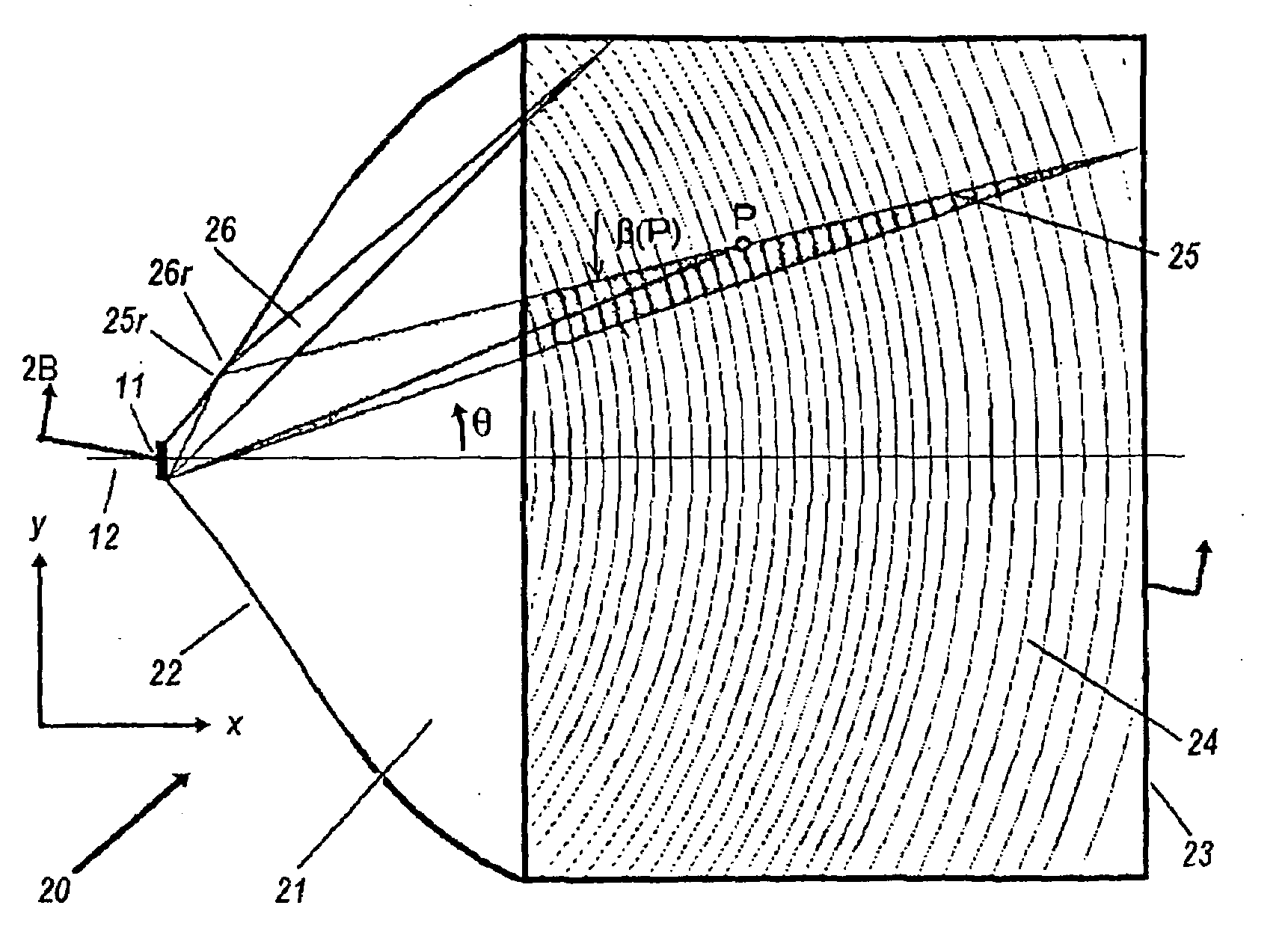

Automatic synthesis method for facial image

The invention provides an automatic synthesis method for a facial image. A user inputs two facial source images, a target image and semantic information of an area to be edited; a model matching module utilizes an active appearance model to automatically search an image and provide facial shape information and facial texture information of the facial image; then, the shapes of the two facial images are aligned by using the characteristics of the model, and the shapes of the source images in the edited area are aligned to a corresponding area in the target image by virtue of the deformation of a sheet sample band; a characteristic synthesis module is used for characteristic synthesis for the shape information and the texture information of the aligned source images and the target image, so as to generate a synthetic image automatically; an occlusion processing module is used for occlusion processing the synthetic image, and the occlusion areas of the source images are divided, matched and seamlessly integrated into the target image. Compared with the traditional facial synthesis, the invention integrates the characteristic areas of the two images and solves the problem of image distortion caused by partial face occlusion in the target image.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

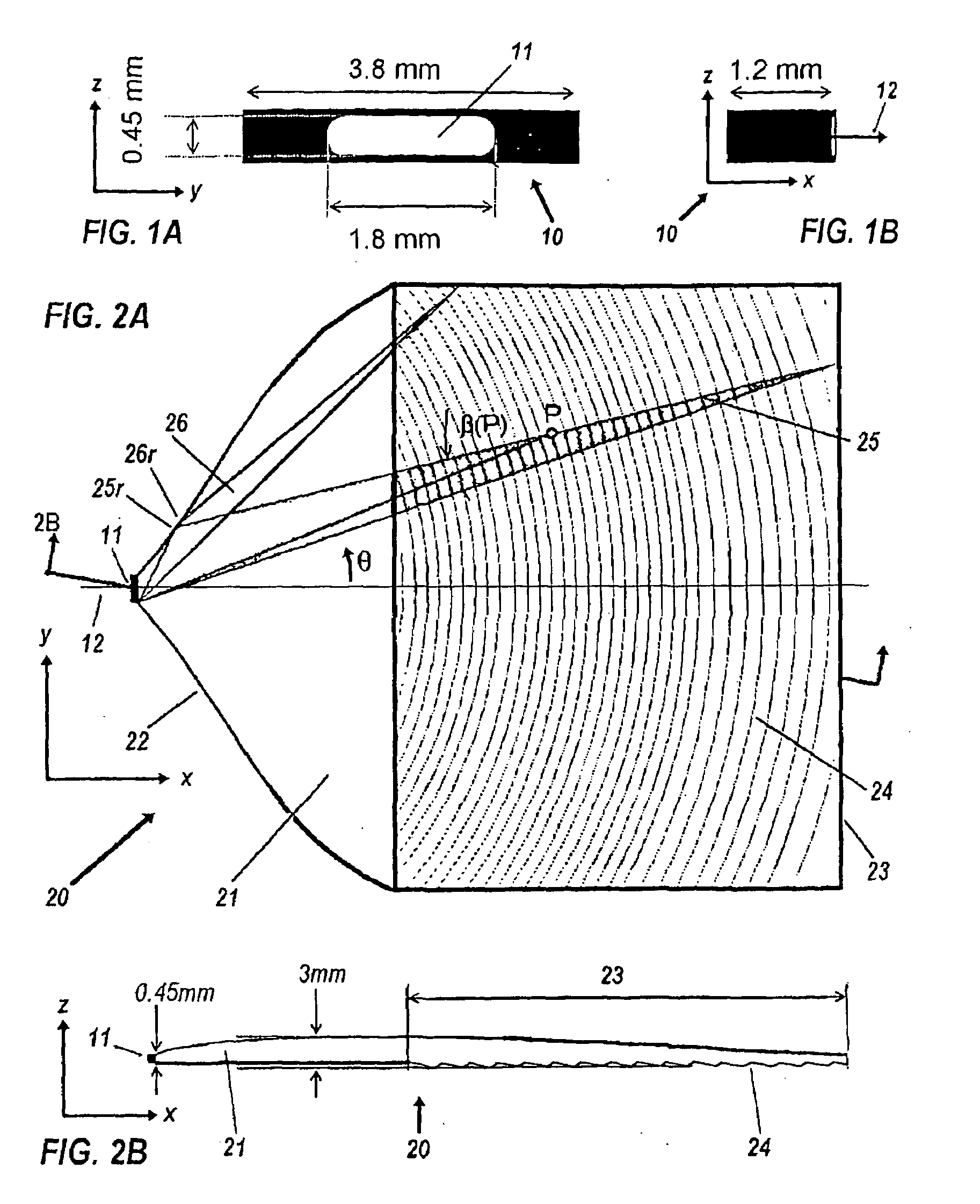

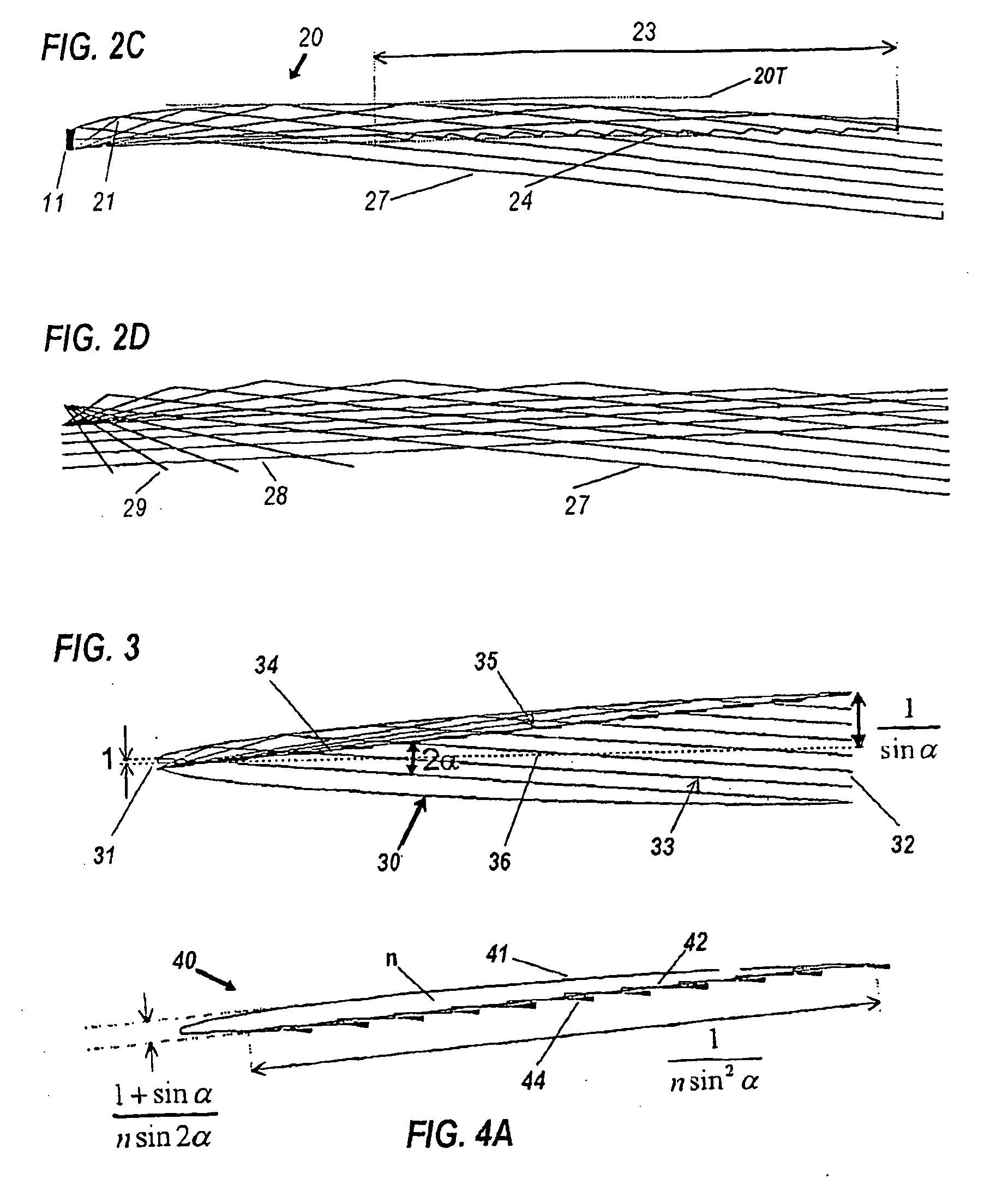

Etendue-conserving illumination-optics for backlights and frontlights

InactiveUS20090167651A1Bright enoughReduce areaShow cabinetsImpedence networksTotal internal reflectionLight beam

Some embodiments provide luminance-preserving non-imaging backlights that comprise a luminous source emitting light, an input port that receives the light, an injector and a beam-expanding ejector. The injector includes the input port and a larger output port with a profile that expands away from the input port acting via total internal reflection to keep x-y angular width of the source image inversely proportional to its luminance. The injector is defined by a surface of revolution with an axis on the source and a swept profile that is a first portion of an upper half of a CPC tilted by its acceptance angle. The beam-expanding ejector comprising a planar waveguide optically coupled to the output port of the injector. The ejector includes a smooth upper surface, and a reflective lower surface comprising microstructured facets that refract upwardly reflected light into a collimated direction common to the facets.

Owner:LIGHT PRESCRIPTIONS INNOVATORS

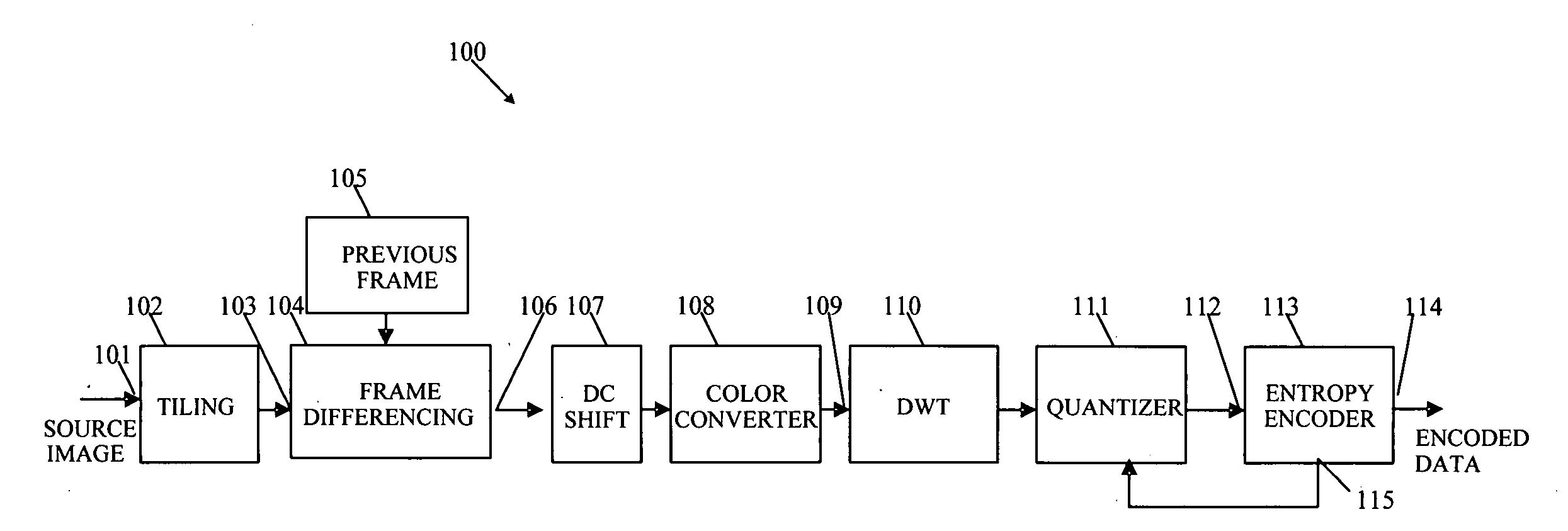

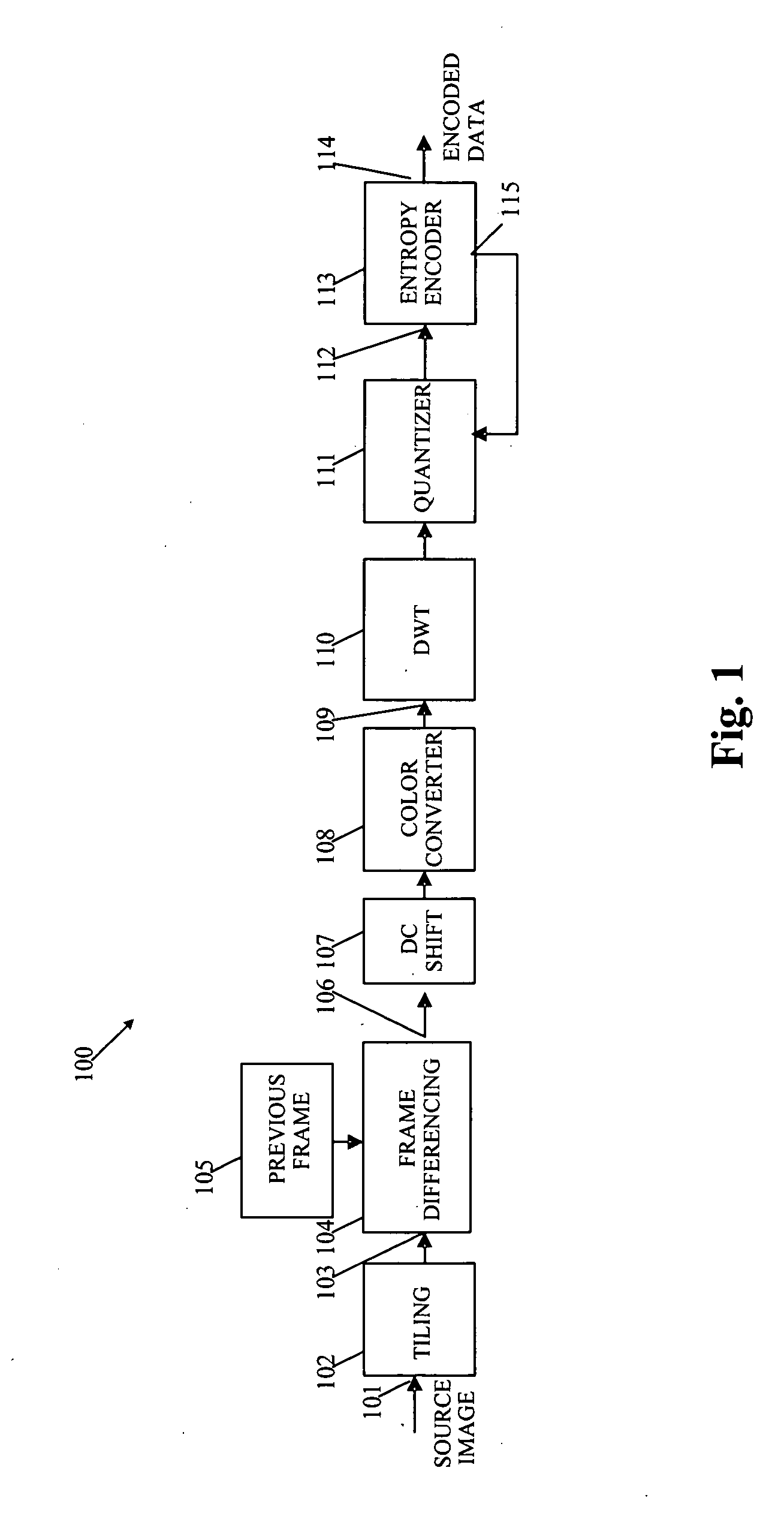

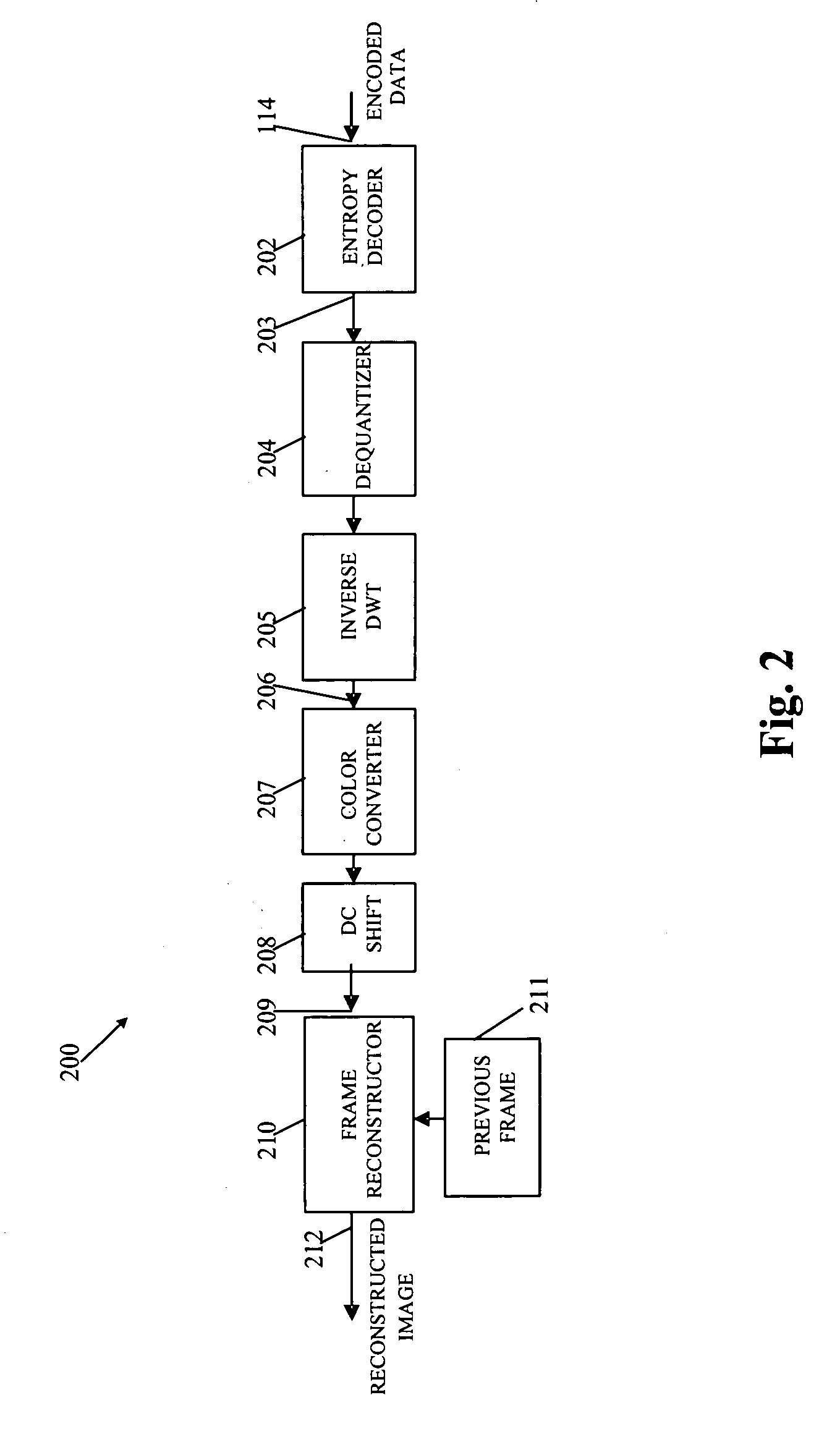

System and method for effectively encoding and decoding electronic information

ActiveUS20080112489A1Effective informationColor television with pulse code modulationColor television with bandwidth reductionFrame differenceSource image

A system and method for effectively encoding and decoding electronic information includes an encoding system with a tiling module that initially divides source image data into data tiles. A frame differencing module then outputs only altered data tiles to various processing modules that convert the altered data tiles into corresponding tile components. A quantizer performs a compression procedure upon the tile components to generate compressed data according to an adjustable quantization parameter. An adaptive entropy selector then selects one of a plurality of available entropy encoders to most effectively perform an entropy encoding procedure to thereby produce encoded data. The entropy encoder may also utilize a feedback loop to adjust the quantization parameter in light of current transmission bandwidth characteristics.

Owner:MICROSOFT TECH LICENSING LLC

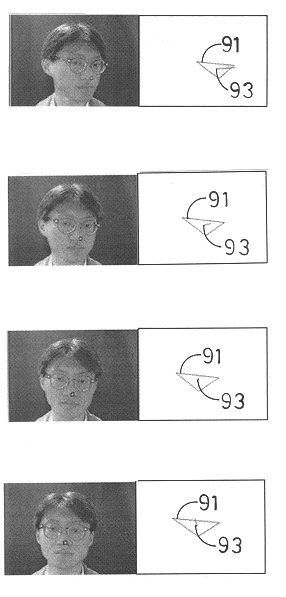

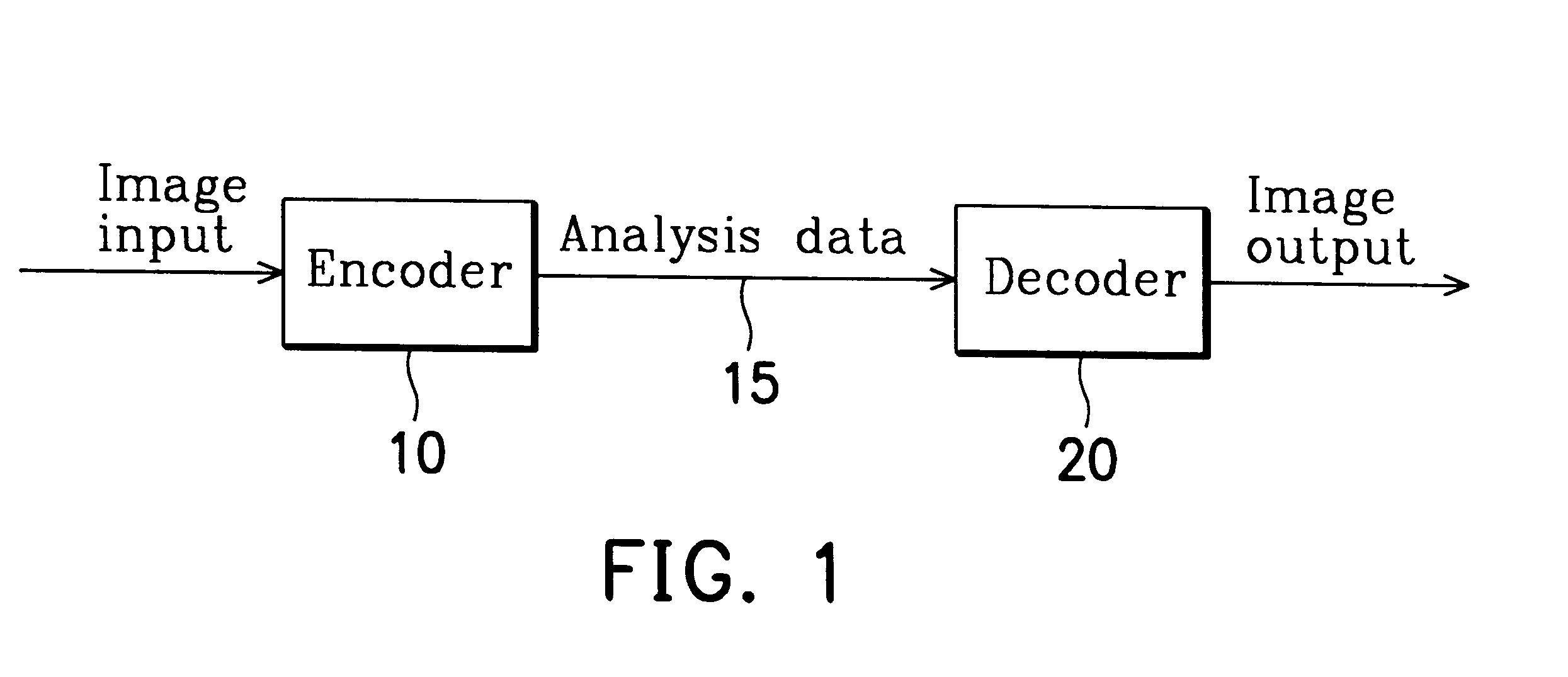

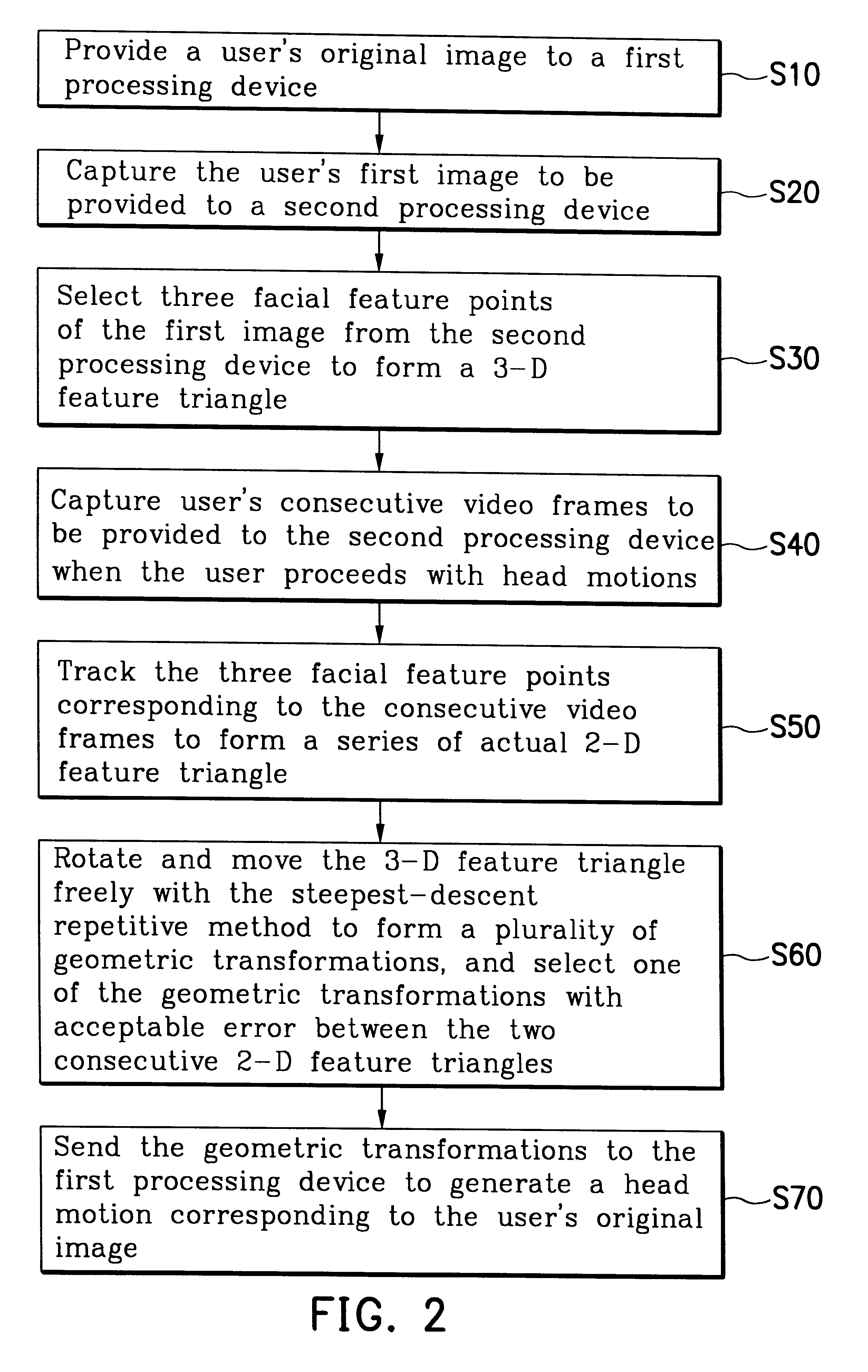

Method of image processing using three facial feature points in three-dimensional head motion tracking

A method of image processing in three-dimensional (3-D) head motion tracking is disclosed. The method includes: providing a user's source image to a first processing device; capturing the user's first image and providing it to a second processing device; selecting three facial feature points of the first image from the second processing device to form a 3-D feature triangle; capturing user's consecutive video frames and providing them to the second processing device when the user proceeds with head motions; tracking the three facial feature points corresponding to the consecutive video frames to form a series of actual 2-D feature triangle; rotating and translating the 3-D feature triangle freely to form a plurality of geometric transformations, selecting one of the geometric transformations with acceptable error between the two consecutive 2-D feature triangles, repeating the step until the last frame of the consecutive video frames and geometric transformations corresponding to various consecutive video frames are formed; and providing the geometric transformations to the first processing device to generate a head motion corresponding to the user's source image.

Owner:CYBERLINK

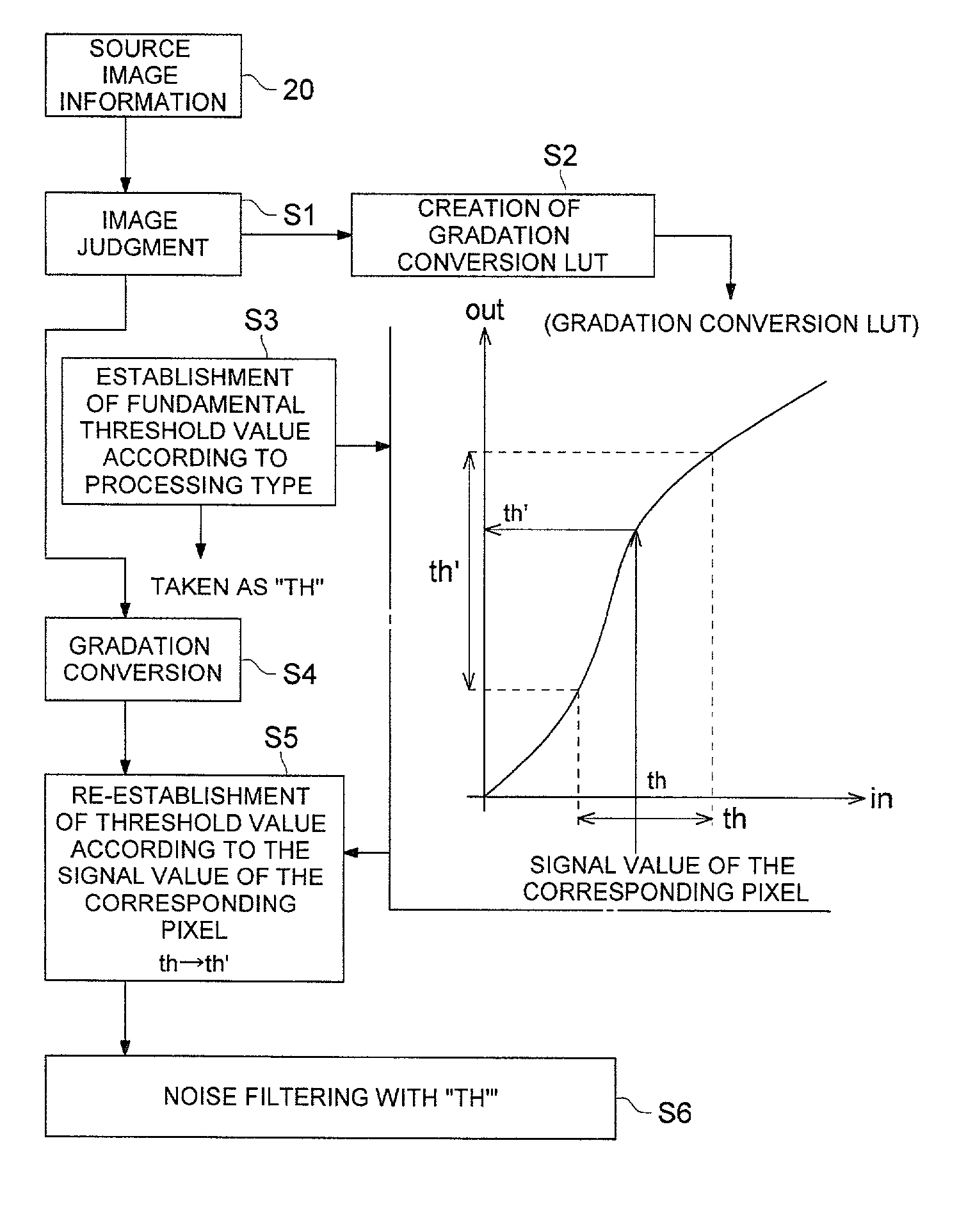

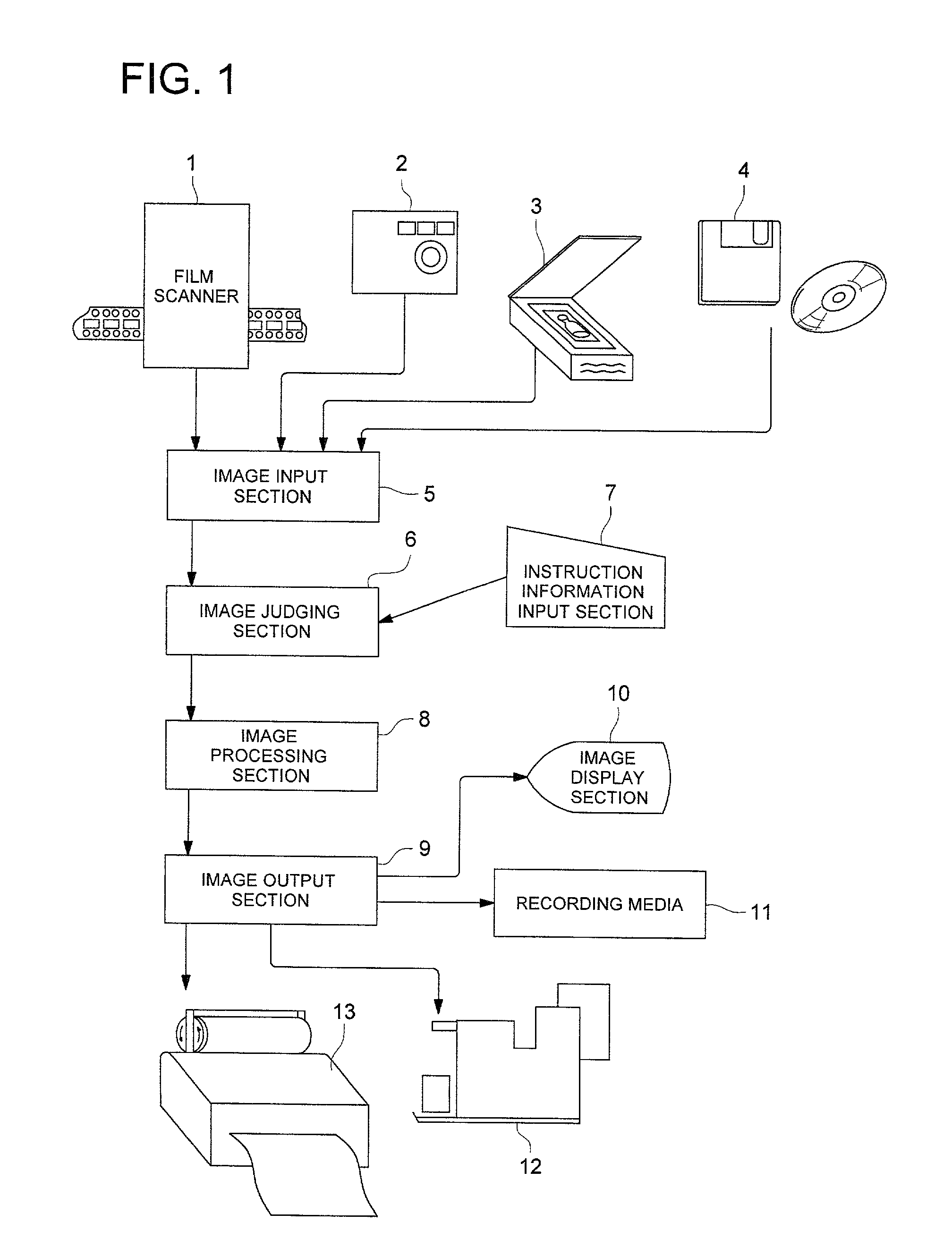

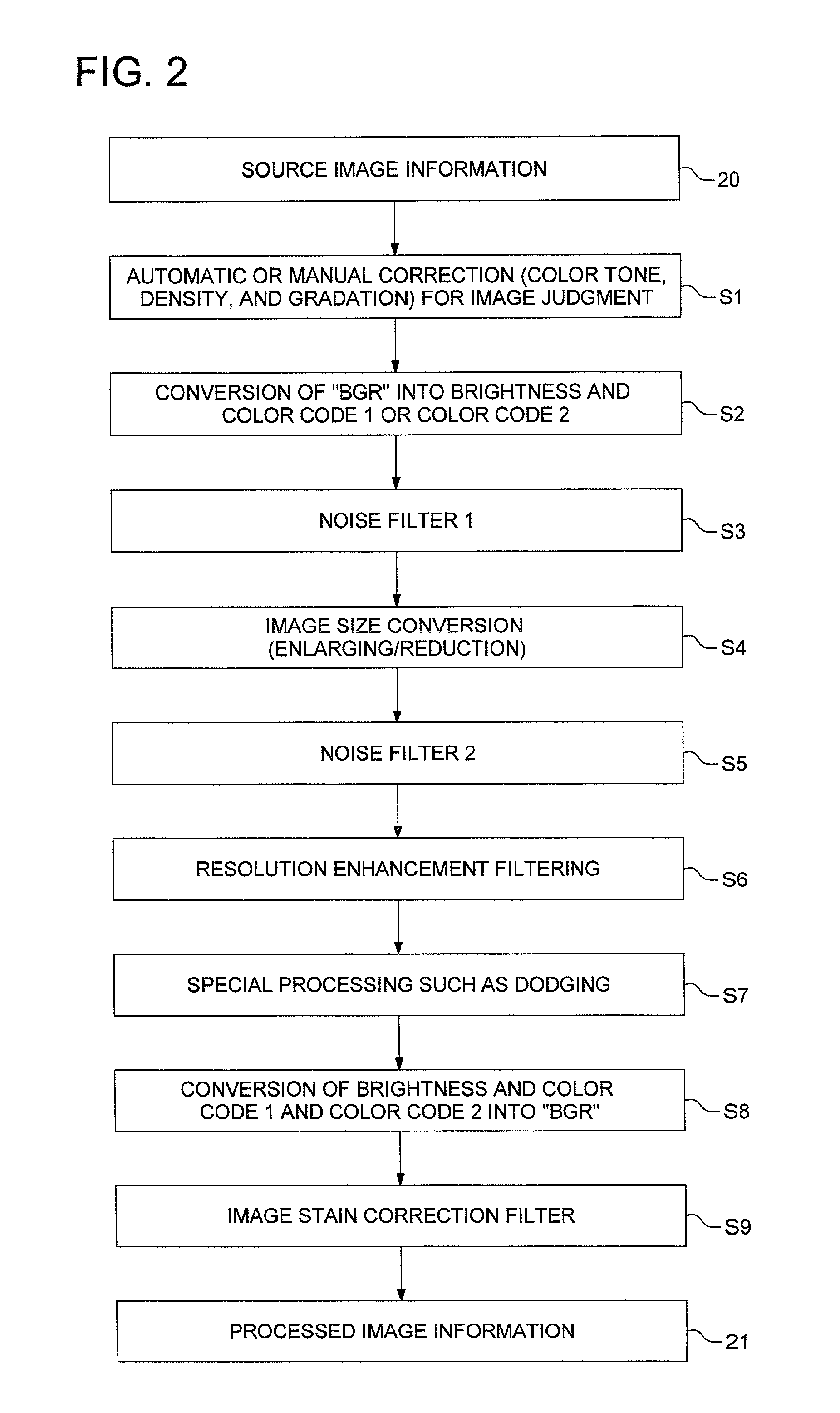

Image processing methods and image processing apparatus

InactiveUS6990249B2Minimum image noiseFast processingImage enhancementColor signal processing circuitsImaging processingImaging quality

There is described image-processing methods and image processing apparatus, which enable sharpness, scaling coefficients, and image quality to be adjusted with minimum image noise and minimum image quality deterioration and at high processing speed. One of the image-processing methods for creating processed image data by applying a spatial-filtering processing to source image data, comprises the steps of: setting a predetermined upper-limit value for a variation amount of the source image data, before performing an image-conversion processing through which the source image data are converted to the processed image data by applying the spatial-filtering processing; and performing the image-conversion processing for the source image data within a range of the variation amount limited by the predetermined upper-limit value. In the above, a plurality of spatial-filtering processing(s), characteristics of which are different each other, are performed either simultaneously in parallel or sequentially one by one in the image-conversion processing.

Owner:KONICA CORP

Method and apparatus for providing panoramic view with high speed image matching and mild mixed color blending

ActiveUS20070159524A1Improves speed of colorImprove image stitching speedTelevision system detailsImage analysisSource imageImage matching

An apparatus and method of providing a panoramic image, the method including: preparing images of a plurality of levels, which are made by scaling each of two source images at several rates; computing a Sum of Square Difference (SSD) between two corresponding images in each of the plurality of levels of the two source images to form an image stitched together; and when stitching the two images, the overlapping area is divided into a plurality of parts and each part is assigned a weight which is linearly applied respectively to the divided part of each source image for blending color. The apparatus includes stitching units to stitch source images together at the plurality of levels and blending units to color blend the source images in the overlapped area.

Owner:SAMSUNG ELECTRONICS CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com