Patents

Literature

201 results about "Rigid transformation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In mathematics, a rigid transformation (also called Euclidean transformation or Euclidean isometry) is a geometric transformation of a Euclidean space that preserves the Euclidean distance between every pair of points.

Determining camera motion

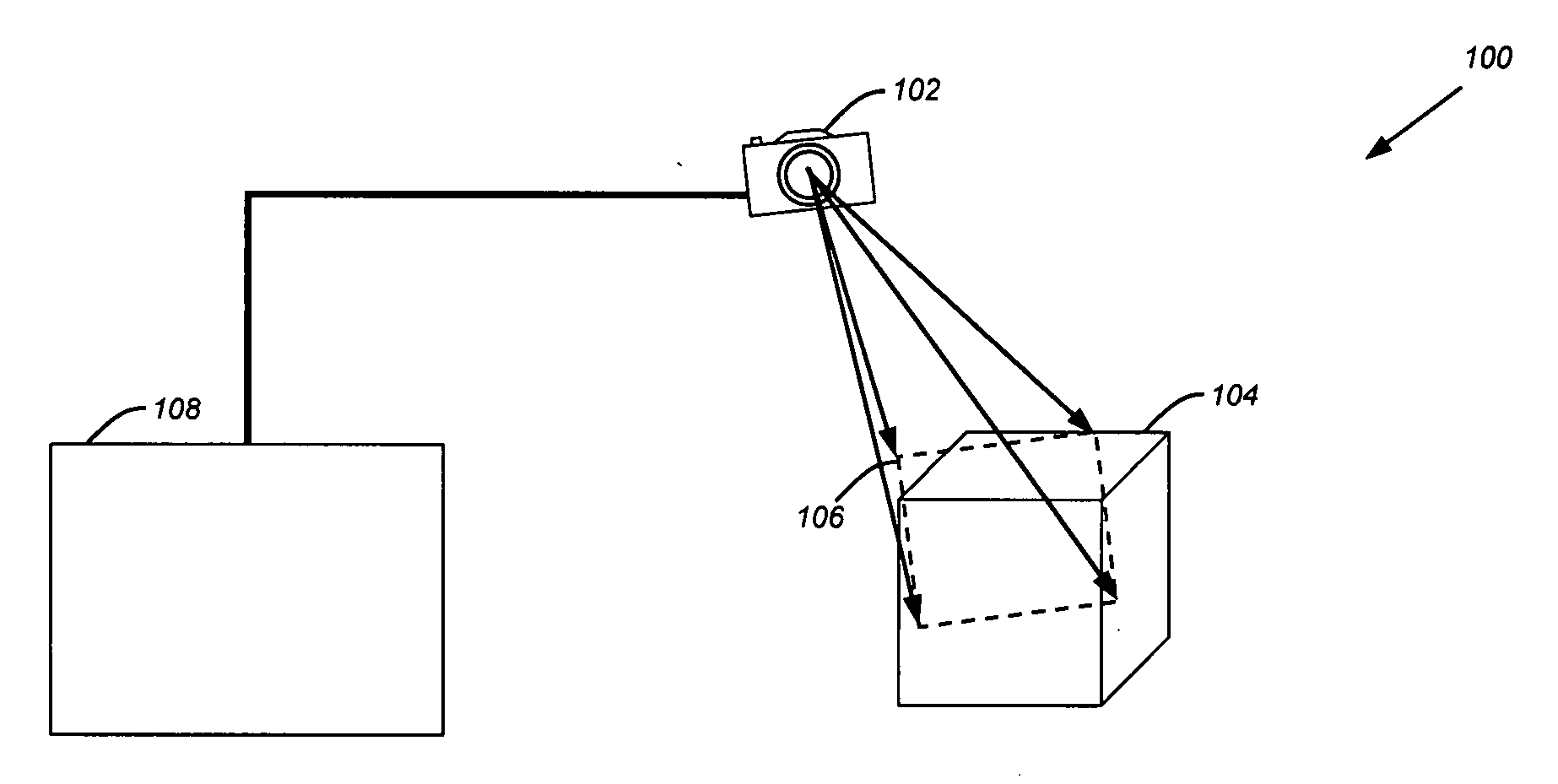

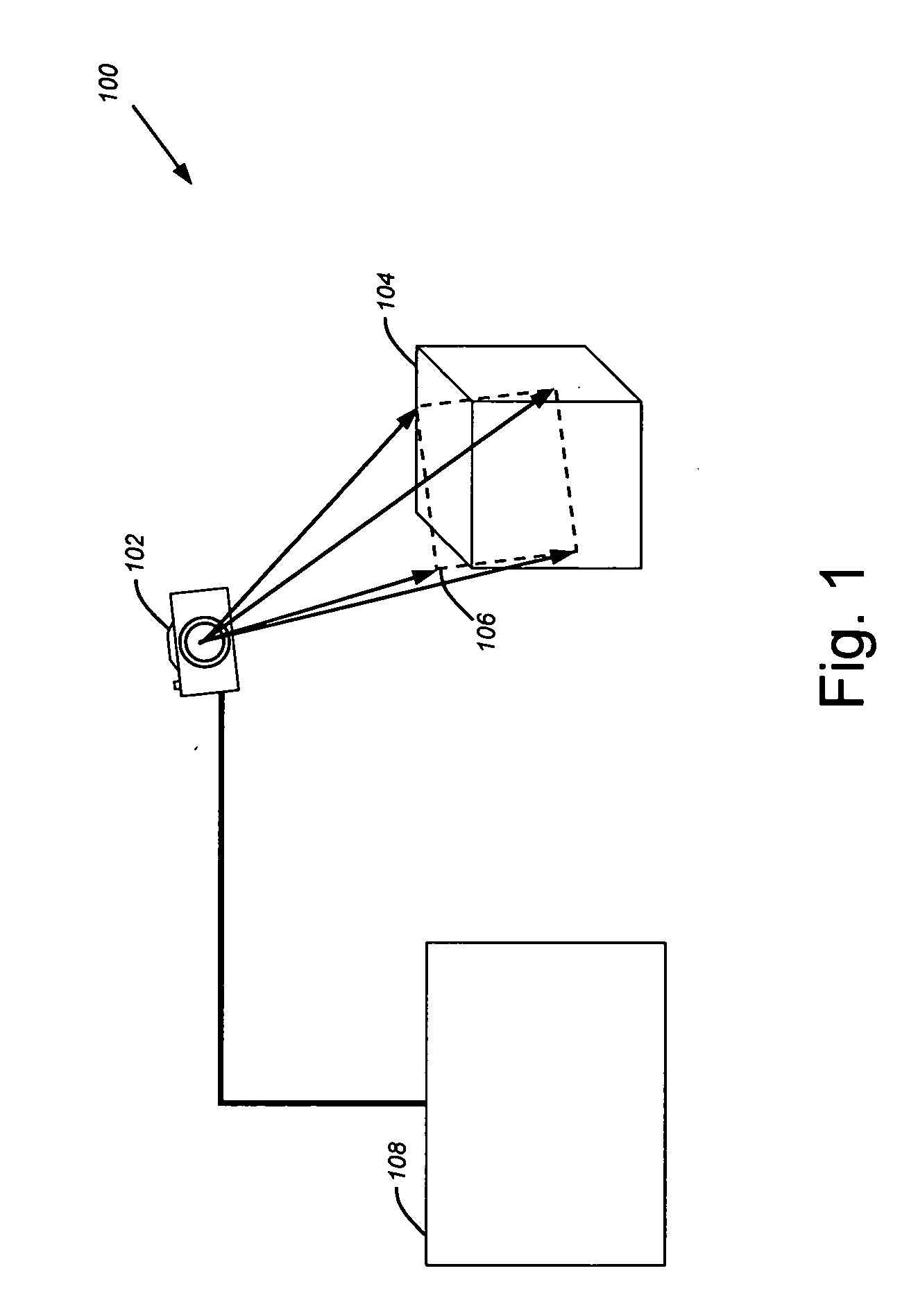

ActiveUS7605817B2Efficiently parameterizedTelevision system detailsImage analysisPoint cloudSource image

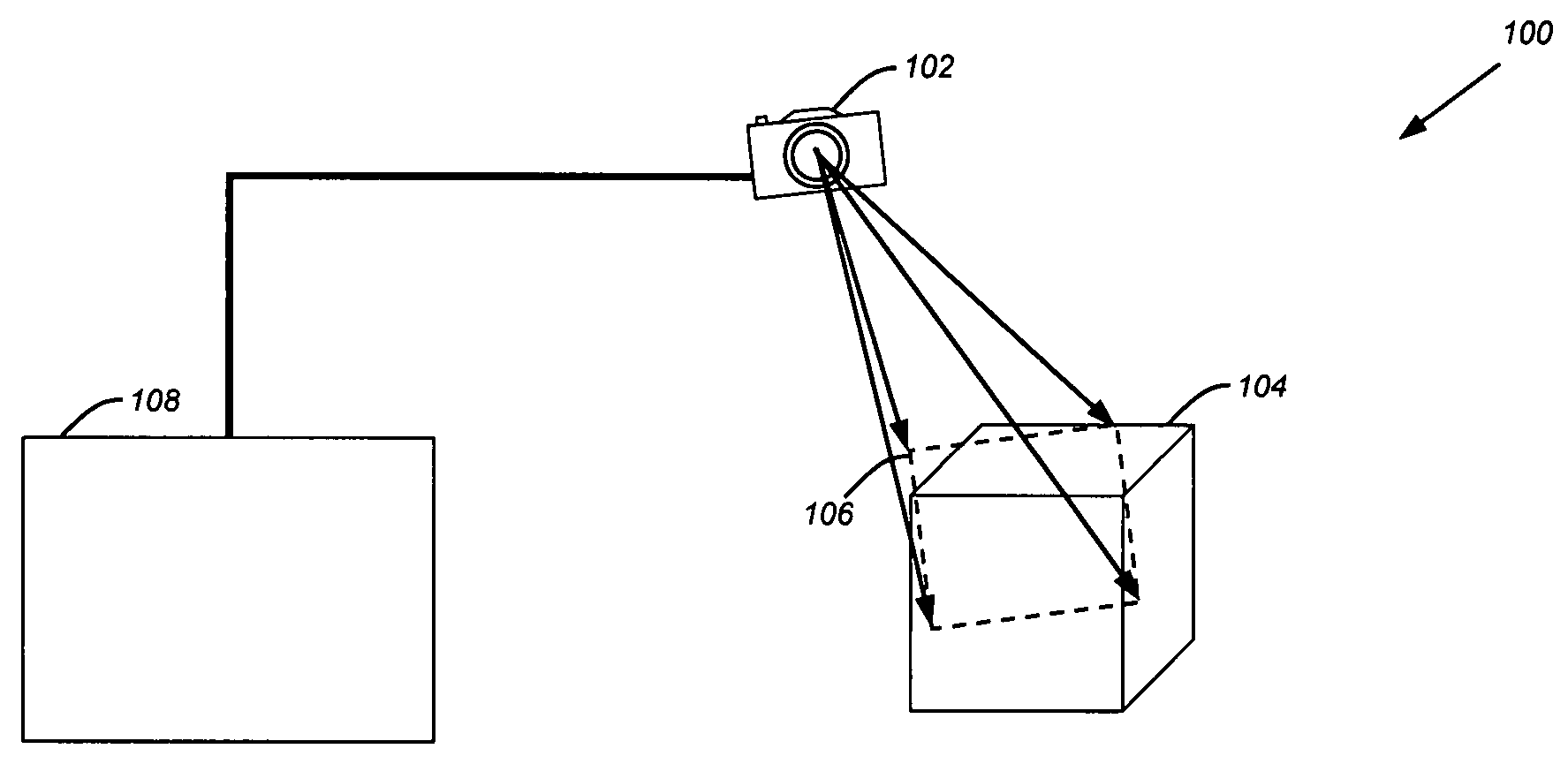

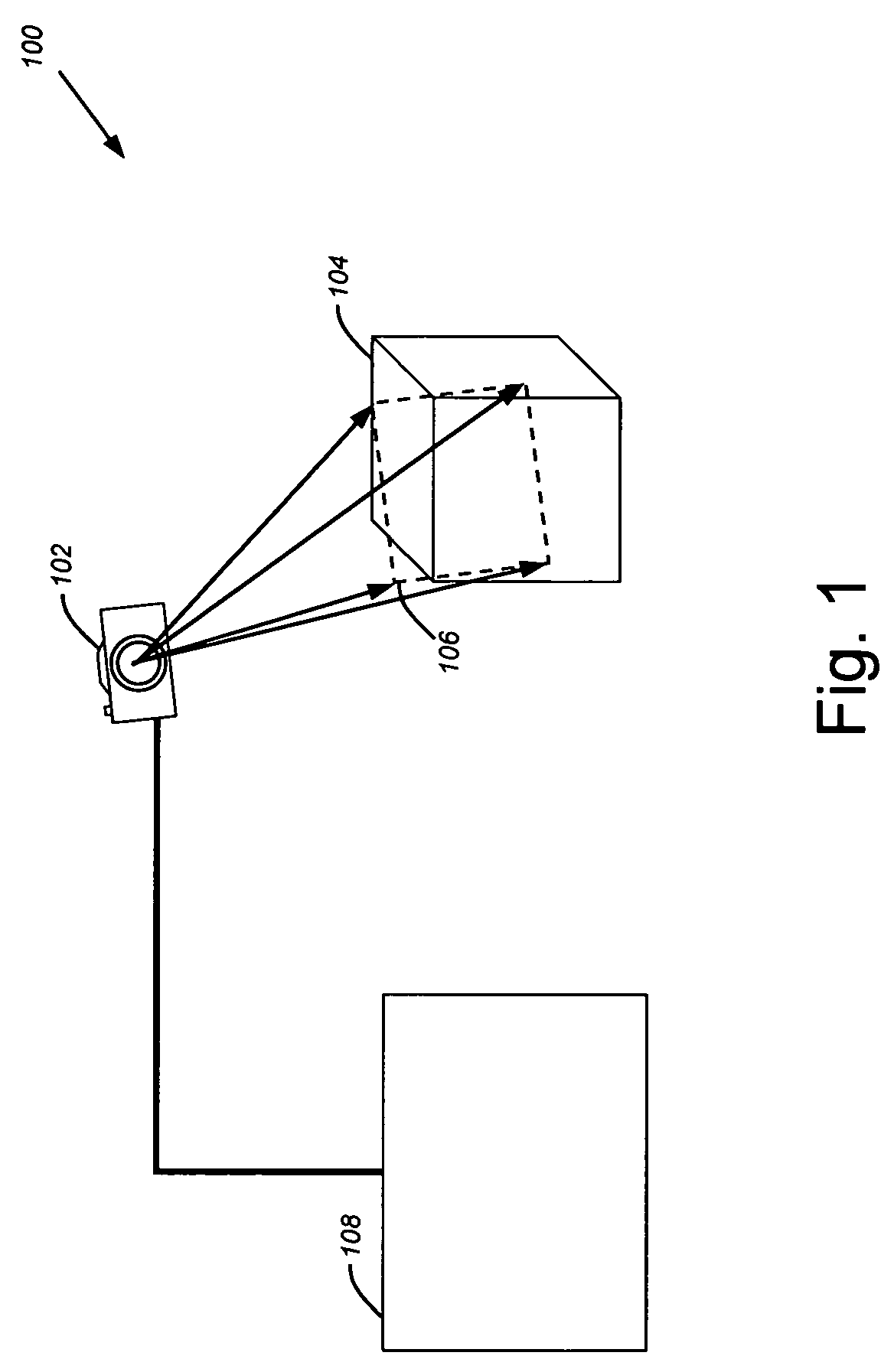

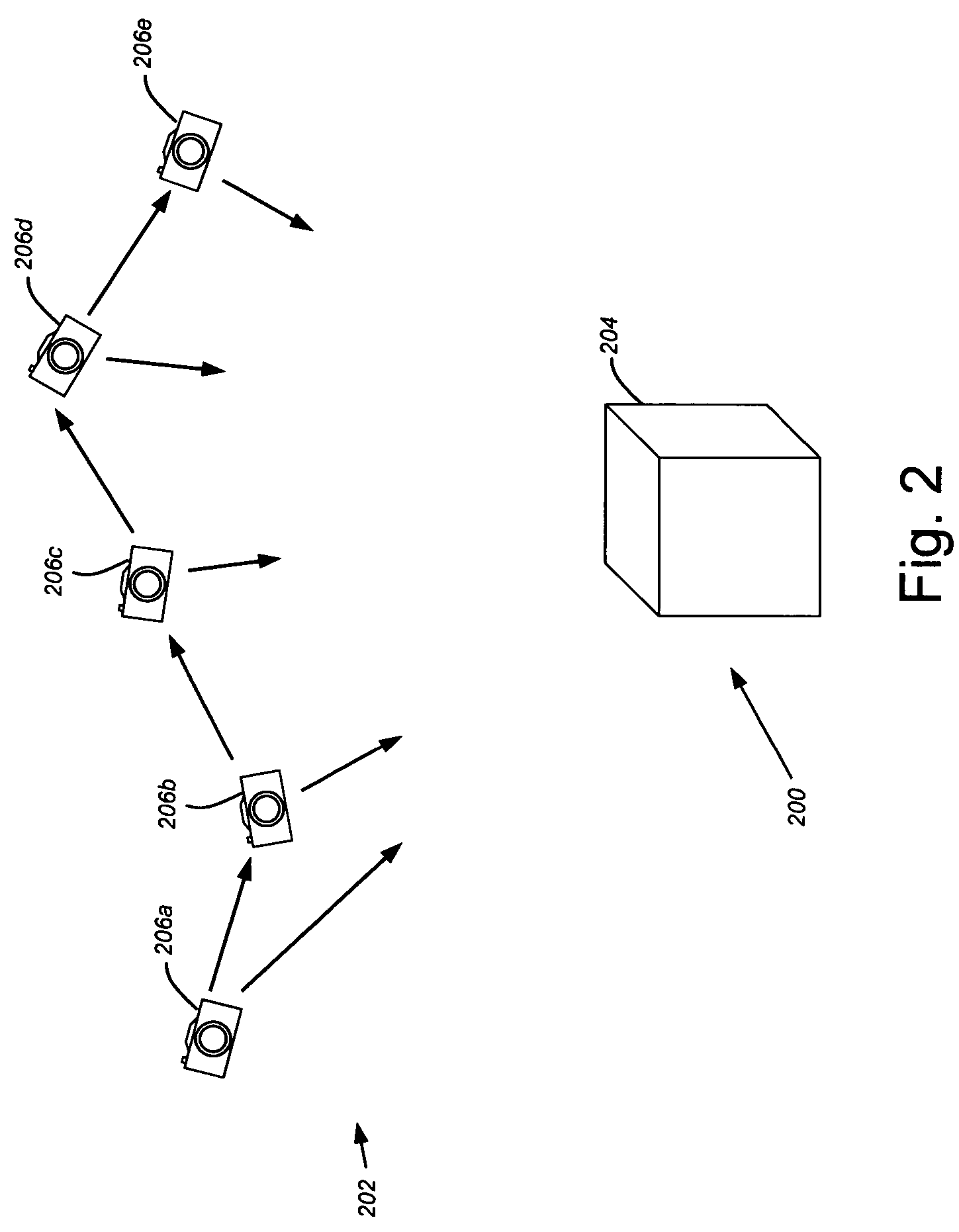

Camera motion is determined in a three-dimensional image capture system using a combination of two-dimensional image data and three-dimensional point cloud data available from a stereoscopic, multi-aperture, or similar camera system. More specifically, a rigid transformation of point cloud data between two three-dimensional point clouds may be more efficiently parameterized using point correspondence established between two-dimensional pixels in source images for the three-dimensional point clouds.

Owner:MEDIT CORP

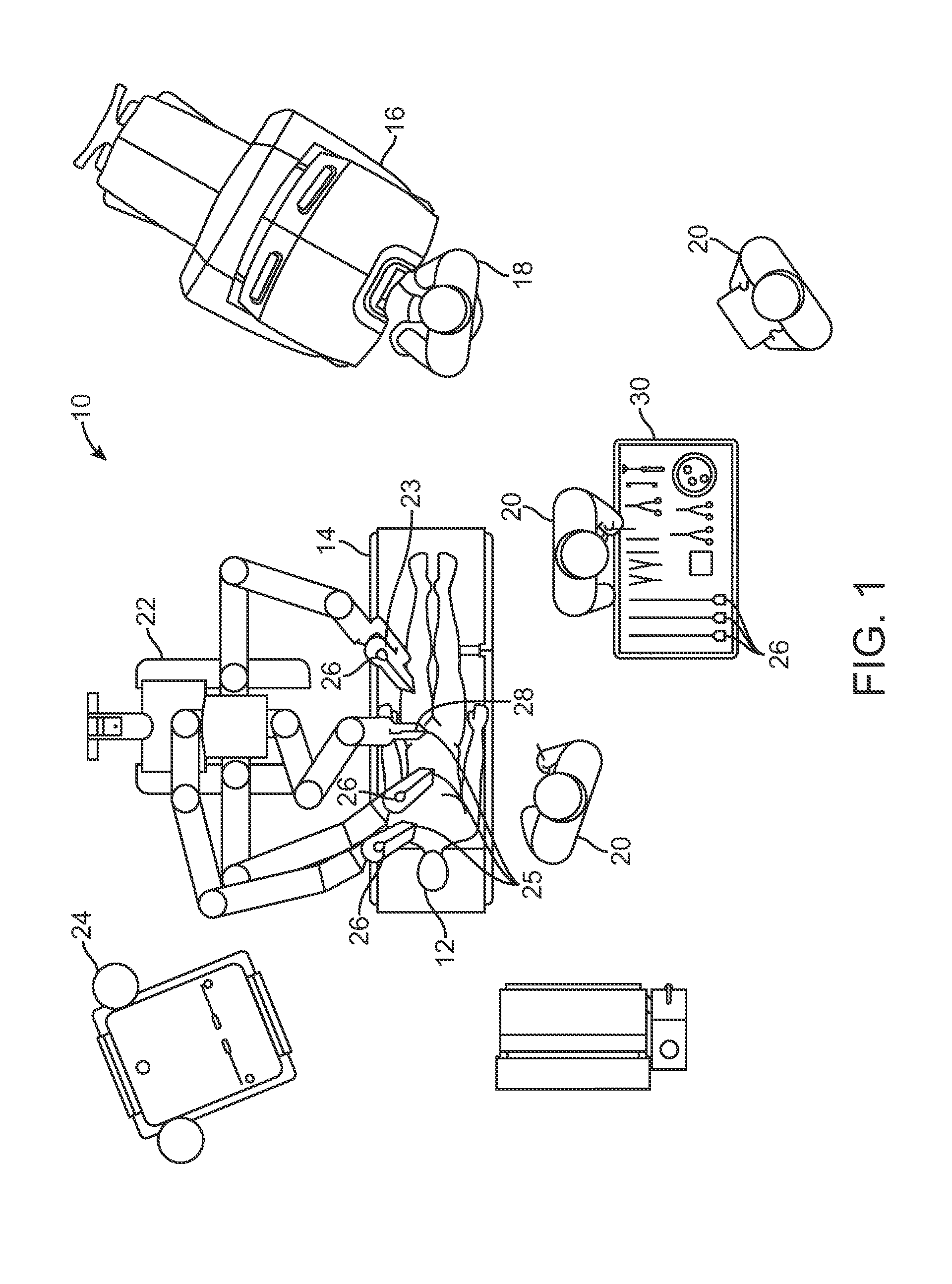

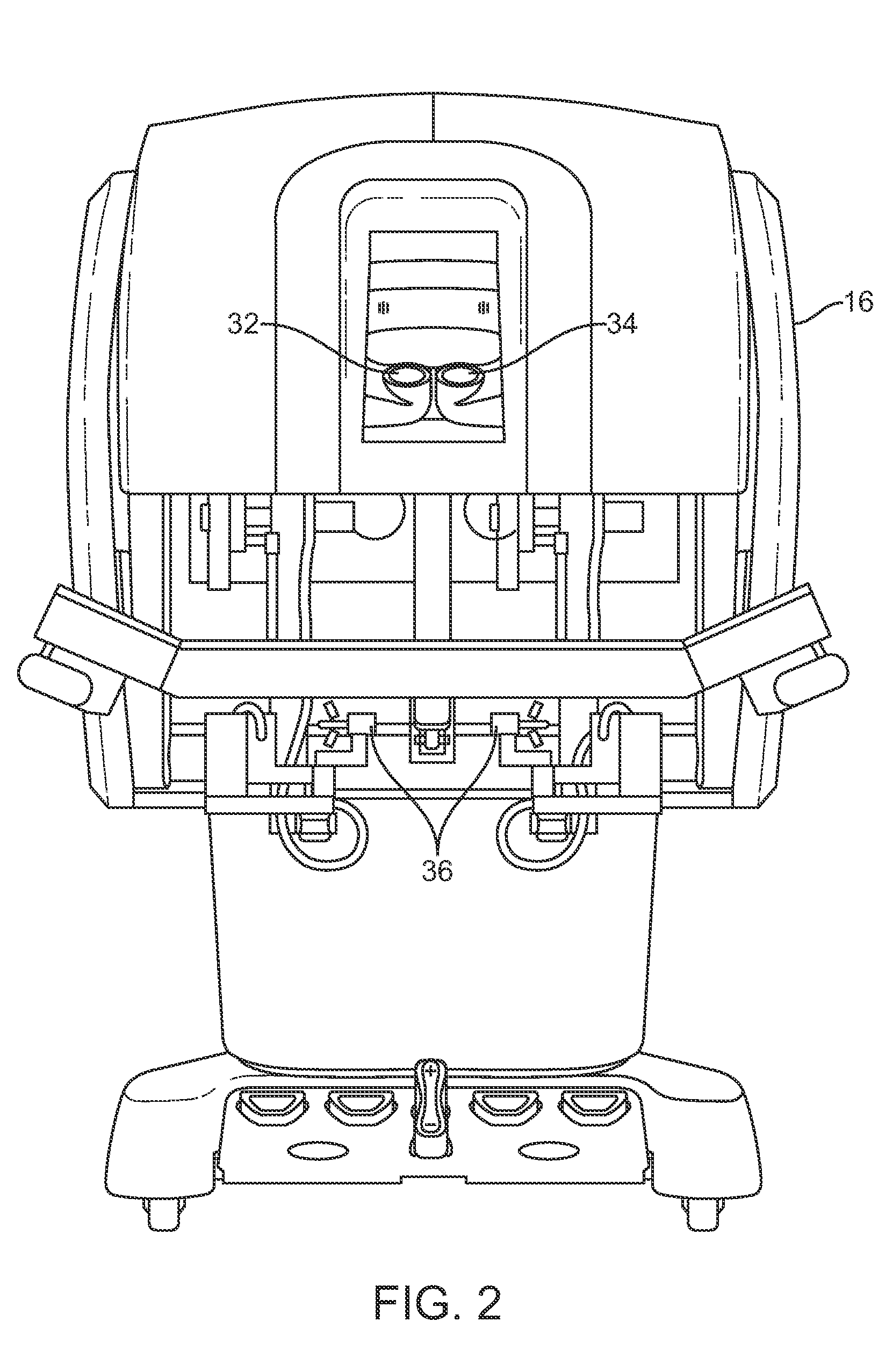

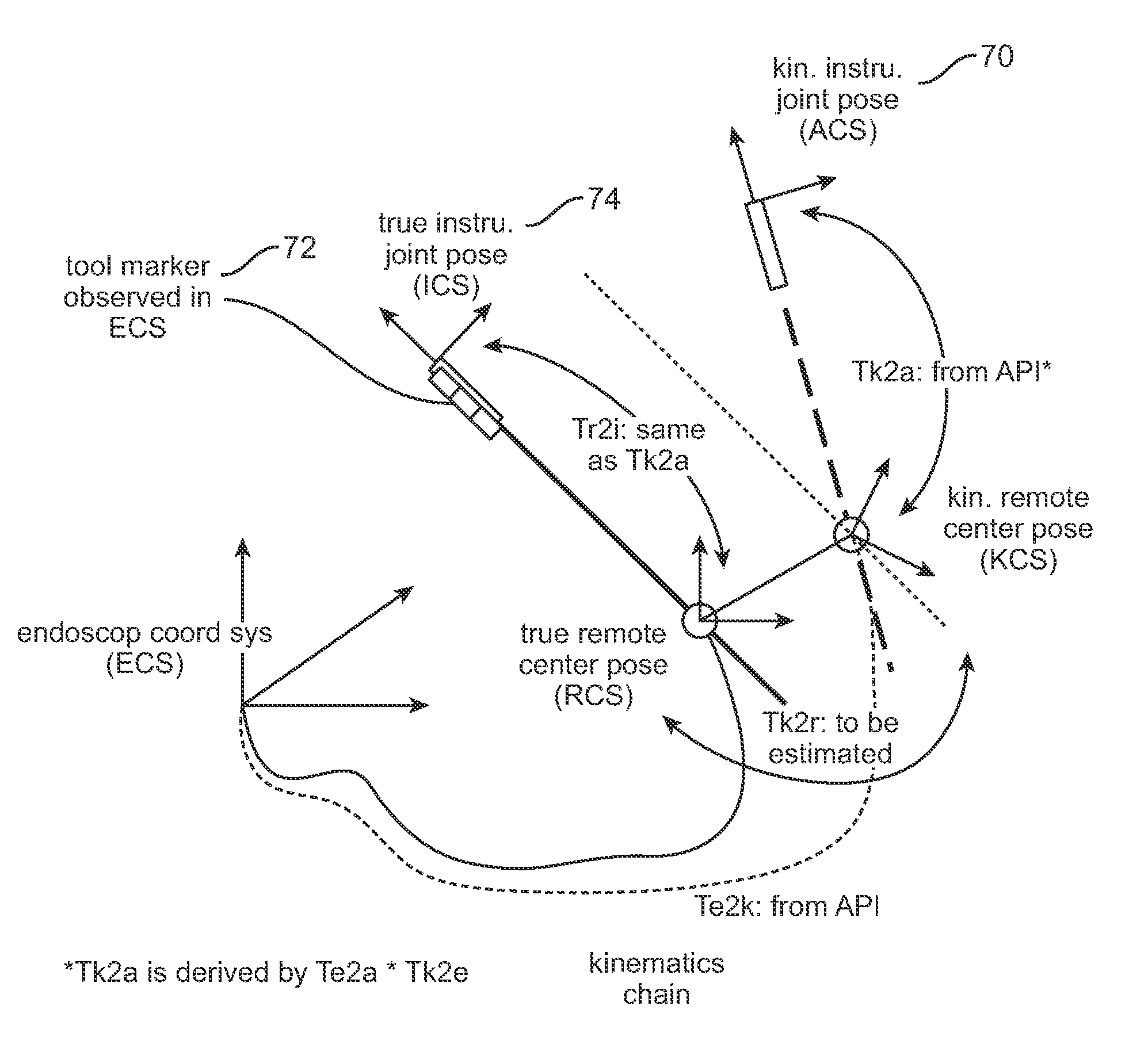

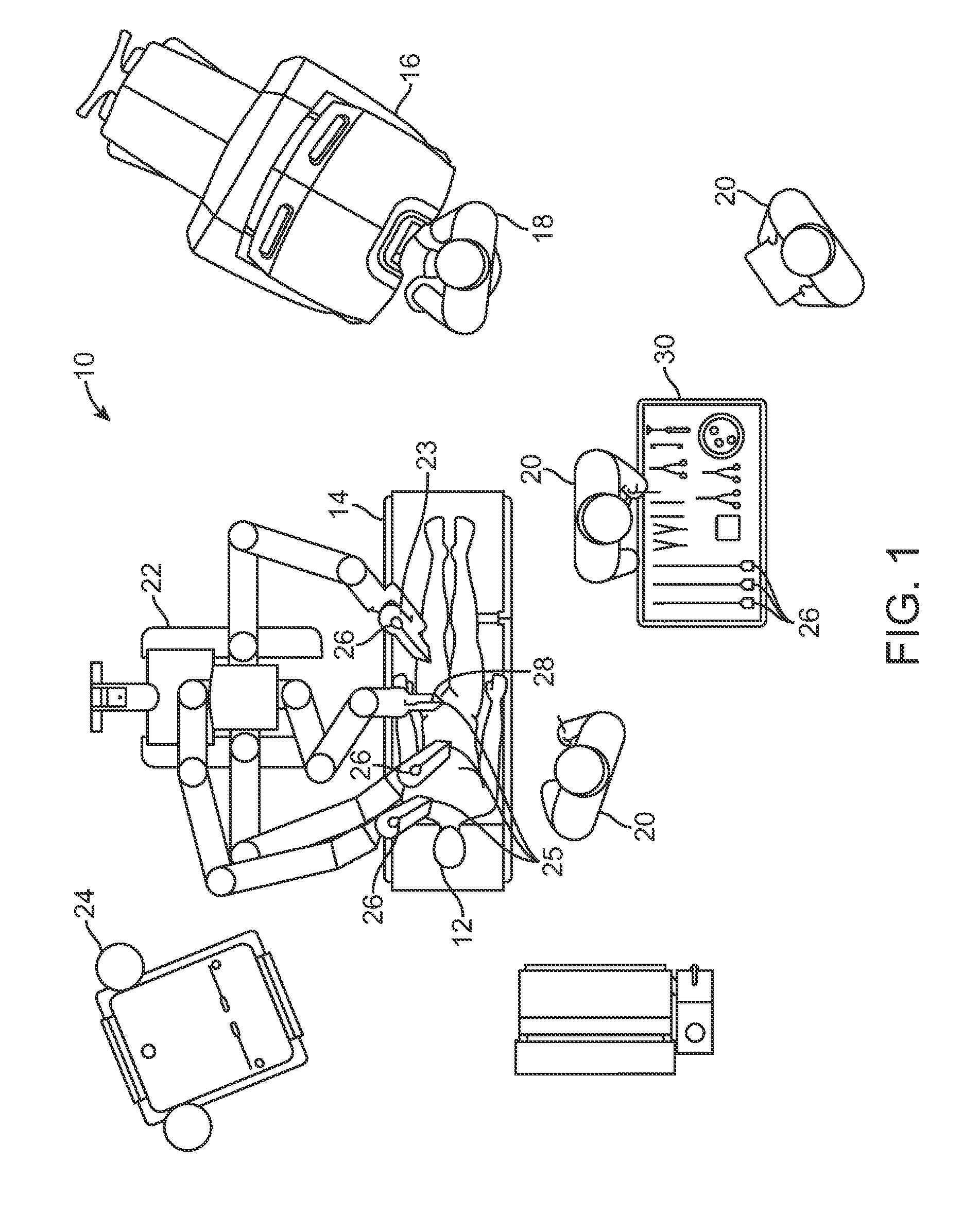

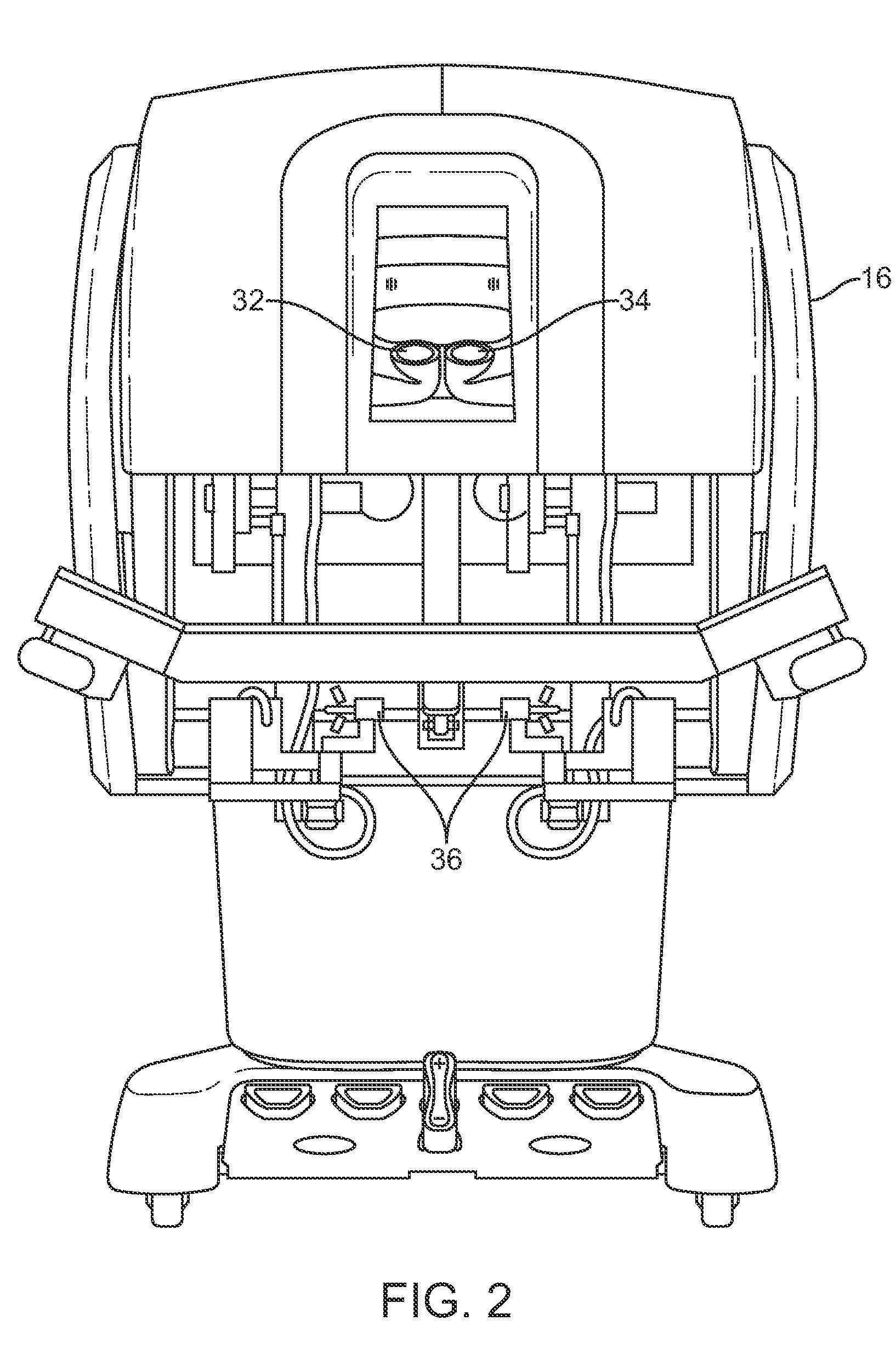

Efficient Vision and Kinematic Data Fusion For Robotic Surgical Instruments and Other Applications

ActiveUS20100331855A1Easy to adjustSimple and relatively stable adjustment or bias offsetDiagnosticsSurgical robotsRemote surgeryEngineering

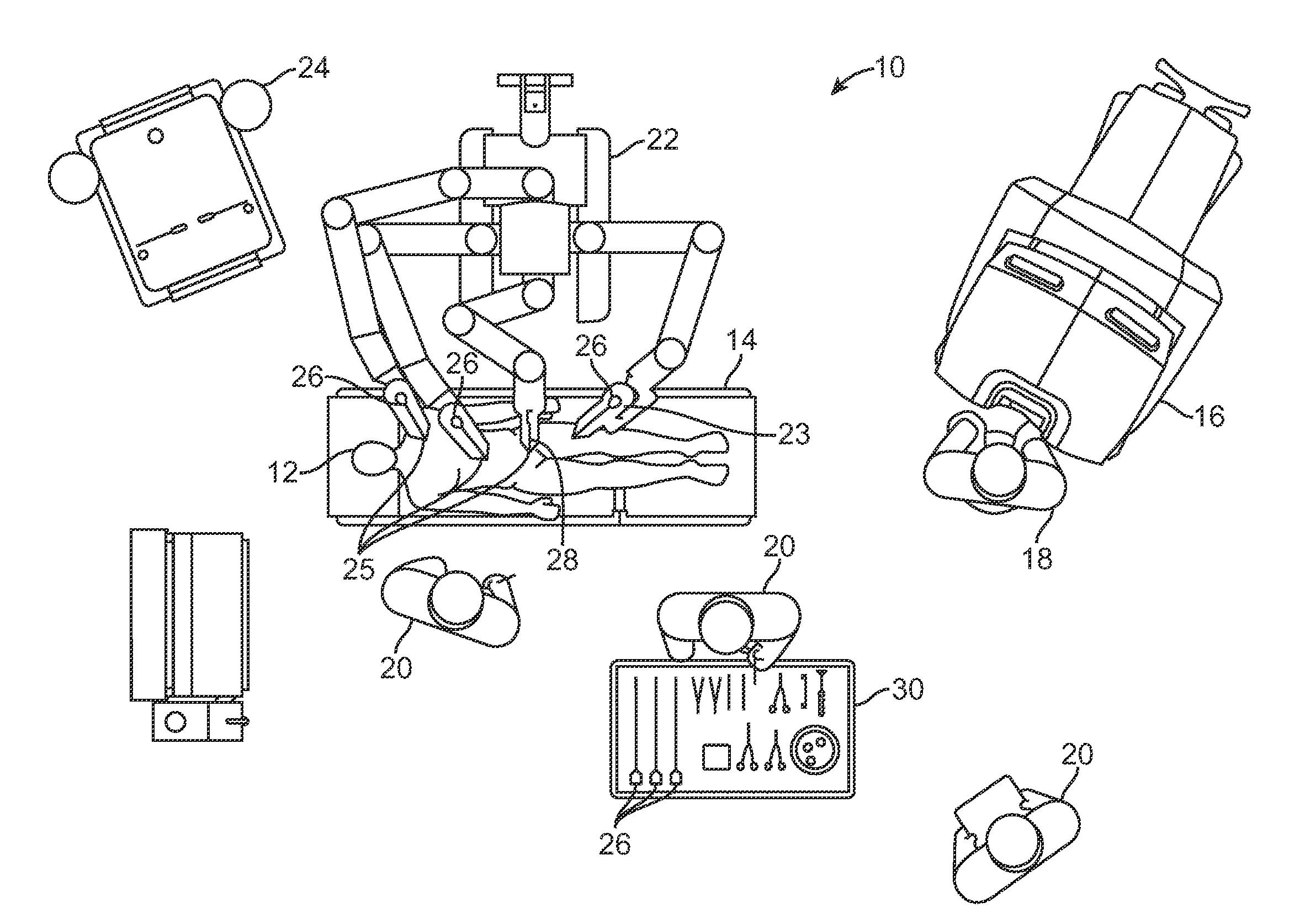

Robotic devices, systems, and methods for use in telesurgical therapies through minimally invasive apertures make use of joint-based data throughout much of the robotic kinematic chain, but selectively rely on information from an image capture device to determine location and orientation along the linkage adjacent a pivotal center at which a shaft of the robotic surgical tool enters the patient. A bias offset may be applied to a pose (including both an orientation and a location) at the pivotal center to enhance accuracy. The bias offset may be applied as a simple rigid transformation from the image-based pivotal center pose to a joint-based pivotal center pose.

Owner:INTUITIVE SURGICAL OPERATIONS INC

Context and Epsilon Stereo Constrained Correspondence Matching

InactiveUS20130002828A1Television system detailsCharacter and pattern recognitionParallaxGreek letter epsilon

A catadioptric camera having a perspective camera and multiple curved mirrors, images the multiple curved mirrors and uses the epsilon constraint to establish a vertical parallax between points in one mirror and their corresponding reflection in another. An ASIFT transform is applied to all the mirror images to establish a collection of corresponding feature points, and edge detection is applied on mirror images to identify edge pixels. A first edge pixel in a first imaged mirror is selected, its 25 nearest feature points are identified, and a rigid transform is applied to them. The rigid transform is fitted to 25 corresponding feature points in a second imaged mirror. The closes edge pixel to the expected location as determined by the fitted rigid transform is identified, and its distance to the vertical parallax is determined. If the distance is not greater than predefined maximum, then it is deemed correlate to the edge pixel in the first imaged mirror.

Owner:SEIKO EPSON CORP

Determining camera motion

ActiveUS20070103460A1Efficiently parameterizedTelevision system detailsImage analysisPoint cloud3d image

Camera motion is determined in a three-dimensional image capture system using a combination of two-dimensional image data and three-dimensional point cloud data available from a stereoscopic, multi-aperture, or similar camera system. More specifically, a rigid transformation of point cloud data between two three-dimensional point clouds may be more efficiently parameterized using point correspondence established between two-dimensional pixels in source images for the three-dimensional point clouds.

Owner:MEDIT CORP

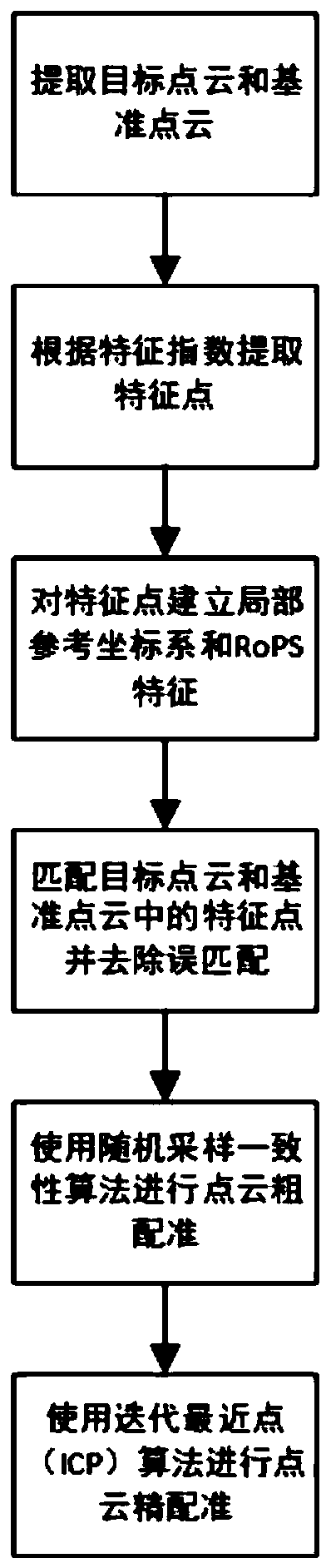

Automatic registration method for three-dimensional point cloud data

InactiveCN106780459AReduce manual pasteEliminate workloadImage enhancementImage analysisPoint cloudSystem transformation

The invention discloses an automatic registration method for three-dimensional point cloud data. The method comprises the steps that two point clouds to be registered are sampled to obtain feature points, rotation invariant feature factors of the feature points are calculated, and the rotation invariant feature factors of the feature points in the two point clouds are subjected to matching search to obtain an initial corresponding relation between the feature points; then, a random sample consensus algorithm is adopted to judge and remove mismatching points existing in an initial matching point set to obtain an optimized feature point corresponding relation, and a rough rigid transformation relation between the two point clouds is obtained through calculation to realize rough registration; a rigid transformation consistency detection algorithm is provided, a local coordinate system transformation relation between the matching feature points is utilized to perform binding detection on the rough registration result, and verification of the correctness of the rough registration result is completed; and an ICP algorithm is adopted to optimize the rigid transformation relation between the point cloud data to realize automatic precise registration of the point clouds finally.

Owner:HUAZHONG UNIV OF SCI & TECH

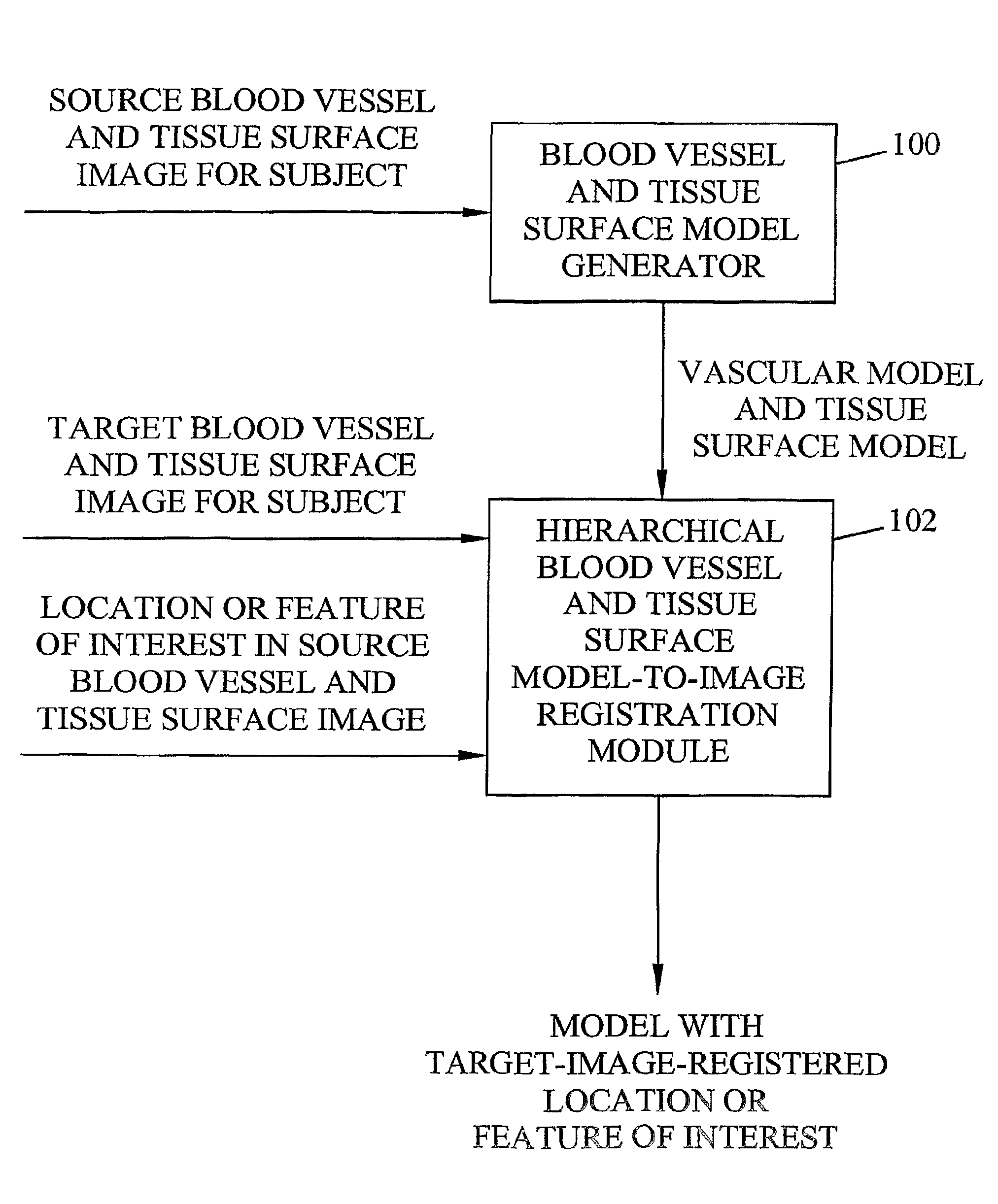

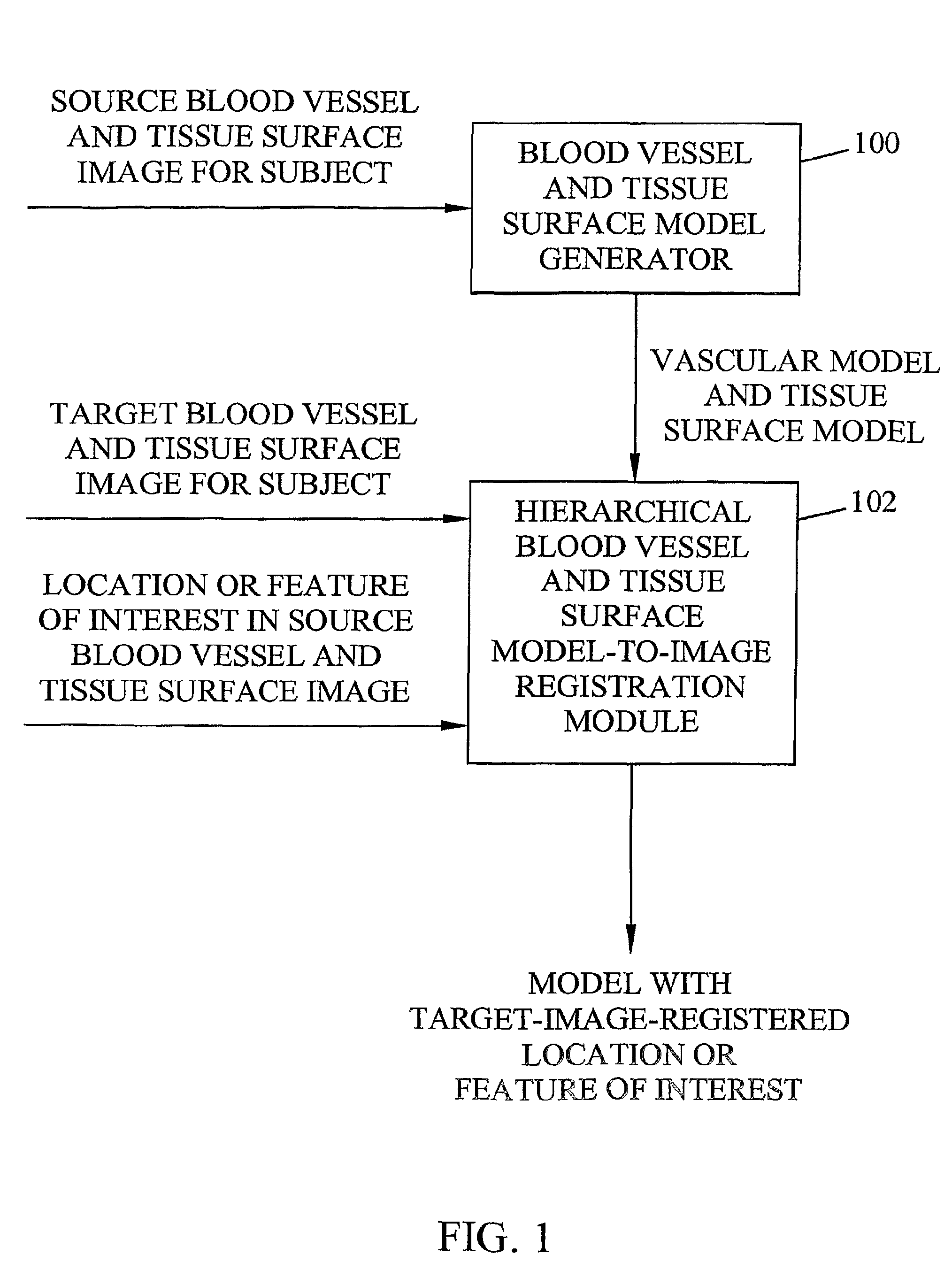

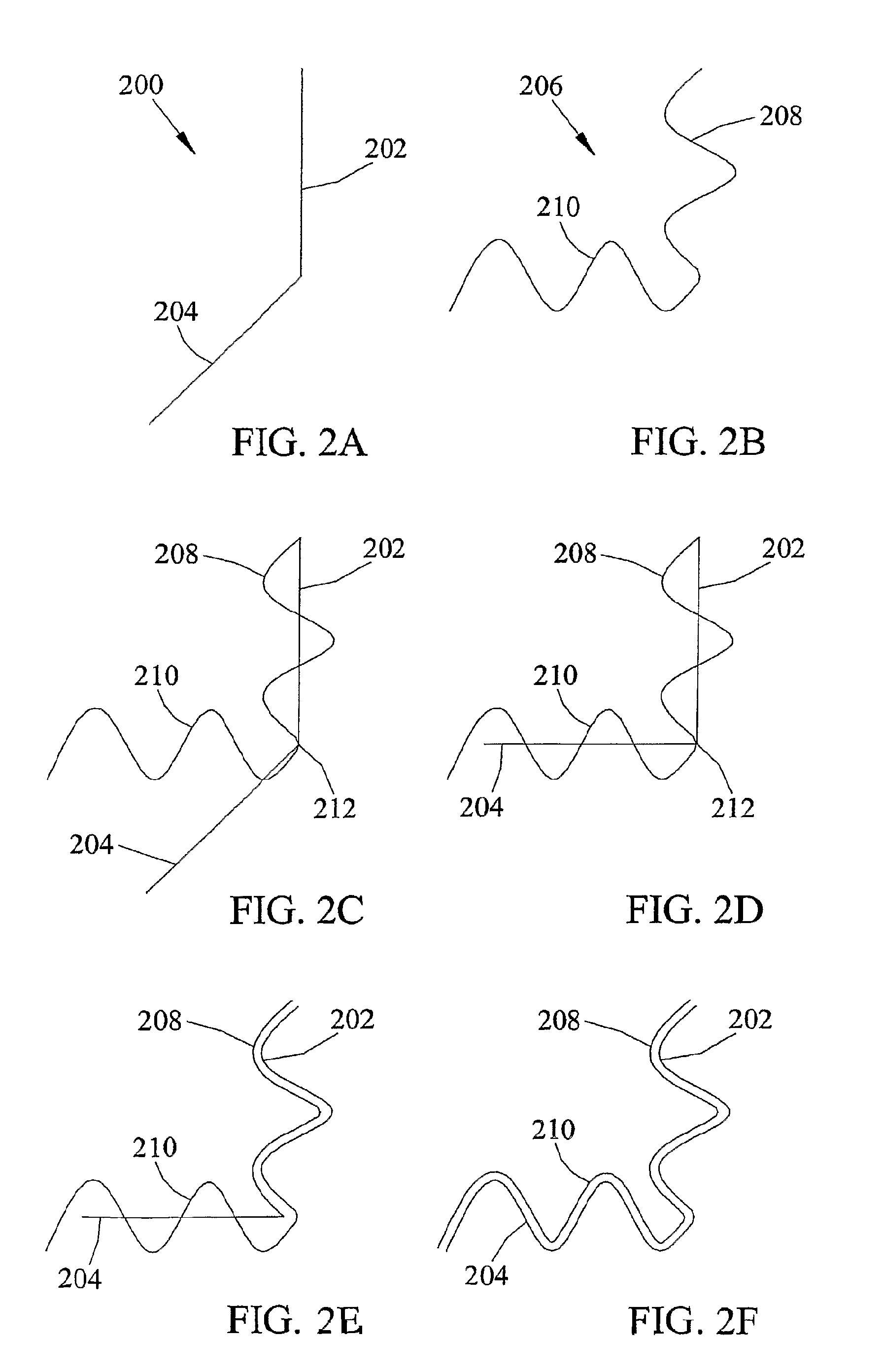

Methods, systems, and computer program products for hierarchical registration between a blood vessel and tissue surface model for a subject and a blood vessel and tissue surface image for the subject

Methods, systems, and computer program products for hierarchical registration (102) between a blood vessel and tissue surface model (100) for a subject and a blood vessel and tissue surface image for the subject are disclosed. According to one method, hierarchical registration of a vascular model to a vascular image is provided. According to the method, a vascular model is mapped to a target image using a global rigid transformation to produce a global-rigid-transformed model. Piecewise rigid transformations are applied in a hierarchical manner to each vessel tree in the global-rigid-transformed model to perform a piecewise-rigid-transformed model. Piecewise deformable transformations are applied to branches in the vascular tree in the piecewise-transformed-model to produce a piecewise-deformable-transformed model.

Owner:THE UNIV OF NORTH CAROLINA AT CHAPEL HILL

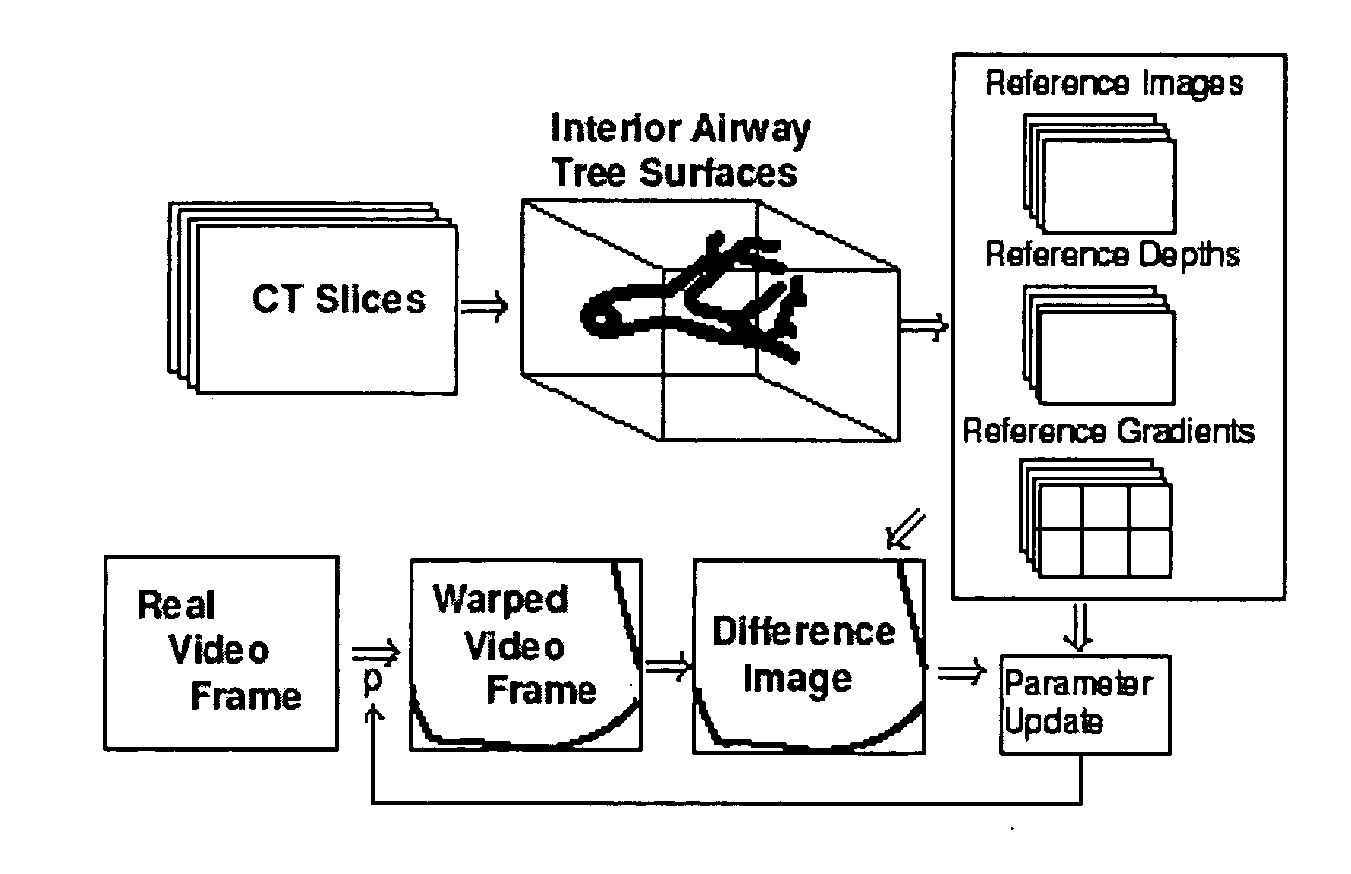

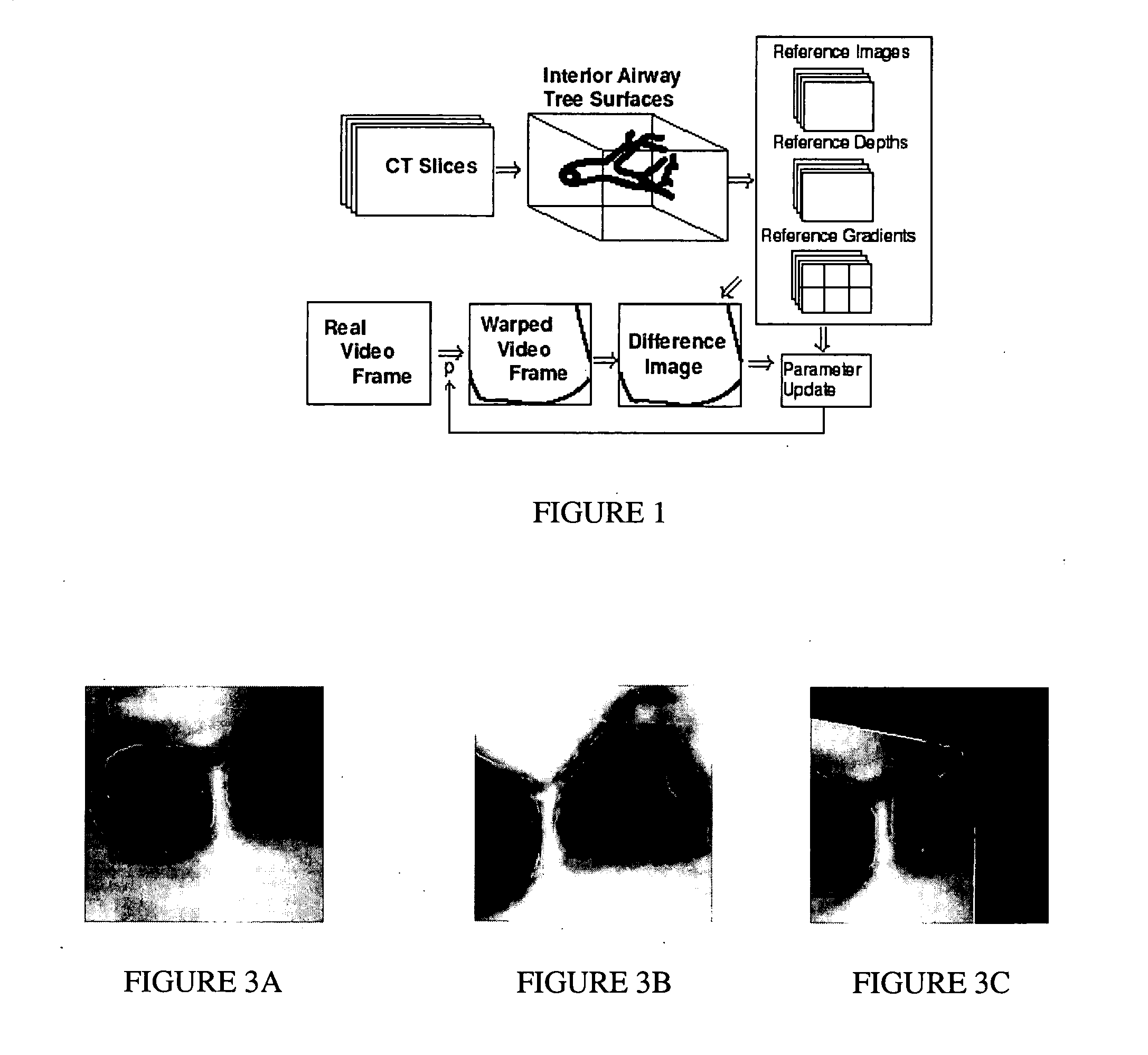

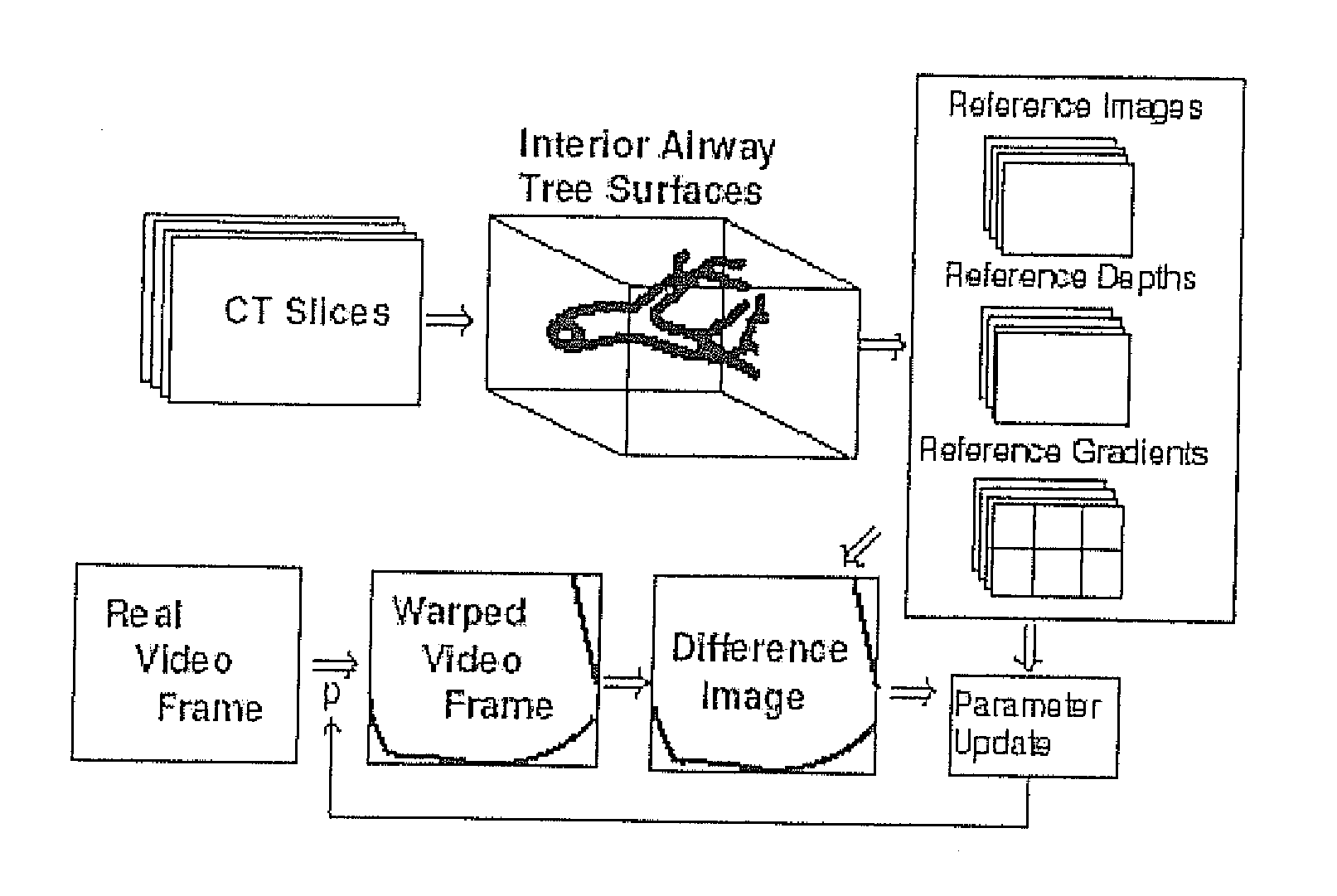

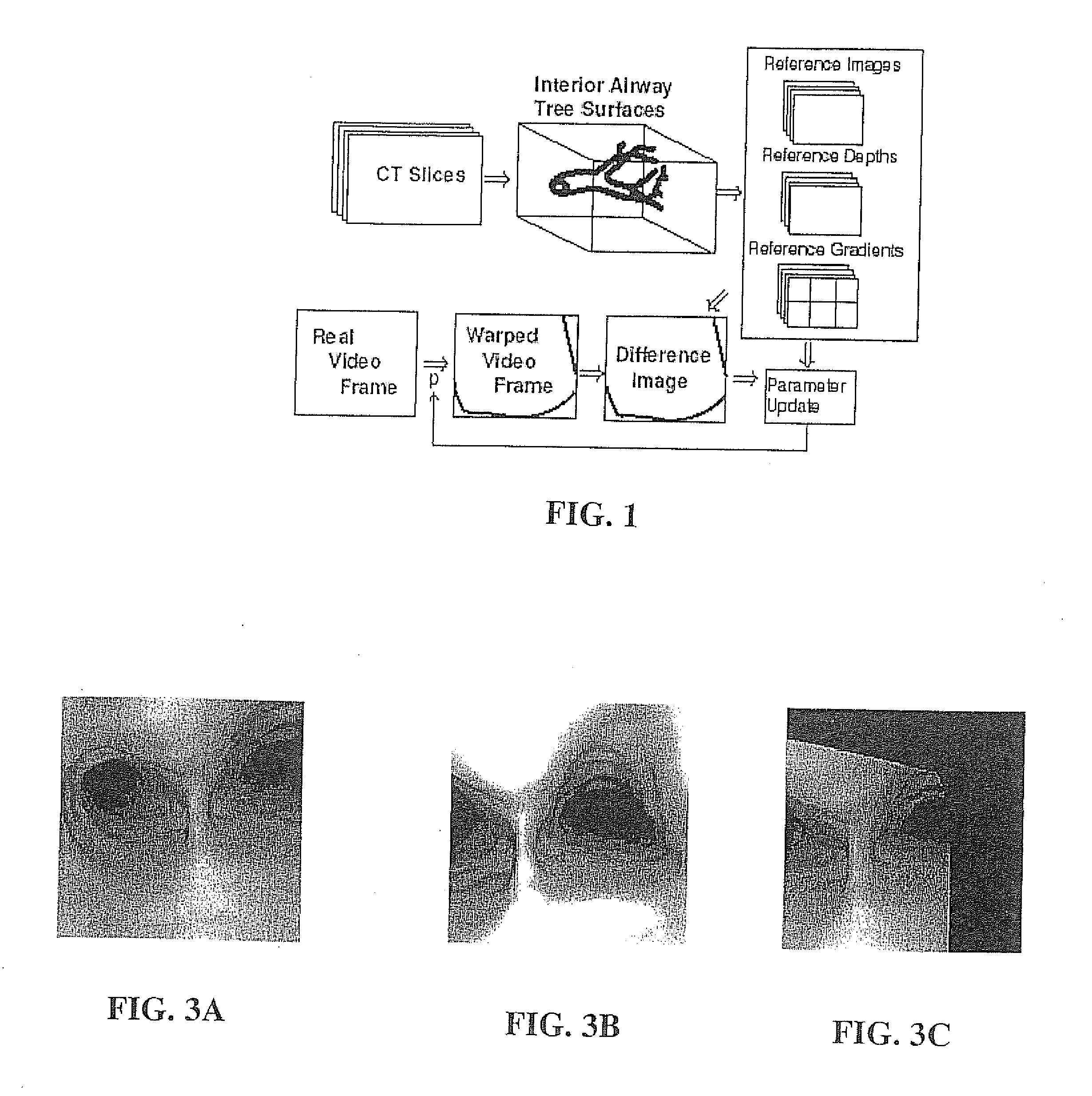

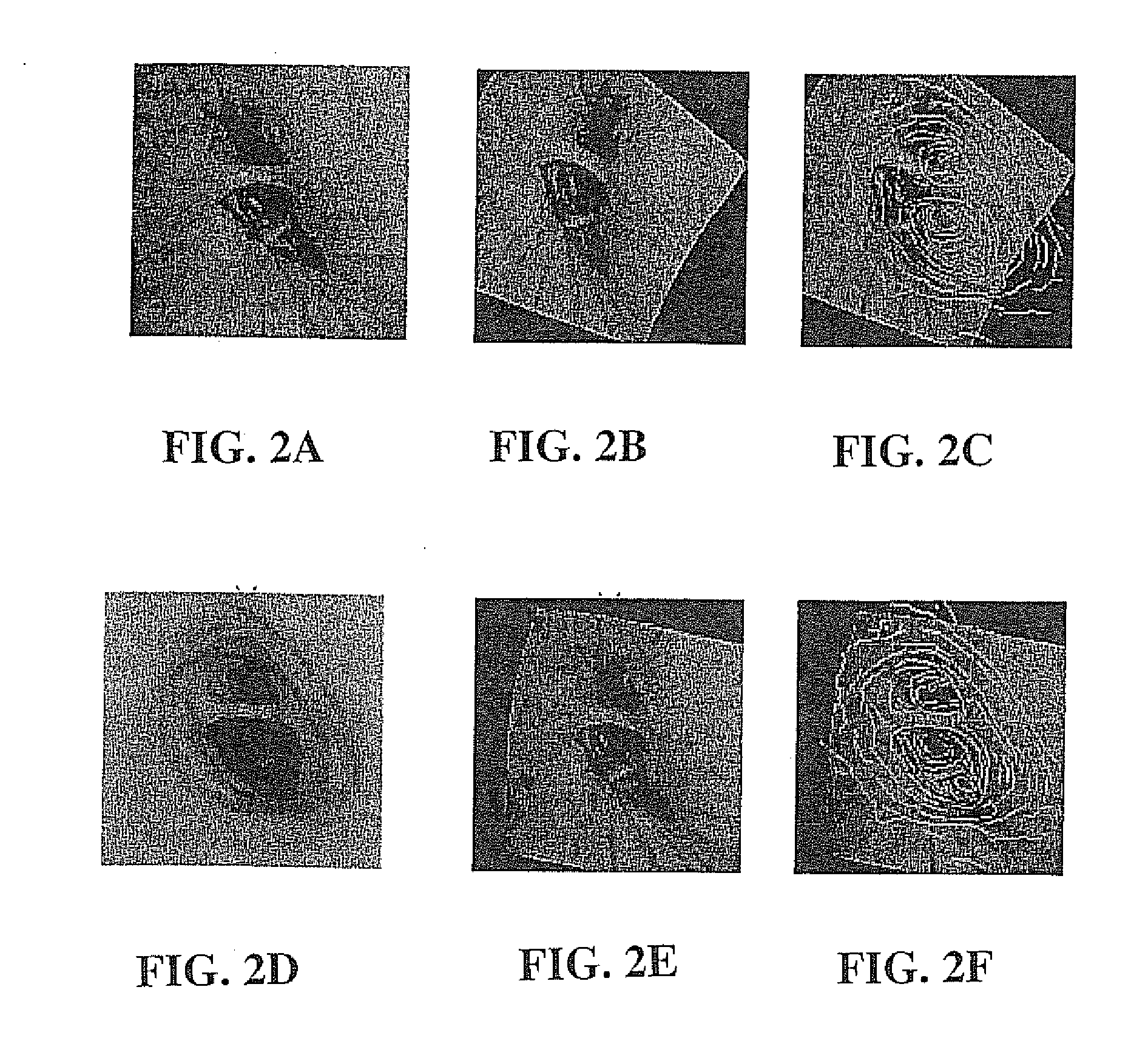

Fast 3D-2D image registration method with application to continuously guided endoscopy

A novel framework for fast and continuous registration between two imaging modalities is disclosed. The approach makes it possible to completely determine the rigid transformation between multiple sources at real-time or near real-time frame-rates in order to localize the cameras and register the two sources. A disclosed example includes computing or capturing a set of reference images within a known environment, complete with corresponding depth maps and image gradients. The collection of these images and depth maps constitutes the reference source. The second source is a real-time or near-real time source which may include a live video feed. Given one frame from this video feed, and starting from an initial guess of viewpoint, the real-time video frame is warped to the nearest viewing site of the reference source. An image difference is computed between the warped video frame and the reference image. The viewpoint is updated via a Gauss-Newton parameter update and certain of the steps are repeated for each frame until the viewpoint converges or the next video frame becomes available. The final viewpoint gives an estimate of the relative rotation and translation between the camera at that particular video frame and the reference source. The invention has far-reaching applications, particularly in the field of assisted endoscopy, including bronchoscopy and colonoscopy. Other applications include aerial and ground-based navigation.

Owner:PENN STATE RES FOUND

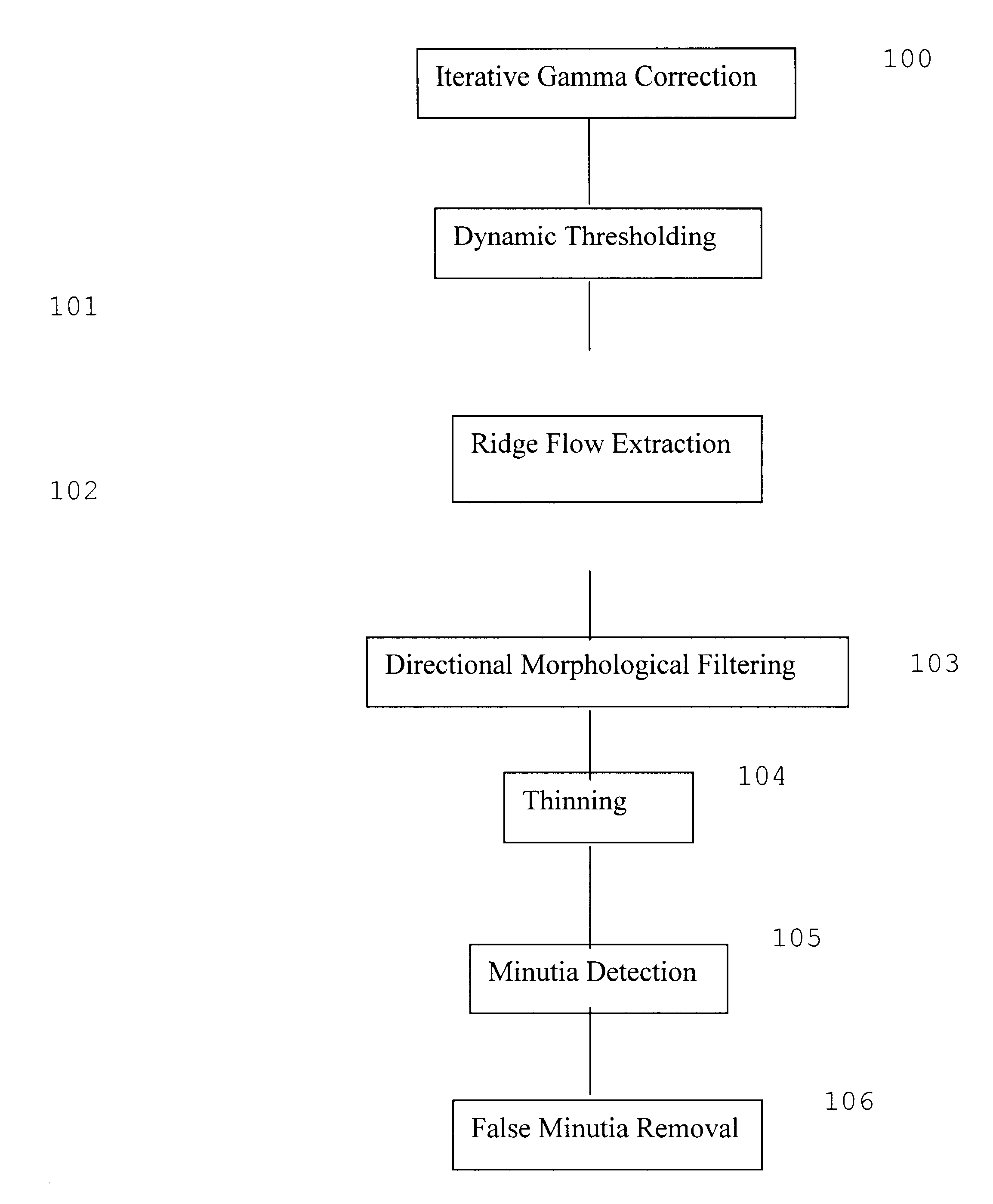

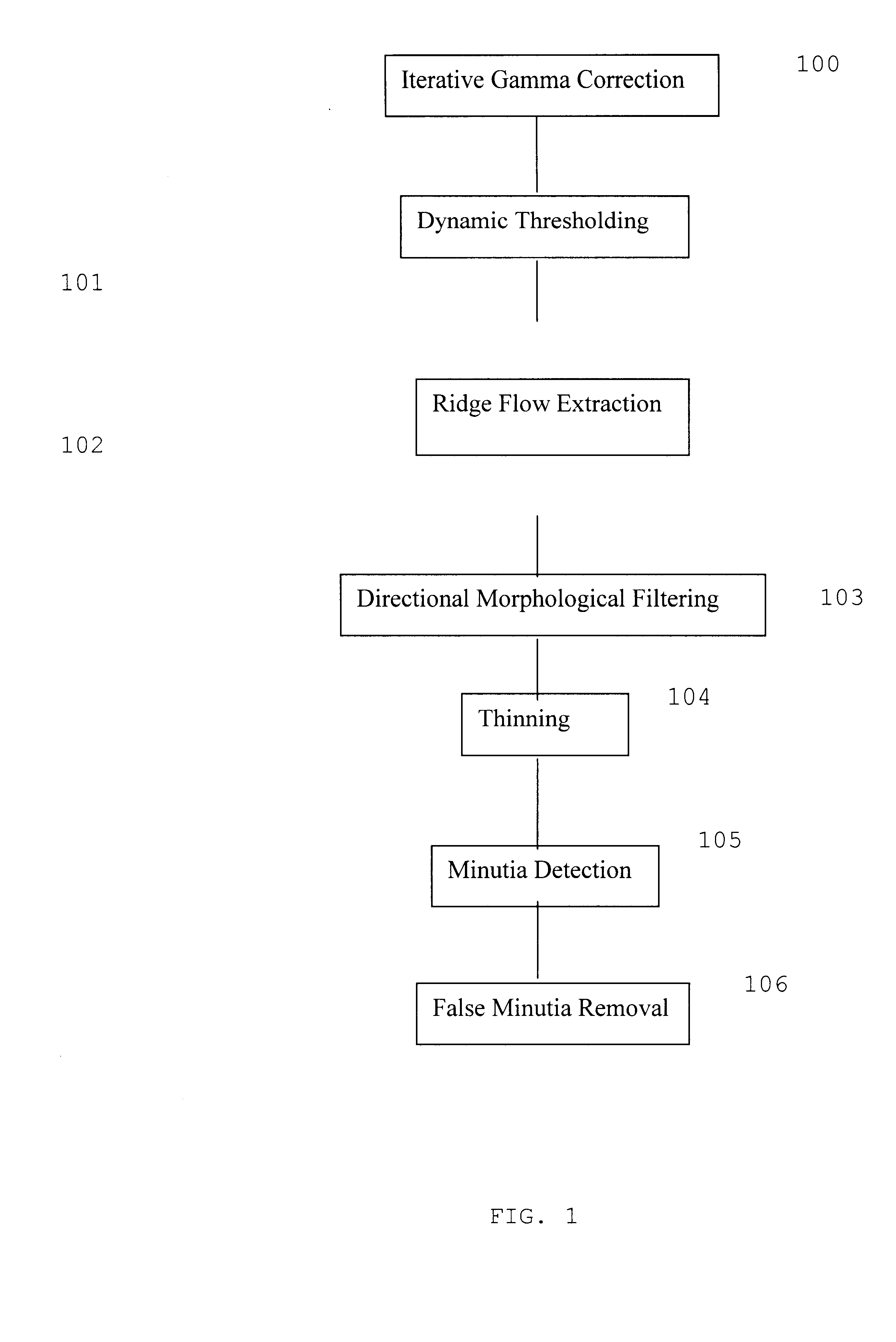

Apparatus and method for fingerprint recognition system

InactiveUS6763127B1Compensation effectMatching and classificationFeature extractionMorphological filtering

A fingerprint recognition method includes iterative gamma correction that compensates moisture effect, feature extraction operations, directional morphological filtering that effectively links broken ridges and breaks smeared ridges, adaptive image alignment by local minutia matching, global matching by relaxed rigid transform, and statistical matching with Gaussian weighting functions.

Owner:KIOBA PROCESSING LLC

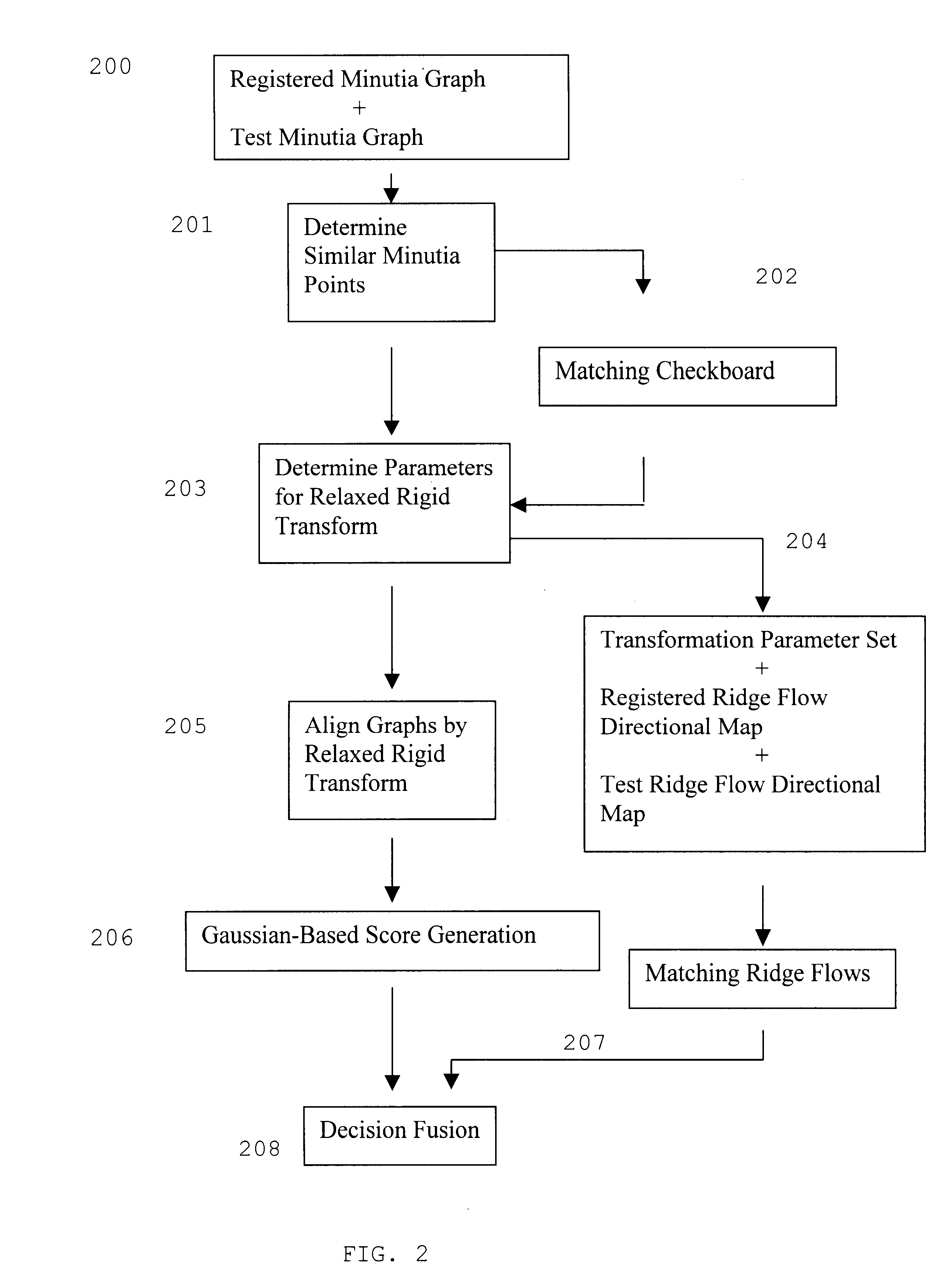

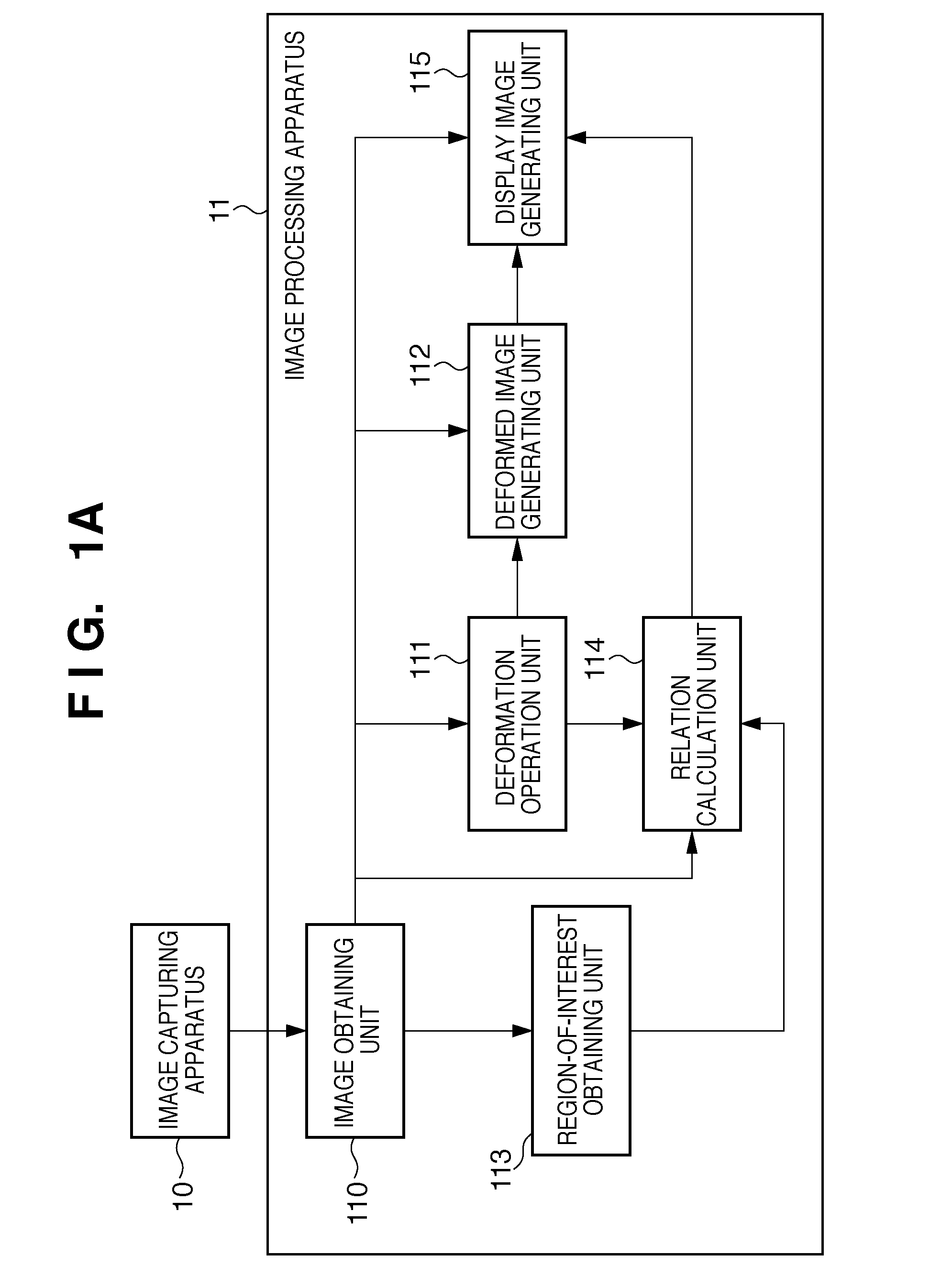

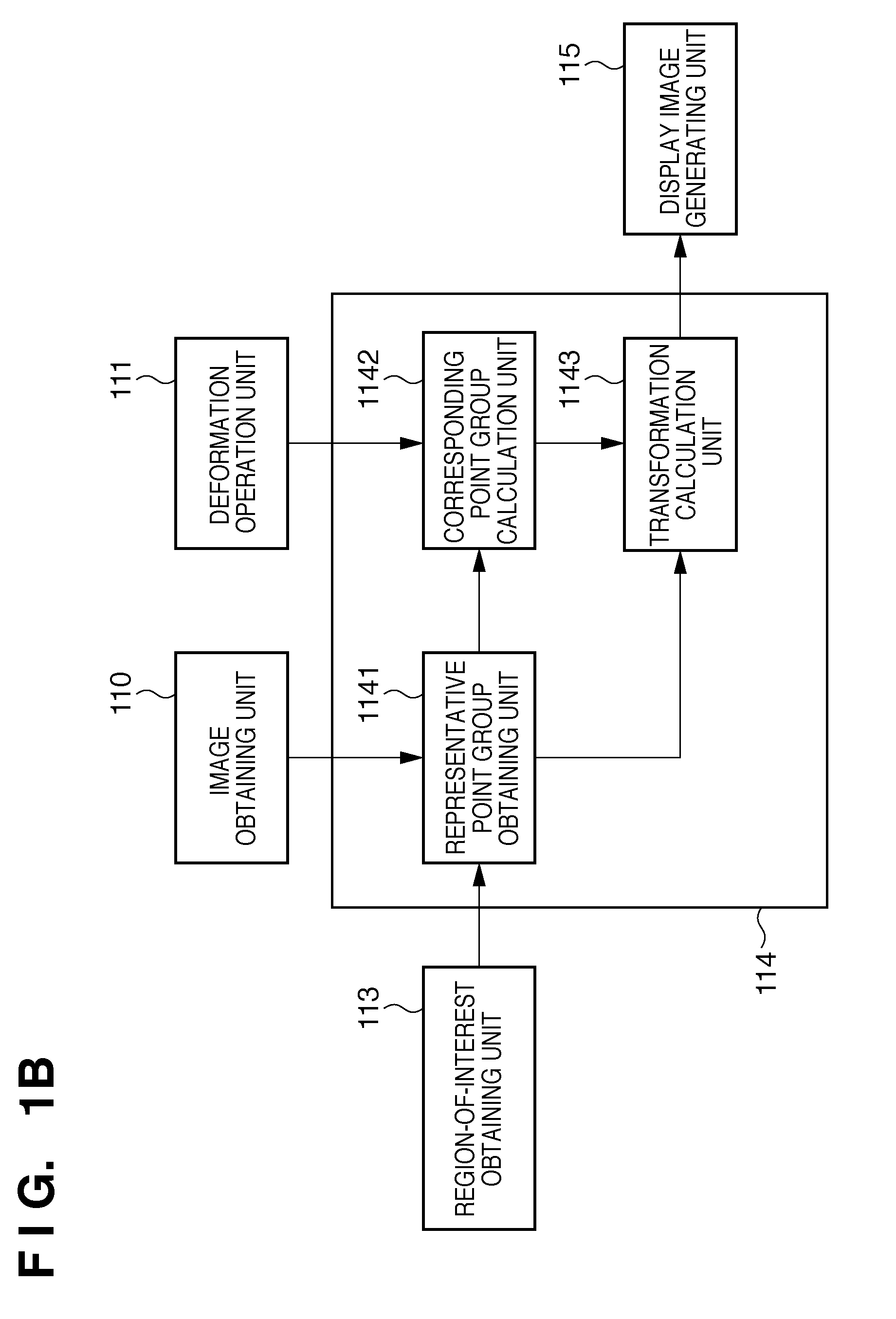

Image processing apparatus, image processing method, and storage medium

An image processing apparatus comprises: a deformation unit adapted to deform a first 3D image to a second 3D image; a calculation unit adapted to obtain a relation according to which rigid transformation is performed such that a region of interest in the first 3D image overlaps a region in the second 3D image that corresponds to the region of interest in the first 3D image; and an obtaining unit adapted to obtain, based on the relation, a cross section image of the region of interest in the second 3D image and a cross section image of the region of interest in the first 3D image that corresponds to the orientation of the cross section image in the second 3D image.

Owner:CANON KK

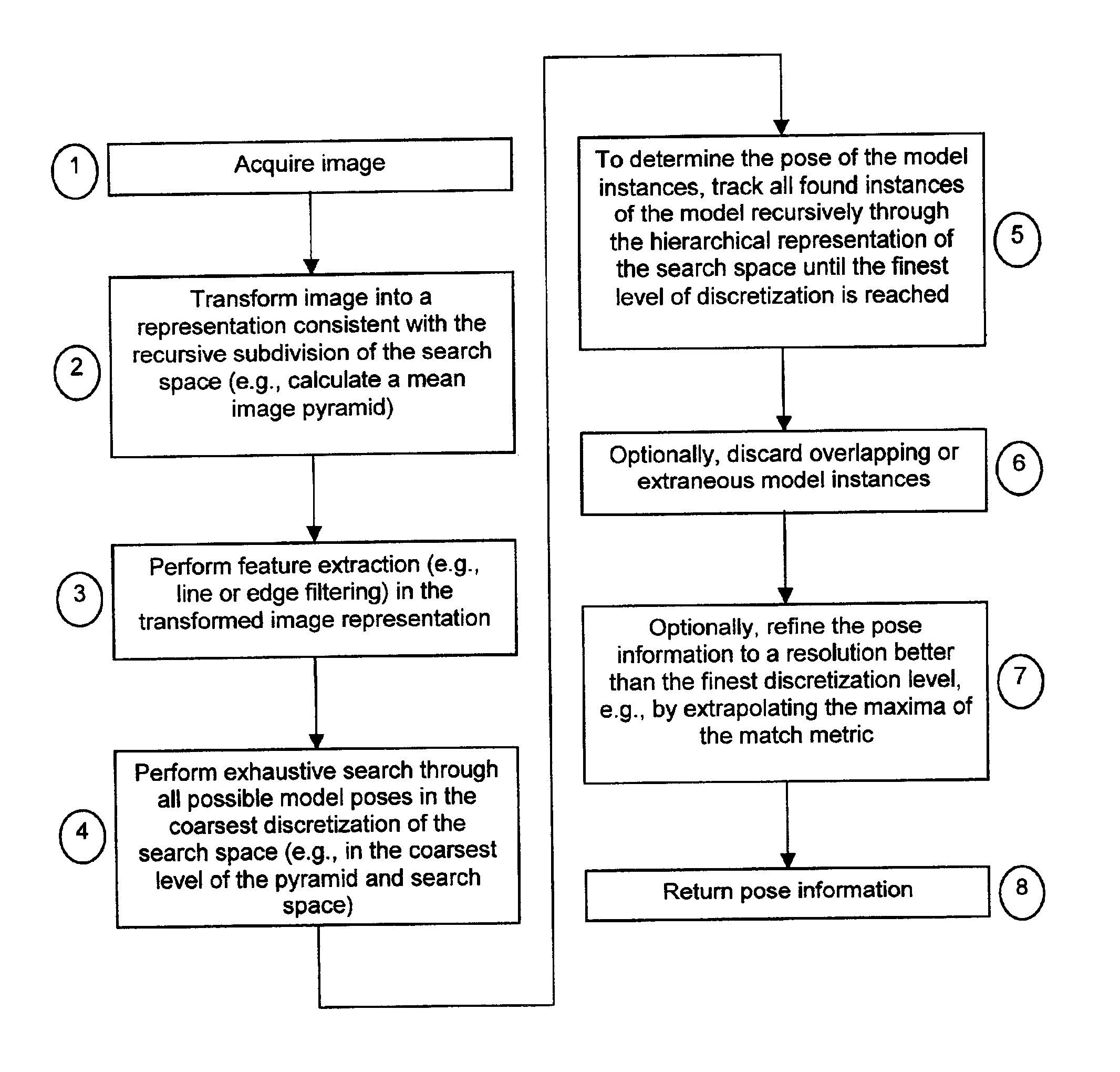

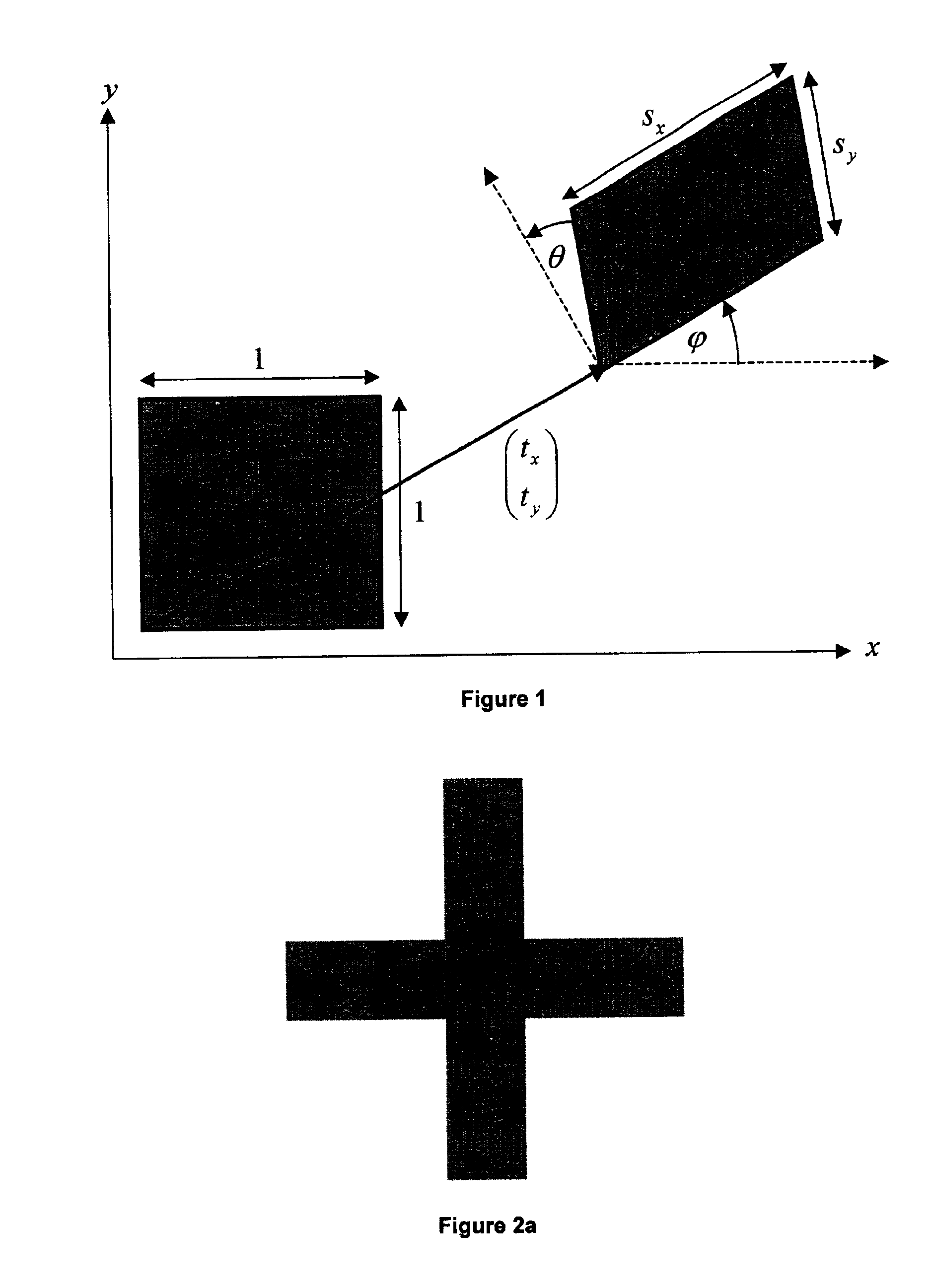

System and method for object recognition

ActiveUS7062093B2Speed up object recognition processImage analysisCharacter and pattern recognitionPattern recognitionRigid transformation

A system and method recognize a user-defined model object within an image. The system and method recognize the model object with occlusion when the model object to be found is only partially visible. The system and method also recognize the model object with clutter when there may be other objects in the image, even within the model object. The system and method also recognize the model object with non-linear illumination changes as well as global or local contrast reversals. The model object to be found may have been distorted, when compared to the user-defined model object, from geometric transformations of a certain class such as translations, rigid transformations by translation and rotation, arbitrary affine transformations, as well as similarity transformations by translation, rotation, and scaling.

Owner:MVTEC SOFTWARE

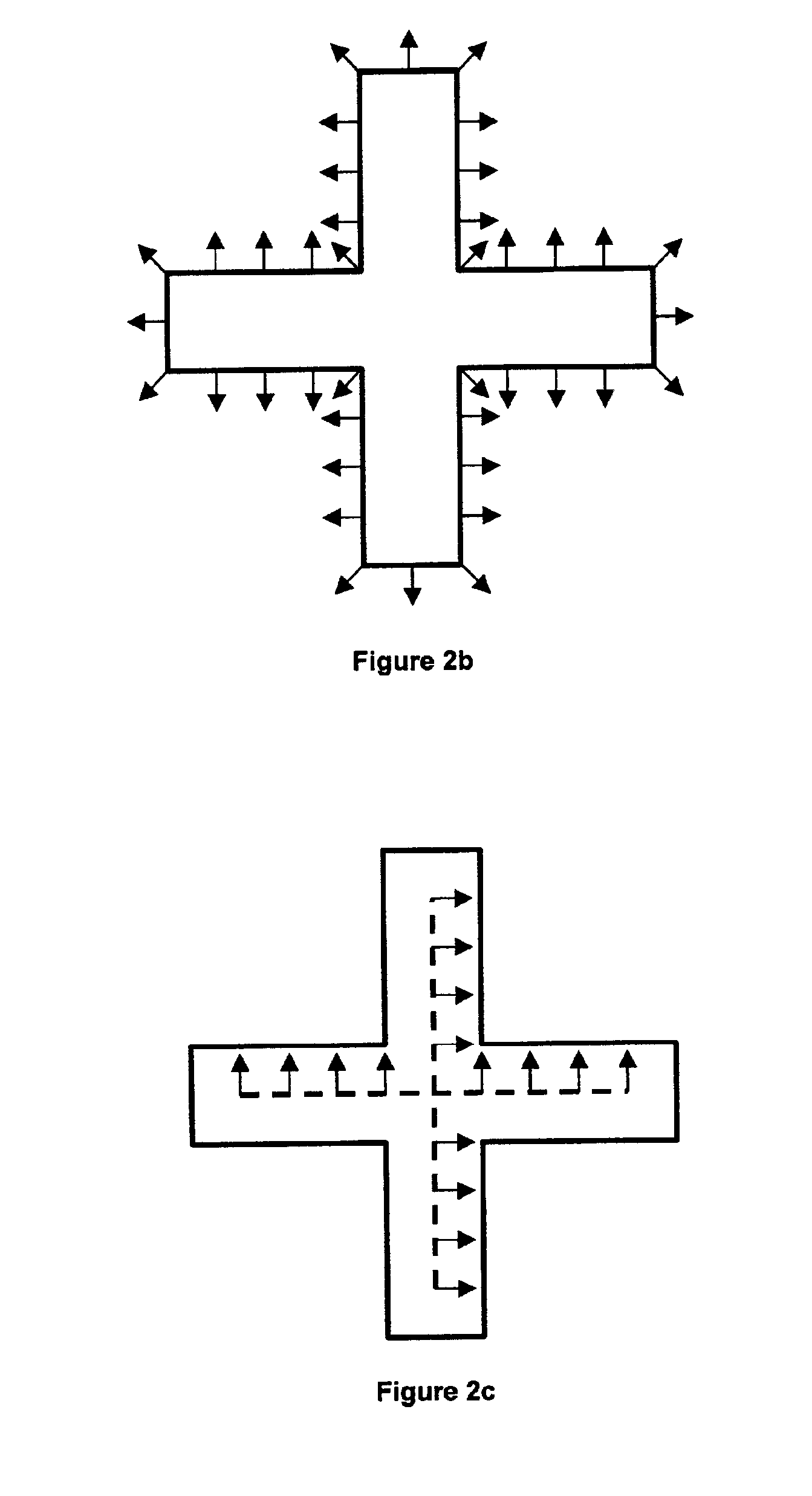

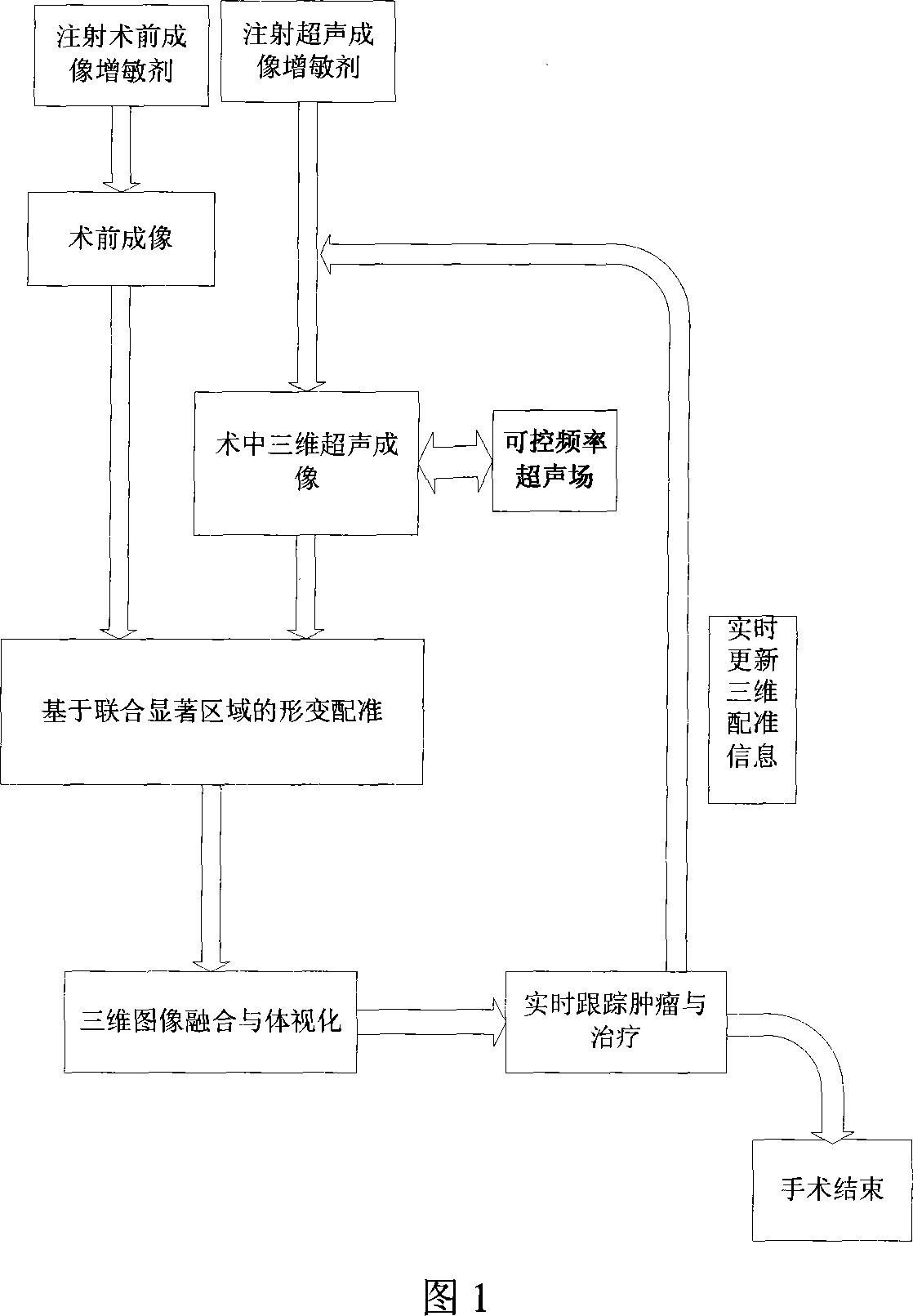

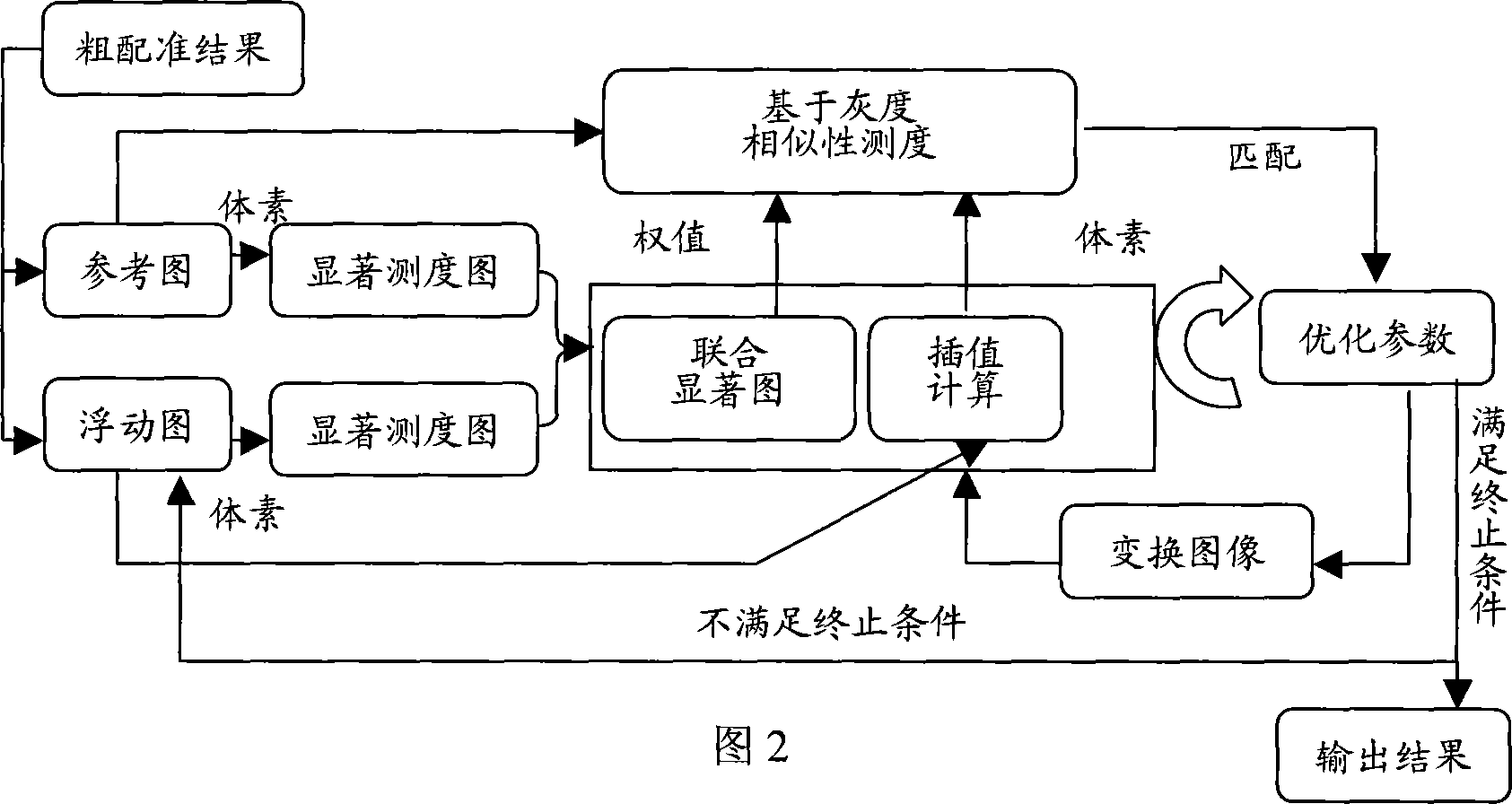

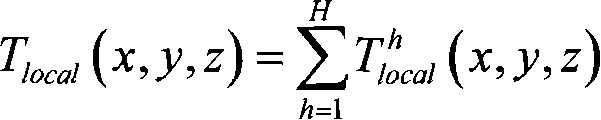

Early tumor positioning and tracking method based on multi-mold sensitivity intensifying and imaging fusion

InactiveCN101053531AImprove target tracking accuracyImage enhancementSurgeryStaging tumorsStage tumor

An early stage tumor localizing tracking based on the multimode sensitization imaging fuse, belongs to the medical image processing field. The invention includes: a medical image before the operation for obtaining the tumour aim focus imaging sensitization; an ultrasound sensitization image during the operation for obtaining the tumour aim focus imaging sensitization. When the image is processed the guide therapy, using the global rigid transformation and the local nonstiff transformation combination round the tumour aim focus as the geometric transformation model with deformation registration, the sensitization images before and during the operation are processed with the deformation registration based on the union marked region, while the images before and during the operation are fused, to rebuild the three-dimensional visualization model in the tumor focus region. Using the above deformation registration method to complete the sport deformation compensation for the imaged before the operation, the target tracking of the tumour target focus is further automatically completed. The invention can be used in a plurality of places, such as the early diagnosis of the tumour, the image guide tumour early intervention, the image guide minimal invasive operation, the image guide physiotherapy etc.

Owner:SHANGHAI JIAO TONG UNIV

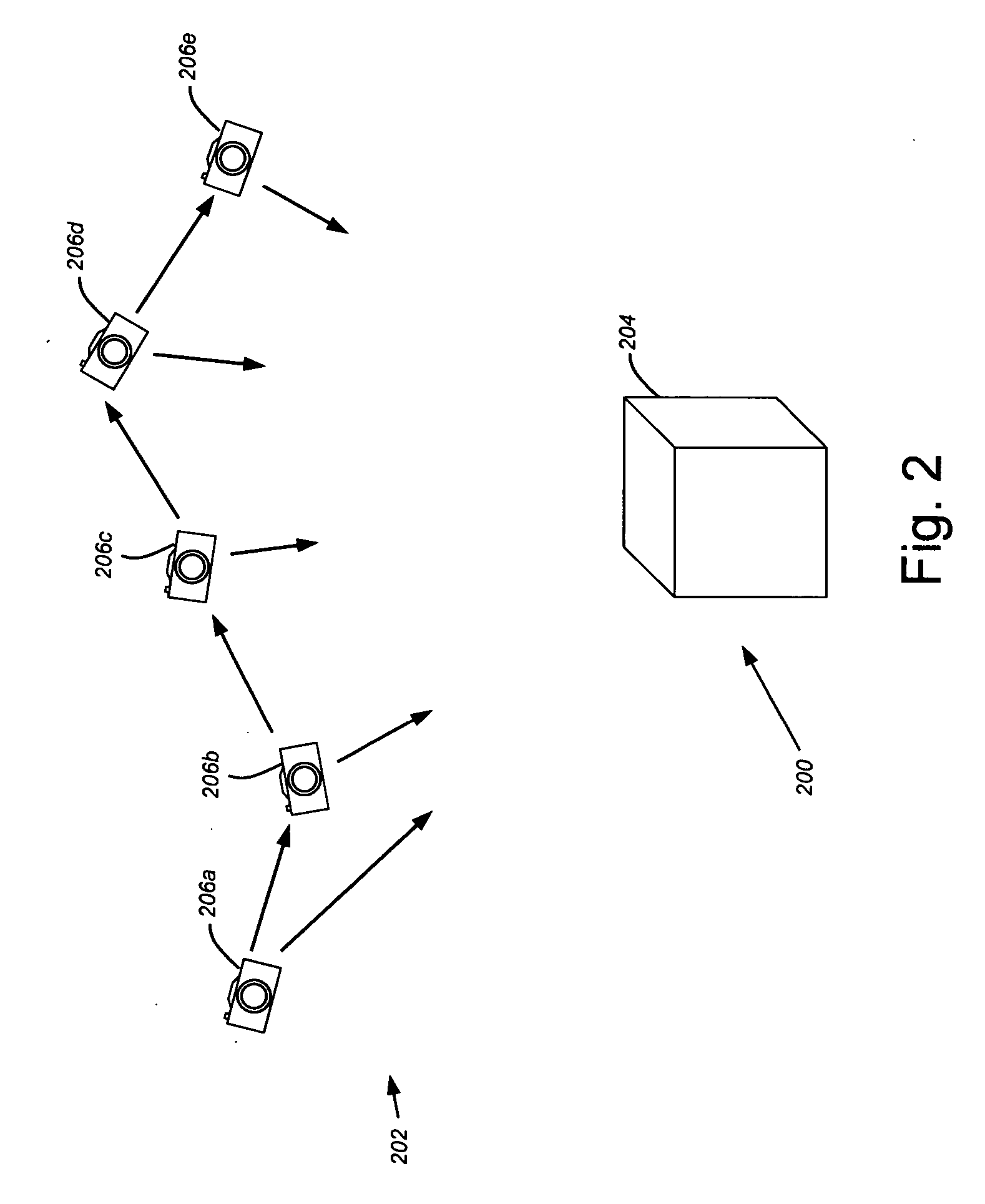

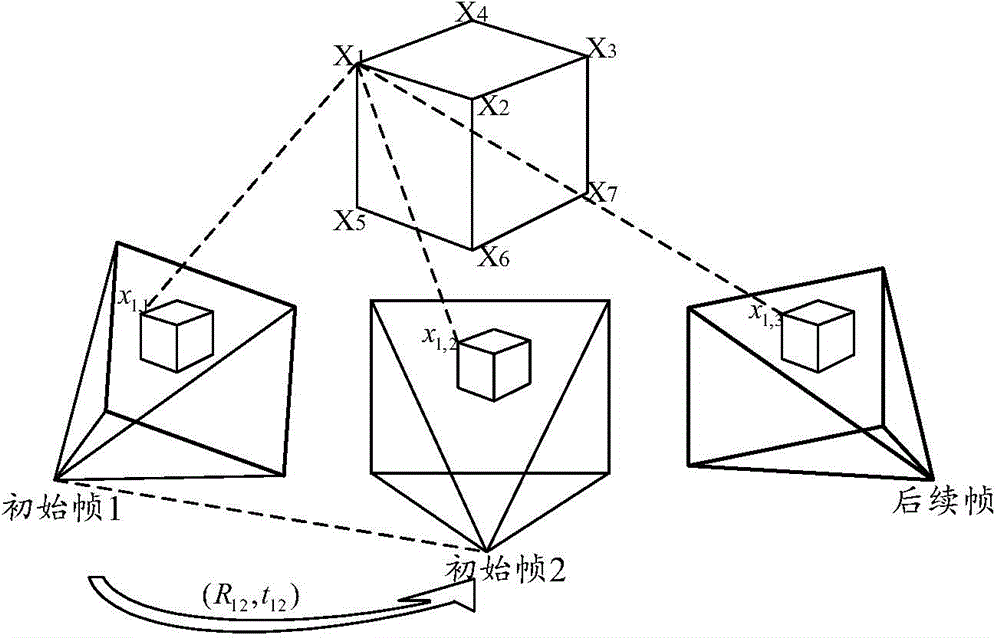

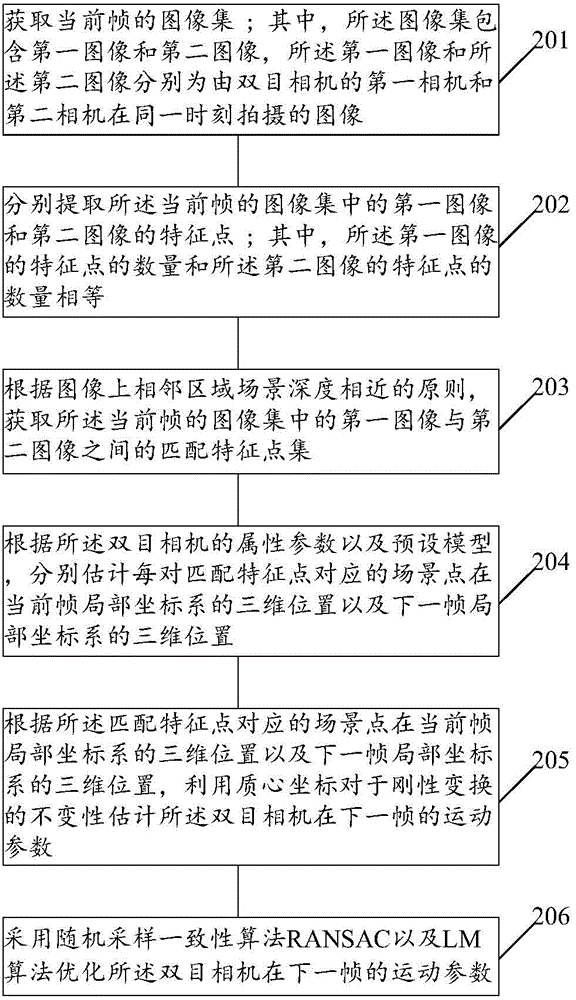

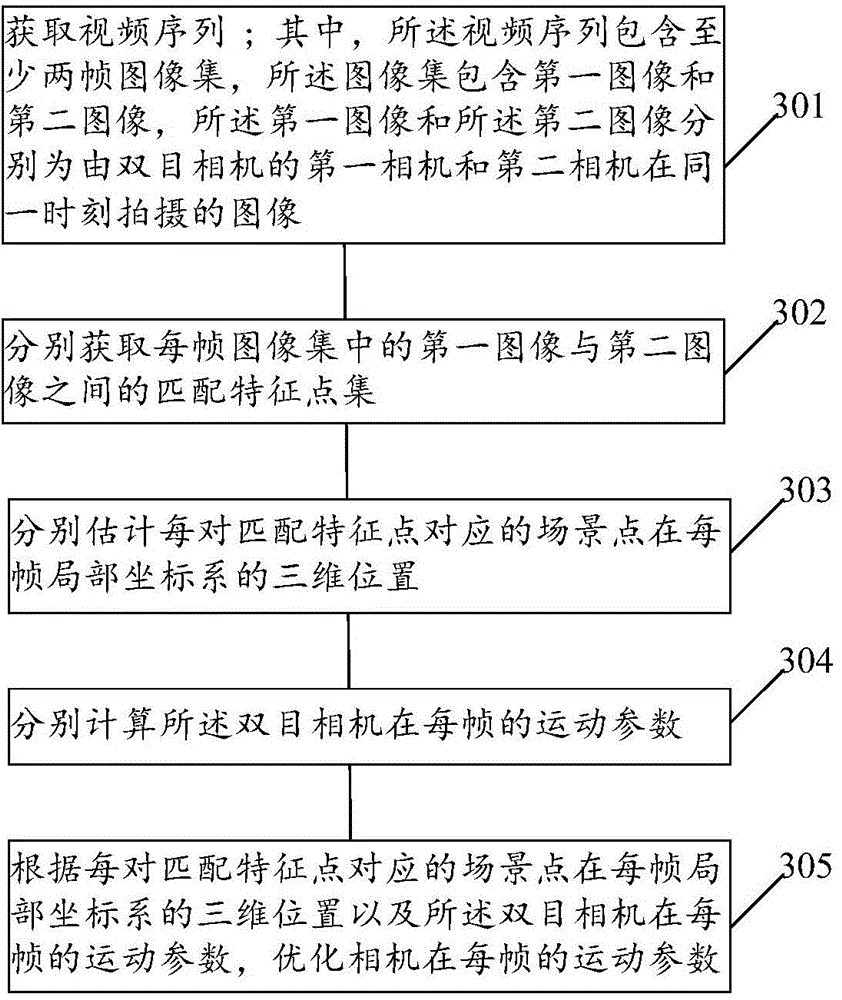

Camera tracking method and device

InactiveCN104915965AAvoid the defect of low tracking accuracyImprove tracking accuracyImage enhancementImage analysisMotion parameterRigid transformation

Provided are a camera tracking method and device, which use a binocular video image to perform camera tracking, thereby improving the tracking accuracy. The camera tracking method provided in the embodiments of the present invention comprises: acquiring an image set of a current frame; respectively extracting feature points of each image in the image set of the current frame; according to a principle that depths of scene in adjacent regions on an image are similar, acquiring a matched feature point set of the image set of the current frame; according to an attribute parameter and a pre-set model of a binocular camera, respectively estimating three-dimensional positions of scene points corresponding to each pair of matched feature points in a local coordinate system of the current frame and a local coordinate system of the next frame; and according to the three-dimensional positions of the scene points corresponding to the matched feature points in the local coordinate system of the current frame and the local coordinate system of the next frame, estimating a motion parameter of the binocular camera in the next frame using the invariance of a barycentric coordinate with respect to rigid transformation, and optimizing the motion parameter of the binocular camera in the next frame.

Owner:HUAWEI TECH CO LTD

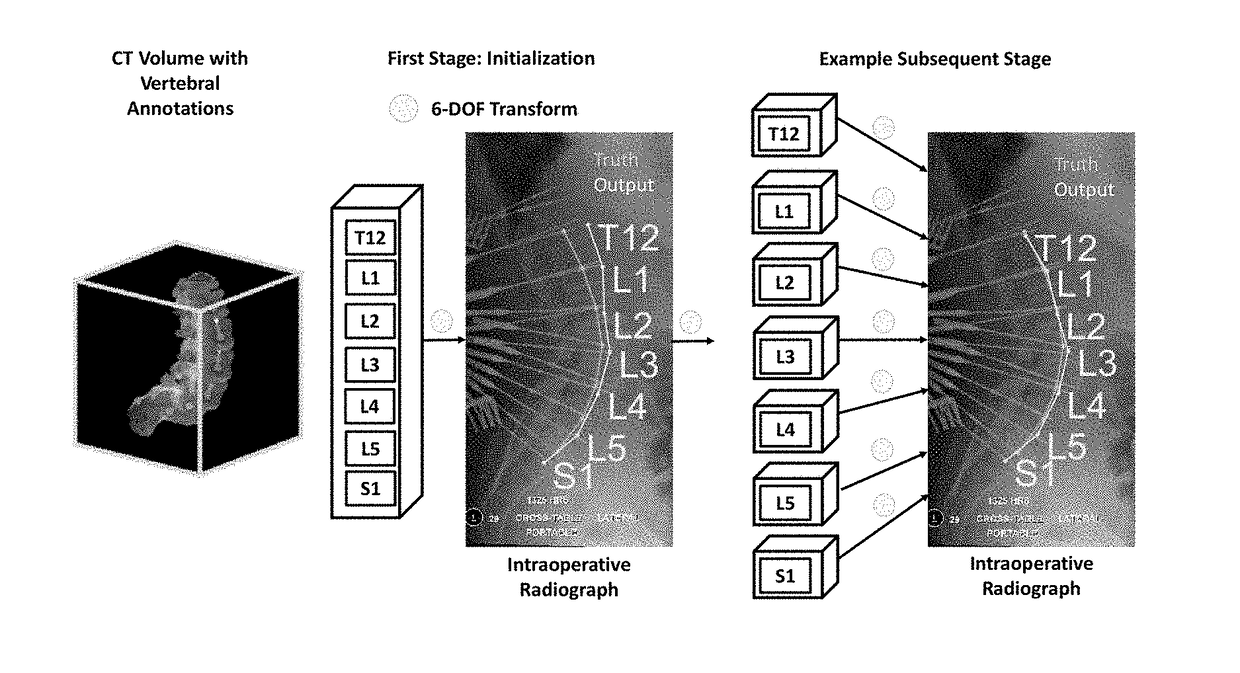

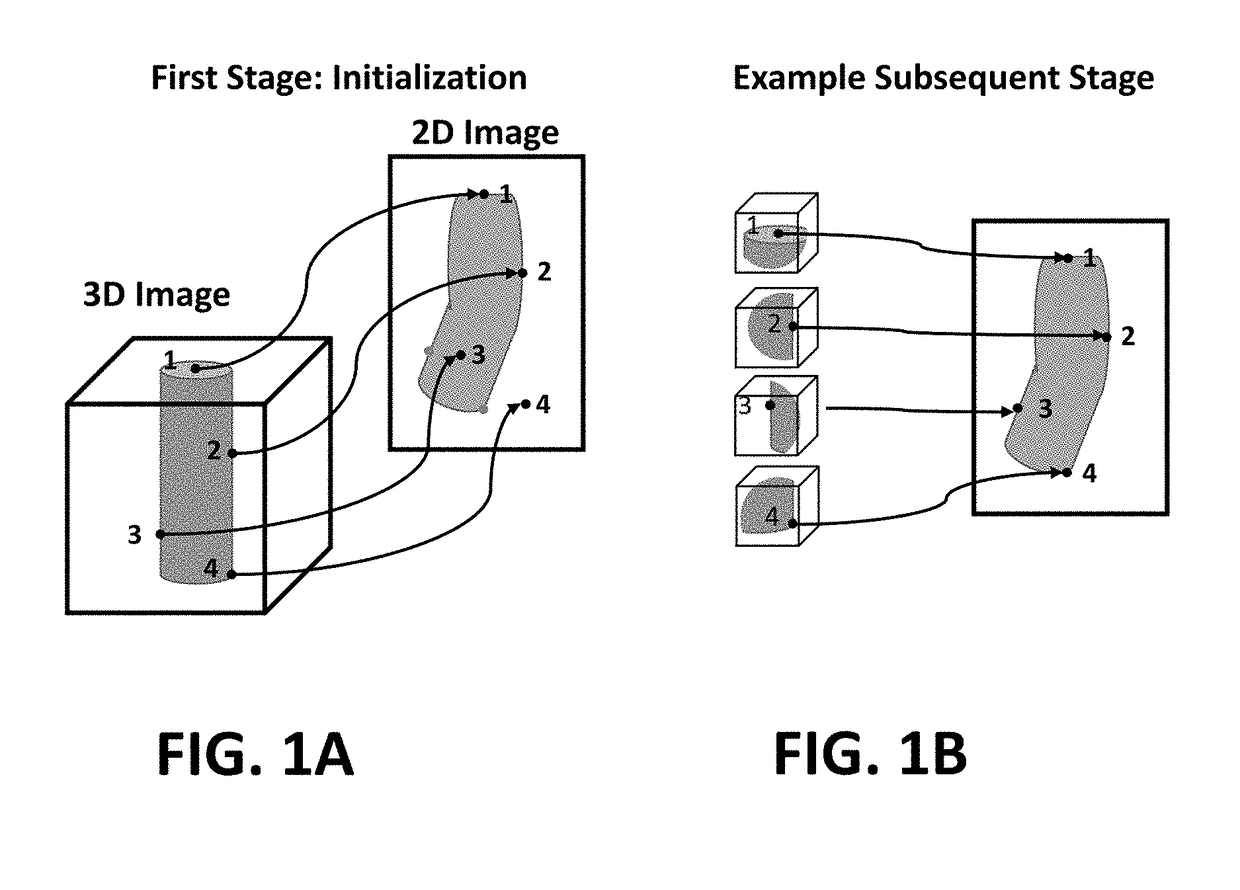

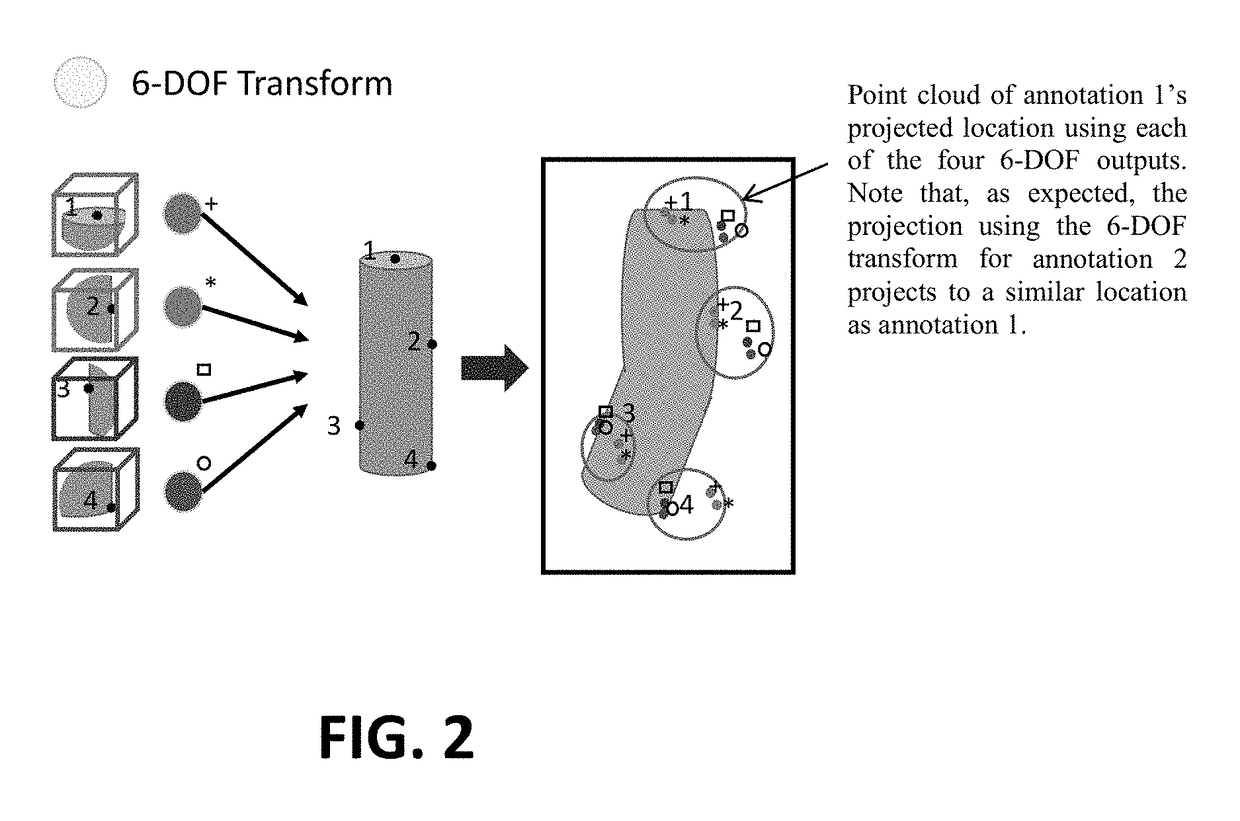

Method for deformable 3d-2d registration using multiple locally rigid registrations

An embodiment in accordance with the present invention provides a method for 3D-2D registration (for example, registration of a 3D CT image to a 2D radiograph) that permits deformable motion between structures defined in the 3D image based on a series of locally rigid transformations. This invention utilizes predefined annotations in 3D images (e.g., the location of anatomical features of interest) to perform multiple locally rigid registrations that yield improved accuracy in aligning structures that have undergone deformation between the acquisition of the 3D and 2D images (e.g., a preoperative CT compared to an intraoperative radiograph). The 3D image is divided into subregions that are masked according to the annotations, and the registration is computed simultaneously for each divided region by incorporating a volumetric masking method within the 3D-2D registration process.

Owner:THE JOHN HOPKINS UNIV SCHOOL OF MEDICINE

Efficient vision and kinematic data fusion for robotic surgical instruments and other applications

ActiveUS8971597B2Easy to adjustSimple and relatively stable adjustment or bias offsetDiagnosticsCharacter and pattern recognitionRemote surgeryEngineering

Robotic devices, systems, and methods for use in telesurgical therapies through minimally invasive apertures make use of joint-based data throughout much of the robotic kinematic chain, but selectively rely on information from an image capture device to determine location and orientation along the linkage adjacent a pivotal center at which a shaft of the robotic surgical tool enters the patient. A bias offset may be applied to a pose (including both an orientation and a location) at the pivotal center to enhance accuracy. The bias offset may be applied as a simple rigid transformation from the image-based pivotal center pose to a joint-based pivotal center pose.

Owner:INTUITIVE SURGICAL OPERATIONS INC

Fast 3d-2d image registration method with application to continuously guided endoscopy

A novel framework for fast and continuous registration between two imaging modalities is disclosed. The approach makes it possible to completely determine the rigid transformation between multiple sources at real-time or near real-time frame-rates in order to localize the cameras and register the two sources. A disclosed example includes computing or capturing a set of reference images within a known environment, complete with corresponding depth maps and image gradients. The collection of these images and depth maps constitutes the reference source. The second source is a real-time or near-real time source which may include a live video feed. Given one frame from this video feed, and starting from an initial guess of viewpoint, the real-time video frame is warped to the nearest viewing site of the reference source. An image difference is computed between the warped video frame and the reference image. The viewpoint is updated via a Gauss-Newton parameter update and certain of the steps are repeated for each frame until the viewpoint converges or the next video frame becomes available. The final viewpoint gives an estimate of the relative rotation and translation between the camera at that particular video frame and the reference source. The invention has far-reaching applications, particularly in the field of assisted endoscopy, including bronchoscopy and colonoscopy. Other applications include aerial and ground-based navigation.

Owner:PENN STATE RES FOUND

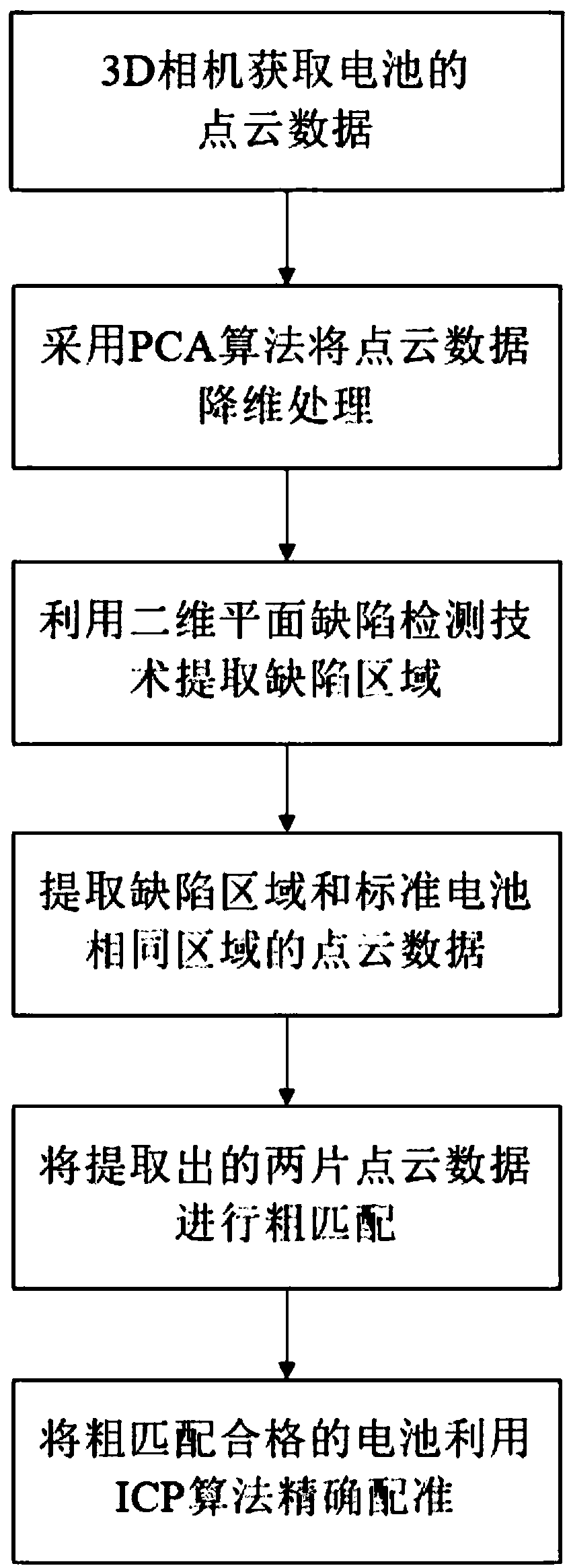

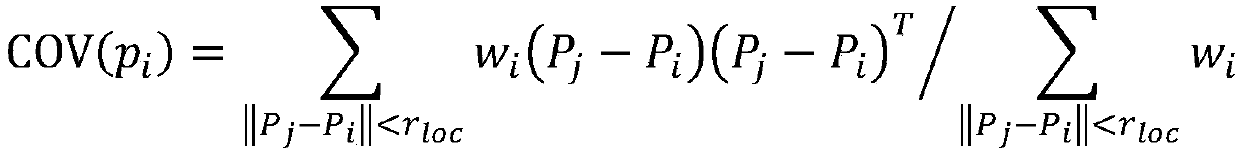

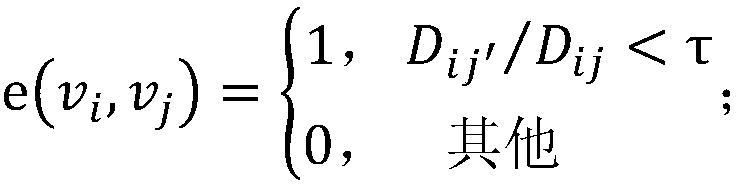

A battery appearance defect detection method based on dimension reduction and point cloud data matching

InactiveCN109523501ANarrow detection rangeReduce running timeImage enhancementImage analysisData matchingMachine vision

The invention discloses a battery appearance defect detection method based on dimension reduction and point cloud data matching, belonging to the technical field of machine vision detection, which obtains three-dimensional point cloud data of a battery to be detected and reduces the dimension of the point cloud data. Obtaining a defect area of the battery to be detected, and extracting point clouddata of the defect area; Extracting the point cloud data of the same area of the standard battery; Sampling two pieces of point cloud data and matching searching; acquiring The optimized correspondence of feature points and calculating roughly rigid transformation relation to realize rough registration. Performing Constraint detection on the rough registration results, and verifying correctness.Optimizing the rigid transformation relationship between the point cloud data to achieve automatic and accurate registration to determine whether the battery appearance is qualified or not. Changing A3D imagen into a 2D image by a dimension reduction algorithm, and acquiring a point cloud data of a defect area by using a plane defect detection technology in that 2D image so that the point cloud data is matched, the detection range is narrowed, the running time is reduce, and the accuracy is improved.

Owner:JIANGSU UNIV OF TECH

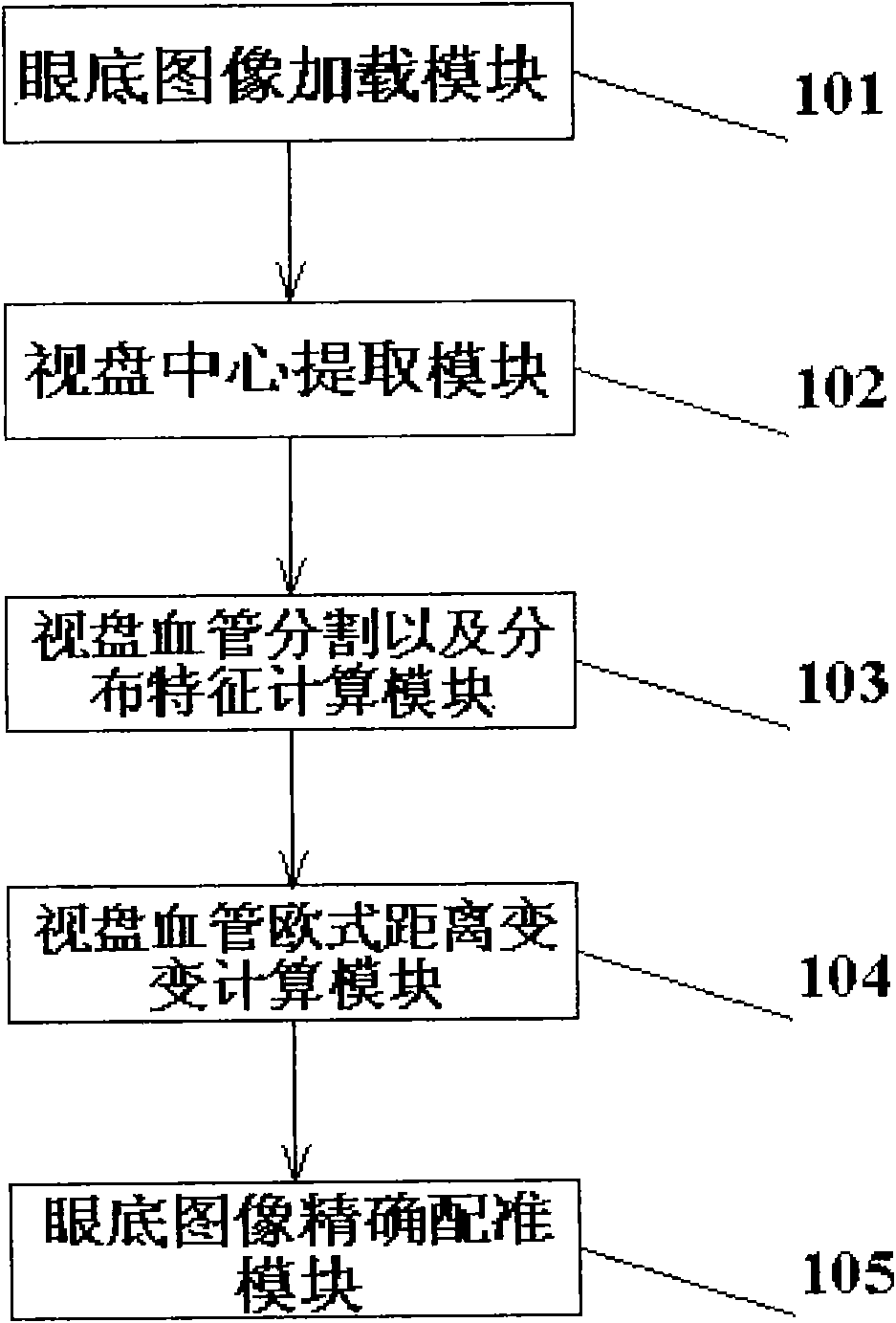

Ocular fundus image registration method estimated based on distance transformation parameter and rigid transformation parameter

InactiveCN101593351ARun fastImprove robustnessImage analysisTransformation parameterRigid transformation

The invention provides an ocular fundus image registration method estimated based on distance transformation parameter and rigid transformation parameter, comprising the following main steps: (1) ocular fundus images are loaded; (2) the optic disk center is extracted to estimate image translation parameters; (3) gradient vectors of pixel points in the adjacent zone of the optic disk are calculated, vessel segmentation is carried out, vessel distribution probability characteristics are calculated, and the estimation of image rotation parameters are obtained by minimizing two probability distribution relative entropies (Kullback-Leibler Divergence); (4) the Euclidean distance transformation of vessels segmented in step 3 is calculated; (5) accurate registration of images is carried out. The invention is a quick, precise, robust and automatic ocular fundus image registration algorithm, and has great application value on the aspect of ocular fundus image registration.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

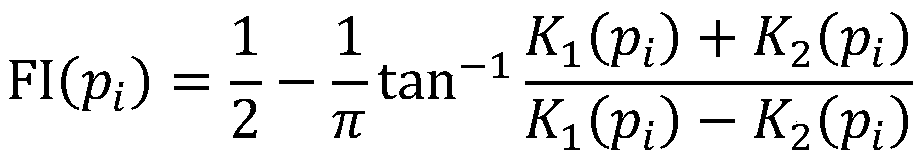

Point cloud registration method based on feature extraction

The invention belongs to the field of three-dimensional measurement, and particularly discloses a point cloud registration method based on feature extraction. The point cloud registration method comprises the steps: firstly calculating a feature index of each point through the maximum principal curvature and the minimum principal curvature of each point in a reference point cloud and a target point cloud; determining a neighborhood point of each point according to a preset number of neighborhood points, and obtaining feature points in the reference point cloud and the target point cloud according to the relationship between the feature indexes of the points and the feature indexes of the neighborhood points; constructing a local reference coordinate system for each feature point; and further obtaining three-dimensional local features of each feature point, matching the feature points in the reference point cloud and the target point cloud according to the three-dimensional local features to obtain multiple pairs of corresponding feature points, obtaining a three-dimensional rigid transformation matrix from the reference point cloud to the target point cloud according to the relationship between the corresponding feature points, and completing point cloud registration. According to the point cloud registration method, the influence of noise in the point cloud, isolated points, local point cloud density non-uniformity and the like on point cloud registration can be reduced, so that the point cloud registration result is accurate.

Owner:HUAZHONG UNIV OF SCI & TECH

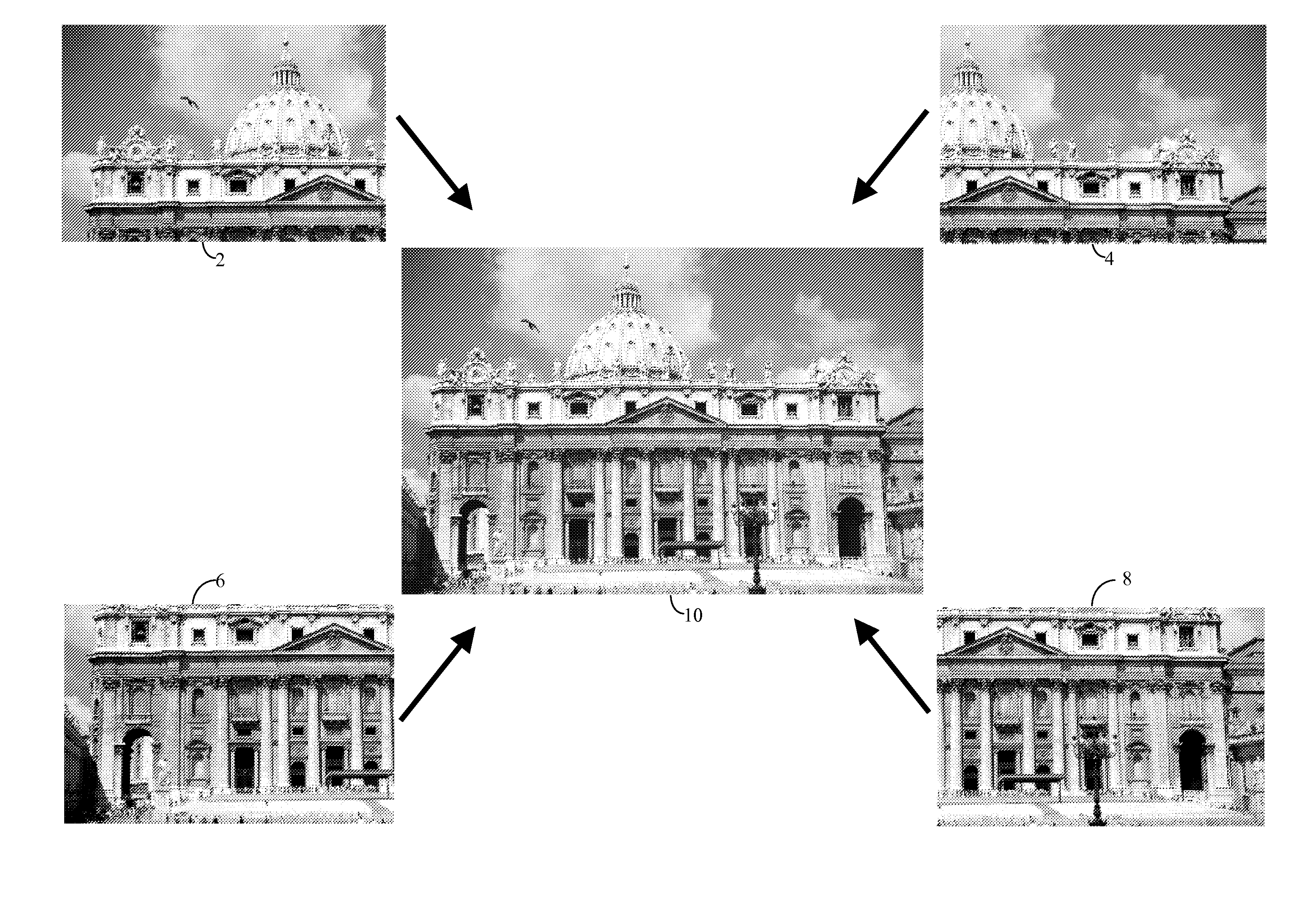

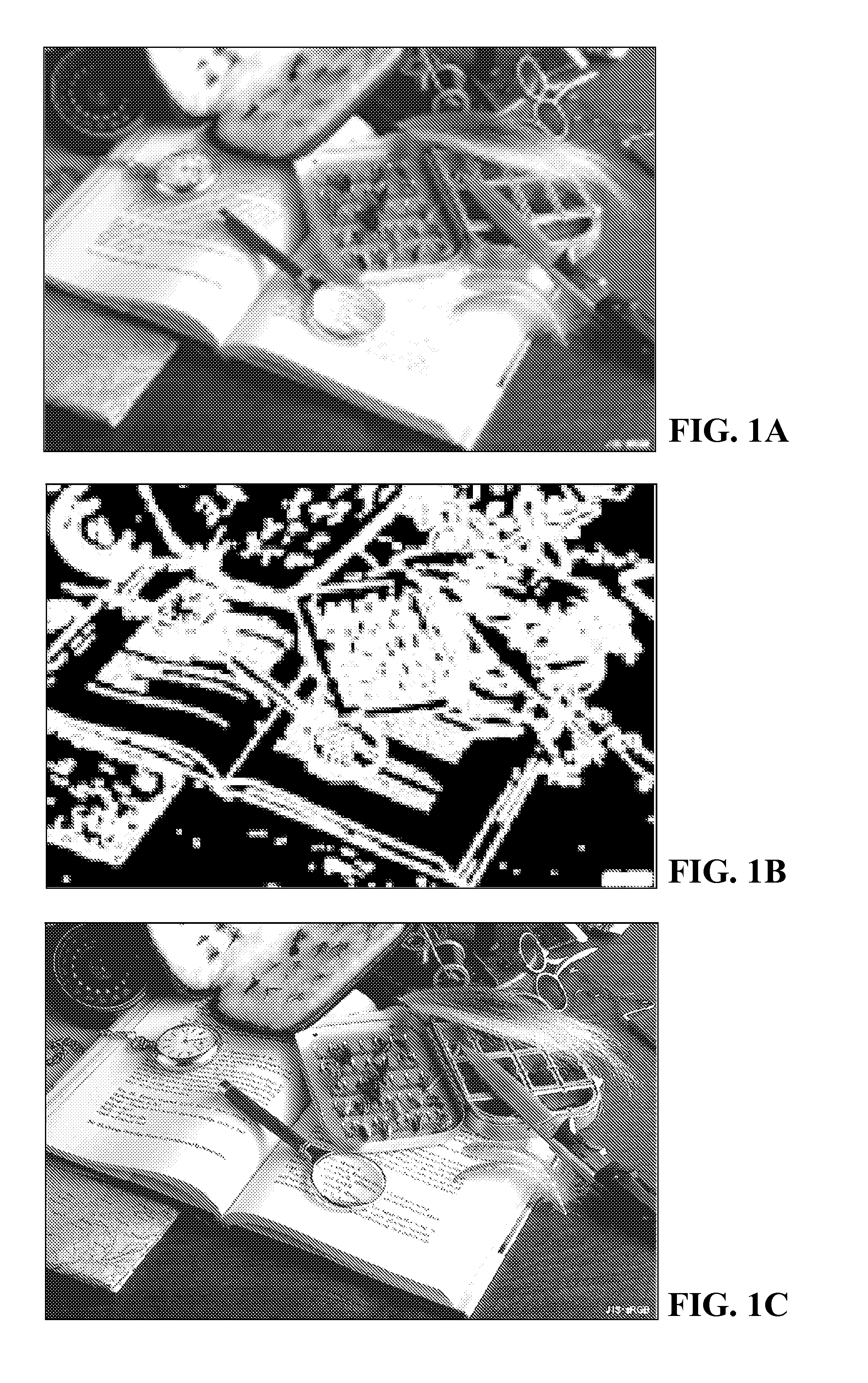

Methods and apparatus for registering and warping image stacks

ActiveUS20140119595A1Promote resultsGeometric image transformationCharacter and pattern recognitionReference imageSource image

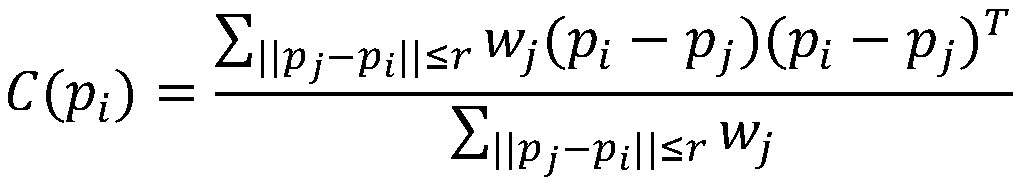

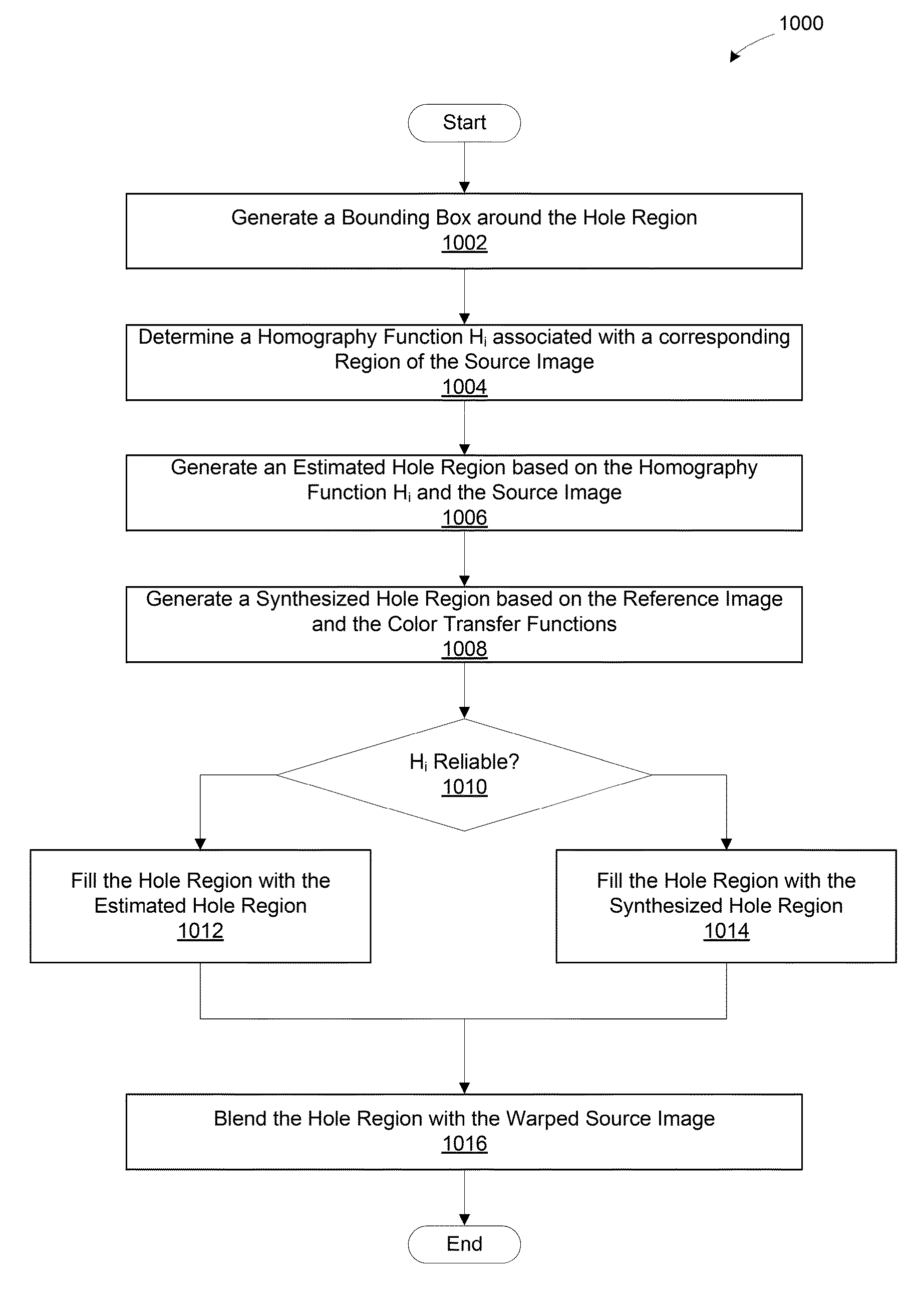

A set of images is processed to modify and register the images to a reference image in preparation for blending the images to create a high-dynamic range image. To modify and register a source image to a reference image, a processing unit generates a correspondence map for the source image based on a non-rigid dense correspondence algorithm, generates a warped source image based on the correspondence map, estimates one or more color transfer functions for the source image, and fills the holes in the warped source image. The holes in the warped source image are filled based on either a rigid transformation of a corresponding region of the source image or a transformation of the reference image based on the color transfer functions.

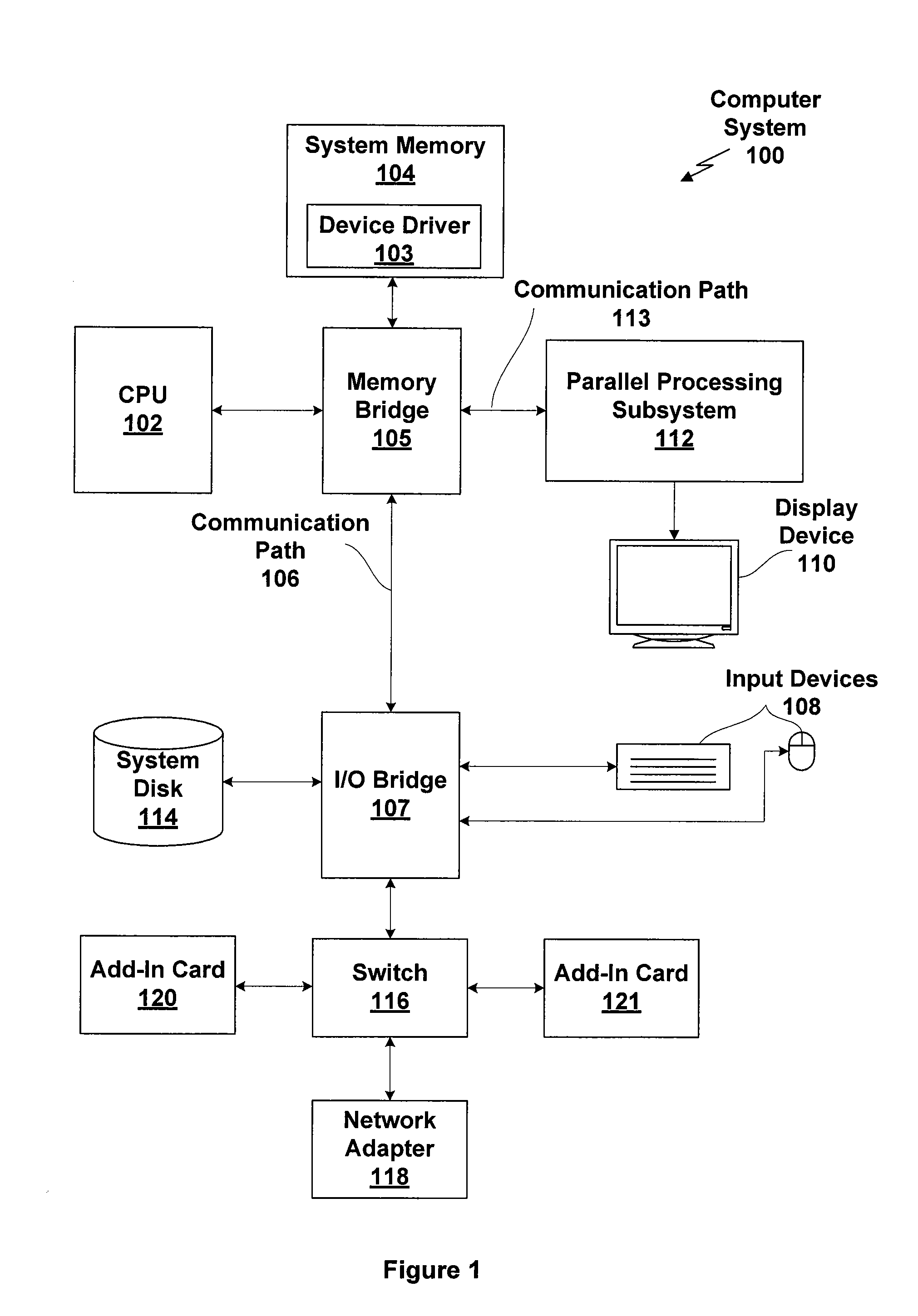

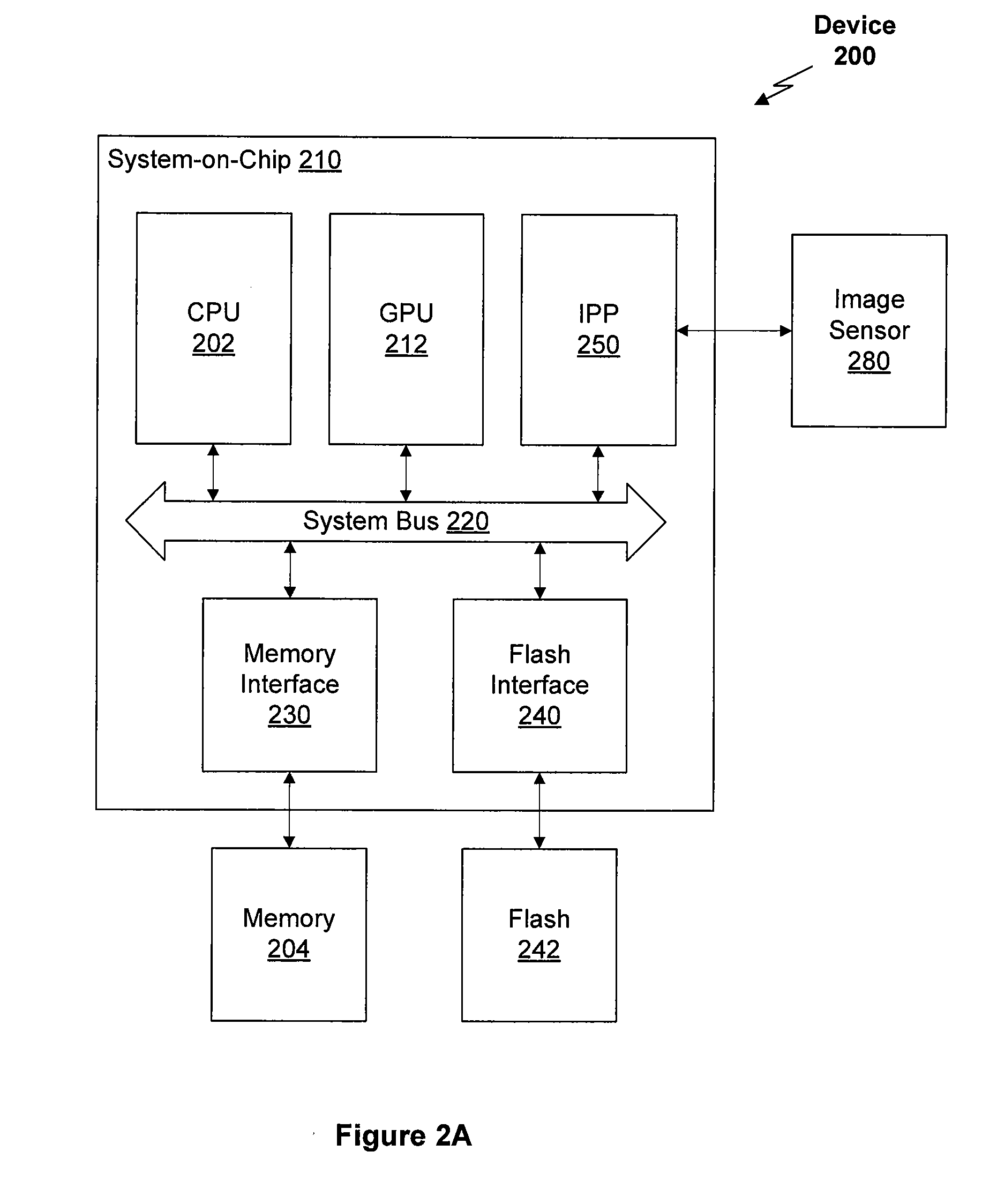

Owner:NVIDIA CORP

Techniques for registering and warping image stacks

ActiveUS20140118402A1Less computational effortRobust resultImage enhancementImage analysisReference imageRigid transformation

A set of images is processed to modify and register the images to a reference image in preparation for blending the images to create a high-dynamic range image. To modify and register a source image to a reference image, a processing unit generates correspondence information for the source image based on a global correspondence algorithm, generates a warped source image based on the correspondence information, estimates one or more color transfer functions for the source image, and fills the holes in the warped source image. The holes in the warped source image are filled based on either a rigid transformation of a corresponding region of the source image or a transformation of the reference image based on the color transfer functions.

Owner:NVIDIA CORP

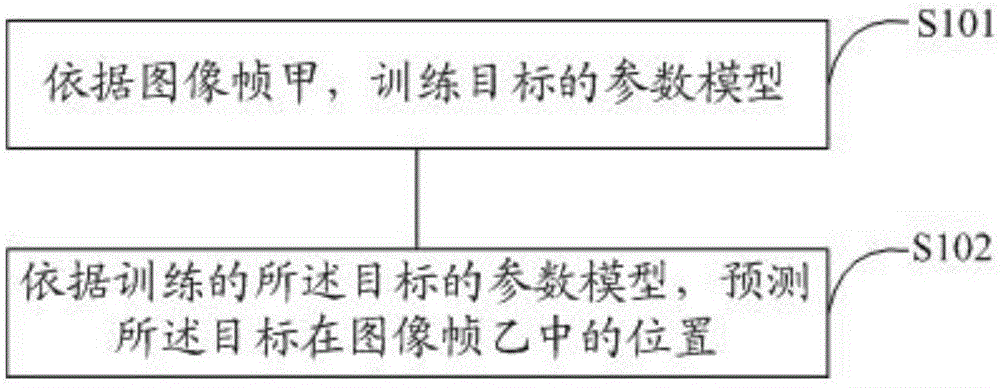

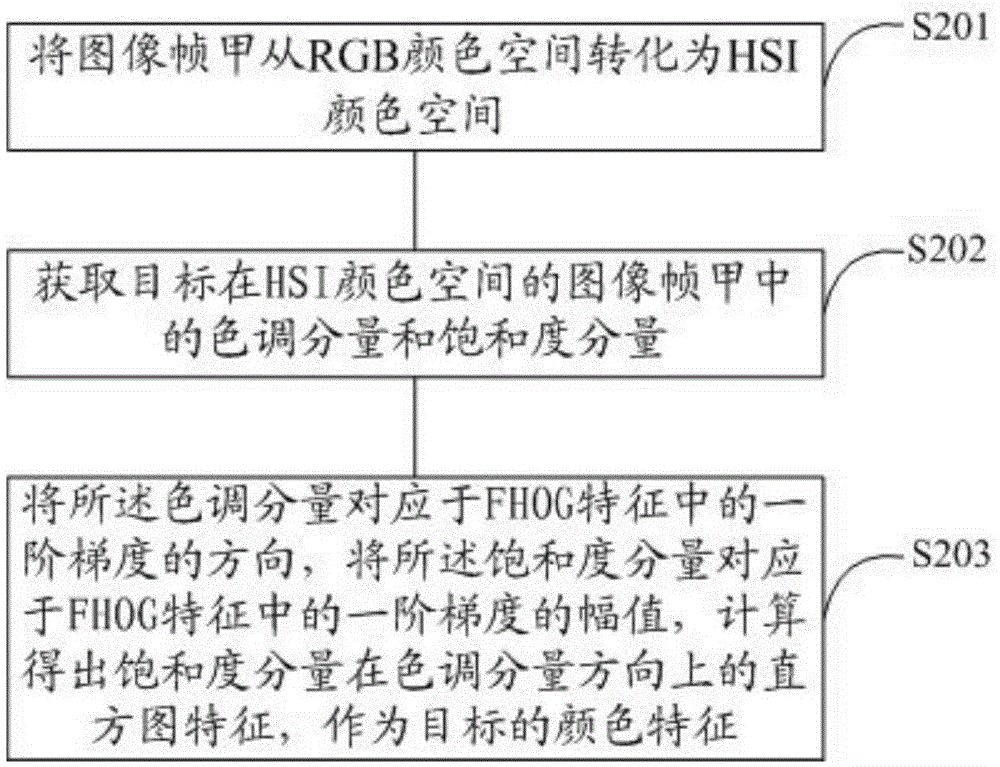

User terminal and object tracking method and device thereof

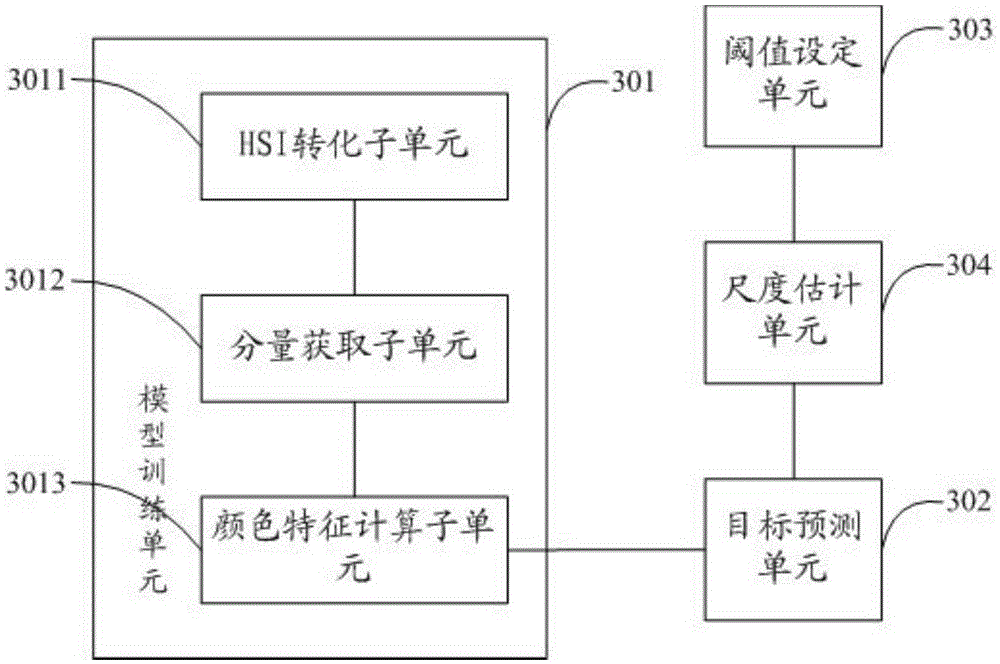

InactiveCN106683110AImprove performanceImprove real-time performanceImage enhancementImage analysisPattern recognitionCorrelation filter

The invention relates to a user terminal and an object tracking method and device thereof. The method comprises that a parameter model of an object is trained according to a first picture frame; according to the trained parameter model of the object, the position of the object in a second picture frame is predicted; the parameter model of the training object comprises first and second parameter models of the training objects; and the second parameter model of the object is described by combining FHOG and color features of the object. When the object is tracked, a correlation filter in a KCF algorithm is utilized under a tracking-detection frame, and a combination feature formed by the FHOG and color features is added to improve the performance of the algorithm. In the object tracking algorithm, multiple types of features are used to express information, the instantaneity is high, and adverse influence of adverse factors, including complex background, illumination and non-rigid transformation, on object tracking can be handled effectively.

Owner:SPREADTRUM COMM (TIANJIN) INC

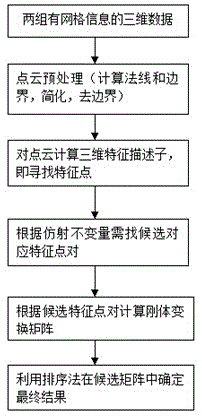

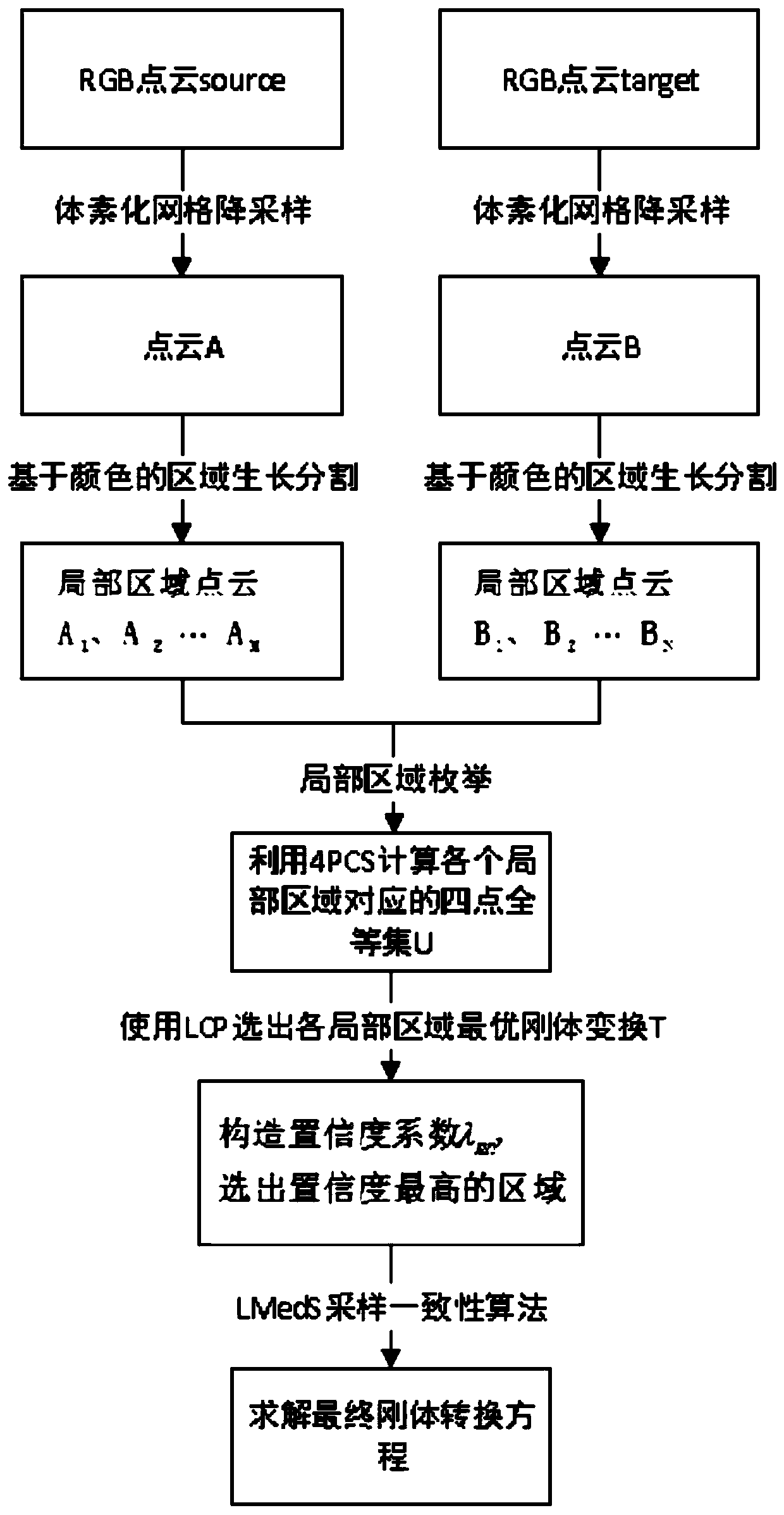

Three-dimensional point cloud full-automatic registration method

ActiveCN105654483ARealize fully automatic registrationImage analysisDetails involving 3D image dataPoint cloudConfidence factor

The invention discloses a three-dimensional point cloud full-automatic registration method. The method includes the following steps that: two groups of point cloud data A and B are inputted, the normal directions and boundaries of the two groups of point cloud data A and B are calculated, and the data are simplified, and boundary points are removed; three-dimensional feature processing is performed on the pre-processed point cloud data A and B, so that corresponding three-dimensional feature descriptors Key A and Key B are obtained; as for each datum in the Key A, a plurality of points which are nearest to each datum in the Key are searched in the Key B, and are adopted as preliminary corresponding points, and corresponding points which do not satisfy a predetermined condition are removed from the preliminary corresponding points, so that a final candidate point set can be obtained; rigid transformation matrixes are calculated for each group of candidate point pair so as to form a candidate matrix set; and confidence factors are calculated for each candidate matrix according to the candidate matrix set, a candidate matrix with a maximum confidence factor is selected as a final rigid transformation matrix, and source point cloud is transformed to a coordinate system of target point cloud through the rigid transformation matrix.

Owner:WISESOFT CO LTD

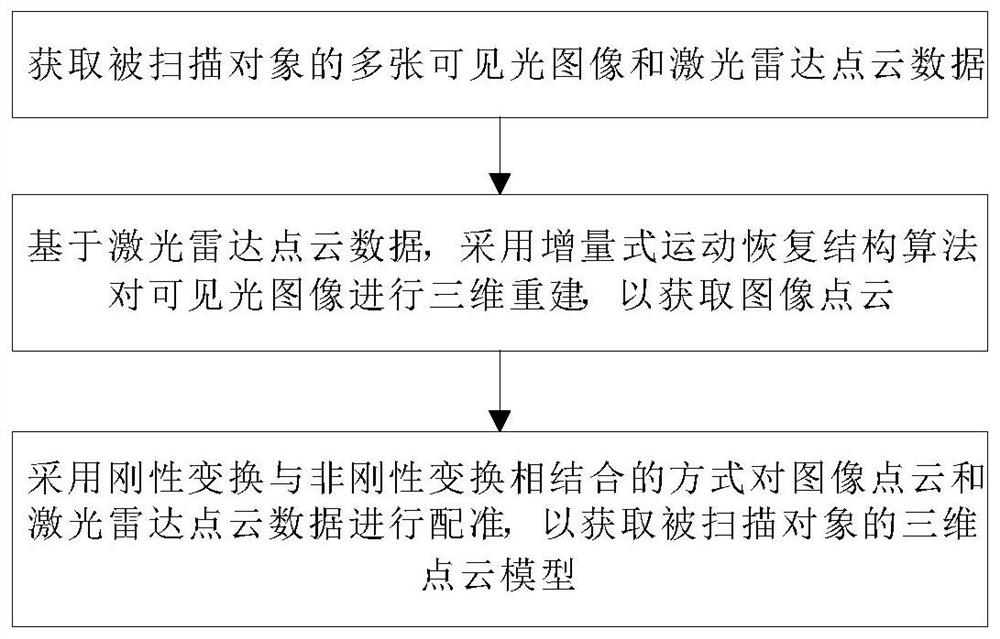

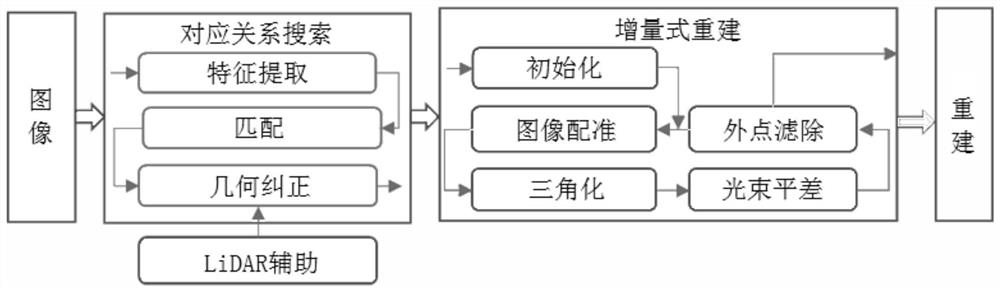

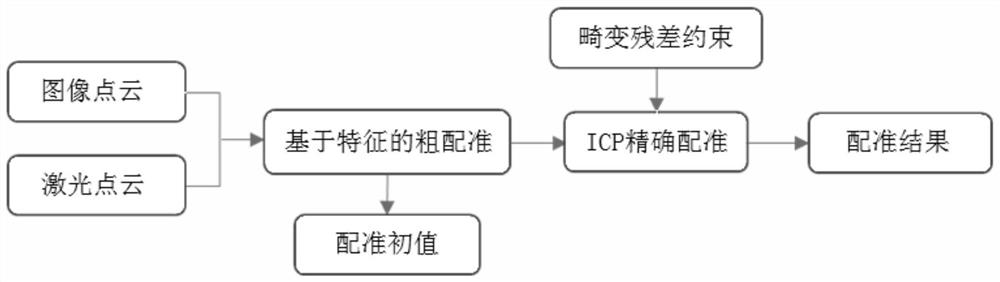

Single-lens three-dimensional image reconstruction method based on laser radar point cloud data assistance

PendingCN112102458AReduce distortionImplement refactoringImage enhancementImage analysisPoint cloudModel reconstruction

The invention discloses a single-lens three-dimensional image reconstruction method based on laser radar point cloud data assistance. The method comprises the steps: acquiring multiple visible light images and laser radar point cloud data of a scanned object; based on the laser radar point cloud data, adopting an incremental motion recovery structure algorithm to carry out three-dimensional reconstruction on the visible light image so as to acquire an image point cloud; and registering the image point cloud and the laser radar point cloud data by adopting a mode of combining rigid transformation and non-rigid transformation to obtain a three-dimensional point cloud model of a scanned object. According to the method, geometric correction is carried out by adding virtual ground control points in the three-dimensional reconstruction process of the image in a mode of searching homonymous points of the image and the laser radar point cloud, so that the distortion condition in the three-dimensional reconstruction process of the image can be reduced; and image point cloud and laser radar point cloud data are registered in a rigid transformation and non-rigid transformation combined mode,the registration precision can be improved, and high-precision three-dimensional point cloud model reconstruction is achieved.

Owner:HUNAN SHENGDING TECH DEV CO LTD

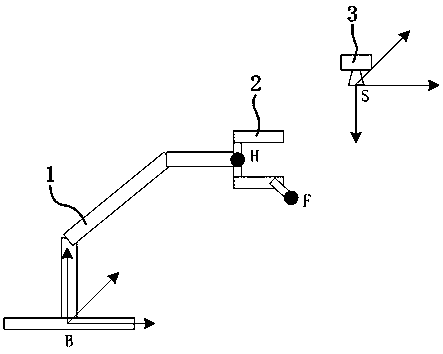

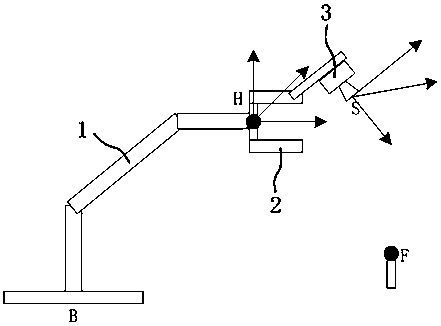

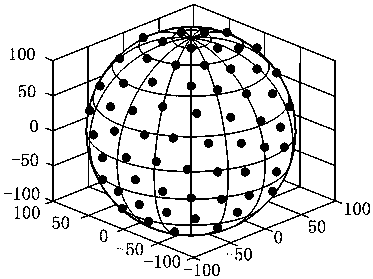

Calibration-target-free universal hand-eye calibration method based on 3D vision

InactiveCN110450163AMeet the requirements of fine operationReduce errorProgramme-controlled manipulatorHand eye calibrationNonlinear matrix equation

The invention discloses a calibration-target-free universal hand-eye calibration method based on 3D vision. The calibration-target-free universal hand-eye calibration method based on 3D vision is common for the eye-to-hand condition and the eye-in-hand condition. The calibration-target-free universal hand-eye calibration method based on 3D vision comprises the steps that firstly, the center position of a flange plate of an end executor of a mechanical arm is kept constant, the end executor is controlled to only rotate, and a 3D vision sensor is utilized to collect coordinates of at least fourfeature points F for sphere center fitting; then, the posture of the end executor of the mechanical arm is kept constant, the end executor is controlled to only conduct horizontal movement, the coordinates of at least three feature points F are collected by a 3D camera, and a controller of a robot is used for recording or calculating the corresponding center position of the flange plate so as to estimate the rigid transformation parameter. The calibration-target-free universal hand-eye calibration method based on 3D vision has the beneficial effects that space information of the 3D vision sensor are fully utilized, a high error generated when the posture of the calibration target is measured is avoided, it is not needed to solve a complicated high-dimensional nonlinear matrix equation, andtherefore the calibration precision and calibration efficiency are high.

Owner:SHANGHAI RO INTELLIGENT SYST

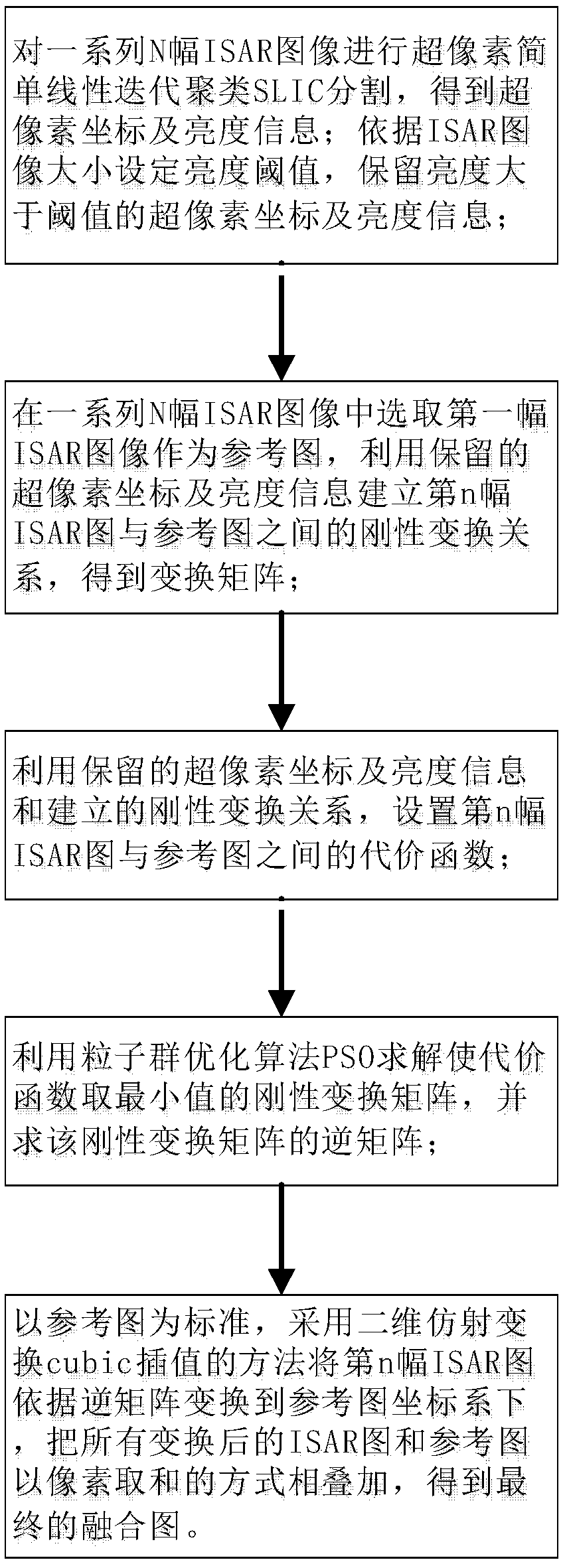

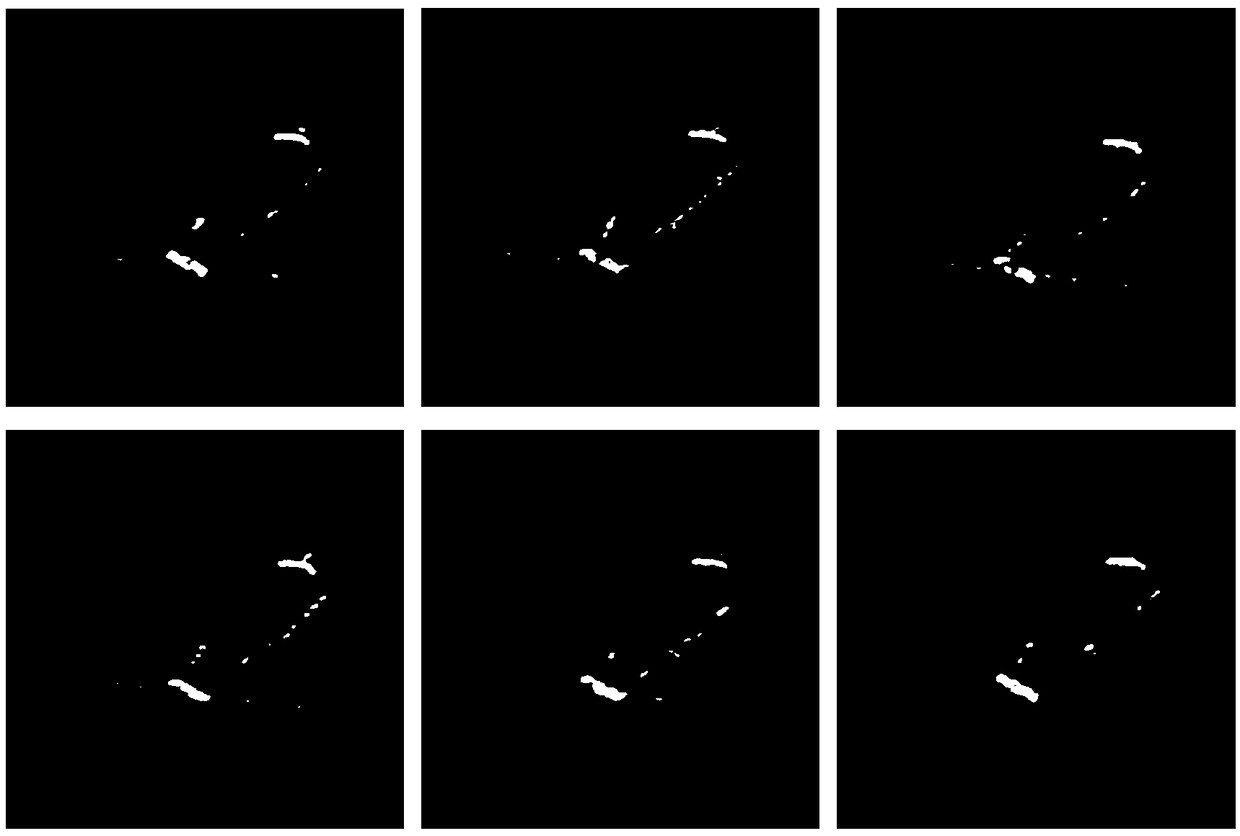

Multi-view ISAR image fusion method

ActiveCN109146001AAchieve registrationAchieve integrationCharacter and pattern recognitionReference mapReference image

The invention discloses a multi-view ISAR image fusion method, which mainly solves the problems of redundancy of feature points extracted by the prior art, complexity of processing and large operationamount. The scheme is as follows: a series of N ISAR images are segmented by superpixel simple linear iterative clustering to obtain superpixel coordinates X, Y and brightness information L; a brightness threshold is set, and the super pixel information whose L is larger than the threshold is retained; the first ISAR image is selected as the reference image, and the rigid transformation relationship between the nth ISAR image and the reference image is established by using the reserved parameters, and the transformation matrix Bn is obtained; a cost function Jn between the nth ISAR map and the reference map is set; the rigid transformation matrix Bn' which minimizes Jn is solved, and the inverse matrix An of Bn' is obtained; the n-th ISAR map is transformed into the reference map coordinate system according to the inverse matrix An, and all the transformed ISAR maps and the reference maps are superposed to obtain the fusion map. The feature points extracted by the invention are refined, and the operation amount is small, and can be used for three-dimensional image reconstruction, target recognition and attitude estimation.

Owner:XIDIAN UNIV

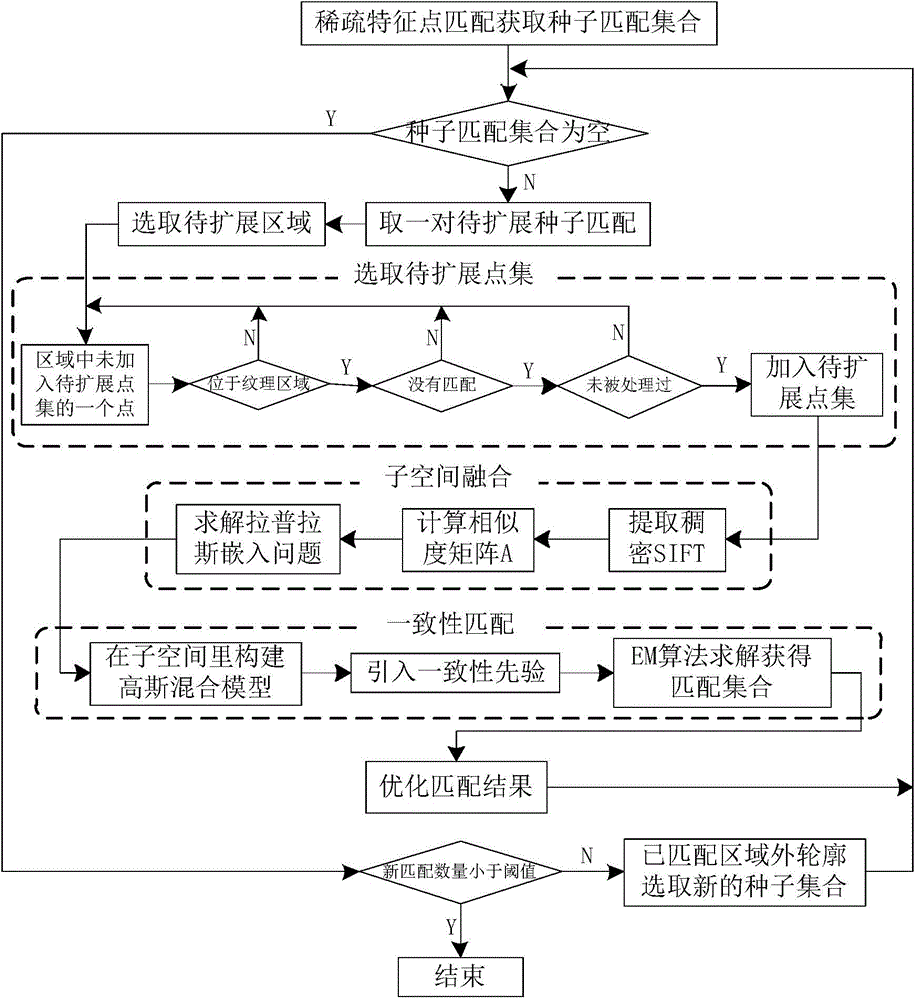

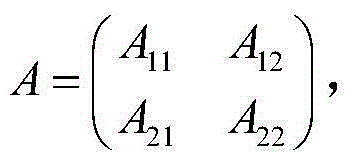

Quasi-dense matching extension method based on subspace fusion and consistency constraint

The invention discloses a quasi-dense matching extension method based on subspace fusion and consistency constraint. The quasi-dense matching extension method comprises the following steps: firstly, acquiring reliable seed matching, and selecting a region to be extended around the seed matching; secondly, performing dense SIFT characteristic extraction on all pixel points to be extended in the region, and through subspace learning, fusing characteristic information and position information of the pixel points to be extended. During matching seeking, a local non-rigid transformation is learned through the consistency constraint, and compared with an affine transformation model and other models, the local non-rigid transformation can better describe nonplanar complex scenes; after each extension, an extension result is optimized to get rid of bad matching points; an extension process is repeated constantly until new matching cannot be found; the quasi-dense matching extension method has relatively good robustness for a scene with a complex surface structure; by the quasi-dense matching extension method, under the condition of less image, accurate and dense reconstruction of a target scene becomes possible.

Owner:HUAZHONG UNIV OF SCI & TECH

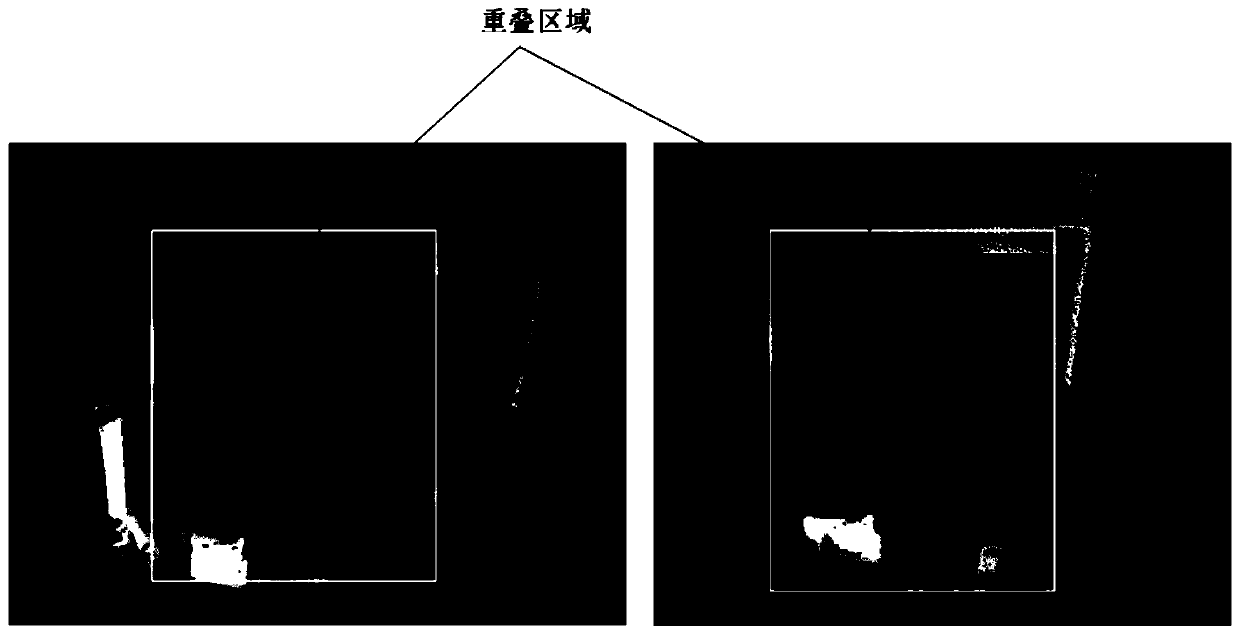

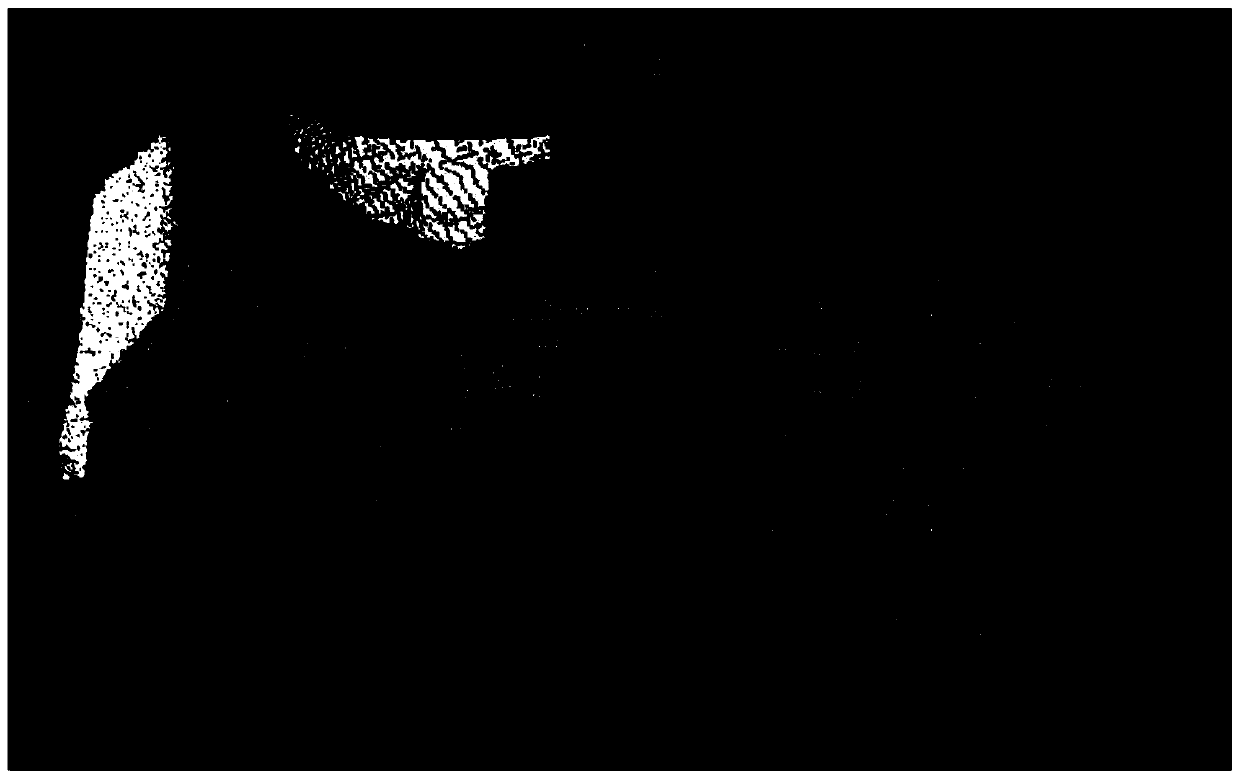

Point cloud registration method with a low overlapping rate

ActiveCN109767464AOvercome failureReduce in quantityImage analysis3D modellingRigid transformationAlgorithm Selection

The invention discloses a point cloud registration method with a low overlapping rate. The point cloud registration method comprises the following steps: step 1, obtaining a source point cloud and a target point cloud; step 2, performing downsampling by using the obtained source point cloud and target point cloud to obtain a point cloud A and a point cloud B respectively; step 3, respectively segmenting the point cloud A and the point cloud B into a plurality of local areas; step 4, performing preliminary matching, and selecting a corresponding four-point congruent set is through a 4PCS algorithm; step 5, selecting the optimal transformation of the local area; step 6, constructing a matching confidence coefficient of the local area, enumerating and calculating the matching condition of allthe local areas, sorting the local areas, and selecting the local area with the highest confidence coefficient; step 7, performing registration by using an LMidS sampling consistency algorithm to obtain a final rigid transformation matrix, and completing point cloud registration; According to the invention, good registration precision can be ensured under the condition of low overlapping rate.

Owner:SOUTHWEST JIAOTONG UNIV

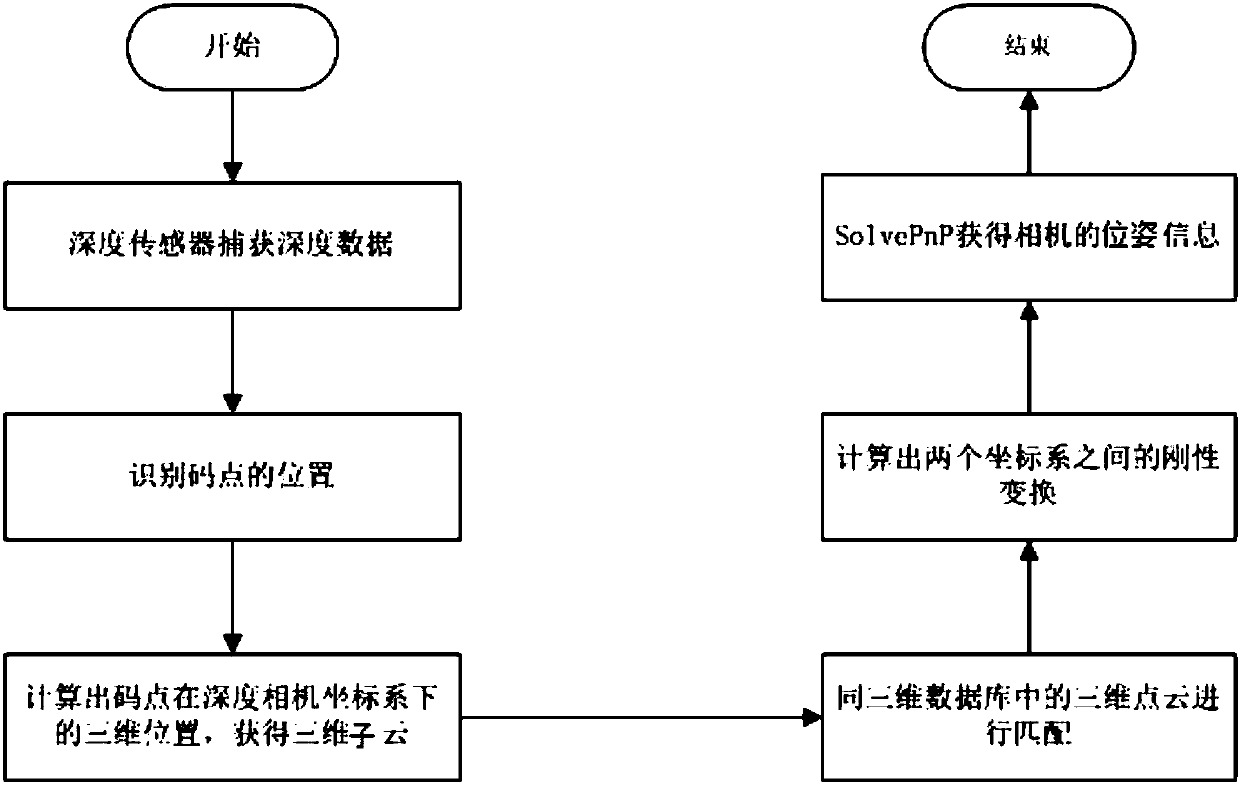

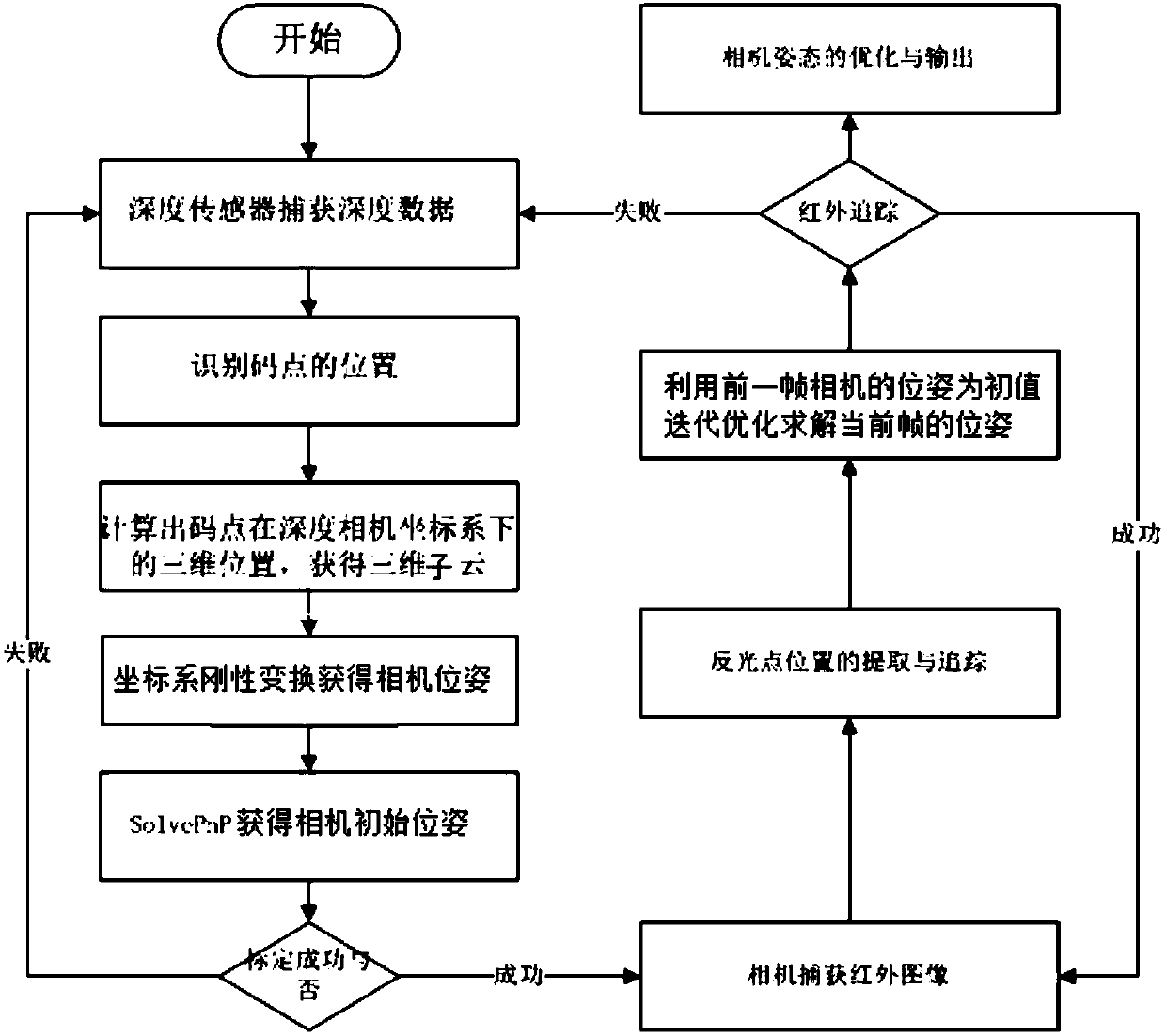

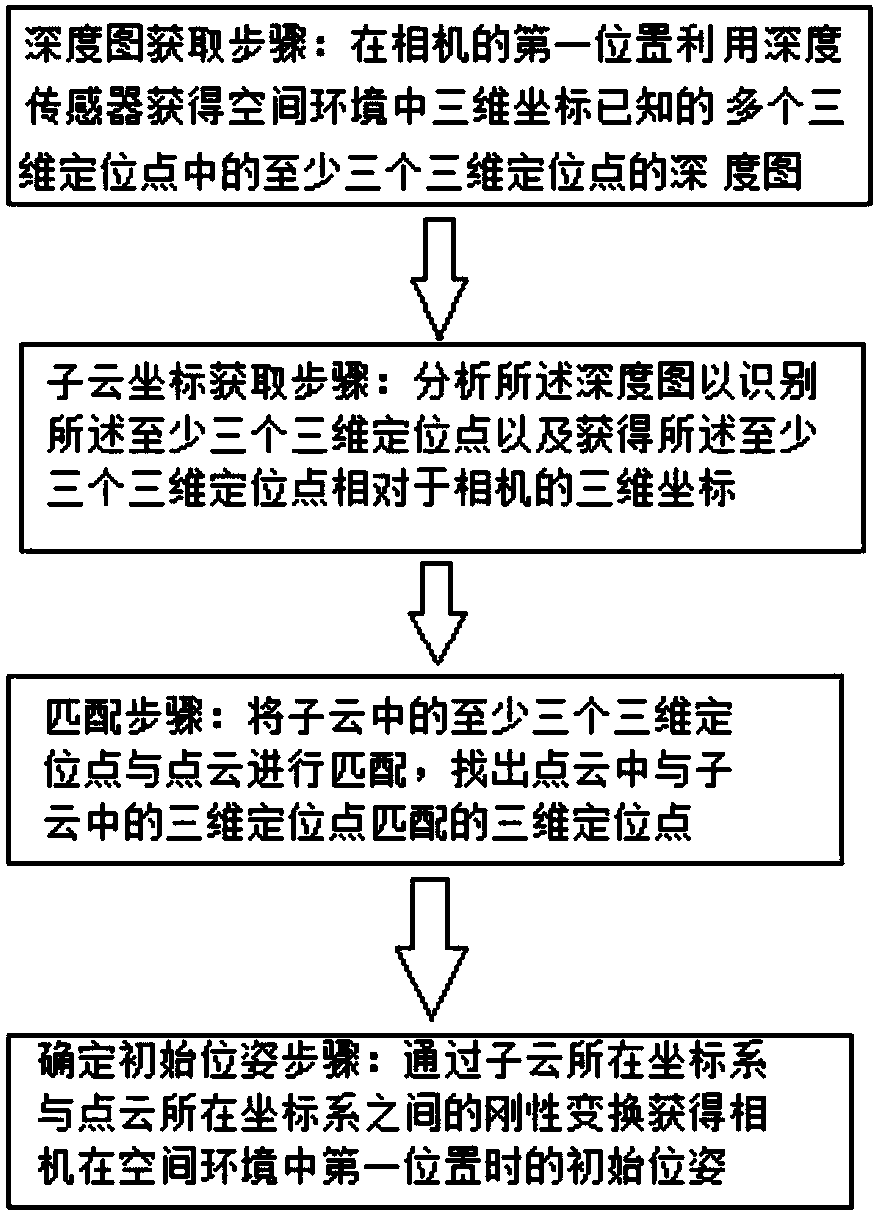

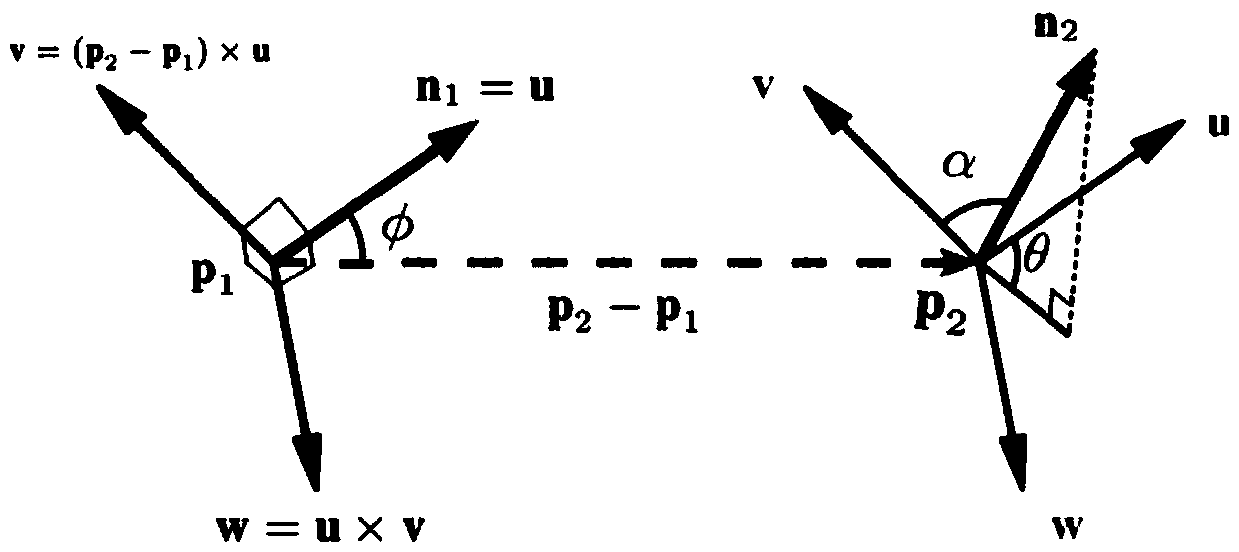

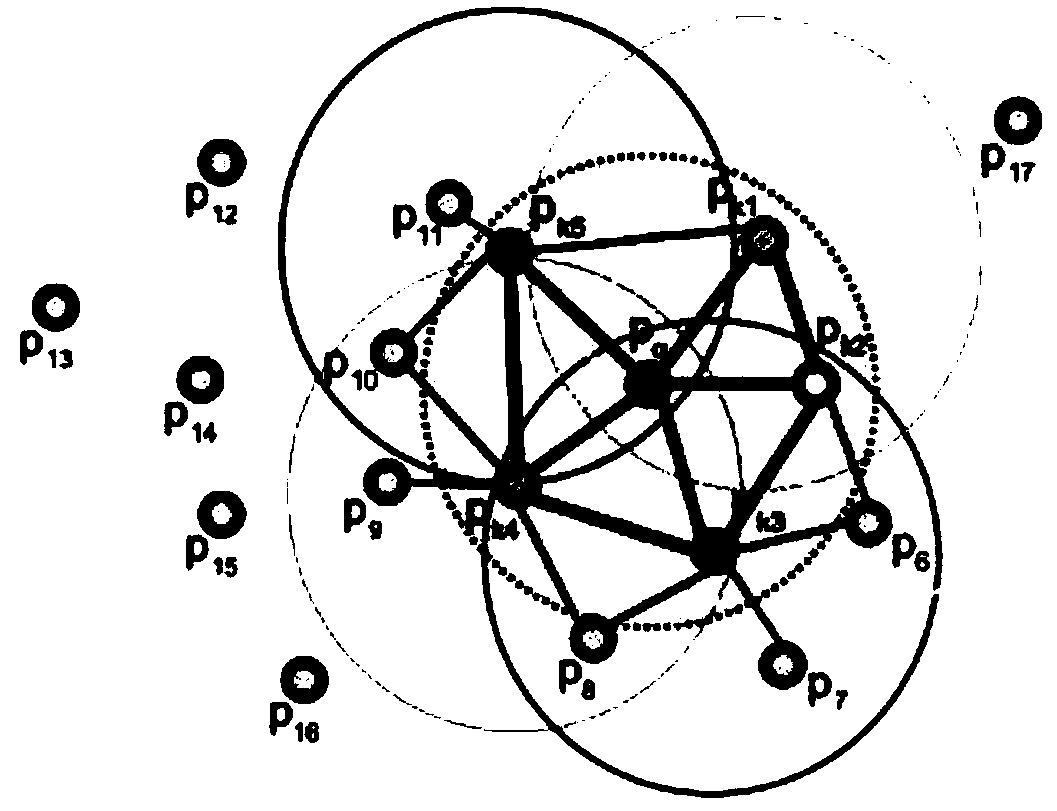

Camera pose determining method and device

The invention relates to a camera pose determining method. The method comprises a depth map obtaining step in which in a first position of a camera, a depth sensor is used to obtain a depth map of atleast three of 3D positioning points with known 3D coordinates in a space environment, the 3D positioning points form code points in the depth map, and the 3D positioning points form a point cloud inthe space environment; a sub cloud coordinate obtaining step in which the depth map is analyzed to identify the at least three 3D positioning points and obtain 3D coordinates, relative to the camera,of the at least three 3D positioning points, and the at least three 3D positioning points form a sub cloud; a matching step in which the at least three 3D positioning points in the sub cloud matches the point cloud to search the point cloud for 3D positioning points that match 3D positioning points in the sub cloud; and an initial pose determining step in which an initial pose of the camera in thefirst position in the space environment is obtained via rigid transformation between a coordinate system of the sub cloud and a coordinate system of the point cloud. The invention also relates to a camera pose determining device.

Owner:北京墨土科技有限公司

A parallel three-dimensional point cloud data automatic registration method

ActiveCN109559340AReduce computational complexityHigh precisionImage enhancementImage analysisPoint cloudRigid transformation

The invention discloses a parallel three-dimensional point cloud data automatic registration method. The method comprises the steps of obtaining two to-be-registered point clouds which are in different views and have overlapped regions; downsampling processing is carried out on the point cloud to reduce the calculation amount; calculating a normal vector of the point cloud data and calculating a fast point feature histogram FPFH feature; starting a plurality of processes to select n points from one point cloud and search corresponding points from the other point cloud, calculating a rotation translation matrix of rigid transformation according to the corresponding points, calculating an error measurement standard, taking a result of the minimum error measurement standard as an iteration result of this time, carrying out iteration for multiple times, and taking a final result as a transformation matrix; and finally, performing fine registration by using an ICP iteration algorithm.

Owner:NORTHEASTERN UNIV

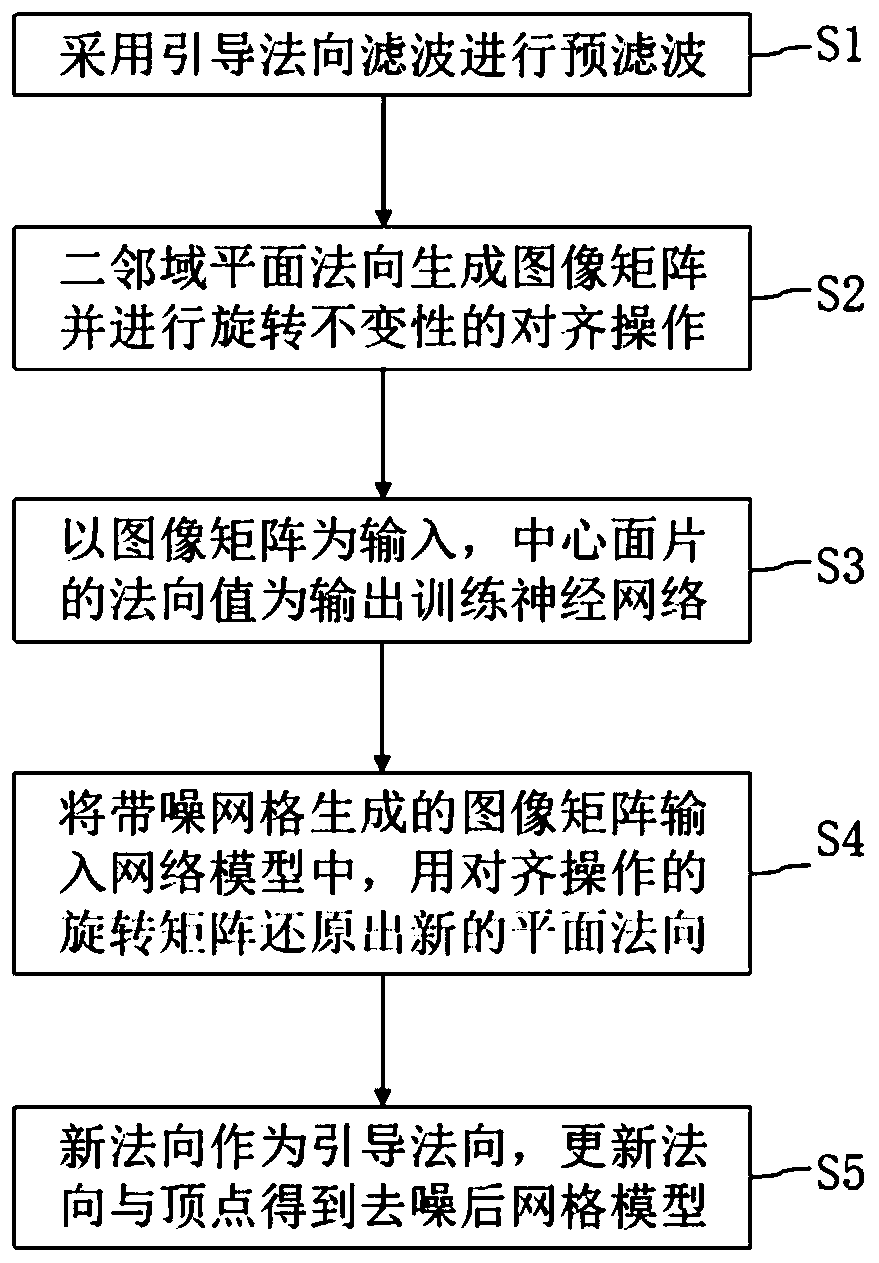

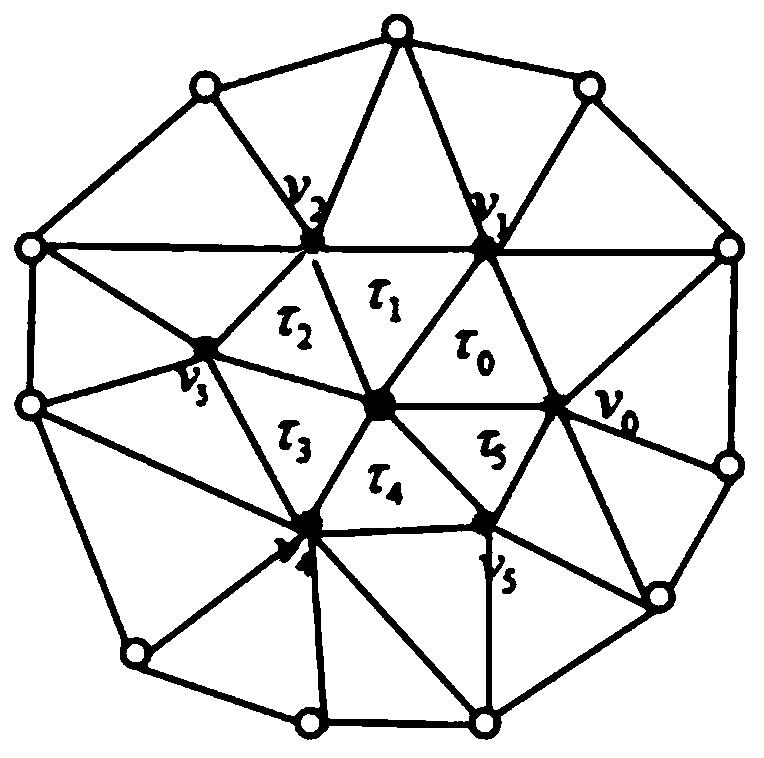

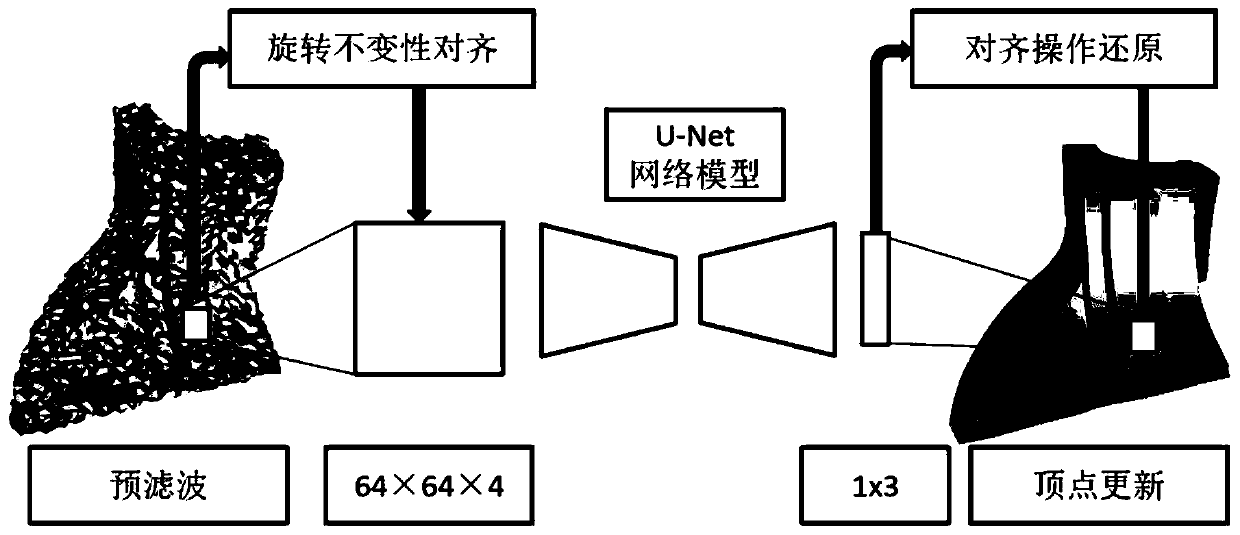

Grid denoising method based on neural network

ActiveCN110930334AEfficient denoisingMany geometric featuresImage enhancementImage analysisPattern recognitionData set

The invention discloses a grid denoising method based on a neural network. The method comprises the following steps: carrying out pre-filtering operation by adopting guided normal filtering with fixedparameters, extracting a two-neighborhood plane normal generation image matrix of a pre-filtered grid model, carrying out alignment operation for rigid transformation and image rotation by utilizingnormal tensor voting, constructing a data set and training a neural network; and in an operation denoising stage, inputting an image matrix generated after pre-filtering of the noisy grid into the trained network model, restoring a new normal direction as a guide normal direction by utilizing the rotation matrix, and updating the normal direction and vertex information to obtain a denoised grid model. According to the method, the neural network is applied to the denoising problem of the three-dimensional grid, and the surface normal is mapped into the image matrix, so that the grid denoising effect of highly maintaining features can be simply and efficiently achieved.

Owner:ZHEJIANG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com