Calibration-target-free universal hand-eye calibration method based on 3D vision

A technology of hand-eye calibration and calibration board, which is applied in the direction of manipulators, program-controlled manipulators, manufacturing tools, etc., which can solve the problems of inconvenient, inability to achieve online calibration, and inability to carry calibration boards

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

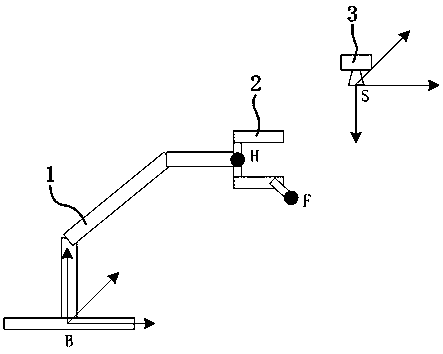

[0060] Example 1 - Eye-to-hand:

[0061] See figure 1 , in this implementation, the table tennis ball is clamped by the end effector of the robotic arm. The center of the table tennis ball is taken as the feature point F. There are at least two ways to extract the center position of the table tennis ball: first, use the depth image data of the 3D camera to perform Hough transform; second, segment the yellow circular area from the 2D image data, which is the closest to the camera Adding a radius of the ping-pong ball to the point along the camera depth direction is the center of the ball.

[0062] The specific steps of the general hand-eye calibration method based on 3D vision are as follows, including,

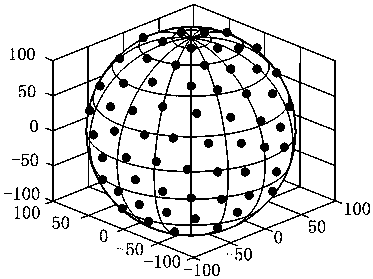

[0063] Step S1, keep the position of the center of the flange of the end effector of the mechanical arm unchanged, and control the end effector to only perform rotational movement. As far as the controller level of the manipulator is concerned, only the ABC Euler angles re...

Embodiment 2

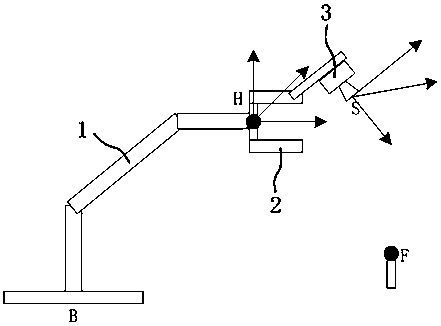

[0104] Example 2 - Eye-in-hand:

[0105] See figure 2 , the operation steps of the eye-in-hand calibration technique are basically the same as the above-mentioned embodiment, except that in step S2, the attitude of the recording robot arm is added, and the specific implementation methods are not described here one by one.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com