Patents

Literature

428 results about "3d vision" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

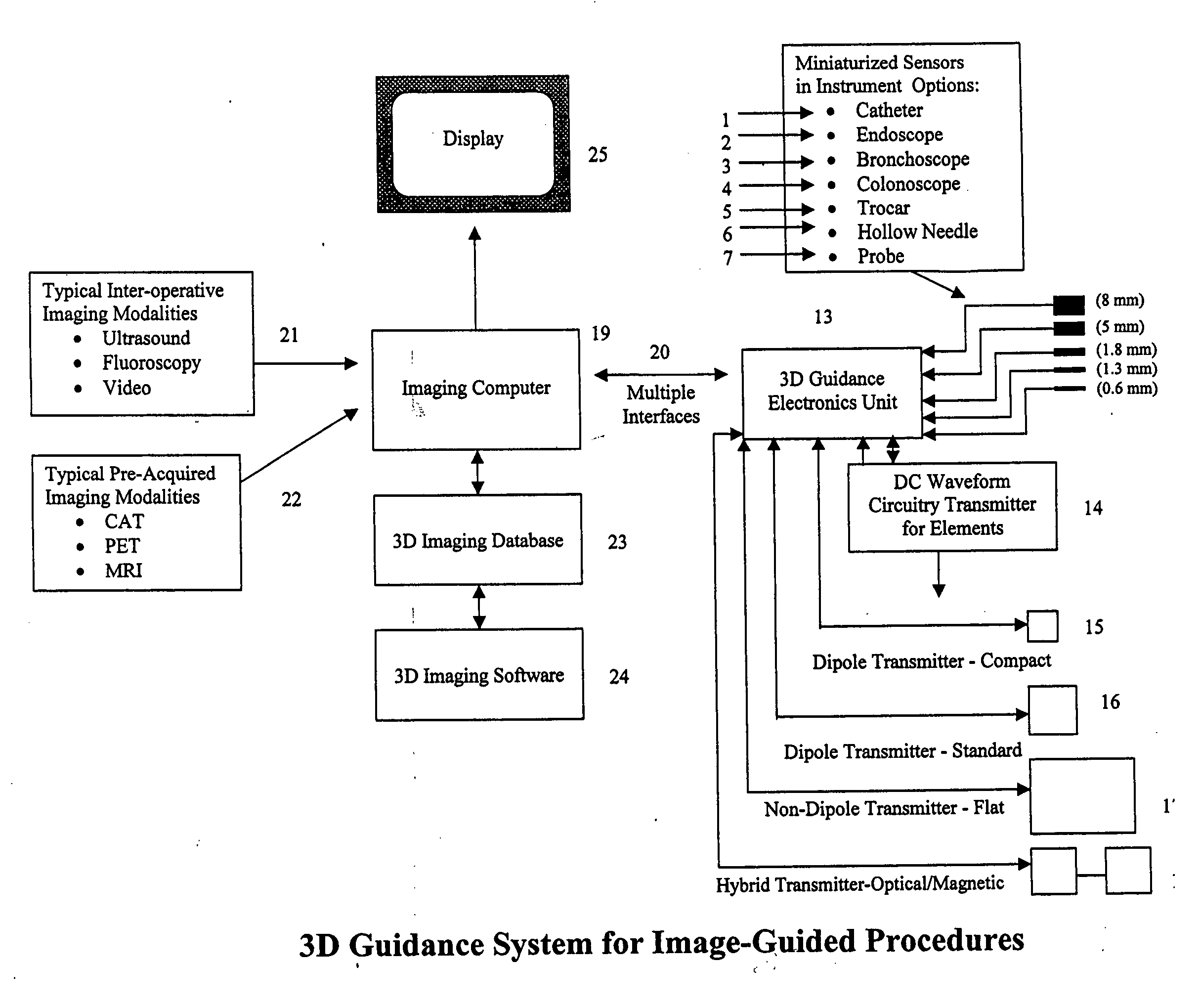

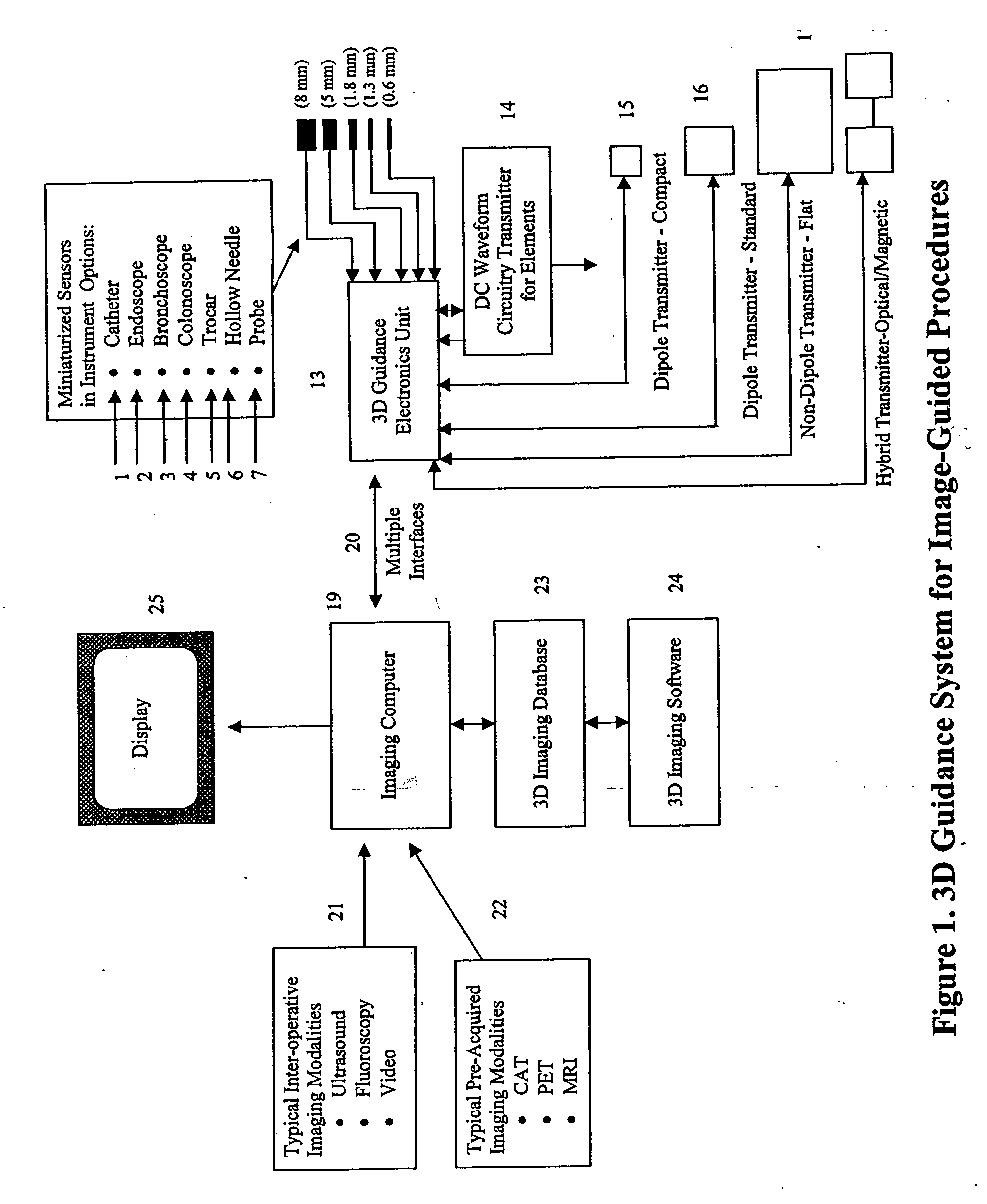

DC magnetic-based position and orientation monitoring system for tracking medical instruments

ActiveUS20070078334A1Overcome disposabilityOvercome cost issueDiagnostic recording/measuringSensors3d sensorEngineering

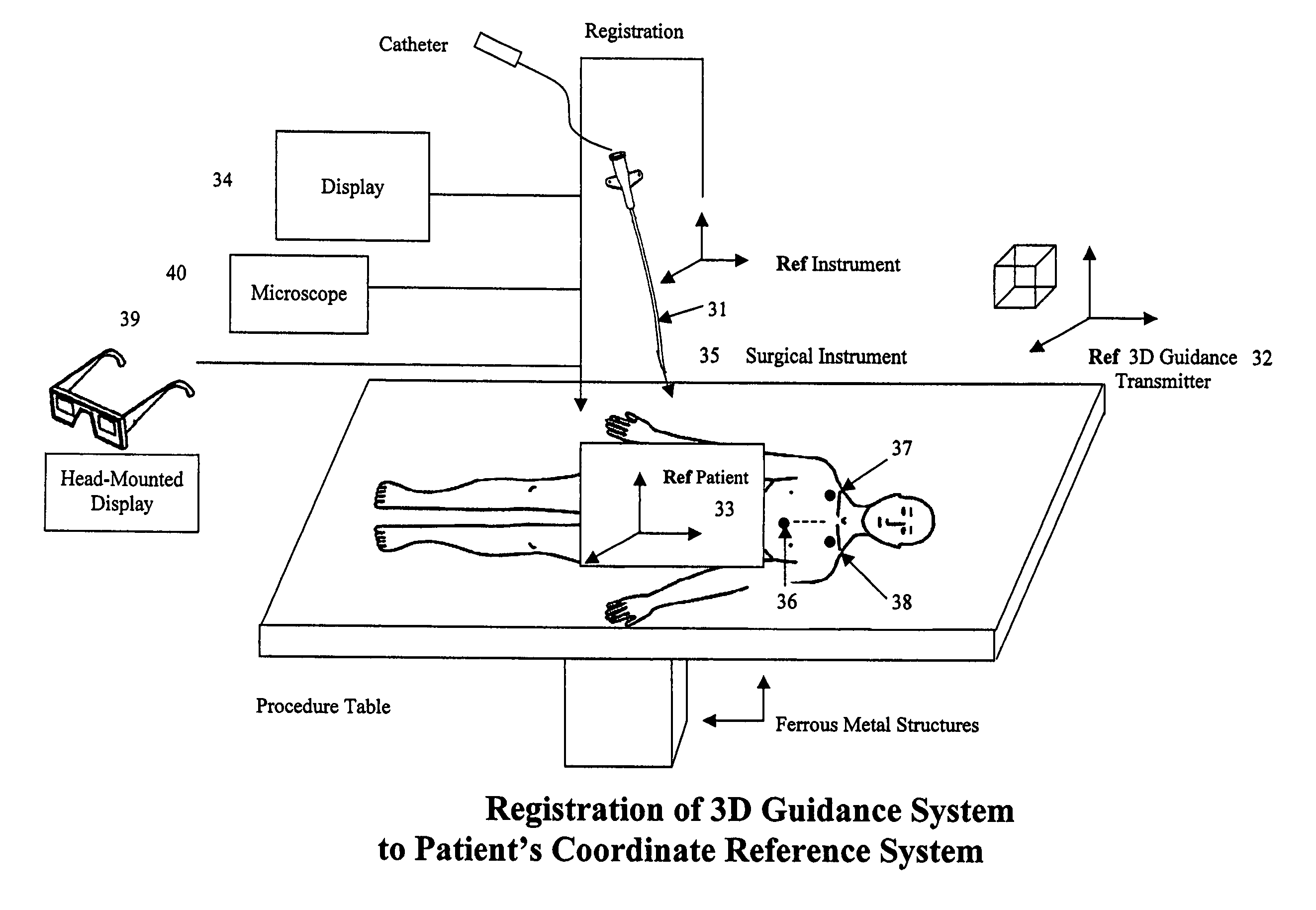

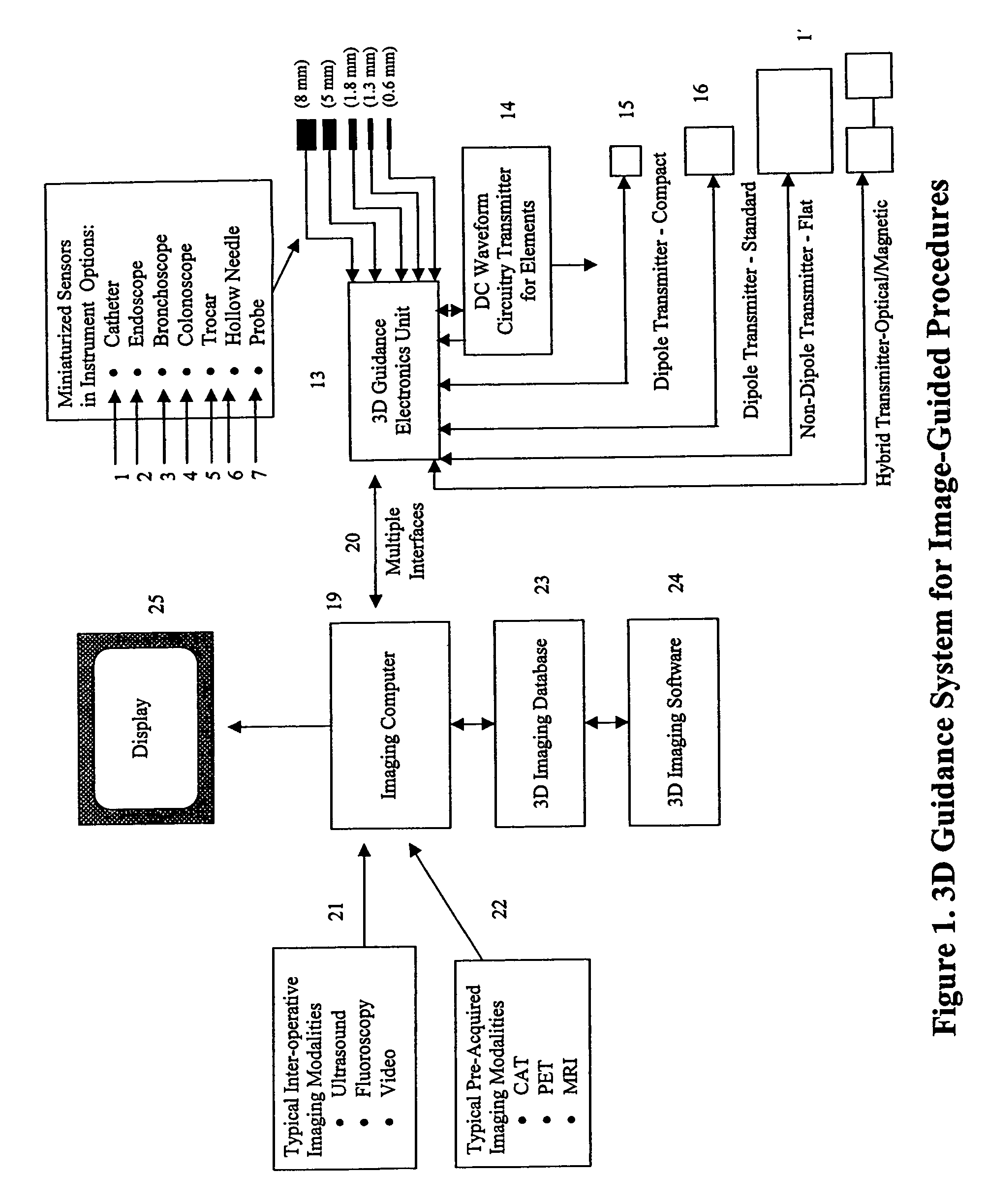

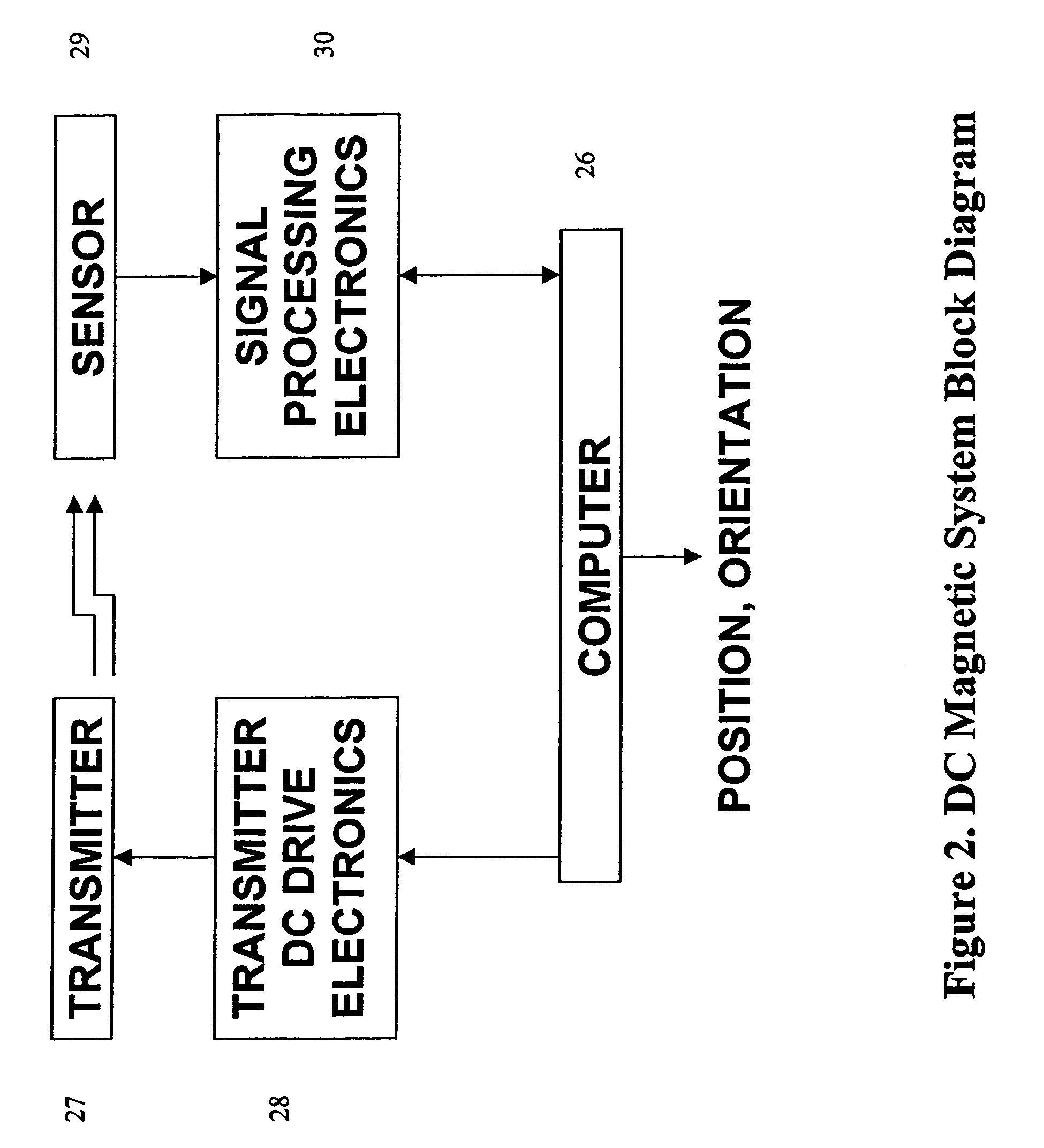

Miniaturized, five and six degrees-of-freedom magnetic sensors, responsive to pulsed DC magnetic fields waveforms generated by multiple transmitter options, provide an improved and cost-effective means of guiding medical instruments to targets inside the human body. The end result is achieved by integrating DC tracking, 3D reconstructions of pre-acquired patient scans and imaging software into a system enabling a physician to internally guide an instrument with real-time 3D vision for diagnostic and interventional purposes. The integration allows physicians to navigate within the human body by following 3D sensor tip locations superimposed on anatomical images reconstructed into 3D volumetric computer models. Sensor data can also be integrated with real-time imaging modalities, such as endoscopes, for intrabody navigation of instruments with instantaneous feedback through critical anatomy to locate and remove tissue. To meet stringent medical requirements, the system generates and senses pulsed DC magnetic fields embodied in an assemblage of miniaturized, disposable and reposable sensors functional with both dipole and co-planar transmitters.

Owner:NORTHERN DIGITAL

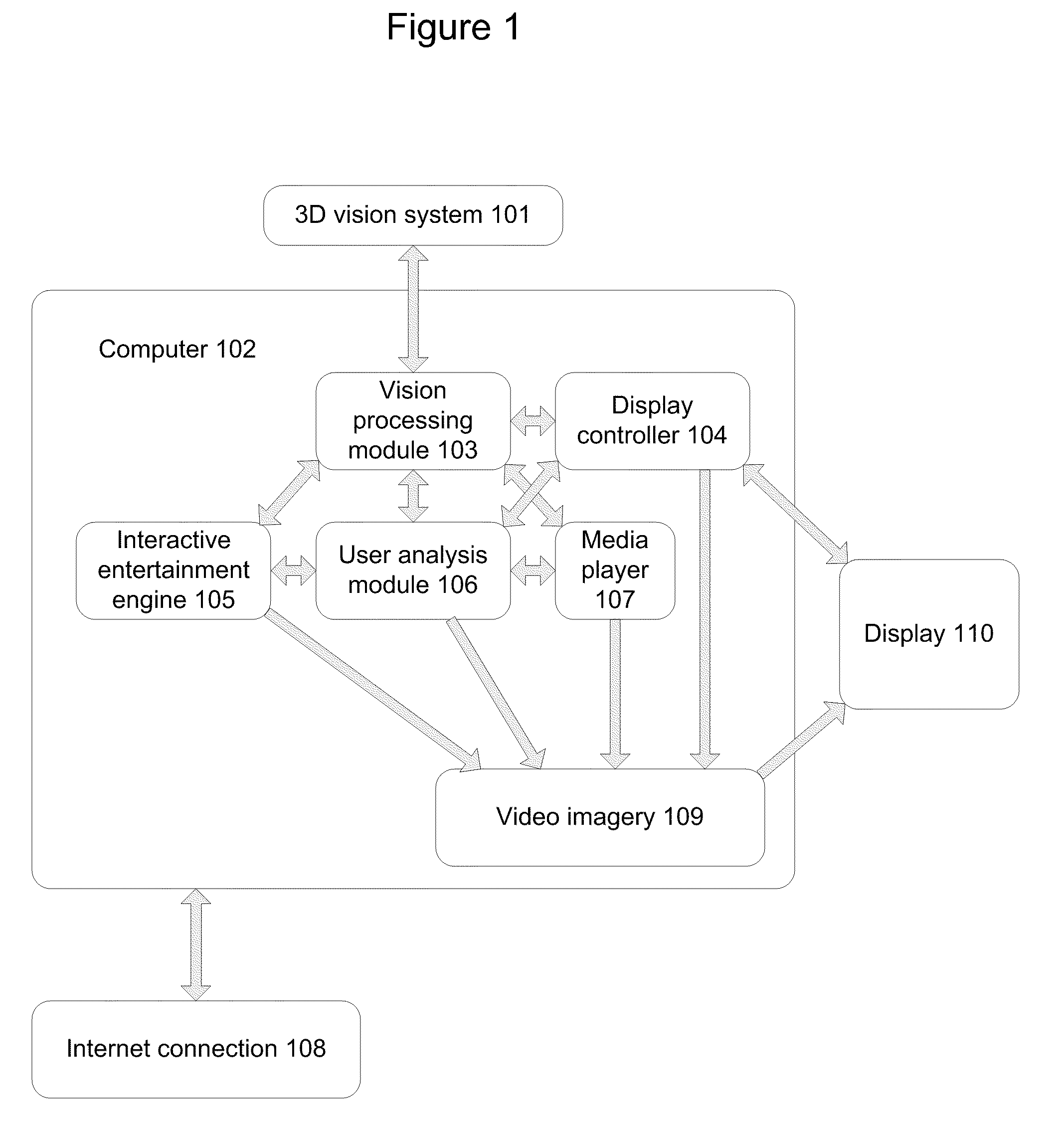

Display with built in 3D sensing

Owner:META PLATFORMS INC

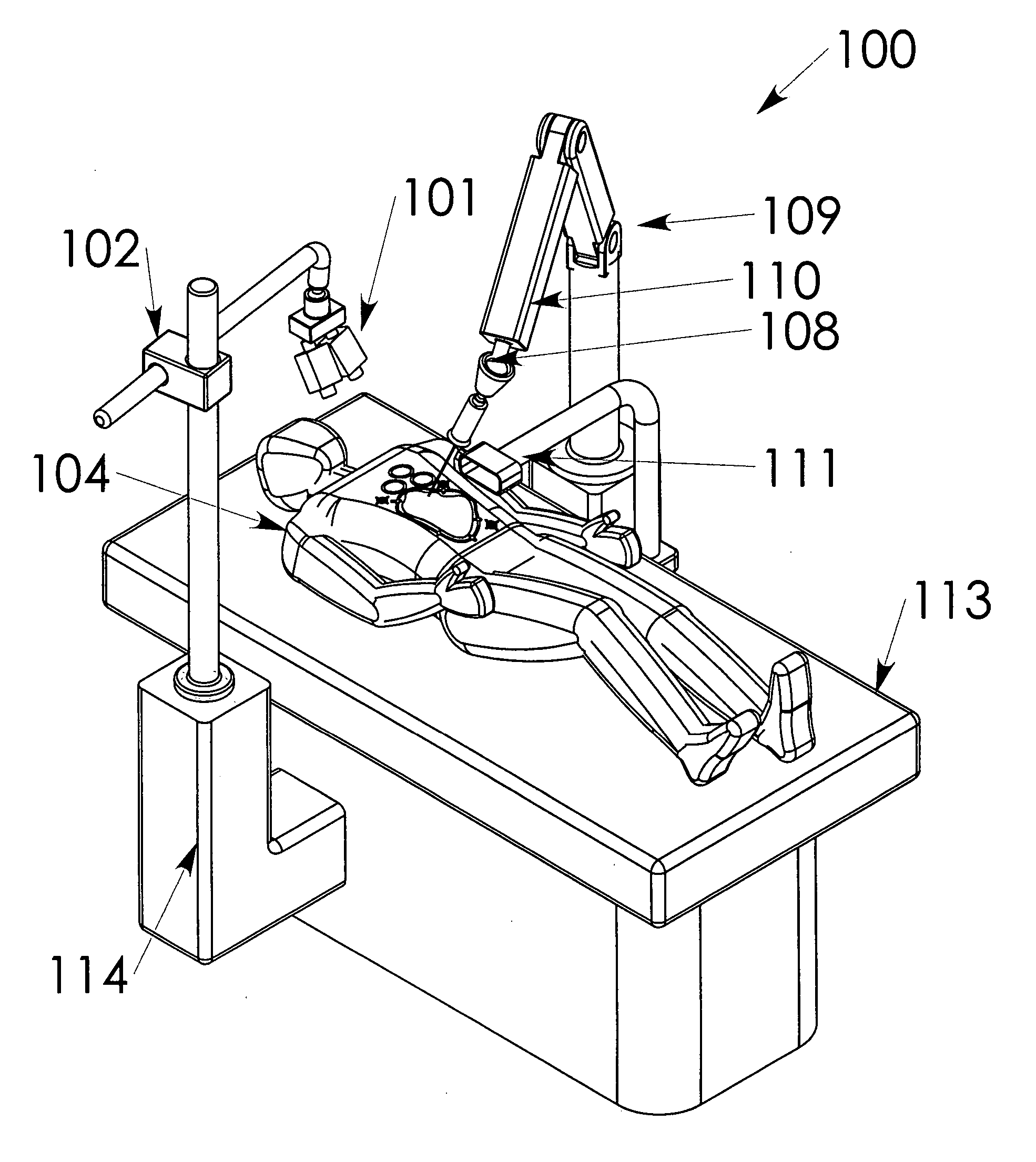

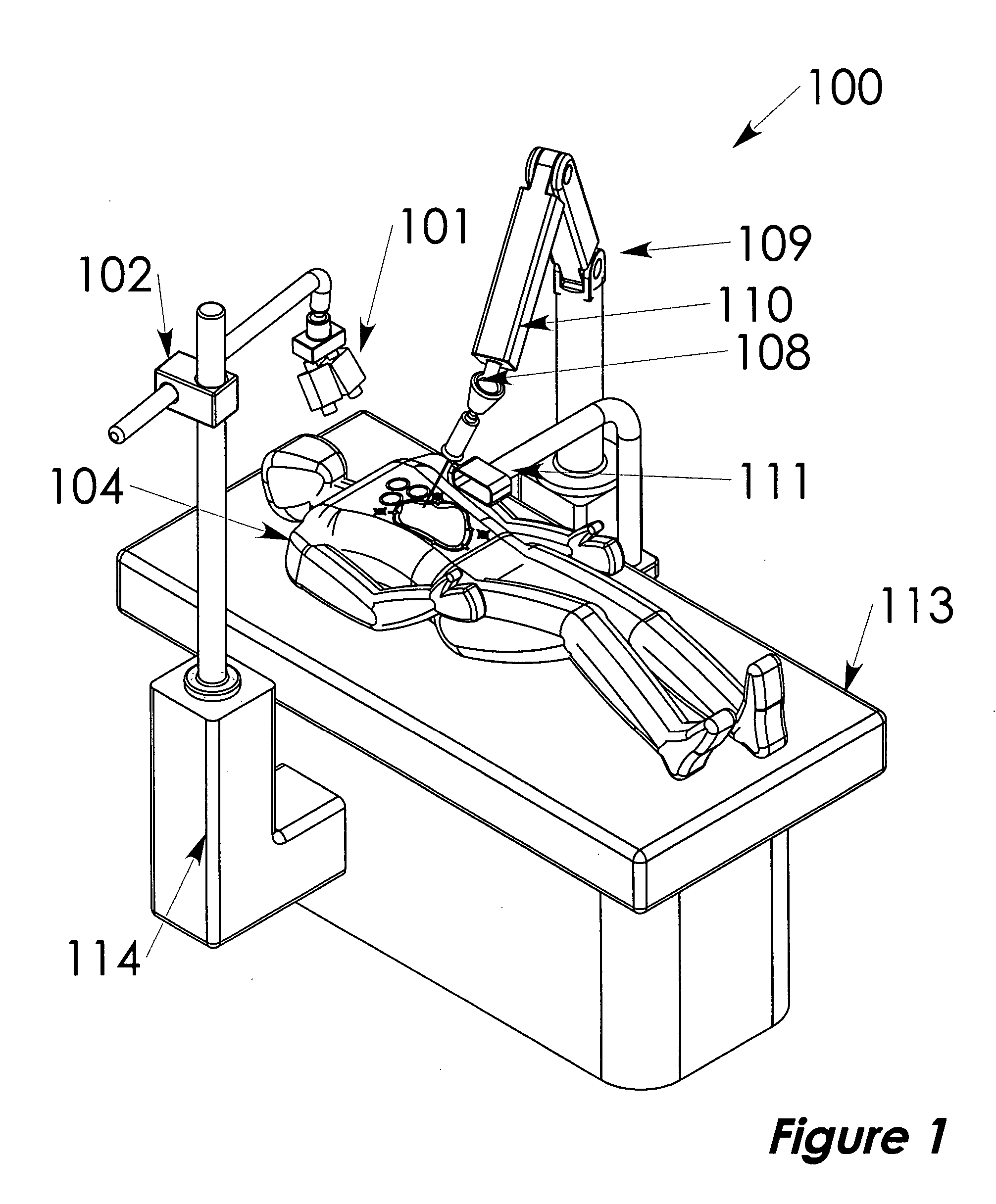

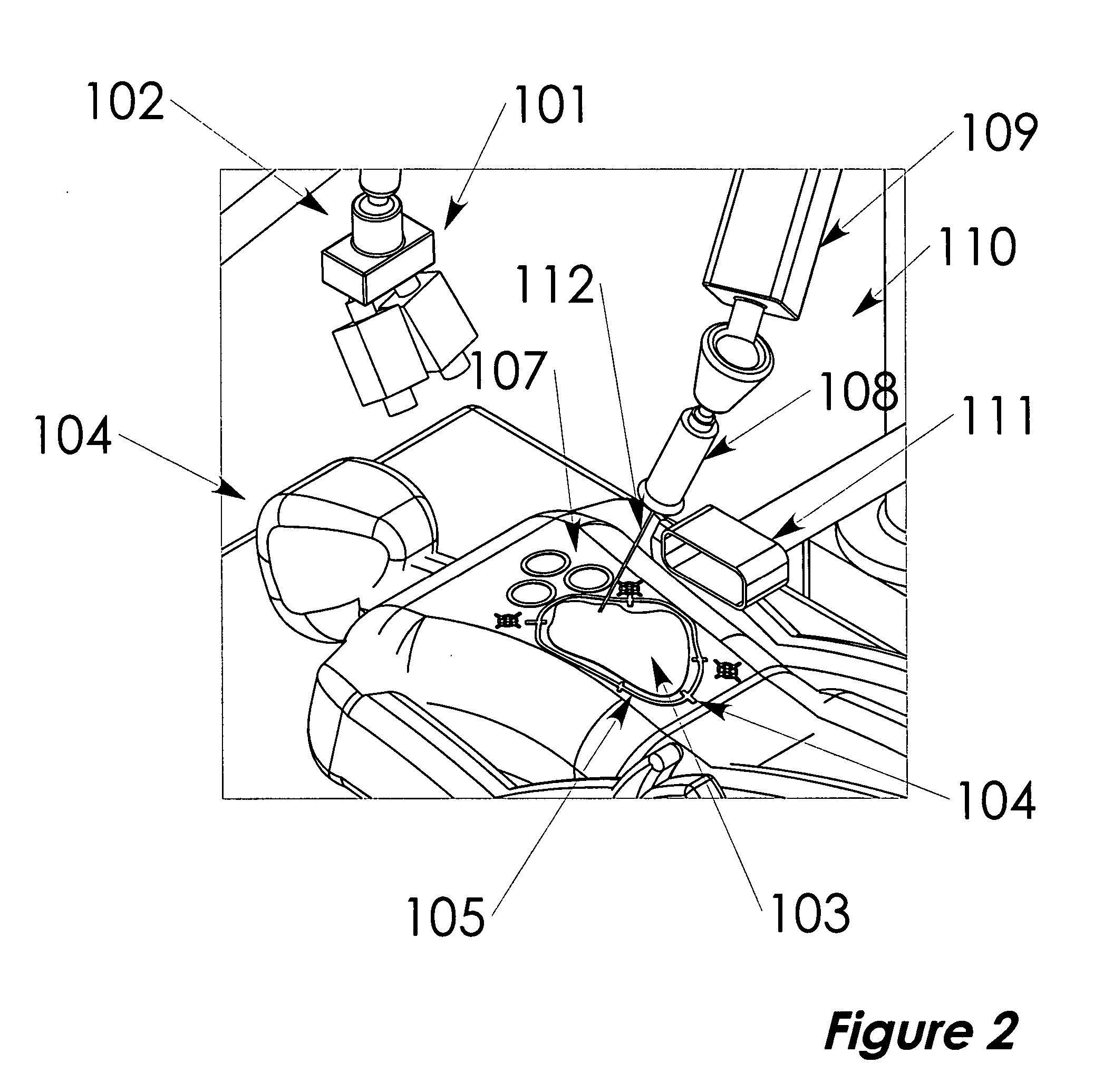

Automated laser-treatment system with real-time integrated 3D vision system for laser debridement and the like

ActiveUS20080033410A1Reduce the burden onDiagnosticsControlling energy of instrumentVisual perception3d vision

A method for automated treatment of an area of skin of a patient with laser energy. The method including: identifying the area to be treated with the laser; modeling the identified area of the skin to be treated; and controlling the laser to direct laser energy to within the modeled area of the skin.

Owner:OMNITEK PARTNERS LLC

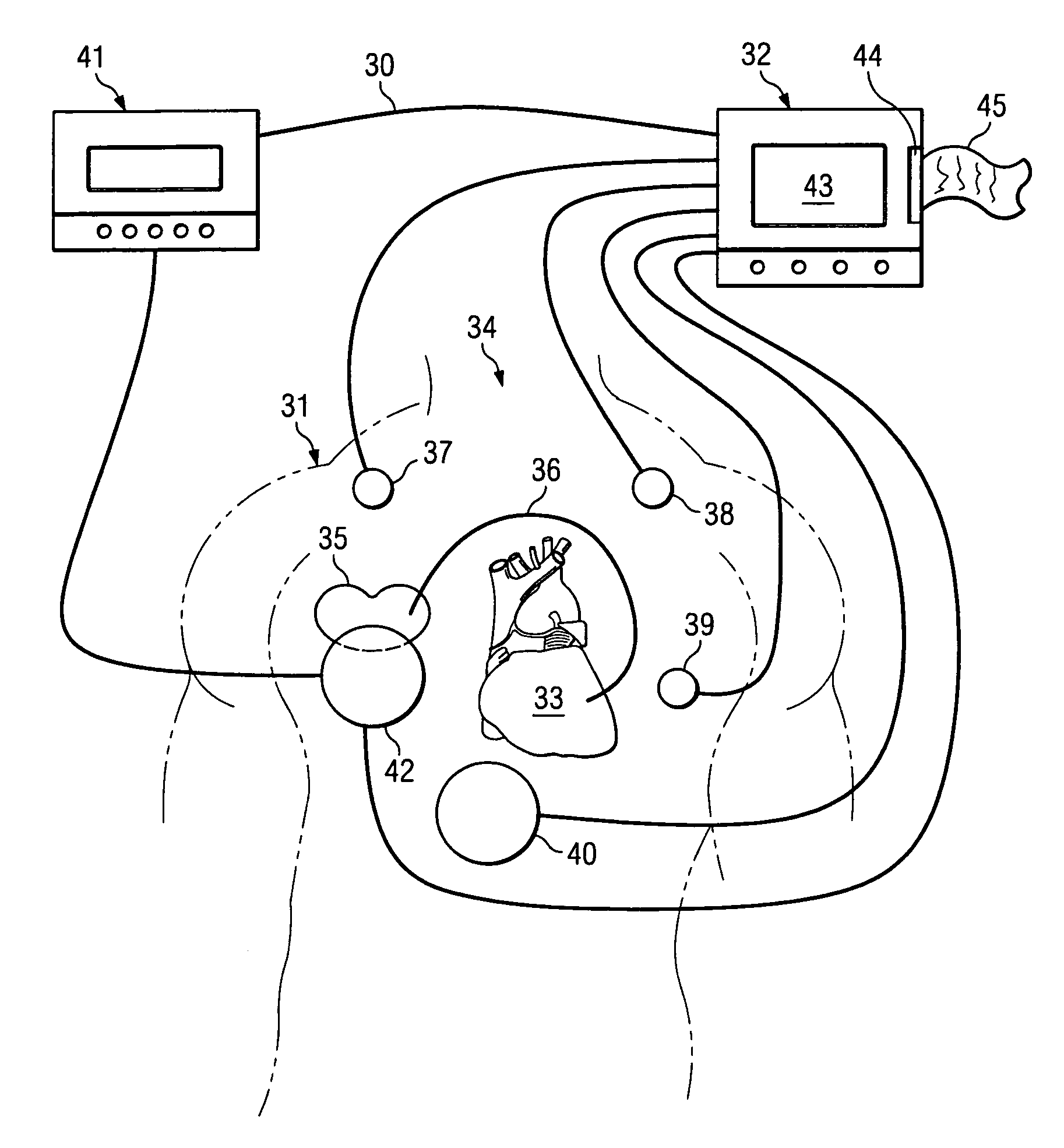

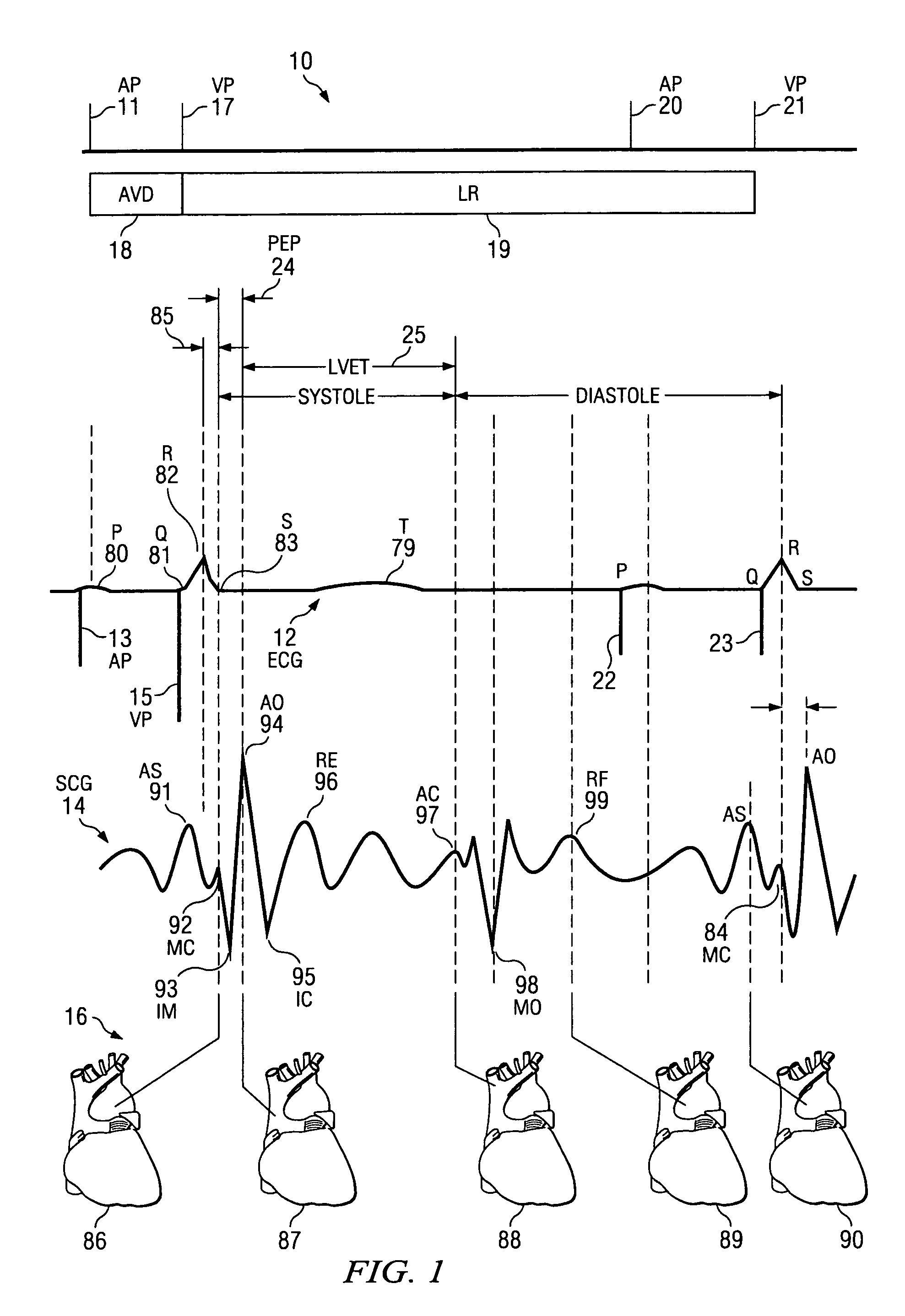

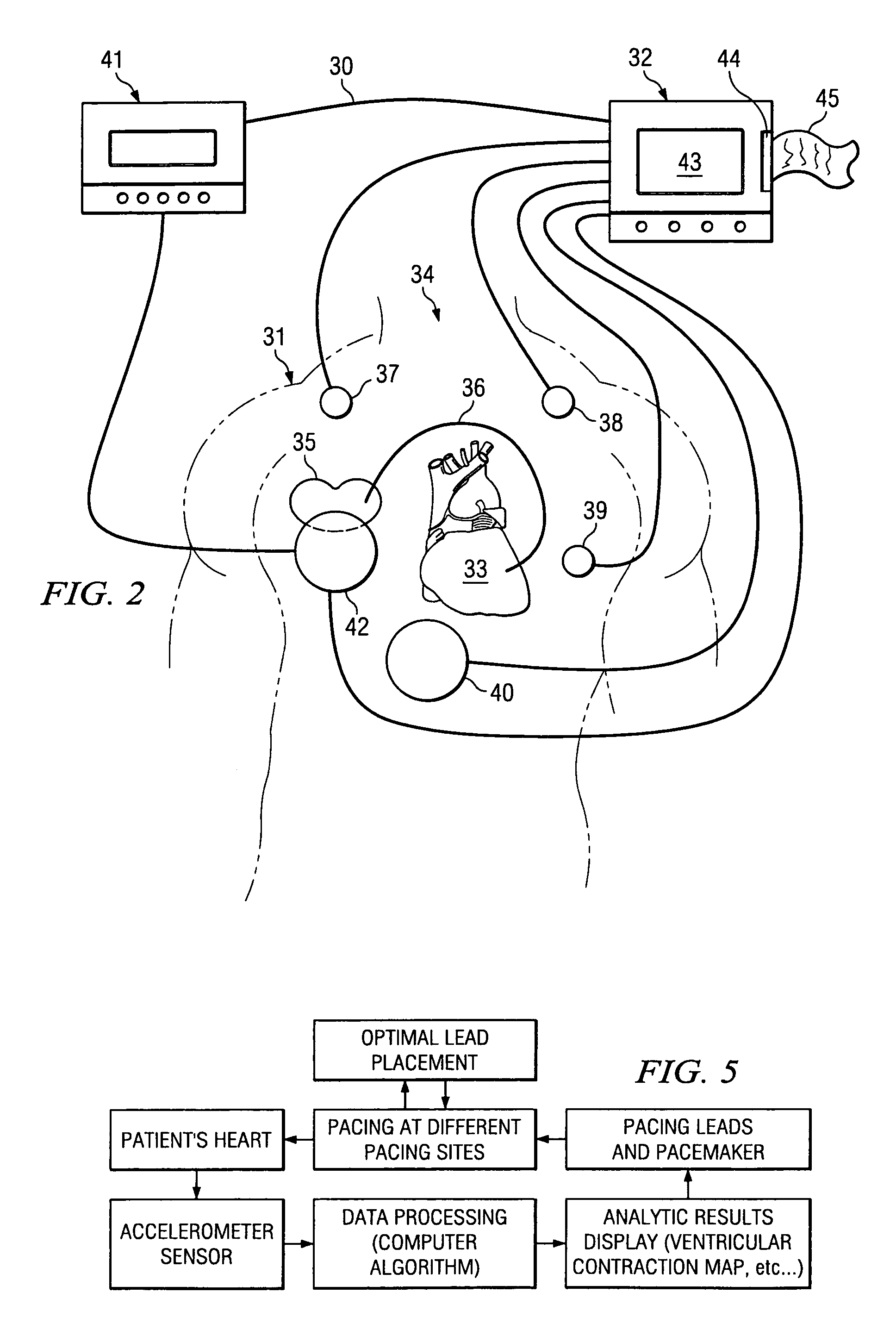

Optimization method for cardiac resynchronization therapy

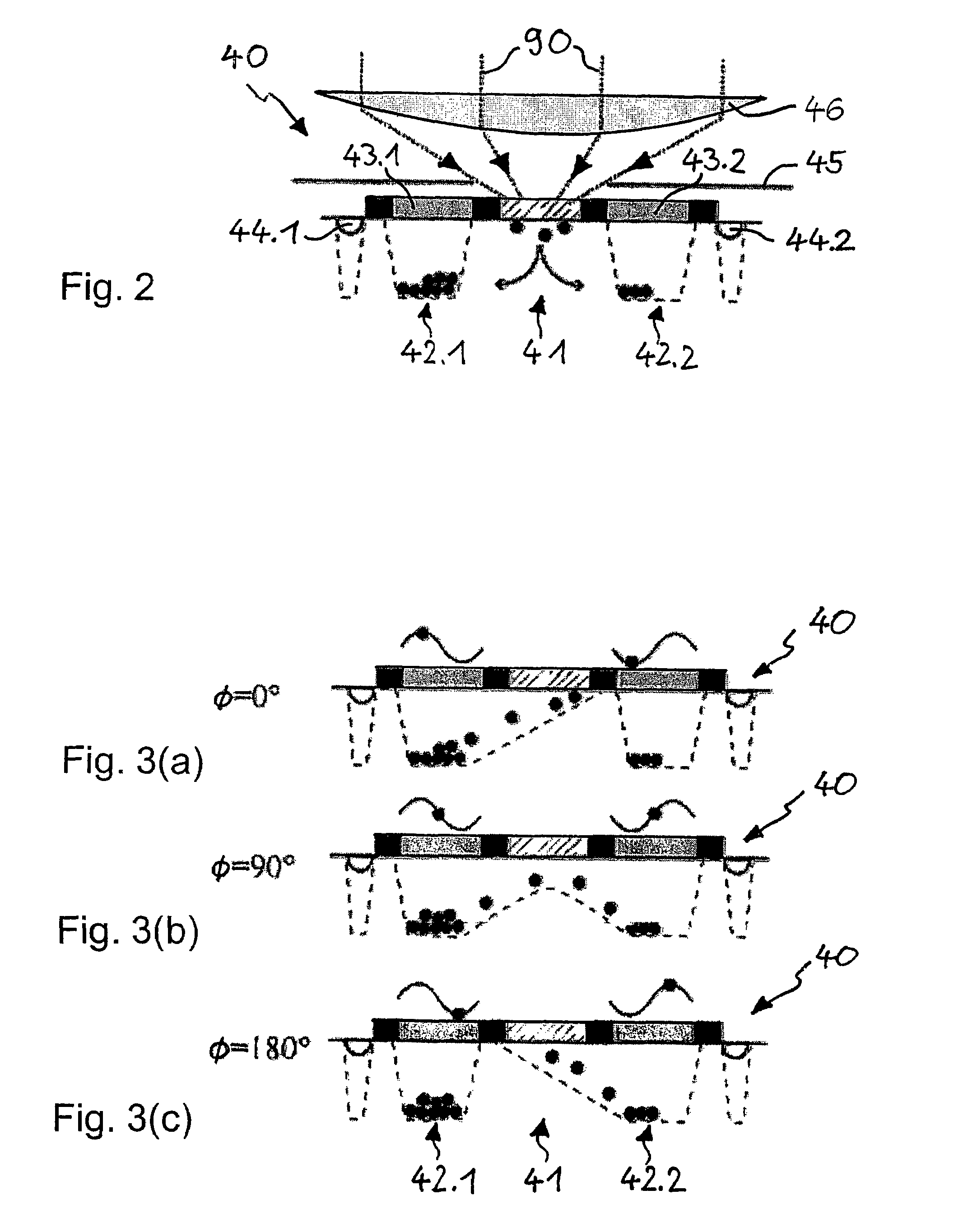

ActiveUS6978184B1Shortening of pre-ejection periodIncrease in rate of contractionInternal electrodesHeart stimulatorsAccelerometerLeft ventricular size

The patterns of contraction and relaxation of the heart before and during left ventricular or biventricular pacing are analyzed and displayed in real time mode to assist physicians to screen patients for cardiac resynchronization therapy, to set the optimal A-V or right ventricle to left ventricle interval delay, and to select the site(s) of pacing that result in optimal cardiac performance. The system includes an accelerometer sensor; a programmable pace maker, a computer data analysis module, and may also include a 2D and 3D visual graphic display of analytic results, i.e. a Ventricular Contraction Map. A feedback network provides direction for optimal pacing leads placement. The method includes selecting a location to place the leads of a cardiac pacing device, collecting seismocardiographic (SCG) data corresponding to heart motion during paced beats of a patient's heart, determining hemodynamic and electrophysiological parameters based on the SCG data, repeating the preceding steps for another lead placement location, and selecting a lead placement location that provides the best cardiac performance by comparing the calculated hemodynamic and electrophysiological parameters for each different lead placement location.

Owner:HEART FORCE MEDICAL +1

DC magnetic-based position and orientation monitoring system for tracking medical instruments

ActiveUS7835785B2Low costEasy to integrateDiagnostic recording/measuringSensors3d sensorDiagnostic Radiology Modality

Miniaturized, five and six degrees-of-freedom magnetic sensors, responsive to pulsed DC magnetic fields waveforms generated by multiple transmitter options, provide an improved and cost-effective means of guiding medical instruments to targets inside the human body. The end result is achieved by integrating DC tracking, 3D reconstructions of pre-acquired patient scans and imaging software into a system enabling a physician to internally guide an instrument with real-time 3D vision for diagnostic and interventional purposes. The integration allows physicians to navigate within the human body by following 3D sensor tip locations superimposed on anatomical images reconstructed into 3D volumetric computer models. Sensor data can also be integrated with real-time imaging modalities, such as endoscopes, for intrabody navigation of instruments with instantaneous feedback through critical anatomy to locate and remove tissue. To meet stringent medical requirements, the system generates and senses pulsed DC magnetic fields embodied in an assemblage of miniaturized, disposable and reposable sensors functional with both dipole and co-planar transmitters.

Owner:NORTHERN DIGITAL

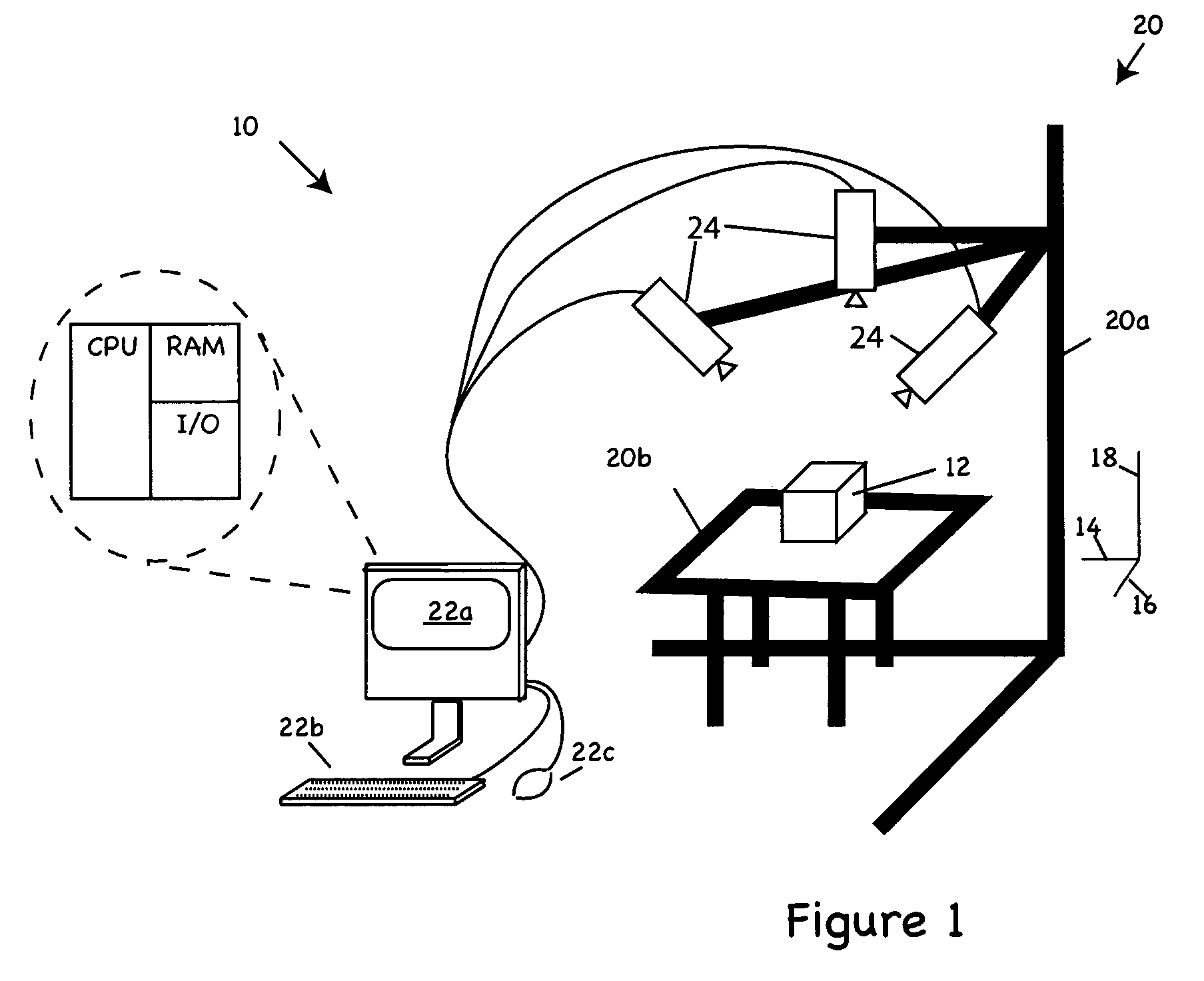

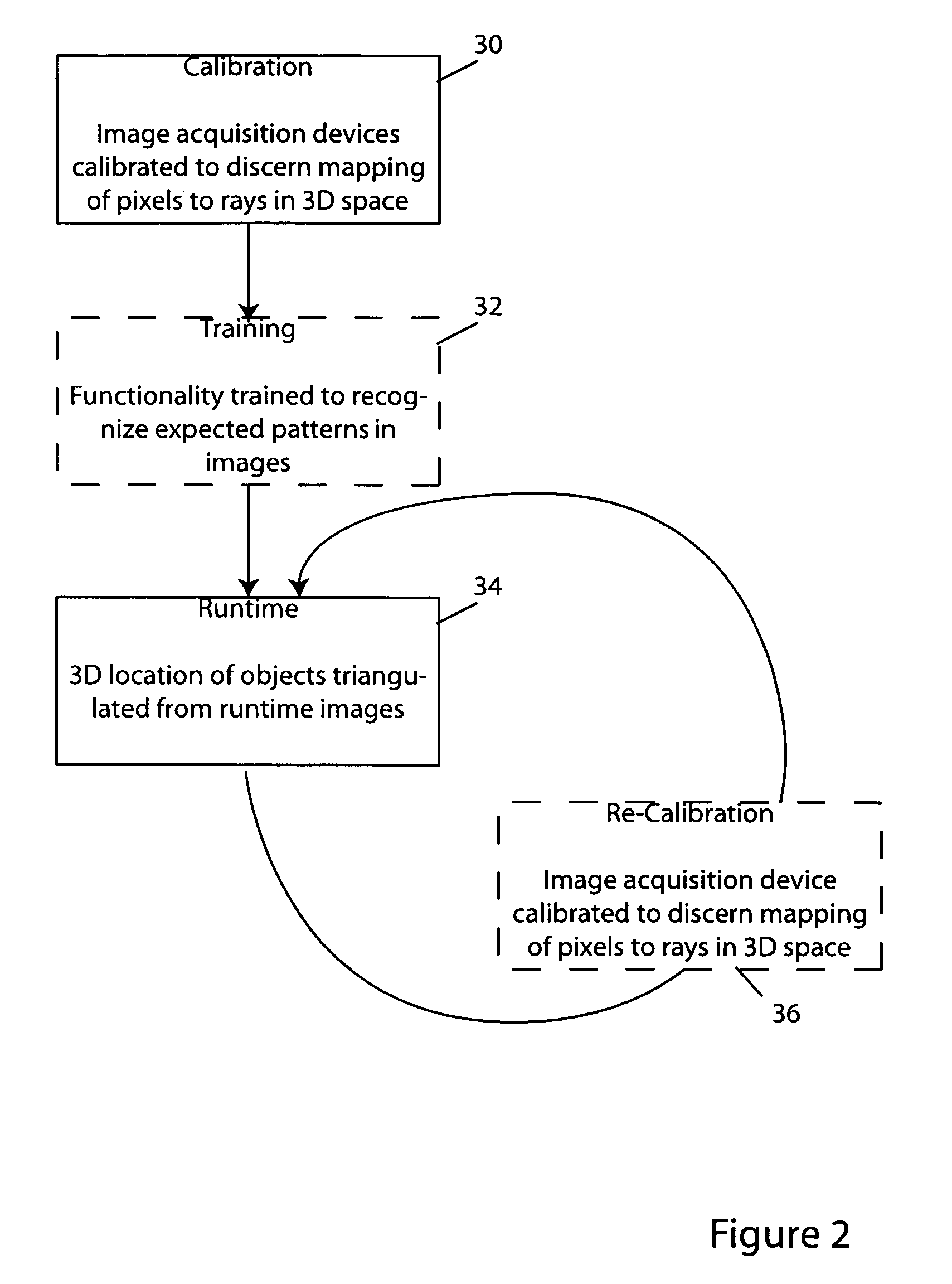

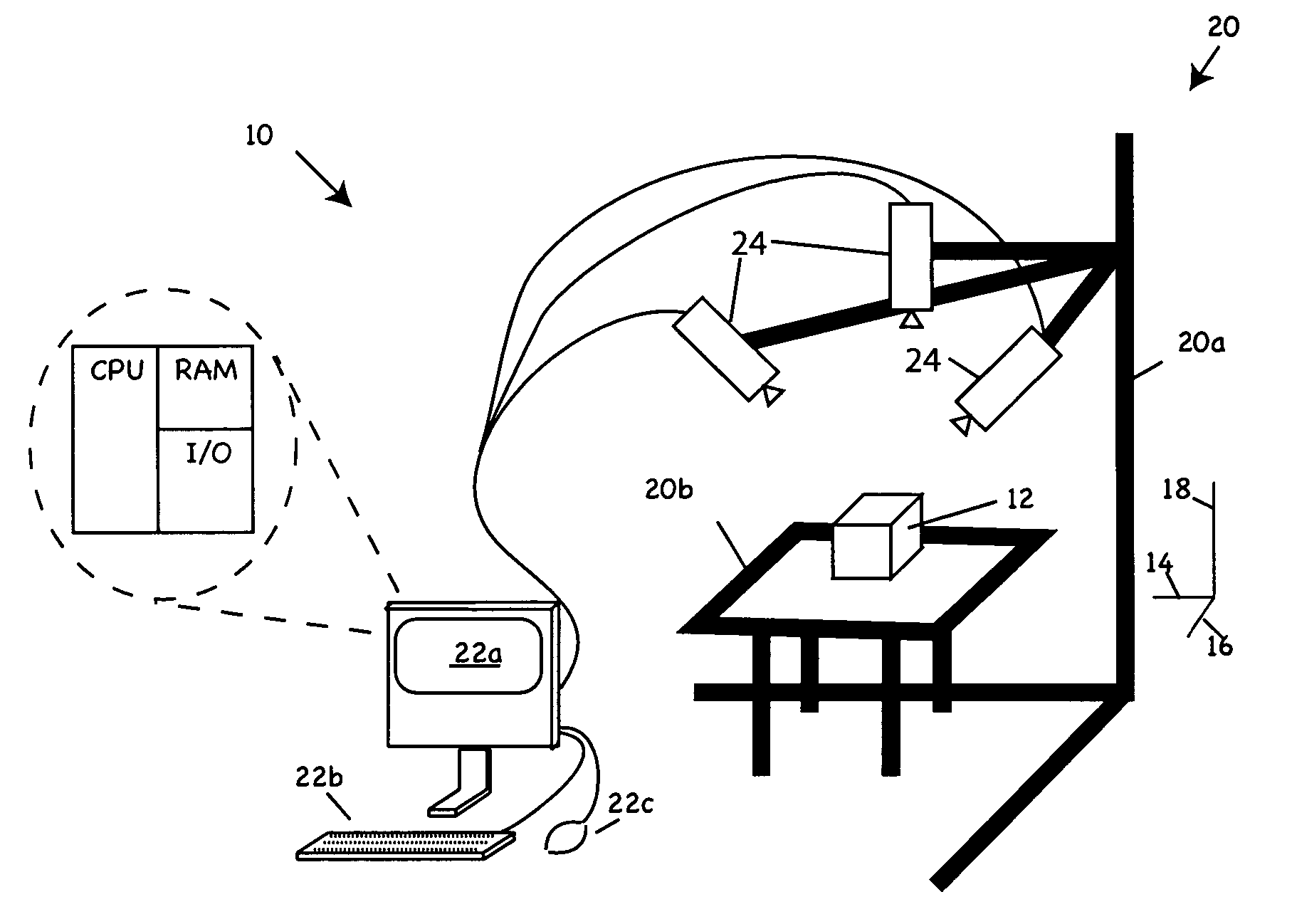

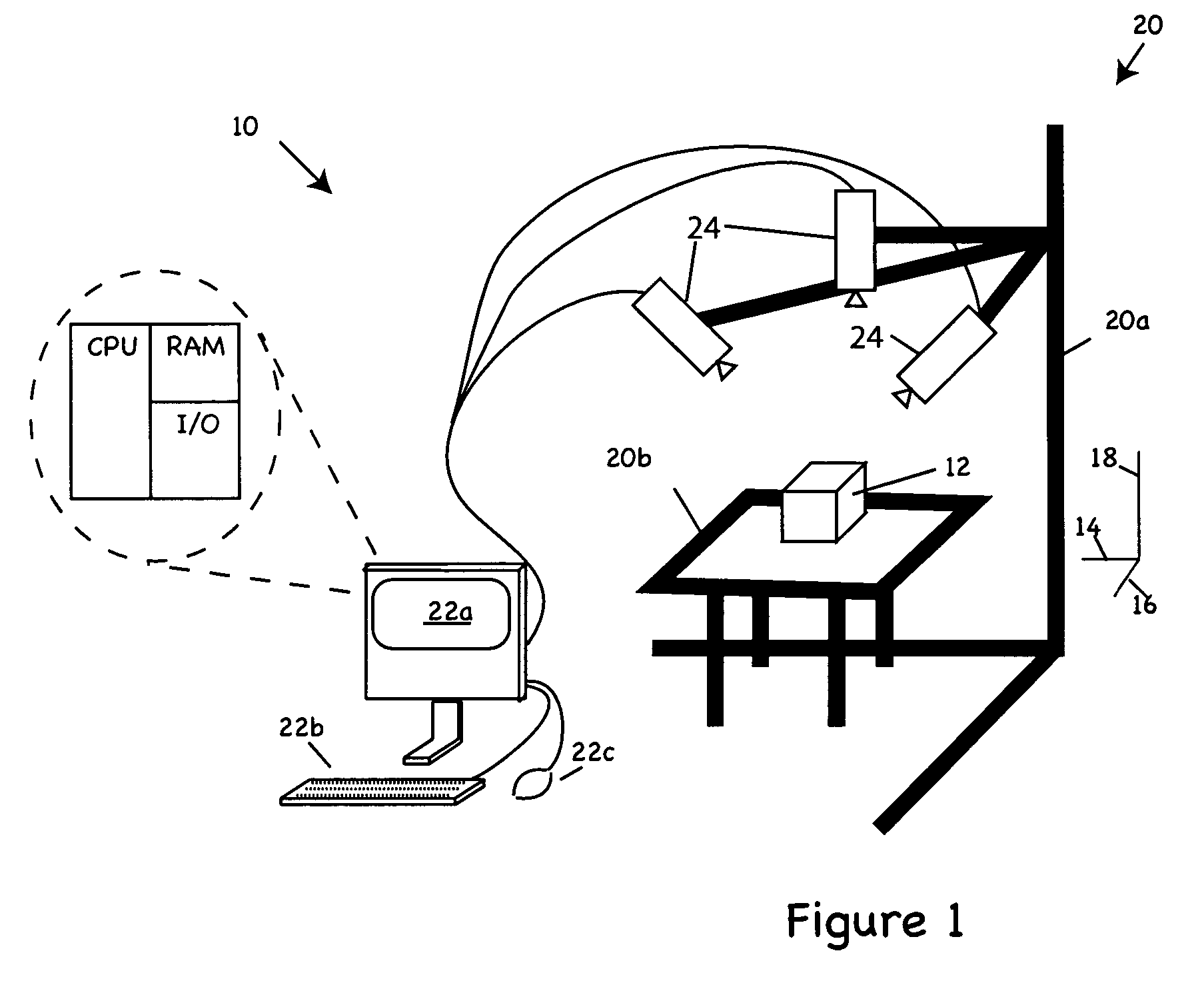

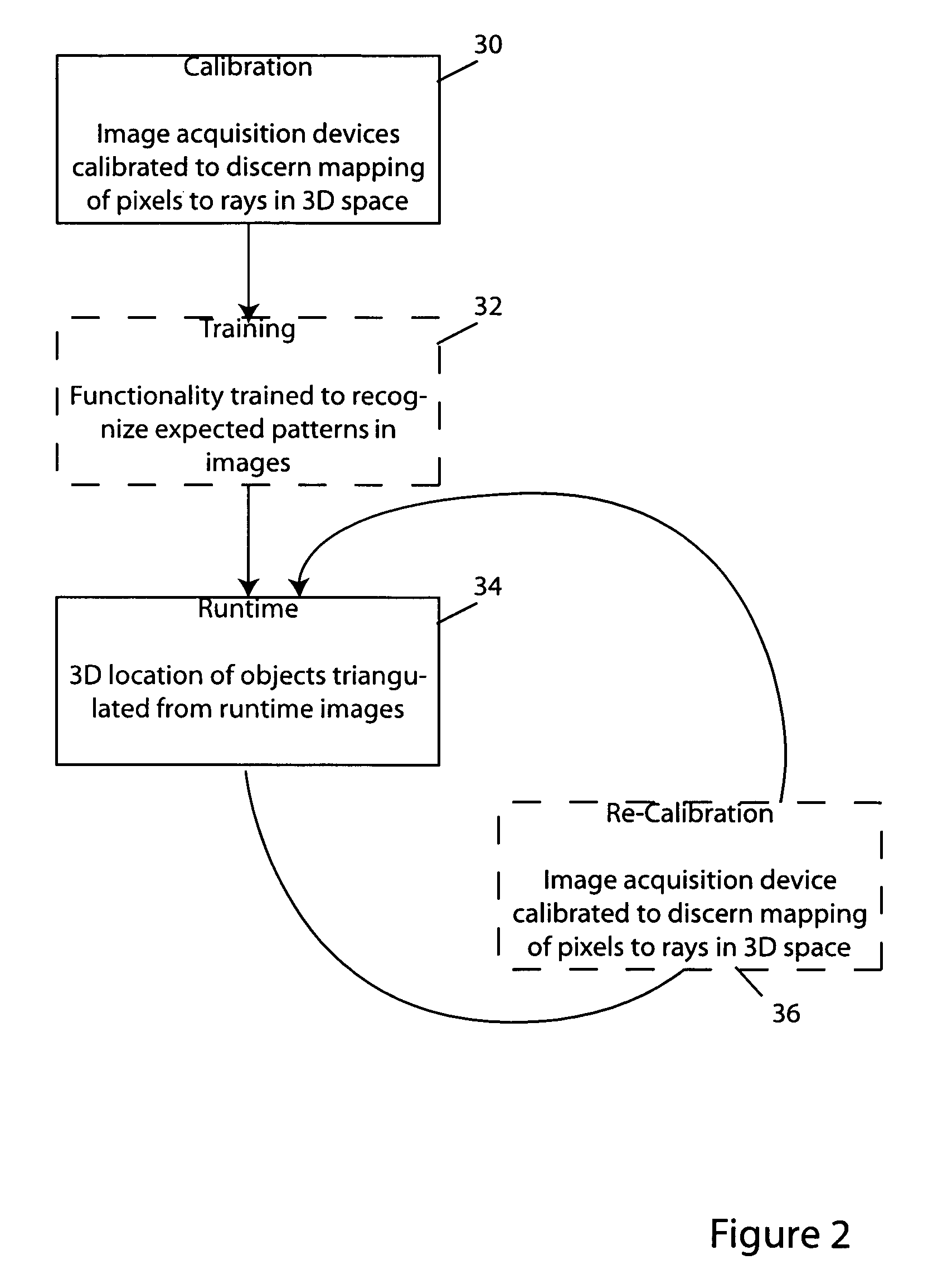

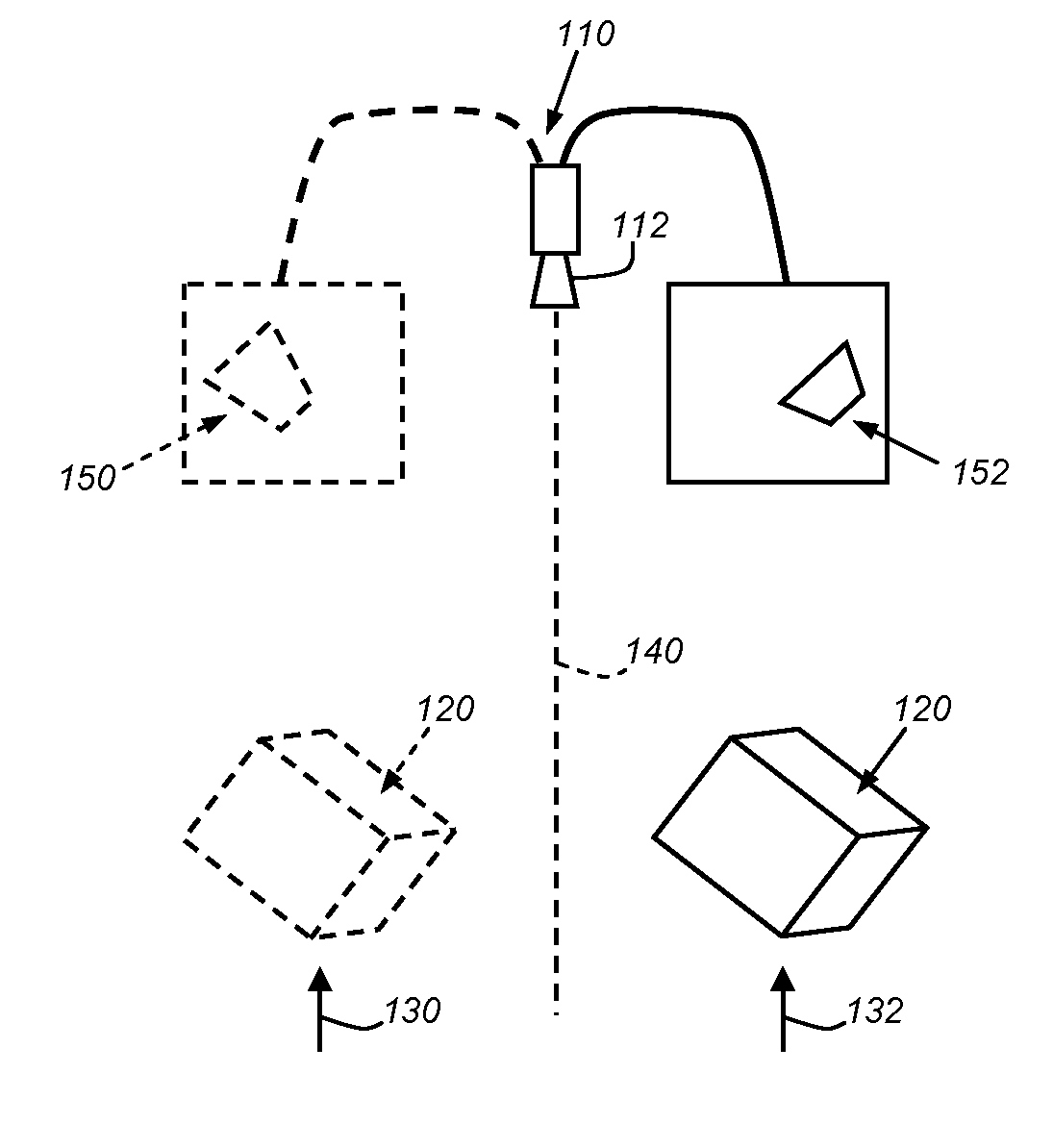

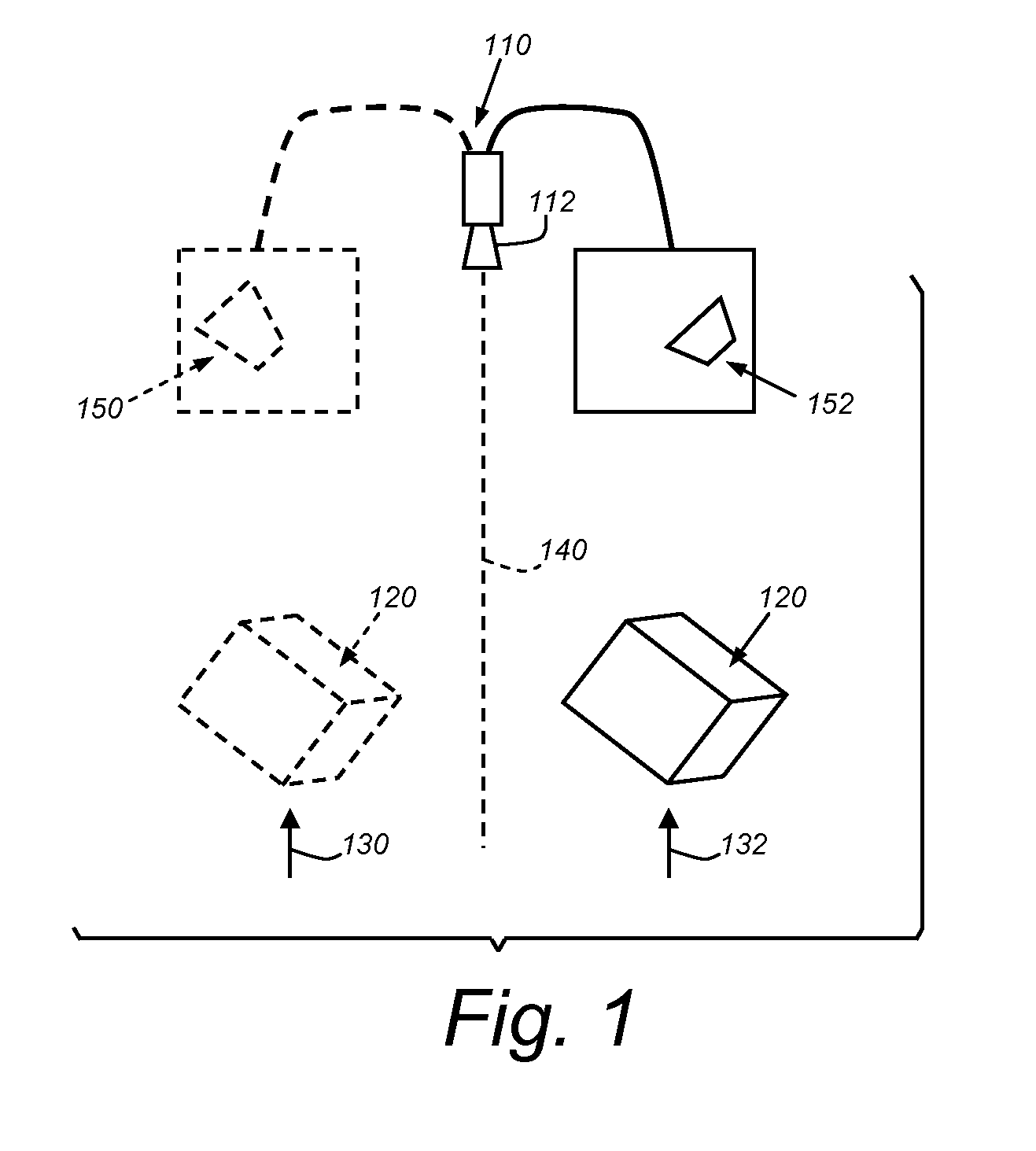

Methods and apparatus for practical 3D vision system

ActiveUS20070081714A1Facilitates finding patternsHigh match scoreCharacter and pattern recognitionCamera lensMachine vision

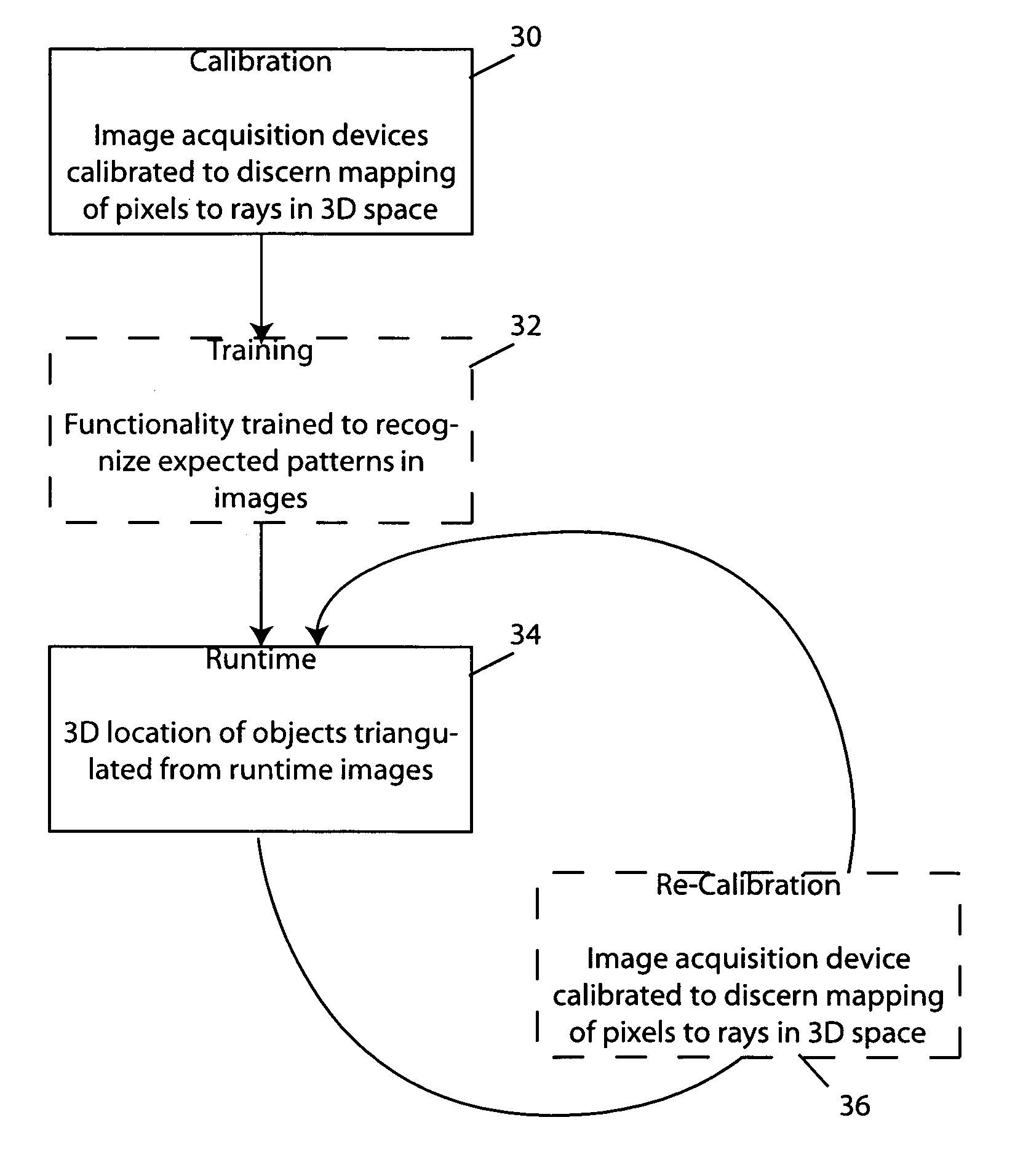

The invention provides inter alia methods and apparatus for determining the pose, e.g., position along x-, y- and z-axes, pitch, roll and yaw (or one or more characteristics of that pose) of an object in three dimensions by triangulation of data gleaned from multiple images of the object. Thus, for example, in one aspect, the invention provides a method for 3D machine vision in which, during a calibration step, multiple cameras disposed to acquire images of the object from different respective viewpoints are calibrated to discern a mapping function that identifies rays in 3D space emanating from each respective camera's lens that correspond to pixel locations in that camera's field of view. In a training step, functionality associated with the cameras is trained to recognize expected patterns in images to be acquired of the object. A runtime step triangulates locations in 3D space of one or more of those patterns from pixel-wise positions of those patterns in images of the object and from the mappings discerned during calibration step.

Owner:COGNEX TECH & INVESTMENT

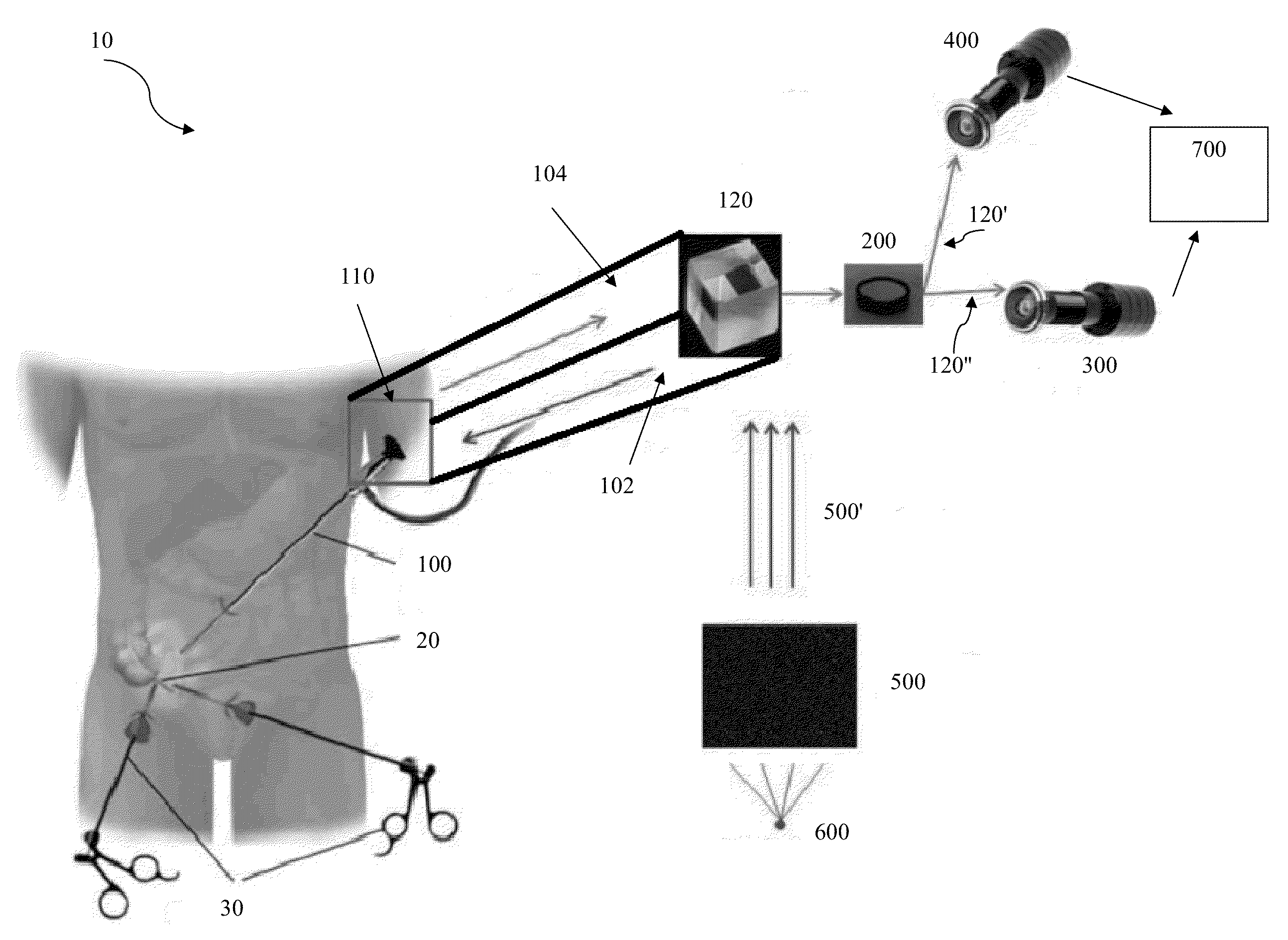

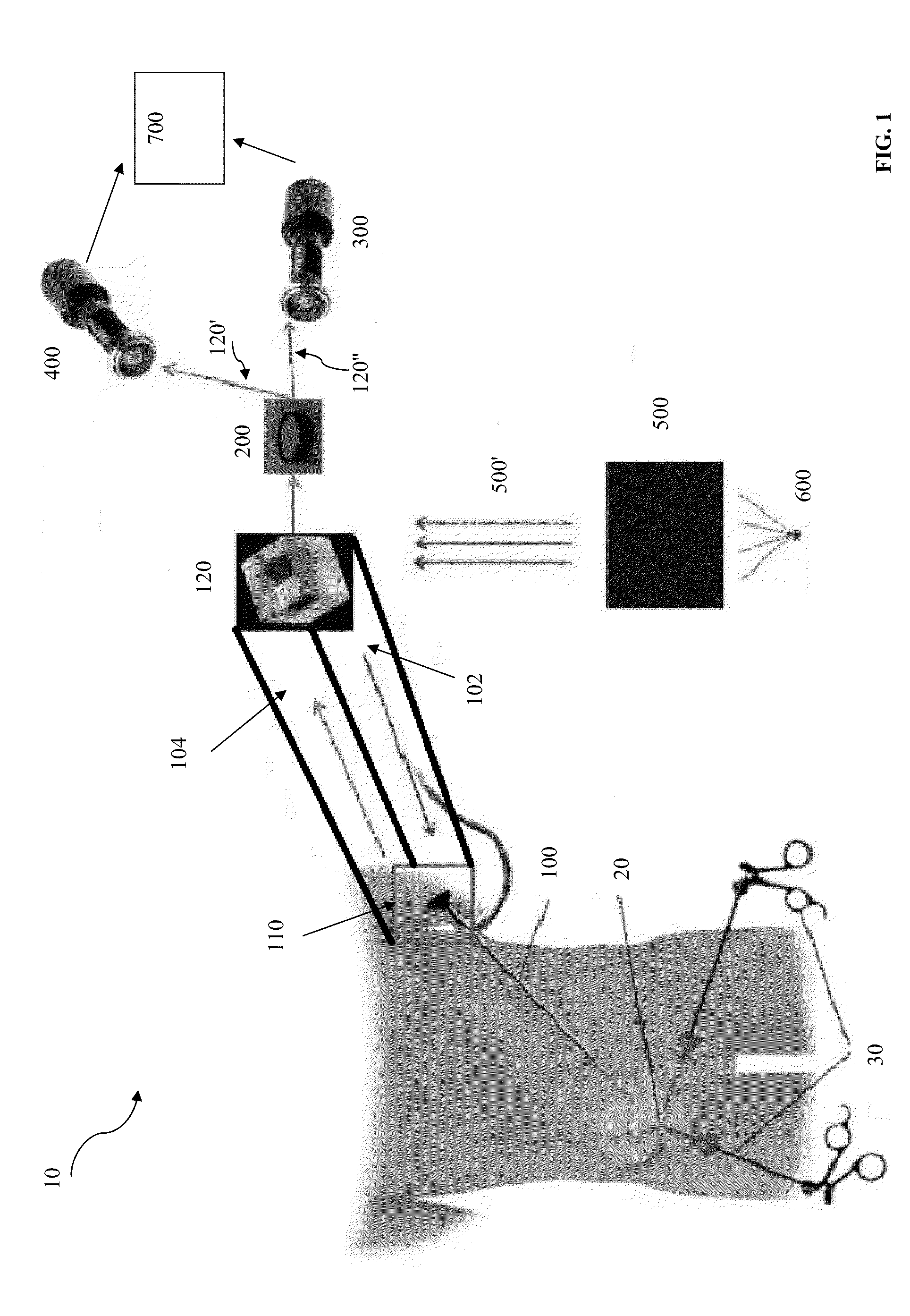

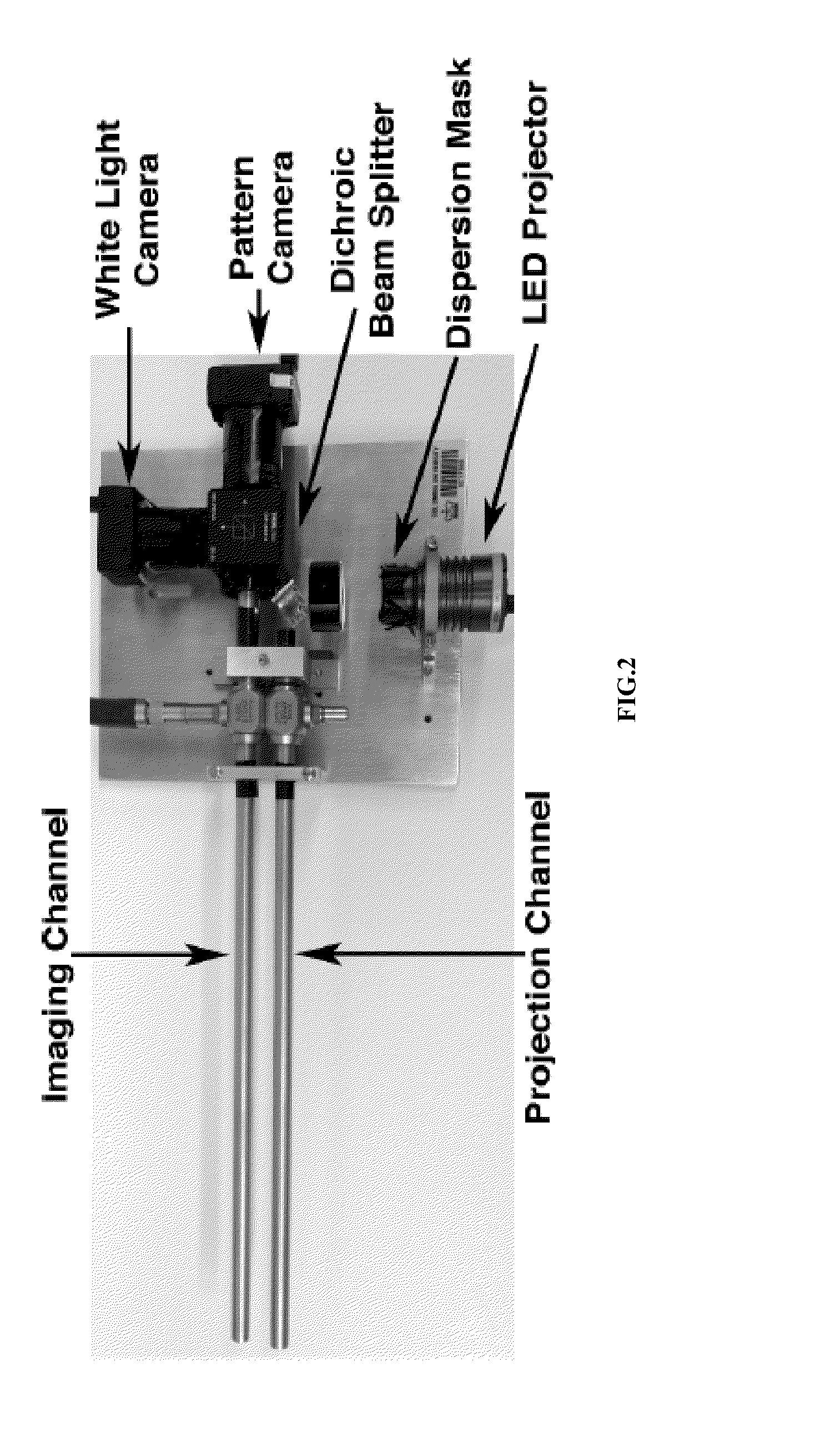

Surgical structured light system

InactiveUS20140336461A1Improved navigation and safetyEasy to learnSurgeryEndoscopesVisual perceptionMarine navigation

A Surgical Structured Light (SSL) system is disclosed that provides real-time, dynamic 3D visual information of the surgical environment, allowing registration of pre- and intra-operative imaging, online metric measurements of tissue, and improved navigation and safety within the surgical field.

Owner:THE TRUSTEES OF COLUMBIA UNIV IN THE CITY OF NEW YORK

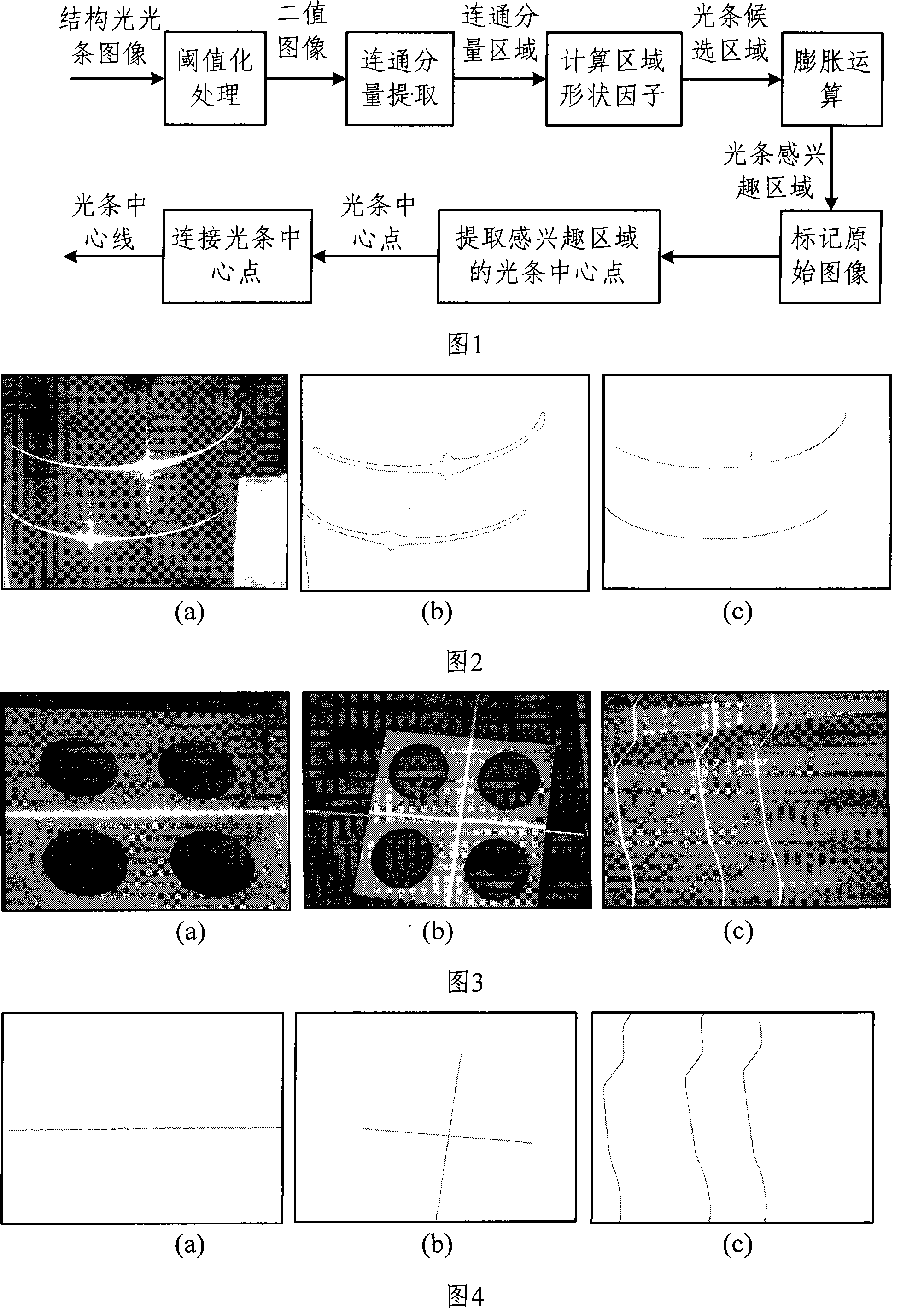

Mixed image processing process of structure light striation central line extraction

InactiveCN101178812AGuaranteed extraction accuracyReduce redundant calculationsImage analysisUsing optical meansThree dimensional measurementVisual perception

The invention relates to a hybrid image processing method of extracting the centric line of the structured-light stripe based on the ROI area, belonging to the field of machine vision technique. By combining the image threshold processing, communicated components extraction, shape factor constraint and expansion method, the invention automatically divides the structured light area as the ROI area of light stripe extraction, using the extraction method of fringe center based on Hessian matrix to realize the extraction of sub-pixel image space of the centric line of the structured-light stripe of the ROI area. The method has the advantages of high precision, good robustness, high automation degree and other advantages, avoiding the large formwork Gaussian convolution calculation of the pixel the non-light stripe area, thereby reducing the redundant calculation of the extraction of the structured light stripe and realizing the high speed and precision extraction of the centric line of the light stripe, laying a foundation for the real-time application of the structured light 3D vision measurement.

Owner:BEIHANG UNIV

Methods and apparatus for practical 3D vision system

ActiveUS8111904B2Facilitates finding patternsHigh match scoreCharacter and pattern recognitionColor television detailsMachine visionComputer graphics (images)

Owner:COGNEX TECH & INVESTMENT

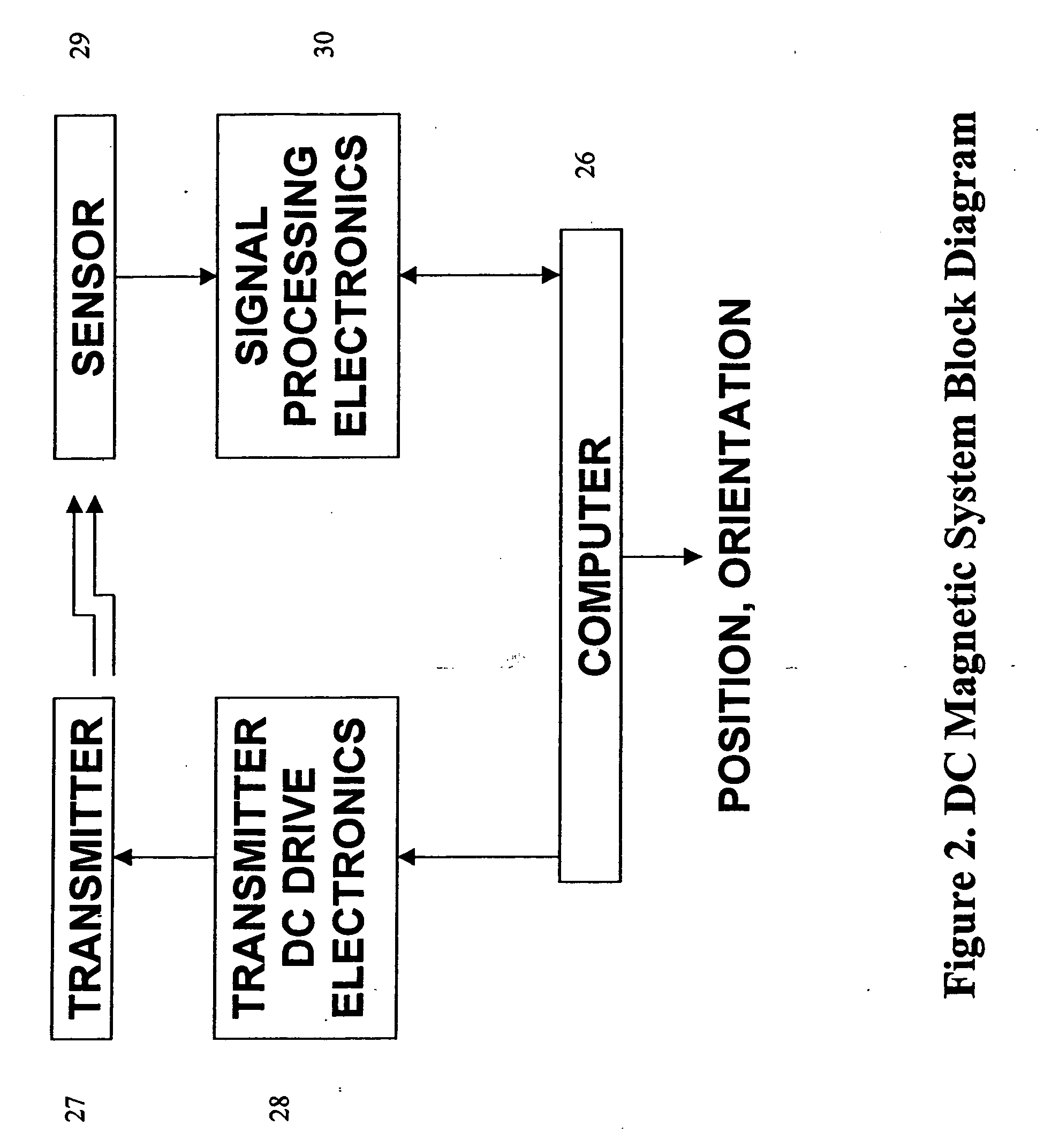

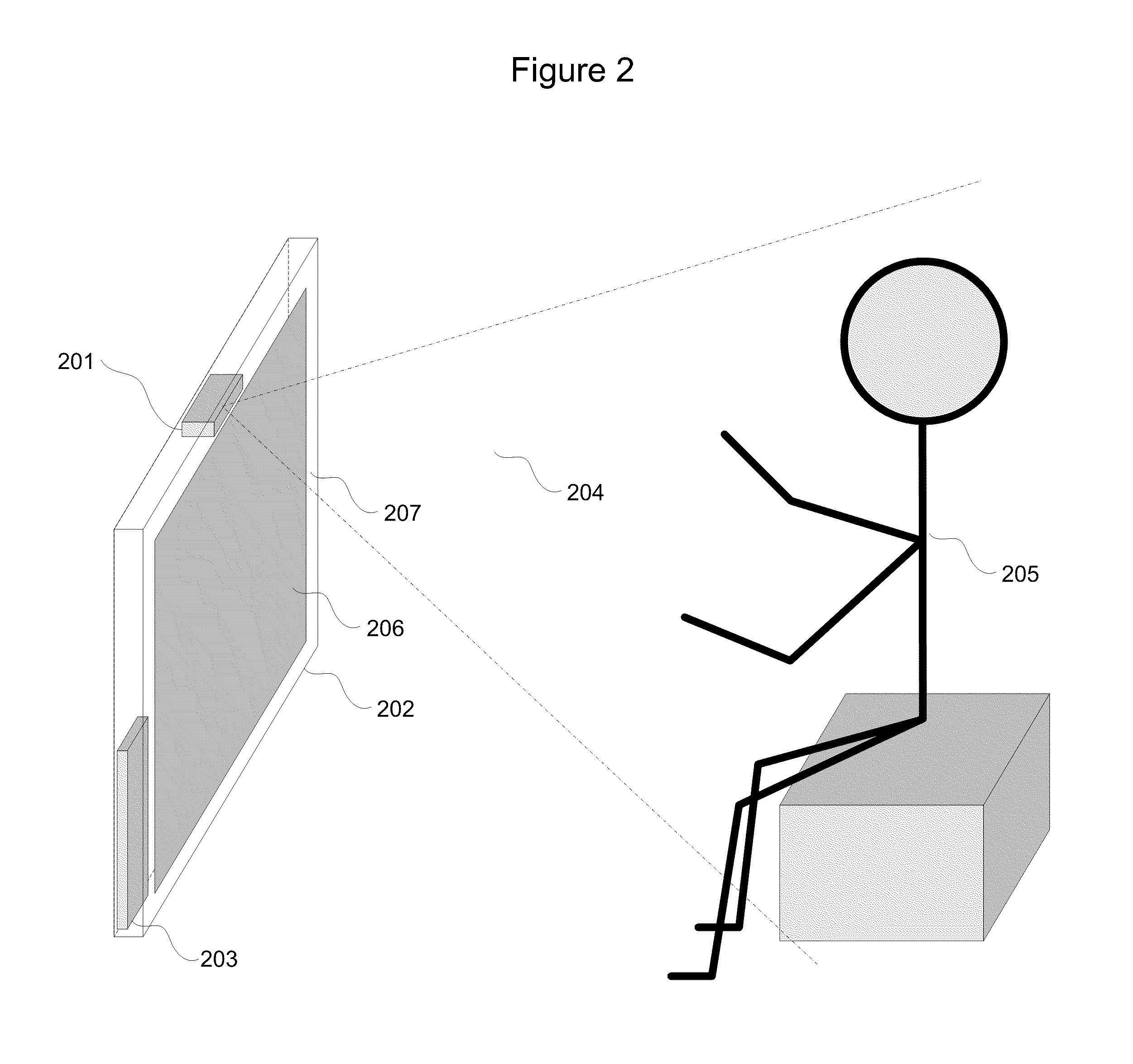

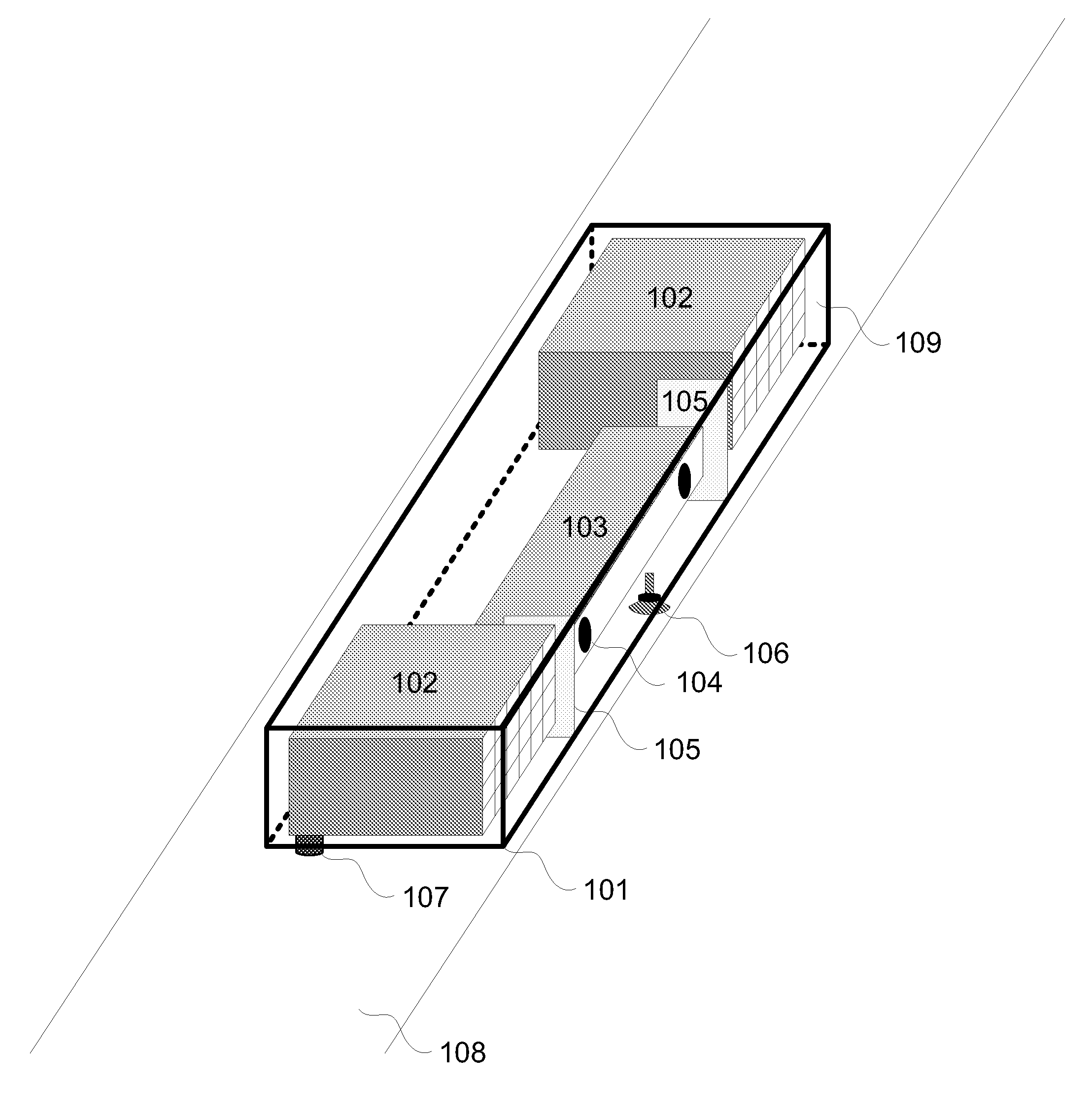

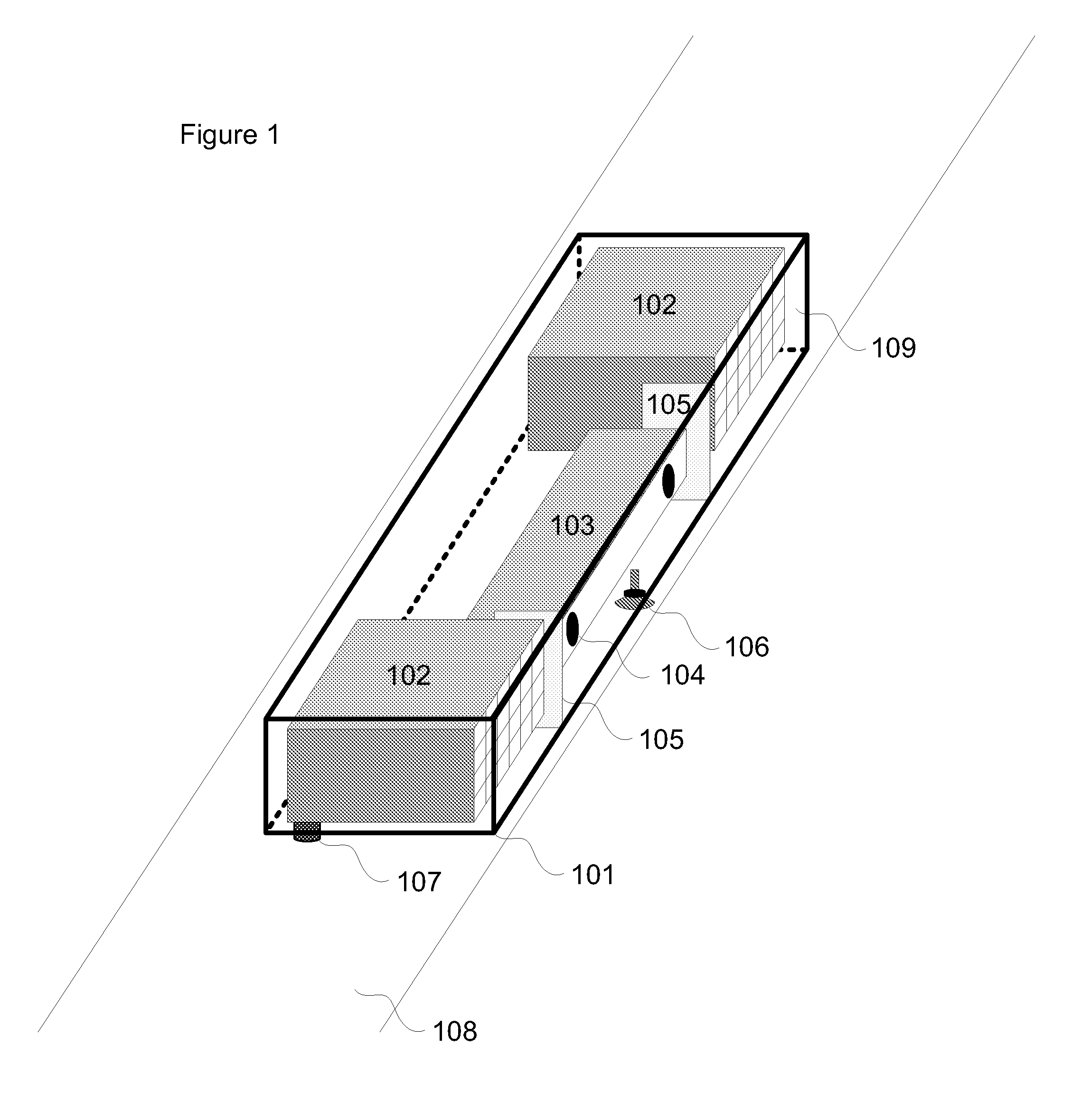

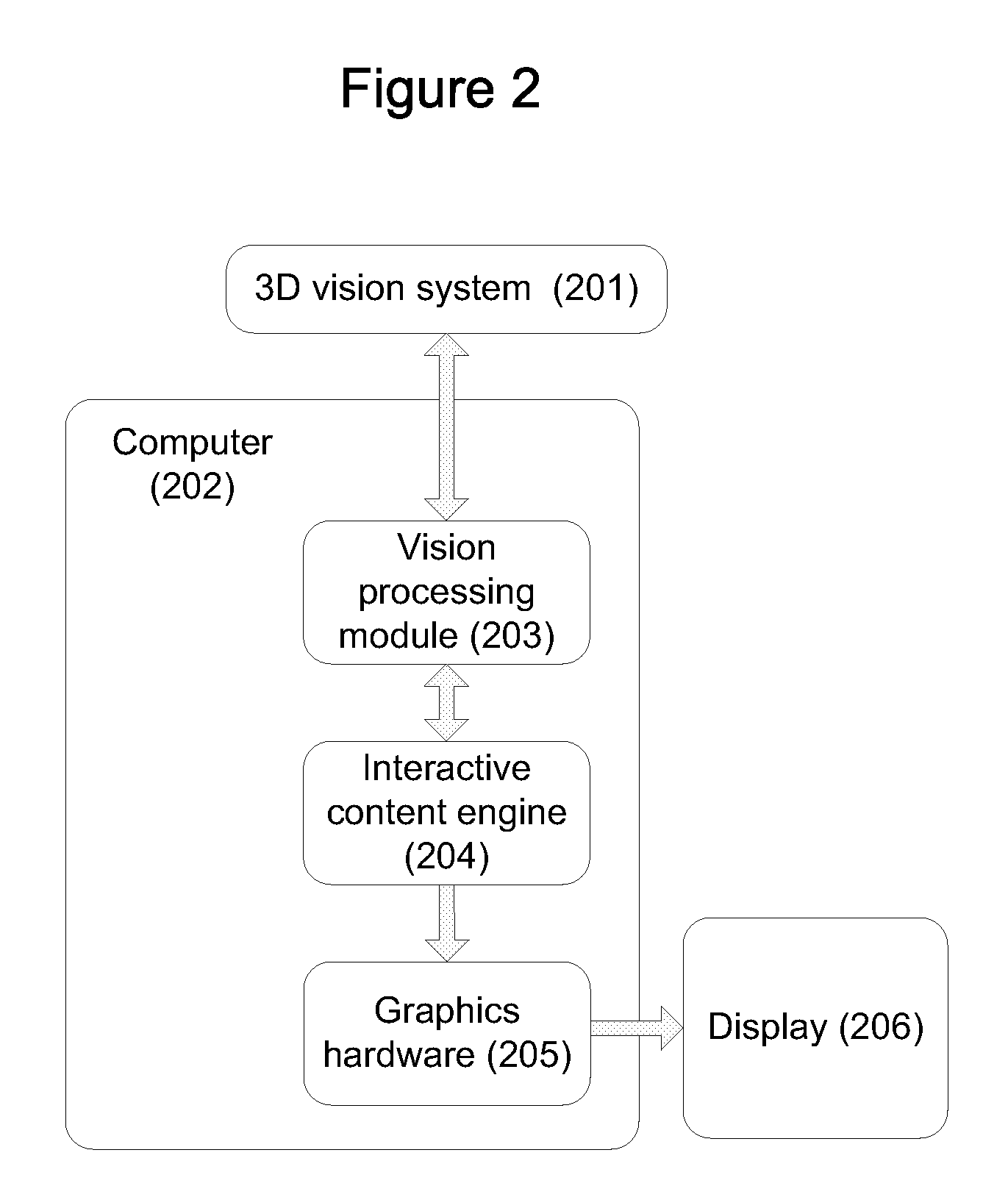

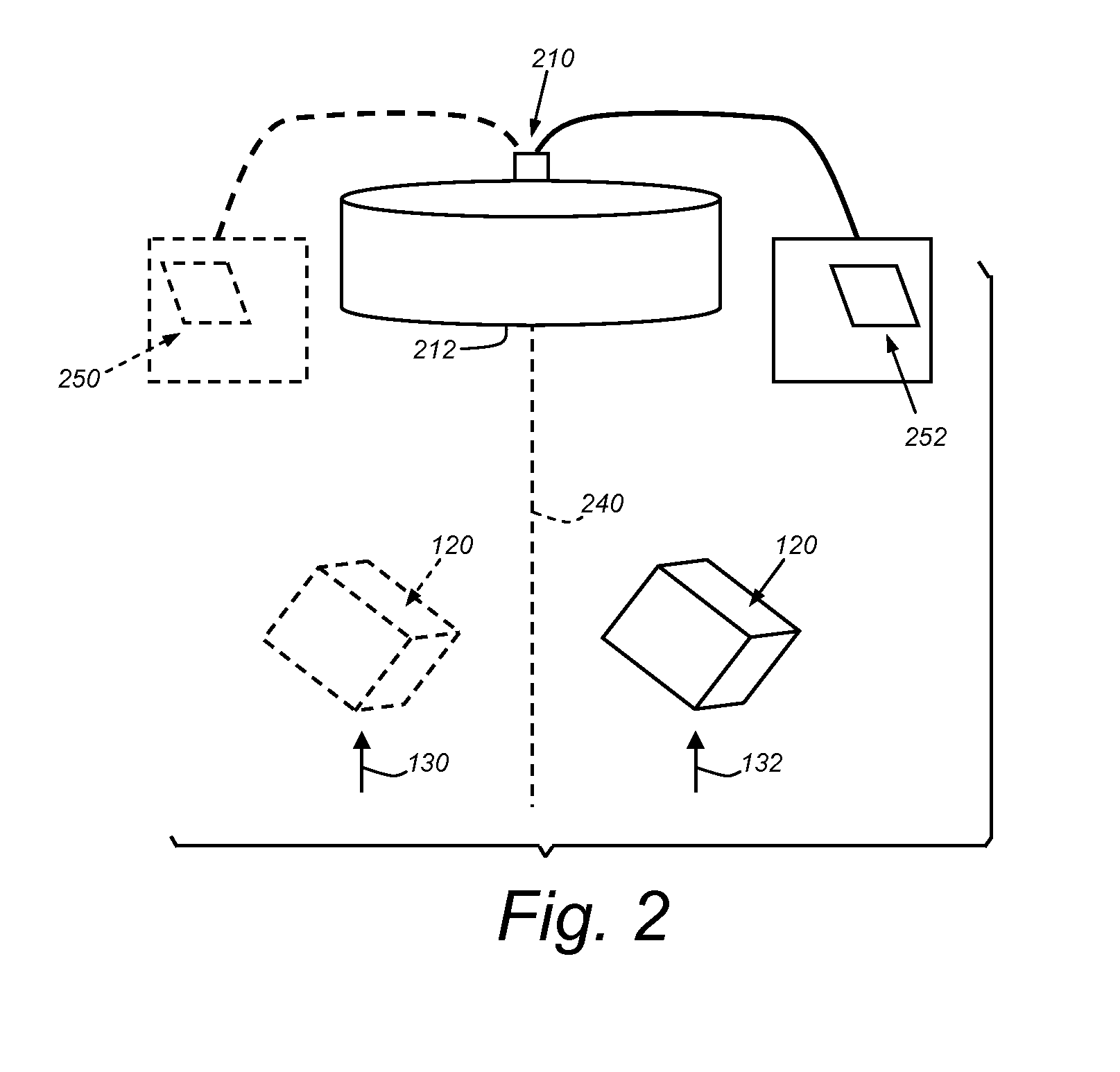

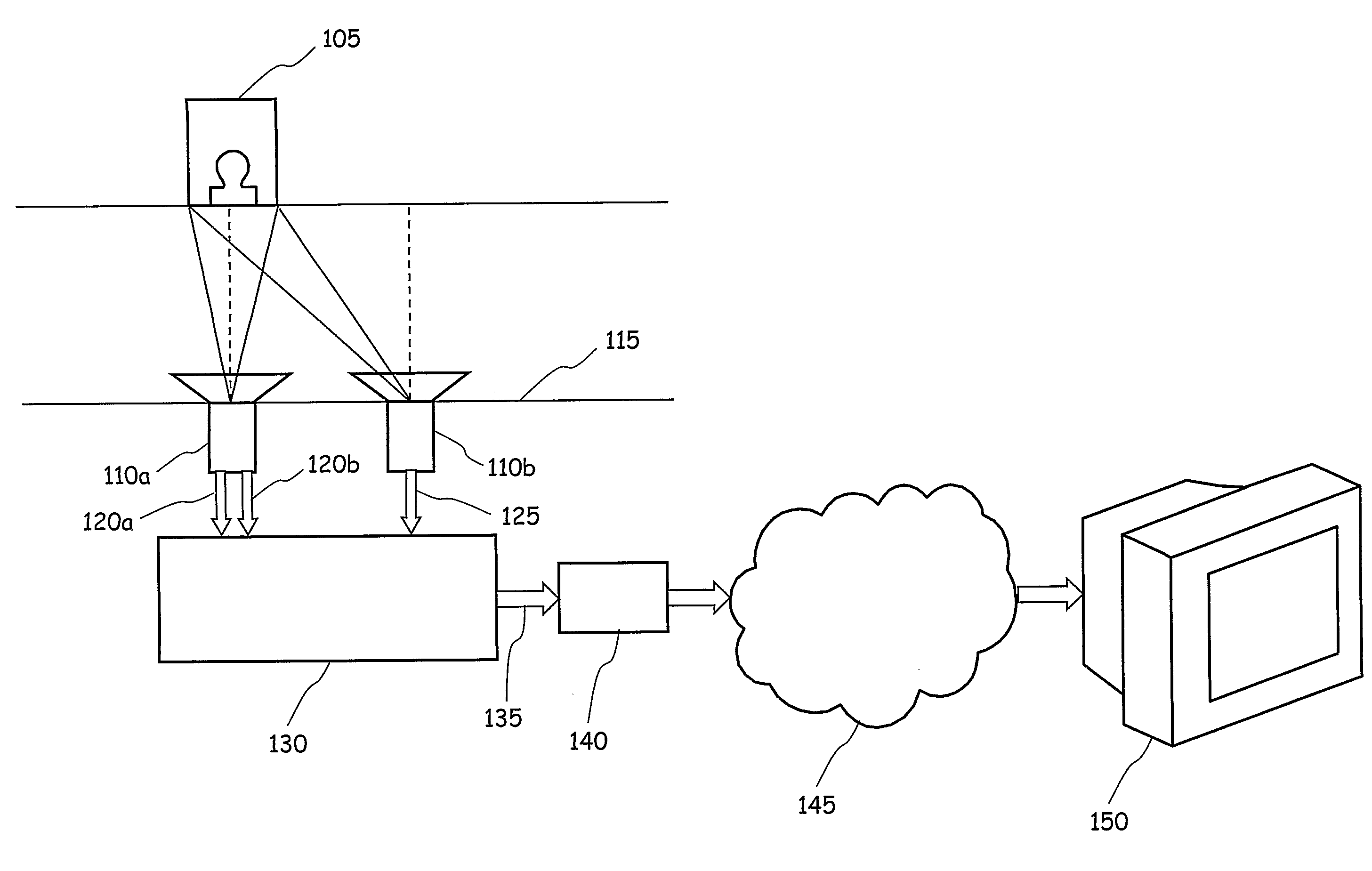

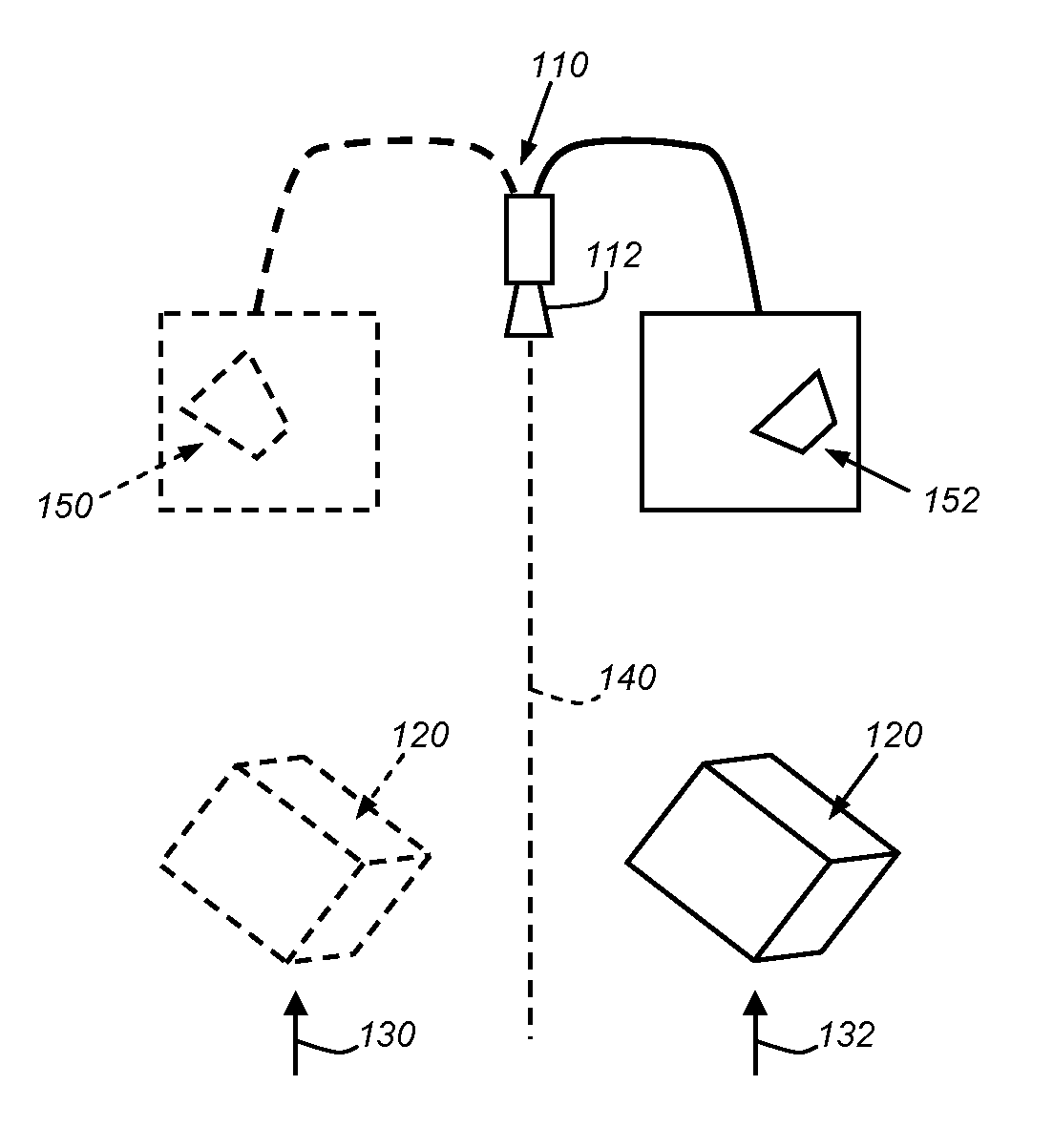

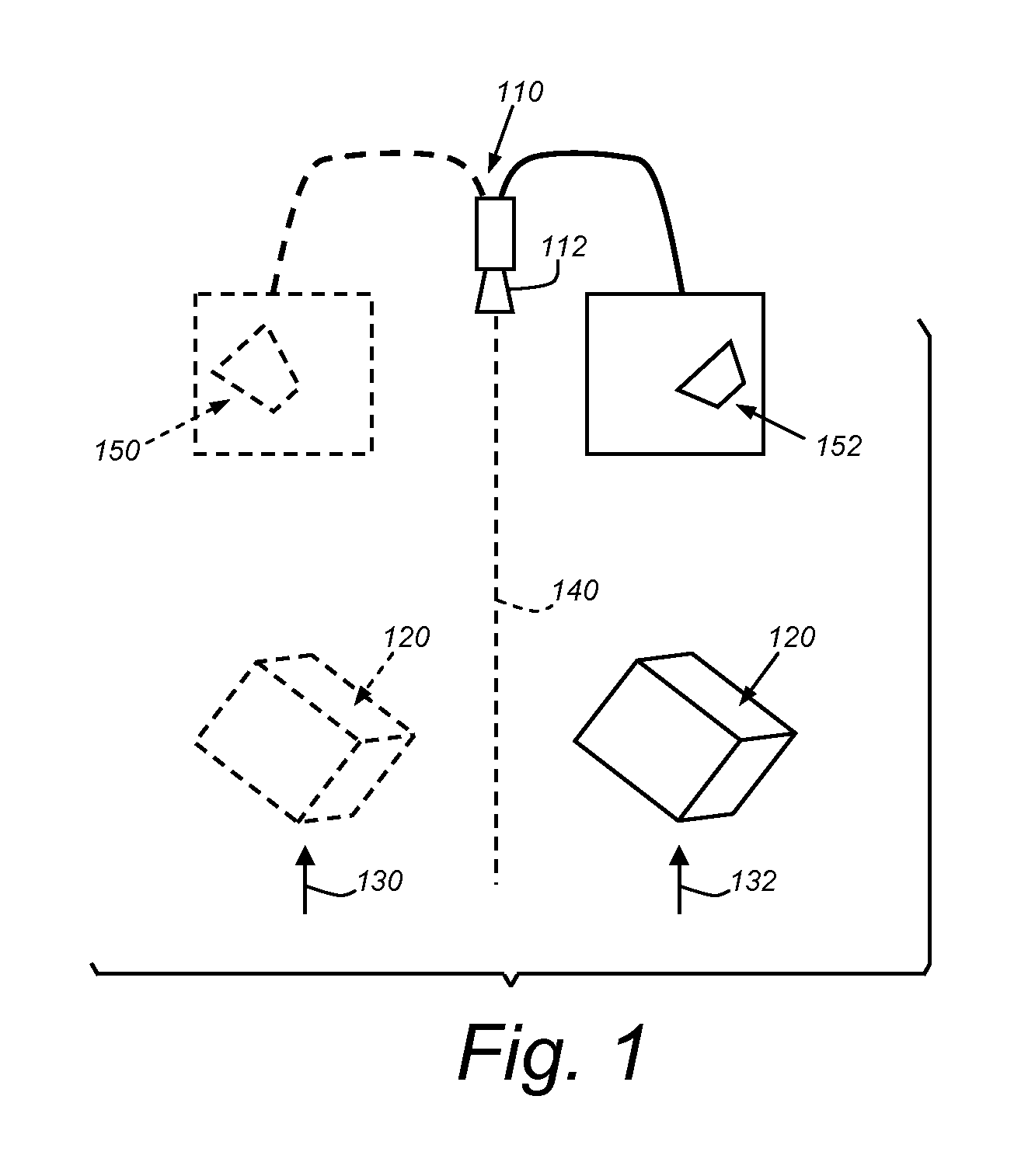

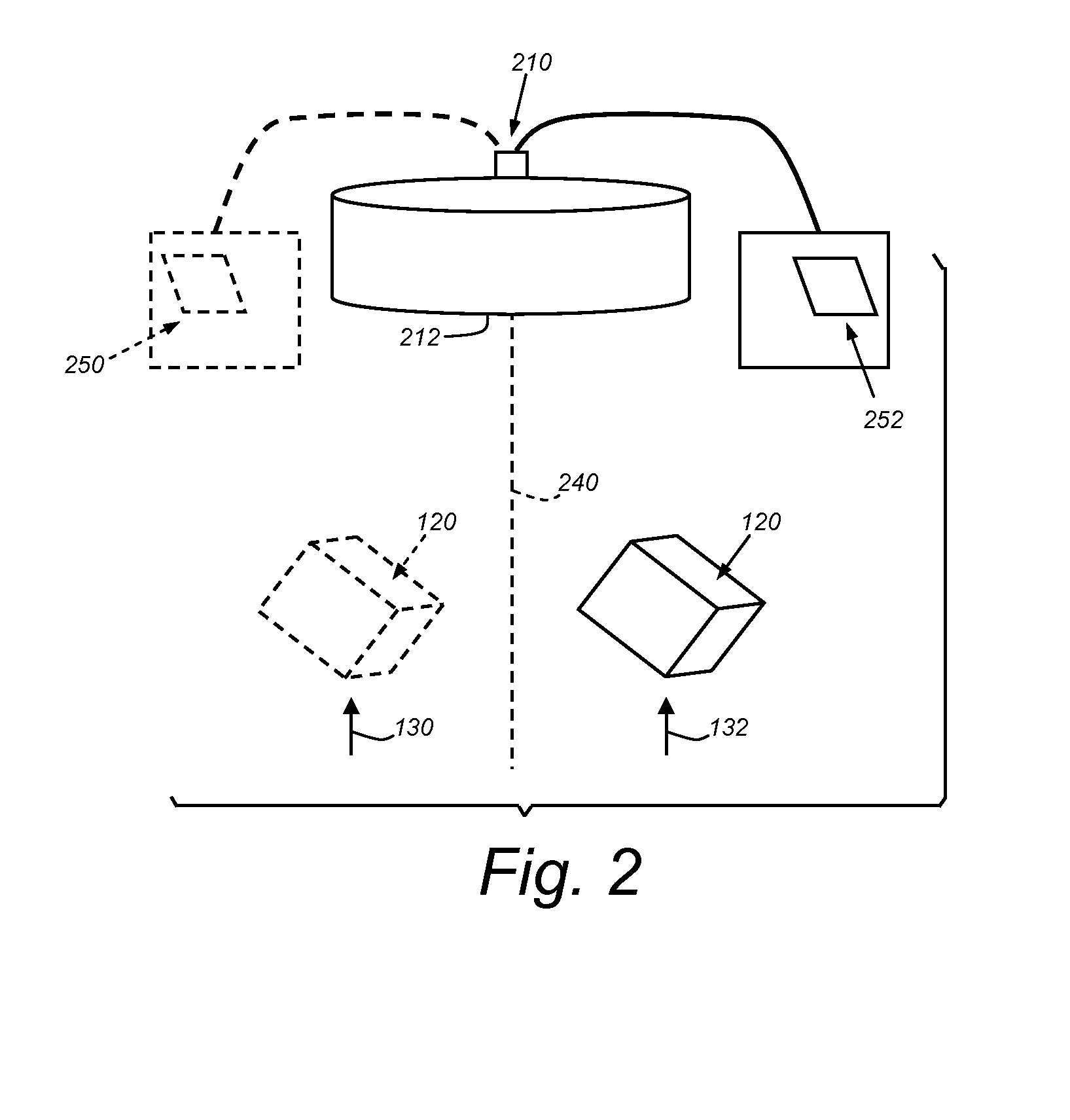

Self-Contained 3D Vision System Utilizing Stereo Camera and Patterned Illuminator

A self-contained hardware and software system that allows reliable stereo vision to be performed. The vision hardware for the system, which includes a stereo camera and at least one illumination source that projects a pattern into the camera's field of view, may be contained in a single box. This box may contain mechanisms to allow the box to remain securely and stay in place on a surface such as the top of a display. The vision hardware may contain a physical mechanism that allows the box, and thus the camera's field of view, to be tilted upward or downward in order to ensure that the camera can see what it needs to see.

Owner:INTELLECTUAL VENTURES HLDG 67

System and method for finding correspondence between cameras in a three-dimensional vision system

ActiveUS20140118500A1Improve accuracyIncrease speedImage enhancementImage analysisManipulatorVisual perception

This invention provides a system and method for determining correspondence between camera assemblies in a 3D vision system implementation having a plurality of cameras arranged at different orientations with respect to a scene involving microscopic and near microscopic objects under manufacture moved by a manipulator, so as to acquire contemporaneous images of a runtime object and determine the pose of the object for the purpose of guiding manipulator motion. At least one of the camera assemblies includes a non-perspective lens. The searched 2D object features of the acquired non-perspective image, corresponding to trained object features in the non-perspective camera assembly can be combined with the searched 2D object features in images of other camera assemblies, based on their trained object features to generate a set of 3D features and thereby determine a 3D pose of the object.

Owner:COGNEX CORP

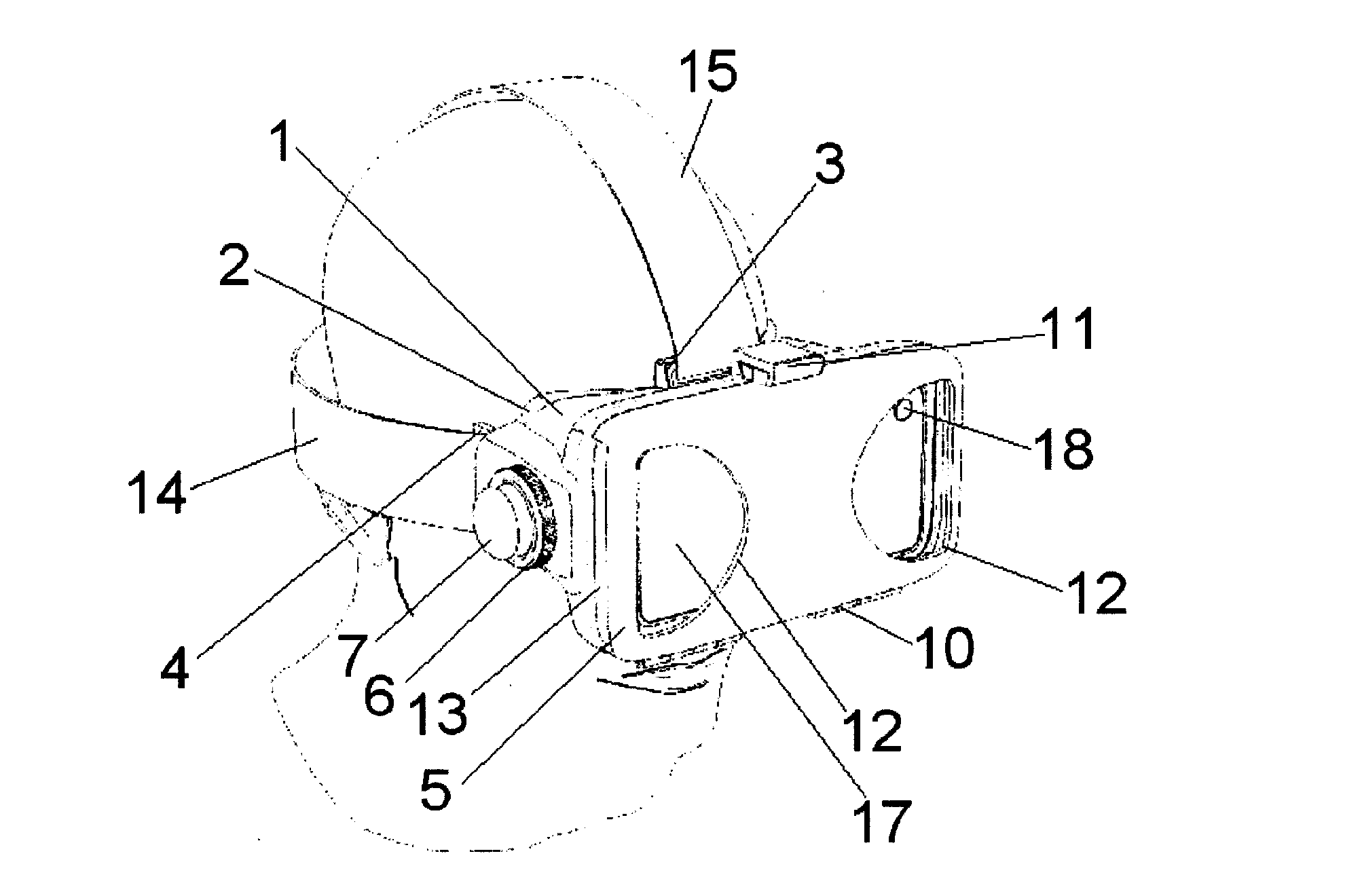

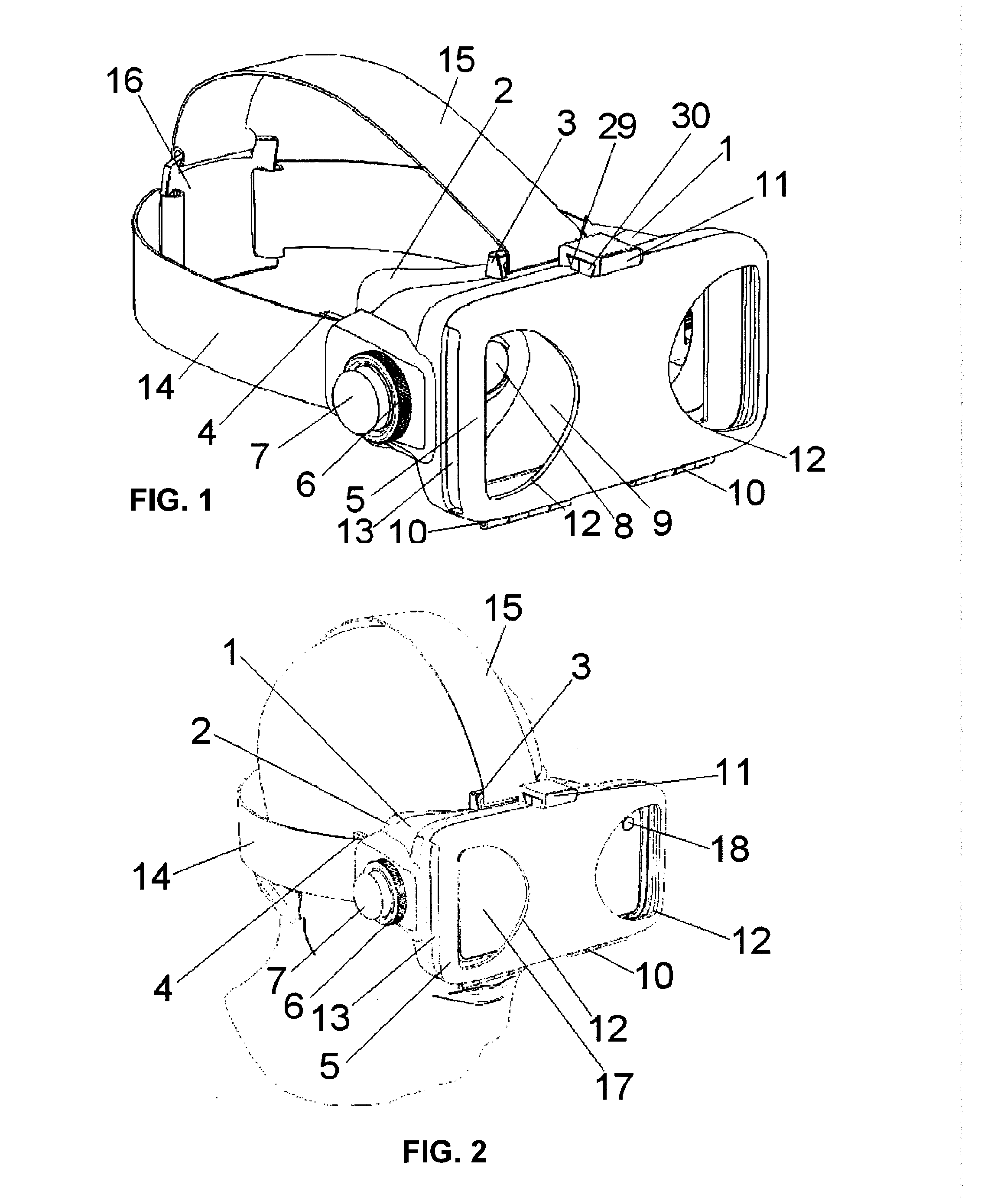

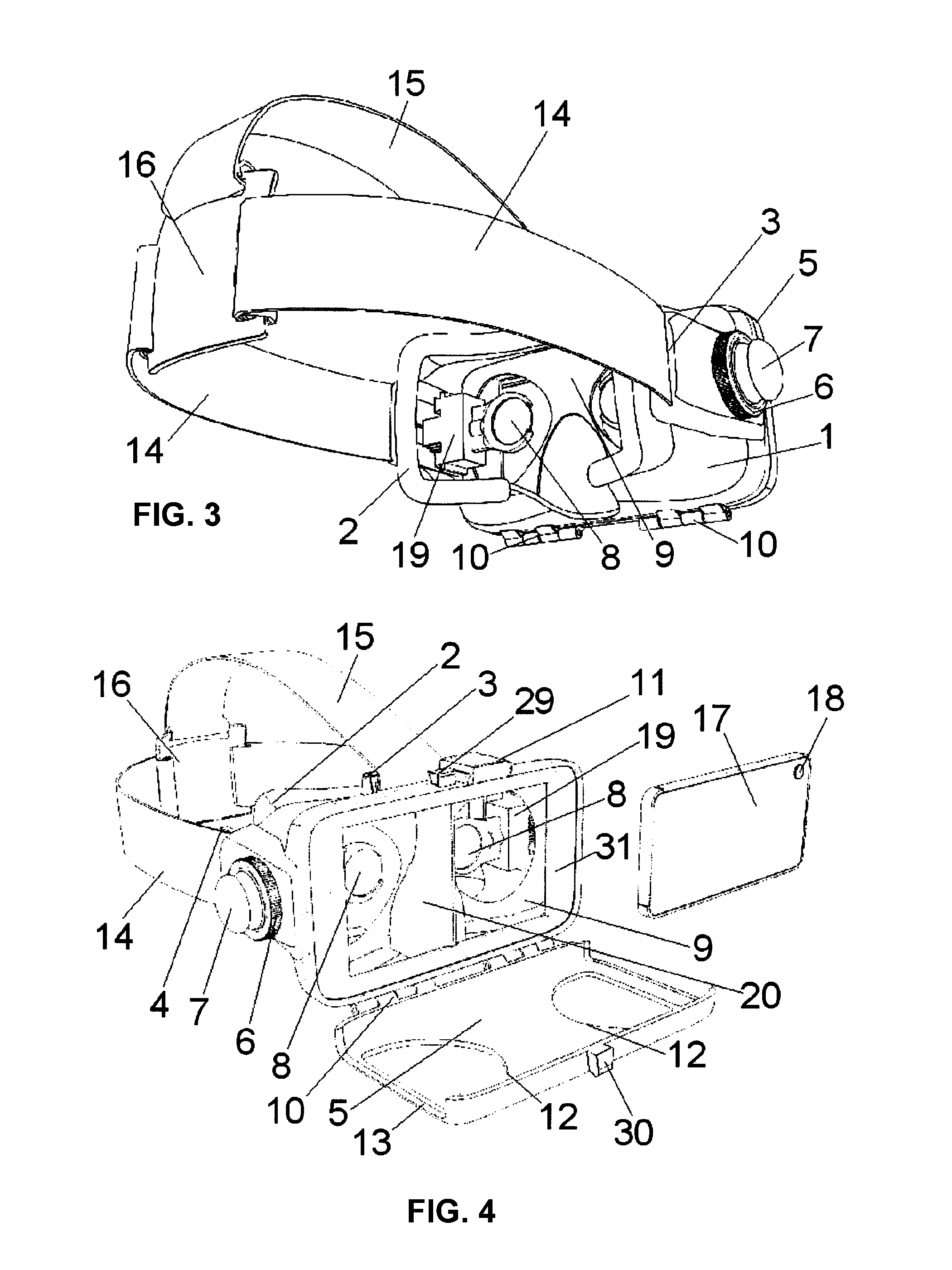

Graphic display adapter device for mobile virtual stereoscopic vision

InactiveUS20170010471A1Loss of qualityPrecision of movementImage data processingSteroscopic systemsGraphicsMultiplexing

An adapter device is provided to be disposed on the wearer's head. A mobile phone or other mobile graphical device can be inserted in the front of the device so this is opposite the user's eyes at a short distance. The device also incorporates lenses and adjustment mechanisms of the lenses that allow proper display of the graphic display device that is inserted in the adapter. Thus the device is designed to achieve stereoscopic 3D vision, configuring the adapter assembly and the device graphic as a device that allows multiplexing (two or more information channels on a single means of transmission) for position (side-by-side) in a mobile phone or other device with graphic screen TV, merging both visual content and receiving a three-dimensional image.

Owner:IMMERSIONVRELIA INC

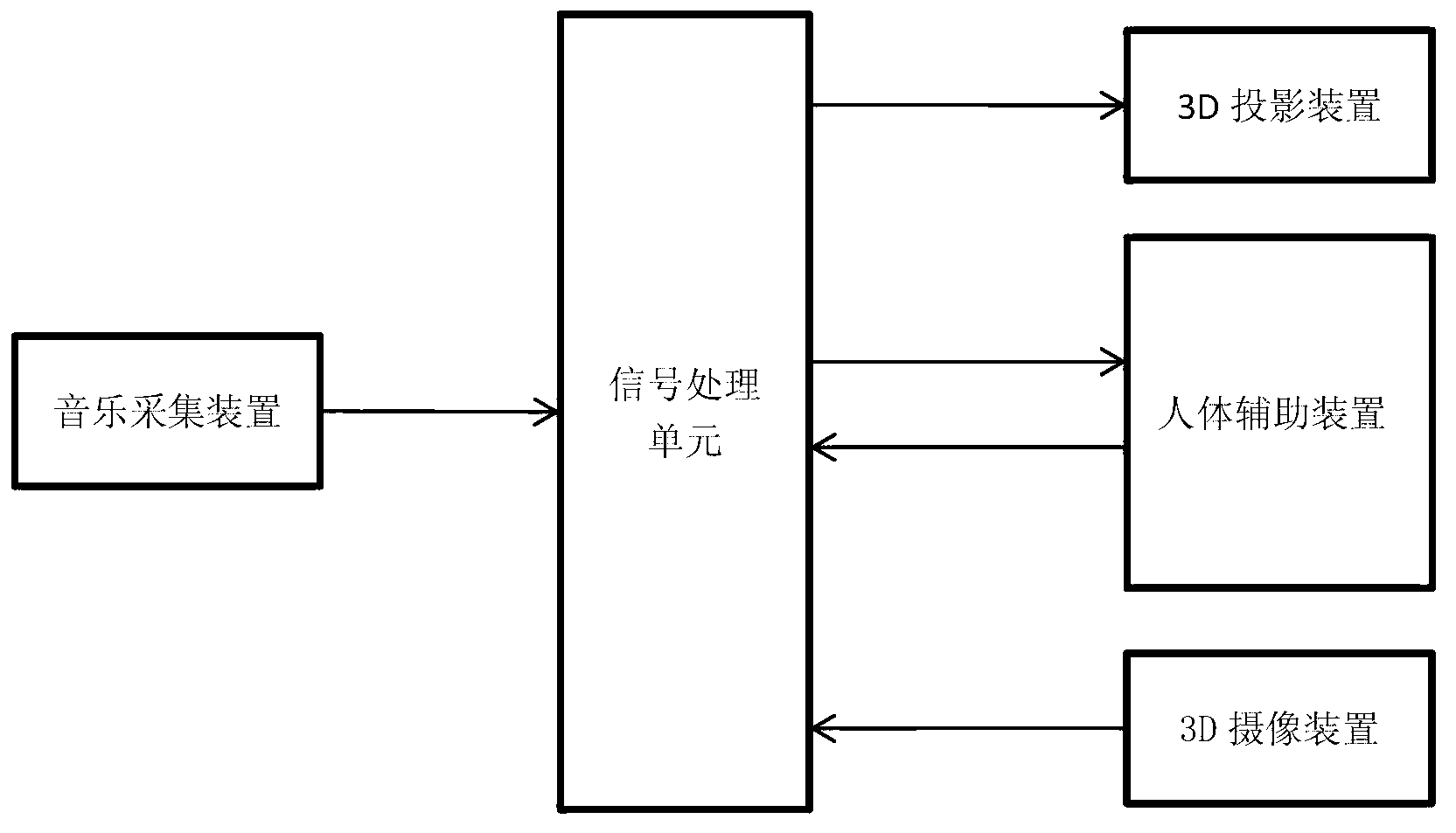

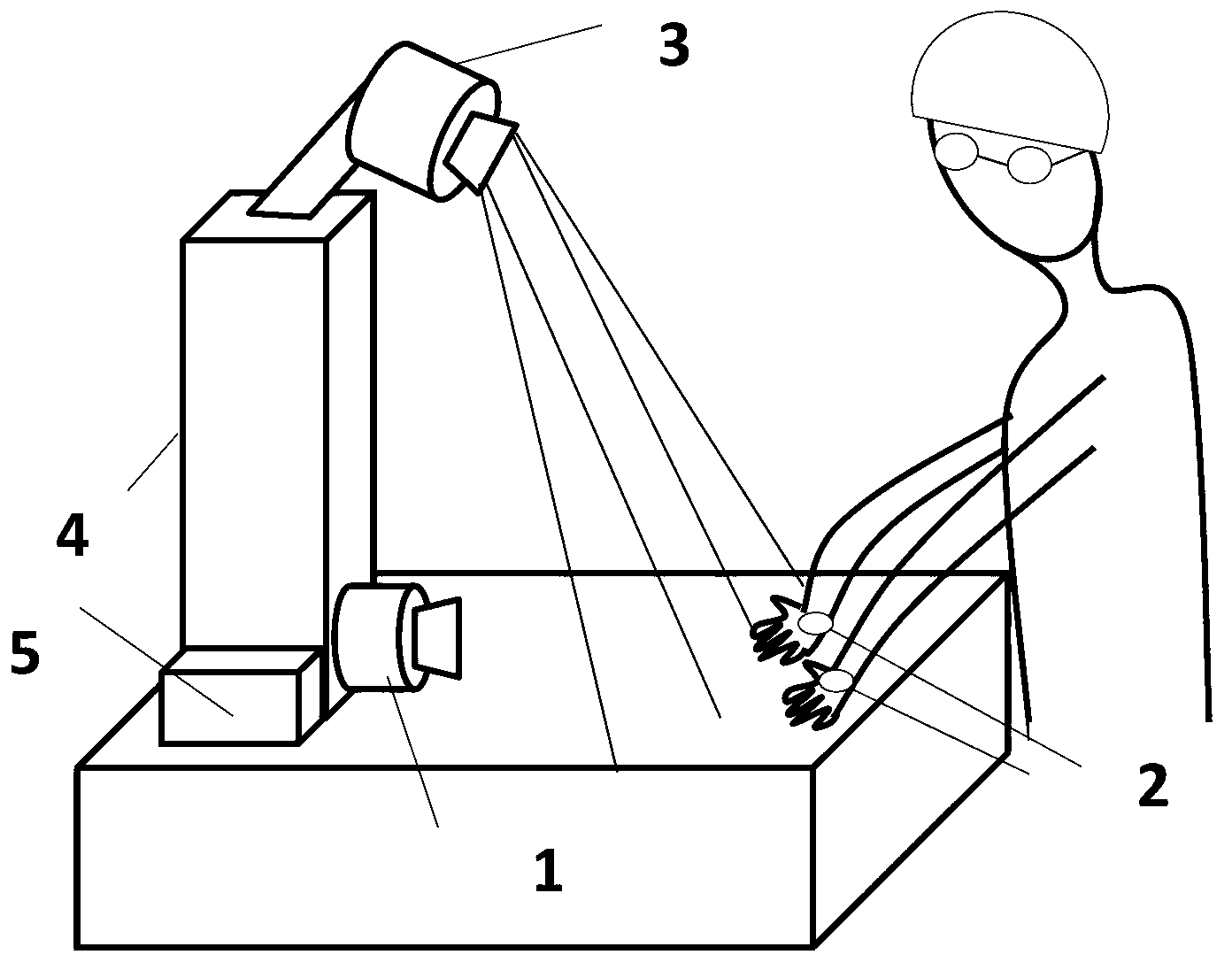

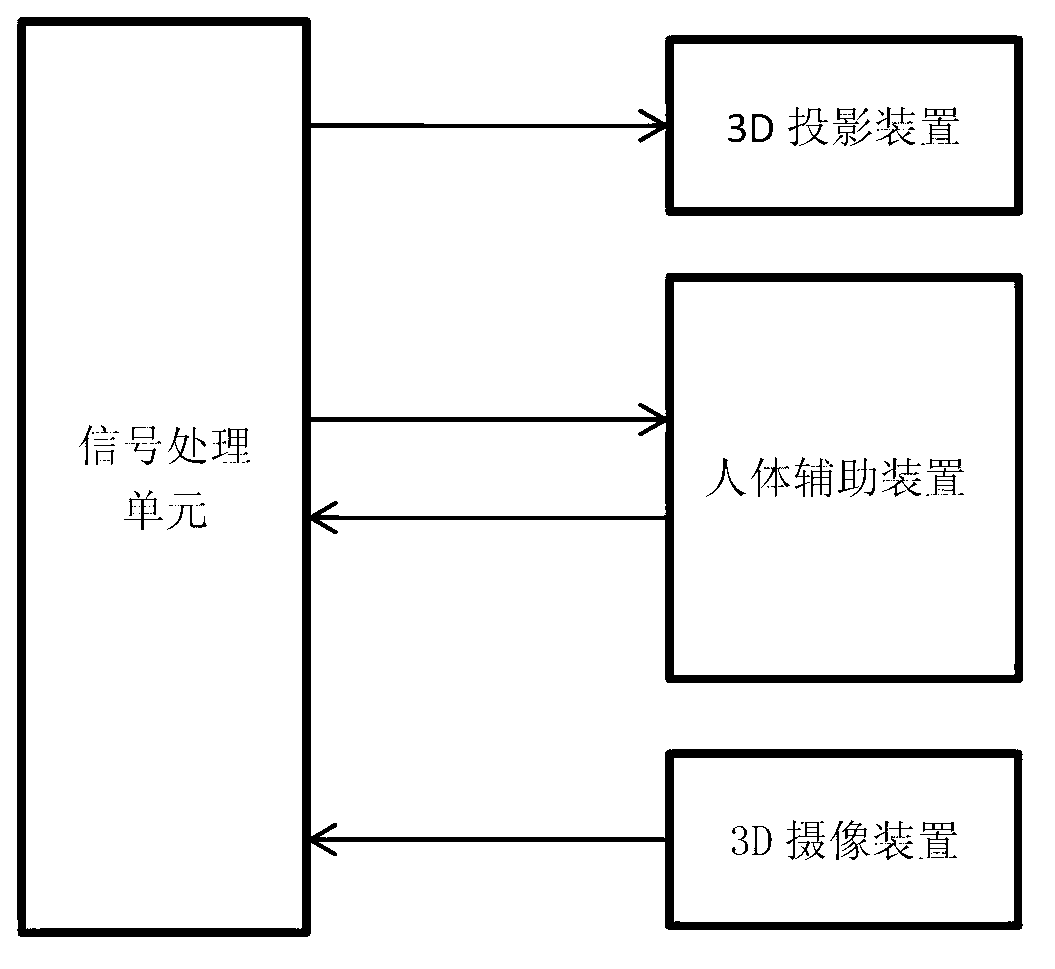

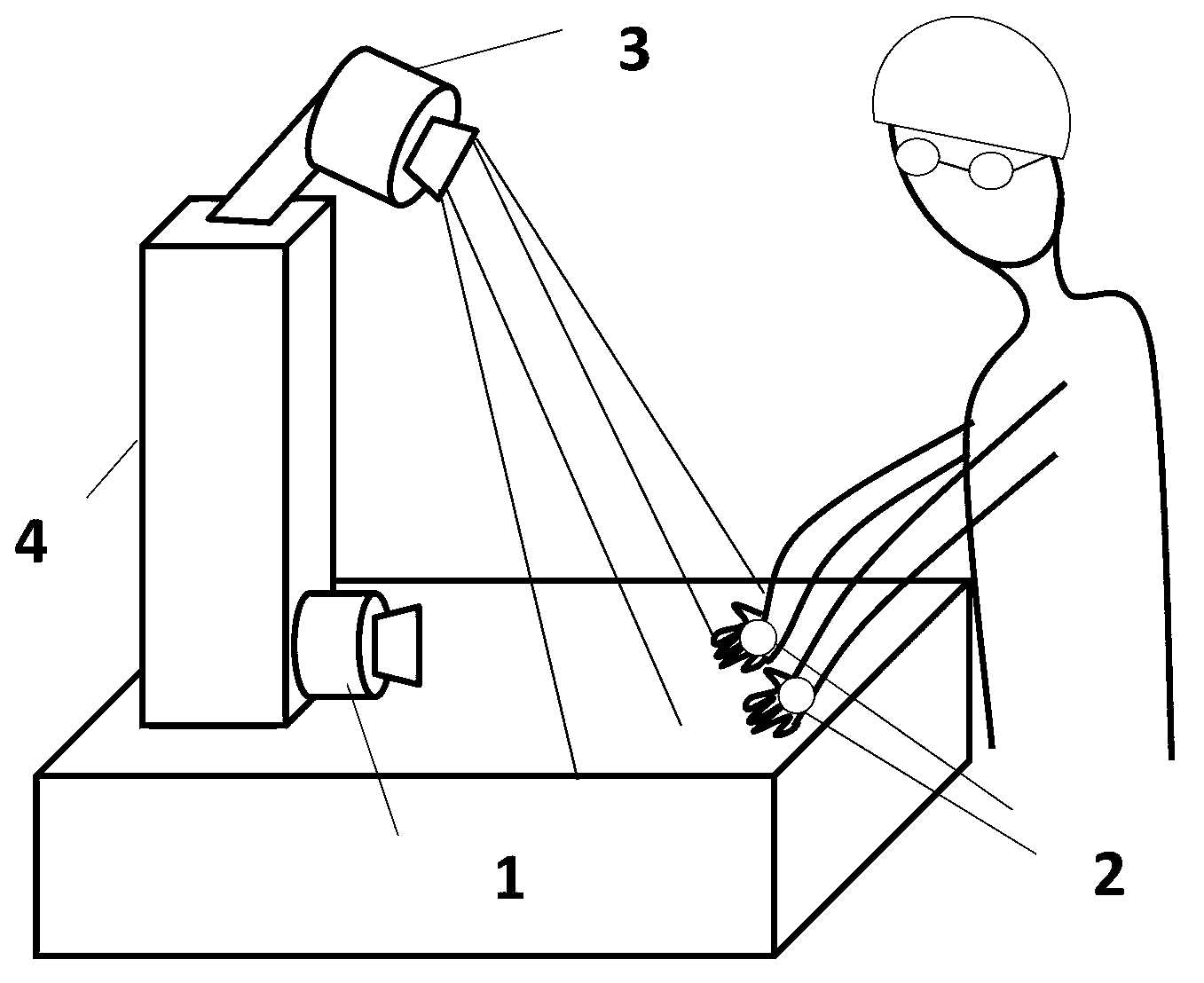

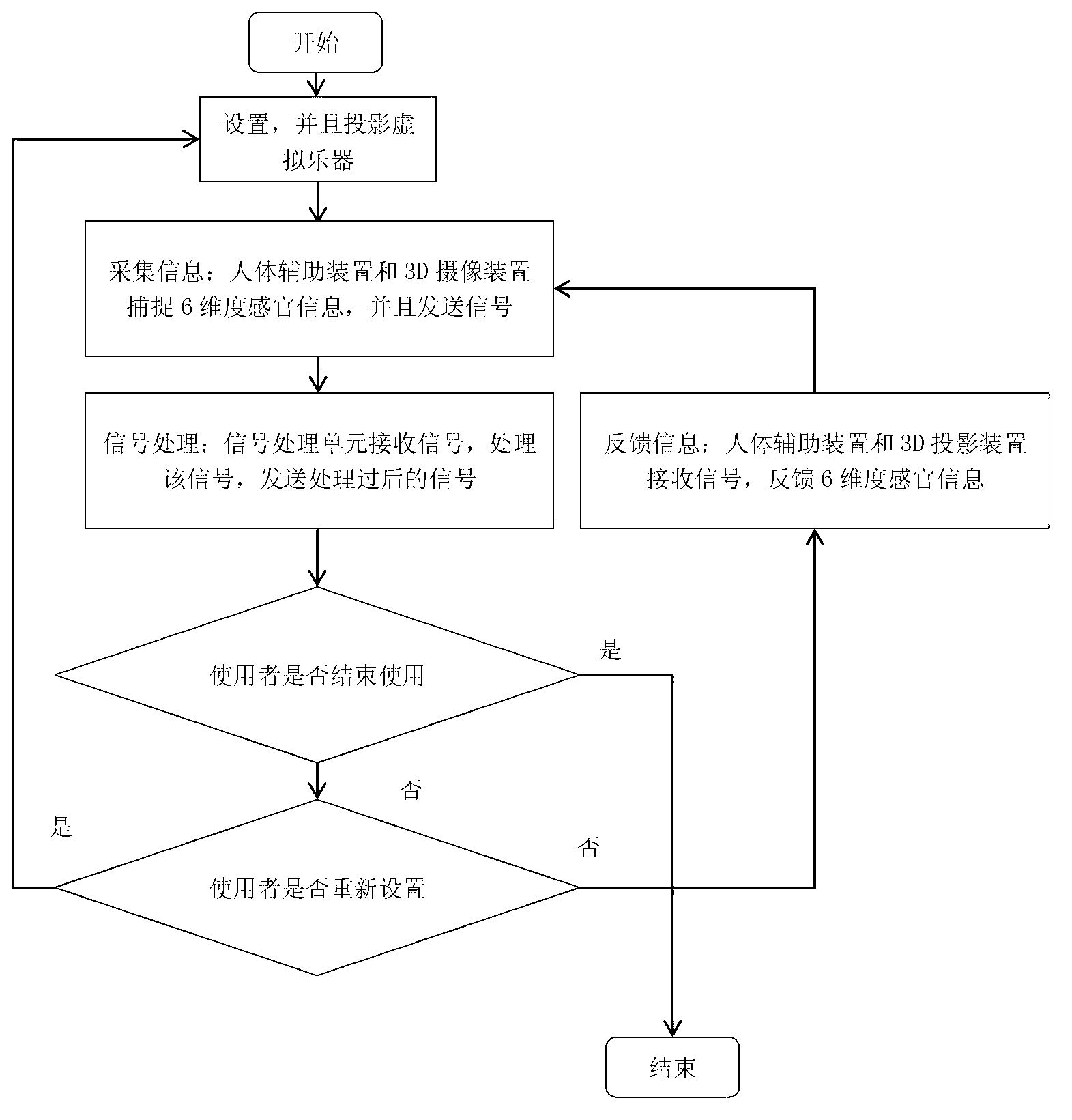

6-dimensional sensory-interactive virtual keyboard instrument system and realization method thereof

ActiveCN103235641AImprove experienceEasy to useInput/output for user-computer interactionMusicHuman bodyPiano

The invention provides a 6-dimentional sensory-interactive virtual keyboard instrument system and a realization method thereof. The 6-dimentional sensory-interactive virtual keyboard instrument system comprises a human body auxiliary device, a 3-dimentiaonal camera shooting device, a 3-dimentiaonal projection device, a signal processing unit and a music collection device. The system provided by the invention has various working modes, including a play mode, an optimal fingering prompting mode, a fingering correction mode and a teaching mode, not only can provides a basic play experience for a user, but also has the functions of fingering prompting, fingering correction and teaching demonstration. The 6-dimentional sensory keyboard instrument system provided by the invention is based on the 3-dimentiaonal vision, hearing, touch feeling and press feeling, and the provided virtual instruments comprises a piano, an electronic organ, an accordion, an organ and other keyboard instruments in species, so that the user can feel an interaction effect just like playing a real instrument; and moreover, the system can realize a powerful teaching function, is simple in use method, flexible and space-saving, is the huge breakthrough for a conventional keyboard instrument, and has a very broad application prospect.

Owner:ZHEJIANG UNIV

Method of estimating the body size and the weight of yak, and corresponding portable computer device of the same

ActiveCN107180438AAutomatic IdentificationReal-time calculation of body size indicatorsImage enhancementImage analysisBody sizeWeight estimation

The invention provides a method of estimating the body size of a yak, based on 3D vision. The method of estimating the body size of a yak, based on 3D vision includes the steps: acquiring the side images of a yak by means of image acquisition equipment; extracting the foreground images from the side images; identifying the key points of the yak body from the extracted foreground images; and by means of the identified key points of the yak body, automatically extracting the body size information of the yak. The invention also provides a method of estimating the weight of a yak, based on 3D vision. The method of estimating the weight of a yak, based on 3D vision includes the steps: including the method of performing the method of estimating the body size of a yak; and taking the extracted body size information of the yak as input, and utilizing a yak weight estimation model to predict the weight value of the yak. Alternatively, the invention furthermore provides a method of estimating the weight of a yak, based on 3D vision. The method of estimating the weight of a yak, based on 3D vision includes the steps: acquiring the side images of a yak by means of image acquisition equipment; extracting the foreground images from the side images; and directly predicting the weight value from the foreground images, by means of a convolution neural network. The invention also provides a corresponding portable computer device.

Owner:TSINGHUA UNIV

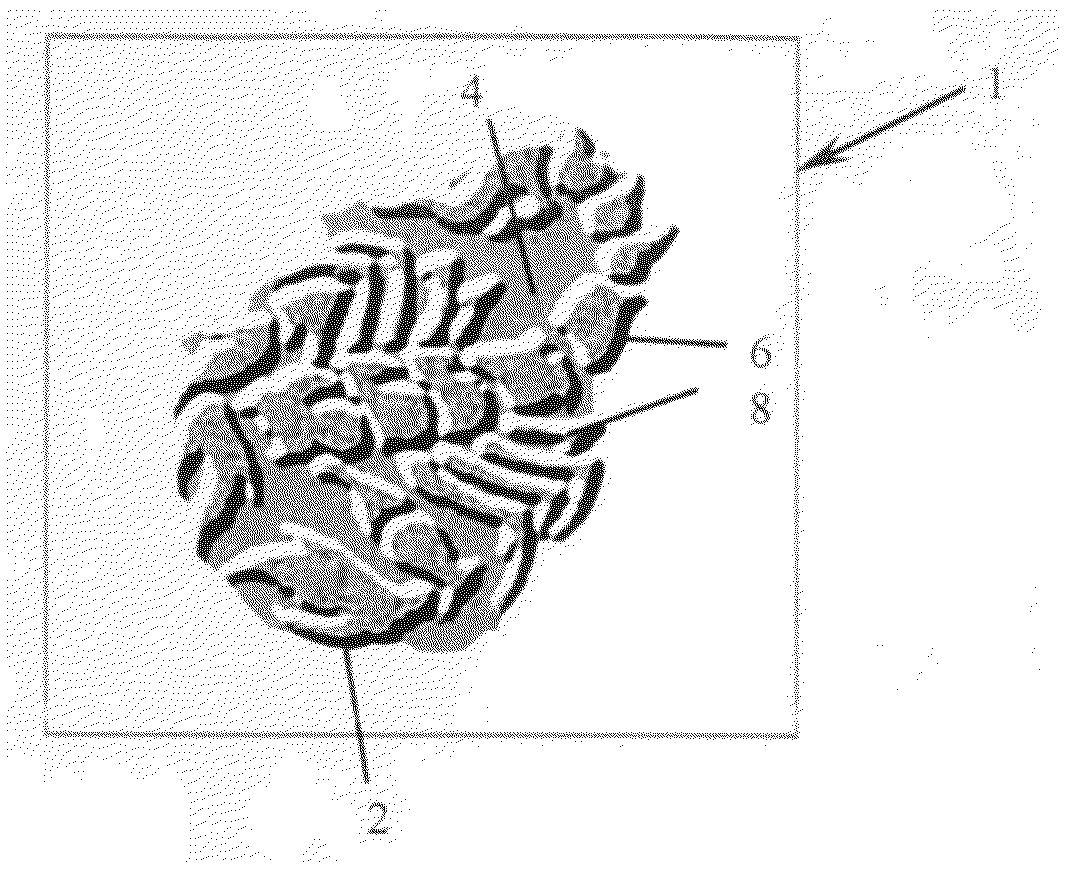

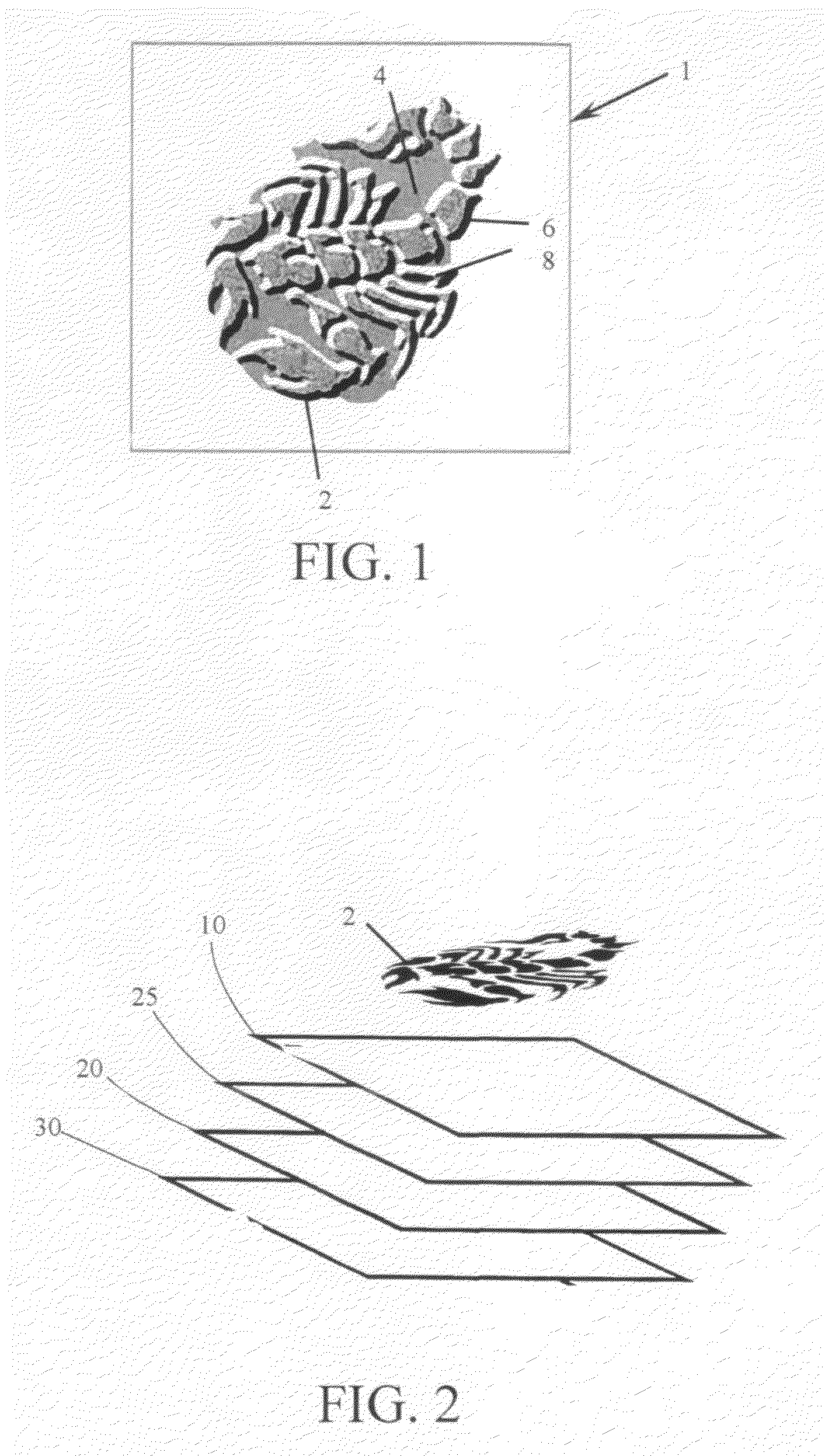

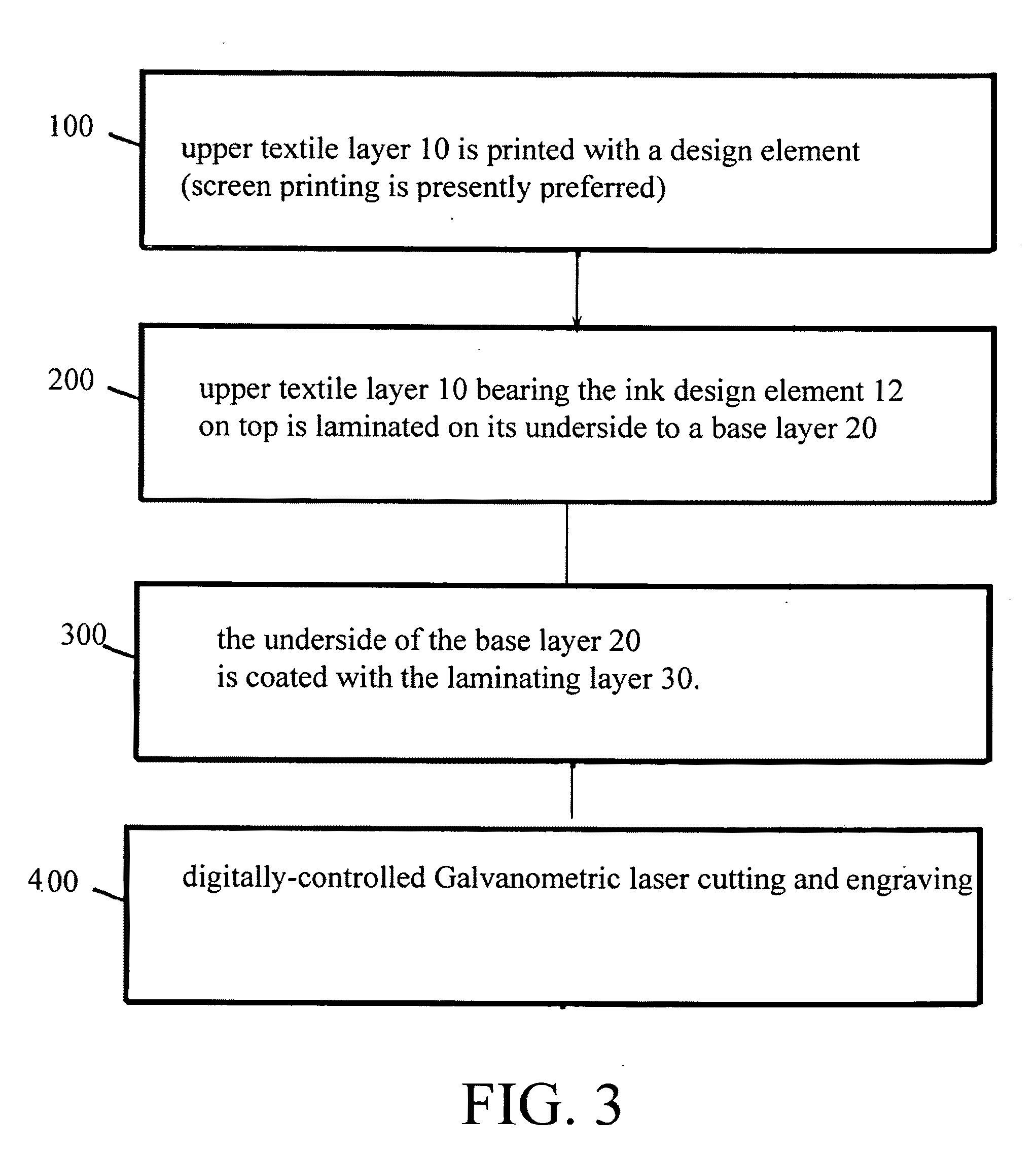

Appliqué having dual color effect by laser engraving

A product and process for producing heat-transfers having a contrasting-color 3D appearance comprising a printed layer of woven fabric substrate adhered to a colorfast textile substrate. The woven fabric substrate includes a top substrate printed with a design logo. The underlying substrate is a solid color. The design logo / is engraved away in patterned designs using a galvanometric laser to reveal the contrasting substrate in desired areas, giving a layered embroidery 3D visual effect. These heat activated appliqués of the present invention are particularly suitable for use in forming decorations for apparel, bags and home furnishings. Their soft tactile hand feel does not cause discomfort to the wearer. The resulting product has superior care and durability characteristics, is more wash fast and can be ironed, and has superior appearance characteristics to other types of heat transfer appliqués. The heat transfer capability of the appliqué allows for fast customization of finished garments.

Owner:LION BROS CO INC

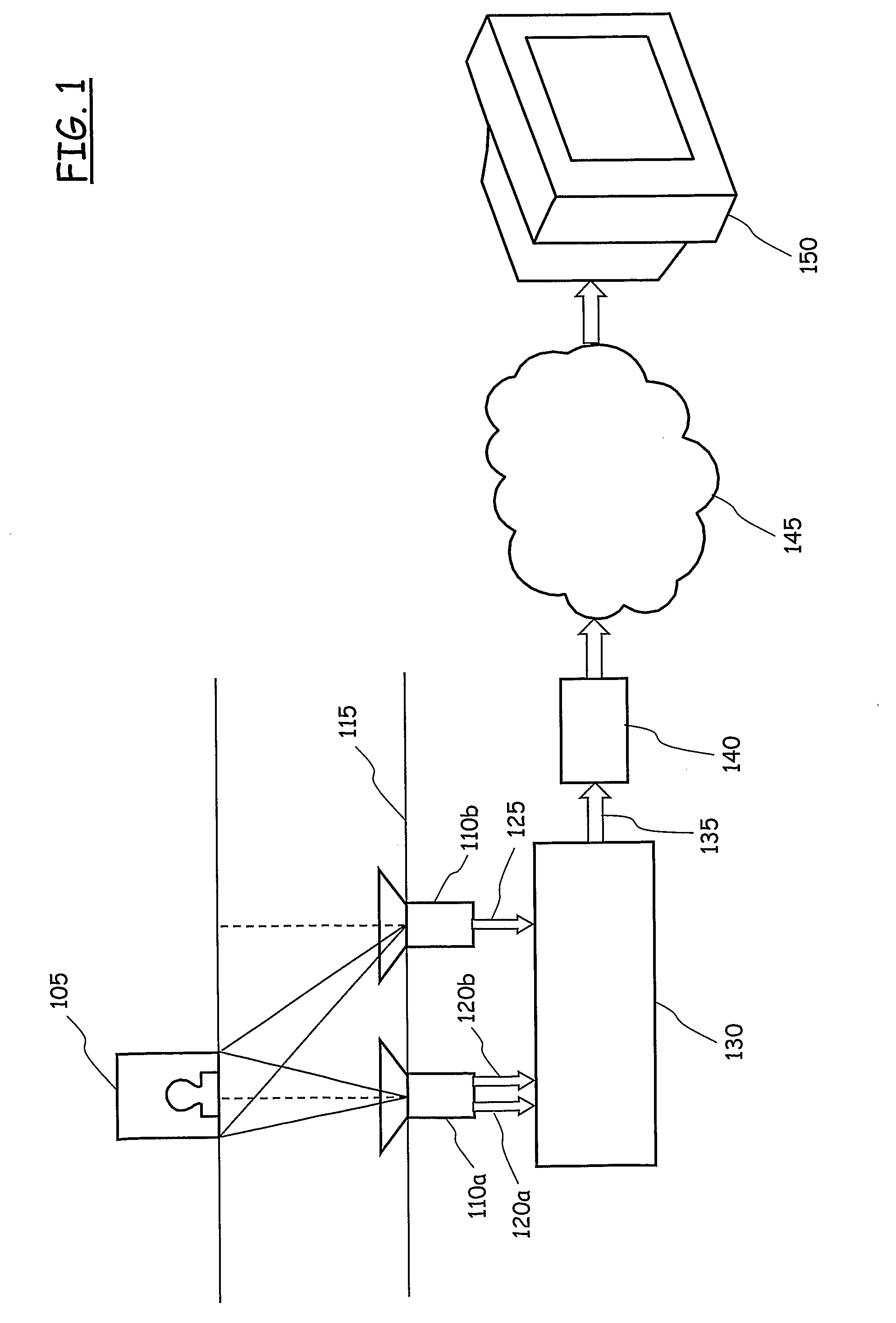

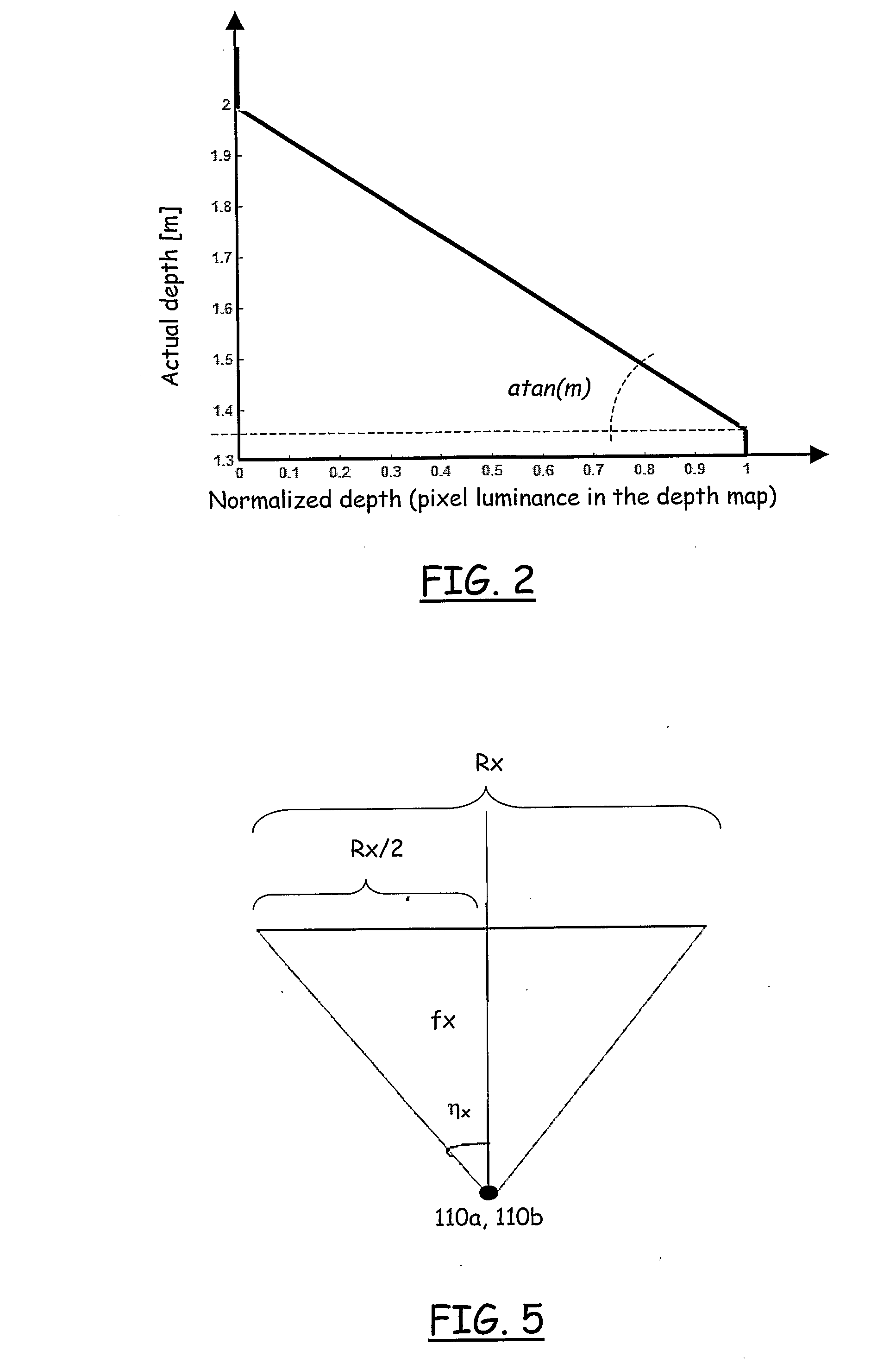

Method and system for producing multi-view 3D visual contents

ActiveUS20110211045A1Computationally demandingImage analysisSteroscopic systemsVisual perceptionDepth map

A method for producing 3D multi-view visual contents including capturing a visual scene from at least one first point of view for generating a first bidimensional image of the scene and a corresponding first depth map indicative of a distance of different parts of the scene from the first point of view. The method further includes capturing the visual scene from at least one second point of view for generating a second bidimensional image; processing the first bidimensional image to derive at least one predicted second bidimensional image predicting the visual scene captured from the at least one second point of view; deriving at least one predicted second depth map predictive of a distance of different parts of the scene from the at least one second point of view by processing the first depth map, the at least one predicted second bidimensional image and the second bidimensional image.

Owner:TELECOM ITALIA SPA

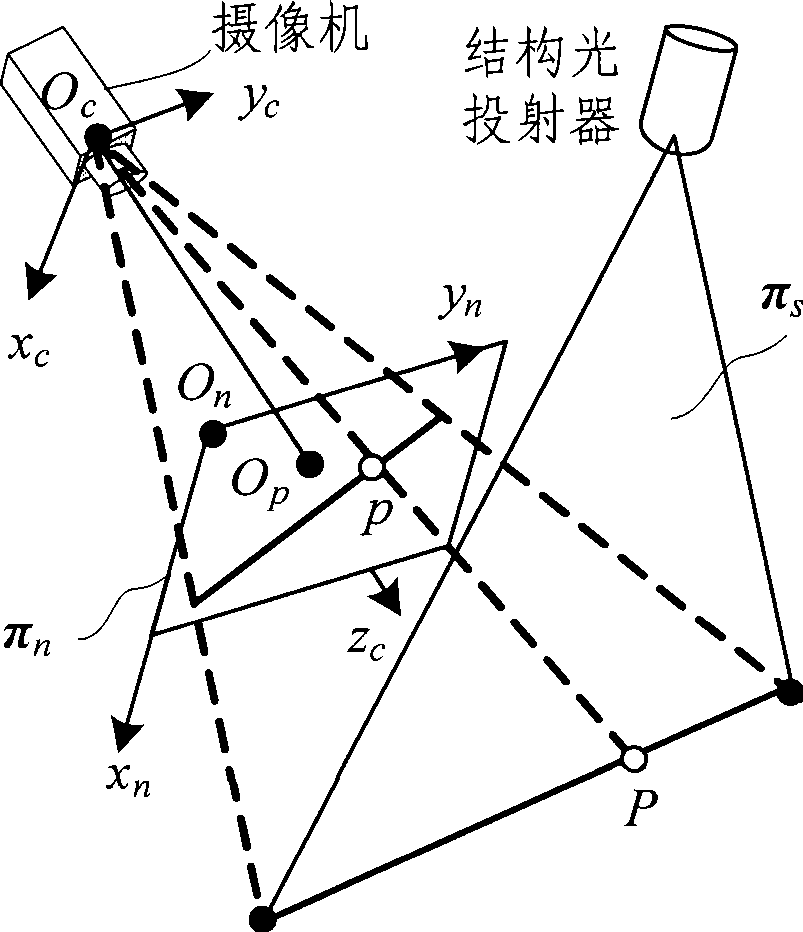

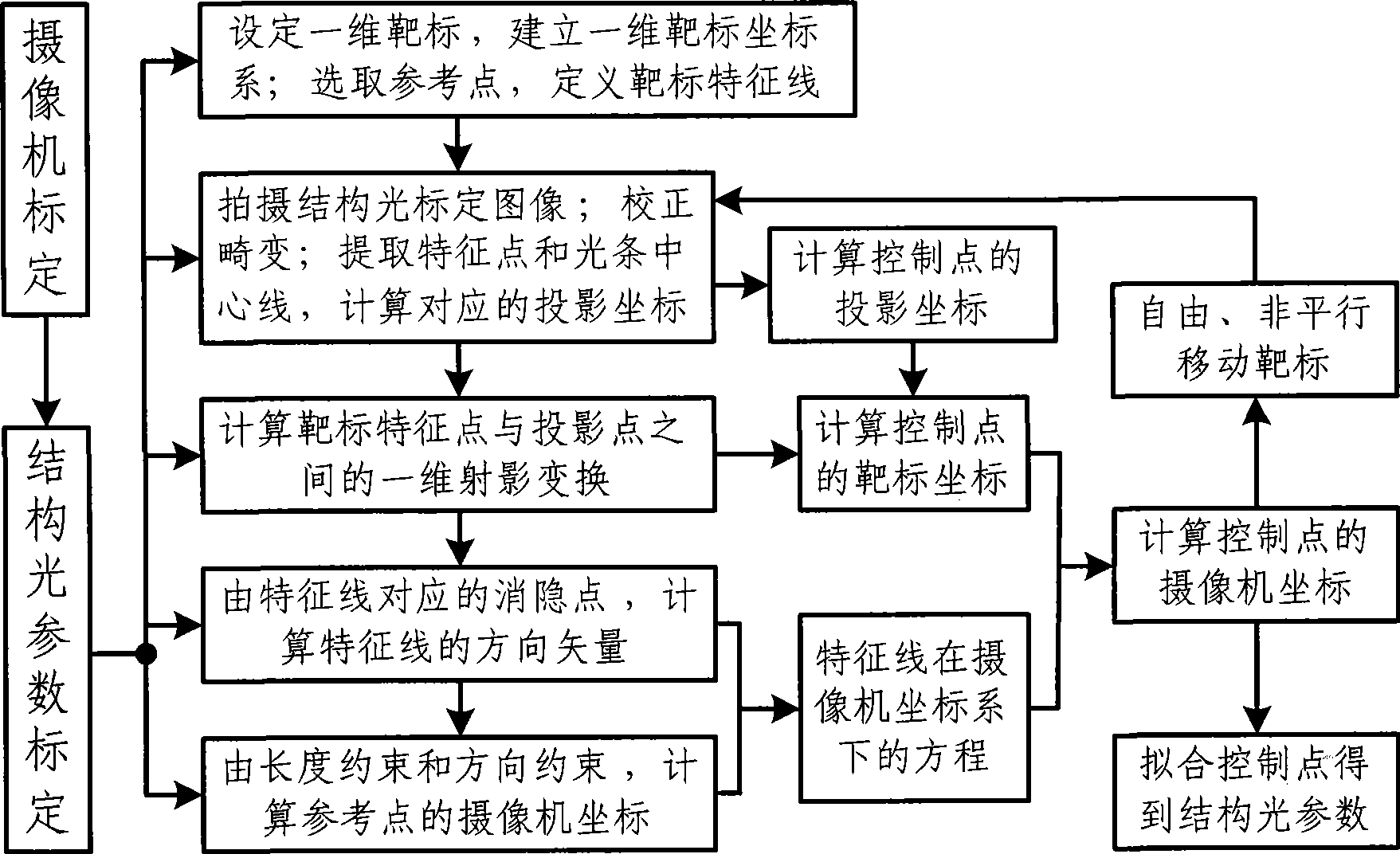

Structure optical parameter demarcating method based on one-dimensional target drone

InactiveCN101419708ALow costEasy maintenanceImage analysisUsing optical meansThree dimensional measurementVisual perception

The invention belongs to the technical field of measurement, and relates to an improvement to a calibration method of structured light parameters in 3D vision measurement of structured light. The invention provides a calibration method of the structured light parameters based on a one-dimensional target. After a sensor is arranged, a camera of the sensor takes a plurality of images of the one-dimensional target in free non-parallel motion; a vanishing point of a characteristic line on the target is obtained by one-dimensional projective transformation, and a direction vector of the characteristic line under a camera coordinate system is determined by the one-dimensional projective transformation and a camera projection center; camera ordinates of a reference point on the characteristic line is computed according to the length constraint among characteristic points and the direction constraint of the characteristic line to obtain an equation of the characteristic line under the camera coordinate system; the camera ordinates of a control point on a plurality of non-colinear optical strips are obtained by the projective transformation and the equation of the characteristic line, and then the control point is fitted to obtain parameters of the structured light. In the method, high-cost auxiliary adjustment equipment is unnecessary; the method has high calibration precision and simple process, and can meet the field calibration need for the 3D vision measurement of the large-sized structured light.

Owner:BEIHANG UNIV

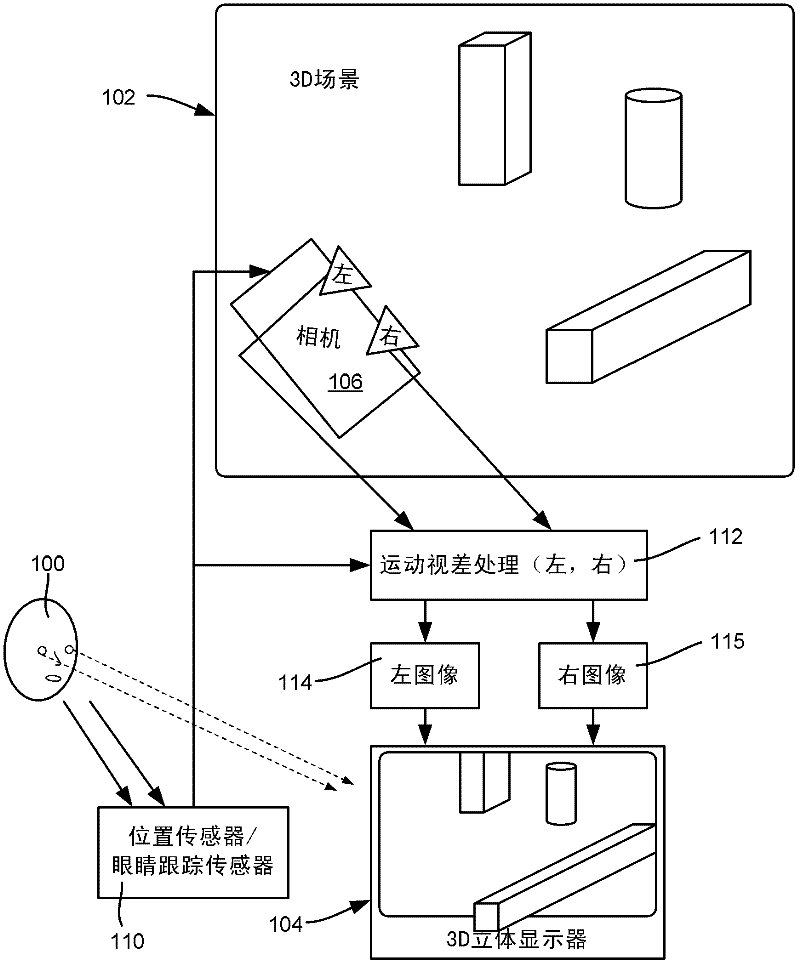

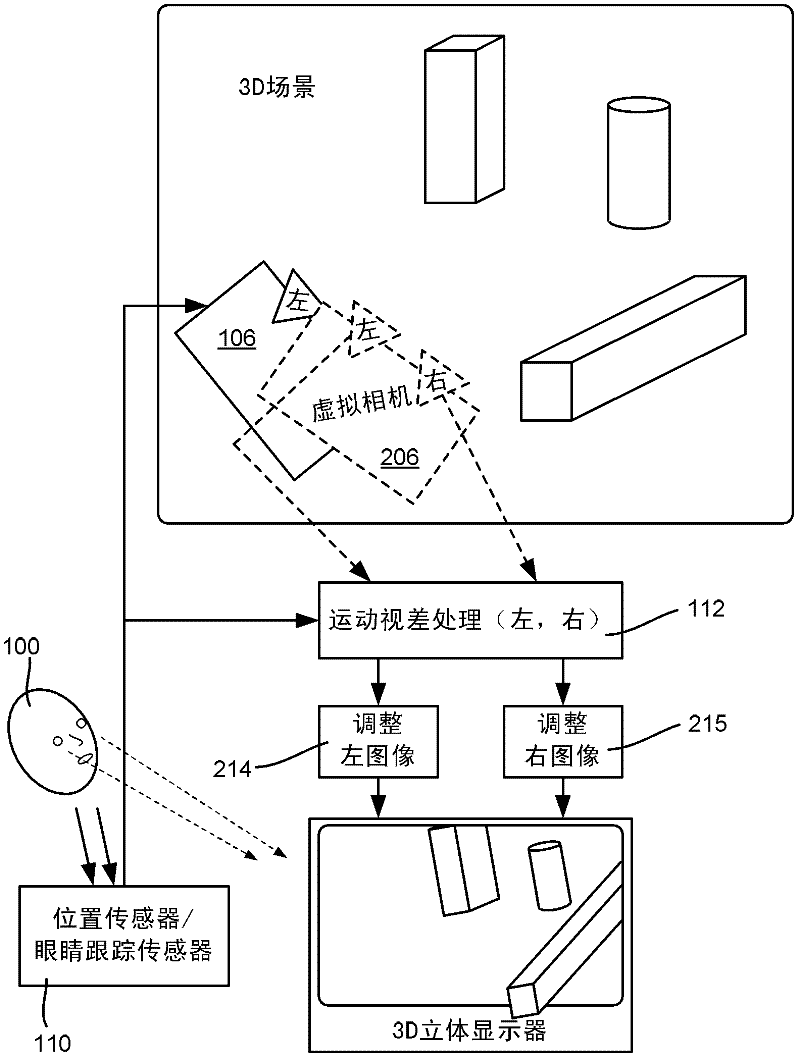

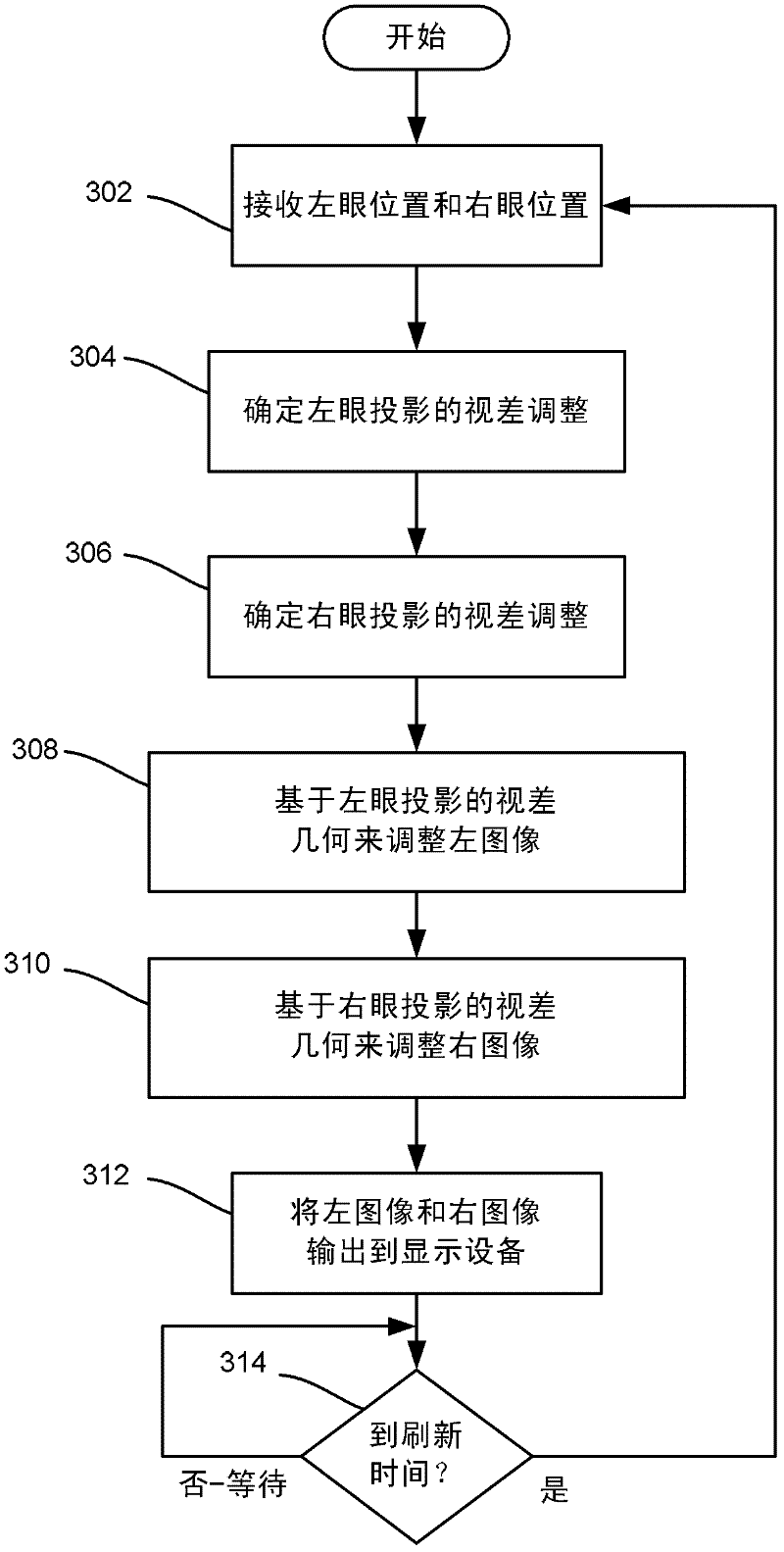

Three-Dimensional Display with Motion Parallax

The subject disclosure is directed towards a hybrid stereo image / motion parallax system that uses stereo 3D vision technology for presenting different images to each eye of a viewer, in combination with motion parallax technology to adjust each image for the positions of a viewer's eyes. In this way, the viewer receives both stereo cues and parallax cues as the viewer moves while viewing a 3D scene, which tends to result in greater visual comfort / less fatigue to the viewer. Also described is the use of goggles for tracking viewer position, including training a computer vision algorithm to recognize goggles instead of only heads / eyes.

Owner:MICROSOFT TECH LICENSING LLC

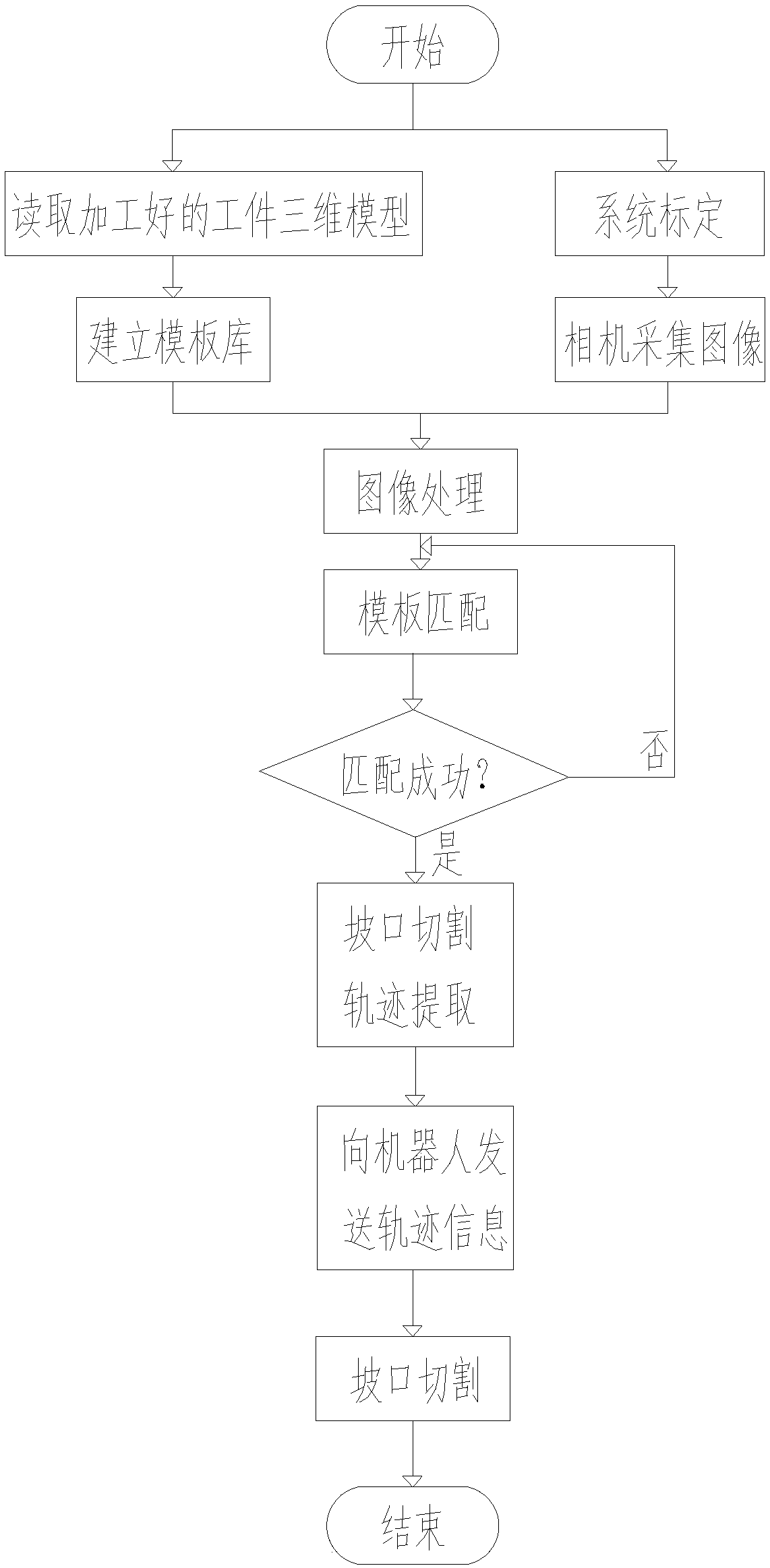

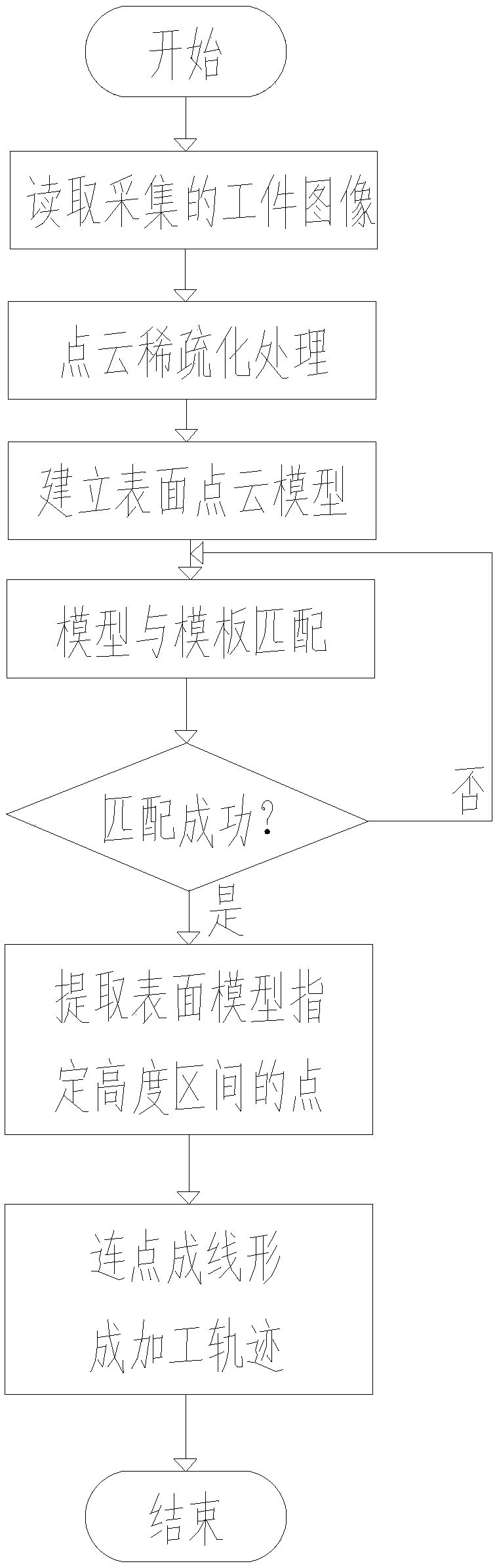

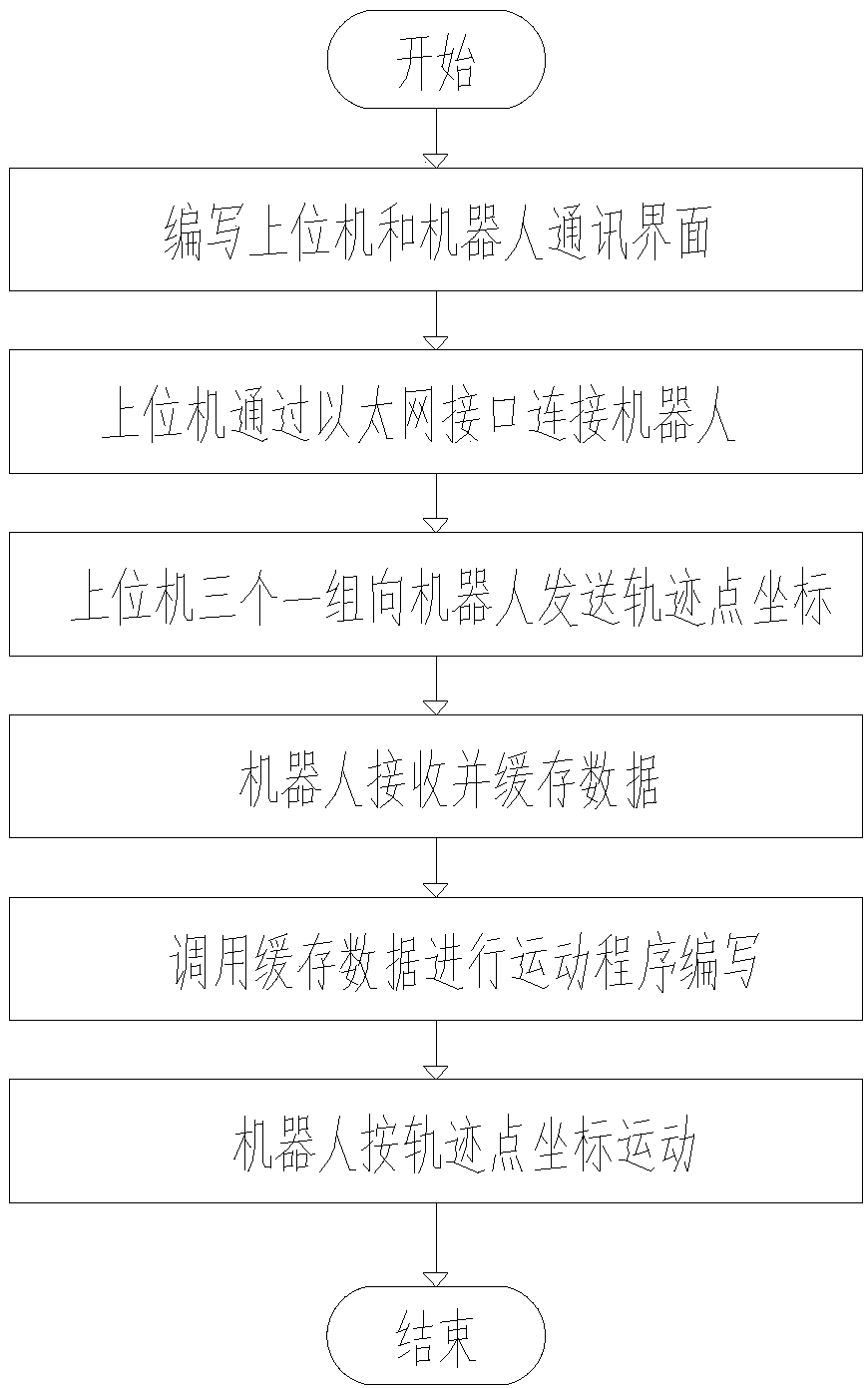

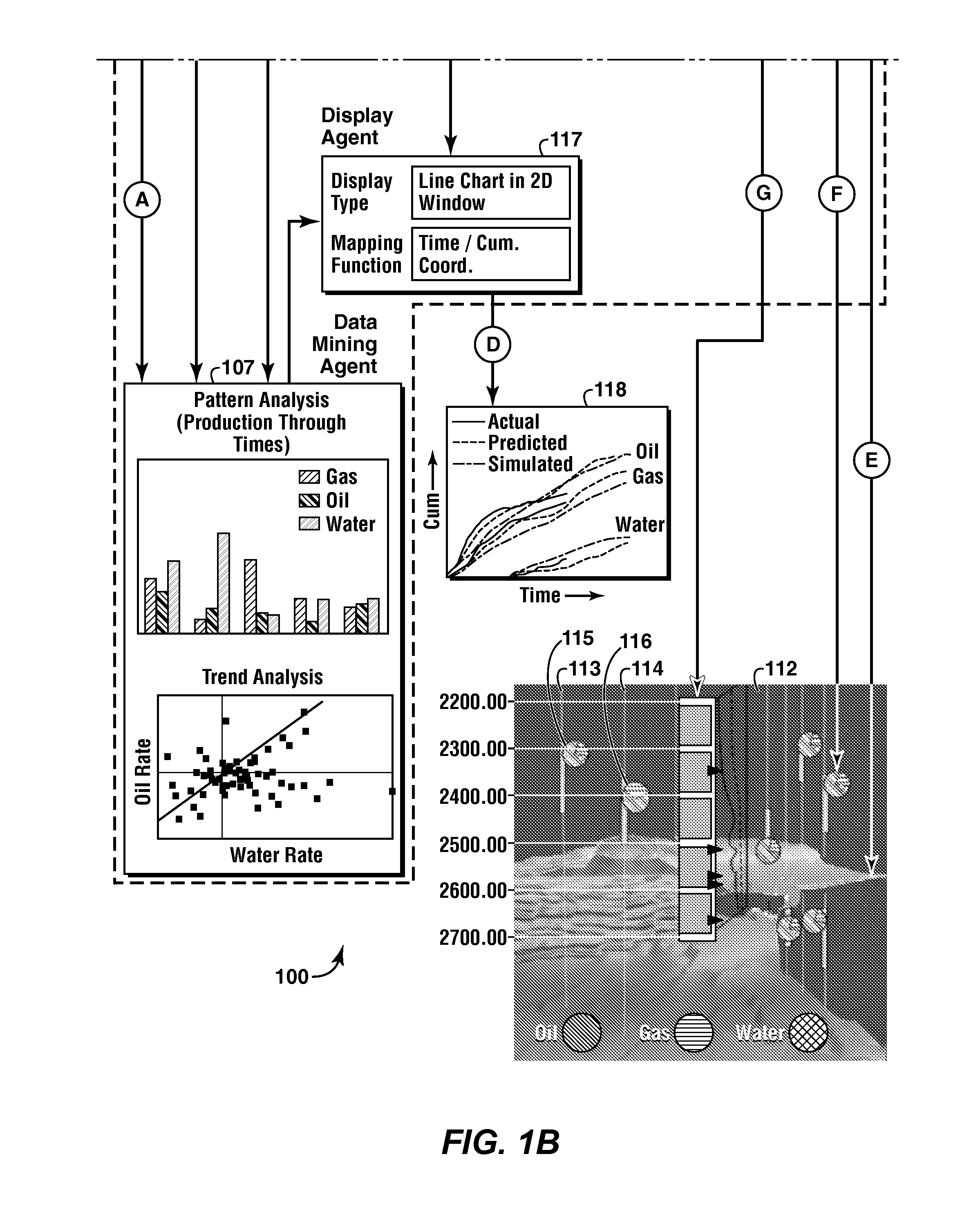

Automatic groove cutting system and cutting method based on three-dimensional vision and model matching

ActiveCN108274092ASolve the problems of poor cutting quality, low efficiency and high costSolve the technical difficulties of cuttingGas flame welding apparatusProcess equipmentEngineering

The invention provides an automatic groove cutting system and a cutting method based on three-dimensional vision and model matching, and relates to the technical filed of groove processing equipment.The automatic groove cutting system based on three-dimensional vision and model matching comprises a 3D vision subsystem, a host computer, a motion control system, a cutting robot and a cutting device. The 3D vision subsystem is connected with the host computer in a signal mode, the host computer is connected with the motion control system in a signal mode, the cutting device is arranged on the cutting robot, and the motion control system is electrically connected with the cutting robot. The automatic groove cutting method uses a 3D vision system and an image processing software and is based on an image processing algorithm, and the mapping between a robot, the 3D vision system and a workpiece coordinate system is realized by using a 3D camera internal and external parameter calibration algorithm, a three-point calibration method and an attitude matching algorithm . Different workpieces and groove types can be automatically cut, groove quality is good and efficiency is high, and automation and intelligence of the groove cutting system are improved.

Owner:BEIJING INSTITUTE OF PETROCHEMICAL TECHNOLOGY +1

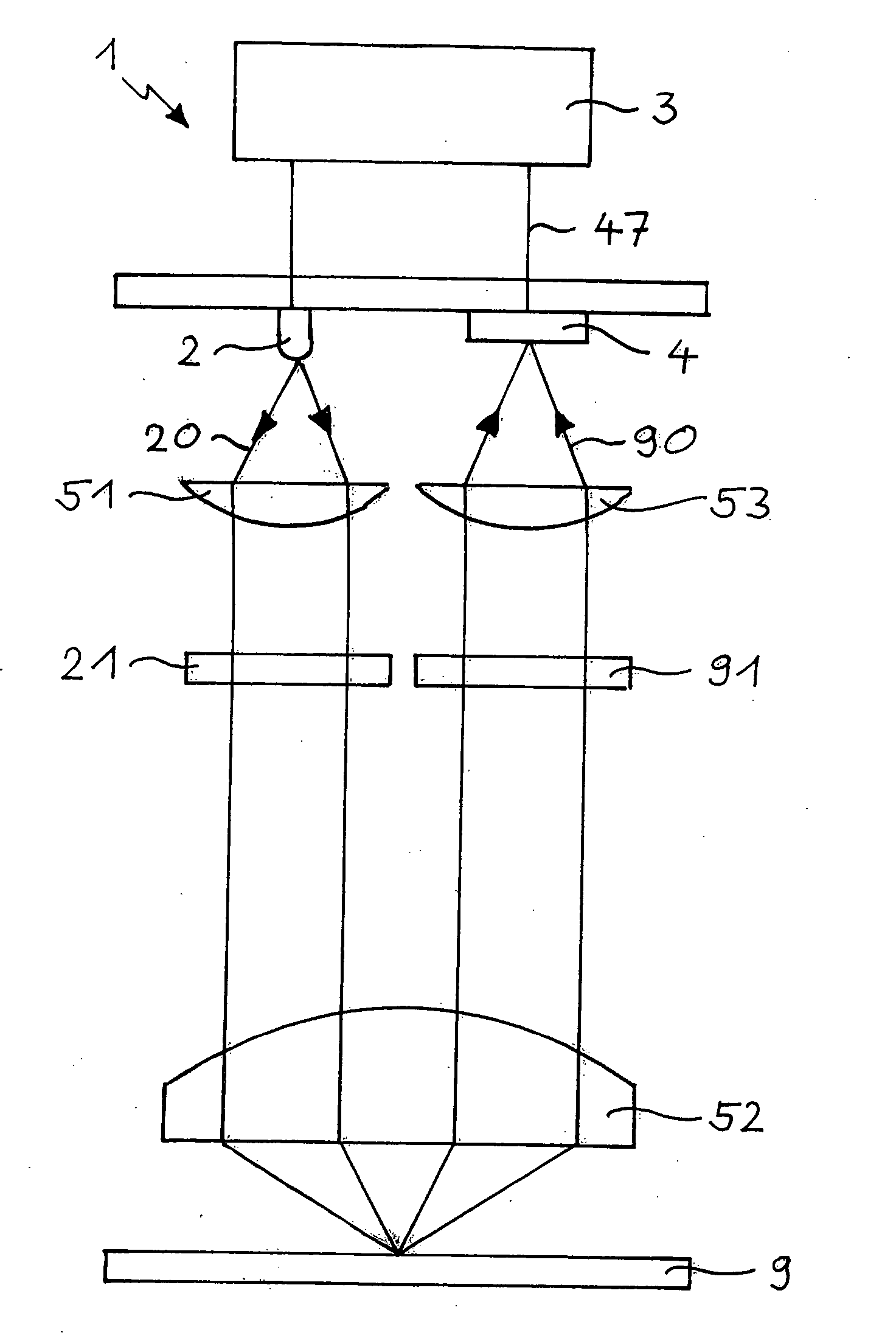

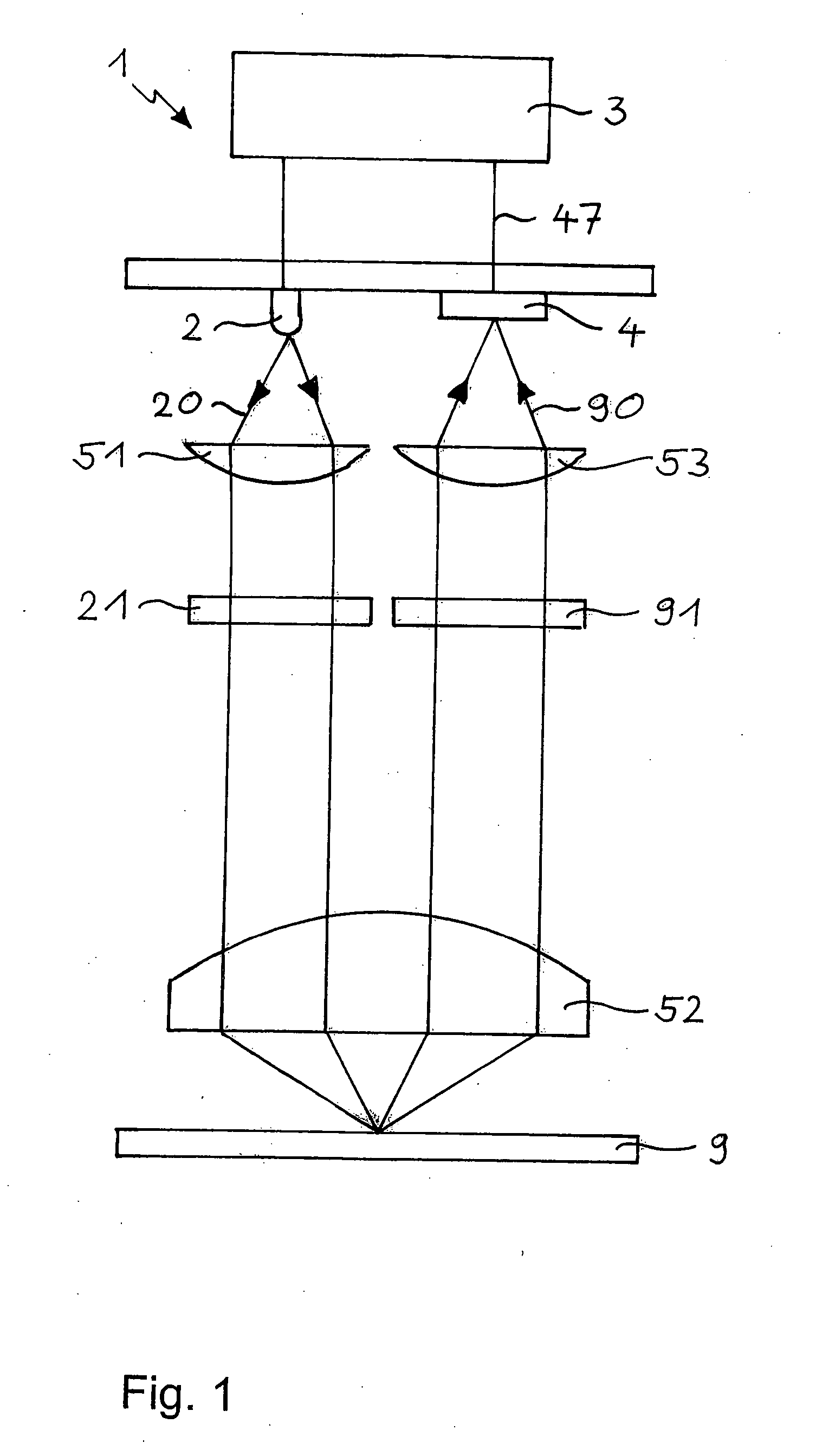

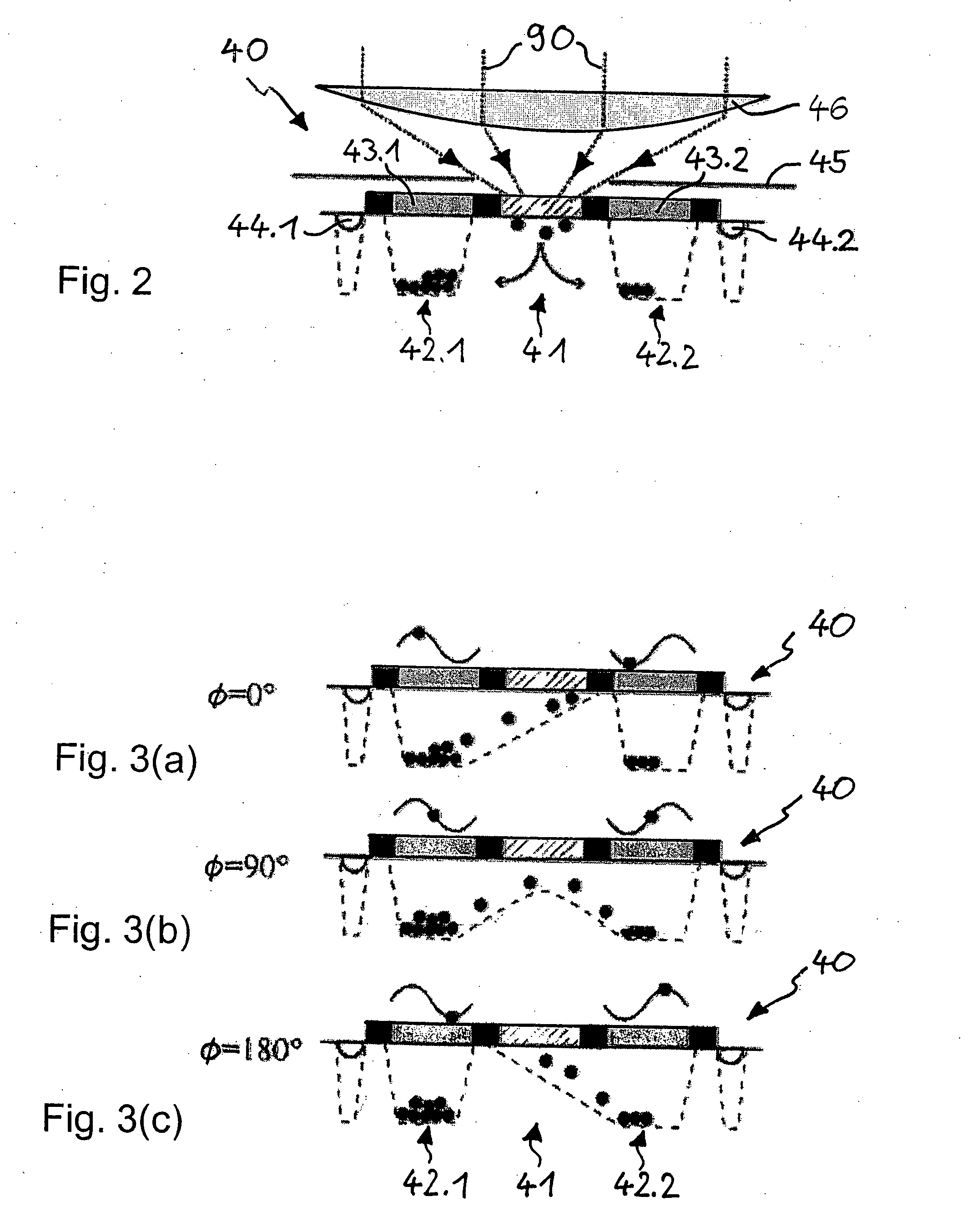

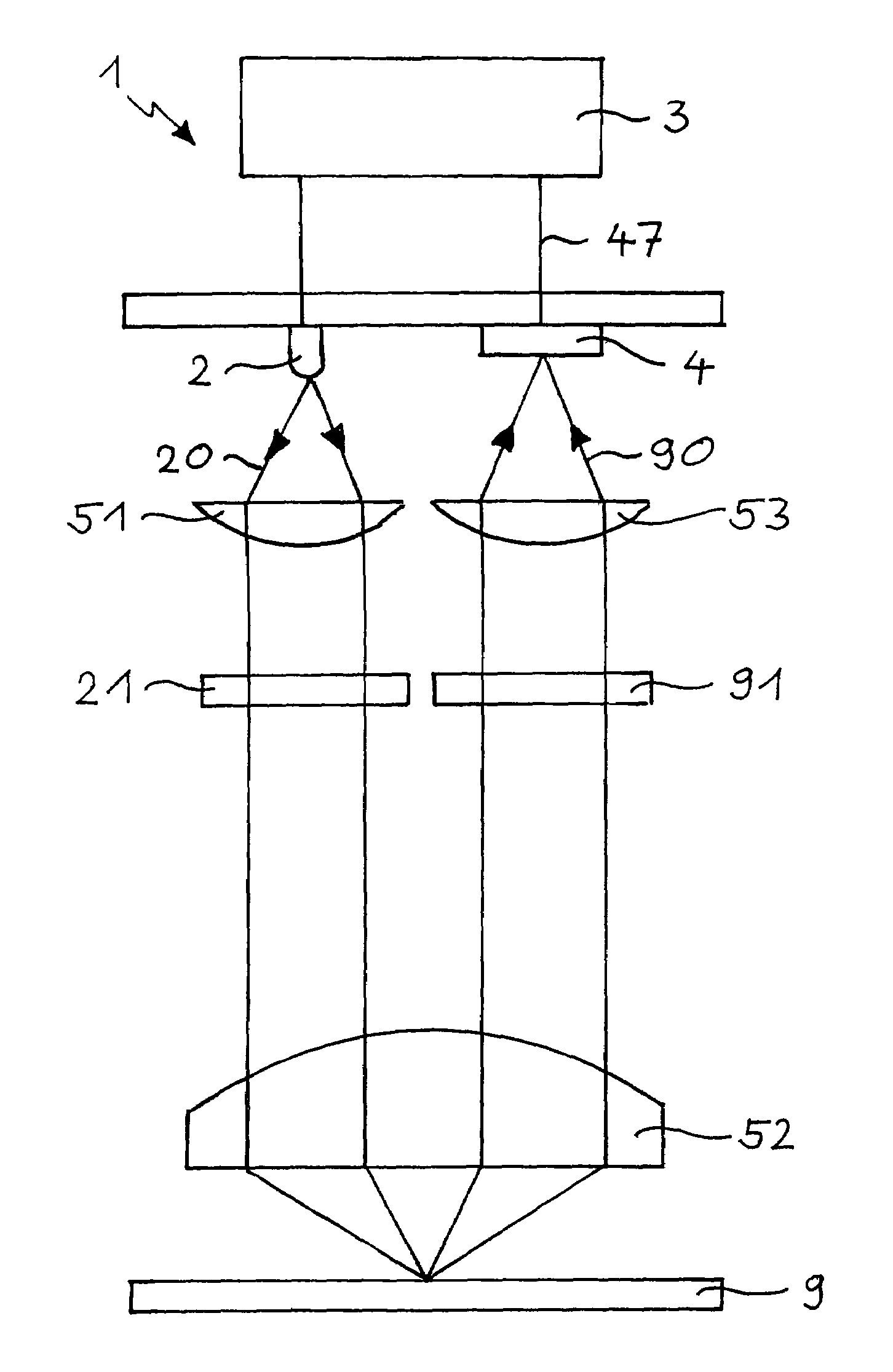

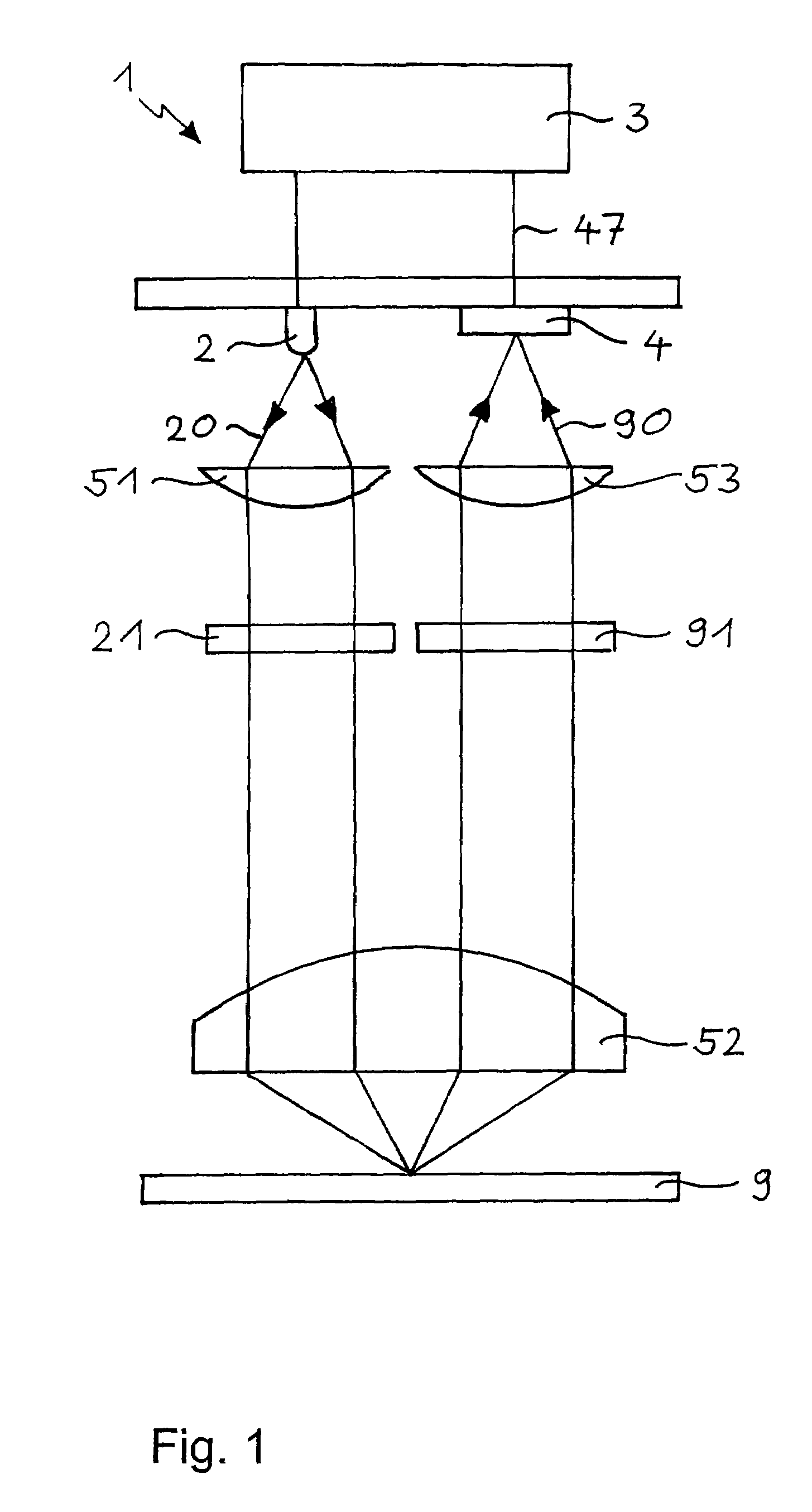

Apparatus and method for all-solid-state fluorescence lifetime imaging

ActiveUS20070018116A1More cost-effectiveMore user-friendlyRadiation pyrometrySpectrum investigationAll solid stateVideo rate

Fluorescence lifetime imaging microscopy (FLIM) is a powerful technique increasingly used in the life sciences during the past decades. An all-solid-state fluorescence-lifetime-imaging microscope (1) with a simple lock-in imager (4) for fluorescence lifetime detection is described. The lock-in imager (4), originally developed for 3D vision, embeds all the functionalities required for FLIM in a compact system. Its combination with a light-emitting diode (2) yields a cost-effective and user-friendly FLIM unit for wide-field microscopes. The system is suitable for nanosecond lifetime measurements and achieves video-rate imaging capabilities.

Owner:CSEM CENT SUISSE DELECTRONIQUE & DE MICROTECHNIQUE SA RECH & DEV

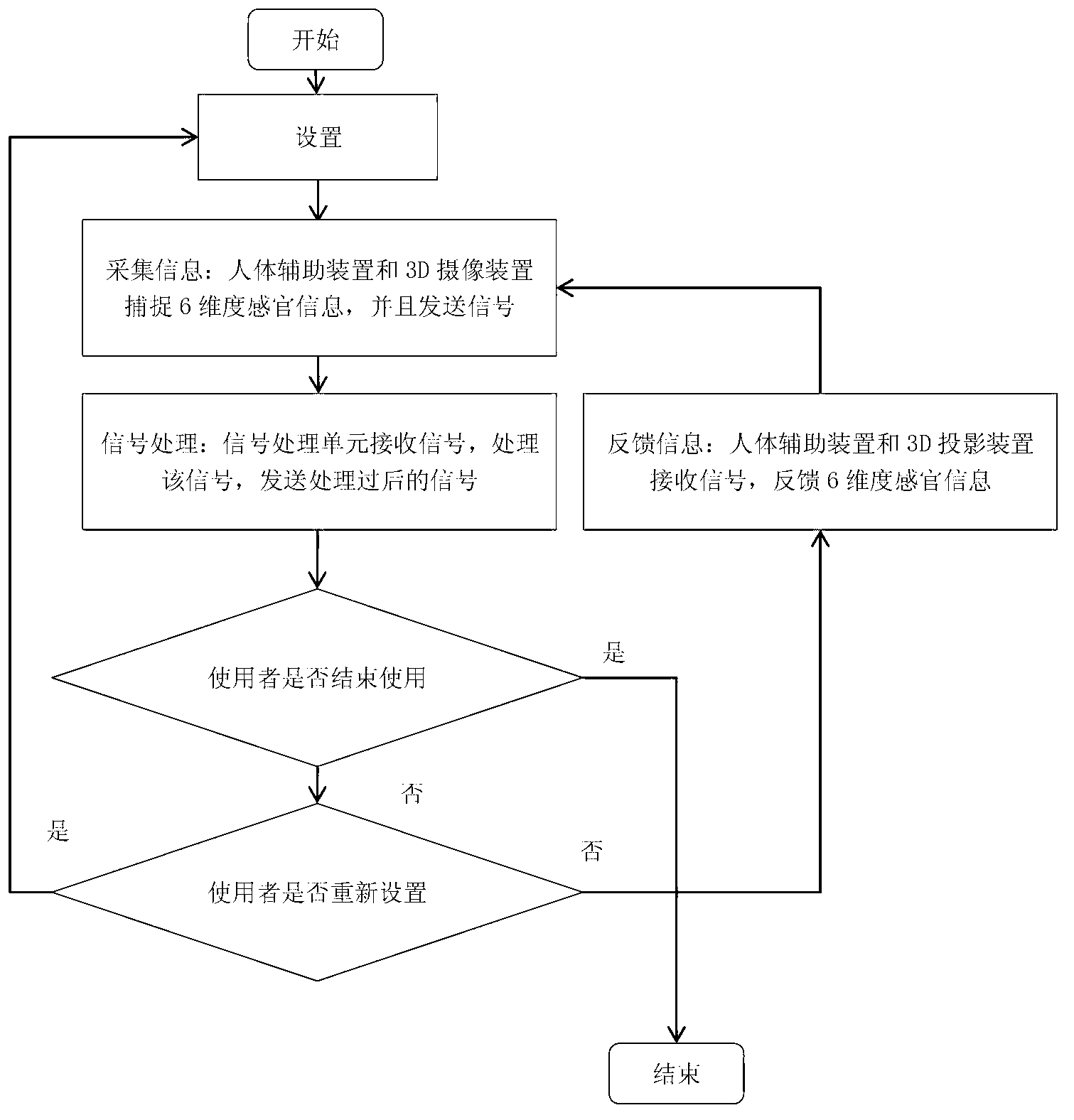

6-dimentional sensory-interactive virtual instrument system and realization method thereof

ActiveCN103235642AImprove experienceEasy to useInput/output for user-computer interactionMusic3d visionHuman body

The invention relates to a 6-dimentional sensory-interactive virtual instrument system and a realization method thereof. The 6-dimentional sensory-interactive virtual instrument system comprises a human body auxiliary device, a 3-dimentiaonal camera shooting device, a 3-dimentiaonal projection device and a signal processing unit, wherein the human body auxiliary device is used for collecting human body sound information, contact feeling information and press feeling information and converting all the information into signals to be sent to the signal processing unit; and the signal processing unit processes the signals, sends a signal to control the human body auxiliary device to feed back hearing sensing information, contact feeling sensing information and press feeling sensing information, and controls the 3-dimentiaonal projection device to feed back the 3-dimentiaonal vision sensing information. The invention further provides a realization method of a 6-dimentional sensory-interactive virtual instrument. The realization method comprises the four steps of setting, information collection, signal processing and information feedback. The 6-dimentional sensory-interactive virtual instrument system provided by the invention can greatly realize man-machine interaction and virtual reality, a user can feel an interaction effect just like playing a real instrument, and the system has the advantages of powerful function, convenience in use, exquisite appearance and low cost.

Owner:ZHEJIANG UNIV

Apparatus and method for all-solid-state fluorescence lifetime imaging

ActiveUS7508505B2More cost-effectiveMore user-friendlySpectrum investigationSolid-state devicesAll solid stateVideo rate

Fluorescence lifetime imaging microscopy (FLIM) is a powerful technique increasingly used in the life sciences during the past decades. An all-solid-state fluorescence-lifetime-imaging microscope (1) with a simple lock-in imager (4) for fluorescence lifetime detection is described. The lock-in imager (4), originally developed for 3D vision, embeds all the functionalities required for FLIM in a compact system. Its combination with a light-emitting diode (2) yields a cost-effective and user-friendly FLIM unit for wide-field microscopes. The system is suitable for nanosecond lifetime measurements and achieves video-rate imaging capabilities.

Owner:CSEM CENT SUISSE DELECTRONIQUE & DE MICROTECHNIQUE SA RECH & DEV

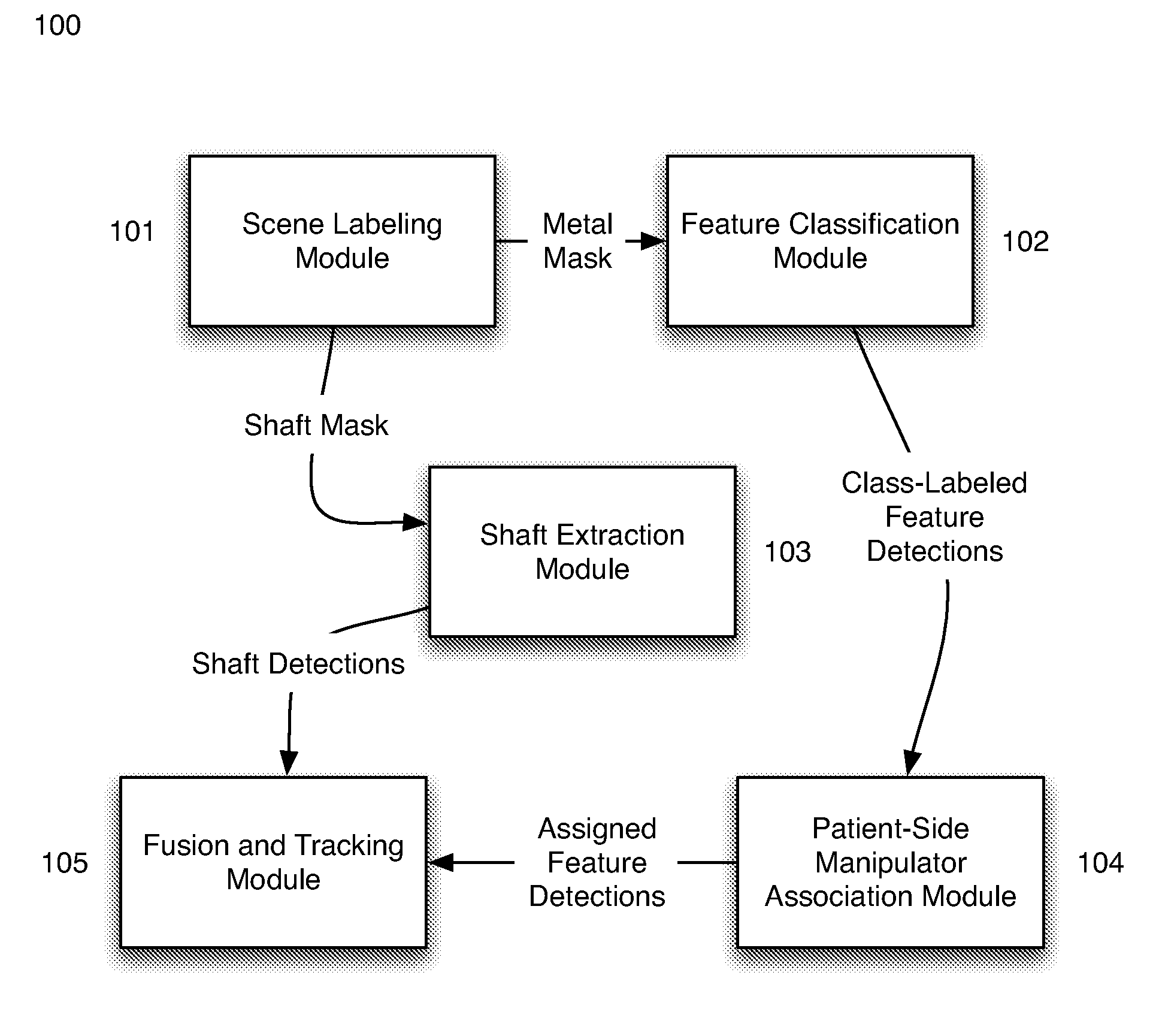

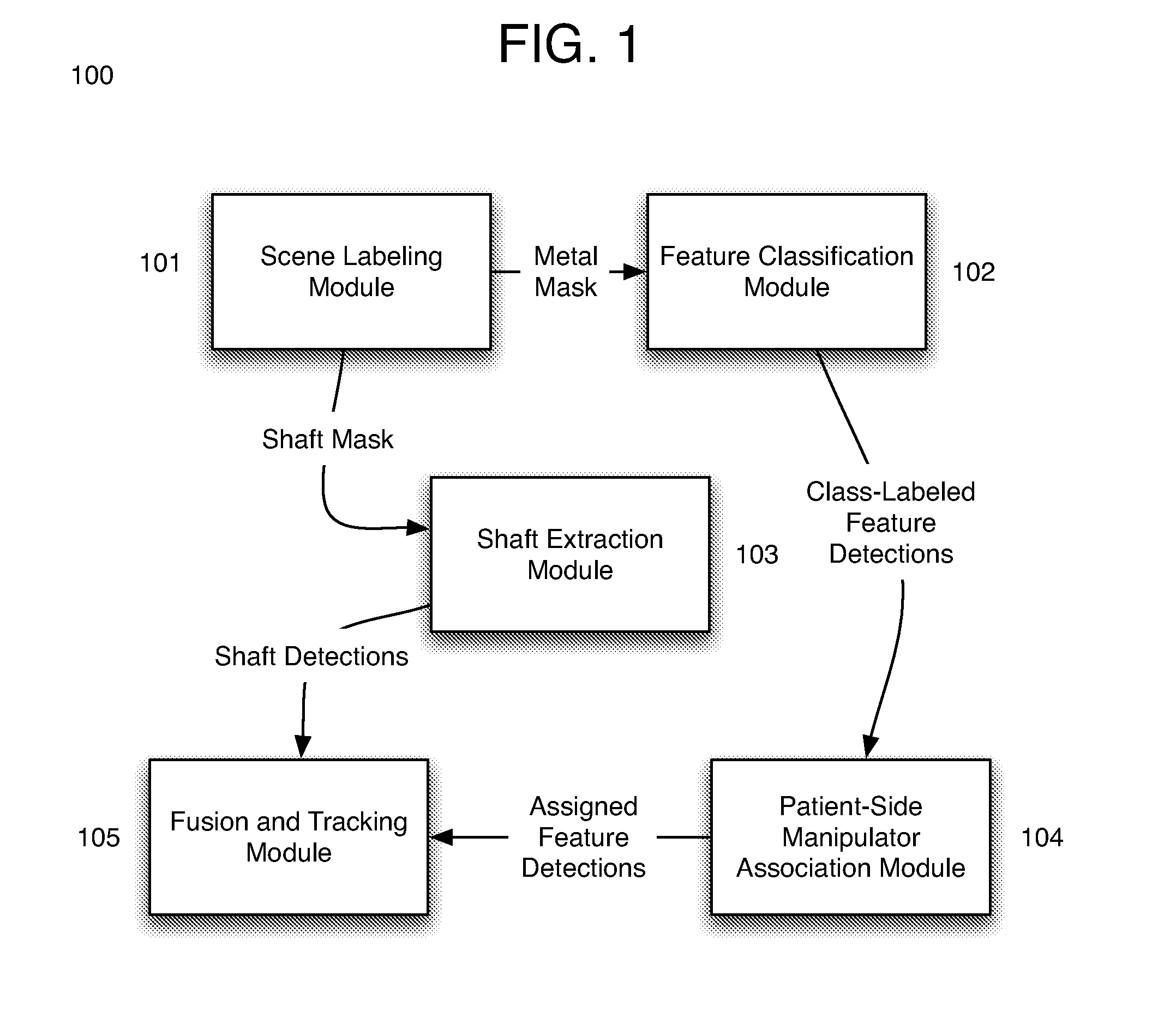

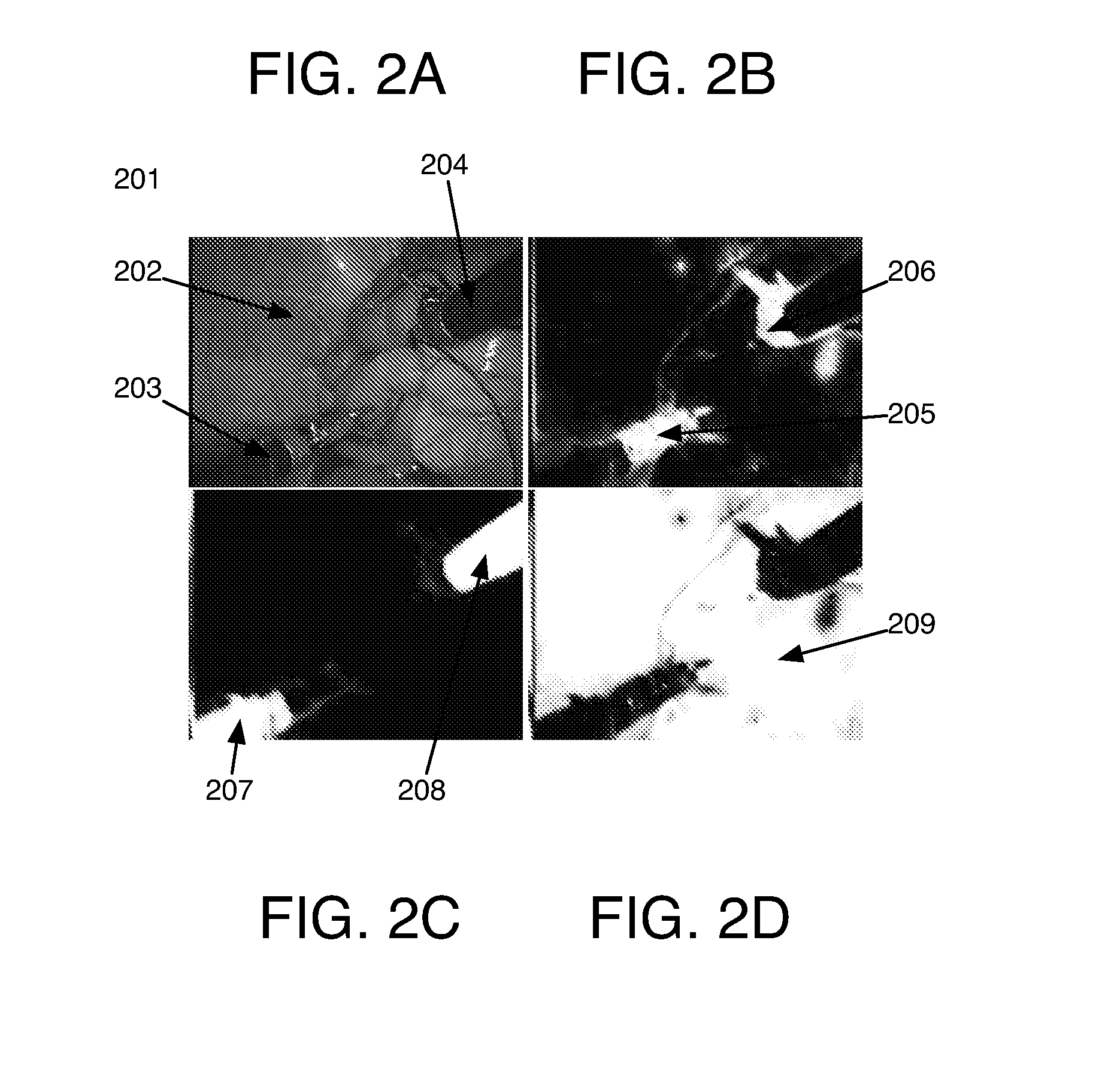

Markerless tracking of robotic surgical tools

Appearance learning systems, methods and computer products for three-dimensional markerless tracking of robotic surgical tools. An appearance learning approach is provided that is used to detect and track surgical robotic tools in laparoscopic sequences. By training a robust visual feature descriptor on low-level landmark features, a framework is built for fusing robot kinematics and 3D visual observations to track surgical tools over long periods of time across various types of environments. Three-dimensional tracking is enabled on multiple tools of multiple types with different overall appearances. The presently disclosed subject matter is applicable to surgical robot systems such as the da Vinci® surgical robot in both ex vivo and in vivo environments.

Owner:THE TRUSTEES OF COLUMBIA UNIV IN THE CITY OF NEW YORK

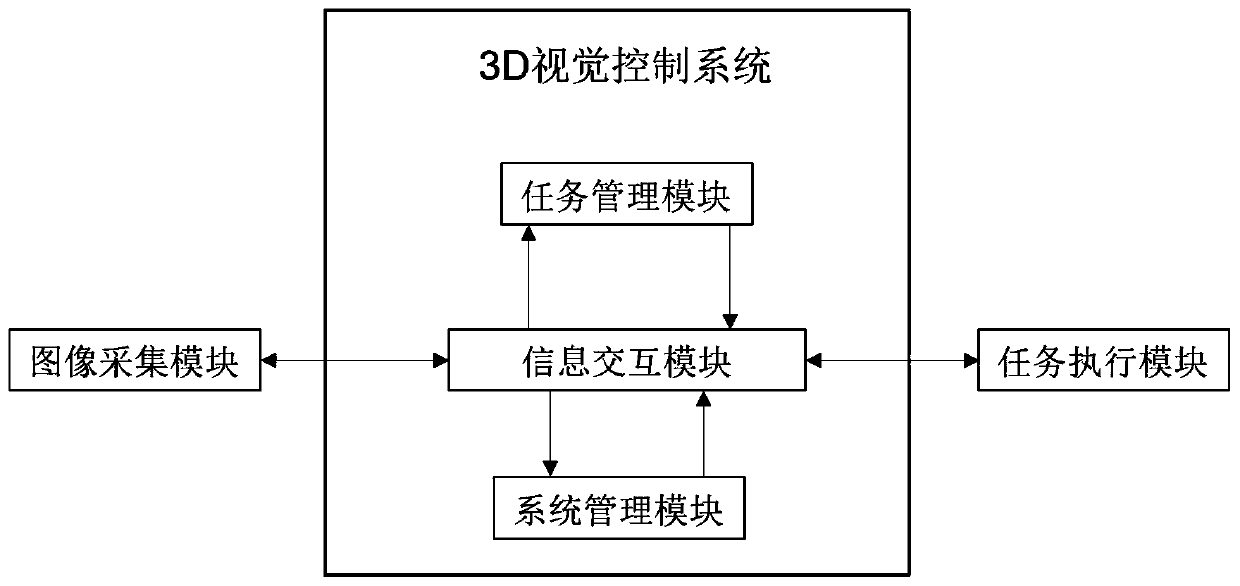

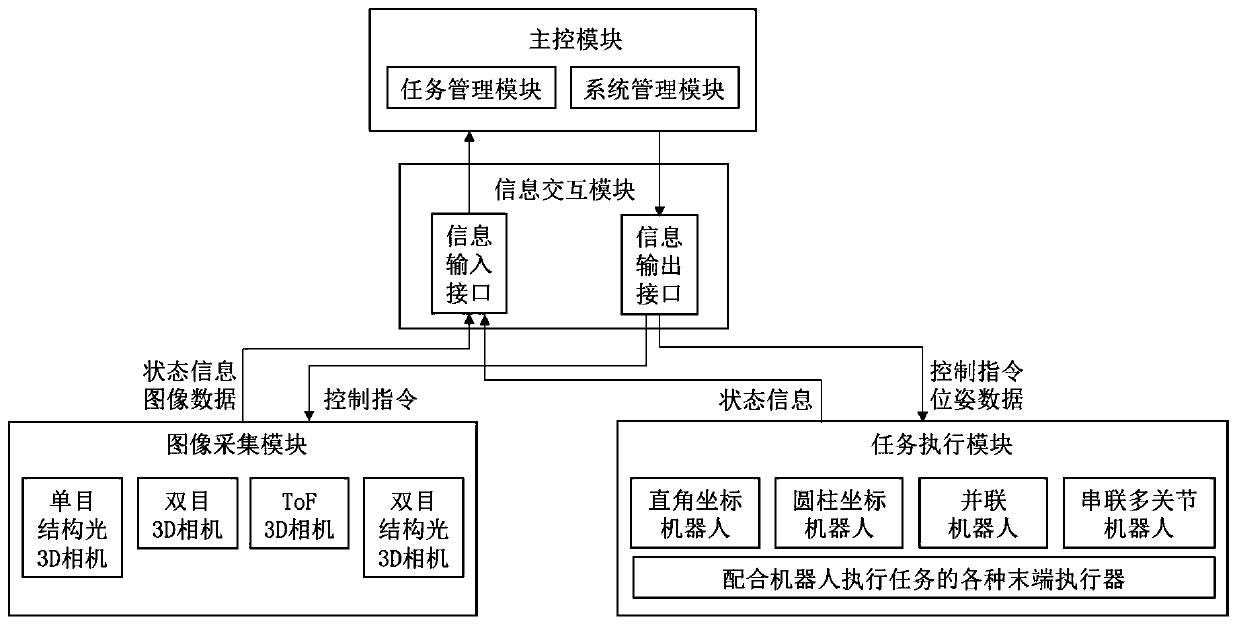

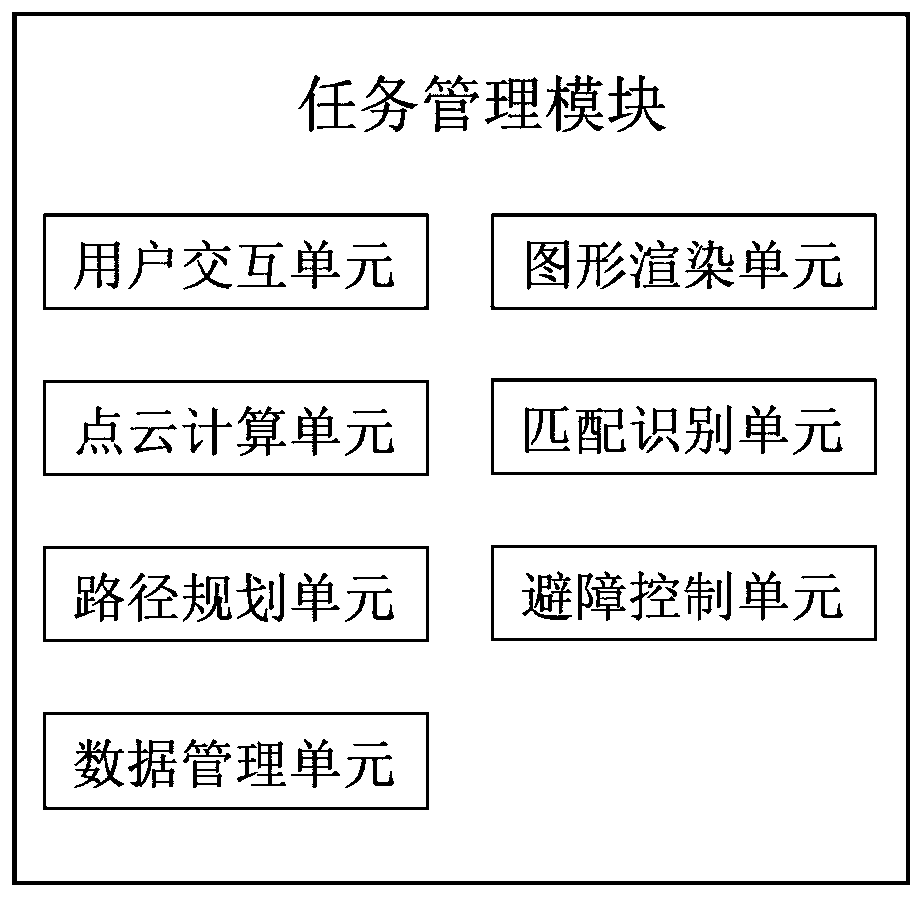

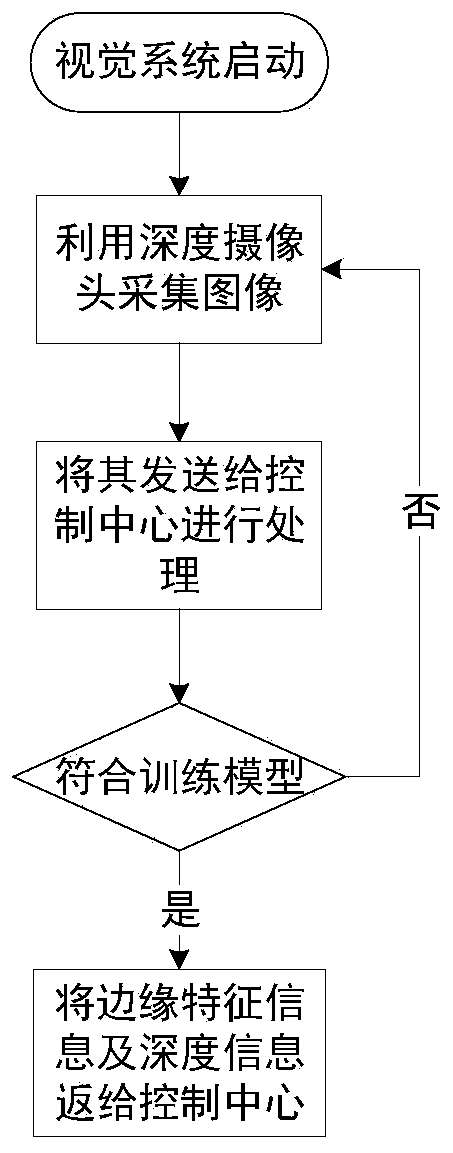

Disorderly stacked workpiece grabbing system based on 3D vision and interaction method

ActiveCN111508066ALower barriers to useReduced commissioning timeTotal factory controlInput/output processes for data processingVisual technologySystems management

The invention discloses a disorderly stacked workpiece grabbing system based on 3D vision and an interaction method, and relates to the technical field of 3D vision. The system comprises a task management module, an information interaction module, a system management module, an image acquisition module and a task execution module. According to the invention, a process-oriented task creation form is adopted for guiding workers to complete disordered workpiece grabbing task creation according to set steps; workers only need to grasp the specific implementation process of the disordered workpiecegrabbing task; the technical details of workpiece point cloud model generation, workpiece matching recognition, grabbing pose calculation, path planning and the like do not need to be paid attentionto, the usability of a visual software system is improved, the use threshold and learning cost of a terminal user are reduced, the debugging time of a 3D visual guidance robot for executing a grabbingtask is shortened, and the production efficiency is improved.

Owner:北京迁移科技有限公司

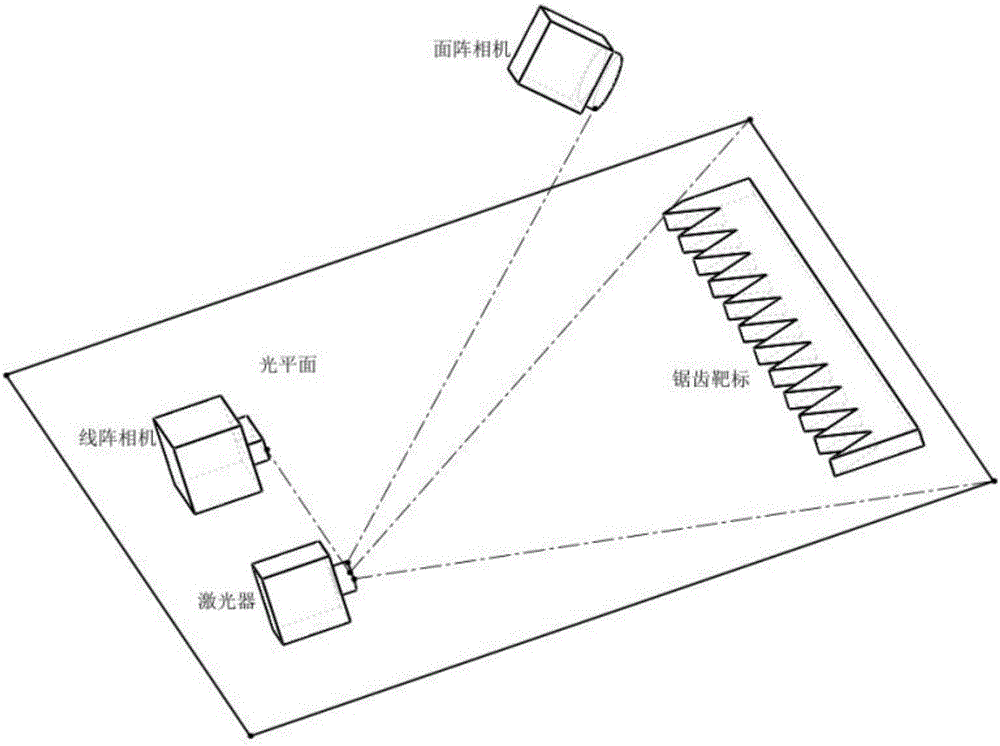

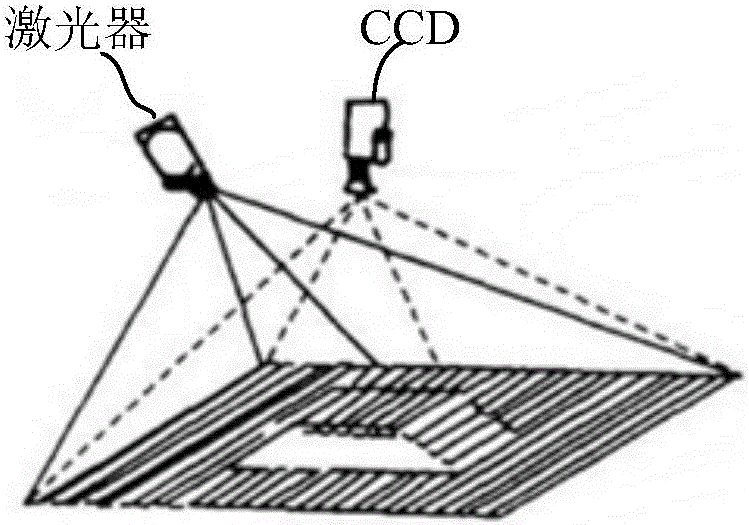

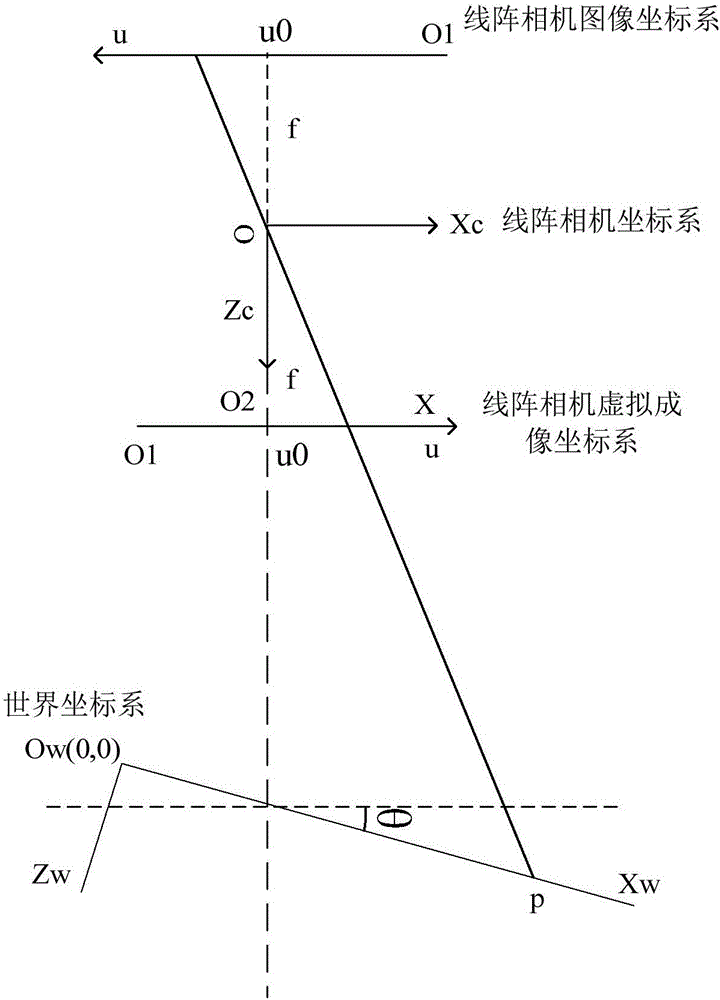

Joint calibration method and apparatus for structured light 3D visual system and linear array camera

ActiveCN106127745AMeet the requirements of traffic measurementImprove efficiencyImage analysisCamera imageVisual perception

A method and device for joint calibration of a structured light 3D vision system and a line array camera. The structured light 3D vision system includes an area array camera and a laser. The method includes: acquiring a light plane coordinate system and a target coordinate in the structured light 3D vision system The conversion relationship of the system, and as the first conversion relationship; according to the coordinates of the selected feature points in the target coordinate system, and the coordinates of the feature points in the line camera image coordinate system, the target coordinates are established System and the transformation relationship of the line scan camera image coordinate system, and as the second transformation relationship; according to the first transformation relationship and the second transformation relationship, establish the light plane coordinate system and the line scan camera image The conversion relationship of the coordinate system is used as the third conversion relationship; according to the third conversion relationship, the line camera image coordinates corresponding to each coordinate point on the light plane coordinate system are obtained, so as to realize the structured light 3D vision system Joint calibration with line scan cameras.

Owner:BEIJING LUSTER LIGHTTECH

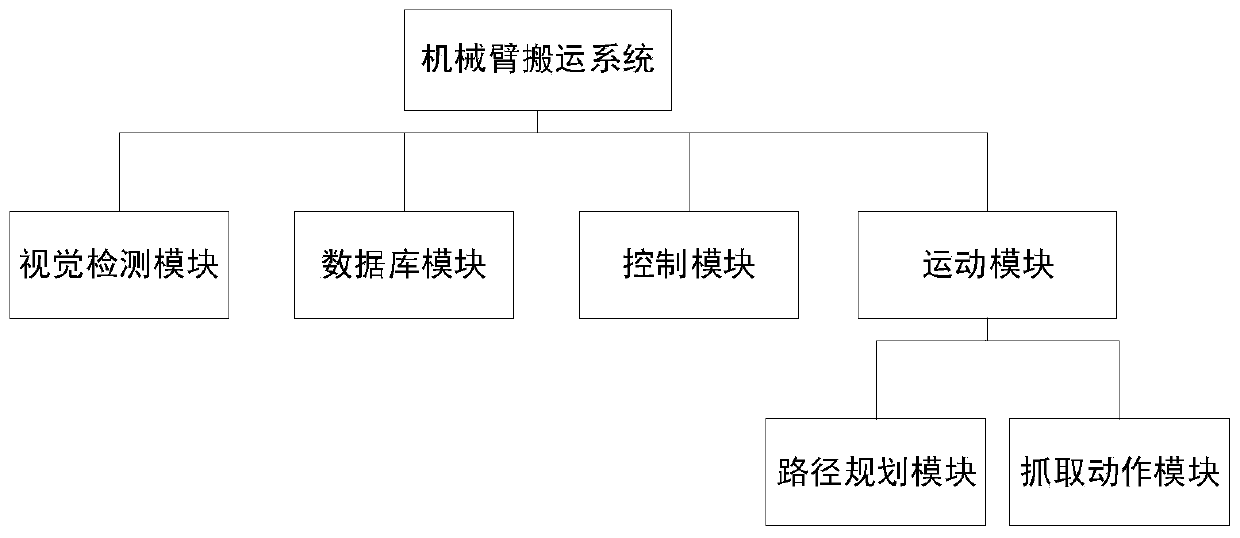

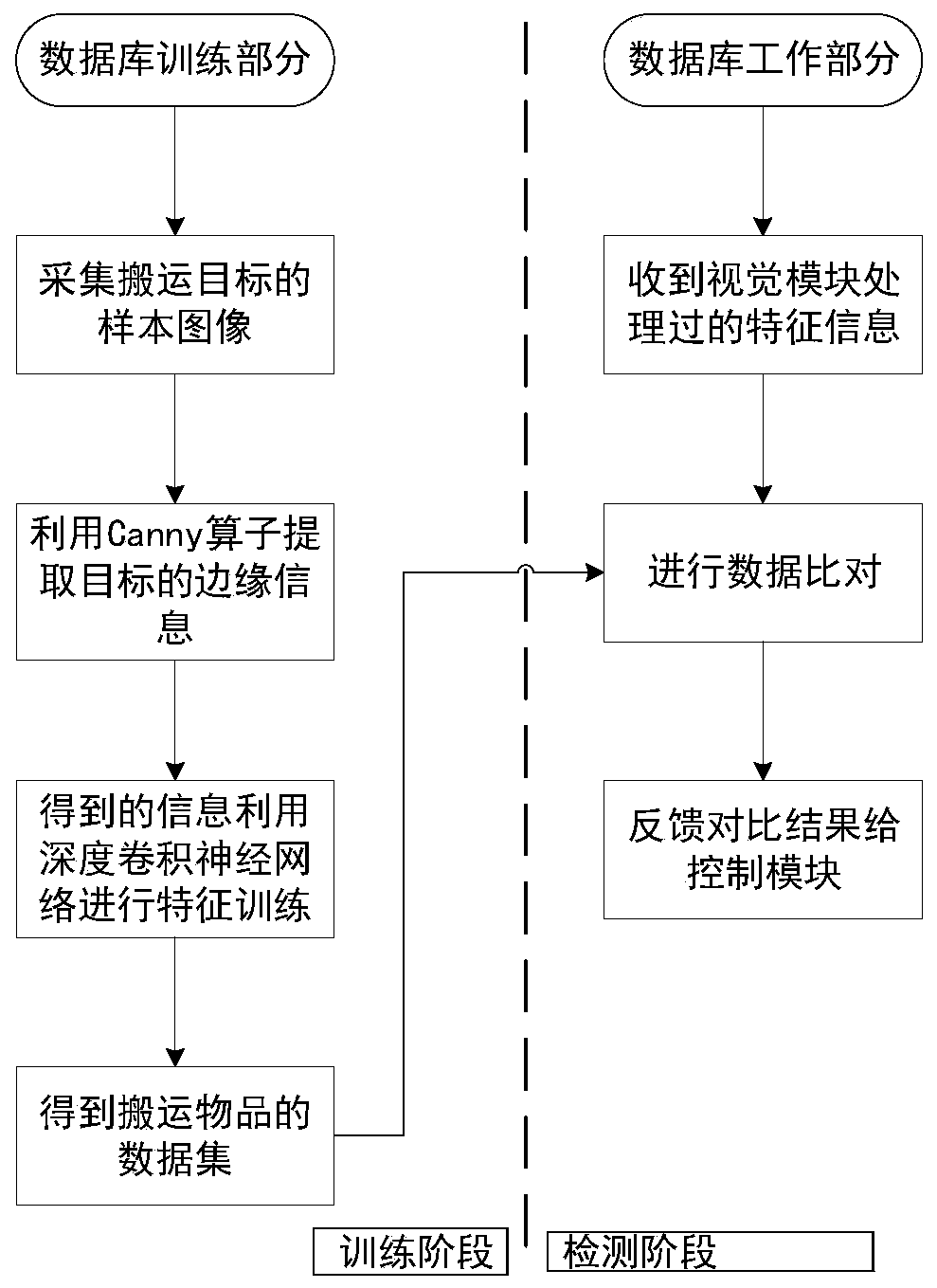

Intelligent handling robotic arm system based on 3D vision and deep learning, and using method

ActiveCN111496770AIncrease the scope of workGood application effectProgramme-controlled manipulatorImage enhancementRobotic armVision based

The invention proposes an intelligent handling robotic arm system based on 3D vision and deep learning. The system comprises a vision detection module, a training learning module, a motion planning module and a control module, wherein the vision detection module collects images of objects and sends the images to the control module; the training learning module collects sample data and forms a database for the objects that the robotic arm needs to perform grasping actions; the motion planning module comprises a path planning part and a grasping motion planning part, and the path planning part realizes the path planning of the robotic arm and realizes the function of autonomous selection of paths and obstacle avoidance of the robotic arm; the grasping action planning part realizes the grasping function; and the control module processes the information transmitted by the vision detection module, the training learning module and the motion planning module and transmits corresponding commands to the vision detection module, training learning module and motion planning module, so that the robotic arm completes path movement and grabbing. The invention makes the work scene more diversified, the production and transportation more intelligent, and the application field of the robotic arm broadened.

Owner:SHANGHAI DIANJI UNIV

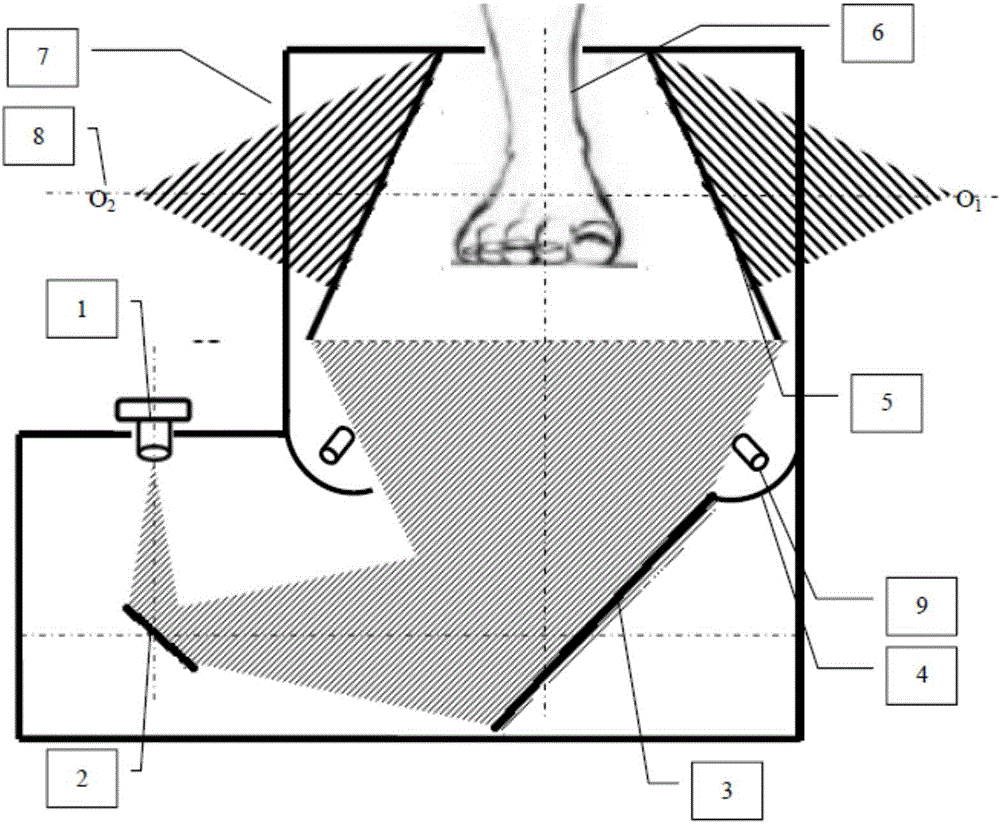

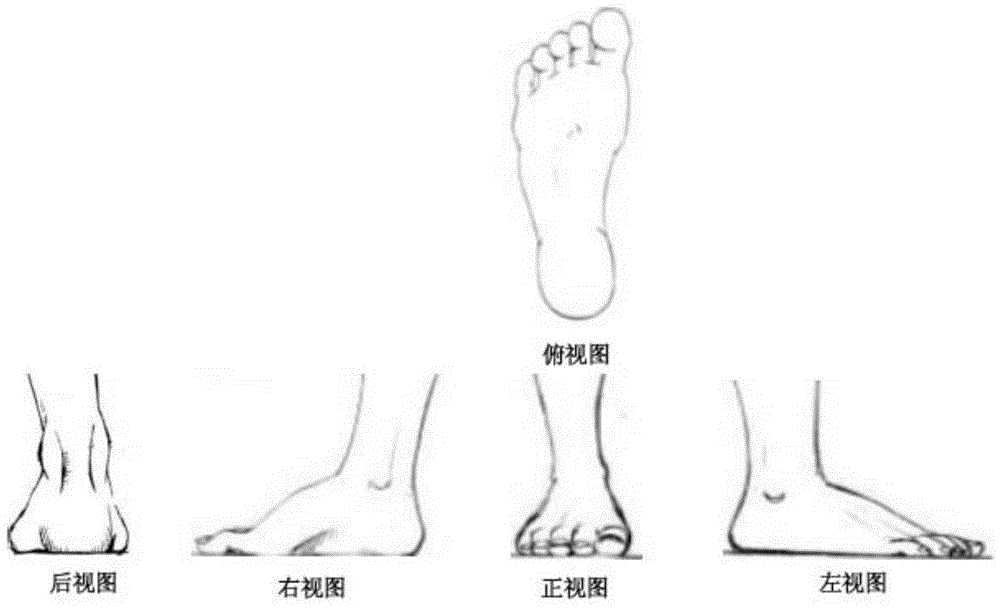

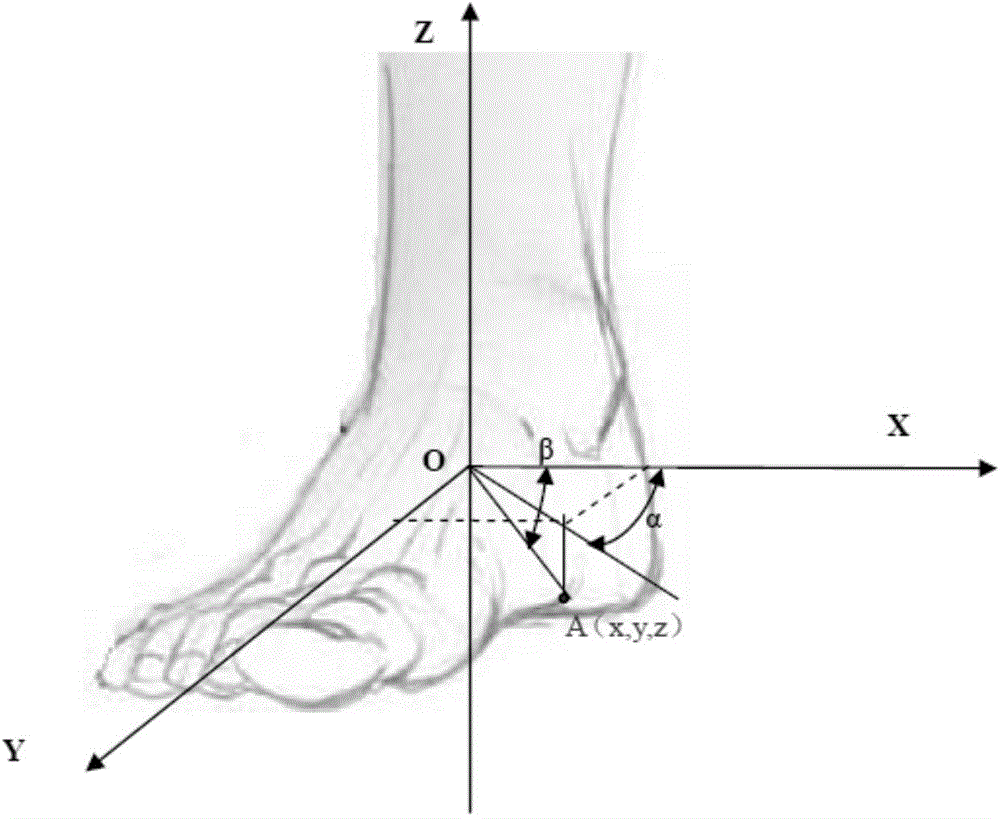

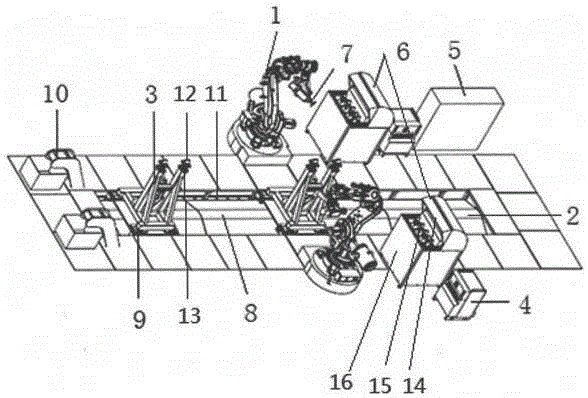

Real-person shoe type copying device and shoe tree manufacturing method based on single-eye multi-angle-of-view robot vision

InactiveCN104573180AIncrease silhouetteConstraints for adding silhouettesSpecial data processing applications3D modellingPlane mirrorImage segmentation

The invention discloses a real-person shoe type copying device based on single-eye multi-angle-of-view robot vision. The device comprises a single-eye multi-angle-of-view 3D (Three-dimensional) vision box and a computer, wherein the single-eye multi-angle-of-view 3D vision box is used for shooting images of a foot of a real person at five different angles of view; the computer is used for achieving the 3D reconstruction of the foot of the real person and automatically generating a 3D printing file; a plane mirror rectangular bucket-type cavity is formed inside the single-eye multi-angle-of-view 3D vision box and consists of four trapezoidal mirror planes; the upper part of a mirror body is large, and the lower part of the mirror body is small; the mirror planes face the inner side of a cavity body; a light source used for providing uniform soft lighting for the foot of the real person is arranged at the lower part of the plane mirror rectangular bucket-type cavity; cameras used for obtaining the images of the foot of the real person at multiple angles of view according to the refraction and reflection principle of the mirror planes are further arranged in the single-eye multi-angle-of-view 3D vision box; the computer comprises a single-eye multi-angle-of-view 3D vision calibrating unit, an image division, conversion and correction unit, a real person foot surface shape measurement unit and an automatic STL (Standard Template Library) file generation unit. The invention further discloses a shoe tree manufacturing method based on the single-eye multi-angle-of-view robot vision.

Owner:ZHEJIANG UNIV OF TECH

System and method for training a model in a plurality of non-perspective cameras and determining 3D pose of an object at runtime with the same

ActiveUS20120147149A1Improve accuracySpeed training processImage analysisSteroscopic systemsIntrinsicsPostural orientation

This invention provides a system and method for training and performing runtime 3D pose determination of an object using a plurality of camera assemblies in a 3D vision system. The cameras are arranged at different orientations with respect to a scene, so as to acquire contemporaneous images of an object, both at training and runtime. Each of the camera assemblies includes a non-perspective lens that acquires a respective non-perspective image for use in the process. The searched object features in one of the acquired non-perspective image can be used to define the expected location of object features in the second (or subsequent) non-perspective images based upon an affine transform, which is computed based upon at least a subset of the intrinsics and extrinsics of each camera. The locations of features in the second, and subsequent, non-perspective images can be refined by searching within the expected location of those images. This approach can be used in training, to generate the training model, and in runtime operating on acquired images of runtime objects. The non-perspective cameras can employ telecentric lenses.

Owner:COGNEX CORP

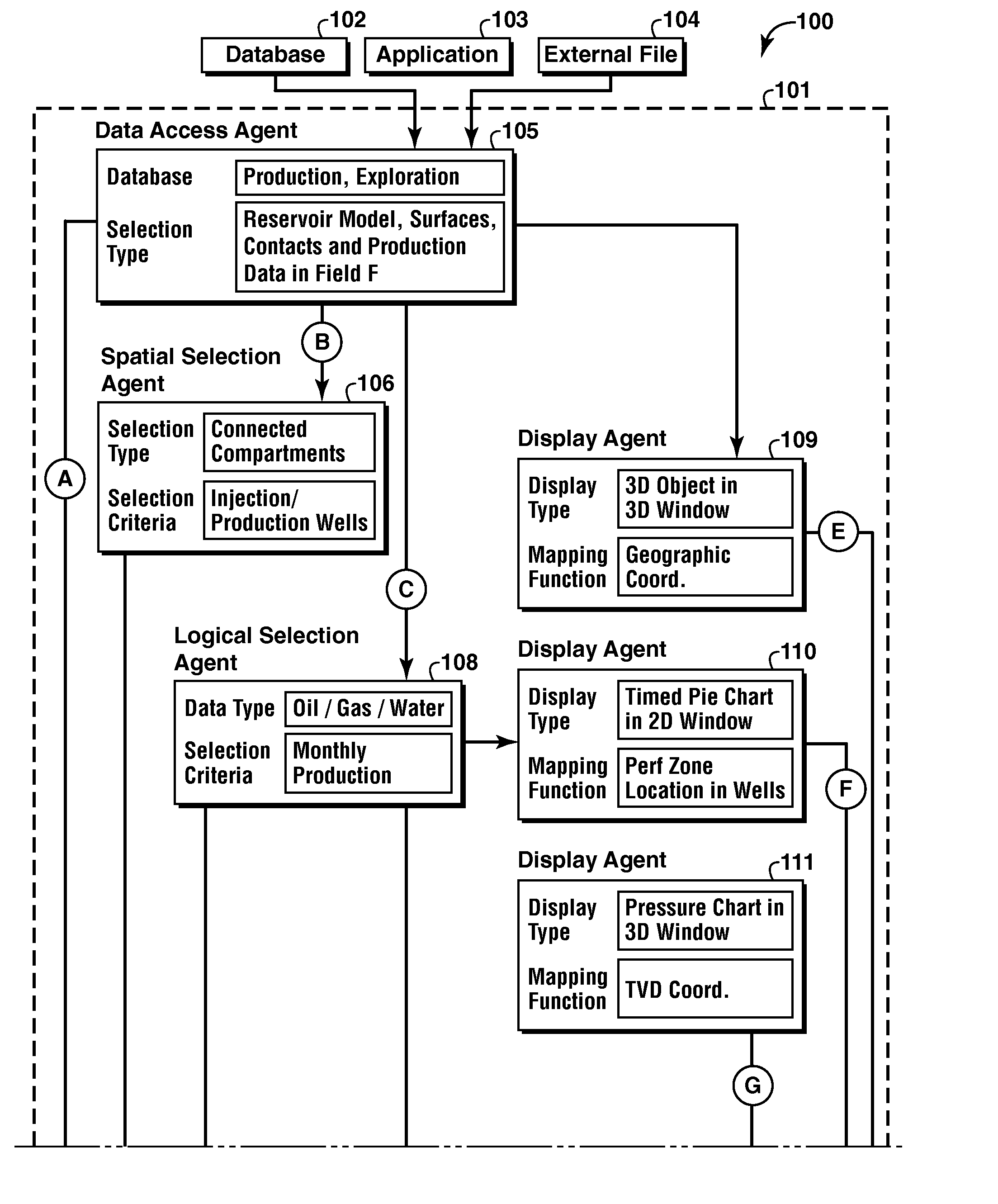

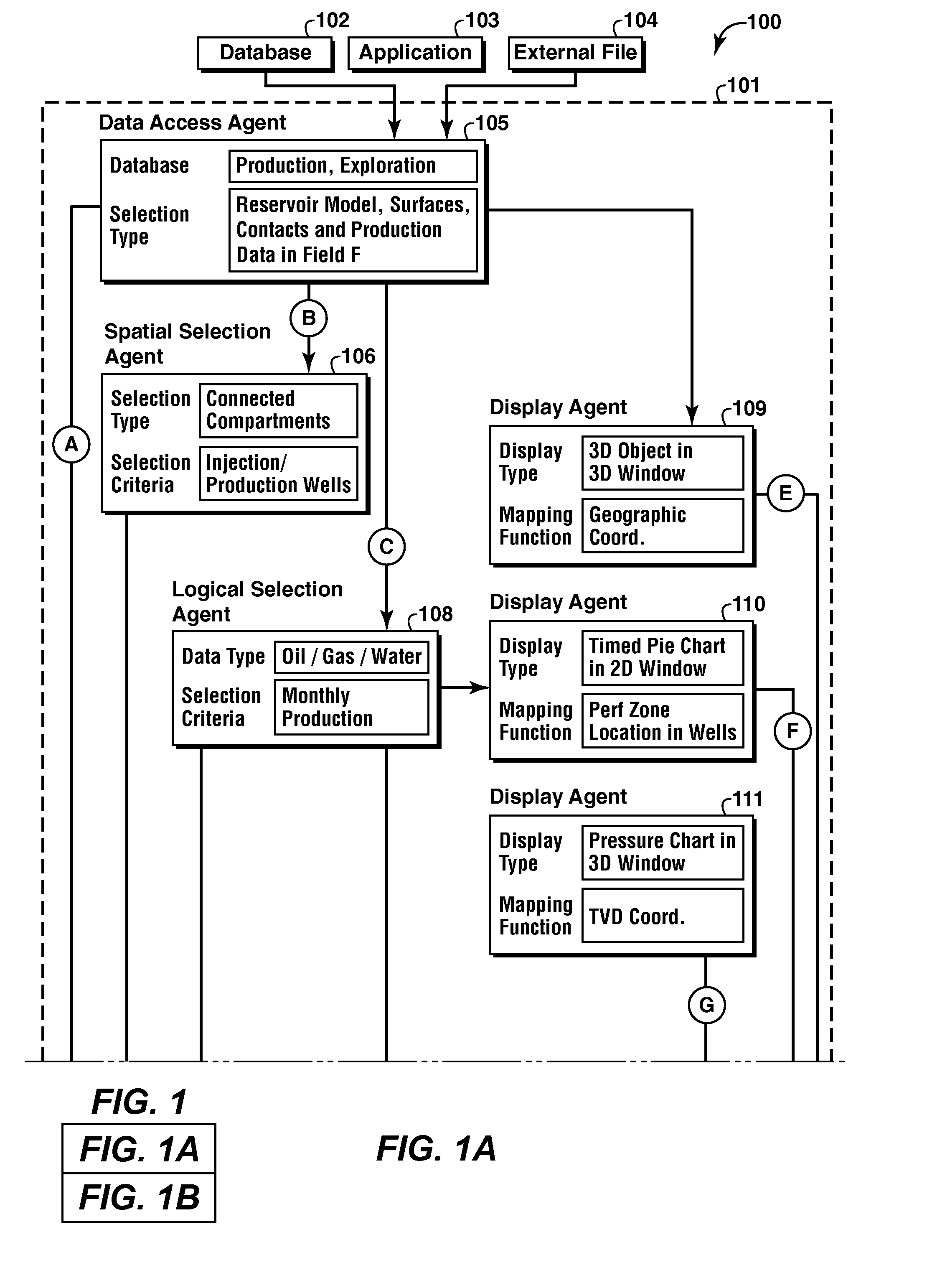

Functional-Based Knowledge Analysis In A 2D and 3D Visual Environment

ActiveUS20110044532A1Digital data information retrievalCharacter and pattern recognitionData sourceVisual perception

A method of creating a visual display based on a plurality of data sources is provided. An exemplary embodiment of the method comprises extracting a set of extracted data from the plurality of data sources and processing at least a portion of the extracted data with a set of knowledge agents according to specific criteria to create at least one data assemblage. The exemplary method also comprises providing an integrated two-dimensional / three-dimensional (2D / 3D) visual display in which at least one 2D element of the at least one data assemblage is integrated into a 3D visual representation using a mapping identifier and a criteria identifier.

Owner:EXXONMOBIL UPSTREAM RES CO

Intelligent sensing grinding robot system with anti-explosion function

ActiveCN105215809AReduce demandProcess safetyEdge grinding machinesGrinding feed controlControl systemControl engineering

The invention relates to an intelligent sensing grinding robot system with the anti-explosion function. The intelligent sensing grinding robot system comprises industrial grinding robots, a 3D vision system, a force / position mixed control system, an anti-explosion device, a conveying system, a shock absorption tool rack, industrial grinding robot control cabinets, a main electric control cabinet and anti-dust automatic tool replacing magazines. Electric spindles with the automatic tool replacing function are arranged on arms of the industrial grinding robots. The conveying system is composed of a sliding table, a sliding block and a cover board, wherein the sliding table is embedded in a ground groove. The conveying system, the industrial grinding robot control cabinets, the anti-dust automatic tool replacing magazines, the anti-explosion device, the 3D vision system and the force / position mixed control system are all connected with the main electric control cabinet. The main electric control cabinet controls and dispatches all the devices. By the adoption of the intelligent sensing grinding robot system, through vision guidance and force and position intelligent sensing, the industrial grinding robots have the visual sense, the touch sense and the perception function, and the better machining quality of a large complex surface is achieved.

Owner:SHENZHEN BOLINTE INTELLIGENT ROBOT CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com