Patents

Literature

121 results about "Real time vision" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

DC magnetic-based position and orientation monitoring system for tracking medical instruments

ActiveUS20070078334A1Overcome disposabilityOvercome cost issueDiagnostic recording/measuringSensors3d sensorEngineering

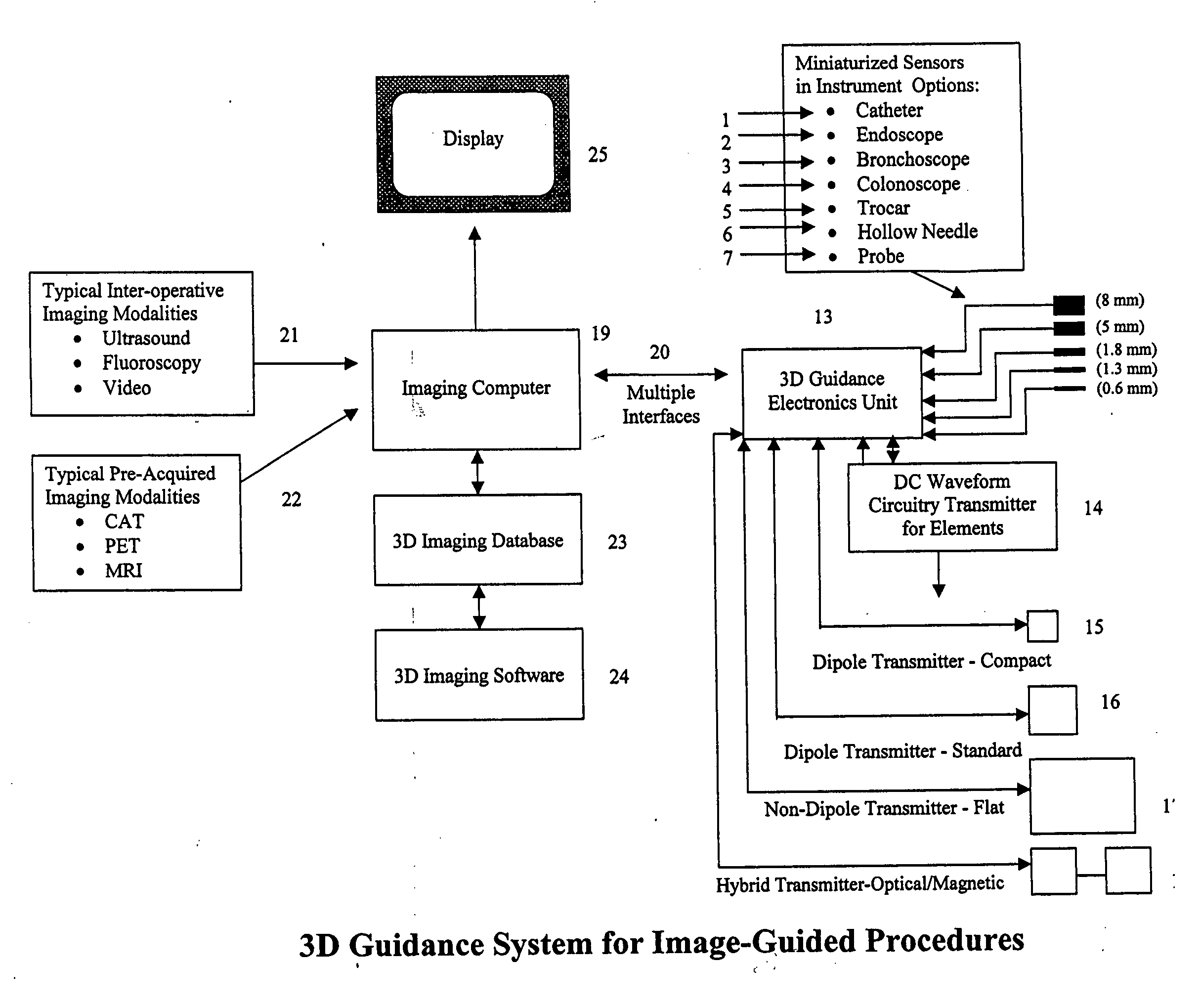

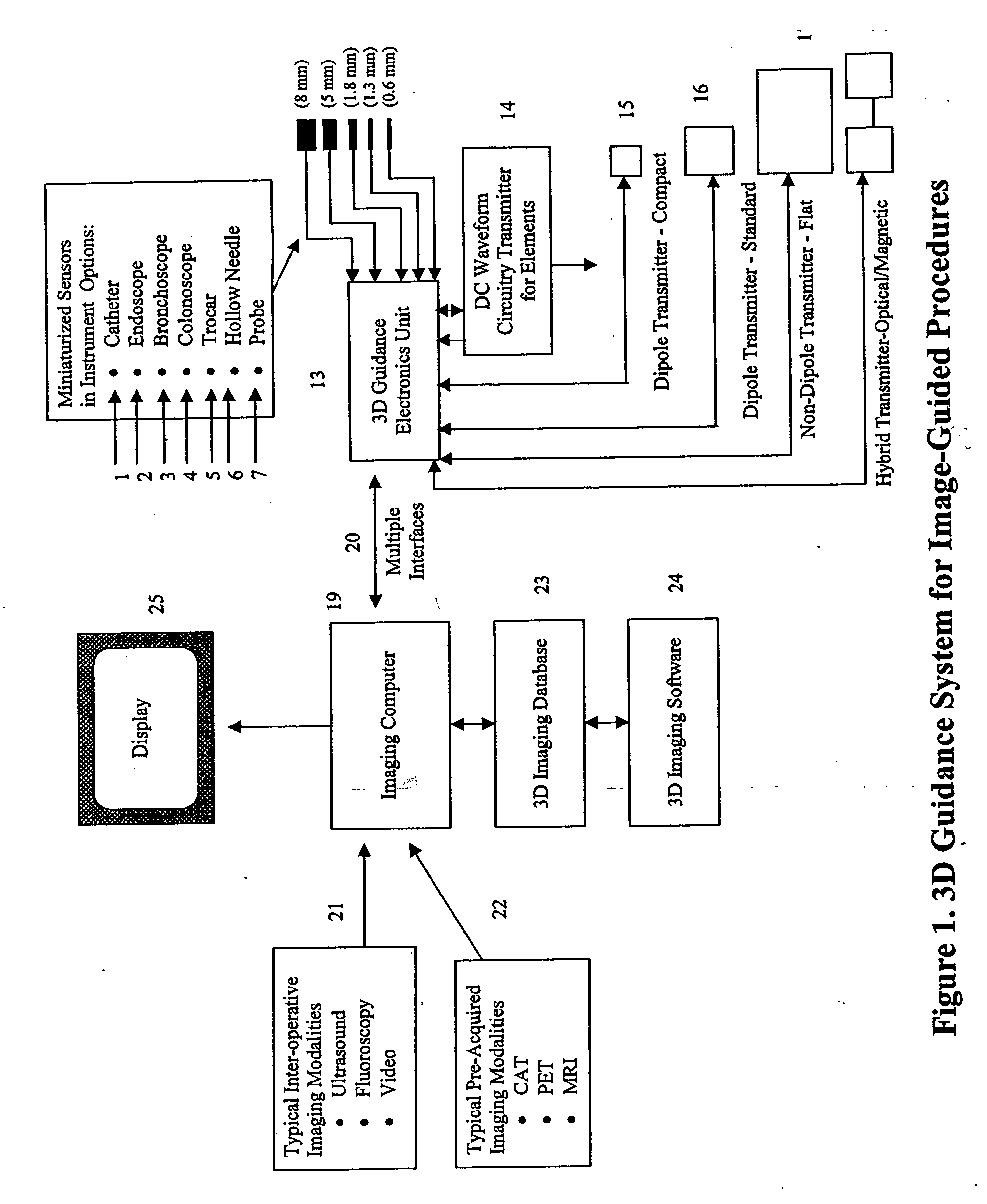

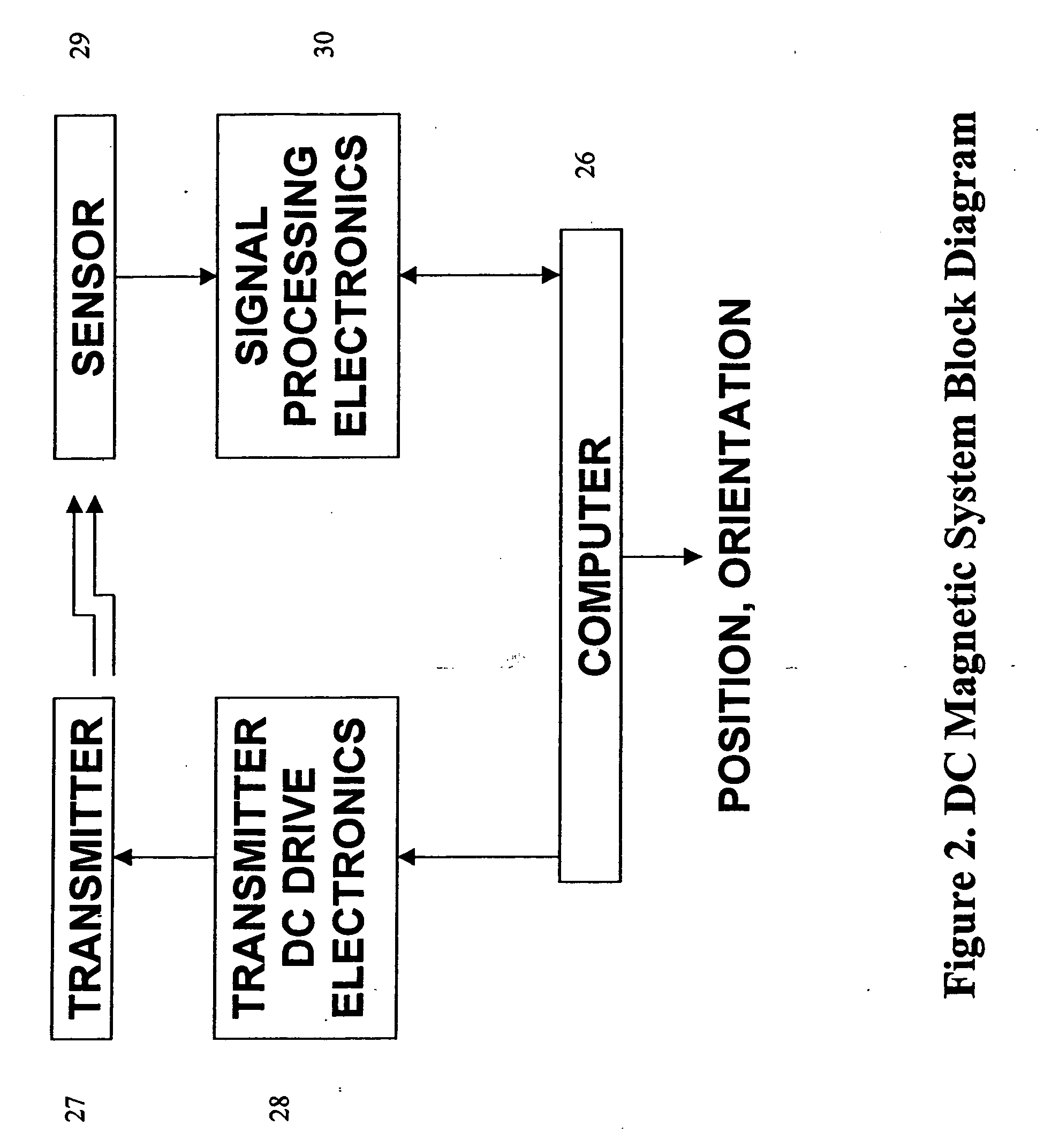

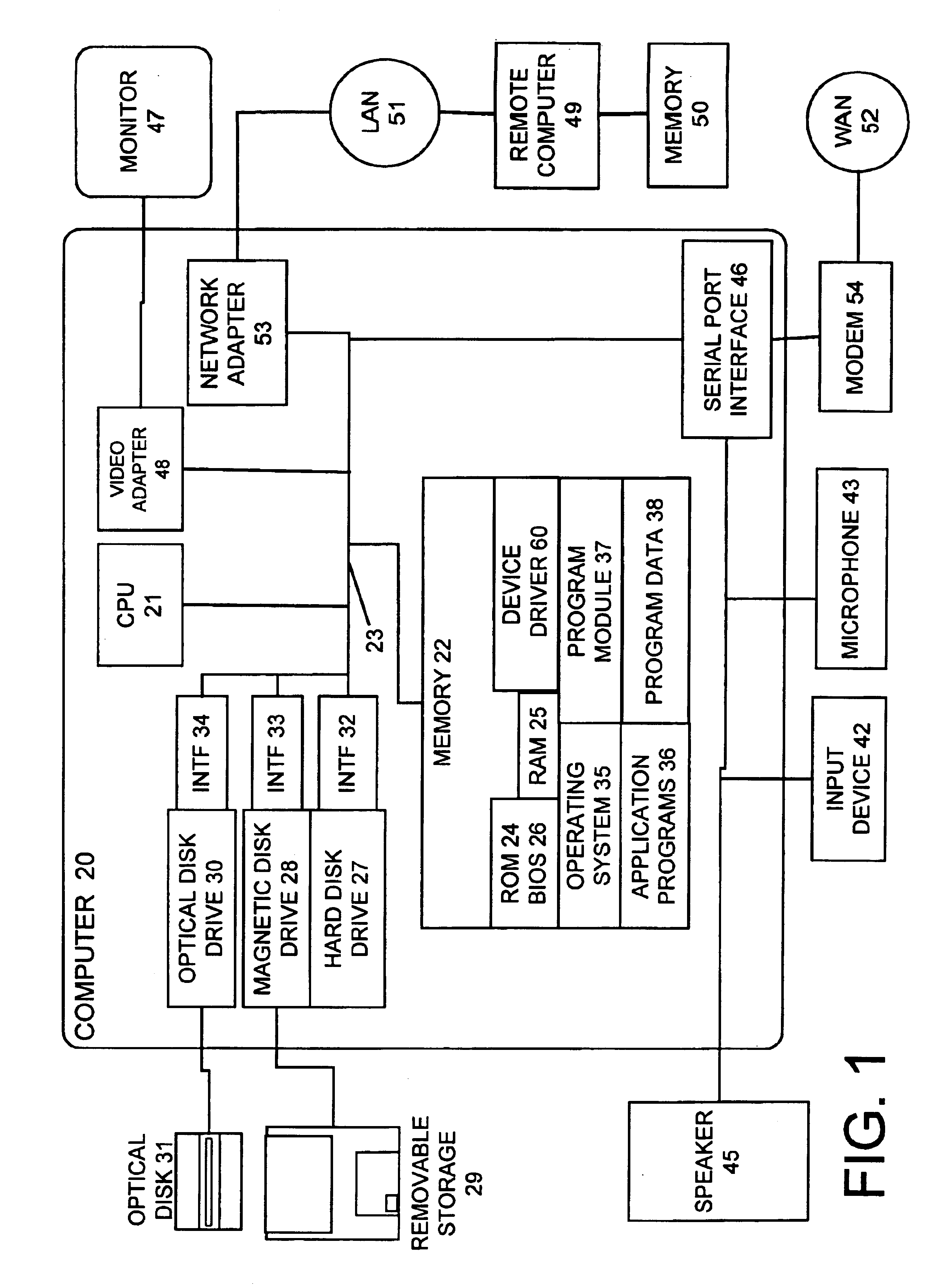

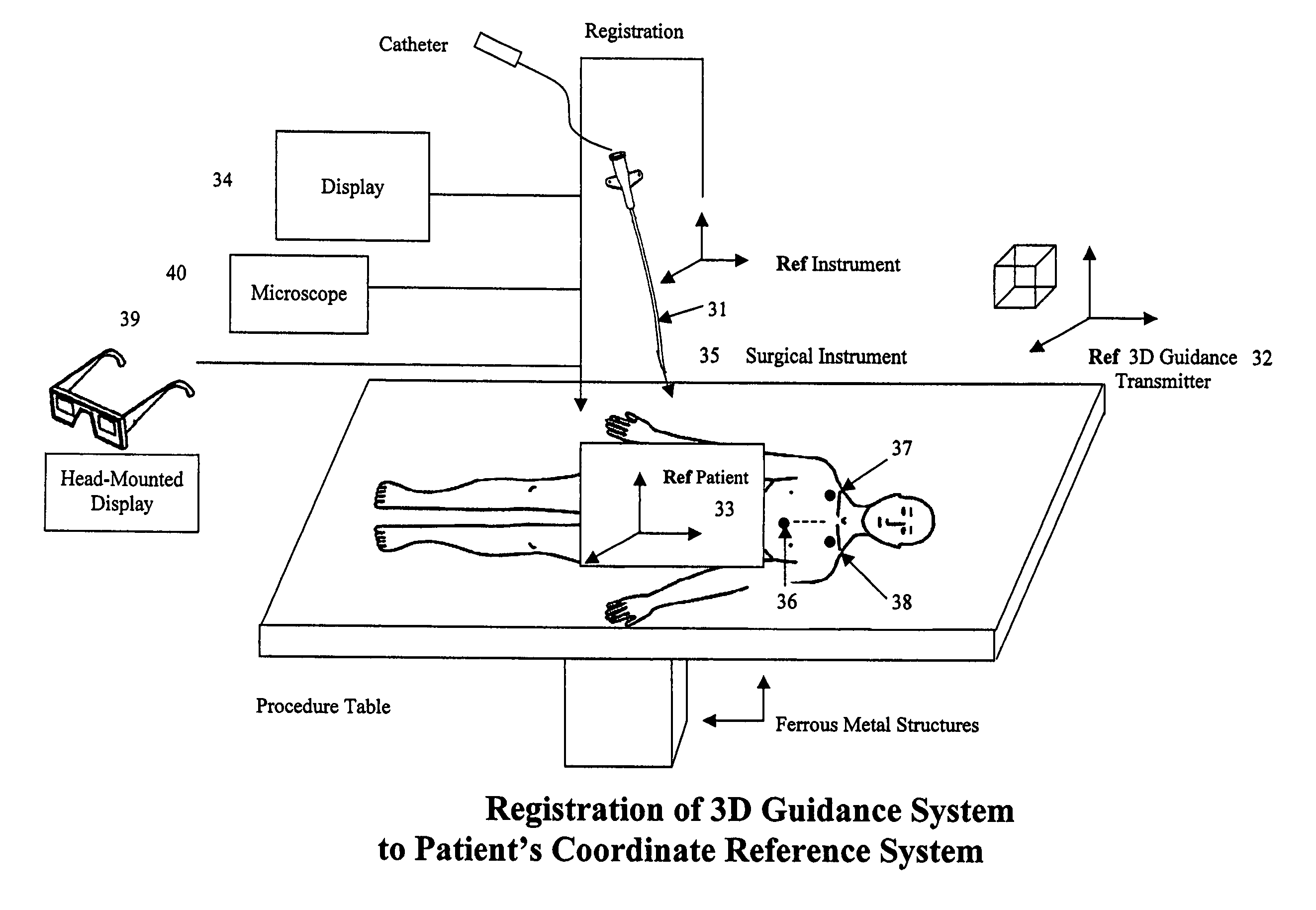

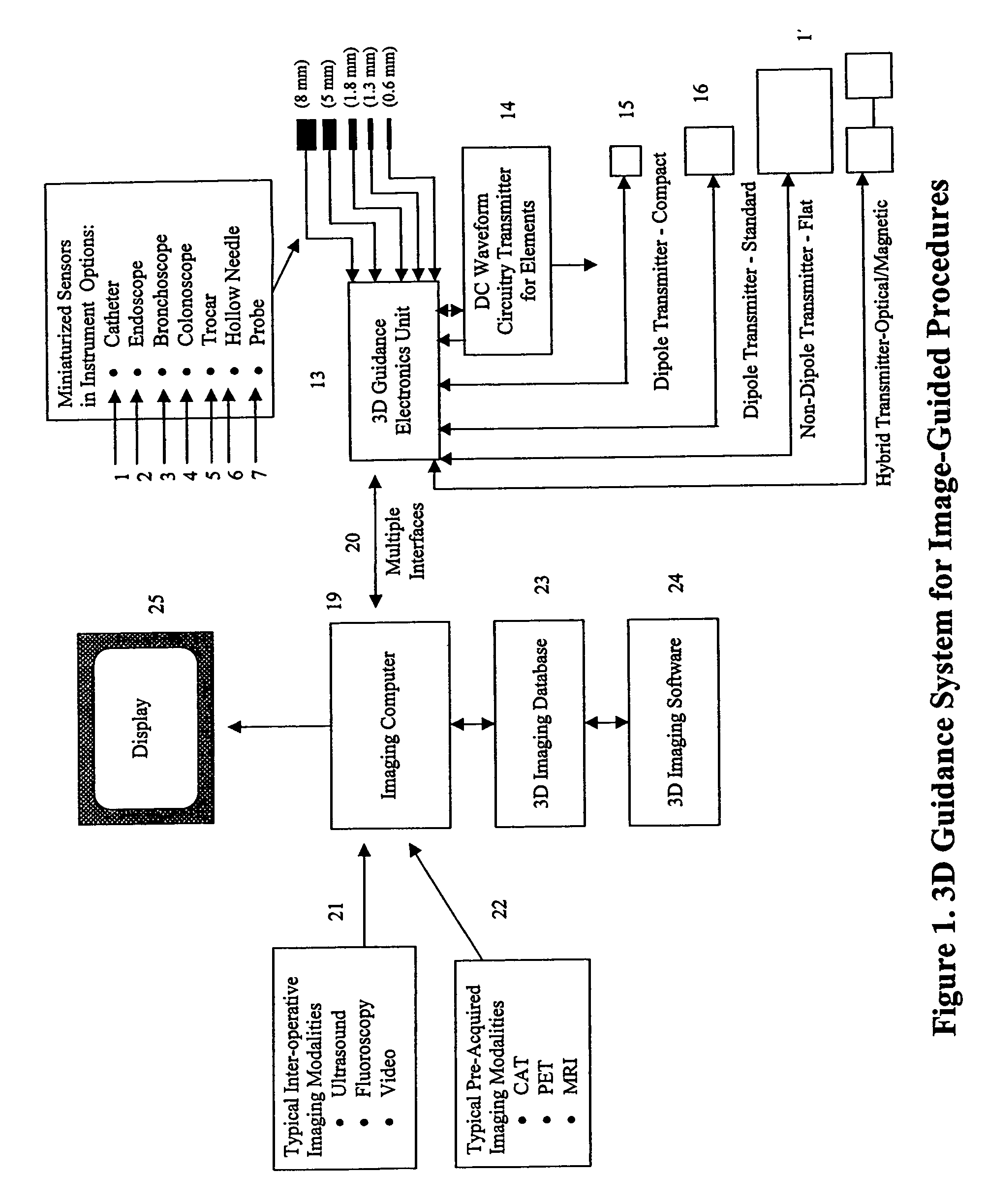

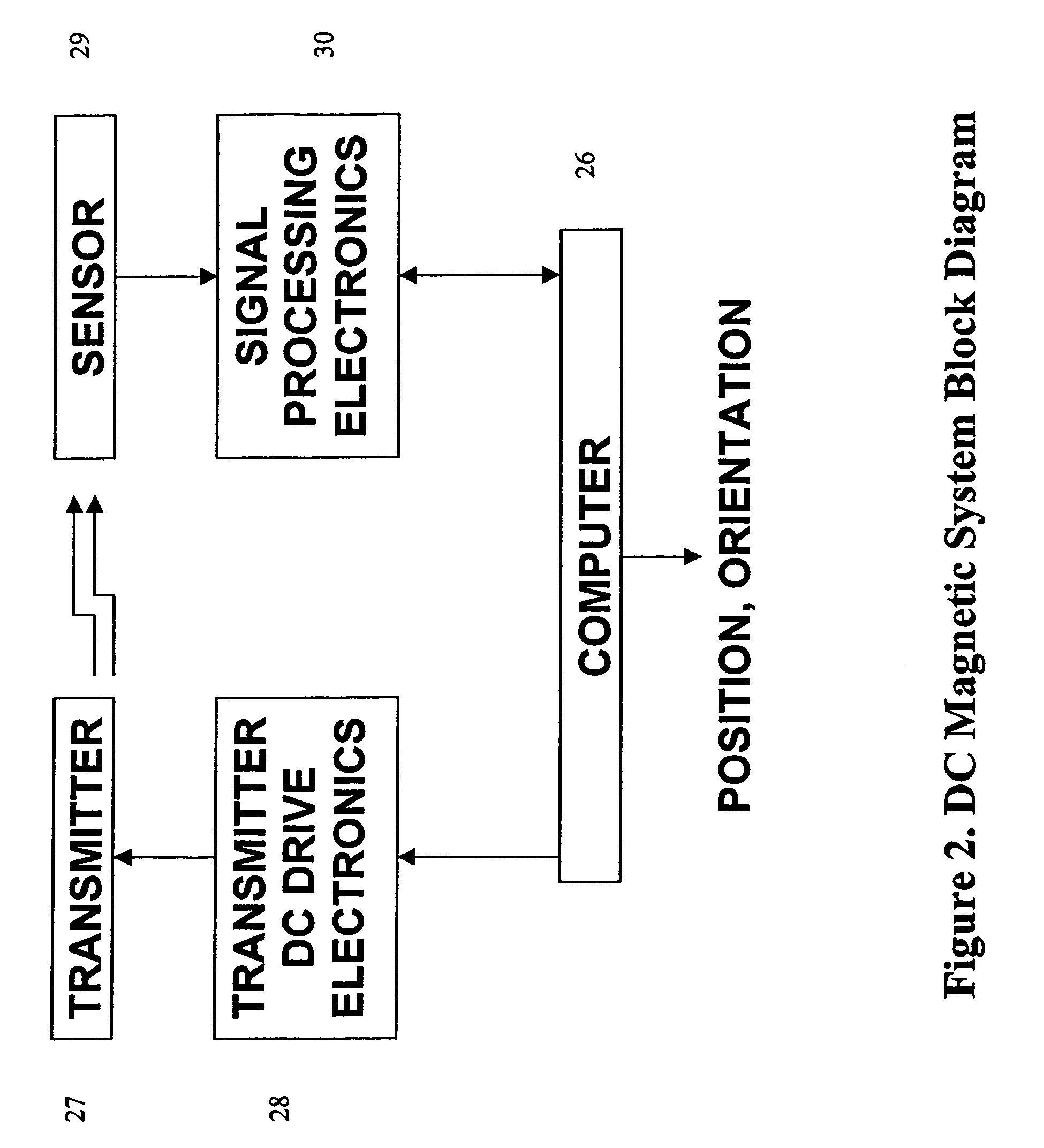

Miniaturized, five and six degrees-of-freedom magnetic sensors, responsive to pulsed DC magnetic fields waveforms generated by multiple transmitter options, provide an improved and cost-effective means of guiding medical instruments to targets inside the human body. The end result is achieved by integrating DC tracking, 3D reconstructions of pre-acquired patient scans and imaging software into a system enabling a physician to internally guide an instrument with real-time 3D vision for diagnostic and interventional purposes. The integration allows physicians to navigate within the human body by following 3D sensor tip locations superimposed on anatomical images reconstructed into 3D volumetric computer models. Sensor data can also be integrated with real-time imaging modalities, such as endoscopes, for intrabody navigation of instruments with instantaneous feedback through critical anatomy to locate and remove tissue. To meet stringent medical requirements, the system generates and senses pulsed DC magnetic fields embodied in an assemblage of miniaturized, disposable and reposable sensors functional with both dipole and co-planar transmitters.

Owner:NORTHERN DIGITAL

Method and apparatus for computer input using six degrees of freedom

InactiveUS6844871B1Keep the environmentImprove user interactionInput/output for user-computer interactionCathode-ray tube indicatorsGraphicsGraphical user interface

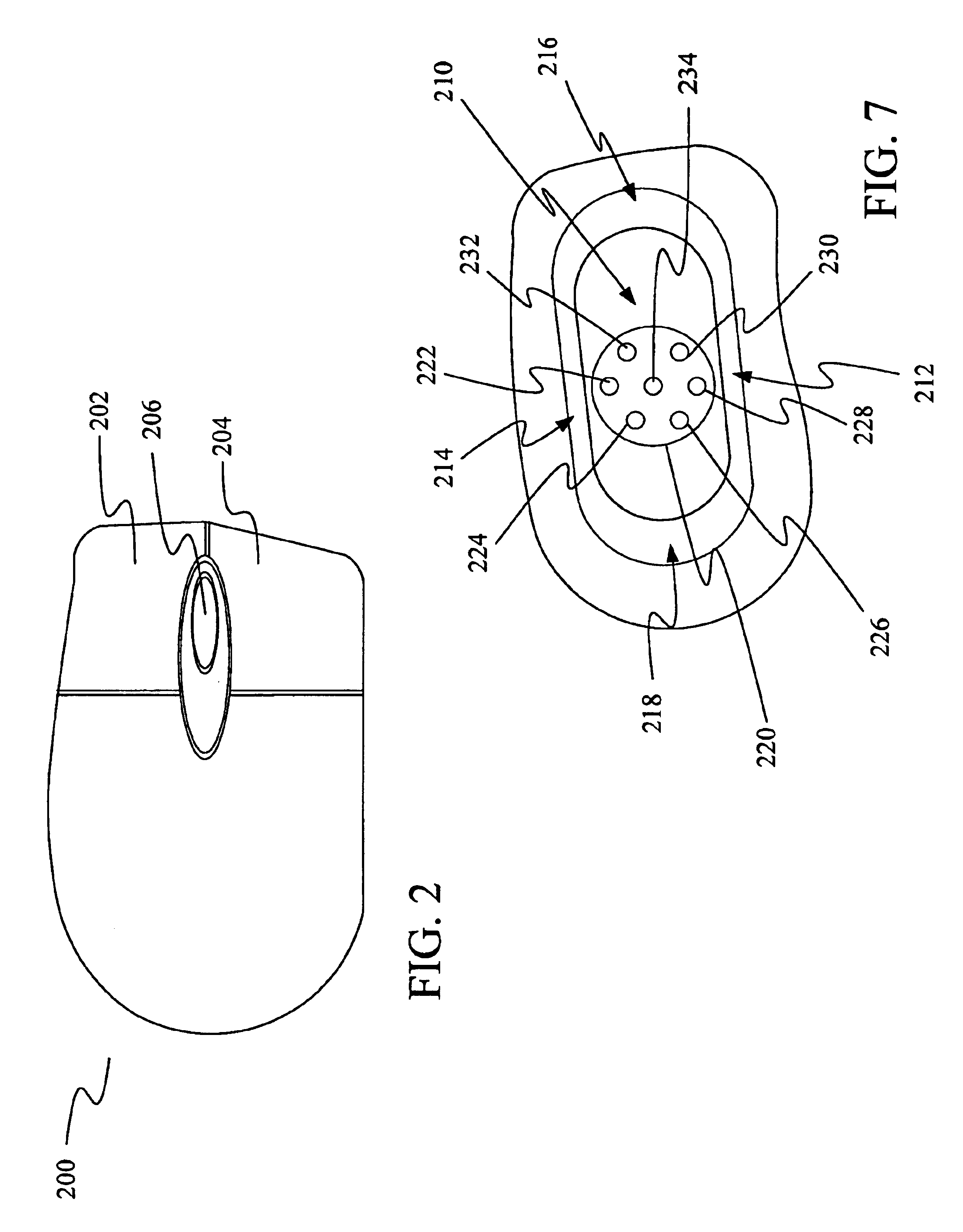

A mouse is provided that uses a camera as its input sensor. A real-time vision algorithm determines the six degree-of-freedom mouse posture, consisting of 2D motion, tilt in the forward / back and left / right axes, rotation of the mouse about its vertical axis, and some limited height sensing. Thus, a familiar 2D device can be extended for three-dimensional manipulation, while remaining suitable for standard 2D Graphical User Interface tasks. The invention includes techniques for mouse functionality, 3D manipulation, navigating large 2D spaces, and using the camera for lightweight scanning tasks.

Owner:MICROSOFT TECH LICENSING LLC

DC magnetic-based position and orientation monitoring system for tracking medical instruments

ActiveUS7835785B2Low costEasy to integrateDiagnostic recording/measuringSensors3d sensorDiagnostic Radiology Modality

Miniaturized, five and six degrees-of-freedom magnetic sensors, responsive to pulsed DC magnetic fields waveforms generated by multiple transmitter options, provide an improved and cost-effective means of guiding medical instruments to targets inside the human body. The end result is achieved by integrating DC tracking, 3D reconstructions of pre-acquired patient scans and imaging software into a system enabling a physician to internally guide an instrument with real-time 3D vision for diagnostic and interventional purposes. The integration allows physicians to navigate within the human body by following 3D sensor tip locations superimposed on anatomical images reconstructed into 3D volumetric computer models. Sensor data can also be integrated with real-time imaging modalities, such as endoscopes, for intrabody navigation of instruments with instantaneous feedback through critical anatomy to locate and remove tissue. To meet stringent medical requirements, the system generates and senses pulsed DC magnetic fields embodied in an assemblage of miniaturized, disposable and reposable sensors functional with both dipole and co-planar transmitters.

Owner:NORTHERN DIGITAL

Real-time Visual Feedback for User Positioning with Respect to a Camera and a Display

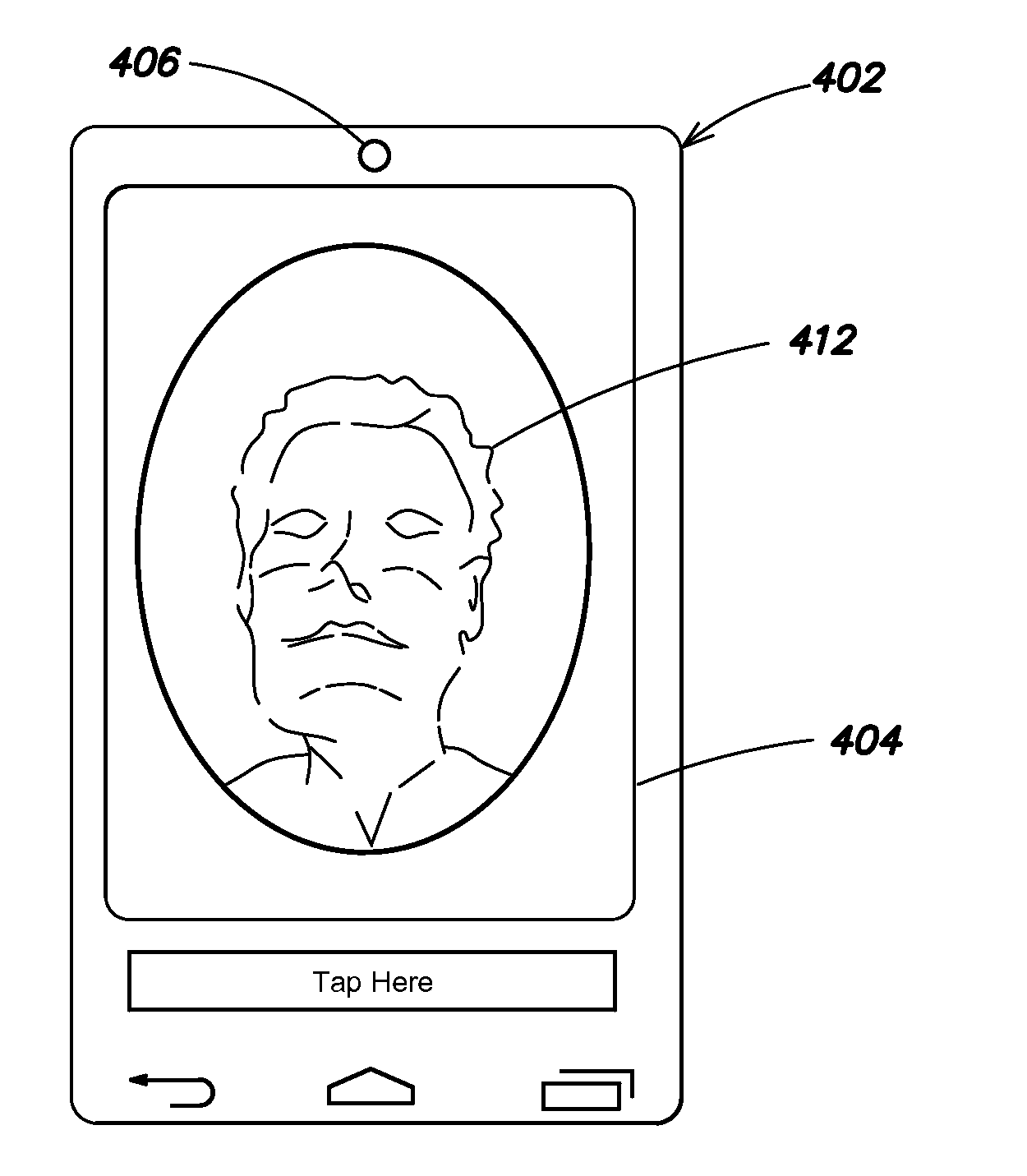

ActiveUS20160148384A1Illuminate userIncrease impressionImage enhancementTelevision system detailsComputer graphics (images)Display device

Systems, methods, and computer program products provide near real-time feedback to a user of a camera-enabled device to guide the user to capture self-imagery when the user is in a desired position with respect the camera and / or the display of the device. The desired position optimizes aspects of self-imagery that is captured for applications in which the imagery is not primarily intended for the user's consumption. One class of such applications includes applications that rely on illuminating the user's face with light from the device's display screen. The feedback is abstracted to avoid biasing the user with aesthetic considerations. The abstracted imagery may include real-time cartoon-like line drawings of edges detected in imagery of the user's head or face.

Owner:IPROOV

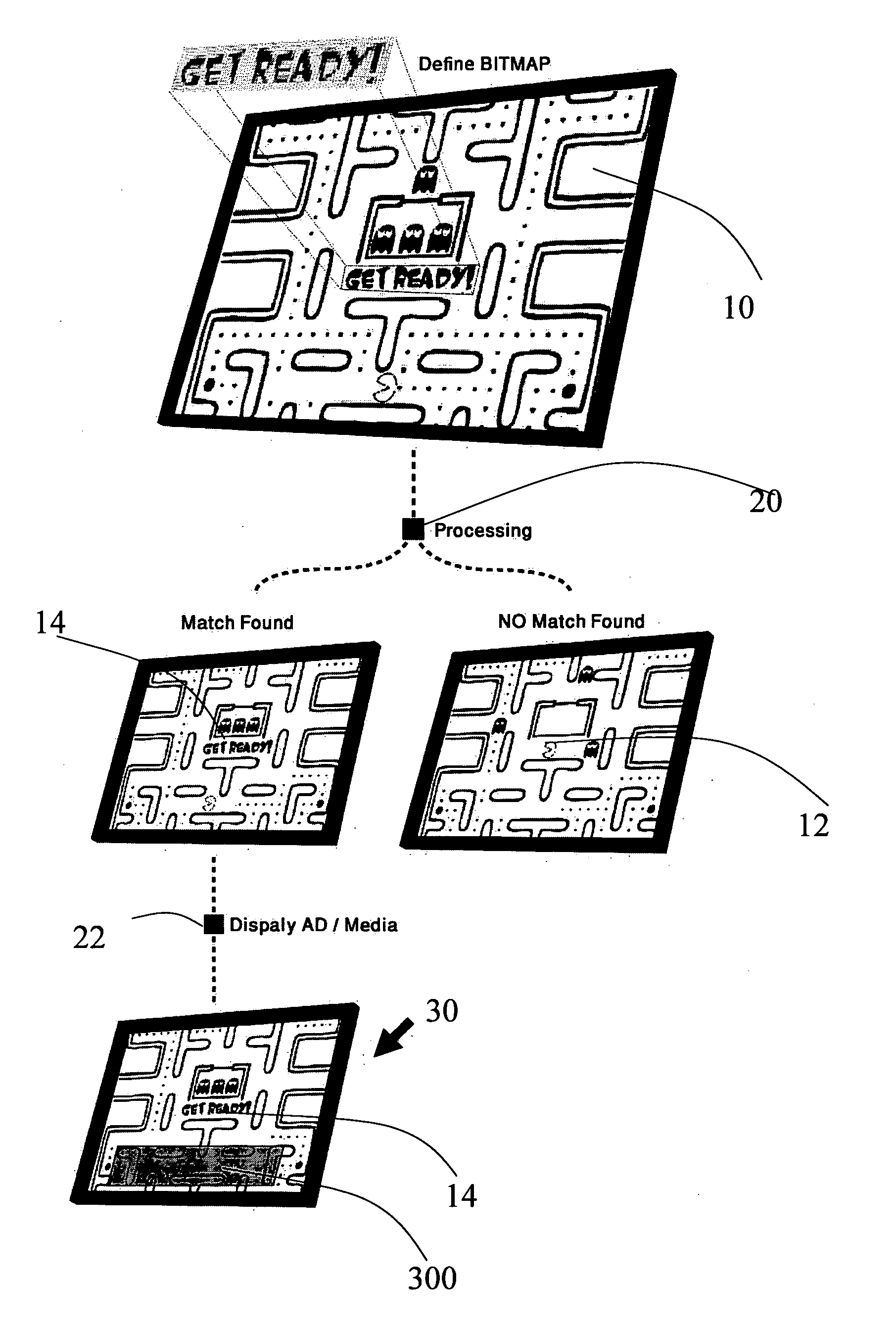

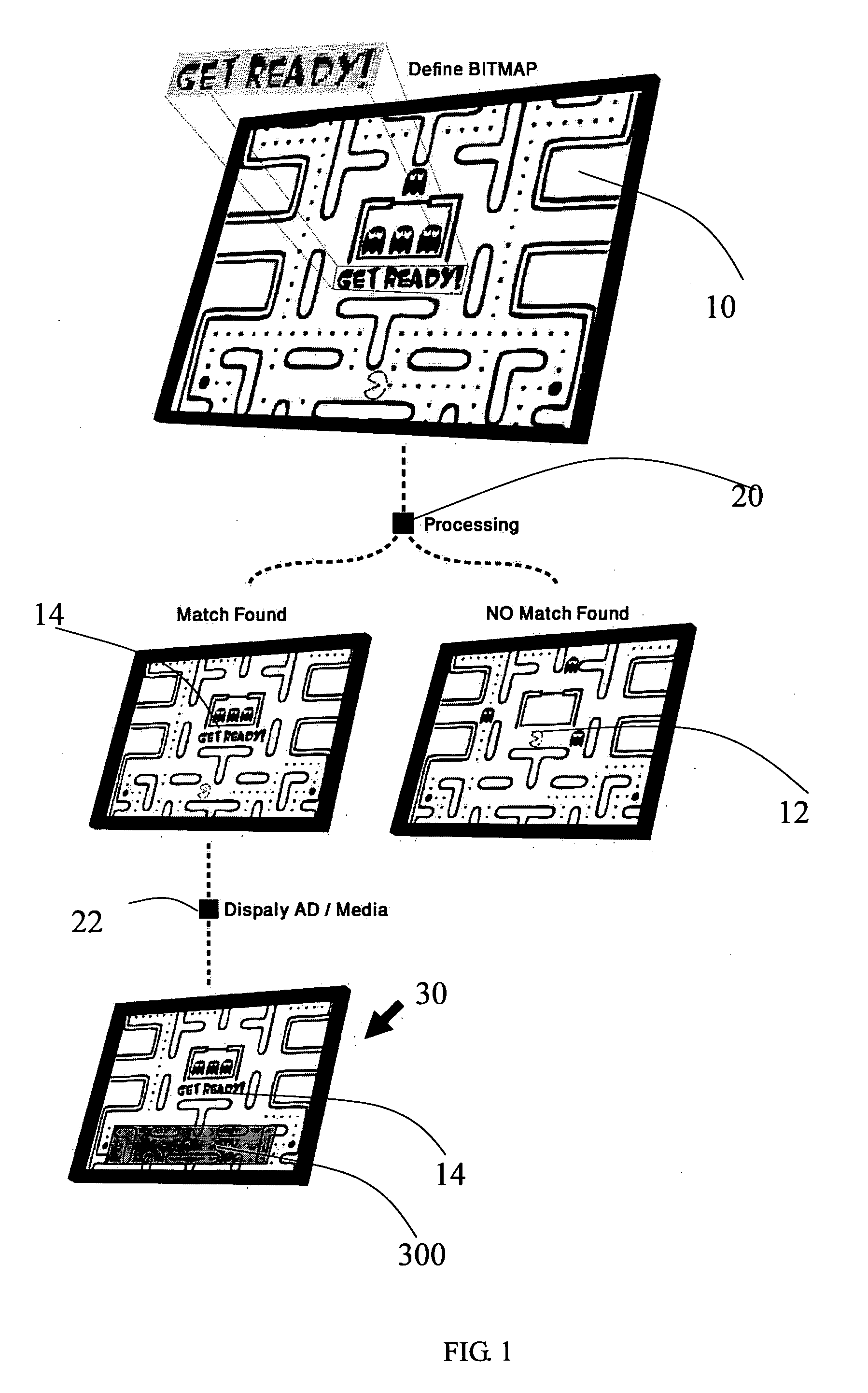

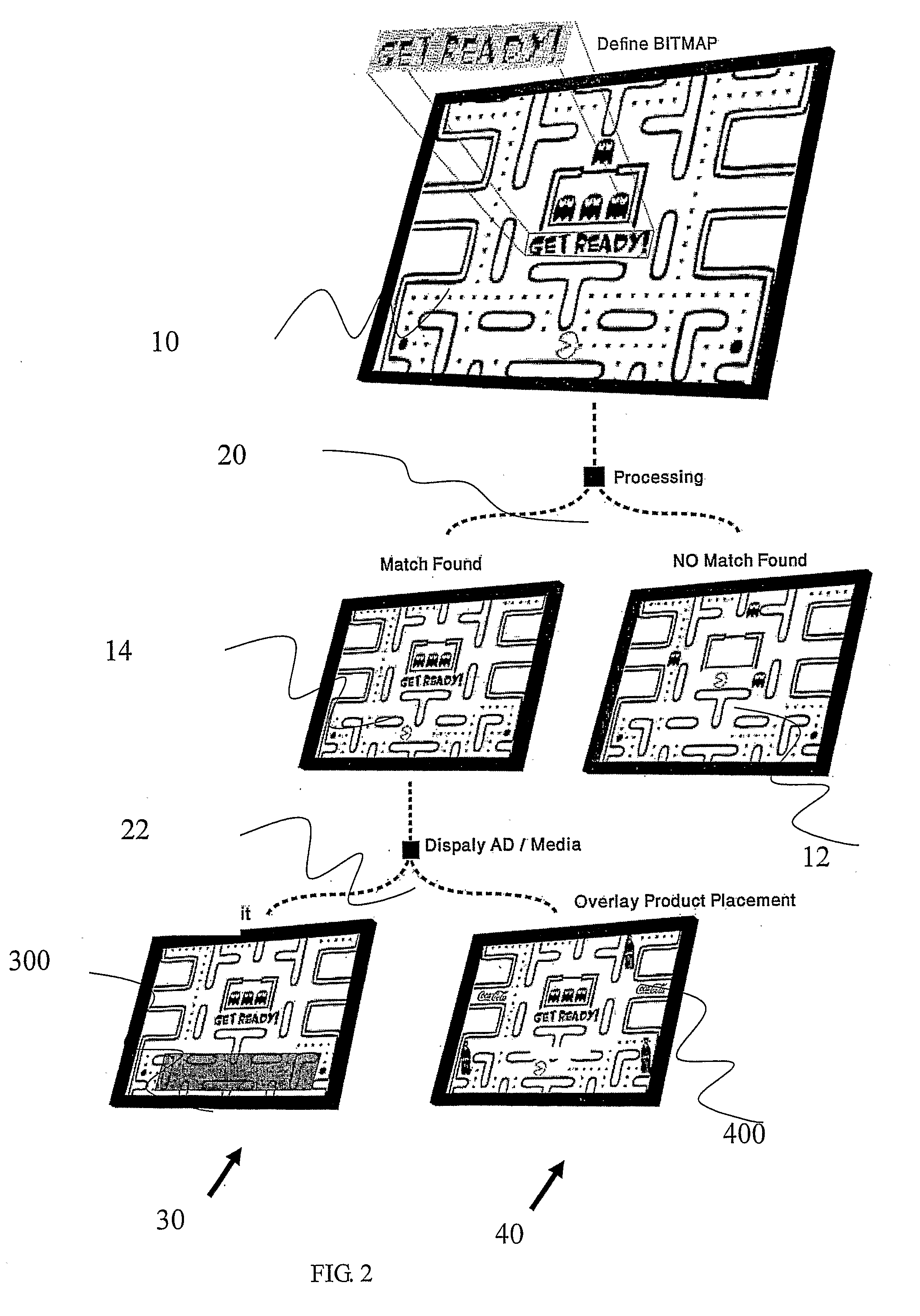

System and method for ad placement in video game content

InactiveUS20120100915A1Minimizing time strain is placed upon computer processing powerVideo gamesSpecial data processing applicationsVisual perceptionGame play

The present invention provides an ad placement system for superimposing advertising content onto video games, comprising: a. networked game playing means having access to a video game; b. object identification means having access to visual output of a plurality of game playing iterations of said video game; c. means for obtaining real-time visual captions; d. object tracking means; e. means for superimposing a stream of remotely stored advertising content onto said objects, in networked connection with said game playing means; f. emulation means that superimposes an invisible, click, touch or event enabled emulation layer on top of said visual output from said video game;

Owner:TICTACTI

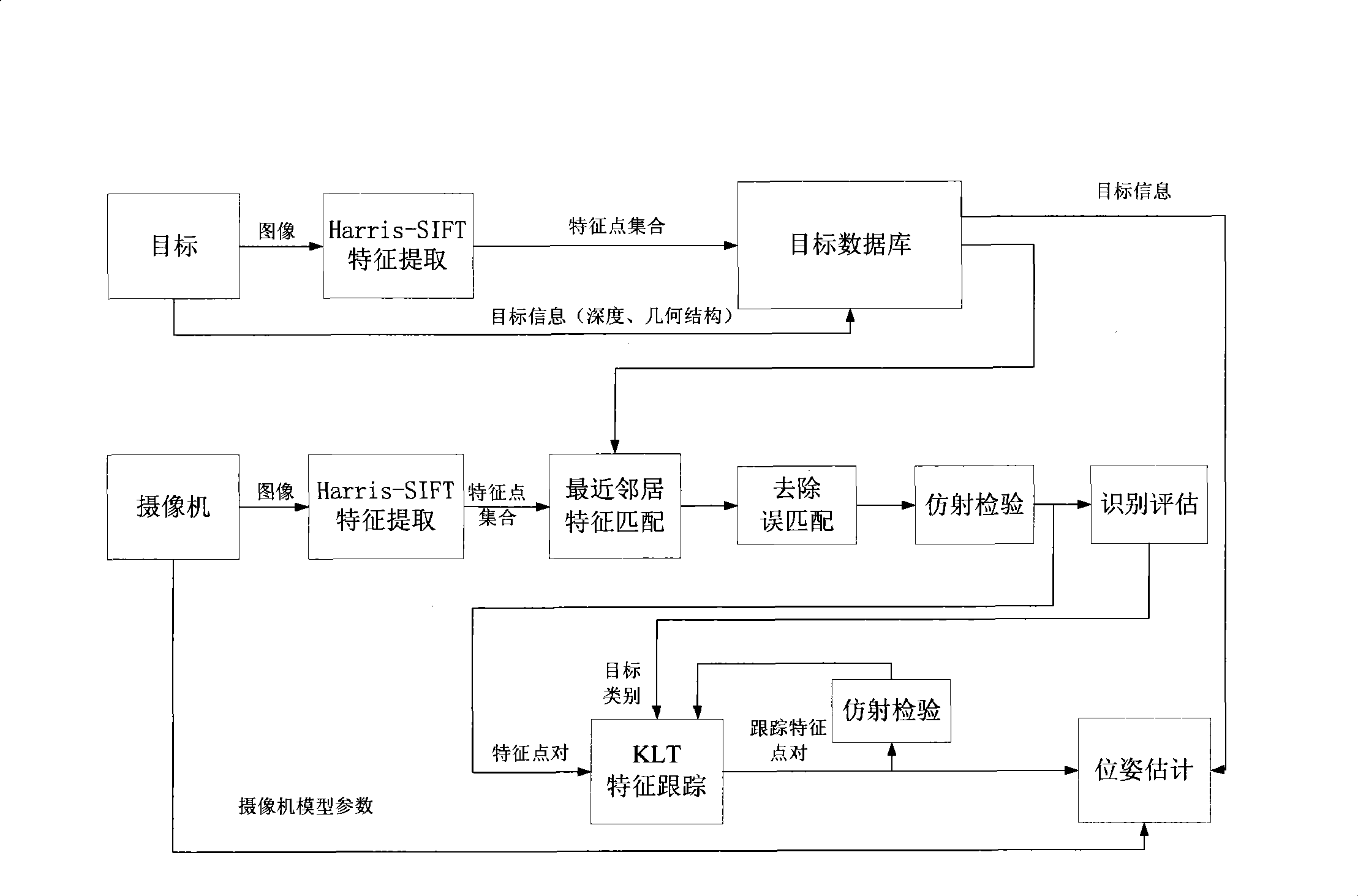

Real time vision positioning method of monocular camera

InactiveCN101441769AReduce complexityLow costTelevision system detailsImage analysisModel parametersVisual positioning

The invention relates to a method for real-time vision positioning for a monocular camera, which belongs to the field of computer vision. The method comprises the following steps: firstly, acquiring an object image characteristic point set to establish an object image database and perform real-time trainings; secondly, modeling the camera to acquire model parameters of the camera; and thirdly, extracting a real-time image characteristic point set by the camera, matching real-time image characteristic points with the characteristic point set in the object database, and removing error matching and performing an affine inspection to acquire characteristic point pairs and object type information. The characteristic point pairs and the object type information are used to perform characteristic tracking, and accurate tracking characteristic points of the object image are combined with the model parameters of the camera so as to acquire three-dimensional poses of the camera. The method can achieve the functions of self-positioning and navigation by using a single camera only, thus the system complexity and the cost are reduced.

Owner:SHANGHAI JIAO TONG UNIV

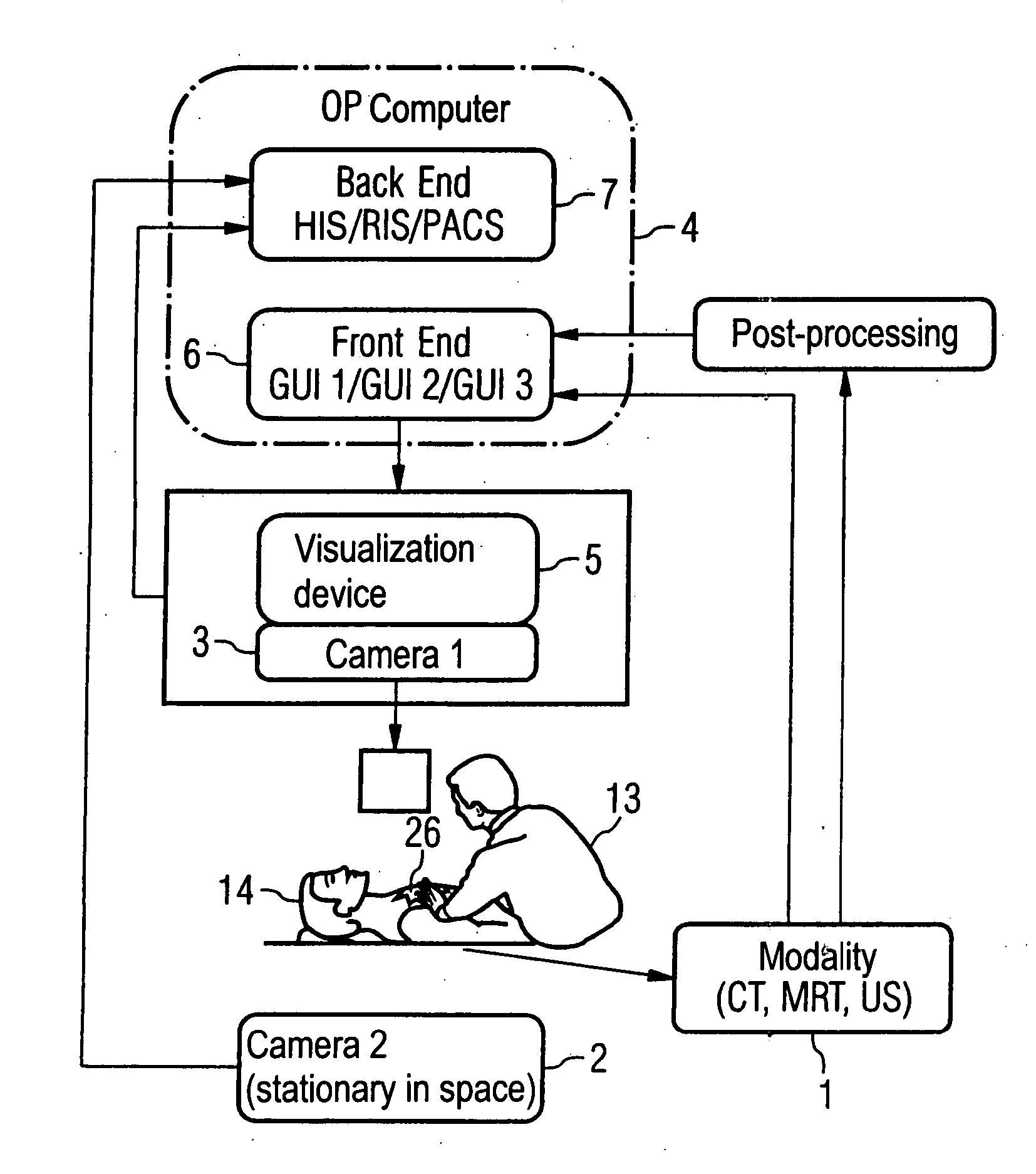

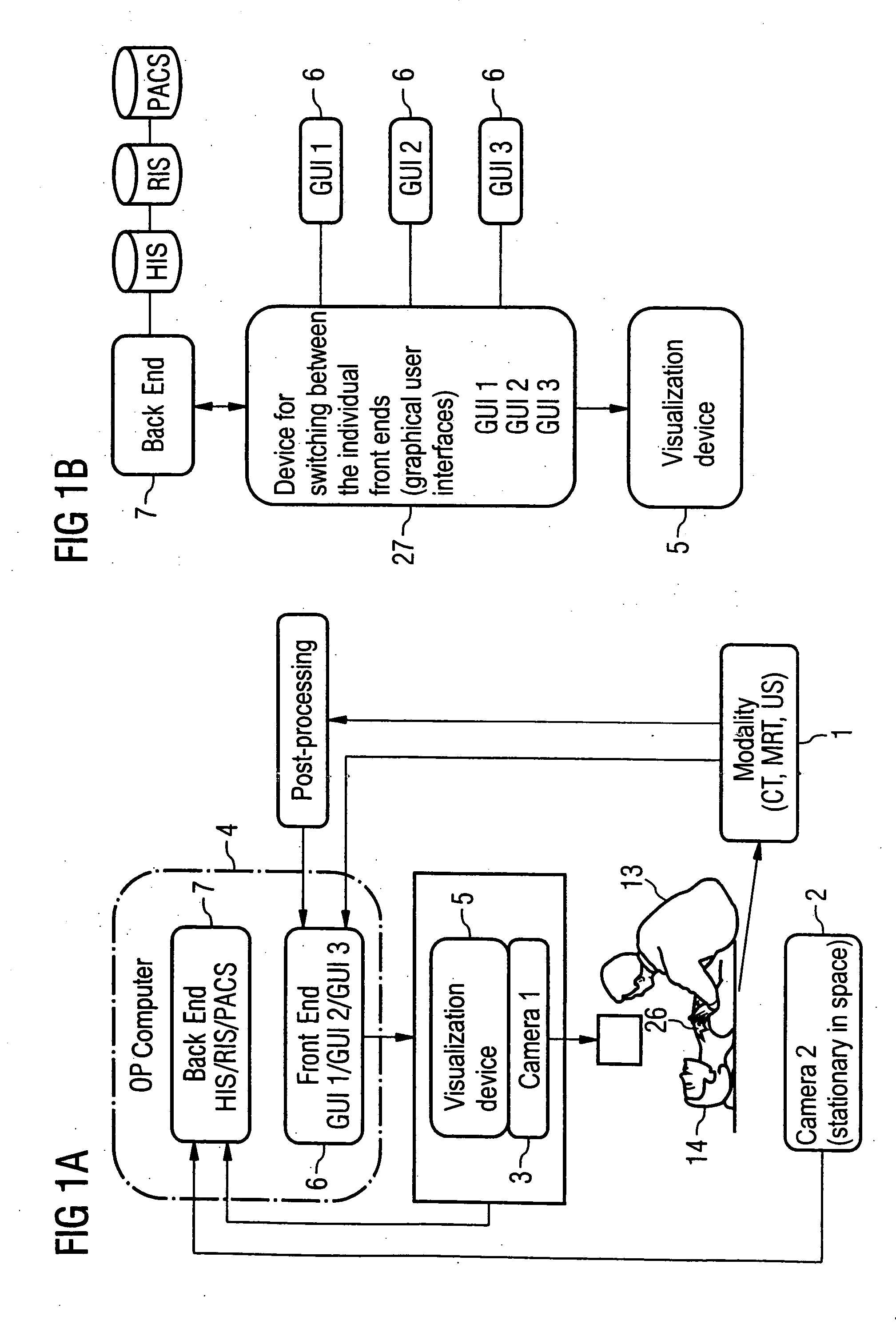

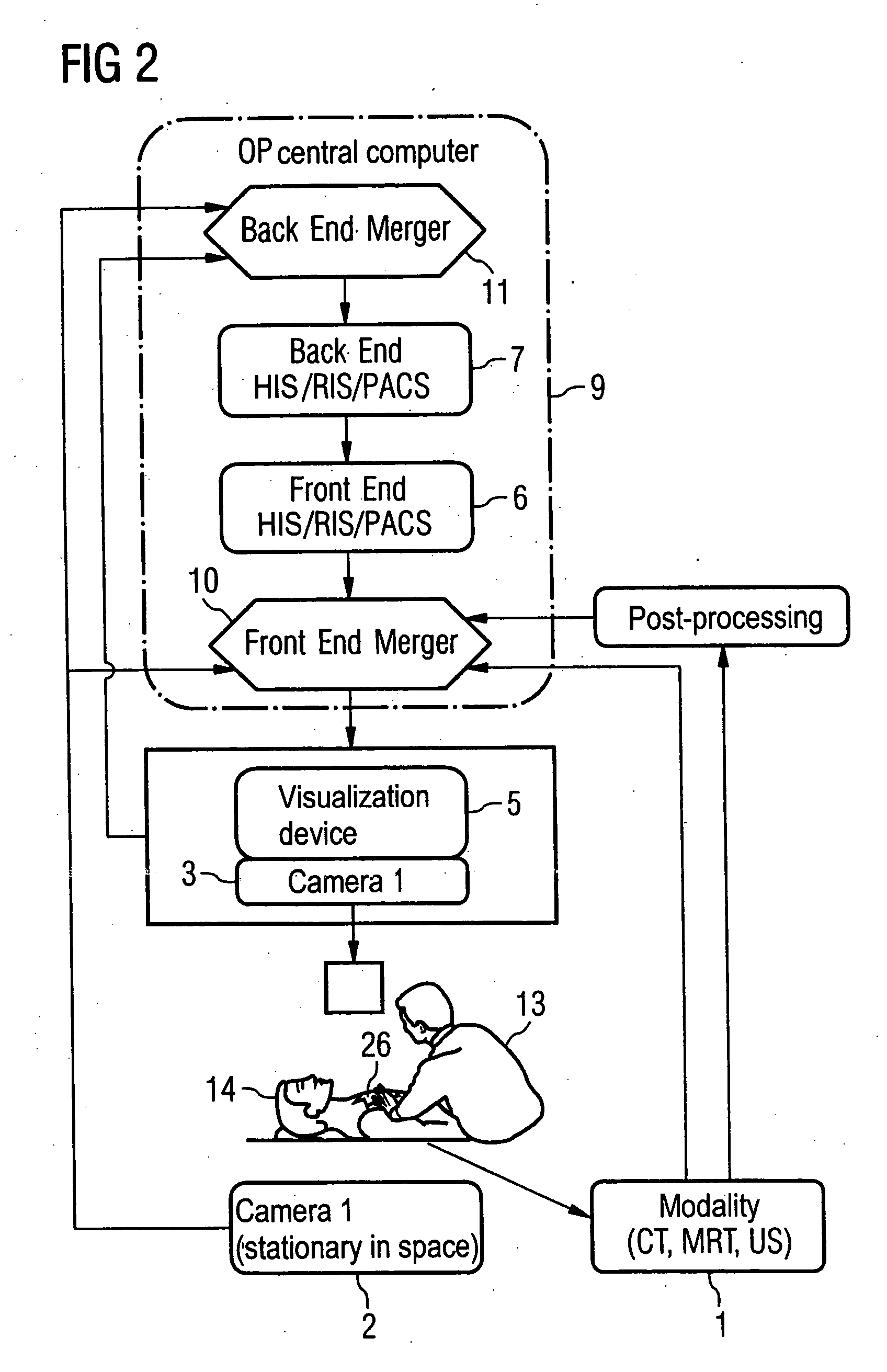

System for providing situation-dependent, real-time visual support to a surgeon, with associated documentation and archiving of visual representations

InactiveUS20060079752A1Avoid problemsMechanical/radiation/invasive therapiesLocal control/monitoringSurgical operationVisual presentation

A system for providing the situation-dependent real-time visual support to a surgeon during a surgical operation, and for real-time documentation and archiving of the visual impressions generated by the support system and perceived by the surgeon during the operation, has a visualization device for outputting data, to the surgeon during the surgical operation, a first video camera fixed to the visualization device, at least one second video camera focusing on the operating area from an angle of view that is different from that of the video camera of the visualization device, at least one central computing unit that is connected to the visualization device, to the video cameras, to operation monitoring components, and to computing devices, via a medical information system and the central computing unit has a front end merger that controls the visual presentation of data of the visualization device.

Owner:SIEMENS AG

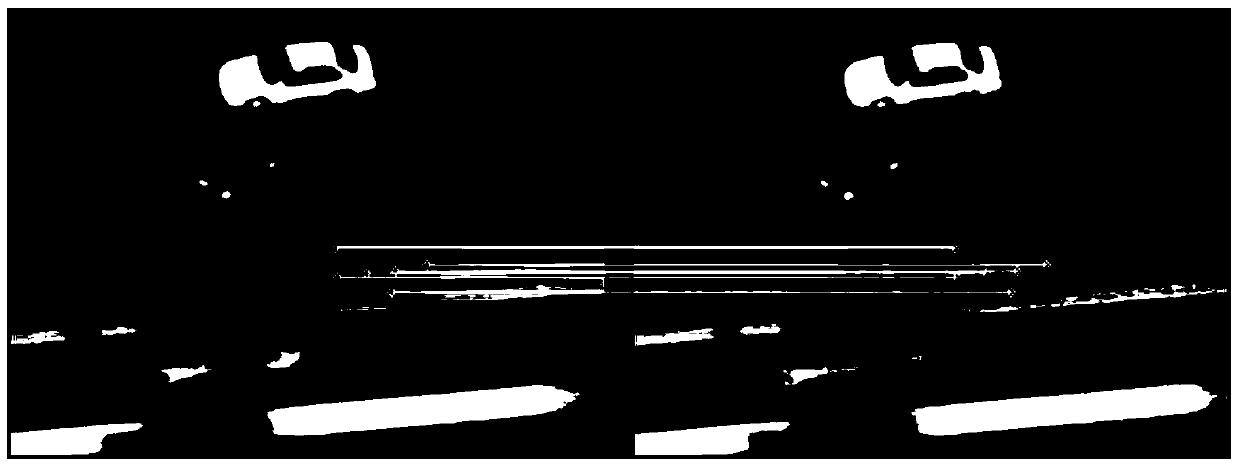

Lightweight peccancy parking detection device based on full view vision

ActiveCN103824452AWith automatic detectionImplement automatic detectionDetection of traffic movementCharacter and pattern recognitionVideo imageVision sensor

The invention discloses a lightweight peccancy parking detection device based on full view vision. The device comprises a full view vision sensor which is used for acquiring a video image of a wide range monitoring zone and a microprocessor which is used for analyzing and understanding the video image and carrying out peccancy parking detection. A high definition camera is connected with the microprocessor through a video interface, and carries out real-time vision detection on vehicles on a road. When the behavior of peccancy parking is detected in a vision detection range, a peccancy parking driver is told or warned not to carry out peccancy parking through a voice playing unit. If the parking time exceeds a specified time threshold, a system snapshots a peccancy vehicle, and automatically generate a peccancy parking record. According to the invention, a video image detection method which uses points to replace a side is used to reduce spatial redundancy, thus lightweight peccancy parking vision detection is realized.

Owner:ENJOYOR COMPANY LIMITED +1

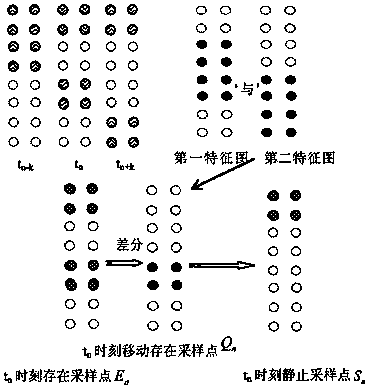

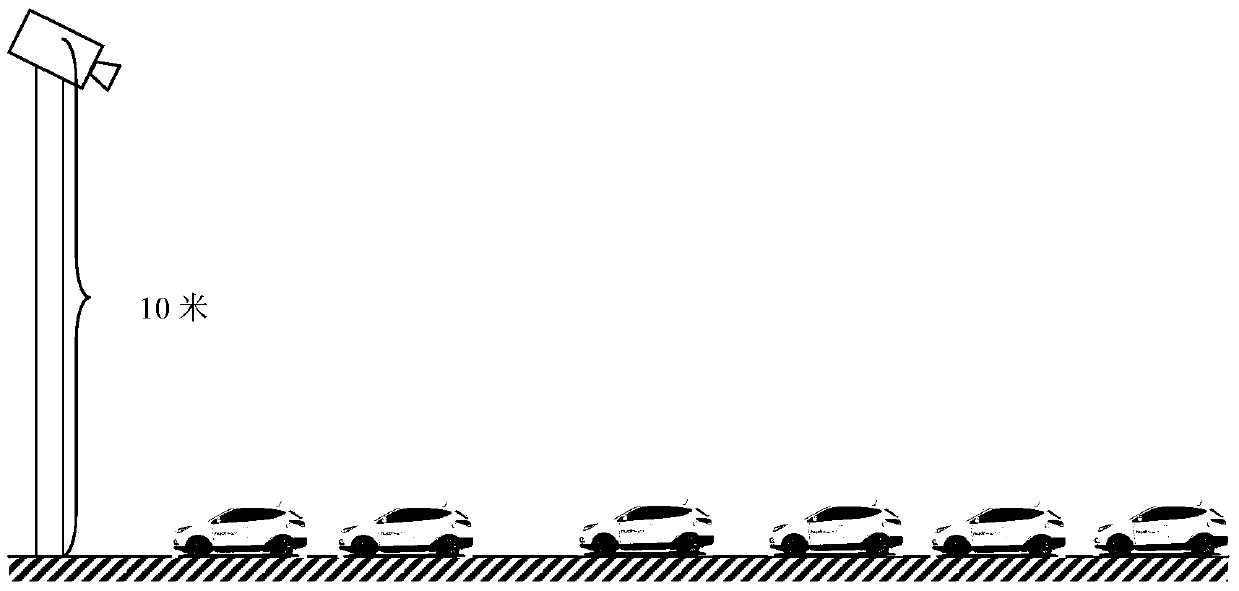

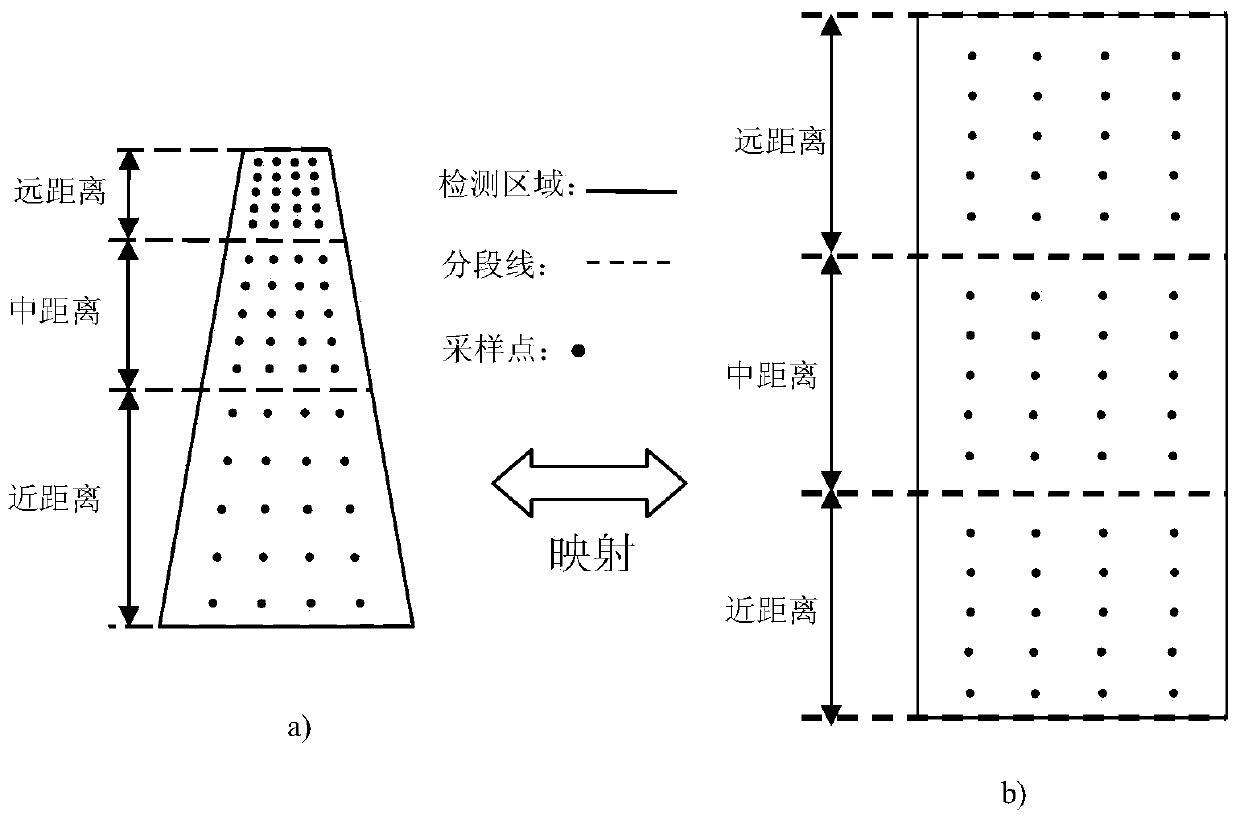

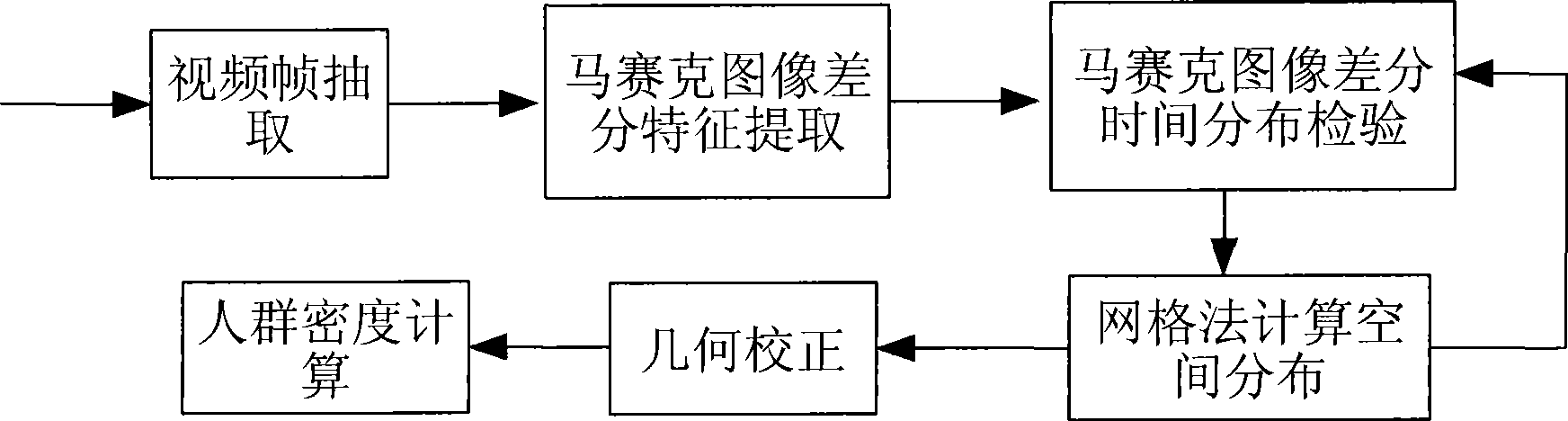

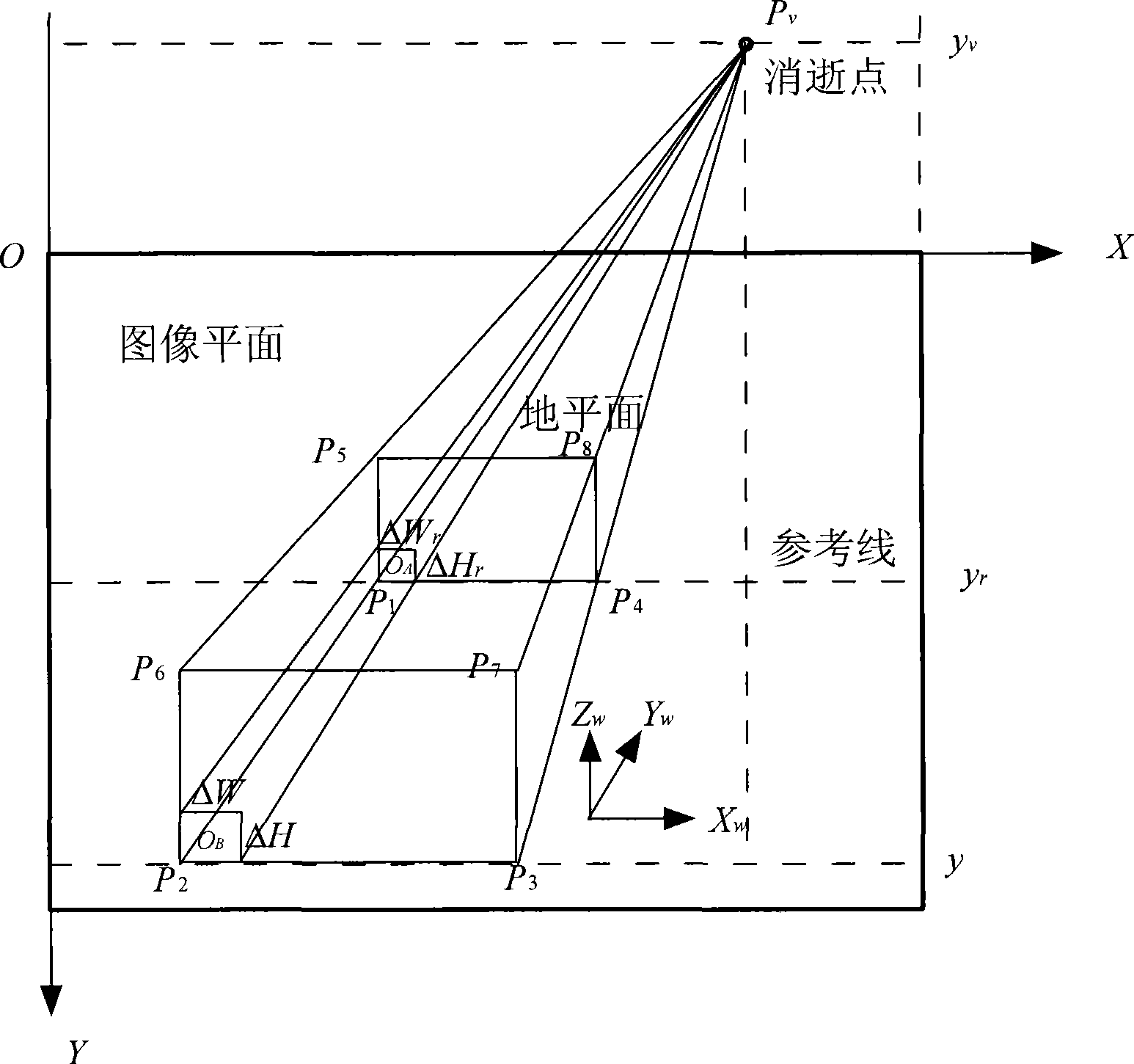

Crowd density analysis method based on statistical characteristics

InactiveCN101464944AMathematical model is simpleIntuitiveCharacter and pattern recognitionReal time visionAnalysis method

The invention discloses a method for analyzing crowd density base on statistical characteristics. The method comprises the following steps: video input and frame extraction are carried out; the mosaic image difference MID characteristics are extracted from the video-frequency frame sequence, and the subtle movements in the crowd are detected; the uniform distribution of the sequence time of the mosaic image difference MID characteristics is checked; the geometric correction is performed to the crowd and the scene with obvious perspective phenomenon, and a contribution factor of each picture element to the crowd density is obtained on the image plane; and the weighting process is performed to the crowd space area, so as to obtain the crowd density. Compared with the prior method, the method has no need of the reference background, also has no need of the background modeling and can self-adapt change of either morning or evening light, the algorithm is quite robust, and the application is convenient; the mathematical model is simple and effective, the spatial distribution and the size of the crowd can be accurately located, and the vivacity is strong; the calculation amount is small, and the method is suitable for real-time visual monitoring. The invention can be widely applied to the monitoring and the management of the public places with detained crowd density such as the public transportation, the subway, the square and the like.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

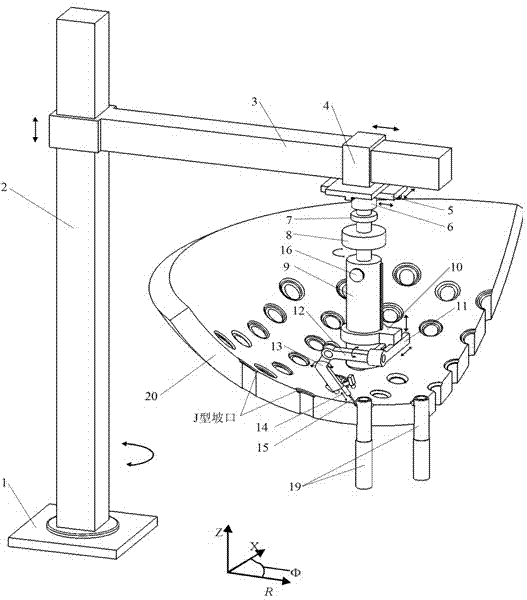

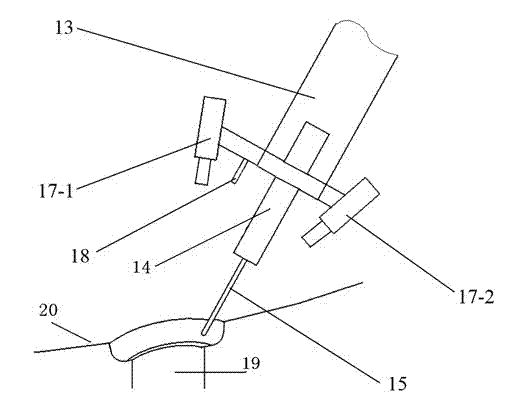

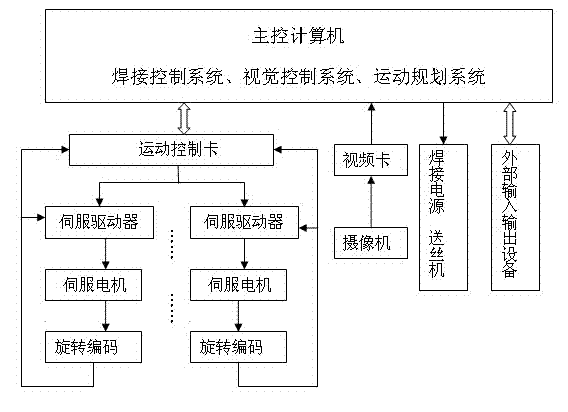

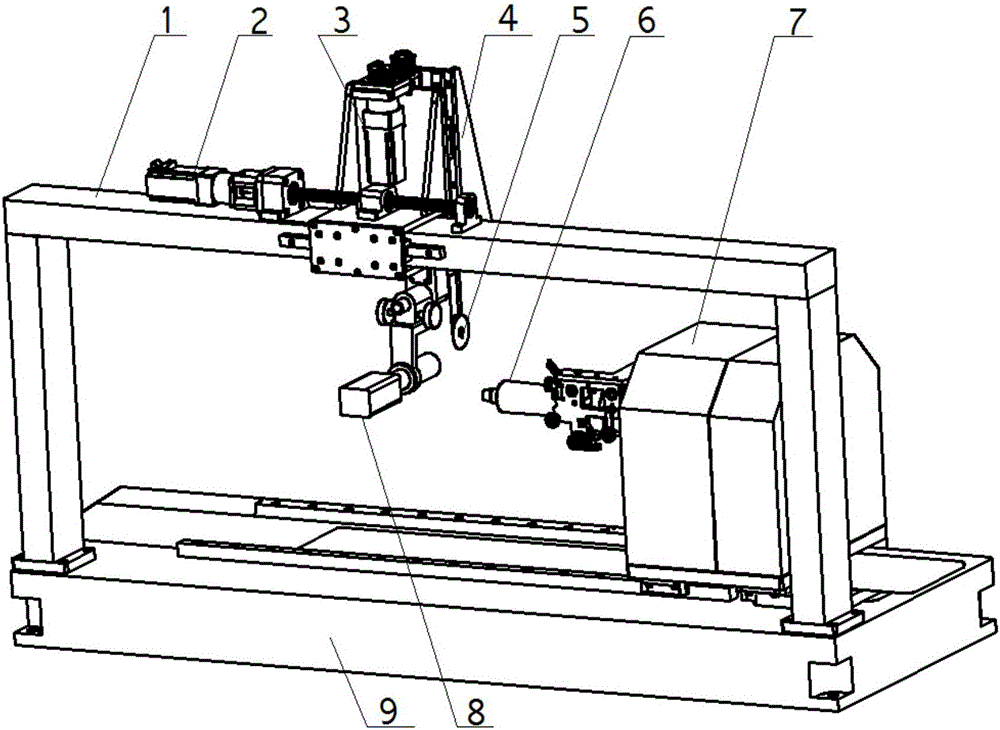

Circular seam welding robot device

ActiveCN102049638AWith visual functionFacilitates motion controlProgramme-controlled manipulatorWelding/cutting auxillary devicesMachineVision sensor

The invention discloses a robot device for the circular seam welding of large spherical crown workpieces and pipes. A robot main body comprises a machine head and a machine frame. The machine head consists of a cross sliding table mechanism, a six-freedom-degree motion system, a sliding ring system and a welding gun. The machine frame consists of a fixed base, upright columns, a cross beam and a slider. The machine frame of the robot makes large range motion and moves the robot main body to a position to be welded, the accuracy of micro motion is compensated by the cross sliding table mechanism of the machine head, and six-freedom-degree circular seam welding is realized. Welding process is monitored by a visual sensing technology, and a video camera fits a laser generator and serves as an optical visual sensor for laser structures. Another video camera fits the welding gun, serves as a visual sensor for a welding pool, and carries out real-time visual detection for the welding process. The robot device is particularly suitable for accurate locating motion required for welding large spherical crown workpieces and pipes, and can finish specific track welding in narrow space and control welding hot deformation.

Owner:TIANJIN YANGTIAN TECH CO LTD

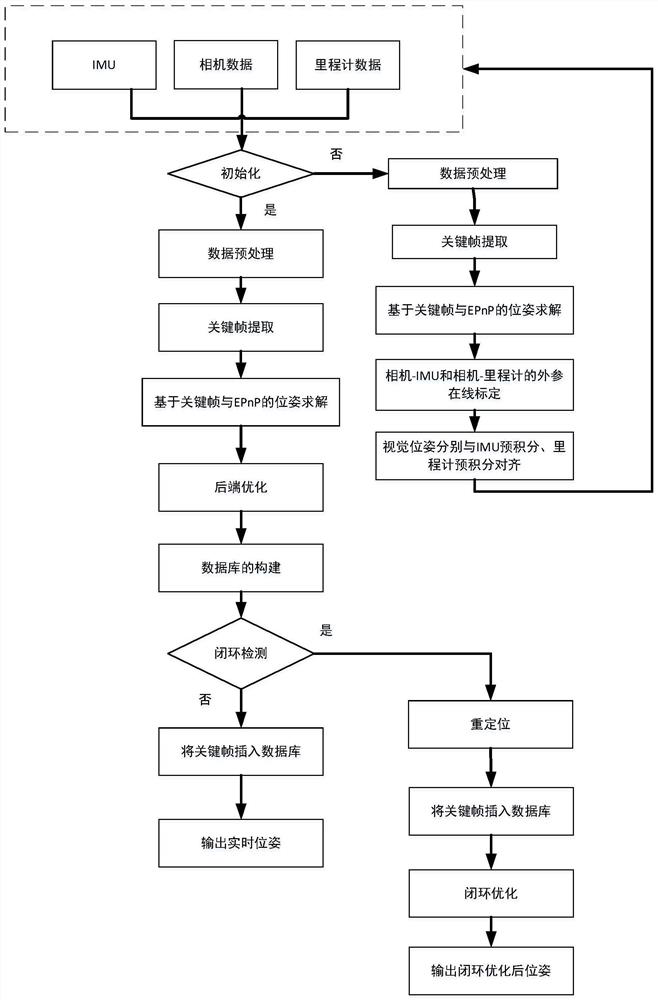

Electric power inspection robot positioning method based on multi-sensor fusion

ActiveCN111739063AA large amountEvenly distributedProgramme-controlled manipulatorChecking time patrolsPattern recognitionGyroscope

The invention provides an electric power inspection robot positioning method based on multi-sensor fusion, and the method comprises the steps: firstly carrying out the preprocessing of data collectedby a camera, an IMU and a speedometer and the calibration of a system, and completing the initialization of a robot system; secondly, extracting a key frame, and performing back-end optimization on the position, the speed and the angle of the robot and the bias of a gyroscope in the IMU by utilizing the real-time visual pose of the key frame to obtain the real-time pose of the robot; then, constructing a key frame database, and calculating the similarity between the current frame image and all key frames in the key frame database; and finally, performing closed-loop optimization on the key frames in the key frame database forming the closed loop, and outputting the pose after closed-loop optimization to complete positioning of the robot. According to the back-end optimization method provided by the invention, the positioning precision is improved; and closed-loop optimization is added in the visual positioning process, so that accumulated errors existing in the positioning process areeffectively eliminated, and the accuracy under the condition of long-time operation is guaranteed.

Owner:ZHENGZHOU UNIV

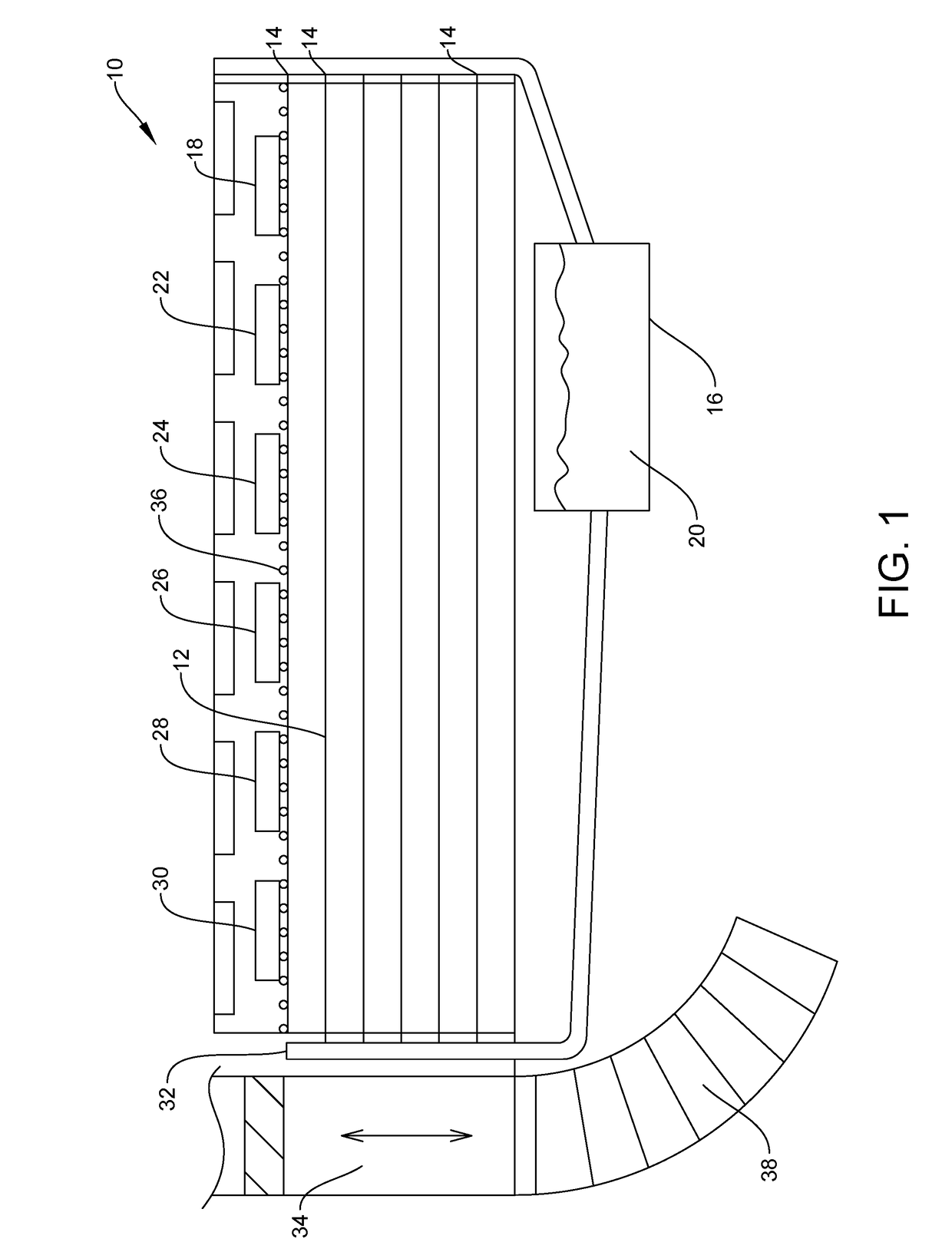

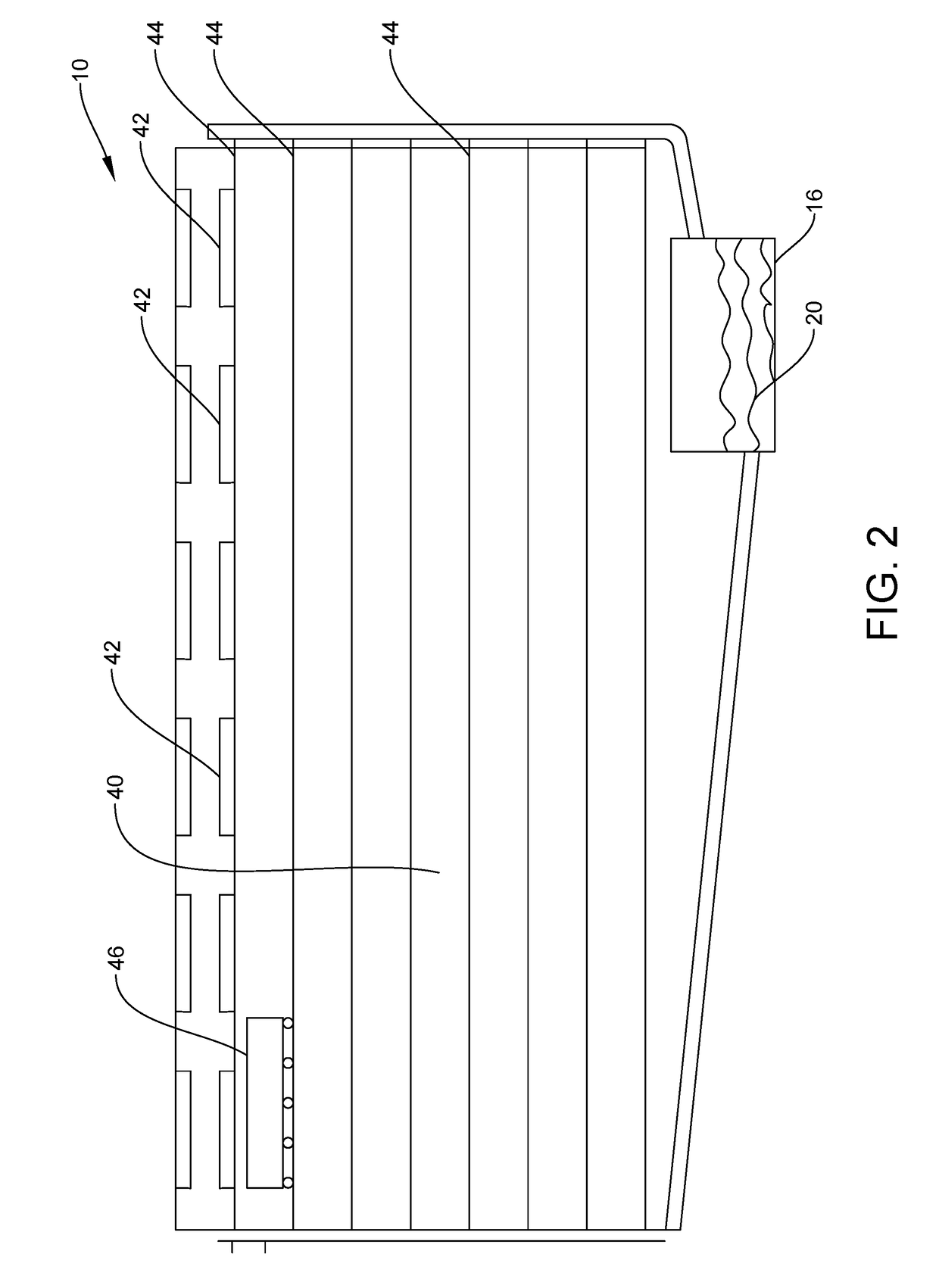

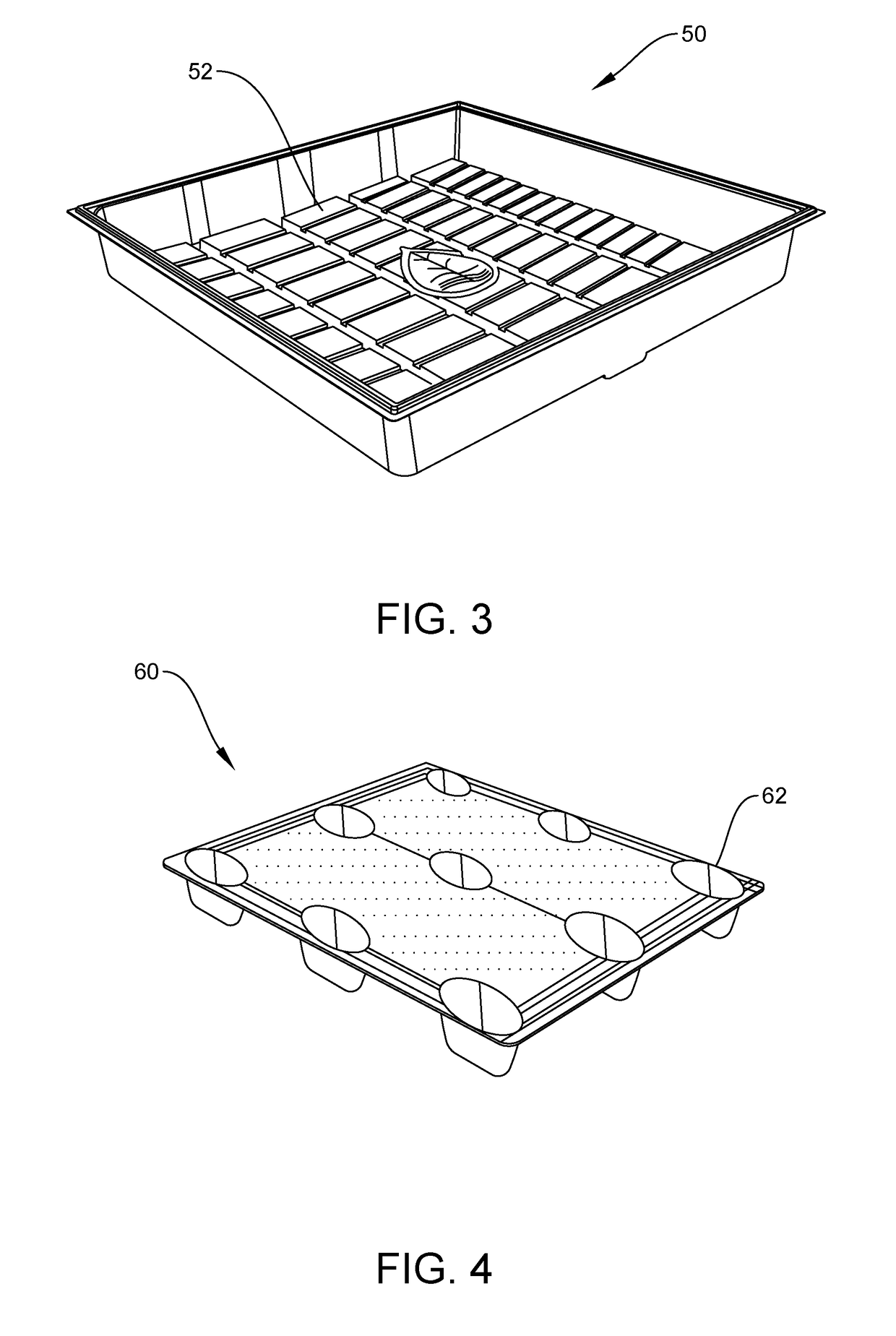

Apparatus, system and methods for improved vertical farming

The present disclosure is directed to improved vertical farming using autonomous systems and methods for growing edible plants, using improved stacking and shelving units configured to allow for gravity-based irrigation, gravity-based loading and unloading, along with a system for autonomous rotation, incorporating novel plant-growing pallets, while being photographed and recorded by camera systems incorporating three dimensional / multispectral cameras, with the images and data recorded automatically sent to a database for processing and for gauging plant health, pest and / or disease issues, and plant life cycle. The present disclosure is also directed to novel harvesting methods, novel modular lighting, novel light intensity management systems, real time vision analysis that allows for the dynamic adjustment and optimization of the plant growing environment, and a novel rack structure system that allows for simplified building and enlarging of vertical farming rack systems.

Owner:WILDER FIELDS LLC

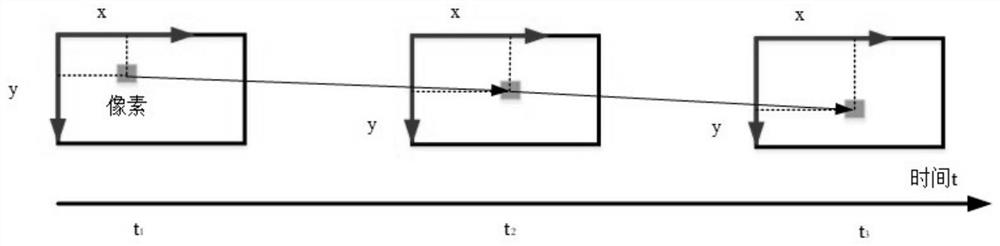

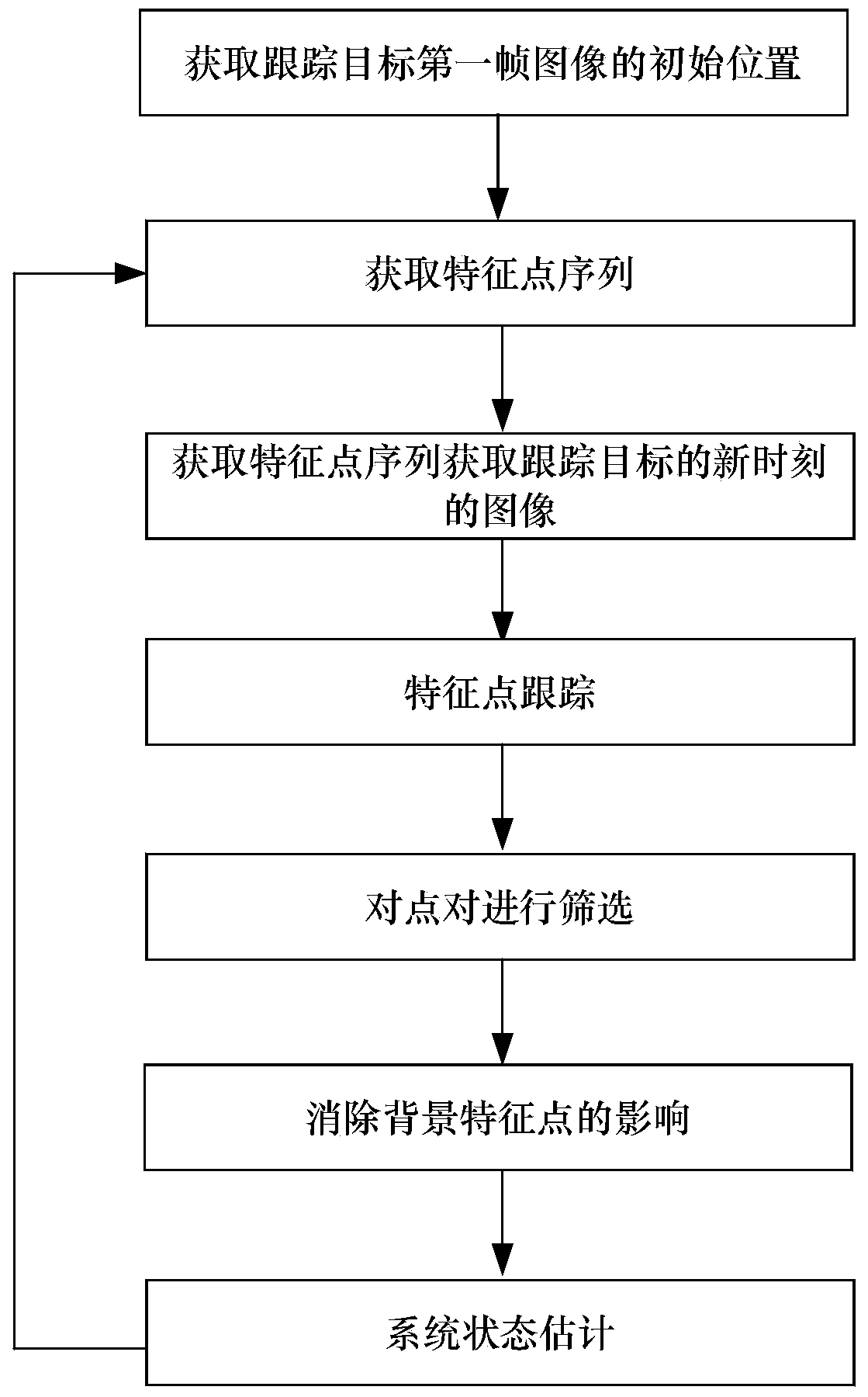

Real-time visual target tracking method based on light streams

InactiveCN104200494ASmall amount of calculationRun fastImage analysisObject tracking algorithmFilter algorithm

The invention discloses a real-time visual target tracking method based on light streams. The method includes: screening feature points rich in textural features, and tracking the feature points between every two frames of images to obtain feature point matching relations; filtering the feature points with large matching errors through various filter algorithms such as normalized correlation coefficients, forward-reverse tracking errors and random consistency detection to retain most reliable feature points so as to obtain the target motion speed in images; using the target motion speed as observation quantity, and estimating through Kalman filtering to generate the target position. Compared with a tracking algorithm, the method has the advantages that the method is unaffected by light, the target can be stably tracked under the conditions that the target moves, a camera moves, the target is blocked for a short term and the like. The method is high in calculation efficiency, and the tracking algorithm is verified for the first time on an ARM platform.

Owner:BEIHANG UNIV

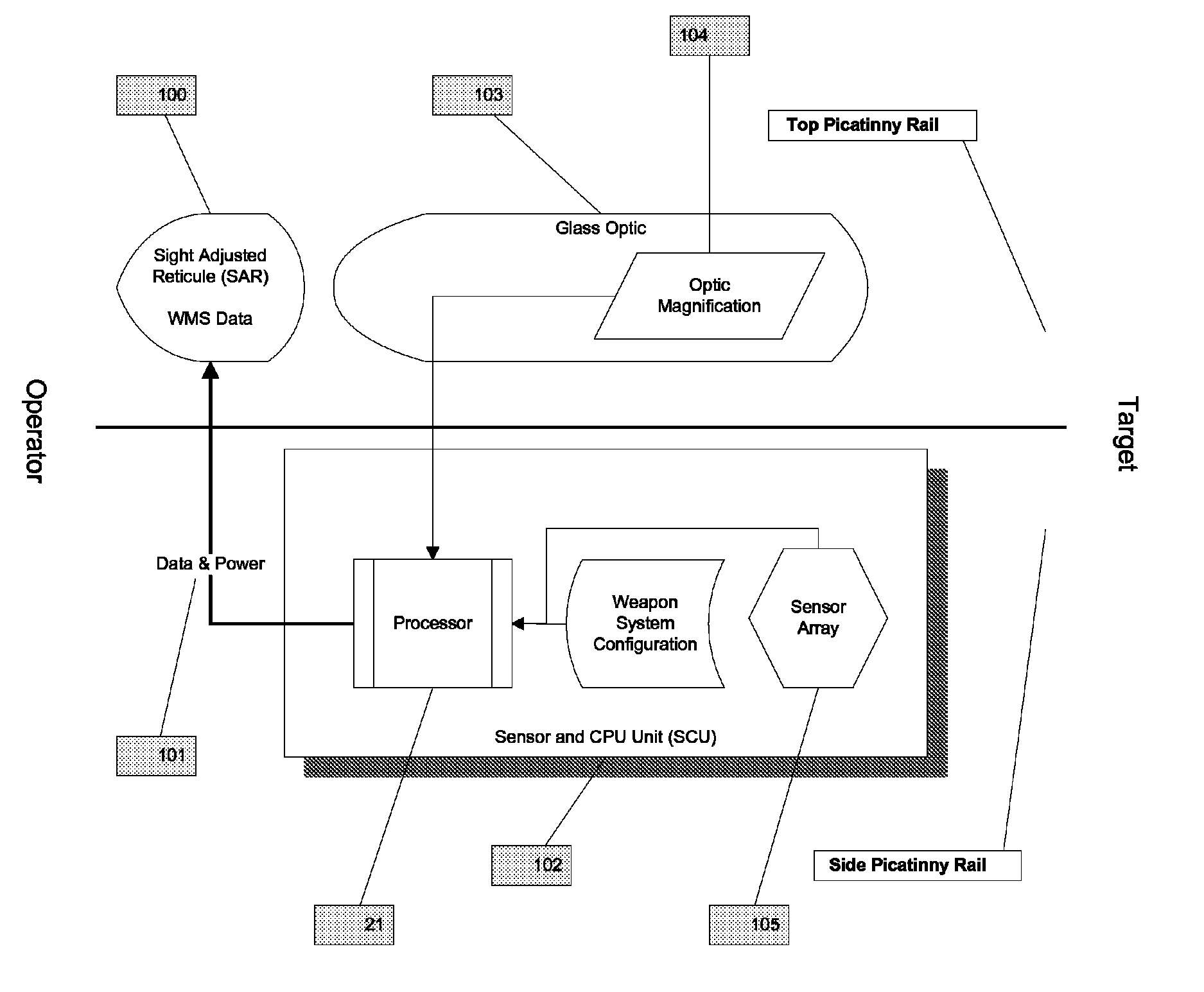

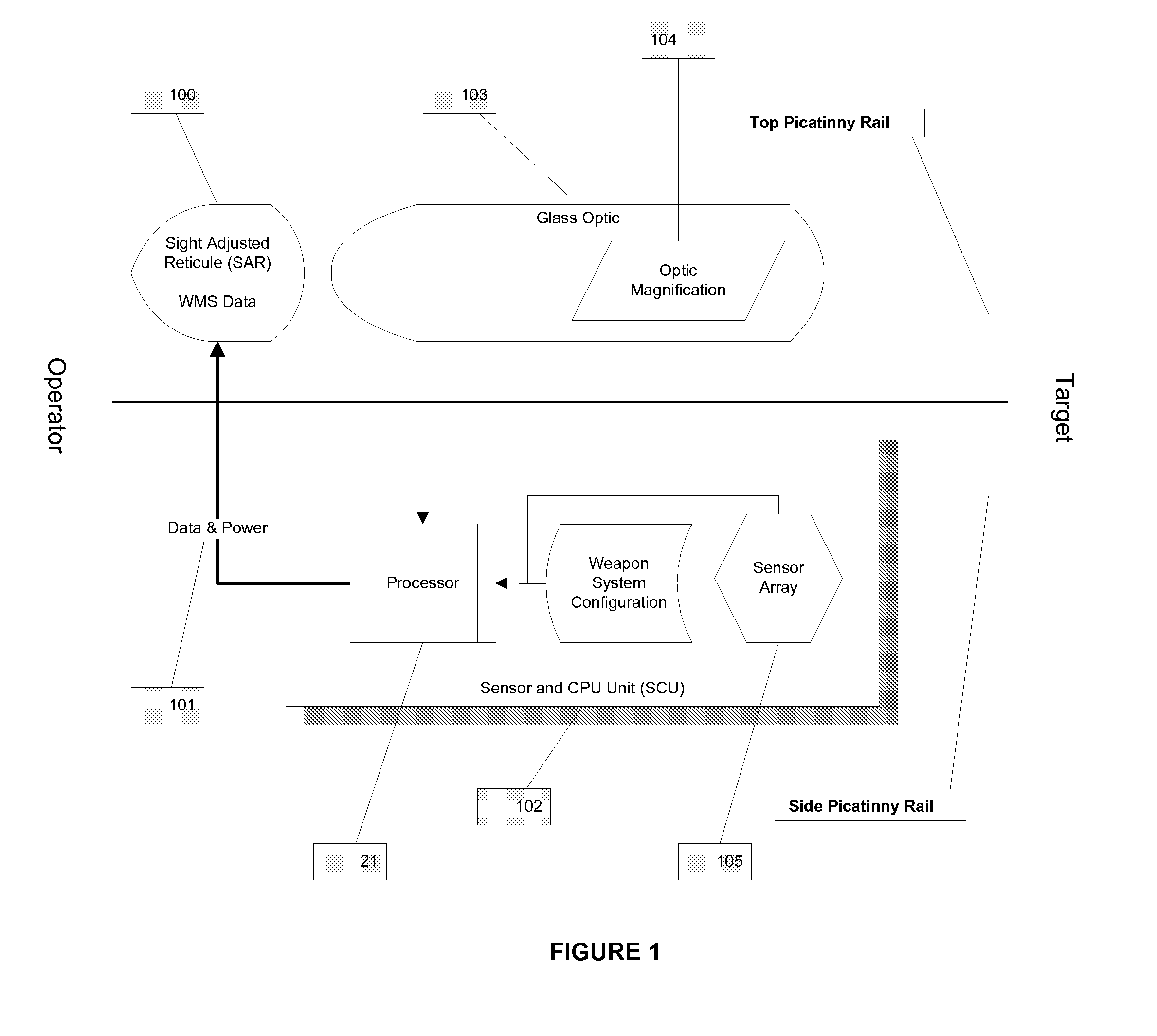

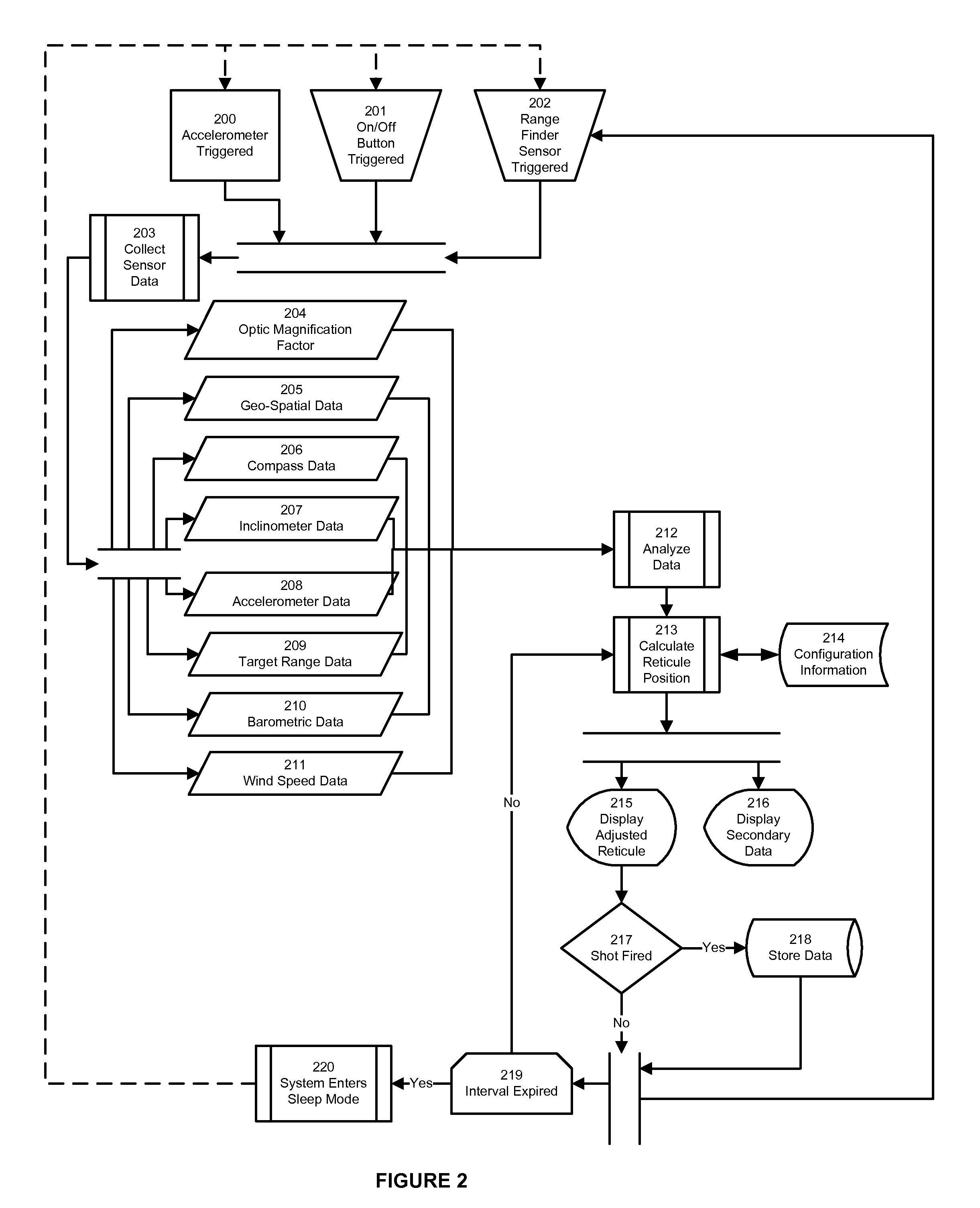

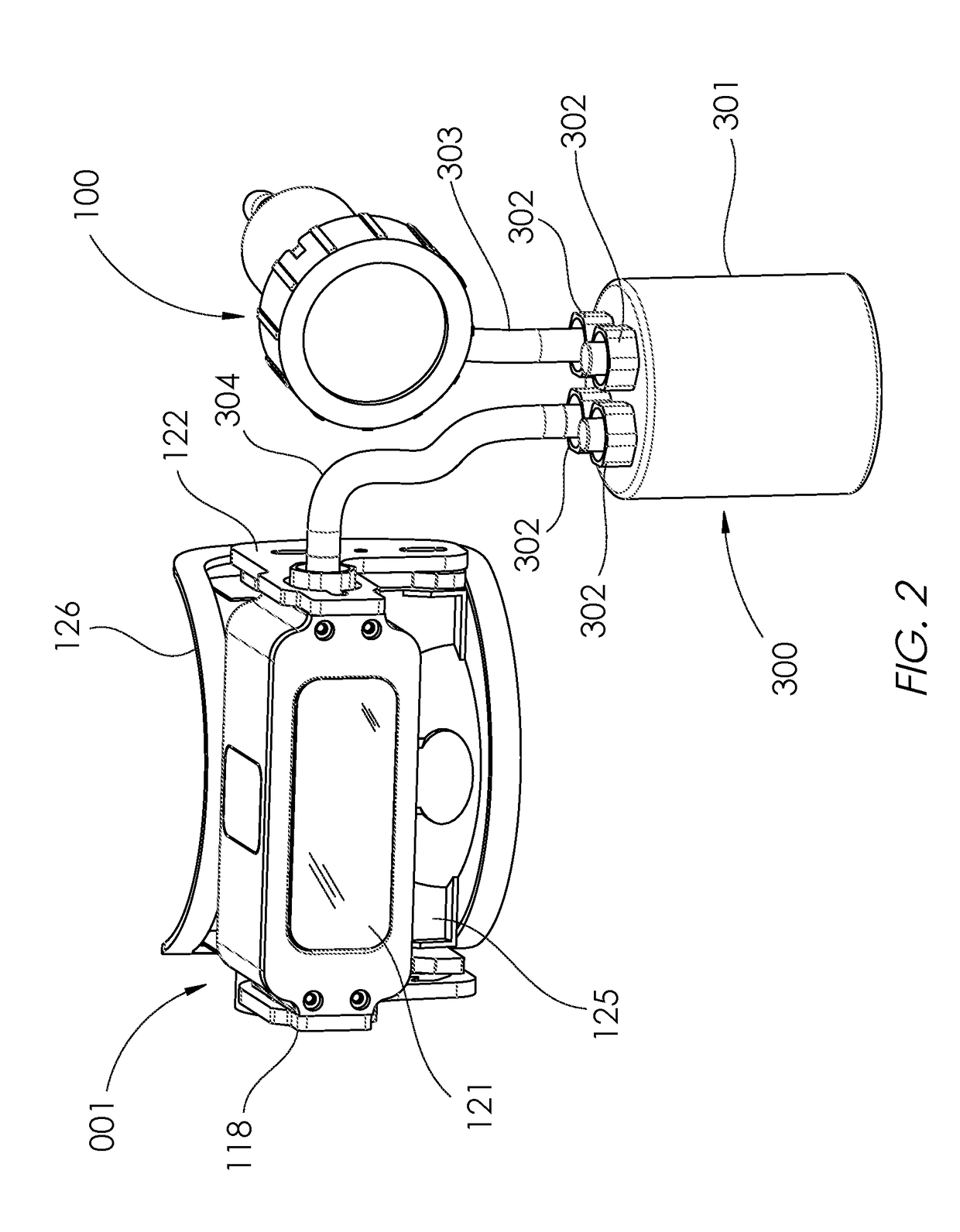

System and method for the display of a ballestic trajectory adjusted reticule

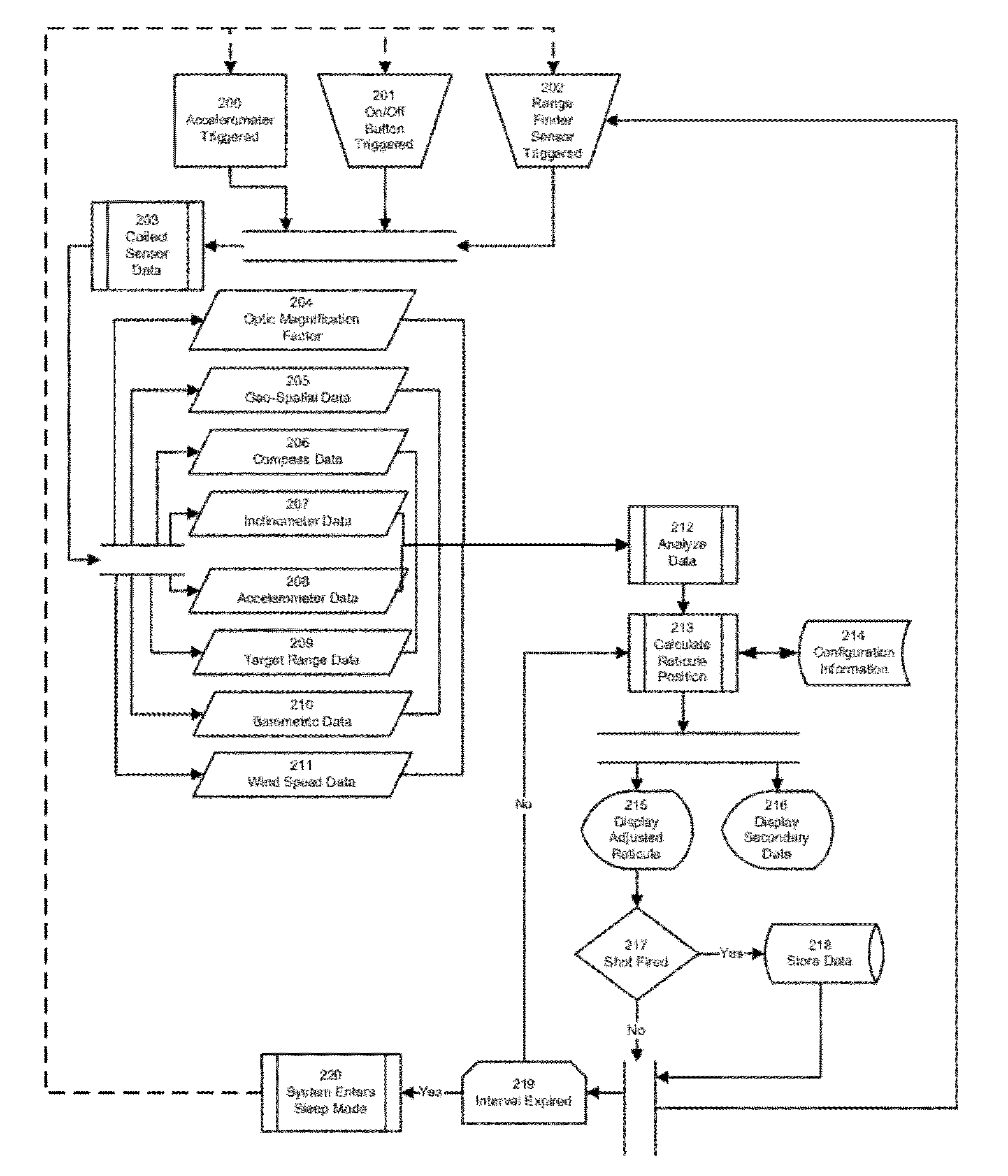

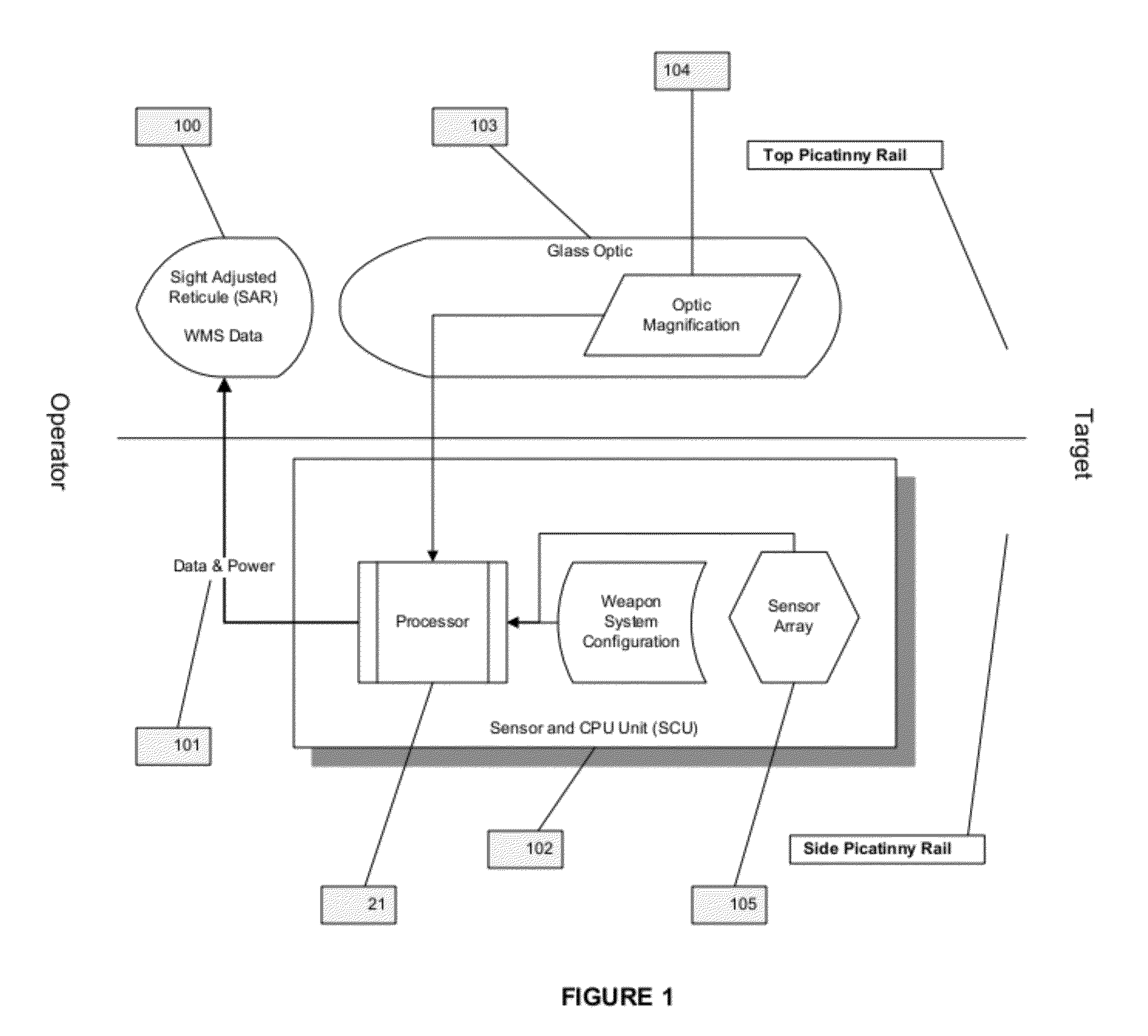

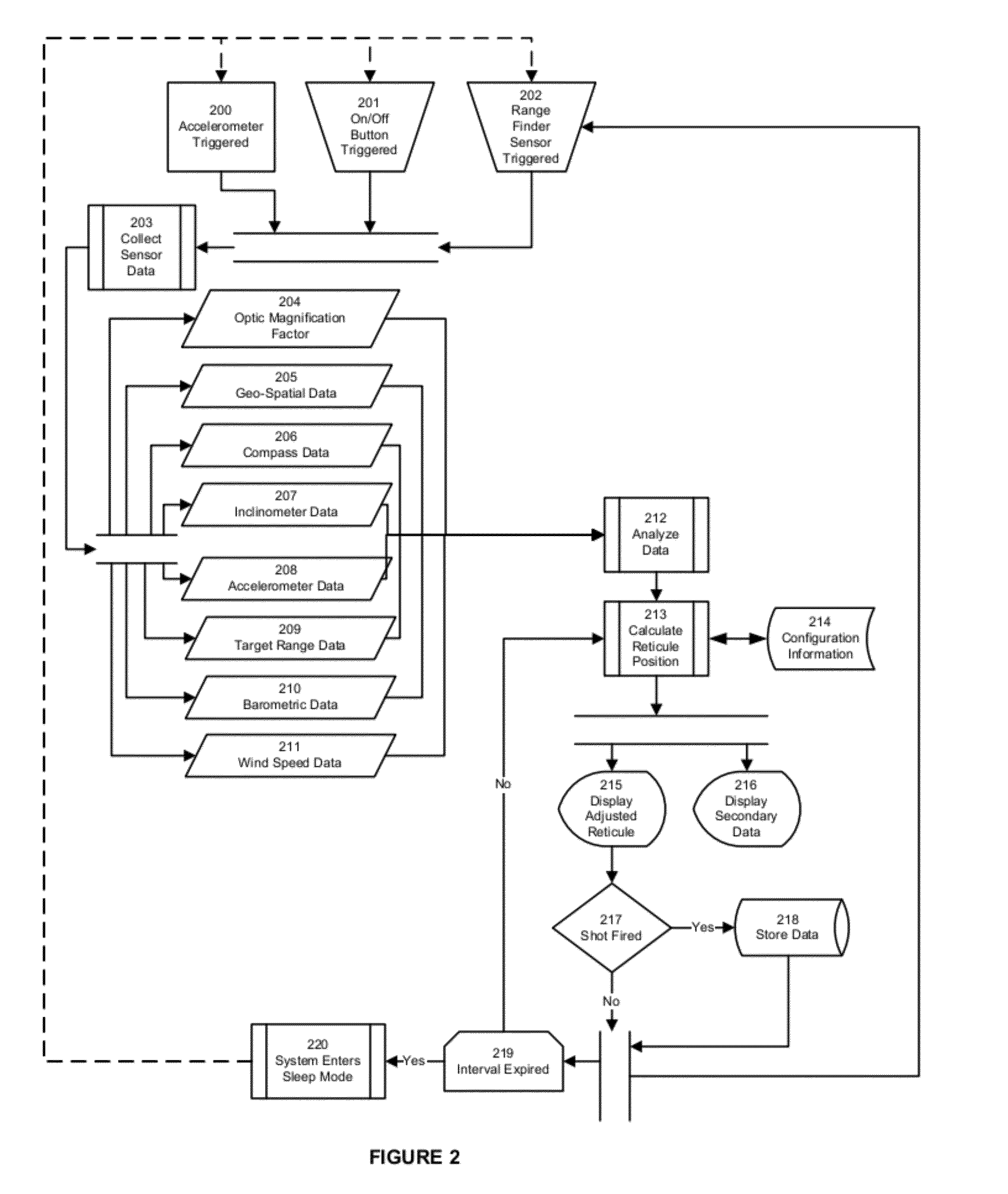

ActiveUS20120048931A1Reduce power consumptionLong operating timeSighting devicesAiming meansDisplay deviceEngineering

This invention relates to a method, system and computer program product that calculates a real-time, accurate, firing solution for man carried weapon system; specifically a transparent display to be located in-line with a weapon mounted optic and a device to adjust the aim point through real-time data collection, analysis and real-time visual feedback to the operator. A firing solution system mounted on a projectile weapon comprising: A Sensor and CPU Unit (SCU) and a Sight Adjusted Reticule (SAR) and a PC Dongle which configured to facilitate communication between the SCU and a personal computer (PC), or similar computing device, enabling management of the SCU configuration and offloading of sensor obtained and system determined data values.

Owner:AWIS

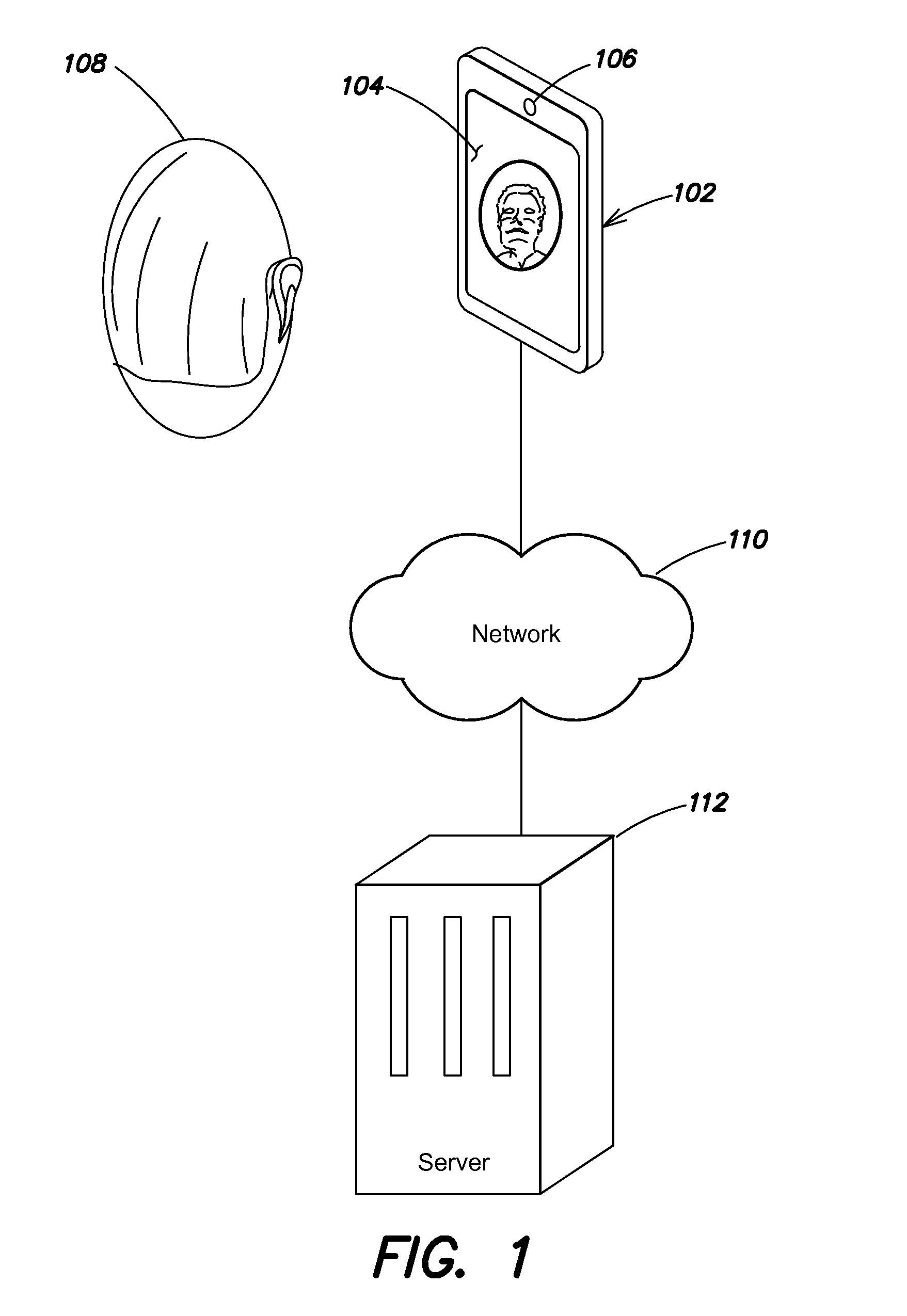

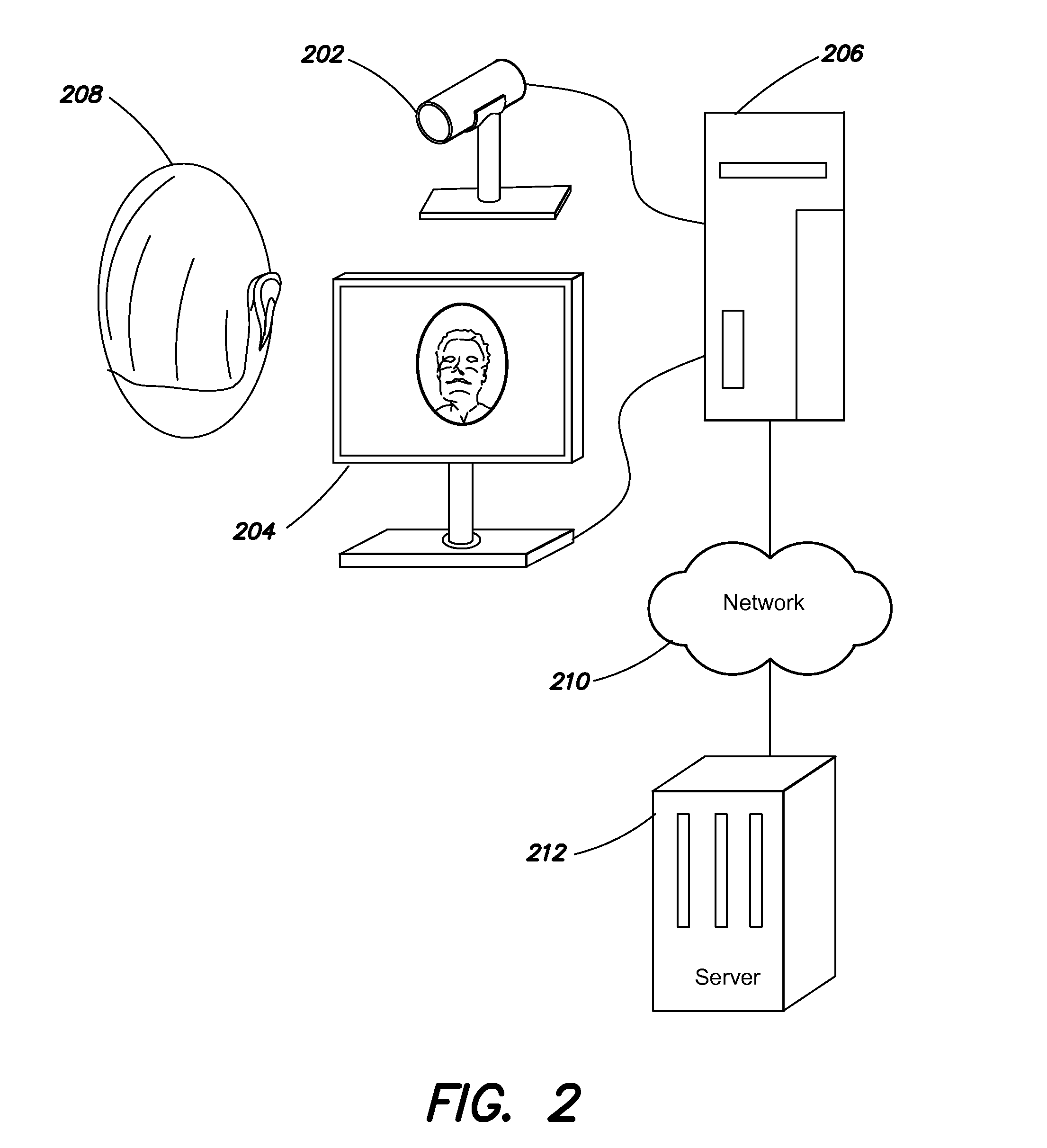

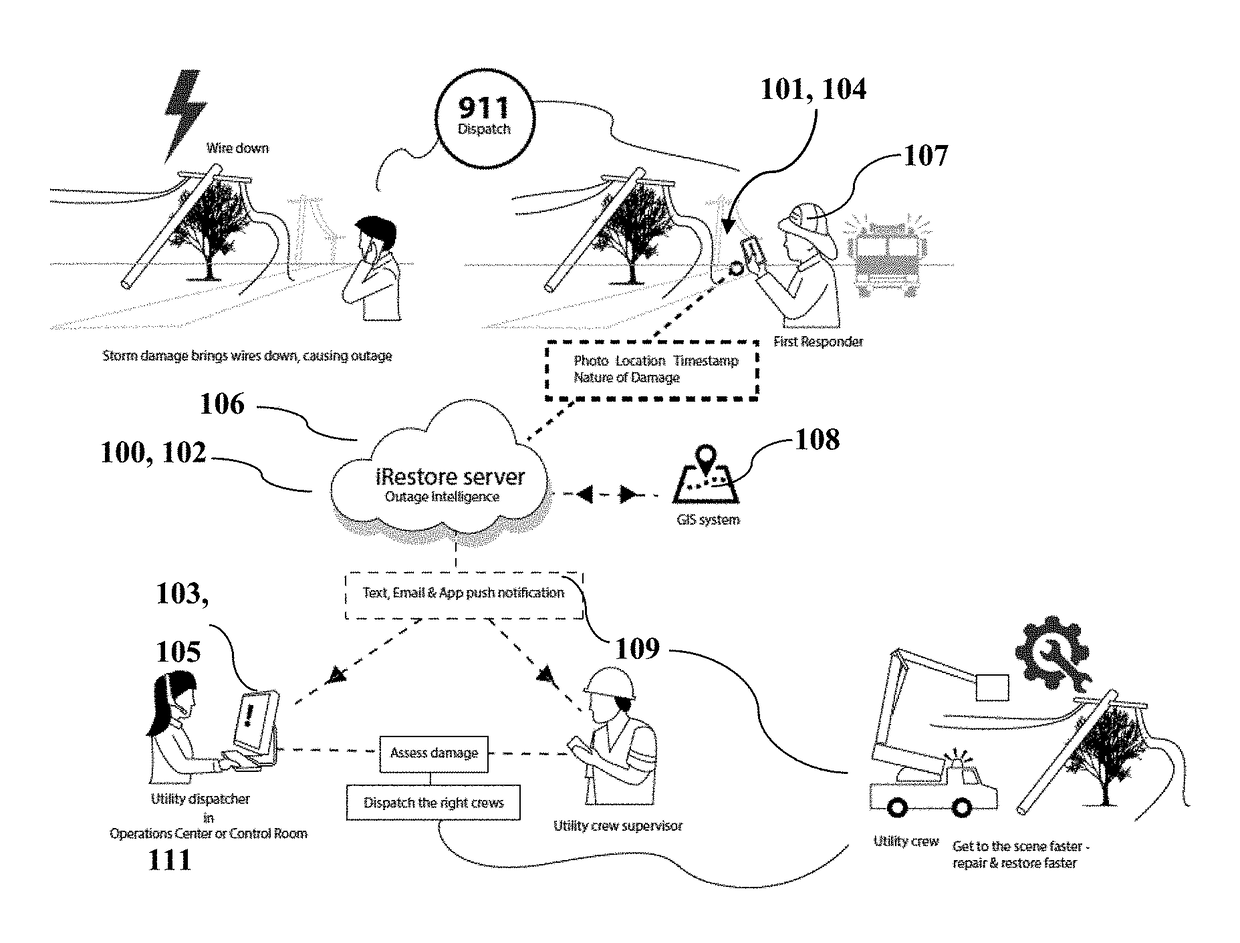

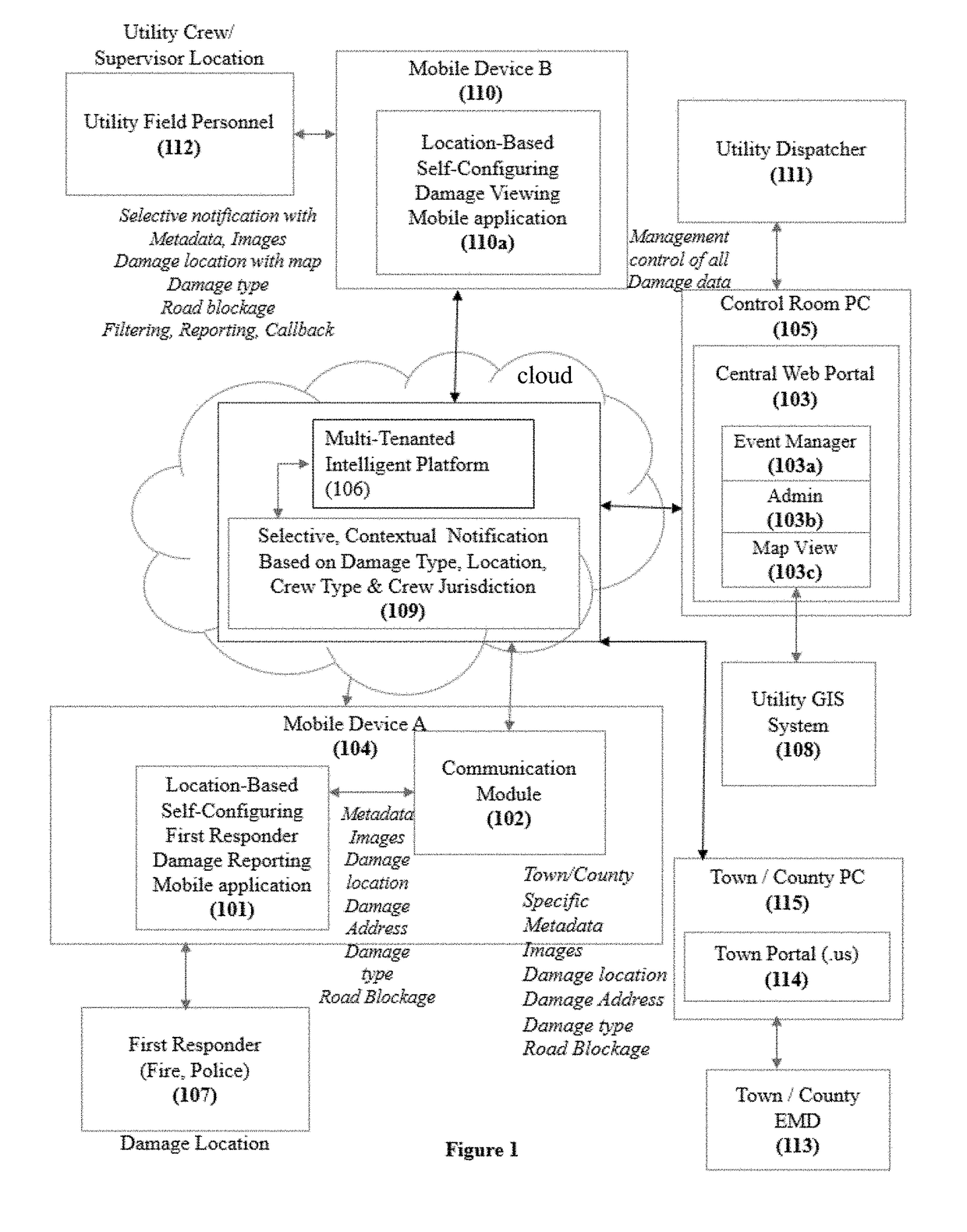

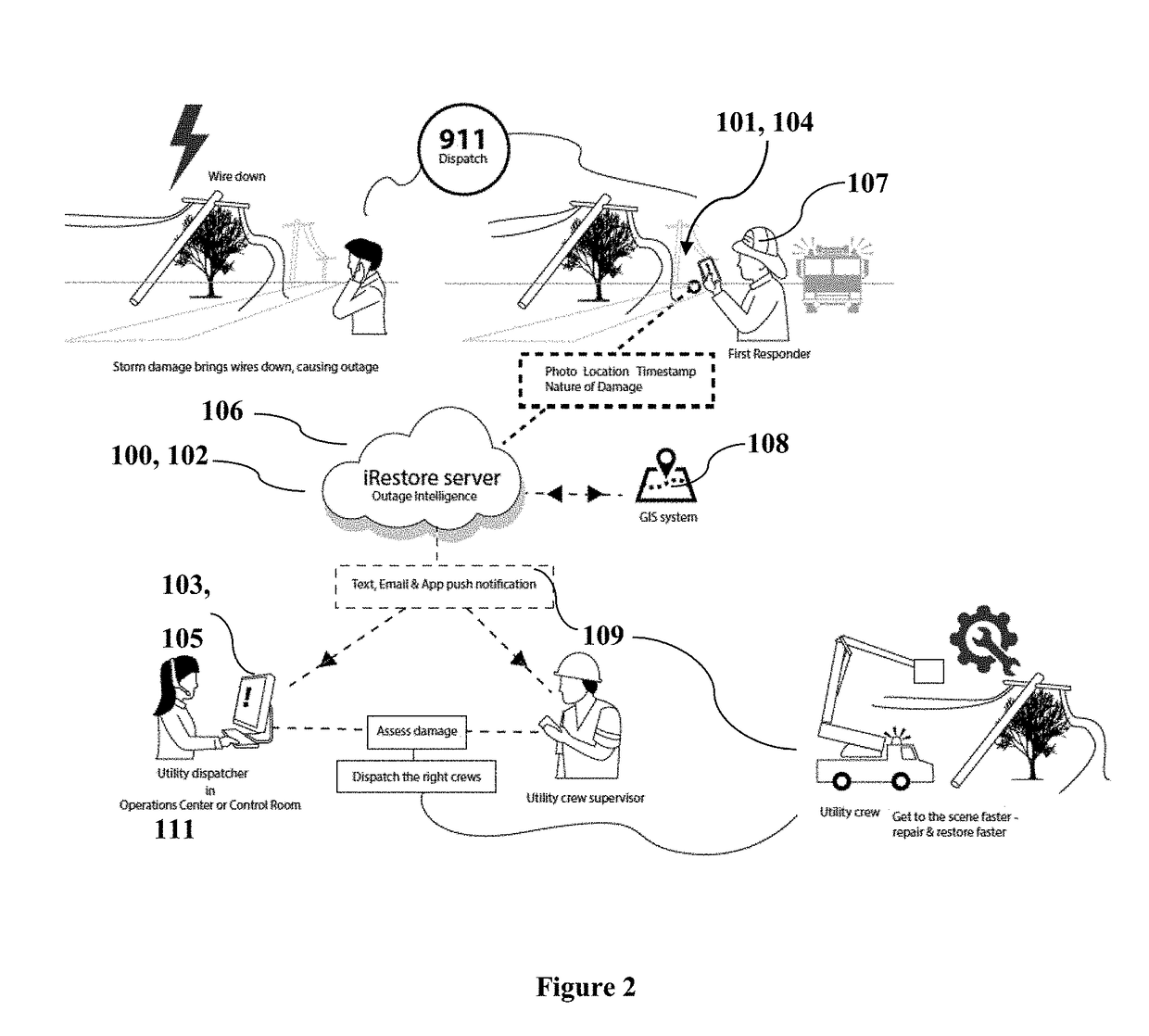

Self-customizing, multi-tenanted mobile system and method for digitally gathering and disseminating real-time visual intelligence on utility asset damage enabling automated priority analysis and enhanced utility outage response

A self-customizing, multi-tenanted mobile system includes digitally gathering and disseminating real-time visual intelligence on utility asset damage enabling automated priority analysis and enhanced utility outage response. A preferred embodiment may be made up of, for example, communication module (102) to transfer geo-coded damage imaging and associated metadata simultaneously from multiple outage-causing damage locations to dispatchers and operations personnel in the utility control room and in the field. A mobile application (101) is installed onto the first responder's Global Positioning System (GPS) enabled mobile device (104) to send metadata to a multi-tenanted intelligent platform (MTIP) (106). MTIP (106) determines which utility tenant receives the damage report and customizes all aspects of the technical solution: the first responder mobile application (101), the central web portal (103) and the damage viewing application for utility field personnel (110). A central web portal (103) running on a control room personal computer running a Javascript capable browser or similar environment (105) receives geo-coded damage imaging and associated metadata from mobile application (101) via the MTIP (106) which automatically analyzes event location, relevance and severity to compute, recommend and communicate event priority. MTIP (106) further analyzes inbound images using computer vision technology and wire geometry algorithms to determine relative risk and event priority of downed wires. The multi-tenanted intelligent platform (106) is used to store outage-causing damage information and perform damage assessment enabling dispatchers to respond appropriately. A preferred embodiment enables external users—specifically municipal first responders (fire, police and municipal workers) to report outage-causing damage to the electric grid and provides a simple, easily deployable and secure system. The system then uses location, severity and role-based rules to dynamically notify appropriate utility personnel (112) via text message, email notification or within the damage viewing application on the field personnel's mobile device (110) so they are best able to respond and repair the damage. A preferred embodiment also speeds and improves communication between municipalities and utilities, and enhances the transparency of utility damage repair leading to outage resolution.

Owner:BOSSANOVA SYST INC

System and method for the display of a ballestic trajectory adjusted reticule

This invention relates to a method, system and computer program product that calculates a real-time, accurate, firing solution for man carried weapon system; specifically a transparent display to be located in-line with a weapon mounted optic and a device to adjust the aim point through real-time data collection, analysis and real-time visual feedback to the operator. A firing solution system mounted on a projectile weapon comprising: A Sensor and CPU Unit (SCU) and a Sight Adjusted Reticule (SAR) and a PC Dongle which configured to facilitate communication between the SCU and a personal computer (PC), or similar computing device, enabling management of the SCU configuration and offloading of sensor obtained and system determined data values.

Owner:AWIS LLC

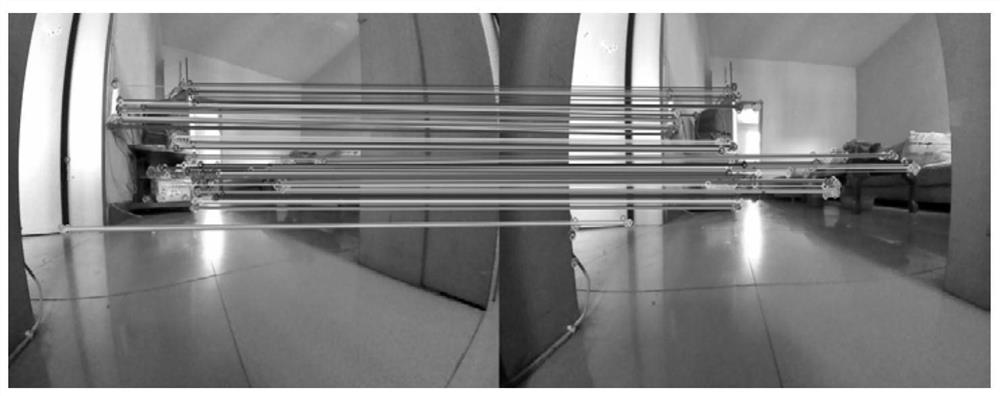

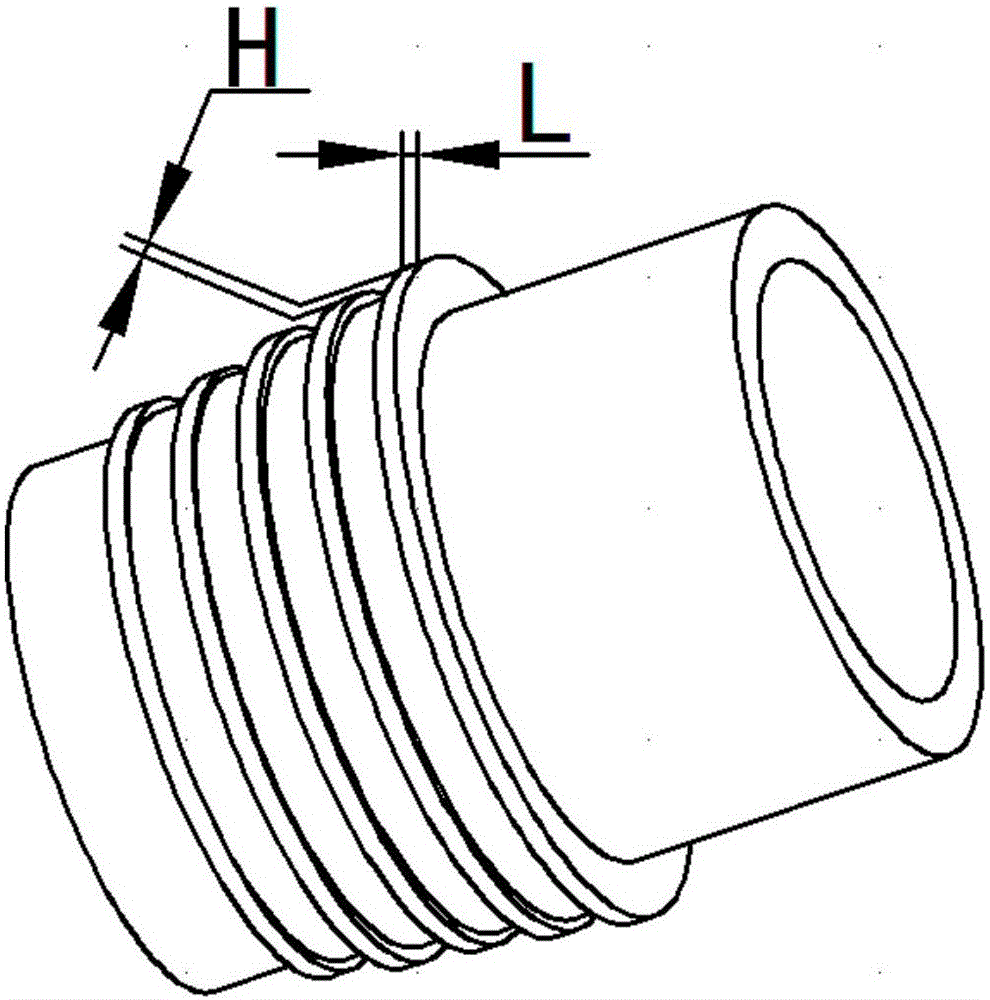

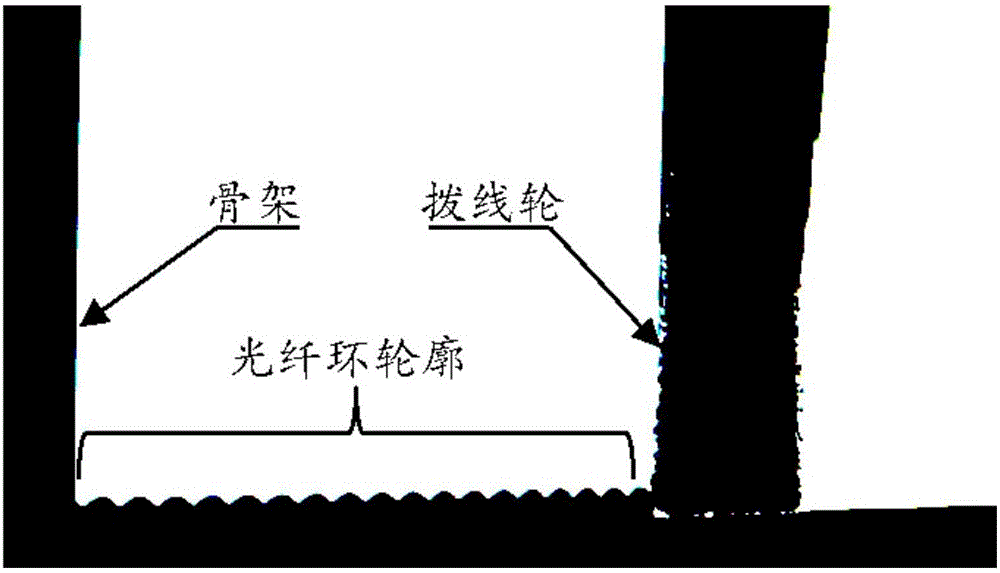

Vision auxiliary control method and device for wire arrangement consistency of optical fiber winding machine

InactiveCN105841716AEvenly distributedSolve the problem of unable to automatically adjust the wire feedMeasurement devicesFilament handlingWinding machineReduction drive

The invention provides a vision auxiliary control device for wire arrangement consistency of an optical fiber winding machine. The vision auxiliary control device comprises a machine seat, a rack and a supporting cross beam, and further comprises a gear box body which is arranged on the machine seat and can move left and right; a winding main shaft is arranged on the gear box body and can move left and right along with the movement of the gear box body; a first servo motor in the gear box body drives a speed reducer to generate rotating motion so as to realize winding; a bracket capable of moving left and right as well as up and down is arranged on the supporting cross beam; a wire shifting wheel and a camera which are static relatively are fixedly arranged on the bracket; a lens of the camera is positioned in front of the winding wheel. The invention further provides a vision auxiliary control device for wire arrangement consistency of the optical fiber winding machine. According to the vision auxiliary control device and the vision auxiliary control device, the shortcoming that the conventional optical fiber winding machine cannot automatically adjust the wire shifting feeding amount is overcome; real-time vision feedback is added, and the feeding amount of the wire shifting wheel of the optical fiber winding machine is adjusted, so that optical fiber ring winding is higher in consistency.

Owner:NORTH CHINA UNIVERSITY OF TECHNOLOGY

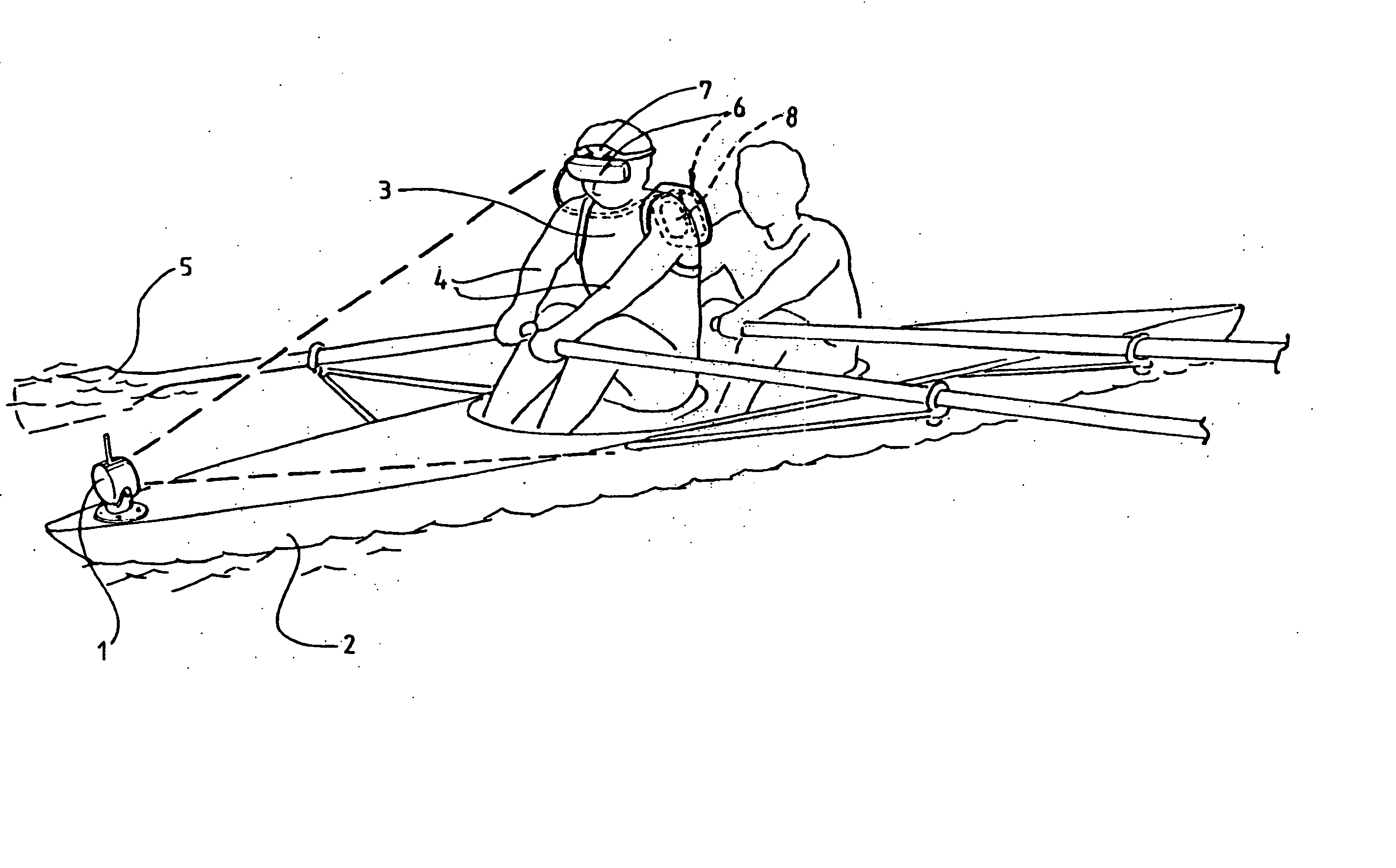

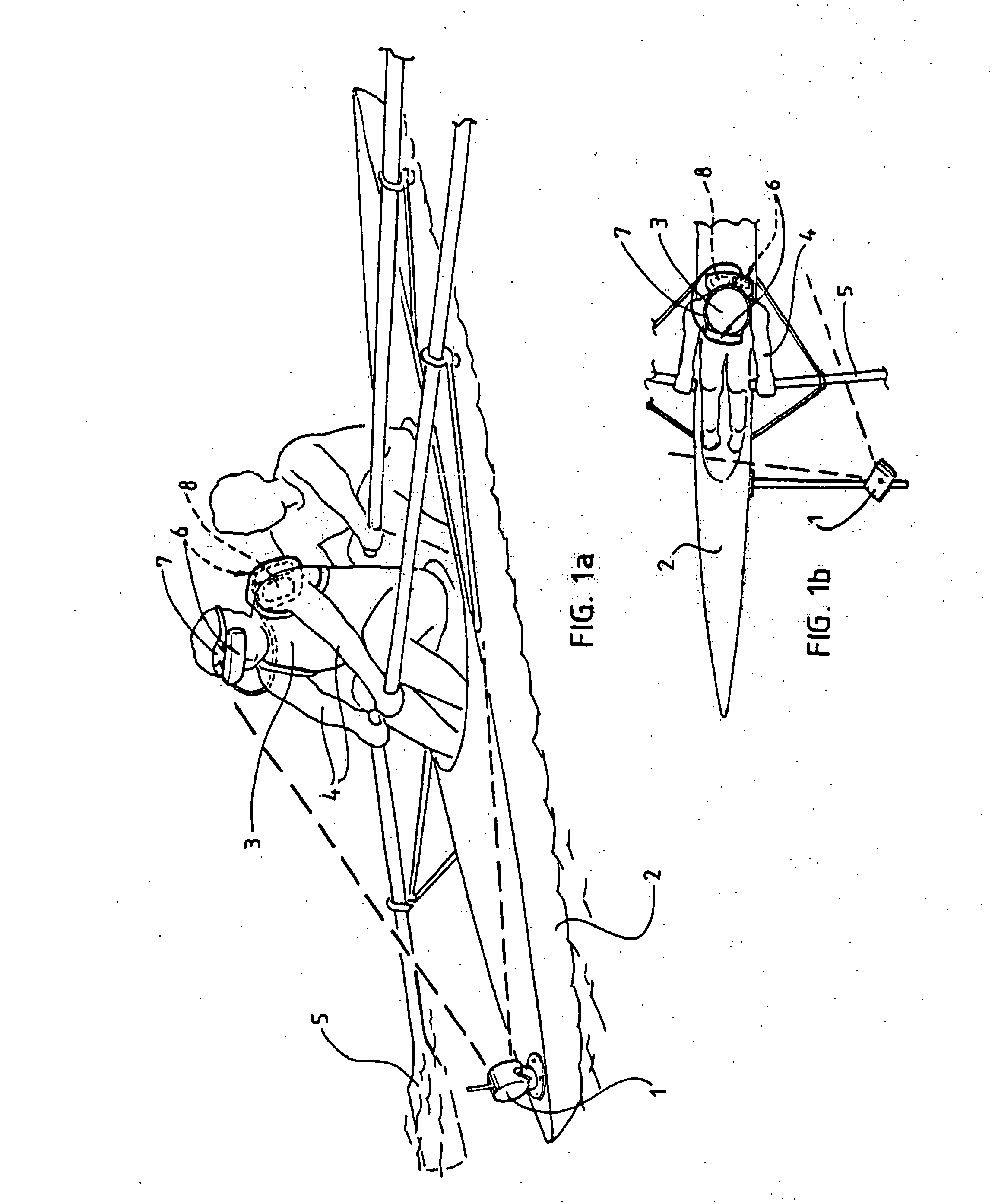

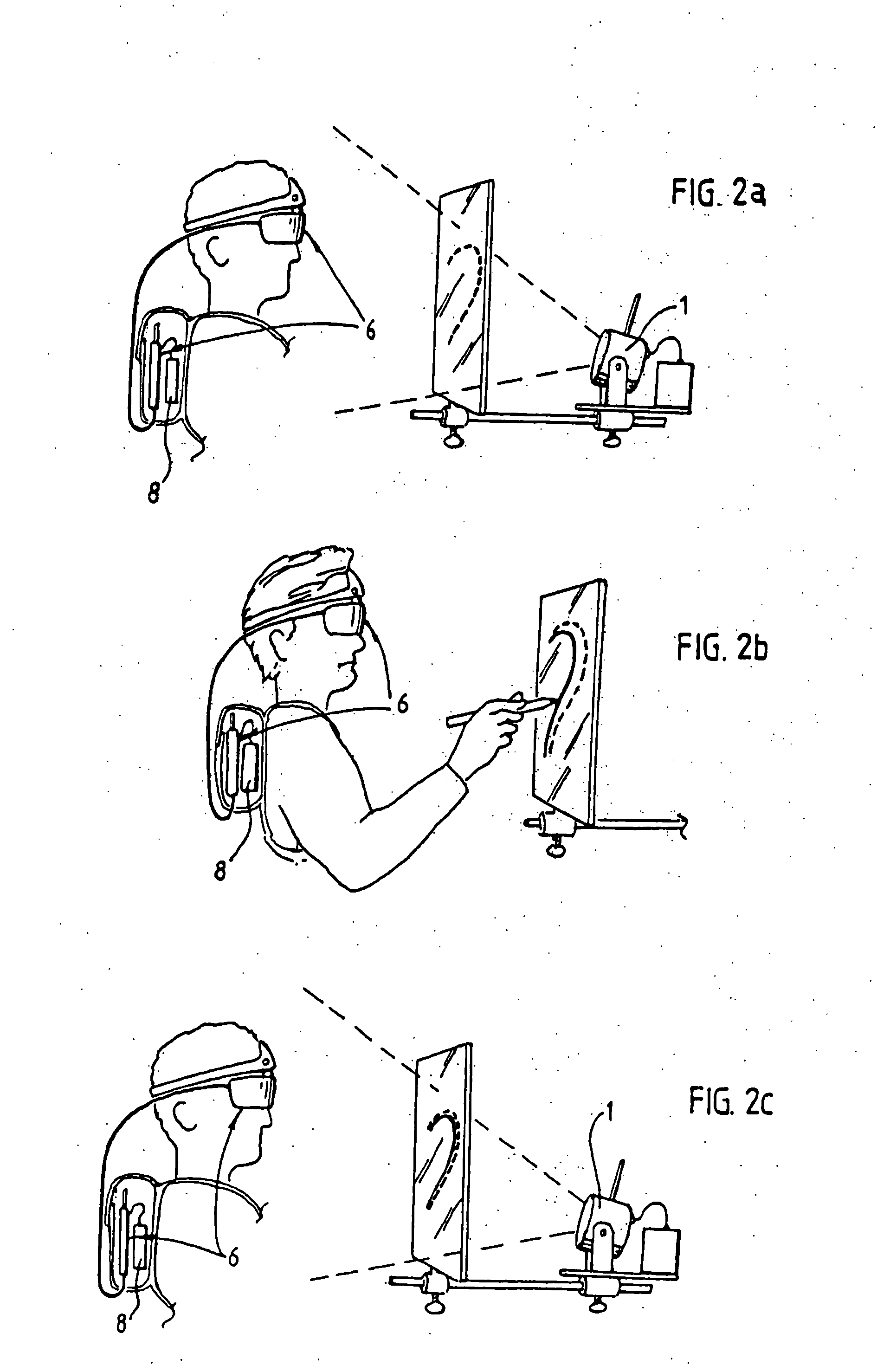

Visual sports training apparatus and method

InactiveUS20070032143A1Gymnastic exercisingMuscle power acting propulsive elementsMonitoring systemReal Time Kinematic

A real time visual self-monitoring system for a user, said system comprising a camera (1) adapted for fixed monitoring in selectable relativity to said user (3), a monitoring means (6) for receiving camera output adapted for viewing by said user and a power supply (8) for said camera and monitoring means as required wherein said monitoring allows said user to selectively and / or simultaneously view their own real time motion from a remote vantage point in conjunction with their own peripheral vision.

Owner:SHORT ANDREW LIAM BRENDAN

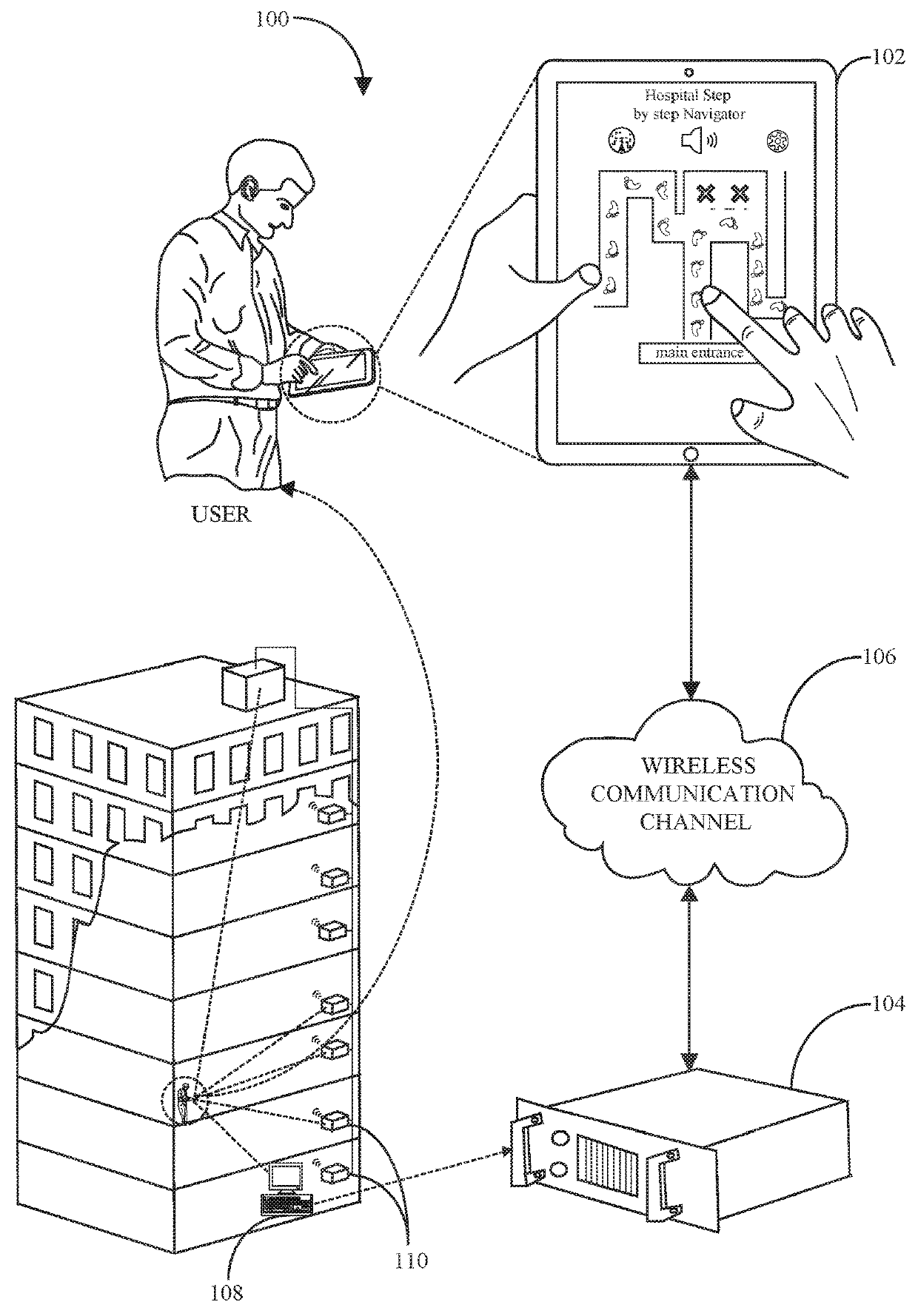

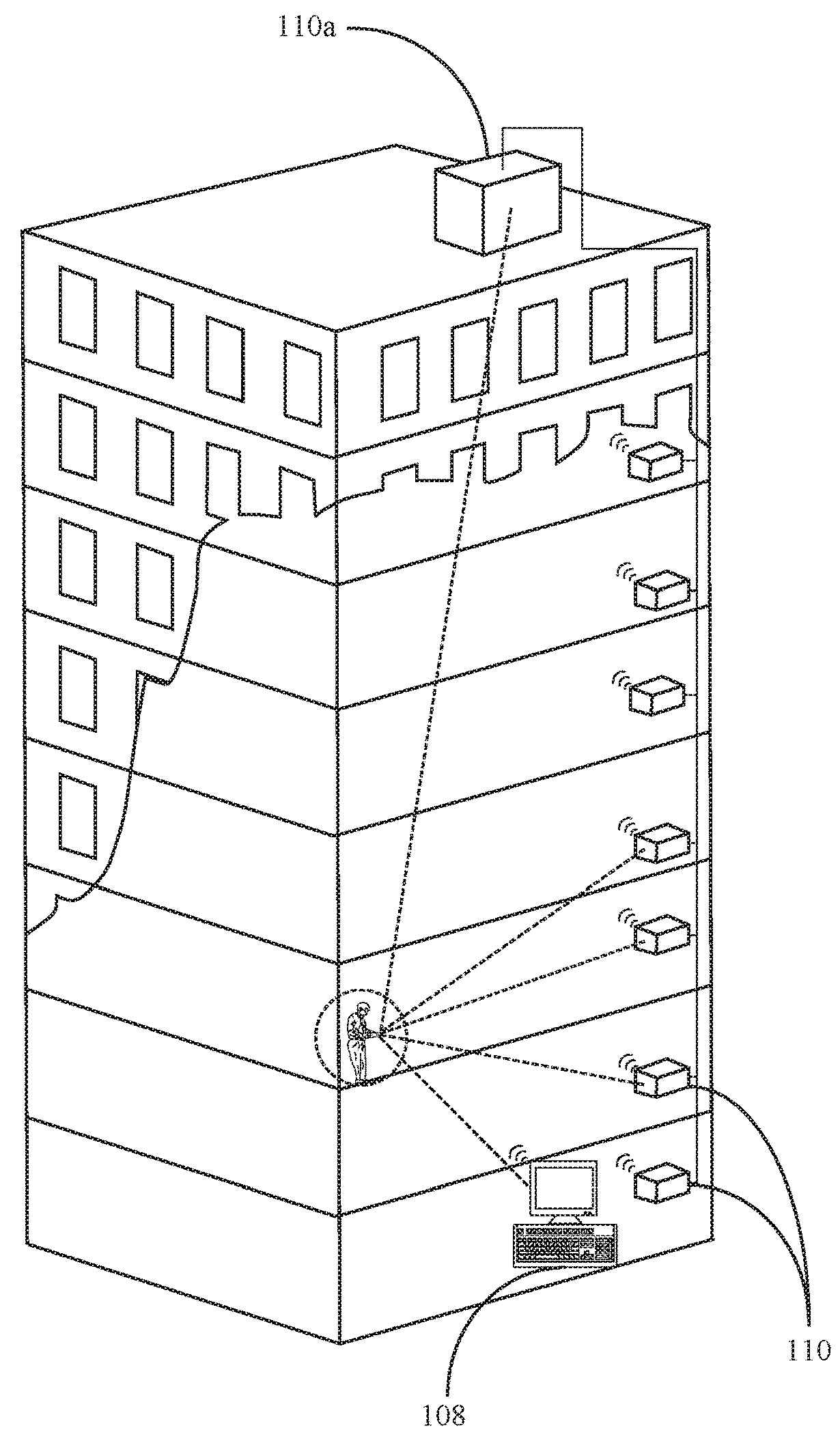

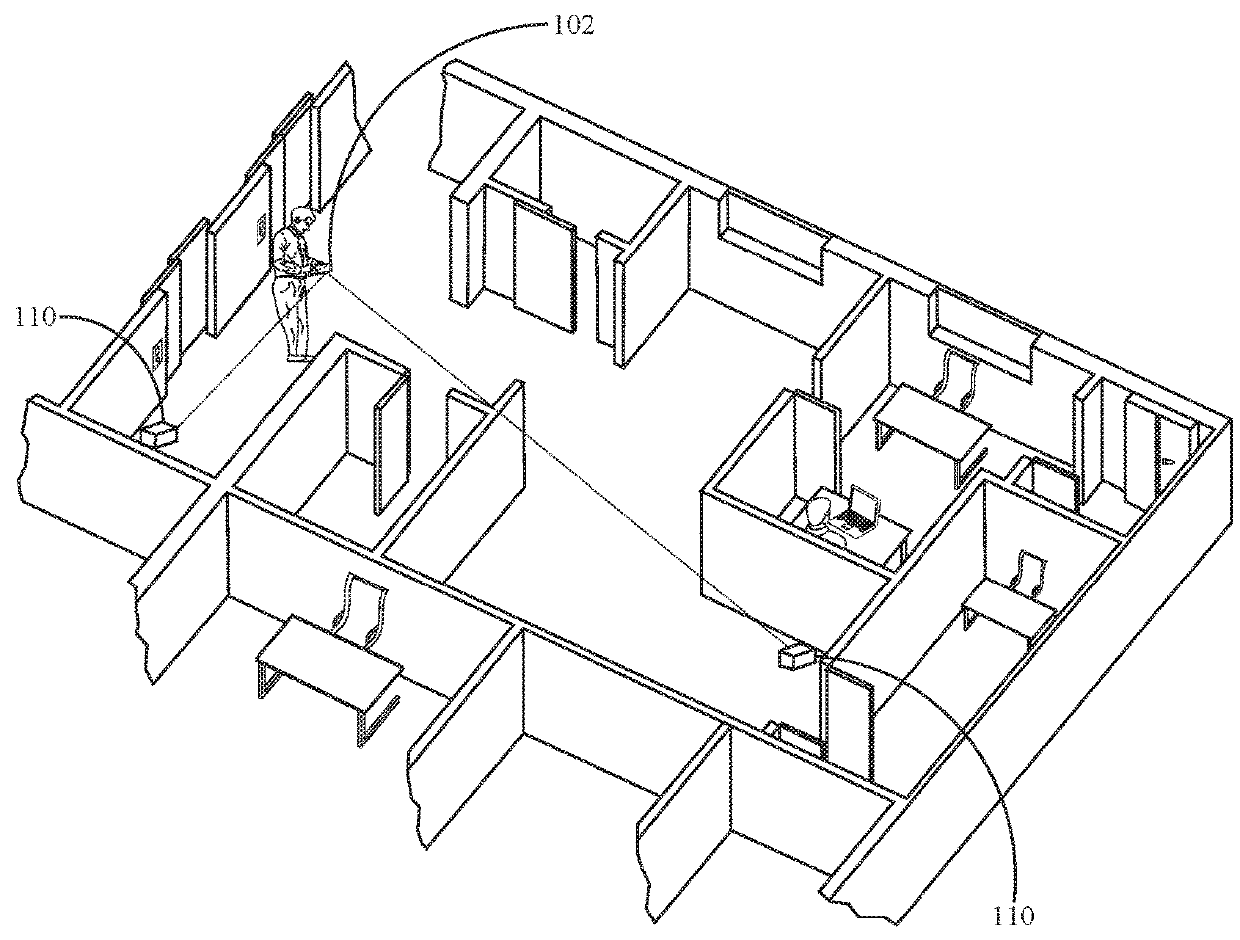

Facility navigation

InactiveUS20190212151A1Easy to browseNavigational calculation instrumentsParticular environment based servicesGraphicsGraphical user interface

A computer assisted system for providing navigational assistance to a user towards a desired destination associated with a facility includes an electronic communication device running an application providing an indoor and outdoor location information associated with the facility and a real-time, dynamic, interactive navigational assistance information to the desired destination, a number of three-dimensional indoor positioning devices installed at the facility for identifying a real-time location of the electronic communication devices and a server in communication with the electronic communication devices over a wireless communication channel for storing the indoor and outdoor location information associated with the facility. An interactive dynamic graphical user interface of the application provides step-by-step instructions in form of augmented reality based real-time visuals and audio instructions towards the desired destination selected by the user based on the real-time location of the electronic communication device identified using the three-dimensional indoor positioning devices at the facility.

Owner:PARKER LYNETTE +1

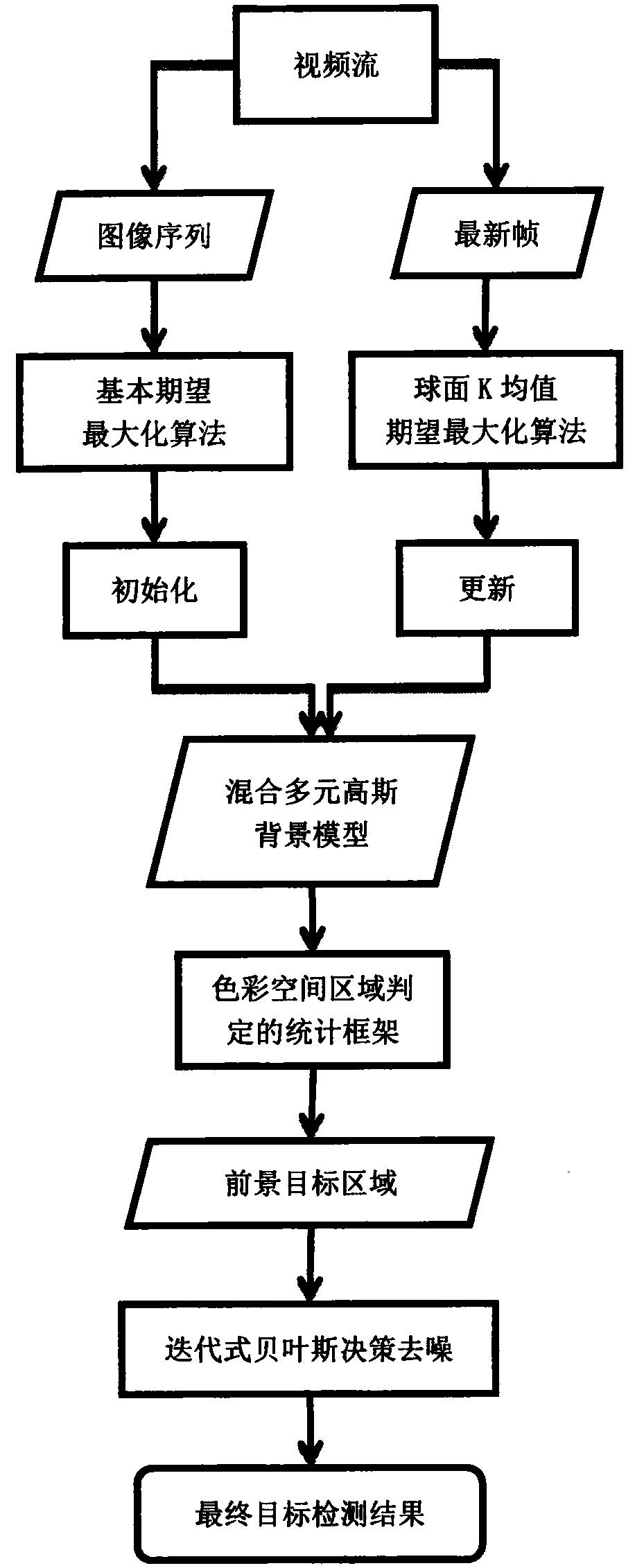

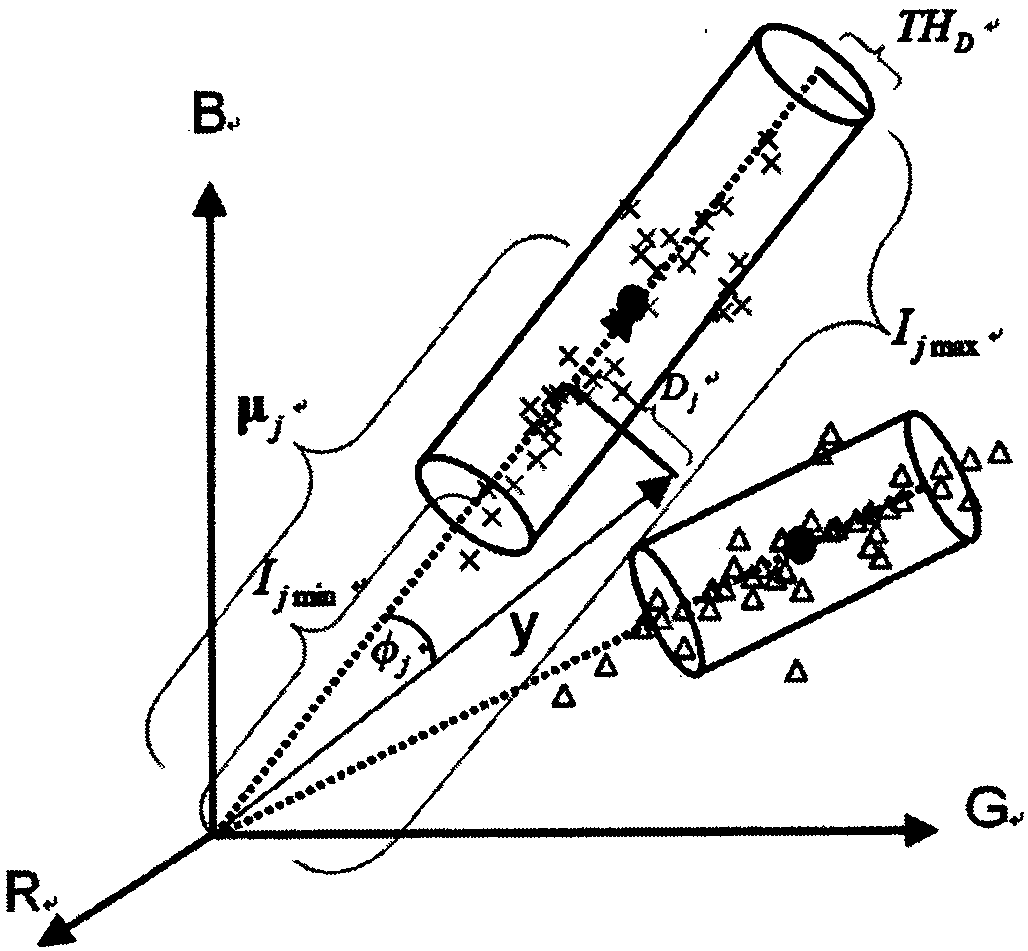

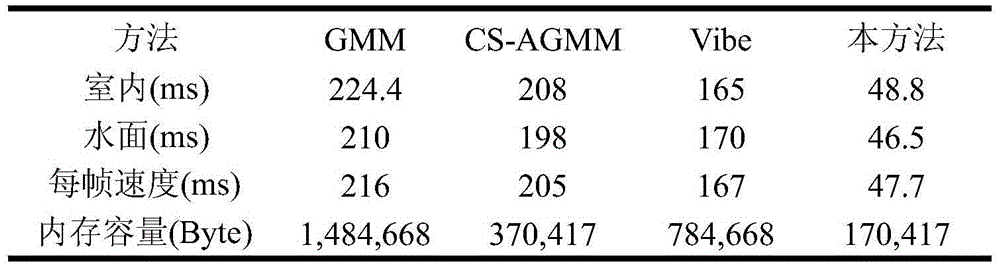

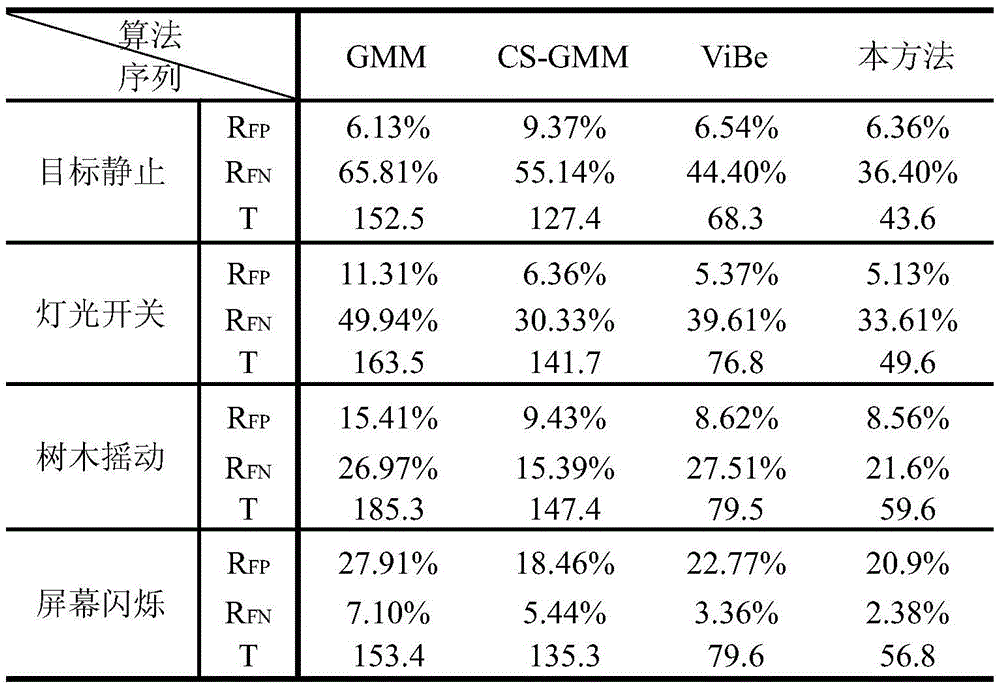

Real-time vision target detection method robust to ambient light change

InactiveCN107833241AAccurate detectionResistance to disturbanceImage enhancementImage analysisExpectation–maximization algorithmVisual field loss

The invention provides a real-time vision target detection method robust to an ambient light change. The method comprises a step of acquiring a historical image sequence in a video stream, a step of initializing a pixel-level mixed multivariate Gaussian background model, a step of designing a background modeling method of a spherical K-means expectation maximization algorithm and updating parameters of the background model in an online way to adapt to a change of an environment once a new image frame is obtained, a foreground target detection step of using a statistical framework determined bya color space area and calculating a latest image frame and the background model in the statistical framework to obtain a pixel area where a foreground target is located, and a step of weakening detection noise through an iterative Bayesian decision step, wherein a target contour can be enhanced in the process. According to the method, the foreground target position and contour in a visual fieldcan be accurately detected in real time in the visual field, a correct detection rate is high, a false alarm rate is low, the disturbance of a detection result caused by the ambient light change can be resisted, and the method is particularly suitable for an intelligent video surveillance system.

Owner:DONGHUA UNIV

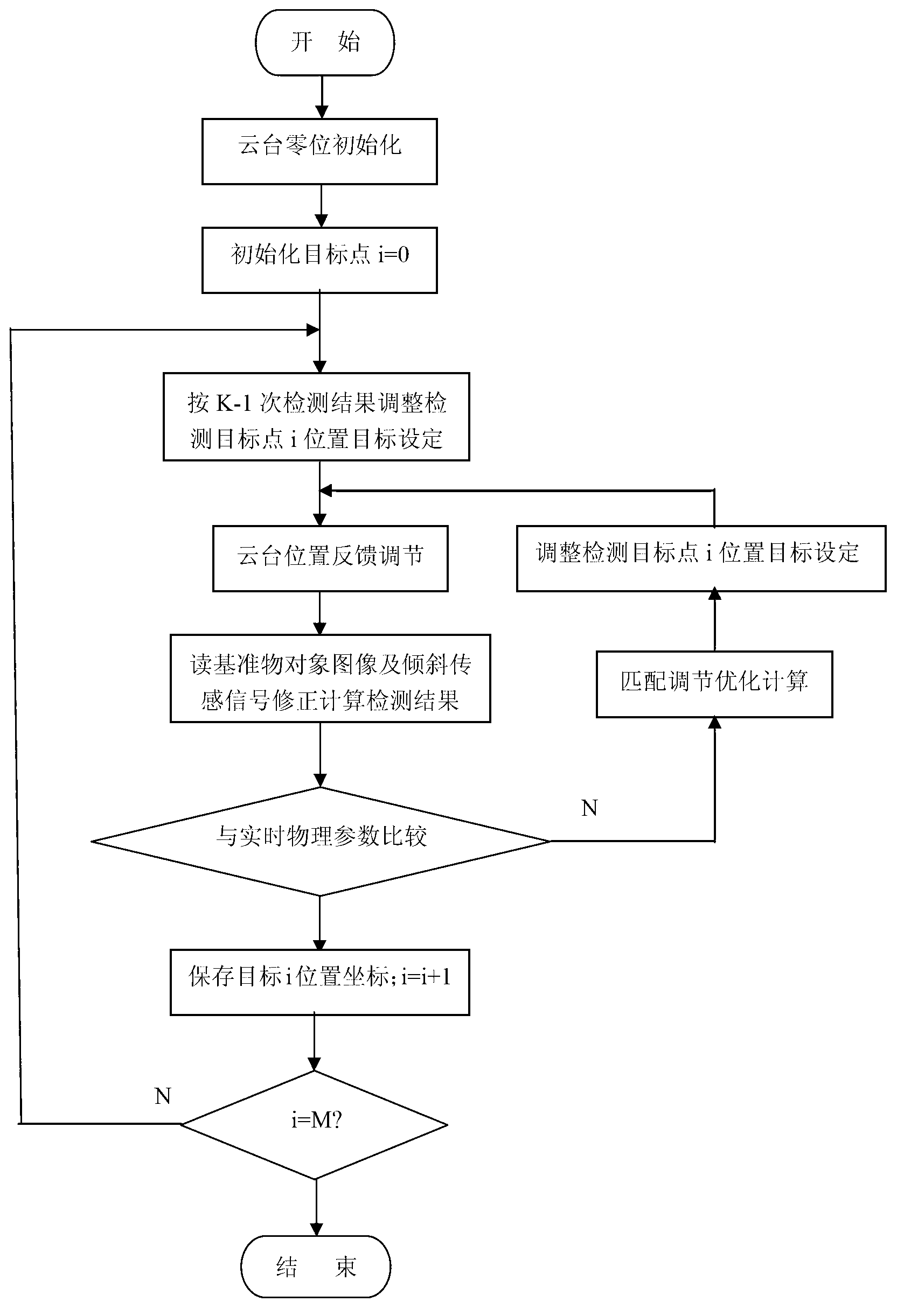

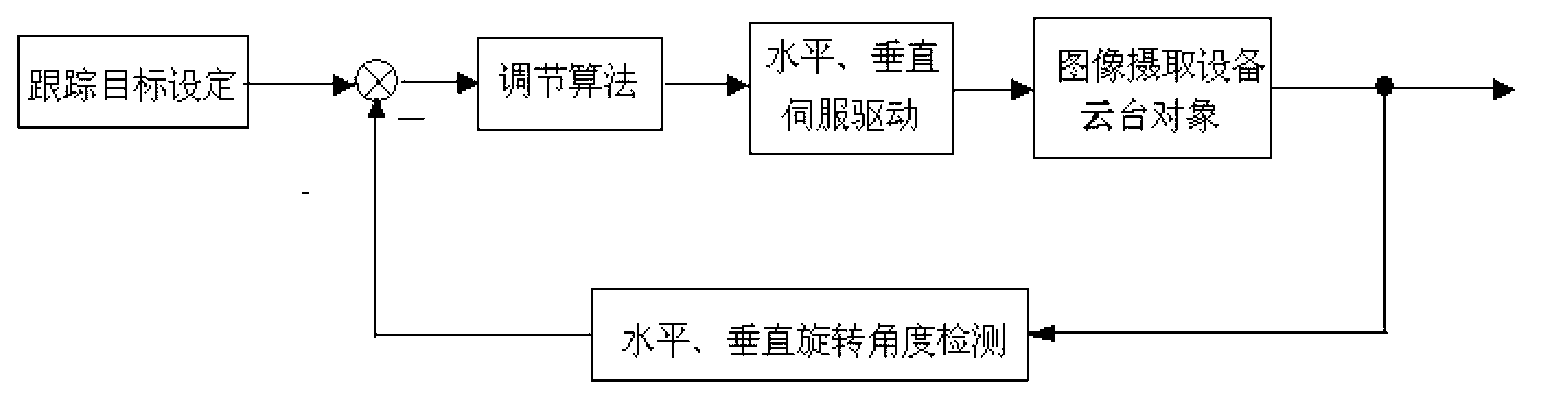

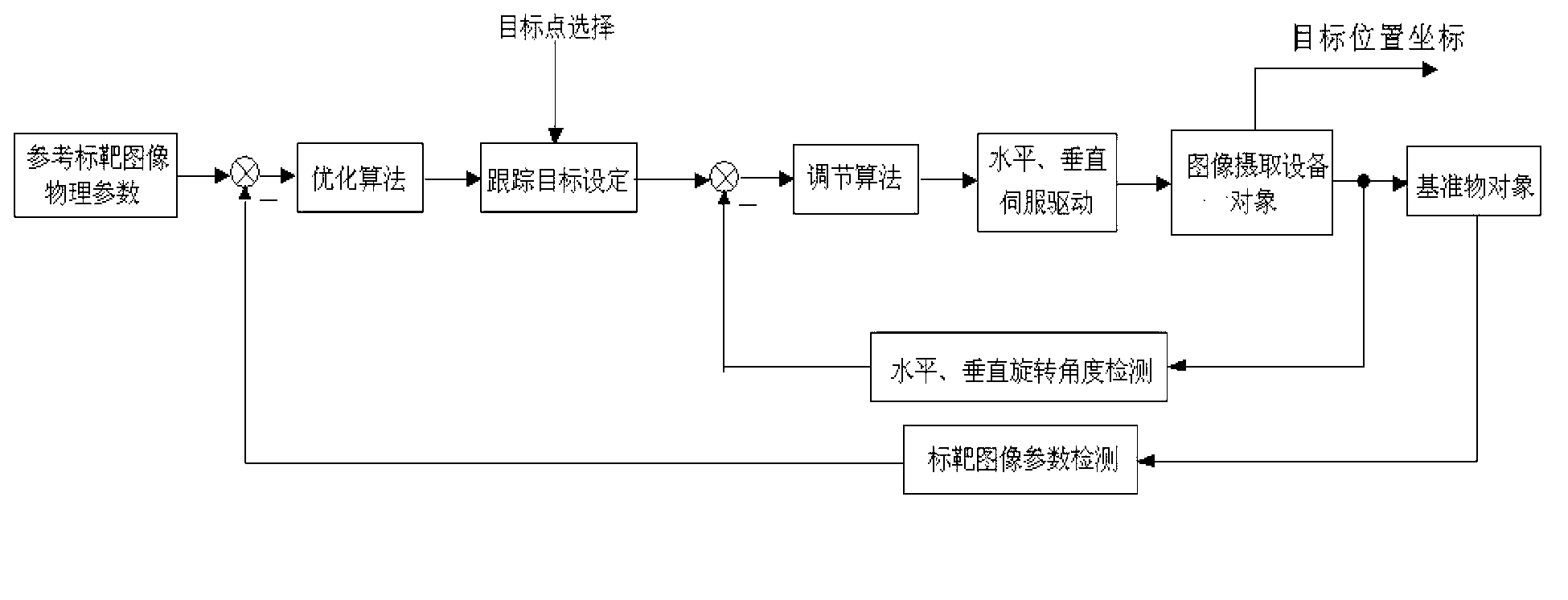

Real-time multi-target position detection method and system based on image

InactiveCN103075999ATo achieve real-time tracking detection needsRapid positioningTelevision system detailsOptical rangefindersImage calibrationEngineering

The invention provides a real-time multi-target position detection method and a system based on an image and relates to a real-time visual detection technology. The invention solves the problem that a traditional real-time visual detection technology can not satisfy continuous real-time high-precision detection and adopts the main technical scheme that the method comprises the following steps: carrying out feedback control and adjustment on the position of a tripod head and calibrating real-time multi-target position detection system image pickup equipment based on the image. The real-time multi-target position detection method and the system have the outstanding advantages that the detection target direction of the image system can be quickly and accurately positioned; the calibration of the online real-time self-adaptive image system can be realized; and different detection demands can be satisfied, the image calibration precision is improved, and the measurement precision and the real time are improved.

Owner:STATE GRID SICHUAN ELECTRIC POWER CORP ELECTRIC POWER RES INST +1

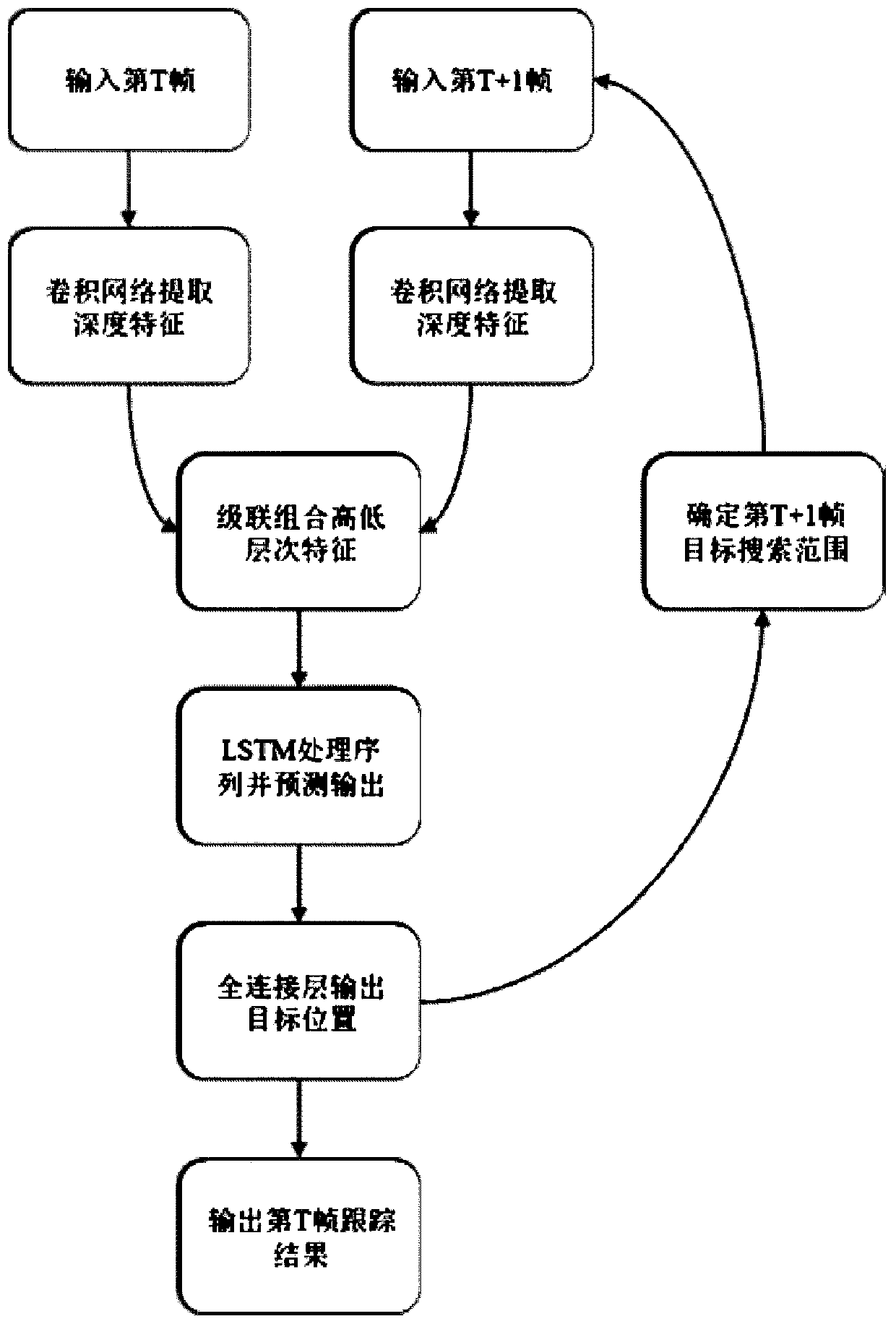

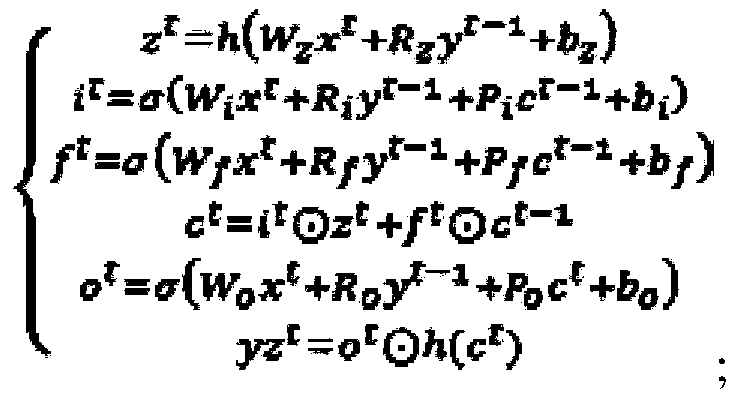

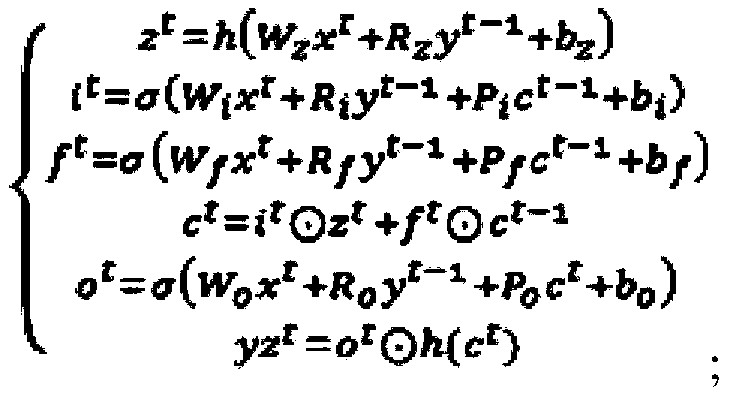

Real-time visual target tracking method based on twin convolutional network and long short-term memory network

InactiveCN110490906AGuaranteed stabilityGuaranteed accuracyImage enhancementImage analysisShort-term memoryFeature extraction

The invention relates to a real-time visual target tracking method based on a twin convolutional network and a long short-term memory network, which comprises the following steps of: firstly, for a video sequence to be tracked, taking two continuous frames of images as inputs acquired by the network each time; carrying out feature extraction on two continuous frames of input images through a twinconvolutional network, obtaining appearance and semantic features of different levels after convolution operation, and combining depth features of high and low levels through full-connection cascading; transmitting the depth features to a long-term and short-term memory network containing two LSTM units for sequence modeling, performing activation screening on target features at different positions in the sequence by an LSTM forgetting gate, and outputting state information of a current target through an output gate; and finally, receiving a full connection layer output by the LSTM to output the predicted position coordinates of the target in the current frame, and updating the search area of the target in the next frame. The tracking speed is greatly improved while certain tracking stability and accuracy are guaranteed, and the tracking real-time performance is greatly improved.

Owner:NANJING UNIV OF POSTS & TELECOMM

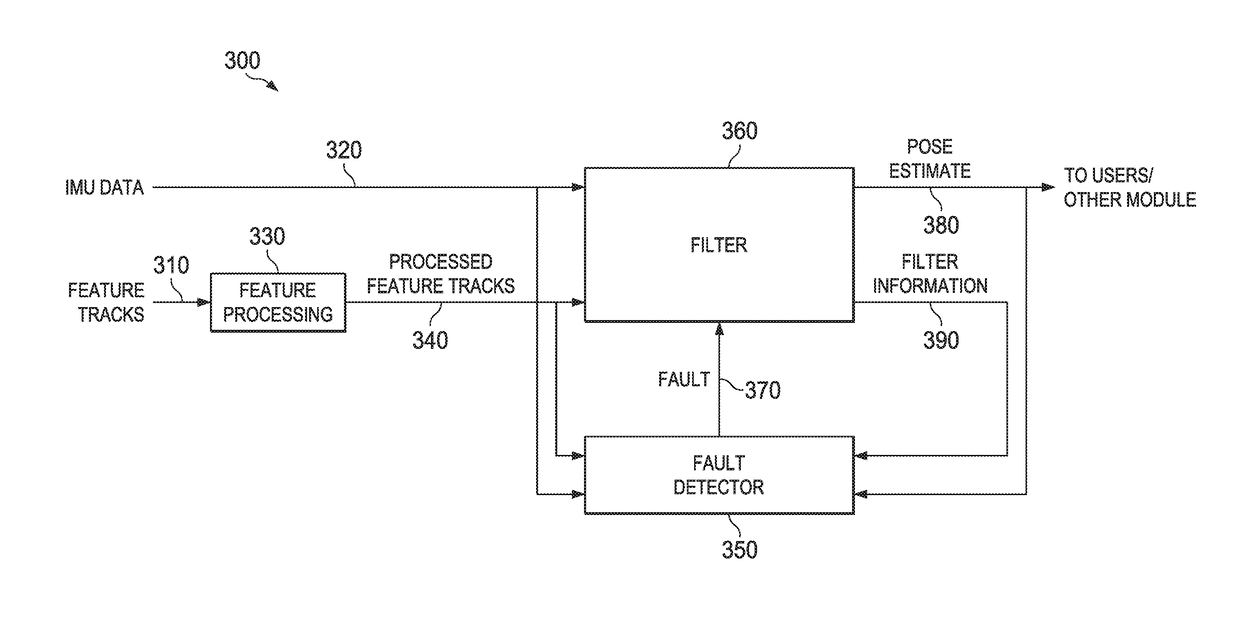

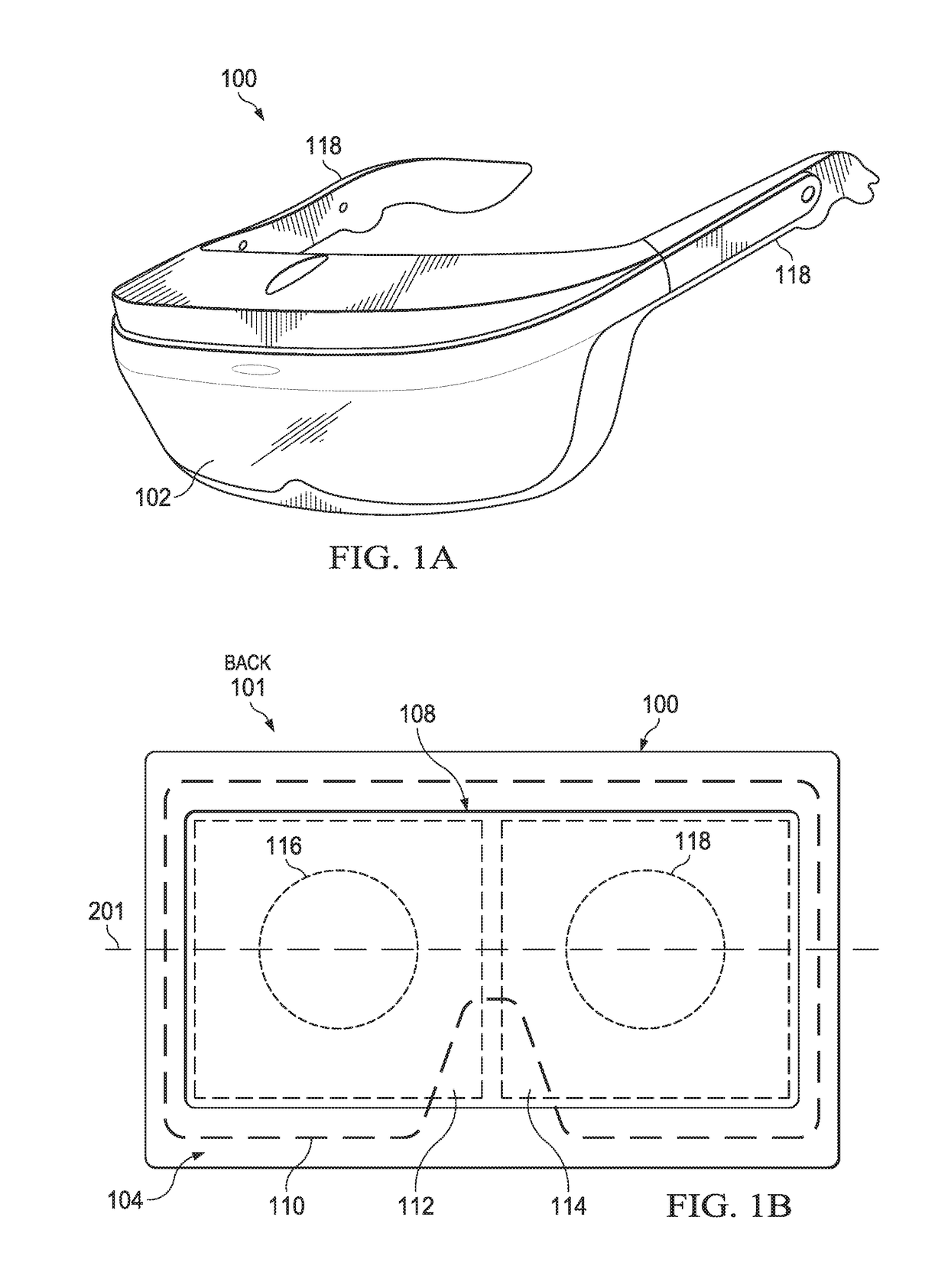

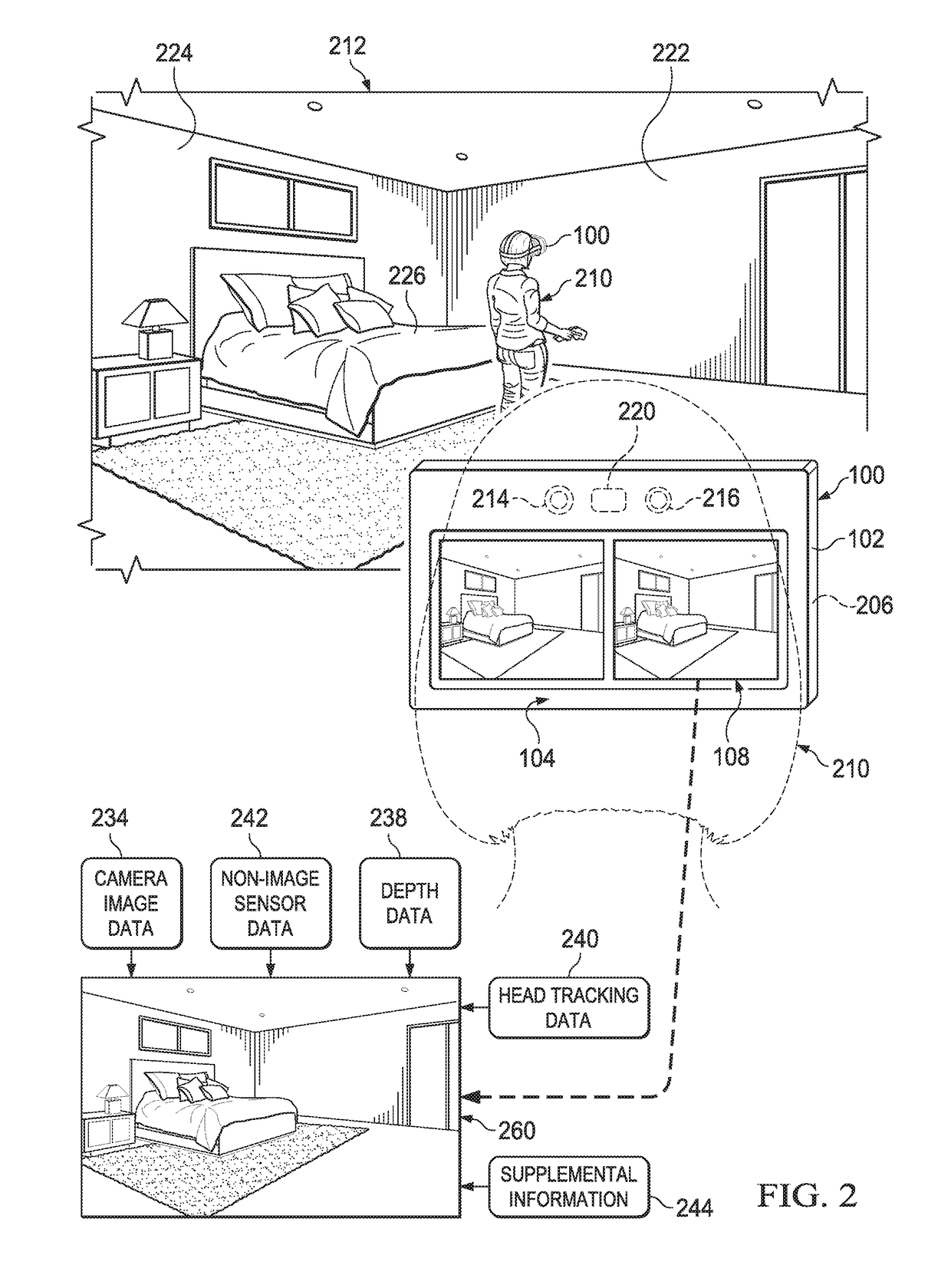

Real-time visual-inertial motion tracking fault detection

ActiveUS20170336439A1Input/output for user-computer interactionImage analysisOdometryVisual perception

Fault detection for real-time visual-inertial odometry motion tracking. A fault detection system allows immediate detection of error when the motion of a device cannot be accurately determined. The system includes subdetectors that operate independently and in parallel to a main system on a device to determine if a condition exists which results in a main system error. Each subdetector covers a phase of a six-degrees of freedom (6DOF) estimation. If any of the subdetectors detect an error, a fault is output to the main system to indicate a motion tracking failure.

Owner:GOOGLE LLC

Vision enhancing system and method

ActiveUS20170078645A1Increase viewing distanceReduce light scatterTelevision system detailsOptical filtersOptical frequenciesNir light

A digital vision system for use in turbid, dark or stained water is disclosed. Turbid water is opaque to the optical wavelengths viewable by humans but is transparent to near infrared (NIR) light. Using NIR wavelength illumination in turbid water allows viewing of objects that would otherwise not be visible through turbid water. NIR light is used to illuminate an area to be viewed. Video cameras comprising optical filters receive the NIR light reflected from objects in the camera field of view, producing camera video signals that may be processed and communicated to projector that convert the video signals to independent optical output video that is projected to the eye of the at optical frequencies viewable by humans. The user is thus provided with a real time vision system that allows the diver to visualize objects otherwise not visible using white light illumination.

Owner:AURIGEMA ANDREW NEIL

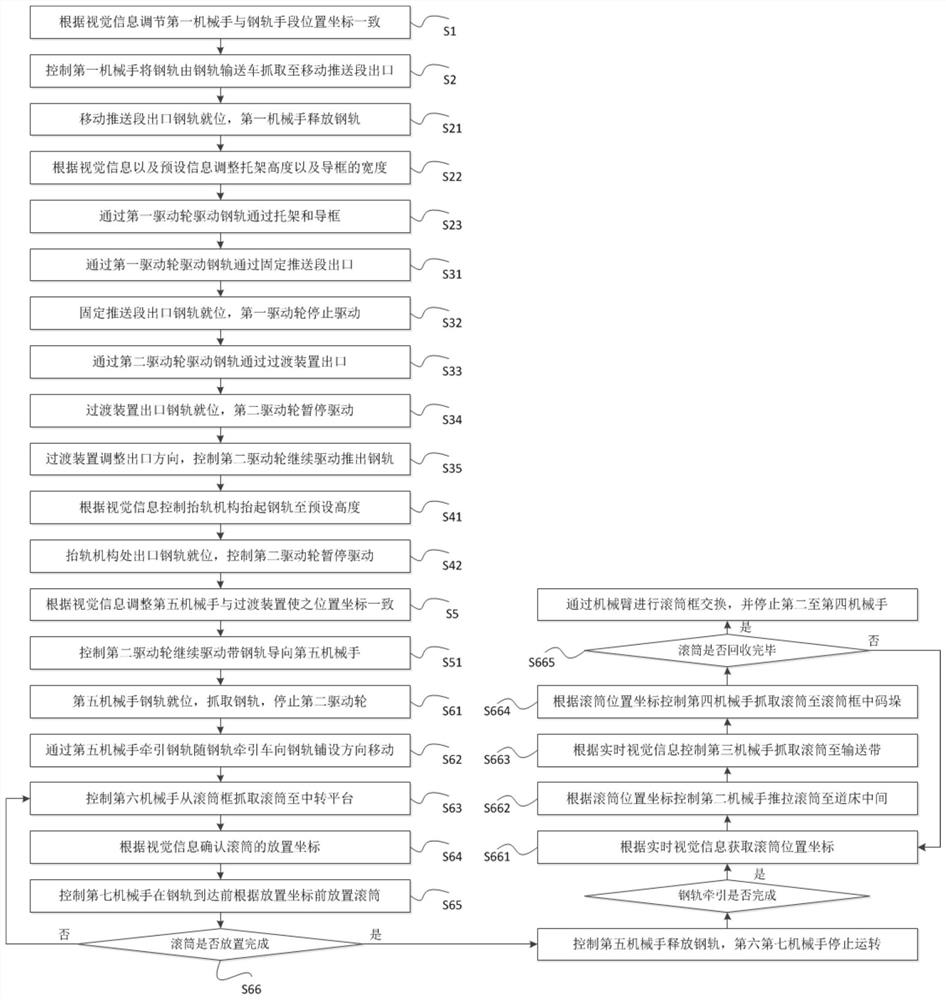

Intelligent control method for ballastless track steel rail laying process

ActiveCN112012057AGuaranteed accuracyNoise improvementImage enhancementImage analysisTrackwayControl engineering

The invention discloses an intelligent control method for a ballastless track steel rail laying process, and particularly relates to the field of track laying. A visual sensor and a position sensor are arranged at each steel rail connecting piece, real-time position coordinates of the steel rail are acquired by utilizing the visual image; a manipulator is controlled to grab the steel rail according to the real-time position coordinates of the steel rail; the moving track of the steel rail is calibrated through the guide frame and the bracket according to the real-time visual information, and when the steel rail is guided out from the transition device, the center line of the ballast bed can be accurately aligned; meanwhile, the movement of the manipulator is controlled by utilizing the steel rail real-time coordinates obtained by the visual images so that when the steel rail is grabbed and fixed by the manipulator, the grabbed position coordinates can be kept consistent with the calibrated position coordinates, and the laying accuracy of the steel rail is not influenced by the position deviation of the construction operation vehicle.

Owner:HUNAN YUECHENG ELECTROMECHANICAL SCI & TECH

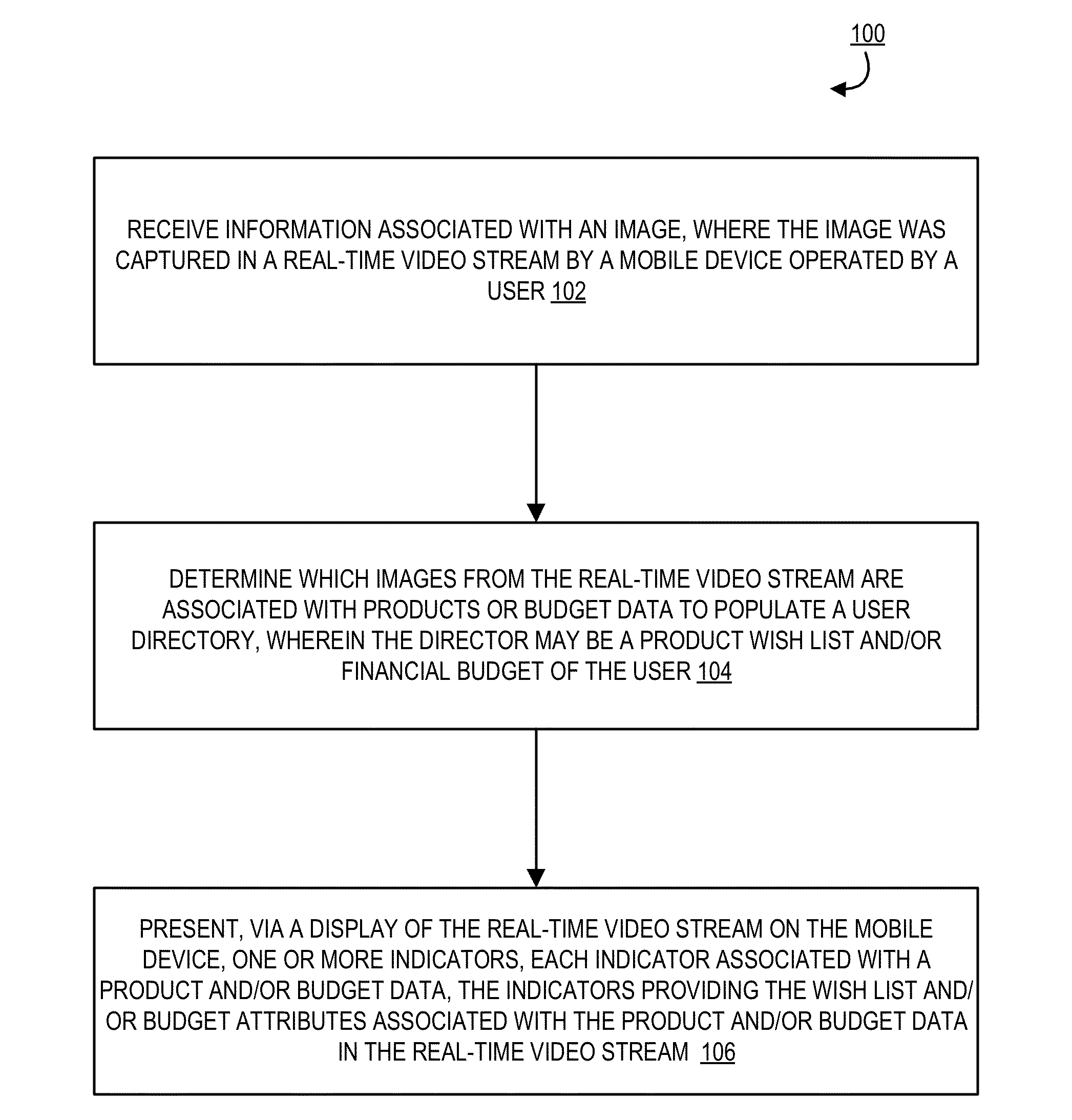

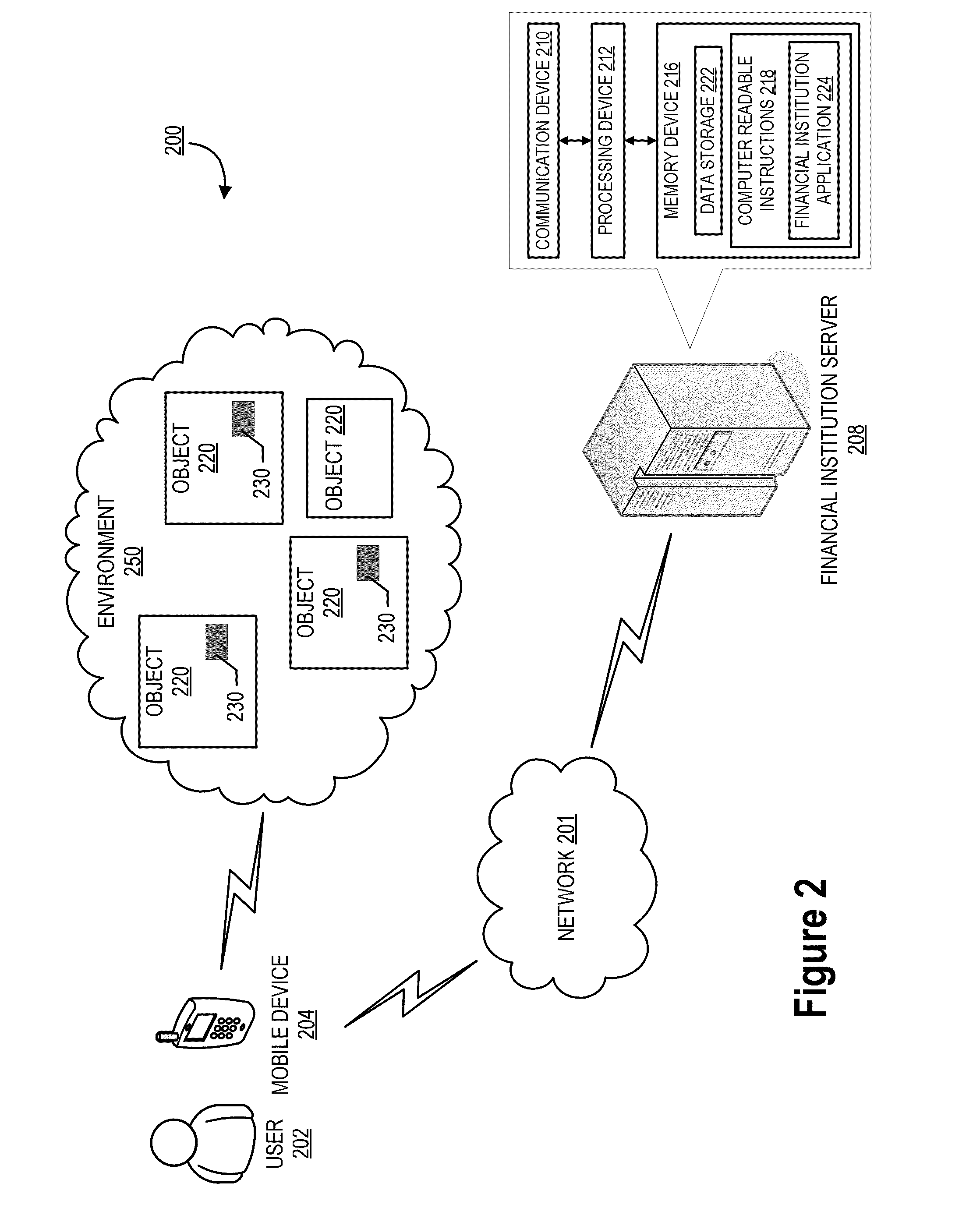

Populating budgets and/or wish lists using real-time video image analysis

ActiveUS20160163000A1Easy to addData is availableFinanceCharacter and pattern recognitionData matchingImaging analysis

System, method, and computer program product are provided for using real-time video analysis, such as augmented reality to provide the user of a mobile device with real-time budgeting and wish lists. Through the use of real-time vision object recognition objects, logos, artwork, products, locations, and other features that can be recognized in the real-time video stream can be matched to data associated with such to provide the user with real-time budget impact and wish list updates based on the products and budget data determined as being the object. In this way, the objects, which may be products and / or budget data in the real-time video stream, may be included into a user's budget and / or wish list, such that the user receives real-time budget and / or wish list updates incorporating product and / or budget data located in a real-time video stream.

Owner:BANK OF AMERICA CORP

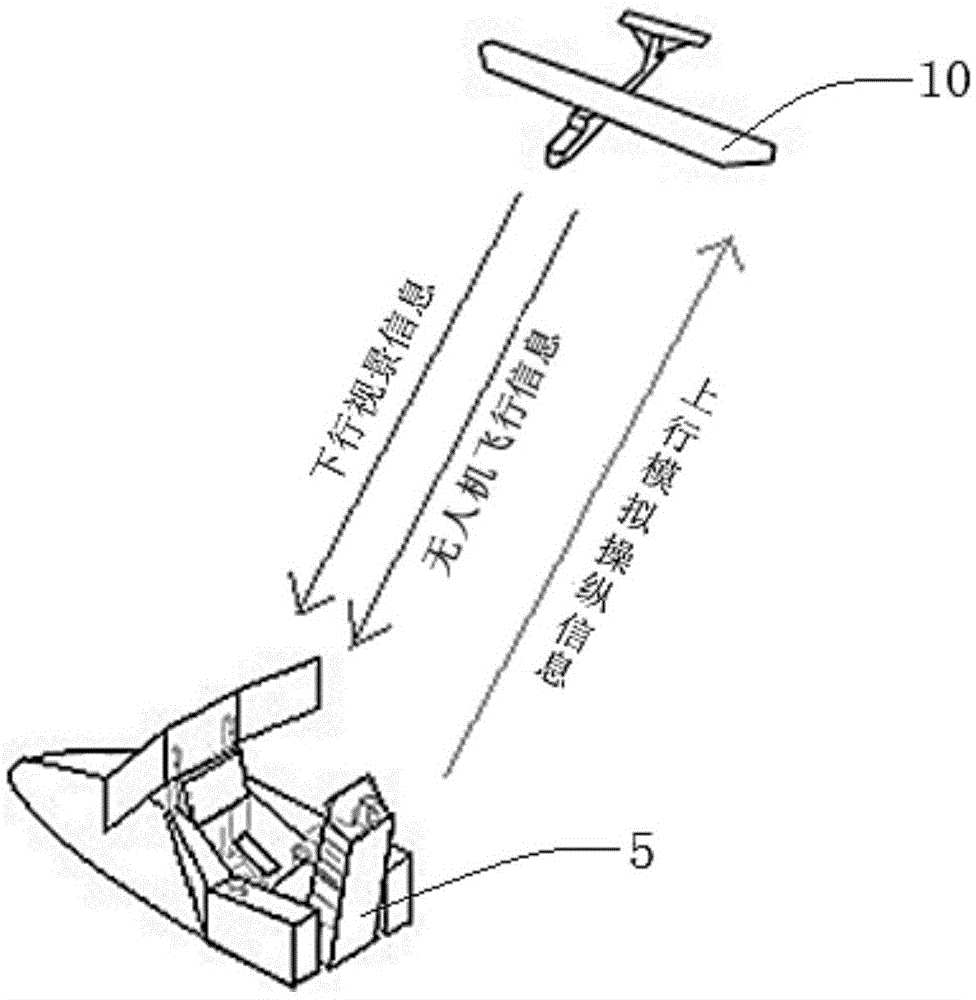

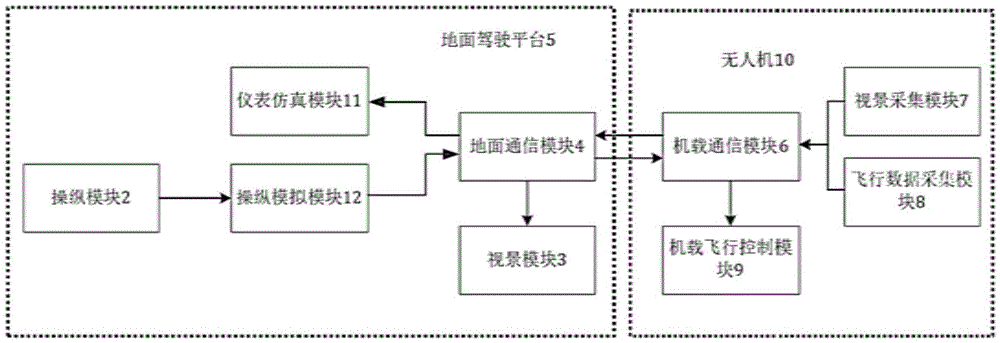

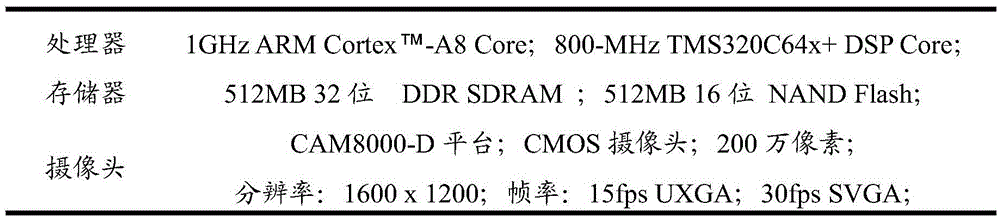

Flight simulation training system based on unmanned aerial vehicle and flight simulation method thereof

ActiveCN105608952AImprove interactive experienceCosmonautic condition simulationsSimulatorsAviationData acquisition

The invention discloses a flight simulation system based on an unmanned aerial vehicle in the technical field of simulated flight. The flight simulation system comprises a ground driving platform and an unmanned aerial vehicle, wherein the ground driving platform is provided with an instrument simulation module, a maneuvering simulation module, a maneuvering module, a vision module and a ground communication module; the unmanned aerial vehicle is provided with an airborne communication module, a vision acquisition module, a flight data acquisition module and an airborne flight control module; the maneuvering module, the maneuvering simulation module, the ground communication module, the airborne communication module and the airborne flight control module are connected in sequence and transmit simulated unmanned aerial vehicle maneuvering information; the vision acquisition module and the flight data acquisition module are separately connected with the airborne communication module and output real-time vision information and simulated flight information; the airborne communication module and the ground communication module are connected with the vision module in sequence and output high recovered video information, and are connected with the instrument simulation module in sequence and output unmanned aerial vehicle flight information. The flight simulation system can improve the quality of simulated flight and meet the requirements of flight training, air travel and research trial.

Owner:SHANGHAI JIAO TONG UNIV

Real-time vision system oriented target compression sensing method

ActiveCN105095898AFast updateIncrease sampling rateCharacter and pattern recognitionComputer visionVisual perception

The invention discloses a real-time vision system oriented target compression sensing method, which comprises the following steps: image reconstruction, mixed compression sensing, high-efficient Vibe target detection, updating and post-processing, wherein the step of the image reconstruction comprises the following specific steps: according to the size of a collected image, carrying out 4*4 partitioning on an image, and converting obtained image blocks into 16*1 vectors; the step of the mixed compression sensing comprises the following specific steps: constructing the image block corresponding to a mixed sampling matrix, and carrying out sampling compression; the step of the high-efficient Vibe target detection comprises the following specific steps: for each pixel in the image block, comparing a pixel value with a sample set to judge whether the pixel belongs to a background point or not; the step of updating comprises the following specific steps: according to the above detection result, determining a background block area and a target block area in the image block, and obtaining the parameter regulation information of the mixed sampling matrix of a next frame of image of the pixel according to a situation that the pixel belongs to the background block area or the target block area; and the step of the post-processing comprises the following specific steps: carrying out image optimization processing on each image block of the current frame to obtain a final target image of the current frame.

Owner:SUZHOU INST OF TRADE & COMMERCE

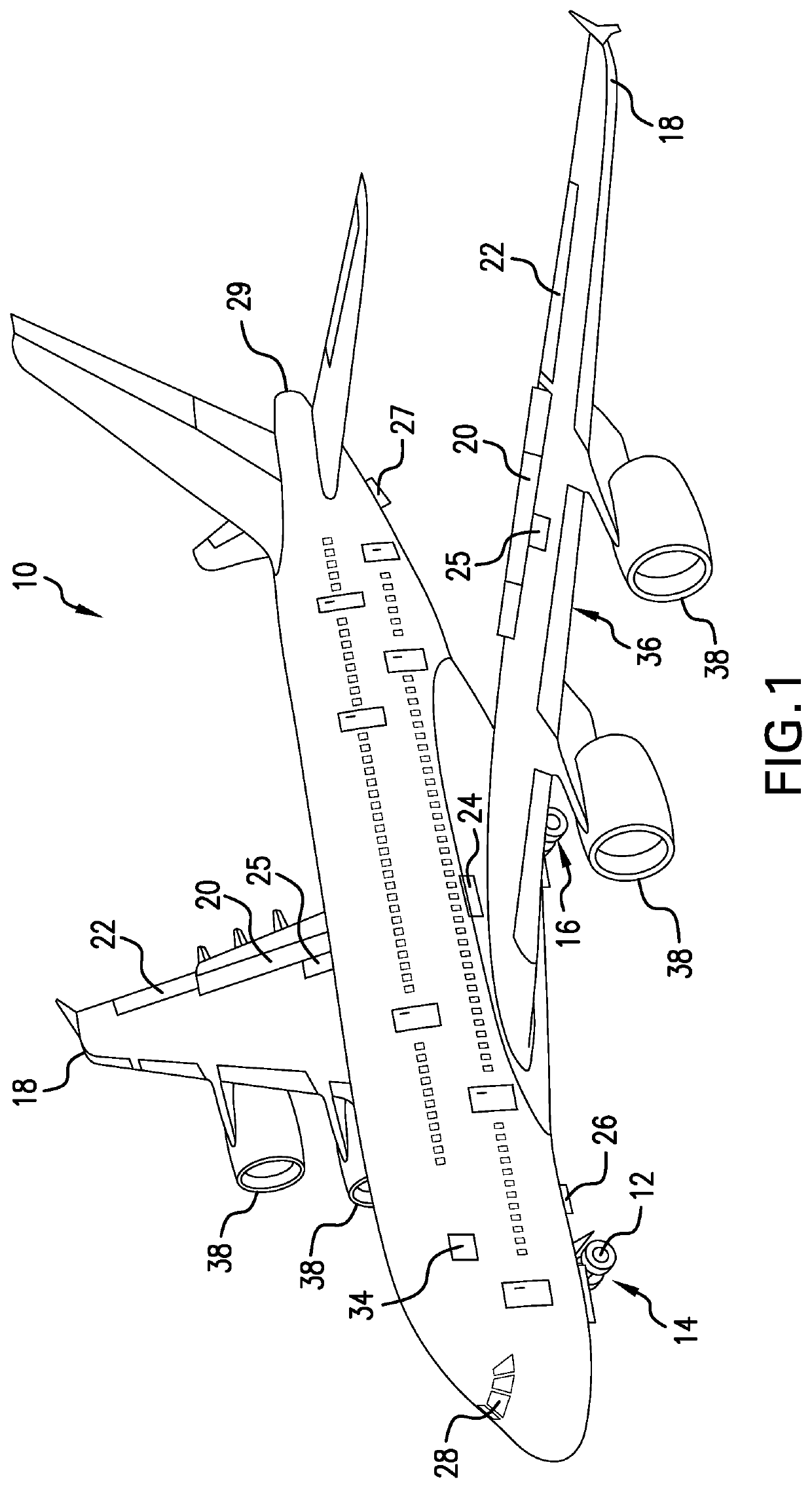

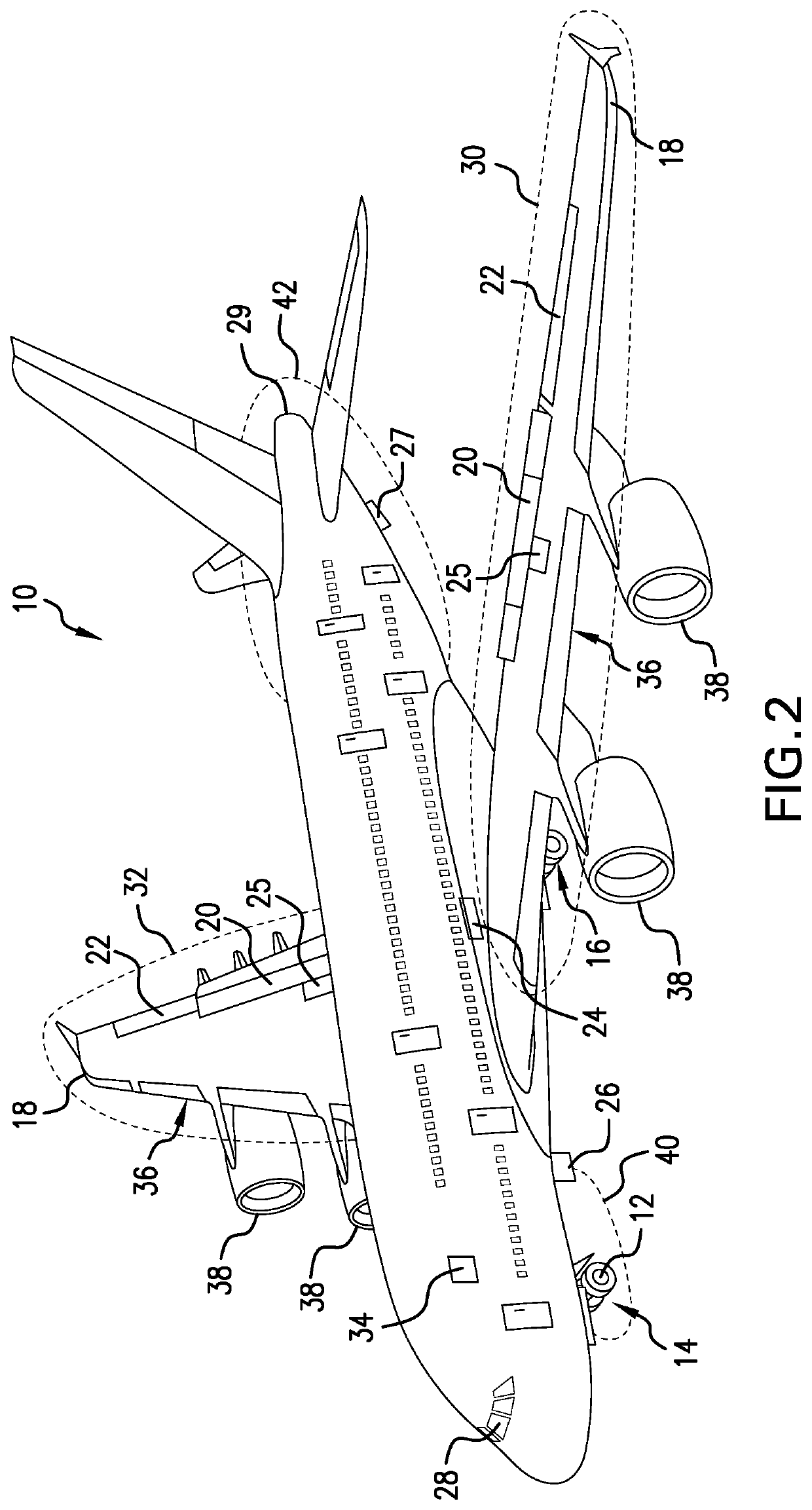

System and method for determining aircraft safe taxi, takeoff, and flight readiness

ActiveUS20200290750A1Maximize safetyImprove securityEnergy efficient operational measuresElectronic flight bags adaptationsData streamMonitoring system

A monitoring system and method are provided for real time monitoring of flaps, landing gears, or tail skids to determine safe taxi, takeoff, and flight readiness. Monitoring units, including scanning LiDAR devices combined with cameras or sensors, mounted in aircraft exterior locations produce a stream of meshed data that is securely transmitted to a processing system to generate a real time visual display of the flaps, landing gears, or tail skid for communication to aircraft pilots to ensure safe aircraft taxi, takeoff, and flight readiness. Actual flap position alignment with optimal flap setting, proper retraction and extension positions of landing gears, and tail skid condition is ensured. Safety of aircraft taxi, takeoff and flight and airport operations are improved when the present system and method are used to prevent incidents related to misaligned flaps and improperly positioned landing gears or tail skids.

Owner:BOREALIS TECH LTD

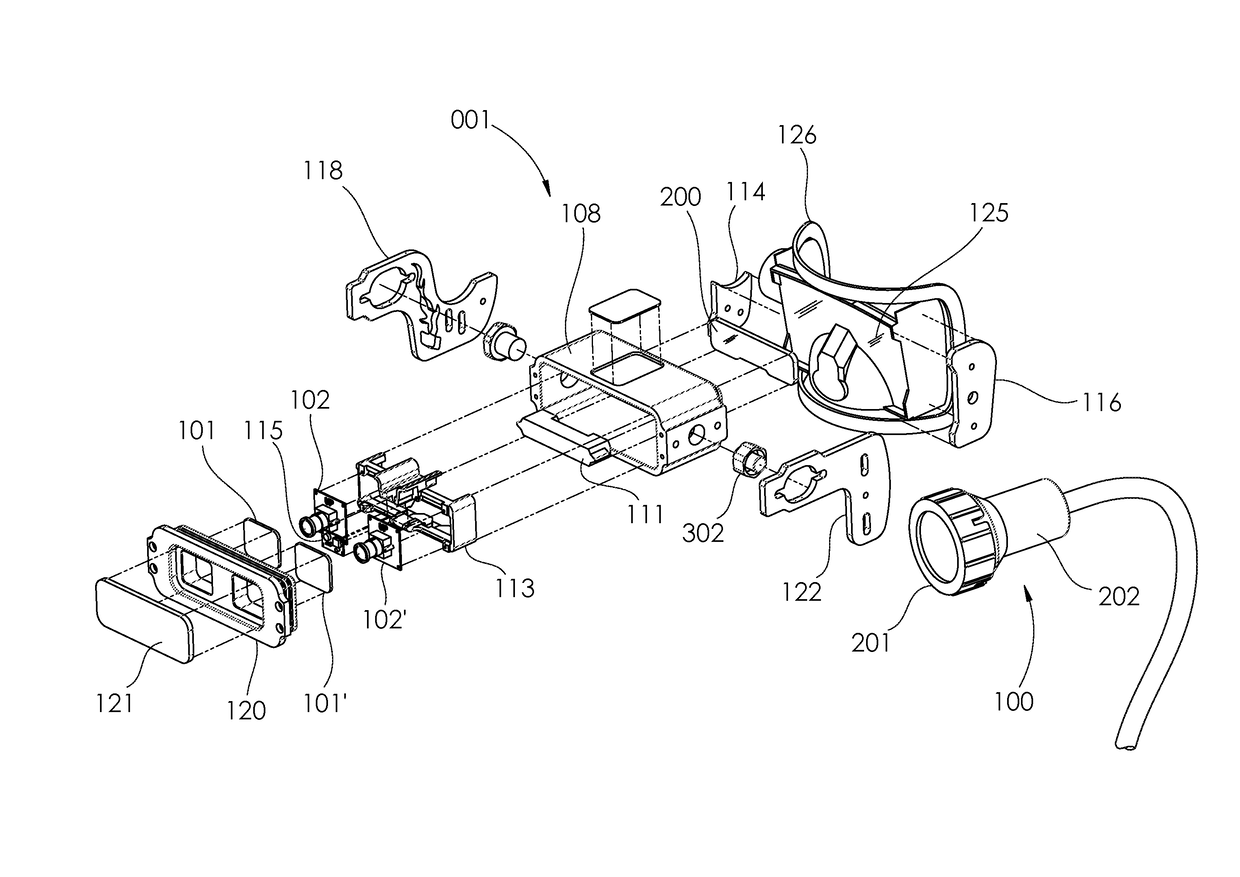

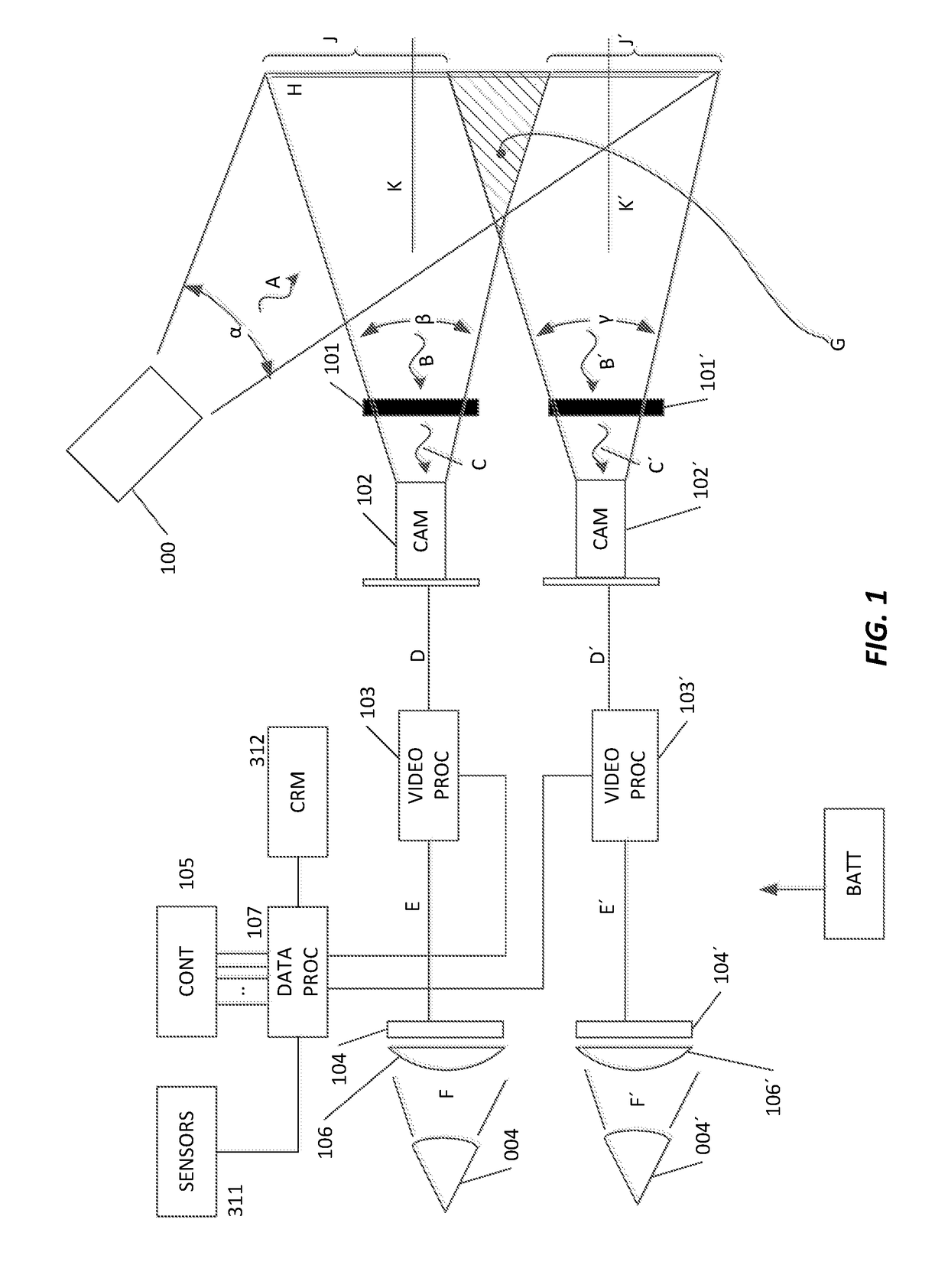

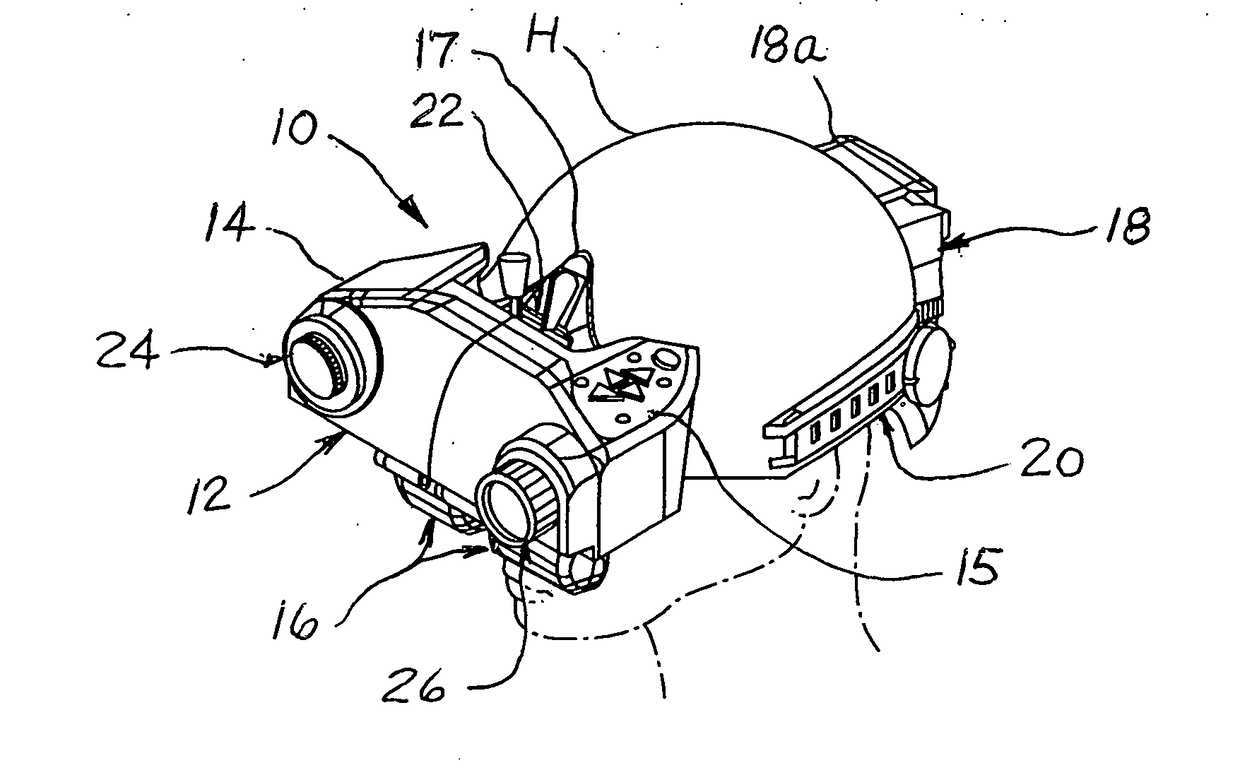

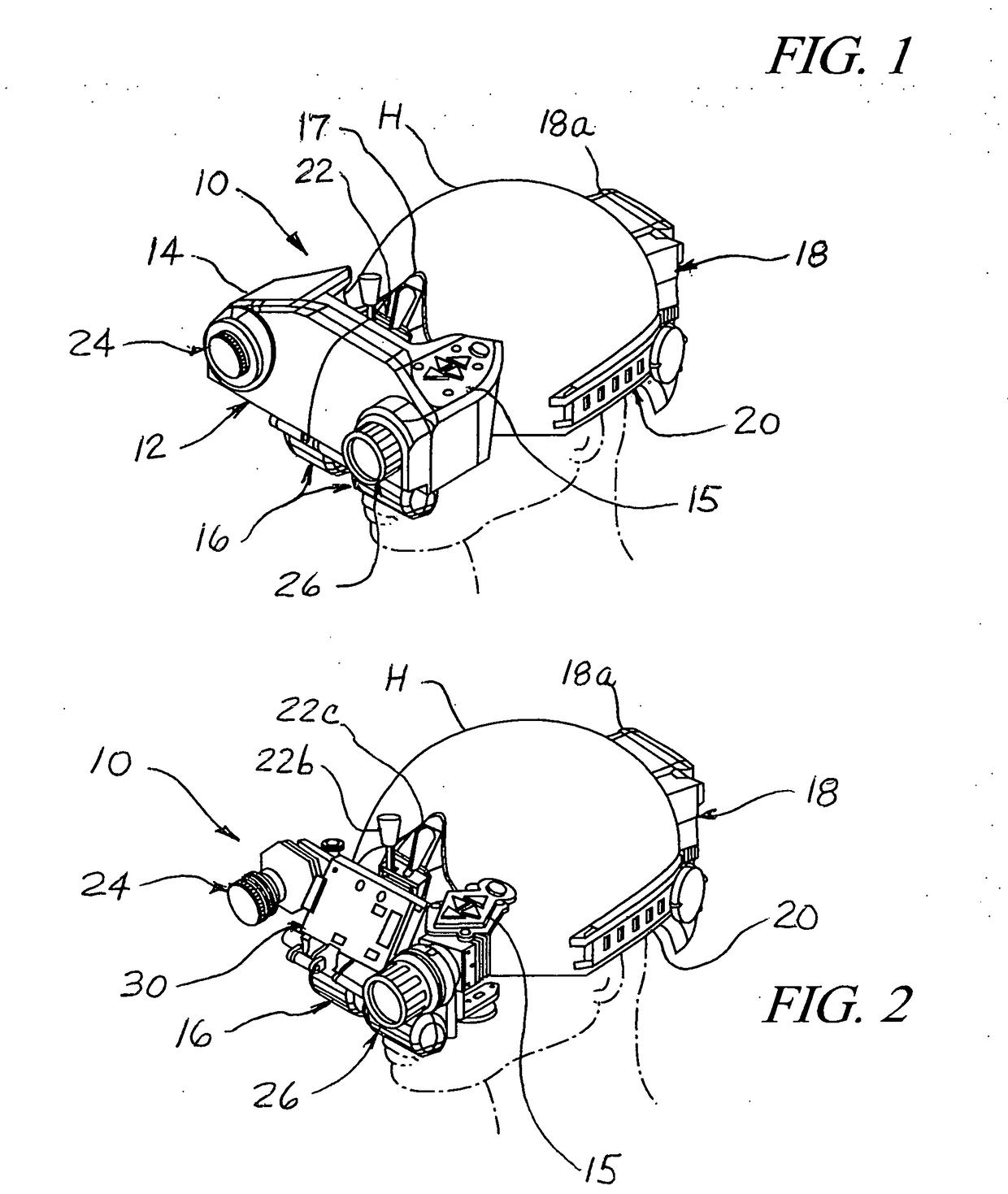

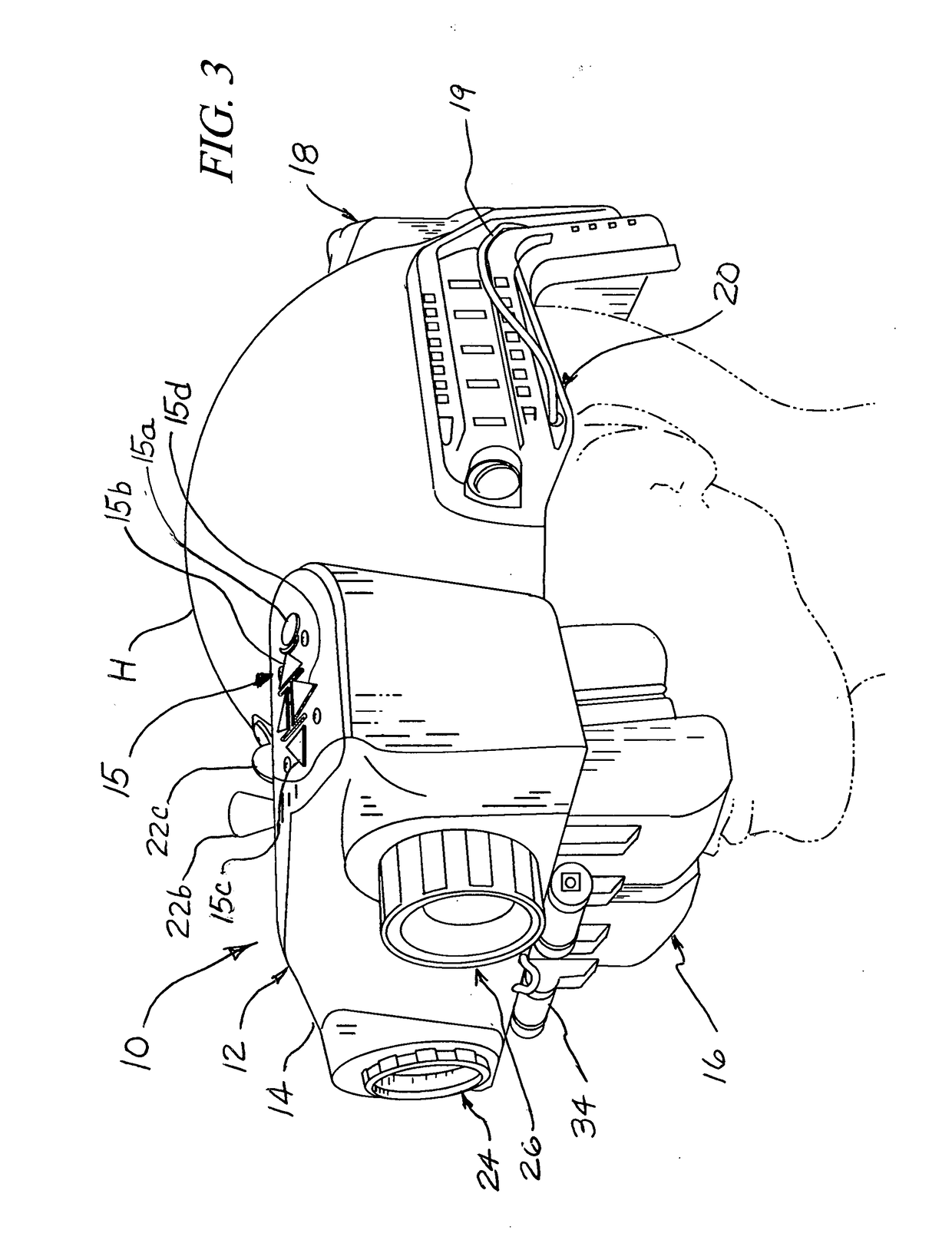

Digital enhanced vision system

InactiveUS20170208262A1Minimal impactEnhance the imageTelevision system detailsCharacter and pattern recognitionComputer visionBolometer

A digital enhanced vision system assembled and adapted for mobile use by personnel in surveillance operations. The inventive system comprises separate infrared sensor channels, one featuring a short wave infrared (SWIR) camera and the other, a thermal imaging camera with a micro-bolometer for long wave infrared (LWIR) detection, each of the cameras being fixed and similarly directed upon the viewed scene to collect real-time visual data of the scene in their respective infrared bands. Respective data outputs from the SWIR and LWIR cameras are connected to an advanced vision processor for digitally fusing the respective data on a pixel-by-pixel basis providing significant enhancements to the viewed scene in a visual image presented for biocular display to both eyes of the user. The system is housed for helmet-mounted operation further including an adjustable mounting clip to releasably engage the system to the front of a standard helmet and a separate battery back connected by cabling routed alongside the helmet and adapted to mount upon the rear of the helmet.

Owner:SAGE TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com