Patents

Literature

1640results about How to "Improve interactive experience" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

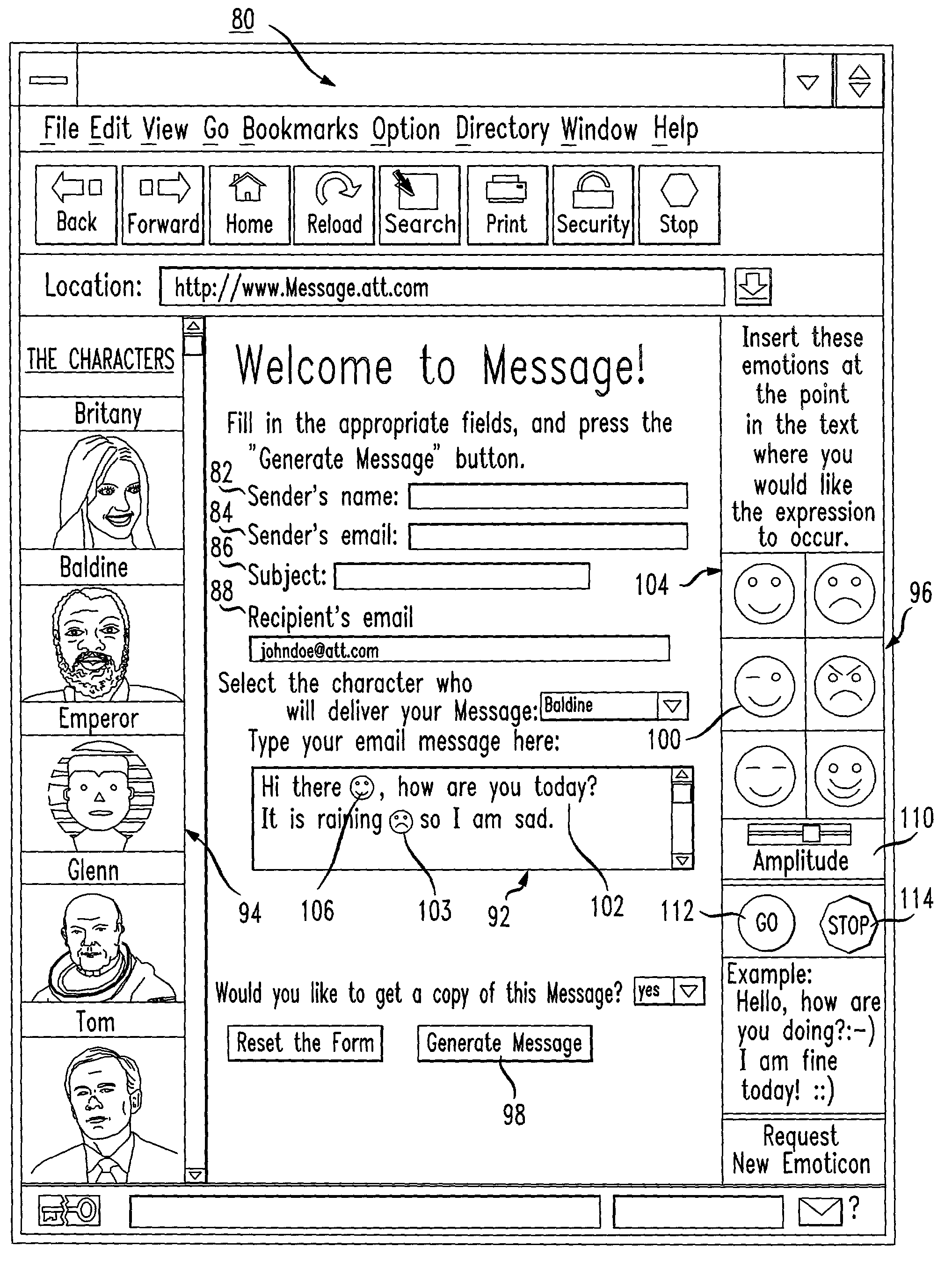

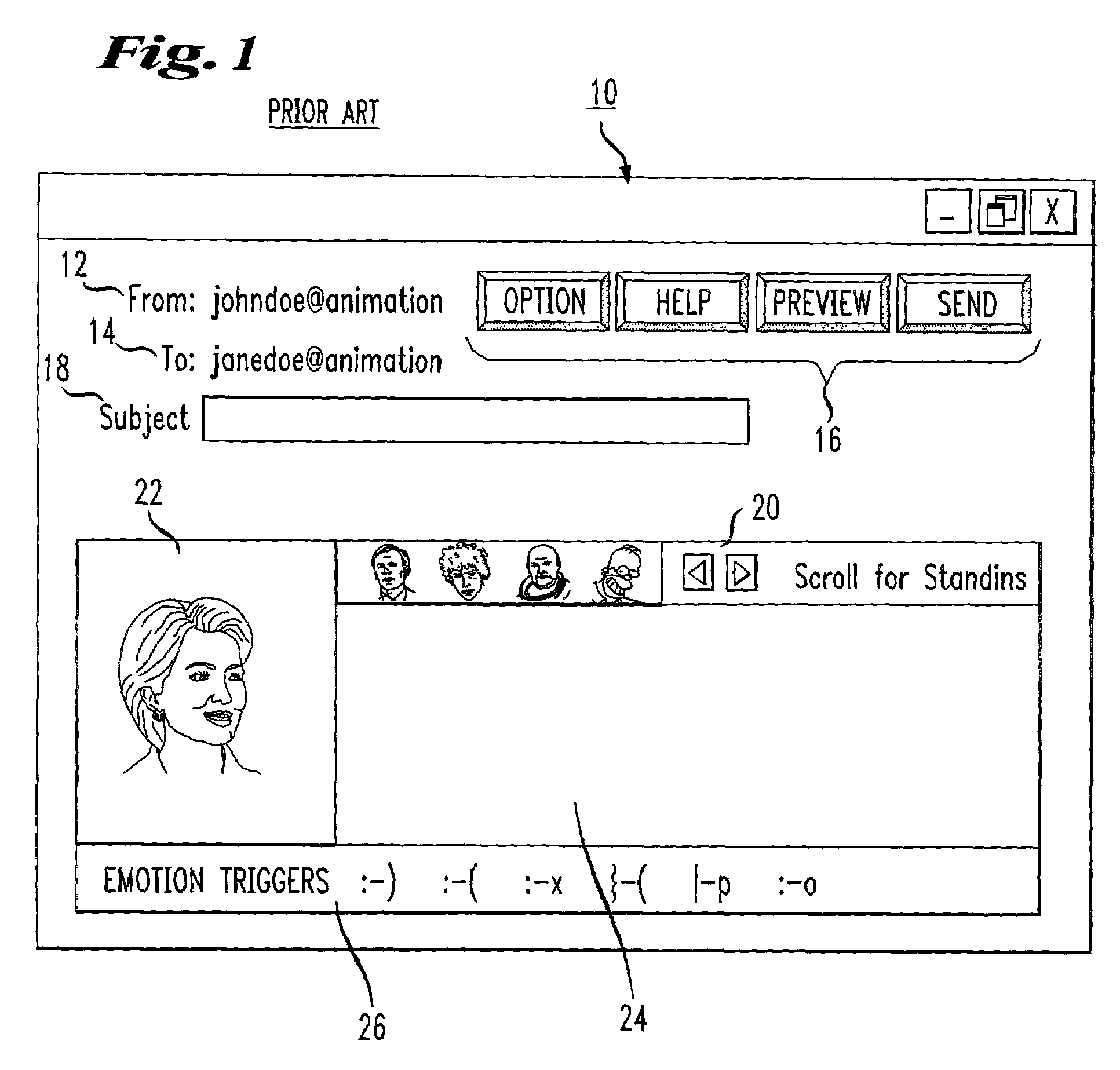

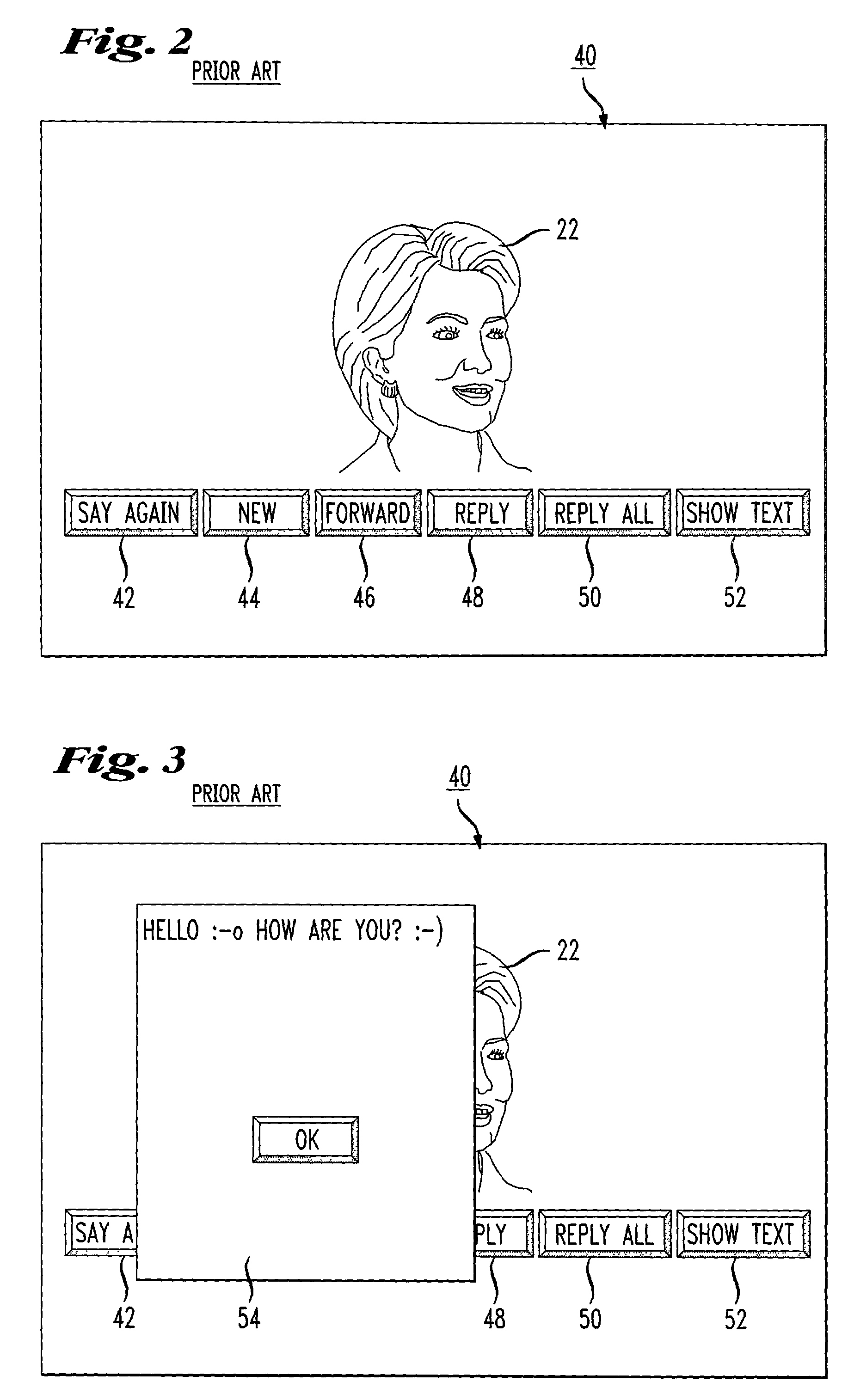

Method for sending multi-media messages using emoticons

InactiveUS6990452B1Increase user appreciationImprove interactive experienceNatural language data processingAnimationAnimationText message

A system and method of providing sender-customization of multi-media messages through the use of emoticons is disclosed. The sender inserts the emoticons into a text message. As an animated face audibly delivers the text, emoticons associated with the message are started a predetermined period of time or number of words prior to the position of the emoticon in the message text and completed a predetermined length of time or number of words following the location of the emoticon. The sender may insert emoticons through the use of emoticon buttons that are icons available for choosing. Upon sender selections of an emoticon, an icon representing the emoticon is inserted into the text at the position of the cursor. Once an emoticon is chosen, the sender may also choose the amplitude for the emoticon and increased or decreased amplitude will be displayed in the icon inserted into the message text.

Owner:AMERICAN TELEPHONE & TELEGRAPH CO

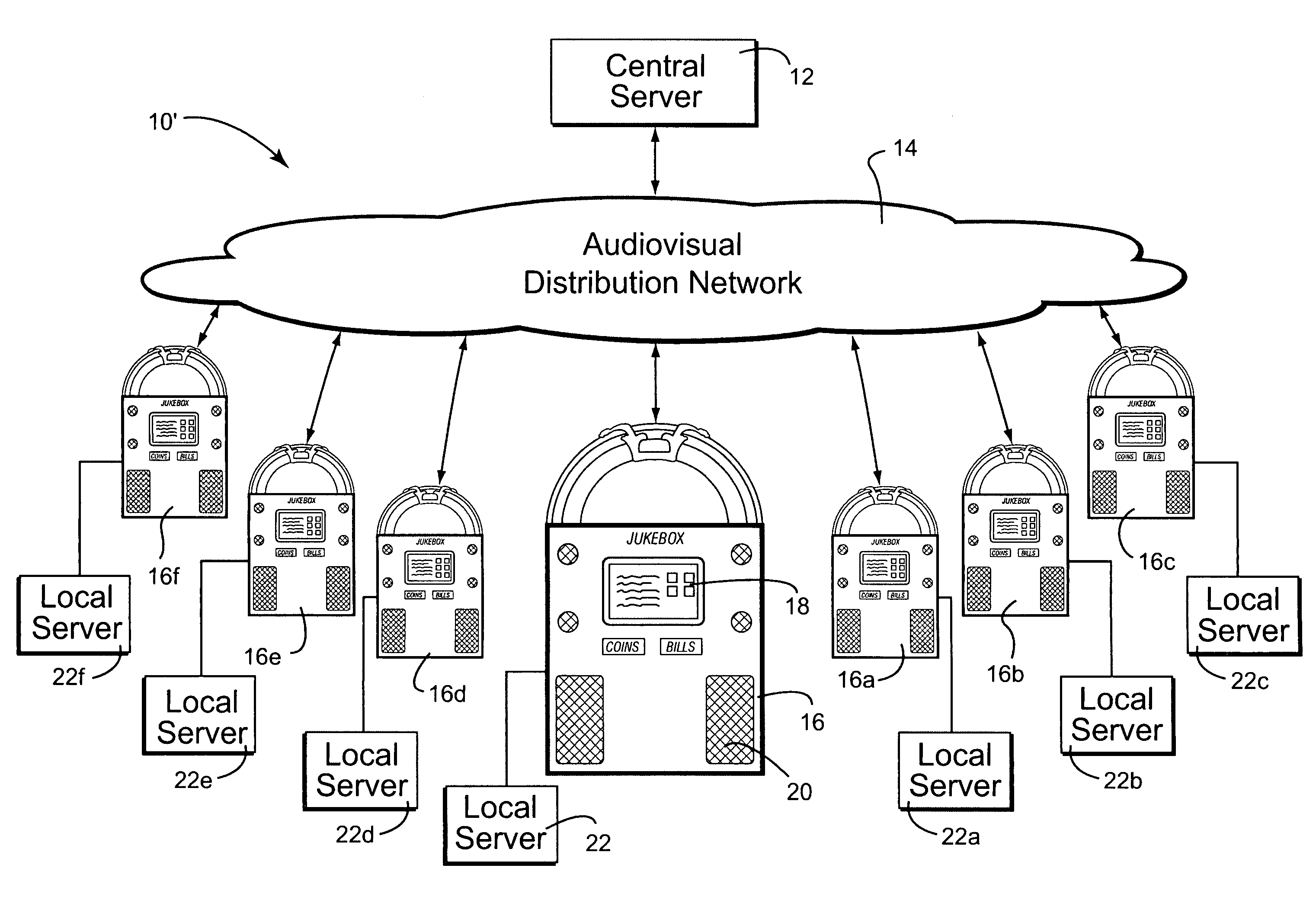

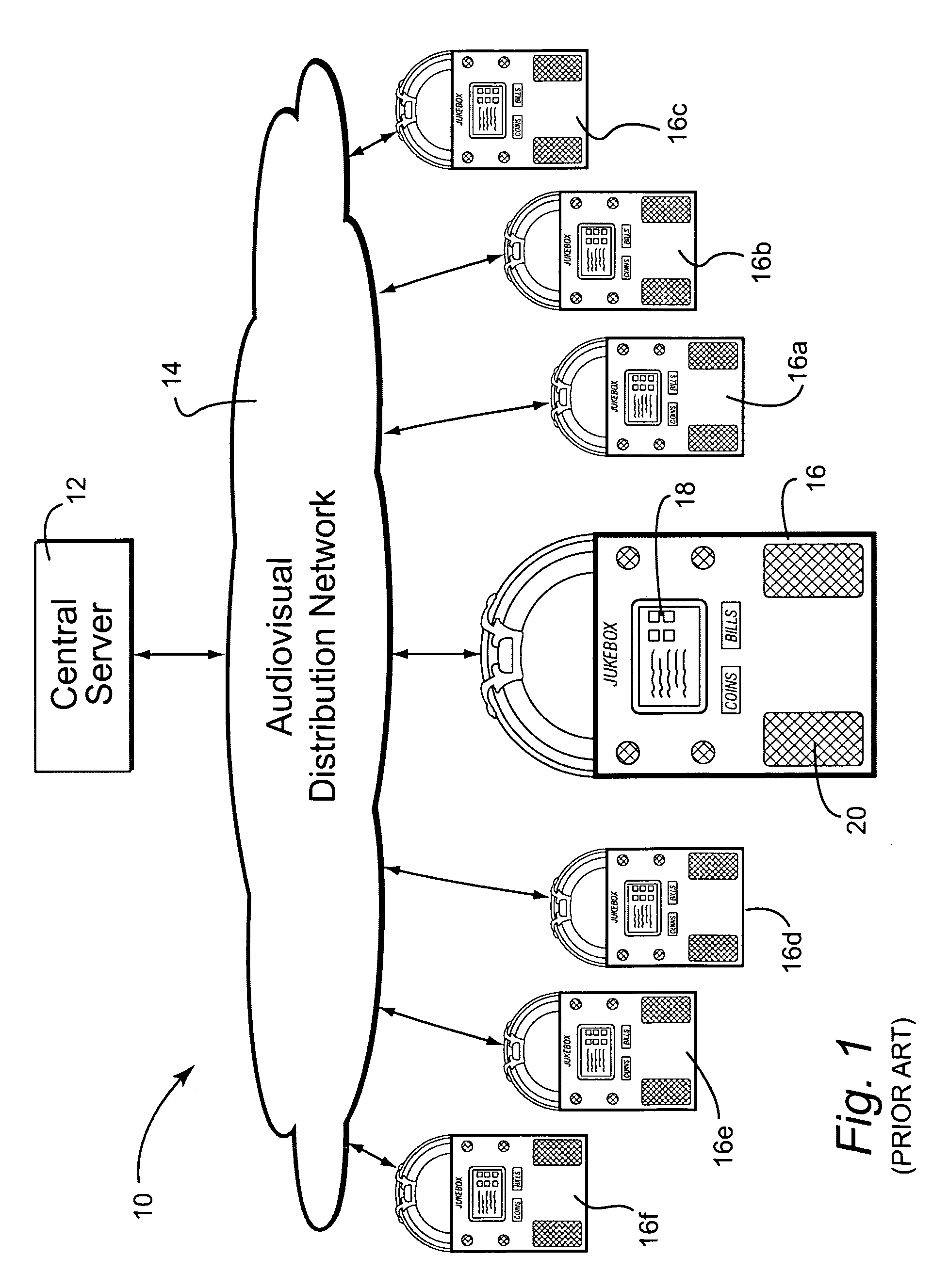

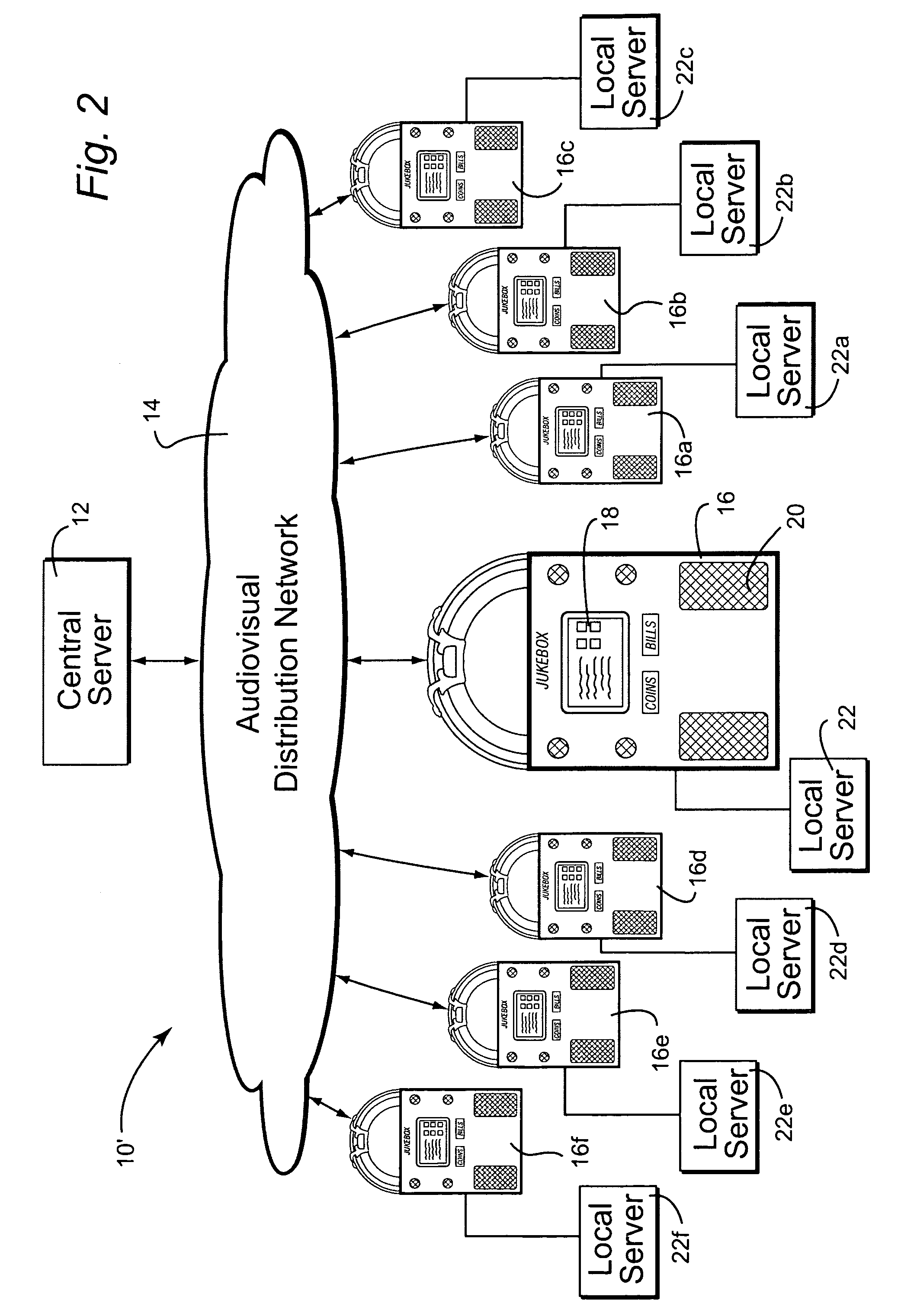

Jukebox with associated video server

ActiveUS20080239887A1Accurate and reliable processReduce needRecord information storageApparatus for meter-controlled dispensingDisplay deviceVideo processing

In certain example embodiments, jukebox systems that have associated video servers for displaying video content on one or more displays or groups of displays external to the jukebox and / or directly on the jukebox are provided. Such video servers may effectively off-load at least some of the video processing load from the jukebox device. Accordingly, video content may be provided to complement and / or further enhance the interactive experience that jukeboxes currently provide, while also enabling patrons not directly in front of the jukebox to participate in the interactive process. Content may be distributed to the jukeboxes and / or video servers via a network. In addition to creating a compelling entertainment experience for patrons, it also is possible to create new revenue opportunities for customers. For example, operators and national account customers and advertising partners may provide additional value to venues through the innovative use of managed video content.

Owner:TOUCHTUNES MUSIC CO LLC

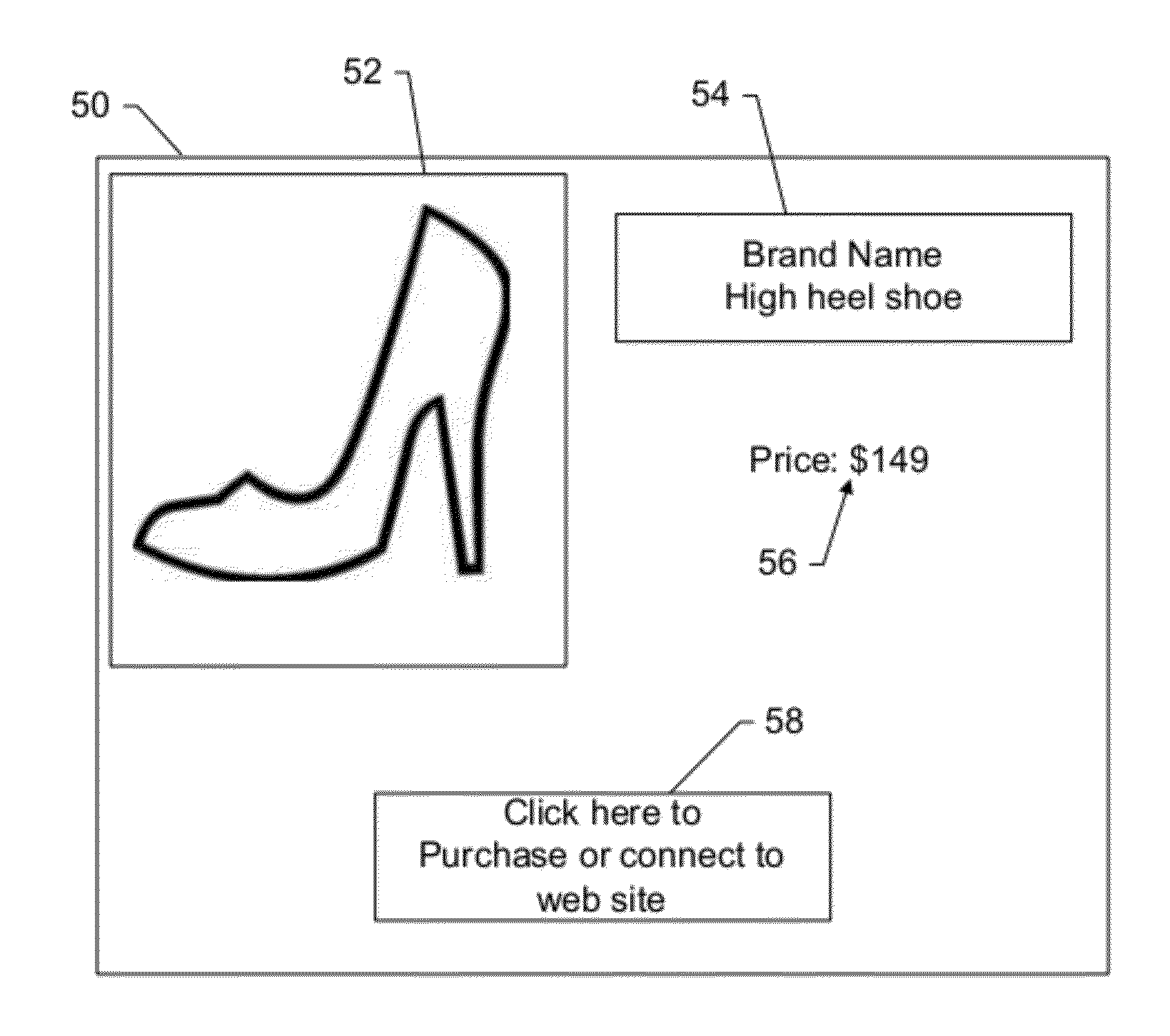

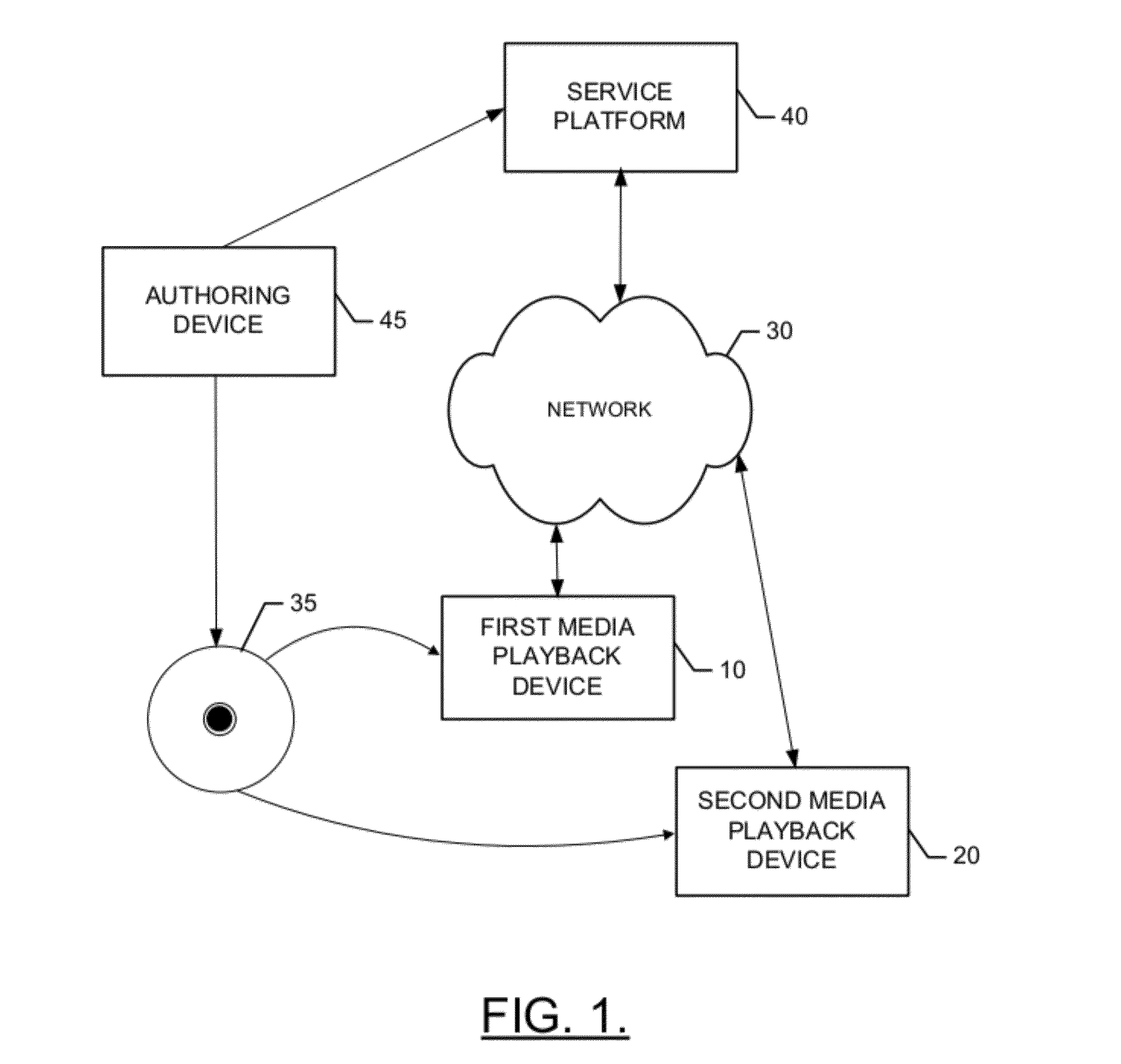

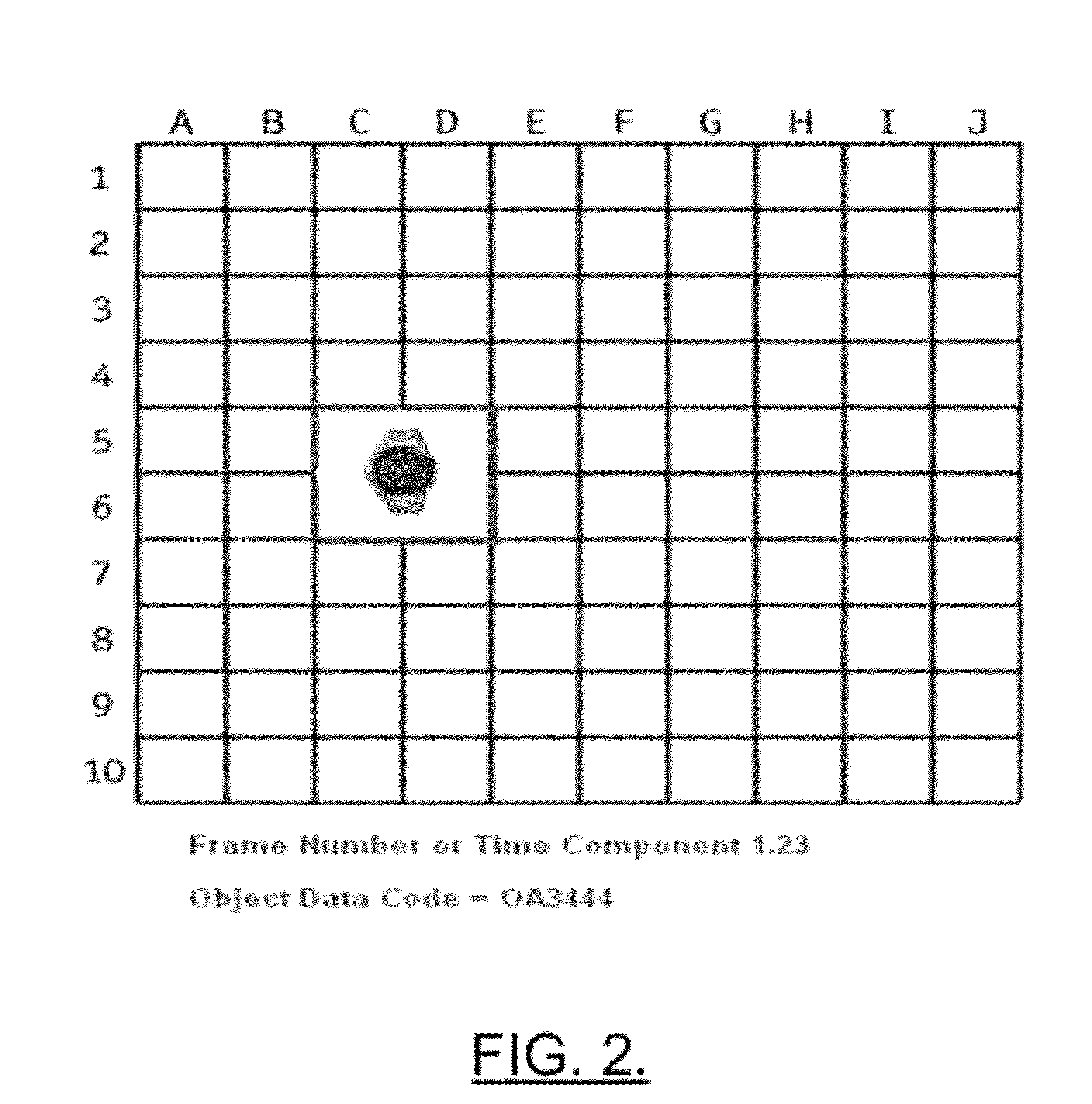

Method and apparatus for providing or utilizing interactive video with tagged objects

InactiveUS20120167146A1Improve interaction experienceImprove interactive experienceTelevision system detailsColor television detailsVideo MediaObject function

A method for utilizing interactive video with tagged objects may include generating video data that includes both video media and an interactive video layer, providing the video data to a remote user via a network, and enabling the remote user to present the video data with or without the interactive video layer based on user selection of an option to turn the interactive video layer on and off, respectively. The interactive video layer may include objects mapped to corresponding identifiers associated with additional information about respective ones of the objects. At least one defined selectable video object may correspond to a mapped object. The selectable video object may be selectable from the interactive video layer during rendering of the video data responsive to the interactive video layer being turned on, where the selectable video object has a corresponding object function call defining an action to be performed responsive to user selection of the selectable video object. A corresponding apparatus is also provided.

Owner:WHITE SQUARE MEDIA

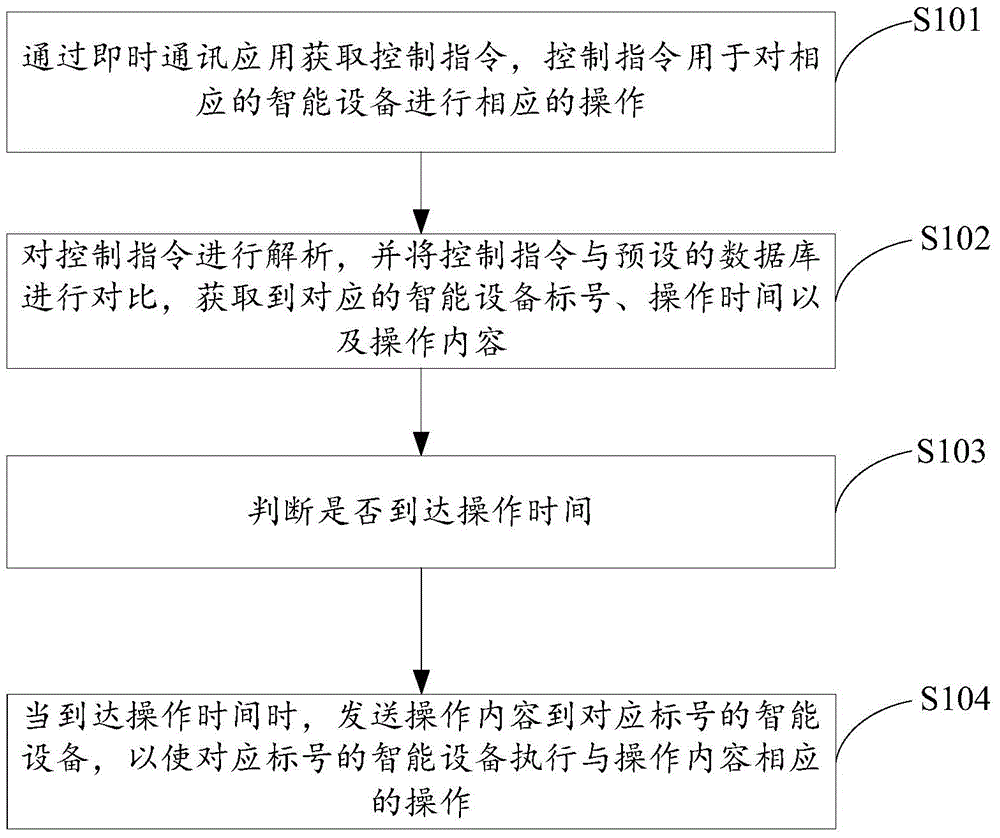

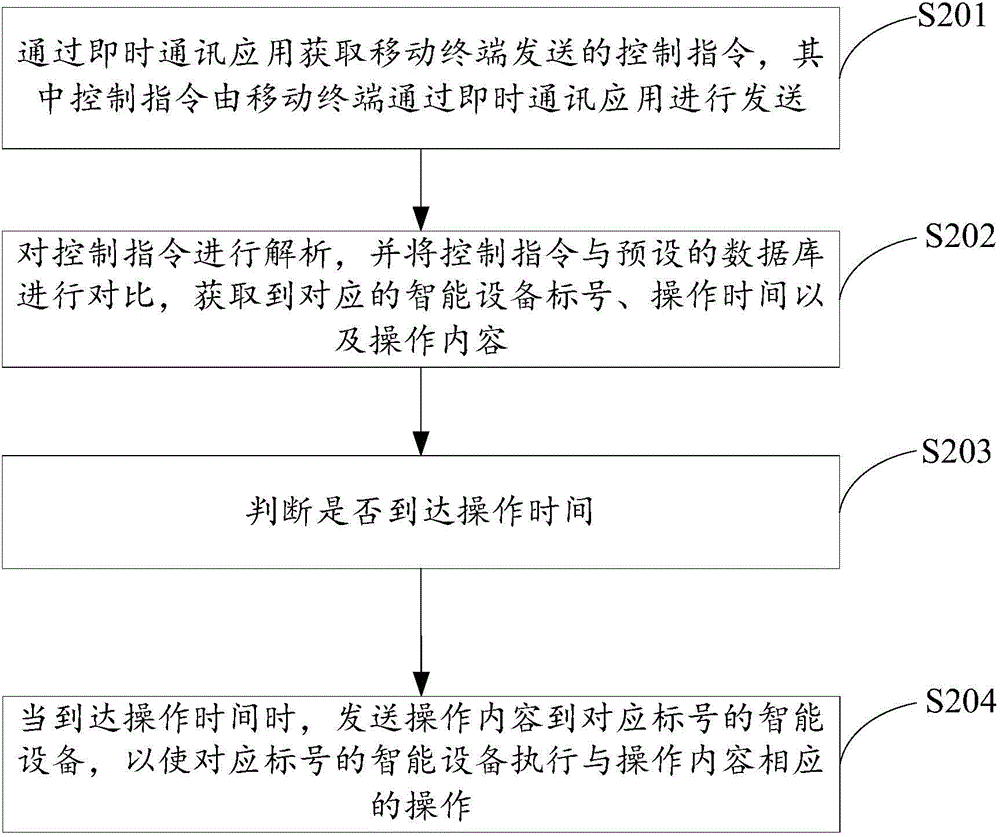

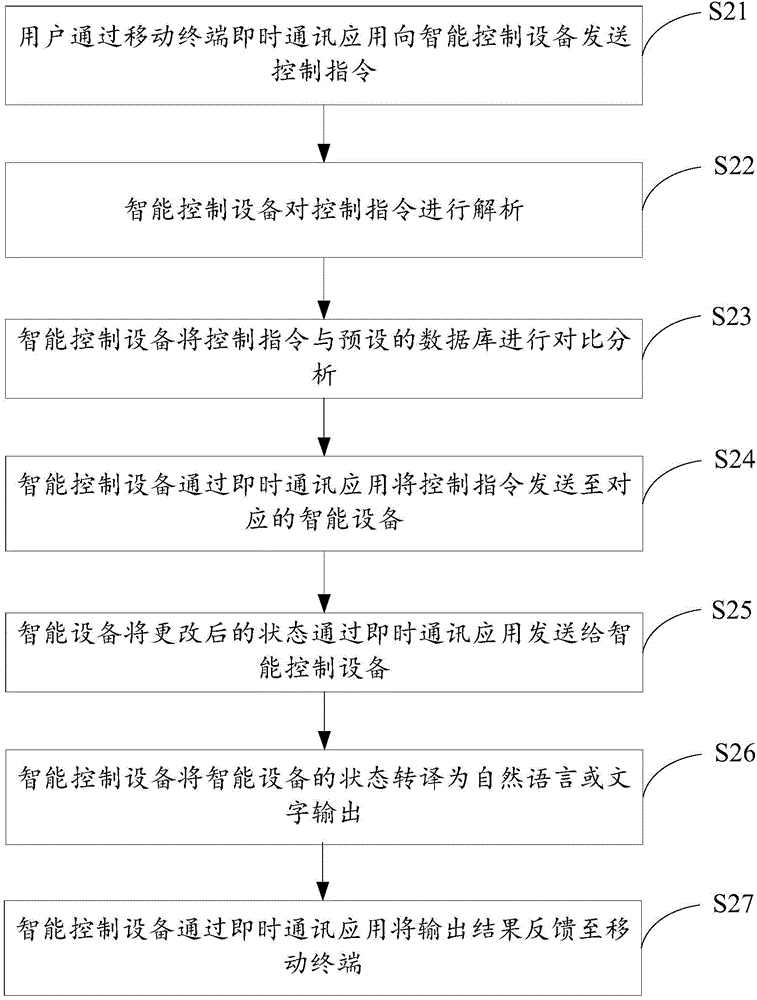

Intelligent control method, device and system based on instant messaging

ActiveCN105471705AImprove interactive experienceData switching networksTotal factory controlIntelligent controlOperating time

The invention discloses an intelligent control method, device and system based on instant messaging. The method comprises the following steps: obtaining a control instruction through an instant messaging application, wherein the control instruction is used for carrying out corresponding operation on corresponding intelligent devices; analyzing the control instruction, and comparing the control instruction with a preset database to obtain corresponding intelligent device labels, operation time and operation content; judging whether the operation time arrives; and when the operation time arrives, sending the operation content to the intelligent devices of the corresponding labels to enable the intelligent devices of the corresponding labels to carry out operation corresponding to the operation content. The intelligent control device can manage different types of intelligent devices through instant messaging, thereby enhancing man-machine interaction experience and facilitating unified management of the intelligent devices.

Owner:TENCENT TECH (SHENZHEN) CO LTD

Computer interaction based upon a currently active input device

InactiveUS20060209016A1Improve interactive experienceEnhanced interactionCathode-ray tube indicatorsInput/output processes for data processingSystems analysisHuman–computer interaction

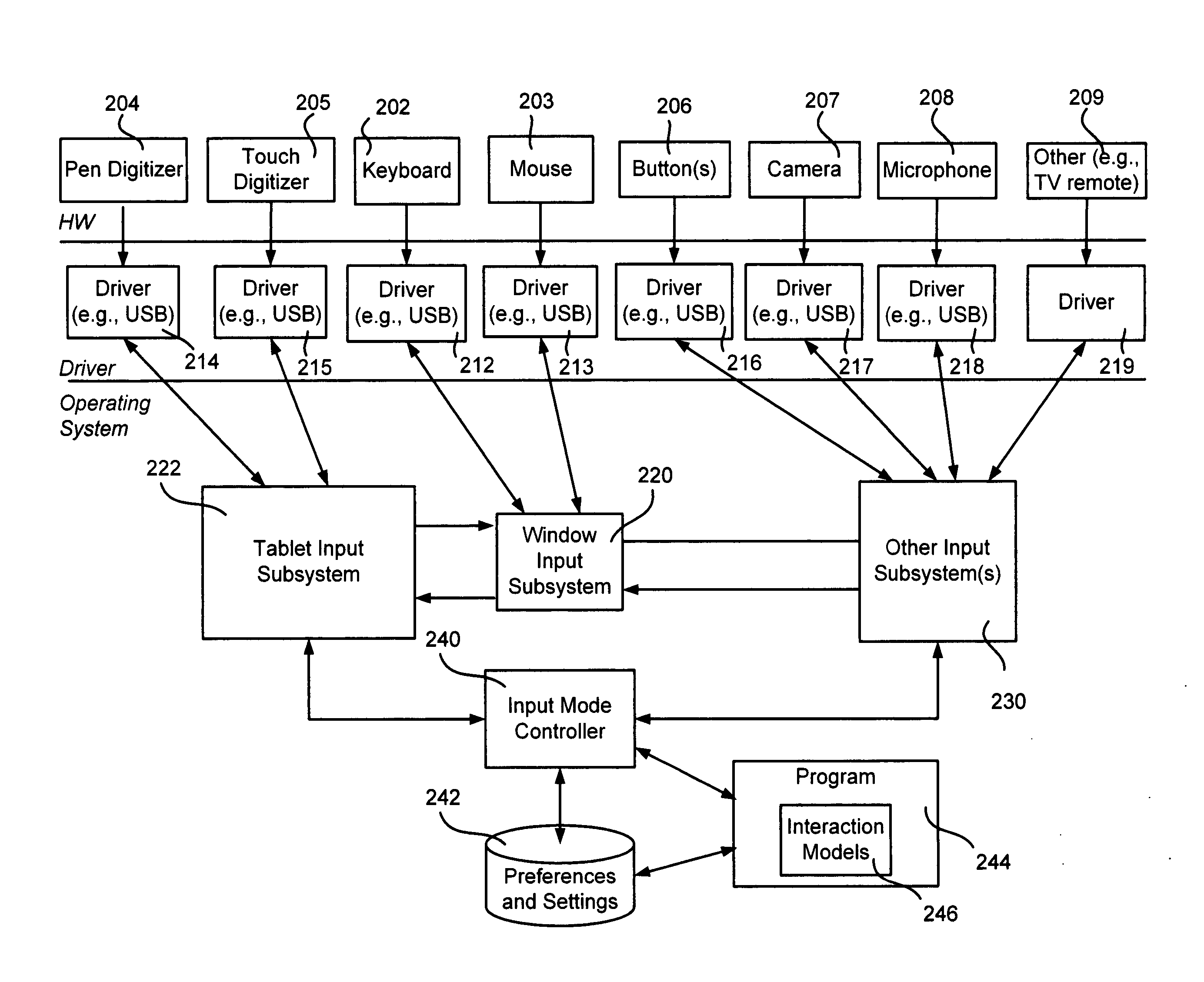

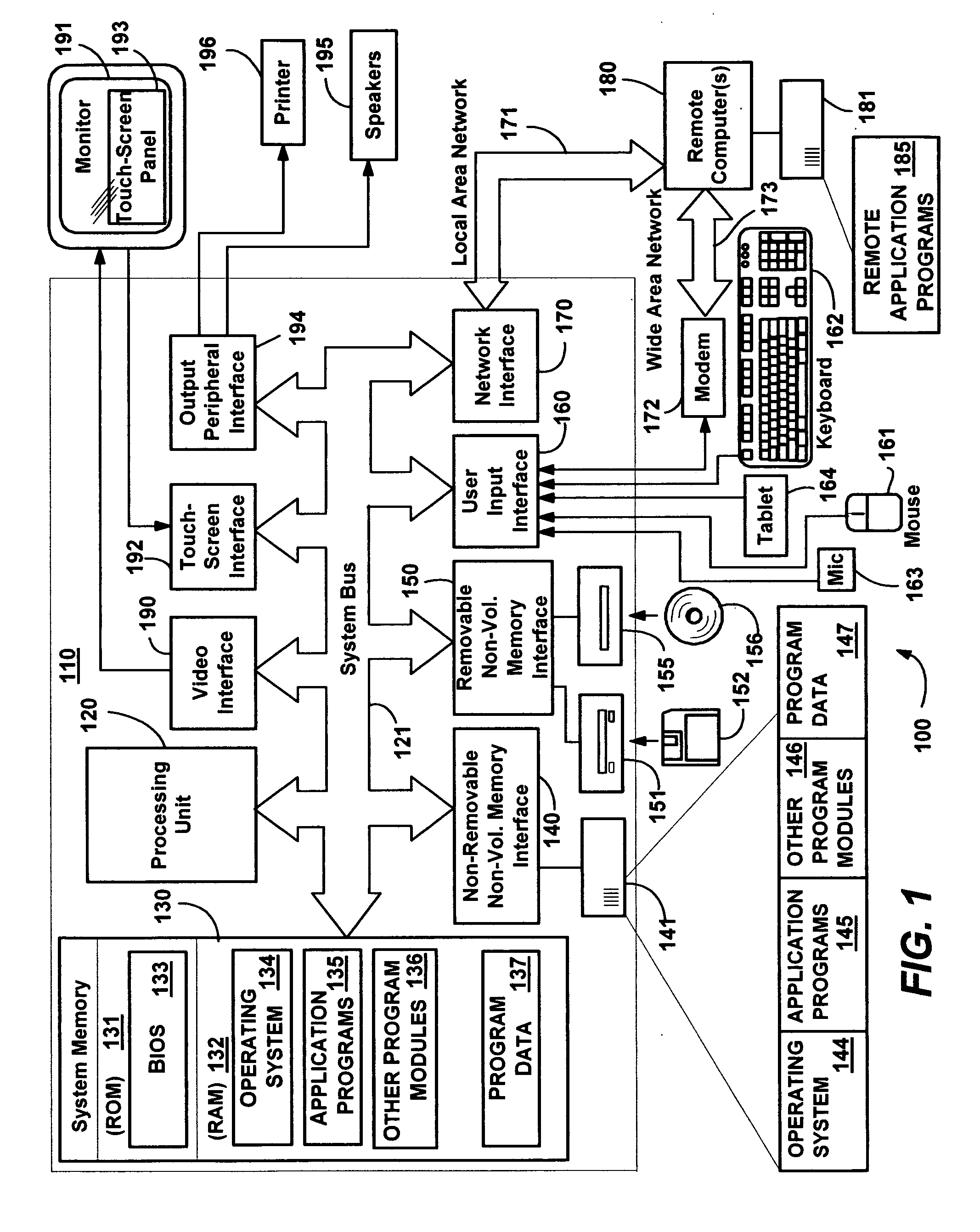

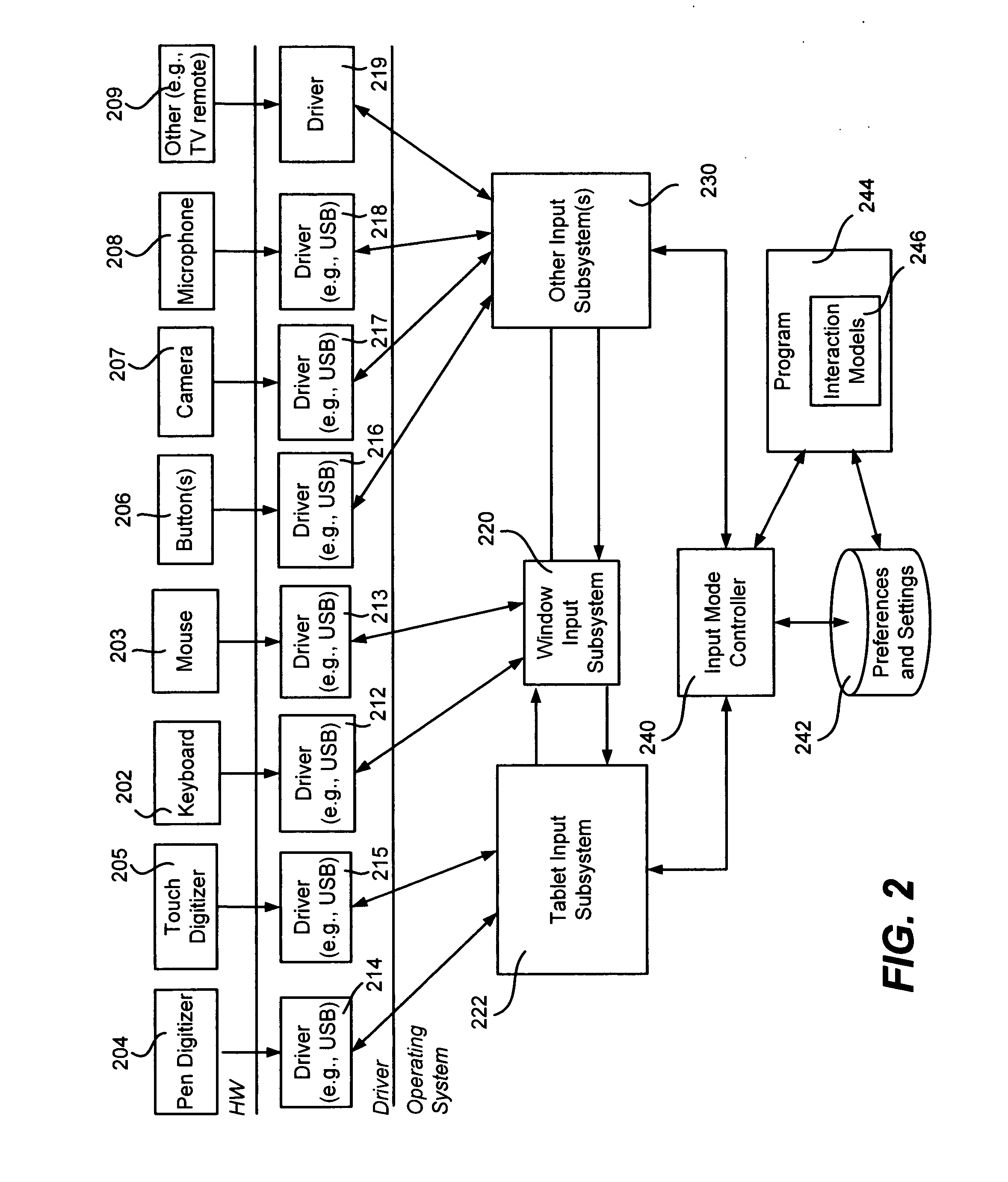

Described is a computer-implemented system and method that dynamically detects which input device (e.g., pen or mouse) is currently in use, and based on the device, varies a program's user interaction model to better optimize the user's ability to interact with the program via that input device. A tablet input subsystem receives pen and touch data, and also obtains keyboard and mouse data. The subsystem analyzes the data and determines which input device is currently active. The active device is mapped to an interaction model, whereby different user interface appearances, behaviors and the like may be presented to the user to facilitate improved interaction. For example, a program may change the size of user interface elements to enable the user to more accurately scroll and make selections. Timing, tolerances and thresholds may change. Pen hovering can become active, and interaction events received at the same location can be handled differently.

Owner:MICROSOFT TECH LICENSING LLC

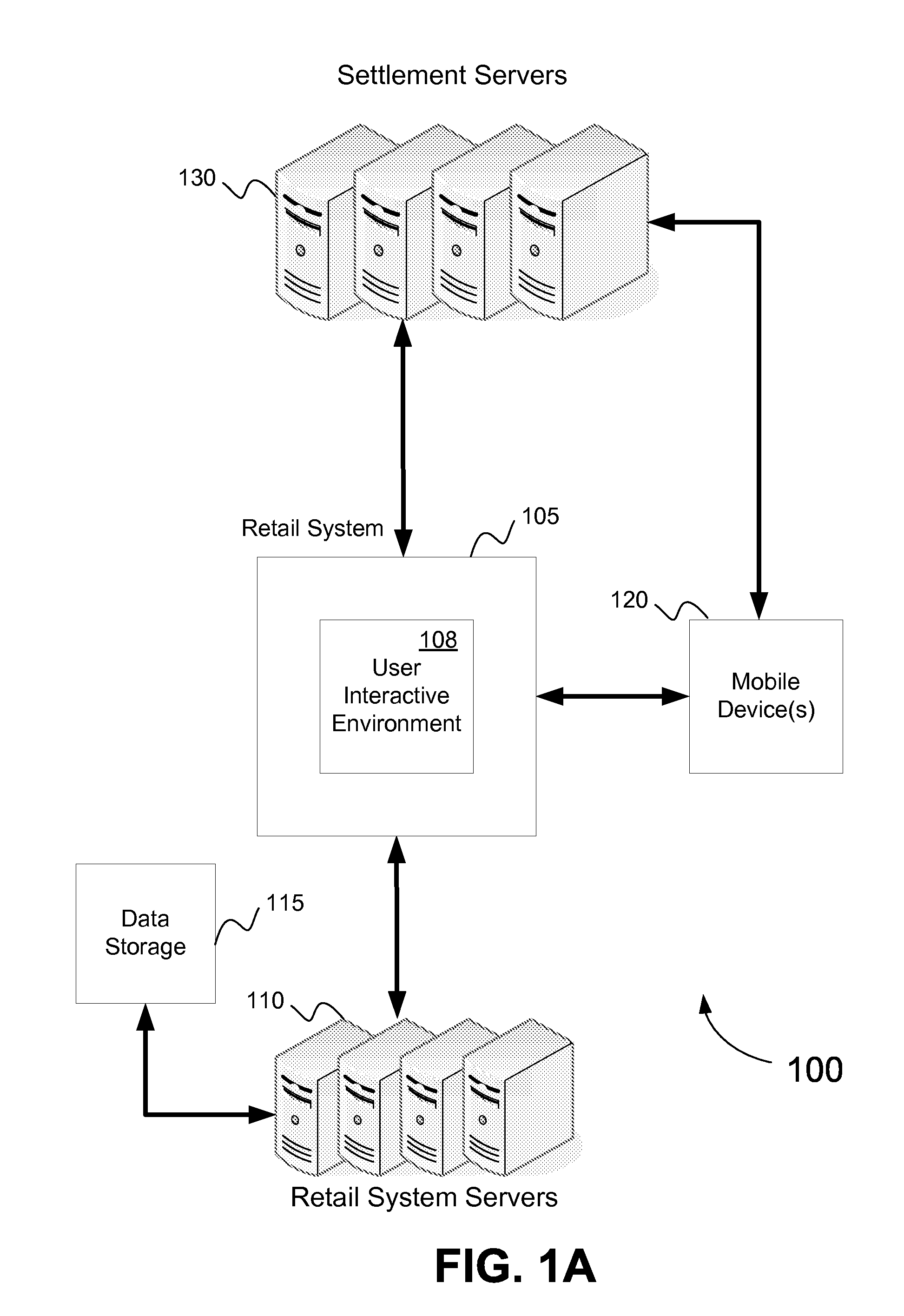

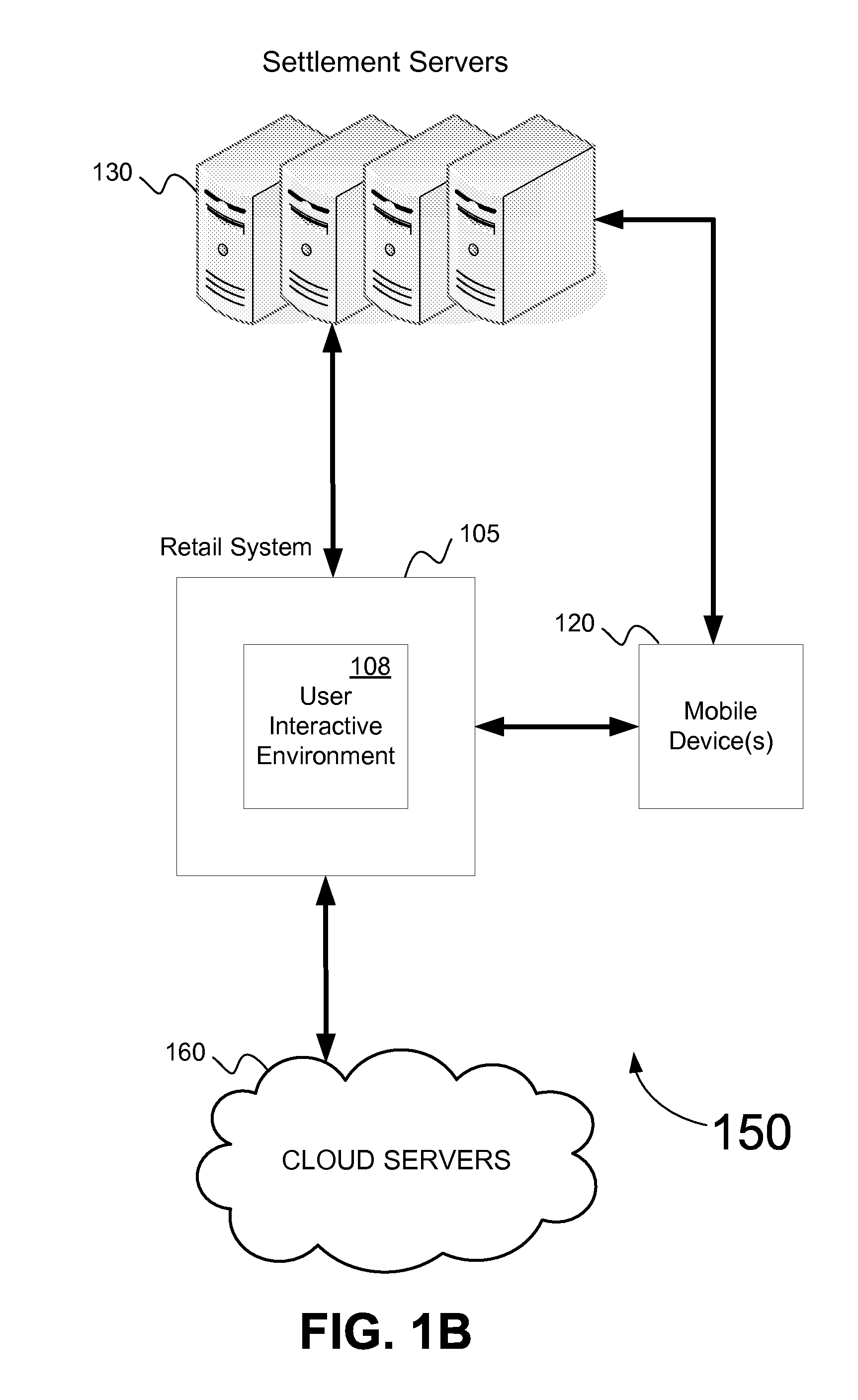

Interactive retail system

InactiveUS20130110666A1Great customizationOptimize spaceBuying/selling/leasing transactionsTransmissionDisplay deviceMultimedia

Owner:ADIDAS

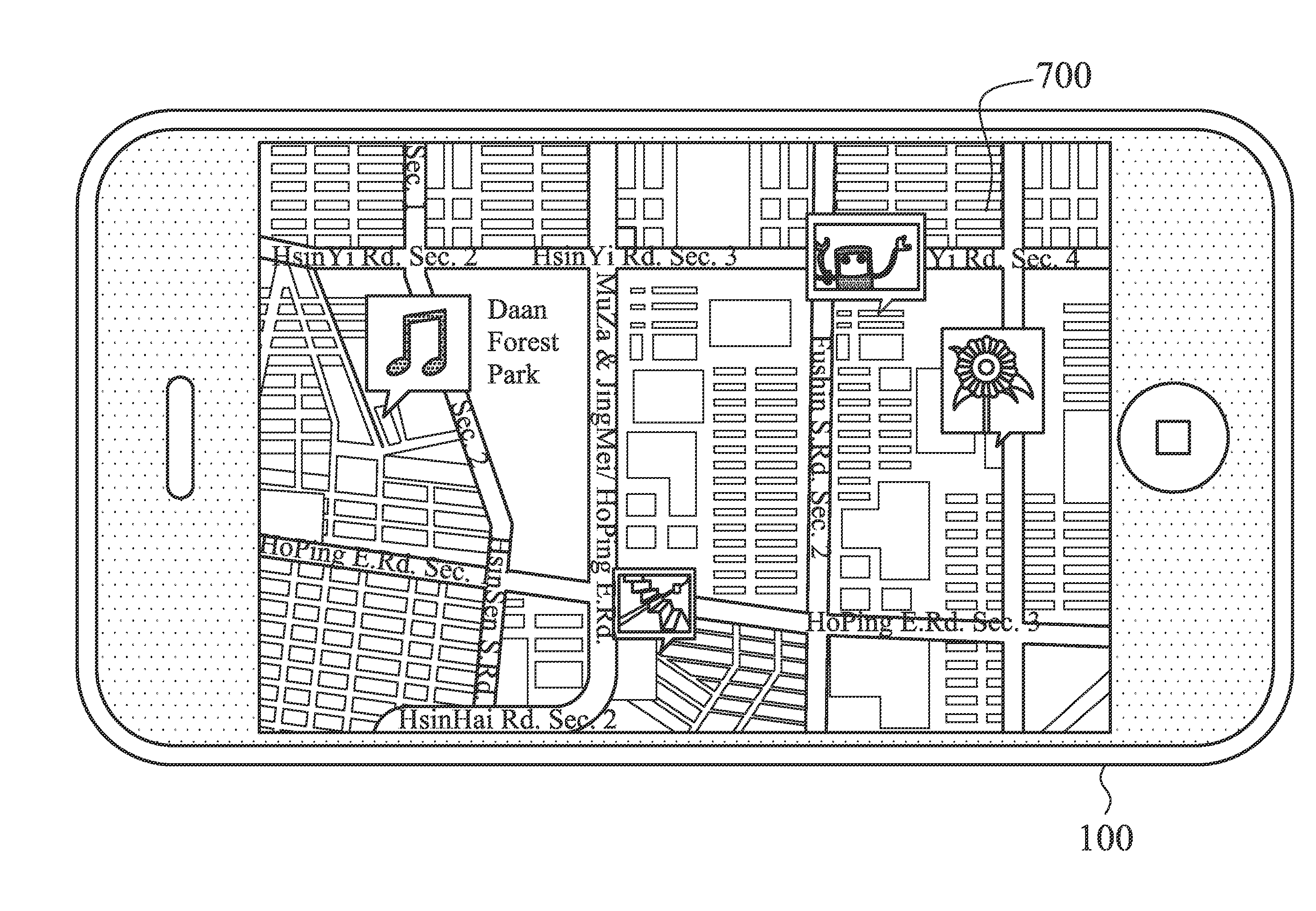

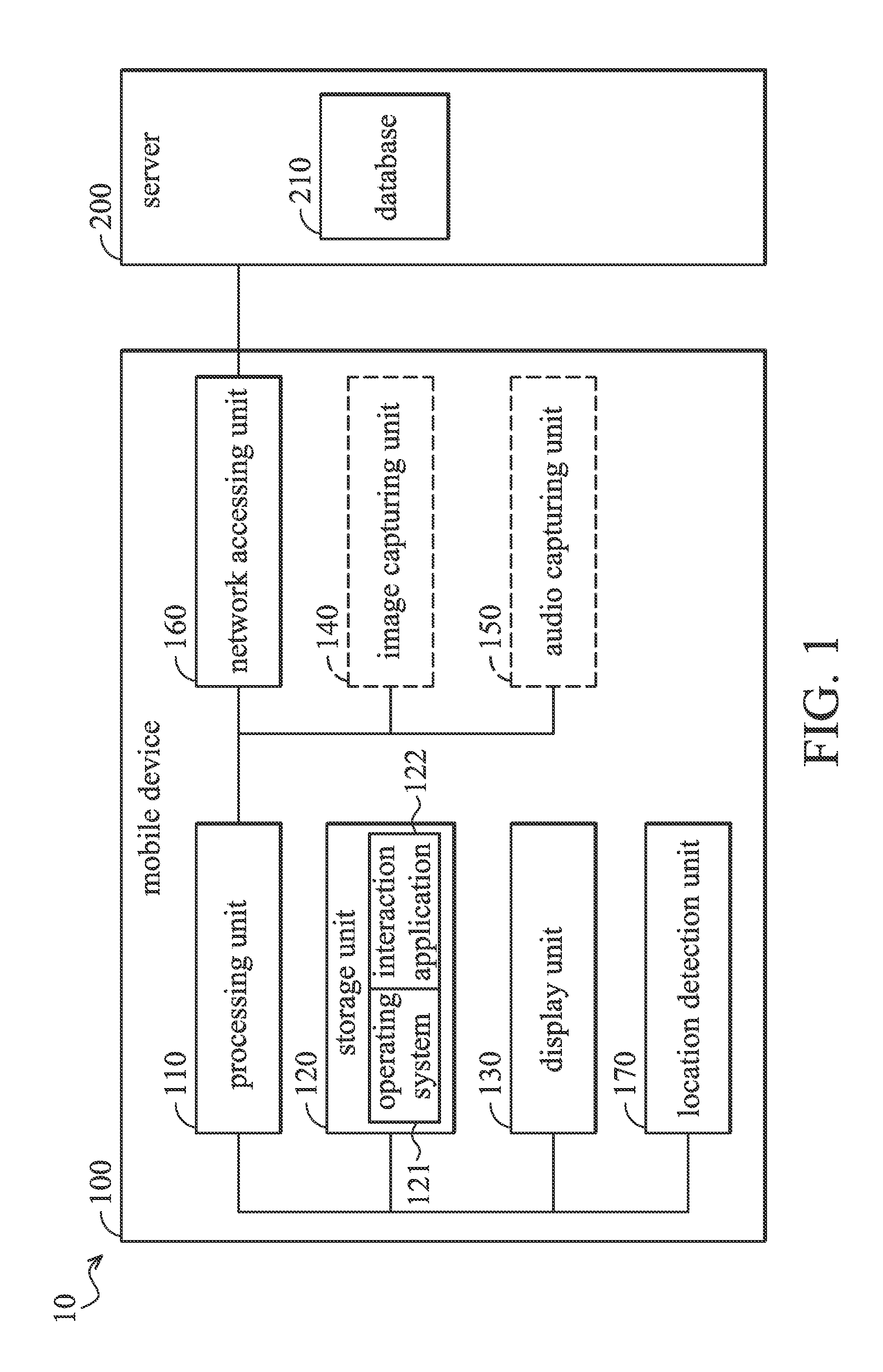

Interaction system

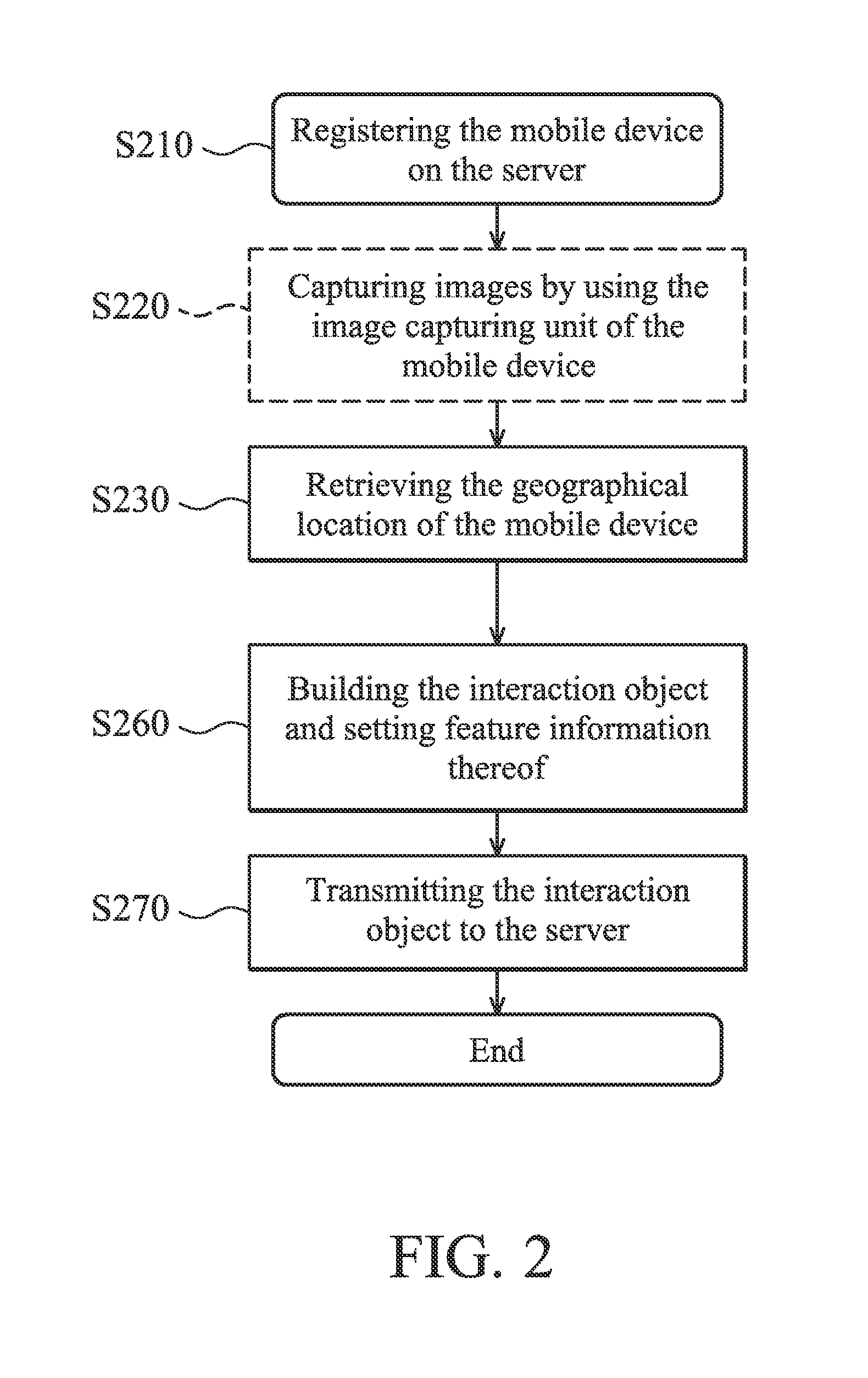

InactiveUS20140004884A1Improve interactive experienceLocation information based serviceInteraction systemsLocation detection

An interaction system is provided. The interaction system has: a mobile device having: a location detection unit configured to retrieve a geographical location of the mobile device; and a server configured to retrieve the geographical location of the mobile device, wherein the server has a database configured to store at least one interaction object and location information associated with the interaction object, and the server further determines whether the location information of the interaction object corresponds to the geographical location of the mobile device, wherein when the location information of the interaction object corresponds to the geographical location of the mobile device, the server further transmits the interaction object to the mobile device, so that the mobile device executes the at least one interaction object.

Owner:QUANTA COMPUTER INC

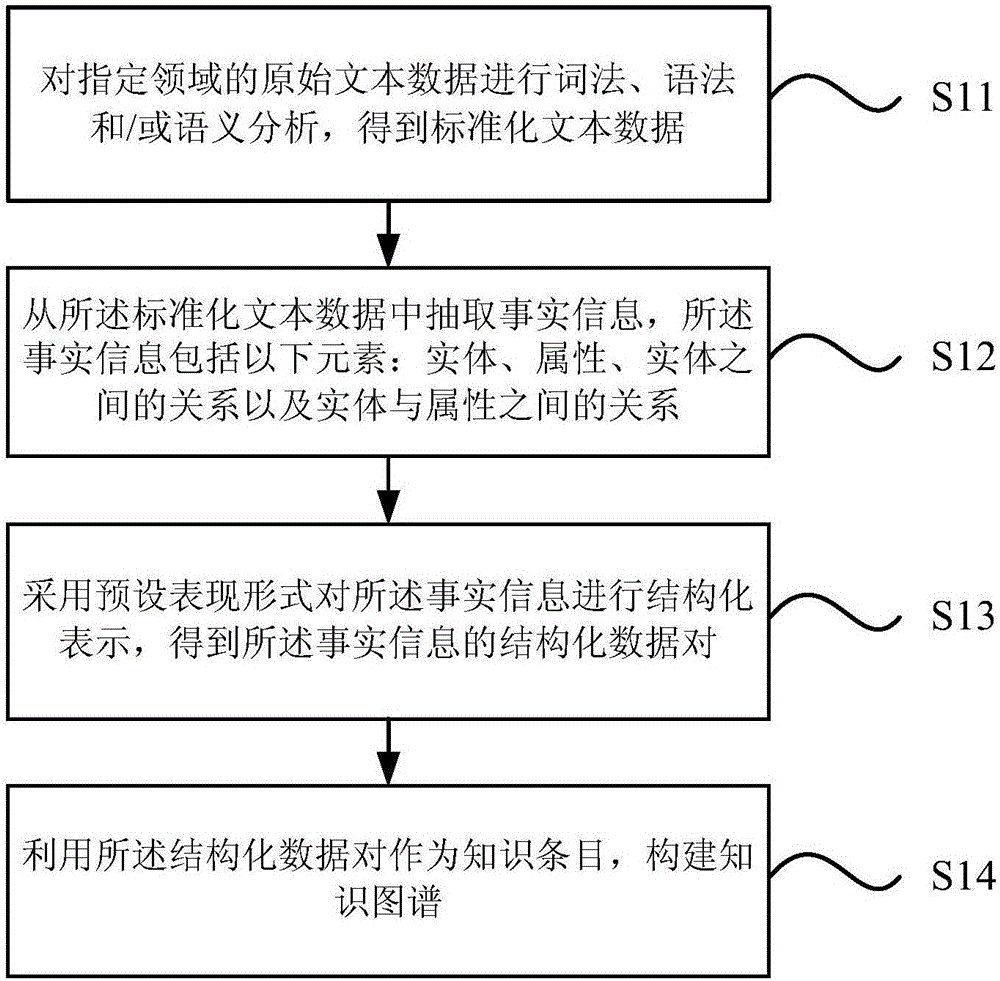

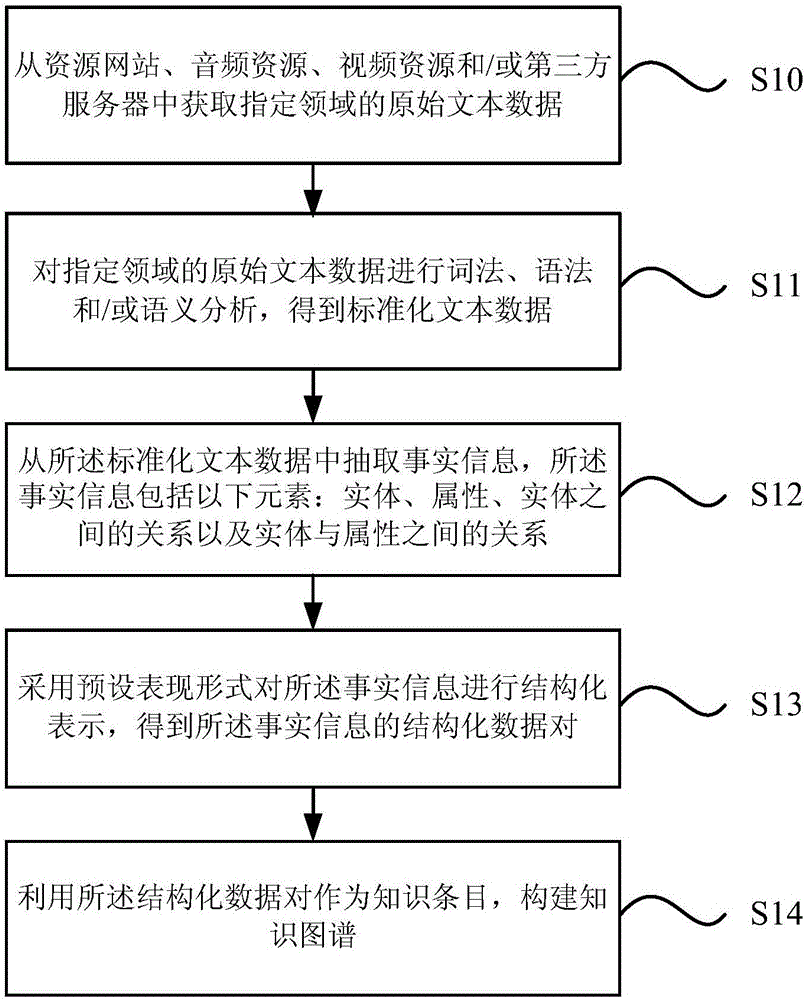

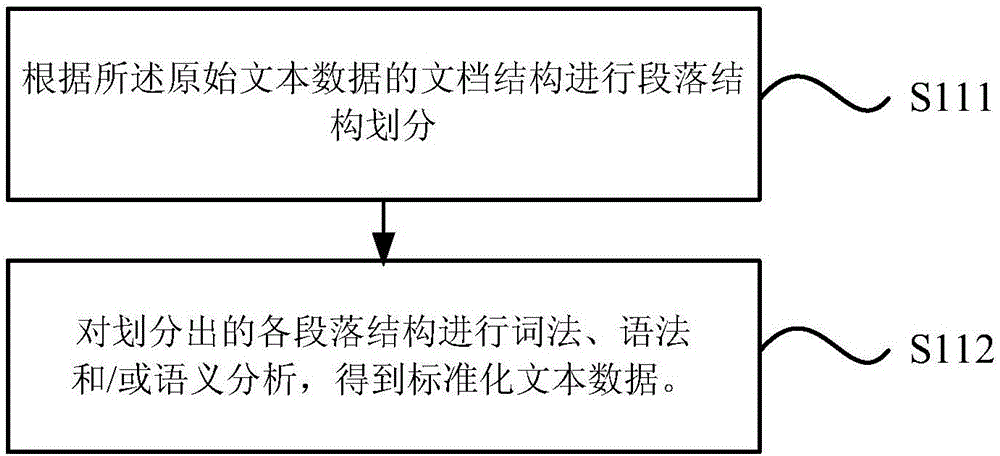

Knowledge graph generating method and device

ActiveCN106156365AImprove interactive experienceMeet the needs of intelligent interactionSemantic analysisSpecial data processing applicationsStructural representationKnowledge graph

The invention provides a knowledge graph generating method and device. The method comprises the steps as follows: original text data in a designated field are subjected to morphological, semantic analysis and / or semantic analysis, and standard text data are obtained; factual information is extracted from the standard text data and comprises the following elements: the relation among entities, attributes and entities as well as the relation between the entities and the attributes; structural representation is performed on the factual information in a preset representation form, and structured data pairs of the factual information are obtained; the structured data pairs are adopted as knowledge entries for establishing of a knowledge graph. With the adoption of the knowledge graph generating method, the specific knowledge graph can be established, the intelligent interaction requirement in the specific field such as the children's filed is met, and the interactive experience of users with different requirements is improved.

Owner:北京如布科技有限公司

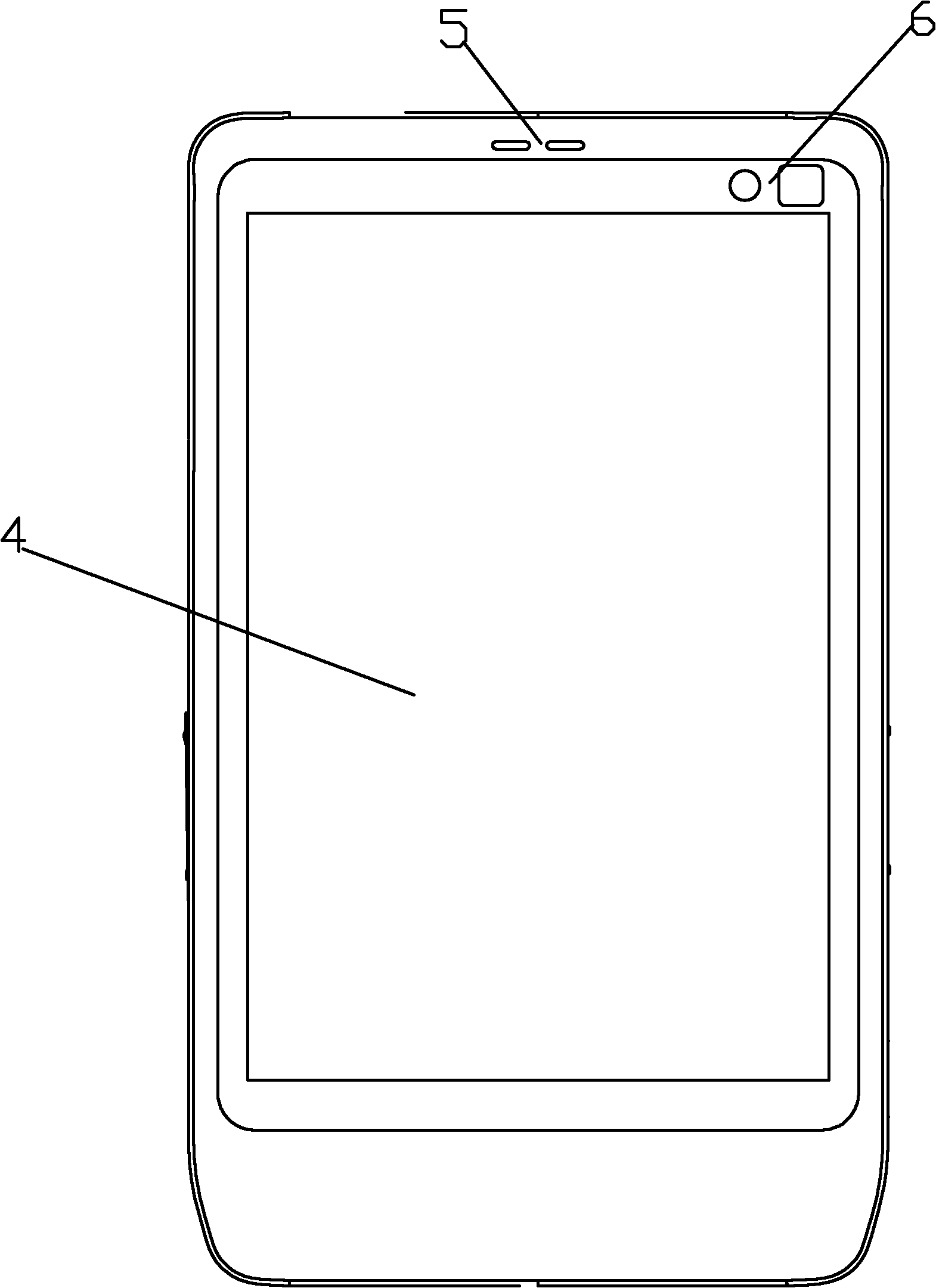

Mobile terminal and design method of operation and control technology thereof

InactiveCN101984384AImprove input speedReduce the number of swipe selectionsDigital data processing detailsInput/output processes for data processingKey pressingControl system

The invention discloses a mobile terminal and a design method of an operation and control system thereof, the mobile terminal comprises a machine body and an embedded central processing unit, wherein a back surface touch panel is mounted on the back surface of the machine body, and three-state buttons are respectively mounted in lower middle positions on two sides of the machine body; and the embedded central processing unit is the embedded central processing unit equipped with the operation and control system including finger action semantic recognition, a virtual keyboard and an input method. The design method comprises the steps of designing the shape of the machine body, designing the finger action semantic recognition under the support of an algorithm of the operation and control system and designing the virtual keyboard and the input method in the operation and control system. The invention designs the back surface touch type mobile terminal with natural operation and control concept, thereby realizing the purposes of maximizing the liberation of a front surface touch screen, easily realizing operation and control, in particular to text input by single hand, improving the intelligent operation and control based on full combination of system software algorithm, software and hardware, leading the operation to cater for habitual thinking and natural reaction of a user, and being suitable for long-time use.

Owner:DALIAN POLYTECHNIC UNIVERSITY

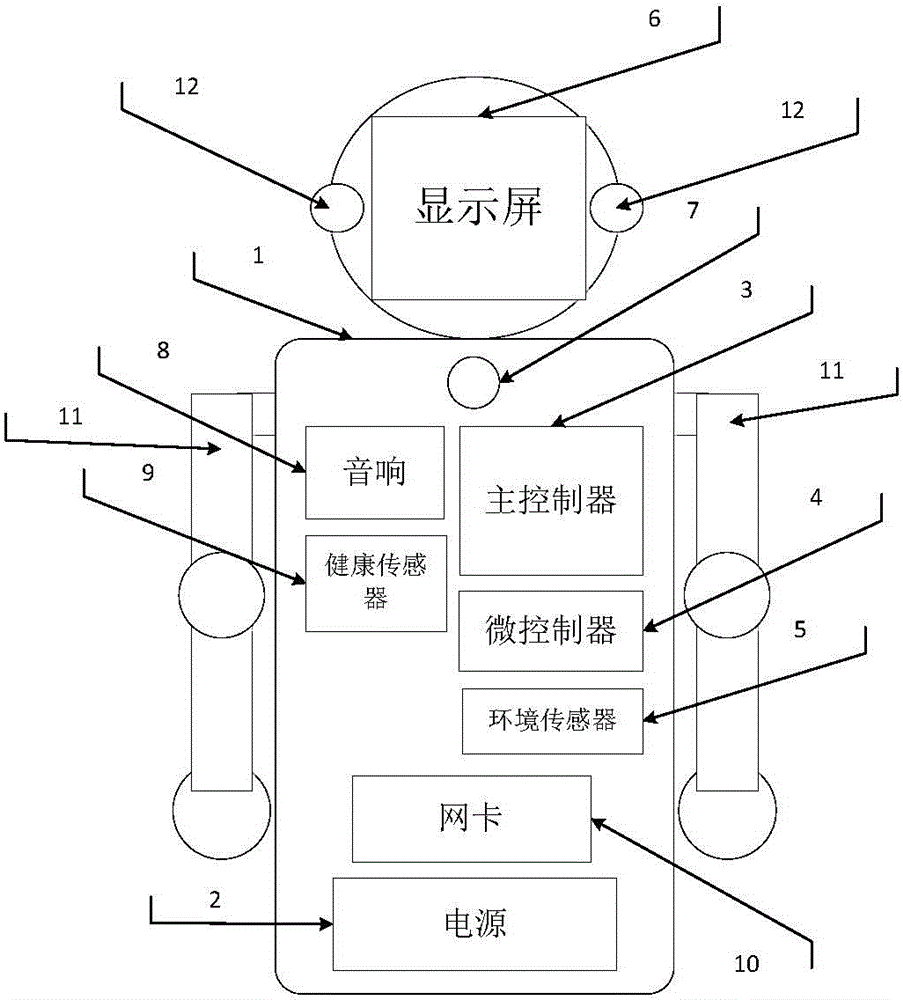

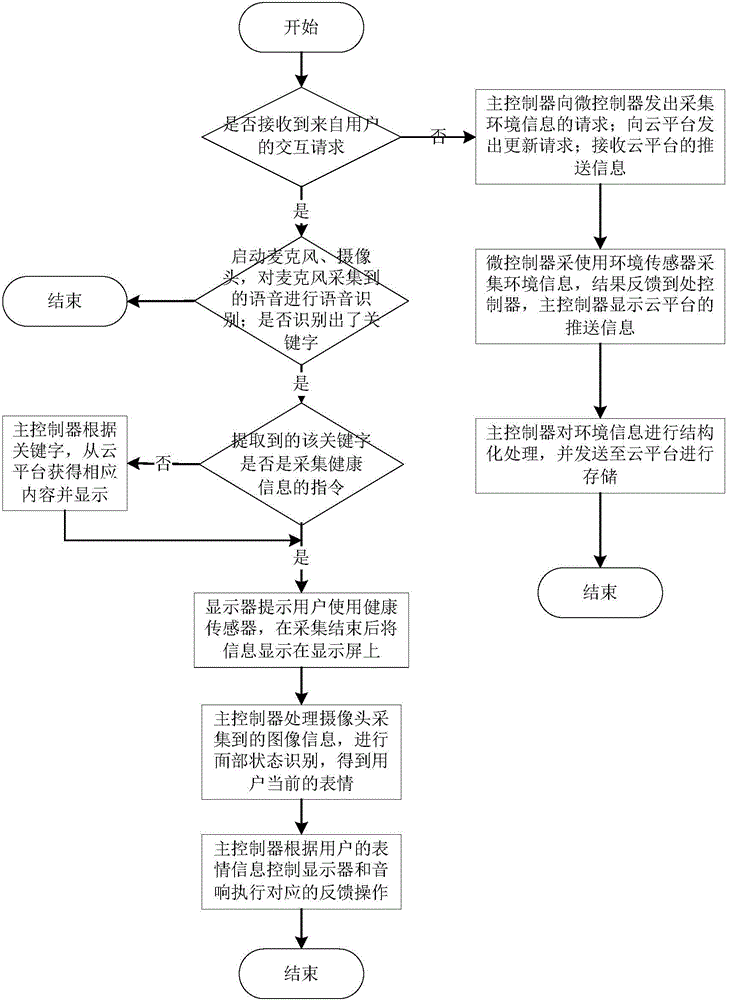

Household information acquisition and user emotion recognition equipment and working method thereof

InactiveCN104102346AVarious functionsImprove scalabilityProgramme controlInput/output for user-computer interactionMicrocontrollerAudio electronics

The invention discloses a household information acquisition and user emotion recognition equipment, which comprises a shell, a power supply, a main controller, a microcontroller, multiple environmental sensors, a screen, a microphone, an audio, multiple health sensors, a pair of robot arms and a pair of cameras, wherein the microphone is arranged on the shell; the power supply, the main controller, the microcontroller, the environmental sensors, the audio and the pair of cameras are arranged symmetrically relative to the screen respectively on the left and right sides; the robot arms are arranged on the two sides of the shell; the main controller is in communication connection with the microcontroller, and is used for controlling the microcontroller to control the movements of the robot arms through motors of the robot arms; the power supply is connected with the main controller and the microcontroller, and is mainly used for providing energy for the main controller and the microcontroller. According to the household information acquisition and user emotion recognition equipment, the intelligent speech recognition technology, the speech synthesis technology and the facial expression recognition technology are integrated, thus the use of the household information acquisition and user emotion recognition equipment is more convenient, and the feedback is more reasonable.

Owner:HUAZHONG UNIV OF SCI & TECH

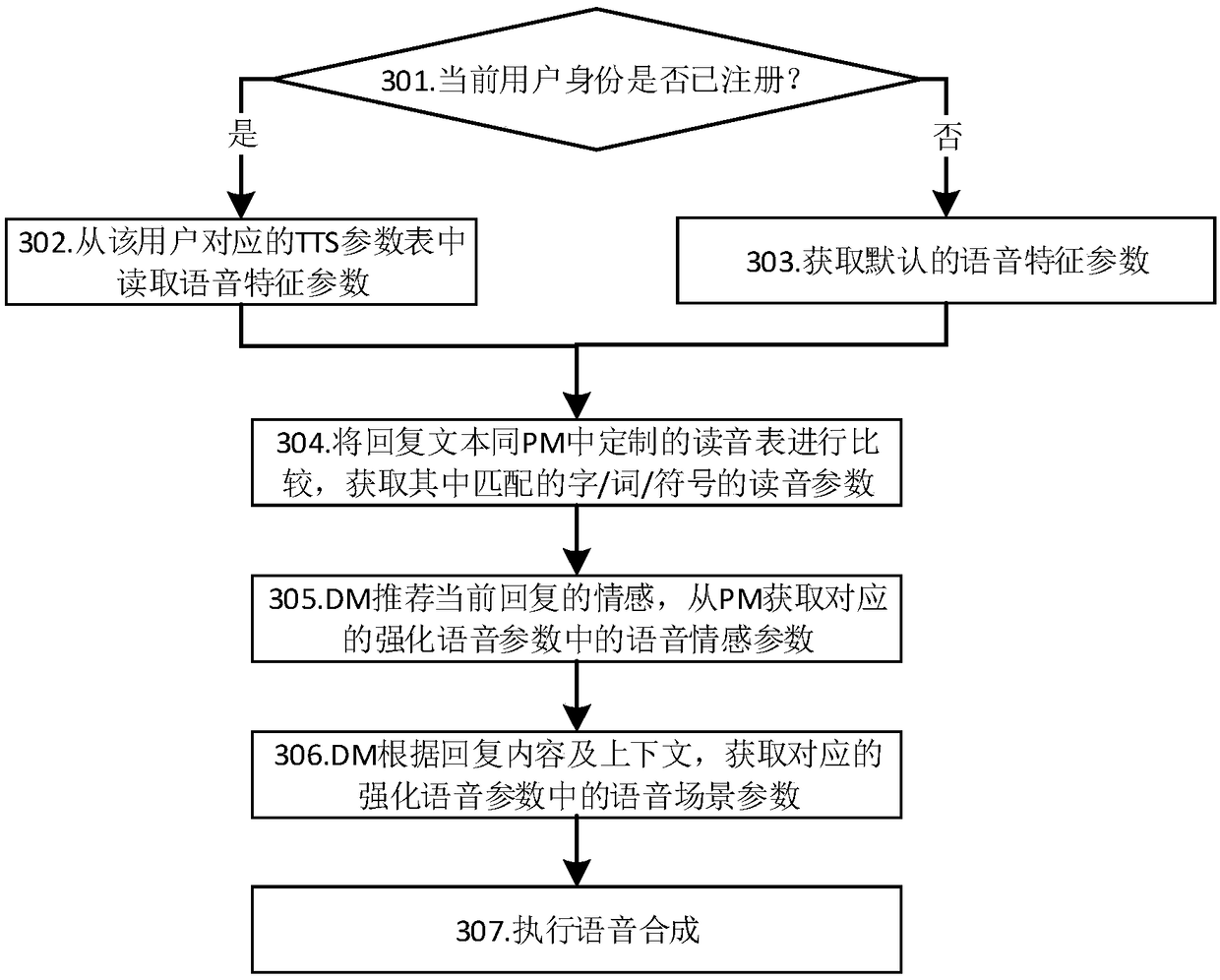

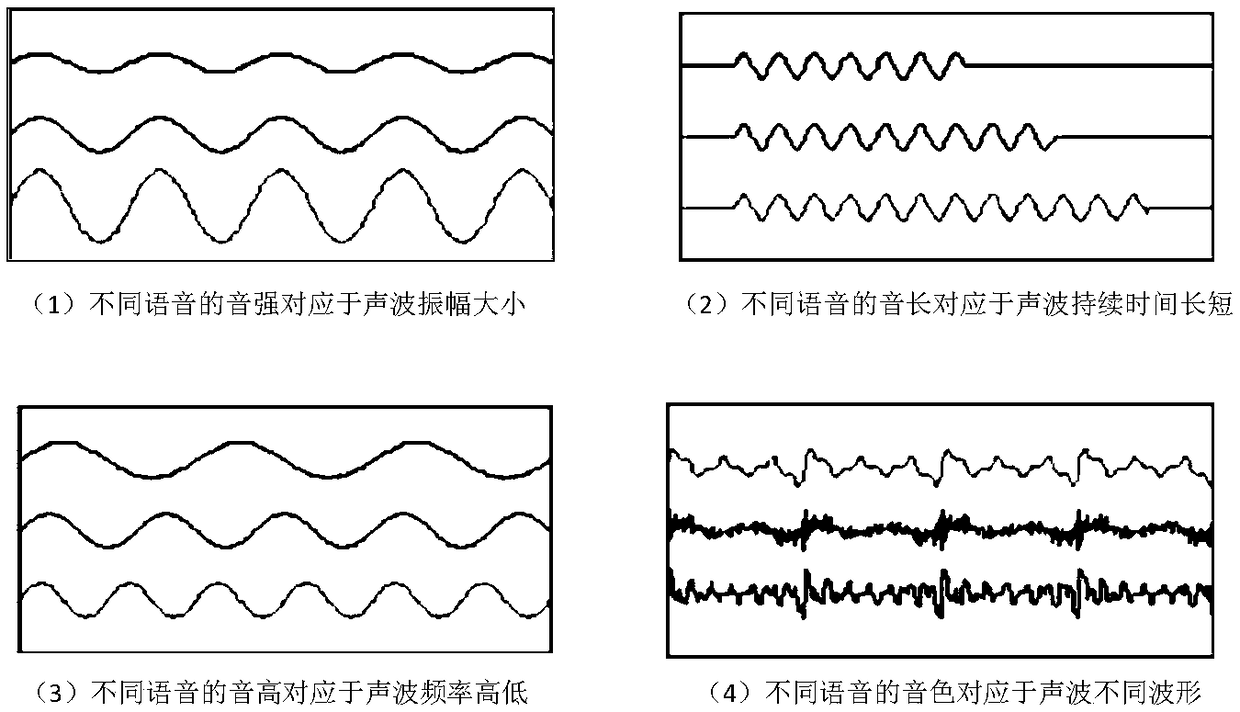

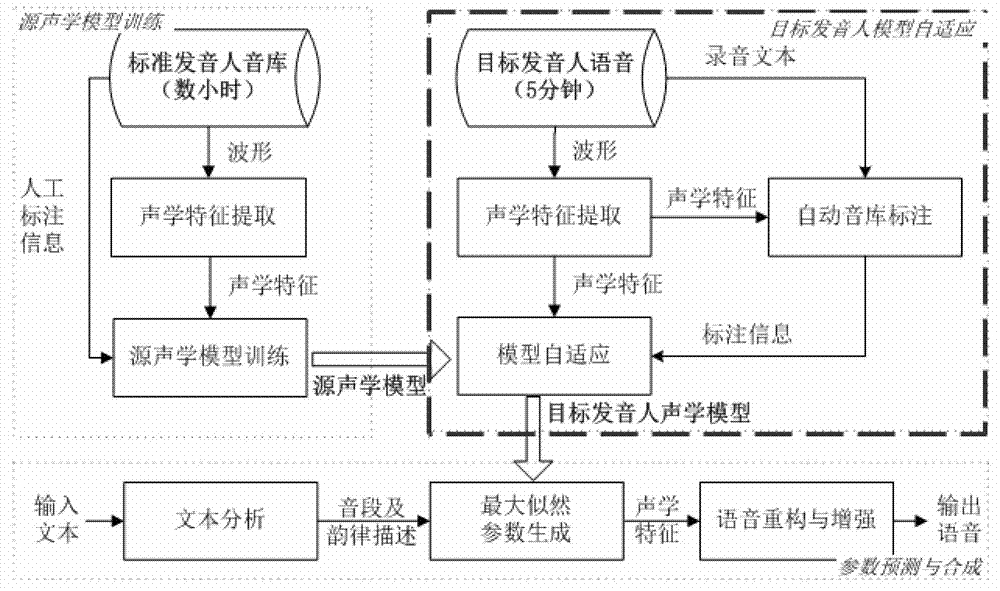

Speech synthesis method and related equipment

ActiveCN108962217AImprove voice interaction experienceImprove interactive experienceSpeech synthesisPersonalizationAcoustic model

The present application provides a speech synthesis method and related equipment. The method includes the following steps that: the identity of a user is determined according to the current input speech of the user; an acoustic model is obtained from an acoustic model library according to the current input speech; basic speech synthesis information is determined according to the identity of the user, wherein the basic speech synthesis information characterizes variable quantities in the preset sound speed, preset volume, and preset pitch of the acoustic model; a reply text is determined; enhanced speech synthesis information is determined according to the reply text and context information, wherein the enhanced speech synthesis information characterizes variable quantities in the preset timbre, tone and preset rhythm of the acoustic model; and speech synthesis is performed on the reply text through the acoustic model according to the basic speech synthesis information and the enhancedspeech synthesis information, so that reply speech for the user can be obtained. With the speech synthesis method and related apparatus provided by the embodiments of the invention adopted, a device can provide a personalized speech synthesis effect to the user during a man-machine interaction process, and therefore, the speech interaction experience of the user can be improved.

Owner:HUAWEI TECH CO LTD

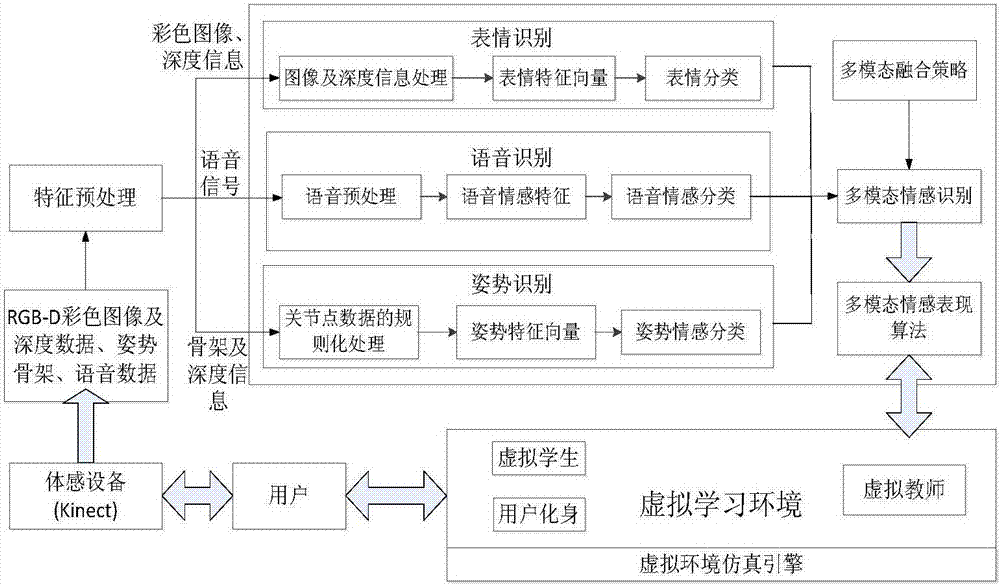

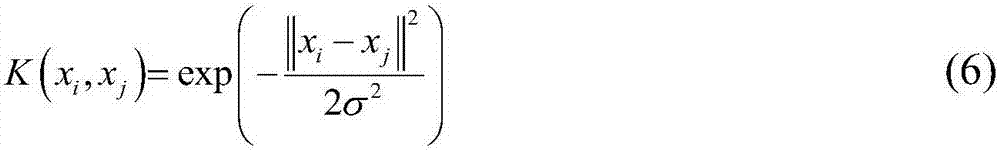

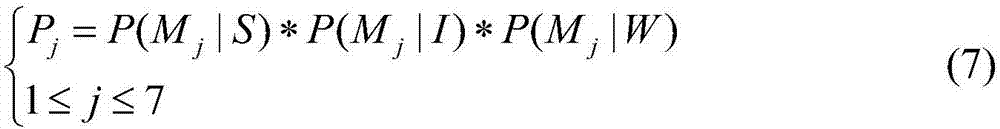

Virtual learning environment natural interaction method based on multimode emotion recognition

InactiveCN106919251AAccuracyImprove practicalityInput/output for user-computer interactionSpeech recognitionHuman bodyColor image

The invention provides a virtual learning environment natural interaction method based on multimode emotion recognition. The method comprises the steps that expression information, posture information and voice information representing the learning state of a student are acquired, and multimode emotion features based on a color image, deep information, a voice signal and skeleton information are constructed; facial detection, preprocessing and feature extraction are performed on the color image and a depth image, and a support vector machine (SVM) and an AdaBoost method are combined to perform facial expression classification; preprocessing and emotion feature extraction are performed on voice emotion information, and a hidden Markov model is utilized to recognize a voice emotion; regularization processing is performed on the skeleton information to obtain human body posture representation vectors, and a multi-class support vector machine (SVM) is used for performing posture emotion classification; and a quadrature rule fusion algorithm is constructed for recognition results of the three emotions to perform fusion on a decision-making layer, and emotion performance such as the expression, voice and posture of a virtual intelligent body is generated according to the fusion result.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

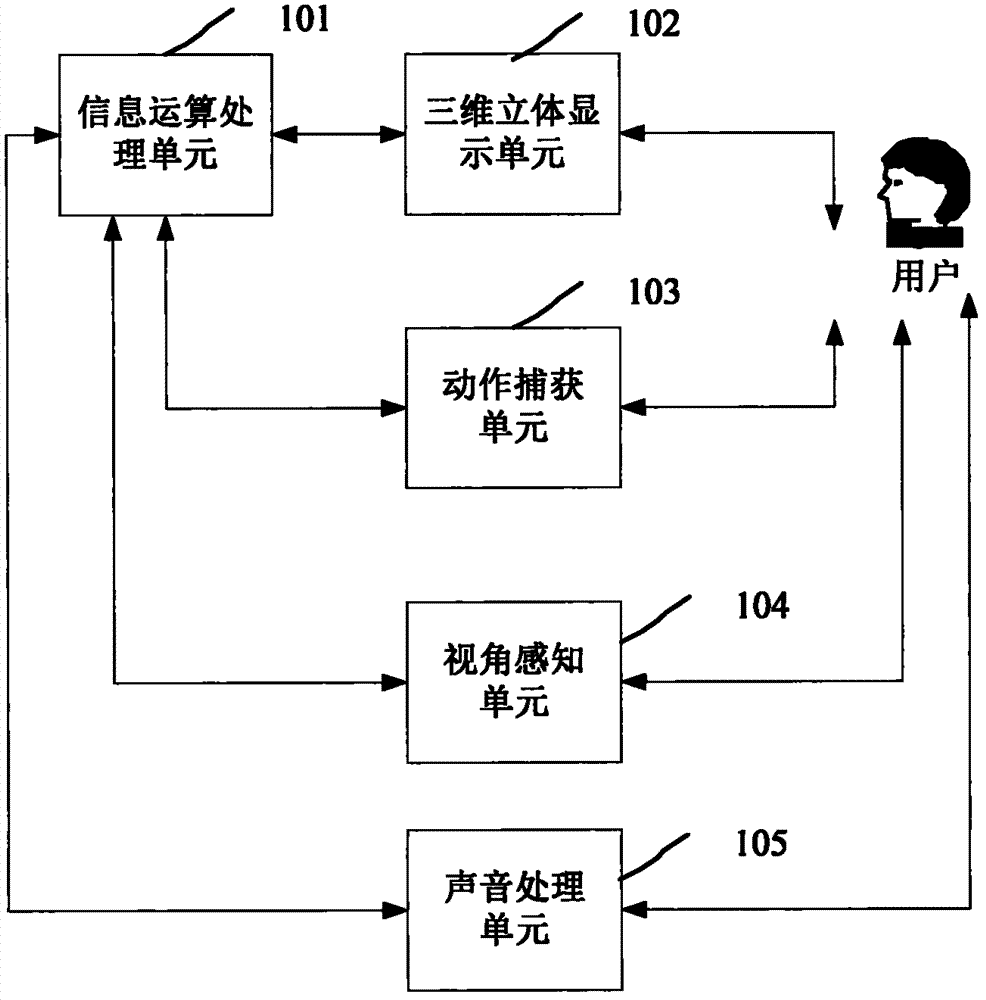

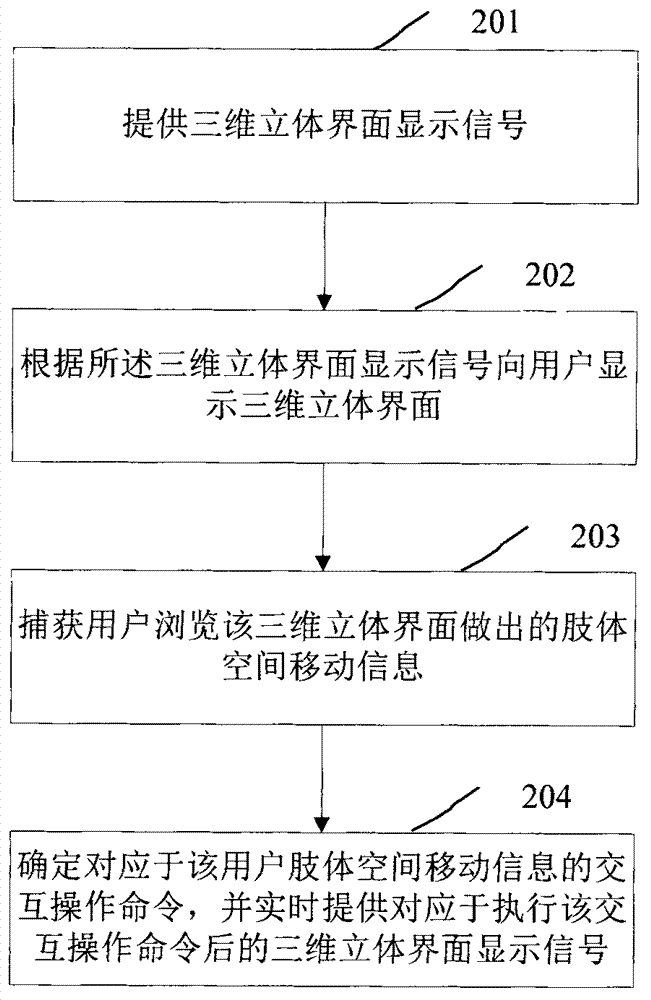

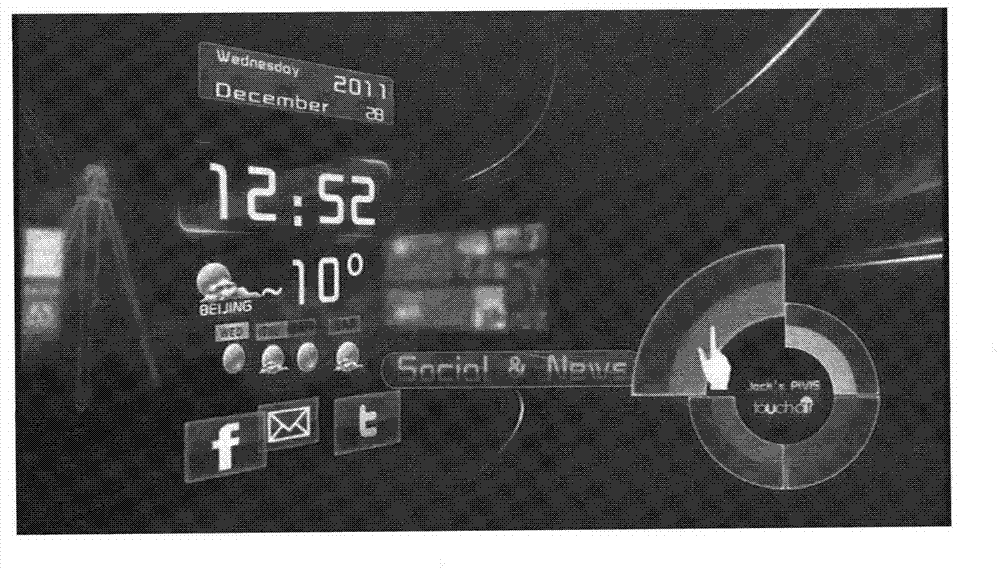

User interaction system and method

ActiveCN102789313AImprove interactive experienceImprove experienceInput/output for user-computer interactionGraph readingInteraction systemsHuman–computer interaction

The invention discloses a user interaction system and a method. The system comprises an information operation processing unit, a three dimensional display unit and a motion capture unit; wherein the information operation processing unit is used for providing three dimensional interface display signals for the three dimensional display unit; the three dimensional display unit is used for displaying a three dimensional interface to a user according to the tree dimensional interface display signals; and the motion capture unit is used for capturing body space mobile information made by the user when the tree dimensional interface is browsed, and sending the body space mobile information to the information operation processing unit. When the user interaction system and the method are applied, the user can indulge in private and interesting virtual information interaction space, and natural information interaction is preformed in the space, so that user interactive experience is enhanced, a large number of meaningful applications can be derived on the basis, and the user is enabled to interact with a digital information world naturally.

Owner:TOUCHAIR TECH

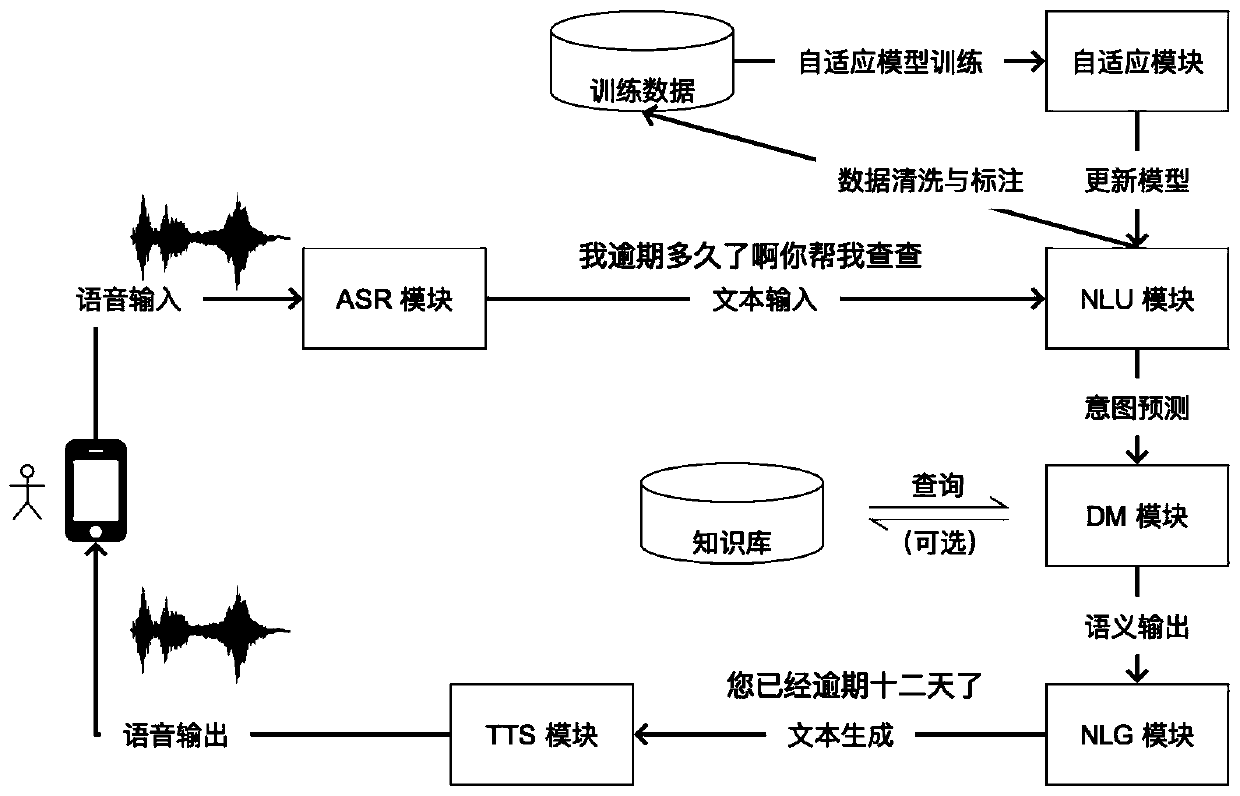

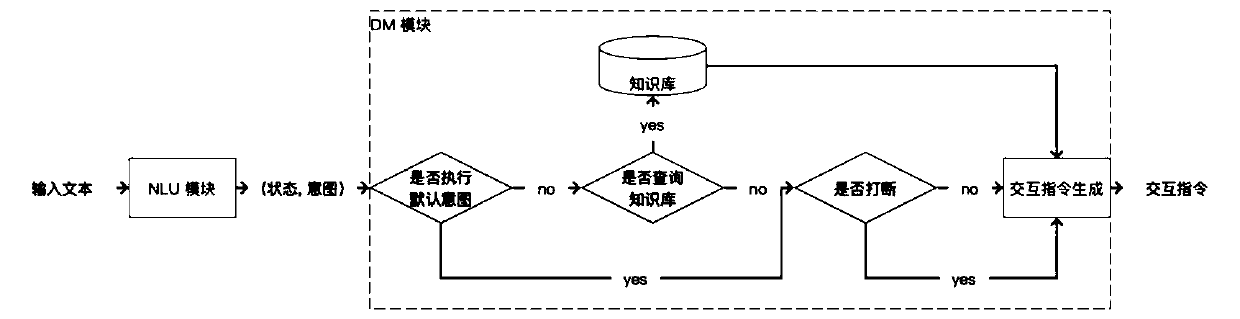

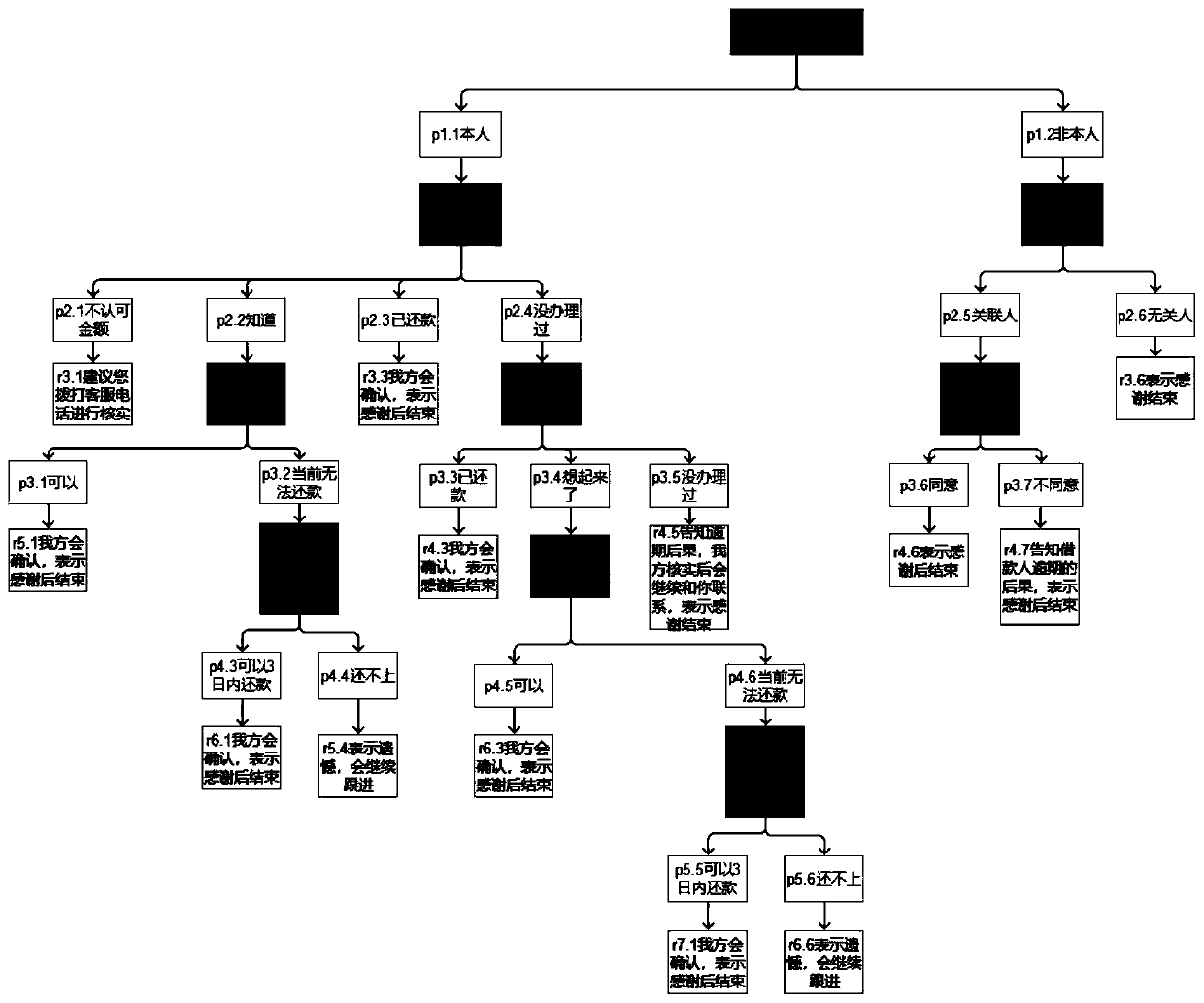

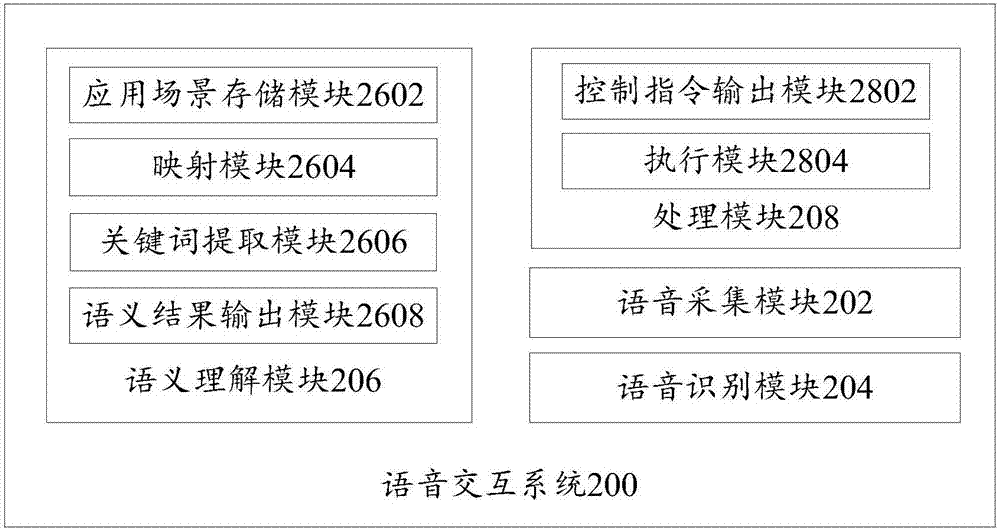

Multi-round dialogue intelligent voice interaction system and device

ActiveCN110209791ASmooth communicationImprove interactive experienceText database queryingSpecial data processing applicationsInteraction systemsNatural language understanding

The invention discloses a multi-round dialogue intelligent voice interaction system and device. The system comprises a hybrid semantic understanding module, a semantic understanding adaptive module and an automatic dialogue management module. The voice input is converted into a text and input to a hybrid semantic understanding module after being subjected to voice recognition; wherein the hybrid semantic understanding module is used for understanding user intention and extracting corresponding state information, an automatic dialogue management module is used for guiding a dialogue process, outputting dialogue texts and converting the dialogue texts into voice output based on the user intention to realize dialogue, and the semantic understanding self-adaptive module is used for optimized learning of the hybrid semantic understanding module. According to the invention, a plurality of modules such as speech recognition, natural language understanding, natural language generation, speechsynthesis and dialogue management are integrated to form a whole set of multi-round dialogue intelligent speech interaction system which is easy to expand and configure and can be applied to any scene.

Owner:百融云创科技股份有限公司

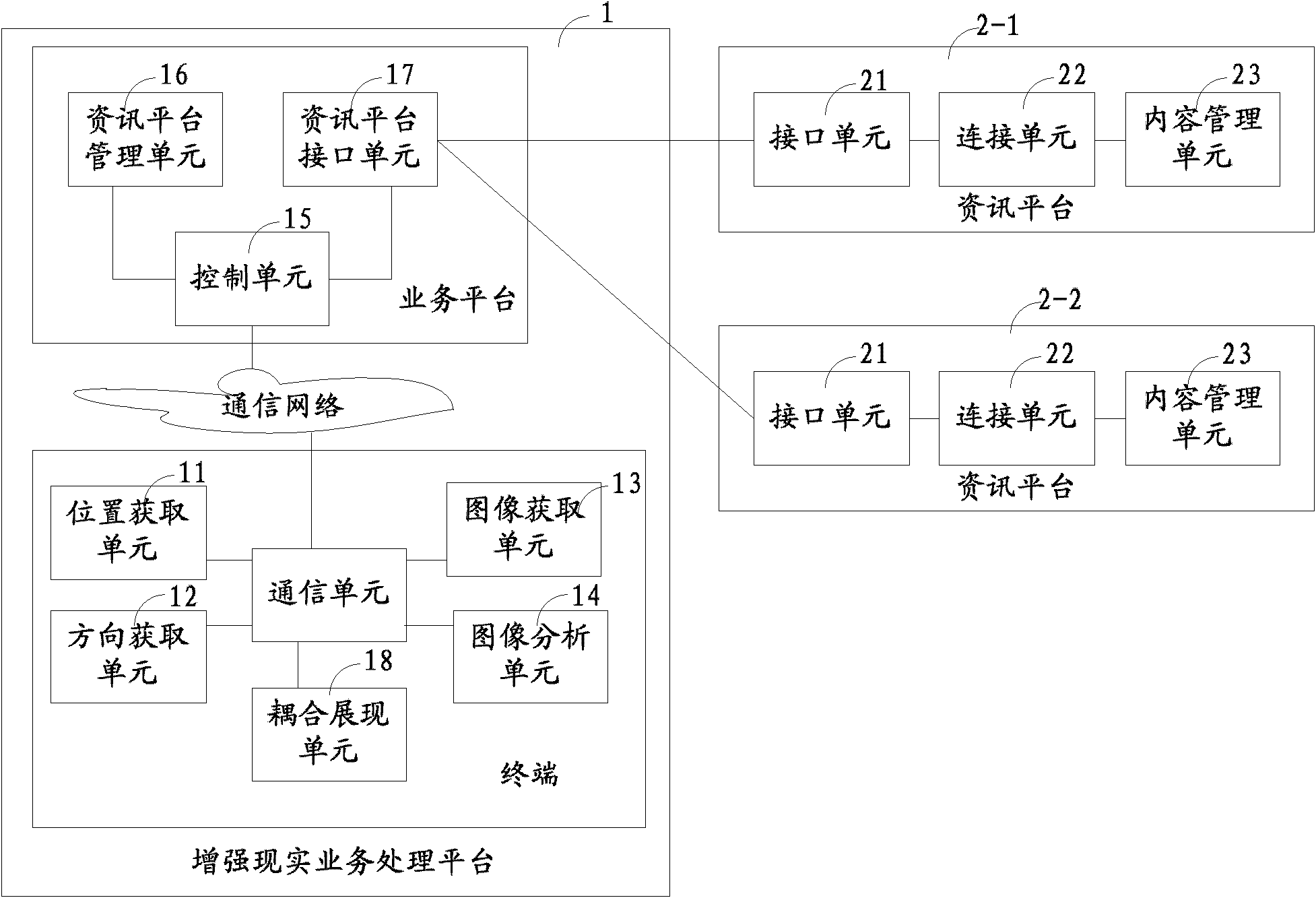

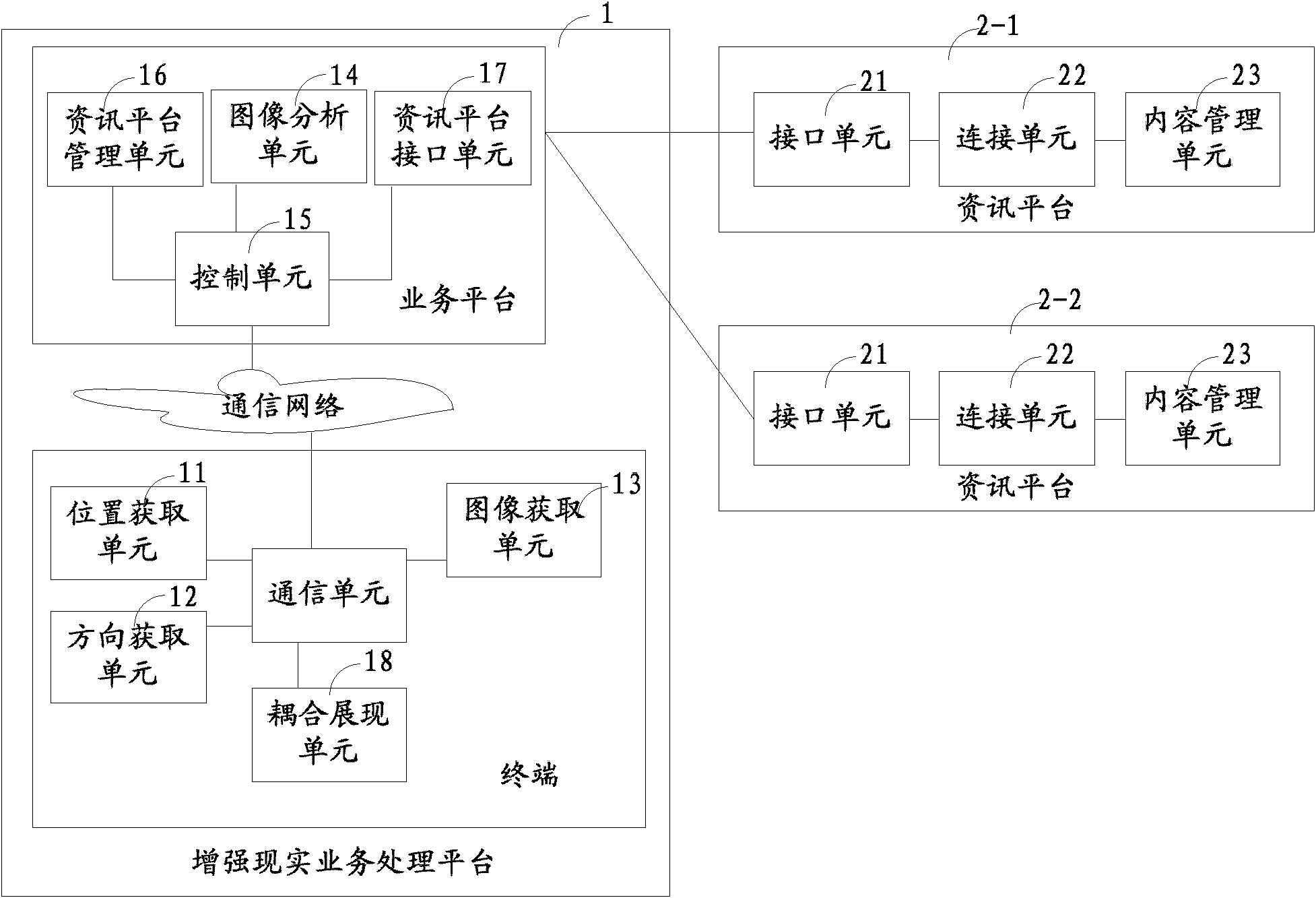

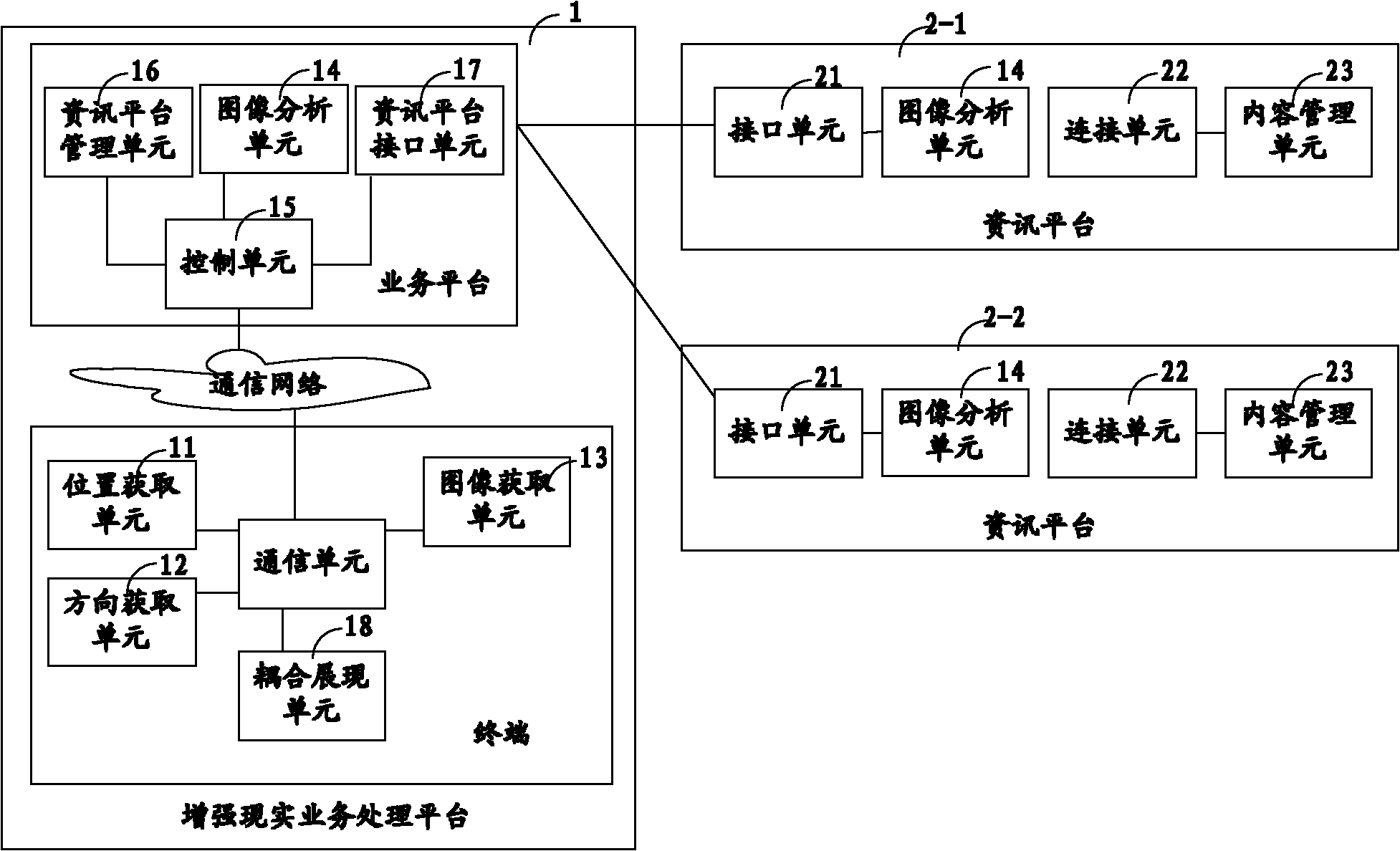

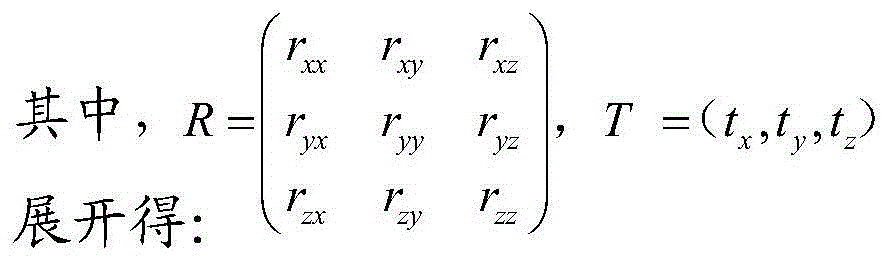

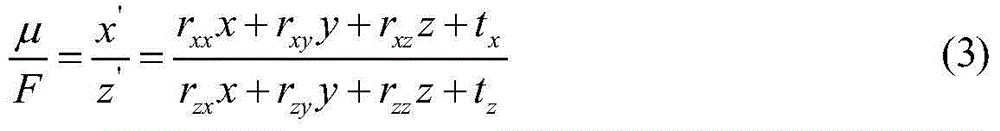

System and method for acquiring mobile augmented reality information

ActiveCN102194007AImprove accuracyImprove interactive experienceCharacter and pattern recognitionSpecial data processing applicationsComputer scienceInformation acquisition

The invention provides a system and a method for acquiring mobile augmented reality information. The method comprises the following steps of: acquiring image information, and extracting image characteristics of the image information; determining an information platform needed to establish connection, and connecting one or a plurality of information platforms according to an information platform address; carrying the image characteristics and position information in a request parameter, and transmitting the request parameter to the information platform; and searching through the information platform according to the request parameter so as to acquire content information matched with the request parameter, and returning the content information to an augmented reality service processing platform. By the method, the acquisition accuracy of the information is improved, and the information can be accurately matched and superposed with realistic scenes.

Owner:CHINA TELECOM CORP LTD

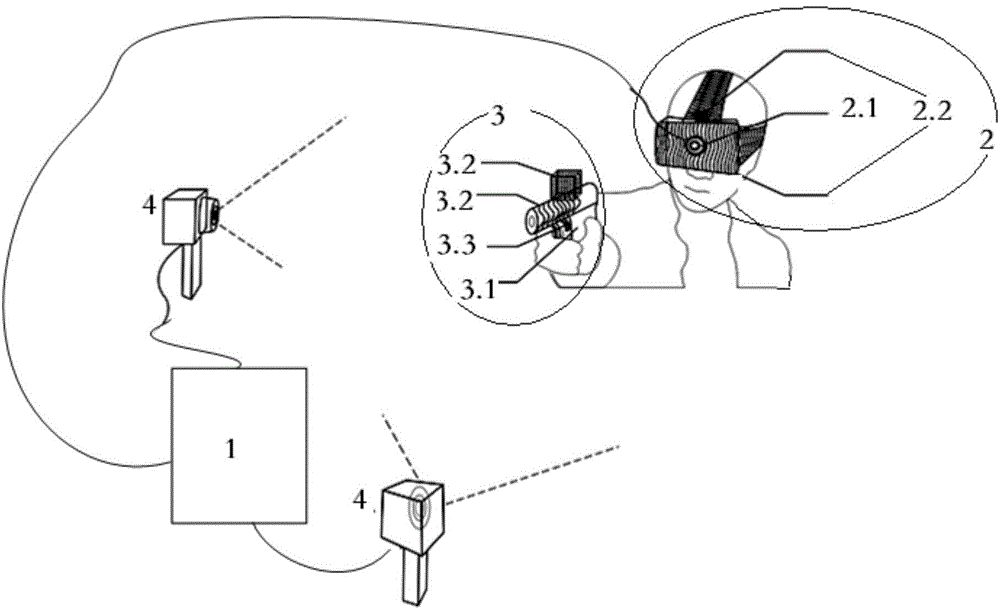

Virtual reality interactive system and method based on machine vision

ActiveCN104699247AAccurate trackingImprove experienceInput/output for user-computer interactionAnimationMachine visionAnimation

The invention provides a virtual reality interactive system and method based on machine vision. The method is: a virtual environment generation controller obtains the posture change information of the head of participant in a world coordinate system based on the feature point recognition technology of the machine vision and further obtains the posture change information of separation portion interactive equipment; and an overlapped virtual animation is generated under the condition of the motions of both the helmet equipment and the separation portion interactive equipment by combination of the aiming direction of the separation portion interactive equipment and the interactive command of the virtual environment, and the overlapped virtual animation is output to a display screen arranged in a clamping groove of the front end of the helmet equipment. The virtual reality interactive system and the method based on the machine vision has the advantages of being capable of realizing the output of the overlapped virtual animation under the condition of the motions of the helmet equipment and the separation portion interactive equipment, generating a more natural and smooth animation, and enhancing the experience of a player.

Owner:BEIJING 7INVENSUN TECH

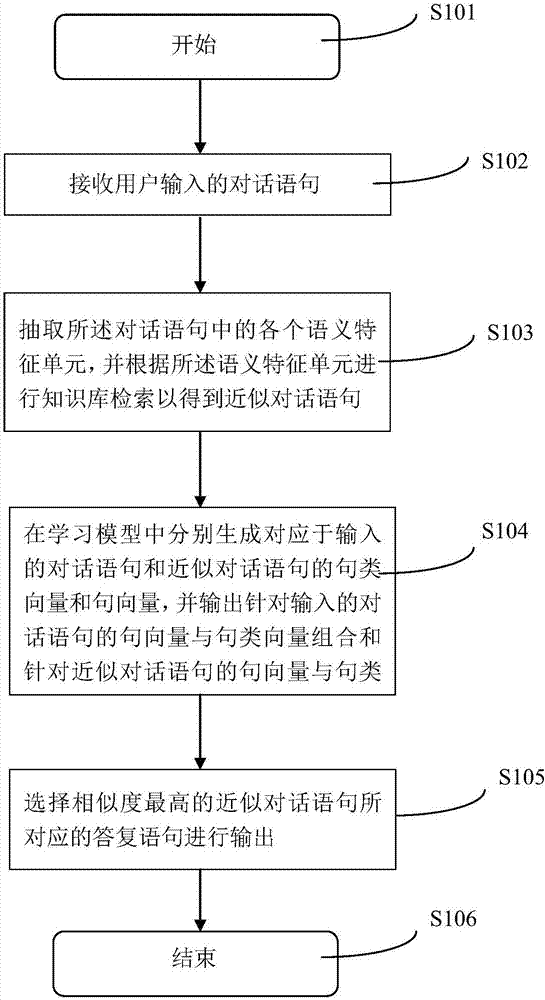

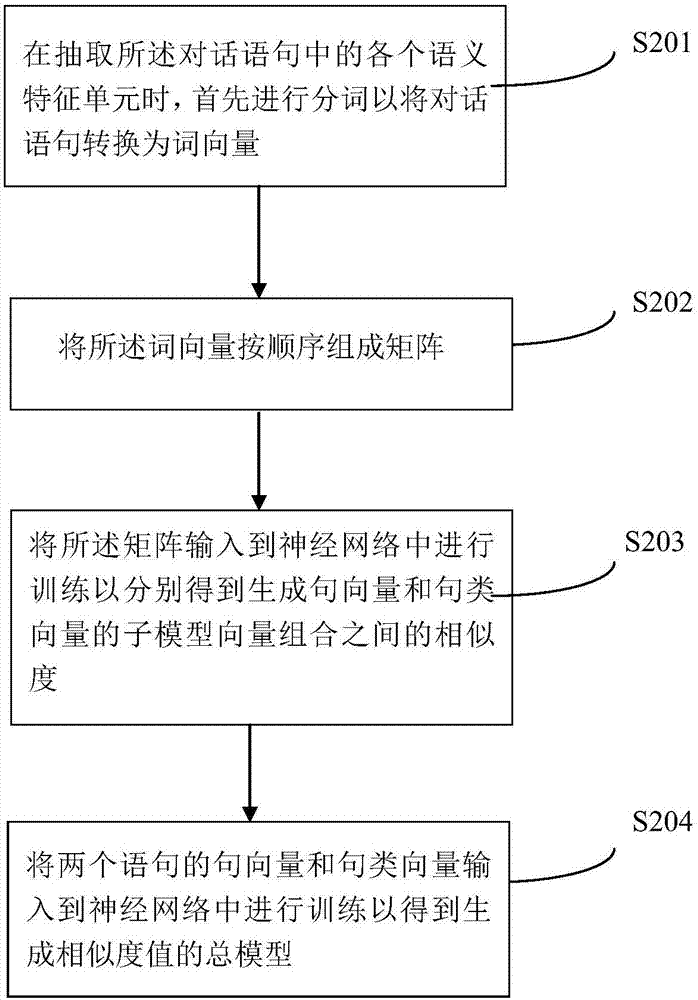

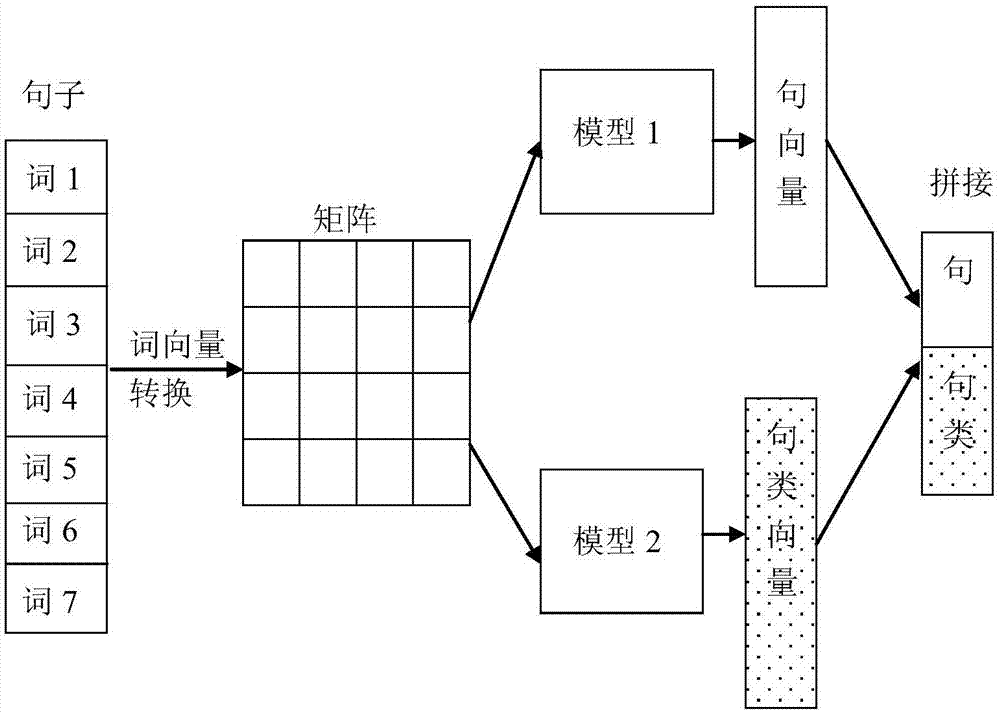

Deep learning-based robot conversation interaction method and apparatus

The invention provides a deep learning-based robot conversation interaction method. The method comprises the steps of receiving a conversation statement input by a user; extracting semantic feature units in the conversation statement, and performing knowledge base retrieval according to the semantic feature units to obtain a similar conversation statement; generating sentence type vectors and sentence vectors corresponding to the input conversation statement and the similar conversation statement respectively in a learning model, and outputting the similarity between a combination of the sentence vectors and the sentence type vectors for the input conversation statement and a combination of the sentence vectors and the sentence type vectors for the similar conversation statement; and selecting and outputting a reply statement corresponding to the similar conversation statement with highest similarity. According to the method, a robot can judge not only semantics according to a single word of Chinese but also statement semantics consisting of the words and the similarity among different statements composed of the same words, so that an appropriate reply is found and output more accurately for an intention of a conversation object, and the man-machine interaction experience effect is greatly improved.

Owner:BEIJING GUANGNIAN WUXIAN SCI & TECH

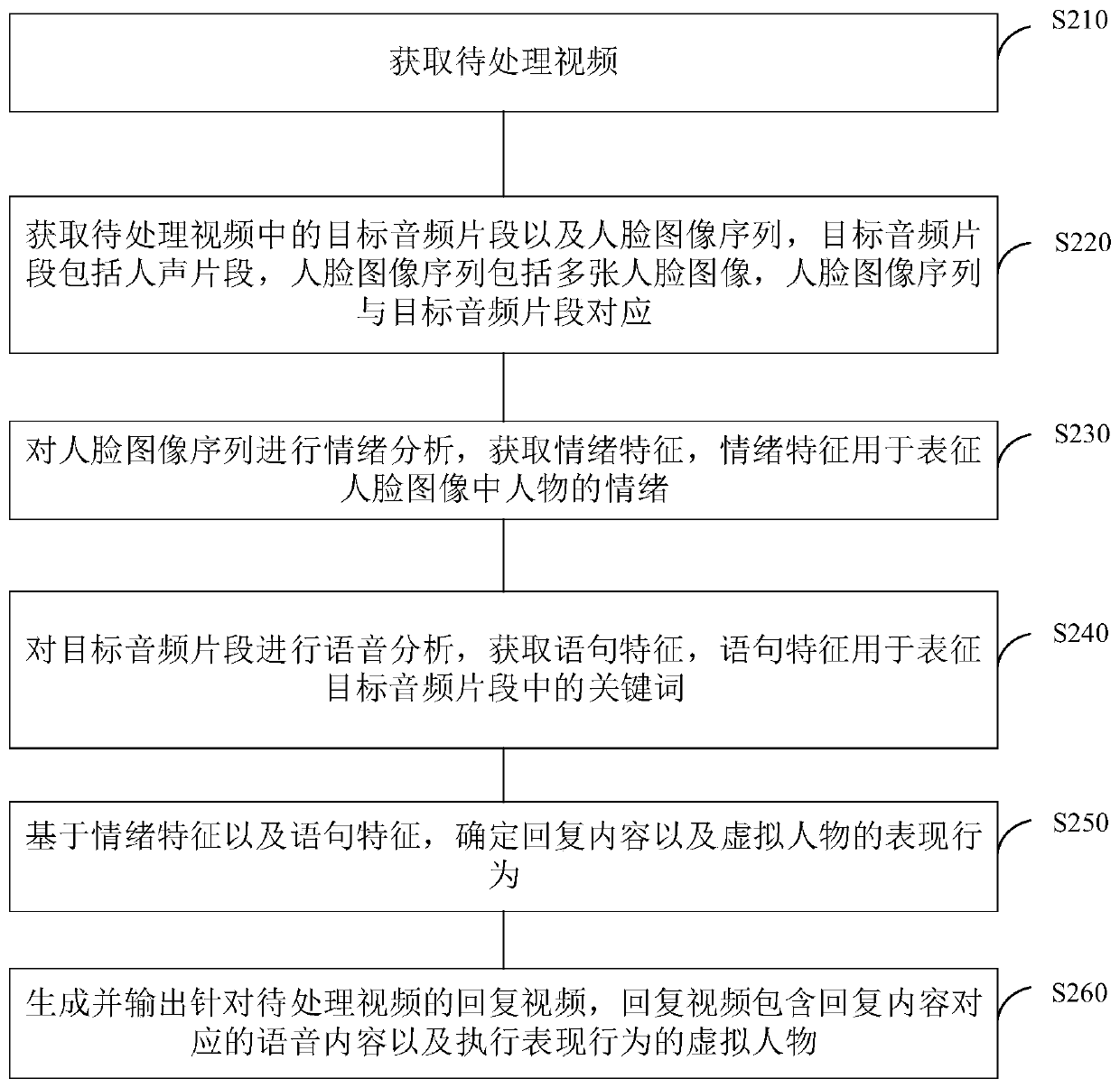

Video processing method, device and system, terminal equipment and storage medium

ActiveCN110688911AImprove the accuracy of semantic understandingImprove realismCharacter and pattern recognitionSpeech recognitionComputer graphics (images)Video processing

The embodiment of the invention discloses a video processing method and device, terminal equipment and a storage medium. The method comprises the steps of obtaining a to-be-processed video; obtaininga target audio clip and a face image sequence in the to-be-processed video; performing emotion analysis on the face image sequence to obtain emotion features; performing voice analysis on the target audio clip to obtain statement features which are used for representing keywords in the target audio clip; based on the emotional characteristics and the statement characteristics, determining reply contents and expression behaviors of the virtual character; and generating and outputting a reply video for the to-be-processed video, wherein the reply video comprises voice content corresponding to the reply content and a virtual character for executing the expression behavior. According to the embodiment of the invention, the emotional characteristics and the statement characteristics of the character can be obtained according to the speaking video of the character, and the virtual character video matched with the emotional characteristics and the statement characteristics is generated as thereply, so that the sense of reality and naturalness of human-computer interaction are improved.

Owner:SHENZHEN ZHUIYI TECH CO LTD

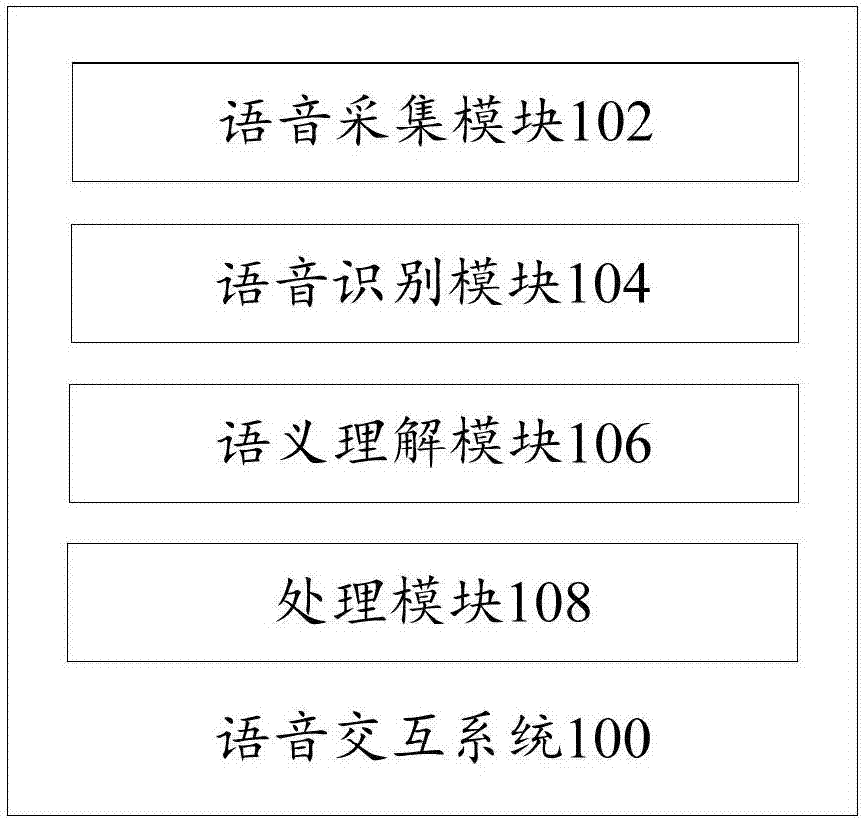

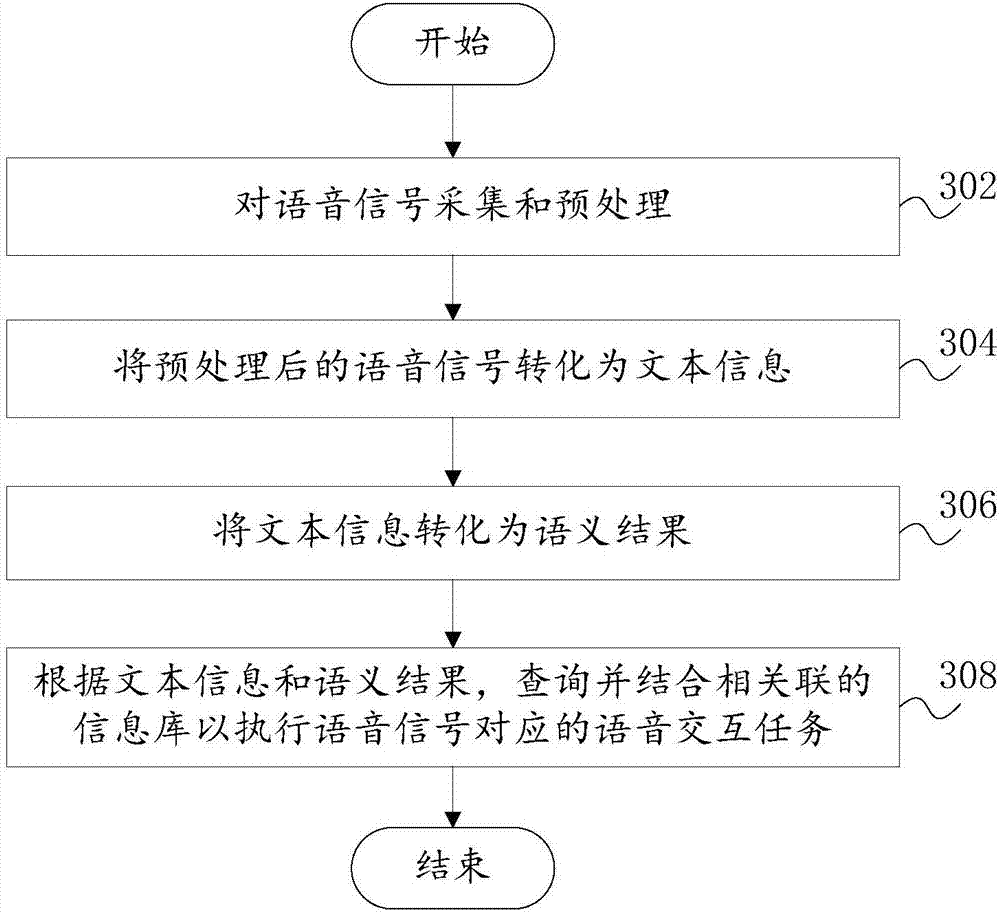

Refrigerator, voice interaction system, method, computer device and readable storage medium

ActiveCN107146622AAccurate understandingImprove intelligenceLighting and heating apparatusDomestic refrigeratorsInteraction systemsInformation repository

The invention proposes a refrigerator, a voice interaction system, a voice interaction method, a computer device and a computer readable storage medium, wherein the voice interaction system comprises a voice acquiring module used to acquire and pre-process the voice signals and to transmit the pre-processed voice signals to a voice recognition module; the voice recognition module used to convert the processed voice signals into text information and to transmit the text information to a processing module and a semantics understanding module respectively; the semantic understanding module used to convert the text information into semantic result and to transmit the semantic result to the processing module; and the processing module used to check and combine related information base according to the text information and the semantic result to execute voice interaction tasks corresponding to the voice signals. According to the invention, interactive scenes are provided in advance so as to understand the user's languages more accurately, to realize more natural human-machine interactions, to increase the interaction experience and to make the refrigerator more intelligent.

Owner:HEFEI MIDEA INTELLIGENT TECH CO LTD

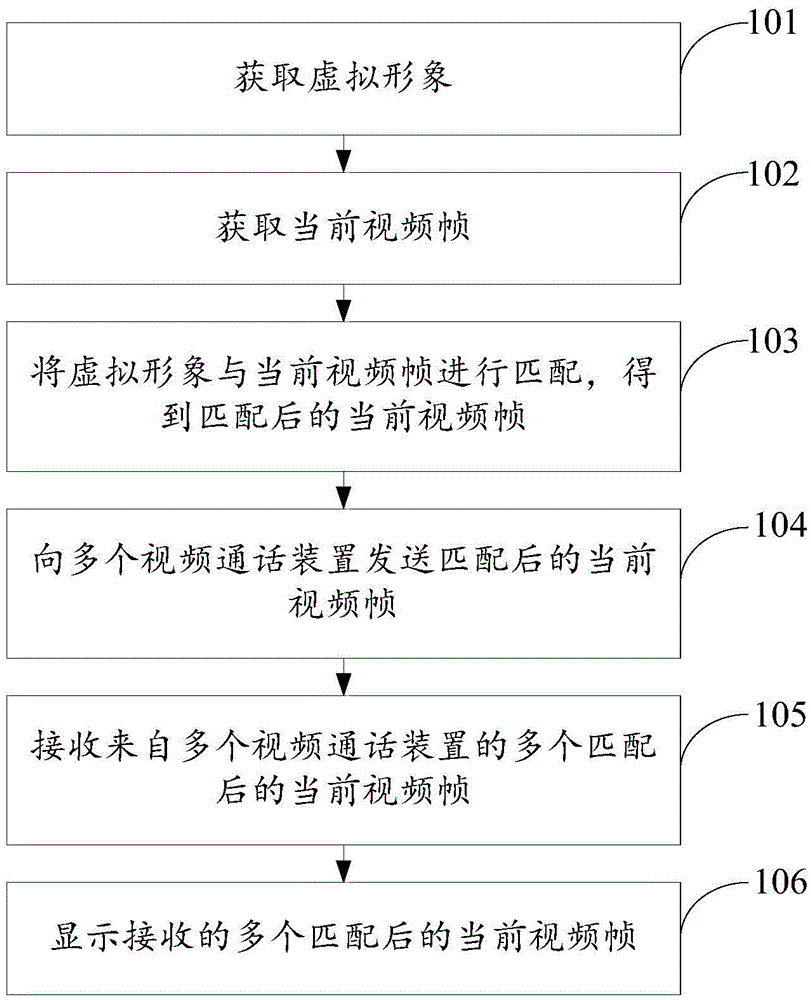

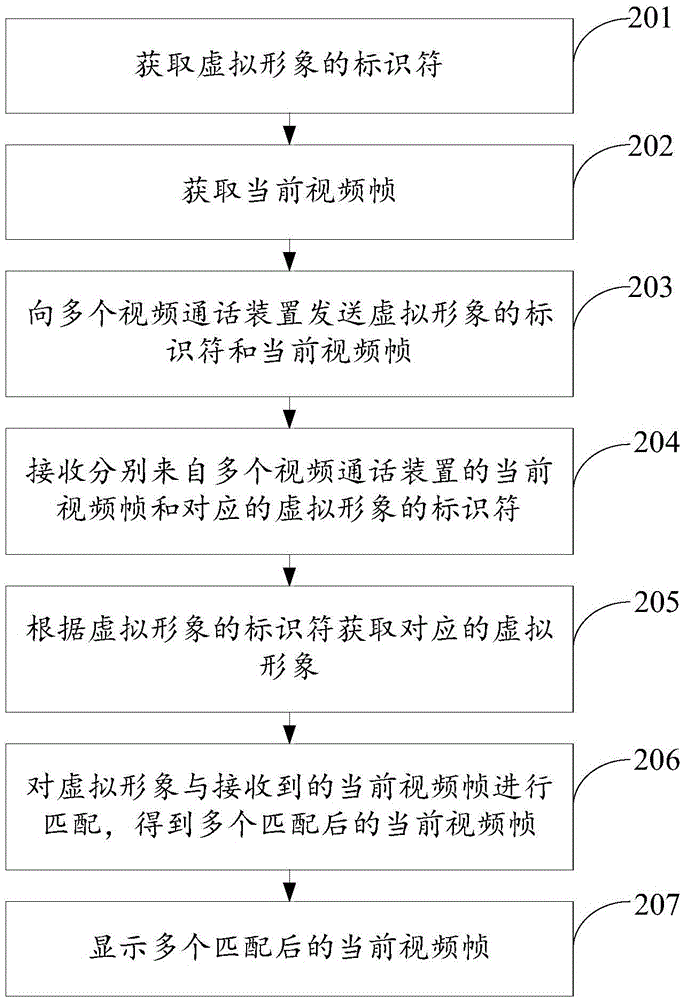

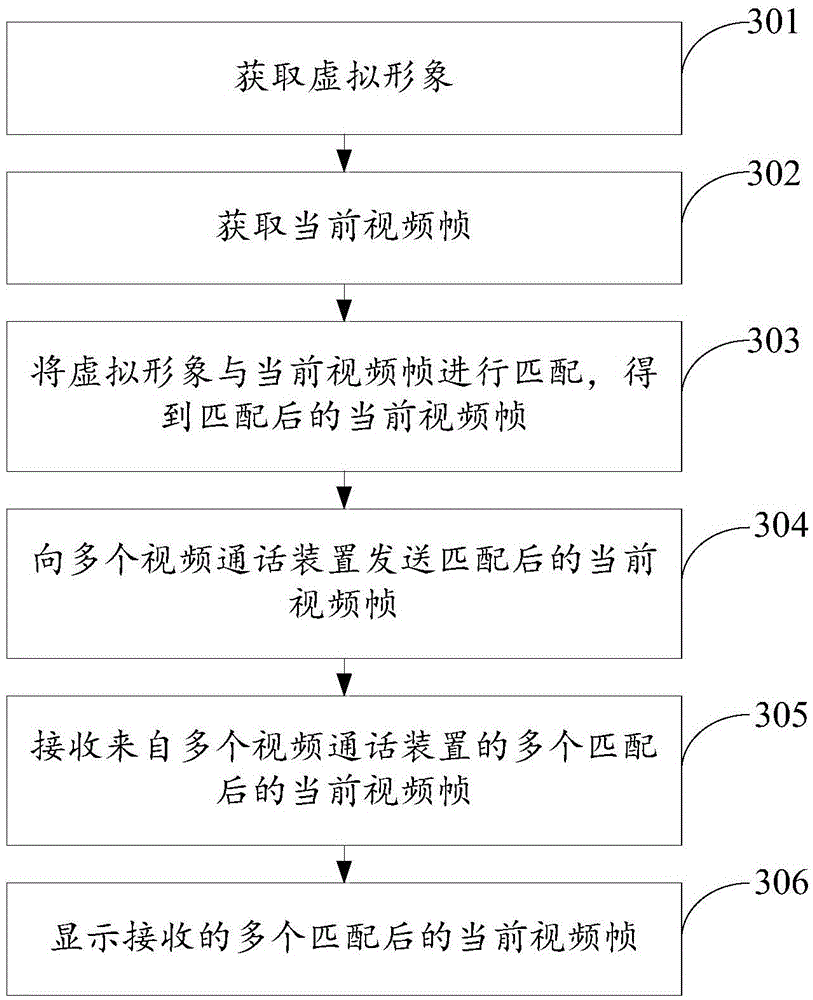

Video call method and device

InactiveCN105611215AImprove interactivityMeet individual needsTwo-way working systemsPersonalizationVirtual image

The invention discloses a video call method and device, and belongs to the field of video. The video call method comprises the steps of obtaining a virtual image; obtaining a current video frame; matching the virtual image with the current video frame to obtain a matched current video frame; sending the matched current video frame to multiple video call devices; receiving multiple matched current video frames from the multiple video call devices; and displaying the received multiple matched current video frames. Through displaying the current video frames obtained after the current video frame and the virtual image are matched in a multi-user instant video interaction process, the video call method, compared with the traditional multi-user instant video display method, adds a multi-user instant video display mode, satisfies individual demands of users in the multi-user instant video interaction process, increases interactivity of multi-user instant video participators in the interaction process and improves interaction experience.

Owner:PALMWIN INFORMATION TECH SHANGHAI

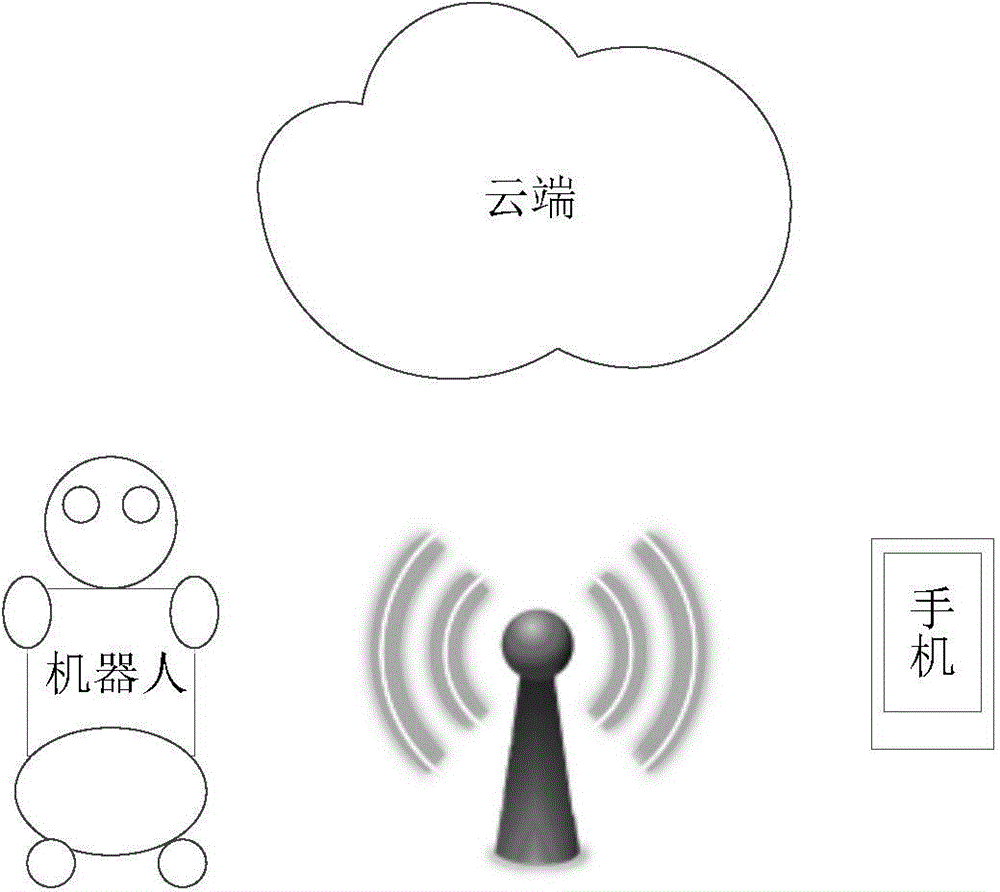

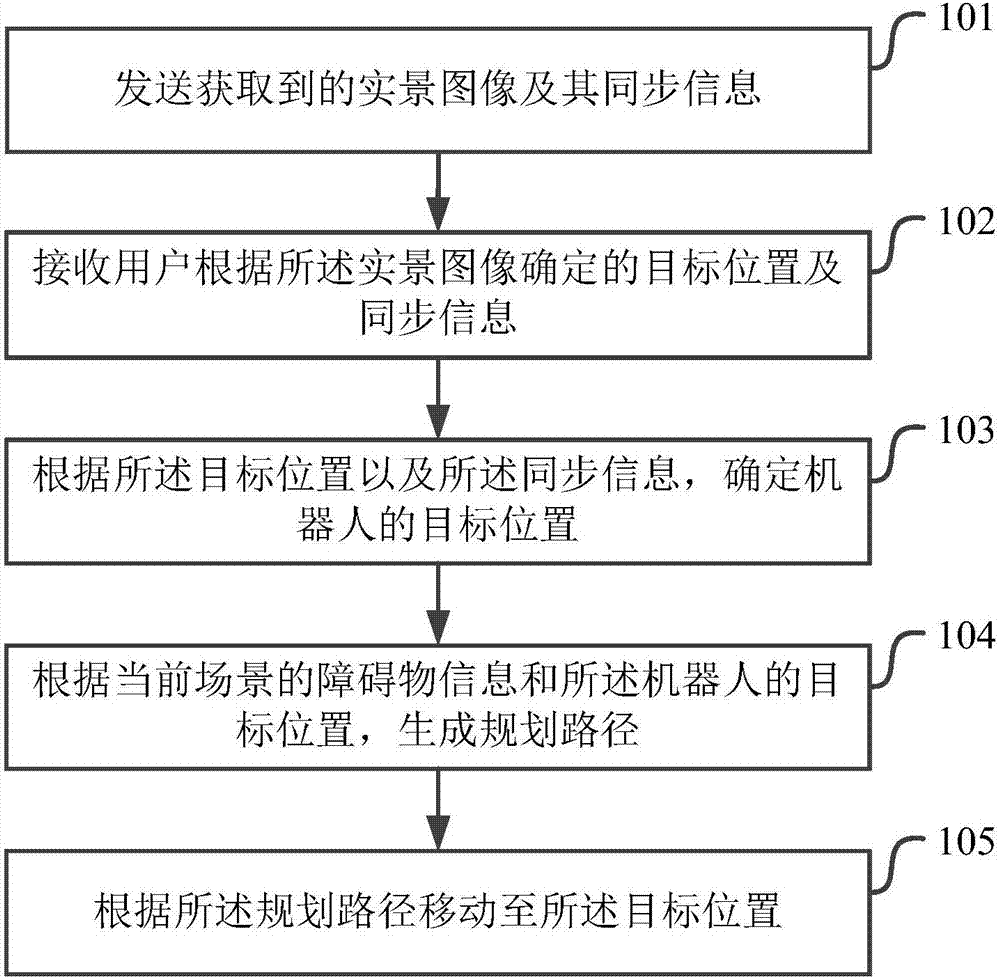

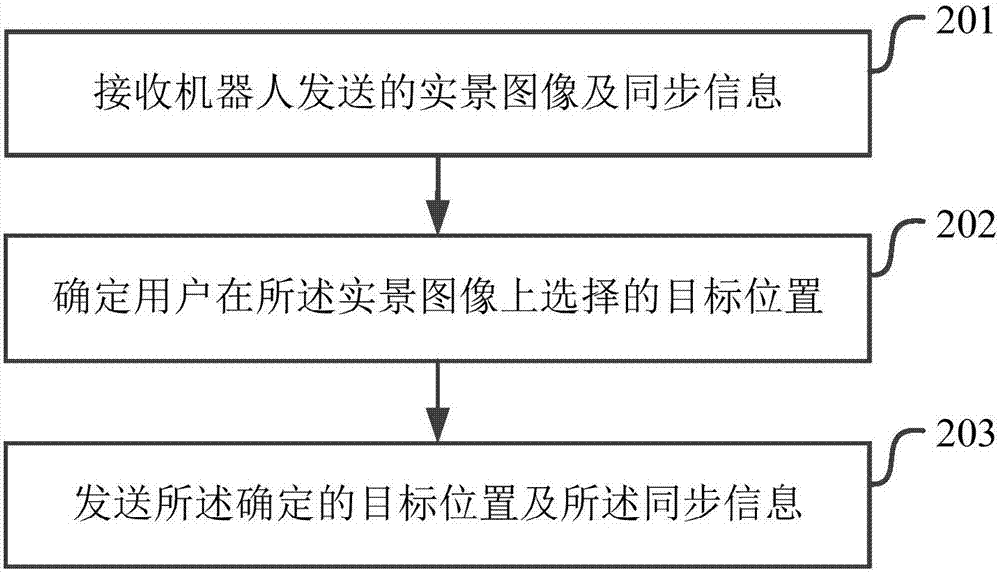

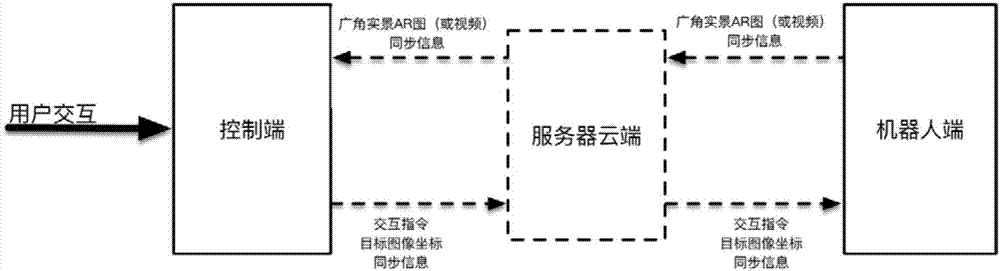

Robot realization method, control method, robot and electronic device

InactiveCN107515606ASimple motion controlLow control frequencyPosition/course control in two dimensionsVehiclesMovement controlElectronic equipment

The invention provides a robot realization method, a control method, a robot and an electronic device. The robot realization method comprises steps: the robot sends an acquired real scene image and synchronization information thereof, and a control end determines a target position according to the real scene image and the target position and the synchronization information are sent to the robot; according to the target position and the synchronization information, the robot determines the target position of the robot; according to the obstacle information of the current scene and the target position of the robot, a planning path is generated; and according to the planning path, the robot is moved to the target position. By adopting the technical scheme provided by the invention, a user only needs to specify a target point on the real scene image uploaded by the robot, the robot can be autonomously navigated to the target point according to the target point selected by the user, the motion control on the robot is more convenient, the control frequency is lower, the control instruction amount is reduced, and a friendly interactive experience can also be provided in a condition with a poor remote control network environment.

Owner:BEIJING DEEPGLINT INFORMATION TECH

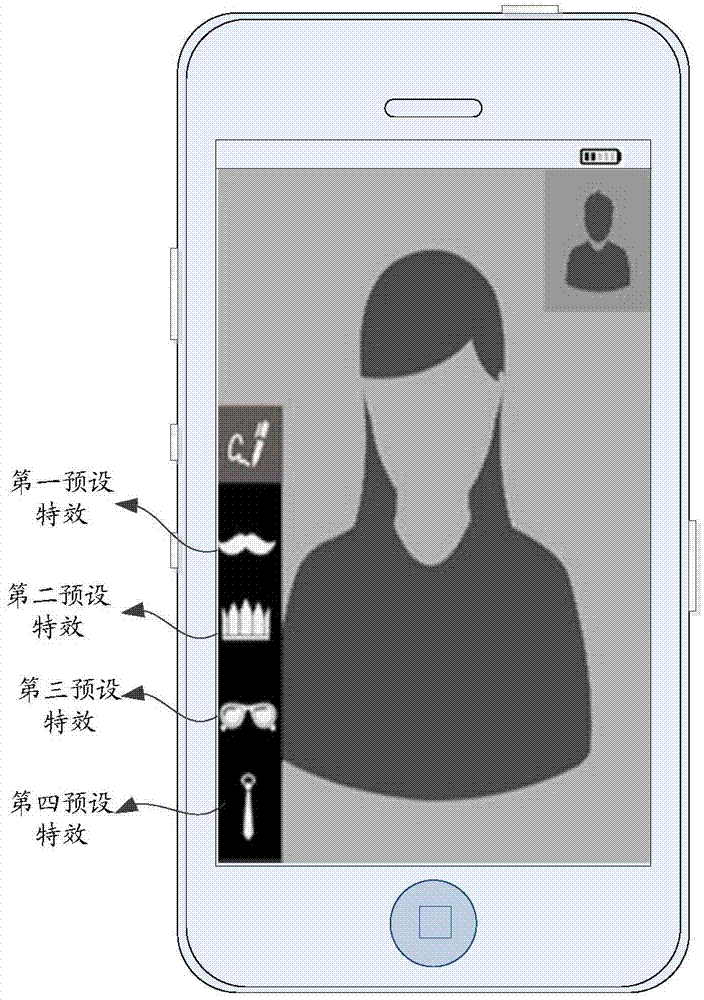

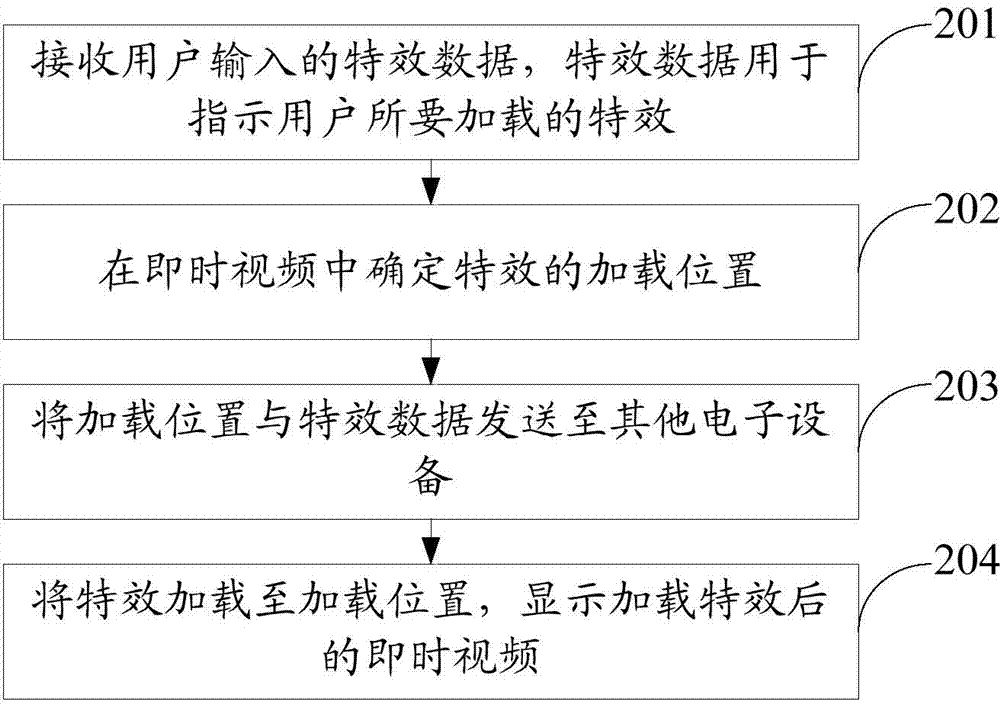

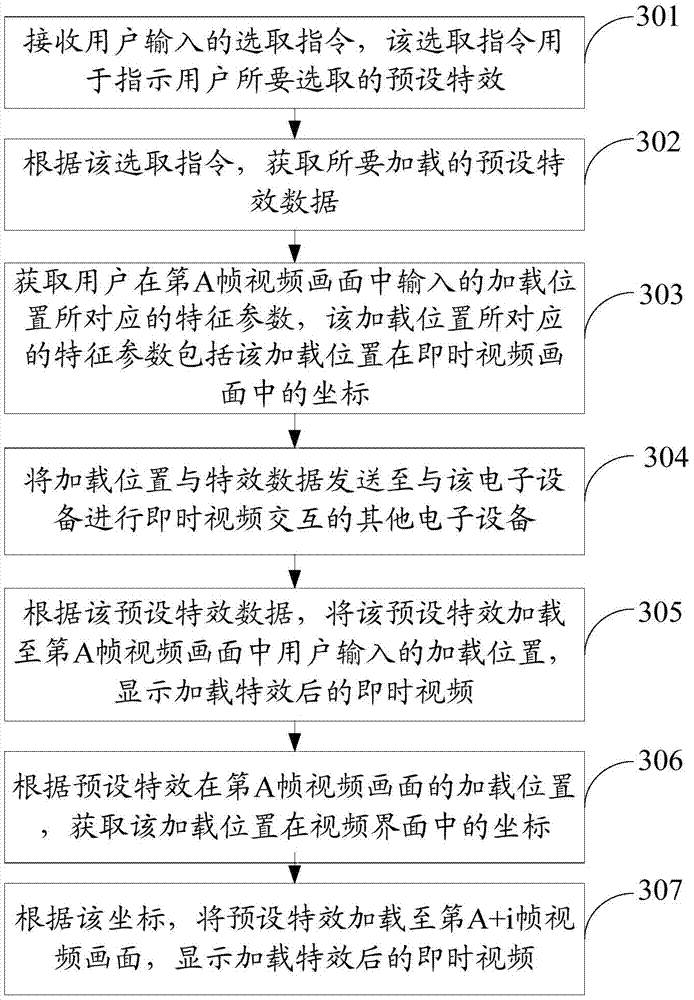

Method and electronic equipment for loading effects in instant video

InactiveCN104780458AImprove interactive experienceRich demandSelective content distributionData inputElectric equipment

The invention provides a method and electronic equipment for loading effects in an instant video, and belongs to the field of videos. The method comprises the following steps: receiving effect data input by a user, wherein the effect data is used for indicating the effect which needs to be loaded by the user; determining the loading position input by the user in an instant video image; sending the loaded position and the effect data to other electronic equipment; loading the effect to the loading position, and showing the instant video after the effect is loaded; therefore, the effect input by the user is loaded to the instant video, the diversified requirements of the user on instant video communication are satisfied, and the interaction experience of the user is improved.

Owner:CHATGAME

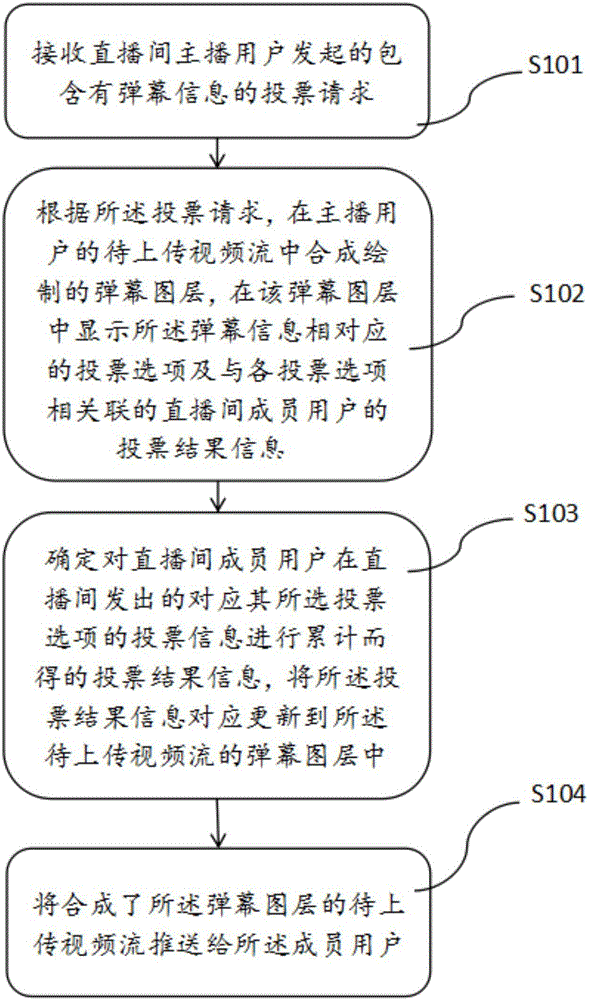

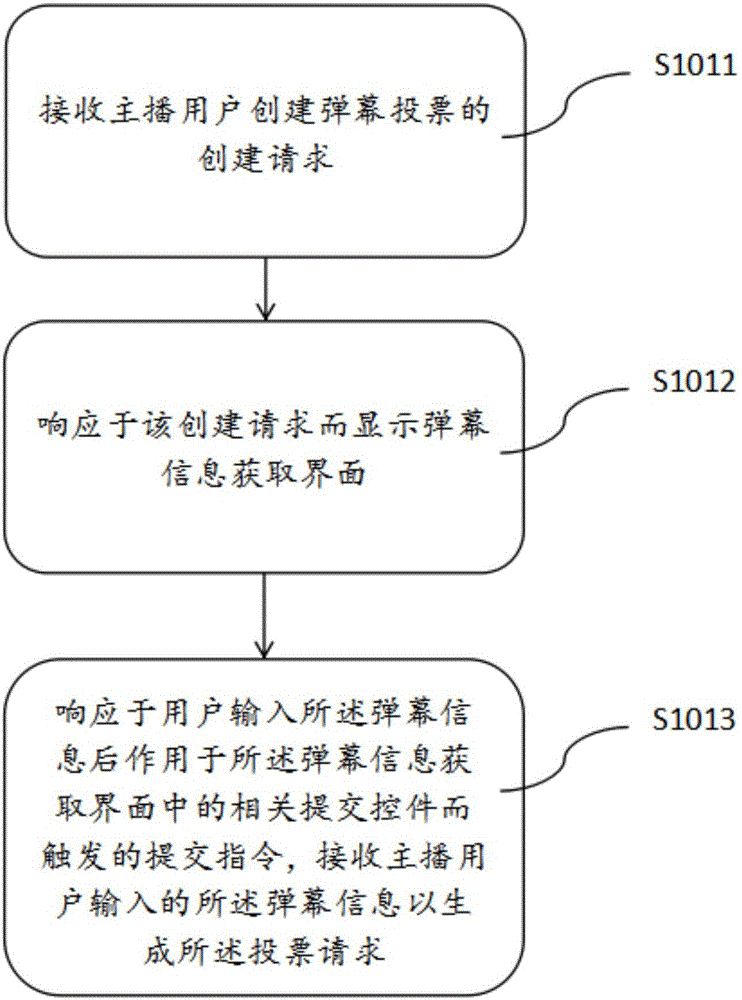

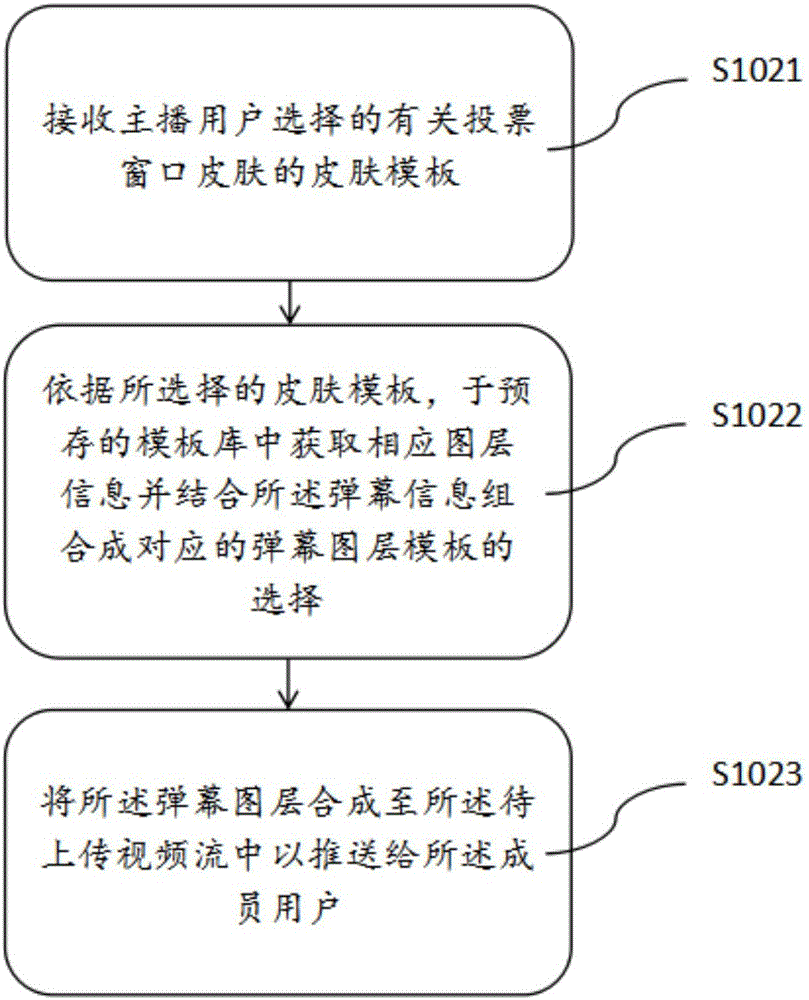

Vote interaction method and device based on live broadcast room video stream bullet screens

ActiveCN106792229AGuaranteed time limit for votingEnsure fairnessSelective content distributionMultimediaInteraction method

The invention provides a vote interaction method and device based on live broadcast room video stream bullet screens. The method comprises the steps of receiving a vote request which is sent by a live broadcast room anchor user and comprises bullet screen information; synthesizing a drawn bullet screen layer into to-be-uploaded video stream of the anchor user according to the vote request, and displaying vote options corresponding to the bullet screen information and vote result information of live broadcast room member users associated with the vote options, in the bullet screen layer; determining the volte result information obtained by accumulating the vote information corresponding to the selected vote options sent by the live broadcast room member users in a live broadcast room, and correspondingly updating the vote result information to the bullet screen layer in to-be-uploaded video stream; and pushing the to-be-uploaded video stream into which the bullet screen layer is synthesized to the member users. According to the method and the device, the timeliness of the data is improved, the machine load of the live broadcast room member users is reduced, the interaction experience among the users is improved, and the great innovation is achieved.

Owner:GUANGZHOU HUYA INFORMATION TECH CO LTD

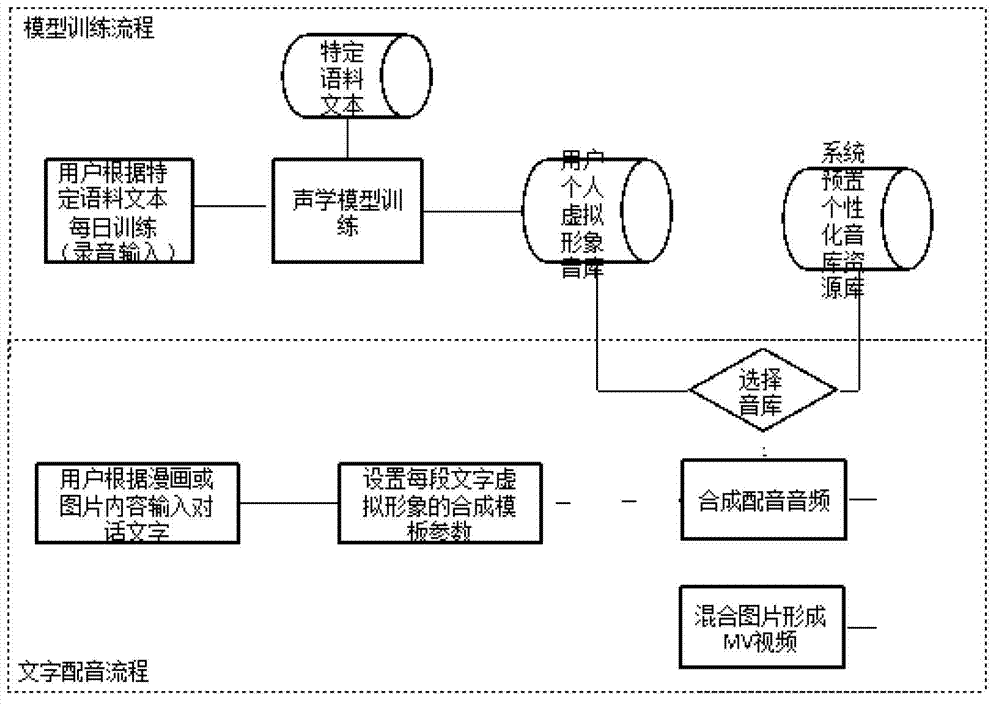

Application method of special human voice synthesis technique in mobile phone cartoon dubbing

The invention discloses an application method of special human voice synthesis technique in mobile phone cartoon dubbing According to the method, characters input by a user is received by a mobile phone, according to the special human voice synthesis technique, audio data imitating tones and timbres of different voice generating persons are generated, and dubbing is conducted for pictures or cartoons. The characters input by the user on the mobile phone is received, The characters are processed through the special human voice synthesis technique, synthesis template parameters are arranged, various synthesis audio imitating dialogue between men and women, and between old persons and children, and the like is achieved, and dubbing of the pictures and cartoons of the mobile phone is achieved. According to the application method, the characters input by the user is converted into the voice of special persons even the voice of relatives of the special persons, dubbing for the pictures and the cartoons of the mobile phone is achieved, interests are increased, and user interaction experience is improved.

Owner:IFLYTEK CO LTD

A three-dimensional display system and method based on cloud computing

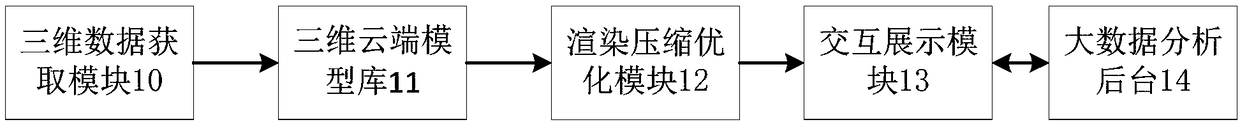

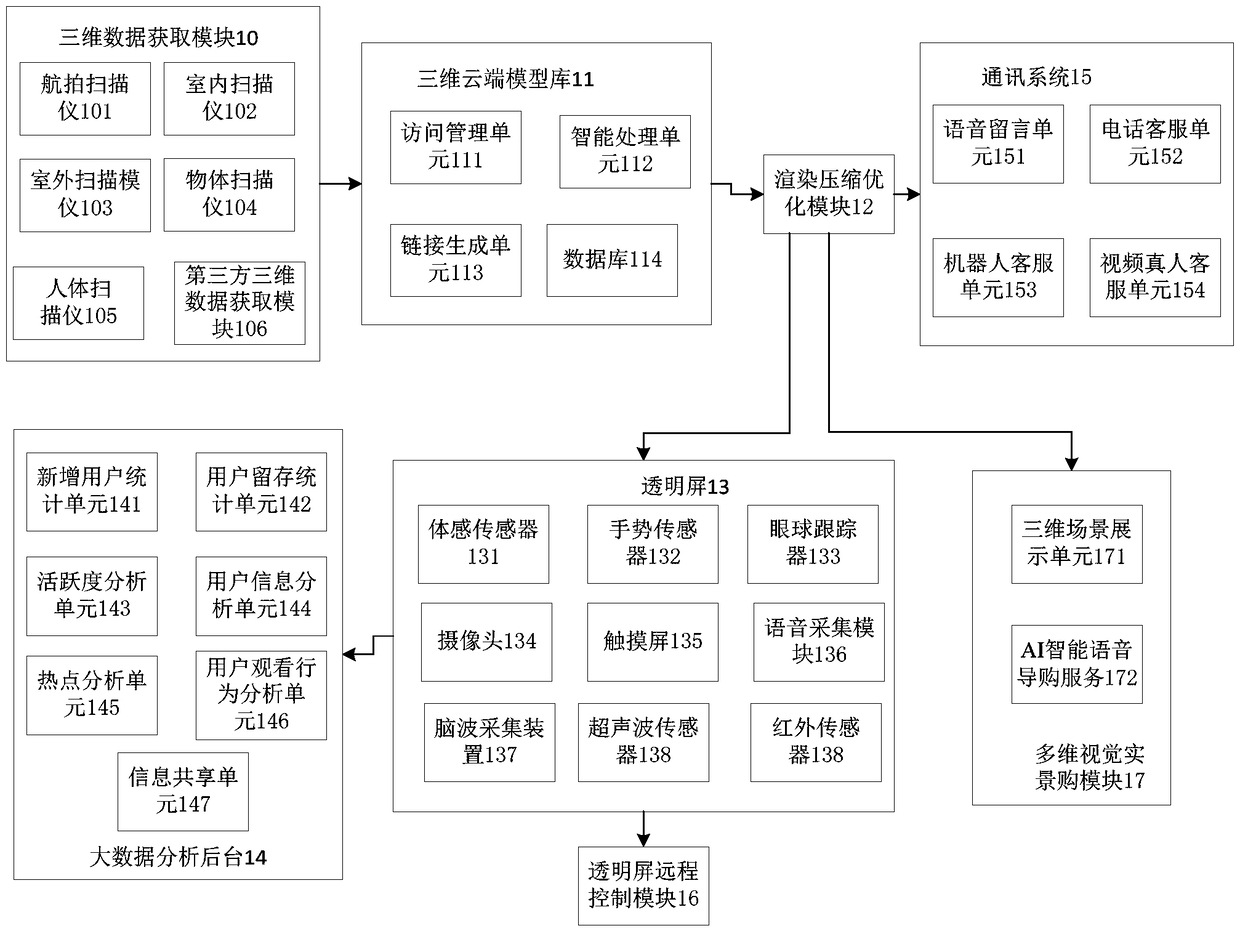

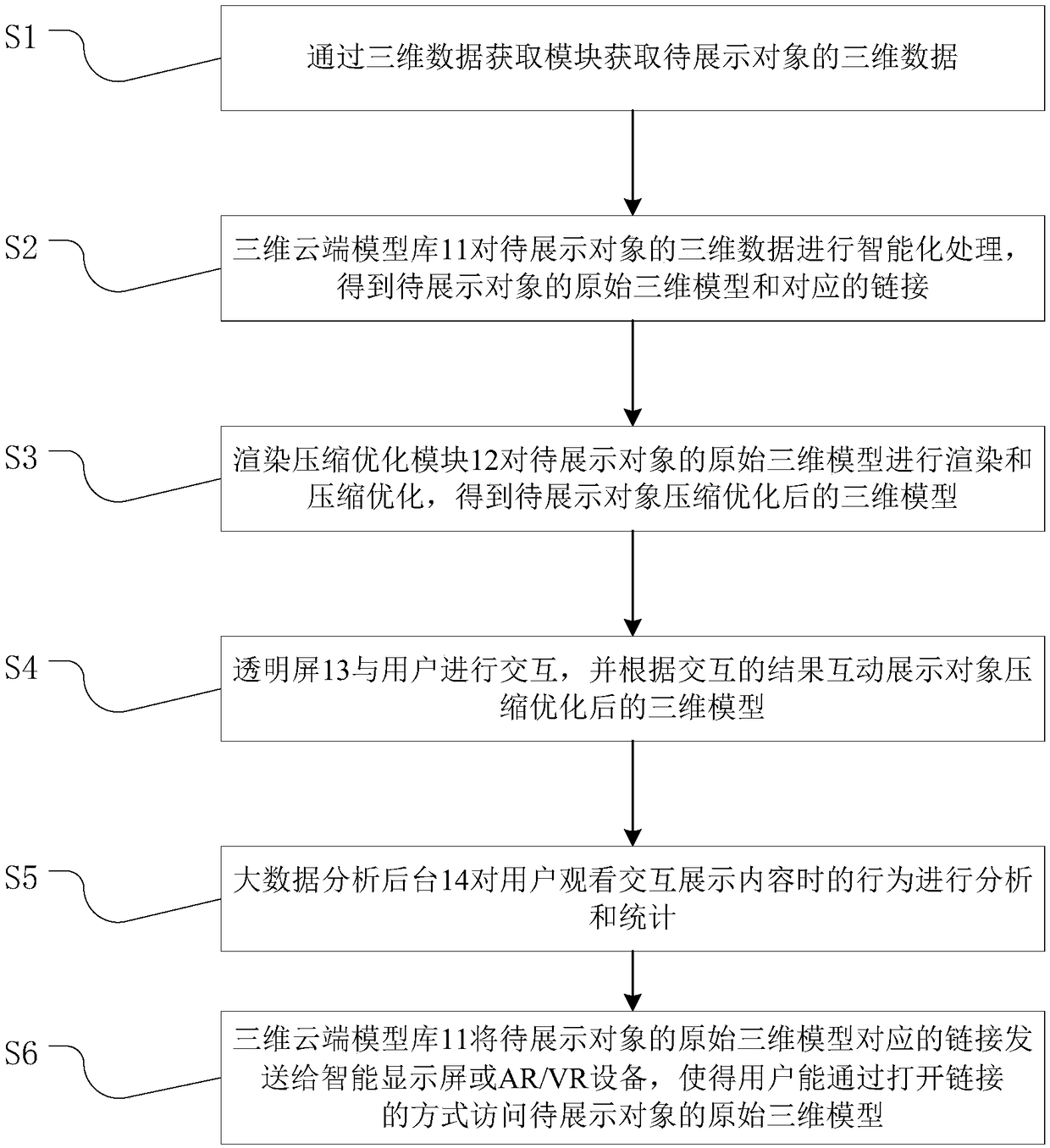

ActiveCN109085966AEfficient use ofImprove interactive experienceAdvertisingInput/output processes for data processingData profilingInteractive displays

The invention discloses a three-dimensional display system and method based on cloud computing. The system comprises a three-dimensional data acquisition module, a three-dimensional cloud model library, a rendering compression optimization module, an interactive display module and a big data analysis background. 3-D data of an object to be displayed is intelligently processed base on a cloud computing service, the original three-dimensional model and the corresponding link of the object to be displayed are obtained, and the original three-dimensional model and the corresponding link of the object to be displayed are mutually displayed according to the obtained link or the three-dimensional model of the object to be displayed after the optimization of rendering and compression, so that theobject to be displayed can interact with a user, and the interaction experience is good; by linking or interacting with the optimized three-dimensional model of the object to be displayed, the cross-platform display can be realized, and the multi-screen interaction and air imaging are also supported, so that the functions are more abundant; The system can analyze and count the user's behavior whena user viewing the interactive display content, so as to obtain the interesting points of different viewers for the convenience of business use, and to be more comprehensive. The system and method can be widely applied to the technical field of display.

Owner:GUANGDONG KANG YUN TECH LTD

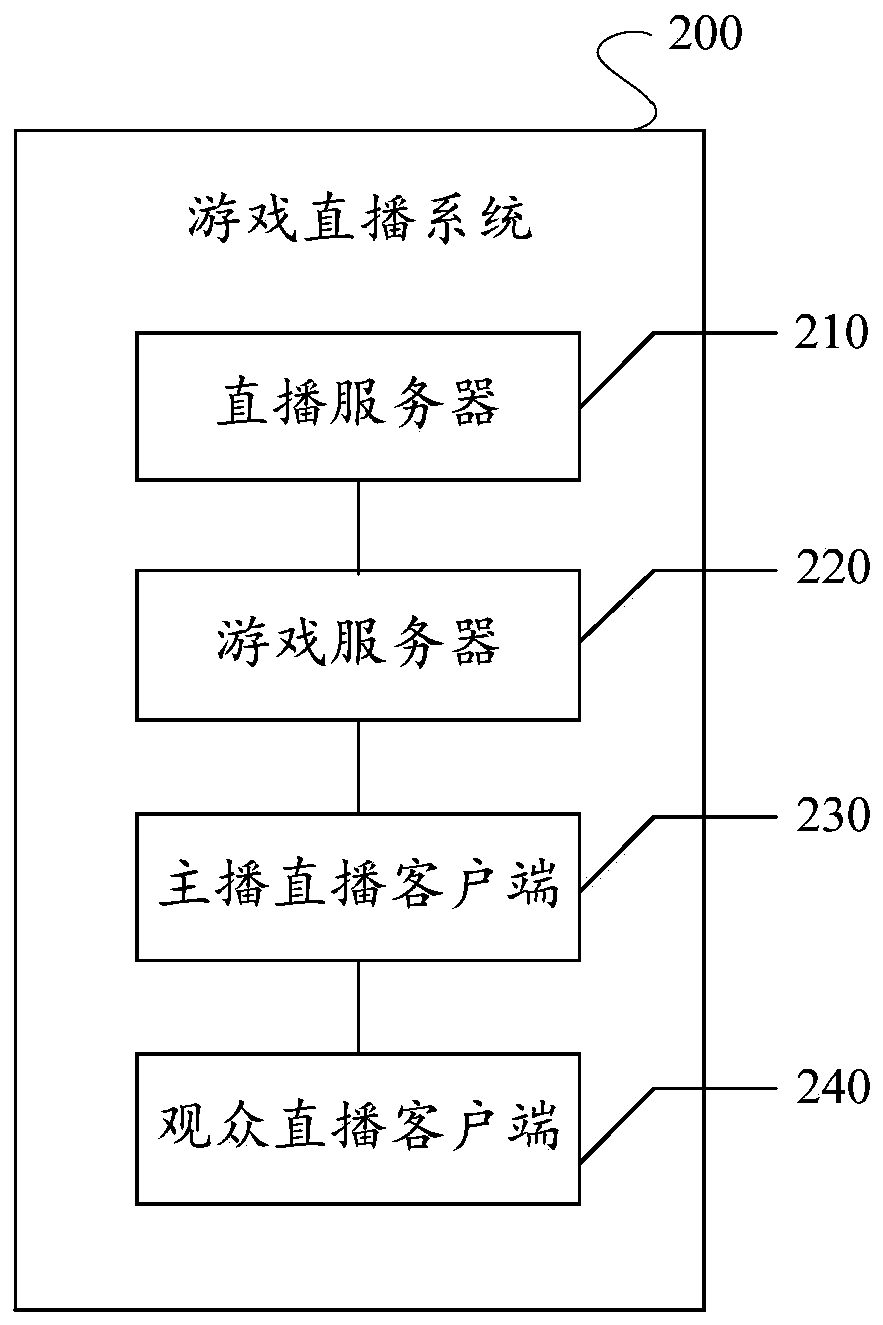

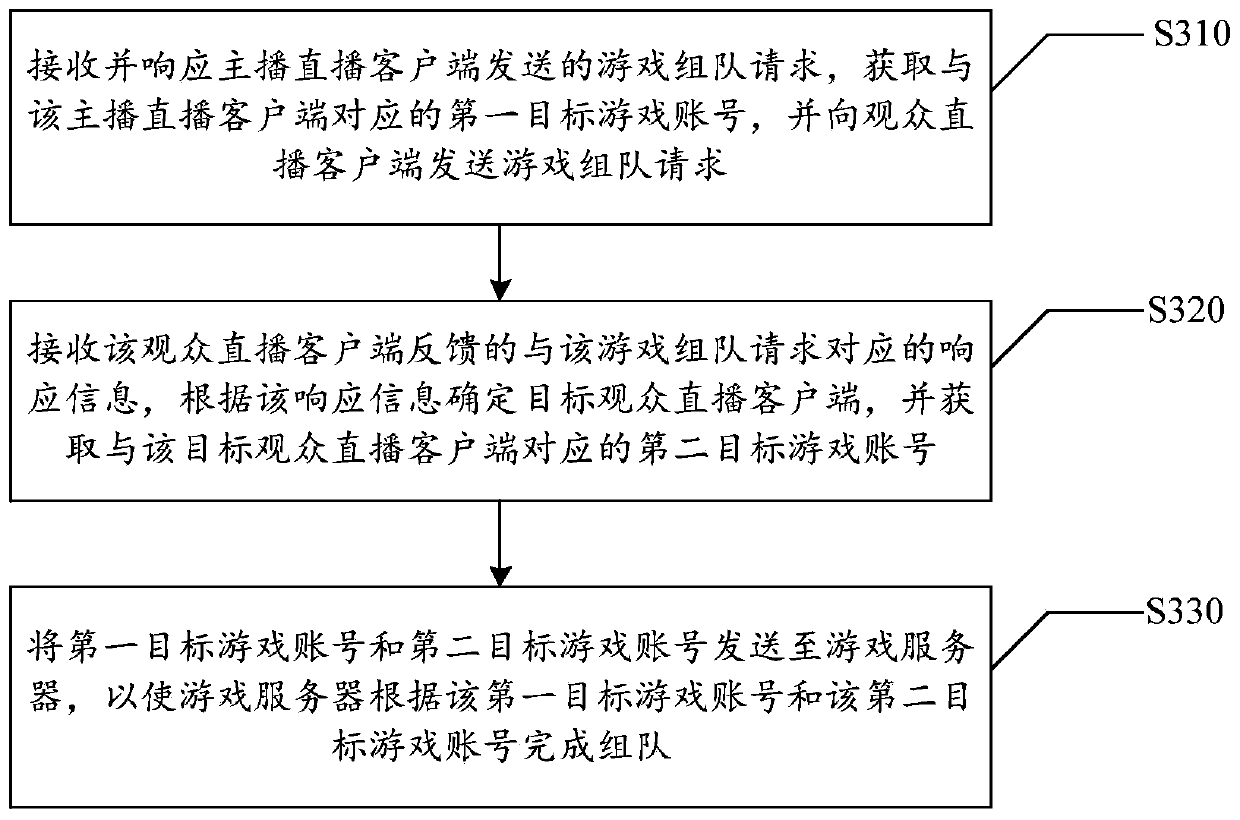

Game team-forming method and device in live streaming, and storage medium and electronic equipment

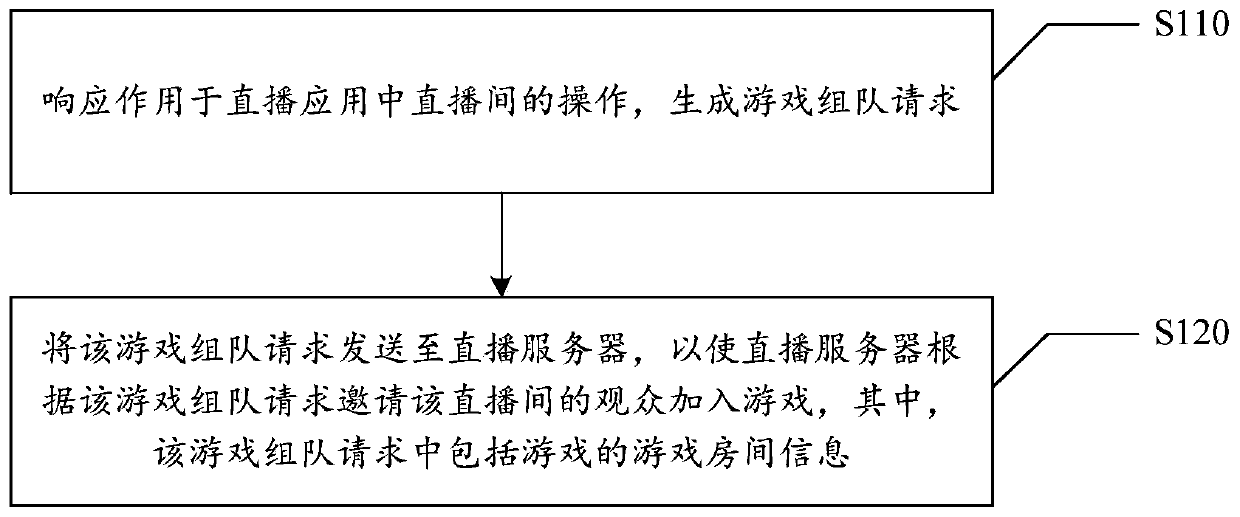

PendingCN110496396AImprove efficiencyIncrease engagementVideo gamesSelective content distributionLive streaming

The invention relates to the technical field of games, and provides a game team-forming method in live streaming, a game team-forming device in live streaming, a computer readable storage medium and electronic equipment; the game team-forming method in live streaming comprises the steps of responding to operation acting on a live streaming room in a live streaming application, and generating a game team-forming request; and sending the game team-forming request to a live streaming server so as to enable the live streaming server to invite an audience in the live streaming to join games according to the game team-forming request, wherein the game team-forming request comprises game room information of the game. According to the method and the device, the game team forming can be quickly andefficiently completed in a game live streaming interface, the tedious setting and the process in the prior art are simplified, the efficiency of the game team forming is improved, and the interactionbetween a host and the audience is enhanced through game interaction.

Owner:NETEASE (HANGZHOU) NETWORK CO LTD

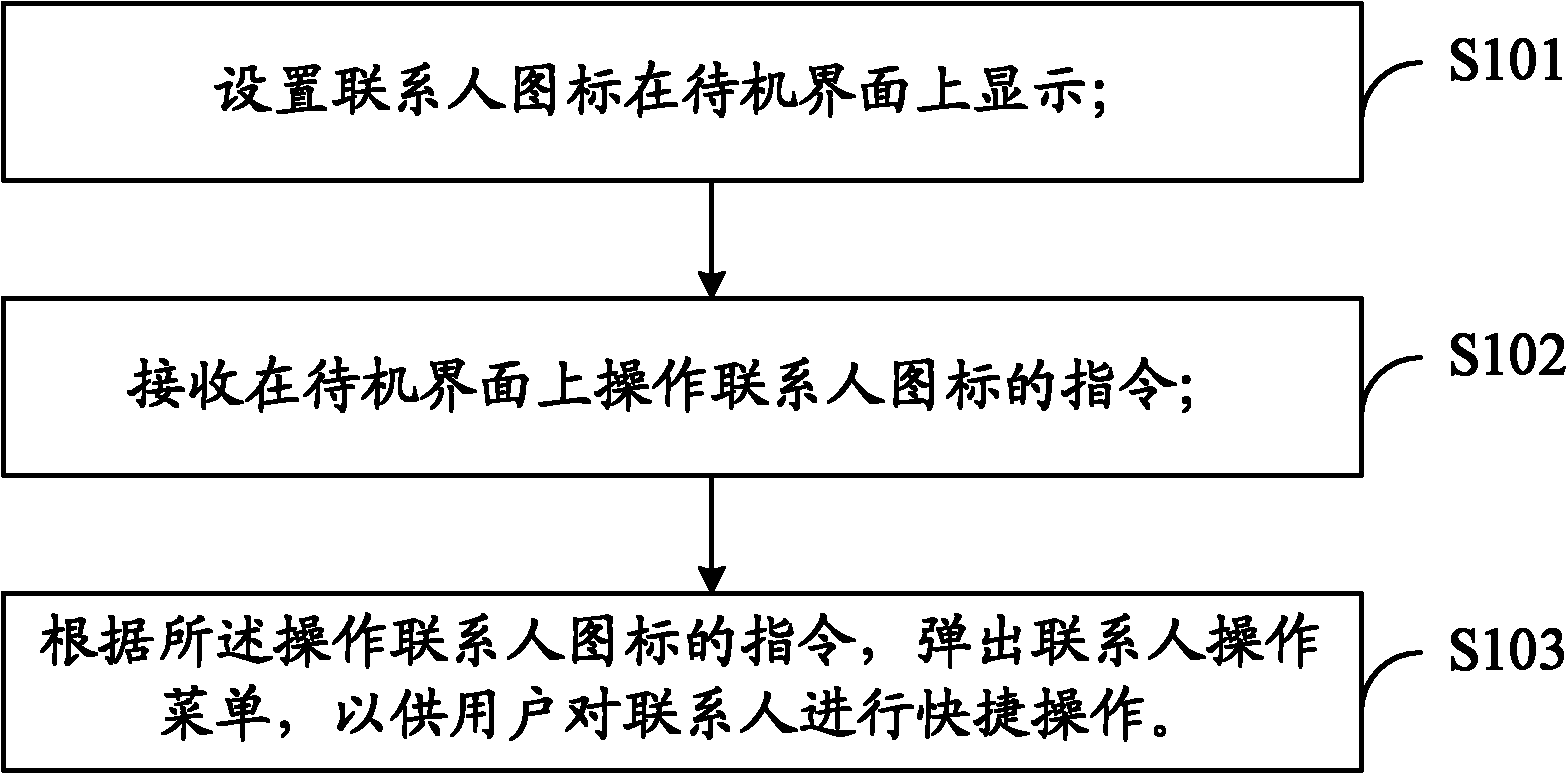

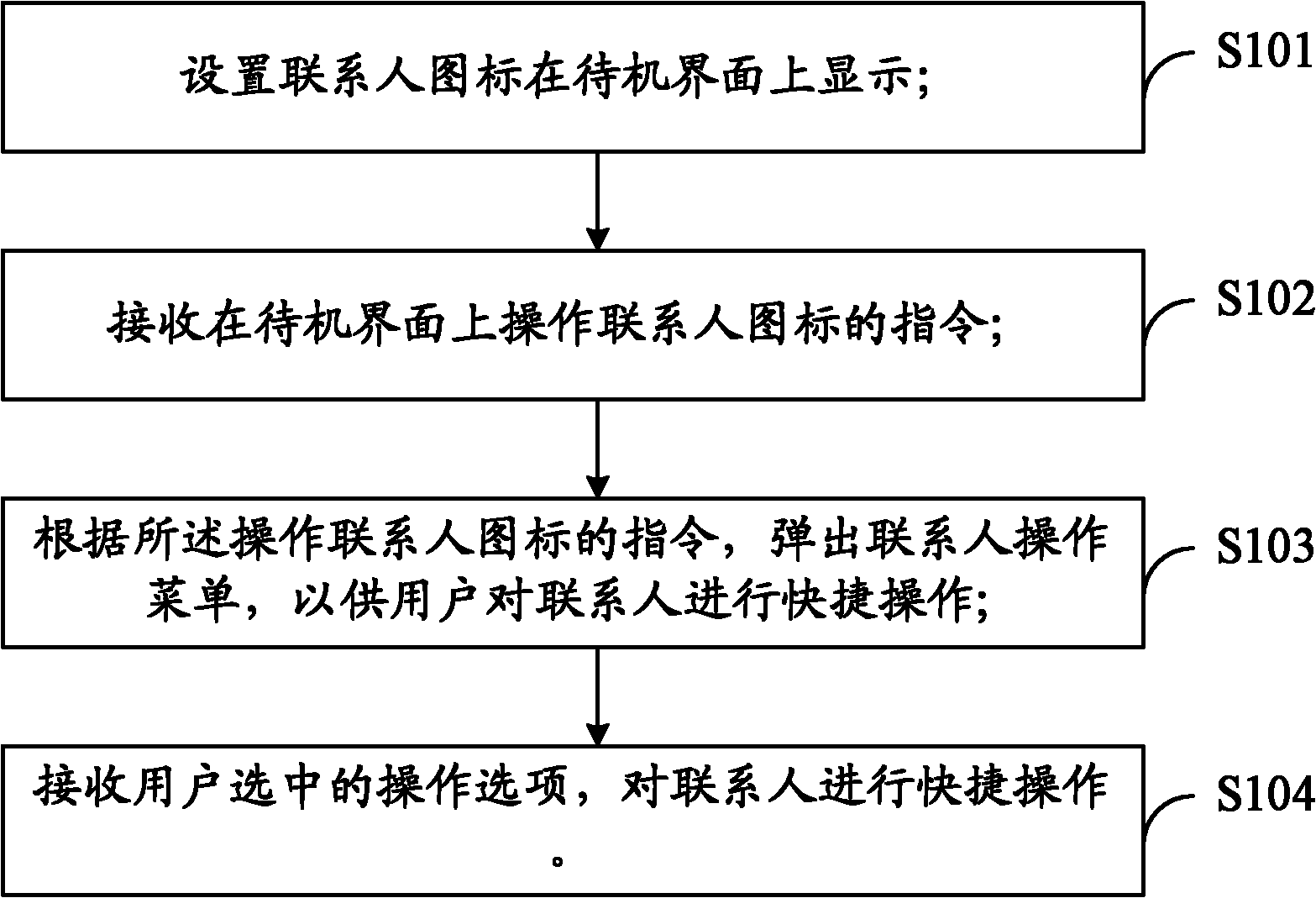

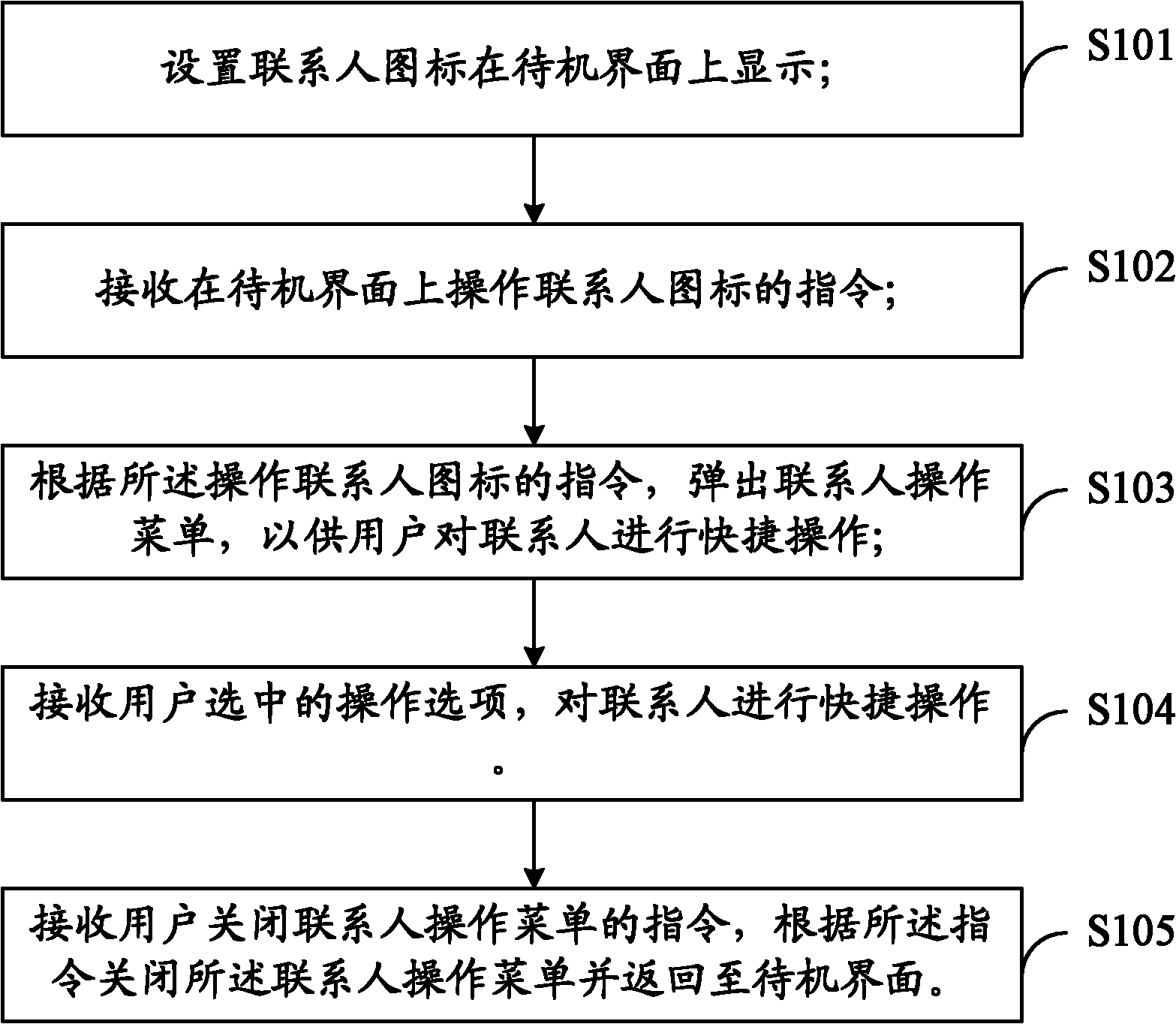

Mobile terminal and method and system for carrying out rapid and convenient operation on contacts

InactiveCN102065166AReduce operating proceduresImprove interactive experienceTelephone sets with user guidance/featuresComputer science

The invention is suitable for the field of mobile terminals and provides a mobile terminal and a method and system for carrying out rapid and convenient operation on contacts. The method comprises the following steps of: displaying a contact icon on a standby interface; receiving a command for operating the contact icon on the standby interface; and popping up a contact operation menu for a user to carry out rapid and convenient operation on the contacts according to the command of operating the contact icon. In the method, an operation process that the user enters an application interface is omitted and the interactive experience and the subjective satisfaction degree of the user are improved.

Owner:DONGGUAN YULONG COMM TECH +1

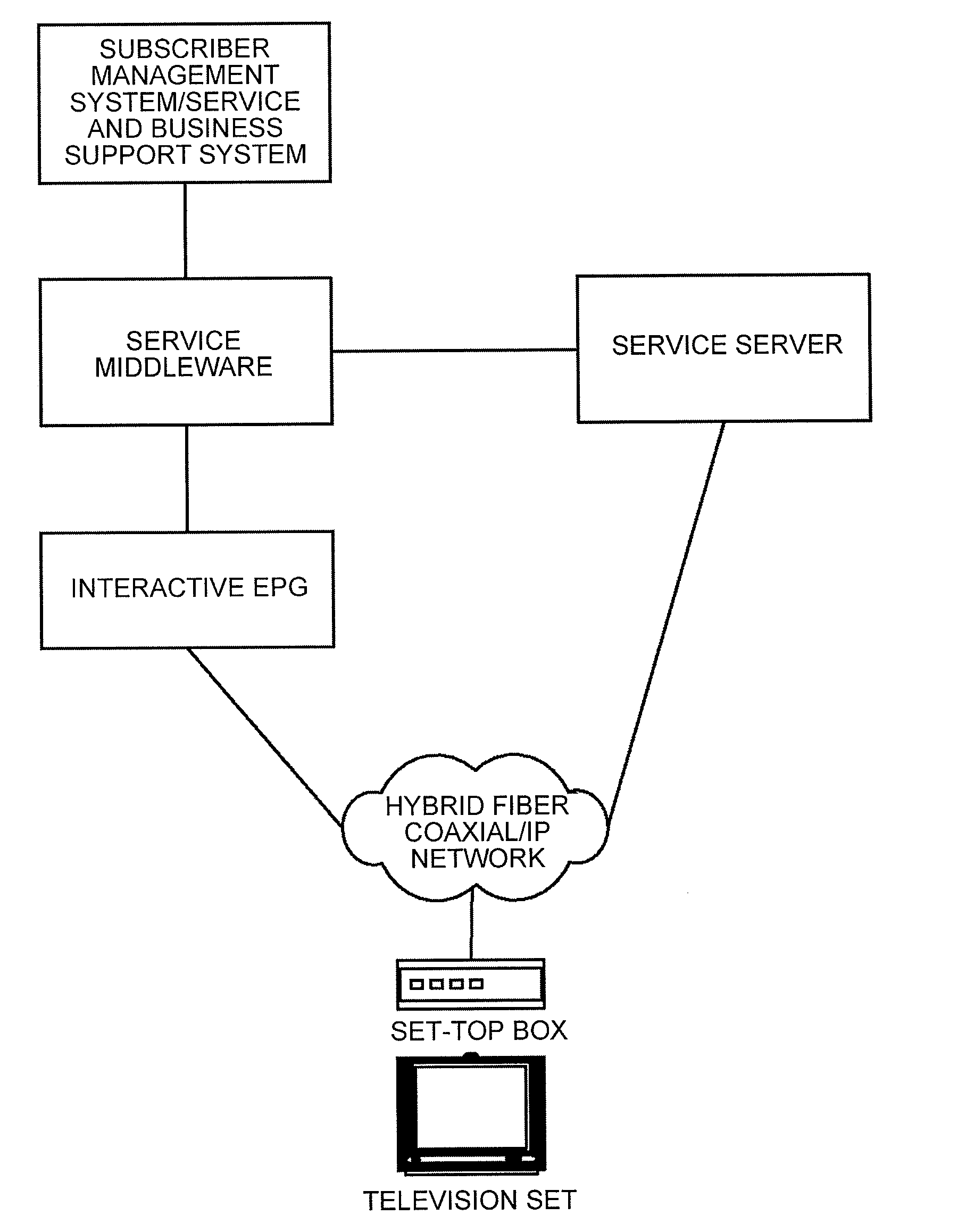

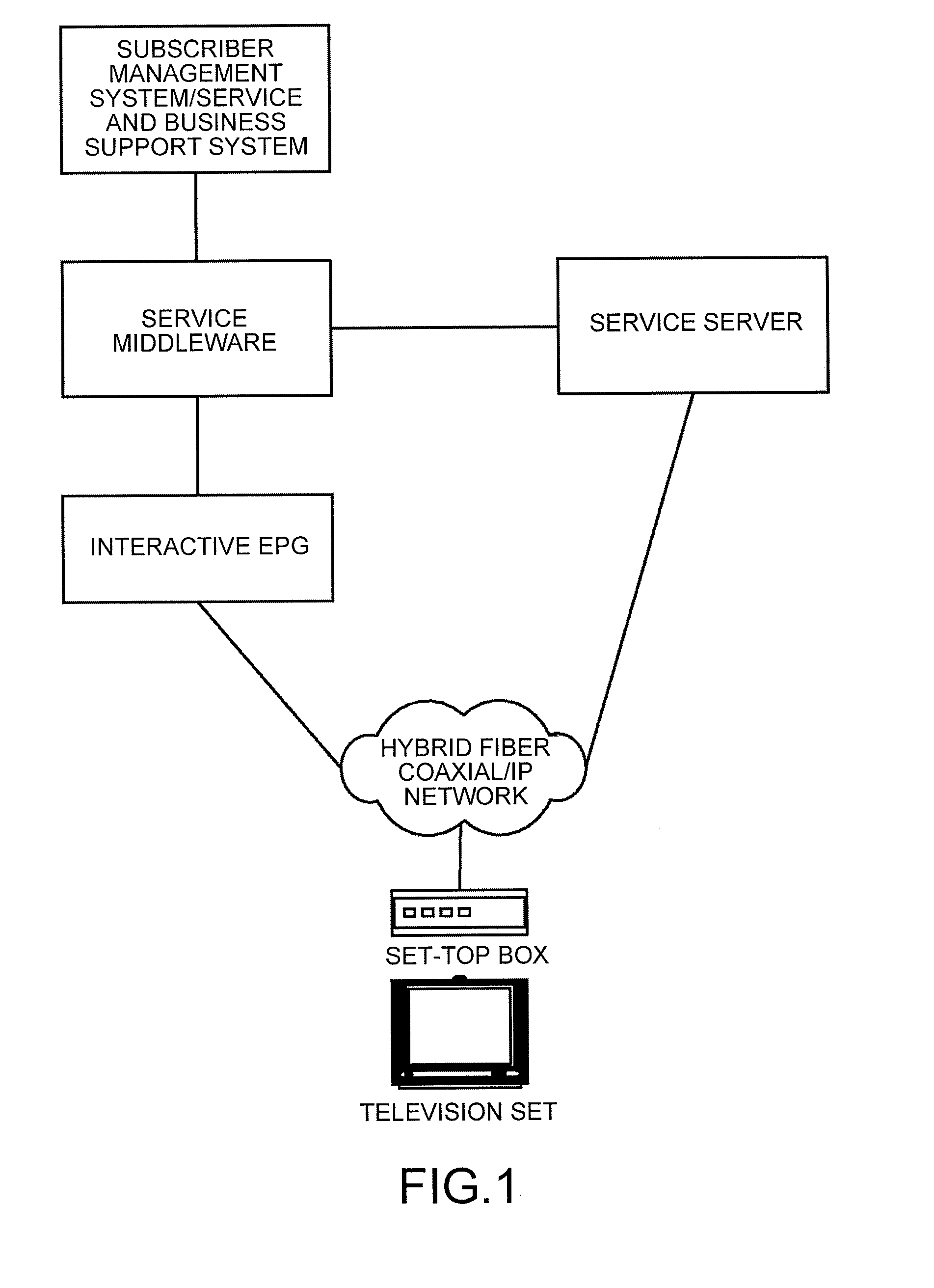

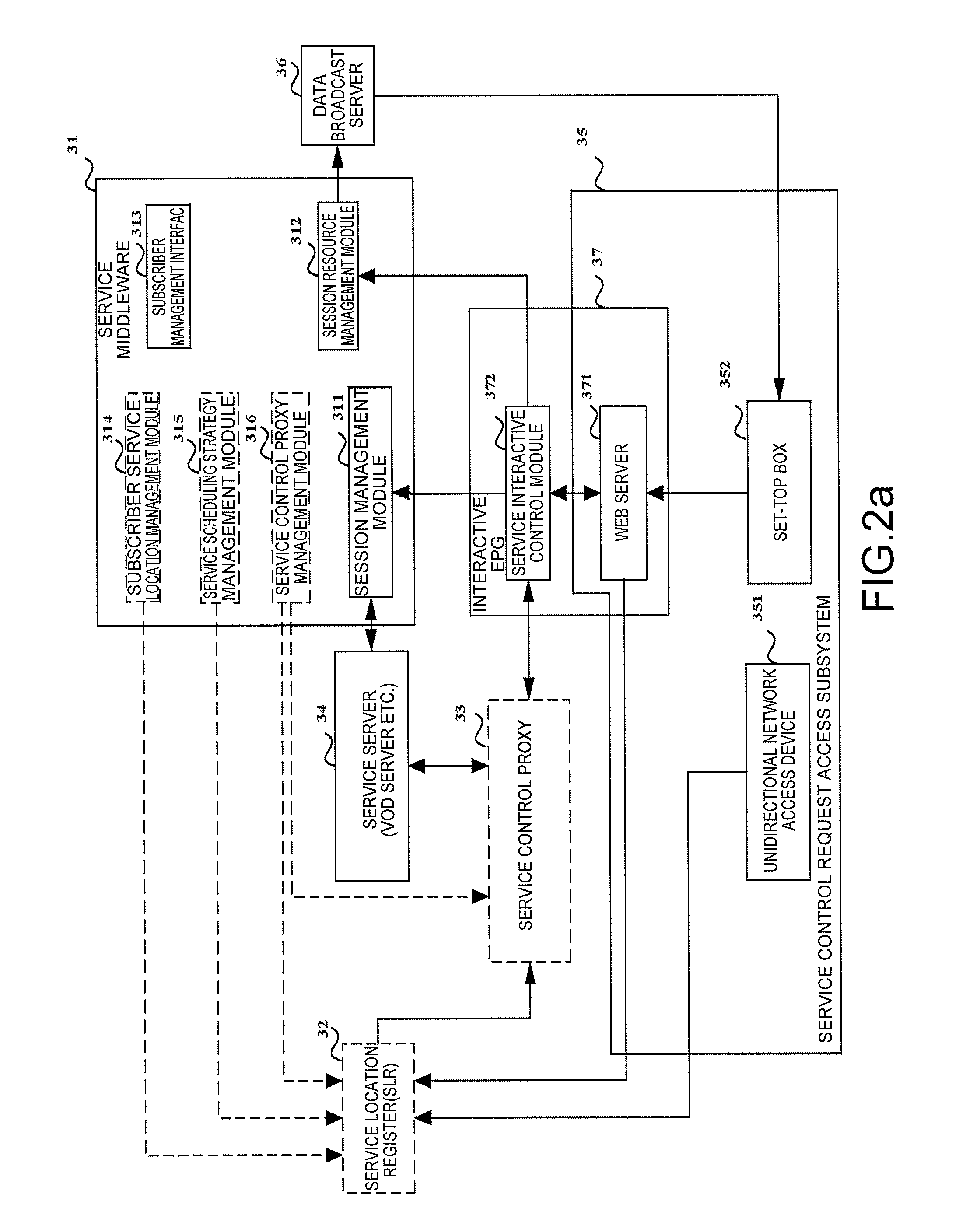

System, method and device for realizing multimedia service

InactiveUS7904925B2Improve interactive experienceLimited level of enhancementTelevision system detailsColor television detailsControl flowInteraction control

A realization system, method and device for multimedia service are provided. In the realization system for multimedia service, a service middleware receives multimedia service location information updated by users, multimedia service scheduling policy and device maintenance information of a service control proxy and loads them onto a service location register; the service middleware starts up or stops corresponding service control proxy according to device maintenance information of the service control proxy; the service location register authenticates a user multimedia service control request according to multimedia service location information and determines a service control proxy for the user through authentication according to multimedia service scheduling policy; the user multimedia service control request is forwarded to a determined service control proxy; the determined service control proxy provides multimedia service interactive control with an interactive electronic program guide and multimedia service control with a service server. The control flow of multiple multimedia services is unified.

Owner:HUAWEI TECH CO LTD

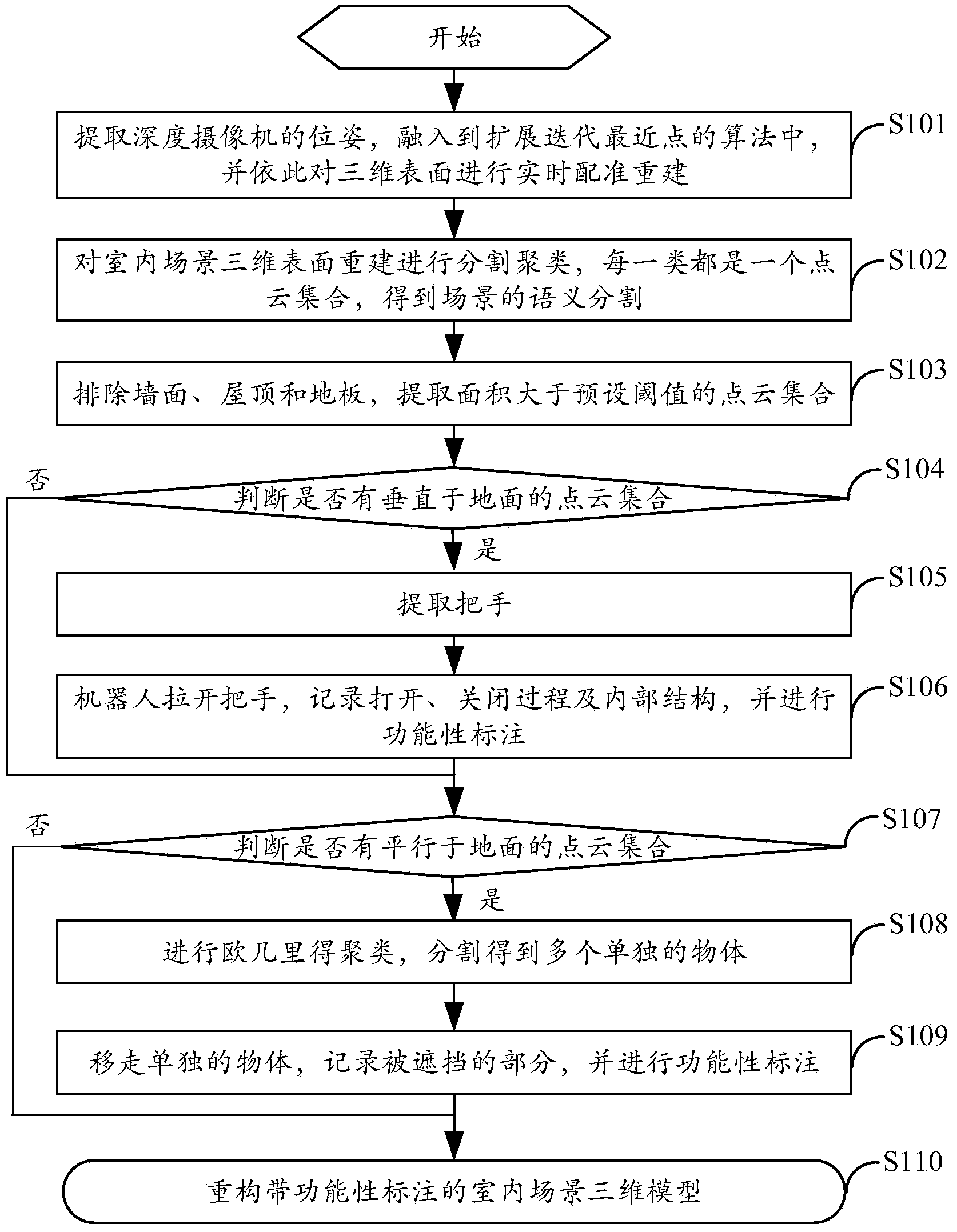

Functional modeling method for indoor scene

The invention provides a functional modeling method for an indoor scene. The functional modeling method includes the first step of extracting the pose of a depth camera, integrating the pose of the depth camera into an ICP algorithm and carrying out real-time registration reestablishment on three-dimensional surfaces according to the ICP algorithm, the second step of carrying out segmenting and clustering to realize semantic segmentation of the scene, the third step of extracting point-cloud sets with the area larger than a preset threshold value, the fourth step of judging whether point-cloud sets perpendicular to the ground exist, the fifth step of extracting a handle, the sixth step of pulling out the handle, recording the opening process, the closing process and the internal structure and carrying out functional labeling, the seventh step of judging whether point-cloud sets parallel to the ground exist, the eighth step of carrying out Euclid clustering and segmenting to obtain a plurality of individual objects, the ninth step of moving away the individual objects, recording blocked parts and carrying out functional labeling, and the tenth step of carrying out summarizing to obtain a functional model of the indoor scene. According to the method, functional operation can be conducted on the parts with labels, so interactive experience of users is greatly promoted.

Owner:SHENZHEN INST OF ADVANCED TECH CHINESE ACAD OF SCI

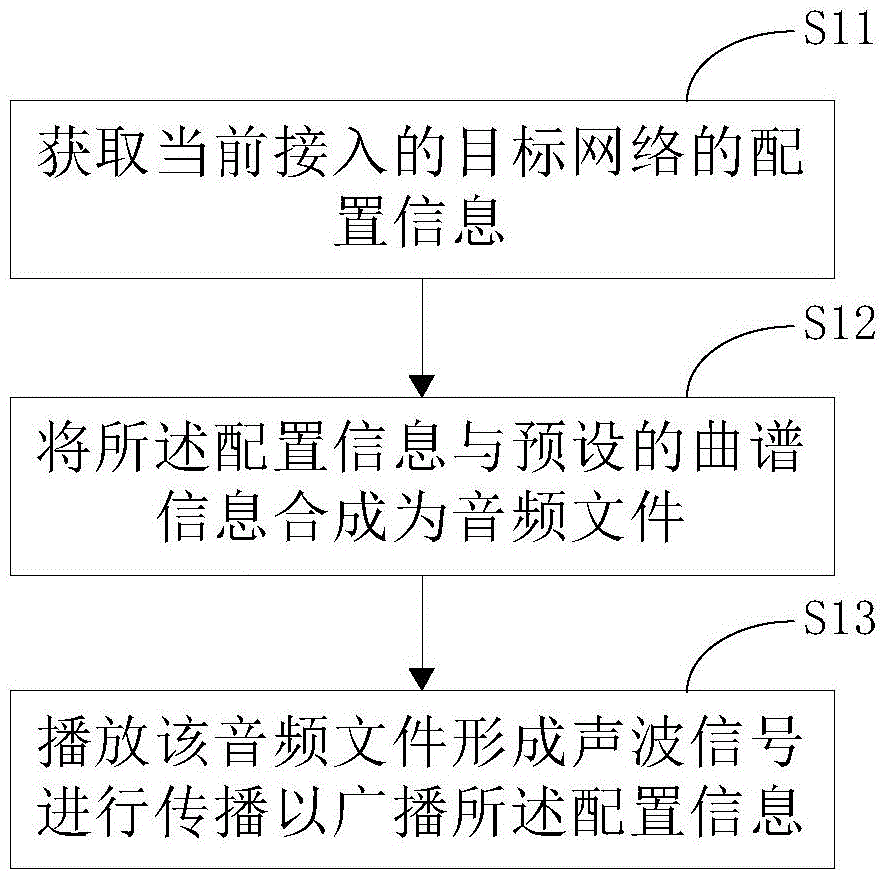

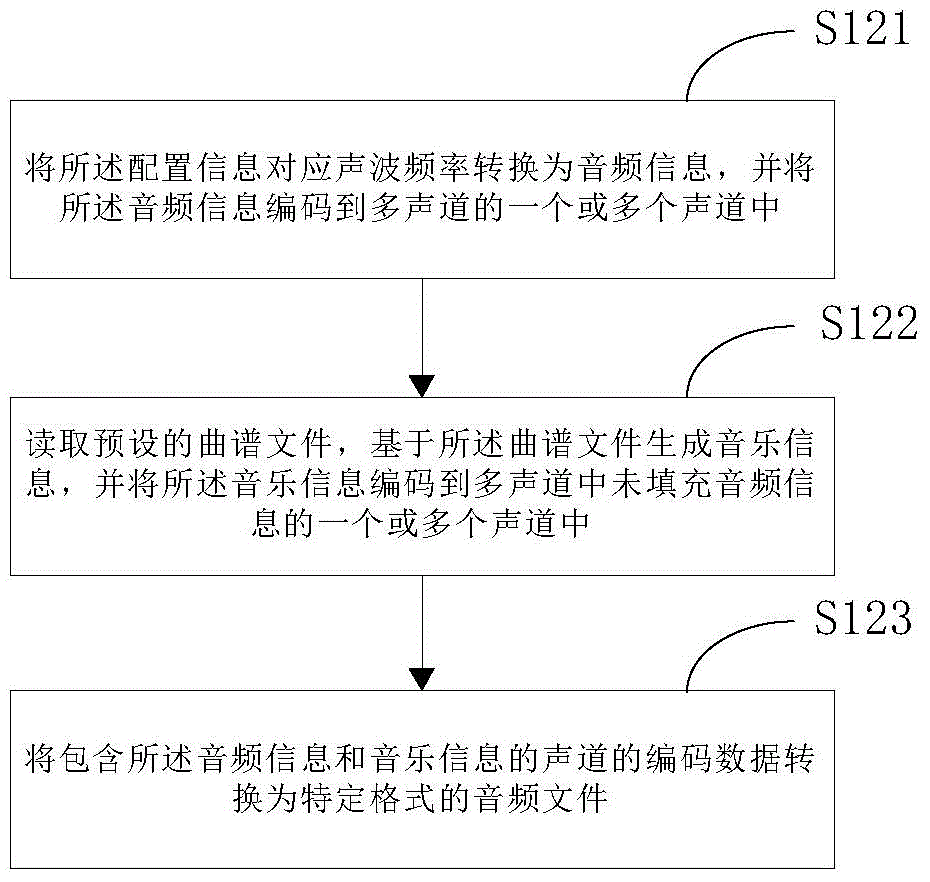

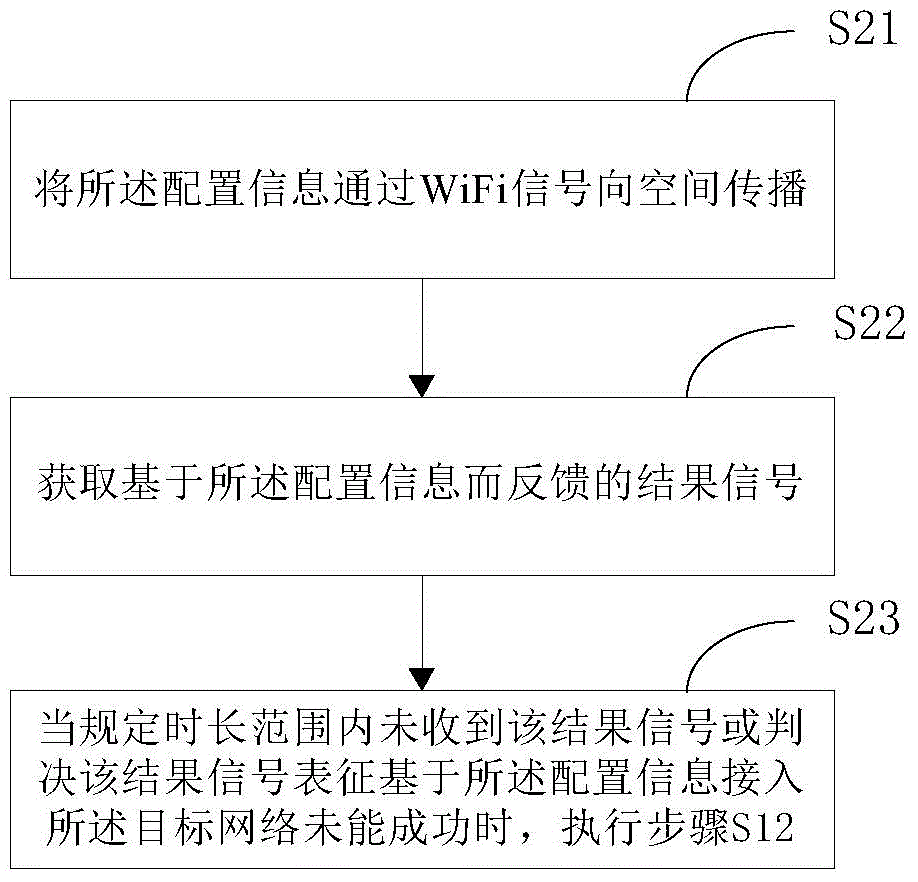

Object network access method, object network access guiding and control methods, and corresponding terminals

InactiveCN104883720AImprove interactive experienceEfficient use ofAssess restrictionAccess methodAcoustic wave

The invention mainly provides an object network access guiding method. The method comprises the following steps: obtaining configuration information of a currently accessed object network; synthesizing the configuration information with preset score information to form an audio file; and playing the audio file to form sound wave signals for propagation so as to broadcast the configuration information. Besides, the invention further provides an object network access method realized based on one side of an intelligent terminal to be accessed to an object network, an access control method, and a mobile phone terminal and an intelligent terminal correspondingly applying these methods. According to the invention, the mode of transmitting the configuration information of the accessed object network via the sound wave signals is taken as a technical core, a more reliable and improved transmission link is enabled to be established between a control end and a device to be accessed, and the intelligent terminal to be accessed to the object network can be more effectively accessed to the object network.

Owner:BEIJING QIHOO TECH CO LTD +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com