Patents

Literature

421 results about "Interaction object" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Interaction Object. The interaction object logs inbound or outbound customer contact. Technically speaking, the interaction object is an entry in the contact tracking table that contains details about a customer contact but is not linked to the business transaction.

System, device and method for processing interlaced multimodal user input

ActiveUS20150019227A1Speech recognitionInput/output processes for data processingUser inputInteraction object

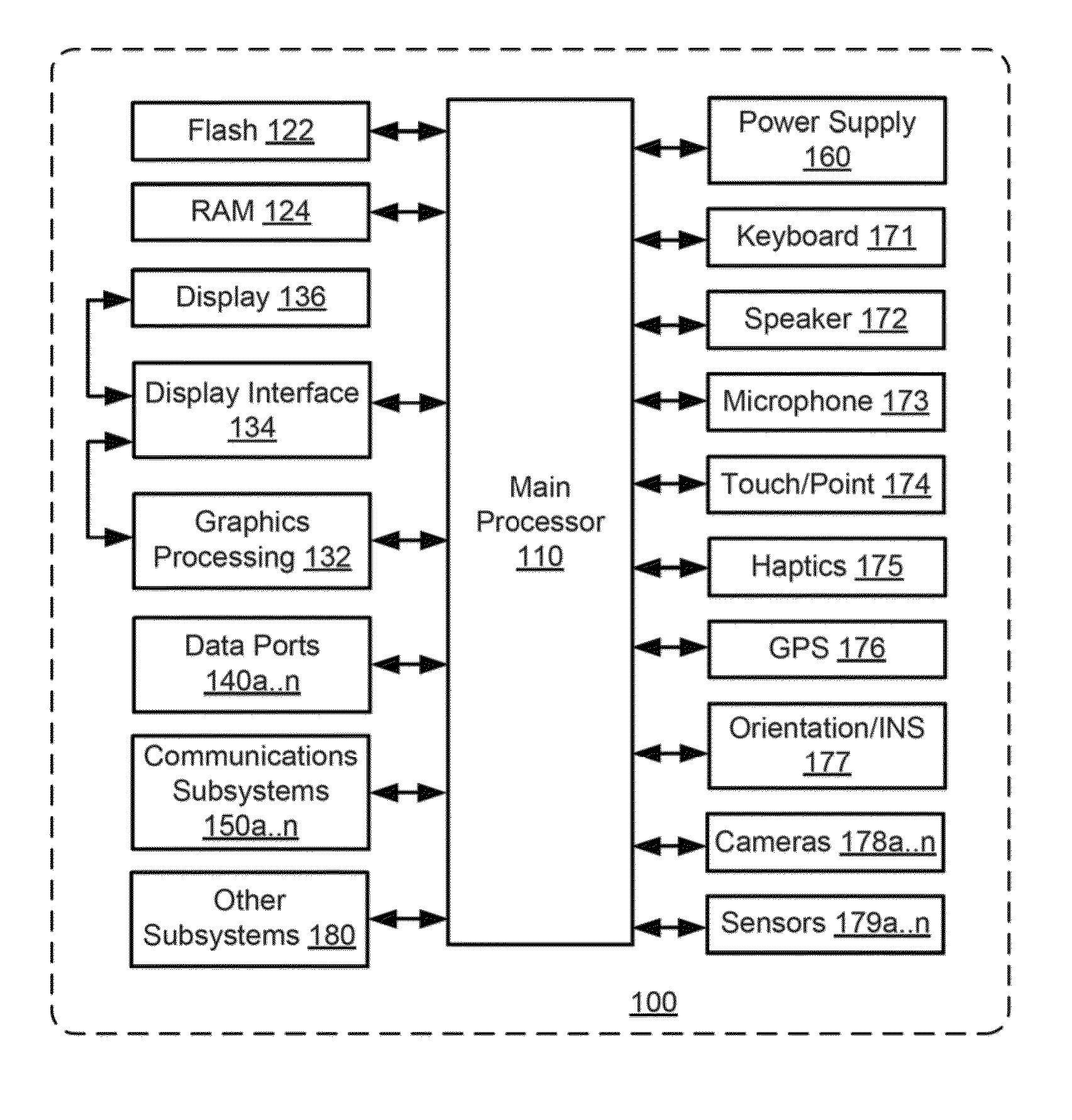

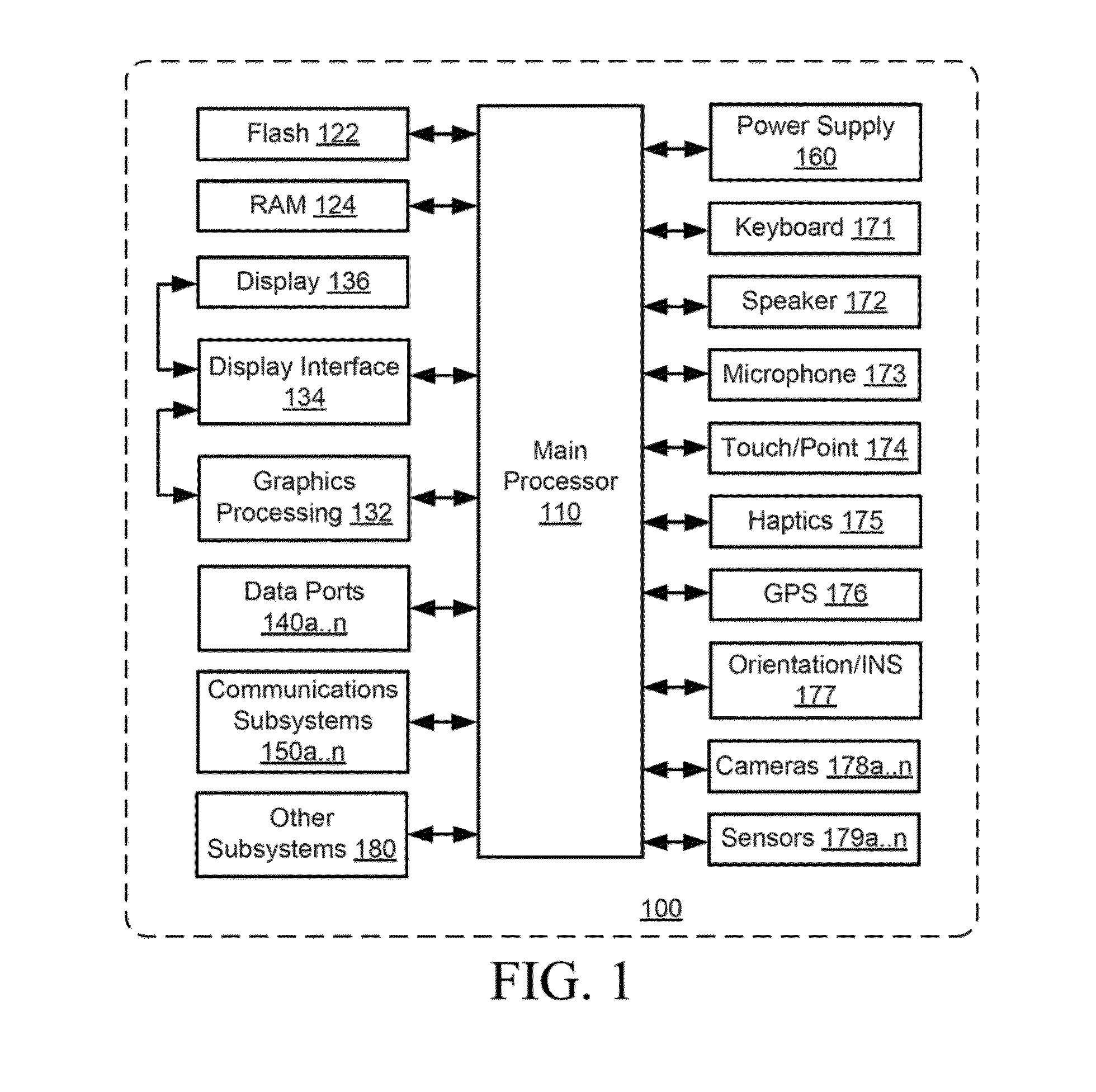

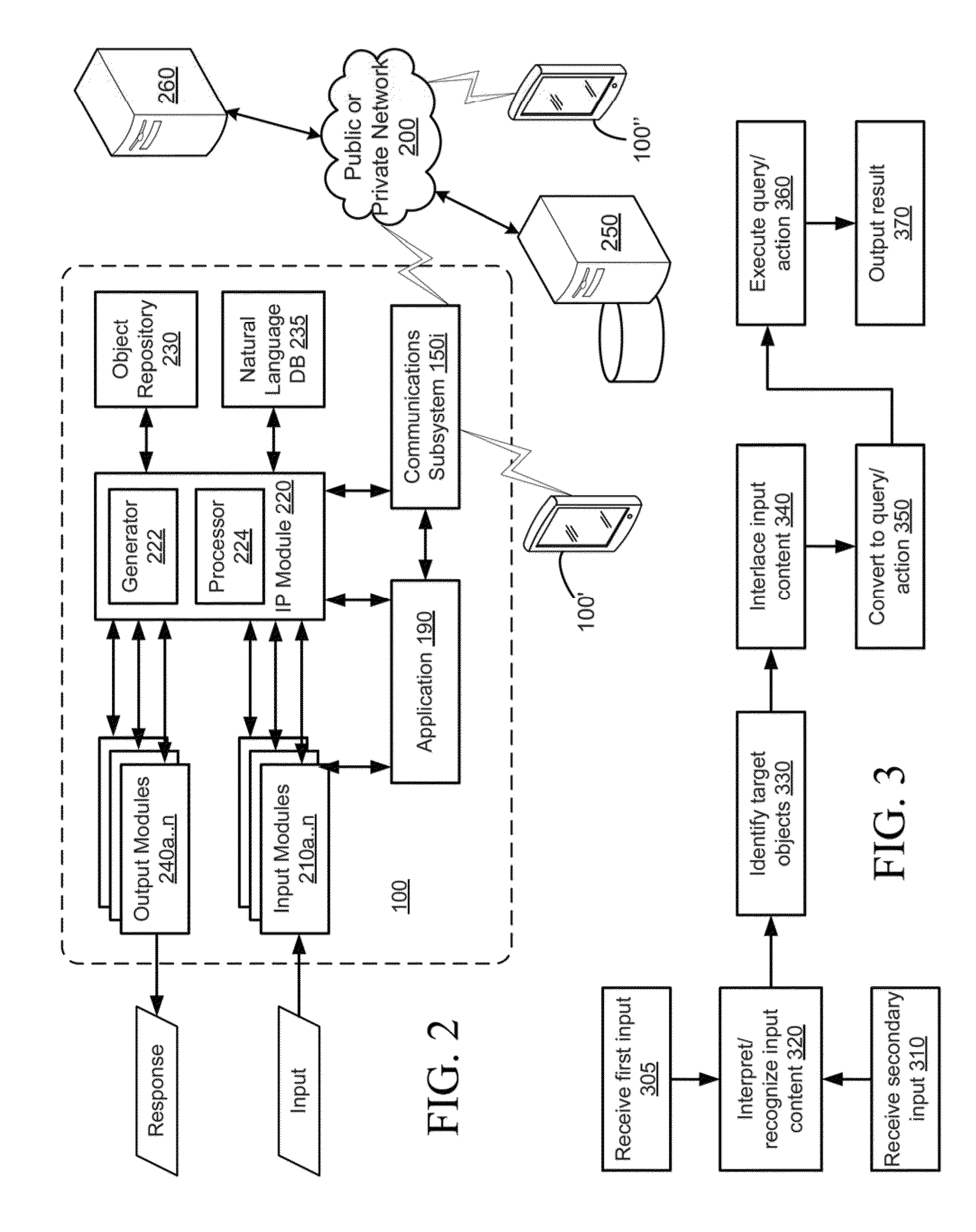

A device, method and system are provided for interpreting and executing operations based on multimodal input received at a computing device. The multimodal input can include one or more verbal and non-verbal inputs, such as a combination of speech and gesture inputs received substantially concurrently via suitable user interface means provided on the computing device. One or more target objects is identified from the non-verbal input, and text is recognized from the verbal input. An interaction object is generated using the recognized text and identified target objects, and thus comprises a natural language expression with embedded target objects. The interaction object is then processed to identify one or more operations to be executed.

Owner:XTREME INTERACTIONS

Interaction system

InactiveUS20140004884A1Improve interactive experienceLocation information based serviceInteraction systemsLocation detection

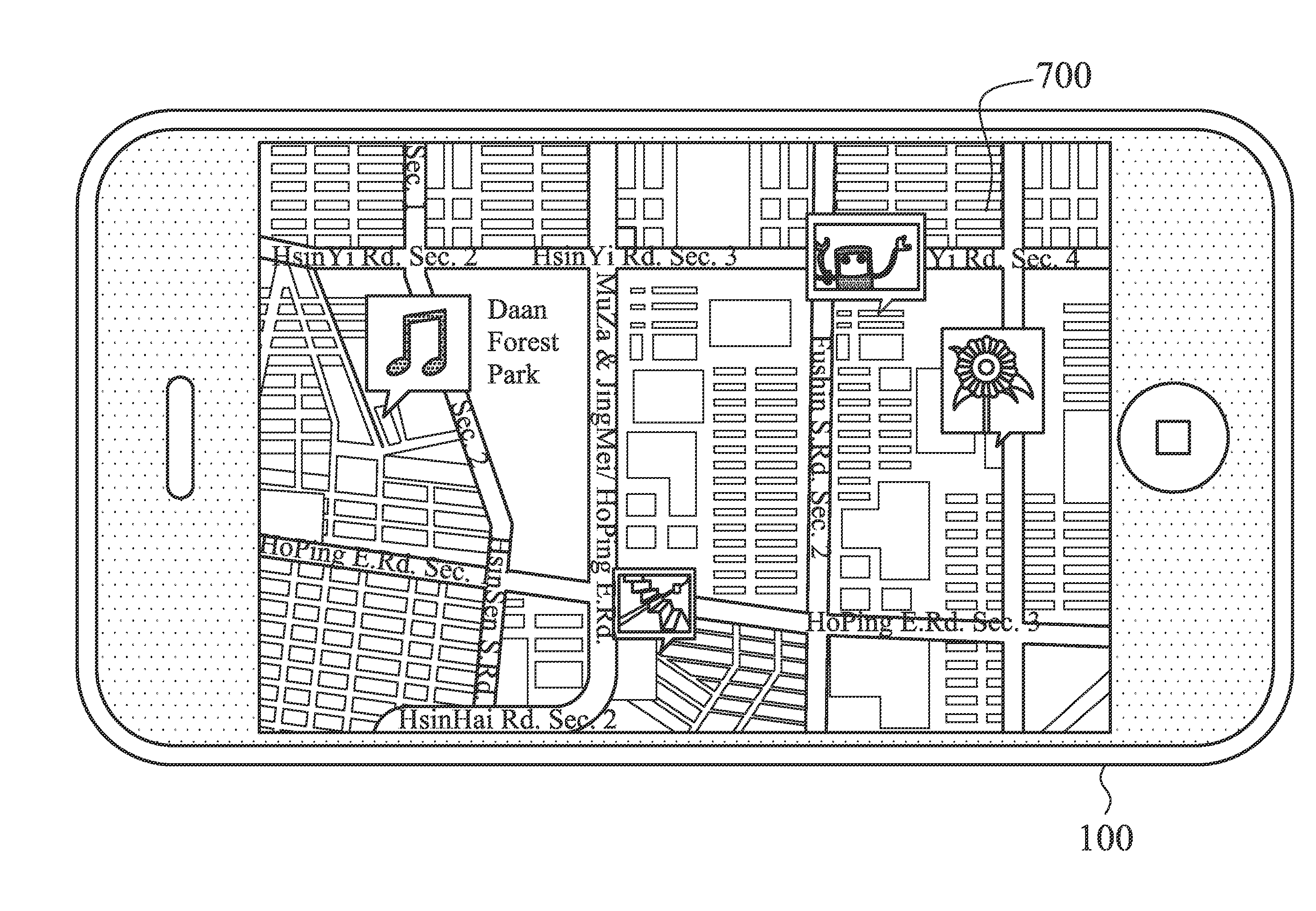

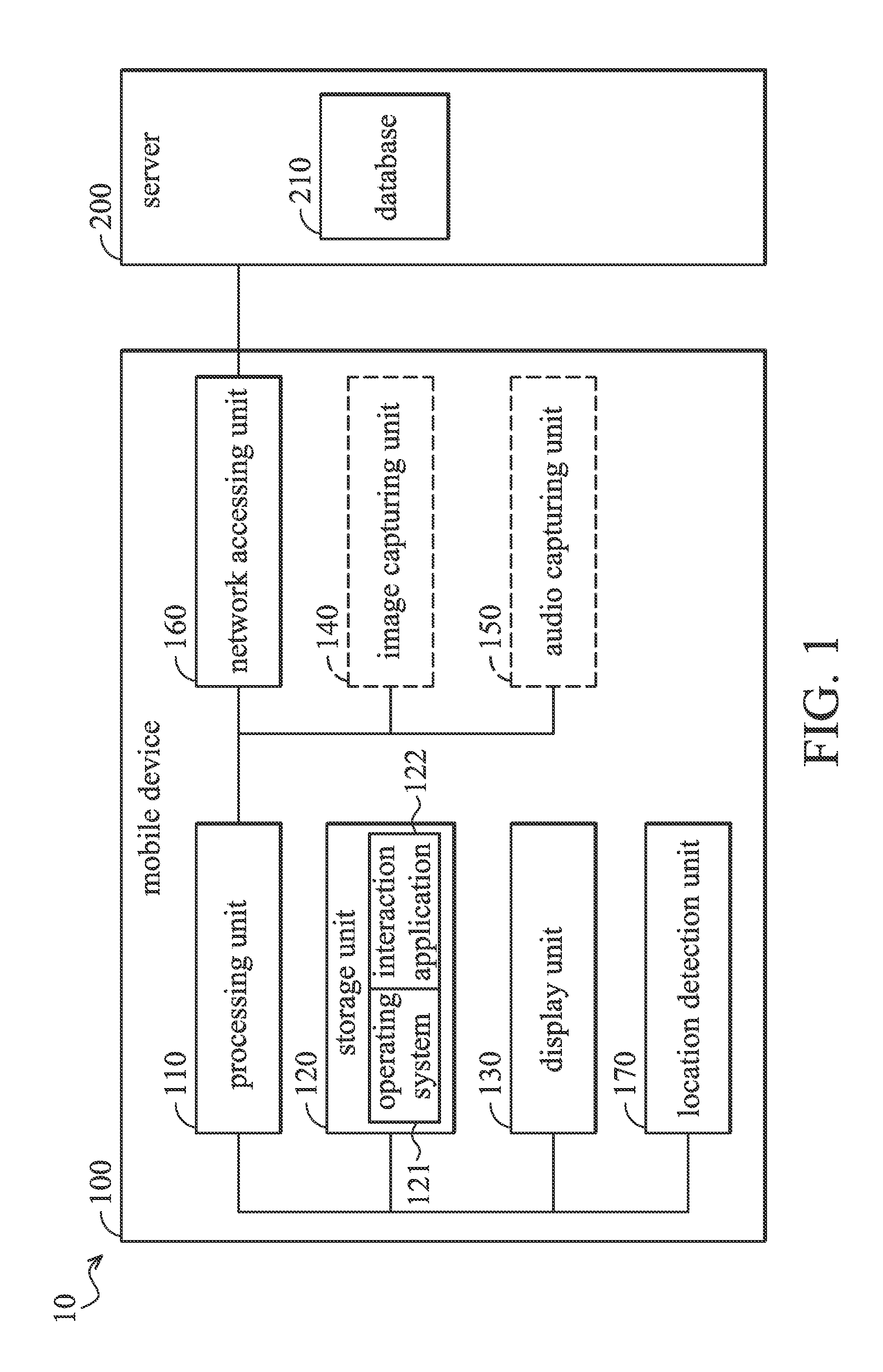

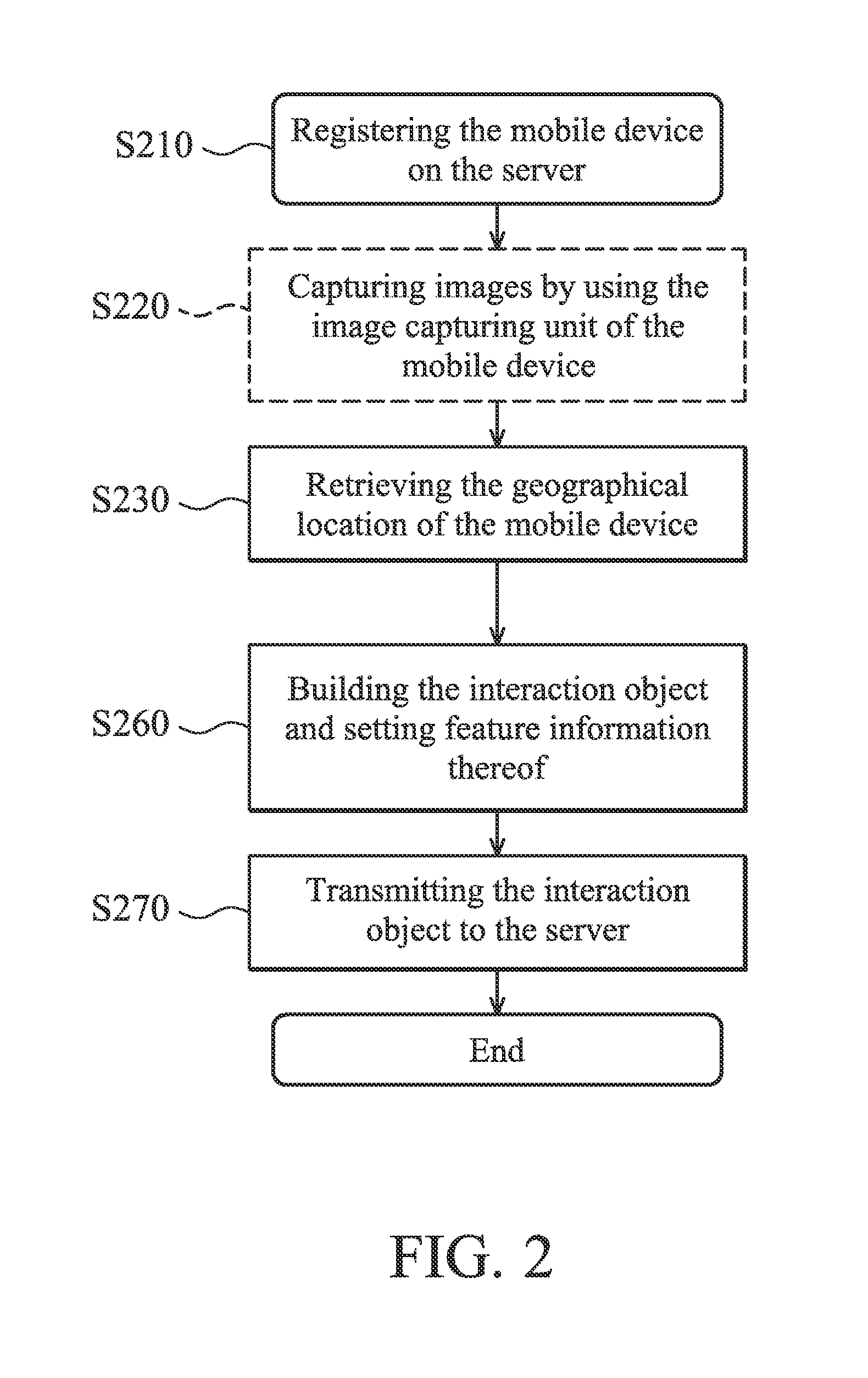

An interaction system is provided. The interaction system has: a mobile device having: a location detection unit configured to retrieve a geographical location of the mobile device; and a server configured to retrieve the geographical location of the mobile device, wherein the server has a database configured to store at least one interaction object and location information associated with the interaction object, and the server further determines whether the location information of the interaction object corresponds to the geographical location of the mobile device, wherein when the location information of the interaction object corresponds to the geographical location of the mobile device, the server further transmits the interaction object to the mobile device, so that the mobile device executes the at least one interaction object.

Owner:QUANTA COMPUTER INC

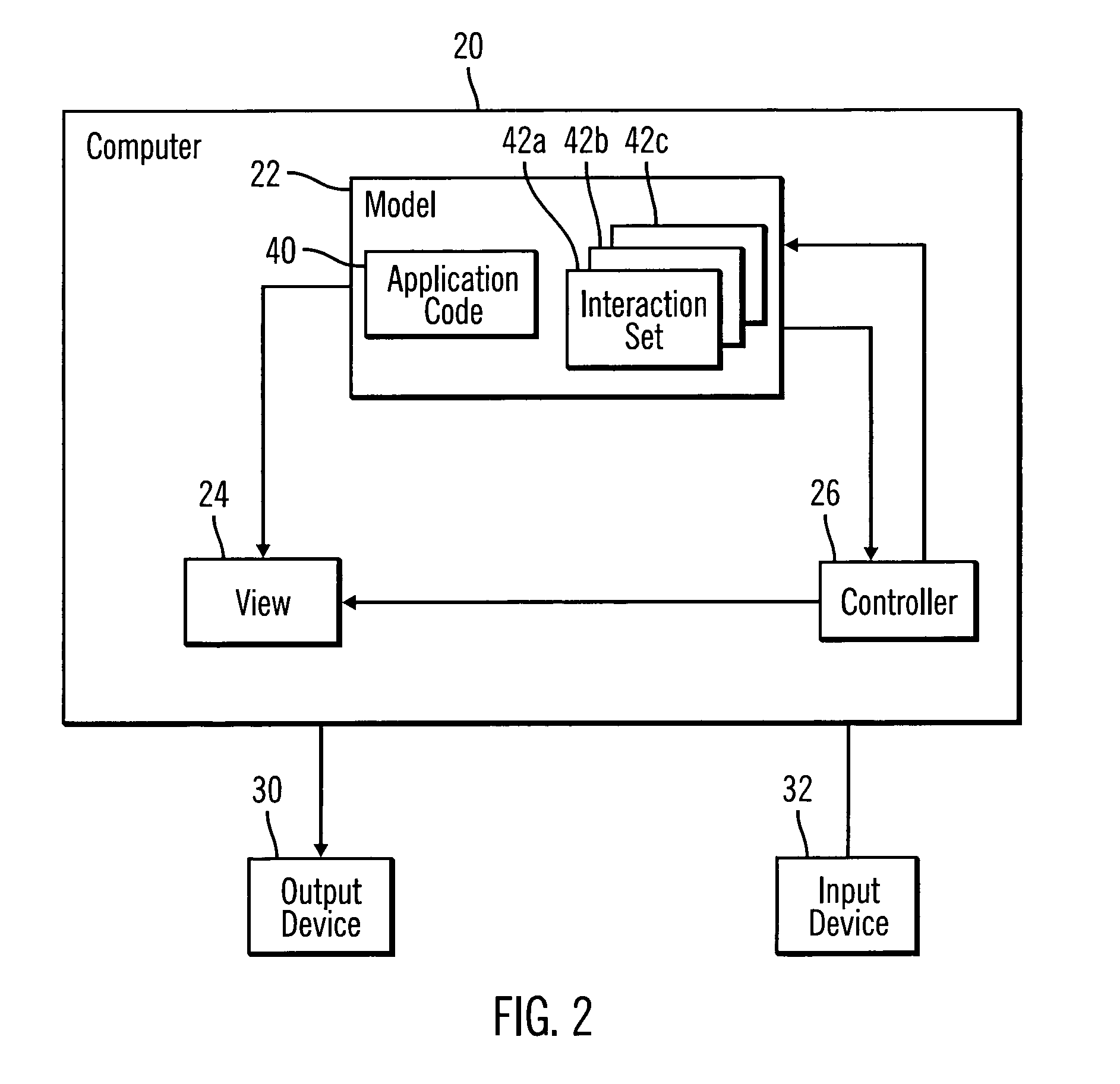

Method, system, and program for generating a user interface

InactiveUS7017145B2Software designSpecific program execution arrangementsOutput deviceInteraction object

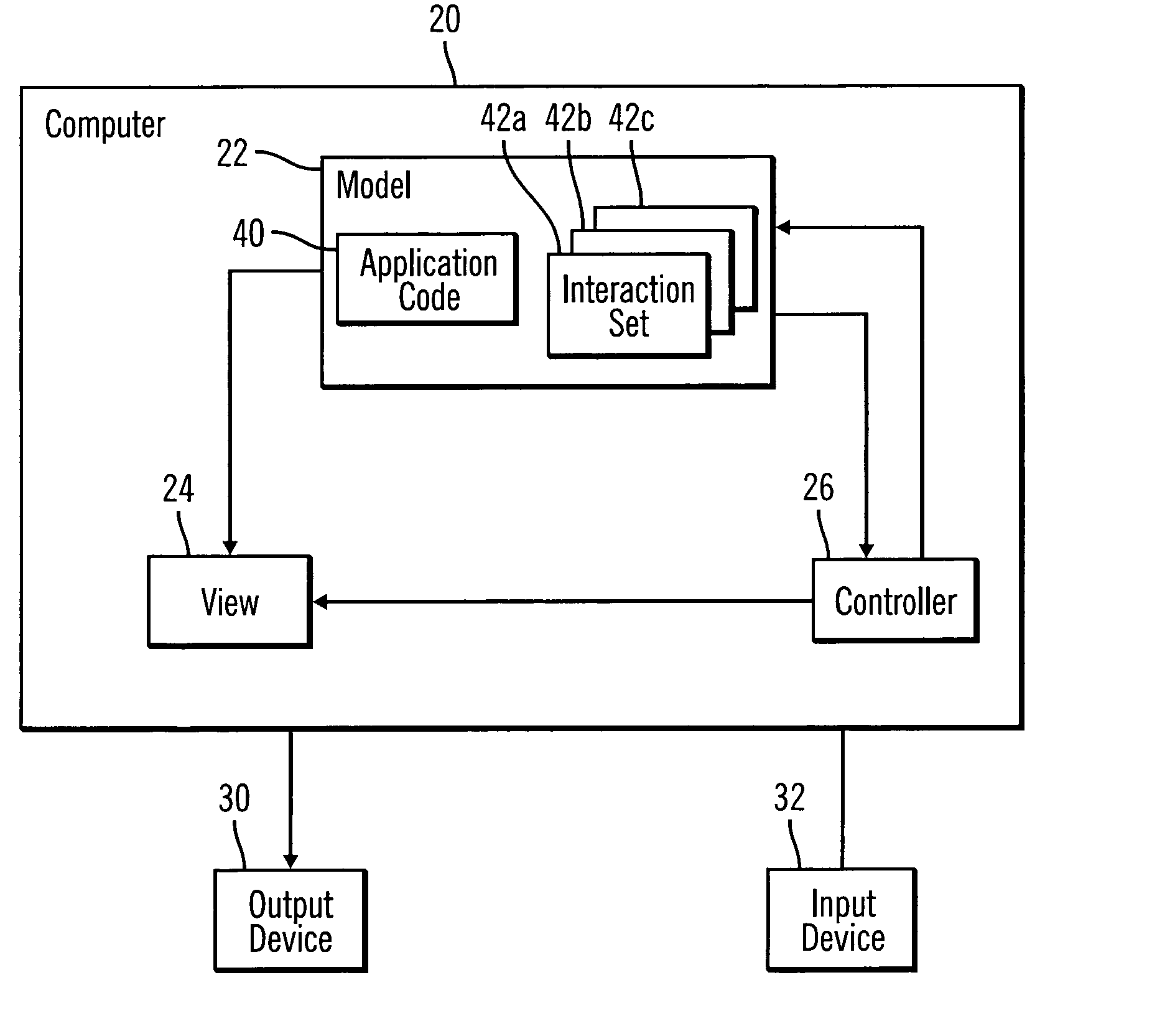

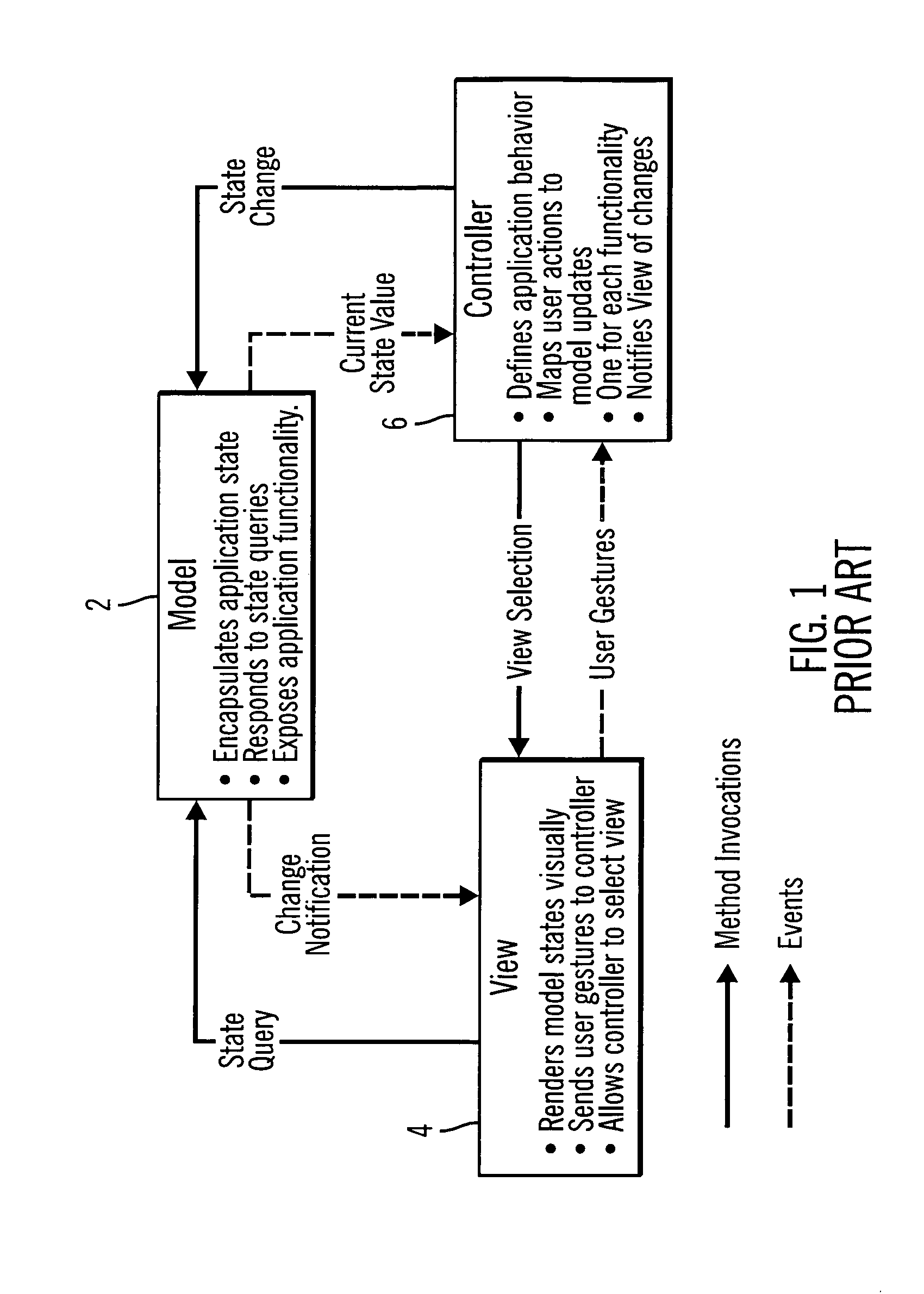

Provided is a method, system, program, and data structures for generating a user interface. An application program processes data and generates application output and a user interface module processes the application output to generate output data to render on an output device. The user interface module generates output data to render on the output device in response to processing statements in the user interface module. The user interface module reaches a processing point where the user interface module does not include statements to generate output data. After reaching the processing point, the user interface module receives an interaction object from the application program specifying data to generate as output data. The user interface module then generates output data to render on the output device from the interaction object.

Owner:ORACLE INT CORP

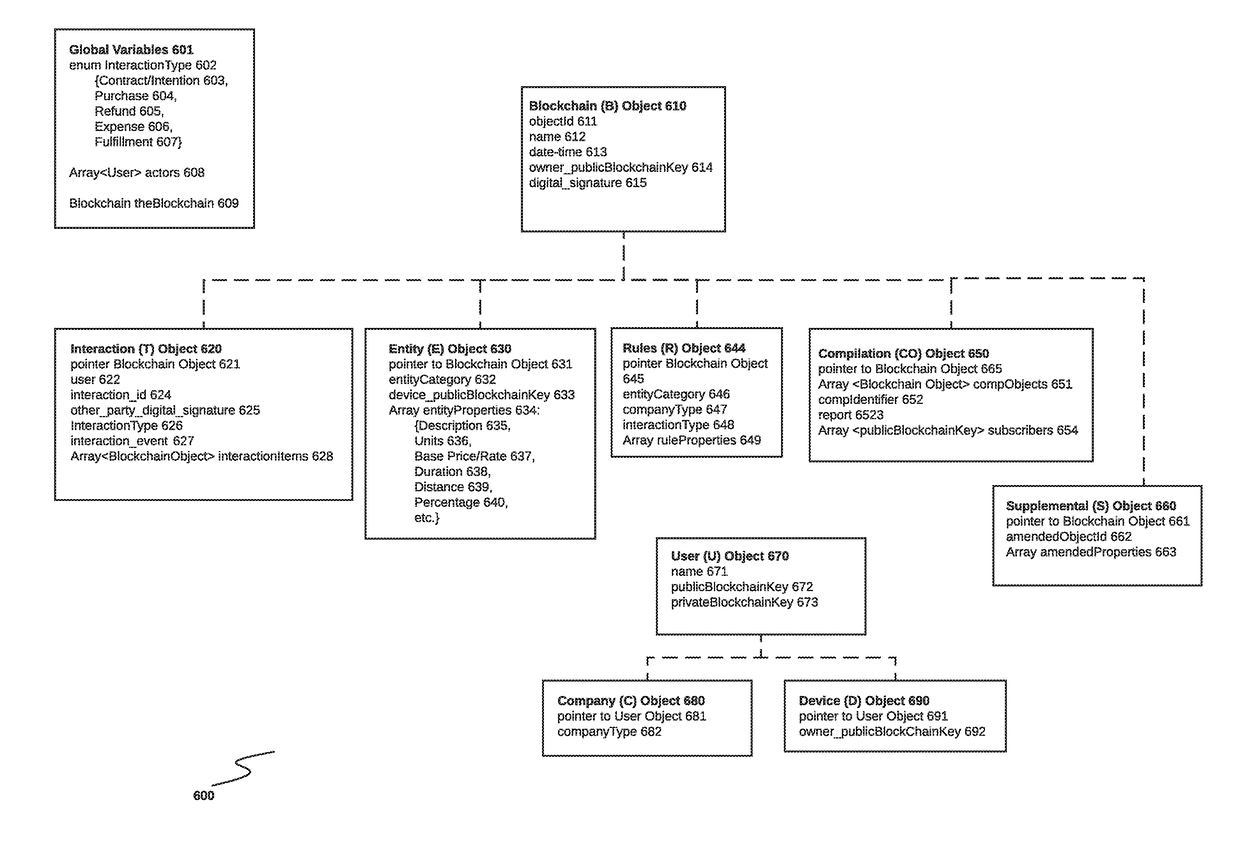

System and method for interaction object reconciliation in a public ledger blockchain environment

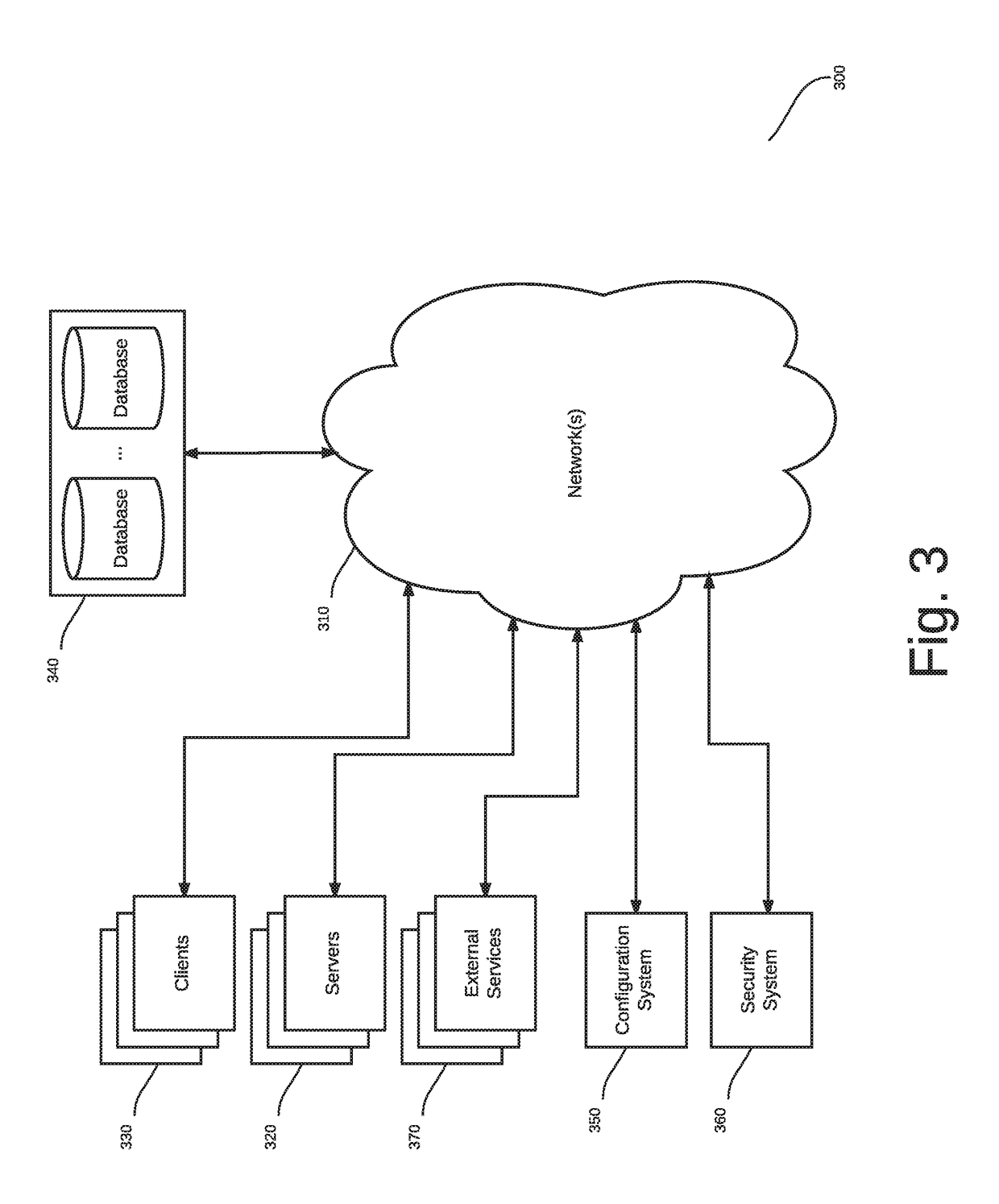

ActiveUS20180144156A1Secure data communicationEncryption apparatus with shift registers/memoriesTelegraphic message interchanged in timeObject basedNetwork connection

A system and method for block reconciliation of interactions comprising a network-connected block reconciliation computer connected to a plurality of connected devices and to one or more blockchains to enable an object compiler to receive a plurality of criteria from a requesting device. The compiler the receives a plurality of blocks from the public ledger blockchains based on the criteria. Each block corresponding to a preconfigured interaction object previously written by devices either during or after the completion of a transaction. The compiler analyzes the preconfigured interaction objects to determine if there is corresponding supplemental object. The compiler requests the supplemental blocks from the blockchains, and processes supplemental objects based on type, if no corresponding supplemental object it found, the associated interaction object is flagged.

Owner:COSTANZ MARIO A

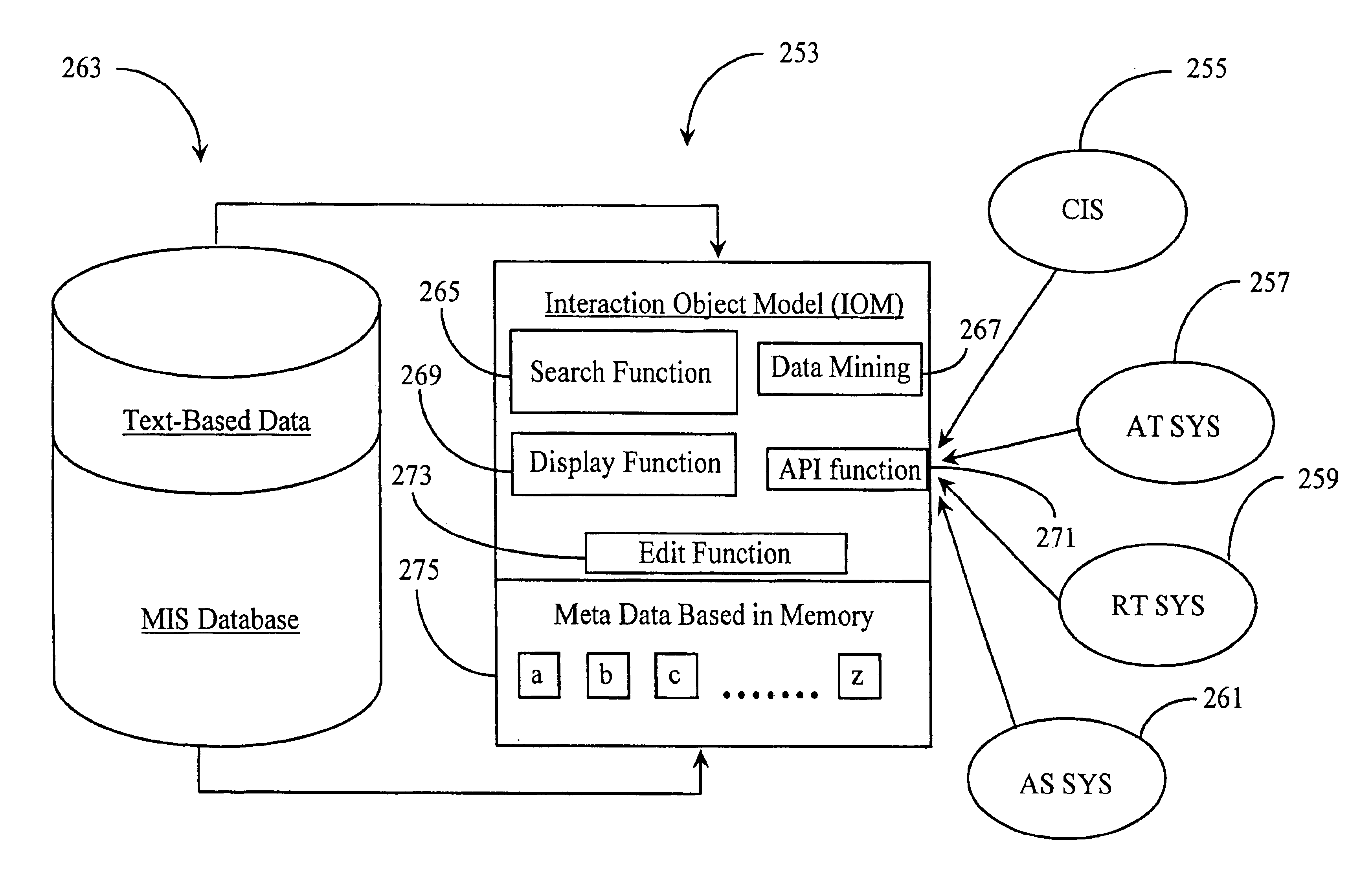

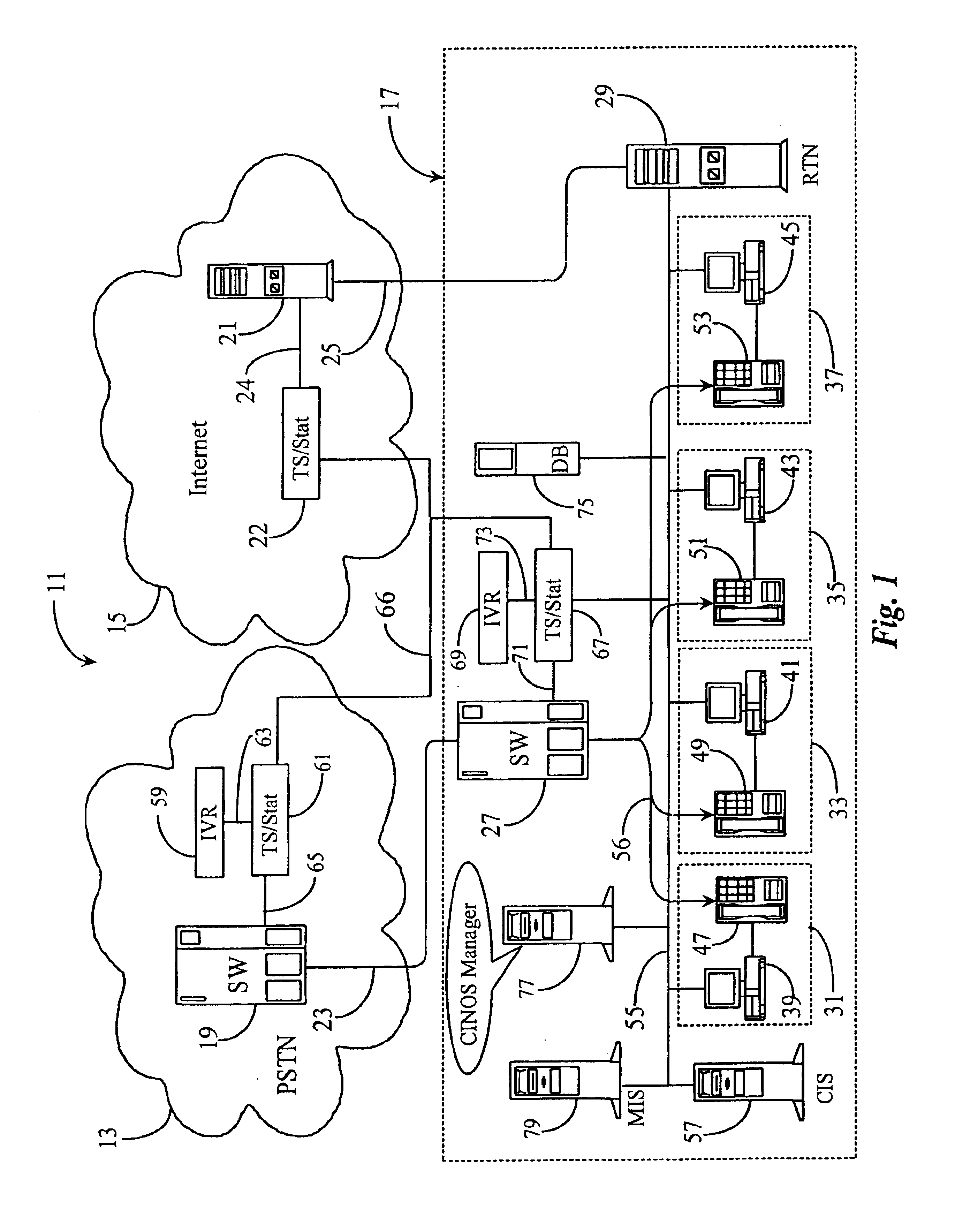

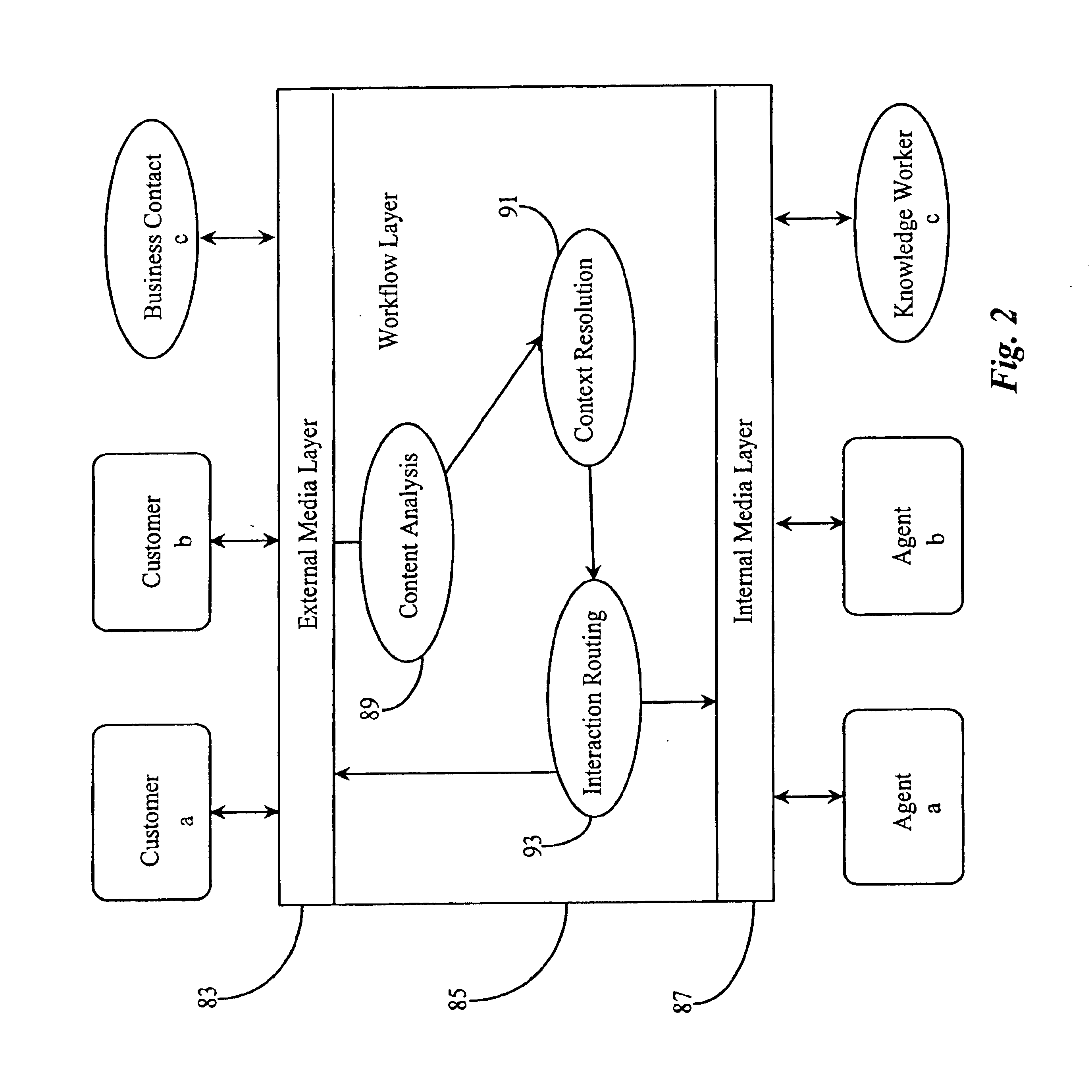

Stored-media interface engine providing an abstract record of stored multimedia files within a multimedia communication center

InactiveUS6874119B2Easy accessInput/output for user-computer interactionInterconnection arrangementsInteraction interfaceData file

An Interaction Object Model (IOM) Interface to a data repository includes objects representing files in the data repository; standardized information about each file, the information associated in the IOM with each object, and an updating interface communicating with the data repository, keeping objects conformal with the files in the data repository. An interaction interface to system function modules requiring data associated with the files in the data repository is provided, wherein through the interaction interface the system function modules are enabled to retrieve required data from the IOM without accessing the data files in the data repository directly. A principle use is in a multimedia call center, storing text and non-text transactions of the call center.

Owner:GENESYS TELECOMMUNICATIONS LABORATORIES INC

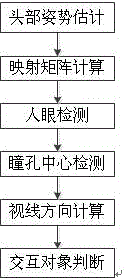

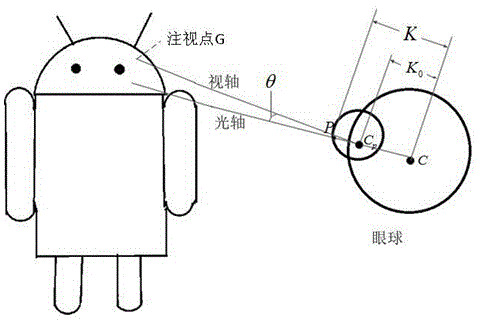

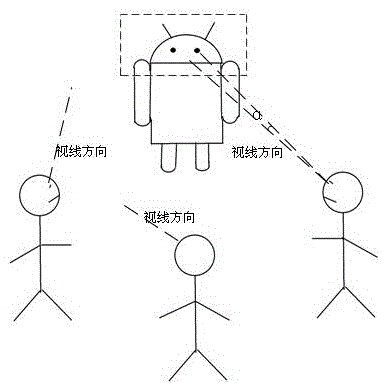

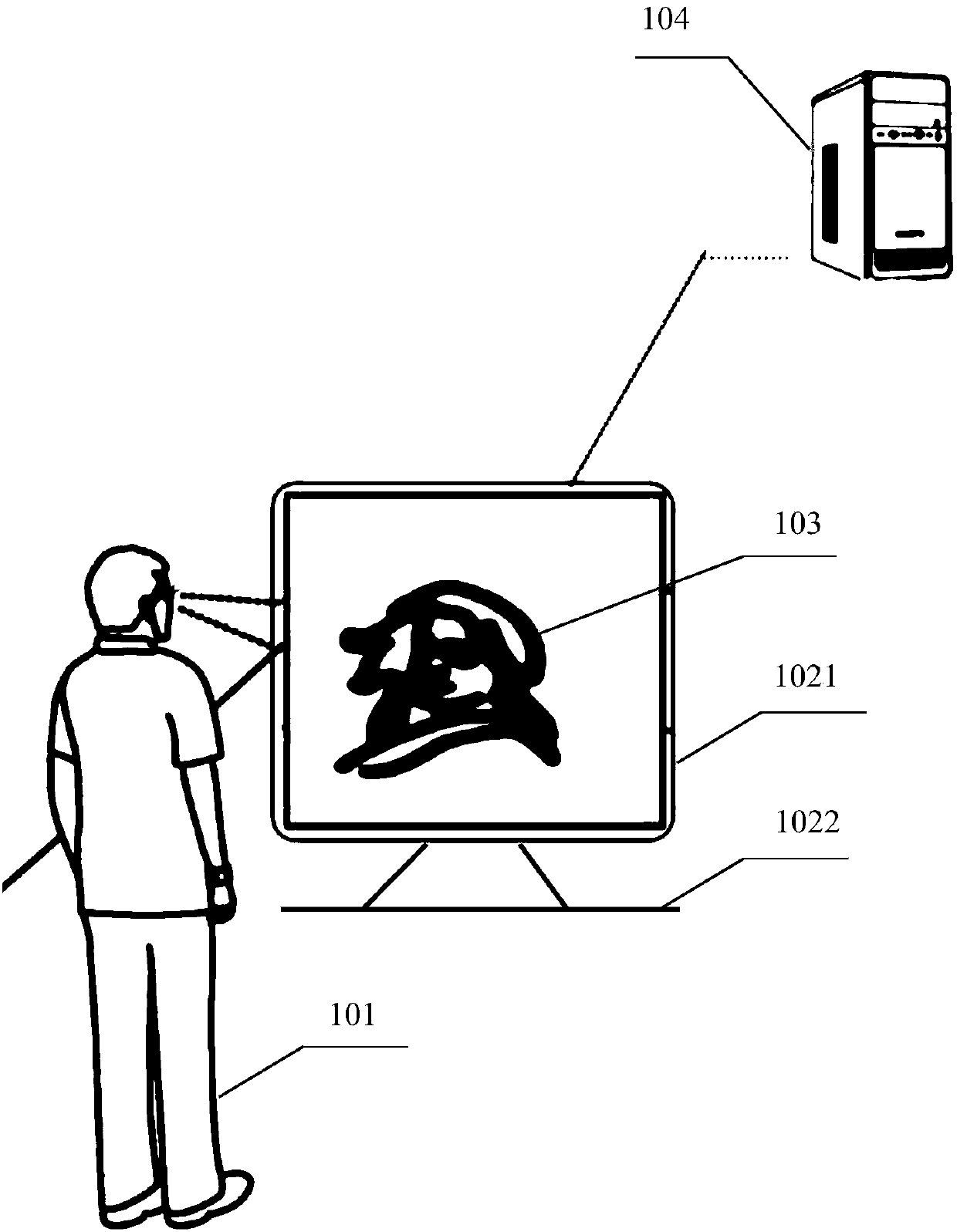

3D (three-dimensional) sight direction estimation method for robot interaction object detection

ActiveCN104951808ASimple hardwareEasy to implementAquisition of 3D object measurementsHough transformEstimation methods

The invention discloses a 3D (three-dimensional) sight direction estimation method for robot interaction object detection. The method comprises steps as follows: S1, head posture estimation; S2, mapping matrix calculation; S3, human eye detection; S4, pupil center detection; S5, sight direction calculation; S6, interaction object judgment. According to the 3D (three-dimensional) sight direction estimation method for robot interaction objection detection, an RGBD (red, green, blue and depth) sensor is used for head posture estimation and applied to a robot, and a system only adopts the RGBD sensor, does not require other sensors and has the characteristics of simple hardware and easiness in use. A training strong classifier is used for human eye detection and is simple to use and good in detection and tracking effect; a projecting integral method, a Hough transformation method and perspective correction are adopted when the pupil center is detected, and the obtained pupil center can be more accurate.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

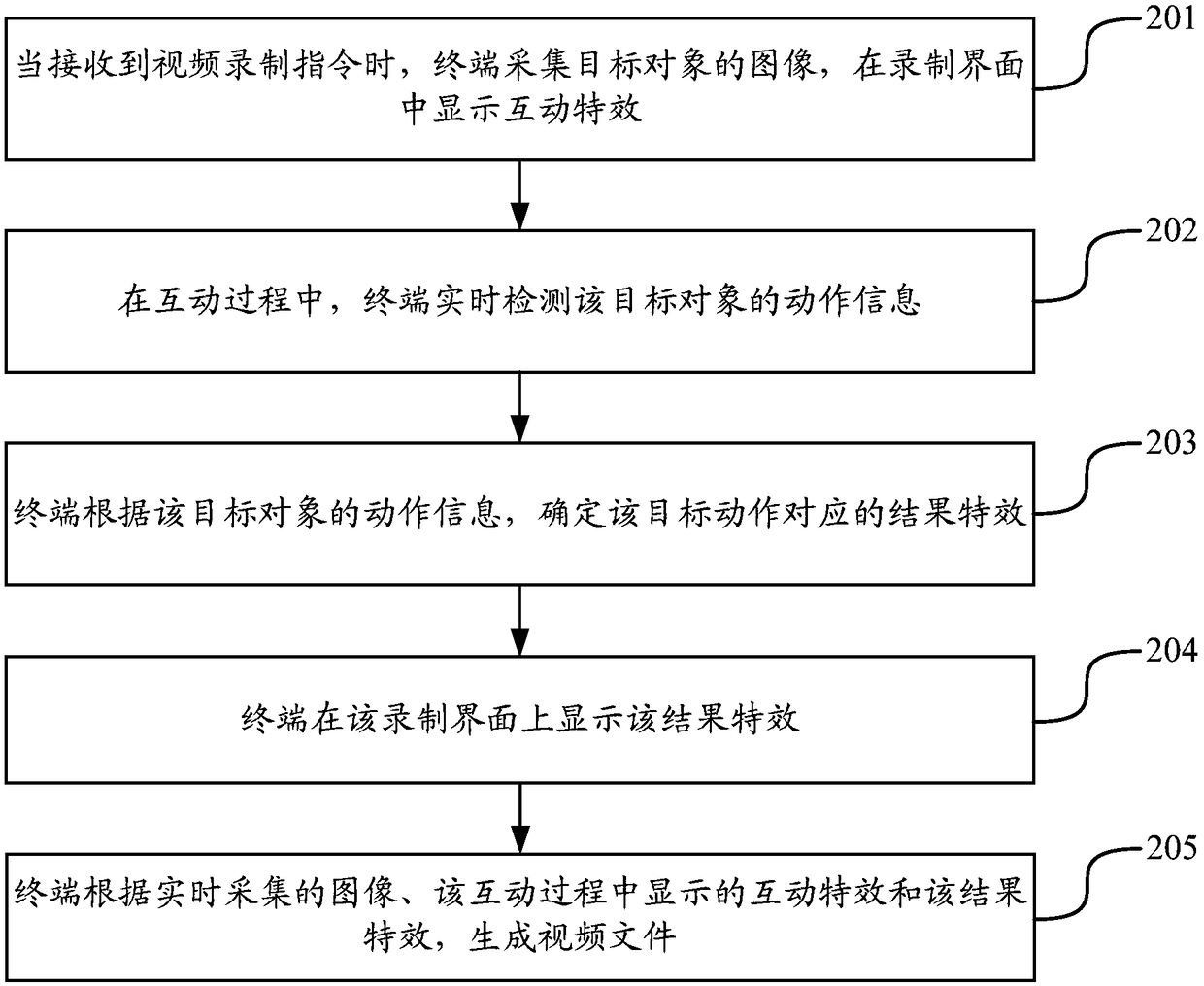

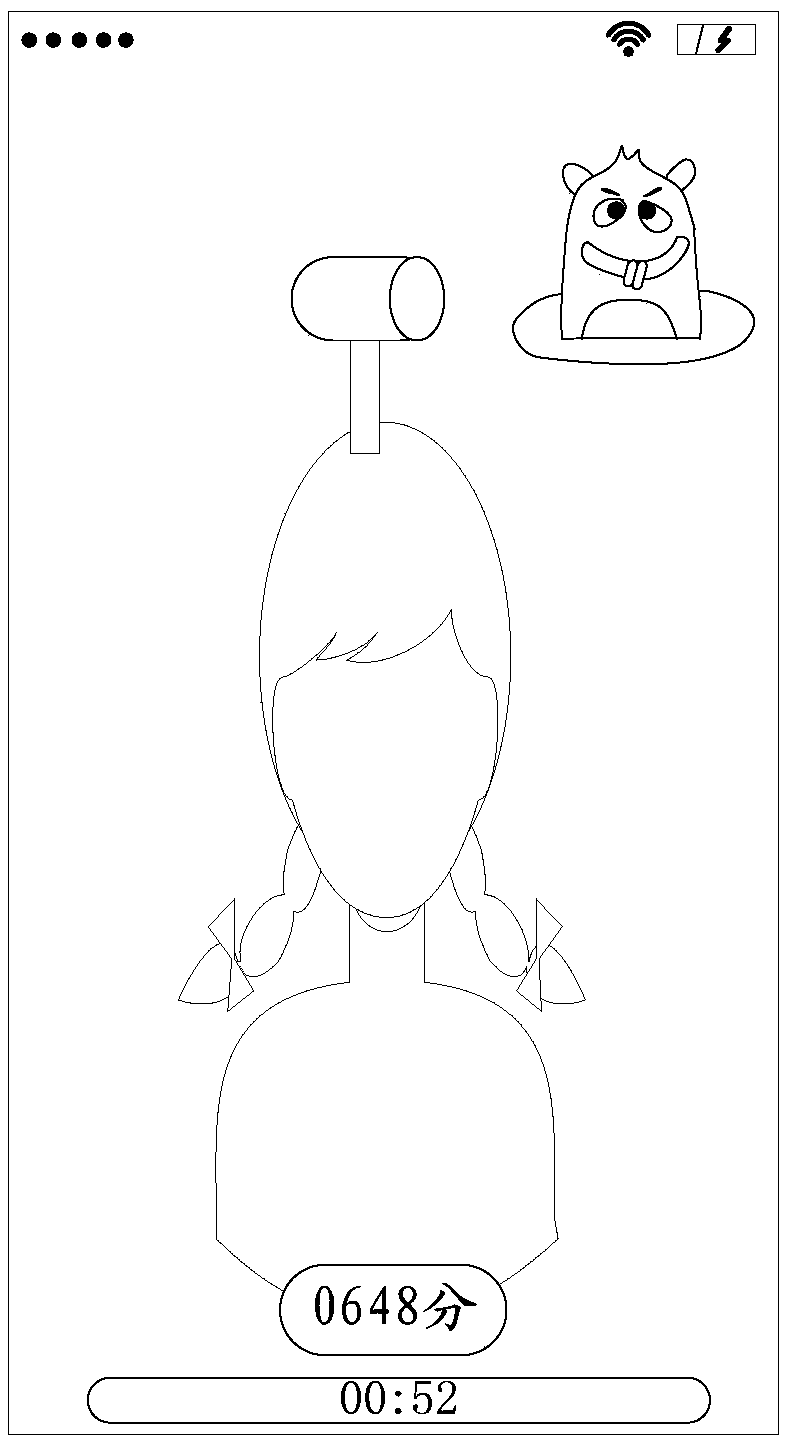

Video record method and device, terminal and storage medium

ActiveCN108833818AAdd funAdd video contentTelevision system detailsColor television detailsComputer graphics (images)Video record

The present invention discloses a video record method and device, terminal and a storage medium, belonging to the technical field of the Internet. The method comprises the steps of: when a video record command is received, collecting images of a target object in real time, and displaying an interactive special effect in a record interface, wherein the interactive special effect at least comprisesthe icon of the interactive object which performs interaction through a target motion; in the interaction process, detecting the motion information of the target object in real time; according to themotion information of the target object, determining a result special effect corresponding to the target motion, wherein the special effect result is configured to indicate an interaction effect of interaction of the target motion and the interaction object; displaying the result special effect on the record interface; and according to the collected image in real time and the interactive special effect and the result special effect displayed in the interaction process, generating a video file. The interaction process is increased to improve the user activeness and allow the video file to comprise a plurality of wonderful interaction moments so as to enrich the video content and improve the video interestingness.

Owner:TENCENT TECH (SHENZHEN) CO LTD

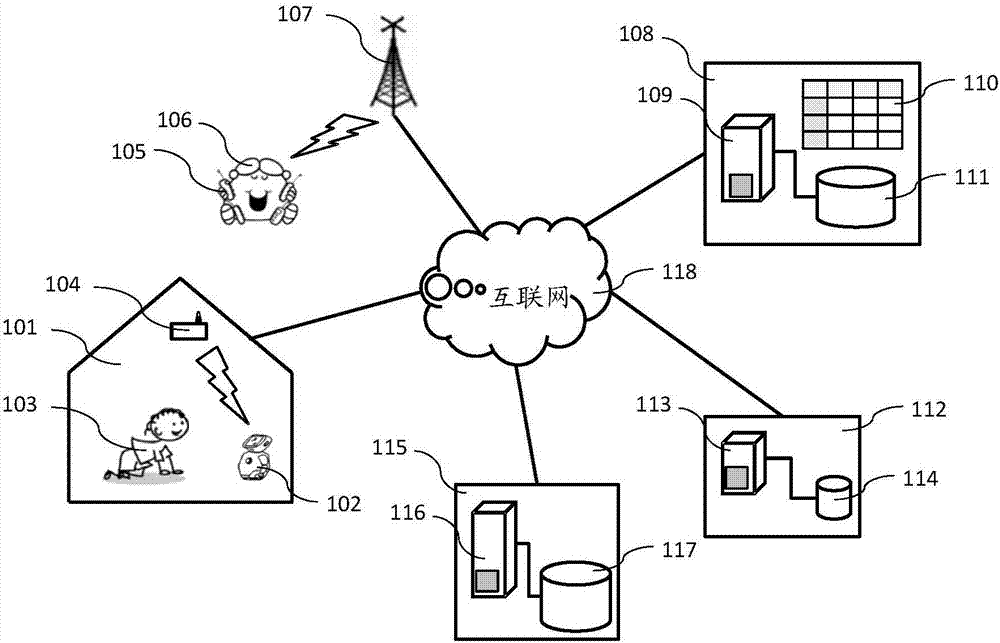

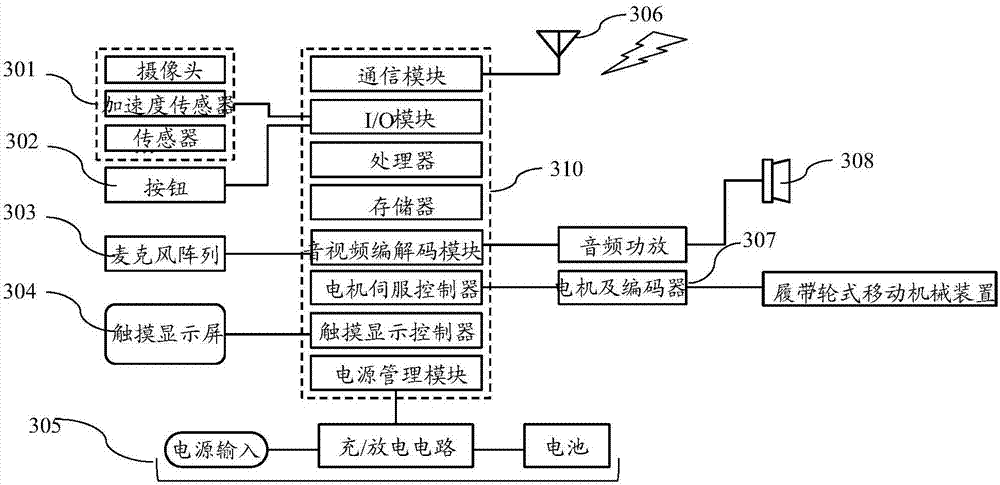

Data processing method and device for nursing robot

ActiveCN107030691ASuitable for interactiveProgramme controlProgramme-controlled manipulatorModel engineAlgorithm

The invention discloses a data processing method and device for a nursing robot. The method and device are used for solving the problem that a nursing robot in the prior art can only be selected in a set interaction mode by calculating the emotional state of an interaction object and cannot provide a more proper interaction mode for the interaction object. The method includes the steps that a model engine receives the data of a target object to generate a growth model capacity parameter matrix of the target object, the model engine adjusts capacity parameter adjustment values in the growth model capacity parameter matrix according to an adjustment formula coefficient or a standard growth model capacity parameter matrix, the adjusted capacity parameter adjustment values are determined, and the model engine judges whether the adjusted capacity parameter adjustment values exceed a preset threshold value or not; and if the adjusted capacity parameter adjustment values are within the preset threshold value range, the model engine sends the adjusted capacity parameter adjustment values to a machine learning engine.

Owner:HUAWEI TECH CO LTD

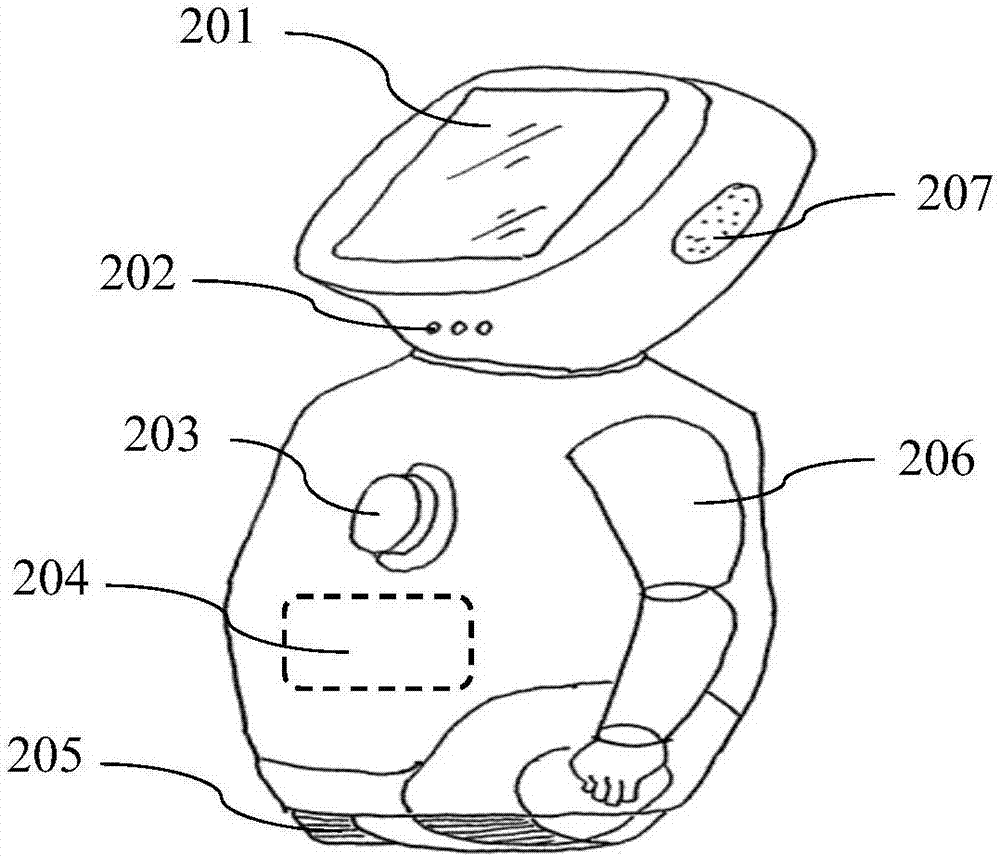

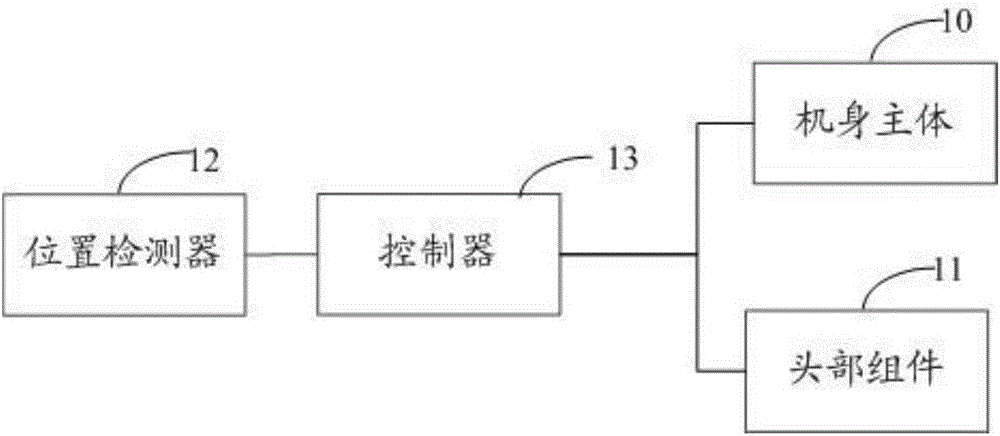

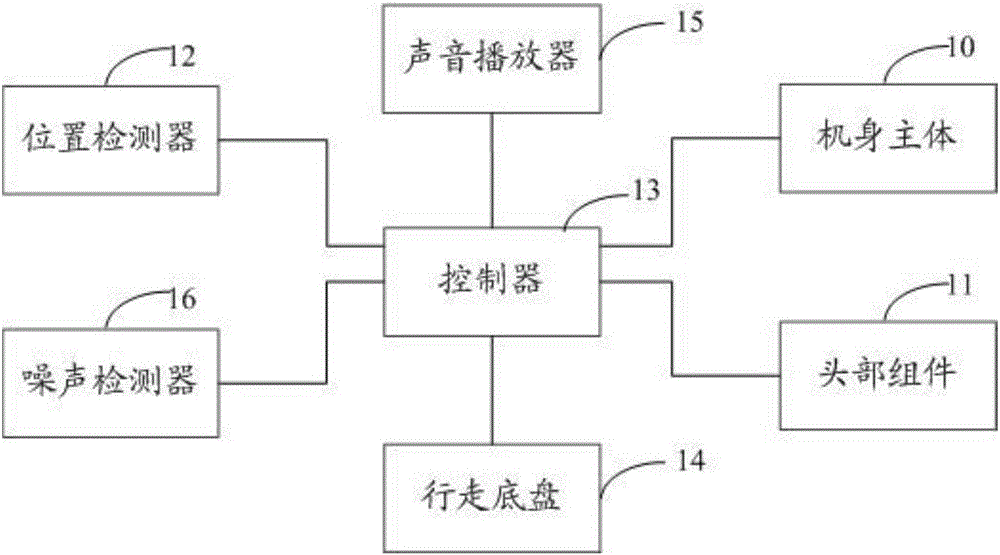

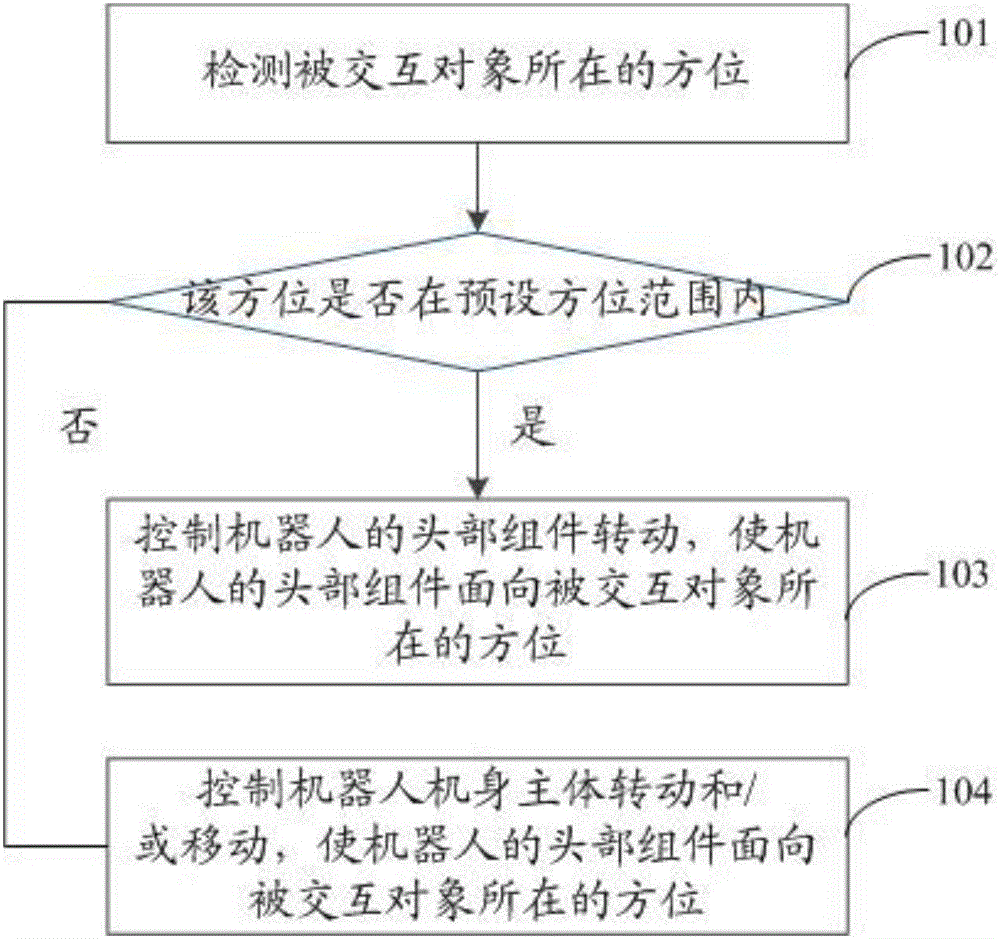

The robot and robot control method

ActiveCN106335071AImprove human-computer interactionProgramme-controlled manipulatorRobot controlInteraction object

The application discloses a robot and a robot control method. The robot comprises a main body, a head subassembly, a position detector and a controller; in which, head subassembly is connected to the main body in a rotatable way; the position detector is used to detecting the orientation of the interaction object; the controller is used to control the rotation of the head assembly so that the head assembly faces the orientation of the interactive object. Through the medium of this scheme, head assembly of the robot can be rotated to a corresponding direction as per position change of the interactive object so as to expand the human-machine interaction performance of the robot.

Owner:上海木木聚枞机器人科技有限公司

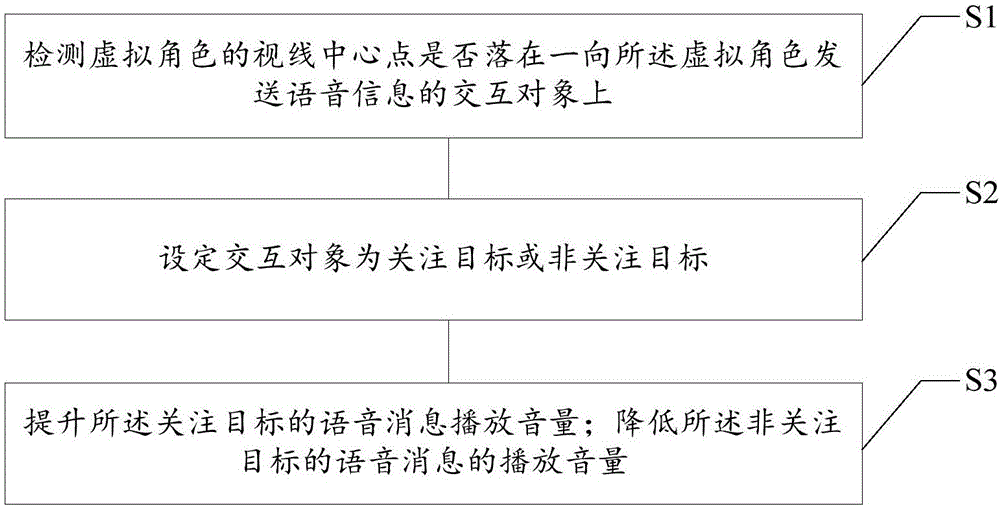

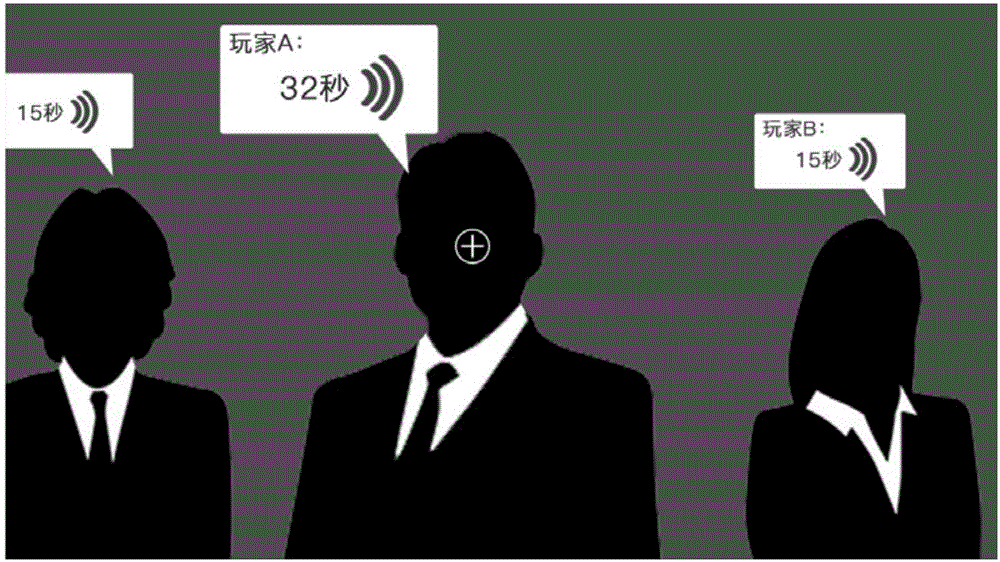

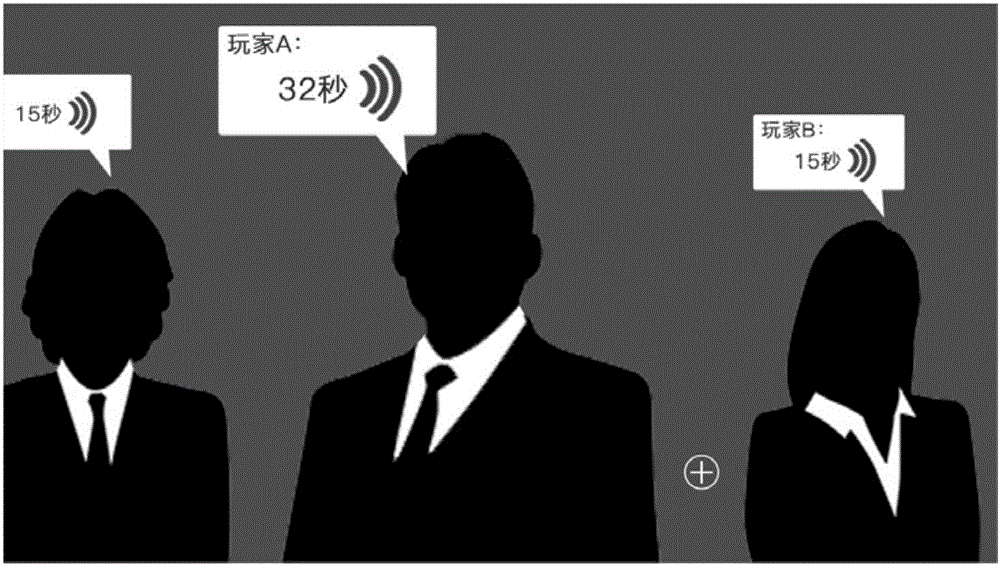

Virtual reality system and voice interaction method and device

ActiveCN106774830AIncrease playback volumePlay volume downInput/output for user-computer interactionSound input/outputVisual field lossInteraction object

The invention relates to a virtual reality system and a voice interaction method and device. The voice interaction method comprises the steps that whether a sight-line central point of a virtual character in a virtual reality scene is located on an interaction object sending a voice message to the virtual character or not is detected; when it is determined that the sight-line central point of the virtual character is located on the interaction object sending the voice message to the virtual character, the interaction object serves as a concerned target, and other interaction objects sending voice messages to the virtual character in a visual field range of the virtual character serveas non-concerned targets; the playing volume of the voice message sent by the concerned target to the virtual character is increased, and the playing volumes of the voice messages sent by the non-concerned targets to the virtual character are decreased. The virtual reality system and the voice interaction method and device can improve the immersion of users.

Owner:NETEASE (HANGZHOU) NETWORK CO LTD

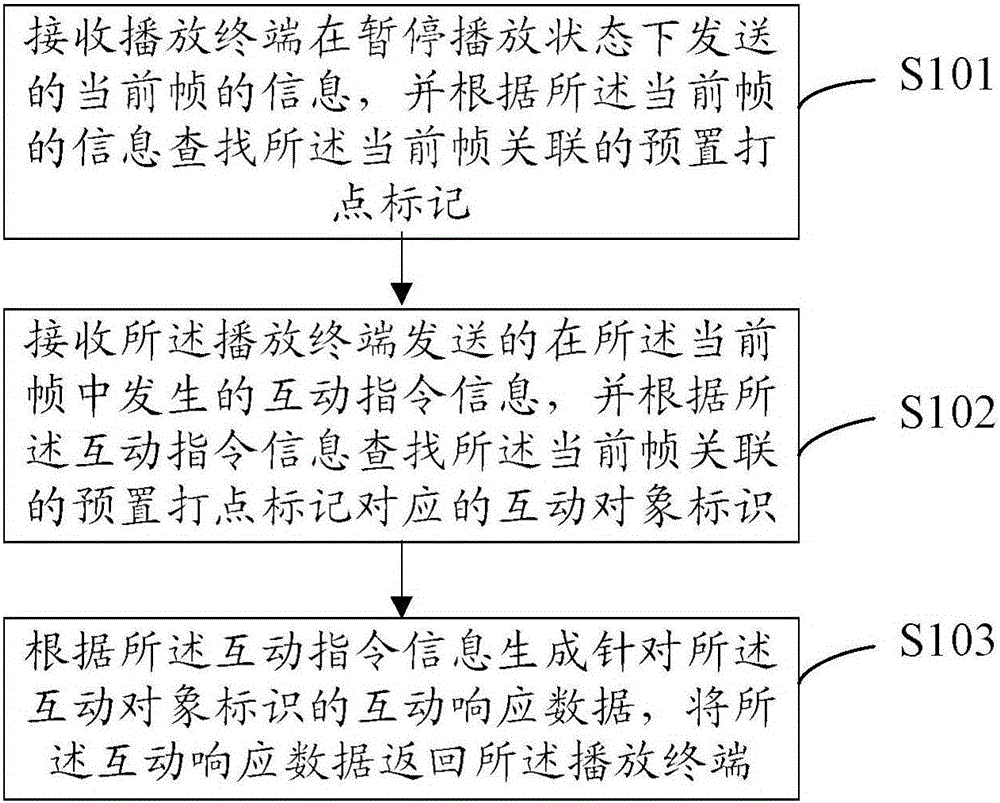

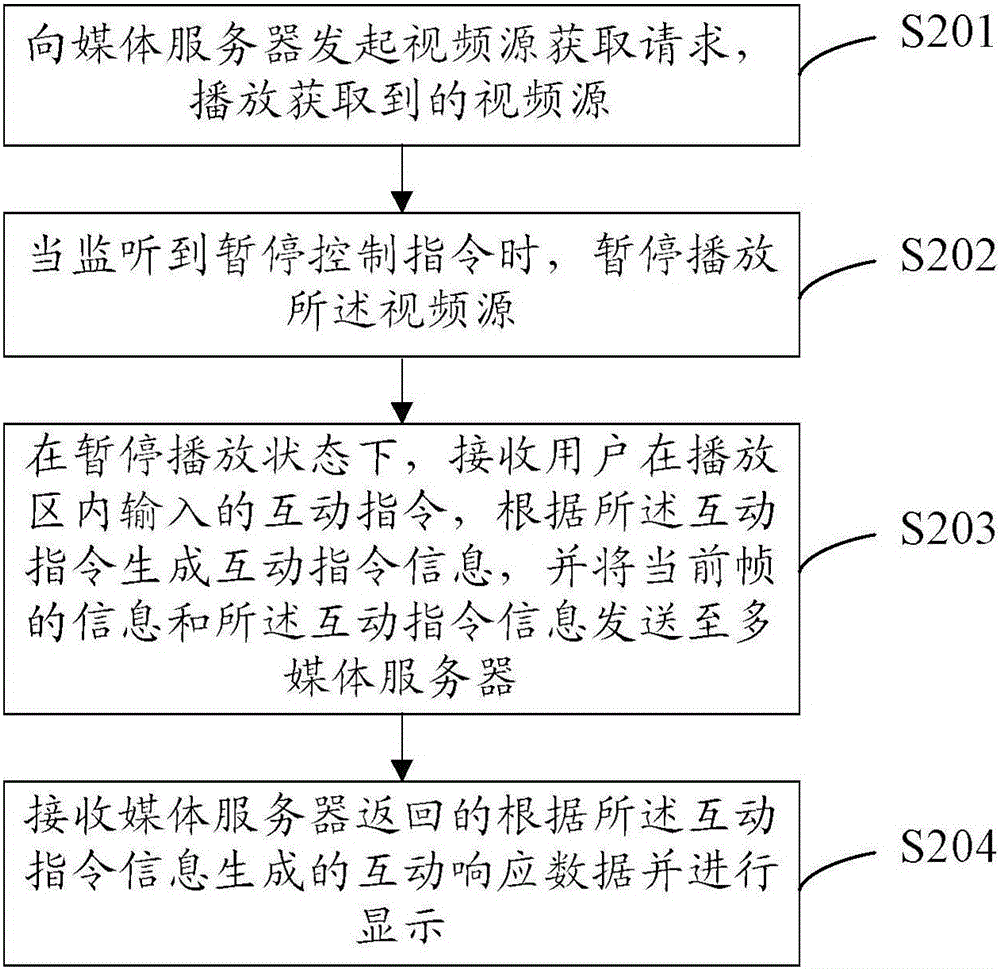

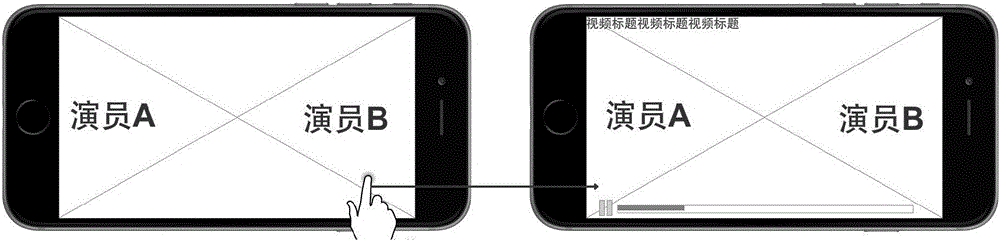

Video playing method, playing terminal, and media server

The invention provides a video playing method, a playing terminal, and a media server. The video playing method is characterized in that information of a current frame transmitted by a playing terminal in a pause-state is received, and a preset dotting marker related to the current frame can be searched according to the information of the current frame; the interaction instruction information generated in the current frame transmitted by the playing terminal can be received, and the interaction object identification corresponding to the dotting marker related to the current frame can be searched according to the interaction instruction information; the interaction response data aiming at the interaction object identification can be generated according to the interaction instruction information, and the interaction response data can be returned to the playing terminal. The corresponding interaction response data can be generated by aiming at the plot and the figure corresponding to the current frame according to the interaction instruction, and the user is not required to leave the playing area, and the interaction with the video content can be initiated at any time, and in addition, the correlation between the interaction content and the video plot and figure can be established.

Owner:LETV HLDG BEIJING CO LTD +1

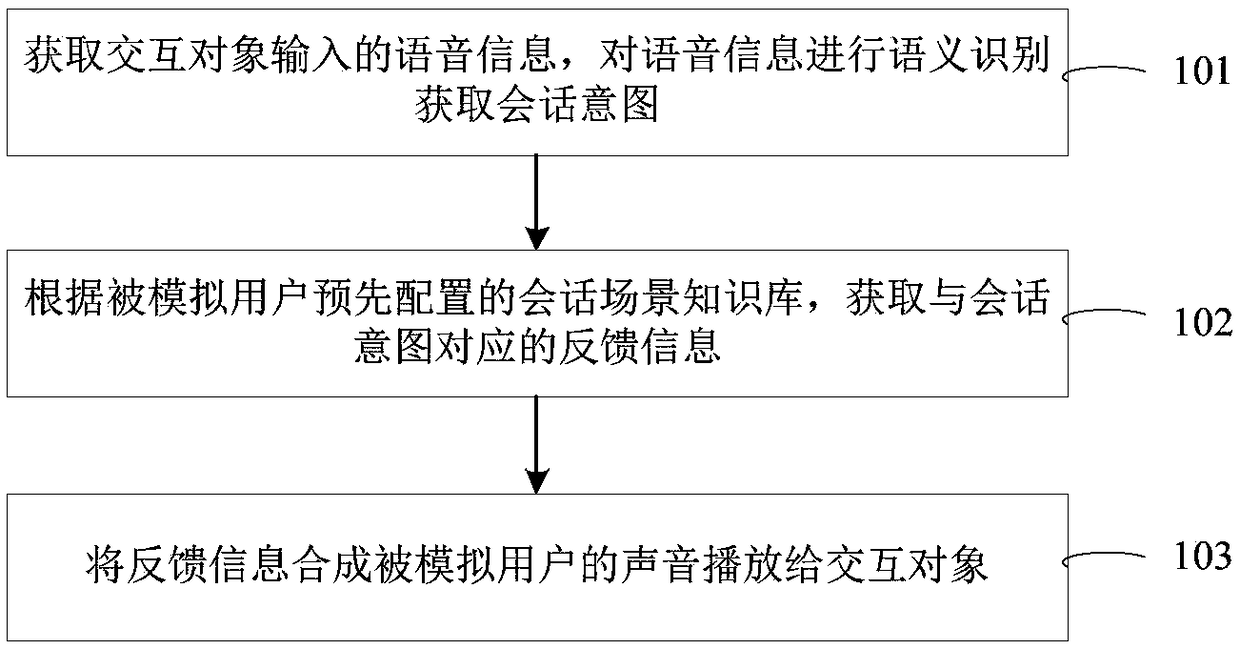

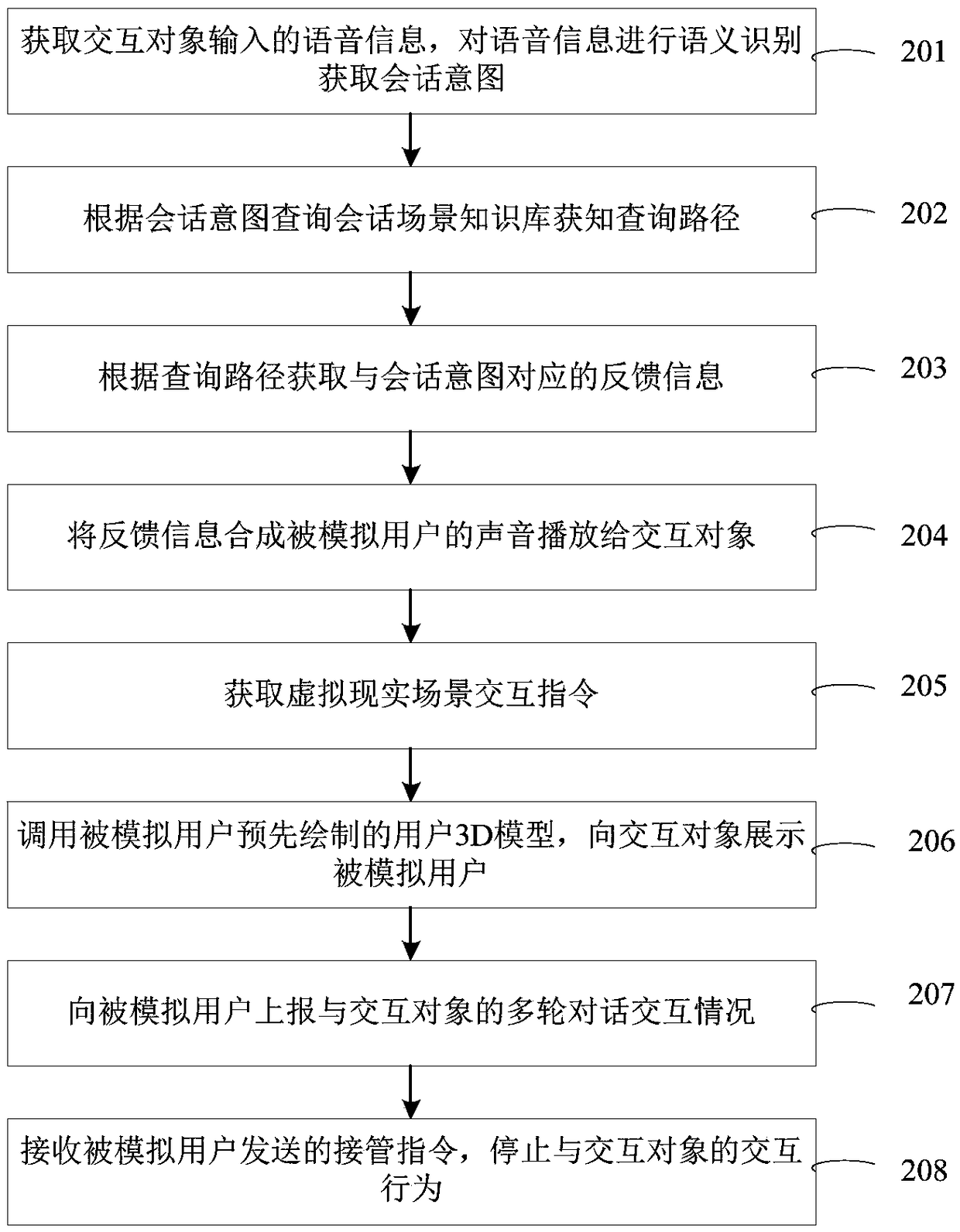

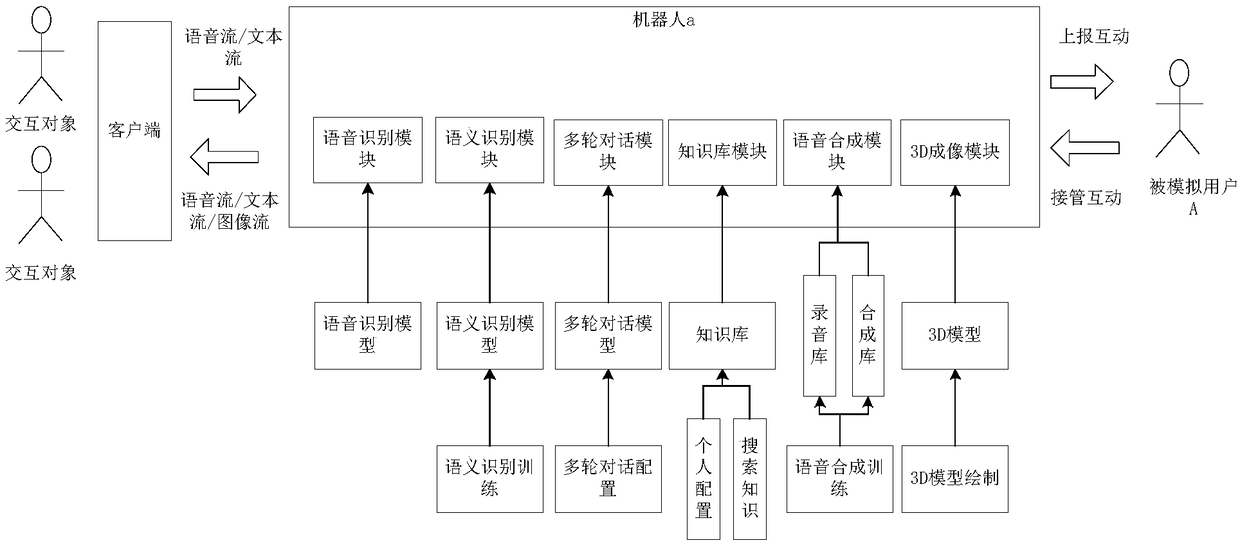

Method and device for robot interaction and equipment

InactiveCN108897848AIncrease freedomImprove completenessSpeech recognitionSpecial data processing applicationsSemanticsHuman–robot interaction

The invention discloses a method and device for robot interaction and equipment. The method includes the steps that voice information input by an interaction object is obtained and subjected to semantics recognition to obtain a dialogue intention; according to a dialogue scene knowledge library preconfigured by a simulated user, feedback information corresponding to the dialogue intention is obtained; the feedback information is synthesized into voice of the simulated user to be broadcast to the interaction object. In this way, a robot can simulate a specific person by a high degree, and the freedom degree and intelligence degree of robot interaction are increased.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

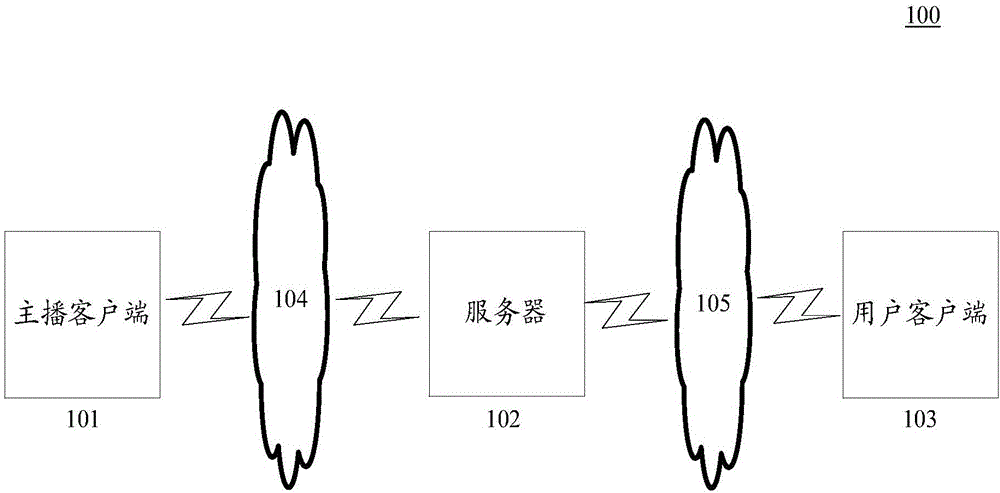

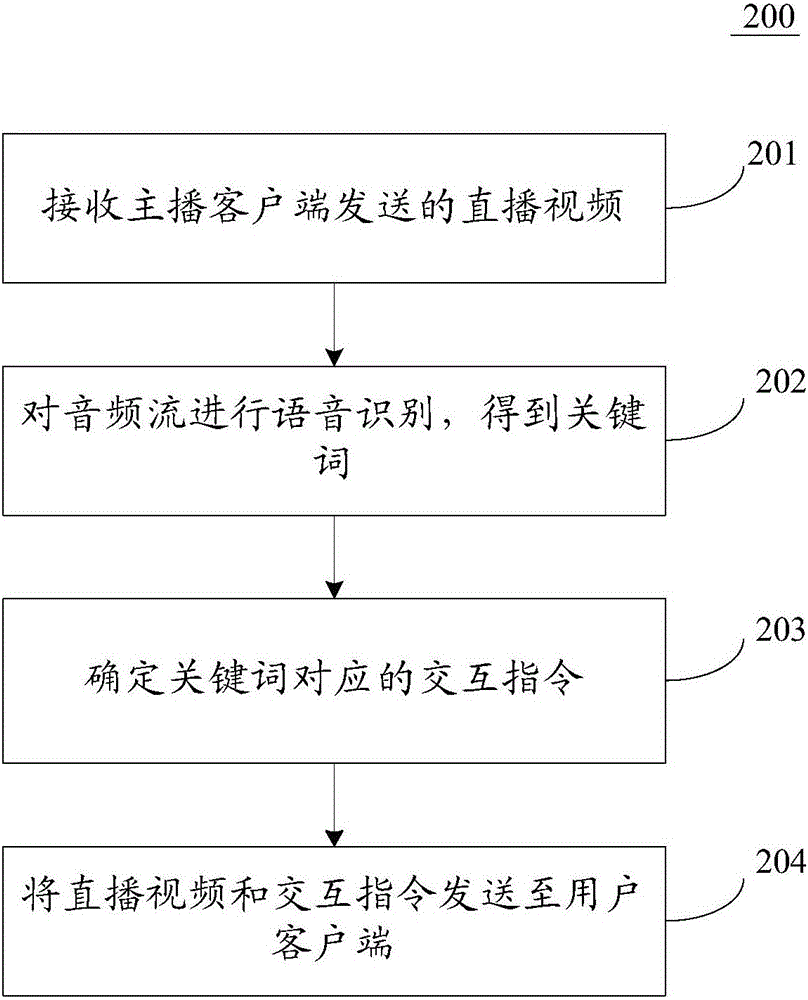

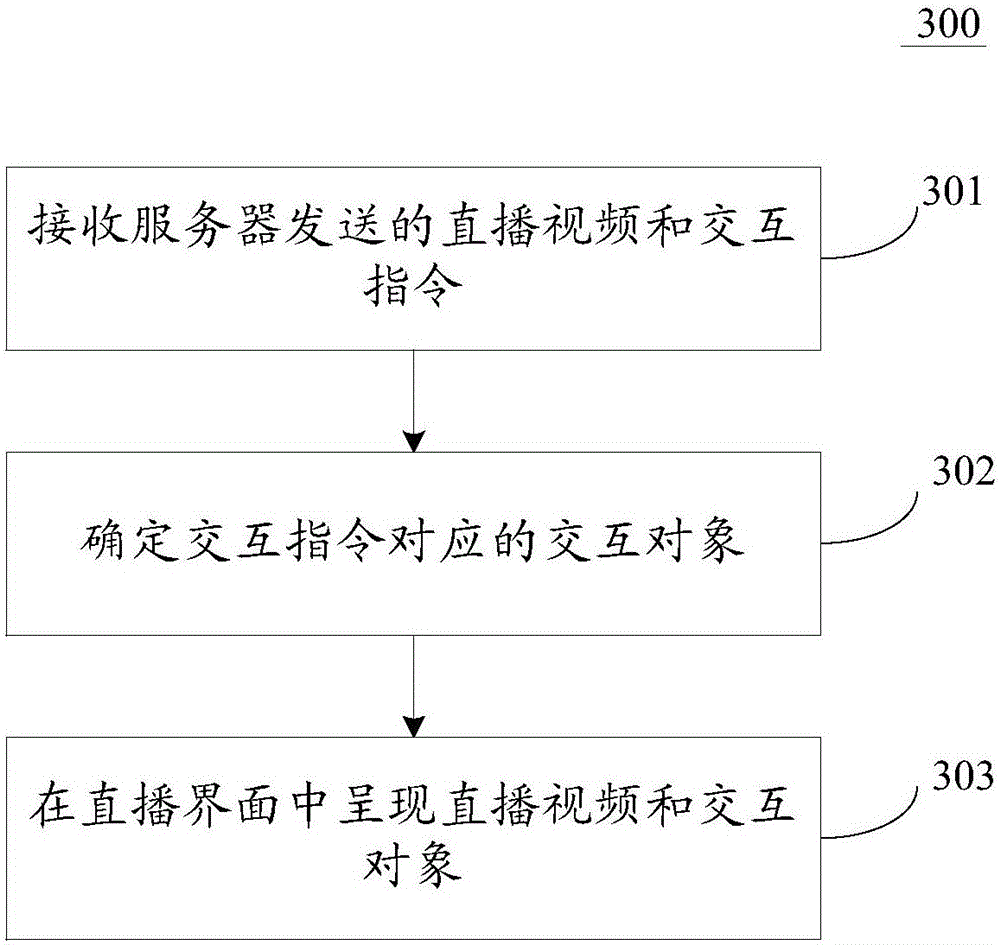

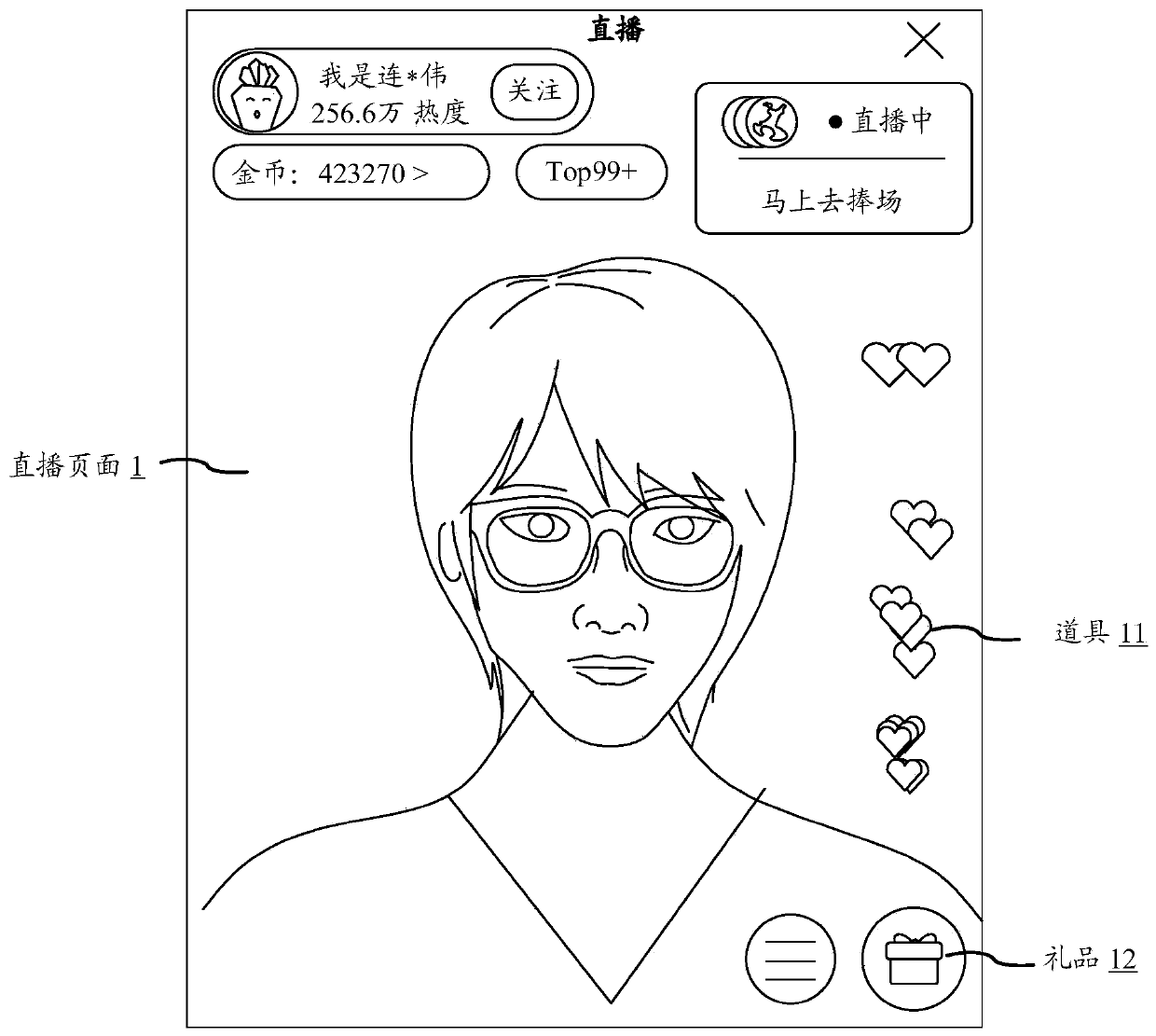

Interaction method and device applied to live video streaming

ActiveCN106303658AGuaranteed smoothnessEasy to operateTransmissionSelective content distributionInteraction objectLive video

The invention discloses an interaction method and device applied to live video streaming. One specific embodiment of the method comprises the following steps: receiving a live video transmitted by a host client, wherein the live video is generated by the host client through real-time recording, and comprises video streams and audio streams; performing speech recognition on the audio streams to obtain a keyword; determining an interaction command corresponding to the keyword; and transmitting the live video and the interaction command to a user client in order to present the live video and an interaction object corresponding to the interaction command in a live broadcast interface of the user client. Through adoption of the interaction method and device, operations of a host in the interaction between the host and a user are simplified on the one hand; current live broadcast content does not need to be paused on the other hand; and smoothness of the live video streaming is kept.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

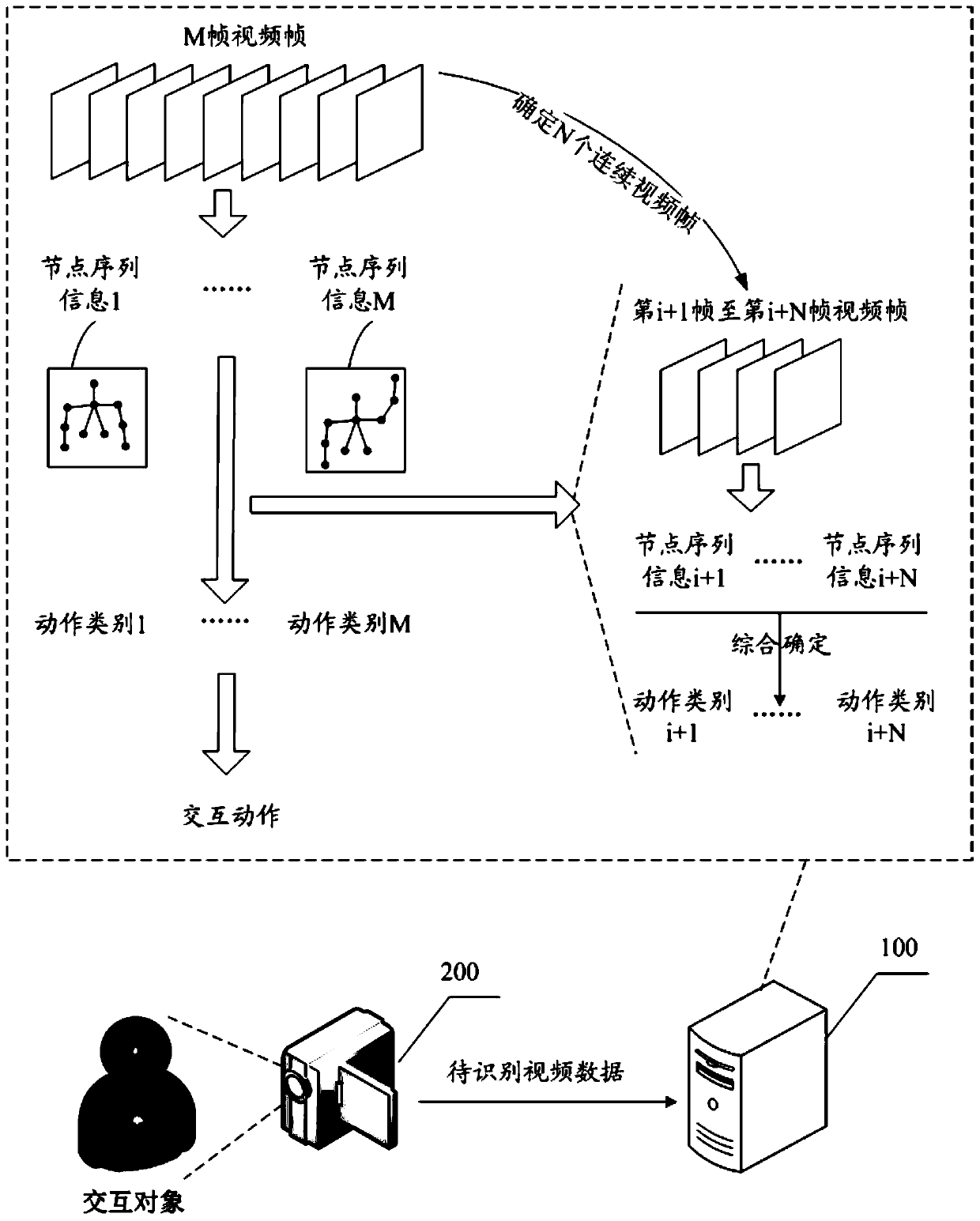

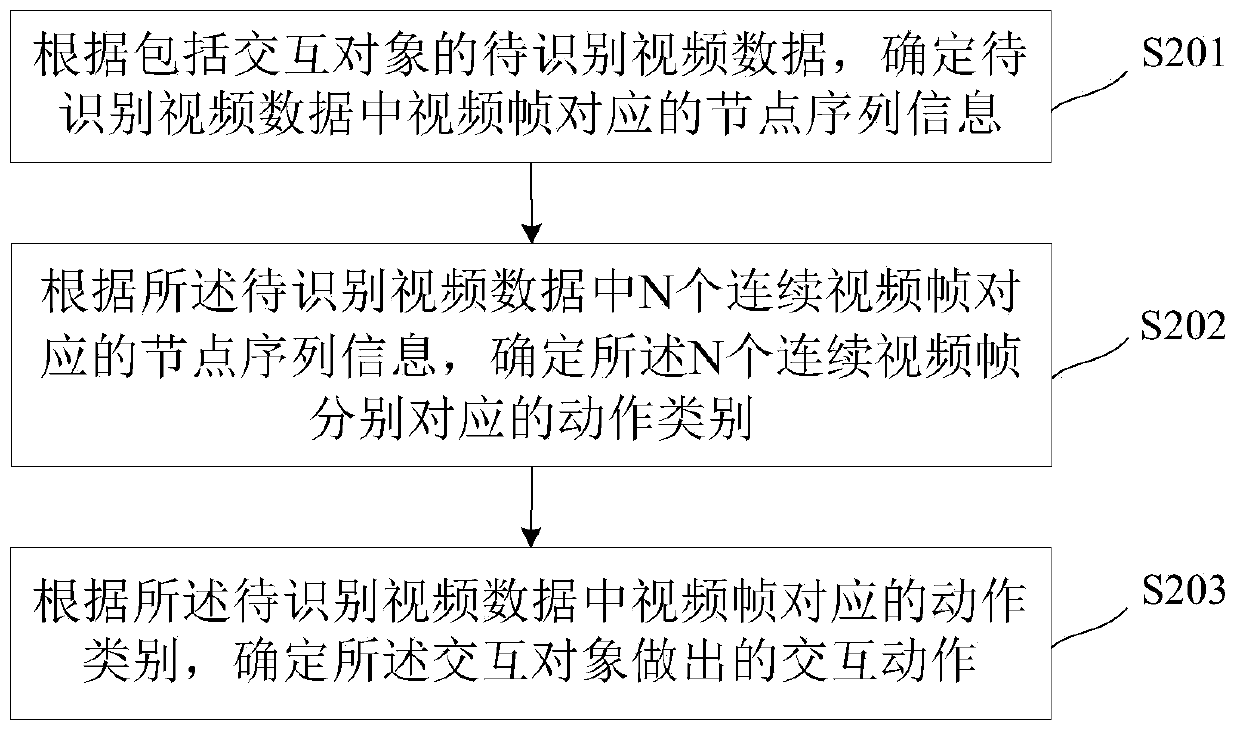

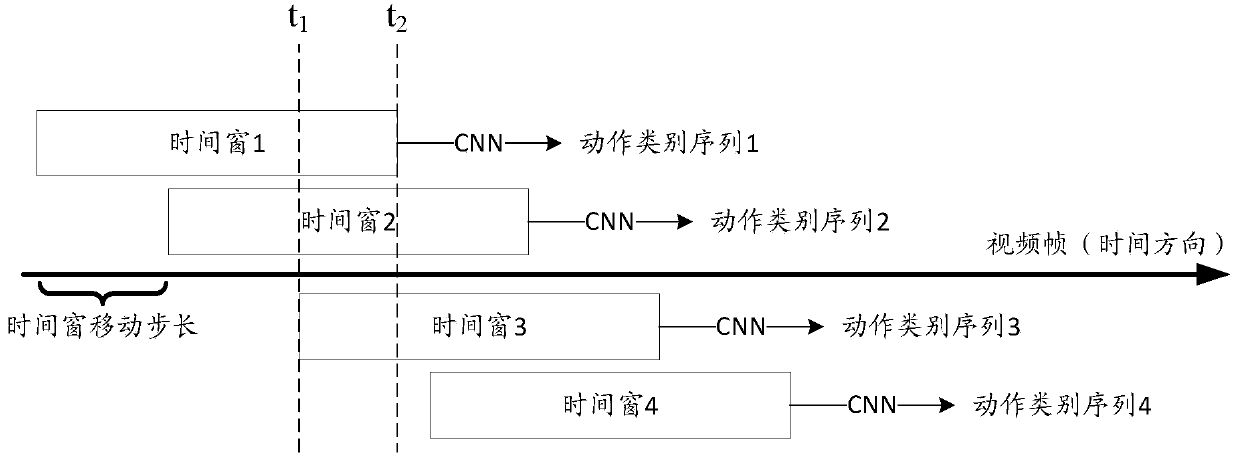

Action recognition method based on artificial intelligence and related device

ActiveCN110765967ALow implementation costGood action recognition accuracyCharacter and pattern recognitionNeural architecturesInteraction objectMachine learning

The embodiment of the invention discloses an action recognition method based on artificial intelligence and a related device. The method comprises steps of determining node sequence information corresponding to the video frames in the to-be-identified video data, determining action types corresponding to the video frames according to the node sequence information corresponding to the N continuousvideo frames in the to-be-identified video data, and determining which interaction action the interaction object makes according to the action types. When determining an action category correspondingto a video frame, information carried by N continuous video frames where the video frame is located is referred to, or related information of the video frame in past and / or future time is referred to;therefore, more effective information is introduced; even if the to-be-identified video data is acquired by adopting a non-special action identification acquisition device, relatively high action identification precision can also be realized in a mode of determining the action type corresponding to each video frame through a group of continuous video frames, so that the application range and theimplementation cost of an intelligent interaction scene are reduced.

Owner:TENCENT TECH (SHENZHEN) CO LTD

System and method for interaction object reconciliation in a blockchain environment

ActiveUS10192073B2Secure data communicationEncryption apparatus with shift registers/memoriesUser identity/authority verificationObject basedNetwork connection

Owner:ROCK INNOVATION LLC

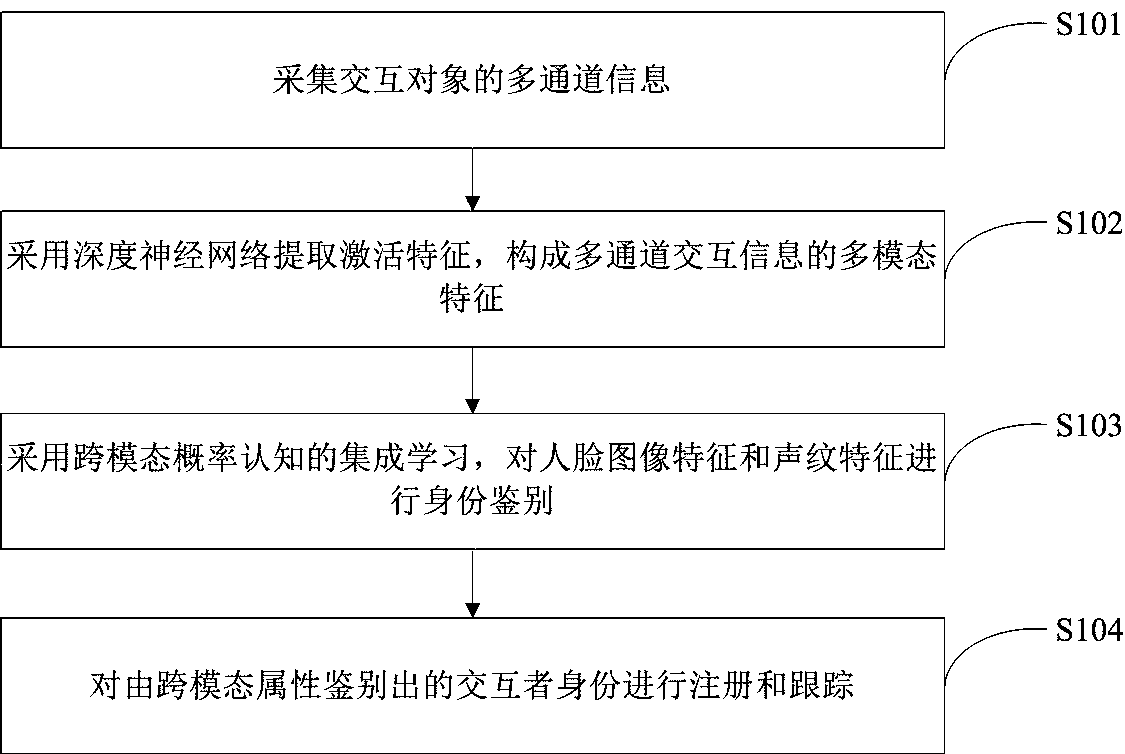

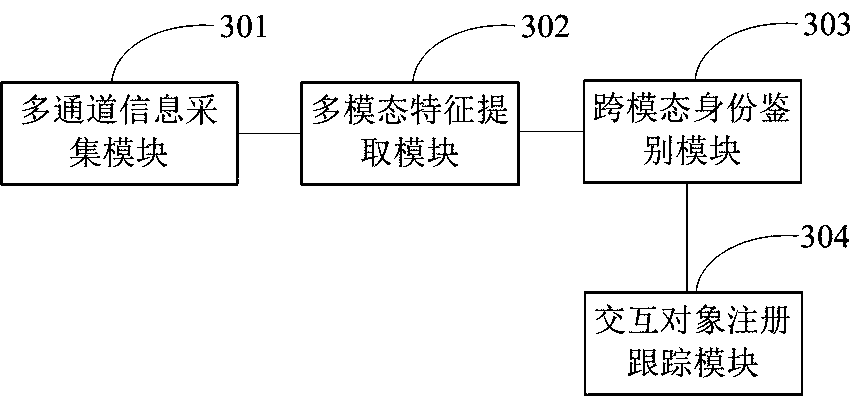

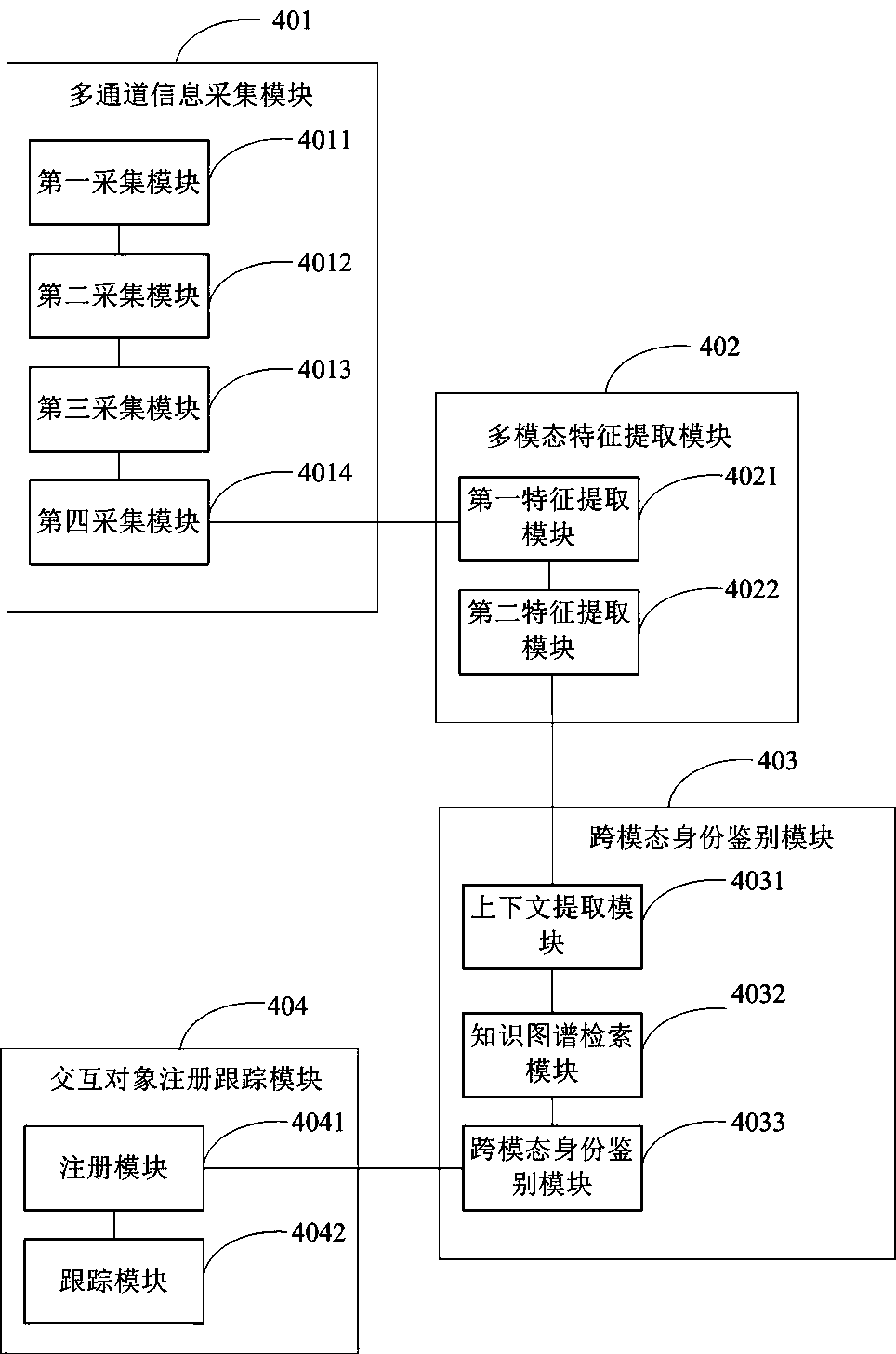

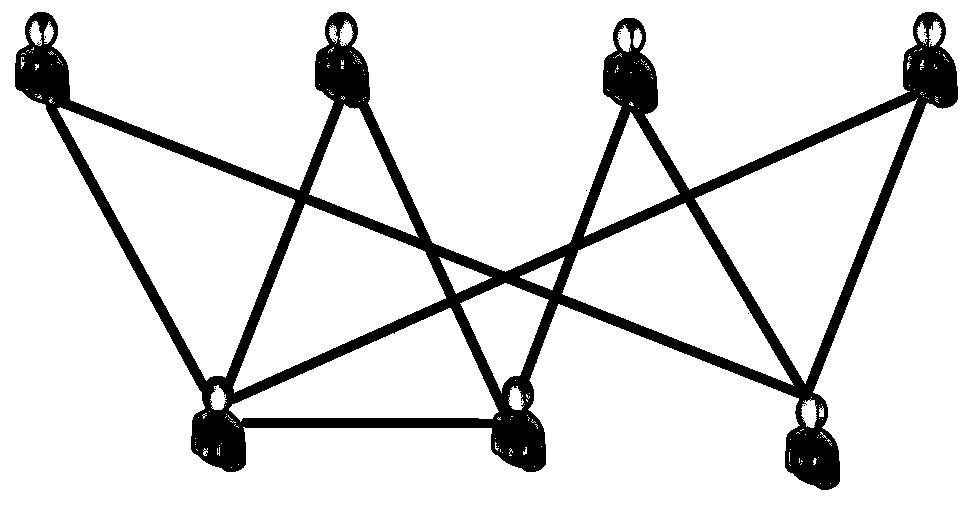

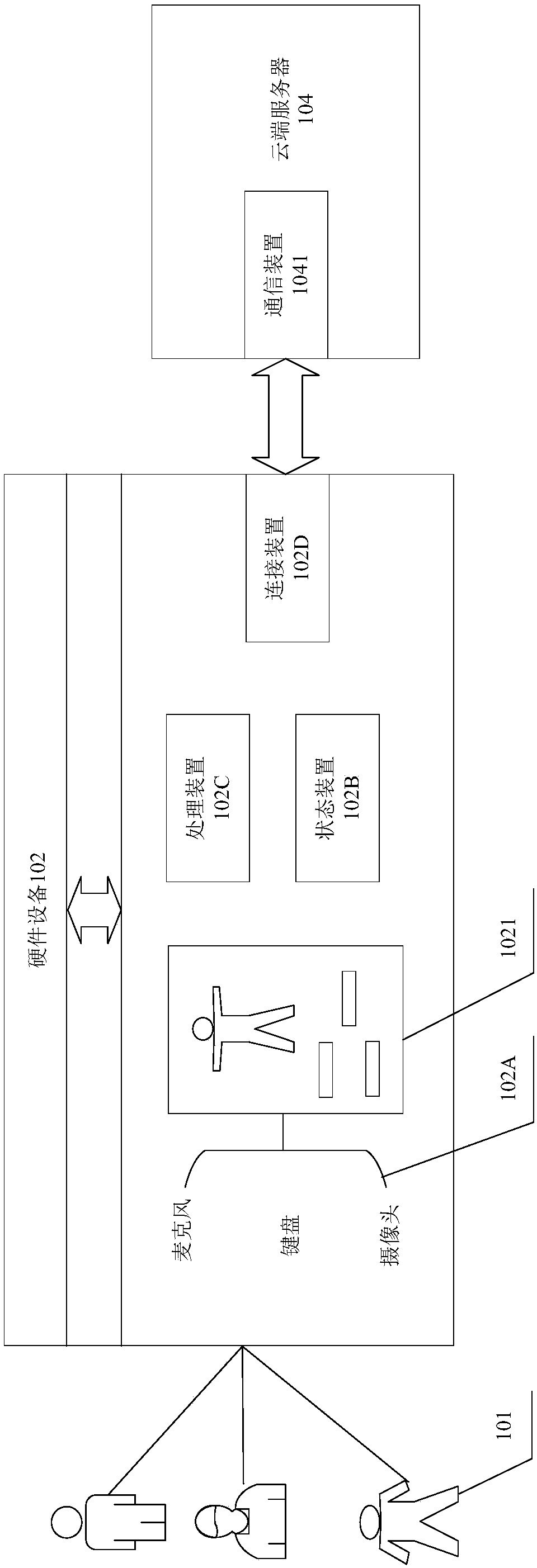

Interactive identity authenticating and tracking method and system based on multi-modal intelligent robot

ActiveCN107808145AResolve identifiabilitySolve the problem of tracking interaction identitySpeech analysisCharacter and pattern recognitionCognitionInteraction object

The invention belongs to the field of intelligent robots, particularly relates to a method for authenticating and tracking the identity of an interaction person for an intelligent dialogue robot, andespecially particularly relates to an interactive identity authenticating and tracking method and system based on a multi-modal intelligent robot. The interactive identity authenticating and trackingmethod based on the multi-modal intelligent robot comprises the following steps that: collecting the multichannel information of an interaction object; adopting a deep neural network to extract activation characteristics, and forming the multimodal characteristics of the multichannel interaction information; adopting ensemble learning of cross-modal probability cognition, and carrying out identityauthentication on face image characteristics and vocal print characteristics; and carrying out registration and tracking by the identity, which is authenticated by the cross-modal attribute, of the interaction person. The interactive identity authenticating and tracking system based on the multi-modal intelligent robot comprises a multichannel information collection module, a multi-modal characteristic extraction module, a cross-modal identity authentication module and an interaction object registering and tracking module. By use of the method, the problem that single modal information is inshortage and the interaction identity can not be identified and tracked is solved.

Owner:HENAN UNIVERSITY

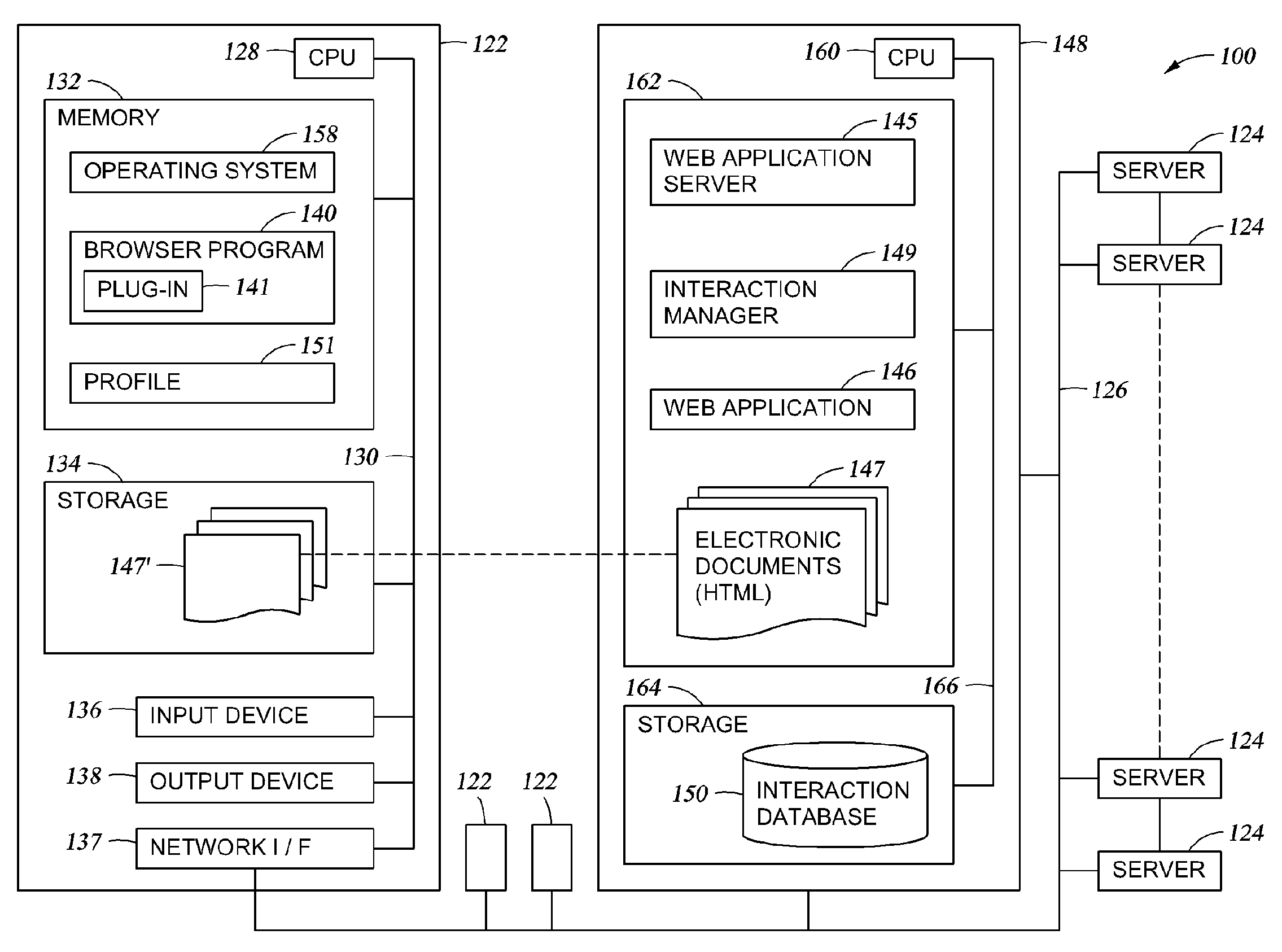

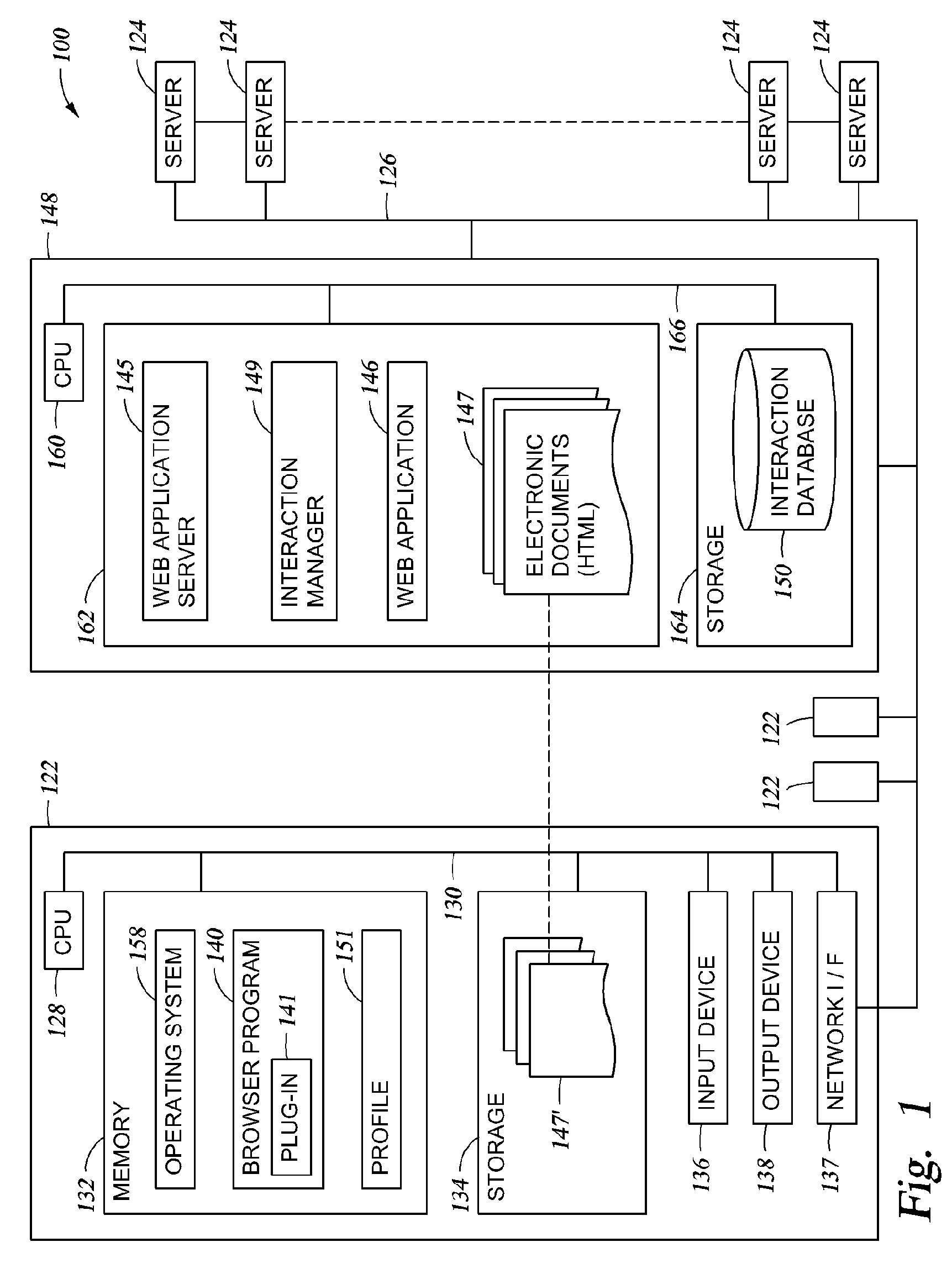

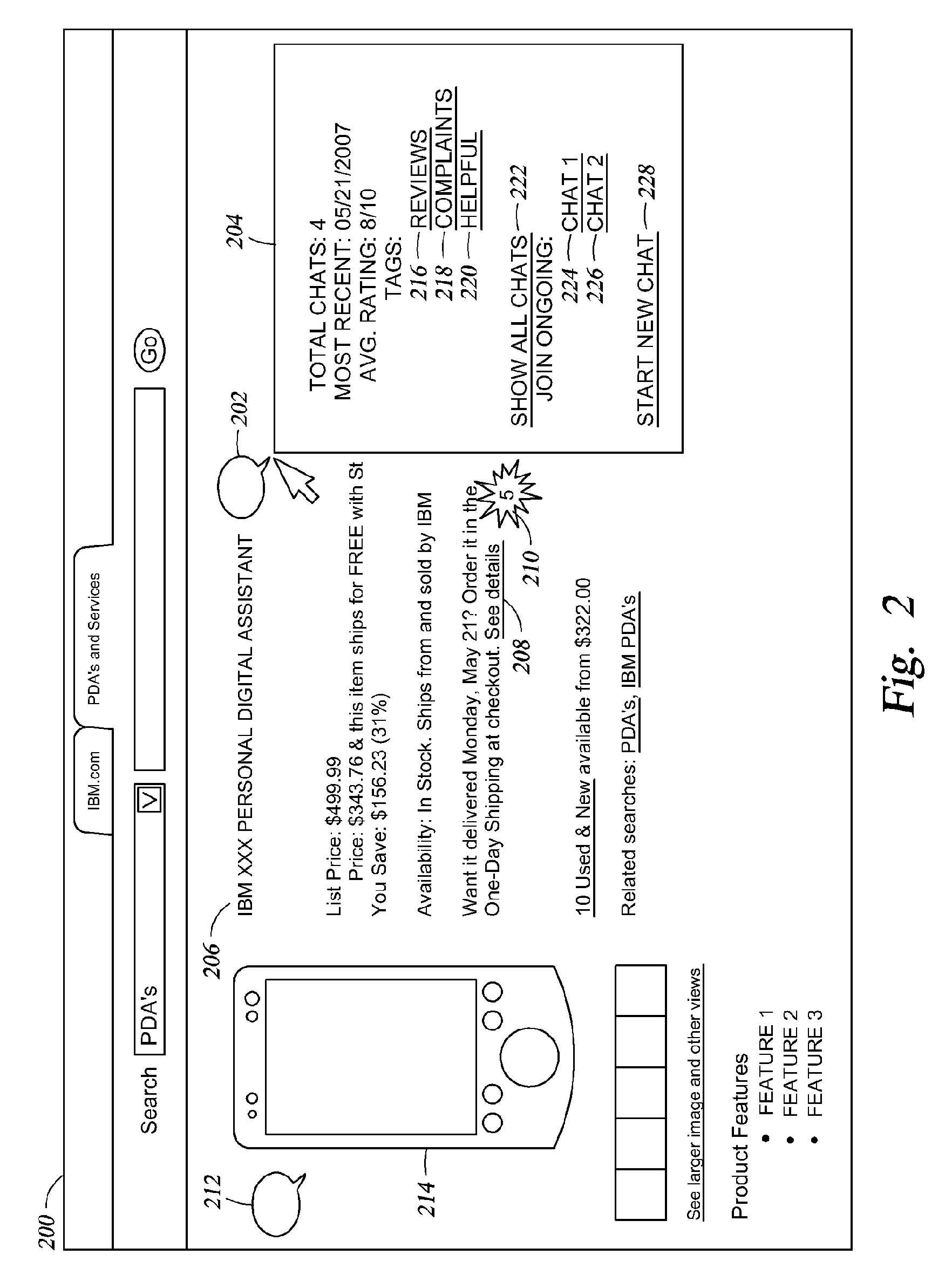

Framework for persistent user interactions within web-pages

ActiveUS20090019372A1Web data navigationSpecial data processing applicationsGraphicsInteraction object

A method, article of manufacture, and apparatus for tracking user interactions comprising receiving a first request associated with a first user, to exchange a communication with a second user about one of a plurality of elements of a web-page, wherein the plurality of elements includes at least one of graphical elements and textual elements; in response to the first request, exchanging a communication between the first user and the second user; capturing the communication; storing an interaction object comprising the communication, wherein storing includes associating the interaction object with the one of the plurality of elements of the web-page; and serving a modified version of the web-page to a third user; wherein the modified version specifies the interaction object being associated with the one of the plurality of elements of the web-page.

Owner:IBM CORP

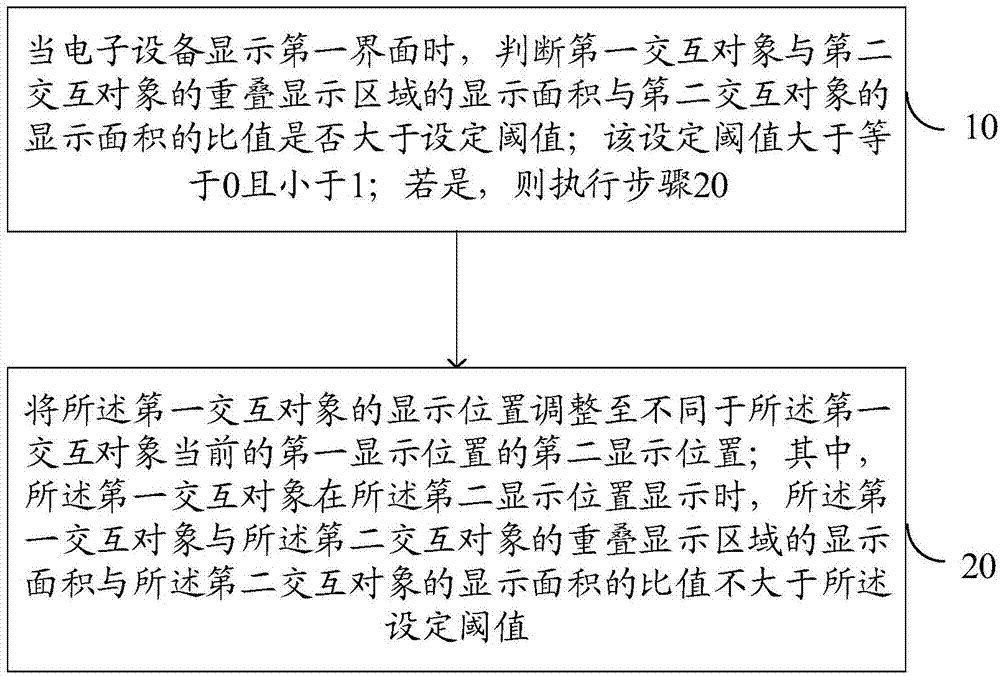

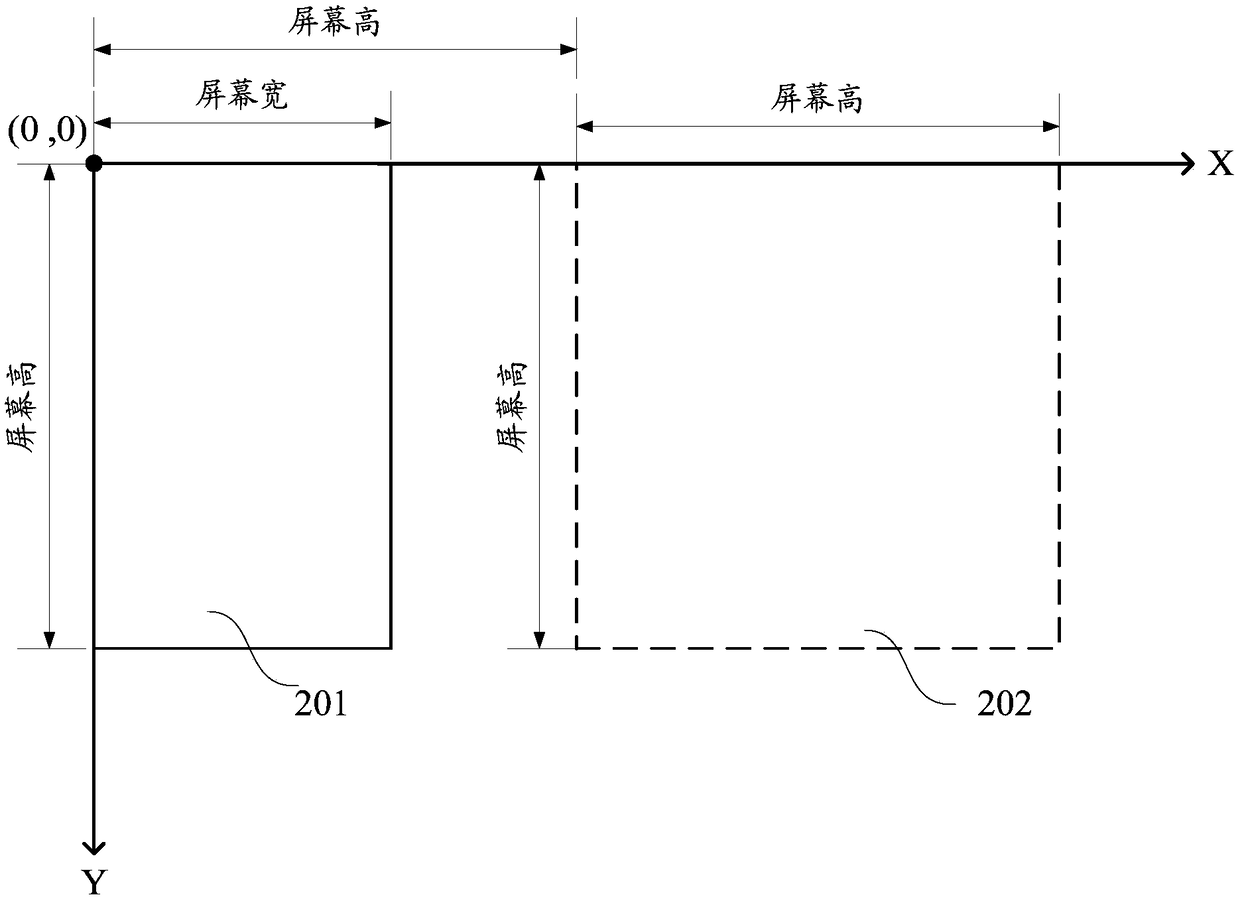

Display method and electronic device

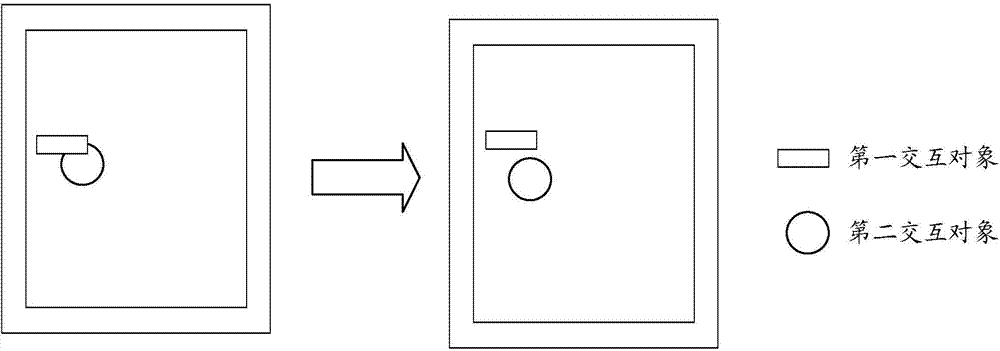

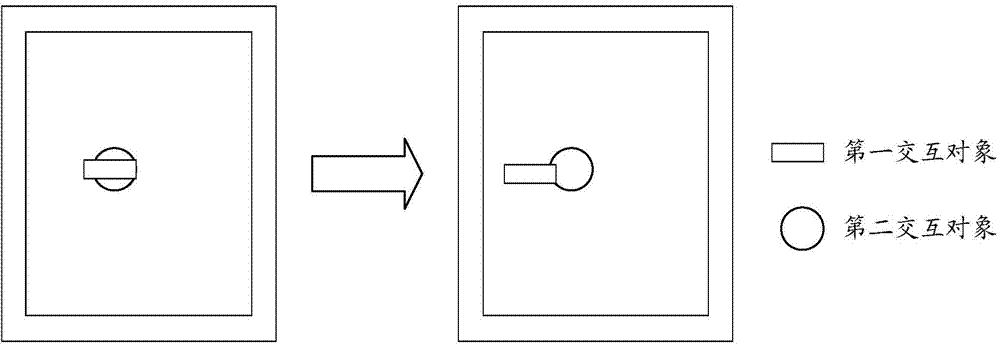

ActiveCN103793137AImprove user experienceInput/output processes for data processingInteraction objectElectronic equipment

The invention discloses a display method and an electronic device, wherein the display method and the electronic device are used for solving the technical problem in the prior art that front-end display interaction objects in an electronic device block other interaction objects, as a result, operation on the blocked interaction objects can not be correctly responded to. The display method is applied to the electronic device provided with a display unit. The display unit can display a first interface. A first interaction object is displayed on the first interface. When the first interaction object overlaps the second interaction object different from the first interaction object, the first interaction object is displayed at the front end of the second interaction object. The display method comprises the steps that whether the ratio of the display area of the overlapping display area of the first interaction object and the second interaction object to the display area of the second interaction object is larger than a set threshold value is judged when the first interface is displayed on the electronic device, and if yes, the display position of the first interaction object is adjusted to a second display position different from the current first display position of the first interaction object.

Owner:LENOVO (BEIJING) CO LTD

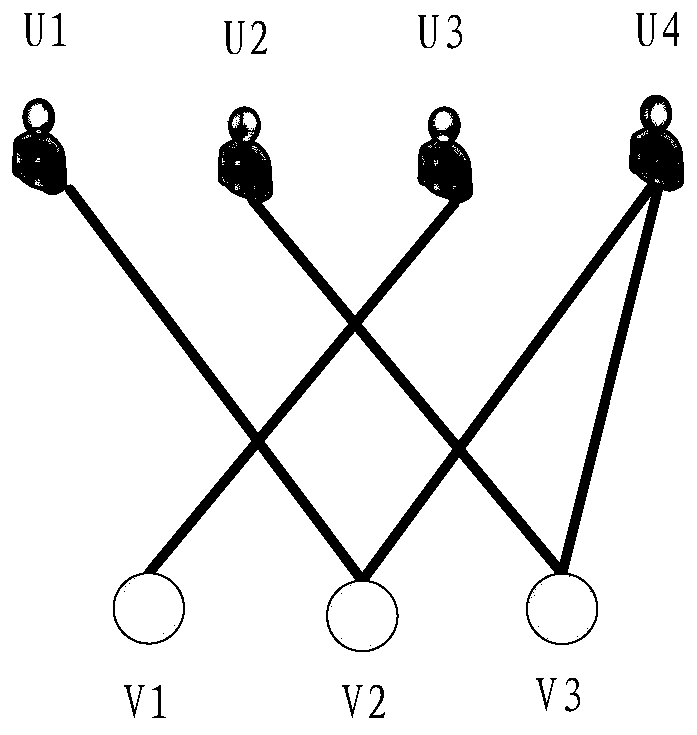

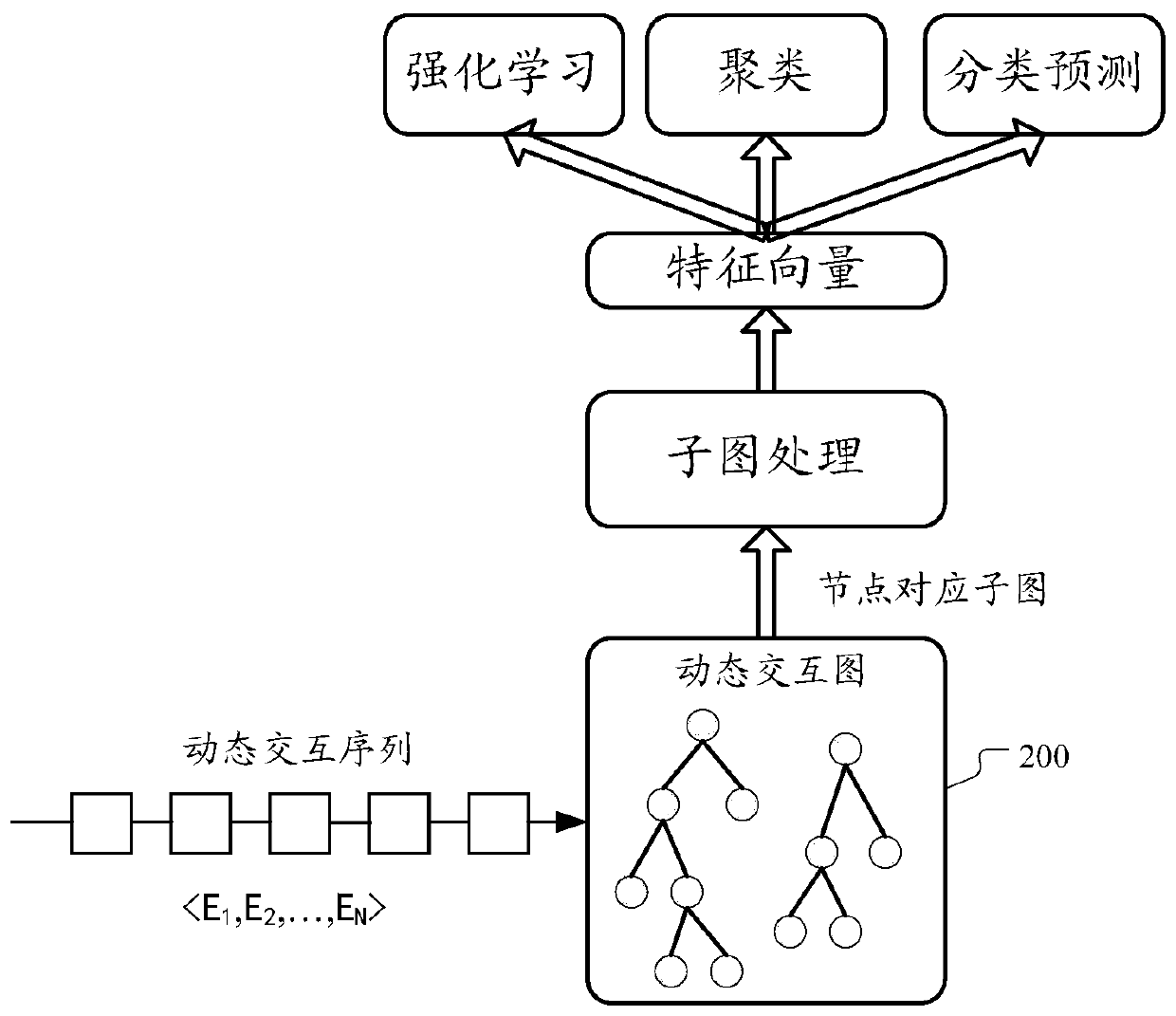

Method and device for processing interaction sequence data

ActiveCN110598847AExpress deep featuresCharacter and pattern recognitionNeural architecturesFeature vectorAlgorithm

The embodiment of the invention provides a method and device for processing interaction sequence data. The method includes the steps: firstly, obtaining a dynamic interaction graph constructed according to a dynamic interaction sequence, wherein the dynamic interaction sequence comprises a plurality of interaction events arranged according to a time sequence, and the dynamic interaction graph comprises nodes representing all interaction objects in all the interaction events, and any node i points to two nodes corresponding to a previous interaction event in which an object represented by the node i participates through a connecting edge; and in the dynamic interaction graph, determining a target sub-graph corresponding to the target node, the target sub-graph comprising nodes starting fromthe target node and arriving within a predetermined range via the connection edge. Therefore, the feature vector corresponding to the target node can be determined based on the node feature of each node contained in the target sub-graph and the pointing relationship of the connection edges between the nodes.

Owner:ADVANCED NEW TECH CO LTD

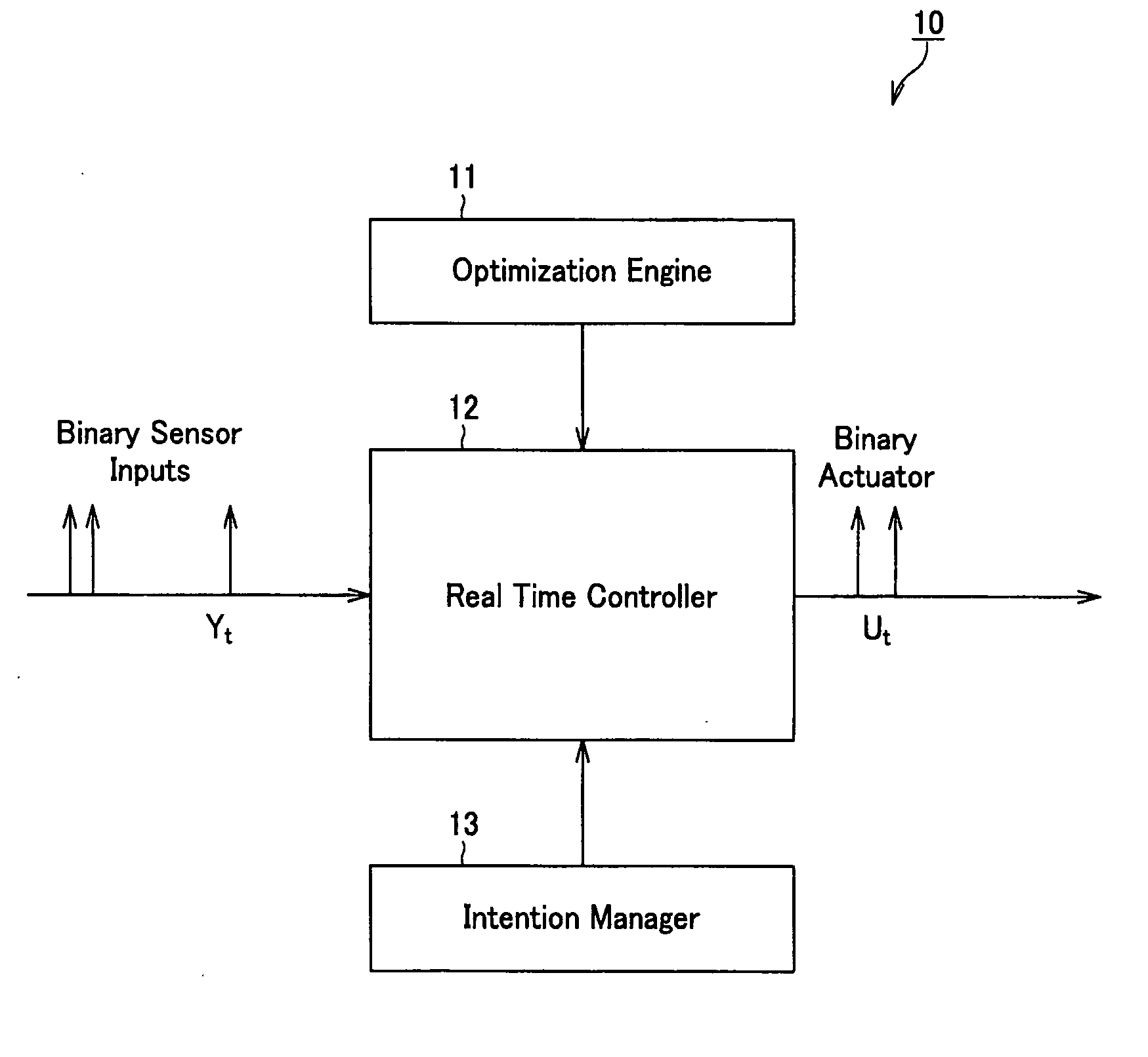

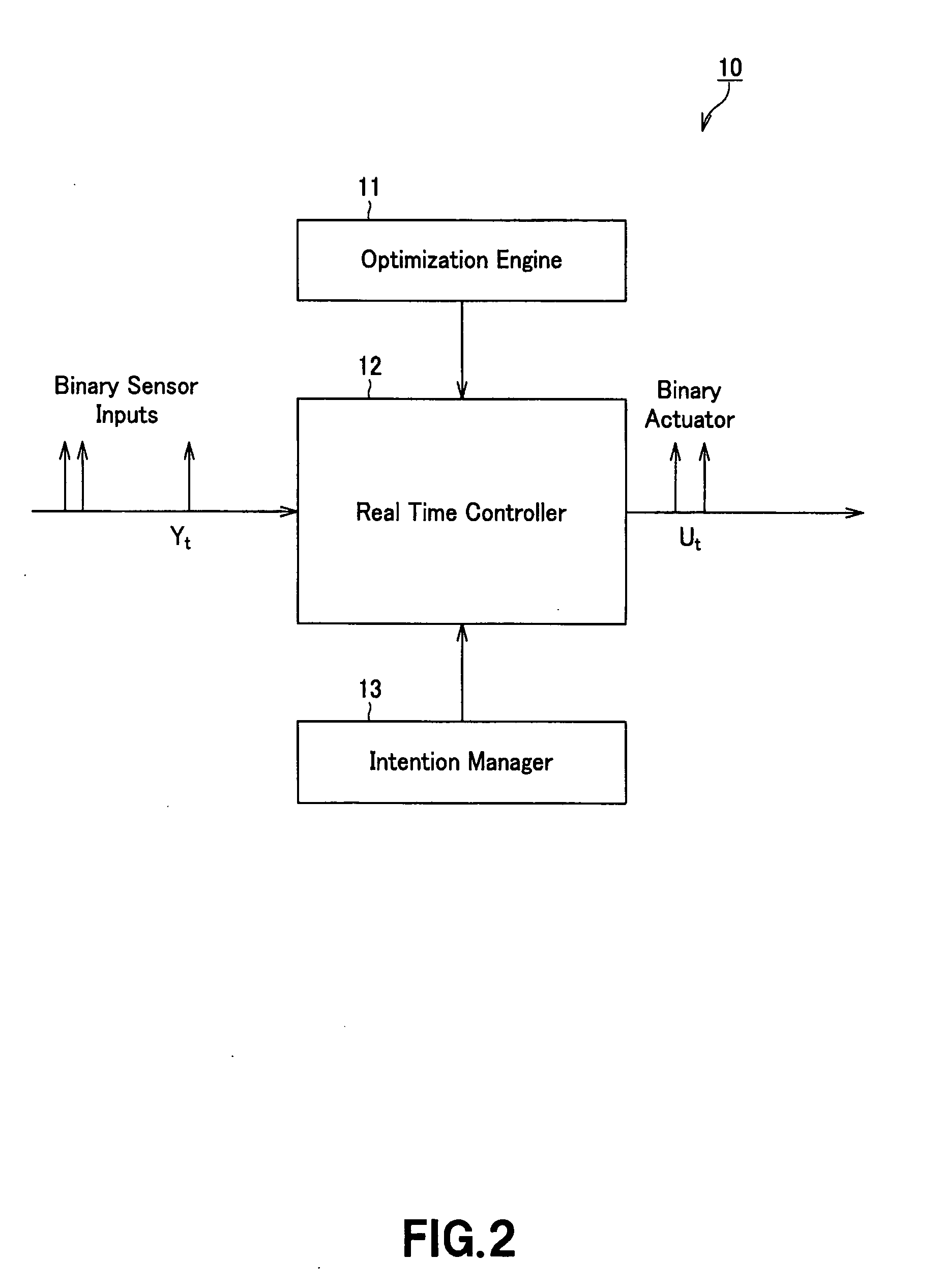

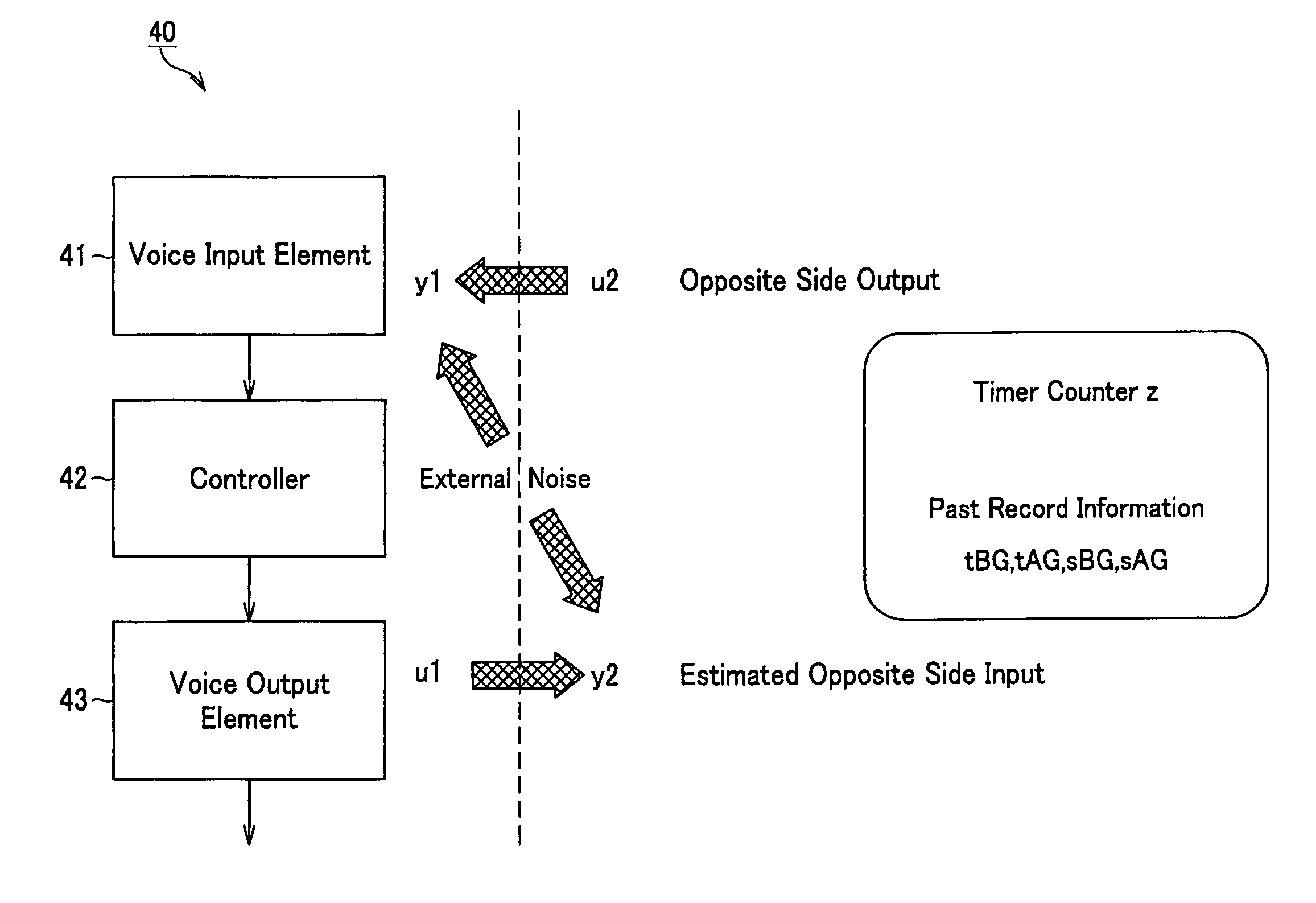

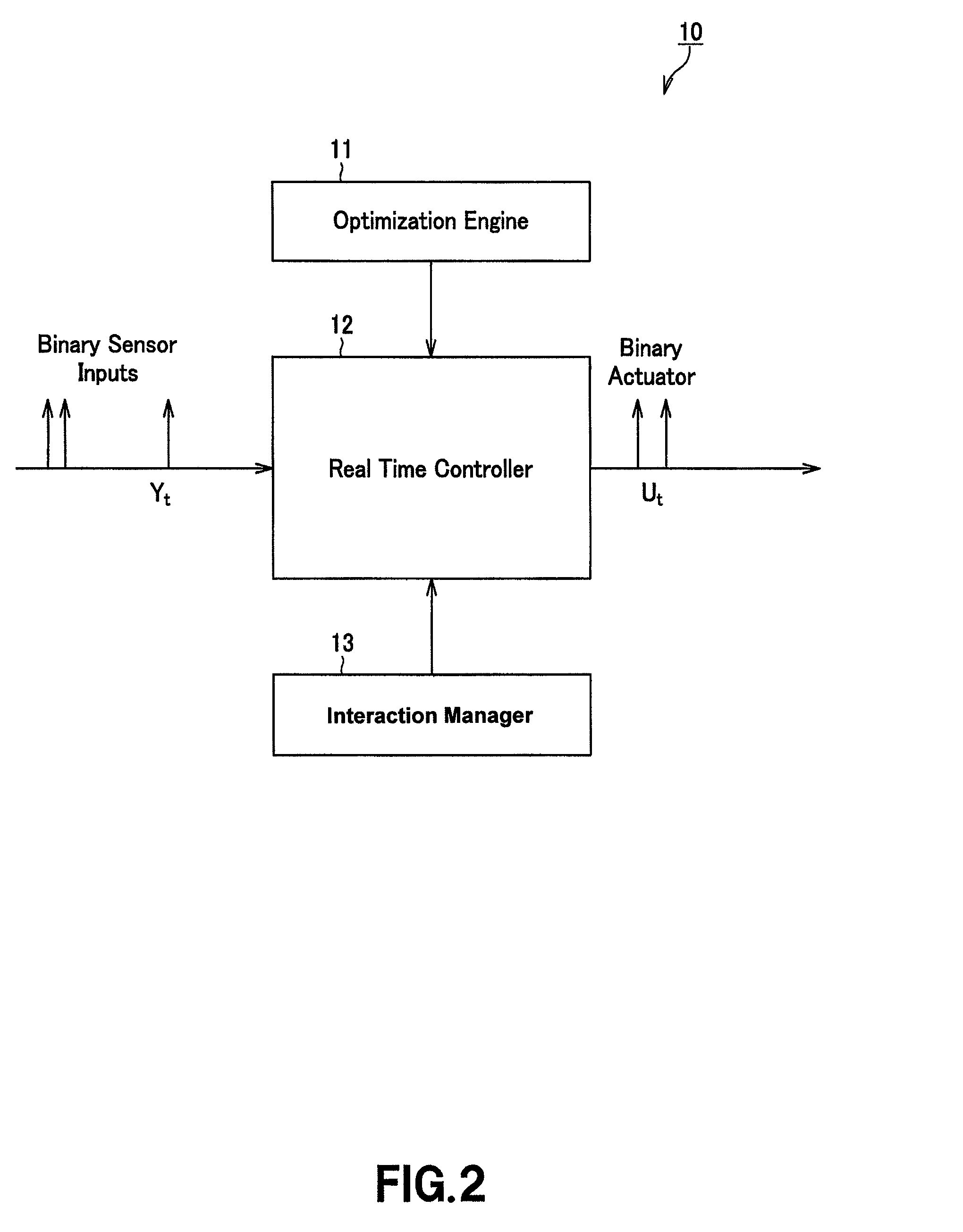

Interaction device

ActiveUS20070198444A1Maximize expectationSpeech analysisDigital computer detailsInteraction deviceSocial robot

The present invention provides an interaction device adapted for setting own controller for maximizing expectation of information defined between a hypothesis about an interaction object and own input / output. Thus, the social robot can judge by using only simple input / output sensor whether or not the human being is present or absent at the outside world.

Owner:UNIV OF CALIFORNIA SAN DIEGO REGENTS OF UNIV OF CALIFORNIA +1

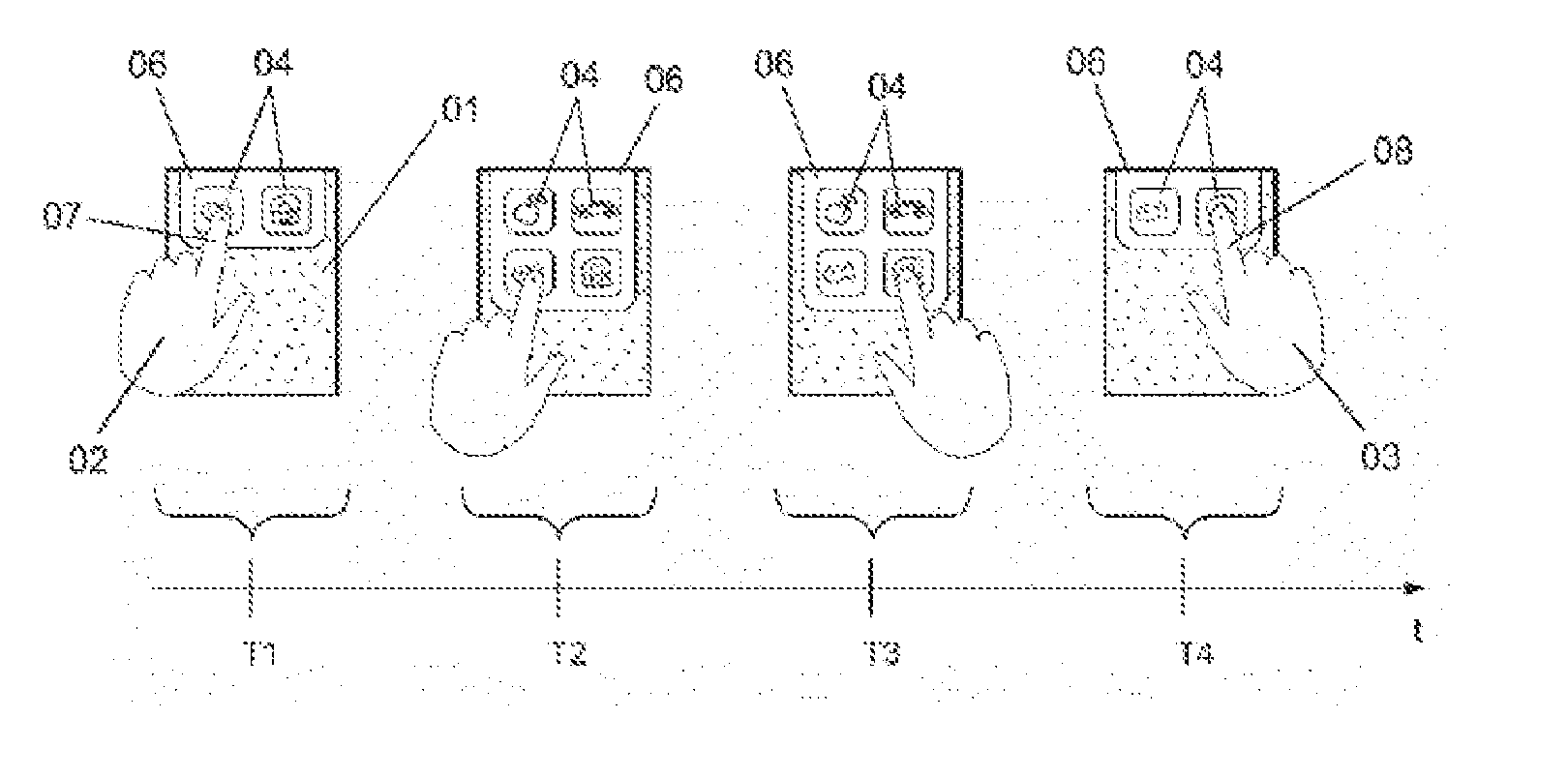

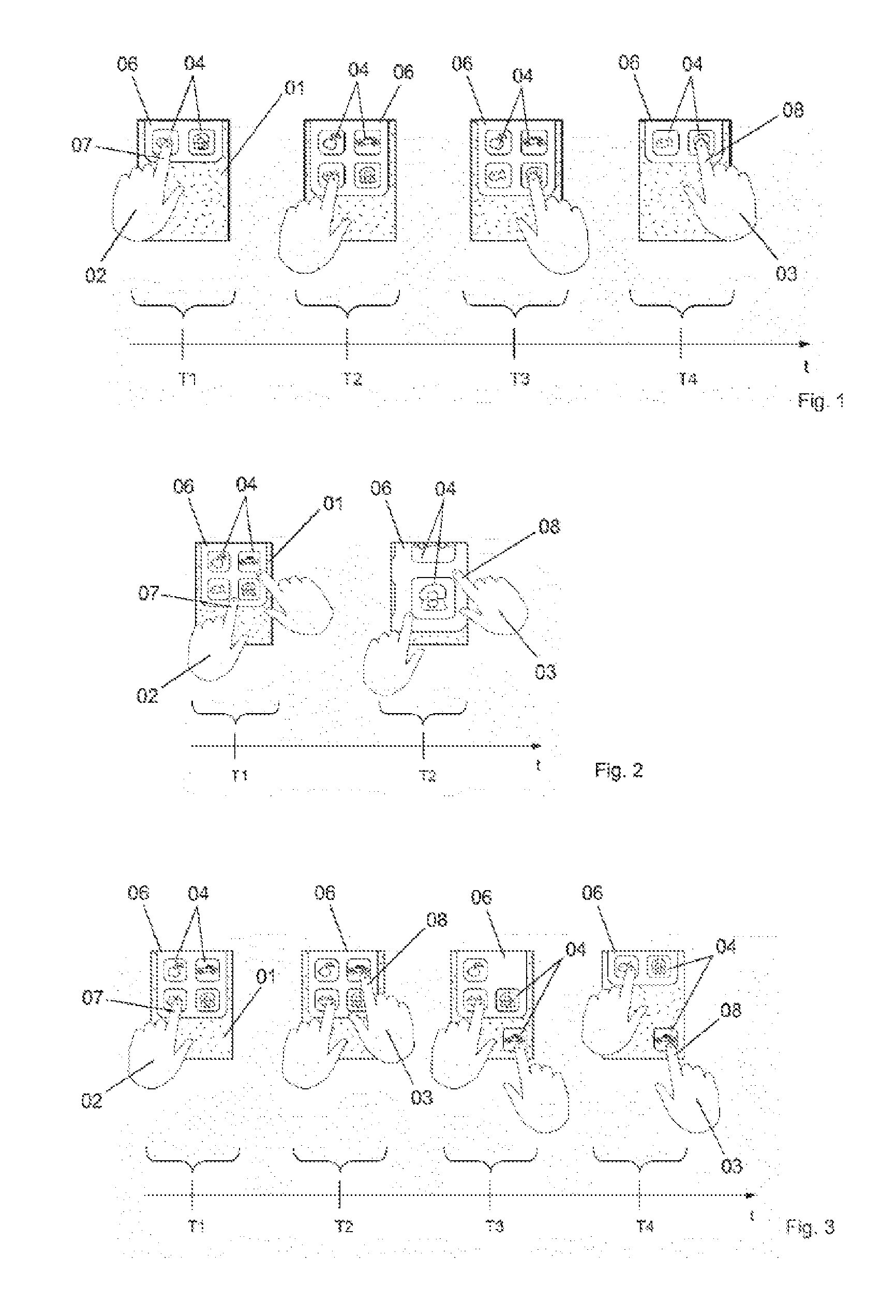

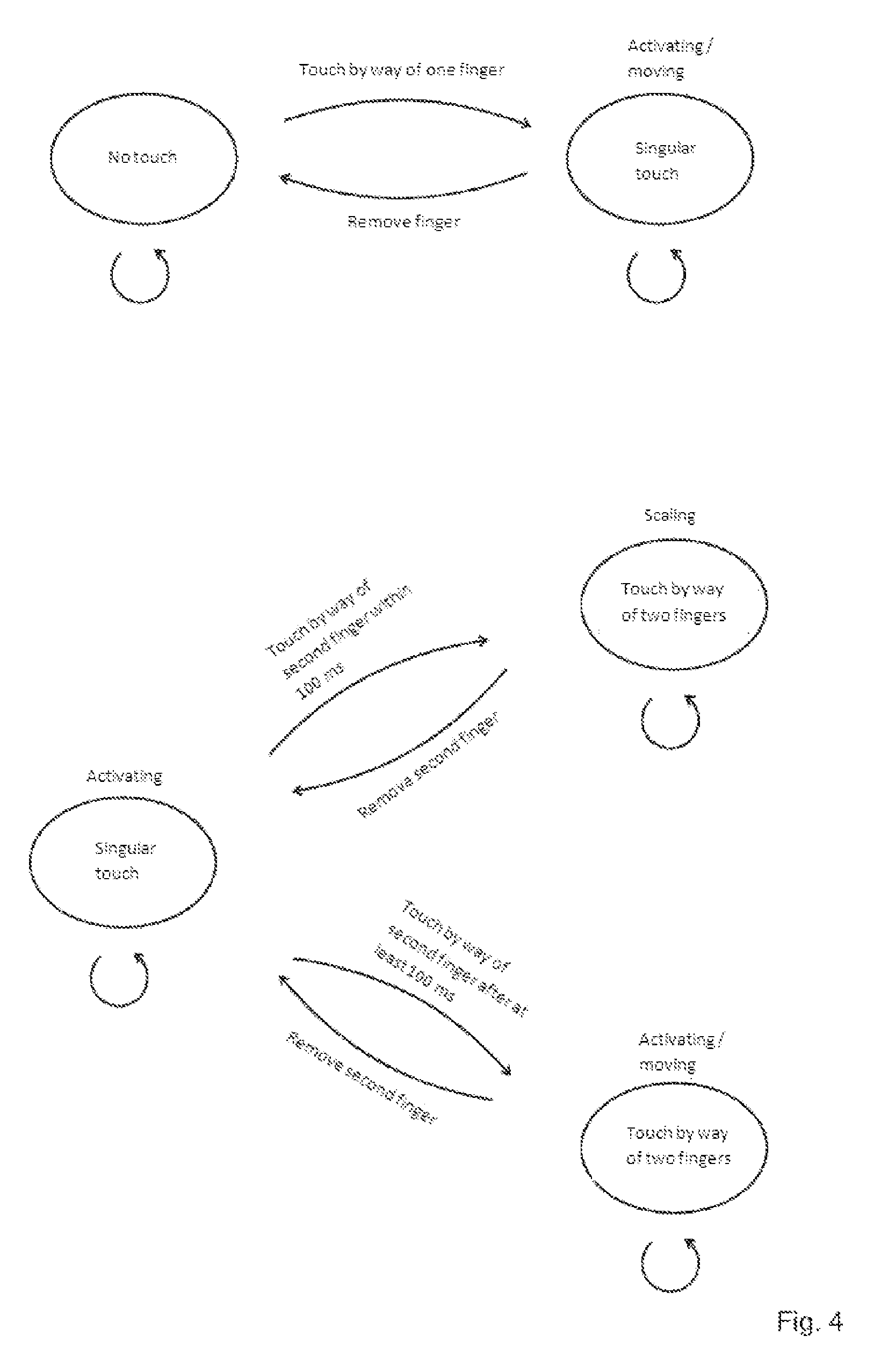

Method for operating a multi-touch-capable display and device having a multi-touch-capable display

InactiveUS20150169122A1Undesired change is preventedAvoid problemsInput/output processes for data processingDisplay deviceInteraction object

Owner:BAUHAUS UNIVERSITY

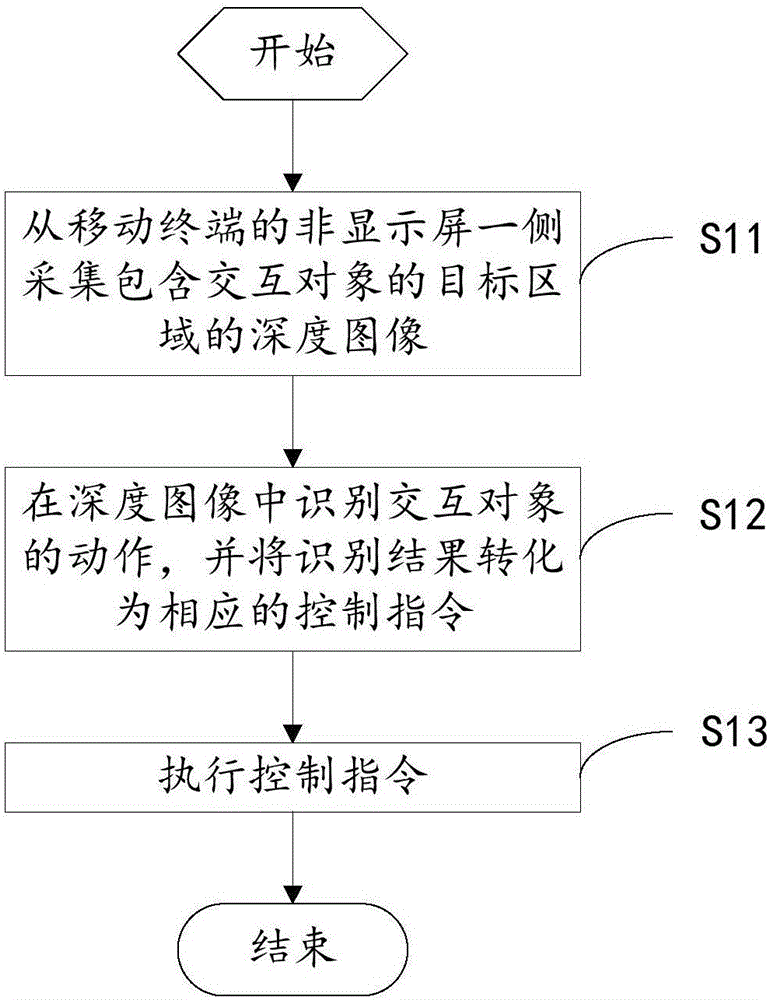

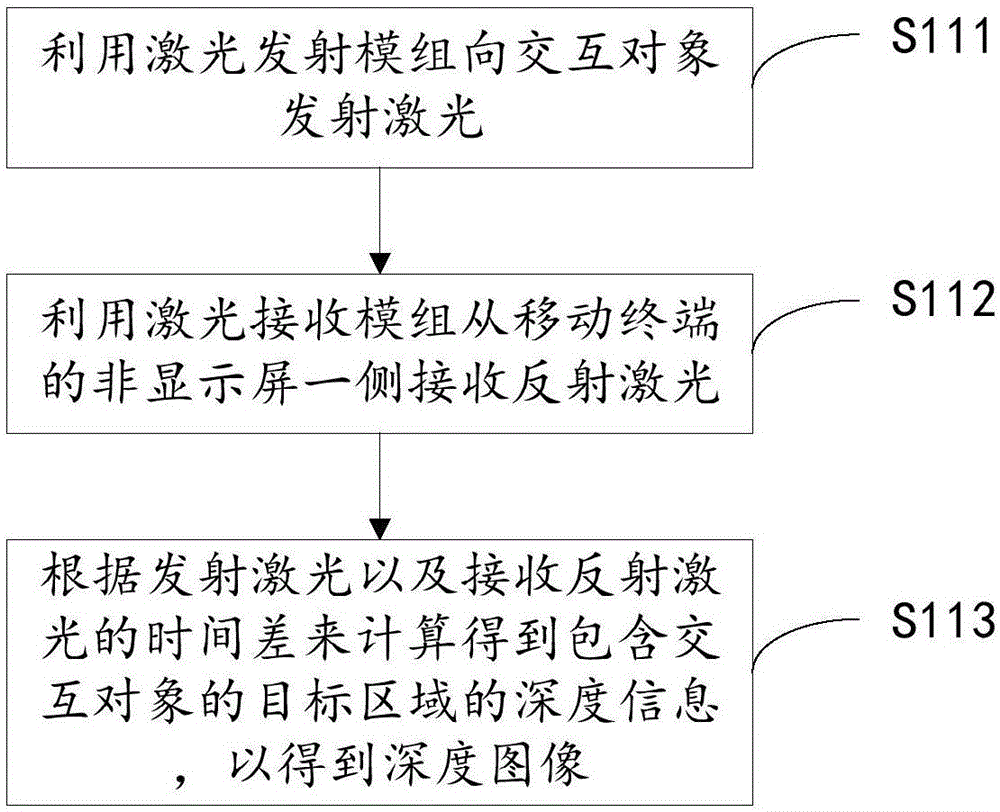

Mobile terminal and interaction control method thereof

ActiveCN106774850AEasy to controlAchieve one-handed operationInput/output for user-computer interactionImage enhancementInteraction controlLarge screen

The invention discloses a mobile terminal and an interaction control method thereof. The interaction control method comprises the following steps: acquiring a depth image of a target area containing interaction objects from a non-display-screen side of the mobile terminal; identifying actions of the interaction objects in the depth image, and converting an identification result into a corresponding control instruction; and executing the control instruction. By virtue of the mode, the mobile terminal can be controlled at the non-display-screen side, which is beneficial for achieving one-hand operation on a large-screen device.

Owner:SHENZHEN ORBBEC CO LTD

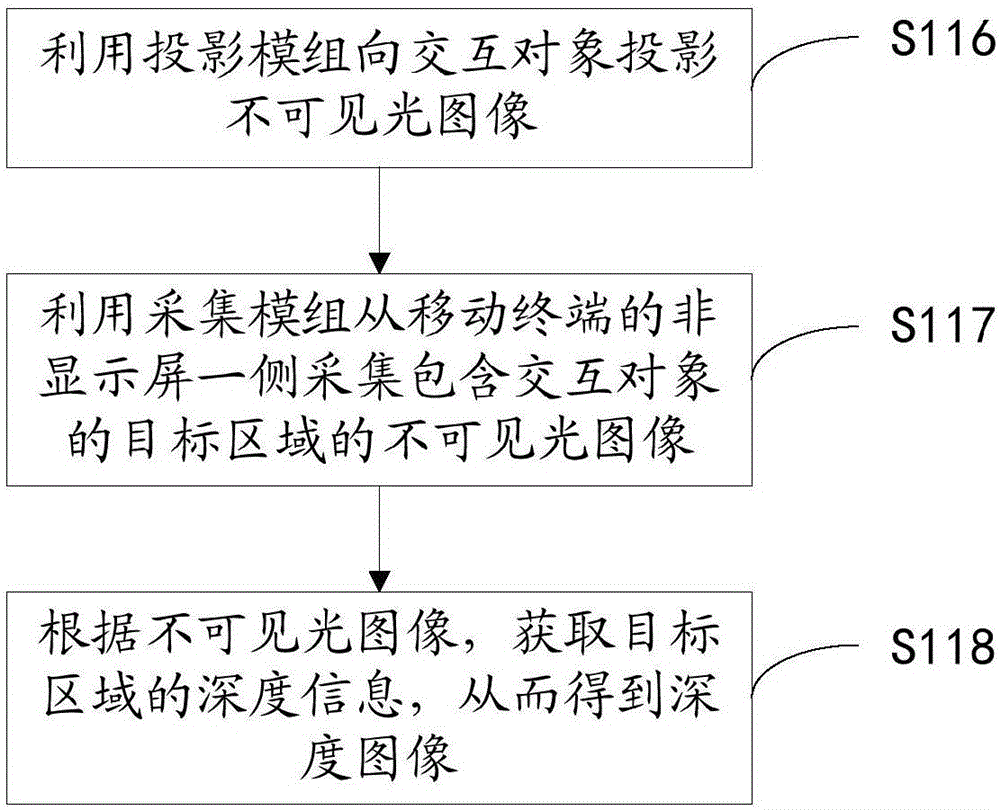

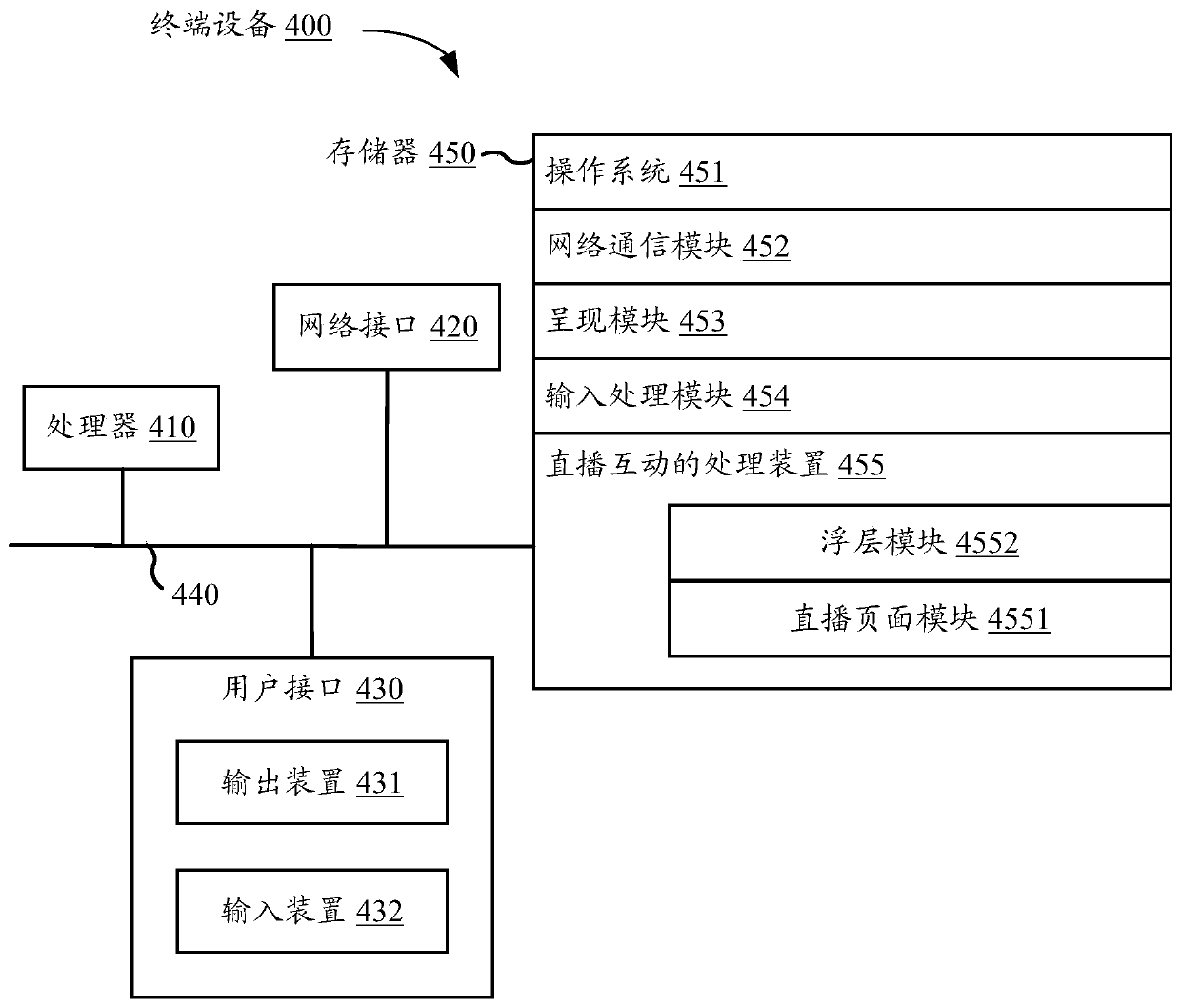

Live broadcast interaction processing method and device, electronic equipment and storage medium

ActiveCN110225388AEfficient disseminationImprove interactivityExecution for user interfacesSelective content distributionFeedback effectInteraction object

The invention provides a live broadcast interaction processing method and device, electronic equipment and a storage medium. The method comprises the following steps: presenting live broadcast contentin a playing page of a client; when an interactive object appears in the live broadcast content presented by the playing page, loading an interactive entry in the playing page; in response to a touchoperation corresponding to the interaction entrance, loading a floating layer in the client, and presenting a material for guiding interaction with an interaction object in the floating layer; and inresponse to a touch operation corresponding to the material, presenting a feedback effect corresponding to the material in the floating layer. According to the invention, flexible and diversified interaction can be realized in live broadcast.

Owner:TENCENT TECH (SHENZHEN) CO LTD

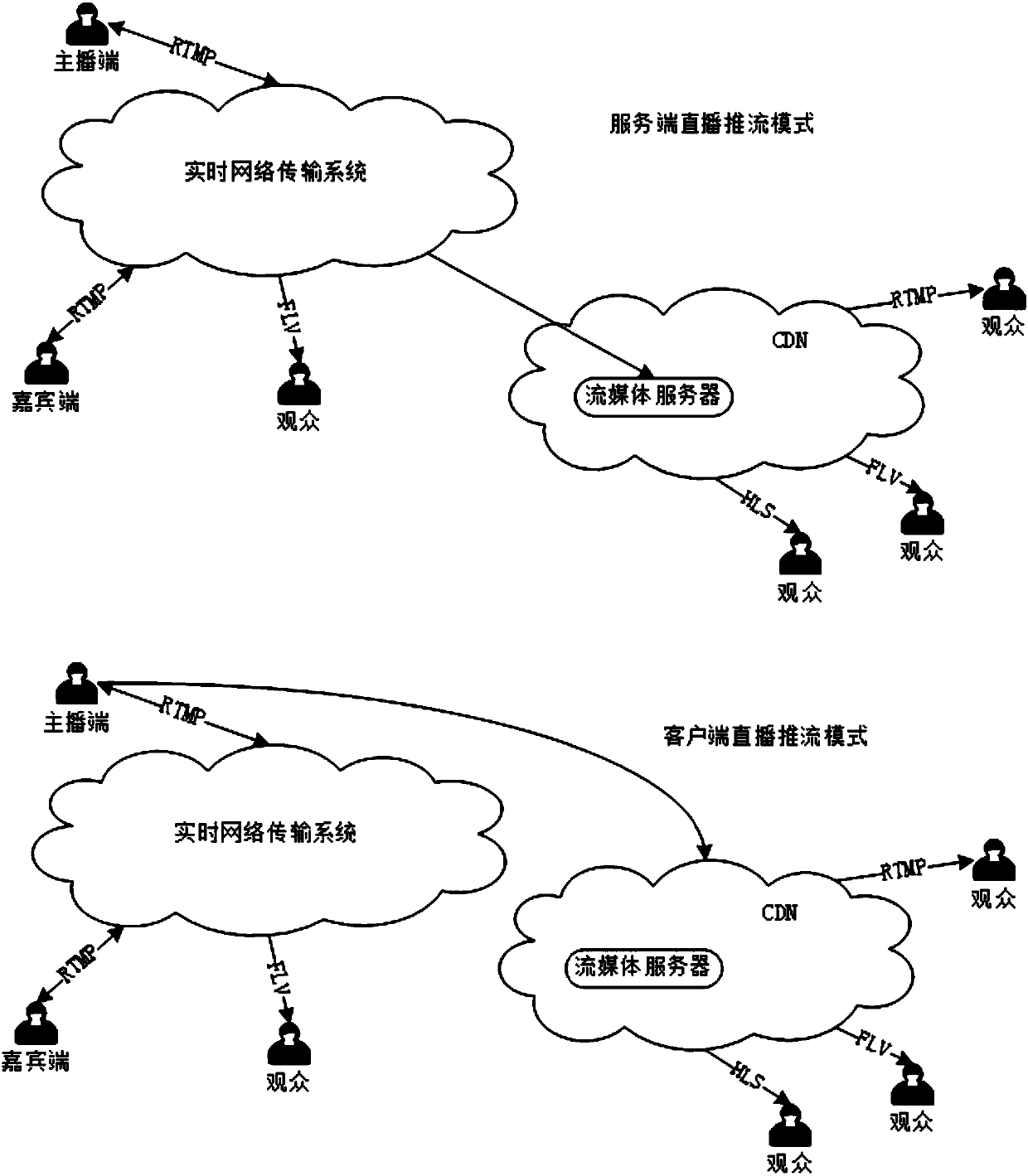

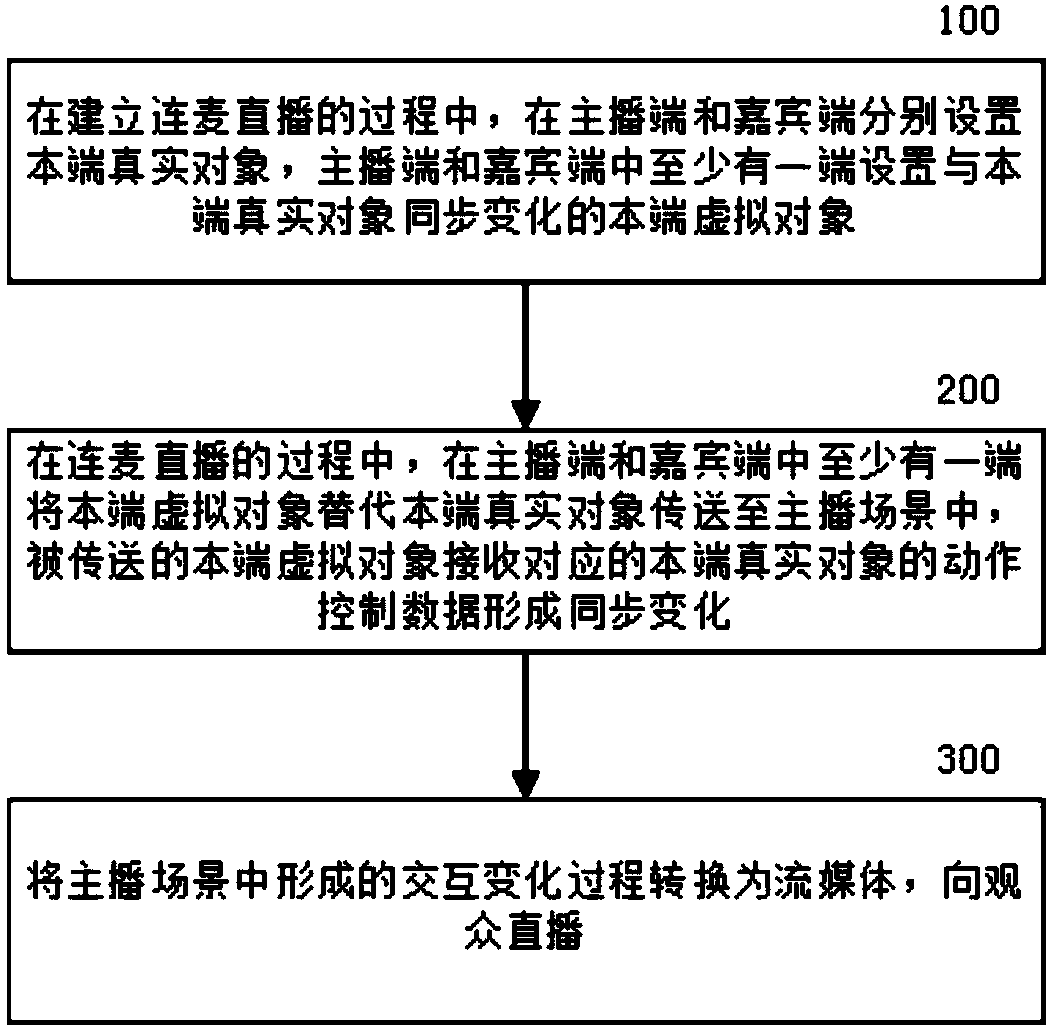

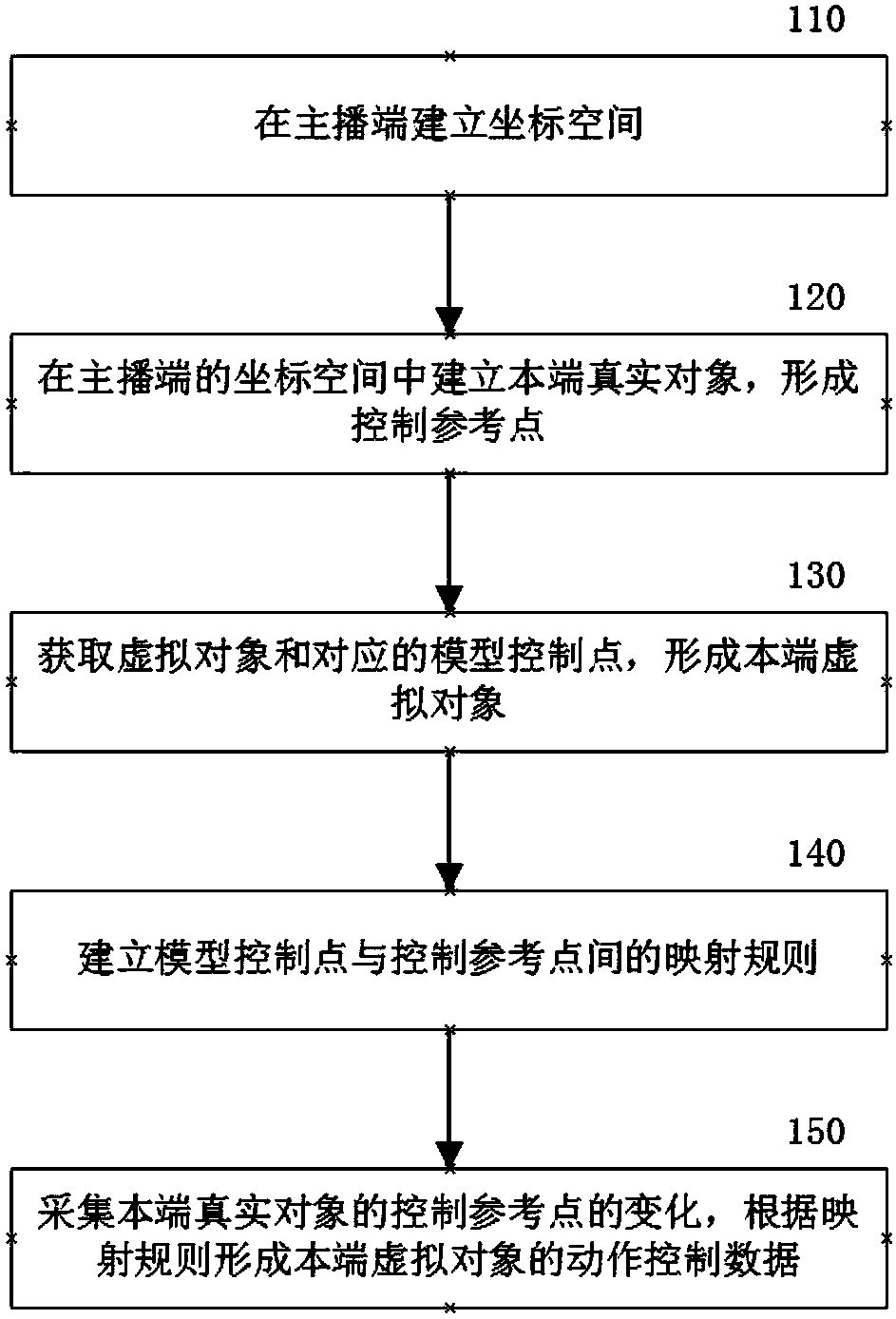

Microphone-connecting live method and system

ActiveCN107750014AReduce bandwidth requirementsAlleviate high-quality bandwidth pressureSelective content distributionControl dataInteraction object

The embodiment of the invention provides a microphone-connected live method and system. The problem that the optimization measure interaction information content in the lack of an interaction object cannot be effectively controlled is solved. The method comprises the following steps: respectively setting a home terminal real object at each of an anchor terminal and a guest terminal in the processof establishing the microphone-connecting live, wherein a home terminal virtual object synchronously changed with the home terminal real object is arranged on at least one of the anchor terminal and the guest terminal; transmitting the home terminal virtual object, which replaces the home terminal real object, to an anchor scene by at least one of the anchor terminal and the guest terminal in themicrophone-connecting live process, wherein the transmitted home terminal virtual object receives the control data of the corresponding home terminal real object to form the synchronous change; converting an interaction change process in the anchor scene into the streaming media to live to the audience. The high-quality bandwidth pressure on a real-time data transmission system is relieved by converting the big data size video stream of the real object into the virtual objects of the small data size action expression parameter in real time transmission and the interaction subject in quasi real-time transmission.

Owner:APPMAGICS TECH BEIJING LTD

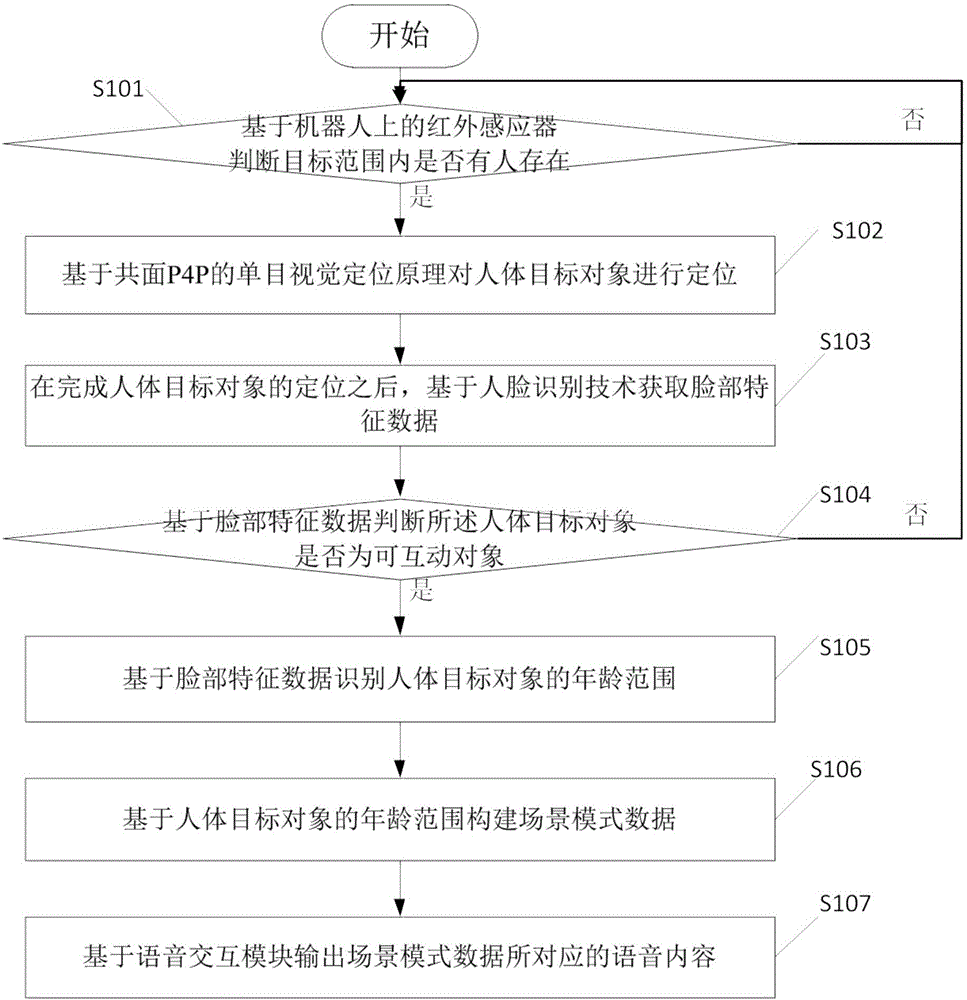

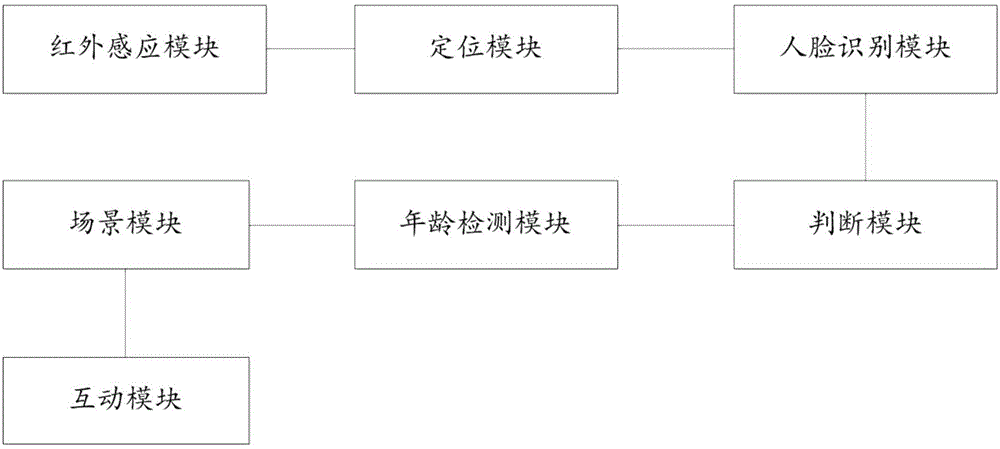

Robot intelligent interaction method and intelligent robot

InactiveCN106570491AAdd funImprove intelligenceCharacter and pattern recognitionSound input/outputHuman bodyFeature data

The invention discloses a robot intelligent interaction method and an intelligent robot. The method comprises the steps that an infrared sensor on the robot judges whether any one is in a target range; if a human is present, a monocular vision positioning principle based on the coplanar P4P is used to position the human body target object; after the human body target object is positioned, face feature data are acquired based on a face identification technology; whether the human body target object is an interactive object is judged based on the face feature data; if the human body target object is an interactive object, the age range of the human body target object is identified based on the face feature data; scene mode data are constructed based on the age range of the human body target object; and a speech content corresponding to the scene mode data is output based on a speech interaction module. According to the the embodiment of the invention, precise matching of interaction scene contents is realized, and an interaction scene mode is more interesting.

Owner:华南智能机器人创新研究院

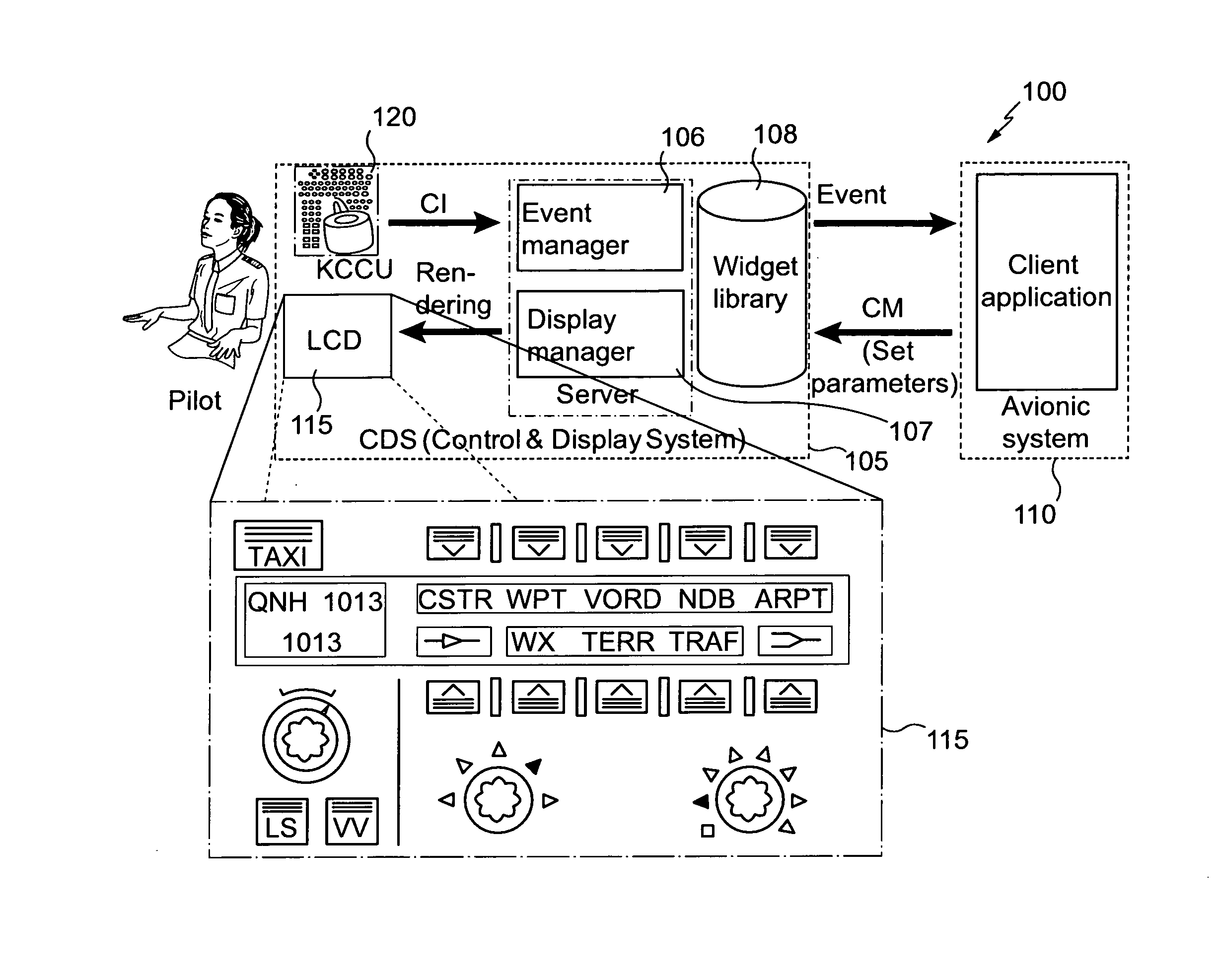

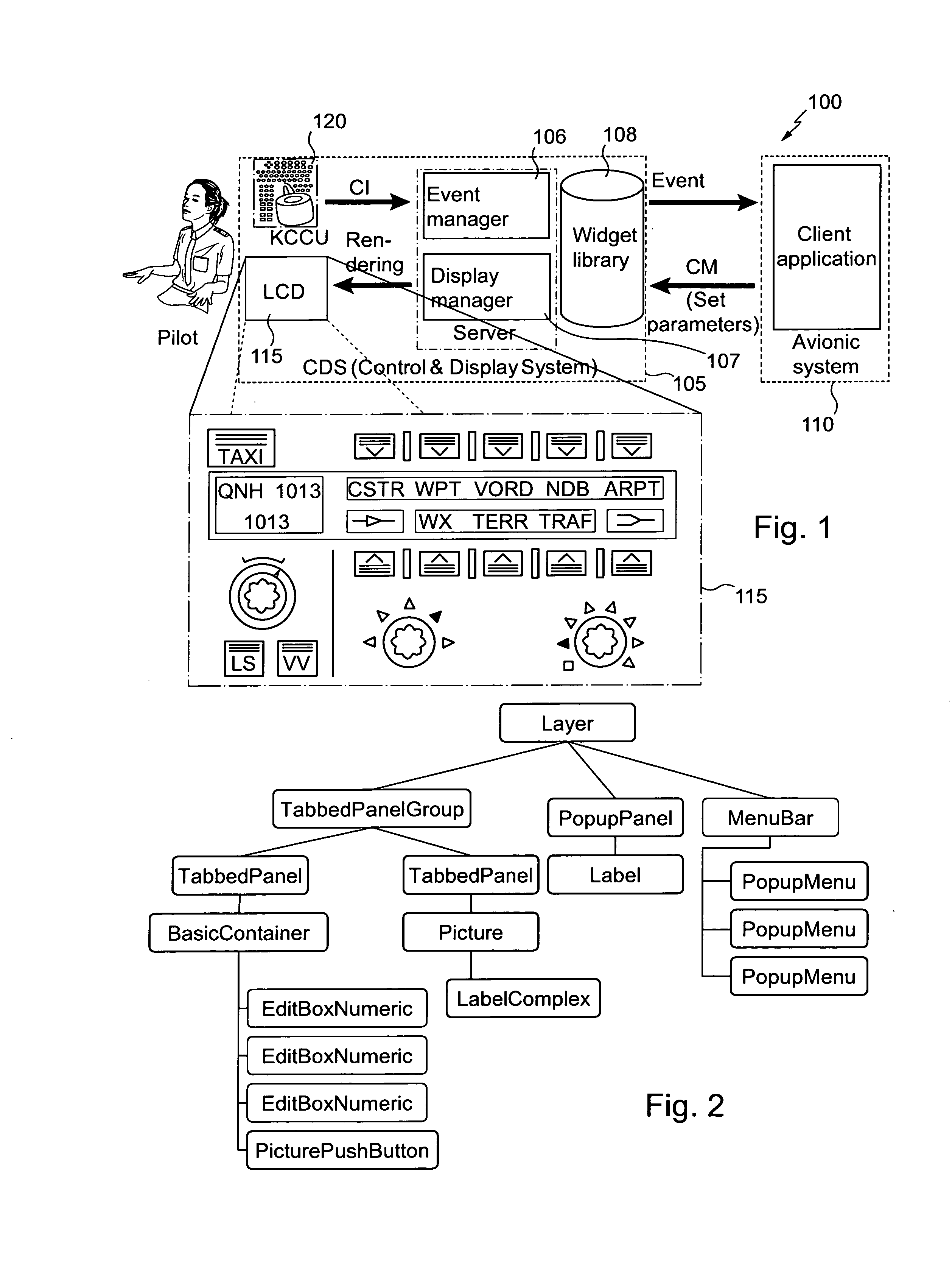

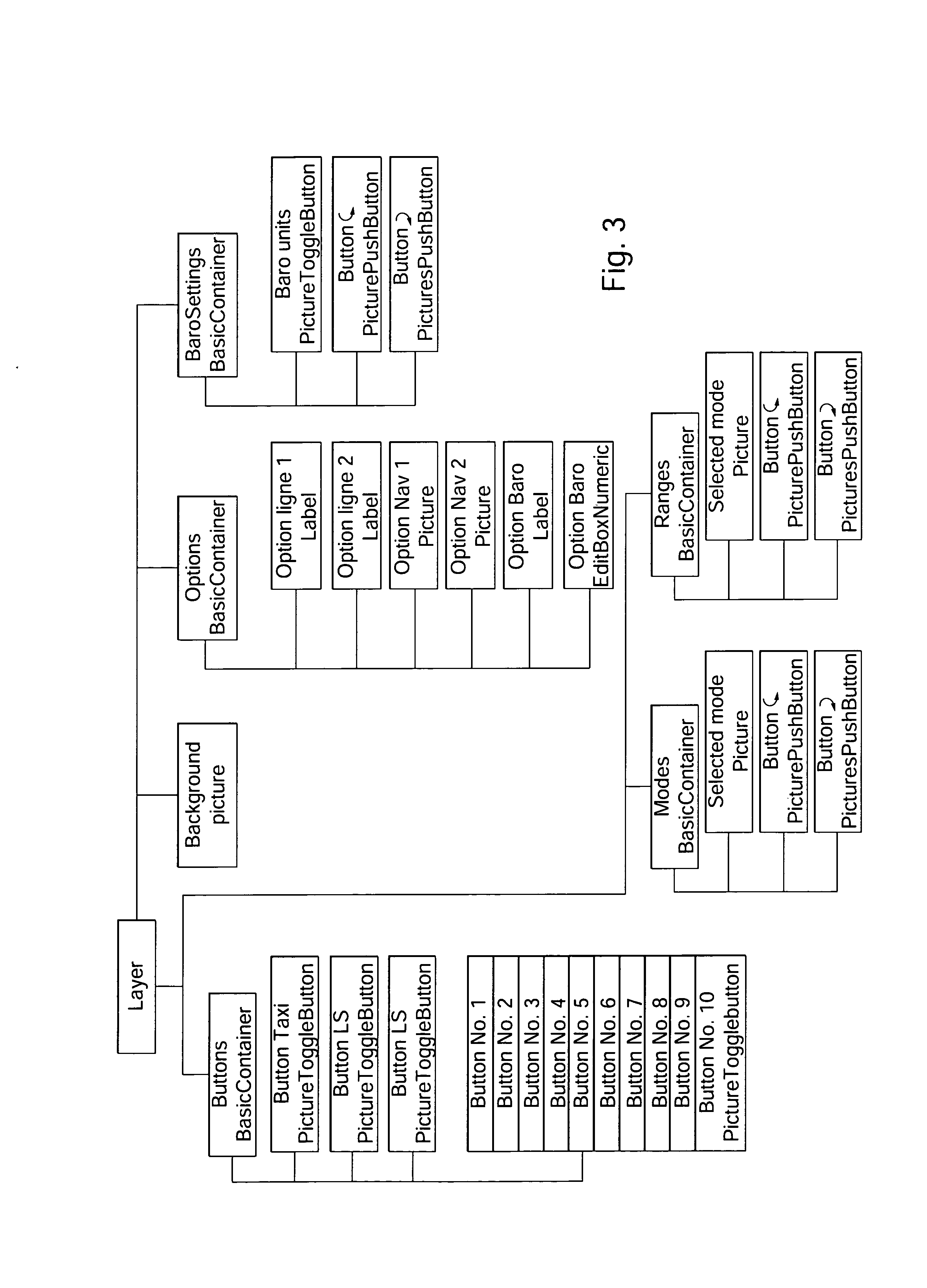

Method and system for monitoring a graphical interface in an aircraft cockpit

ActiveUS20130179842A1Raise security concernsPreventing situationError detection/correctionExecution for user interfacesGraphicsGraphical user interface

The invention relates in particular to a method and a system for monitoring a graphical interface in a computer system of an aircraft cockpit. The method comprises, in addition to the display of a graphical interface of a client application based on a tree structure of graphical interaction objects composing said graphical interface, the following steps: obtaining a plurality of graphical interaction objects having a tree-structure organization; creating and adding, to said obtained tree structure, at least one new graphical interaction object defining a critical graphical display zone; modifying the tree-structure dependency of at least one critical graphical interaction object, of the obtained tree structure to make it dependent from the new graphical interaction object defining the critical zone; and performing critical monitoring only of the critical graphical objects attached to the critical zone.

Owner:AIRBUS OPERATIONS (SAS)

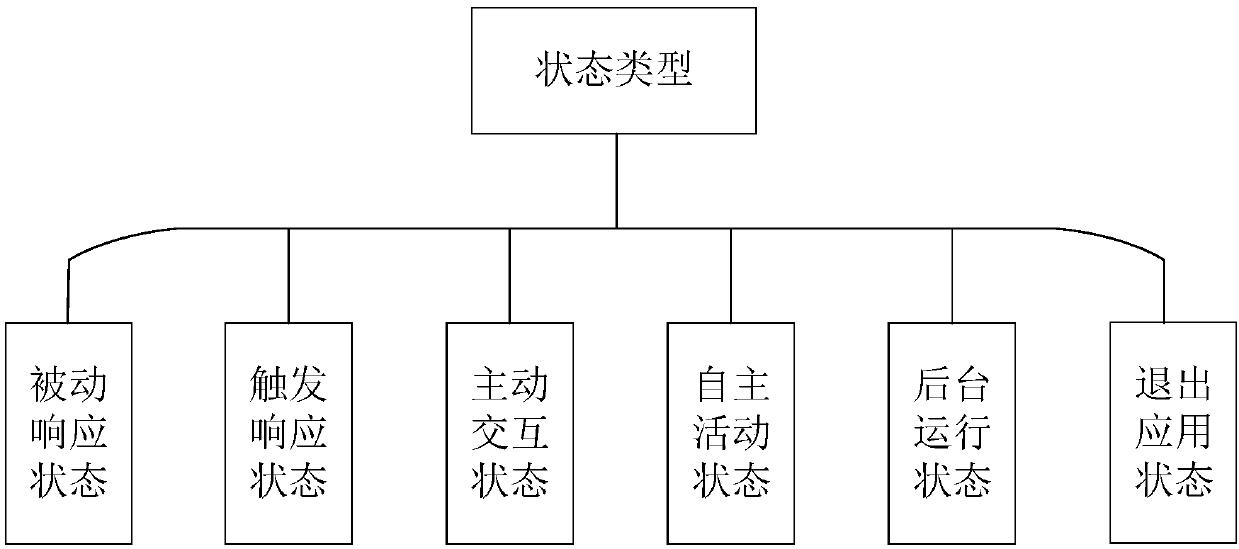

Virtual human state management method and system

ActiveCN107704169AHigh viscosityImprove interactive experienceExecution for user interfacesSpecial data processing applicationsSkill setsState management

The invention provides a virtual human state management method. The method comprises the following steps of: obtaining multi-mode input data; learning multi-mode interaction history information and the current operation state between interaction objects and the current operation state of hardware equipment; analyzing the multi-mode input data to obtain intention data; judging a new operation stateto which a virtual human needs to enter according to the learnt data and the intention data; calling a state adjustment ability interface to enter the new operation state and generating multi-mode output data under the new state, wherein the multi-mode output data is associated with a character, an attribute and skills of the virtual human; and outputting the multi-mode output data through an image of the virtual human. According to the virtual human state management method and system provided by the invention, the virtual human can develop interaction with different experiences with users under different states, and the multi-mode output of the virtual human is coordinated, so that the viscosity of visual sense of the users is strengthened and the interaction experience is improved.

Owner:BEIJING GUANGNIAN WUXIAN SCI & TECH

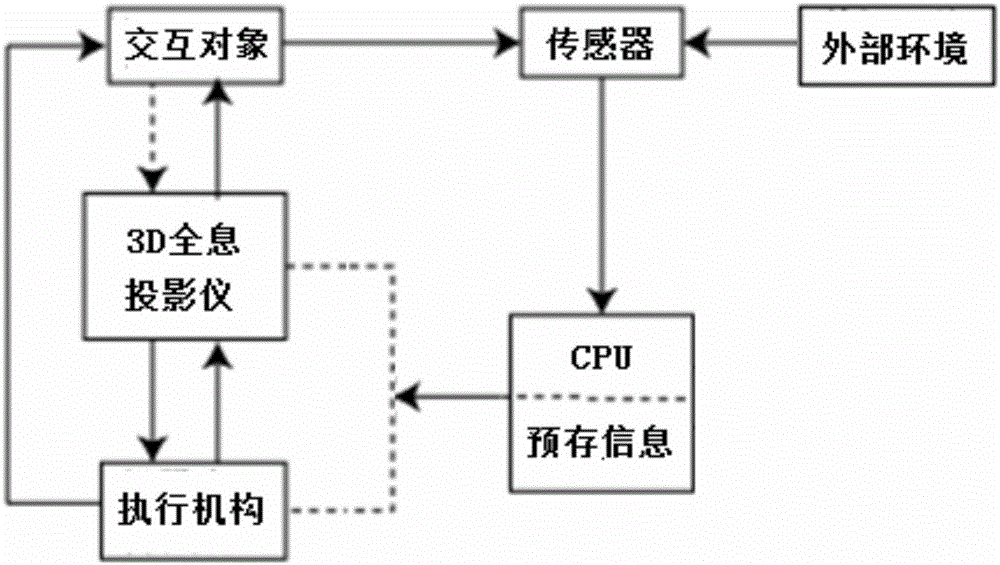

Man-machine interaction method of intelligent robot

InactiveCN106648074AInteractive three-dimensional and vividStrong entertainment and interactiveInput/output for user-computer interactionGraph readingRecreationAnimation

The invention discloses a man-machine interaction method of an intelligent robot. The method at least includes the following execution steps that a control device gives a command to a 3D holographic projection device to generate an animated image with a holographic effect; an image sensor shoots the face image and limb action of an interaction object and transmits the face image and the limb action to the control device; a sound sensor and the image sensor are synchronous, and the sound of the interaction object is recorded and transmitted to the control device; the control device processes the information transmitted by the image sensor and the sound sensor, compared with prestored information, a command for control over the 3D holographic projection device and the sound device is generated, then the animated image with the holographic effect generates action, an execution mechanism is instructed to control the robot to generate or not to generate action, and meanwhile audio frequency corresponding to the action is played or not played. Holographic projection is integrated on the robot, man-machine interaction is carried out according to the sound, expression, limb action and other information fed back by the sensors, a traditional communication mode of a liquid crystal panel interaction interface is changed, man-machine interaction is more stereoscopic and vivid, and recreation interactivity is higher. Meanwhile, by the utilization of autonomous moving of the robot, obstacles can be intelligently avoided.

Owner:JUNFENG MEI

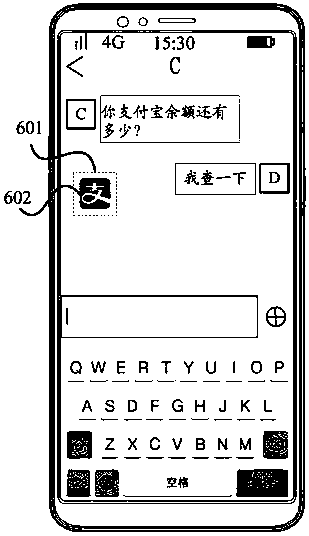

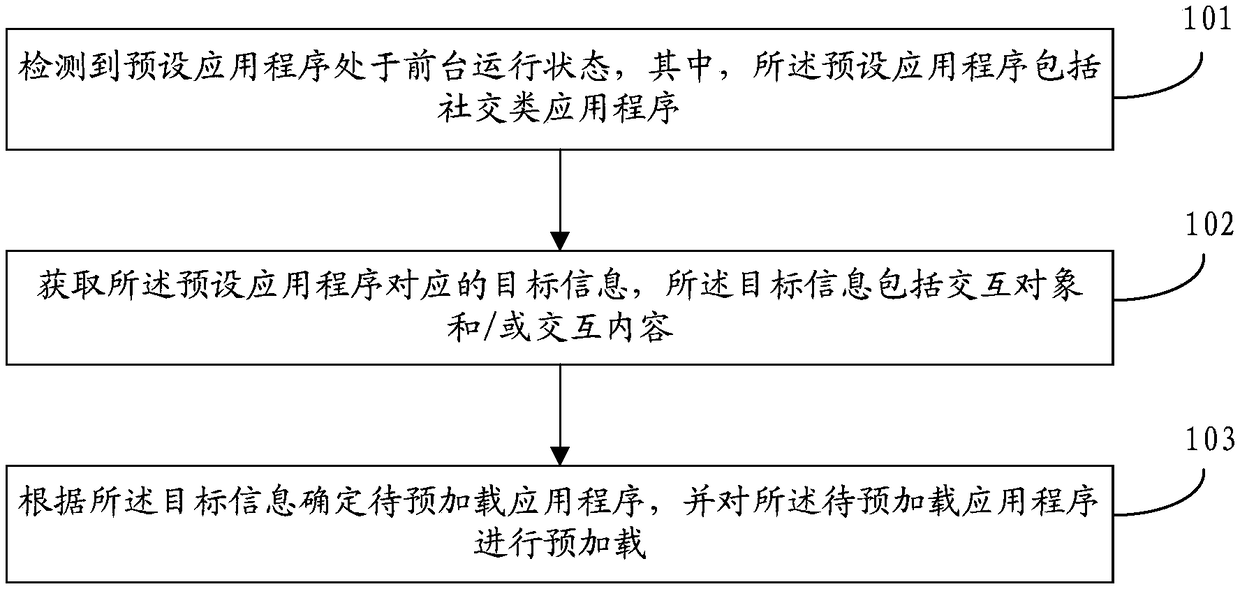

Application program preloading method and apparatus, storage medium and terminal

ActiveCN108647052AFast startupImprove accuracyProgram loading/initiatingMachine learningComputer terminalInteraction object

The embodiment of the invention discloses an application program preloading method and apparatus, a storage medium and a terminal. The method comprises the following steps: detecting that a preset application program is in a foreground running state, wherein the preset application program comprises a social application program; obtaining target information corresponding to the preset application program, wherein the target information comprises an interaction object and / or an interaction content; and determining a to-be-preloaded application program according to the target information, and preloading the to-be-preloaded application program. By adoption of the technical scheme provided by the embodiment of the invention, the application program that a user may open can be predicted according to the interaction object or the interaction content of the currently used social application program of the user, and the predicted application program is preloaded, so that the accuracy of the prediction result is high, and the startup speed of preloaded application program can be improved.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

Interaction device implementing a bayesian's estimation

ActiveUS8484146B2Maximize expectationSpeech analysisDigital computer detailsHypothesisInteraction device

Owner:UNIV OF CALIFORNIA SAN DIEGO REGENTS OF UNIV OF CALIFORNIA +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com