3D (three-dimensional) sight direction estimation method for robot interaction object detection

A technology of interactive objects and line-of-sight direction, applied in the acquisition, instrumentation, calculation and other directions of 3D object measurement, which can solve the problems of lack of robustness and other problems

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0068] The technical solution of the present invention will be further described in detail below in conjunction with the accompanying drawings, but the protection scope of the present invention is not limited to the following description.

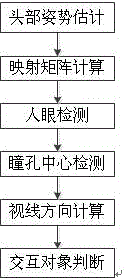

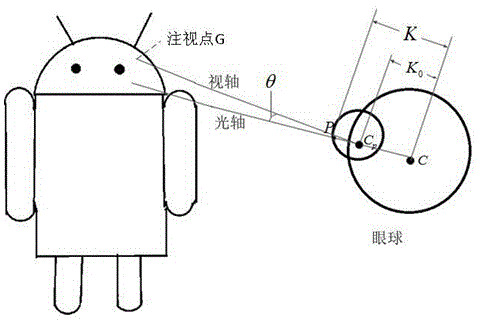

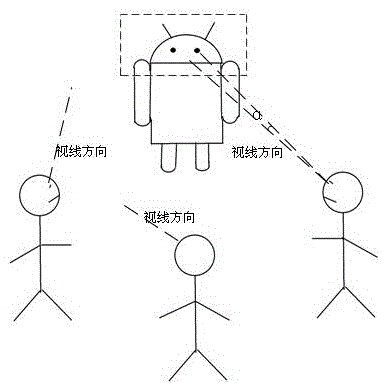

[0069] Such as figure 1 As shown, a 3D gaze direction estimation method for robot interactive object detection, it includes the following steps:

[0070] S1. Perform head pose estimation: use RGBD sensors to collect color information and depth information, and calculate the three-dimensional position information T and head pose R of the head according to the collected information;

[0071] S2. Calculate the mapping matrix M between the head pose R and the head reference pose R0, where the head reference pose R0 is the head pose when the user and the robot face each other, R0=[0,0,1] ;

[0072] S3. Collect the human eye picture, and extract the human eye area image from the collected human eye picture;

[0073] S4. After obtaining the ima...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com