Patents

Literature

432 results about "Branch" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A branch is an instruction in a computer program that can cause a computer to begin executing a different instruction sequence and thus deviate from its default behavior of executing instructions in order. Branch (or branching, branched) may also refer to the act of switching execution to a different instruction sequence as a result of executing a branch instruction. Branch instructions are used to implement control flow in program loops and conditionals (i.e., executing a particular sequence of instructions only if certain conditions are satisfied).

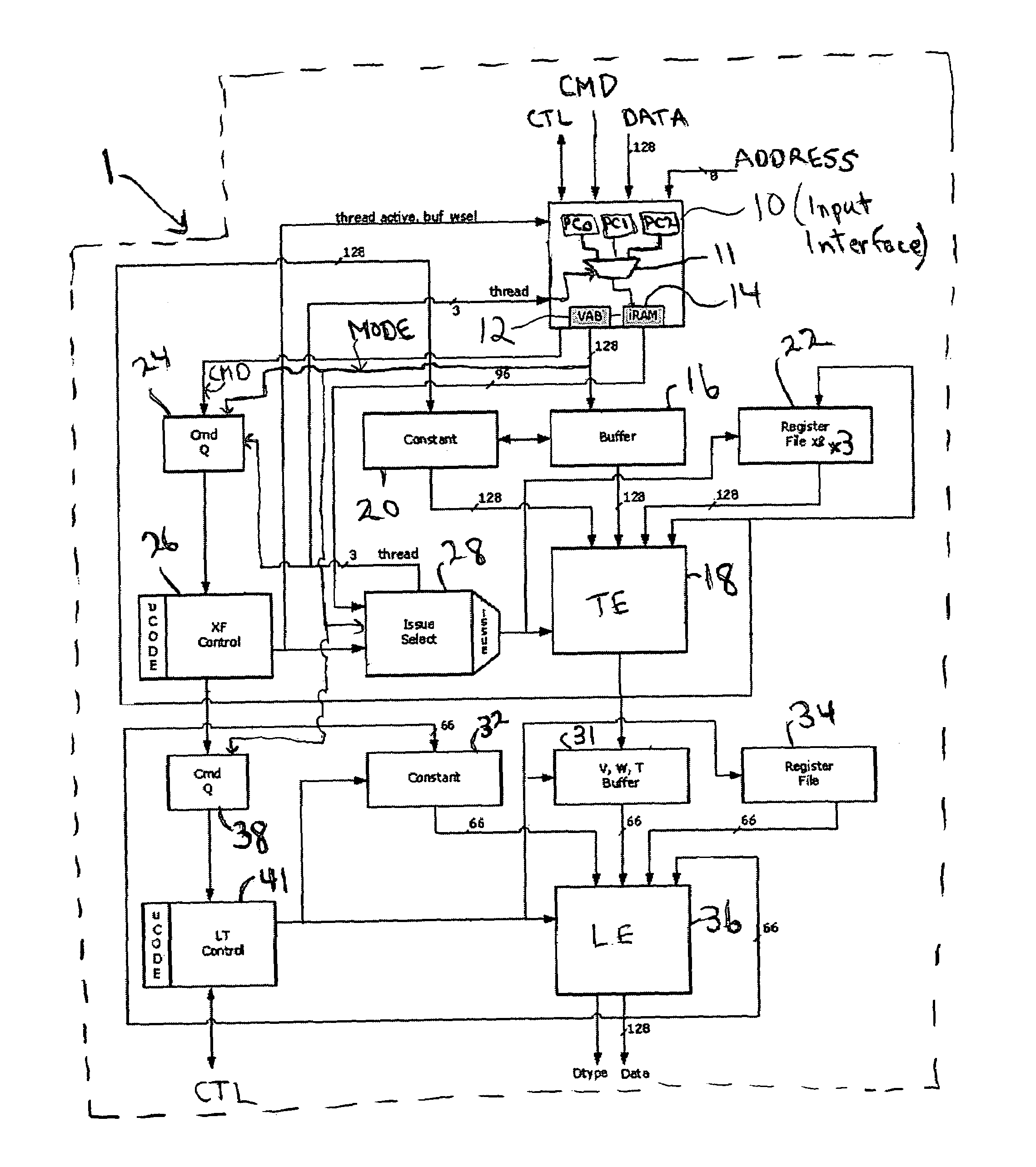

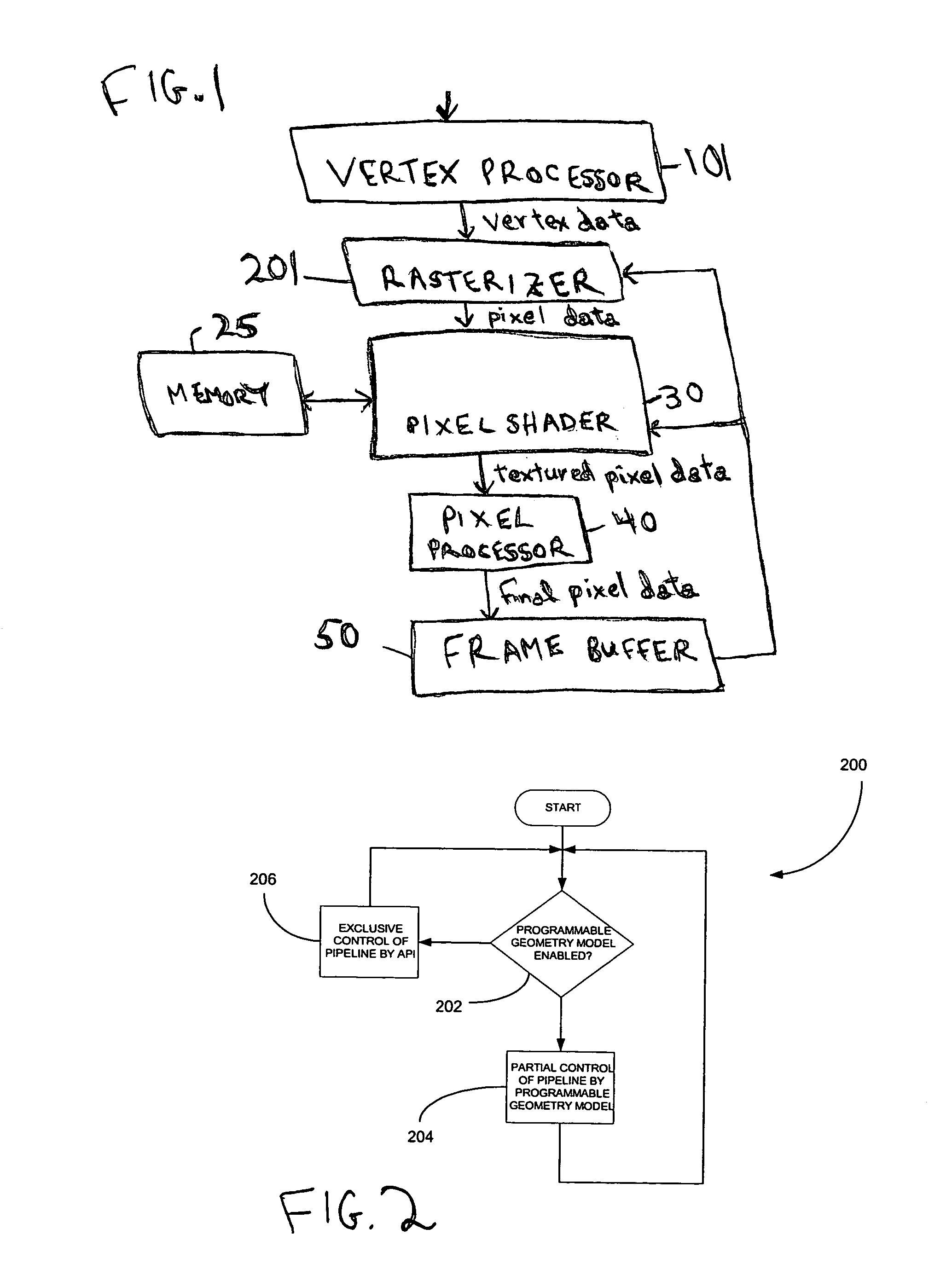

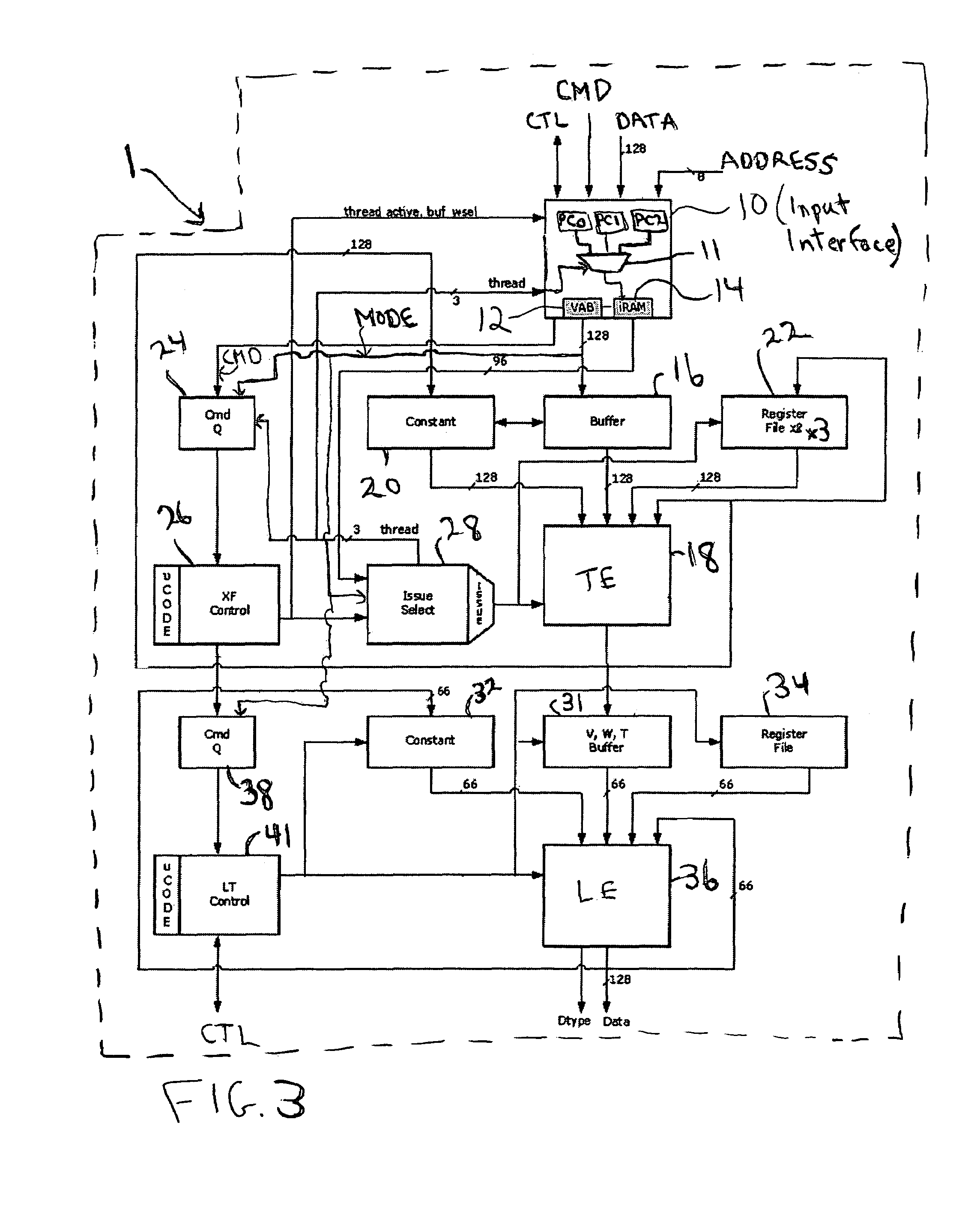

Method and system for programmable pipelined graphics processing with branching instructions

InactiveUS6947047B1Avoid confictMultiple digital computer combinationsProcessor architectures/configurationGraphicsMode control

A programmable, pipelined graphics processor (e.g., a vertex processor) having at least two processing pipelines, a graphics processing system including such a processor, and a pipelined graphics data processing method allowing parallel processing and also handling branching instructions and preventing conflicts among pipelines. Preferably, each pipeline processes data in accordance with a program including by executing branch instructions, and the processor is operable in any one of a parallel processing mode in which at least two data values to be processed in parallel in accordance with the same program are launched simultaneously into multiple pipelines, and a serialized mode in which only one pipeline at a time receives input data values to be processed in accordance with the program (and operation of each other pipeline is frozen). During parallel processing mode operation, mode control circuitry recognizes and resolves branch instructions to be executed (before processing of data in accordance with each branch instruction starts) and causes the processor to operate in the serialized mode when (and preferably only for as long as) necessary to prevent any conflict between the pipelines due to branching. In other embodiments, the processor is operable in any one of a parallel processing mode and a limited serialized mode in which operation of each of a sequence of pipelines (or pipeline sets) pauses for a limited number of clock cycles. The processor enters the limited serialized mode in response to detecting a conflict-causing instruction that could cause a conflict between resources shared by the pipelines during parallel processing mode operation.

Owner:NVIDIA CORP

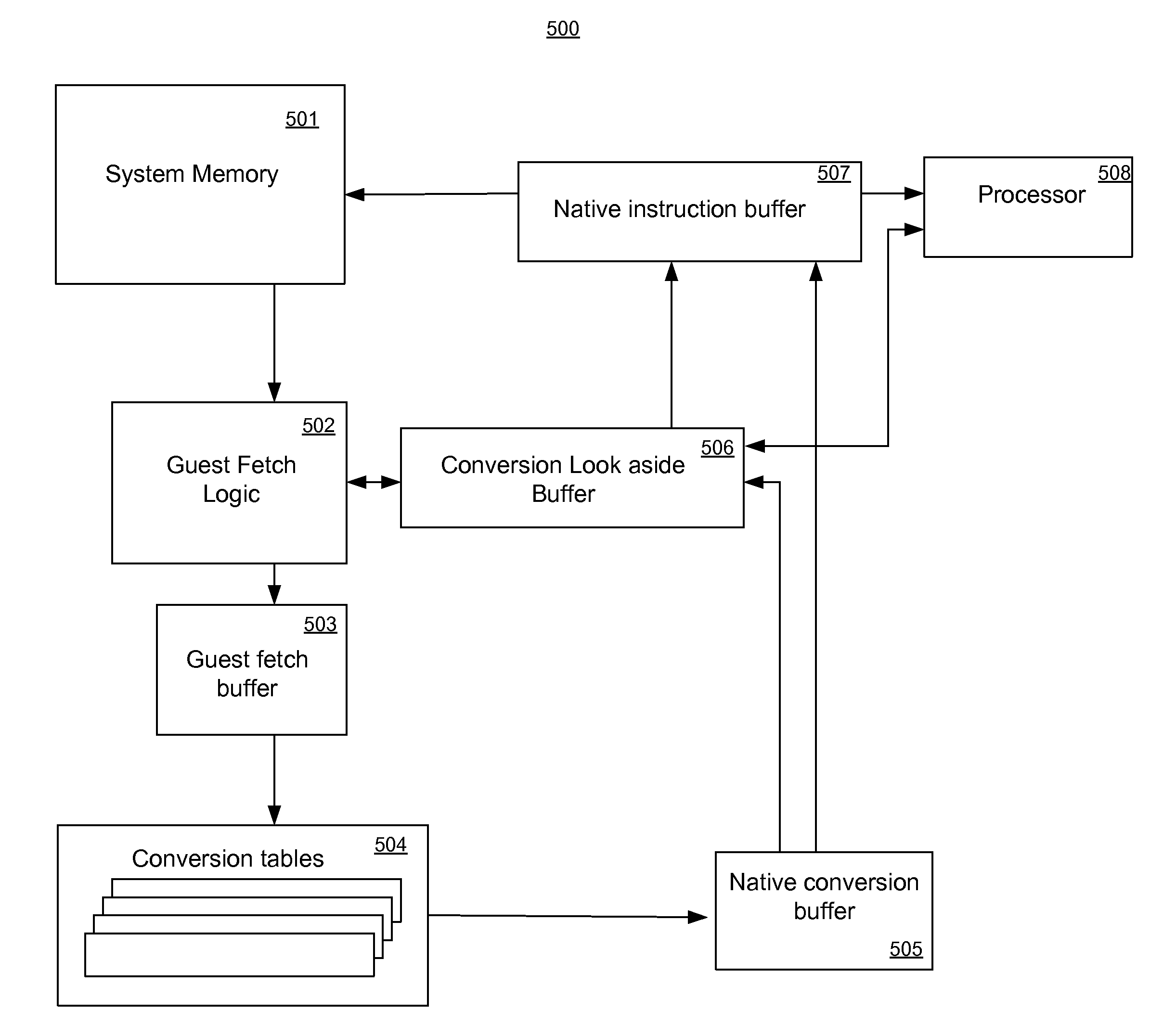

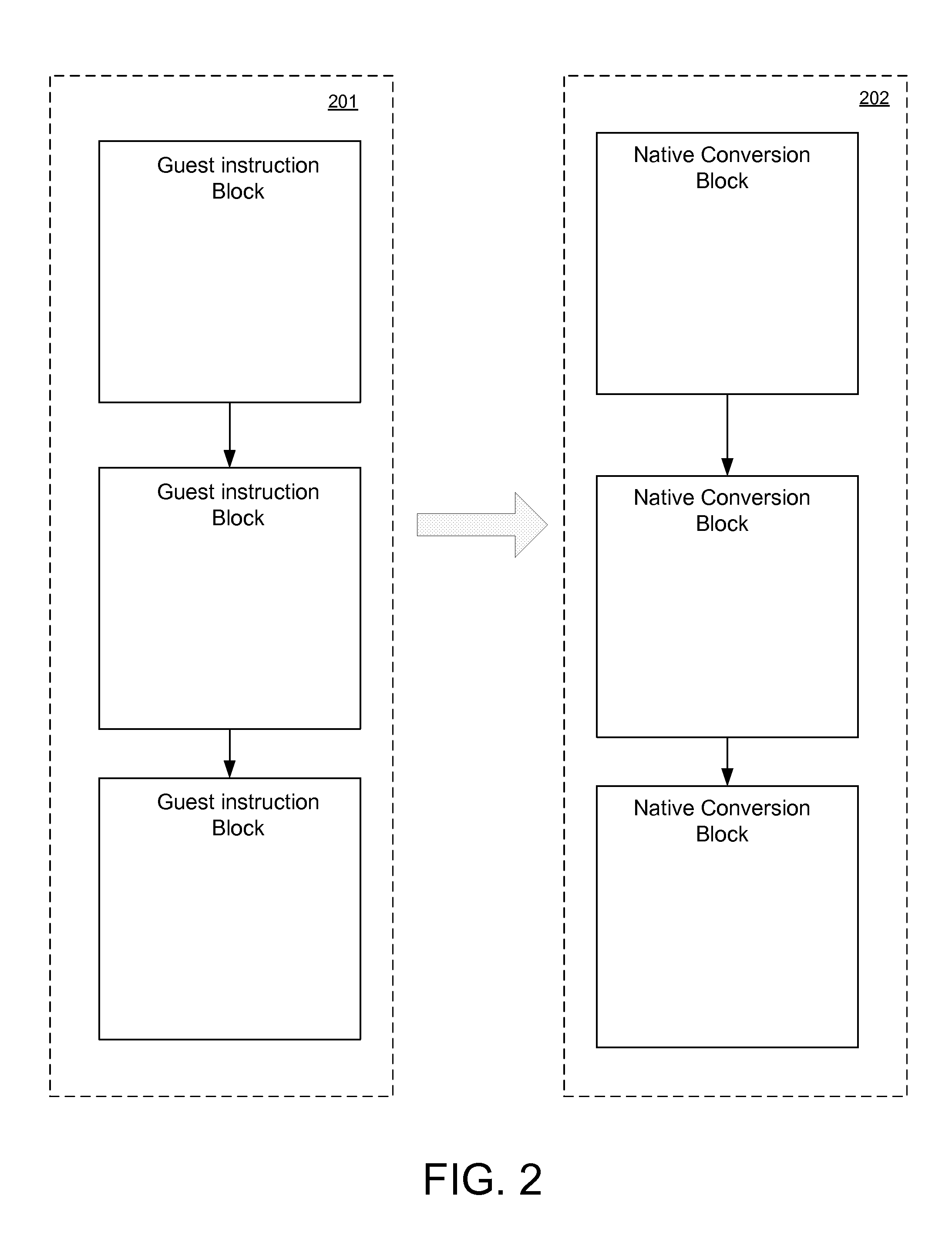

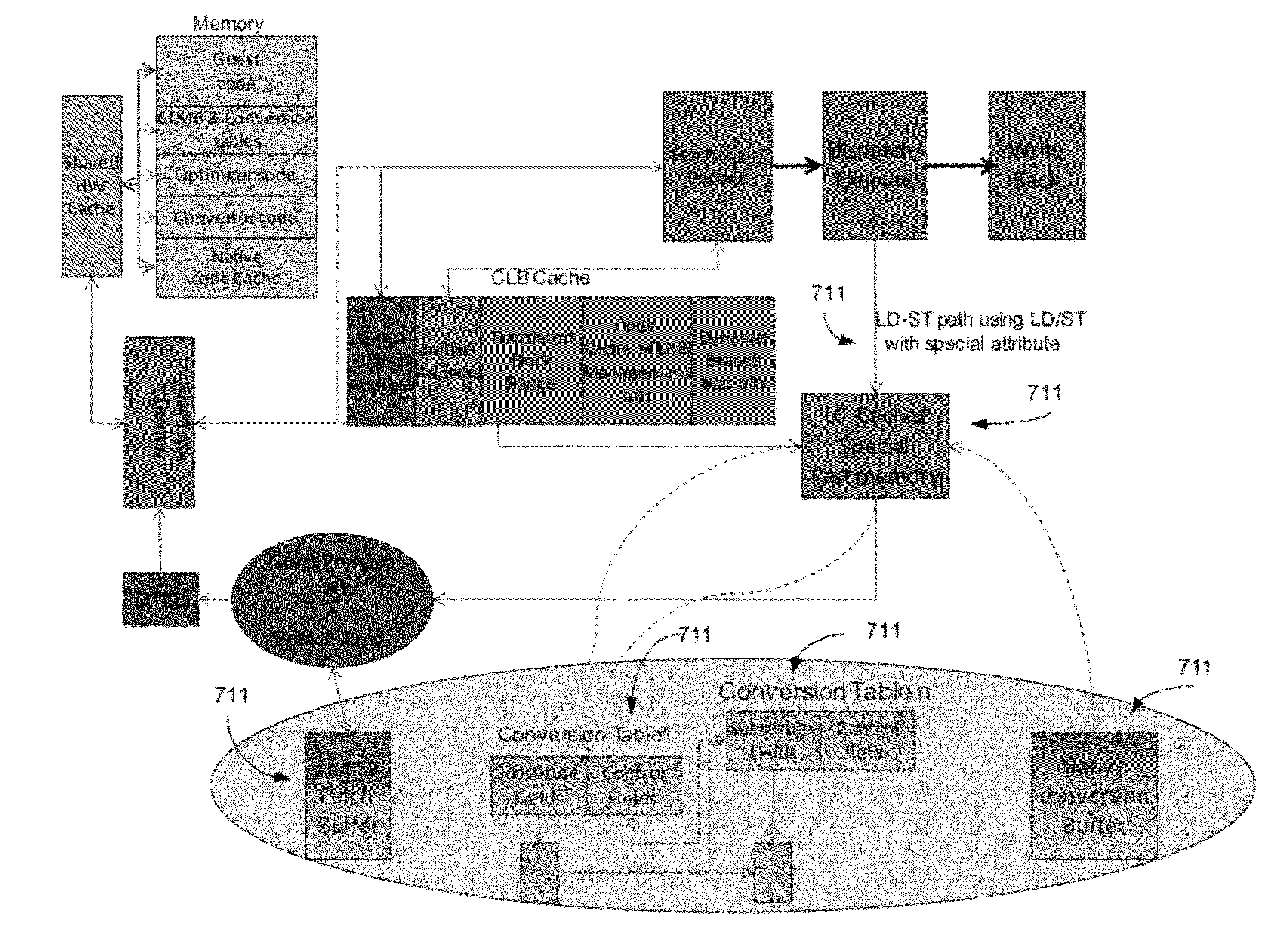

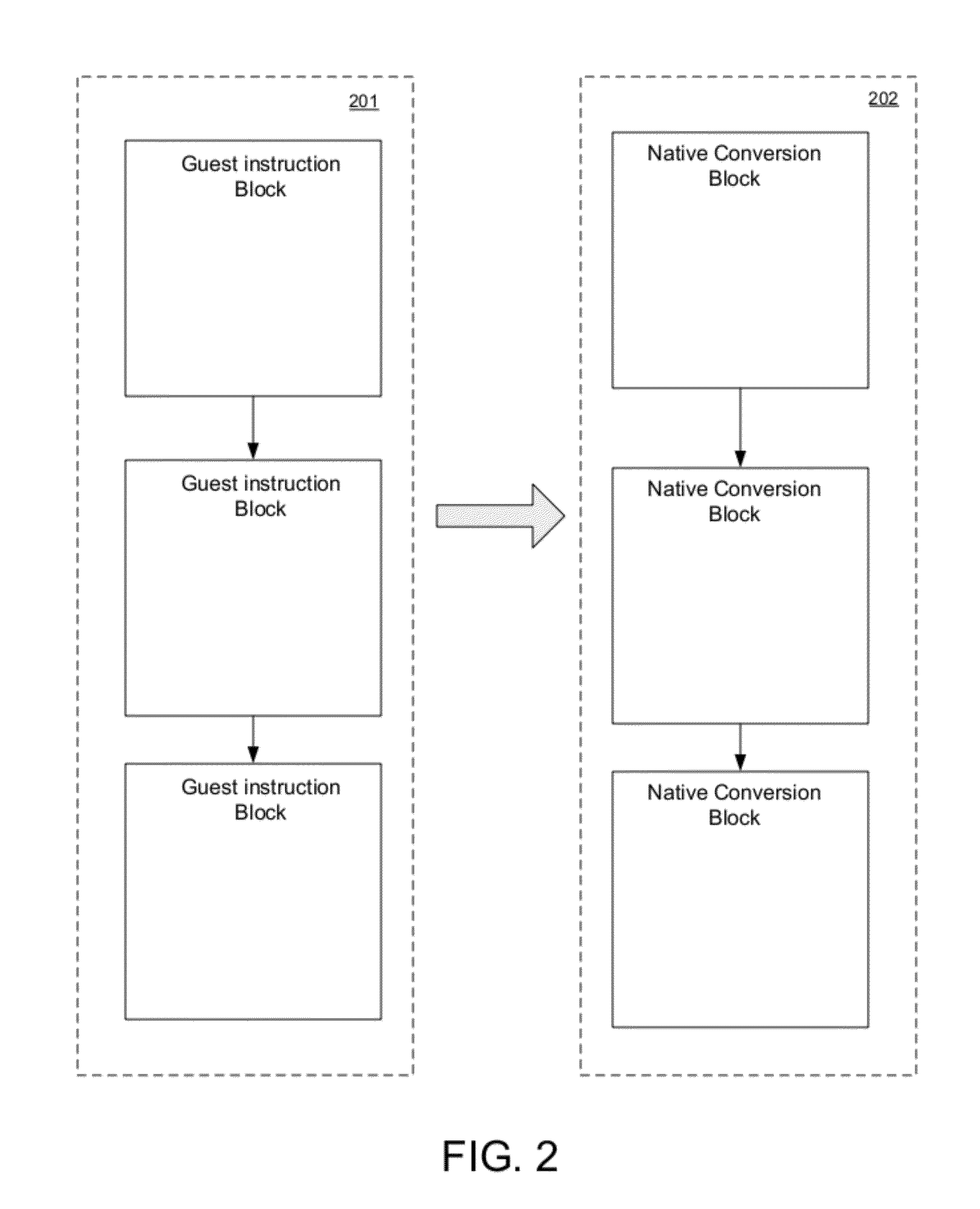

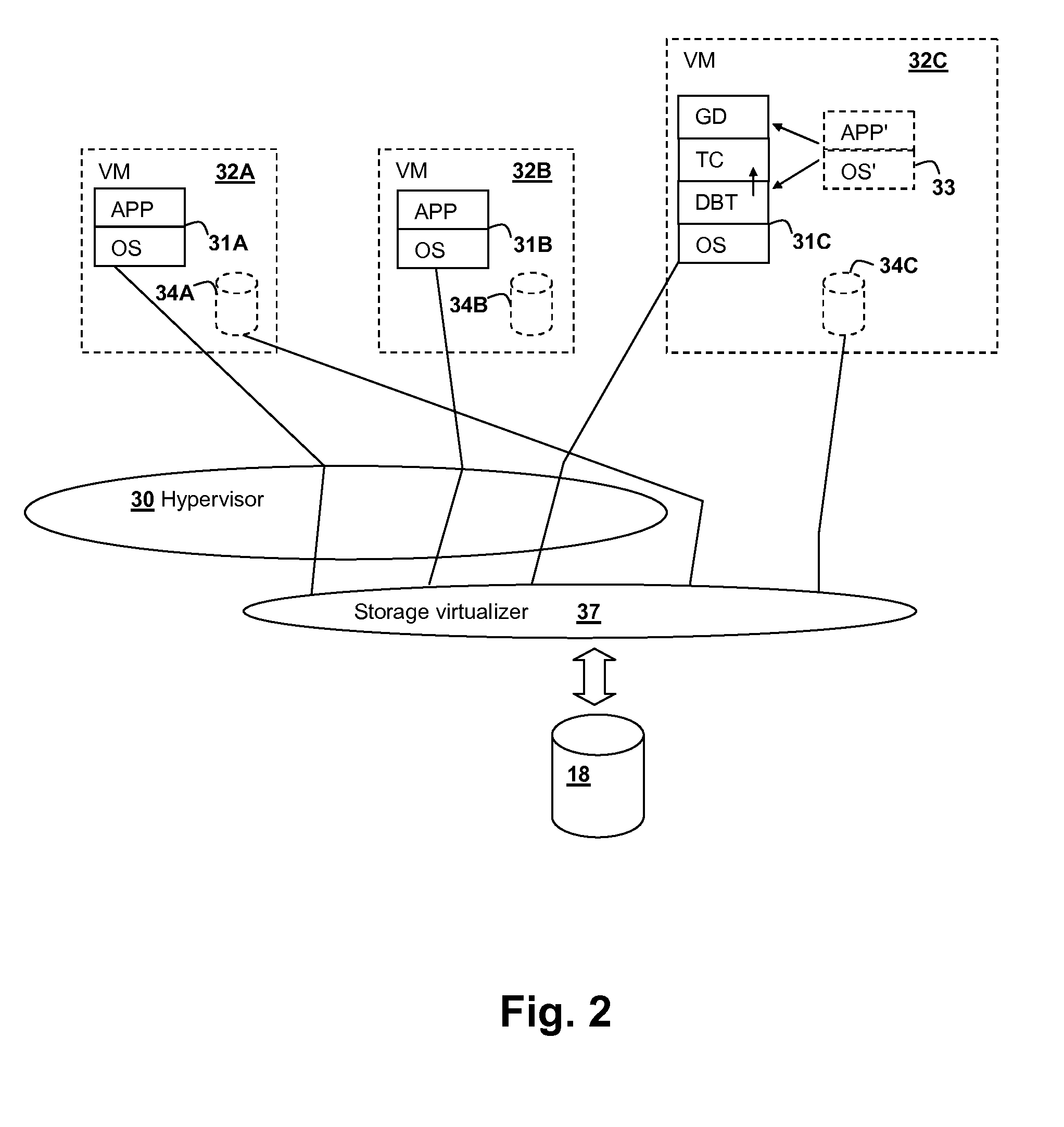

Hardware acceleration components for translating guest instructions to native instructions

ActiveUS20130024661A1Memory architecture accessing/allocationSingle instruction multiple data multiprocessorsParallel computingHardware acceleration

A hardware based translation accelerator. The hardware includes a guest fetch logic component for accessing guest instructions; a guest fetch buffer coupled to the guest fetch logic component and a branch prediction component for assembling guest instructions into a guest instruction block; and conversion tables coupled to the guest fetch buffer for translating the guest instruction block into a corresponding native conversion block. The hardware further includes a native cache coupled to the conversion tables for storing the corresponding native conversion block, and a conversion look aside buffer coupled to the native cache for storing a mapping of the guest instruction block to corresponding native conversion block, wherein upon a subsequent request for a guest instruction, the conversion look aside buffer is indexed to determine whether a hit occurred, wherein the mapping indicates the guest instruction has a corresponding converted native instruction in the native cache.

Owner:INTEL CORP

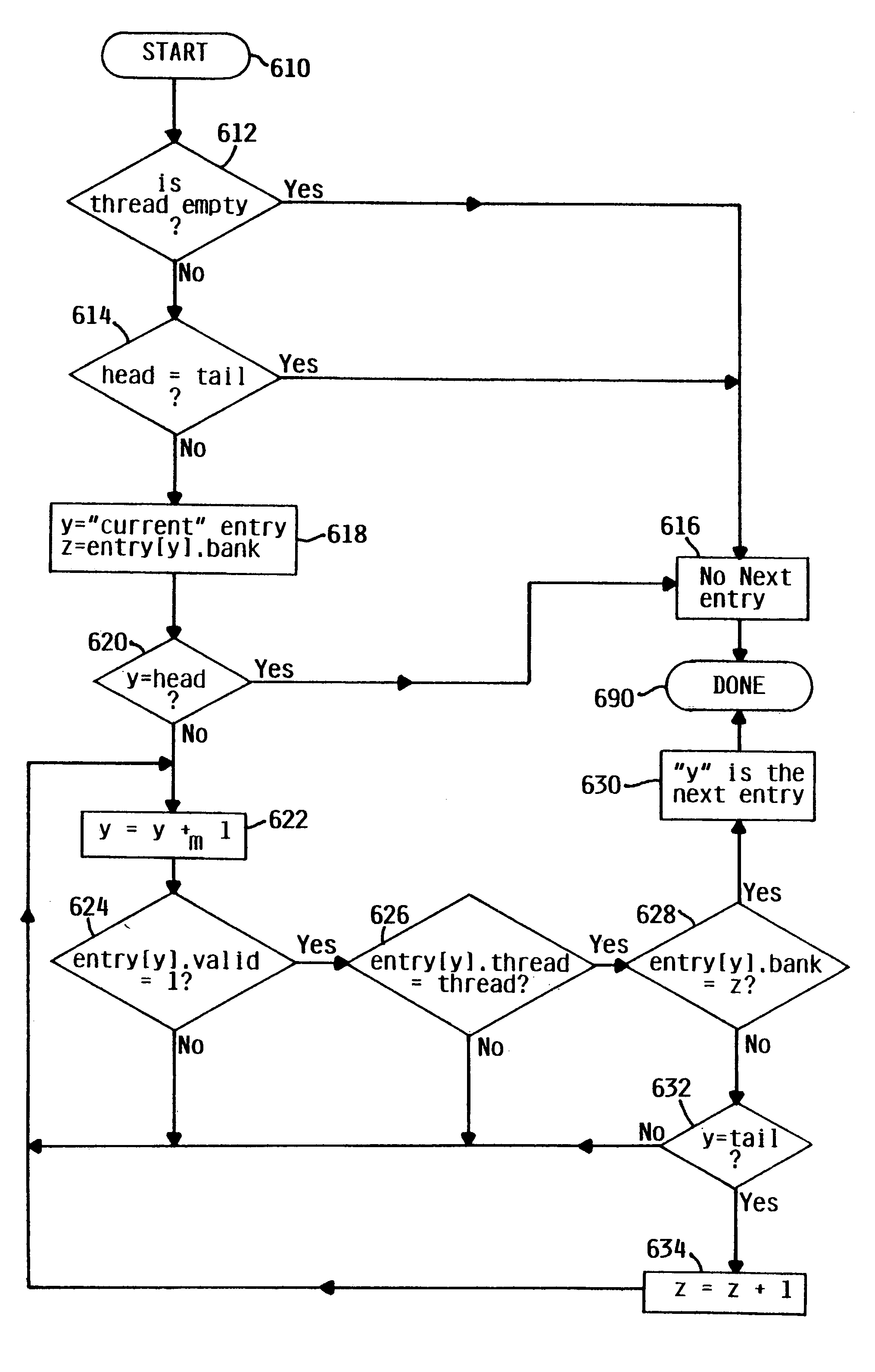

Shared resource queue for simultaneous multithreading processing wherein entries allocated to different threads are capable of being interspersed among each other and a head pointer for one thread is capable of wrapping around its own tail in order to access a free entry

InactiveUS6988186B2Improve efficiencyDigital computer detailsConcurrent instruction executionShared resourceDistributed computing

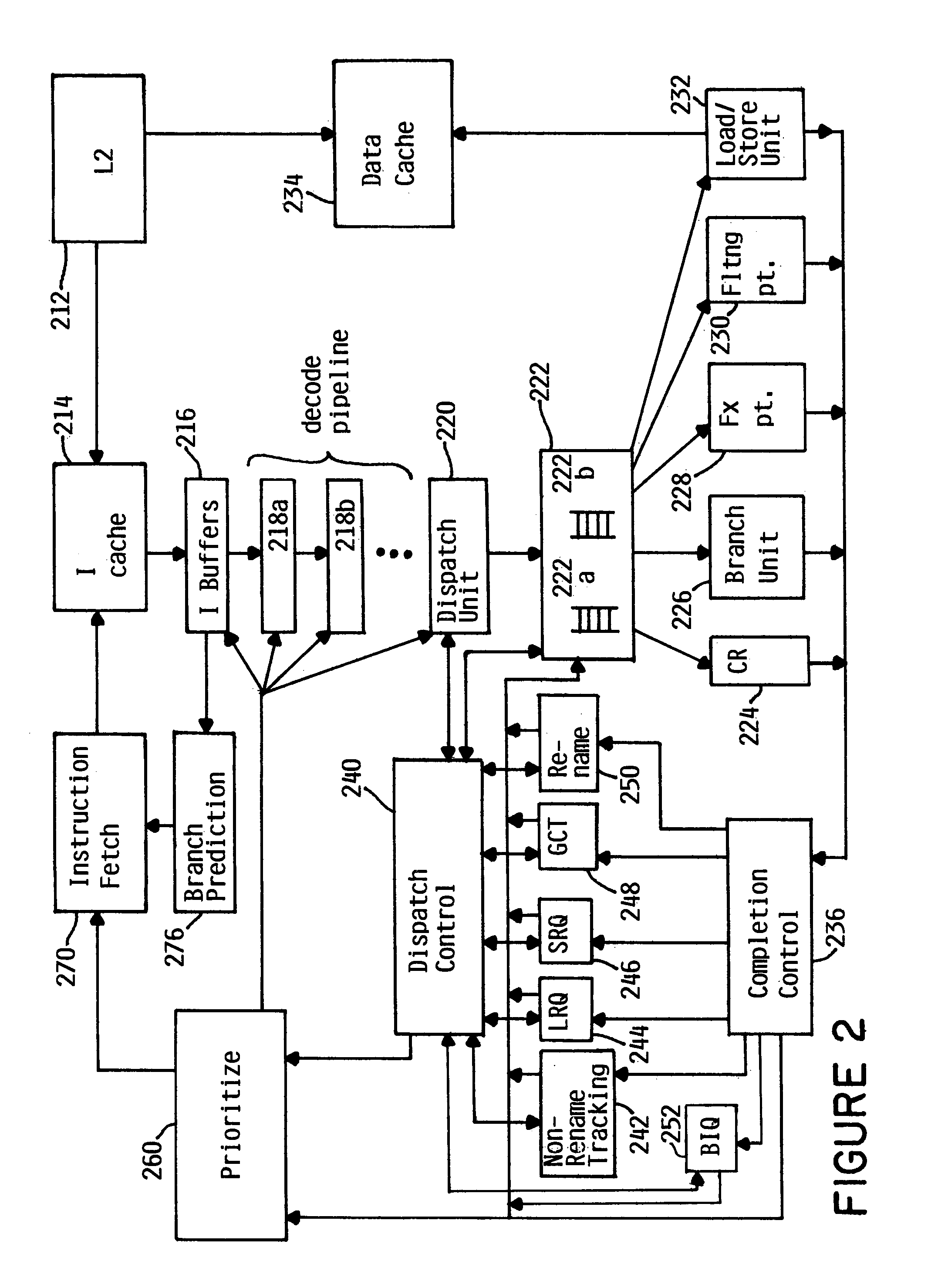

A queue, such as a first-in first-out queue, is incorporated into a processing device, such as a multithreaded pipeline processor. The queue may store the resources of more than one thread in the processing device such that the entries of one thread may be interspersed among the entries of another thread. The entries of each thread may be identified by a thread identification, a valid marker to indicate if the resources within the entry are valid, and a bank number. For a particular thread, the bank number tracks the number of times a head pointer pertaining to the first entry has passed a tail pointer. In this fashion, empty entries may be used and the resources may be efficiently allocated. In a preferred embodiment, the shared resource queue may be implemented into an in-order multithreaded pipelined processor as a queue storing resources to be dispatched for execution of instructions. The shared resource queue may also be implemented into a branch information queue or into any queue where more than one thread may require dynamic registers.

Owner:IBM CORP

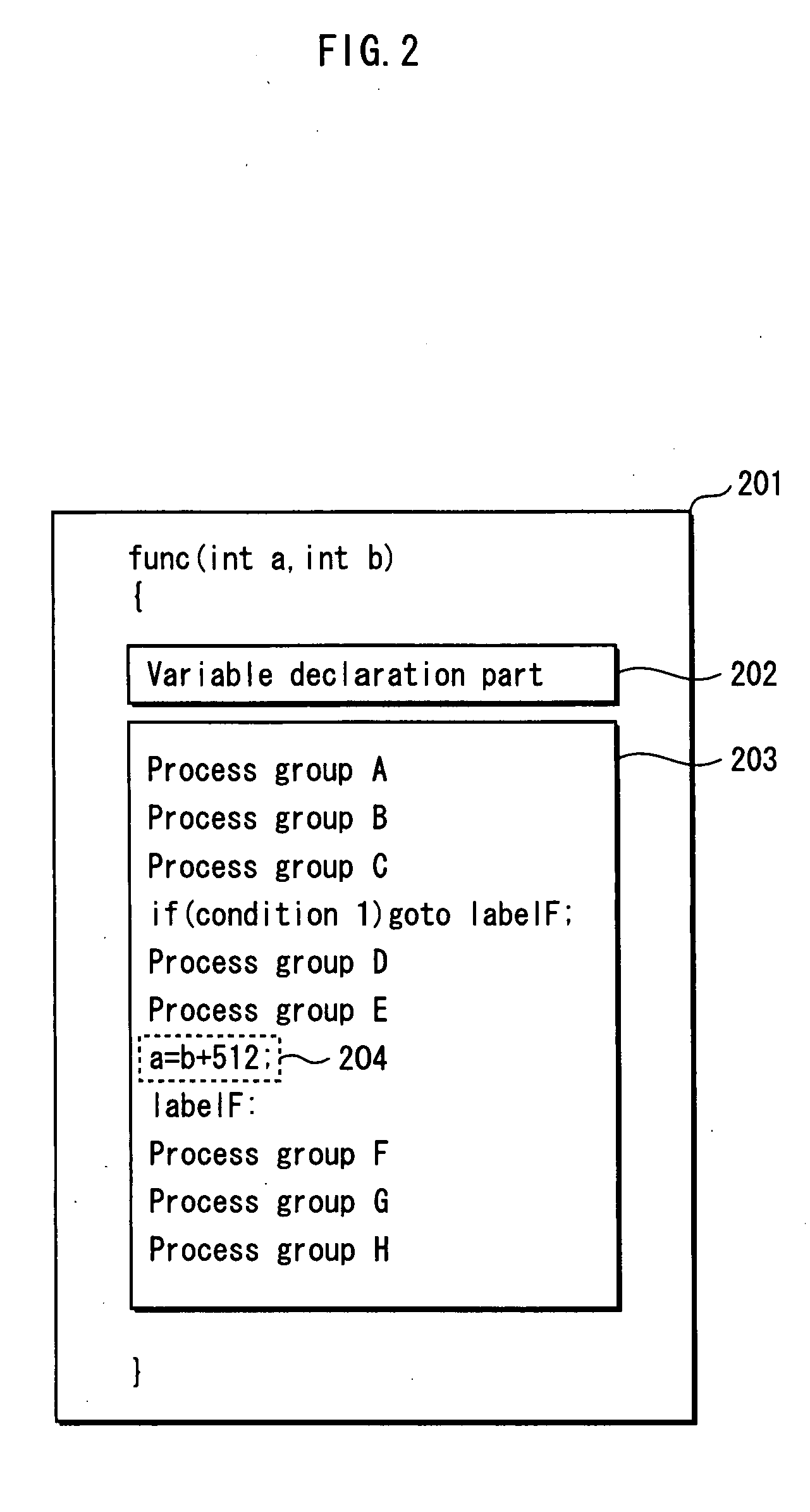

Determination of local variable type and precision in the presence of subroutines

InactiveUS6442751B1Accurate trackingData processing applicationsSoftware engineeringLocal variableComputer science

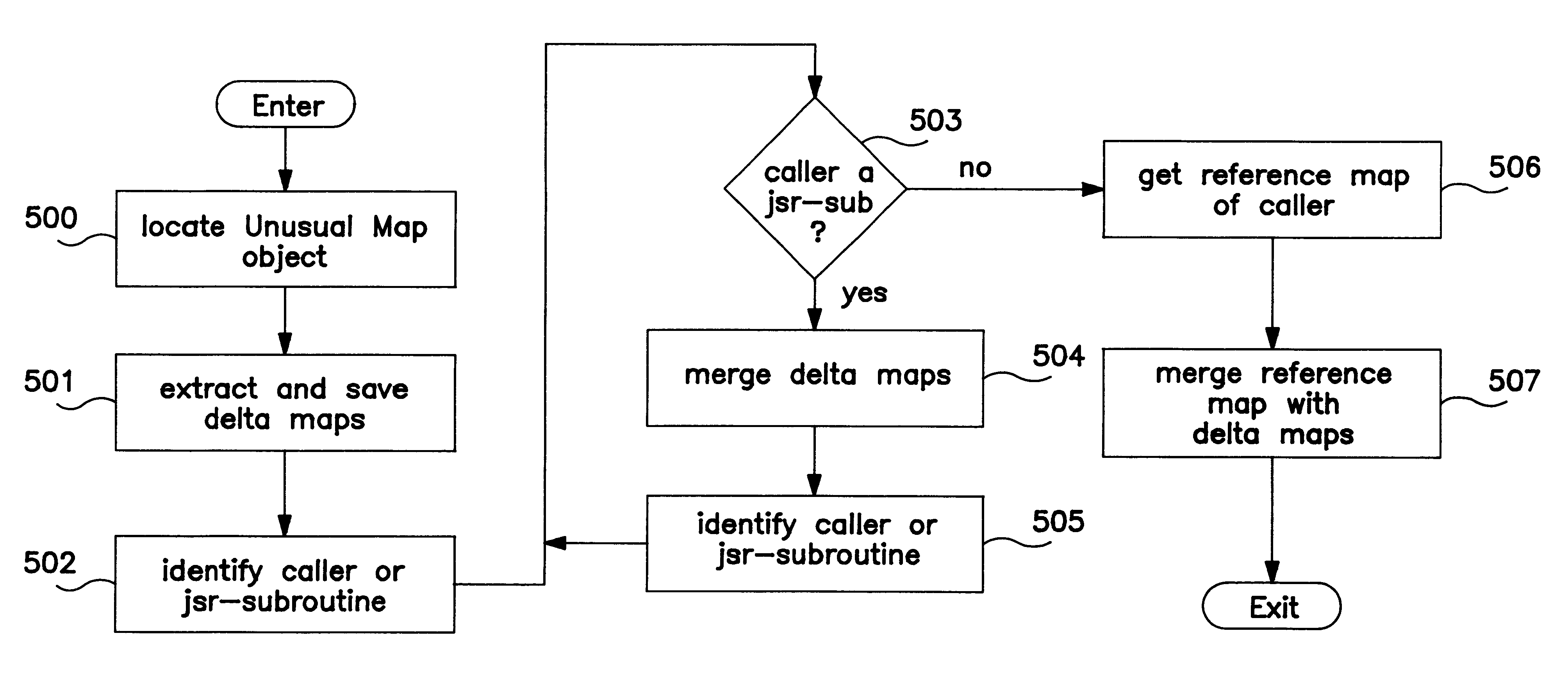

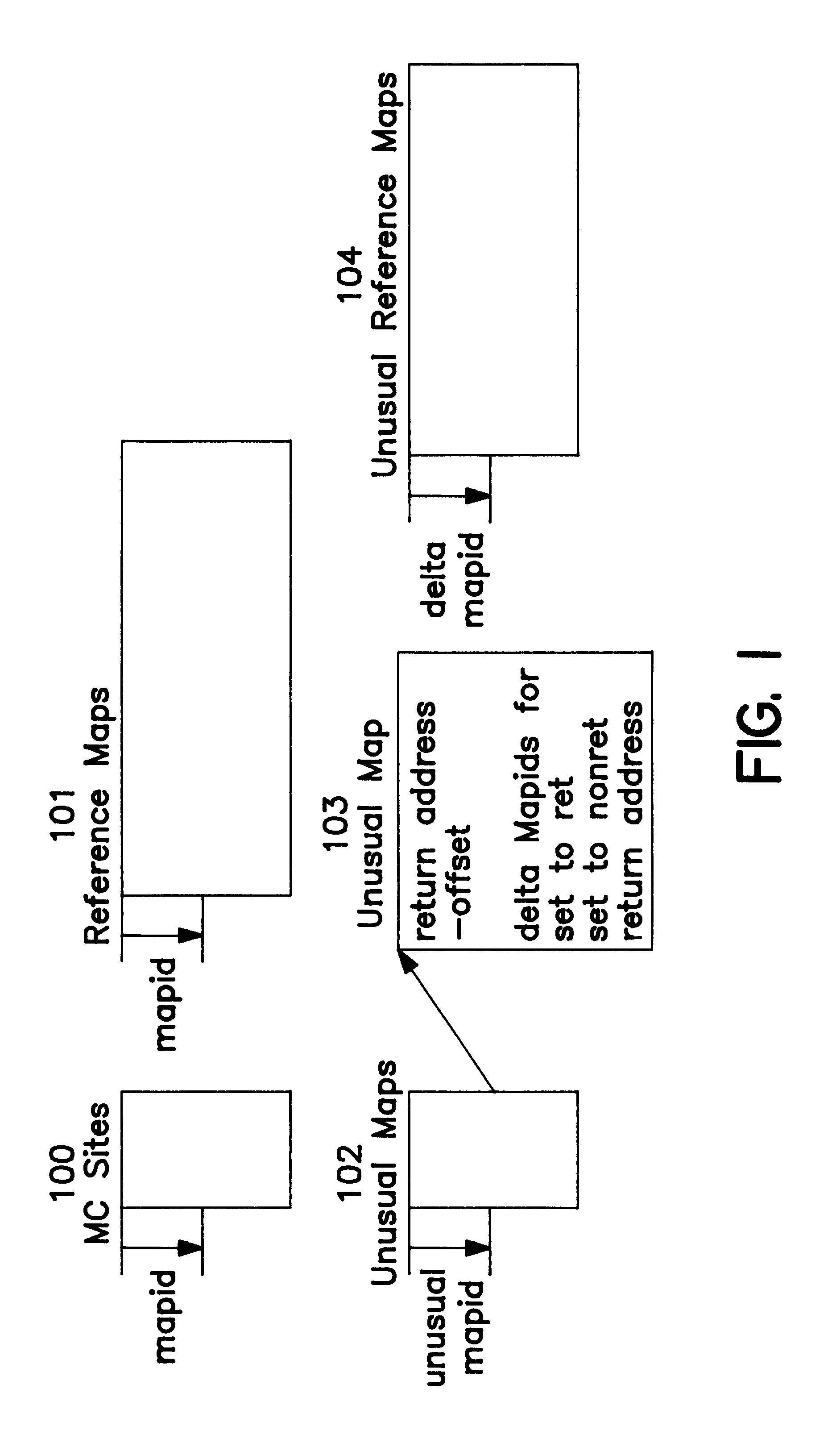

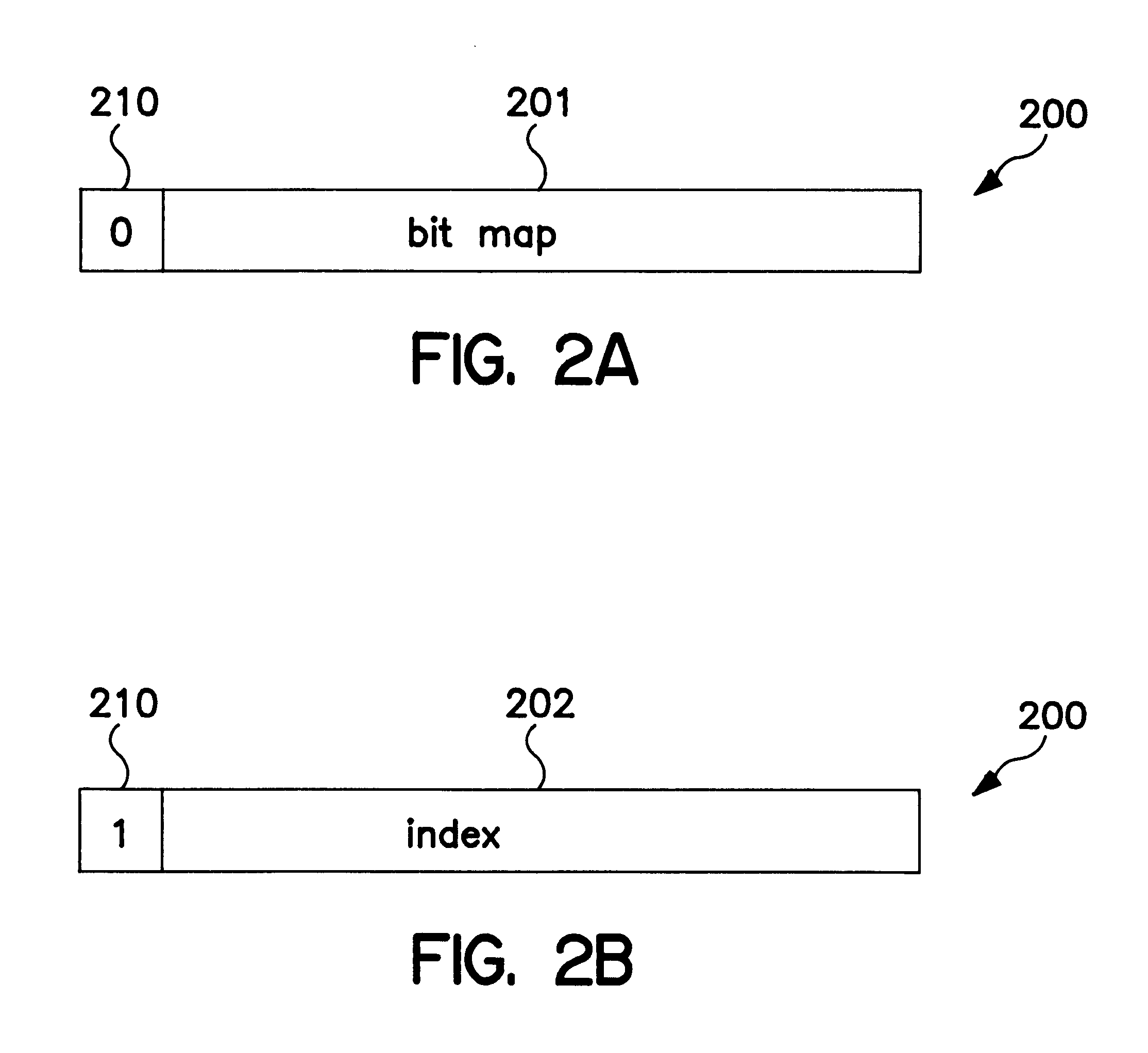

A method is provided for tracking the type of at least one local variable after calling a subroutine. The exemplary method associates each one of a plurality of branch instructions calling the subroutine with a first information, which indicates the type of value stored in the local variable when each one of the plurality of branch instructions is executed. The exemplary method associates at least one execution point-of-interest within the subroutine with a second information. The execution point-of-interest is any point within the subroutine where it may be necessary to ascertain the type of each local variable. The second information is a data structure indicating a change in type made to the local variable after entering the subroutine and before the execution point-of-interest. The exemplary method associates the execution point-of-interest with a return address for the subroutine. This return address enables the method to identify the point in the calling program from which the current subroutine was called. When a request is received to identify the type of the local variable at the execution point-of-interest in the subroutine, the exemplary method obtains a second map from the second information using the execution point-of-interest. The second map indicates the change in type of the local variable made within the subroutine. The method also obtains the return address associated with the execution point-of-interest, and obtains a first map from the first information using the return address. The first map indicates the type of value stored in the local variable when one of the branch instructions is executed to call the subroutine. Given the first and second maps, the exemplary method merges the first map with the second map to identify a current type for the local variable. In performing this merge, the method combines the type status of the local variable as modified by the subroutine with the type status of the local variable as it stood before calling the subroutine.

Owner:IBM CORP

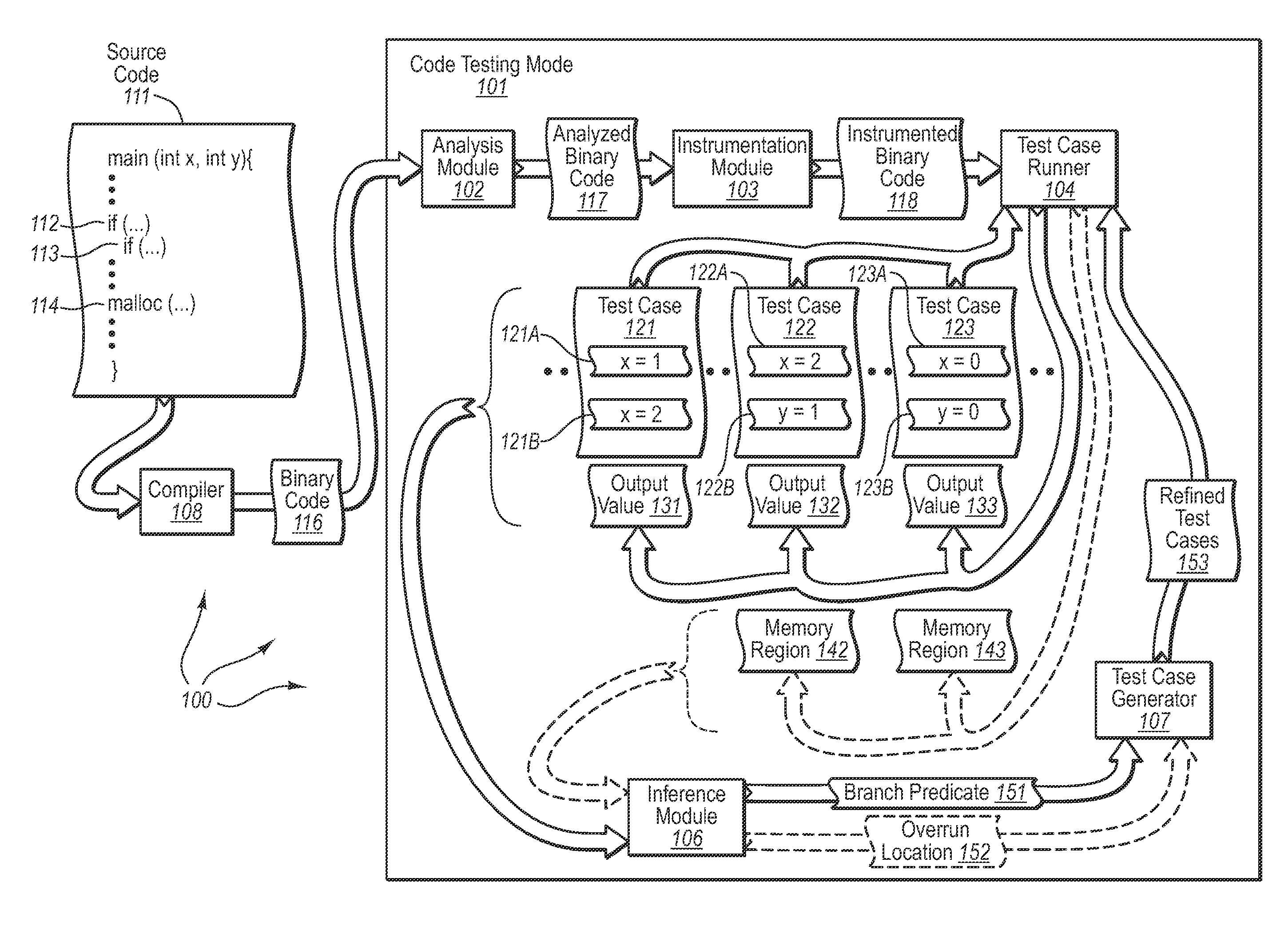

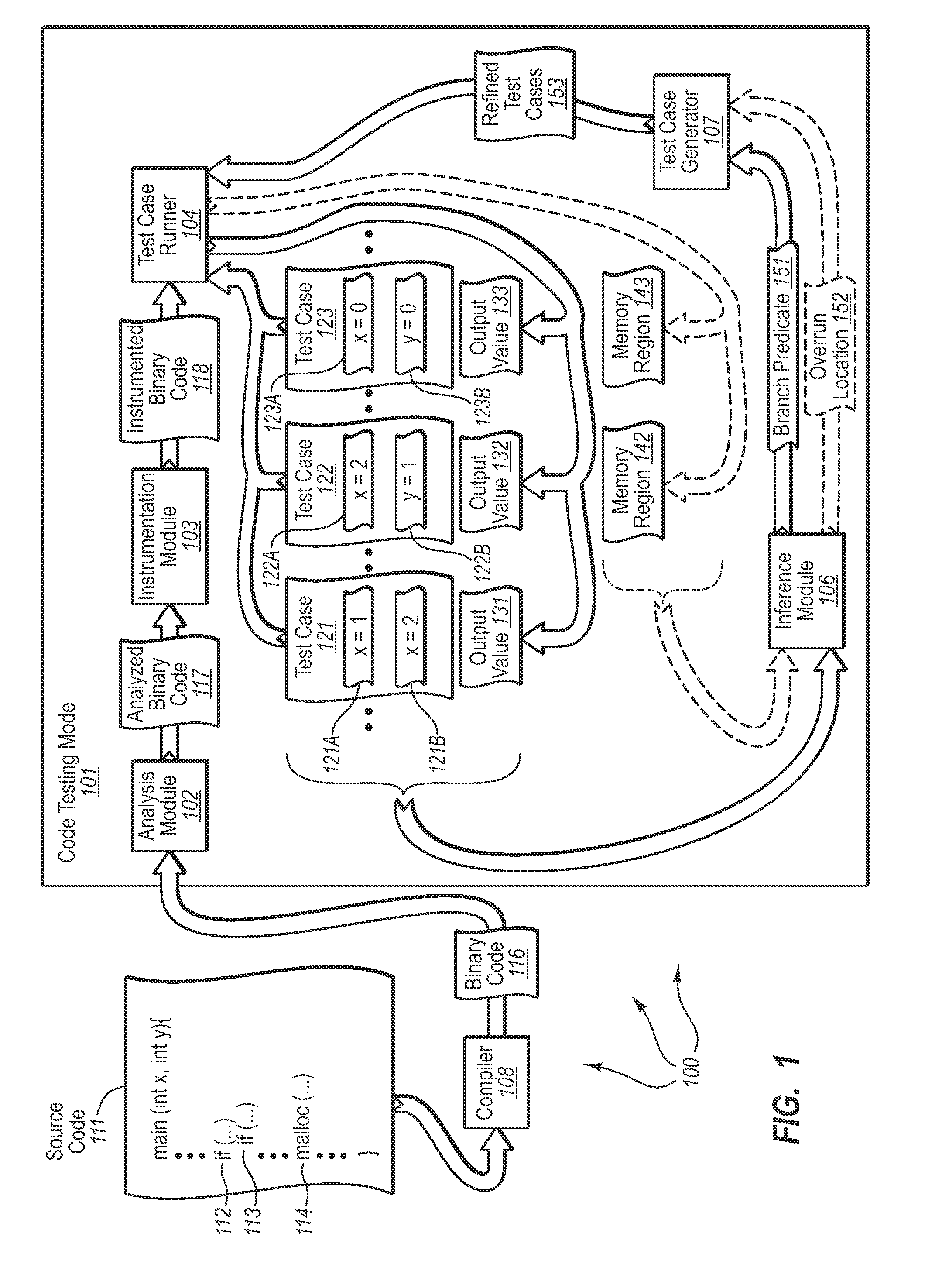

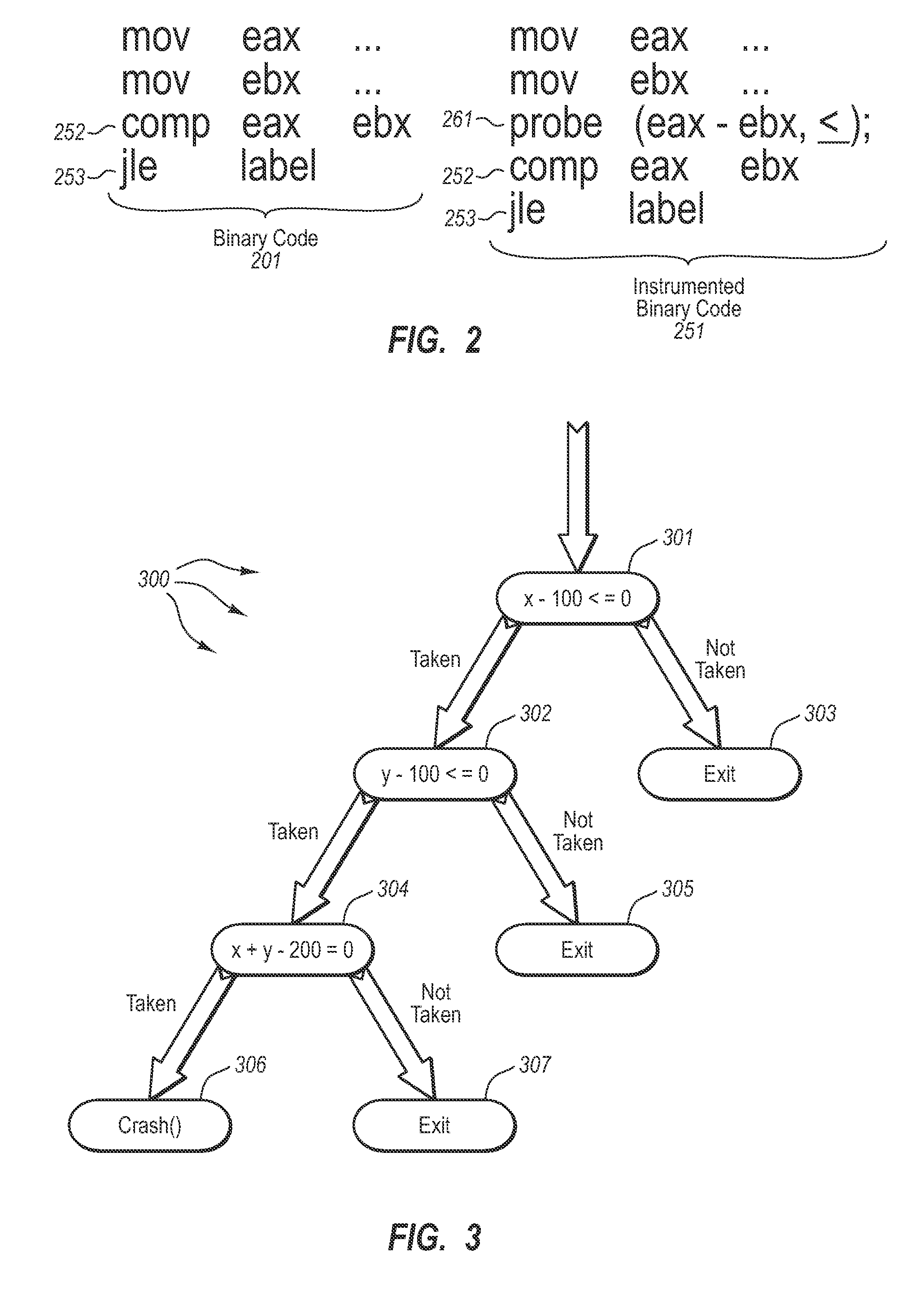

Automatically generating test cases for binary code

InactiveUS20090007077A1Efficient testingError detection/correctionSpecific program execution arrangementsTest inputParallel computing

The present invention extends to methods, systems, and computer program products for automatically generating test cases for binary code. Embodiments of the present invention can automatically generate test inputs for systematically covering program execution paths within binary code. By monitoring program execution of the binary code on existing or random test cases, branch predicates on execution paths can be dynamically inferred. These inferred branch predicates can then be used to drive the program along previously unexplored execution paths, enabling the learning of further execution paths. Embodiments of the invention can be used in combination with other analysis and testing techniques to provide better test coverage and expose program errors.

Owner:MICROSOFT TECH LICENSING LLC

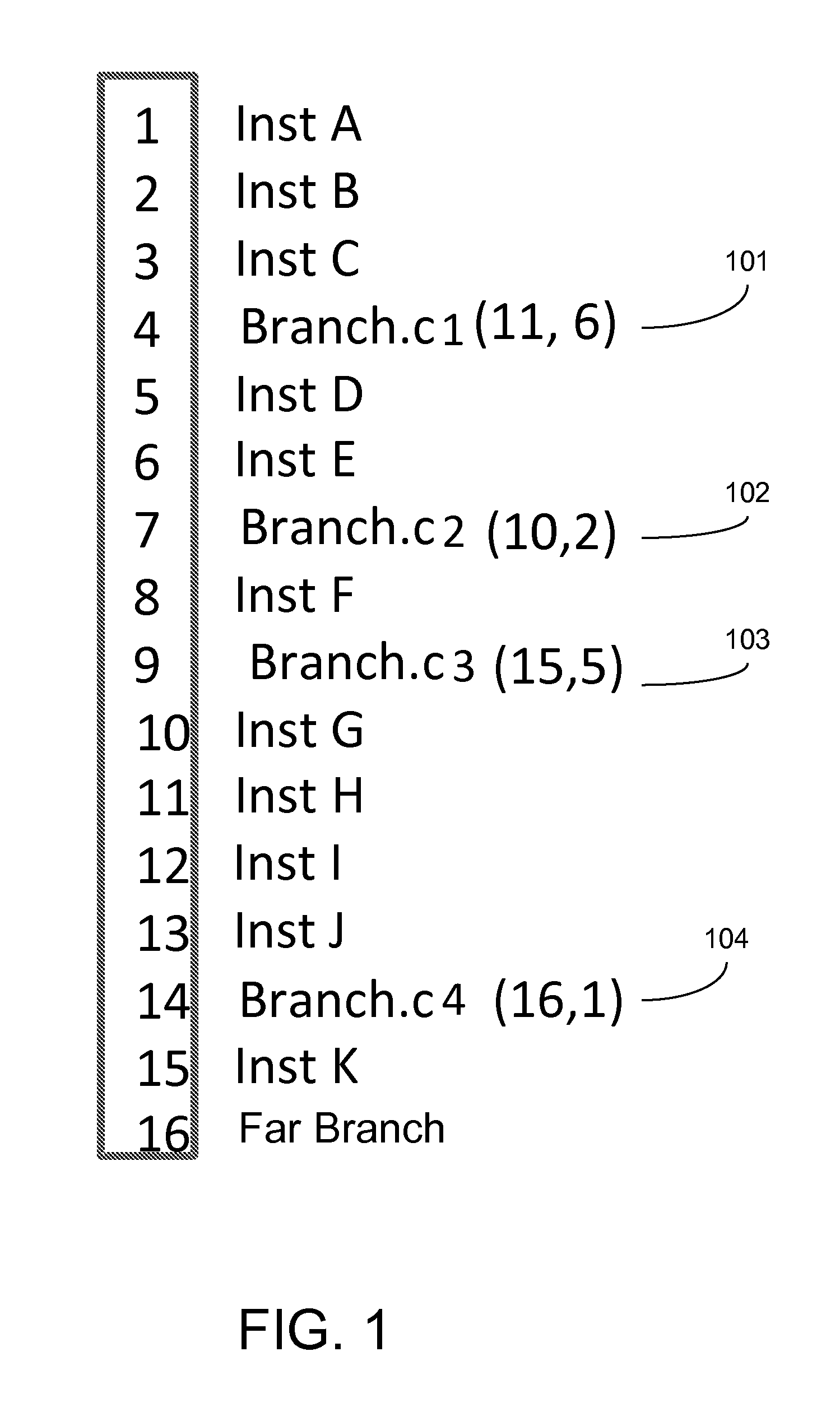

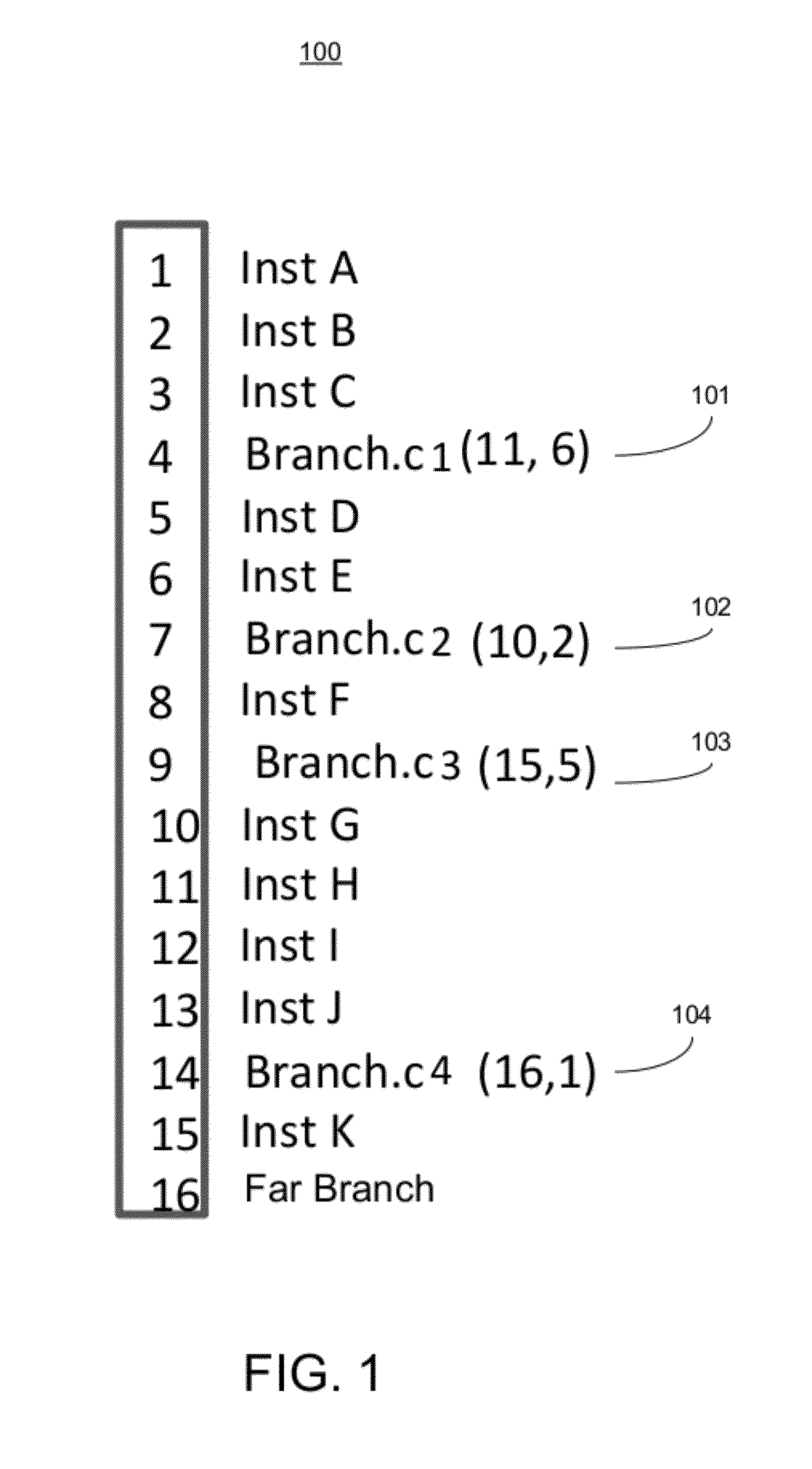

Guest instruction to native instruction range based mapping using a conversion look aside buffer of a processor

ActiveUS20120198157A1Memory architecture accessing/allocationBinary to binaryParallel computingComputer architecture

A method for translating instructions for a processor. The method includes accessing a plurality of guest instructions that comprise multiple guest branch instructions, and assembling the plurality of guest instructions into a guest instruction block. The guest instruction block is converted into a corresponding native conversion block. The native conversion block is stored into a native cache. A mapping of the guest instruction block to corresponding native conversion block is stored in a conversion look aside buffer. Upon a subsequent request for a guest instruction, the conversion look aside buffer is indexed to determine whether a hit occurred, wherein the mapping indicates whether the guest instruction has a corresponding converted native instruction in the native cache. The converted native instruction is forwarded for execution in response to the hit.

Owner:INTEL CORP

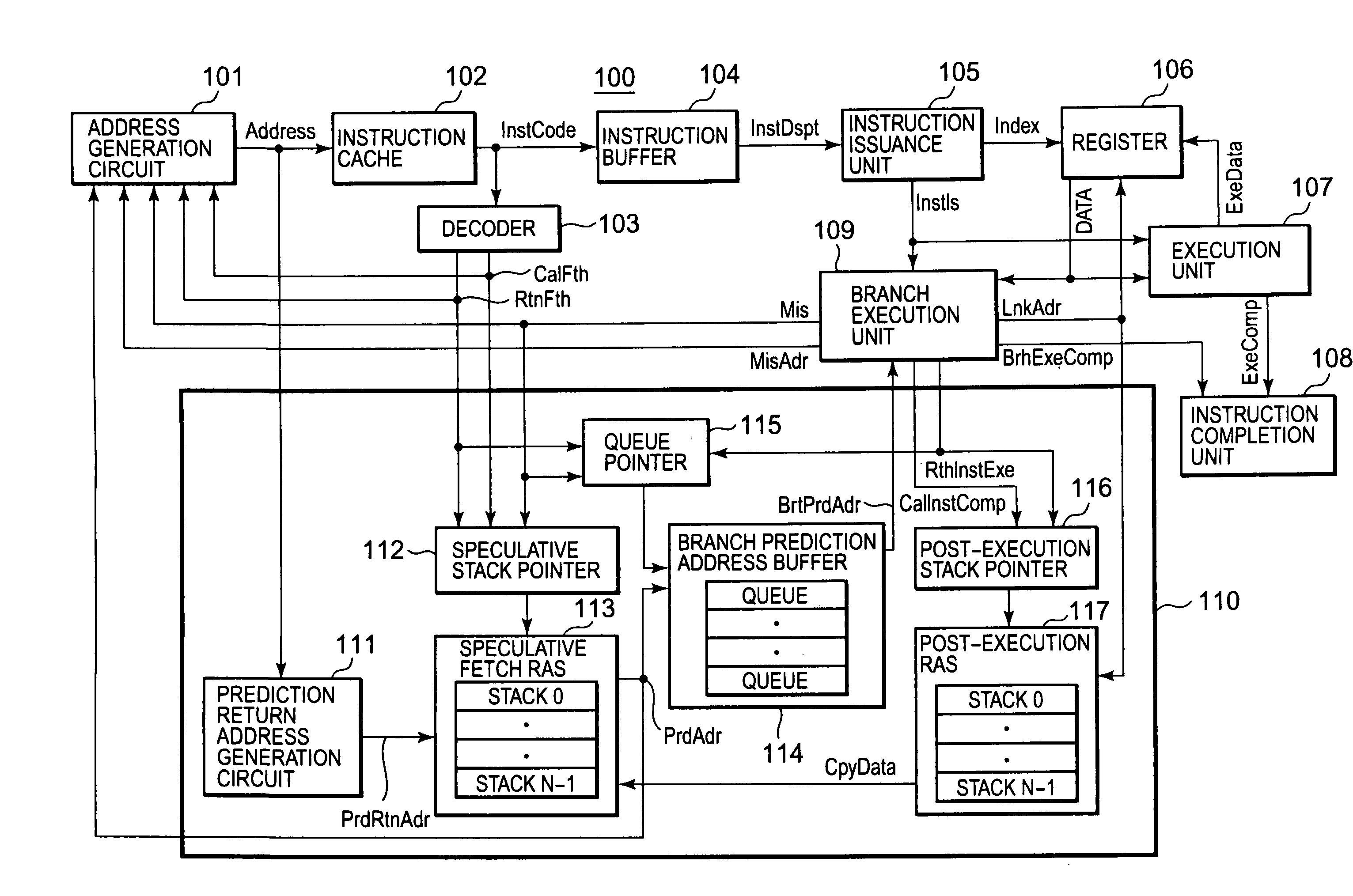

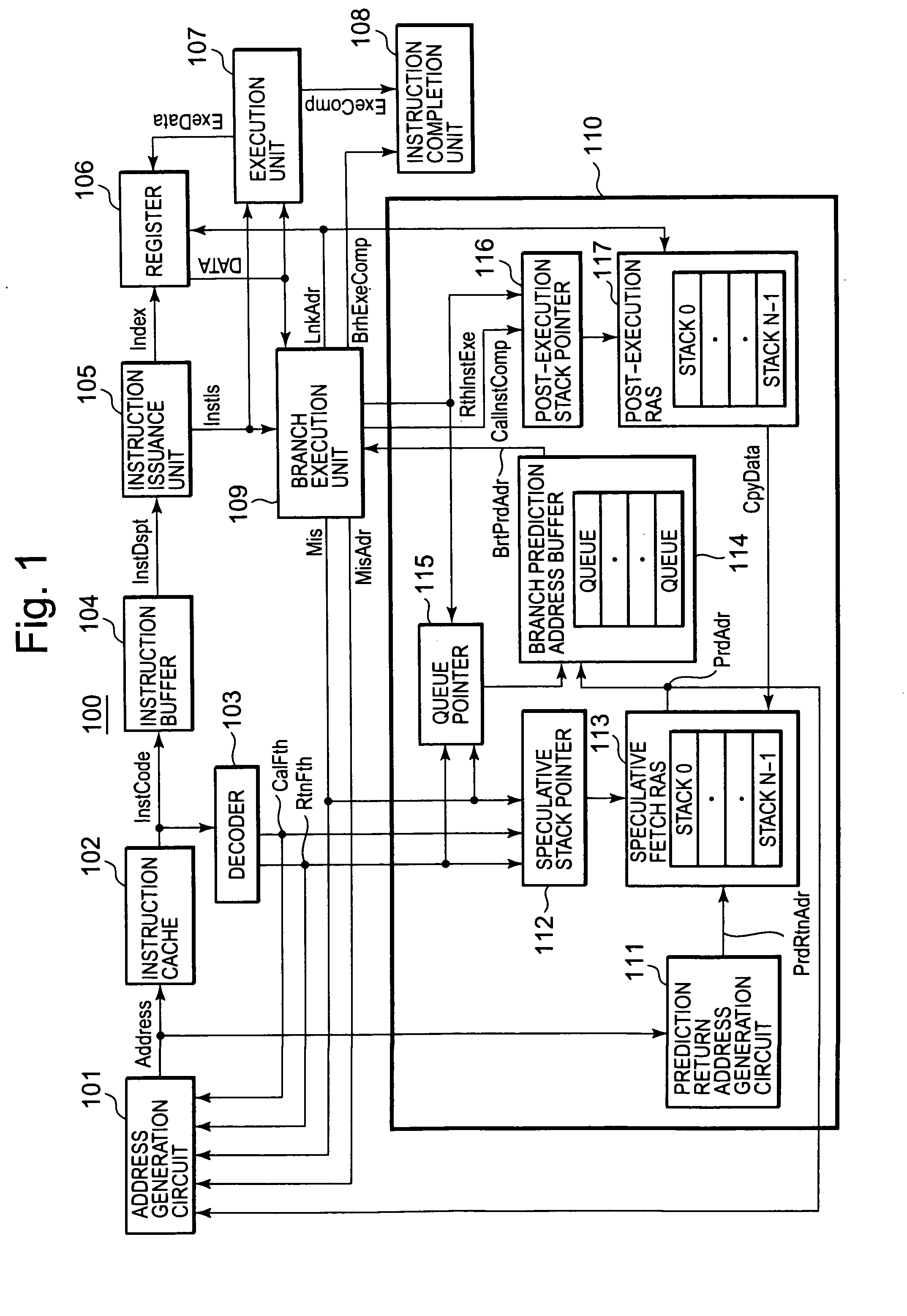

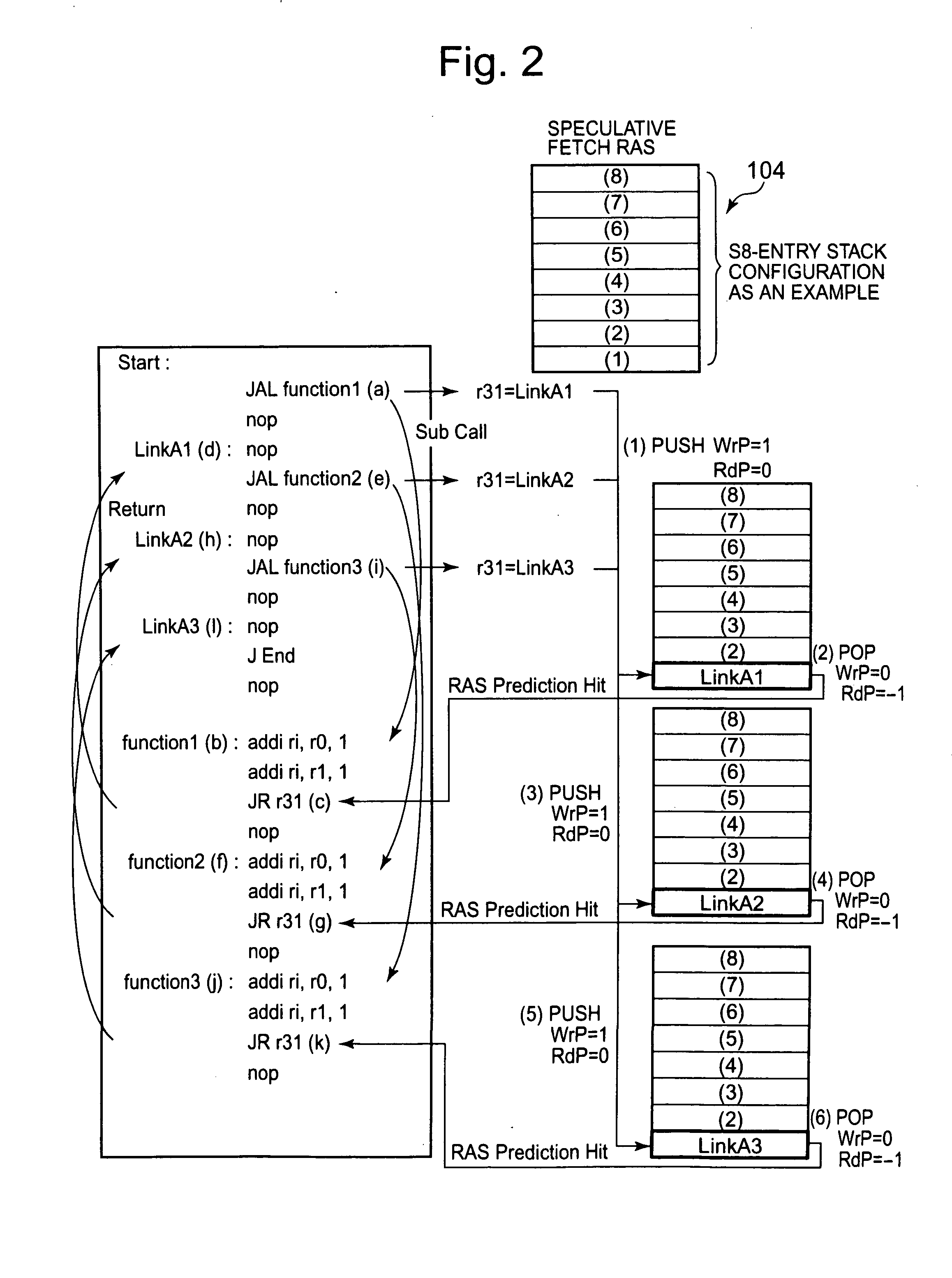

Branch prediction control device having return address stack and method of branch prediction

InactiveUS20080301420A1Improve accuracyDigital computer detailsConcurrent instruction executionInformation processingReturn address stack

A branch prediction control device, in an information processing unit which performs a pipeline process, generates a branch prediction address used for verification of an instruction being speculatively executed. The branch prediction control device includes a first return address storage unit storing the prediction return address, a second return address storage unit storing a return address to be generated depending on an execution result of the call instruction, and a branch prediction address storage unit sending a stored prediction return address as a branch prediction address and storing the sent branch prediction address. When the branch prediction address differs from a return address, which is generated after executing a branch instruction or a return instruction, contents stored in the second return address storage unit are copied to the first return address storage unit.

Owner:RENESAS ELECTRONICS CORP

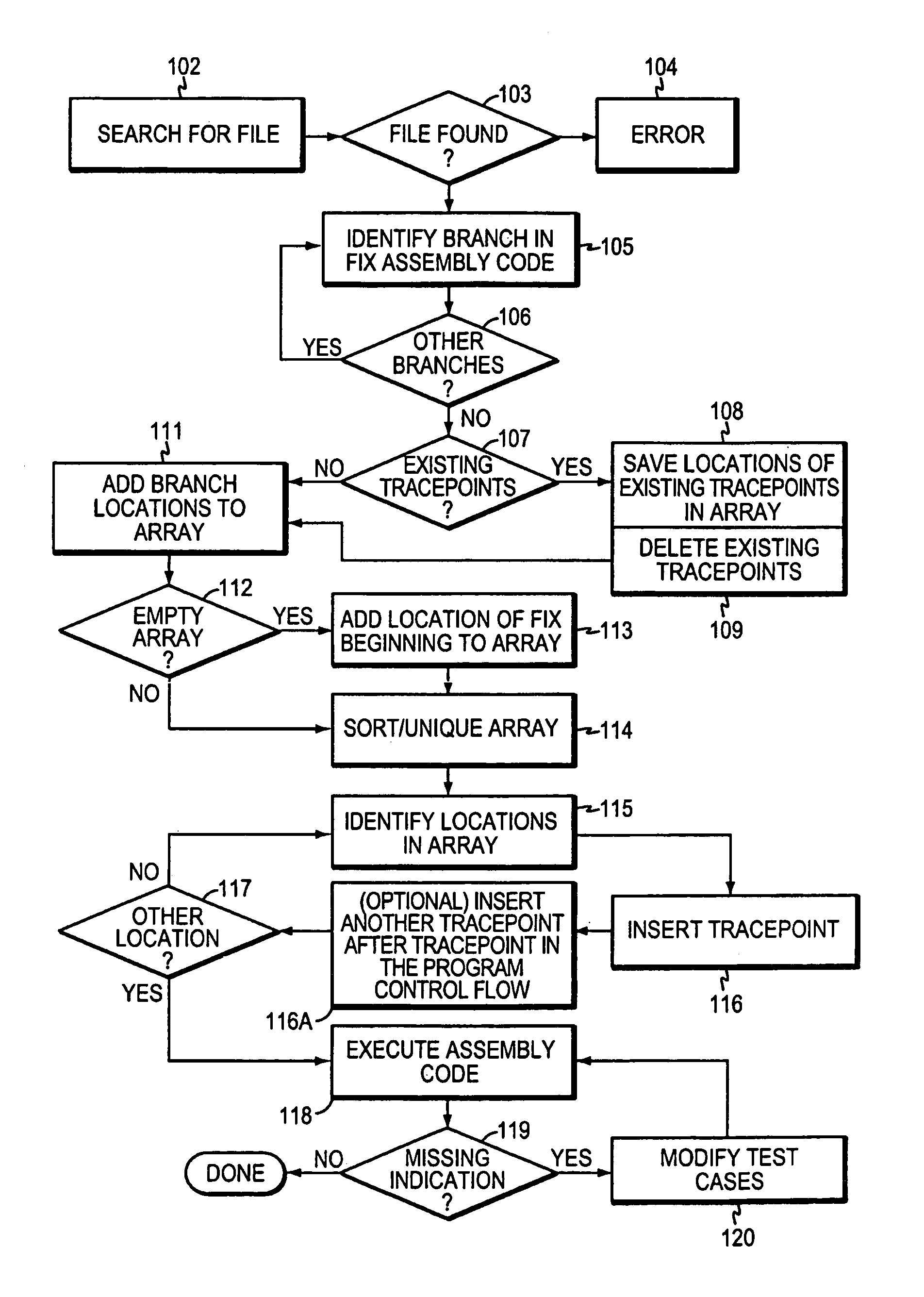

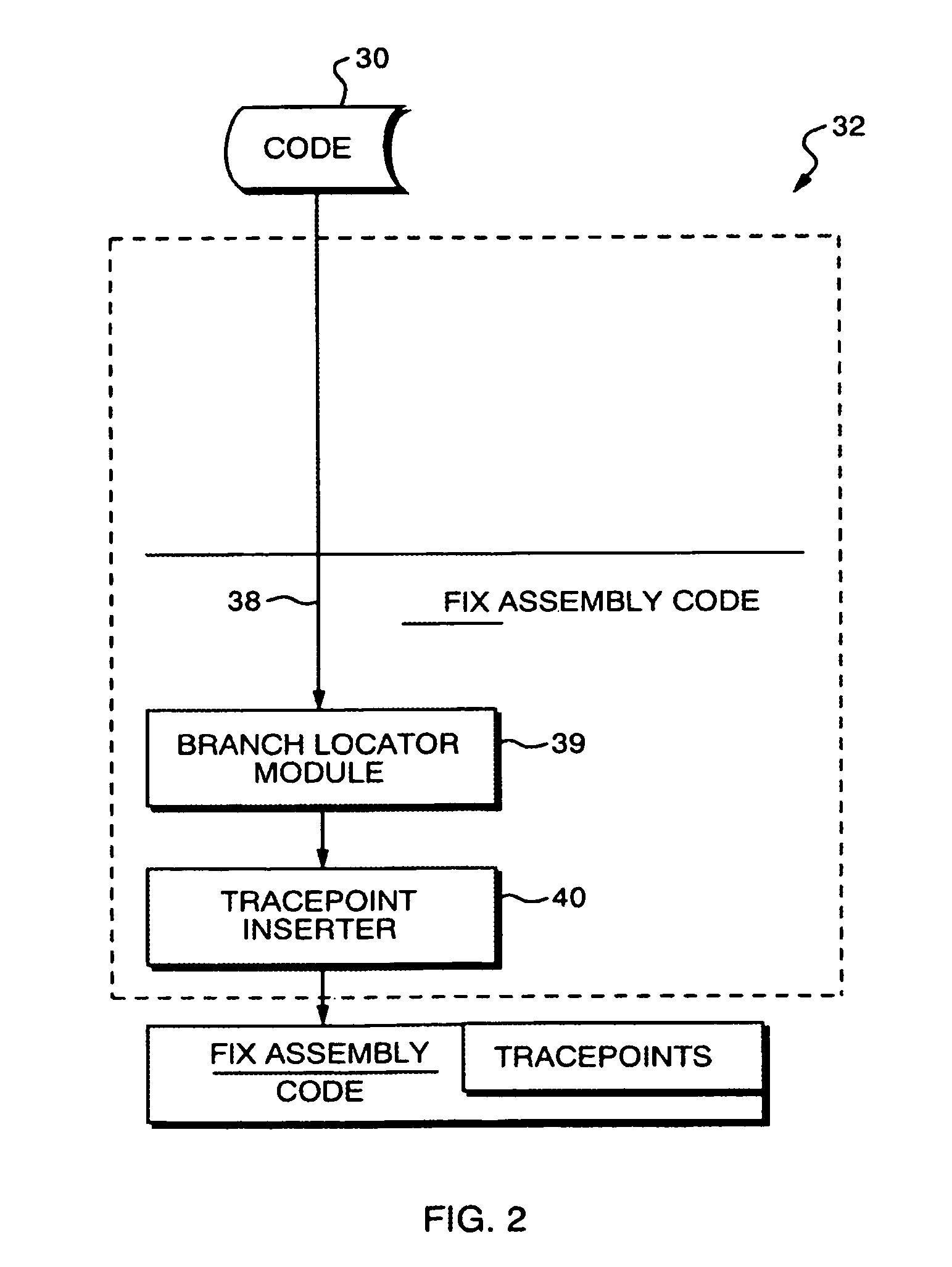

Software debugging tool

ActiveUS7401322B1Raise completelyIncrease heightError detection/correctionSpecific program execution arrangementsTest caseProgram control

In a method for testing computer code, each branch that occurs within the machine-readable code is located. A first tracepoint is placed immediately after the beginning of the branch and a second tracepoint at the target address of each branch, each tracepoint generating an indicator. When the machine-readable code with the tracepoints is executed on the target computer, the method identifies those indicators that have been generated by their corresponding tracepoints, thereby permitting determination of those branches that the program control flow has not passed through. The test cases are modified to exercise the previously omitted branches, and the converted code is re-executed, until all branches have been properly exercised. The tracepoints are automatically eliminated after they have performed their intended function.

Owner:WILSON PENELOPE S +1

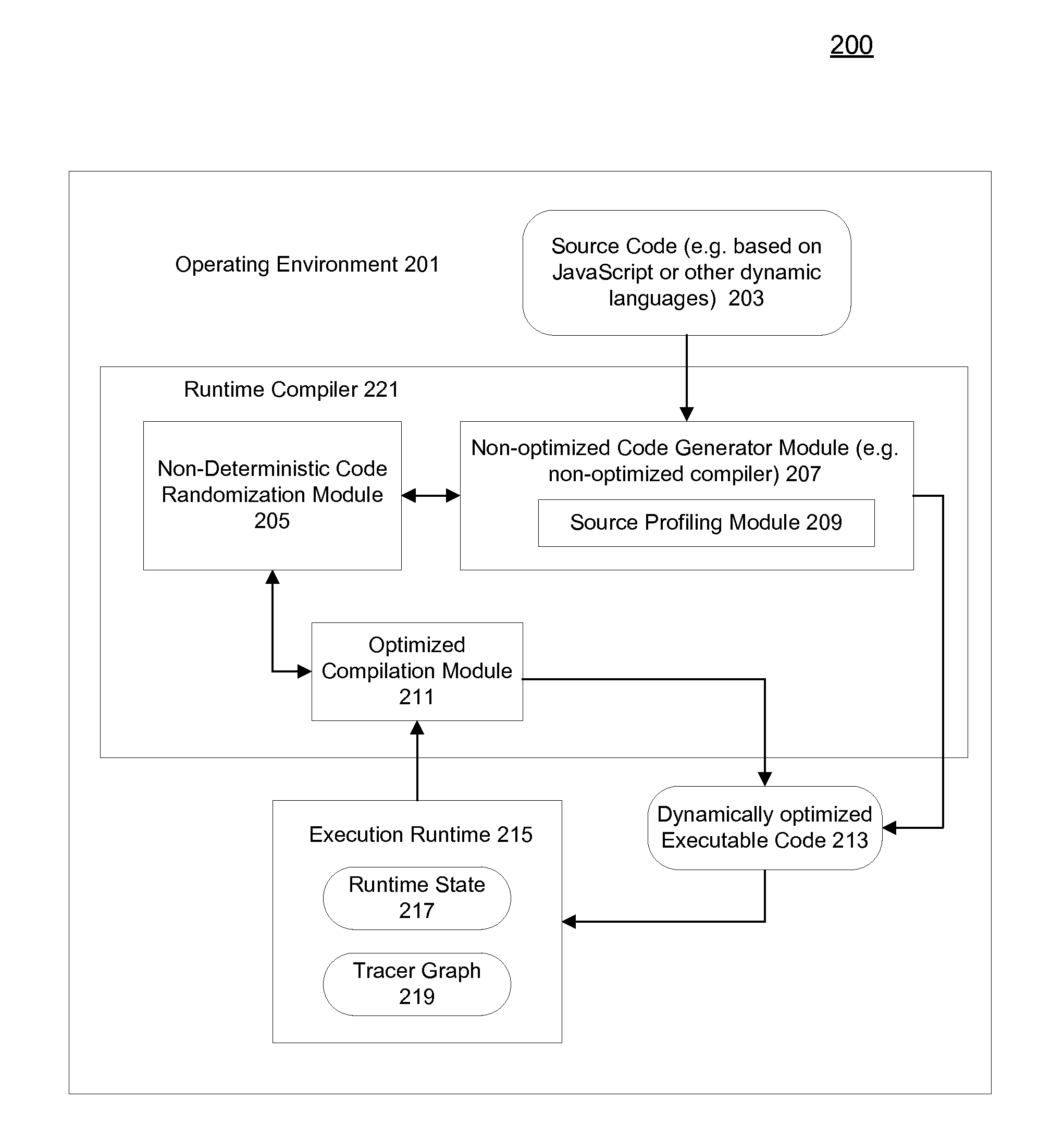

Computer implemented machine learning method and system

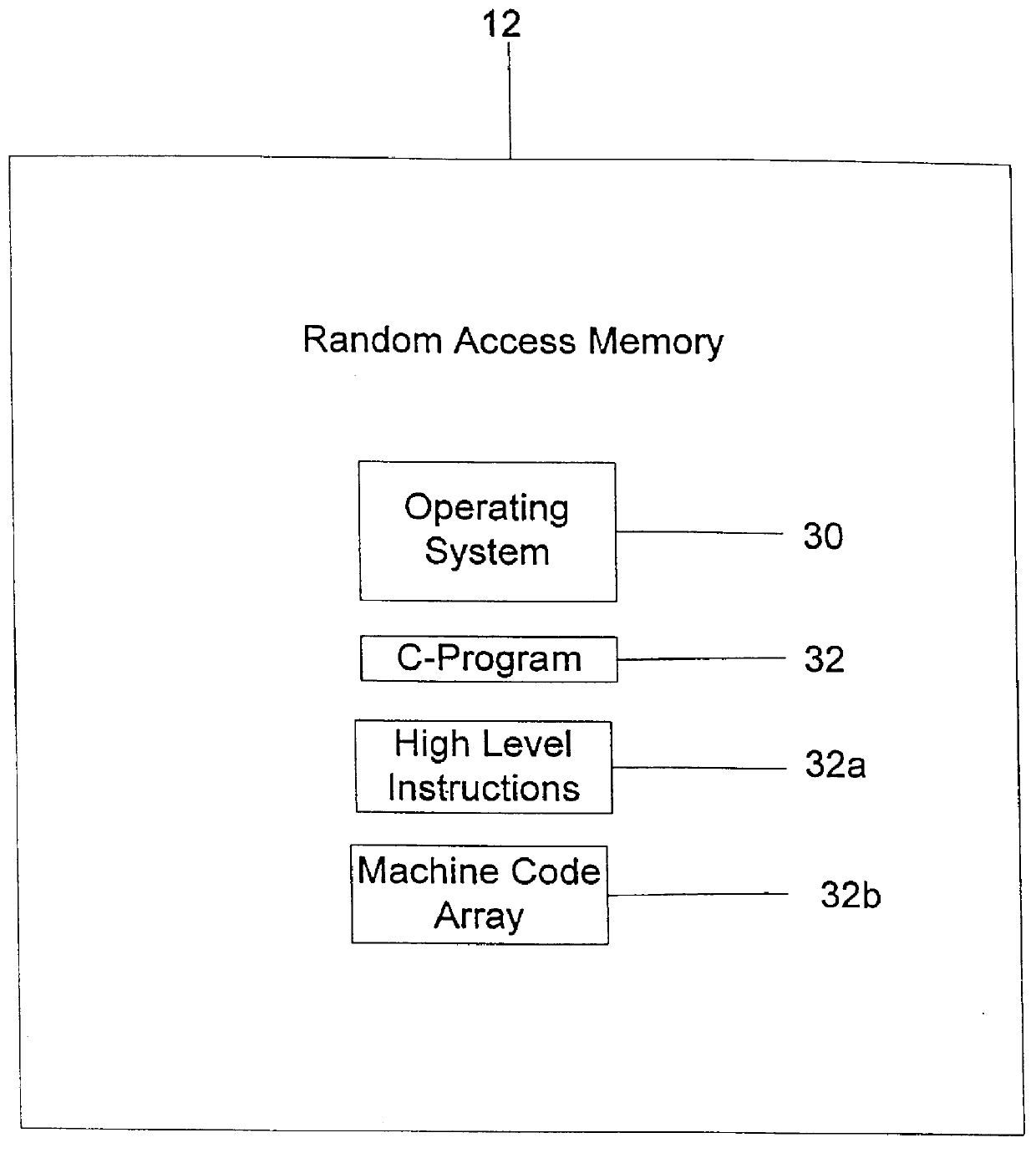

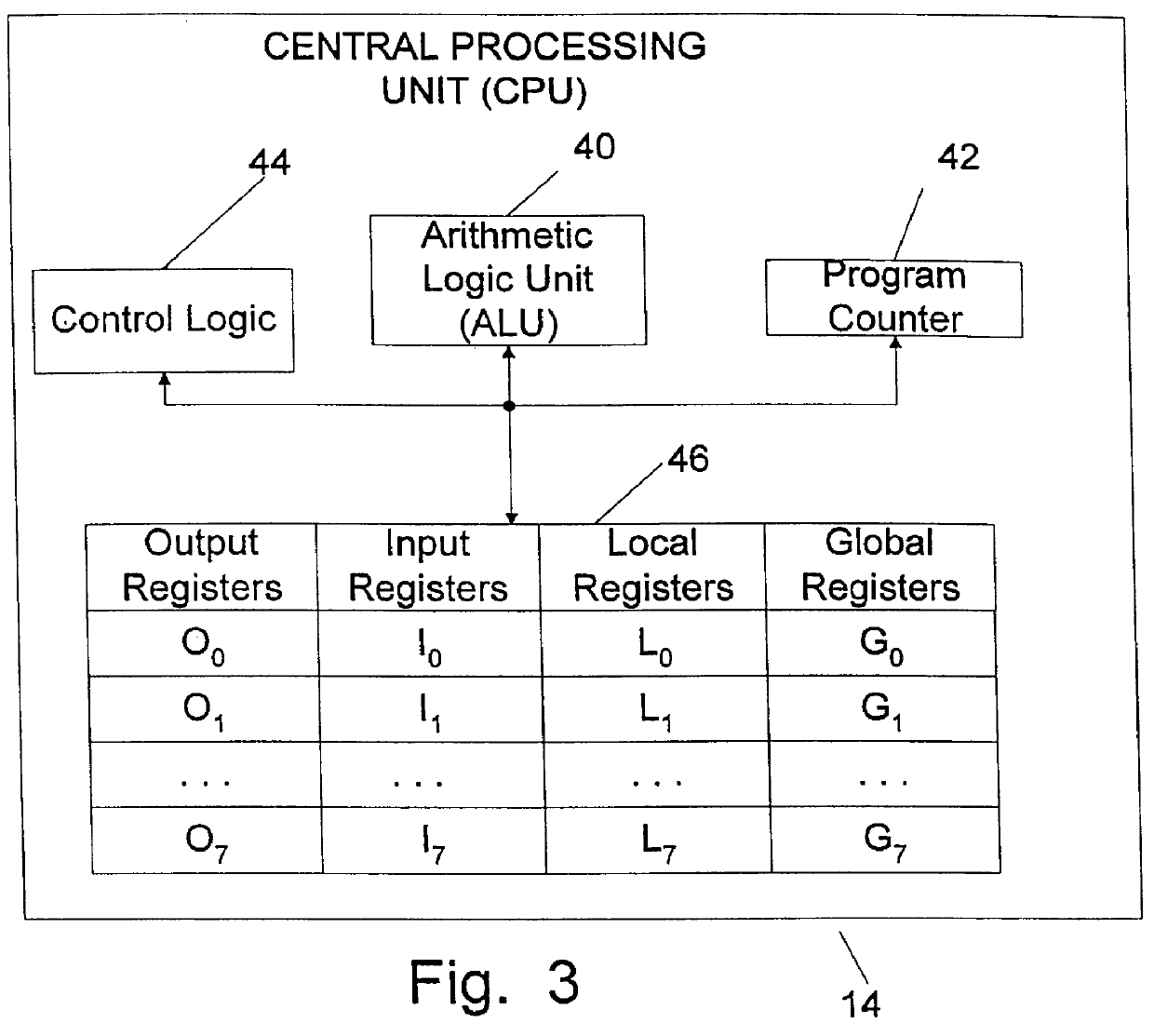

One or more machine code entities such as functions are created which represent solutions to a problem and are directly executable by a computer. The programs are created and altered by a program in a higher level language such as "C" which is not directly executable, but requires translation into executable machine code through compilation, interpretation, translation, etc. The entities are initially created as an integer array that can be altered by the program as data, and are executed by the program by recasting a pointer to the array as a function type. The entities are evaluated by executing them with training data as inputs, and calculating fitnesses based on a predetermined criterion. The entities are then altered based on their fitnesses using a machine learning algorithm by recasting the pointer to the array as a data (e.g. integer) type. This process is iteratively repeated until an end criterion is reached. The entities evolve in such a manner as to improve their fitness, and one entity is ultimately produced which represents an optimal solution to the problem. Each entity includes a plurality of directly executable machine code instructions, a header, a footer, and a return instruction. The instructions include branch instructions which enable subroutines, leaf functions, external function calls, recursion, and loops. The system can be implemented on an integrated circuit chip, with the entities stored in high speed memory in a central processing unit.

Owner:NORDIN PETER +1

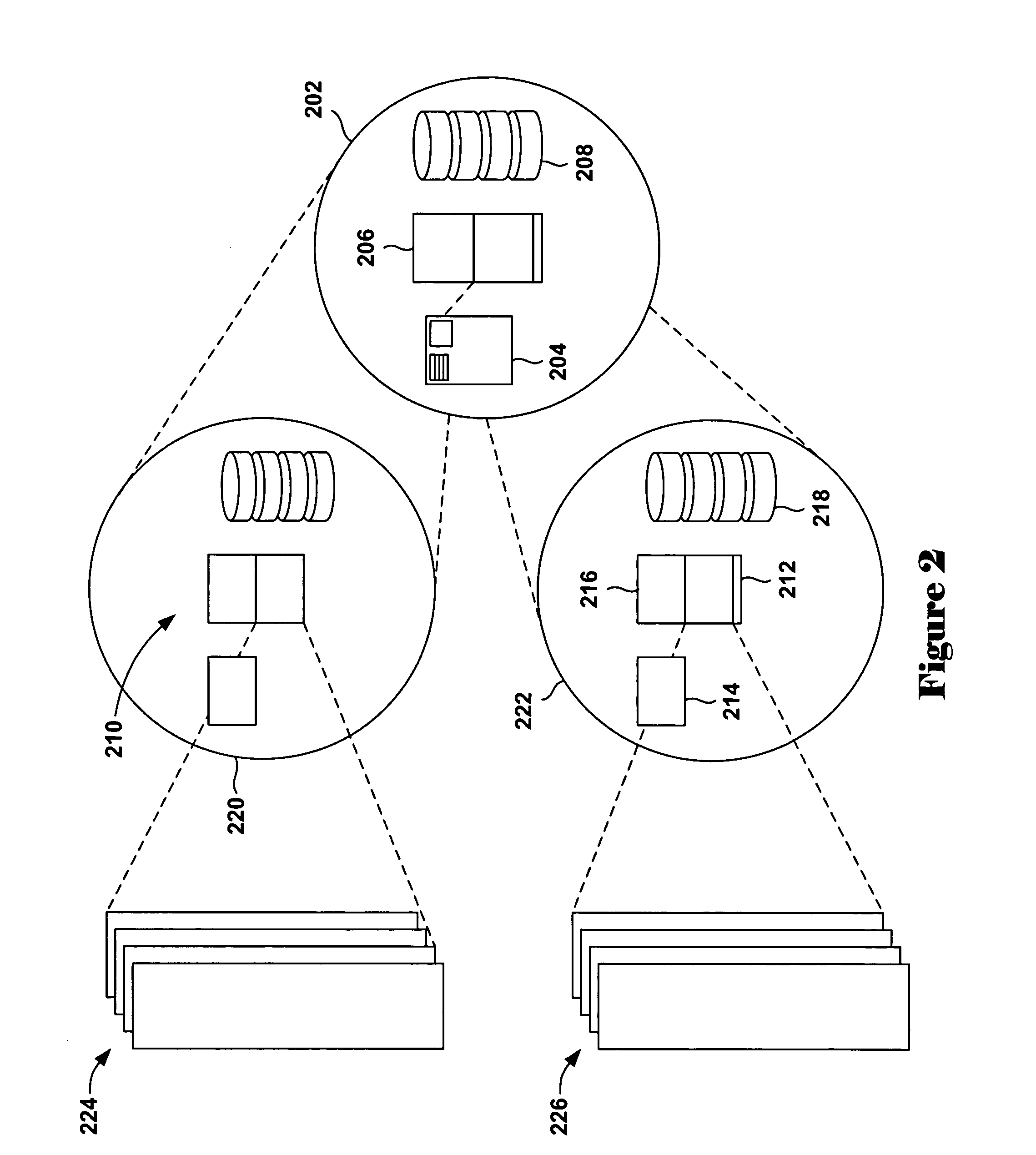

Method and apparatus for segmented sequential storage

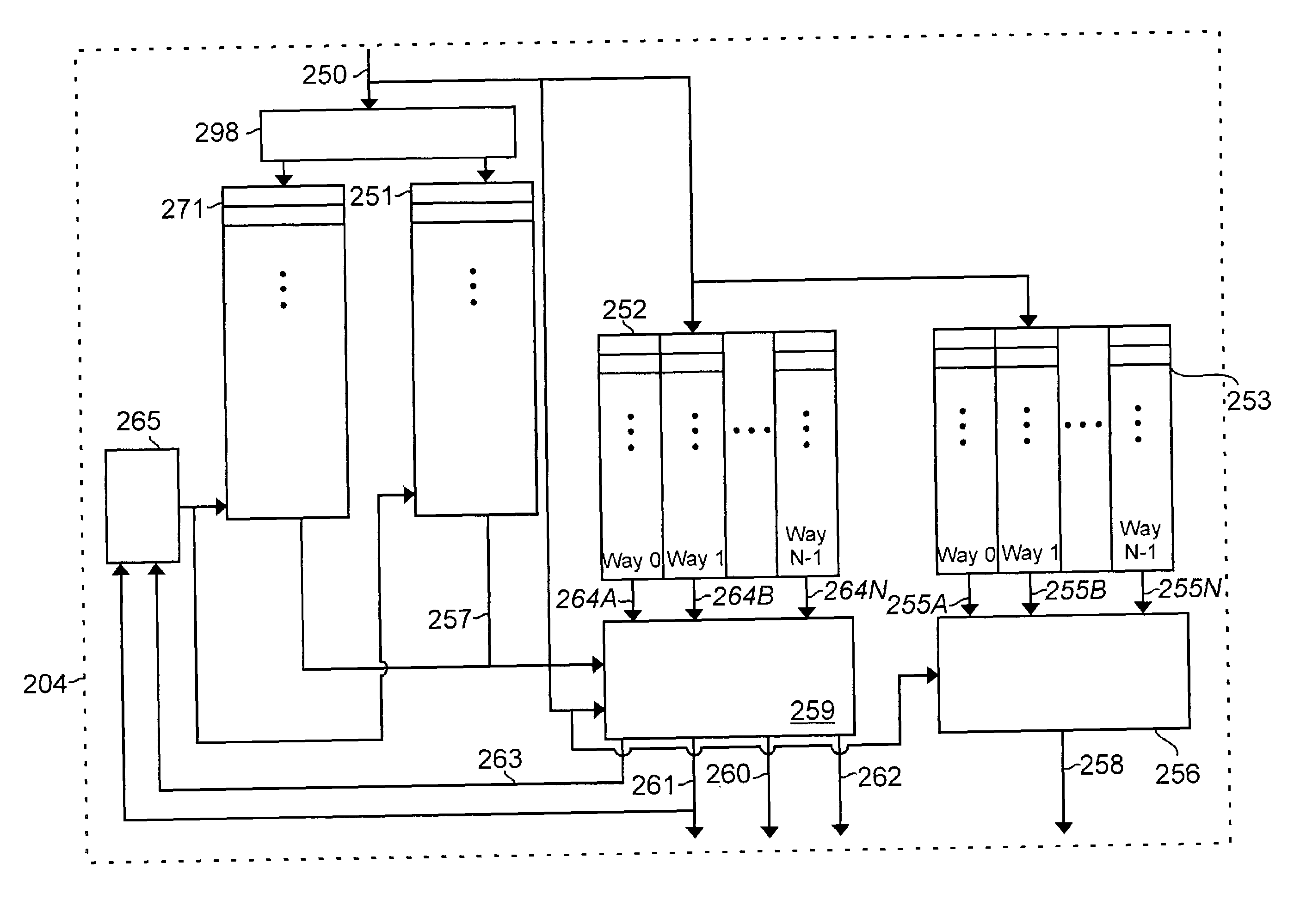

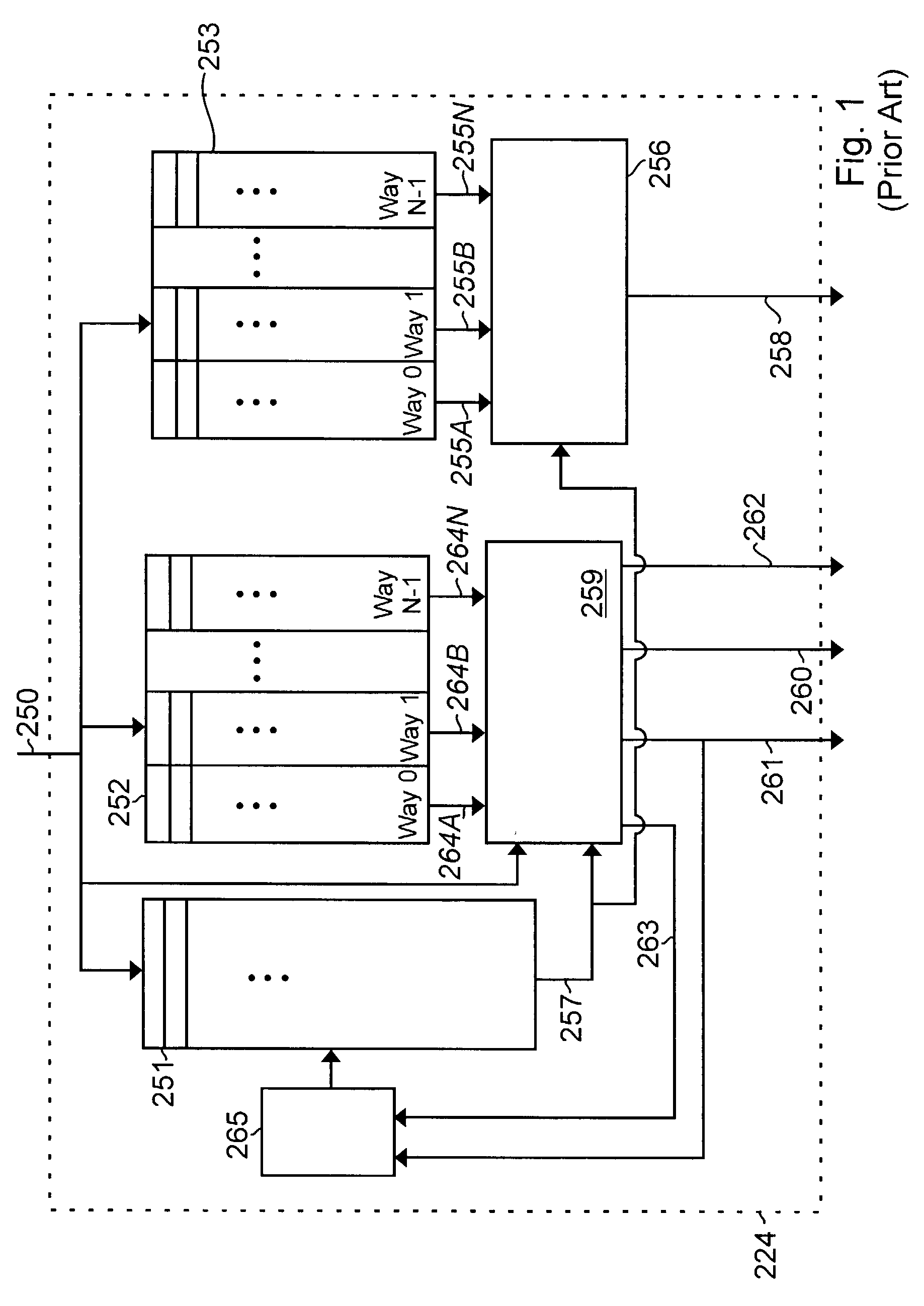

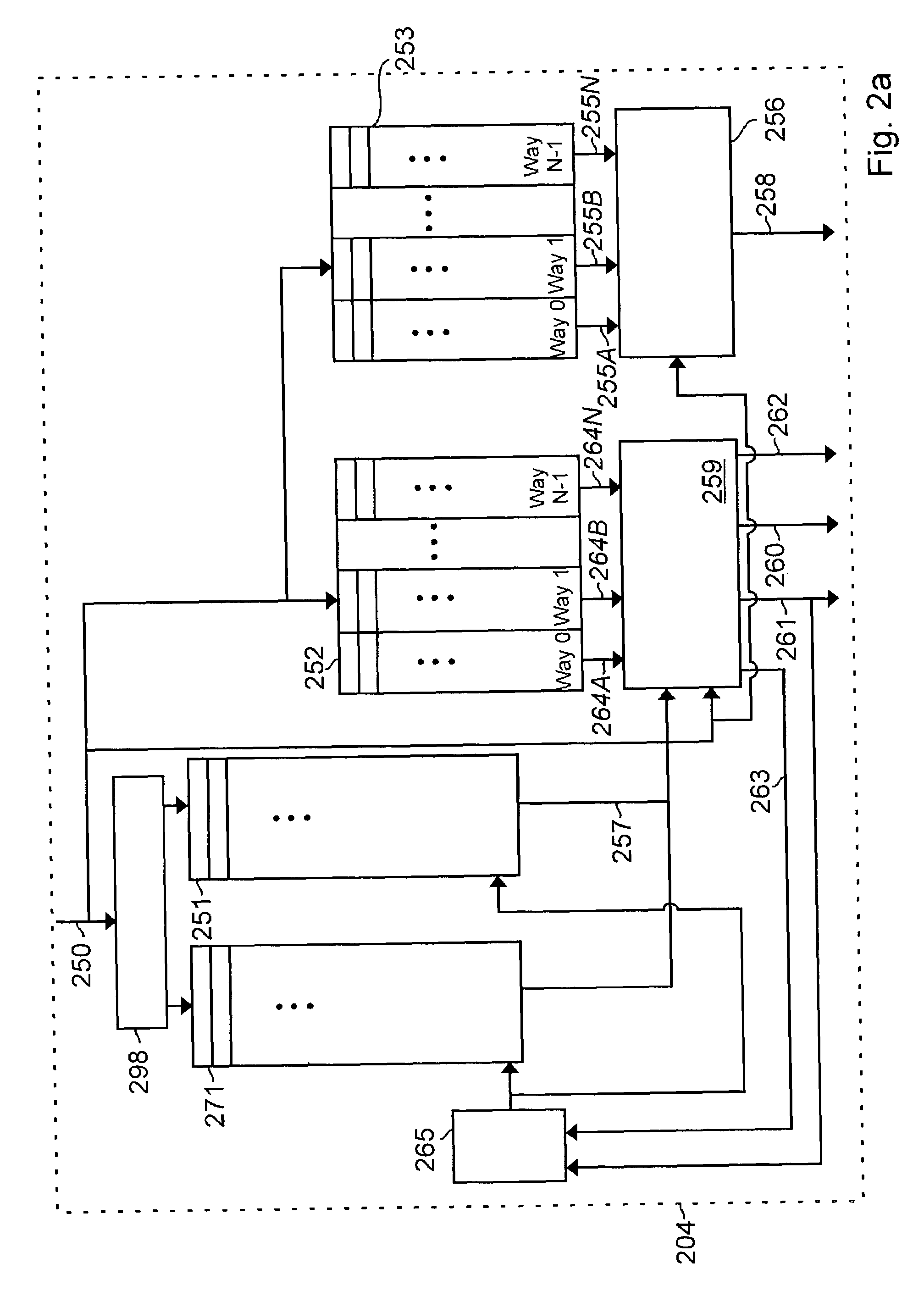

InactiveUS20160098279A1Digital computer detailsConcurrent instruction executionComputer architectureSequential data

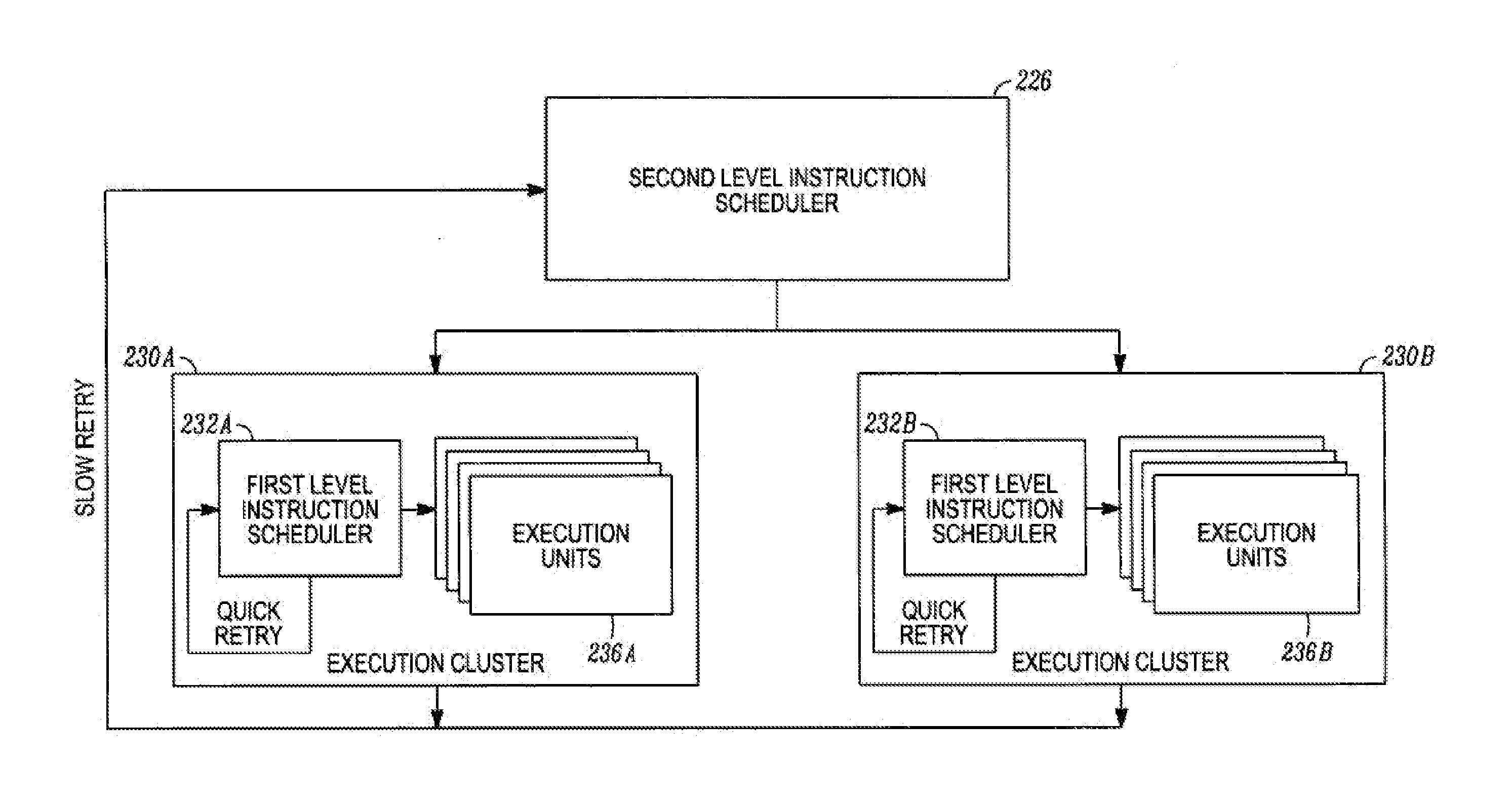

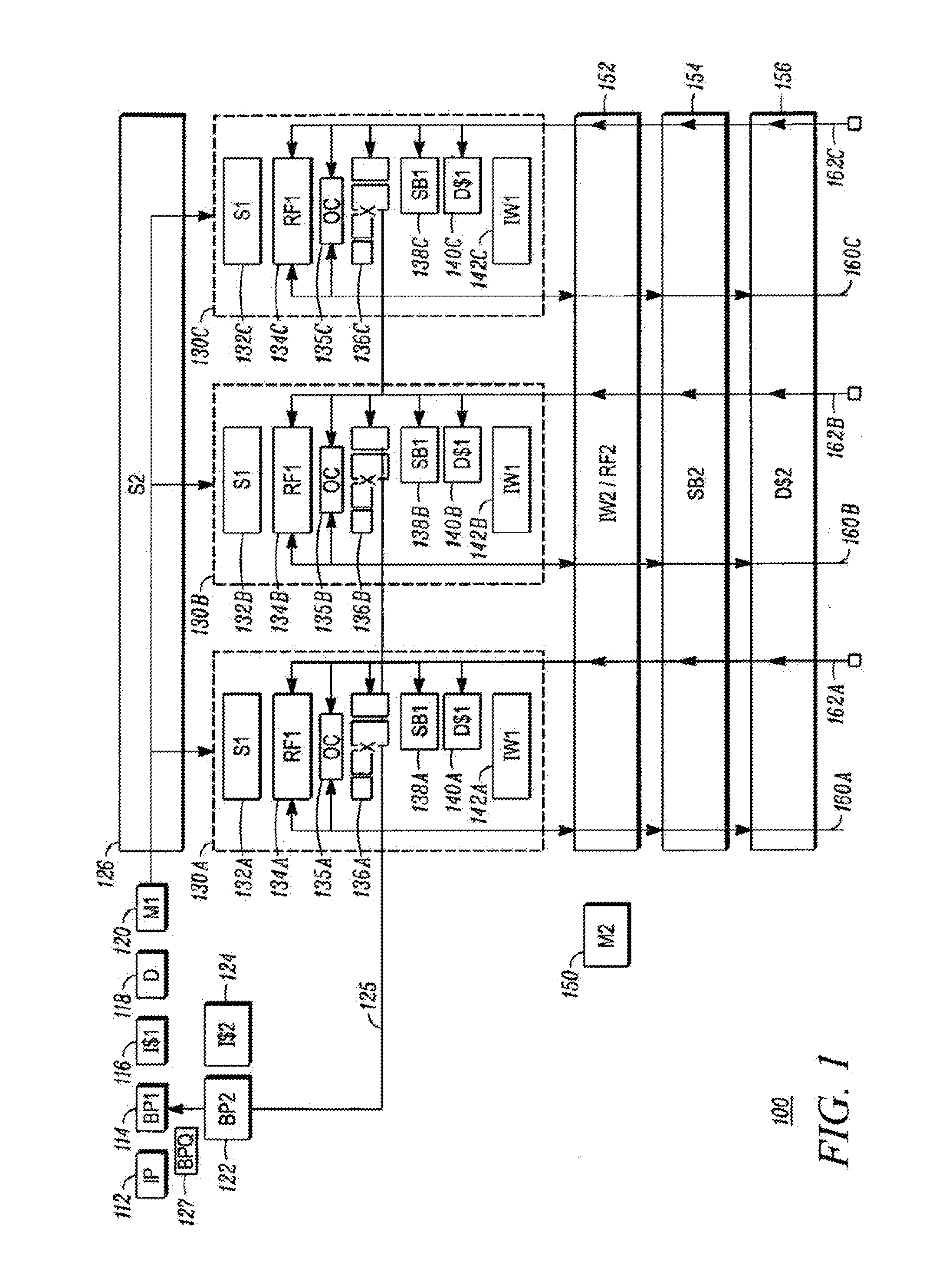

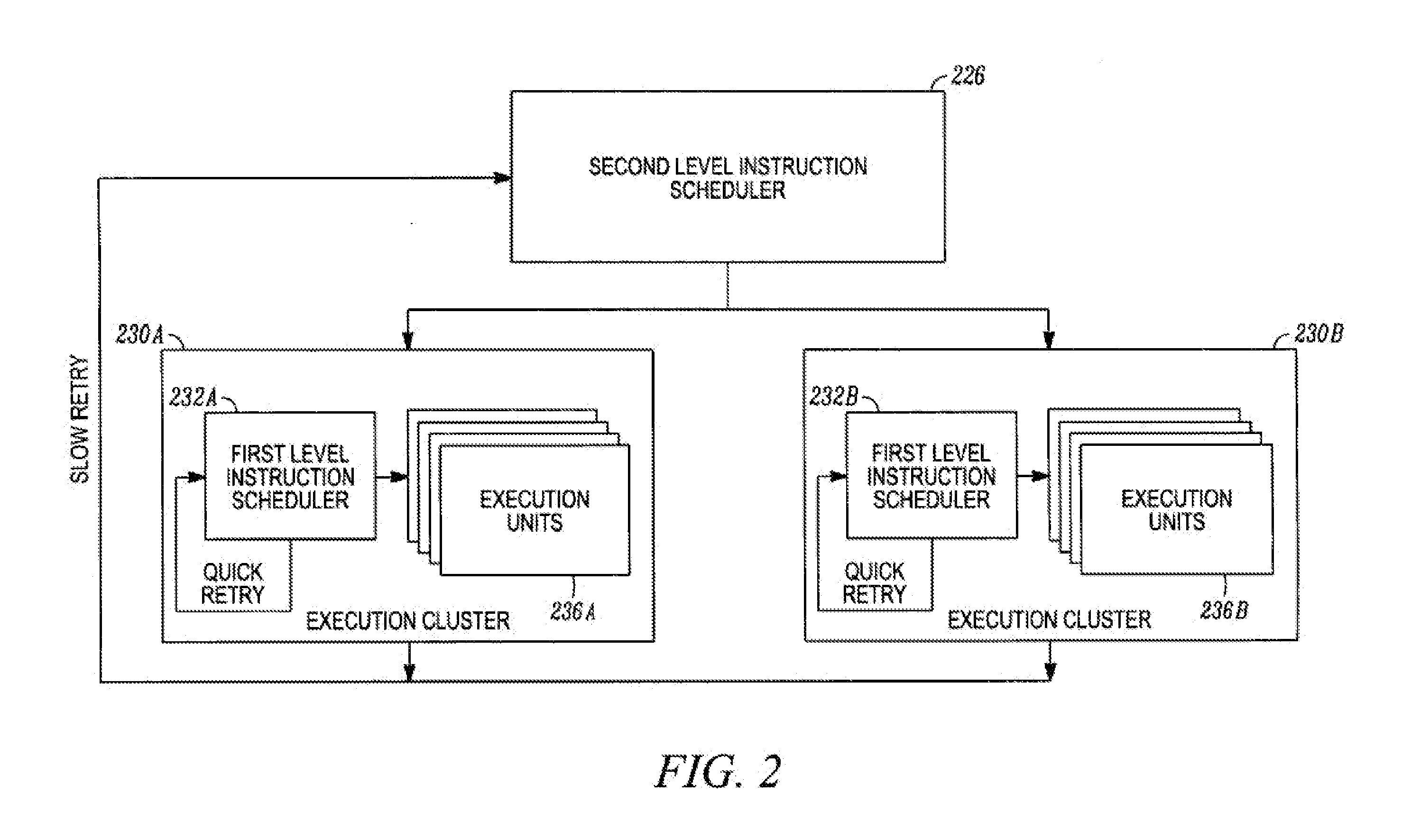

Various embodiments are described relating to processors, hierarchical processors, branch predictors, branch prediction systems, and computing systems. Some or all of a hierarchical instruction scheduler, hierarchical register file, or a hierarchical store buffer may be included in a hierarchical microprocessor. Some or all aspects of the hierarchical microprocessor may be implemented, partially or fully, using a method for sequential data storage.

Owner:RPX CORP

Adaptive fetch gating in multithreaded processors, fetch control and method of controlling fetches

ActiveUS20060101238A1Minimize processor power consumptionMinimize Simultaneous MultiThreaded (SMT) processor power consumptionDigital computer detailsMemory systemsComputer architectureParallel computing

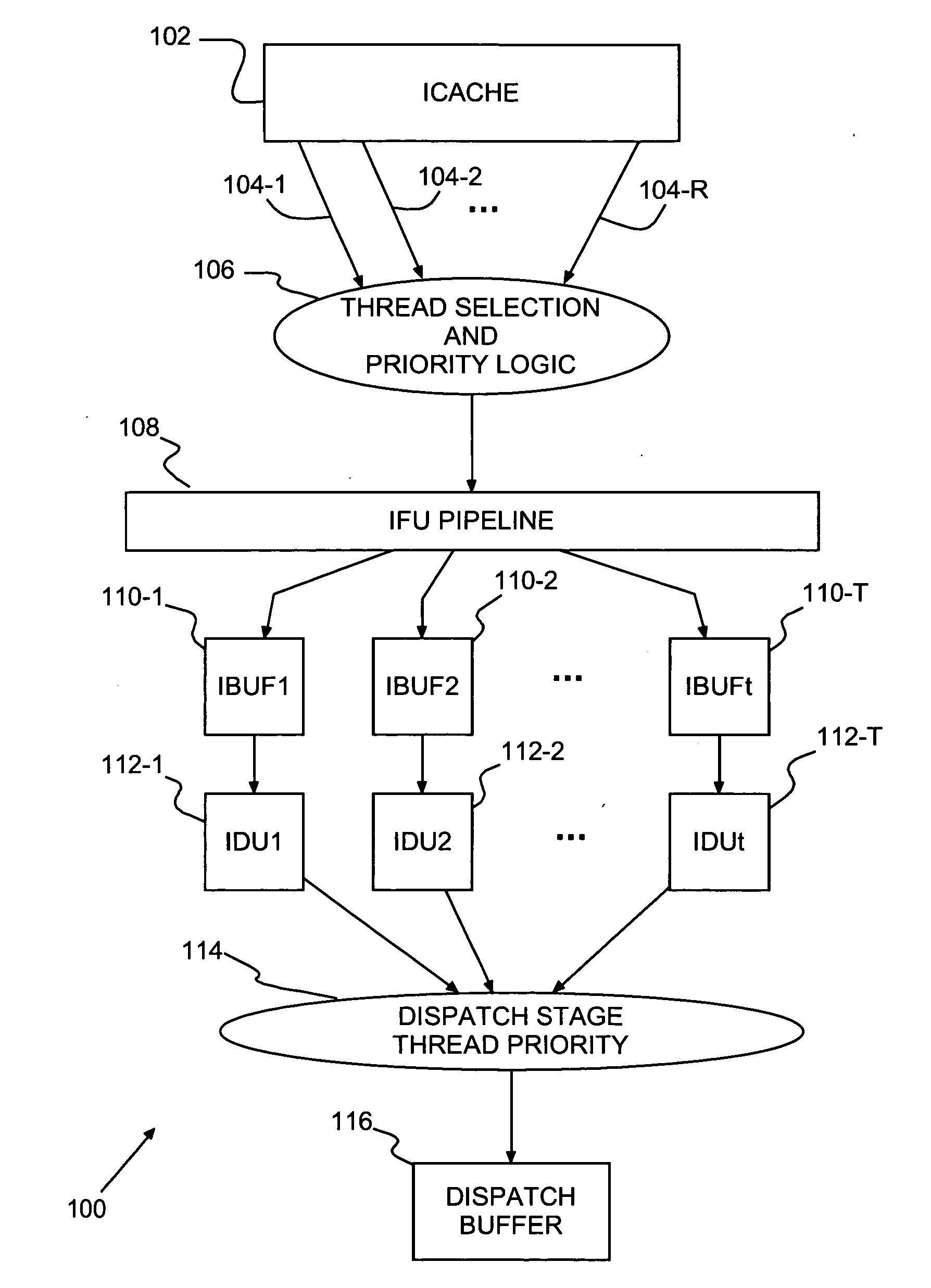

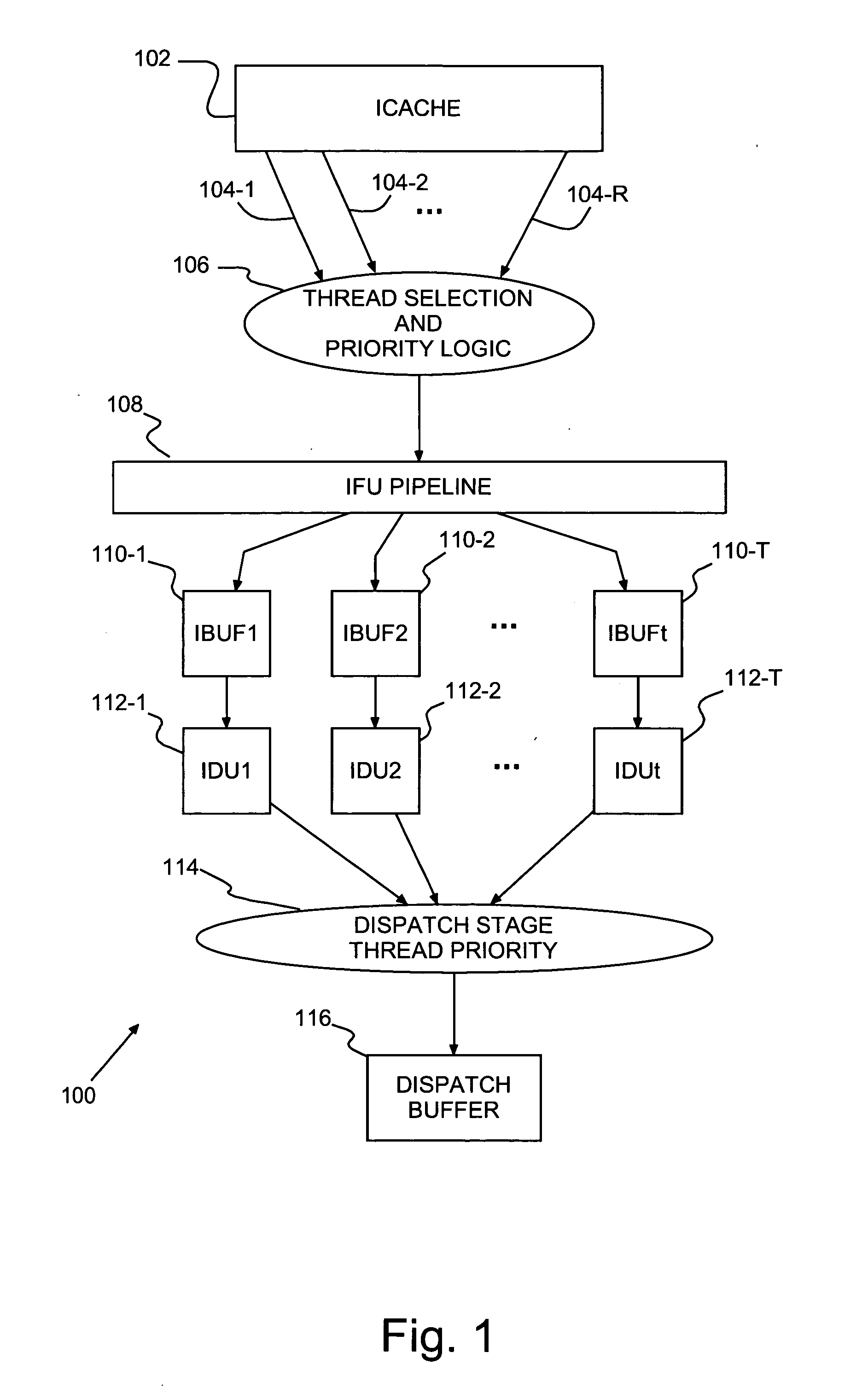

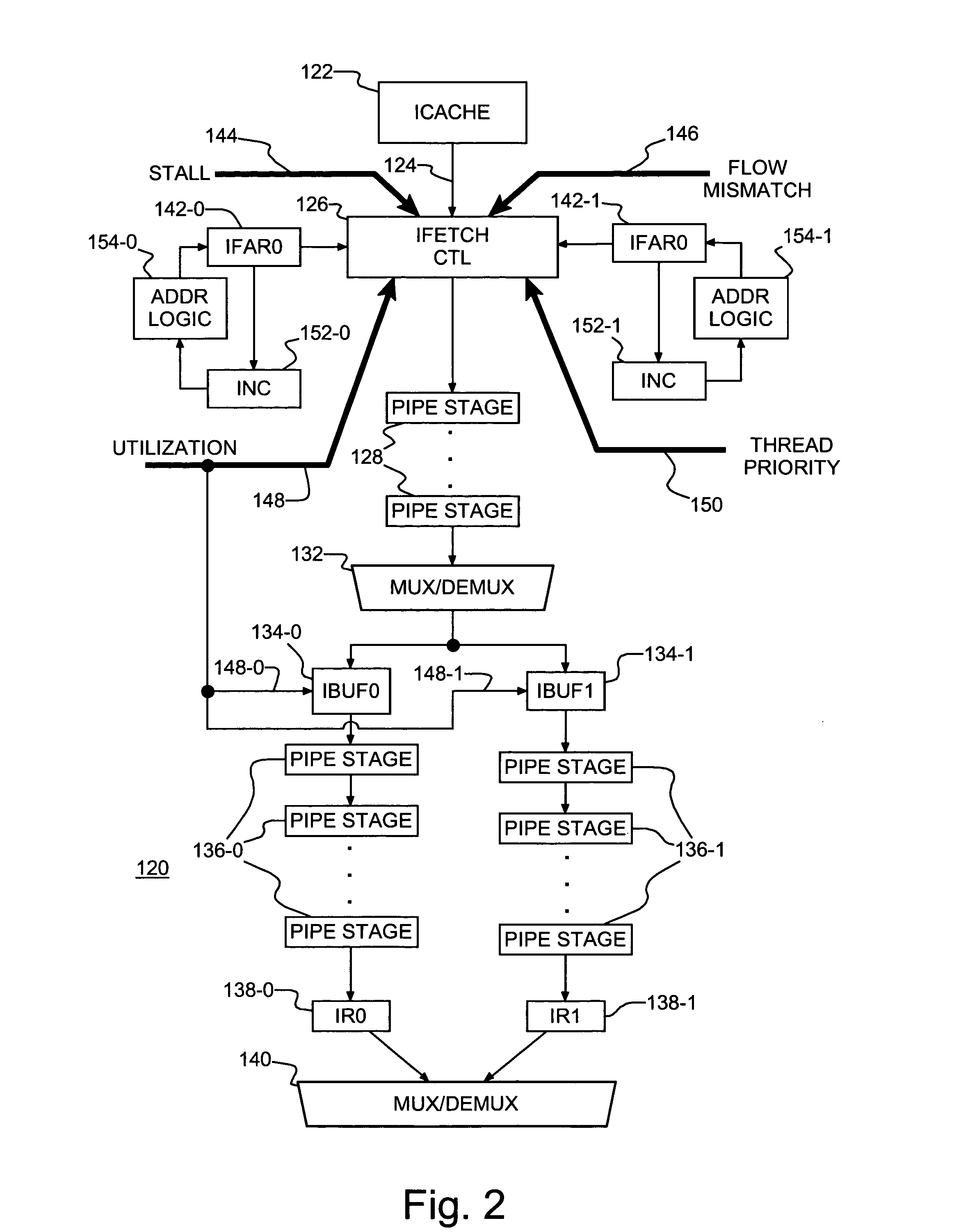

A multithreaded processor, fetch control for a multithreaded processor and a method of fetching in the multithreaded processor. Processor event and use (EU) signs are monitored for downstream pipeline conditions indicating pipeline execution thread states. Instruction cache fetches are skipped for any thread that is incapable of receiving fetched cache contents, e.g., because the thread is full or stalled. Also, consecutive fetches may be selected for the same thread, e.g., on a branch mis-predict. Thus, the processor avoids wasting power on unnecessary or place keeper fetches.

Owner:META PLATFORMS INC

Instruction unit with instruction buffer pipeline bypass

InactiveUS20110320771A1Memory adressing/allocation/relocationDigital computer detailsInstruction unitMethod selection

A circuit arrangement and method selectively bypass an instruction buffer for selected instructions so that bypassed instructions can be dispatched without having to first pass through the instruction buffer. Thus, for example, in the case that an instruction buffer is partially or completely flushed as a result of an instruction redirect (e.g., due to a branch mispredict), instructions can be forwarded to subsequent stages in an instruction unit and / or to one or more execution units without the latency associated with passing through the instruction buffer.

Owner:IBM CORP

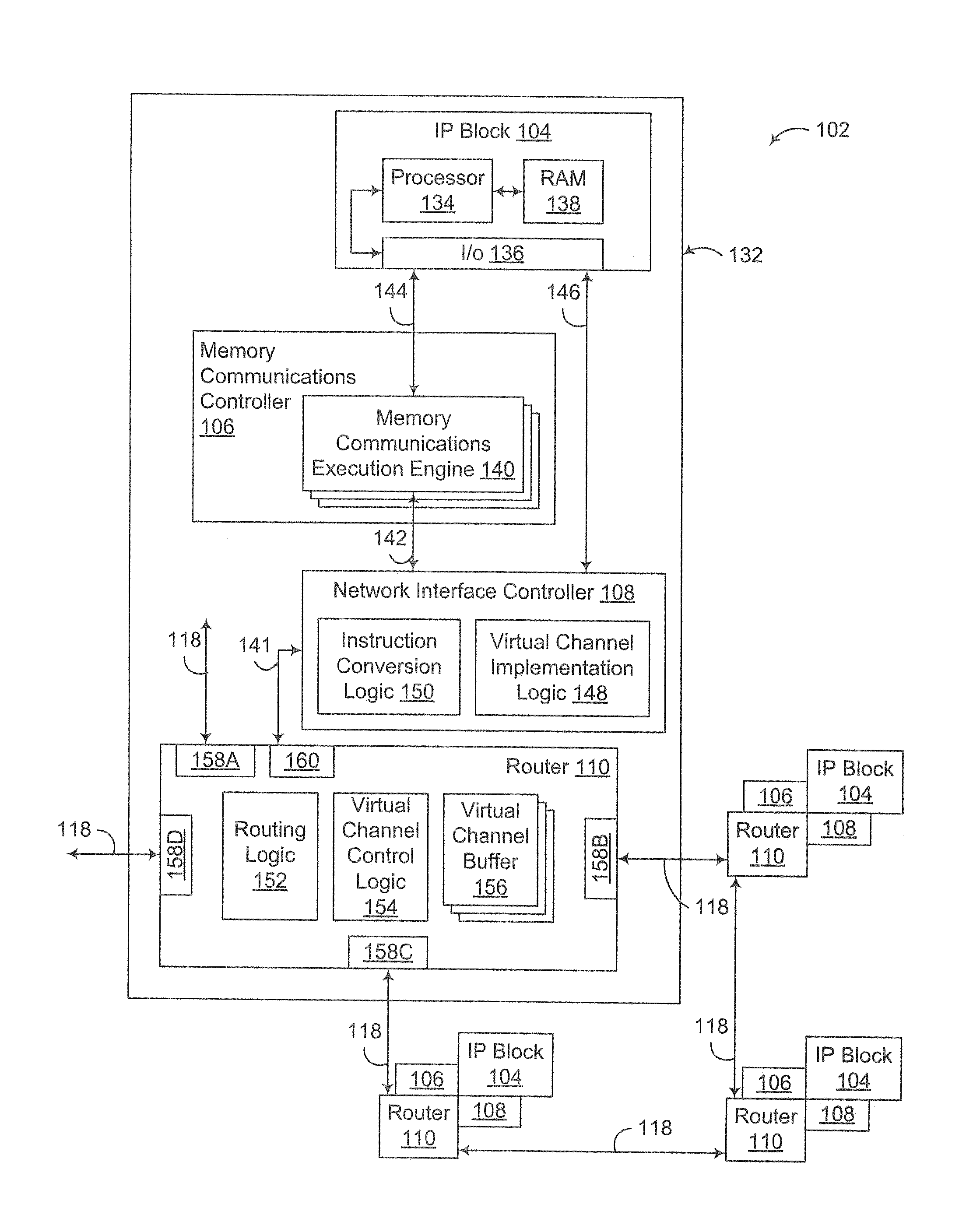

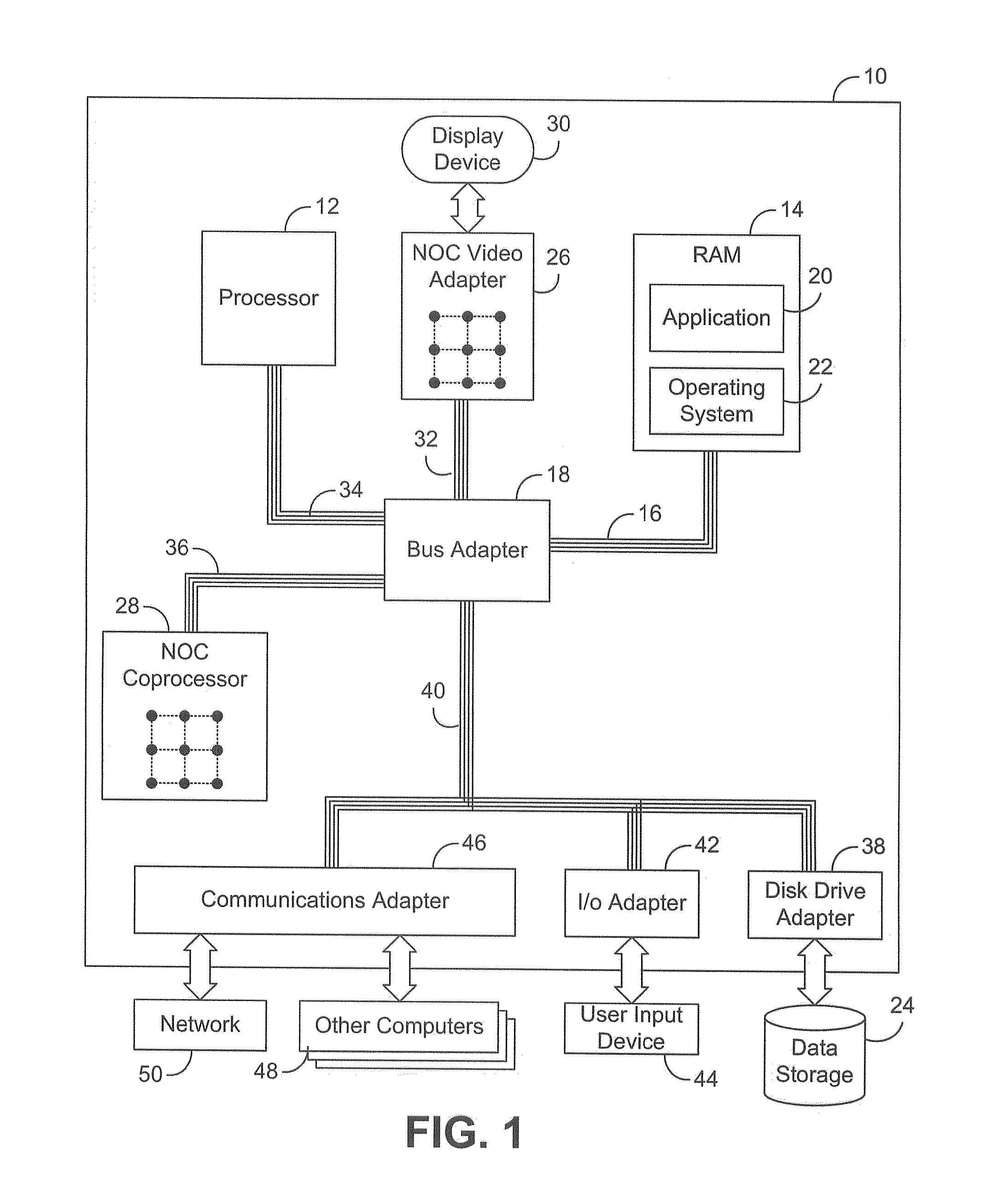

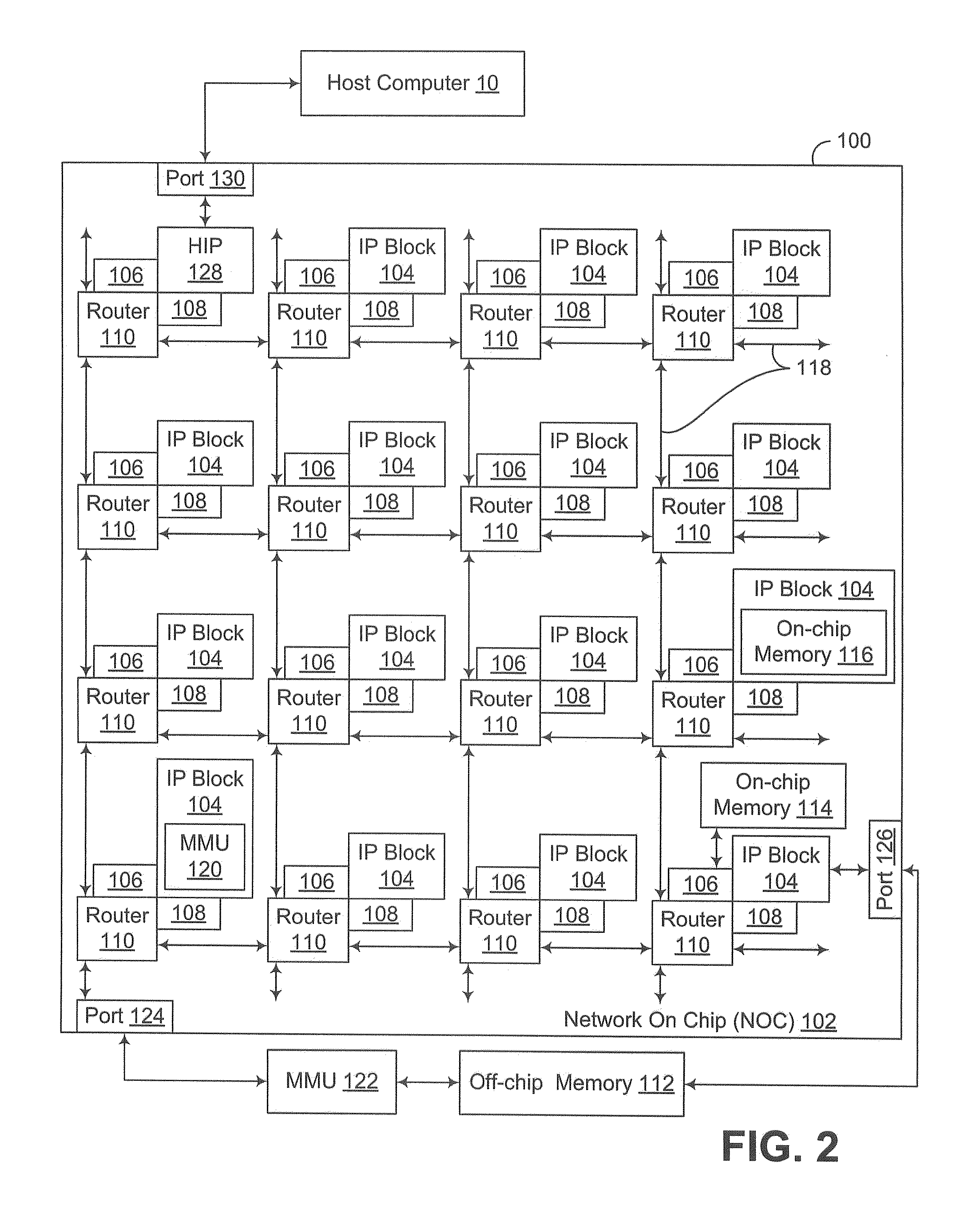

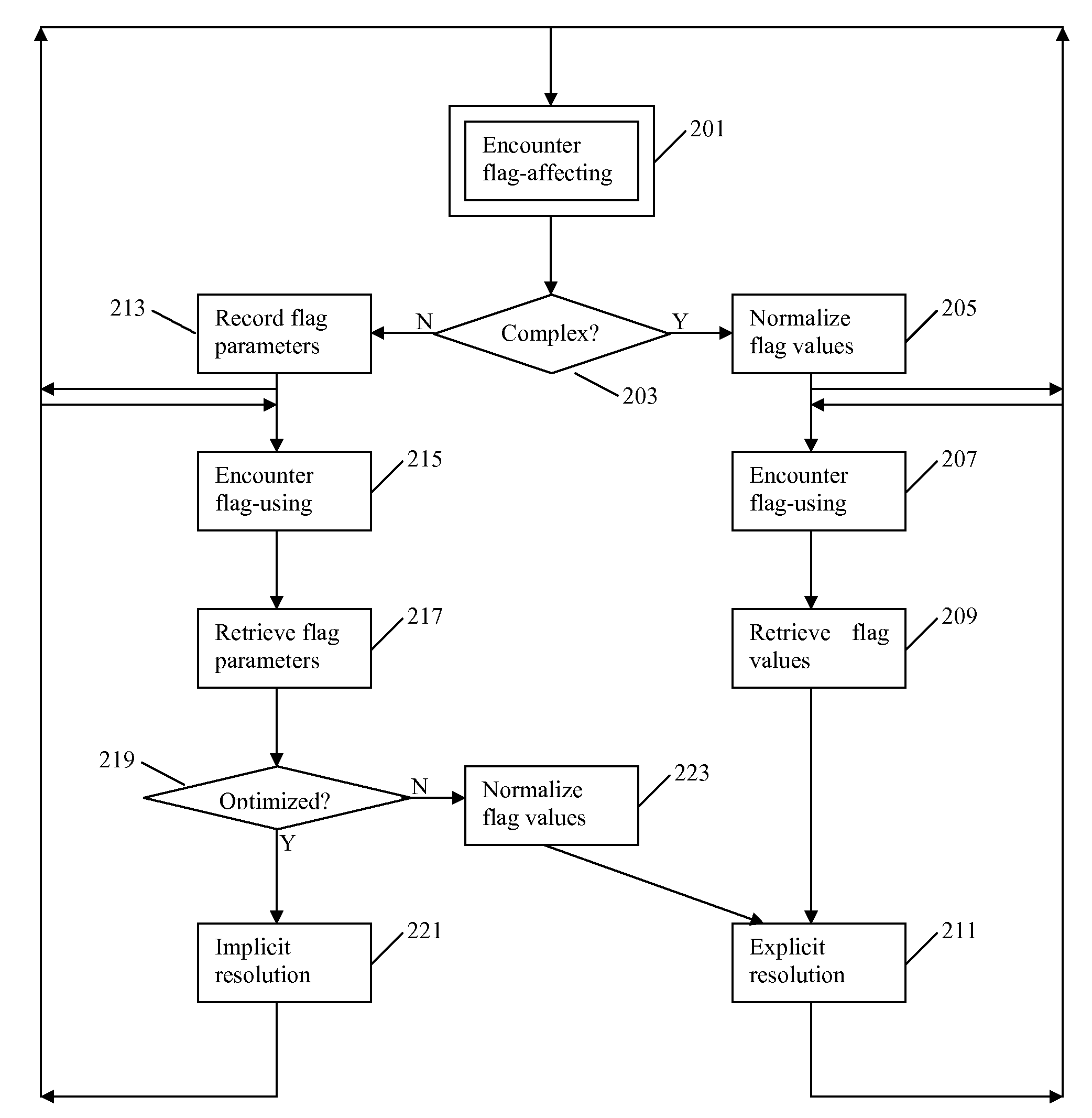

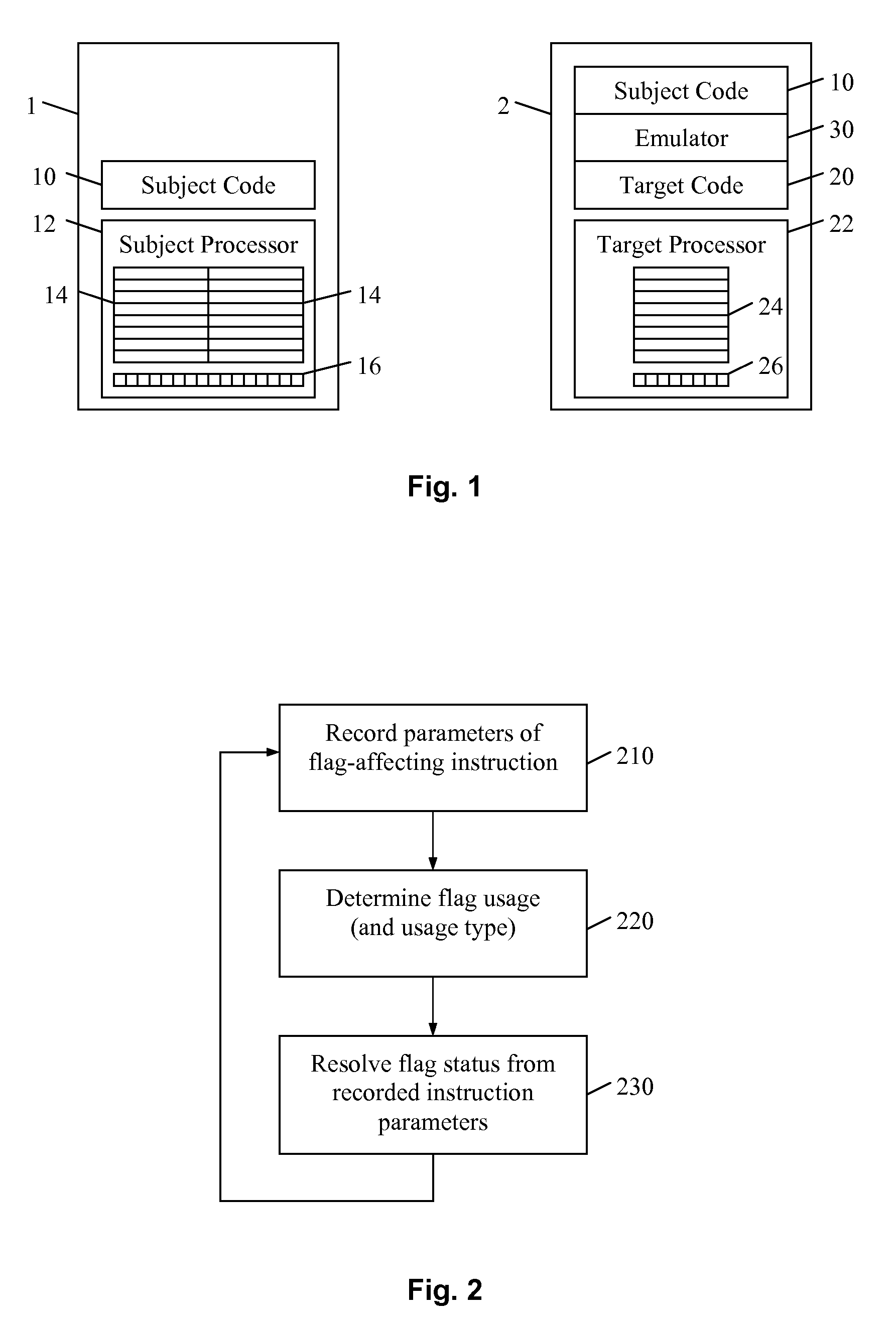

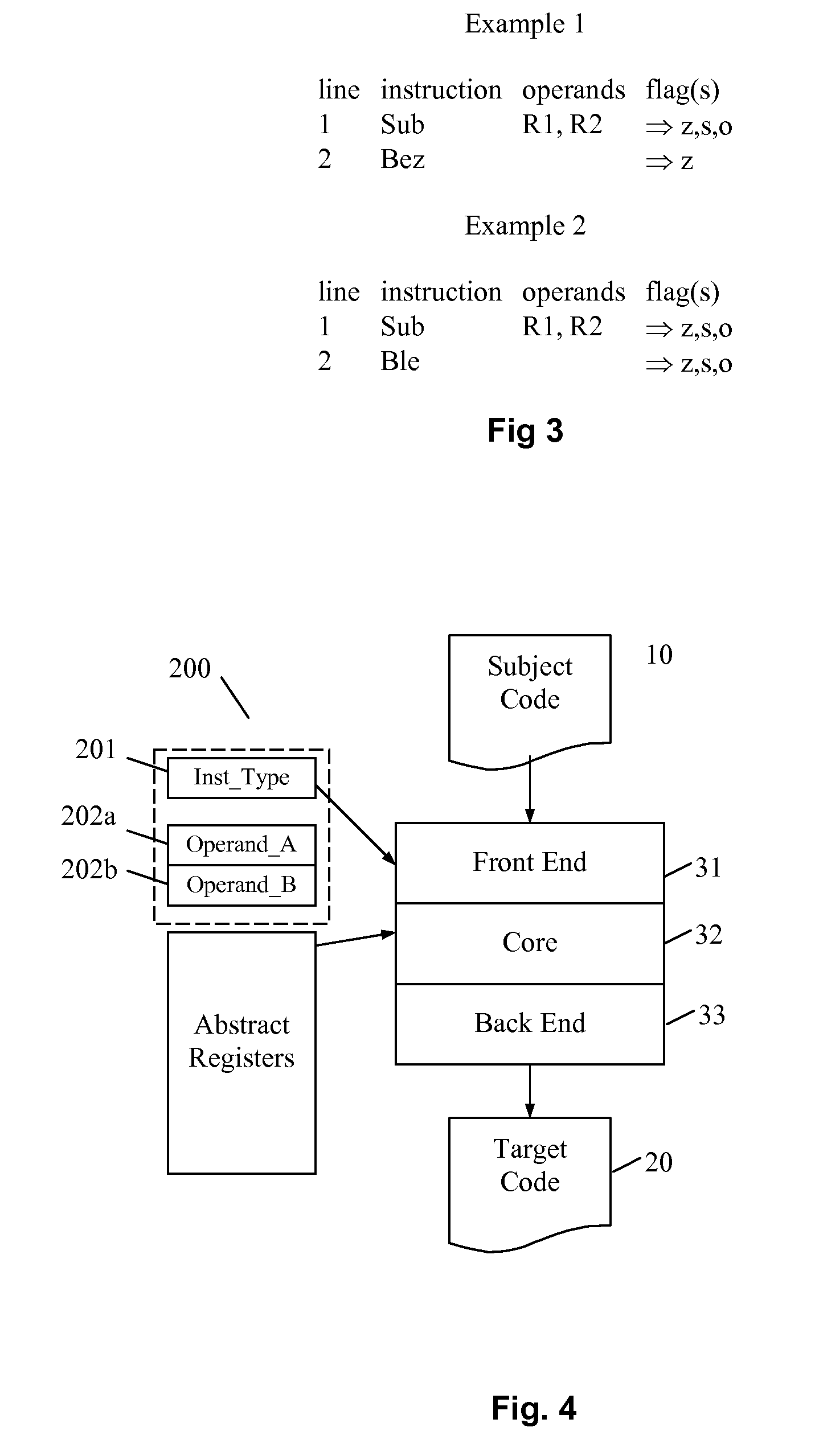

Condition code flag emulation for program code conversion

ActiveUS20080177985A1Low costDigital computer detailsSoftware simulation/interpretation/emulationProgram codeSubject matter

An emulator allows subject code written for a subject processor having subject processor registers and condition code flags to run in a non-compatible computing environment. The emulator identifies and records parameters of instructions in the subject code that affect status of the subject condition code flags. Then, when an instruction in the subject code is encountered, such as a branch or jump, that uses the flag status to make a decision, the flag status is resolved from the recorded instruction parameters. Advantageously, emulation overhead is substantially reduced.

Owner:IBM CORP

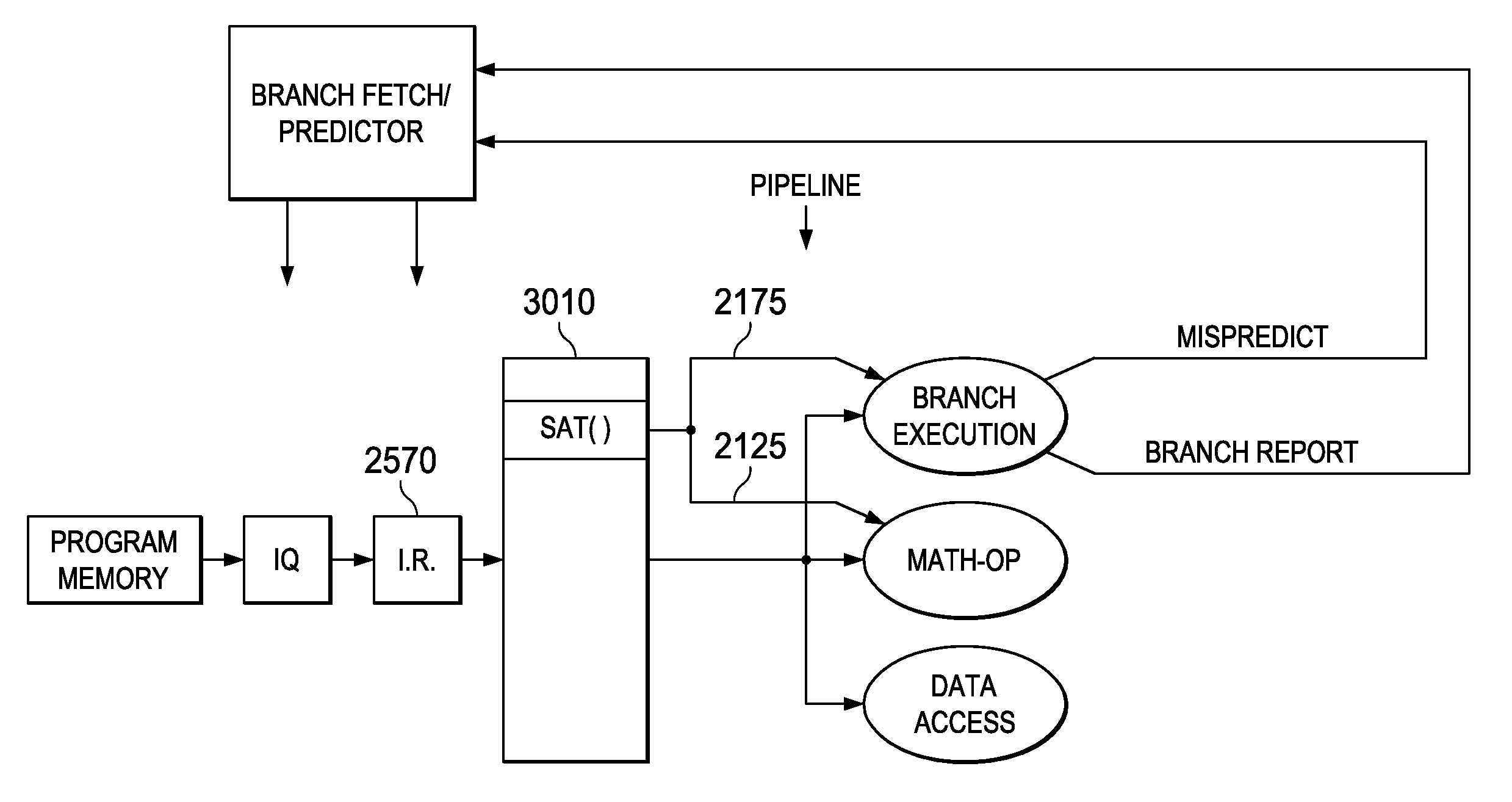

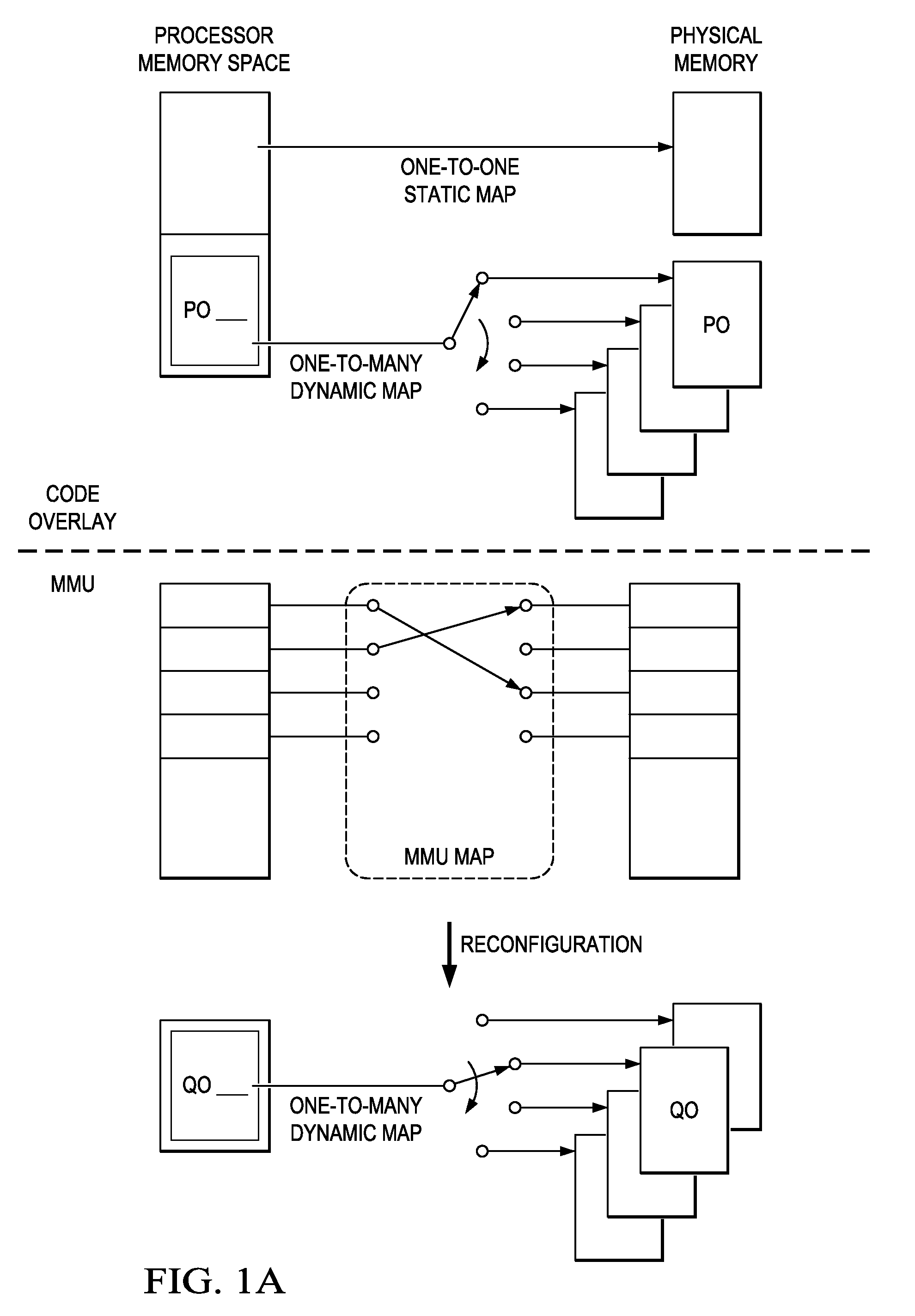

Processors with branch instruction, circuits, systems and processes of manufacture and operation

ActiveUS20090106541A1Avoid dependenceDigital computer detailsConcurrent instruction executionParallel computingLogical circuit

An electronic processor is provided for use with a memory (2530) having selectable memory areas. The processor includes a memory area selection circuit (MMU) operable to select one of the selectable memory areas at a time, and an instruction fetch circuit (2520, 2550) operable to fetch a target instruction at an address from the selected one of the selectable memory areas. The processor includes an execution circuit (Pipeline) coupled to execute instructions from the instruction fetch circuit (2520, 2550) and operable to execute a first instruction for changing the selection by the memory area selection circuit (MMU) from a first one of the selectable memory areas to a second one of the selectable memory areas, the execution circuit (Pipeline) further operable to execute a branch instruction that points to a target instruction, access to the target instruction depending on actual change of selection to the second one of the memory areas; and the processor includes a logic circuit (3108, 3120, 3125, 3130, 3140) operable to ensure fetch of the target instruction in response to the branch instruction after actual change of selection. Other circuits, devices, systems, apparatus, and processes are also disclosed.

Owner:TEXAS INSTR INC

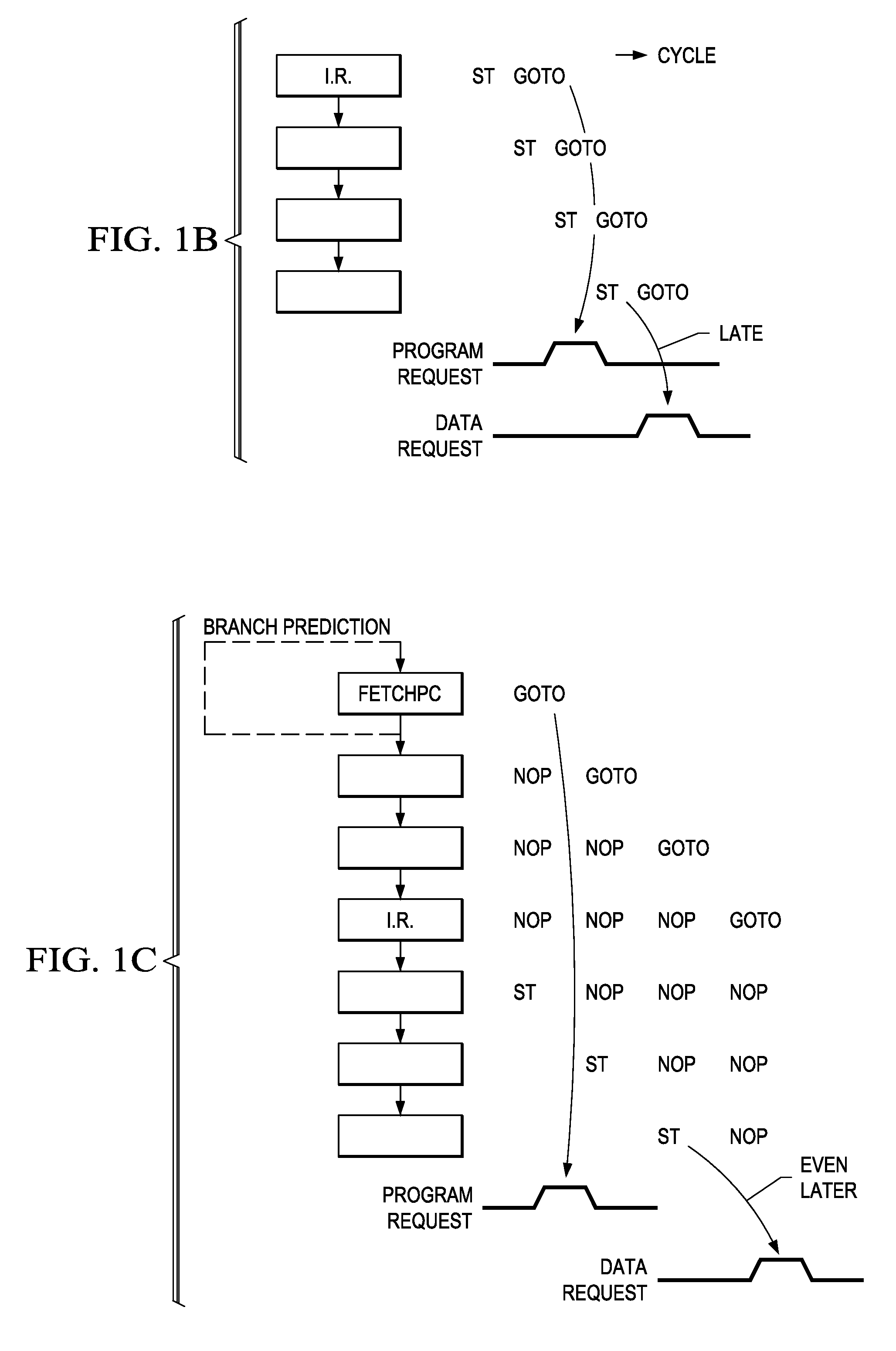

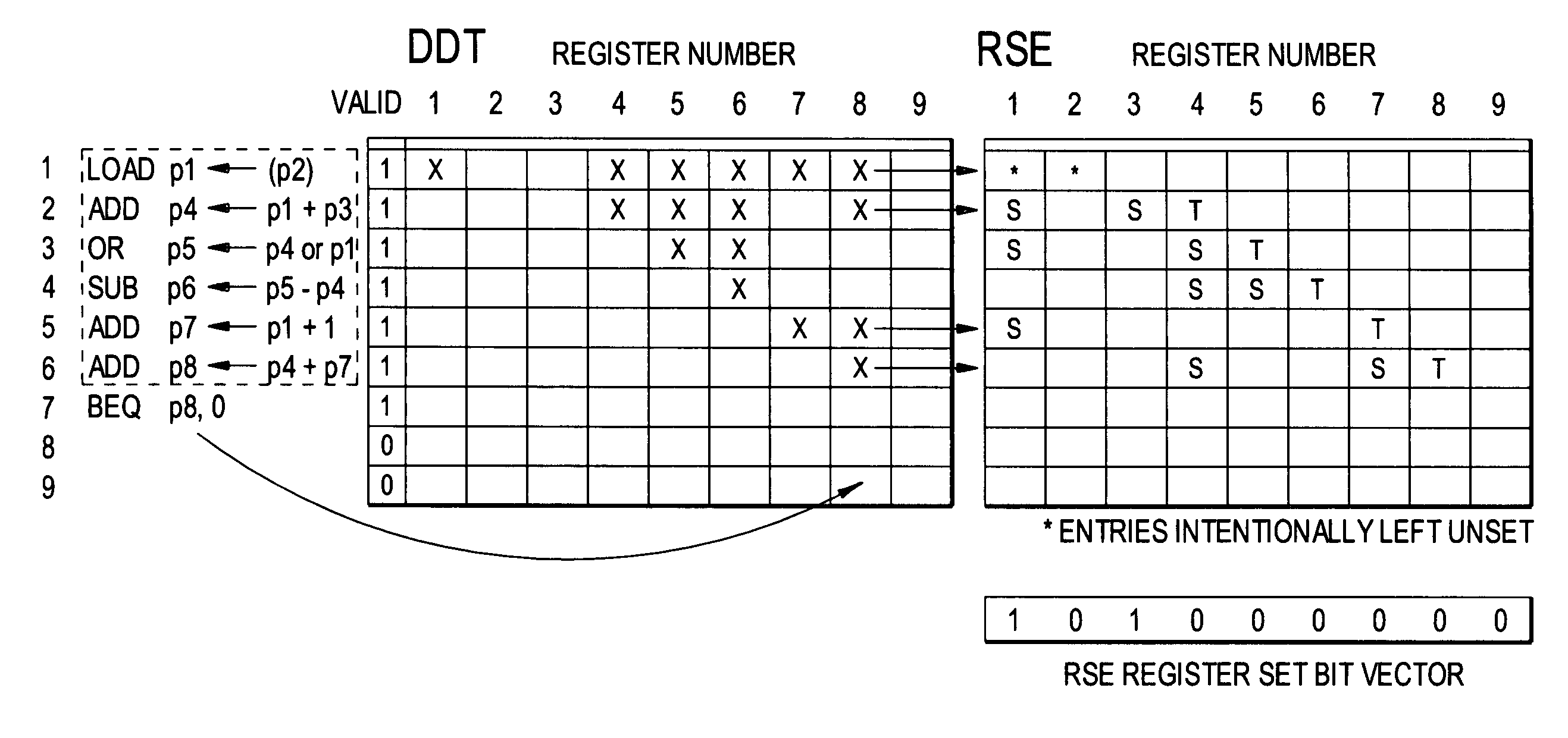

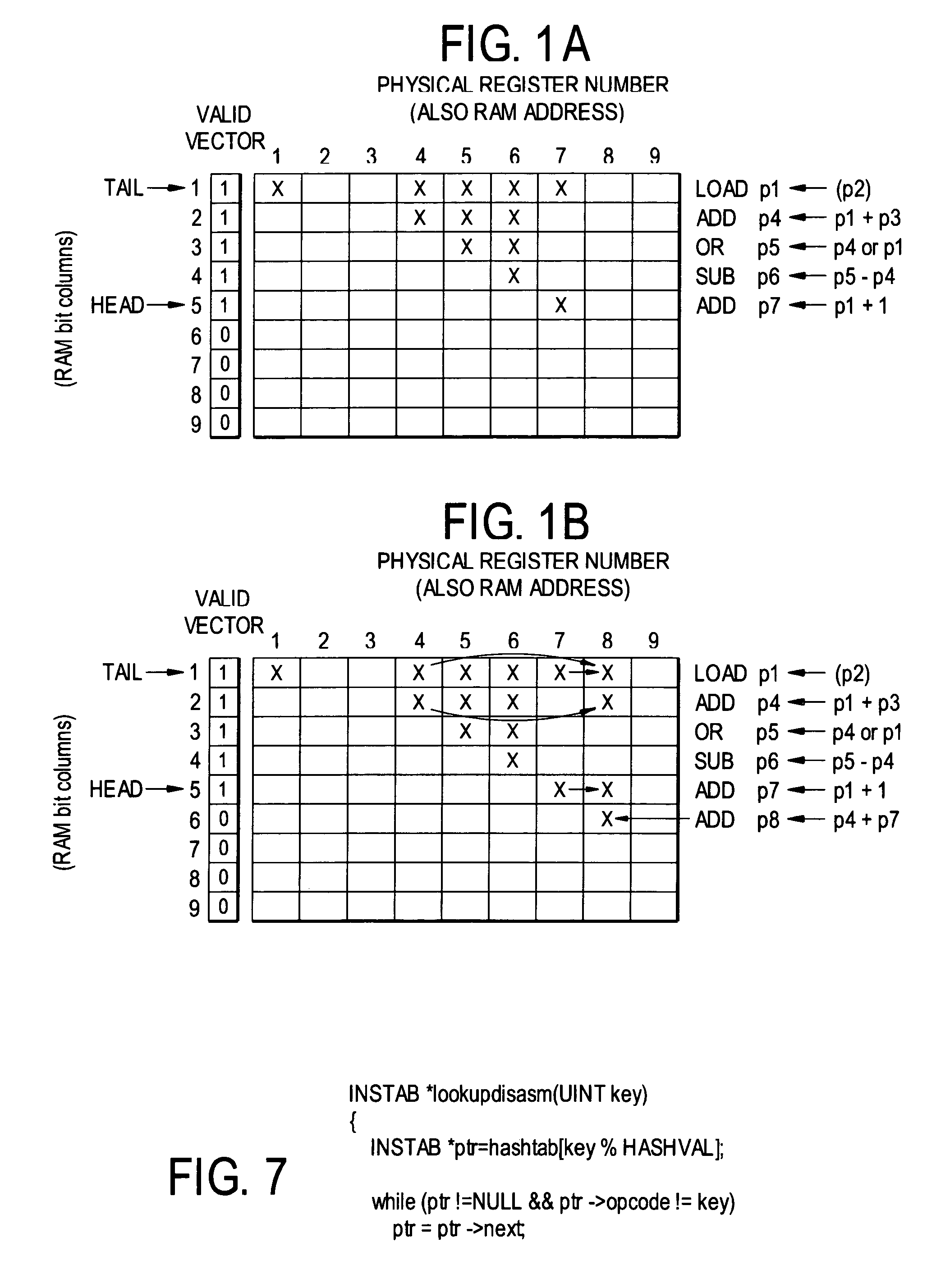

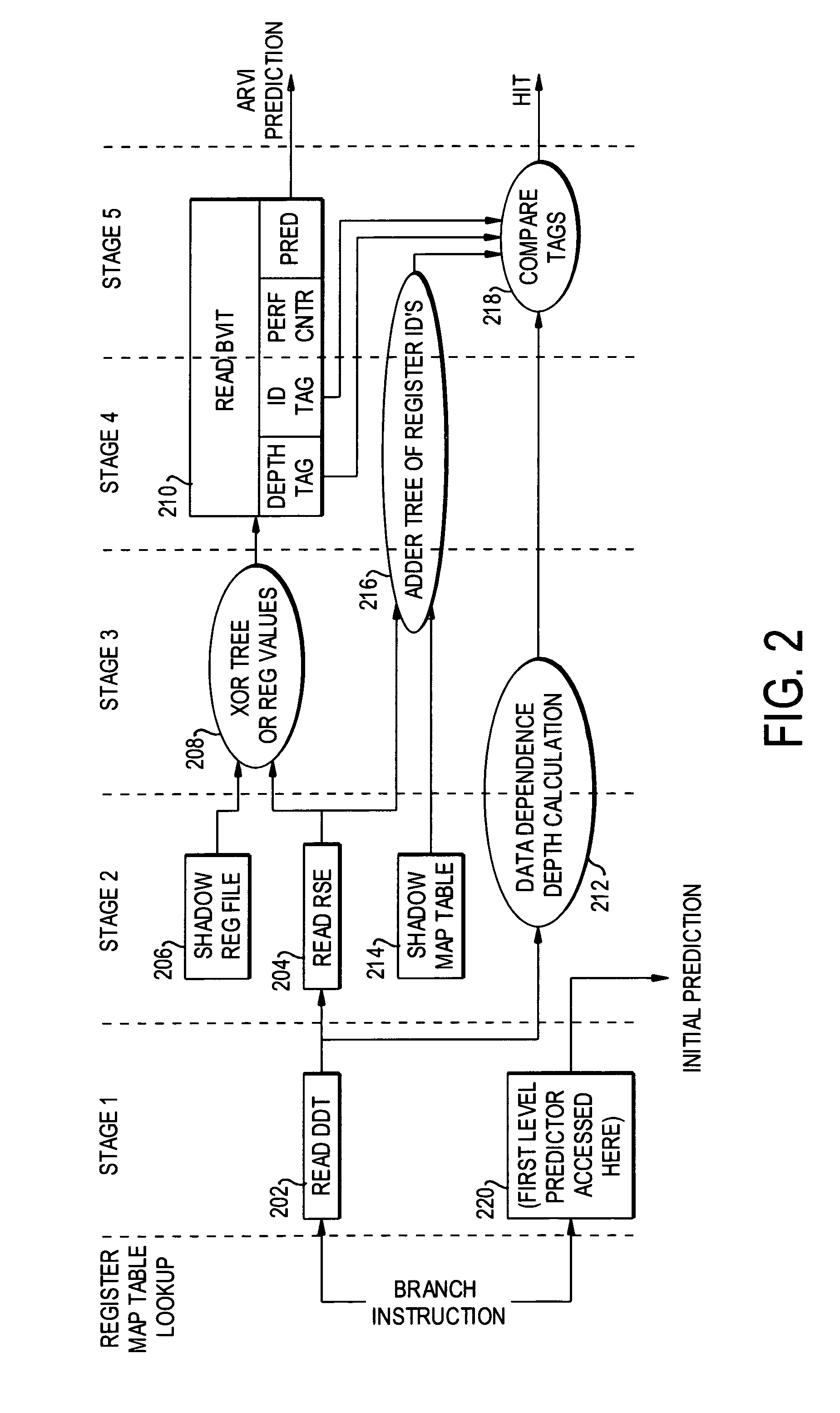

Dynamic data dependence tracking and its application to branch prediction

ActiveUS7571302B1Accurate measurementImproving the accuracy of criticality measuresDigital computer detailsMemory systemsProcessor registerData dependence

A data dependence table in RAM relates physical register addresses to instructions such that for each instruction, the registers on whose data the instruction depends are identified. The table is updated for each instruction added to the pipeline. For a branch instruction, the table identifies the registers relevant to the branch instruction for branch prediction.

Owner:UNIVERSITY OF ROCHESTER

Method, system, and computer program product to generate test instruction streams while guaranteeing loop termination

InactiveUS7877742B2Error detection/correctionDigital computer detailsInstruction streamPseudo random testing

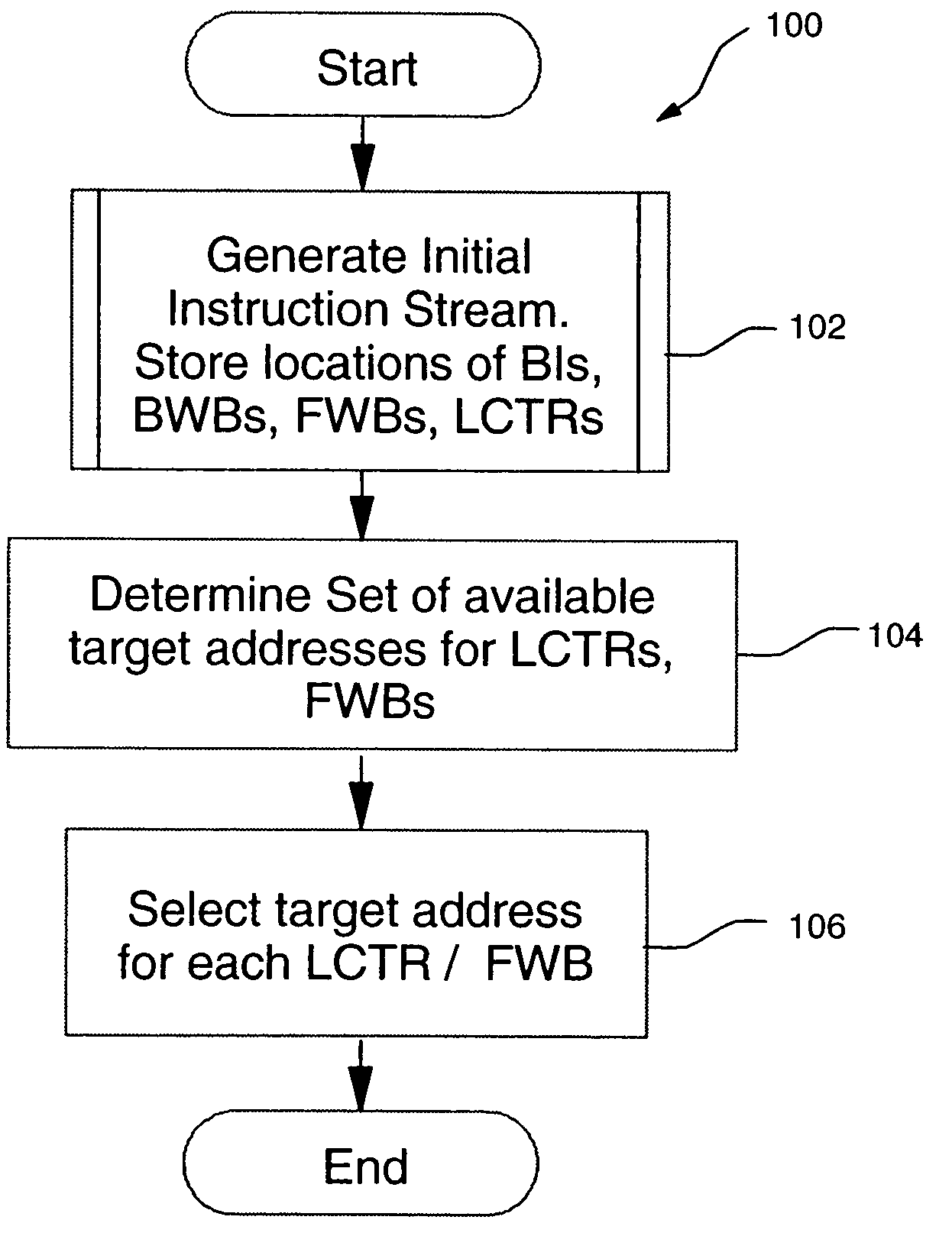

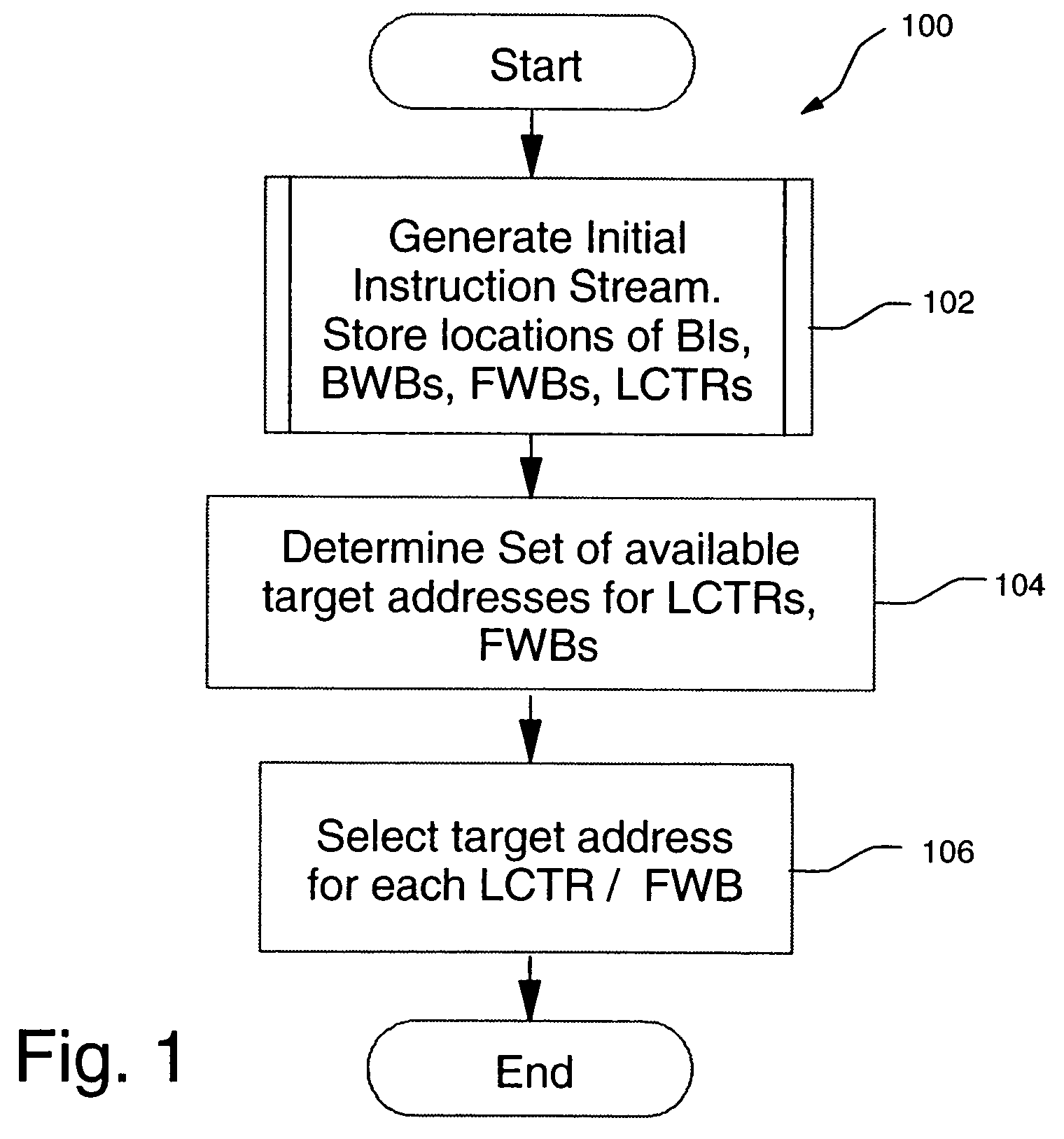

A method, system, and computer program product for generating terminating, pseudo-random test instruction streams, including forward and backward branching instructions. A first instruction stream is generated, including at least one backward branching instruction and at least one forward branching instruction. Each backward branching instruction is preceded by at least one forward branching instruction, which is used to guarantee termination of the loop formed by the backward branching instruction. Backward branching targets are resolved when the backward branching instruction is inserted into the first instruction stream. Forward branching targets remain unresolved in the first instruction stream. A set of potential branch targets is determined for each forward branching instruction. For each forward branching instruction, a branch target is randomly selected from the set of potential branch targets for that forward branching instruction. The final terminating instruction stream consists of the first stream, with all forward branch targets resolved.

Owner:IBM CORP

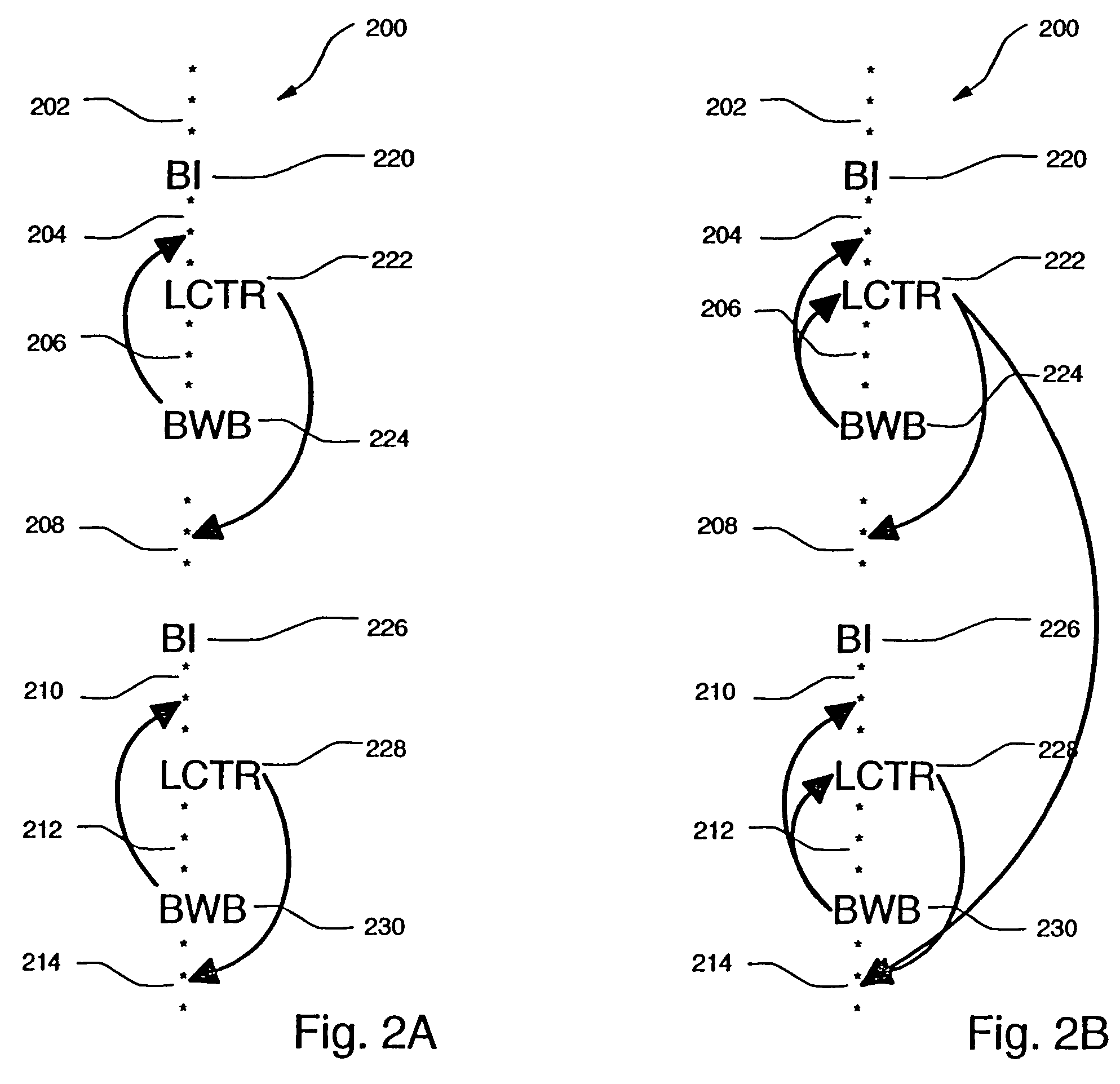

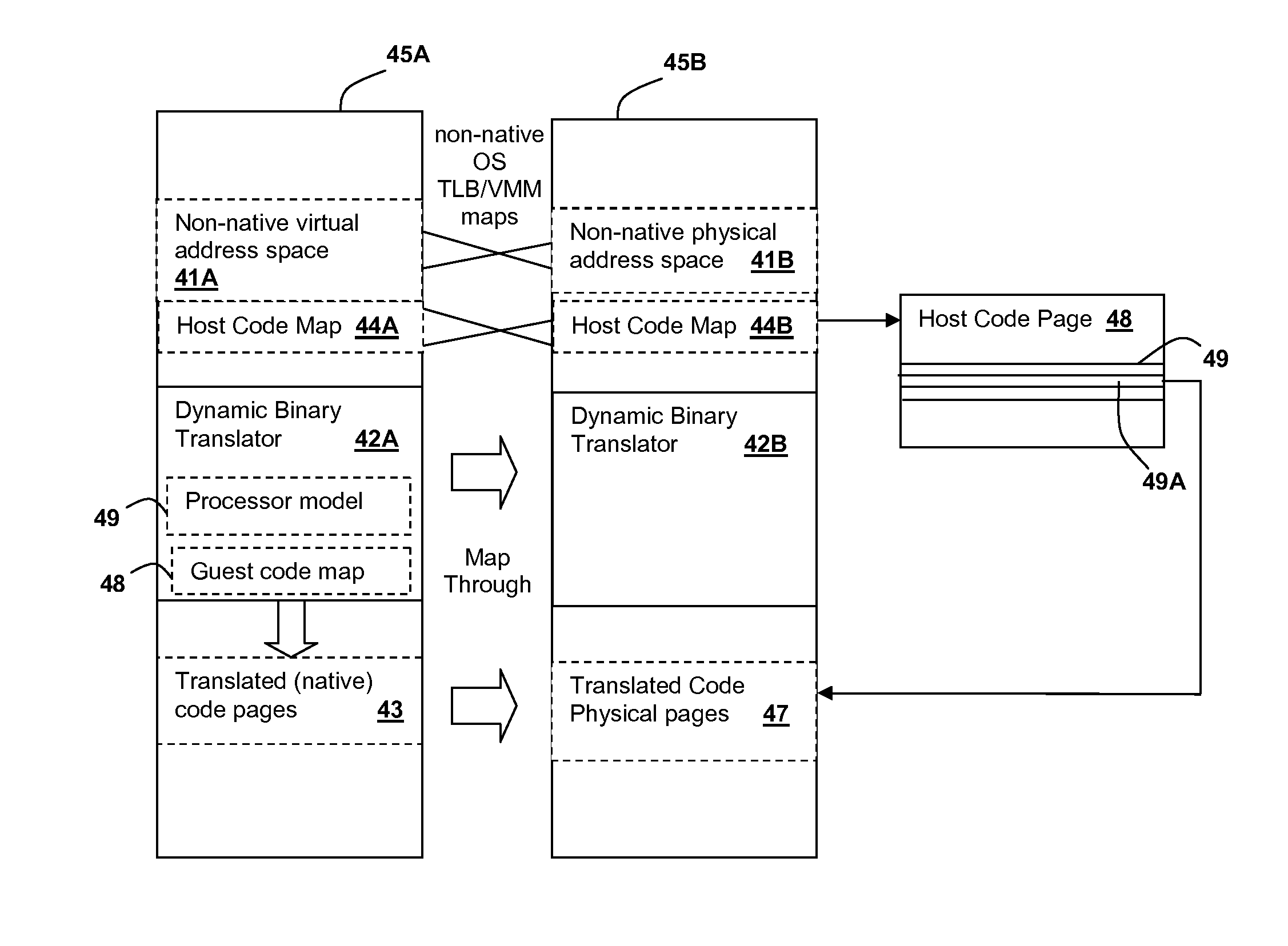

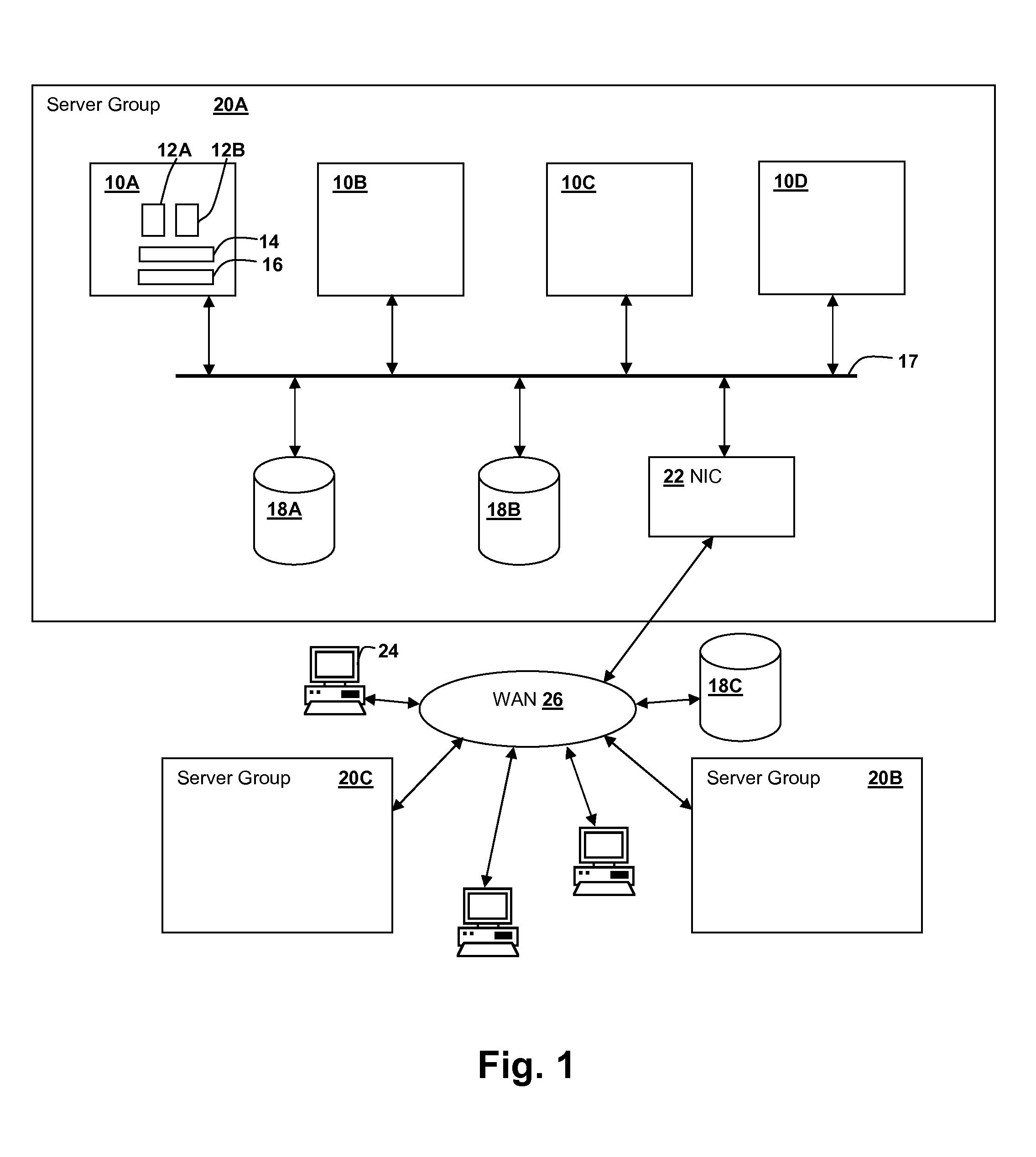

Control flow management for execution of dynamically translated non-native code in a virtual hosting environment

InactiveUS20140025893A1Easy retrievalMemory adressing/allocation/relocationProgram controlVirtualizationControl flow

Execution of non-native operating system images within a virtualized computer system is improved by providing a mechanism for retrieving translated code physical addresses corresponding to un-translated code branch target addresses using a host code map. Hardware acceleration mechanisms, such as content-accessible look-up tables, directory hardware, or processor instructions that operate on tables in memory can be provided to accelerate the performance of the translation mechanism. The virtual address of the branch instruction target is used as a key to look up a corresponding record that contains a physical address of the translated code page containing the translated branch instruction target, and execution is directed to the physical address obtained from the record, once the physical page containing the translated code corresponding the target address is loaded in memory.

Owner:IBM CORP

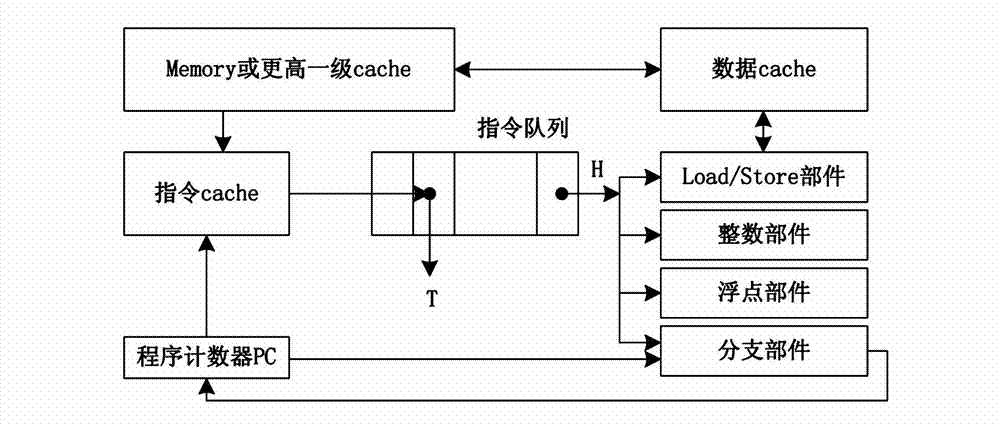

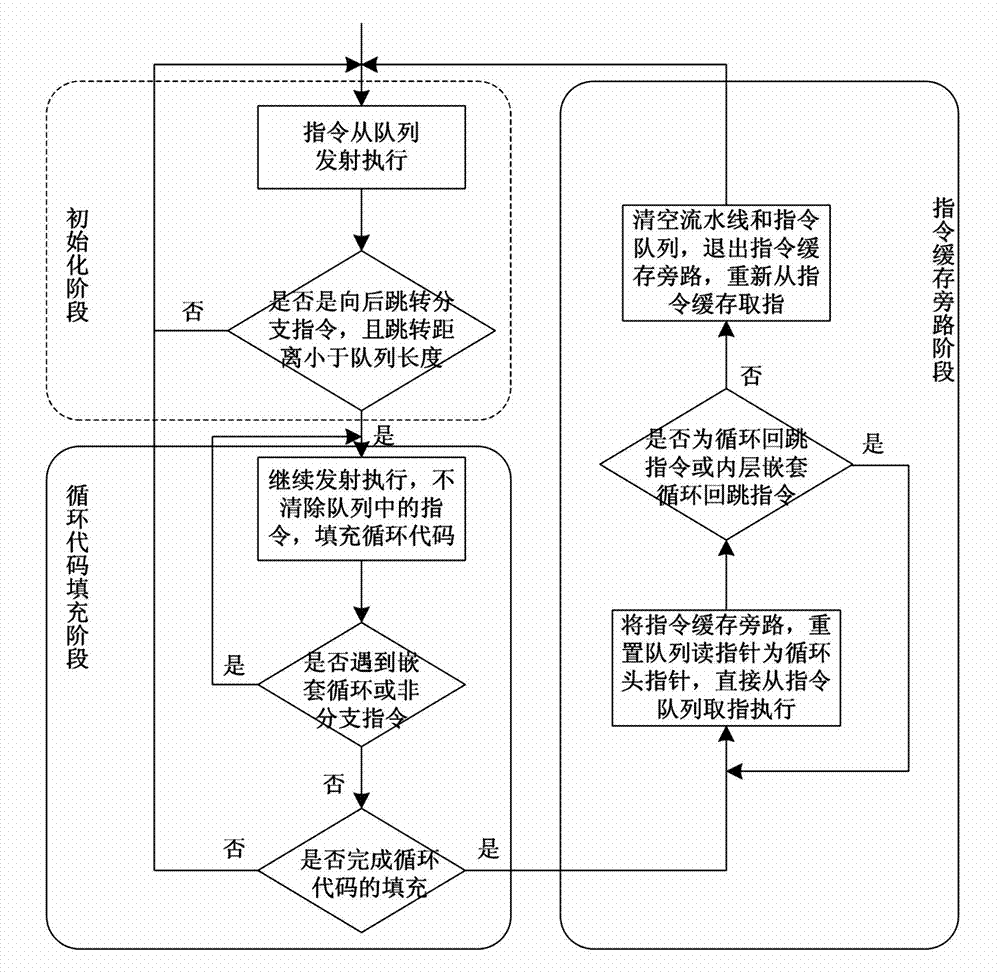

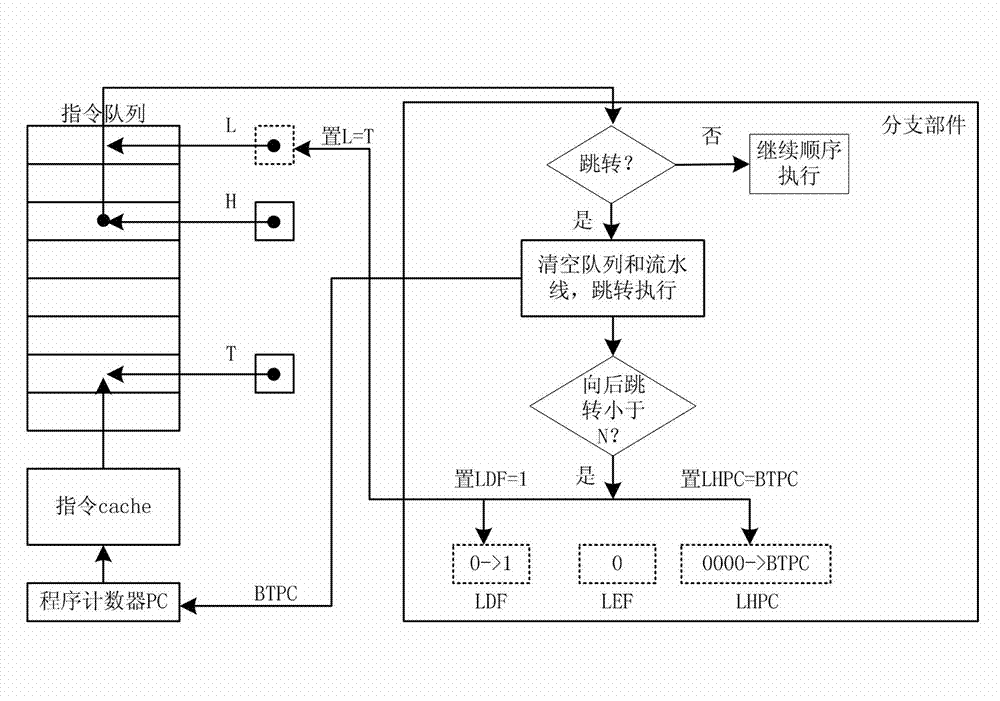

Dynamic detection and execution method of program loop code based on instruction queue

ActiveCN102968293AImprove processing speedImprove processing efficiencyMachine execution arrangementsComputer compatibilityFilling-in

The invention discloses a dynamic detection and execution method of a program loop code based on an instruction queue. The dynamic detection and execution method comprises the implementation steps as follows: 1) instructions are taken from an instruction cache and stored in the instruction queue; the instructions stored in the instruction queue are sent to functional components for execution; when the execution instructions branch instructions and execution results are skip, skip directions and skip object distances are acquired; if the skip is backward and the skip object distances are within the length of the instruction queue, the next step is executed; 2) instructions corresponding to the program loop code are taken out from the instruction cache and filled in the instruction queue; and 3) the instruction cache is bypast, the instructions are taken out from the instruction queue and executed, and the working state of the instruction cache is restored after all the instructions of the program loop code are executed. The method has the advantages that the execution efficiency is high, the processing property is good, the execution power consumption is low, the hardware cost is low, the nesting loop is supported, the compatibility is strong, and the extendibility is good.

Owner:NAT UNIV OF DEFENSE TECH

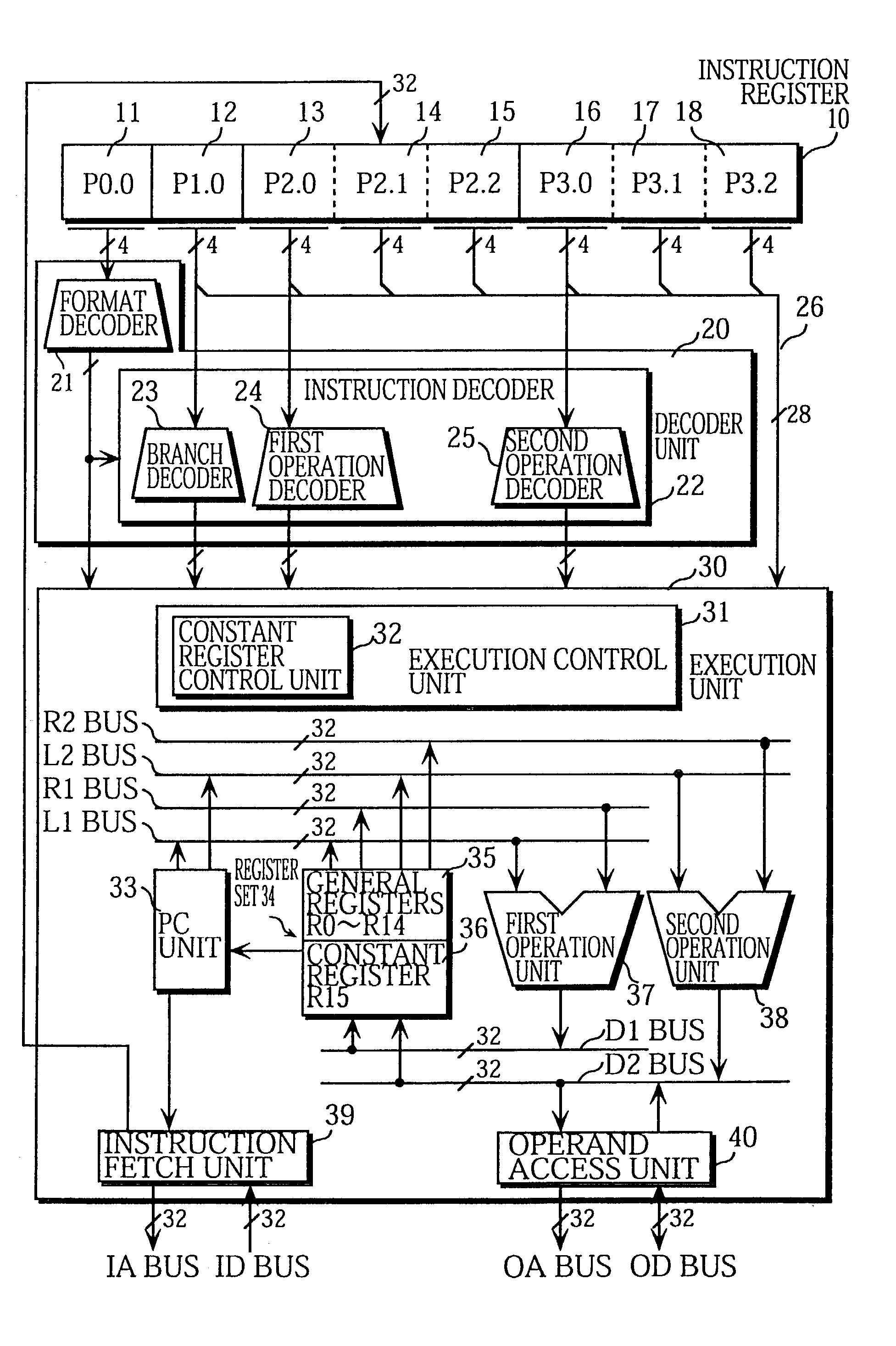

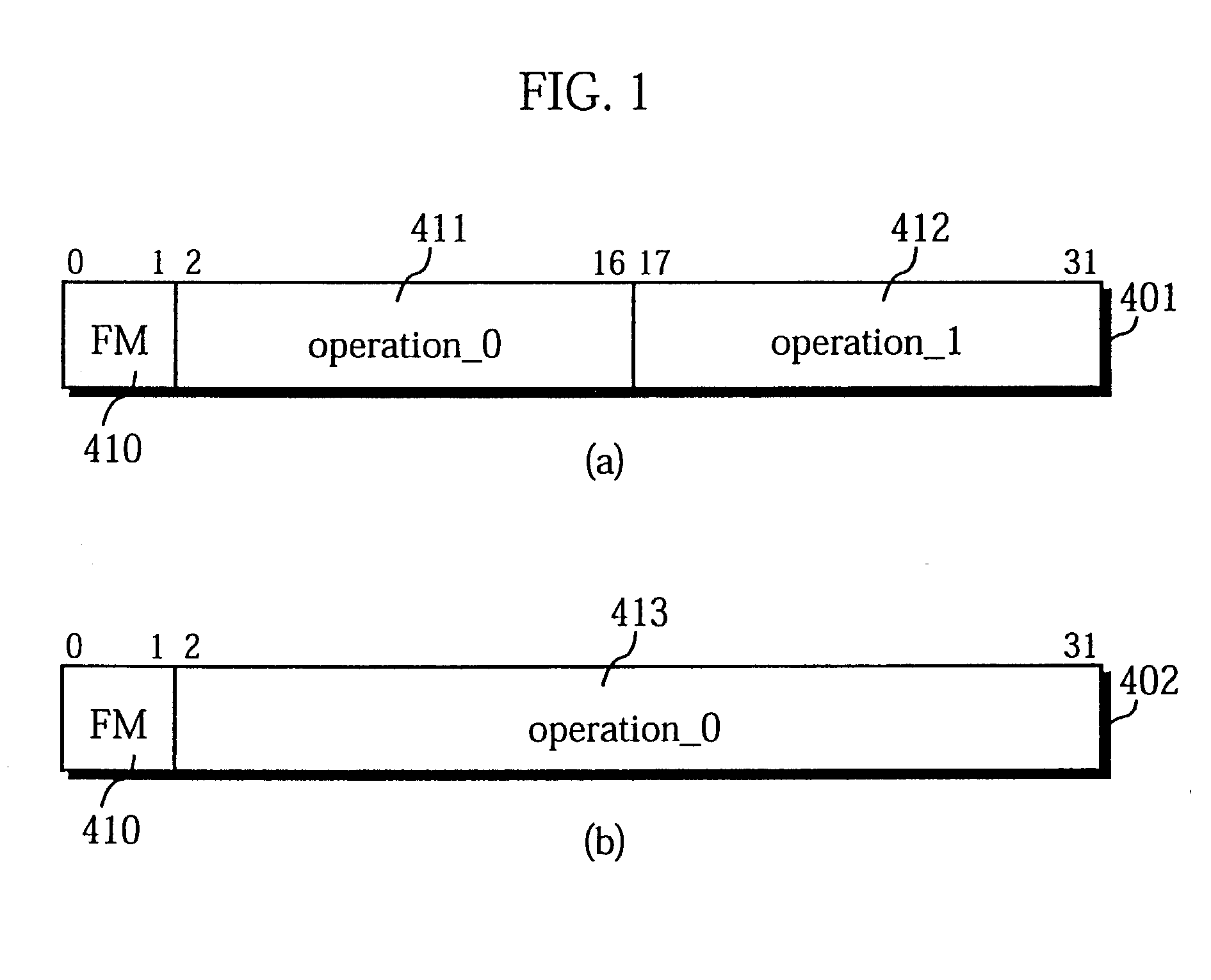

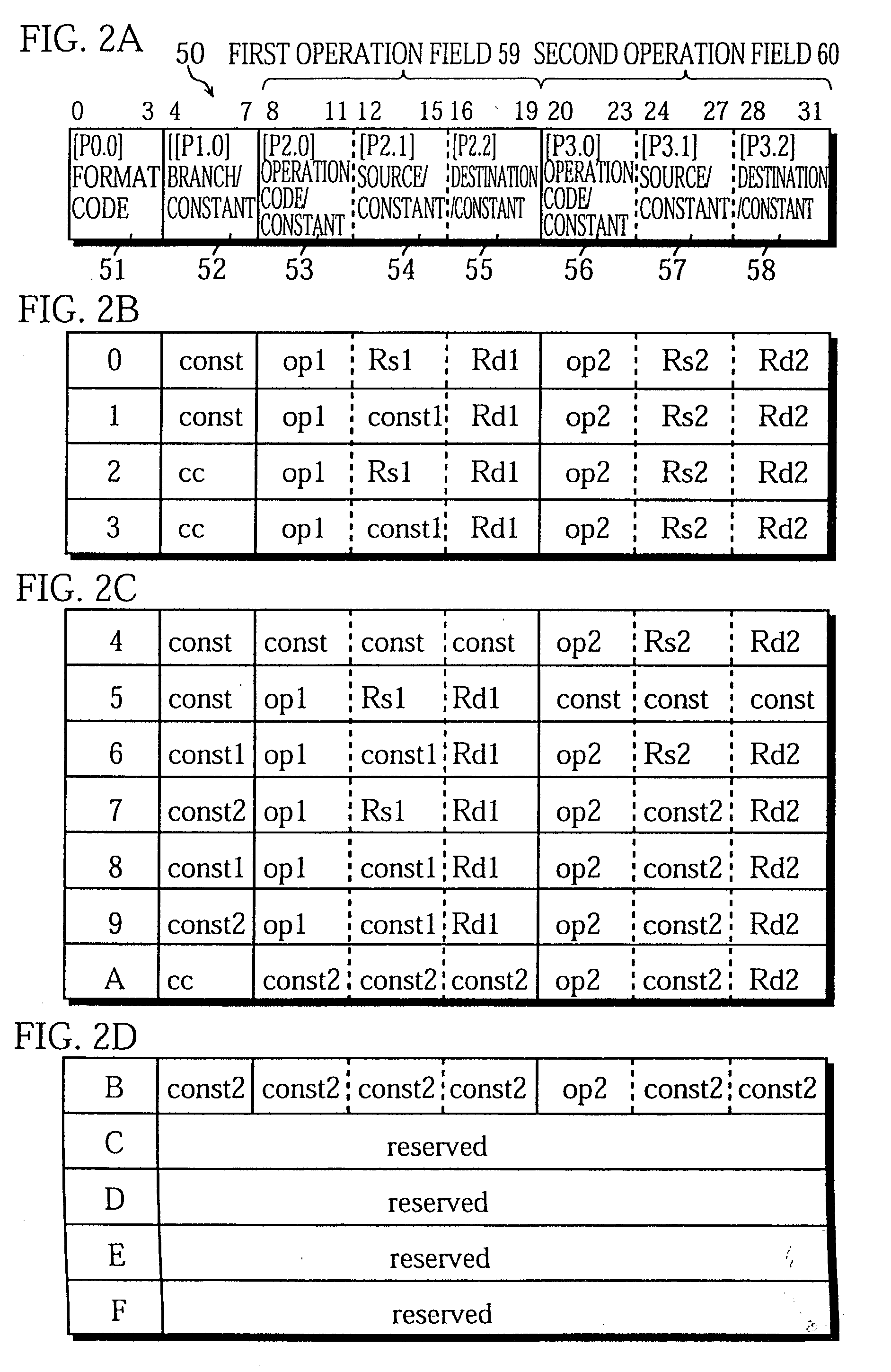

Processor for executing highly efficient VLIW

InactiveUS20020129223A1Efficiently constructHighly efficient code structureInstruction analysisGeneral purpose stored program computer4-bit12-bit

A 32-bit instruction 50 is composed of a 4-bit format field 51, a 4-bit operation field 52, and two 12-bit operation fields 59 and 60. The 4-bit operation field 52 can only include (1) an operation code "cc" that indicates a branch operation which uses a stored value of the implicitly indicated constant register 36 as the branch address, or (2) a constant "const". The content of the 4-bit operation field 52 is specified by a format code provided in the format field 51.

Owner:GK BRIDGE 1

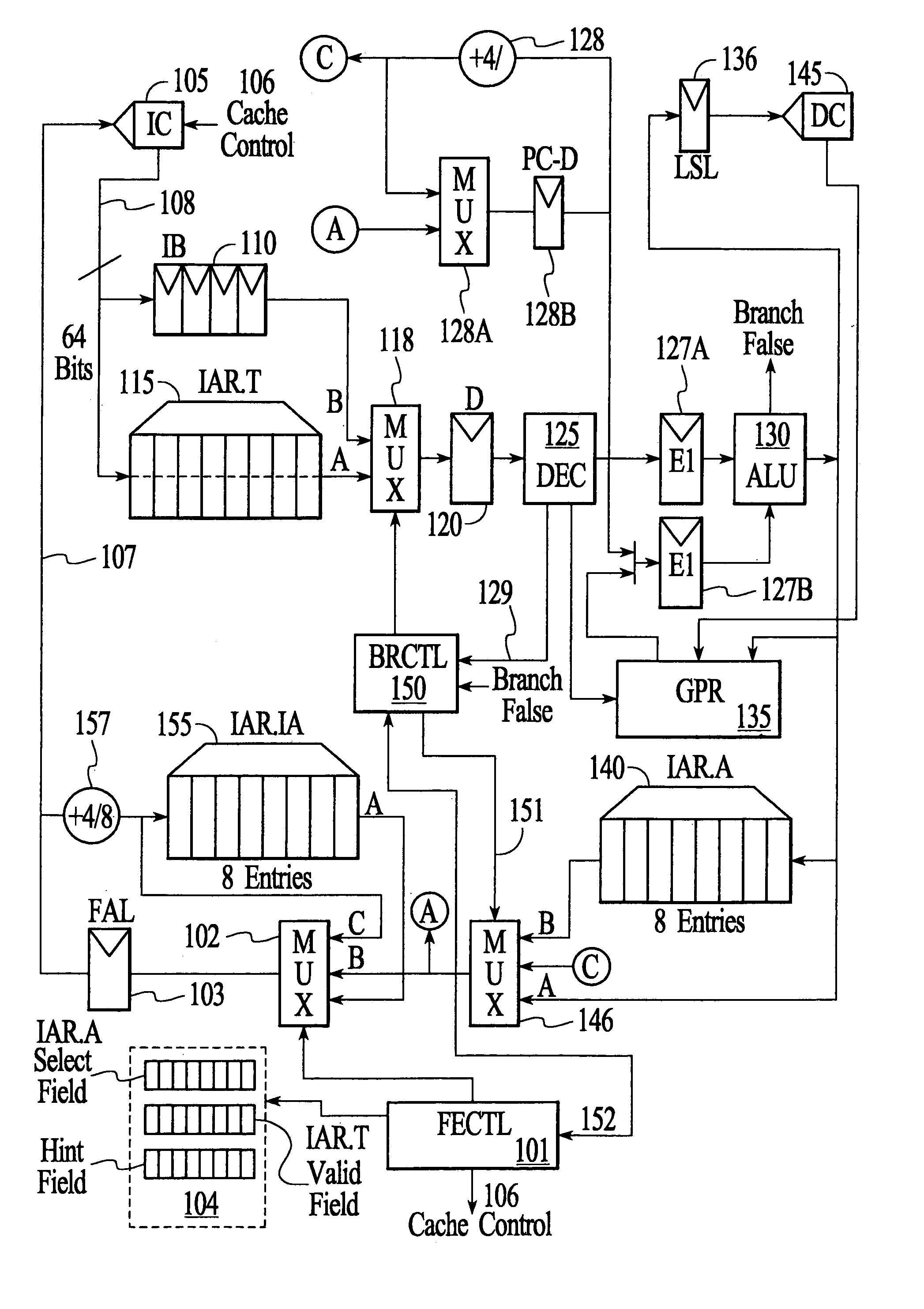

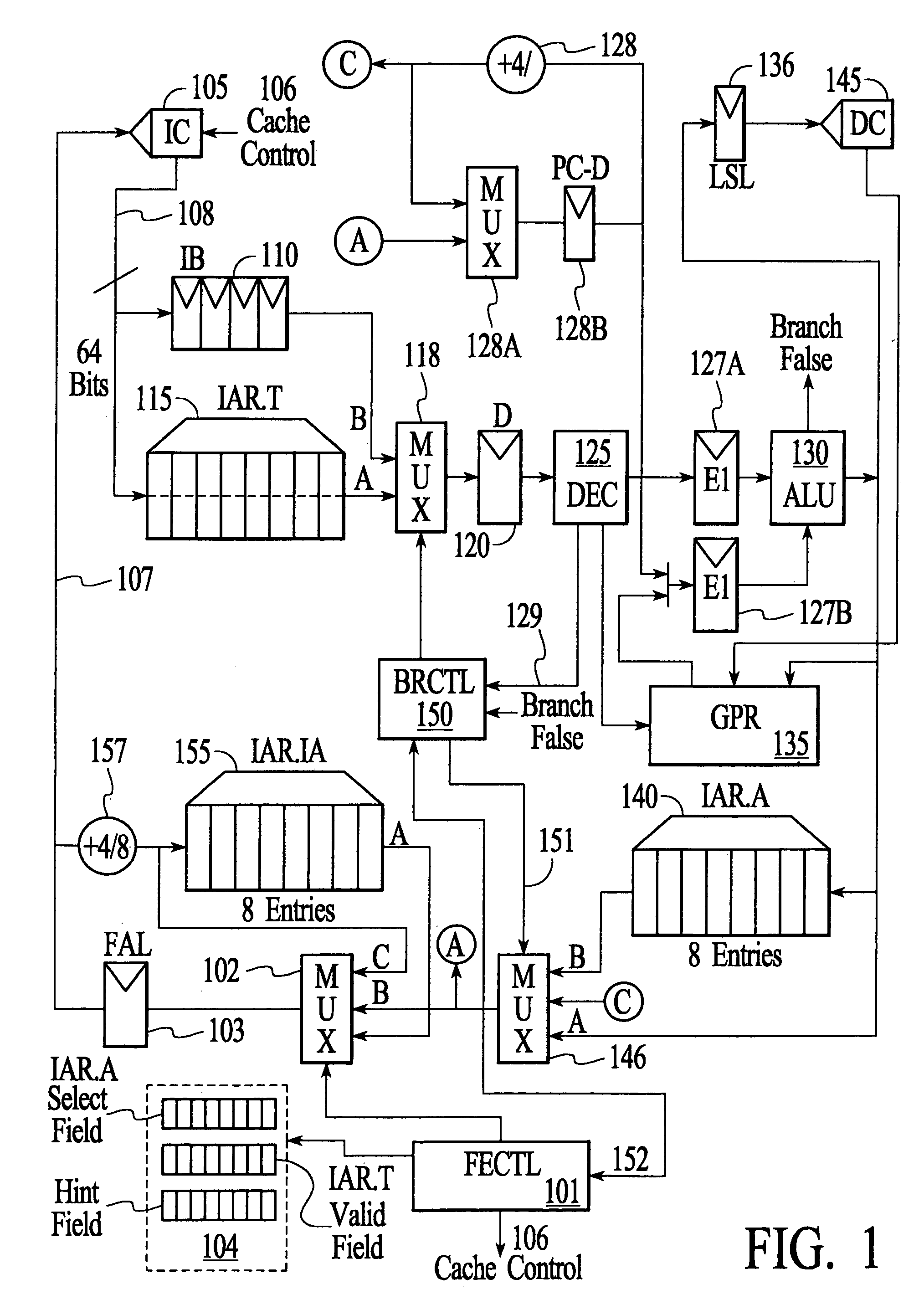

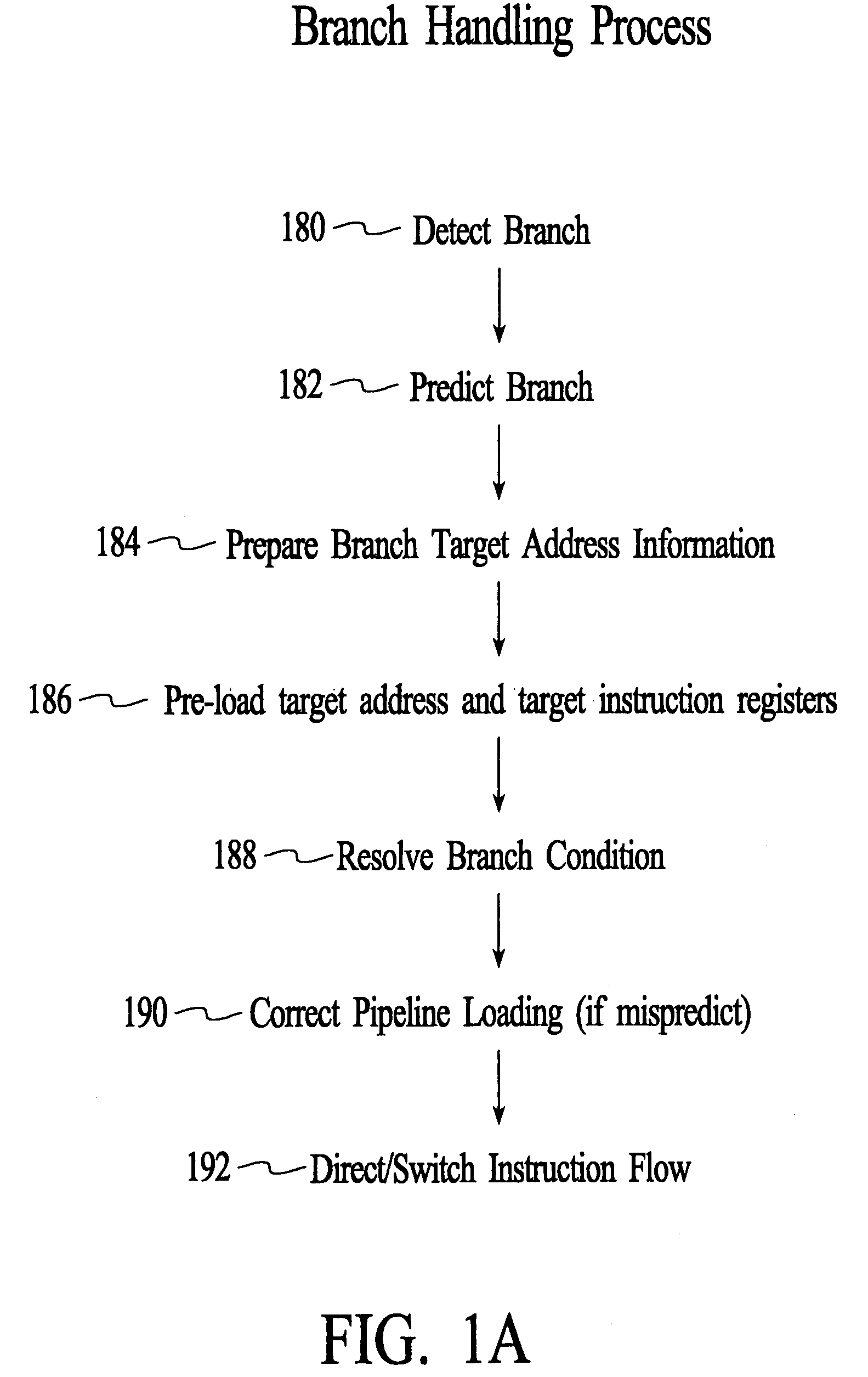

Branch control memory

InactiveUS7159102B2Simplify the commissioning processImprove latency handlingDigital computer detailsNext instruction address formationParallel computingControl memory

A branch control memory store branch instructions which are adapted for optimizing performance of programs run on electronic processors. Flexible instruction parameter fields permit a variety of new branch control and branch instruction implementations best suited for a particular computing environment. These instructions also have separate prediction bits, which are used to optimize loading of target instruction buffers in advance of program execution, so that a pipeline within the processor achieves superior performance during actual program execution.

Owner:RENESAS ELECTRONICS CORP

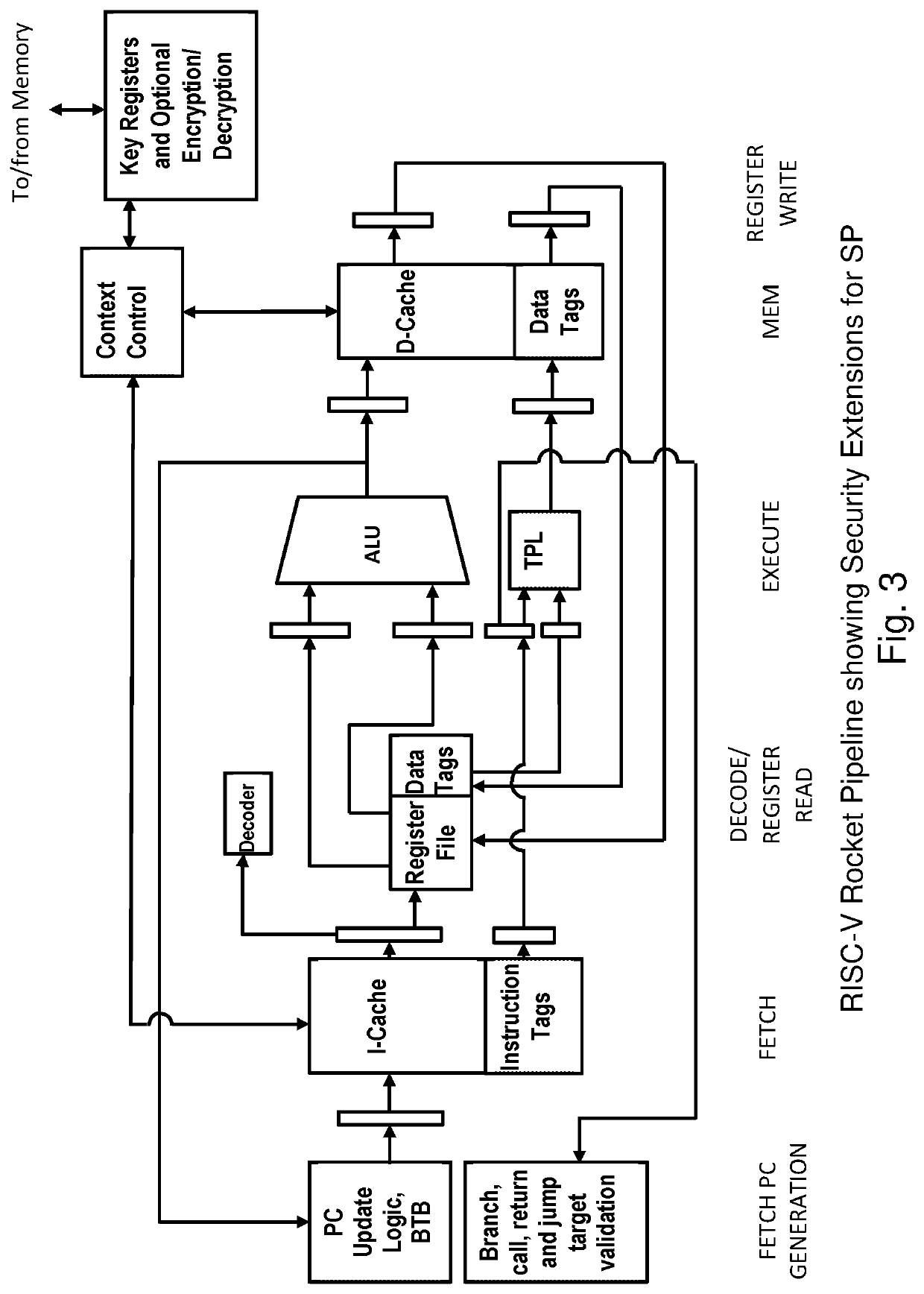

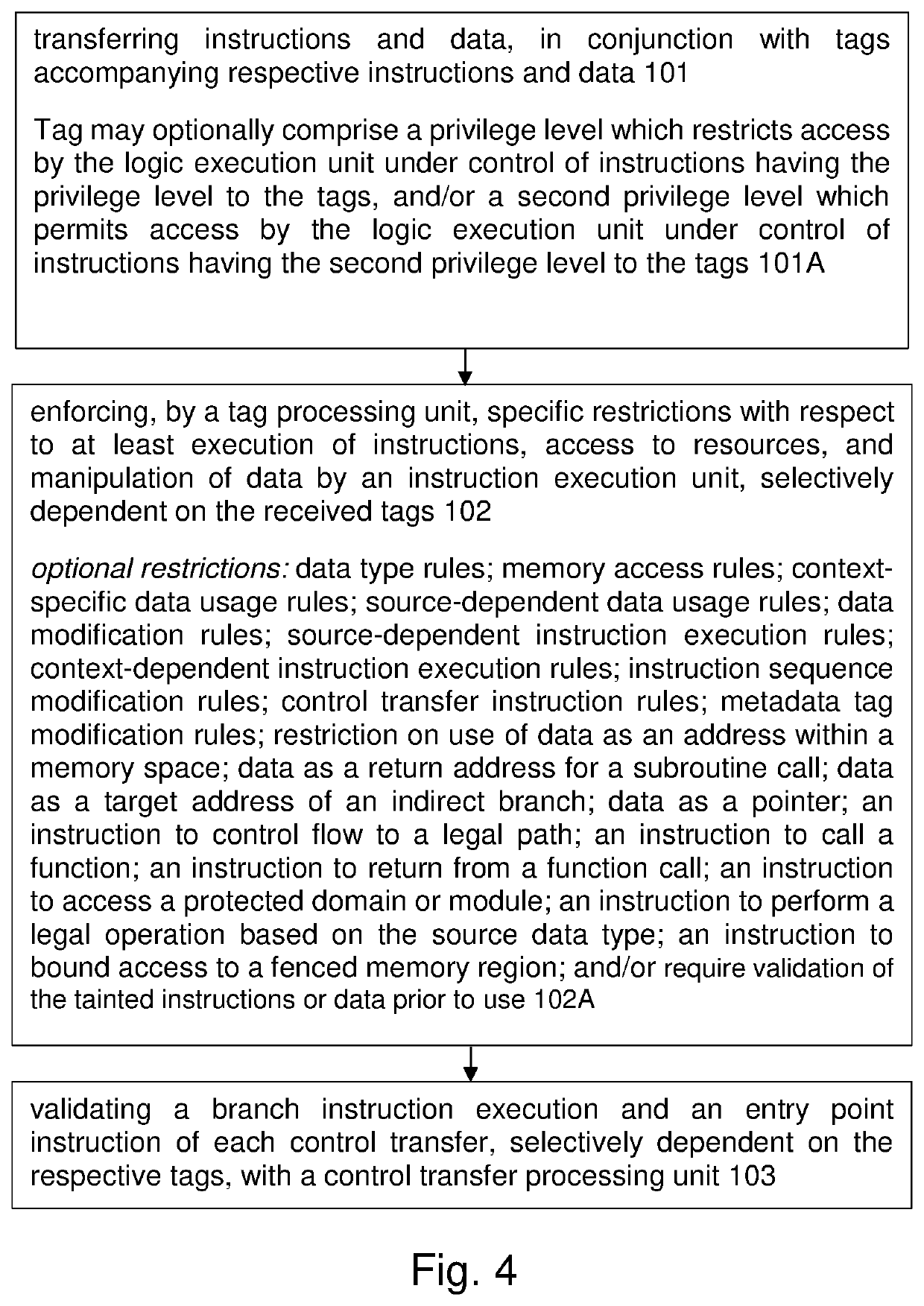

Secure processor for detecting and preventing exploits of software vulnerability

ActiveUS20200159888A1Robust solutionEasy to solveMemory architecture accessing/allocationRegister arrangementsCommunication interfaceBranch

A secure processor, comprising a logic execution unit configured to process data based on instructions; a communication interface unit, configured to transfer of the instructions and the data, and metadata tags accompanying respective instructions and data; a metadata processing unit, configured to enforce specific restrictions with respect to at least execution of instructions, access to resources, and manipulation of data, selectively dependent on the received metadata tags; and a control transfer processing unit, configured to validate a branch instruction execution and an entry point instruction of each control transfer, selectively dependent on the respective metadata tags.

Owner:THE RES FOUND OF STATE UNIV OF NEW YORK

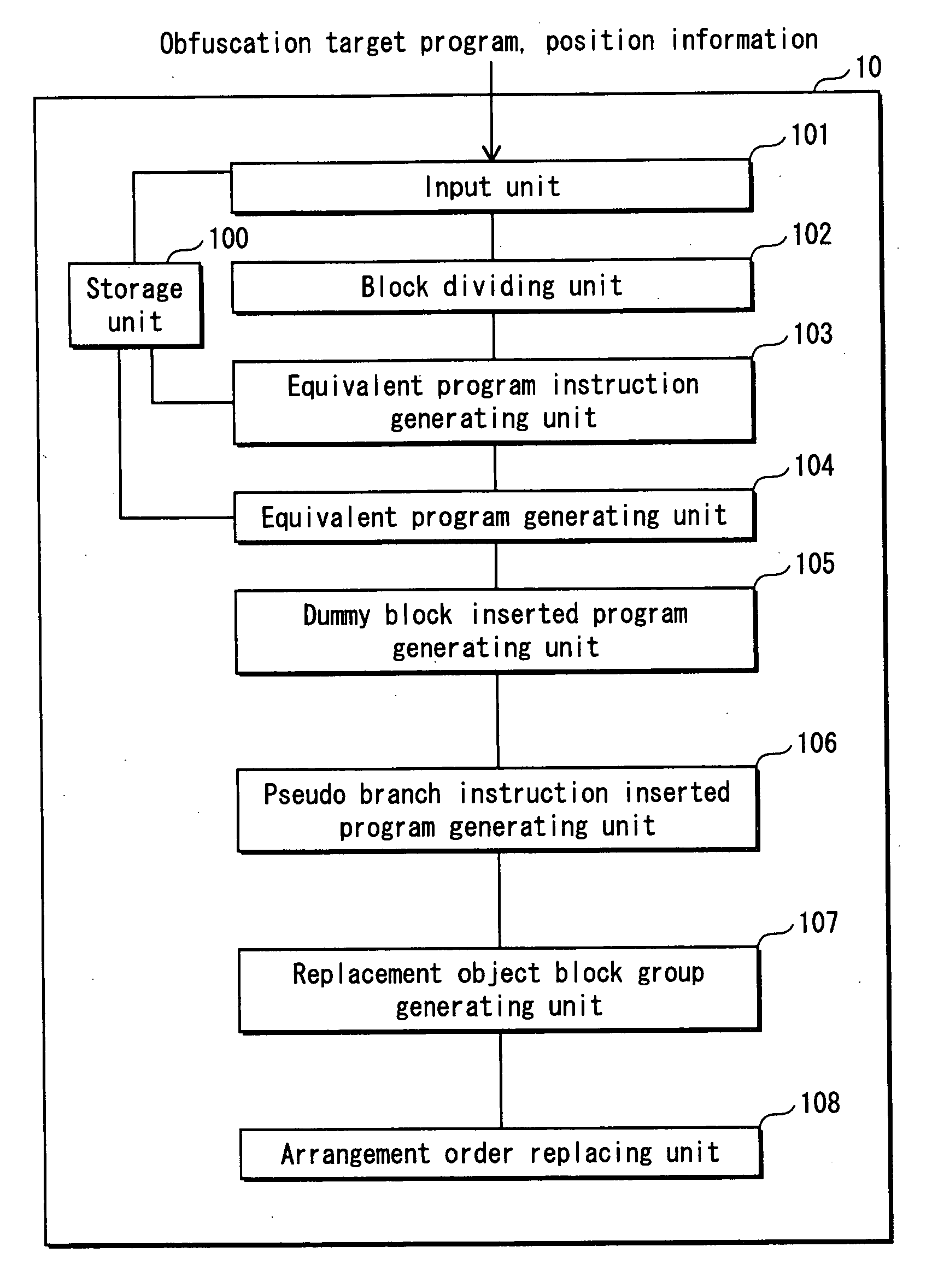

Program illegiblizing device and method

ActiveUS20090083521A1Obtain confidential informationDifficult to analyzeDigital computer detailsProgram/content distribution protectionProcessing InstructionProgram instruction

A program obfuscating device for generating obfuscated program from which unauthorized analyzer cannot obtain confidential information easily. The program obfuscating device stores original program that contains authorized program instructions and confidential process instruction group containing confidential information that needs to be kept confidential, generates process instructions which, when executed in predetermined order, provide same result, with execution of last process instruction thereof, as the confidential process instruction group, inserts the process instructions into the original program at position between start of the original program and the confidential process instruction group so as to be executed in the predetermined order, in place of the confidential process instruction group, generates dummy block as dummy of the process instructions, and inserts the dummy block and control instruction, which causes the dummy block to be bypassed, into the original program, and inserts branch instruction into the dummy block.

Owner:PANASONIC CORP

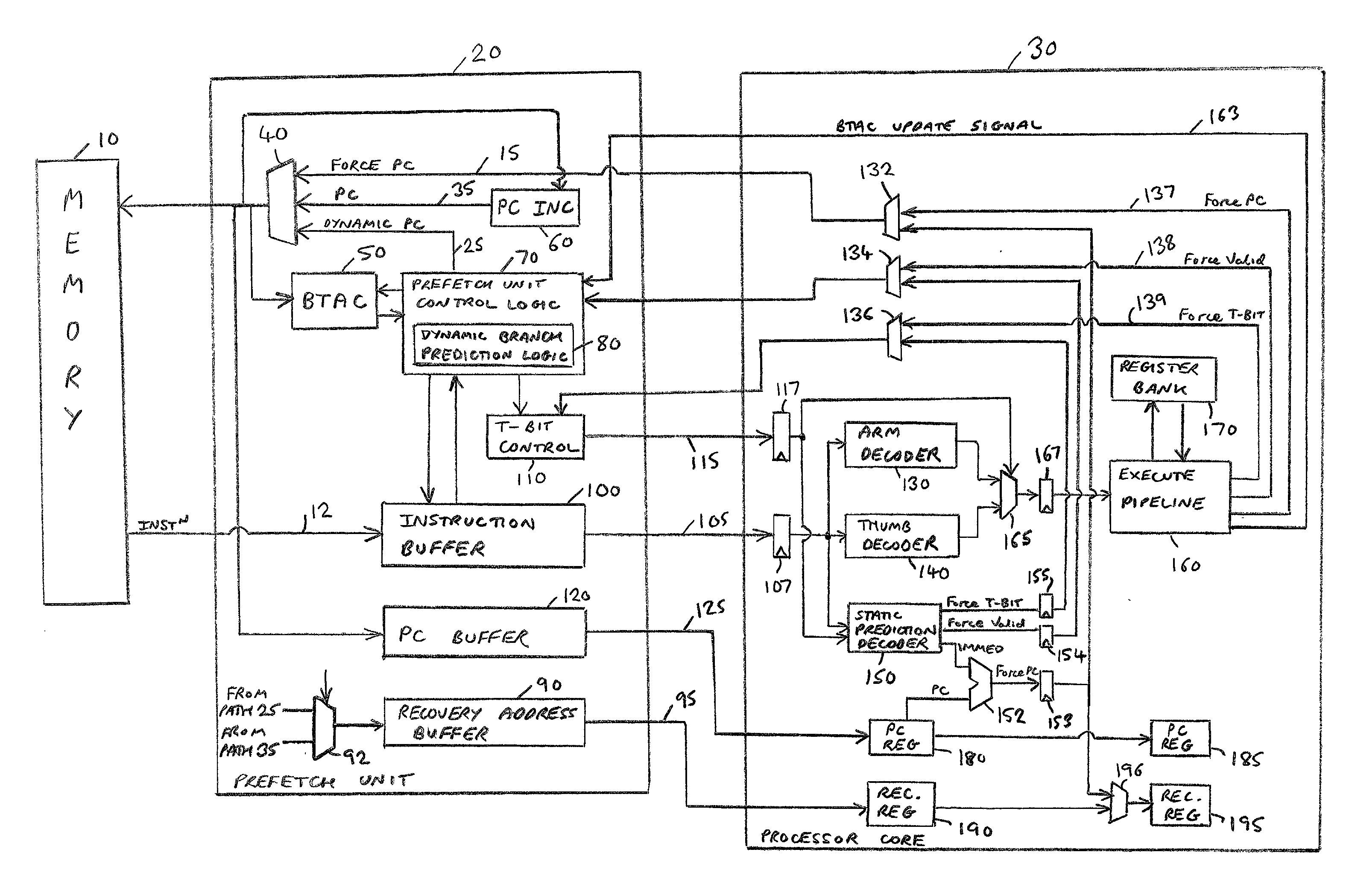

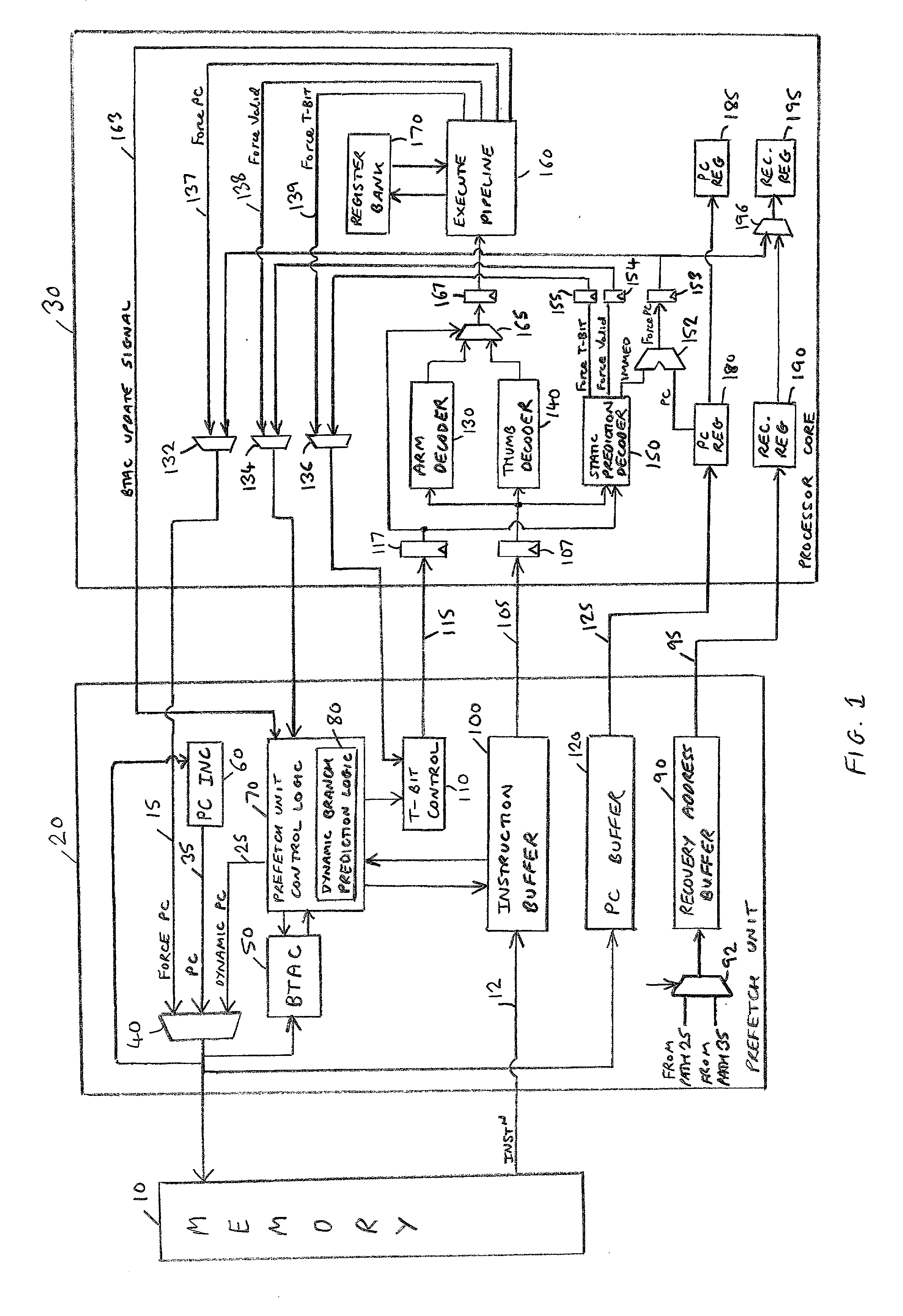

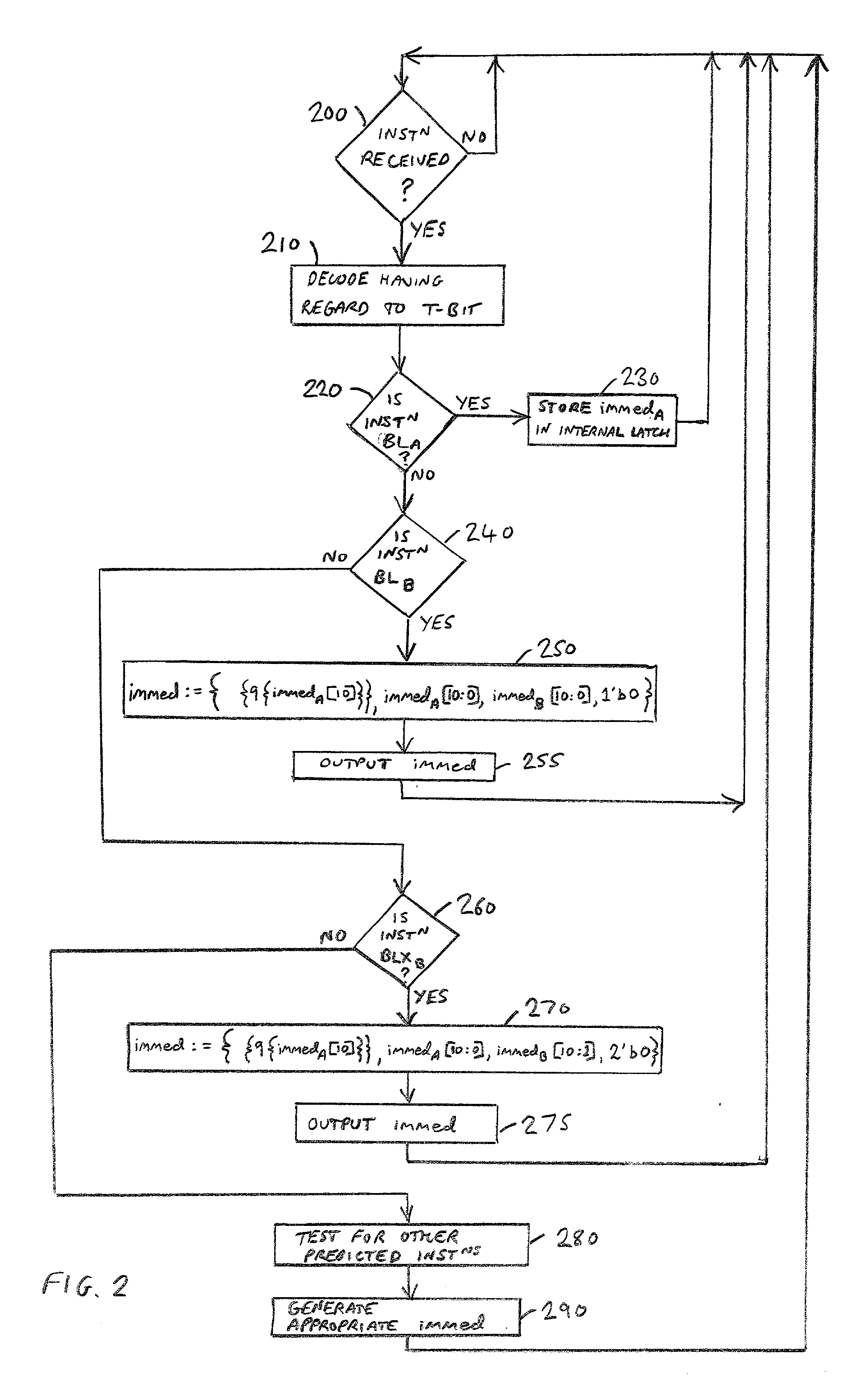

Prediction of branch instructions in a data processing apparatus

InactiveUS20030204705A1Significant comprehensive benefitsIncrease speedInstruction analysisDigital computer detailsInstruction streamData processing

The present invention provides a data processing apparatus and method for predicting branch instructions in a data processing apparatus. The data processing apparatus comprises a processor for executing instructions, a prefetch unit for prefetching instructions from a memory prior to sending those instructions to the processor for execution, and branch prediction logic for predicting which instruction should be prefetched by the prefetch unit. The branch prediction logic is arranged to predict whether a prefetched instruction specifies a branch operation that will cause a change in instruction flow, and if so to indicate to the prefetch unit a target address within the memory from which a next instruction should be retrieved. The instructions include a first instruction and a second instruction that are executable independently by the processor, but which in combination specify a predetermined branch operation whose target address is uniquely derivable from a combination of attributes of the first and second instruction. The data processing apparatus further comprises target address logic for deriving from the combination of attributes the target address for the predetermined branch operation, the branch prediction logic being arranged to predict whether the predetermined branch operation will cause a change in instruction flow, in which event the branch prediction logic is arranged to indicate to the prefetch unit the target address determined by the target address logic. Accordingly, even though neither the first instruction nor the second instruction itself uniquely identifies the target address, the target address can nonetheless be uniquely determined thereby allowing prediction of the predetermined branch operation specified by the combination of the first and second instructions.

Owner:ARM LTD

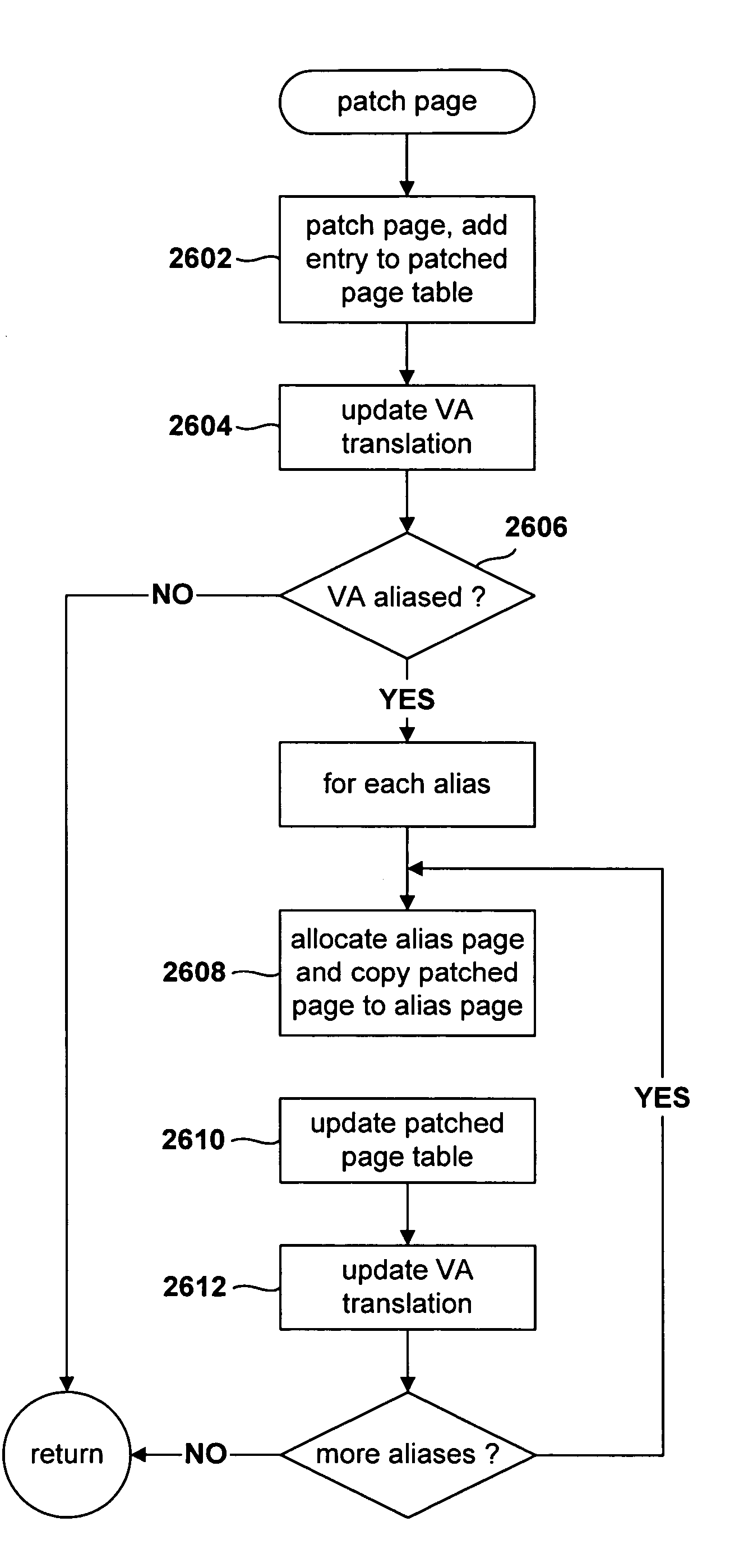

Method for patching virtually aliased pages by a virtual-machine monitor

InactiveUS7213125B2Multiprogramming arrangementsSoftware simulation/interpretation/emulationContent replicationSoftware engineering

Various embodiments of the present invention are directed to methods by which a virtual-machine monitor can introduce branch instructions, in order to emulate privileged and other instructions on behalf of a guest operating system, into guest-operating-system code residing on virtually aliased virtual-memory pages. In a described embodiment of the present invention, the virtual-machine monitor physically aliases each virtual alias for a particular physical memory page by allocating a physical page for the virtual alias, copying the original contents of the physical memory page to the allocated physical page, or physical alias page, and subsequently patching each physical alias page appropriate to the physical address of the physical alias page.

Owner:HEWLETT PACKARD DEV CO LP

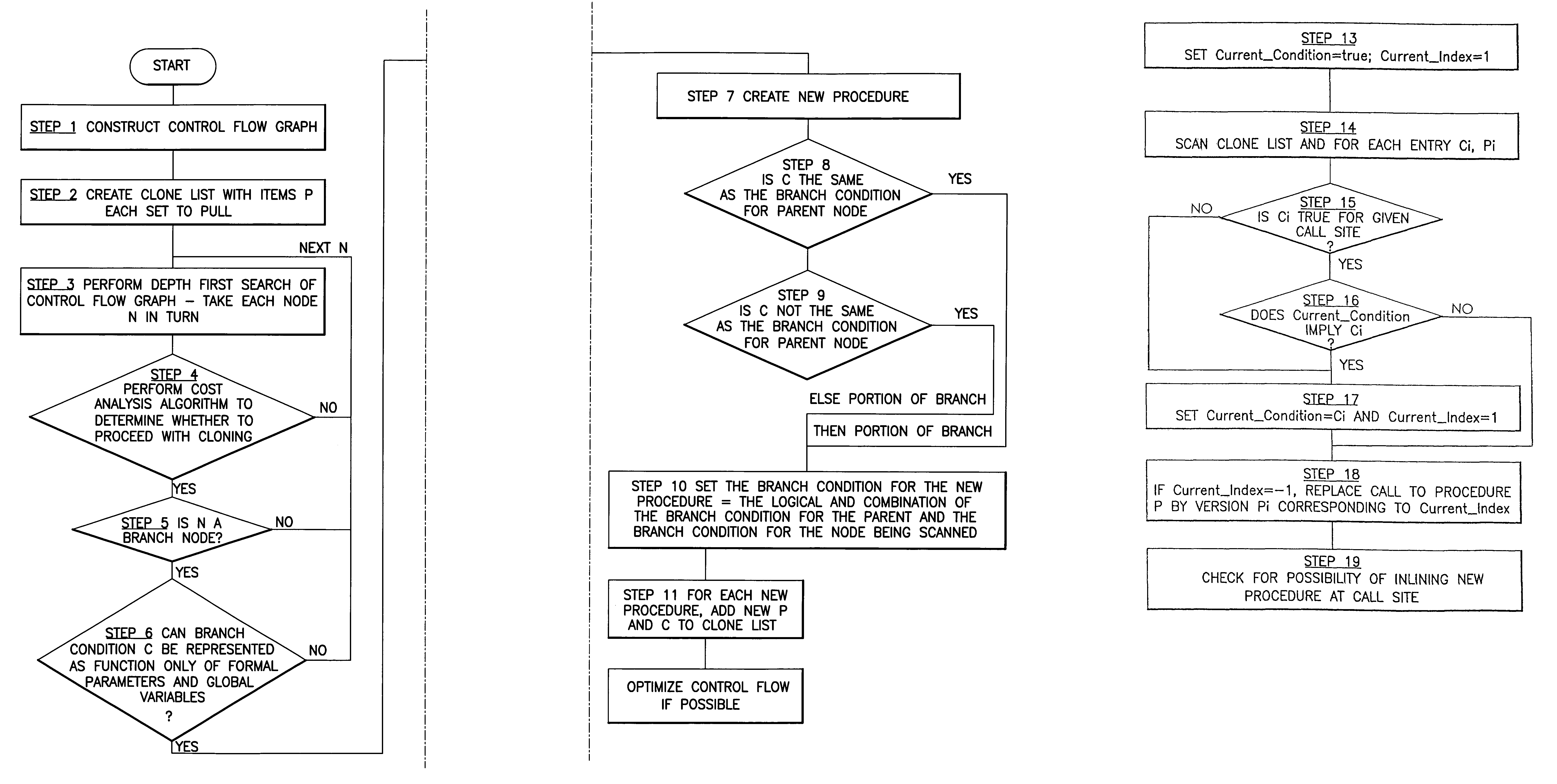

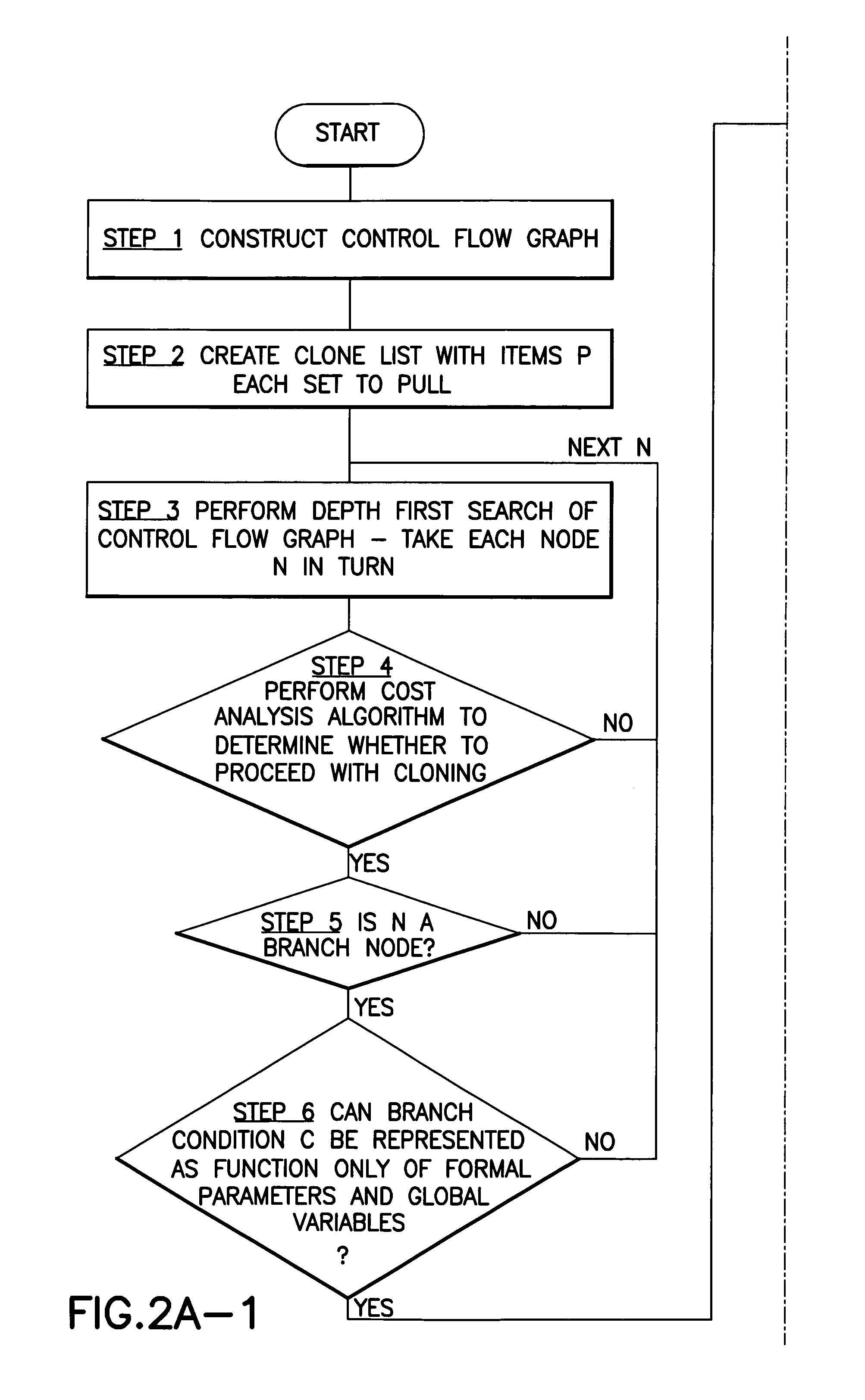

System, method and program product for optimising computer software by procedure cloning

InactiveUS7058561B1Facilitate further analysis and optimisationEasy to calculateProgram controlMemory systemsDead codeControl flow

A method, system and program product for optimizing software in which procedure clones are created based on the control flow information for the procedure body. In an example, a control flow graph for a called procedure is constructed and, for a branching node which can direct program flow to two or more code branches of the procedure, respective clones or new procedures are formed one for each code branch. A list containing pointers to the clones and the respective branch conditions for those clones is formed. Then, for each call site, the list is scanned to see if a particular call could be replaced by a call to a clone. Meanwhile, each clone is optimized and this may lead to removal of dead code or the replacement of a particular call statement by a constant.

Owner:IBM CORP

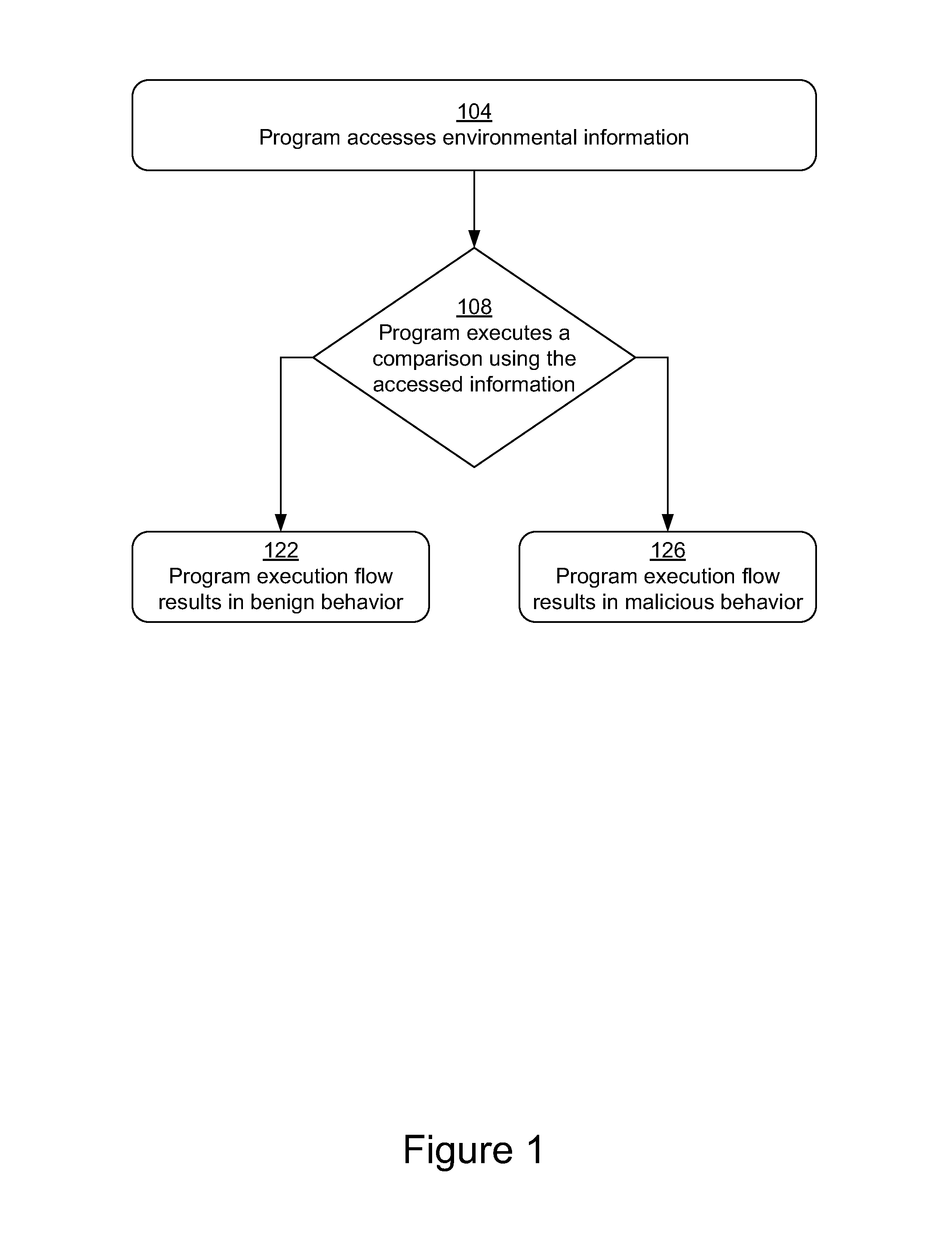

Methods and systems for malware detection based on environment-dependent behavior

The present disclosure is directed to methods and systems for malware detection based on environment-dependent behavior. Generally, an analysis environment is used to determine how input collected from an execution environment is used by suspicious software. The methods and systems described identify use of environmental information to decide between execution paths leading to malicious behavior or benign activity. In one aspect, one embodiment of the invention relates to a method comprising monitoring execution of suspect computer instructions; recognizing access by the instructions of an item of environmental information; identifying a plurality of execution paths in the instructions dependant on a branch in the instructions based on a value of the accessed item of environmental information; and determining that a first execution path results in benign behavior and that a second execution path results in malicious behavior. The method comprises classifying the computer instructions as evasive malware responsive to the determination.

Owner:VMWARE INC

Instruction cache way prediction for jump targets

ActiveUS7406569B2Easy retrievalMemory architecture accessing/allocationEnergy efficient ICTDecision circuitParallel computing

Typical cache architecture provides a single cache way prediction memory for use in predicting a cache way for both sequential and non-sequential instructions contained within a program stream. Unfortunately, one of the drawbacks of the prior art cache way prediction scheme lies in its efficiency when dealing with instructions that vary the PC in a non-sequential manner, such as branch instructions including jump instructions. To facilitate caching of non-sequential instructions an additional cache way prediction memory is provided to deal with the non-sequential instructions. Thus during program execution a decision circuit determines whether to use a sequential cache way prediction array or a non sequential cache way prediction array in dependence upon the type of instruction. Advantageously the improved cache way prediction scheme provides an increased cache hit percentage when used with non-sequential instructions.

Owner:NYTELL SOFTWARE LLC

Methods and apparatuses for automatic type checking via poisoned pointers

ActiveUS20130205285A1Calculation can be costlyOptimize dataSoftware engineeringError detection/correctionSoftware engineeringBranch

A method and an apparatus that modify pointer values pointing to typed data with type information are described. The type information can be automatically checked against the typed data leveraging hardware based safety check mechanisms when performing memory access operations to the typed data via the modified pointer values. As a result, hardware built in logic can be used for a broad class of programming language safety check when executing software codes using modified pointers that are subject to the safety check without executing compare and branch instructions in the software codes.

Owner:APPLE INC

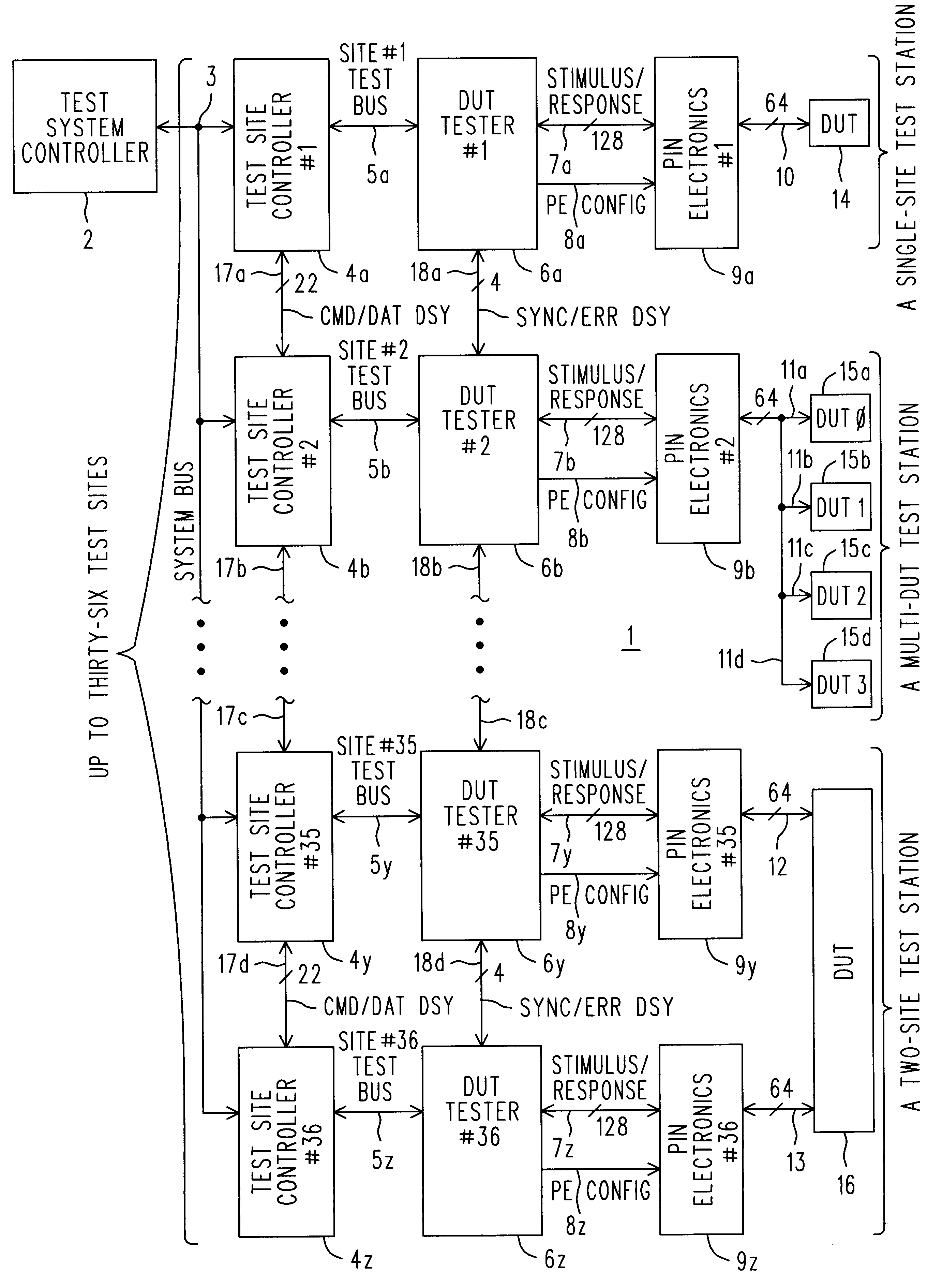

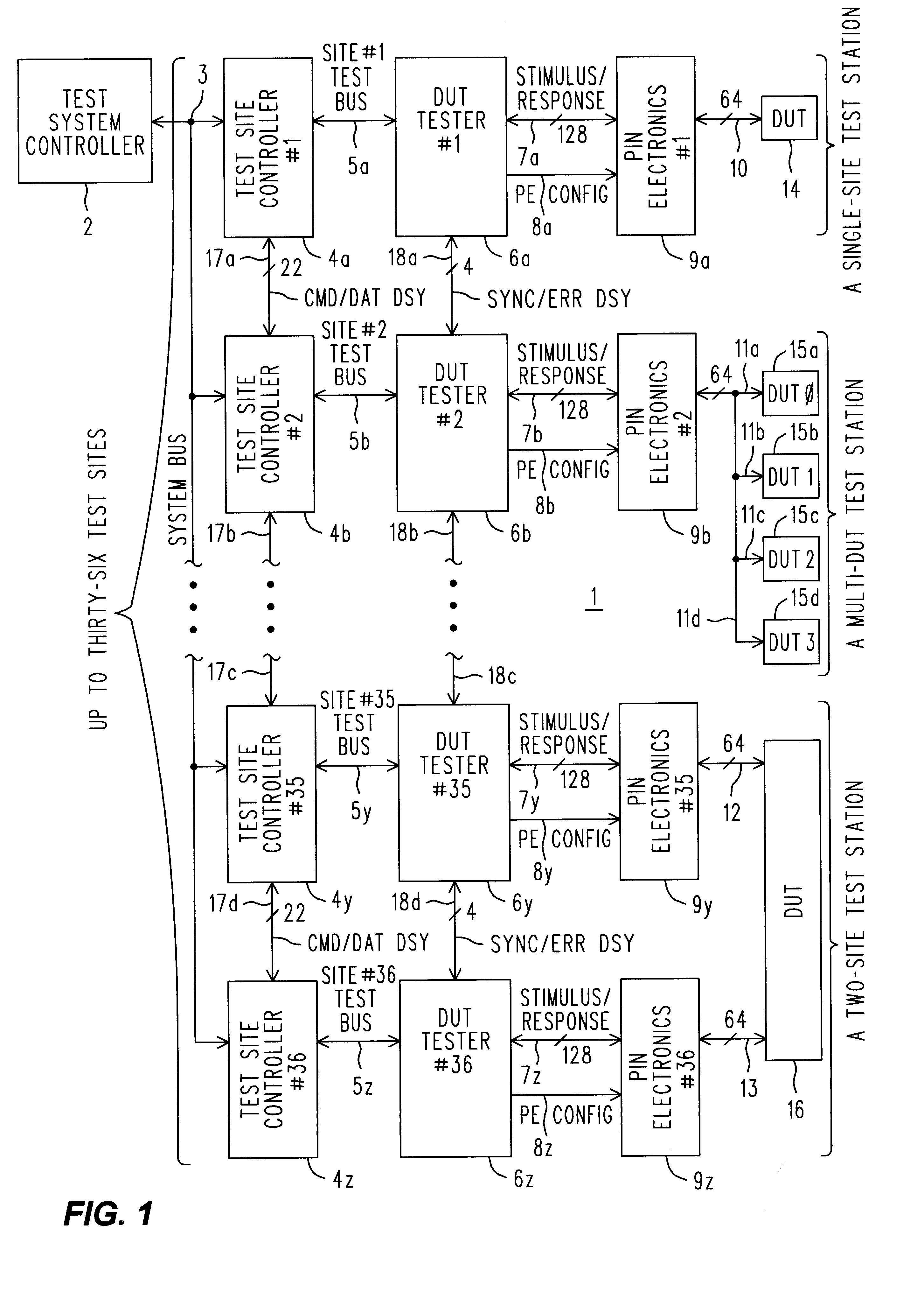

Algorithmically programmable memory tester with history FIFO's that aid in error analysis and recovery

InactiveUS6574764B2Increase overheadDigital circuit testingMarginal checkingMemory testerTester device

The problem is to branch back to an appropriate location within a memory tester test program, and also restore its state of algorithmic control, when an error associated therewith occurs later in time at the DUT. Owing to delays in pipelines connecting the program execution environment to the DUT and back again. These delays allow the program to arbitrarily advance beyond where the stimulus was given. The arbitrary advance makes it difficult to determine the exact circumstances that were associated with the error. A branch based on the error signal can restart a section of the test program, but it is likely only a template needing further test algorithm control information that varies dynamically as the test program executes. The solution is to equip the memory tester with History FIFO's whose depths are adjusted to account for the sum of the delays of the pipelines, relative to the location of that History FIFO. When the error flag is generated the desired program location and state information is present at the bottom of an appropriate History FIFO.

Owner:ADVANTEST CORP

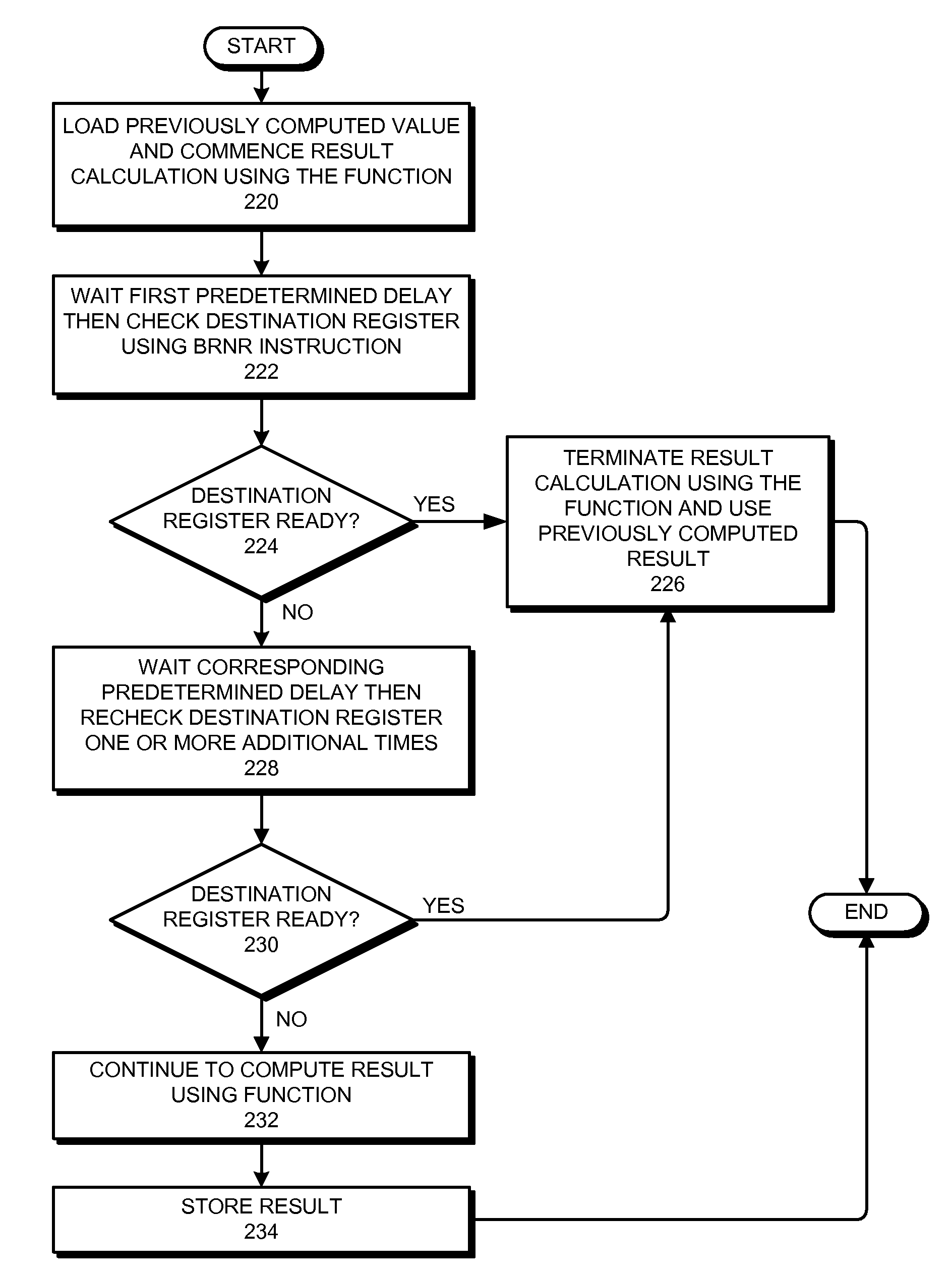

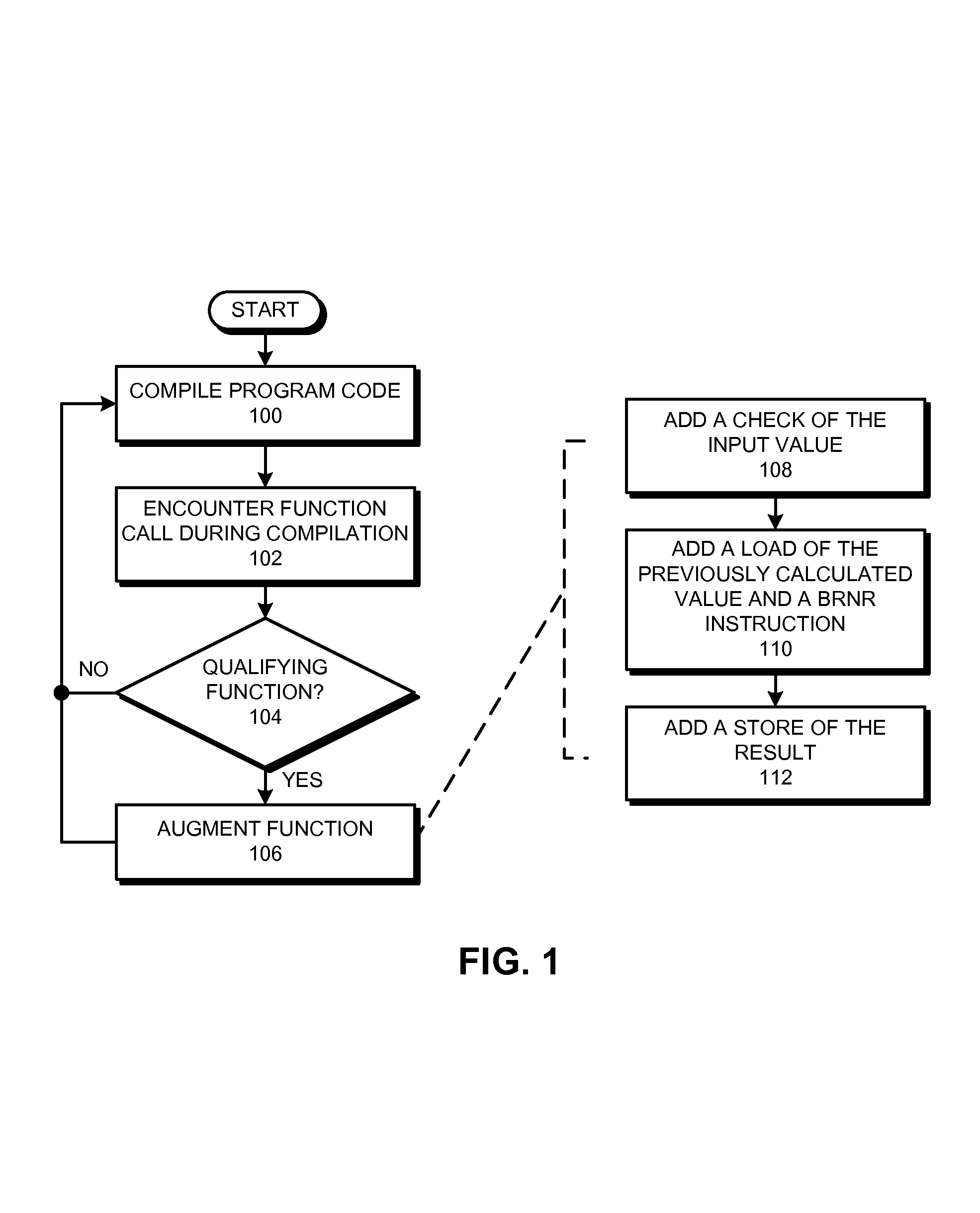

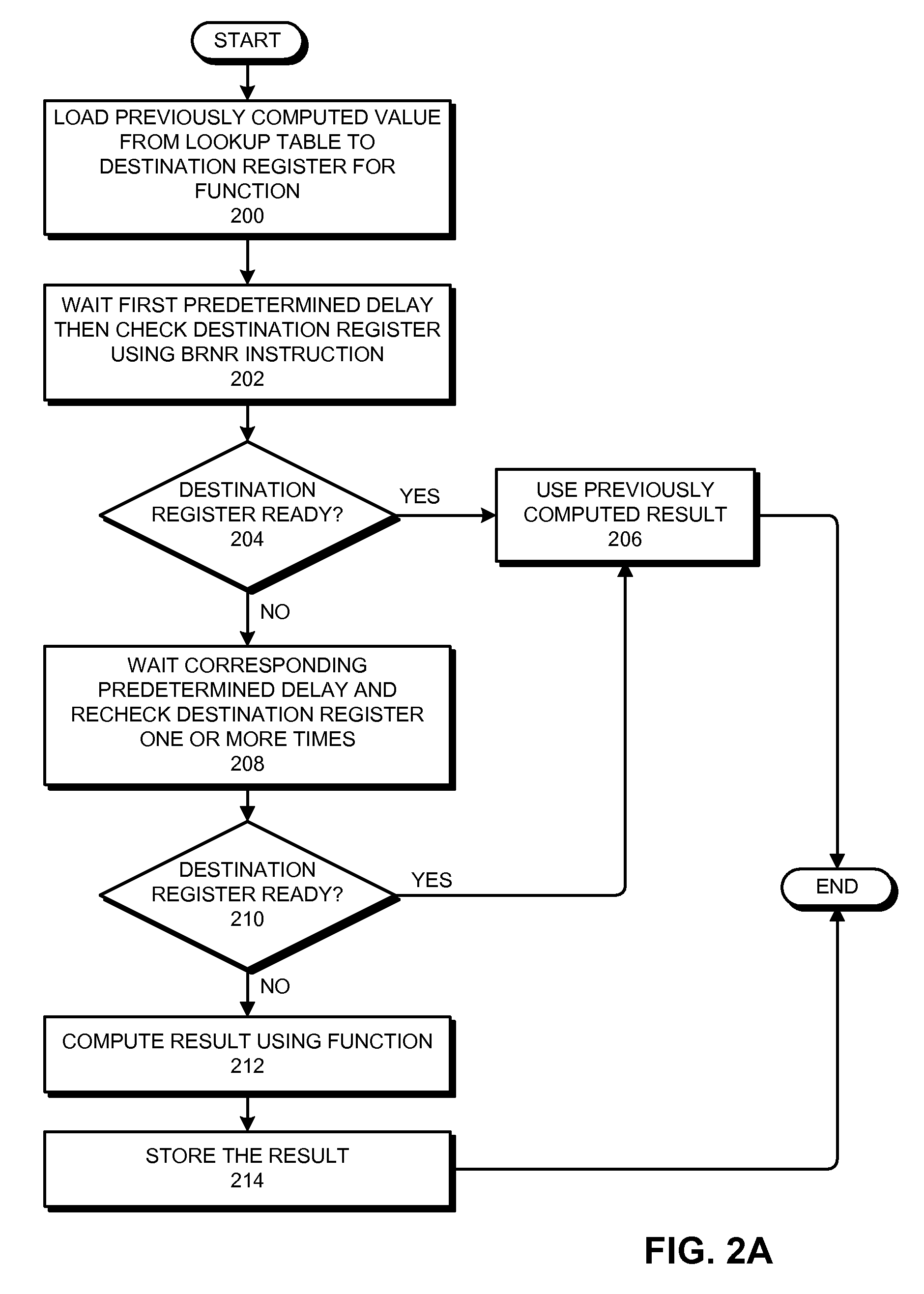

Using register readiness to facilitate value prediction

ActiveUS7539851B2Avoids unnecessarily calculatingIncrease valueDigital computer detailsSpecific program execution arrangementsProcessor registerParallel computing

One embodiment of the present invention provides a system for using register readiness to facilitate value prediction. The system starts by loading a previously computed result for a function to a destination register for the function from a lookup table. The system then checks the destination register for the function by using a Branch-Register-Not-Ready (BRNR) instruction to check the readiness of the destination register. If the destination register is ready, the system uses the previously computed result in the destination register as the result of the function. Loading the value from the lookup table in this way avoids unnecessarily calculating the result of the function when that result has previously been computed.

Owner:ORACLE INT CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com