Patents

Literature

1070results about "Next instruction address formation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Method and system for e-mail message transmission

InactiveUS7127741B2Facilitates transmission methodDigital data processing detailsUser identity/authority verificationInternet privacyElectronic mail

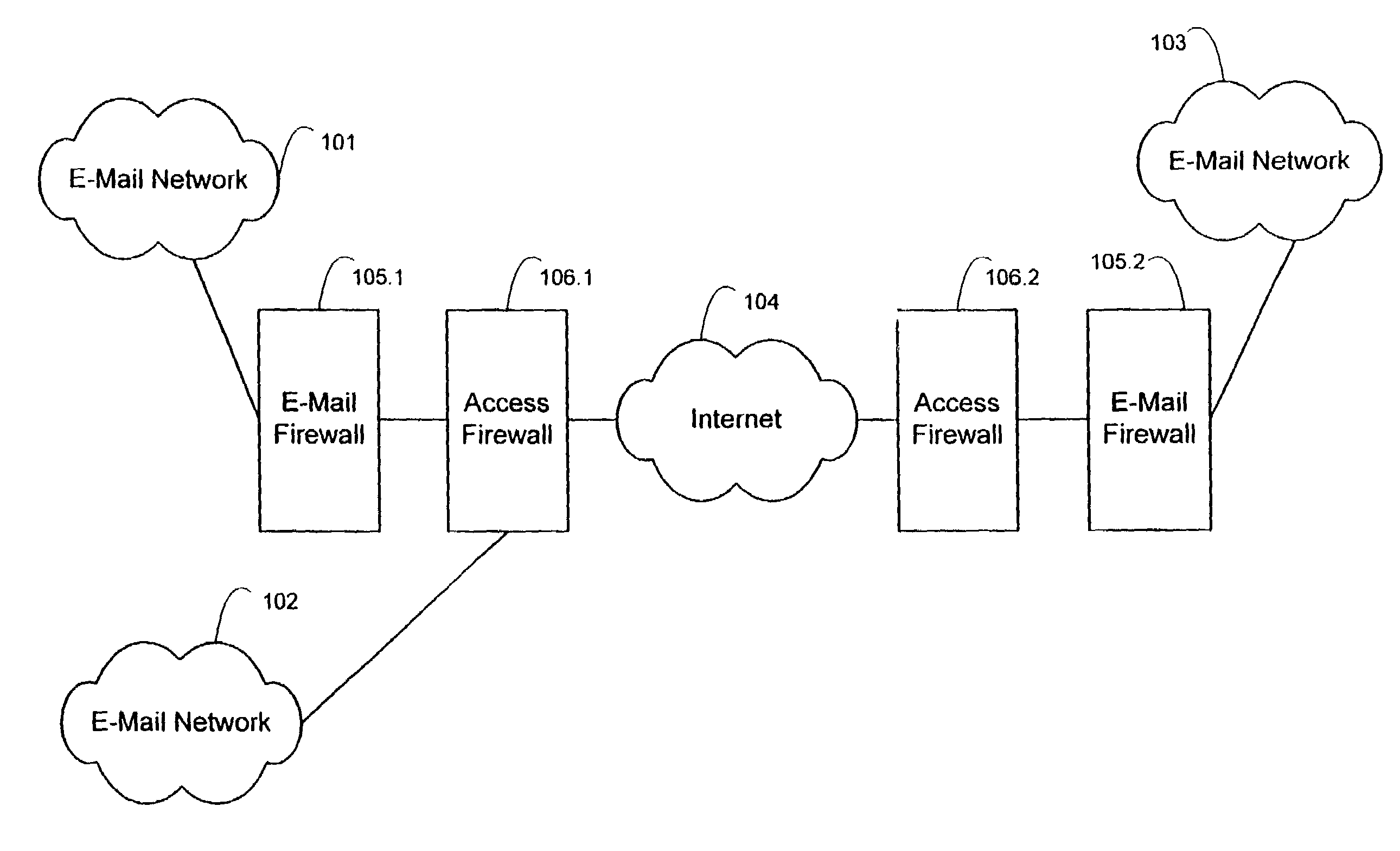

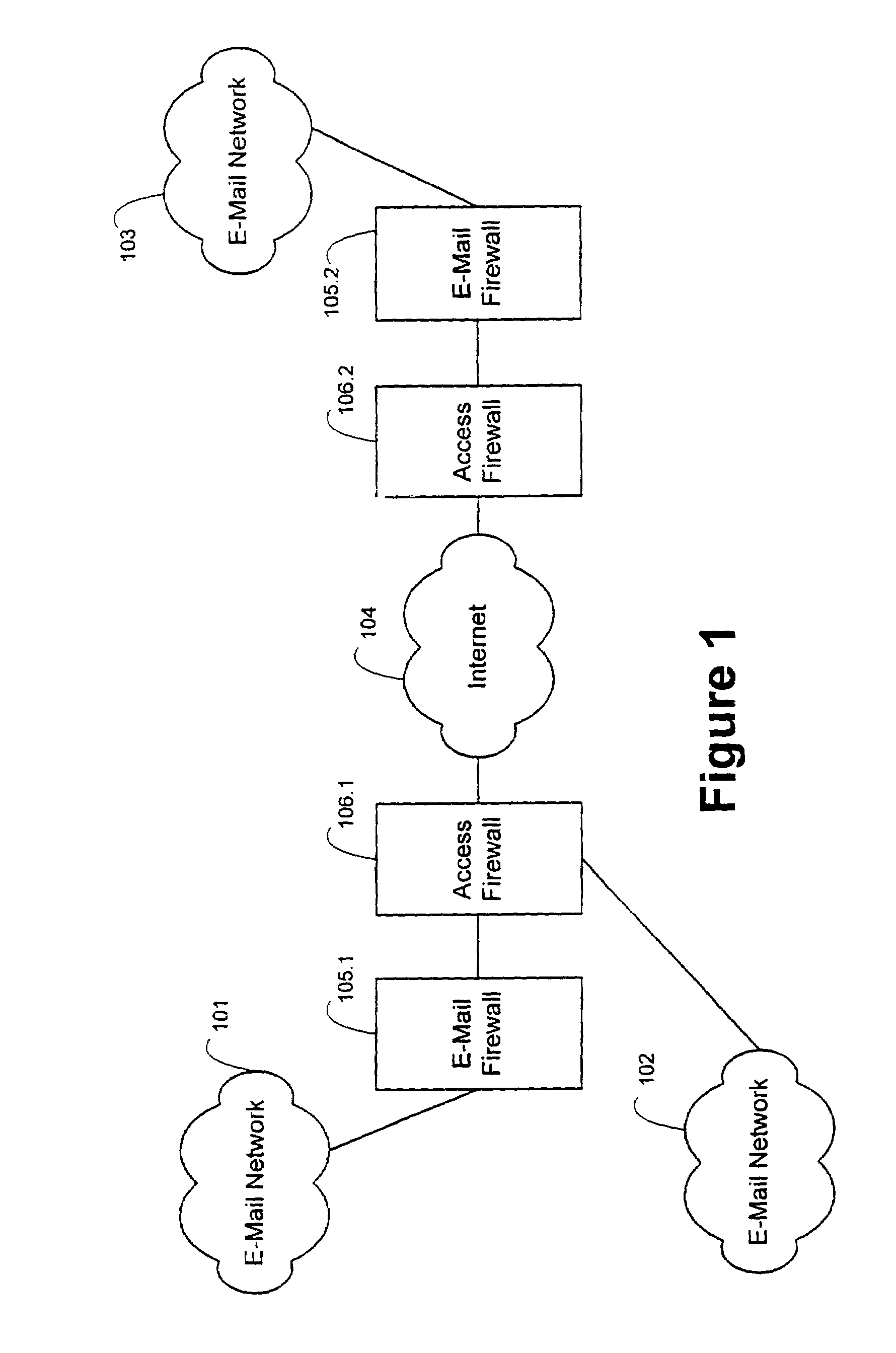

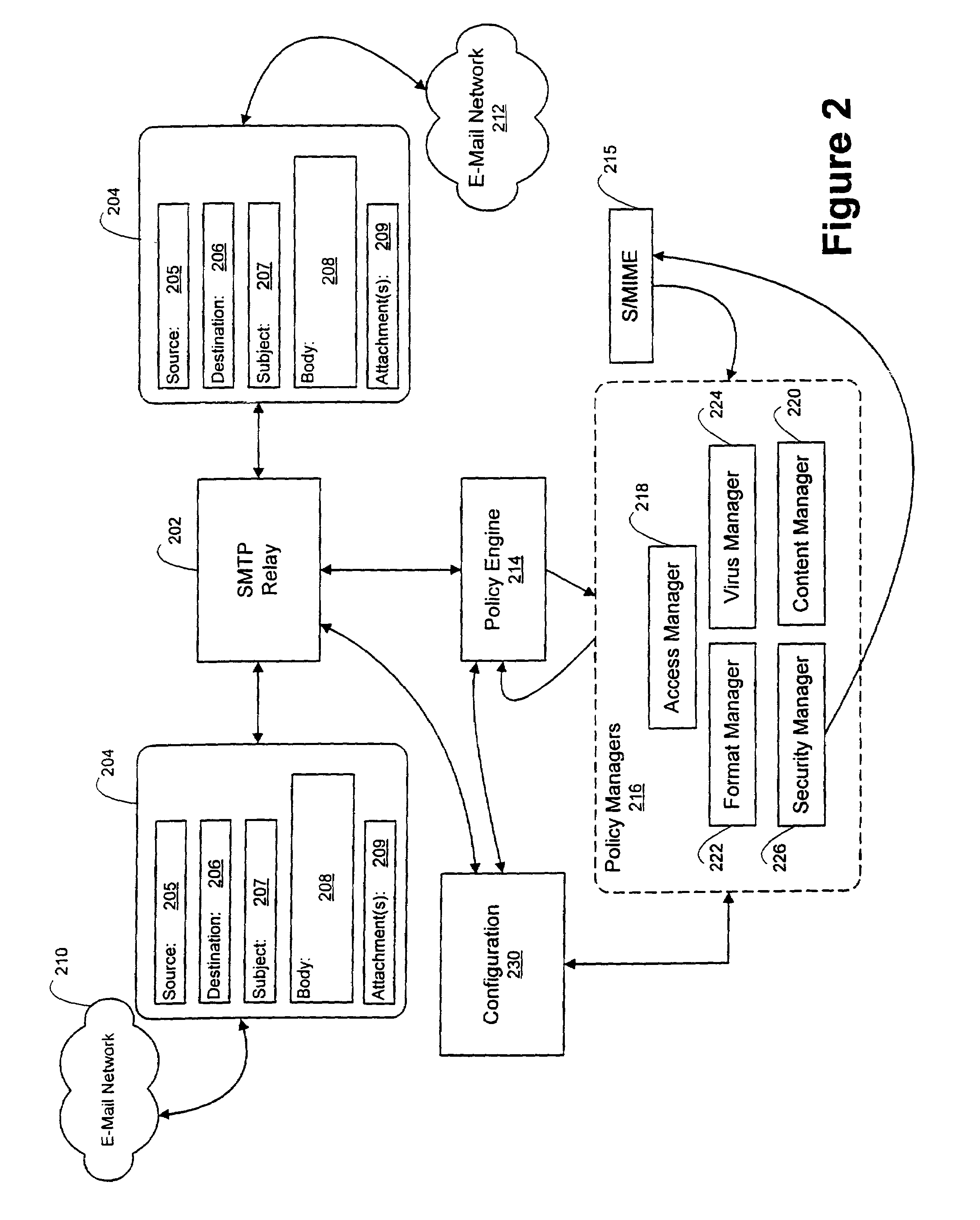

An e-mail firewall applies policies to e-mail messages transmitted between a first site and a plurality of second sites. The e-mail firewall includes a plurality of mail transfer relay modules for transferring e-mail messages between the first site and one of the second sites. Policy managers are used to enforce and administer selectable policies. The policies are used to determine security procedures for the transmission and reception of e-mail messages. The e-mail firewall employs signature verification processes to verify signatures in received encrypted e-mail messages. The e-mail firewall is further adapted to employ external servers for verifying signatures. External servers are also used to retrieve data that is employed to encrypt and decrypt e-mail messages received and transmitted by the e-mail firewall, respectively.

Owner:AXWAY

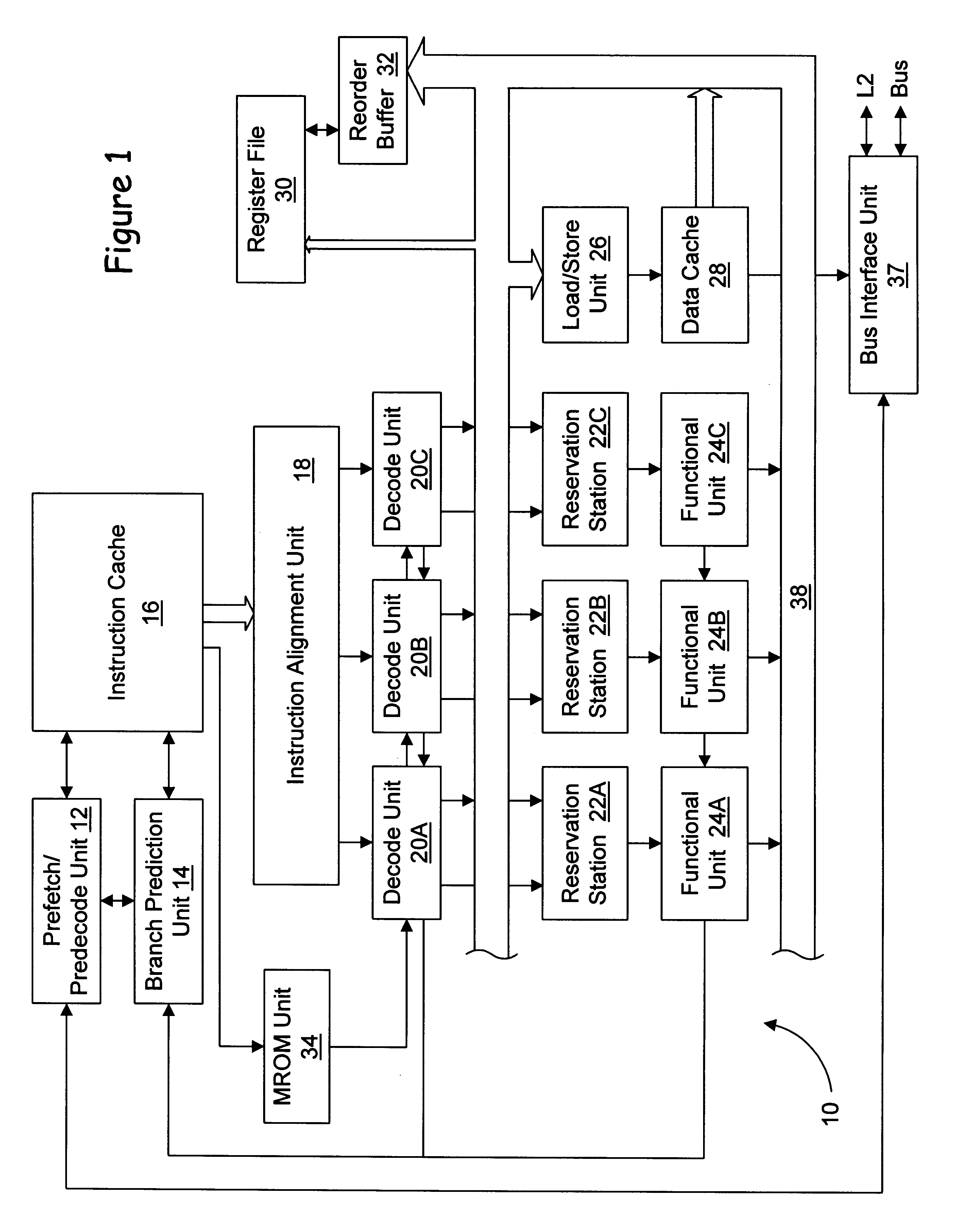

Microprocessors

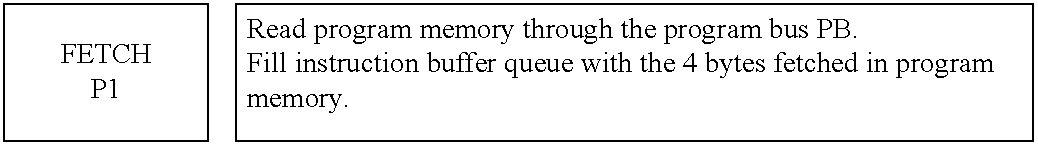

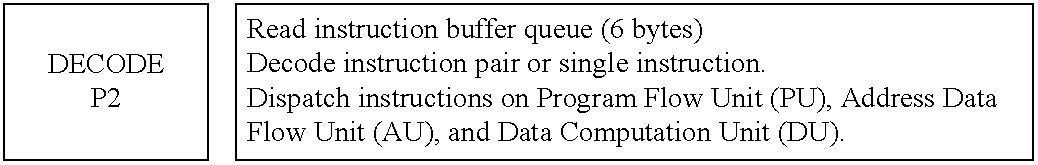

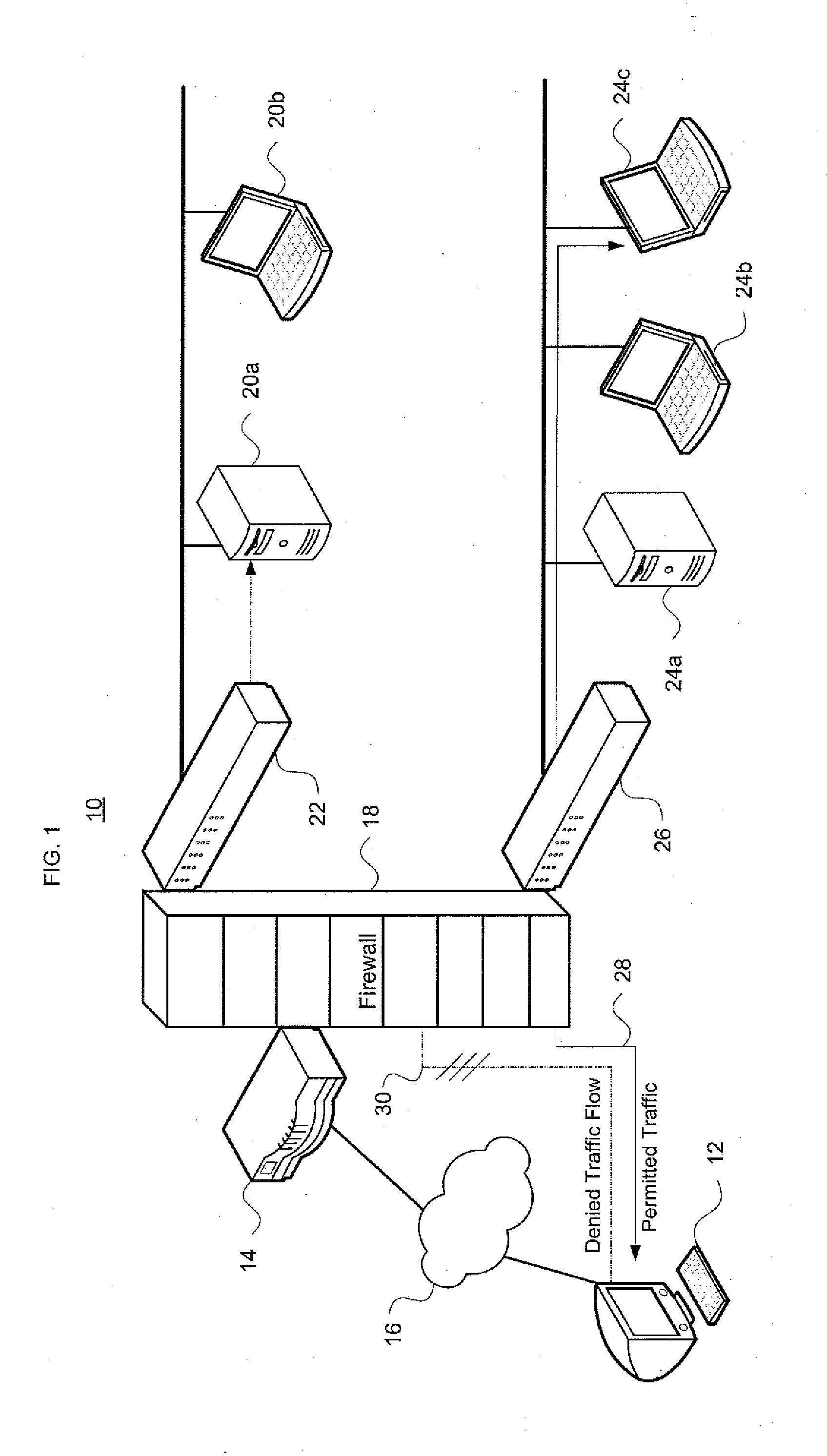

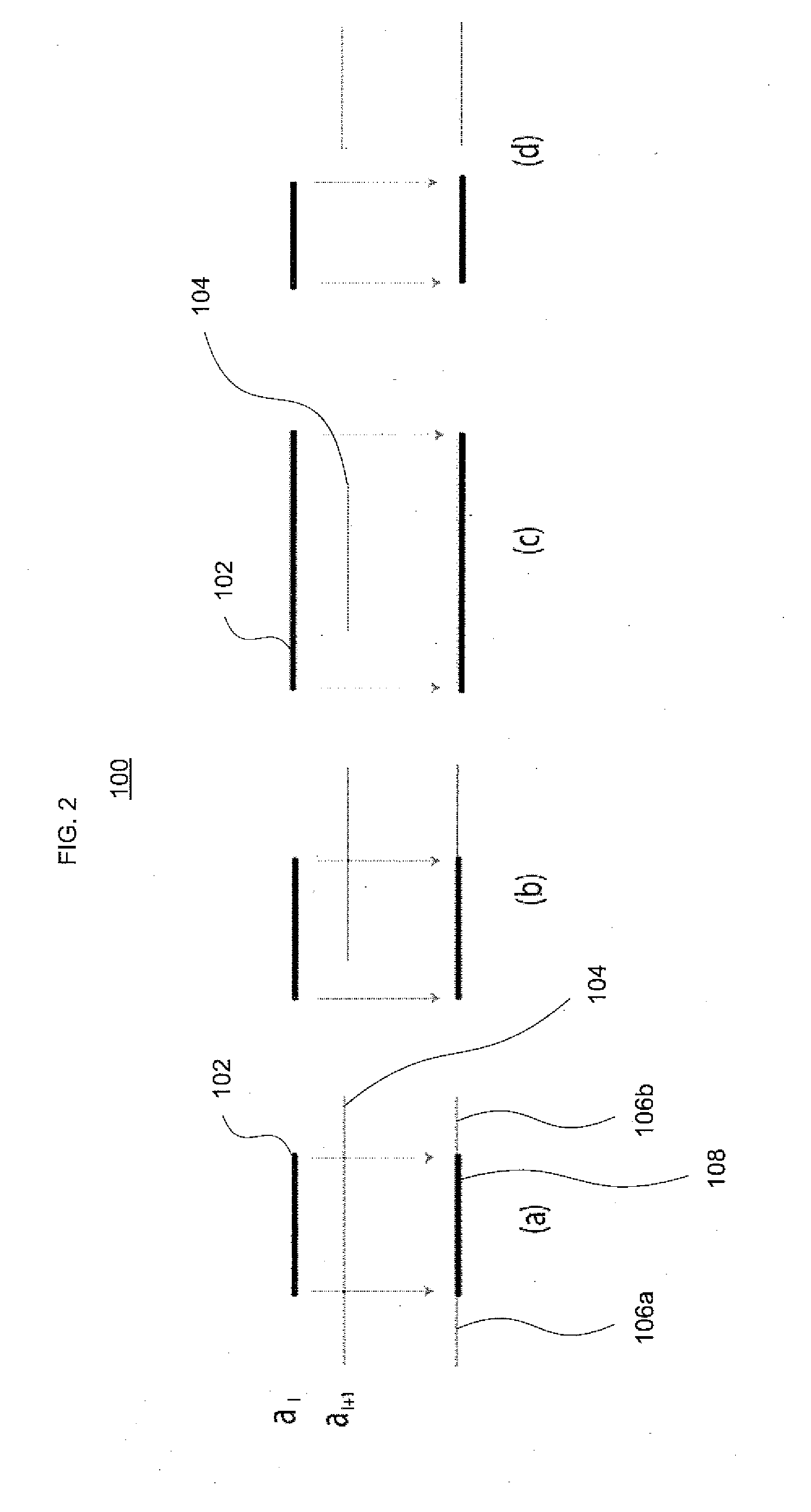

A processor (100) is provided that is a programmable fixed point digital signal processor (DSP) with variable instruction length, offering both high code density and easy programming. Architecture and instruction set are optimized for low power consumption and high efficiency execution of DSP algorithms, such as for wireless telephones, as well as pure control tasks. The processor includes an instruction buffer unit (106), a program flow control unit (108), an address / data flow unit (110), a data computation unit (112), and multiple interconnecting busses. Dual multiply-accumulate blocks improve processing performance. A memory interface unit (104) provides parallel access to data and instruction memories. The instruction buffer is operable to buffer single and compound instructions pending execution thereof. A decode mechanism is configured to decode instructions from the instruction buffer. The use of compound instructions enables effective use of the bandwidth available within the processor. A soft dual memory instruction can be compiled from separate first and second programmed memory instructions. Instructions can be conditionally executed or repeatedly executed. Bit field processing and various addressing modes, such as circular buffer addressing, further support execution of DSP algorithms. The processor includes a multistage execution pipeline with pipeline protection features. Various functional modules can be separately powered down to conserve power. The processor includes emulation and code debugging facilities with support for cache analysis.

Owner:TEXAS INSTR INC

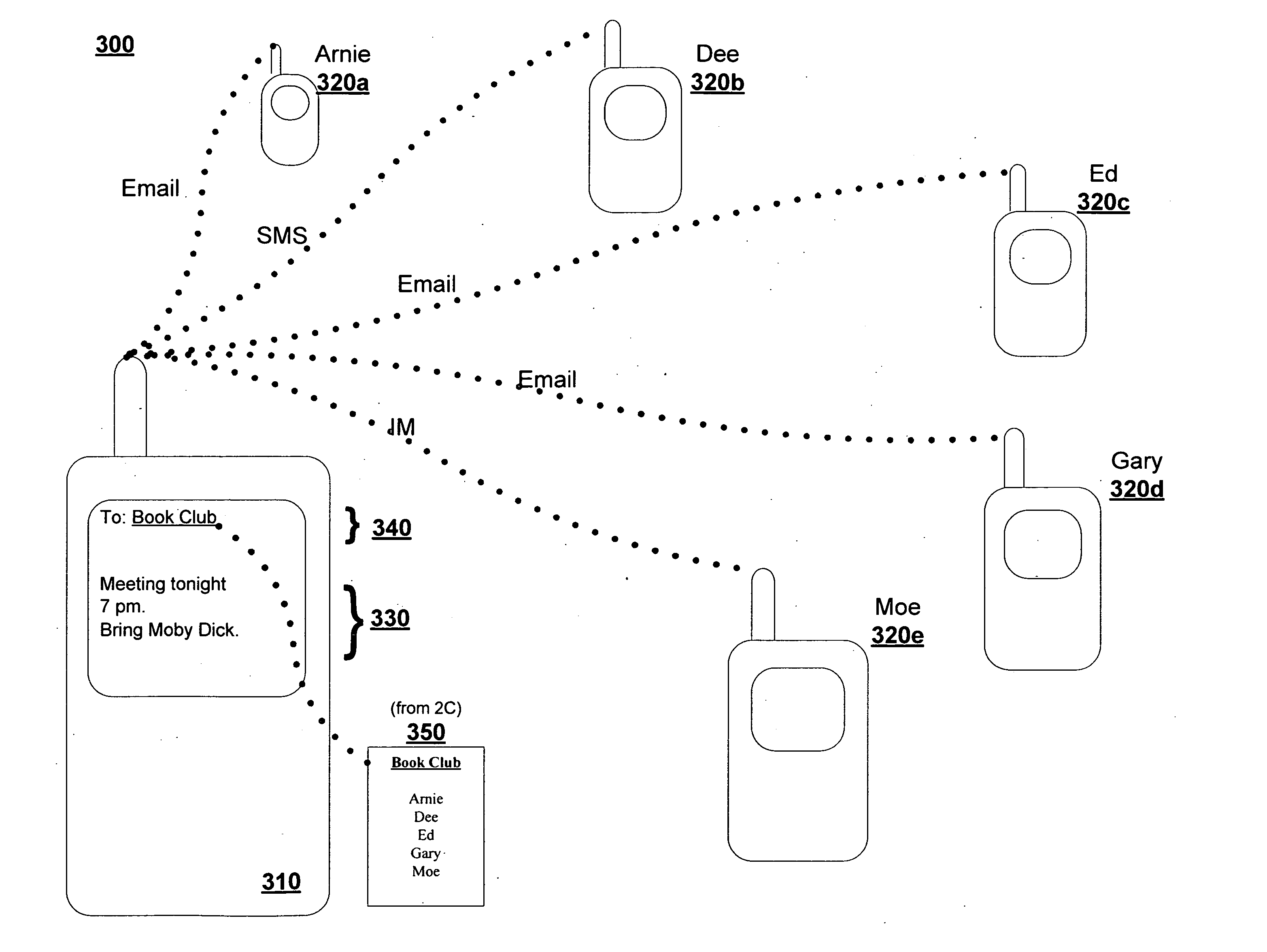

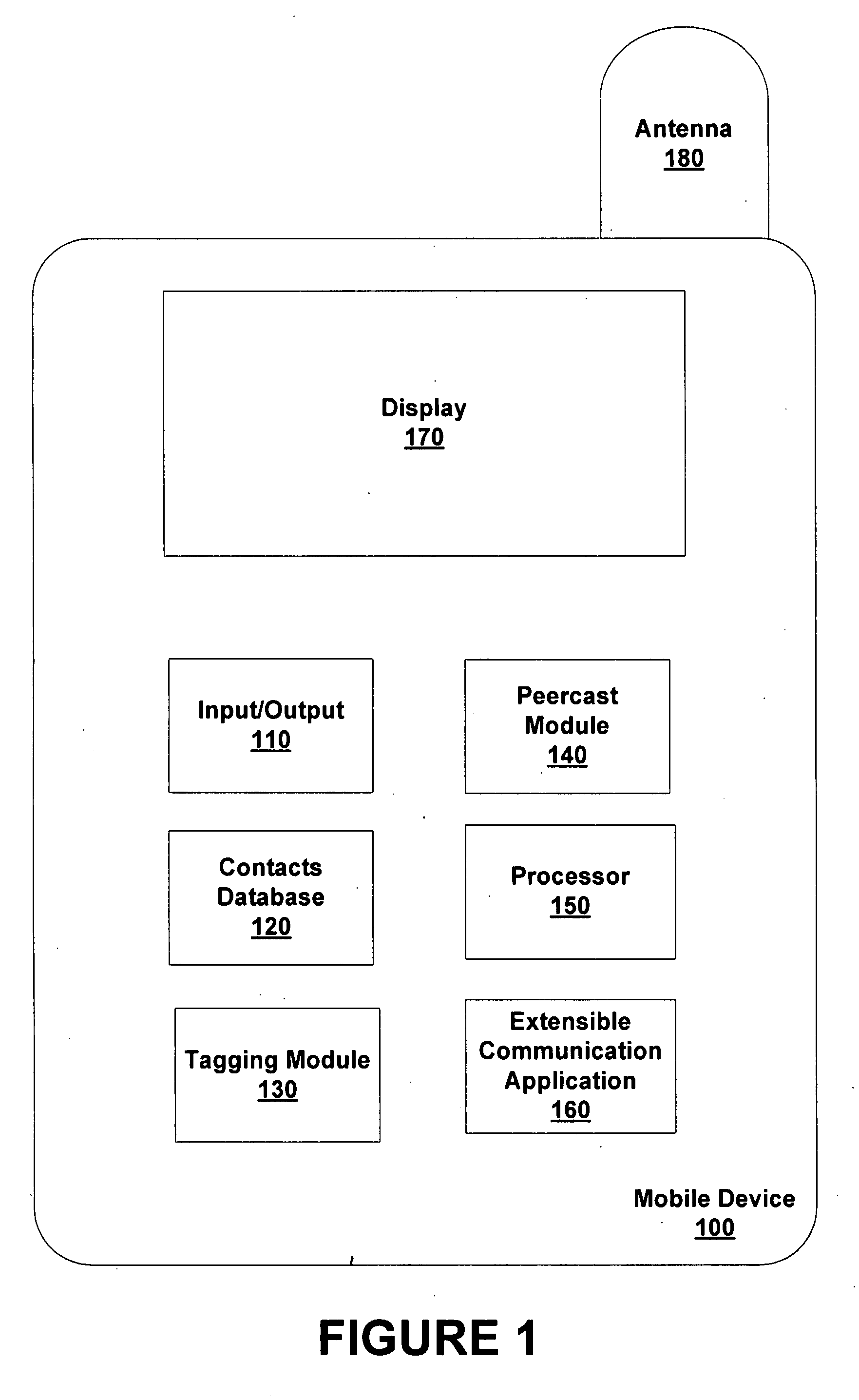

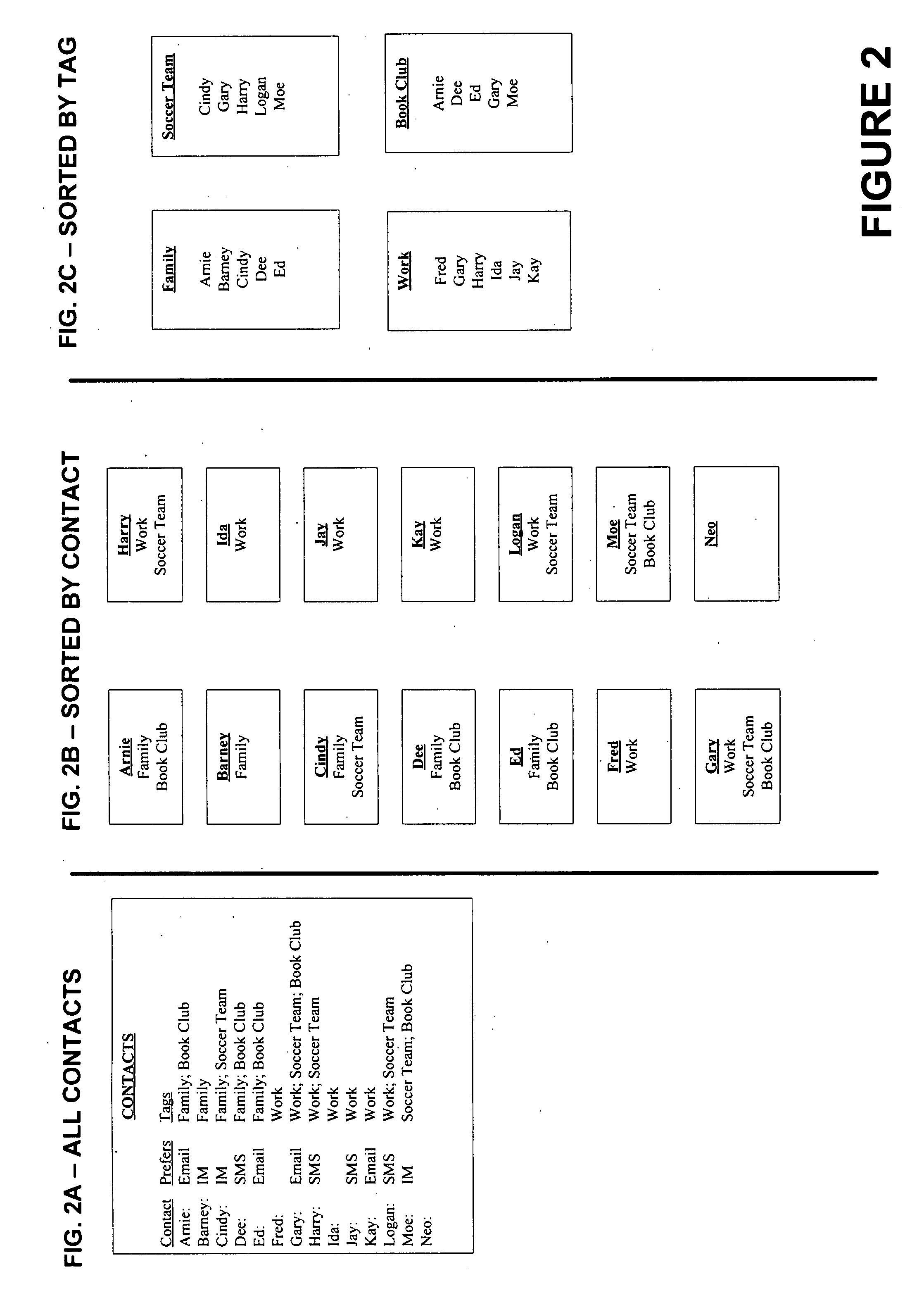

Systems and methods for group messaging

InactiveUS20070290787A1Electronic switchingNext instruction address formationMessage passingComputer science

Owner:SEVEN NETWORKS INC

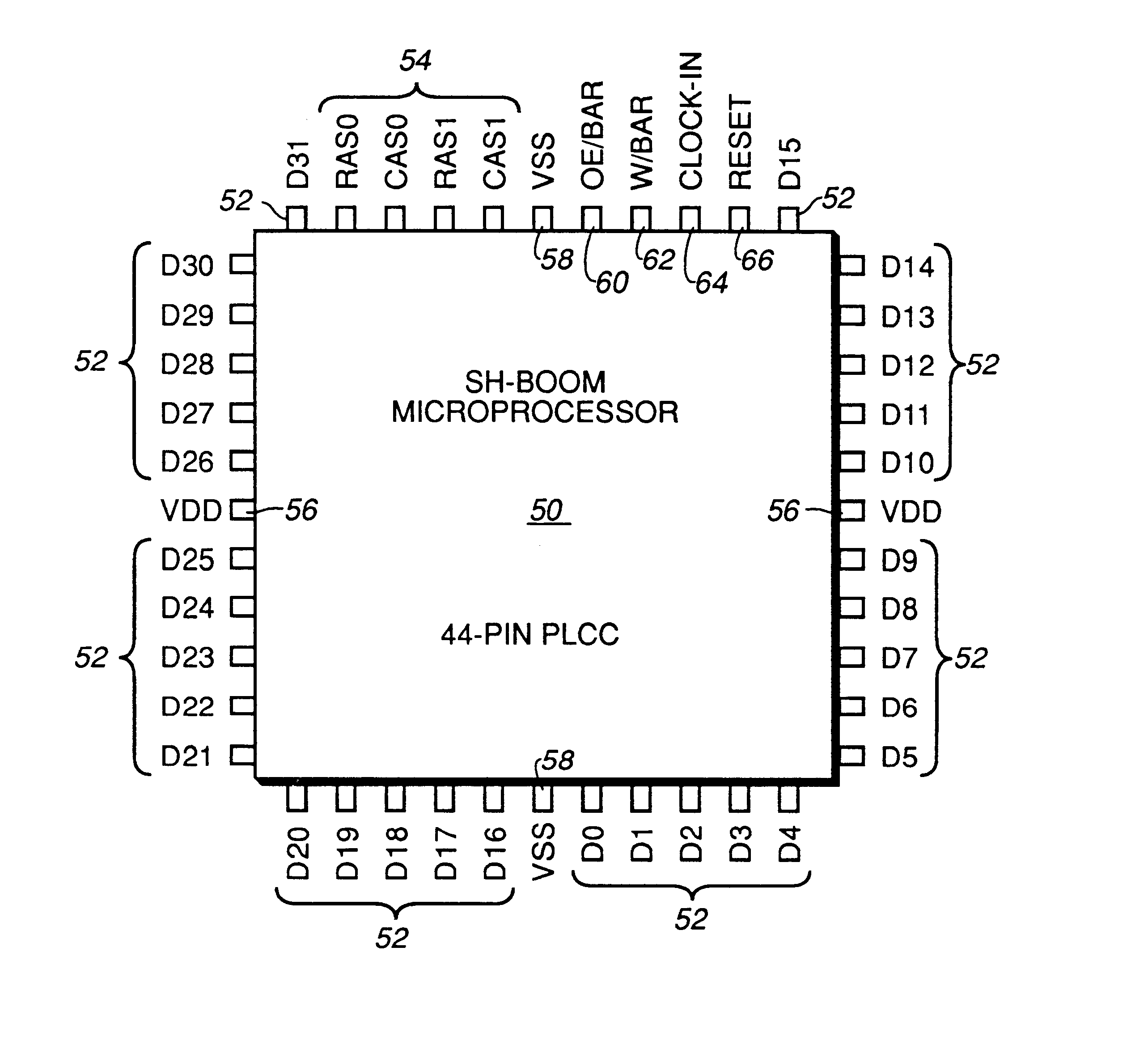

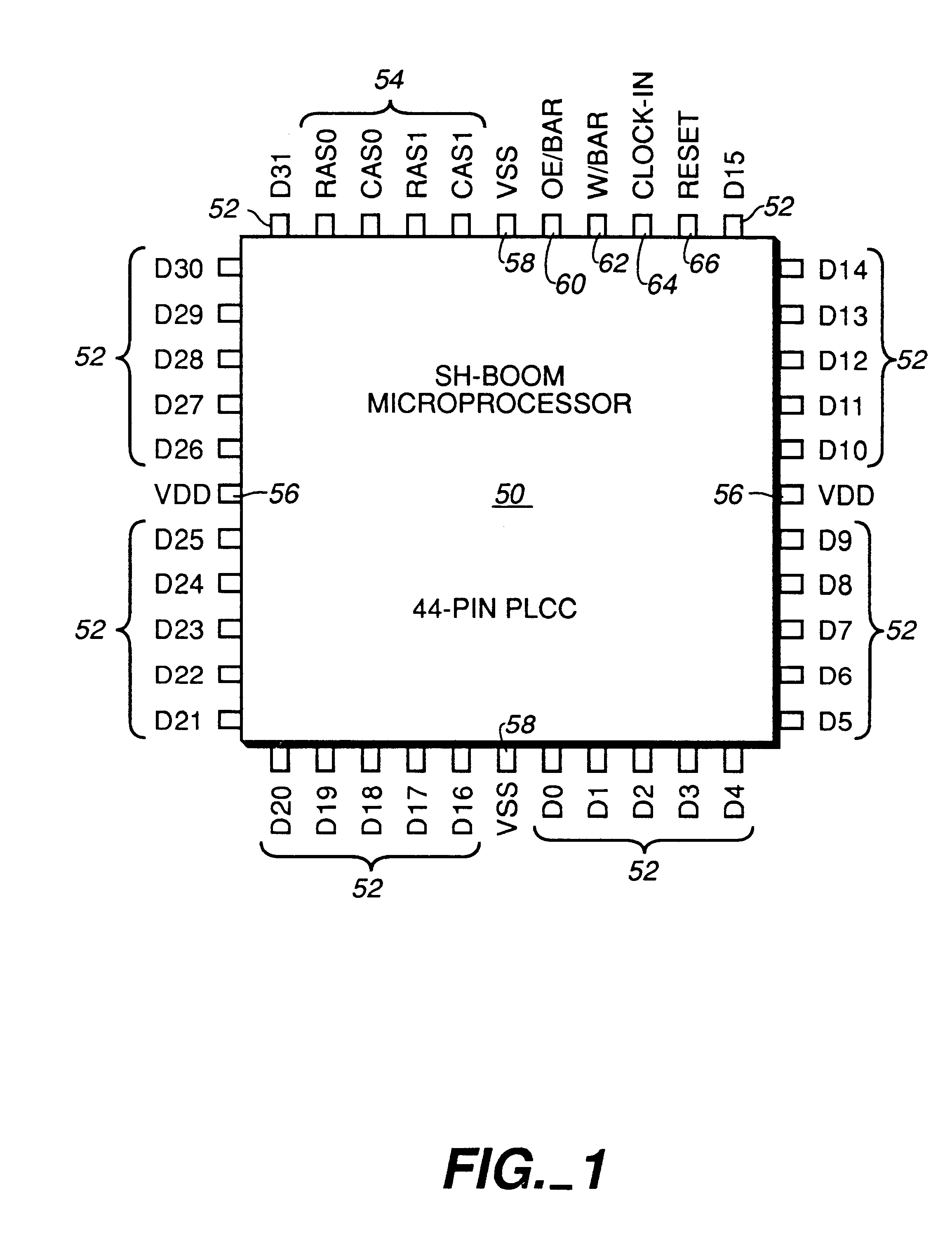

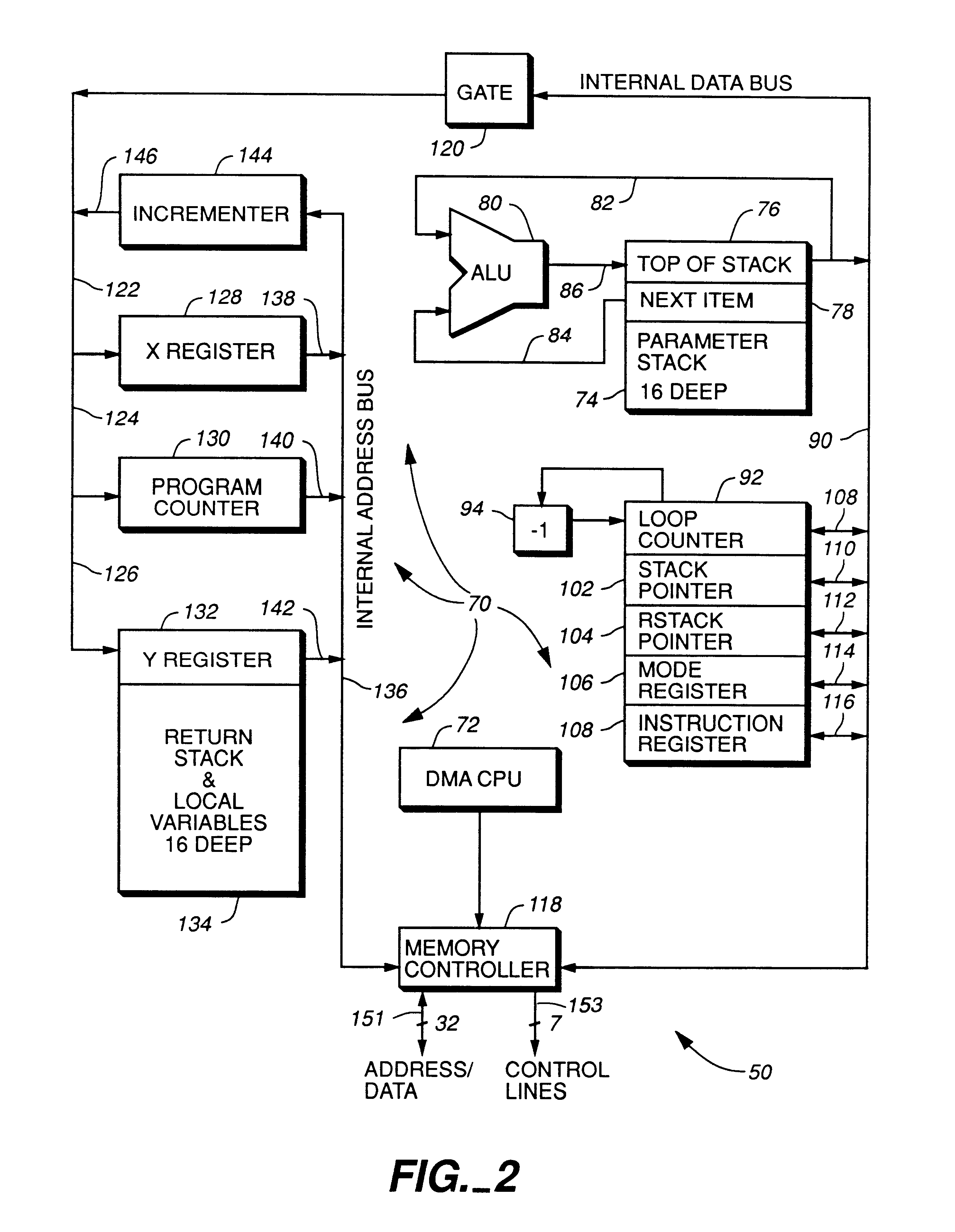

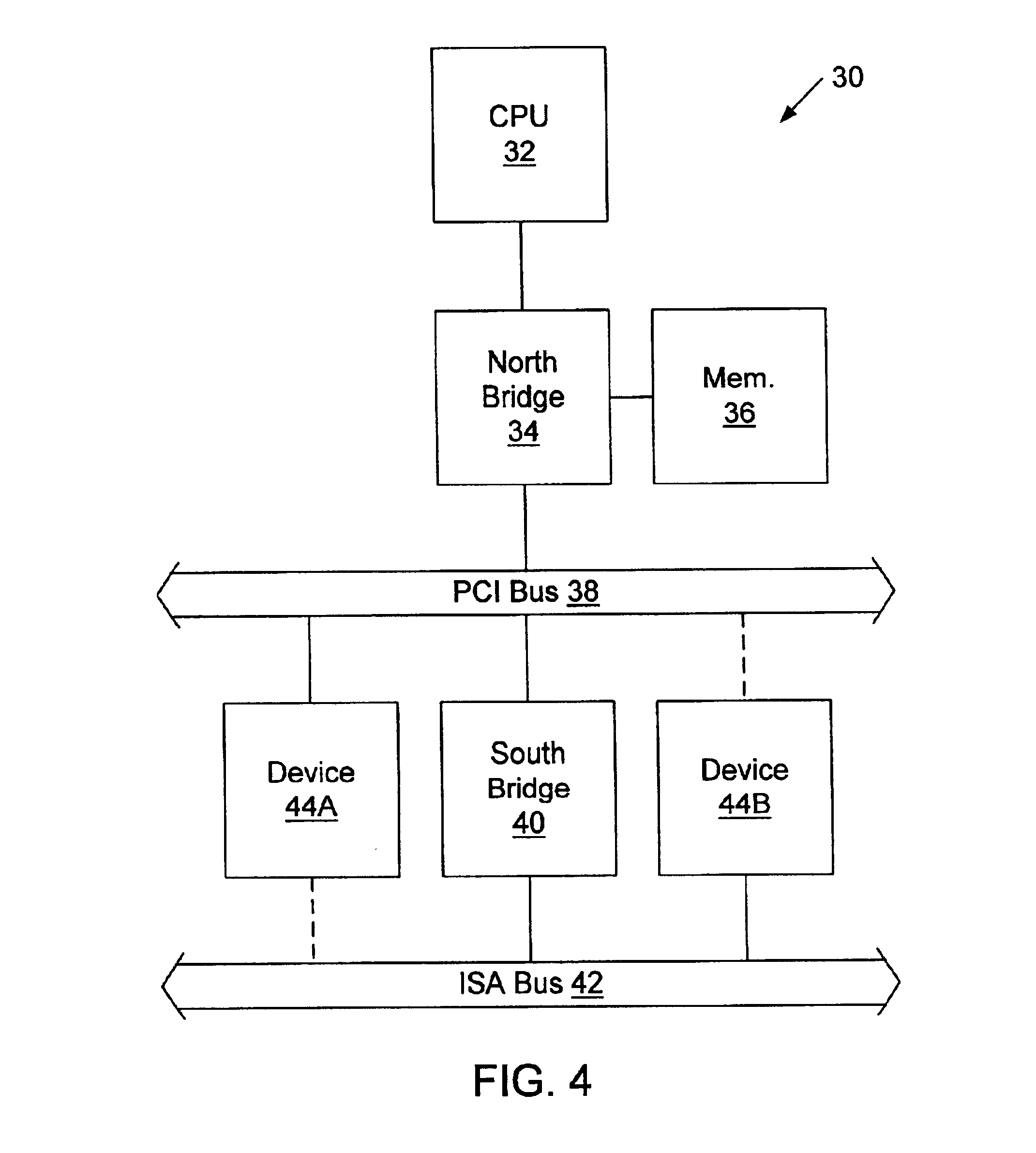

High performance microprocessor having variable speed system clock

InactiveUS6598148B1Improve performanceWithout sacrificing microprocessor speedRandom number generatorsInstruction analysisComputer architectureStatic random-access memory

A microprocessor integrated circuit including a processing unit disposed upon an integrated circuit substrate is disclosed herein. The processing unit is designed to operate in accordance with a predefined sequence of program instructions stored within an instruction register. A memory, capable of storing information provided by the processing unit and occupying a larger area of the integrated circuit substrate than the processing unit, is also provided within the microprocessor integrated circuit. The memory may be implemented using, for example dynamic or static random-access memory. A variable output frequency system clock, such as generated by a ring oscillator, is also disposed on the integrated circuit substrate.

Owner:MOORE CHARLES H TTE UTD 03 21 2006 THE EQUINOX TRUST

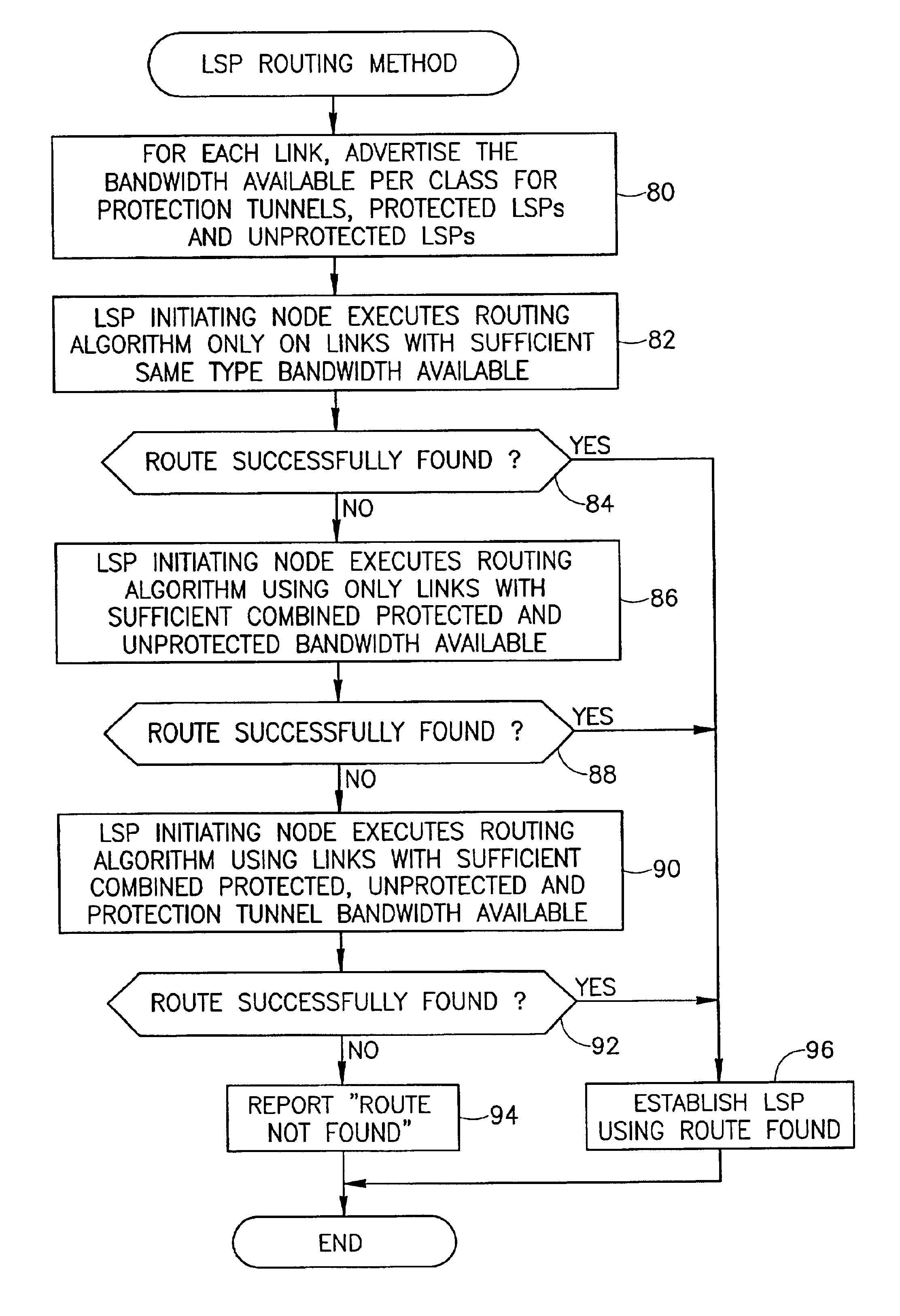

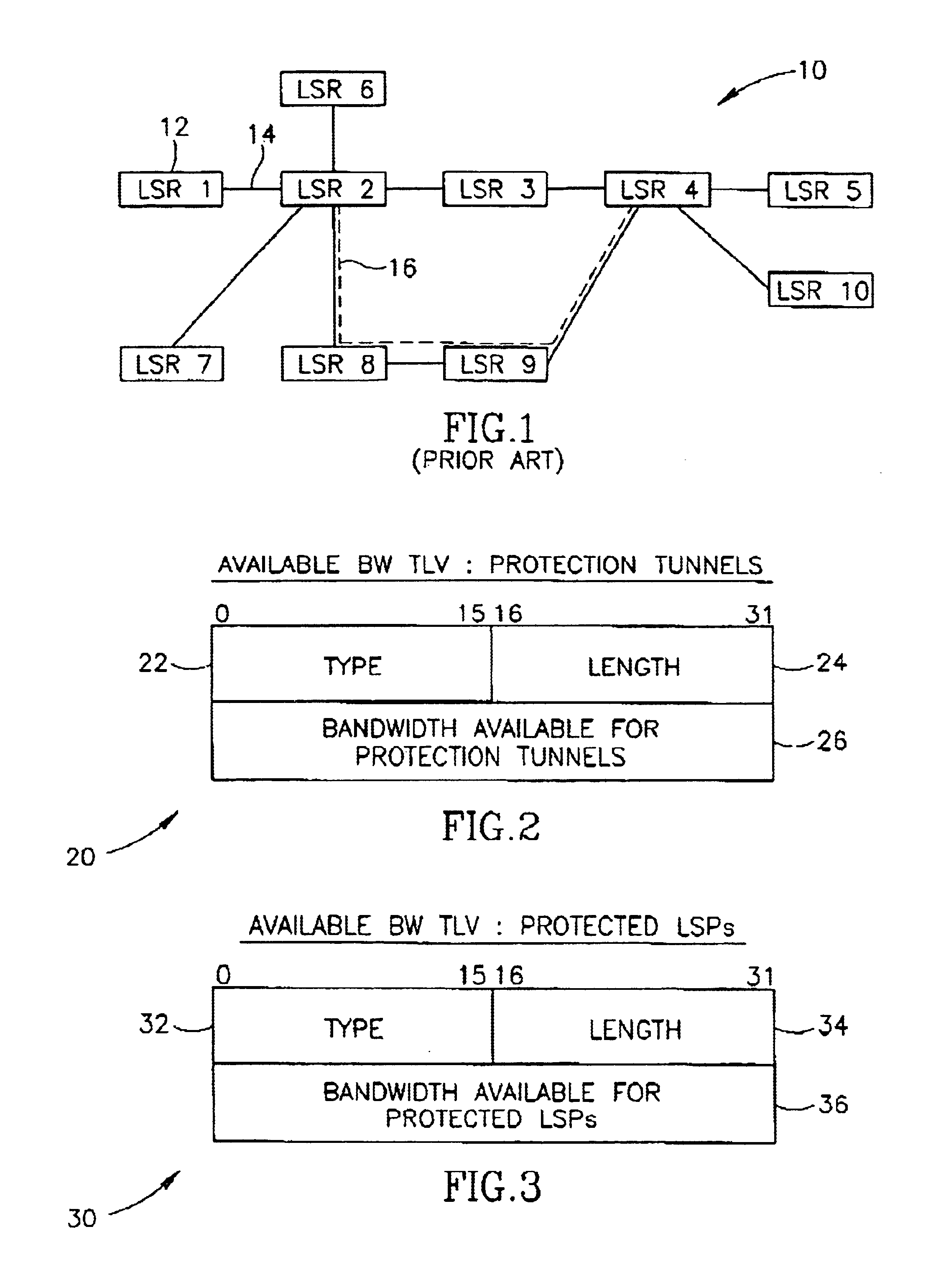

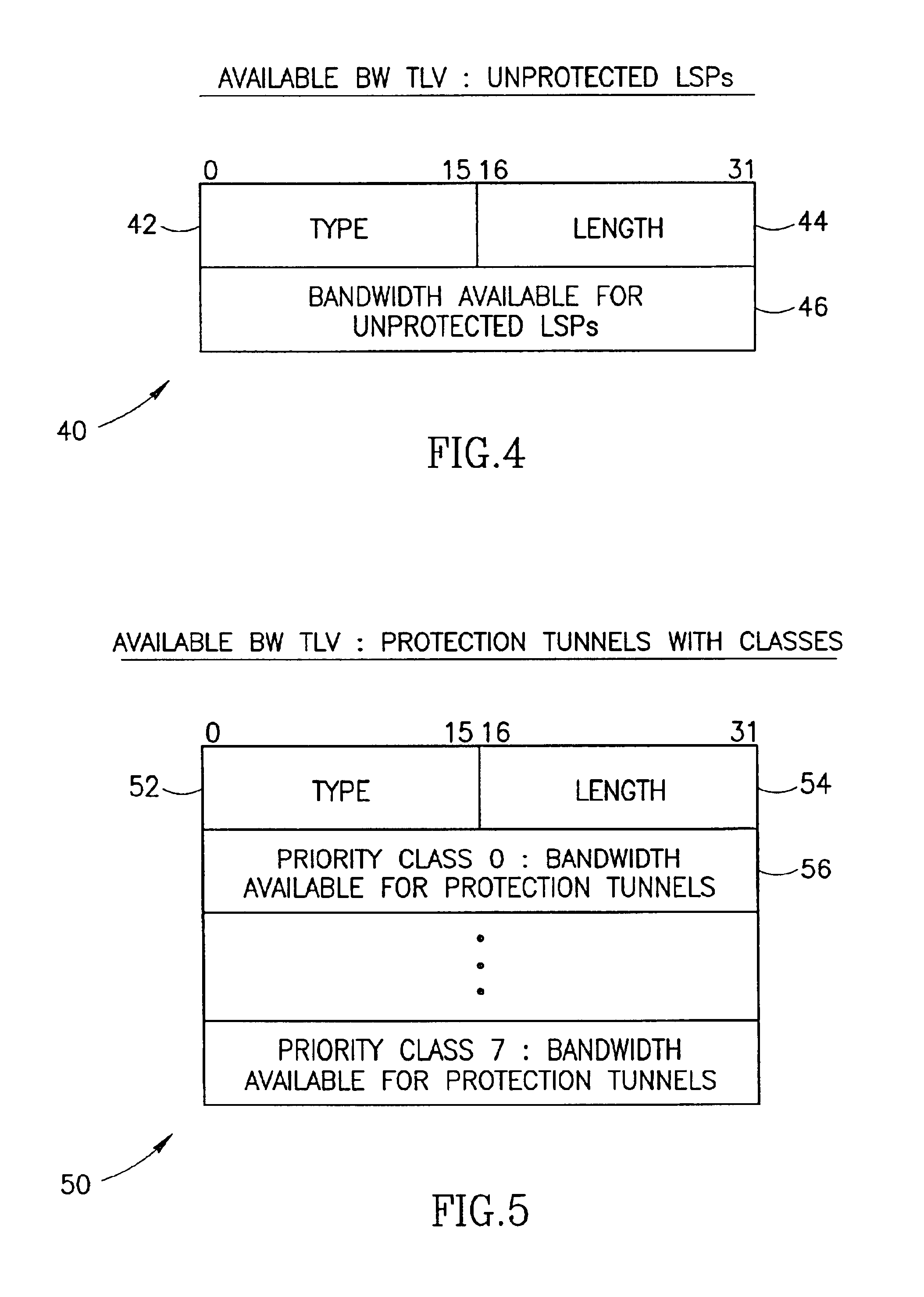

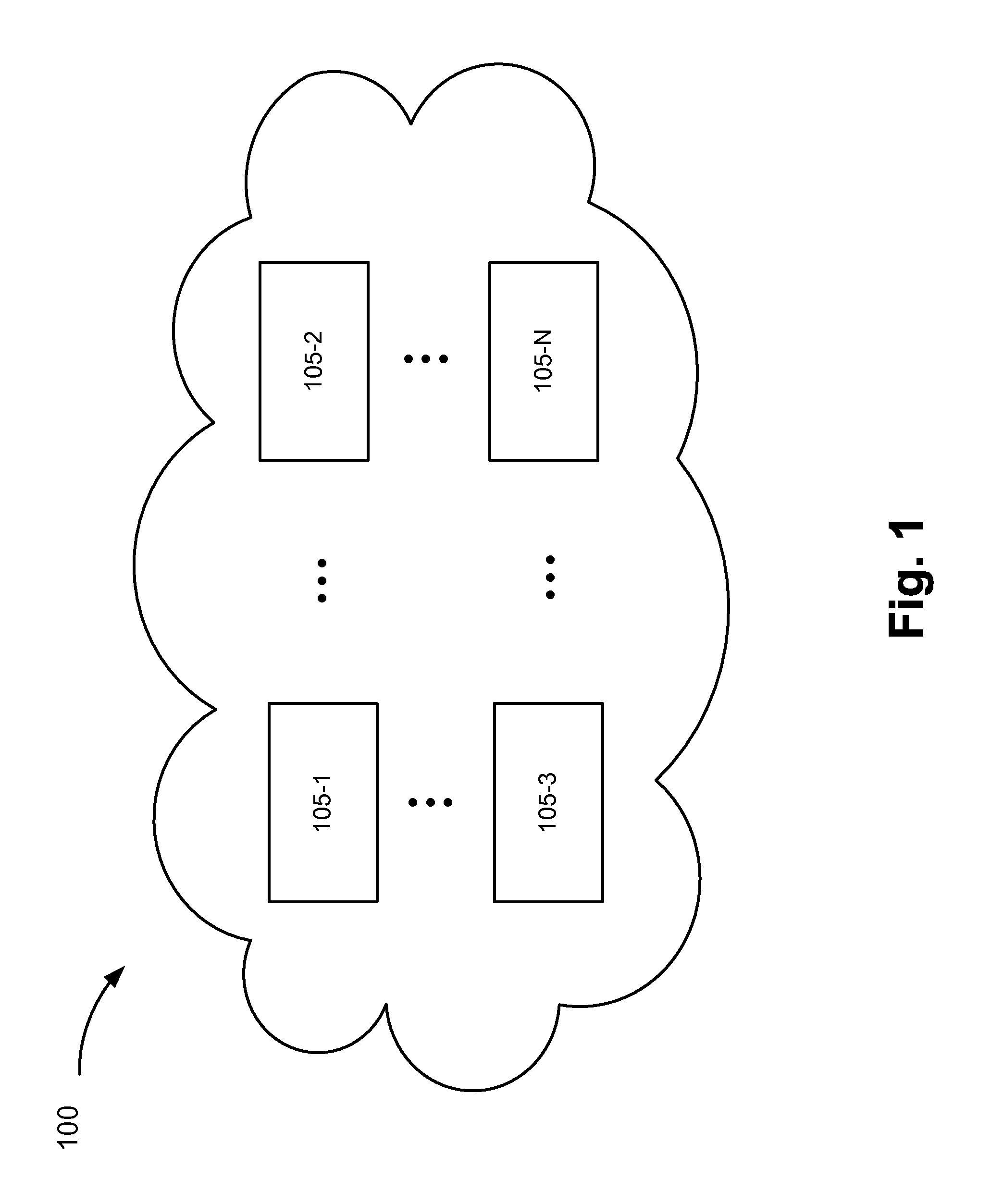

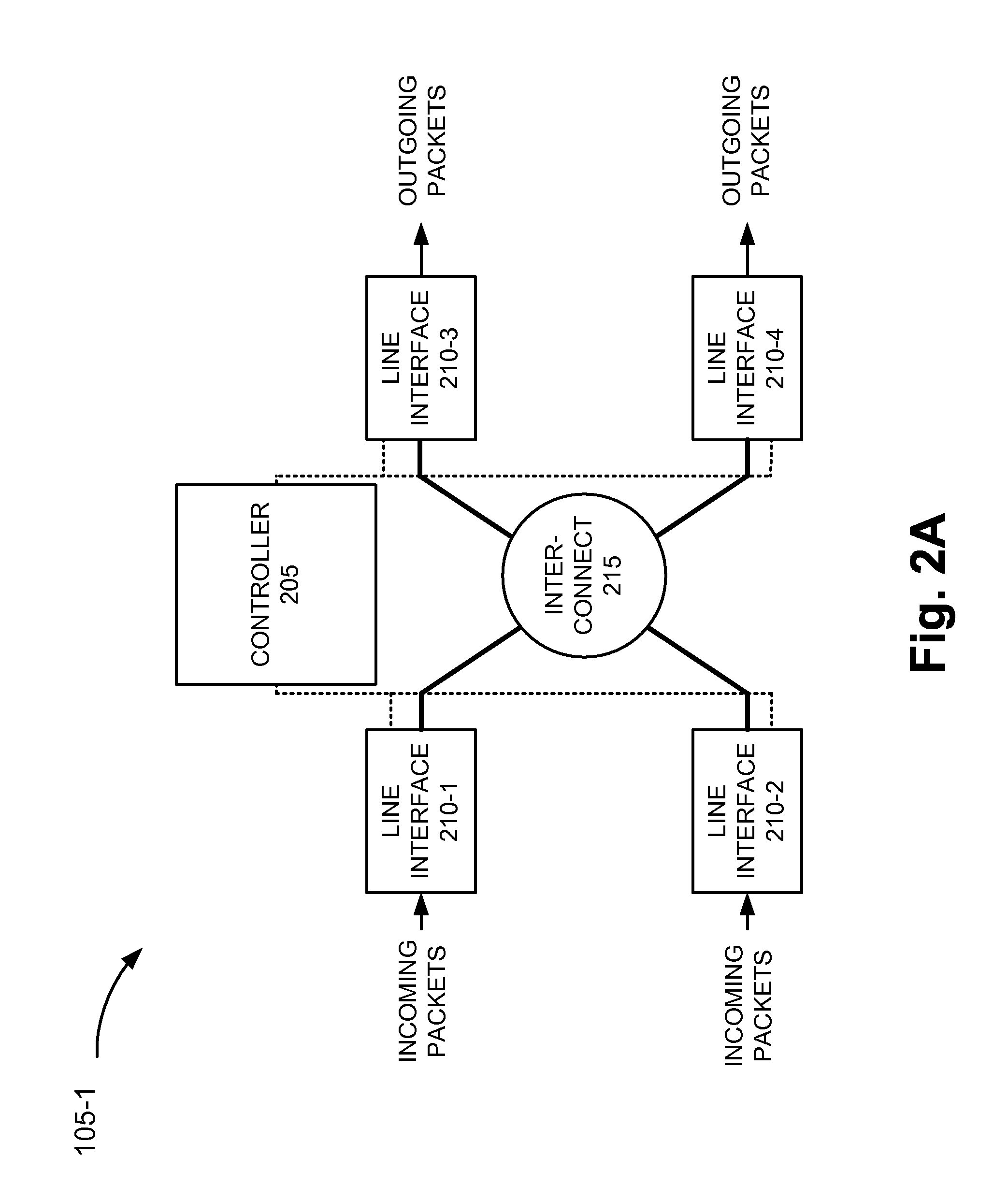

Path rerouting mechanism utilizing multiple link bandwidth allocations

InactiveUS6895441B1Error preventionFrequency-division multiplex detailsTraffic capacityDistributed computing

A path reroute mechanism for use in communication networks comprising multiple searches for a routing path to restore traffic following a failure that could not be protected by a previously established protection route (i.e. protection tunnel, bypass, etc.) or for routing or rerouting of traffic paths for optimization or any other purpose. Each node advertises TLVs that include bandwidth allocation information used to derive the actual amount of bandwidth available for protection purposes, protected paths and unprotected paths or a portion of this information such as in the case where unprotected paths are not supported. Searches are performed on larger and larger portions of the available bandwidth until a route for the path is found.

Owner:ATRICA INC

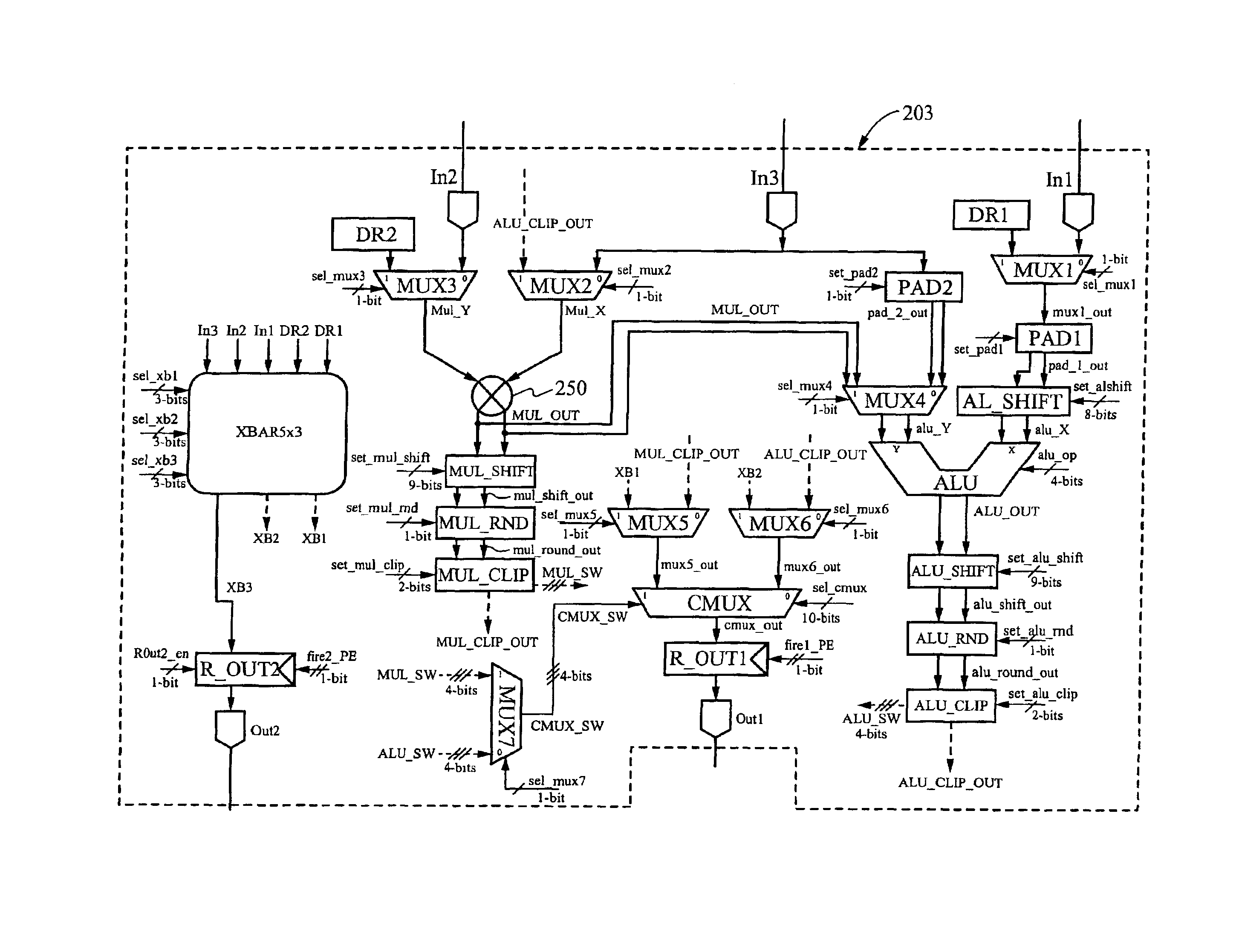

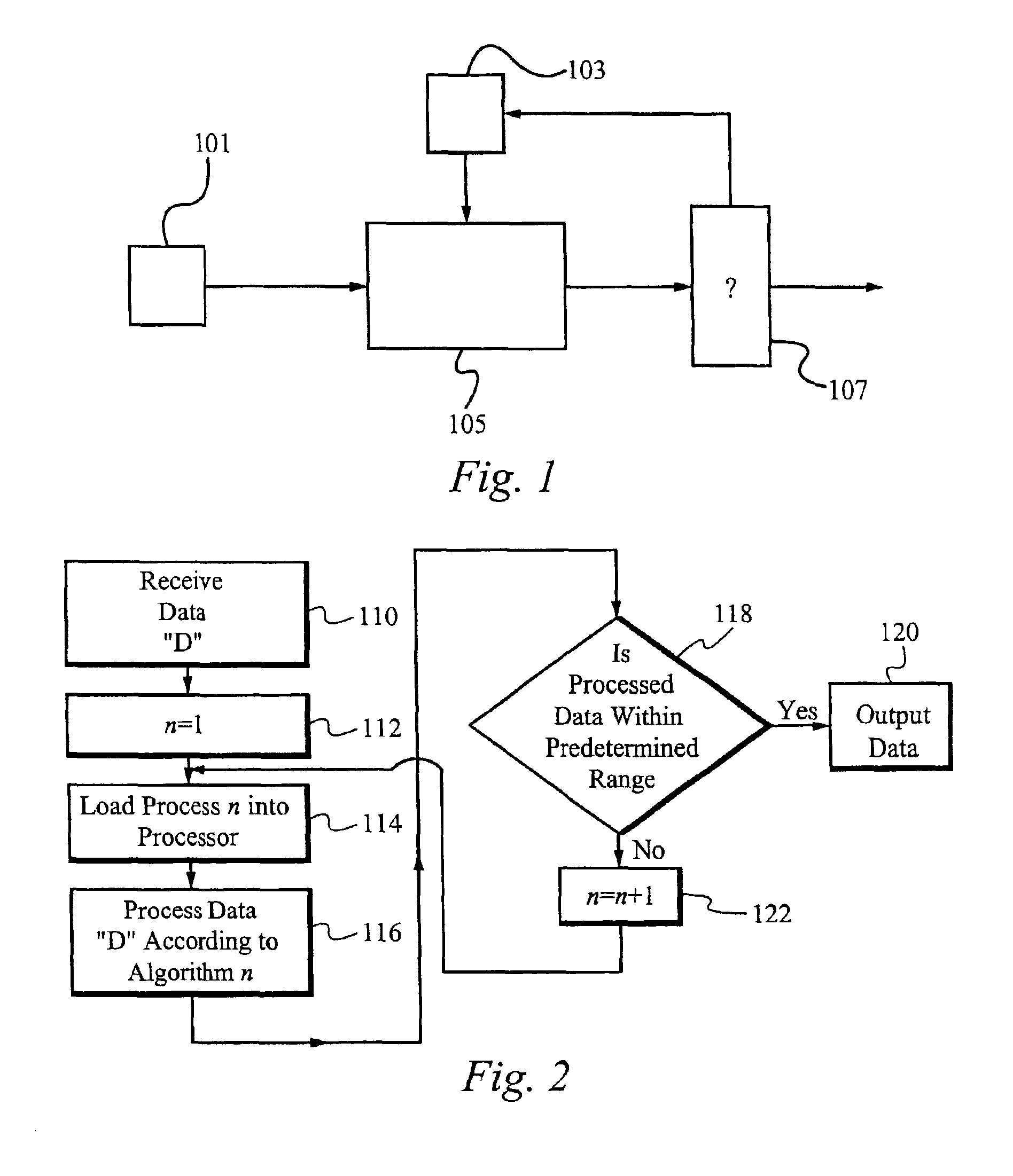

Reconfigurable data path processor

InactiveUS6883084B1Eliminates branchingCycle simpleEnergy efficient ICTConditional code generationMultiplexerProcessing element

A reconfigurable data path processor comprises a plurality of independent processing elements. Each of the processing elements advantageously comprising an identical architecture. Each processing element comprises a plurality of data processing means for generating a potential output. Each processor is also capable of through-putting an input as a potential output with little or no processing. Each processing element comprises a conditional multiplexer having a first conditional multiplexer input, a second conditional multiplexer input and a conditional multiplexer output. A first potential output value is transmitted to the first conditional multiplexer input, and a second potential output value is transmitted to the second conditional multiplexer output. The conditional multiplexer couples either the first conditional multiplexer input or the second conditional multiplexer input to the conditional multiplexer output, according to an output control command. The output control command is generated by processing a set of arithmetic status-bits through a logical mask. The conditional multiplexer output is coupled to a first processing element output. A first set of arithmetic bits are generated according to the processing of the first processable value. A second set of arithmetic bits may be generated from a second processing operation. The selection of the arithmetic status-bits is performed by an arithmetic-status bit multiplexer selects the desired set of arithmetic status bits from among the first and second set of arithmetic status bits. The conditional multiplexer evaluates the select arithmetic status bits according to logical mask defining an algorithm for evaluating the arithmetic status bits.

Owner:STC UNM +1

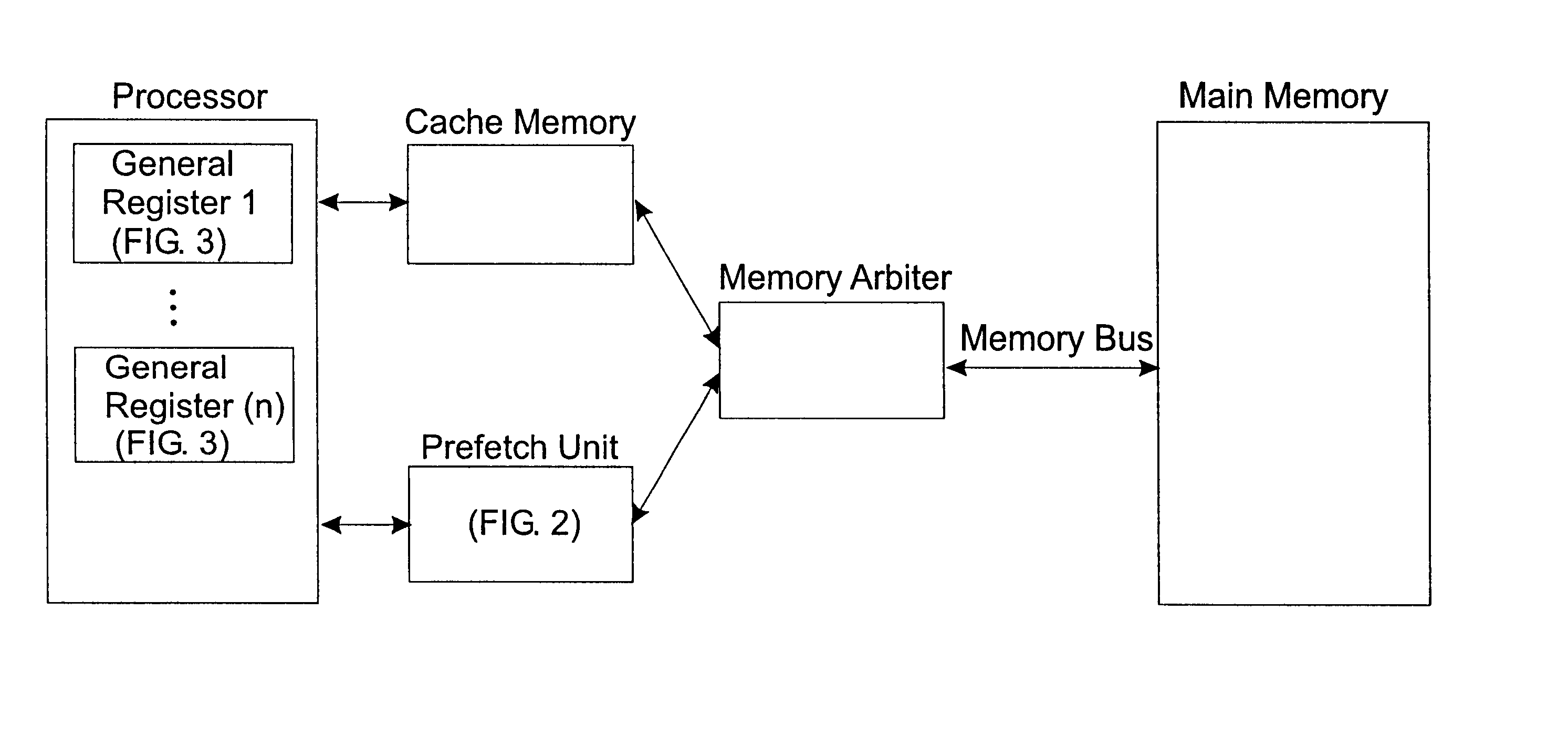

Method for perfetching structured data

InactiveUS6311260B1Memory adressing/allocation/relocationDigital computer detailsIdle timeComputer science

A method for prefetching structured data, and more particularly a mechanism for observing address references made by a processor, and learning from those references the patterns of accesses made to structured data. Structured data means aggregates of related data such as arrays, records, and data containing links and pointers. When subsequent accesses are made to data structured in the same way, the mechanism generates in advance the sequence of addresses that will be needed for the new accesses. This sequence is utilized by the memory to obtain the data somewhat earlier than the instructions would normally request it, and thereby eliminate idle time due to memory latency while awaiting the arrival of the data.

Owner:NEC CORP

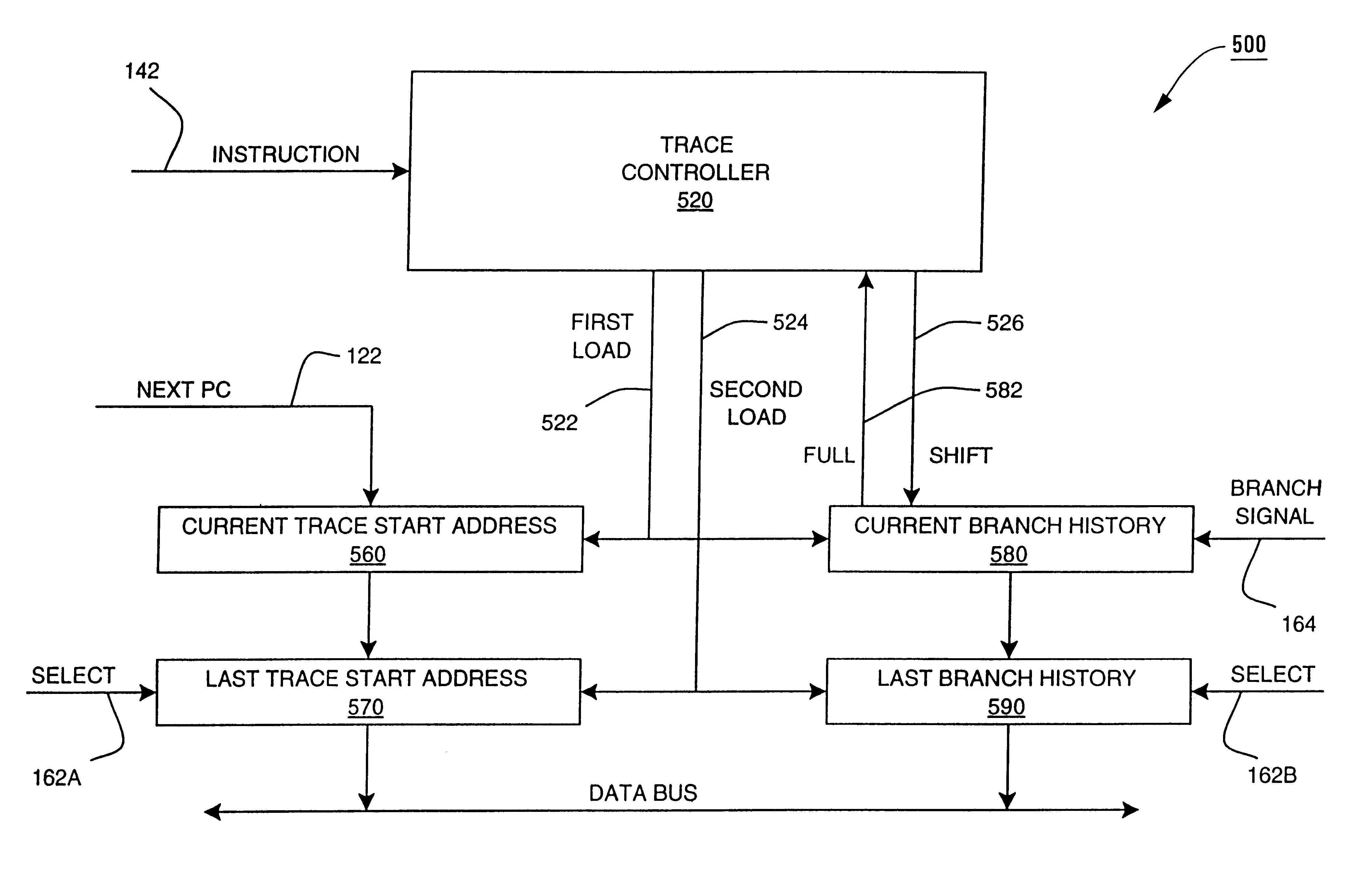

Method and apparatus for profiling of non-instrumented programs and dynamic processing of profile data

InactiveUS6233678B1Digital computer detailsNext instruction address formationProcessor registerParallel computing

An apparatus and method are shown for collecting a branch history value of a program executing in a processor. A current start address register latches a program count value in response to a trace termination condition, such as an indirect branch instruction. A current branch history register is cleared in response to the trace termination condition and shifts in a branch outcome value of the processor in response to a conditional direct branch instruction. A last trace start address latches the content of the current trace start address and a last branch history register latches the content of the current branch history register when a trace termination condition occurs.

Owner:HEWLETT PACKARD CO

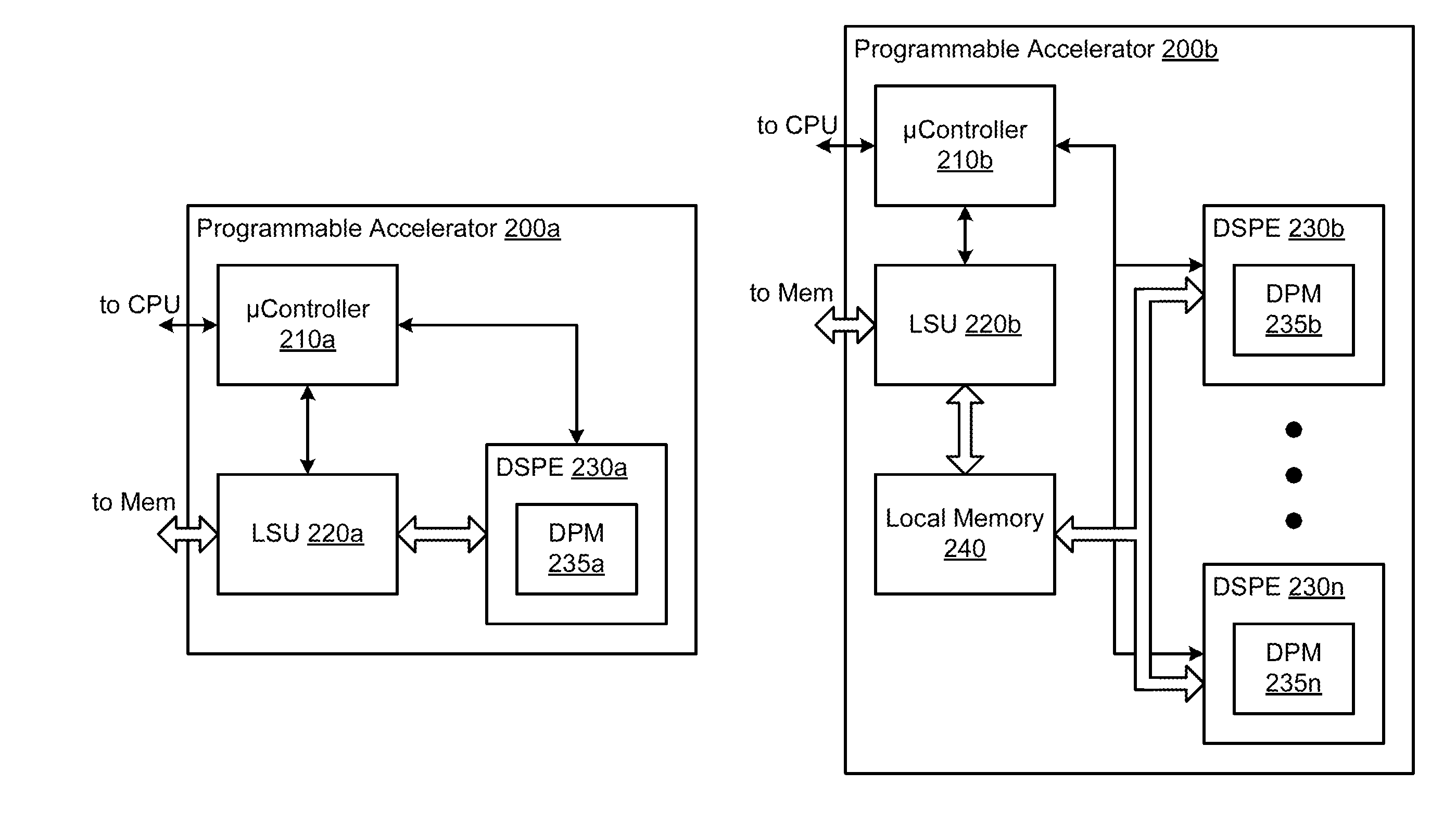

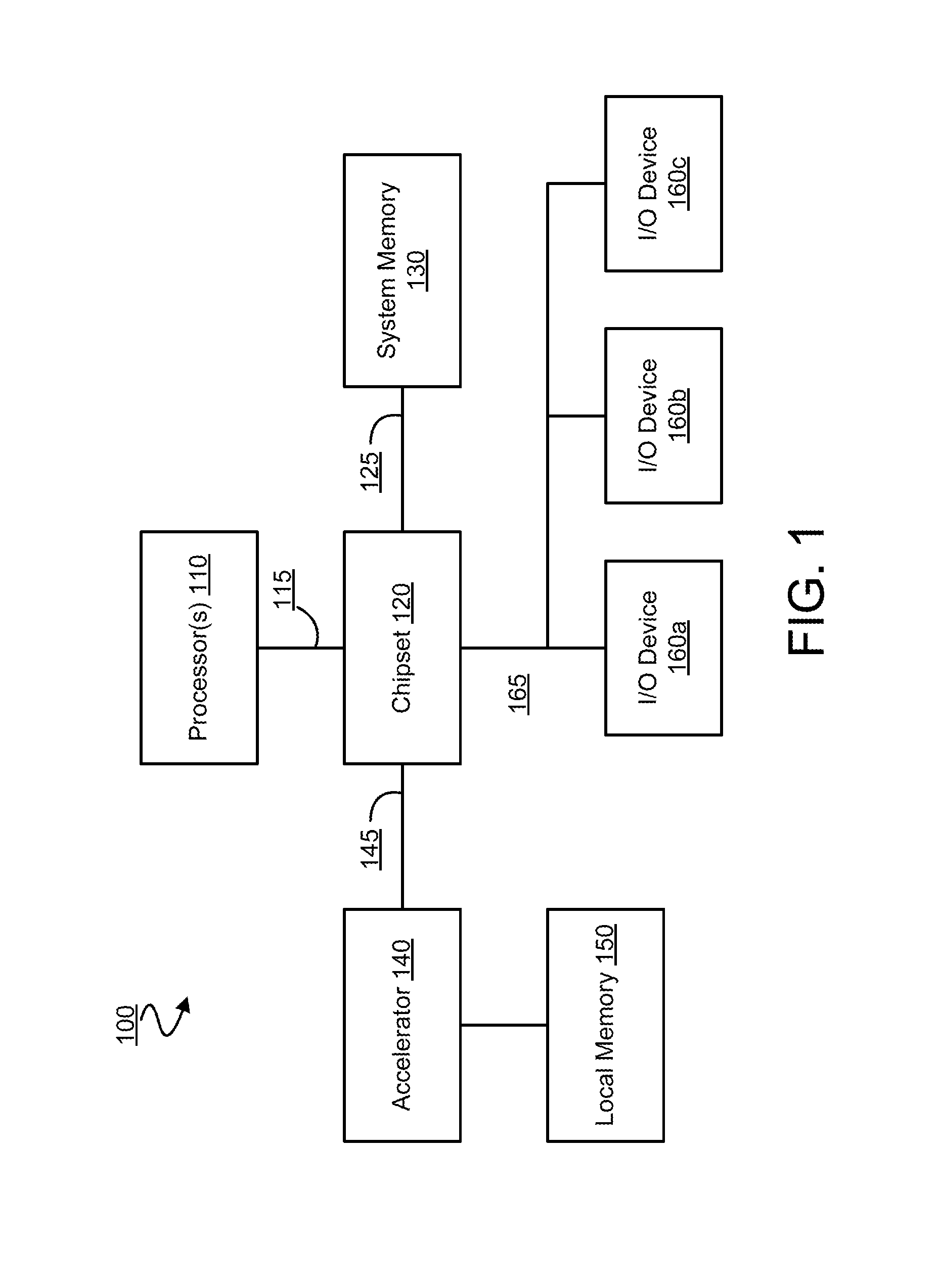

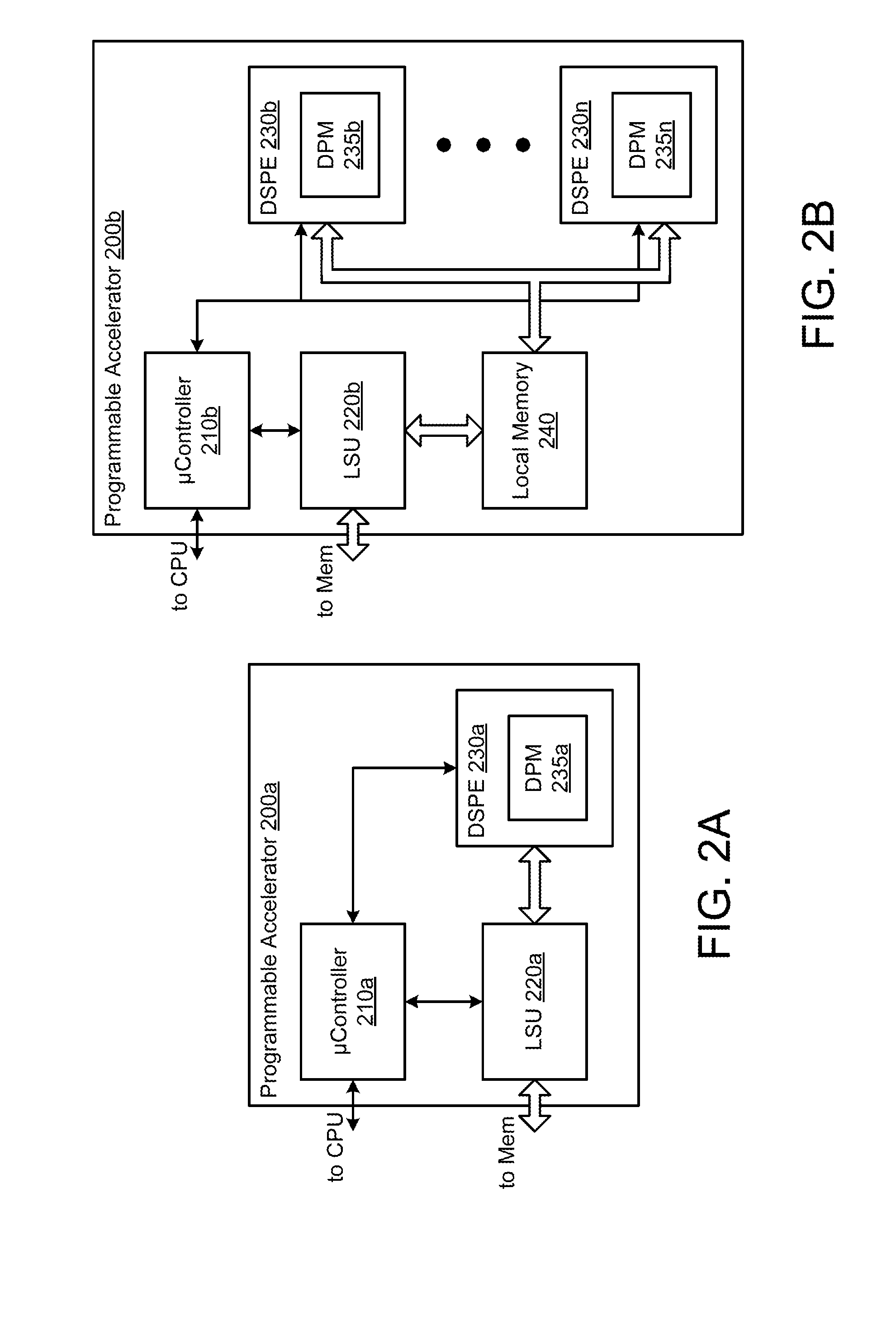

Method, device and system for control signalling in a data path module of a data stream processing engine

InactiveUS20150033001A1Next instruction address formationMultiple digital computer combinationsControl signalData stream processing

Techniques and mechanisms for exchanging control signals in a data path module of a data stream processing engine. In an embodiment, the data path module may be configured to form a set of one or more data paths corresponding to an instruction which is to be executed. In another embodiment, data processing units of the data path module may be configured to exchange one or more control signals for elastic execution of the instruction.

Owner:INTEL CORP

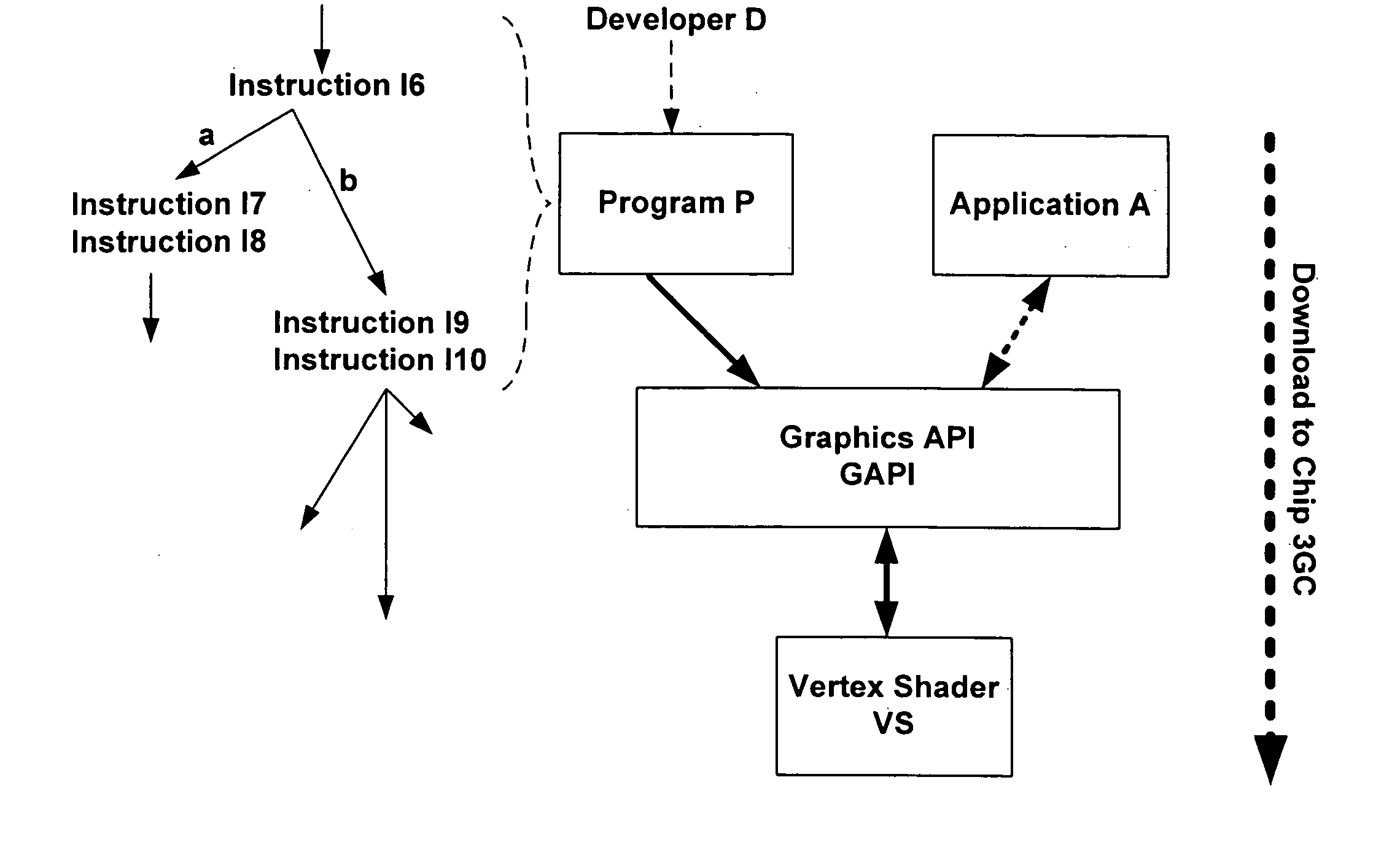

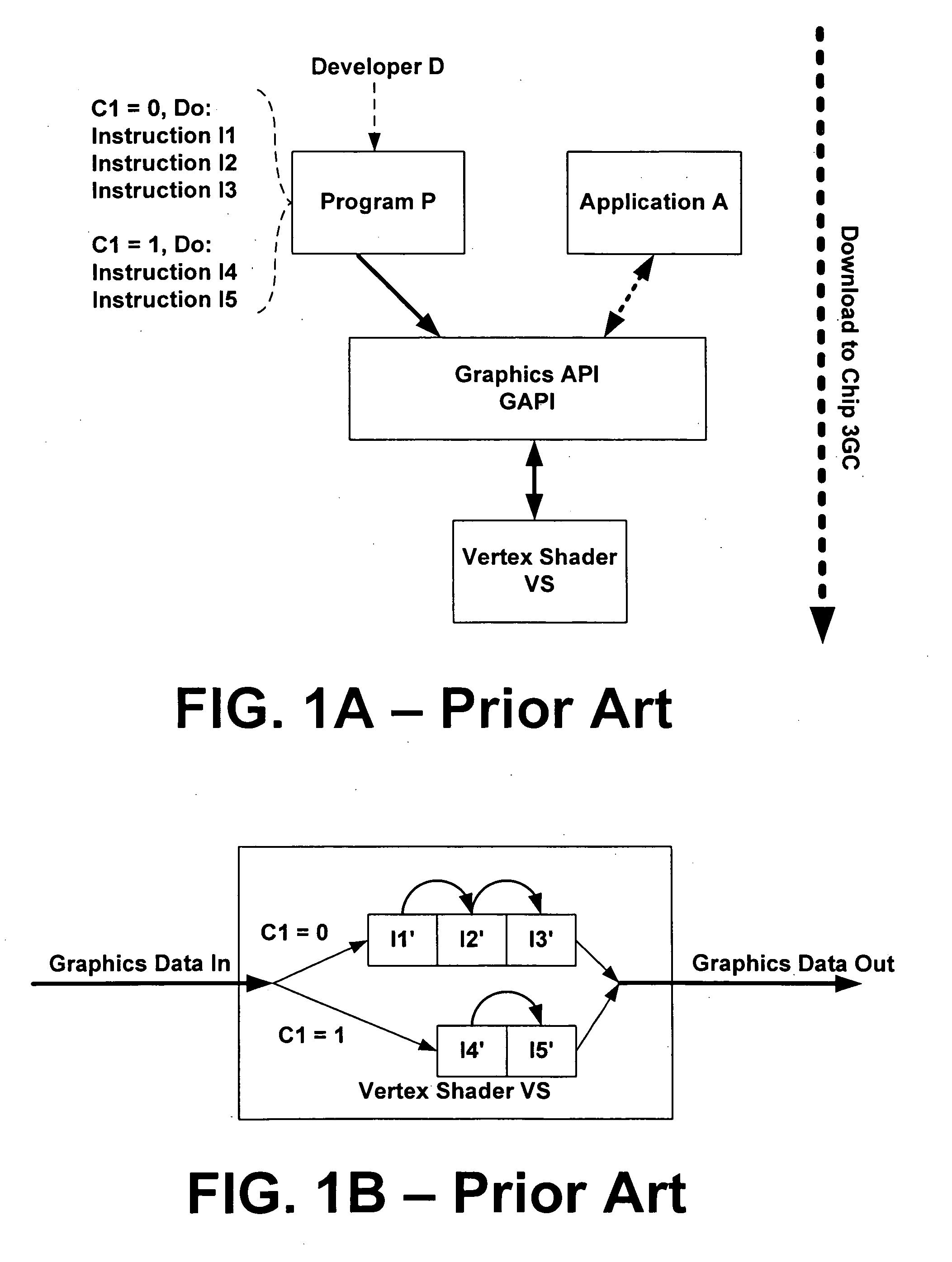

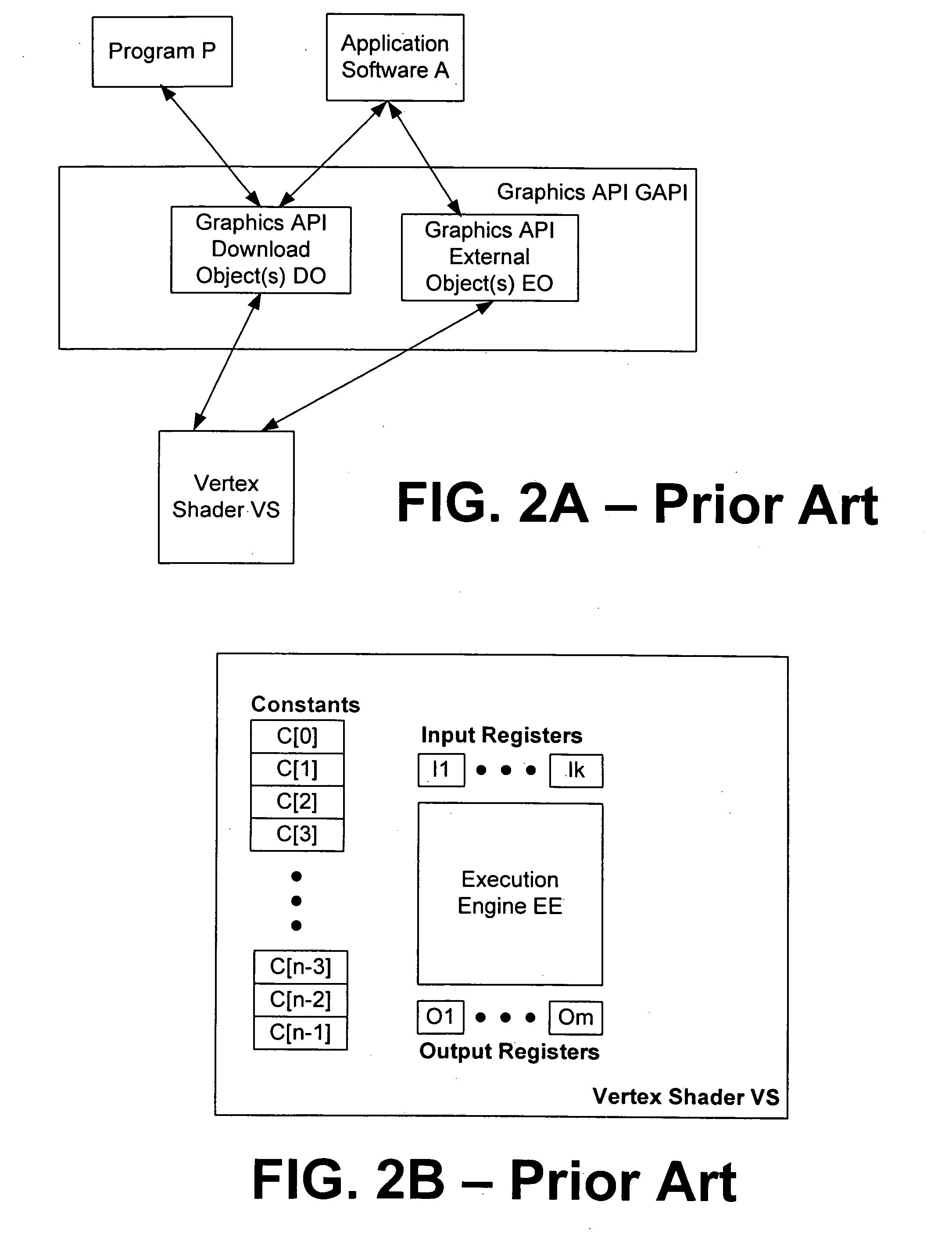

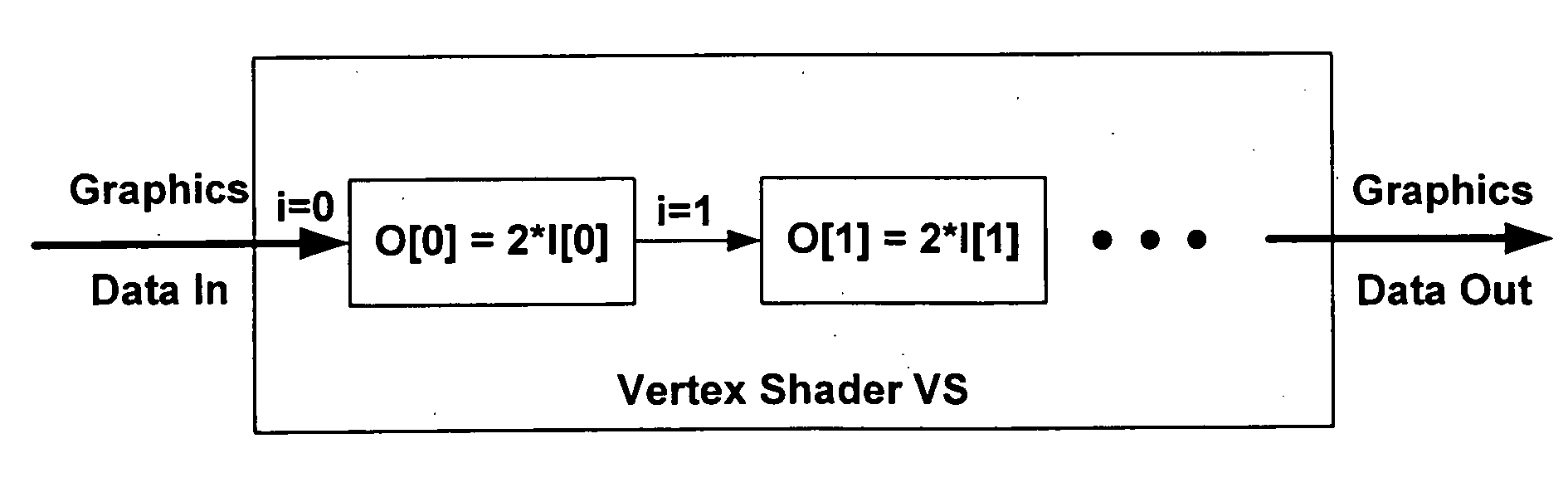

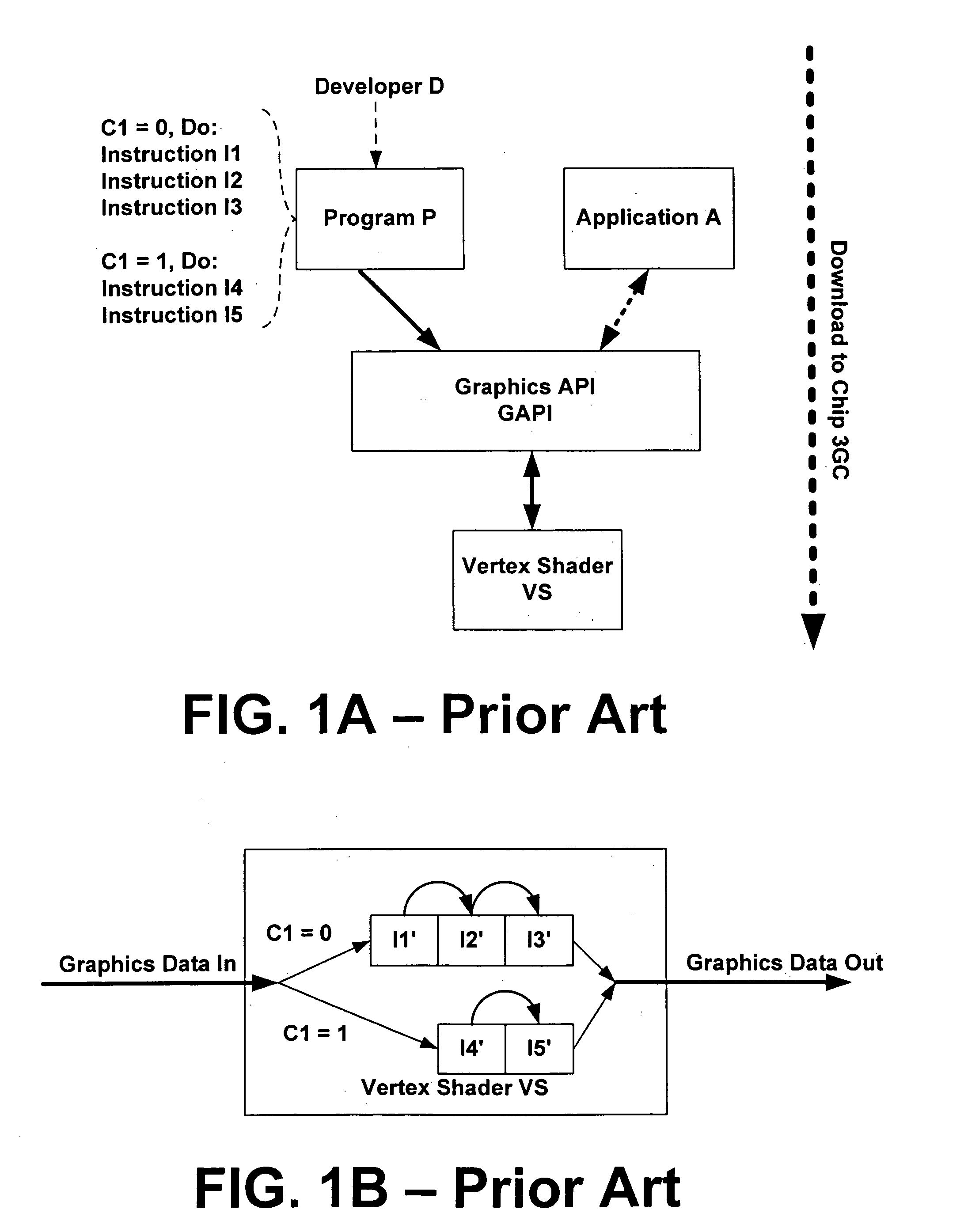

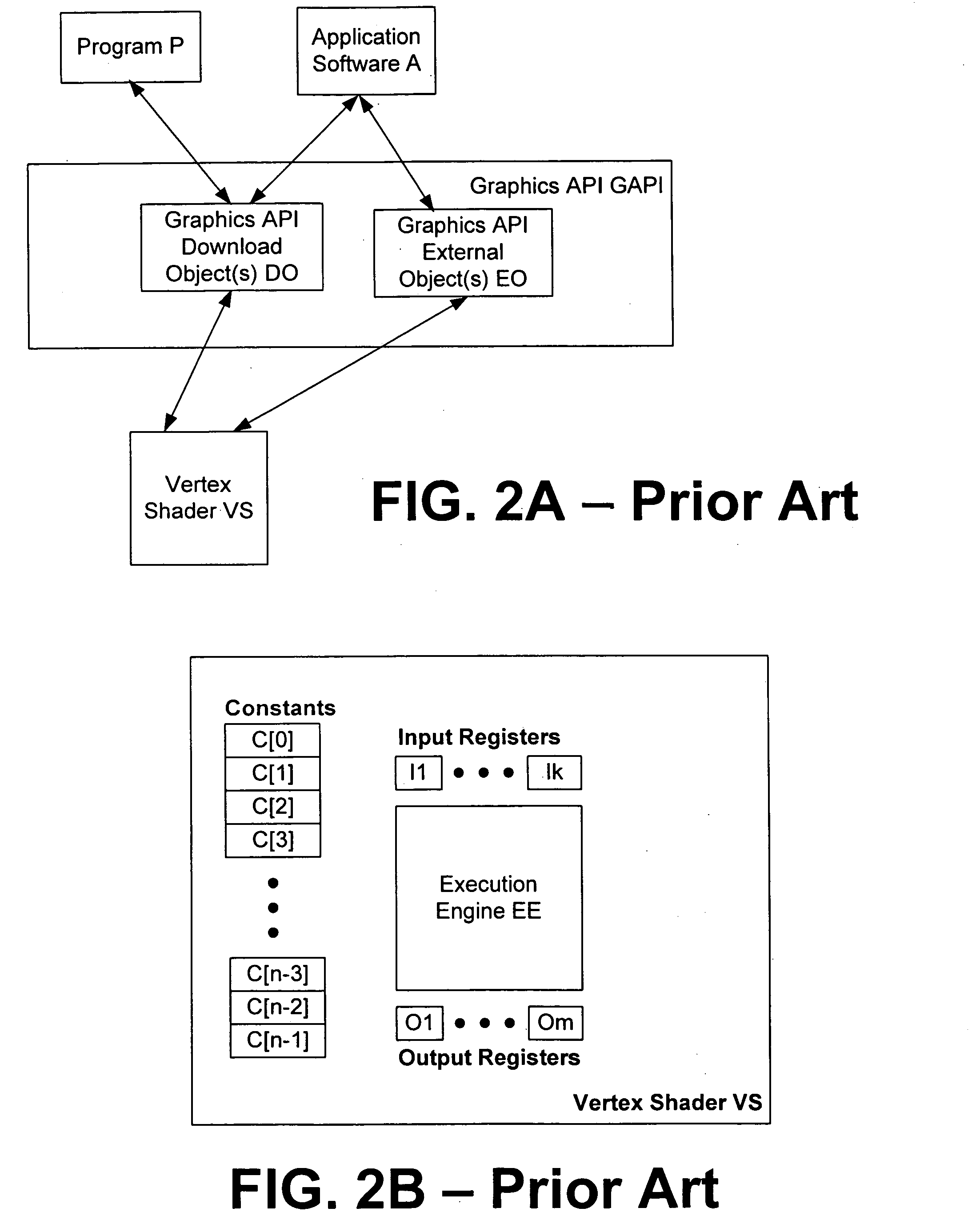

Systems and methods for downloading algorithmic elements to a coprocessor and corresponding techniques

ActiveUS20050122330A1Improve abilitiesSophisticated effectDigital computer detailsNext instruction address formationComputational scienceCoprocessor

Systems and methods for downloading algorithmic elements to a coprocessor and corresponding processing and communication techniques are provided. For an improved graphics pipeline, the invention provides a class of co-processing device, such as a graphics processor unit (GPU), providing improved capabilities for an abstract or virtual machine for performing graphics calculations and rendering. The invention allows for runtime-predicated flow control of programs downloaded to coprocessors, enables coprocessors to include indexable arrays of on-chip storage elements that are readable and writable during execution of programs, provides native support for textures and texture maps and corresponding operations in a vertex shader, provides frequency division of vertex streams input to a vertex shader with optional support for a stream modulo value, provides a register storage element on a pixel shader and associated interfaces for storage associated with representing the “face” of a pixel, provides vertex shaders and pixel shaders with more on-chip register storage and the ability to receive larger programs than any existing vertex or pixel shaders and provides 32 bit float number support in both vertex and pixel shaders.

Owner:MICROSOFT TECH LICENSING LLC

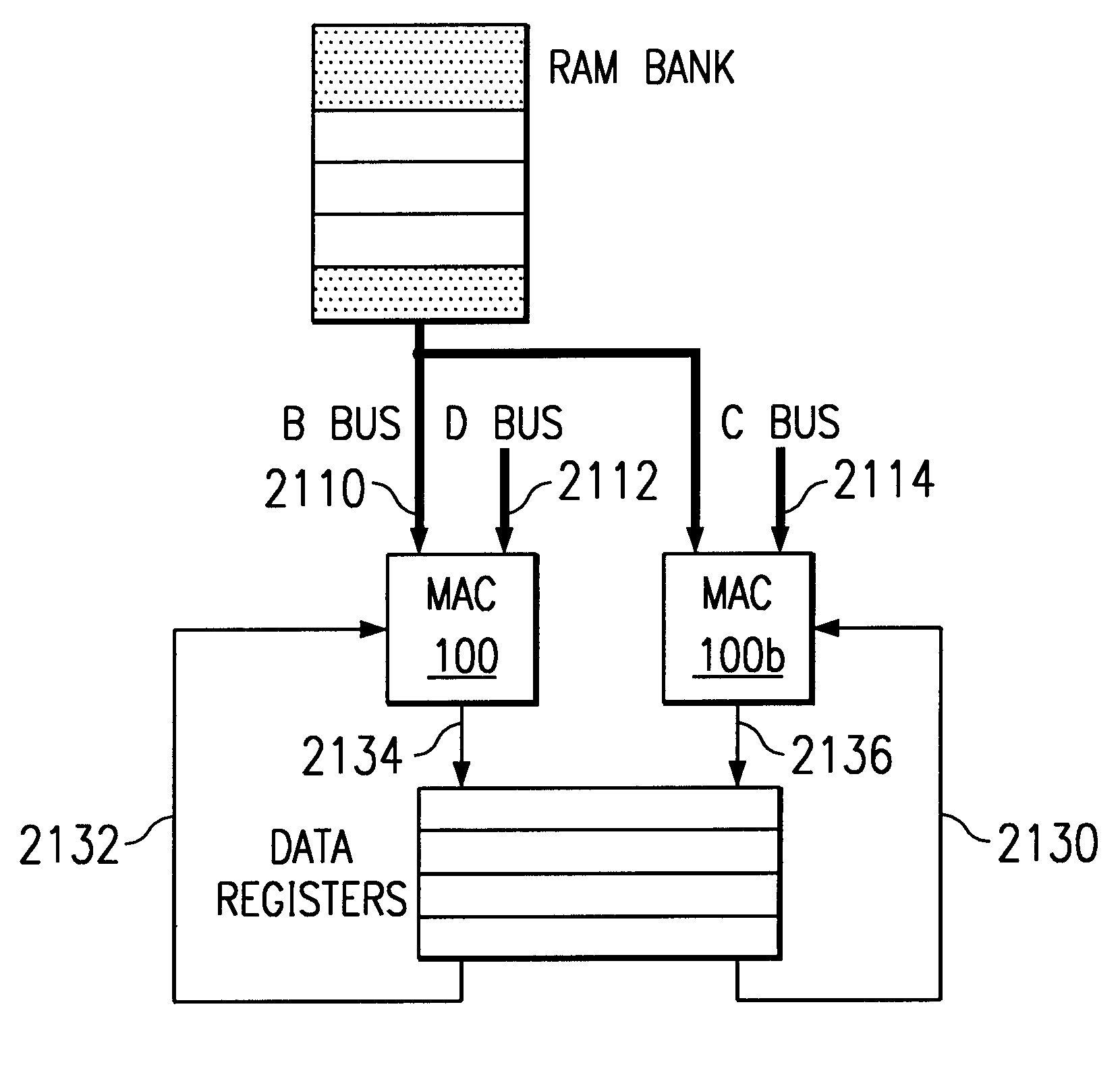

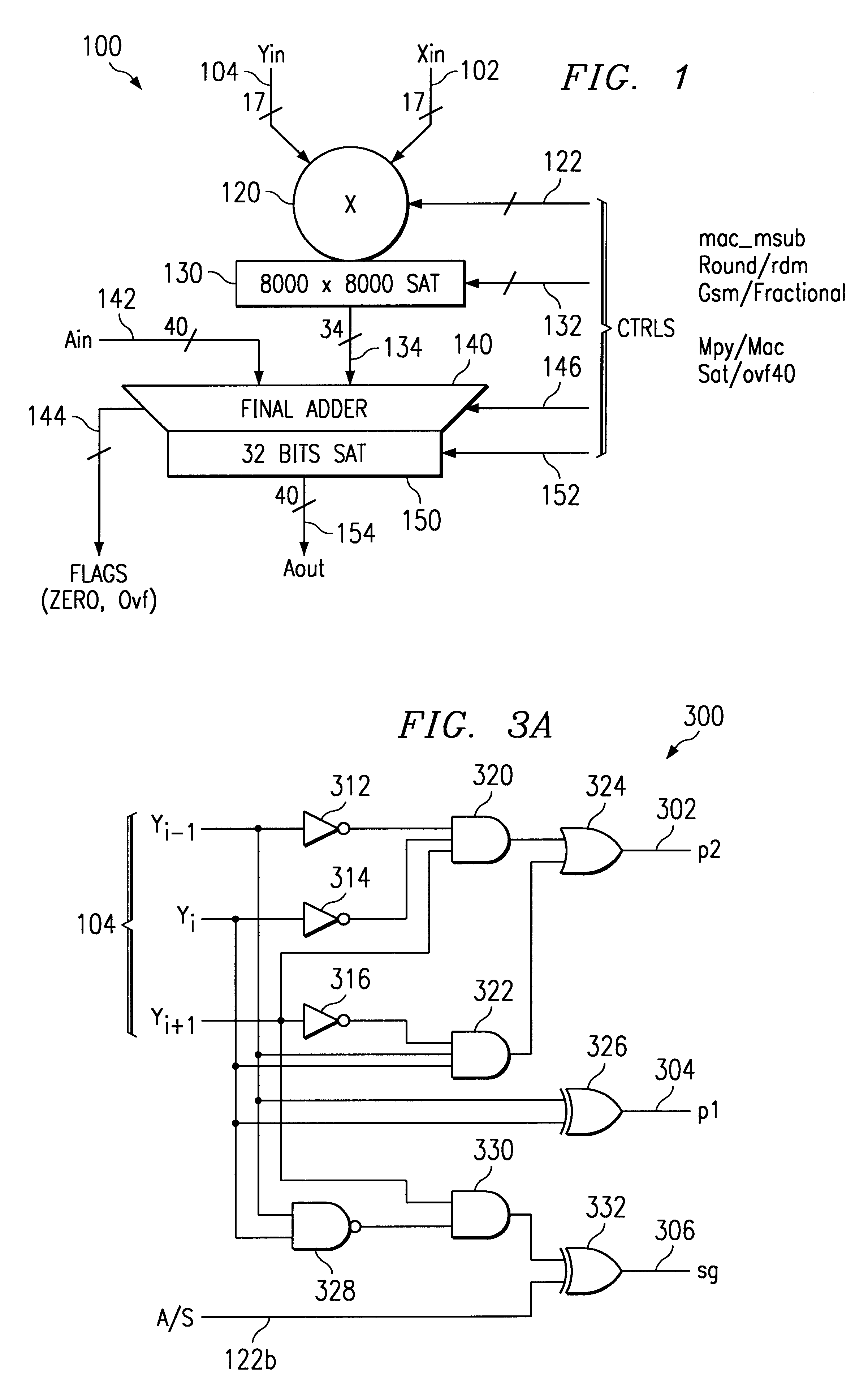

Multiplier accumulator circuits

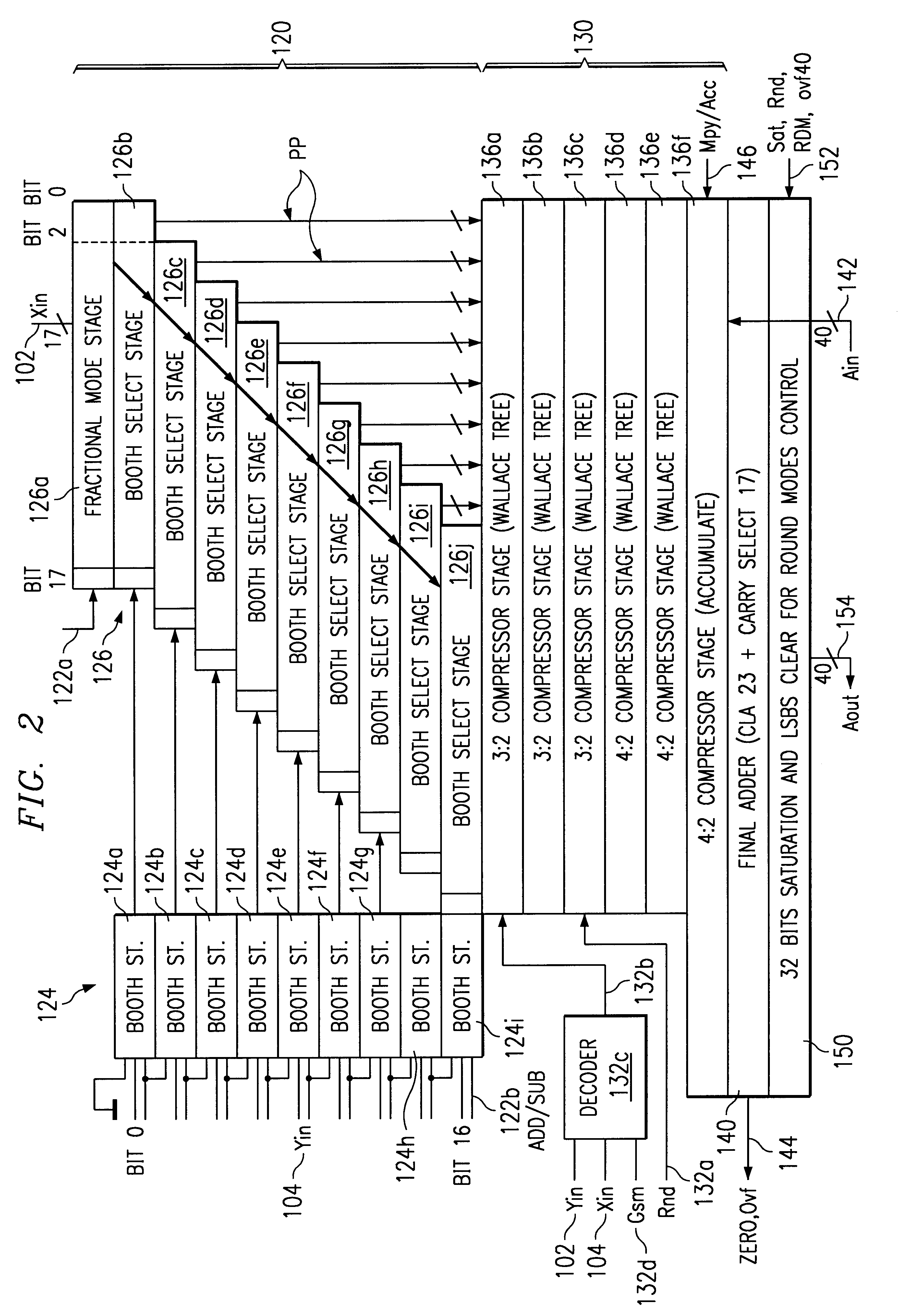

A multiply-accumulate (MAC) unit, having a first binary operand X, a second binary operand Y, a third binary operand, Booth recode logic for generating a plurality of partial products from said first and second operands, a Wallace tree adder for reducing the partial products and for selectively arithmetically combining the reduced partial products with said third operand, a final adder for generating a final sum, and a saturation circuitry for selectively rounding or saturating said final sum is provided. A dual MAC unit is also provided.

Owner:TEXAS INSTR INC

Fast stack save and restore system and method

InactiveUS6449709B1Memory adressing/allocation/relocationDigital computer detailsData accessParallel computing

A processor includes a stack that operates as a circular stack and appears to the address space in the memory of the processor as a single point address location. The stack supports read and write data access functions in addition to CALL (push) and RETURN (pop) programming operations. The processor may be programmed to save the stack in a typical manner with one instruction atomically transferring each element in the stack directly from the stack to a save storage. To restore the stack, the processor may be programmed to individually restore each element. The processor supports a special MOV instruction that transfers a plurality of bytes in a single operation. The special MOV instruction has one argument that identifies the beginning transfer source address, another argument defines the byte count indicating the number of bytes to be transferred, and a beginning transfer destination address. The processor may be programmed to perform a stack save operation with only a single instruction that moves the contents of the stack to the save storage. To further reduce context switching time and reduce the stack save and restore operation to a minimum number of instructions while maintaining the proper entry relationship for both stack read and write operations, the processor includes a "stack read forward" option to the special MOV instruction. The option to the special MOV instruction operates to read data in a forward direction even when no valid data is stored in the locations. The read operation begins at the start address specified by an argument to the MOV instruction, reads forward, and wraps around in a binary fashion back to the start address.

Owner:PMC-SIERRA

High data density RISC processor

InactiveUS6282633B1High densityIncrease in CPIInstruction analysisDigital computer detailsProgram instructionProcessor register

A RISC processor implements an instruction set which, in addition to optimizing a relationship between the number of instructions required for execution of a program, clock period and average number of clocks per instruction, also is designed to optimize the equation S=IS * BI, where S is the size of program instructions in bits, IS is the static number of instructions required to represent the program (not the number required by an execution) and BI is the average number of bits per instruction. Compared to conventional RISC architectures, this processor lowers both BI and IS with minimal increases in clock period and average number of clocks per instruction. The processor provides good code density in a fixed-length high-performance encoding based on RISC principles, including a general register with load / store architecture. Further, the processor implements a simple variable-length encoding that maintains high performance.

Owner:TENSILICA

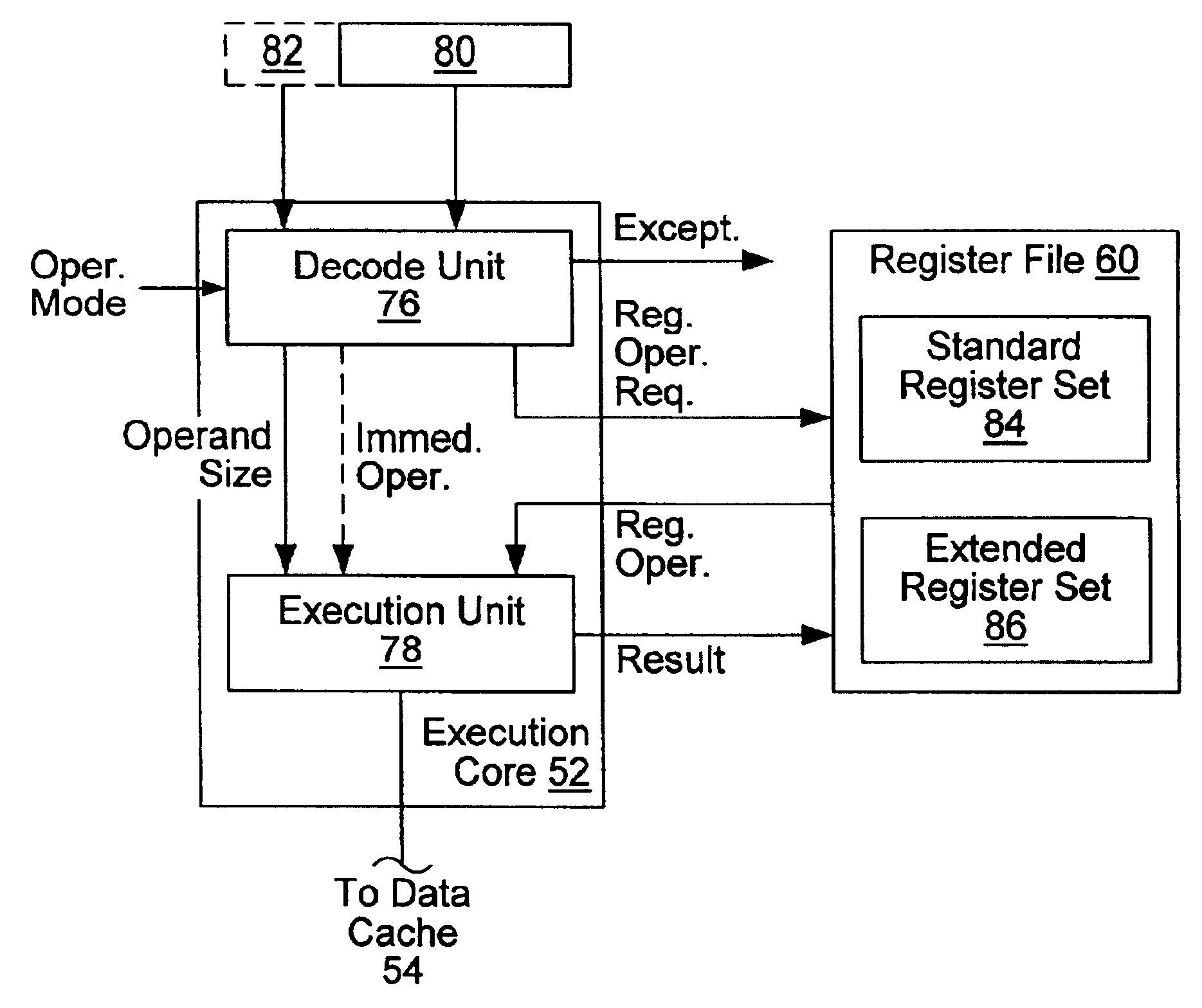

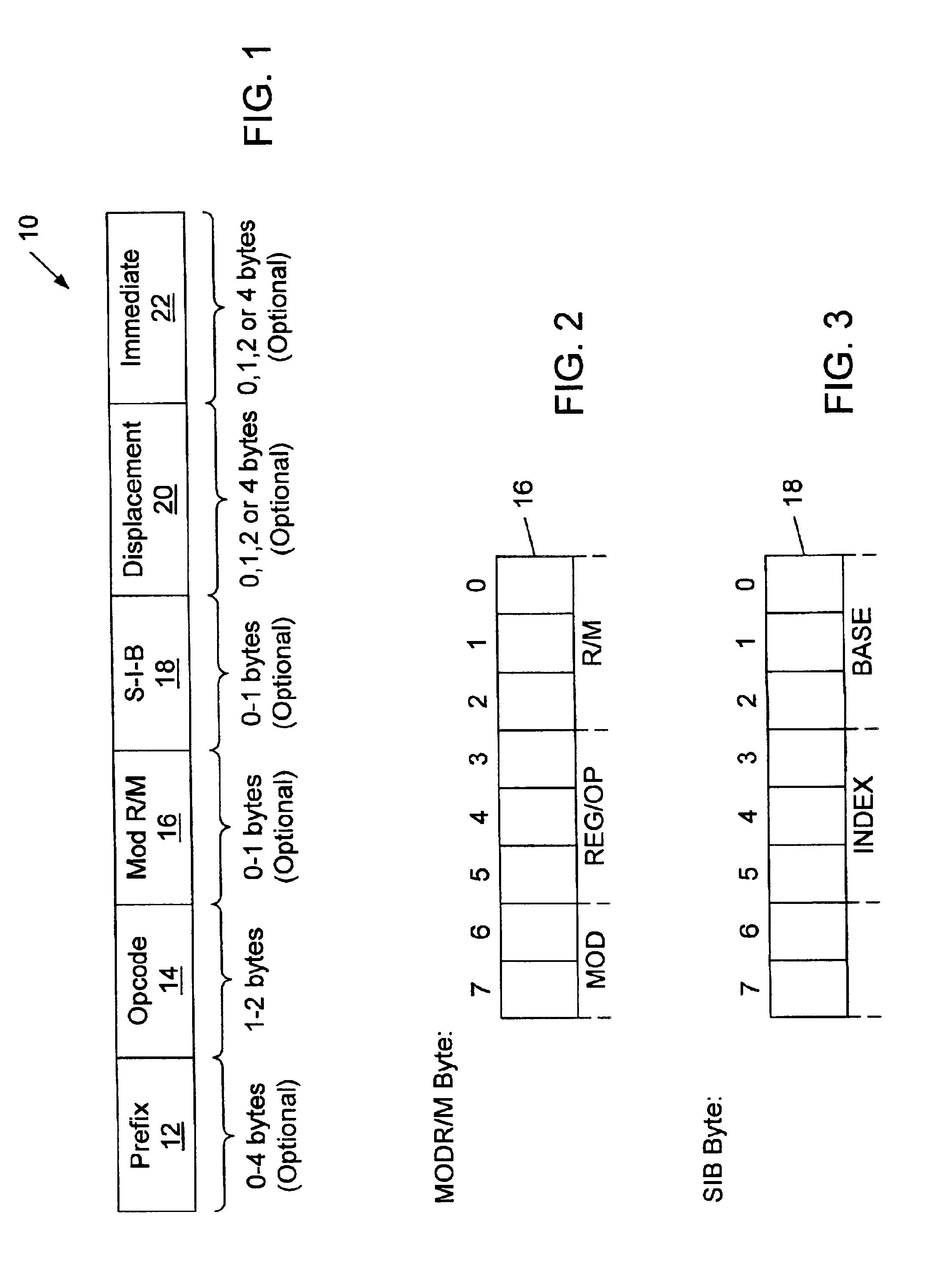

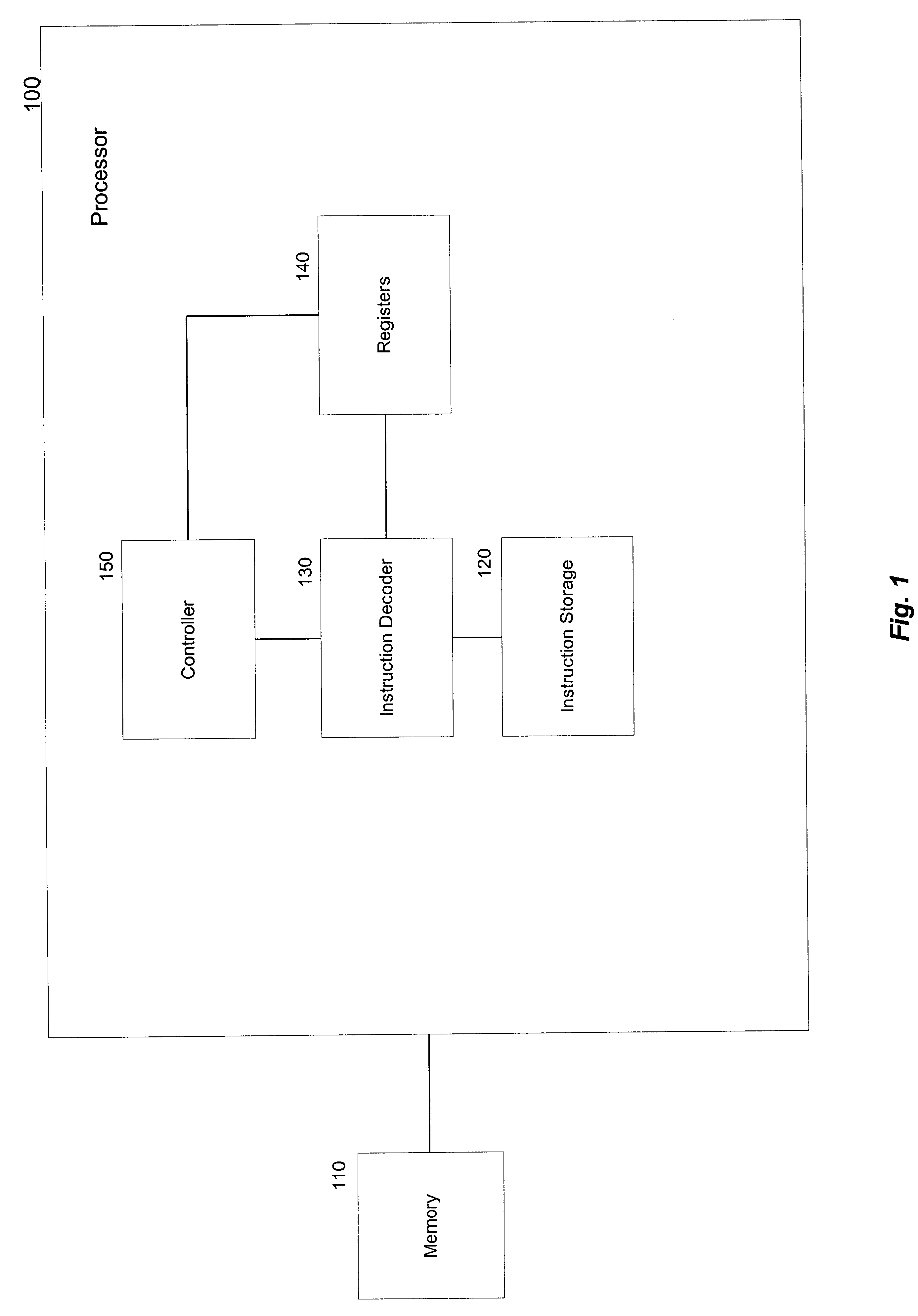

Central processing unit (CPU) accessing an extended register set in an extended register mode

InactiveUS6877084B1Resource allocationMemory adressing/allocation/relocationGeneral purposeComputer architecture

A central processing unit (CPU) is described including a register file and an execution core coupled to the register file. The register file includes a standard register set and an extended register set. The standard register set includes multiple standard registers, and the extended register set include multiple extended registers. The execution core fetches and executes instructions, and receives a signal indicating an operating mode of the CPU. The execution core responds to an instruction by accessing at least one extended register if the signal indicates the CPU is operating in an extended register mode and the instruction includes a prefix portion including information needed to access the at least one extended register. The standard registers may be general purpose registers of a CPU architecture associated with the instruction. The number of extended registers may be greater than the number of general purpose registers defined by the CPU architecture. In this case, the additional register identification information in the prefix portion is needed to identify a selected one of the extended registers. A width of the extended registers may also be greater than a width of the standard registers. In this case, the prefix portion may also include an indication that the entire contents of the least one extended register is to be accessed. In this way, instruction operand sizes may selectively be increased when the CPU is operating in the extended register mode. A computer system including the CPU is also described.

Owner:GLOBALFOUNDRIES INC

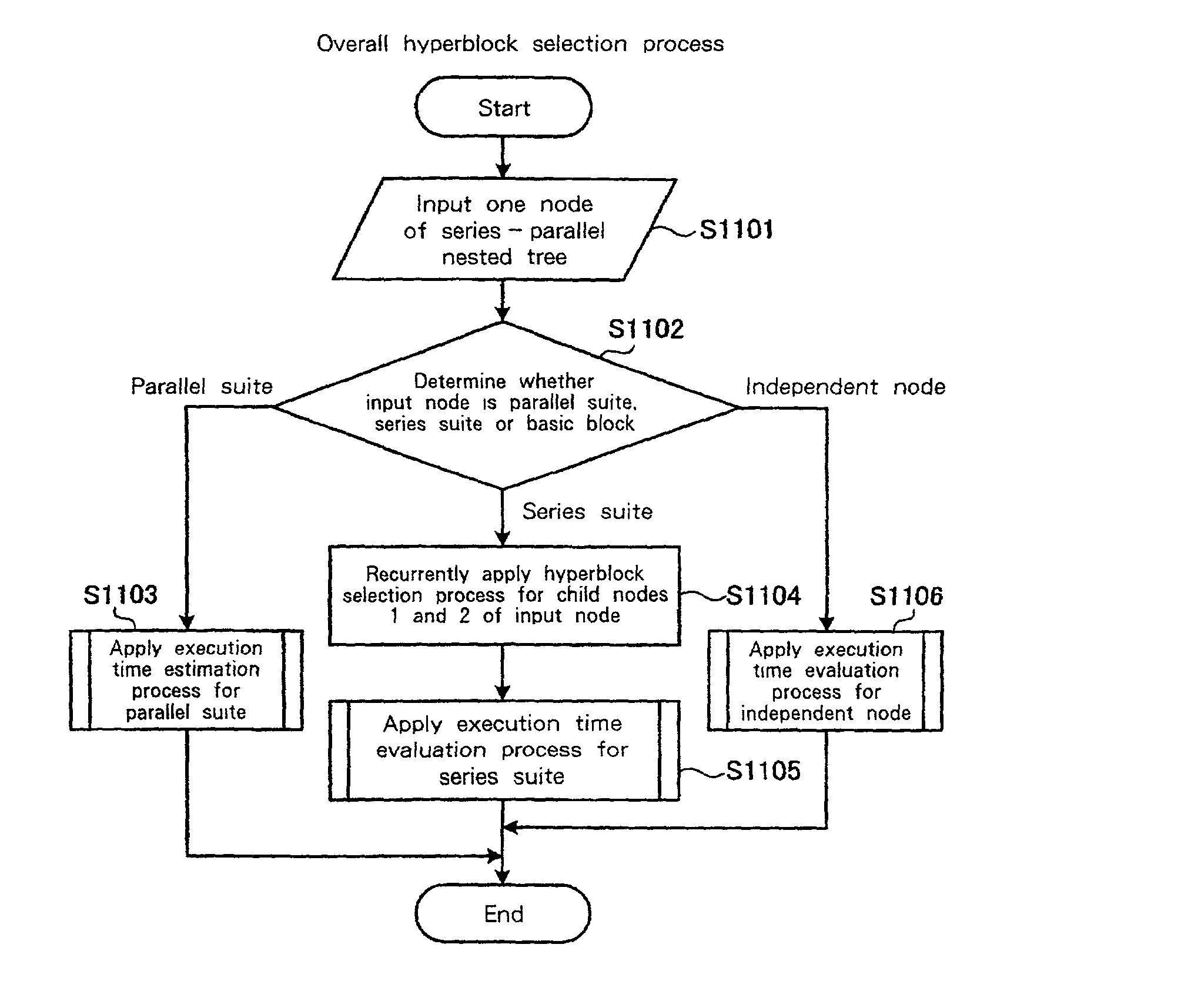

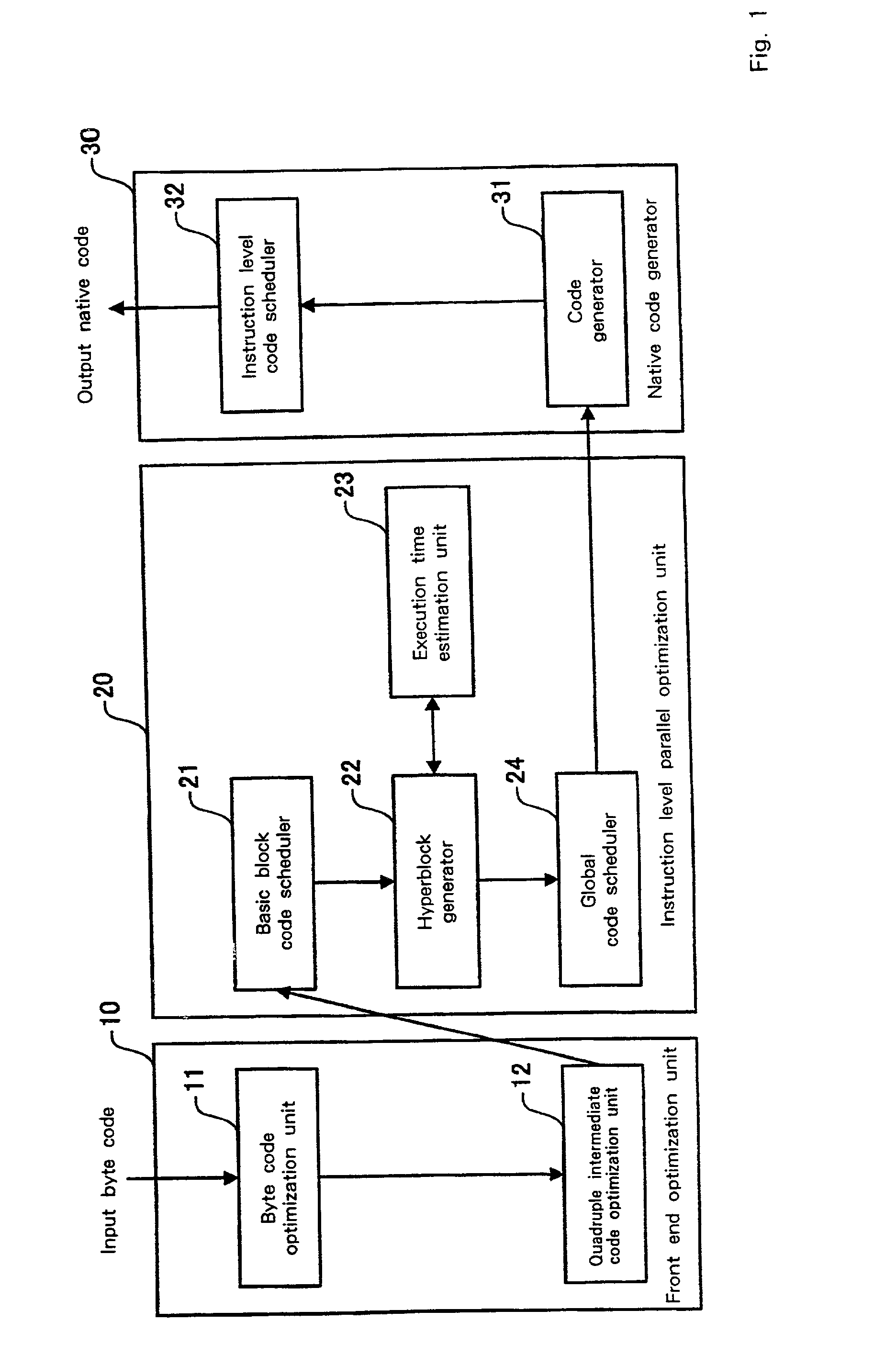

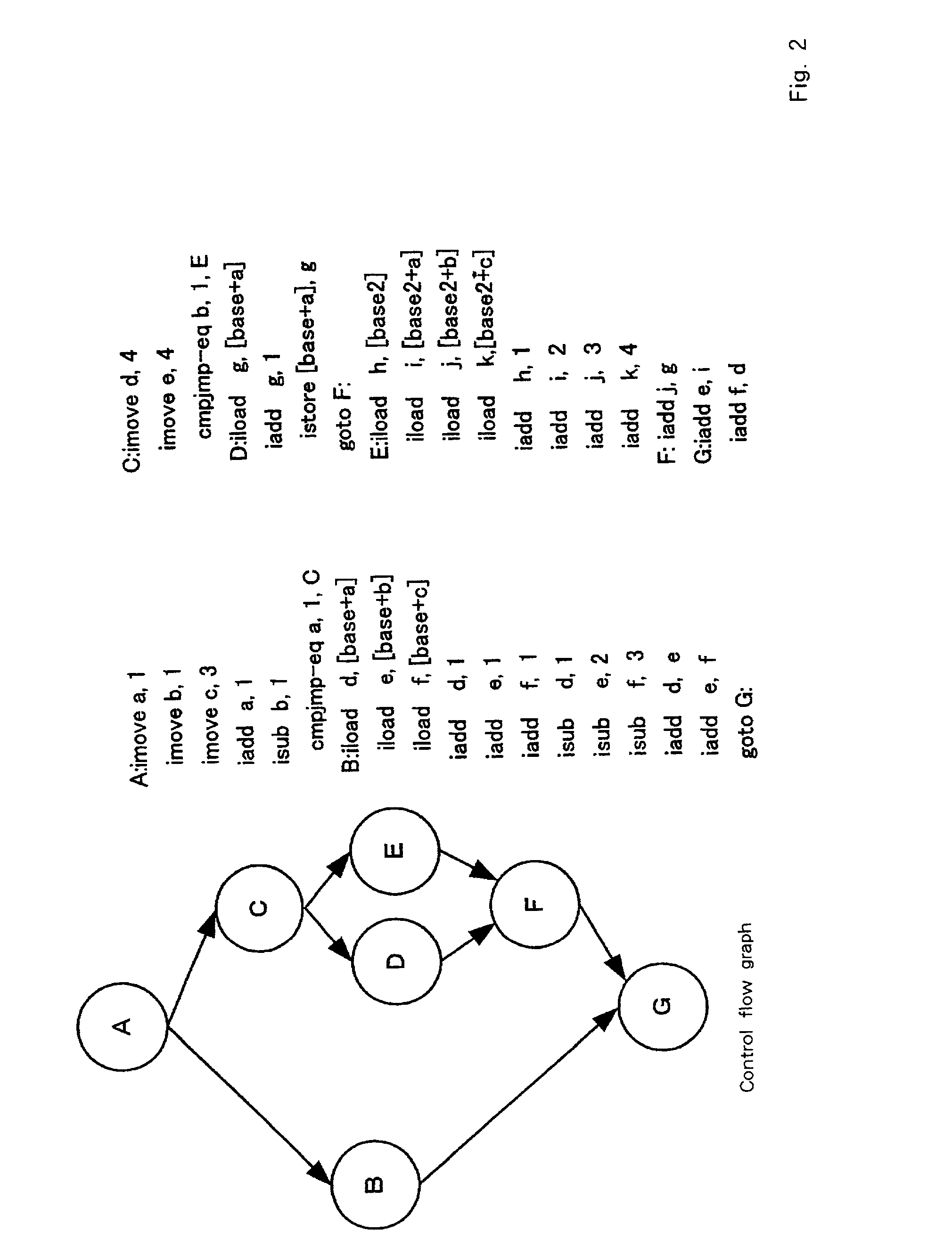

Program optimization method, and compiler using the same

InactiveUS20020095666A1Short processing timeEfficient executionSoftware engineeringDigital computer detailsSource codeBasic block

An optimization method and apparatus for converting source code for a program written in a programming language into machine language and for optimizing the program includes employing a basic block as a unit to estimate an execution time for the program to be processed, generating a nested tree that represents the connections of the basic blocks using a nesting structure, when a conditional branch is accompanied by a node in the nested tree, employing the execution time estimated by using the basic blocks as units to obtain an execution time at the node of the program when a conditional branching portion of a program is directly executed and when the conditional branching portion is executed in parallel, and defining the node as a parallel execution area group when the execution time required for the parallel execution is shorter or dividing multiple child nodes of the nodes into multiple parallel execution areas when the execution time for the conditional branching portion is shorter.

Owner:IBM CORP

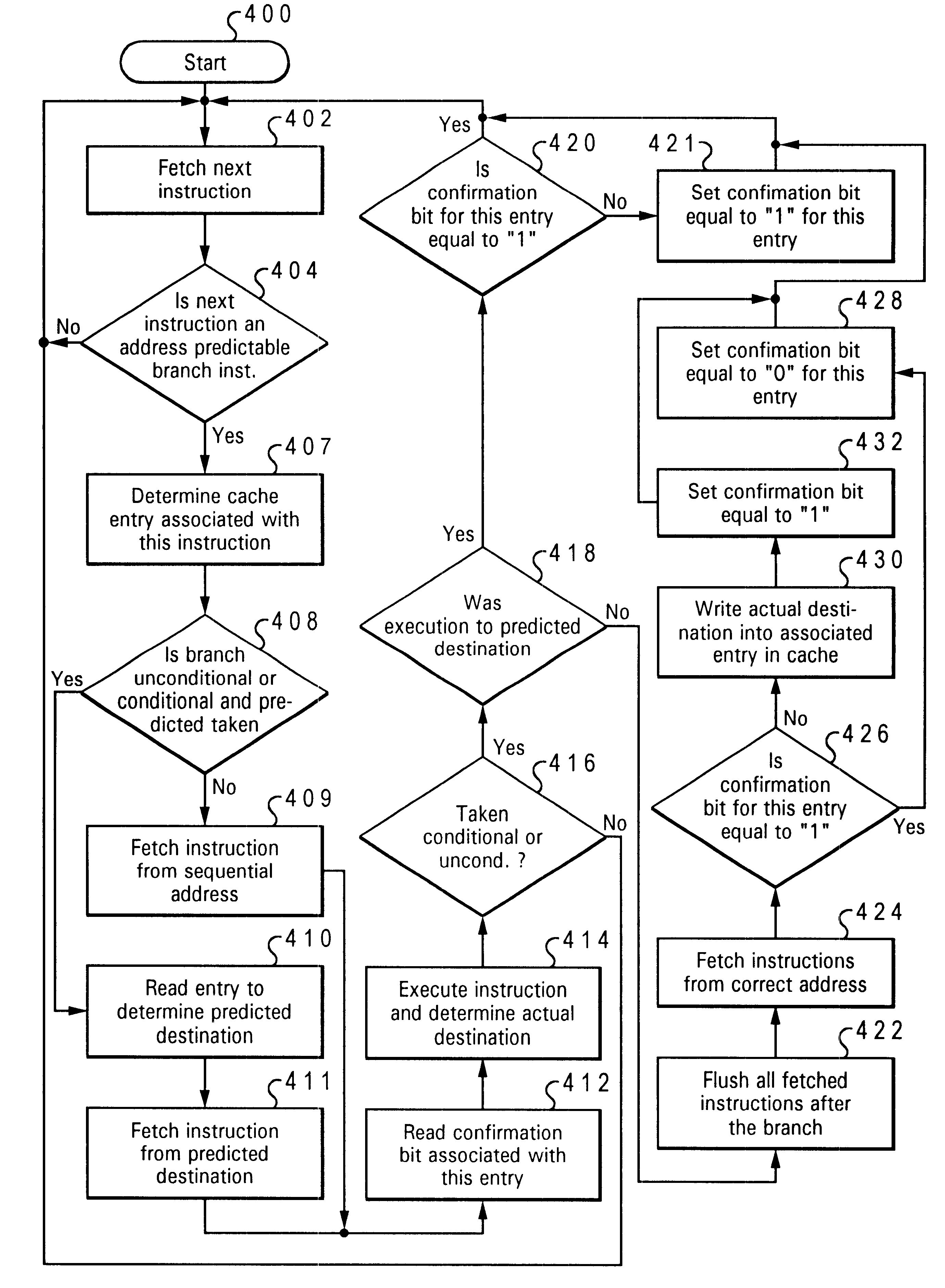

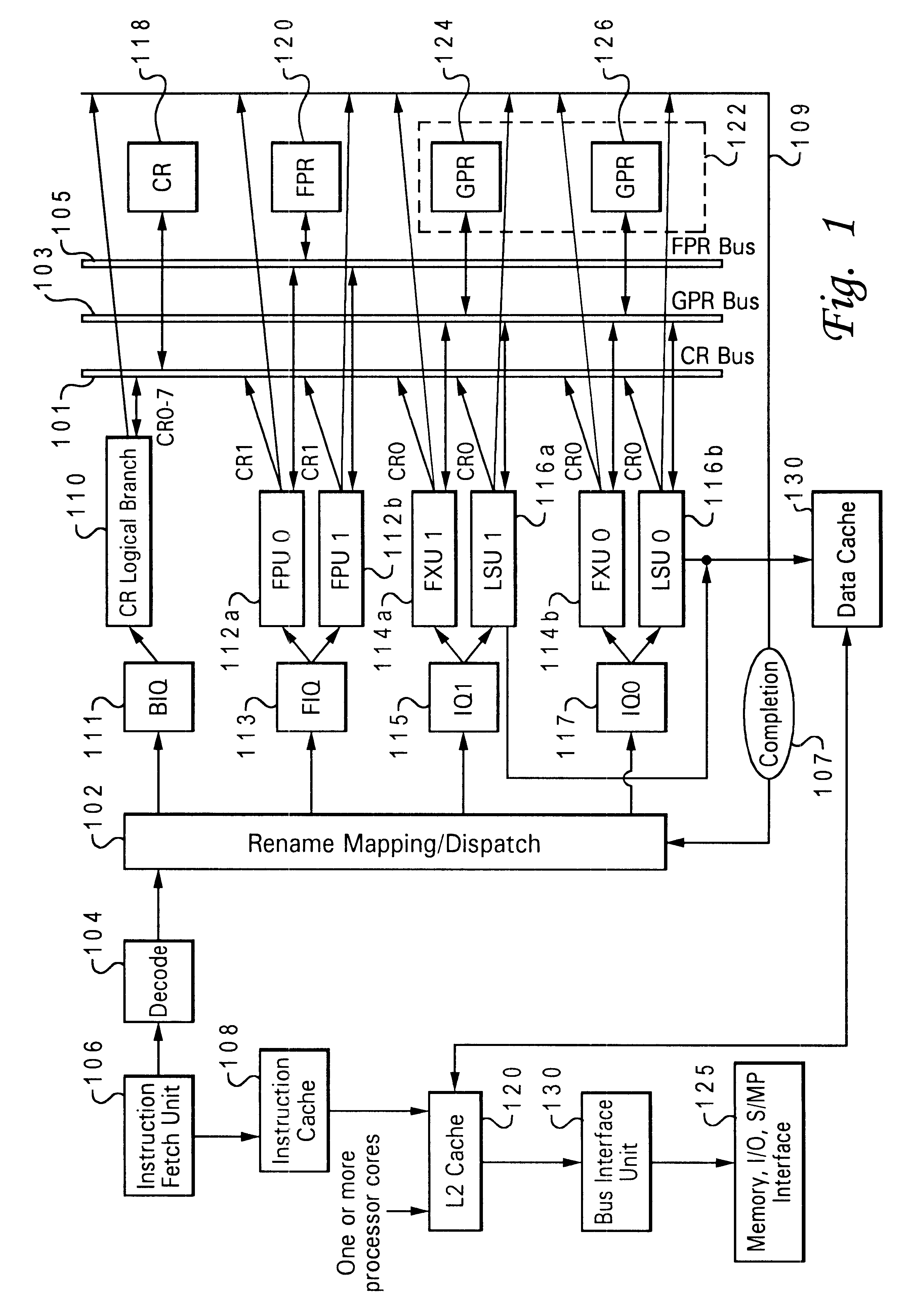

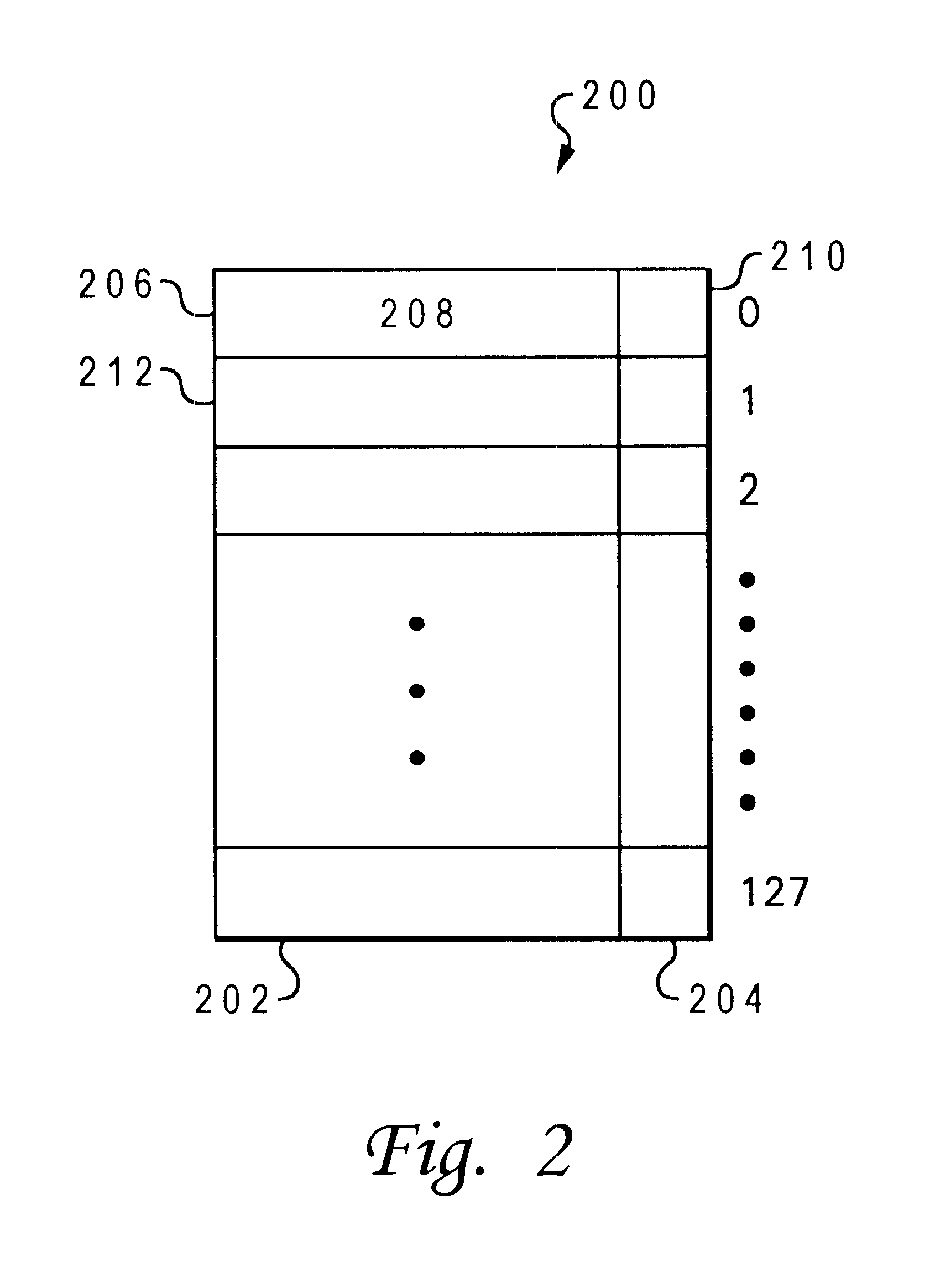

Processor and method including a cache having confirmation bits for improving address predictable branch instruction target predictions

InactiveUS6457120B1Memory adressing/allocation/relocationDigital computer detailsScalar processorSuperscalar

A superscalar processor and method are disclosed for improving the accuracy of predictions of a destination of a branch instruction utilizing a cache. The cache is established including multiple entries. Each of multiple branch instructions are associated with one of the entries of the cache. One of the entries of the cache includes a stored predicted destination for the branch instruction associated with this entry of the cache. The predicted destination is a destination the branch instruction is of predicted to branch to upon execution of the branch instruction. The stored predicted destination is updated in the one of the entries of the cache only in response to two consecutive mispredictions of the destination of the branch instruction, wherein the two consecutive mispredictions were made utilizing the one of the entries of the cache.

Owner:IBM CORP

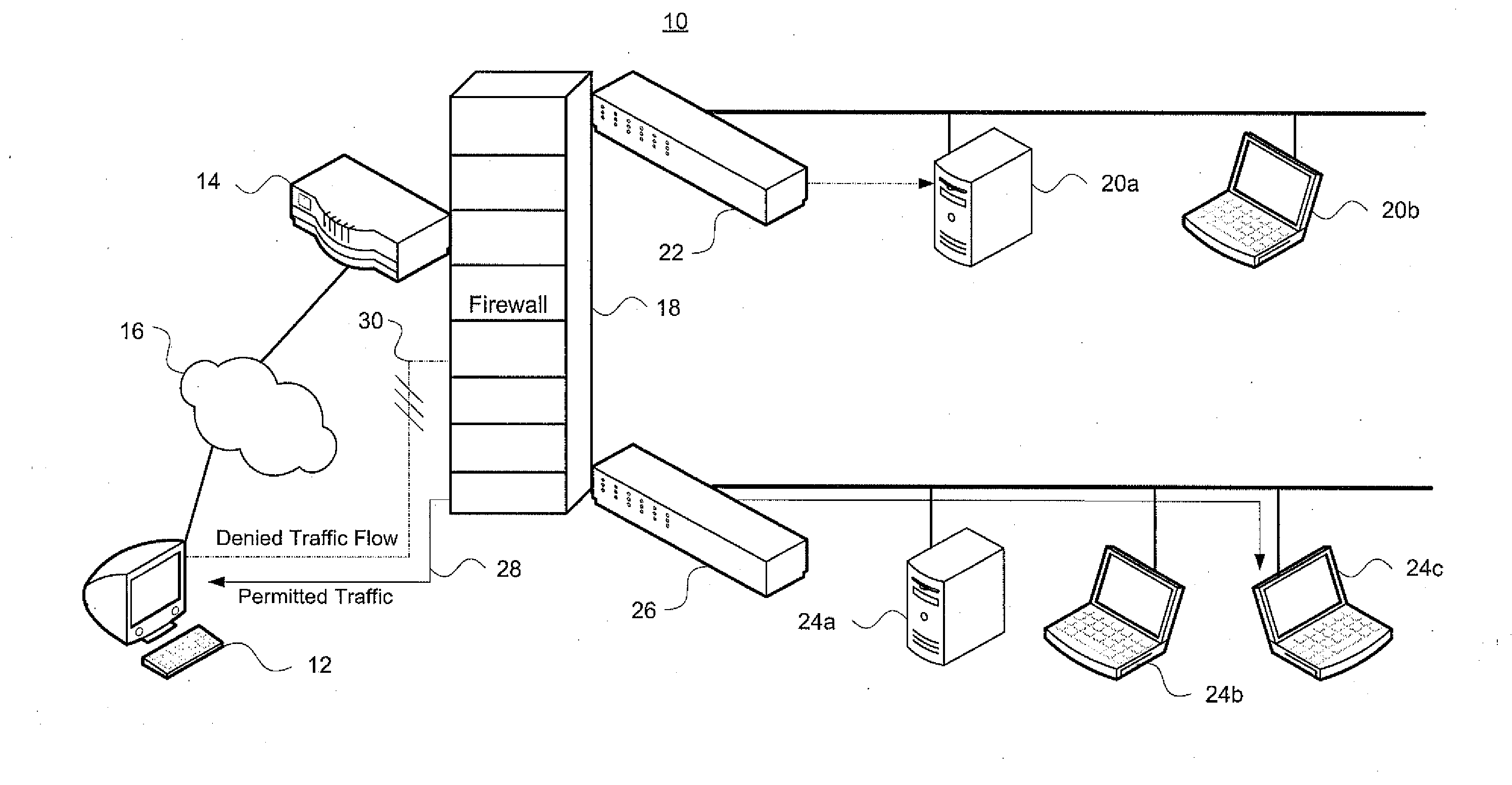

System and method for determining symantic equivalence between access control lists

InactiveUS20100199346A1Effective judgmentEfficiently determinedNext instruction address formationMultiple digital computer combinationsSemantic equivalenceDatabase

Aspects of the invention pertain to analyzing and modifying access control lists that are used in computer networks. Access control lists may have many individual rules that indicate whether information can be passed between certain devices in a computer network. The access control lists may include redundant or conflicting rules. An aspect of the invention determines whether two or more access control lists are equivalent or not. Order-dependent access control lists are converted into order-independent access control lists, which enable checking of semantic equivalence of different access control lists. Upon conversion to an order-independent access control list, lower-precedence rules in the order-free list are checked for overlap with a current higher precedence entry. If overlap exists, existing order-free rules are modified so that spinoff rules have no overlap with the current entry. This is done while maintaining semantic equivalence.

Owner:TT GOVERNMENT SOLUTIONS

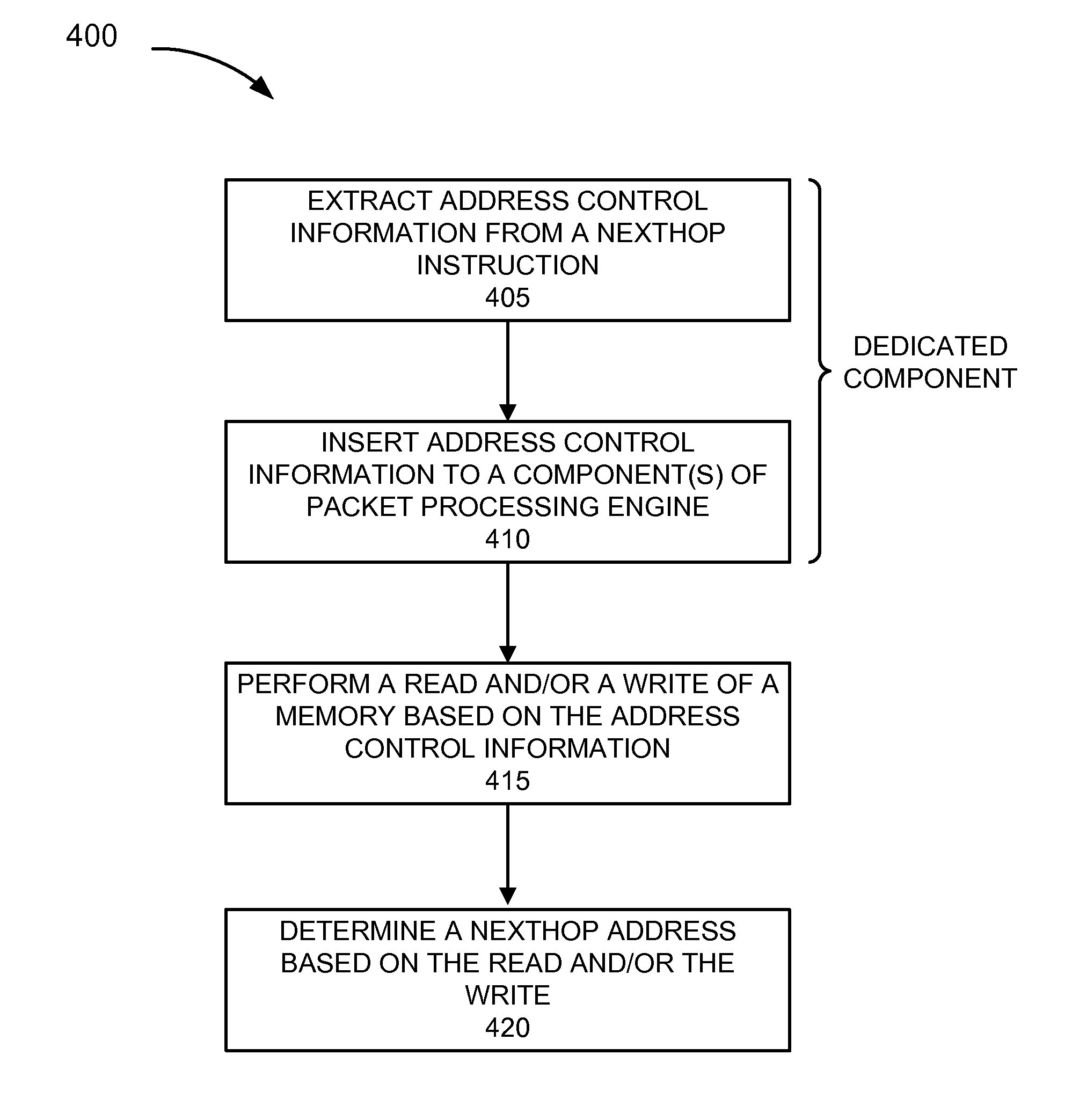

Hardware support for instruction set emulation

ActiveUS8069023B1Instruction analysisAnalogue computers for electric apparatusBit fieldNetwork addressing

A method for emulating a nexthop instruction in microcode with the assistance of dedicated hardware to extract read and write addressing from the nexthop instruction instead of performing this operation in microcode. A method for emulating a nexthop instruction in microcode with the assistance of dedicated hardware to compare a nexthop read address to a special value and to indicate whether the nexthop read address matches the special value, instead of performing this operation in microcode. A method for determining a network address by performing a single extraction of bit fields of a tree instruction to allow multiple tree search processes to be performed.

Owner:JUMIPER NETWORKS INC

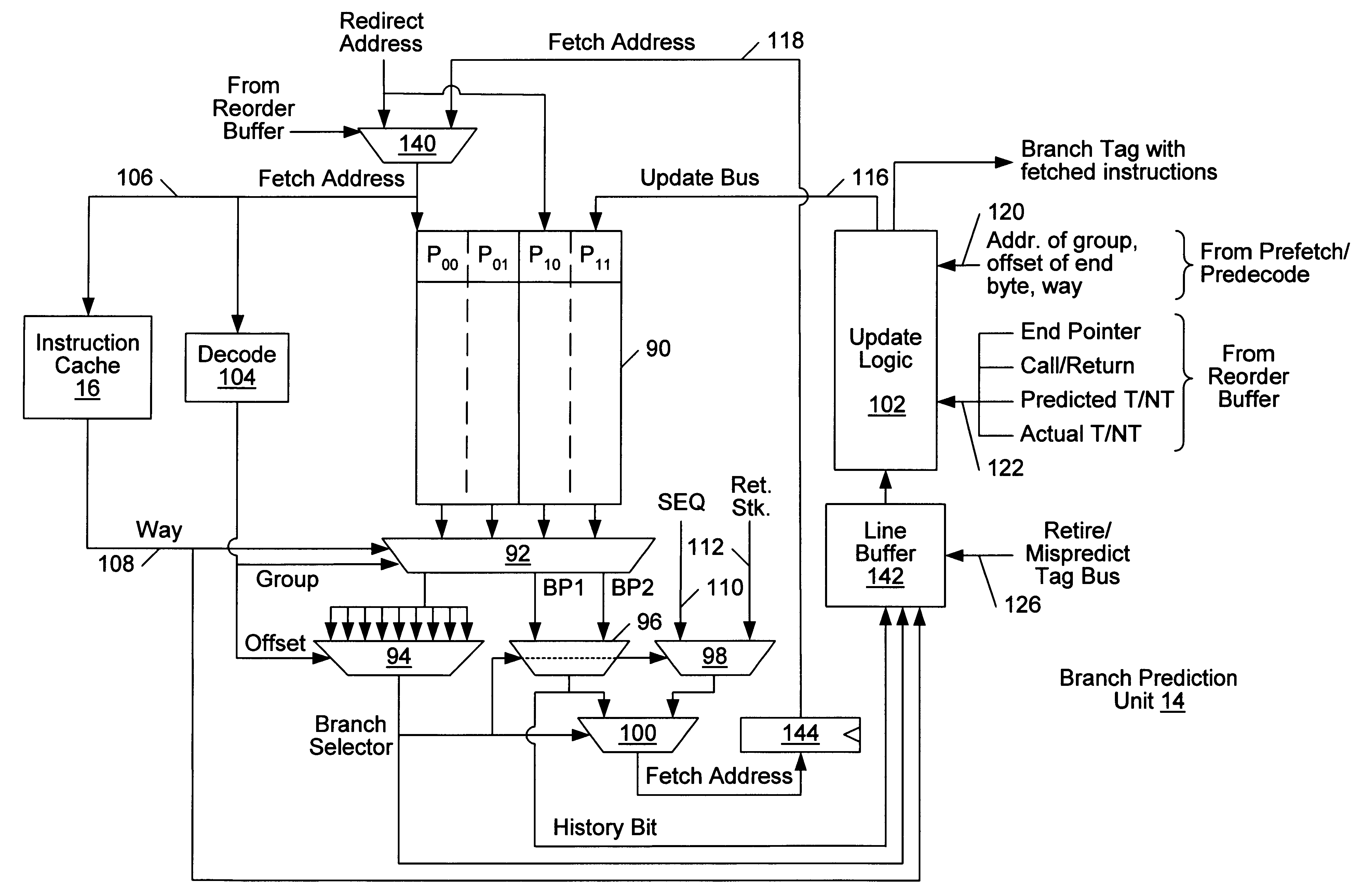

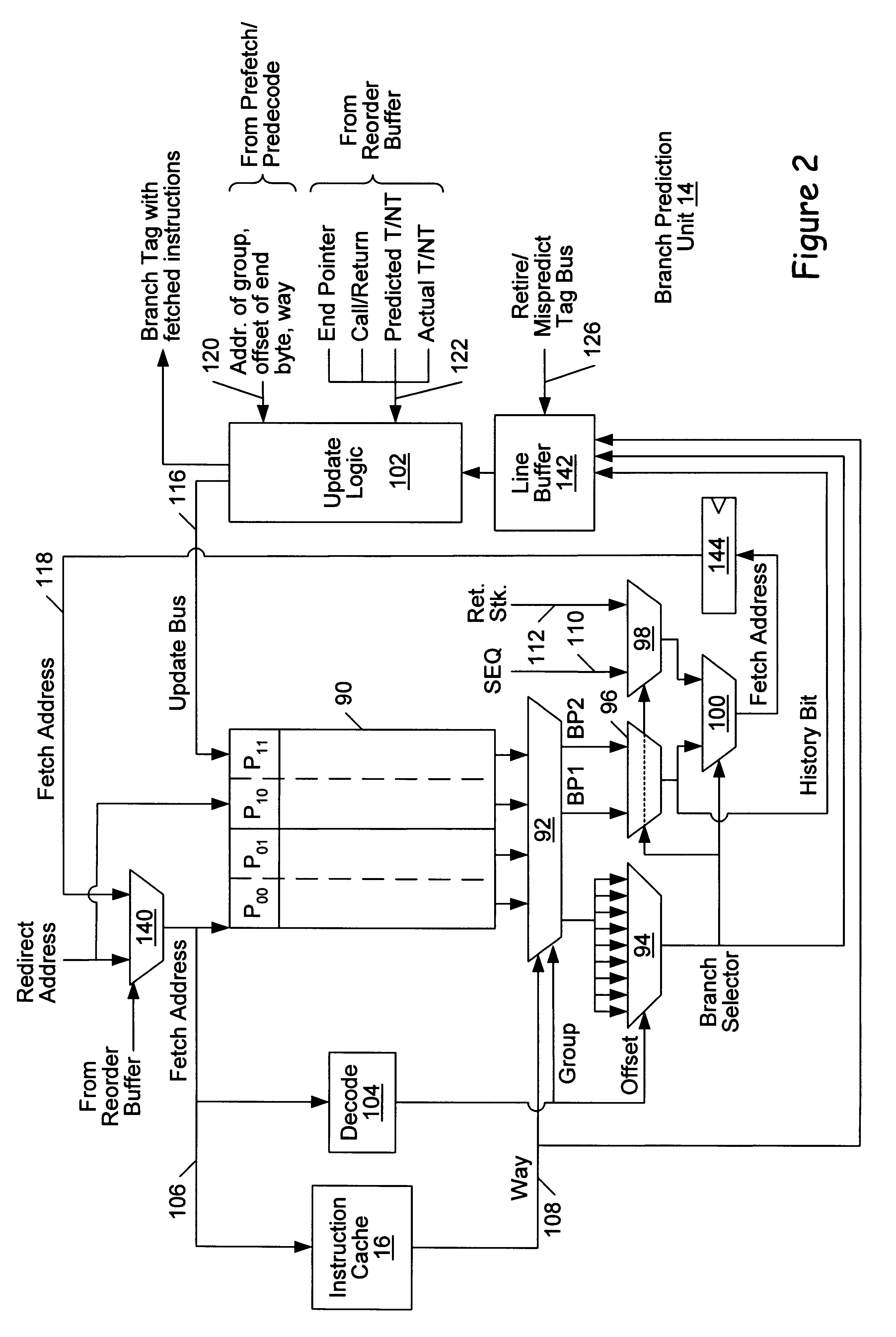

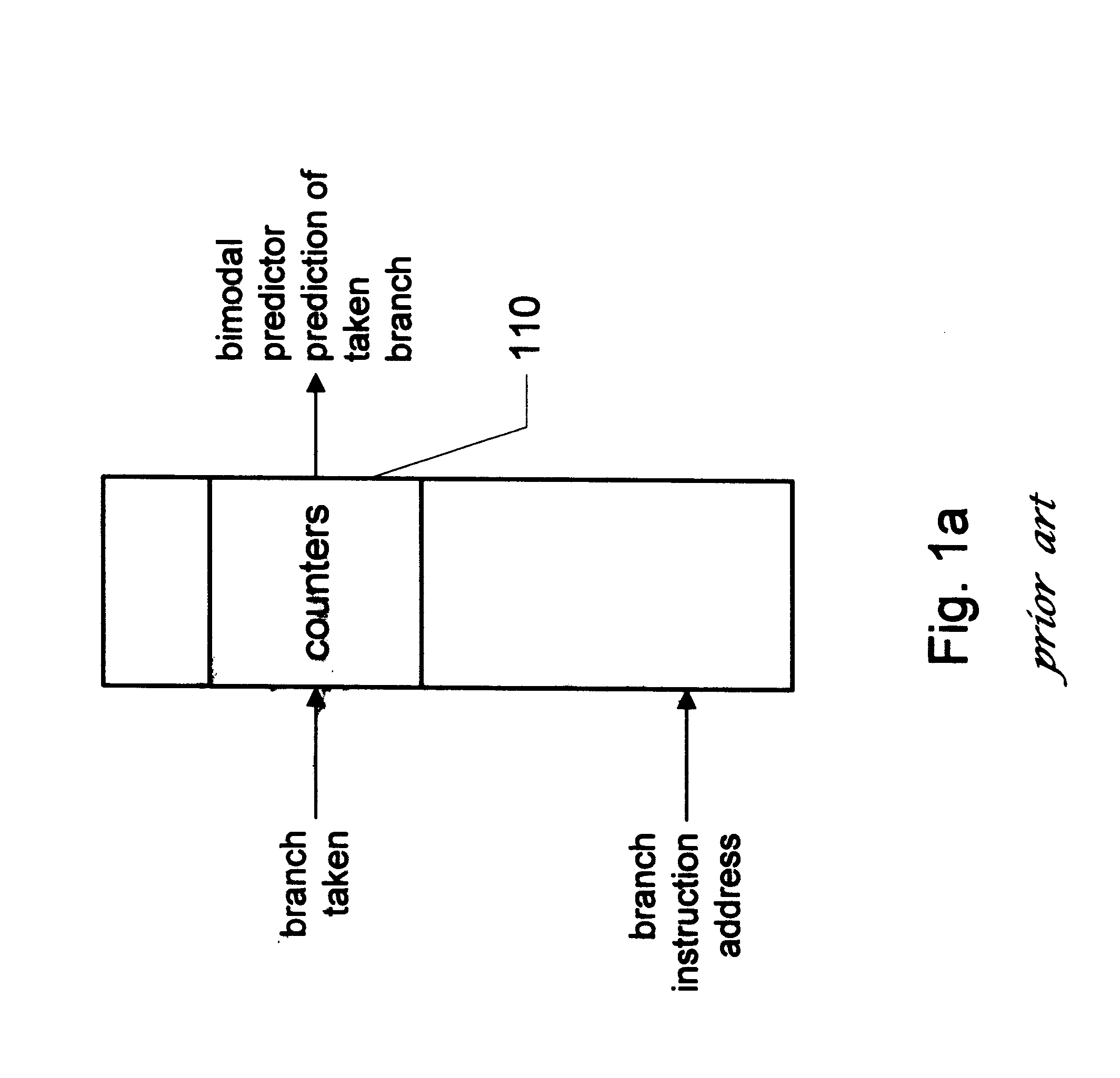

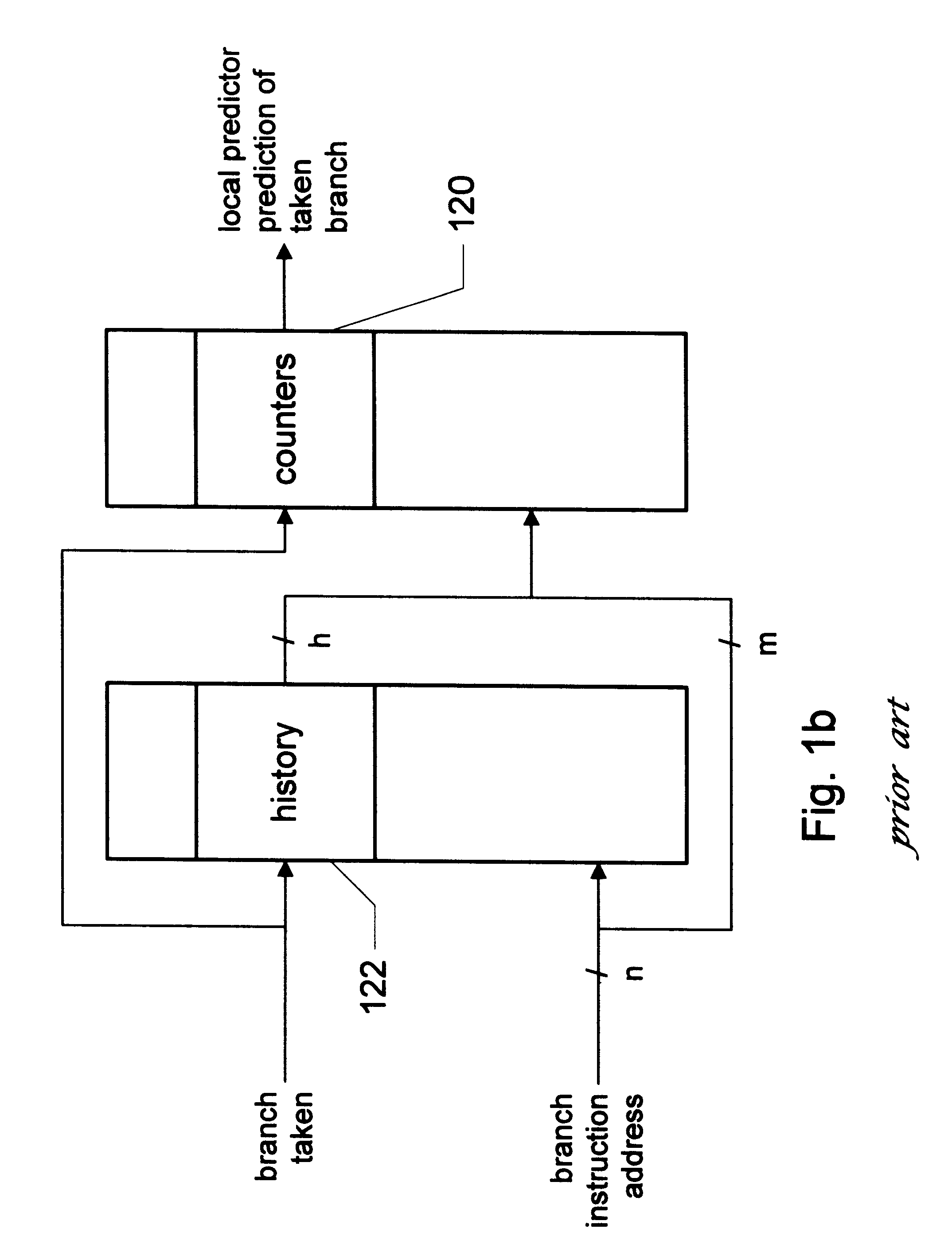

Three state branch history using one bit in a branch prediction mechanism

A branch prediction unit stores a set of branch prediction history bits and branch selectors corresponding to each of a group of contiguous instruction bytes stored in an instruction cache. While only one bit is used to represent branch prediction history, three distinct states are represented in conjunction with the absence of a branch prediction. This provides for the storage of fewer bits, while maintaining a high degree of branch prediction accuracy. Each branch selector identifies the branch prediction to be selected if a fetch address corresponding to that branch selector is presented. In order to minimize the number of branch selectors stored for a group of contiguous instruction bytes, the group is divided into multiple byte ranges. The largest byte range may include a number of bytes comprising the shortest branch instruction in the instruction set (exclusive of the return instruction). For example, the shortest branch instruction may be two bytes in one embodiment. Therefore, the largest byte range is two bytes in the example. Since the branch selectors as a group change value (i.e. indicate a different branch instruction) only at the end byte of a predicted-taken branch instruction, fewer branch selectors may be stored than the number of bytes within the group.

Owner:GLOBALFOUNDRIES INC

Systems and methods for downloading algorithmic elements to a coprocessor and corresponding techniques

ActiveUS20050122334A1Improve abilitiesSophisticated effectDigital computer detailsNext instruction address formationComputational scienceCoprocessor

Systems and methods for downloading algorithmic elements to a coprocessor and corresponding processing and communication techniques are provided. For an improved graphics pipeline, the invention provides a class of co-processing device, such as a graphics processor unit (GPU), providing improved capabilities for an abstract or virtual machine for performing graphics calculations and rendering. The invention allows for runtime-predicated flow control of programs downloaded to coprocessors, enables coprocessors to include indexable arrays of on-chip storage elements that are readable and writable during execution of programs, provides native support for textures and texture maps and corresponding operations in a vertex shader, provides frequency division of vertex streams input to a vertex shader with optional support for a stream modulo value, provides a register storage element on a pixel shader and associated interfaces for storage associated with representing the “face” of a pixel, provides vertex shaders and pixel shaders with more on-chip register storage and the ability to receive larger programs than any existing vertex or pixel shaders and provides 32 bit float number support in both vertex and pixel shaders.

Owner:MICROSOFT TECH LICENSING LLC

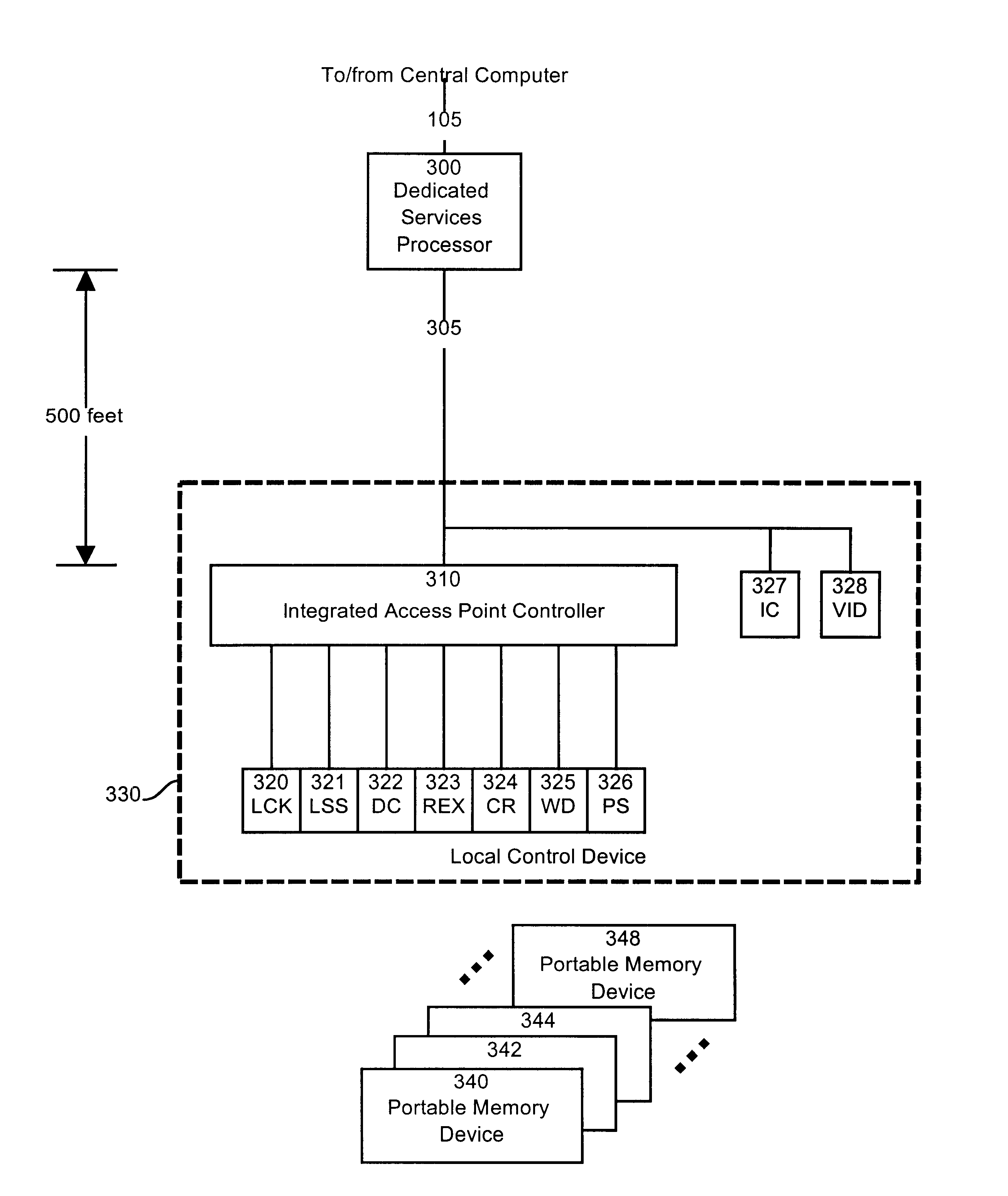

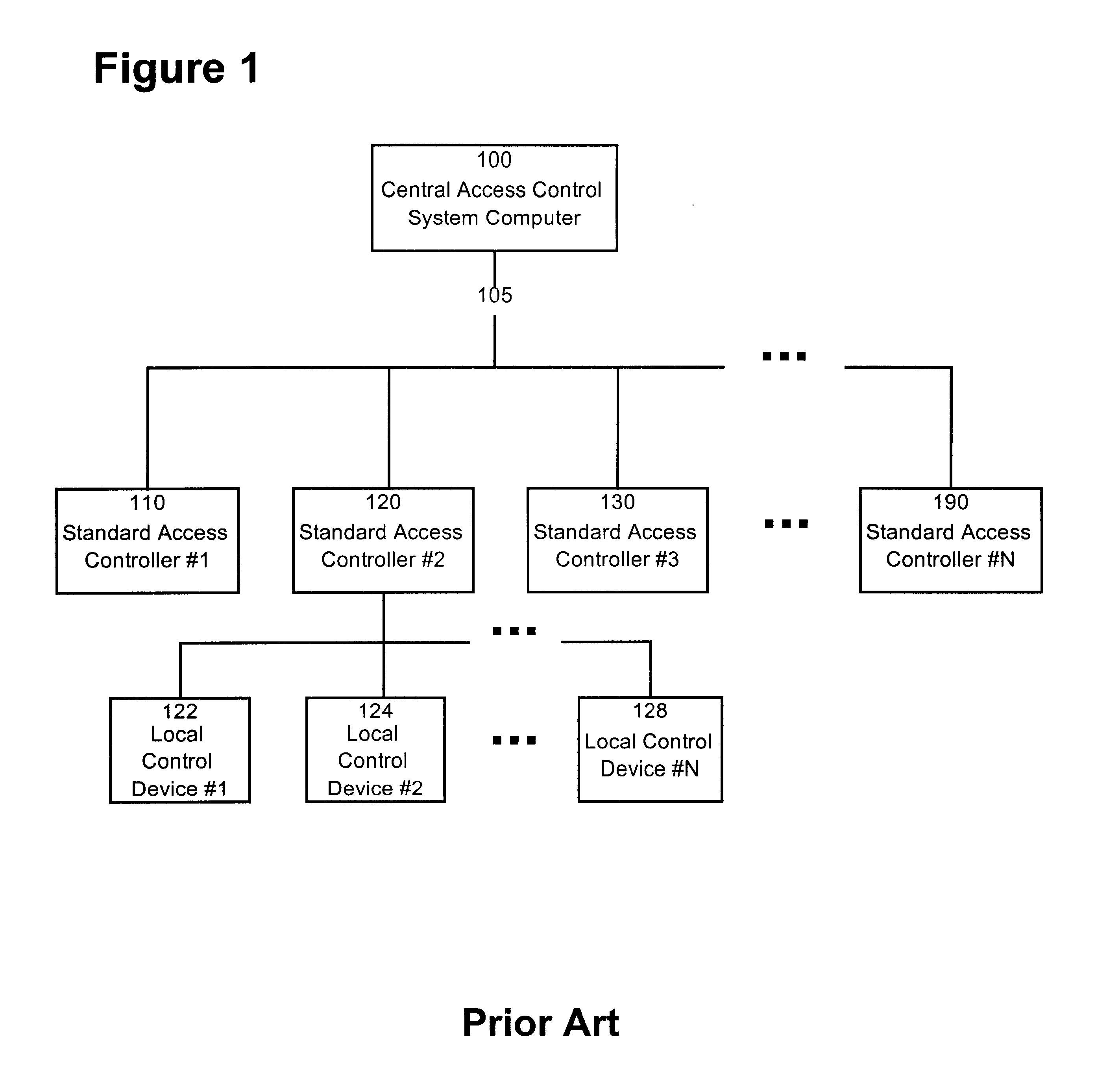

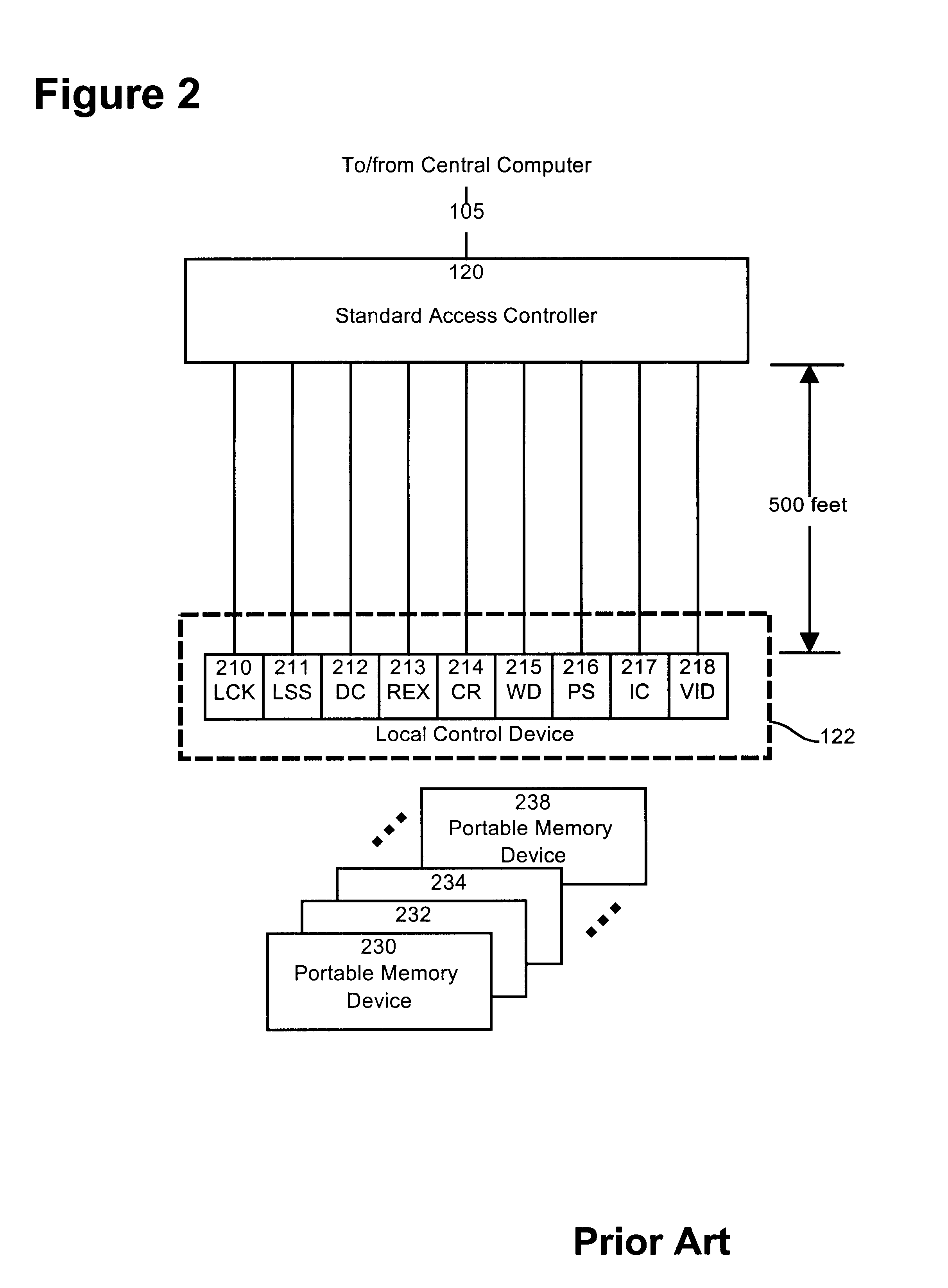

Shared intelligence automated access control system

InactiveUS6374356B1Fast response timeLess bandwidthFrequency-division multiplex detailsDigital data processing detailsControl systemSpecific function

The present invention relates to access control systems ore commonly referred to as security systems. In a preferred embodiment of the invention, the access control system uses a memory card and a network of access control subsystems. Among the specific characteristics of the invention are the loading of schedule data on the memory card and the devolution of specific functions such as schedule validation to the access control subsystem level. These characteristics of the system provide, among other advantages, a reduction of the amount of wiring, a reduction in the hardware and installation costs, a considerable memory saving for the hardware and an increase in the system's capacity and flexibility.

Owner:INTEGRAL TECHNOLOGY INC

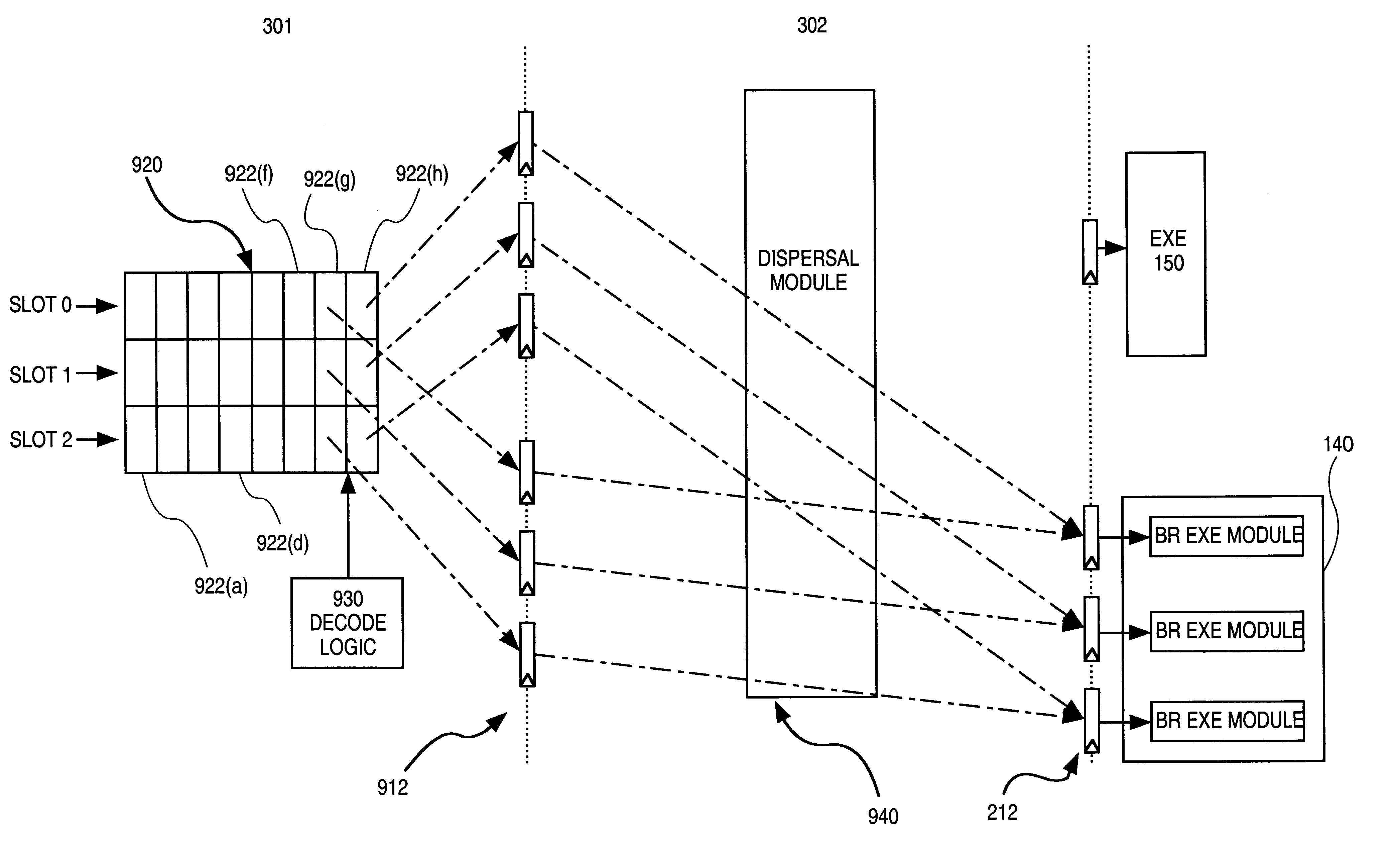

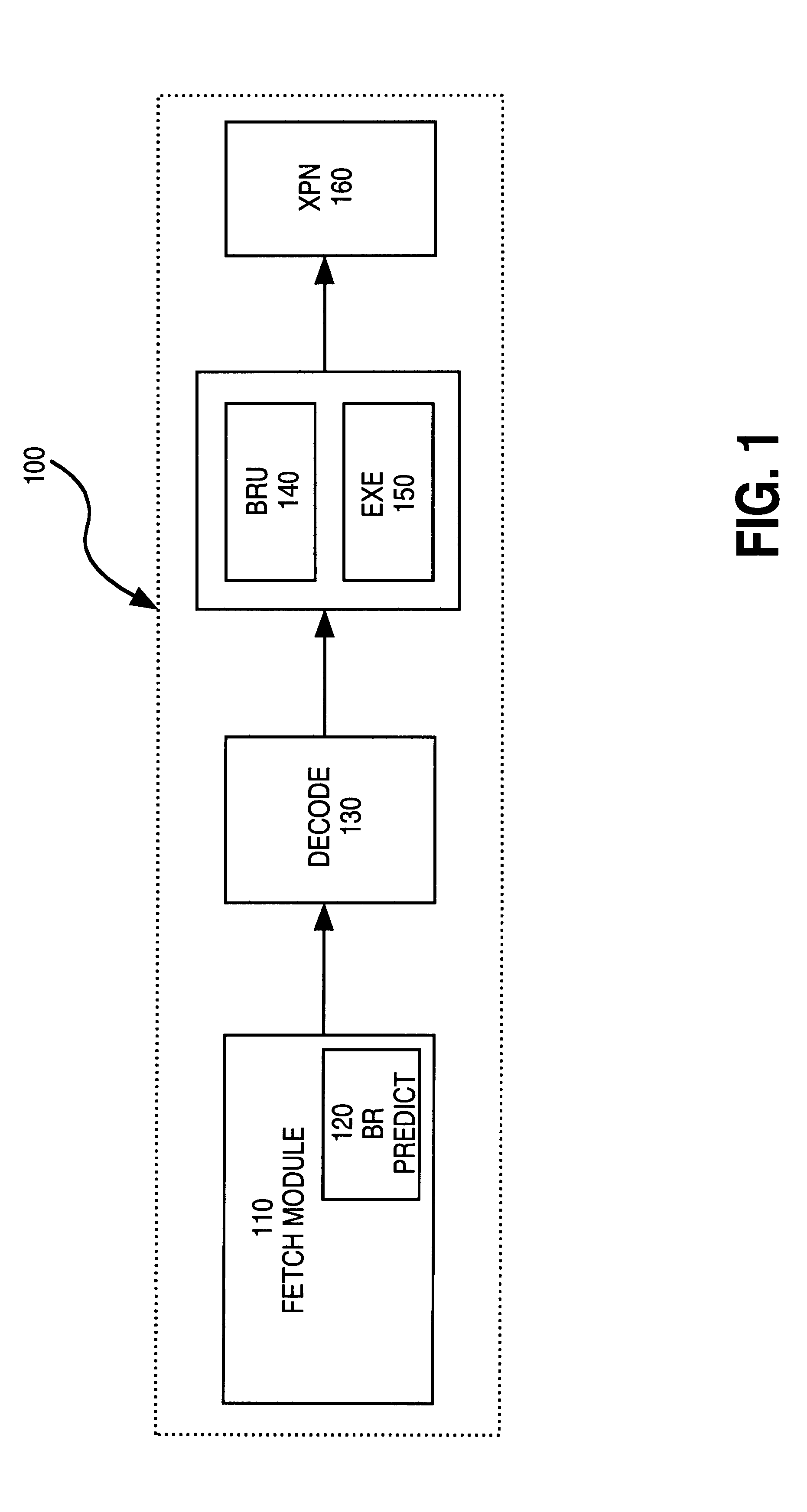

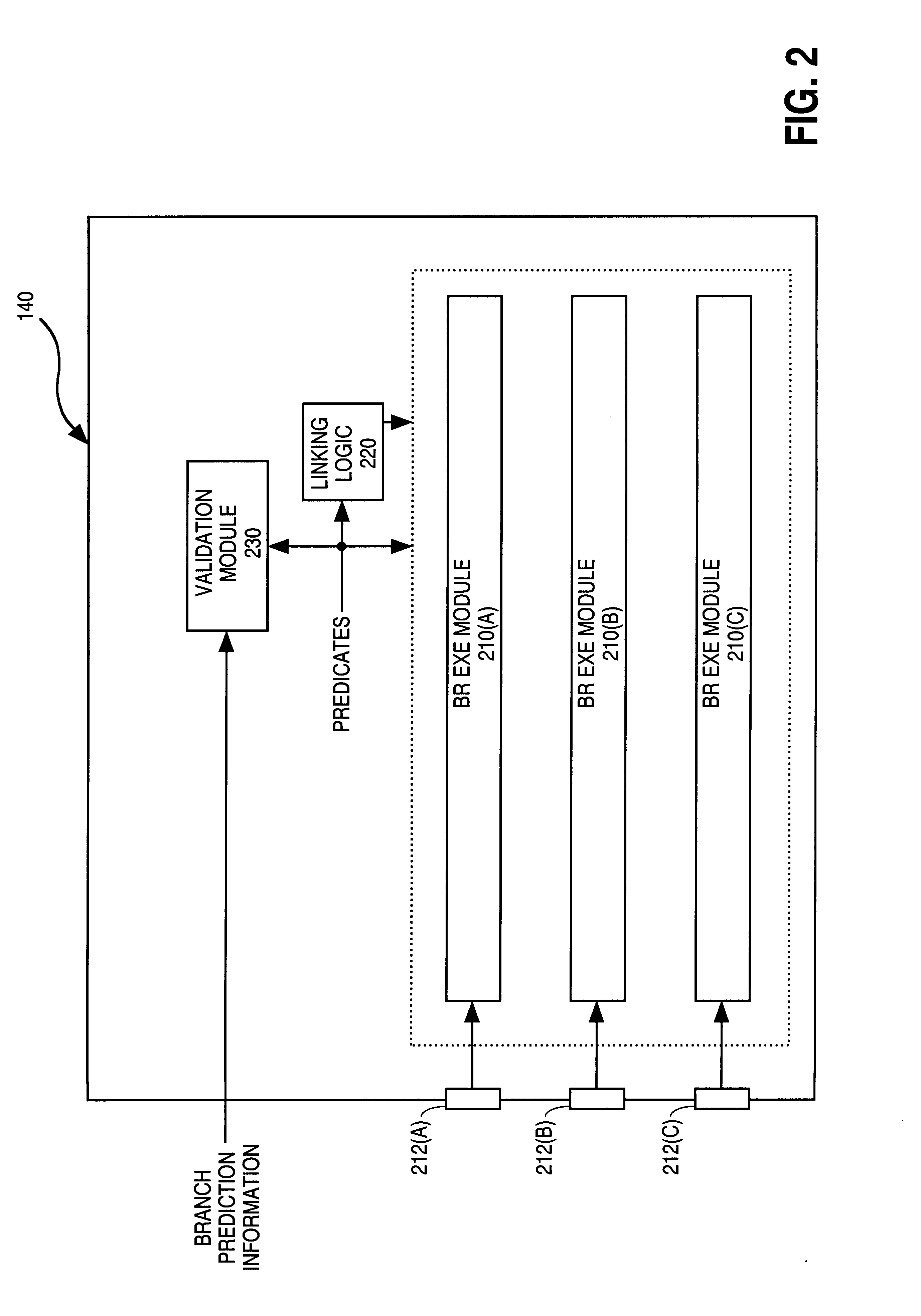

System for processing a cluster of instructions where the instructions are issued to the execution units having a priority order according to a template associated with the cluster of instructions

InactiveUS6240510B1Conditional code generationDigital computer detailsProcessing InstructionParallel computing

A system is provided for processing concurrently one or more branch instructions in an instruction bundle. The system includes multiple branch execution pipelines, each capable of executing a branch instruction to determine a branch direction, target address, and any side effects. Linking logic receives the resolved branch information and identifies a first branch instruction in execution order for which the branch direction is taken.

Owner:INTEL CORP

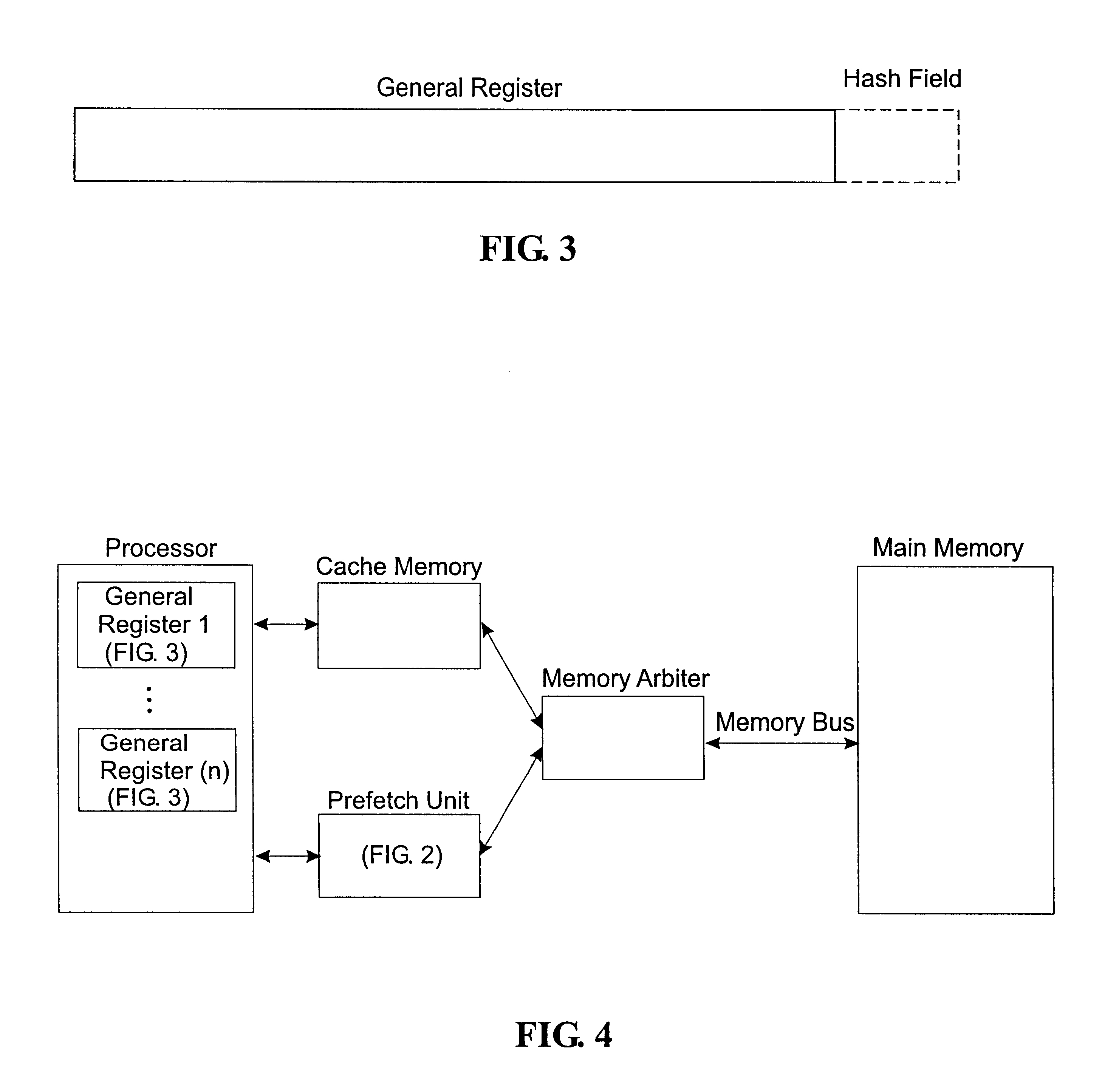

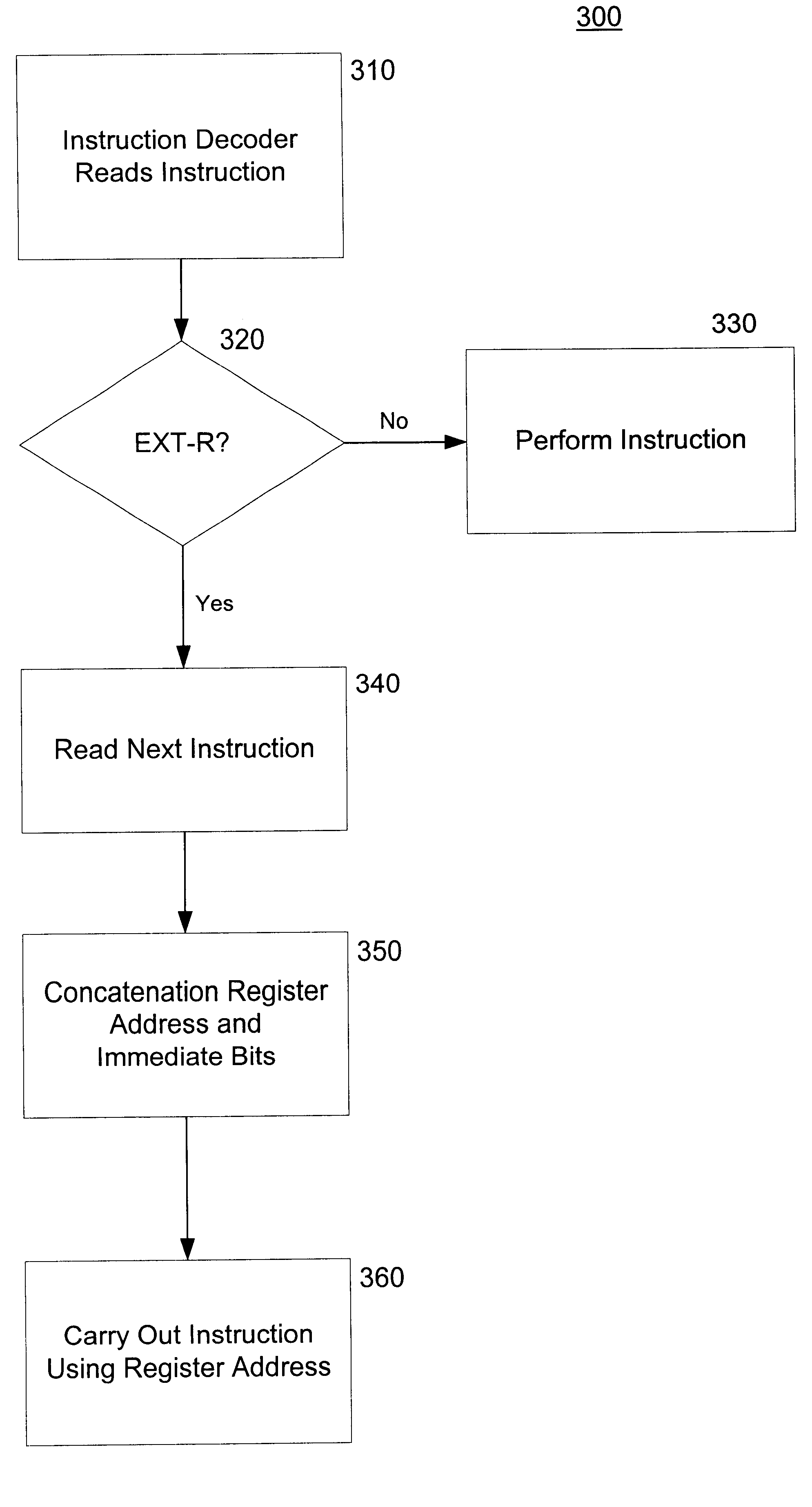

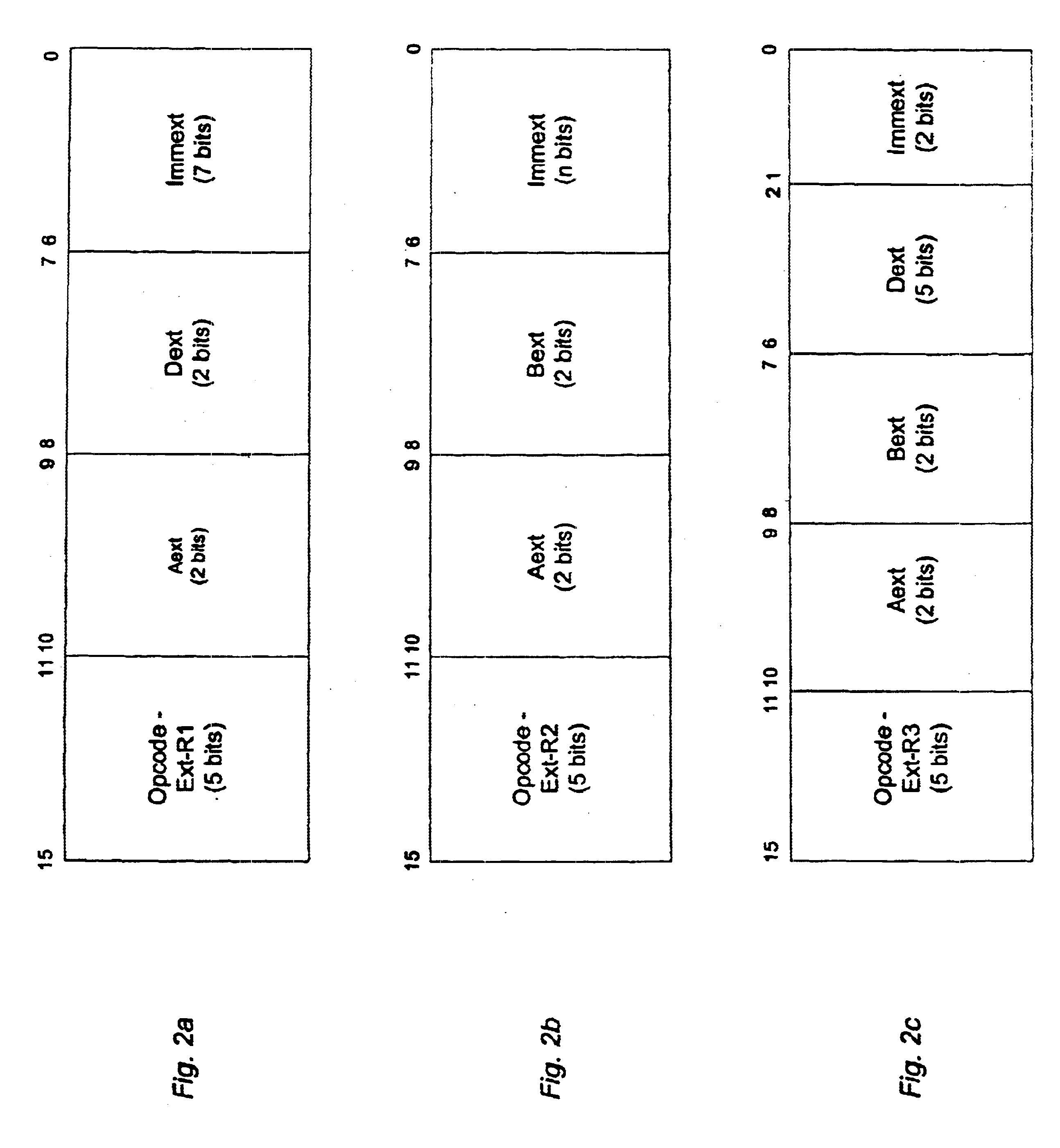

Register set extension for compressed instruction set

InactiveUS6651160B1Digital computer detailsNext instruction address formationProcessor registerComputer science

Owner:ARM FINANCE OVERSEAS LTD

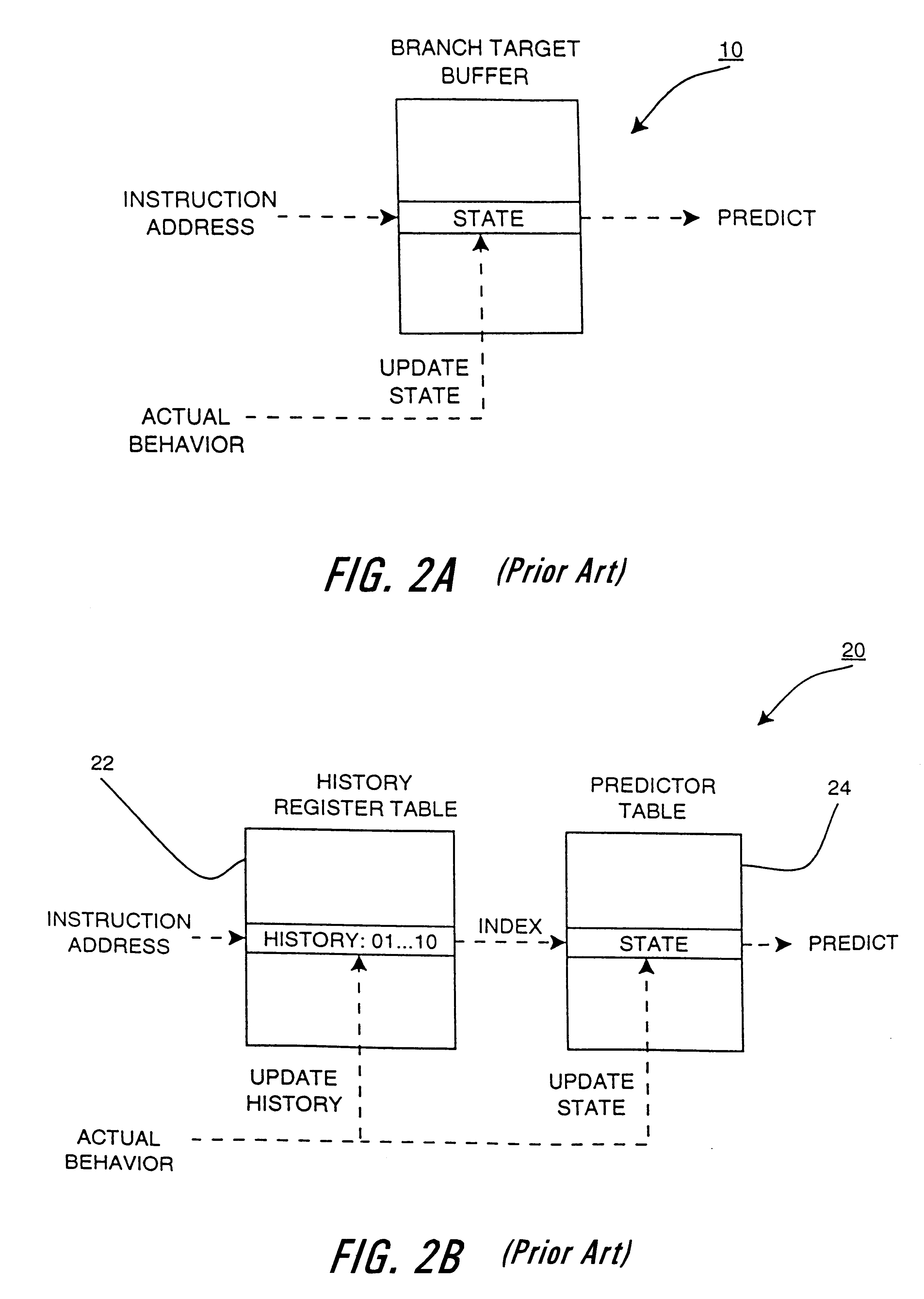

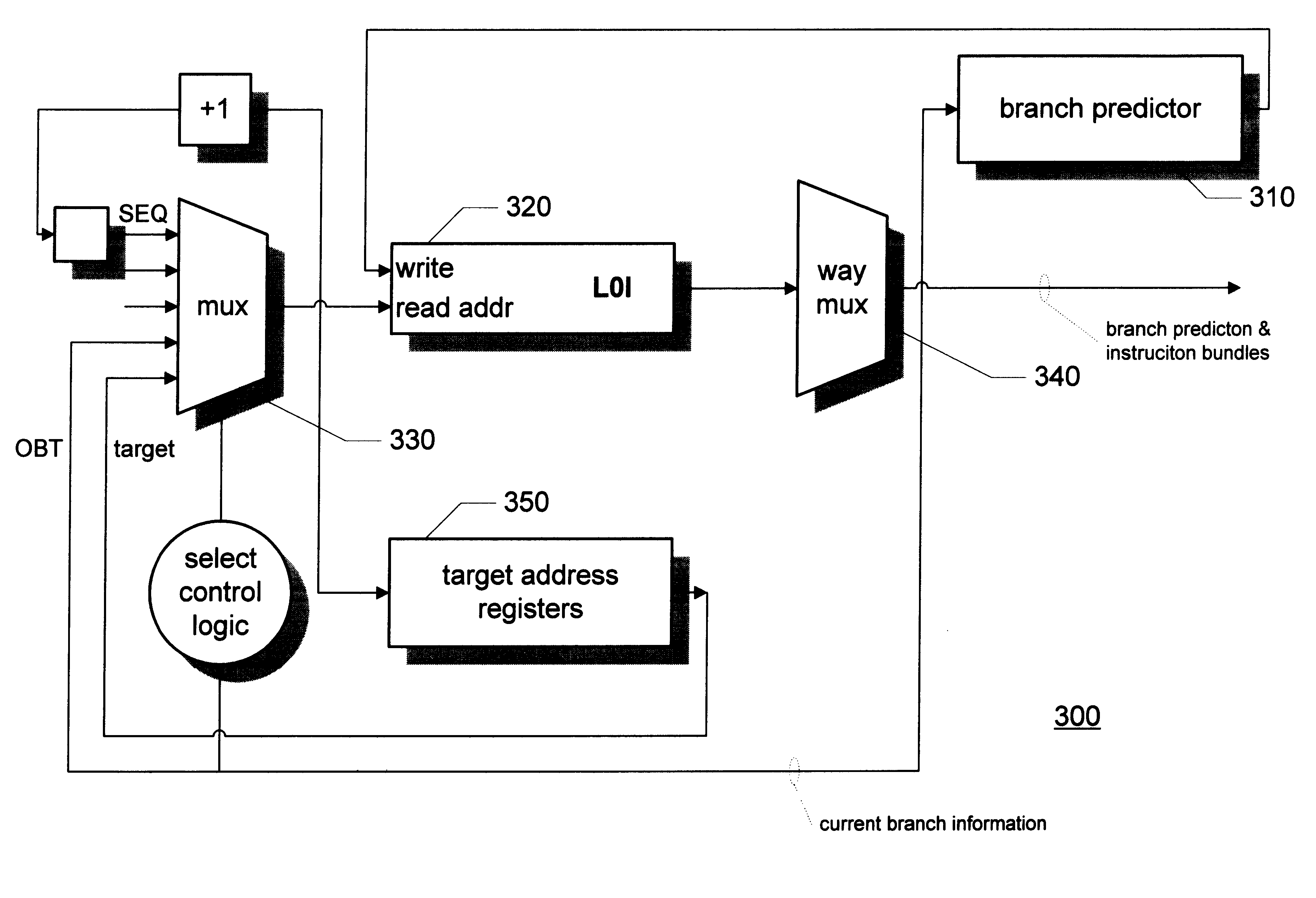

Branch prediction architecture

InactiveUS6332189B1Digital computer detailsNext instruction address formationProcessor registerTrace Cache

A branch prediction architecture is disclosed, having a branch predictor, a target address register, first and second multiplexors, a cache memory, and a trace cache. The branch predictor may advantageously be a series-parallel branch predictor, and alternatively may be a serial-BLG branch predictor or a choosing branch predictor. The first multiplexor receives an input from the target address register, and provides an output to the cache memory. The cache memory receives output from both the branch predictor and the first multiplexor, and provides an output to the second multiplexor. The trace cache receives the output from the branch predictor, and provides an output received by the second multiplexor. The second multiplexor, receiving input from both the trace cache and the cache memory, outputs branch predictions and instruction bundles.

Owner:INTEL CORP

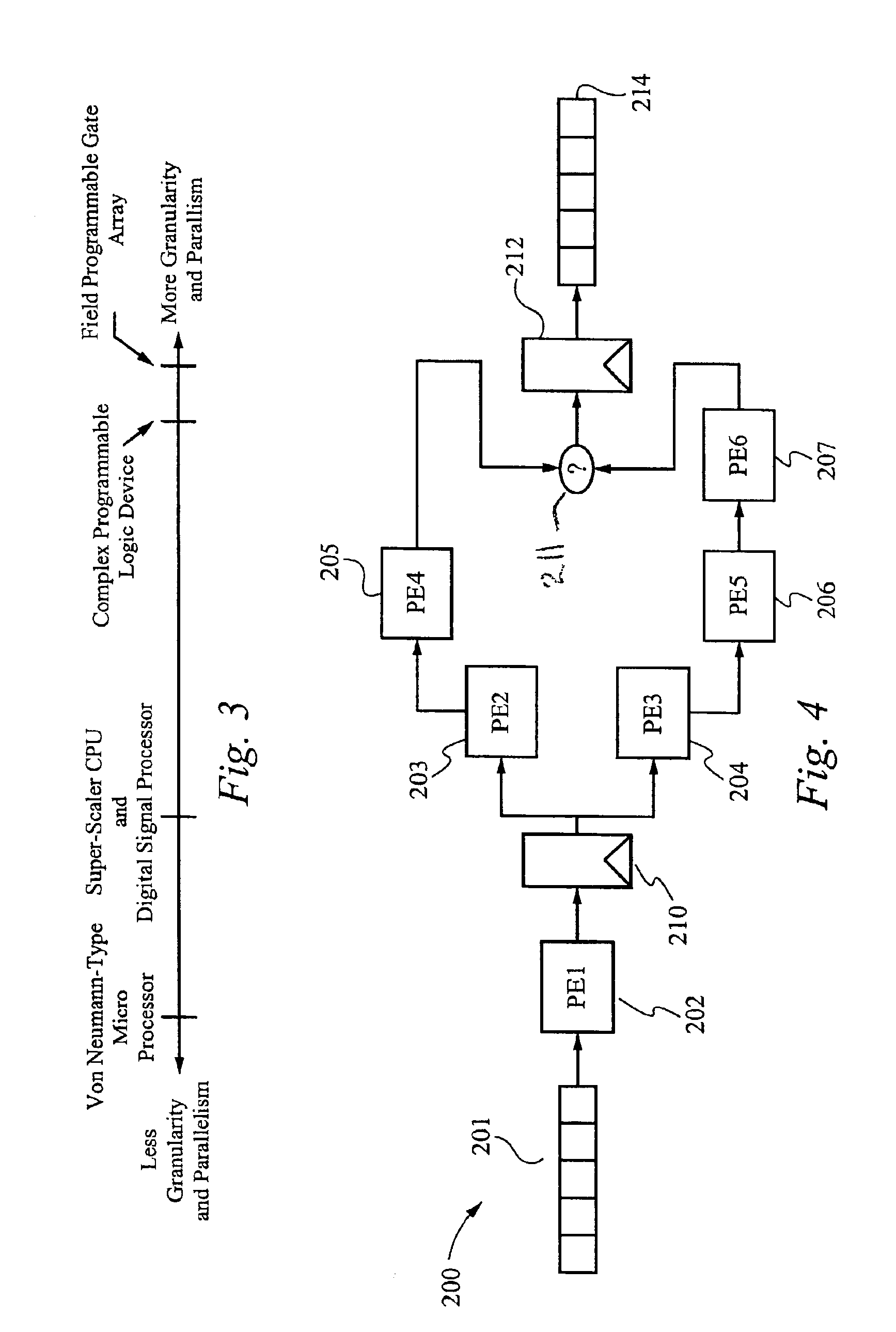

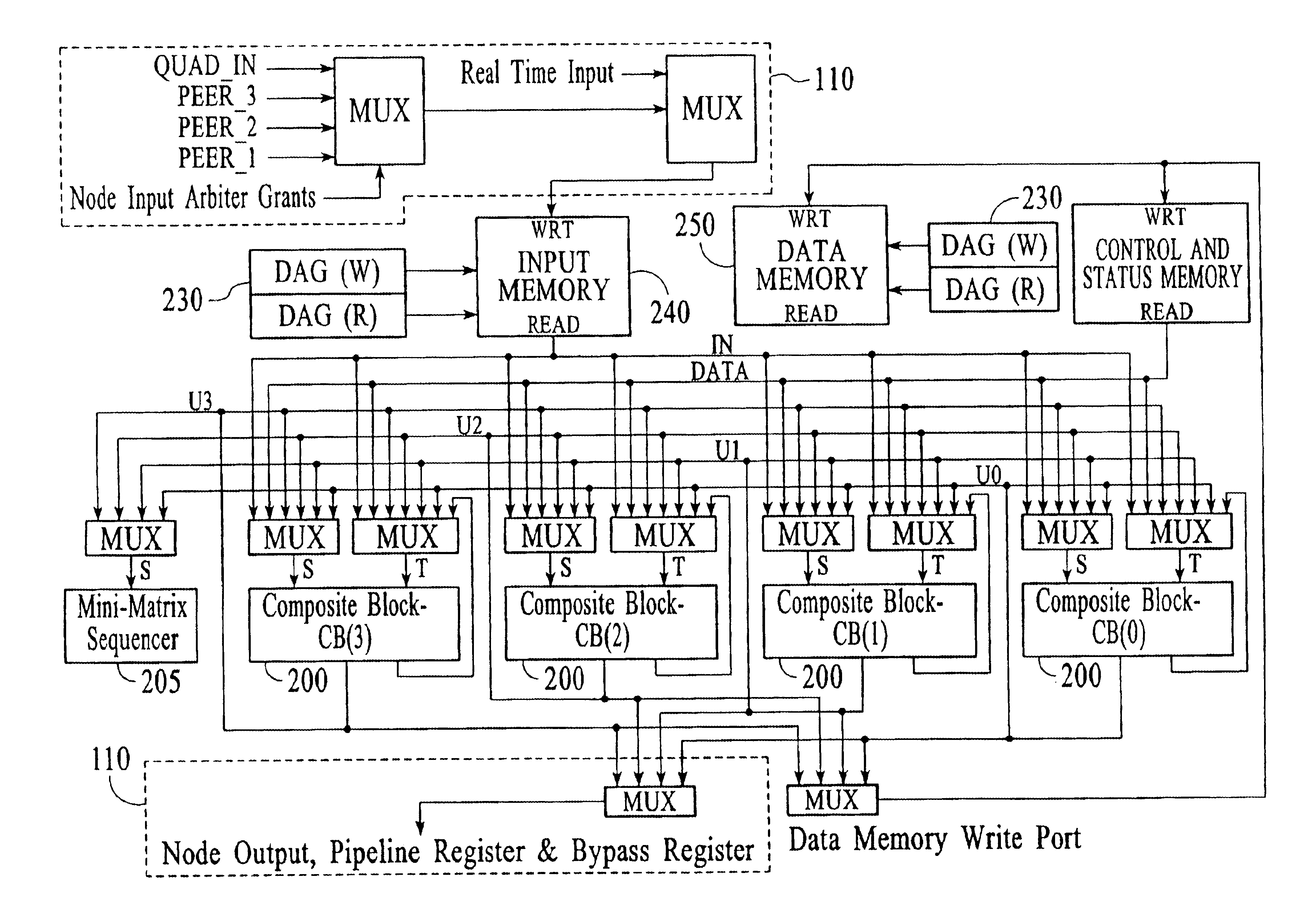

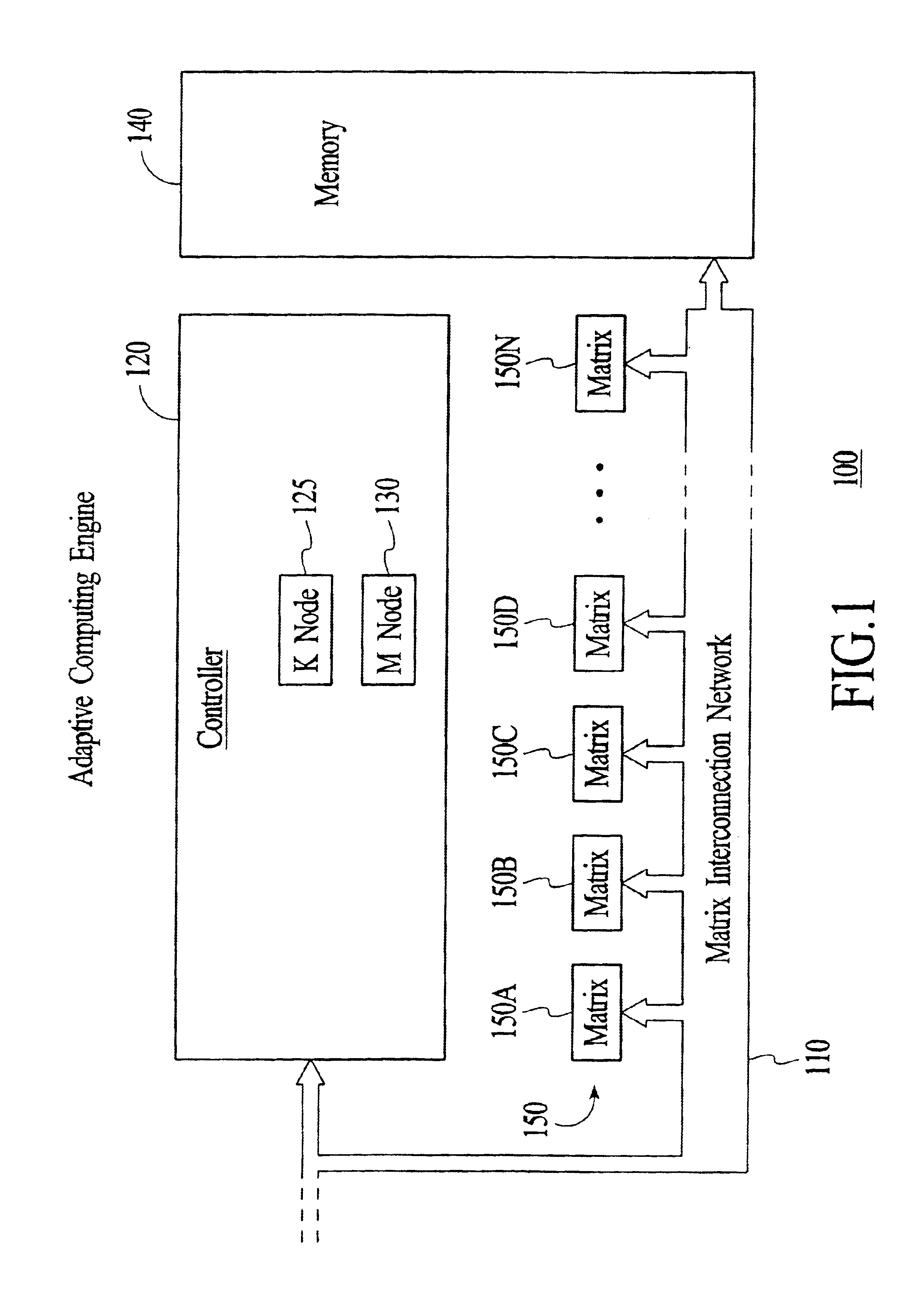

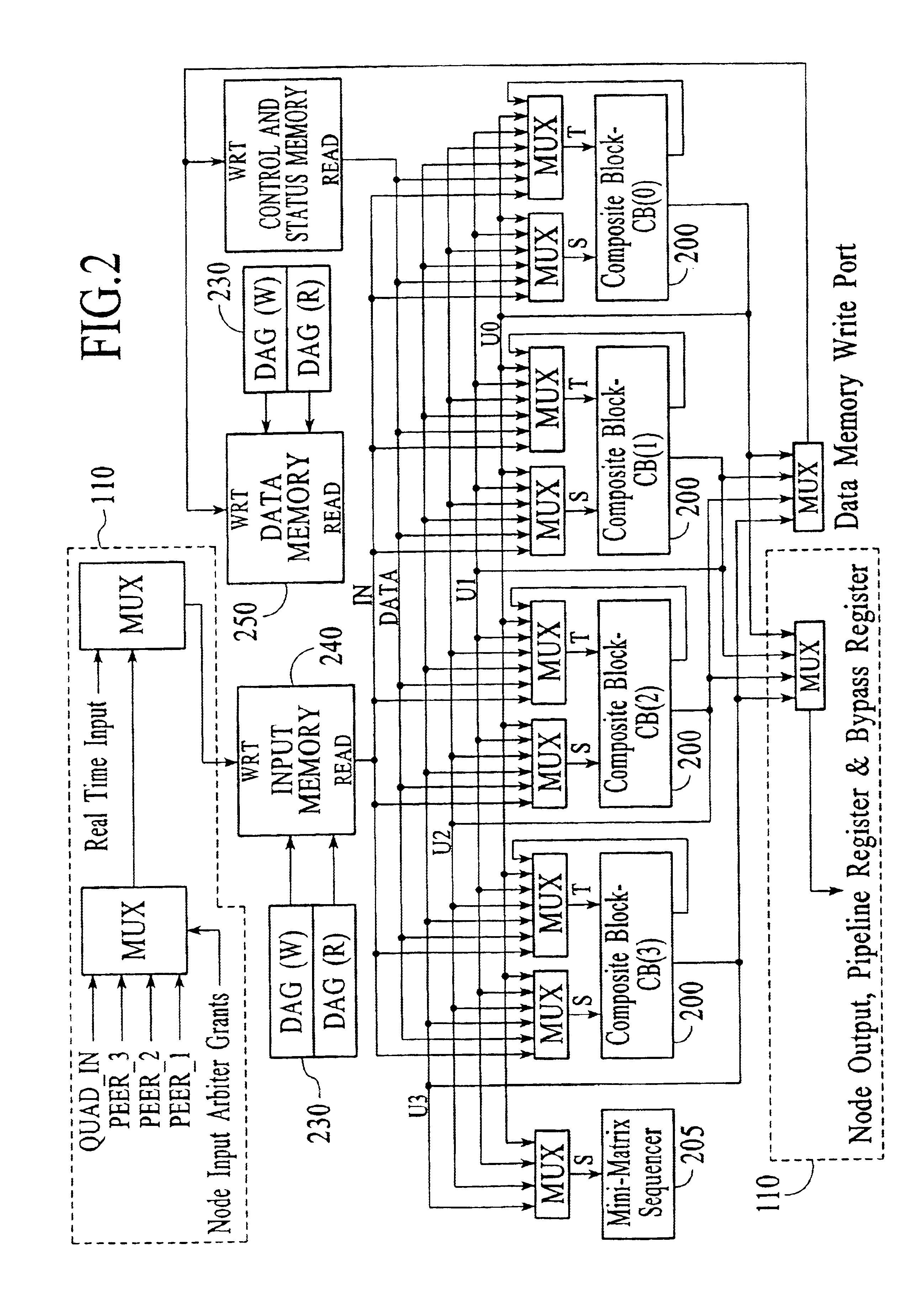

Adaptive computing engine with dataflow graph based sequencing in reconfigurable mini-matrices of composite functional blocks

InactiveUS6874079B2Single instruction multiple data multiprocessorsNext instruction address formationDigital signal processingParallel computing

Aspects of a method and system for digital signal processing within an adaptive computing engine are described. These aspects include a mini-matrix, the mini-matrix comprising a set of composite blocks, each composite block capable of executing a predetermined set of instructions. A sequencer is included for controlling the set of composite blocks and directing instructions among the set of composite blocks based on a data-flow graph. Further, a data network is included and transmits data to and from the set of composite blocks and to the sequencer, while a status network routes status word data resulting from instruction execution in the set of composite blocks. With the present invention, an effective combination of hardware resources is provided in a manner that provides multi-bit digital signal processing capabilities for an embedded system environment, particularly in an implementation of an adaptive computing engine. These and other advantages will become readily apparent from the following detailed description and accompanying drawings.

Owner:CORNAMI INC

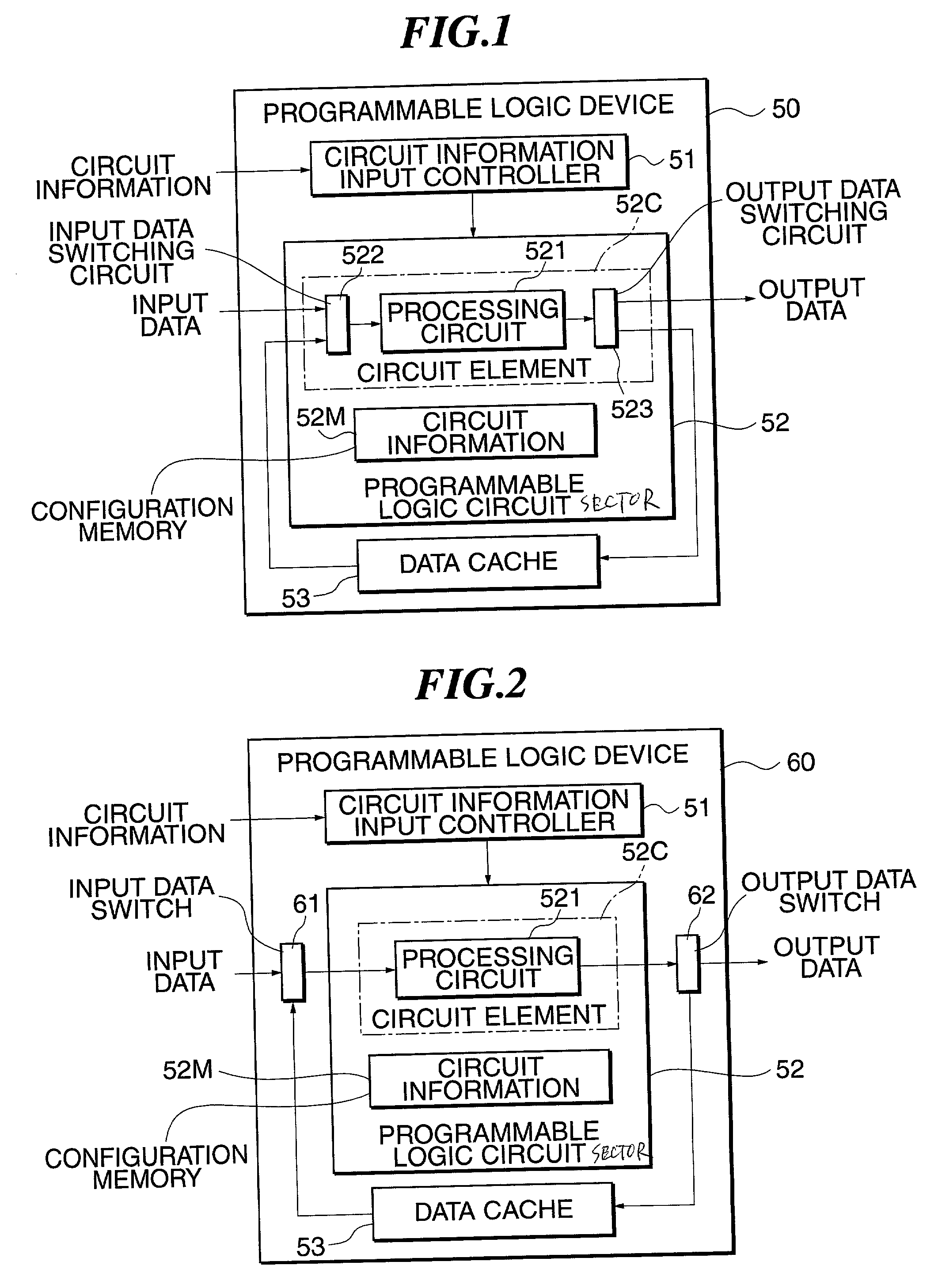

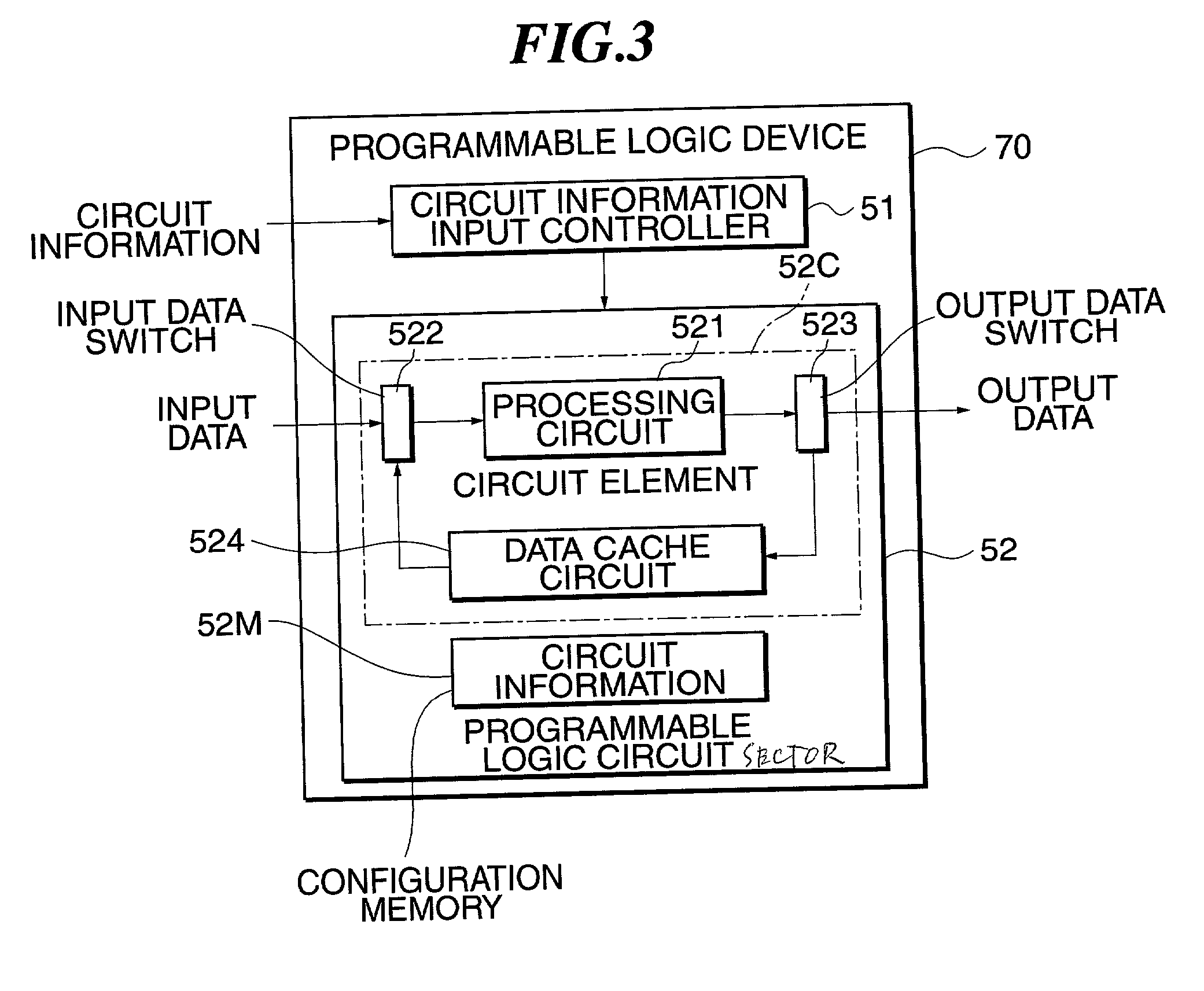

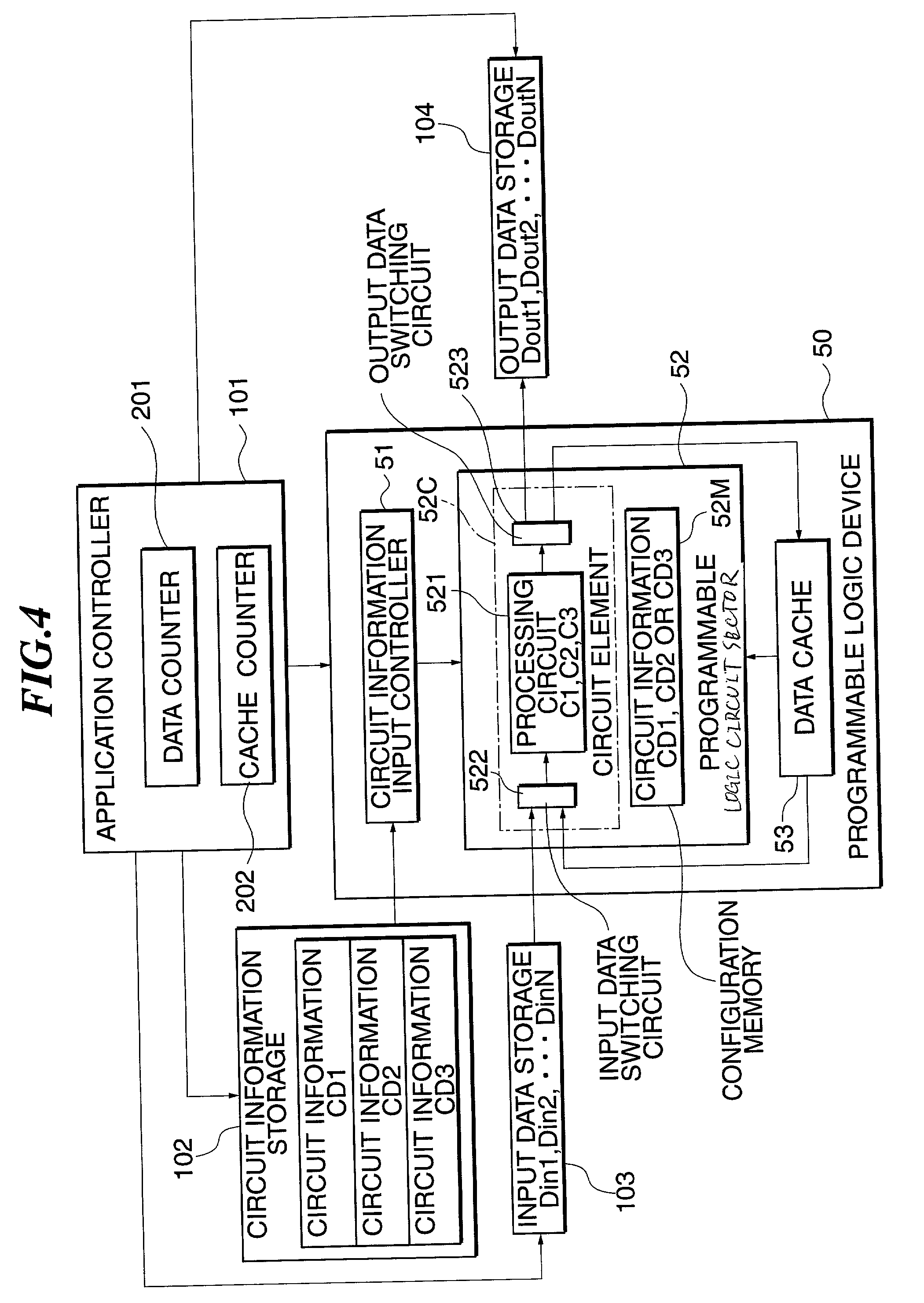

Data processing method by programmable logic device, programmable logic device, information processing system and method of reconfiguring circuit in programmable logic

InactiveUS20010010074A1Increase the number ofProgram control using stored programsNext instruction address formationInformation processingProgrammable logic device

To provide a method of implementing cache logic technique in which total data processing time can be reduced, input data divided into block is sequentially processed in units of block in plural circuits using a programmable logic device provided with a circuit information input controller, a programmable logic circuit sector and a data cache. The plural circuits are sequentially reconfigured in the programmable logic device and execute processing per plural blocks which can be stored in the data cache. Intermediate data in units of plural blocks is stored in the data cache to be input data to a reconfigured circuit and intermediate data as the result of the processing by the reconfigured circuit is overwritten to the data cache. When the processing of the plural circuits is finished, the result of the processing is output to an external device without being stored in the data cache.

Owner:FUJIFILM BUSINESS INNOVATION CORP

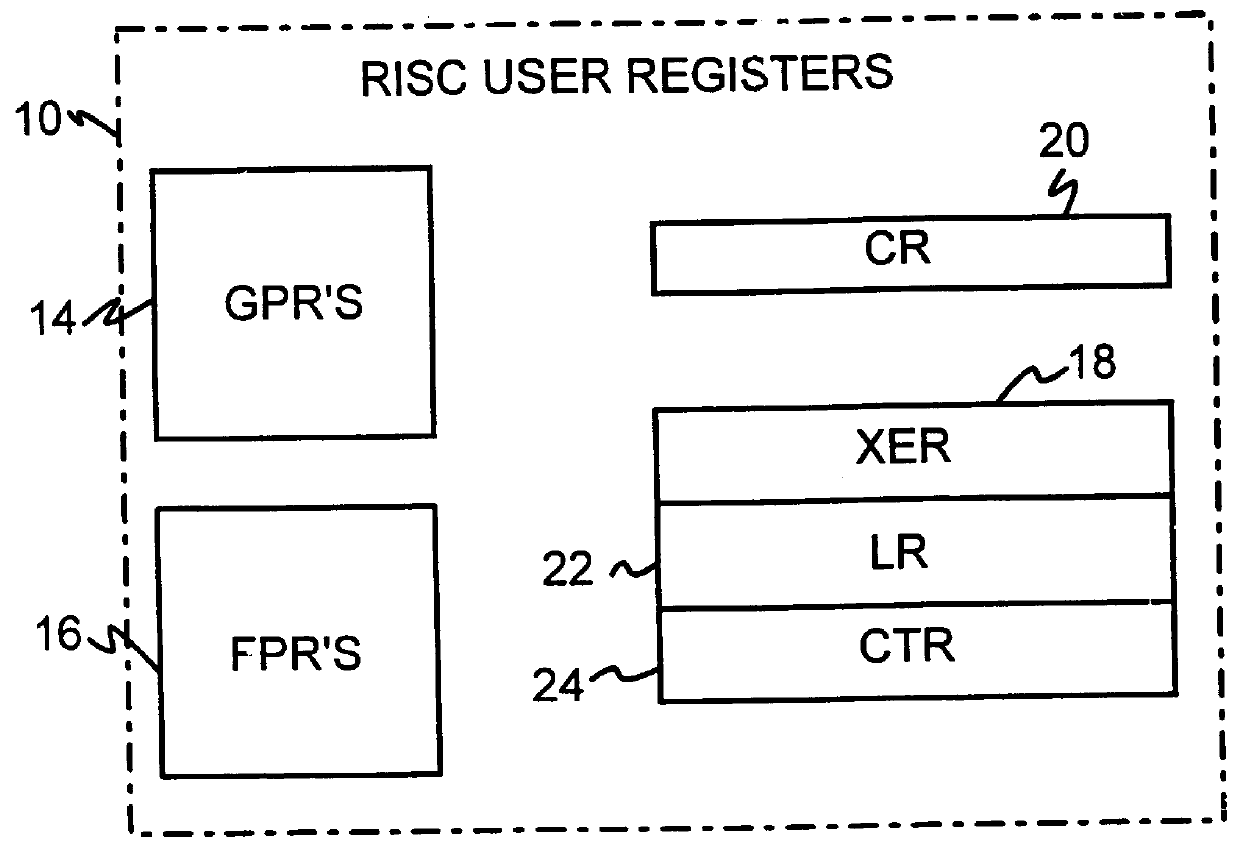

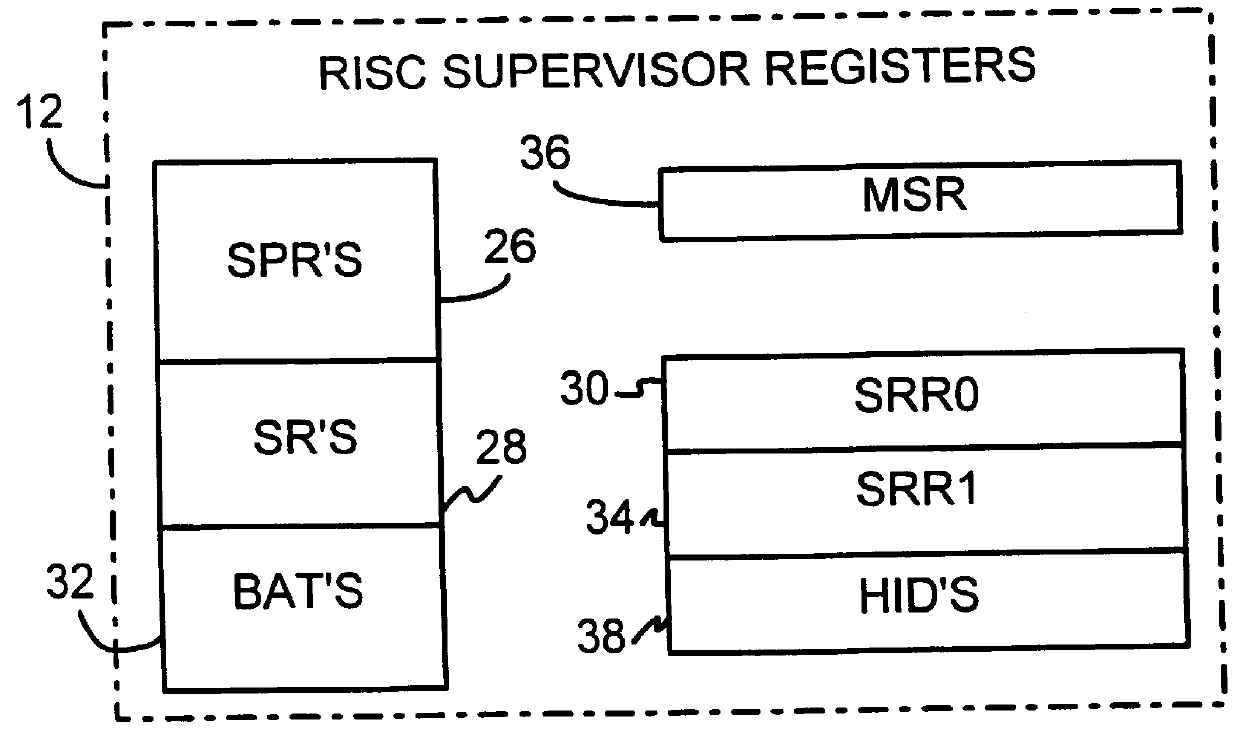

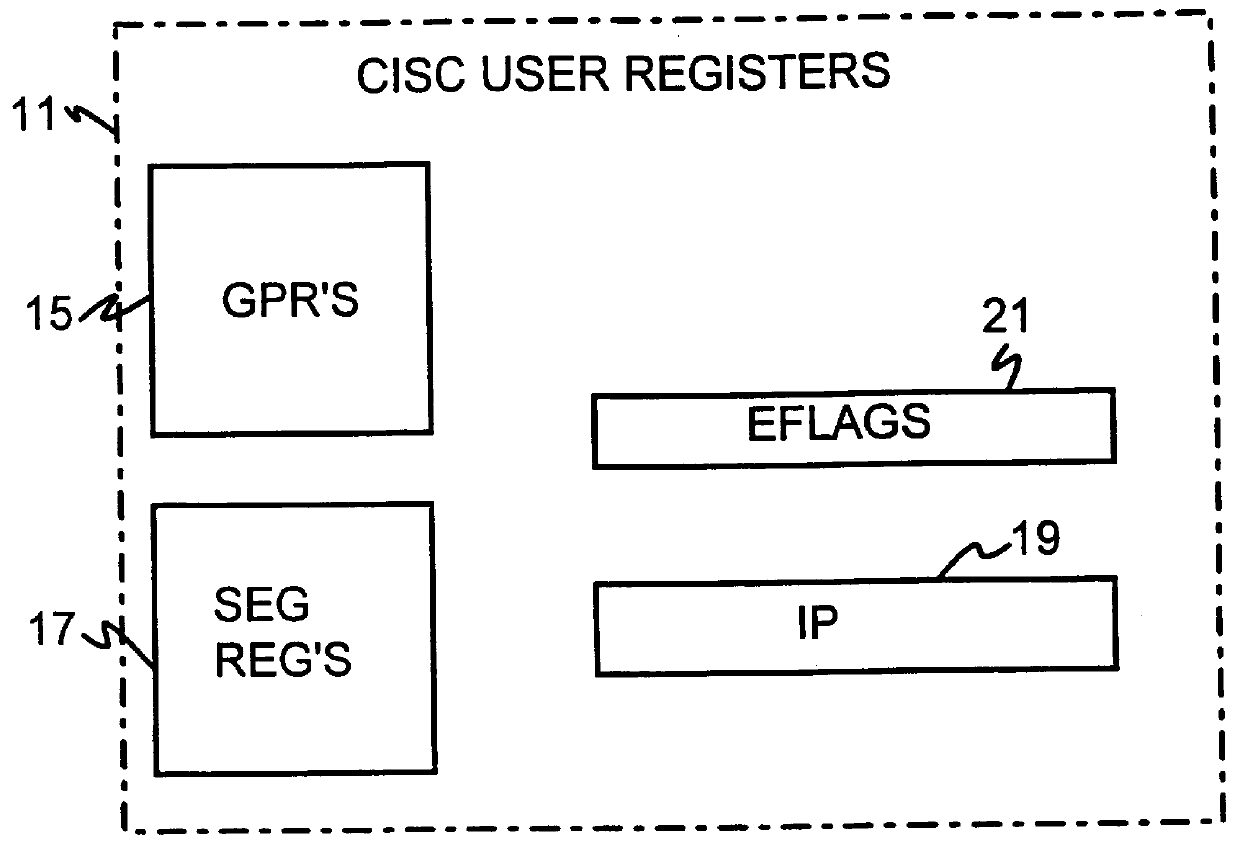

Shared register architecture for a dual-instruction-set CPU to facilitate data exchange between the instruction sets

A dual-instruction set central processing unit (CPU) is capable of executing instructions from a reduced instruction set computer (RISC) instruction set and from a complex instruction set computer (CISC) instruction set. Data and address information may be to transferred from a CISC program to a RISC program running on the CPU by using shared registers. The architecturally-defined registers in the CISC instruction set are merged or folded into some of the architecturally-defined registers in the RISC architecture so that these merged registers are shared by the two instructions sets. In particular, the flags or condition code registers defined by each architecture are merged together so that CISC instructions and RISC instructions will implicitly update the same merged flags register when performing computational instructions. The RISC and CISC registers are folded together so that the CISC flags are at one end of the register while the frequently used RISC flags are at the other end, but the RISC instructions can read or write any bit in the merged register. The CISC code segment base address is stored in the RISC branch count register, while the CISC floating point instruction address is stored in the RISC branch link register. The general-purpose registers (GPR's) are also merged together, allowing a CISC program to pass data to a RISC program merely by writing one of its GPR's, switching control to the RISC program, and the RISC program reading one of its GPR's that is merged with and corresponds to the CISC GPR that was written to by the CISC program.

Owner:SAMSUNG ELECTRONICS CO LTD

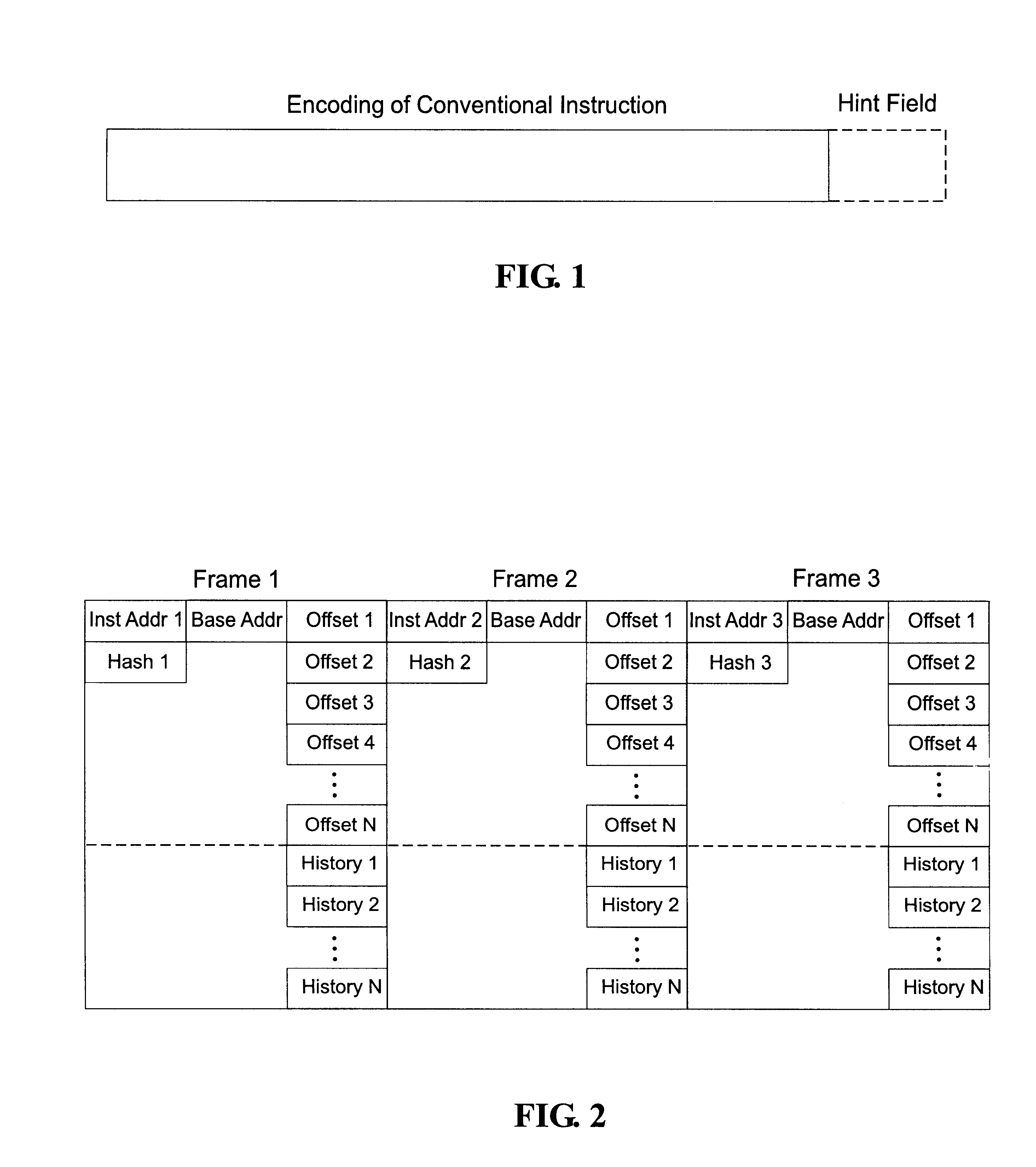

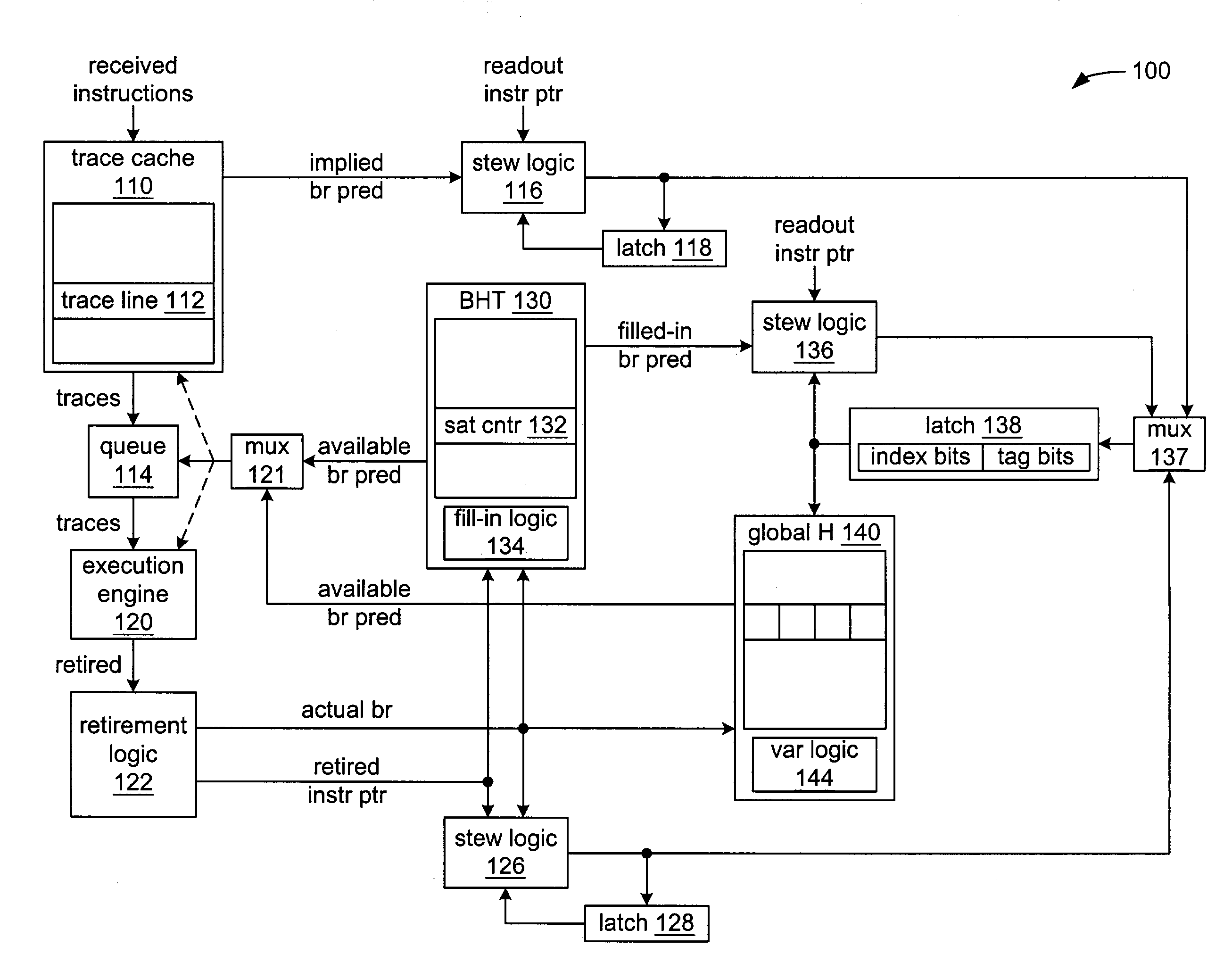

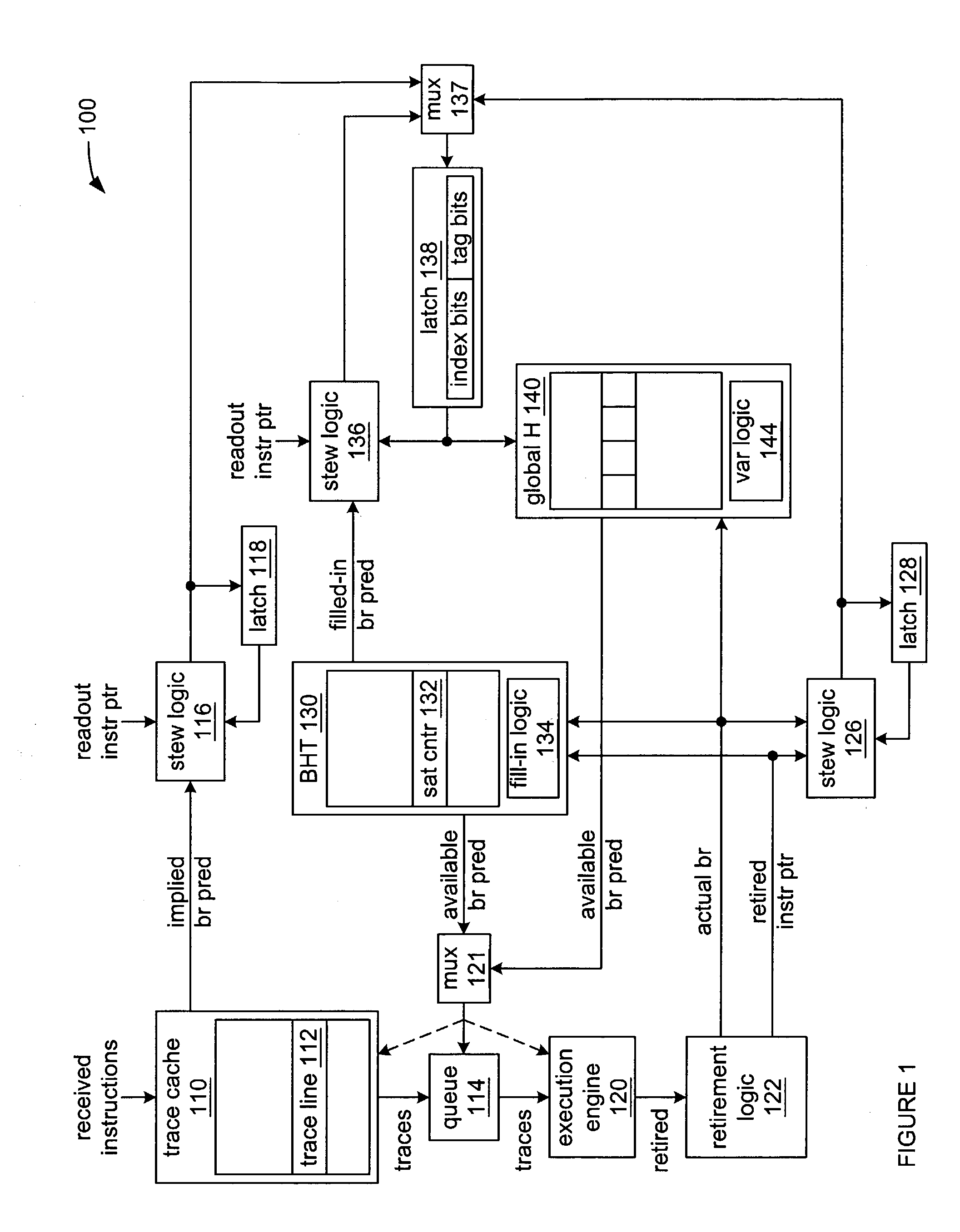

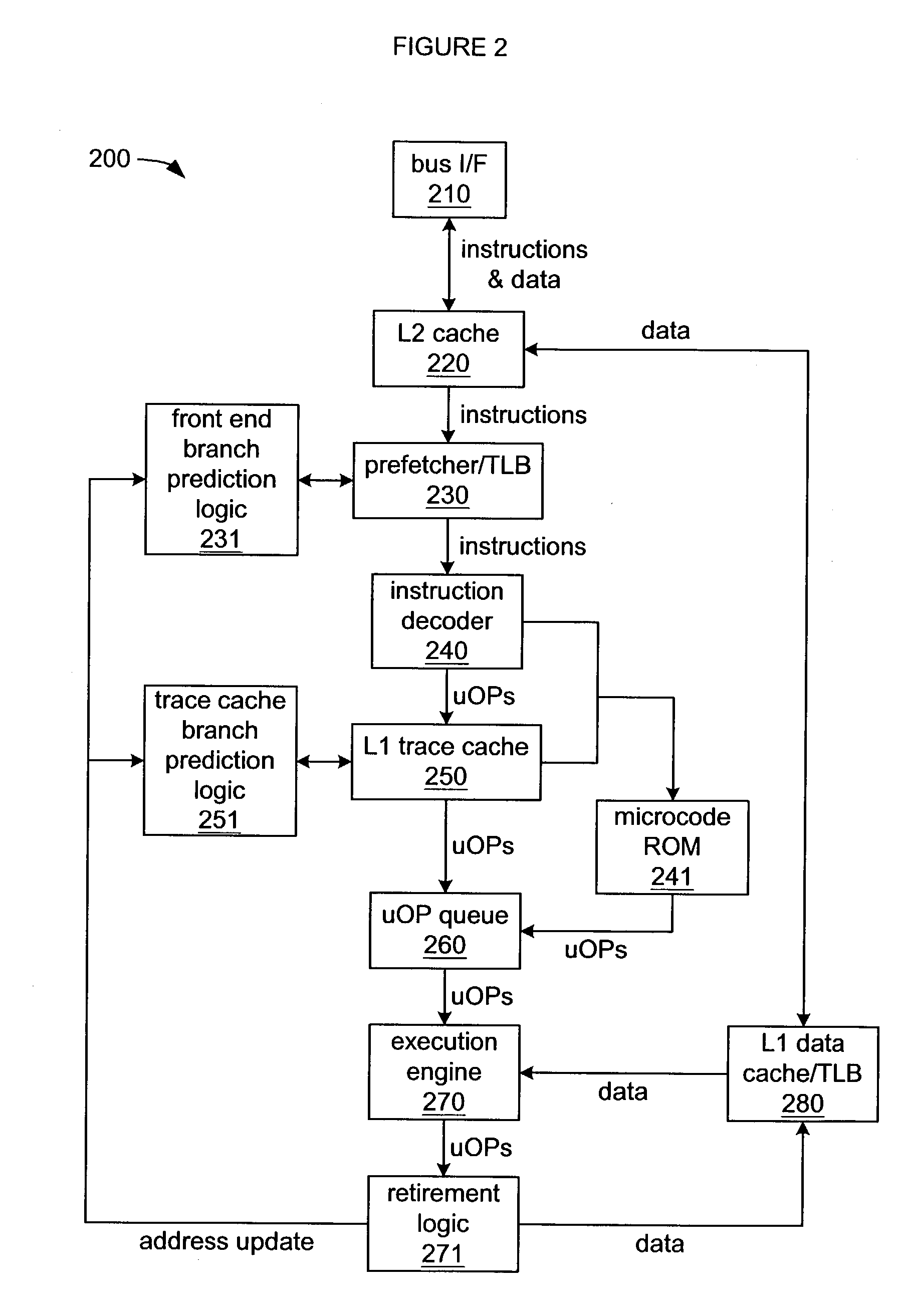

Method and apparatus for dynamic branch prediction utilizing multiple stew algorithms for indexing a global history

InactiveUS7143273B2Digital computer detailsNext instruction address formationParallel computingInstruction sequence

Toggling between accessing an entry in a global history with a stew created from branch predictions implied by the ordering of instructions within a trace of a trace cache when a trace is read out of a trace cache, and accessing an entry in a global history with repeatable variations of a stew when there is more than branch instruction within a trace within the trace cache and at least a second branch instruction is read out.

Owner:INTEL CORP

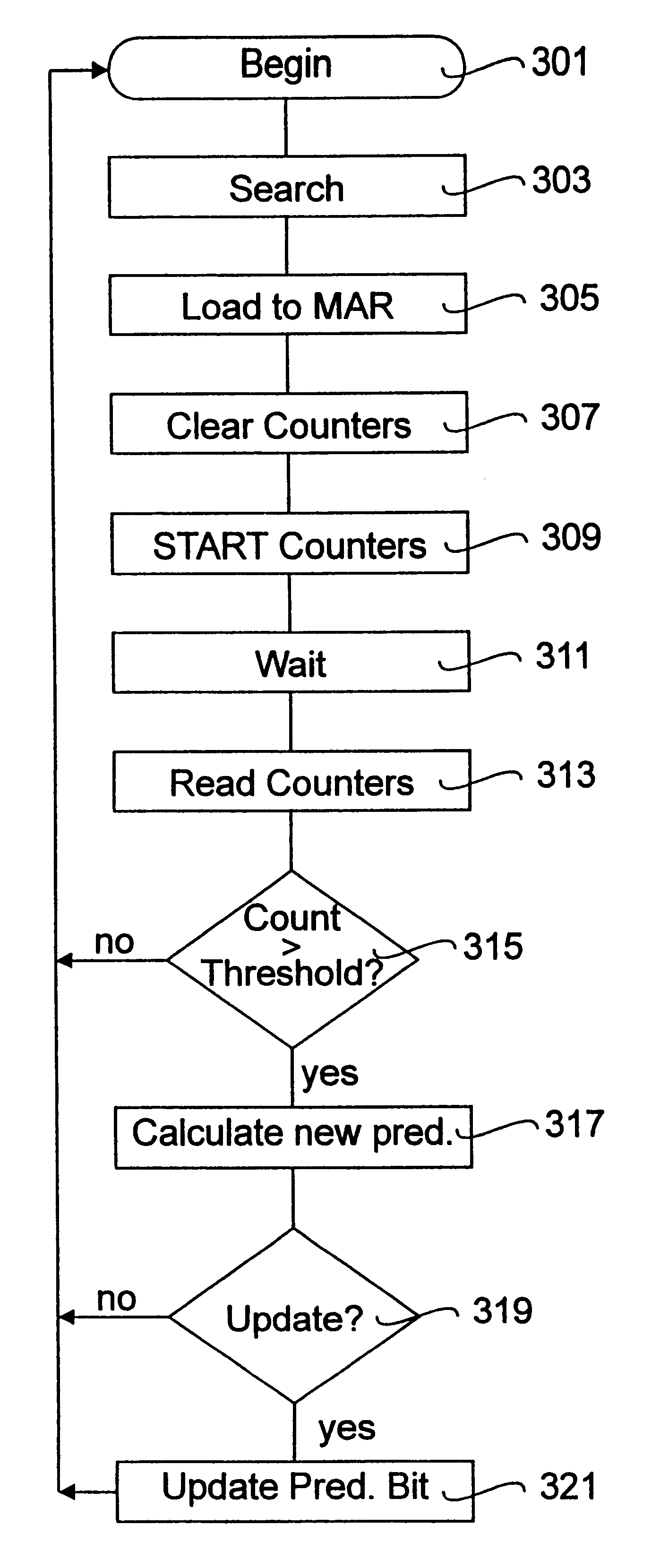

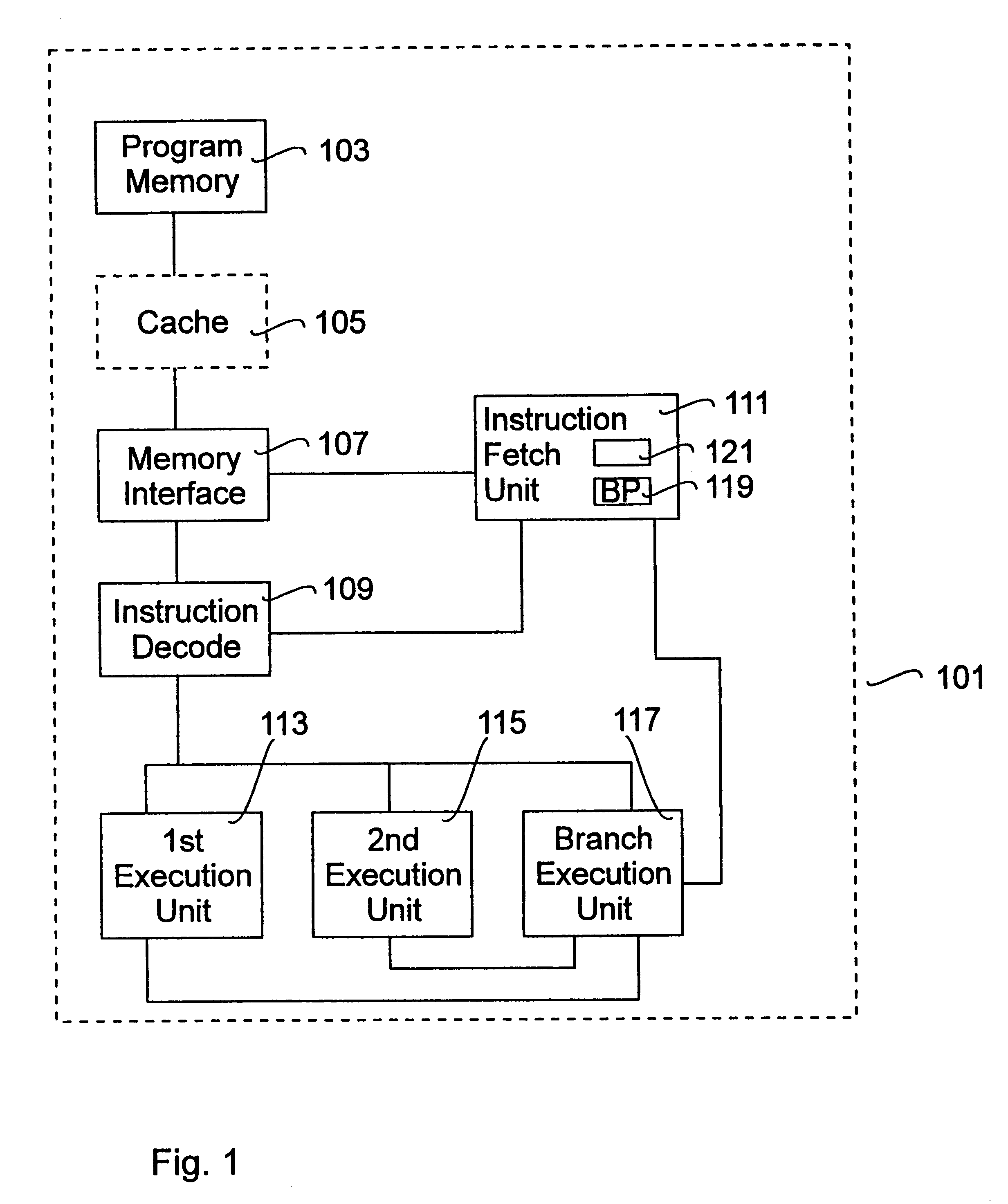

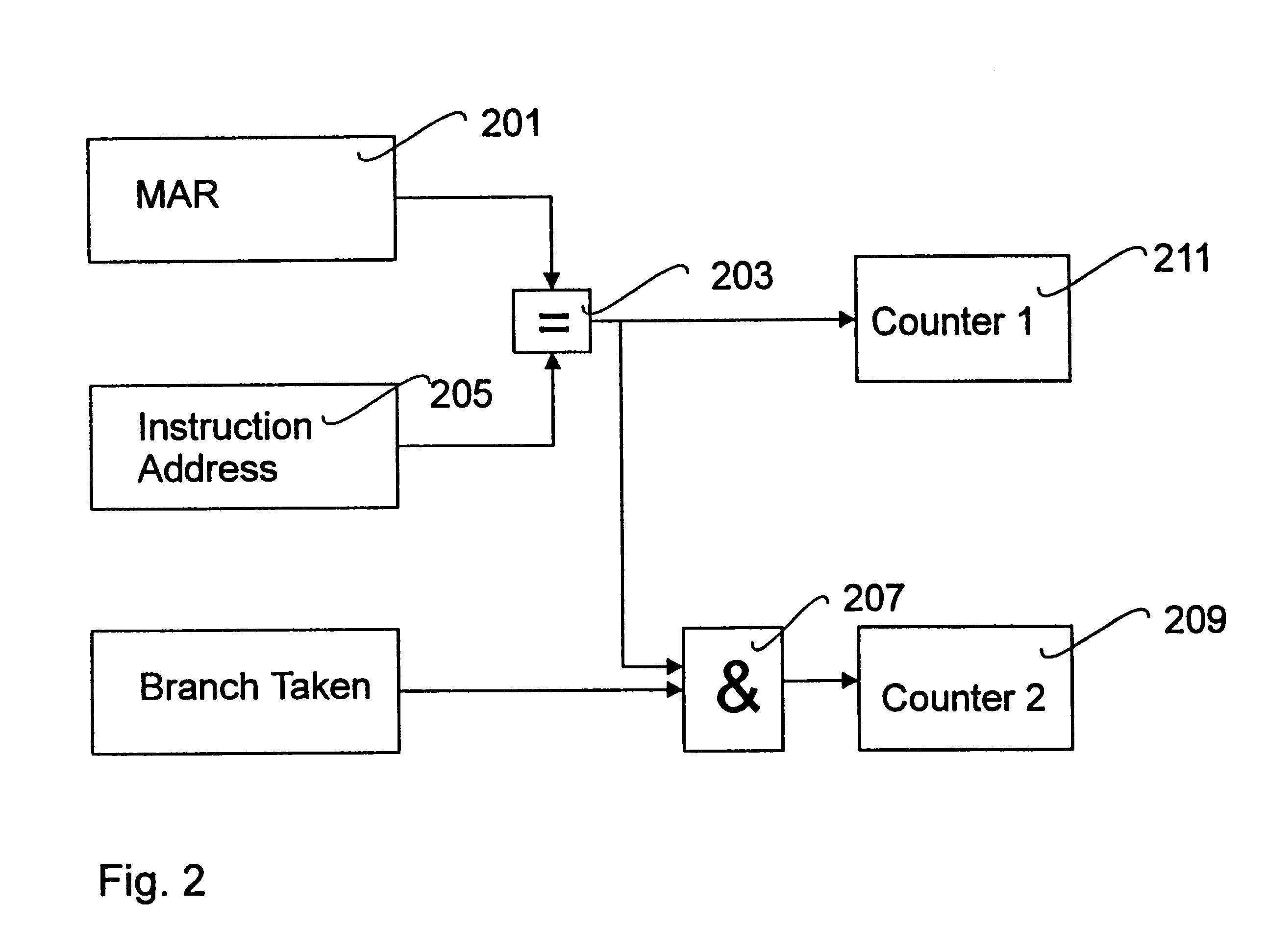

Method and system for branch prediction

InactiveUS6233679B1Digital computer detailsNext instruction address formationPrediction systemSystem usage

In a computer a system for branch prediction is arranged. The branch prediction system uses a scanning mechanism (303) for scanning the program memory for conditional branch instructions during the running of the program. When finding such an instruction the system records during a preset time interval (311) the statistics for that specific conditional branch instruction and sets a branch prediction but in the instruction accordingly (321). The system then starts to scan for the next conditional branch instruction in the program memory. The system can also be used for updating a BHT during the running of a program. The use of the system is particularly useful in applications when a program is run for a relatively long time such as a program used in a telephone switch. The use of the system also allows for changing branch predictions during the run of a program.

Owner:TELEFON AB LM ERICSSON (PUBL)

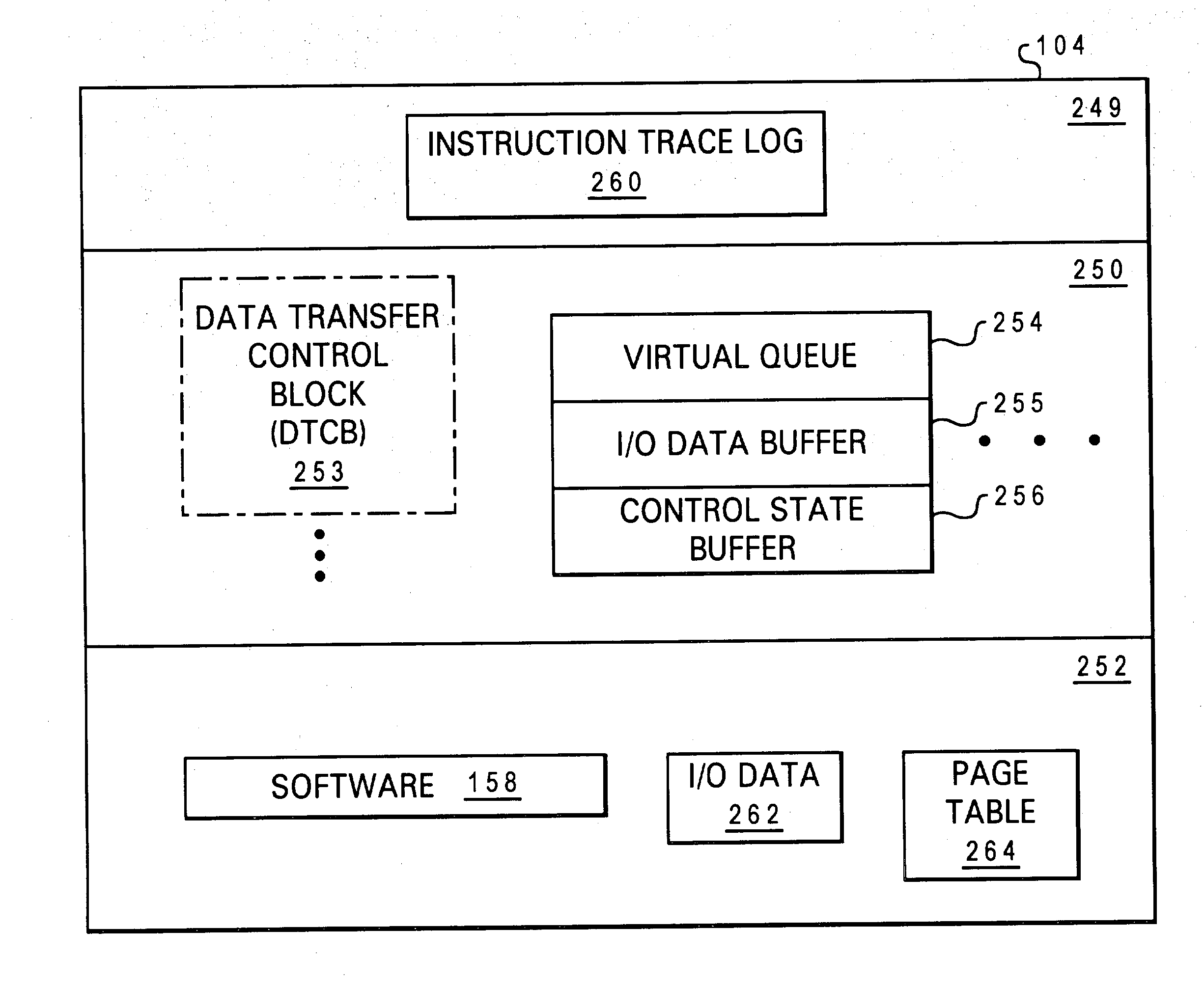

Hardware-enabled instruction tracing

InactiveUS20040139305A1Error detection/correctionDigital computer detailsData processing systemExecution unit

A data processing system includes an instruction pipeline, including one or more execution units that execute instructions and an instruction sequencing unit that dispatches instructions to the execution units for execution. The data processing system further includes a memory controller for a memory containing an instruction trace log and an interconnect coupled to the instruction pipeline and to the memory controller. The interconnect transmits to the memory controller for storage in the instruction trace log instructions processed within the instruction pipeline.

Owner:IBM CORP

Popular searches

Analogue secracy/subscription systems Computer security arrangements Data switching networks Special data processing applications Memory systems Handling data according to predetermined rules Current supply arrangements Number-of-one counters Specific program execution arrangements Computation using denominational number representation

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com