Patents

Literature

145 results about "Cpu architecture" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

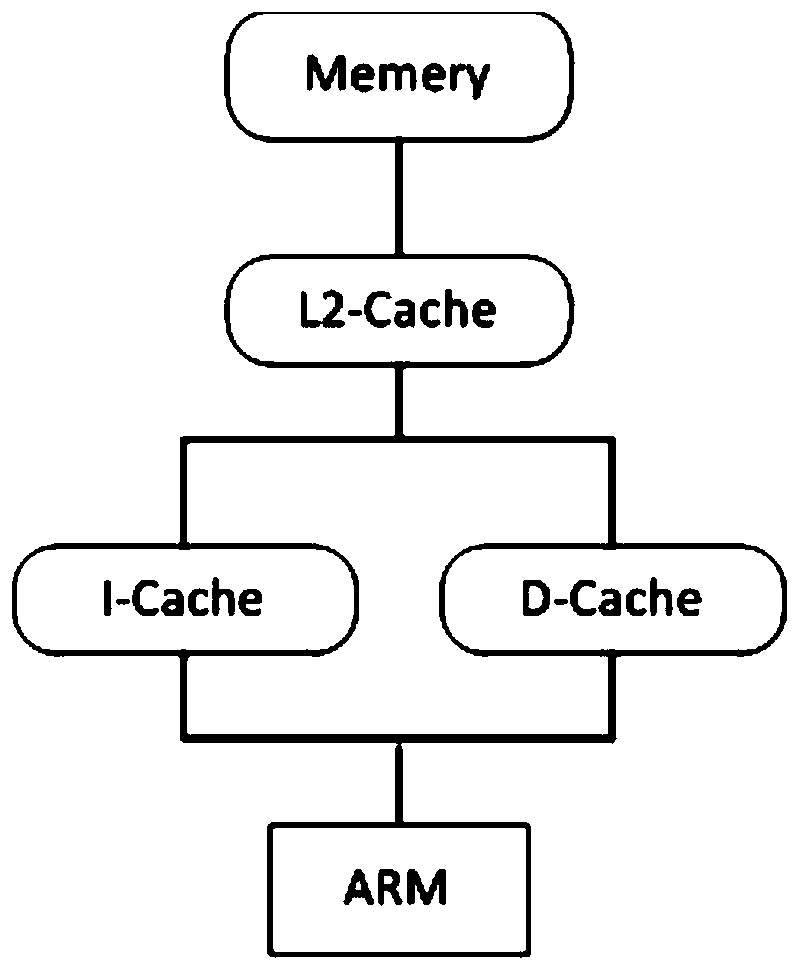

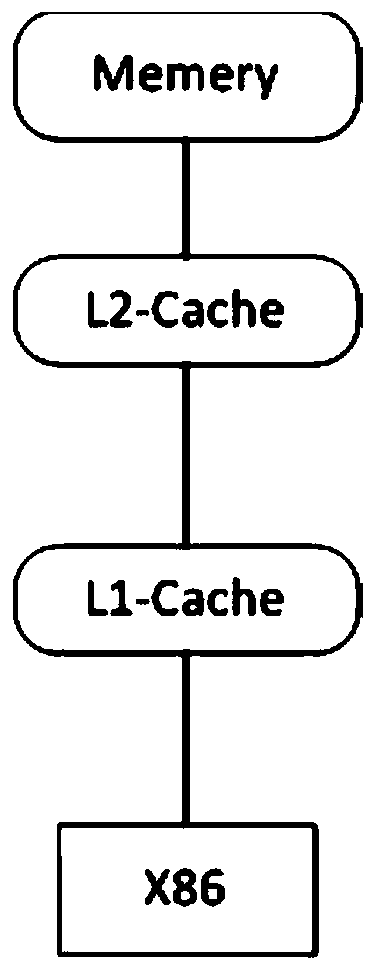

CPU Architecture. The processor (really a short form for microprocessor and also often called the CPU or central processing unit) is the central component of the PC. This vital component is in some way responsible for every single thing the PC does.

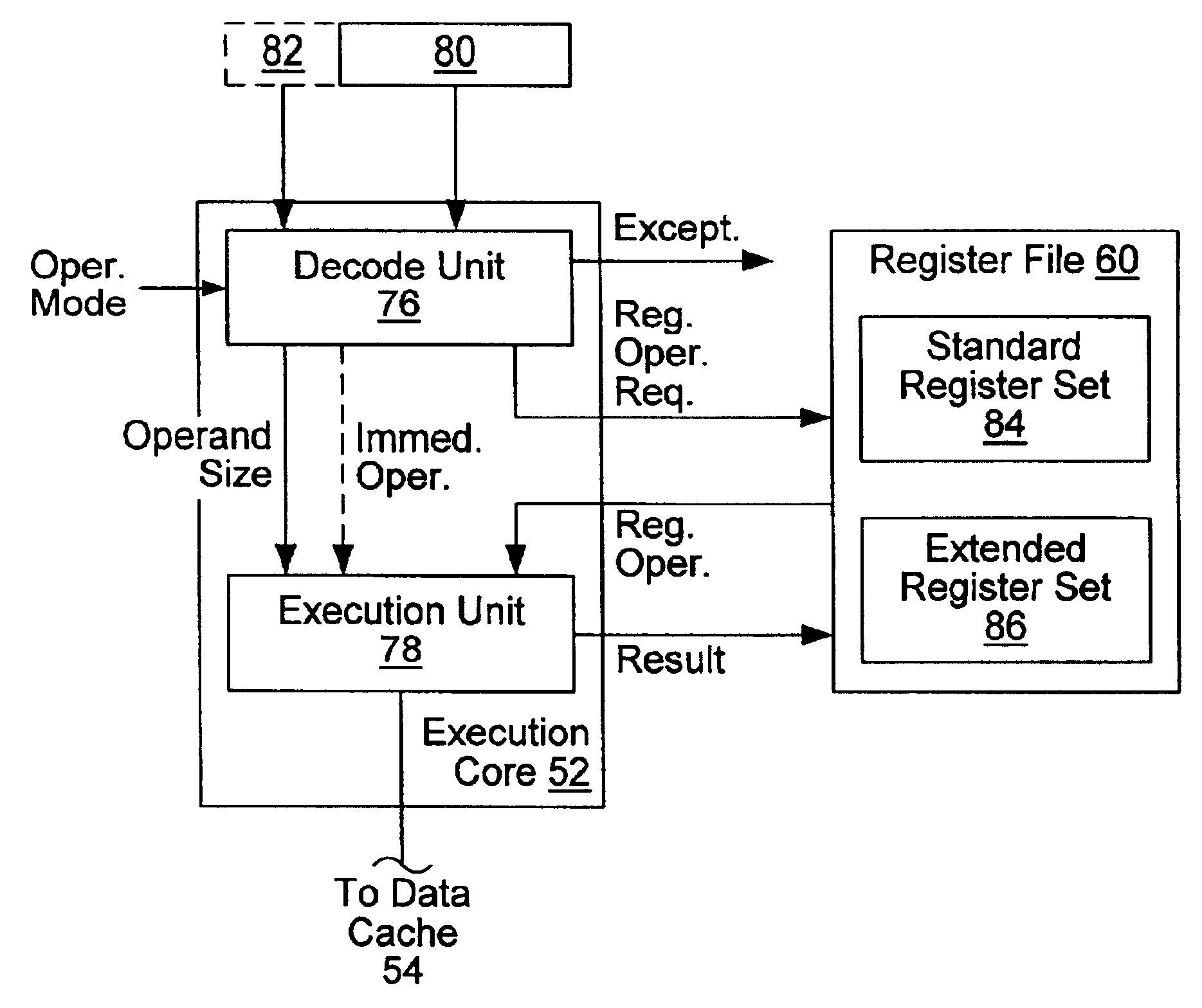

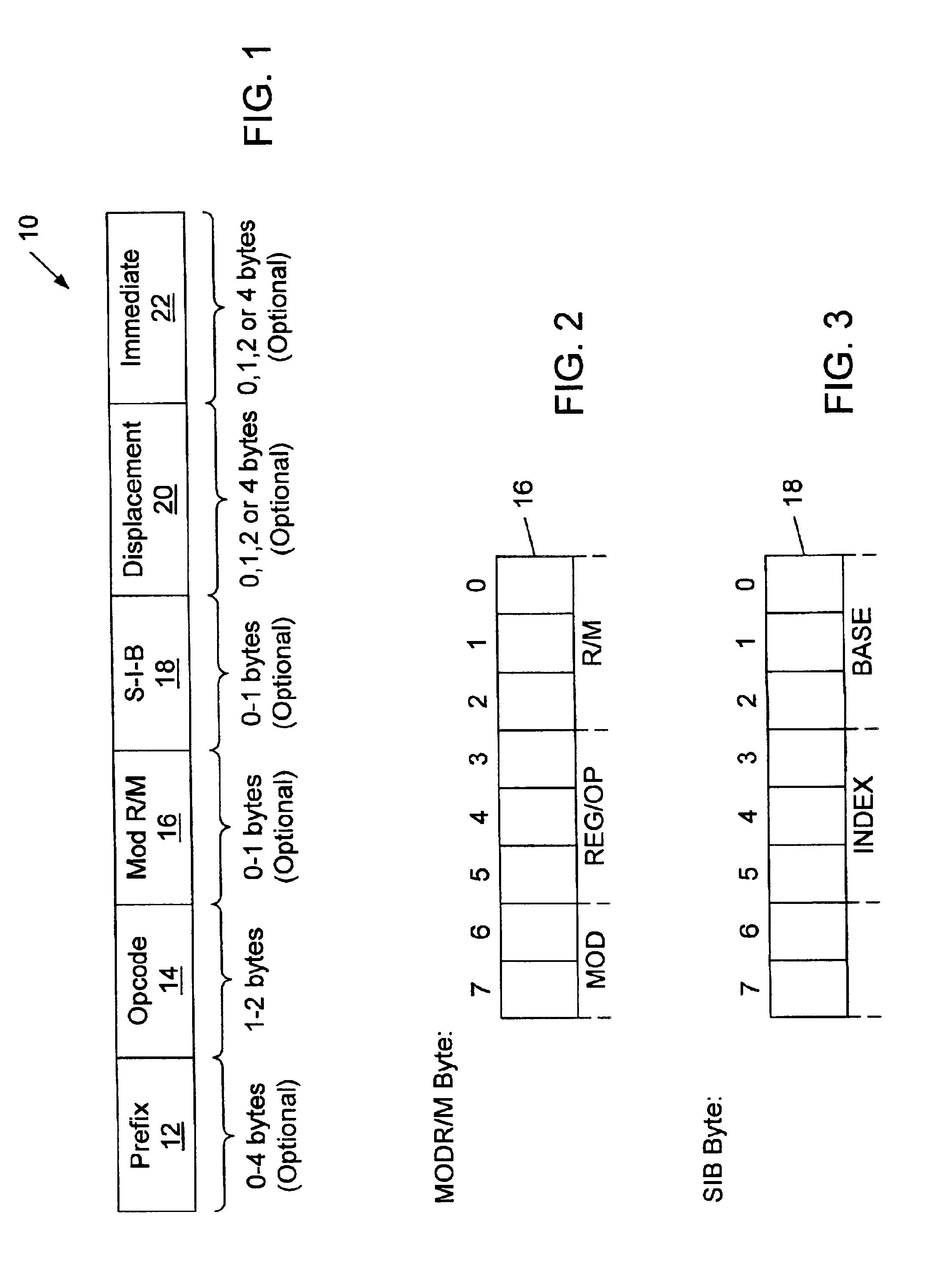

Central processing unit (CPU) accessing an extended register set in an extended register mode

InactiveUS6877084B1Resource allocationMemory adressing/allocation/relocationGeneral purposeComputer architecture

A central processing unit (CPU) is described including a register file and an execution core coupled to the register file. The register file includes a standard register set and an extended register set. The standard register set includes multiple standard registers, and the extended register set include multiple extended registers. The execution core fetches and executes instructions, and receives a signal indicating an operating mode of the CPU. The execution core responds to an instruction by accessing at least one extended register if the signal indicates the CPU is operating in an extended register mode and the instruction includes a prefix portion including information needed to access the at least one extended register. The standard registers may be general purpose registers of a CPU architecture associated with the instruction. The number of extended registers may be greater than the number of general purpose registers defined by the CPU architecture. In this case, the additional register identification information in the prefix portion is needed to identify a selected one of the extended registers. A width of the extended registers may also be greater than a width of the standard registers. In this case, the prefix portion may also include an indication that the entire contents of the least one extended register is to be accessed. In this way, instruction operand sizes may selectively be increased when the CPU is operating in the extended register mode. A computer system including the CPU is also described.

Owner:GLOBALFOUNDRIES INC

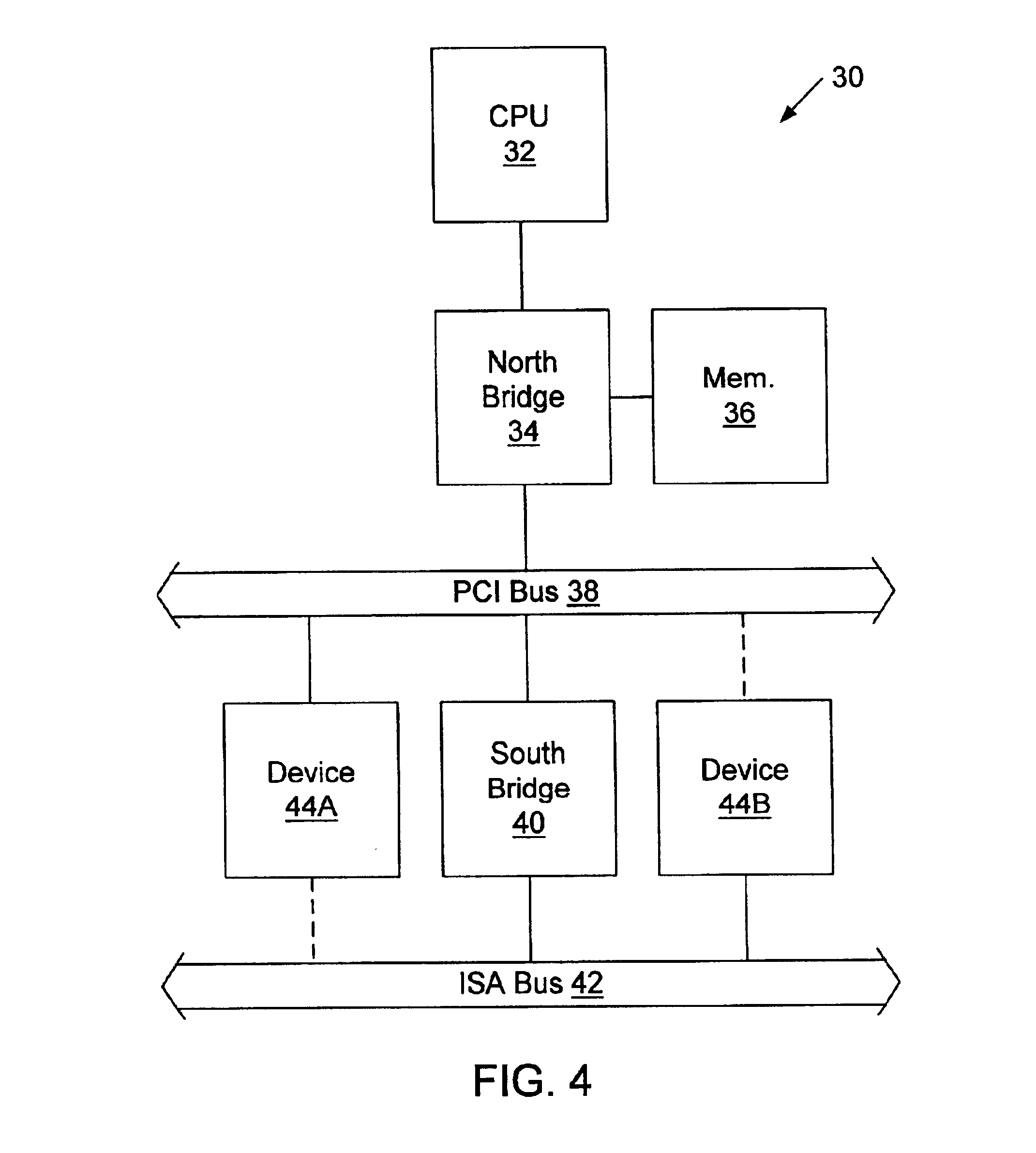

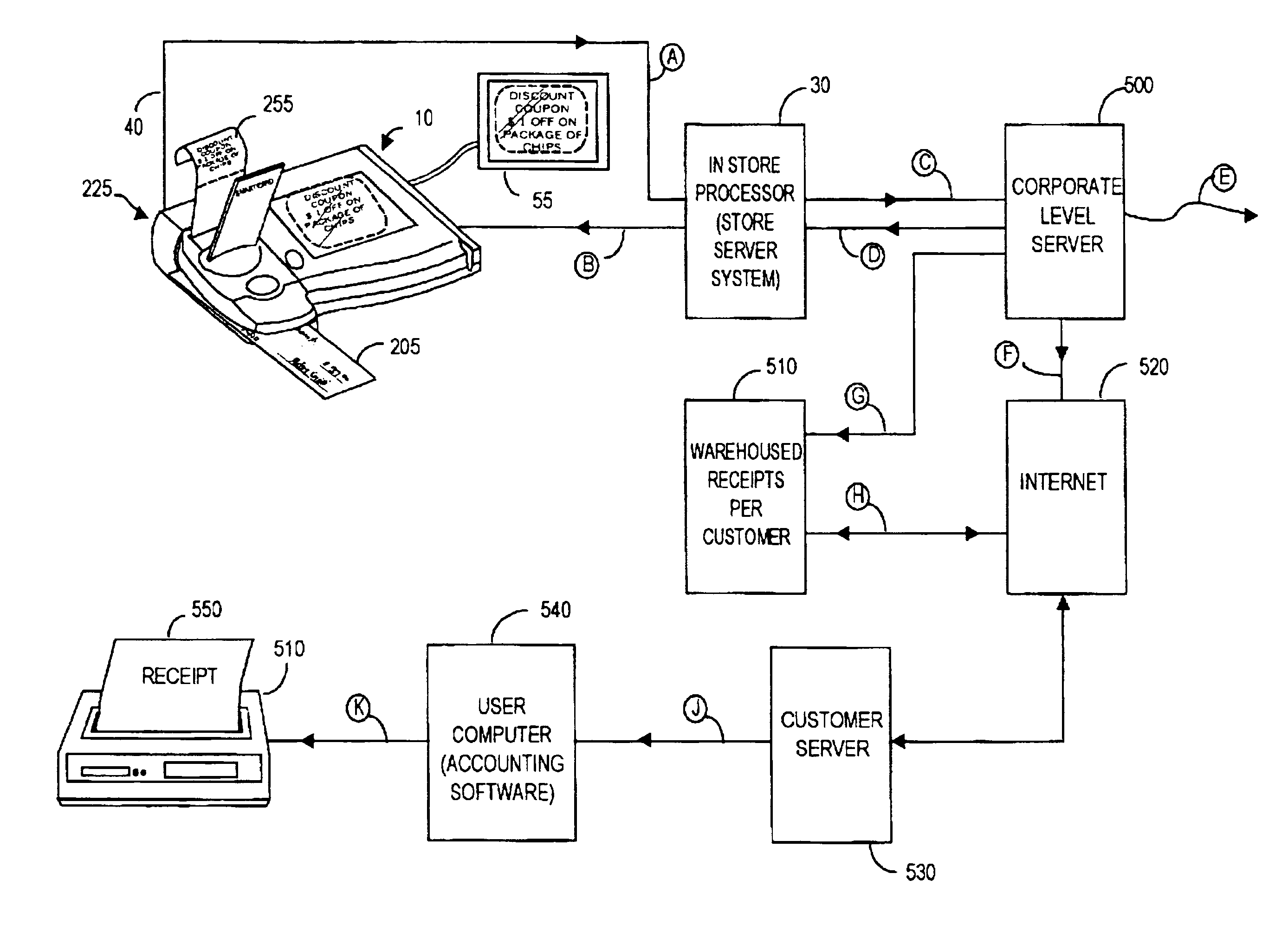

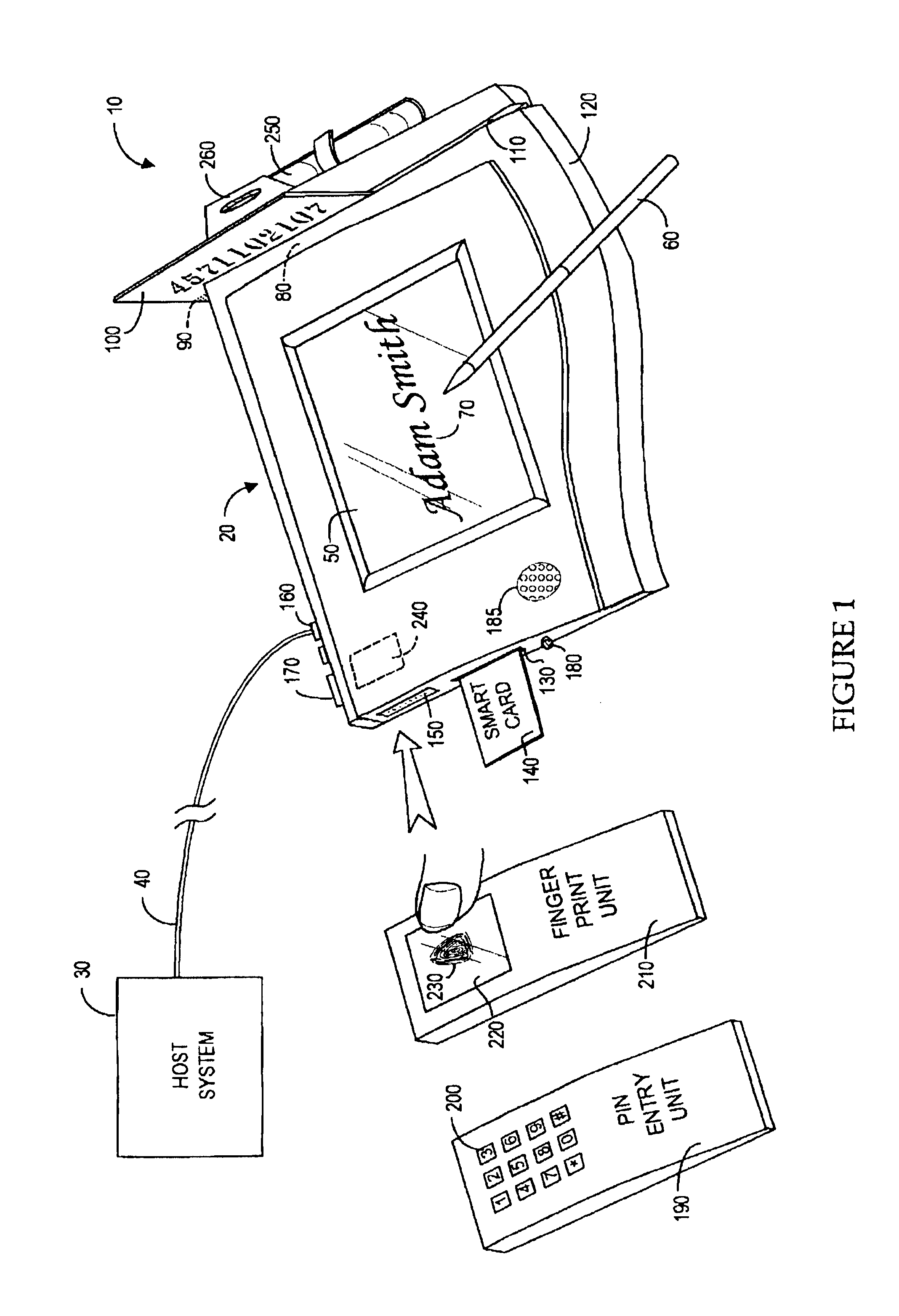

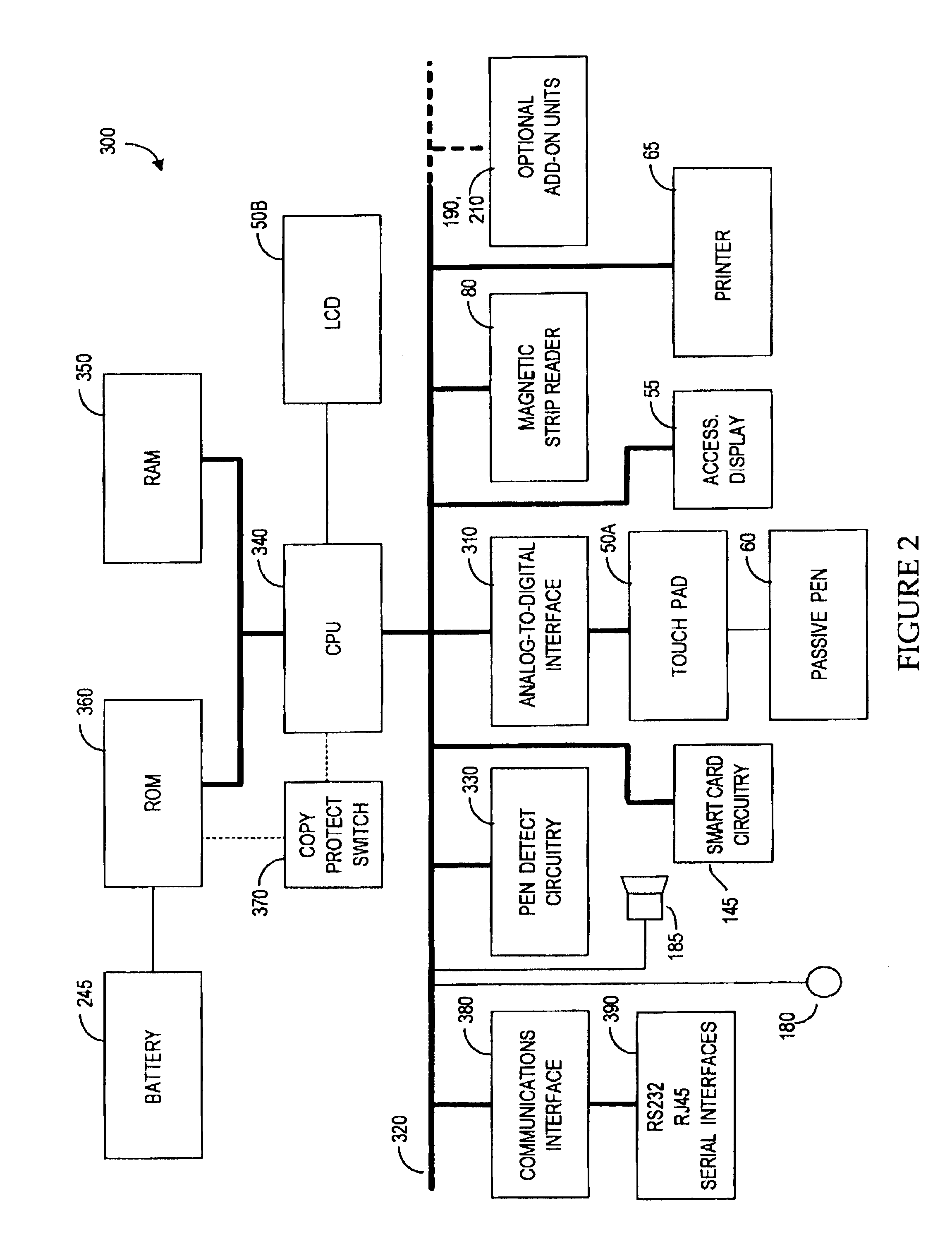

Modular signature and data-capture system and point of transaction payment and reward system

InactiveUSRE41716E1Increase flexibilityMinimizes paper-workComplete banking machinesFinanceReward systemCompatible accessories

A modular signature and data capture device employs a standardized ISA bus, standardized communication ports, and standardized ×86 CPU architecture to promote flexibility in using past, present, and future software and accessories. A VGA-caliber backlit LCD is superimposingly combined with a pressure touch pad that is useable with a passive stylus. The LCD displays pen drawn signatures and graphics in real-time, and can display images and data stored in the device, or downloaded from a host system, including advertisements. The LCD can also display menus, device instructions, virtual pressure-sensitive data keys, and control keys. The device includes a built-in a three-stripe magnetic card reader unit. The device accepts PCMCIA-compatible accessories including solid state memory units and smartcards, and is compatible with plug-in accessories including an external PIN keypad entry unit, a fingerprint unit, an omnibus unit including a printer and check processor in addition to a fingerprint unit. Security is provided by DES-encrypting PIN data and / or using Master / Session and / or DUKPT key management, or by using fingerprint token data as a PIN. The invention may be used to conduct paperless transactions in which the merchant is paid in realtime. Further, merchant purchase profiles may be generated on a per-user basis to promote more effective advertising.

Owner:SYMBOL TECH LLC

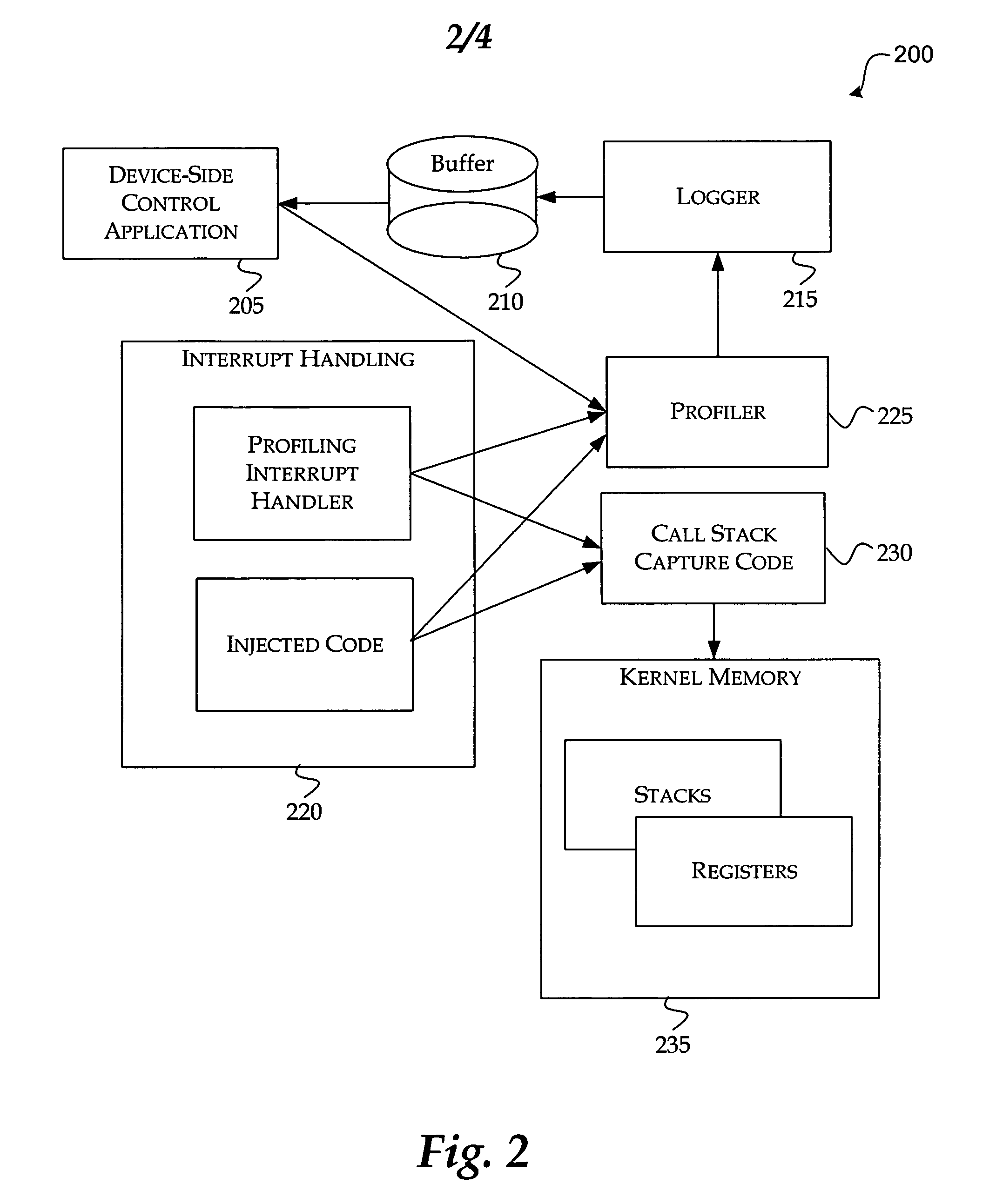

Call stack capture in an interrupt driven architecture

InactiveUS20060059486A1Simplify workError detection/correctionMultiprogramming arrangementsCall stackCpu architecture

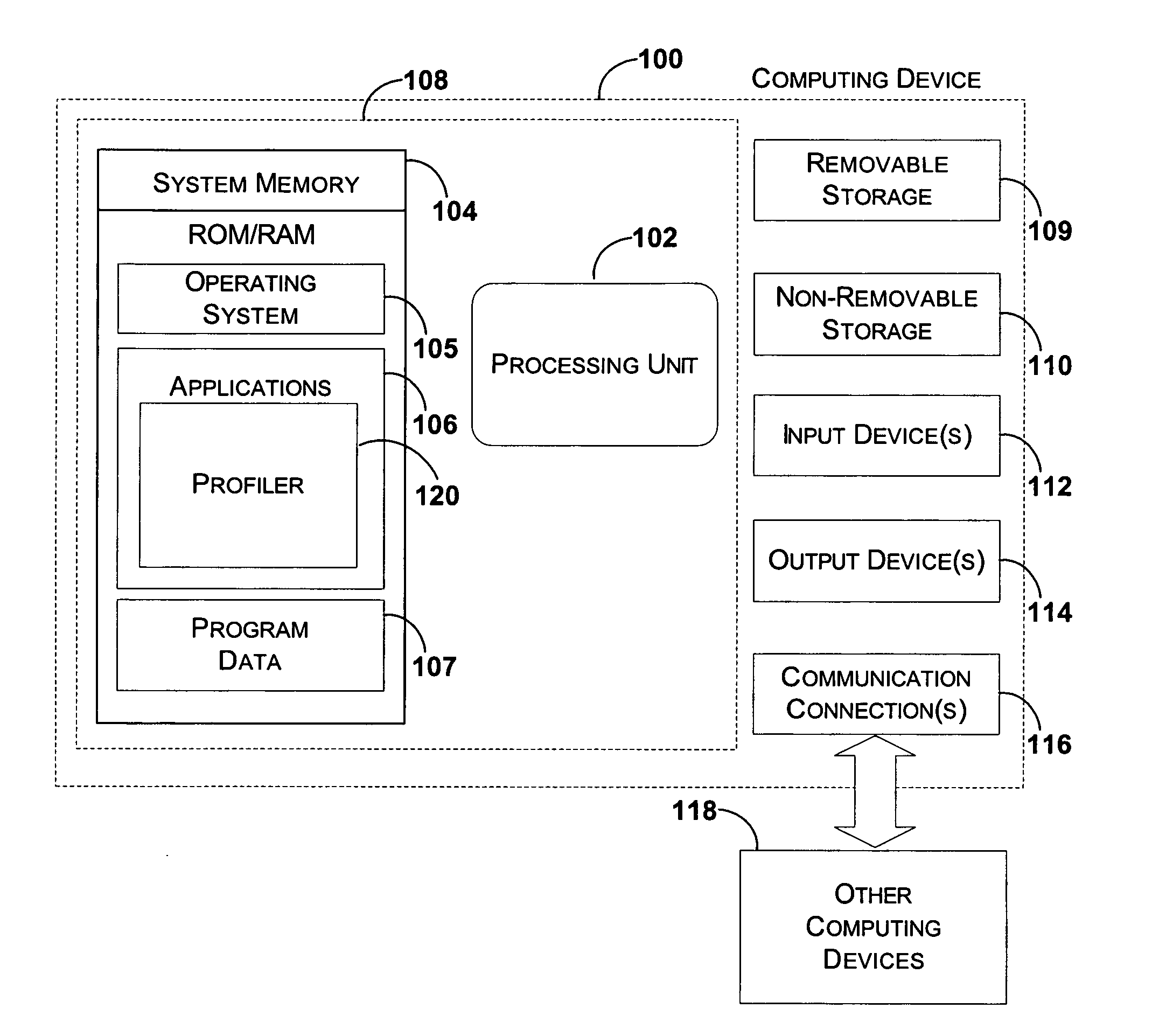

The present invention provides a method and system for capturing the call stack of a currently-running thread at the time a profiler interrupt occurs. The thread context of the thread is determined before a full push of the thread context is performed by the CPU architecture. The hardware state at the time of the interrupt is used to aid in determining which portions of memory to search for portions of the thread context. Based on the hardware state and the software state of the thread at the time of the interrupt the thread context is captured. Code may also be injected into a thread to capture a thread's call stack. The state of the thread is altered to induce the thread to invoke the kernel's call stack API itself, using its own context.

Owner:MICROSOFT TECH LICENSING LLC

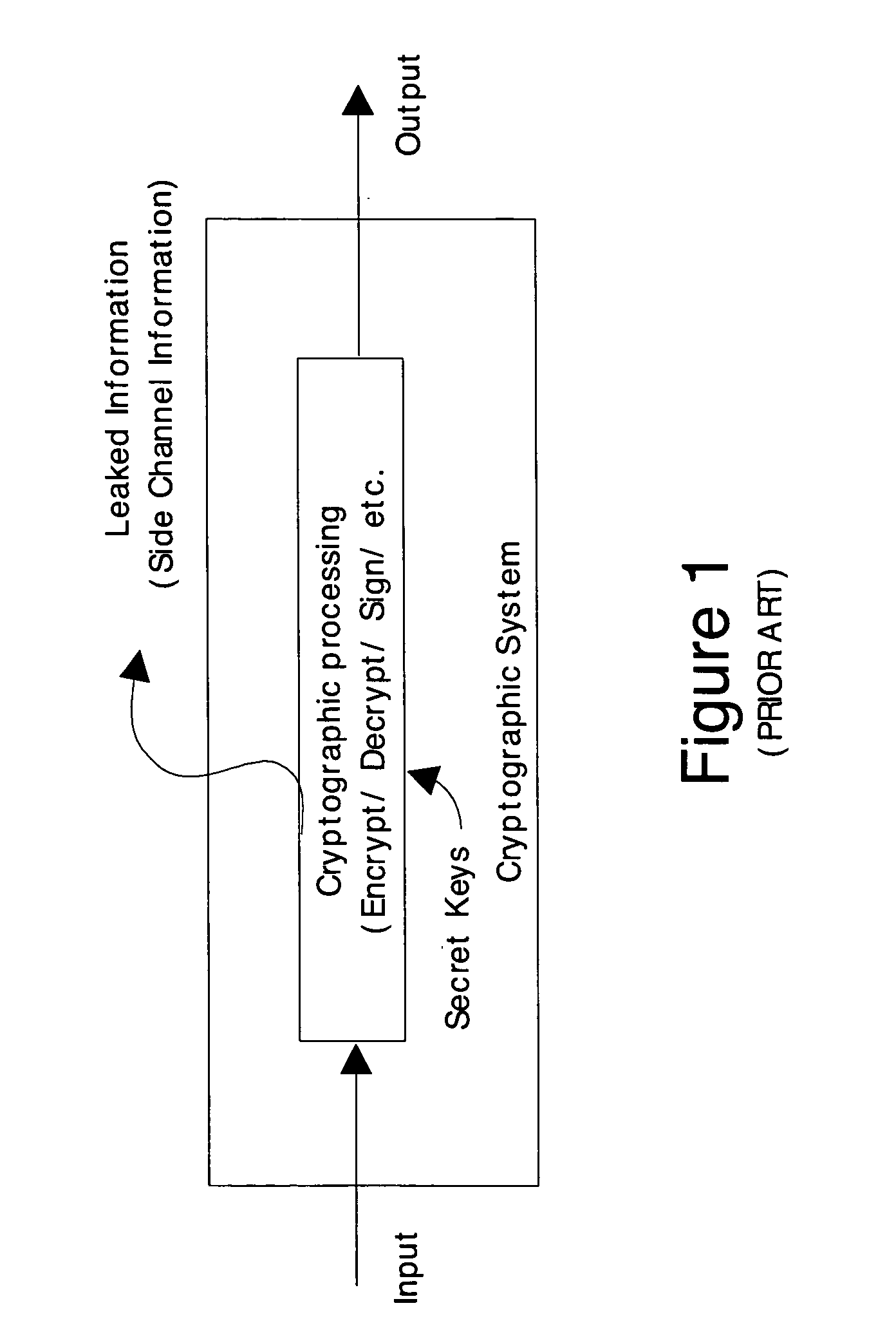

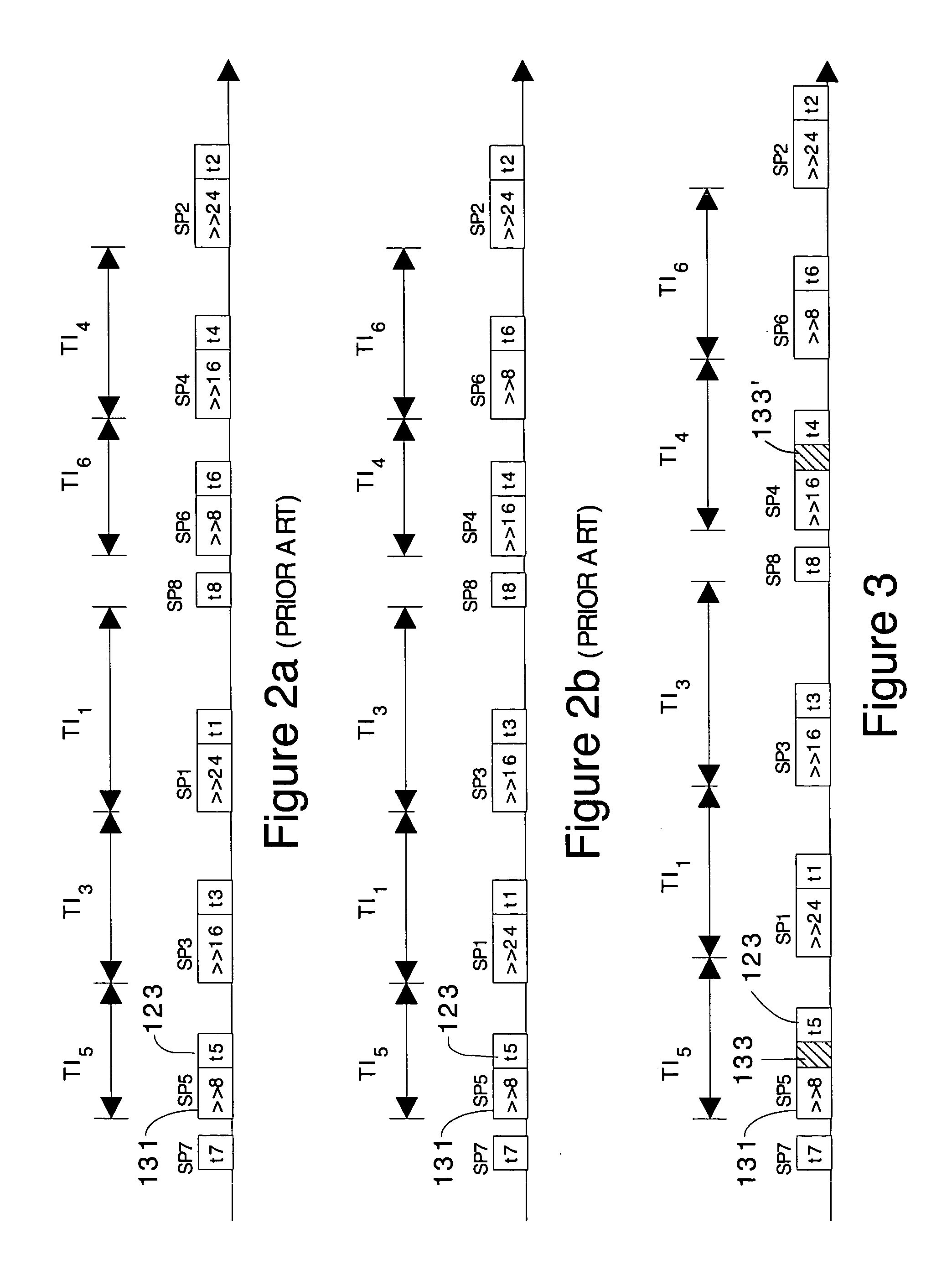

Cryptographic CPU architecture with random instruction masking to thwart differential power analysis

InactiveUS20050273631A1Memory loss protectionEncryption apparatus with shift registers/memoriesComputer hardwarePower analysis

An apparatus and method for preventing information leakage attacks that utilize timeline alignment. The apparatus and method inserts a random number of instructions into an encryption algorithm such that the leaked information can not be aligned in time to allow an attacker to break the encryption.

Owner:HRL LAB

Finite element methods and systems

ActiveUS20150120261A1Address limitationsComputation using non-denominational number representationDesign optimisation/simulationMulticore architectureCpu architecture

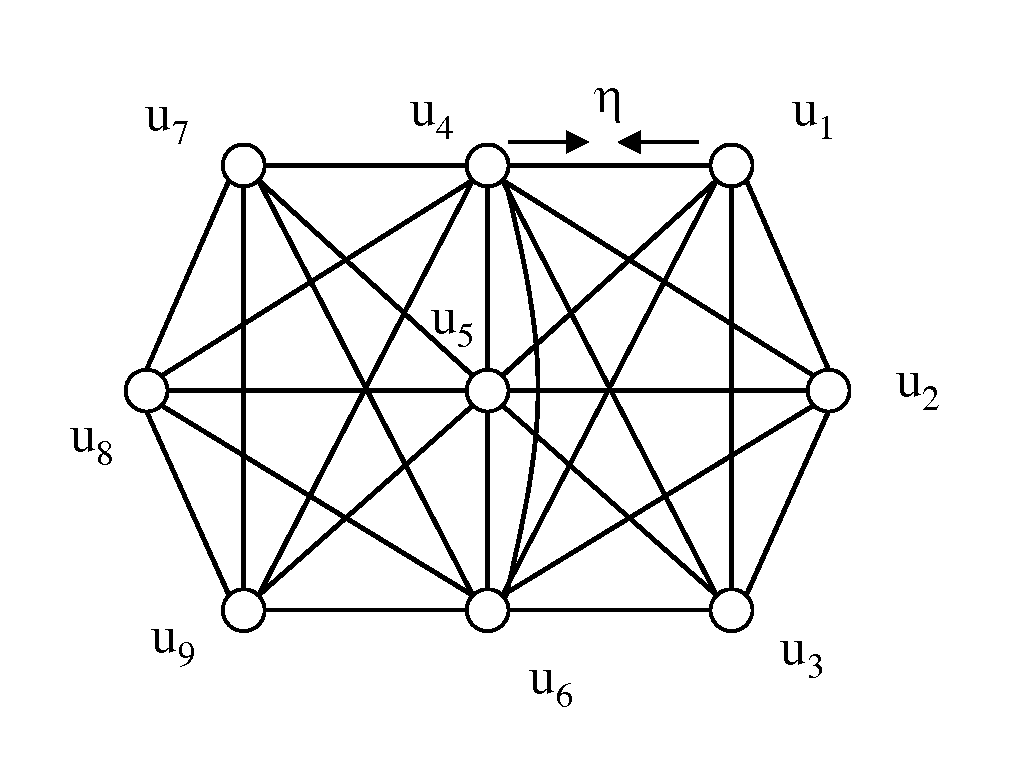

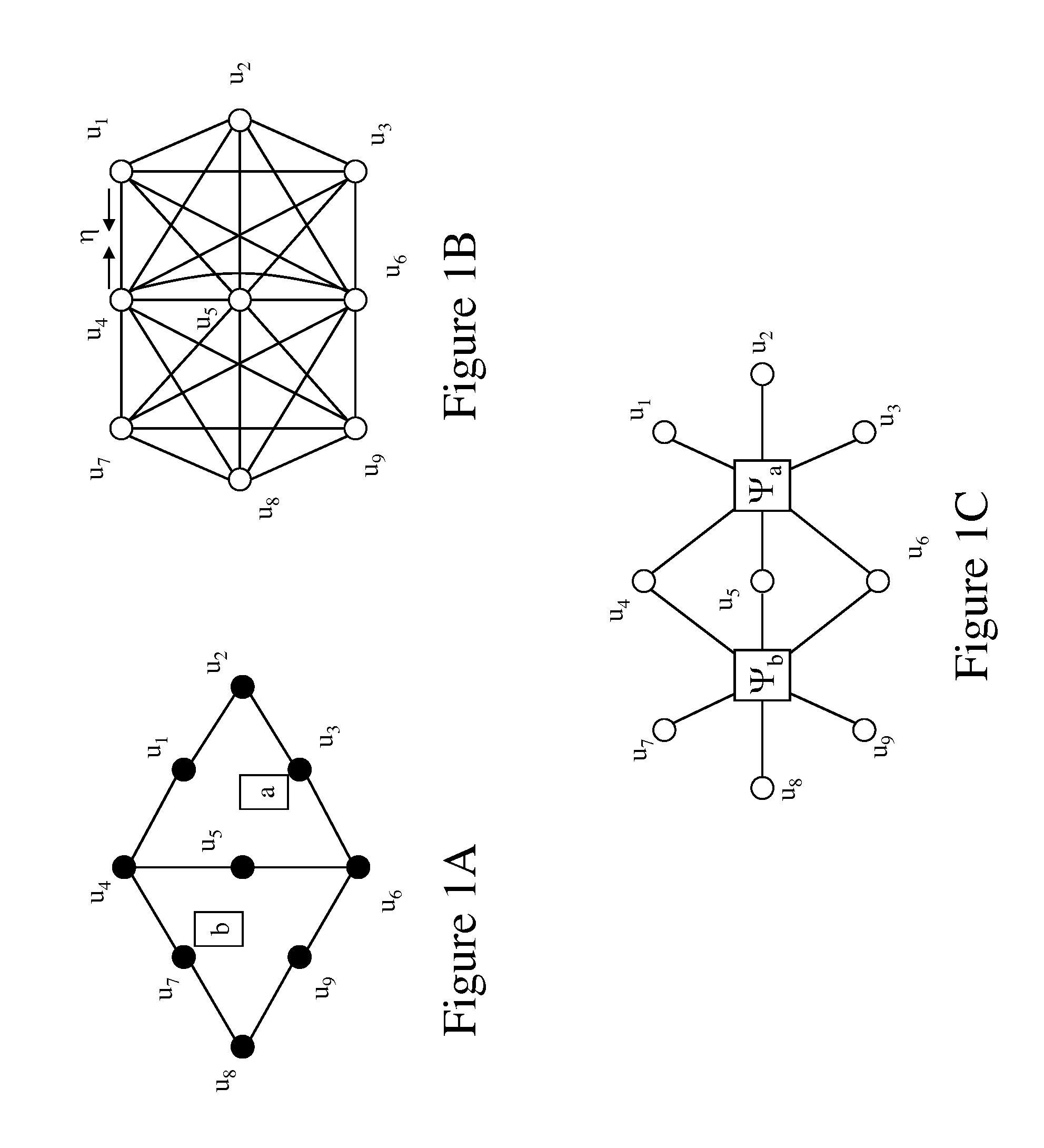

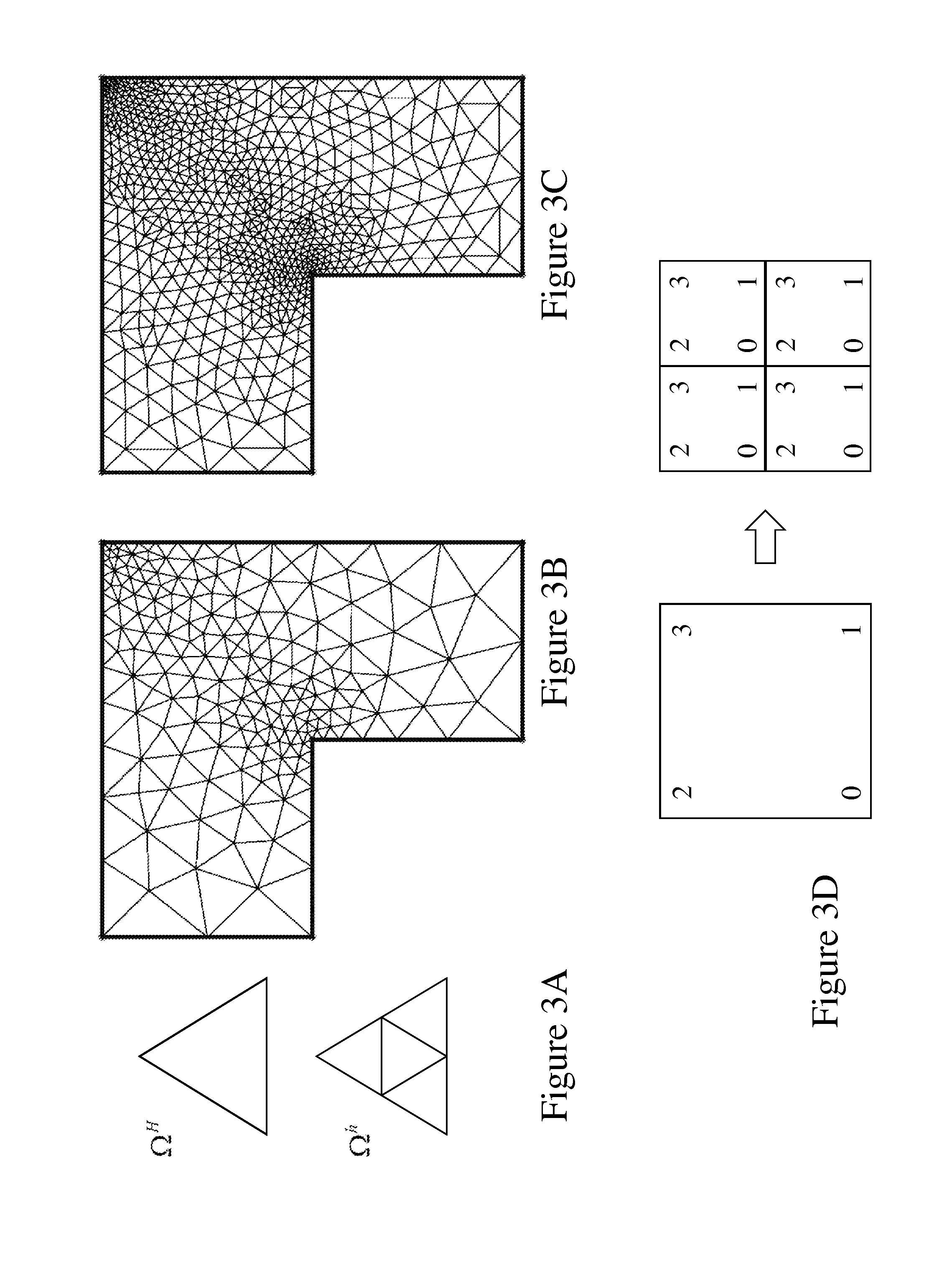

The computational efficiency of Finite Element Methods (FEM) on parallel architectures is typically severely limited by sparse iterative solvers. Standard iterative solvers are based on sequential steps of global algebraic operations, which limit their parallel efficiency, and prior art techniques exploit sophisticated programming techniques tailored to specific CPU architectures to improve performance. The inventors present a FEM Multigrid Gaussian Belief Propagation (FMGaBP) technique that eliminates global algebraic operations and sparse data-structures based upon reformulating the variational FEM into a probabilistic inference problem based upon graphical models. Further, the inventors present new formulations for FMGaBP, which further enhance its computation and communication complexities where the parallel features of FMGaBP are leveraged to multicore architectures.

Owner:MCGILL UNIV

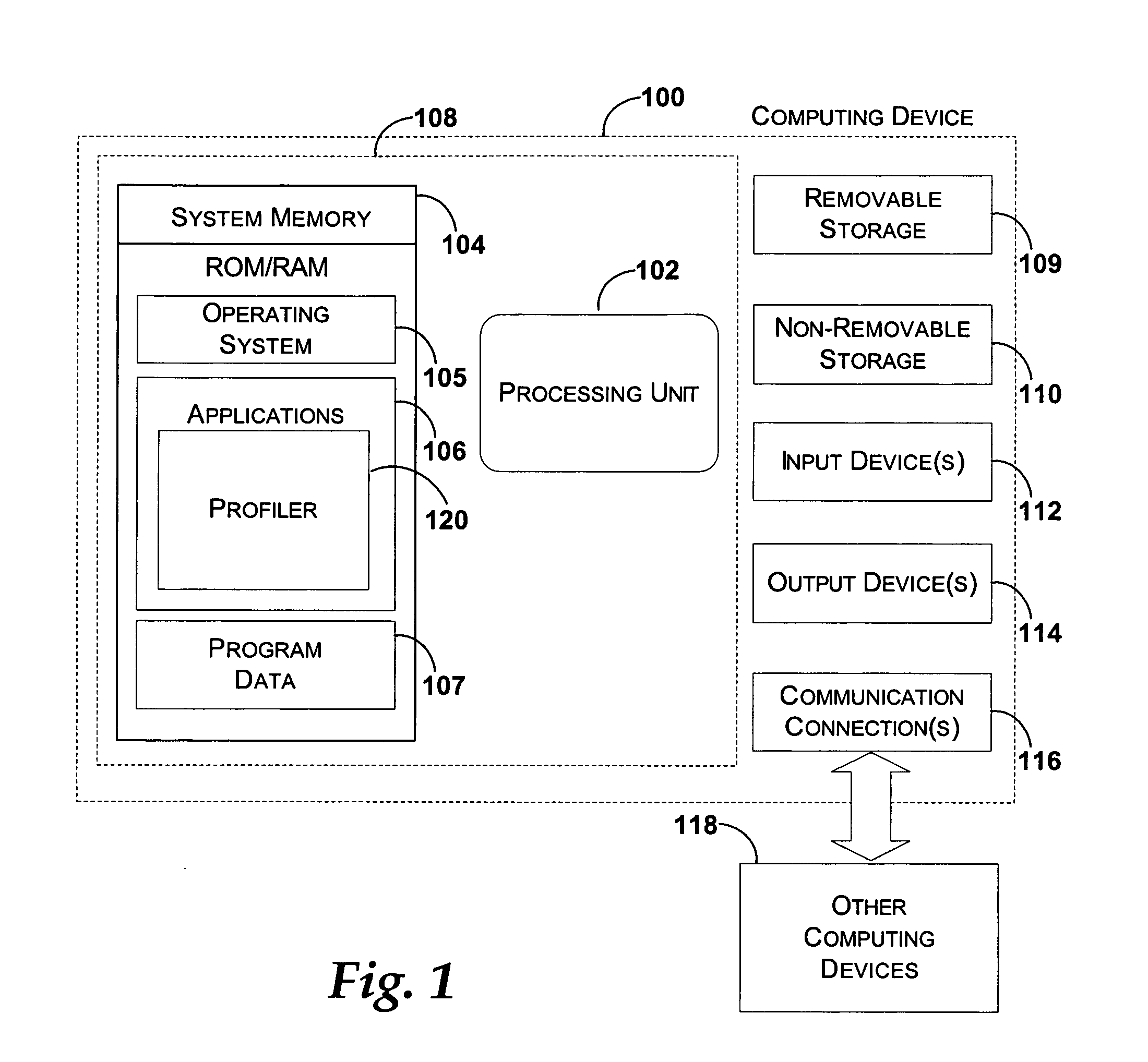

Intelligent Platform

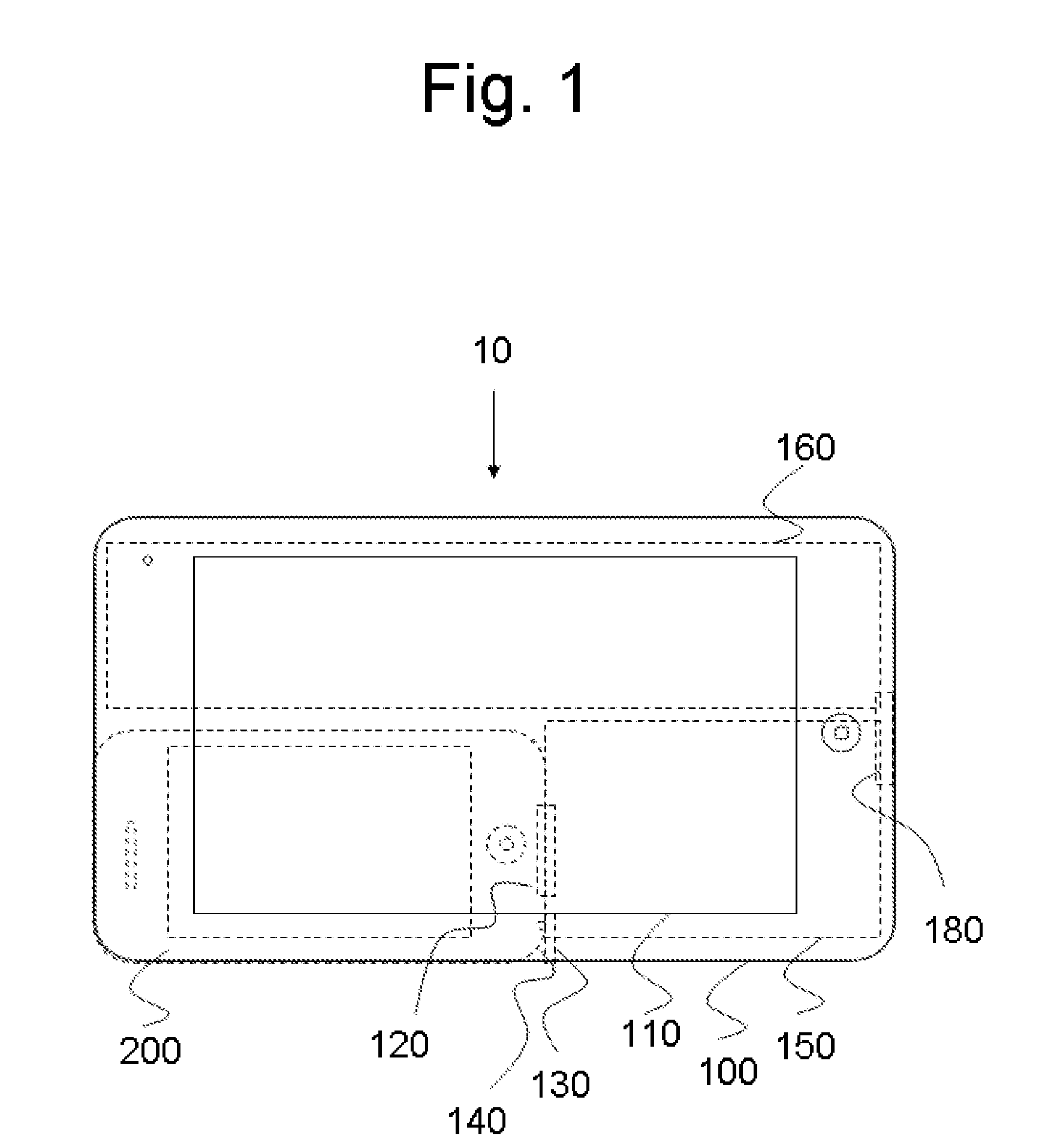

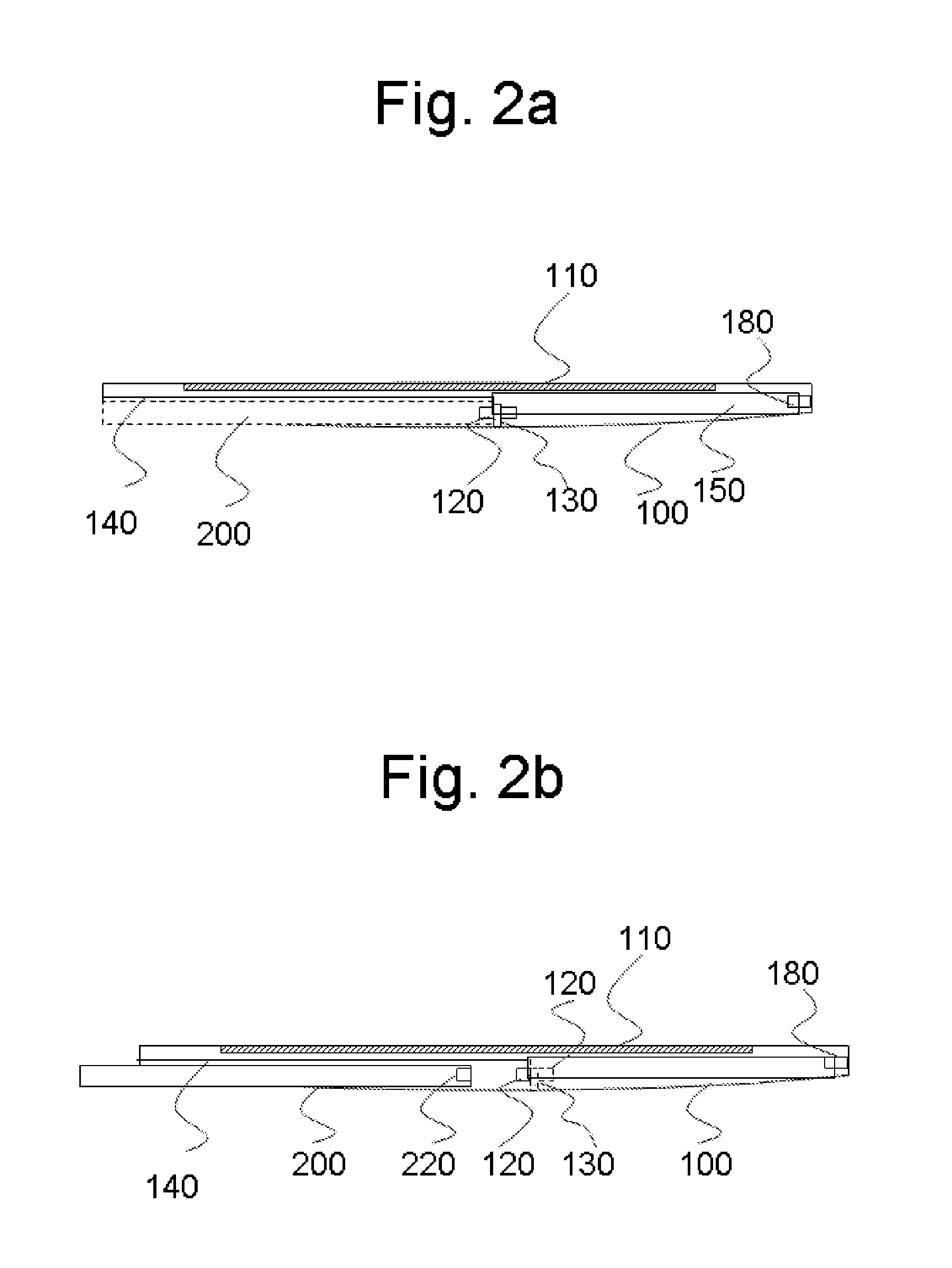

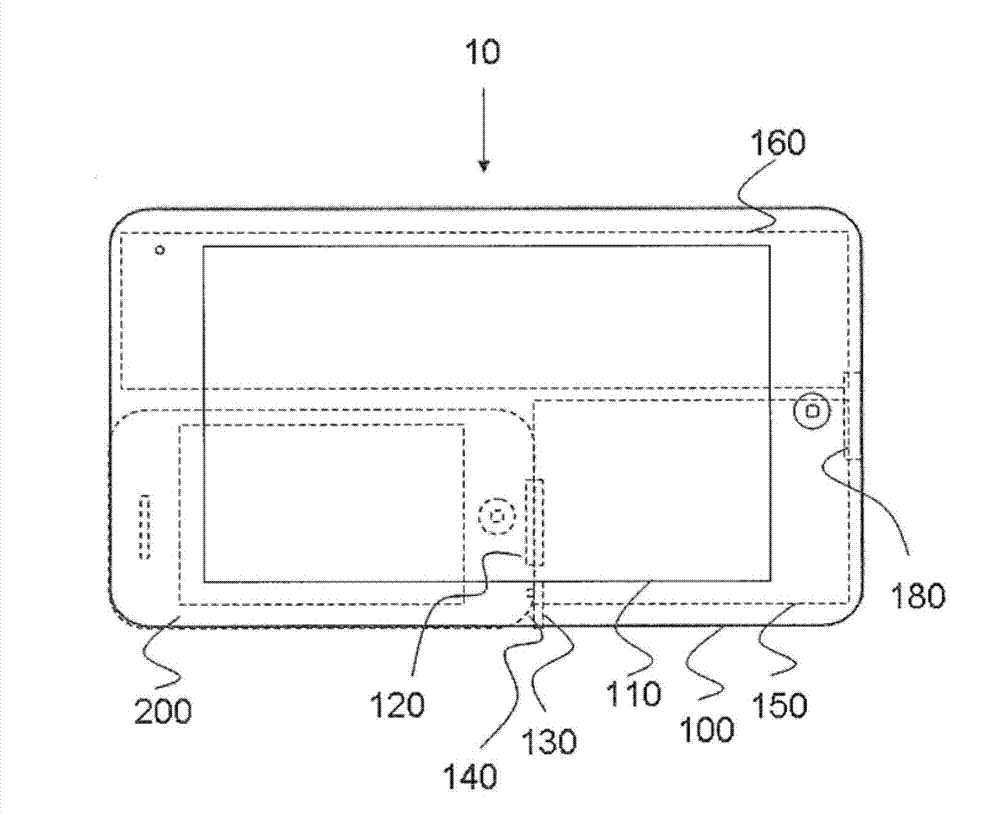

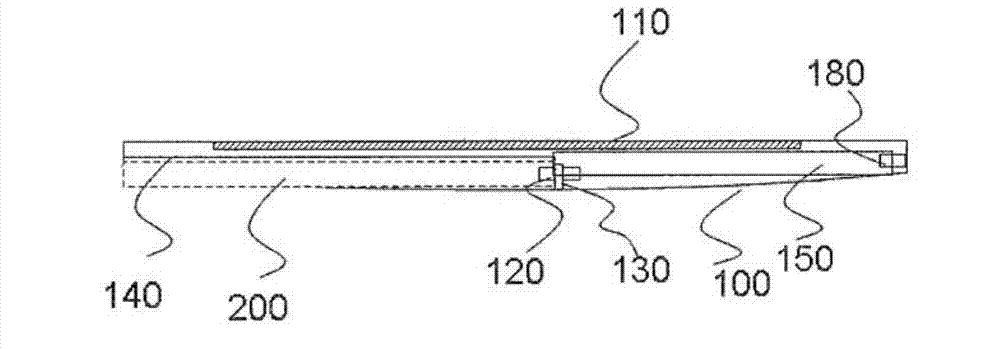

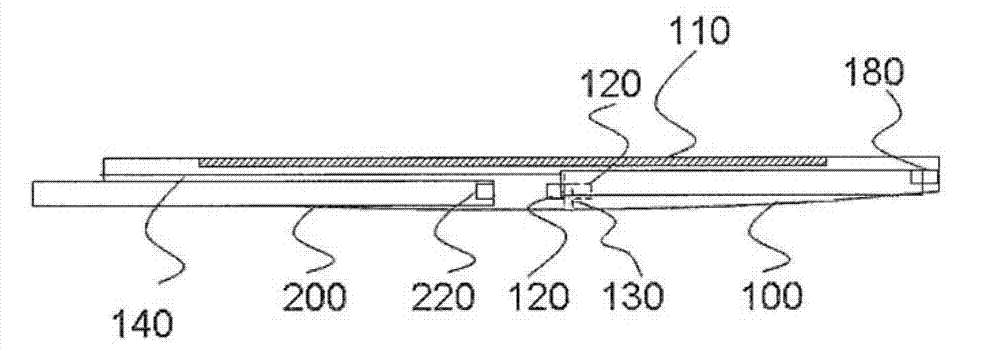

ActiveUS20120011293A1Cost-effectiveCompact integrationDigital data processing detailsTablet computerOperational system

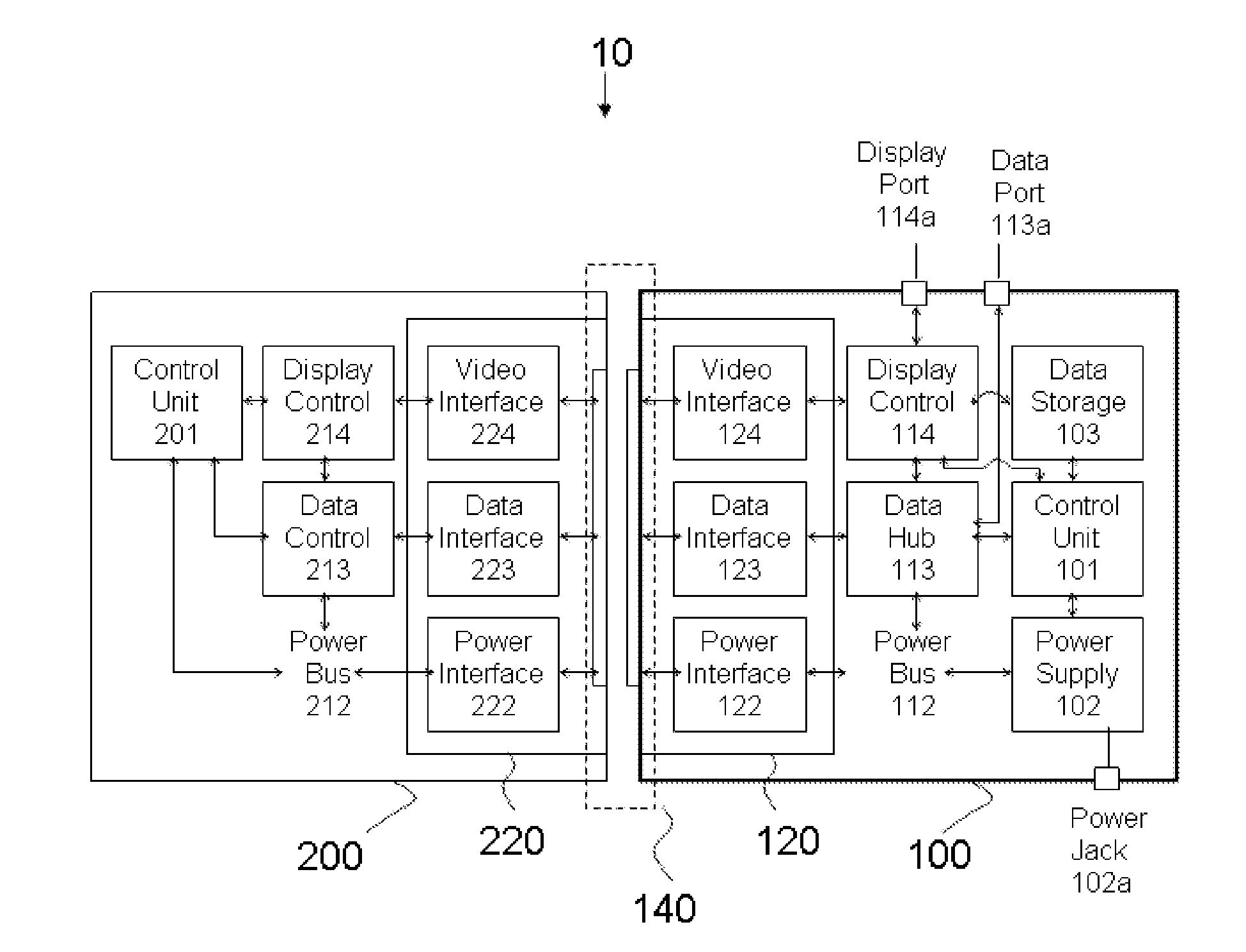

An intelligent platform integrates with an intelligent portable device or intelligent core to provide a dynamic computer that may serve as any of: a pad, a tablet computing device, a netbook computer, and a notebook computer. The operations of the integrated device are determined by the connected intelligent core's CPU architecture and its installed operating system. The intelligent platform includes a housing and a core slot located behind a display for accommodating the intelligent core. A core connector is provided on an inner wall of the core slot for interconnecting with a compatible connector of the inserted intelligent core. A control unit continually communicates with the intelligent core through signals carried by the connector, refreshes image received from the intelligent core on its touch-sensitive display, and sends touch-input commands from the touchable panel of the touch-sensitive display to the intelligent core. The battery on intelligent platform provides backup power to the intelligent core.

Owner:ICE COMP

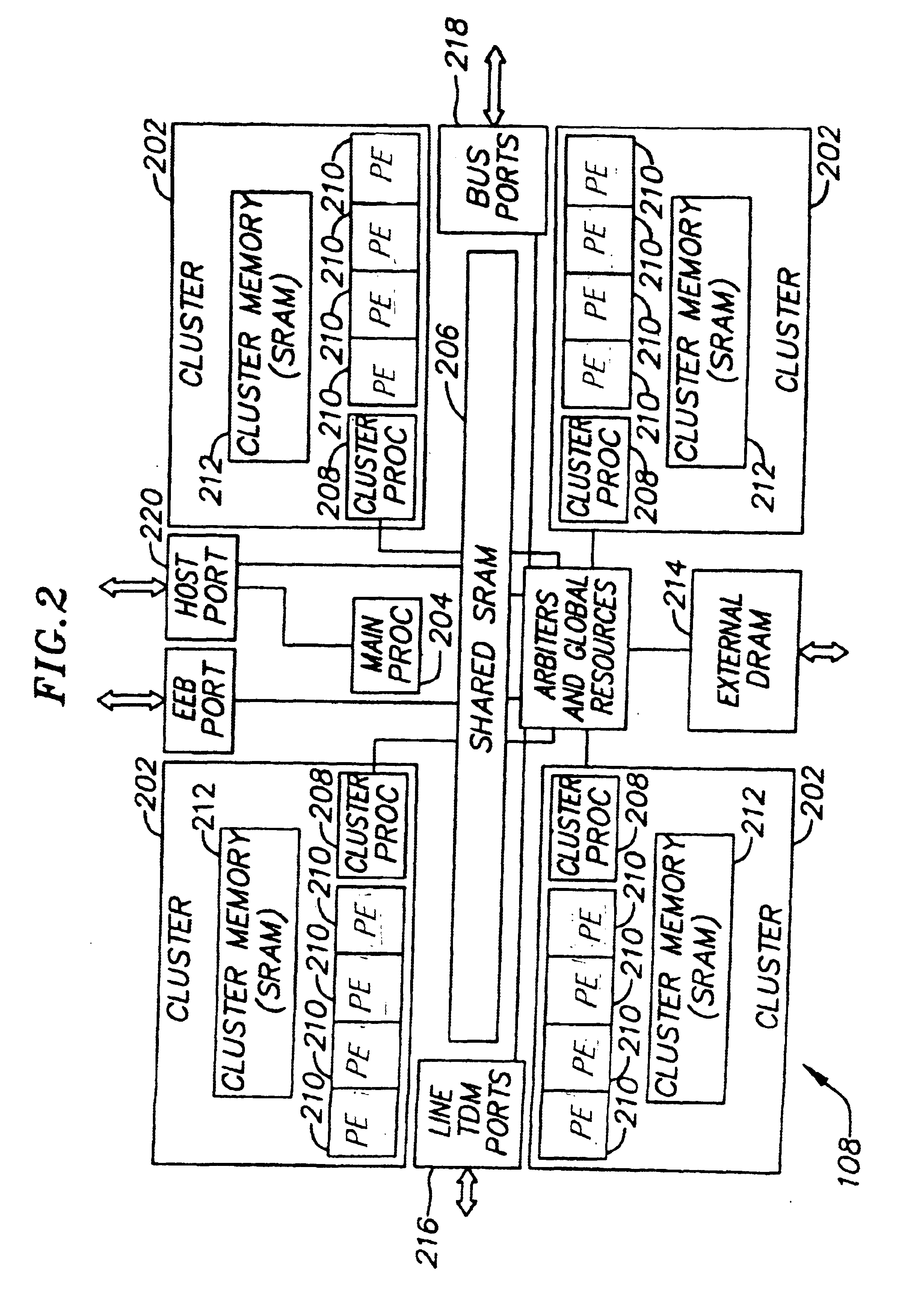

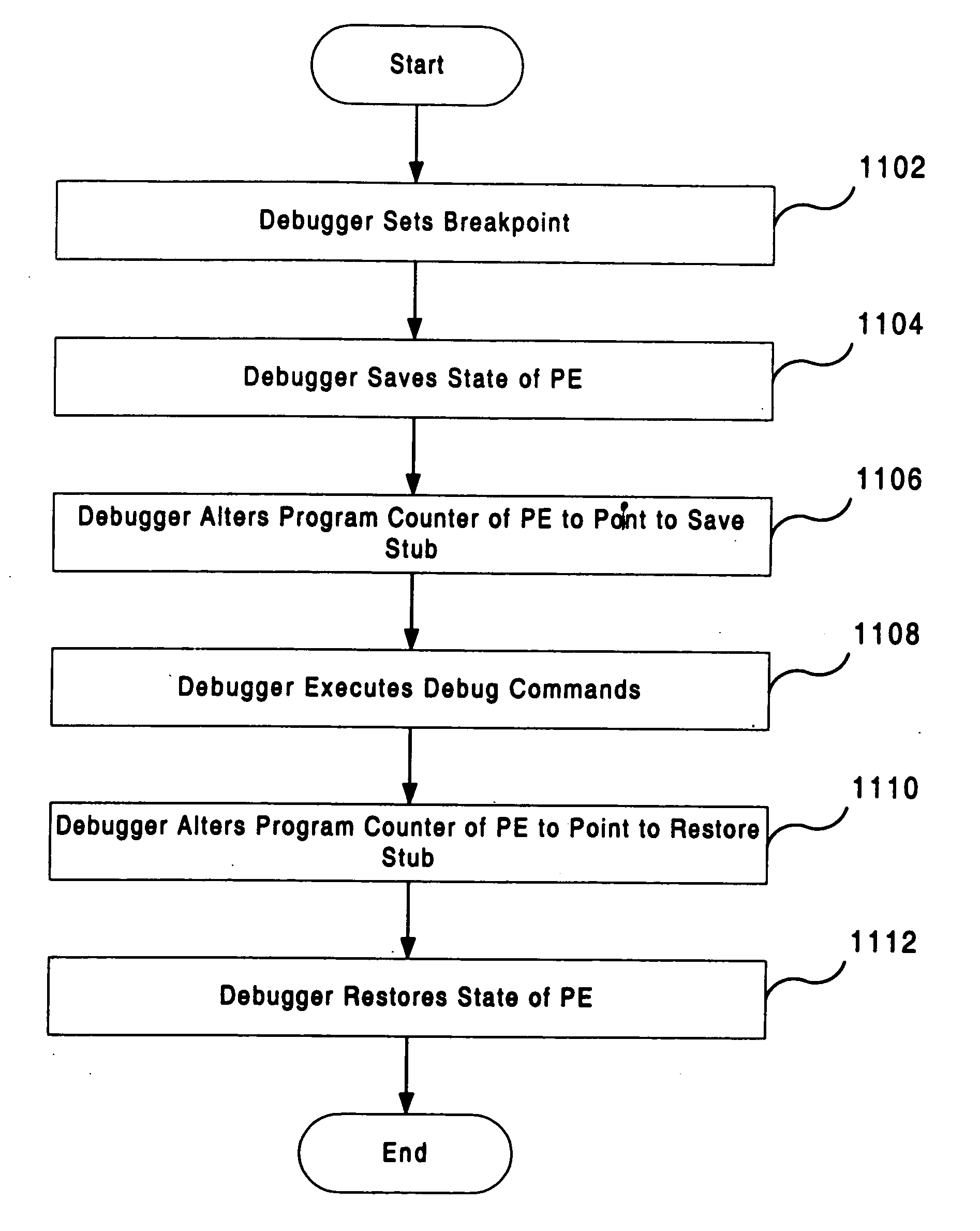

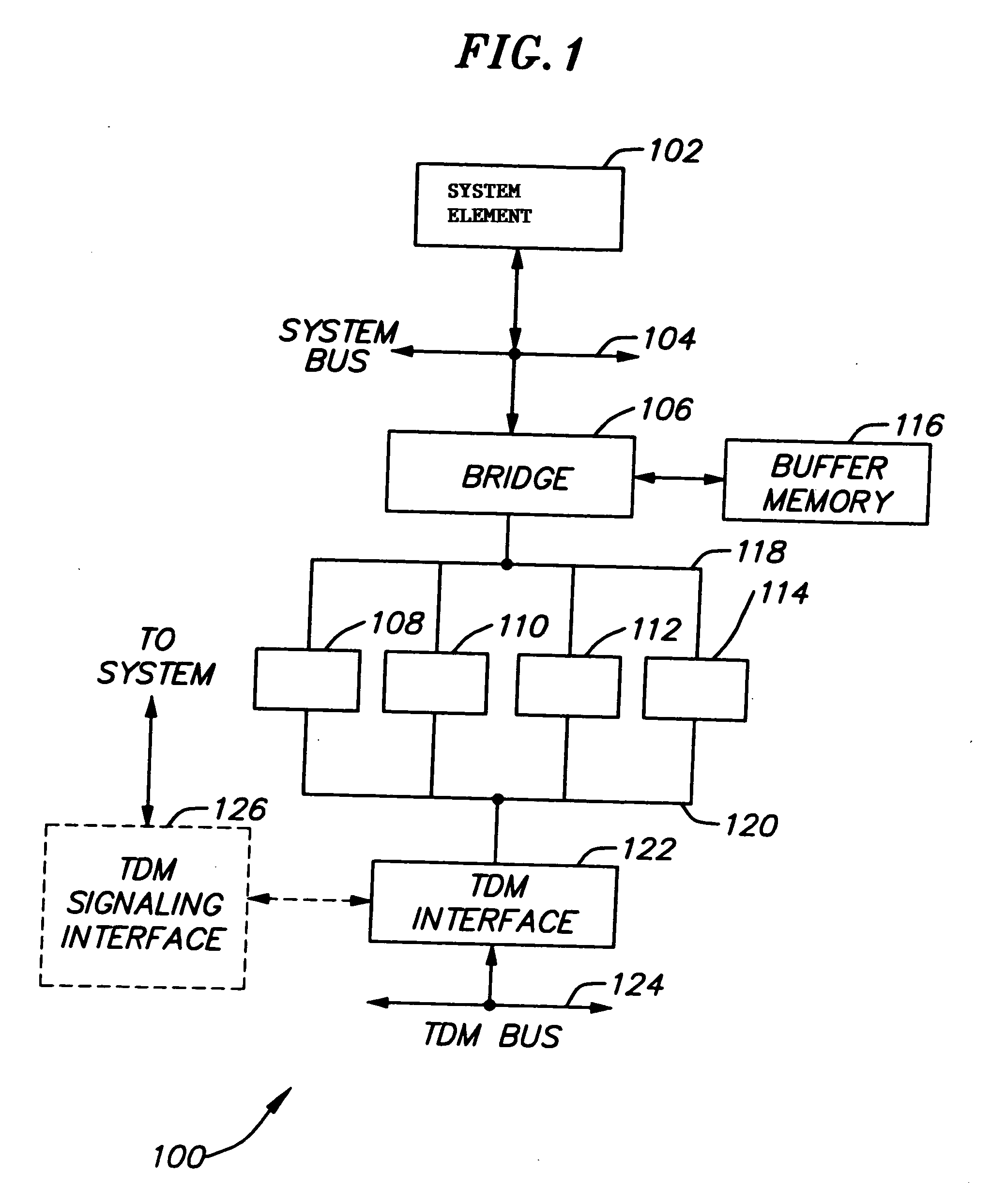

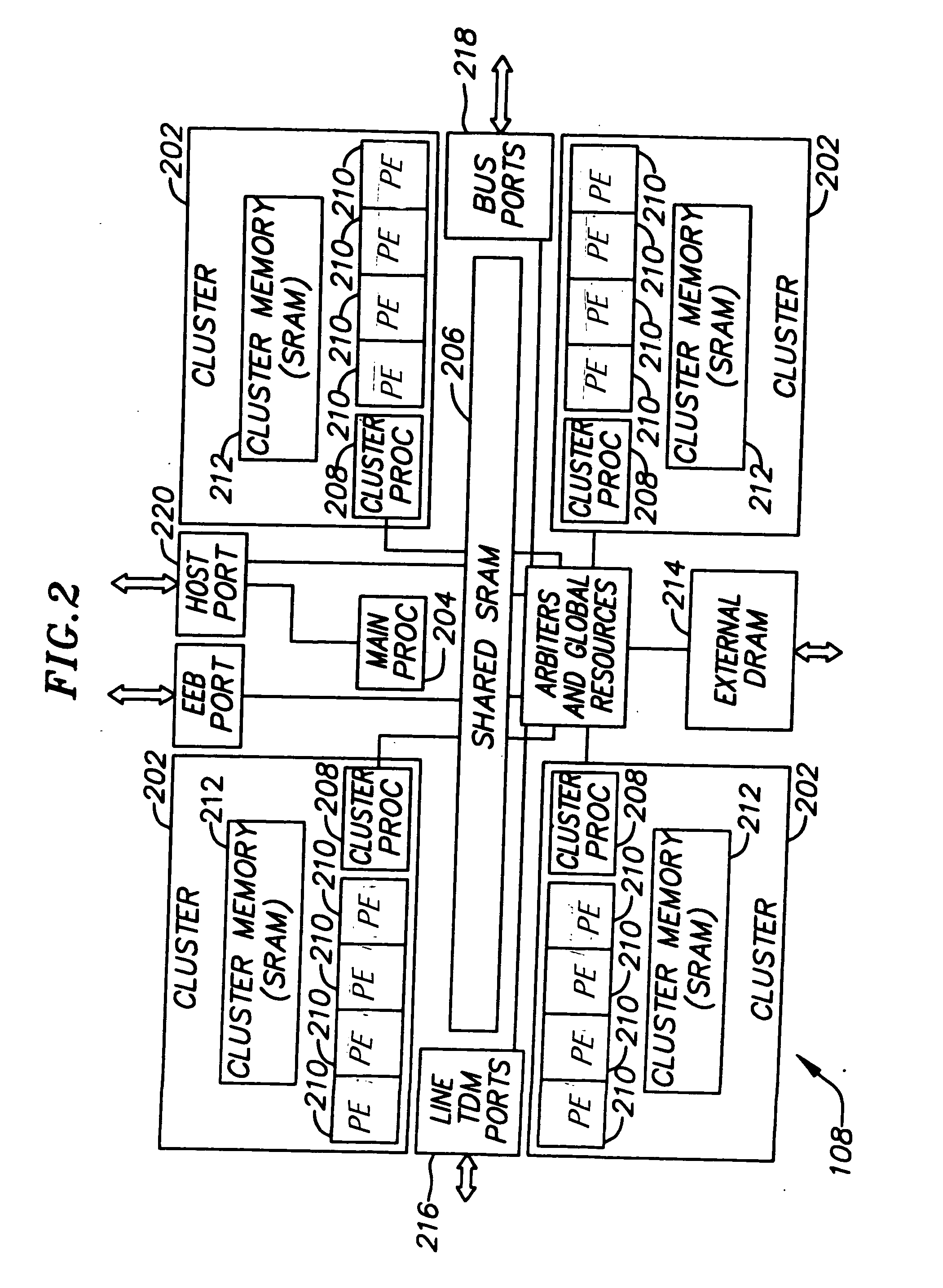

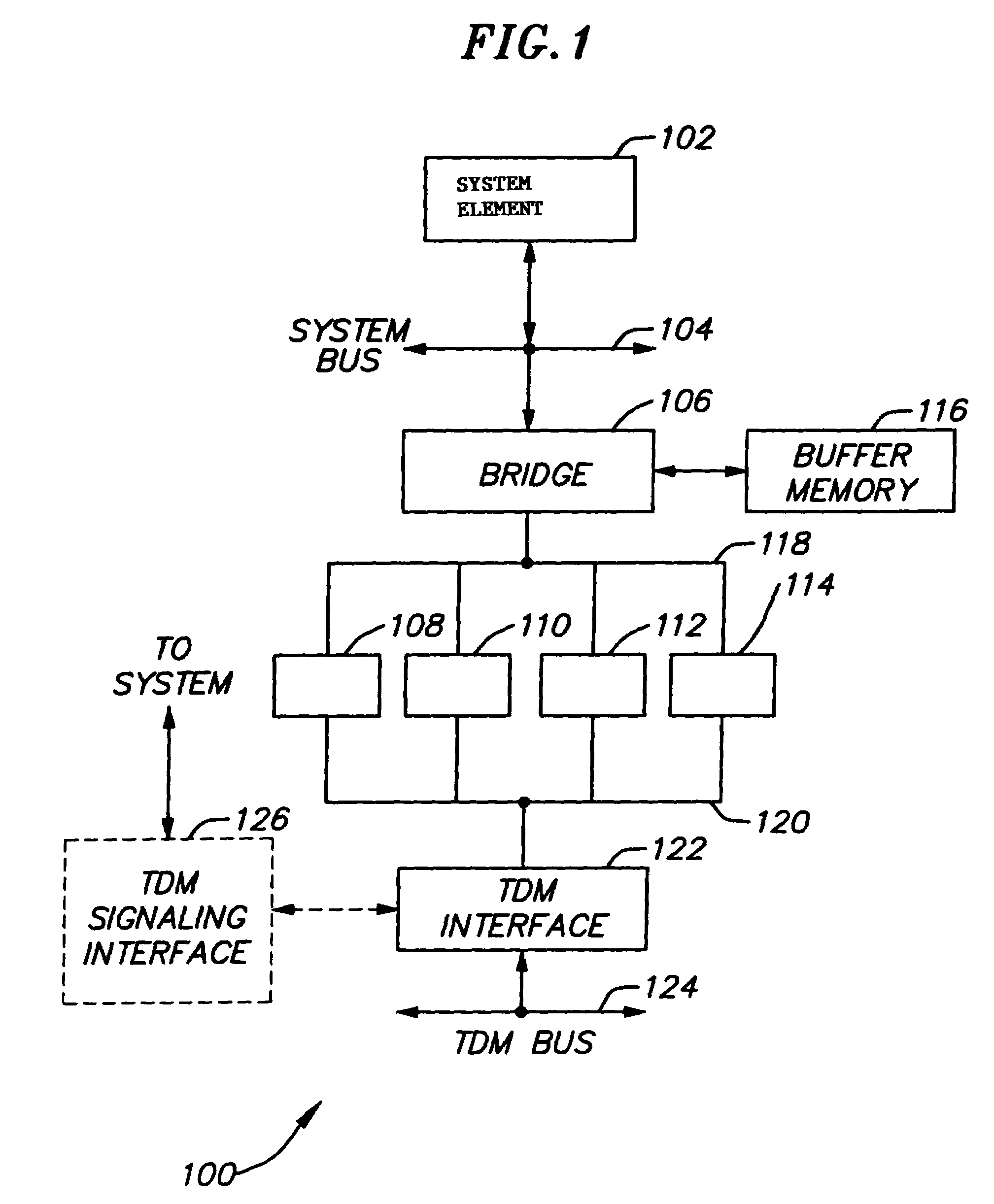

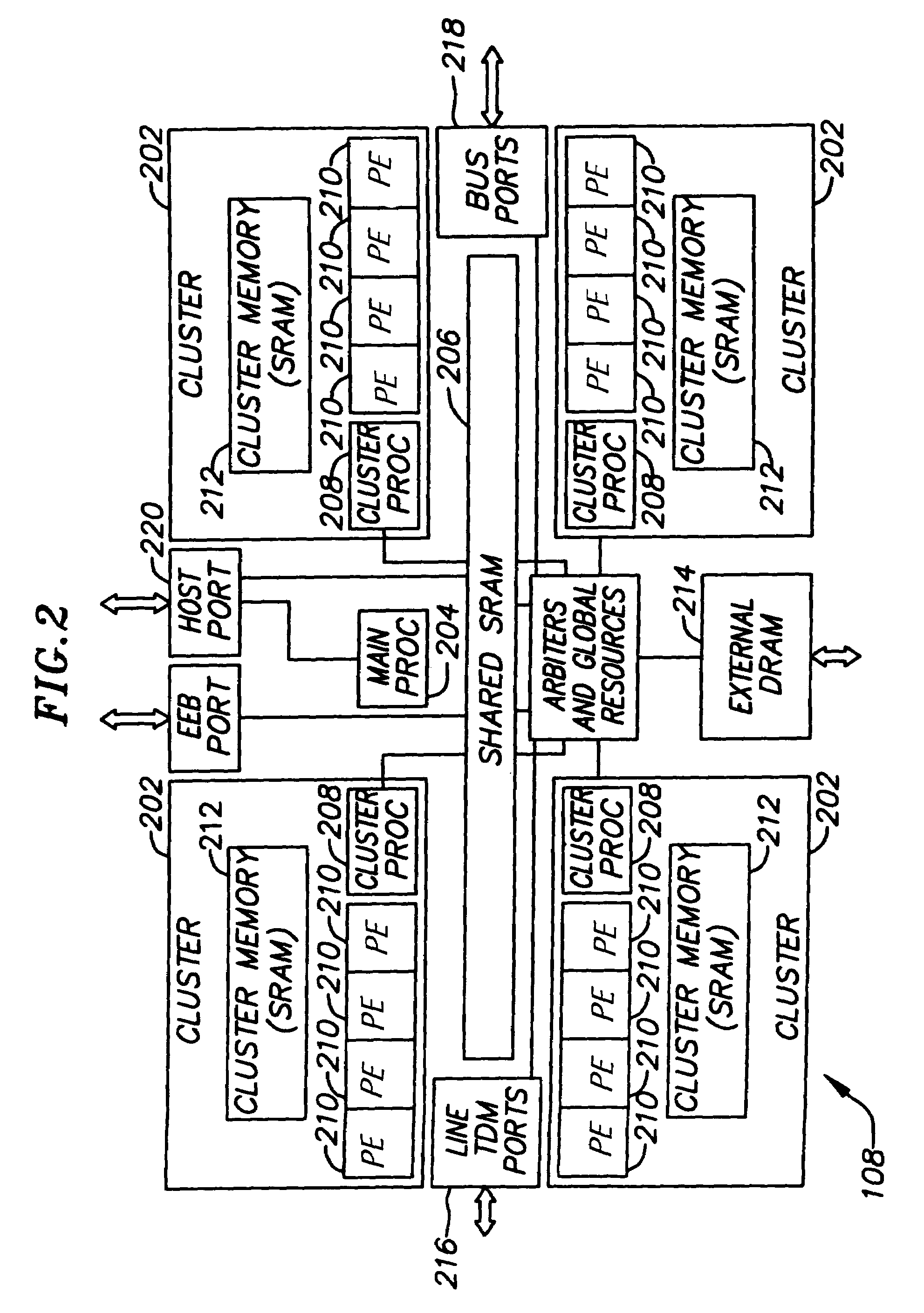

Multi-channel, multi-service debug on a pipelined CPU architecture

InactiveUS6934937B1Digital computer detailsSoftware testing/debuggingCpu architectureParallel computing

A method and system for debugging an executing service on a pipelined CPU architecture are described. In one embodiment, a breakpoint within an executing service is set and a minimum state of the executing service is saved. In addition, a program counter of the executing service is altered. The program counter is restored and the state of the executing service is restored.

Owner:AVAGO TECH INT SALES PTE LTD

Multi-channel, multi-service debug on a pipelined CPU architecture

InactiveUS20050188358A1Software testing/debuggingSpecific program execution arrangementsParallel computingCpu architecture

Owner:AVAGO TECH INT SALES PTE LTD

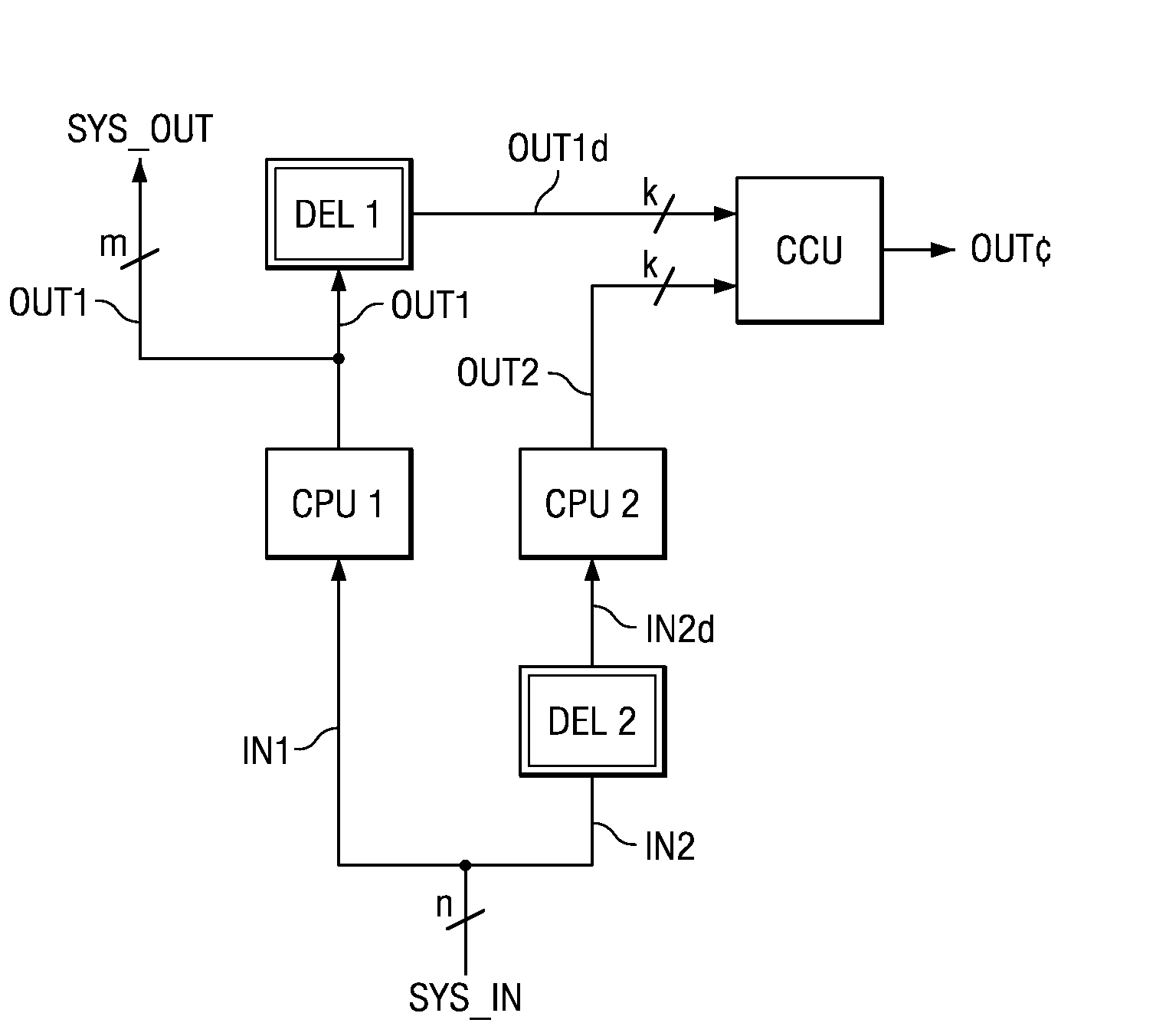

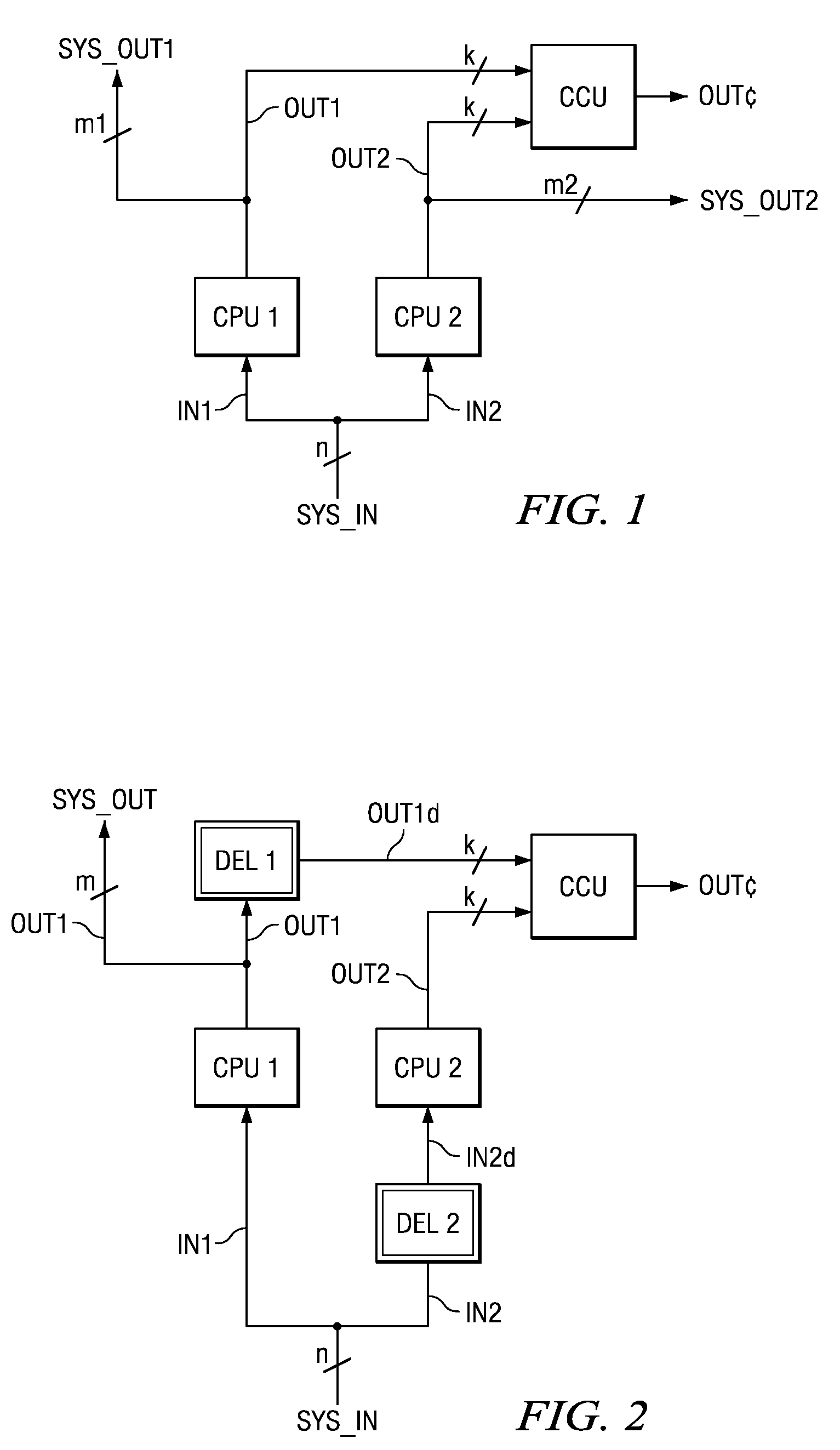

Delayed lock-step CPU compare

InactiveUS20080244305A1Error detection/correctionGeneral purpose stored program computerMicrocontrollerCpu architecture

The present invention relates to an electronic device comprising a first CPU, a second CPU, a first delay stage and a second delay stage for delaying data propagating on a bus, a CPU compare unit, and wherein the first delay stage is coupled to an output of the first CPU and a first input of the CPU compare unit, an input of the first CPU is coupled to a system input bus, the second delay stage is coupled to the system input bus and to an input of the second CPU, an output of the second CPU (CPU2) is coupled to the CPU compare unit, and wherein the first CPU and the second CPU are adapted to execute the same program code and the CPU compare unit is adapted to compare an output signal of the first delay stage, which is a delayed output signal of the first CPU, with an output signal of the second CPU. In one embodiment, the present invention relates to a method for lock-step comparison of CPU outputs of an electronic device, in particular a microcontroller, having a dual CPU architecture, the method comprising executing the same program code on a first CPU and a second CPU in response to data provided via a system input bus, delaying an output data of the first CPU by a predetermined first delay to receive a delayed output data, delaying the data to be input to the second CPU by a predetermined second delay, and comparing the output data of the second CPU with the delayed output data of the first CPU.

Owner:TEXAS INSTR INC

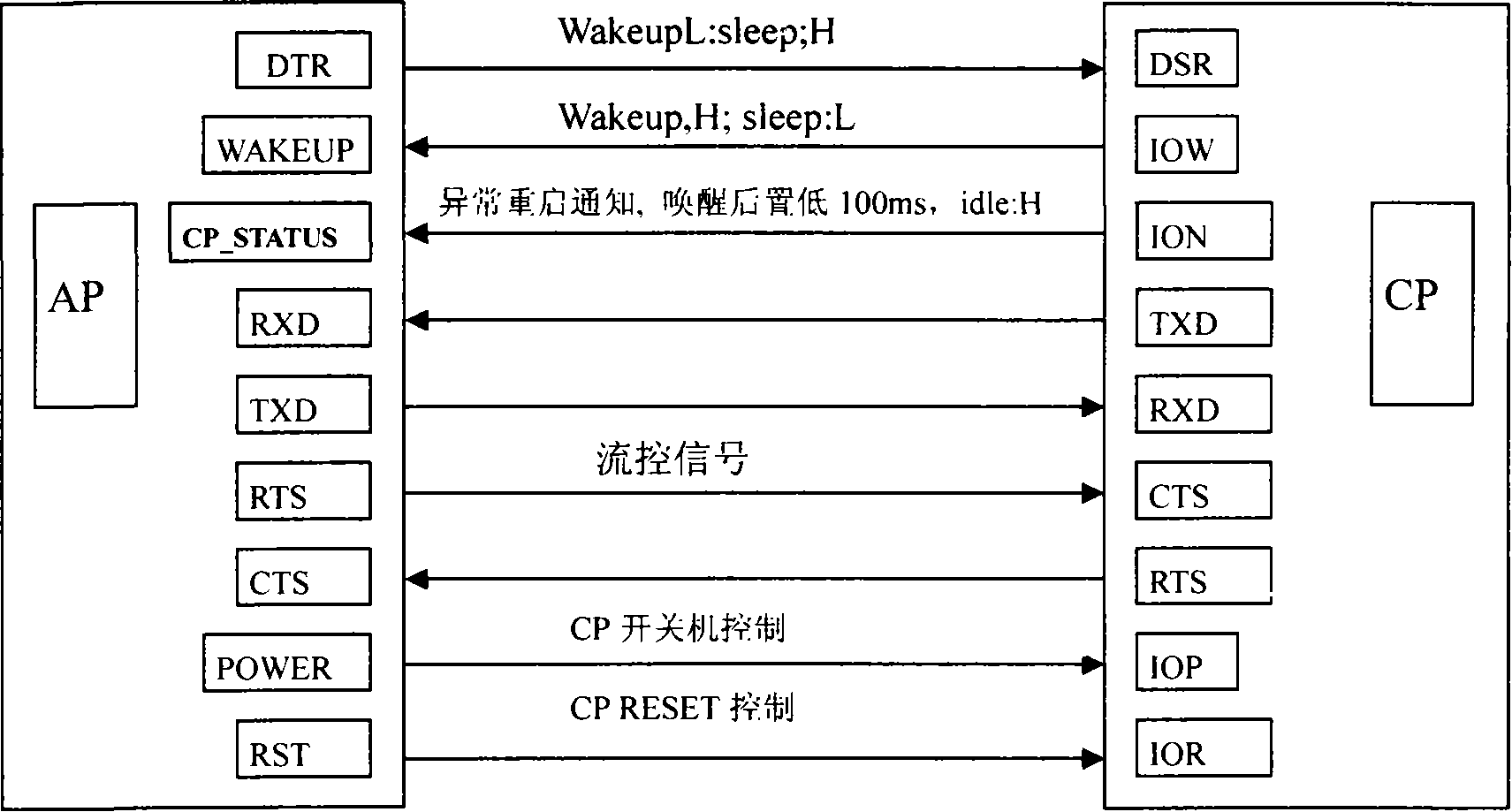

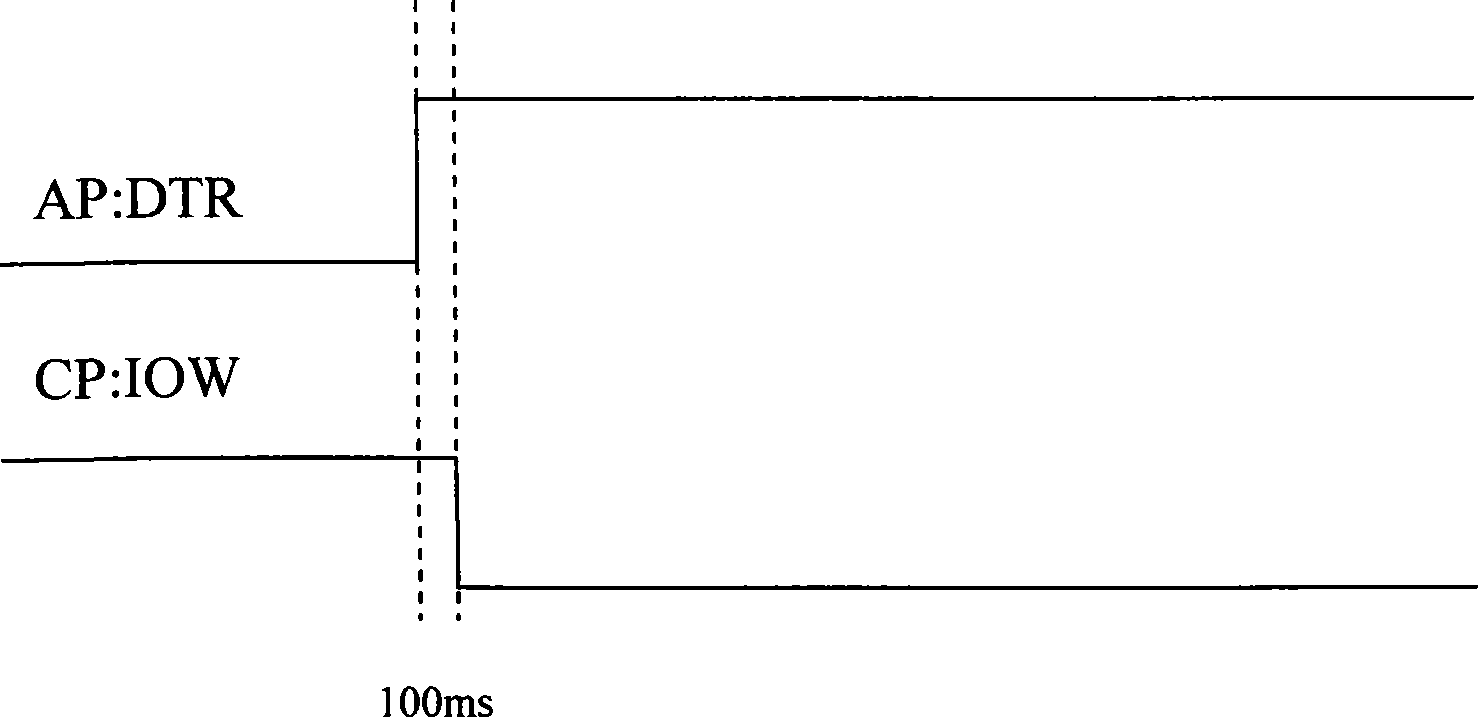

An intelligent mobile phone based on dual-CPU architecture and communication control method

ActiveCN101242447AMeet power saving requirementsCurrent supply arrangementsPower supply for data processingUser needsSleep state

The invention relates to a double CPU architecture intelligent mobile phone and the communication control method thereof. According to the technology, AP and CP are in sleep state in most time during stand-by process; when a user only operates AP, CP is also in sleep state; when the user needs to operate CP, AP actively wakeups CP to transmit data; when URC is reported to CP, AP is waken up to transmit data; when accidental restart occurs during CP operation, AP is firstly waken up, then a 100 to 200ms fall edge interrupt is generated at ION pin to notify AP of reinitializing CP and entering MUX mode. According to the invention, not only stable communication is realized, but also the power consumption is reduced to minimum.

Owner:SHANGHAI WINGTECH ELECTRONICS TECH

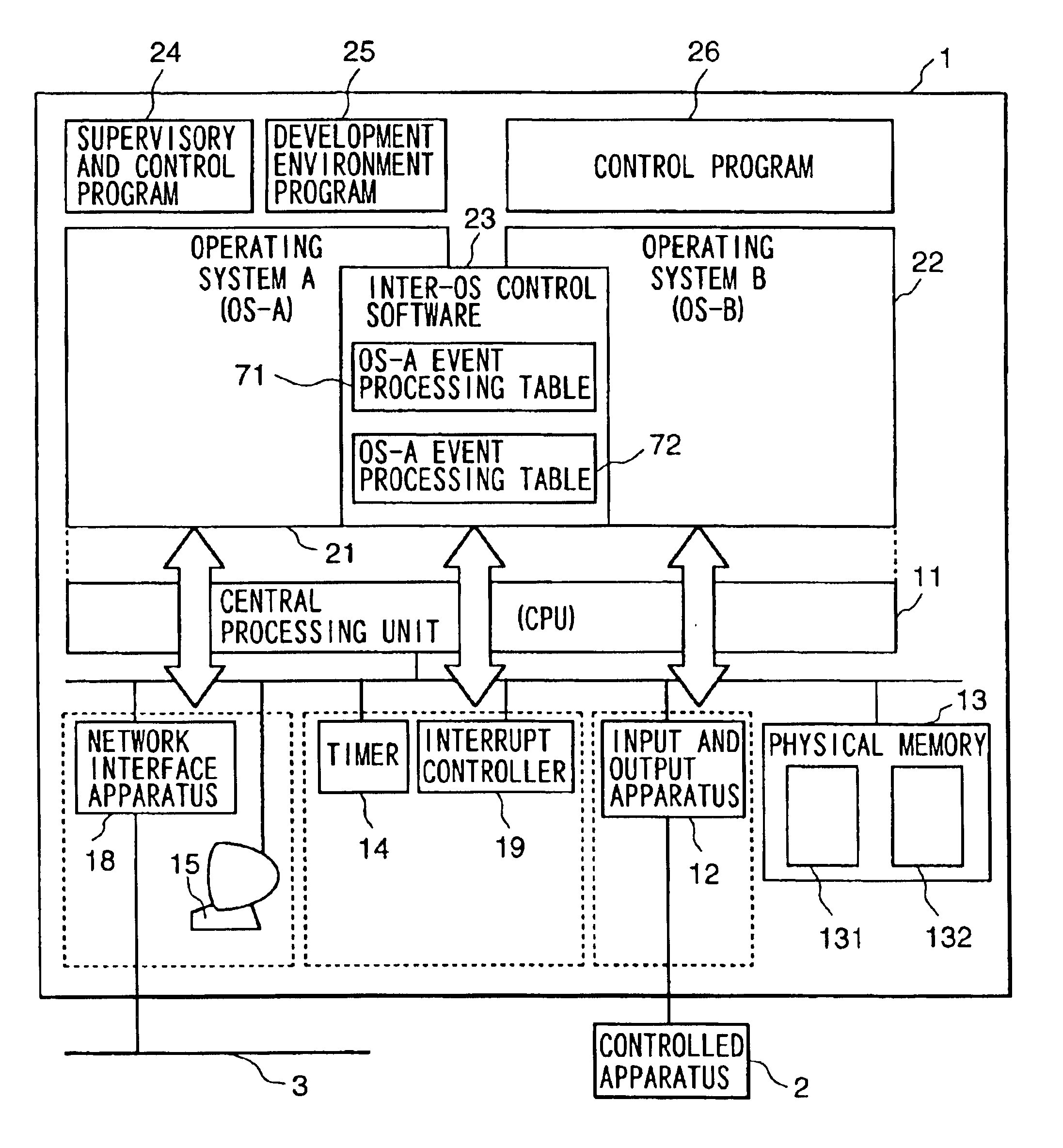

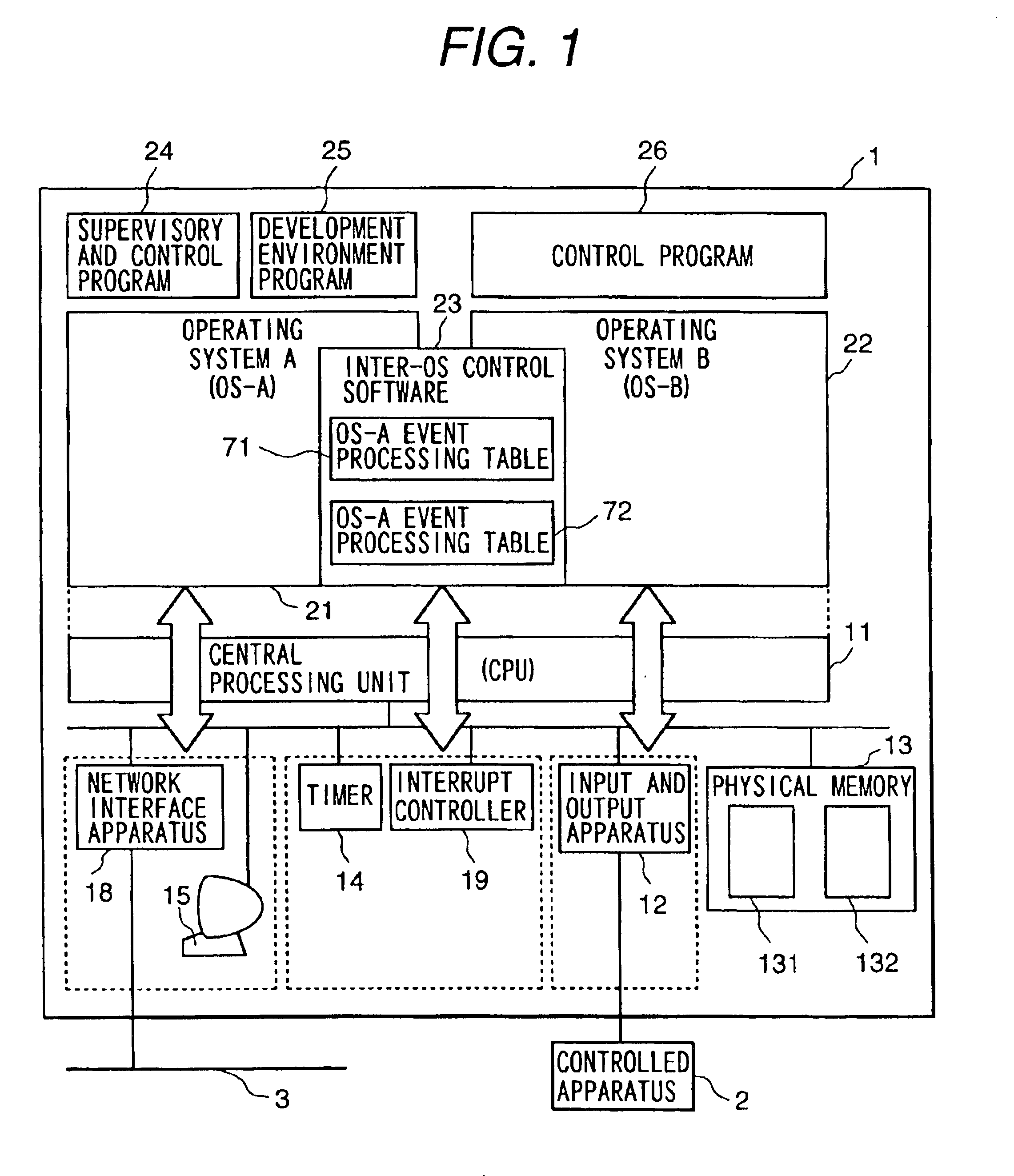

Multiple operating system control method

InactiveUS6892261B2Improve reliabilityFunction increaseProgram initiation/switchingDigital computer detailsOperational systemCpu architecture

An inter-OS control software for switching OS's in operation executed on a single CPU is installed, and plural OS's are made alternately executed. A control program is executed exclusively on one OS, which controls the controlled apparatus. A supervisory control program and a development environment program are executed on another OS, and a memory space is divided so as to make no effect for the operation of the control program. A higher real-time performance and reliability can be established with a single CPU architecture.

Owner:HITACHI LTD

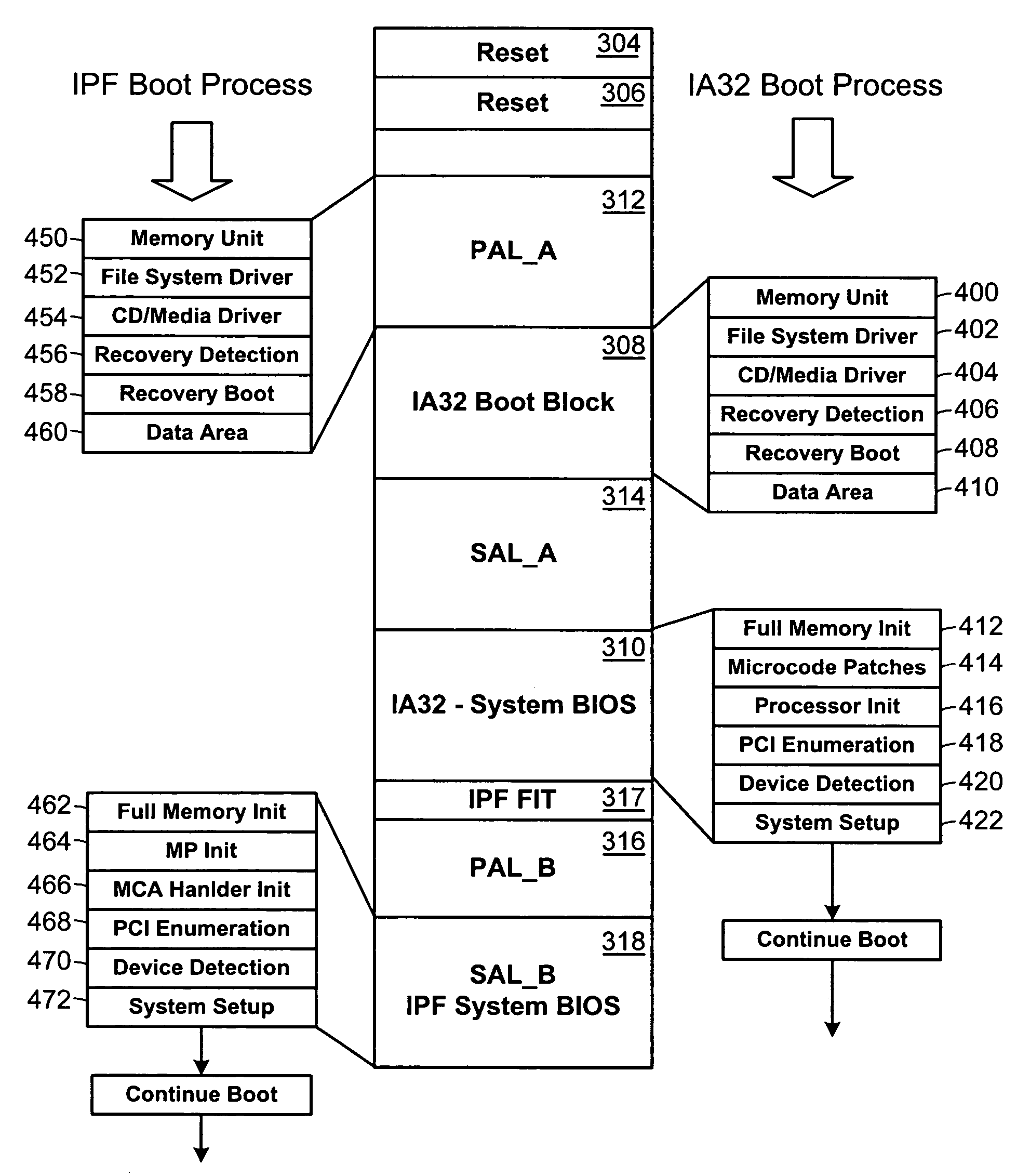

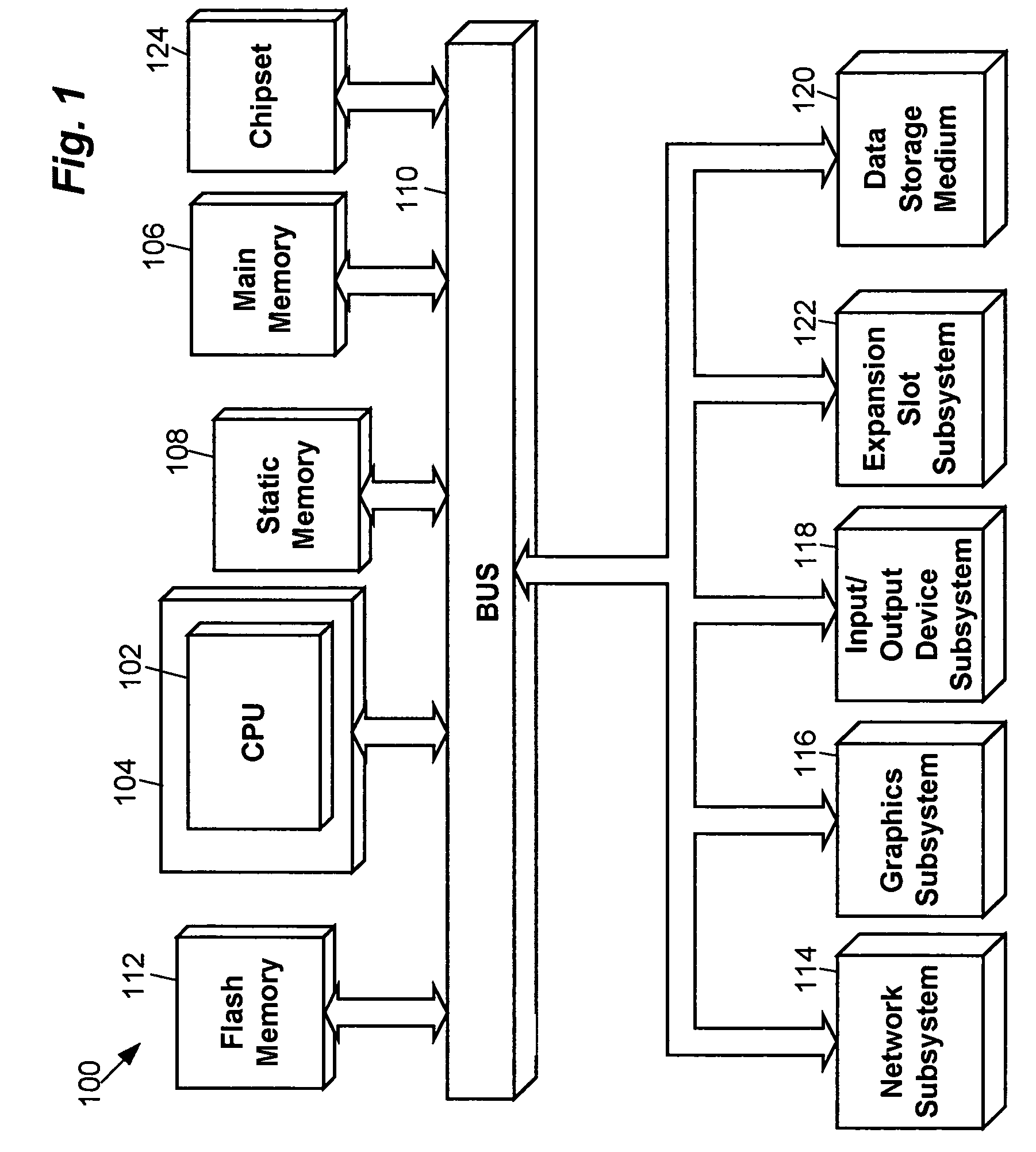

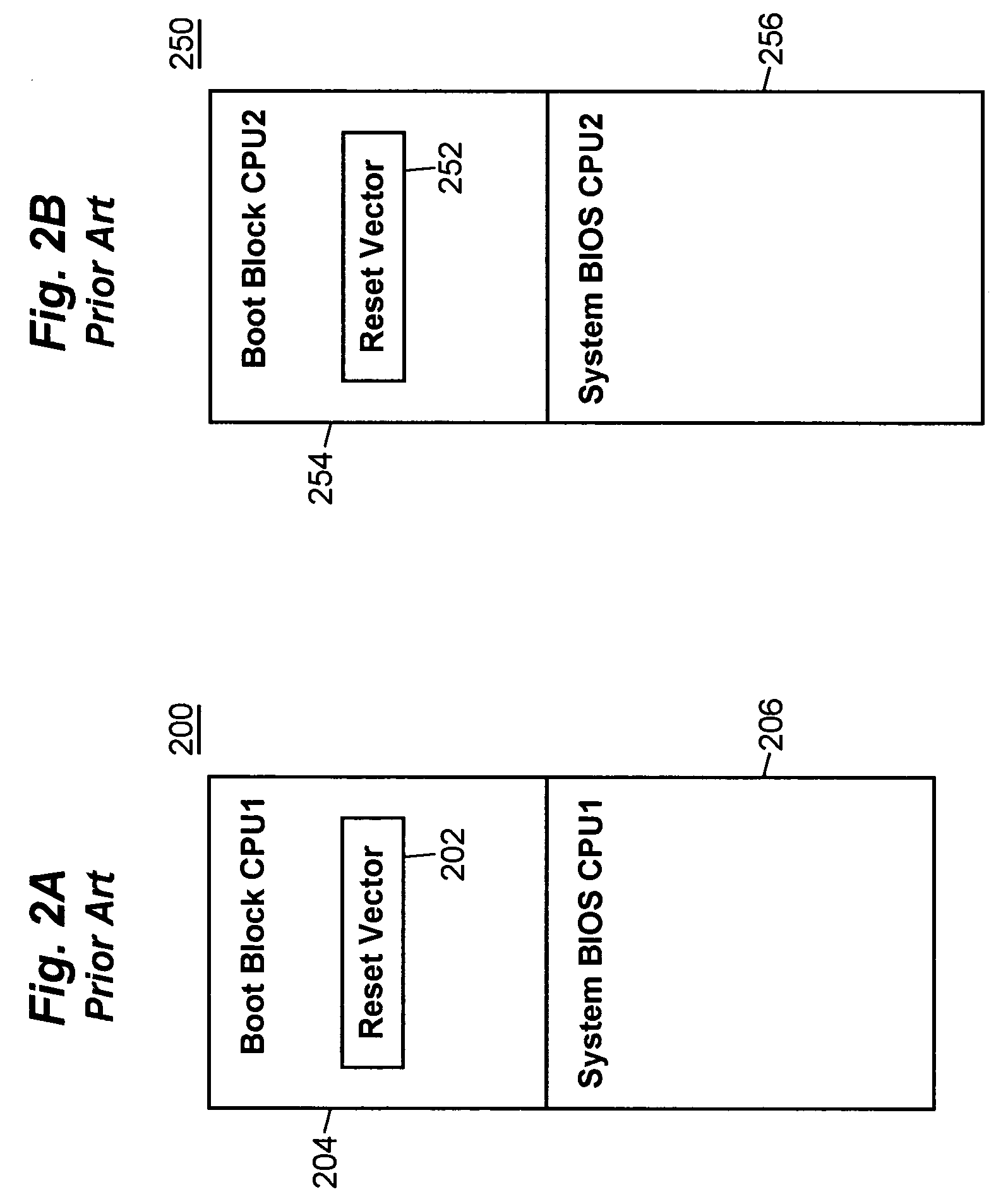

Interleaved boot block to support multiple processor architectures and method of use

Owner:TAHOE RES LTD

Dynamic load balancing method for CPU+GPU CPPC

InactiveCN103902387AImprove platform adaptabilityImprove efficiencyResource allocationDynamic load balancingParallel computing

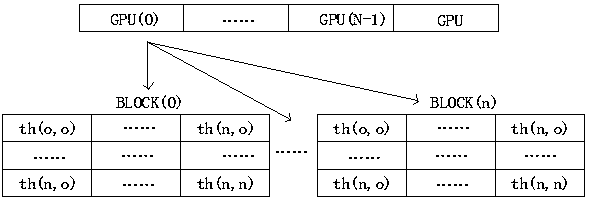

The invention discloses a dynamic load balancing method for CPU+GPU CPPC. The method mainly comprises the steps that in a single node, a main thread processes input parameters and finishes the corresponding initialization operation, 1+N computing equipment control threads are established, CPU devices and N GPU devices in the node are respectively controlled, a CPU control thread establishes a plurality of parallel computing threads according to the computing nuclear number in the node, a dynamic load balancing scheme is adopted between the CPU devices and the GPU devices, and optimization of the parallel efficiency is achieved. The number of enabled GPU calculating devices and the number of CPU computing threads can be manually allocated according to the user demand. According to the dynamic load balancing method, a pure CPU architecture platform and a CPU+GPU mixed architecture framework platform are combined in the load balancing scheme, a work station system with the various different types of GPU devices is supported, and the platform adaptability, the parallel efficiency and the overall running performance of software are greatly improved.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

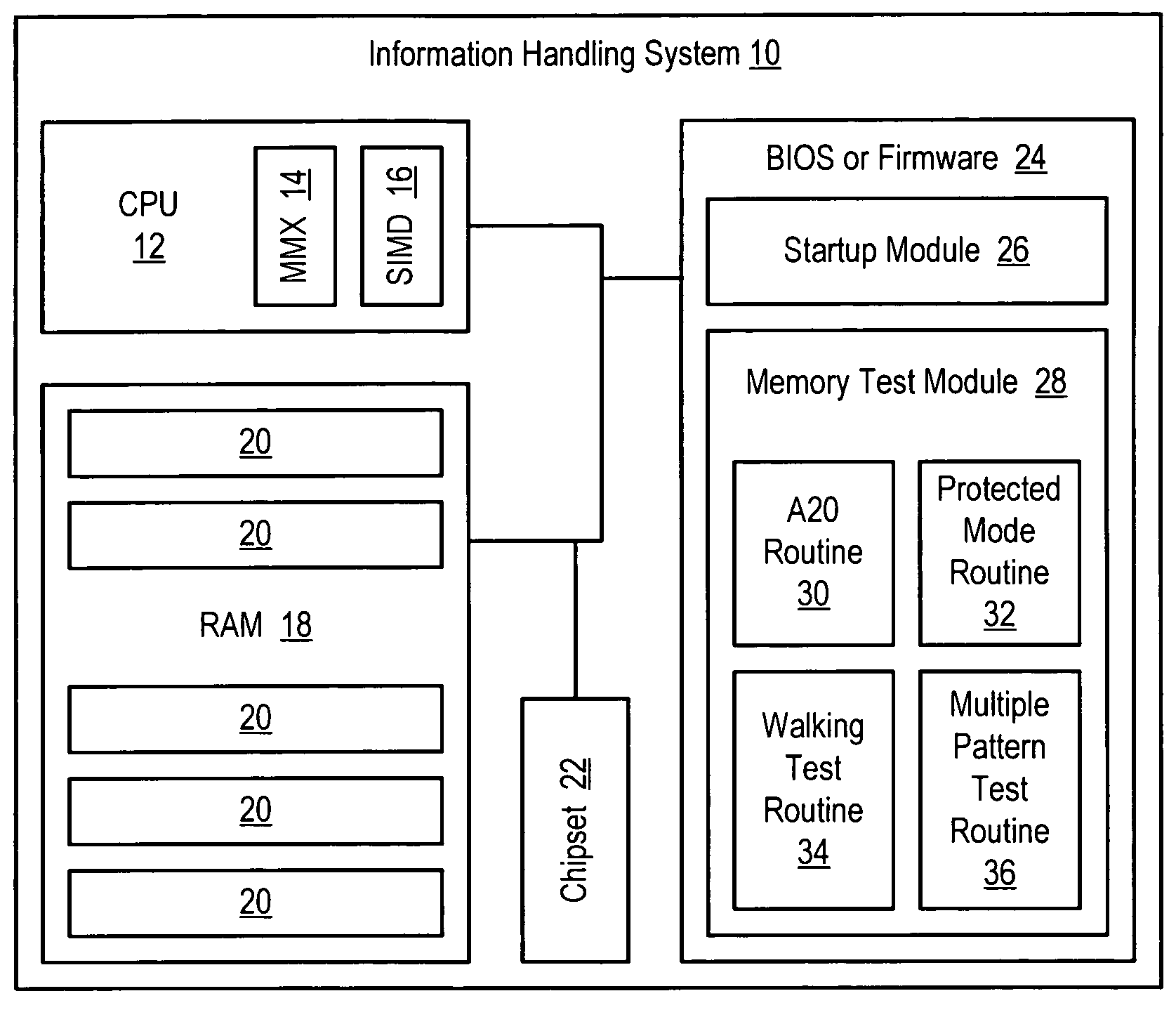

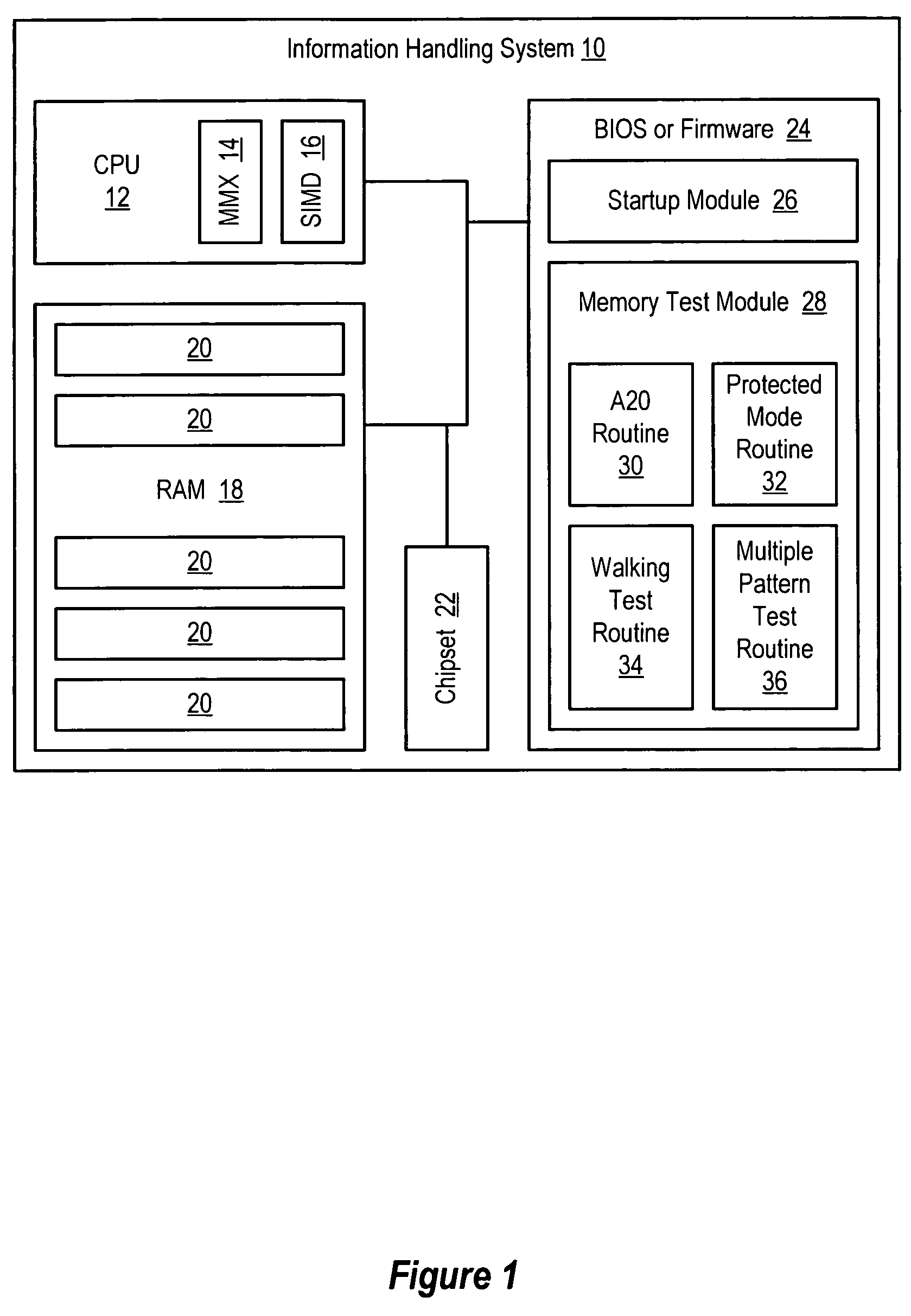

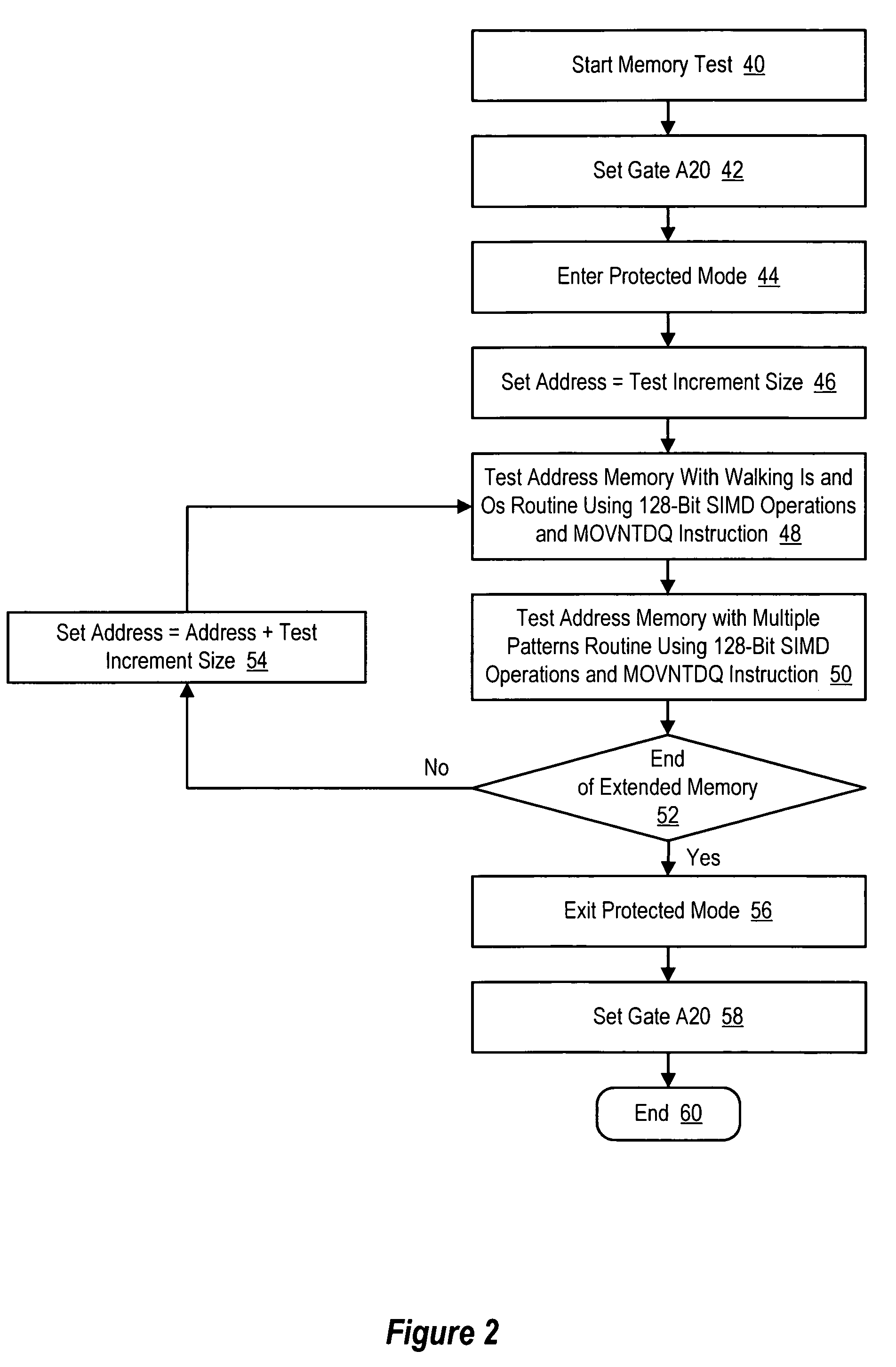

System and method for accelerated information handling system memory testing

ActiveUS7325176B2Easy to testReduce disadvantagesError detection/correctionDigital storageCpu architectureProtected mode

Owner:DELL PROD LP

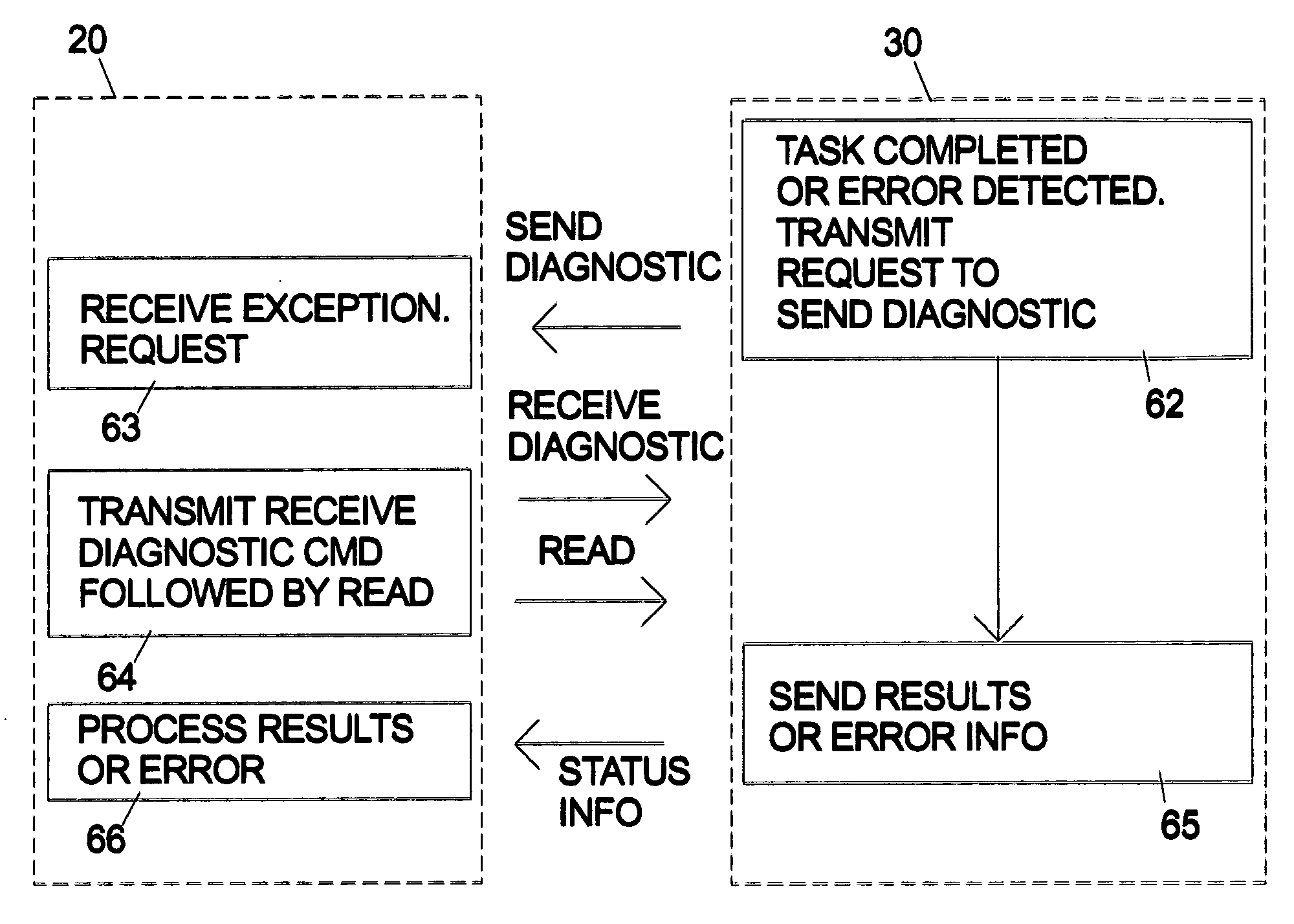

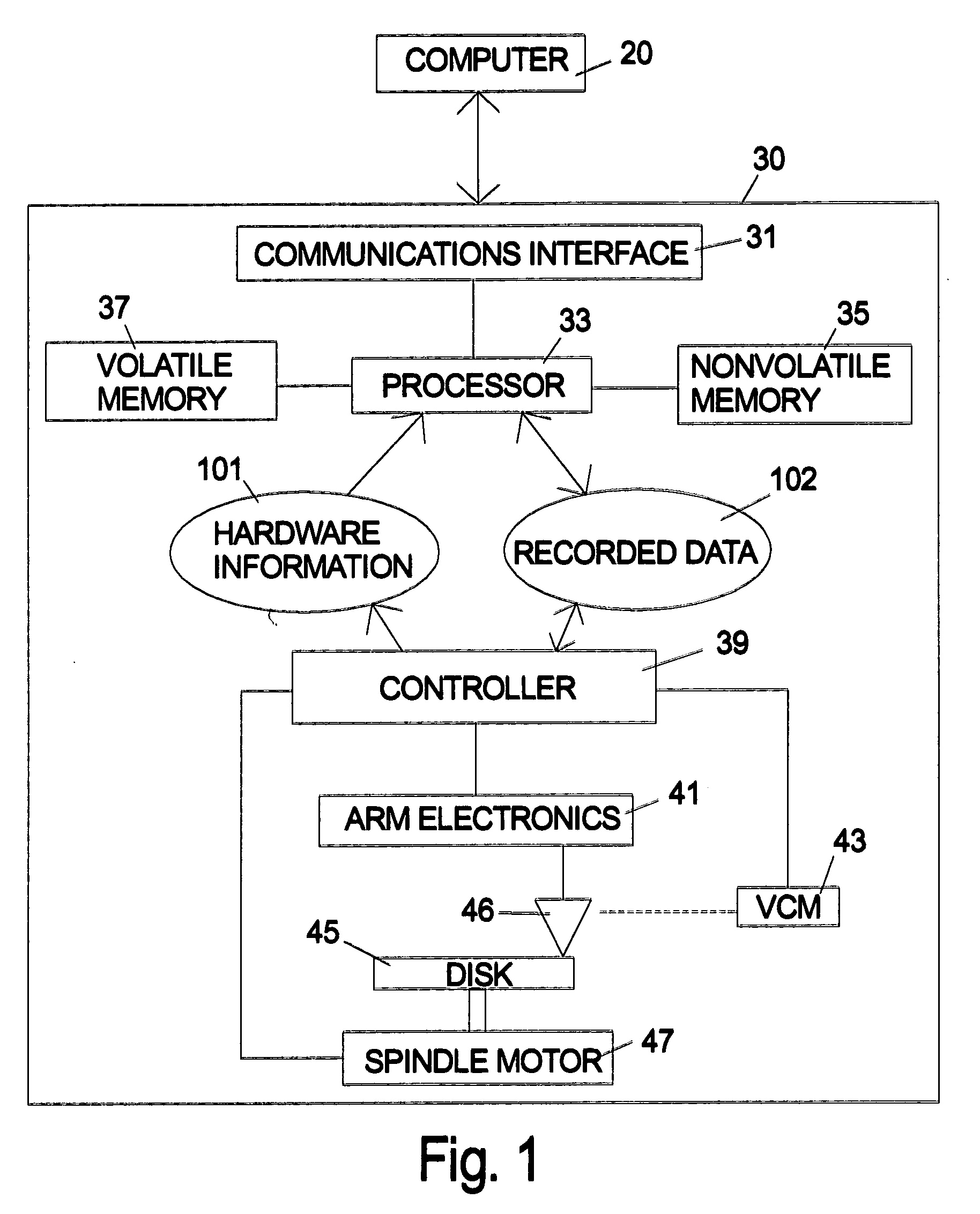

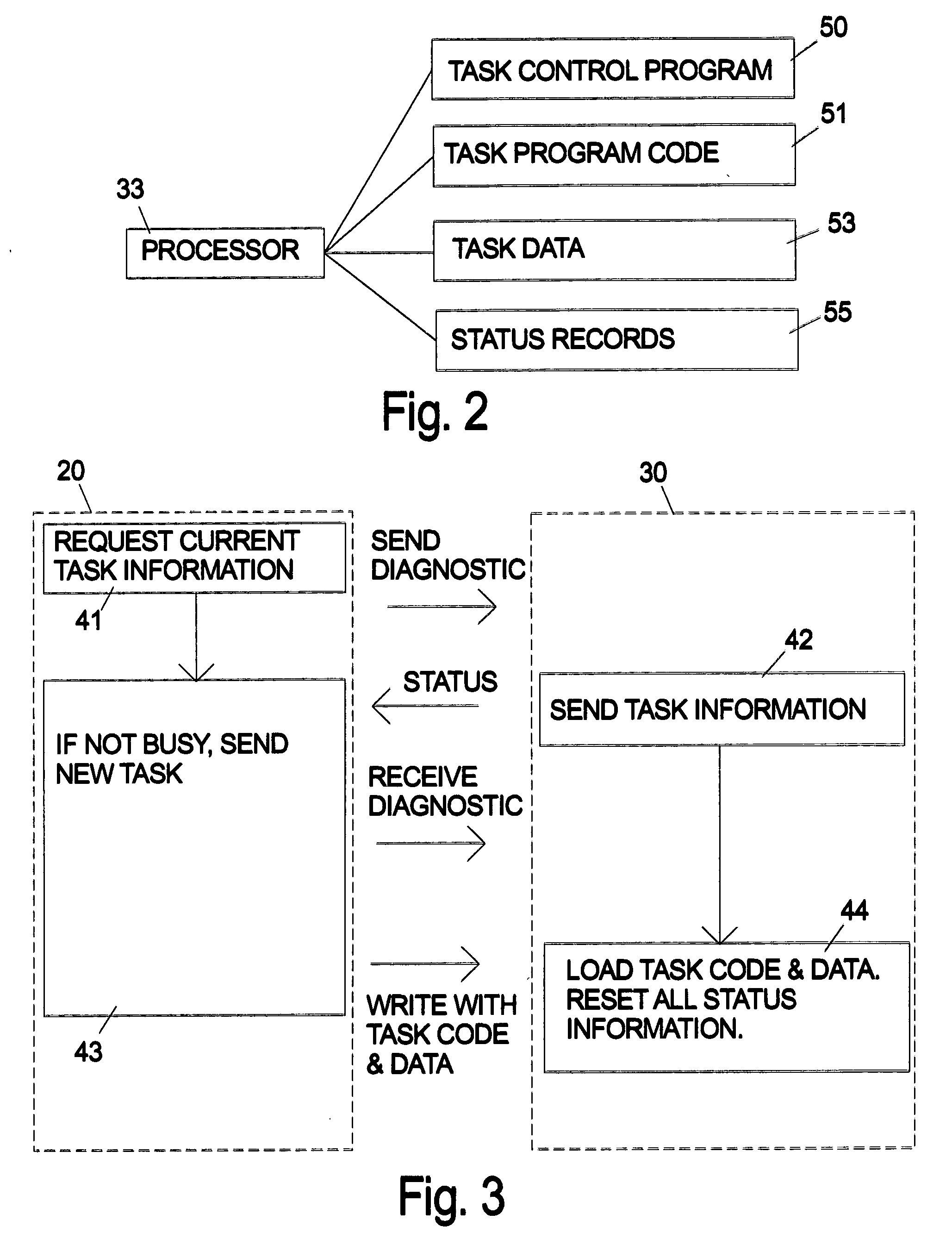

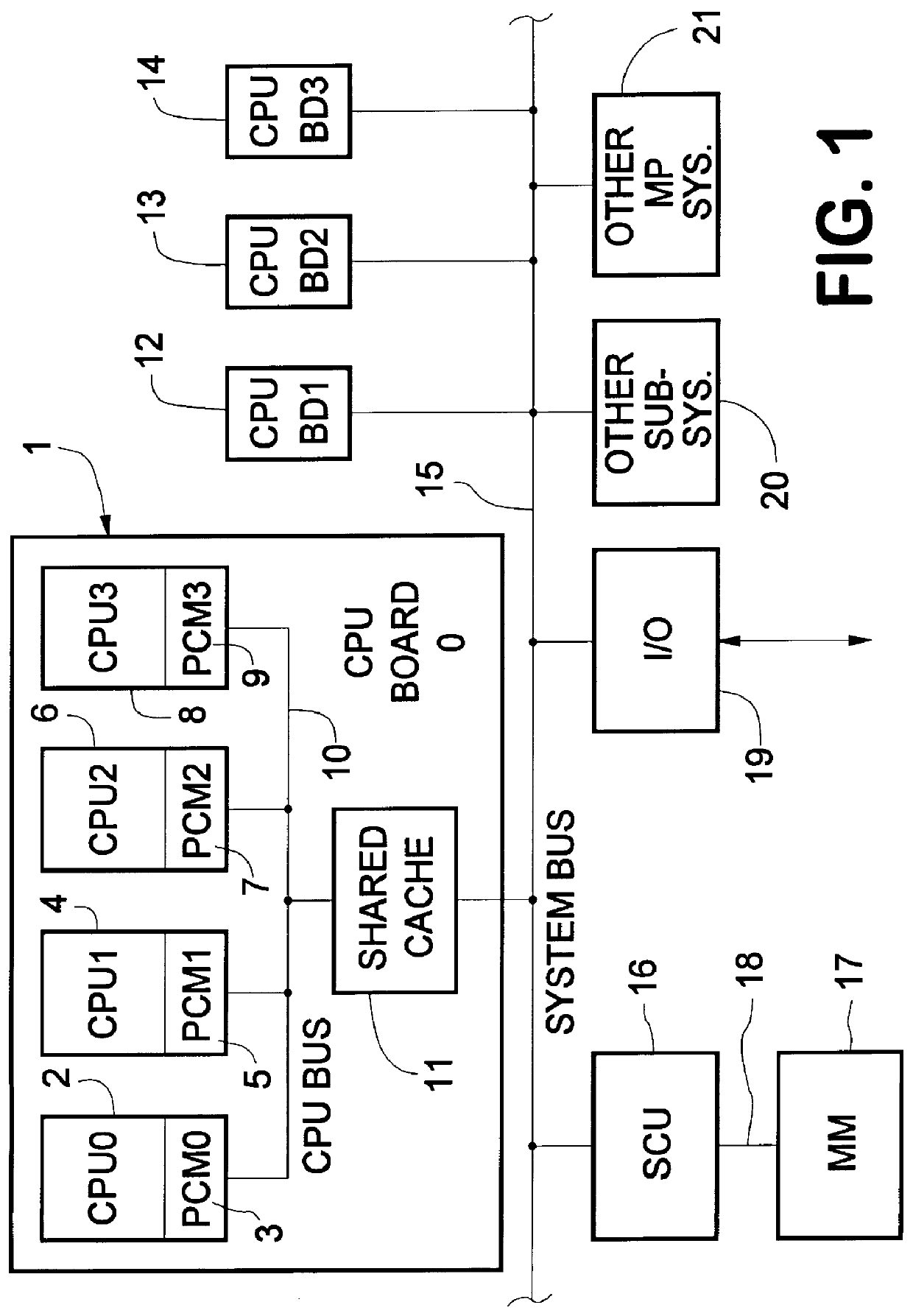

Disk drive/CPU architecture for distributed computing

InactiveUS20060195842A1Less resourcesError detection/correctionInterprogram communicationCommunication interfaceMass storage

A disk drive is described which includes means for executing distributed computing tasks including a CPU and associated memory. The communication interface with the host computer is modified to allow the host computer to send executable code for a task to the drive and to allow the drive to communicate the results and status information about the task to the host computer. In a preferred embodiment the disk drive has a task control program, task program code, task data and status information for the distributed task. In alternative embodiments, the disk drive can communicate with other similar disk drives in the bus to provide the results of computation to the other processors. The RAM memory, and mass storage are intimately connected through the associated hard disk controller such that the exact location of the required data and program instructions are known.

Owner:WESTERN DIGITAL TECH INC

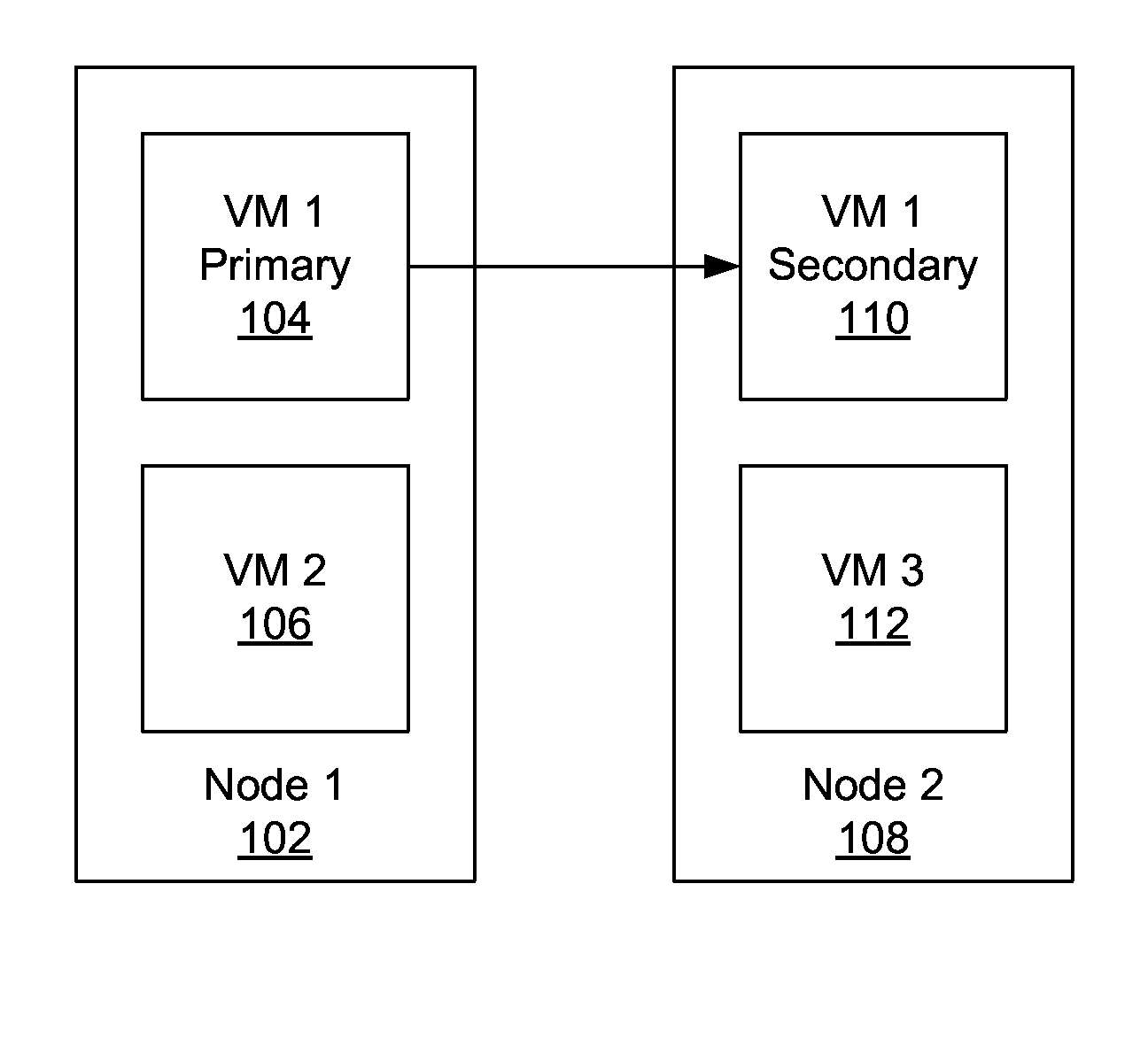

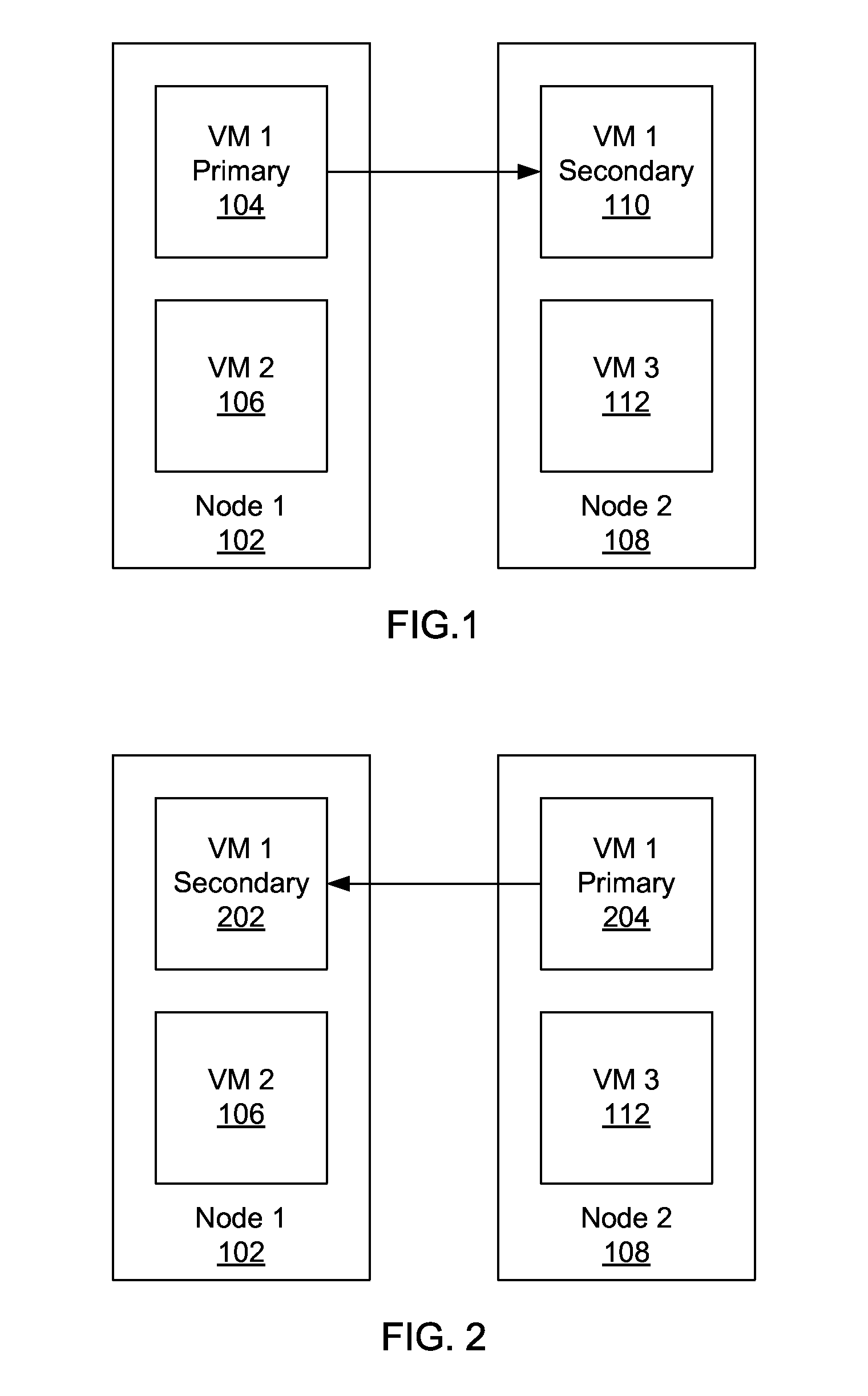

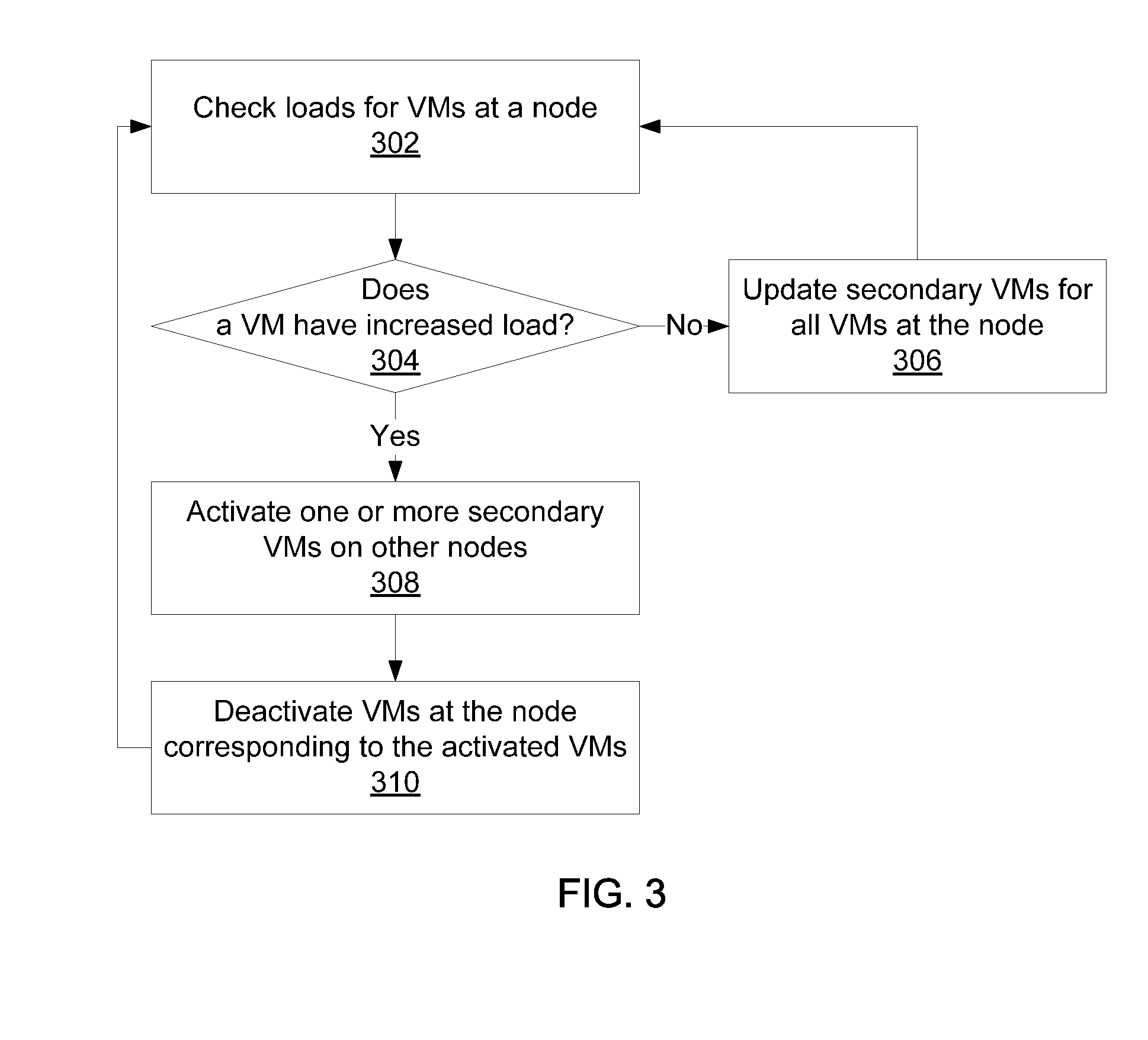

Agile vm load balancing through micro-checkpointing and multi-architecture emulation

ActiveUS20150331704A1Easy loadingFree resourceResource allocationSoftware simulation/interpretation/emulationCpu architectureParallel computing

Methods and systems for agile load balancing include detecting an increased load for a first primary virtual machine (VM) on a first node that has a plurality of additional primary VMs running on a processor; deactivating one or more of the additional primary VMs, reducing said one or more deactivated VMs to a secondary state, to free resources at the first node for the first primary VM; and activating secondary VMs, located at one or more additional nodes, that correspond to the one or more deactivated VMs, raising said secondary VMs to a primary state. Activation and deactivation through micro-checkpointing may involve nodes of different CPU architectures during transient periods of peak load.

Owner:IBM CORP

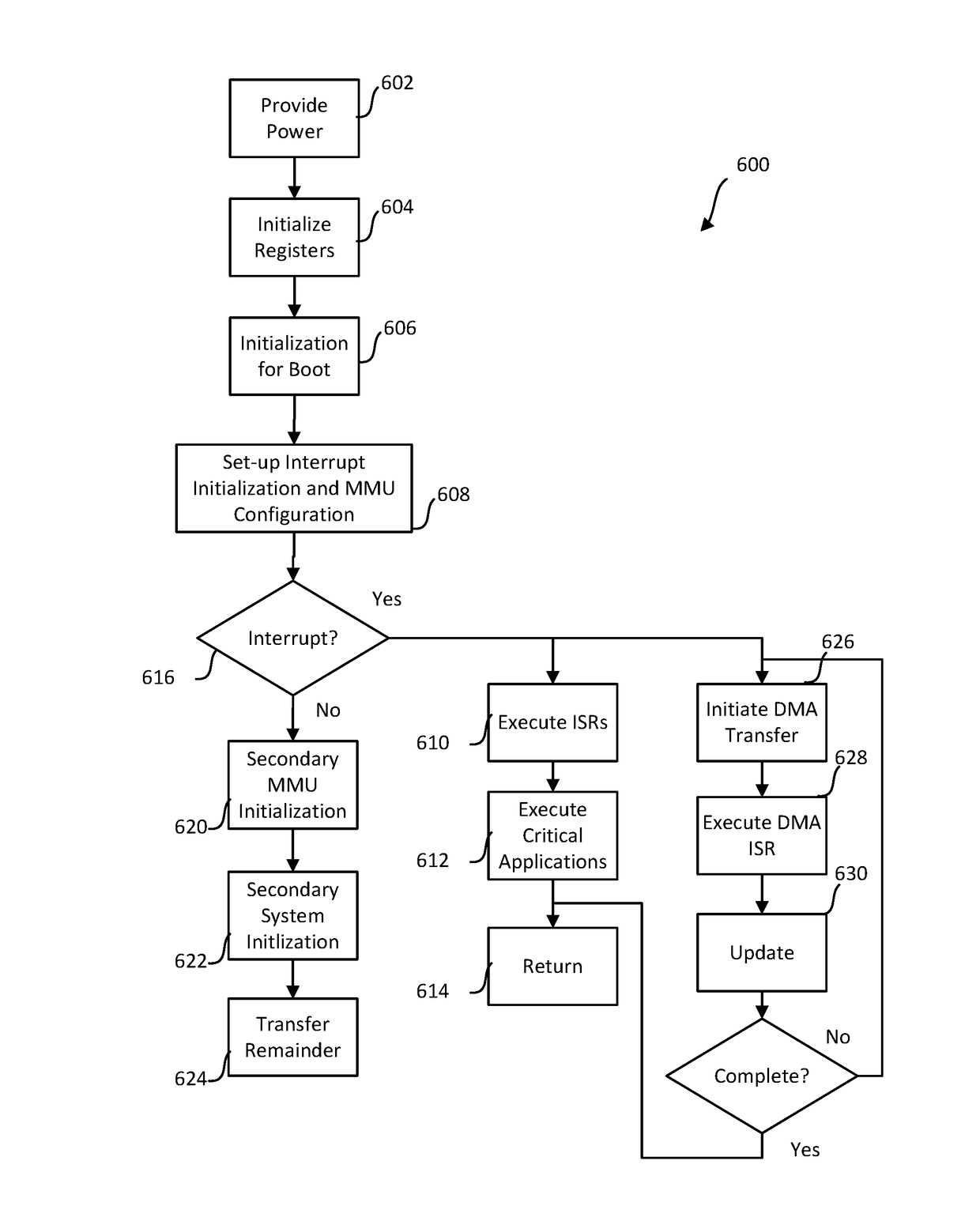

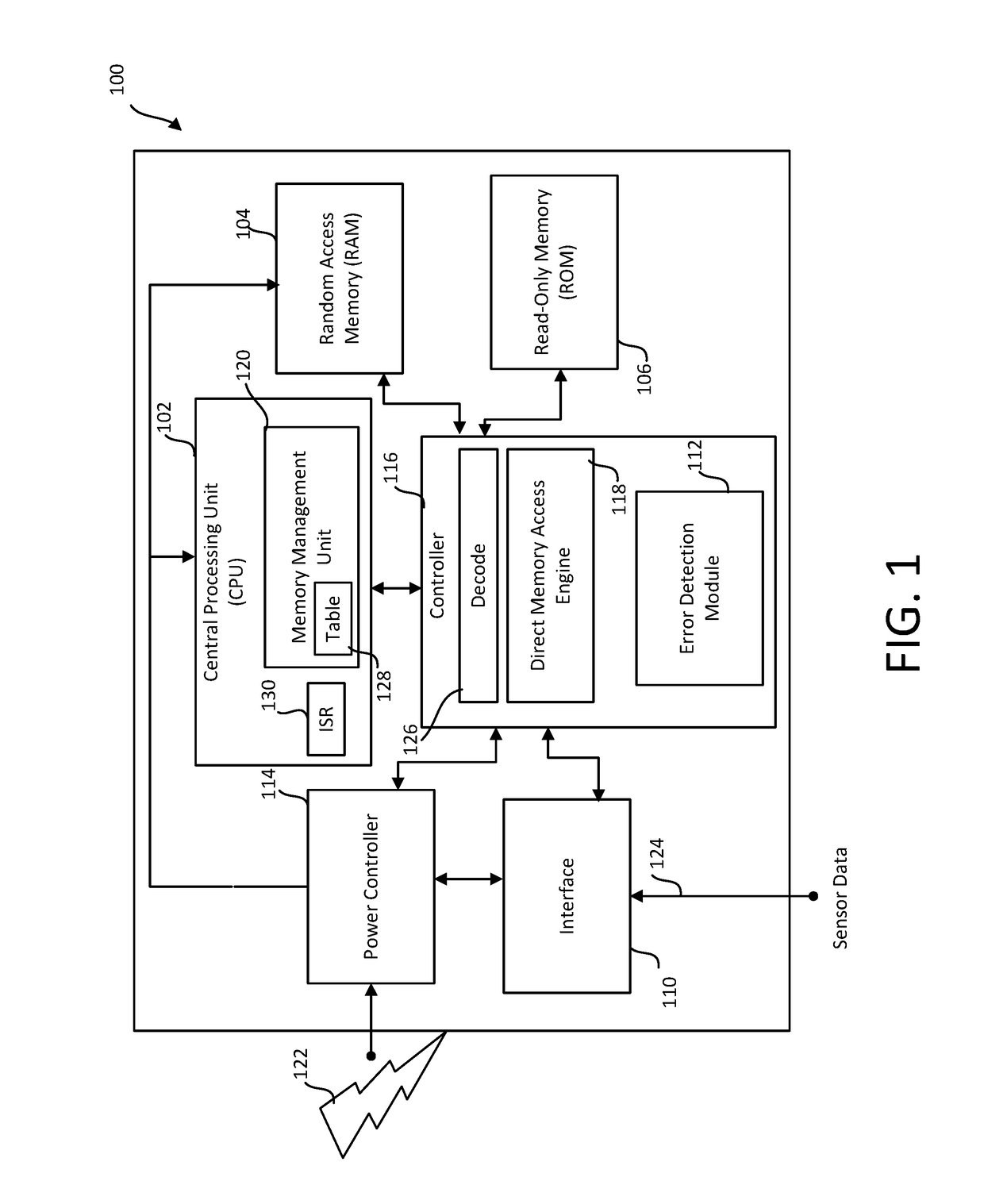

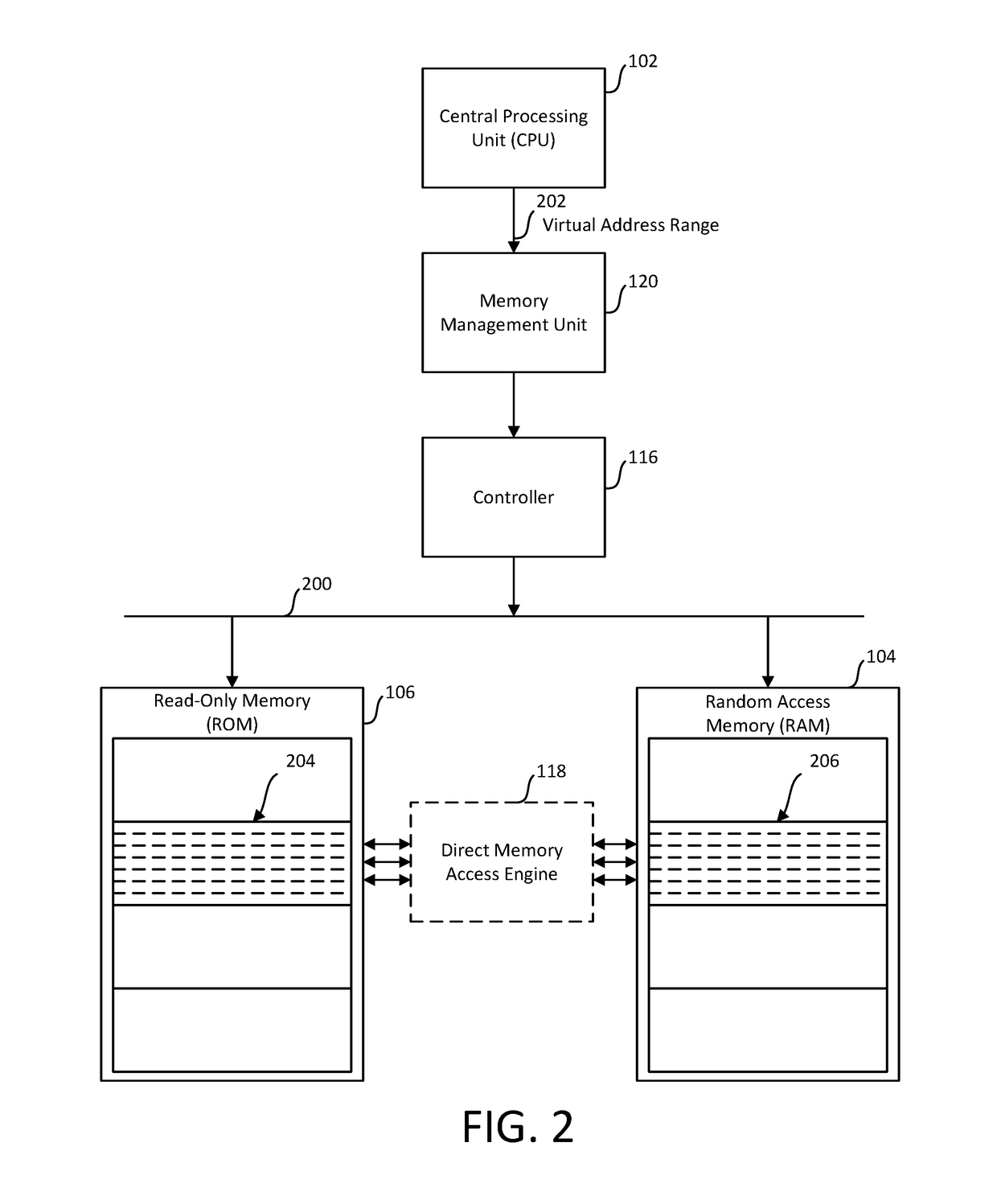

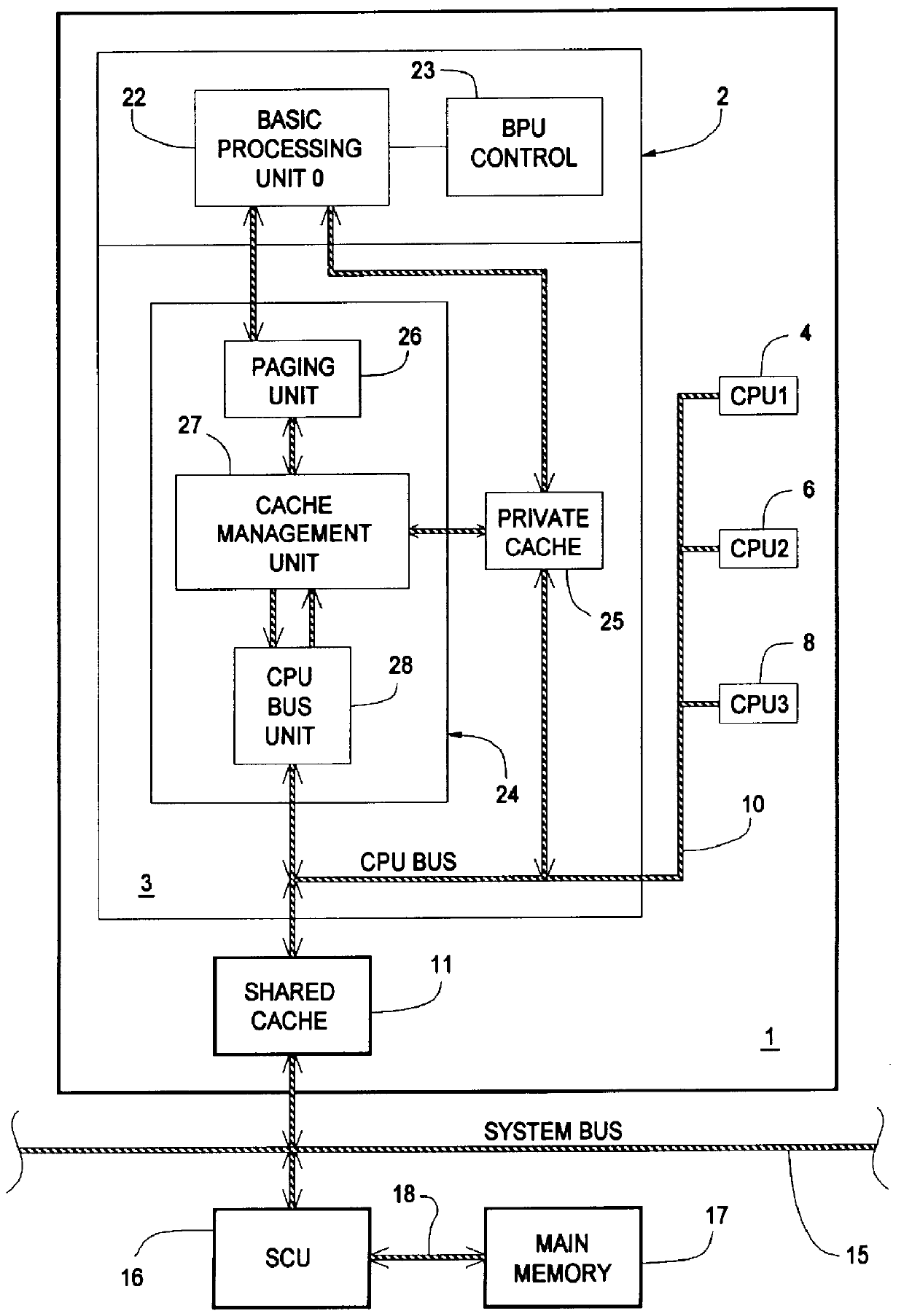

Central processing unit architecture and methods for high availability systems

ActiveUS20180039580A1Fast startupPromote recoveryMemory architecture accessing/allocationBootstrappingRecovery methodMemory address

Aspects are directed to a start-up or recovery method and a central processing unit (CPU) architecture. In one example, the CPU architecture includes read-only memory (ROM) storing instructions addressable by a first range of physical memory addresses, random access memory (RAM), a direct memory access (DMA) engine, the DMA engine controllable to transfer the instructions from the ROM to RAM, the instructions addressable by a second range of physical memory addresses at the RAM, a memory management unit configured to translate a range of virtual addresses to the first range while in ROM, and translate the range of virtual memory addresses to the second range while in RAM, and a CPU to execute a DMA interrupt service routine to update the memory management unit to translate the range of virtual addresses to the second range of physical memory addresses while the DMA engine transfers the instructions to RAM.

Owner:RAYTHEON CO

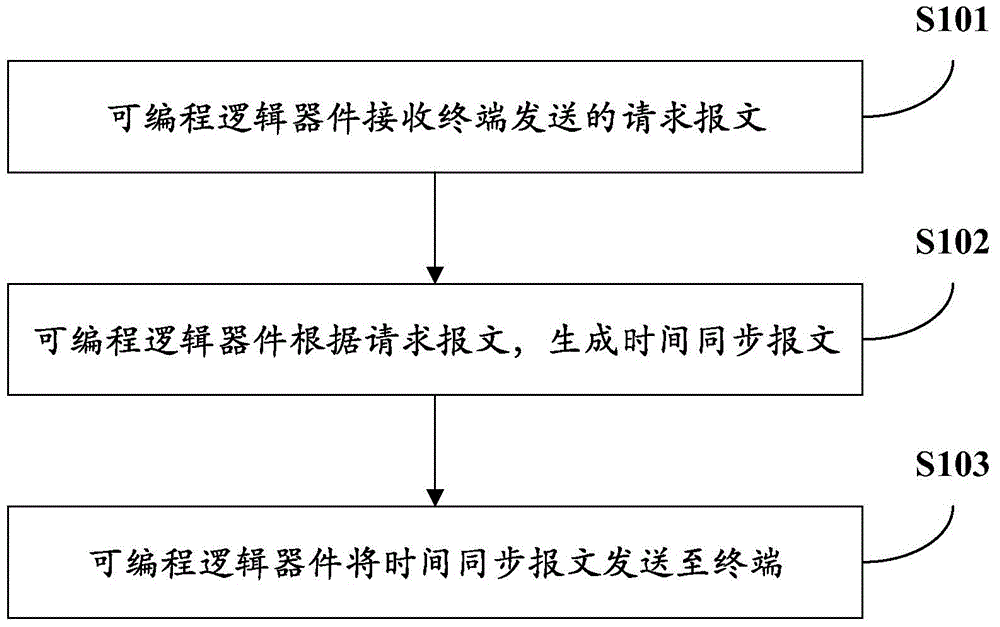

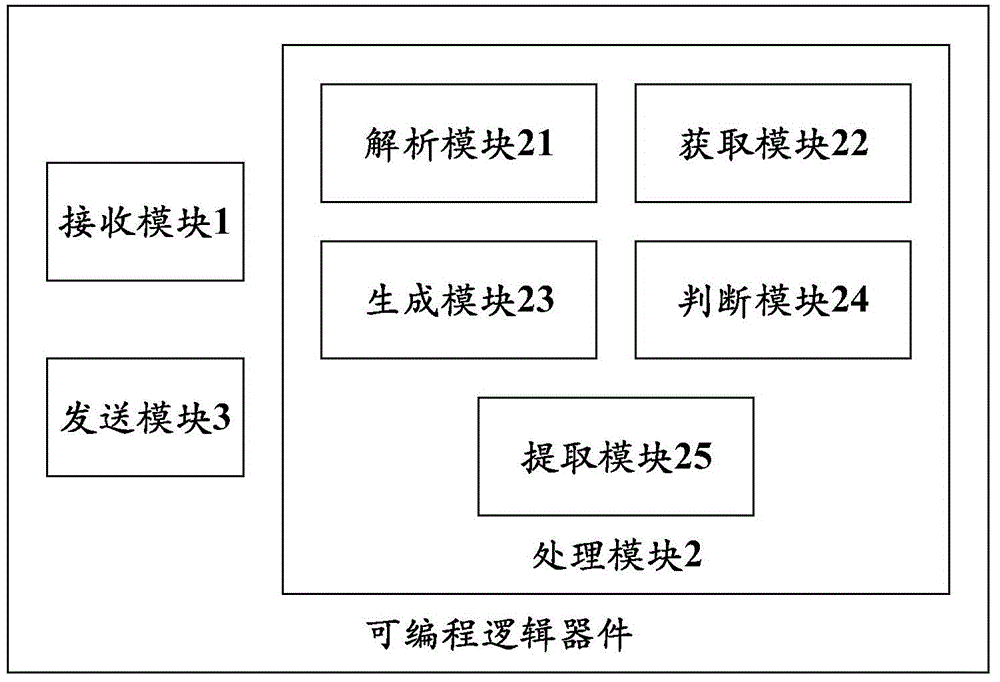

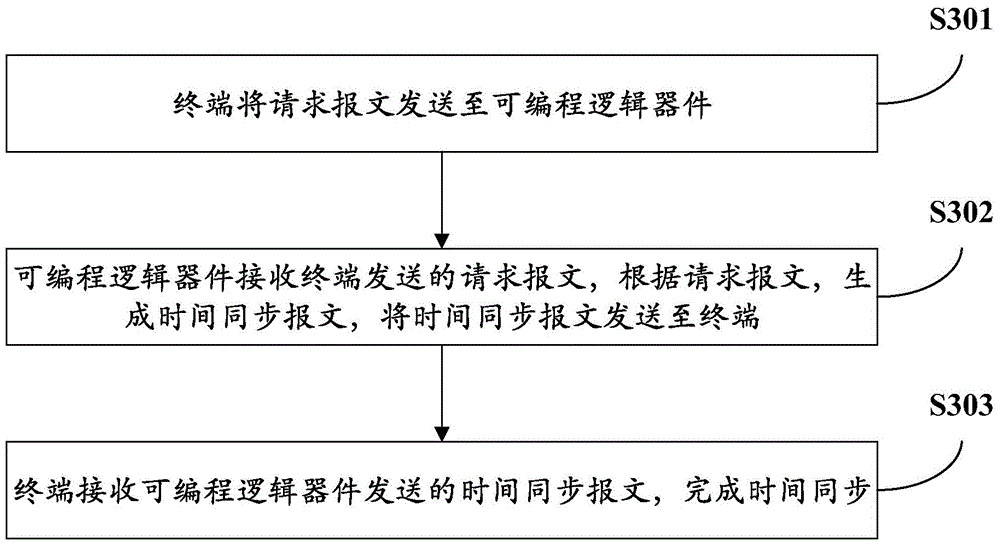

Time synchronization method, programmable logic device, single board and network element

ActiveCN105262555AEasy to handlePrecise packet sending intervalTime-division multiplexSynchronisation signal speed/phase controlProgrammable logic deviceCpu architecture

The invention provides a time synchronization method, a programmable logic device, a single board and a network element. The method comprises the following steps: the programmable logic device receives a request message sent by a terminal; the programmable logic device generates a time synchronization message according to the request message; and the programmable logic device sends the time synchronization message to the terminal. By adopting the time synchronization method in the technical scheme provided by the invention, the problem that a time synchronization response must be completed by software interruption due to the special limitation of a CPU architecture to result in definicient packet sending ability is solved. The programmable logic device frames and processes the time synchronization message and directly receives and sends the message to comprehensively improve the message processing ability, and a high-frequency accurate timing manner of the programmable logic device ensures a more accurate packet sending interval and further optimizes the time synchronization performance, therefore bandwidth resources of the Ethernet are used to the utmost to determine the message transceiving ability.

Owner:ZTE CORP

Panoramic video transcoding method, apparatus and device

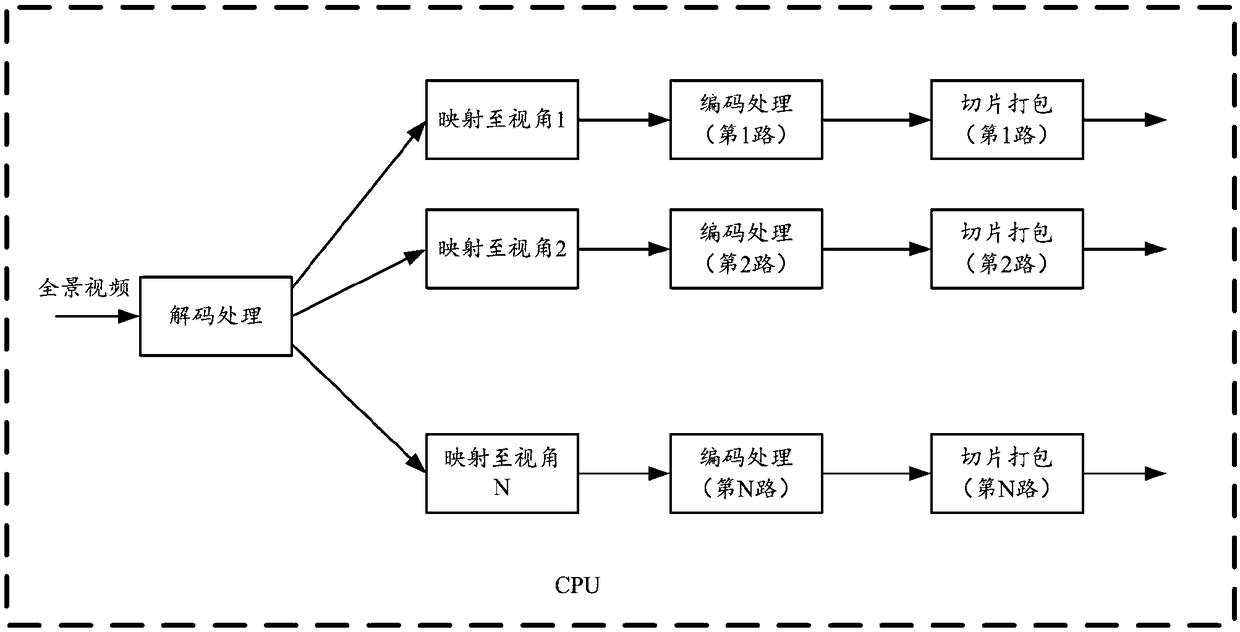

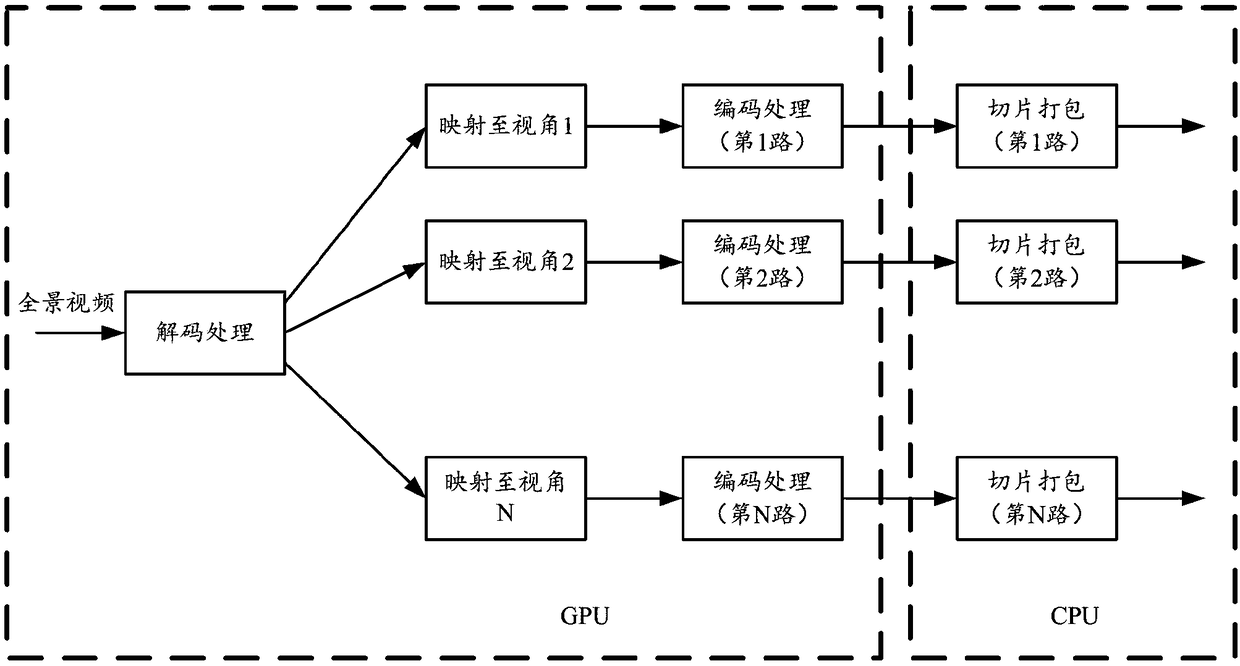

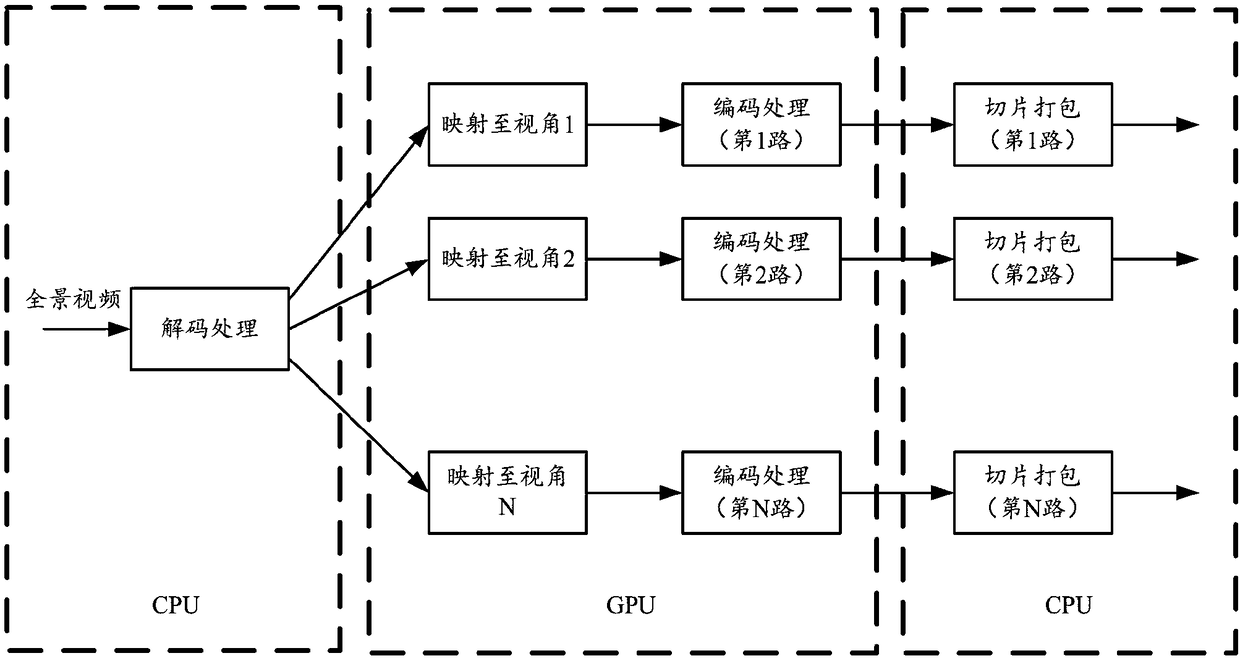

ActiveCN108289228AMeet the needs of live streamingImprove real-time performanceProcessor architectures/configurationDigital video signal modificationGraphicsTranscoding

The invention provides a panoramic video transcoding method, apparatus and device. The method comprises the following steps: performing decoding processing on a panoramic video; mapping the decoded video data to N viewing angles to obtain N paths of video data, wherein the N is a preset positive integer; respectively encoding the N paths of video data to obtain N paths of video streams; respectively slicing and packing the N paths of video streams; wherein part or all of the decoding, mapping, and encoding are executed by a graphical processor GPU. In the panoramic video transcoding, some or all of the processing of decoding, mapping and encoding is executed by the GPU, and the method for accelerating the panoramic video transcoding by using the GPU resource improves the instantaneity compared with the manner of panoramic video transcoding by using pure CPU architecture in the prior art, so that the needs of VR live video are met.

Owner:ALIBABA GRP HLDG LTD

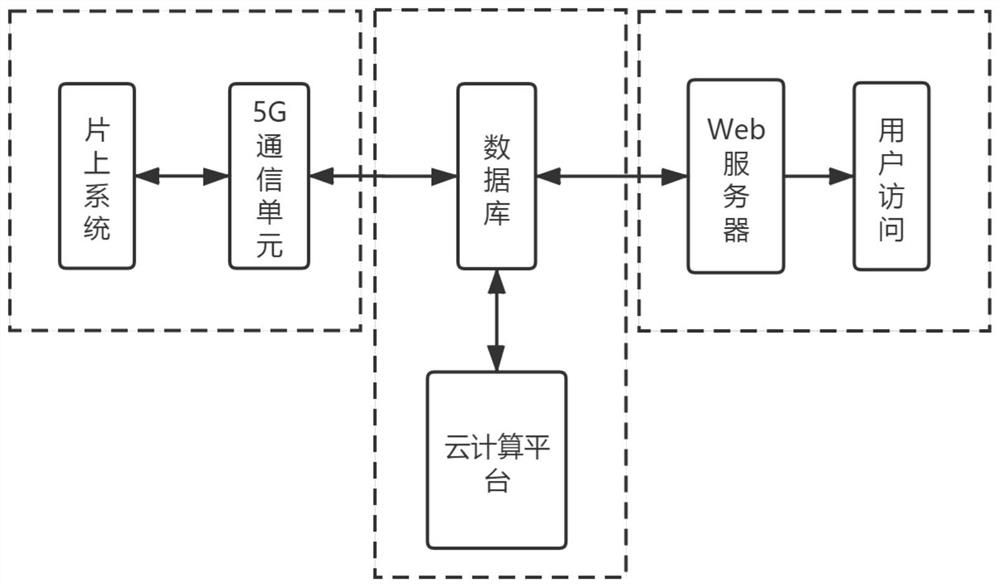

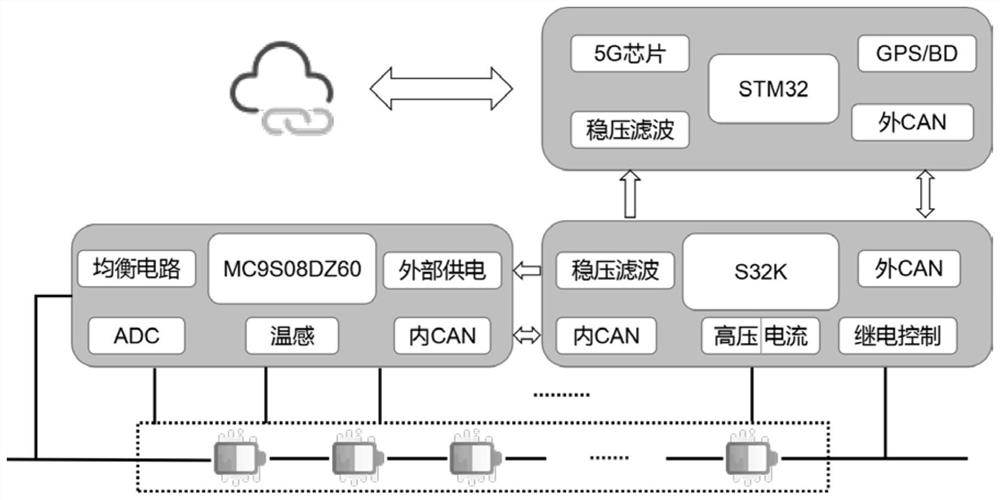

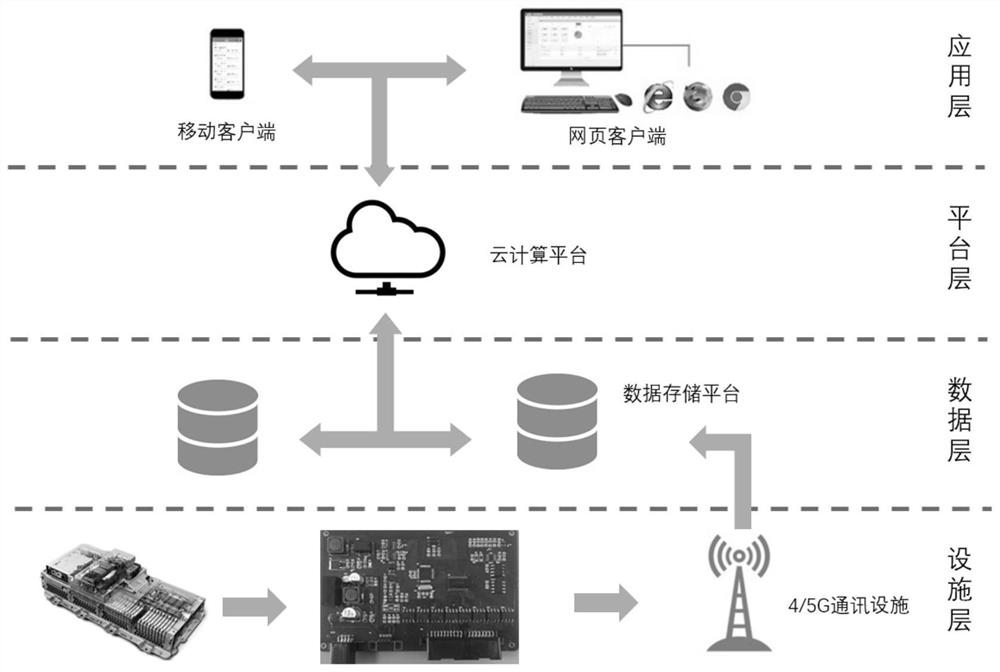

Intelligent battery charging and discharging management system and method based on 5G cloud computing platform

PendingCN111913985AIncrease flexibilityImprove inconsistencyElectric devicesDatabase management systemsCpu architectureEmbedded system

The invention discloses an intelligent battery charging and discharging management system and method based on 5G cloud computing platform. The system comprises a battery management system-on-chip based on a double-CPU framework, a 5G communication unit, a database server, the cloud computing platform, a web server and a visual terminal. The battery management system-on-chip of the double-CPU architecture is used for realizing operation state data acquisition of each battery pack and realizing SOC estimation, SOH estimation, fault diagnosis and charging and discharging control of batteries based on battery data information. The 5G communication unit is connected to the battery management system-on-chip of the double-CPU architecture and is used for transmitting the operation state information and the position information of the battery pack to the cloud computing platform in real time and receiving control parameters and signals transmitted by the cloud computing platform; the cloud computing platform is used for realizing multi-dimensional battery parameter extraction, a BMS control strategy and cooperative control of the cloud auxiliary battery management system; 5G is taken as acarrier, and active safety management and intelligent charging and discharging control of a battery pack are realized through edge calculation and cloud state estimation and correction.

Owner:UNIV OF SCI & TECH OF CHINA

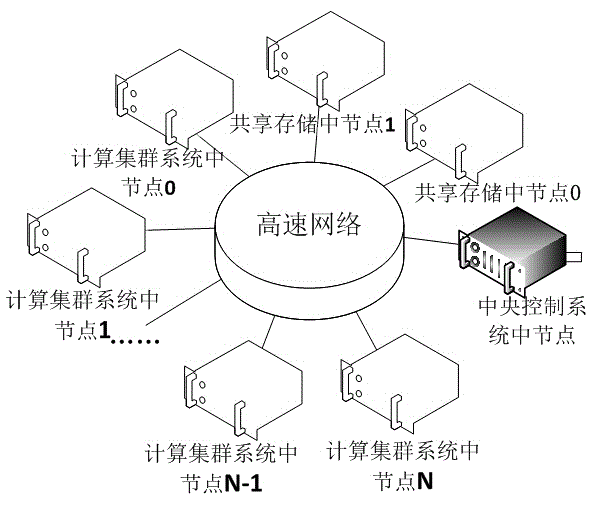

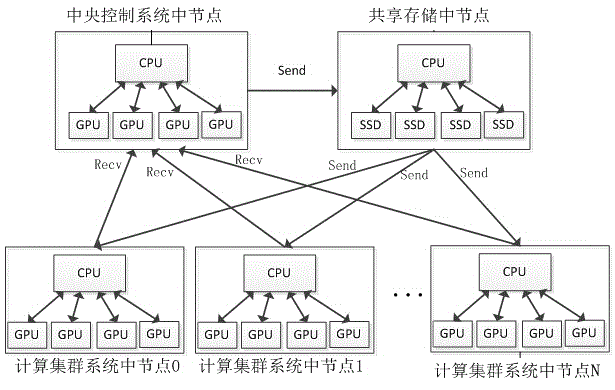

Efficient dynamic load balancing system and method for processing large-scale data

The invention discloses an efficient dynamic load balancing system and method for processing large-scale data, and belongs to the technical field of processing the large-scale data. The efficient dynamic load balancing system structurally comprises a central control system, a computing cluster system, a storage system and a high-speed network. A CPU and GPU mixed heterogeneous architecture is adopted in a middle node of the central control system; a CPU and GPU mixed heterogeneous architecture is adopted in middle nodes of the computing cluster system, or a CPU architecture is adopted in nodes of the computing cluster system; the storage system is divided into a shared storage and a local storage, a CPU architecture is adopted in middle nodes of the shared storage, and the local storage is used for storing data of nodes of the central control system or the nodes of the computing cluster system; the high-speed network is used for connecting the middle node of the central control system, the middle nodes of the computing cluster system and the middle nodes of the shared storage to form the centralized system for processing the large-scale data. The problem that a current server computing system is insufficient in network bandwidth and small in storage capacity and therefore cannot process the large-scale data is solved.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

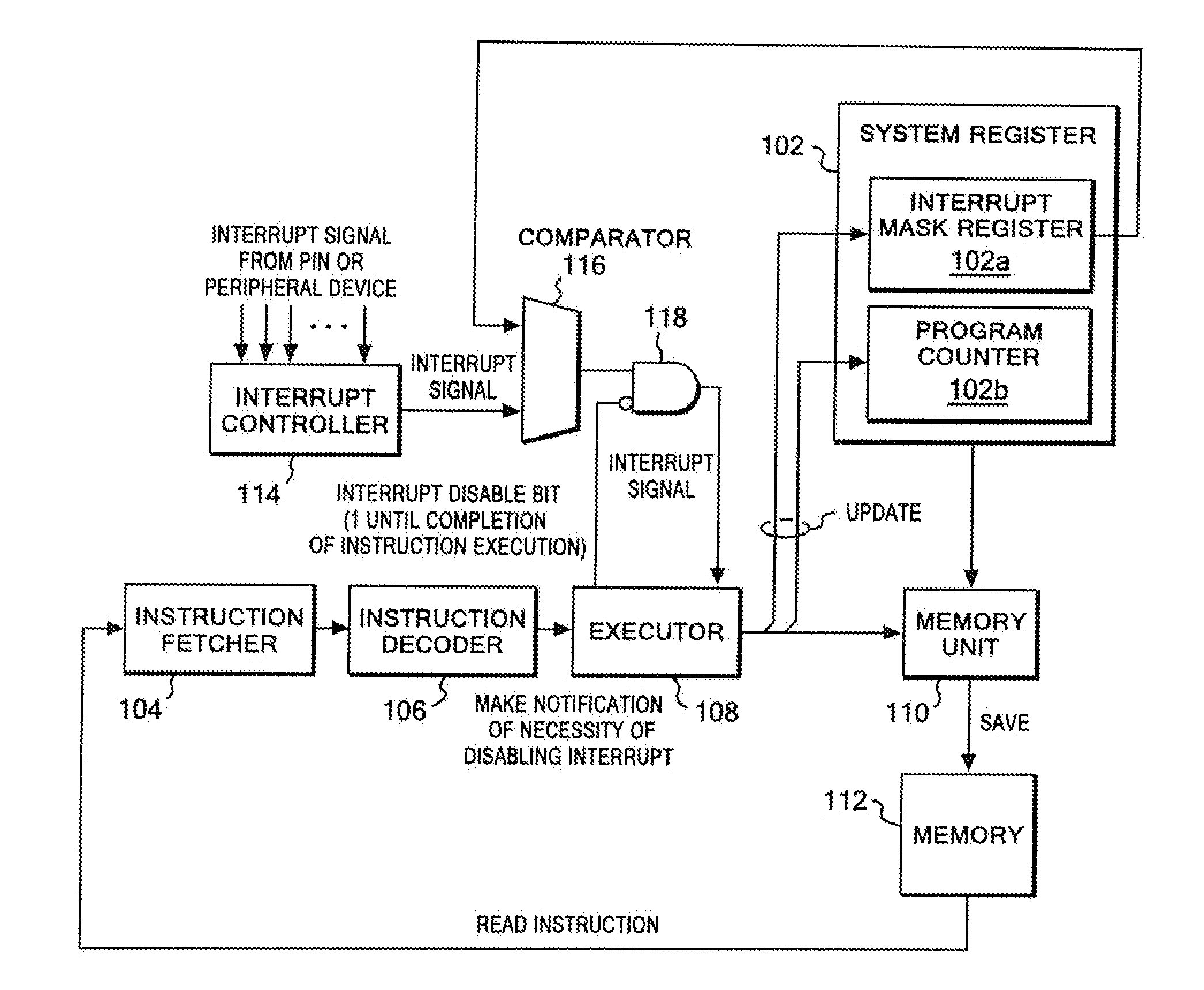

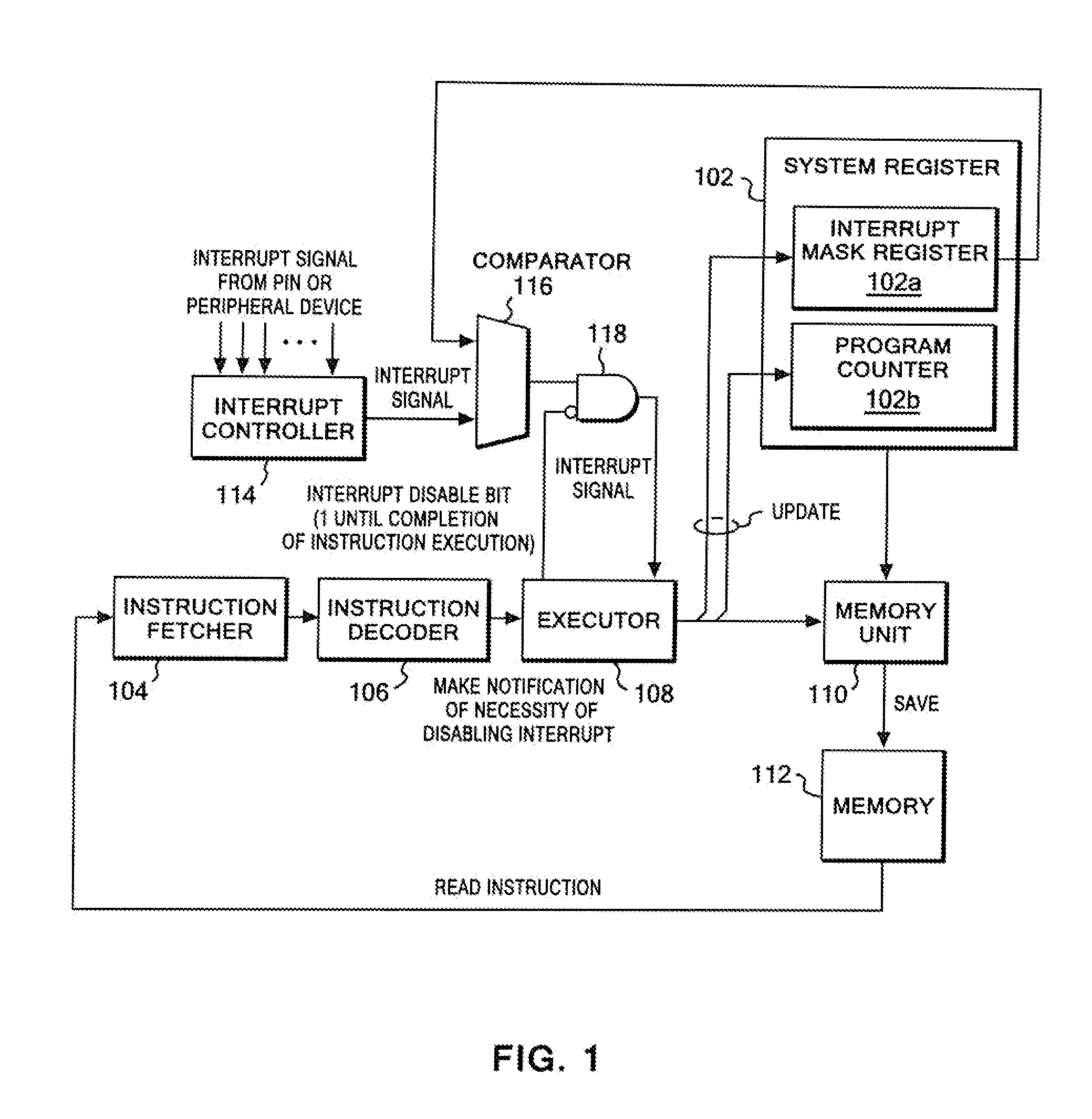

Computer system and method of controlling computer system

CPU architecture is modified so that content of the interrupt mask register can be changed directly based on a decoding result of an instruction decoder of a CPU. Such modification does not require a great deal of labor in changing a CPU design. In addition, an extended CALL instruction and an extended software interrupt instruction are added to the CPU, and each of the extended CALL instruction and the extended software interrupt instruction additionally has a function of changing the value of the interrupt mask register. Atomicity is achieved by: allowing such a single instruction to concurrently execute a call of a process and a value change of the interrupt mask register; and disabling other interrupts during execution of the single instruction.

Owner:IBM CORP

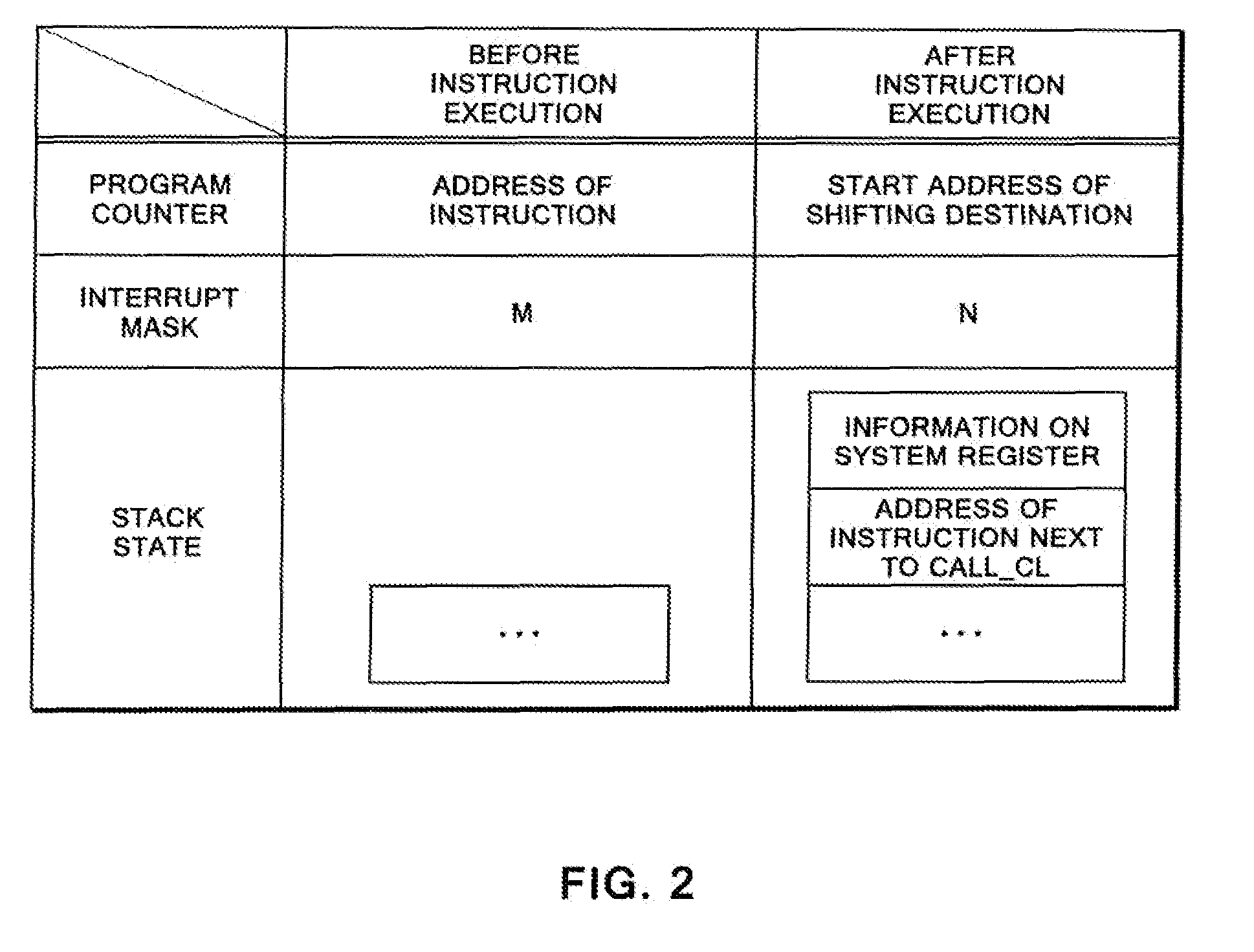

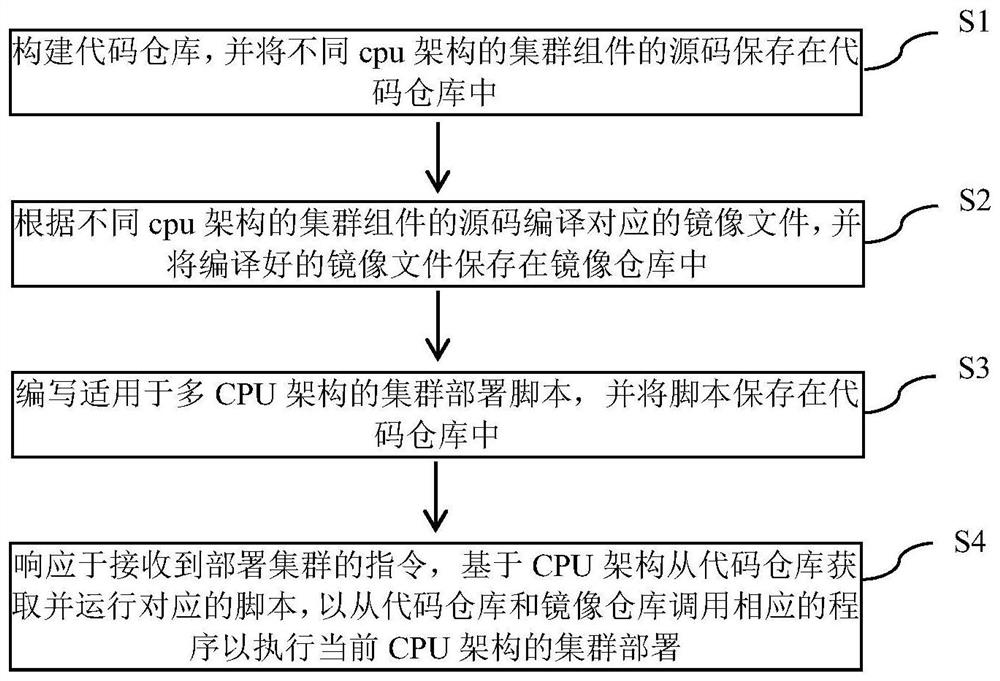

Method for operating multi-CPU (Central Processing Unit) architecture service in Kubernetes

PendingCN111309401ASupport update delete operationMeet the requirements of daily useMultiple digital computer combinationsProgram loading/initiatingManifest fileCpu architecture

The invention discloses a method for operating a multi-CPU (Central Processing Unit) architecture service in Kubernetes. The method specifically comprises the following steps: S1, a Kubernetes component is supported and deployed on an AMD64 architecture by default; s2, according to a CPU architecture which is deployed in a Kubernetes cluster and is to be adapted to a service, establishing a service cluster, a container mirror image of ARM64 and a container mirror image of AMD64 are manufactured respectively. S3, customizing the name of the server mirror image by the container cloud platform according to a specified format; s4, according to the mirror image name and the adaptive CPU architecture type, obtaining a mirror image; the method comprises the steps of S1, establishing a containercloud platform, S2, establishing a container mirror image database integrated with the container cloud platform, S3, establishing a container mirror image database integrated with the container cloudplatform, S4, establishing a Docker manifest file, and pushing the Docker manifest file to the container mirror image database integrated with the container cloud platform, and S5, carrying out container mirror image management on a multi-CPU architecture through a container cloud platform mirror image management function. According to the method for operating the multi-CPU architecture service inthe Kubernetes, the multi-CPU architecture service is operated in the Kubernetes; according to the technical scheme, the requirement for daily use can be met through improvement, the effect of supporting management of multiple CPU architecture hosts in the Kubernetes cluster is achieved, and therefore the Linux containers of the corresponding CPU architectures can be operated on the correspondinghosts.

Owner:广西梯度科技股份有限公司

Intelligent platform

An intelligent platform integrates with an intelligent portable device or intelligent core to provide a dynamic computer that may serve as any of: a pad, a tablet computing device, a netbook computer, and a notebook computer. The operations of the integrated device are determined by the connected intelligent core's CPU architecture and its installed operating system. The intelligent platform includes a housing and a core slot located behind a display for accommodating the intelligent core. A core connector is provided on an inner wall of the core slot for interconnecting with a compatible connector of the inserted intelligent core. A control unit communicates with the intelligent core through signals carried by the connector, refreshes image received on its touch-sensitive display, and sends touch-input commands from the touchable panel of the touch-sensitive display to the intelligent core. The battery on intelligent platform provides backup power to the intelligent core.

Owner:ICE COMP

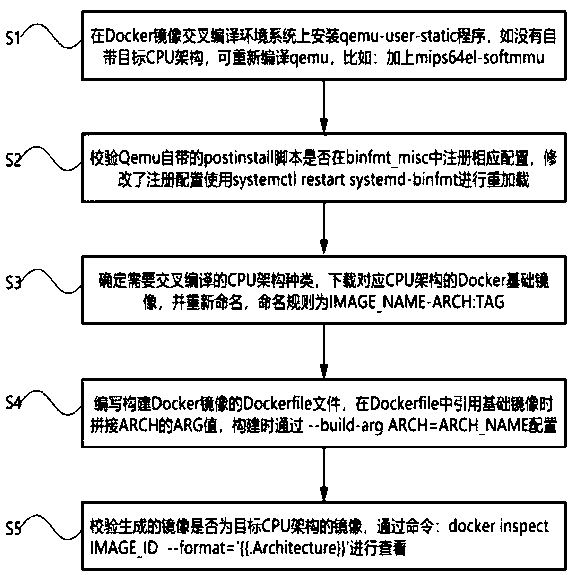

Method for realizing cross compiling of Docker mirror image

ActiveCN111309451AFix fast boot issuesSolve the problem of cross compilationEnergy efficient computingSoftware simulation/interpretation/emulationComputer hardwareCpu architecture

The invention discloses a method for realizing cross compiling of a Docker mirror image. The method specifically comprises the following steps: S1, installing a qemu-user-static service program on a Docker mirror image cross compiling environment system, s2, checking whether a binfmt _ misc in the Linux system registers a corresponding simulator configuration or not; s3, determining the CPU architecture type of the Docker mirror image needing cross compiling, s4, a Docker file for constructing a Docker mirror image is compiled; docker mirror images of different target CPU frameworks are constructed by using the same Docker file, and the Docker mirror images of different target CPU frameworks are constructed by using the same Docker file. And S5, determining whether the generated Docker mirror image is the mirror image of the target CPU architecture or not by checking the identifier in the generated Docker mirror image. The invention relates to the technical field of computer programs.According to the method for realizing Docker mirror image cross compilation, when the application compilation environment is started, the difference of underlying hardware facilities can be completelyshielded, and the problem of cross-platform cross compilation is solved, so that the problem caused by the difference of underlying hardware can also be shielded while the application cross compilation environment is rapidly started.

Owner:广西梯度科技股份有限公司

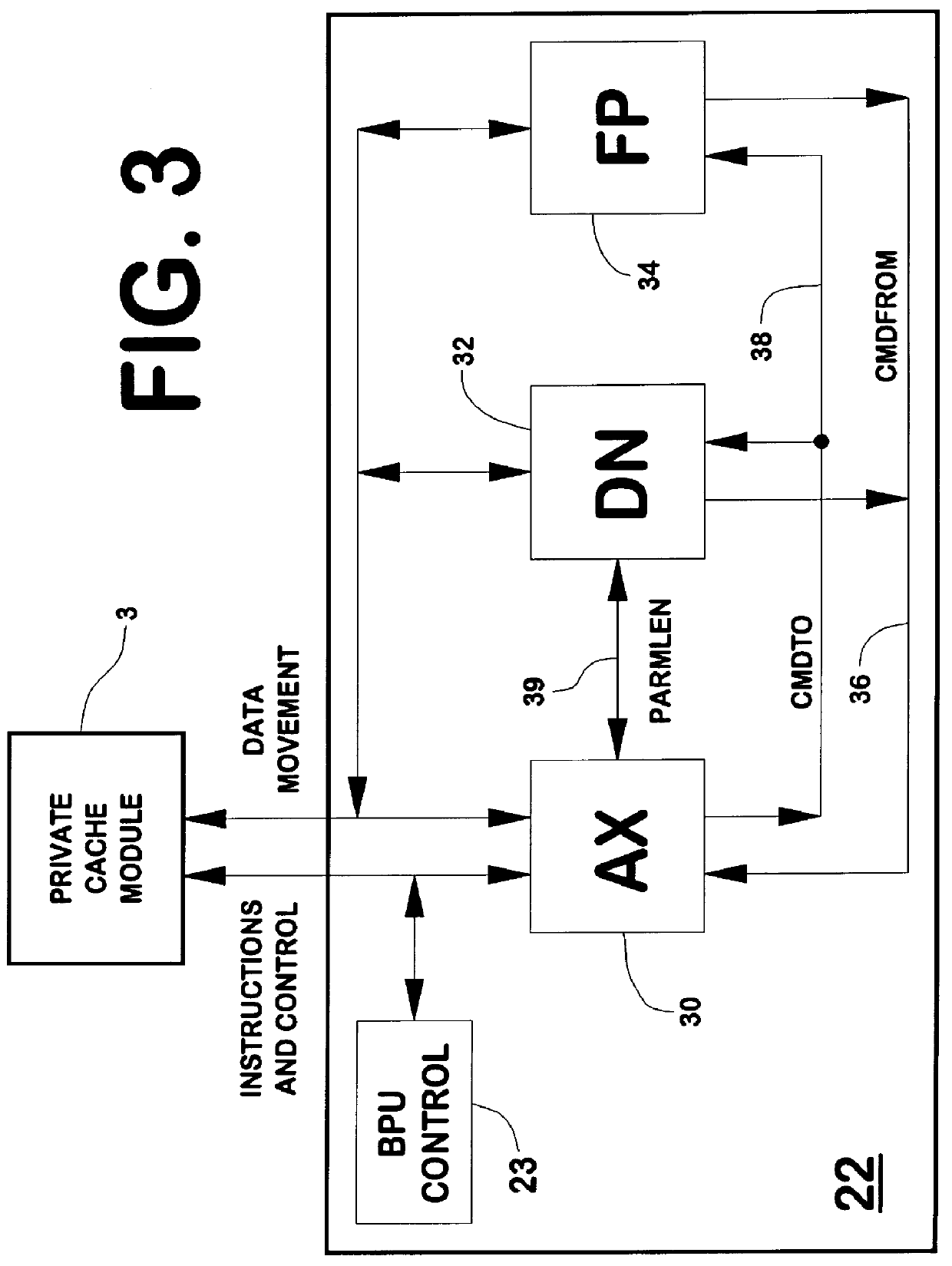

Fast domain switch and error recovery in a secure CPU architecture

InactiveUS6014757AEasy to useConvenient timeConcurrent instruction executionRedundant operation error correctionProcessor registerCpu architecture

In order to gather, store temporarily and efficiently deliver safestore information in a CPU having data manipulation circuitry including a register bank, first and second serially oriented safestore buffers are employed. At suitable times during the processing of information, a copy of the instantaneous contents of the register bank is transferred into the first safestore buffer. After a brief delay, a copy of the first safestore buffer is transferred into the second safestore buffer. If a call for a domain change (which might include a process change or a fault) is sensed, a safestore frame is sent to cache, and the first safestore buffer is loaded from he second safestore buffer rather than from the register bank. Later, during a climb operation, if a restart of the interrupted process is undertaken and the restoration of the register bank is directed to be taken from the first safestore buffer, this source, rather than the safestore frame stored in cache, is employed to obtain a corresponding increase in the rate of restart. In one embodiment, the transfer of information between the register bank and the safestore buffers is carried out on a bit-by-bit basis to achieve additional flexibility of operation and also to conserve integrated circuit space.

Owner:BULL HN INFORMATION SYST INC

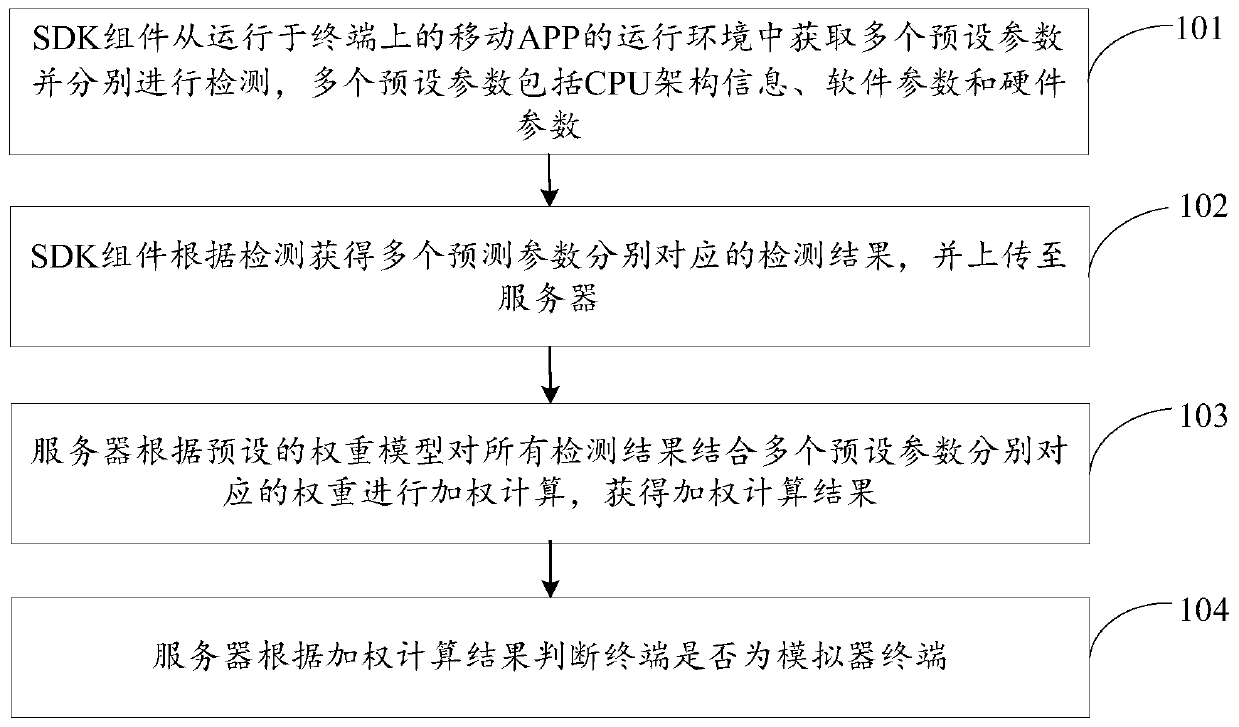

Simulator detection method and system

InactiveCN110619210AImprove accuracyImprove stabilityPlatform integrity maintainanceMobile appsSimulation

The invention discloses a simulator detection method and system, and belongs to the technical field of computers. The method comprises the steps that an SDK assembly obtains a plurality of preset parameters from a running environment of a mobile APP running on a terminal and detects the preset parameters respectively, wherein the preset parameters comprise CPU architecture information, software parameters and hardware parameters; the SDK assembly obtains detection results corresponding to the prediction parameters according to detection and uploads the detection results to the server; the server performs weighted calculation on all the detection results according to a preset weight model in combination with weights corresponding to a plurality of preset parameters to obtain a weighted calculation result; and the server judges whether the terminal is a simulator terminal or not according to the weighted calculation result. Whether a mobile APP runs in the running environment provided bythe simulator or not can be effectively detected, so that network malicious behaviors in the mobile APP are prevented.

Owner:SUNING CLOUD COMPUTING CO LTD

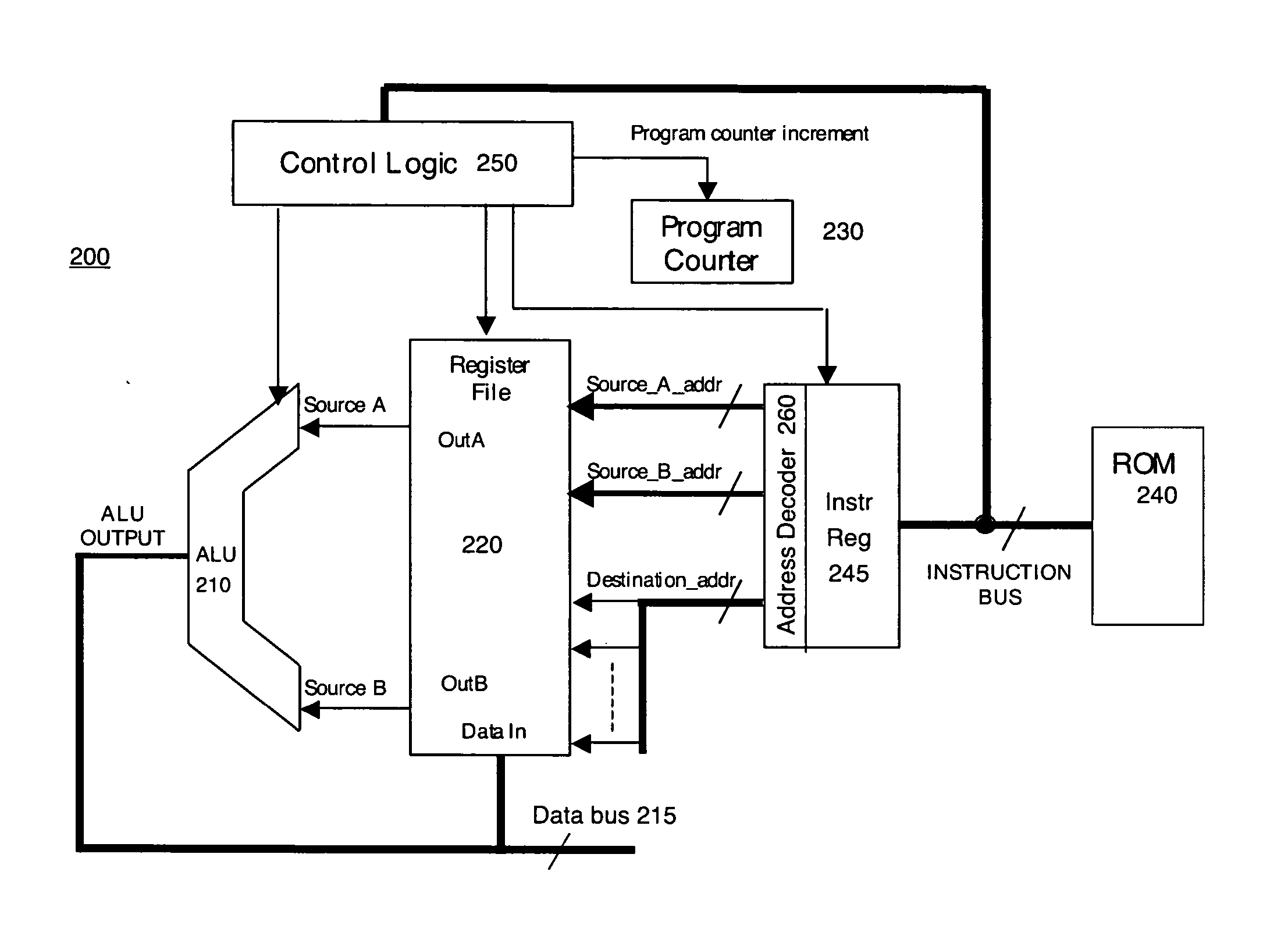

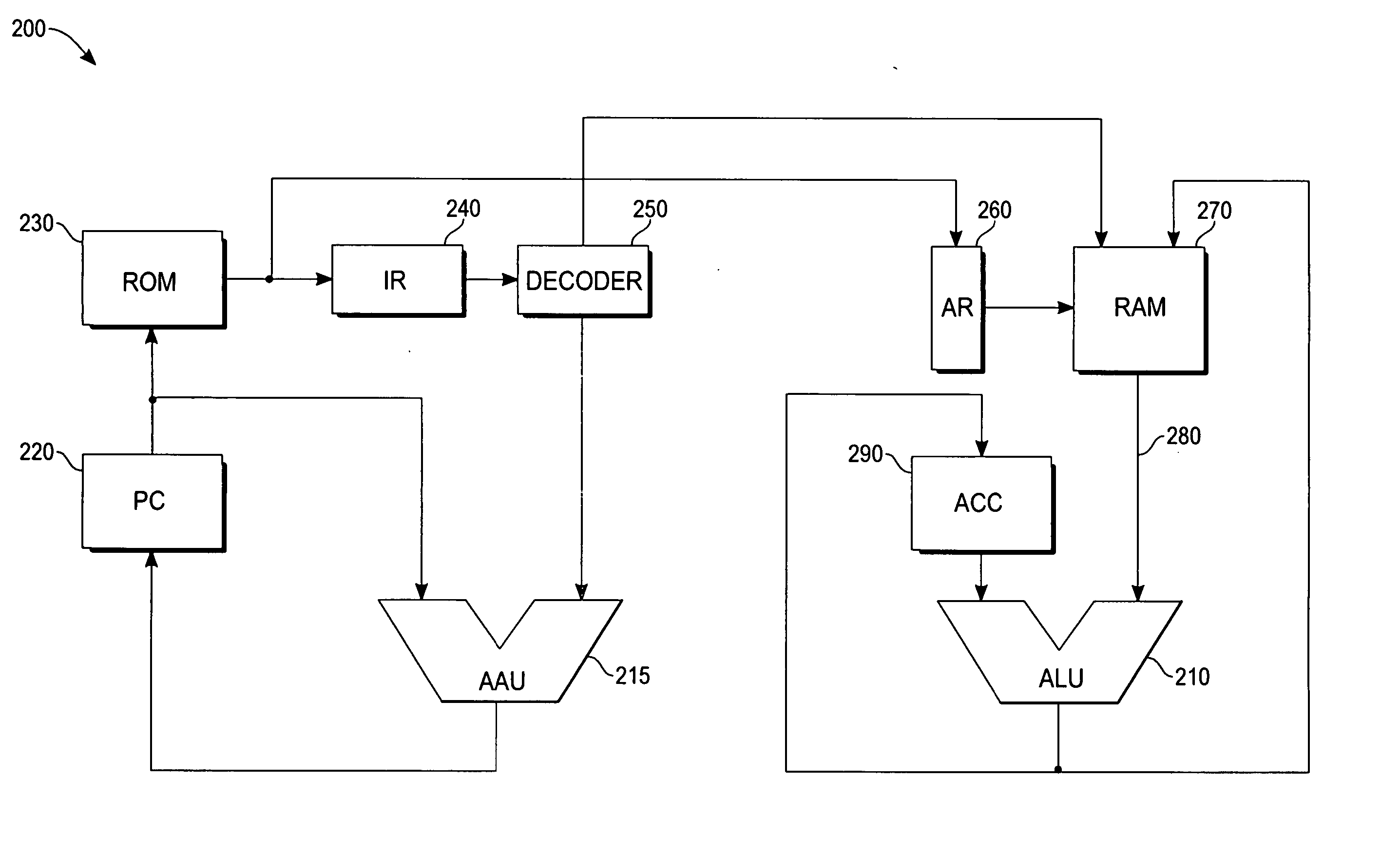

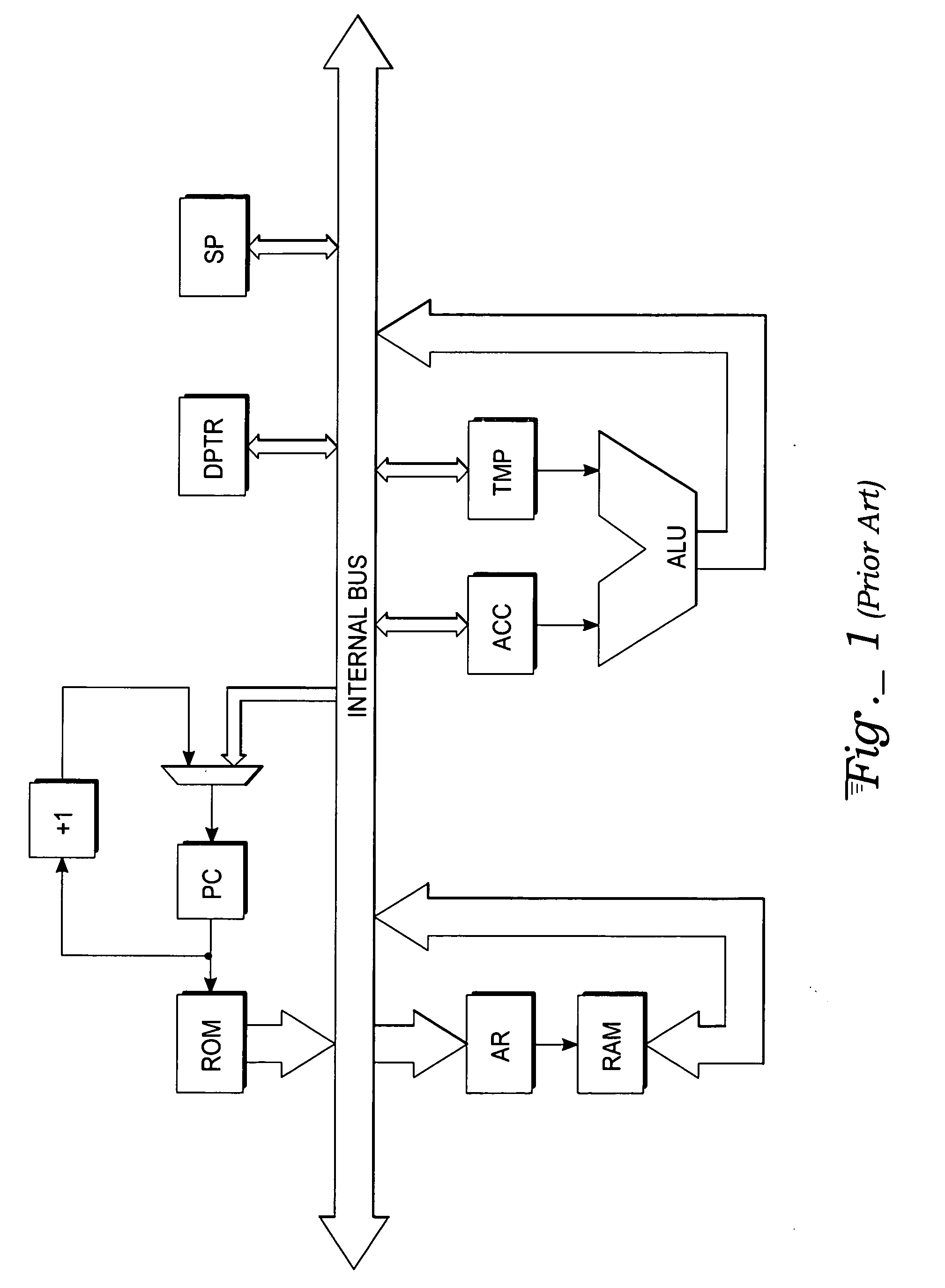

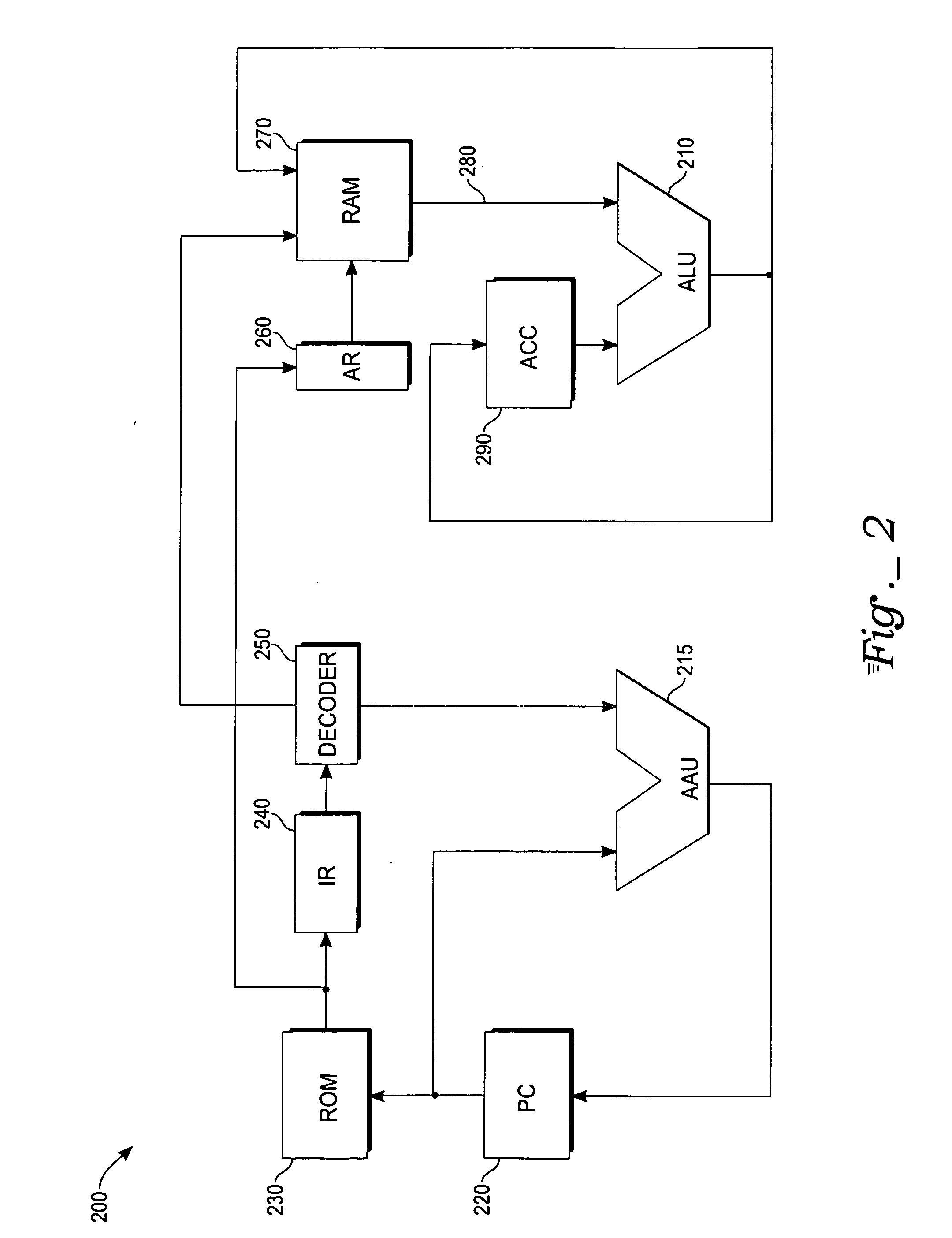

Single-cycle low-power CPU architecture

InactiveUS20060200650A1Effectively complete operationEnabling capabilityRegister arrangementsDigital computer detailsArithmetic logic unitRandom access memory

An n architecture for implementing an instruction pipeline within a CPU comprises an arithmetic logic unit (ALU), an address arithmetic unit (AAU), a program counter (PC), a read-only memory (ROM) coupled to the program counter, to an instruction register, and to an instruction decoder coupled to the arithmetic logic unit. A random access memory (RAM) is coupled to the instruction decoder, to the arithmetic logic unit, and to a RAM address register.

Owner:ATMEL CORP

Multi-channel, multi-service debug on a pipelined CPU architecture

InactiveUS7565644B2Software testing/debuggingSpecific program execution arrangementsParallel computingCpu architecture

A method and system for debugging an executing service on a pipelined CPU architecture are described. In one embodiment, a breakpoint within an executing service is set and a minimum state of the executing service is saved. In addition, a program counter of the executing service is altered. The program counter is restored and the state of the executing service is restored.

Owner:AVAGO TECH INT SALES PTE LTD

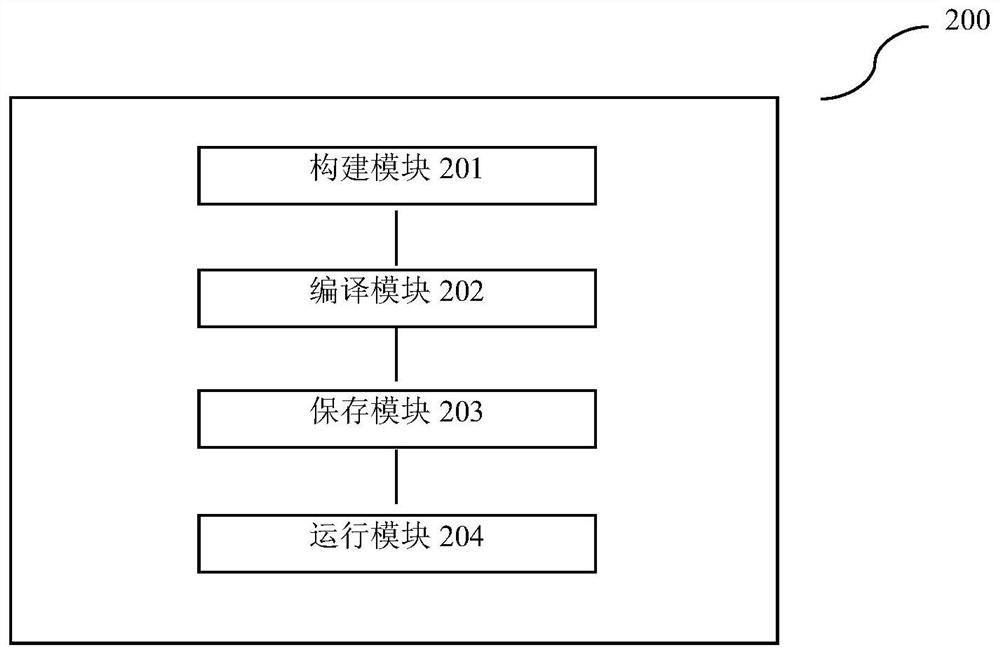

Cluster deployment method and device of multi-CPU architecture

InactiveCN111966366AReduce deployment exceptionsReduce failureSoftware simulation/interpretation/emulationSoftware deploymentComputer architectureCpu architecture

The invention provides a cluster deployment method and device of a multi-CPU architecture. The method comprises the following steps: constructing a code warehouse, and storing source codes of clustercomponents of different CPU architectures in the code warehouse; compiling corresponding mirror image files according to the source codes of the cluster components of different CPU architectures, andstoring the compiled mirror image files in a mirror image warehouse; compiling a cluster deployment script suitable for the multi-CPU architecture, and storing the script in a code warehouse; in response to a received instruction of deploying the cluster, obtaining and running a corresponding script from the code warehouse based on the CPU architecture, so as to call a corresponding program from the code warehouse and the mirror image warehouse to execute cluster deployment of the current CPU architecture. By using the scheme provided by the invention, the deployment exception or failure caused by personnel errors during deployment in different CPU architecture environments can be greatly reduced; the method has the advantages of one-time configuration and multiple reuse, shortening of thedeployment time consumption and improvement of the deployment efficiency.

Owner:SUZHOU LANGCHAO INTELLIGENT TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com